Patents

Literature

734results about How to "Good segmentation effect" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

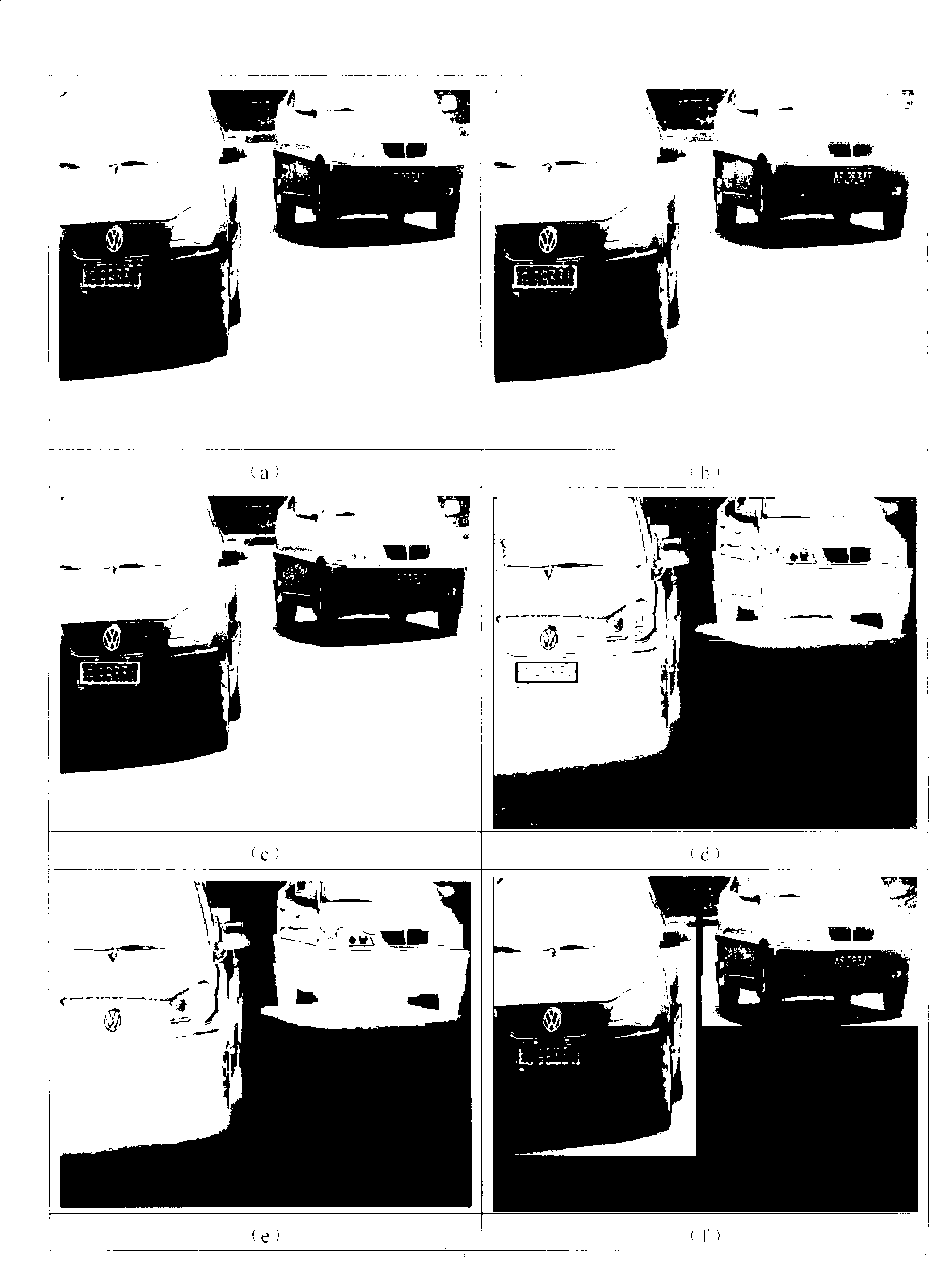

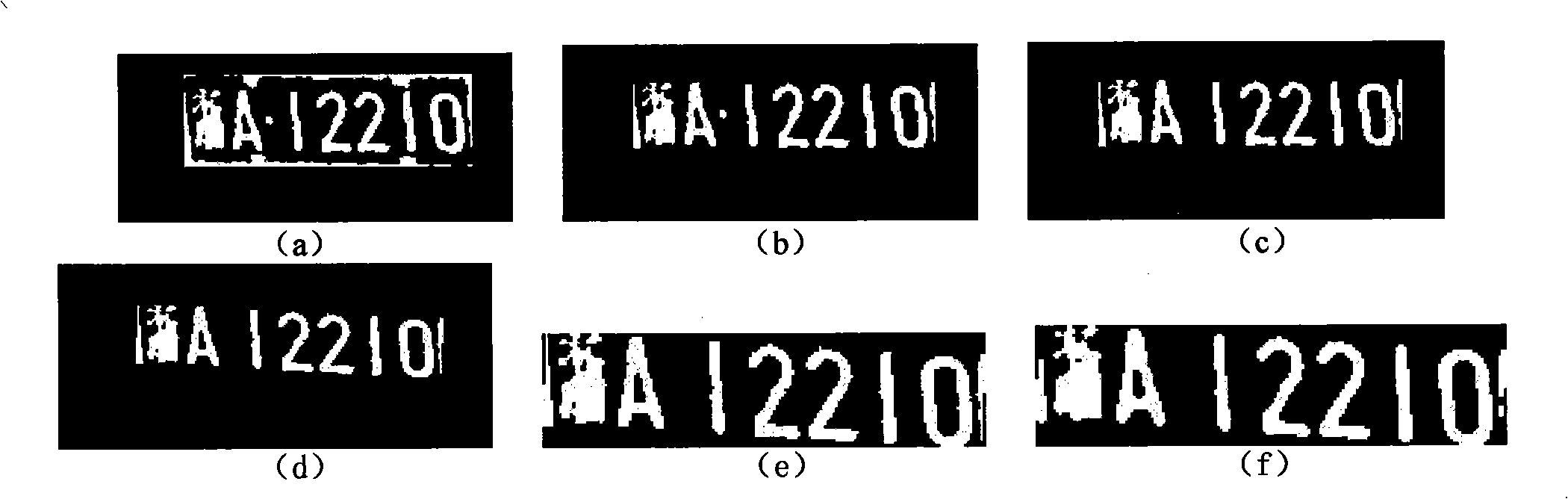

Automobile license plate automatic recognition method and implementing device thereof

InactiveCN101398894ALower quality requirementsScientific and reasonable designImage analysisCharacter and pattern recognitionPattern recognitionChinese characters

The invention discloses a locomotive license automatic identifying method and a realizing device thereof for shooting video in real time under a complex background; wherein, the identifying steps include: (1) locating a movement multi-target locomotive body area; (2) capturing a candidate license area image; (3) capturing a real license edge point; (4) capturing the smallest license area; (5) dividing characters and removing non-character images;(6) normalizing the character images and extracting the original coarse gridding characteristics of the character images; (7) using a trained BP neutral network for realizing the accurate identification of the license characters. The device for realizing the locomotive license automatic method includes a pick-up device and a computer; the pick-up device is connected with the computer by an IEEE1394 cable. The invention is suitable for the automatic identifying method and the realizing device thereof for dividing and identifying the locomotive licenses which are not interfered by the shoot traffic video image quality under a complex background with good identifying effects to the characters.

Owner:ZHEJIANG NORMAL UNIVERSITY

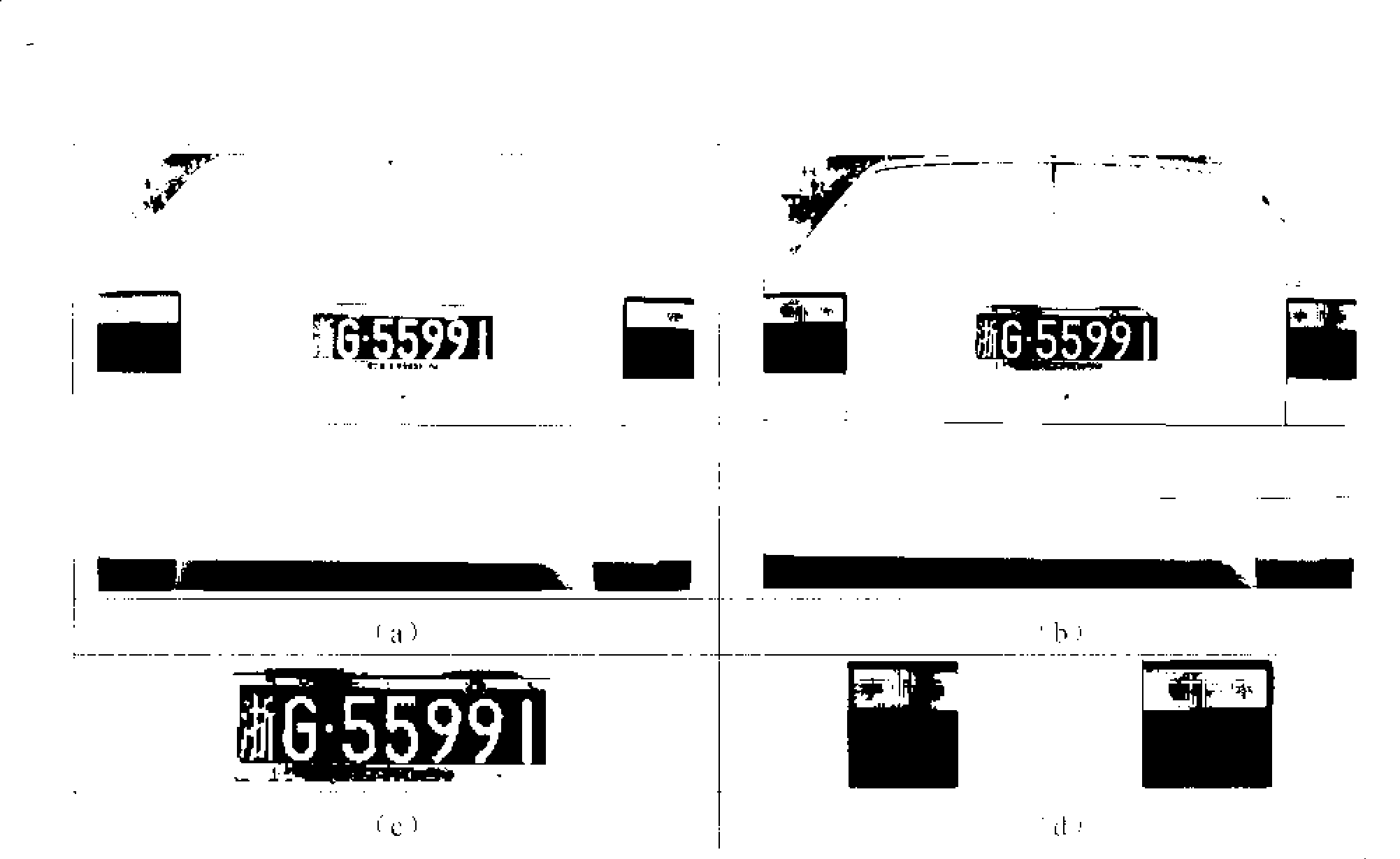

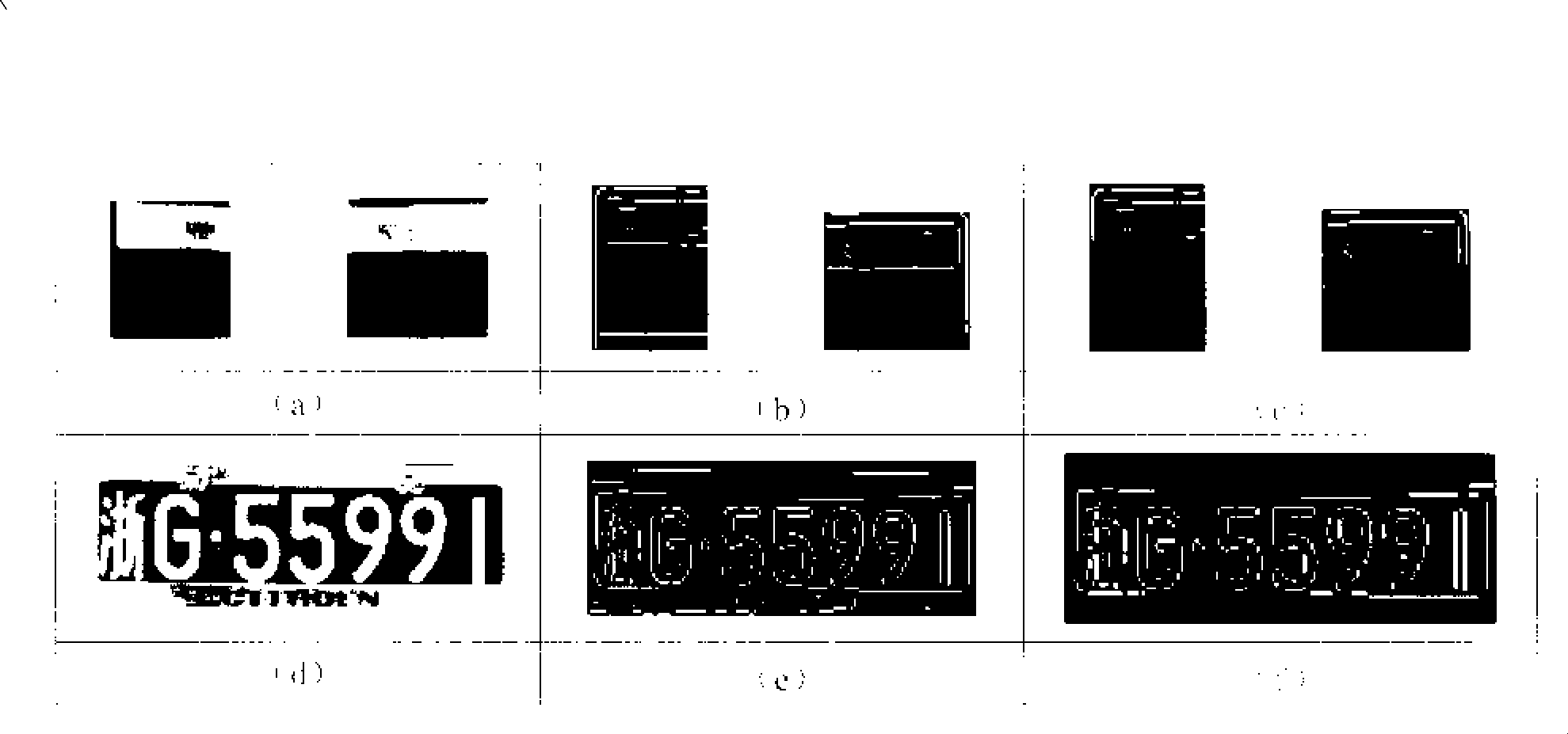

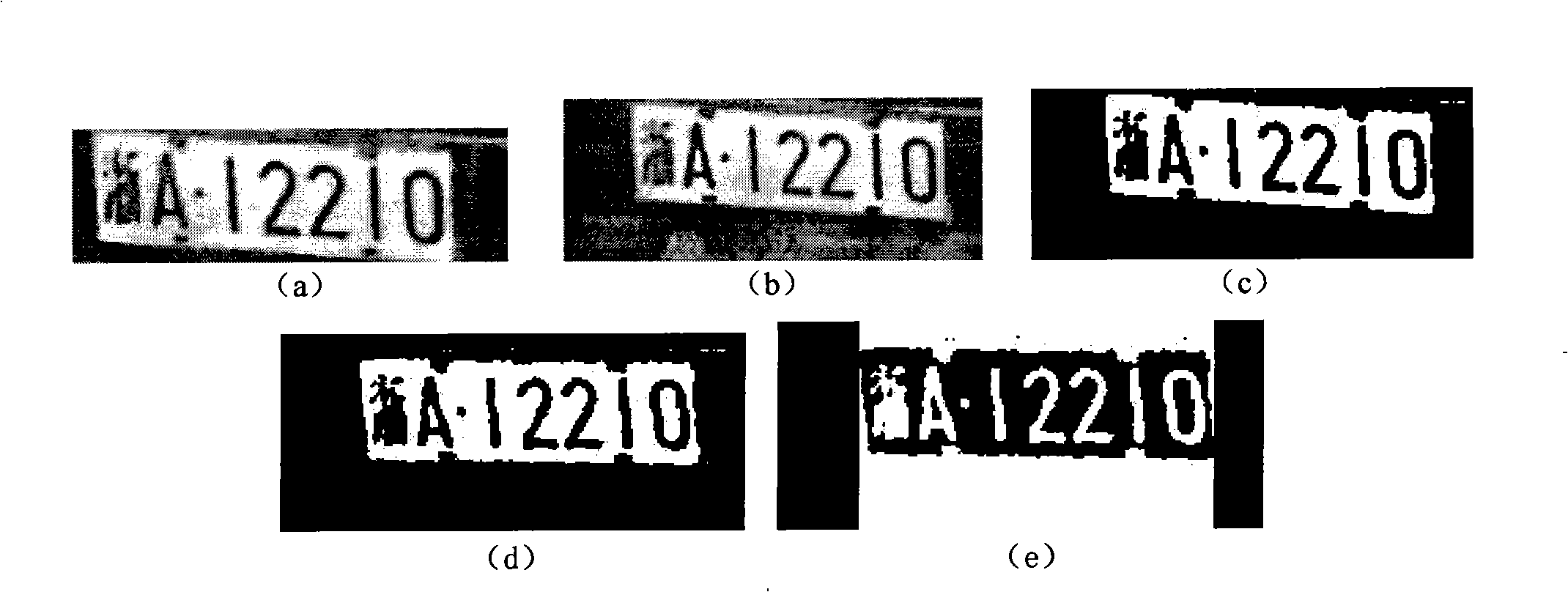

Method for recognizing license plate character based on wide gridding characteristic extraction and BP neural network

InactiveCN101408933AEliminate distractionsImprove robustnessCharacter and pattern recognitionPhysical realisationVertical projectionDot matrix

The invention discloses a vehicle license plate character recognition method based on rough grid feature extraction and a BP neural network. The method comprises the following steps: (1). preprocessing a vehicle license plate image to eliminate various interferences and obtain a minimum vehicle license plate region; (2). segmenting the vehicle license plate characters by combining vertical projection and a drop-fall algorithm; (3). screening the segmentation results to eliminate interferences of vertical frames, separators, rivets and the like; (4). normalizing the characters according to the position of a centre of mass; (5). taking each pixel of a normalized characters dot-matrix as a grid to extract the original features of the characters; (6). designing the BP neural network with a secondary classifier according to the situation of the vehicle license plate; and (7). reasonably constructing a training sample database to train the neural network, and adjusting the training samples by the recognition effect to realize accurate recognition of the network. The method effectively eliminates the noise interference, quickly and accurately segments the characters, steadily and effectively recognizes Chinese characters, and realizes the balance between real-time property and accuracy in the whole recognition course.

Owner:ZHEJIANG NORMAL UNIVERSITY

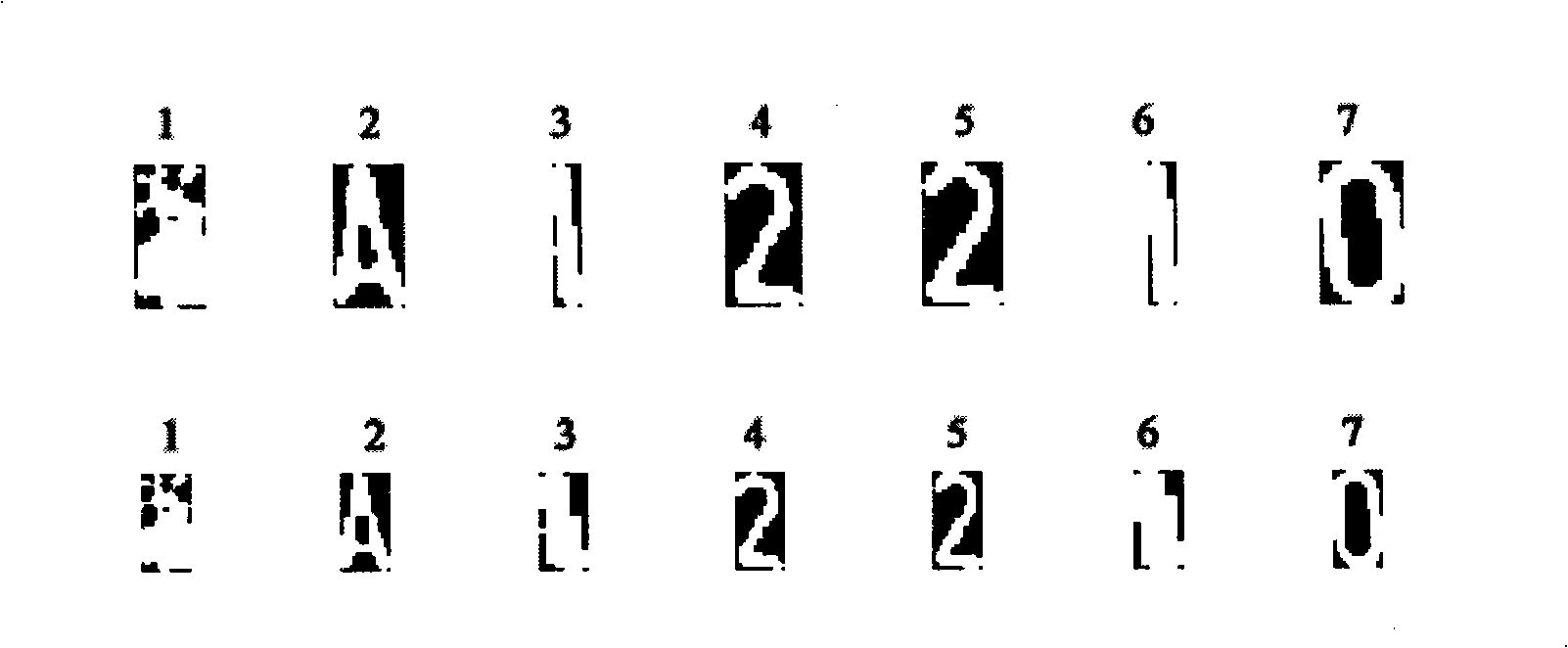

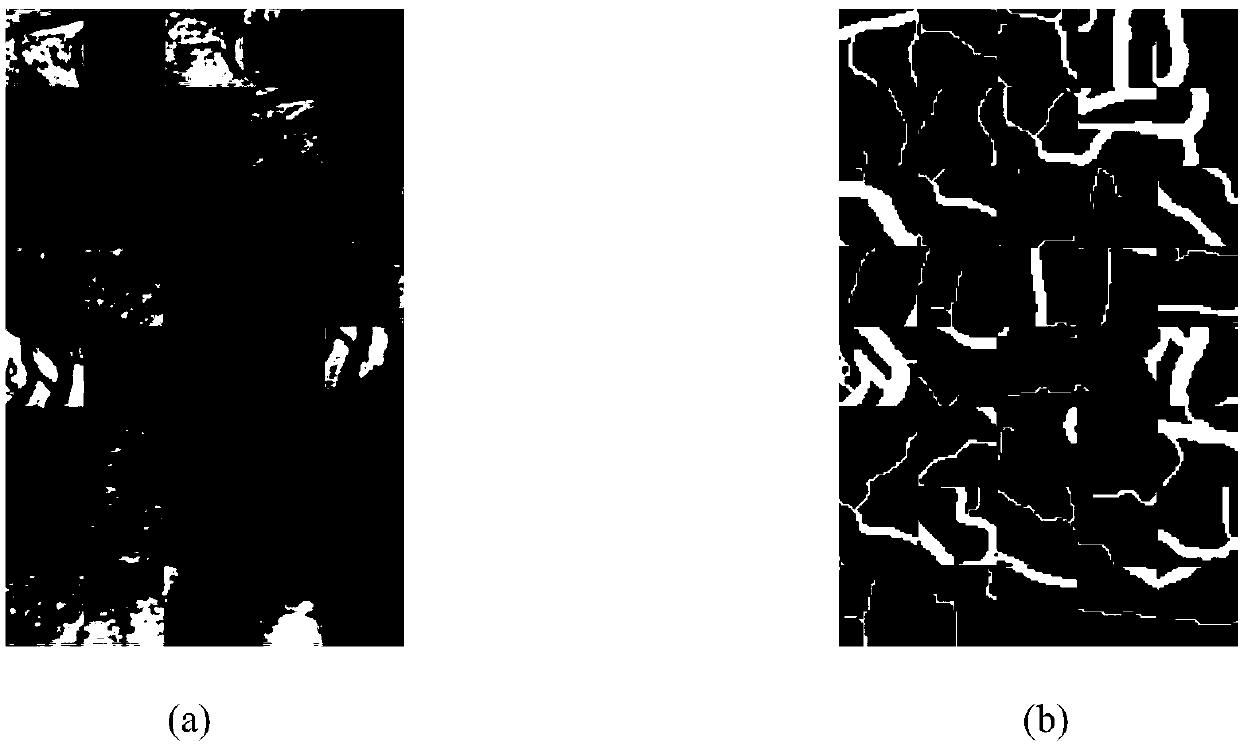

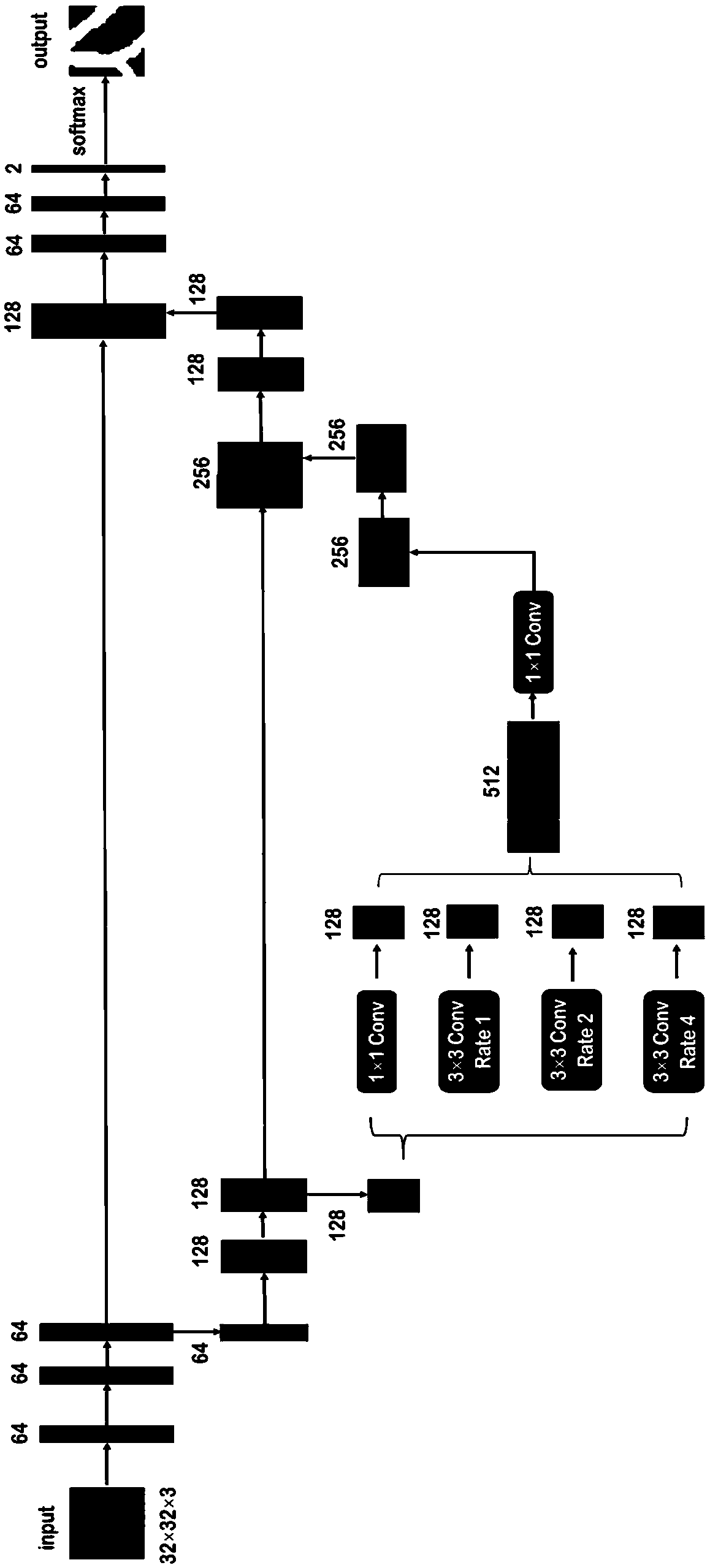

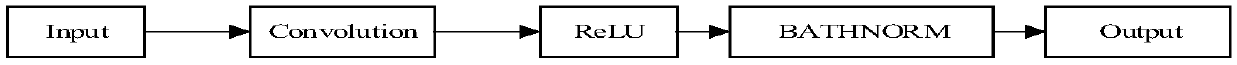

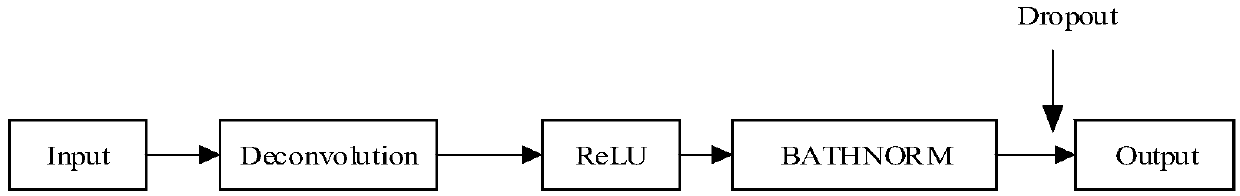

A retinal blood vessel image segmentation method based on a multi-scale feature convolutional neural network

InactiveCN108986124AExpand the receptive fieldReduce training parametersImage enhancementImage analysisAdaptive histogram equalizationHistogram

The invention belongs to the technical field of image processing, in order to realize automatic extraction and segmentation of retinal blood vessels, improve the anti-interference ability to factors such as blood vessel shadow and tissue deformation, and make the average accuracy rate of blood vessel segmentation result higher. The invention relates to a retinal blood vessel image segmentation method based on a multi-scale feature convolutional neural network. Firstly, retinal images are pre-processed appropriately, including adaptive histogram equalization and gamma brightness adjustment. Atthe same time, aiming at the problem of less retinal image data, data amplification is carried out, the experiment image is clipped and divided into blocks, Secondly, through construction of a multi-scale retinal vascular segmentation network, the spatial pyramidal cavity pooling is introduced into the convolutional neural network of the encoder-decoder structure, and the parameters of the model are optimized independently through many iterations to realize the automatic segmentation process of the pixel-level retinal blood vessels and obtain the retinal blood vessel segmentation map. The invention is mainly applied to the design and manufacture of medical devices.

Owner:TIANJIN UNIV

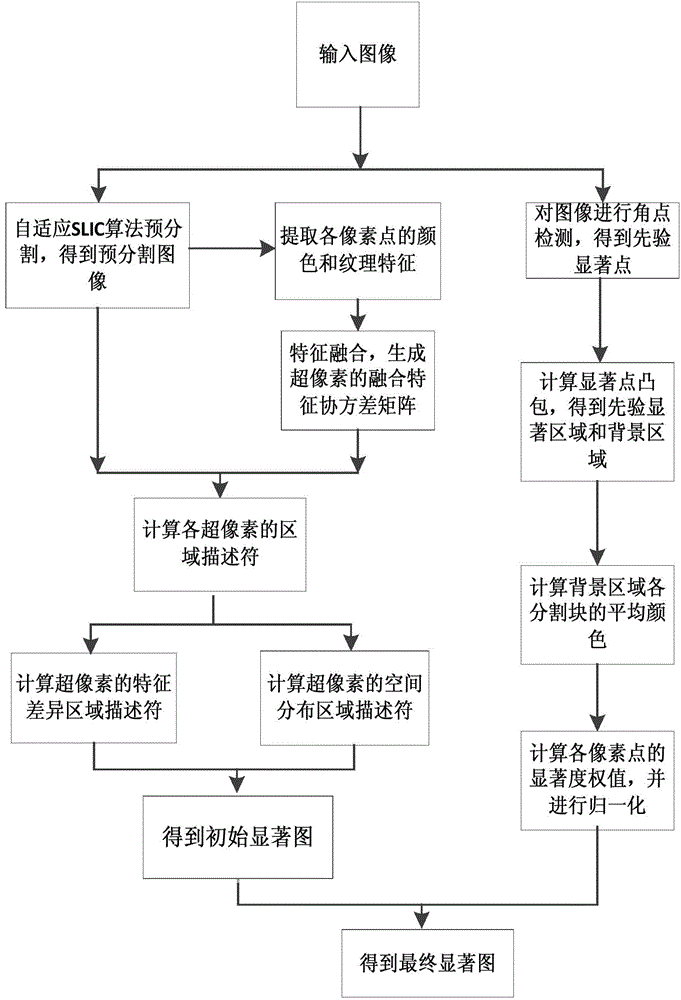

Image saliency detection method based on region description and priori knowledge

InactiveCN104103082APreserve edge detailSignificance detection is goodImage analysisPattern recognitionSaliency map

The invention discloses an image saliency detection method based on region description and priori knowledge. The method comprises the following steps that: (1) an image to be detected is subjected to pre-segmentation, superpixels are generated, and a pre-segmentation image is obtained; (2) a fusion feature covariance matrix of the superpixels is generated; (3) feature different region descriptors and space distribution region descriptors of each superpixel are calculated; (4) the initial saliency value of each pixel point of the image to be detected is calculated; (5) a priori saliency region and a background region of the image are obtained; (6) the saliency weight of each pixel point of the image to be detected is calculated; and (7) the final saliency value of each pixel point is calculated. The image saliency detection method has the advantages that the saliency region can be uniformly highlighted in an obtained final saliency map; the background noise interference is inhibited; a good saliency detection effect can be achieved in ordinary images, and the processing on the saliency detection of complicated images can also be realized; and the processing on subsequent image key region extraction and the like can also be favorably carried out.

Owner:SOUTH CHINA UNIV OF TECH

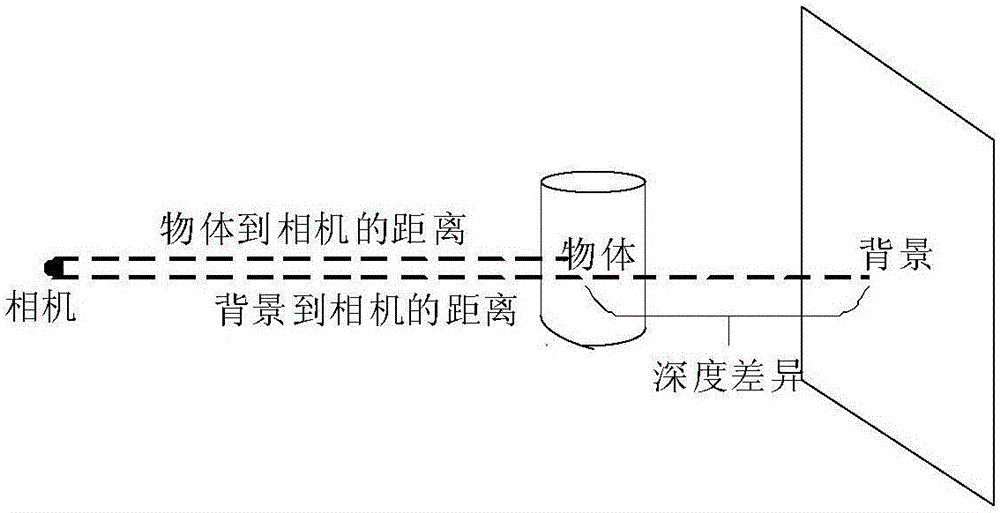

Segmentation method for image with deep image information

ActiveCN102903110AReduced execution timeImprove Segmentation AccuracyImage analysisImage segmentation algorithmEnergy functional

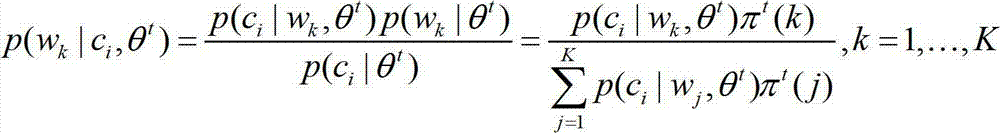

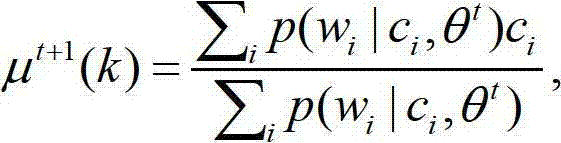

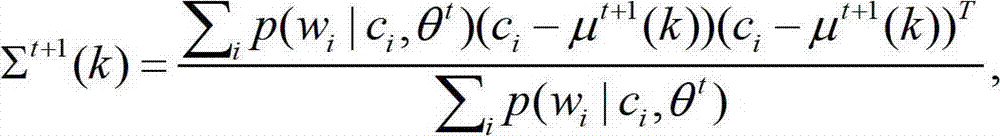

The invention discloses a segmentation method for an image with deep image information, which is high in segmentation accuracy, and capable of still achieving a good segmentation effect under the condition of a very similar front background. The segmentation method comprises the steps of: (1) obtaining the image with deep image information via Kinect; (2) performing probability modelling on the colour information of the front background and the deep image information; (3) performing parameter estimation on the model by EM (Expectation-Maximization) algorithm; and (4) performing segmentation after the first image segmentation on the image by adopting an image segmentation algorithm, wherein an energy function is formula shown in the abstract, and according to the energy function, the smallest segmentation is evaluated by a maximum flow algorithm, so as to obtain the final segmentation object.

Owner:NINGBO UNIV

Generative adversarial network-based pixel-level portrait cutout method

ActiveCN107945204AImprove Segmentation AccuracyGood segmentation effectImage enhancementImage analysisConditional random fieldData set

The invention discloses a generative adversarial network-based pixel-level portrait cutout method and solves the problem that massive data sets with huge making costs are needed to train and optimizea network in the field of machine cutout. The method comprises the steps of presetting a generative network and a judgment network of an adversarial learning mode, wherein the generative network is adeep neural network with a jump connection; inputting a real image containing a portrait to the generative network for outputting a person and scene segmentation image; inputting first and second image pairs to the judgment network for outputting a judgment probability, and determining loss functions of the generative network and the judgment network; according to minimization of the values of theloss functions of the two networks, adjusting configuration parameters of the two networks to finish training of the generative network; and inputting a test image to the trained generative network for generating the person and scene segmentation image, randomizing the generated image, and finally inputting a probability matrix to a conditional random field for further optimization. According tothe method, a training image quantity is reduced in batches; and the efficiency and the segmentation precision are improved.

Owner:XIDIAN UNIV

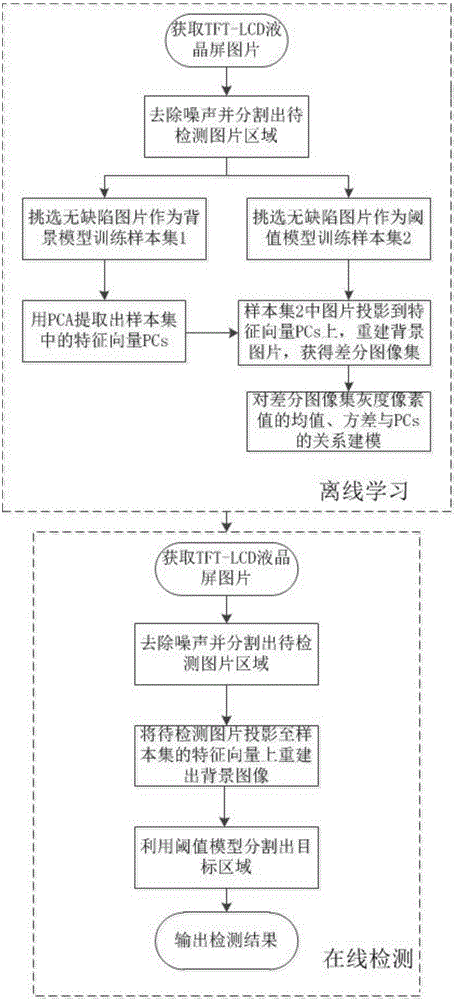

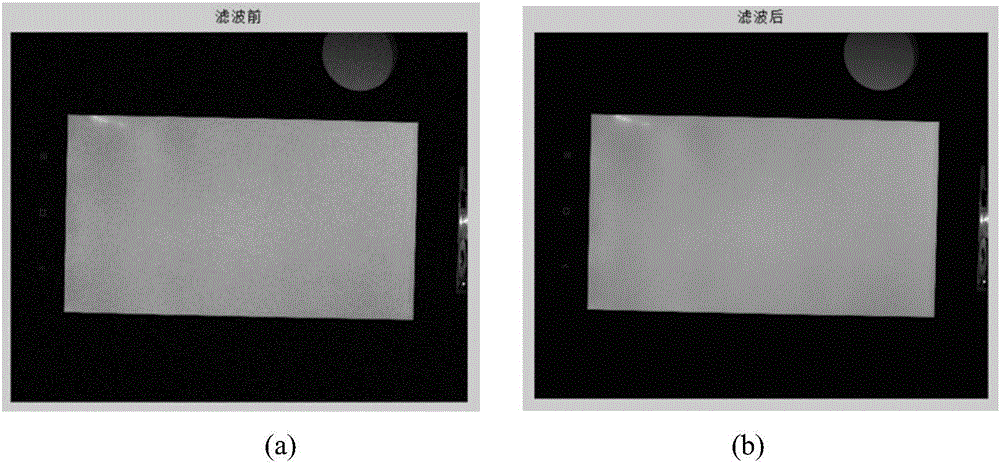

Mura defect detection method based on sample learning and human visual characteristics

ActiveCN106650770AAccurate segmentationEfficient removalCharacter and pattern recognitionHough transformRelational model

The invention discloses a mura defect detection method based on sample learning and human visual characteristics, which belongs to the TFT-LCD display defect detection field. According to the invention, the method comprises the following steps: firstly, utilizing the Gaussian filter smoothing and Hough transform rectangle to preprocess the TFT-LCD display image, removing a large amount of noise and segmenting the image areas to be detected; then, using the PCA algorithm to conduct learning to a large amount of defect-free samples; automatically extracting the differential characteristics between the background and the target and re-constructing a background image; and then, thresholding the differential characteristics between a testing image and the background; through the reconstructing of the background and the threshold calculating, jointly creating a model. According to the invention, based on the training sample learning, a relationship model between the background structure information and the threshold value is established; and a self-adaptive segmentation algorithm based on human visual characteristics is proposed. The main purpose of the invention is to detect different mura defects in a TFT-LCD, to raise the qualification rate and to increase accuracy for the detection of mura defects.

Owner:NANJING UNIV

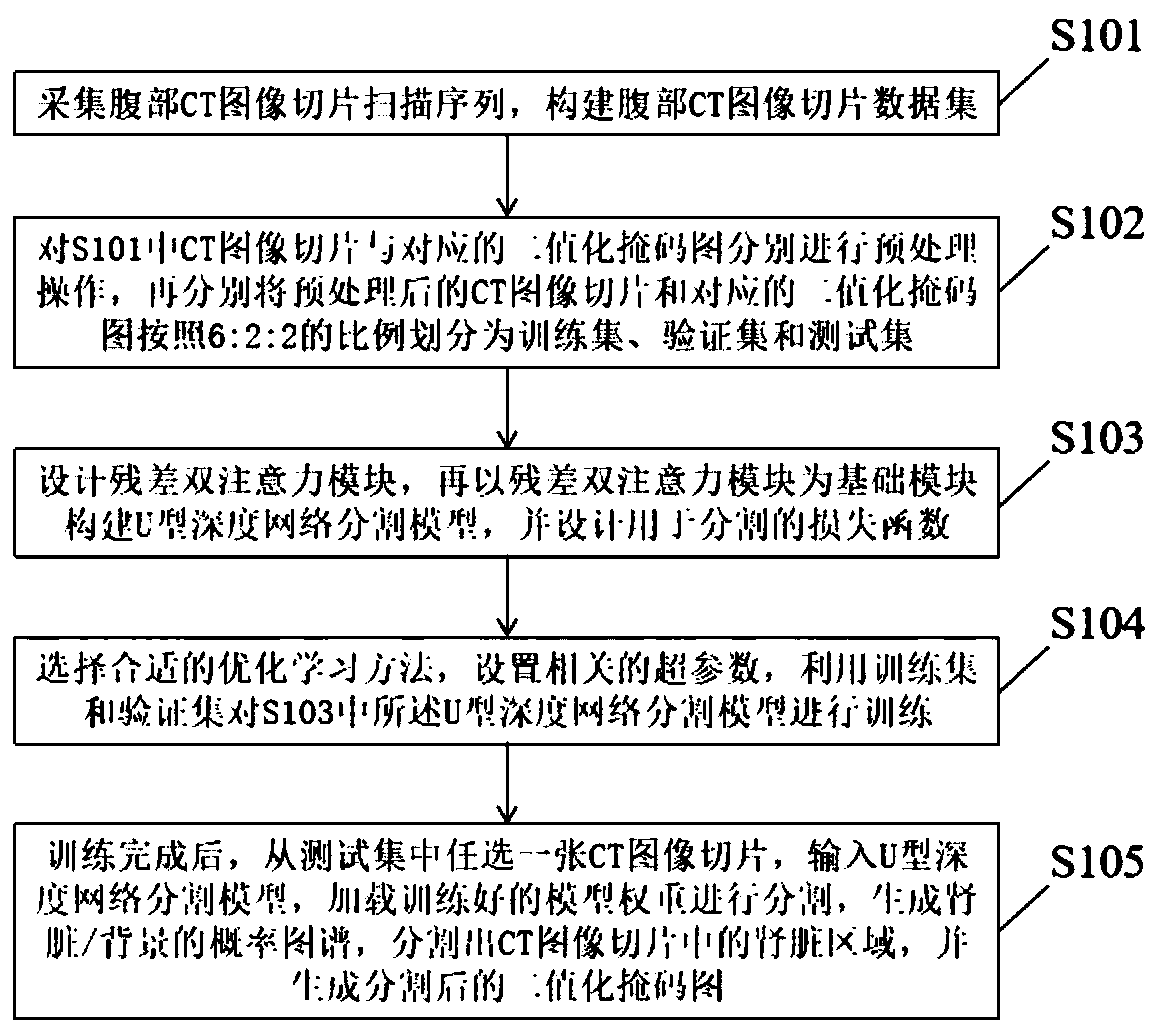

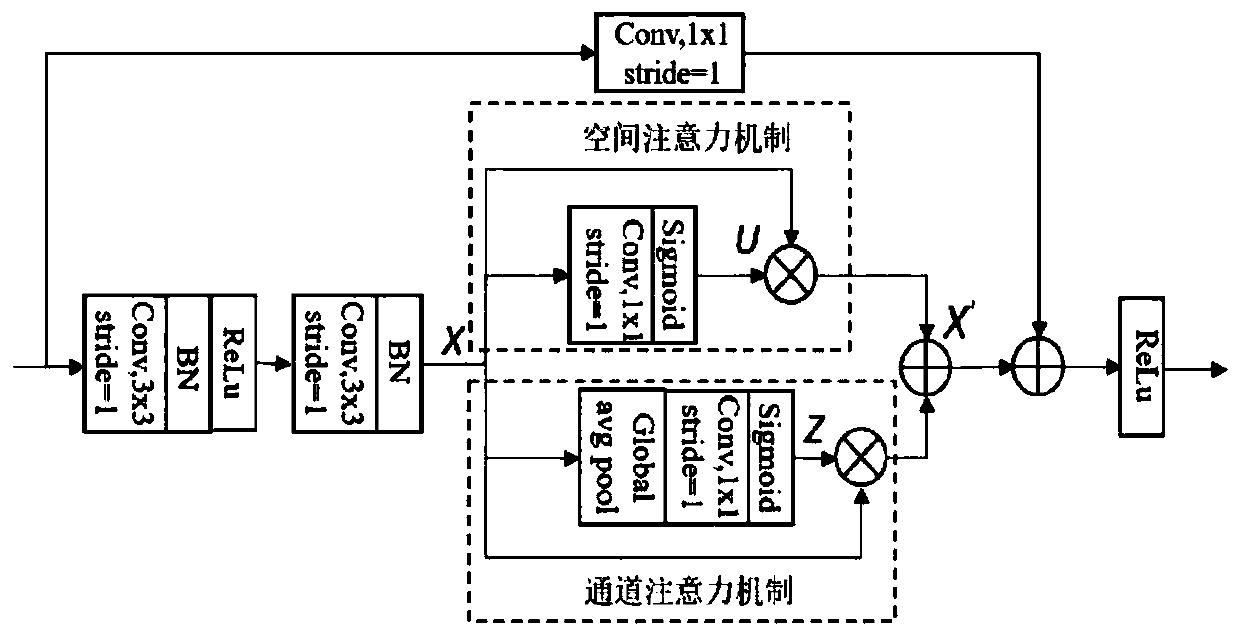

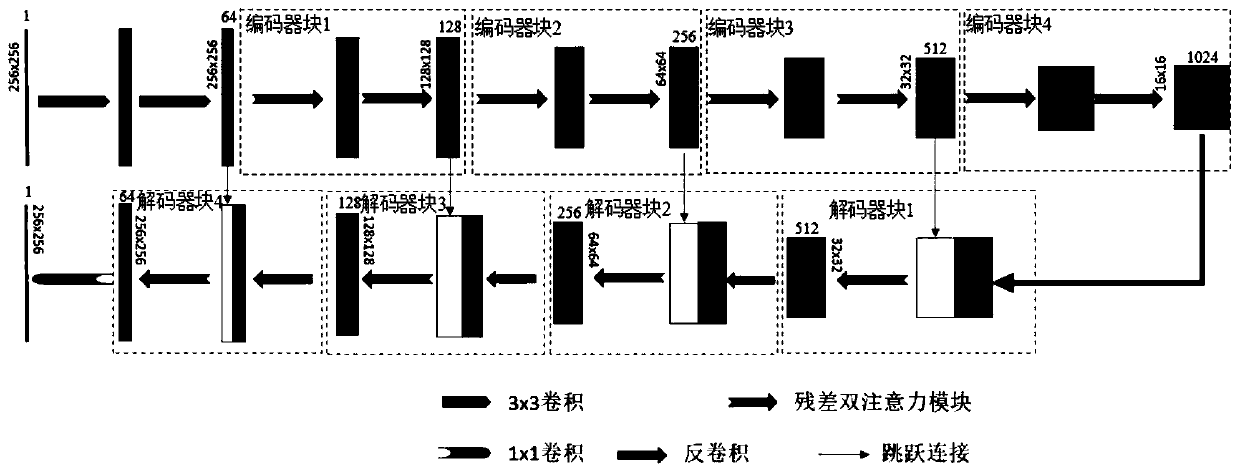

CT image kidney segmentation algorithm based on residual double-attention deep network

PendingCN110675406AResponds effectively to shape changesRobust to shape changesImage enhancementImage analysisAutomatic segmentationFeature learning

The invention discloses a CT image kidney segmentation algorithm based on a residual double-attention deep network. According to the method, the advantage that the residual error unit can repeatedly utilize the features and the excellent feature learning capability of the double attention mechanism are combined; a residual double attention module is designed; and a residual double attention moduleis used as a basic module to construct a U-shaped deep network segmentation model, and a loss function for segmentation is designed at the same time, so that the U-shaped deep network segmentation model can pay more attention to kidney region features, can effectively cope with shape changes of the kidney with cystic lesions, and can maintain robustness for the shape changes of the kidney with cystic lesions. Therefore, the boundary of the kidney area is accurately positioned, and automatic segmentation of the kidney area in the CT image is achieved, and a good segmentation effect is achieved.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

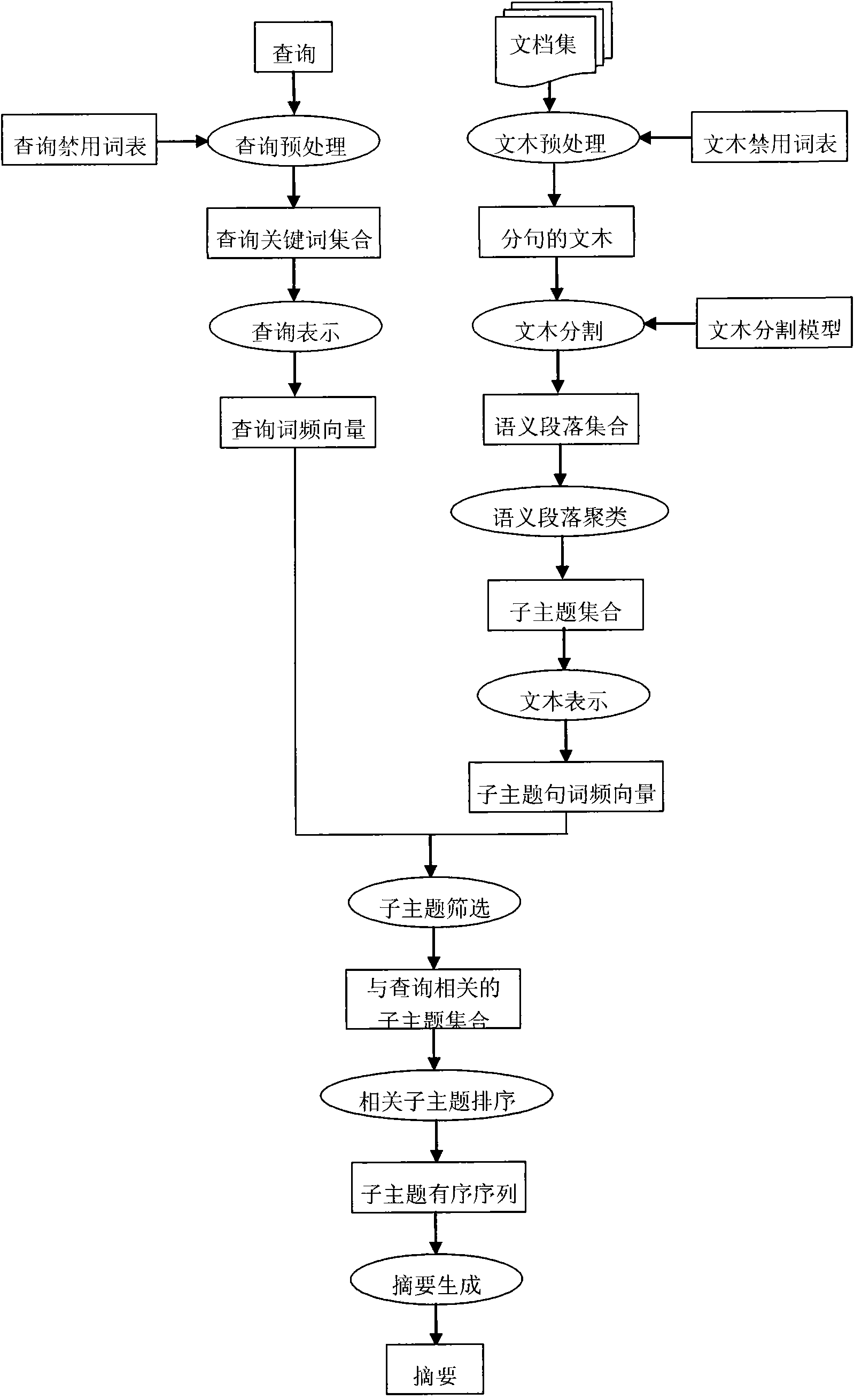

Multi-document auto-abstracting method facing to inquiry

InactiveCN101620596ASolve problemsMeet individual requirementsSpecial data processing applicationsFrequency vectorDocument preparation

The invention relates to a multi-document auto-abstracting method facing to inquiry, which comprises the following steps: performing preprocessing on the inquiry and documents; performing topic segmentation and semantic paragraph clustering on the preprocessed documents to obtain subtopics; expressing the inquiry and the sentences in each of the subtopics in the form of a word frequency vector, and calculating the correlation measurement of the inquiry and the subtopics; screening the subtopics according to the correlation measurement of the inquiry and the subtopics, sequencing the subtopics according to the importance of the subtopics, and selecting the front T important subtopics to obtain an ordered sequence of the subtopics correlative with the inquiry; and circularly obtaining representative sentences from the subtopic sequence in turn, and connecting the representative sentences together to generate an abstract. The method uses the topic segmentation technique so that the abstract is in a limited length range and comprises the important information in a document set as much as possible, provides more targeted services, can adjust the content of the abstract according to a user inquiry topic, and can achieve the interactions with users.

Owner:NORTHEASTERN UNIV

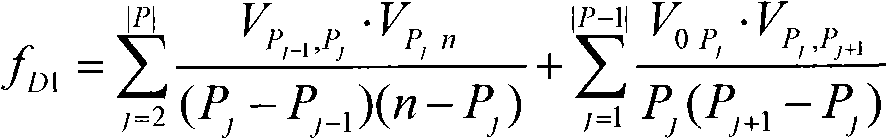

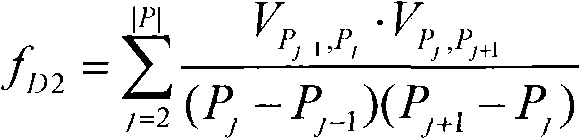

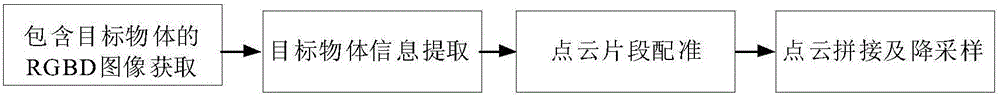

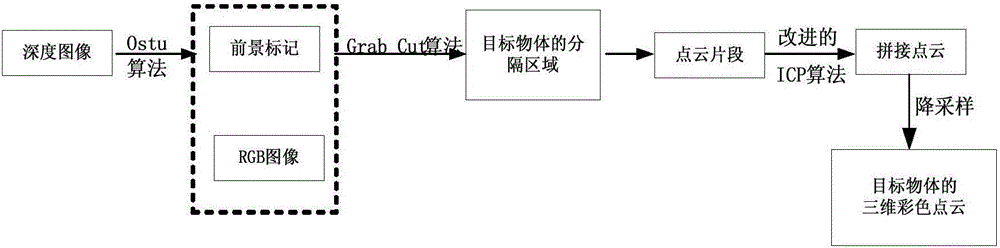

Target object three-dimensional color point cloud generation method based on KINECT

InactiveCN105989604AReduce memory space requirementsLow priceImage enhancementImage analysisPoint cloudRgb image

The invention discloses a target object three-dimensional color point cloud generation method based on Kinect. Firstly a set of RGBD images are photographed around a target object so that the set of RGBD images are enabled to include complete information of the target object; then as for each RGBD image, Ostu segmentation is performed on a depth image and then a foreground mark is acquired and acts as the input of a Grab Cut algorithm, the RGB image is segmented again and then the accurate area of the target object is acquired, and background information is removed; registration is performed on adjacent point cloud fragments by using an improved ICP algorithm so that a transformation relation matrix between the point cloud fragments is acquired; and finally point clouds are spliced by using the transformation relation matrix between the point cloud fragments, and down-sampling is performed to reduce redundancy so that the complete three-dimensional color point cloud data of the target object can be acquired.

Owner:HEFEI UNIV OF TECH

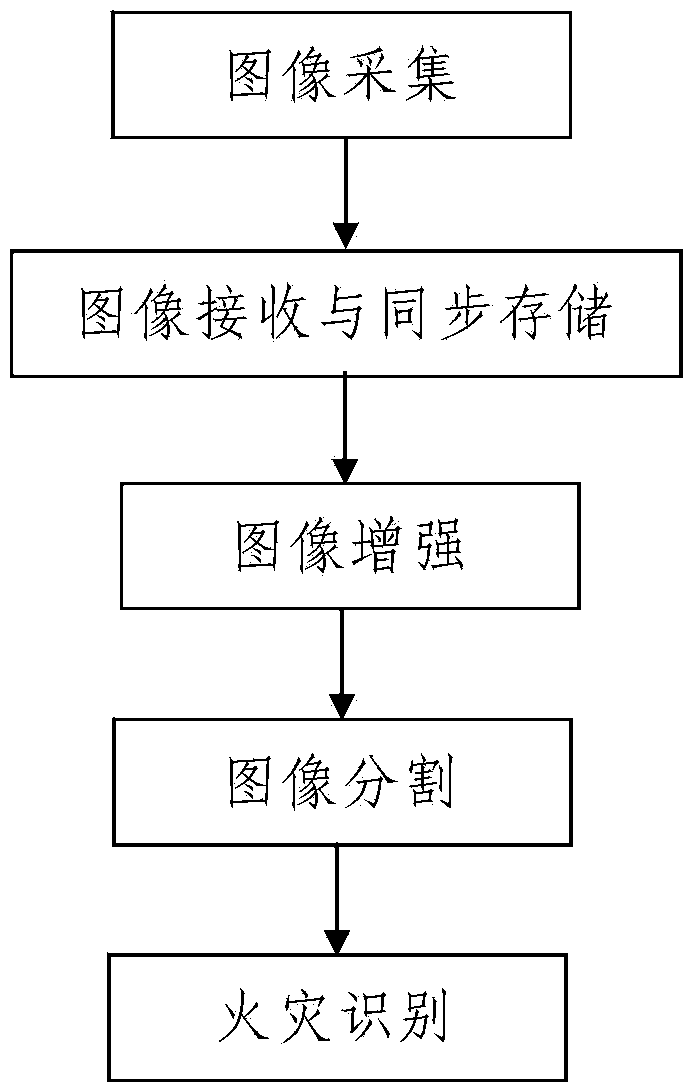

Image type fire flame identification method

ActiveCN103886344AThe method steps are simpleReasonable designCharacter and pattern recognitionImaging processingFeature extraction

The invention discloses an image type fire flame identification method. The method comprises the following steps of 1, image capturing; 2, image processing. The image processing comprises the steps of 201, image preprocessing; 202, fire identifying. The fire identifying comprises the steps that indentifying is conducted by the adoption of a prebuilt binary classification model, the binary classification model is a support vector machine model for classifying the flame situation and the non-flame situation, wherein the building process of the binary classification model comprises the steps of I, image information capturing;II, feature extracting; III, training sample acquiring; IV, binary classification model building; IV-1, kernel function selecting; IV-2, classification function determining, optimizing parameter C and parameter D by the adoption of the conjugate gradient method, converting the optimized parameter C and parameter D into gamma and sigma 2; V, binary classification model training. By means of the image type fire flame identification method, steps are simple, operation is simple and convenient, reliability is high, using effect is good, and the problems that reliability is lower, false or missing alarm rate is higher, using effect is poor and the like in an existing video fire detecting system under a complex environment are solved effectively.

Owner:东开数科(山东)产业园有限公司

Face detection method based on Gaussian model and minimum mean-square deviation

InactiveCN102096823AAccurate segmentationQuick splitCharacter and pattern recognitionPattern recognitionFace detection

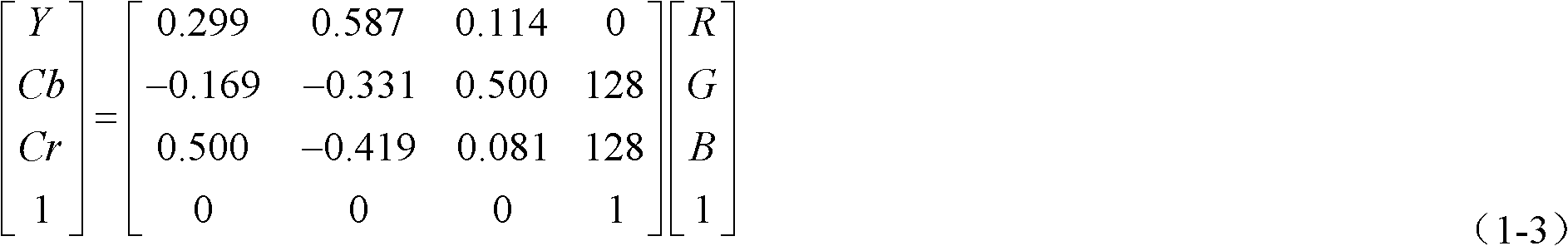

The invention provides a face detection method based on a Gaussian model and a minimum mean-square deviation, and relates to a face recognition technology. A face detection method based on the Gaussian model and the minimum mean-square deviation under the premise of a complicated background, a side face, a stopper and a plurality of faces. The method comprises the following steps of: building a YCbCr Gaussian model: building the YCbCr Gaussian model for face skin color distribution according to collected skin color sample data, and performing lighting compensation on the image, wherein in the YCbCr, Y is a brightness component, Cb is a blue chroma component, and Cr is a red chroma; performing skin color segmentation on the image by using the built YCbCr Gaussian model and the minimum mean-square deviation; performing binaryzation on a skin color region, and processing a binary image by opening to eliminate a small bridge and discrete points; rejecting detected non-face regions in the similar skin color or skin color according to future knowledge of a face; and finally marking a face position by using a rectangular frame.

Owner:XIAMEN UNIV

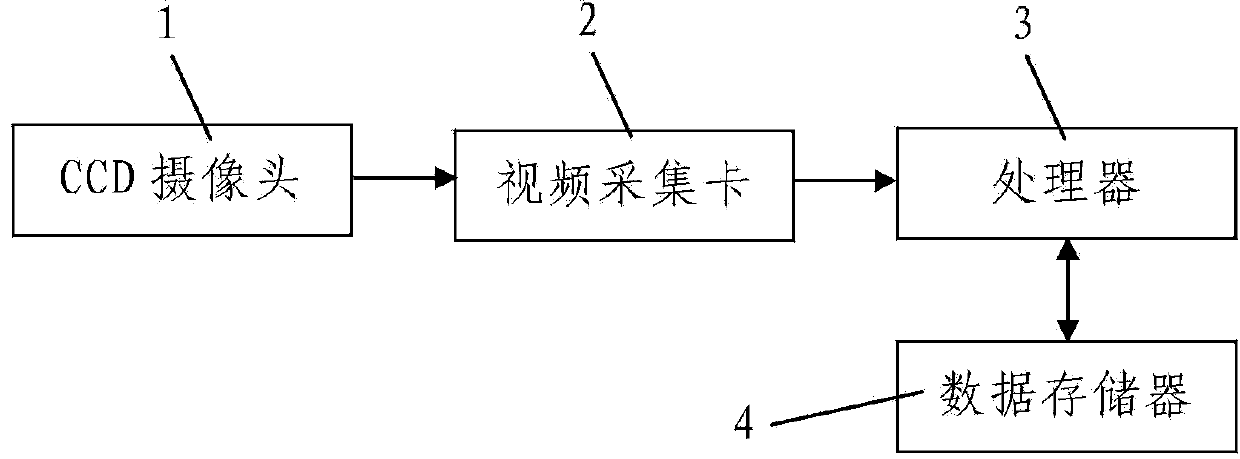

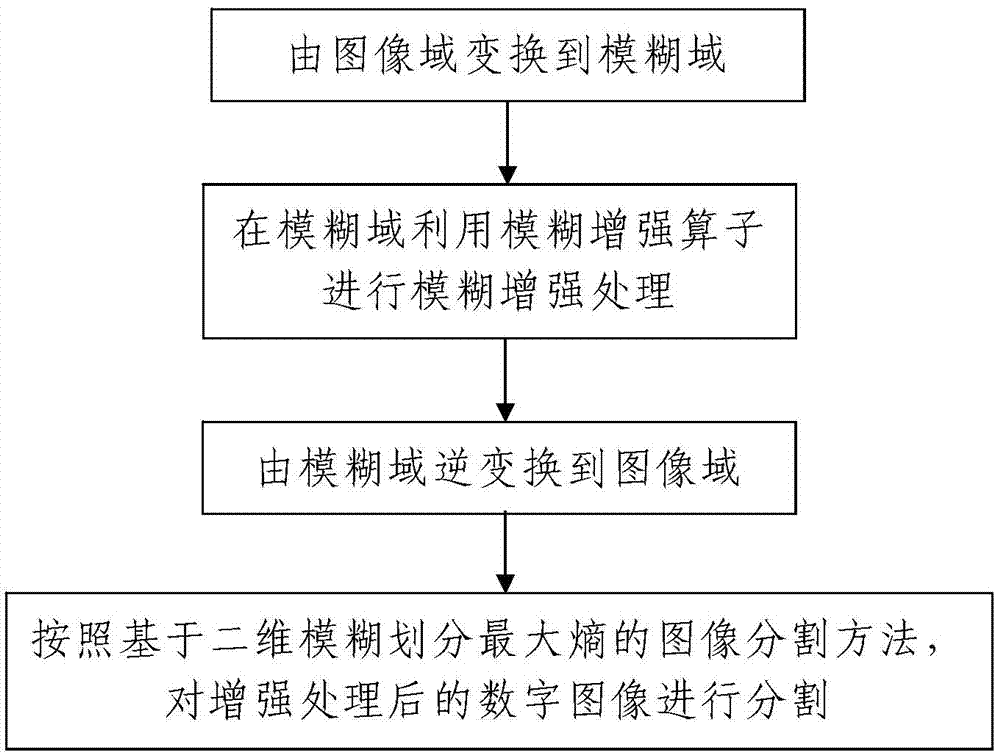

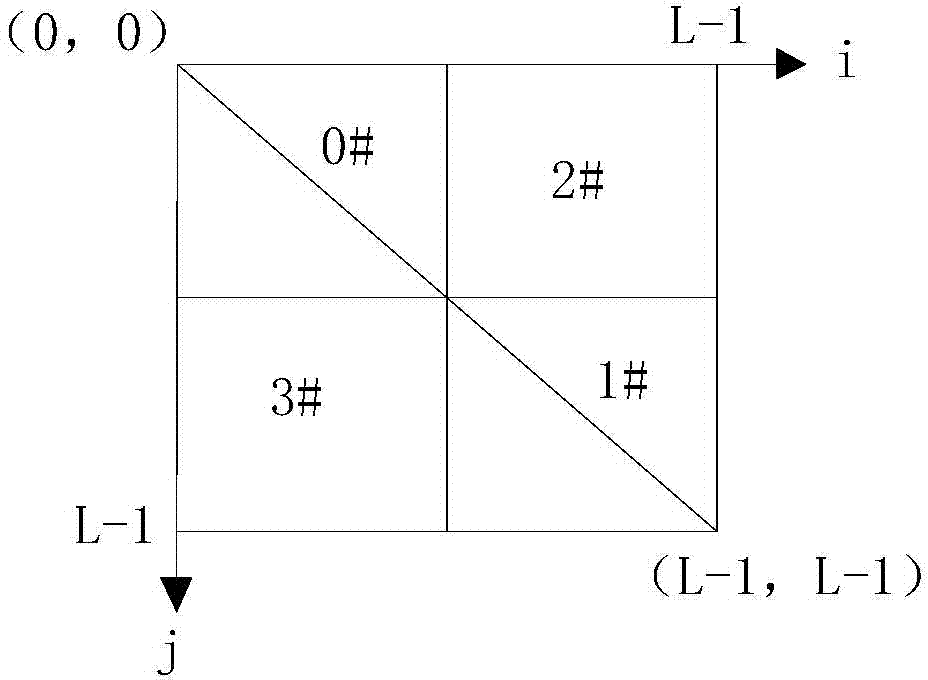

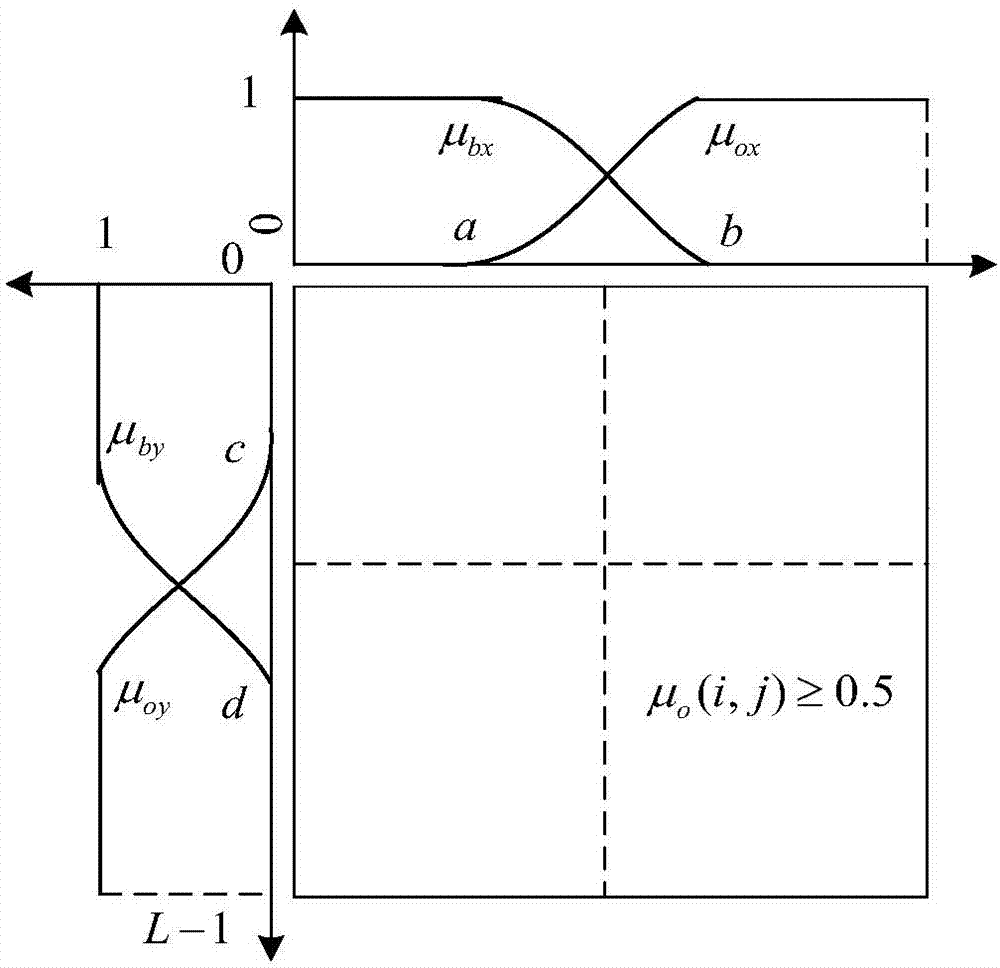

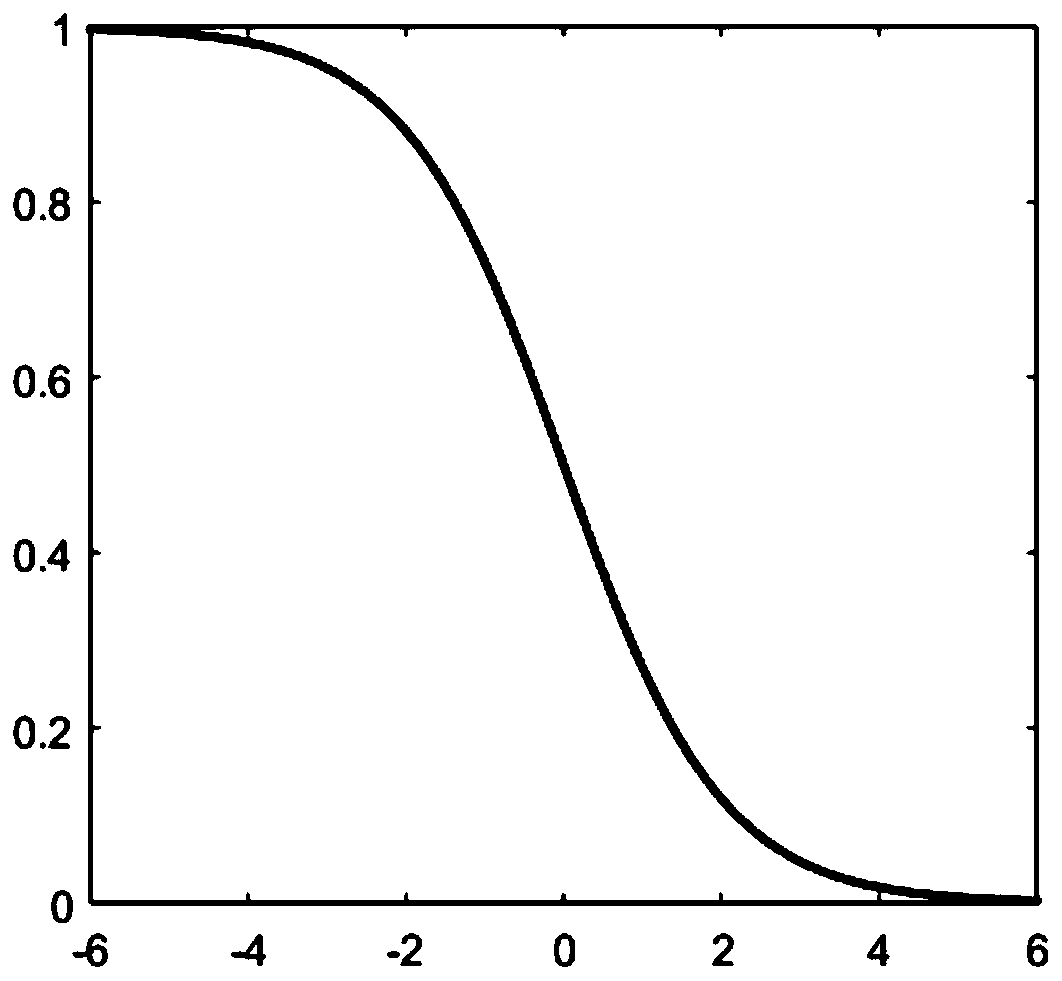

Image enhancement and partition method

InactiveCN103871029AThe method steps are simpleReasonable designImage enhancementImage analysisGradationImage segmentation

The invention discloses an image enhancement and partition method, which comprises the following steps of I, image enhancement: a processor and an image enhancement method based on fuzzy logic are adopted for performing enhancement processing on an image needing to be processed, and the process is as follows: i, converting from an image domain to a fuzzy domain: mapping the grey value of each pixel point of the image needing to be processed to a fuzzy membership degree of a fuzzy set according to a membership function (shown in the specifications); ii, performing fuzzy enhancement processing by utilizing a fuzzy enhancement operator in the fuzzy domain; iii, converting from the fuzzy domain to the image domain; II, image partition: partitioning a digital image subjected to enhancement processing, i.e. a to-be-partition image, according to an image partition method based on a three-dimensional fuzzy division maximum entropy. The method is simple in steps, reasonable in design, convenient to realize, good in processing effect and high in practical value, and an image enhancement and partition process can be simply, conveniently and quickly completed with high quality.

Owner:XIAN UNIV OF SCI & TECH

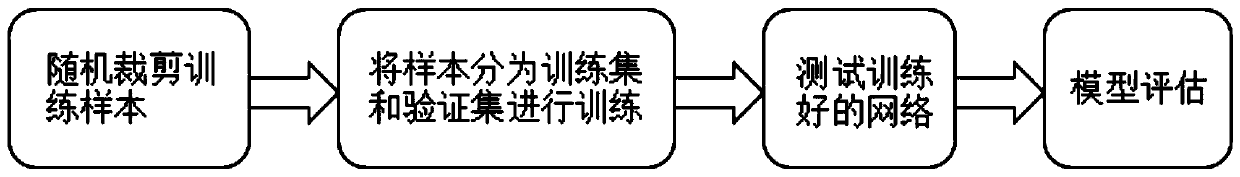

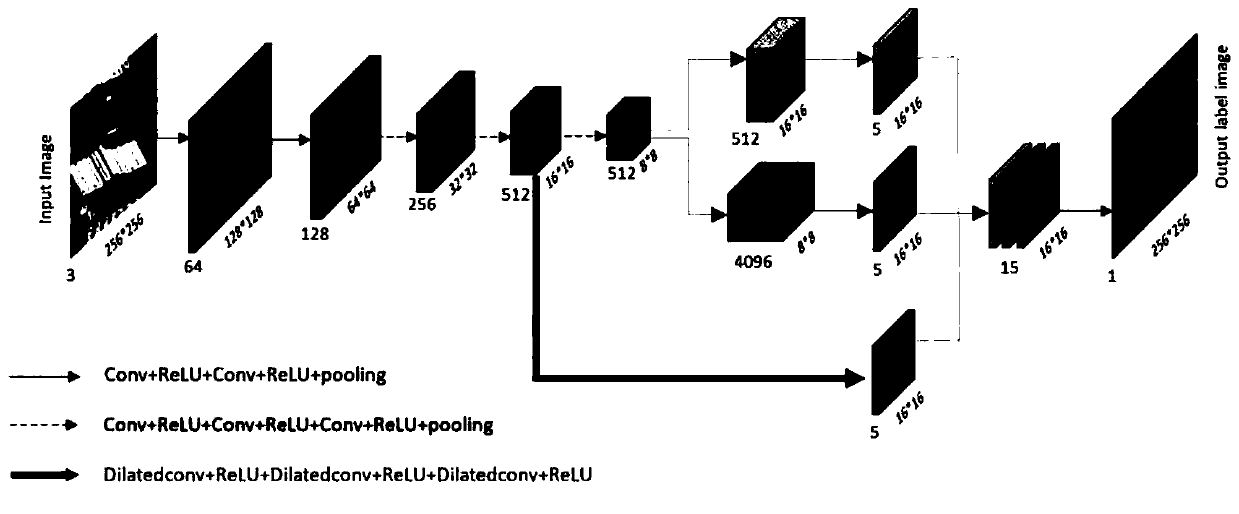

Remote sensing image semantic segmentation method based on migration VGG network

ActiveCN110059772AGood segmentation effectShort training timeCharacter and pattern recognitionNeural architecturesPattern recognitionNerve network

The invention discloses a remote sensing image semantic segmentation technology based on a VGG network. The method comprises the following steps: 1, randomly cutting the high-resolution remote sensingimage for training and the corresponding label image into small images; dividing the network structure into an encoding part and a decoding part; adopting the depooling path and the deconvolution path to double the resolution of the coded information; carrying out channel connection on a characteristic image and a result of cavity convolution, recovering the characteristic image to an original size through deconvolution upsampling, inputting an output label image into a PPB module for multi-scale aggregation processing, and finally, updating network parameters in a random gradient descent mode by taking cross entropy as a loss function; inputting the small images formed by sequentially cutting the test images into a neural network to predict corresponding label images, and splicing the label images into an original size. According to the technical scheme, the segmentation precision of the model is improved, the complexity of the network is reduced, and the training time is saved.

Owner:WENZHOU UNIVERSITY

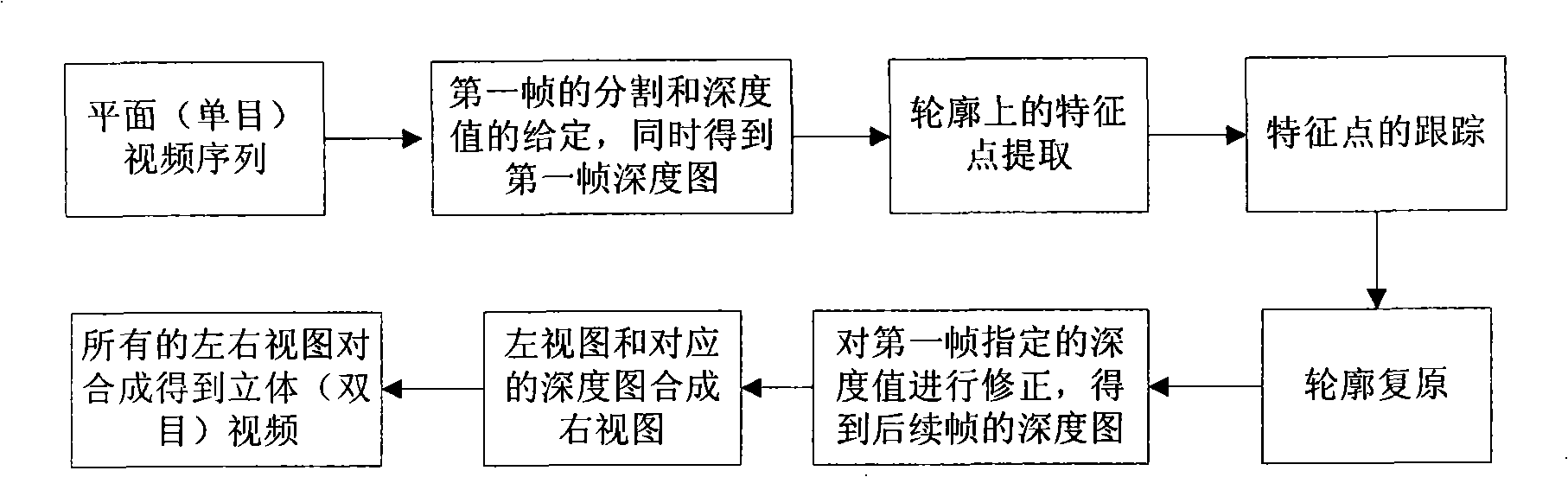

Method for converting plane video into stereoscopic video based on human-machine interaction

InactiveCN101257641ADepth value is accurateGood segmentation effectImage enhancementImage analysisRecovery methodStereoscopic video

The present invention relates to a human-machine interaction based method for converting plane video to stereo video, which belongs to the computer multimedia technology field. The method comprises that: performing object partition to foreground object of first frame of plane video sequence and designating the deepness value, giving a deepness value for the background region, generating a deepness map of the first frame; selecting a plurality of characteristic points using KLT method on the profile of foreground object partitioned from the first frame, and performing track, obtaining positions of a plurality of characteristic points in the subsequent frame; generating closed profile curve of a plurality of objects in the frame using profile recovery method; generating deepness map; synthesizing a left view of original sequence and a right view synthesized by a left view and corresponding deepness map to obtain the stereo video frame; consisting stereo video sequence with all stereo video frame.The advantage of the invention is that accurate deepness map of each frame is obtained, thereby implementing conversion from plane video to stereo video, and the method simplifies working amount of users in the maximal degree.

Owner:TSINGHUA UNIV

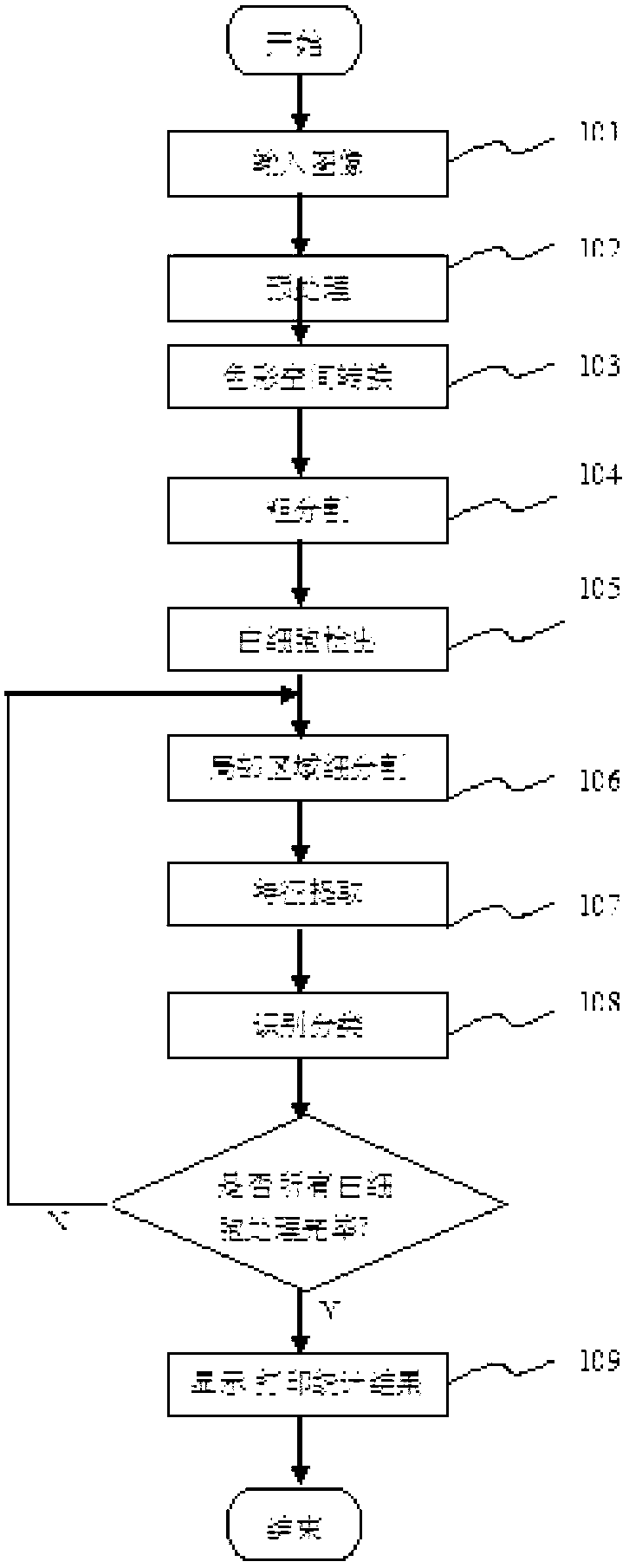

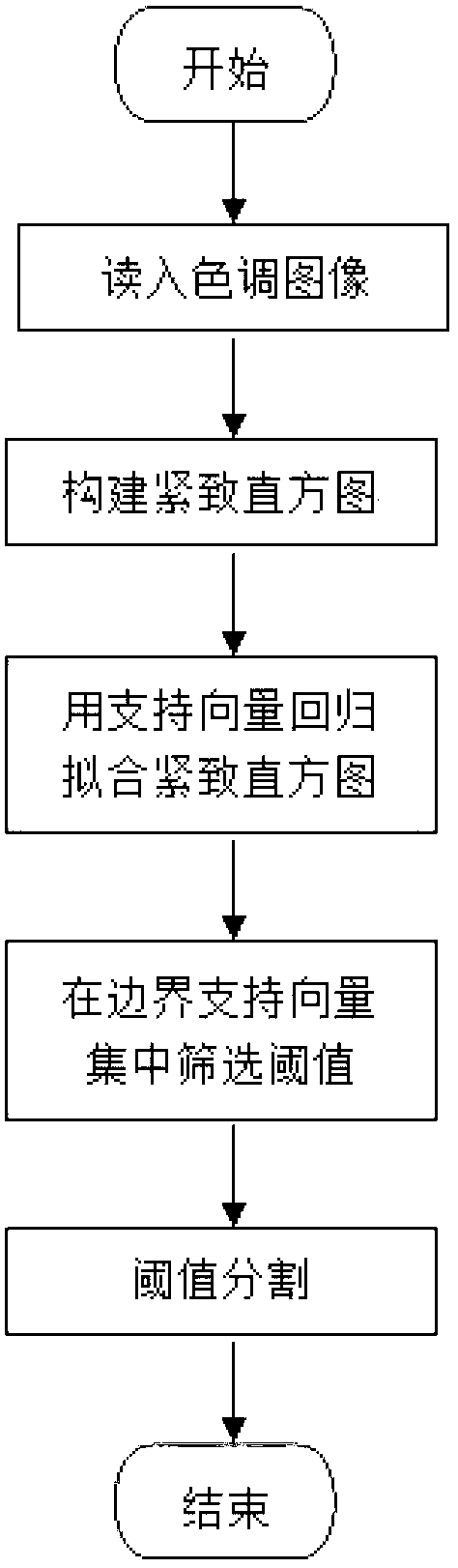

Method for automatically identifying and counting white blood cells

InactiveCN103020639AImplement classificationImprove classification efficiencyCharacter and pattern recognitionColor/spectral properties measurementsSupport vector machineWhite blood cell

The invention provides a method for automatically identifying and counting white blood cells. The method comprises the following steps of: preprocessing, color space conversion, coarse segmentation, checking of white blood cells, fine segmentation, characteristic parameter extraction, identification and classification, result output and the like. The method can be used for automatically identifying and classifying the white blood cells in blood by extracting a group of cell characteristic parameters and utilizing a support vector machine, and has the advantages of high classification efficiency, good effect, high accuracy, high stability and high robustness.

Owner:HOHAI UNIV

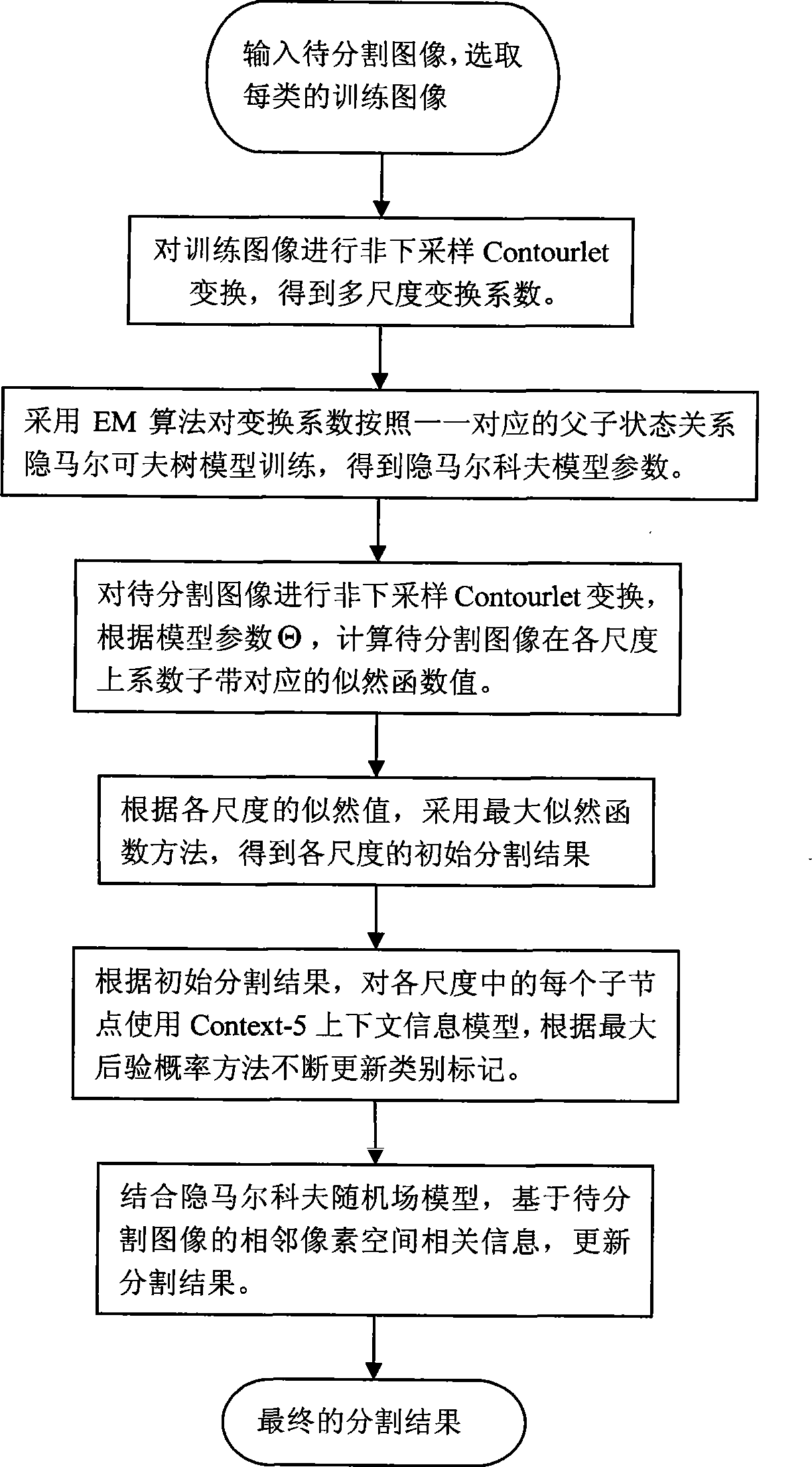

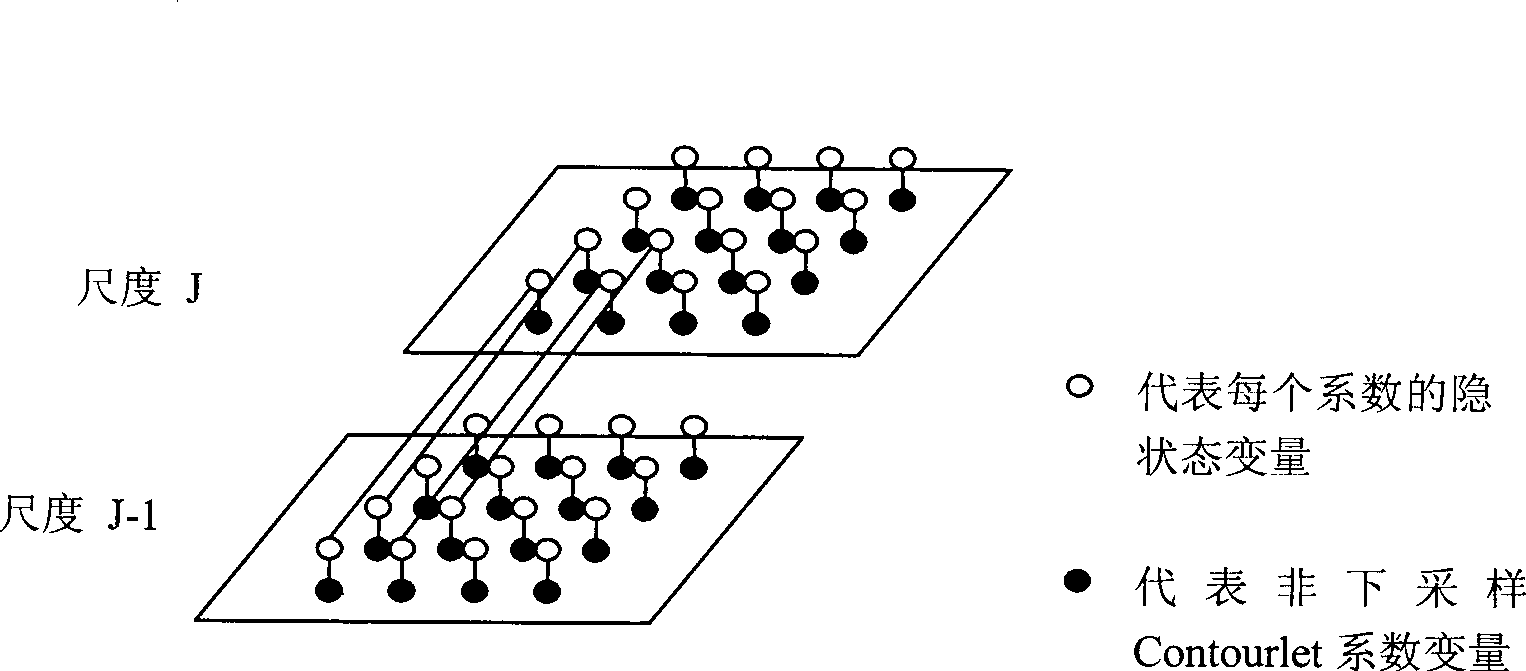

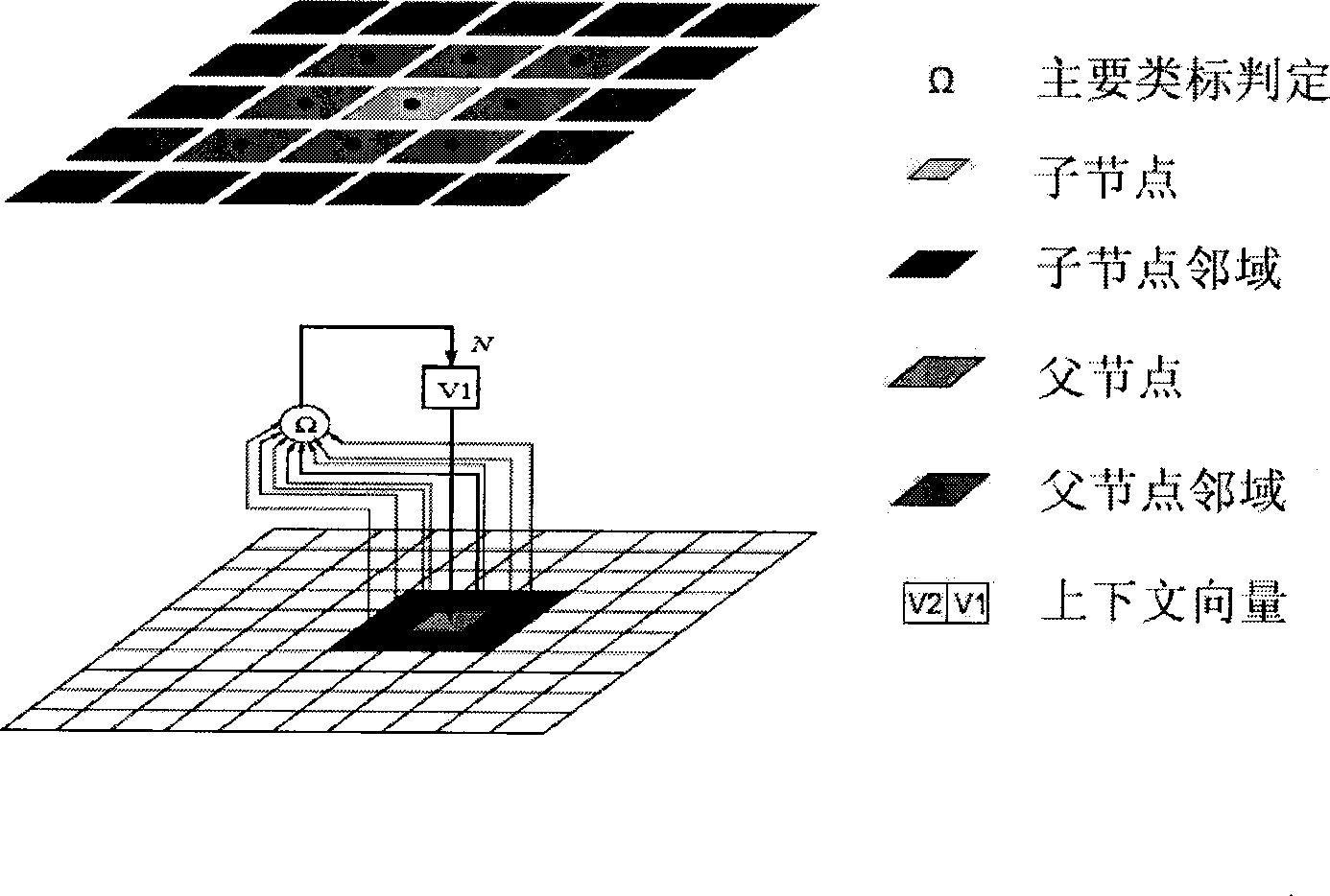

Method for segmenting HMT image on the basis of nonsubsampled Contourlet transformation

ActiveCN101447080AGood segmentation effectAvoid the Gibbs PhenomenonImage analysisRelationship - FatherMaximum a posteriori estimation

The invention discloses a method for segmenting HMT images which is based on the nonsubsampled Contourlet transformation. The method mainly solves the problem that the prior segmentation method has poor area consistency and edge preservation, and comprises the following steps: (1) performing the nonsubsampled Contourlet transformation to images to be segmented and training images of all categories to obtain multi-scale transformation coefficients; (2) according to the nonsubsampled Contourlet transformation coefficients of the training images and the hidden markov tree which represents the one-to-one father and son state relationship, reckoning the model parameters; (3) calculating the corresponding likelihood values of the images to be segmented in all scale coefficient subbands, and classifying by examining possibility after integrating a labeled tree with a multi-scale likelihood function to obtain the maximum multi-scale; (4) updating category labels for each scale based on the context information context-5 model; and (5) with the consideration of the markov random field model and the information about correlation between two adjacent pixel spaces in the images to be segmented, updating the category labels to obtain the final segmentation results. The invention has the advantages of good area consistency and edge preservation, and can be applied to the segmentation for synthesizing grainy images.

Owner:探知图灵科技(西安)有限公司

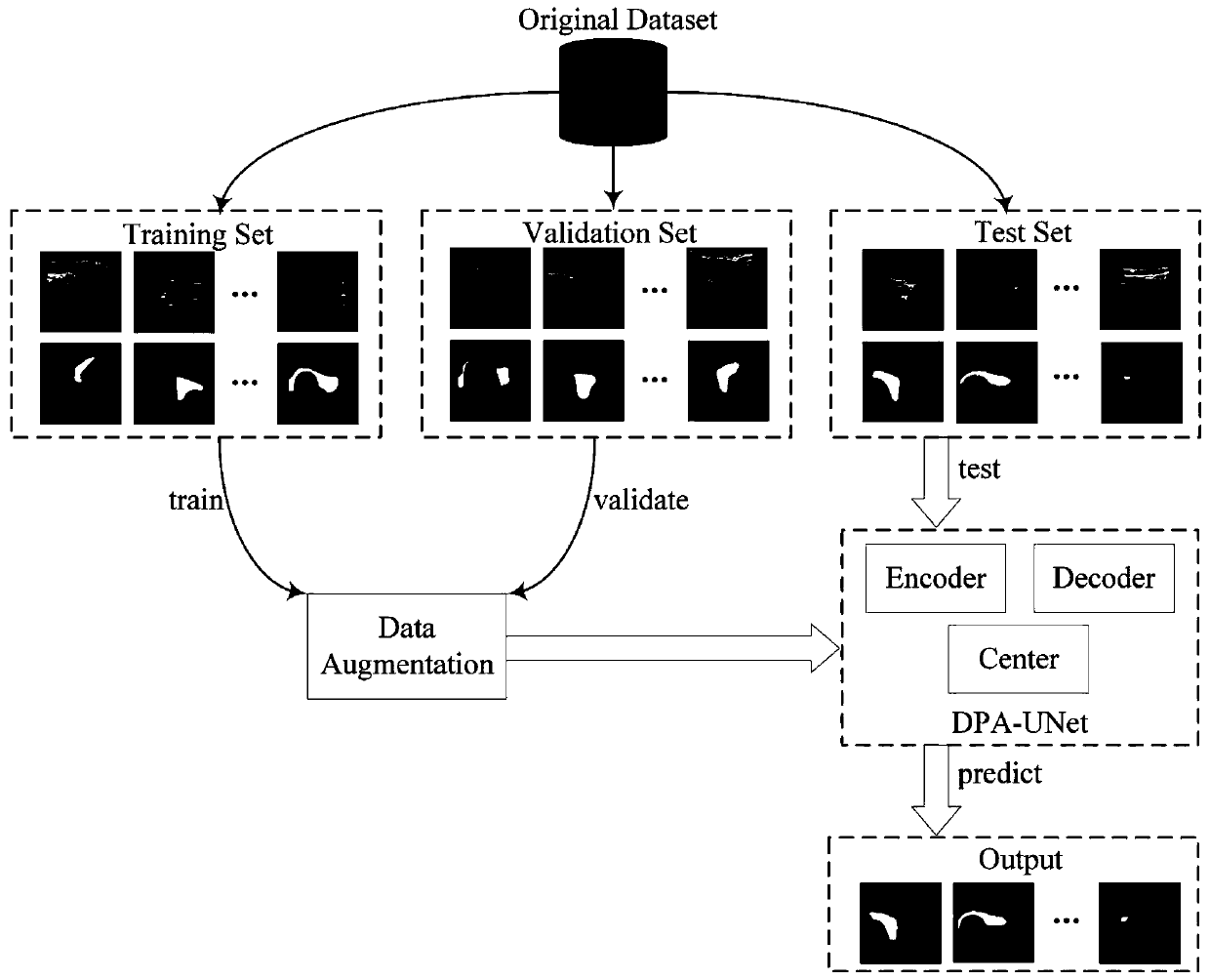

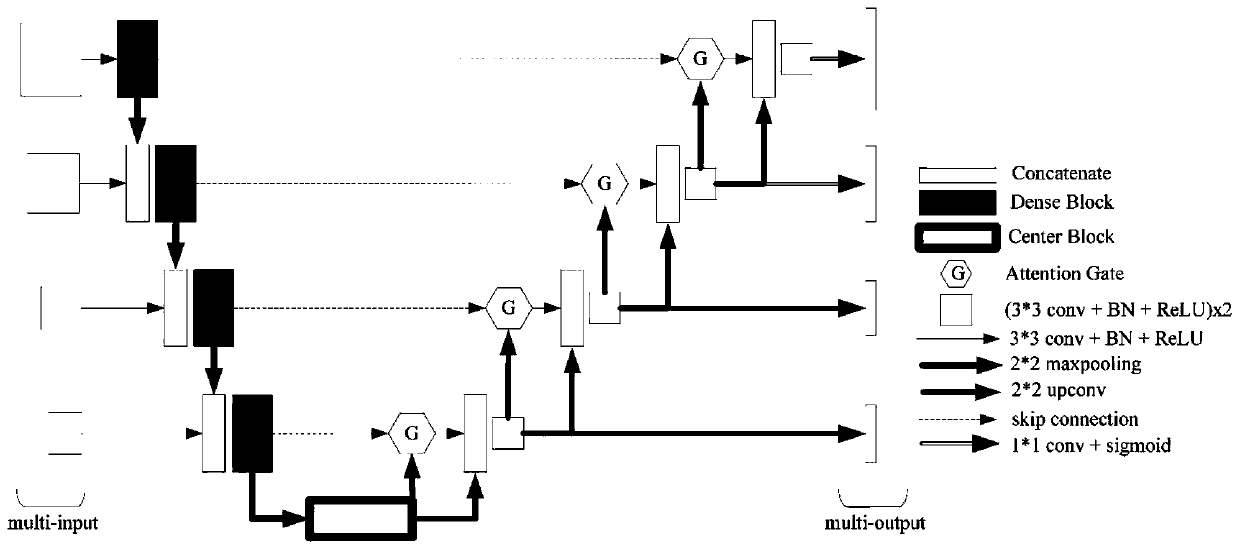

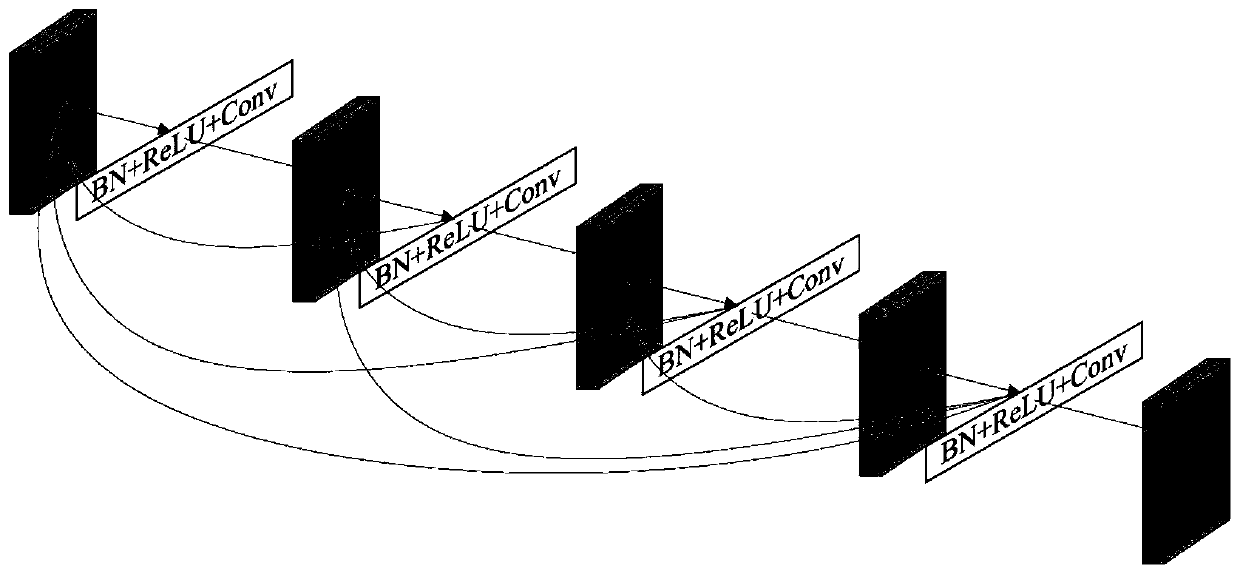

Medical image segmentation method based on deep learning

ActiveCN111145170AImprove performanceInhibitory responseImage enhancementImage analysisPattern recognitionVisual technology

The invention belongs to the technical field of medical image processing and computer vision, and particularly relates to a medical image segmentation method based on deep learning. According to the method, on the basis of U-Net Baseline, multiple technologies such as a multi-scale framework, a dense convolutional network, an attention mechanism, a pyramid model and small sample enhancement are fused, the method is helpful for realizing feature reuse, recovering lost context information, inhibiting response of an unrelated region and improving the performance of a small ROI, solves the problems of few ultrasonic image samples, low pixel, fuzzy boundary, large difference and other pain points, and obtains an optimal segmentation effect.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA +1

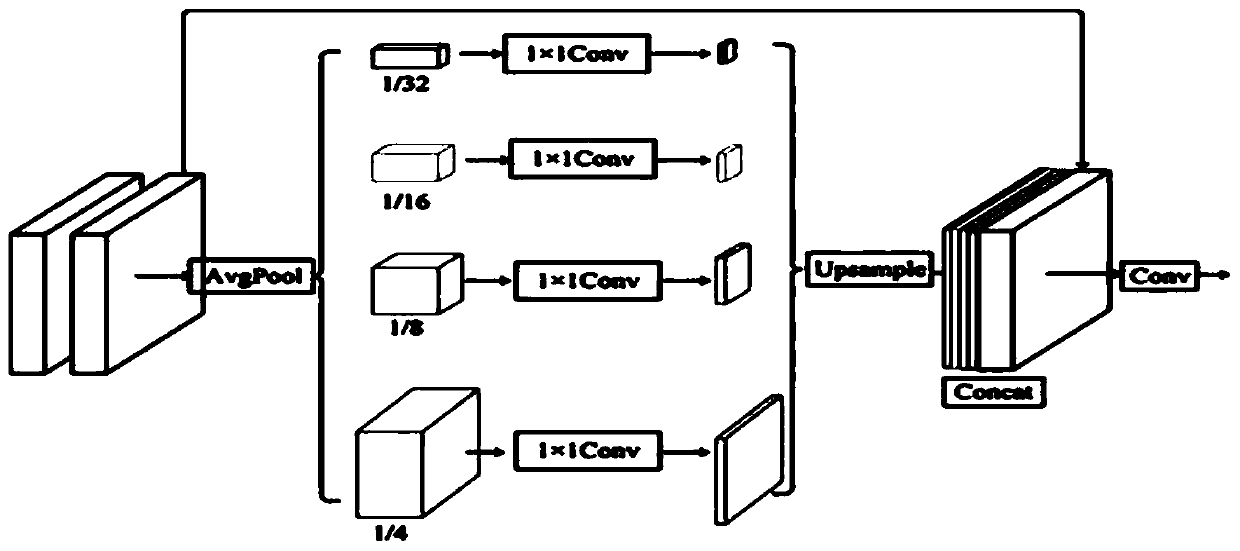

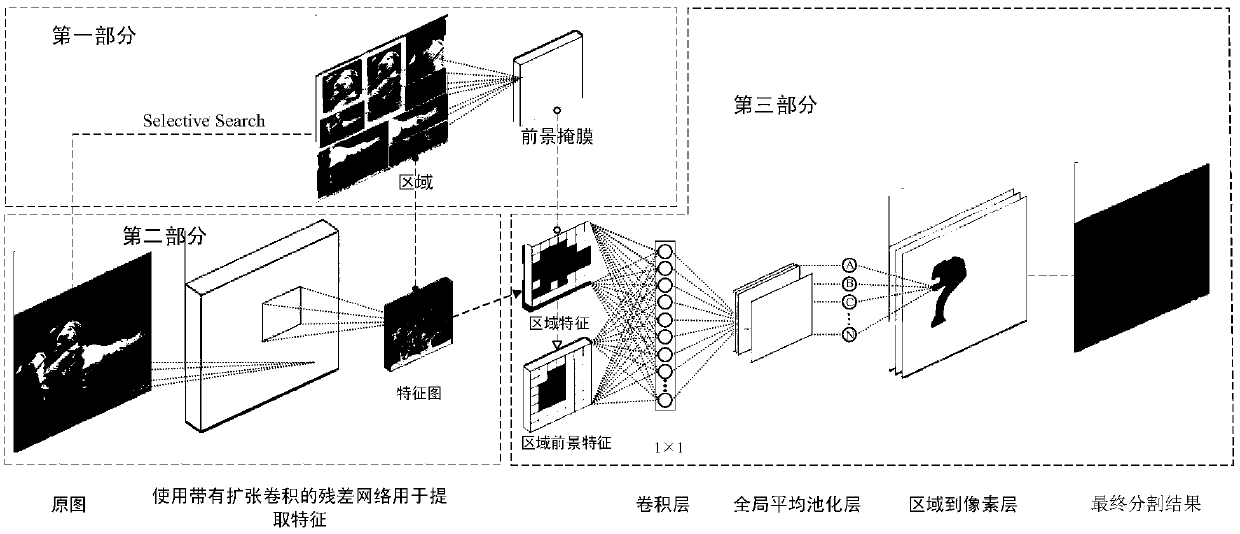

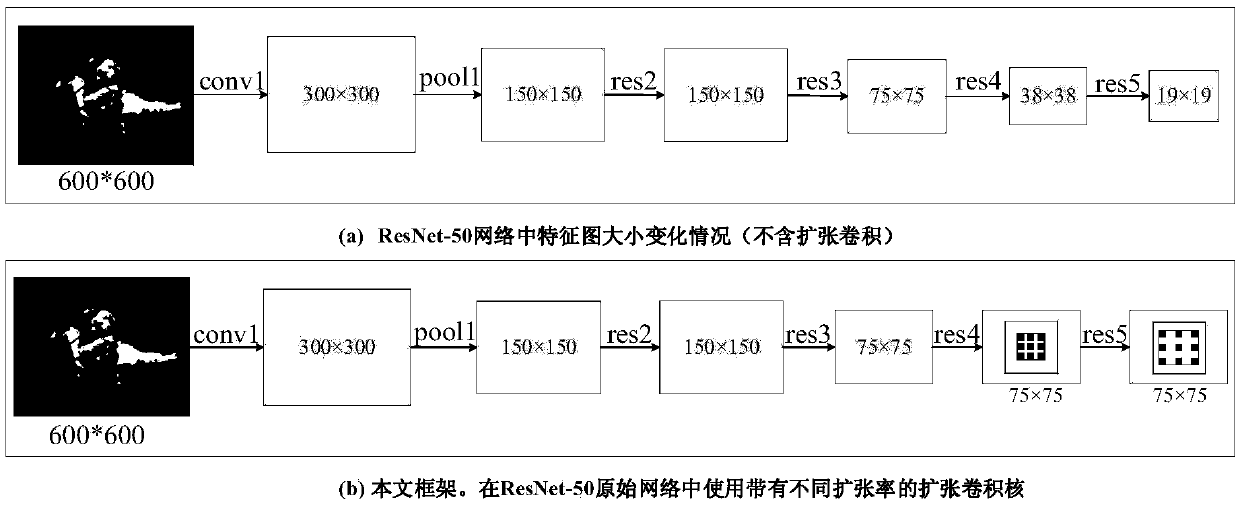

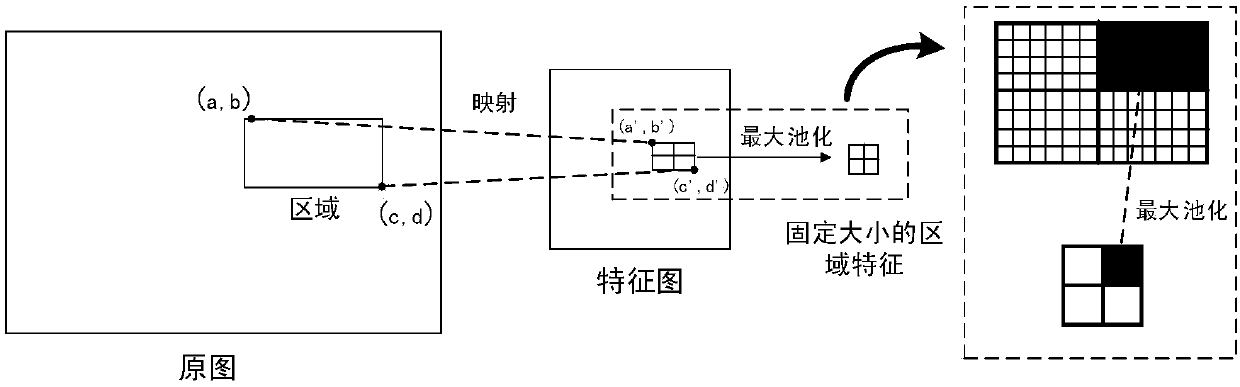

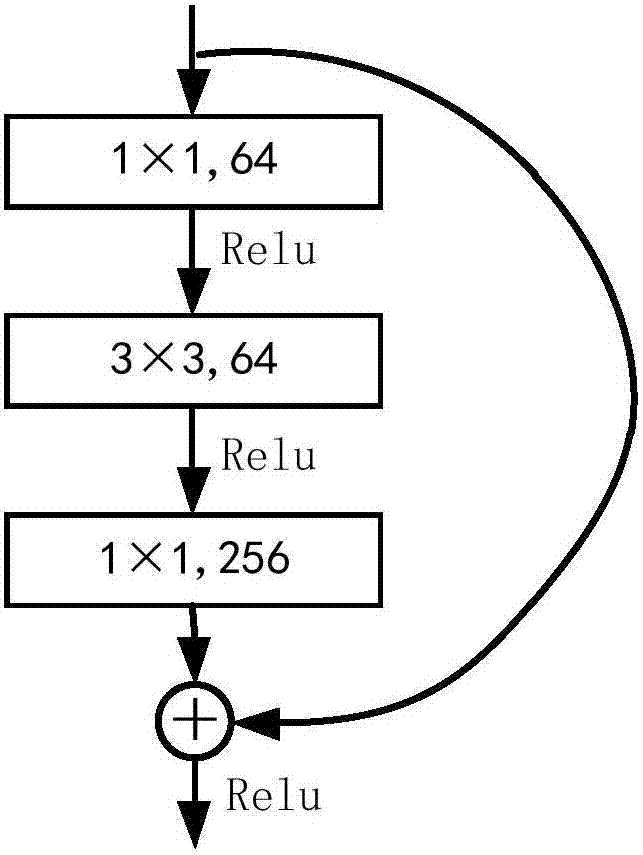

An image semantic segmentation method based on an area and depth residual error network

ActiveCN109685067ASolve the disadvantages of prone to rough segmentation boundariesGood segmentation effectCharacter and pattern recognitionNetwork modelPixel classification

The invention discloses an image semantic segmentation method based on a region and a deep residual network. According to the region-based semantic segmentation method, mutually overlapped regions areextracted by using multiple scales, targets of multiple scales can be identified, and fine object segmentation boundaries can be obtained. According to the method based on the full convolutional network, the convolutional neural network is used for autonomously learning features, end-to-end training can be carried out on a pixel-by-pixel classification task, but rough segmentation boundaries areusually generated in the method. The advantages of the two methods are combined: firstly, a candidate region is generated in an image by using a region generation network, then feature extraction is performed on the image through a deep residual network with expansion convolution to obtain a feature map, the feature of the region is obtained by combining the candidate region and the feature map, and the feature of the region is mapped to each pixel in the region; And finally, carrying out pixel-by-pixel classification by using the global average pooling layer. In addition, a multi-model fusionmethod is used, different inputs are set in the same network model for training to obtain a plurality of models, and then feature fusion is carried out on the classification layer to obtain a final segmentation result. Experimental results on SIFT FLOW and PASCAL Context data sets show that the algorithm provided by the invention has relatively high average accuracy.

Owner:JIANGXI UNIV OF SCI & TECH

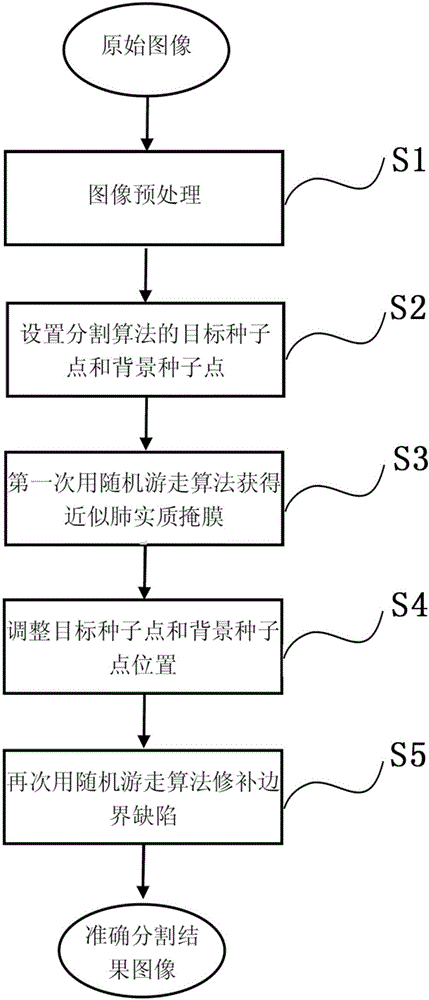

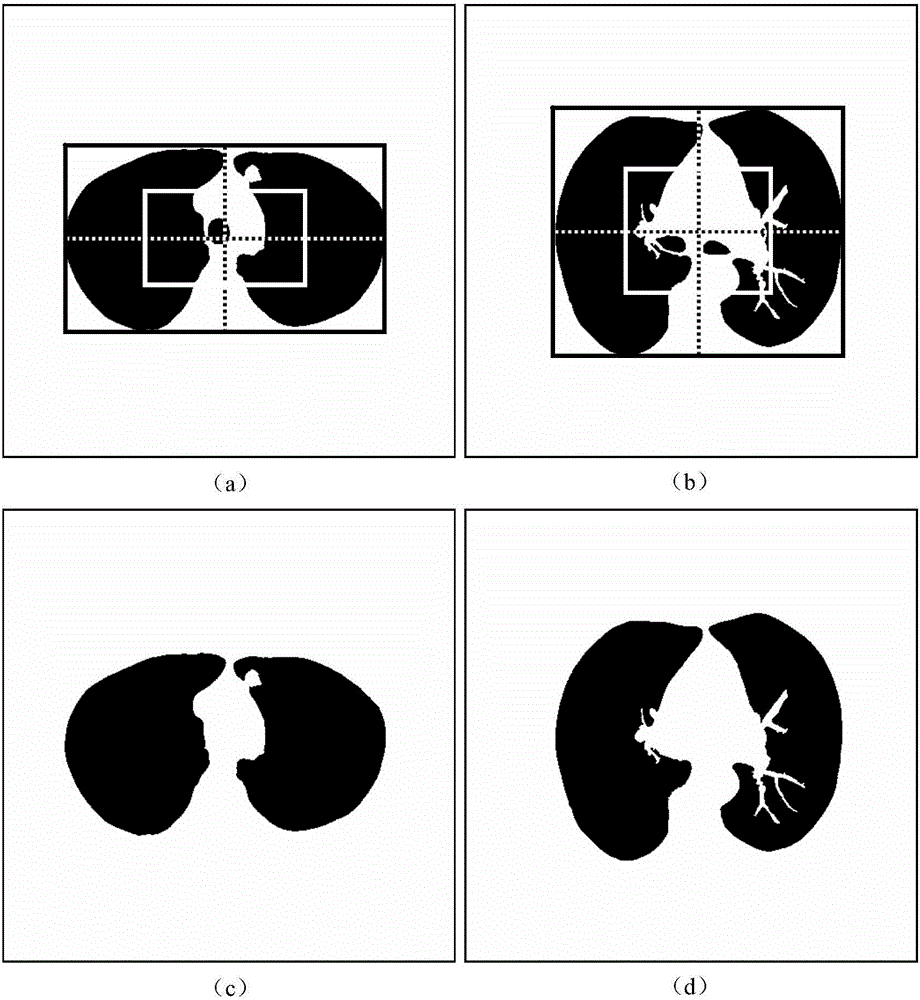

Automatic division method for pulmonary parenchyma of CT image

InactiveCN104992445AThe segmentation result is accurateImprove applicabilityImage enhancementImage analysisPulmonary parenchymaNatural science

The invention provides an automatic division method for pulmonary parenchyma of a CT image. According to the automatic division method, the CT is divided through carrying out a random migration algorithm for two times to obtain the accurate pulmonary parenchyma; in the first time, the random migration algorithm is used for dividing to obtain a similar pulmonary parenchyma mask; and in the second time, the random migration algorithm is used for repairing defects of the periphery of a lung and dividing to obtain an accurate pulmonary parenchyma result. Seed points, which are set by adopting the random migration algorithm to divide, are rapidly and automatically obtained through methods including an Otsu threshold value, mathematical morphology and the like; and manual calibration is not needed so that the working amount and operation time of doctors are greatly reduced. According to the automatic division method provided by the invention, a process of 'selecting the seed points for two times and dividing for two times' is provided and is an automatic dividing process from a coarse size to a fine size; and finally, the dependence on the selection of the initial seed points by a dividing result is reduced, so that the accuracy, integrity, instantaneity and robustness of the dividing result are ensured. The automatic division method provided by the invention is funded by Natural Science Foundation of China (NO: 61375075).

Owner:HEBEI UNIVERSITY

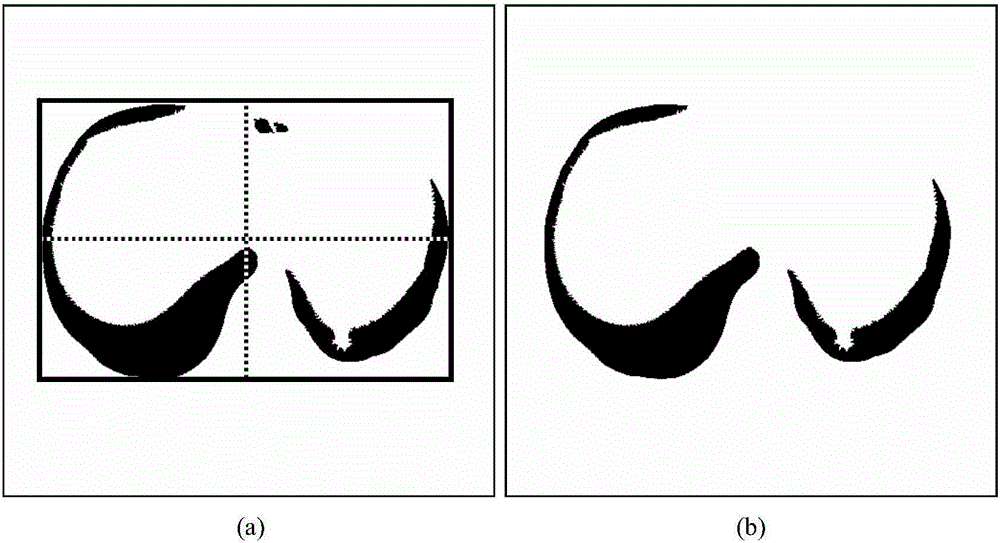

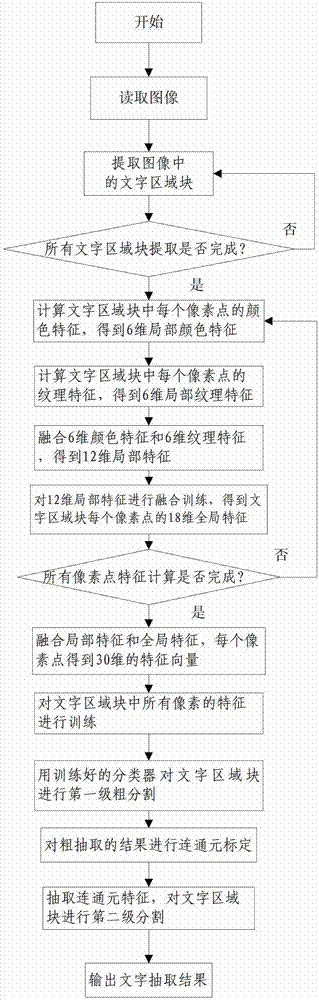

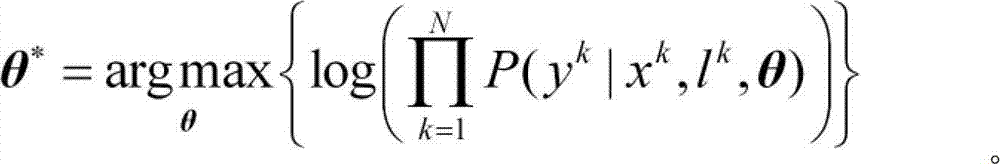

Complicated background image and character division method

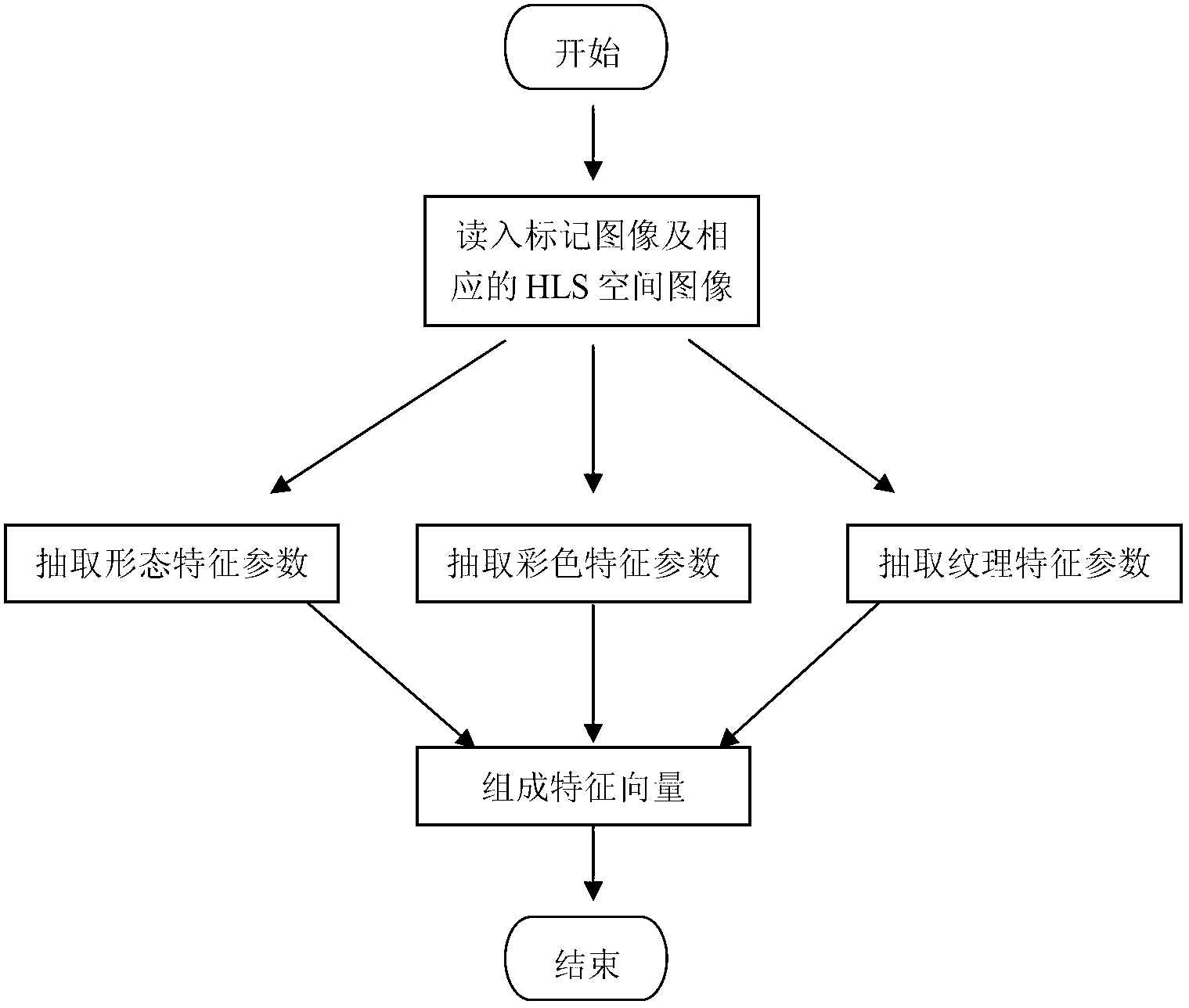

InactiveCN102968637AImprove accuracyGood segmentation effectCharacter and pattern recognitionFeature vectorBackground image

The invention discloses a complicated background image and character division method. The complicated background image and character division method mainly comprises the following steps of: reading an image, reading a character area of the read image, extracting a bottom layer color feature and a bottom layer texture feature of the character area, fusing the extracted bottom layer color feature and the bottom layer texture feature to obtain a bottom layer local feature, extracting a label layer global feature of the character area, fusing the bottom layer local feature and the label layer global feature of the character area to obtain feature vectors of all the pixels in the character area, training the feature vectors of all the pixels in the character area to obtain a first-stage division classifier, carrying out first-stage character division by using the trained classifier, carrying out connected element calibration on the result of the first-stage division, extracting connected element features to carry out second-stage character division, and outputting the result of the character division. The complicated background image and character division method is capable of improving the division correctness of the characters in complicated background images and has certain generality and practicability.

Owner:SHANDONG UNIV OF SCI & TECH

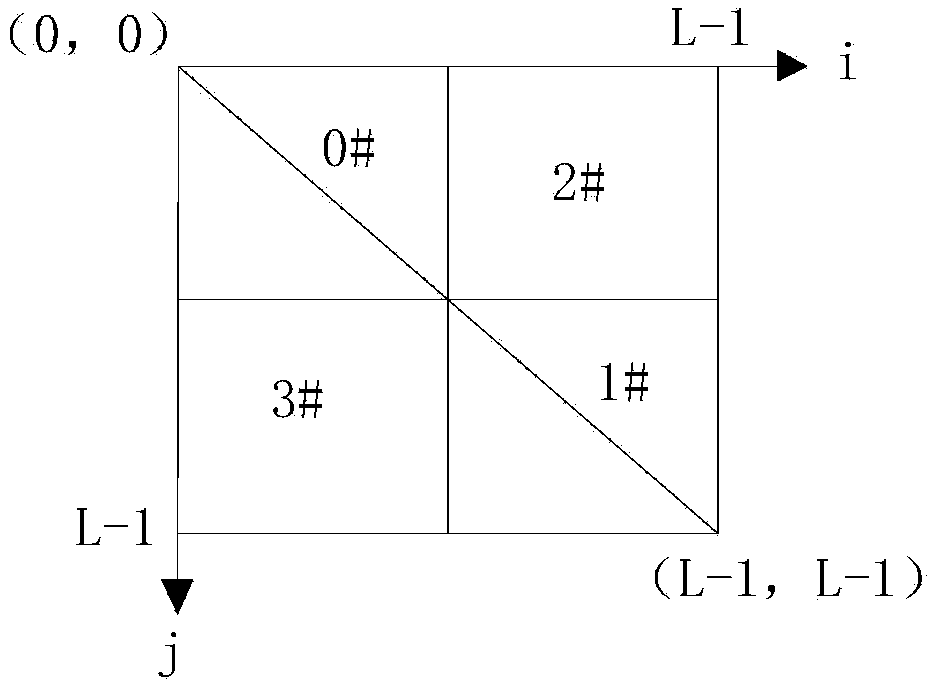

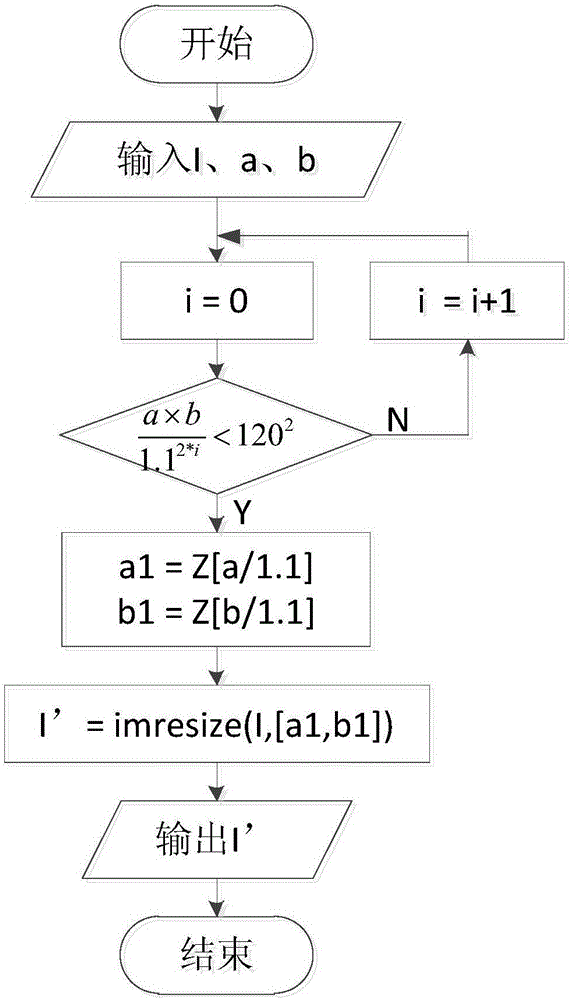

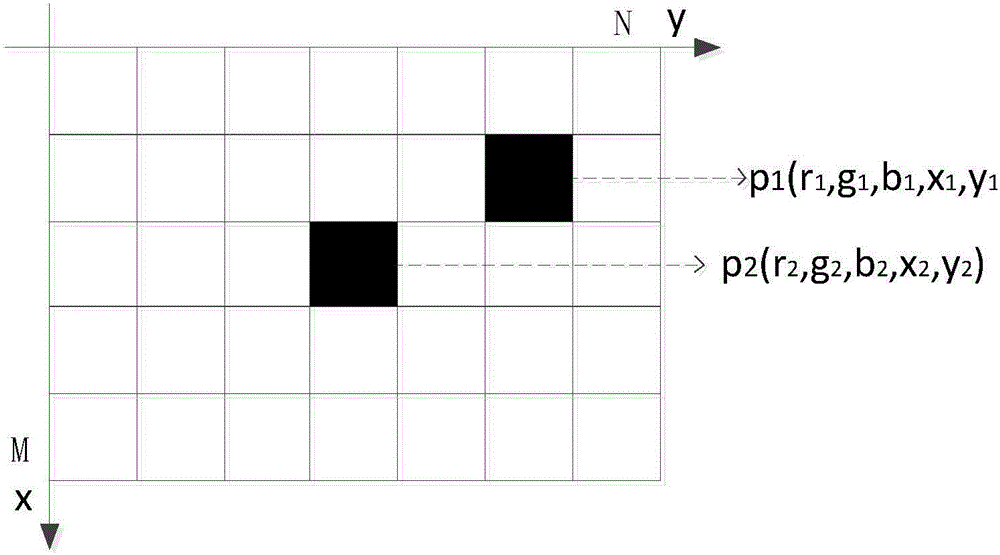

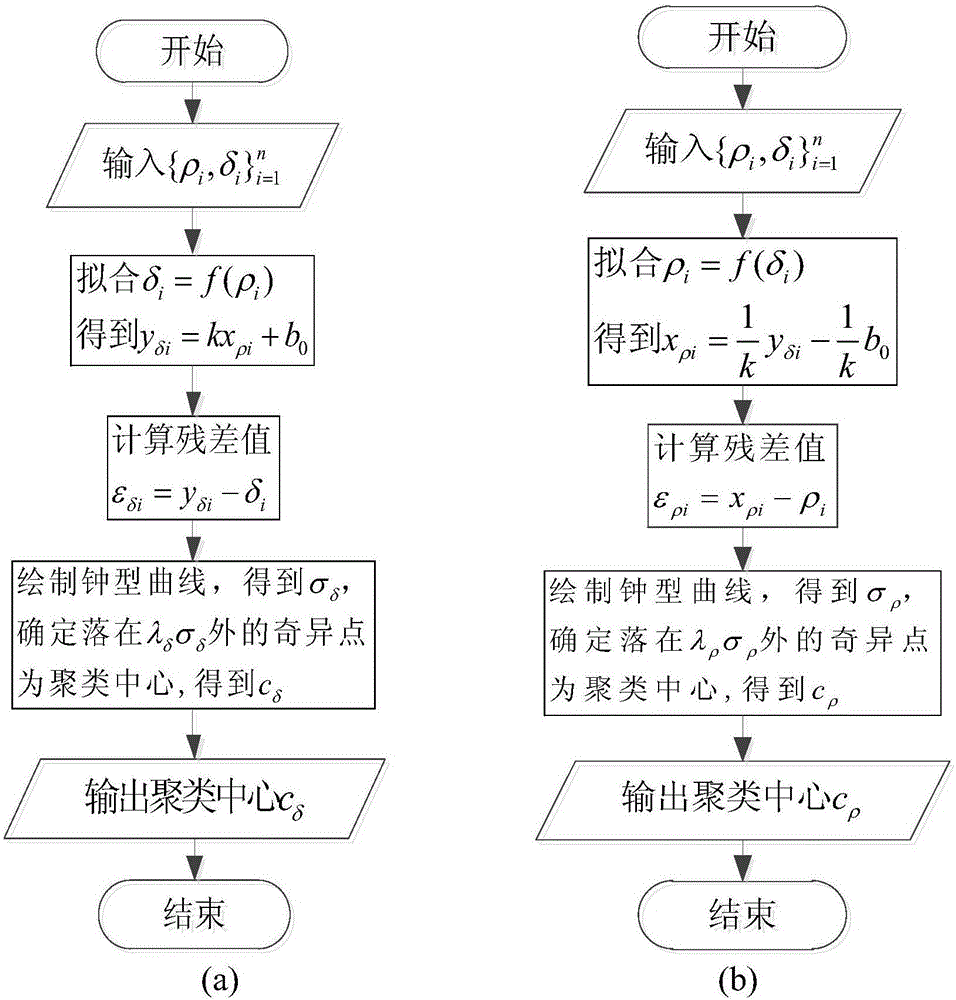

Image segmentation method based on rapid density clustering algorithm

ActiveCN106447676AEfficient Adaptive SegmentationImprove accuracyImage analysisPattern recognitionScale variation

The invention discloses an image segmentation method based on a rapid density clustering algorithm. The image segmentation method comprises the following steps: 1) for a natural image to be processed, firstly carrying out preprocessing and initialization, comprising filtering noise reduction, gray-level registration, area dividing, scale zooming and the like; 2) then carrying out calculation of similarity distance between data points on sub-graphs completing scale variation, and obtaining correlation between pixel points; 3) then carrying out concurrent segmentation processing in each sub-graph, comprising drawing a decision graph based on the density clustering algorithm, carrying out residual analysis to determine a clustering center based on the decision graph and comparing based on the similarity distance to classify remaining points on an original scale sub-graph; and 4) then merging the sub-graphs after the segmentation is completed, and carrying out secondary re-clustering to obtain a segmentation result graph with original size dimensions. The image segmentation method based on the rapid density clustering algorithm for parameter robust provided by the invention can automatically determine the number of segmented classes to realize relatively high segmentation accuracy rate.

Owner:ZHEJIANG UNIV OF TECH

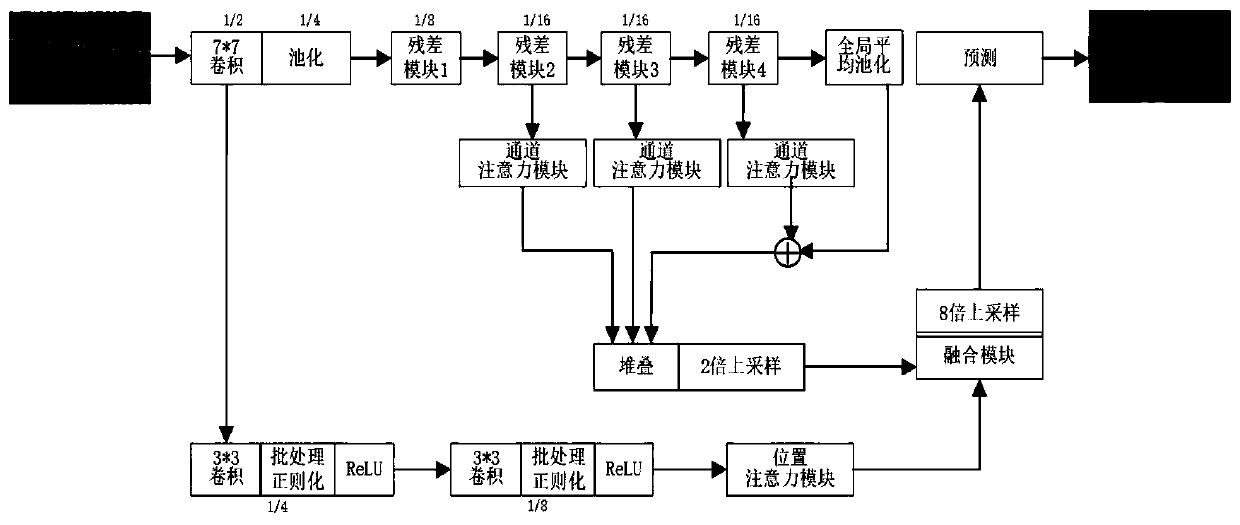

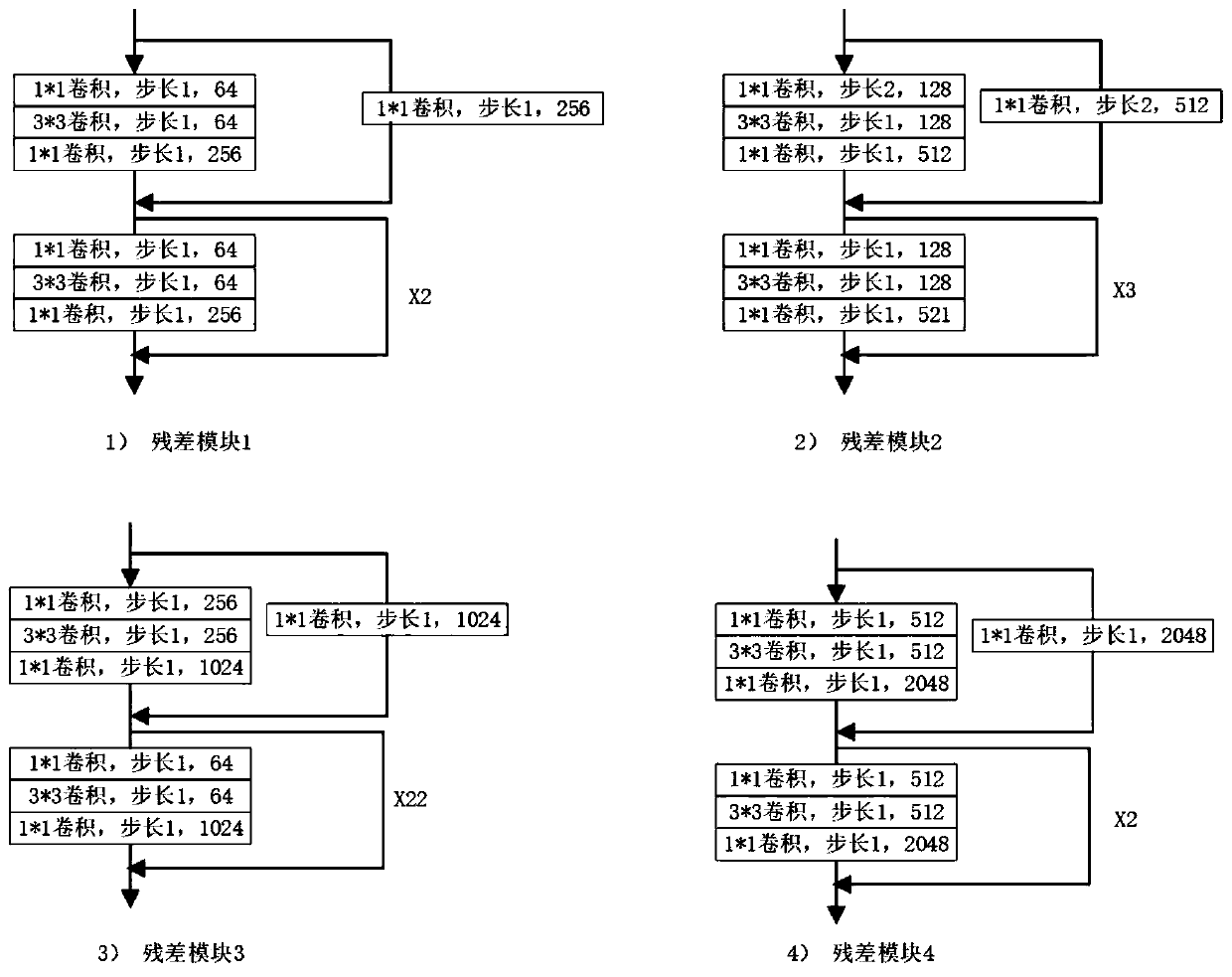

Yellow River ice semantic segmentation method based on multi-attention mechanism double-flow fusion network

ActiveCN111160311AHigh resolutionGood segmentation effectCharacter and pattern recognitionNeural architecturesData setAlgorithm

The invention discloses a Yellow River ice semantic segmentation method based on a multi-attention mechanism double-flow fusion network. The method is used for solving the technical problem that an existing Yellow River ice detection method is poor in accuracy. According to the technical scheme, firstly, data sets are collected and labeled, the labeled data sets are divided into a training data set and a test data set, then a segmentation network structure is constructed, the network comprises shallow branches and deep branches, and a channel attention module is added to the deep branch, a position attention module is added to the shallow branch, the fusion module is used for fusing the shallow branches and the deep branches, the data in the training set is added into the network in batches, the constructed neural network is trained by adopting cross entropy loss and an RMSprop optimizer, and finally, a to-be-tested image is input and a test is carried out by using the trained model. According to the method, multi-level and multi-scale feature fusion can be selectively carried out, context information is captured based on an attention mechanism, a feature map with higher resolutionis obtained, and a better segmentation effect is obtained.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

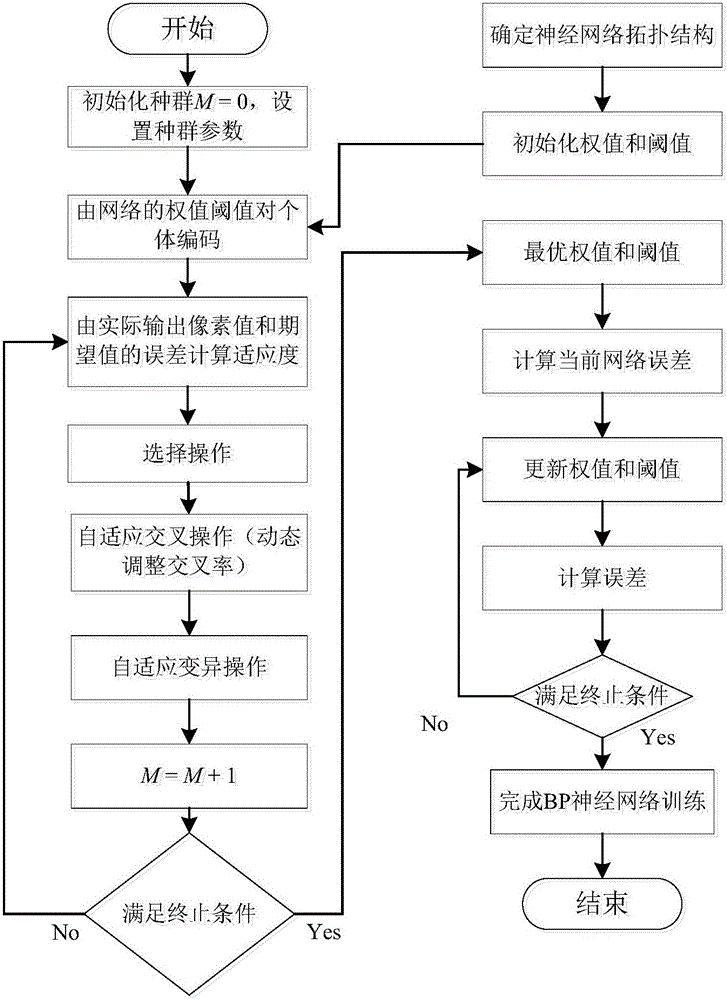

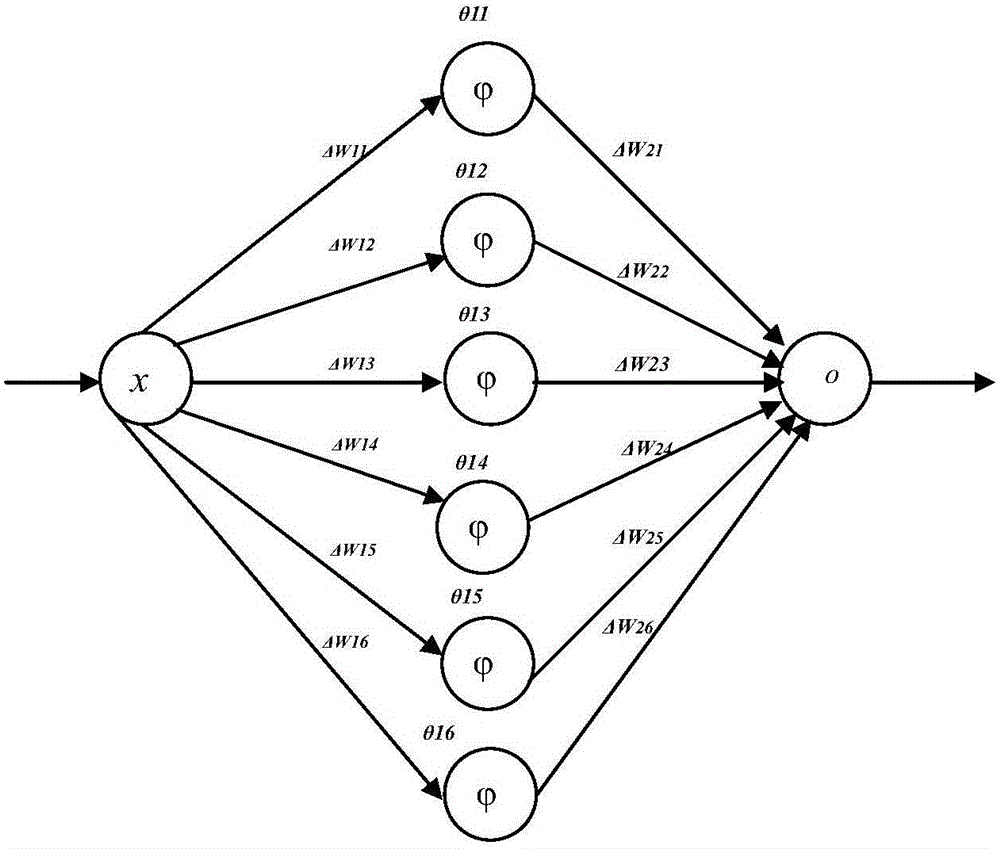

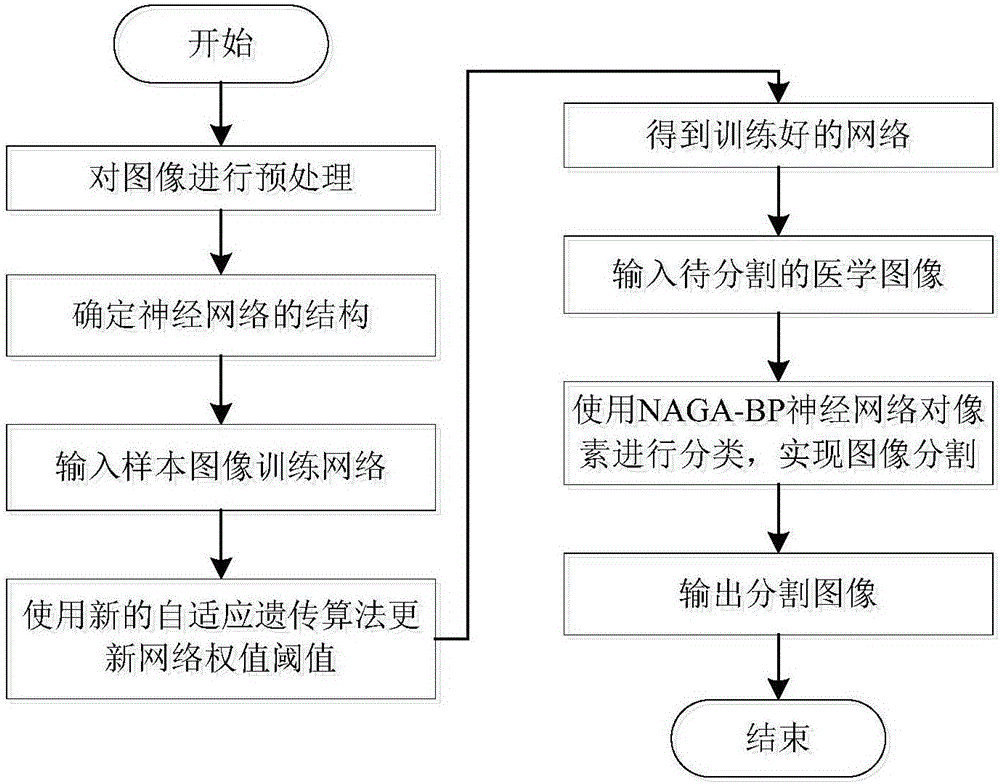

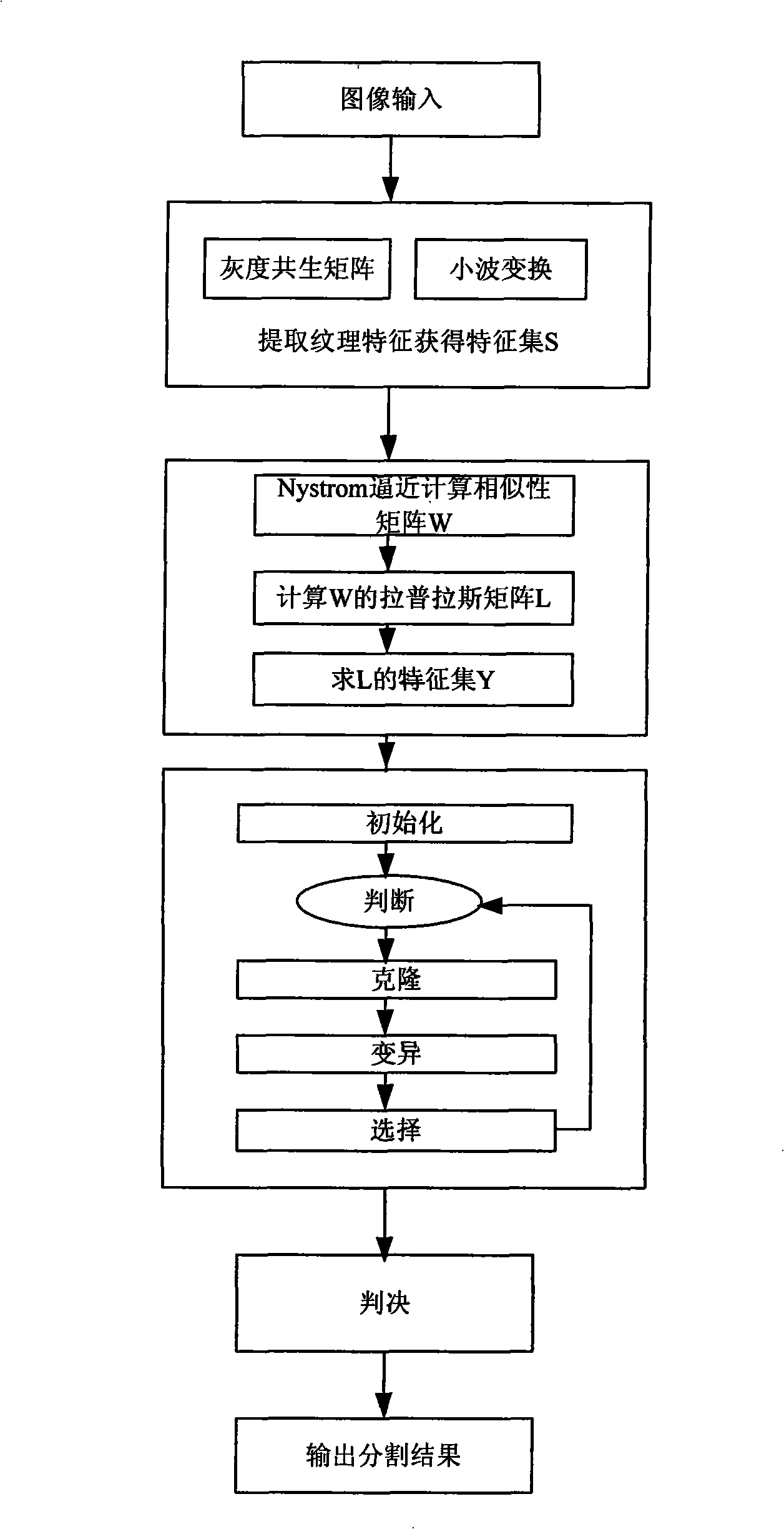

BP neural network image segmentation method and device based on adaptive genetic algorithm

ActiveCN106023195ASolve the problem of evolutionary stagnationAvoid local convergenceImage enhancementImage analysisMutationChromosome encoding

The invention relates to a BP neural network image segmentation method and device based on an adaptive genetic algorithm, and the method comprises the following steps: 1), analyzing a to-be-segmented image, and generating a training sample of a neural network; 2), setting the parameters of the neural network and population parameters, and carrying out the chromosome coding; 3), inputting the training sample for the training of the network, optimizing the weight value and threshold value of the network through employing a new adaptive genetic algorithm, adapting to the crossing and mutation operations, and introducing an adjustment coefficient; 4), inputting the to-be-segmented image, carrying out classifying of the trained neural network, and achieving the image segmentation. The device comprises a training sample generation module, a neural network structure determining module, a network training module, and an image segmentation module. The method introduces the adjustment coefficient which is related with the evolution generations, solves a problem that the individual evolution stagnates at the initial stage of population evolution, and also solves a problem of local convergence caused when the individual adaption degrees are close, thereby obtaining the neural network which can maximize representation of the image features, and achieving the more precise image segmentation.

Owner:HENAN NORMAL UNIV

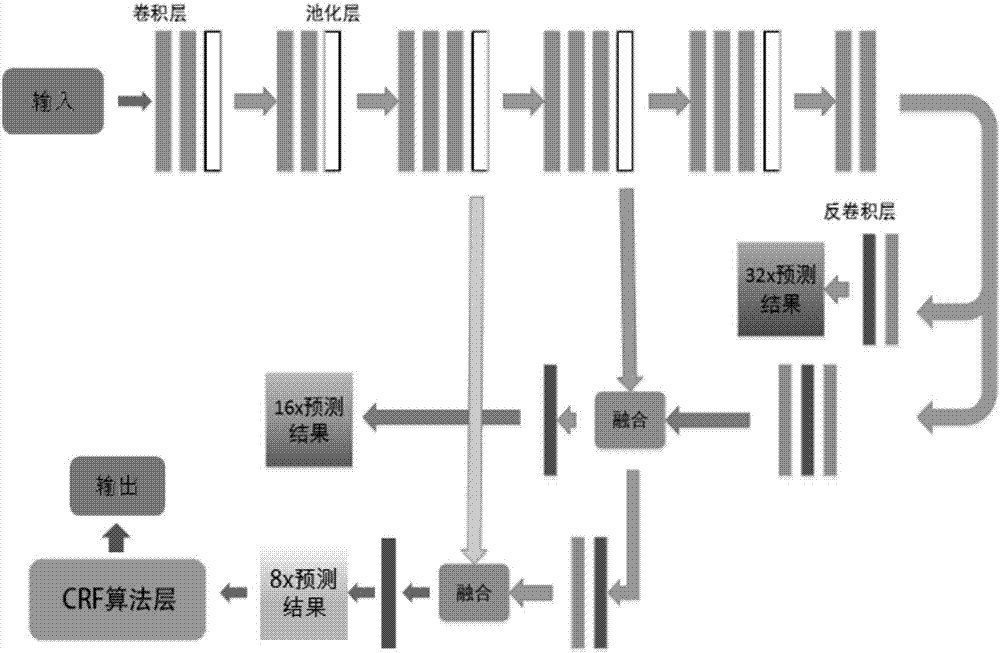

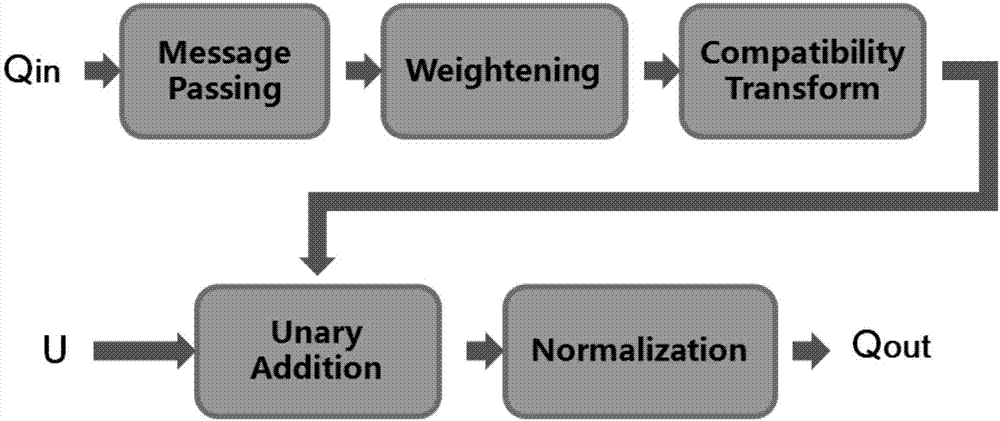

Method of using fully convolutional neural network to segment human hand area in stop-motion animation

InactiveCN108010049AGood segmentation effectImprove noise immunityImage enhancementImage analysisConditional random fieldFeature extraction

The invention discloses a method of using a fully convolutional neural network to segment a human hand area in a stop-motion animation. The method comprises steps: 1) data inputting is carried out; 2)the fully convolutional network is used for feature extraction and preliminary segmentation; 3) a conditional random field (CRF) algorithm is used to optimize the segmentation effects; 4) the networkmodel is trained; and 5) the model which completes the training is used to segment an inputted picture. The method disclosed in the invention mainly aims to solve the problem that through self building a picture data set containing the human hand area, the network model is built, and the data set is used to train the network model. After training, the network model can carry out high-accuracy segmentation on the human hand area. The method of using the fully convolutional neural network to segment the human hand area in the stop-motion animation has the advantages of high accuracy, good anti-noise performance, simple use, high efficiency, quick speed and the like.

Owner:SOUTH CHINA UNIV OF TECH

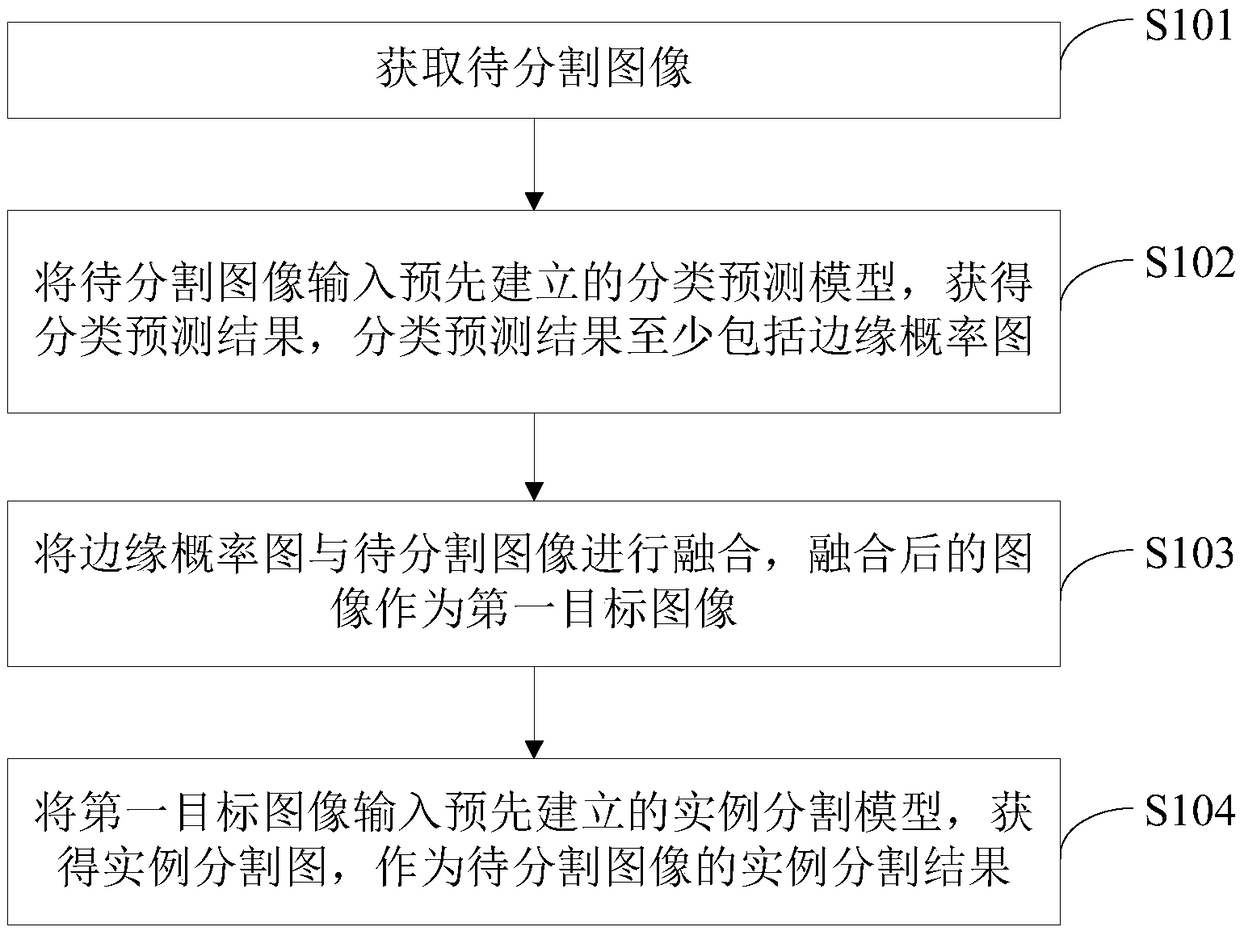

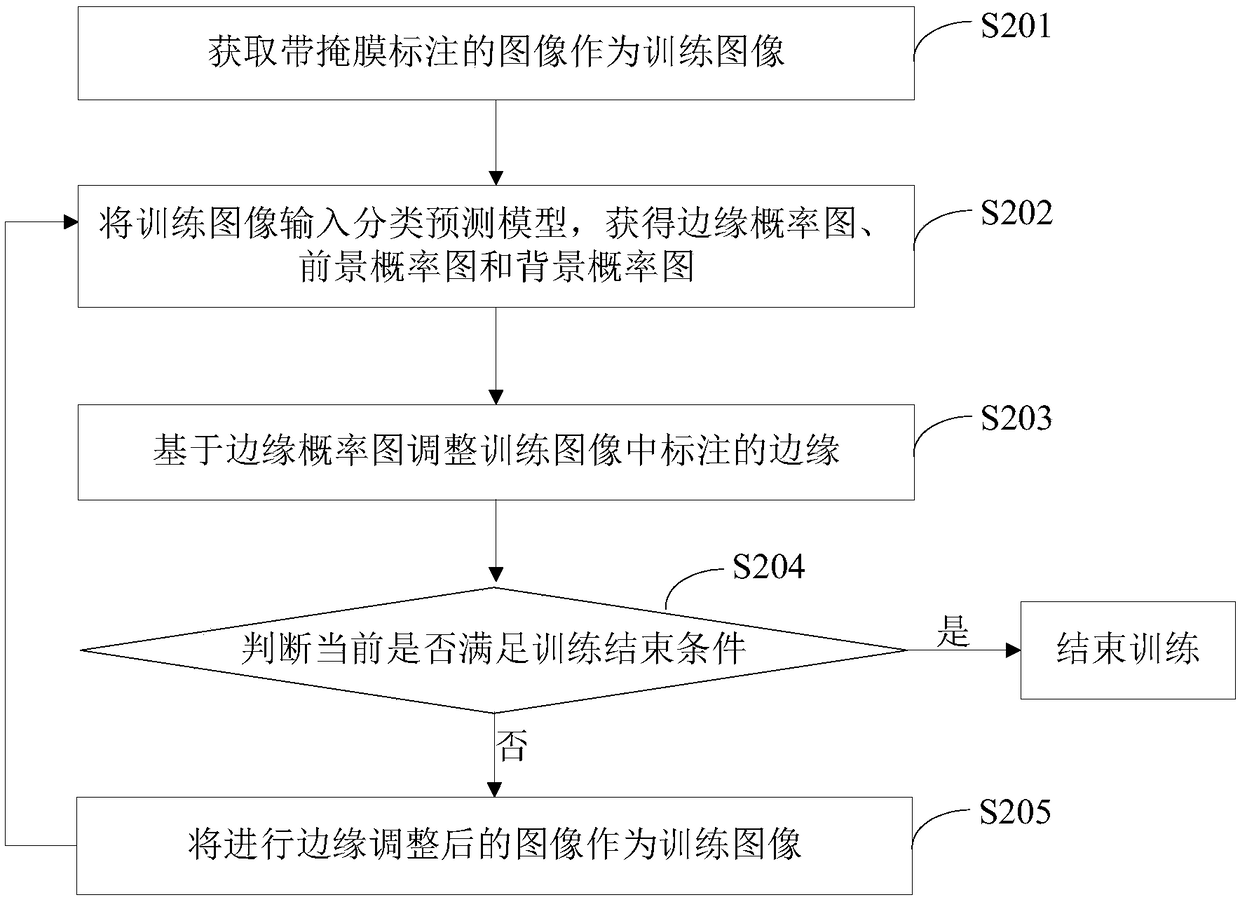

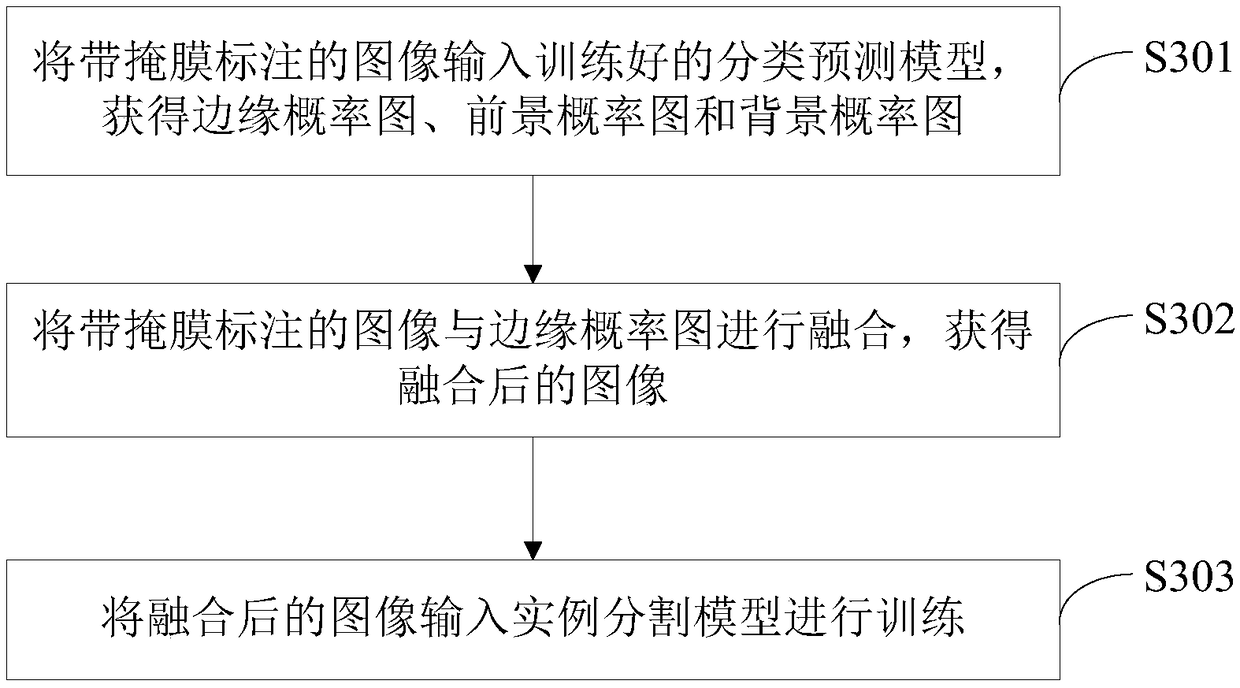

Image instance segmentation method, device, apparatus, and storage medium

ActiveCN109242869AGood segmentation effectAccurate detectionImage enhancementImage analysisComputer vision

Owner:IFLYTEK CO LTD

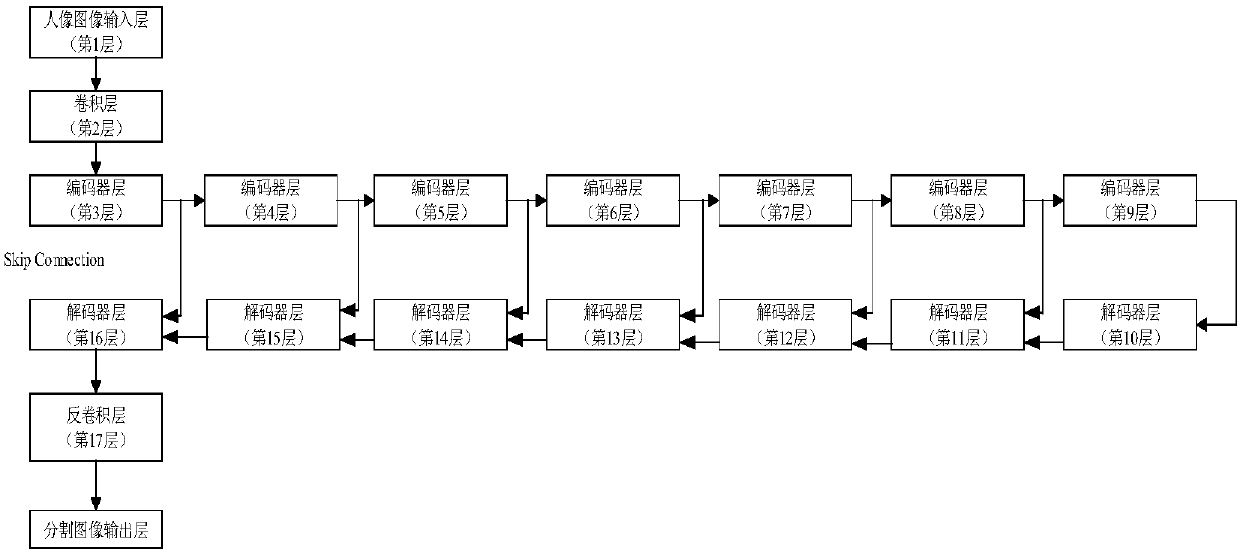

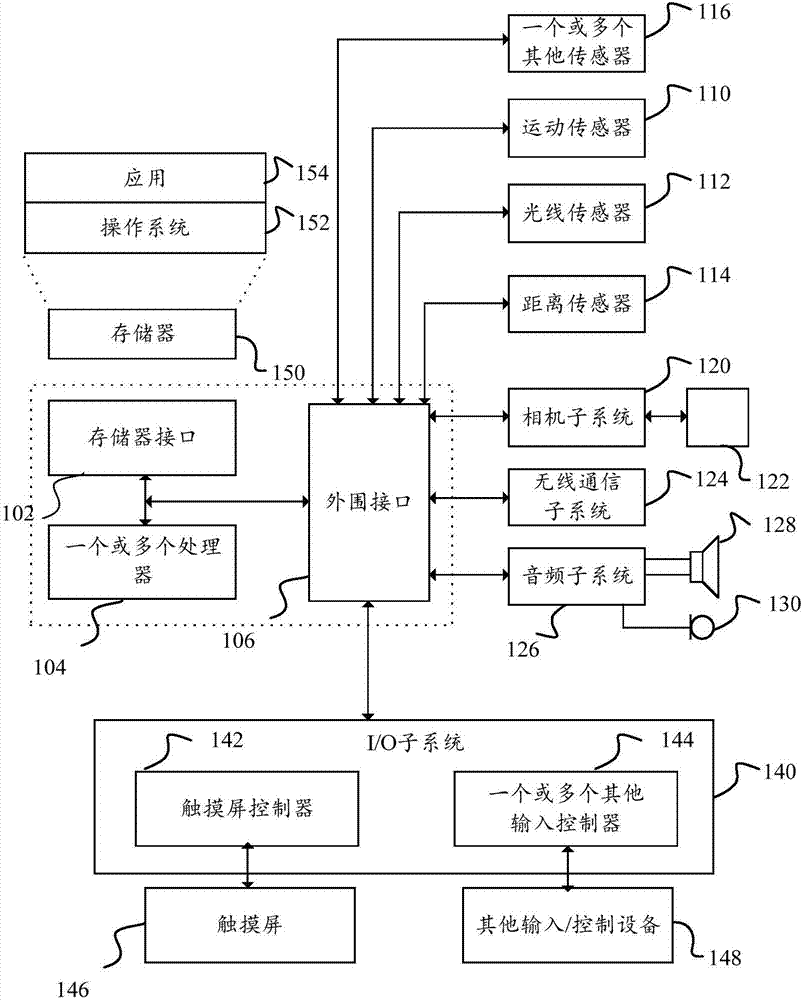

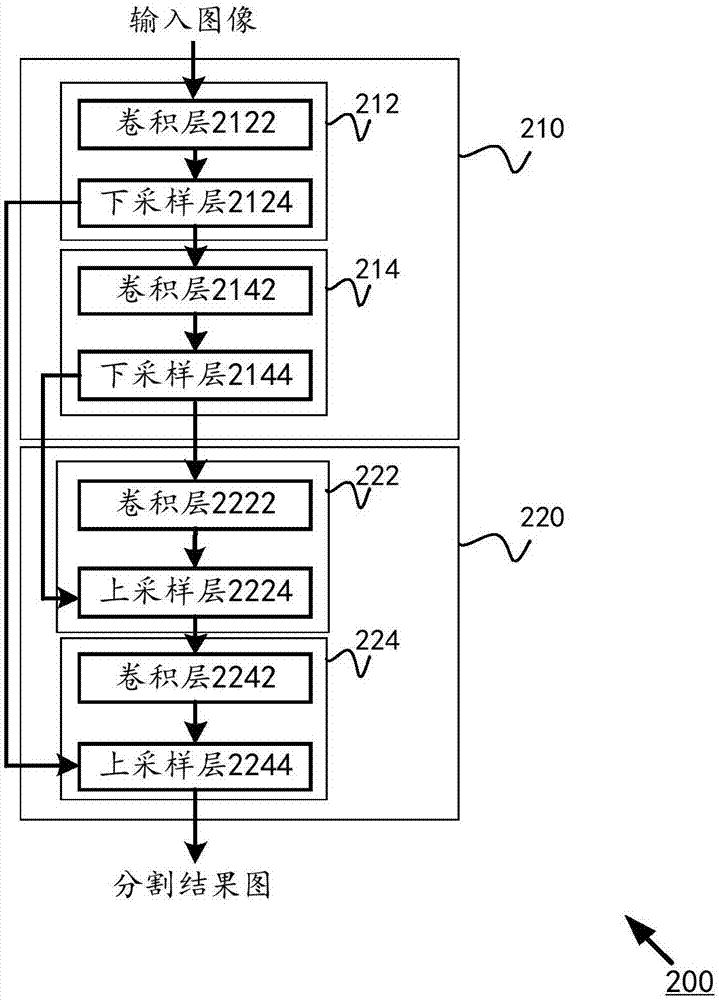

Human image segmentation method and mobile terminal

ActiveCN108010031AGood segmentation effectReduce running timeImage enhancementImage analysisAlgorithmImage segmentation

The invention discloses a human image segmentation method. The method is suitable for being executed in a mobile terminal, and comprises the following steps of: segmenting a to-be-processed image by utilizing a predetermined segmentation network, wherein the predetermined segmentation network comprises an encoding stage and a decoding stage, the encoding stage comprises a first number of pairs ofconvolution layers and down-sampling layers, which are connected in sequence, and each pair of convolution layer and down-sampling layer forms a convolution-down-sampling pair; outputting a down-sampling characteristic pattern of the encoding stage after carrying out iteration through a first number of convolution-down-sampling pairs, wherein the decoding stage comprise a first number of pairs ofconvolution layer and up-sampling layers, which are connected in sequence, and each pair of convolution layer and up-sampling layer forms a convolution-up-sampling pair; and outputting a segmentationresult map after carrying out iteration through the first number of convolution-up-sapling pairs. The invention furthermore discloses the corresponding mobile terminal.

Owner:XIAMEN MEITUZHIJIA TECH

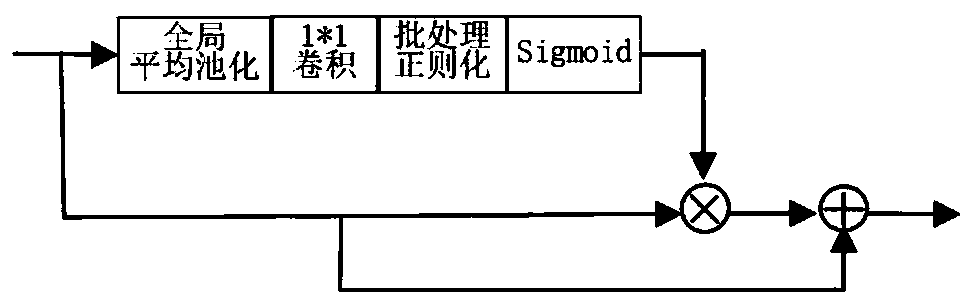

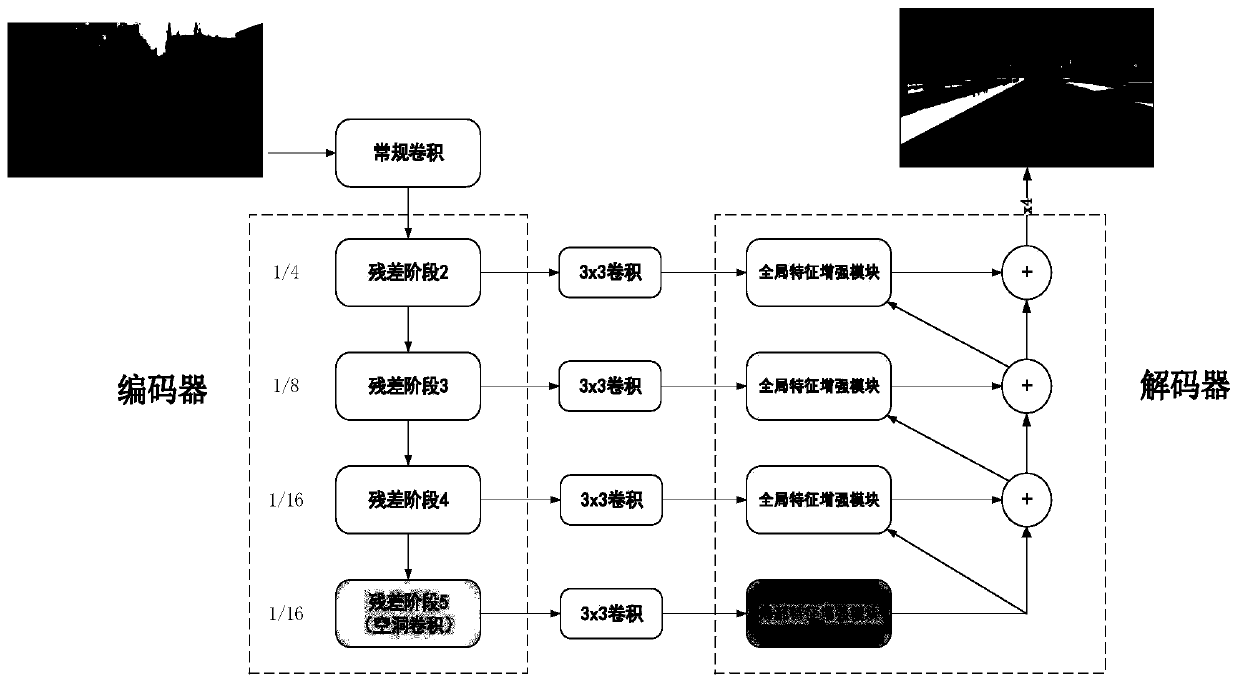

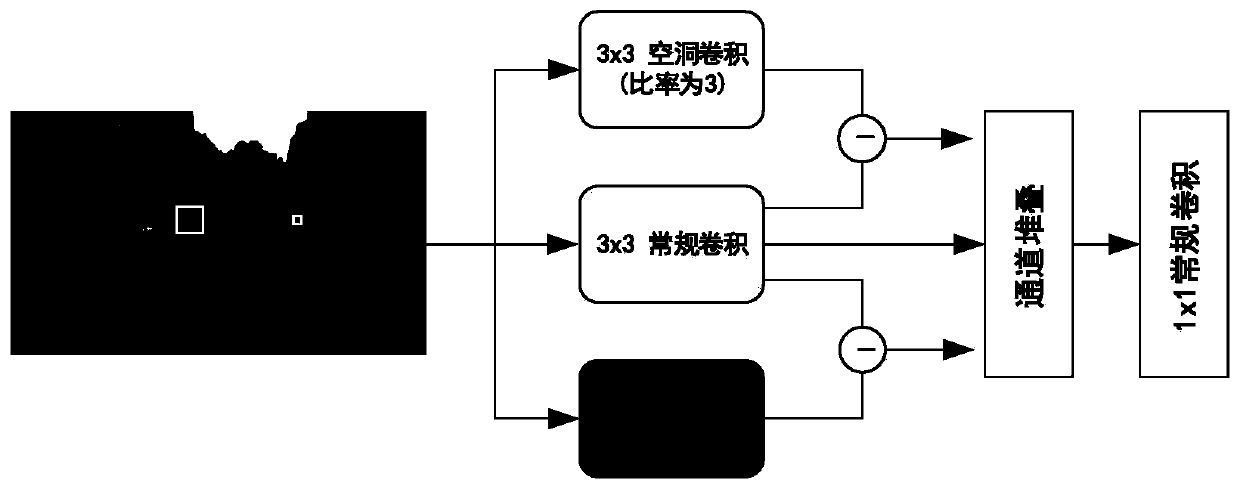

Image semantic segmentation method based on local and global feature enhancement modules

ActiveCN111210435AImprove SegmentationPromote integrationImage enhancementImage analysisSample imageConvolution

The invention discloses an image semantic segmentation method based on local and global feature enhancement modules. The image semantic segmentation method comprises the following steps: selecting andmanufacturing a training set image and a verification set image required by a semantic segmentation task, and corresponding label pictures; performing data enhancement on the training set image; standardizing sample images in the training set image and the verification set image respectively; encoding the corresponding label image, designing a convolutional neural network, taking the processed data as input data of the model, outputting a multi-channel feature map, optimizing parameters of the convolutional neural network, inputting a real scene image into the convolutional neural network with optimized parameters for semantic segmentation, and outputting an image with marked pixels. Important technical support is provided for subsequent operation in research such as scene analysis and reinforcement learning, and the method can be applied to the fields of virtual reality, automatic driving and man-machine interaction.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

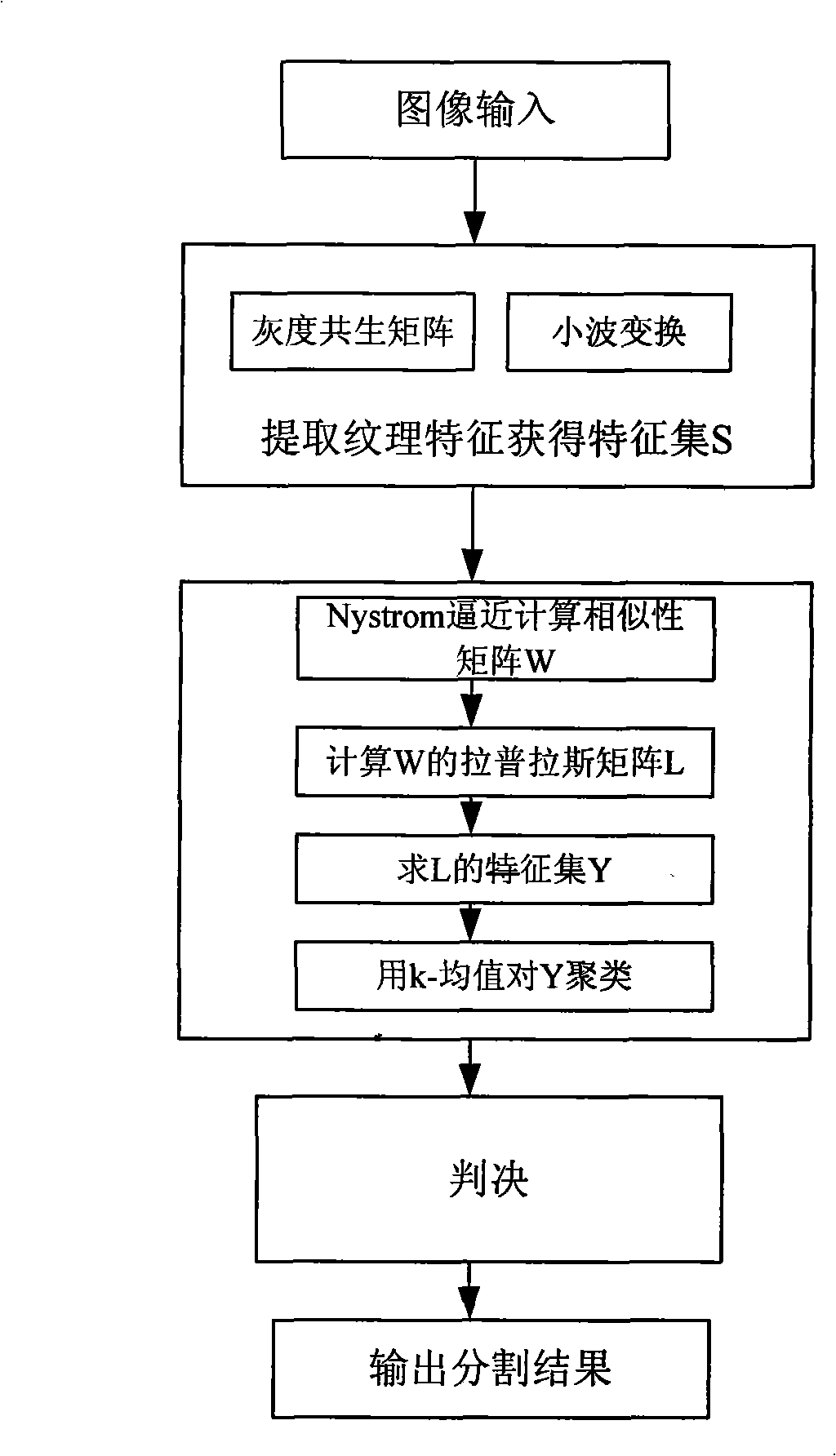

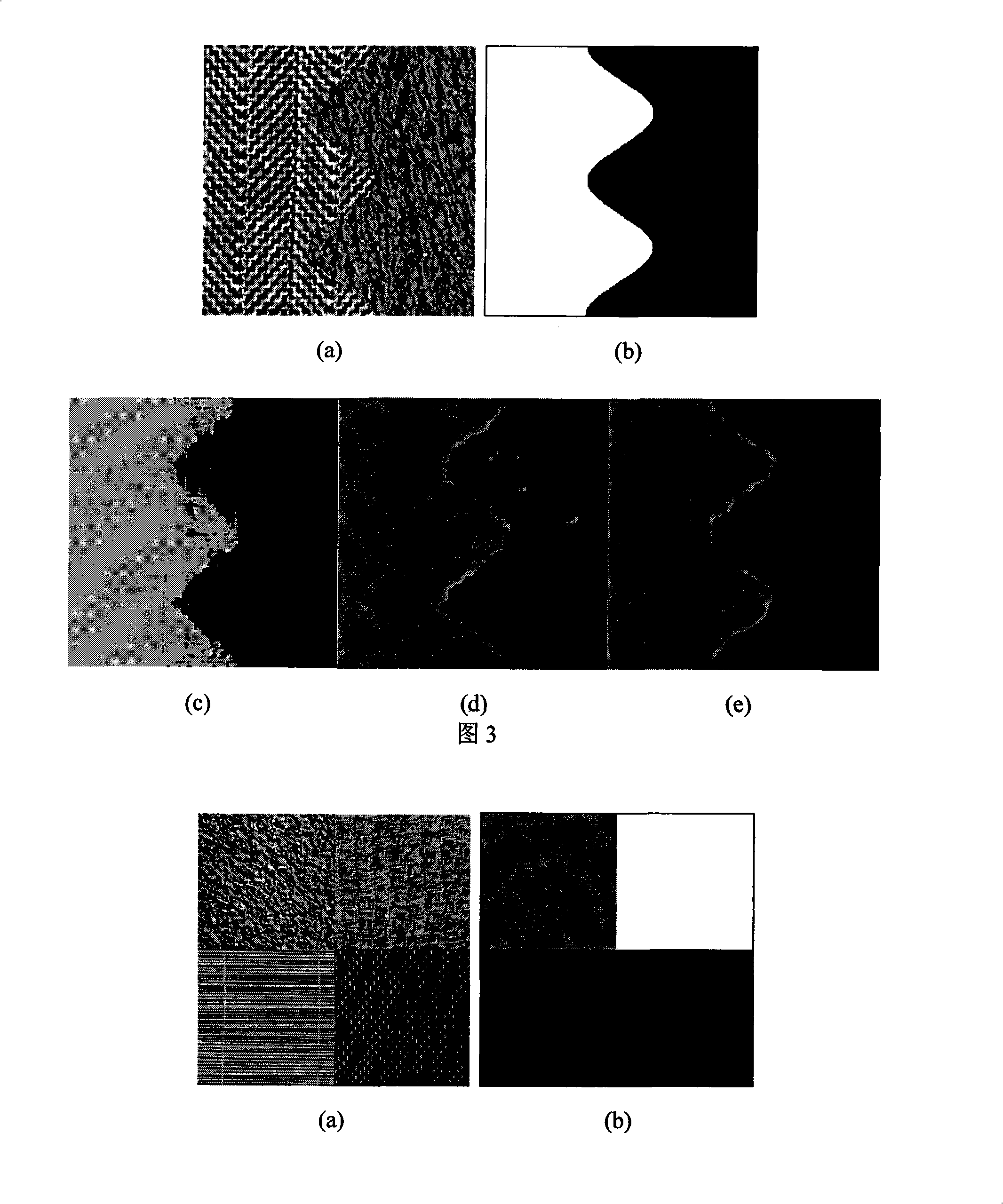

Method of image segmentation based on immune spectrum clustering

InactiveCN101299243AFast convergenceGood segmentation effectImage enhancementImage analysisFeature vectorImaging processing

The invention discloses an image segmentation method based on immunity spectrum clustering, which includes: 1. extracting texture characteristic of the input image, representing each pixel point in the image with an eigenvector to obtain a characteristic set; 2. mappings the characteristic set to a linear measure space by spectrum clustering to a mapping set; 3. dividing the category number according to the given image, accidentally selecting the corresponding number of data from the mapping set as the initial clustering center, executing cloning, variation, selection and judgement in sequence, to find out a optimum clustering center with the same category number with the initial clustering center; 4. dividing all pixel points of the characteristic set to an optimum clustering center nearest to the pixel points, and giving each pixel point a category mark according to the category of optimum clustering center where the pixel point locates to complete the image segmentation. Compared with the prior technology, the invention has advantages of insensitivity to initialization, quick convergence to global optimum and high specification accuracy, which can be used in the image segmentation of SAR image processing and computer visual sense field.

Owner:XIDIAN UNIV

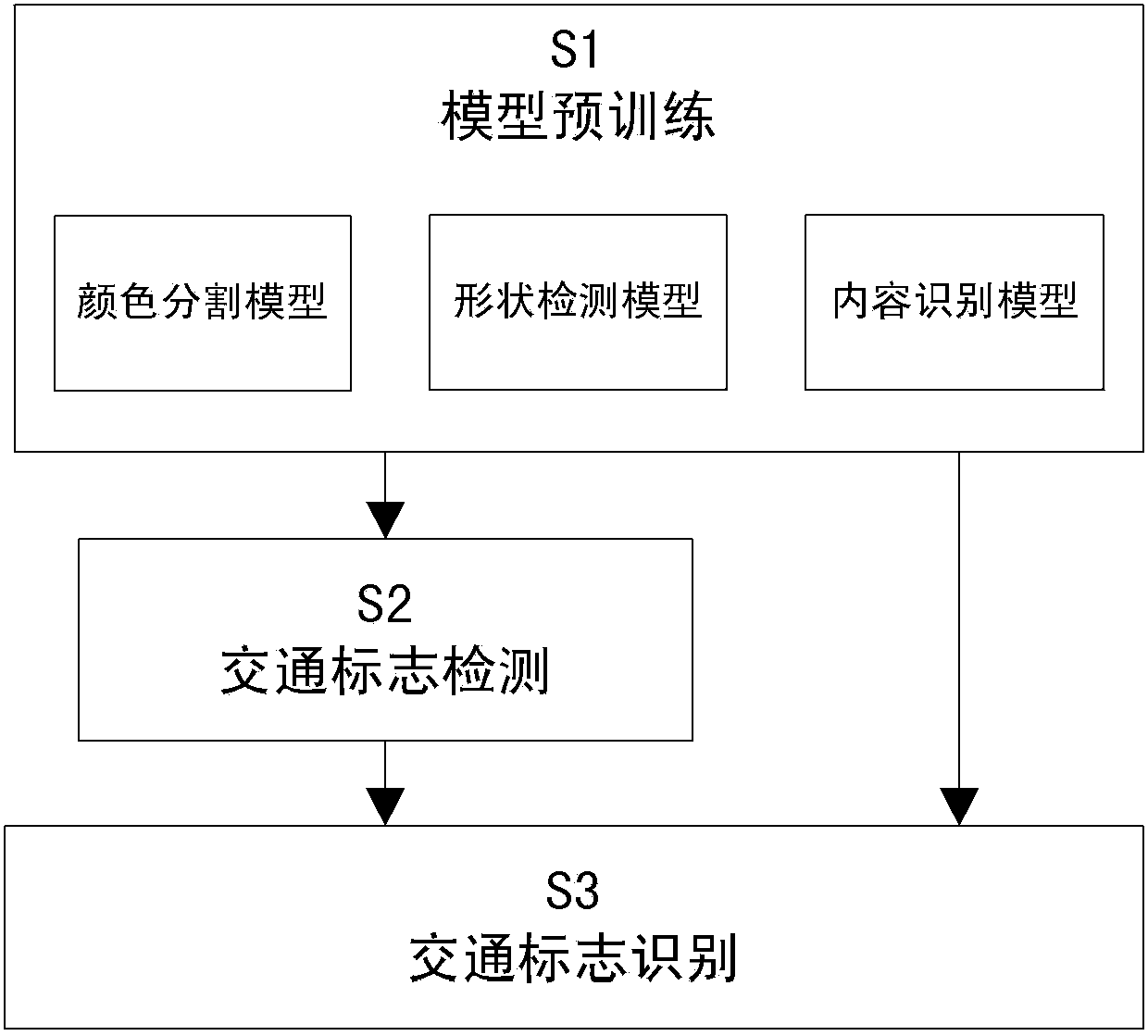

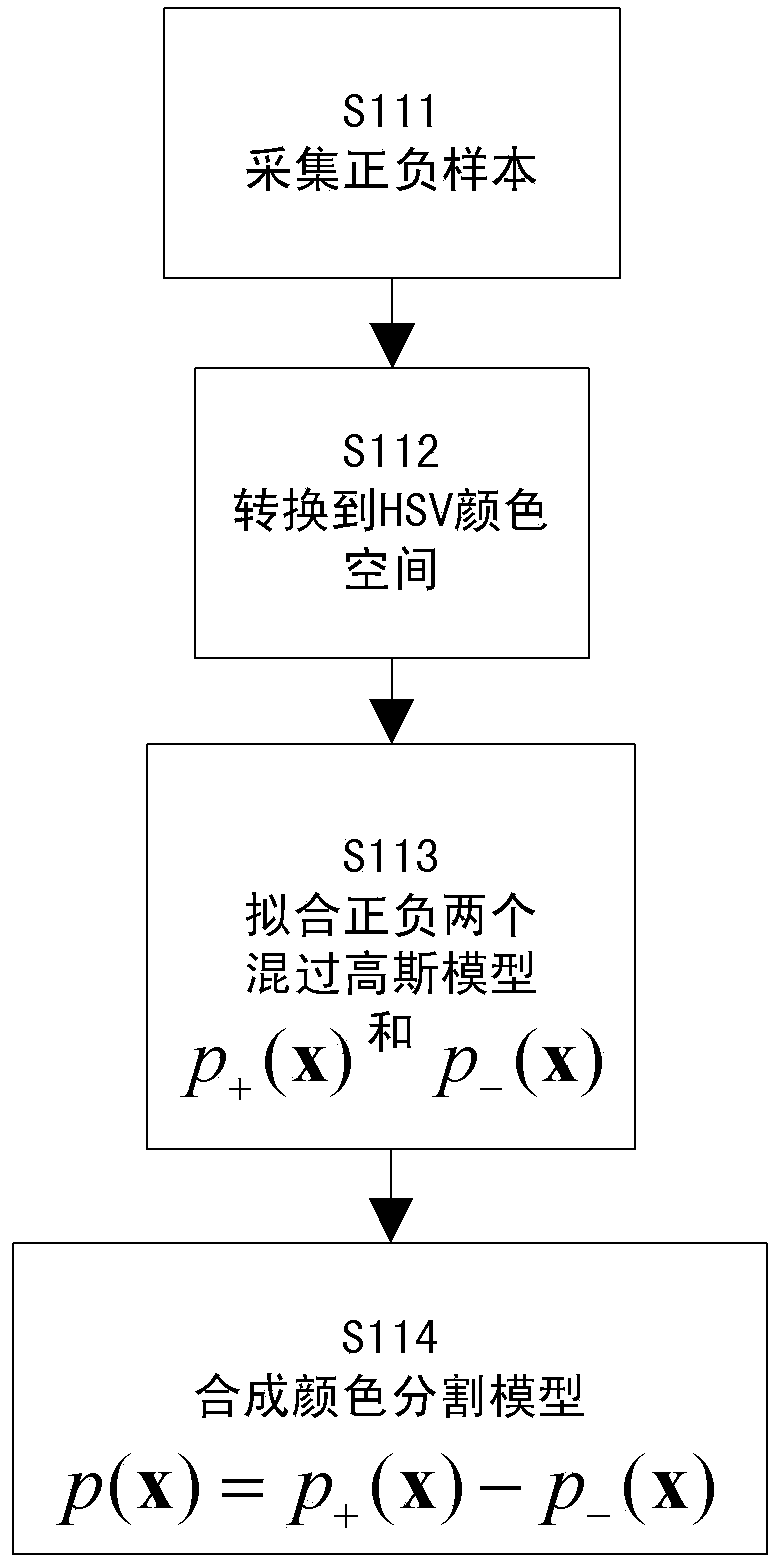

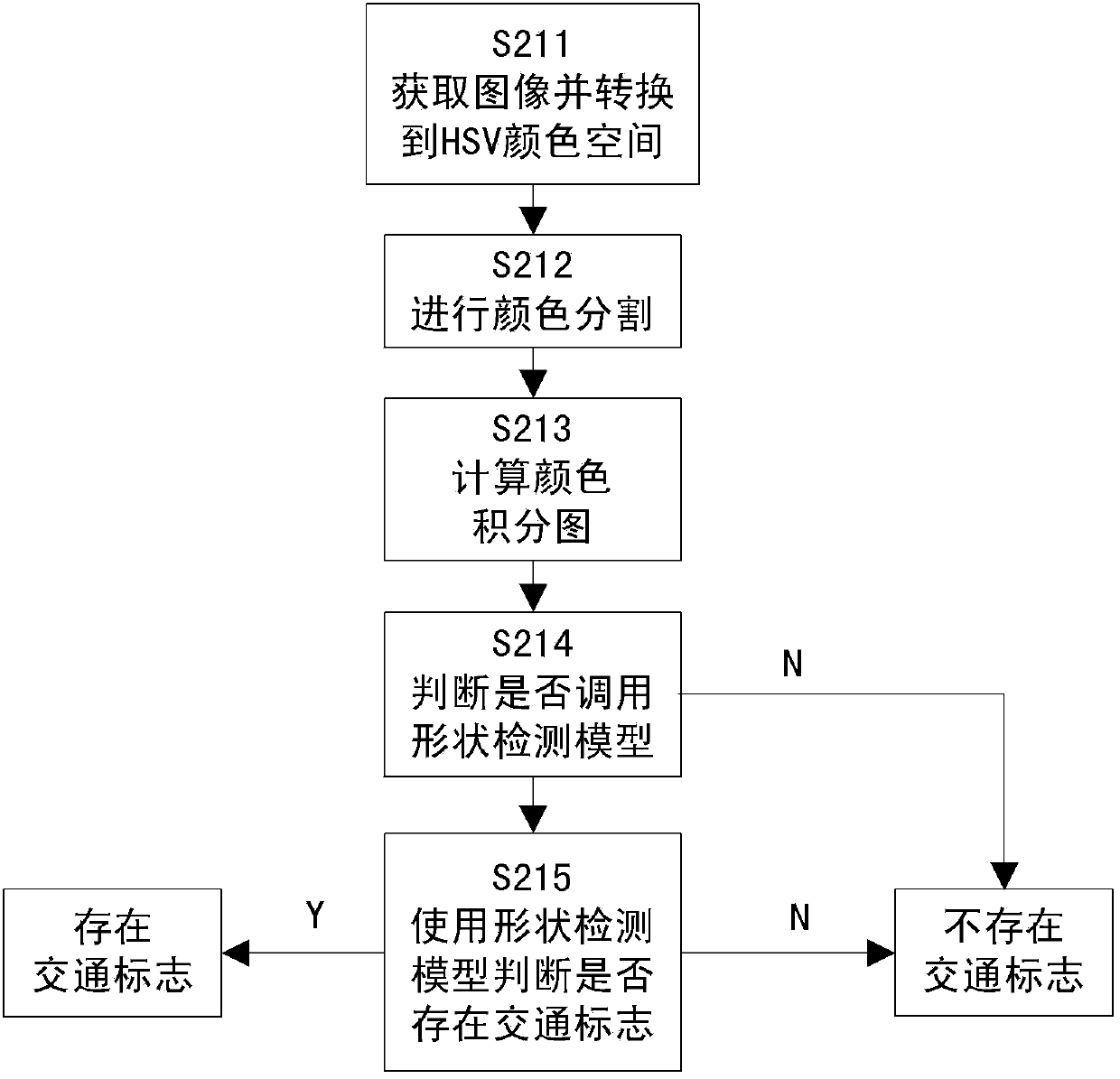

Method for identifying traffic sign

ActiveCN103366190ASmall amount of calculationImprove stabilityCharacter and pattern recognitionSlide windowPattern recognition

The invention discloses a method for identifying a traffic sign. The method comprises the following steps of: 1, generating a color segmentation model, a shape detection model and a content identification model required for identifying the traffic sign; 2, segmenting the original image by using a color segmentation template which corresponds to the color segmentation model, acquiring the segmented image, sliding on the original image by using a sliding window, judging whether a proportional relation of various colors in the window meets the preset conditions, determining that the traffic sign does not exist in the image if the preset conditions are not met, determining that the traffic sign exists in the image and calling the shape detection model if the preset conditions are met, determining that the traffic sign exists in the image if the detection result of the shape detection model meets the preset shape condition of the traffic sign, otherwise determining that the traffic sign does not exist in the image; and 3, calling a corresponding content identification model to judge the type of the traffic sign according to the color and shape information during detection for the image in which the traffic sign exists.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com