Patents

Literature

341 results about "Pixel classification" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

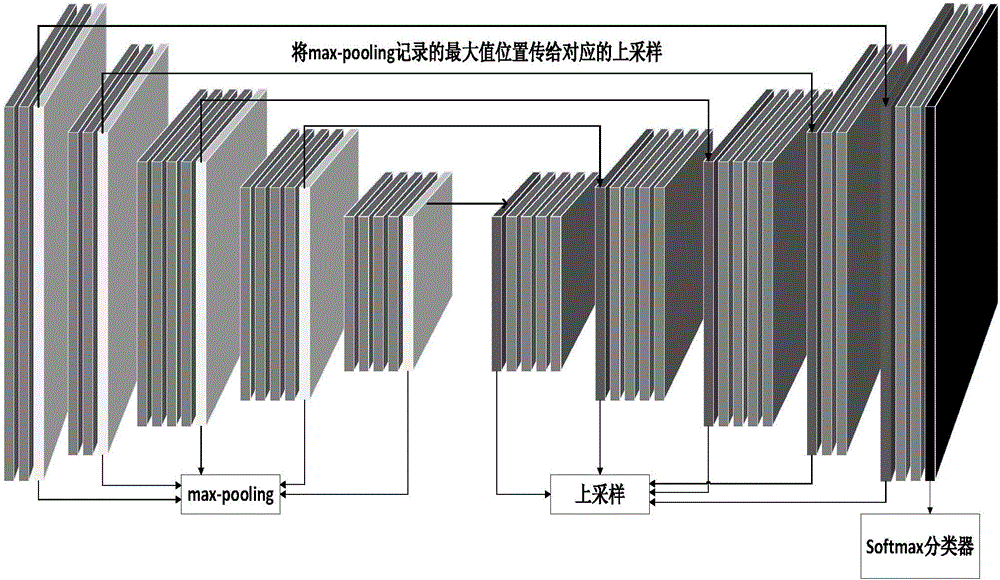

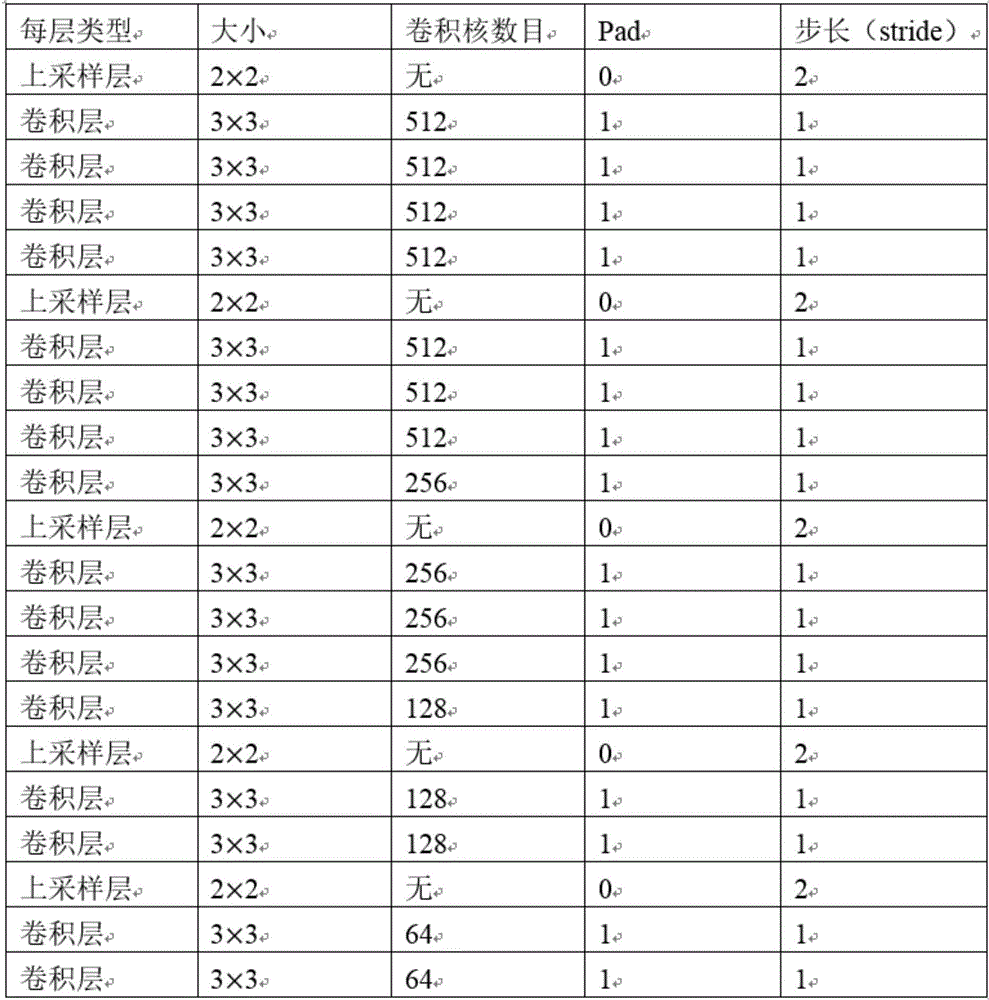

Fundus image retinal vessel segmentation method and system based on deep learning

ActiveCN106408562AEasy to classifyImprove accuracyImage enhancementImage analysisSegmentation systemBlood vessel

The invention discloses a fundus image retinal vessel segmentation method and a fundus image retinal vessel segmentation system based on deep learning. The fundus image retinal vessel segmentation method comprises the steps of performing data amplification on a training set, enhancing an image, training a convolutional neural network by using the training set, segmenting the image by using a convolutional neural network segmentation model to obtain a segmentation result, training a random forest classifier by using features of the convolutional neural network, extracting a last layer of convolutional layer output from the convolutional neural network, using the convolutional layer output as input of the random forest classifier for pixel classification to obtain another segmentation result, and fusing the two segmentation results to obtain a final segmentation image. Compared with the traditional vessel segmentation method, the fundus image retinal vessel segmentation method uses the deep convolutional neural network for feature extraction, the extracted features are more sufficient, and the segmentation precision and efficiency are higher.

Owner:SOUTH CHINA UNIV OF TECH

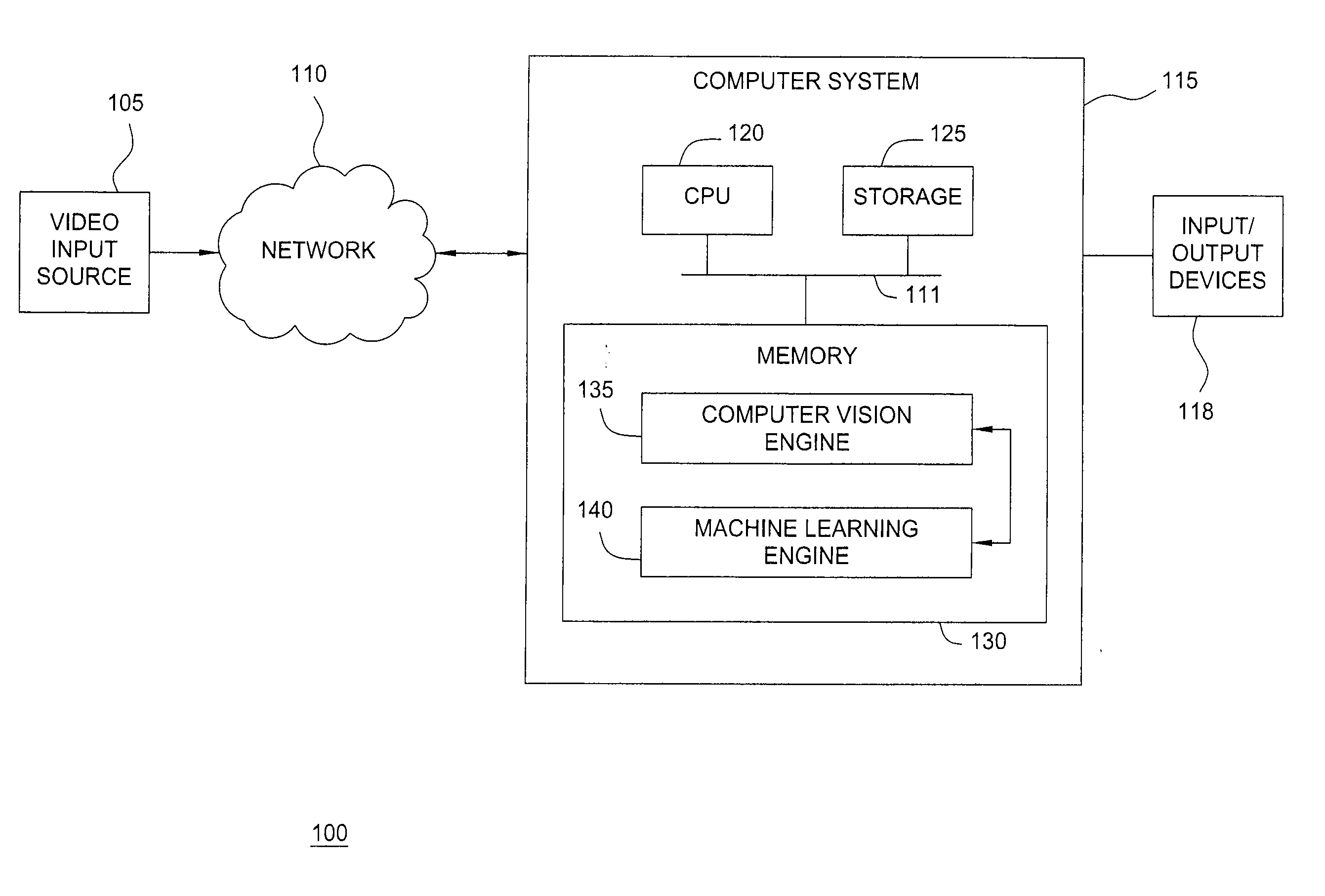

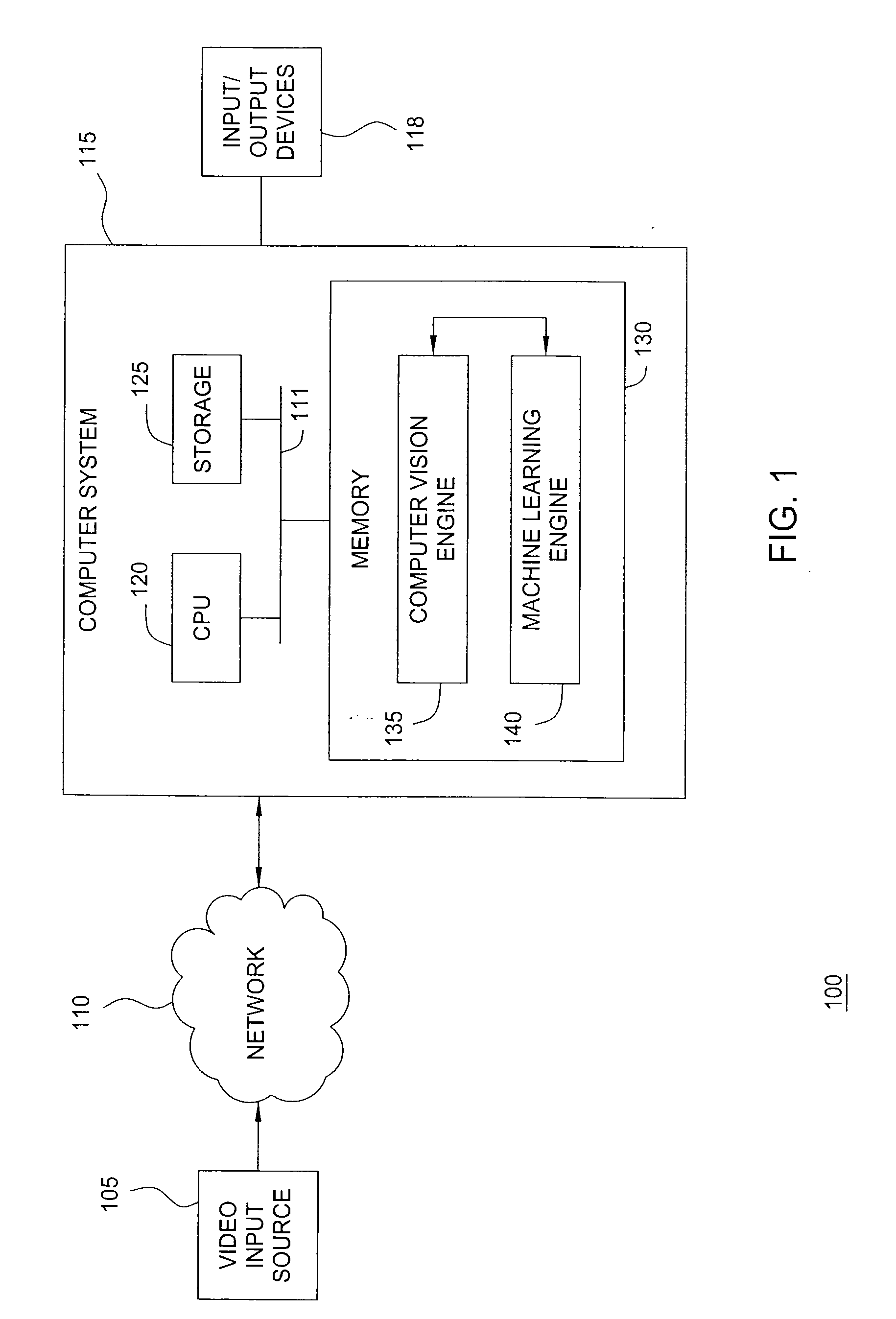

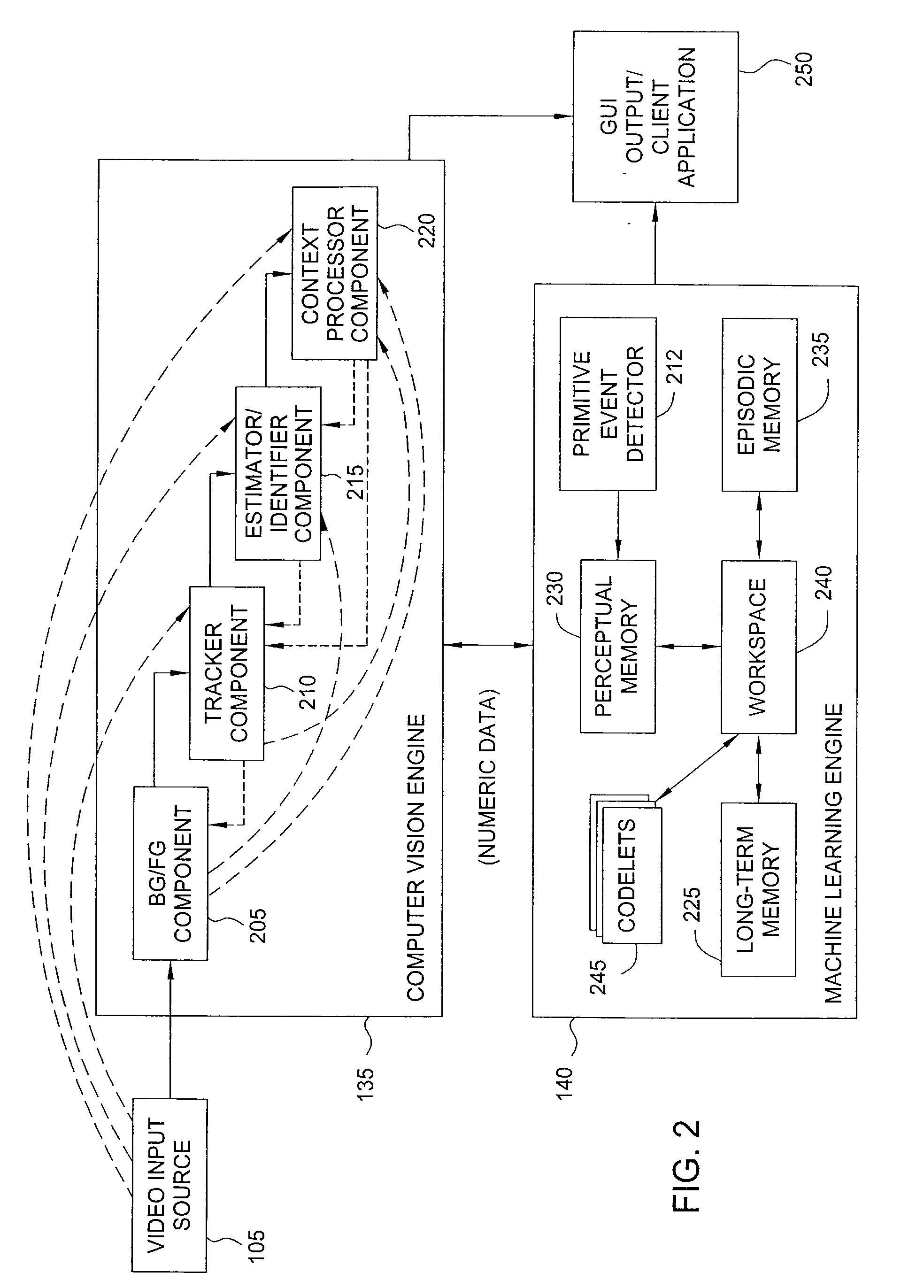

Adaptive update of background pixel thresholds using sudden illumination change detection

Techniques are disclosed for a computer vision engine to update both a background model and thresholds used to classify pixels as depicting scene foreground or background in response to detecting that a sudden illumination changes has occurred in a sequence of video frames. The threshold values may be used to specify how much pixel a given pixel may differ from corresponding values in the background model before being classified as depicting foreground. When a sudden illumination change is detected, the values for pixels affected by sudden illumination change may be used to update the value in the background image to reflect the value for that pixel following the sudden illumination change as well as update the threshold for classifying that pixel as depicting foreground / background in subsequent frames of video.

Owner:PEPPERL FUCHS GMBH +1

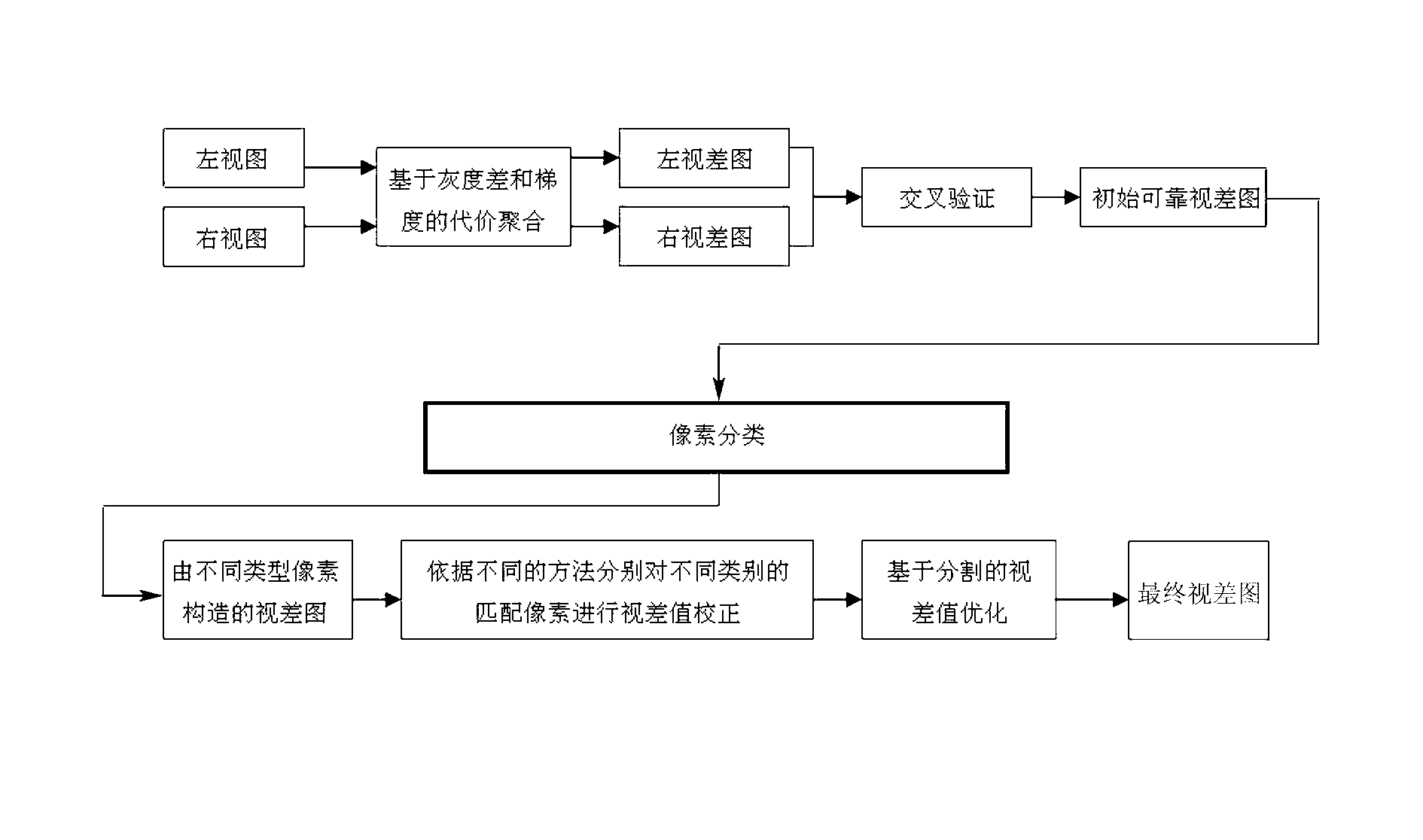

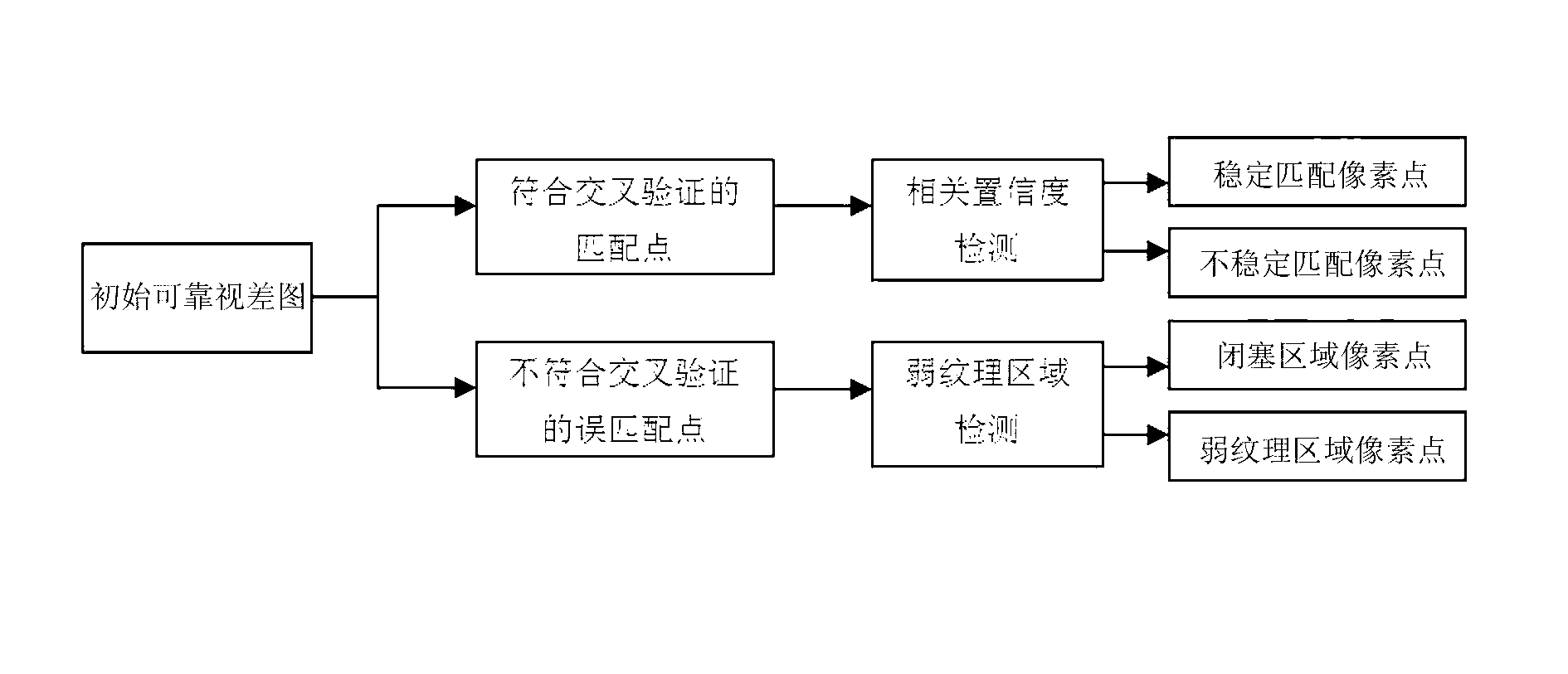

Stereo matching method based on disparity map pixel classification correction optimization

InactiveCN103226821AMatch Pixel ThinningAccurate matching accuracyImage enhancementImage analysisCost aggregationParallax

The invention relates to the technical field of stereo vision, in particular to a stereo matching method. The method solves the problem that the accuracy of disparity correction optimization of the existing stereo matching method is insufficient. The stereo matching method based on disparity map pixel classification correction optimization comprises the following steps that (I) cost aggregation is conducted by taking a left view and a right view as references and based on a method combining a gray scale difference with a gradient, and a left disparity map and a right disparity map are obtained and subjected to left and right consistency detection to generate an initial reliable disparity map; (II) correlation credibility detection and weak texture area detection are conducted, and a pixel is classified into stable matching pixel points, unstable matching pixel points, occlusion area pixel points and weak texture area pixel points; (III) the unstable matching points are corrected by an adaptive weight algorithm based on improvement, and the occlusion area points and the weak texture area points are corrected by a mismatching pixel correction method; and (IV) the corrected disparity maps are optimized by an algorithm based on division, and dense disparity maps are obtained.

Owner:SHANXI UNIV

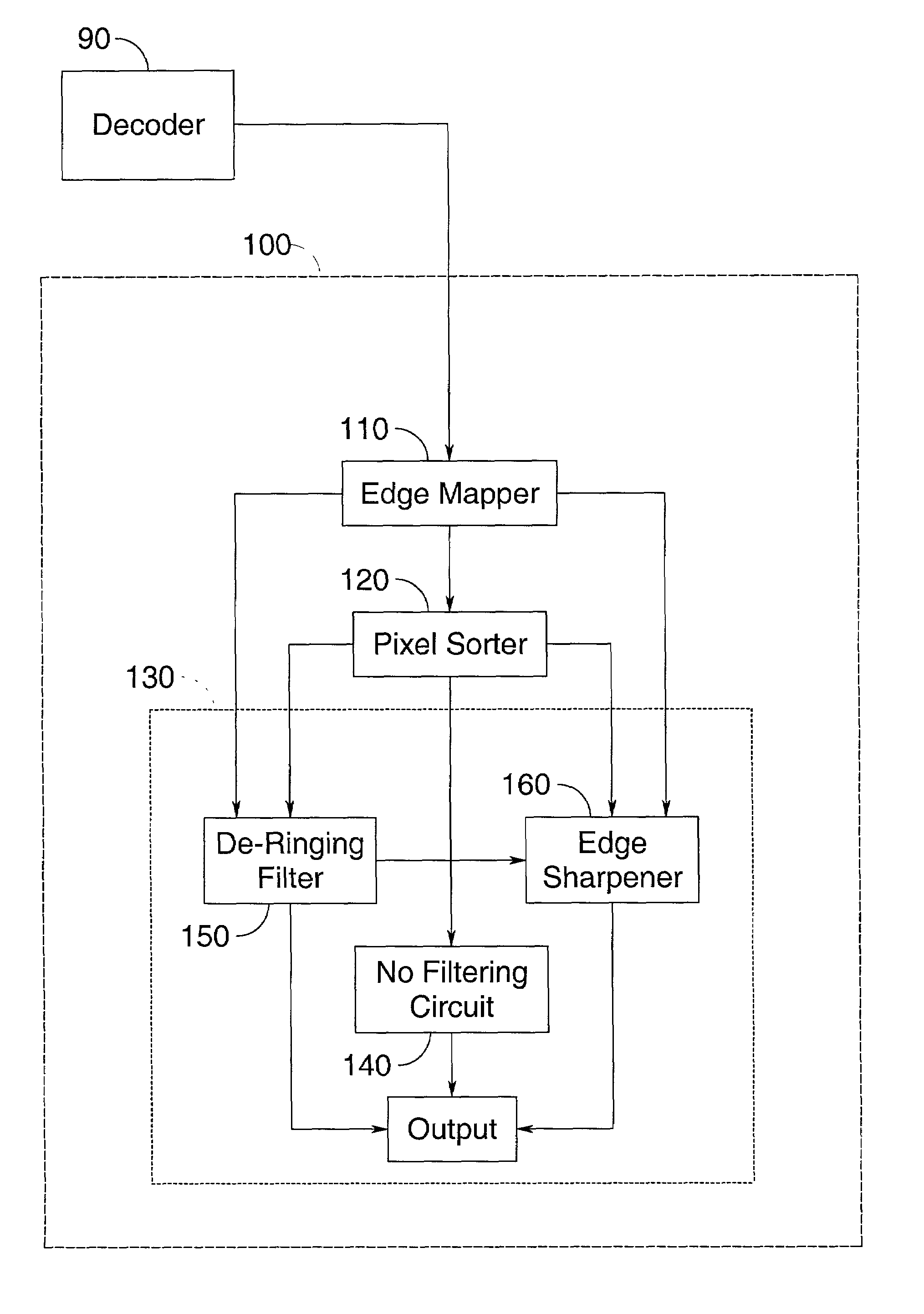

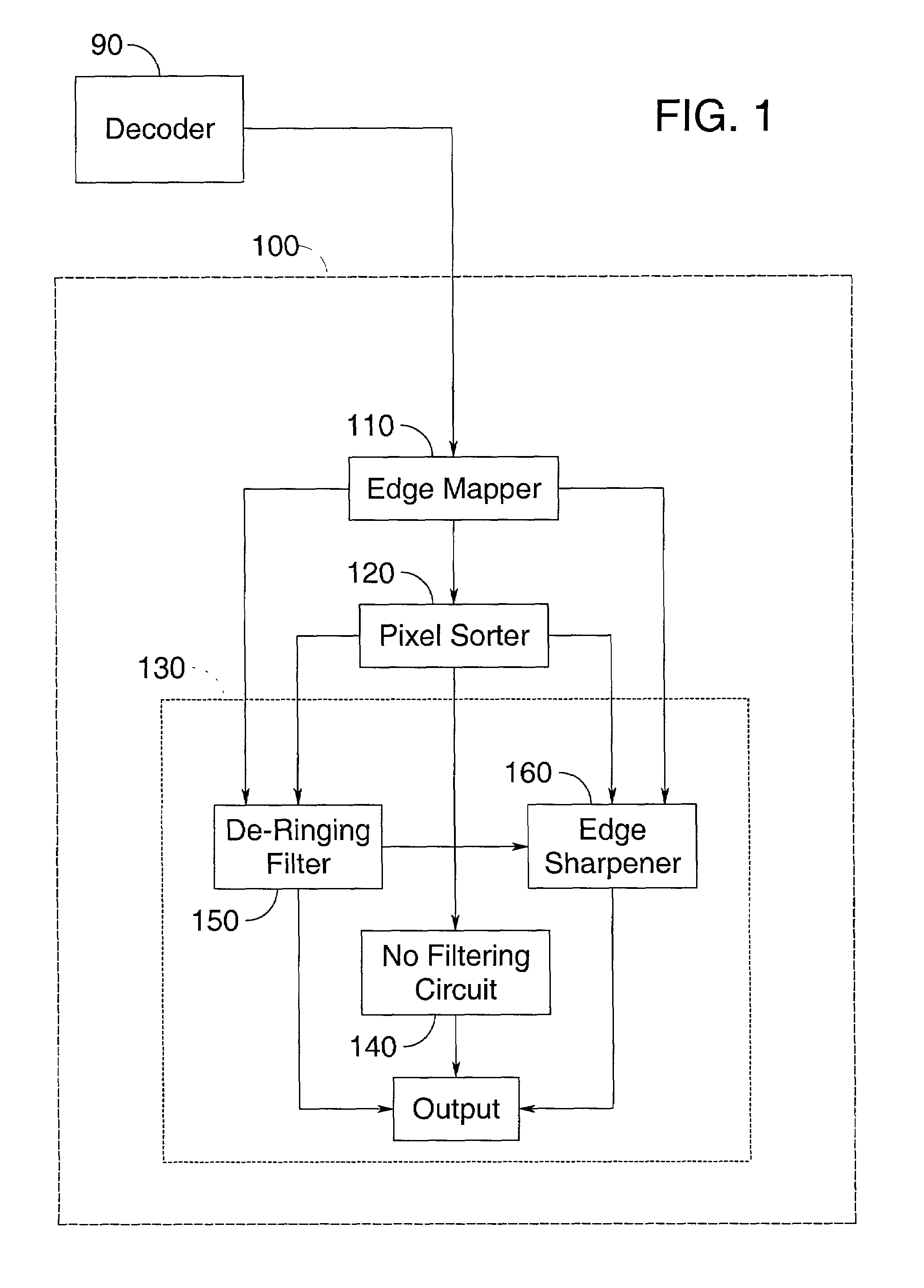

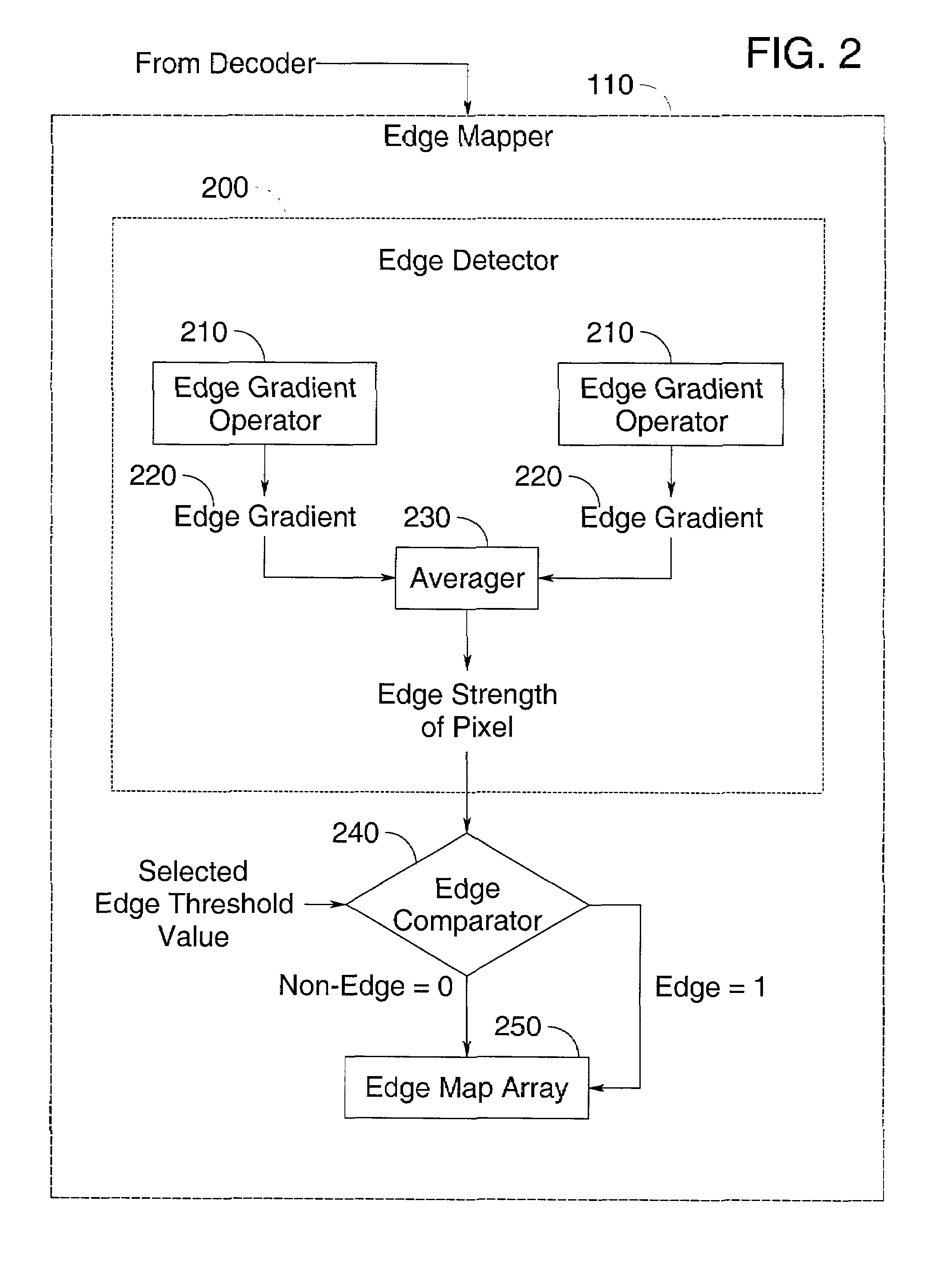

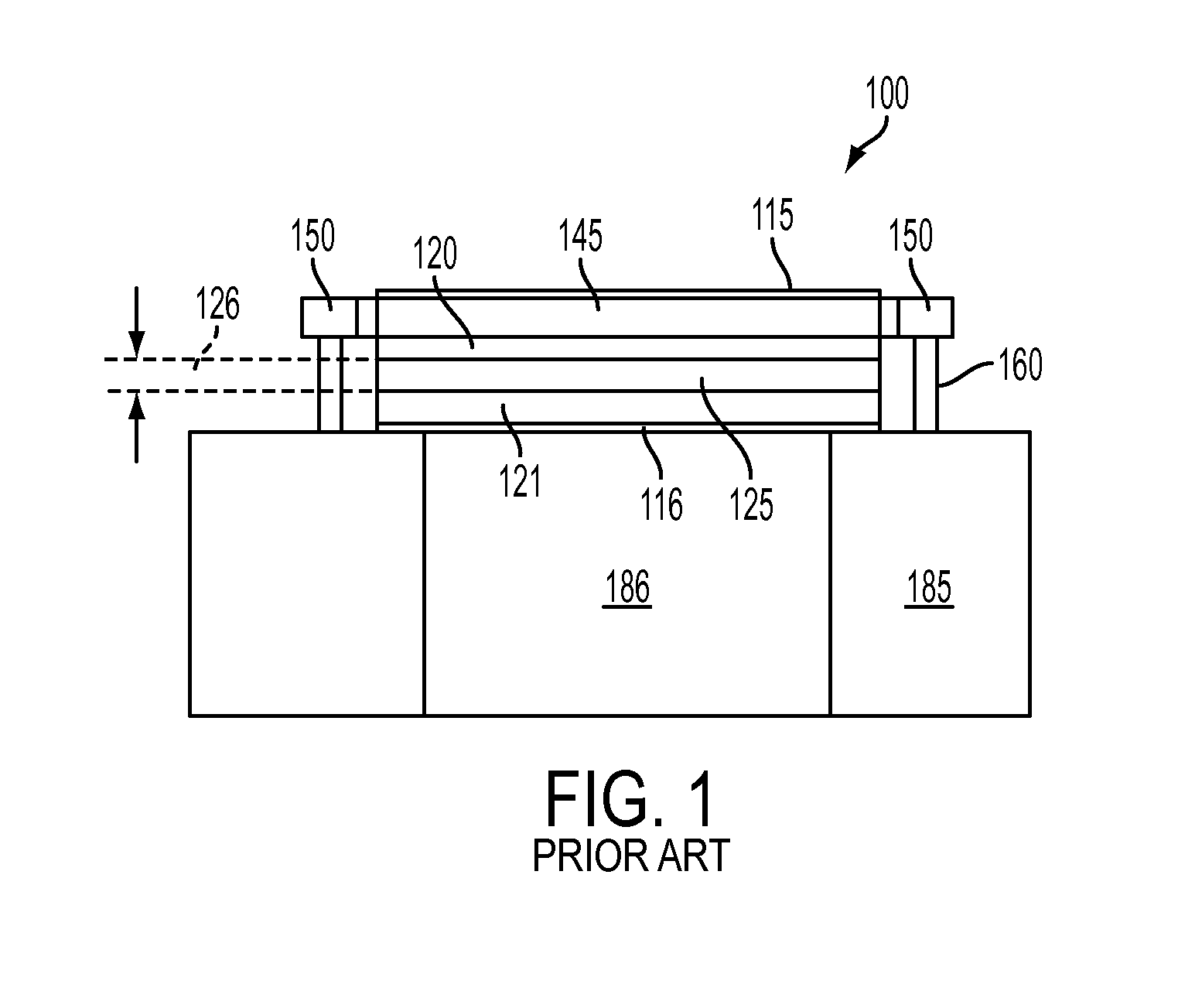

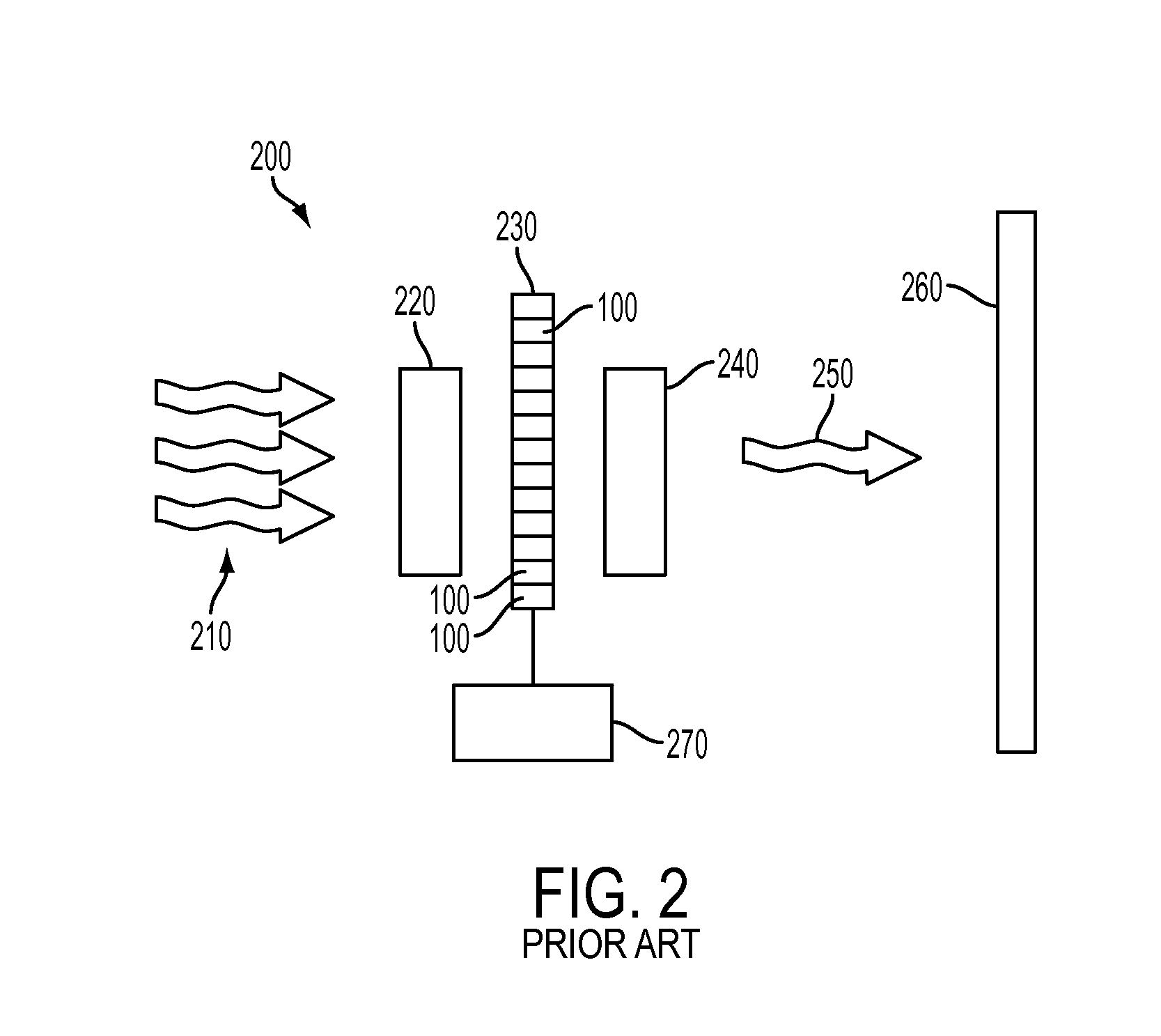

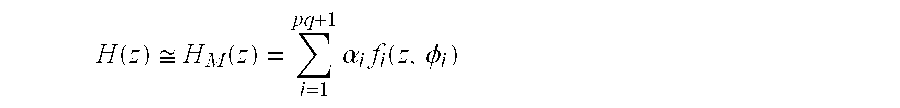

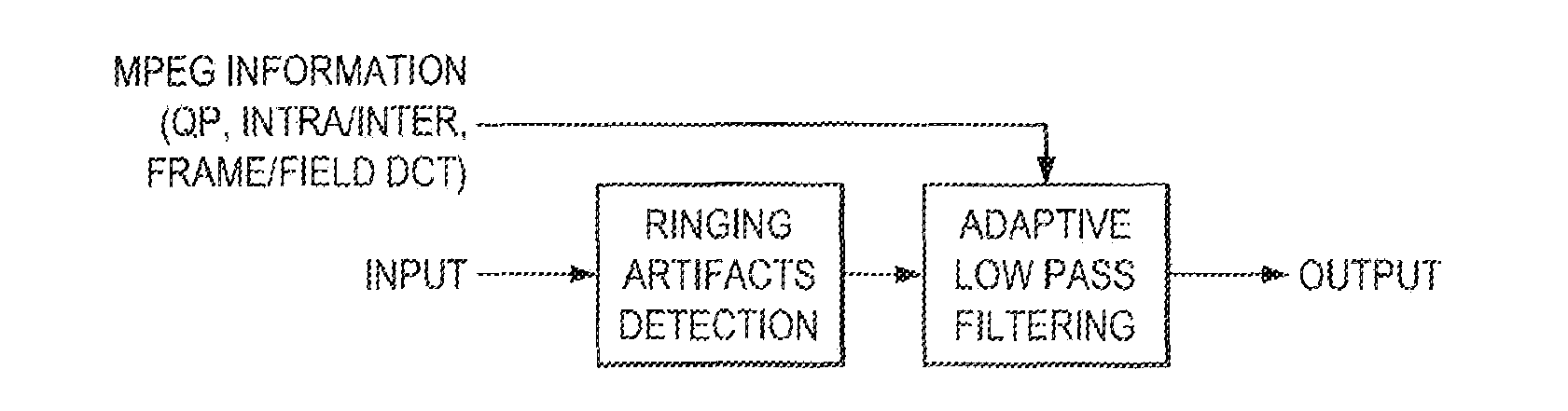

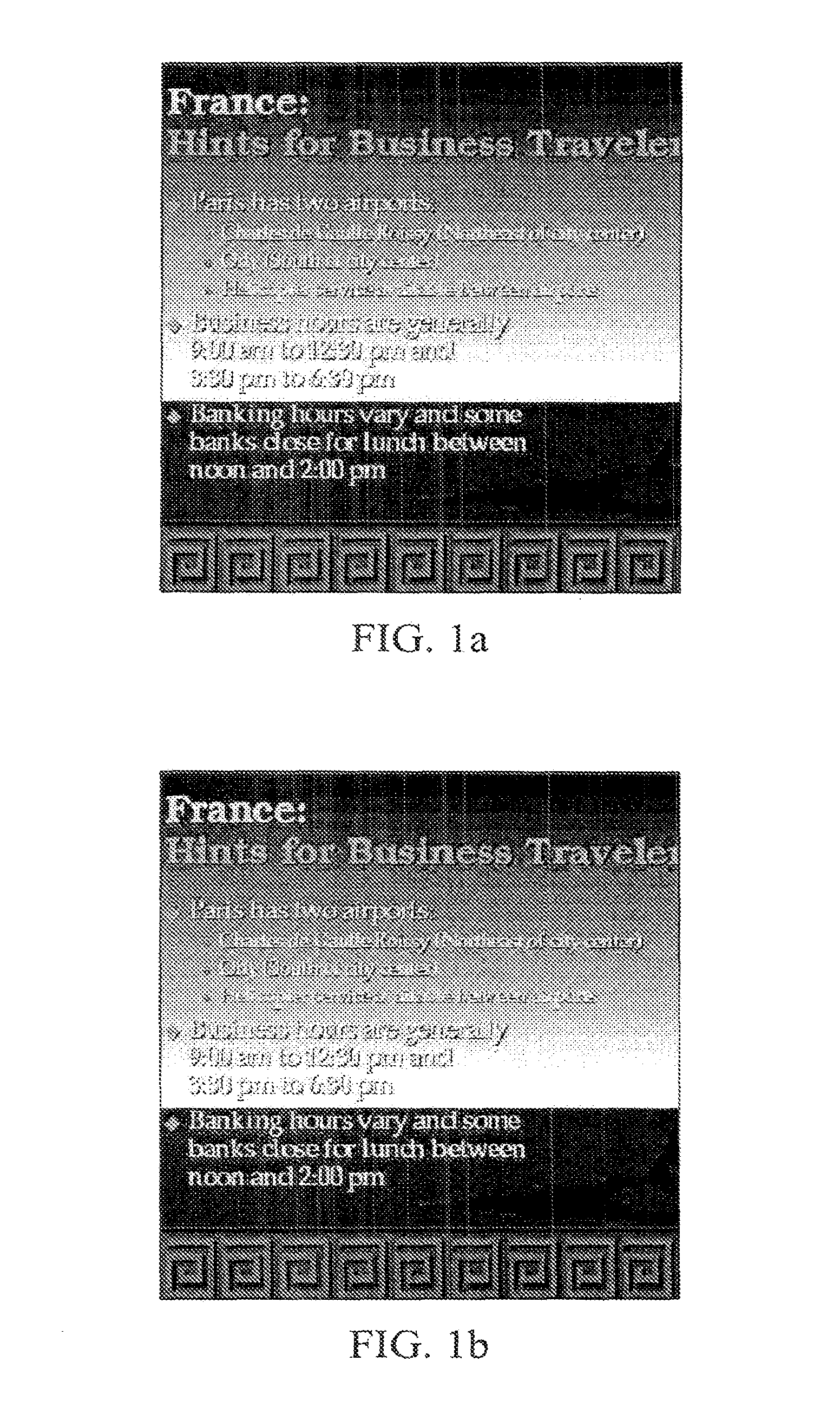

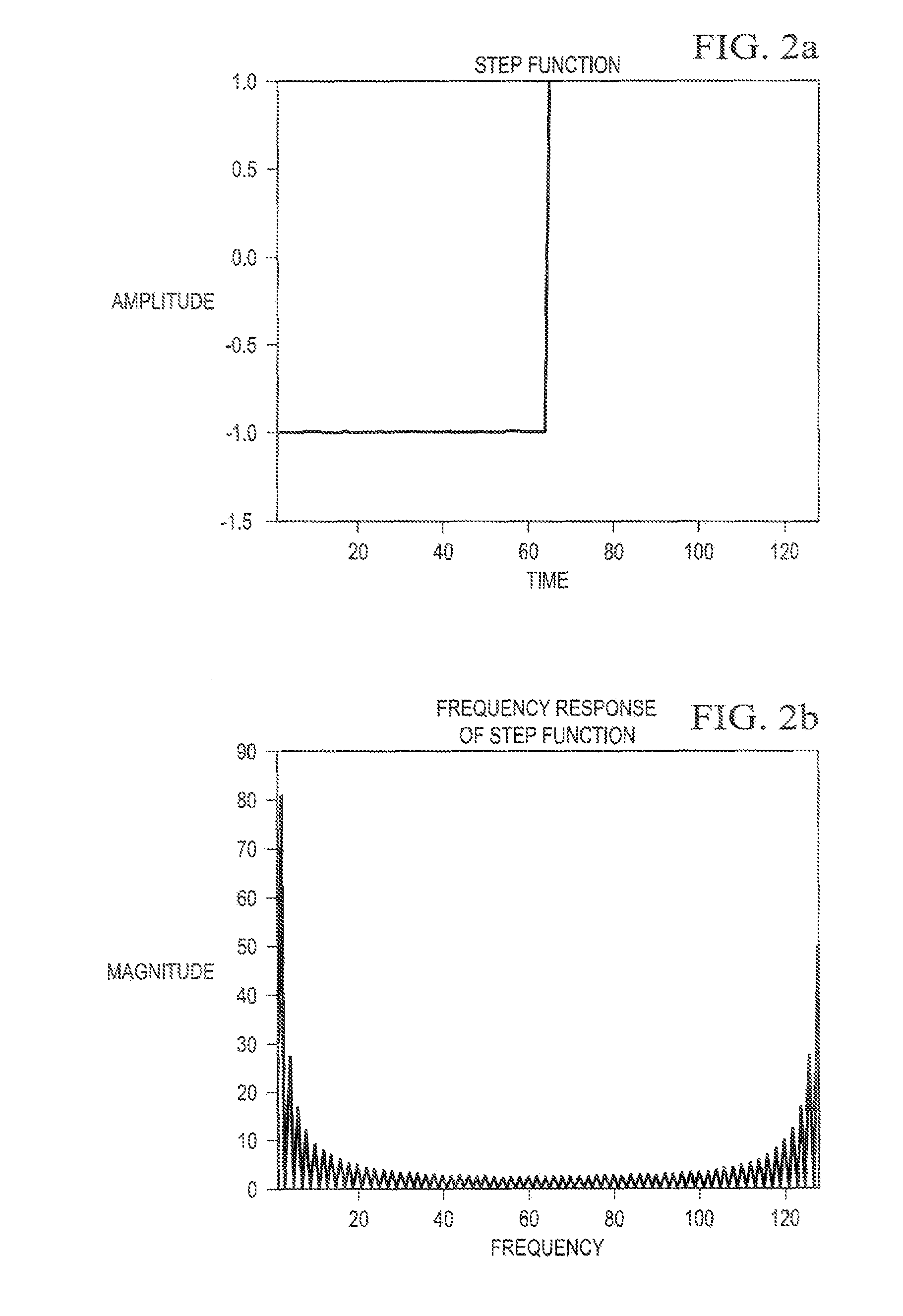

Filter for combined de-ringing and edge sharpening

InactiveUS7003173B2Reduce redundancyComputationally less-expensiveTelevision system detailsImage enhancementAdaptive filterSharpening

A filter for post-processing digital images and videos, having an edge mapper, a pixel sorter, and an adaptive filter that simultaneously performs de-ringing and edge sharpening. The combined filter is computationally simpler than known methods and can achieve removal of ringing artifacts and sharpening of true edges at the same time. The present invention also includes a preferred method of simultaneously de-ringing and edge sharpening digital images and videos.

Owner:SHARP KK

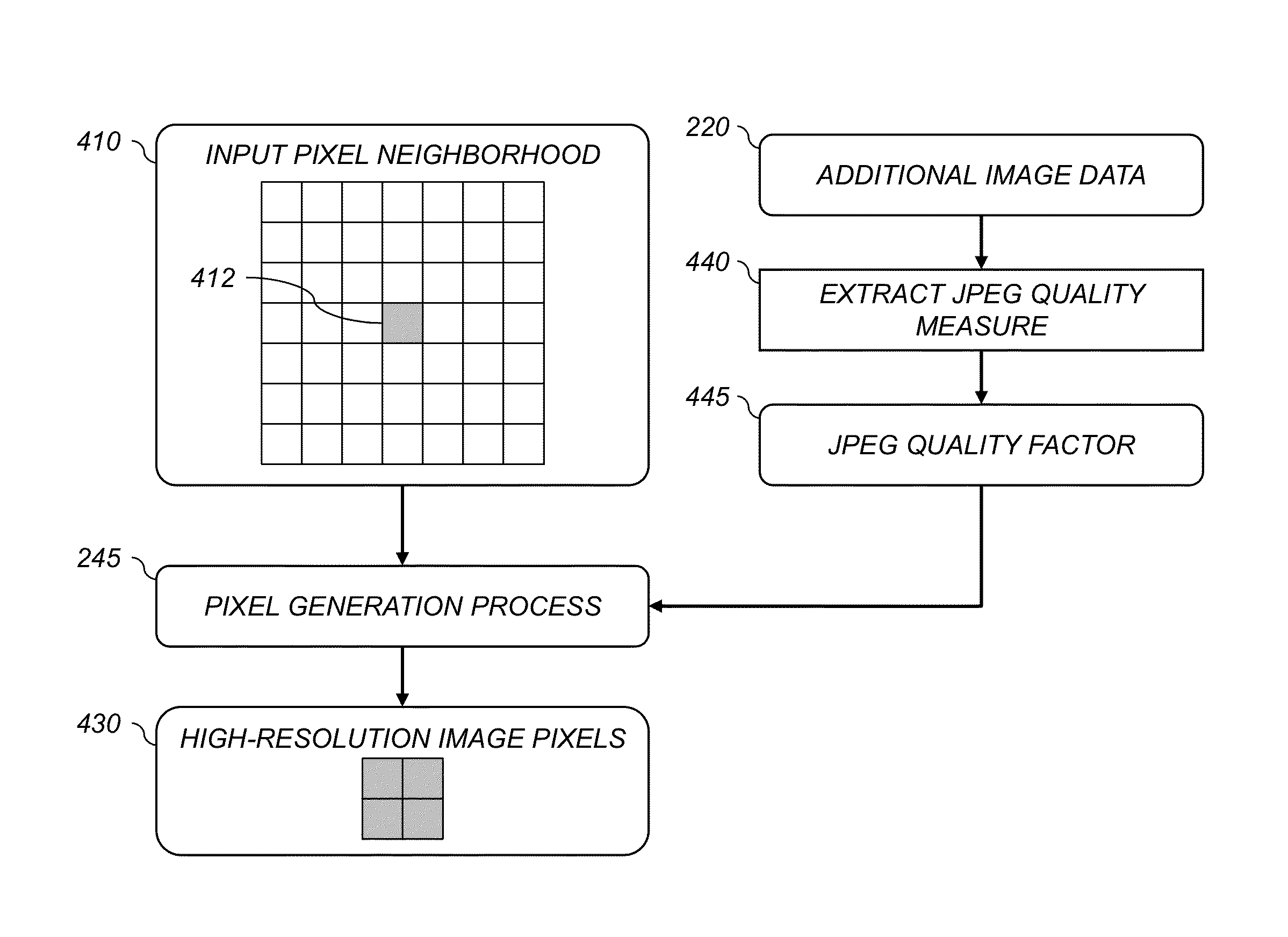

Method for increasing image resolution

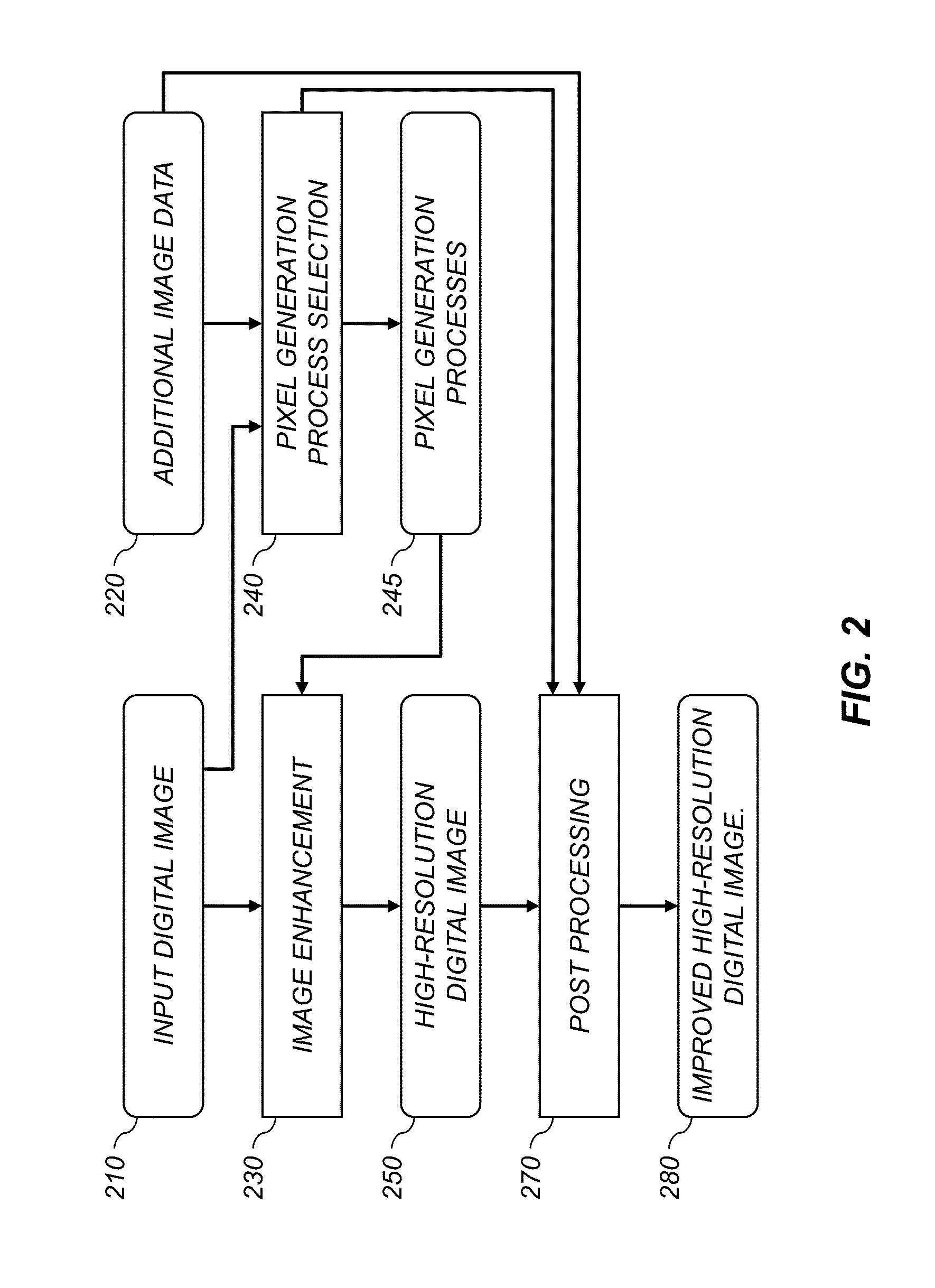

InactiveUS20140072242A1Improve imaging resolutionImproved and more consistent resultGeometric image transformationCharacter and pattern recognitionGeneration processImage resolution

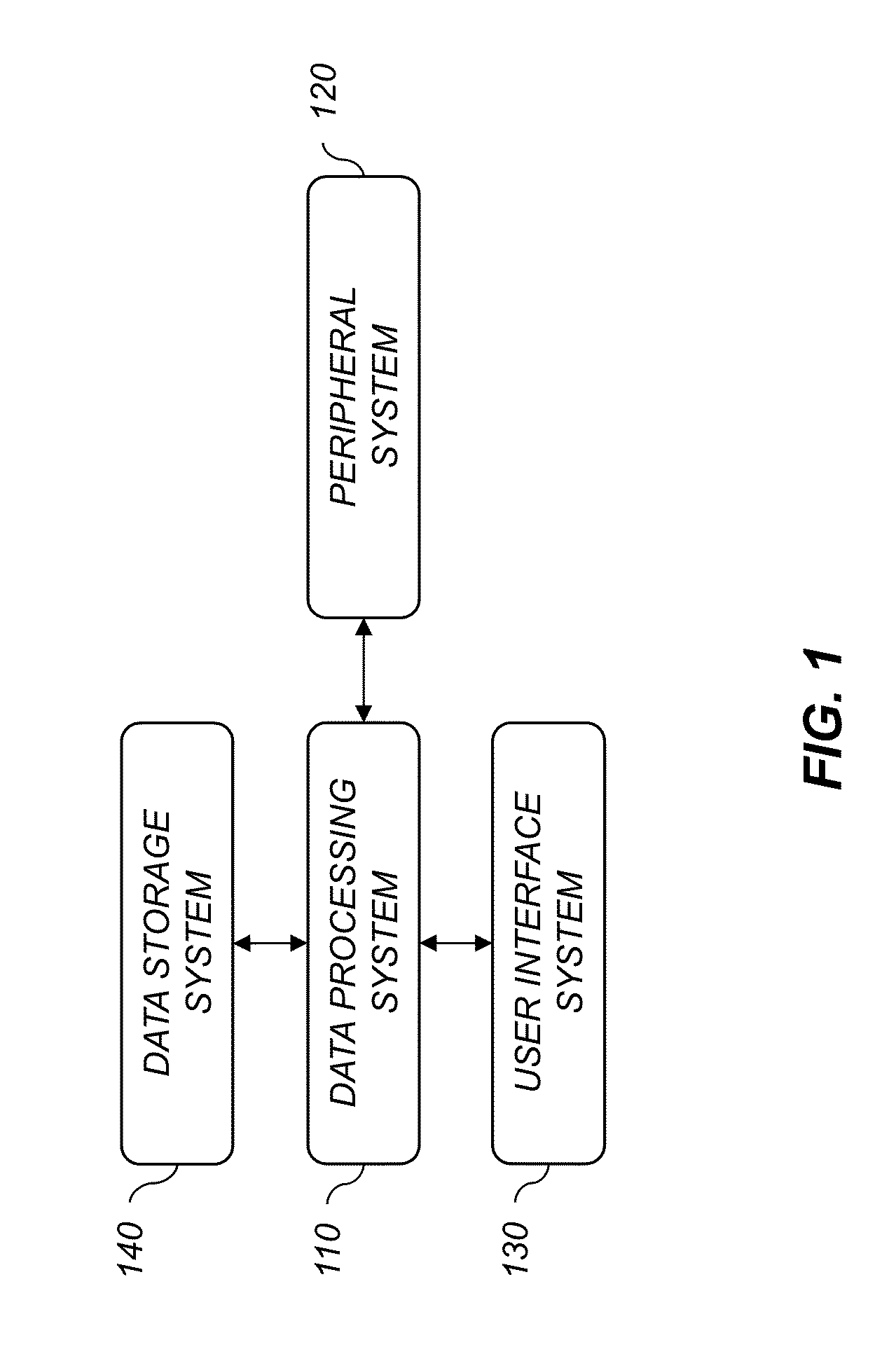

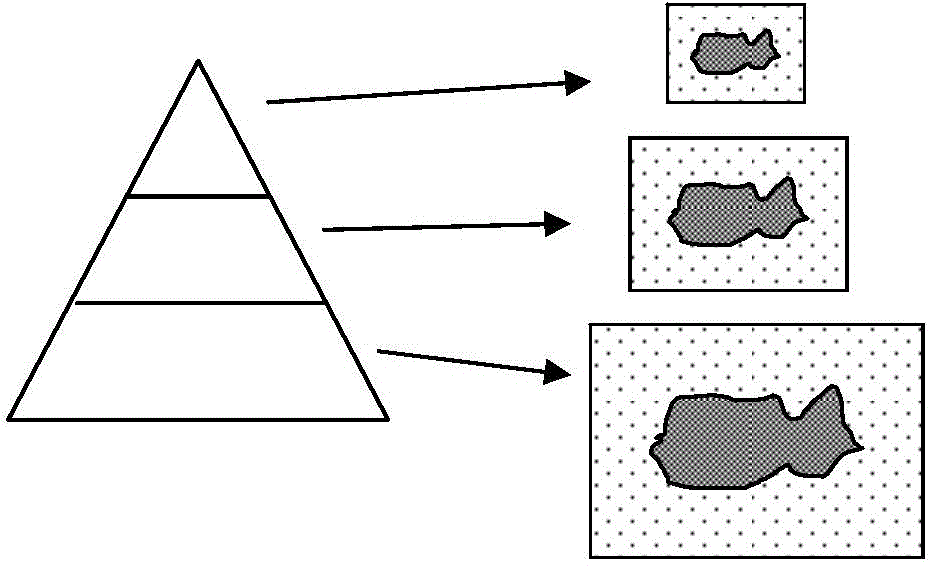

A method for resizing an input digital image to provide a high-resolution output digital image having a larger number of image pixels. The input image pixels in the input digital image are analyzed and assigned to different pixel classifications. A plurality of pixel generation processes are provided, each pixel generation process being associated with a different pixel classification and being adapted to operate on a neighborhood of input image pixels around a particular input image pixel to provide a plurality of output image pixels. Each input image pixel is processed using a pixel generation process that is selected in accordance with the corresponding pixel classification to produce the high-resolution output digital image.

Owner:EASTMAN KODAK CO

Method and system for forming very low noise imagery using pixel classification

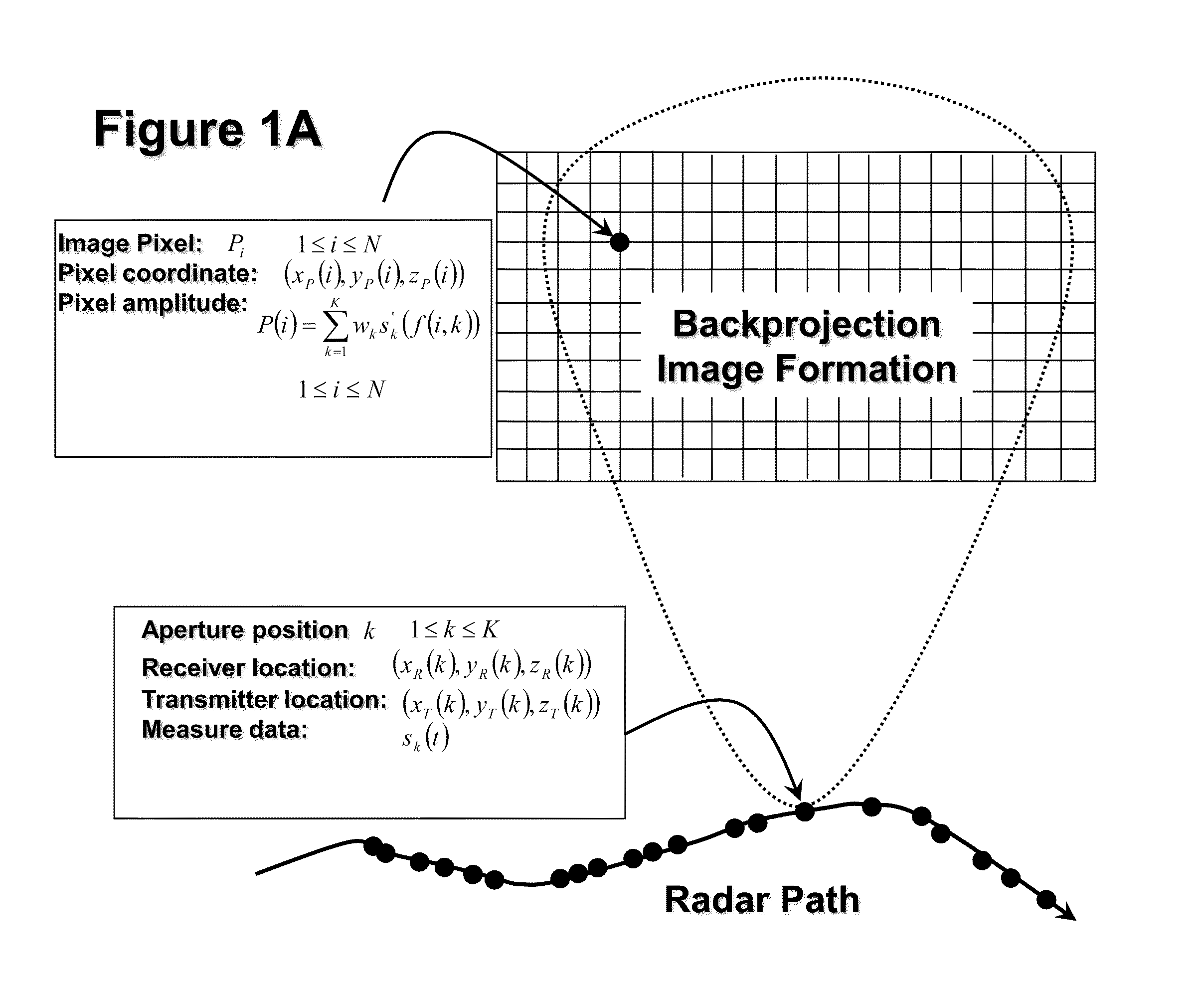

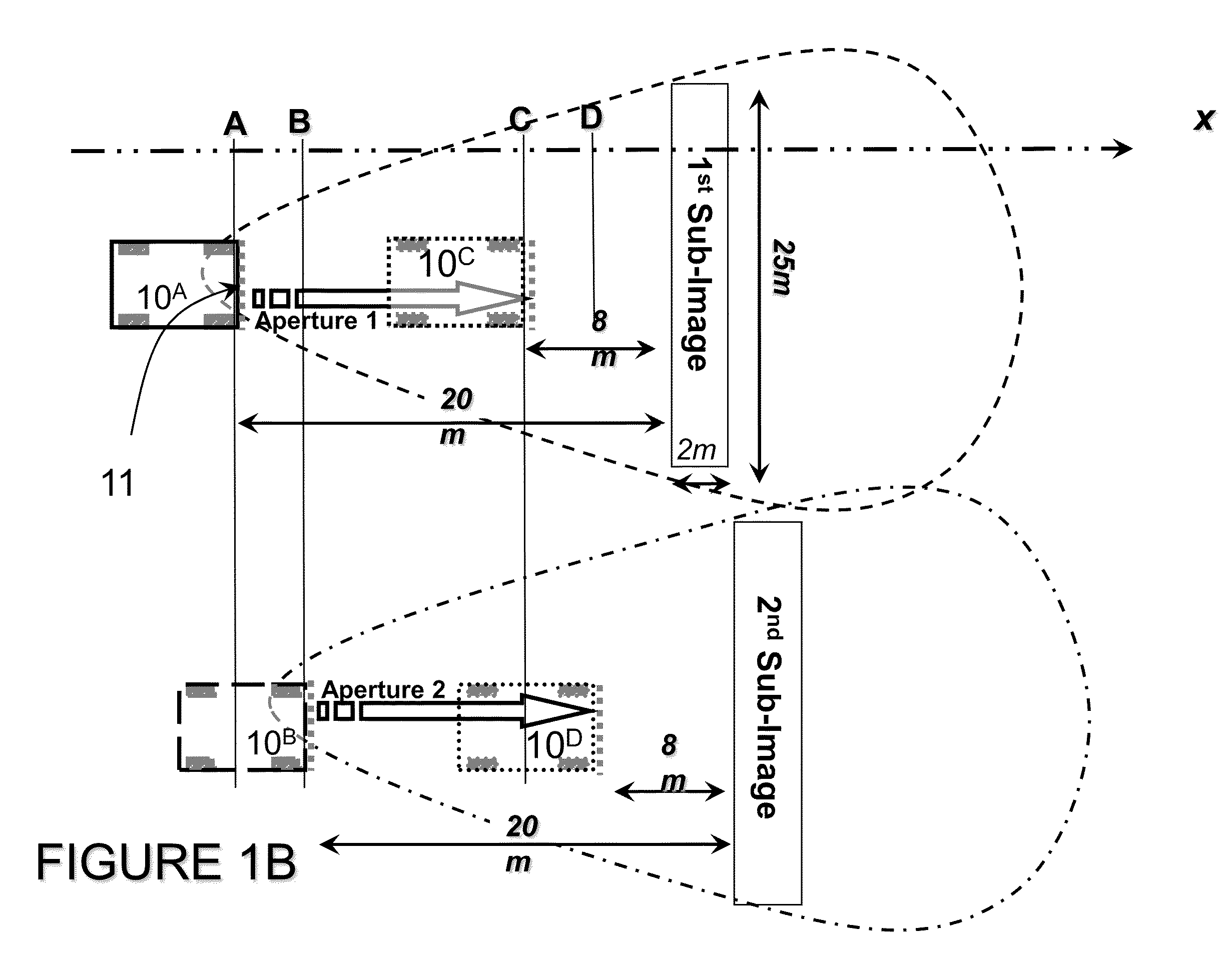

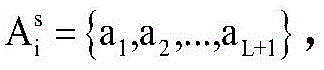

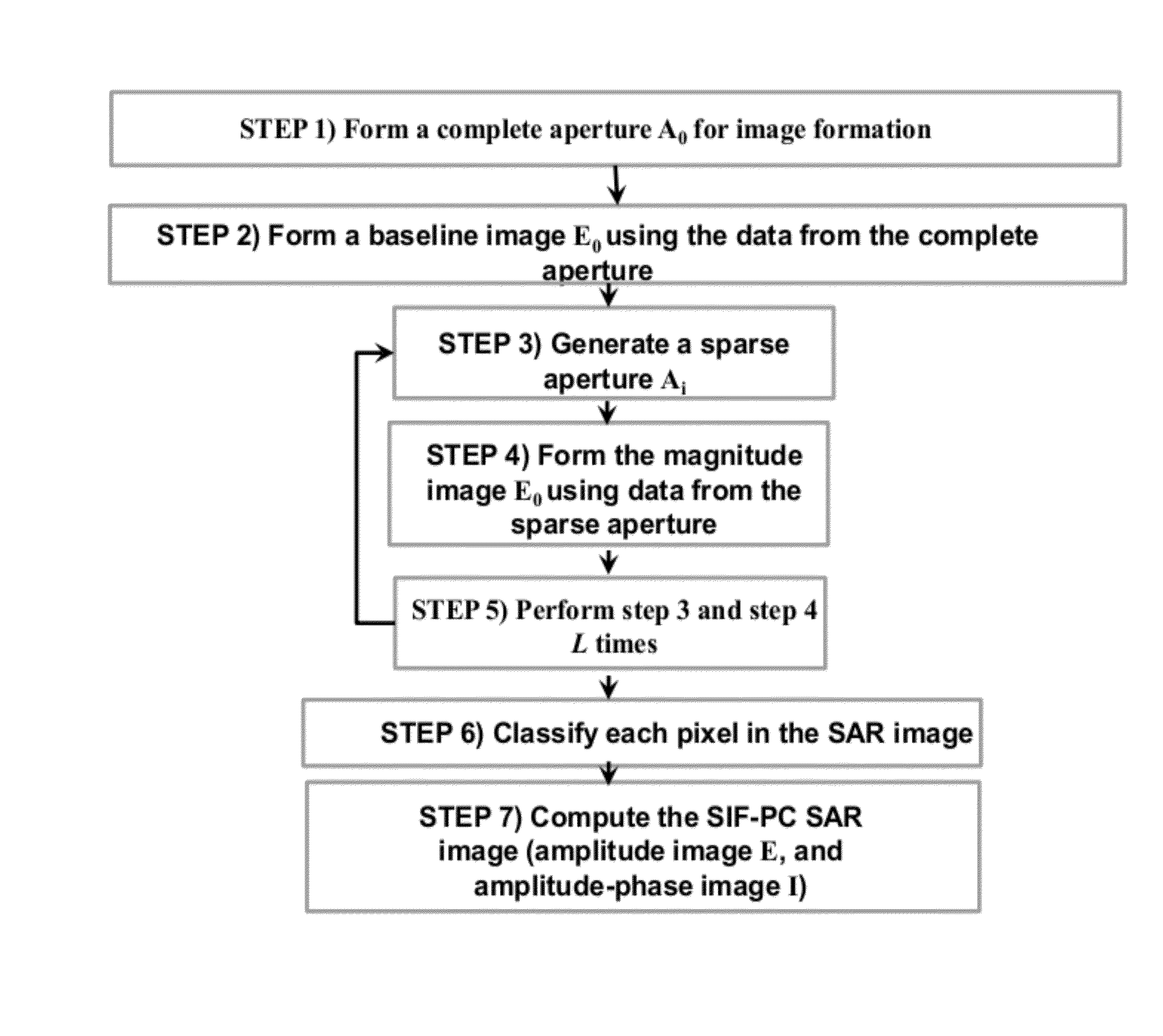

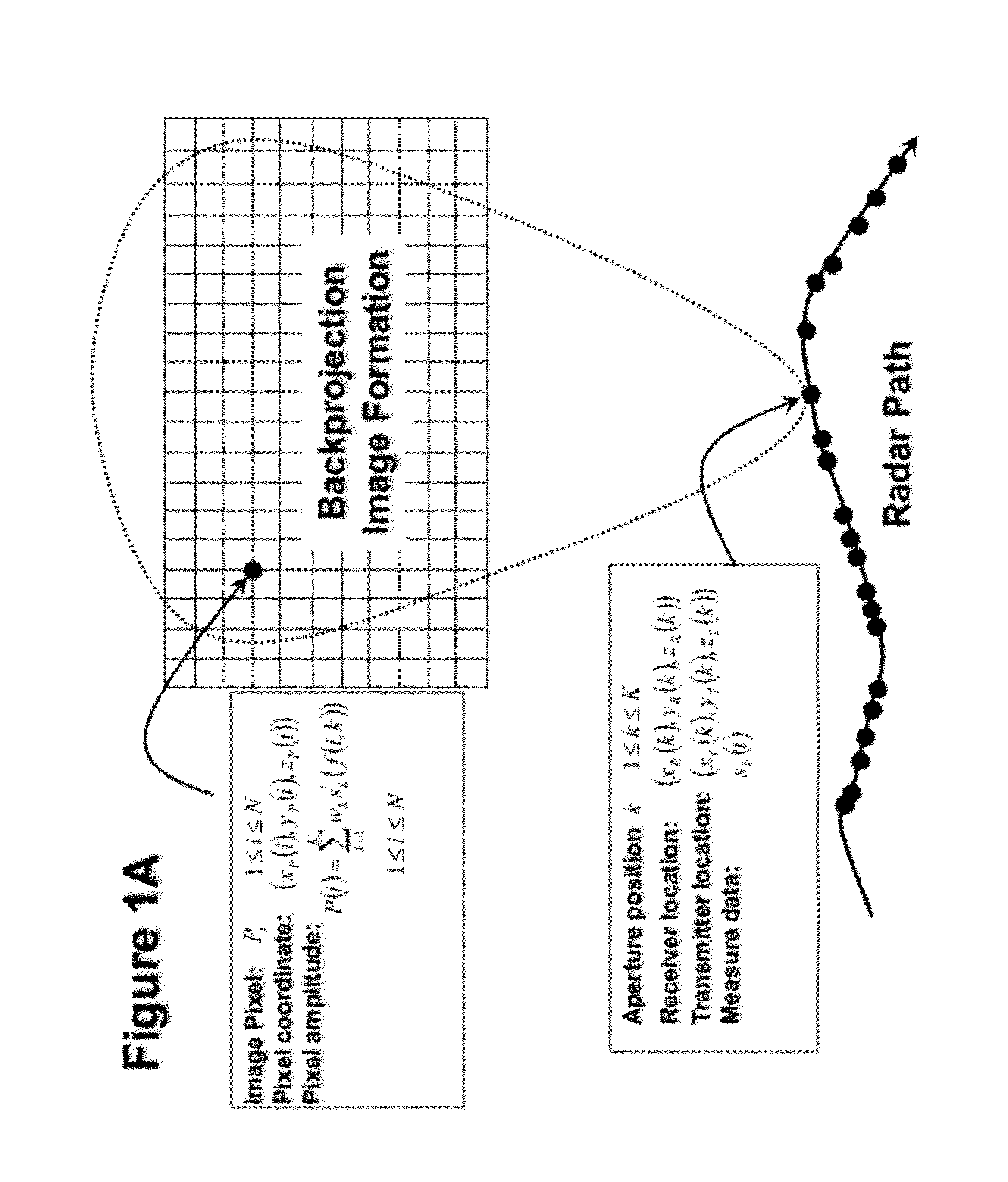

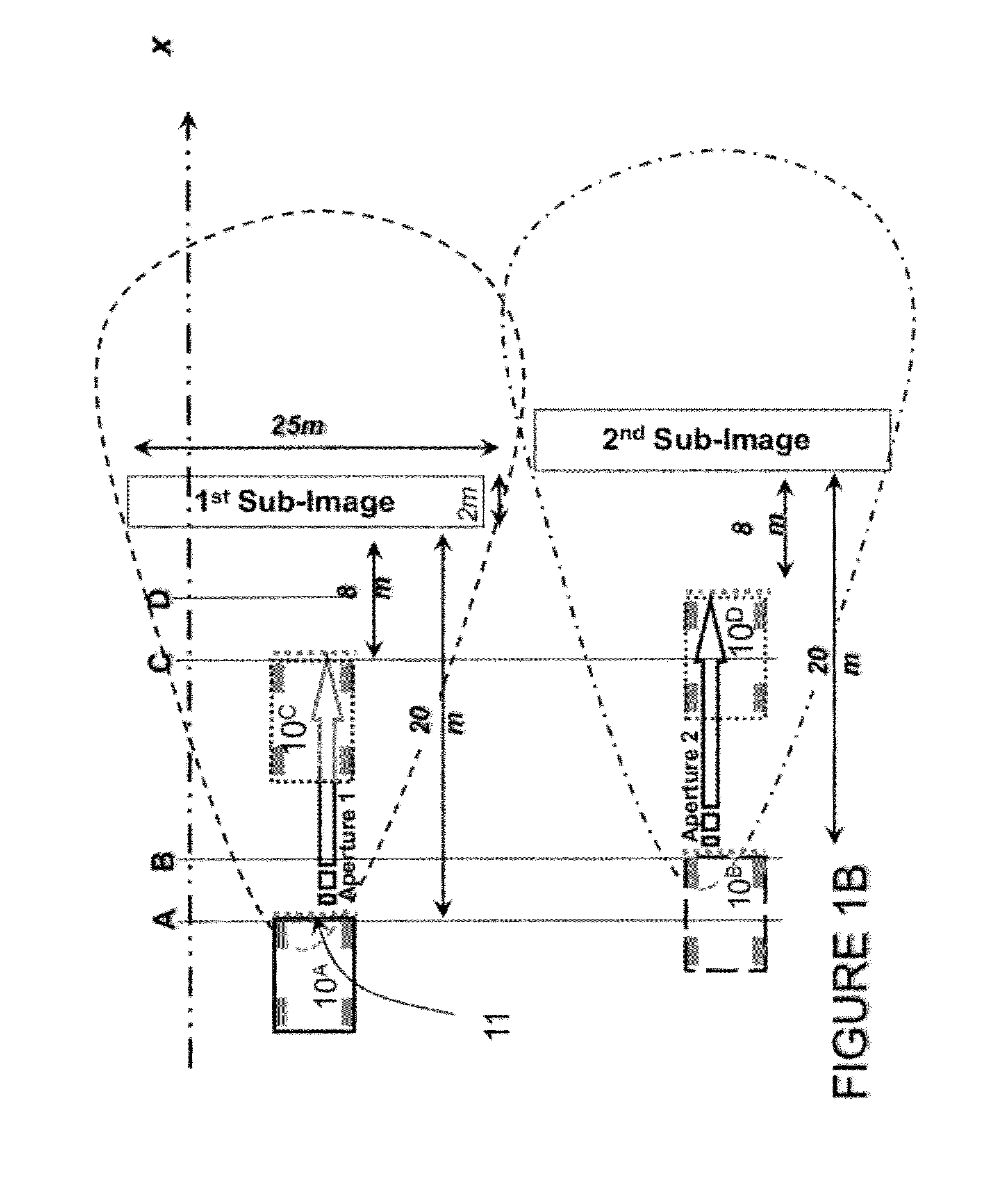

InactiveUS20110012778A1High contrast imageReduce false alarm rateRadio wave reradiation/reflectionLow noiseRange migration

A method and system for generating images from projection data comprising inputting from at least one data receiving element first values representing correlated positional and recorded data; each of said first values forming a point in an array of k data points; forming an image by processing the projection data utilizing a pixel characterization imaging subsystem that combines the positional and recorded data to form the SAR imagery utilizing one of a back-projection algorithm or range migration algorithm; integrating positional and recorded data from many aperture positions, comprising: forming the complete aperture A0 for SAR image formation comprising collecting the return radar data, the coordinates of the receiver, and the coordinates of the transmitter for each position k along the aperture of N positions; forming an imaging grid comprising M image pixels wherein each pixel Pi in the imaging grid is located at coordinate (xP(i),yP(i), zP(i)); selecting and removing a substantial number of aperture positions to form a sparse aperture Ai; repeating the selecting and removing step for L iterations for each Ai; classifying each pixel in the image into either target class based on the statistical distribution of its amplitude across L iterations (1≦i≦L); whereby if an image pixel is classified so as to be associated with a physical object, its value is computed from its statistics; otherwise, the pixel is assumed to come from a non-physical object and is given the value of zero.

Owner:US SEC THE ARMY THE +1

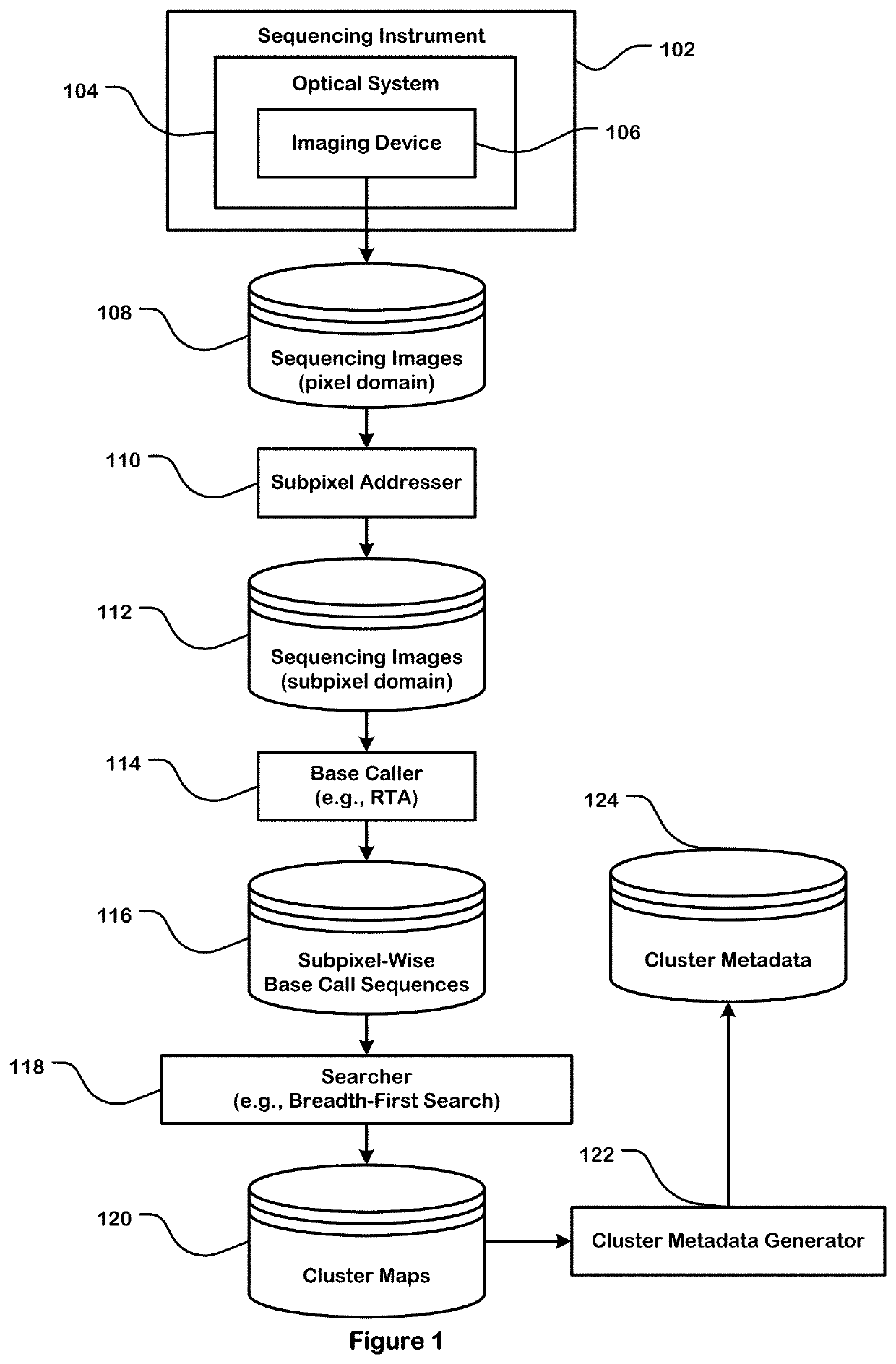

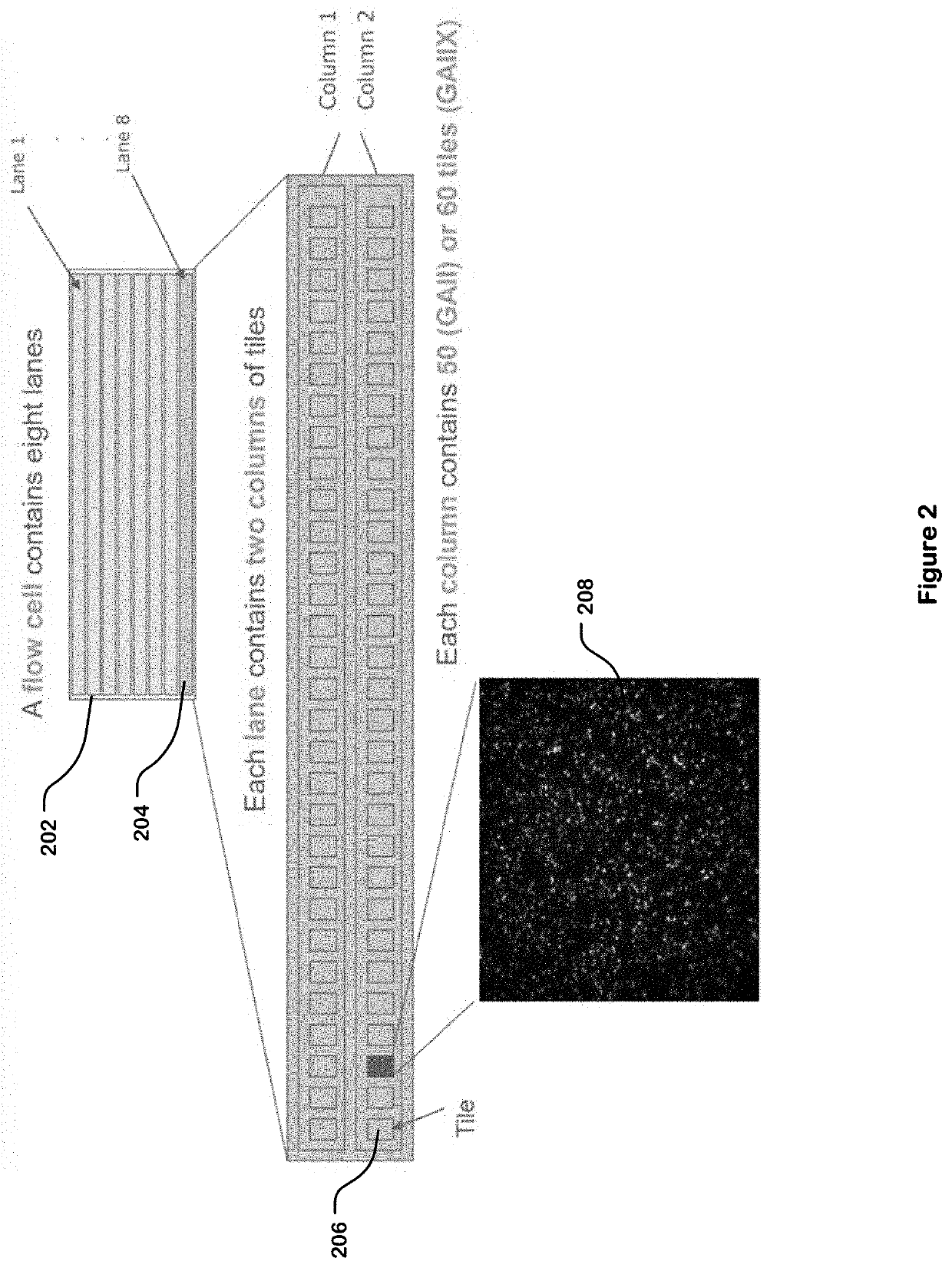

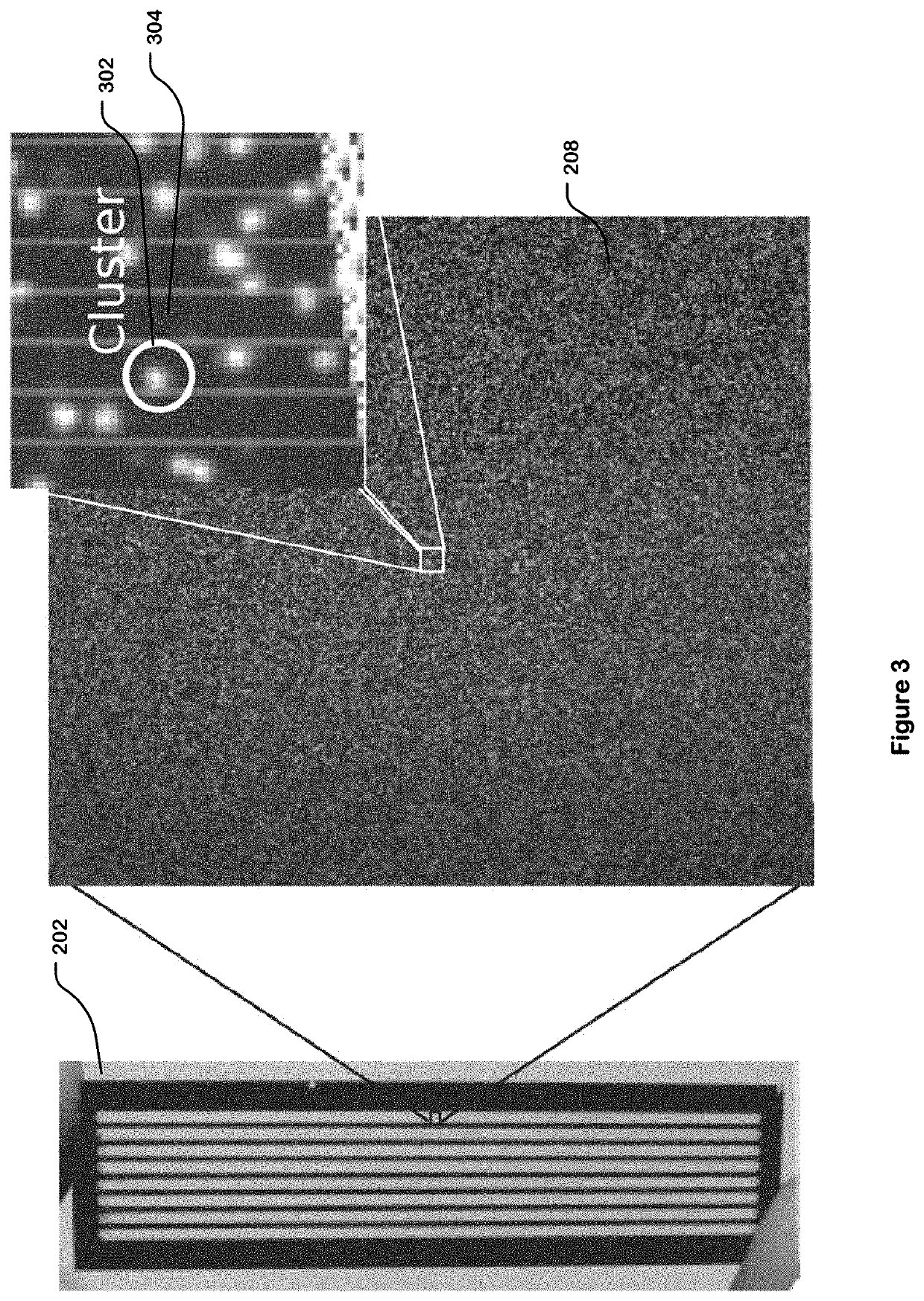

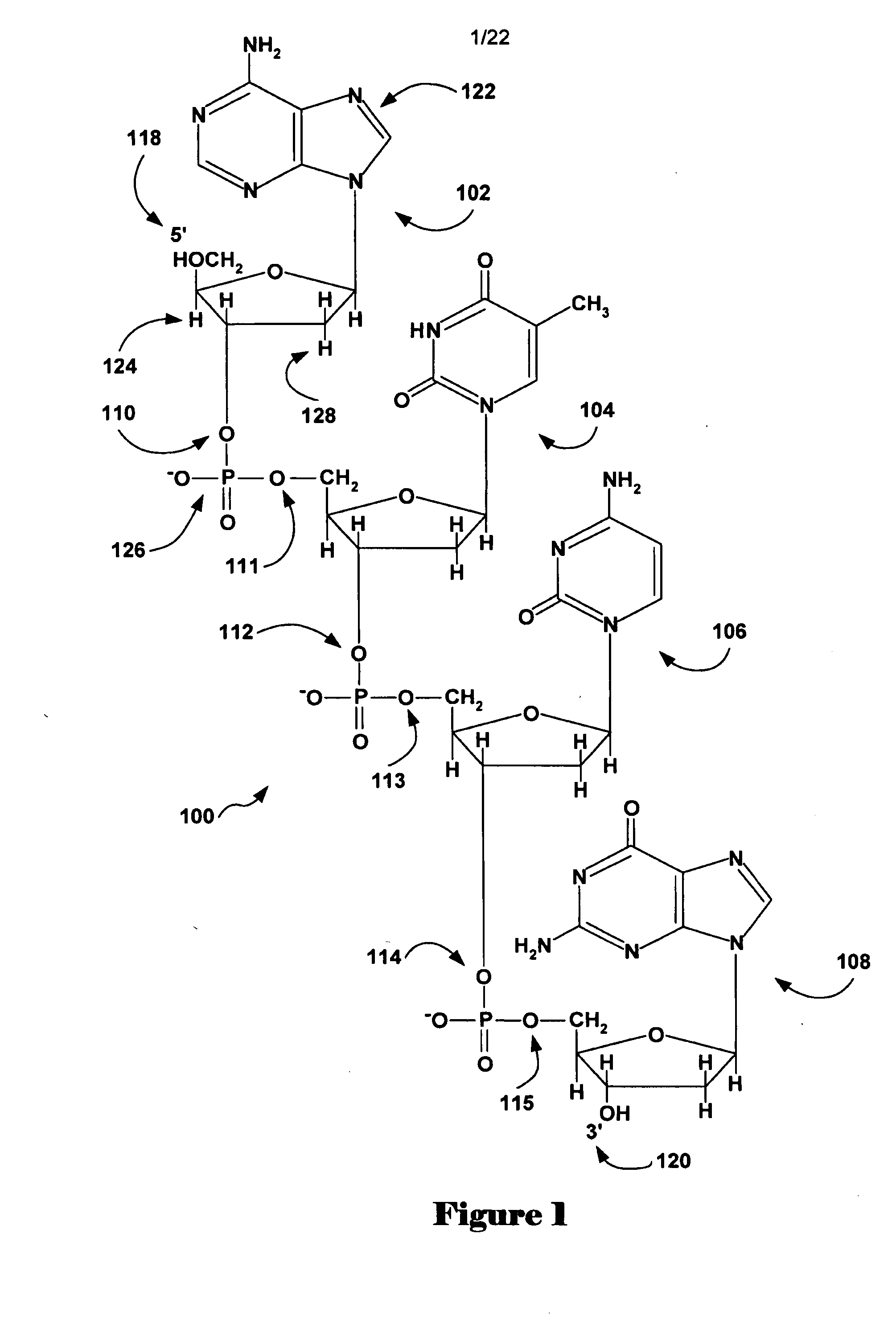

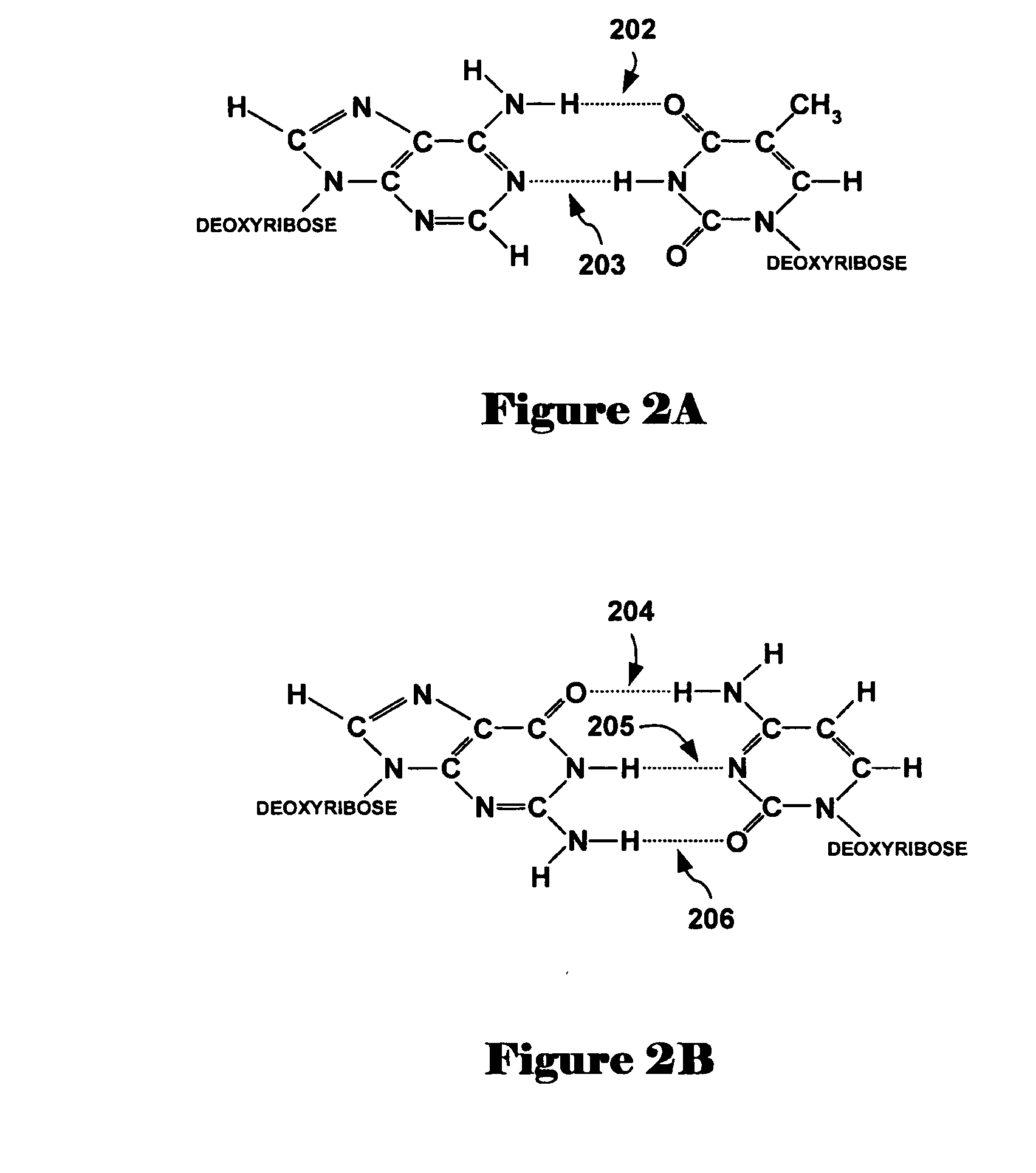

Training Data Generation for Artificial Intelligence-Based Sequencing

The technology disclosed relates to generating ground truth training data to train a neural network-based template generator for cluster metadata determination task. In particular, it relates to accessing sequencing images, obtaining, from a base caller, a base call classifying each subpixel in the sequencing images as one of four bases (A, C, T, and G), generating a cluster map that identifies clusters as disjointed regions of contiguous subpixels which share a substantially matching base call sequence, determining cluster metadata based on the disjointed regions in the cluster map, and using the cluster metadata to generate the ground truth training data for training the neural network-based template generator for the cluster metadata determination task.

Owner:ILLUMINA INC

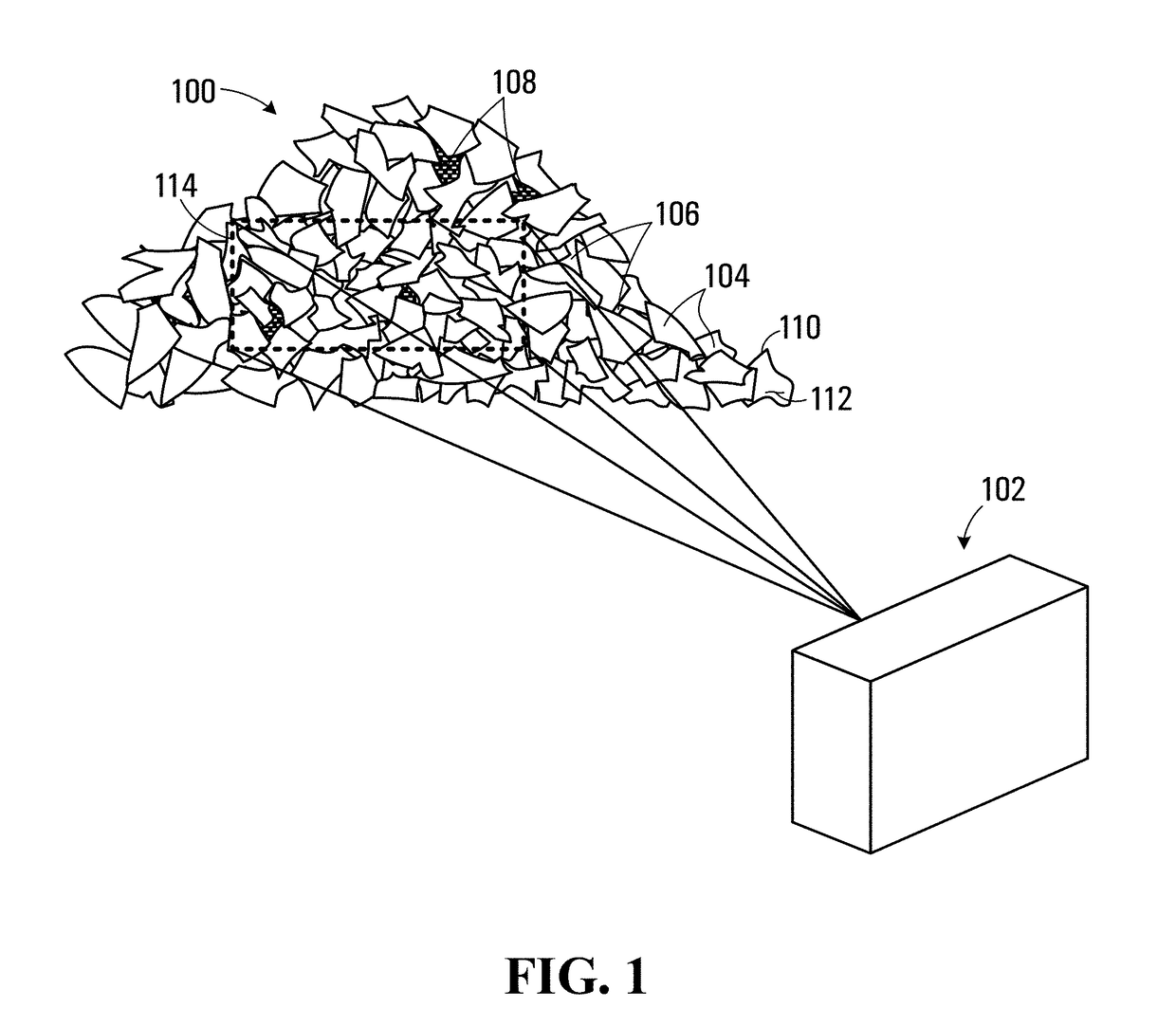

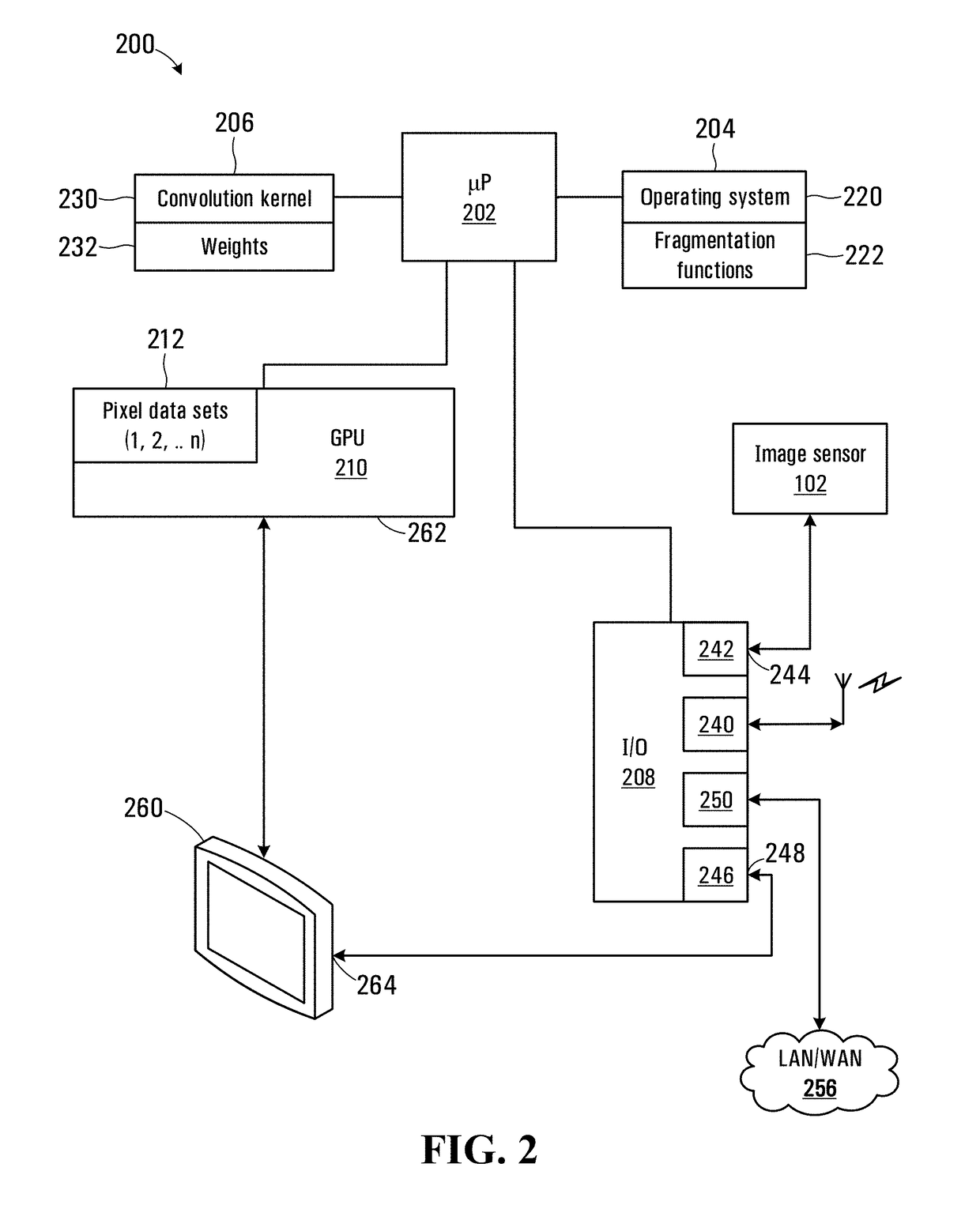

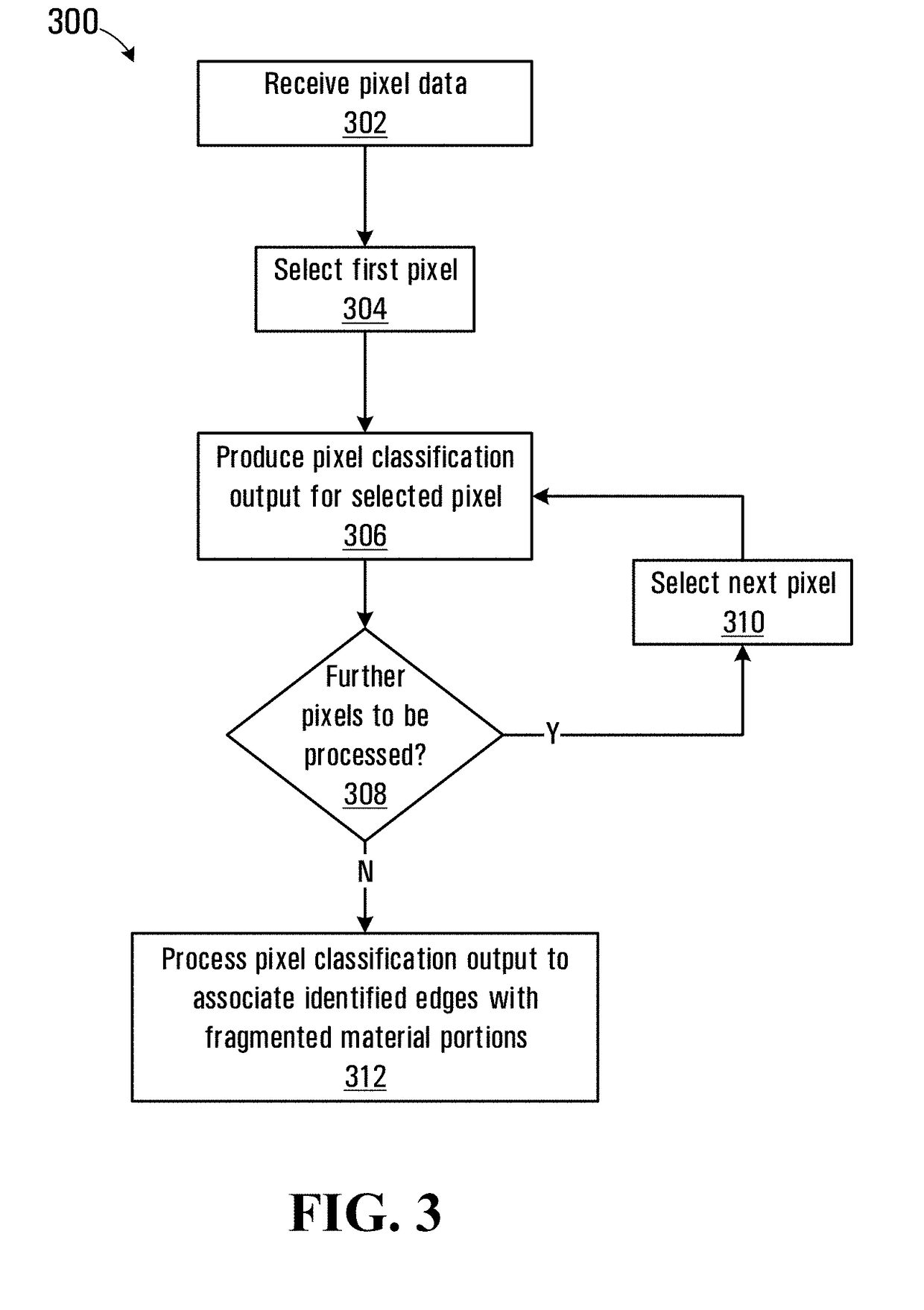

Method and apparatus for identifying fragmented material portions within an image

ActiveUS20190012768A1Improve spatial resolutionImage enhancementImage analysisComputer visionConvolution

A method and apparatus for processing an image of fragmented material to identify fragmented material portions within the image is disclosed. The method involves receiving pixel data associated with an input plurality of pixels representing the image of the fragmented material. The method also involves processing the pixel data using a convolutional neural network, the convolutional neural network having a plurality of layers and producing a pixel classification output indicating whether pixels in the input plurality of pixels are located at one of an edge of a fragmented material portion, inwardly from the edge, and at interstices between fragmented material portions. The convolutional neural network includes at least one convolution layer configured to produce a convolution of the input plurality of pixels, the convolutional neural network having been previously trained using a plurality of training images including previously identified fragmented material portions. The method further involves processing the pixel classification output to associate identified edges with fragmented material portions.

Owner:MOTION METRICS INT CORP

Method for classifying a pixel of a hyperspectral image in a remote sensing application

What is disclosed is a novel system and method for simultaneous spectral decomposition suitable for image object identification and categorization for scenes and objects under analysis. The present system captures different spectral planes simultaneously using a Fabry-Perot multi-filter grid each tuned to a specific wavelength. A method for classifying pixels in the captured image is provided. The present system and method finds its uses in a wide array of applications such as, for example, occupancy detection in a transportation management system and in medical imaging and diagnosis for healthcare management. The teachings hereof further find their uses in other applications where there is a need to capture a two dimensional view of a scene and decompose the scene into its spectral bands such that objects in the image can be appropriately identified.

Owner:CONDUENT BUSINESS SERVICES LLC

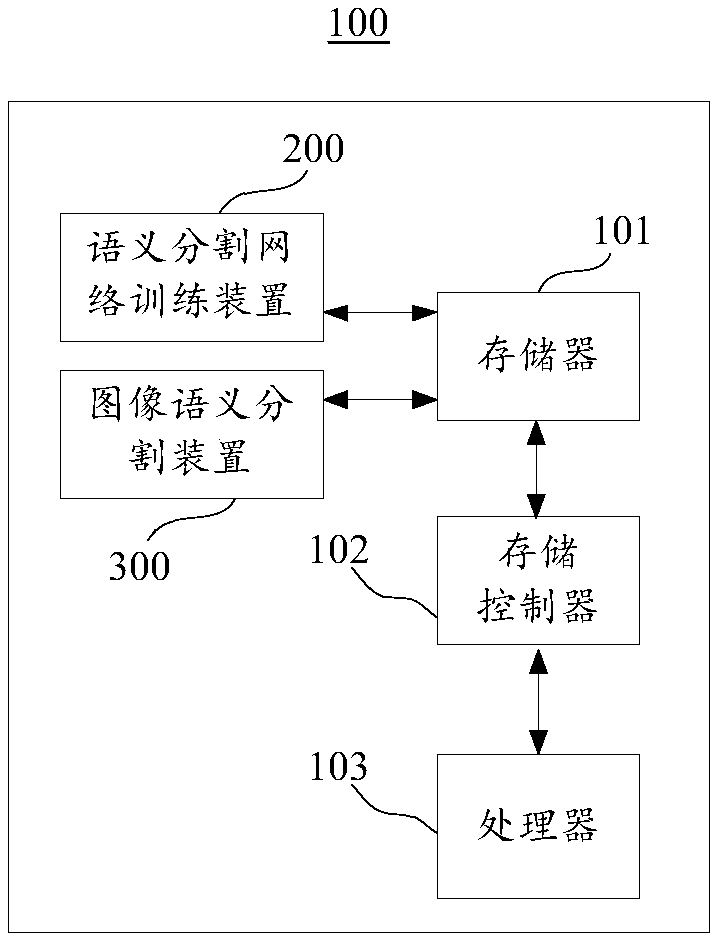

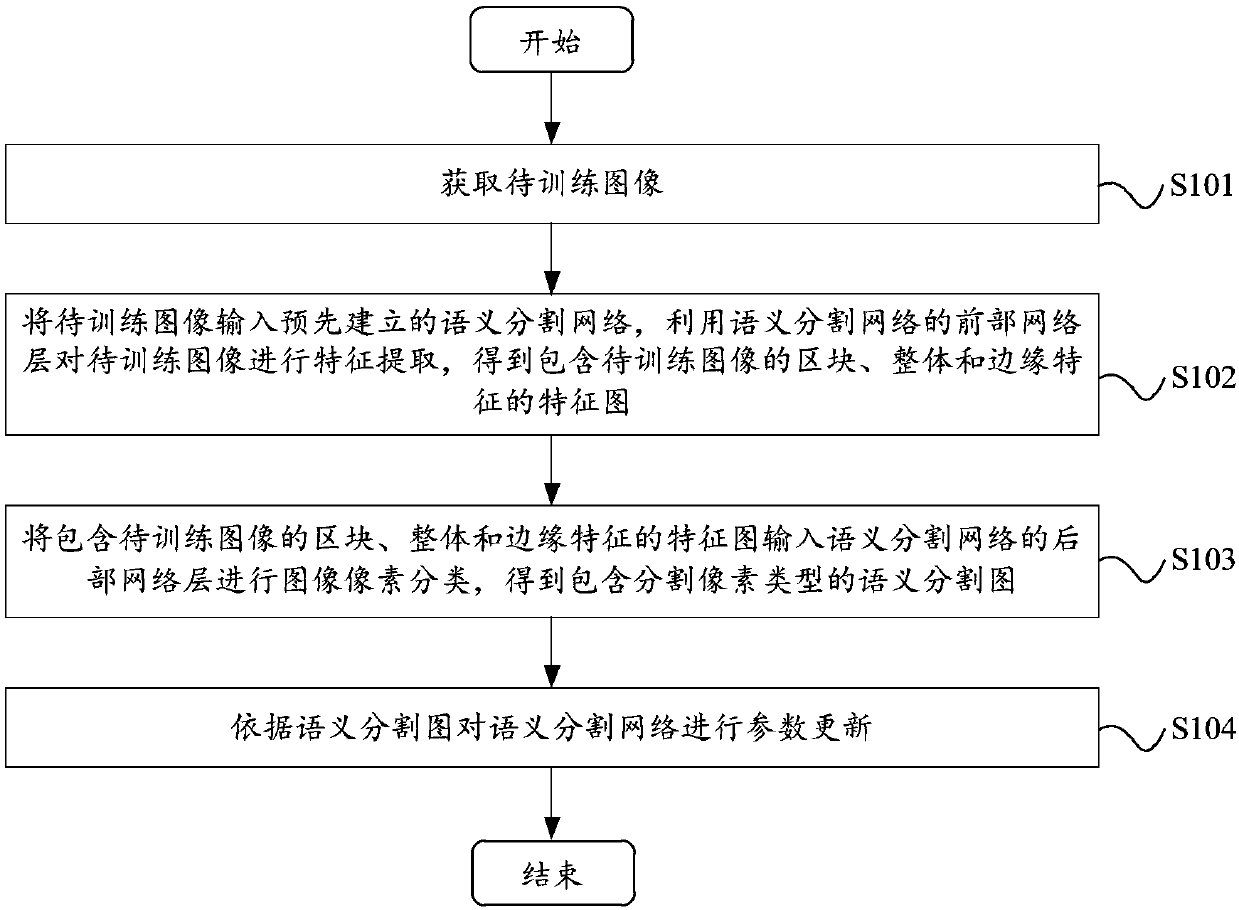

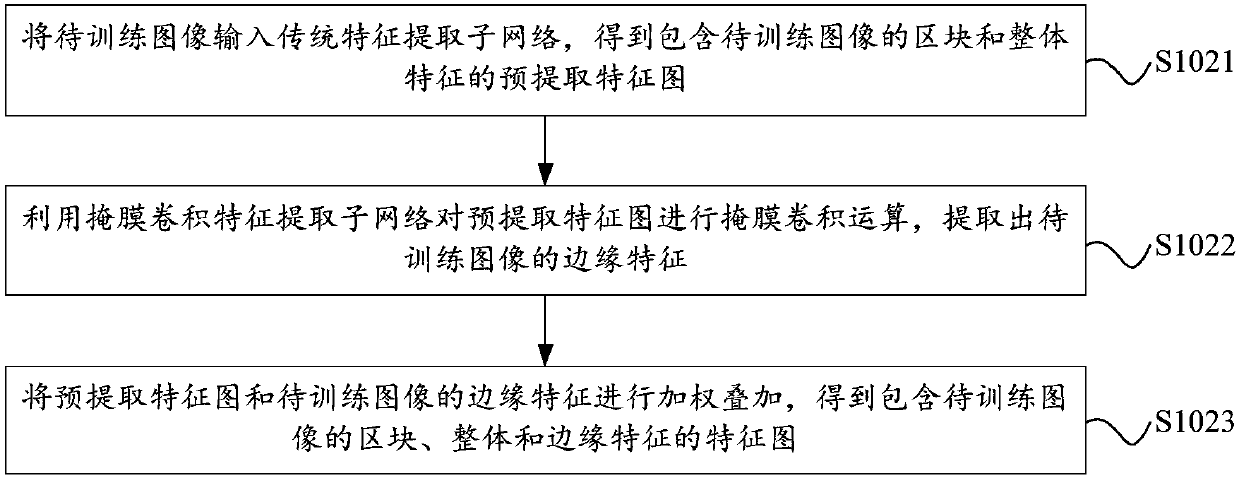

Semantic segmentation network training method, image semantic segmentation method and devices

InactiveCN108537292AImprove training recognition effectMake up for edge feature lossCharacter and pattern recognitionNeural architecturesFeature extractionVisual perception

The embodiments of the present invention belongs to the computer vision technological field and provide a semantic segmentation network training method, an image semantic segmentation method and corresponding devices. The semantic segmentation network training method includes the following steps that: a to-be-trained image is acquired; the to-be-trained image is inputted into a pre-established semantic segmentation network, the front network layer of the semantic segmentation network is adopted to extract the features of the to-be-trained image, and a feature map containing the block, global and edge features of the to-be-trained image can be obtained; the feature image containing the block, global and edge features of the to-be-trained image is inputted to the rear network layer of the semantic segmentation network so as to be subjected to image pixel classification, so that a semantic segmentation image containing segmentation pixel types can be obtained; and the parameters of the semantic segmentation network are update according to the semantic segmentation image. Compared with the prior art, the method of the invention separately extracts and restores the edge features of theto-be-trained image, thereby improving the training recognition effect of the edge of a segmentation region.

Owner:上海白泽网络科技有限公司

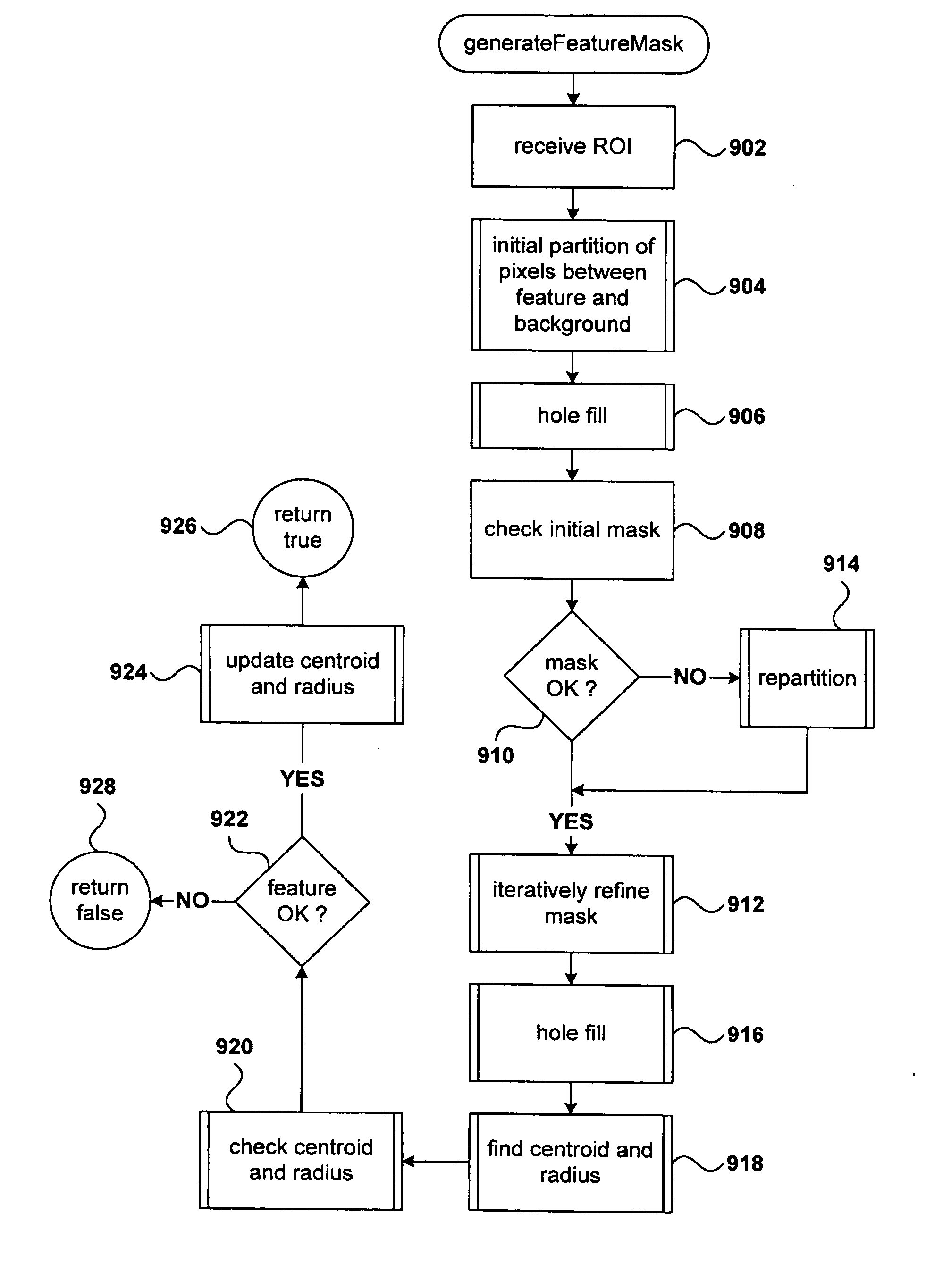

Classification of pixels in a microarray image based on pixel intensities and a preview mode facilitated by pixel-intensity-based pixel classification

One disclosed embodiment is a method based on an iteratively employed Bayesian-probability-based pixel classification, used to refine an initial feature mask that specifies those pixels in a region of interest, including and surrounding a feature in the scanned image of a microarray, that together compose a pixel-based image of the feature within the region of interest. In a described embodiment, a feature mask is prepared using only the pixel-based intensity data for a region of interest, a putative position and size of the feature within the region of interest, and mathematical models of the probability distribution of background-pixel and feature-pixel signal noise and mathematical models of the probabilities of finding feature pixels and background pixels at various distances from the putative feature position. In a described embodiment, preparation of a feature mask allows a feature-extraction system to display feature sizes and locations to a user prior to undertaking the computationally intensive and time-consuming task of feature-signal extraction from the pixel-based intensity data obtained by scanning a microarray.

Owner:AGILENT TECH INC

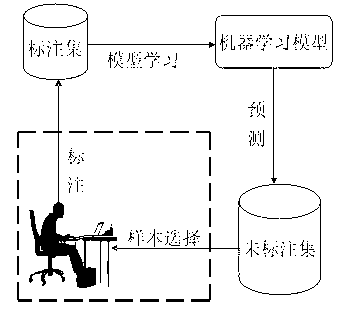

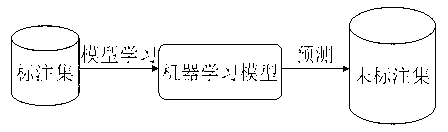

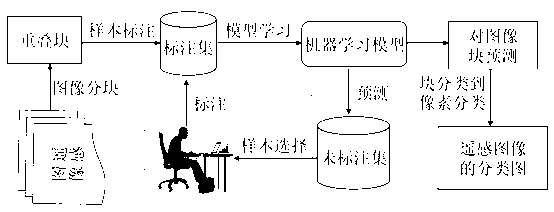

Remote sensing image classification method based on image block active learning

InactiveCN103258214AImprove structural performanceImprove visual effectsCharacter and pattern recognitionInformation processingResearch Object

The invention discloses a remote sensing image classification method based on image block active learning and belongs to the technical field of image information processing. The method comprises the following steps of remote sensing image blocking, initial sample selection, classifier model training, active learning sample selection, training sample set and classifier model updating, classification process iteration; image block classification prediction, and conversion of a block classification result into a pixel classification result. The remote sensing image classification method serves an image block as a research object, compared with a traditional remote sensing image classification method based on active learning of pixel points, under the same experiment condition, the classification result of the image is more accurate, a block sample screened out by the active learning can more rapidly and accurately conduct manual annotating, constitutive properties of the classified image are stronger, spots directly brought by classification of the pixel points are greatly reduced, and the better visual effect is brought to people.

Owner:南京艾利特节能科技有限公司

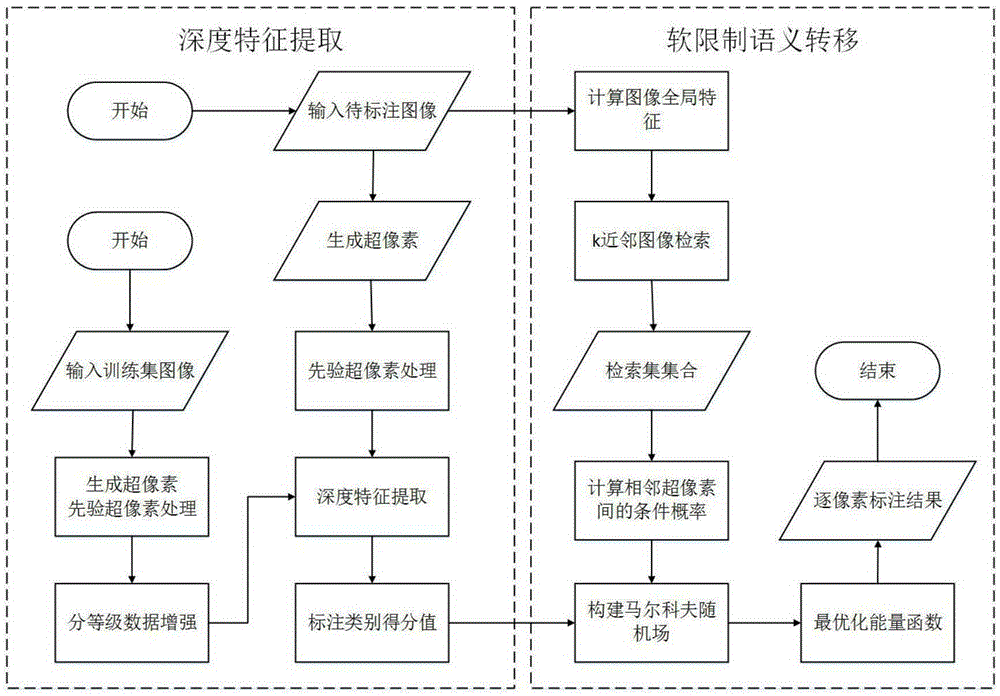

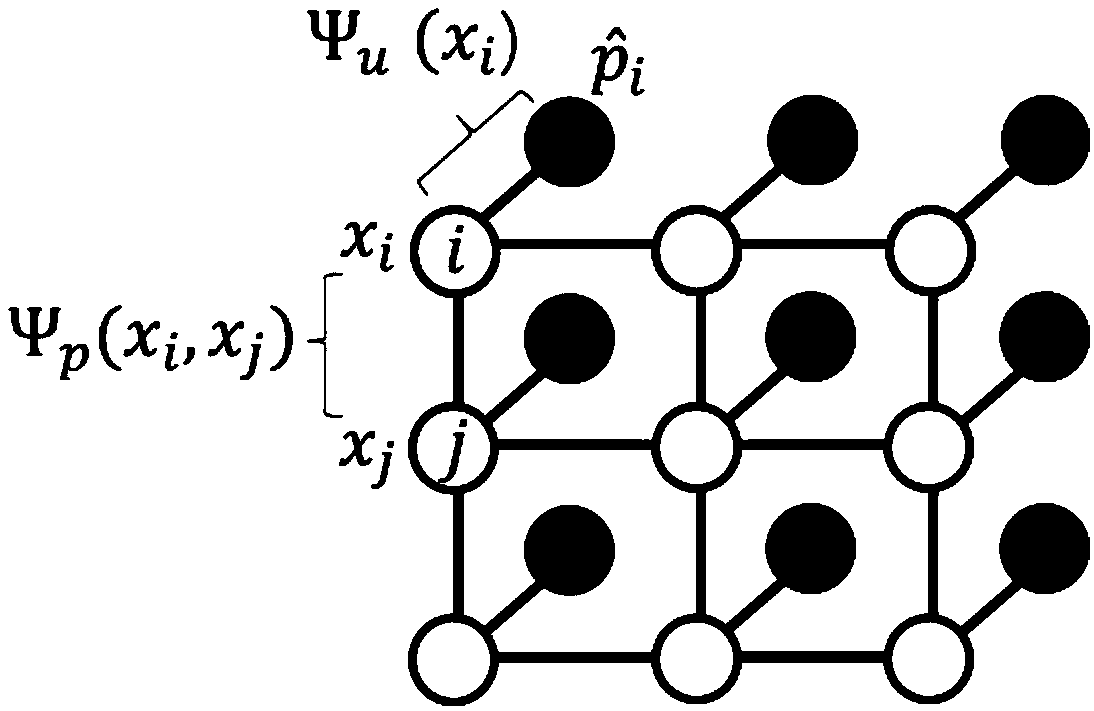

Streetscape semantic annotation method based on convolutional neural network and semantic transfer conjunctive model

ActiveCN105389584AFully excavatedImprove labeling accuracyCharacter and pattern recognitionPixel classificationAlgorithm

The invention relates to a streetscape semantic annotation method based on a convolutional neural network and a semantic transfer conjunctive model. A device according to the streetscape semantic annotation method comprises a deep characteristic extracting part and a soft limited semantic transfer part. A more balanced training set is constructed, and furthermore a super-pixel classification deep model with prior information is trained. According to the streetscape semantic annotation method, the prior information of a scene can be sufficiently mined, and a characteristic expression with more remarkable difference is learned so that the annotation accuracy of a superpixel is greatly improved. Through a Markov random field model, an initial result is optimized and an unnecessary noise is eliminated so that an annotation result is further improved. Finally per-pixel annotation accuracy and average classification accuracy are respectively higher than 77% and 53%.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

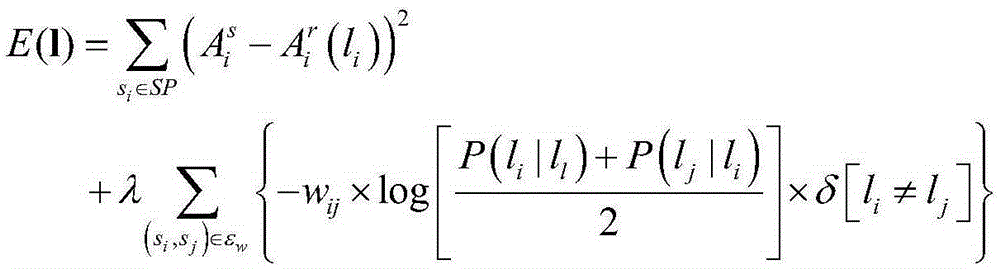

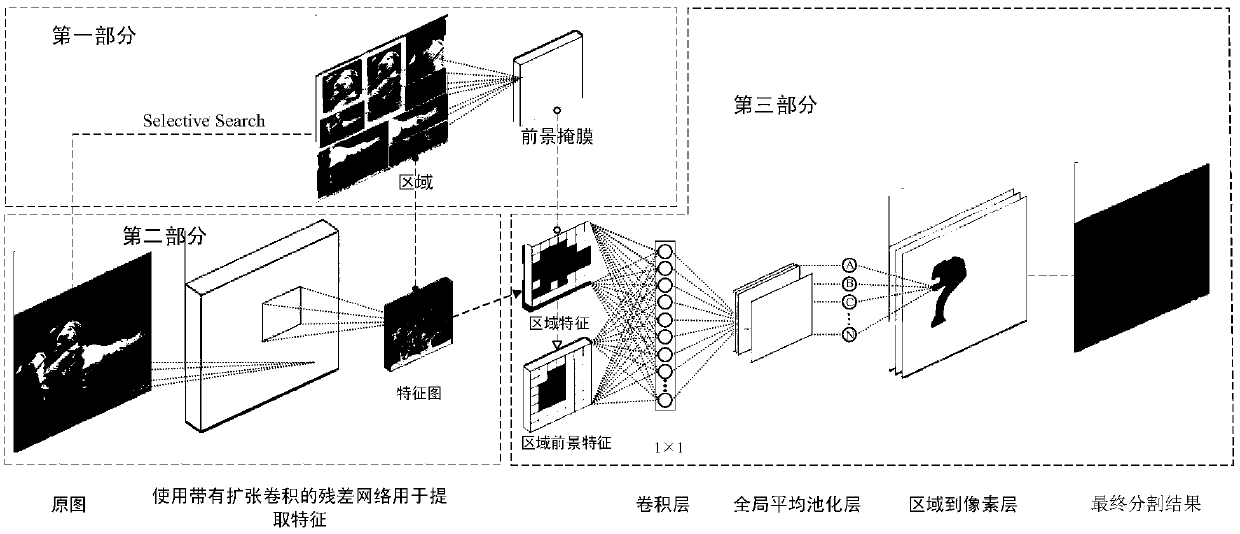

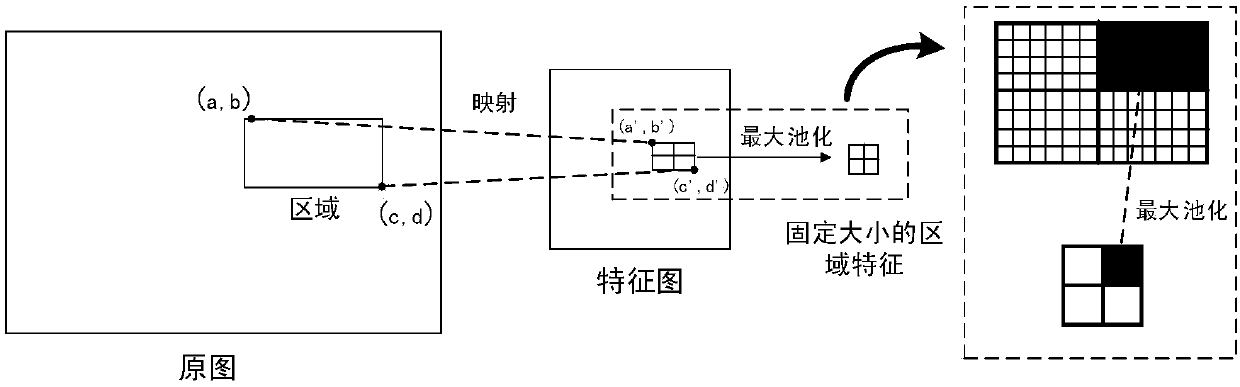

An image semantic segmentation method based on an area and depth residual error network

ActiveCN109685067ASolve the disadvantages of prone to rough segmentation boundariesGood segmentation effectCharacter and pattern recognitionNetwork modelPixel classification

The invention discloses an image semantic segmentation method based on a region and a deep residual network. According to the region-based semantic segmentation method, mutually overlapped regions areextracted by using multiple scales, targets of multiple scales can be identified, and fine object segmentation boundaries can be obtained. According to the method based on the full convolutional network, the convolutional neural network is used for autonomously learning features, end-to-end training can be carried out on a pixel-by-pixel classification task, but rough segmentation boundaries areusually generated in the method. The advantages of the two methods are combined: firstly, a candidate region is generated in an image by using a region generation network, then feature extraction is performed on the image through a deep residual network with expansion convolution to obtain a feature map, the feature of the region is obtained by combining the candidate region and the feature map, and the feature of the region is mapped to each pixel in the region; And finally, carrying out pixel-by-pixel classification by using the global average pooling layer. In addition, a multi-model fusionmethod is used, different inputs are set in the same network model for training to obtain a plurality of models, and then feature fusion is carried out on the classification layer to obtain a final segmentation result. Experimental results on SIFT FLOW and PASCAL Context data sets show that the algorithm provided by the invention has relatively high average accuracy.

Owner:JIANGXI UNIV OF SCI & TECH

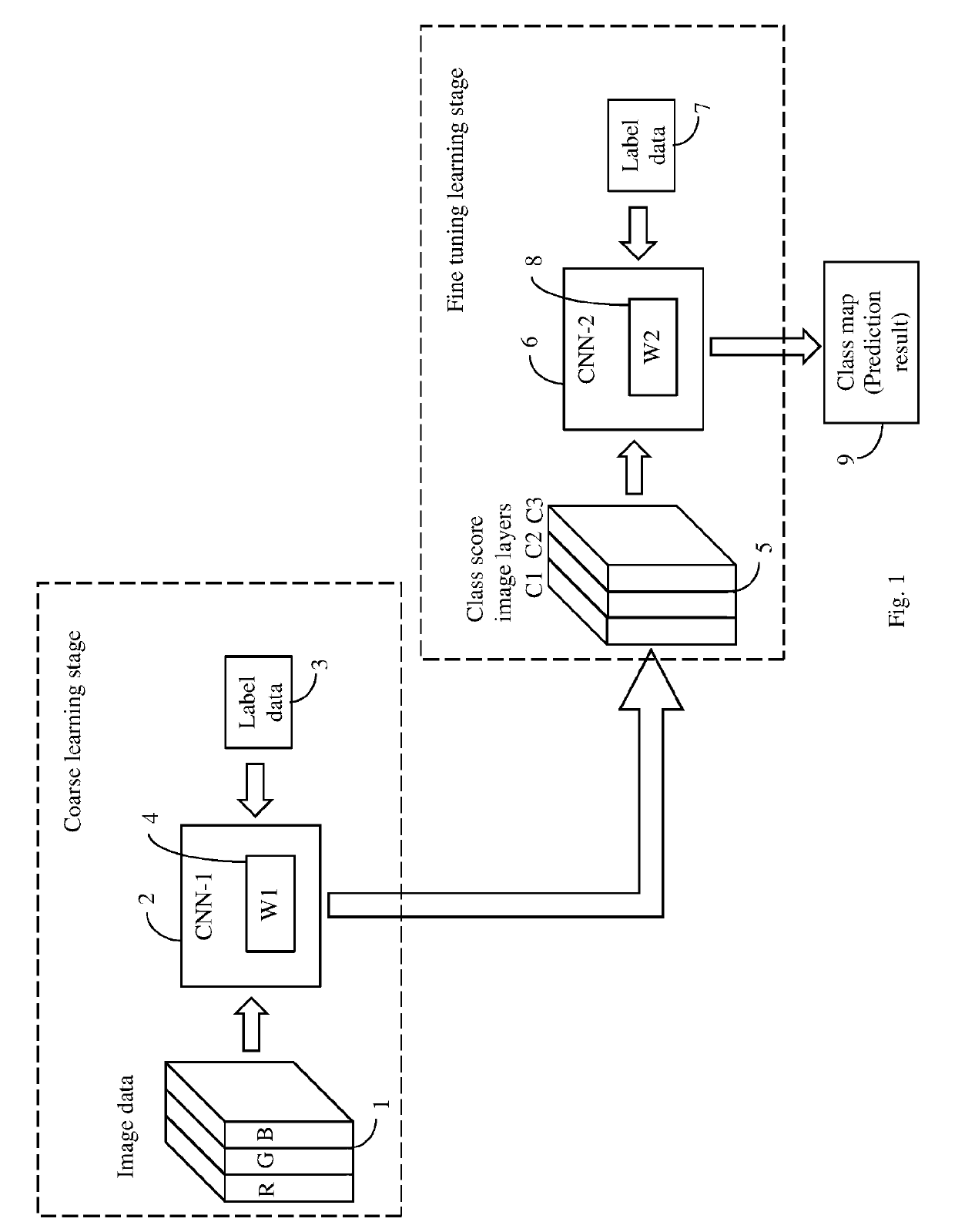

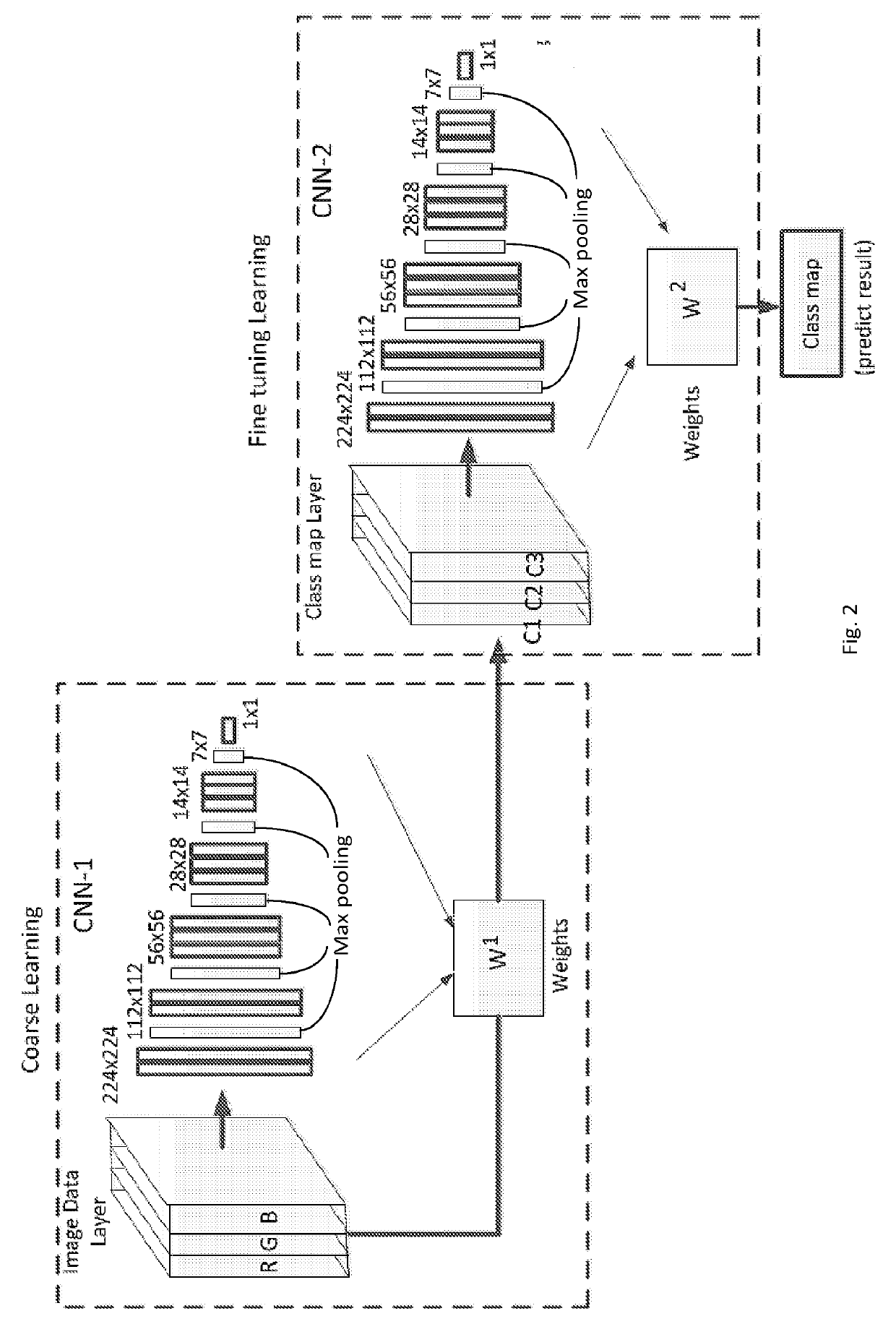

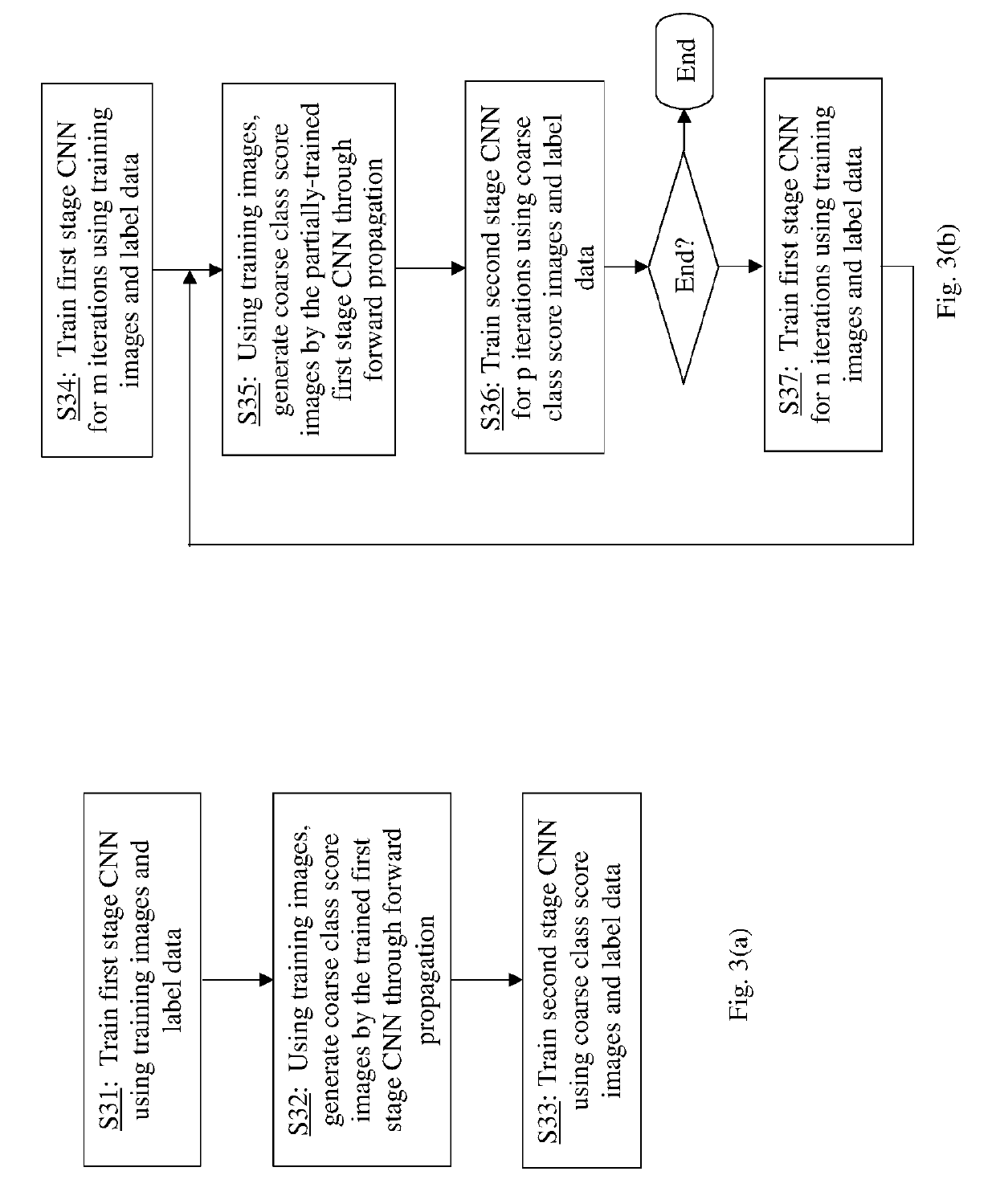

Method and system for cell image segmentation using multi-stage convolutional neural networks

InactiveUS20190228268A1Character and pattern recognitionNeural architecturesImage segmentationLabeled data

An artificial neural network system for image classification, including multiple independent individual convolutional neural networks (CNNs) connected in multiple stages, each CNN configured to process an input image to calculate a pixelwise classification. The output of an earlier stage CNN, which is a class score image having identical height and width as its input image and a depth of N representing the probabilities of each pixel of the input image belonging to each of N classes, is input into the next stage CNN as input image. When training the network system, the first stage CNN is trained using first training images and corresponding label data; then second training images are forward propagated by the trained first stage CNN to generate corresponding class score images, which are used along with label data corresponding to the second training images to train the second stage CNN.

Owner:KONICA MINOLTA LAB U S A INC

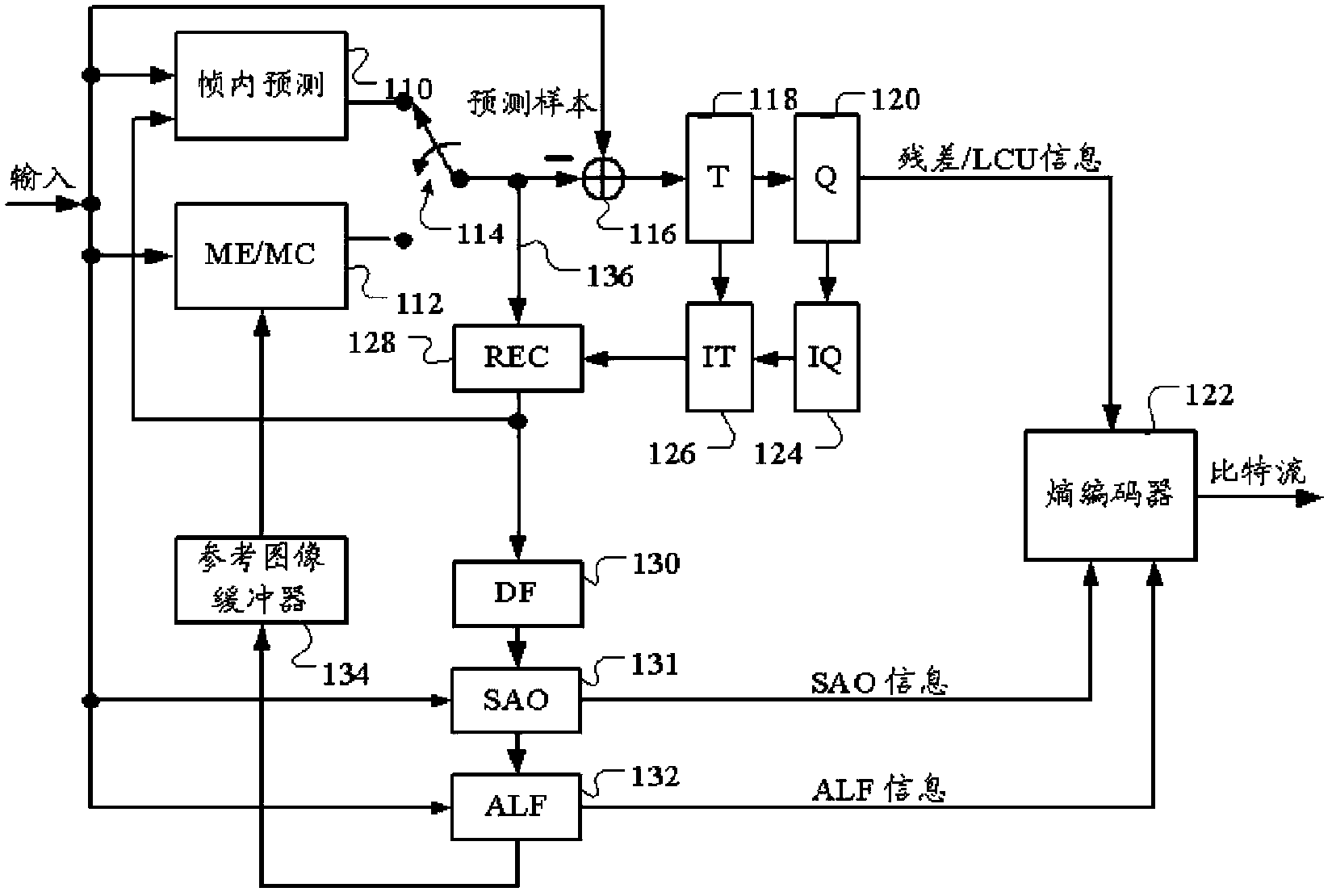

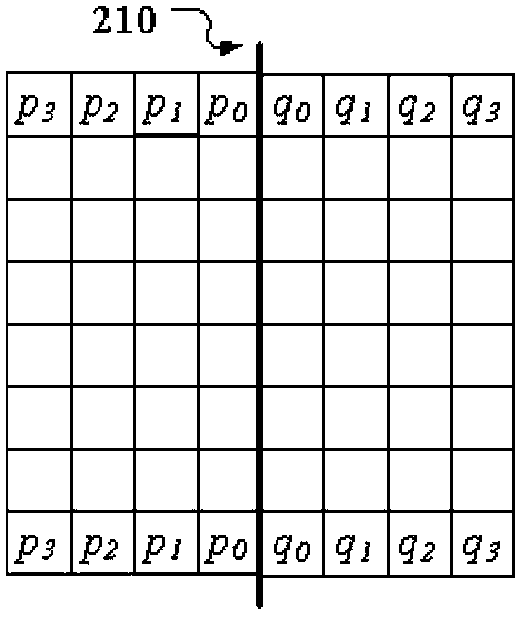

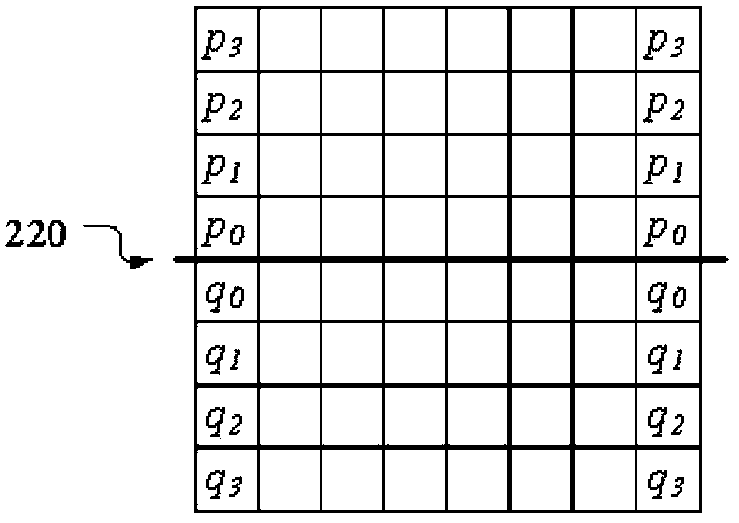

Method and apparatus for non-cross-tile loop filtering

A method and apparatus for loop filter processing of video data are disclosed. Embodiments according to the present invention eliminate data dependency associated with loop processing across tile boundaries. According to one embodiment, loop processing is reconfigured to eliminate data dependency across tile boundaries if cross-tile loop processing is disabled. The loop filter processing corresponds to DF (deblocking filter), SAO (Sample Adaptive Offset) processing or ALF (Adaptive Loop Filter) processing. The processing can be skipped for at least one tile boundary. In another embodiment, data padding based on the pixels of the current tile or modifying pixel classification footprint are used to eliminate data dependency across the tile boundary. Whether cross-tile loop processing is disabled can be indicated by a flag coded at sequence, picture, or slice level to indicate whether the data dependency across said at least one tile boundary is allowed.

Owner:HFI INNOVATION INC

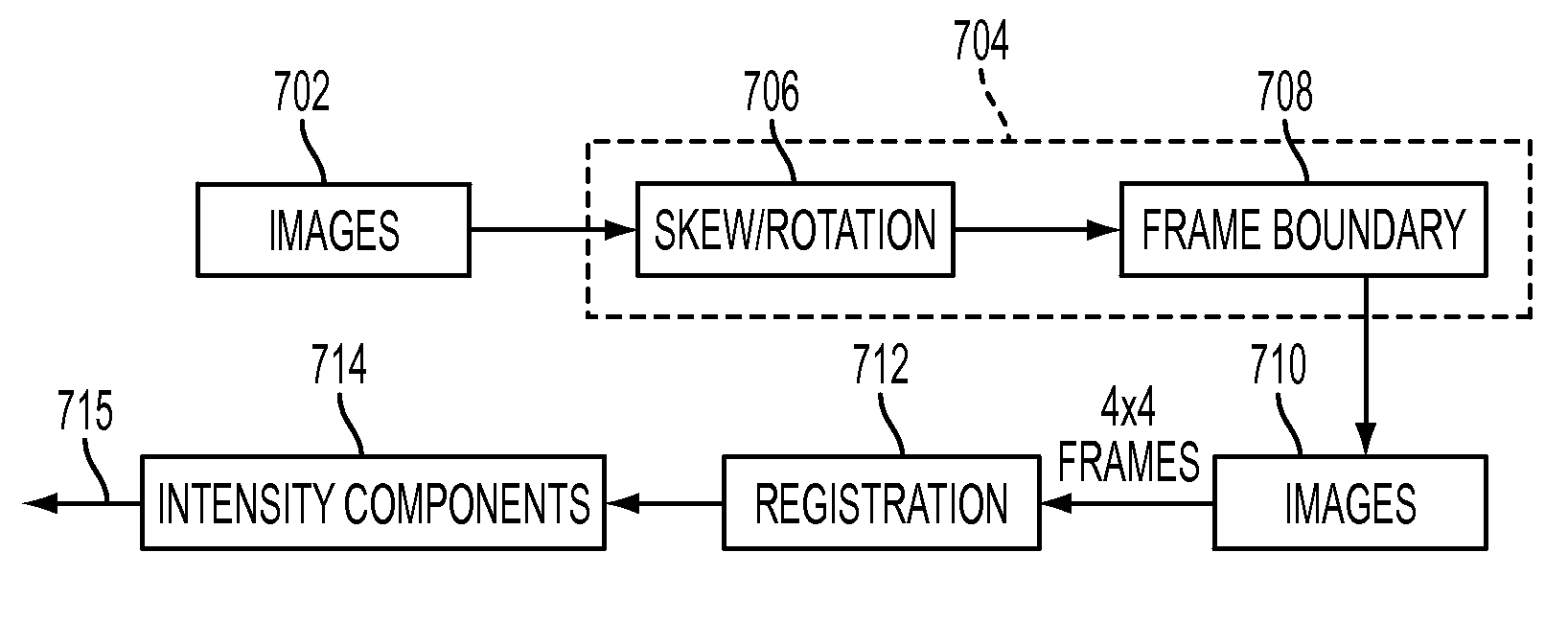

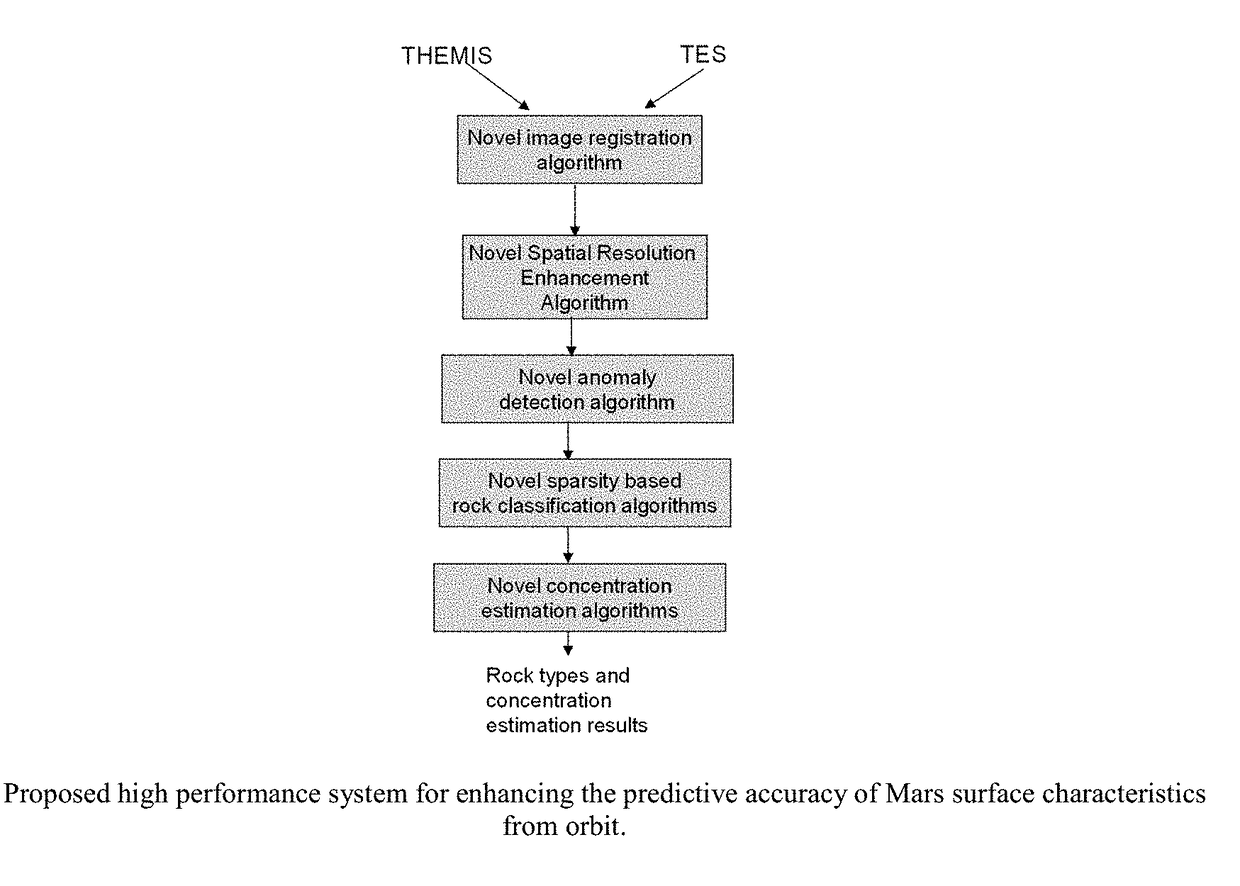

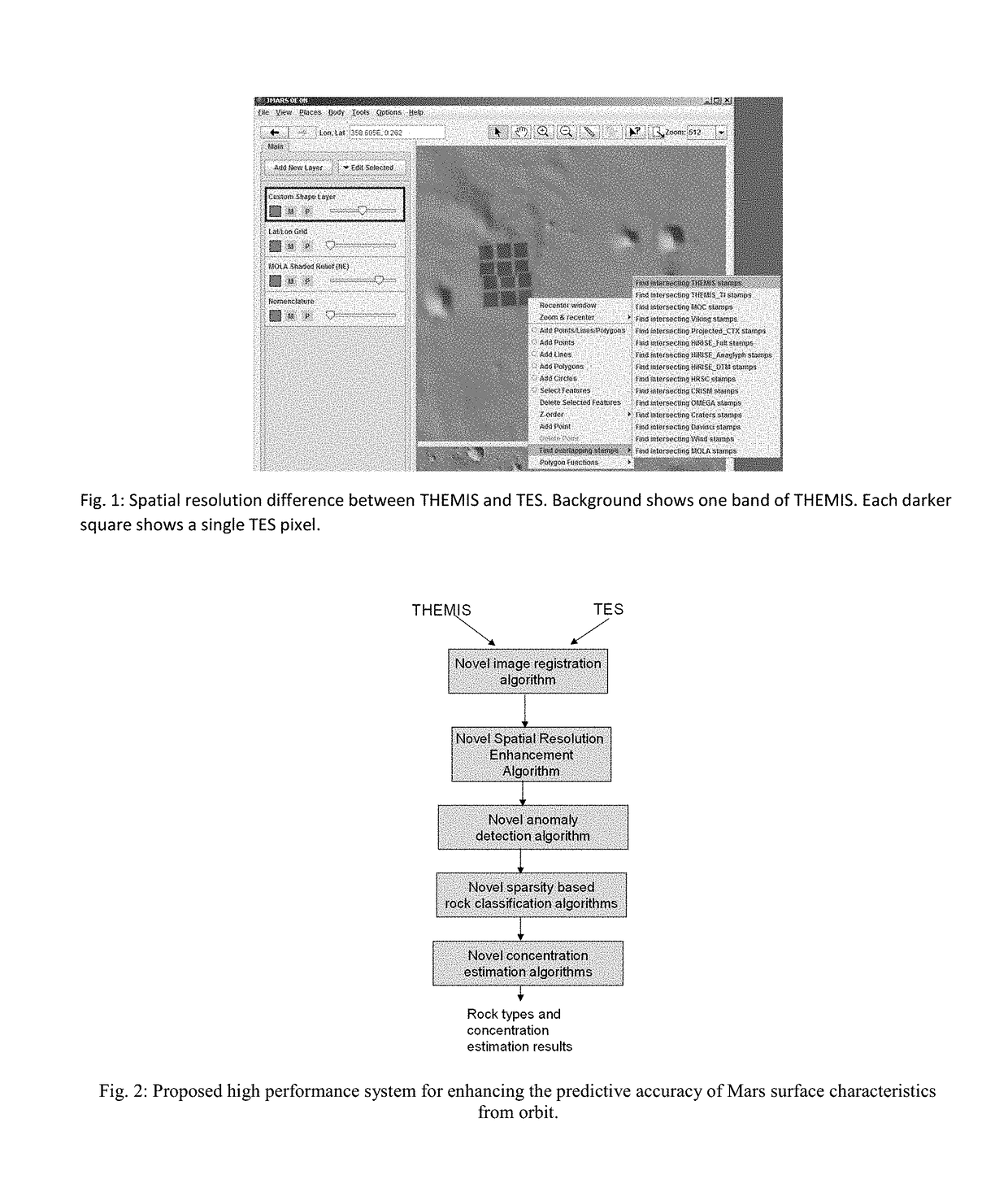

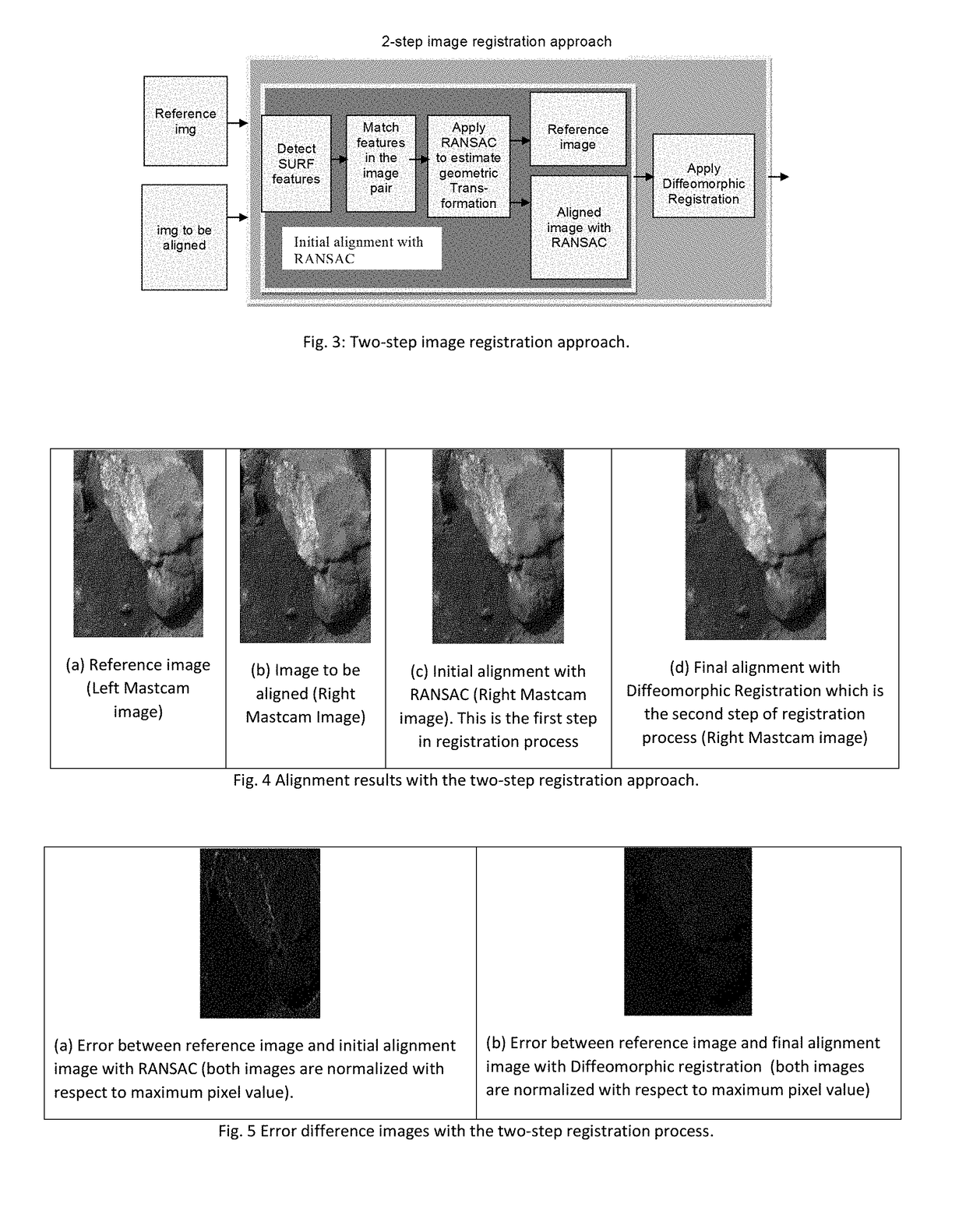

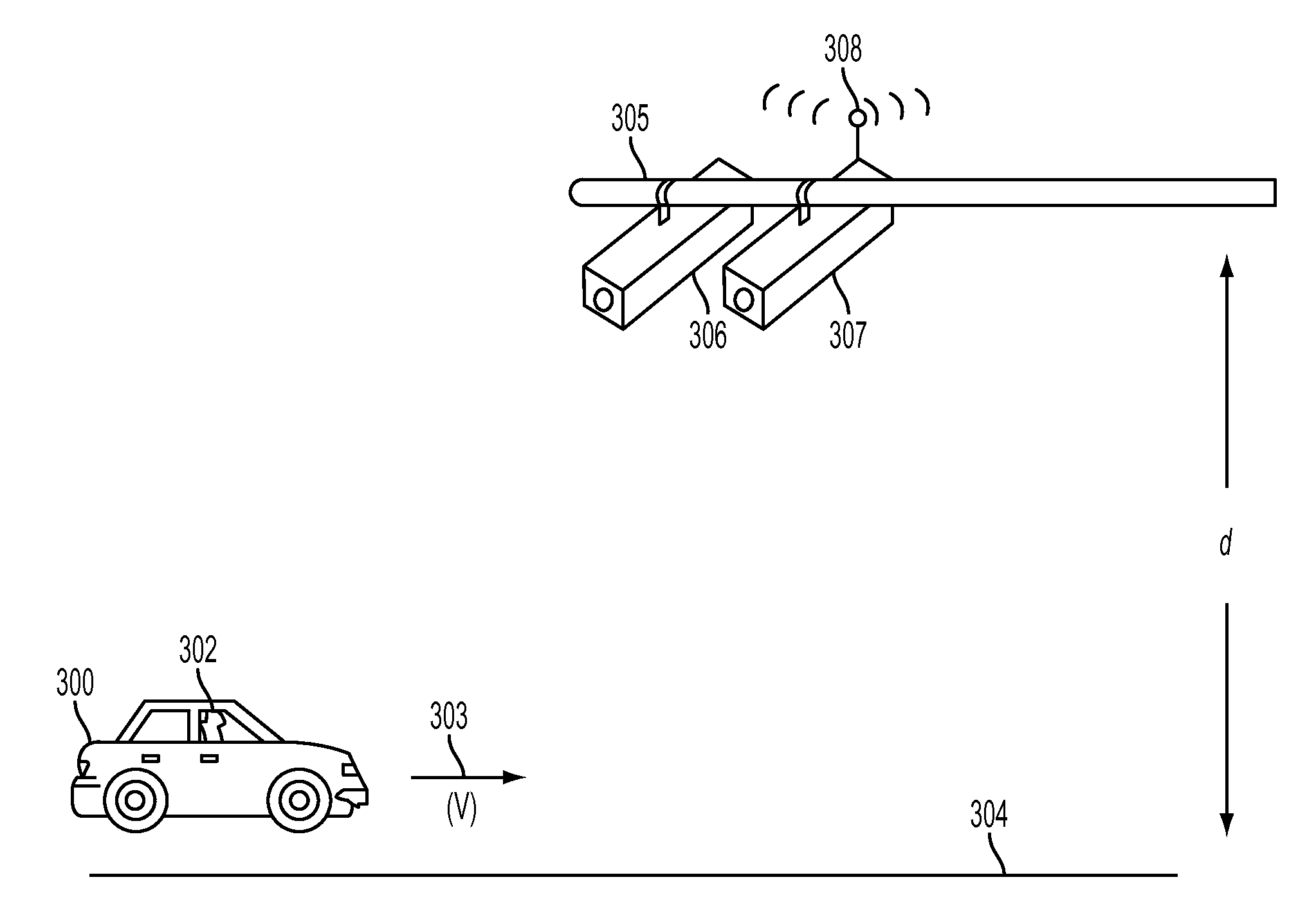

Method and System for Enhancing Predictive Accuracy of Planet Surface Characteristics from Orbit

ActiveUS20180218197A1Accurate image alignmentAchieve accuracyImage enhancementImage analysisSharpeningSpectral image

A method and system for enhancing predictive accuracy of planet surface characteristics from orbit using an extended approach of Pan-Sharpening by using multiple high resolution bands to reconstruct high resolution hyperspectral image is disclosed. Sparsity based classification algorithm is applied to rock type classification. An Extended Yale B face database is used for performance evaluation; and utilizing deep Neural Networks for pixel classification. The present invention presents a system that can significantly enhance the predictive accuracy of surface characteristics from the orbit. The system utilizes complementary images collected from imagers onboard satellites. The present system and method generates high spatial high spectral resolution images; accurate detection of anomalous regions on Mars, Earth, or other planet surfaces; accurate rock / material classification using orbital data and the surface characterization performance will be comparable to in-situ results; and accurate chemical concentration estimation of rocks.

Owner:SIGNAL PROCESSING

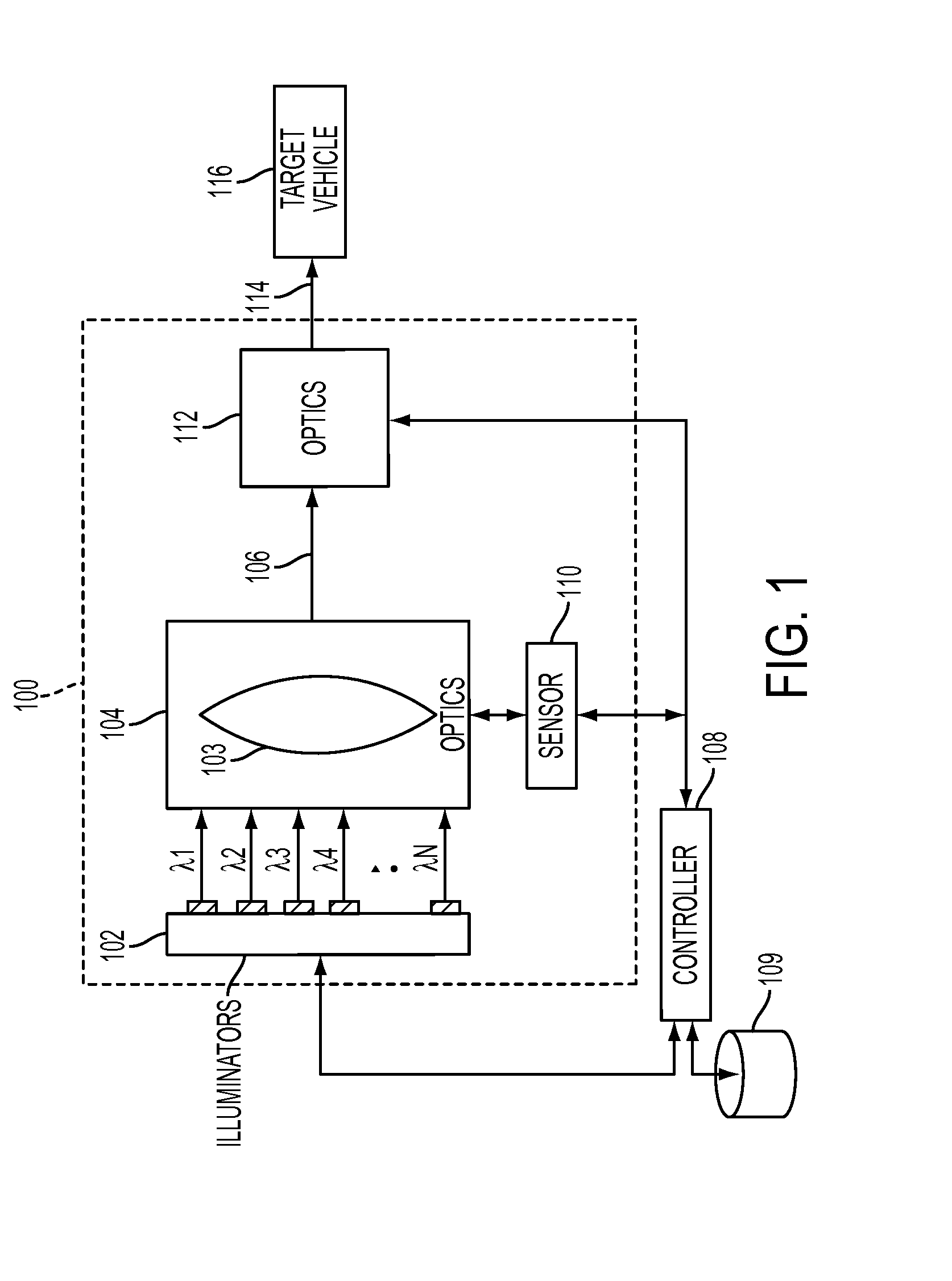

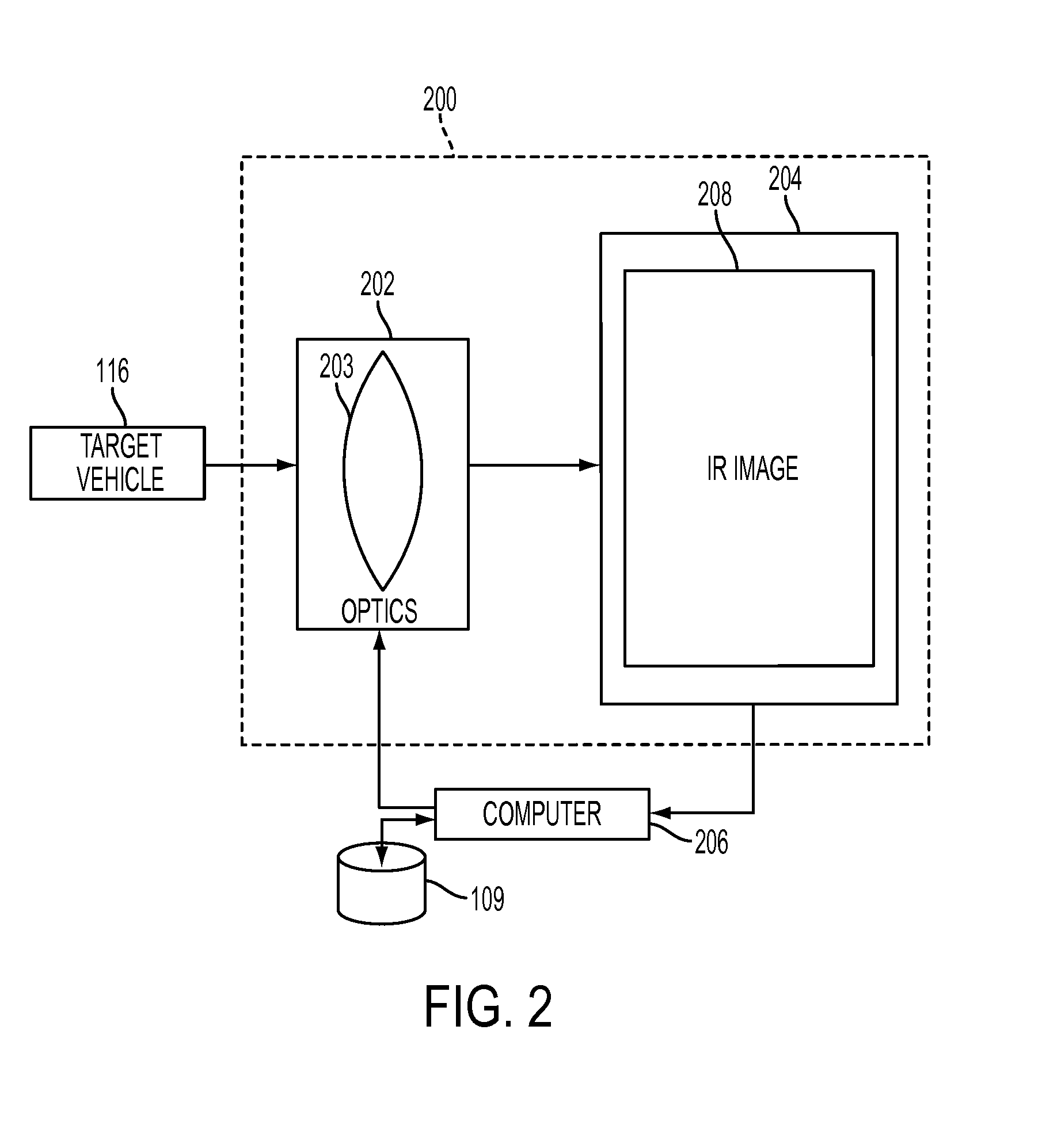

Determining a pixel classification threshold for vehicle occupancy detection

ActiveUS20130147959A1Easy to classifyReadily apparentImage analysisPedestrian/occupant safety arrangementMobile vehicleMulti band

What is disclosed is a system and method for determining a pixel classification threshold for vehicle occupancy determination. An IR image of a moving vehicle is captured using a multi-band IR imaging system. A driver's face is detected using a face recognition algorithm. Multi-spectral information extracted from pixels identified as human tissue of the driver's face is used to determine a pixel classification threshold. This threshold is then used to facilitate a classification of pixels of a remainder of the IR image. Once pixels in the remainder of the image have been classified, a determination can be made whether the vehicle contains additional human occupants other than the driver. An authority is alerted in the instance where the vehicle is found to be traveling in a HOV / HOT lane requiring two or more human occupants and a determination has been made that the vehicle contains an insufficient number of human occupants.

Owner:CONDUENT BUSINESS SERVICES LLC

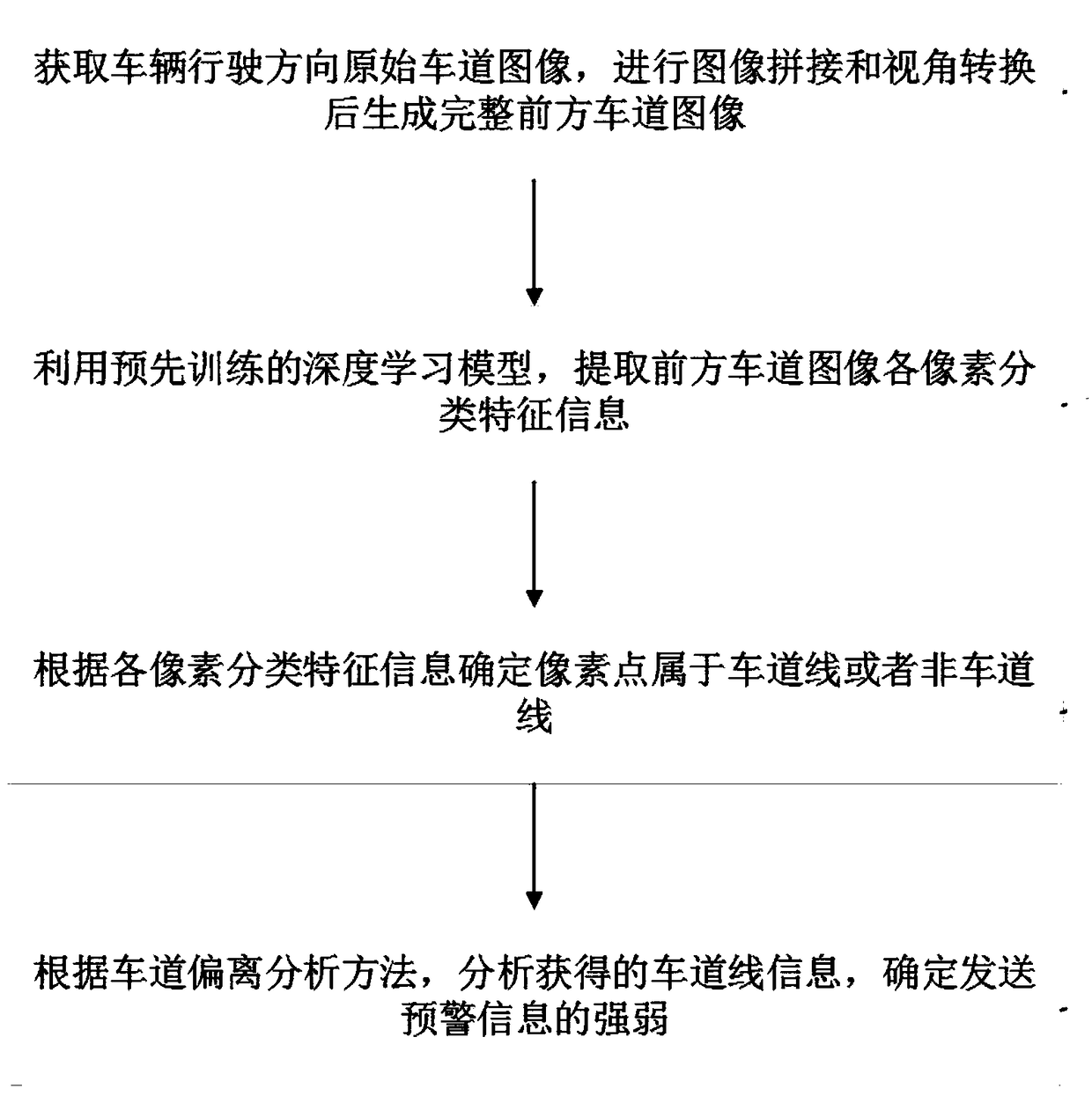

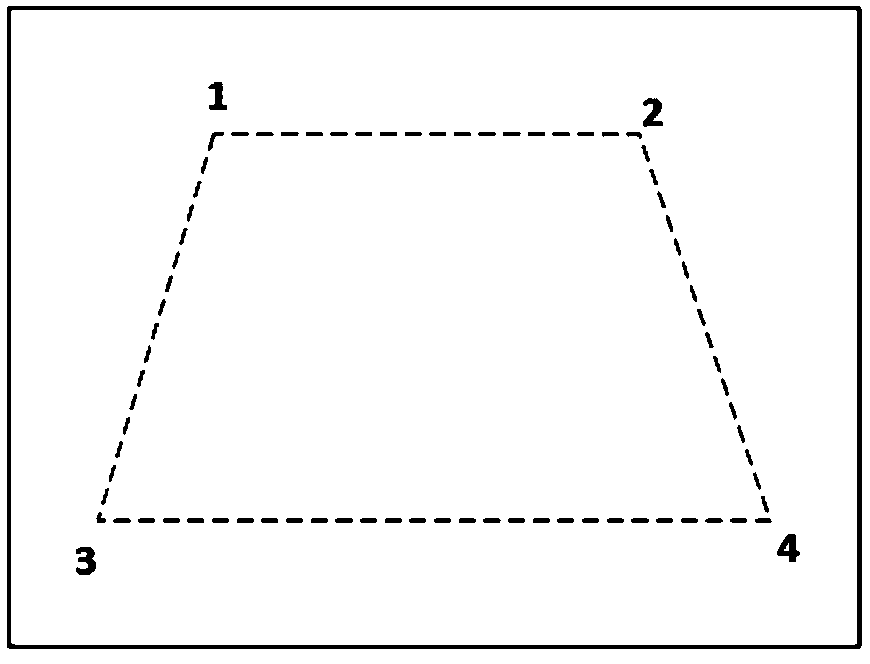

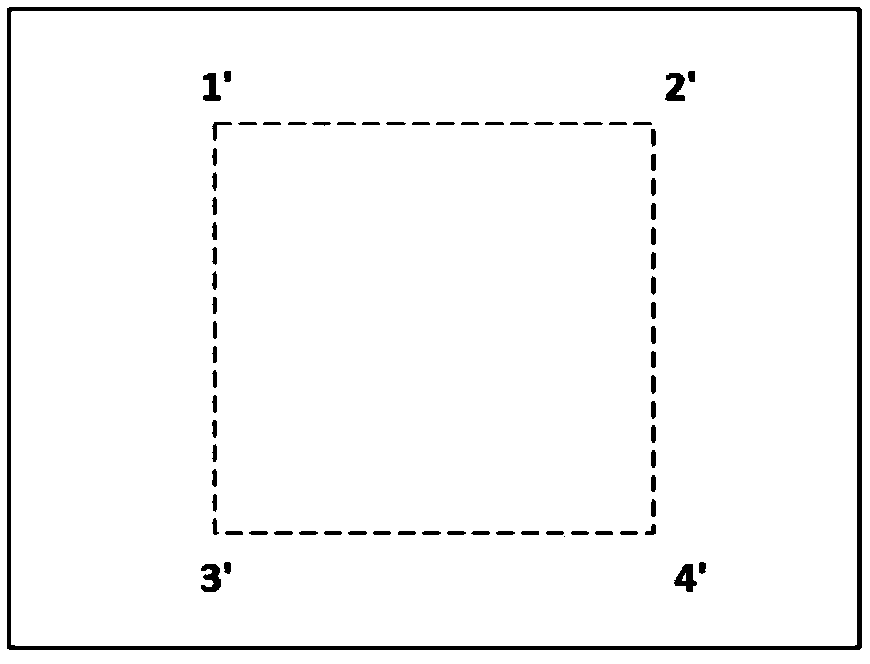

Deep learning based lane line detecting and early-warning device and method

ActiveCN108537197AOptimizing the Lane Line Detection MethodImprove recognition accuracy and robustnessImage enhancementImage analysisDriving safetyImage based

The invention relates to a deep learning based lane line detecting and early-warning device and method. The method comprises the following steps: acquiring an original lane image in the vehicle running direction; splicing the images and changing vision angles to obtain a complete front lane image; extracting the classifying property information of each pixel in the front lane image based on a pre-trained deep learning model; determining that a pixel point belongs to the lane line or a non-lane line based on the pixel classifying property information; analyzing the obtained lane line information through the lane deviation analyzing method; and determining the intensity of the sent early-warning information. With the adoption of the method, a current lane line detection method can be optimized; the problem that the vision angles in image acquiring influence the detection of the actual lane line and the lane line curvature to be computed in the current lane line detection method can be solved; the recognizing accuracy and robustness are improved; and the driving safety is improved.

Owner:JILIN UNIV

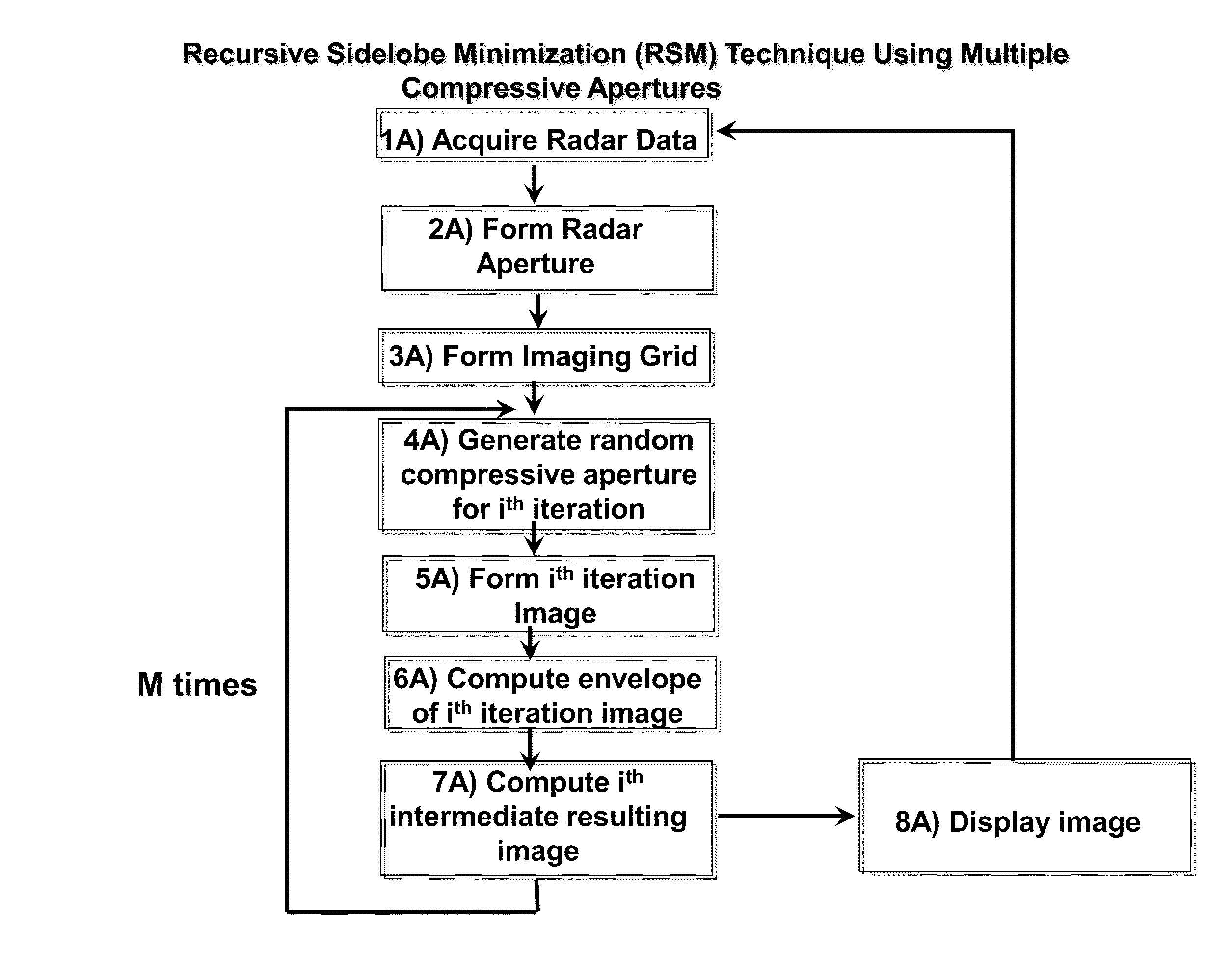

Method and system for forming very low noise imagery using pixel classification

InactiveUS8193967B2High contrast imageReduce false alarm rateRadio wave reradiation/reflectionLow noiseRange migration

A method and system for generating images from projection data comprising inputting first values representing correlated positional and recorded data; forming an image by processing the projection data utilizing a pixel characterization imaging subsystem to form the SAR imagery utilizing one of a back-projection algorithm or range migration algorithm; integrating positional and recorded data from many aperture positions, comprising: forming the complete aperture A0 comprising collecting the return radar data, the coordinates of the receiver, and the coordinates of the transmitter for each position k along the aperture of N positions; forming an imaging grid comprising M image pixels; selecting and removing a substantial number of aperture positions to form a sparse aperture Aifor L iterations; classifying each pixel in the image into target class based on the statistical distribution of its amplitude across L iterations; otherwise, the pixel is given the value of zero.

Owner:US SEC THE ARMY THE +1

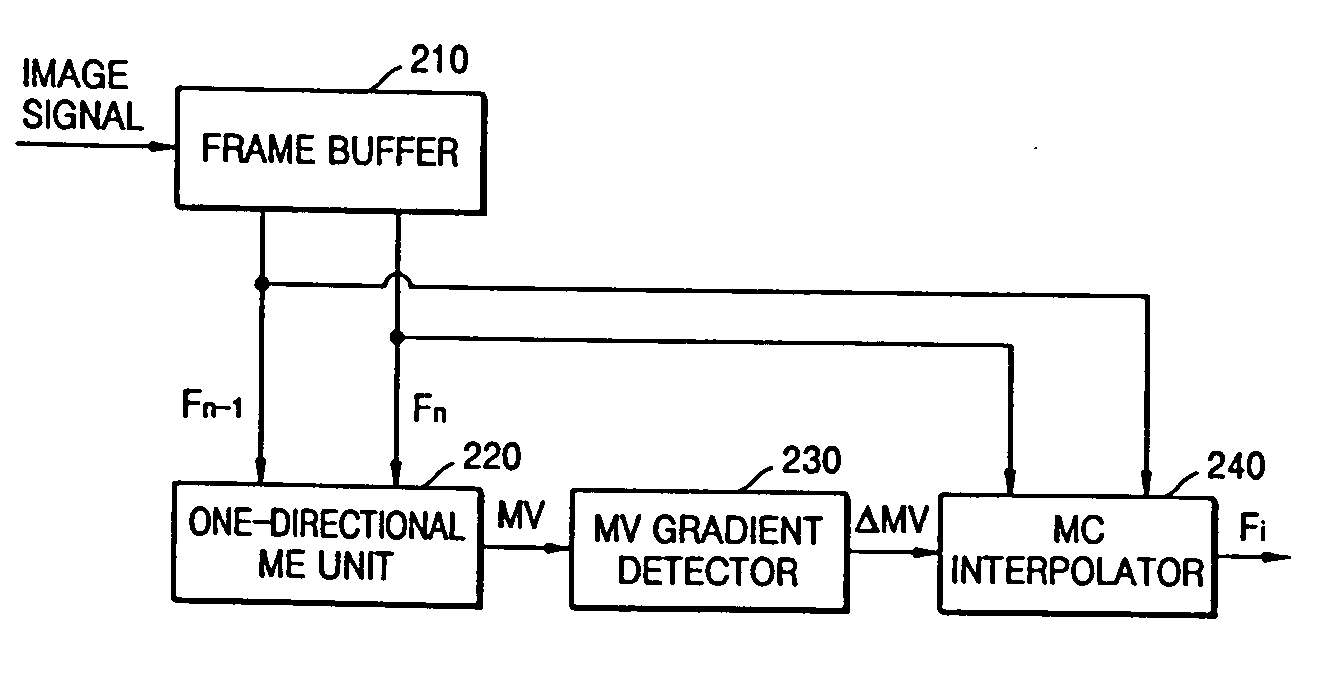

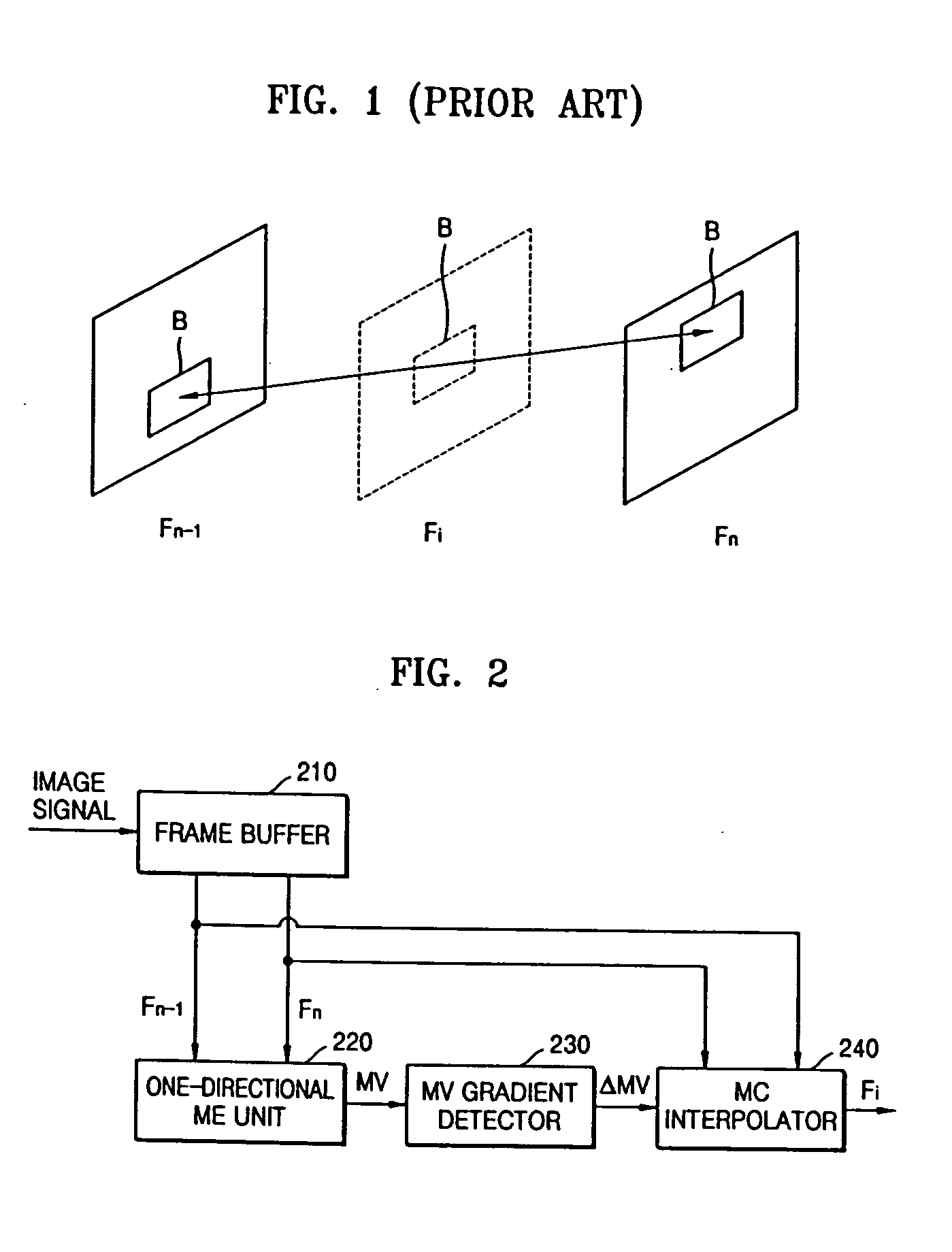

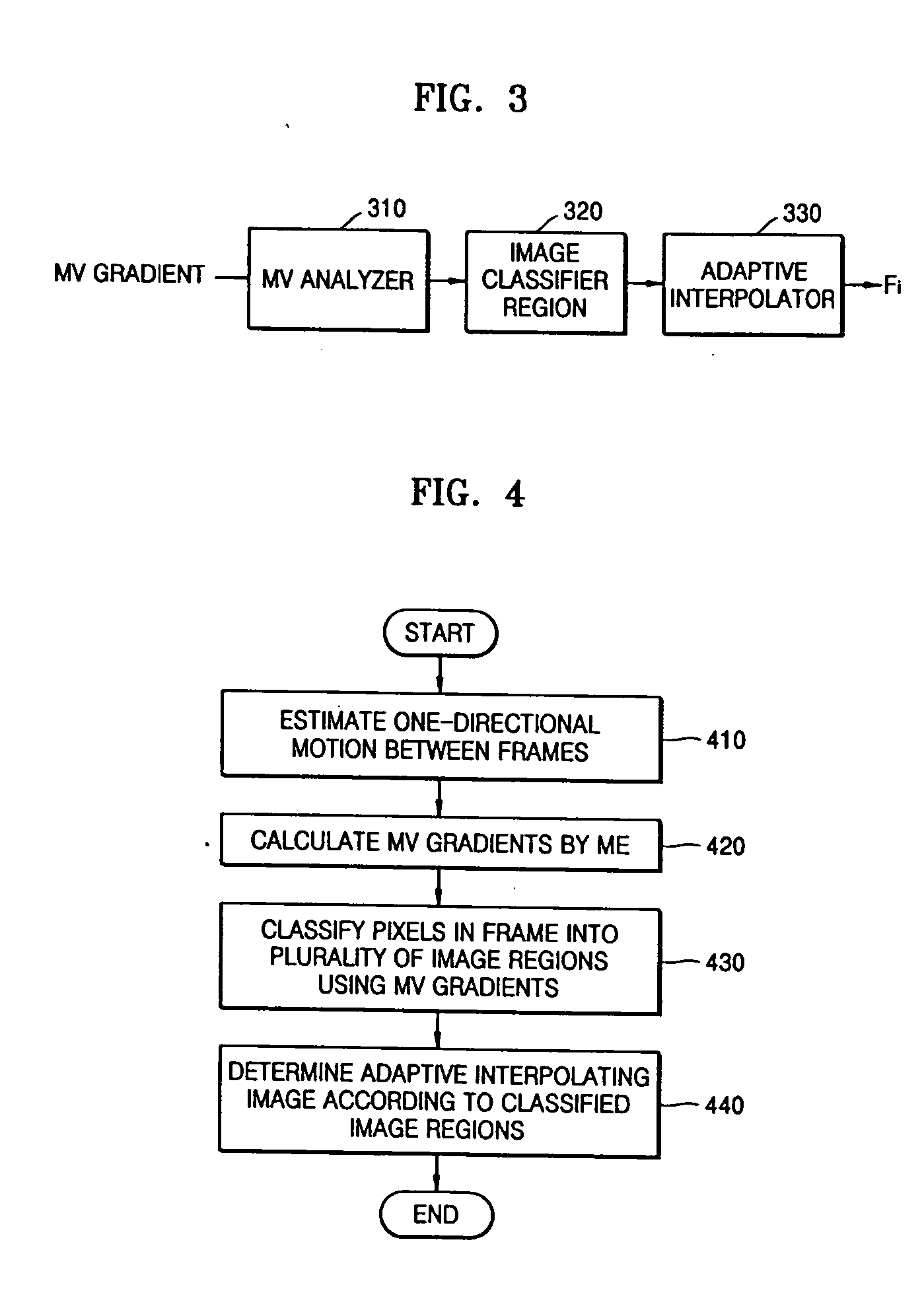

Adaptive motion compensated interpolating method and apparatus

InactiveUS20050129124A1Quality improvementTelevision system detailsImage analysisMotion vectorSelf adaptive

A motion compensated interpolating method of adaptively generating a frame to be obtained by interpolating two frames according to features of a motion vector and an apparatus therefor. The adaptive motion compensated interpolating method includes estimating motion vectors (MV) by performing block-based motion estimation (ME) between adjacent frames, calculating gradients of the estimated MVs, classifying pixels of the adjacent frames into a plurality of image regions according to the gradients of the estimated MVs, and determining pixel values to be obtained by interpolating the adjacent frames by adaptively selecting pixels matched between the adjacent frames or the MV estimated between the adjacent frames for each classified image region.

Owner:SAMSUNG ELECTRONICS CO LTD

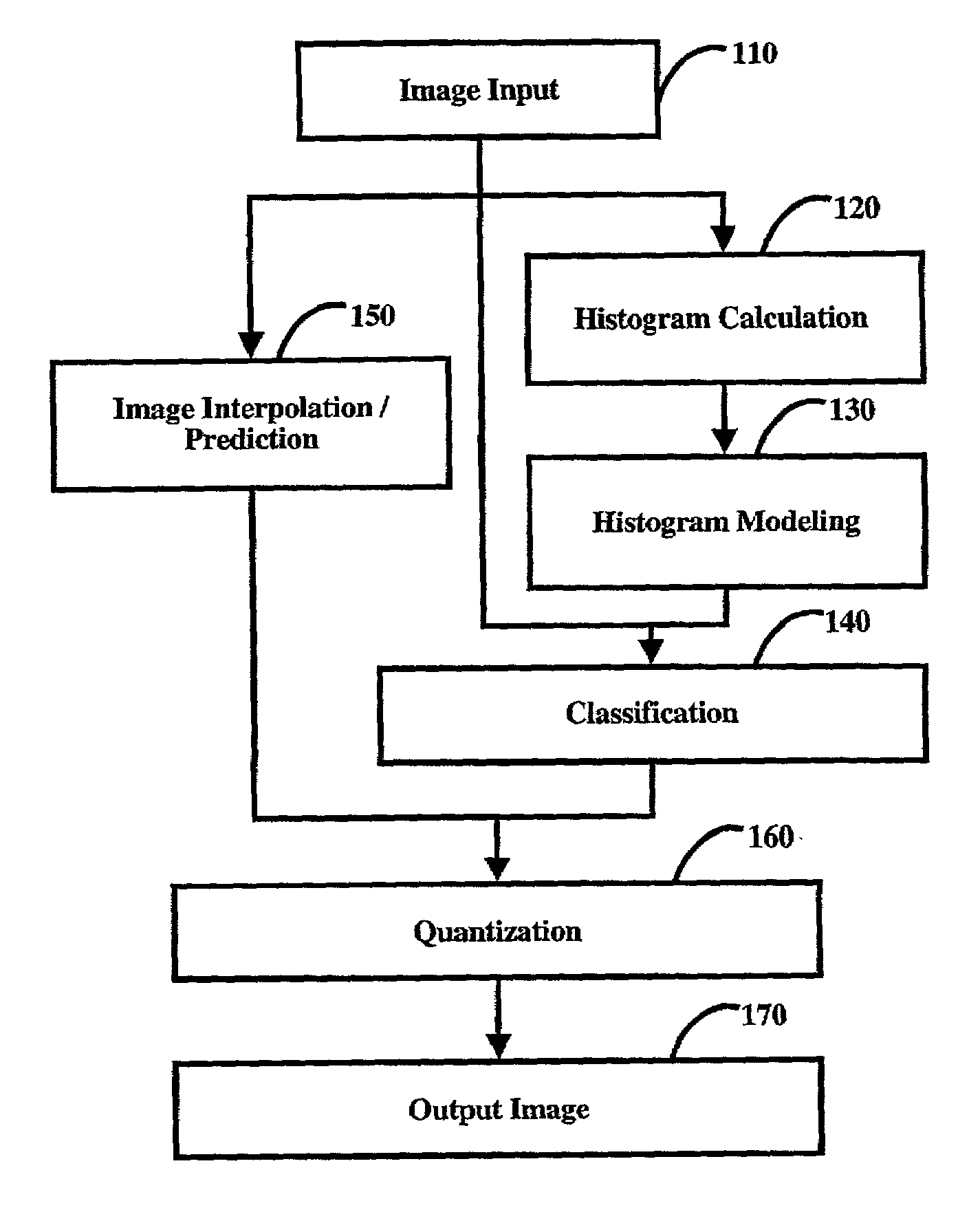

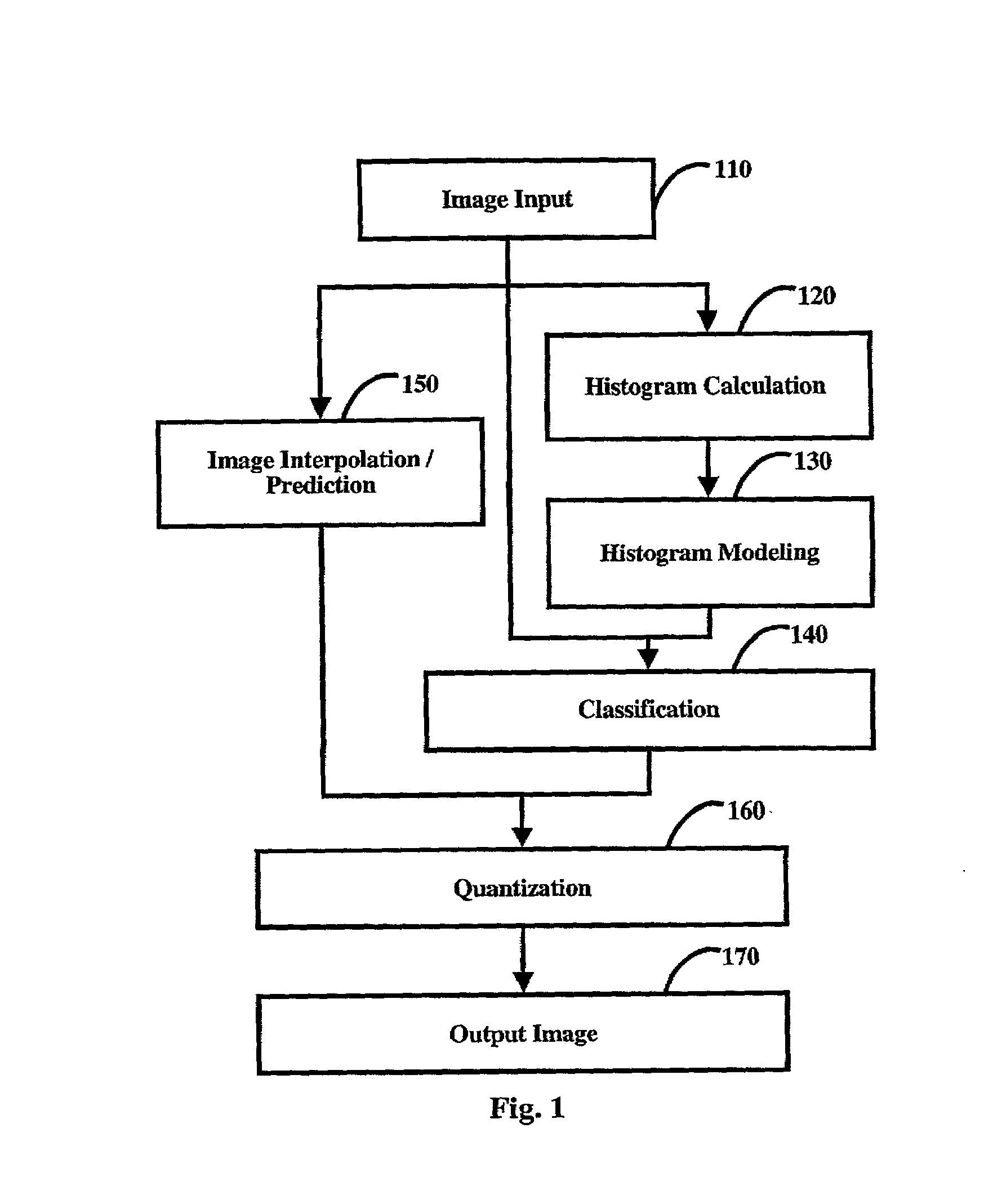

Method of image binarization using histogram modeling

InactiveUS6941013B1Simple methodExpanding horizontal and vertical spatial resolutionImage enhancementGeometric image transformationImage resolutionMethod of images

Method of image binarization using histogram modeling, which combines spatial resolution expansion with binarization in a single integrated process using a combination of spatial expansion, histogram modeling, classification, and quantization. Each pixel of the input image is expanded into a higher resolution image, and a count of the number of times each distinct gray scale intensity value occurs in the input image is calculated from pixel values of the input image and then modeled with an approximate histogram that is computed as the sum of weighted modeling functions. The input pixel values are then classified using the modeling functions and the results of the pixel classification are used to quantize the high resolution gray scale image to create a binary output image.

Owner:THE GOVERNMENT OF THE US REPRESENTED BY THE DIRECTOR NAT SECURITY AGENCY

Method and apparatus for reducing ringing artifacts

A method and apparatus for ringing artifacts reduction for compressed video signals. The method includes receiving luma data to the digital signal processor, calculating sum of gradient of the luma data; calculating SAD of the luma data; performing pixel classification based of the calculated SAD and sum of gradient, performing erosion on a detected edge pixel indicator on a detected flat pixel indicators, determining at least one of the strength or weakness of the an edge based on the determined edge erosion, performing horizontal dilation on the detected edge pixel indicators and edge strength; and performing at least one of sigma or bilateral filtering to the luma data according to the detected edge pixel indicator, flat pixel indicator, edge strength, the number of very flat pixel in the block.

Owner:TEXAS INSTR INC

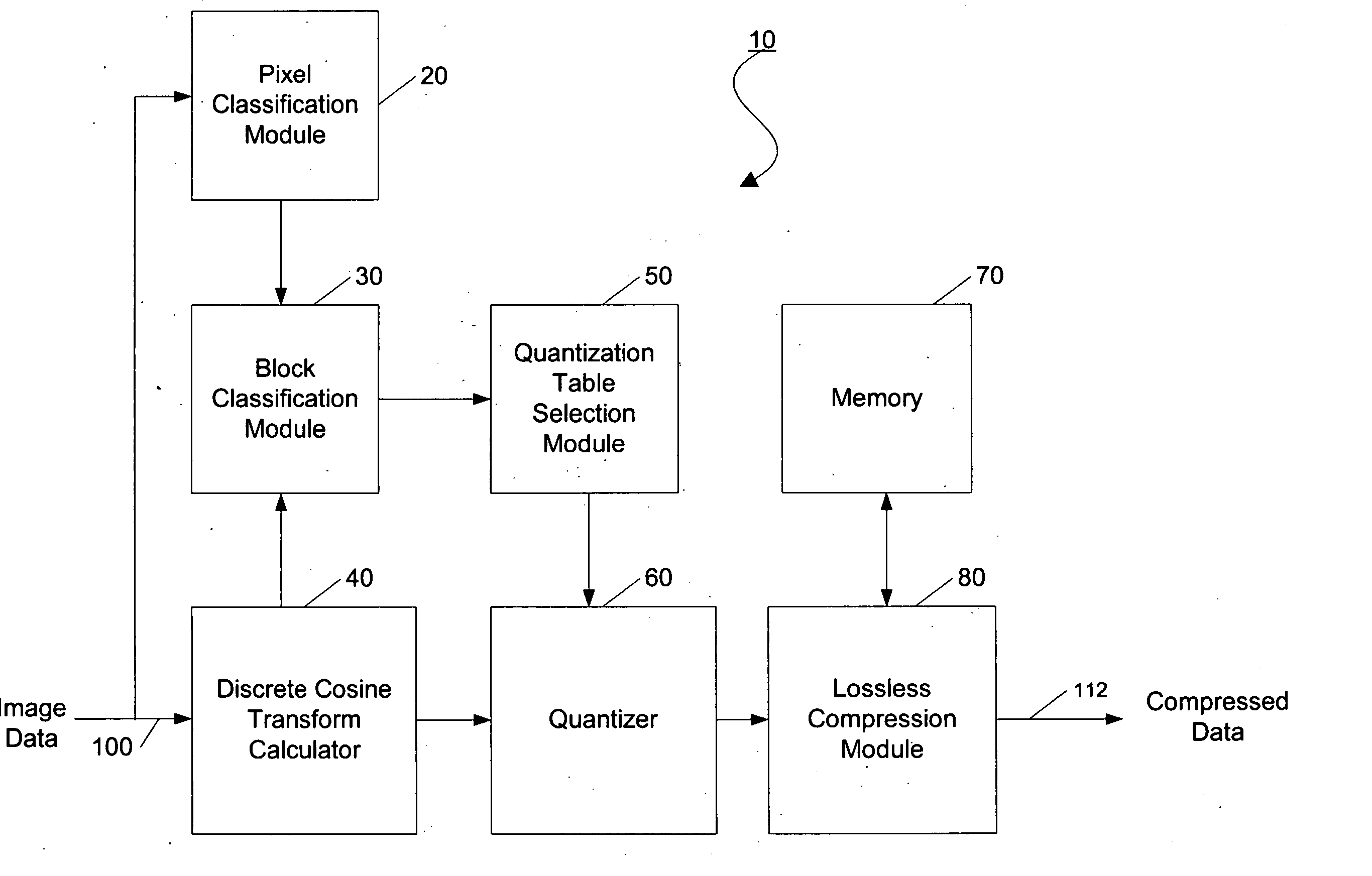

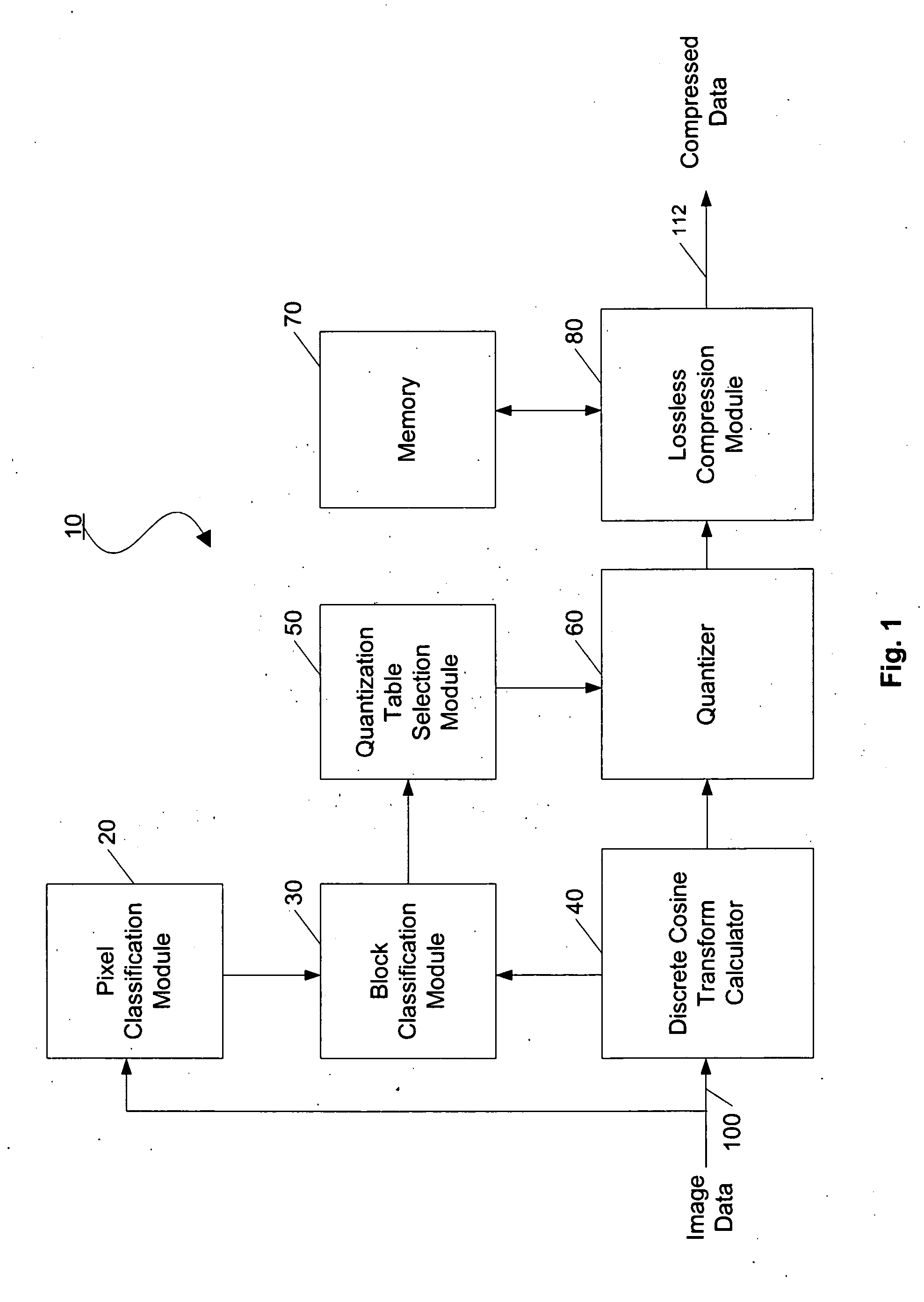

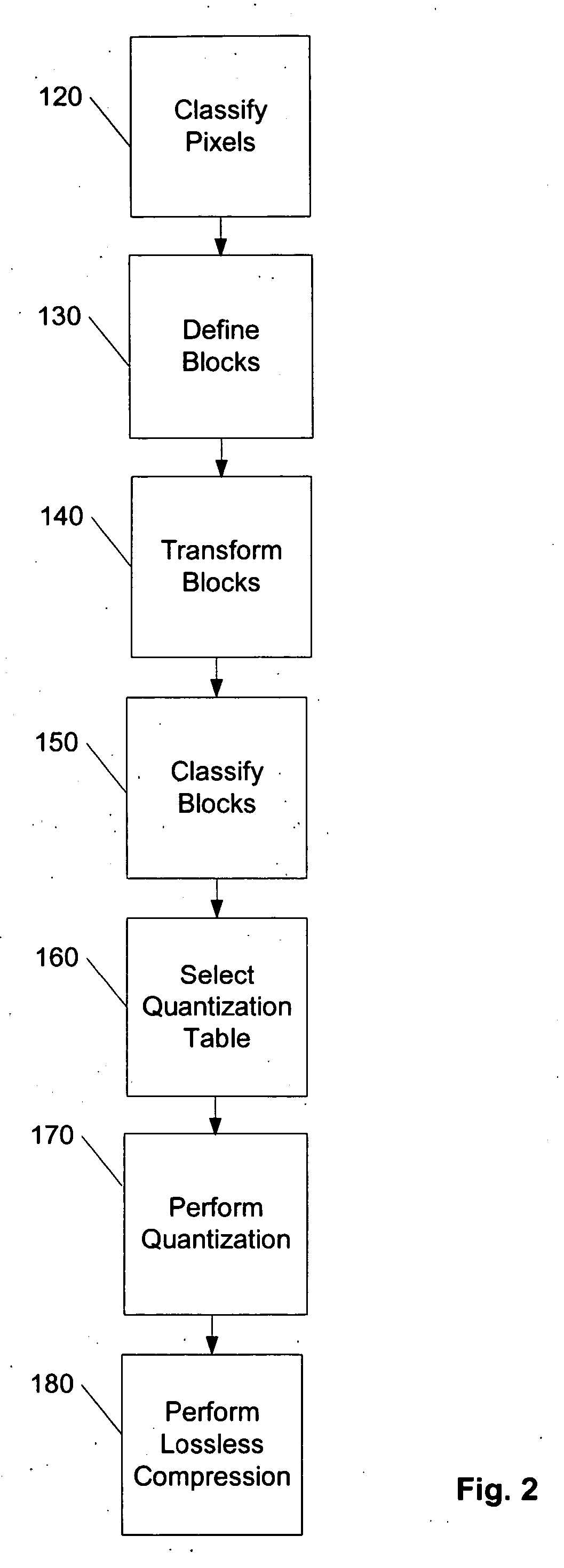

JPEG encoding for document images using pixel classification

ActiveUS20050135693A1Color television with pulse code modulationColor television with bandwidth reductionPattern recognitionJPEG

Owner:LEXMARK INT INC

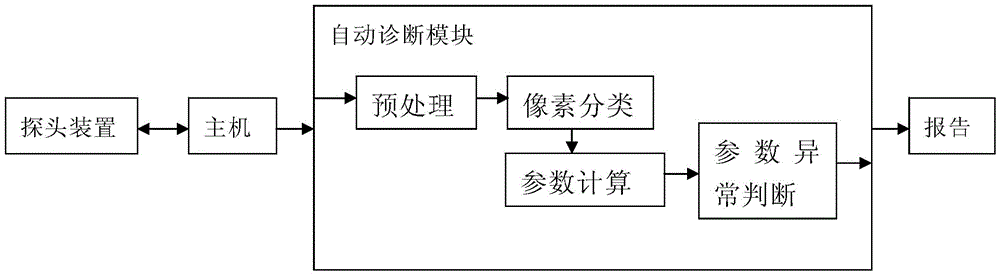

Medical ultrasound assisted automatic diagnosis device and medical ultrasound assisted automatic diagnosis method

InactiveCN105232081ATimely extractionTimely analysisUltrasonic/sonic/infrasonic diagnosticsInfrasonic diagnosticsDiseaseUltrasonography

The invention relates to a medical ultrasound assisted automatic diagnosis device and a medical ultrasound assisted automatic diagnosis method. The method comprises the following steps of: obtaining an ultrasound echo signal of a human body tested position through an ultrasound probe; obtaining an ultrasound gray-scale image for diagnosis through host processing; inputting the ultrasound gray-scale image into an automatic diagnosis module; and performing computer analysis on the image by the automatic diagnosis module, wherein the computer analysis includes pixel classification, parameter calculation and parameter abnormality judgment; according to the pixel classification, pixel data of the image is divided into lesion suspected pixels and normal tissue pixels; according to the parameter calculation, lesion suspected pixels and peripheral pixels are subjected to geometrical and gray-scale relevant parameter calculation; and according to the parameter abnormality judgment, whether each geometrical and gray-scale relevant parameter is abnormal or not is judged, the disease degree is judged according to the abnormal condition of the geometrical or gray-scale relevant parameter, and a detection report is finally output. The device and the method have the advantages that an ultrasound image computer processing technology is used, and gland lesion assisted automatic diagnosis equipment is provided, so that the efficiency and the accuracy of the gland ultrasound examination performed by a user are improved.

Owner:CHISON MEDICAL TECH CO LTD

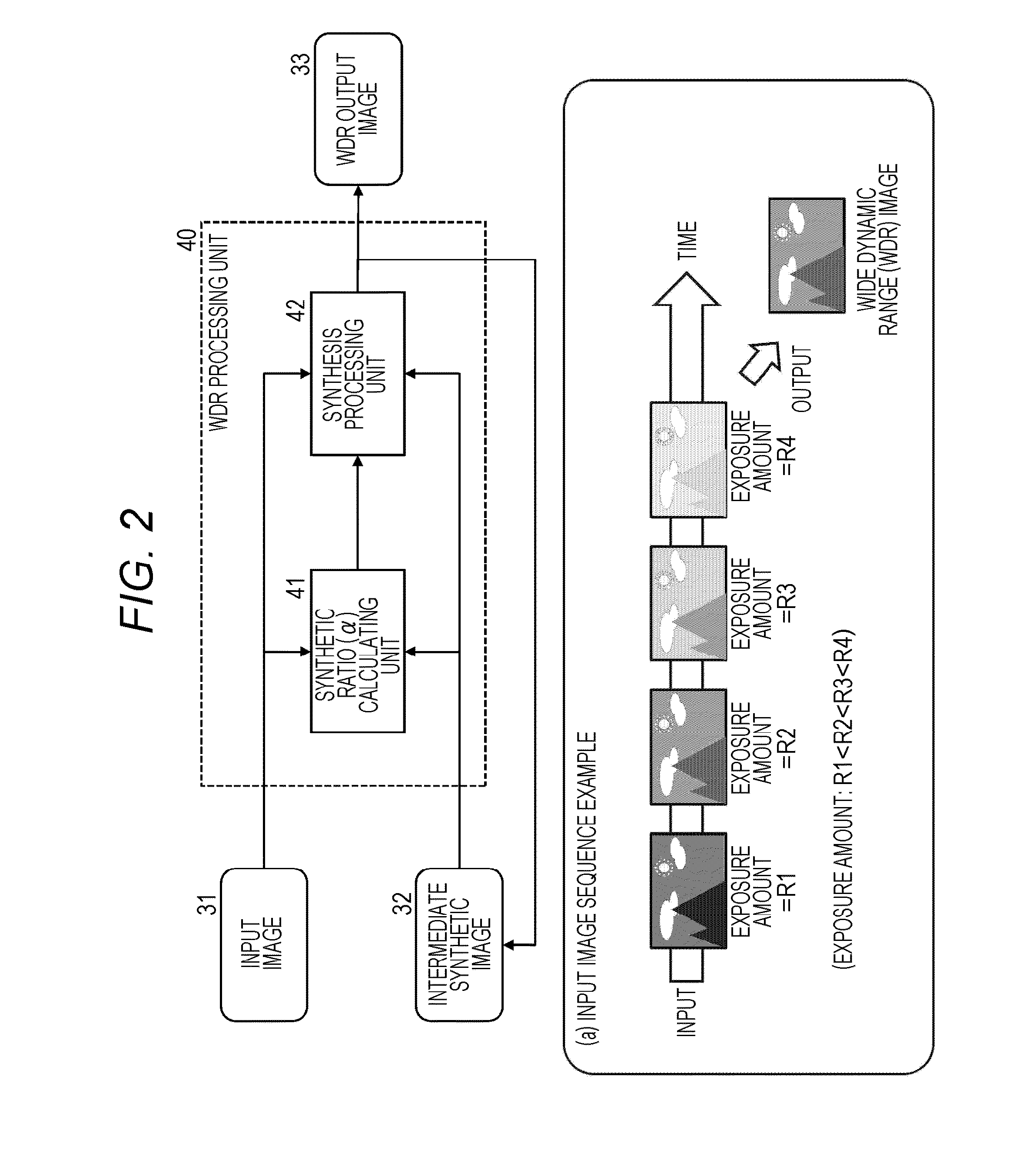

Image processing device, image processing method, and program

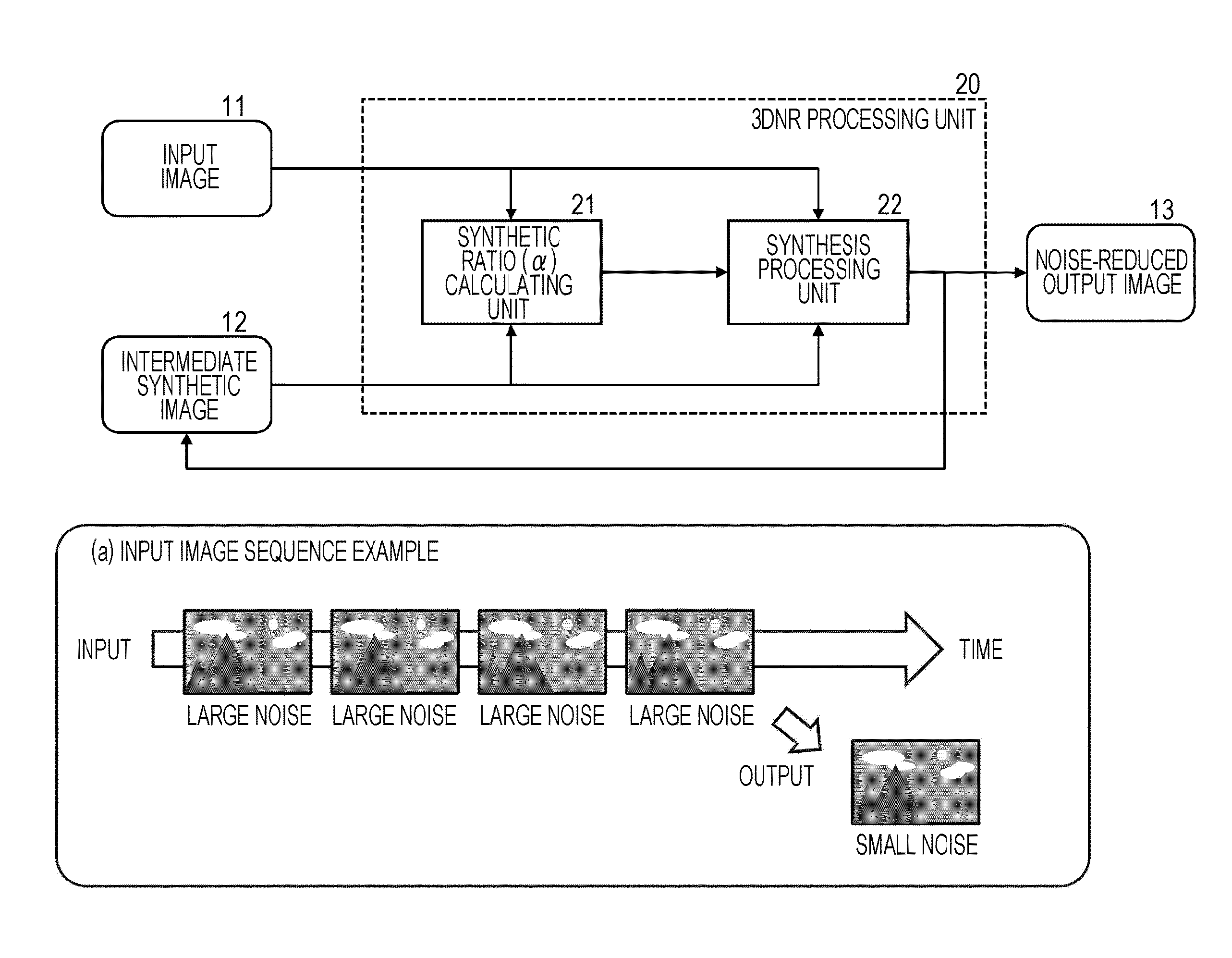

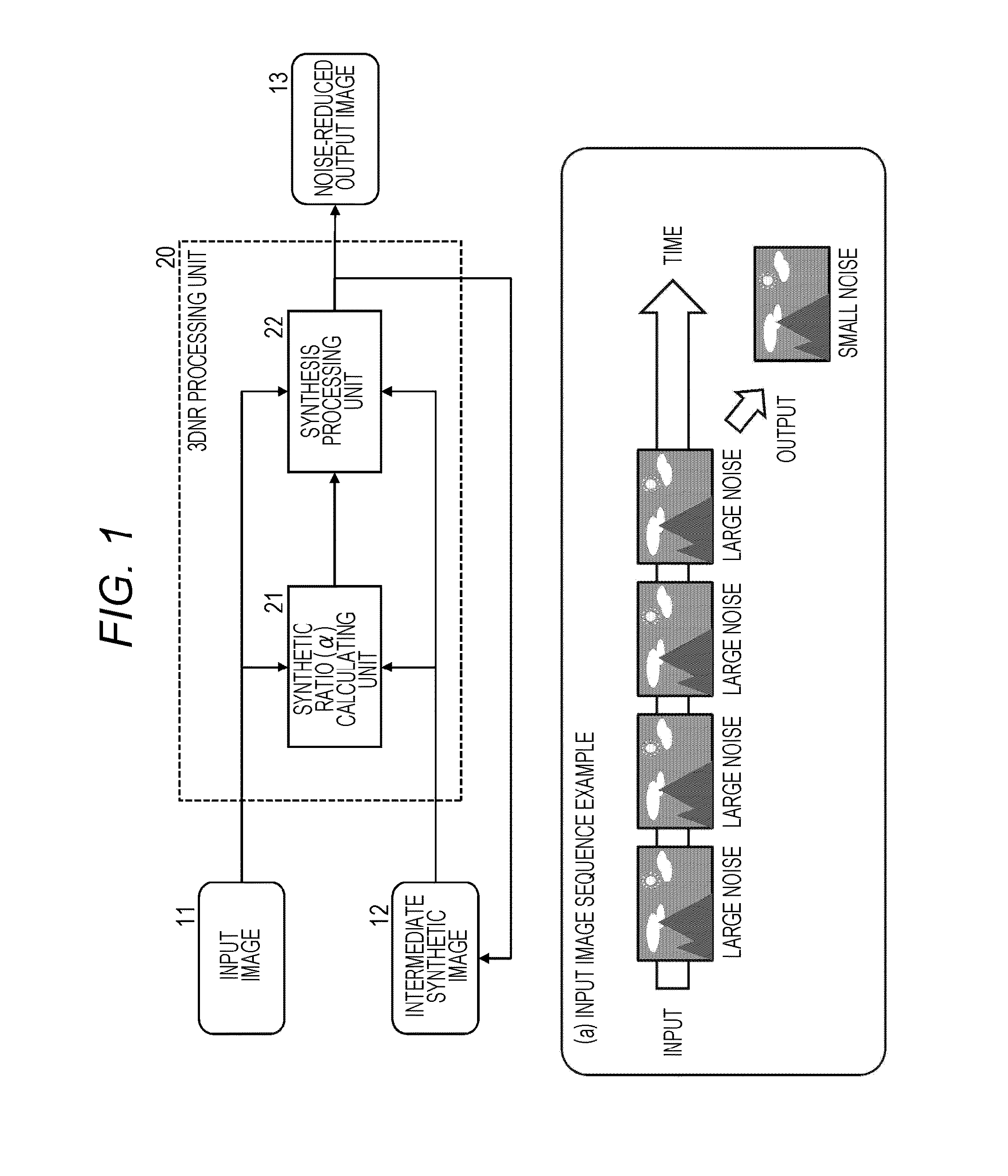

An image on which a noise reduction and a dynamic range expansion process have been performed is generated by a sequential synthesis process using continuous-photographed images of different exposure conditions. The continuous-photographed images of different exposure conditions are input, configuration pixels of an input image and an intermediate synthetic image are classified into a noise pixel, an effective pixel, or a saturation pixel, a synthesis processing method of a pixel unit is decided according to a combination of pixel classification results of the corresponding pixels, and the intermediate synthetic image is updated according to the decided method. A 3DNR process is performed when at least one of the corresponding pixels of the input image and the intermediate synthetic image is the effective pixel, the pixel value of the intermediate synthetic image is output without change when both of the corresponding pixels of the input image and the intermediate synthetic image are the saturation pixel or the noise pixel, and the 3DNR process is performed or the pixel value of the intermediate synthetic image is output when one is the saturation pixel, and the other is the noise pixel.

Owner:SONY SEMICON SOLUTIONS CORP

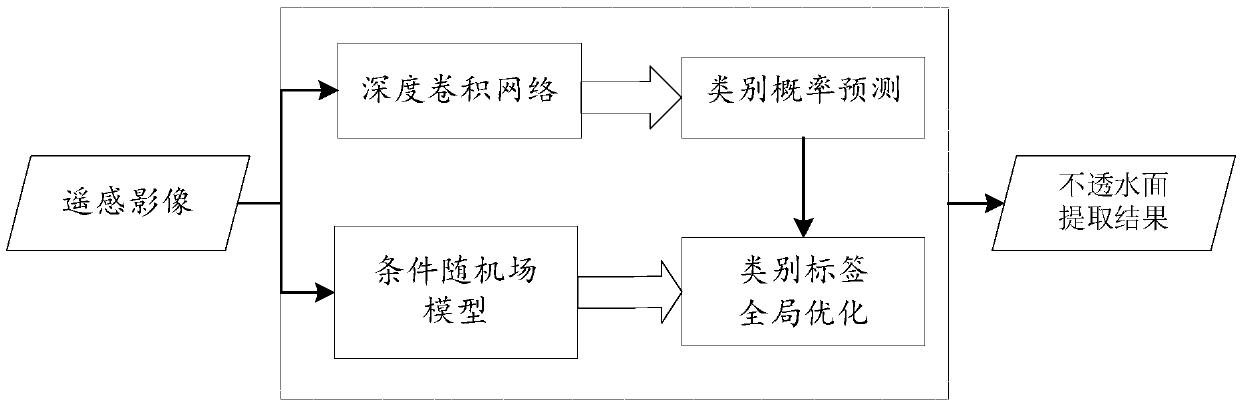

High-resolution remote sensing image impervious surface extraction method and system based on deep learning and semantic probability

ActiveCN108985238AGet goodReasonable impervious surface extraction resultsMathematical modelsEnsemble learningConditional random fieldSample image

A high-resolution remote sensing image impervious surface extraction method and system based on deep learning and semantic probability. The method includes: obtaining a high-resolution remote sensingimage of a target region, normalizing image data, dividing the image data into a sample image and a test image; constructing a deep convolutional network, wherein the deep convolutional network is composed of a multi-layer convolution layer, a pooling layer and a corresponding deconvolution and deconvolution layer, and extracting image features of each sample image; predicting each sample image pixel by pixel, and constructing a loss function by using the error between the predicted value and the true value, and updating and training the network parameters; extracting the test image features by the deep convolutional network, and carrying out the pixel-by-pixel classification prediction, then constructing a conditional random field model of the test image by using the semantic associationinformation between pixel points, optimizing the test image prediction results globally, and obtaining the extraction results. The invention can accurately and automatically extract the impervious surface of the remote sensing image, and meets the practical application requirements of urban planning.

Owner:WUHAN UNIV

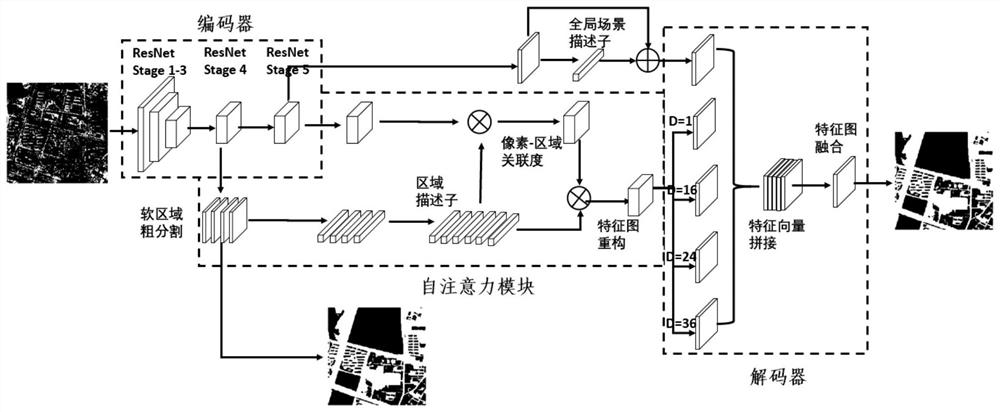

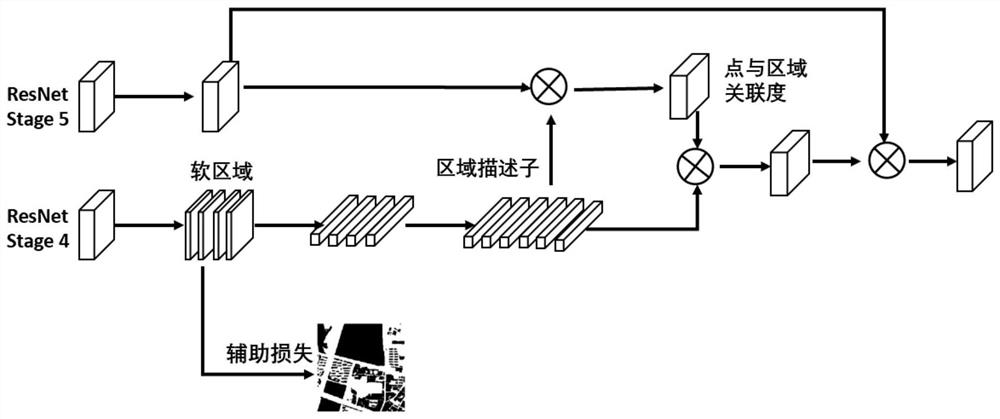

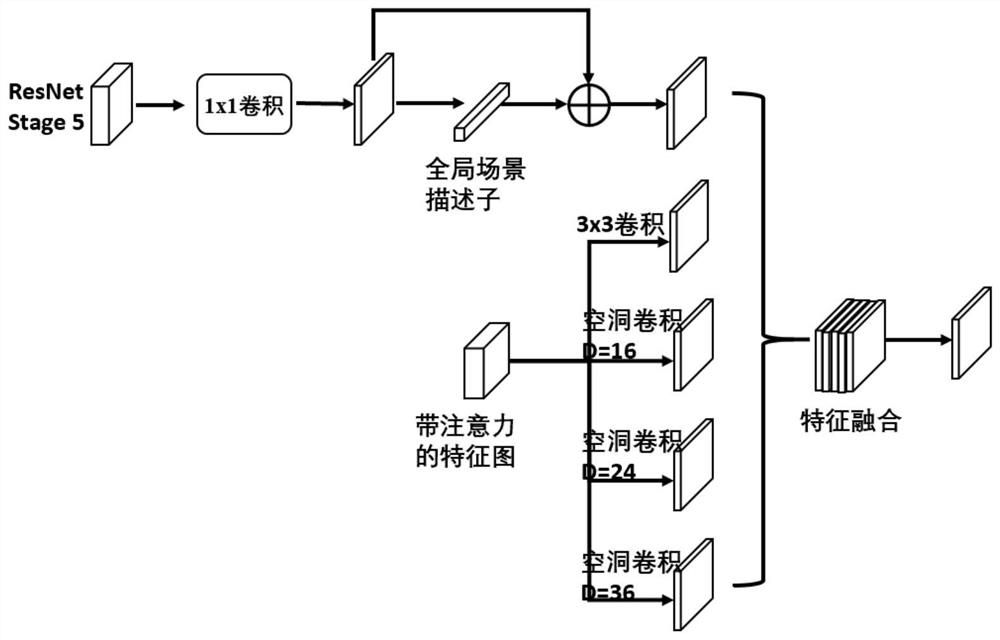

Remote sensing image semantic segmentation method based on region description self-attention mechanism

ActiveCN111932553AAdd self-attention moduleExpand the receptive fieldImage enhancementImage analysisFeature extractionRemote sensing

The invention discloses a remote sensing image semantic segmentation method based on a region description self-attention mechanism. The method comprises the steps that a visible light remote sensing image is input into an encoder, advanced semantic features of the visible light remote sensing image are extracted, feature maps of different levels are obtained, global scene extraction and essentialfeature extraction based on a self-attention module are conducted on the basis of the feature maps of different levels, and a scene guiding feature map and a noiseless feature map are correspondinglyobtained; and inputting the scene guide feature map and the noiseless feature map into a decoder, performing up-sampling to return to the size of an original image, and performing pixel-by-pixel classification to obtain a remote sensing image semantic segmentation result. Through the encoder for extracting the semantic features, the self-attention module for increasing the internal relation of theimage and the decoder for mapping the attention-weighted semantic features back to the original space so as to perform pixel-by-pixel classification, the receptive field of the model is improved, themodel can adapt to the scale change of data, and the problem of category imbalance can be solved.

Owner:BEIHANG UNIV

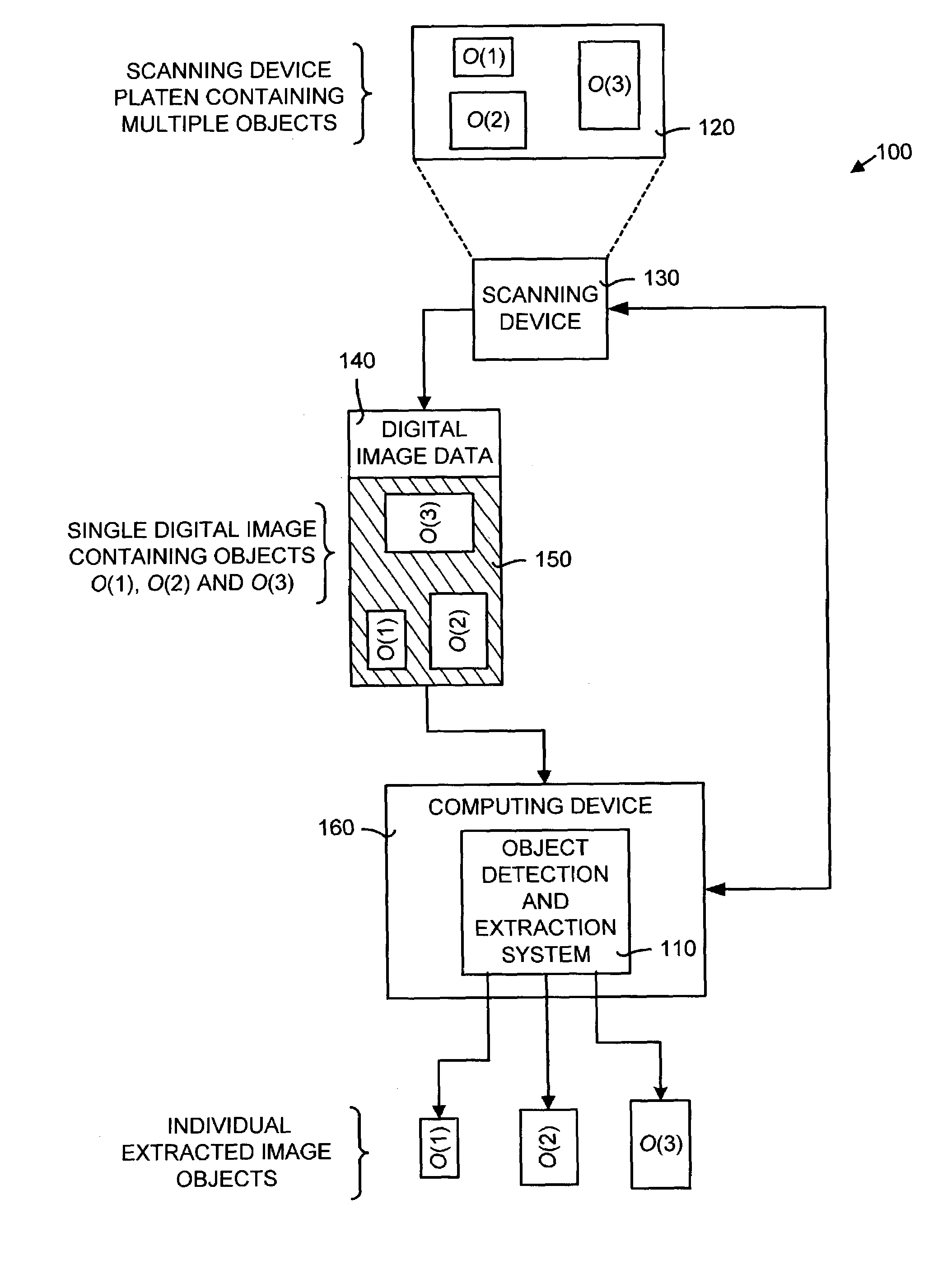

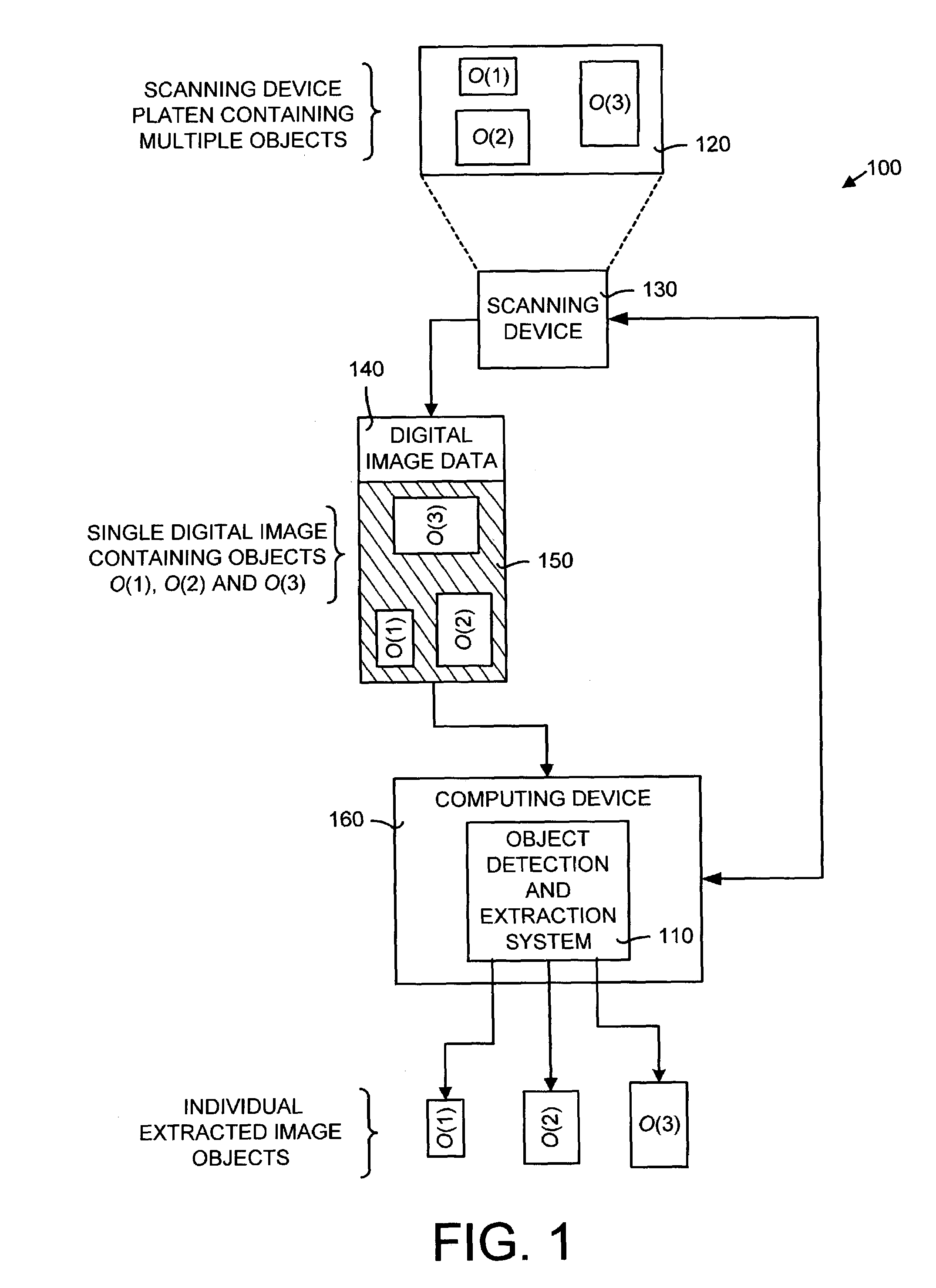

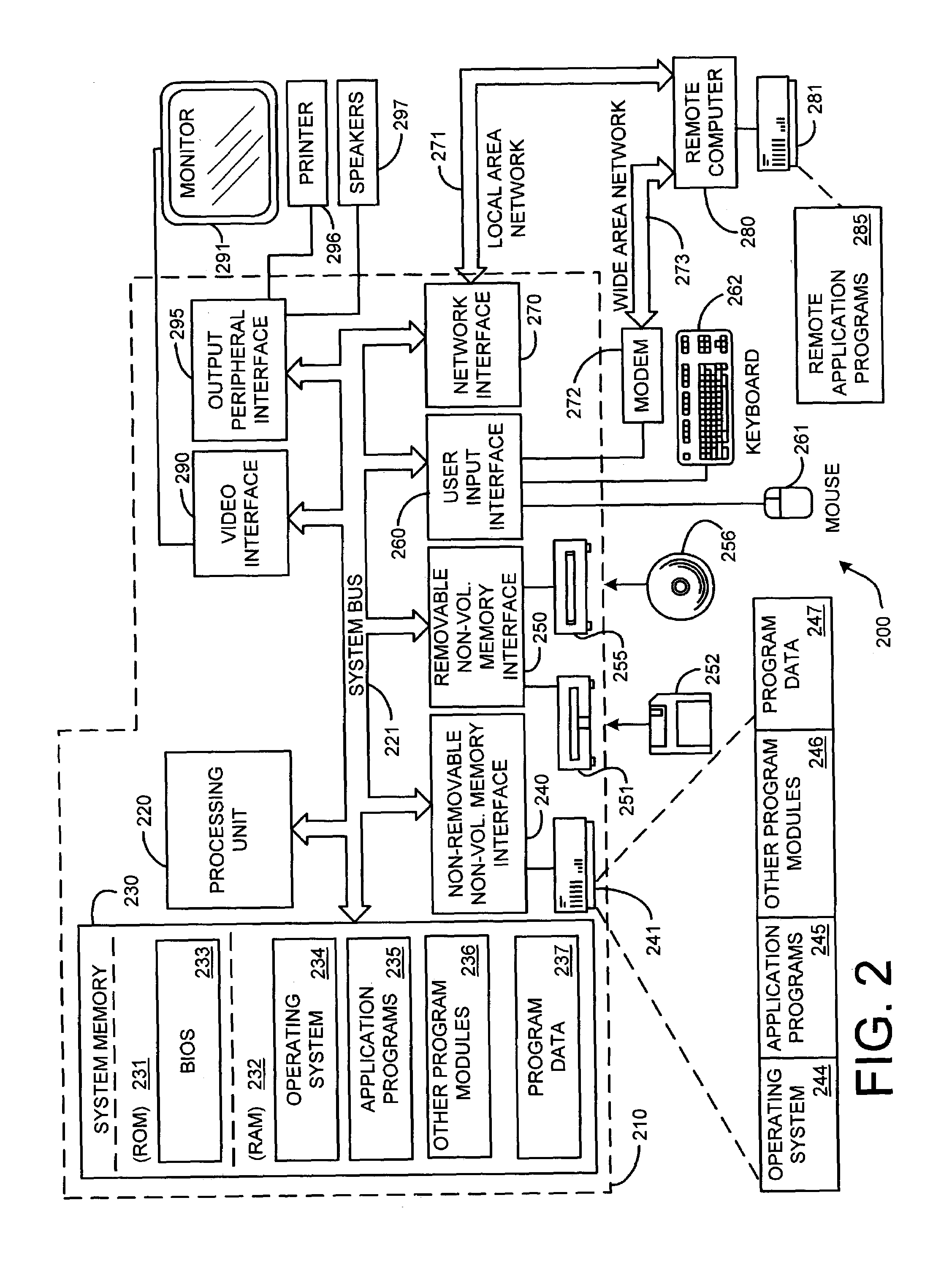

System and method for automatically detecting and extracting objects in digital image data

An object detection and extraction system and method for processing digital image data. The system and method segregates objects contained within a single image and allows those objects to be considered as an individual object. In general, the object detection and extraction method takes an image containing one or more objects of know shape (such as rectangular objects) and finds the number of objects along with their size, orientation and position. In particular, the object detection and extraction method includes classifying each pixel in an image containing one or more objects to obtain pixel classification data, defining an image function to process the pixel classification data, and dividing the image into sub-images based on disparities or gaps in the image function. Each of the sub-images is processed to determine a size and an orientation for the each of the objects. The object detection and extraction system uses the above method.

Owner:ZHIGU HLDG

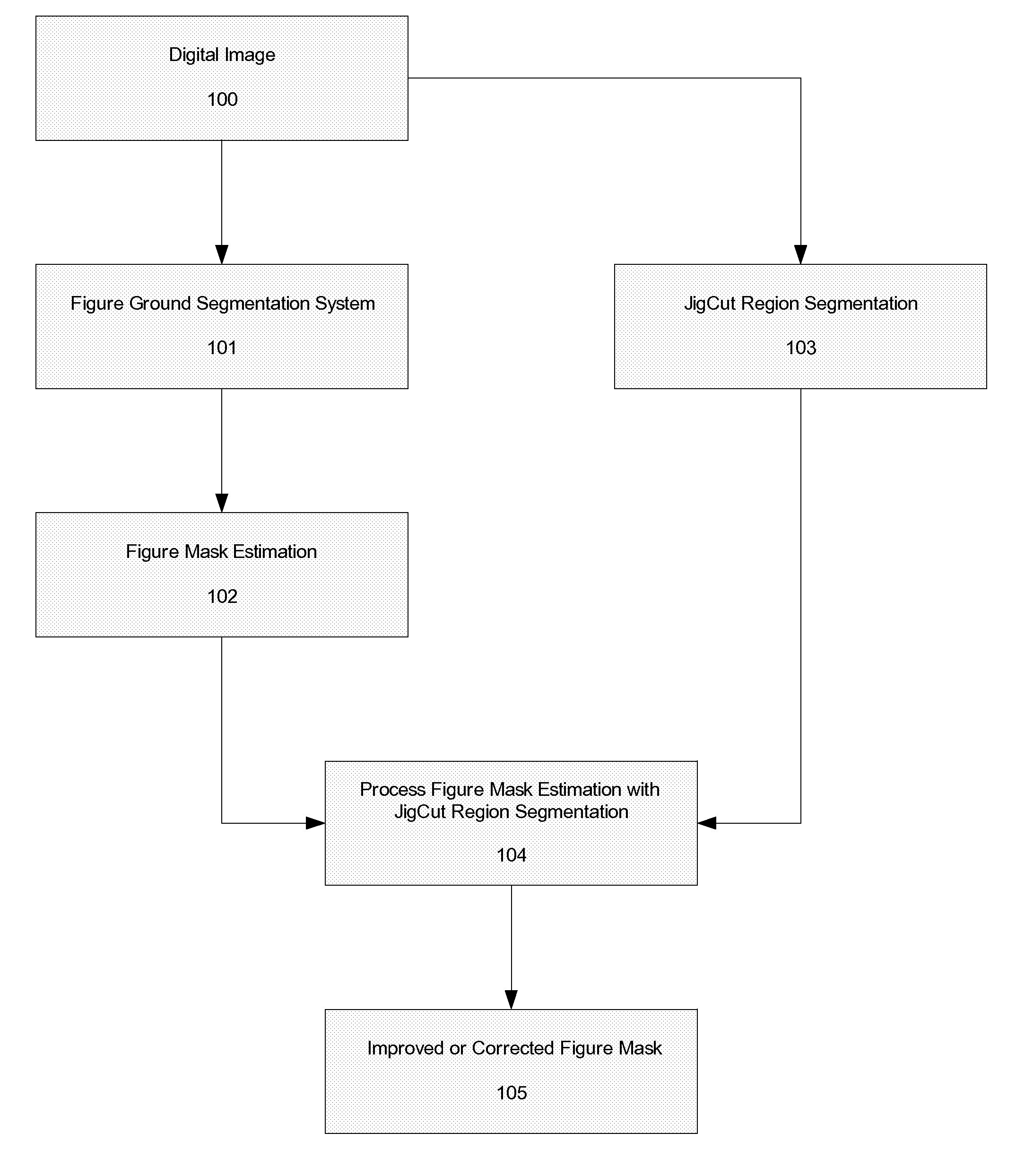

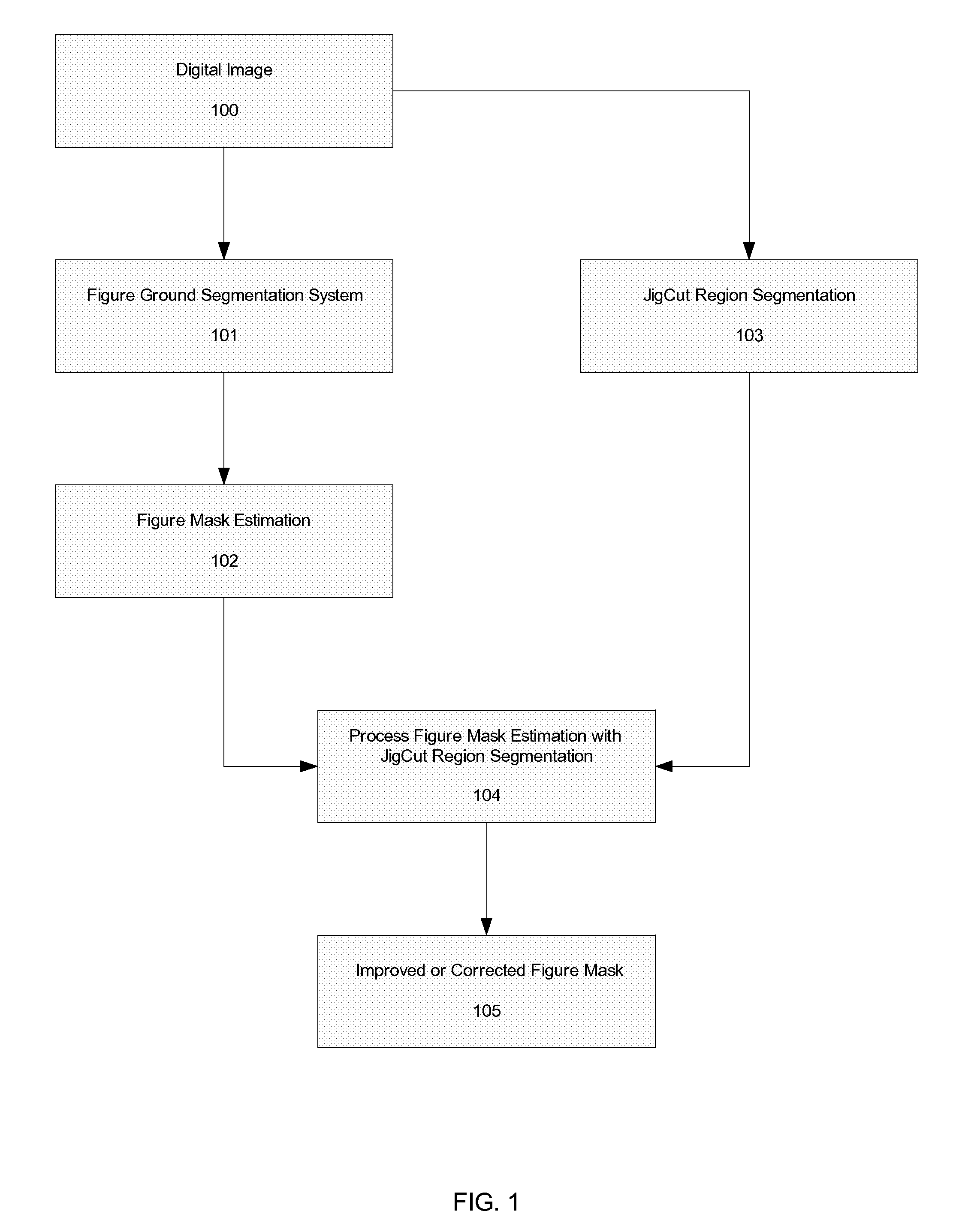

Systems and methods for unsupervised local boundary or region refinement of figure masks using over and under segmentation of regions

InactiveUS20090148041A1Alleviate time and costReduces computational expenseImage enhancementImage analysisGraphicsPattern recognition

An initial figure mask estimation of an image is generated using a figure ground segmentation system, thereby initially assigning each pixel in the image with a first attribute value or a second attribute value. A JigCut region segmentation of the image is generated. The figure mask estimation is processed with the JigCut region segmentation by (i) classifying the pixels of the image in each respective JigCut region in the JigCut region segmentation with the first attribute value when a predetermined number or a predetermined percentage of the pixels within the respective JigCut region have been initially assigned the first attribute value by the initial figure mask estimation and (ii) classifying the pixels of the image in each respective JigCut region in the JigCut region segmentation with the second attribute value otherwise.

Owner:FLASHFOTO

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com