Patents

Literature

487 results about "Image prediction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method and device for image prediction

ActiveCN105163116AGuaranteed complexityImprove forecast accuracyDigital video signal modificationMotion vectorImage prediction

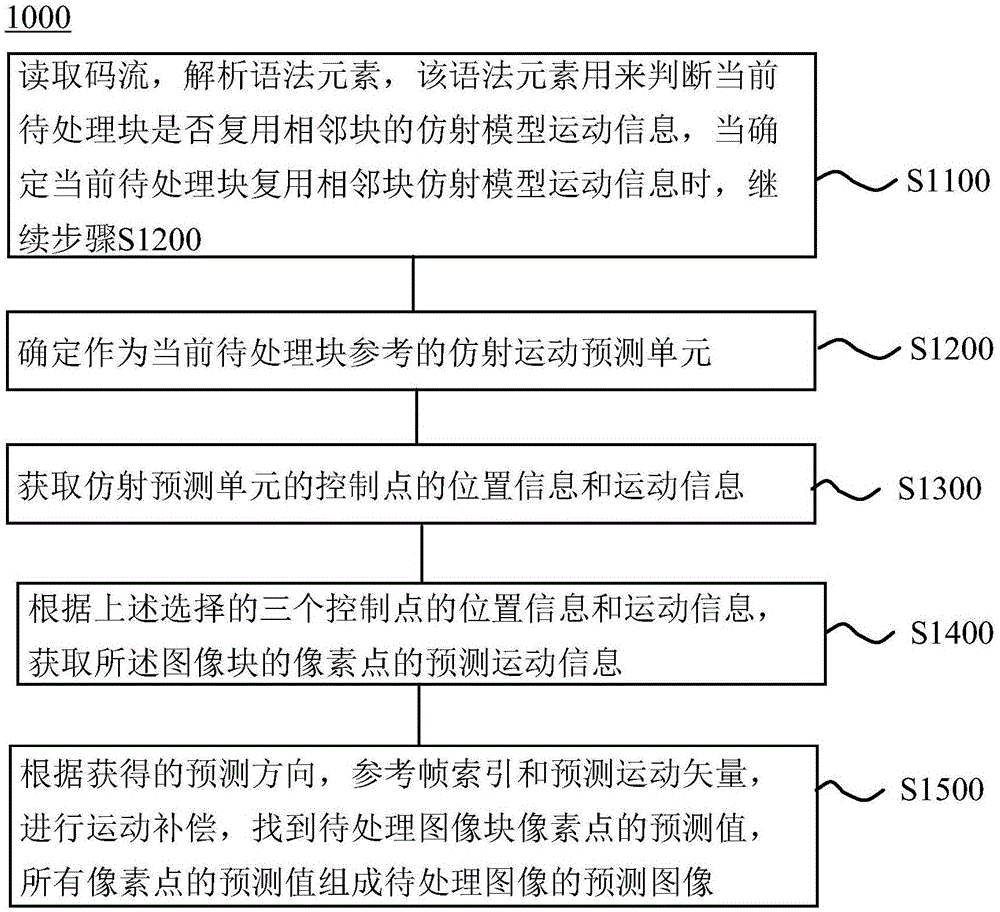

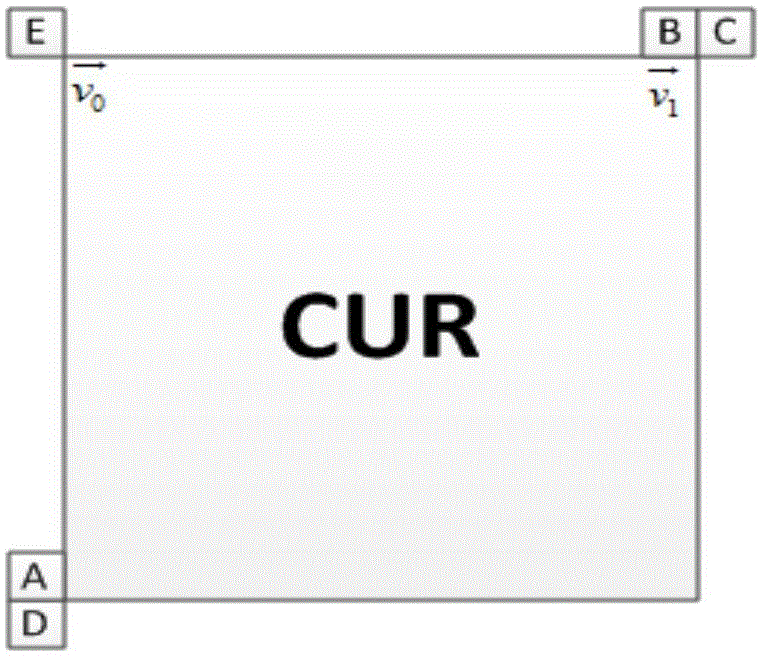

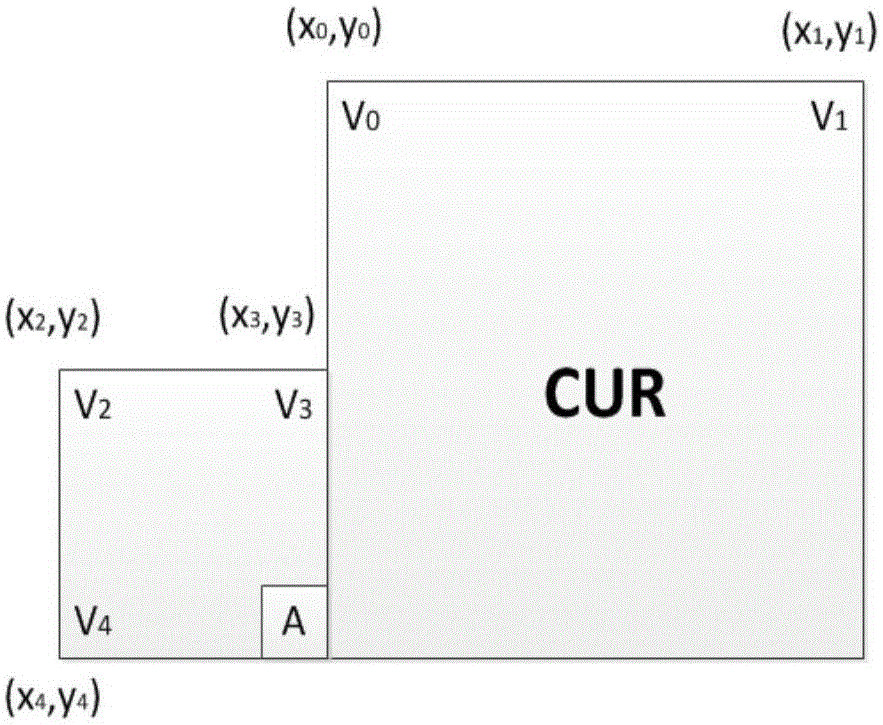

The invention provides a method and a device for image prediction. The method comprises the steps of: obtaining a first reference unit of an image unit, wherein the image unit and the first reference unit obtain respective predicted images by using the same affine model; obtaining motion information of basic motion compensation units at the at least two preset positions of the first reference unit; and obtaining the motion information of the basic motion compensation units of the image unit. Therefore, by multiplexing the motion information of the first reference unit which adopts the same affine pre-model, a motion vector of the current image unit is obtained more exactly, the coding and decoding complexity is kept while the prediction accuracy is improved, and the coding and decoding performance is improved.

Owner:HUAWEI TECH CO LTD +1

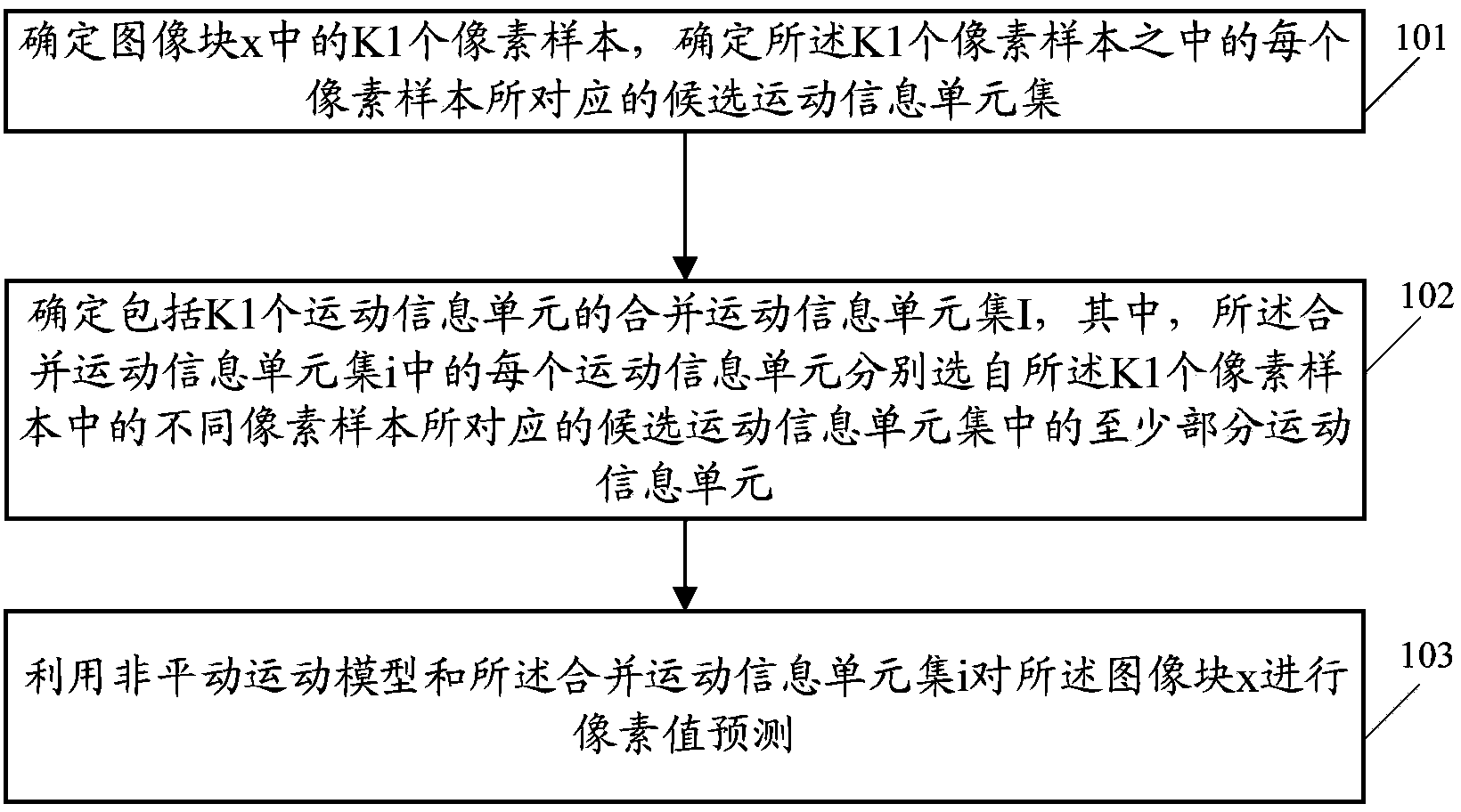

Image forecasting method and related device

ActiveCN104363451AAccurate descriptionImprove coding efficiencyDigital video signal modificationPattern recognitionComputation complexity

The invention discloses an image forecasting method and a related device. The image forecasting method includes: confirming K1 pixel samples in an image block x, and confirming a candidate movement information unit set corresponding to each of the K1 pixel samples; enabling each candidate movement information unit set corresponding to each pixel sample to include at least one candidate movement information unit; confirming a merger movement information unit set i which includes K1 movement information units; using a non-translation movement model and the merger movement information unit set i to forecast pixel values of the image block x, wherein all the movement information units in the merger movement information unit set i are respectively selected from at least a part of the movement information units in the candidate movement information unit sets corresponding to the different pixel samples in the K1 pixel samples. The image forecasting method and the related device facilitate reduction of computation complexity of image forecasting based on the non-translation movement model.

Owner:HUAWEI TECH CO LTD

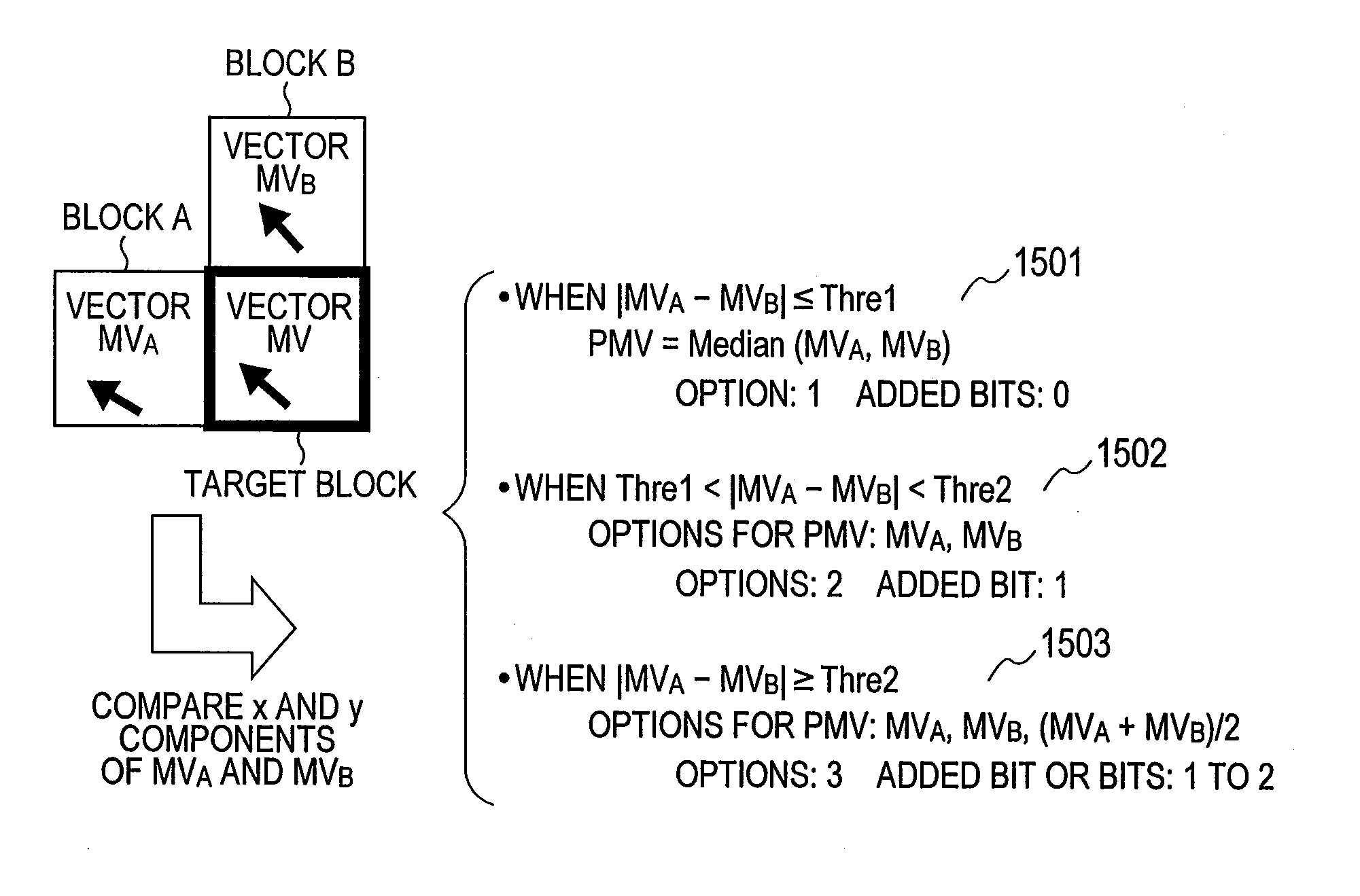

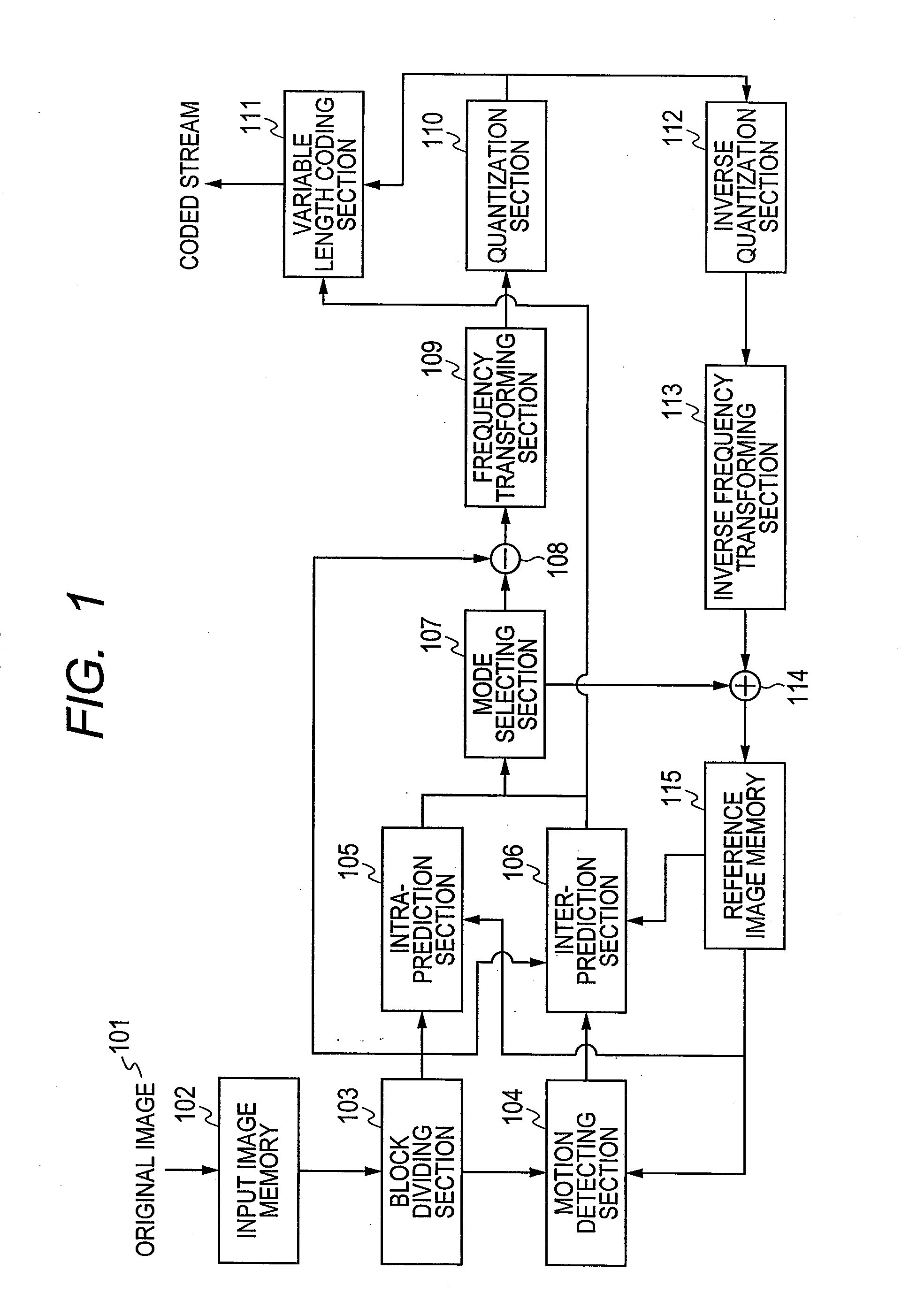

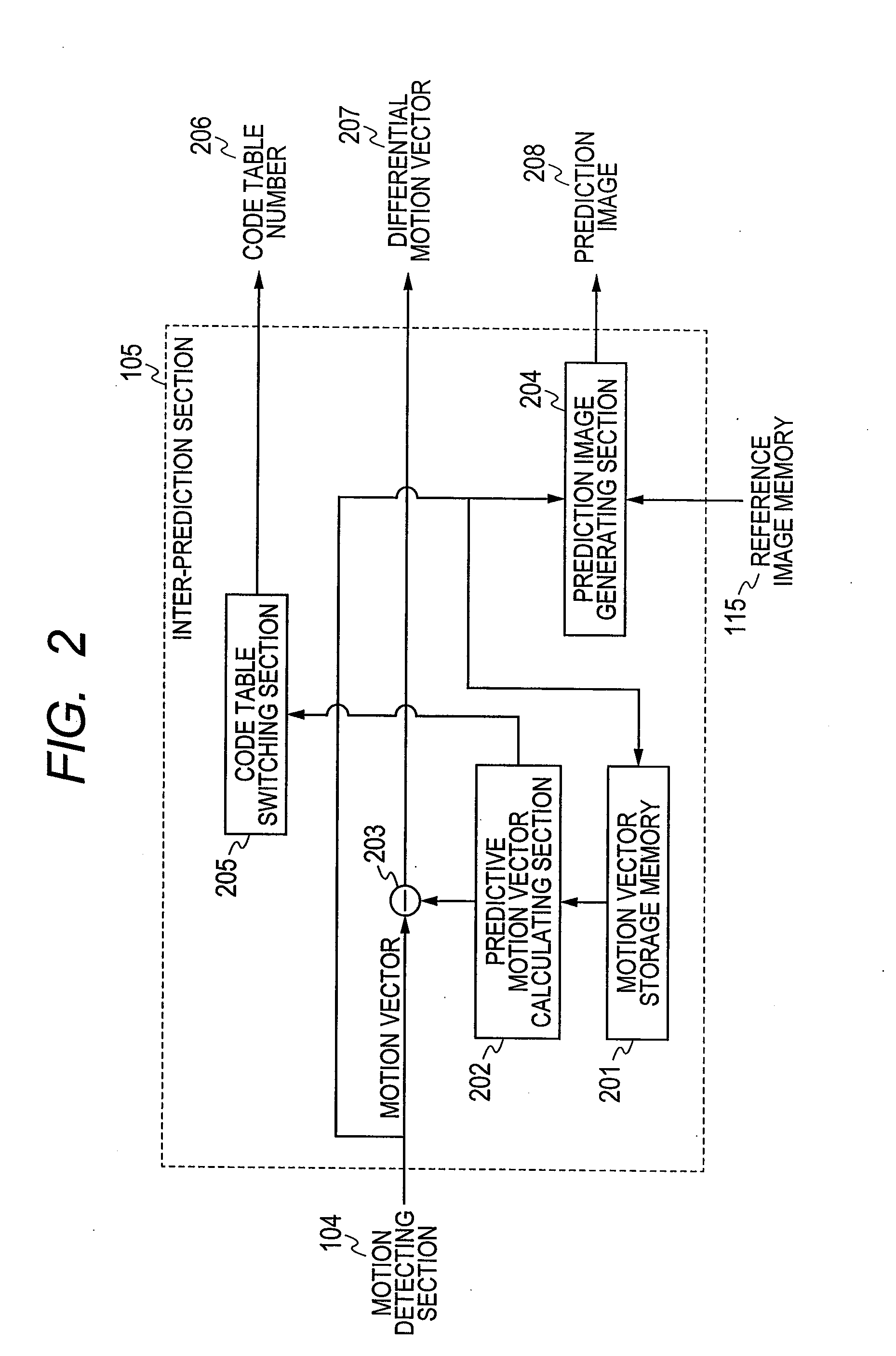

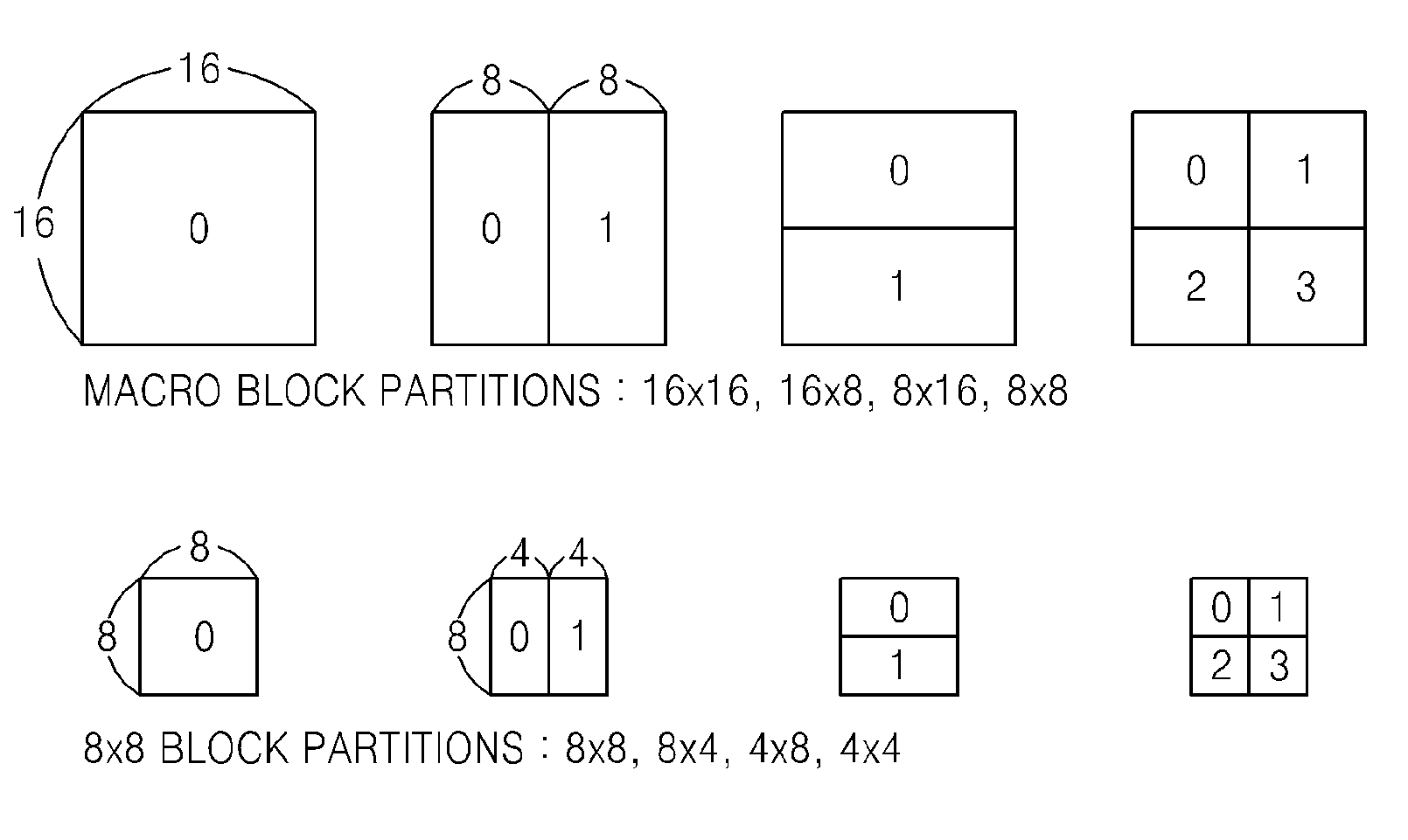

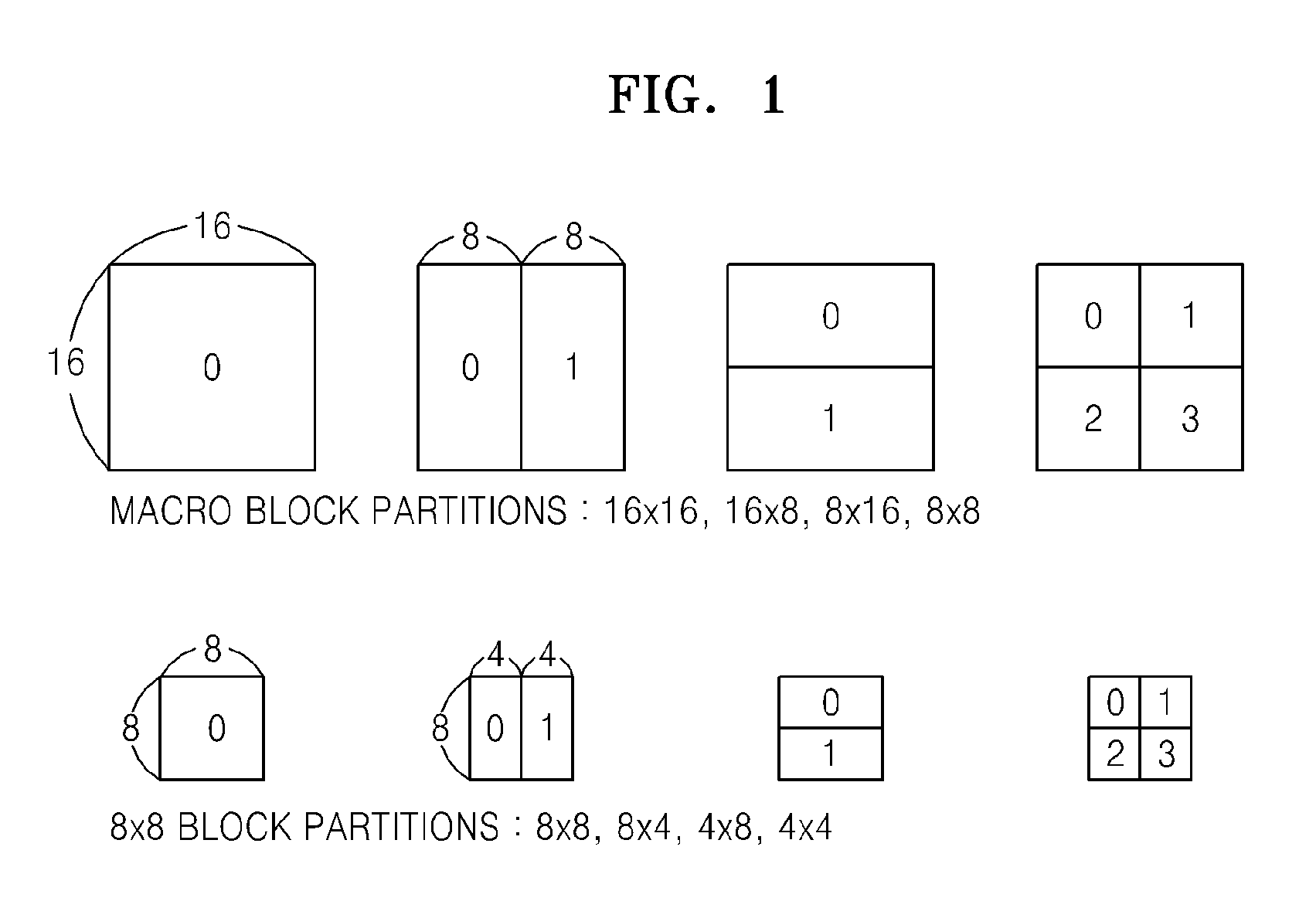

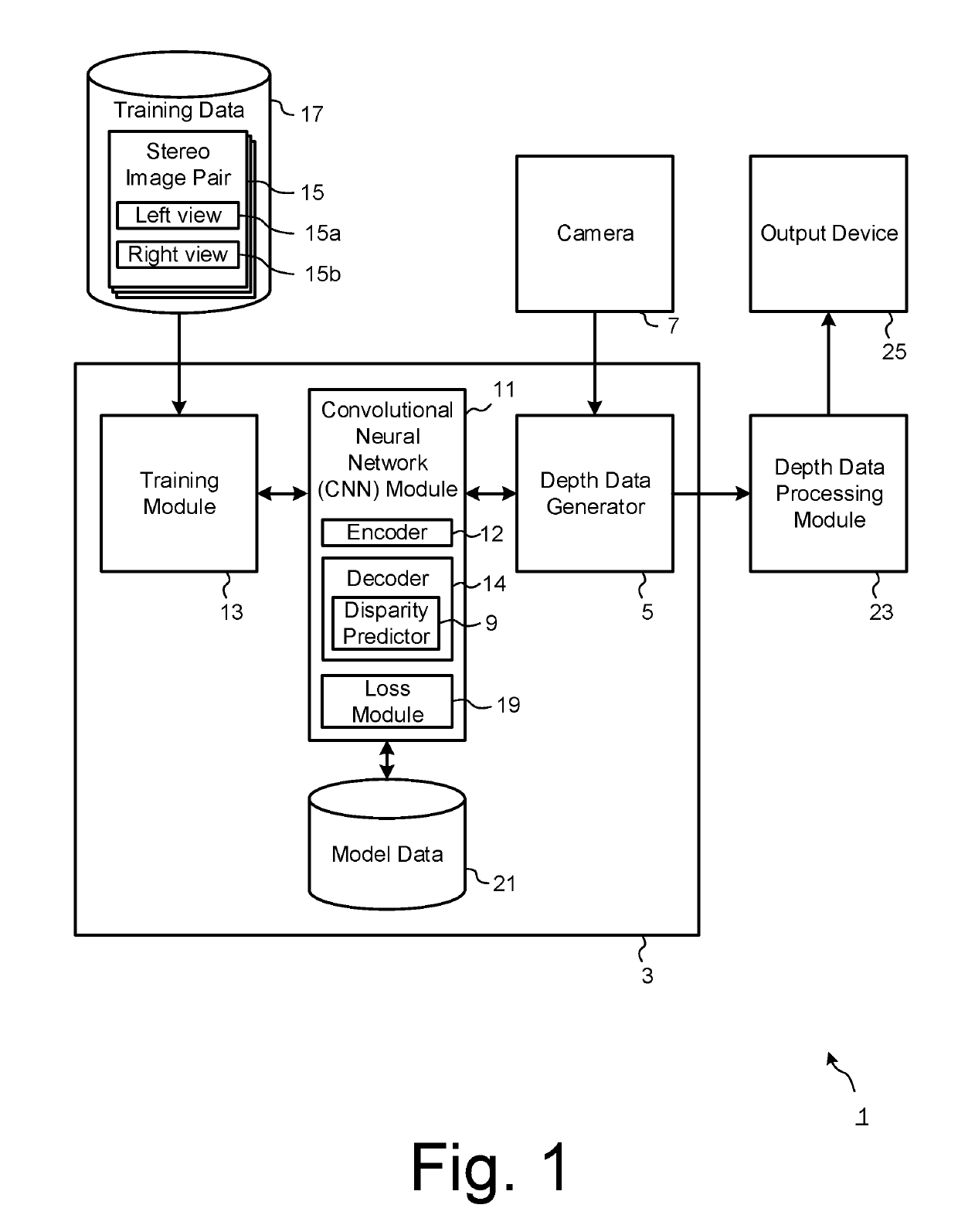

Video encoding method and video decoding method

ActiveUS20110142133A1Reduce the amount requiredImprove compression efficiencyPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningVideo encodingMotion vector

Provided is a video encoding / decoding technique for improving the compression efficiency by reducing the motion vector code amount. In a video decoding process, the prediction vector calculation method is switched from one to another in accordance with a difference between predetermined motion vectors among a plurality of motion vectors of a peripheral block of a block to be decoded and already decoded. The calculated prediction vector is added to a difference vector decoded from an encoded stream so as to calculate a motion vector. By using the calculated motion vector, the inter-image prediction process is executed.

Owner:MAXELL HLDG LTD

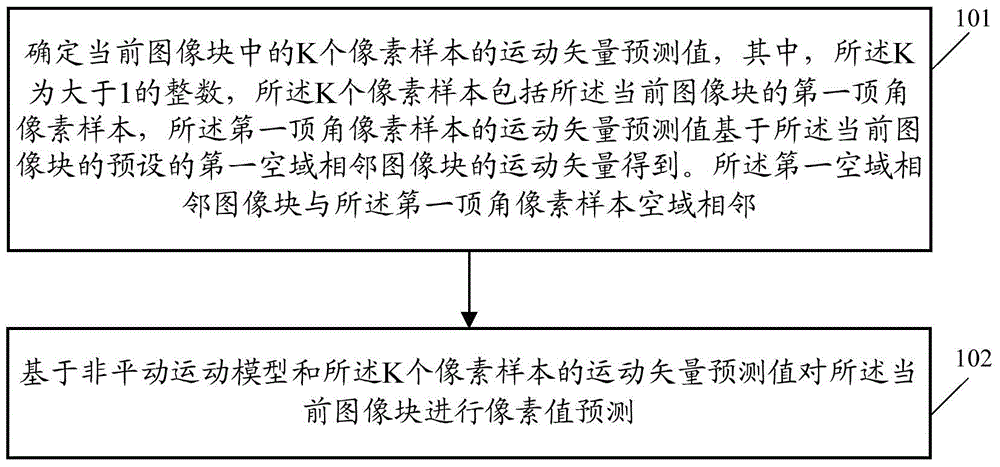

Image prediction method and relevant device

ActiveCN104539966AReduce computational complexityAvoid passingDigital video signal modificationComputation complexityMotion vector

The embodiment of the invention discloses an image prediction method and a relevant device. The image prediction method comprises the following steps: determining the motion vector predictors of K pixel samples in a current image block, wherein K is an integer greater than 1, the K pixel samples comprise a first vertex angle pixel sample of the current image block, the motion vector predictor of the first vertex angle pixel sample is obtained on the basis of the motion vector of a preset first spatial domain adjacent image block of the current image block, and the first spatial domain adjacent image block is adjacent to a first vertex angle pixel sample spatial domain; and predicting the pixel value of the current image block on the basis of a non-translational motion model and the motion vector predictors of the K pixel samples. According to the scheme in the embodiment of the invention, the computation complexity of image prediction based on the non-translational motion model is lowered.

Owner:HUAWEI TECH CO LTD +1

Method and apparatus for determining a prediction mode

InactiveUS20100054334A1Enhance the imageReduce in quantityColor television with pulse code modulationColor television with bandwidth reductionAlgorithmImage prediction

A method and apparatus for determining a prediction mode which is used during encoding of a current block is provided. In the method and apparatus, a prediction mode candidate group to be used for the current block is determined based on a prediction mode for a block positioned at the same location on a reference picture as a location of the current block encoded, predictive coding is performed on the current block by using predictive modes included in the prediction mode candidate group. Thus, image prediction efficient is improved, and the time and number of calculations required for prediction mode determination are reduced.

Owner:SAMSUNG ELECTRONICS CO LTD

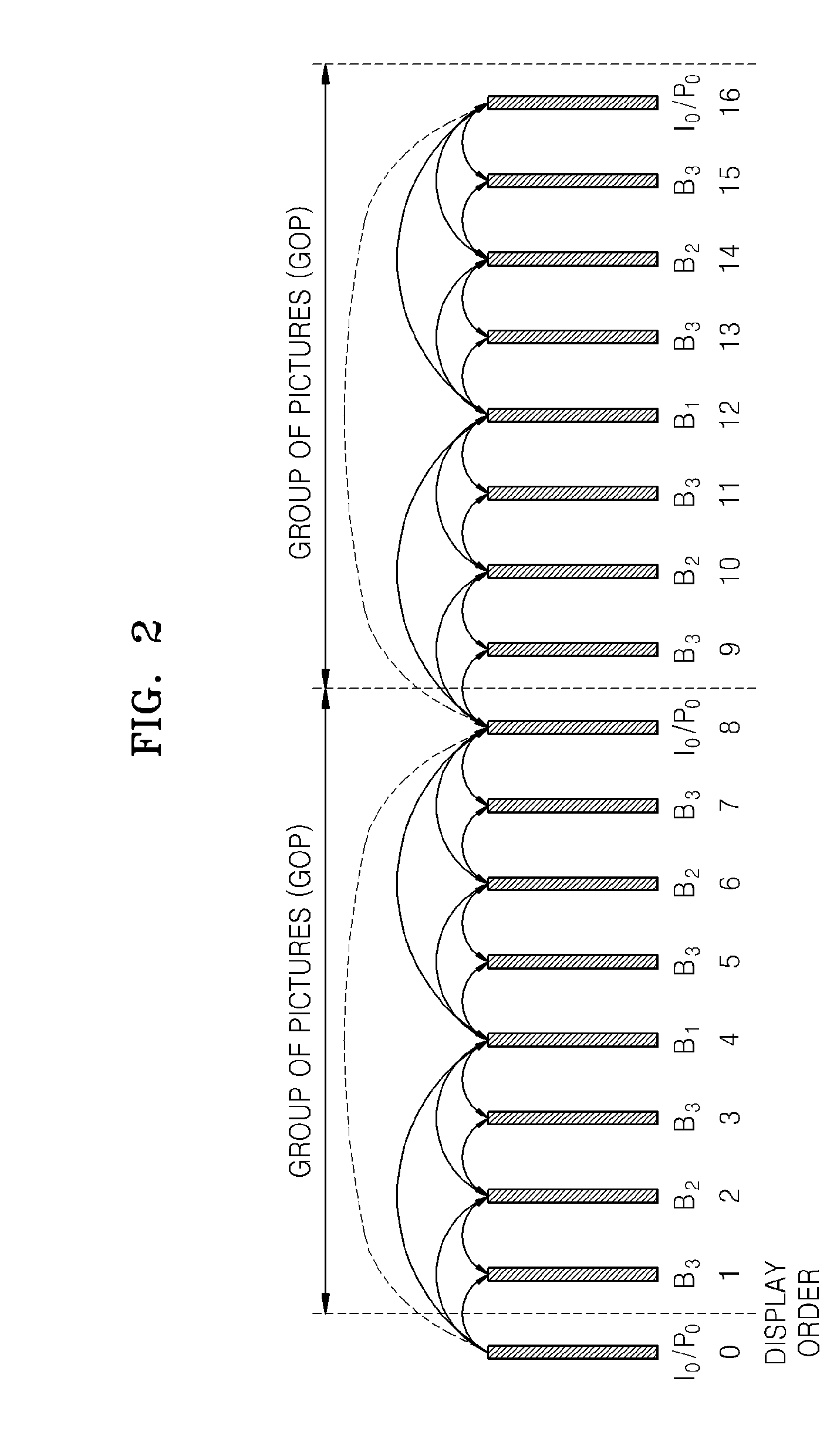

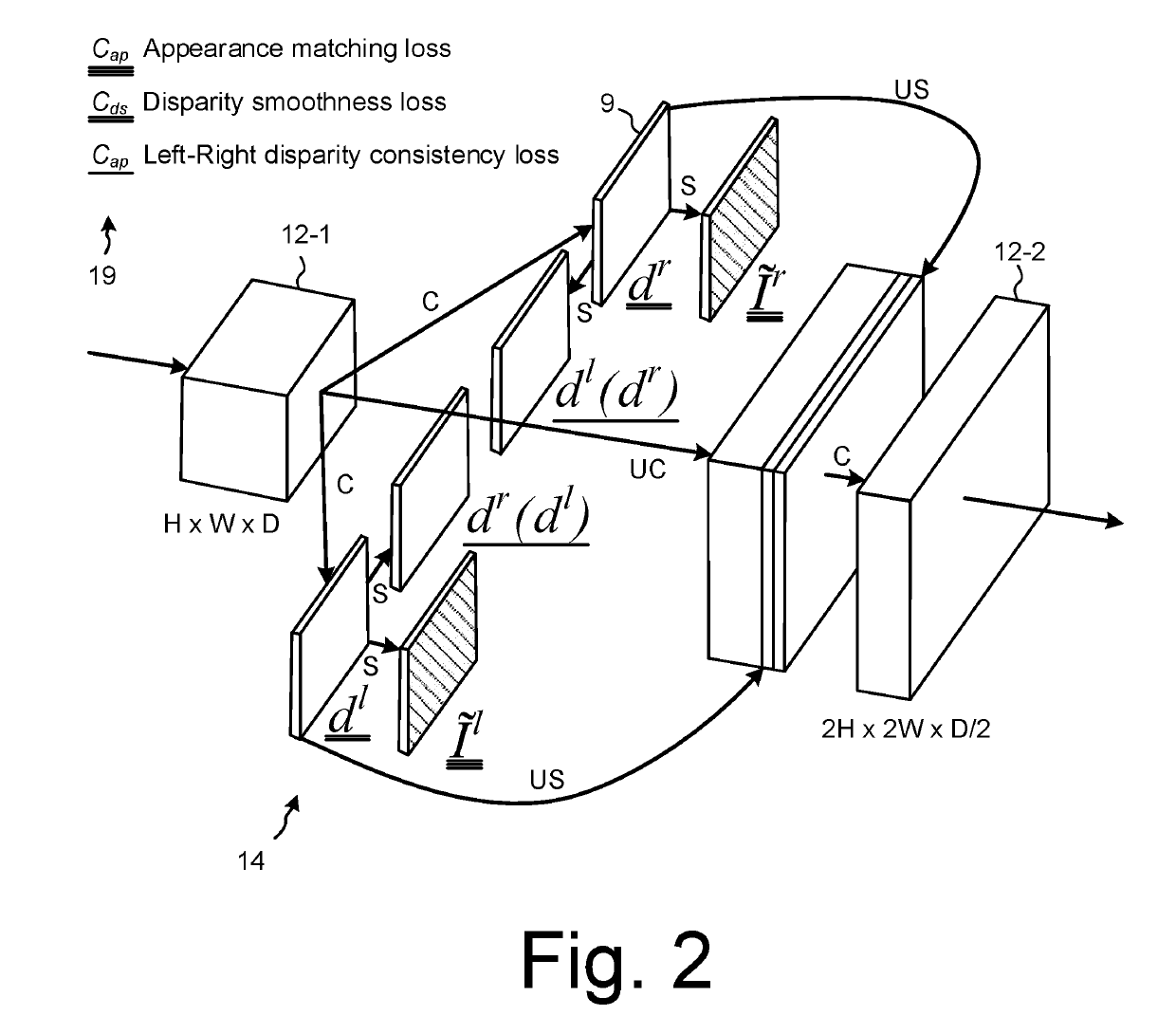

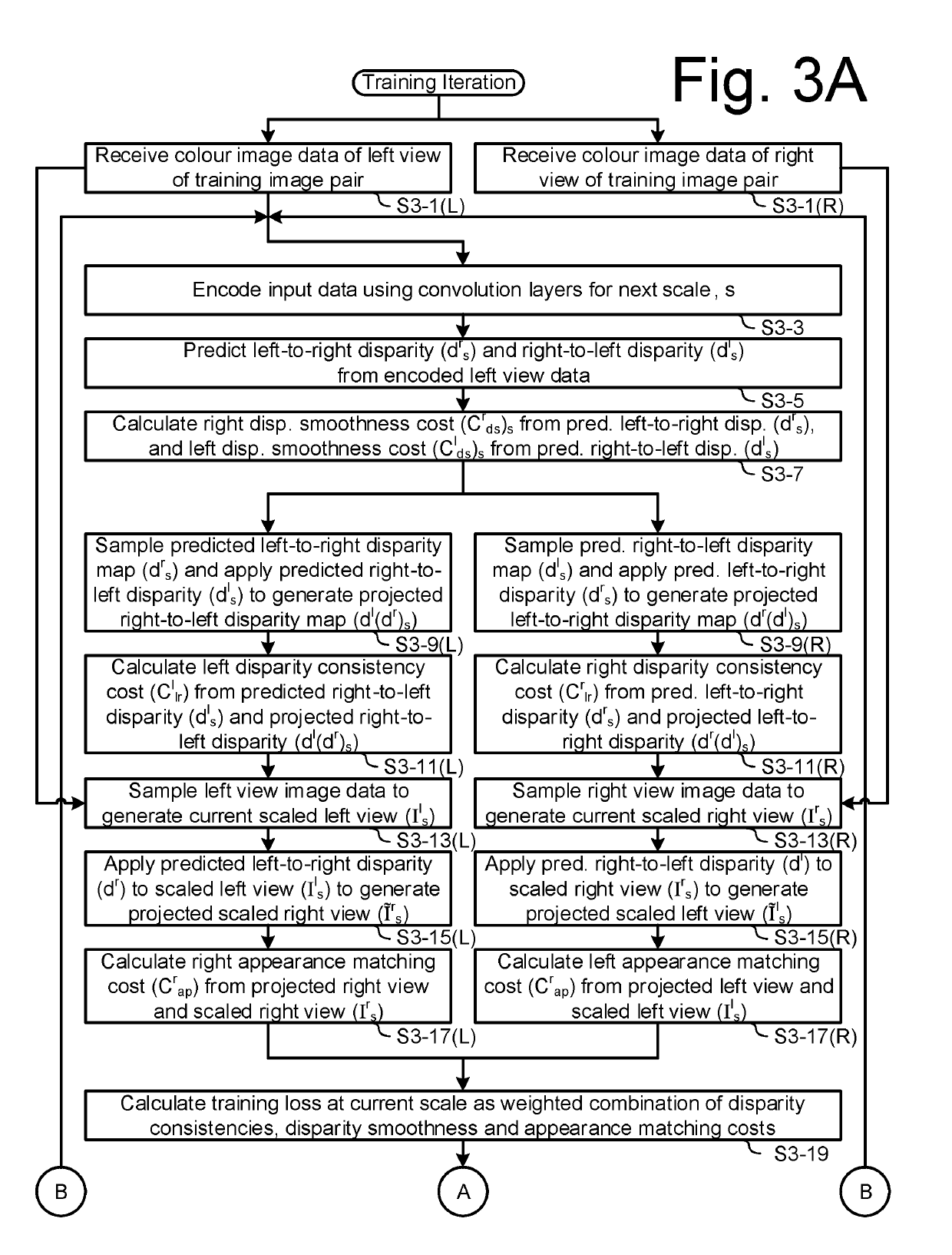

Predicting depth from image data using a statistical model

ActiveUS20190213481A1Minimize image reconstructionImage enhancementImage analysisStereo pairBinocular stereo

Systems and methods are described for predicting depth from colour image data using a statistical model such as a convolutional neural network (CNN), The model is trained on binocular stereo pairs of images, enabling depth data to be predicted from a single source colour image. The model is trained to predict, for each image of an input binocular stereo pair, corresponding disparity values that enable reconstruction of another image when applied, to the image. The model is updated based on a cost function that enforces consistency between the predicted disparity values for each image in the stereo pair.

Owner:NIANTIC INC

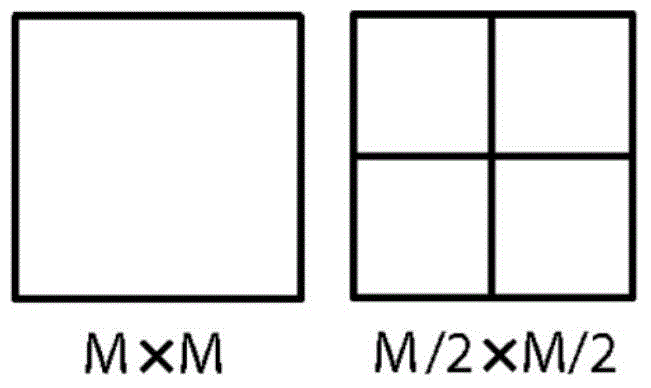

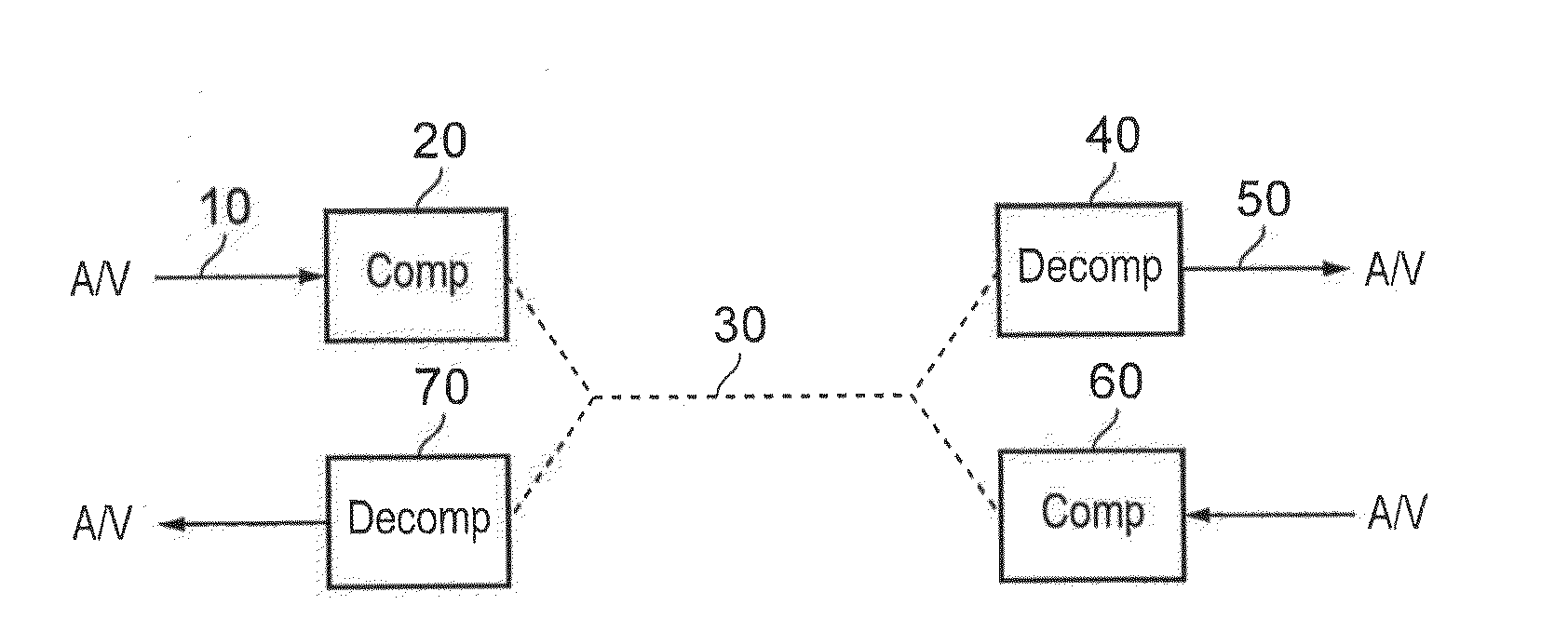

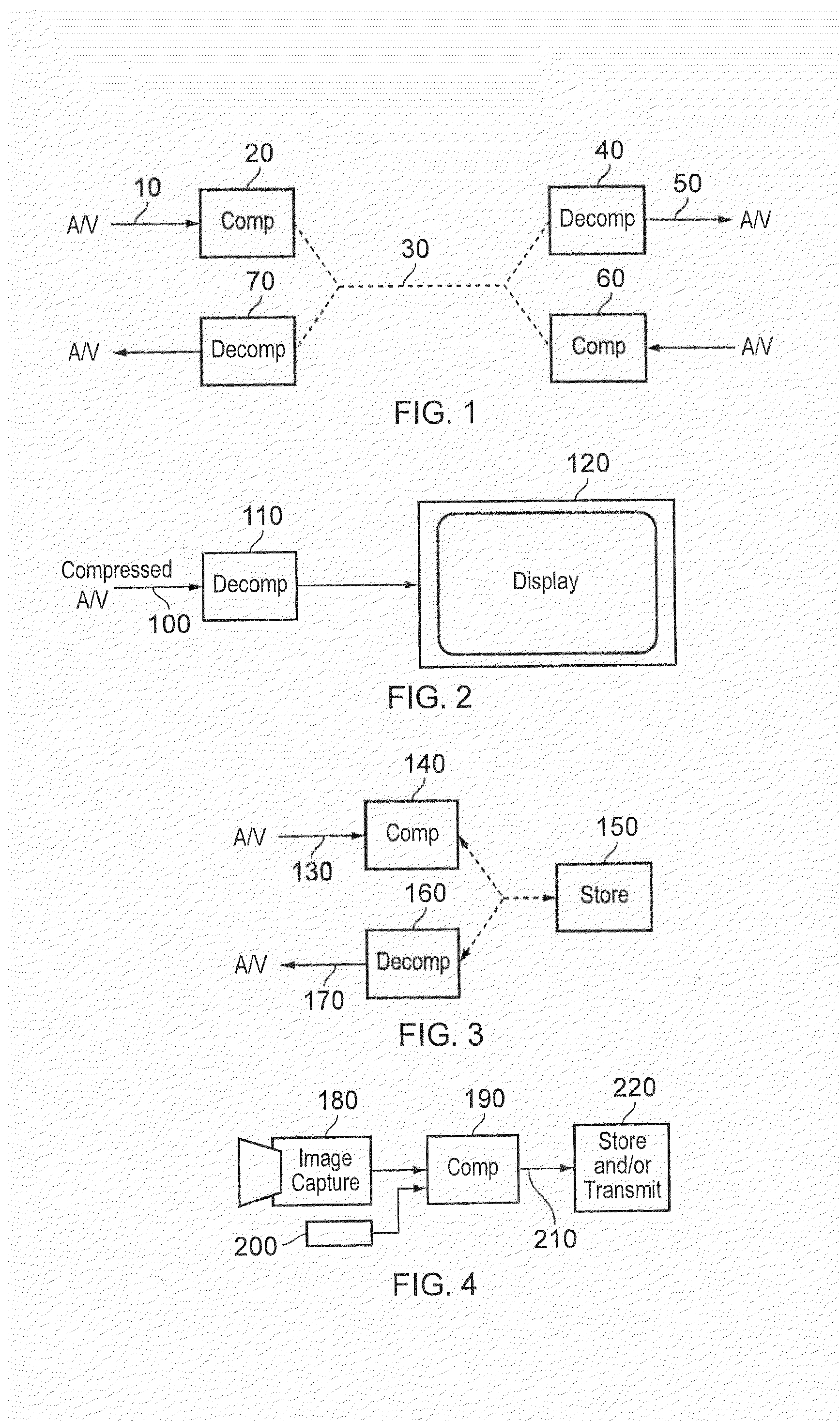

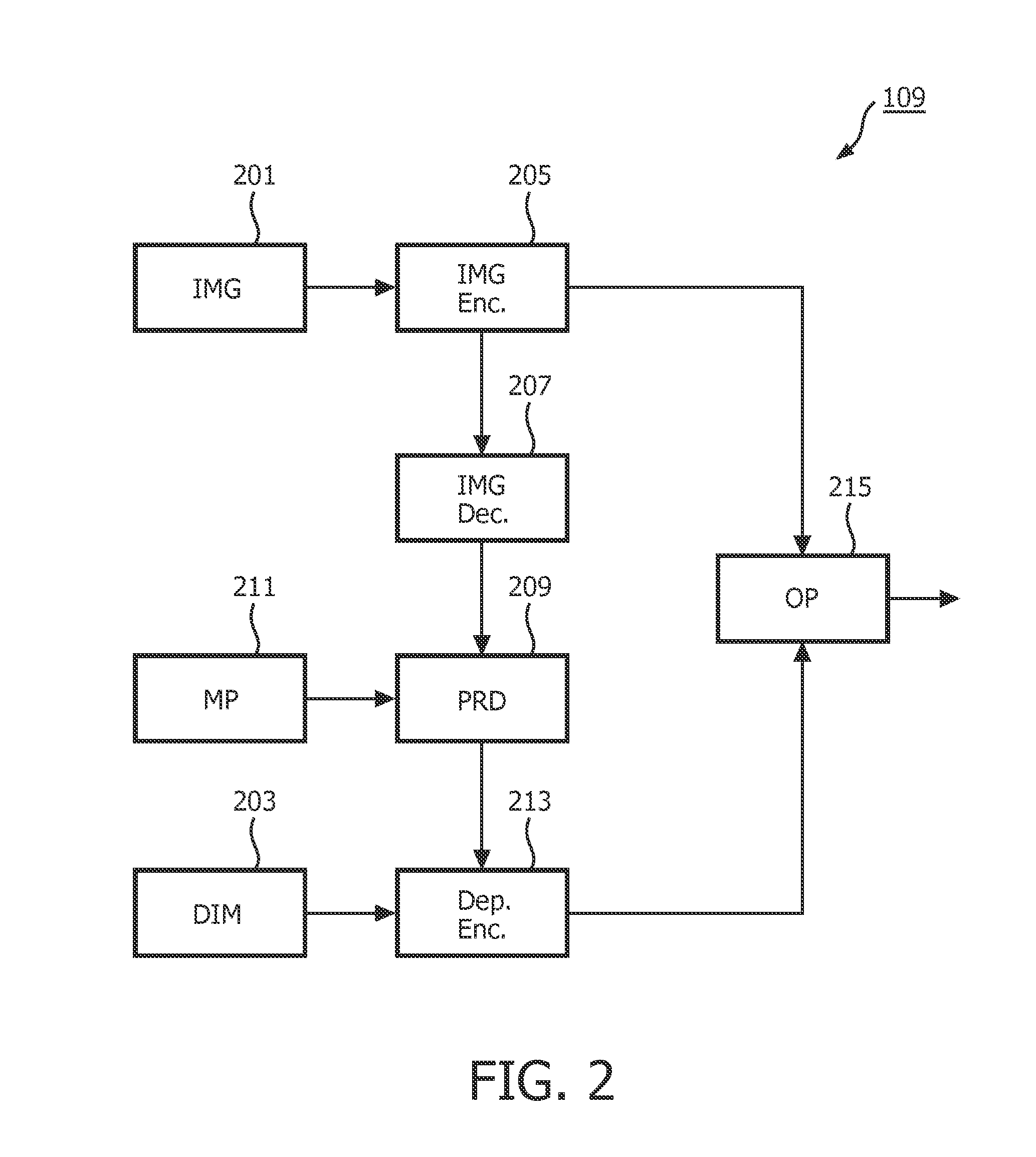

Data encoding and decoding

ActiveUS20150043641A1Reduce decreaseColor television with pulse code modulationHigh-definition color television with bandwidth reductionImage resolutionVideo encoding

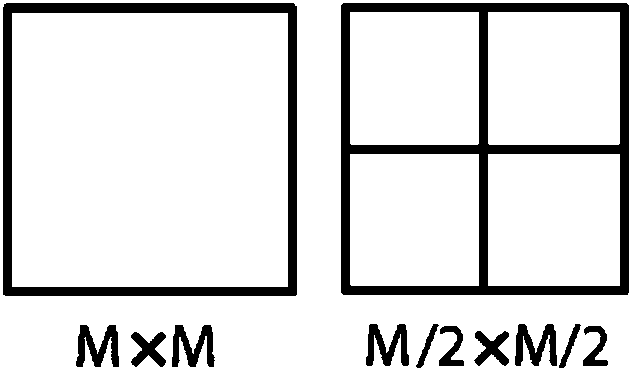

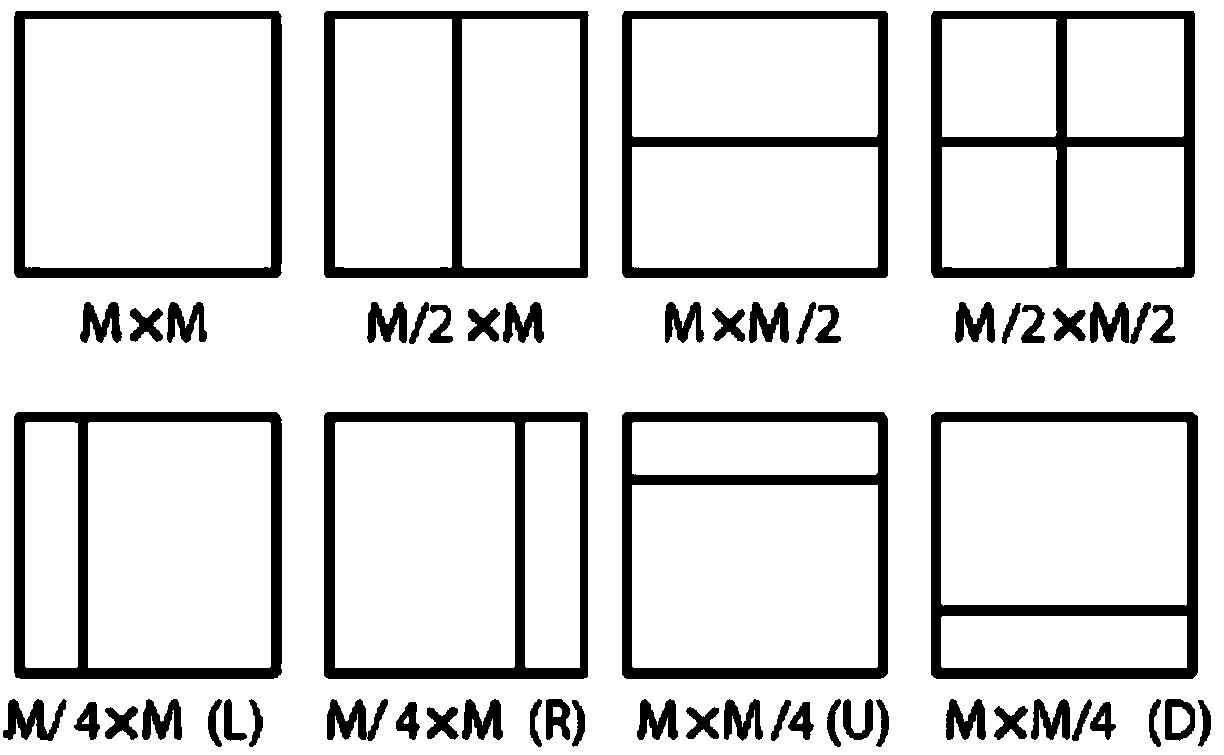

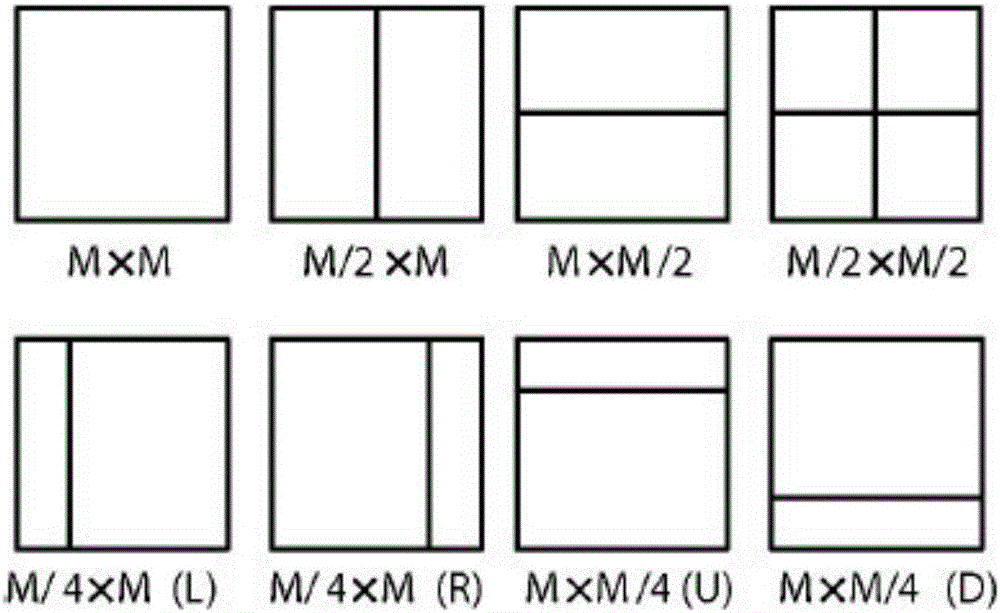

A video coding or decoding method using inter-image prediction to encode input video data in which each chrominance component has 1 / Mth of the horizontal resolution of the luminance component and 1 / Nth of the vertical resolution of the luminance component, where M and N are integers equal to 1 or more, comprises: storing one or more images preceding a current image; interpolating a higher resolution version of prediction units of the stored images so that the luminance component of an interpolated prediction unit has a horizontal resolution P times that of the corresponding portion of the stored image and a vertical resolution Q times that of the corresponding portion of the stored image, where P and Q are integers greater than 1; detecting inter-image motion between a current image and the one or more interpolated stored images so as to generate motion vectors between a prediction unit of the current image and areas of the one or more preceding images; and generating a motion compensated prediction of the prediction unit of the current image with respect to an area of an interpolated stored image pointed to by a respective motion vector; in which the interpolating step comprises: applying a ×R horizontal and ×S vertical interpolation filter to the chrominance components of a stored image to generate an interpolated chrominance prediction unit, where R is equal to (U×M×P) and S is equal to (V×N×Q), U and V being integers equal to 1 or more; and subsampling the interpolated chrominance prediction unit, such that its horizontal resolution is divided by a factor of U and its vertical resolution is divided by a factor of V, thereby resulting in a block of MP×NQ samples.

Owner:SONY CORP

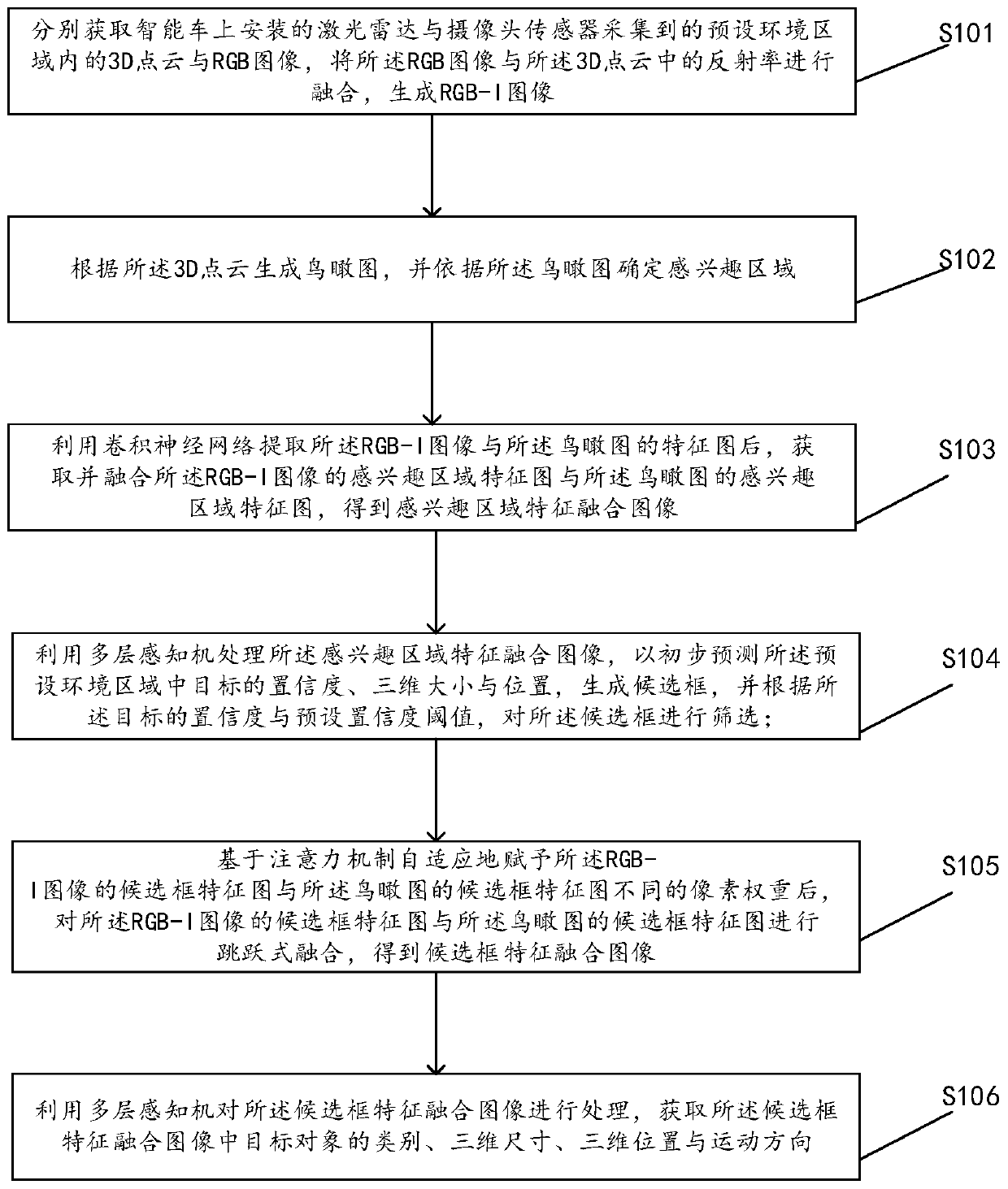

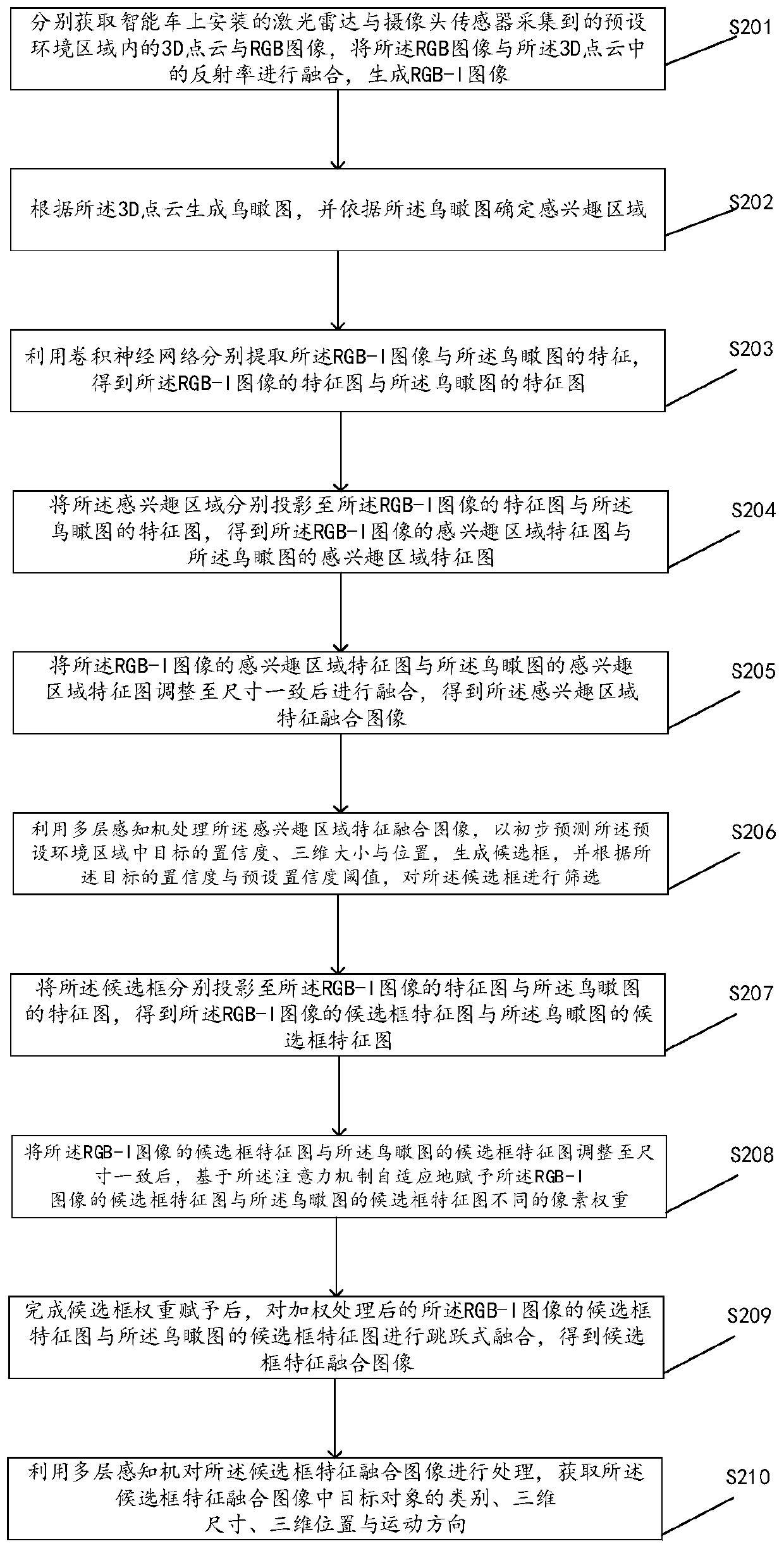

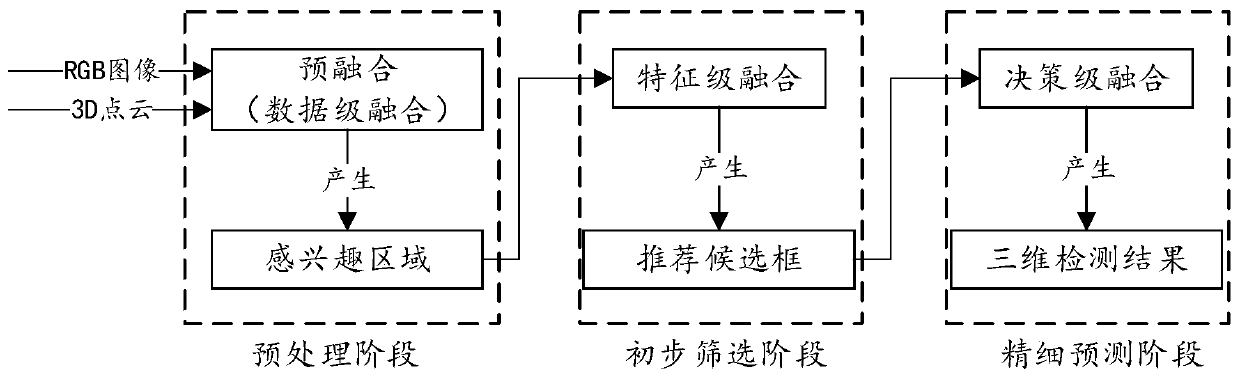

Three-dimensional target detection method and device based on multi-sensor information fusion

ActiveCN110929692AEasy to identifyHigh positioning accuracyCharacter and pattern recognitionView basedRgb image

The invention discloses a three-dimensional target detection method, apparatus and device based on multi-sensor information fusion, and a computer readable storage medium. The three-dimensional targetdetection method comprises the steps: fusing 3D point cloud and an RGB image collected by a laser radar and a camera sensor, and generating an RGB-I image; generating a multi-channel aerial view according to the 3D point cloud so as to determine a region of interest; respectively extracting and fusing region-of-interest features of the RGB-I image and the aerial view based on a convolutional neural network; utilizing a multi-layer perceptron to fuse the confidence coefficient, the approximate position and the size of the image prediction target based on the features of the region of interest,and determining a candidate box; adaptively endowing different pixel weights to different sensor candidate box feature maps based on an attention mechanism, and carrying out skip fusion; and processing the candidate frame feature fusion image by using a multi-layer perceptron, and outputting a three-dimensional detection result. According to the three-dimensional target detection method, apparatus and device, and the computer readable storage medium provided by the invention, the target recognition rate is improved, and the target can be accurately positioned.

Owner:CHANGCHUN INST OF OPTICS FINE MECHANICS & PHYSICS CHINESE ACAD OF SCI

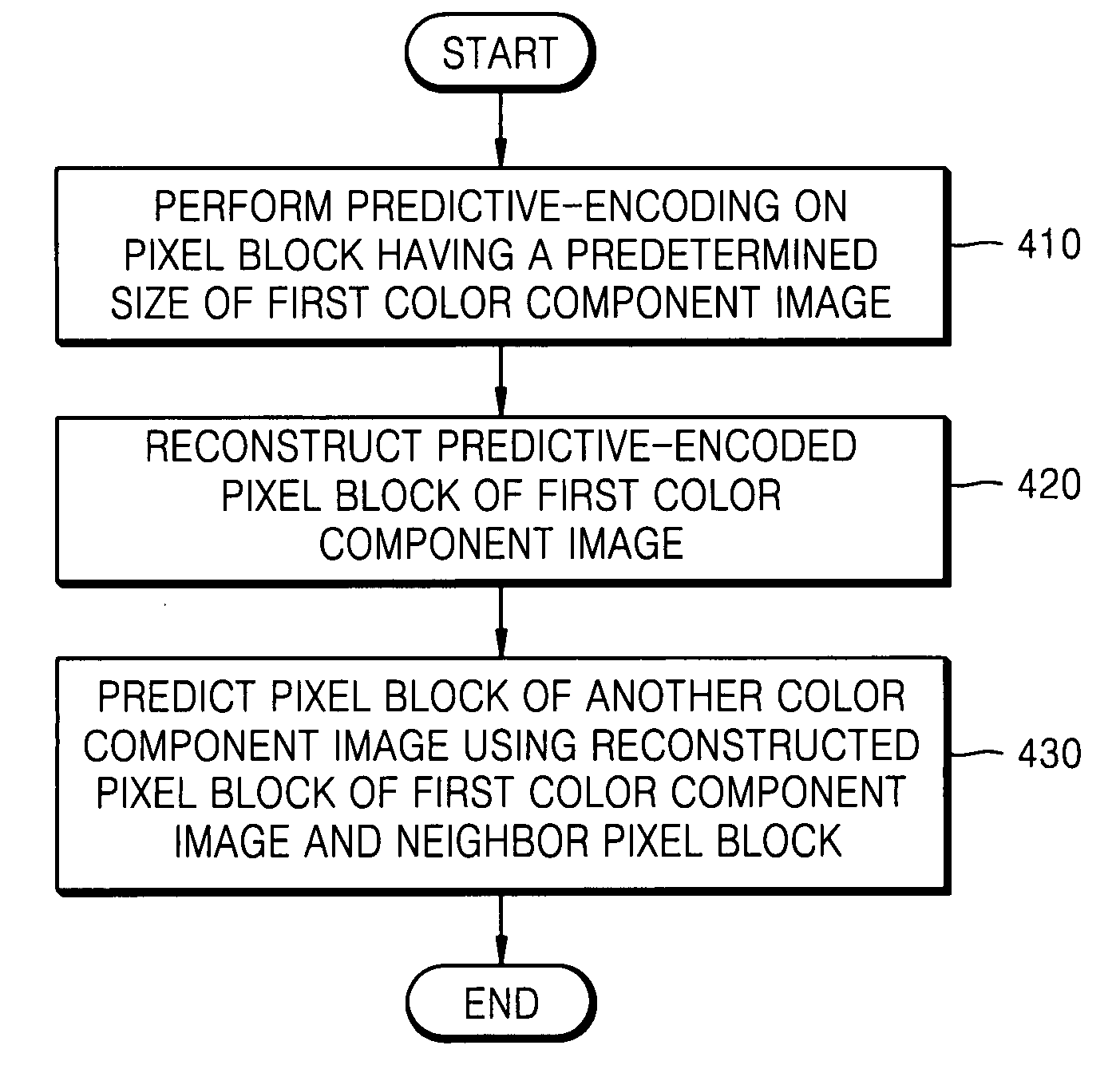

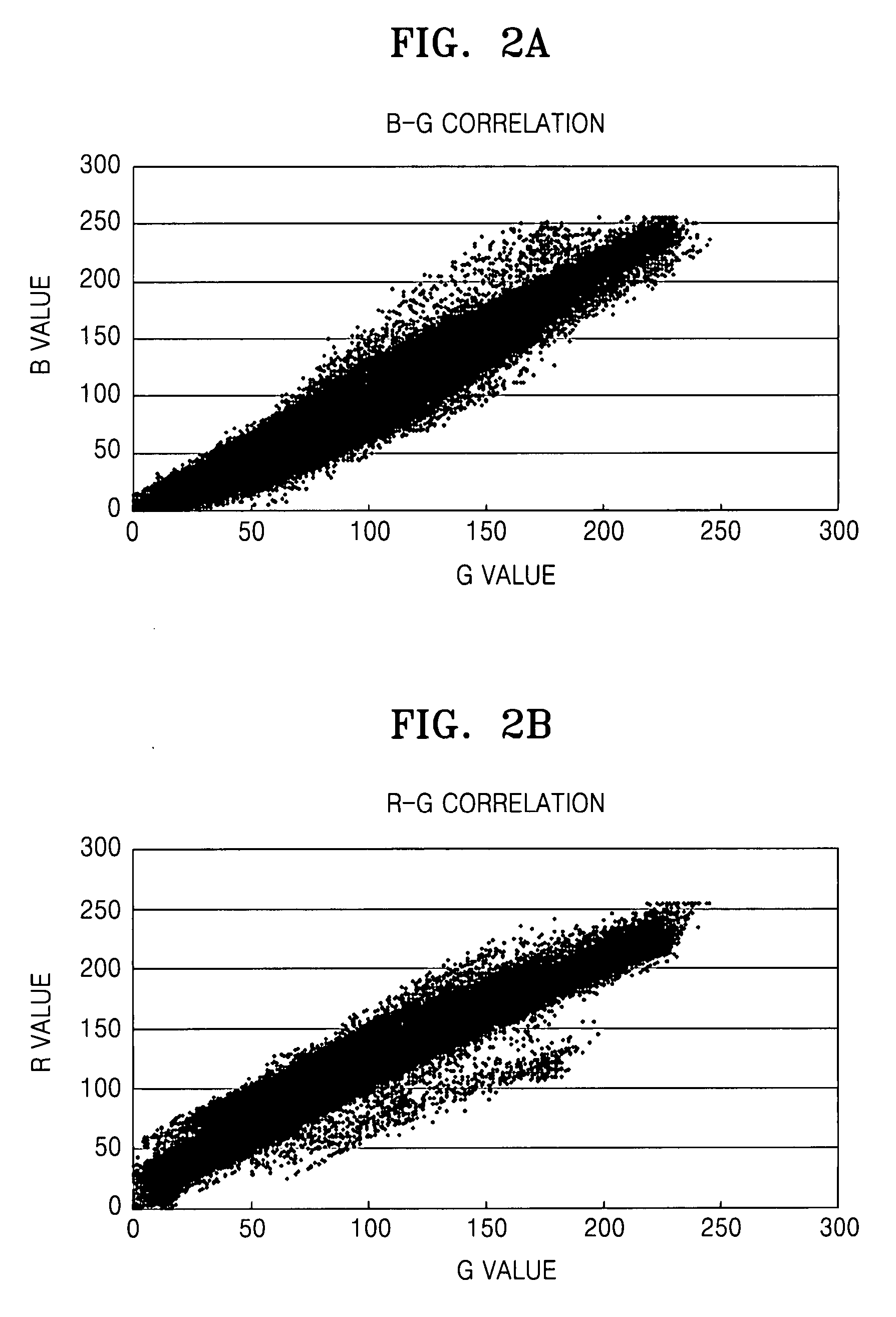

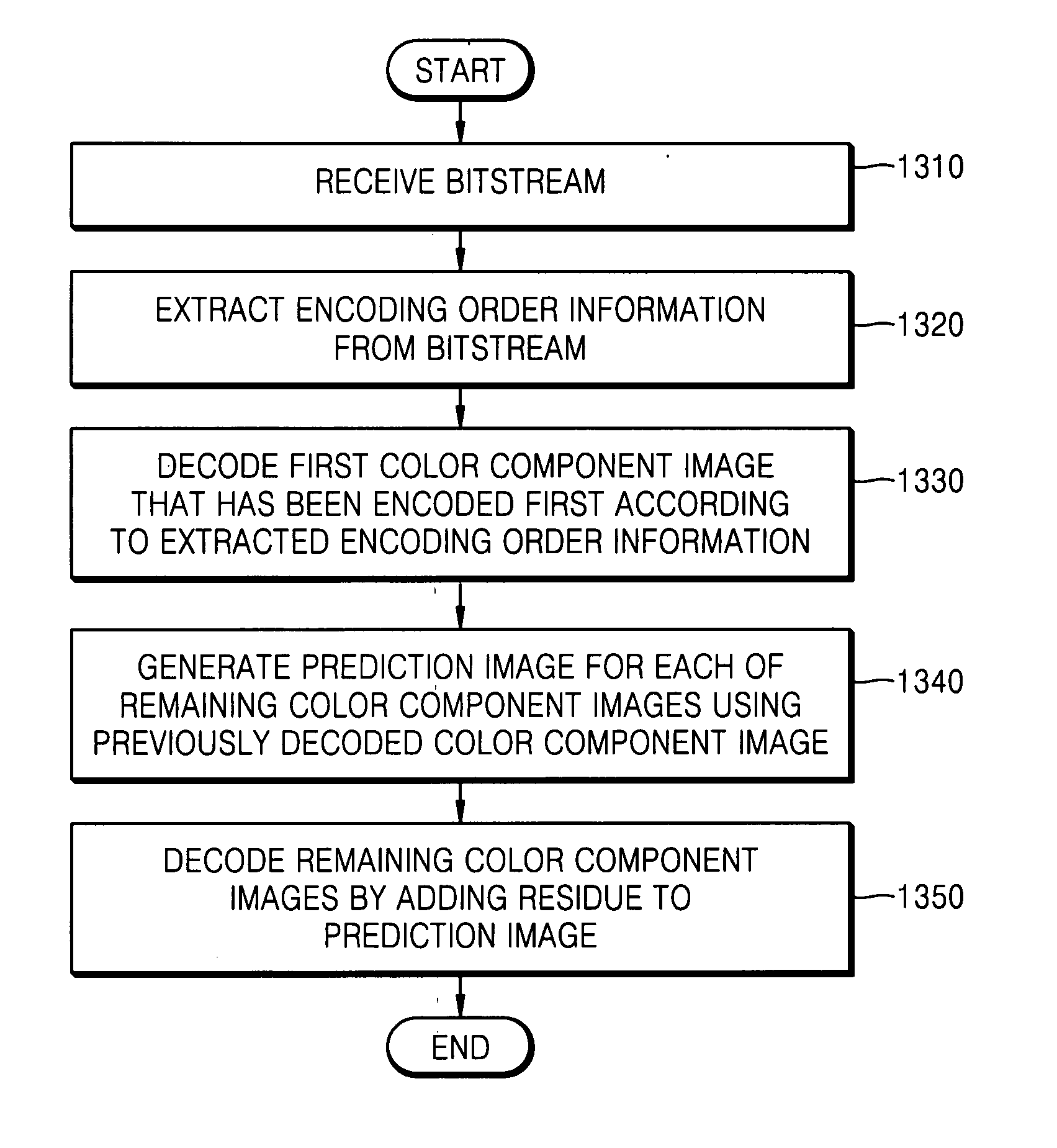

Image encoding/decoding method and apparatus

InactiveUS20080019597A1Encoding efficiency can be improvedImprove efficiencyColor television with pulse code modulationCharacter and pattern recognitionColor imageMonochromatic color

Provided are an image encoding / decoding method and apparatus, in which one of a plurality of color component images is predicted from a different color component image reconstructed using a correlation between the plurality of color component images. Using a reconstructed image of a first color component image selected from among the plurality of color component images forming a single color image, the other color component images are predicted.

Owner:SAMSUNG ELECTRONICS CO LTD

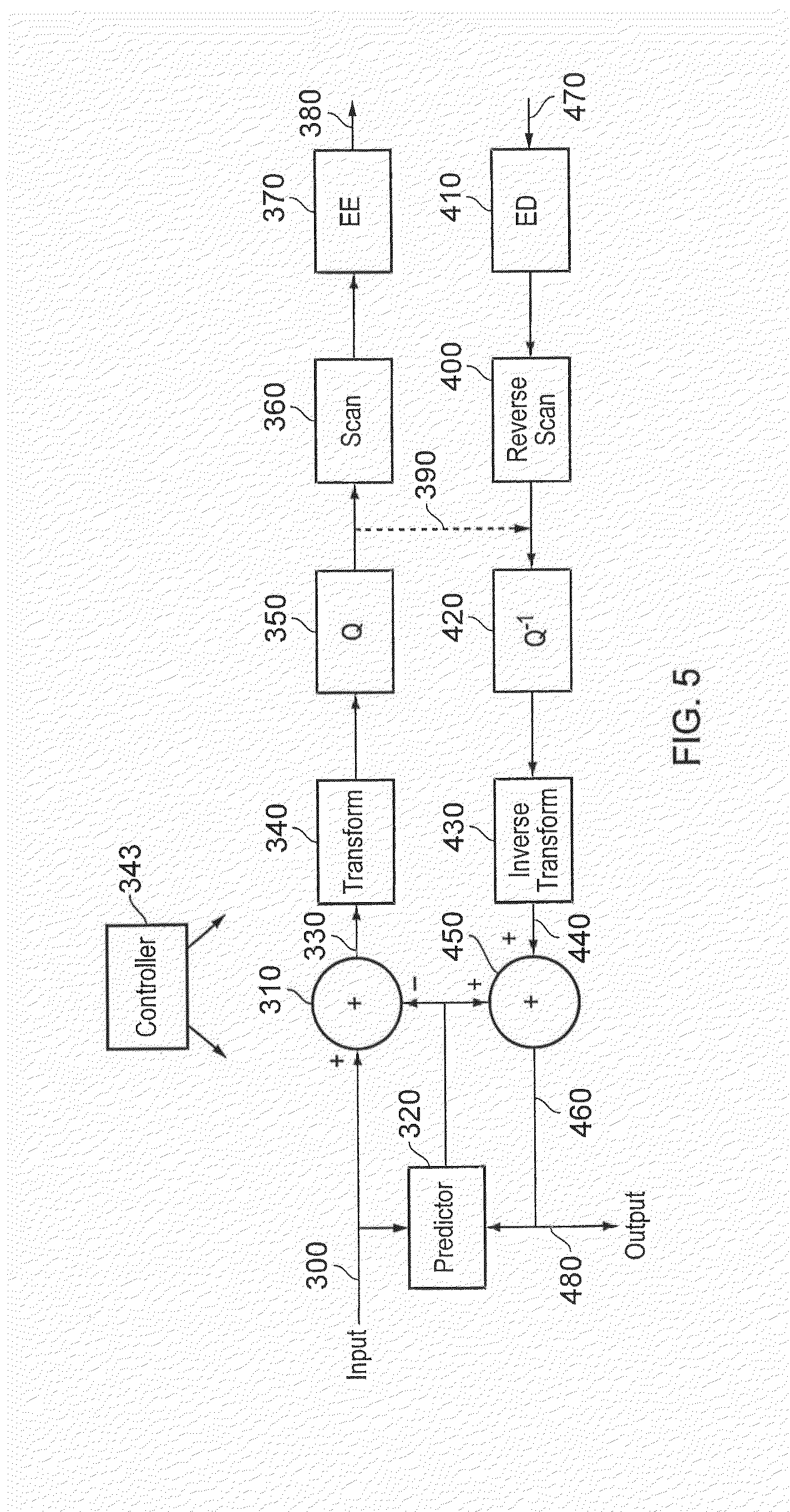

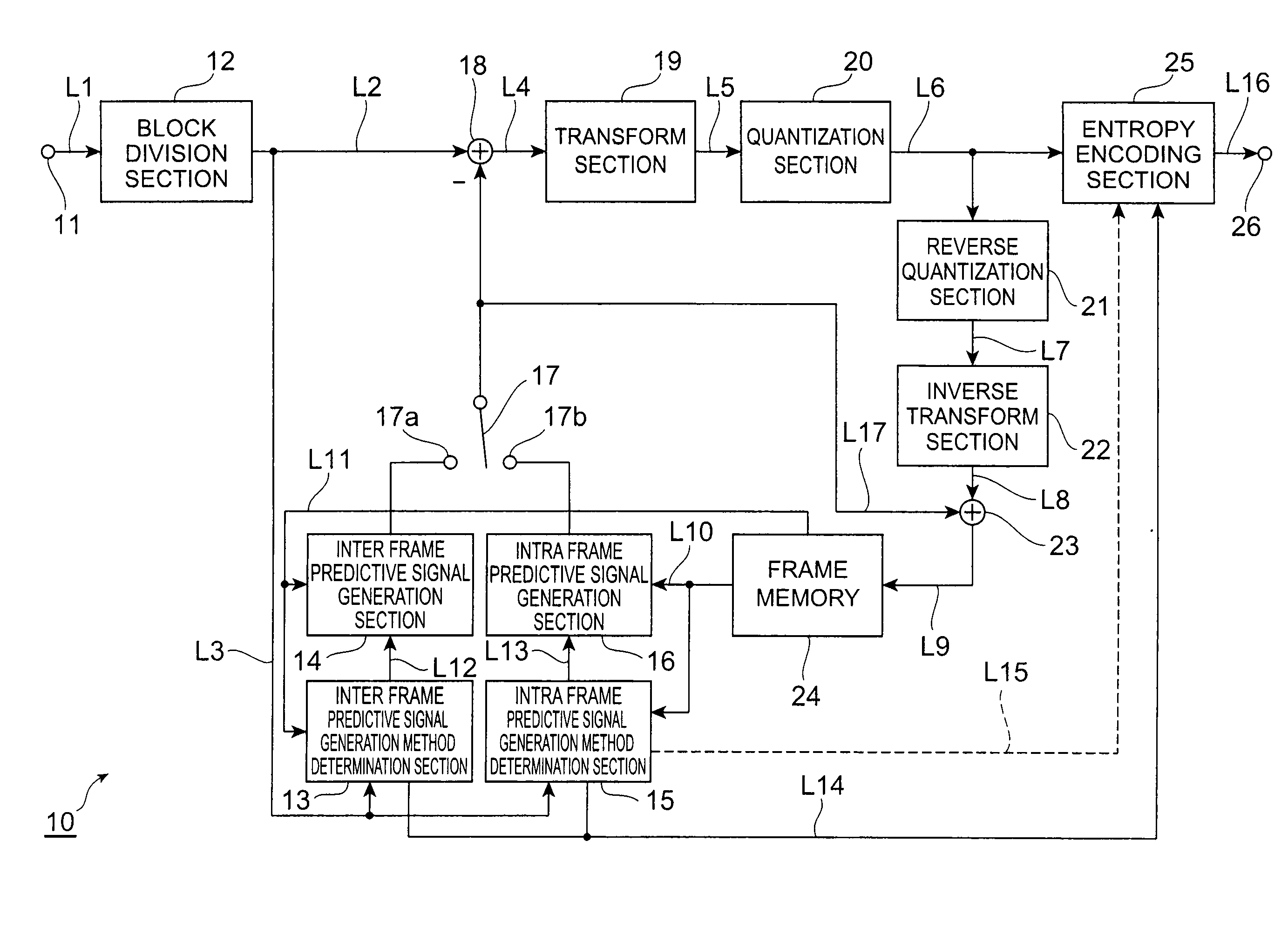

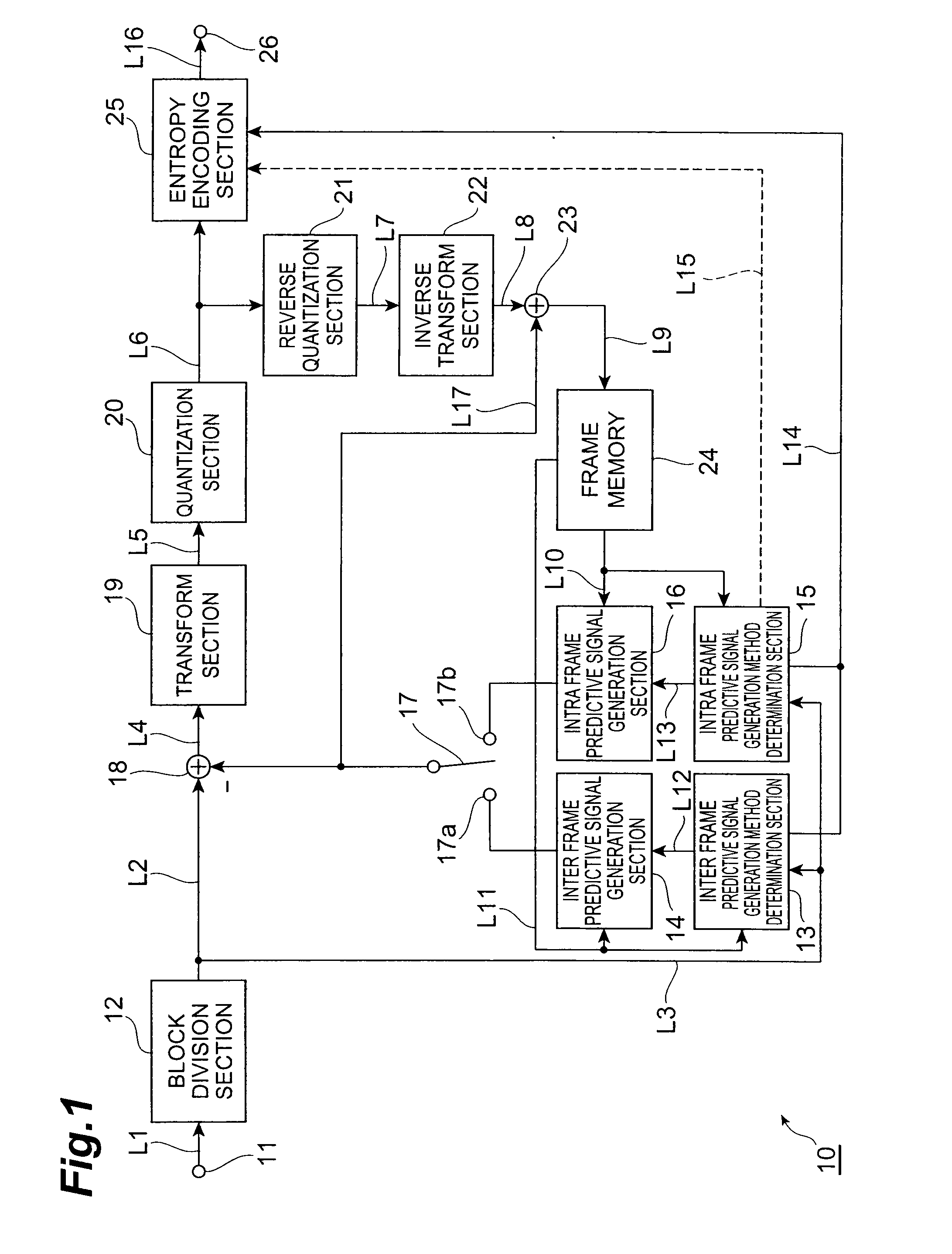

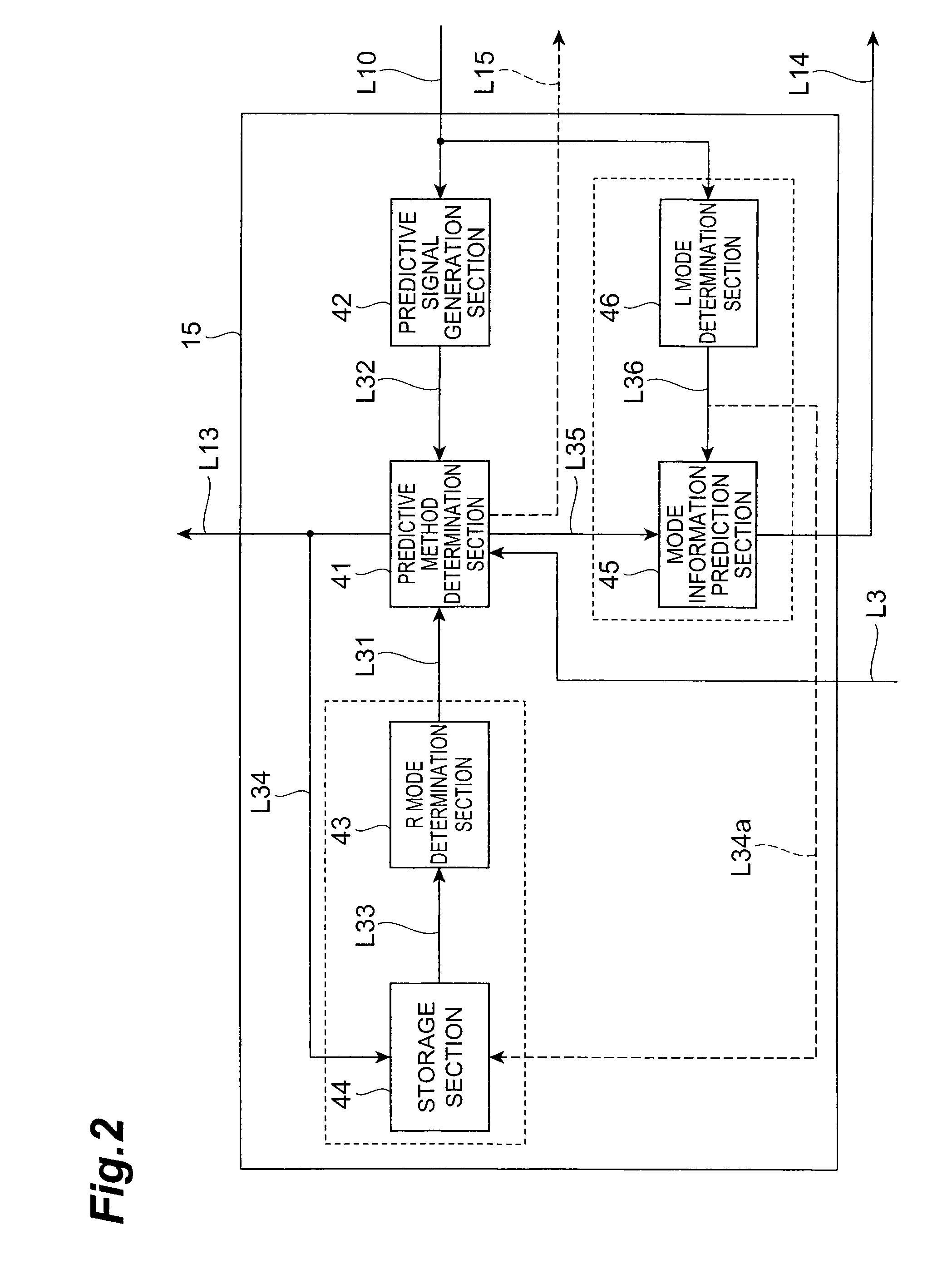

Image prediction encoding device, image prediction decoding device, image prediction encoding method, image prediction decoding method, image prediction encoding program, and image prediction decoding program

InactiveUS20090232206A1Reducing predictive method-related mode informationEasy to handleColor television with pulse code modulationColor television with bandwidth reductionSignal onPredictive methods

An image predictive encoding device including an intra frame predictive signal generation method determination section that determines, for adjacent areas including regenerated pixel signals and adjacent to the target area, a predictive method derived on the basis of data corresponding to the adjacent areas as an R mode predictive method or an L mode predictive method, an intra frame predictive signal generation section that generates an intra frame predictive signal on the basis of the R mode predictive method thus determined, and a subtractor, a transform section, a quantization section, and an entropy encoding section that encode a residual signal of a pixel signal of the target area on the basis of the generated intra frame predictive signal.

Owner:NTT DOCOMO INC

Data encoding and decoding

ActiveUS20150063457A1Color television with pulse code modulationHigh-definition color television with bandwidth reductionImage resolutionVideo encoding

A video coding or decoding method using inter-image prediction to encode input video data in which each chrominance component has 1 / Mth of the horizontal resolution of the luminance component and 1 / Nth of the vertical resolution of the luminance component, where M and N are integers equal to 1 or more, comprises: storing one or more images preceding a current image; interpolating a higher resolution version of prediction units of the stored images so that the luminance component of an interpolated prediction unit has a horizontal resolution P times that of the corresponding portion of the stored image and a vertical resolution Q times that of the corresponding portion of the stored image, where P and Q are integers greater than 1; detecting inter-image motion between a current image and the one or more interpolated stored images so as to generate motion vectors between a prediction unit of the current image and areas of the one or more preceding images; and generating a motion compensated prediction of the prediction unit of the current image with respect to an area of an interpolated stored image pointed to by a respective motion vector; in which the interpolating step comprises: applying a xR horizontal and xS vertical interpolation filter to the chrominance components of a stored image to generate an interpolated chrominance prediction unit, where R is equal to (U×M×P) and S is equal to (V×N×Q), U and V being integers equal to 1 or more; and subsampling the interpolated chrominance prediction unit, such that its horizontal resolution is divided by a factor of U and its vertical resolution is divided by a factor of V, thereby resulting in a block of MP×NQ samples.

Owner:SONY CORP

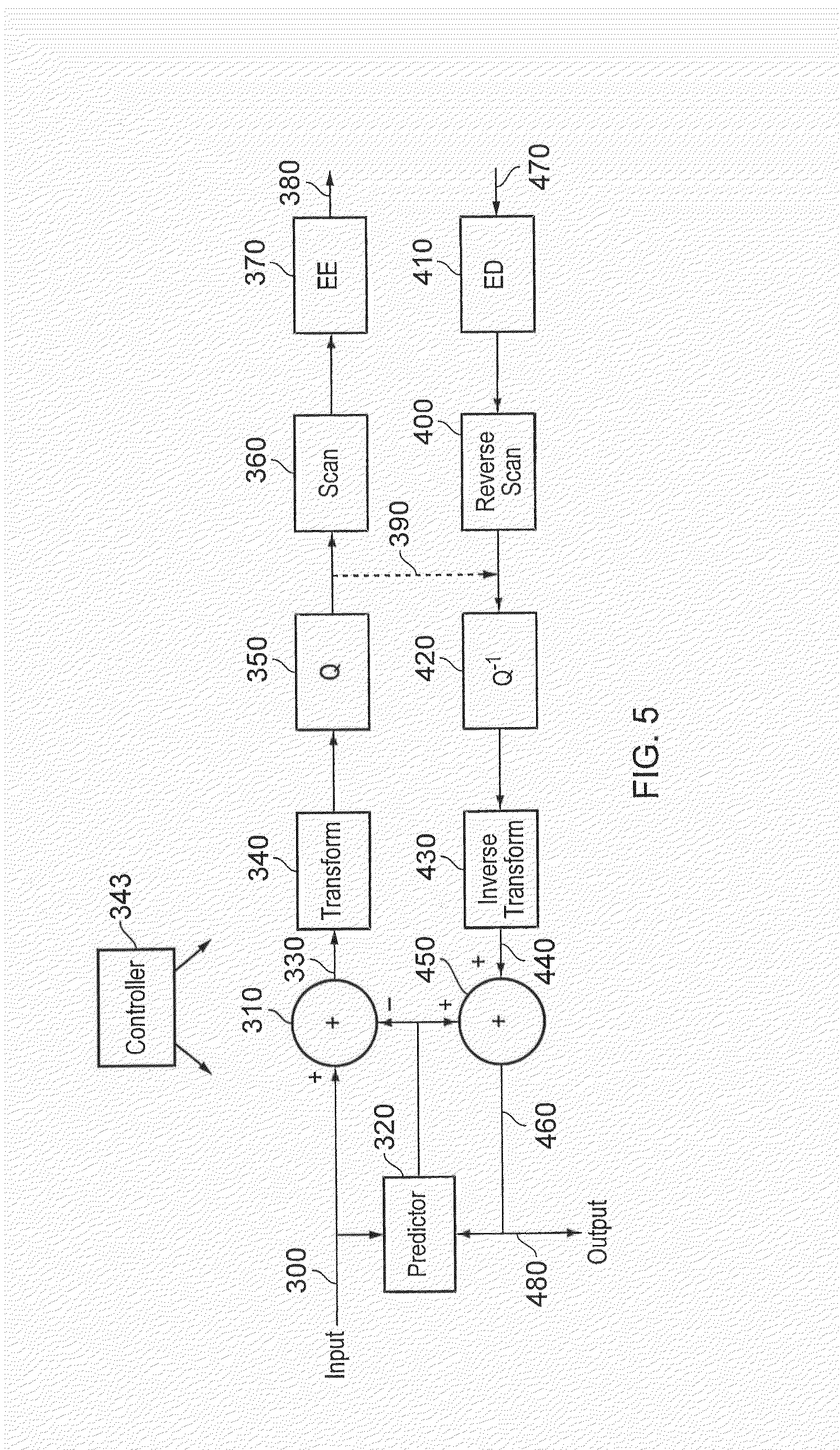

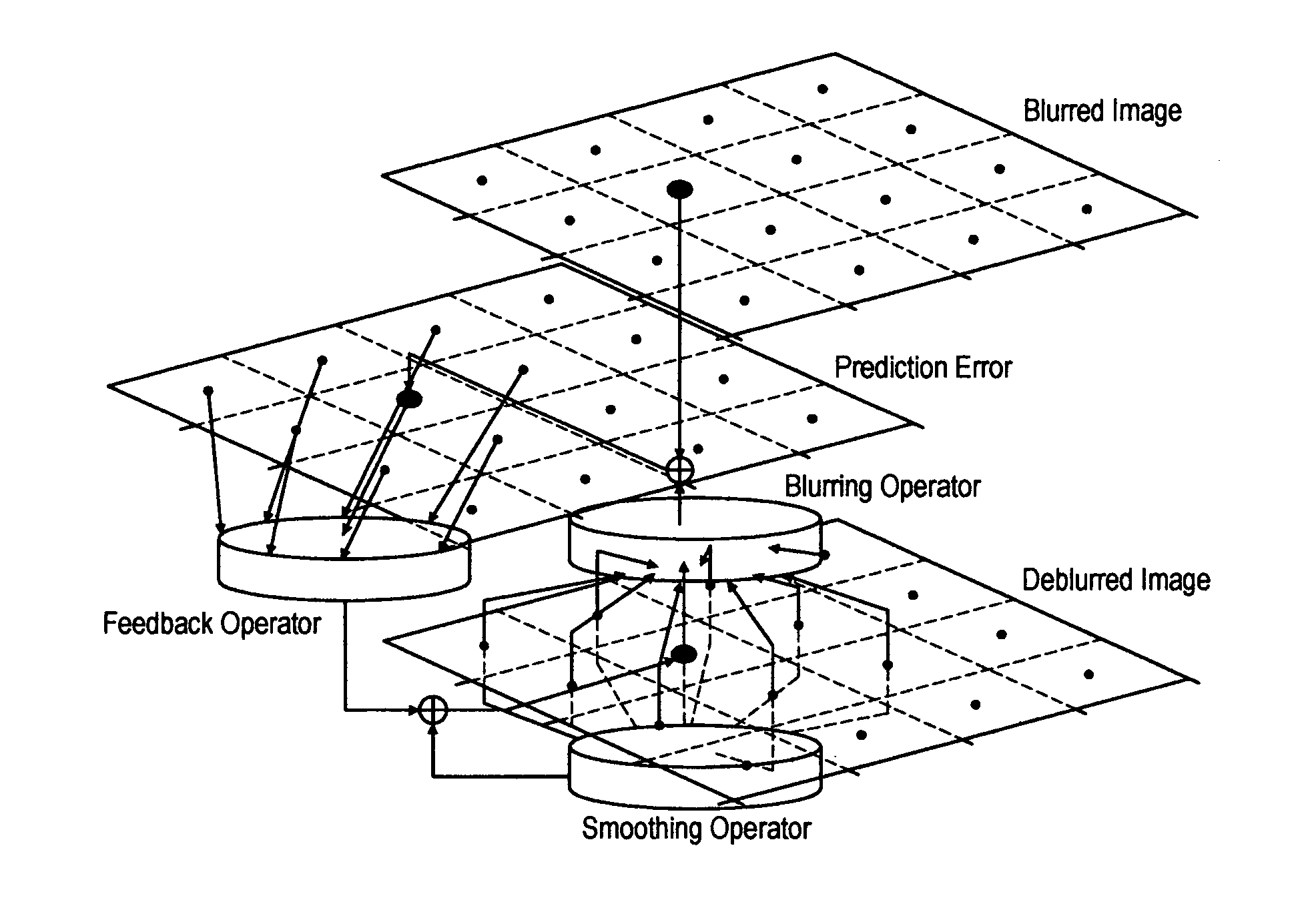

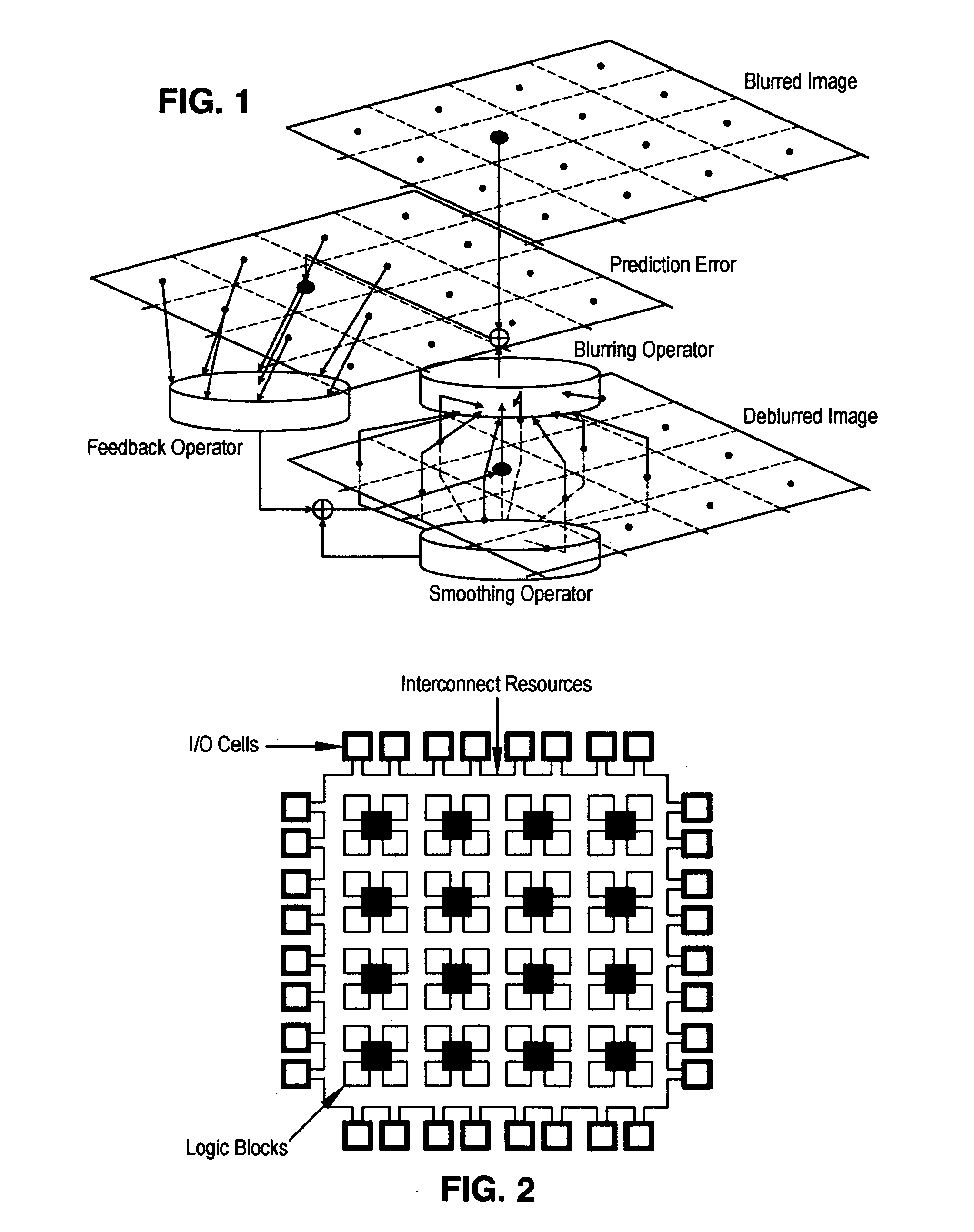

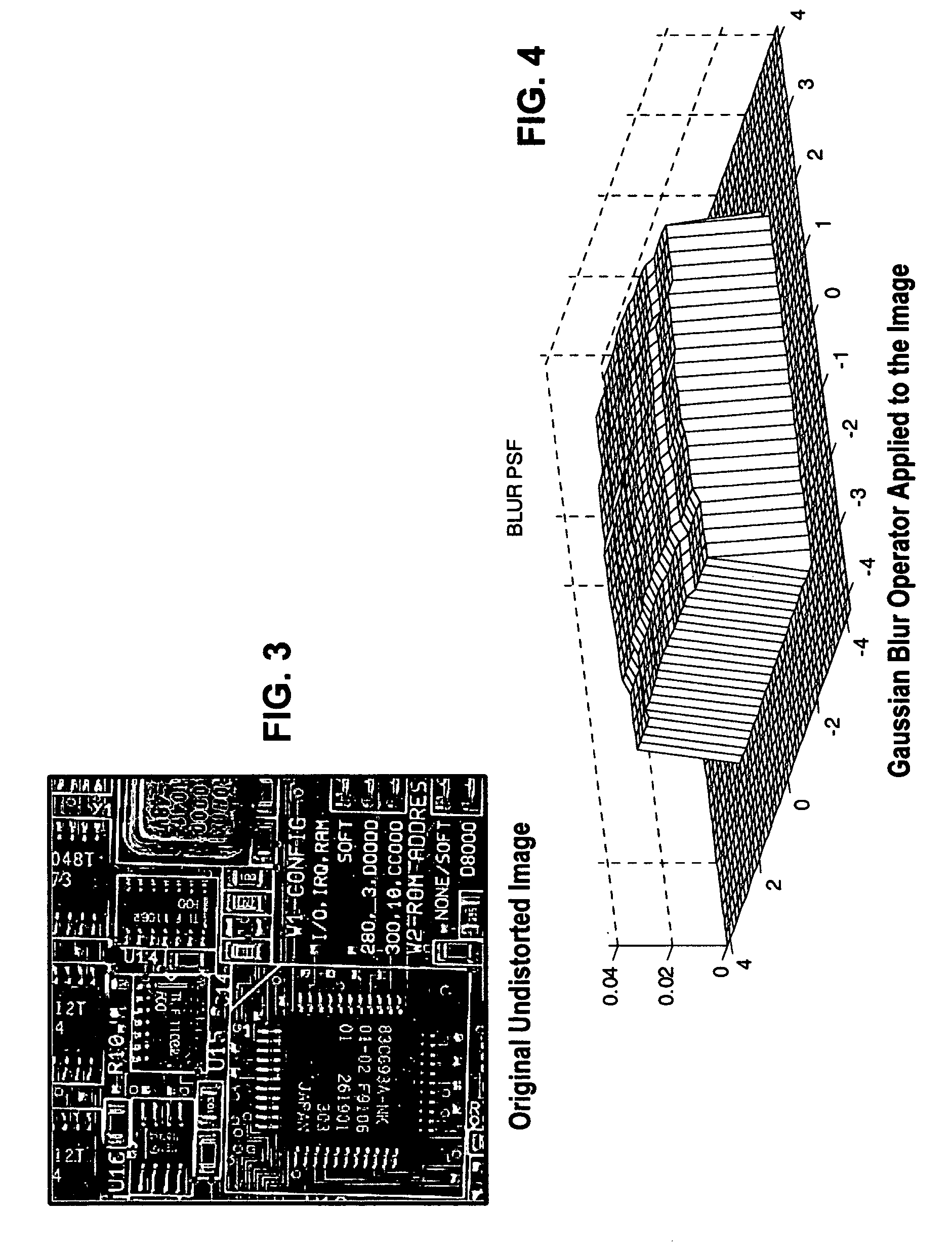

Image deblurring with a systolic array processor

InactiveUS20050147313A1Simple multiplicationSimple addition operationImage enhancementGeneral purpose stored program computerSystolic arrayImage prediction

A method and device for deblurring an image having pixels. A blurred image is downloaded into a systolic array processor having an array of processing logic blocks such that each pixel arrives in a respective processing logic block of one pixel or small groups of pixels. Data is sequentially exchanged between processing logic blocks by interconnecting each processing logic block with a predefined number of adjacent processing logic blocks, followed by uploading the deblurred image. The processing logic blocks provide an iterative update of the blurred image by (i) providing feedback of the blurred image prediction error using the deblurred image and (ii) providing feedback of the past deblurred image estimate. The iterative update is implemented in the processing logic blocks by u(n+1)=u(n)−K*(H*u(n)−yb)−S*u(n).

Owner:HONEYWELL INT INC

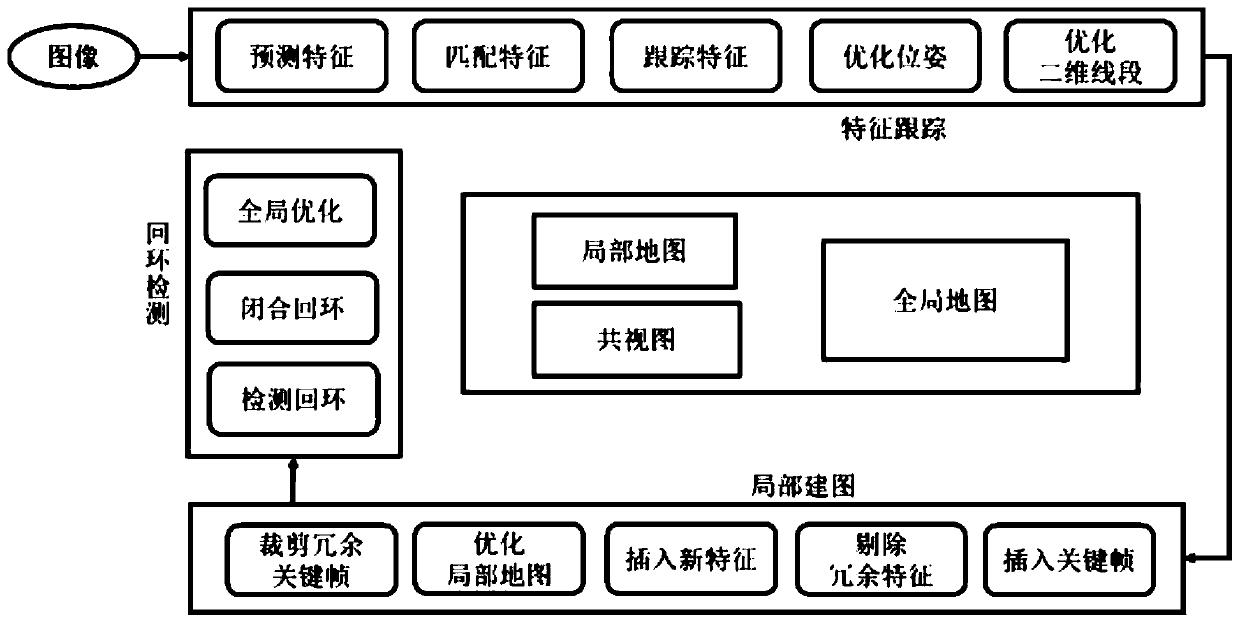

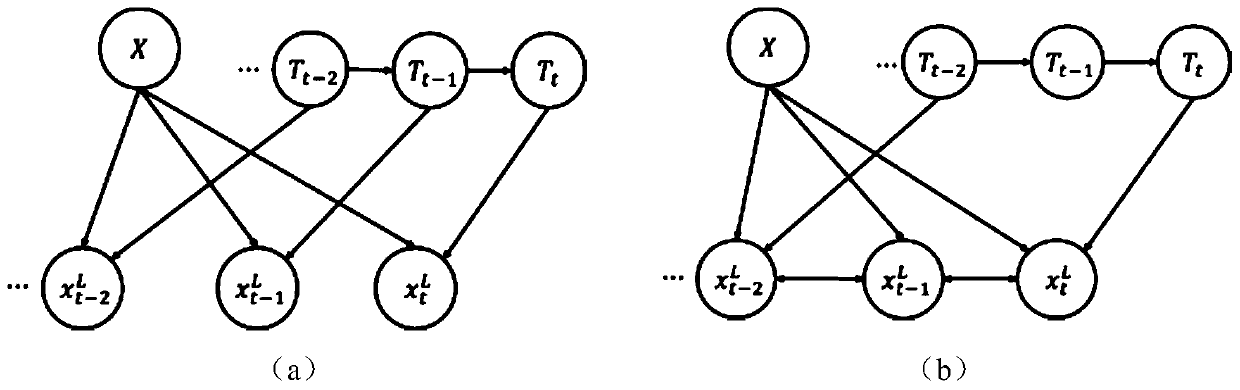

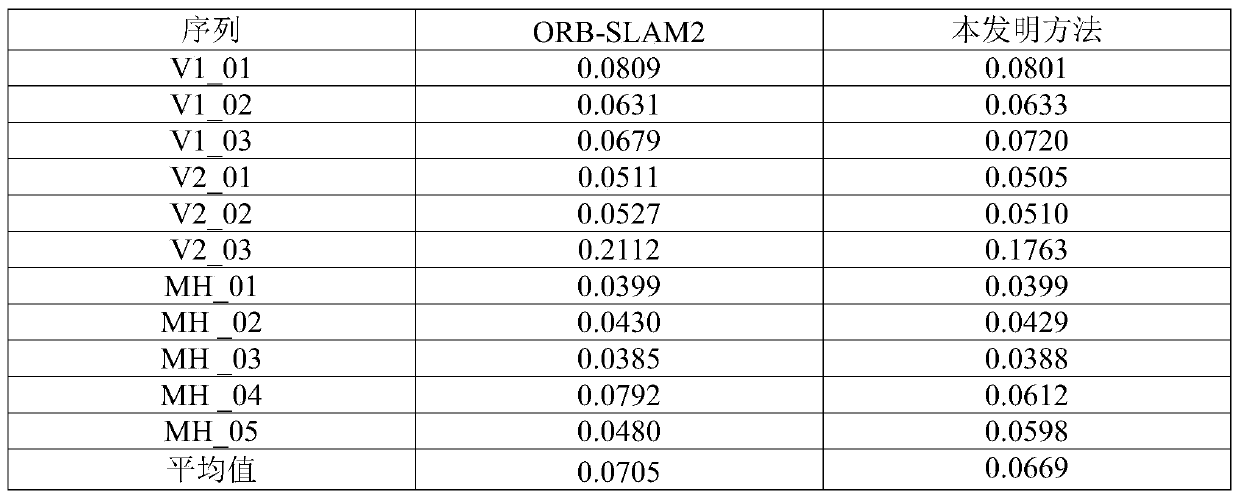

Visual SLAM method based on point-line fusion

PendingCN110782494AExtract completeFast extractionImage analysis3D modellingImage extractionRadiology

The invention discloses a visual SLAM method based on point-line fusion, and the method comprises the steps: firstly inputting an image, predicting the pose of a camera, extracting a feature point ofthe image, and estimating and extracting a feature line through the time sequence information among a plurality of visual angles; and matching the feature points and the feature lines, tracking the features in front and back frames, establishing inter-frame association, optimizing the pose of the current frame, and optimizing the two-dimensional feature lines to improve the integrity of the feature lines; judging whether the current key frame is a key frame or not, if yes, adding the key frame into the map, updating three-dimensional points and lines in the map, performing joint optimization on the current key frame and the adjacent key frame, and optimizing the pose and three-dimensional characteristics of the camera;and removing a part of external points and redundant key frames; and finally, performing loopback detection on the key frame, if the current key frame and the previous frame are similar scenes, closing loopback, and performing global optimization once to eliminate accumulated errors. Under an SLAM system framework based on points and lines, the line extraction speed and the feature line integrity are improved by utilizing the sequential relationship of multiple view angle images, so that the pose precision and the map reconstruction effect are improved.

Owner:BEIJING UNIV OF TECH

Image encoding/decoding method and apparatus

InactiveUS20080043840A1Encoding efficiency can be improvedEasy to codeColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionDecoding methods

Owner:SAMSUNG ELECTRONICS CO LTD

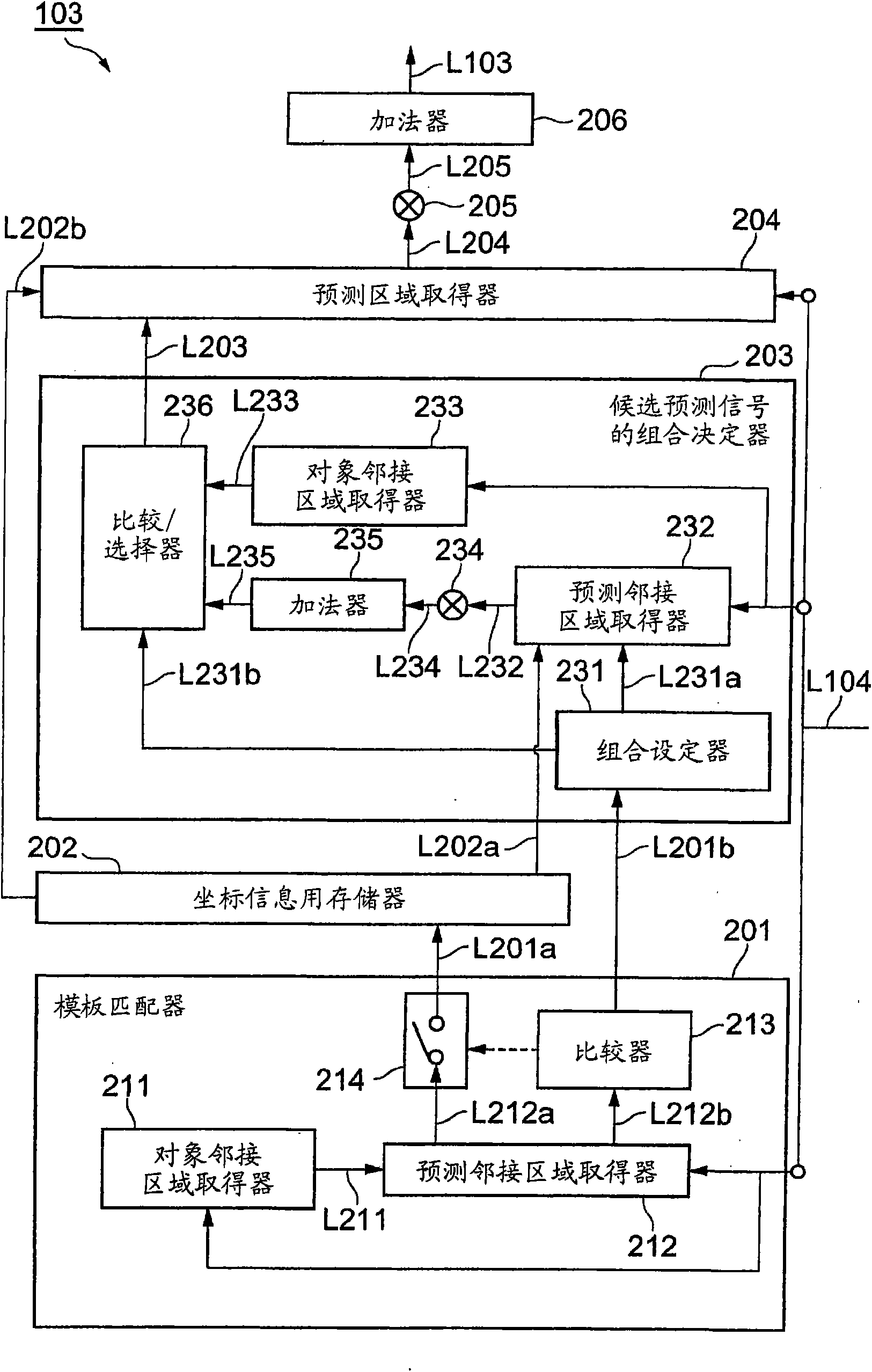

Image prediction/encoding device, image prediction/encoding method, image prediction/encoding program, image prediction/decoding device, image prediction/decoding method, and image prediction decoding

ActiveCN101653009ACombination suitable forGenerate efficientlyTelevision systemsDigital video signal modificationPattern recognitionSynthesis methods

It is possible to provide an image prediction / encoding device, an image prediction / encoding method, an image prediction / encoding program, an image prediction / decoding device, an image prediction / decoding method, and an image prediction / decoding program which can select a plurality of candidate prediction signals without increasing an information amount. A weighting device (234) and an adder (235)perform a process (averaging, for example) of pixel signals extracted by a prediction adjacent region acquisition device (232) by using a predetermined synthesis method so as to generate a comparative signal for an adjacent pixel signal for each of combinations. A comparison / selection device (236) selects a combination having a high correlation between a comparison signal generated by the weighting device (234) and an adjacent pixel signal acquired by an object adjacent region acquisition unit (233). A prediction region acquisition unit (204), a weighting unit (205), and an adder (206) generate a candidate prediction signal and process it by using the predetermined synthesis method so as to generate a prediction signal.

Owner:NTT DOCOMO INC

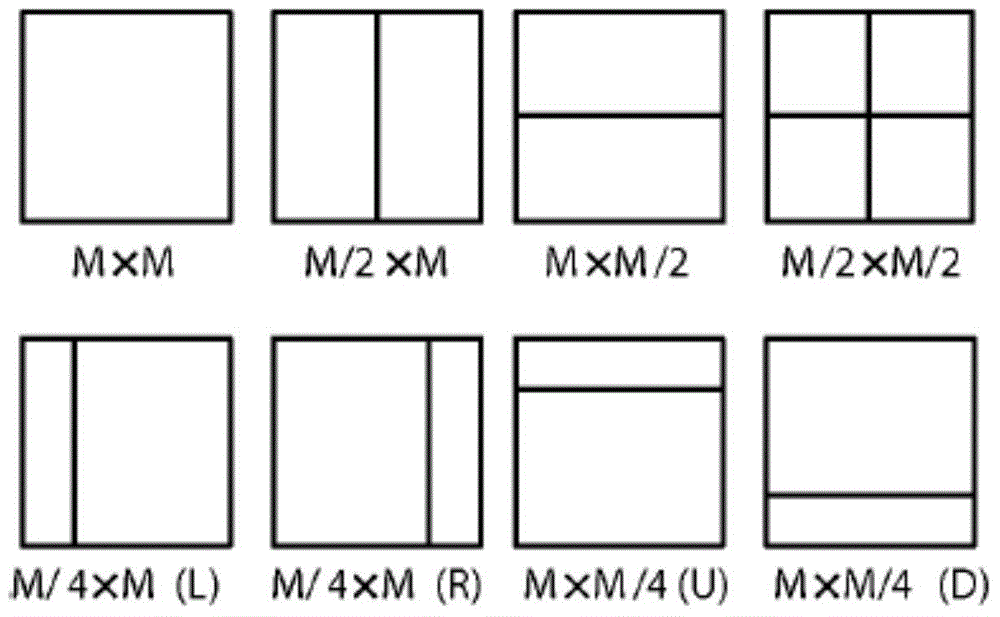

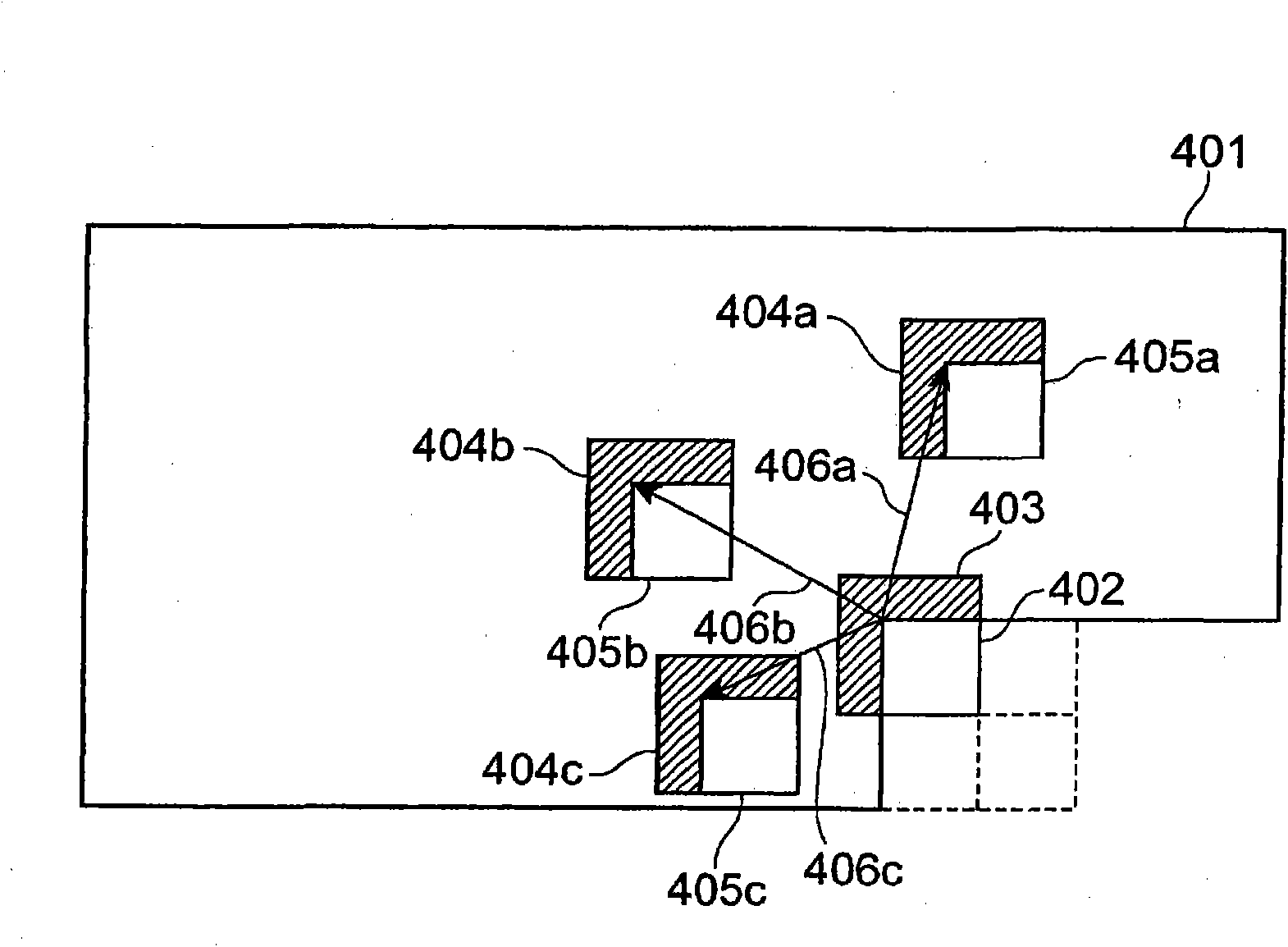

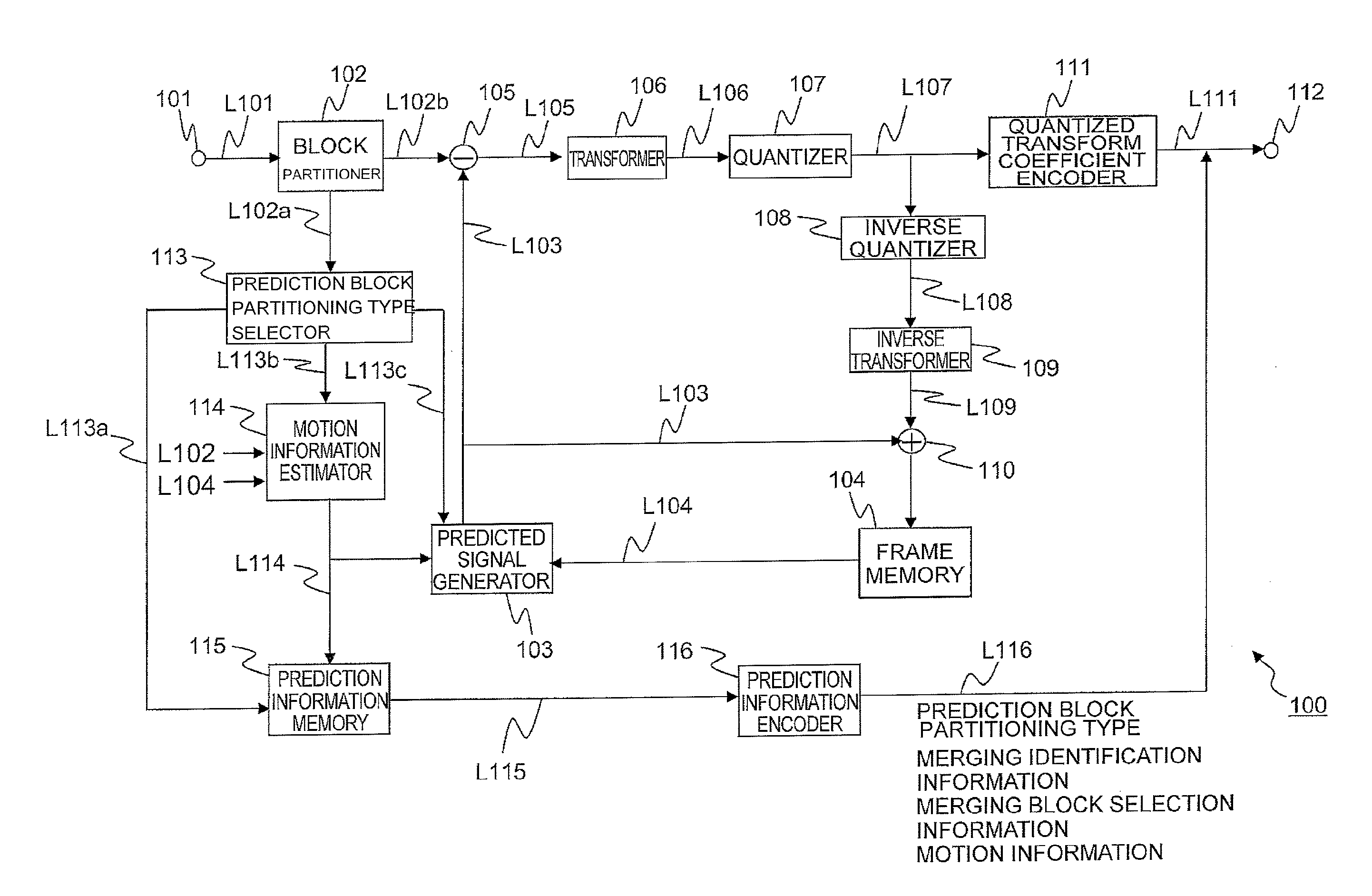

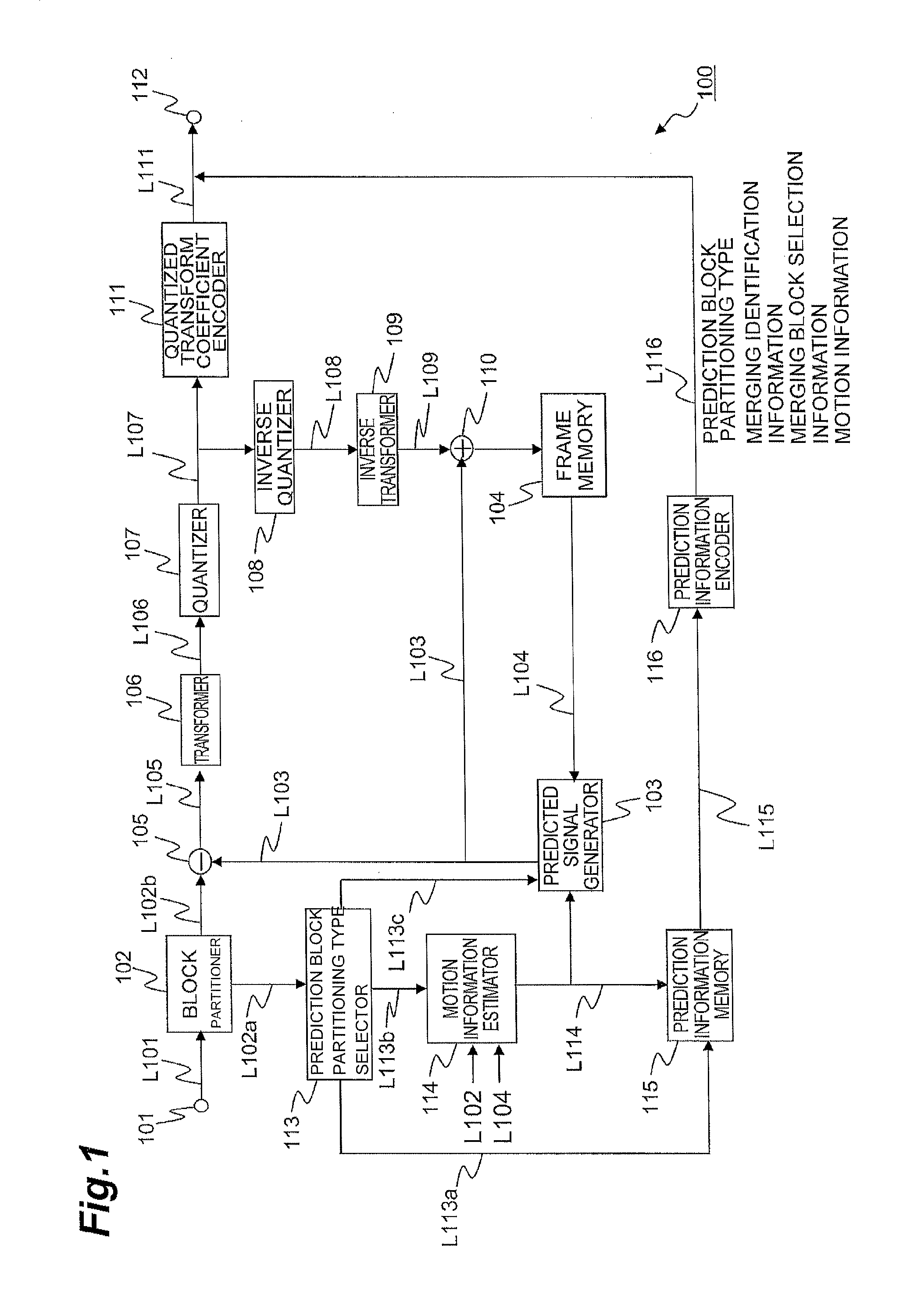

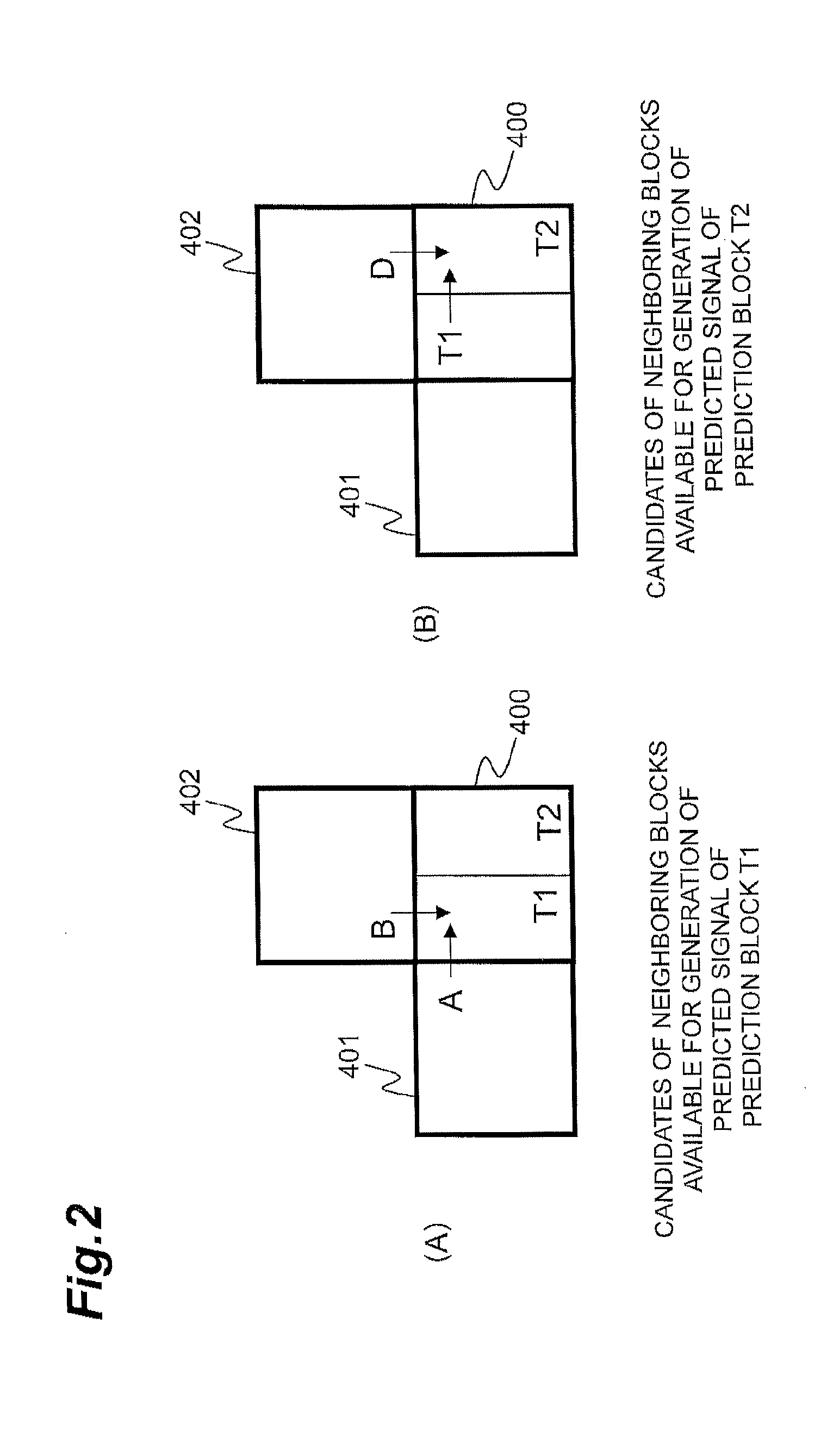

Image prediction encoding/decoding system

ActiveUS20130136184A1Efficient decodingHigh movement precisionColor television with pulse code modulationColor television with bandwidth reductionAlgorithmImage prediction

An encoding target region in an image can be partitioned into a plurality of prediction regions. Based on prediction information of a neighboring region neighboring a target region, the number of previously-encoded prediction regions in the target region, and previously-encoded prediction information of the target region, a candidate for motion information to be used in generation of a predicted signal of the target prediction region as a next prediction region is selected from previously-encoded motion information of regions neighboring the target prediction region. According to the number of candidates for motion information selected, merging block information to indicate generation of the predicted signal of the target prediction region using the selected candidate for motion information and motion information detected by prediction information estimation means, or either one of the merging block information or the motion information is encoded.

Owner:NTT DOCOMO INC

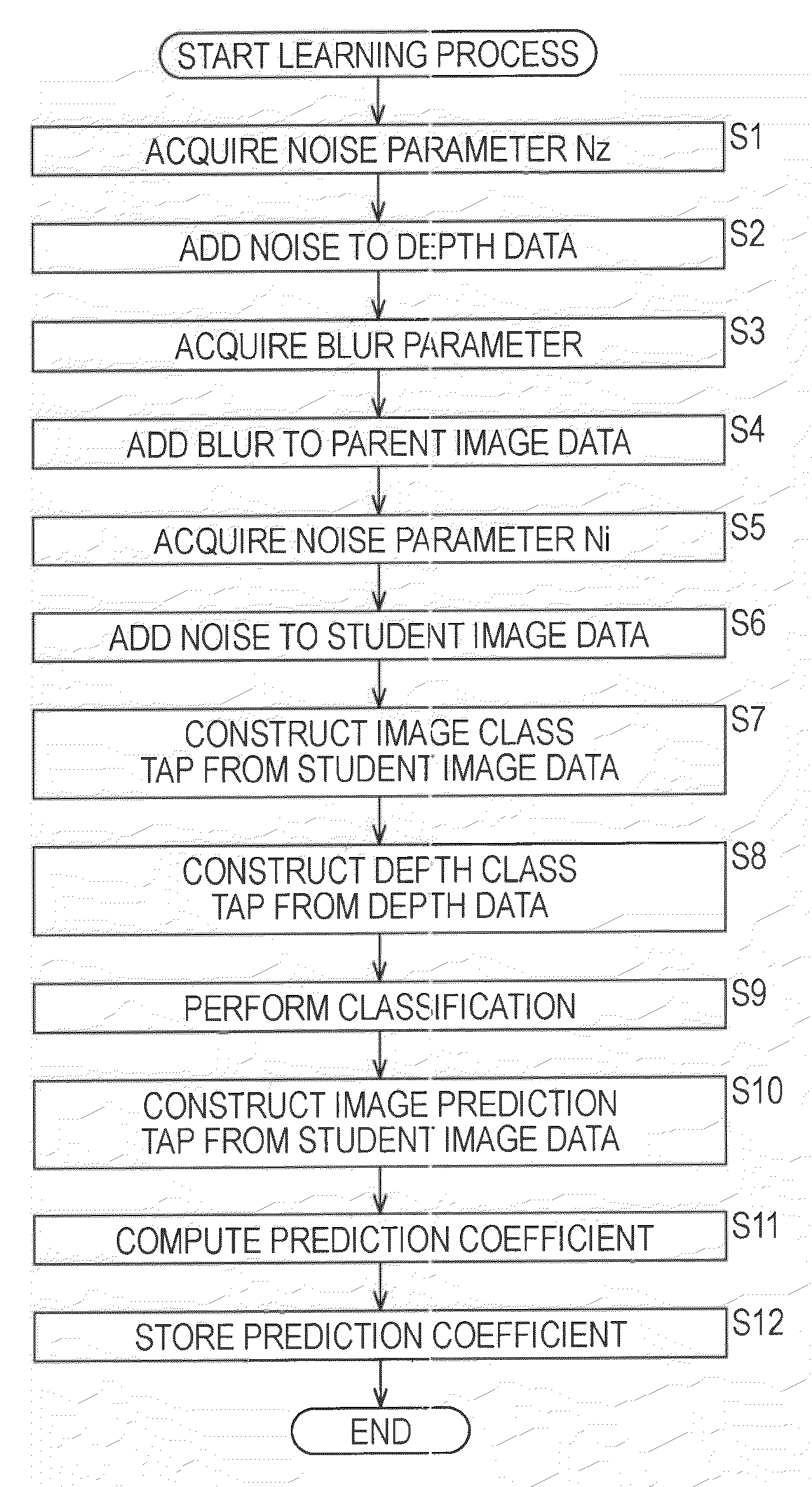

Prediction coefficient operation device and method, image data operation device and method, program, and recording medium

InactiveUS20100061642A1Correcting blurringAccurate imagingImage enhancementImage analysisPattern recognitionImaging processing

The present invention relates to a prediction coefficient computing device and method, an image data computing device and method, a program, and a recording medium which make it possible to accurately correct blurring of an image. A blur adding unit 11 adds blur to parent image data on the basis of blur data of a blur model to generate student image data. A tap constructing unit 17 constructs an image prediction tap from the student image data. A prediction coefficient computing unit 18 computes a prediction coefficient for generating image data corresponding to the parent image data, from image data corresponding to the student image data, on the basis of the parent image data and the image prediction tap. The present invention can be applied to an image processing device.

Owner:SONY CORP

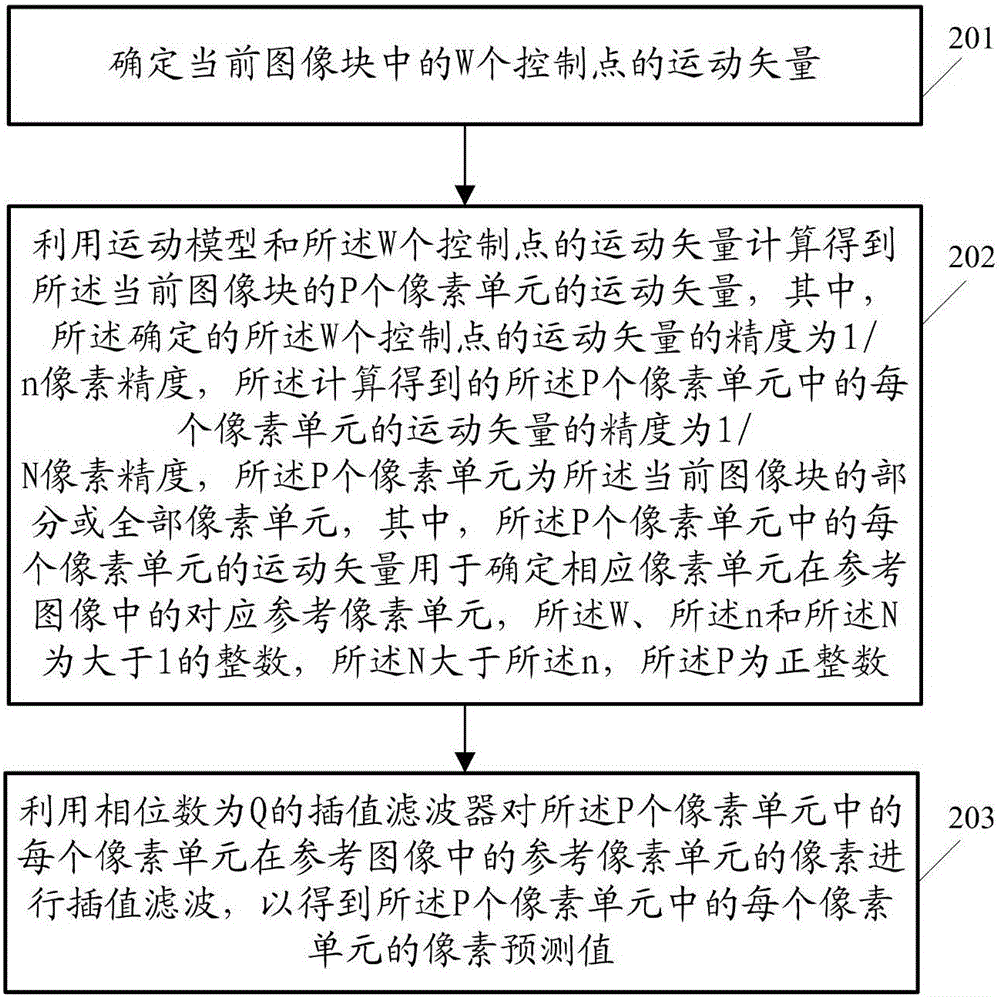

Image prediction method and associated device

ActiveCN106331722AEasy to operateReduce the number of interpolation filteringImage analysisImage codingComputation complexityMotion vector

The embodiment of the invention discloses an image prediction method and an associated device. The image prediction method comprises the following steps: determining motion vectors of W control points in a current image block; calculating the motion vectors of P pixel units of the current image block by using a motion model and the motion vectors of the W control points, wherein the precision of the determined motion vectors of the W control points as 1 / n pixel precision, the precision of the calculated motion vector of each pixel unit in the P pixel units is 1 / N pixel precision, the P pixel units are a part of or all pixel units of the current image block, and N is greater than n; and carrying out interpolation filtering on the pixels of the corresponding pixel units in a reference image of each pixel unit in the P pixel units by using an interpolation filter with a phase number Q to obtain a pixel prediction value of each pixel unit in the P pixel units, wherein Q is greater than n. The technical scheme provided by the embodiment of the invention is conducive to reducing the computational complexity of the image prediction process.

Owner:HUAWEI TECH CO LTD

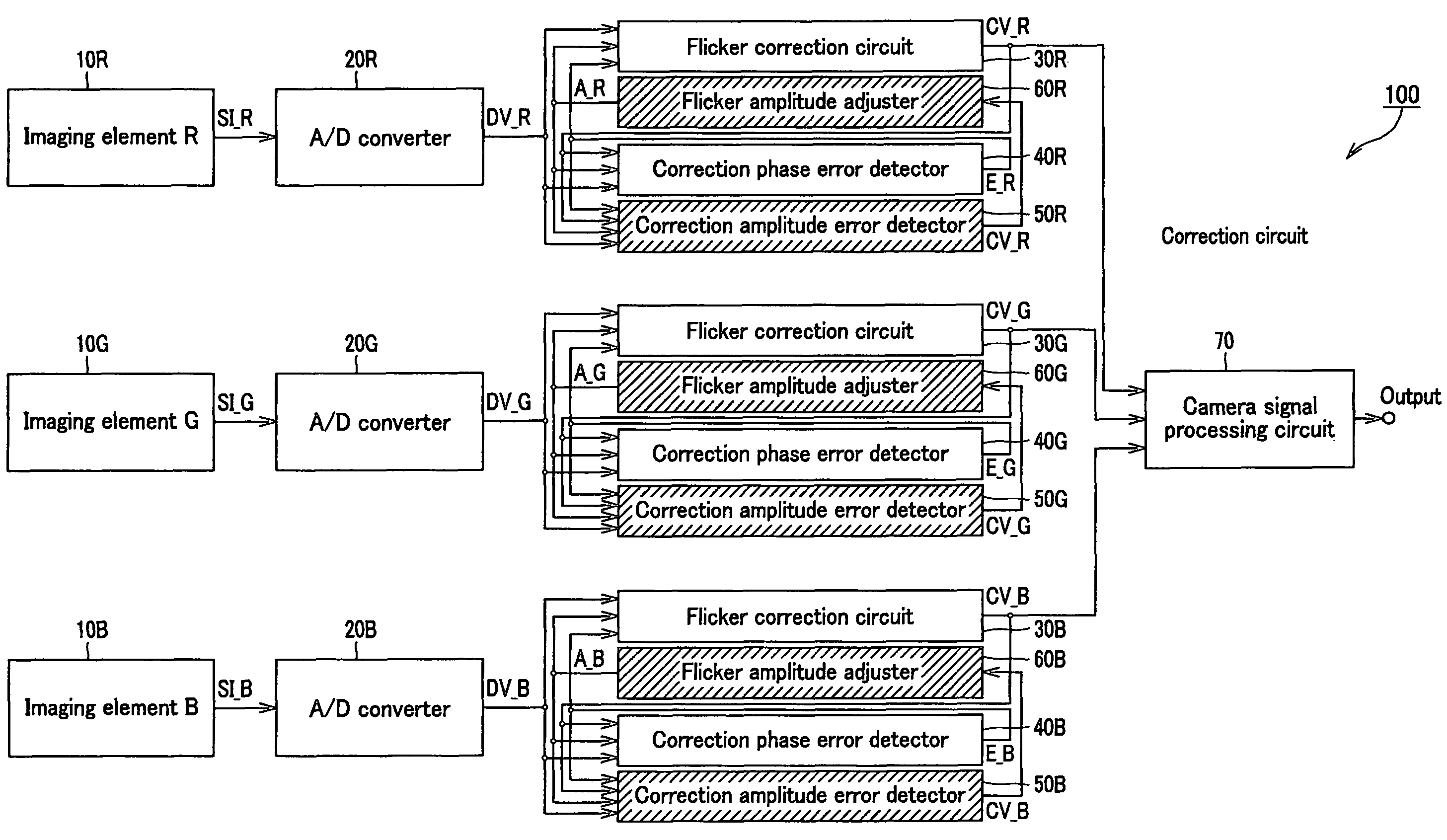

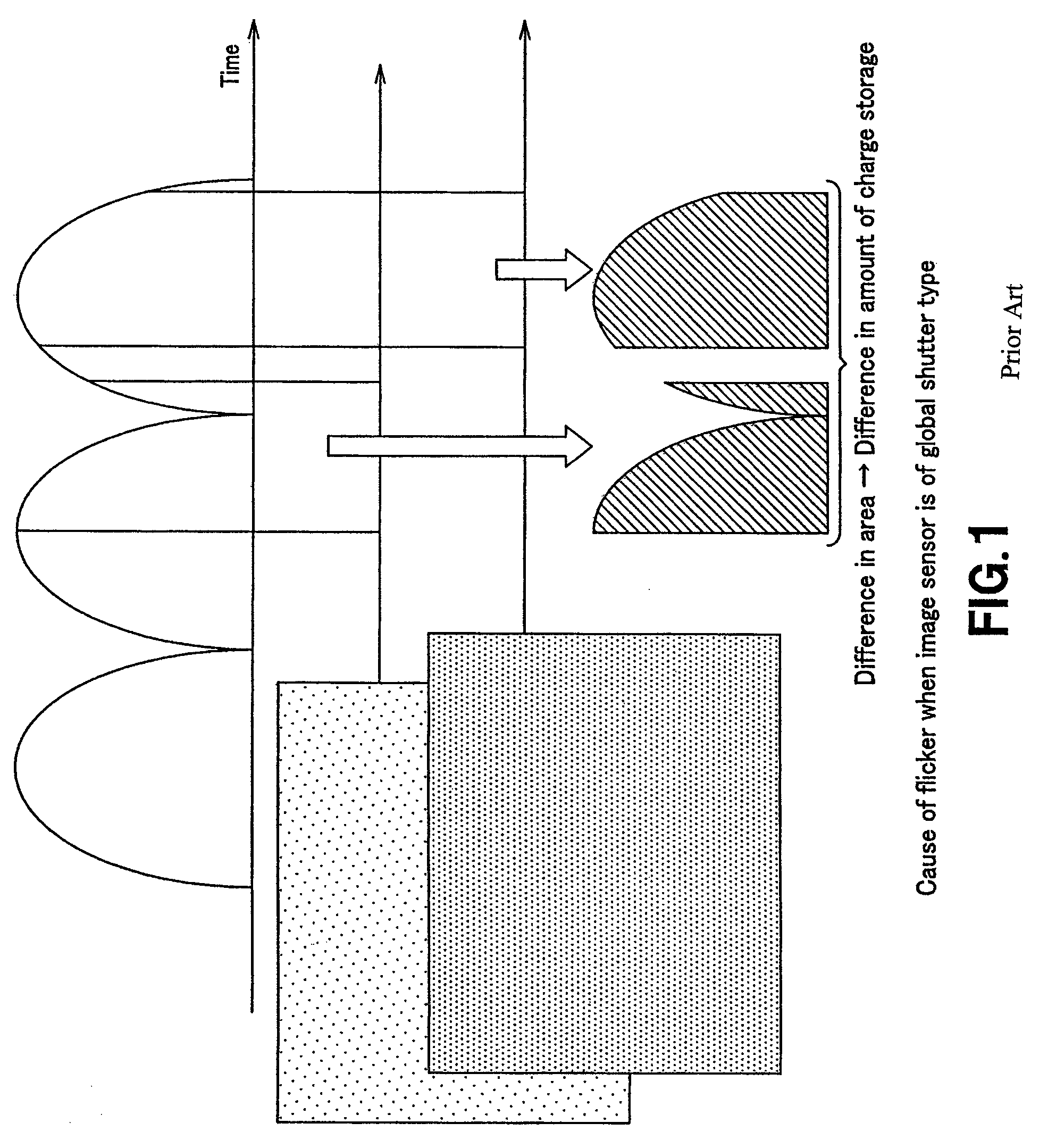

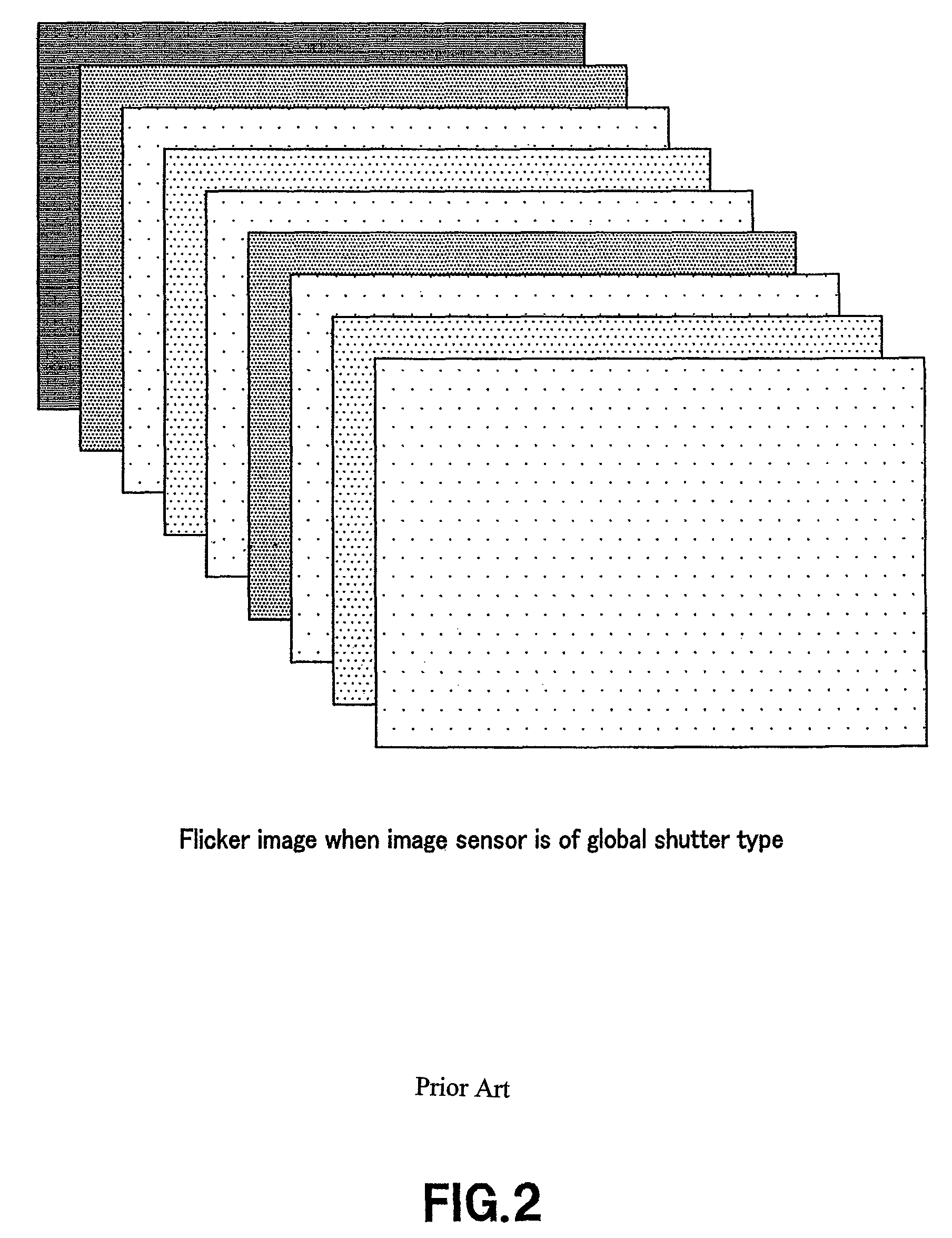

Flicker correction method and device, and imaging device

InactiveUS7764312B2Television system detailsColor signal processing circuitsRadiologyImage prediction

Even when the light intensity of the light source varies, the flicker correction can thus be made flexibly. The present invention provides a flicker correction method comprising the steps of predicting, from an image of a present flicker-corrected frame, a flicker of an image of a next frame to generate two types of flicker images having flickers different in level from each other added thereto, detecting a flicker component through comparison between the generated two types of flicker images and an image of an input next frame, generating a flicker correction value on the basis of the detected flicker component, and making flicker correction by adding the generated flicker correction value to an input image frame by frame.

Owner:SONY CORP

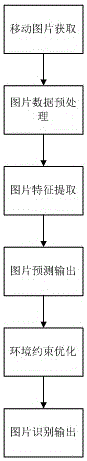

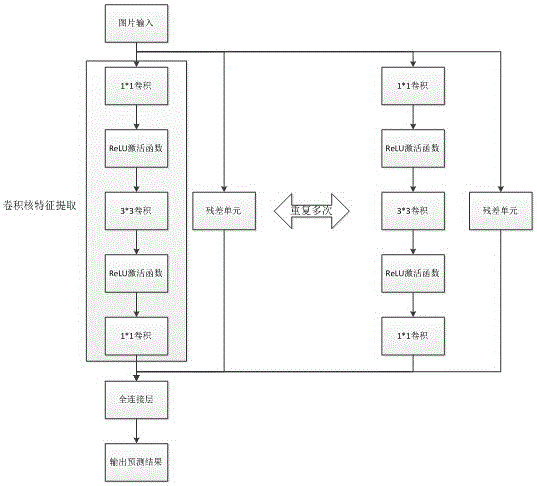

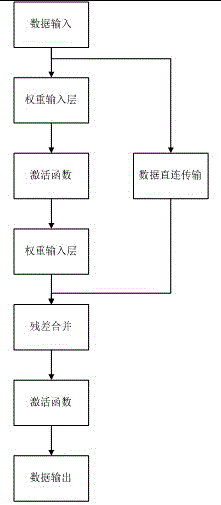

Rapid object recognition method for mobile robot based on deep learning

ActiveCN106228162AOvercoming complexityOvercome instabilityCharacter and pattern recognitionFeature extractionInstability

The invention discloses a rapid object recognition method for a mobile robot based on deep learning, and the method comprises the following steps: 1), the obtaining of a mobile image; 2), the preprocessing of image data; 3), the extracting of the features of the image; 4), image prediction output; 5), environment constrained optimization; 6), image recognition output. Through the unified integration of detection and recognition results, the method overcomes the shortcomings of the complexity and instability of a conventional object recognition system which needs to employ an object. Through a multilayer residual error network design and the generation of environment gravity constraint conditions, the method irons out the defect that an integrated object recognition system is poor in accuracy. The integration of detection and recognition tasks can guarantee the processing efficiency of a system, and improves the sensing capability of the robot in a moving process.

Owner:王威

Image processing device and image processing method

InactiveUS20120114260A1Effective imagingImprove efficiencyImage enhancementCharacter and pattern recognitionImaging processing3d image

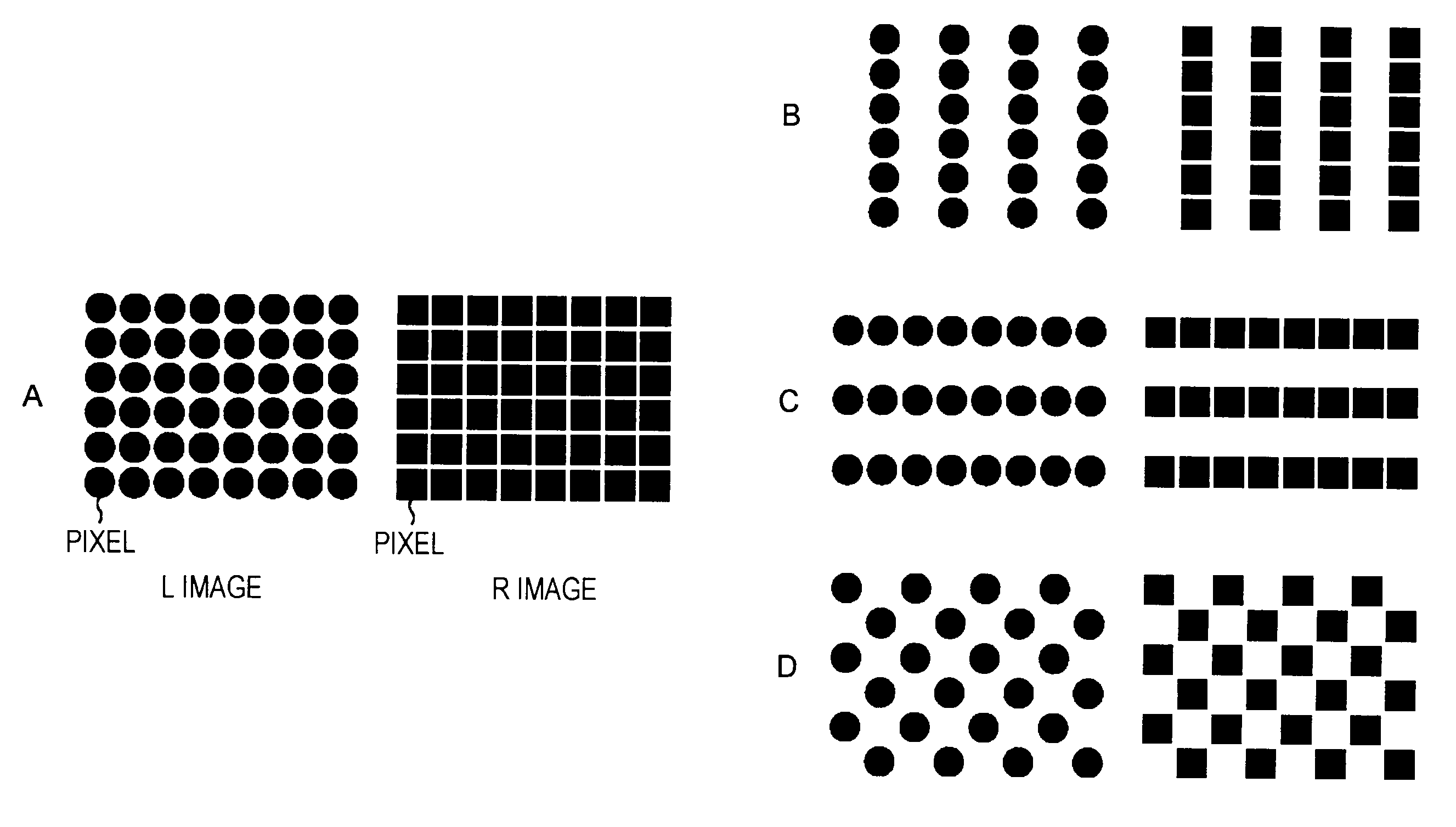

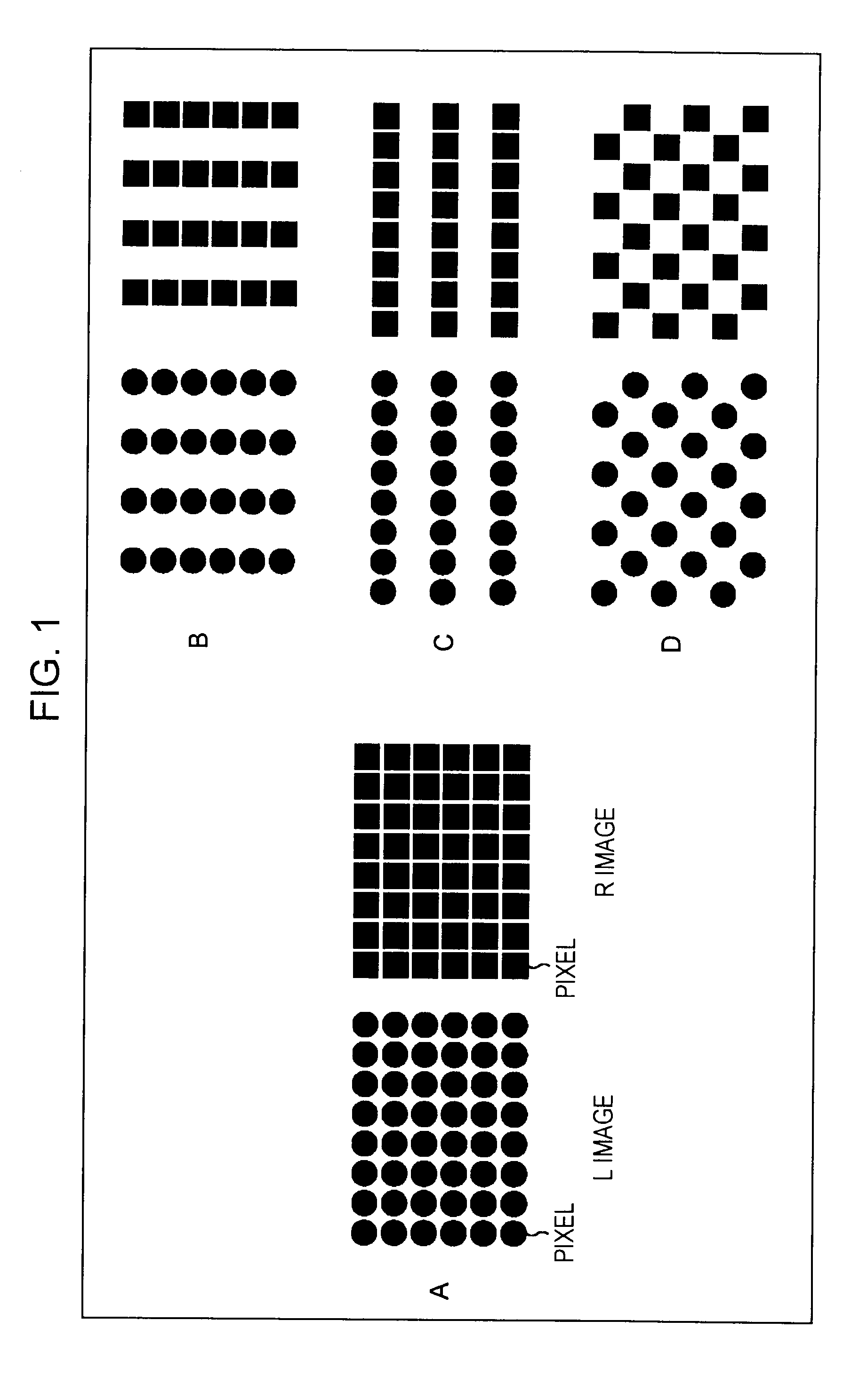

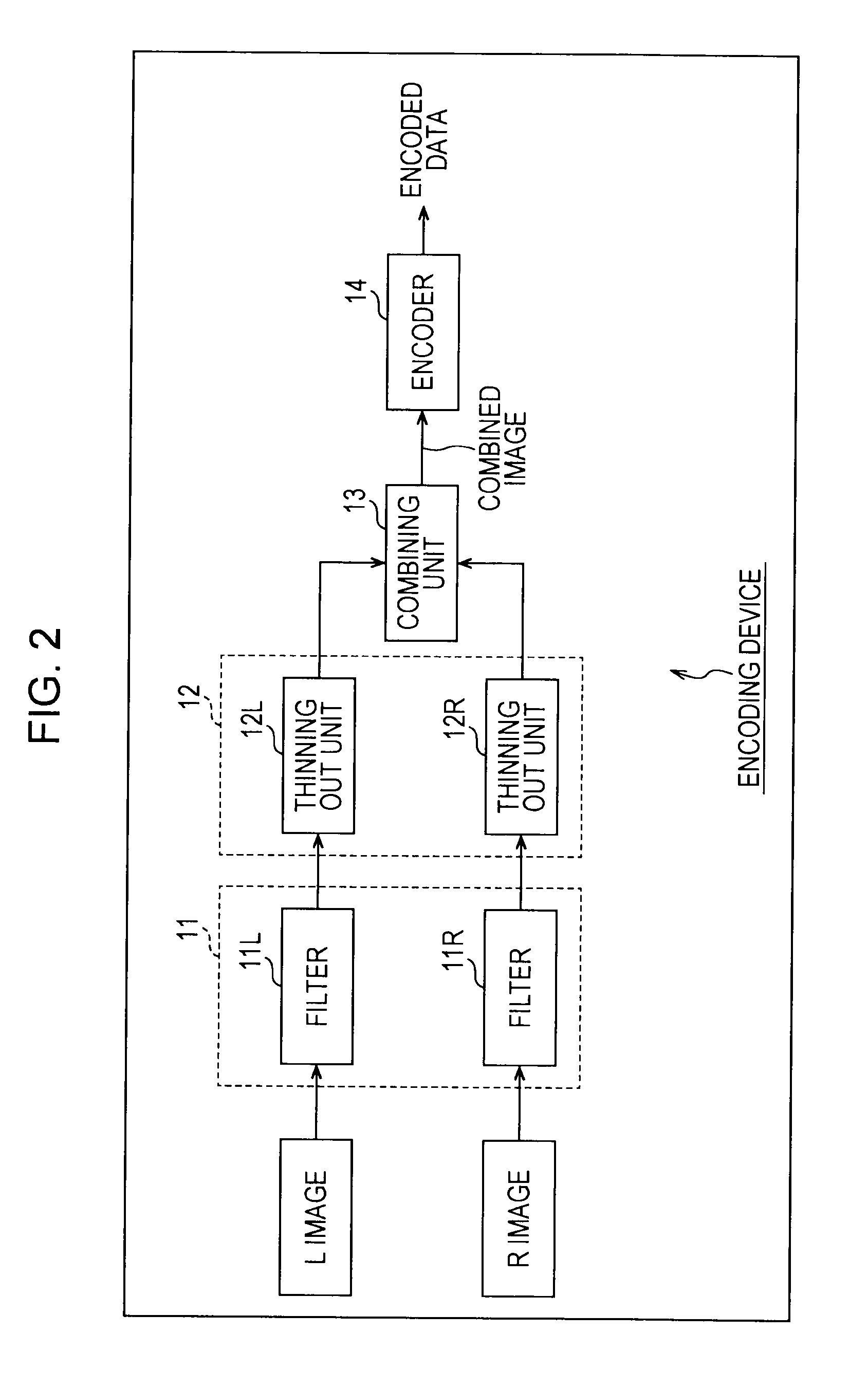

The present invention relates to an image processing device and an image processing method whereby encoding efficiency of image prediction encoding can be improved.A horizontal processing unit 31 performs horizontal packing as horizontal processing to manipulate the horizontal-direction array of pixels of each of a first thinned-out image and a second thinned-out image arrayed in checkerboard fashion, obtained by thinning out the pixels of each of a first image and a second image different from the first image every other line in an oblique direction, wherein pixels of first and second thinned-out images are packed in the horizontal direction. A combining unit 33 generates a combined image which is combined by adjacently arraying the post-horizontal processing first and second thinned-out images after horizontal processing, as an image to serve as the object of prediction encoding. The present invention can be applied to a case of performing prediction encoding on a first and second image, such as an L (Left) image and R (Right) image making up a 3D image or the like, for example.

Owner:SONY CORP

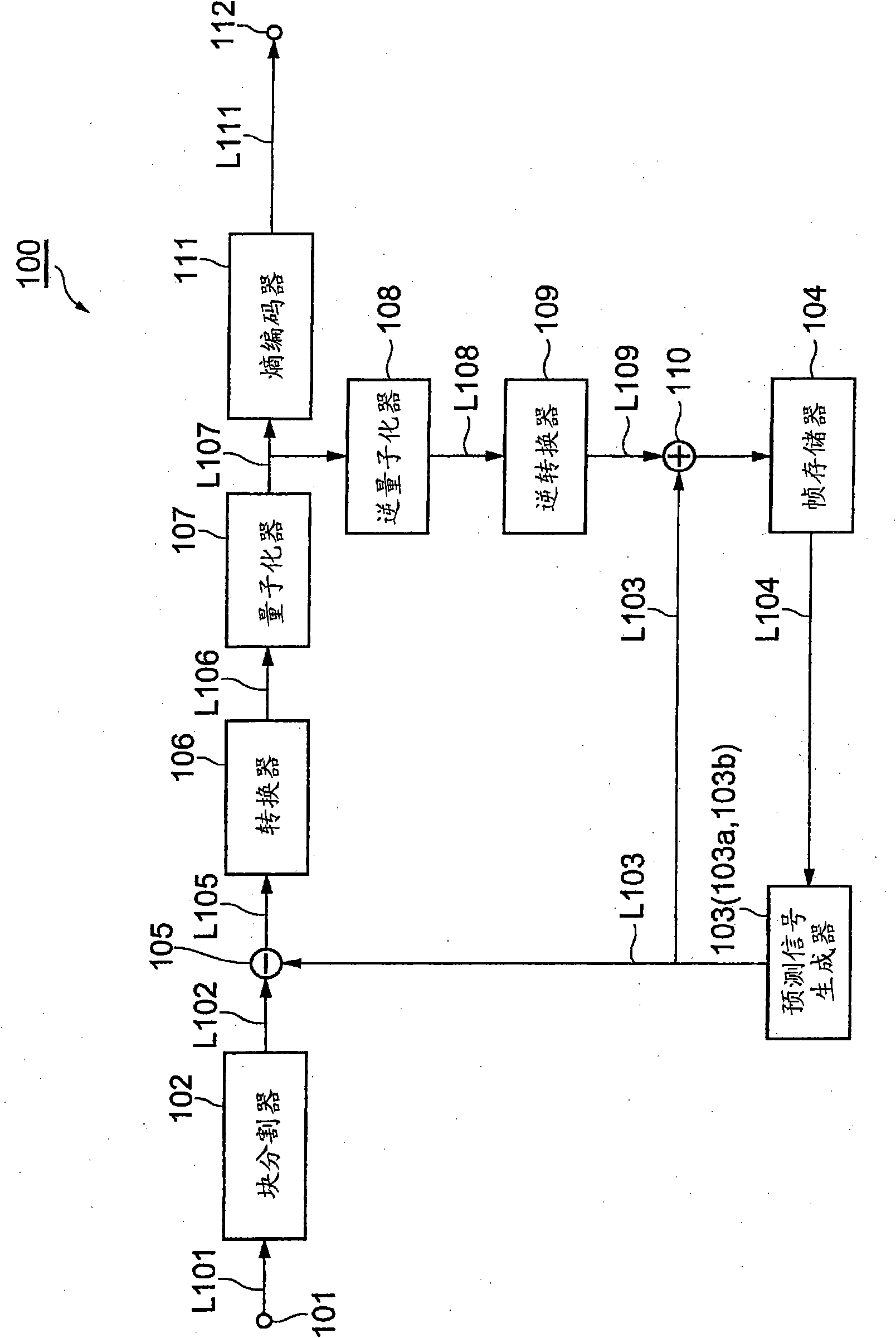

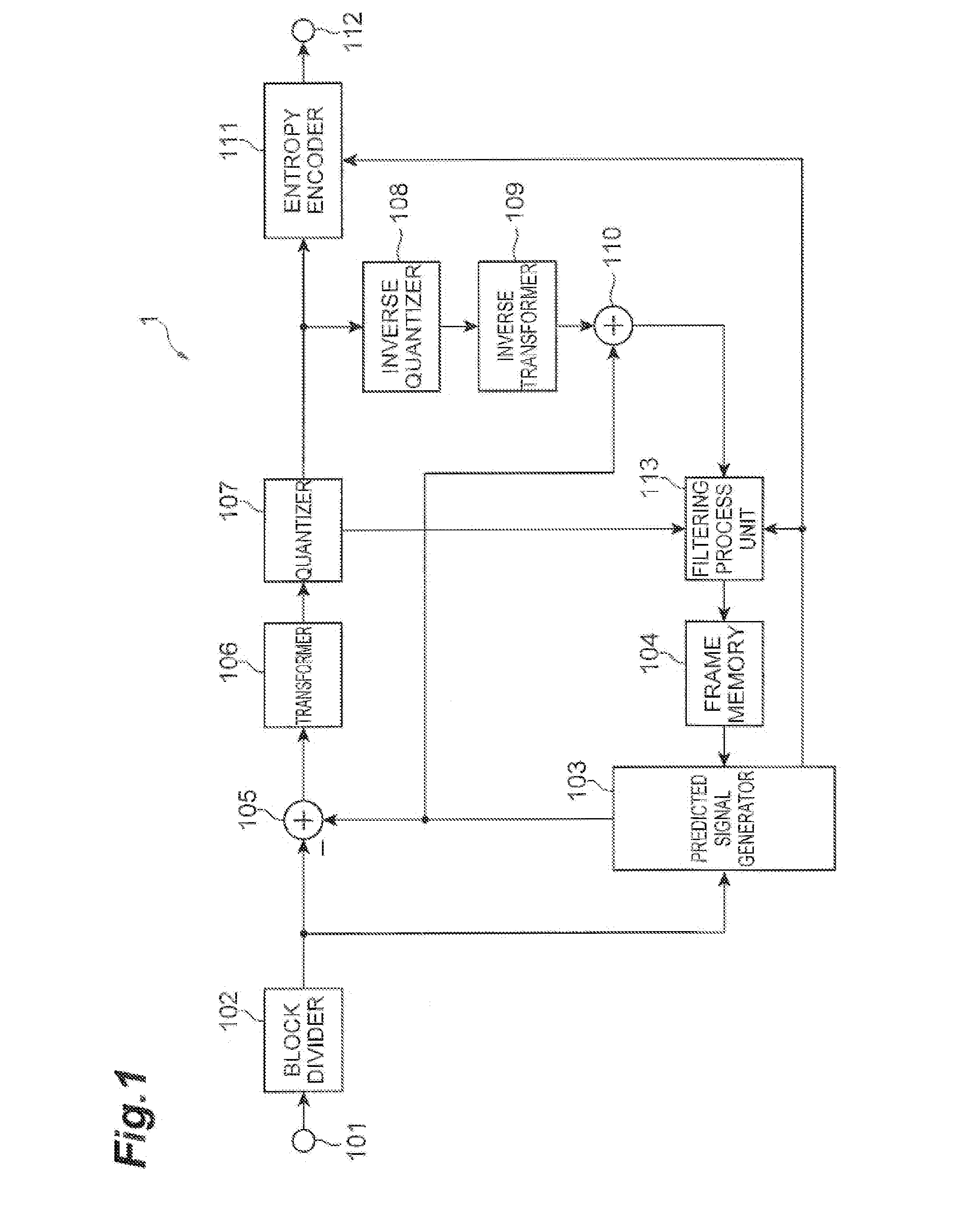

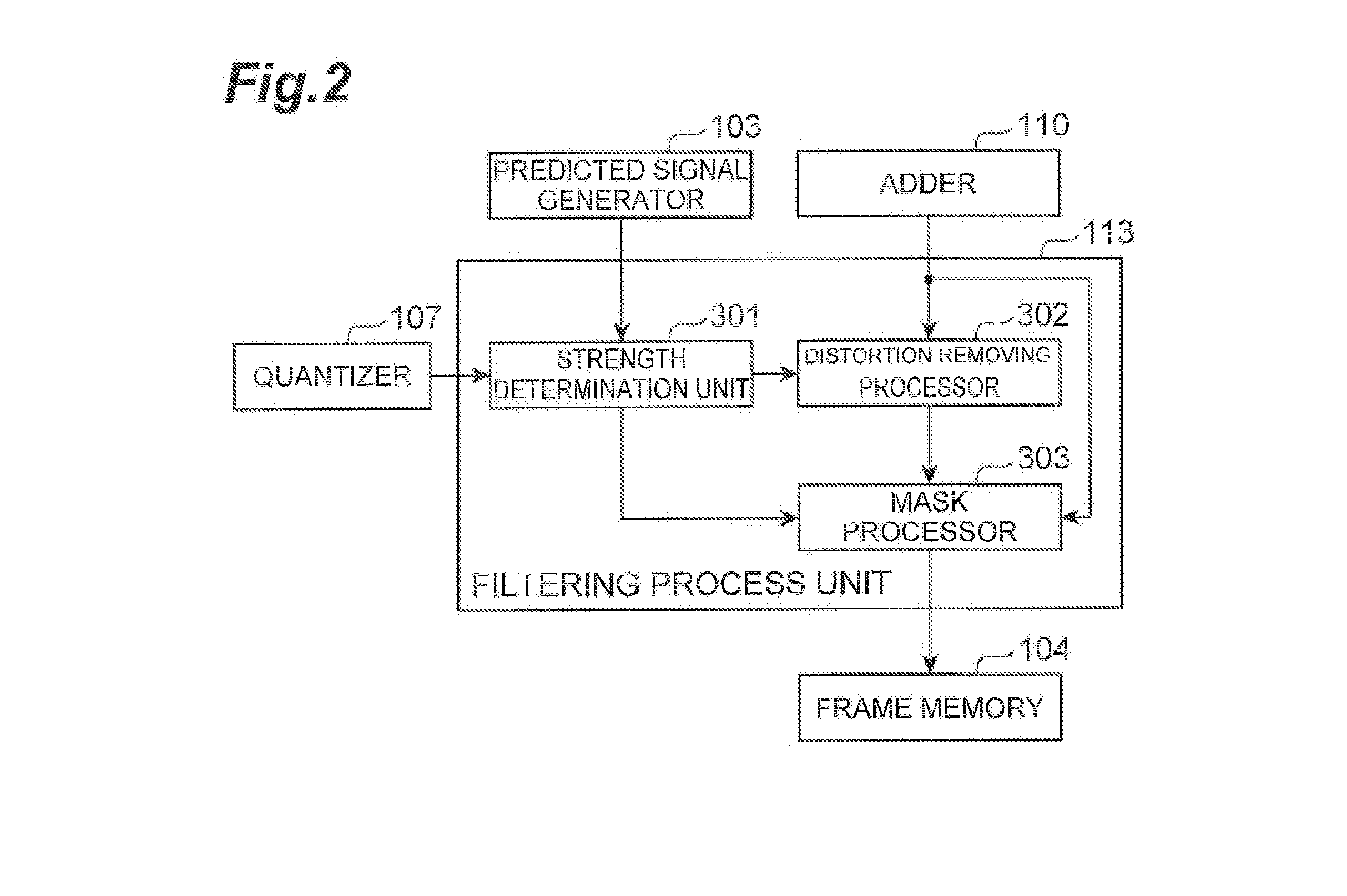

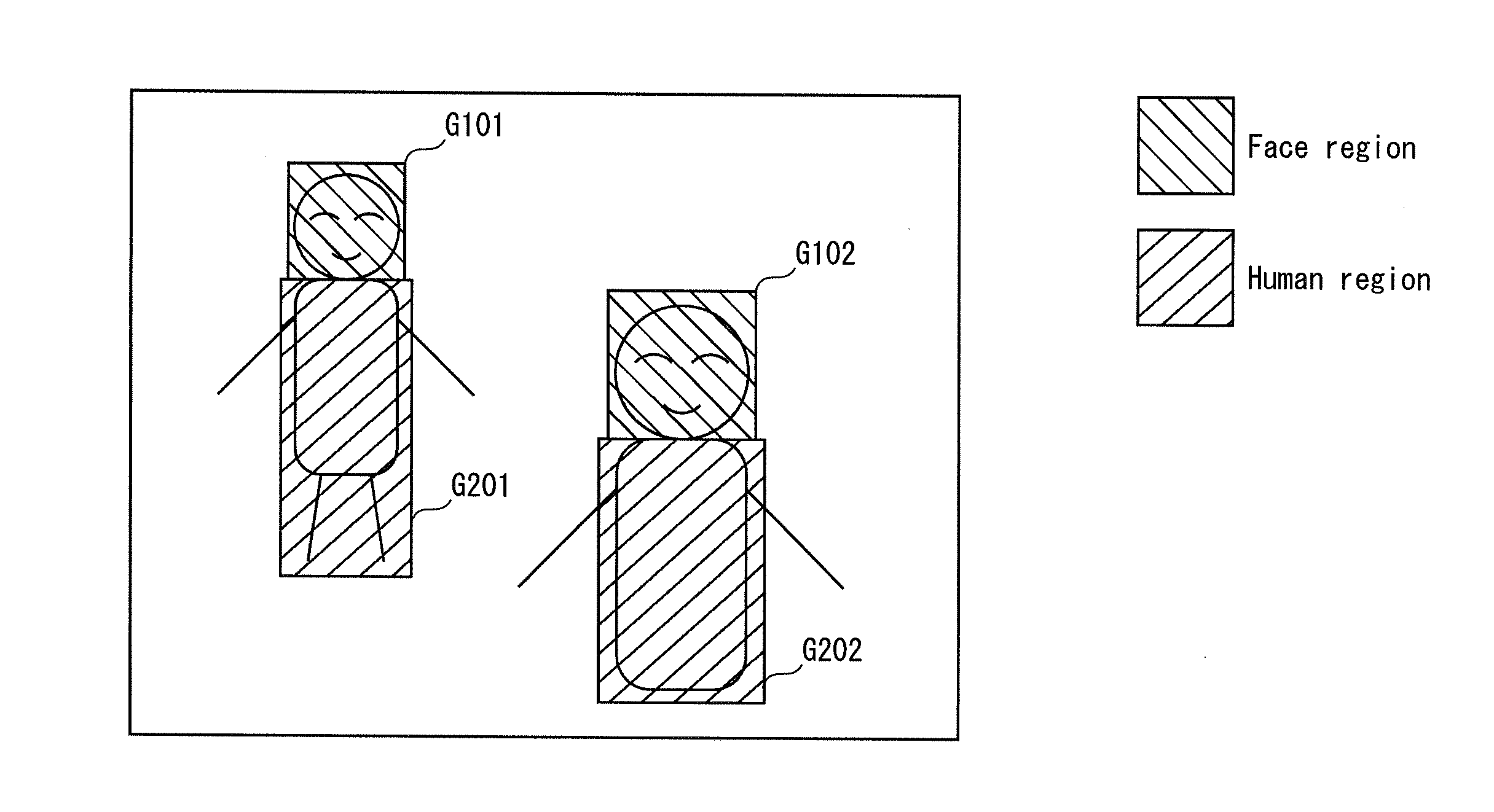

Moving image prediction encoder, moving image prediction decoder, moving image prediction encoding method, and moving image prediction decoding method

InactiveUS20130028322A1Improve efficiencyHigh reproduction qualityColor television with pulse code modulationColor television with bandwidth reductionRestoration deviceReference image

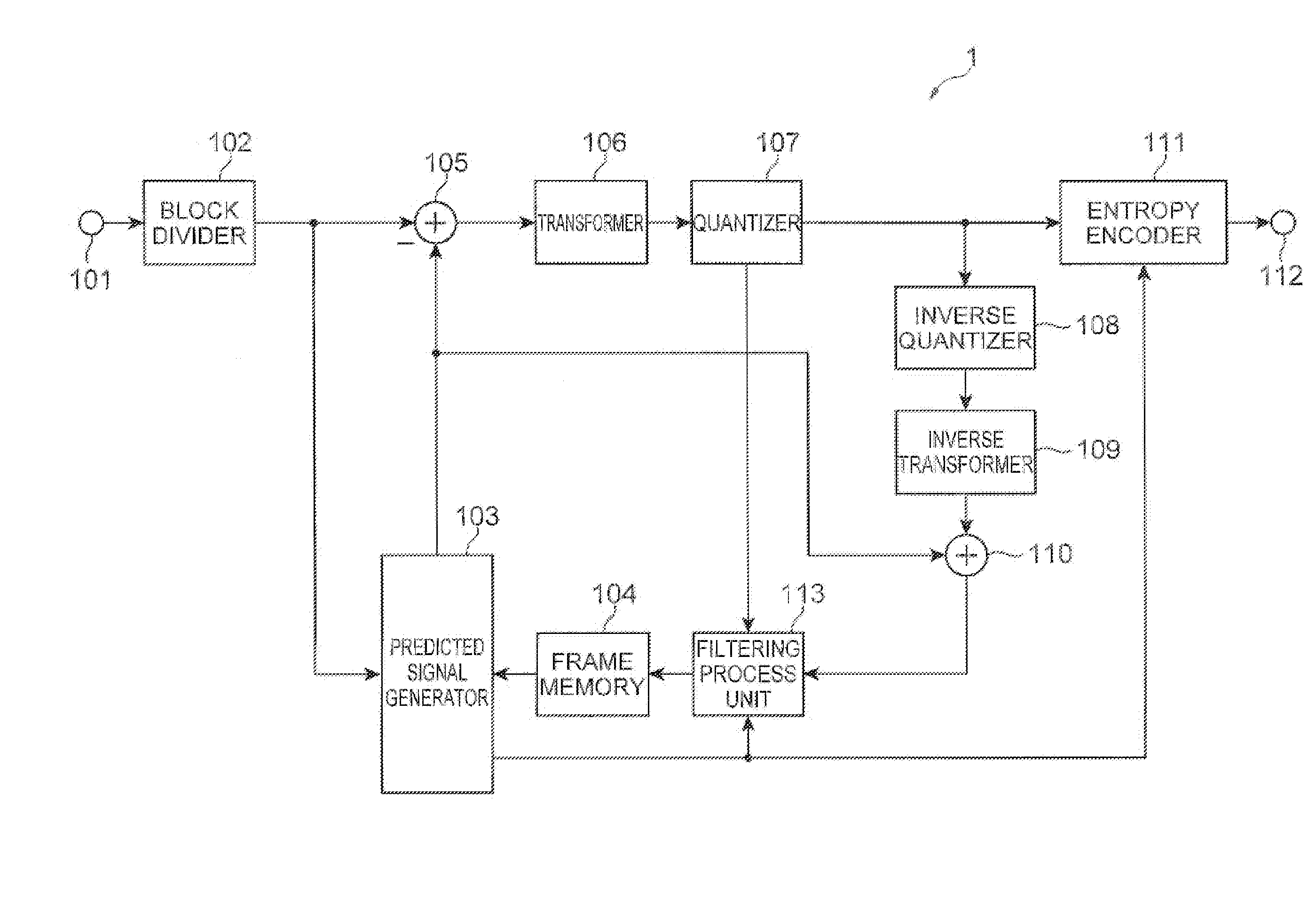

An object is to improve the quality of a reproduced picture and improve the efficiency of predicting a picture using the reproduced picture as a reference picture. For this object, a video prediction encoder 1 comprises an input terminal 101 which receives a plurality of pictures in a video sequence; an encoder which encodes an input picture by intra-frame prediction or inter-frame prediction to generate compressed data and encodes parameters for luminance compensation prediction between blocks in the picture: a restoration device which decodes the compressed data to restore a reproduced picture: a filtering processor 113 which determines a filtering strength and a target region to be filtered, using the parameters for luminance compensation prediction between the blocks and performs filtering on the reproduced picture, according to the filtering strength and the target region to be filtered; and a frame memory 104 which stores the filtered reproduced picture, as a reference picture.

Owner:NTT DOCOMO INC

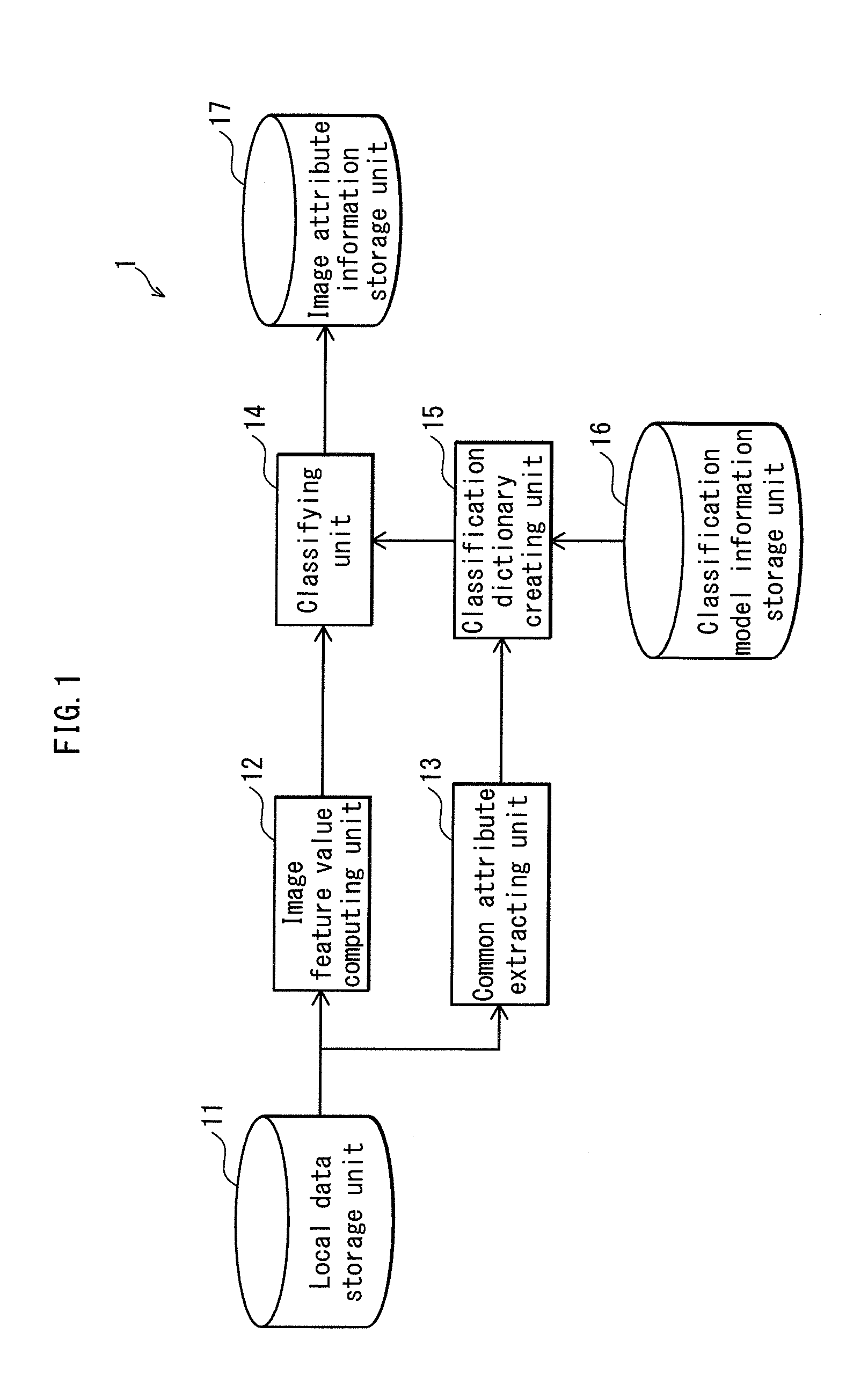

Image processing device

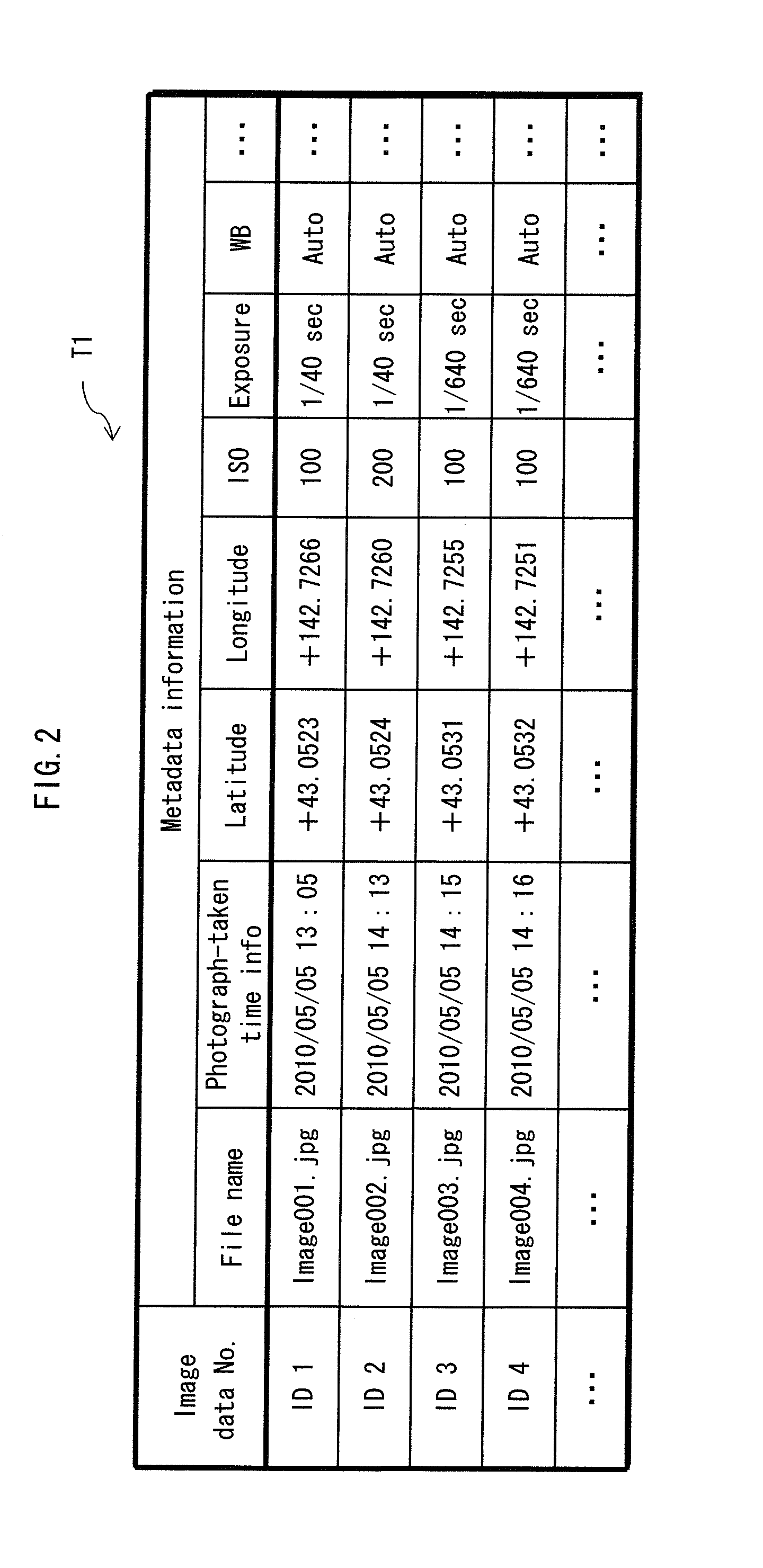

ActiveUS20130101223A1Reduce troubleTelevision system detailsCharacter and pattern recognitionRelevant informationImaging processing

Provided is an image processing device for associating images with objects appearing in the images, while reducing burden on the user. The image processing device: stores, for each of events, a photographic attribute indicating a photographic condition predicted to be met with respect to an image photographed in the event; stores an object predicted to appear in an image photographed in the event; extracts from a collection of photographed images a photographic attribute that is common among a predetermined number of photographed images in the collection, based on pieces of photography-related information of the respective photographed images; specifies an object stored for an event corresponding to the extracted photographic attribute; and conducts a process on the collection of photographed images to associate each photographed image containing the specified object with the object.

Owner:PANASONIC INTELLECTUAL PROPERTY CORP OF AMERICA

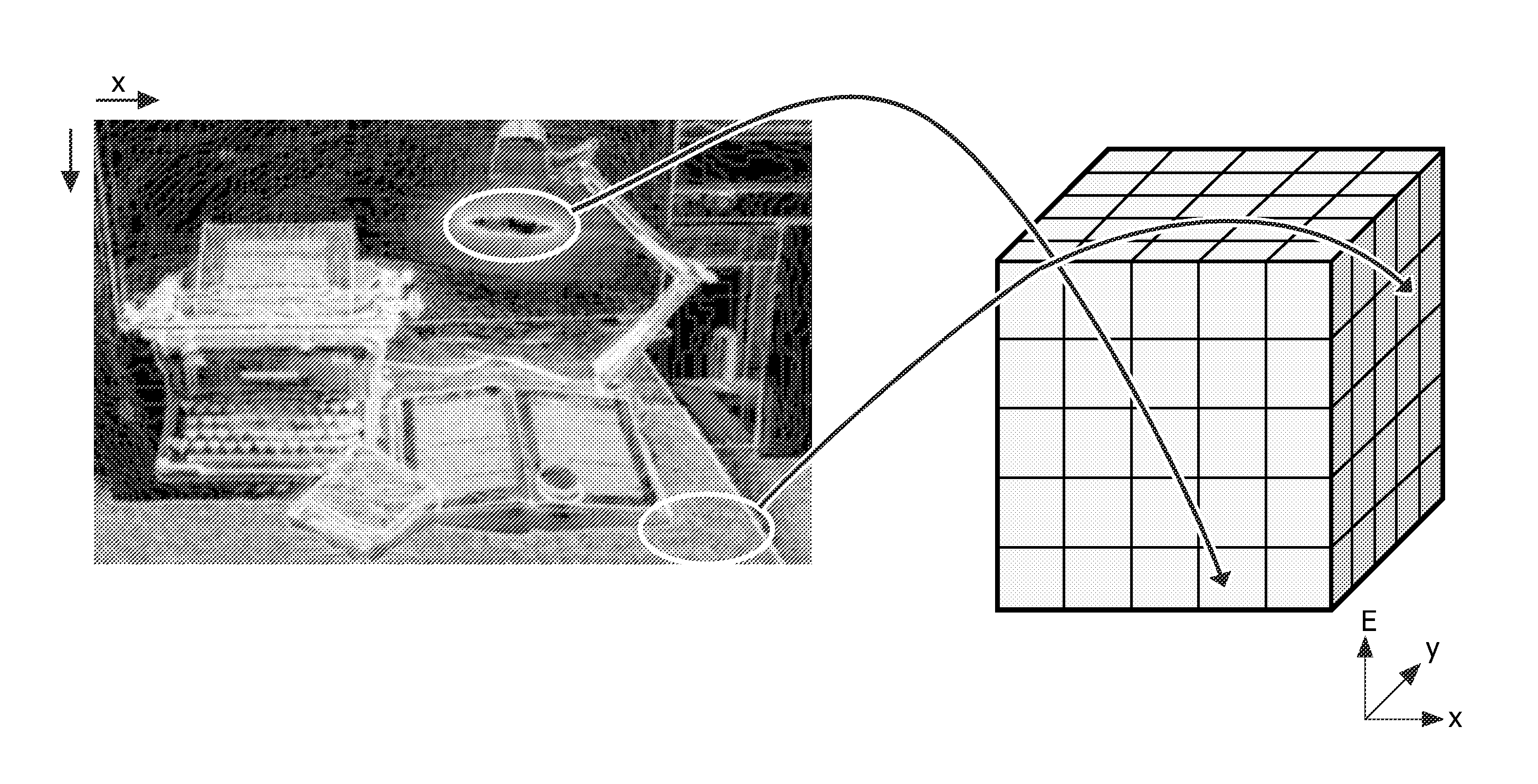

Generation of depth indication maps

InactiveUS20130222377A1Easy to codeAdd depthDigital video signal modificationSteroscopic systemsReference imageImage prediction

An approach is provided for generating a depth indication map from an image. The generation is performed using a mapping relating input data in the form of input sets of image spatial positions and a combination of color coordinates of pixel values associated with the image spatial positions to output data in the form of depth indication values. The mapping is generated from a reference image and a corresponding reference depth indication map. Thus, a mapping from the image to a depth indication map is generated on the basis of corresponding reference images. The approach may be used for prediction of depth indication maps from images in an encoder and decoder. In particular, it may be used to generate predictions for a depth indication map allowing a residual image to be generated and used to provide improved encoding of depth indication maps.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

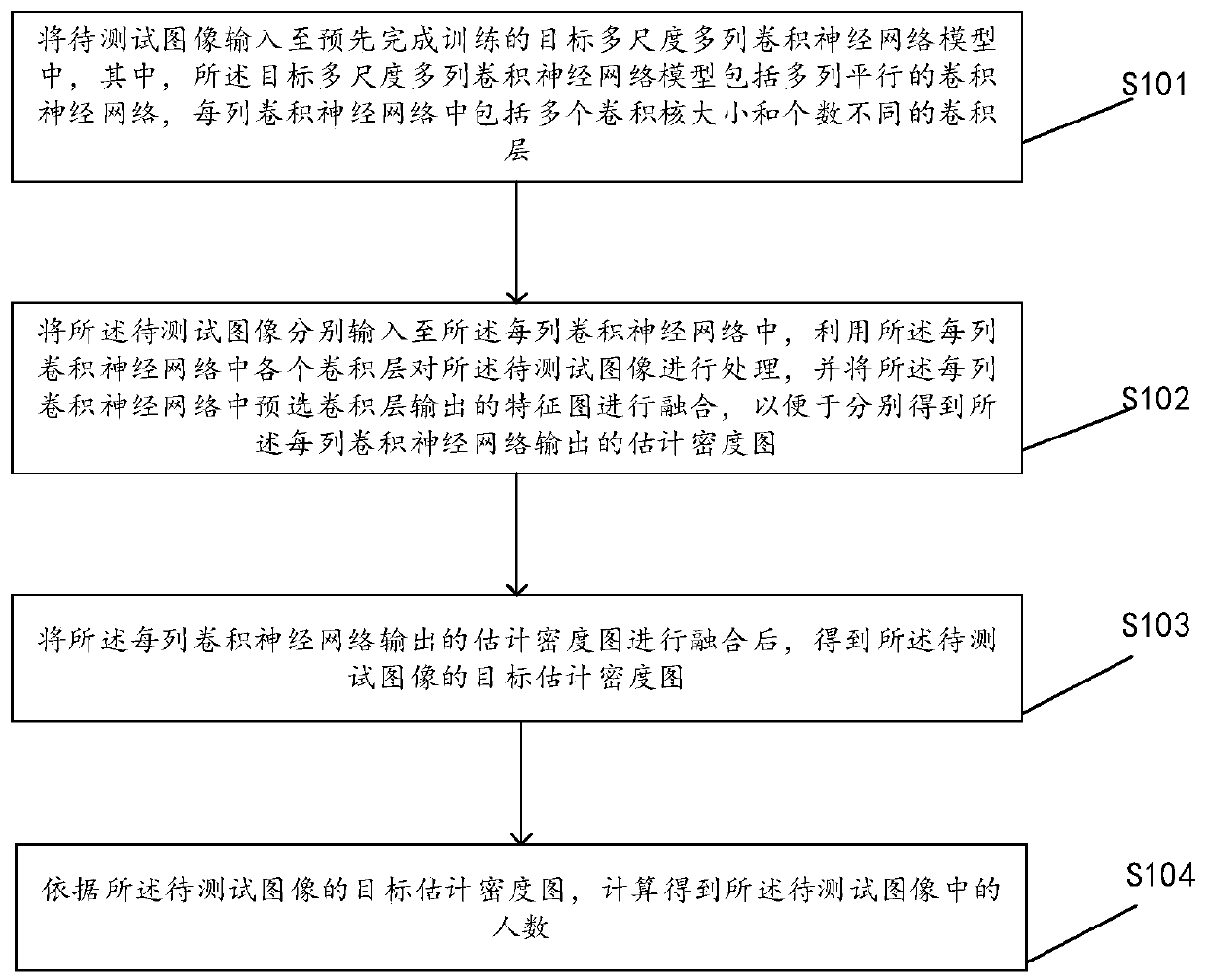

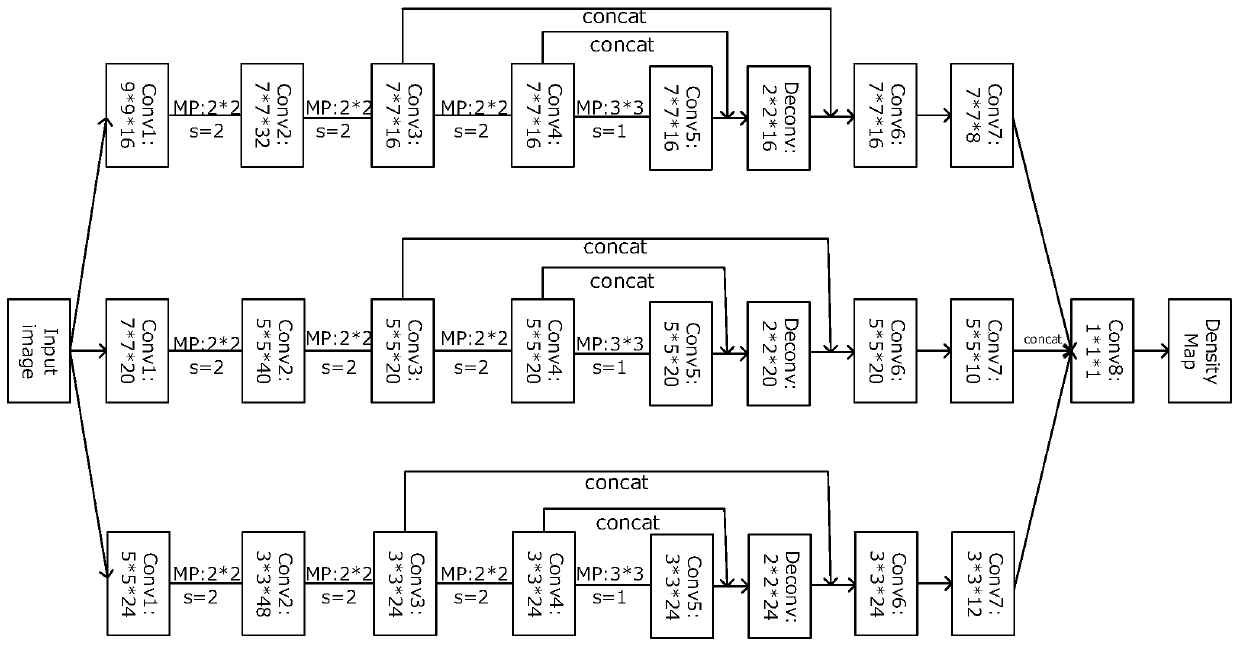

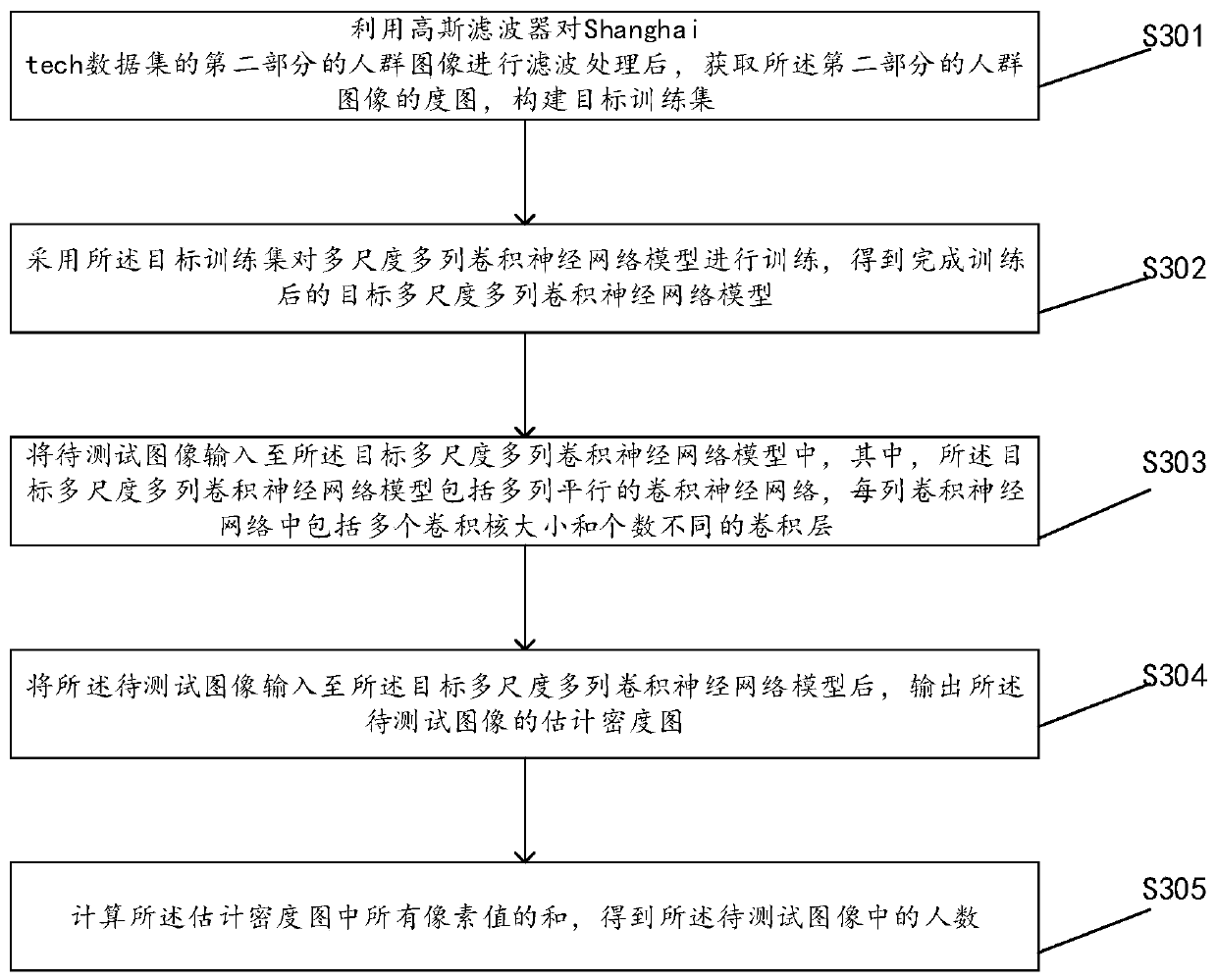

Dense crowd counting method, device and equipment and storage medium

ActiveCN109858461AImprove performanceImprove accuracyInternal combustion piston enginesCharacter and pattern recognitionCrowd countingAlgorithm

The invention discloses a dense crowd counting method and device, equipment, and a computer readable storage medium. The method comprises the steps of inputting a to-be-tested image into a target multi-scale multi-column convolutional neural network model comprising a multi-column parallel convolutional neural network, wherein each column of convolutional neural network comprises a plurality of convolutional layers with different convolutional kernel sizes and numbers; processing a to-be-tested image by using each convolution layer in each column of convolutional neural networks, and fusing the feature maps output by the pre-selected convolution layers in each column of convolutional neural networks to obtain an estimated density map output by each column of convolutional neural networks;fusing the estimated density maps output by each column of convolutional neural networks to obtain a target estimated density map of the to-be-tested image; and calculating the number of people in theto-be-tested image according to the target estimation density map. According to the method, the device, the equipment and the computer readable storage medium provided by the invention, the accuracyof the dense crowd image prediction result is improved.

Owner:SUZHOU UNIV

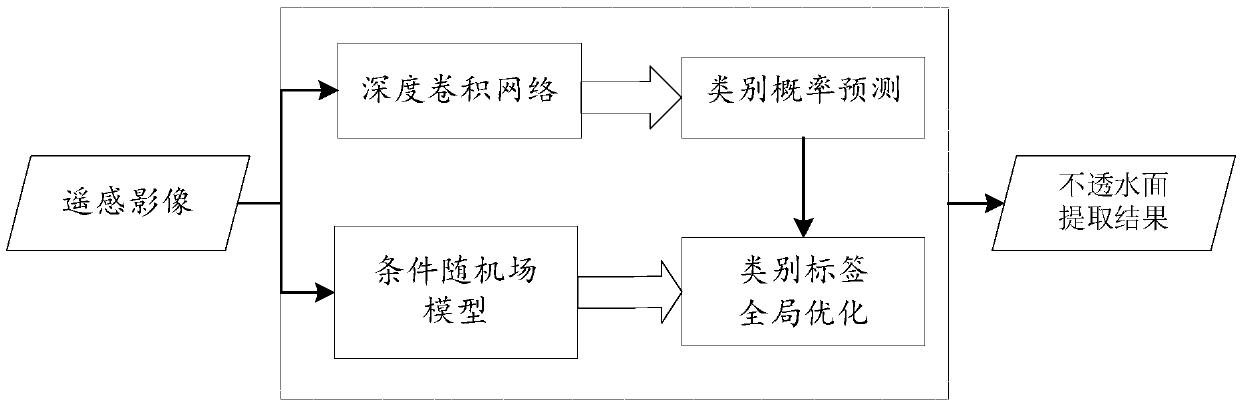

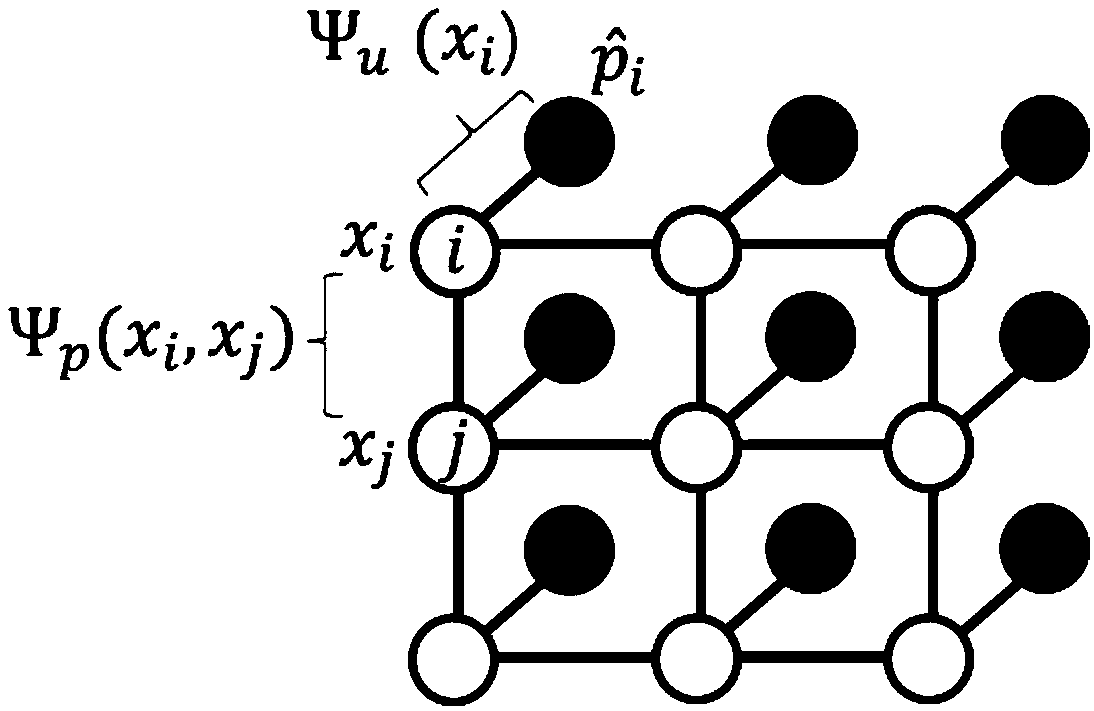

High-resolution remote sensing image impervious surface extraction method and system based on deep learning and semantic probability

ActiveCN108985238AGet goodReasonable impervious surface extraction resultsMathematical modelsEnsemble learningConditional random fieldSample image

A high-resolution remote sensing image impervious surface extraction method and system based on deep learning and semantic probability. The method includes: obtaining a high-resolution remote sensingimage of a target region, normalizing image data, dividing the image data into a sample image and a test image; constructing a deep convolutional network, wherein the deep convolutional network is composed of a multi-layer convolution layer, a pooling layer and a corresponding deconvolution and deconvolution layer, and extracting image features of each sample image; predicting each sample image pixel by pixel, and constructing a loss function by using the error between the predicted value and the true value, and updating and training the network parameters; extracting the test image features by the deep convolutional network, and carrying out the pixel-by-pixel classification prediction, then constructing a conditional random field model of the test image by using the semantic associationinformation between pixel points, optimizing the test image prediction results globally, and obtaining the extraction results. The invention can accurately and automatically extract the impervious surface of the remote sensing image, and meets the practical application requirements of urban planning.

Owner:WUHAN UNIV

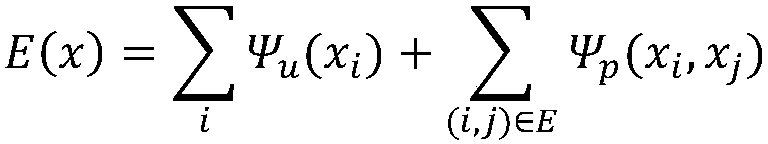

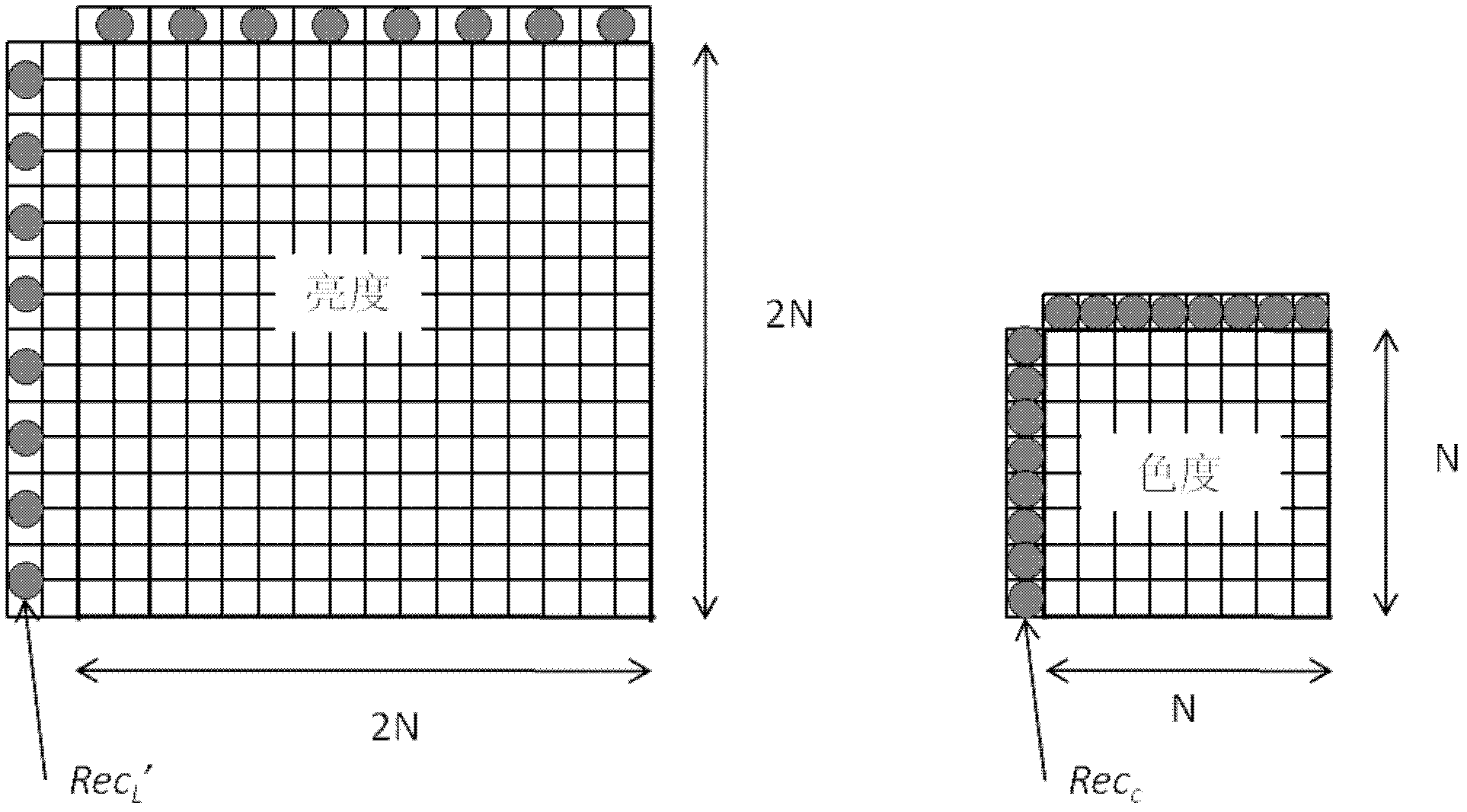

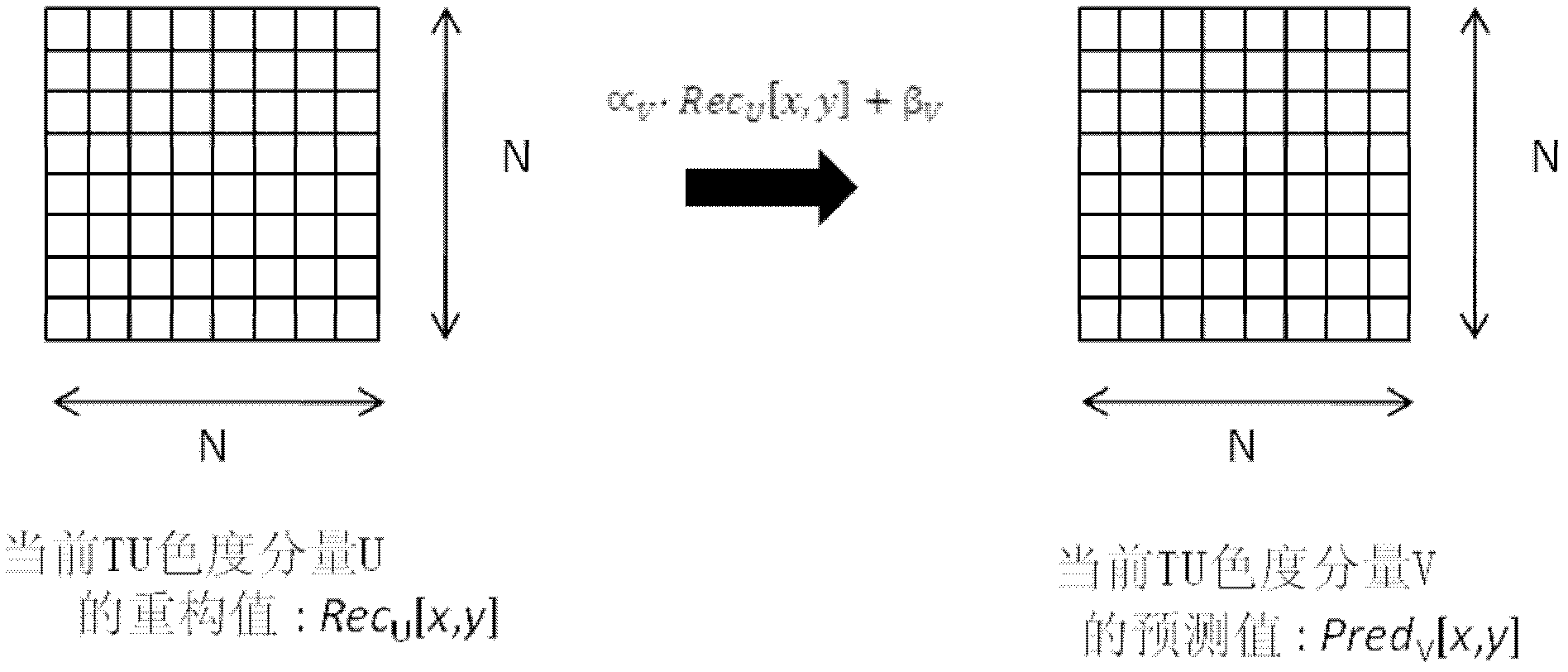

Intra-frame image predictive encoding and decoding method and video codec

InactiveCN103260018AImprove predictive codec efficiencyColor television with bandwidth reductionTelevision systemsPattern recognitionTransformation unit

The invention discloses an intra-frame image predictive encoding and decoding method and a video codec. The intra-frame image predictive encoding and decoding method comprises the following steps that intra-frame image predictive encoding is conducted by the adoption of an chromaticity intra-frame prediction mode; a reconstruction value of a luminance component Y of a transformation unit (TU) is acquired; a chromaticity component U of the current TU is predicted by the means of the reconstruction value of the luminance component Y of the current TU; a reconstruction value of the chromaticity component U of the current TU is acquired; a chromaticity component V of the current TU is predicted by the means of the reconstruction value of the chromaticity component U of the current TU. According to the intra-frame image predictive encoding and decoding method and the video codec, relevance between the chromaticity component V and the chromaticity component U can be fully used, and therefore predictive encoding and decoding efficiency of intra-frame images is improved.

Owner:LG ELECTRONICS (CHINA) R&D CENT CO LTD

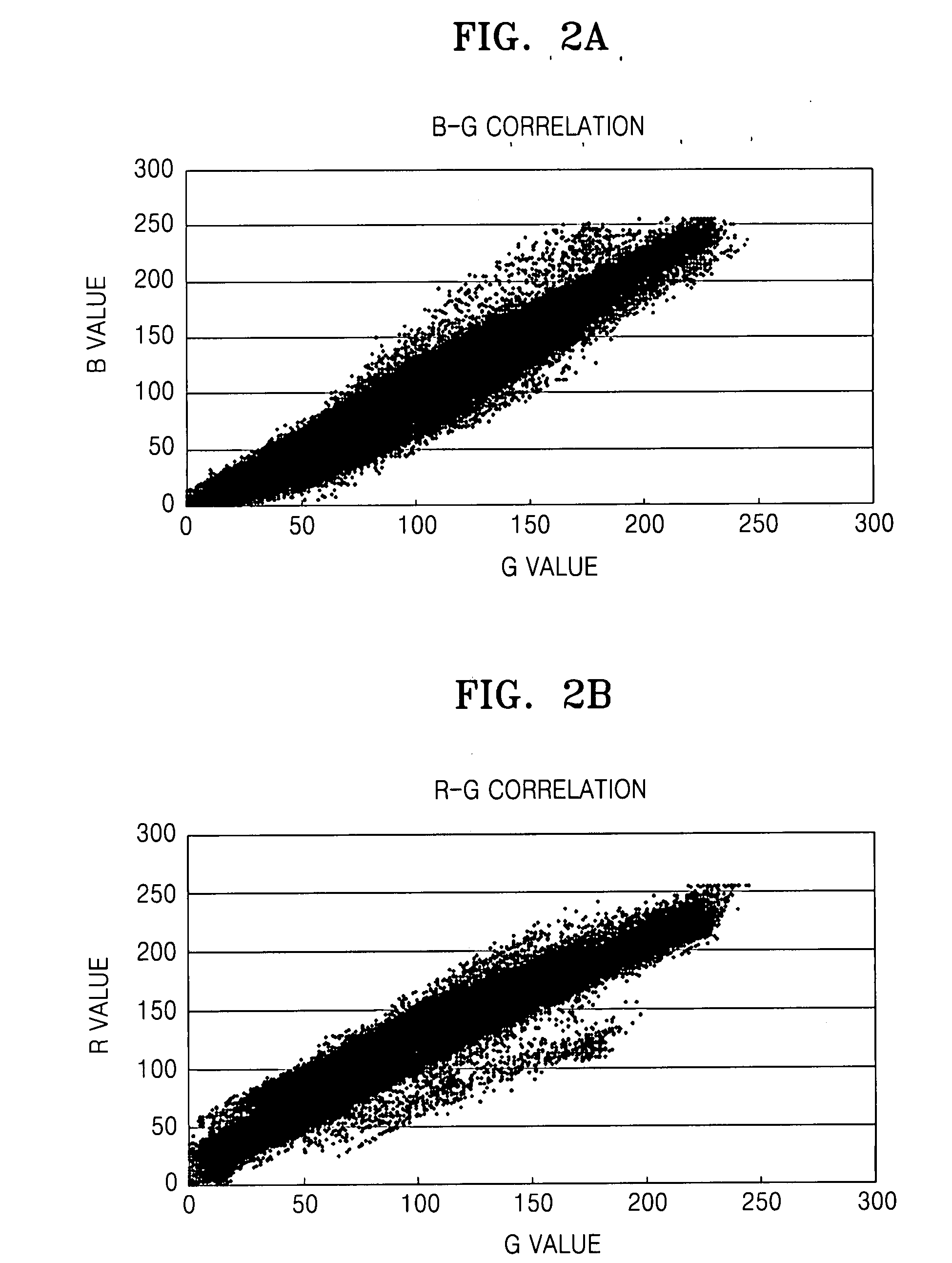

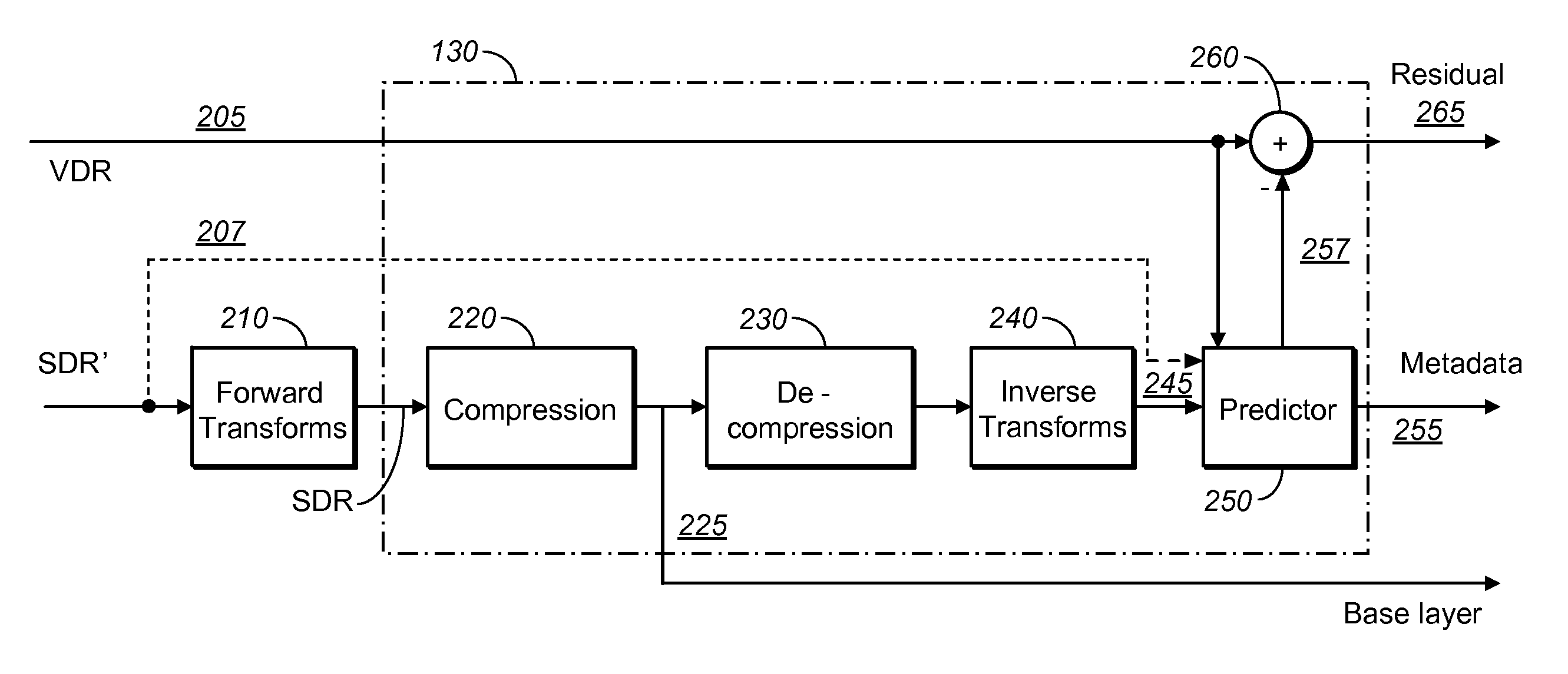

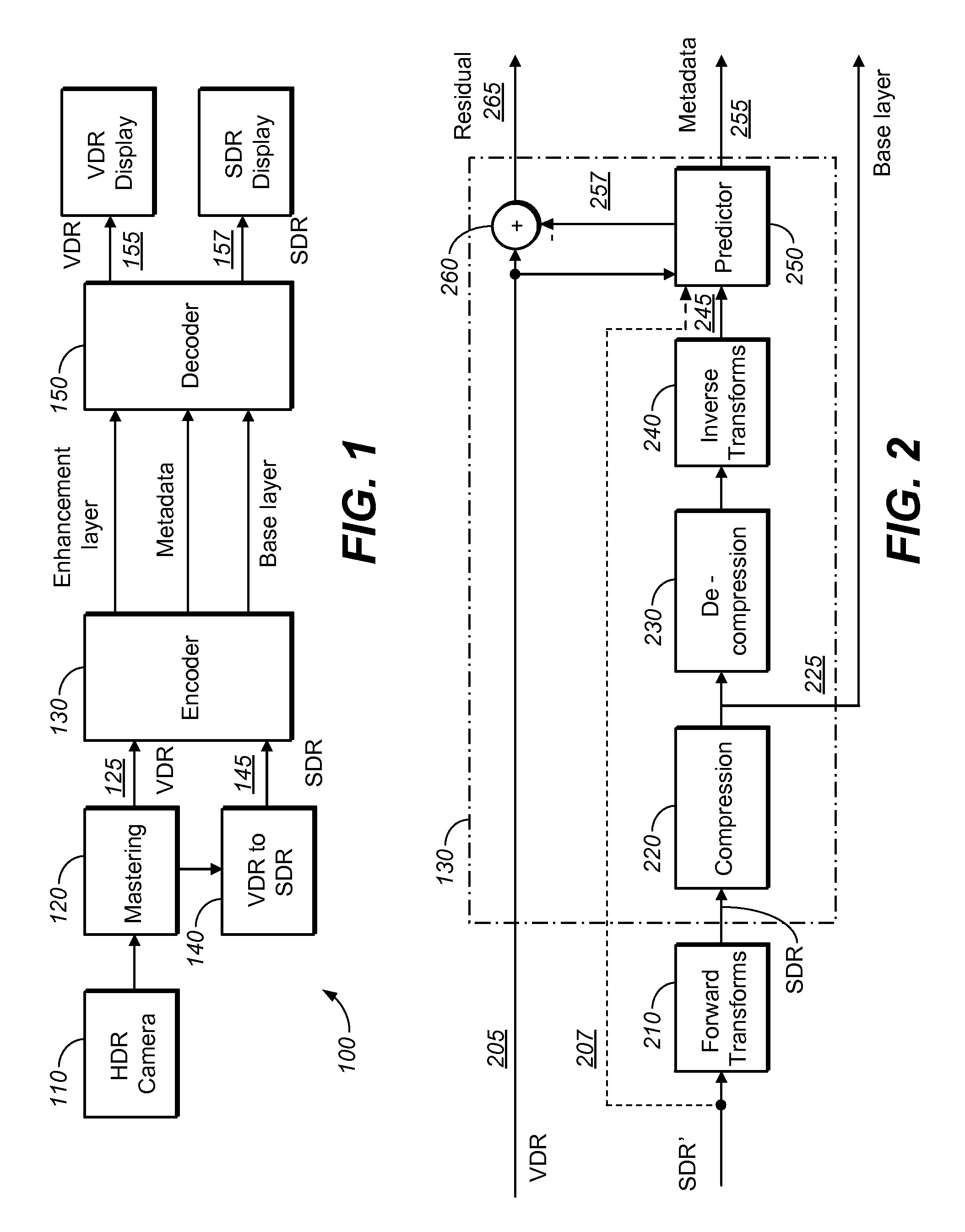

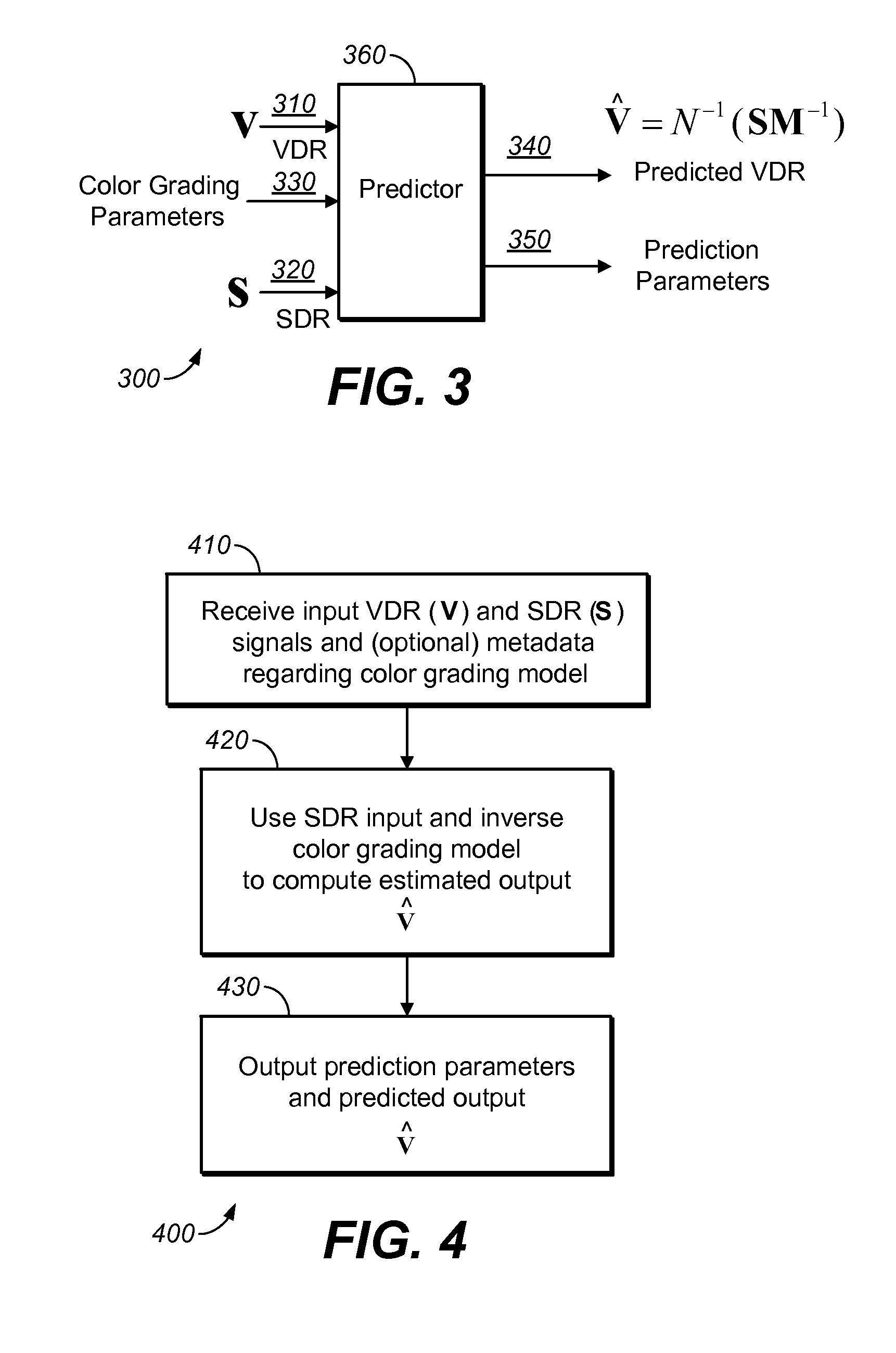

Image Prediction Based on Primary Color Grading Model

ActiveUS20140037205A1Image enhancementCharacter and pattern recognitionColor imagePattern recognition

Inter-color image prediction is based on color grading modeling. Prediction is applied to the efficient coding of images and video signals of high dynamic range. Prediction models may include a color transformation matrix that models hue and saturation color changes and a non-linear function modeling color correction changes. Under the assumption that the color grading process uses a slope, offset, and power (SOP) operations, an example non linear prediction model is presented.

Owner:DOLBY LAB LICENSING CORP

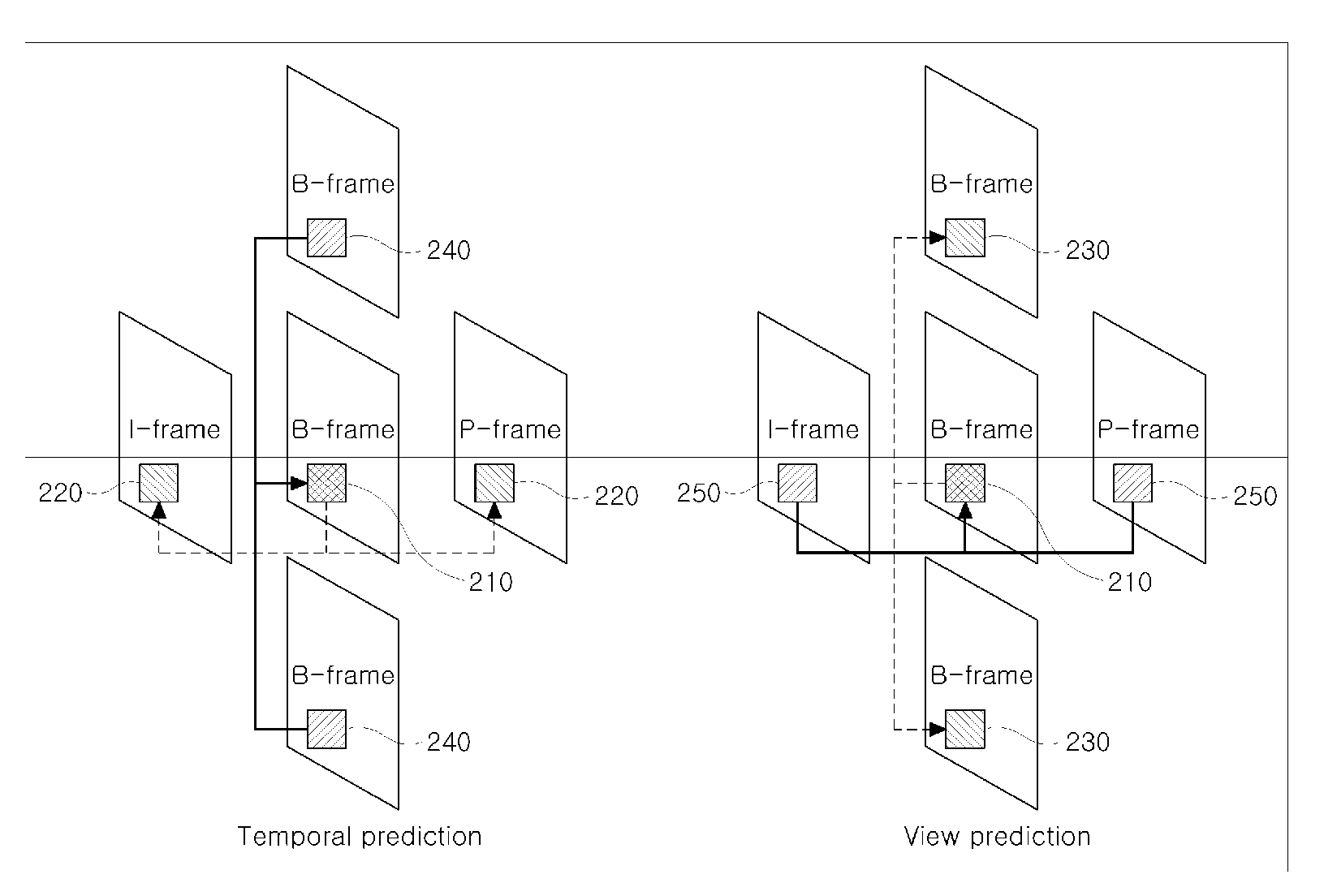

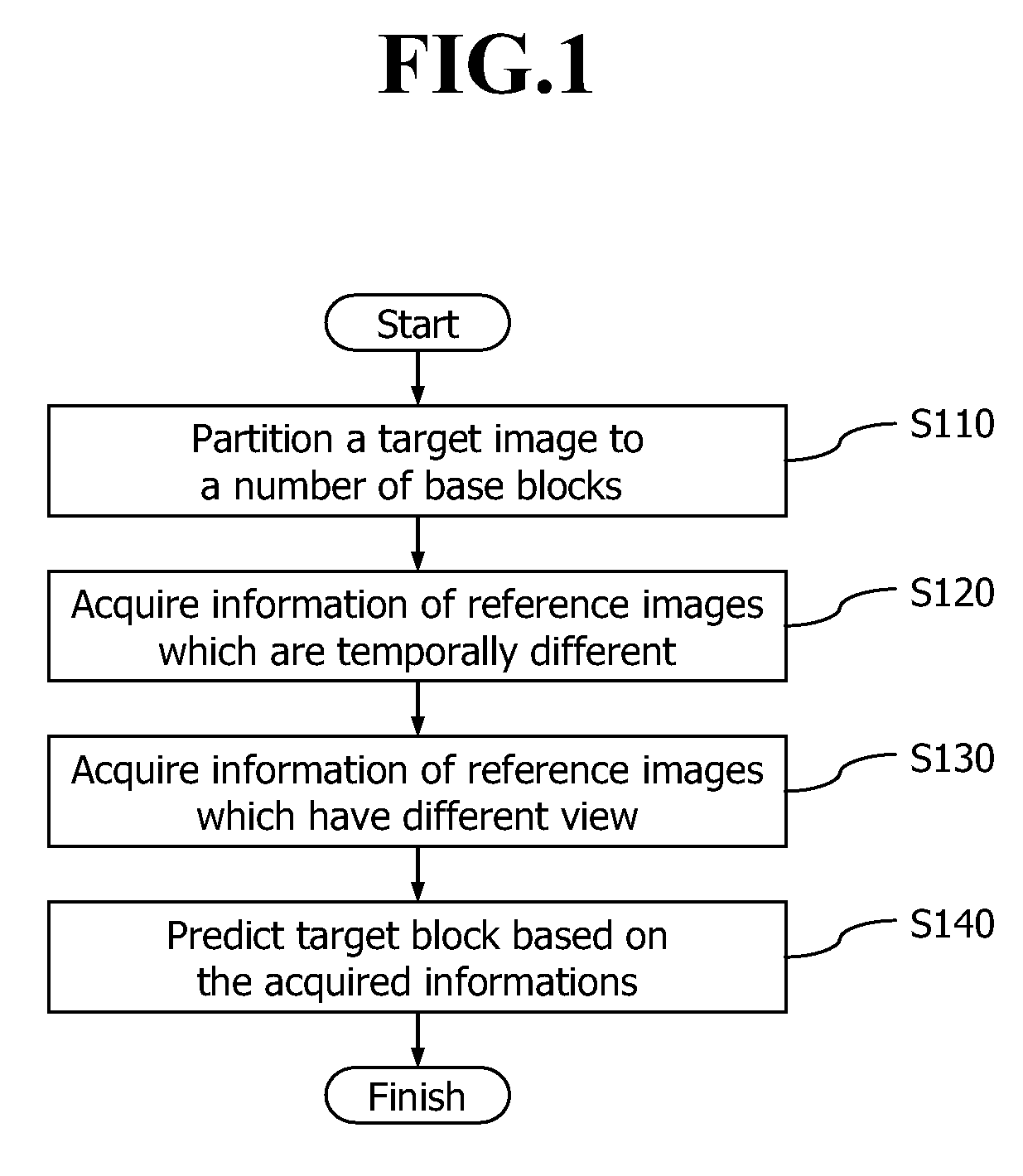

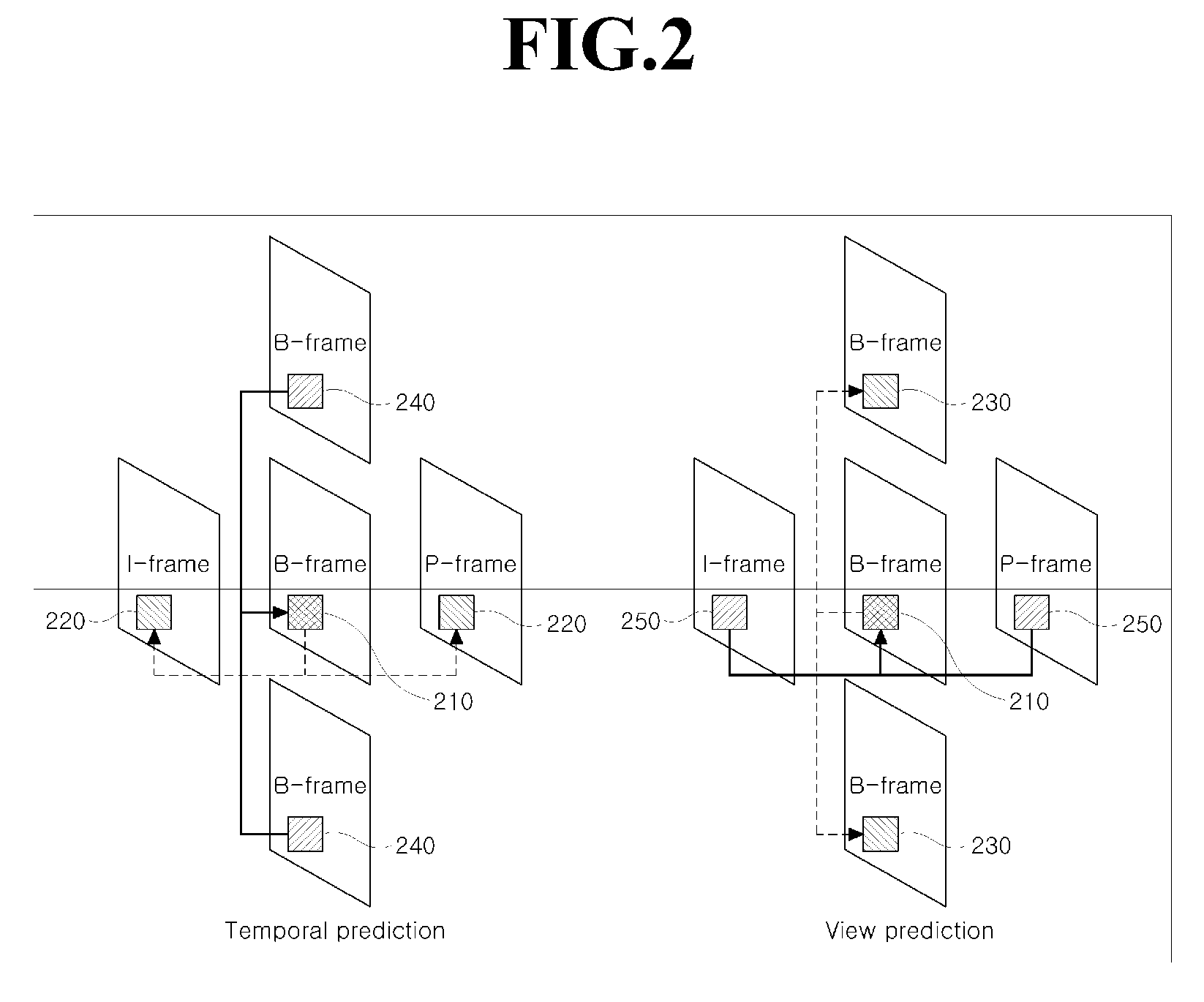

Method for Image Prediction of Multi-View Video Codec and Computer Readable Recording Medium Therefor

InactiveUS20080170753A1Improve coding efficiencyEffectively using imageCharacter and pattern recognitionDigital video signal modificationReference imageImage prediction

Provided are a method for image prediction of a multi-view video codec capable of improving coding efficiency, and a computer readable recording medium therefor. The method for image prediction of a multi-view video codec includes partitioning an image to a plurality of base blocks, acquiring information of reference images which are temporally different, acquiring information of reference images which have different views, and predicting a target block based on the acquired information. Accordingly, an image that is most similar to an image of a view to be currently compressed is generated using multiple images of different views, so that coding efficiency can be improved.

Owner:KOREA ELECTRONICS TECH INST

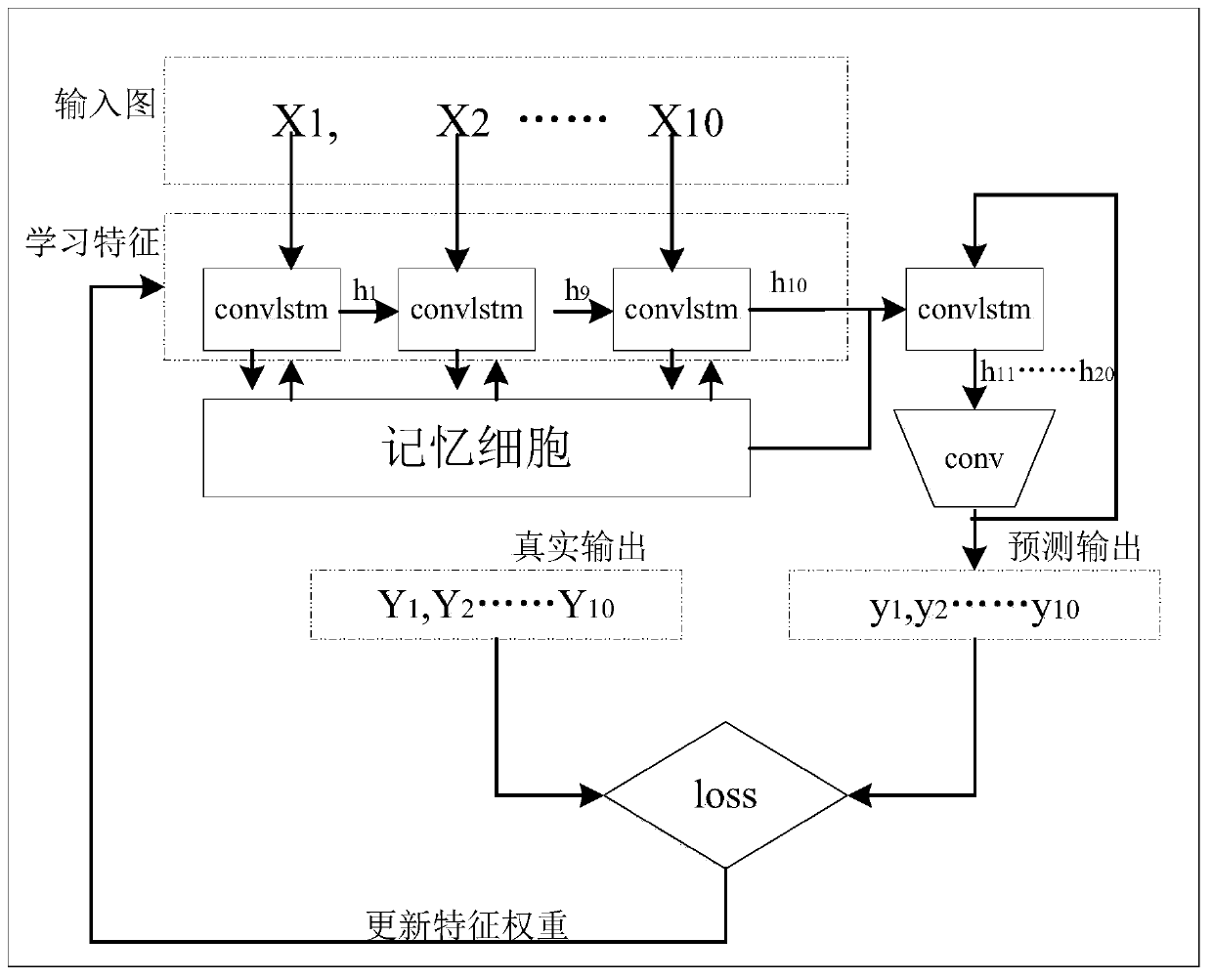

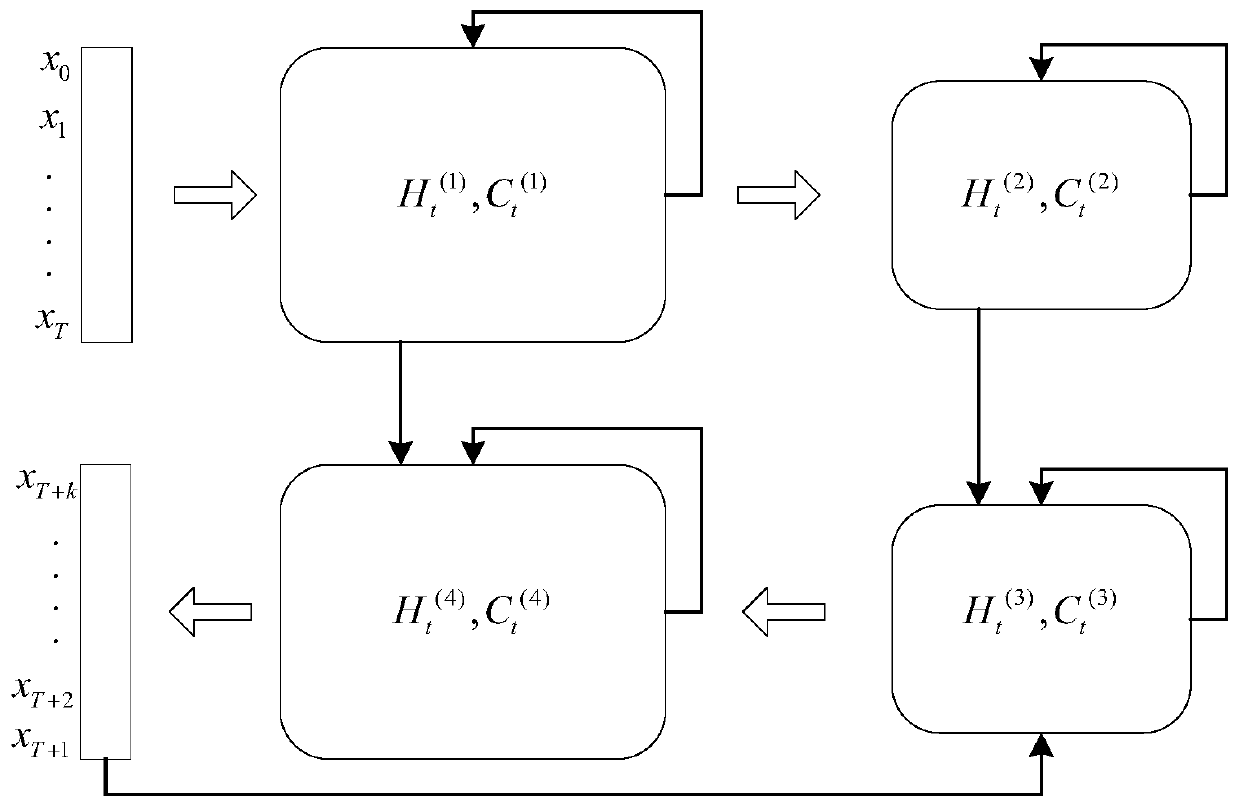

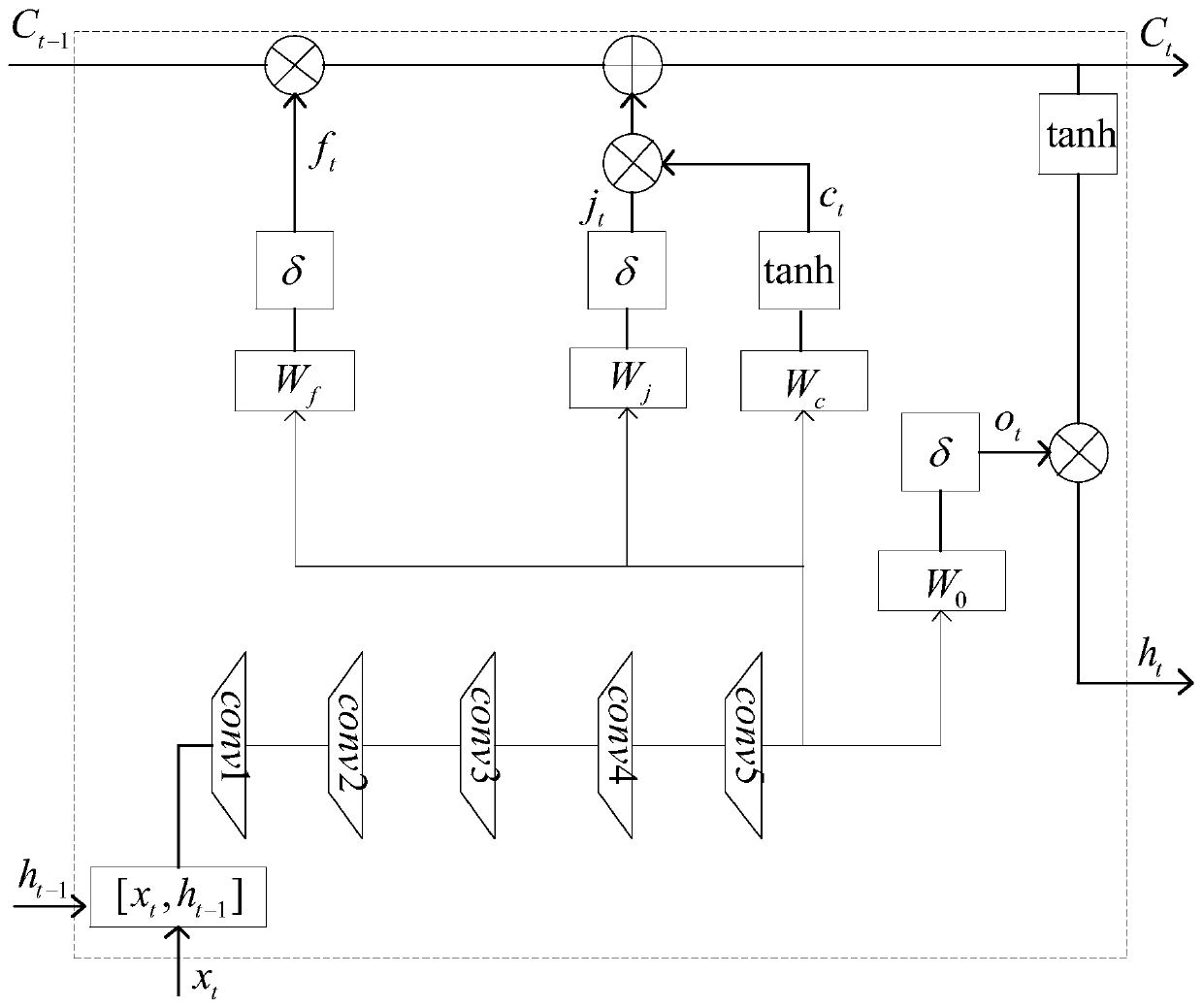

Image prediction method based on multi-layer convolution long-short-term memory neural network

ActiveCN110322009AImprove fitting abilityEnhance the ability to express image feature detailsForecastingNeural architecturesFeature extractionNerve network

The invention relates to an image prediction method based on a multi-layer convolution long-short-term memory neural network, and the method comprises the steps: extracting the change features of an inputted sequence image through a multi-layer convolution long-short-term memory neural network module, and extracting more abstract features through the deepening of single-layer convolution, therebyimproving the expression capability. In the prediction process, the extracted change features are utilized to predict a next image. The predicted image is input back to the prediction module again, sothat a next image is obtained. A single-layer convolution long-short-term memory neural network of an original method is poor in capability of extracting change features of sequence images, and showsthat structural details of the images output during prediction are fuzzy. According to the method, by deepening the convolution layer, more accurate change features are extracted, and the problem ofstructural detail blurring of the predicted image is obviously improved.

Owner:南京梅花软件系统股份有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com