Patents

Literature

18806 results about "Point cloud" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

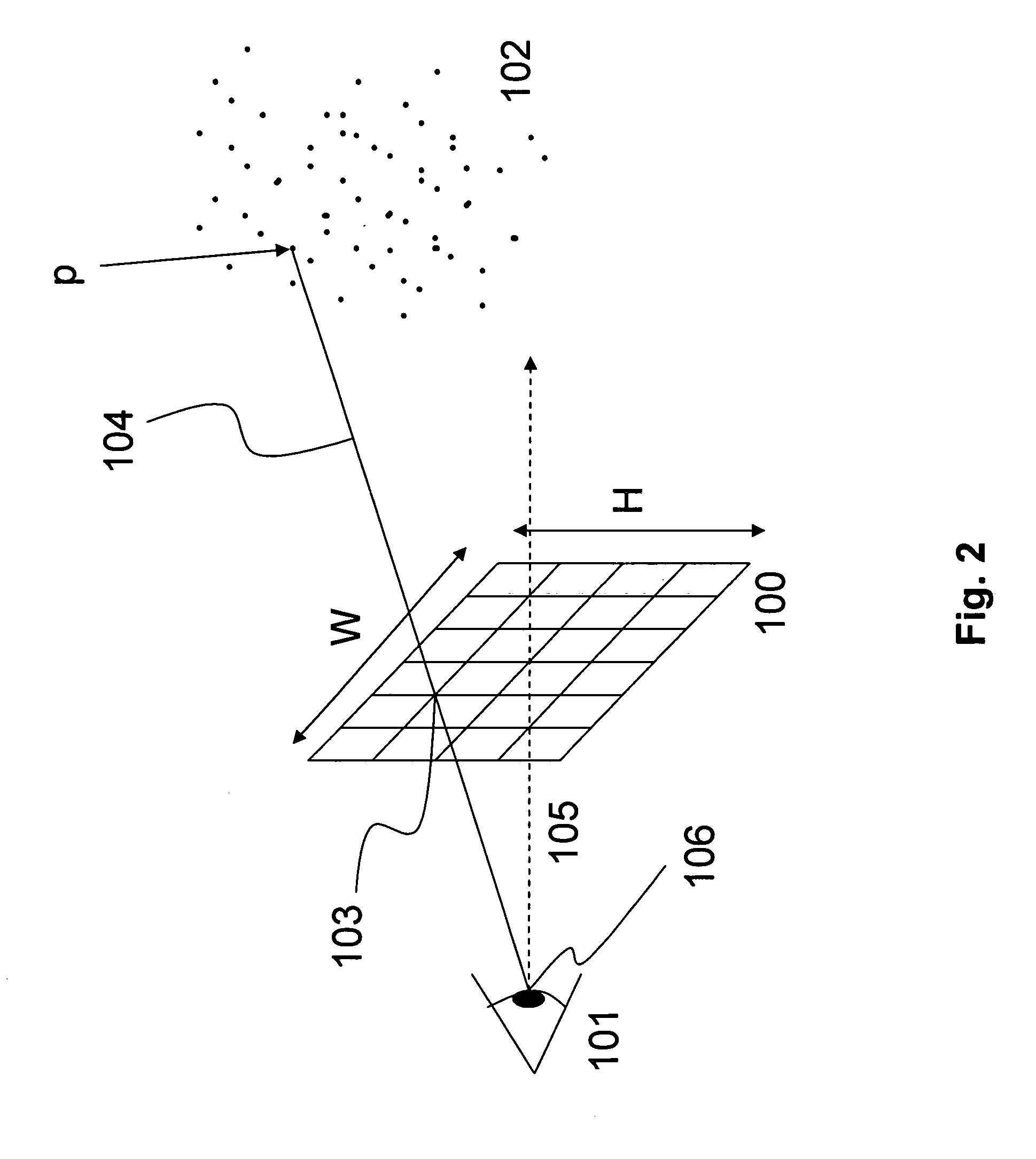

A point cloud is a set of data points in space. Point clouds are generally produced by 3D scanners, which measure many points on the external surfaces of objects around them. As the output of 3D scanning processes, point clouds are used for many purposes, including to create 3D CAD models for manufactured parts, for metrology and quality inspection, and for a multitude of visualization, animation, rendering and mass customization applications.

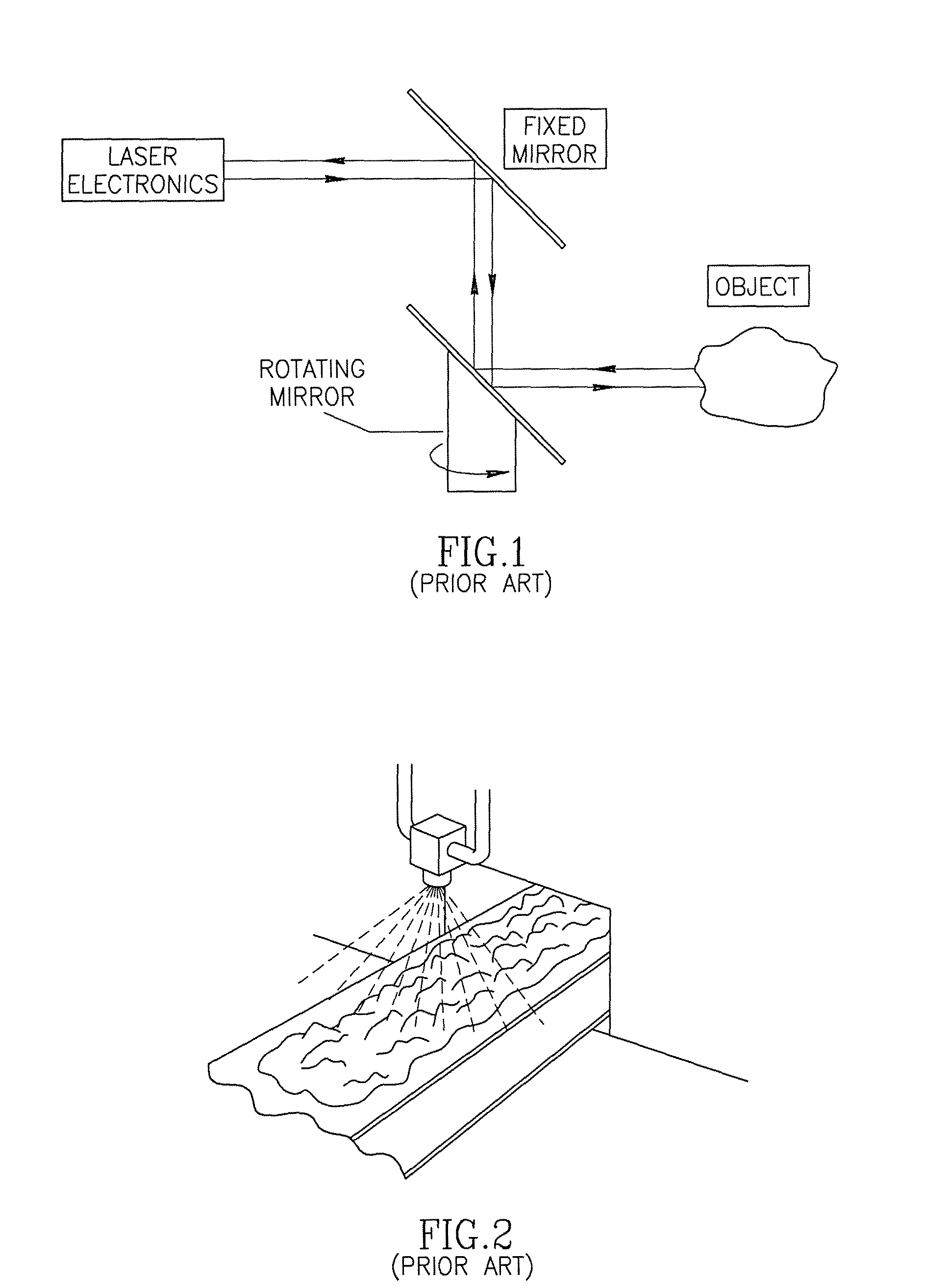

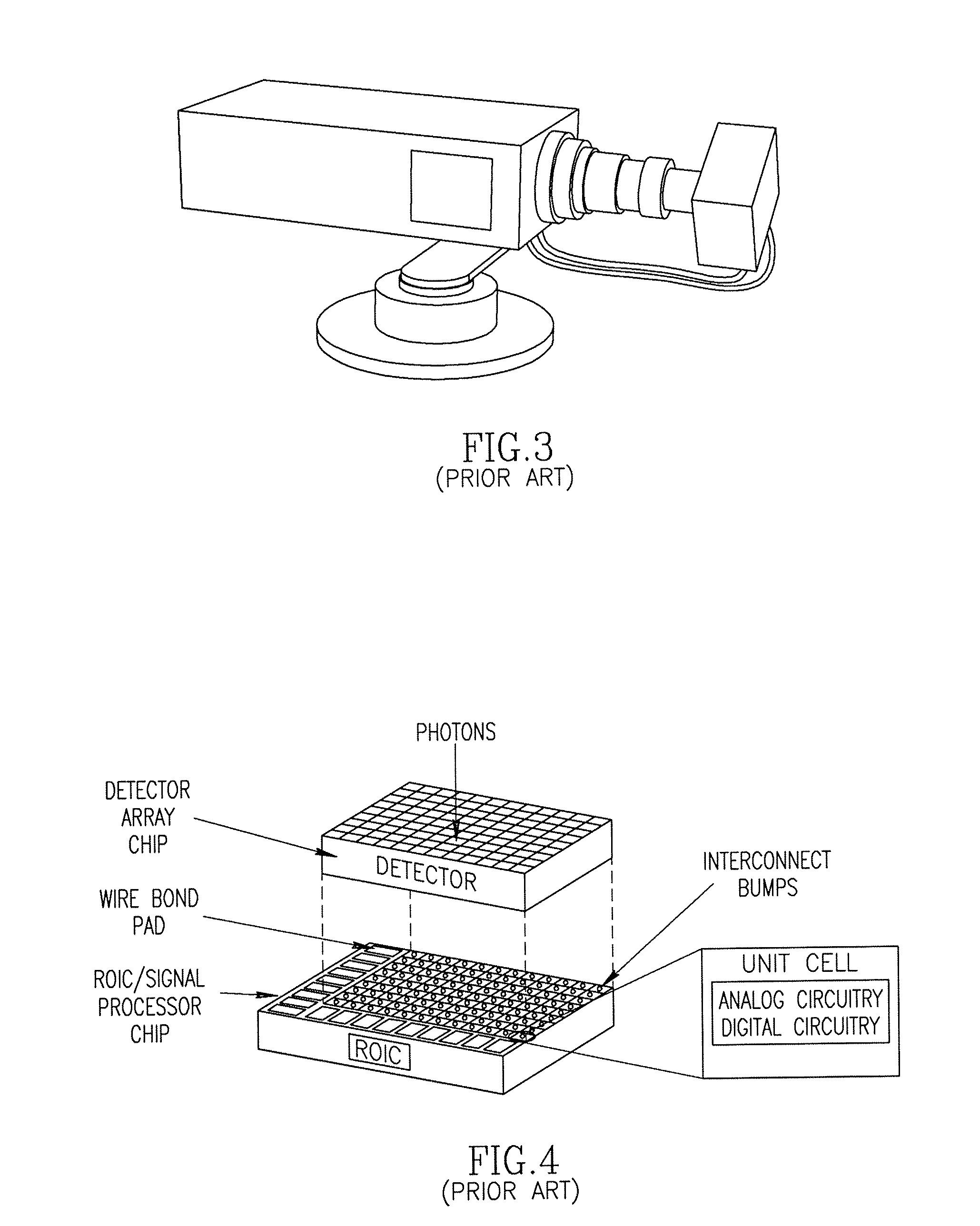

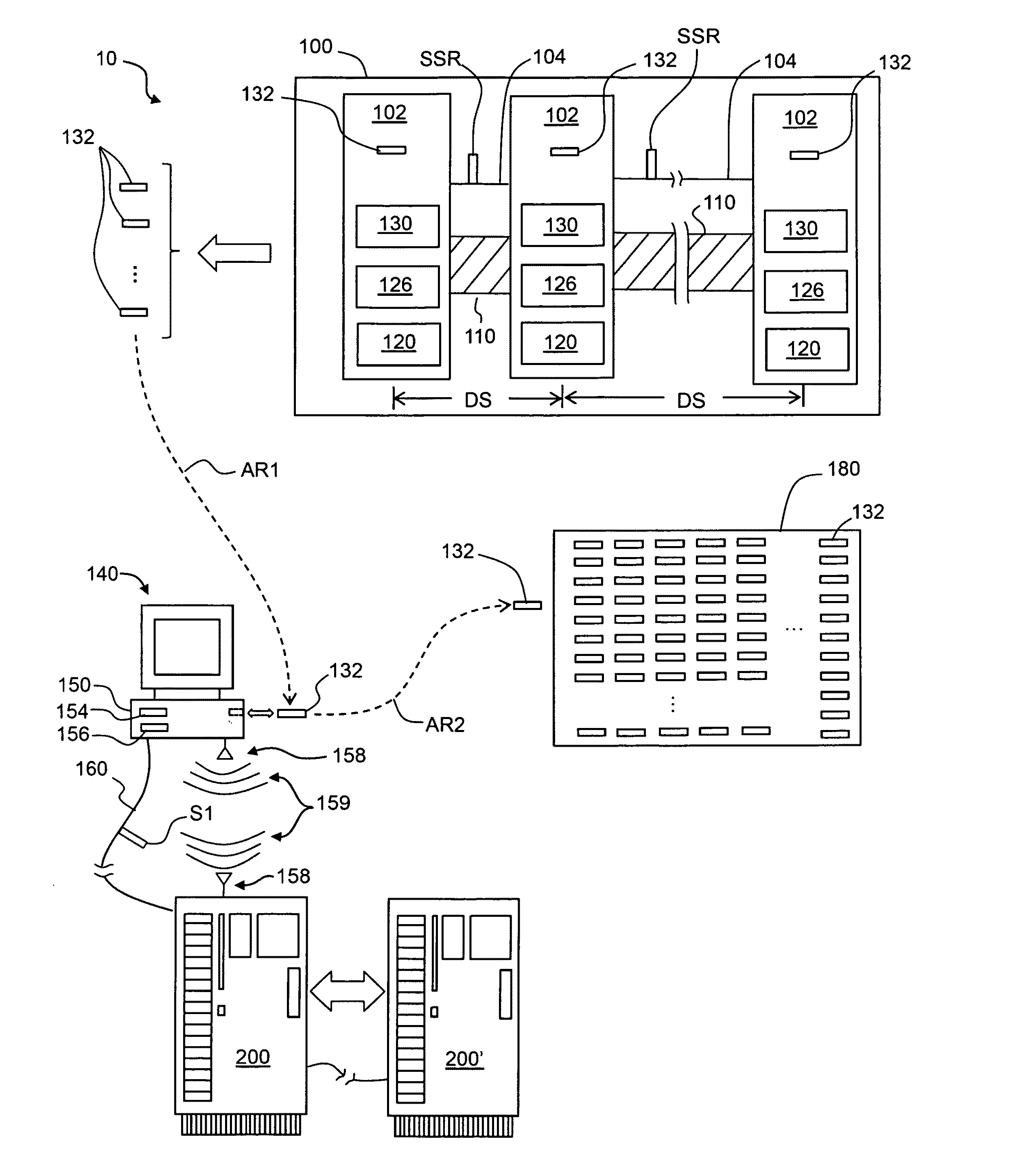

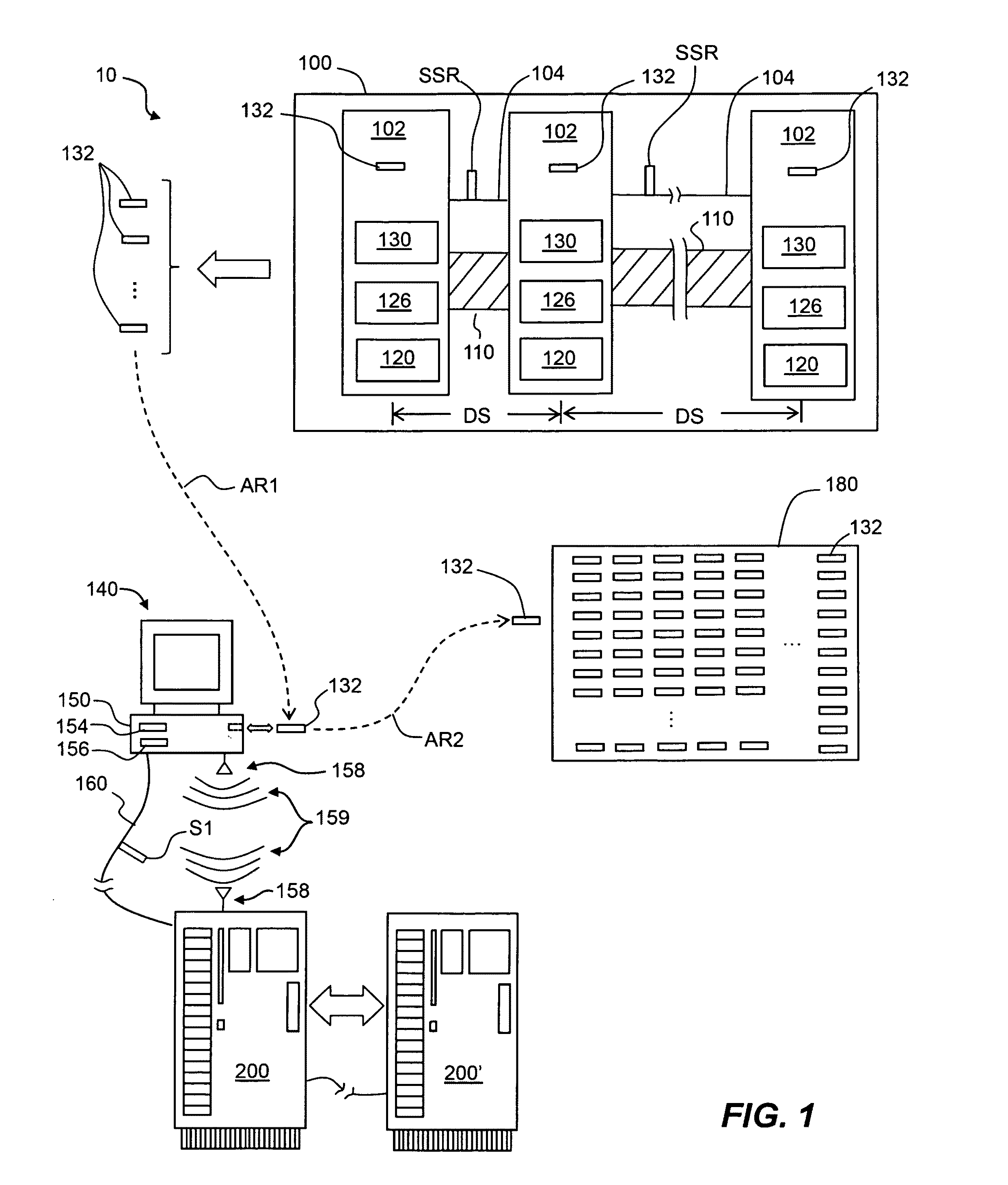

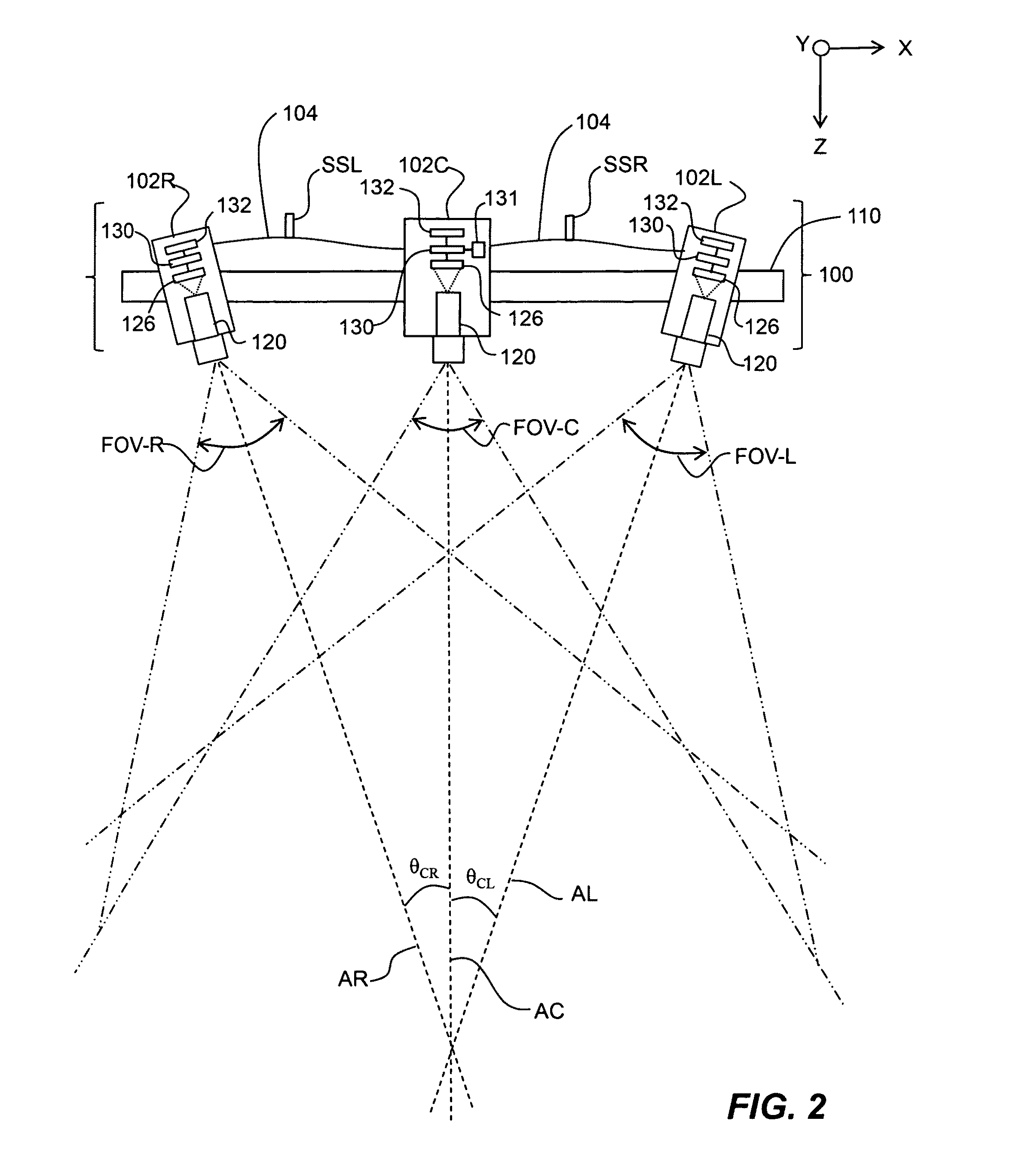

High definition lidar system

ActiveUS7969558B2More compactMore ruggedAngle measurementOptical rangefindersHigh definition tvPower coupling

Owner:VELODYNE LIDAR USA INC

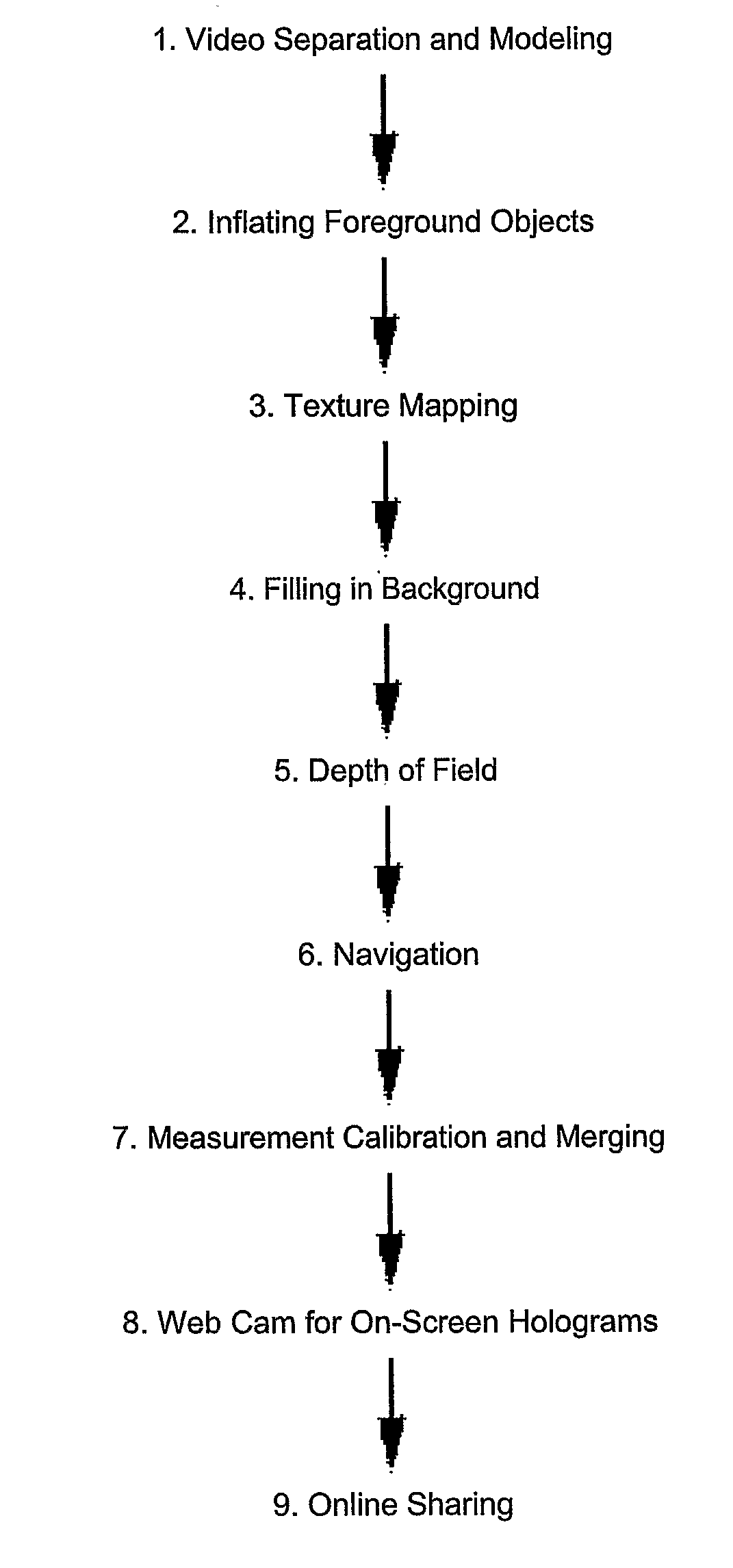

Automatic Scene Modeling for the 3D Camera and 3D Video

InactiveUS20080246759A1Reduce video bandwidthIncrease frame rateTelevision system detailsImage enhancementAutomatic controlViewpoints

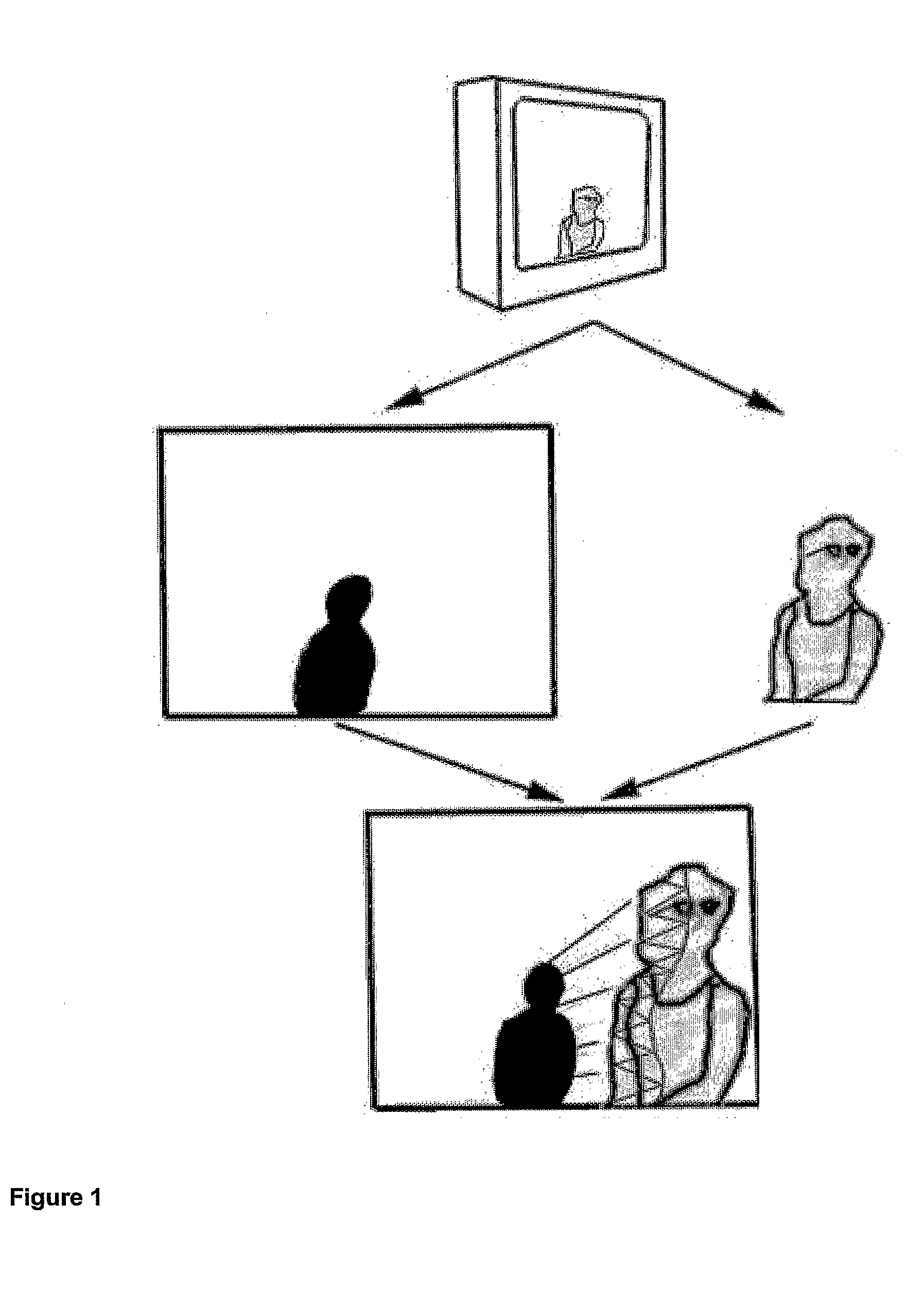

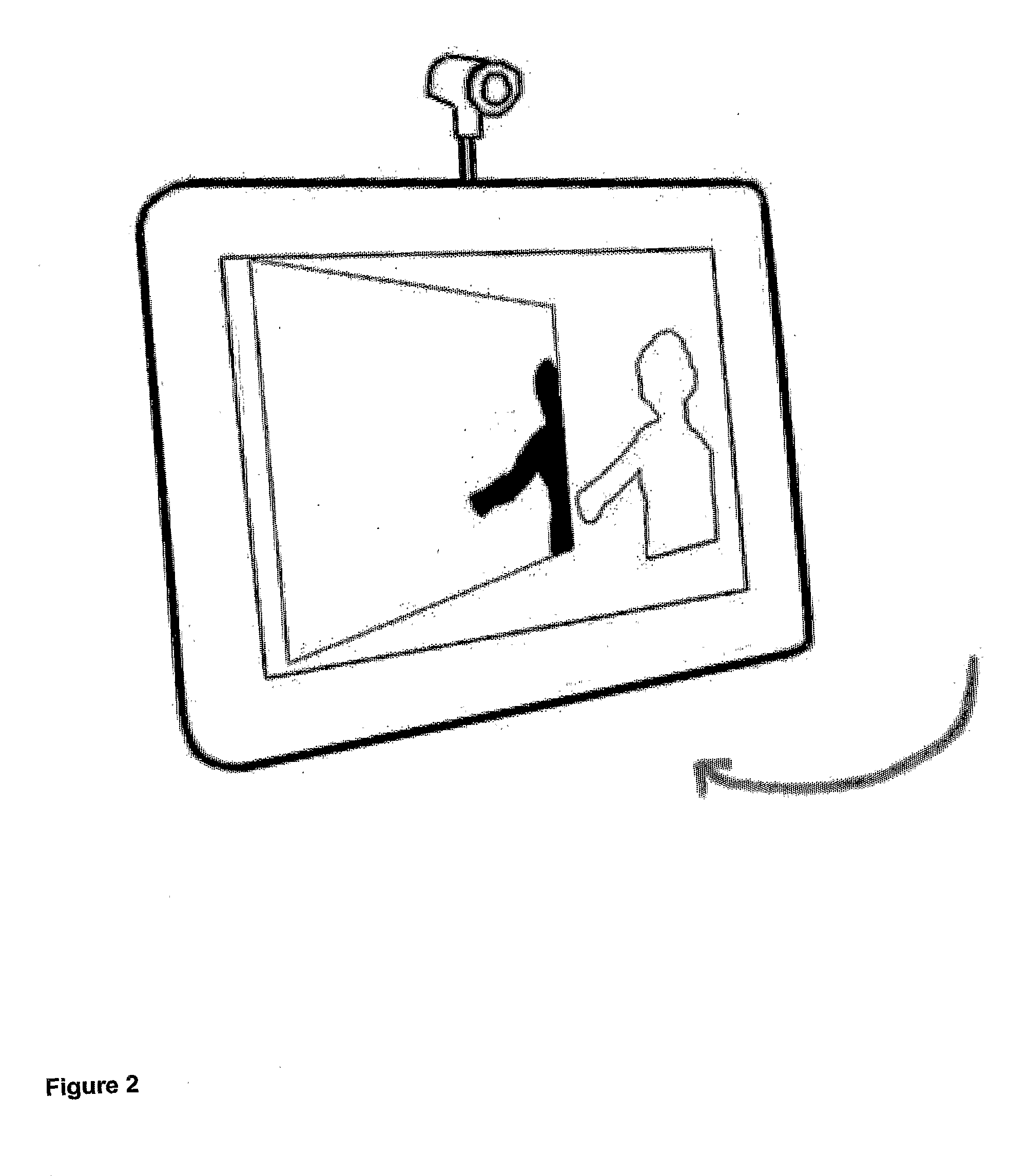

Single-camera image processing methods are disclosed for 3D navigation within ordinary moving video. Along with color and brightness, XYZ coordinates can be defined for every pixel. The resulting geometric models can be used to obtain measurements from digital images, as an alternative to on-site surveying and equipment such as laser range-finders. Motion parallax is used to separate foreground objects from the background. This provides a convenient method for placing video elements within different backgrounds, for product placement, and for merging video elements with computer-aided design (CAD) models and point clouds from other sources. If home users can save video fly-throughs or specific 3D elements from video, this method provides an opportunity for proactive, branded media sharing. When this image processing is used with a videoconferencing camera, the user's movements can automatically control the viewpoint, creating 3D hologram effects on ordinary televisions and computer screens.

Owner:SUMMERS

3D imaging system

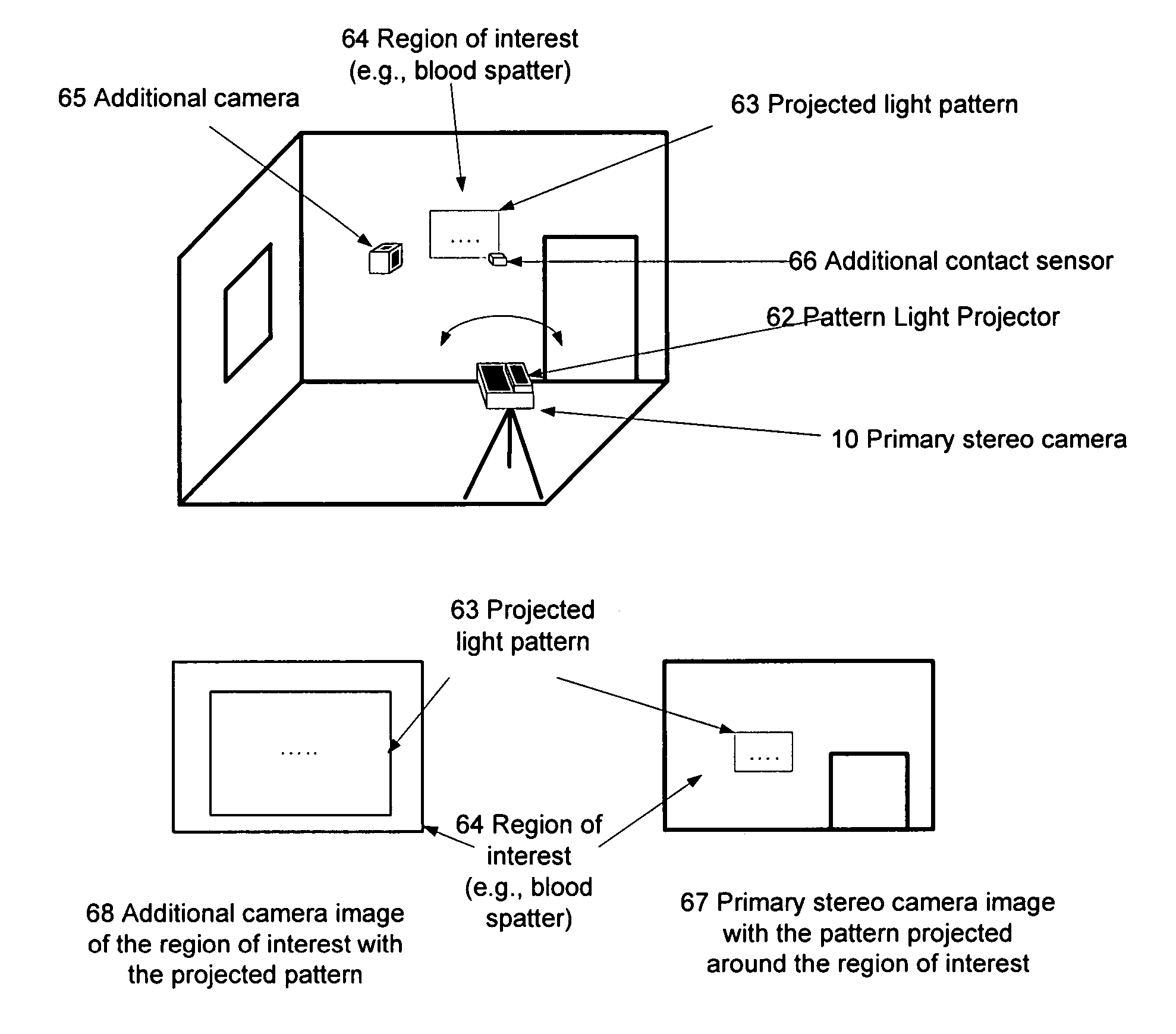

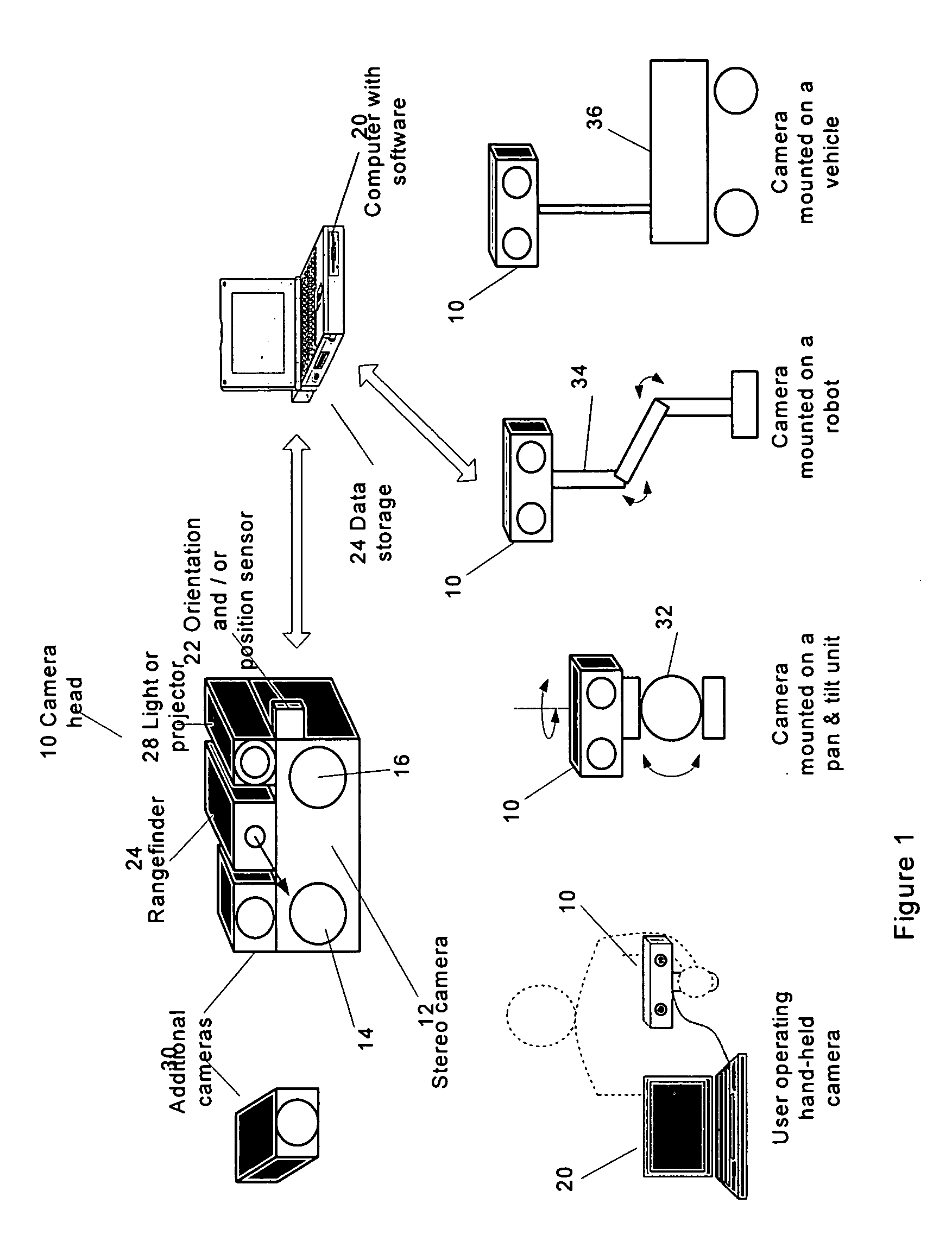

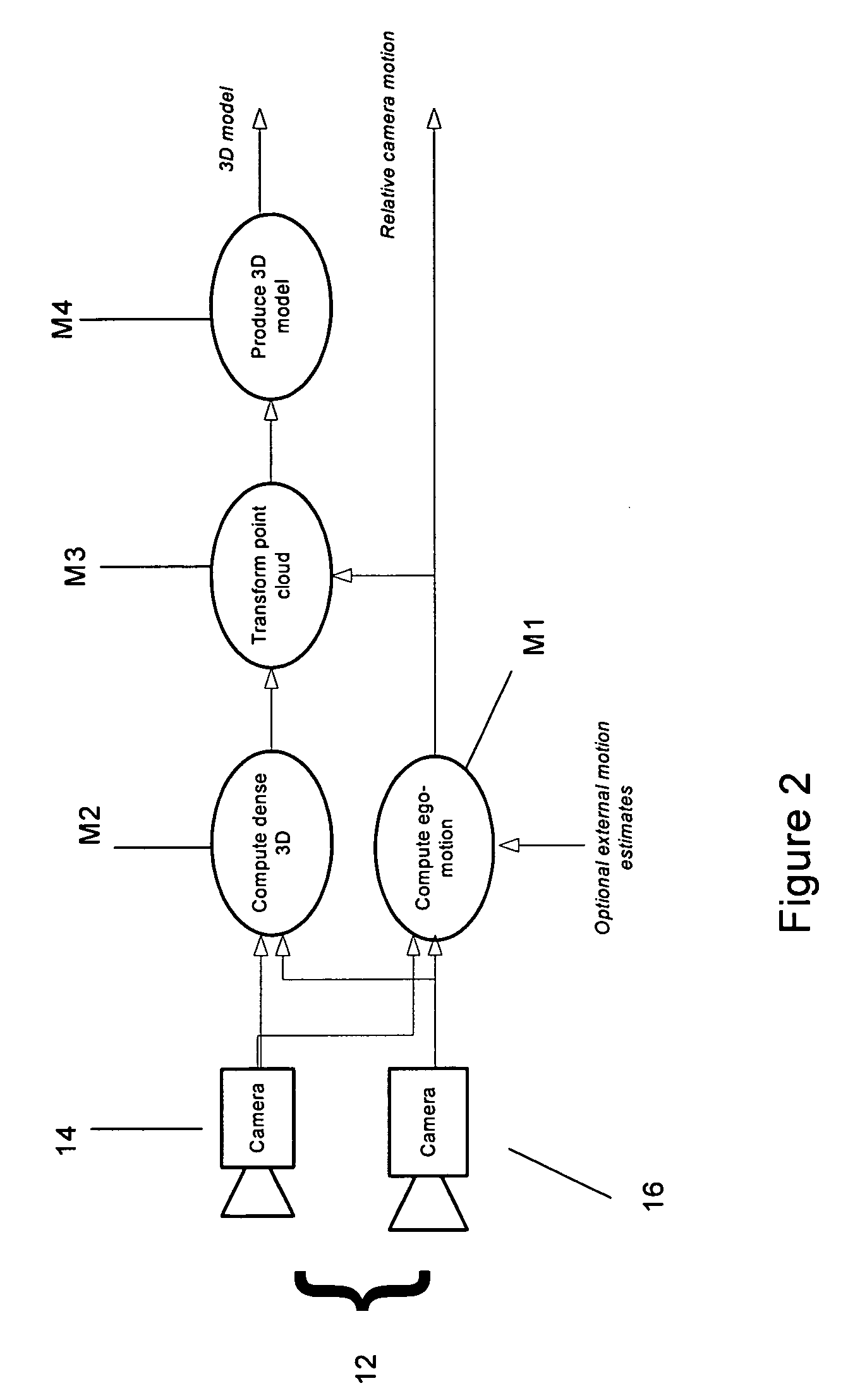

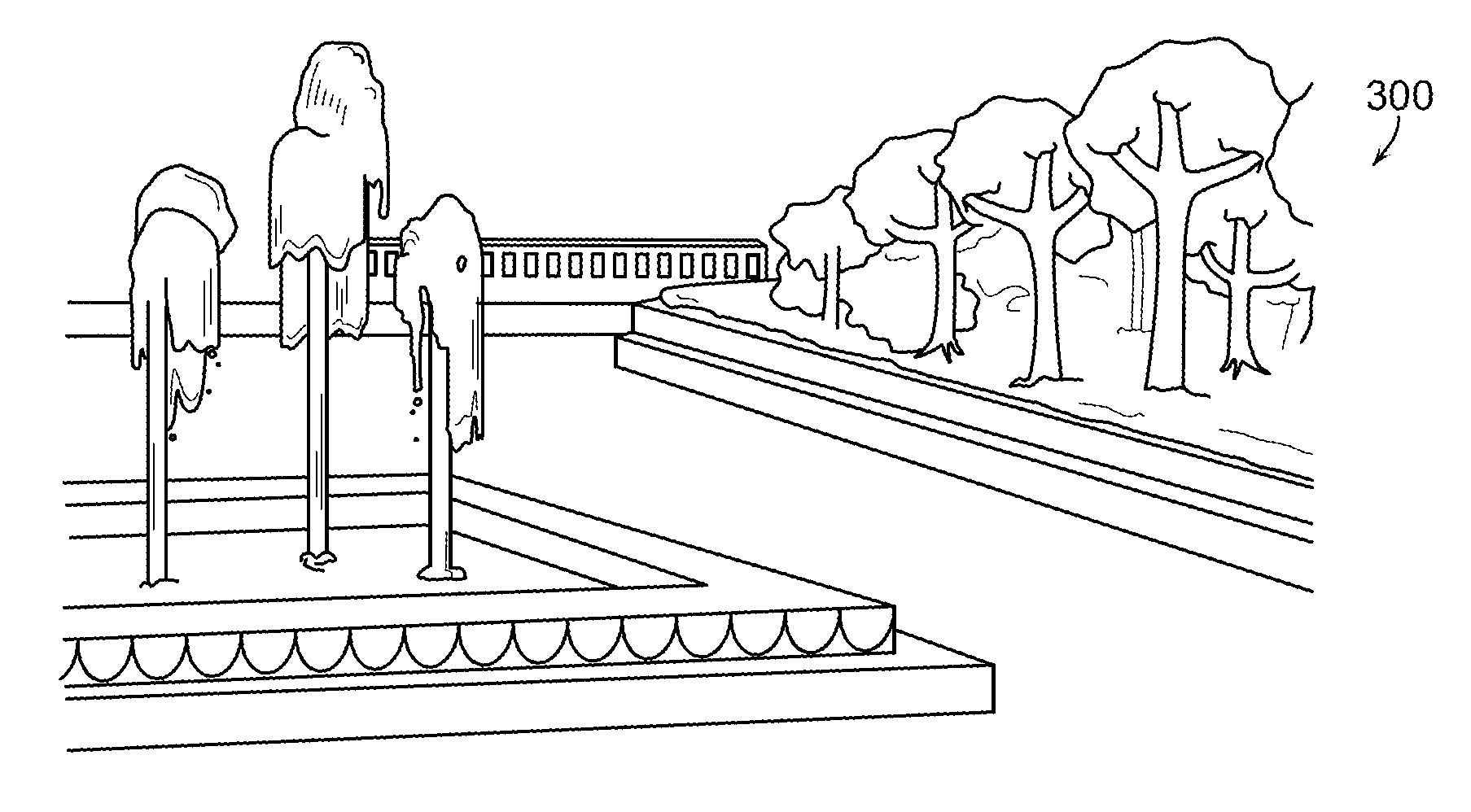

The present invention provides a system (method and apparatus) for creating photorealistic 3D models of environments and / or objects from a plurality of stereo images obtained from a mobile stereo camera and optional monocular cameras. The cameras may be handheld, mounted on a mobile platform, manipulator or a positioning device. The system automatically detects and tracks features in image sequences and self-references the stereo camera in 6 degrees of freedom by matching the features to a database to track the camera motion, while building the database simultaneously. A motion estimate may be also provided from external sensors and fused with the motion computed from the images. Individual stereo pairs are processed to compute dense 3D data representing the scene and are transformed, using the estimated camera motion, into a common reference and fused together. The resulting 3D data is represented as point clouds, surfaces, or volumes. The present invention also provides a system (method and apparatus) for enhancing 3D models of environments or objects by registering information from additional sensors to improve model fidelity or to augment it with supplementary information by using a light pattern projector. The present invention also provides a system (method and apparatus) for generating photo-realistic 3D models of underground environments such as tunnels, mines, voids and caves, including automatic registration of the 3D models with pre-existing underground maps.

Owner:MACDONALD DETTWILER & ASSOC INC

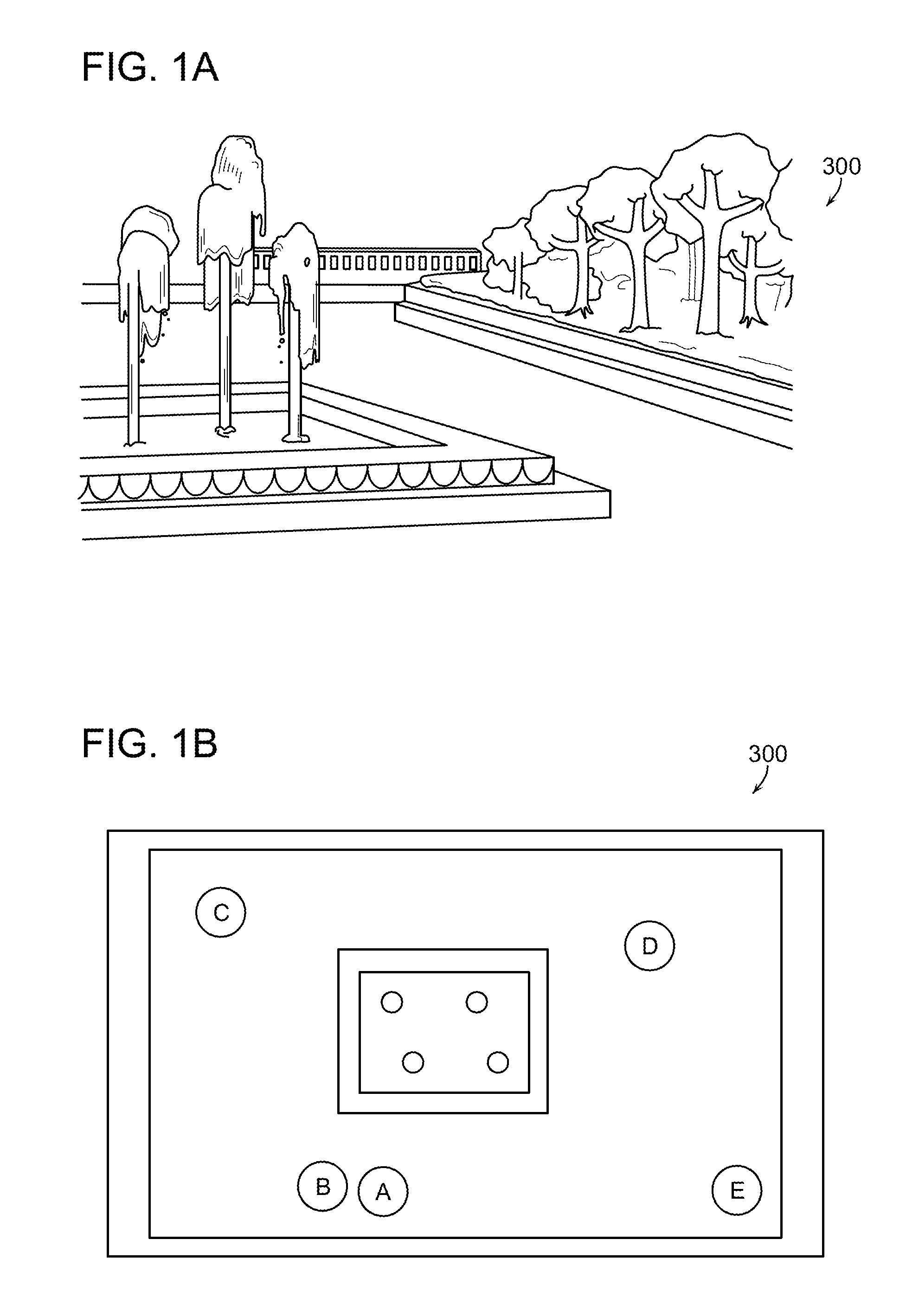

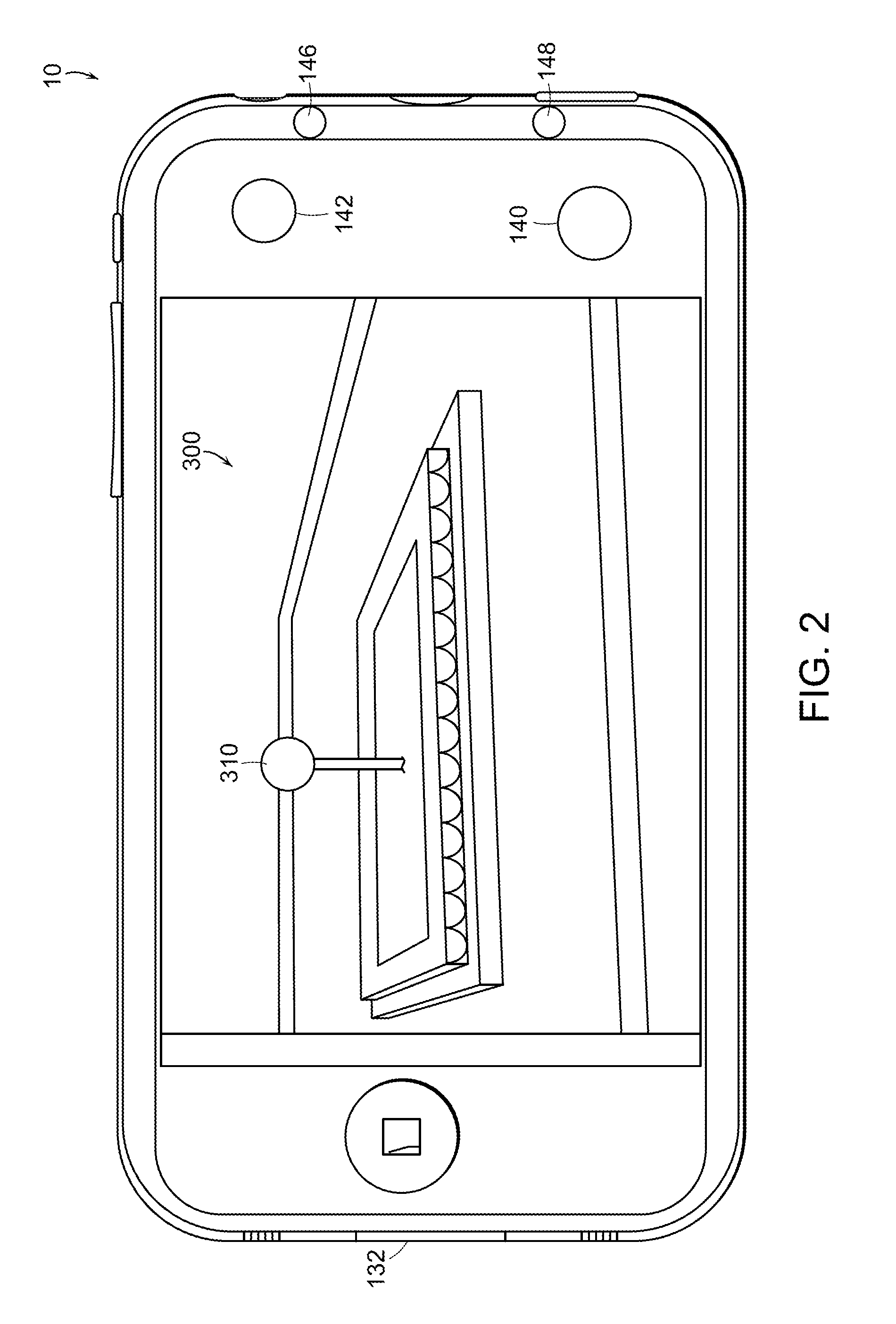

System and Method for Creating an Environment and for Sharing a Location Based Experience in an Environment

InactiveUS20130222369A1Color television detailsWeb data navigationPoint cloudComputer graphics (images)

A system for creating an environment and for sharing an experience based on the environment includes a plurality of mobile devices having a camera employed near a point of interest to capture random, crowdsourced images and associated metadata near said point of interest, wherein the metadata for each image includes location of the mobile device and the orientation of the camera. Preferably, the images include depth camera information. A wireless network communicates with the mobile devices to accept the images and metadata and to build and store a point cloud or 3D model of the region. Users connect to this experience platform to view the 3D model from a user selected location and orientation and to participate in experiences with, for example, a social network.

Owner:HUSTON CHARLES D +1

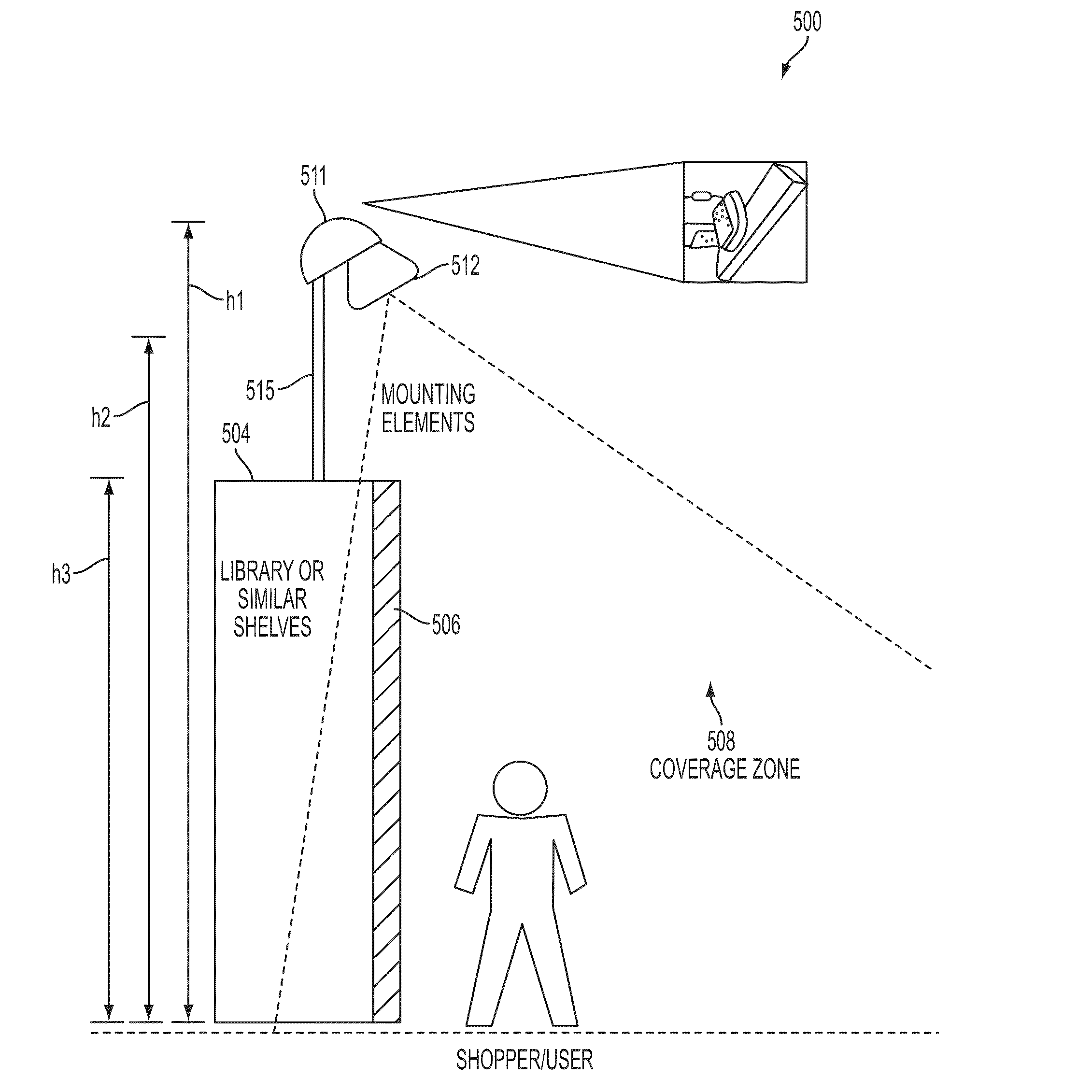

Methods and systems for measuring human interaction

InactiveUS20140132728A1Character and pattern recognitionSteroscopic systemsHuman interactionPoint cloud

A method and system for measuring and reacting to human interaction with elements in a space, such as public places (retail stores, showrooms, etc.) is disclosed which may determine information about an interaction of a three dimensional object of interest within a three dimensional zone of interest with a point cloud 3D scanner having an image frame generator generating a point cloud 3D scanner frame comprising an array of depth coordinates for respective two dimensional coordinates of at least part of a surface of the object of interest, within the three dimensional zone of interest, comprising a three dimensional coverage zone encompassing a three dimensional engagement zone and a computing comparing respective frames to determine the time and location of a collision between the object of interest and a surface of at least one of the three dimensional coverage zone or the three dimensional engagement zone encompassed by the dimensional coverage zone.

Owner:SHOPPERCEPTION

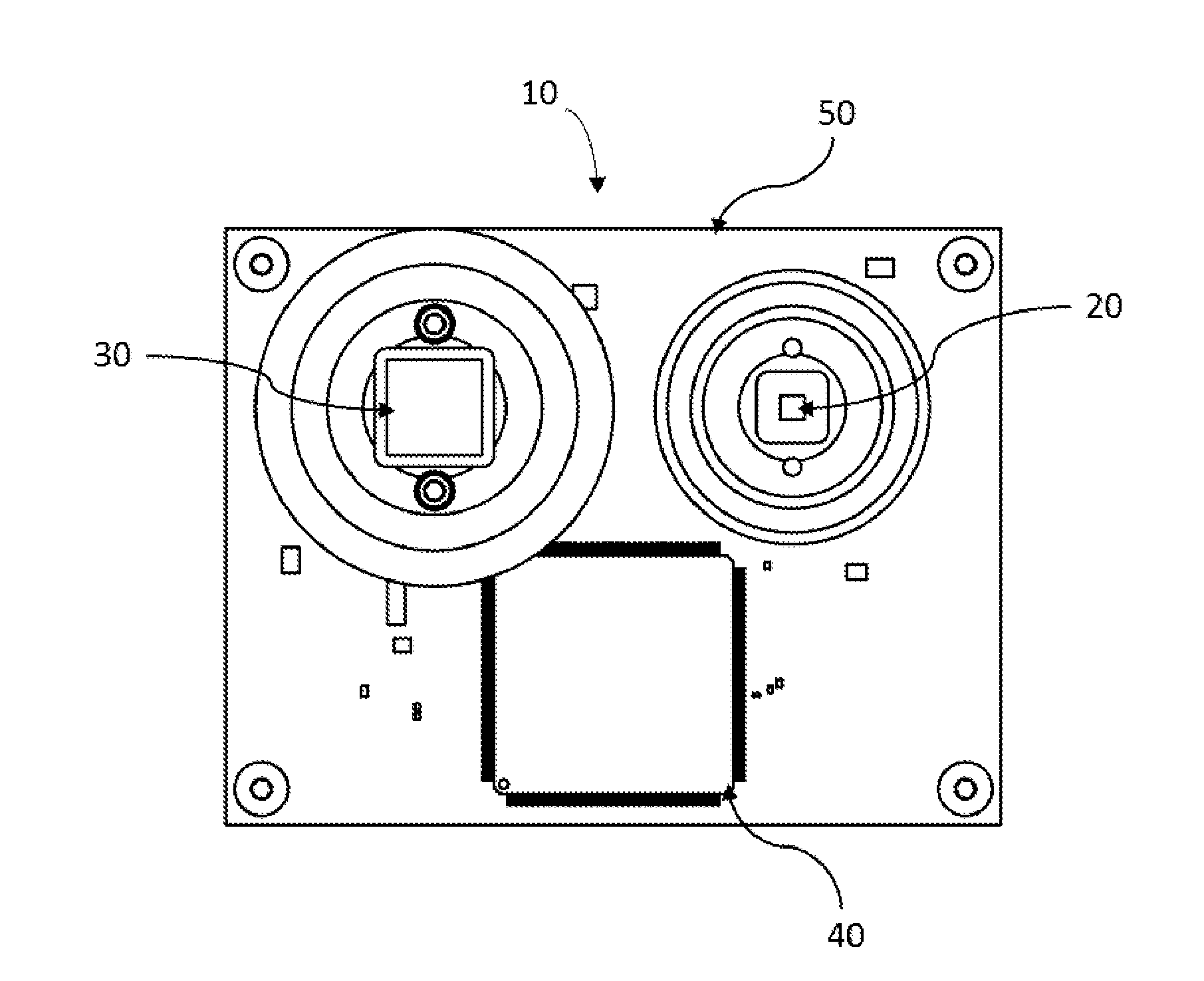

Optical phased array lidar system and method of using same

A lidar-based system and method are used for the solid state beamforming and steering of laser beams using optical phased array (OPA) photonic integrated circuits (PICs) and the detection of laser beams using photodetectors. Transmitter and receiver electronics, power management electronics, control electronics, data conversion electronics and processing electronics are also included in the system and used in the method.Laser pulses beamformed by the OPA PIC reflect from objects in the field of view (FOV) of said OPA, and are detected by a detector or a set of detectors.A lidar system includes at least one lidar, and any subset and any number of complementary sensors, data processing / communication / storage modules, and a balance of system for supplying power, protecting, connecting, and mounting the components of said system.Direct correlation between the 3D point cloud generated by the lidar and the color images captured by an RGB (Red, Green, Blue) video camera can be achieved by using an optical beam splitter that sends optical signals simultaneously to both sensors.A lidar system may contain a plurality of lidar sensors, a lidar sensor may contain a plurality of optical transmitters, and an optical transmitter may contain a plurality of OPA PICs.

Owner:QUANERGY SOLUTIONS INC

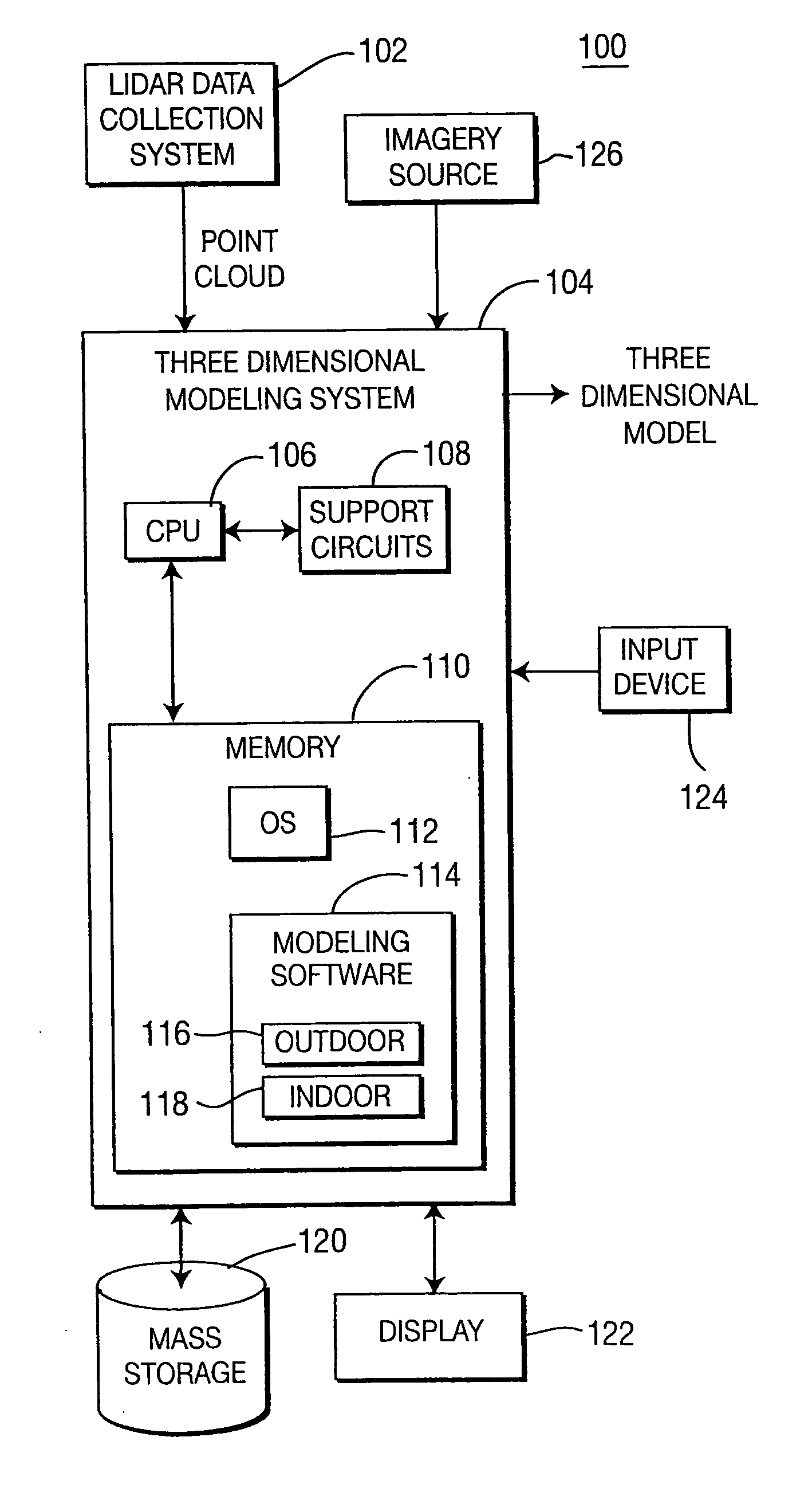

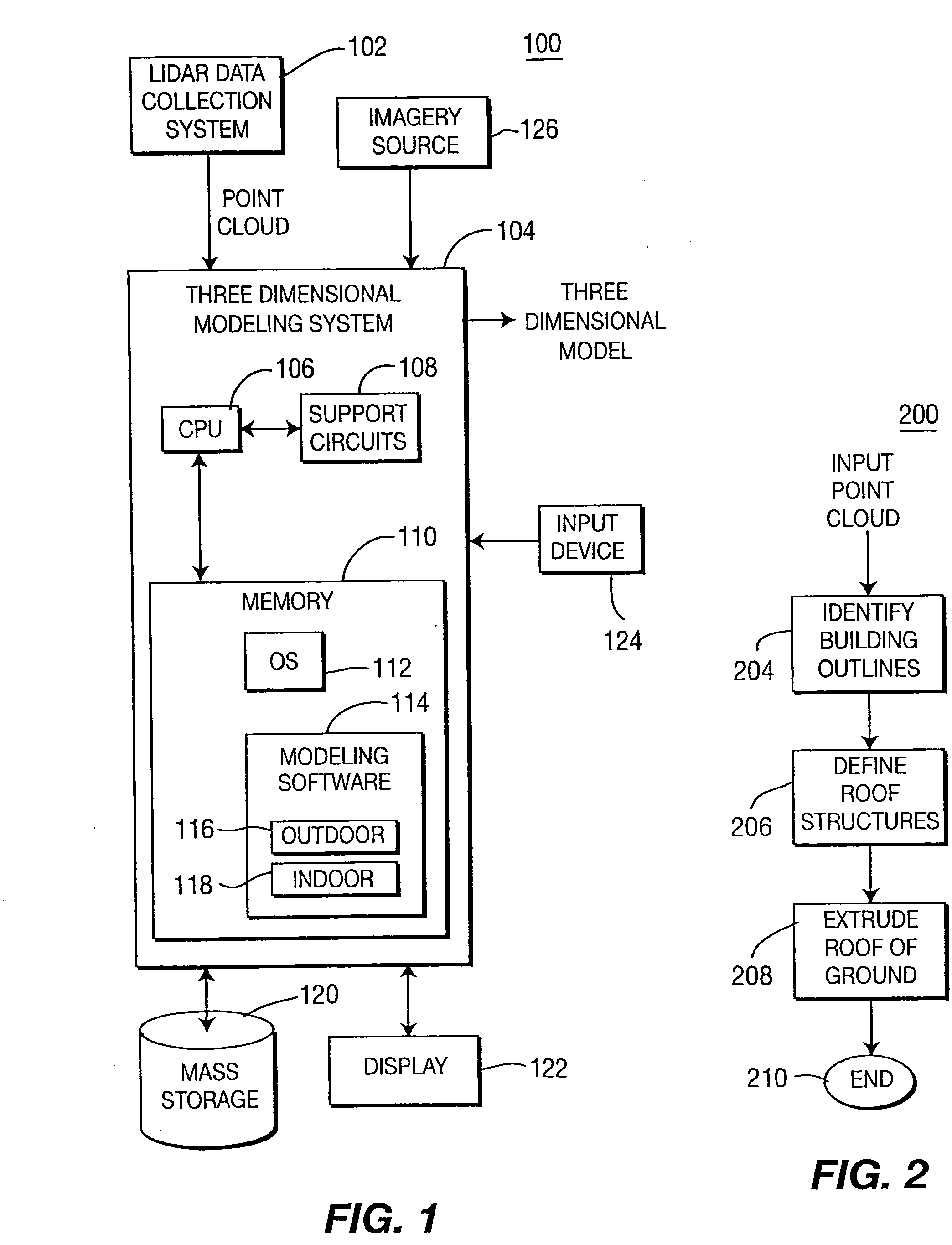

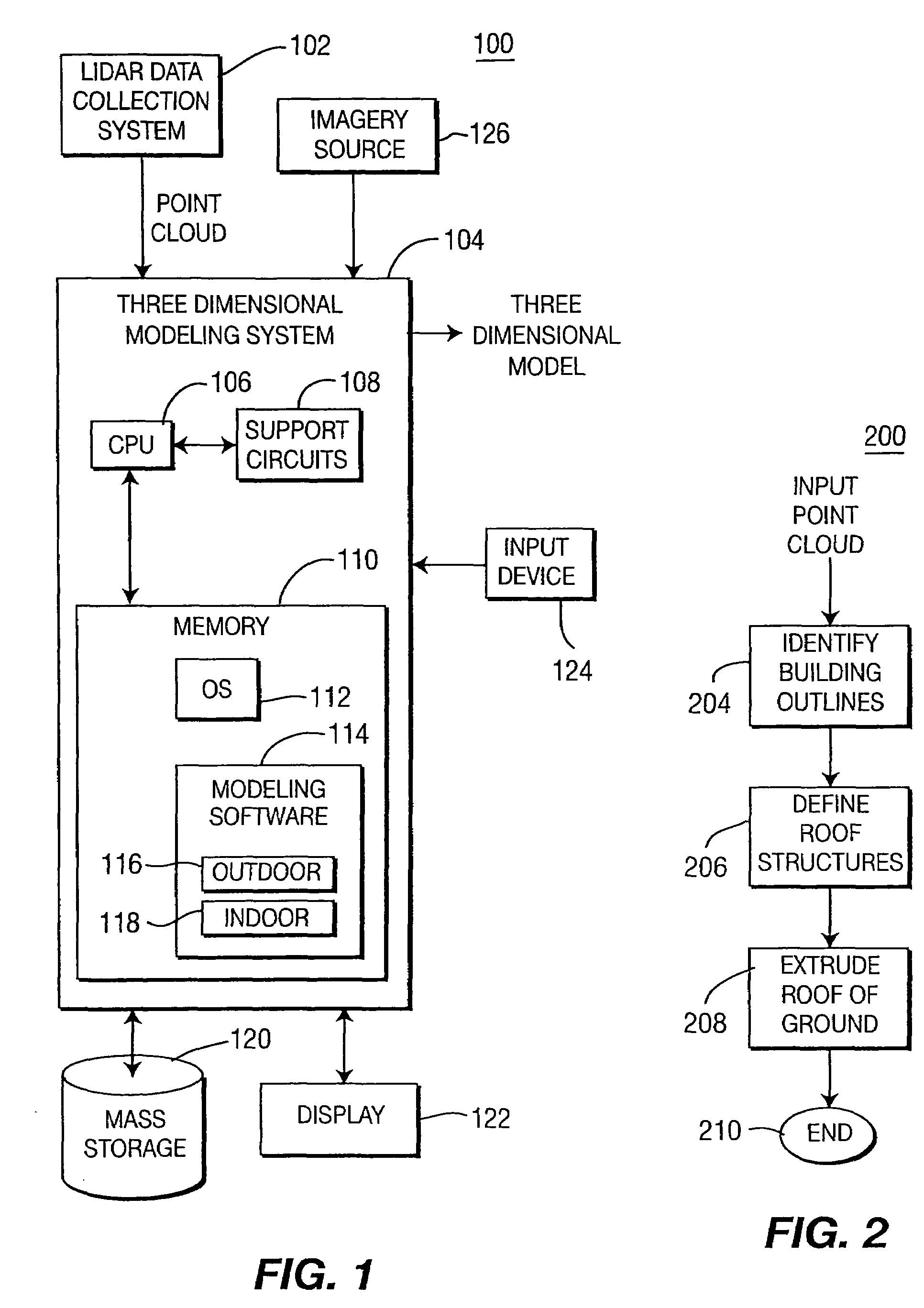

Method and apparatus for performing three-dimensional computer modeling

A method and apparatus for automatically generating a three-dimensional computer model from a “point cloud” of a scene produced by a laser radar (LIDAR) system. Given a point cloud of an indoor or outdoor scene, the method extracts certain structures from the imaged scene, i.e., ceiling, floor, furniture, rooftops, ground, and the like, and models these structures with planes and / or prismatic structures to achieve a three-dimensional computer model of the scene. The method may then add photographic and / or synthetic texturing to the model to achieve a realistic model.

Owner:SRI INTERNATIONAL

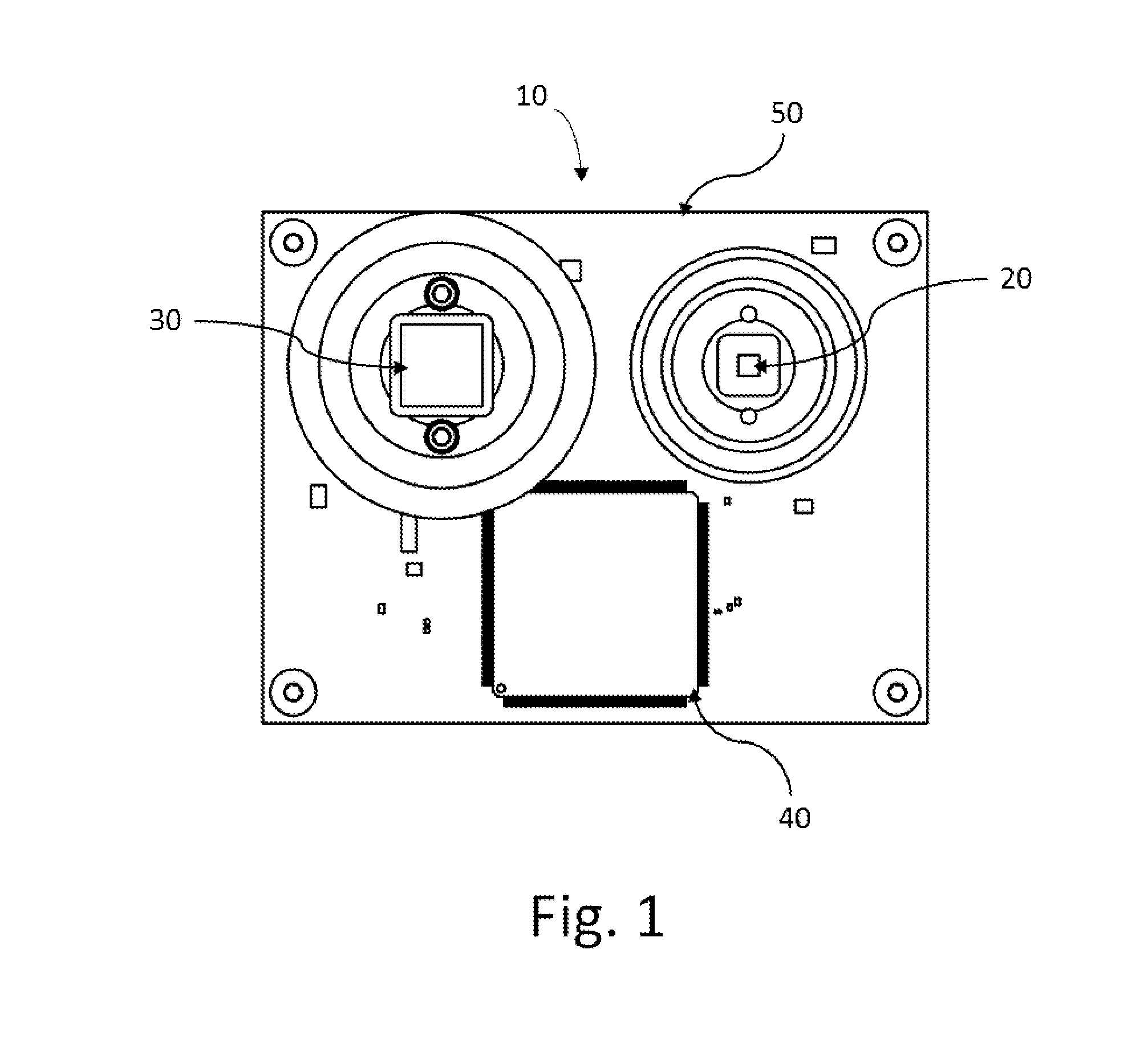

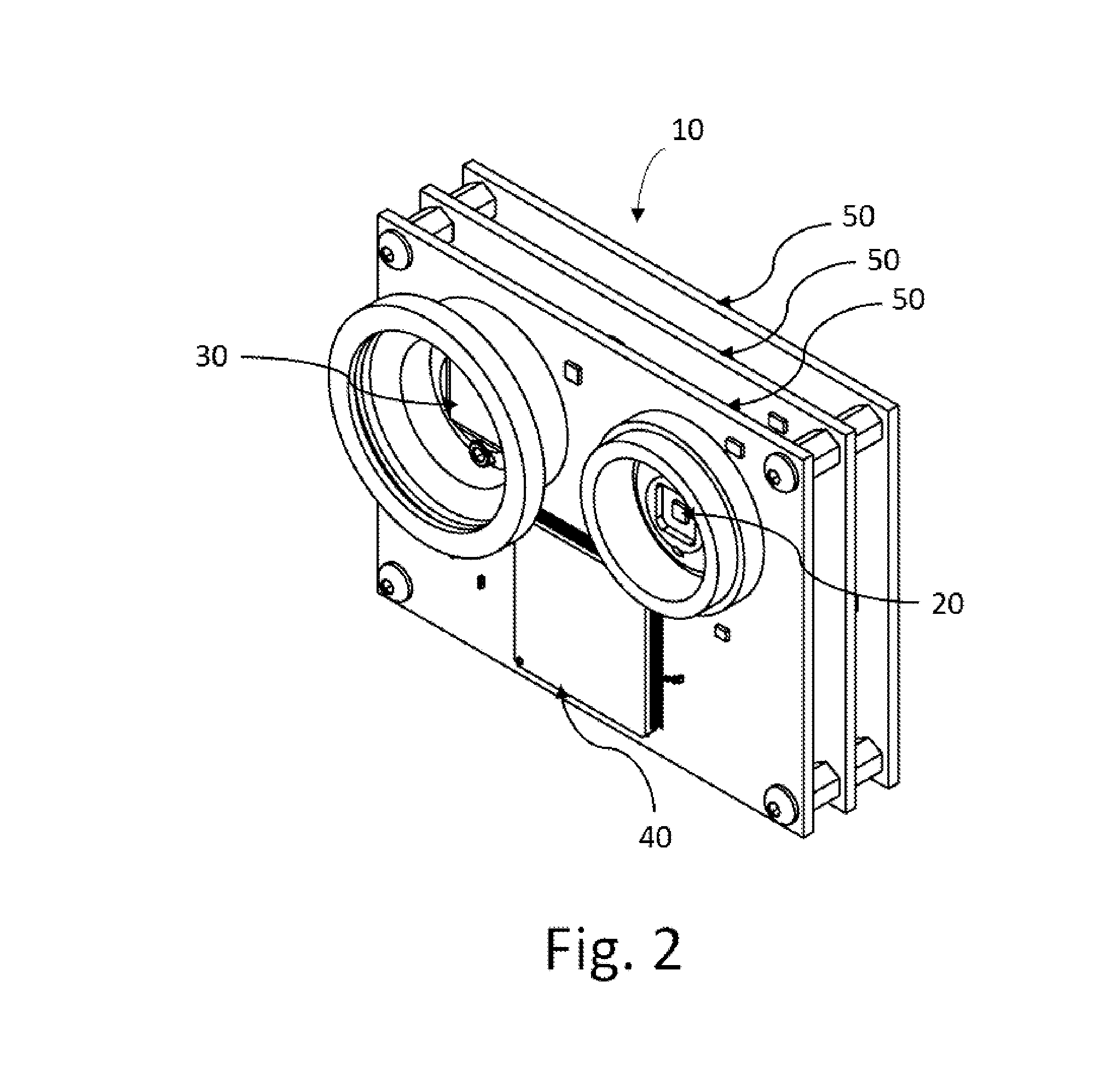

Systems and methods for 2D image and spatial data capture for 3D stereo imaging

InactiveUS20110222757A1Facilitate post-productionLarge separationImage enhancementImage analysisVirtual cameraMovie camera

Systems and methods for 2D image and spatial data capture for 3D stereo imaging are disclosed. The system utilizes a cinematography camera and at least one reference or “witness” camera spaced apart from the cinematography camera at a distance much greater that the interocular separation to capture 2D images over an overlapping volume associated with a scene having one or more objects. The captured image date is post-processed to create a depth map, and a point cloud is created form the depth map. The robustness of the depth map and the point cloud allows for dual virtual cameras to be placed substantially arbitrarily in the resulting virtual 3D space, which greatly simplifies the addition of computer-generated graphics, animation and other special effects in cinemagraphic post-processing.

Owner:SHAPEQUEST

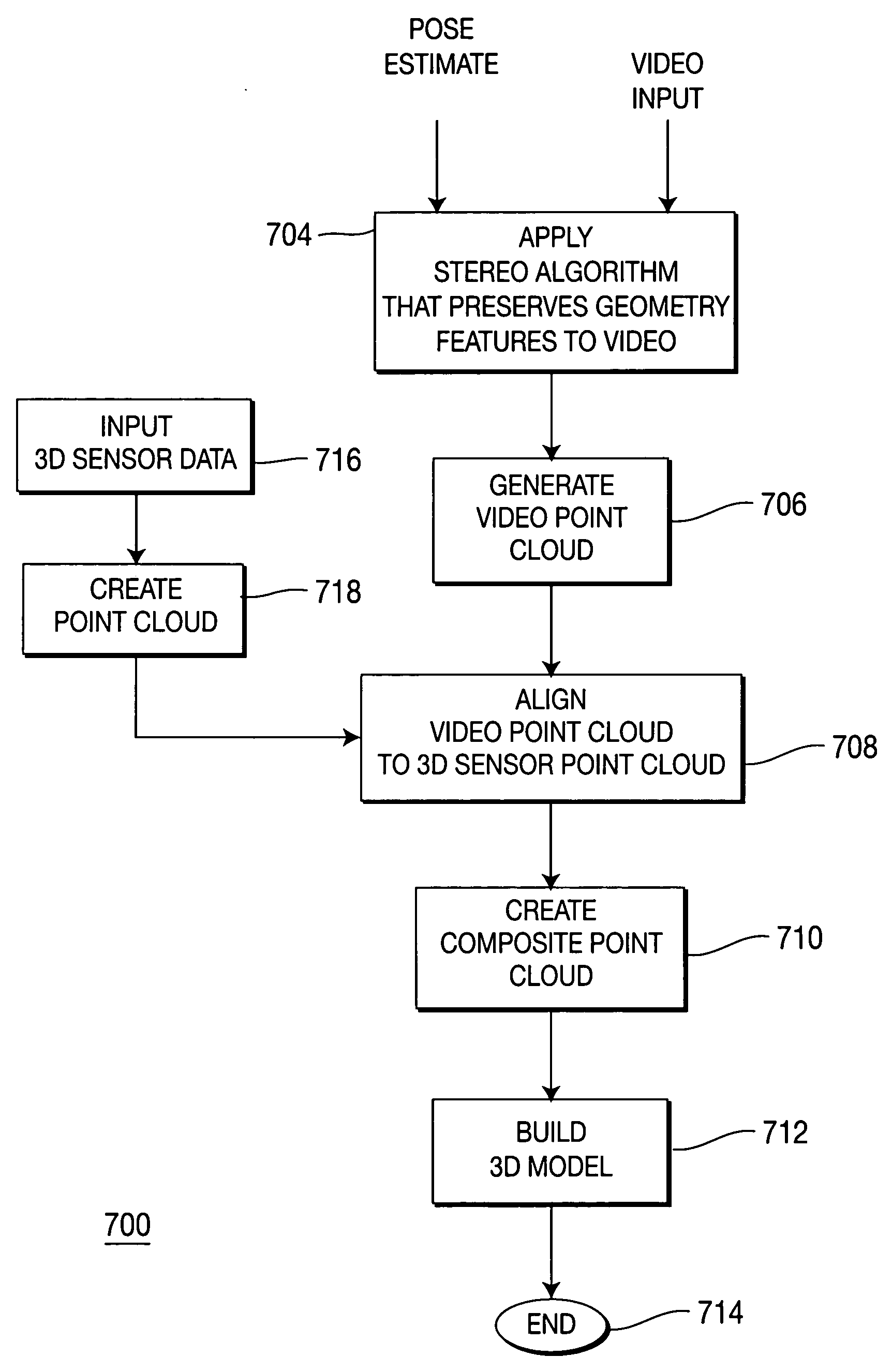

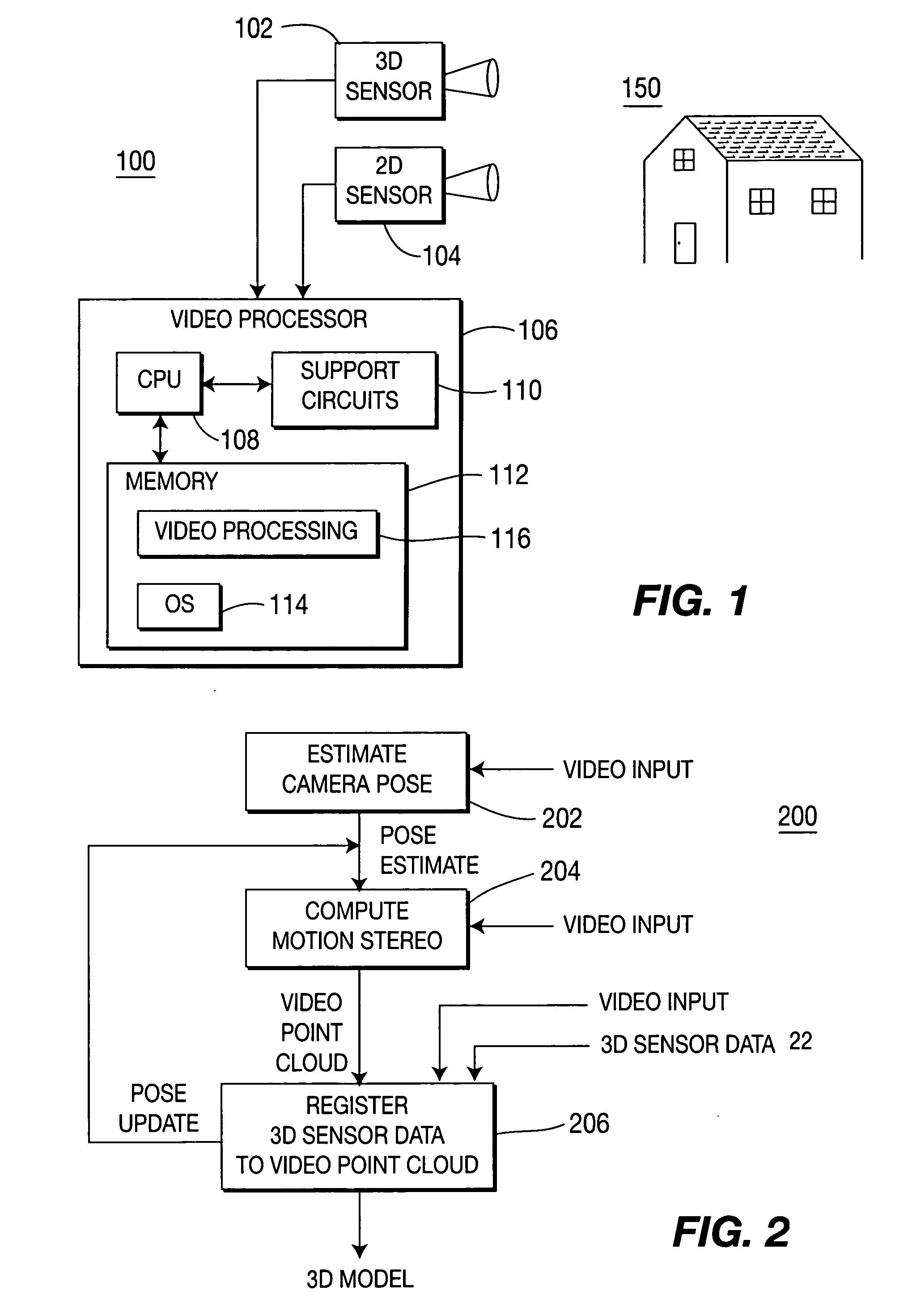

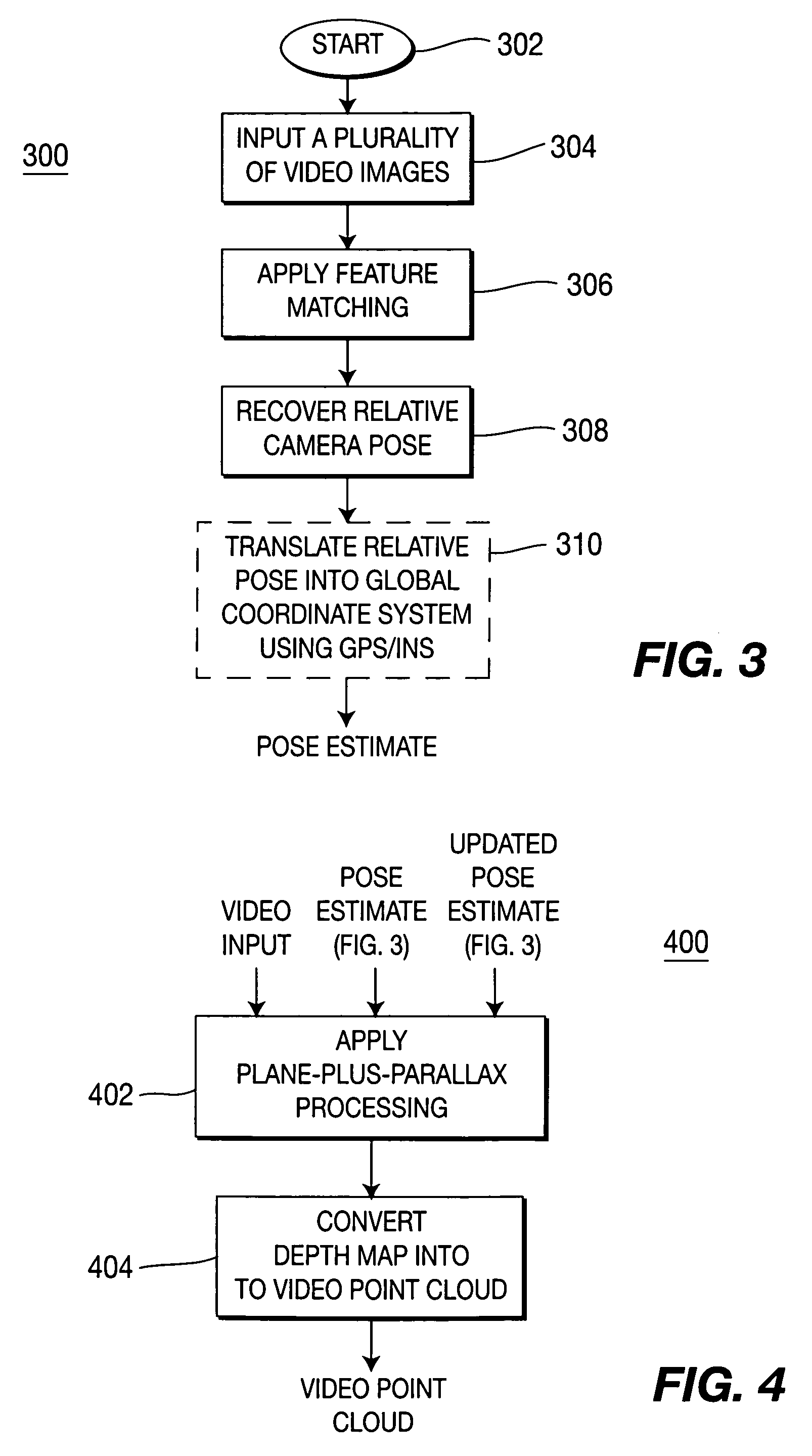

Method and apparatus for aligning video to three-dimensional point clouds

A method and apparatus for performing two-dimensional video alignment onto three-dimensional point clouds. The system recovers camera pose from camera video, determines a depth map, converts the depth map to a Euclidean video point cloud, and registers two-dimensional video to the three-dimensional point clouds.

Owner:SRI INTERNATIONAL

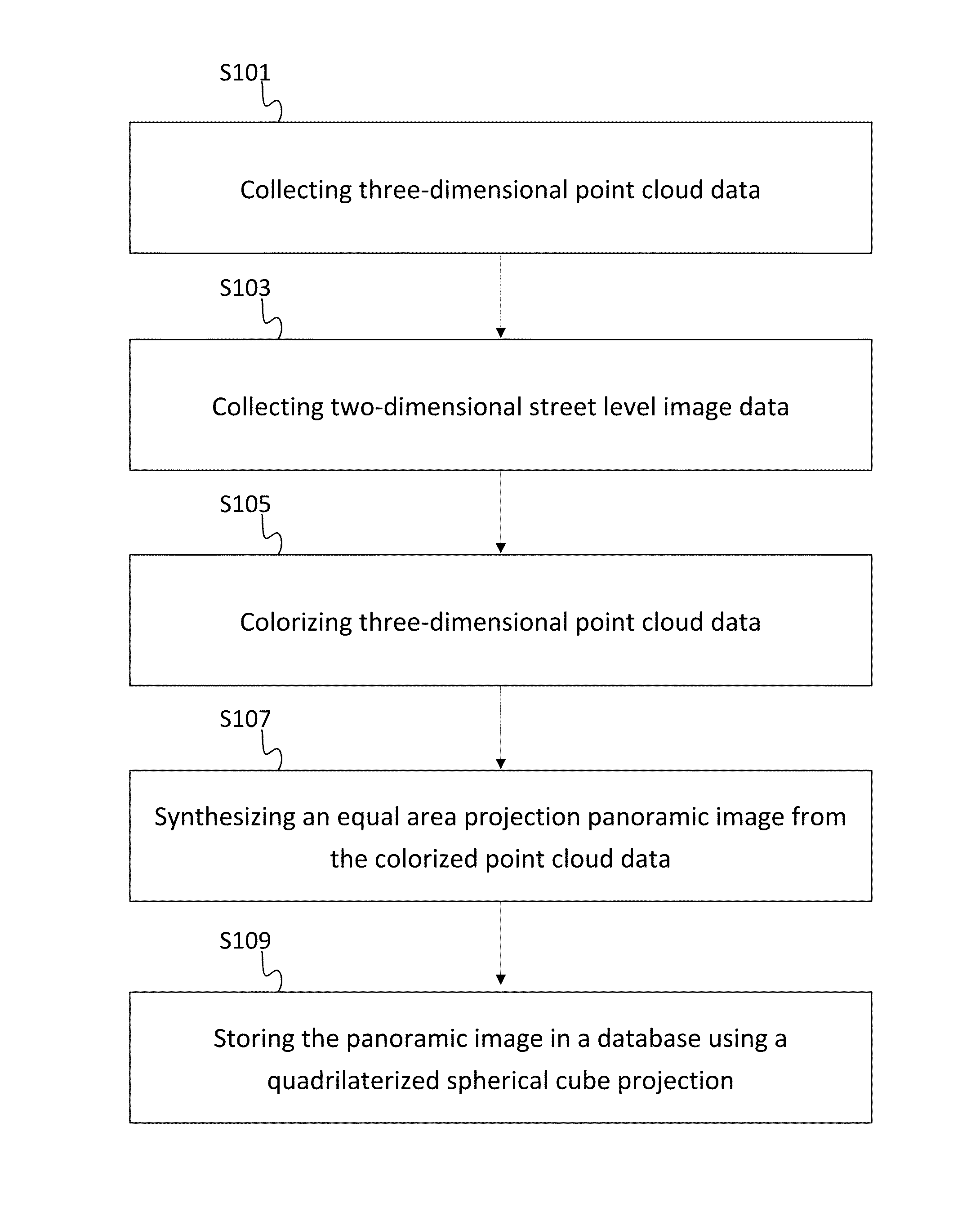

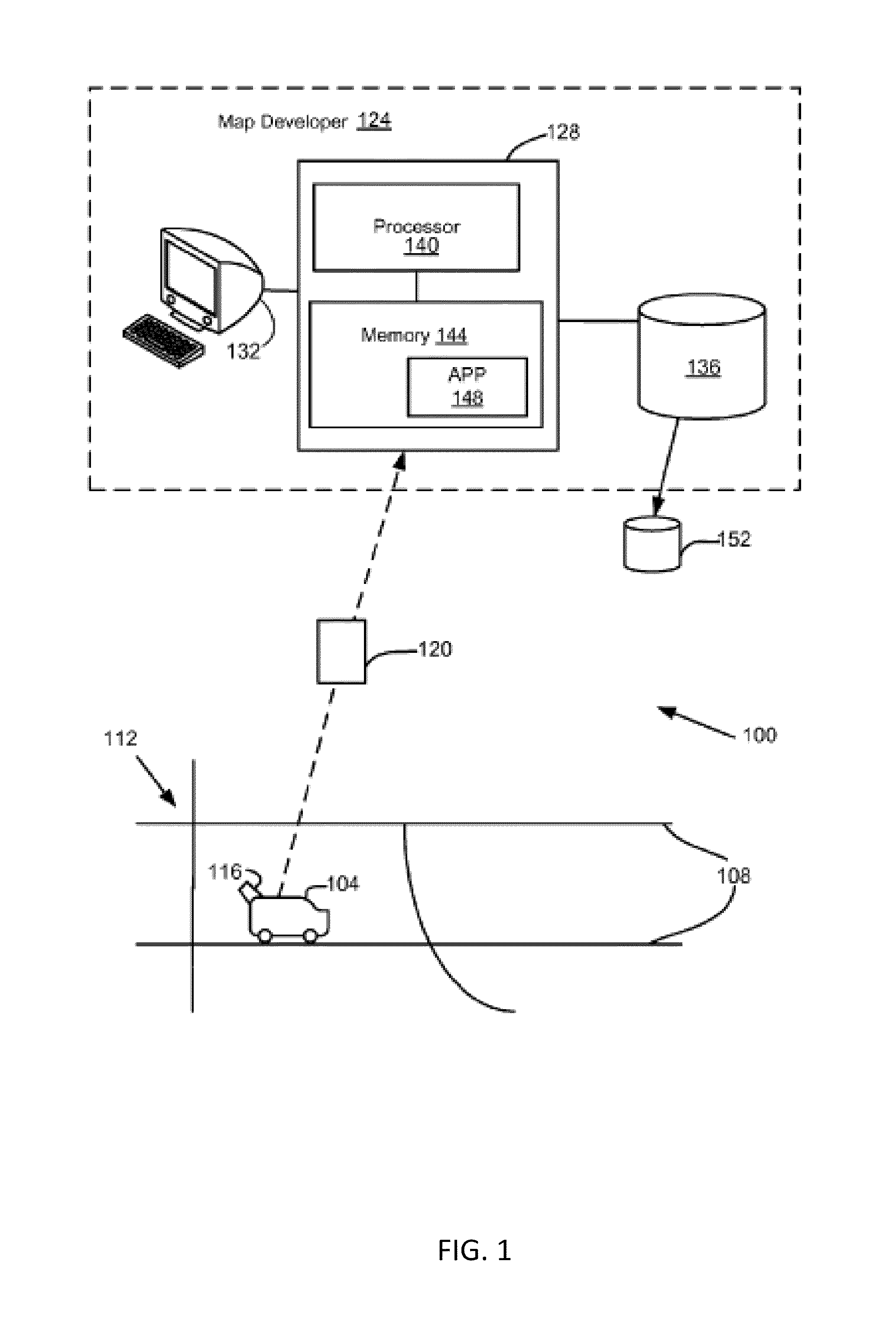

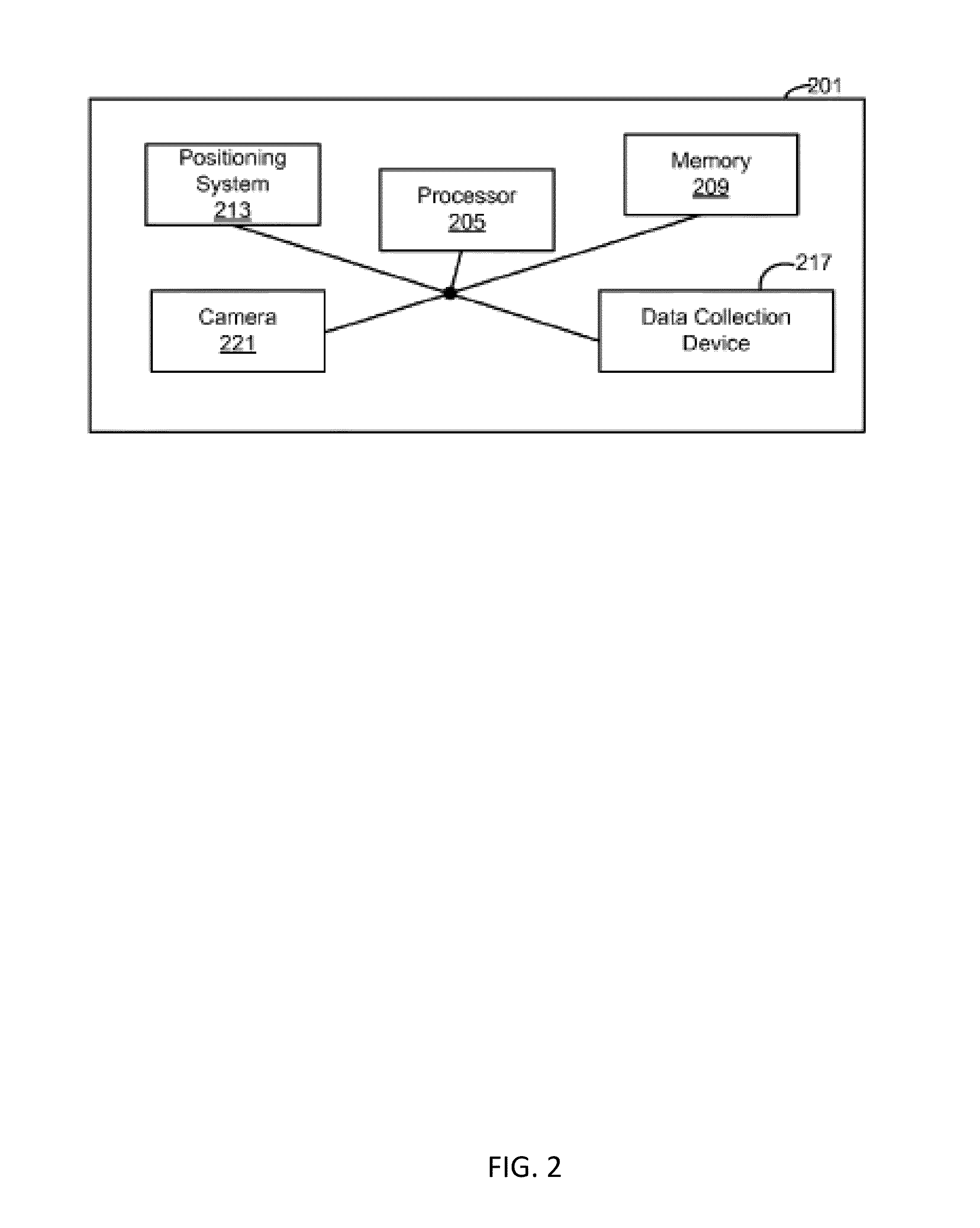

Developing a Panoramic Image

Systems, apparatus, and methods are provided for producing an improved panoramic image. A three-dimensional point cloud image is generated from an optical distancing system. Additionally, at least one two-dimensional image is generated from at least one camera. The three-dimensional point cloud image is colorized with the at least one two-dimensional image, thereby forming a colorized three-dimensional point cloud image. An equal area projection panoramic image is synthesized from the colorized three-dimensional point cloud, wherein the panoramic image comprises a plurality of pixels, and each pixel in the plurality of pixels represents a same amount of area.

Owner:HERE GLOBAL BV

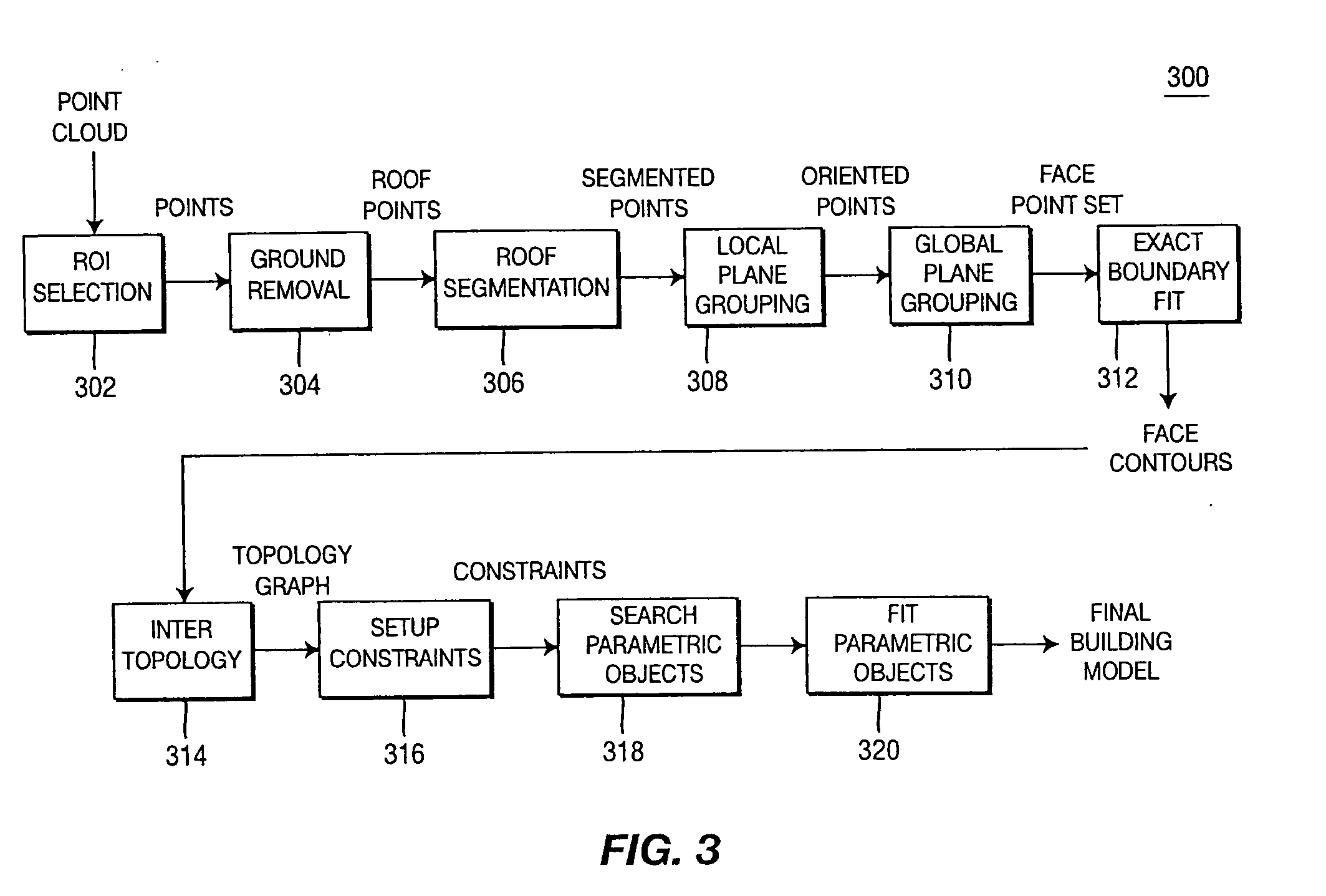

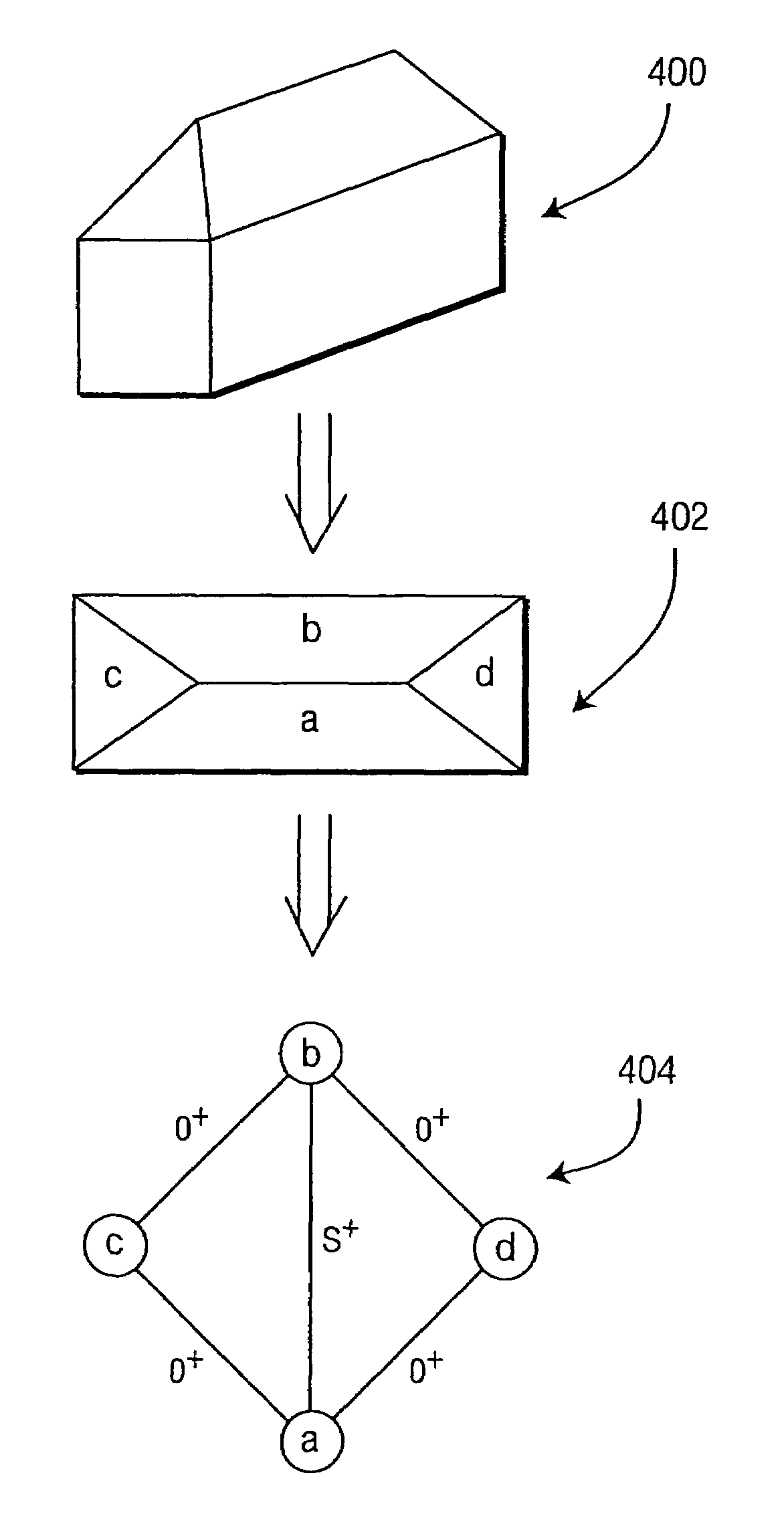

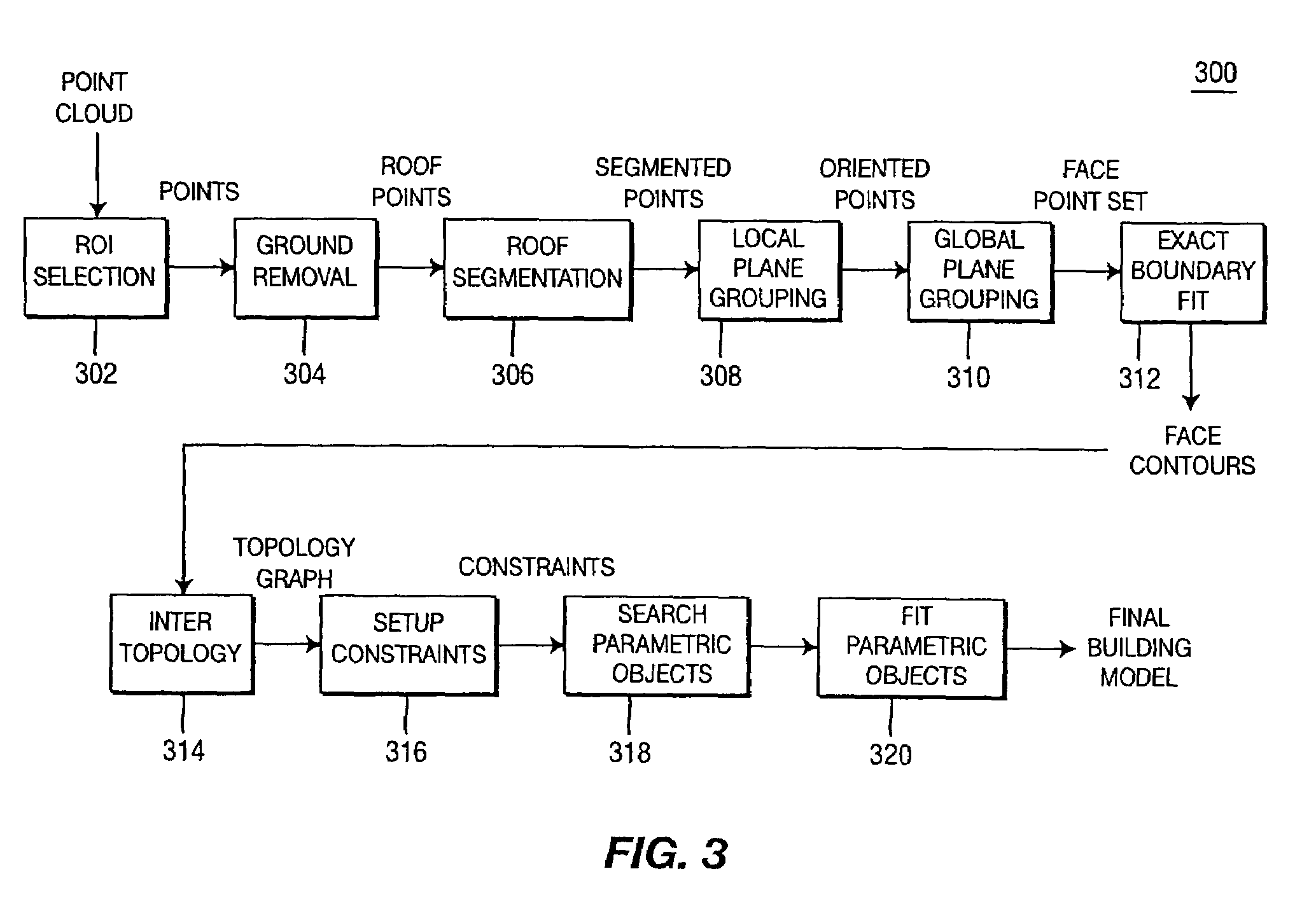

Method for generating a three-dimensional model of a roof structure

A method and apparatus for automatically generating a three-dimensional computer model from a “point cloud” of a scene produced by a laser radar (LIDAR) system. Given a point cloud of an indoor or outdoor scene, the method extracts certain structures from the imaged scene, i.e., ceiling, floor, furniture, rooftops, ground, and the like, and models these structures with planes and / or prismatic structures to achieve a three-dimensional computer model of the scene. The method may then add photographic and / or synthetic texturing to the model to achieve a realistic model.

Owner:SRI INTERNATIONAL

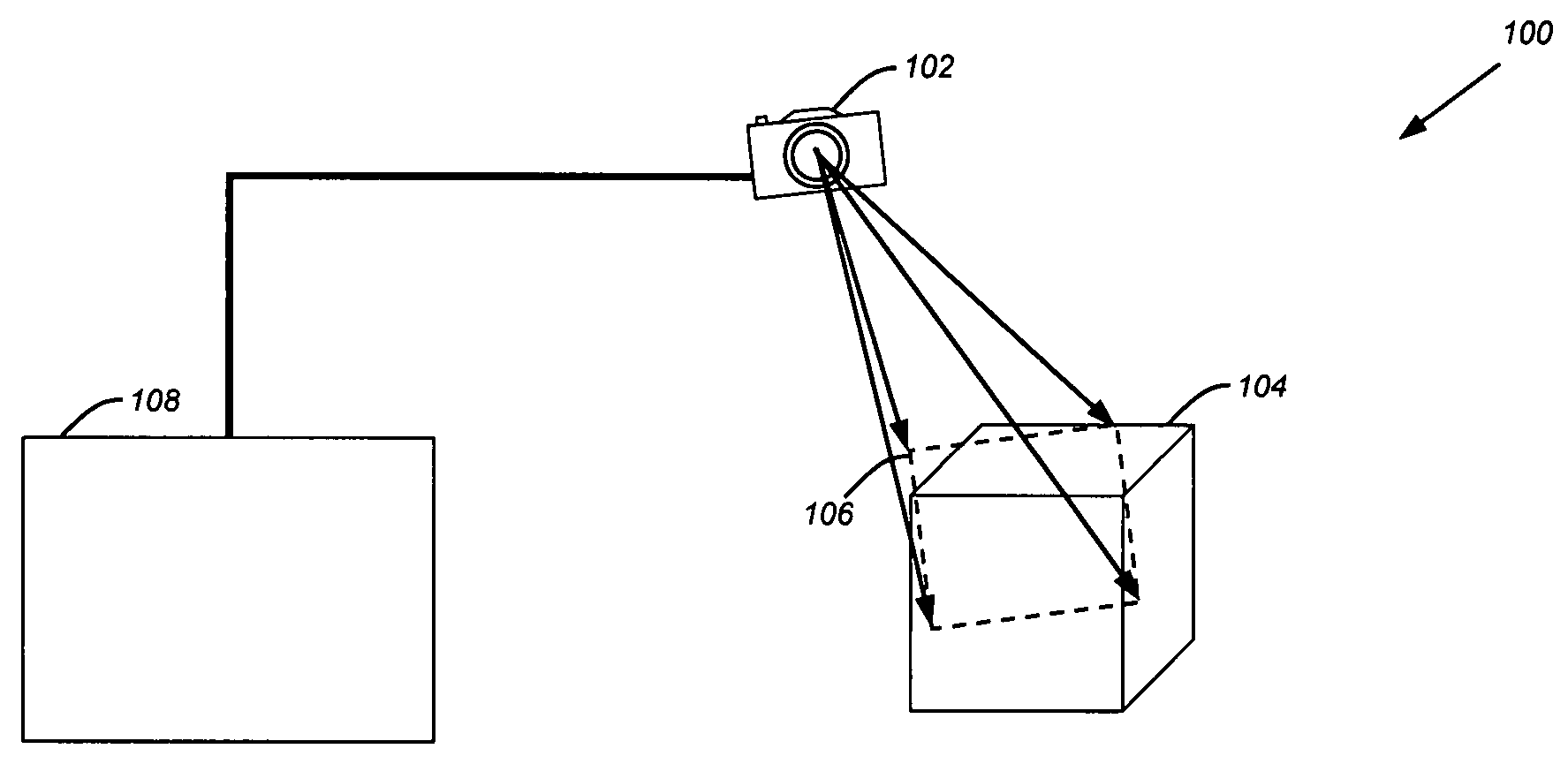

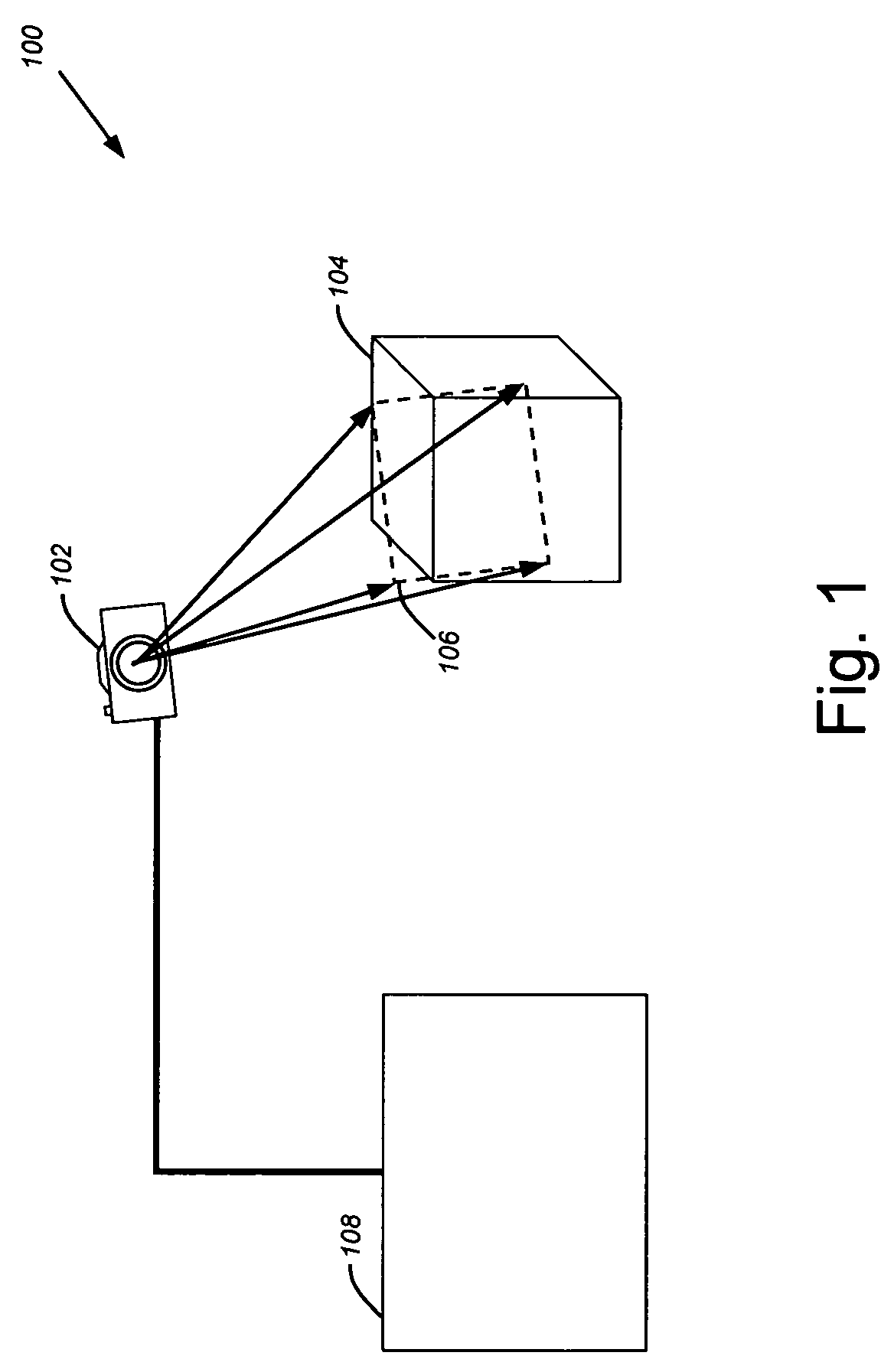

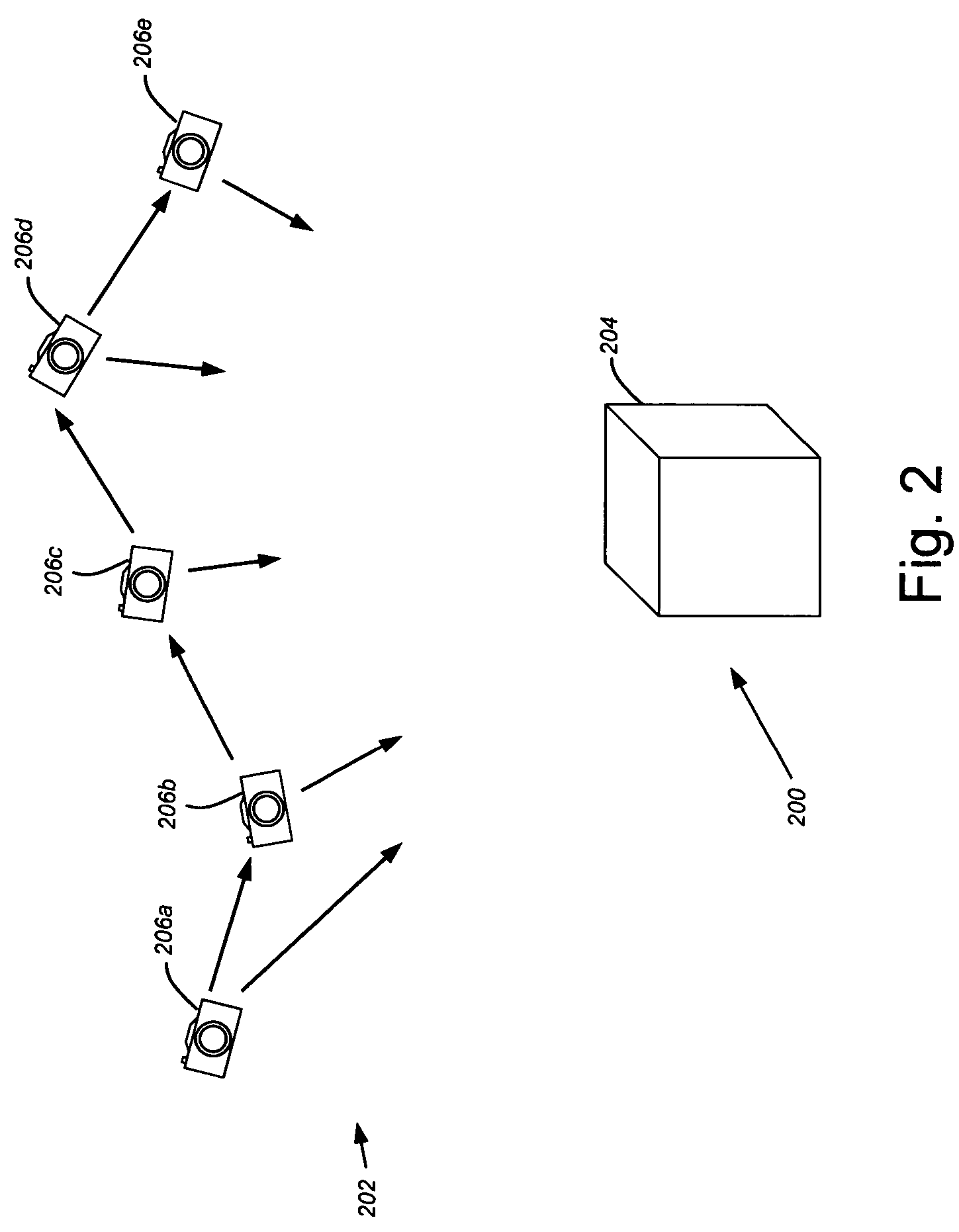

Determining camera motion

ActiveUS7605817B2Efficiently parameterizedTelevision system detailsImage analysisPoint cloudSource image

Camera motion is determined in a three-dimensional image capture system using a combination of two-dimensional image data and three-dimensional point cloud data available from a stereoscopic, multi-aperture, or similar camera system. More specifically, a rigid transformation of point cloud data between two three-dimensional point clouds may be more efficiently parameterized using point correspondence established between two-dimensional pixels in source images for the three-dimensional point clouds.

Owner:MEDIT CORP

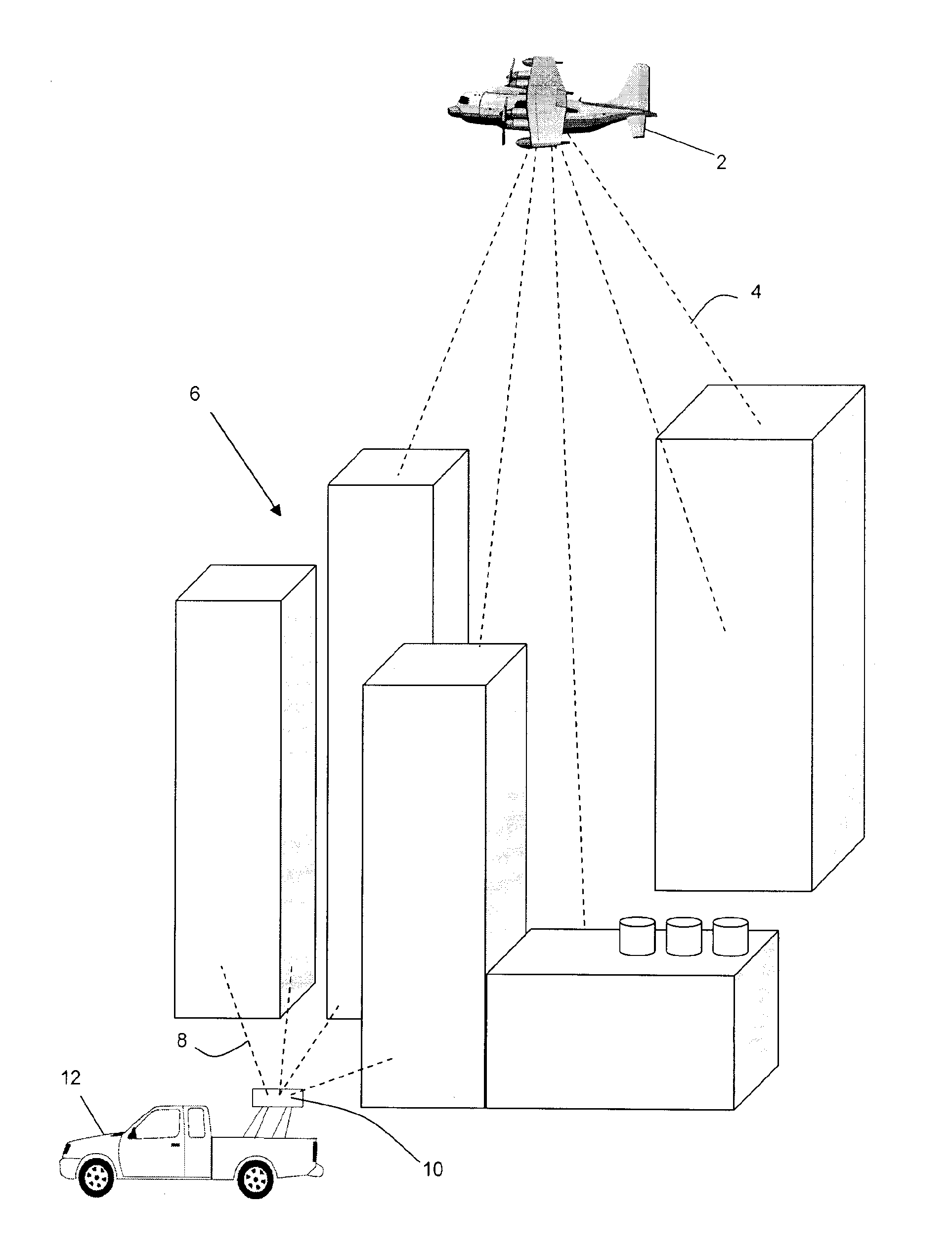

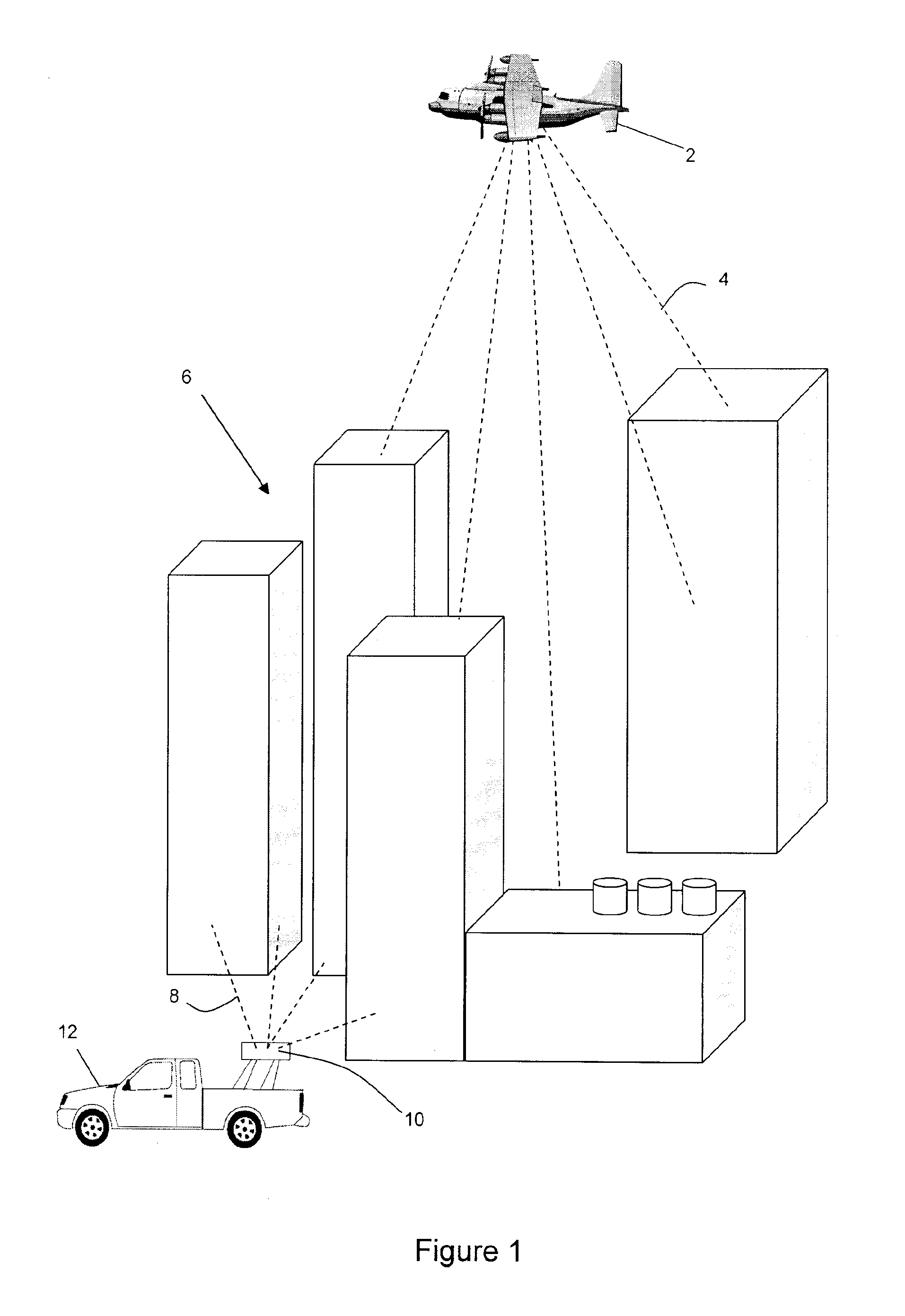

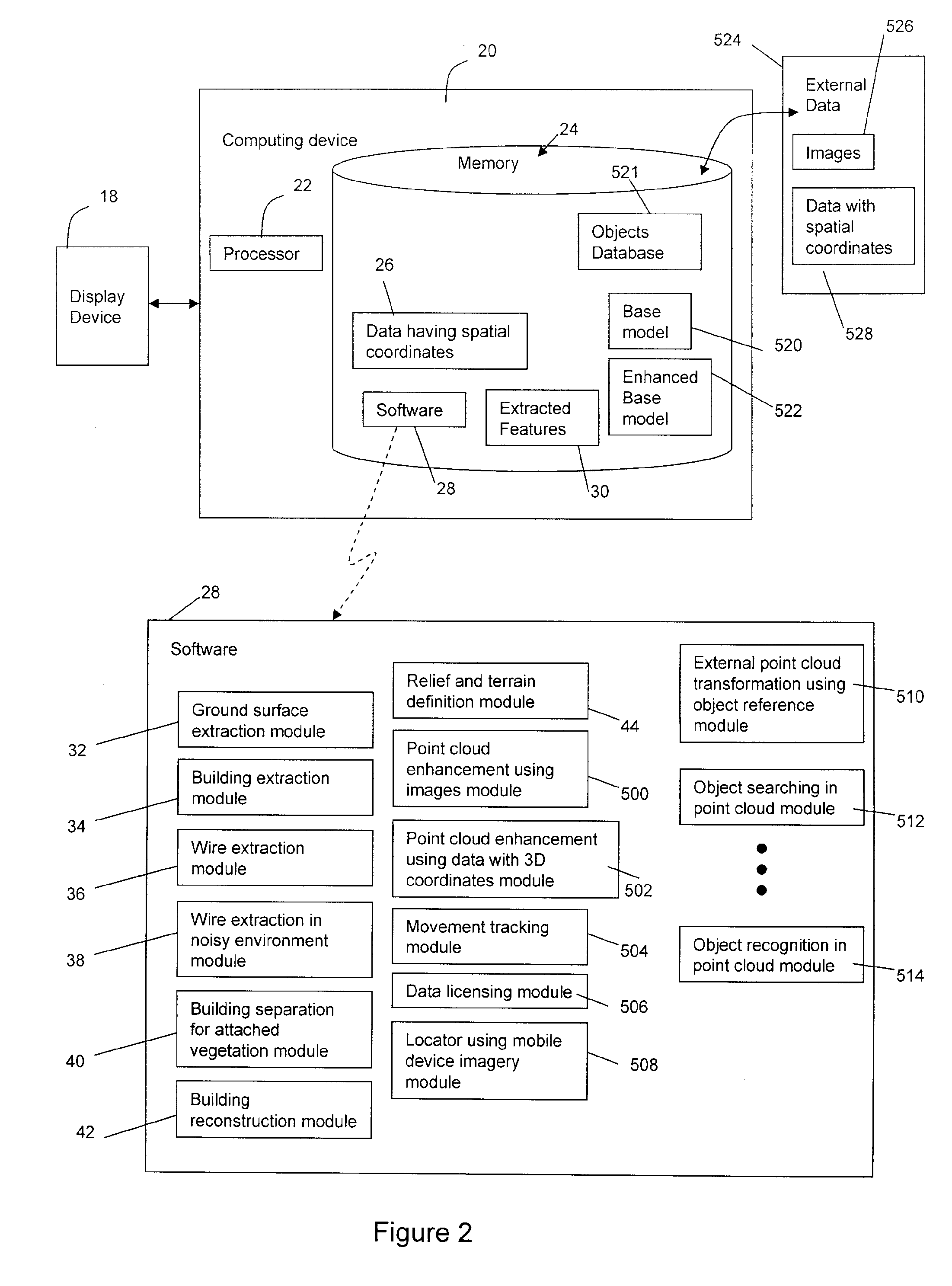

System and Method for Manipulating Data Having Spatial Co-ordinates

Systems and methods are provided for extracting various features from data having spatial coordinates. The systems and methods may identify and extract data points from a point cloud, where the data points are considered to be part of the ground surface, a building, or a wire (e.g. power lines). Systems and methods are also provided for enhancing a point cloud using external data (e.g. images and other point clouds), and for tracking a moving object by comparing images with a point cloud. An objects database is also provided which can be used to scale point clouds to be of similar size. The objects database can also be used to search for certain objects in a point cloud, as well as recognize unidentified objects in a point cloud.

Owner:REELER EDMUND COCHRANE +8

Objection recognition in a 3D scene

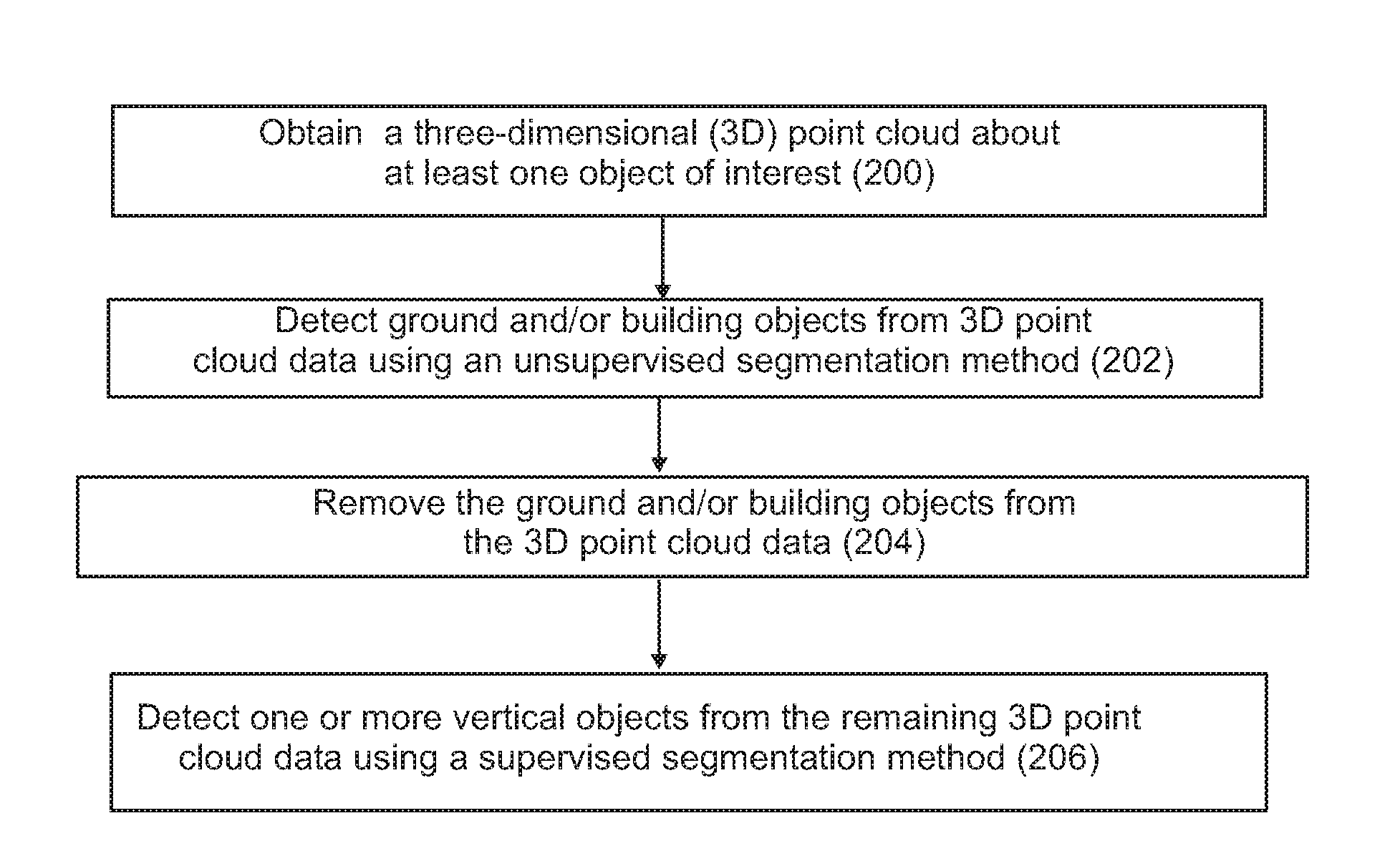

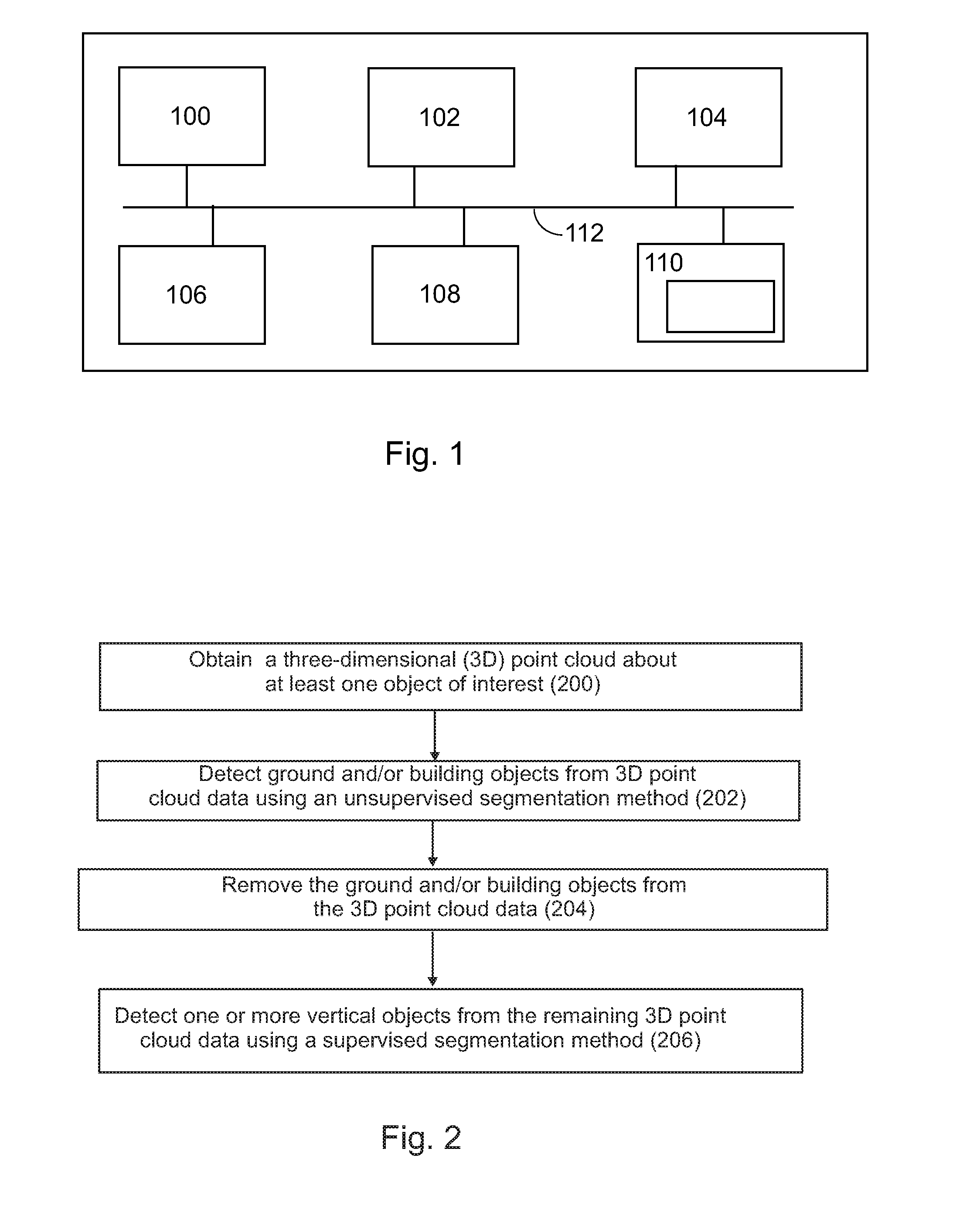

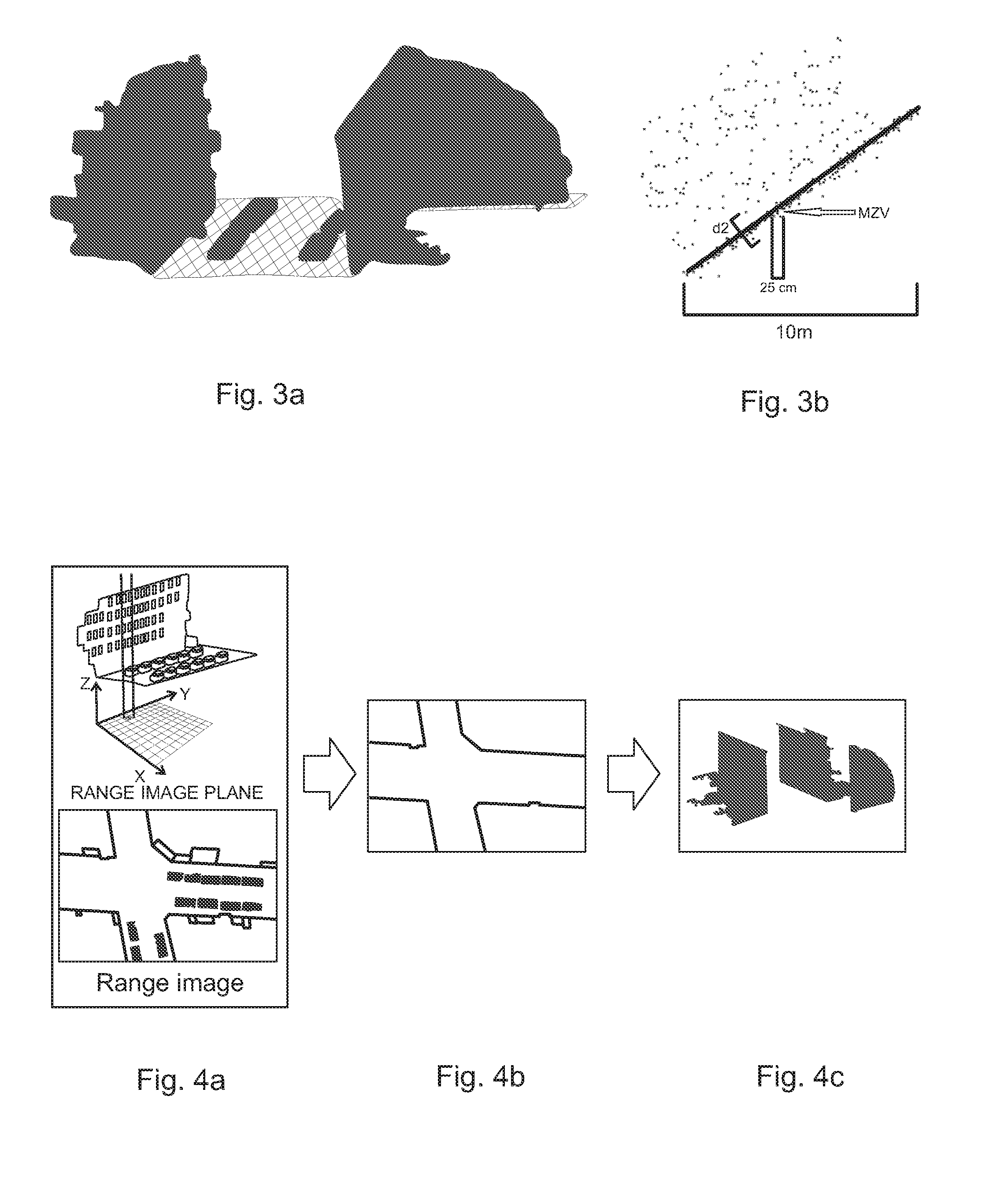

ActiveUS20160154999A1Reduce decreaseImage enhancementImage analysisPoint cloudUnsupervised segmentation

A method comprising: obtaining a three-dimensional (3D) point cloud about at least one object of interest; detecting ground and / or building objects from 3D point cloud data using an unsupervised segmentation method; removing the ground and / or building objects from the 3D point cloud data; and detecting one or more vertical objects from the remaining 3D point cloud data using a supervised segmentation method.

Owner:VIVO MOBILE COMM CO LTD

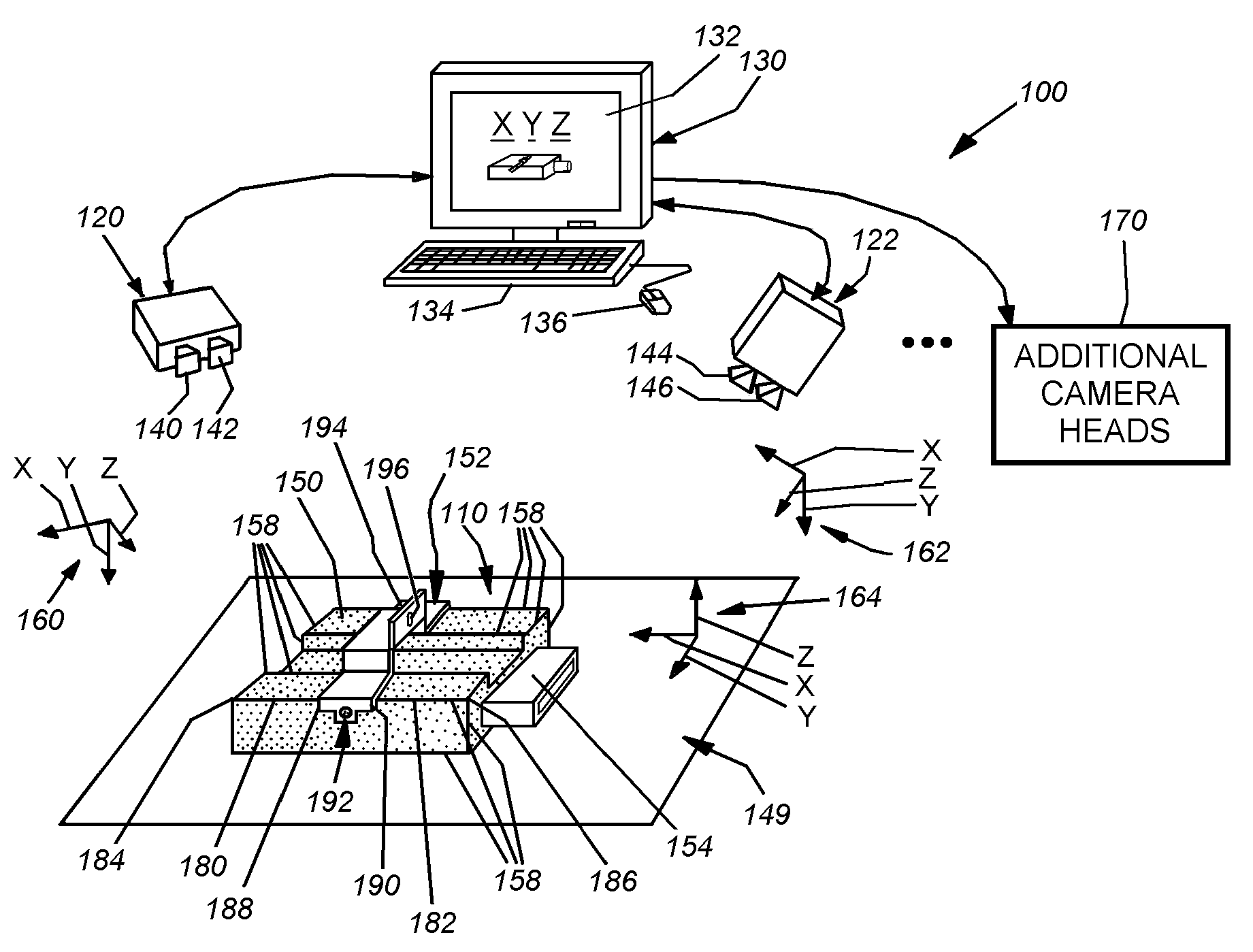

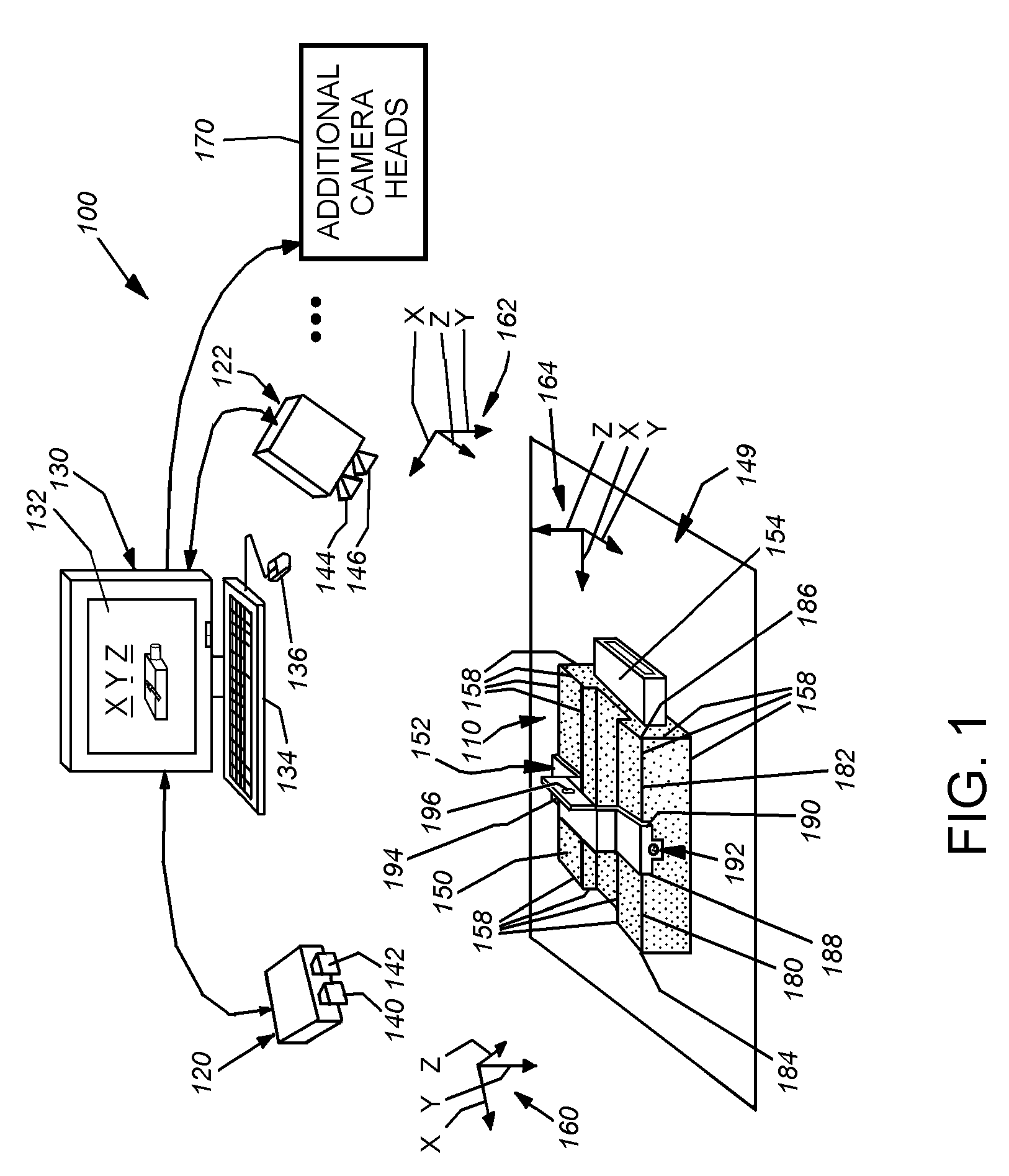

System and method for three-dimensional alignment of objects using machine vision

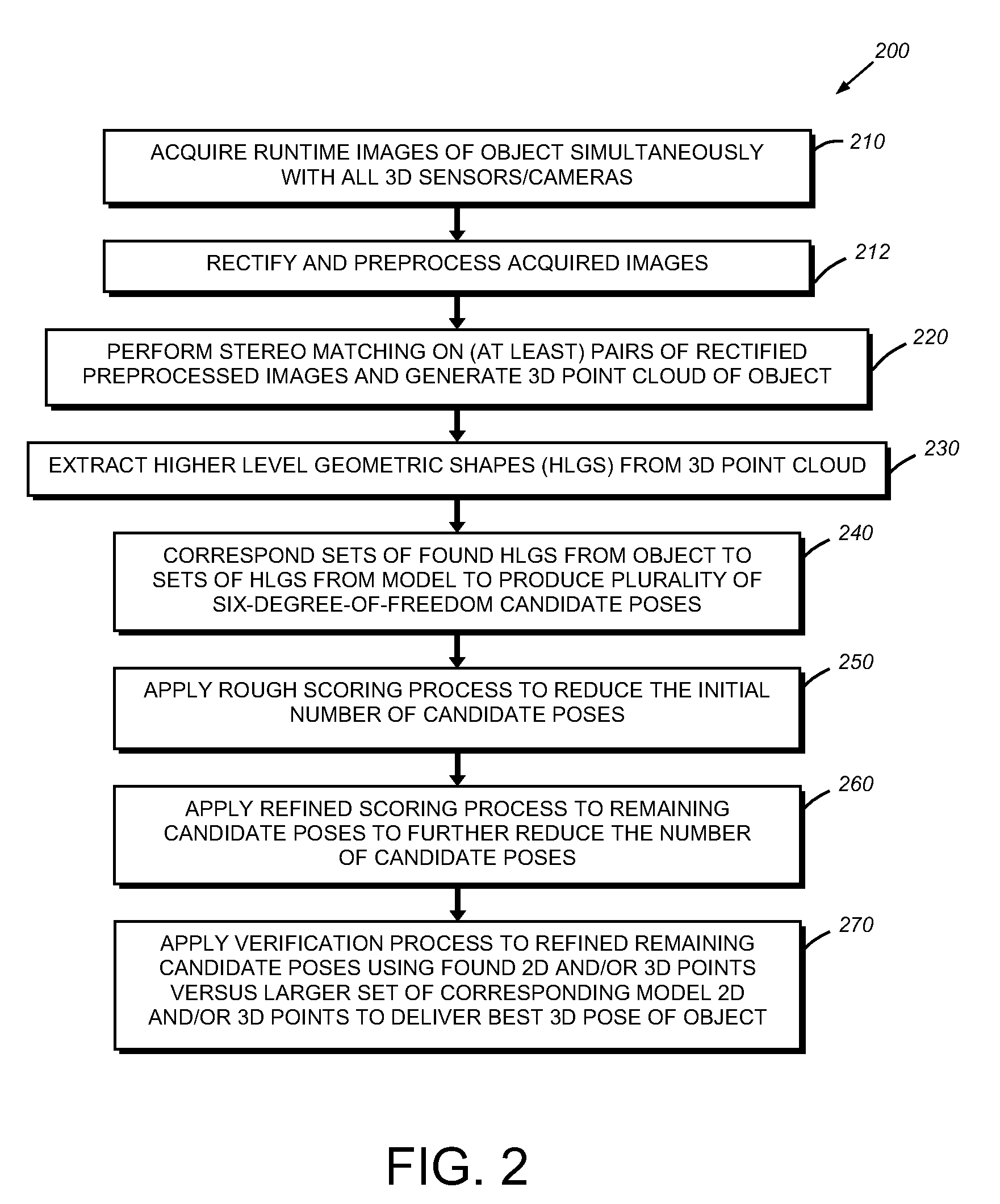

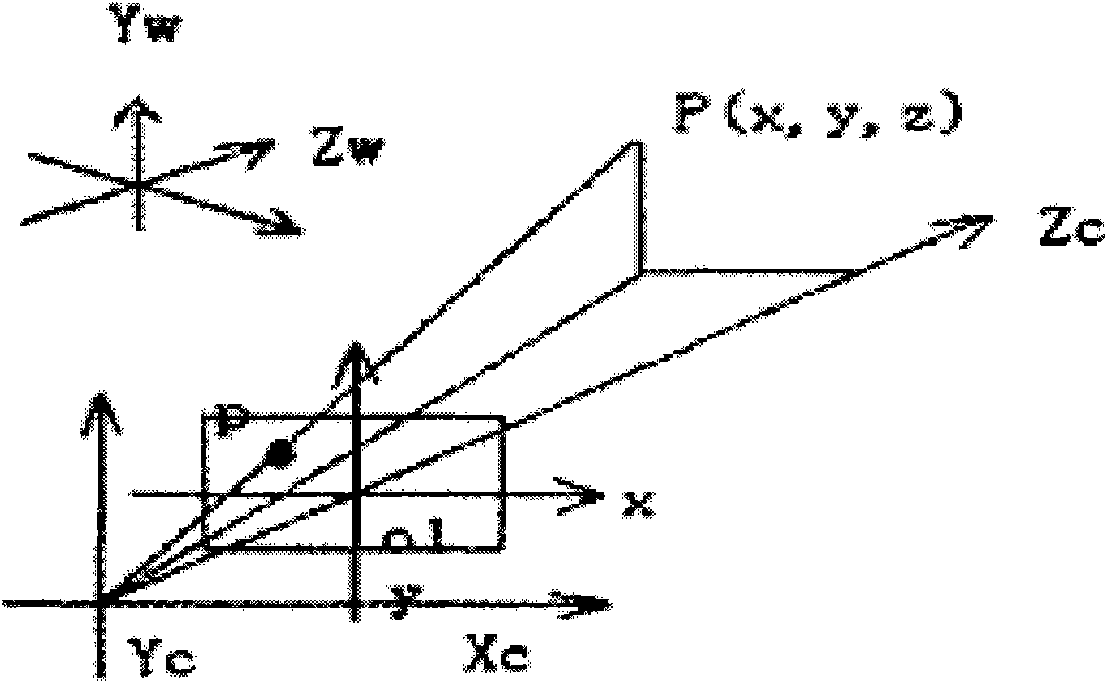

This invention provides a system and method for determining the three-dimensional alignment of a modeledobject or scene. After calibration, a 3D (stereo) sensor system views the object to derive a runtime 3D representation of the scene containing the object. Rectified images from each stereo head are preprocessed to enhance their edge features. A stereo matching process is then performed on at least two (a pair) of the rectified preprocessed images at a time by locating a predetermined feature on a first image and then locating the same feature in the other image. 3D points are computed for each pair of cameras to derive a 3D point cloud. The 3D point cloud is generated by transforming the 3D points of each camera pair into the world 3D space from the world calibration. The amount of 3D data from the point cloud is reduced by extracting higher-level geometric shapes (HLGS), such as line segments. Found HLGS from runtime are corresponded to HLGS on the model to produce candidate 3D poses. A coarse scoring process prunes the number of poses. The remaining candidate poses are then subjected to a further more-refined scoring process. These surviving candidate poses are then verified by, for example, fitting found 3D or 2D points of the candidate poses to a larger set of corresponding three-dimensional or two-dimensional model points, whereby the closest match is the best refined three-dimensional pose.

Owner:COGNEX CORP

Ladar Point Cloud Compression

ActiveUS20160047903A1Reduce data footprintHigh energyOptical rangefindersElectromagnetic wave reradiationPoint cloudRadar

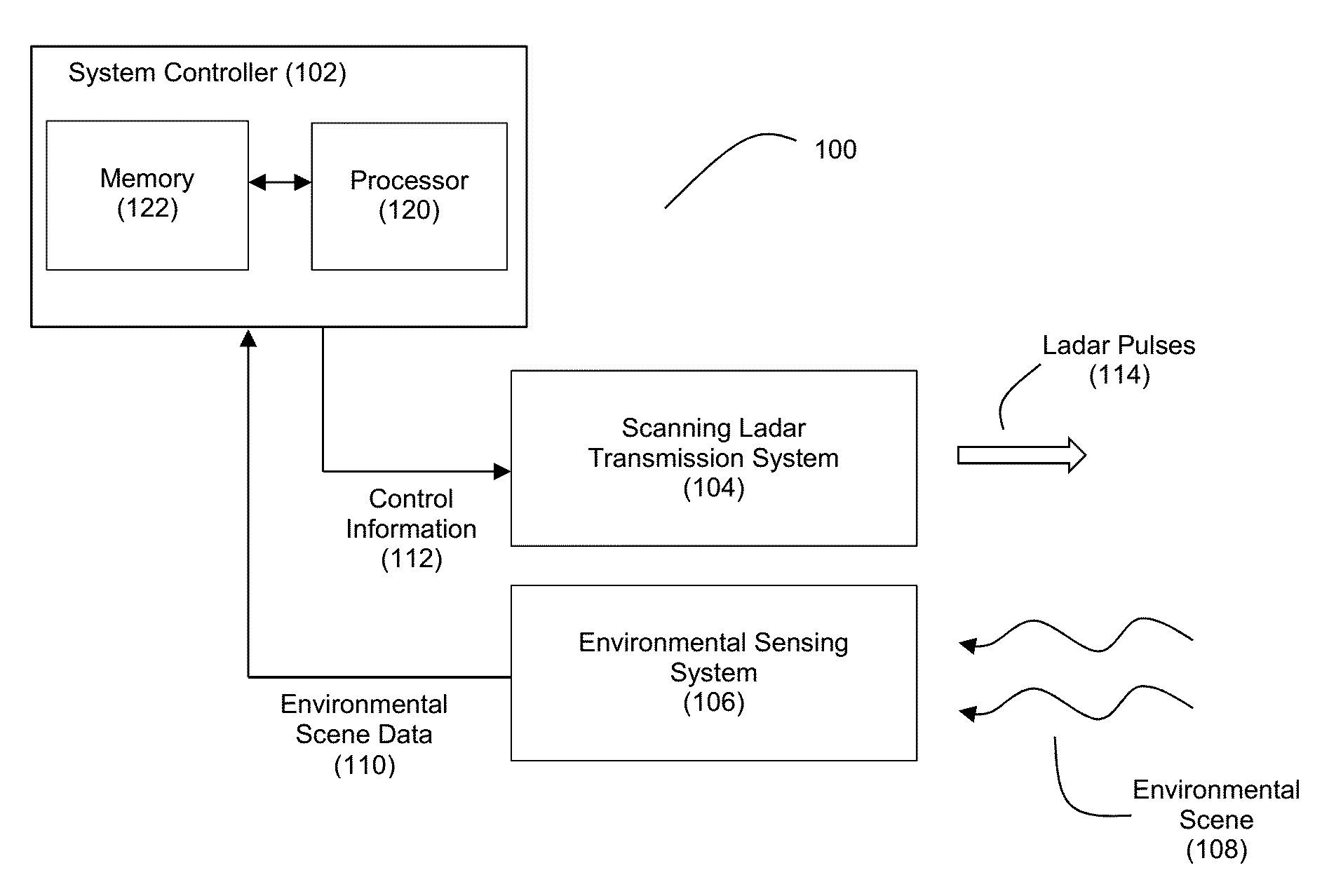

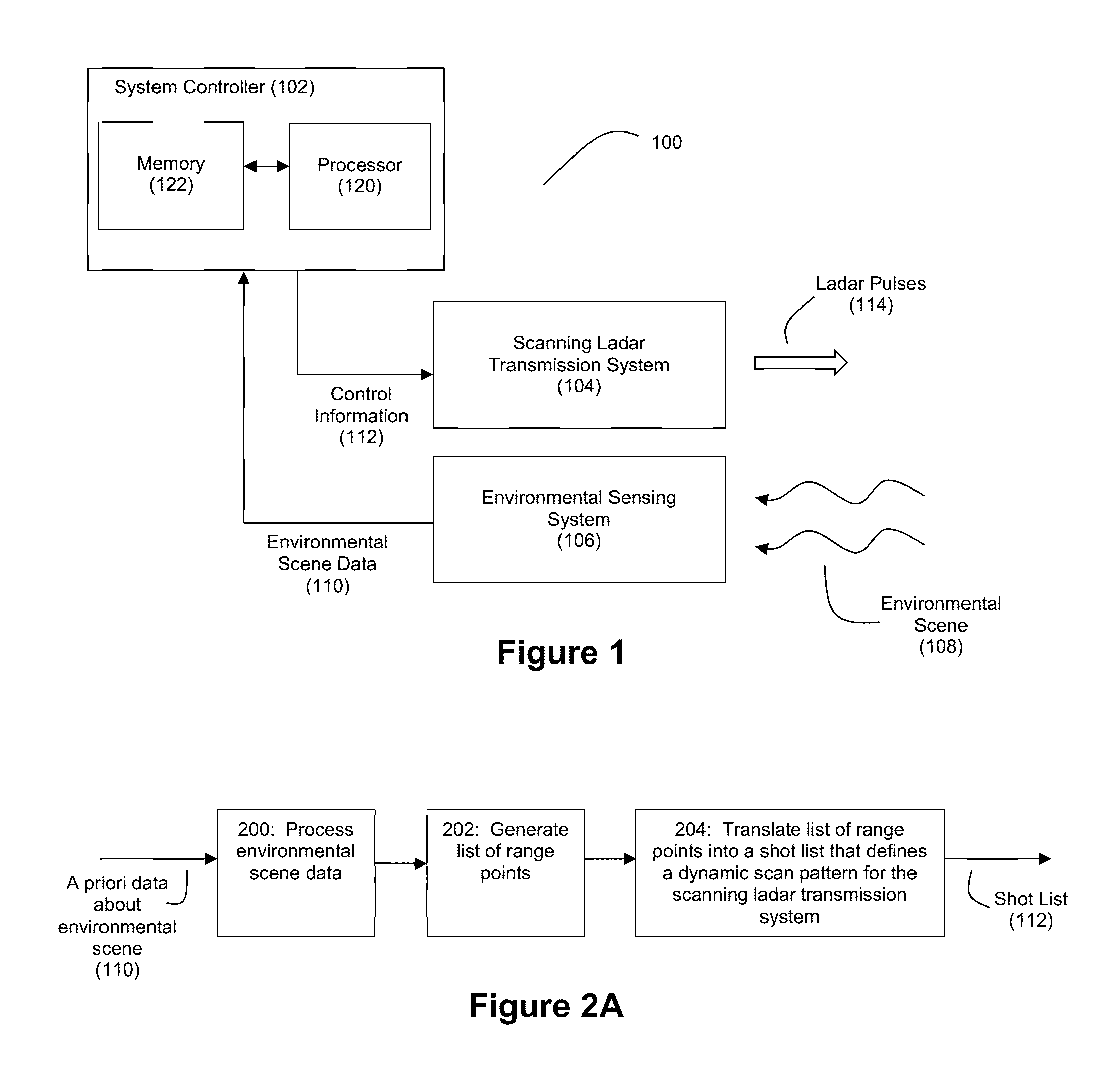

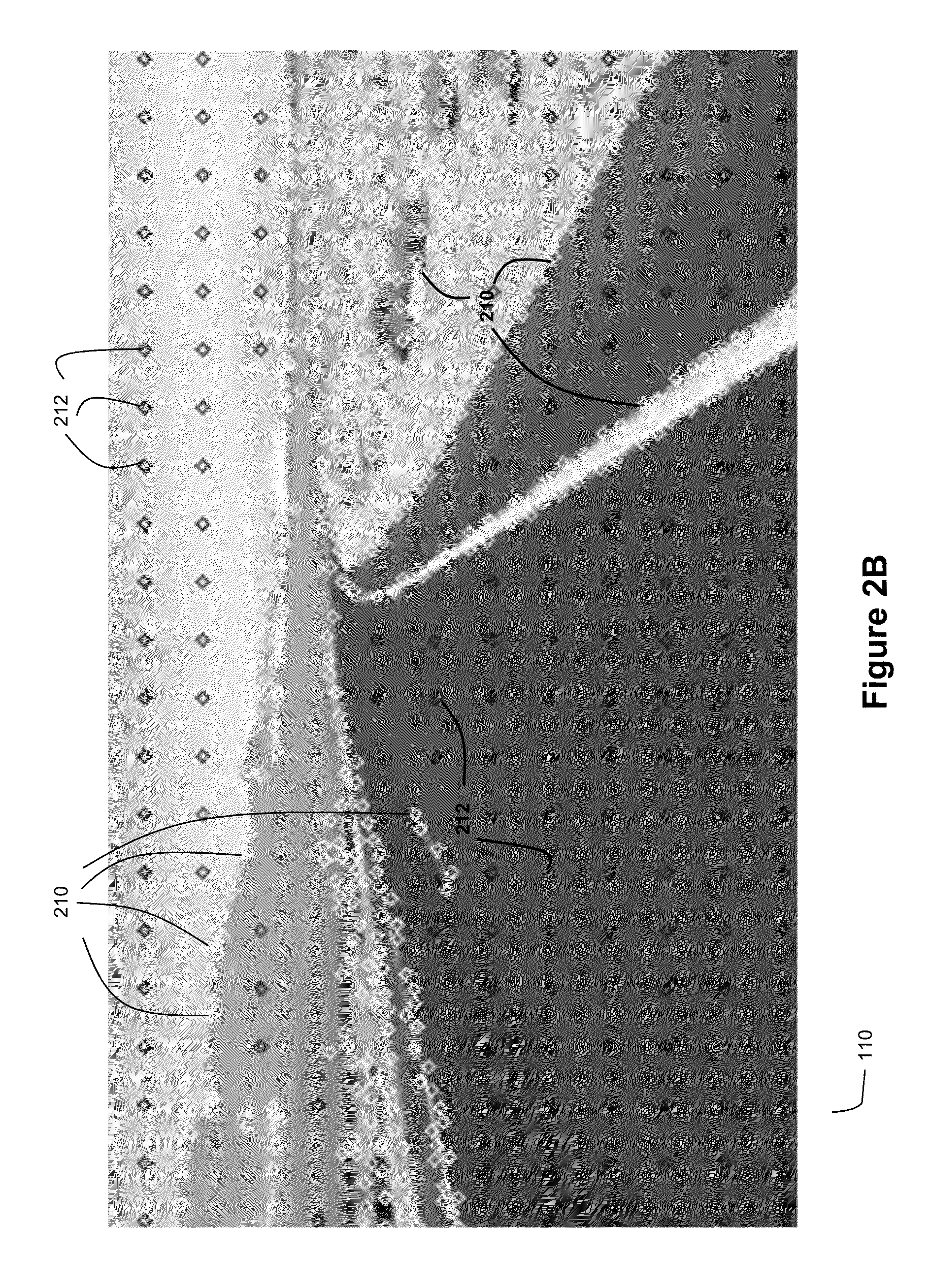

Various embodiments are disclosed ladar point cloud compression. For example, a processor can be used to analyze data representative of an environmental scene to intelligently select a subset of range points within a frame to target with ladar pulses via a scanning ladar transmission system. In another example embodiment, a processor can perform ladar point cloud compression by (1) processing data representative of an environmental scene, and (2) based on the processing, selecting a plurality of the range points in the point cloud for retention in a compressed point cloud, the compressed point cloud comprising fewer range points than the generated point cloud.

Owner:AEYE INC

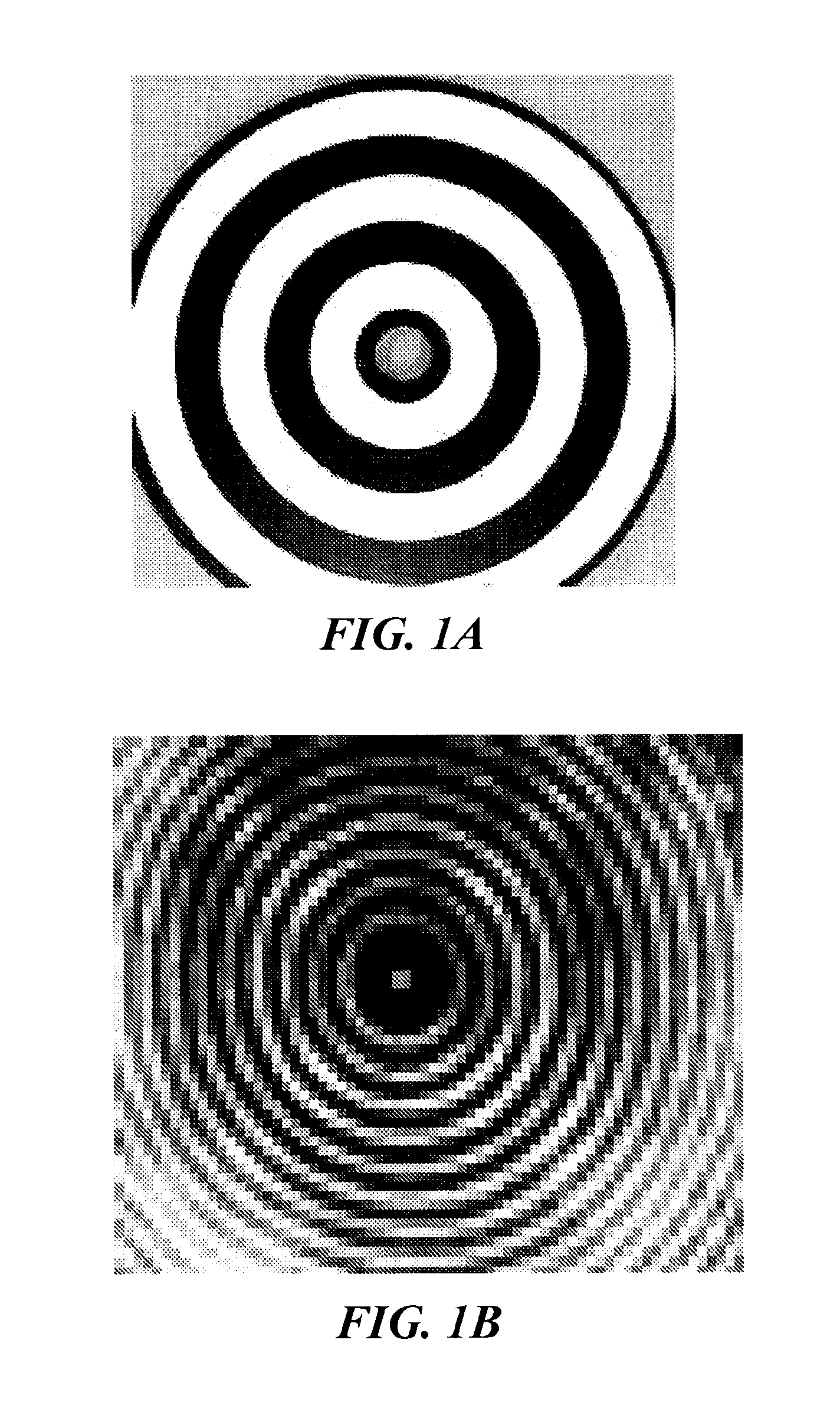

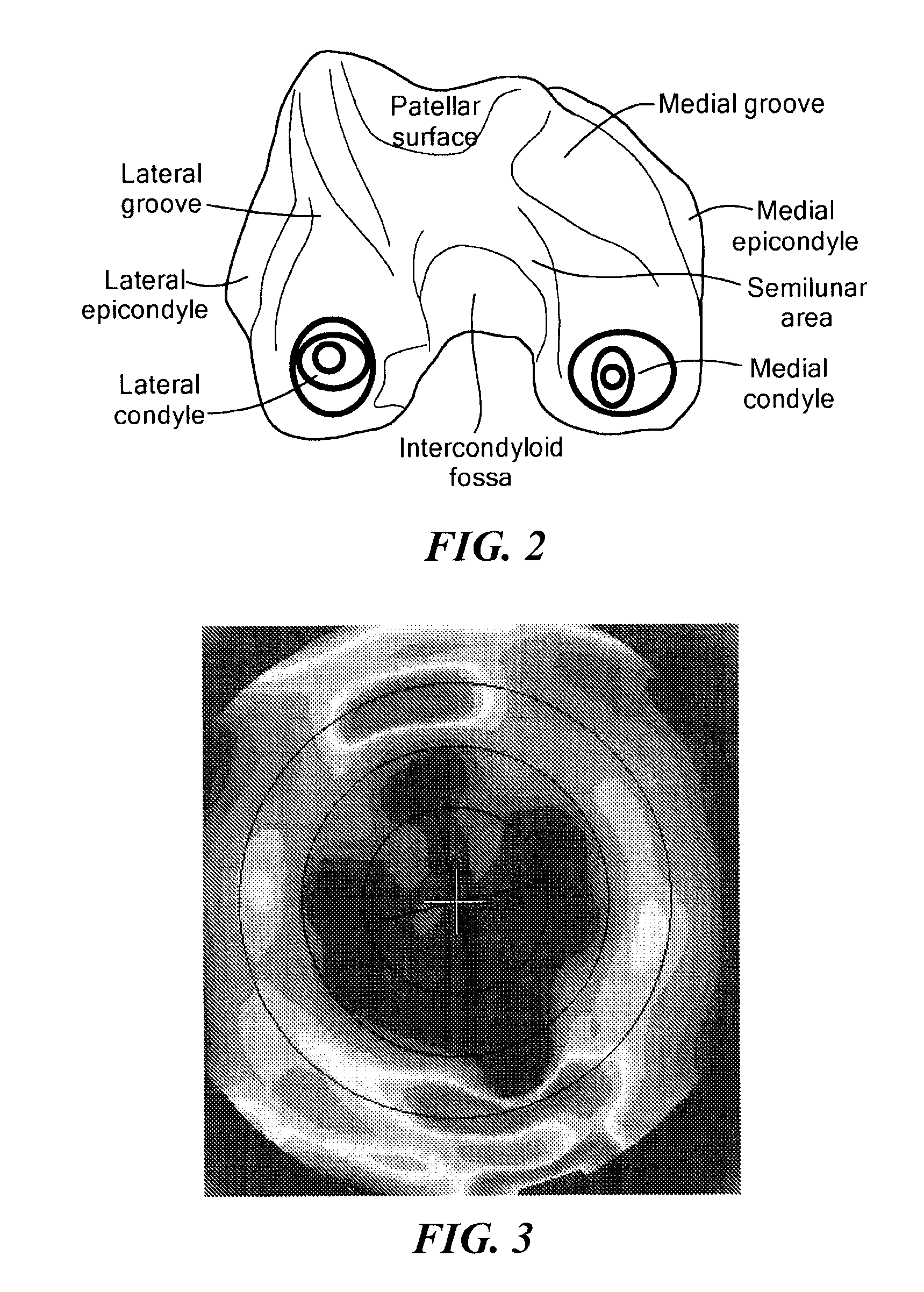

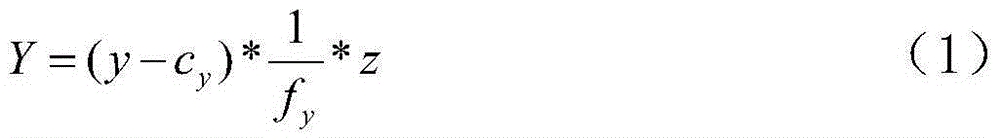

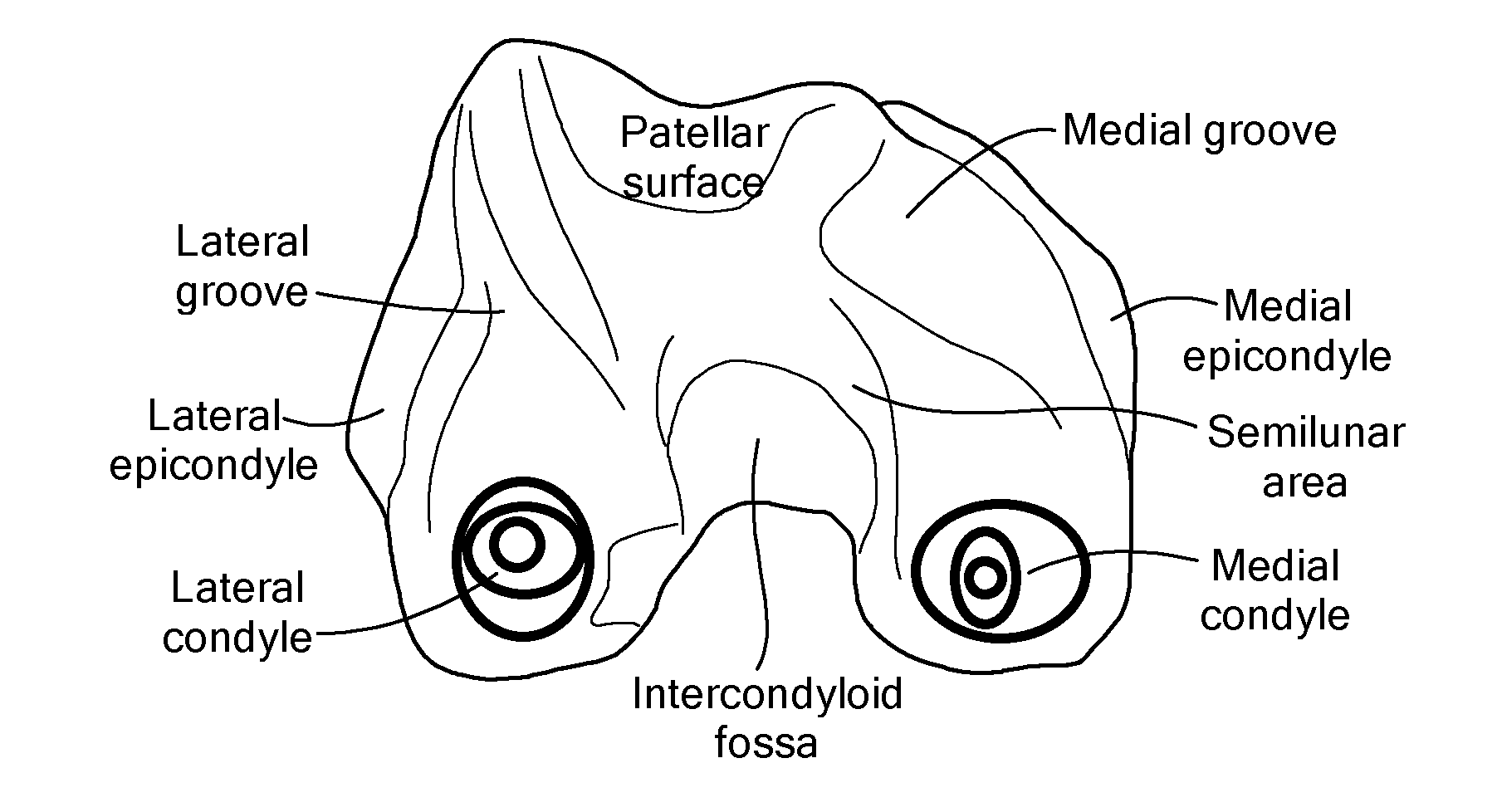

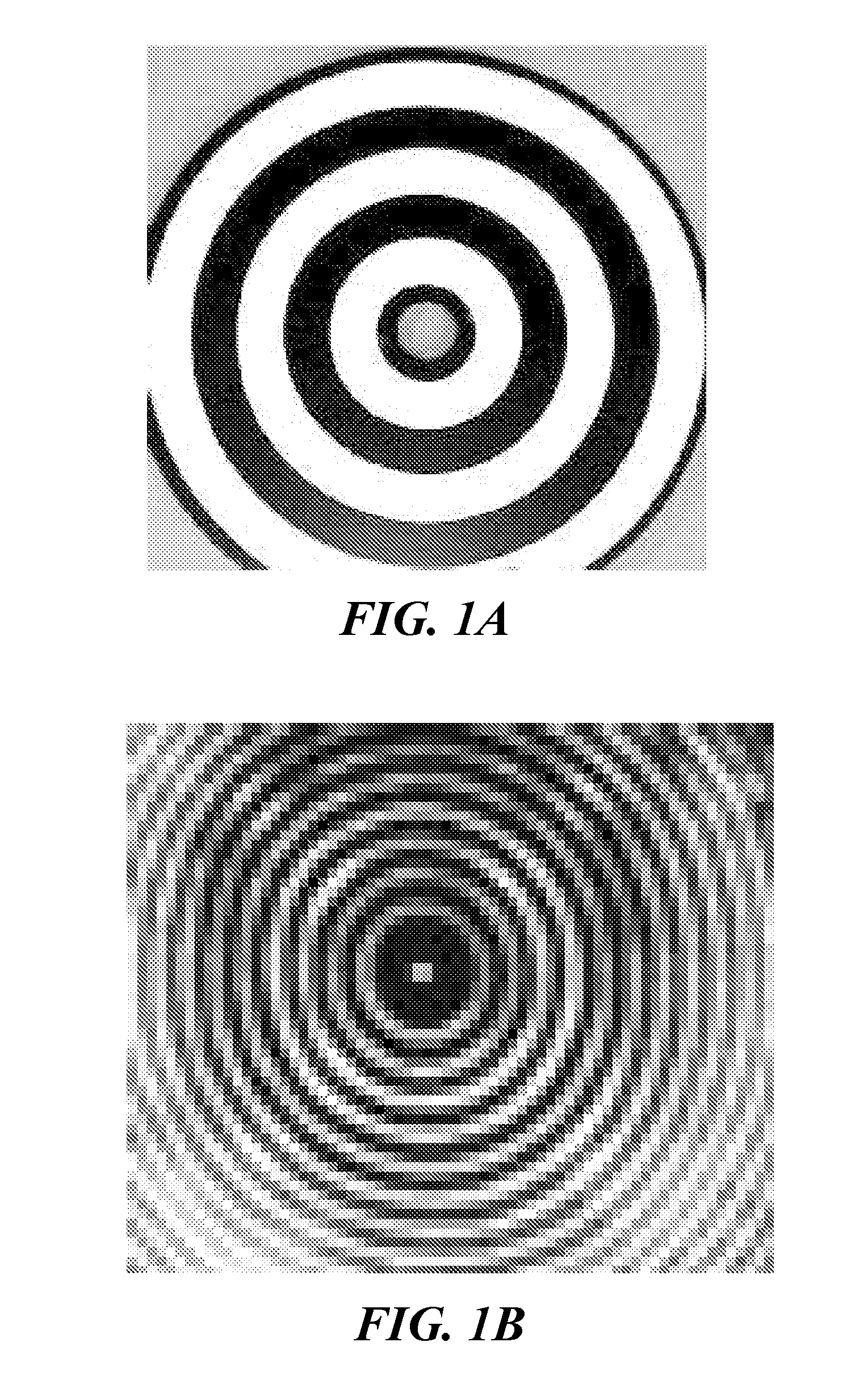

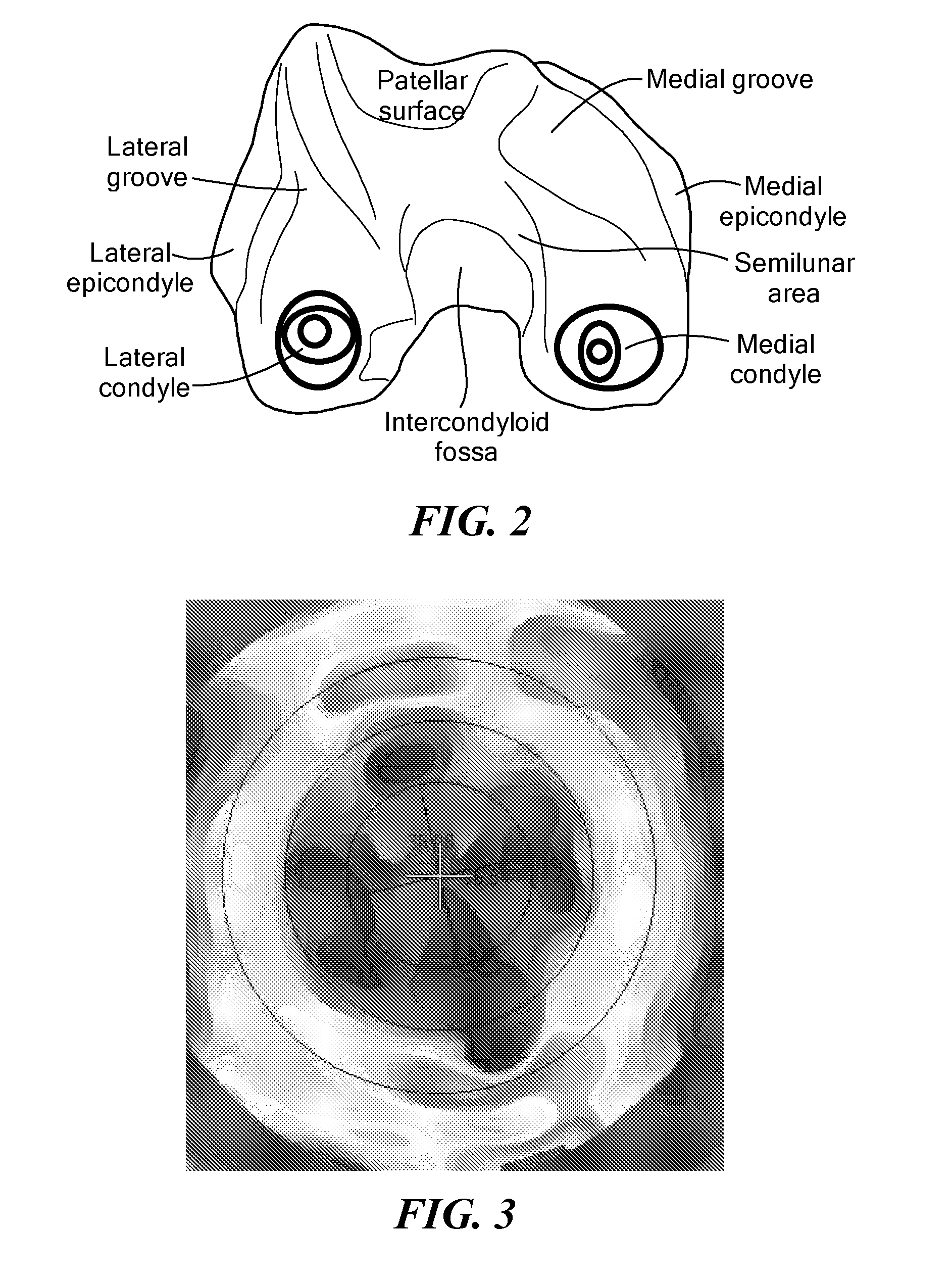

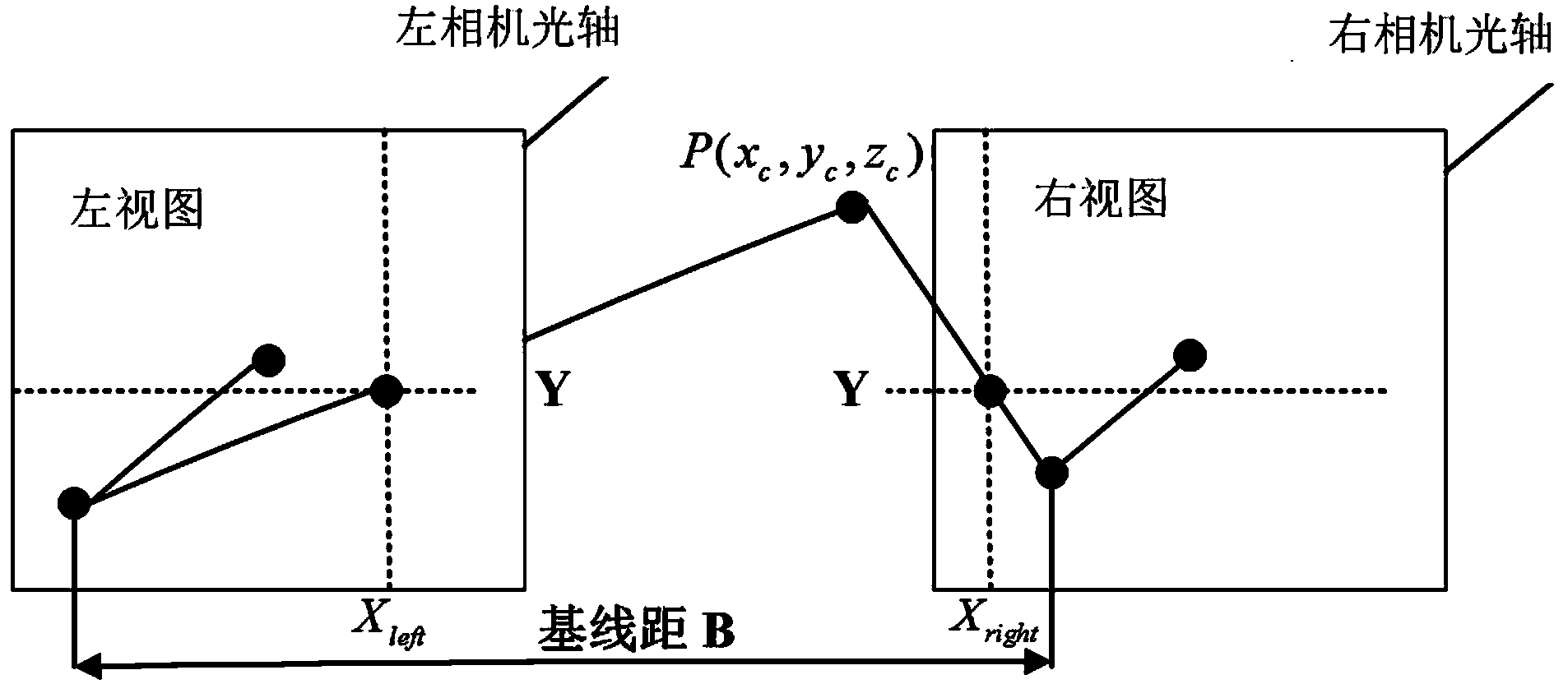

Methods for determining meniscal size and shape and for devising treatment

The present invention relates to methods for determining meniscal size and shape for use in designing therapies for the treatment of various joint diseases. The invention uses an image of a joint that is processed for analysis. Analysis can include, for example, generating a thickness map, a cartilage curve, or a point cloud. This information is used to determine the extent of the cartilage defect or damage and to design an appropriate therapy, including, for example, an implant. Adjustments to the designed therapy are made to account for the materials used.

Owner:CONFORMIS

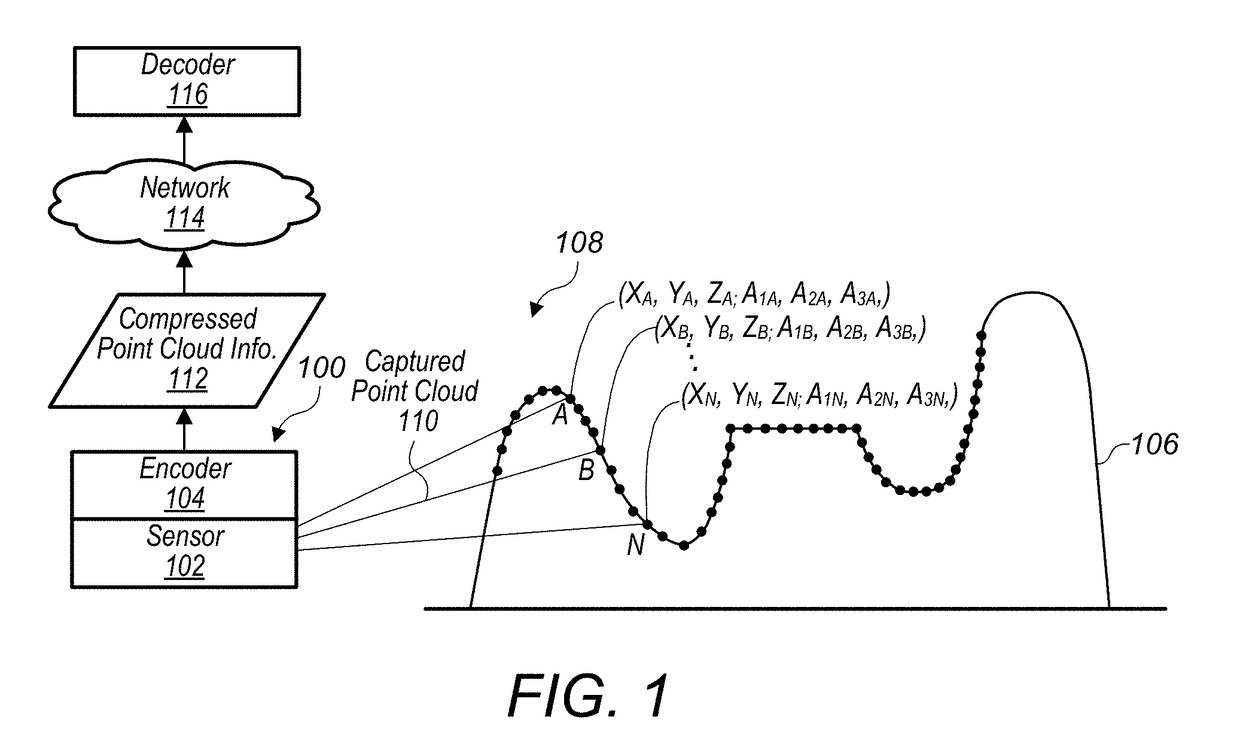

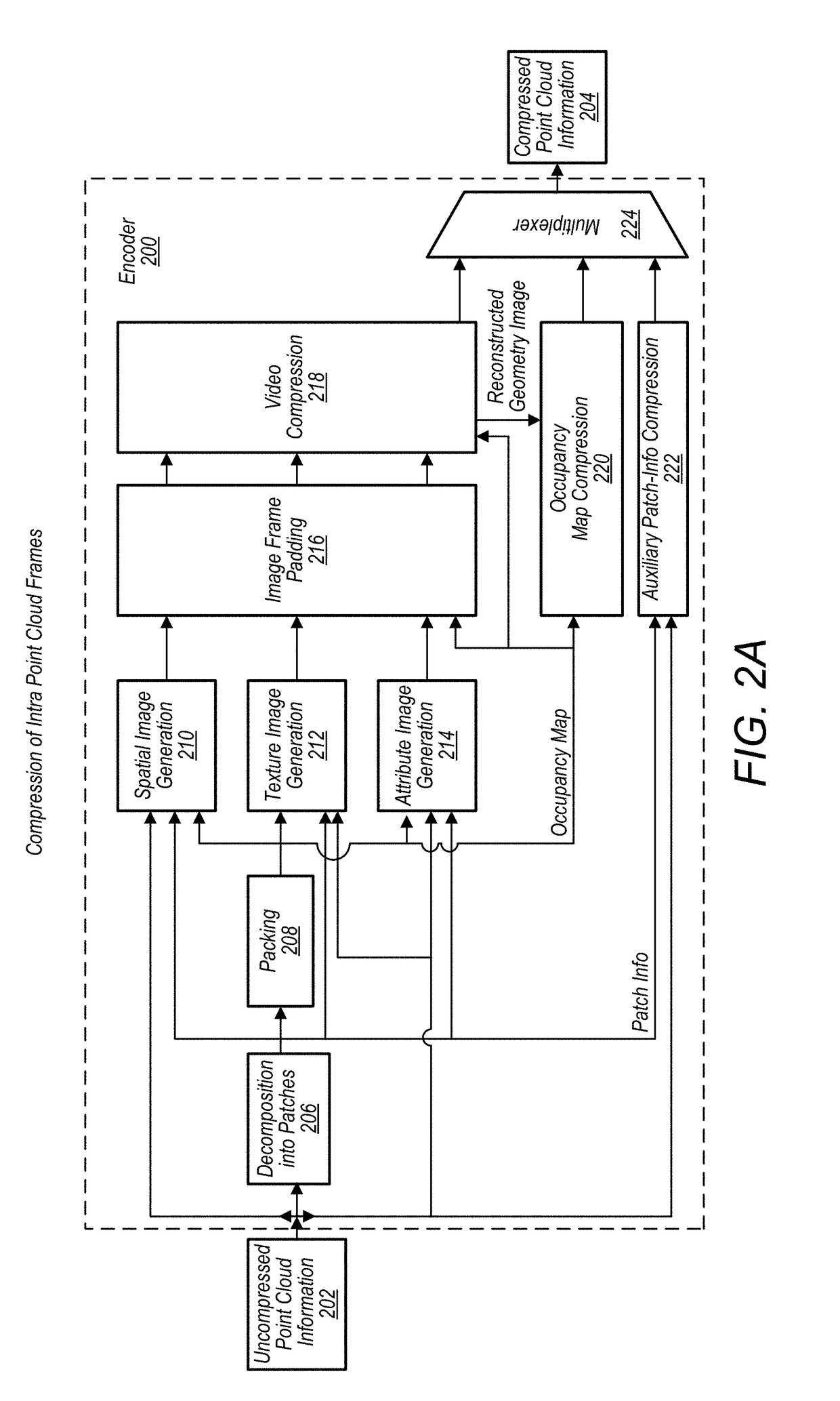

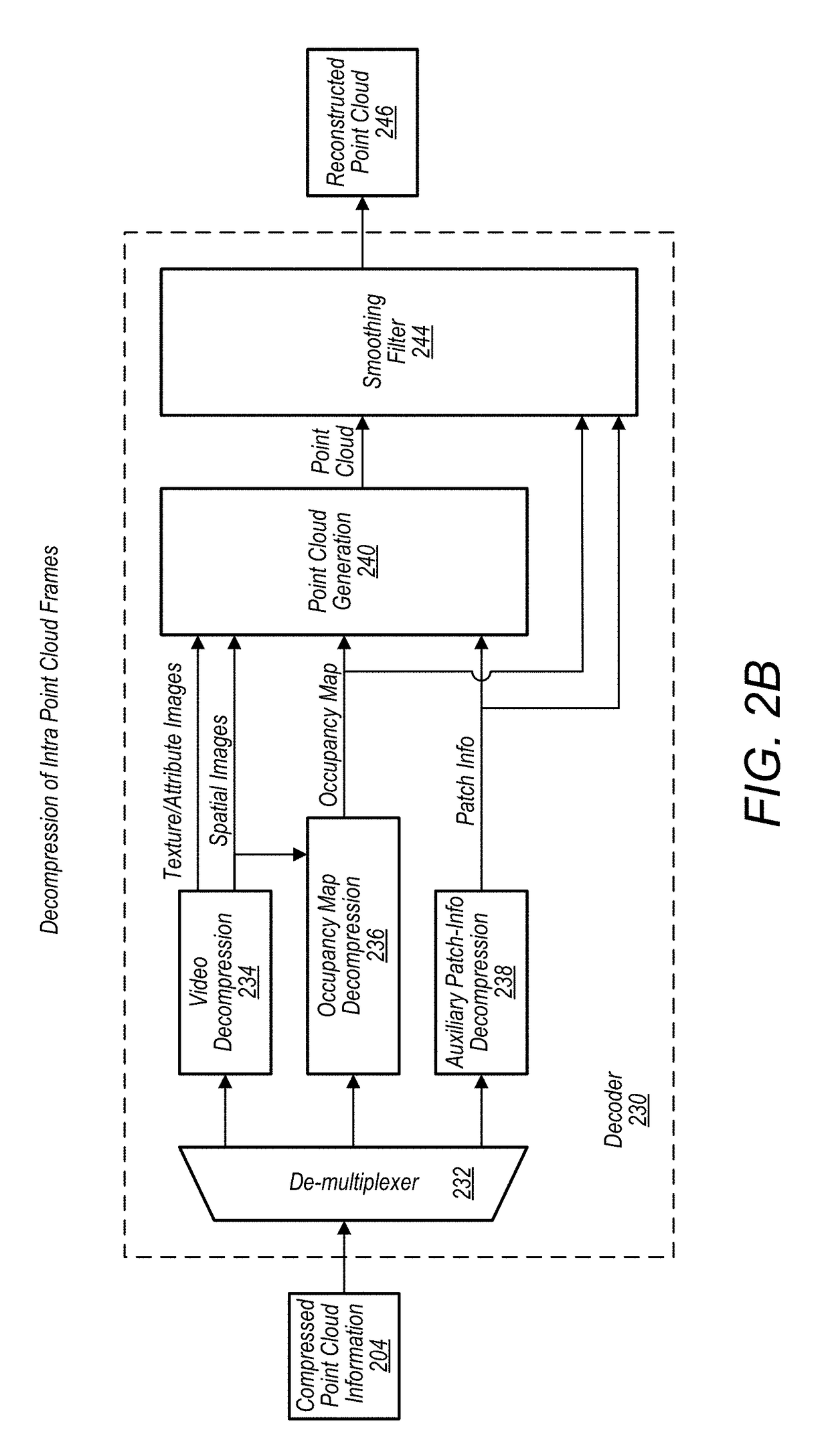

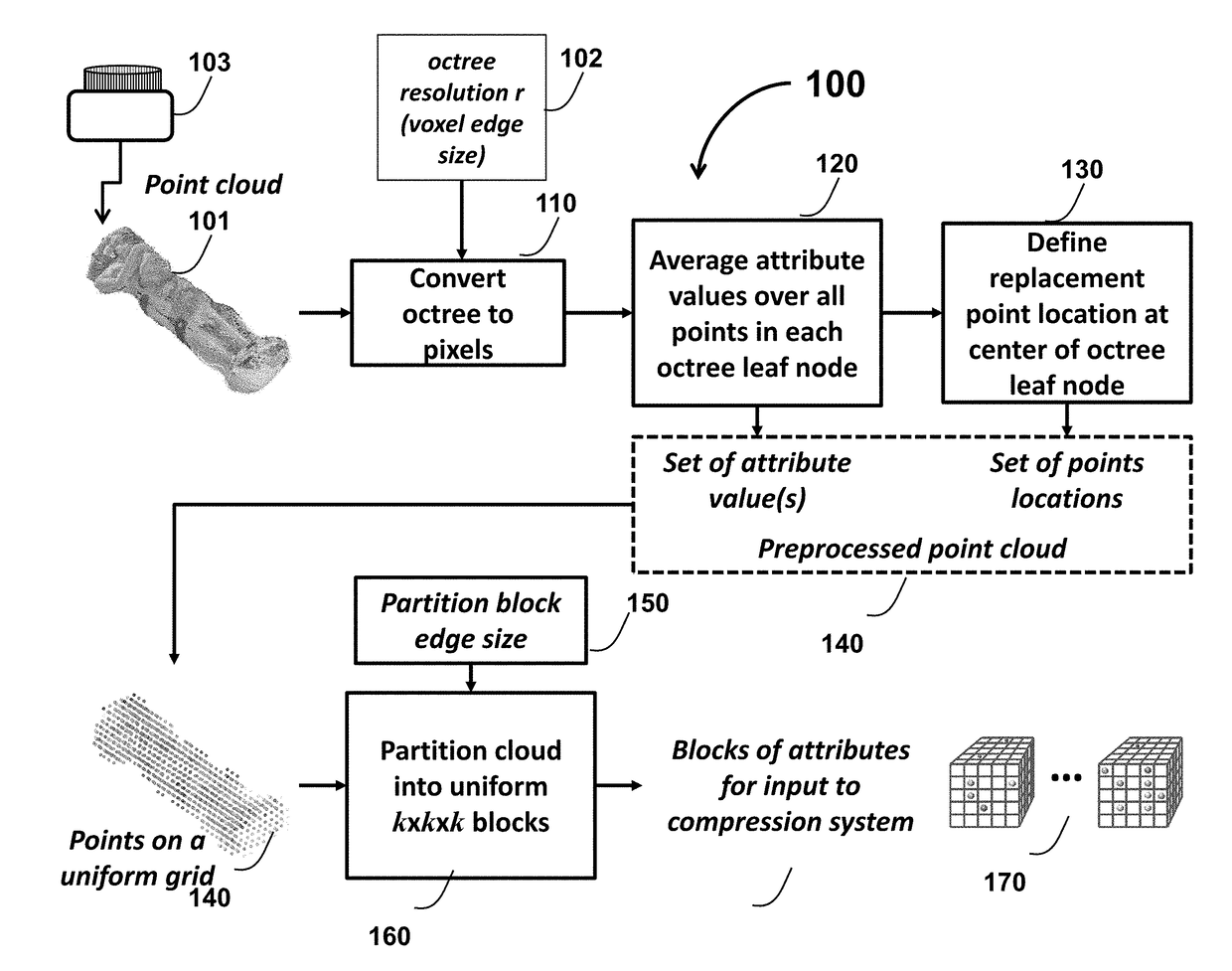

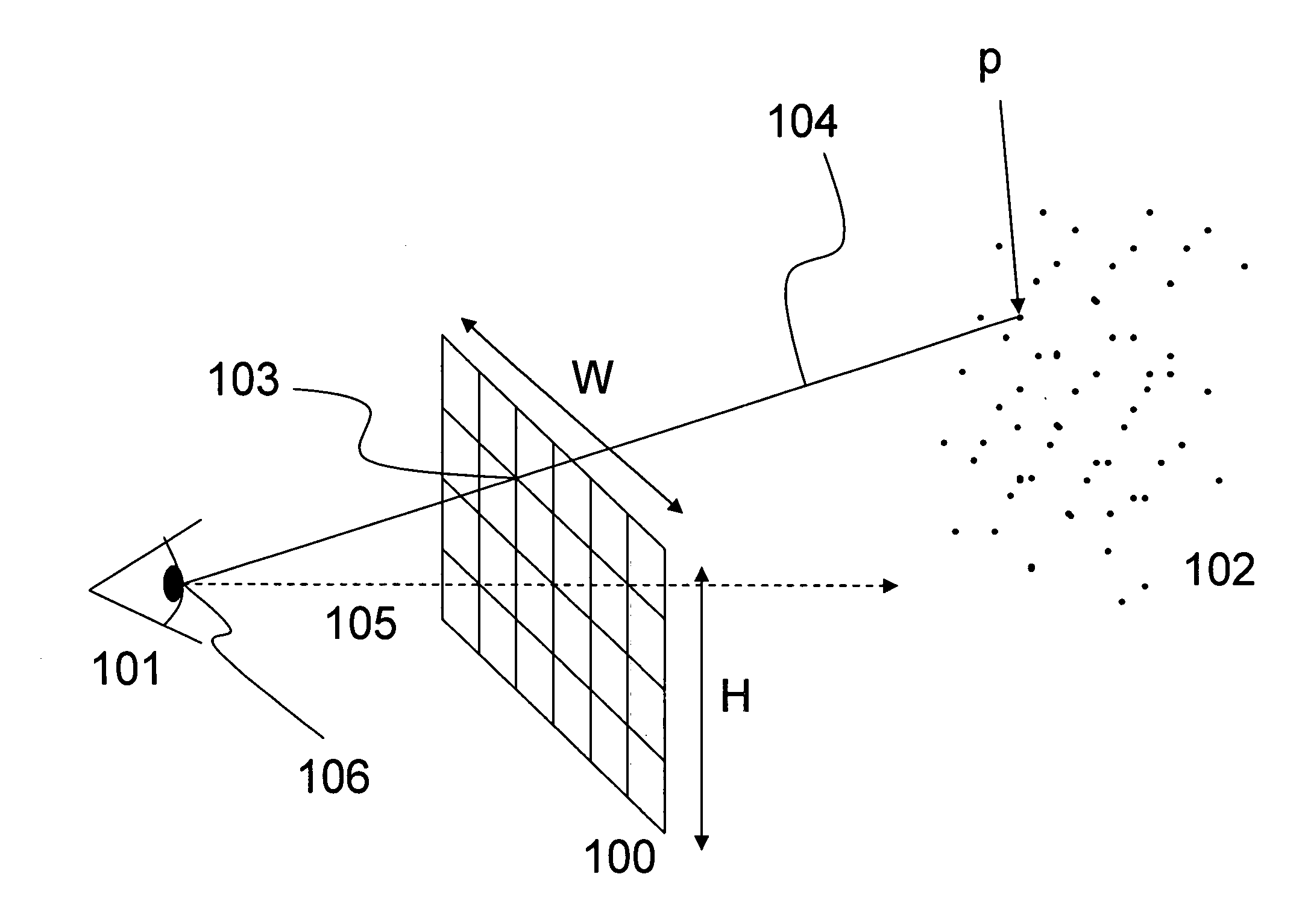

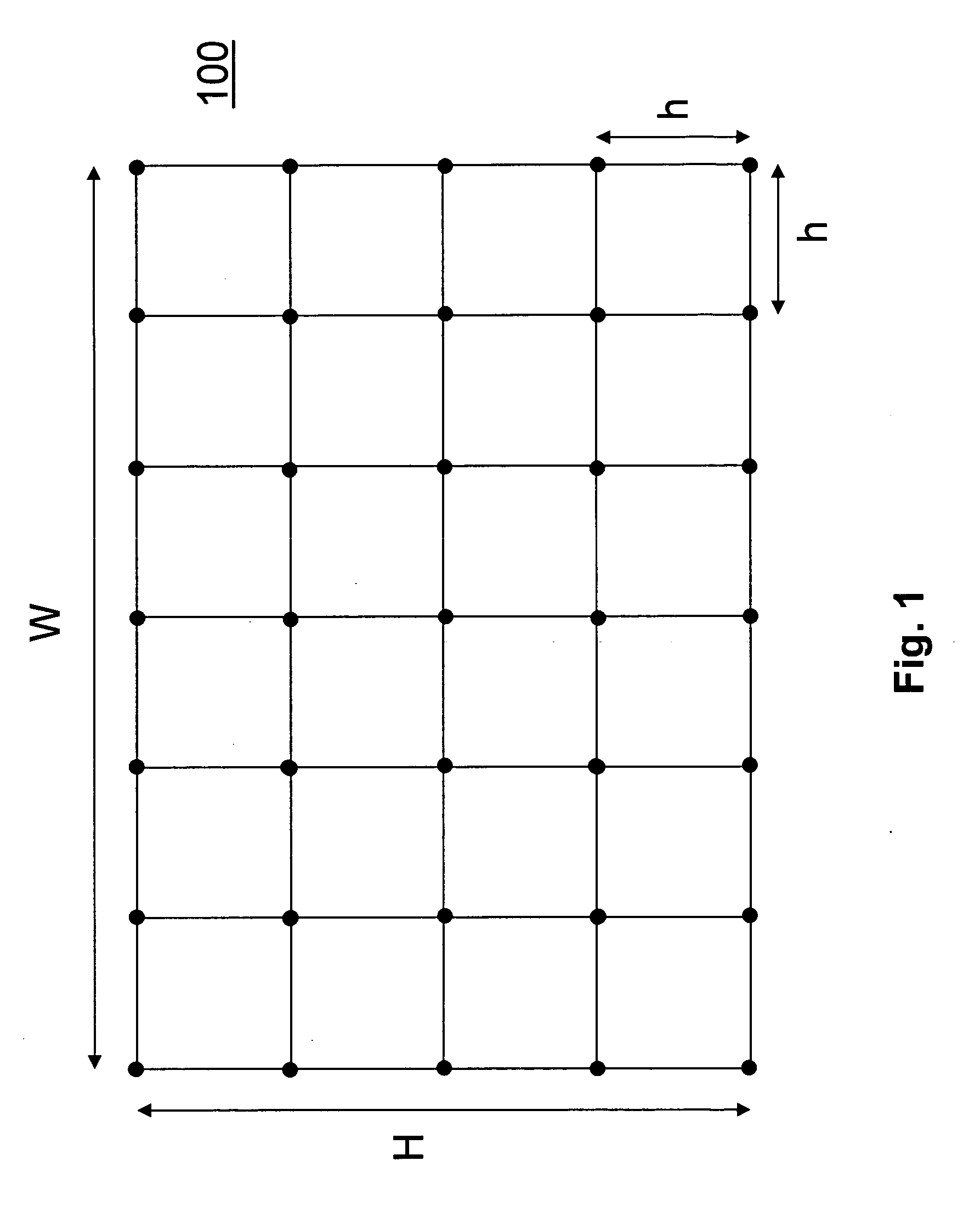

Point cloud compression

A system comprises an encoder configured to compress attribute information and / or spatial for a point cloud and / or a decoder configured to decompress compressed attribute and / or spatial information for the point cloud. To compress the attribute and / or spatial information, the encoder is configured to convert a point cloud into an image based representation. Also, the decoder is configured to generate a decompressed point cloud based on an image based representation of a point cloud.

Owner:APPLE INC

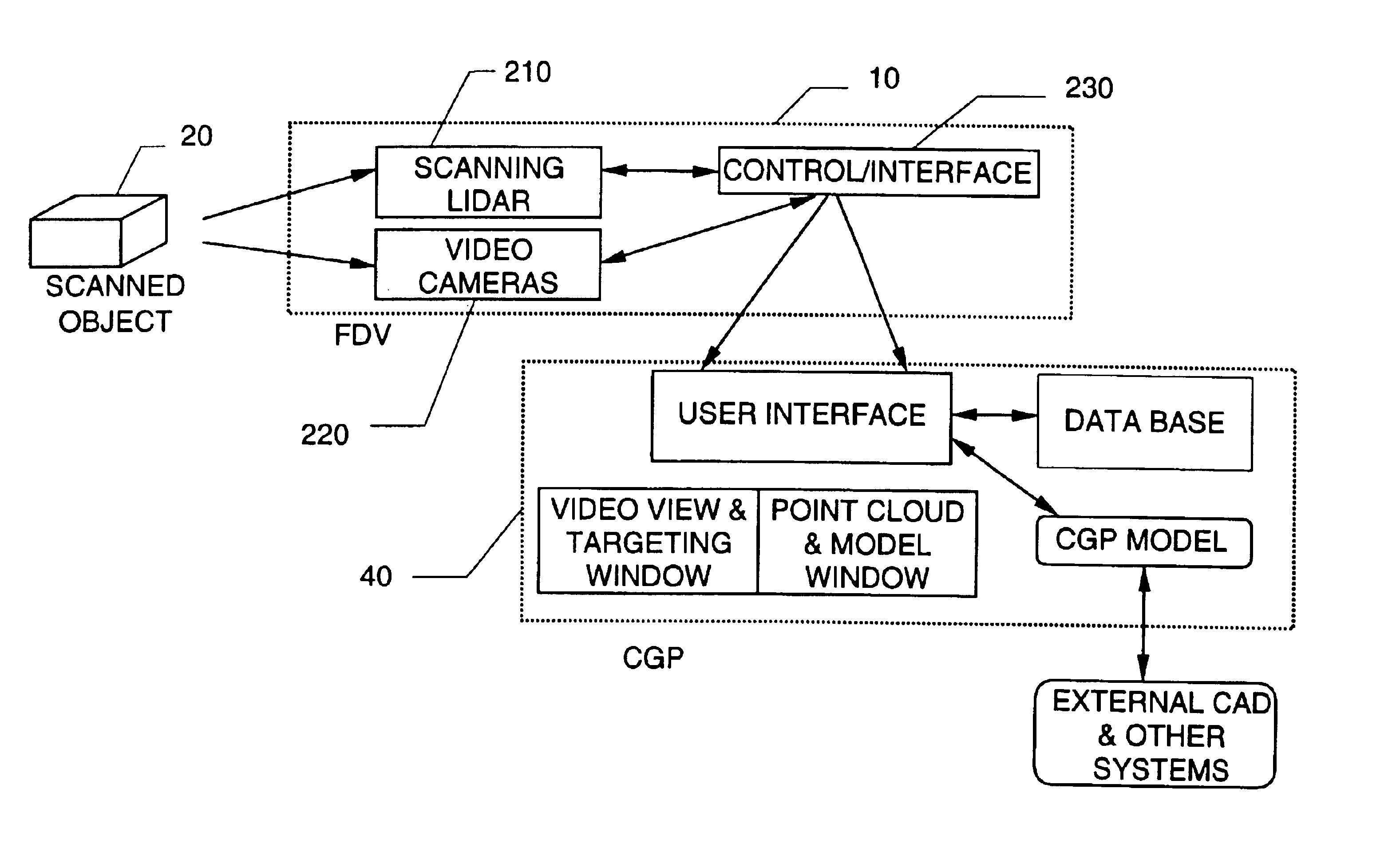

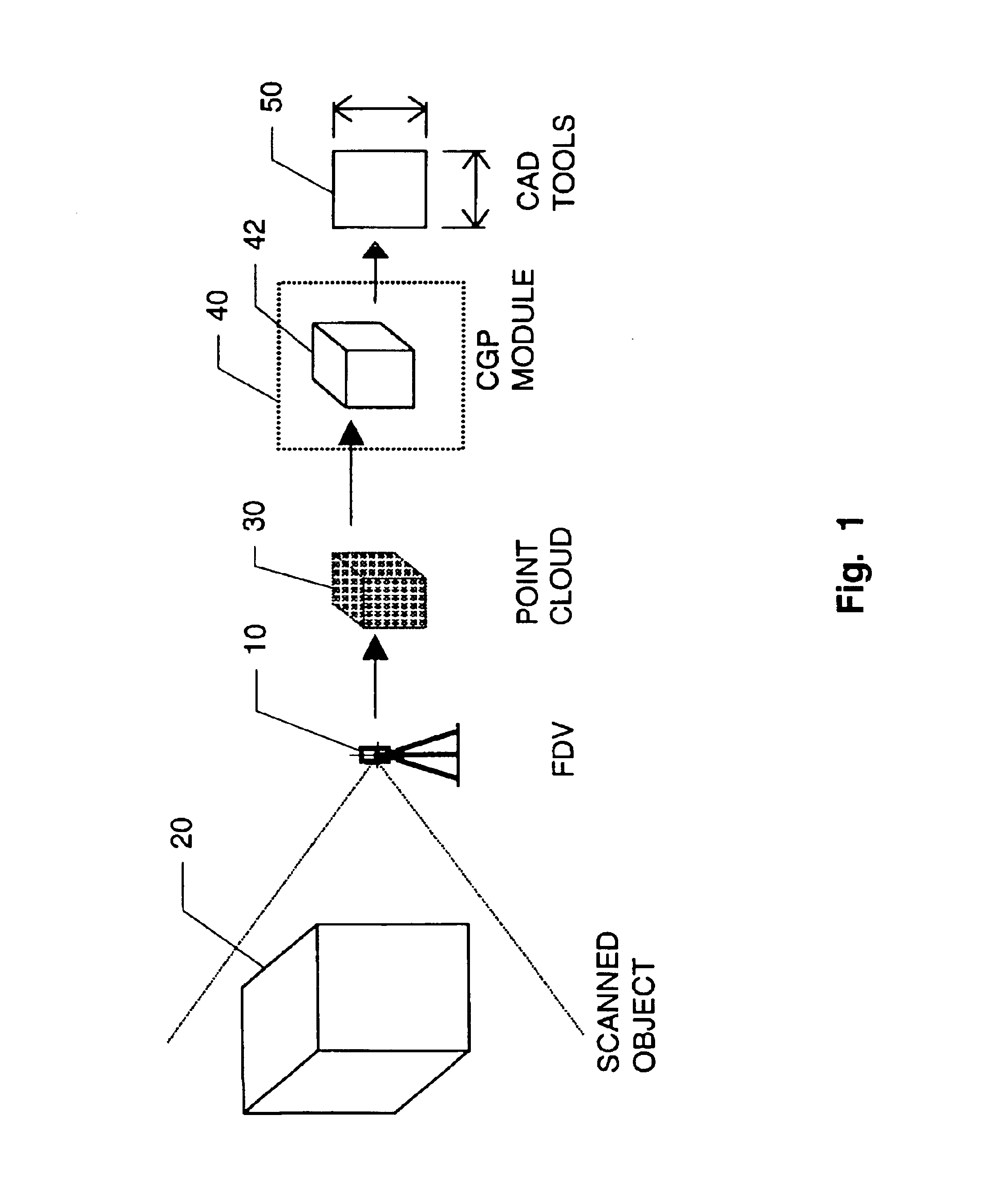

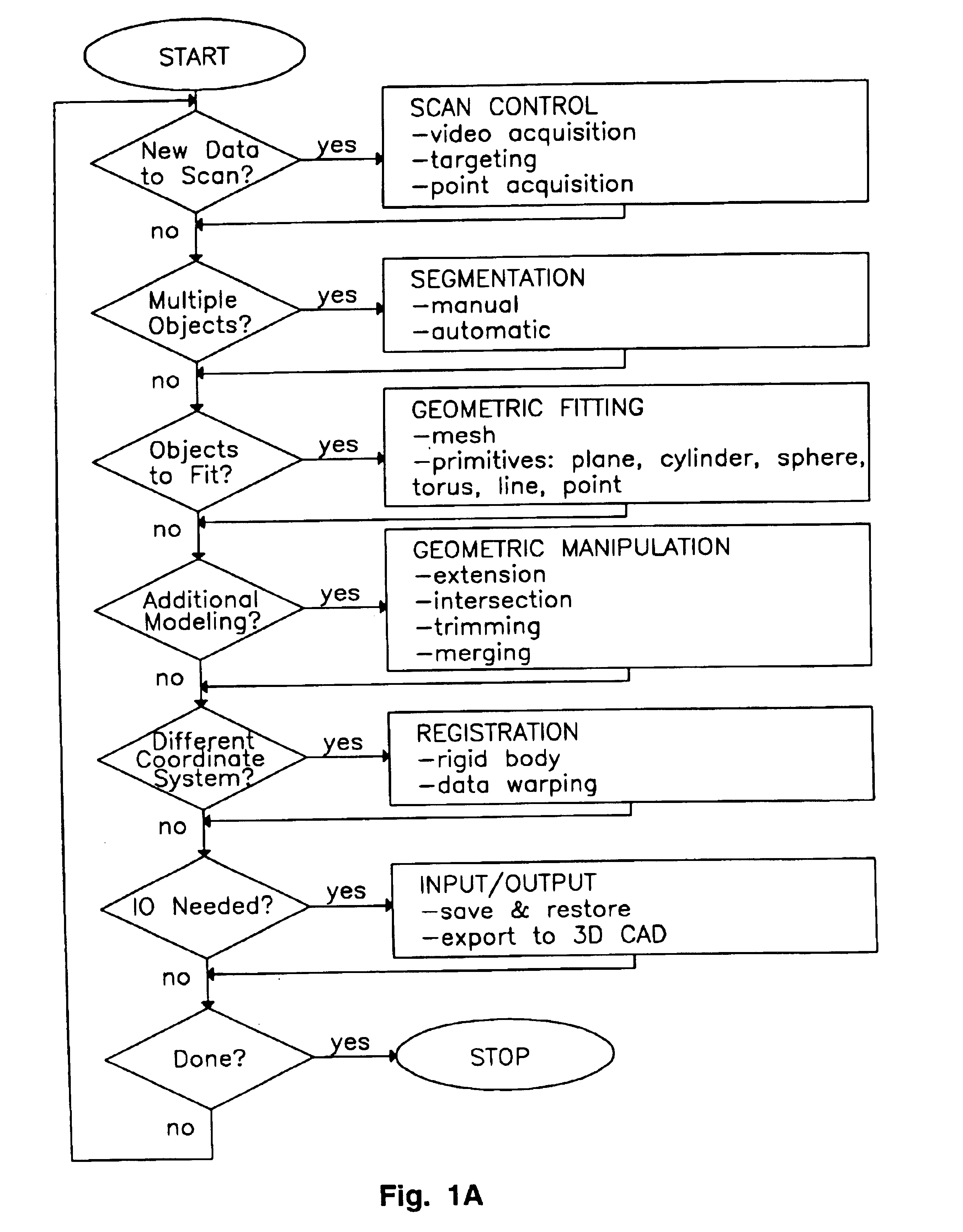

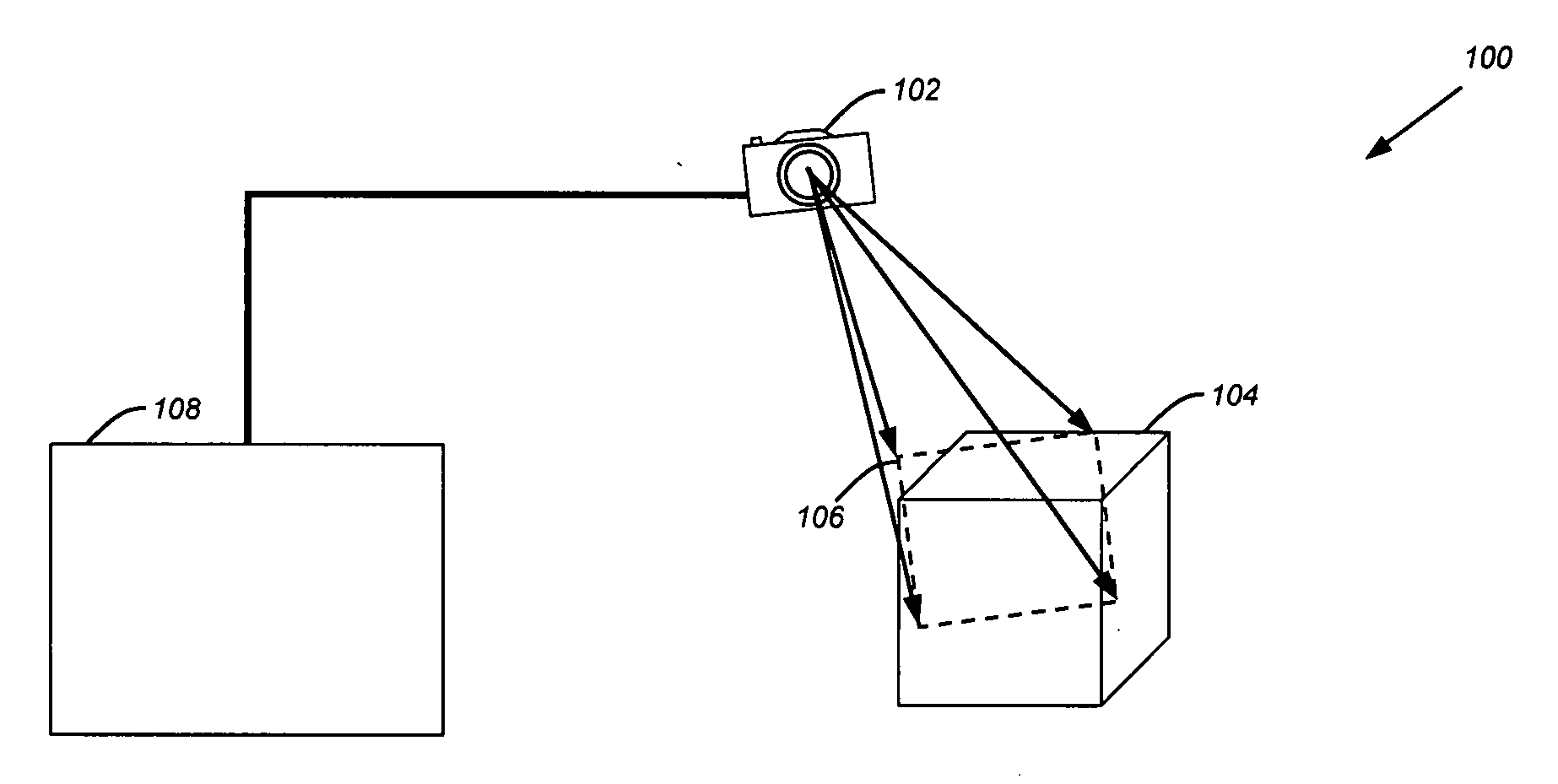

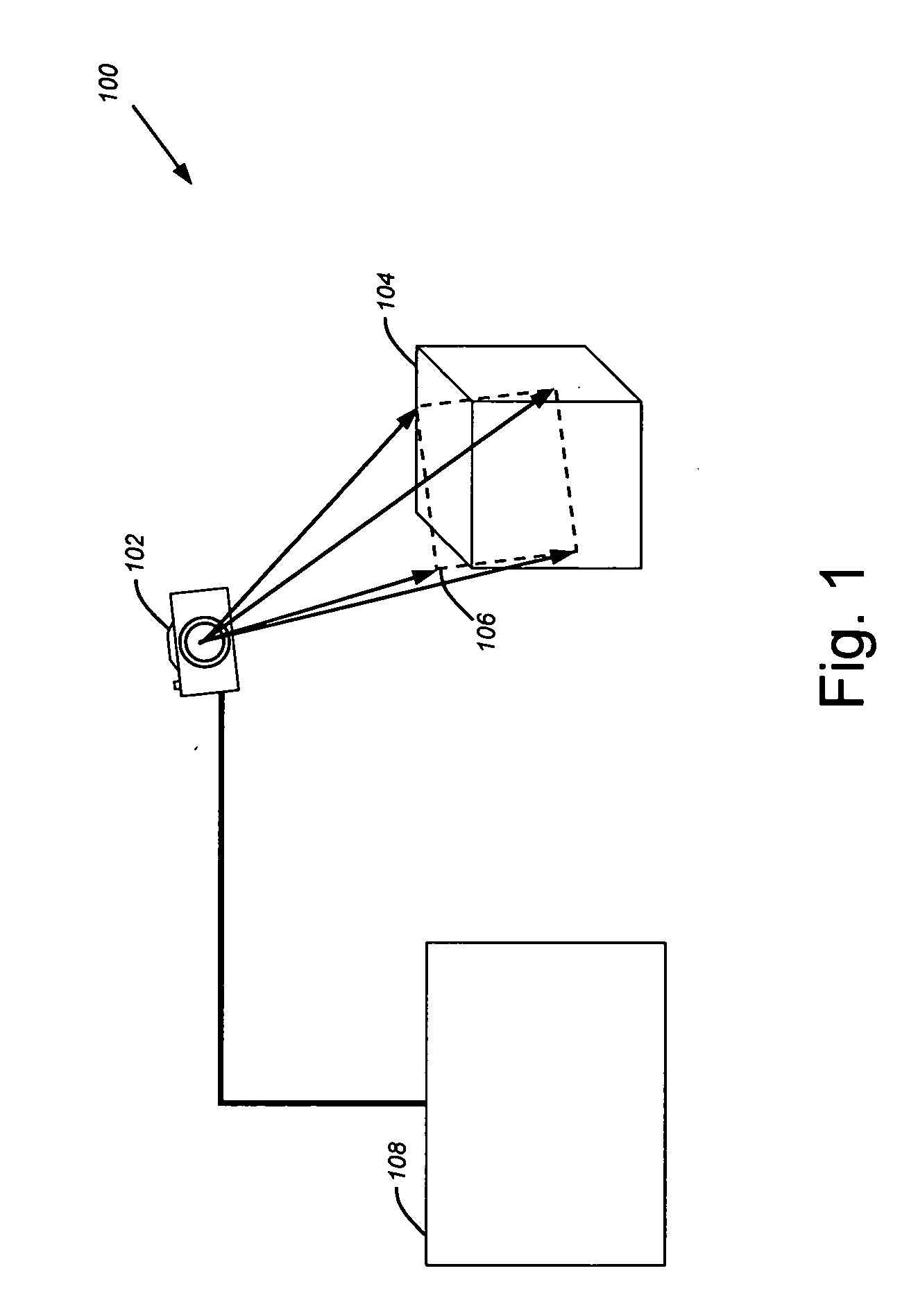

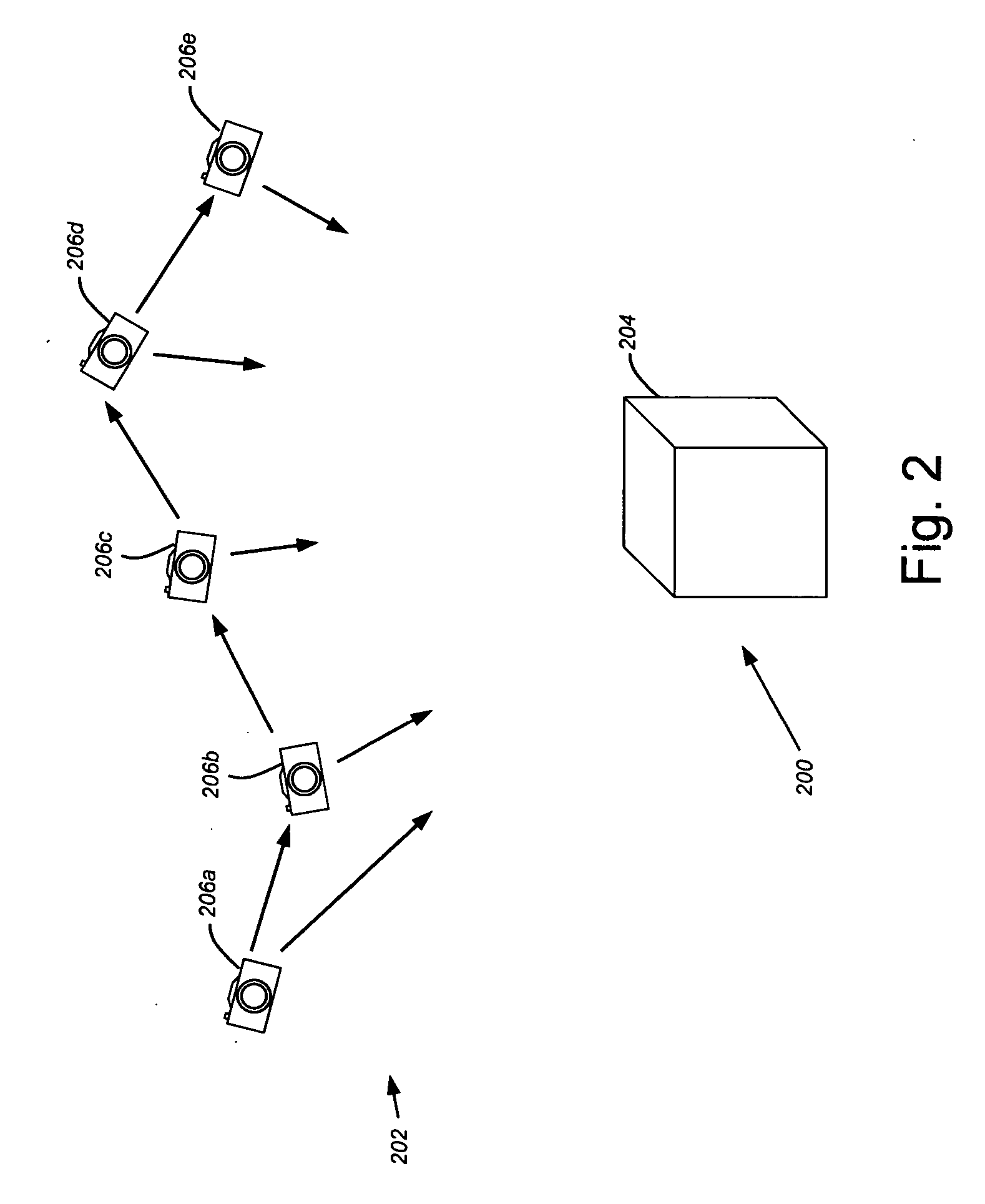

Integrated system for quickly and accurately imaging and modeling three-dimensional objects

InactiveUS6847462B1Improve fitOptical rangefindersBeam/ray focussing/reflecting arrangementsComputer Aided DesignPoint cloud

An integrated system generates a model of a three-dimensional object. A scanning laser device scans the three-dimensional object and generates a point cloud. The points of the point cloud each indicate a location of a corresponding point on a surface of the object. A first model is generated, responsive to the point cloud, that generates a first model representing constituent geometric shapes of the object. A data file is generated, responsive to the first model, that can be inputted to a computer-aided design system.

Owner:LEICA GEOSYSTEMS AG

Determining camera motion

ActiveUS20070103460A1Efficiently parameterizedTelevision system detailsImage analysisPoint cloud3d image

Camera motion is determined in a three-dimensional image capture system using a combination of two-dimensional image data and three-dimensional point cloud data available from a stereoscopic, multi-aperture, or similar camera system. More specifically, a rigid transformation of point cloud data between two three-dimensional point clouds may be more efficiently parameterized using point correspondence established between two-dimensional pixels in source images for the three-dimensional point clouds.

Owner:MEDIT CORP

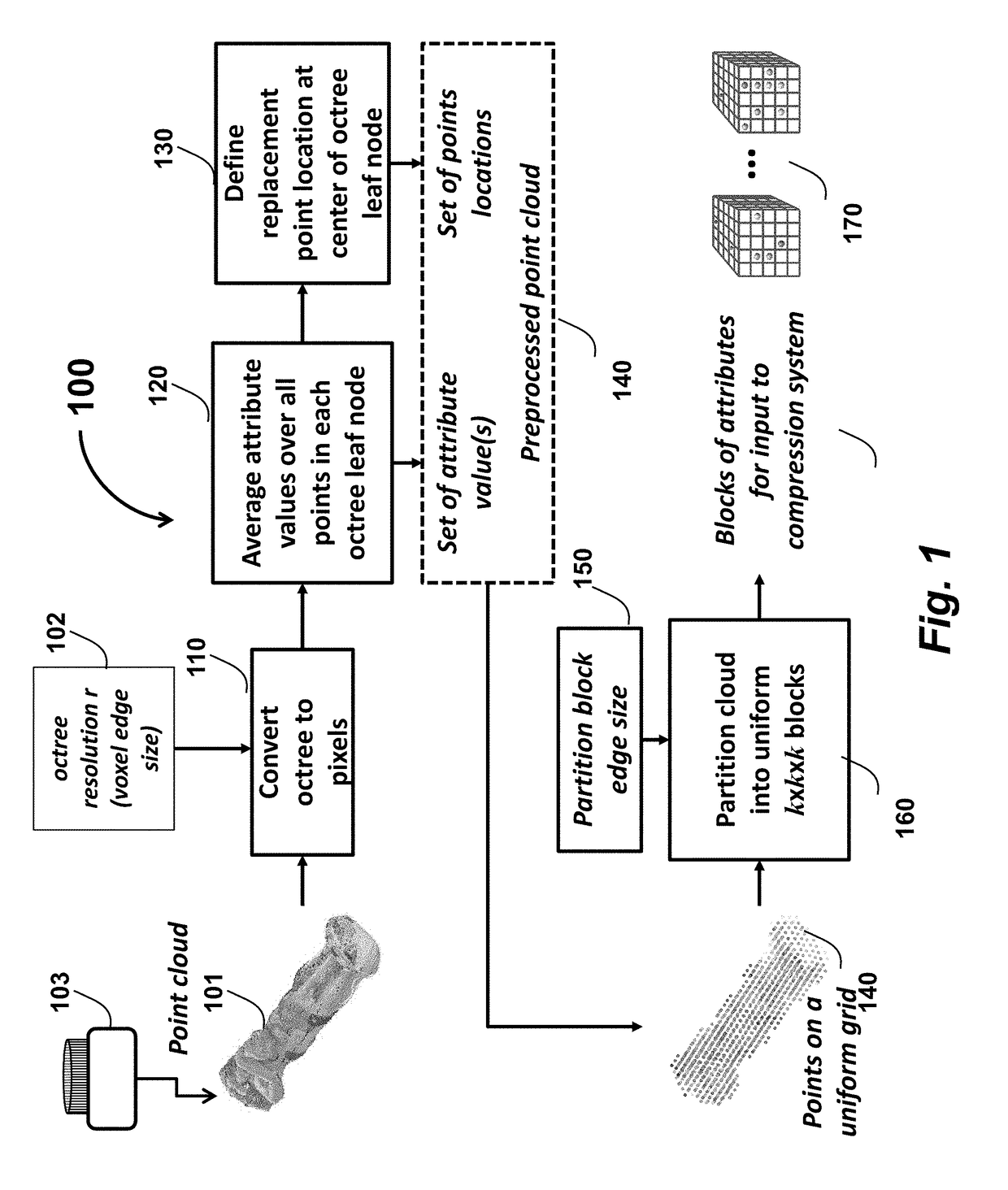

Point Cloud Compression using Prediction and Shape-Adaptive Transforms

InactiveUS20170214943A1Geometric image transformationImage codingPoint cloudComputer graphics (images)

A method compresses a point cloud composed of a plurality of points in a three-dimensional (3D) space by first acquiring the point cloud with a sensor, wherein each point is associated with a 3D coordinate and at least one attribute. The point cloud is partitioned into an array of 3D blocks of elements, wherein some of the elements in the 3D blocks have missing points. For each 3D block, attribute values for the 3D block are predicted based on the attribute values of neighboring 3D blocks, resulting in a 3D residual block. A 3D transform is applied to each 3D residual block using locations of occupied elements to produce transform coefficients, wherein the transform coefficients have a magnitude and sign. The transform coefficients are entropy encoded according the magnitudes and sign bits to produce a bitstream.

Owner:MITSUBISHI ELECTRIC RES LAB INC

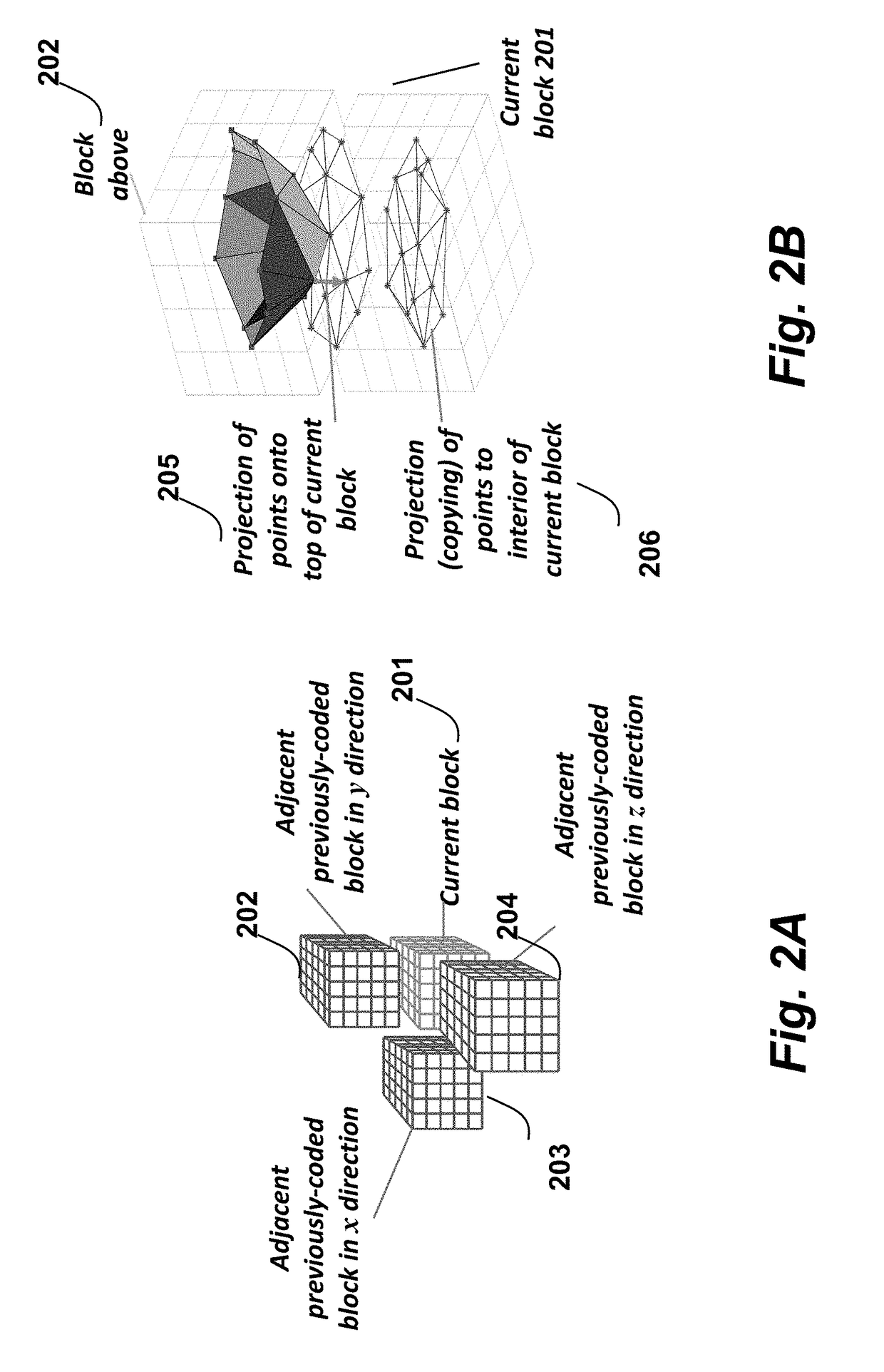

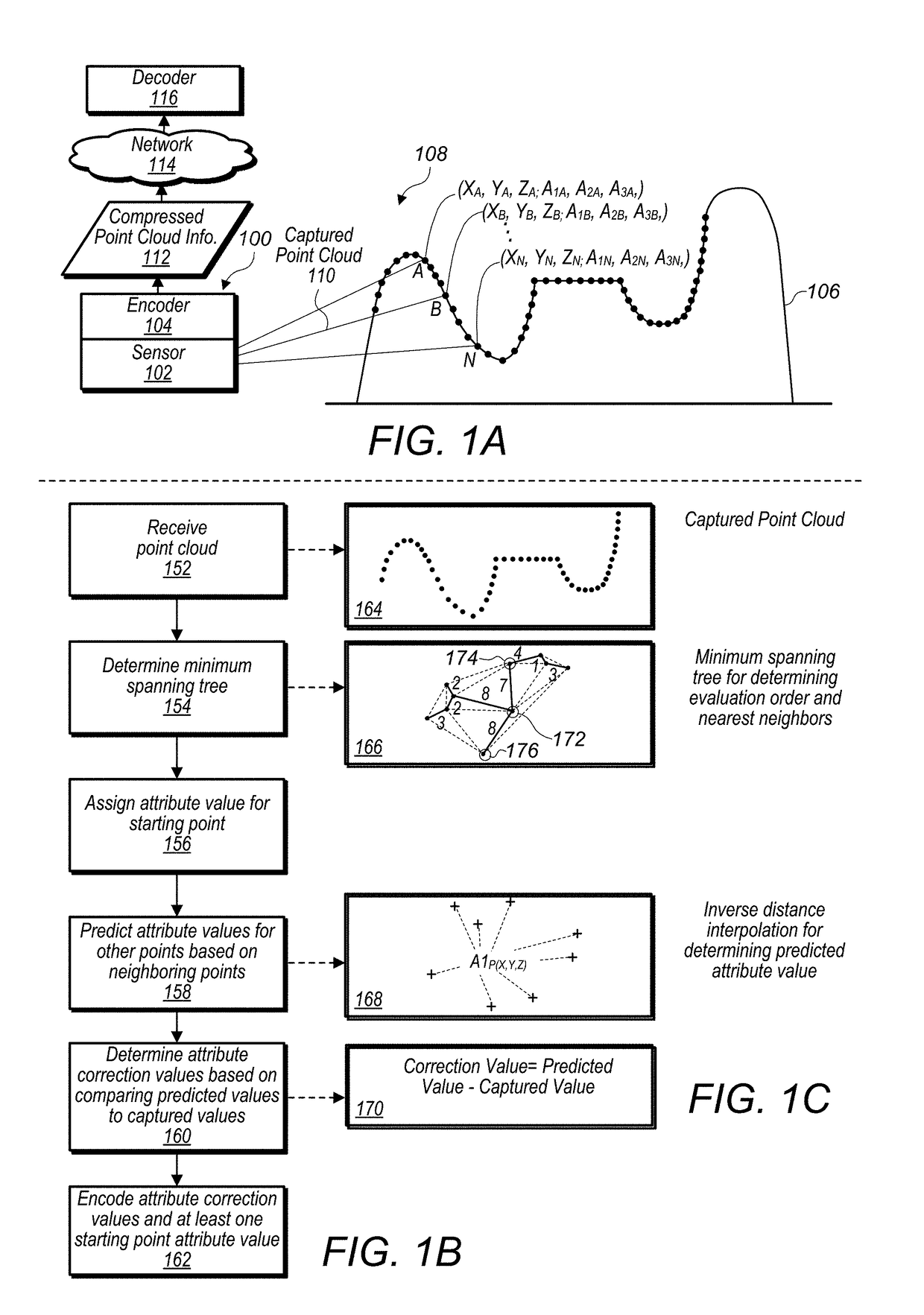

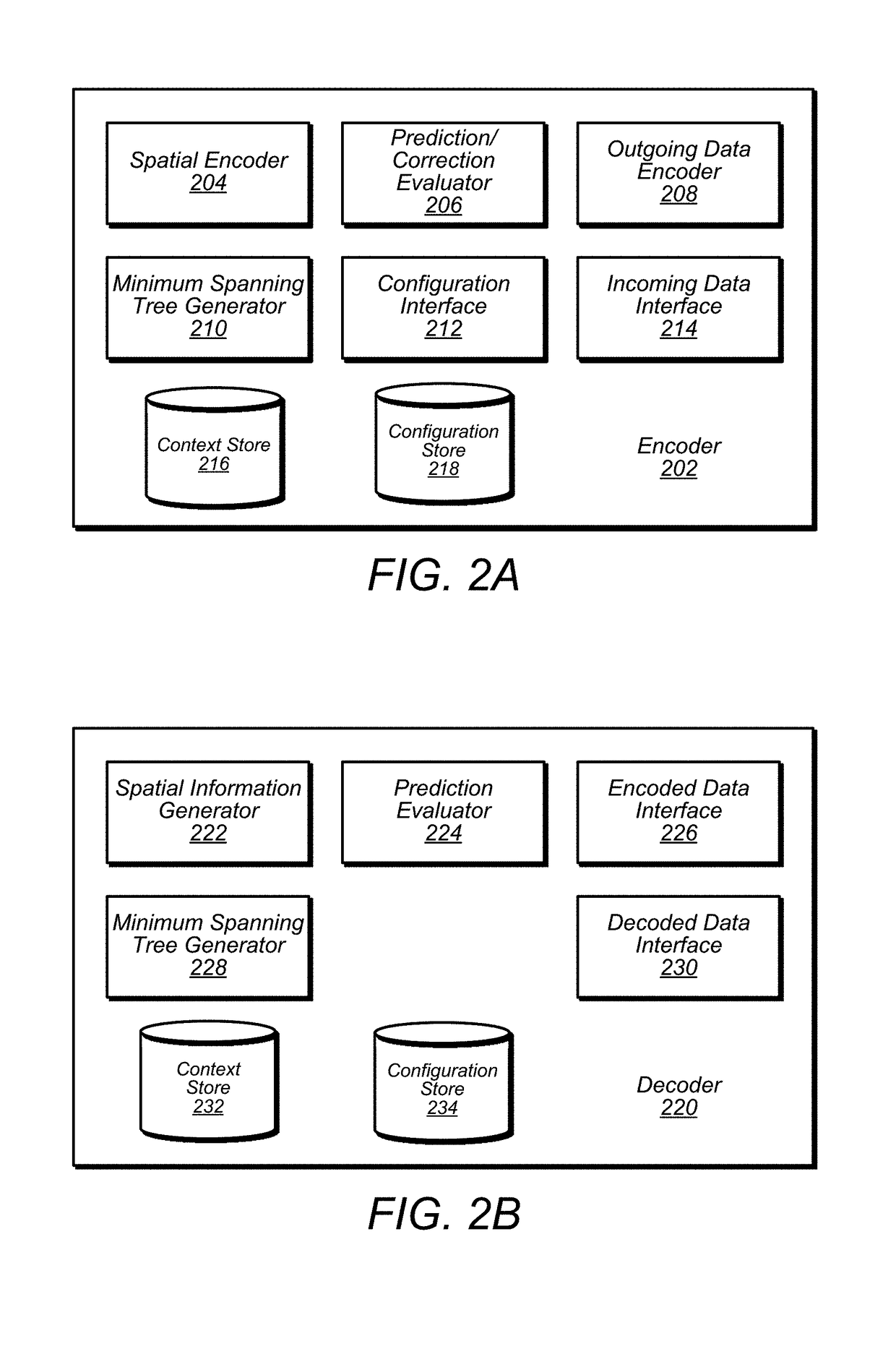

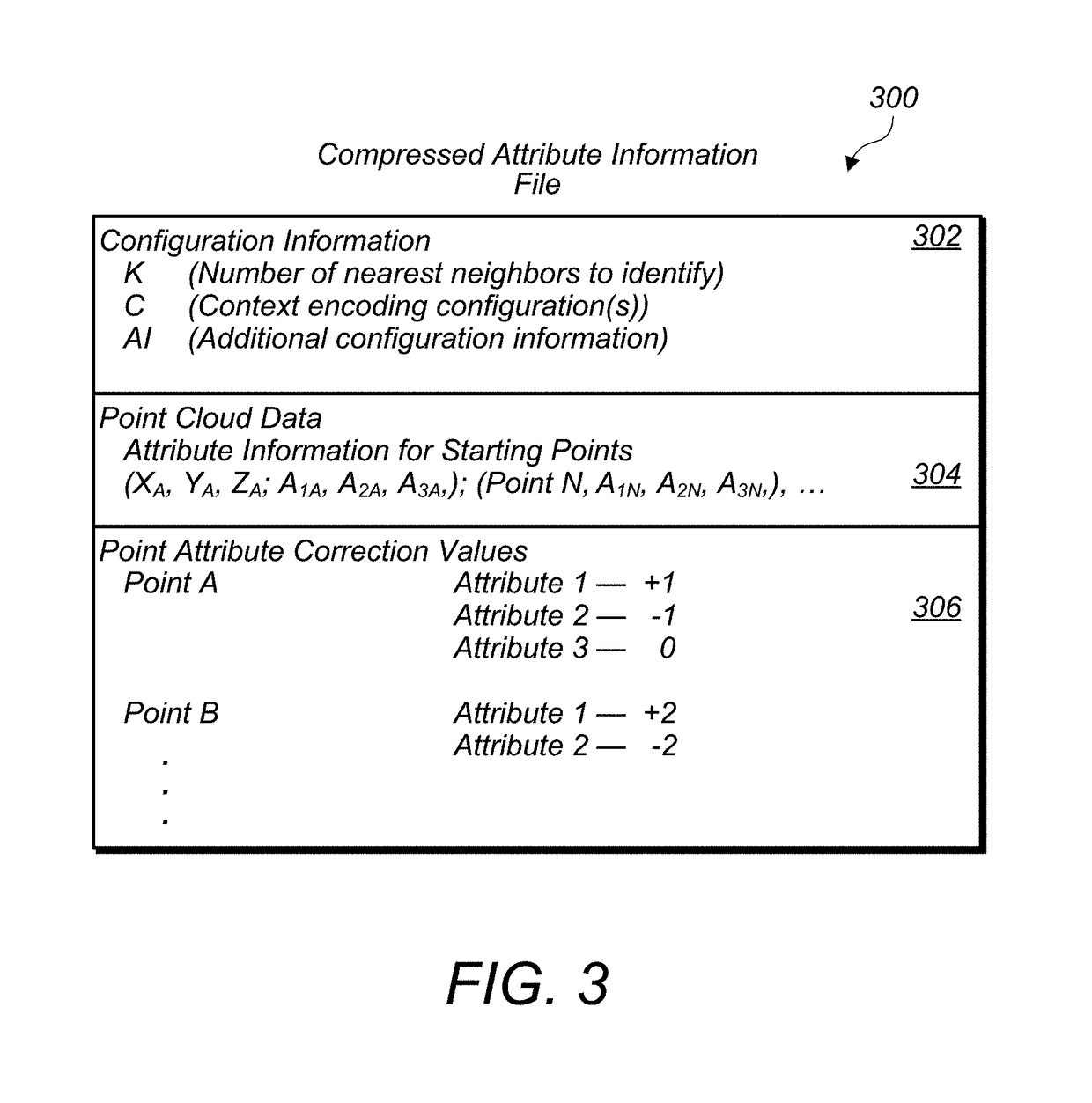

Point Cloud Compression

A system comprises an encoder configured to compress attribute information for a point cloud and / or a decoder configured to decompress compressed attribute information for the point cloud. Attribute values for at least one starting point are included in a compressed attribute information file and attribute correction values used to correct predicted attribute values are included in the compressed attribute information file. Attribute values are predicted based, at least in part, on attribute values of neighboring points and distances between a particular point for whom an attribute value is being predicted and the neighboring points. The predicted attribute values are compared to attribute values of a point cloud prior to compression to determine attribute correction values. A decoder follows a similar prediction process as an encoder and corrects predicted values using attribute correction values included in a compressed attribute information file.

Owner:APPLE INC

Method of generating surface defined by boundary of three-dimensional point cloud

Disclosed is a method of generating a three-dimensional (3D) surface defined by a boundary of a 3D point cloud. The method comprises generating density and depth maps from the 3D point cloud, constructing a 2D mesh from the depth and density maps, transforming the 2D mesh into a 3D mesh, and rendering 3D polygons defined by the 3D mesh.

Owner:NVIDIA CORP

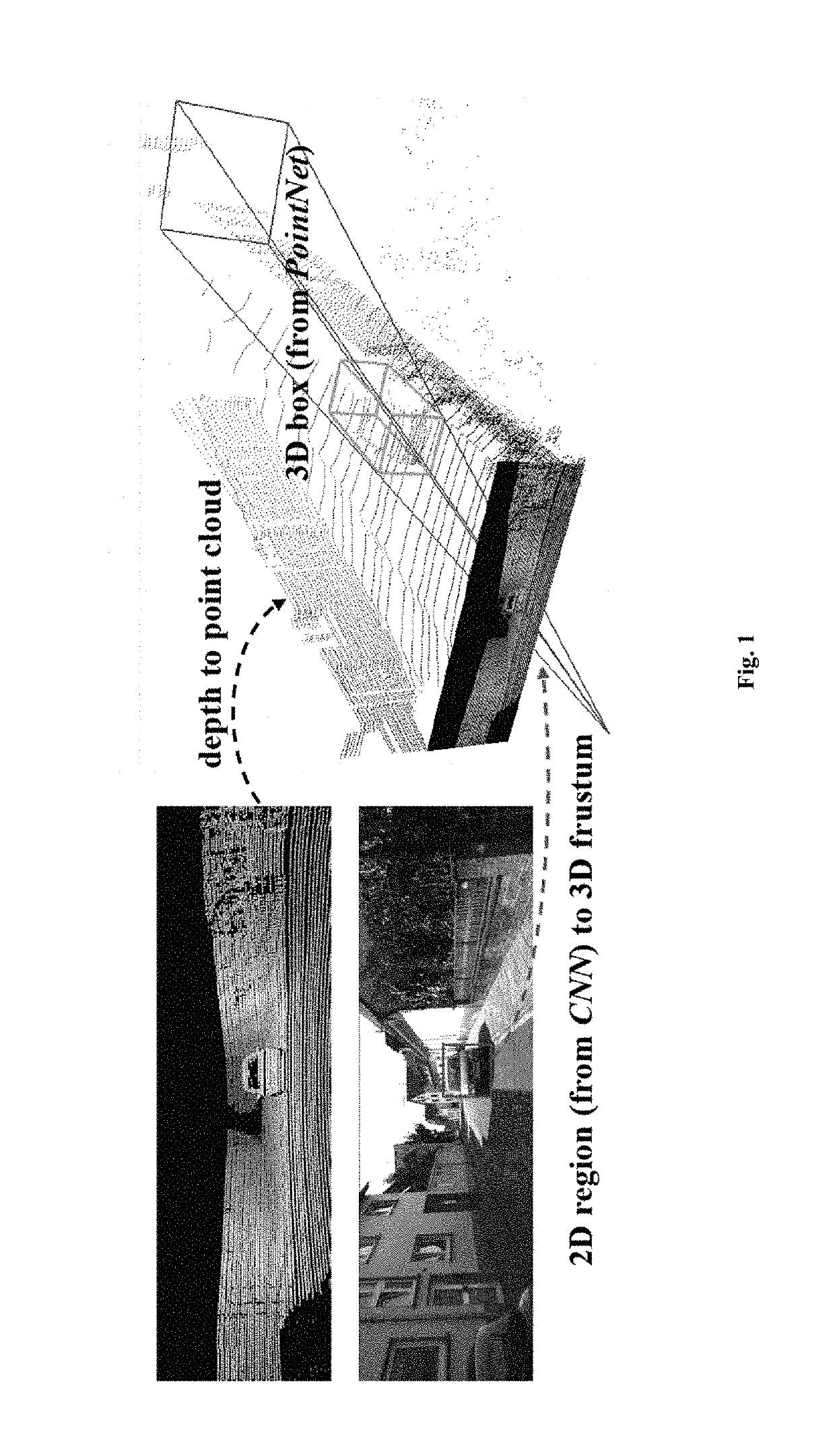

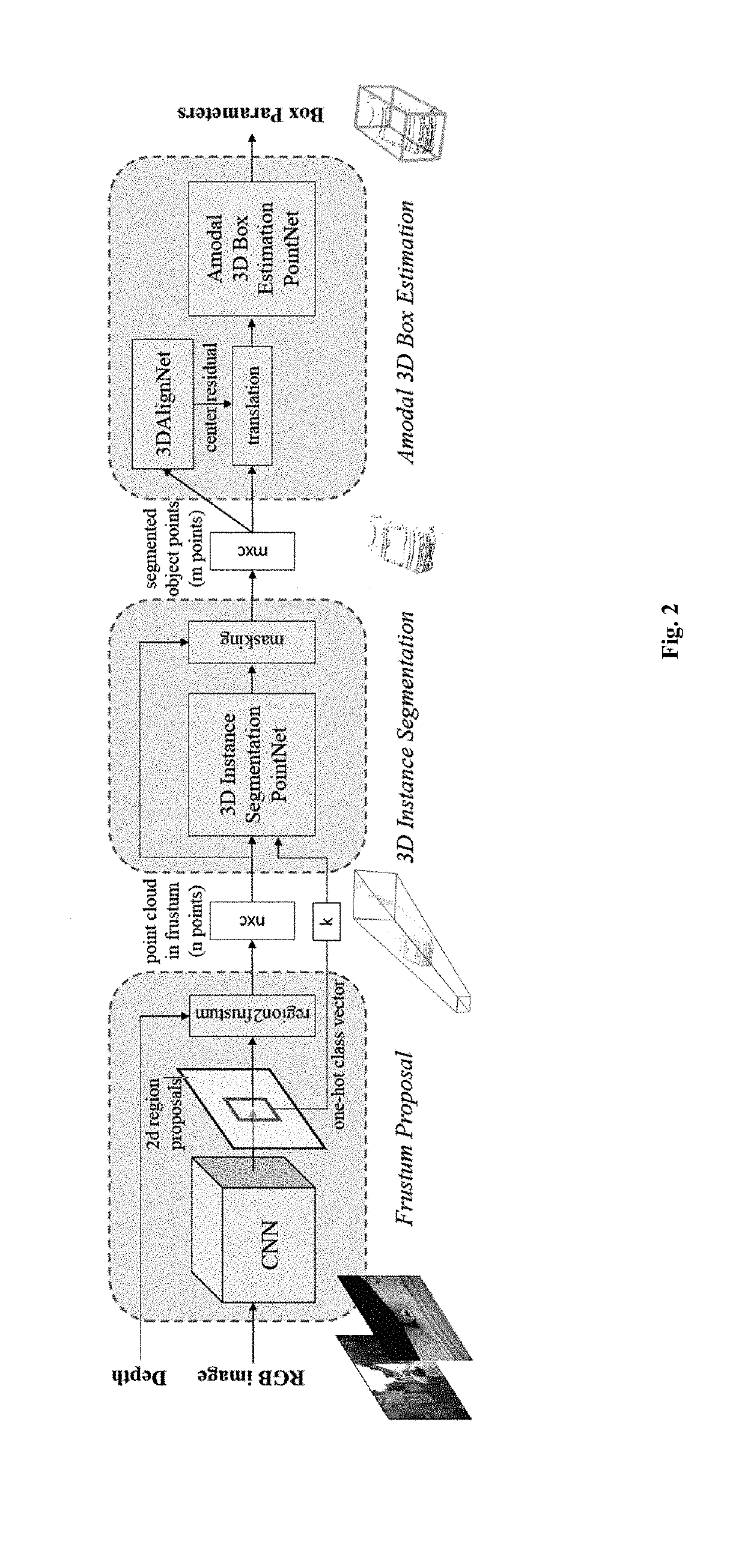

Three-dimensional object detection for autonomous robotic systems using image proposals

Provided herein are methods and systems for implementing three-dimensional perception in an autonomous robotic system comprising an end-to-end neural network architecture that directly consumes large-scale raw sparse point cloud data and performs such tasks as object localization, boundary estimation, object classification, and segmentation of individual shapes or fused complete point cloud shapes.

Owner:NURO INC

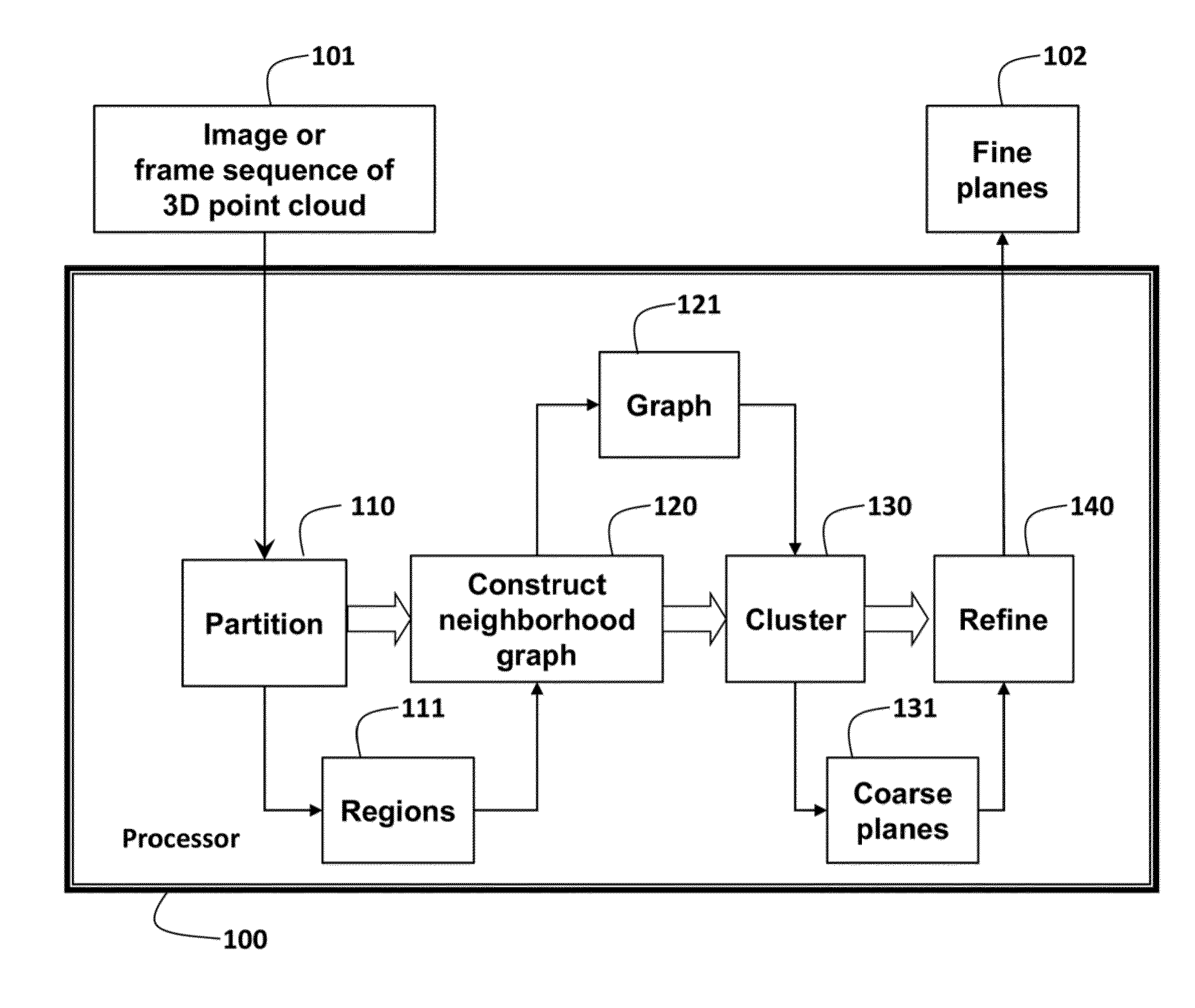

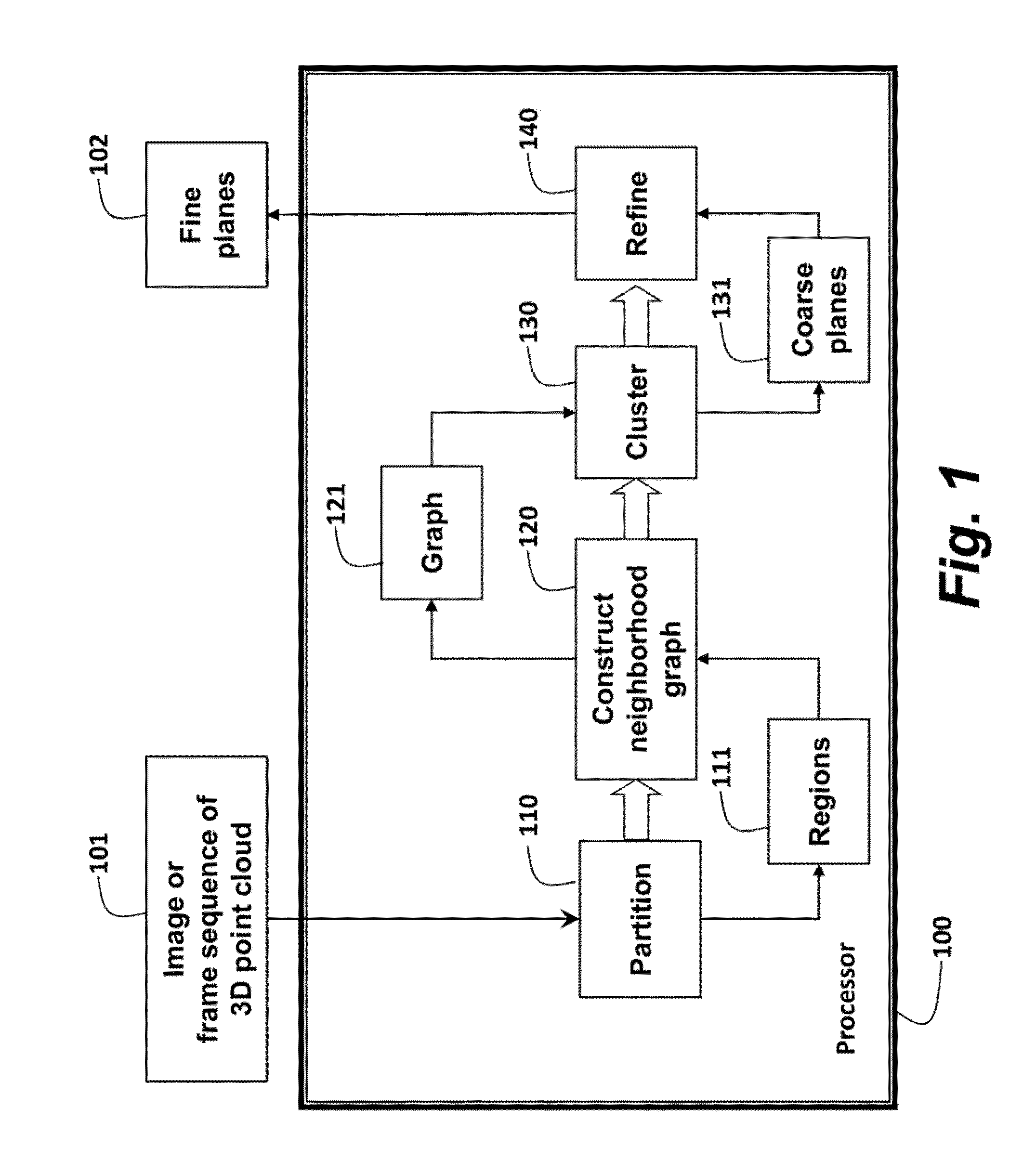

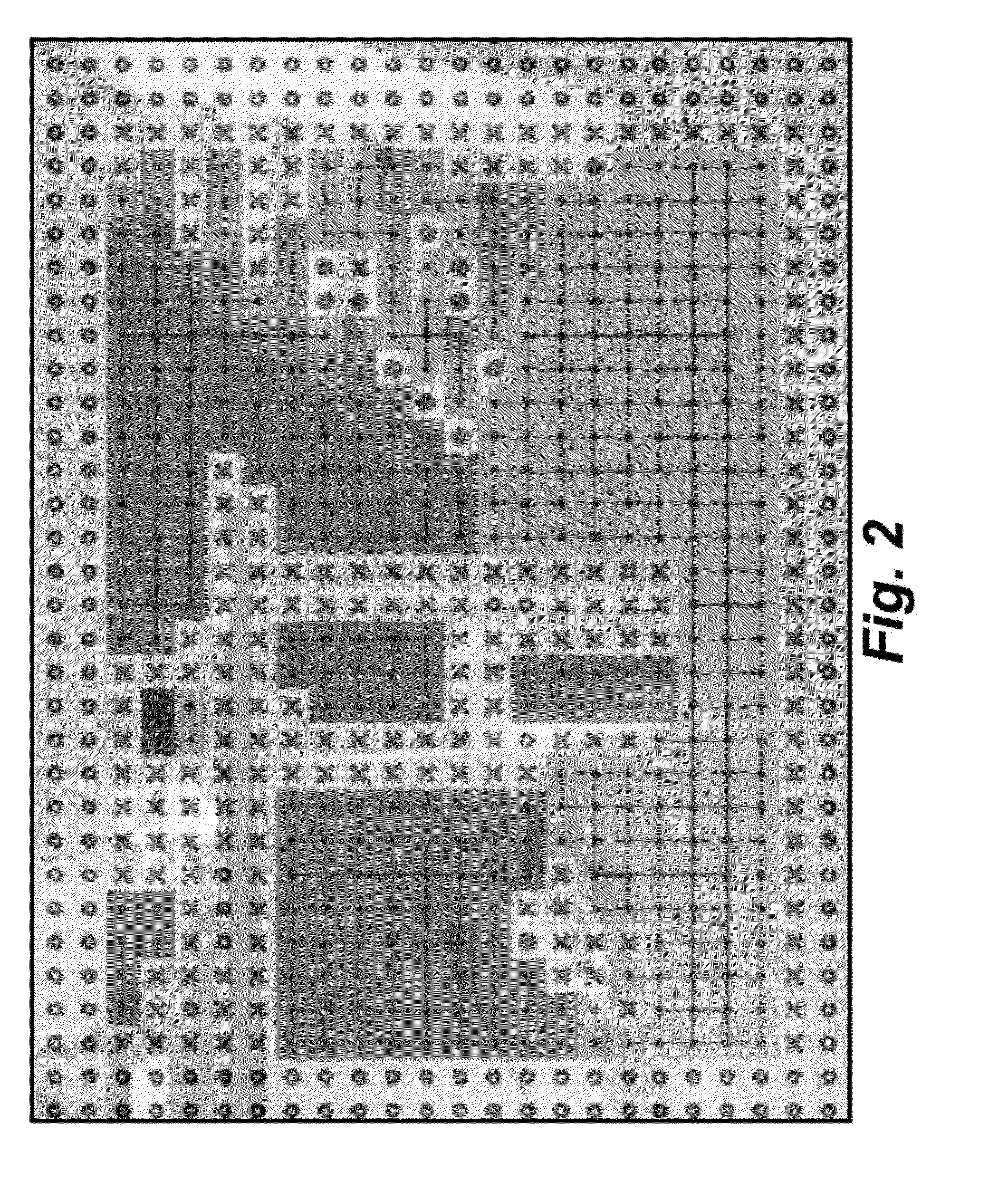

Method for Extracting Planes from 3D Point Cloud Sensor Data

A method extracts planes from three-dimensional (3D) points by first partitioning the 3D points into disjoint regions. A graph of nodes and edges is then constructed, wherein the nodes represent the regions and the edges represent neighborhood relationships of the regions. Finally, agglomerative hierarchical clustering is applied to the graph to merge regions belonging to the same plane.

Owner:MITSUBISHI ELECTRIC RES LAB INC

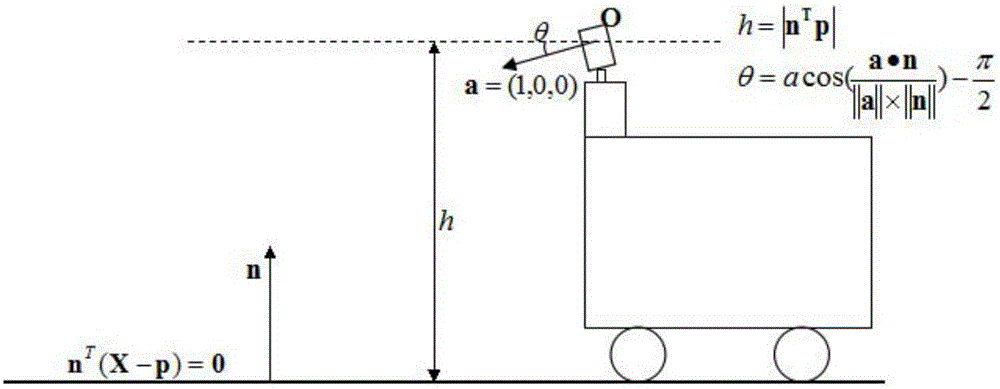

Kinect-based robot self-positioning method

ActiveCN105045263ARealize self-positioningIndependent positioning and stabilityPosition/course control in two dimensionsPoint cloudRgb image

The invention discloses a Kinect-based robot self-positioning method. The method includes the following steps that: the RGB image and depth image of an environment are acquired through the Kinect, and the relative motion of a robot is estimated through the information of visual fusion and a physical speedometer, and pose tracking can be realized according to the pose of the robot at a last time point; depth information is converted into three-dimensional point cloud, and a ground surface is extracted from the point cloud, and the height and pitch angle of the Kinect relative to the ground surface are automatically calibrated according to the ground surface, so that the three-dimensional point cloud can be projected to the ground surface, and therefore, two-dimensional point cloud similar to laser data can be obtained, and the two-dimensional point cloud is matched with pre-constructed environment raster map, and thus, accumulated errors in a robot tracking process can be corrected, and the pose of the robot can be estimated accurately. According to the Kinect-based robot self-positioning method of the invention, the Kinect is adopted to replace laser to perform positioning, and therefore, cost is low; image and depth information is fused, so that the method can have high precision; and the method is compatible with a laser map, and the mounting height and pose of the Kinect are not required to be calibrated in advance, and therefore, the method is convenient to use, and requirements for autonomous positioning and navigation of the robot can be satisfied.

Owner:HANGZHOU JIAZHI TECH CO LTD

Methods for Determining Meniscal Size and Shape and for Devising Treatment

The present invention relates to methods for determining meniscal size and shape for use in designing therapies for the treatment of various joint diseases. The invention uses an image of a joint that is processed for analysis. Analysis can include, for example, generating a thickness map, a cartilage curve, or a point cloud. This information is used to determine the extent of the cartilage defect or damage and to design an appropriate therapy, including, for example, an implant. Adjustments to the designed therapy are made to account for the materials used.

Owner:CONFORMIS

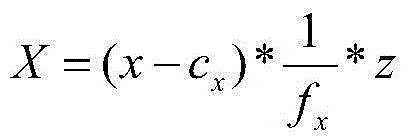

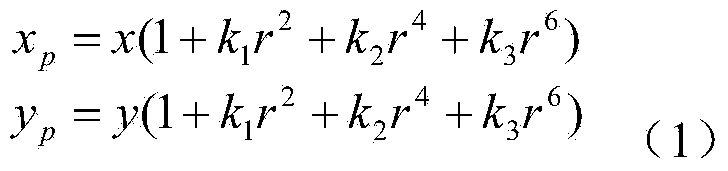

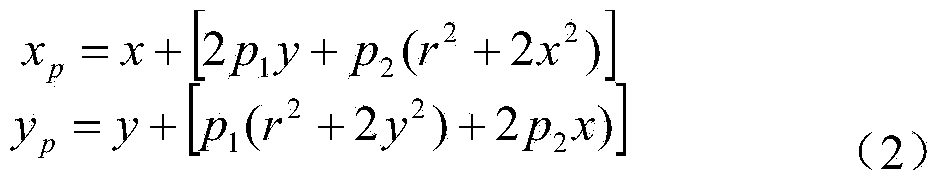

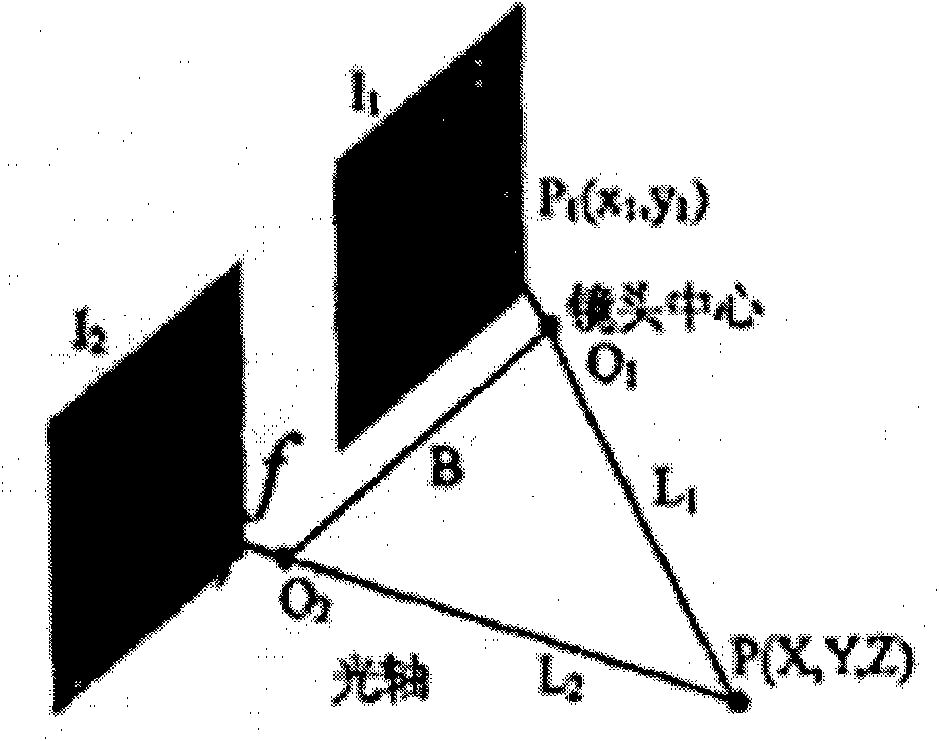

Parallax optimization algorithm-based binocular stereo vision automatic measurement method

InactiveCN103868460AAccurate and automatic acquisitionComplete 3D point cloud informationImage analysisUsing optical meansBinocular stereoNon targeted

The invention discloses a parallax optimization algorithm-based binocular stereo vision automatic measurement method. The method comprises the steps of 1, obtaining a corrected binocular view; 2, matching by using a stereo matching algorithm and taking a left view as a base map to obtain a preliminary disparity map; 3, for the corrected left view, enabling a target object area to be a colorized master map and other non-target areas to be wholly black; 4, acquiring a complete disparity map of the target object area according to the target object area; 5, for the complete disparity map, obtaining a three-dimensional point cloud according to a projection model; 6, performing coordinate reprojection on the three-dimensional point cloud to compound a coordinate related pixel map; 7, using a morphology method to automatically measure the length and width of a target object. By adopting the method, a binocular measuring operation process is simplified, the influence of specular reflection, foreshortening, perspective distortion, low textures and repeated textures on a smooth surface is reduced, automatic and intelligent measuring is realized, the application range of binocular measuring is widened, and technical support is provided for subsequent robot binocular vision.

Owner:GUILIN UNIV OF ELECTRONIC TECH

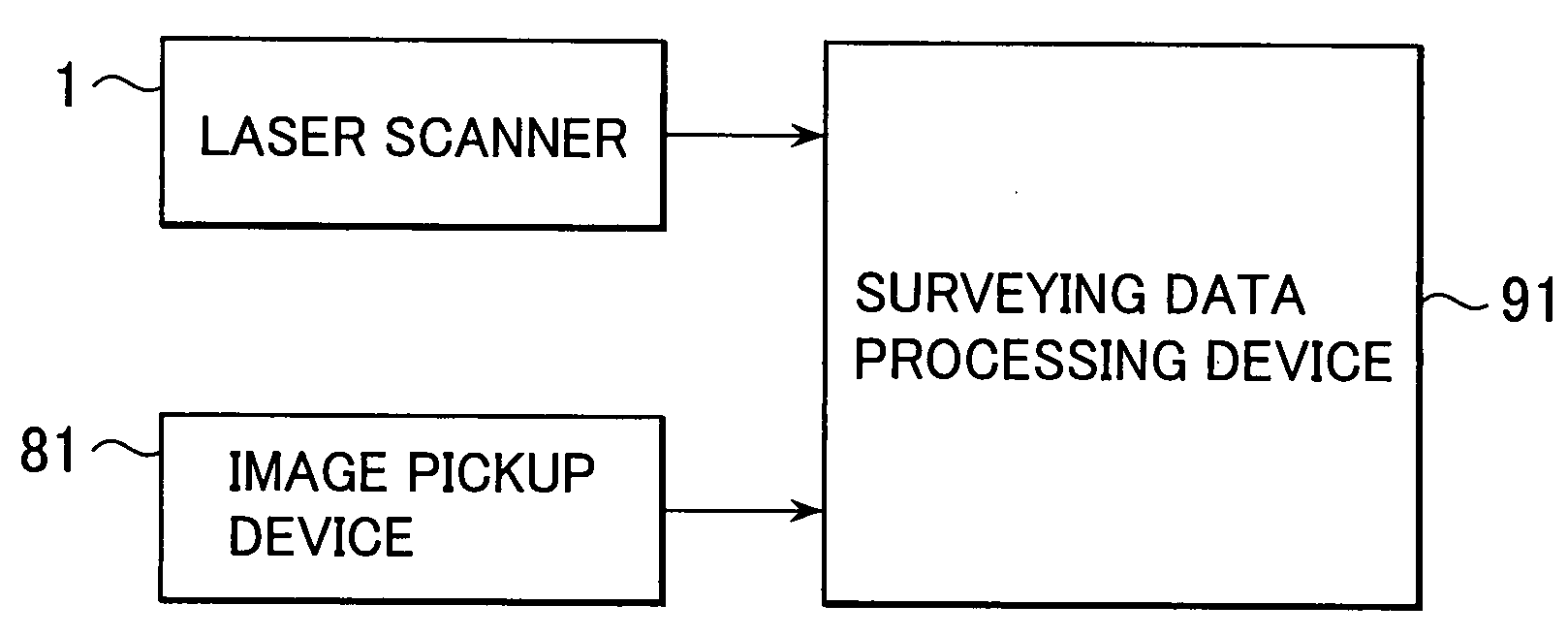

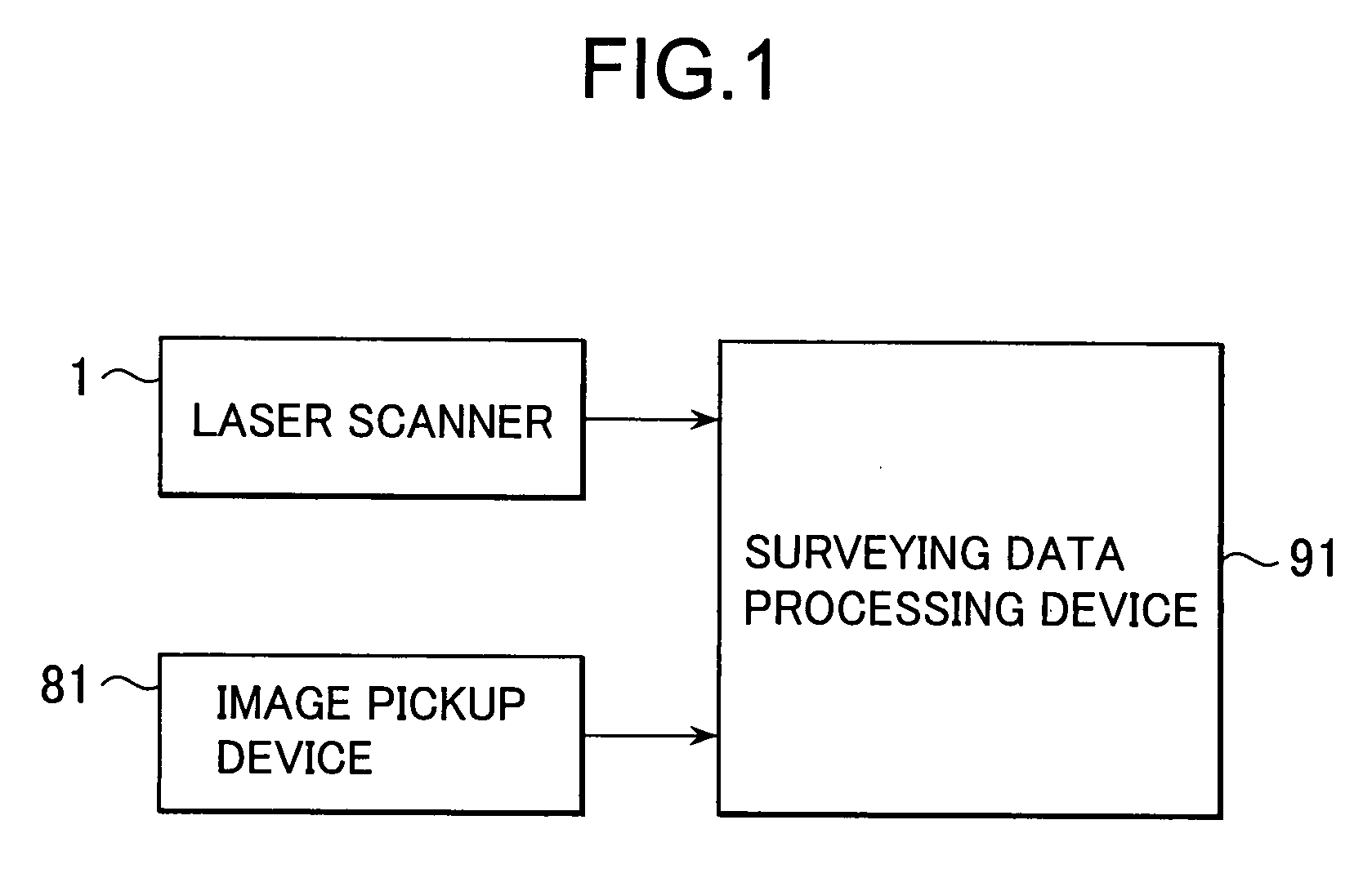

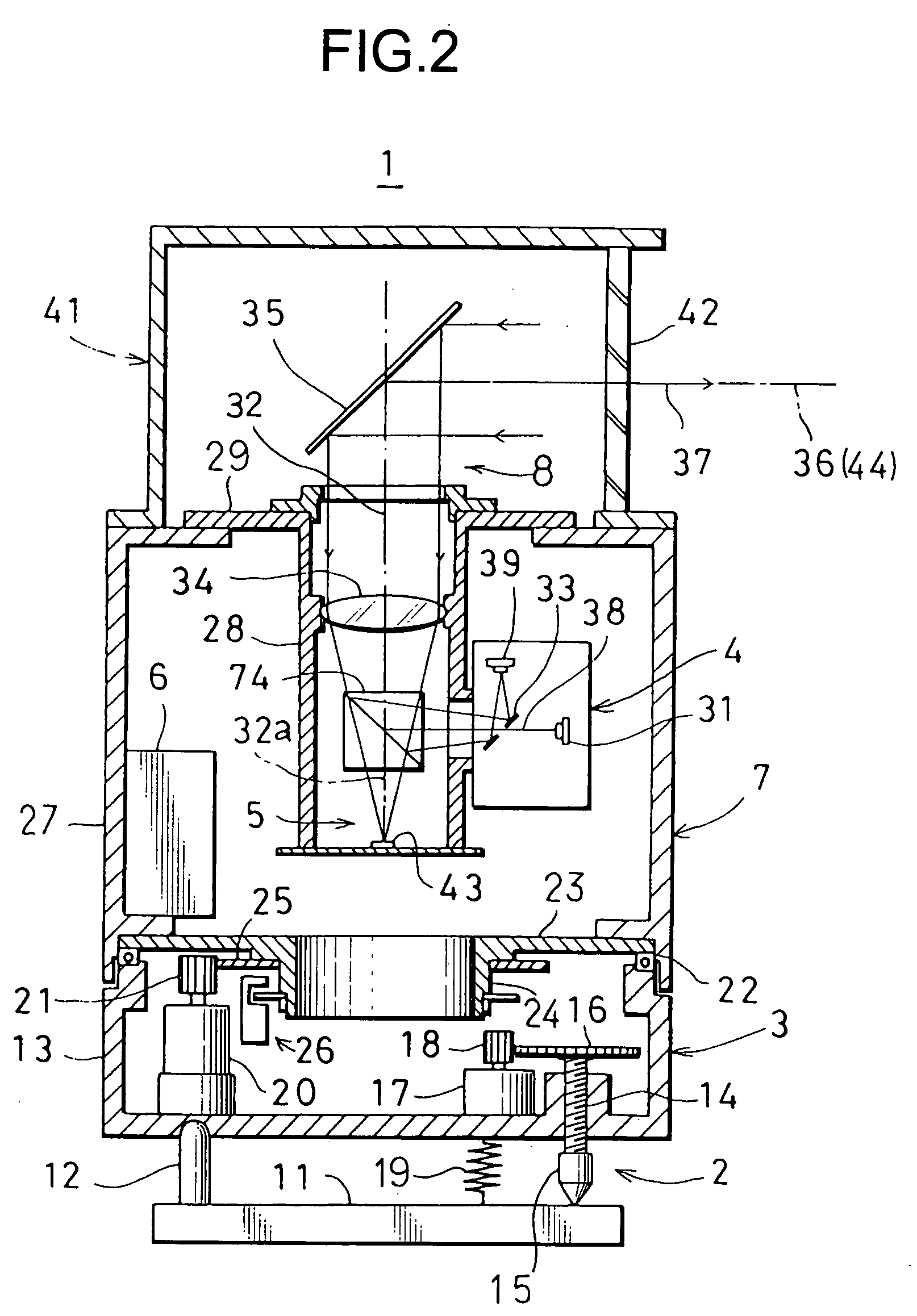

Surveying method, surveying system and surveying data processing program

There are provided a step of acquiring a main point cloud data on a predetermined measurement range by a laser scanner, a step of detecting a range with no data acquired, a step of acquiring a supplementary image data of the range with no data acquired by an auxiliary image pickup device, a step of preparing a stereoscopic image based on the supplementary image data obtained by the auxiliary image pickup device, a step of acquiring supplementary point cloud data from the stereoscopic image, and a step of supplementing the range with no data acquired of the main point cloud data by matching of the main point cloud data and the supplementary point cloud data.

Owner:KK TOPCON

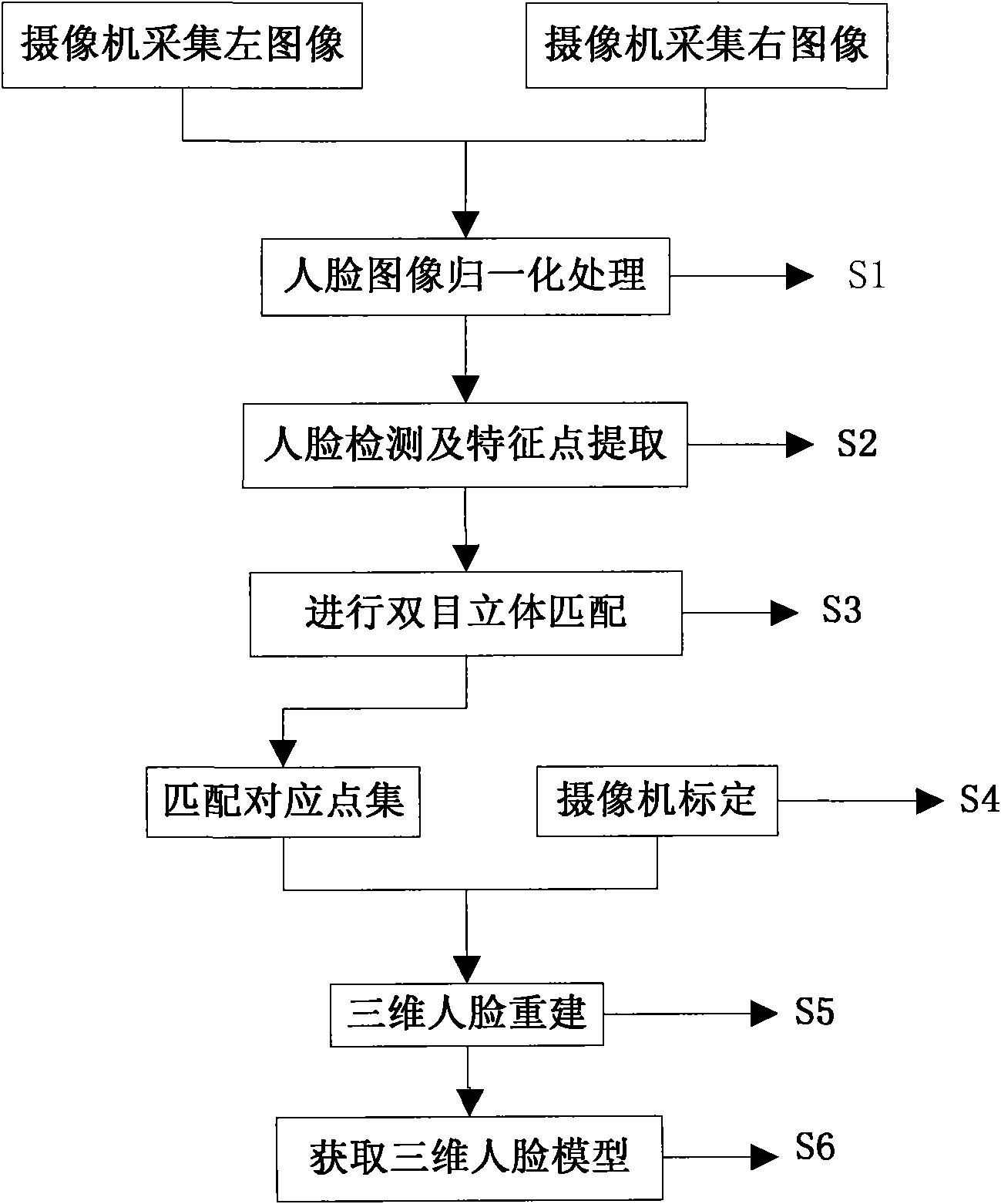

Binocular stereo vision based intelligent three-dimensional human face rebuilding method and system

The invention discloses a binocular stereo vision based intelligent three-dimensional human face rebuilding method and a system; the method comprises: preprocessing operations including image normalization, brightness normalization and image correction are carried out to a human face image; a human face area in the human face image which is preprocessed is obtained and human face characteristic points are extracted; the object is rebuilt by projection matrix, so as to obtain internal and external parameters of a vidicon; based on the human face characteristic points, gray level cross-correlation matching operators are expanded to color information, and a parallax image generated by stereo matching is calculated according to information including polar line restraining, human face area restraining and human face geometric conditions; a three-dimensional coordinate of a human face spatial hashing point cloud is calculated according to the vidicon calibration result and the parallax image generated by stereo matching, so as to generate a three-dimensional human face model. By adopting the steps, more smooth and vivid three-dimensional human face model is rebuilt in the invention.

Owner:BEIJING JIAOTONG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com