Patents

Literature

342 results about "Projection model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

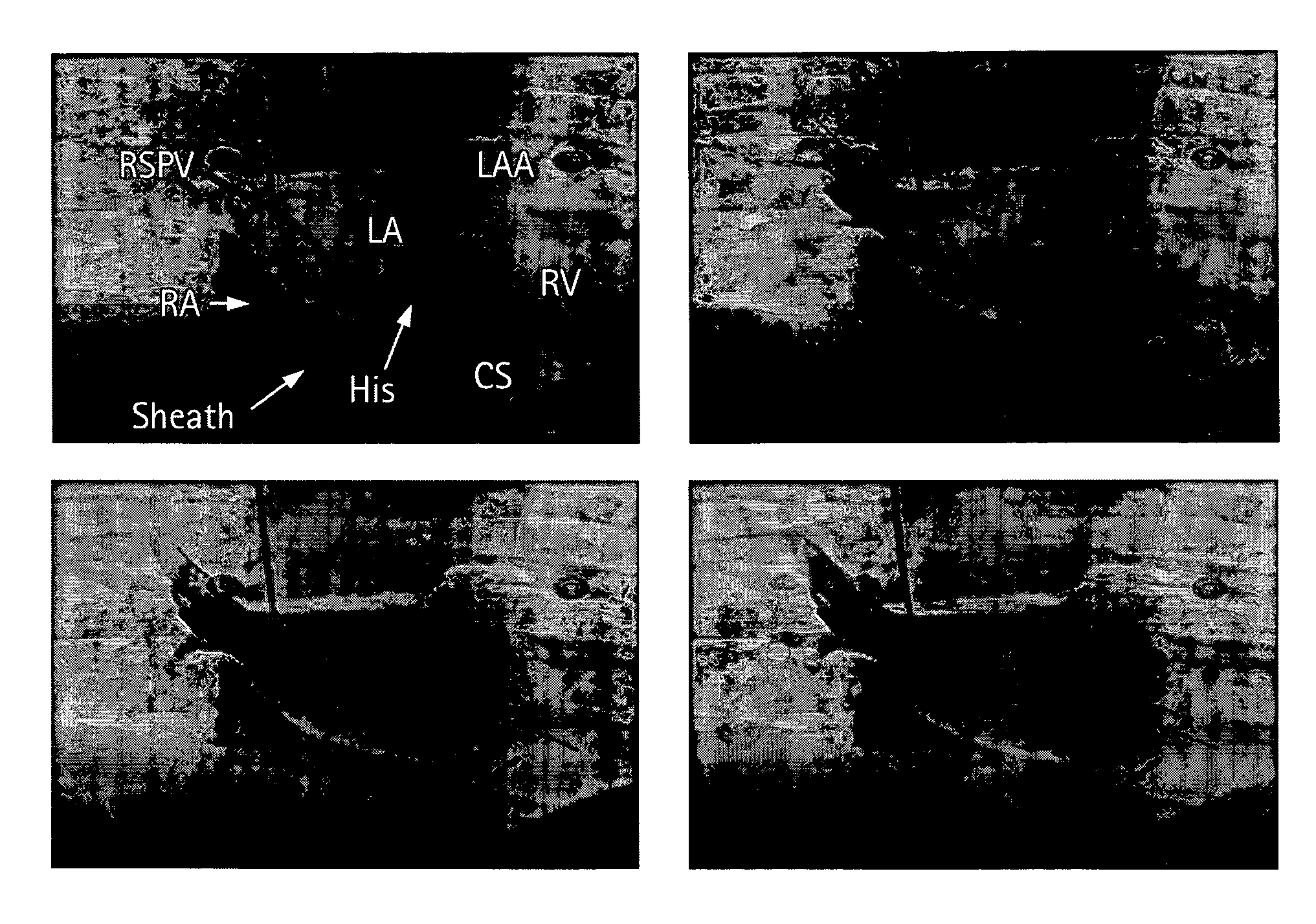

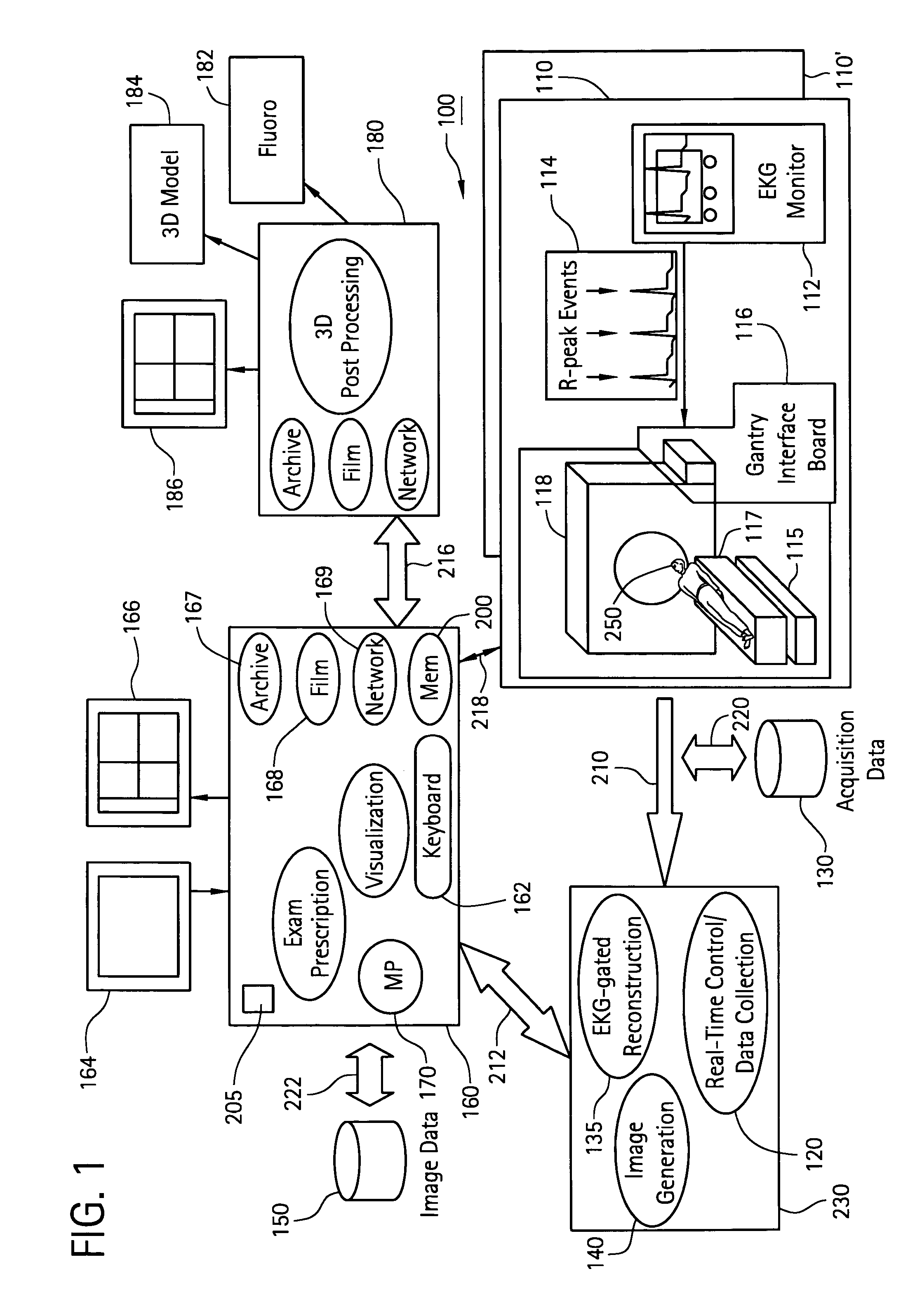

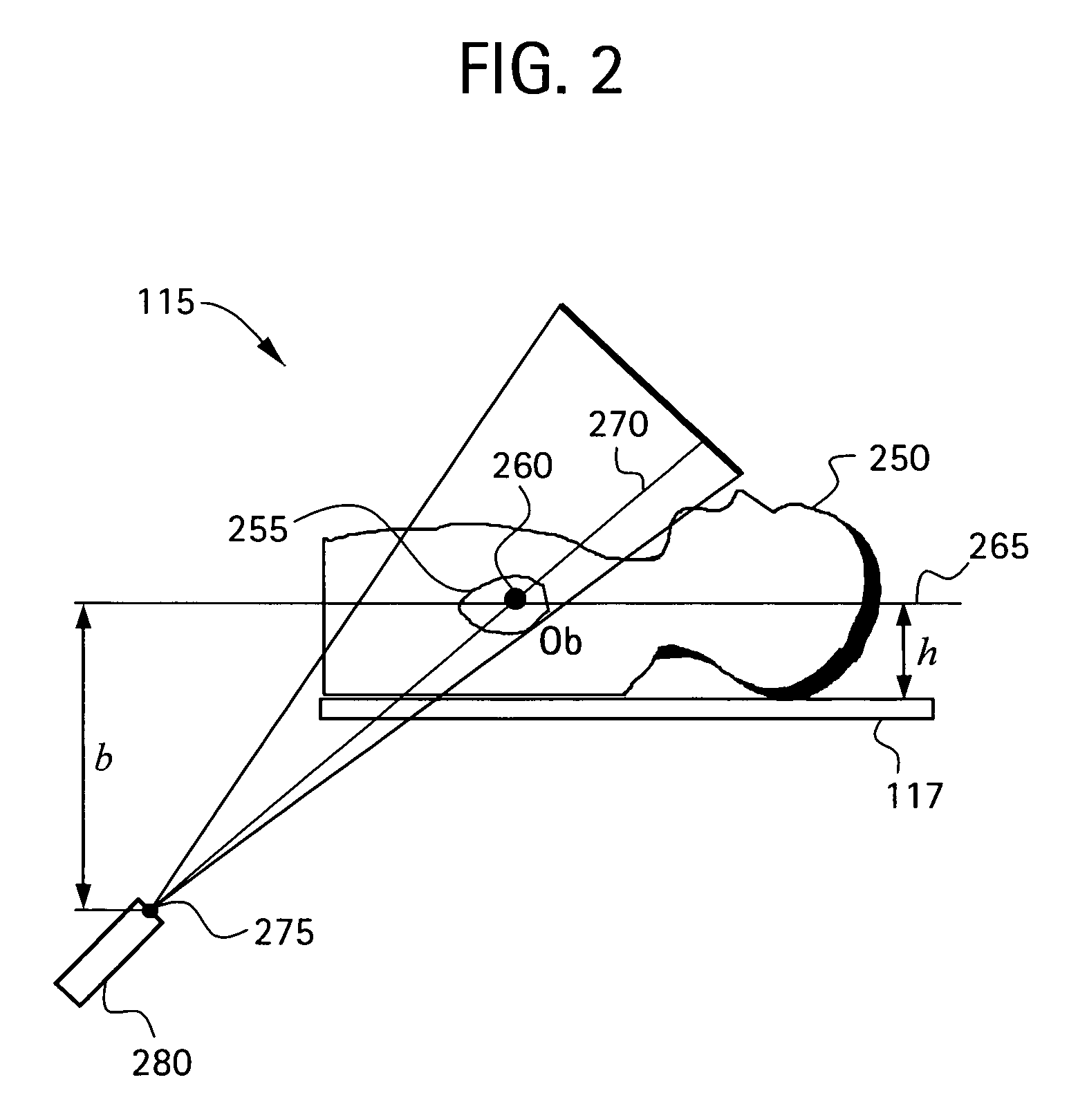

Method and system for registering 3D models of anatomical regions with projection images of the same

Owner:APN HEALTH +1

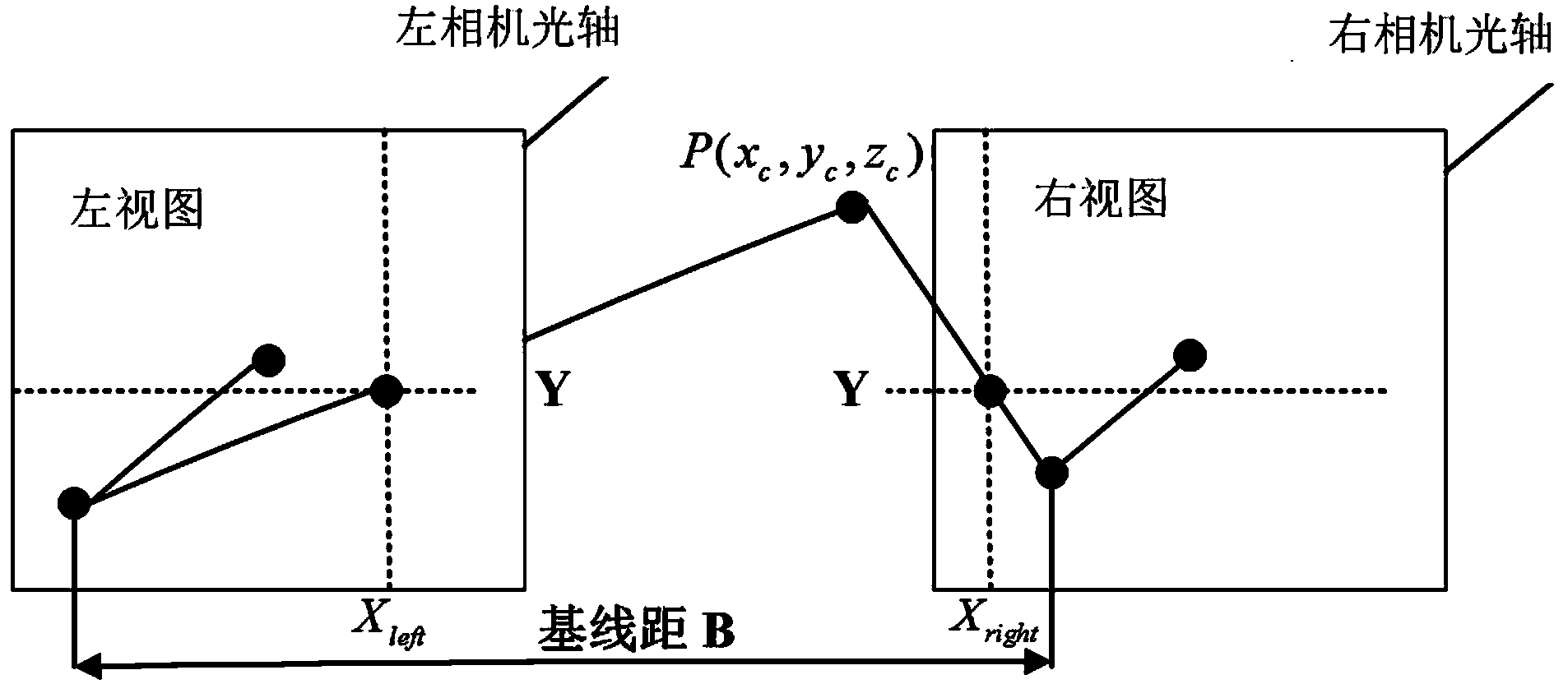

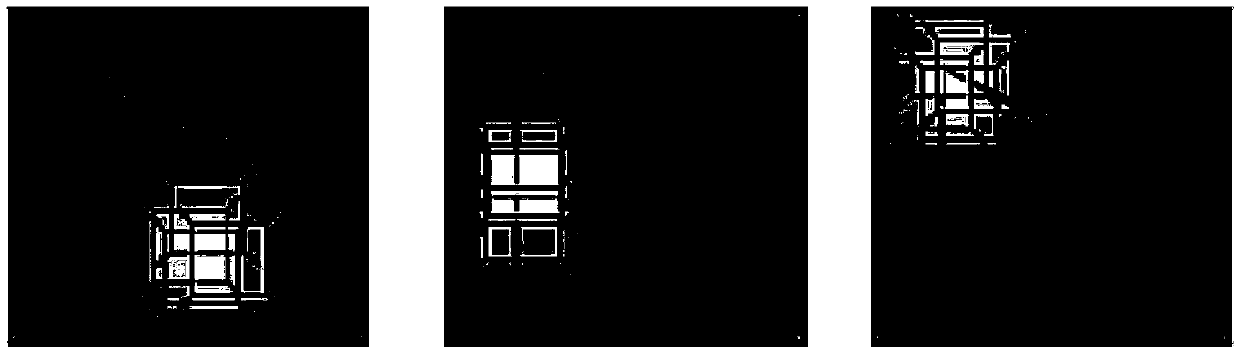

Parallax optimization algorithm-based binocular stereo vision automatic measurement method

InactiveCN103868460AAccurate and automatic acquisitionComplete 3D point cloud informationImage analysisUsing optical meansBinocular stereoNon targeted

The invention discloses a parallax optimization algorithm-based binocular stereo vision automatic measurement method. The method comprises the steps of 1, obtaining a corrected binocular view; 2, matching by using a stereo matching algorithm and taking a left view as a base map to obtain a preliminary disparity map; 3, for the corrected left view, enabling a target object area to be a colorized master map and other non-target areas to be wholly black; 4, acquiring a complete disparity map of the target object area according to the target object area; 5, for the complete disparity map, obtaining a three-dimensional point cloud according to a projection model; 6, performing coordinate reprojection on the three-dimensional point cloud to compound a coordinate related pixel map; 7, using a morphology method to automatically measure the length and width of a target object. By adopting the method, a binocular measuring operation process is simplified, the influence of specular reflection, foreshortening, perspective distortion, low textures and repeated textures on a smooth surface is reduced, automatic and intelligent measuring is realized, the application range of binocular measuring is widened, and technical support is provided for subsequent robot binocular vision.

Owner:GUILIN UNIV OF ELECTRONIC TECH

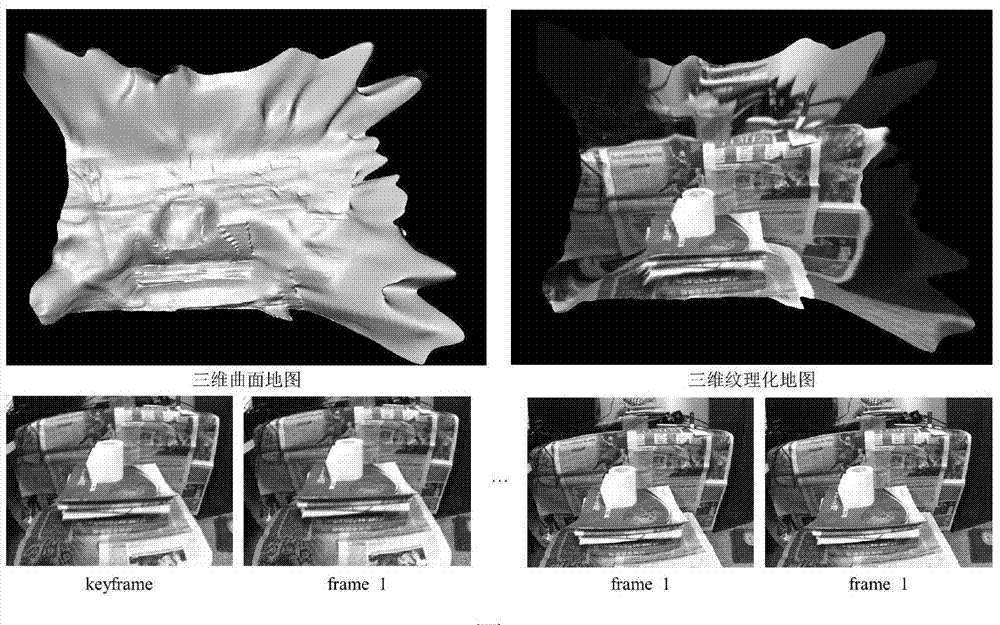

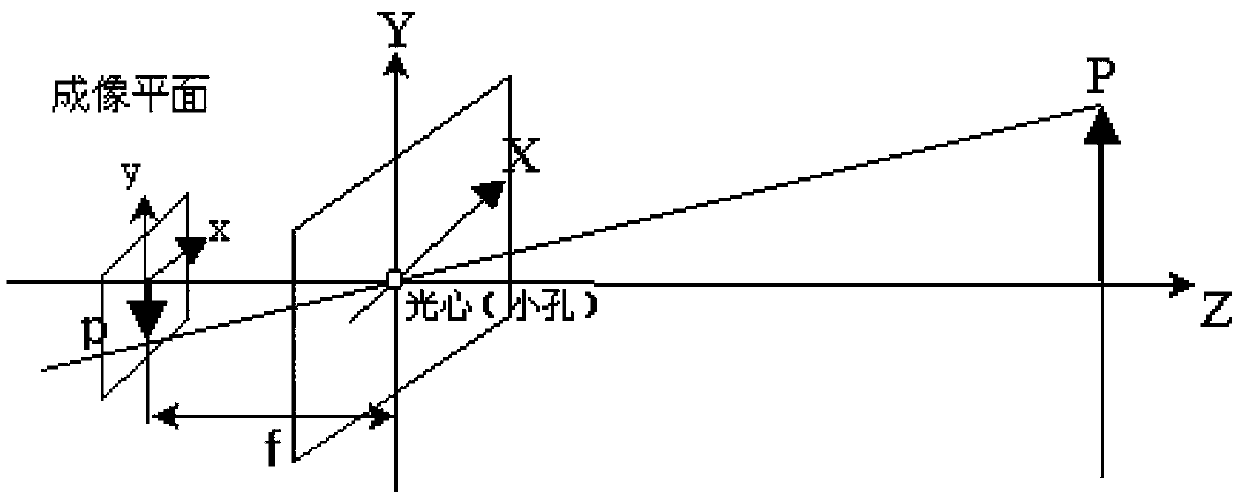

Variational mechanism-based indoor scene three-dimensional reconstruction method

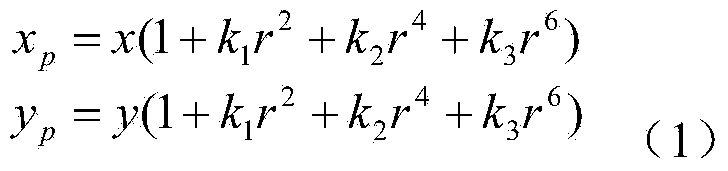

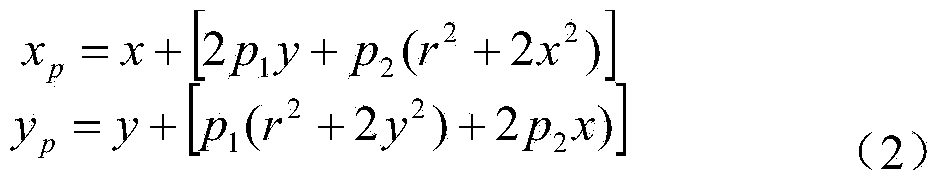

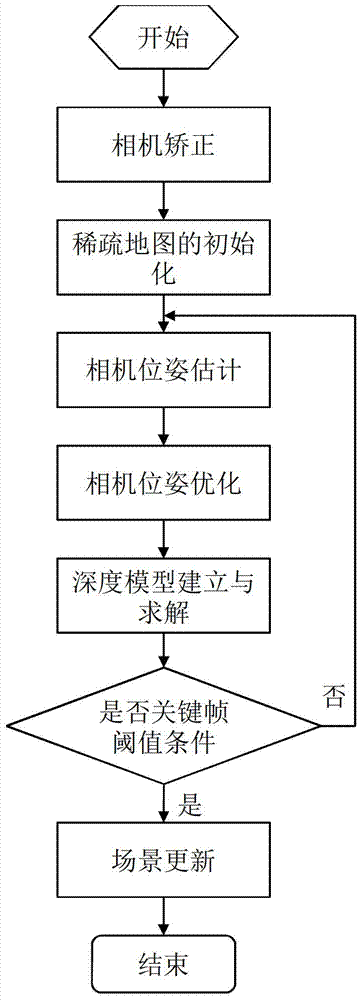

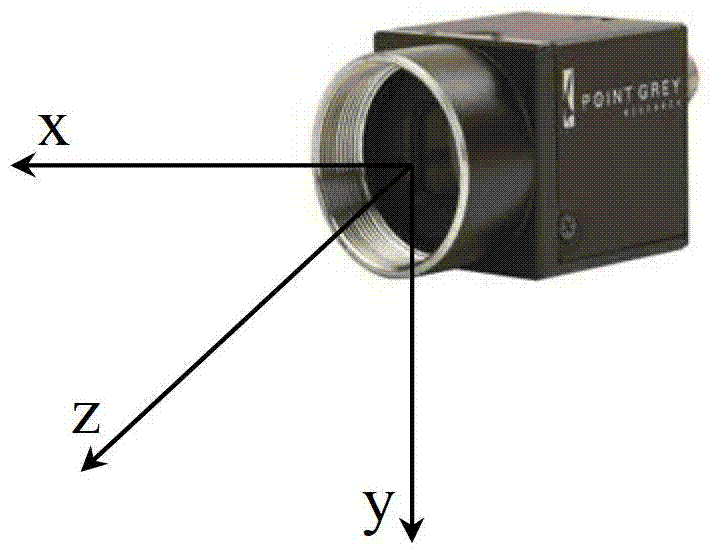

InactiveCN103247075ASolve the costSolve real-timeImage analysis3D modellingReconstruction methodReconstruction algorithm

The invention belongs to crossing field of computer vision and intelligent robots and discloses a variational mechanism-based large-area indoor scene reconstruction method. The method comprises the following steps: step 1, acquiring calibration parameters of a camera, and building an aberration correcting model; step 2, building a camera position and gesture depiction and camera projection model; step 3, utilizing an SFM-based monocular SFM (Space Frequency Modulation) algorithm to realize camera position and gesture estimation; step 4, building a variational mechanism-based depth map estimation model, and performing solving on the model; and step 5, building a key frame selection mechanism to realize three-dimensional scene renewal. According to the invention, an RGB (Red Green Blue) camera is adopted to acquire environmental data, and a variational mechanism-based depth map generation method is proposed through utilizing a high-precision monocular positioning algorithm, so that quick large-area indoor three-dimensional scene reconstruction is realized, and problems of three-dimensional reconstruction algorithm cost and real-time performance are effectively solved.

Owner:BEIJING UNIV OF TECH

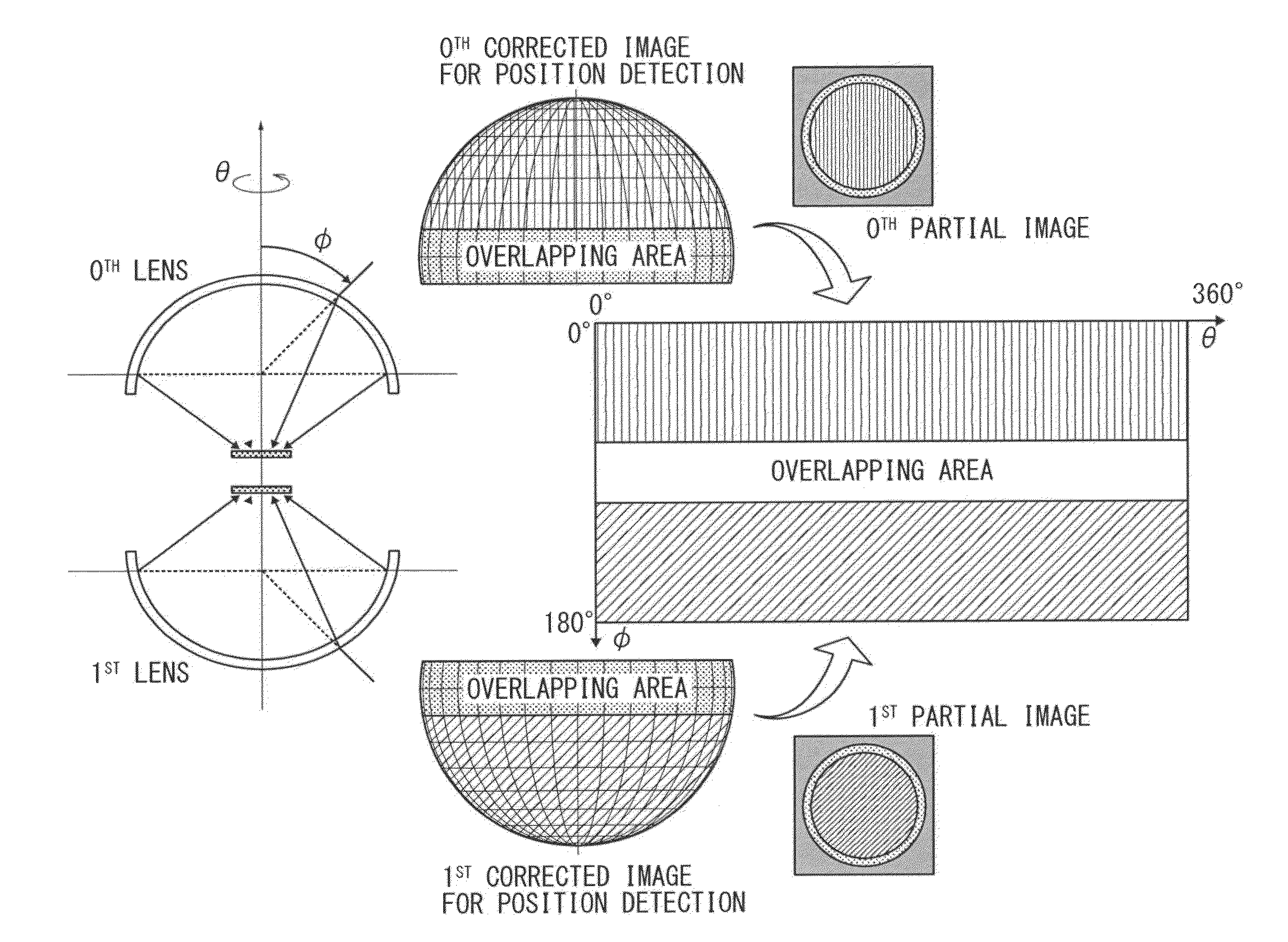

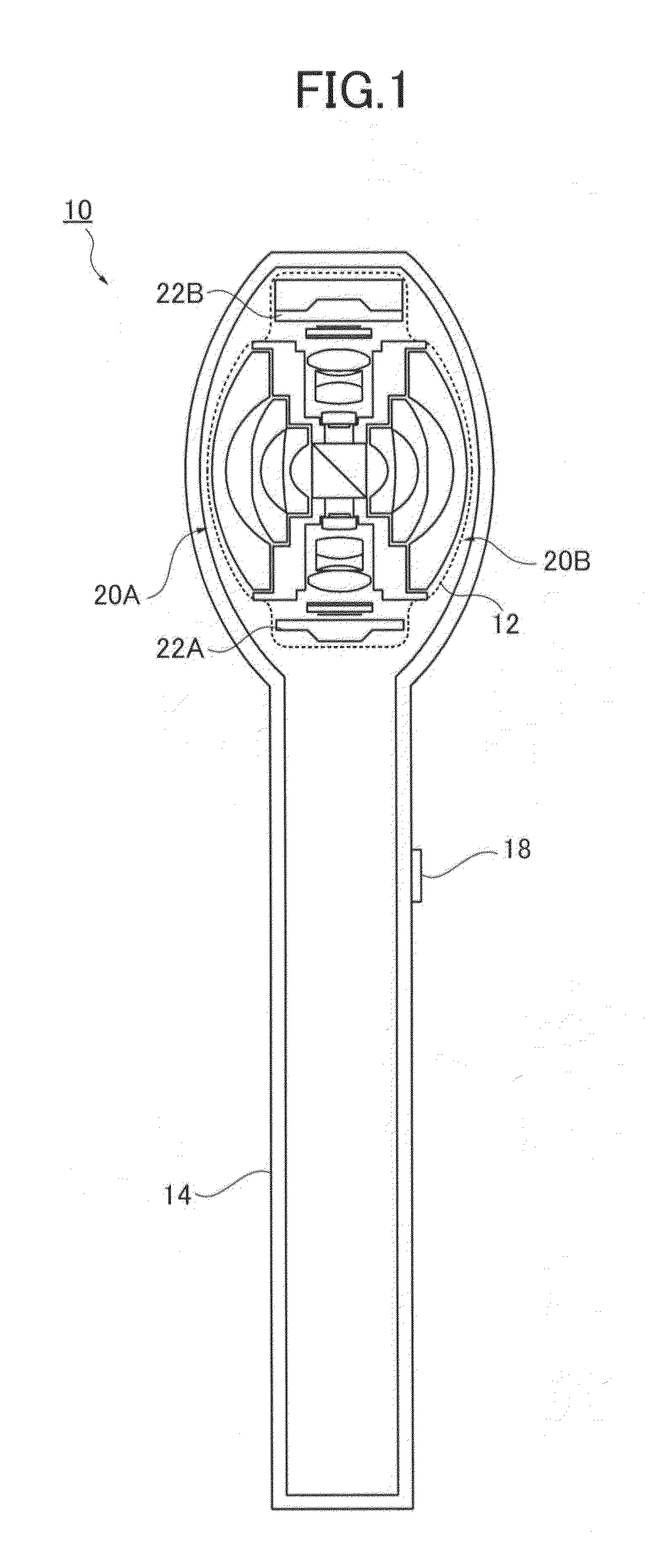

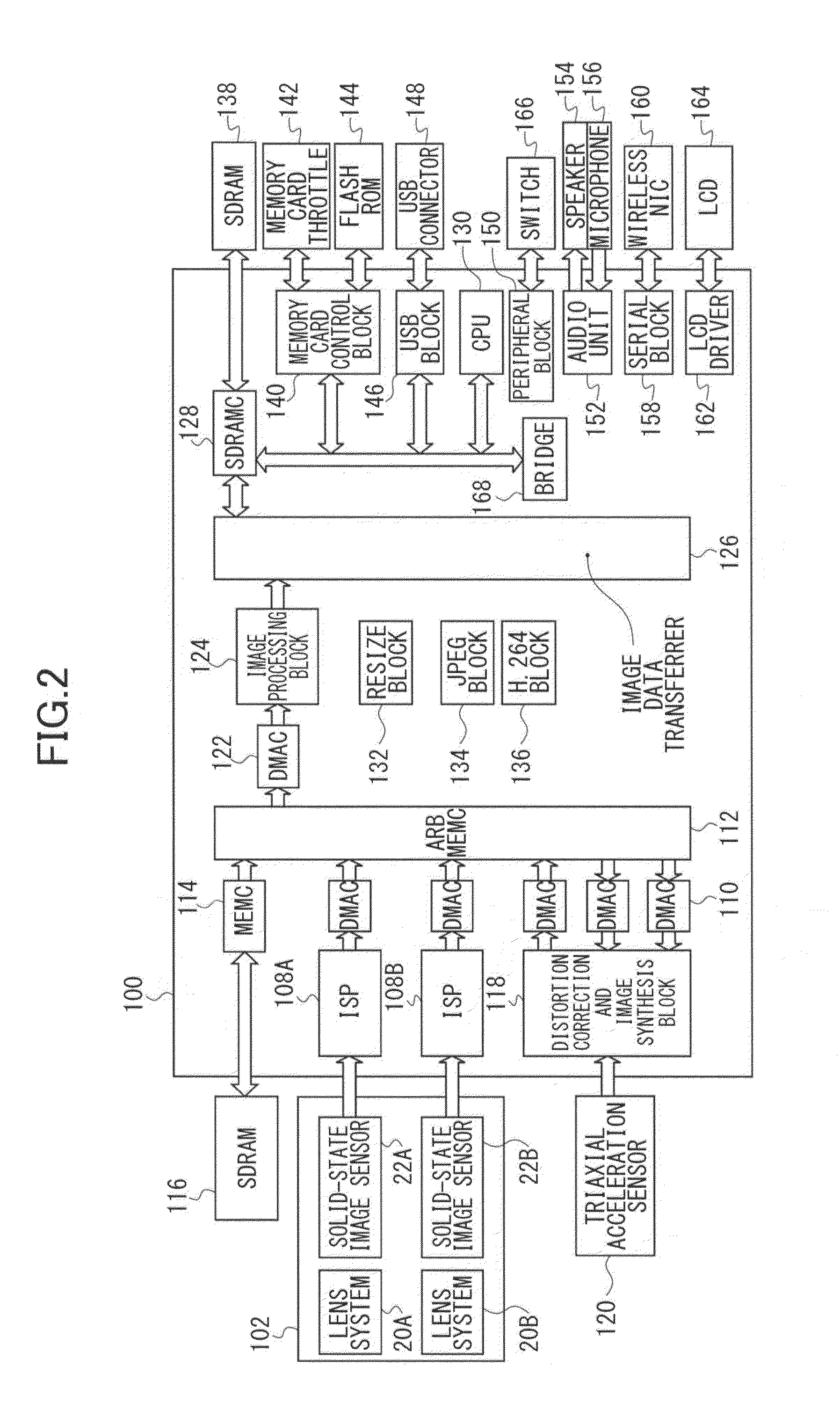

Image processor, image processing method and program, and imaging system

ActiveUS20140071227A1Accurate connectionTelevision system detailsCharacter and pattern recognitionImaging processingComputer graphics (images)

An image processor includes a first converter to convert input images into images in a different coordinate system from that of the input images according to first conversion data based on a projection model, a position detector to detect a connecting position of the images converted by the converter, a corrector to correct the first conversion data on the basis of a result of the detection by the position detector, and a data generator to generate second conversion data for image synthesis from the conversion data corrected by the corrector on the basis of coordinate conversion, the second conversion data defining the conversion of the input images.

Owner:RICOH KK

Method for exploring viewpoint and focal length of camera

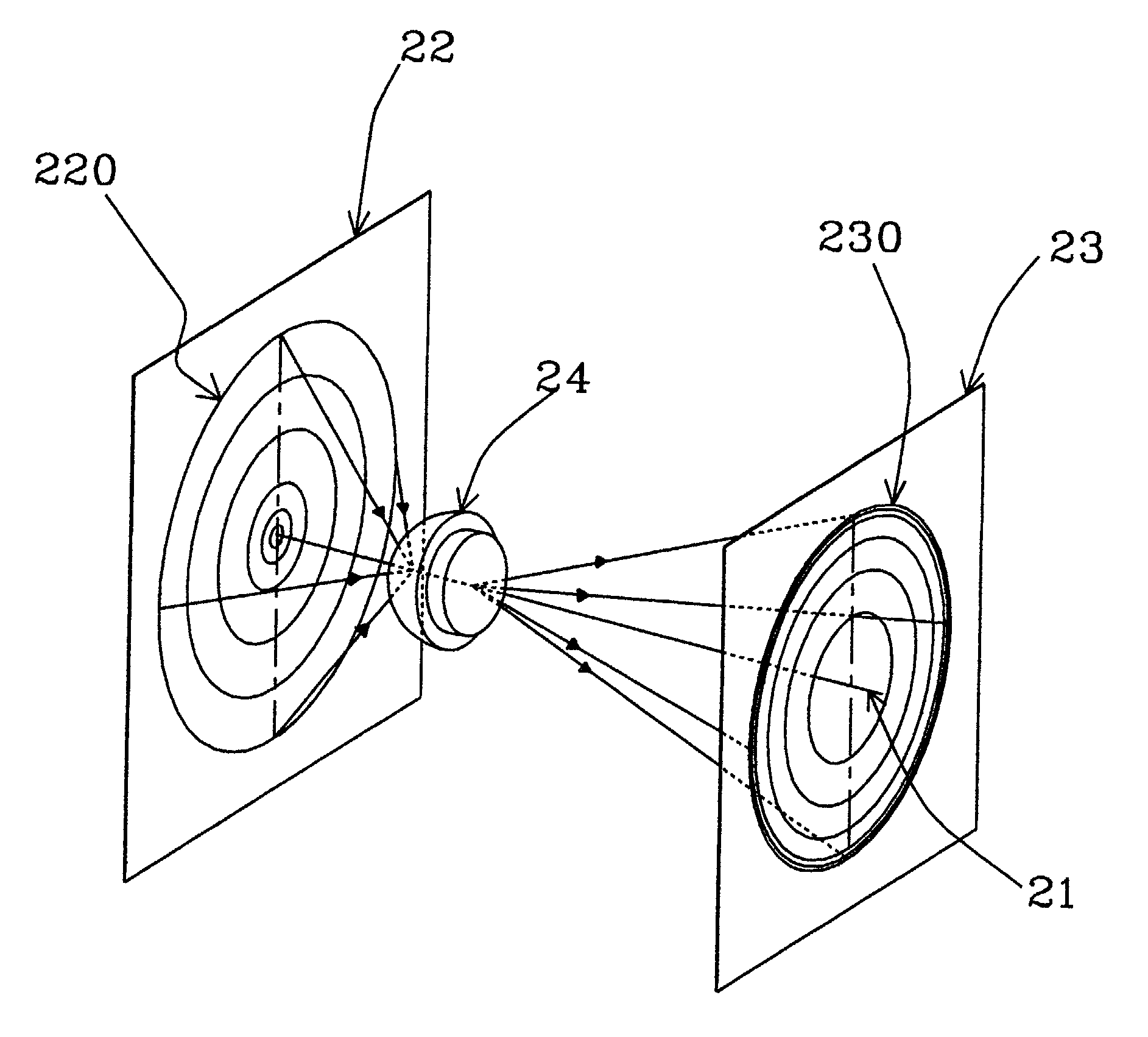

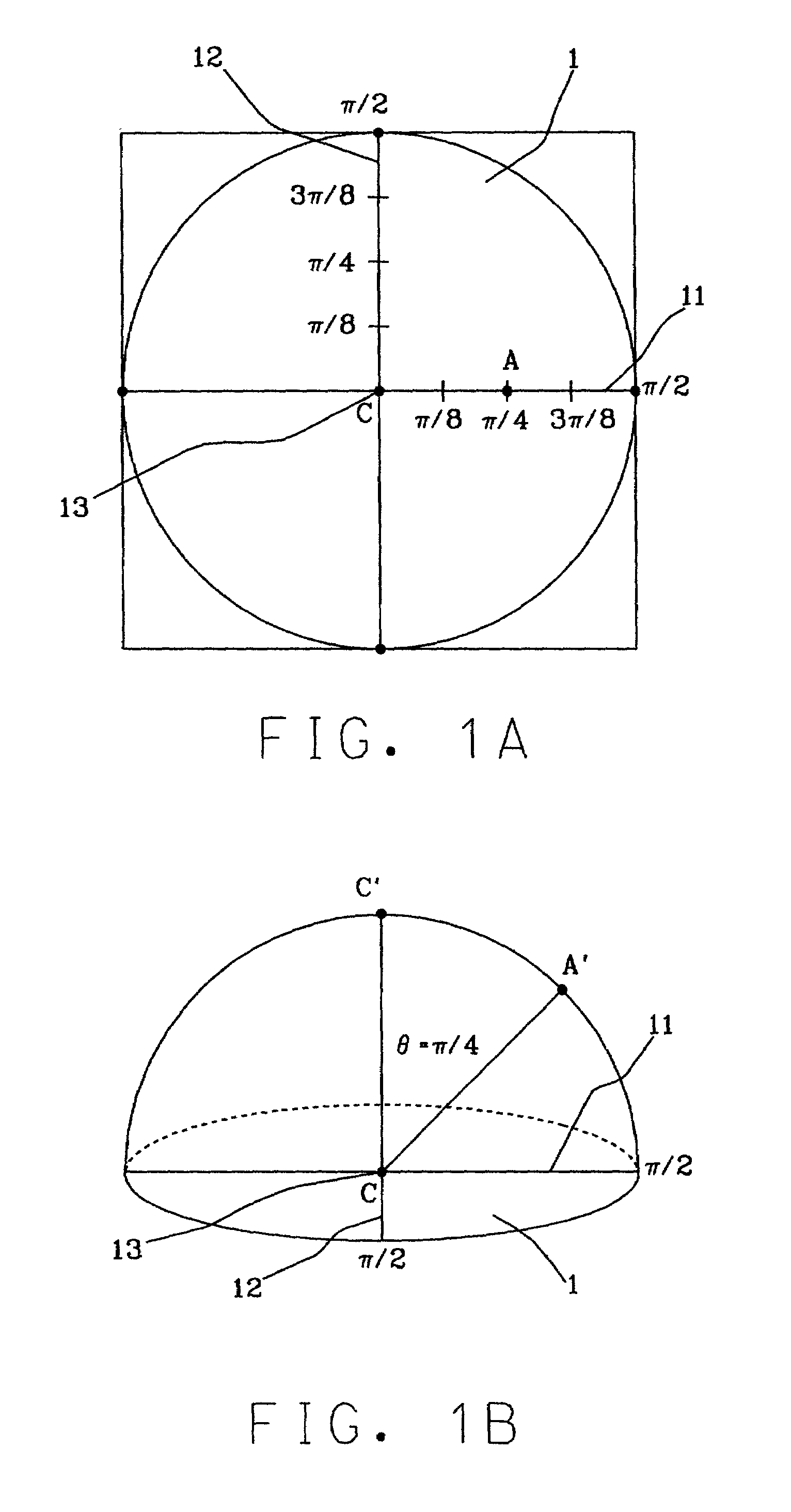

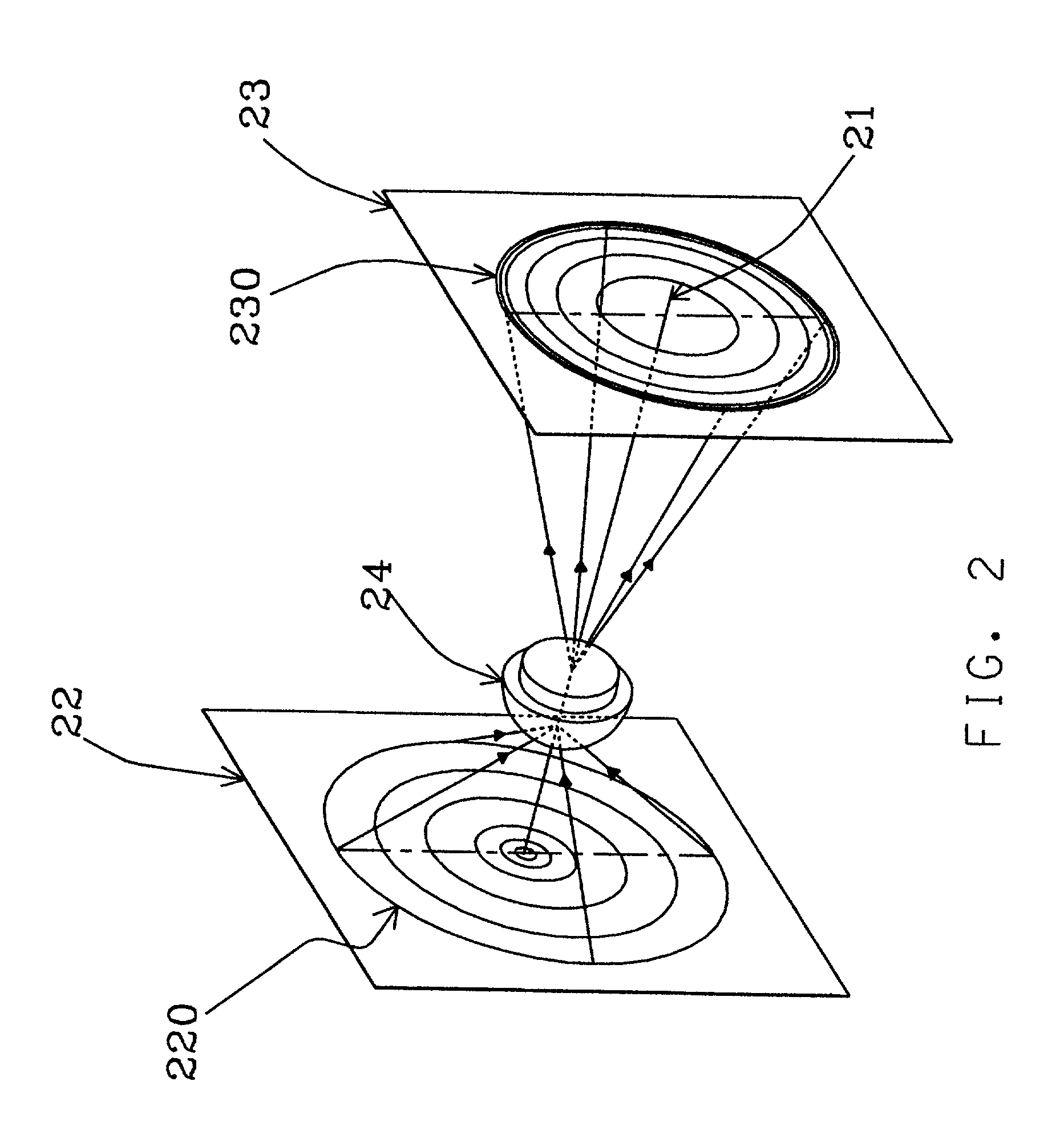

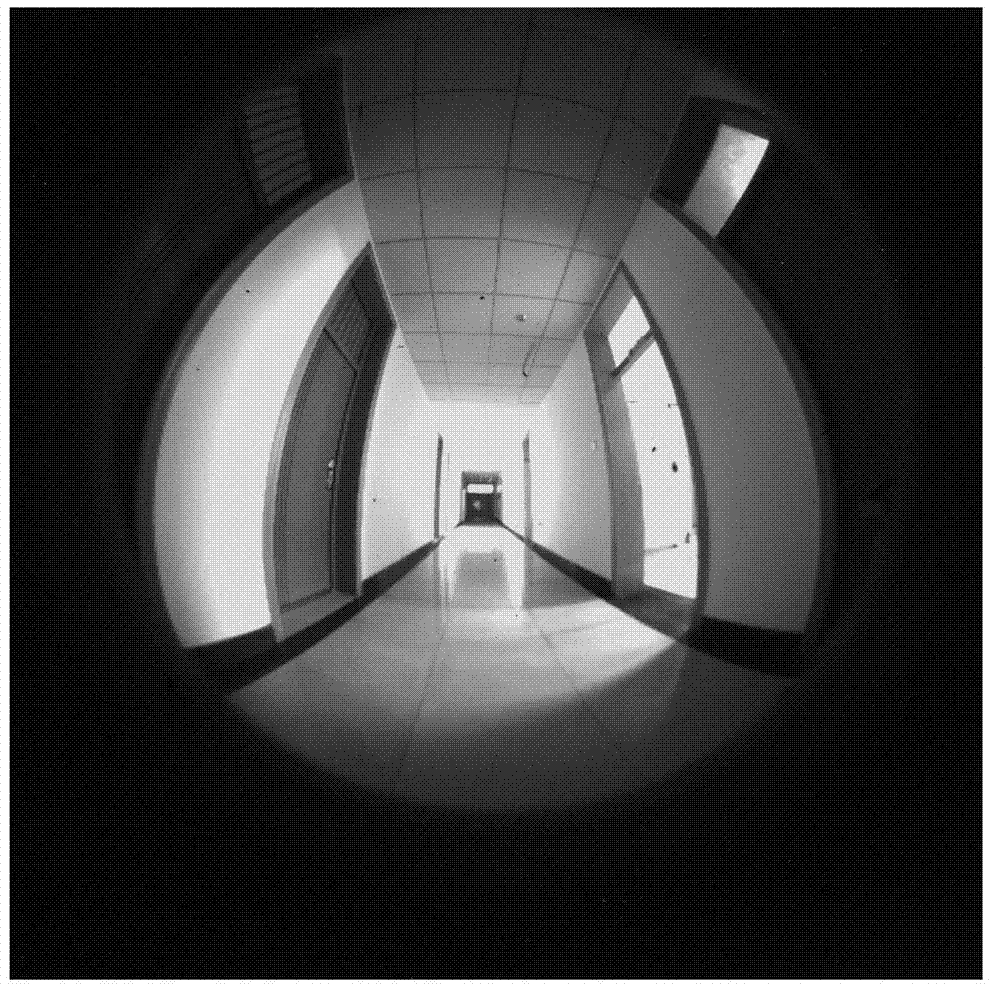

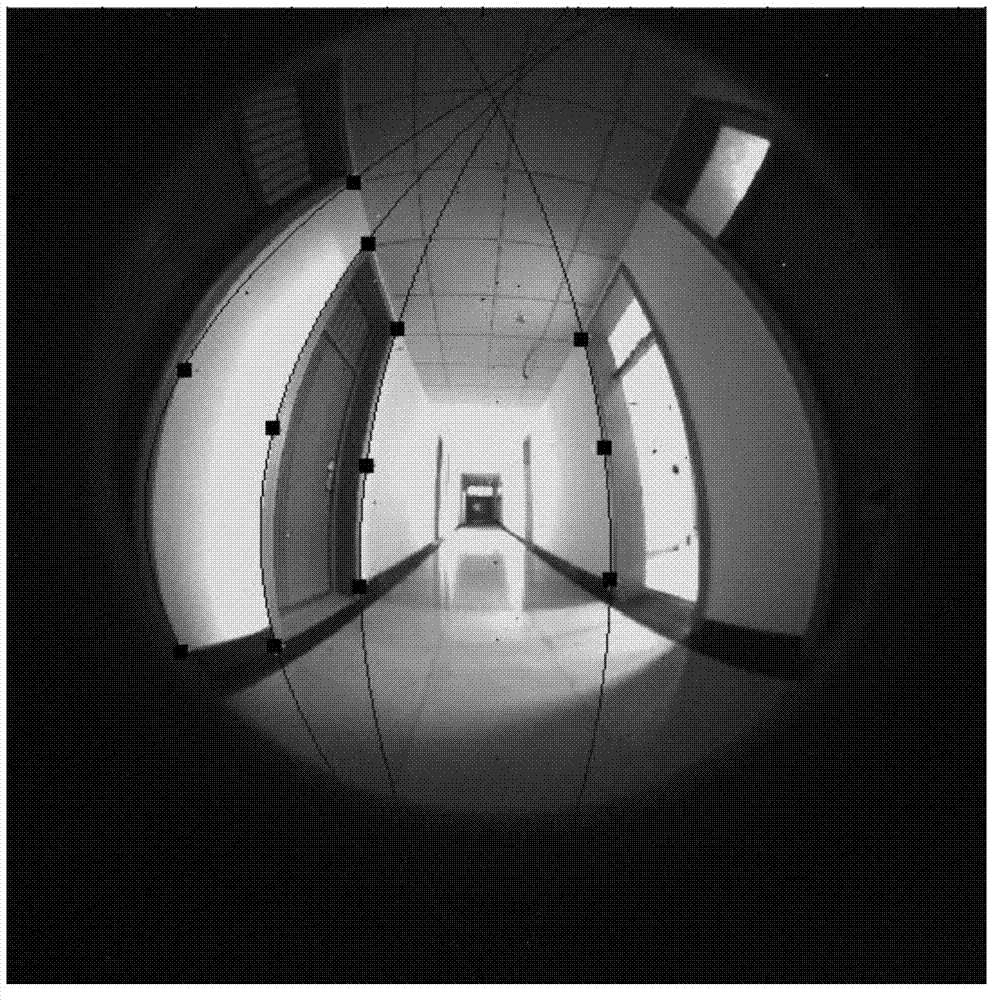

InactiveUS6985183B2Calibrate-distortLarge viewing angleTelevision system detailsTelemetry/telecontrol selection arrangementsViewpointsFisheye lens

The present invention is a method for exploring the viewpoint and focal length of a fisheye lens camera (FELC). It employs the characteristic of the central symmetry of the distortion of the fisheye lens (FEL) to set its optic axis by means of a calibration target with a plurality of symmetrically homocentric figures. Once the optic axis is fixed, further disclose the viewpoint (VP) of the FELC along the optic axis through a trail-and-error procedure and calculate its effective focal length and classify it to the primitive projection mode. Because the invention is capable of finding out both the internal and external parameters of the FELC and the calibration method is easy, low-cost, suitable to any projection model, and has greater sensitivity corresponding to an increasing in image distortion, the distortive images can be transformed easily to normal ones which fit in with a central perspective mechanism. Furthermore, the invention is also practicable and excellent in its applications in the fields of quality identification of the FEL and wide-view 3-D metering.

Owner:APPRO TECH

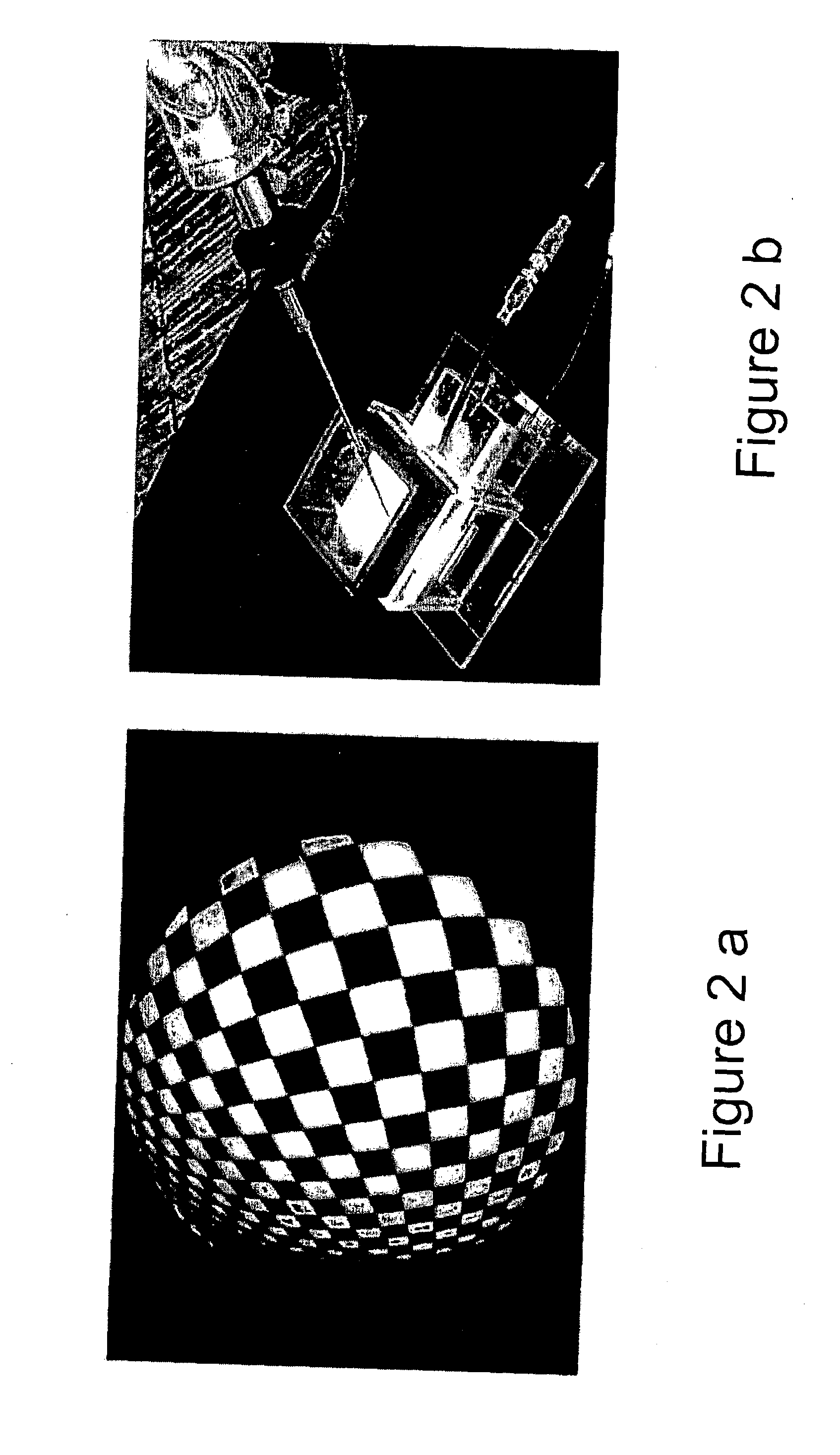

Method and apparatus for automatic camera calibration using one or more images of a checkerboard pattern

ActiveUS20140285676A1Accurate imagingPractical implementationTelevision system detailsImage enhancementImaging qualityCheckerboard pattern

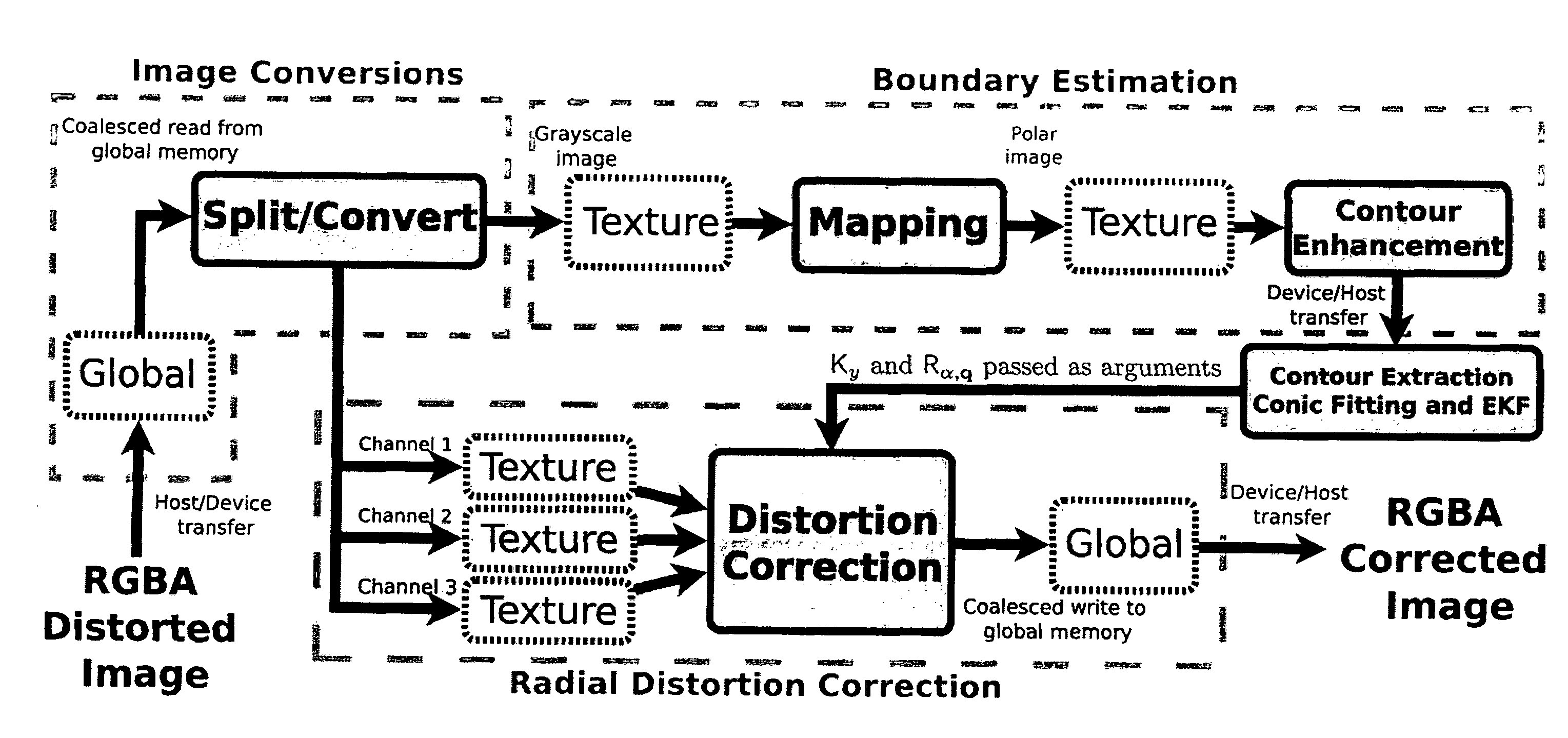

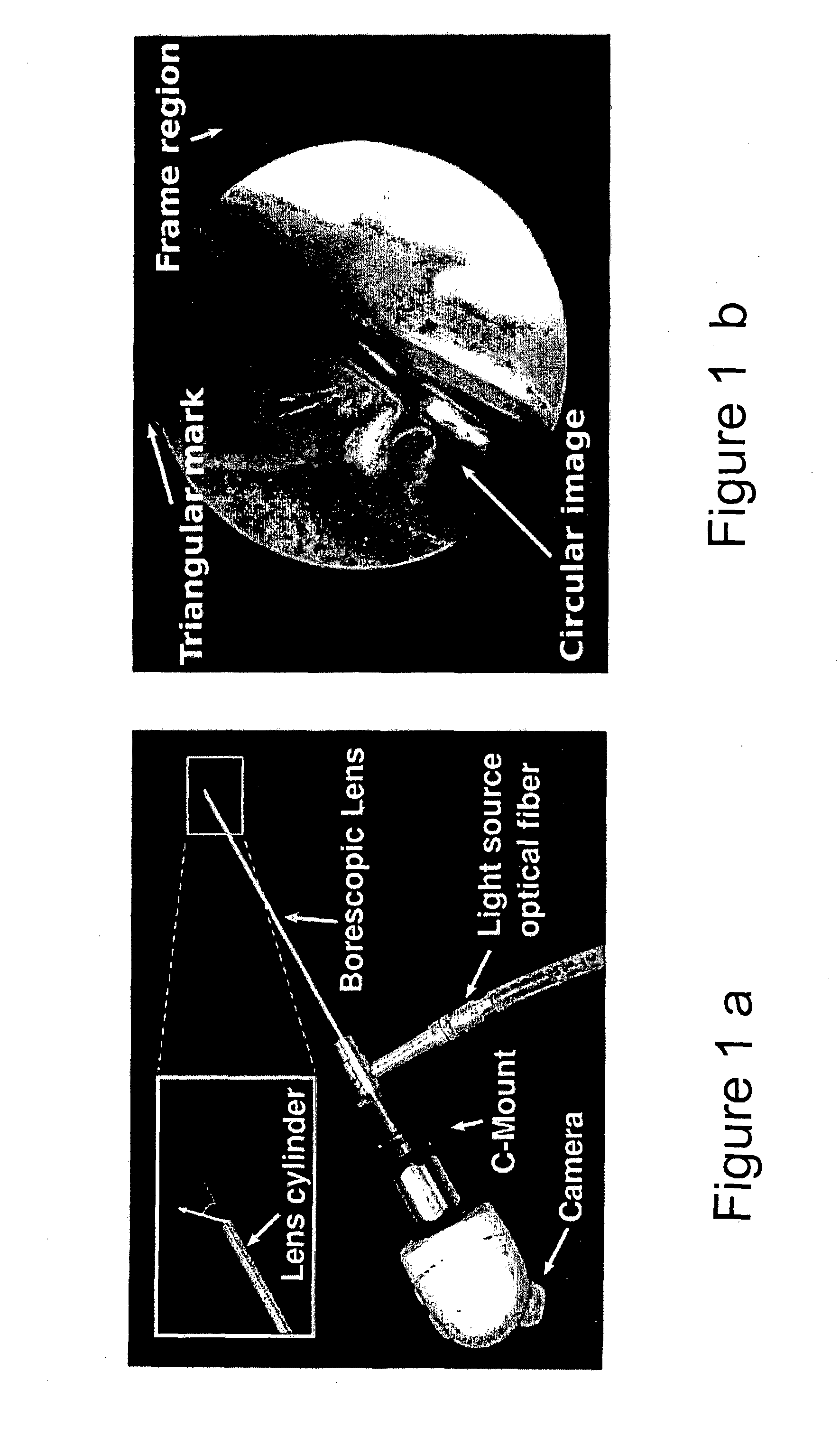

The present invention relates to a high precision method, model and apparatus for calibrating, determining the rotation of the lens scope around its symmetry axis, updating the projection model accordingly, and correcting the image radial distortion in real-time using parallel processing for best image quality.The solution provided herein relies on a complete geometric calibration of optical devices, such as cameras commonly used in medicine and in industry in general, and subsequent rendering of perspective correct image in real-time. The calibration consists on the determination of the parameters of a suitable mapping function that assigns each pixel to the 3D direction of the corresponding incident light. The practical implementation of such solution is very straightforward, requiring the camera to capture only a single view of a readily available calibration target, that may be assembled inside a specially designed calibration apparatus, and a computer implemented processing pipeline that runs in real time using the parallel execution capabilities of the computational platform.

Owner:UNIVE DE COIMBRA

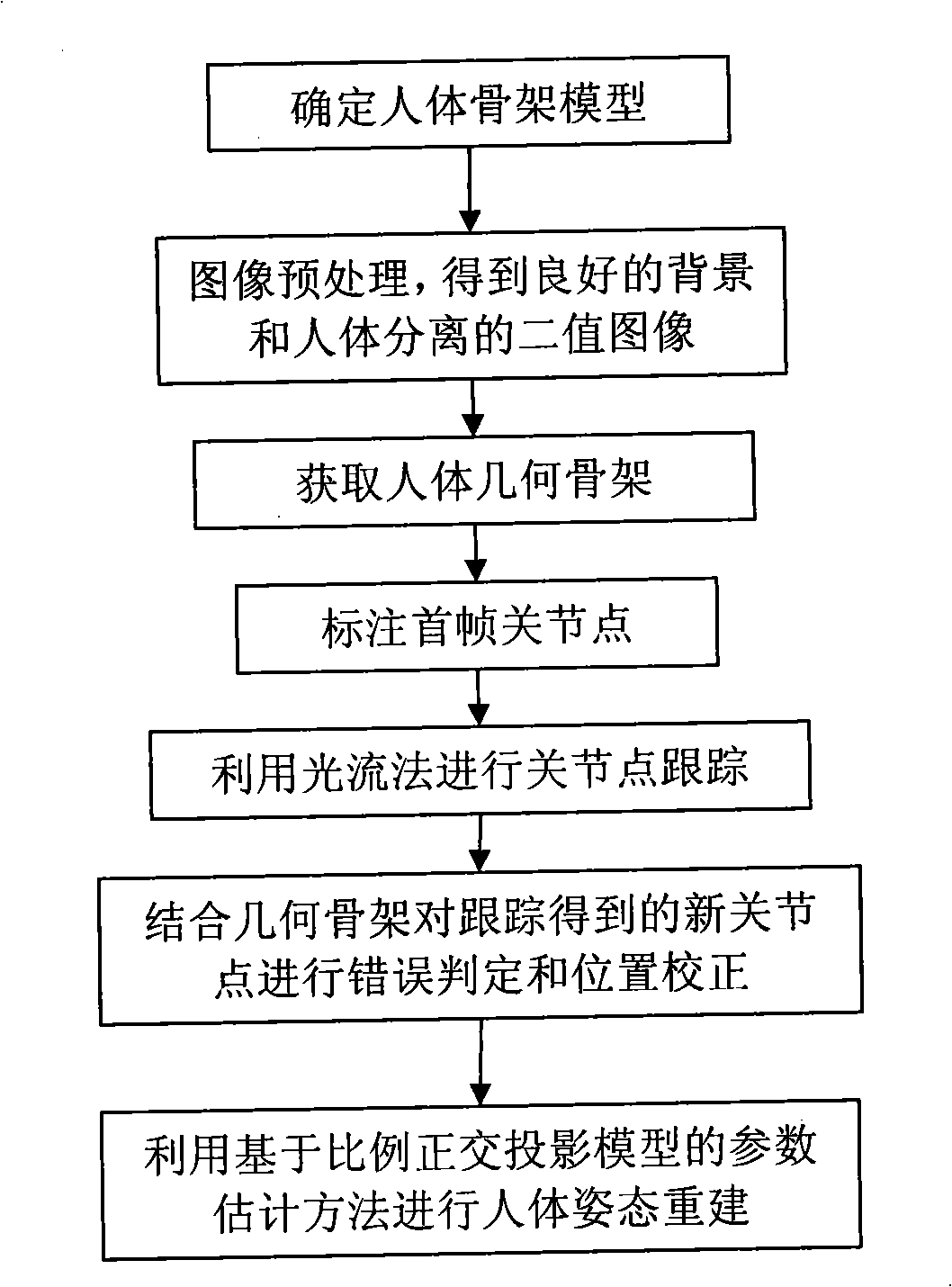

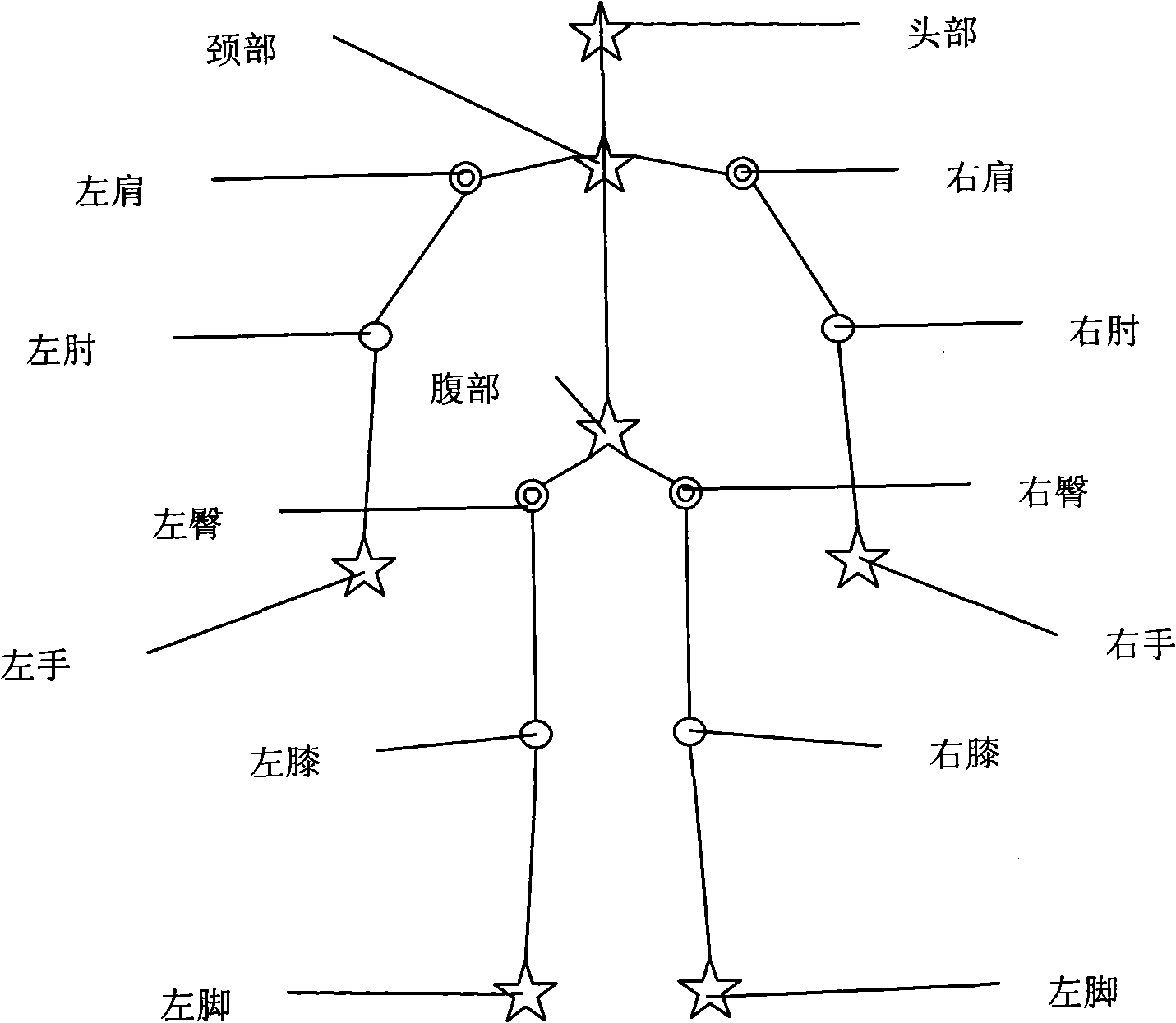

Human body posture reconstruction method based on geometry backbone

InactiveCN101246602AImprove accuracyImprove operational efficiencyCharacter and pattern recognitionDiagnostic recording/measuringHuman bodyNODAL

The present invention provides a reconstruction method of human posture based on the geometrical skeleton, including:determining human skeleton pattern; obtaining 3D human skeleton pattern, pre-processing image; obtaining bianry image of separation of the background and the human; acquiring human geometrical skeleton; obtaining linear geometrical skeleton; labelling articulare of the start frame; bonding the manual labelling articulare on the geometrical skeleton, shortening the initial error; tracing the articulare by light stream method; obtaining the new position by the light stream method; processing error judgement and position correction to the new articulare obtained by the trace with the combined geometrical skeleton; processing line correction calculated by the light stream method; re-constructing the human posture; converting the two-dimensinal coordinate of the articulare to the 3D skeleton pattern by the parameter evaluating method of the proportion orthogonal projection model. The invention improves accuracy of the posture reconstruction, obtaining a high processing efficiency, capable of processing stable and effect human posture reconstruction in the intelligent video monitor system.

Owner:DONGHUA UNIV

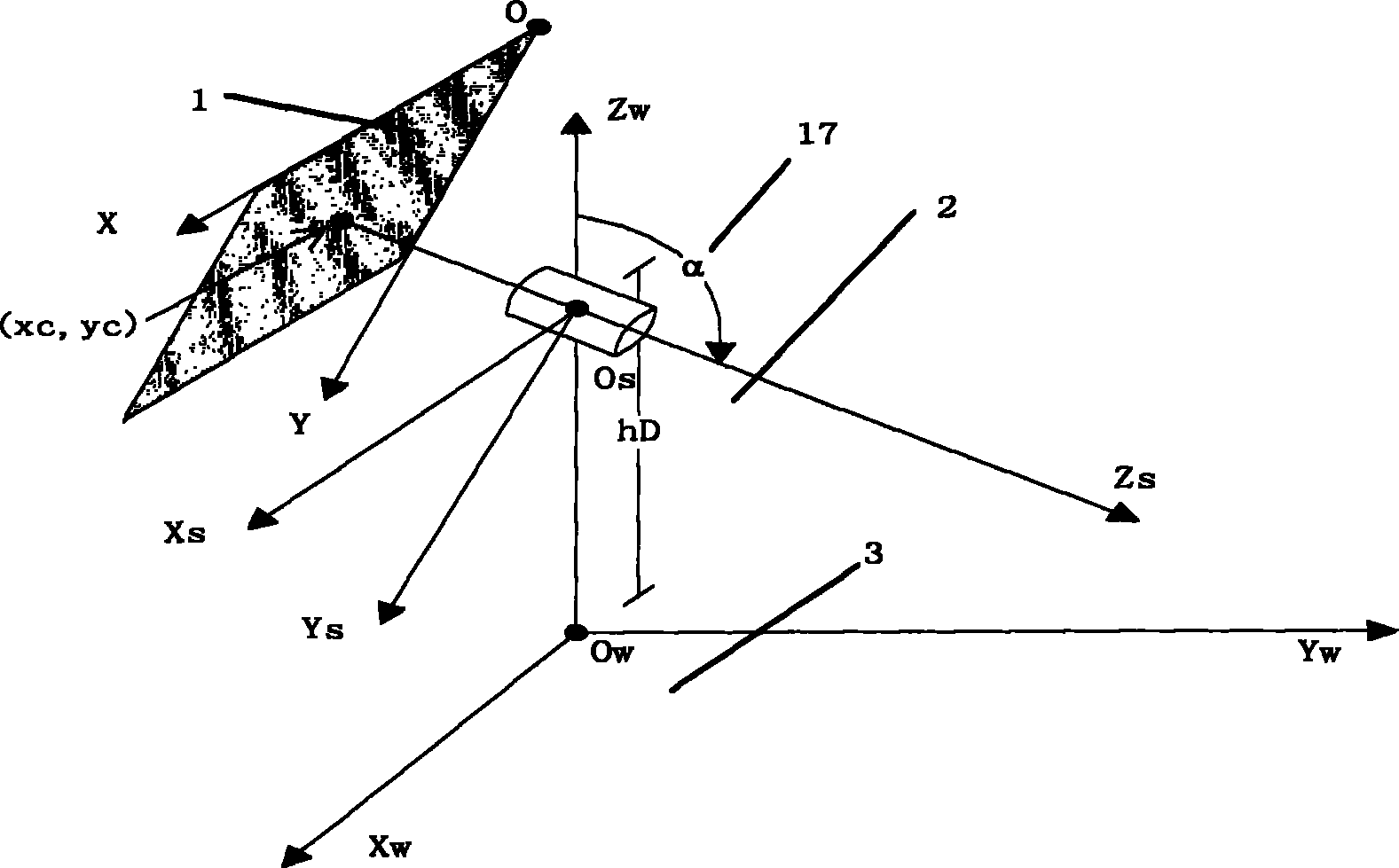

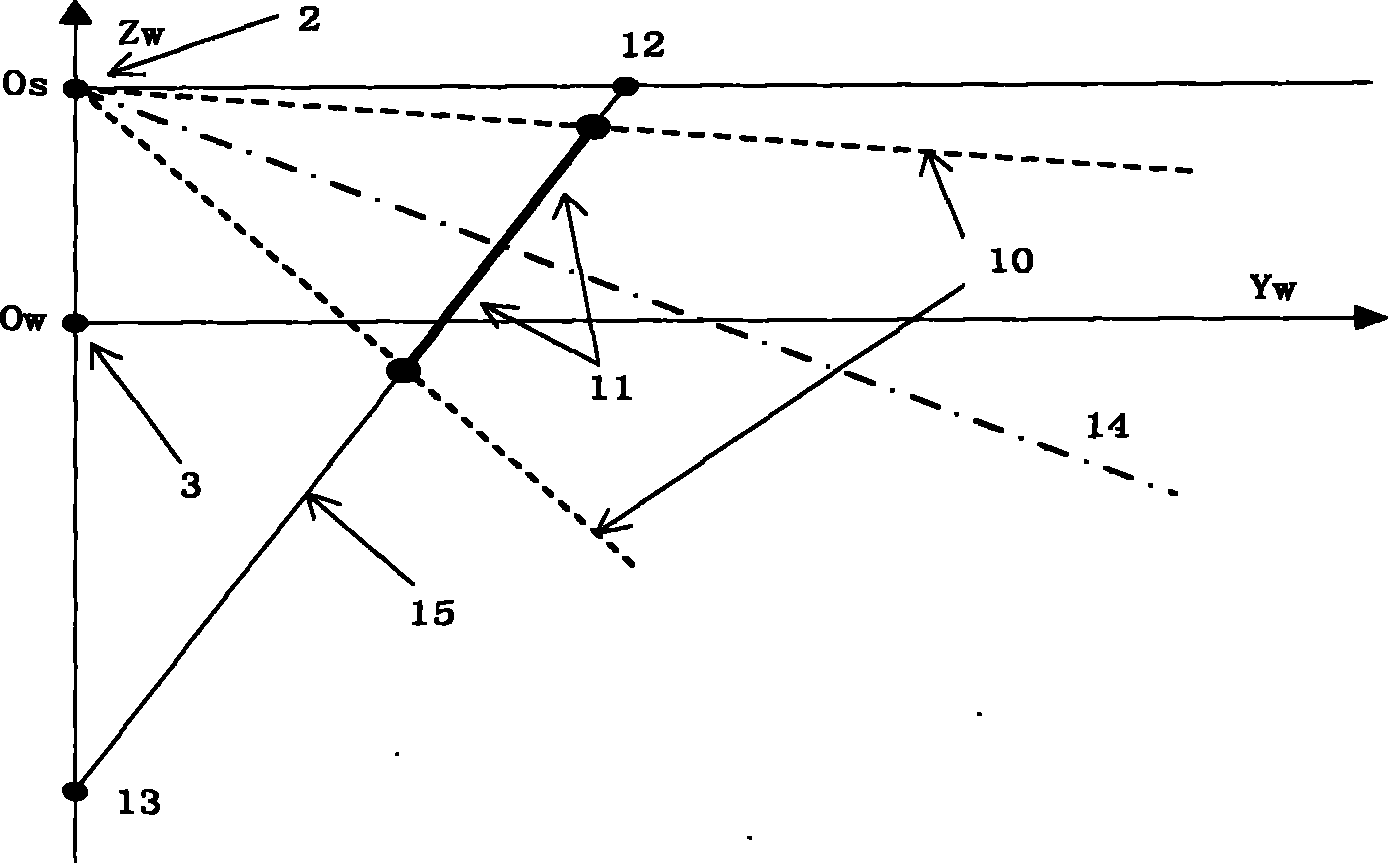

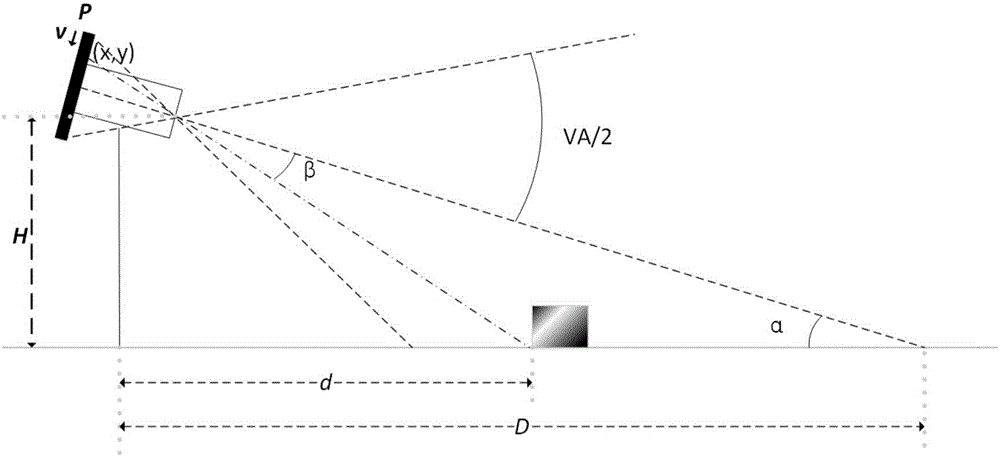

Method for calibrating external parameters of monitoring camera by adopting reference height

InactiveCN102103747AMeet application needsGuaranteed calibration measurement accuracyImage analysisTerrainHorizon

The invention discloses a method for calibrating external parameters of a monitoring camera by adopting reference height, which comprises the following steps of: far vanishing point and lower vanishing point-based vision model description and related coordinate system establishment; projected coordinate calculation of reference plumb information-based far vanishing points and lower vanishing points in an image plane; reference height calibration-based camera height, overhead view angle and amplification factor calculation; design of a reference plumb direction-based horizon inclination angle calibration tool; and design and use of perspective projection model-based three-dimensional measurement software and three tools for three-dimensional measurement, namely an equal height change scale, a ground level distance measurement scale and a field depth reconstruction framework. The calibrating method is simple and convenient in operation, quick in calculation and high in measurement precision. The reference can be a pedestrian, furniture or an automobile; and a special ground mark line is not needed. The method allows the camera to be arranged at a low place, and the shooting overhead view angle is slightly upward as long as the bottom of the reference can be seen clearly in the video and the ground level coordinate system is definite.

Owner:INST OF ELECTRONICS CHINESE ACAD OF SCI

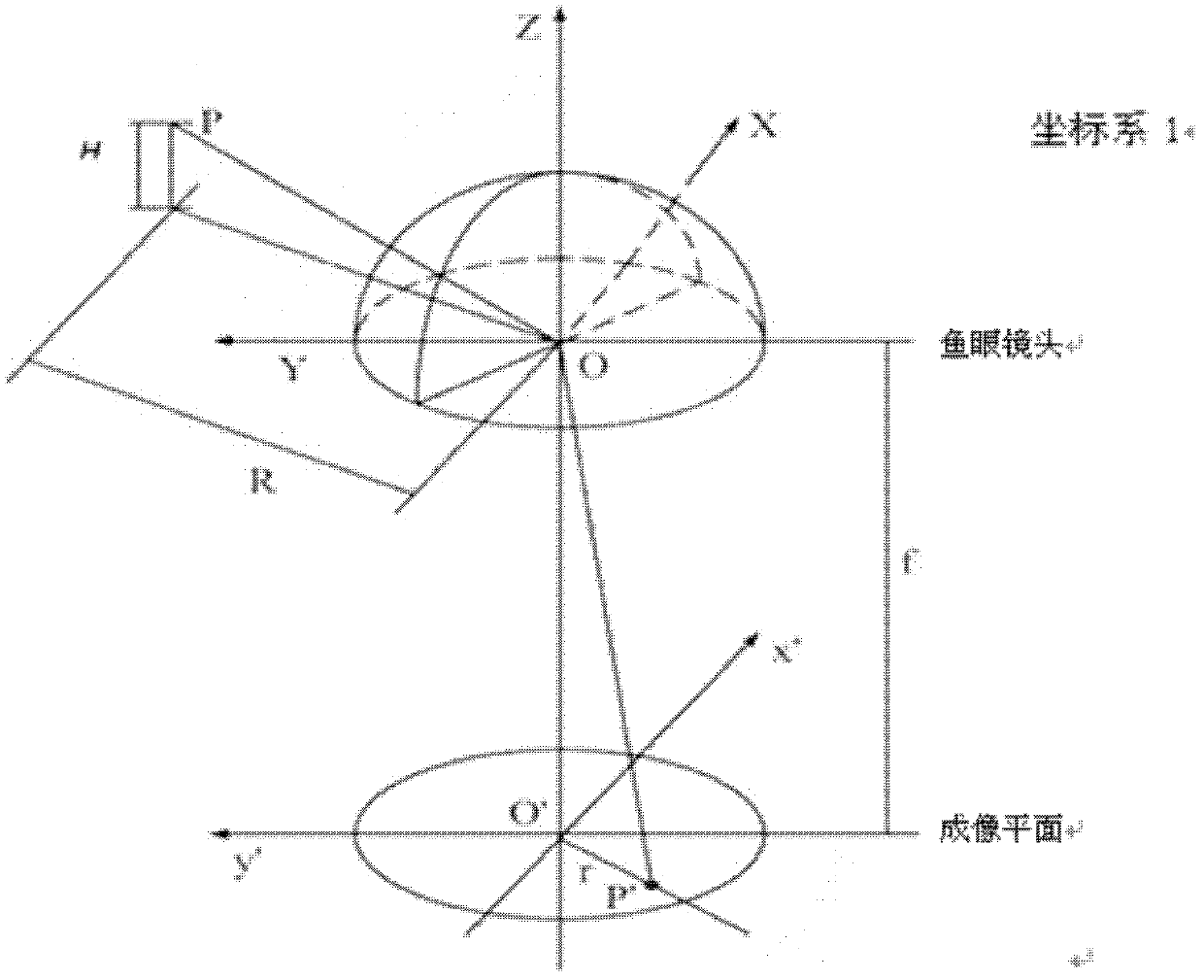

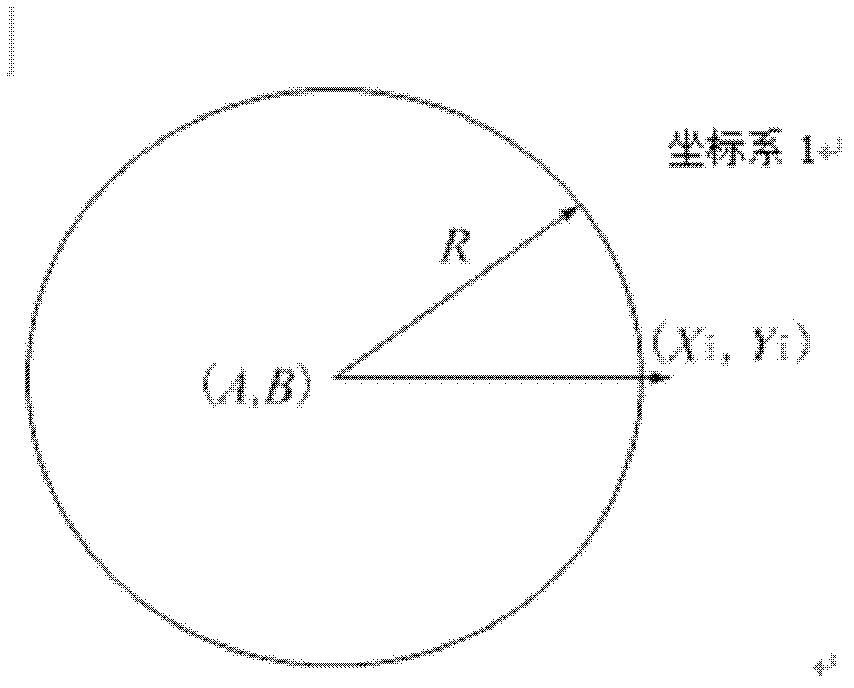

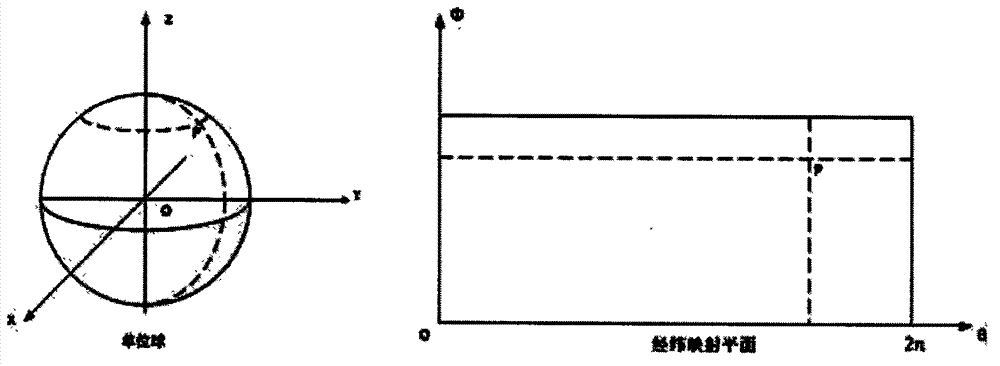

Fish eye lens calibration and fish eye image distortion correction method

InactiveCN102663734AQuick correctionPromote recoveryImage enhancementImage analysisDigital signal processingVertical projection

The invention relates to a fish eye lens calibration and fish eye image distortion correction method which belongs to the field of digital image processing and is very suitable for occasions of vision navigation, mobile monitoring and so on. Since imaging using a large wide angle will cause serious distortion, generally, imaging correction is needed to facilitate human eye observation. The method provided by the invention is mainly technically characterized by establishing a space coordinate system 1 and employing a least square curve-fitting method to calibrate a fish eye image to determine the center andthe radius of a fish eye lens, establishing a cylindrical projection model in a space coordinate system UV, and setting one-to-one relationships among an image point q2 of the fish eye image, a point q1 on object sphere and a point q projected on a cylinder, wherein q1 is in vertical projection relation with a bottom plane on which q2 is located and line q1q parallels to line oq2, thus achieving the purpose of fish eye image correction.

Owner:TIANJIN UNIVERSITY OF TECHNOLOGY

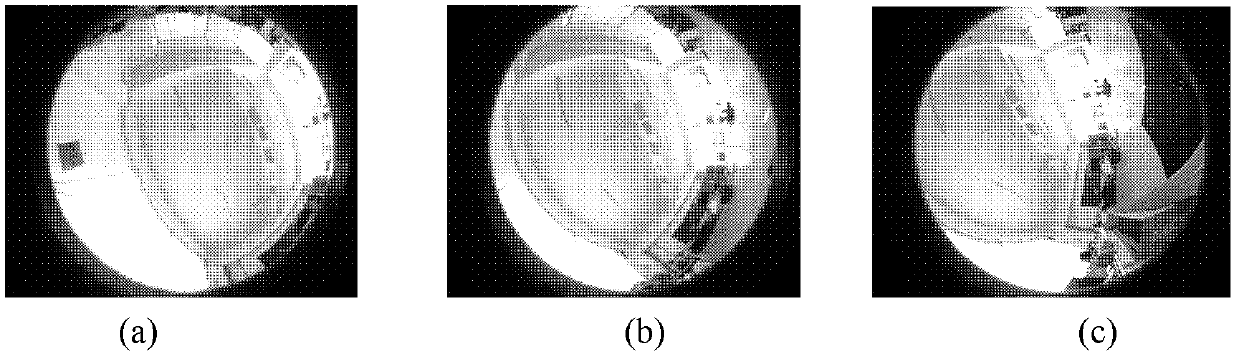

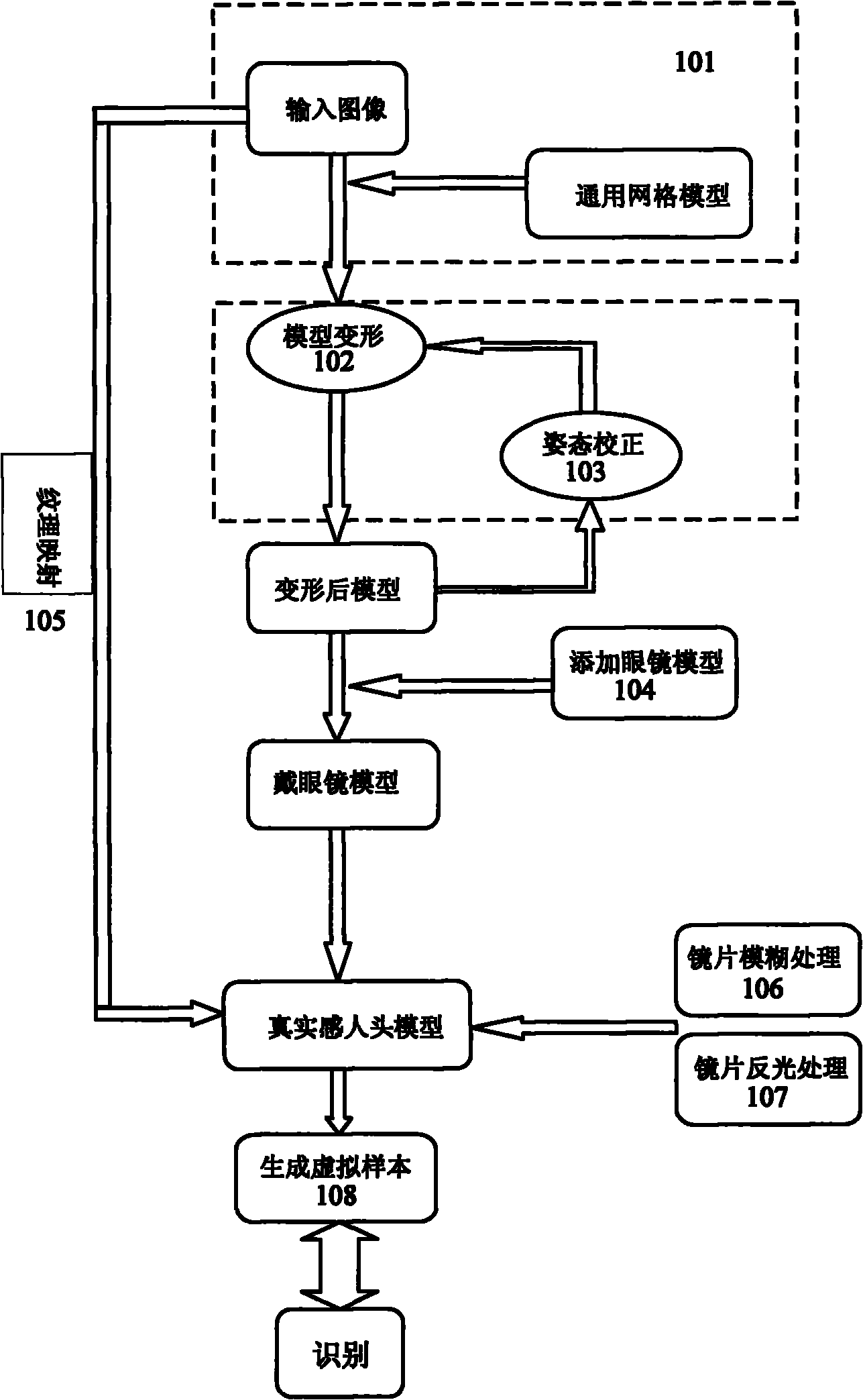

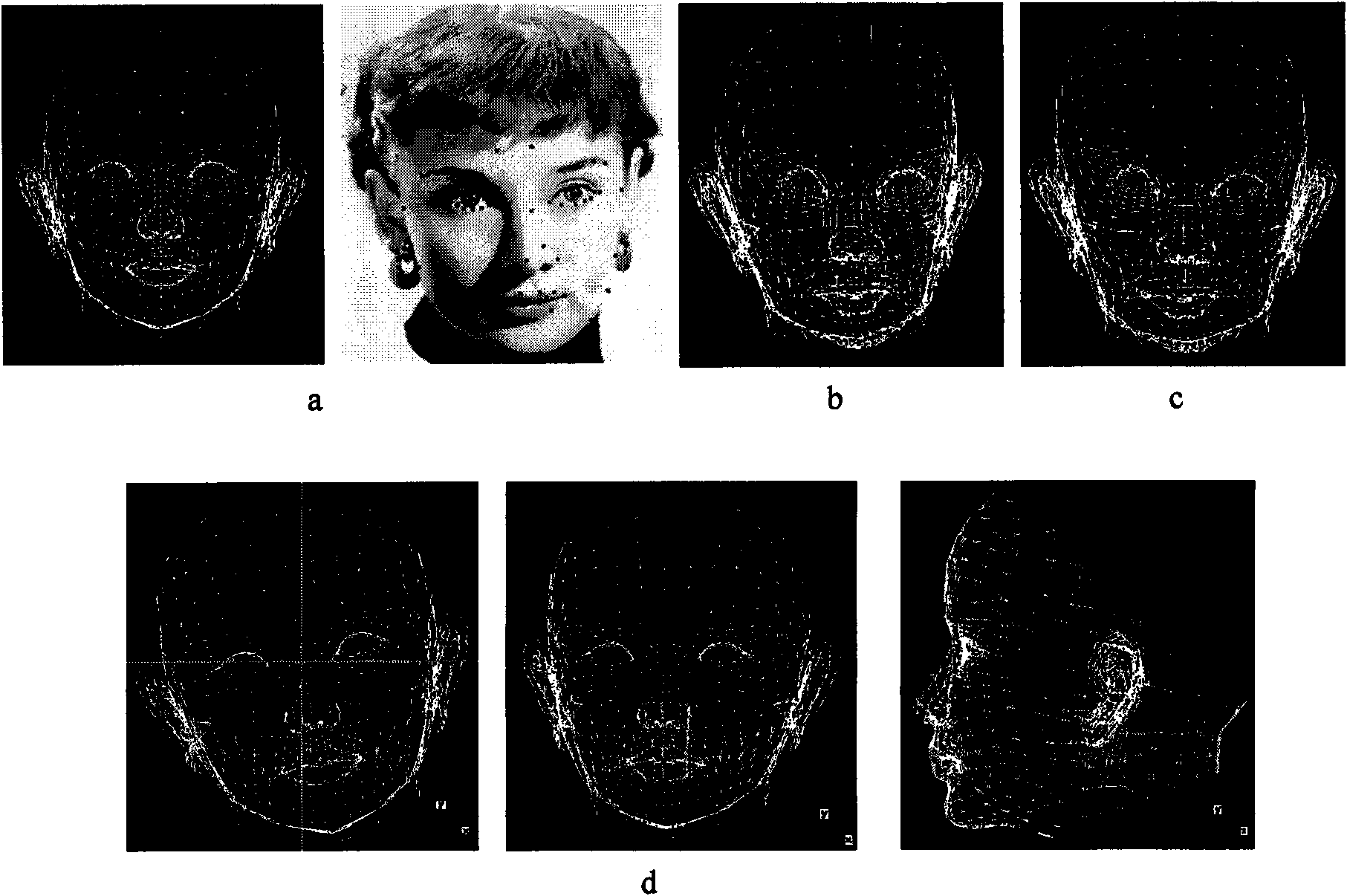

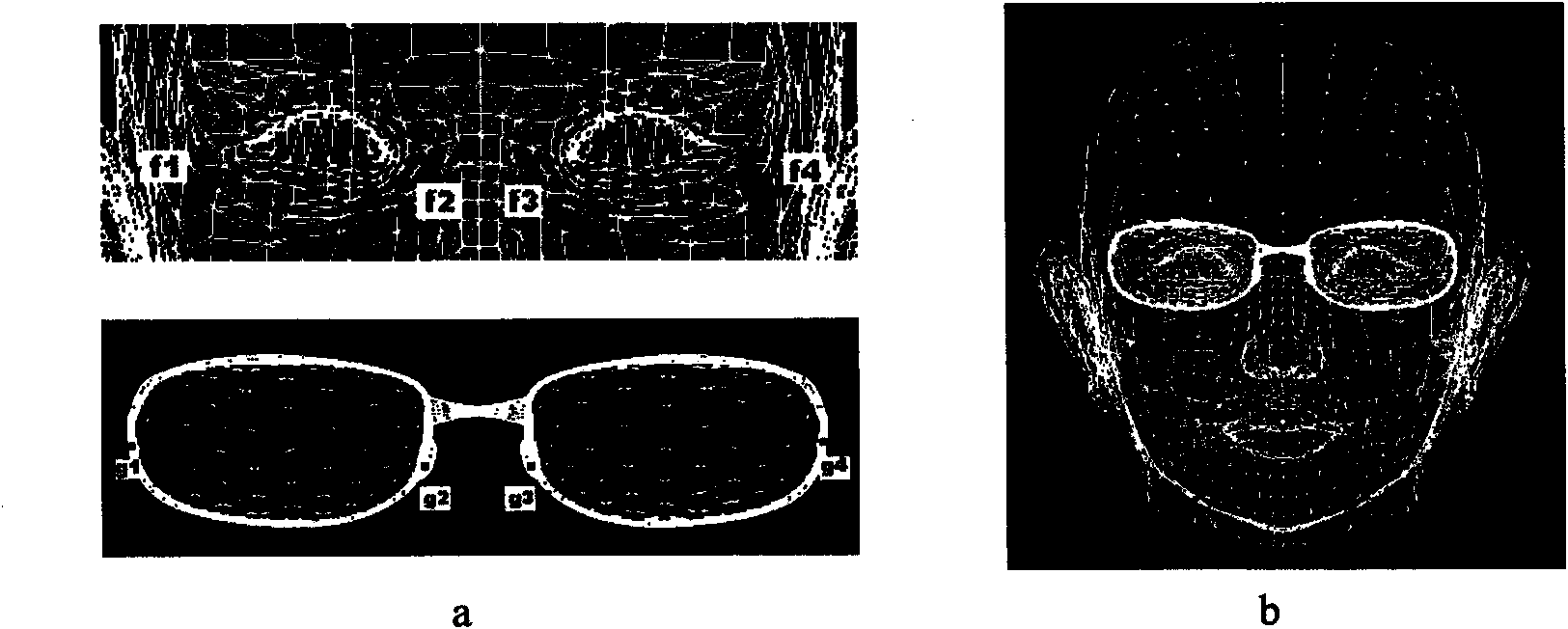

Method and system for identifying faces shaded by eyeglasses

ActiveCN102034079AImprove rebuild efficiencyNo lossCharacter and pattern recognitionEyewearVirtual sample

The invention discloses a method for recognizing faces shaded by eyeglasses, which comprises the following steps of: inputting an image of an eyeglass-free face and a general face model to acquire corresponding points on a face image and the face model, and performing coordinate transformation on all peaks to adjust the peaks under the same coordinate system; processing the model to acquire a facade head model of which the posture is corrected; adding an eyeglass model on the head model and pasting textures; performing fuzzy processing and light reflecting processing on the lenses of the eyeglasses; and generating virtual samples in which the eyeglasses are worn under different conditions by using a projection model. The invention also provides a corresponding face recognition system. The method and the system have the advantages of wide simulation range, high generality, greatly improved recognition effect, real-time property, high practicability, high recognition rate and the like, can comprise more changes of the eyeglasses, are quick and are easy to operate.

Owner:HANVON CORP

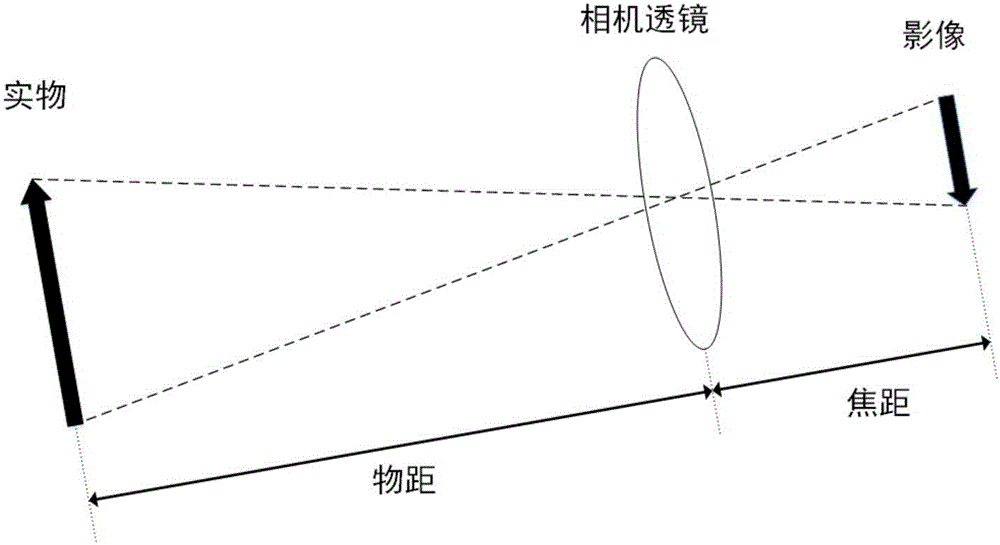

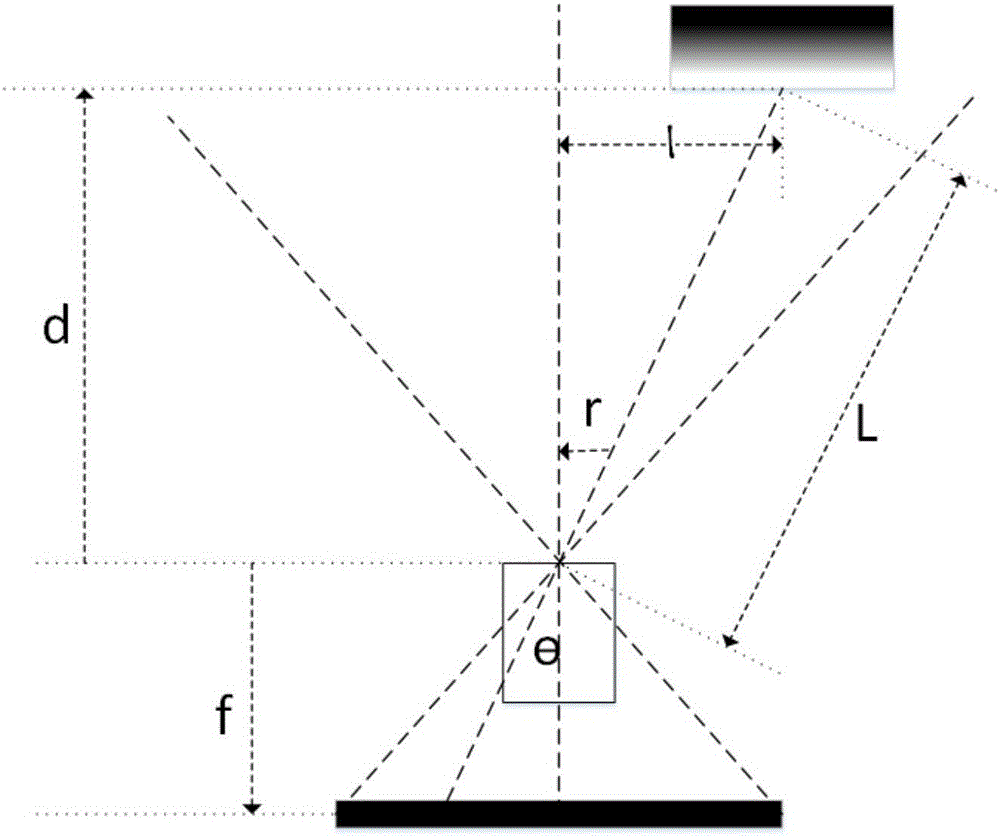

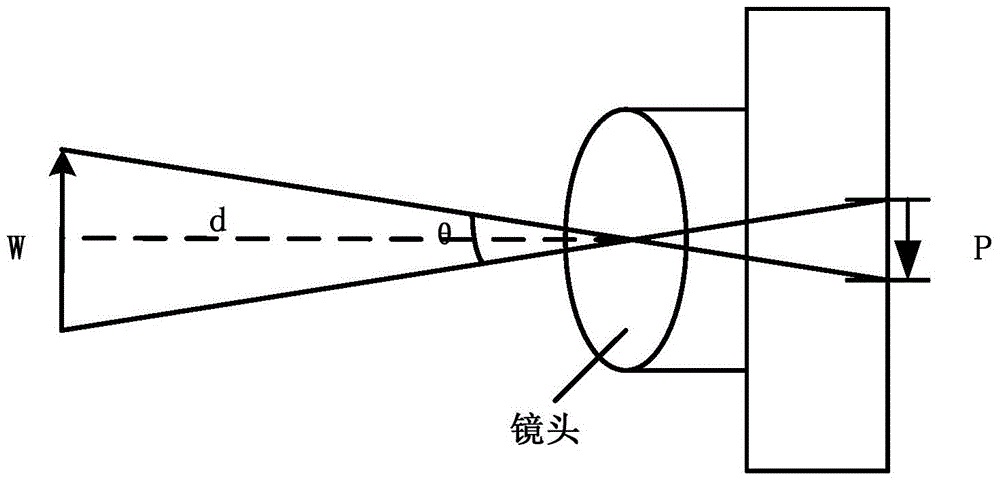

Monocular vision range finding method based on geometric relation

InactiveCN106443650ALower requirementSimple calculationOptical rangefindersUsing reradiationCamera lensComputation complexity

The invention discloses a monocular vision range finding method based on geometric relation, and the method comprises the following steps: S1, calculating the distance between a section straight line of a target object and a camera lens in a horizontal plane; S2, calculating the distance between a target object and the optical axis of a monocular lens through the triangle similarity principle. According to the projection model of a camera, the method obtains the relation between a road surface coordinate system and an image coordinate system through geometric derivation, is simple in calculation process, is low in calculation complexity, and is lower in requirements for hardware. Meanwhile, the method can reach higher precision, and the error of the method is less than 0.5m. The method can effectively calculate the distance between the front vehicles or other obstacles under the conditions that the hardware configuration condition is very simple and almost no additional load and electric quantity is added, thereby achieving the effective avoidance. The method and device are good in application prospect, such as the technical field of automobile automatic driving or intelligent robot walking.

Owner:CHENGDU RES INST OF UESTC

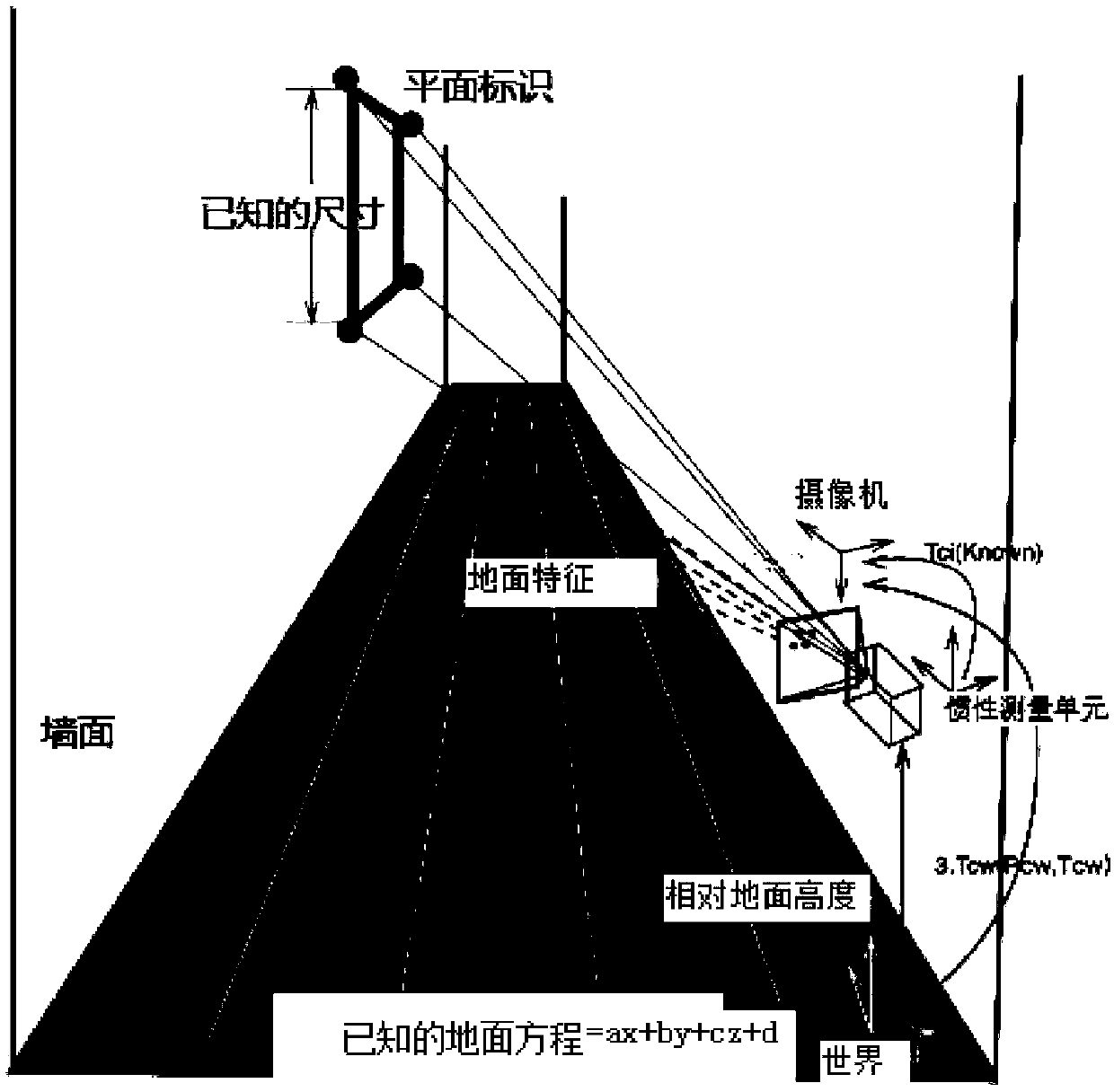

Visual inertial navigation SLAM method based on ground plane hypothesis

ActiveCN108717712AGet real depth in real timeEliminate cumulative errorsImage analysisPoint cloudGround plane

The invention relates to a visual inertial navigation SLAM method based on ground plane hypothesis. According to the method, feature points are extracted from an image to perform IMU pre-integration,a camera projection model is established, and camera internal parameter calibration and external parameter calibration between an IMU and a camera are performed; a system is initialized, a visually observed point cloud and a camera pose are aligned to the IMU pre-integration, and a ground equation and the camera pose are restored; the ground is initialized to obtain a ground equation, the ground equation under the current camera pose is determined and back projected to an image coordinate system, and a more accurate ground region is acquired; and based on state estimation, all sensor observation models are derived, camera observation, IMU observation and ground feature observation are fused to do state estimation, a graph optimization model is used to do state estimation, and a sparse graph optimization and gradient descent method is used to realize overall optimization. Compared with previous algorithms, the precision of the method is greatly improved, estimation of the camera pose can be limited globally, and therefore accuracy is greatly improved.

Owner:NORTHEASTERN UNIV

Picture composing apparatus and method

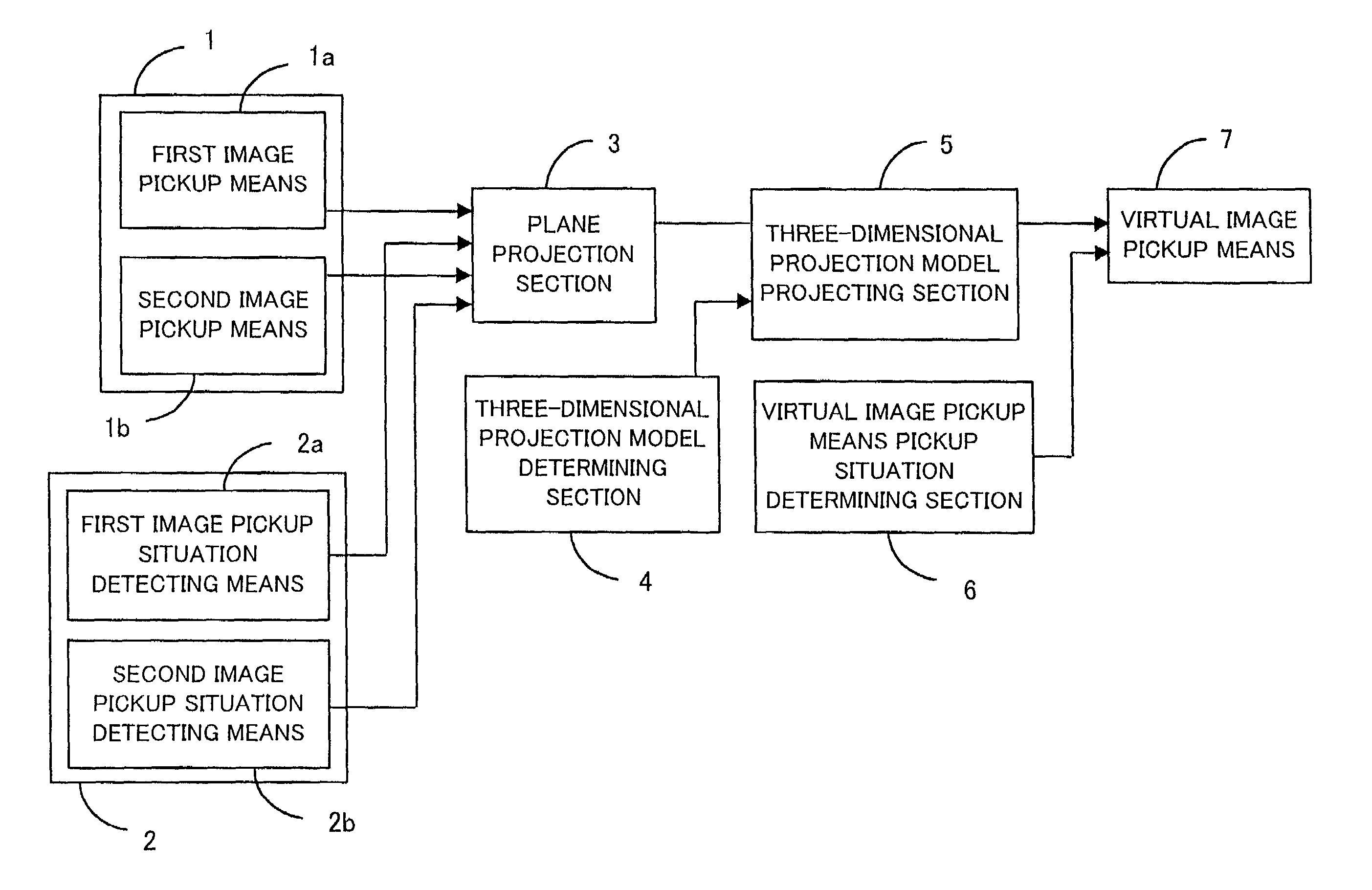

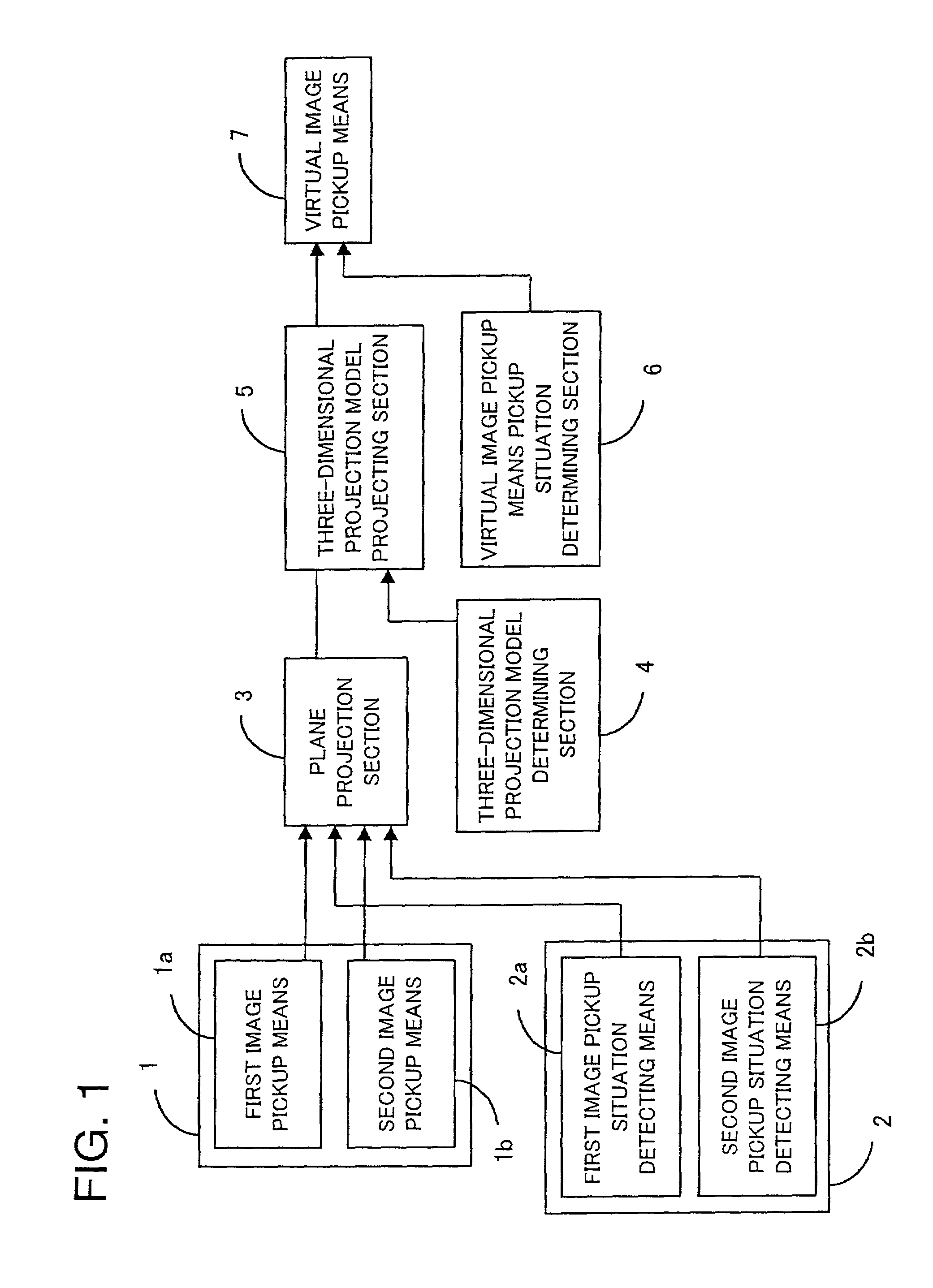

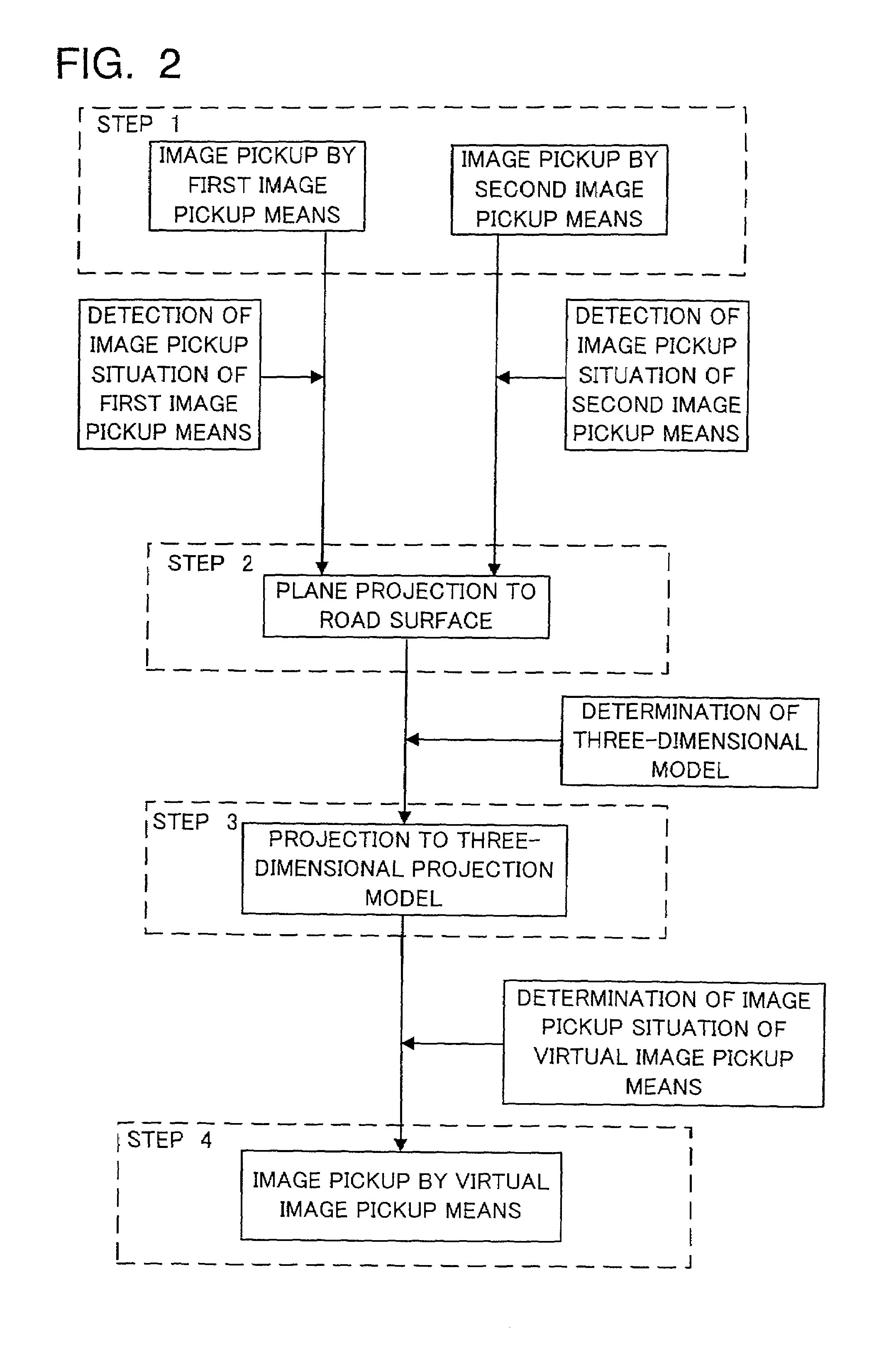

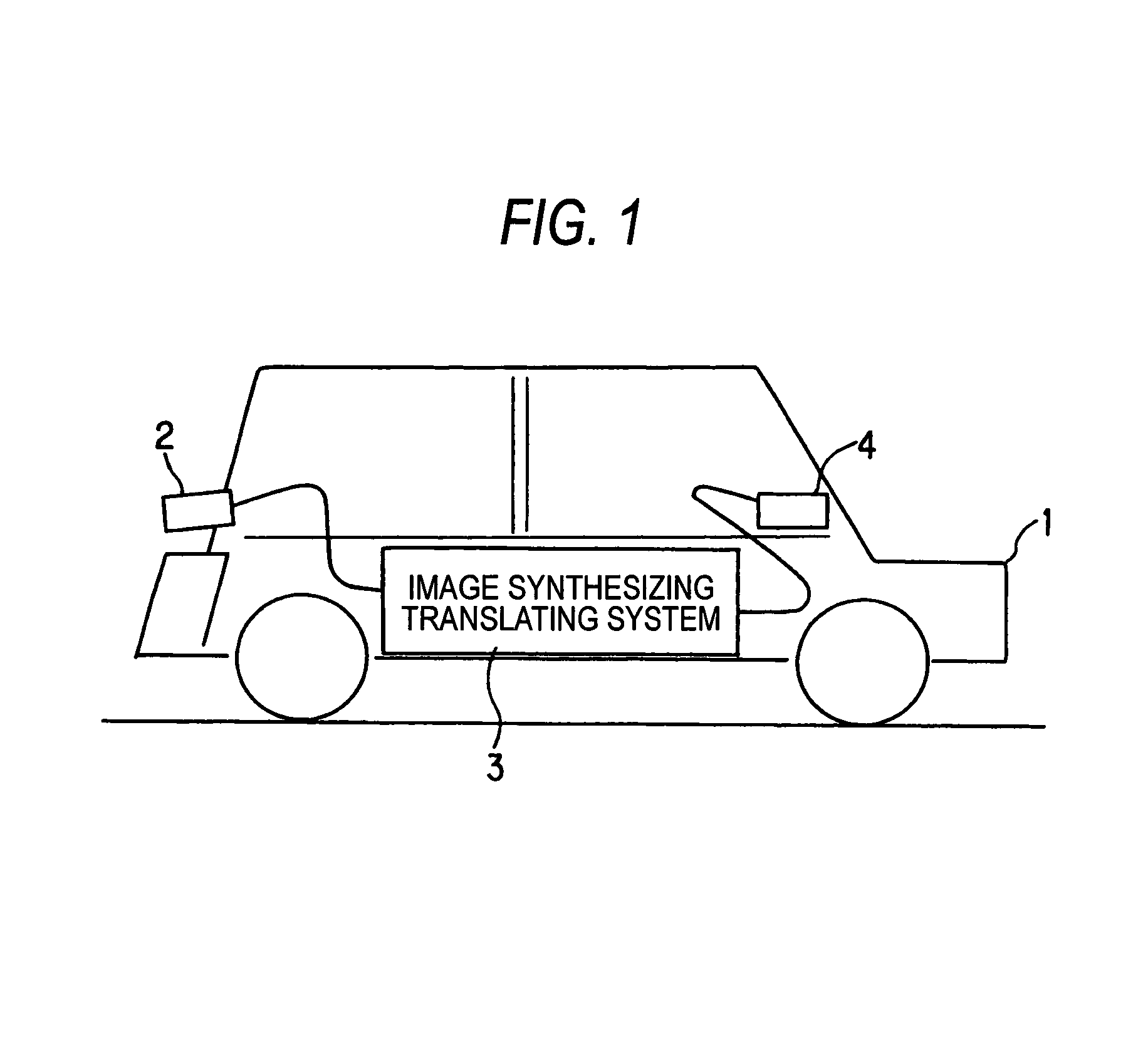

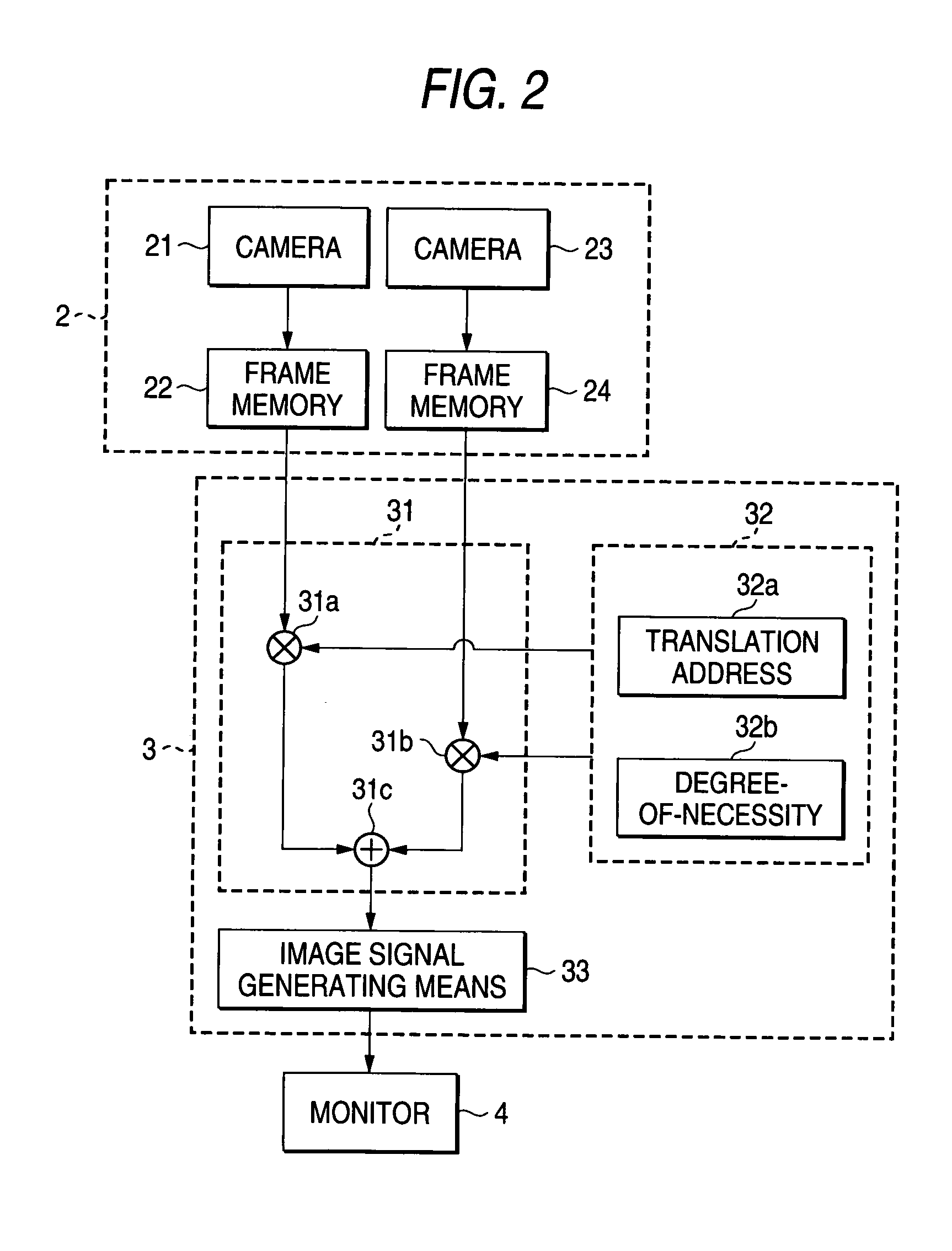

ActiveUS7034861B2Improve visibilityEasily seizedImage enhancementImage analysisMobile vehicleProjection model

A picture composing apparatus designed to combine a plurality of images taken by a plurality of image pickup devices. In the apparatus, a first projecting unit projects the plurality of images taken by the image pickup devices onto a projection section in accordance with an image pickup situation of the image pickup devices to generate a plurality of first projected images, and a second projecting unit projects the plurality of first projected images to a three-dimensional projection model to generate a second projected image. Also included in the apparatus are a virtual image pickup device for virtually picking up the second projected image and an image pickup situation determining unit for determining an image pickup situation of the virtual image pickup device, whereby the second projected image is picked up by the virtual image pickup device in the image pickup situation determined by the pickup situation determining unit to combine the plurality of images taken by the plurality of image pickup devices, thus producing a high-quality composite picture. This apparatus can offer a natural composite picture in which joints among the images taken by the image pickup devices do not stand out. In addition, when mounted on a motor vehicle, this apparatus allows a driver to easily seize the surrounding situations and the positional relationship of the motor vehicle with respect to other objects.

Owner:PANASONIC CORP

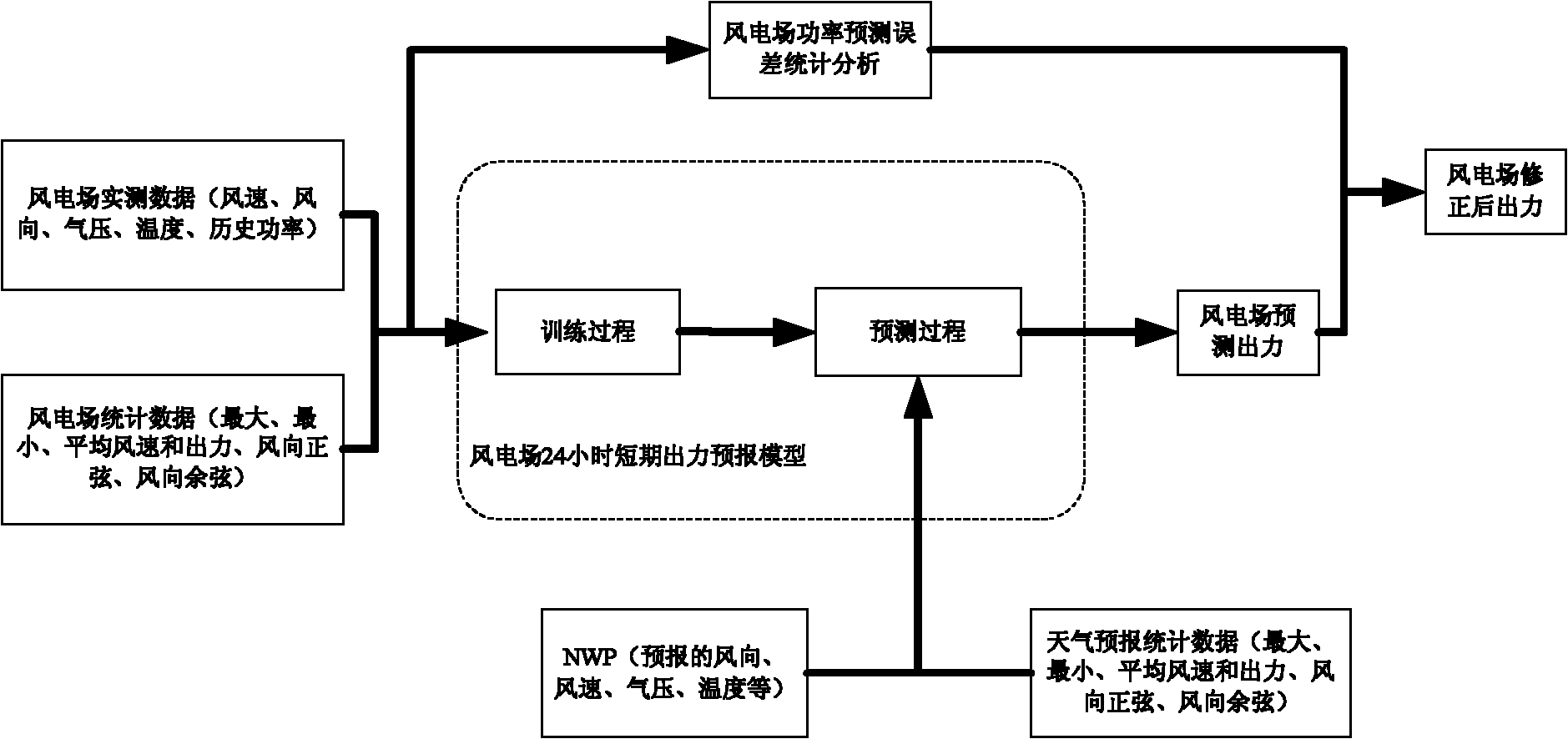

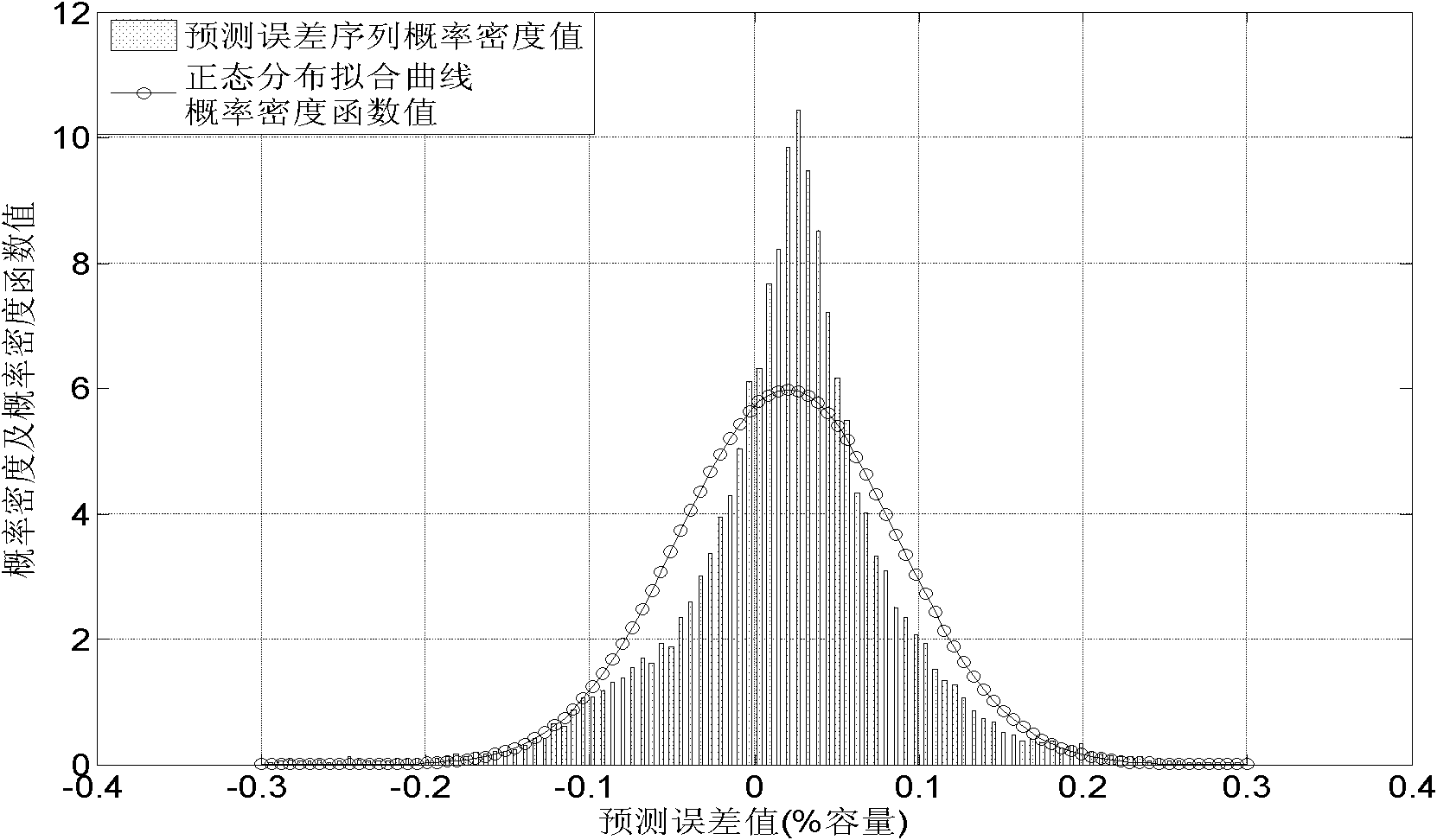

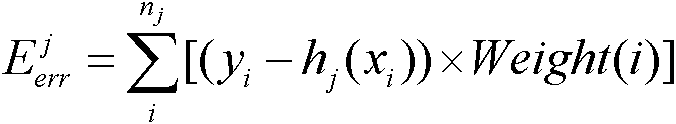

Wind power station power projection method based on error statistics modification

InactiveCN102496927AOptimize forecast resultsMeet the actual needs of the projectClimate change adaptationSpecial data processing applicationsElectricityTerrain

The invention discloses a wind power station power projection method based on error statistics modification. The procedures adopted by the invention is that firstly, data pretreatment is performed, the projection and measured data and the statistical data of the wind power station are divided into a plurality of sample sets according to different terrain heights; secondly, the sample sets are used for the training of a wind power projection model so as to form a 24-hour output short-term forecast model of the wind power station, the error distribution condition of the power can be projected through analyzing the projected power value and measured power value so as to obtain the expected value of the power projection error of the wind power station; thirdly, the power projection value of the wind power station can be obtained according to the numerical weather prediction data, weather forecast data and the trained model; and finally, the final power modification value of the wind power station can be obtained through modifying the power projection value according to the projection error statistical calculation result of the wind power station.

Owner:CHINA ELECTRIC POWER RES INST +1

Correction method of fisheye image distortion on basis of cubic projection

InactiveCN101726855AOvercome geometric deformationIn line with intuitive feelingOptical elementsViewpointsFisheye lens

The invention belongs to the field of advanced manufacturing technology, relating to a correction method of fisheye image distortion on the basis of cubic projection. The correction method comprises the following steps: 1. using a fisheye lens to obtain an imaging model, and calibrating the fisheye lens according to the imaging model to obtain a calibration parameter; 2. according to the collected fisheye image, determining a viewpoint, taking the viewpoint as the original point of a coordinate system, and building a cubic perspective projection model to obtain the cubic perspective projection of a spatial point; 3. building a mapping relation between the cubic perspective projection of the spatial point and the fisheye image; and 4. using bilinearity interpolation to realize the distortion correction of the fisheye image. The invention utilizes a cubic projection model to correct distorted fisheye images and can effectively overcome the geometric distortion existing in the original fisheye images, and the corrected image conforms to the intuitional feeling of people and has strong sense of reality.

Owner:HEBEI UNIV OF TECH

Fast 3-D point cloud generation on mobile devices

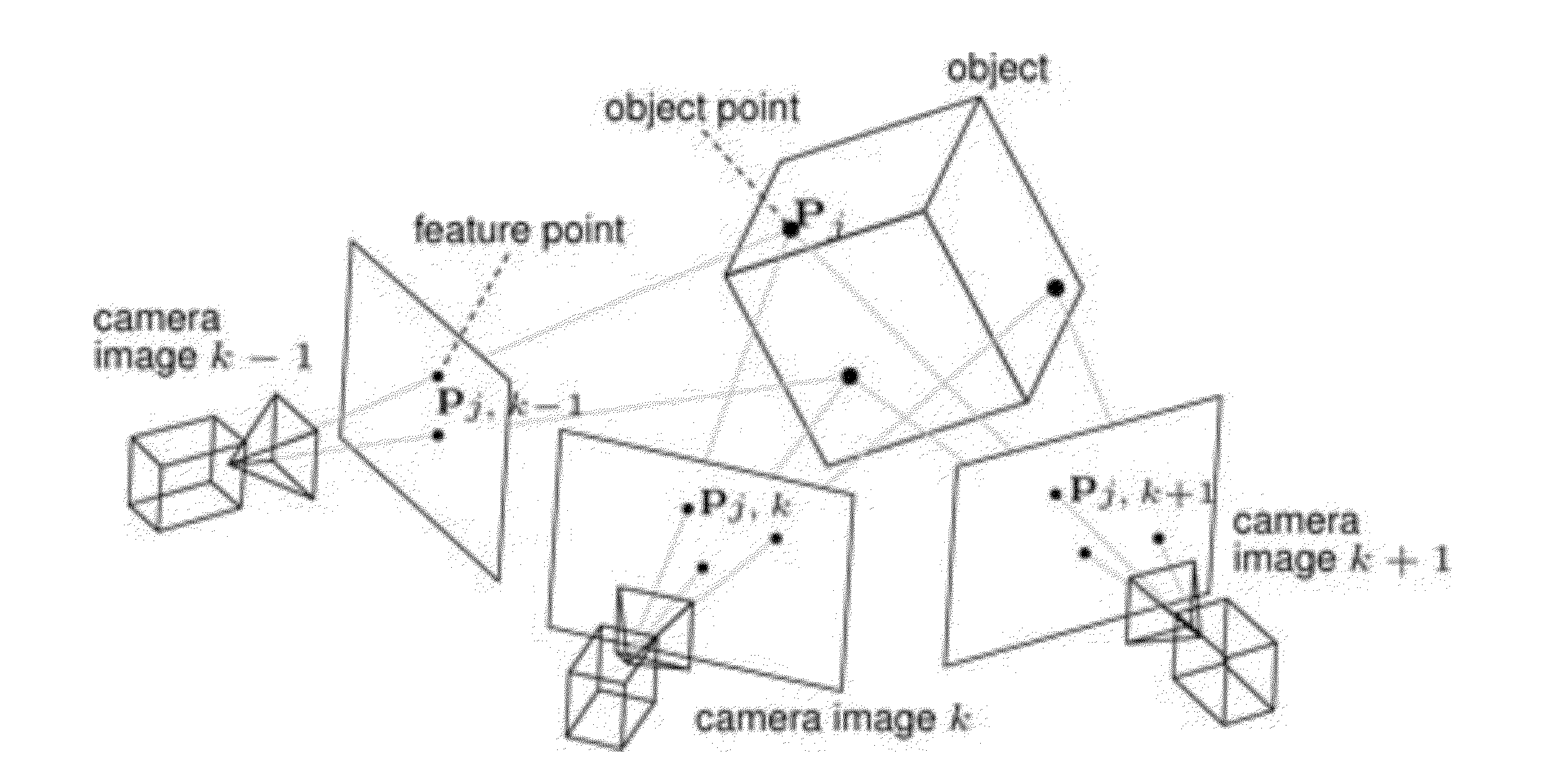

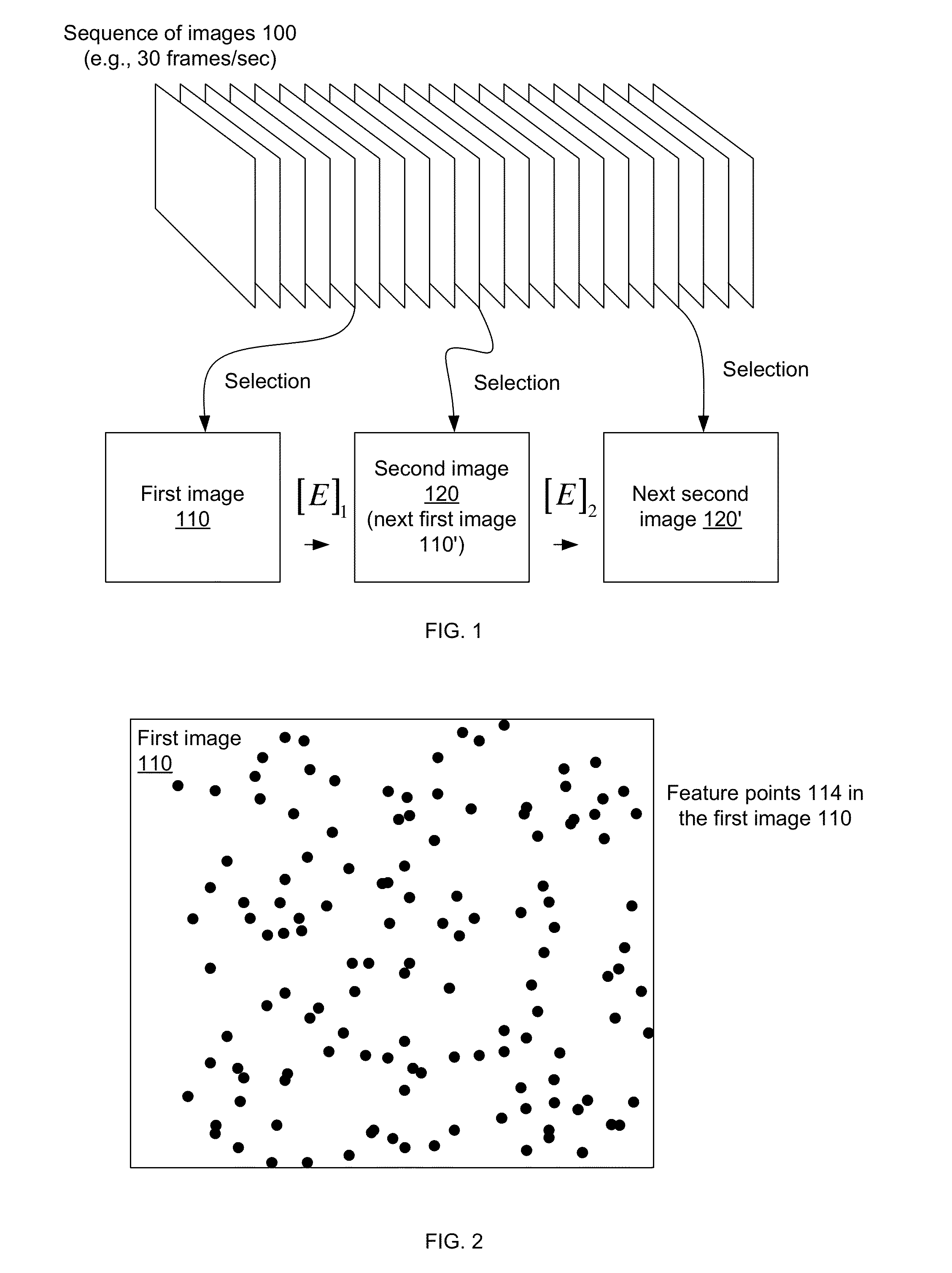

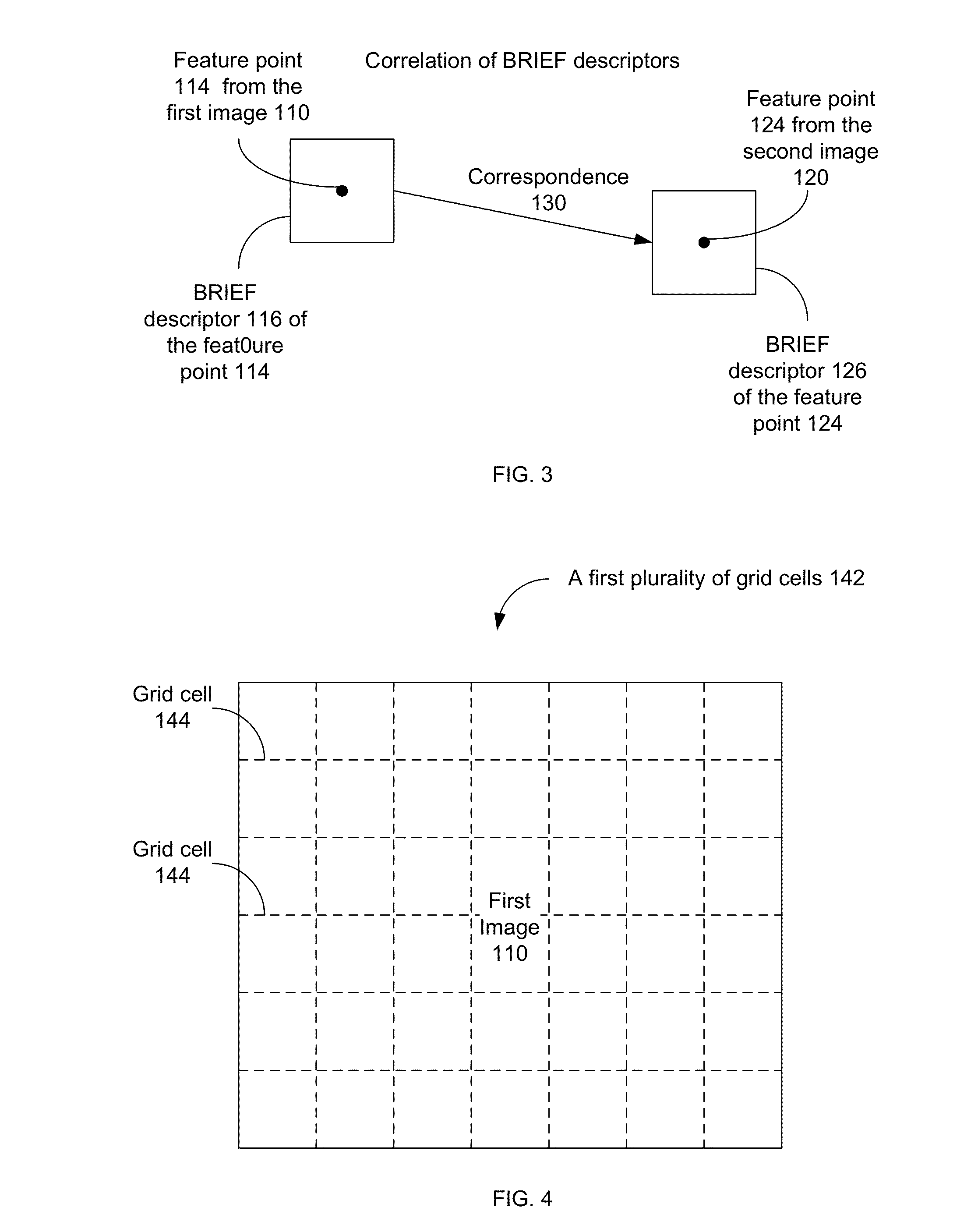

A system, apparatus and method for determining a 3-D point cloud is presented. First a processor detects feature points in the first 2-D image and feature points in the second 2-D image and so on. This set of feature points is first matched across images using an efficient transitive matching scheme. These matches are pruned to remove outliers by a first pass of s using projection models, such as a planar homography model computed on a grid placed on the images, and a second pass using an epipolar line constraint to result in a set of matches across the images. These set of matches can be used to triangulate and form a 3-D point cloud of the 3-D object. The processor may recreate the 3-D object as a 3-D model from the 3-D point cloud.

Owner:QUALCOMM INC

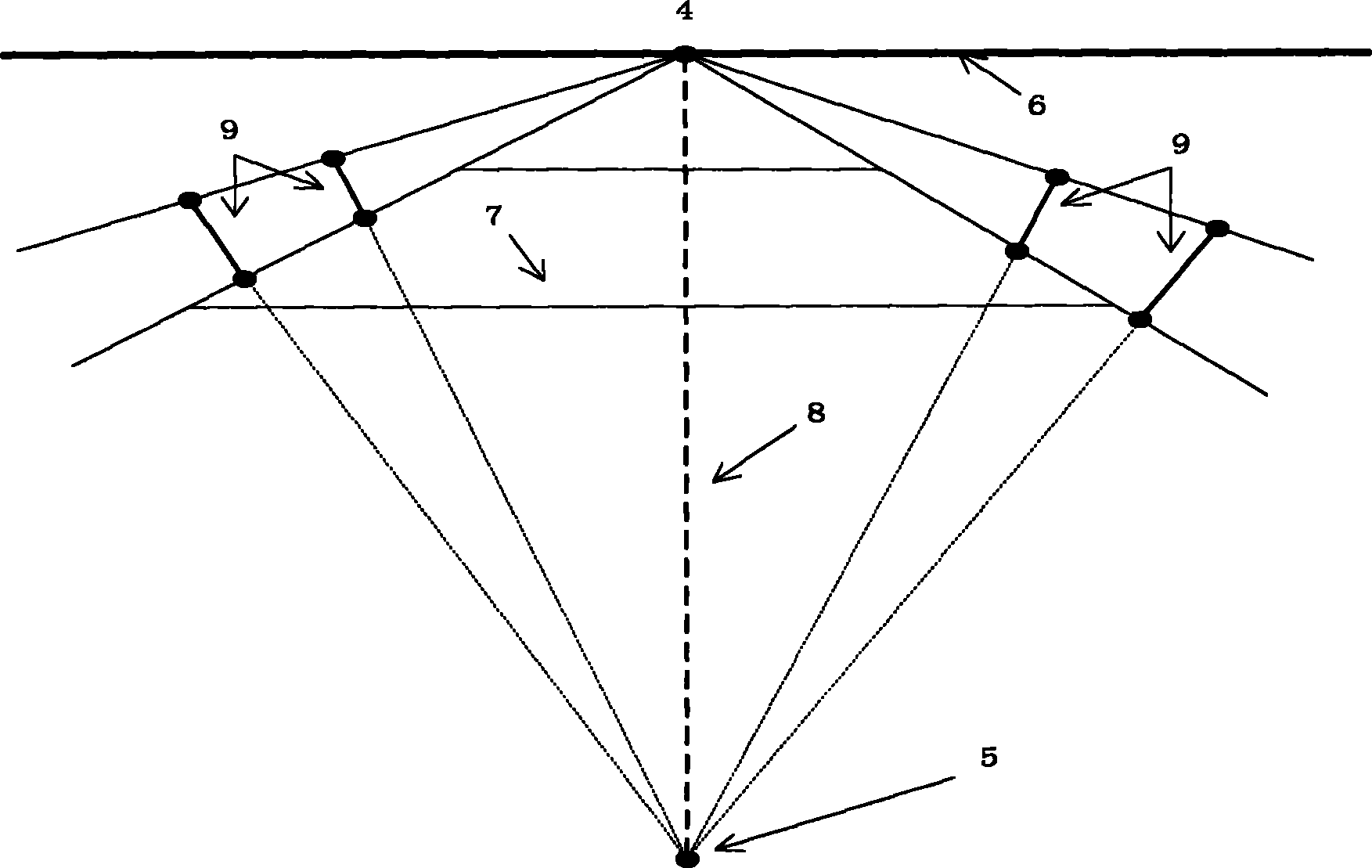

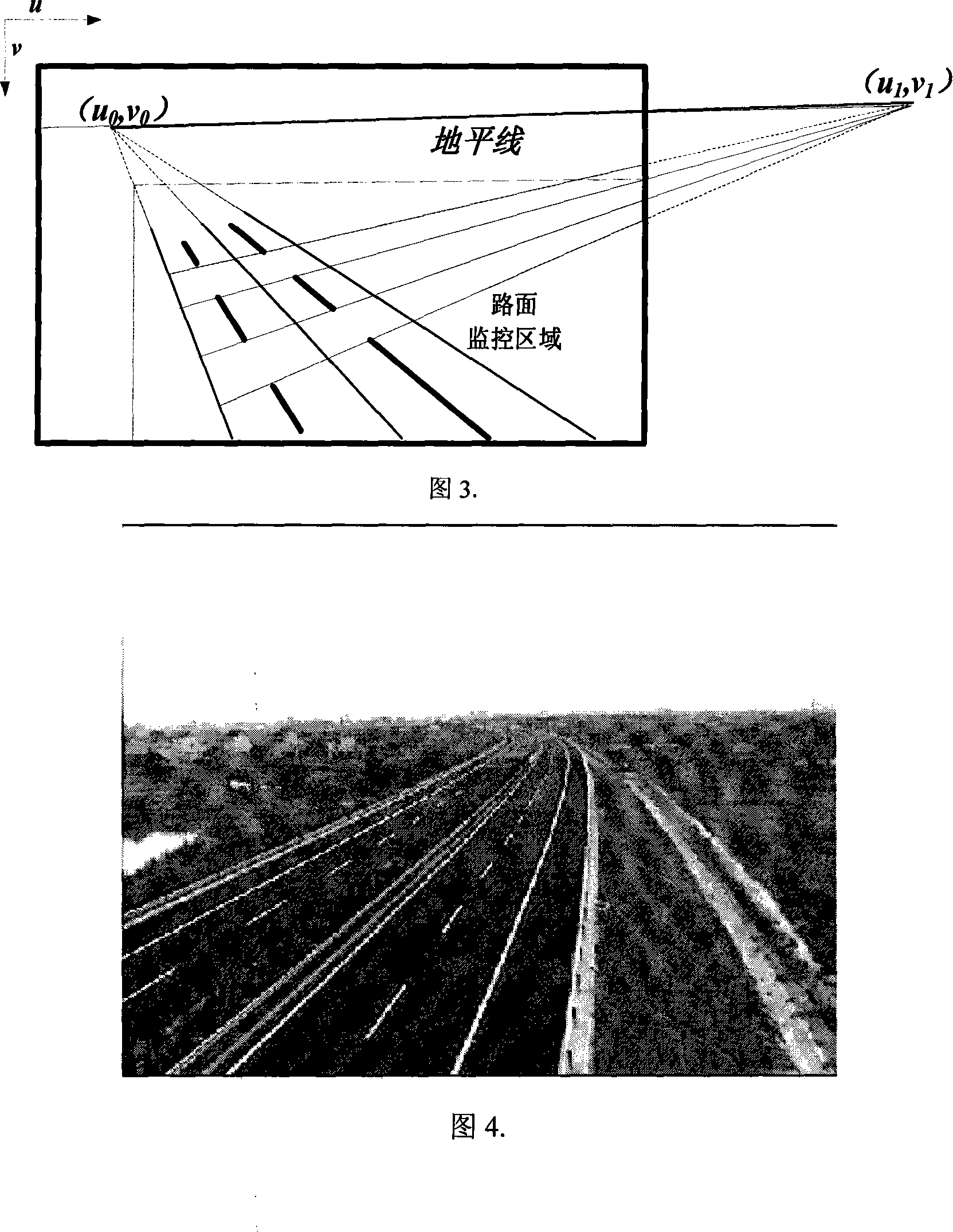

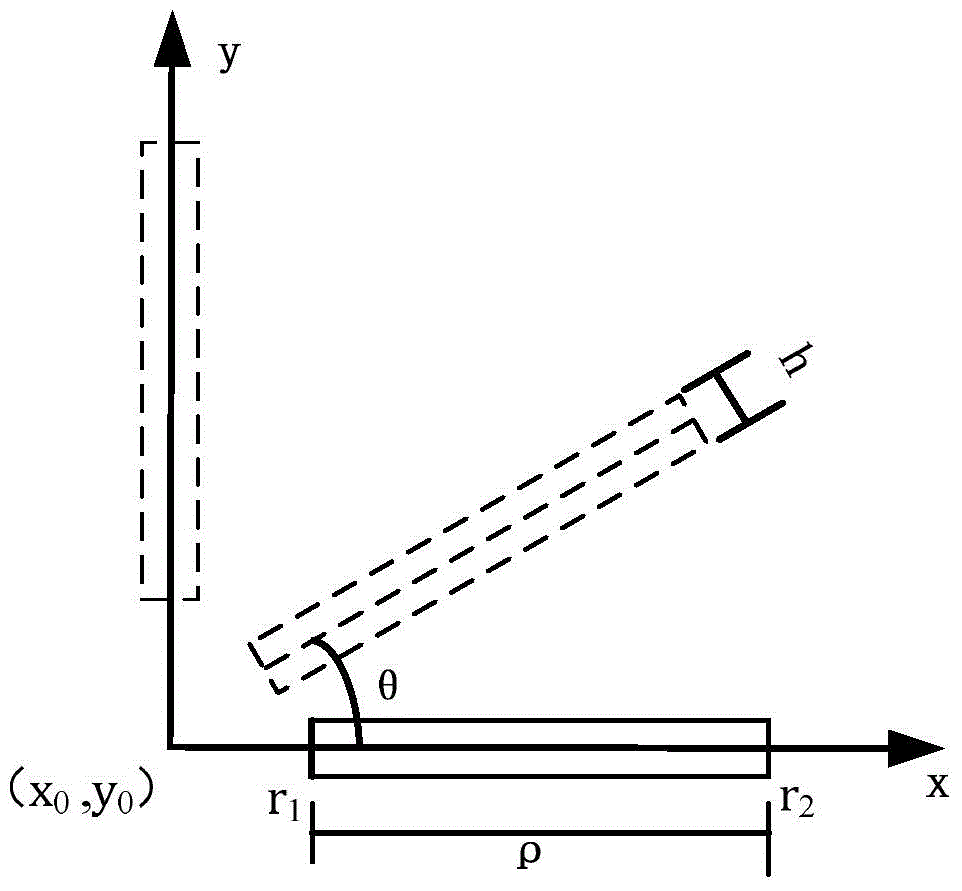

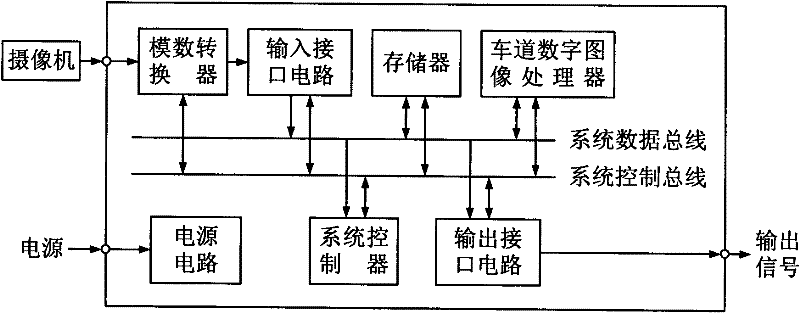

Method for detecting boundary of lane where vehicle is

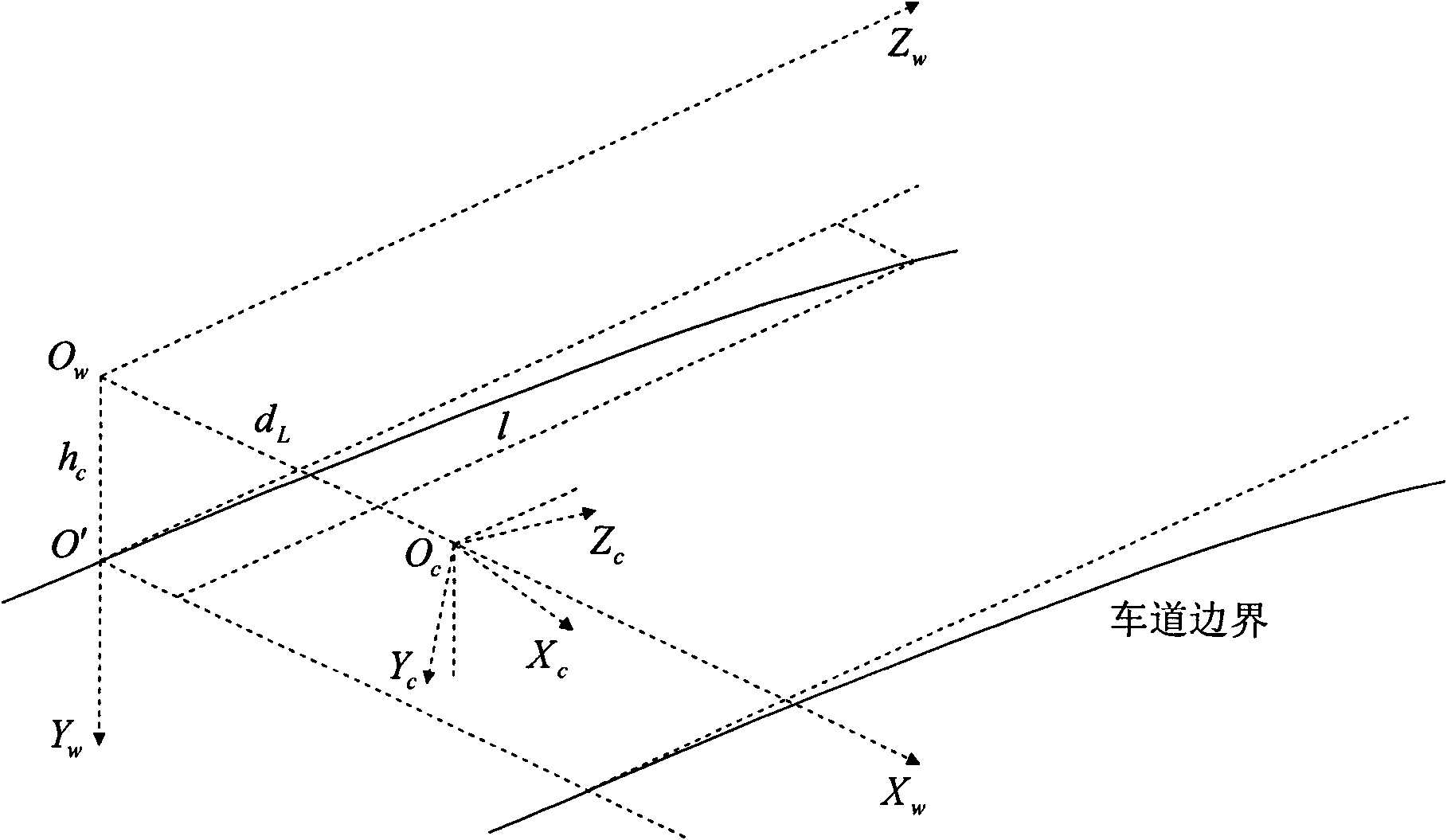

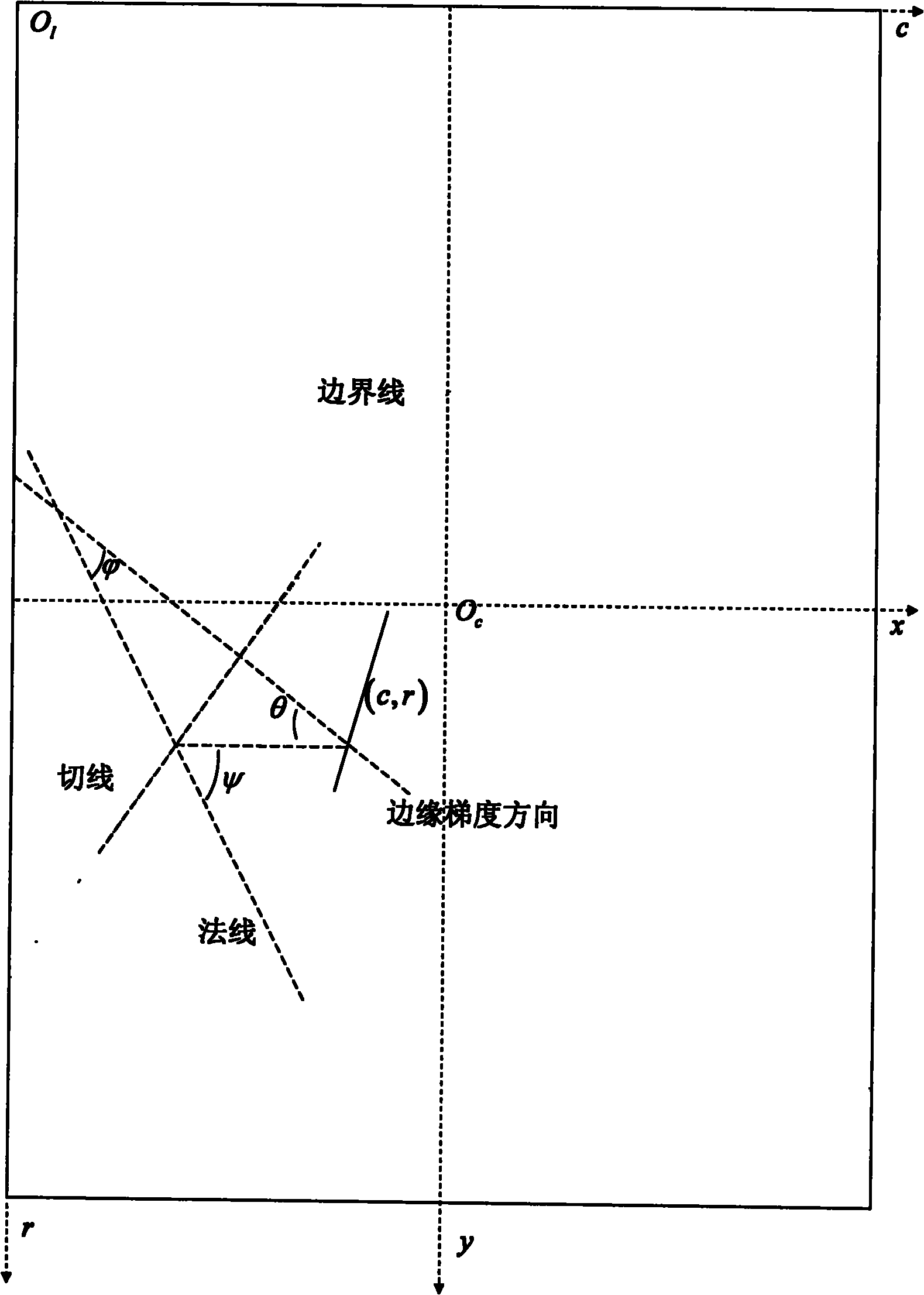

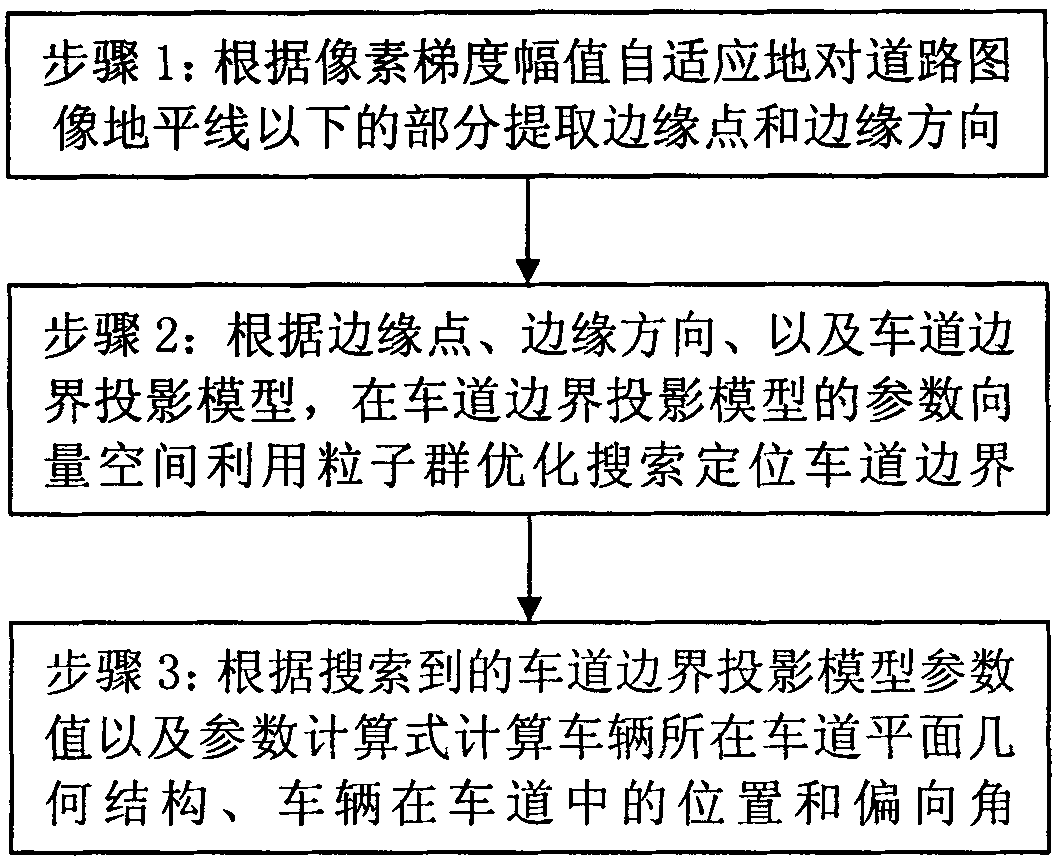

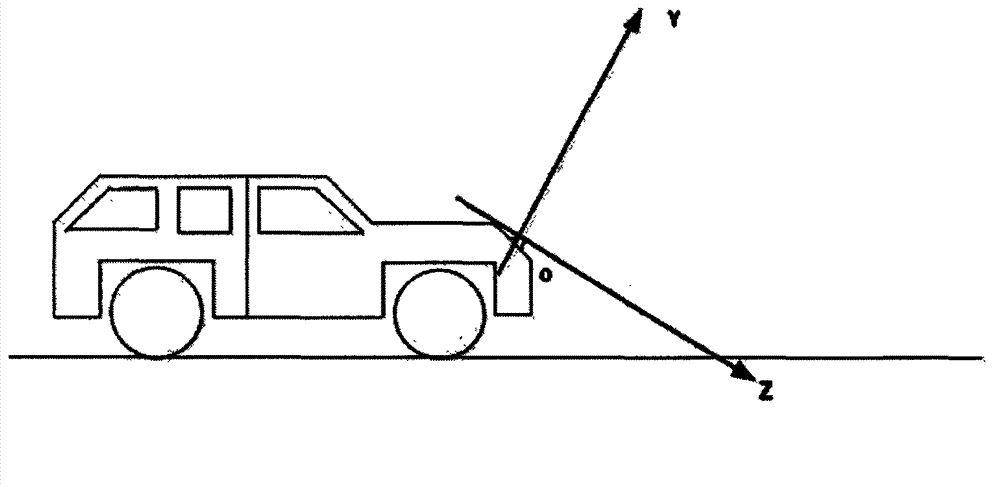

InactiveCN102184535AAccurately reflectImprove environmental adaptabilityImage analysisRoad vehicles traffic controlHorizonComputer graphics (images)

The invention discloses a method for detecting a boundary of a lane where a vehicle is, which comprises the steps of: first adaptively extracting edge points for the part below a horizon of a road image according to pixel gradient amplitudes and calculating an edge direction, then searching and positioning a lane boundary by utilizing the particle swarm optimization in a parameter vector space ofa lane boundary projection model according to the extracted edge points, the edge direction and the lane boundary projection model; finally, calculating a plane geometric structure of the lane wherein the vehicle is, a position of the vehicle in the lane and an angle of deviation according to the searched lane boundary projection model parameter values and a parameter calculation method. The method can reflect an actual lane boundary curve more accurately, has better environment self-adaption capability, enhances the robustness of the lane detection, and has high reliability and strong antijamming capability.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

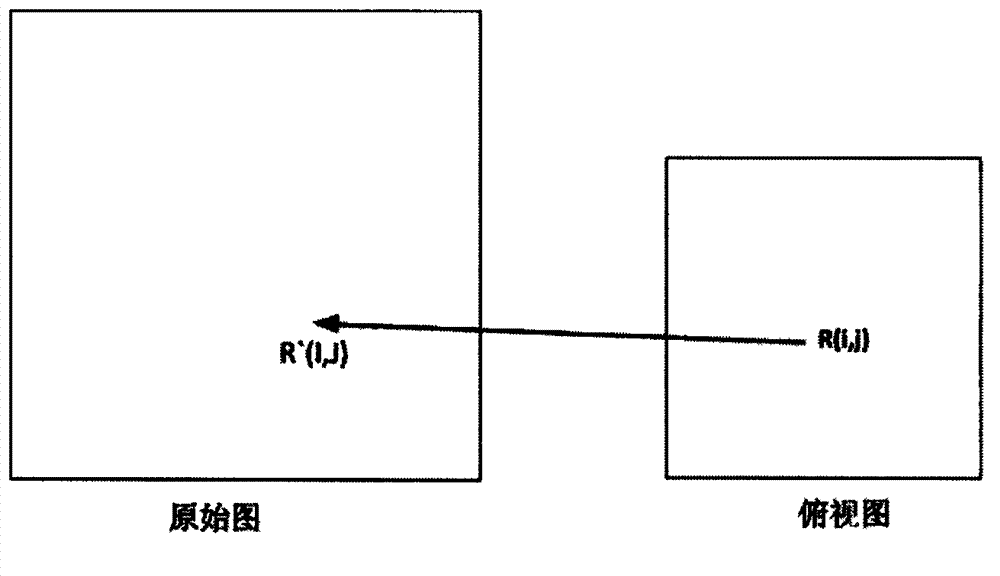

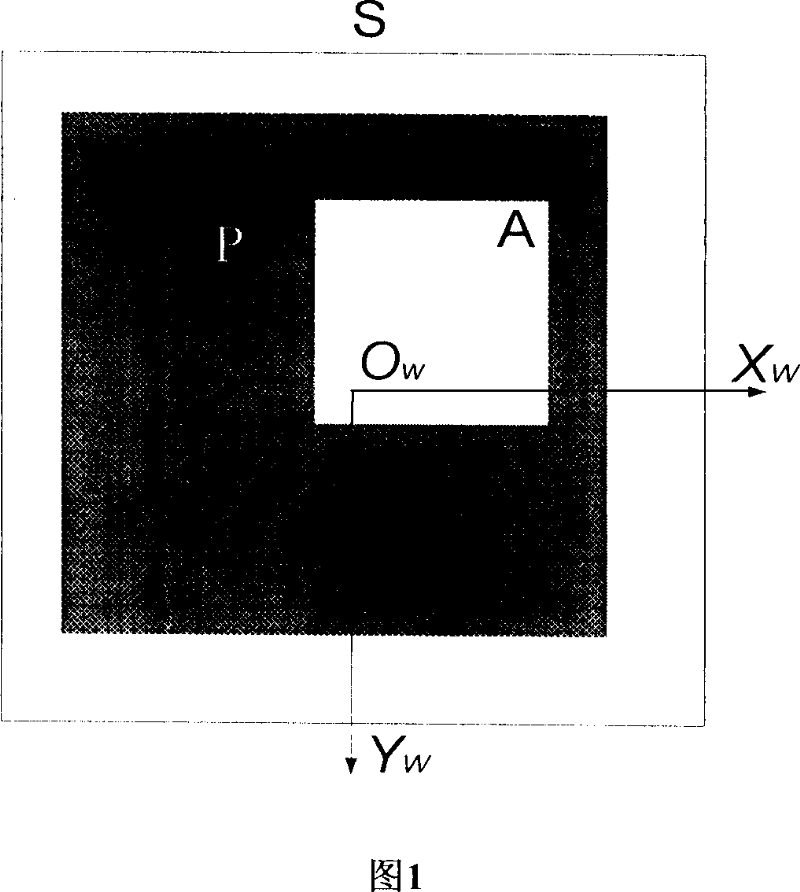

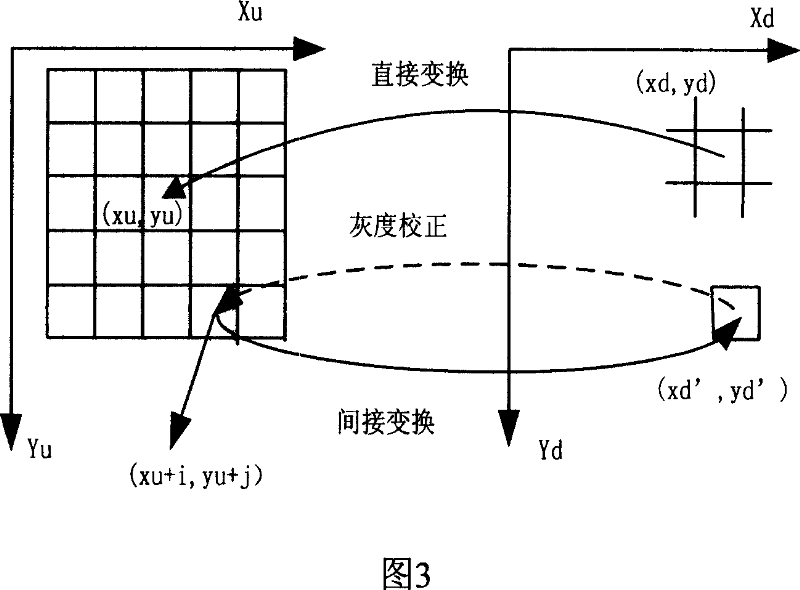

Fisheye image correction method of vehicle panoramic display system based on spherical projection model and inverse transformation model

A fisheye lens is required to be adopted by the vehicle panoramic display system to obtain a 180-degree wide visual field, however, the visual field is deformed severely. The invention discloses a fisheye image correction method of the vehicle panoramic display system based on a spherical projection model and an inverse transformation model. The method comprises the steps as follows: (1), confirming an area A which is required to be displayed in an aerial view, and establishing a world coordinate system to position the display area; (2), obtaining a coordinate B of the area required to be displayed in a spherical longitude and latitude mapping coordinate system according to camera mounting parameters; (3), confirming the position C of the coordinate B in an original image collected by the fisheye lens according to the fisheye lens spherical projection model; (4), establishing a coordinate transformation relation from C to A through inverse projection transformation; and (5), performing interpolation arithmetic for non-integer points through a bilinear interpolation method to obtain a complete aerial view in a certain direction of the vehicle. The algorithm provided by the invention is simple in implementation and strong in universality and instantaneity.

Owner:丹阳科美汽车部件有限公司

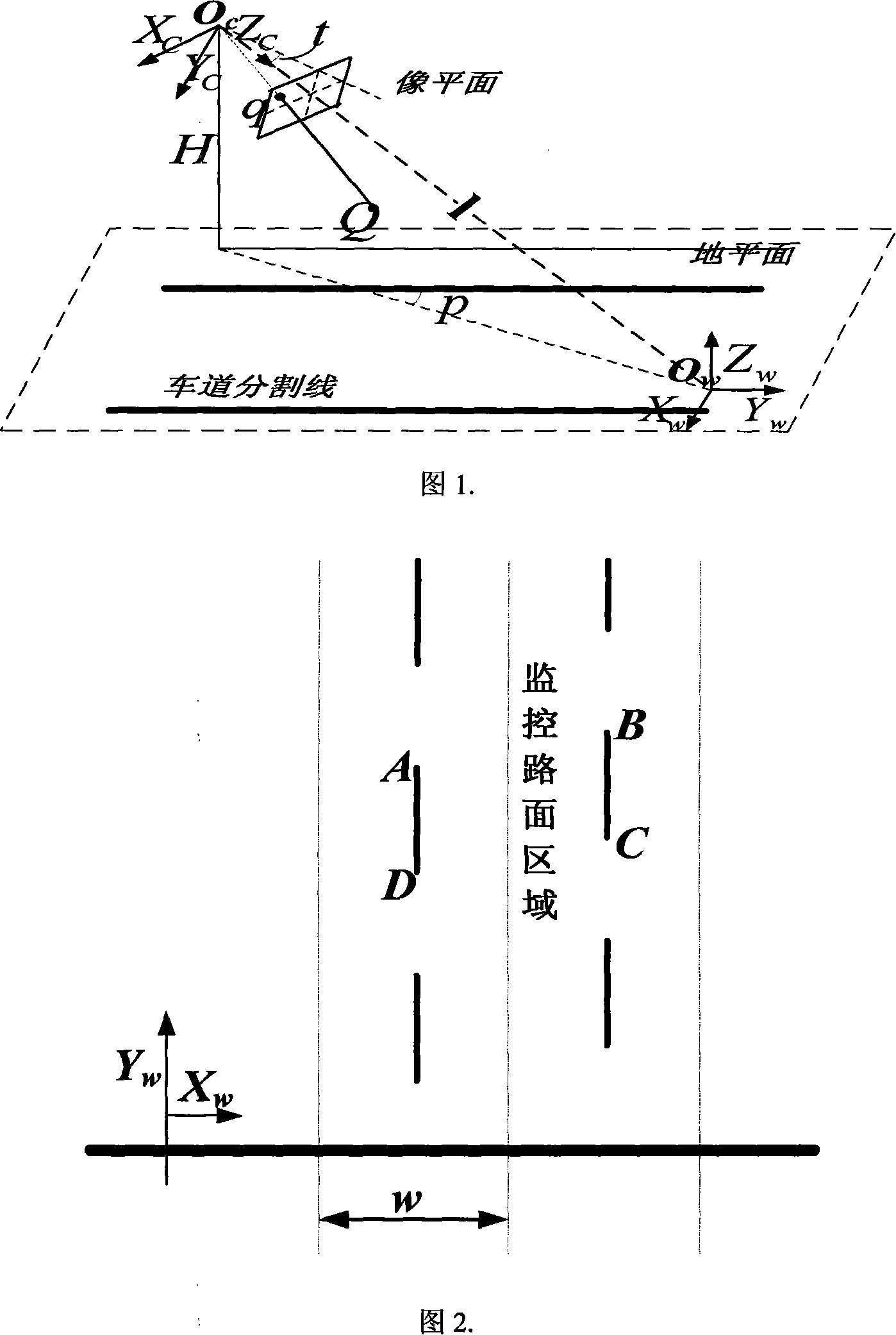

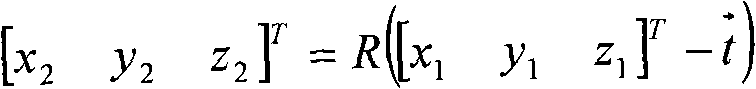

Road conditions video camera marking method under traffic monitoring surroundings

InactiveCN101118648AImprove robustnessMeet the requirements of high-precision camera calibrationTelevision system detailsImage analysisMonitoring systemModel parameters

A road condition video camera demarcating method under the traffic monitoring condition includes the following demarcating steps: (1) visual model describing and relevant coordinate system creating: according to the requirement of the monitoring system performance, a classical Tsai transmission projection model is used for reference, and according to the feature of the road condition imaging, a new visual model is presented after conducting corresponding amendment on the classical Tsai transmission projection model, and three kinds of coordinates are established. (2) demarcating the main point of a video camera and the extension factor: the image monitoring light stream is used as the demarcating basic element; by the extension movement of the video camera, a reference frame forecast image and the difference value of the light stream field in a real time frame sampling image are used as the restriction, a constraint equation is established by adopting the least square method, the main point coordinate of the video camera and the actual magnification coefficient of the video camera can be distinguished by the powell direction family method. (3) Demarcated object selecting and parameter linearity evaluating. (4) Accuracy making on the internal and external parameters of the video camera: according to all the angle points of the monitored image and the corresponding world coordinate point, the Levenberg-Marguardt optimization algorithm is adopted to make accuracy on the video camera model parameter, and then the video camera demarcating is finished.

Owner:NANJING UNIV

Four dimensional rebuilding method of coronary artery vessels axis

InactiveCN101283911AGood repeatabilityImprove operation accuracy3D-image renderingRadiation diagnosticsCoronary arteriesReconstruction method

A 4D reconstruction method of the vascular axis of coronary artery, which belongs to the field of medical detection technology, comprises following steps: constructing the projection models of an X-ray angiography system at two angles according to X-ray coronary artery angiogram sequences at two angles, deducing the geometric transformation relationship between the two angle images, 3D reconstructing the sampled points of interested blood vessels selected by hand in the image of the first moment, connecting the reconstructed points to obtain a broken line, acquiring the 3D axis of the blood vessel at the first moment by snake transformation with respect to the broken line as the initial position, and acquiring 3D vascular axis in each subsequent moment in the image sequence by snake transformation with respect to the 3D vascular axis of the previous moment as the initial position, thus completing the 4D reconstruction of the vascular axis in the entire sequence. The method can greatly reduce the uncertainty and the error due to the operation of operators, thus improving the repeatability of the result and achieving convenient operation and high efficiency.

Owner:NORTH CHINA ELECTRIC POWER UNIV (BAODING)

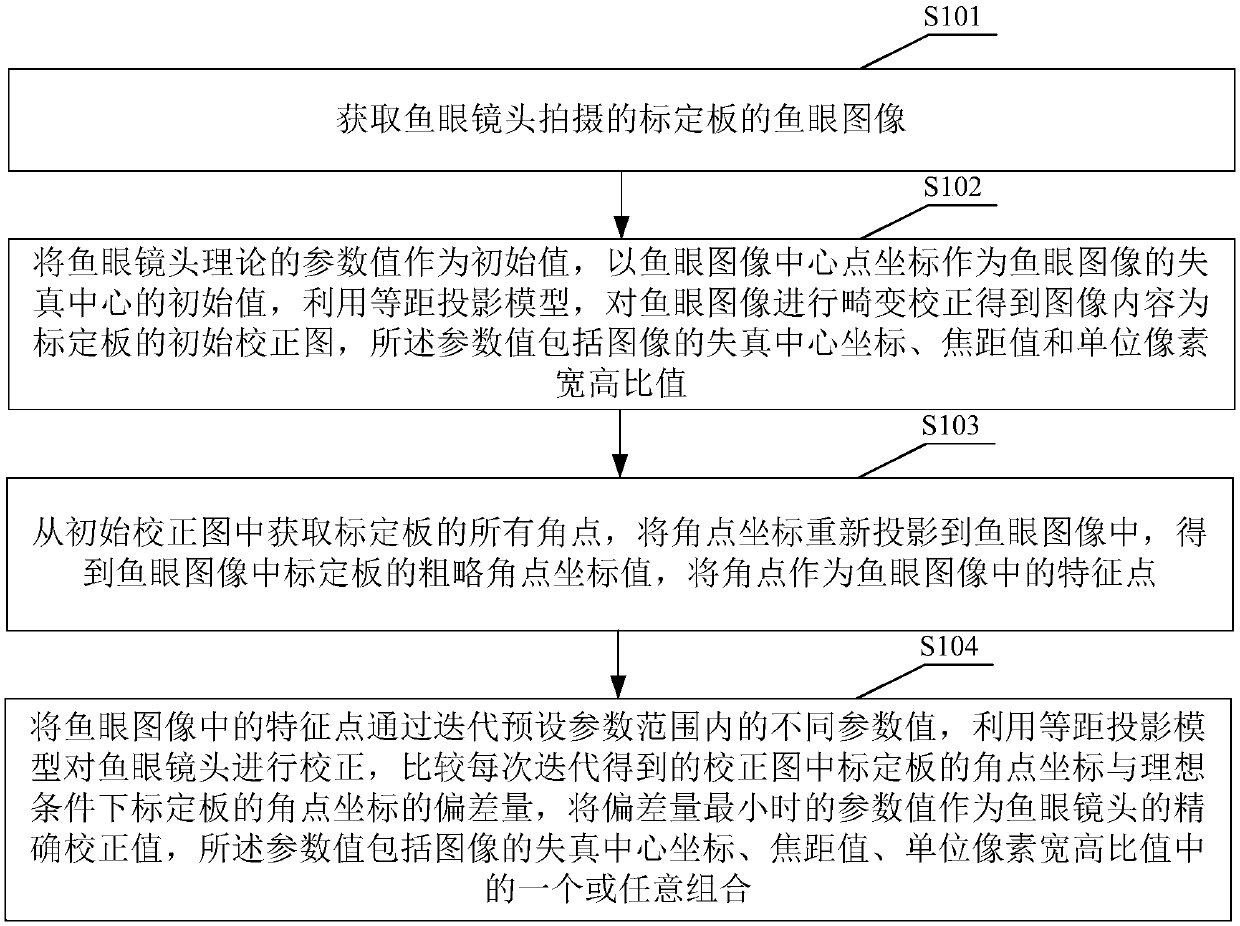

Fish-eye lens correction method and device and portable terminal

ActiveCN107767422AThe calibration result is accurateReduce in quantityImage analysisCamera lensFish eye lens

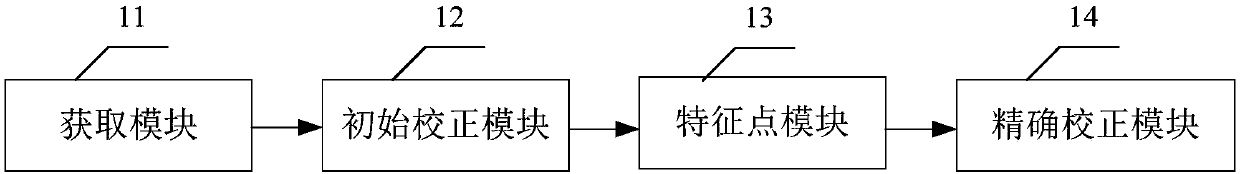

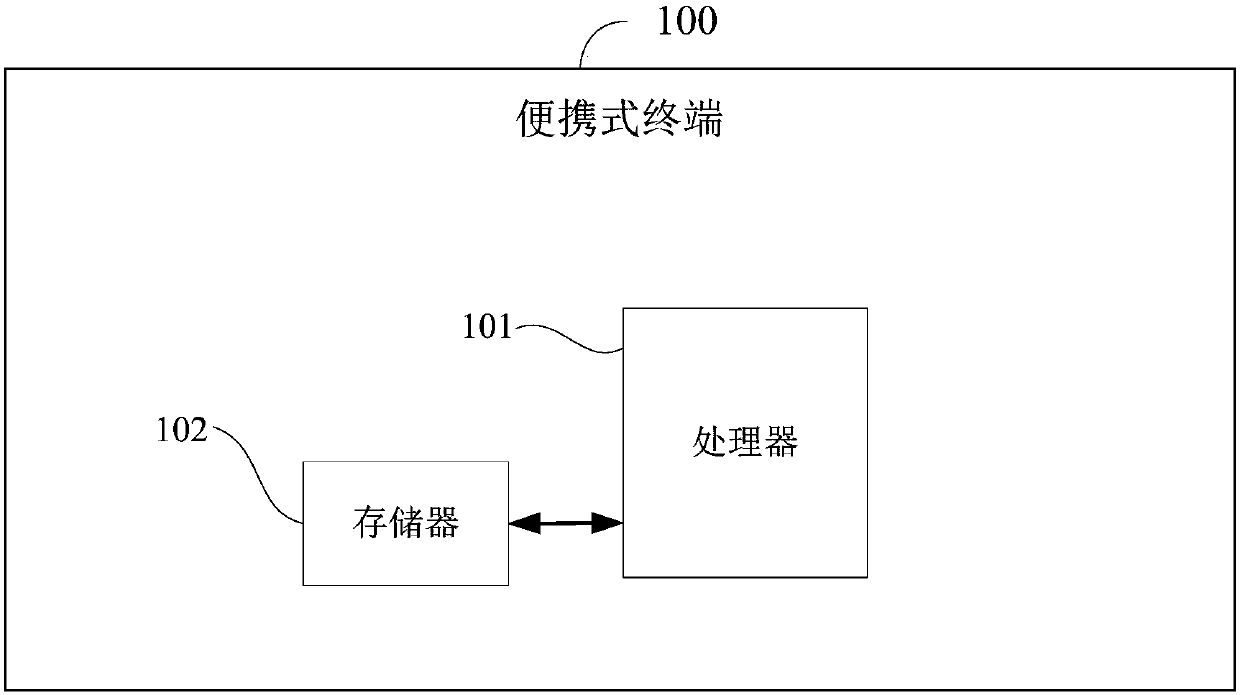

The invention is suitable for the digital image processing field and provides a fish-eye lens correction method and device and a portable terminal. The method comprises the following steps: carrying out distortion correction on a fisheye image through an equidistant projection model to obtain an initial correction image, the image content of which is a calibration board; obtaining all angle pointsof the calibration board from the initial correction image, re-projecting angle point coordinates to the fisheye image to obtain rough angle point coordinate values of the calibration board in the fisheye image, and serving the angle points as feature points in the fisheye image; and carrying out iteration on the feature points in the fisheye image to obtain different parameter values within a preset parameter range, carrying out correction on the fish-eye lens through the equidistant projection model, and serving the parameter value obtained when the deviation amount is minimum as an accurate correction value of the fish-eye lens. The fish-eye lens correction method reduces preparation workload in the early stage, and is simple in operation process; a camera does not need to change position and angle; and the fish-eye lens correction method is wide in application range, does not need to set a lot of parameters, is simple to calculate, and meanwhile, realizes an accurate fisheye correction effect.

Owner:ARKMICRO TECH

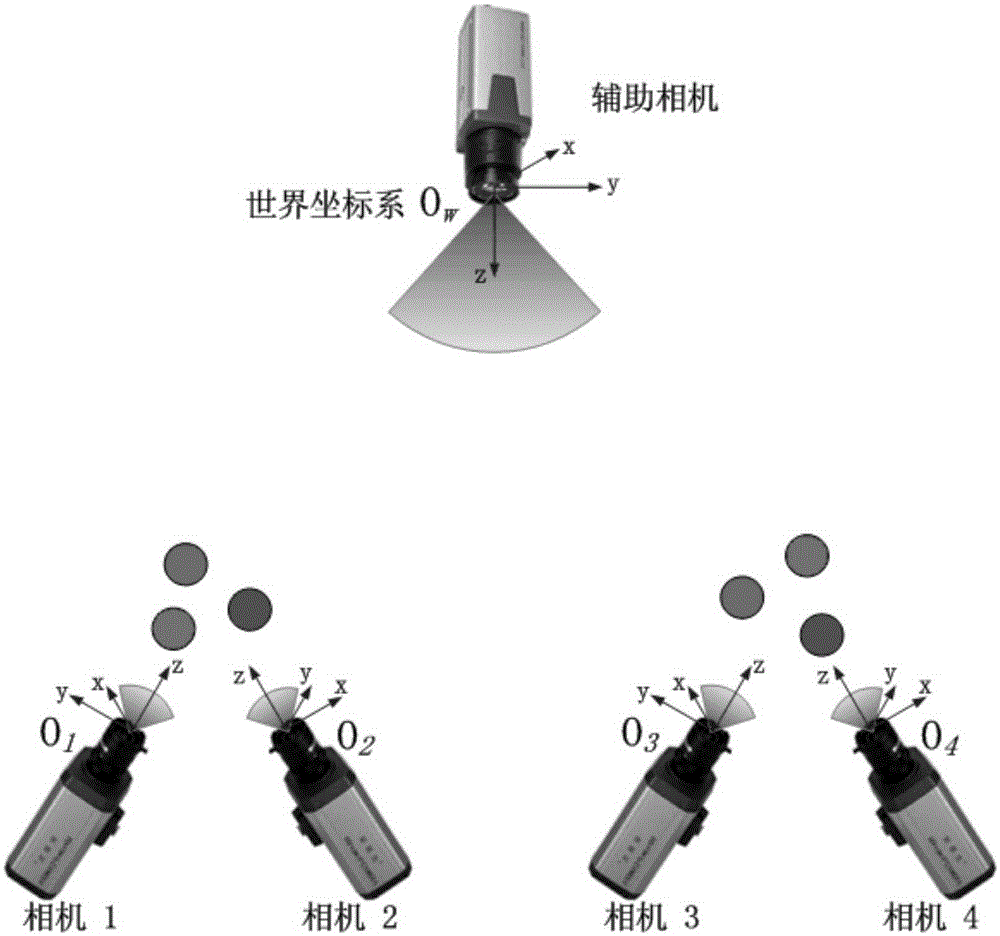

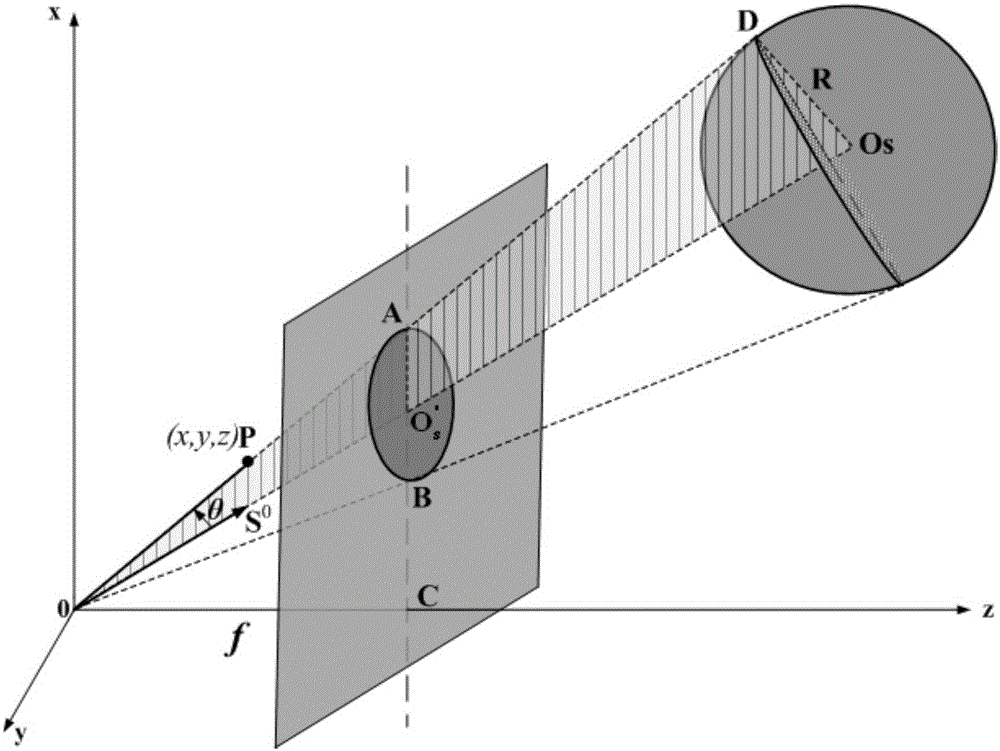

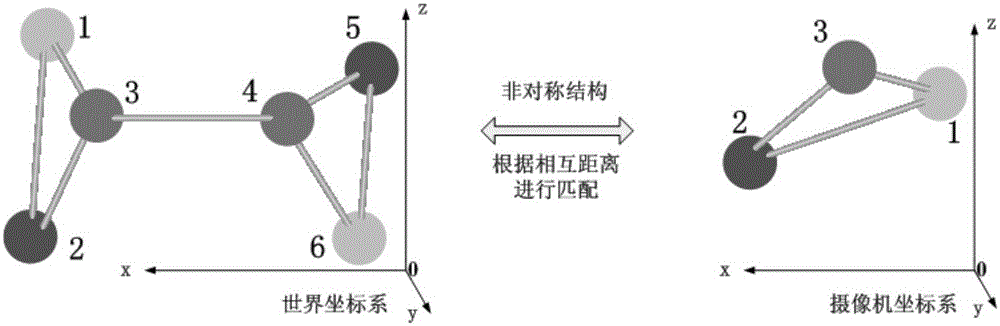

Multi-camera global calibration method based on high-precision auxiliary cameras and ball targets

ActiveCN105205824ASimple and fast operationHigh precisionImage enhancementImage analysisMulti cameraTransfer matrix

The invention discloses a multi-camera global calibration method based on high-precision auxiliary cameras and ball targets. According to the method, aiming at a multi-camera system which is arranged complicatedly and free of public view field, a global camera coordinate system serves as the world coordinate system, the three-dimensional coordinates, under local coordinate systems, of the sphere centers of a group (at least three) of ball targets are reconfigured by means of cameras to be calibrated and a global camera at the same time, a transfer matrix between two coordinate systems is solved on this basis, and then the transfer matrixes from all camera coordinate systems to the world coordinate system are obtained. When the three-dimensional coordinates of the sphere centers are reconfigured, a single-view method is adopted, a parameter equation is adopted for presenting a spheric projection model, and therefore sphere center reconfiguration precision can be improved greatly. The method has the advantages that operation is easy and flexibility is high. By the adoption of the method, calibration can be achieved conveniently for the multi-camera system which is arranged complicatedly and free of public view field.

Owner:BEIHANG UNIV

Method for rebuilding image of positron emission tomography

ActiveCN103164863AImprove detection efficiencyImprove efficiency2D-image generationModel parametersCompanion animal

The invention provides a method for rebuilding an image of positron emission tomography. The method includes that a probability model of scattering photon example projection described by appointed model parameters is established according to the operating principle of scattering photon example projection of Compton scattering; a spread function of scattering photon example dot is established according to the probability model of scattering photon example projection; a spread function of non-scattering photon example dot is established according to the probability model of scattering photon example projection and the spread function of scattering photon example dot; and by means of iterative reconstruction algorithm, a PET (positron emission tomography) image is rebuilt by scattering photon example and non-scattering photon example according to the spread function of scattering photon example dot and the spread function of non-scattering photon example dot. According to the method, detection efficiency can be improved, in clinical application, the radiation dosage bore by a detected object and an operator is substantially reduced, detection time is shortened, using efficiency is improved, data sampling is perfected, detector structure is simplified, and cost of the detector is reduced.

Owner:INST OF HIGH ENERGY PHYSICS CHINESE ACAD OF SCI

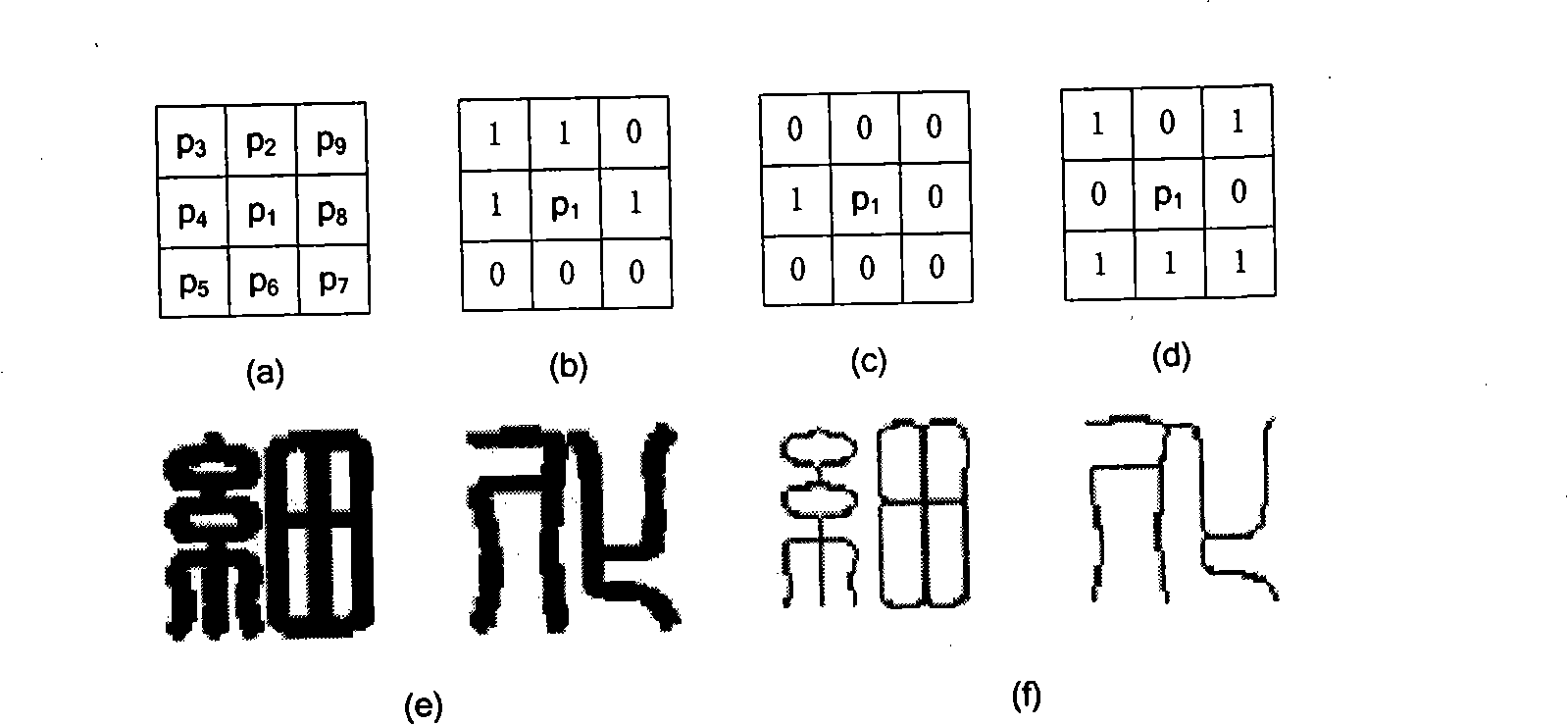

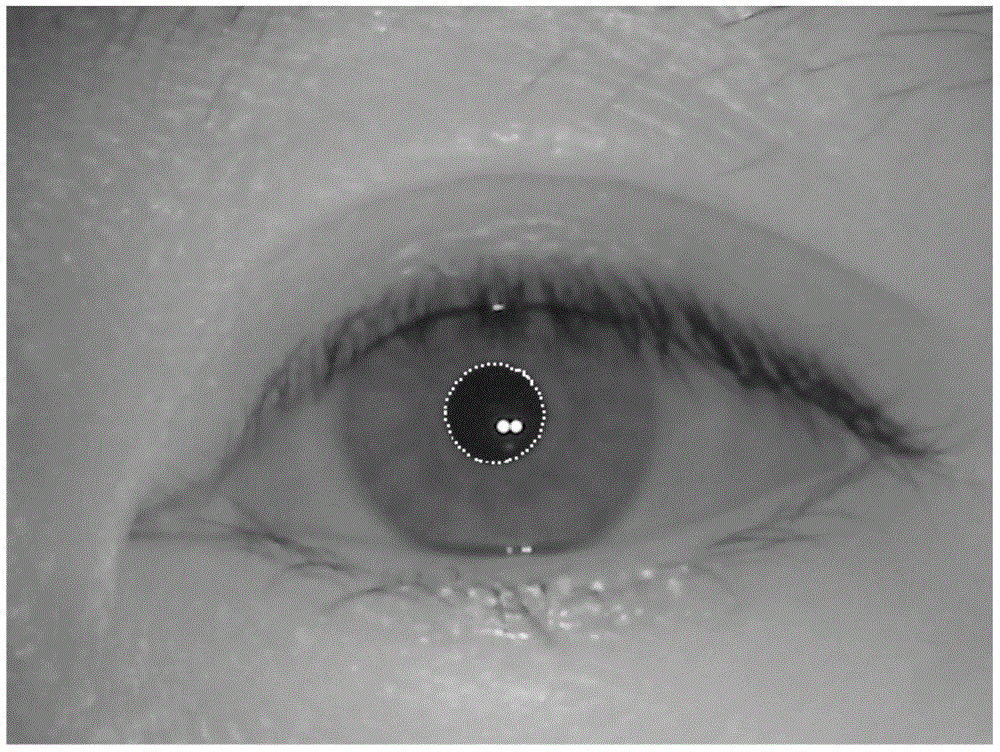

Image processing method and device of iris positioning

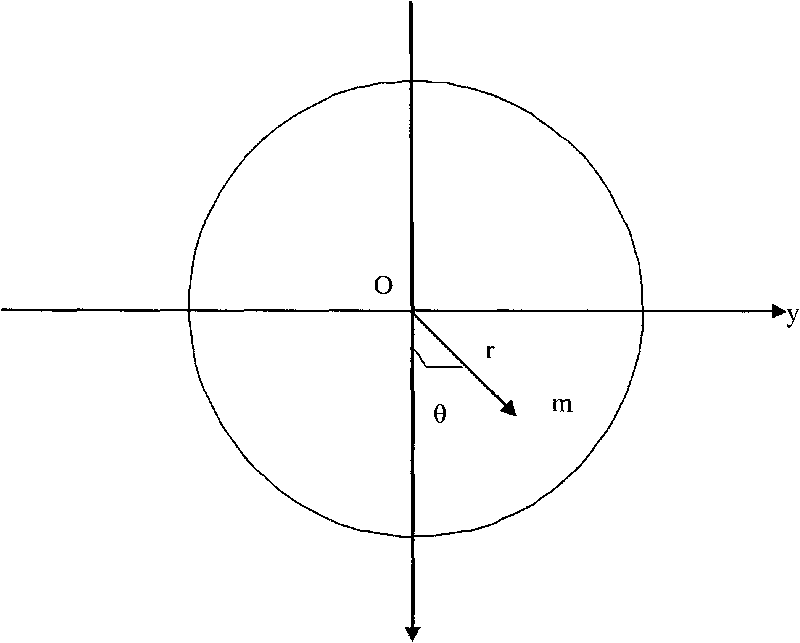

The invention discloses an image processing method and device of iris positioning. The method comprises the steps that a human eye image in an iris area is obtained; the pupil boundary is positioned in the human eye image through a self-adaptation circular arc projection model algorithm; the outer boundary of the iris is positioned in the human eye image through the self-adaptation circular arc projection model algorithm; the eyelid area is removed from the human eye image, and the image of the interested iris area is extracted.

Owner:EYESMART TECH

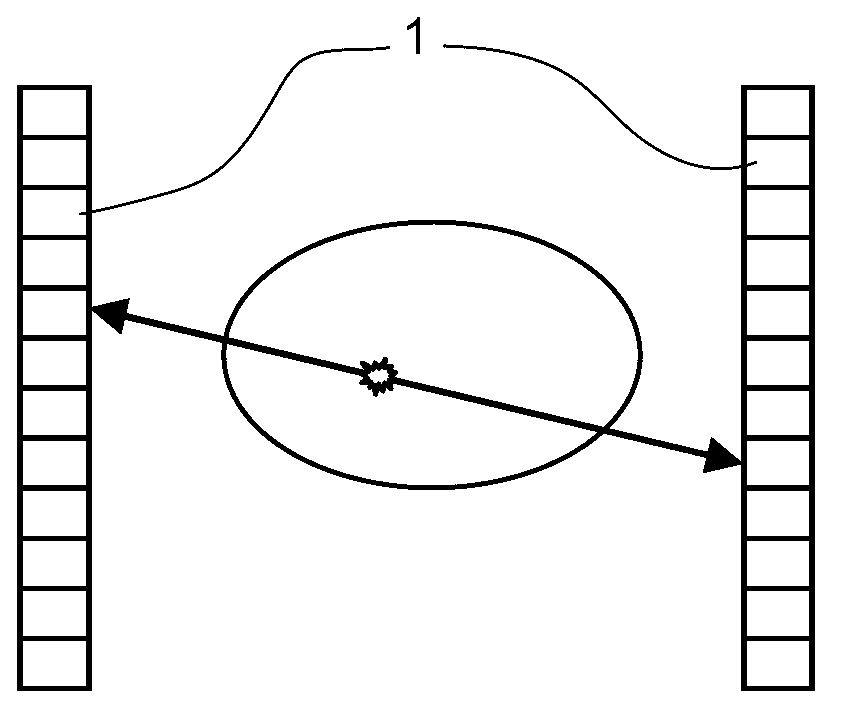

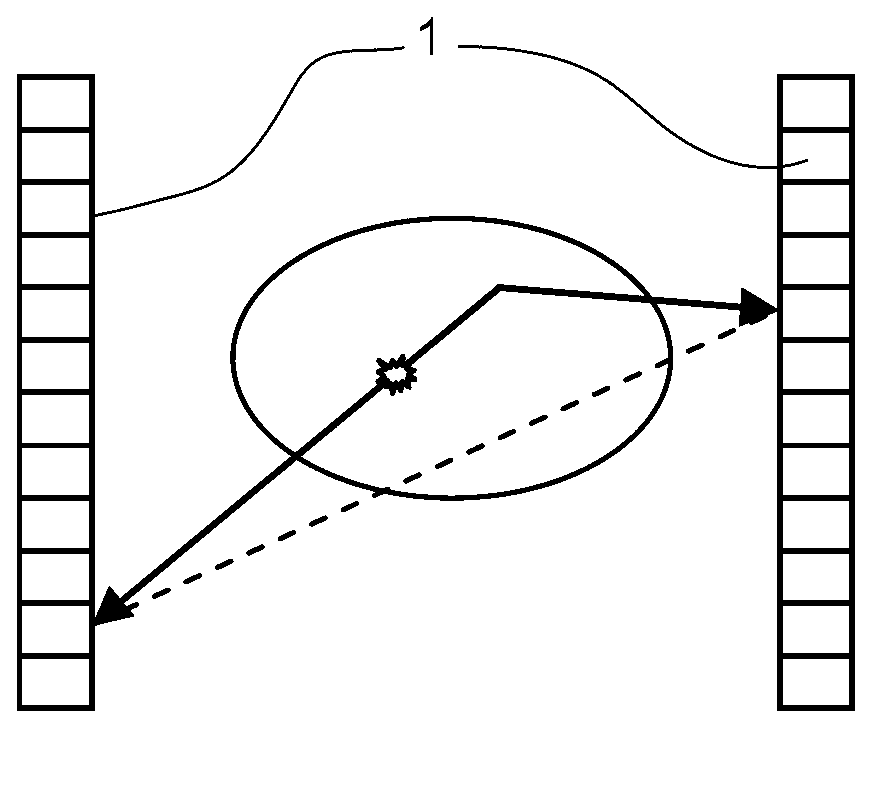

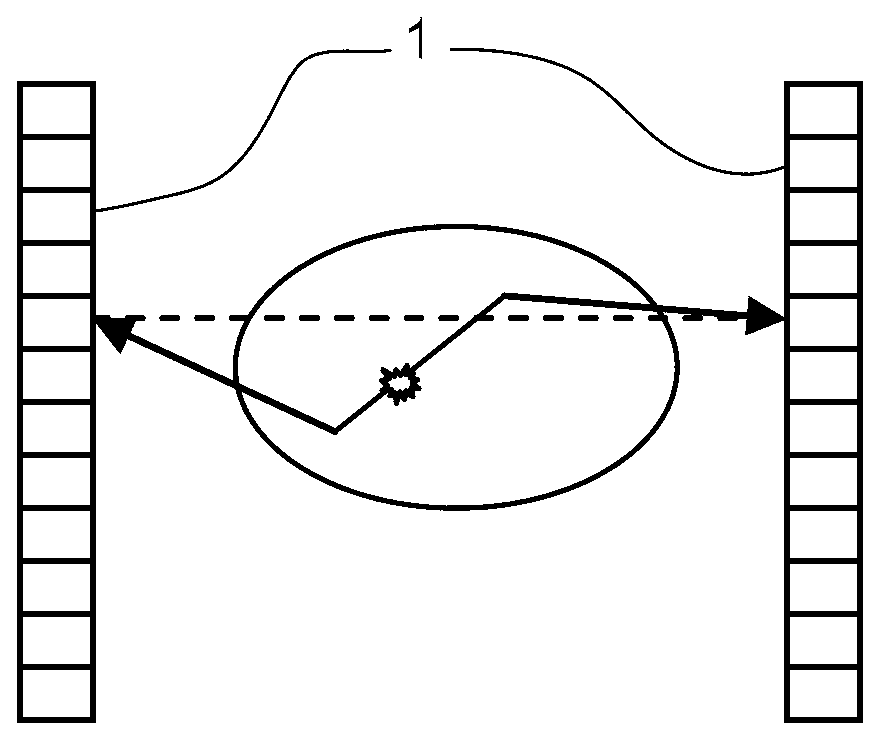

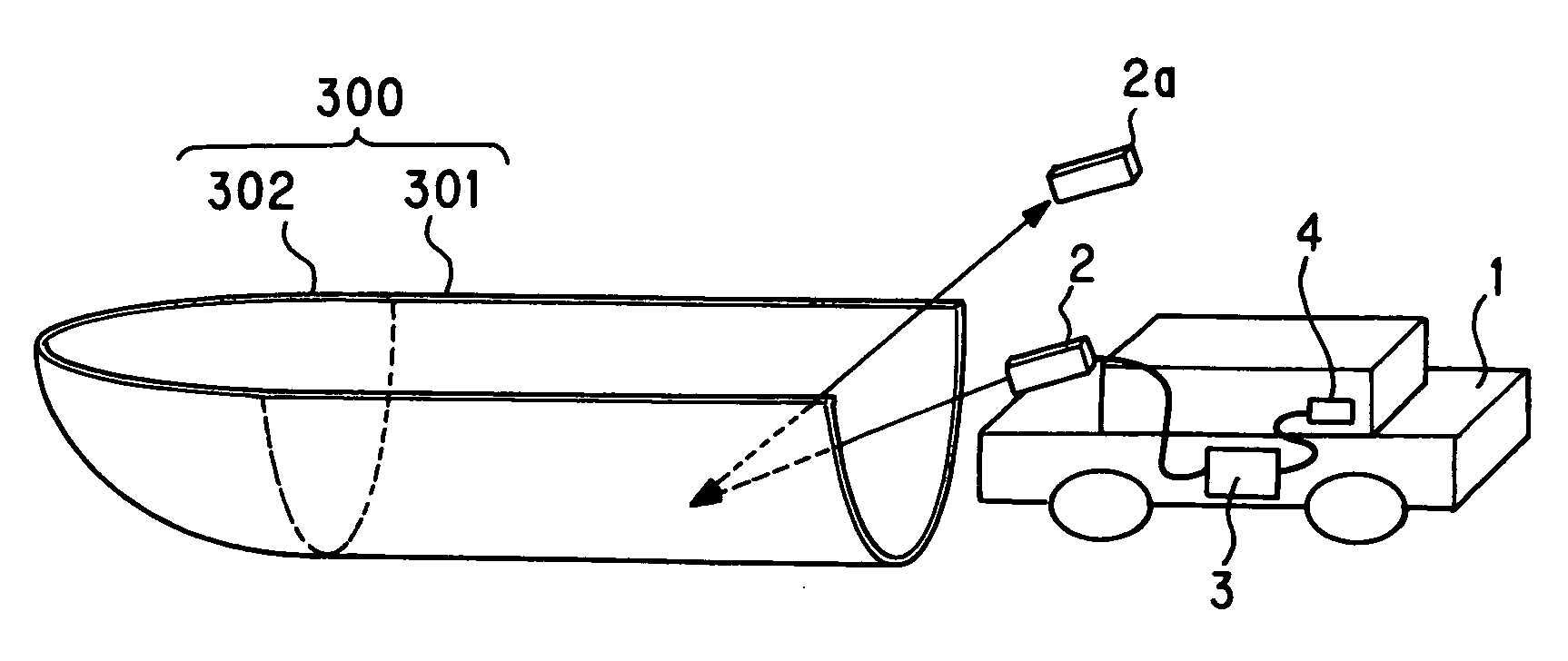

Drive assisting system

ActiveUS7554573B2Reduction factorEasy to confirmImage enhancementGeometric image transformationDriver/operatorEngineering

An object of the present invention is to provide a driving assistance system capable of displaying a picked-up image near a vehicle as a less-distorted image on a monitor.A driving assistance system according to the present invention comprises an imaging means (2) for picking up a surrounding image of a vehicle (1) on a road surface, an image translating means (3) for executing an image translation by using a three-dimensional projection model (300), which is convex toward a road surface side and whose height from the road surface is not changed within a predetermined range from a top end portion of the vehicle (1) in a traveling direction, to translate the image picked up by the imaging means (2) into an image viewed from a virtual camera (2a), and a displaying means (4) for displaying an image translated by the image translating means (3). The three-dimensional projection model (300) is configured by a cylindrical surface model (301) that is convex toward the road surface side, and a spherical surface model (302) connected to an end portion of the cylindrical surface model (301). Accordingly, the straight line on the road surface, which is in parallel with the center axis of the cylindrical surface model (301), is displayed as the straight line, and thus a clear and less-distorted screen can be provided to the driver.

Owner:PANASONIC CORP

Self-adaptive and rapid correcting method for fish-eye lens

The invention discloses a self-adaptive and rapid correcting method for a fish-eye lens. The self-adaptive and rapid correcting method for the fish-eye lens comprises the steps that an equidistant projection model is constructed and straight lines at different positions are extracted to obtain a point set; camera parameters are initialized and noise filtering is carried out on the point set; the image plane of the straight lines is further optimized according to the rule that the straight line must be projected as straight lines; the optimized image is projected to six faces of a largest inscribed cube of a unit watching ball by unfolding the cube, and the projection is output; secondary correction is carried out on primary calibration of the same equipment, and if the output projection result has deviation, recalibration is carried out. Through the self-adaptive and rapid correcting method for the fish-eye lens, the fish-eye lens can be rapidly corrected, a large amount of calculation is avoided, the result is accurate, and operation is convenient and rapid.

Owner:STATE GRID CORP OF CHINA +1

Single-vision measuring method of space three-dimensional attitude of variable-focus video camera

InactiveCN101038163ASingle-vision measurement implementationTelevision system detailsPhotogrammetry/videogrammetryCamera lensComputer graphics (images)

The present invention provides a single-view measuring method of the three-dimensional pose of the space by a zoom type vidicon. The method includes: a plane cooperative target consisting of three square patterns is provided; a plane cooperative target image is picked up in a certain position of the space; the plane cooperative target image is processed, and the processing steps are: 1) geometric distortion exists in the plane cooperative target image because of the optical lens distortion, as a result, the geometric distortion of the outline of the plane cooperative target image is proofread by using a quick distortion proofreading method based on the second pels edge line point; 2) the second pels edge beeline parameter of the plane cooperative target image that has been proofread is obtained; then, the single-view measuring of the three-dimensional pose of the space by a zoom type vidicon relative to the plane cooperative target is performed by adopting a method of a main point searching list according to the vidicon perspective projection model to obtain the focus of the vidicon and the external parameter through the linear transform.

Owner:BEIHANG UNIV

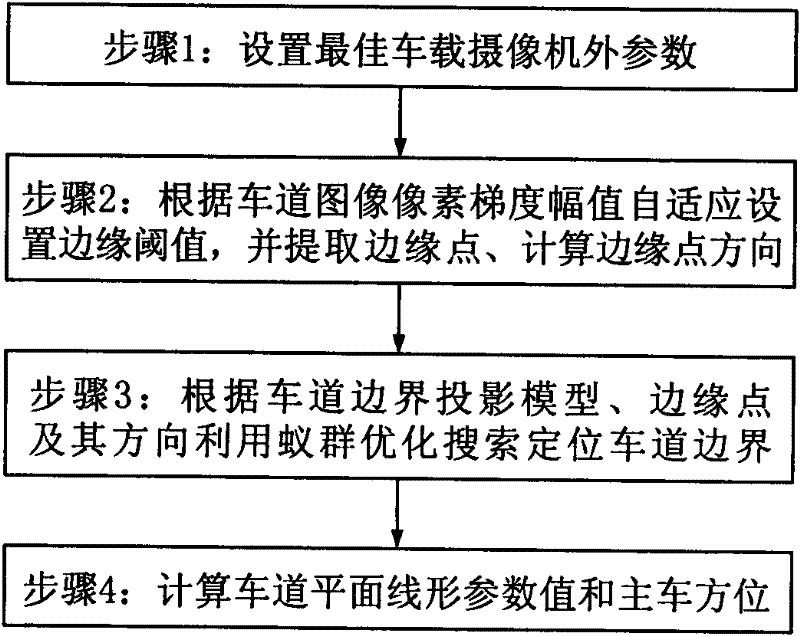

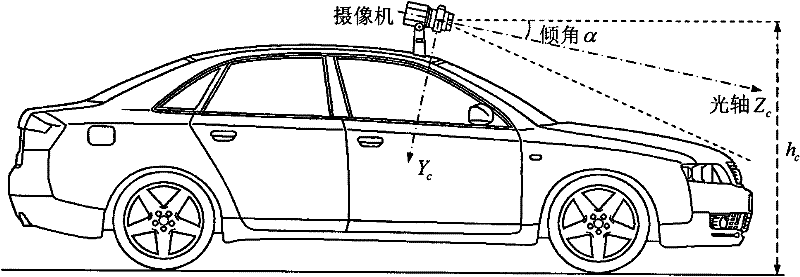

Detection method for lane boundary and main vehicle position

InactiveCN102509067ASimple calculationIncrease contentAnti-collision systemsCharacter and pattern recognitionProjection modelVideo camera

The invention discloses a detection method for a lane boundary and a main vehicle position. The detection method comprises the following steps of: firstly, setting an optimal external vehicle-mounted camera parameter; then self-adaptively setting an edge threshold according to a lane image pixel gradient amplitude, extracting an edge point and calculating an edge point direction; optimally searching and locating the lane boundary by using an ant colony according to a lane boundary projection model, the edge point and the edge point direction; and finally calculating a lane plane linear parameter value and the main vehicle position. According to the detection method, the lane boundary can be searched and located quickly and effectively, and the lane plane linear parameter value and the deviation angle and the position of a main vehicle in a lane can be measured; and the detection method can adapt to linear changes of various lanes and weather and illumination changes.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

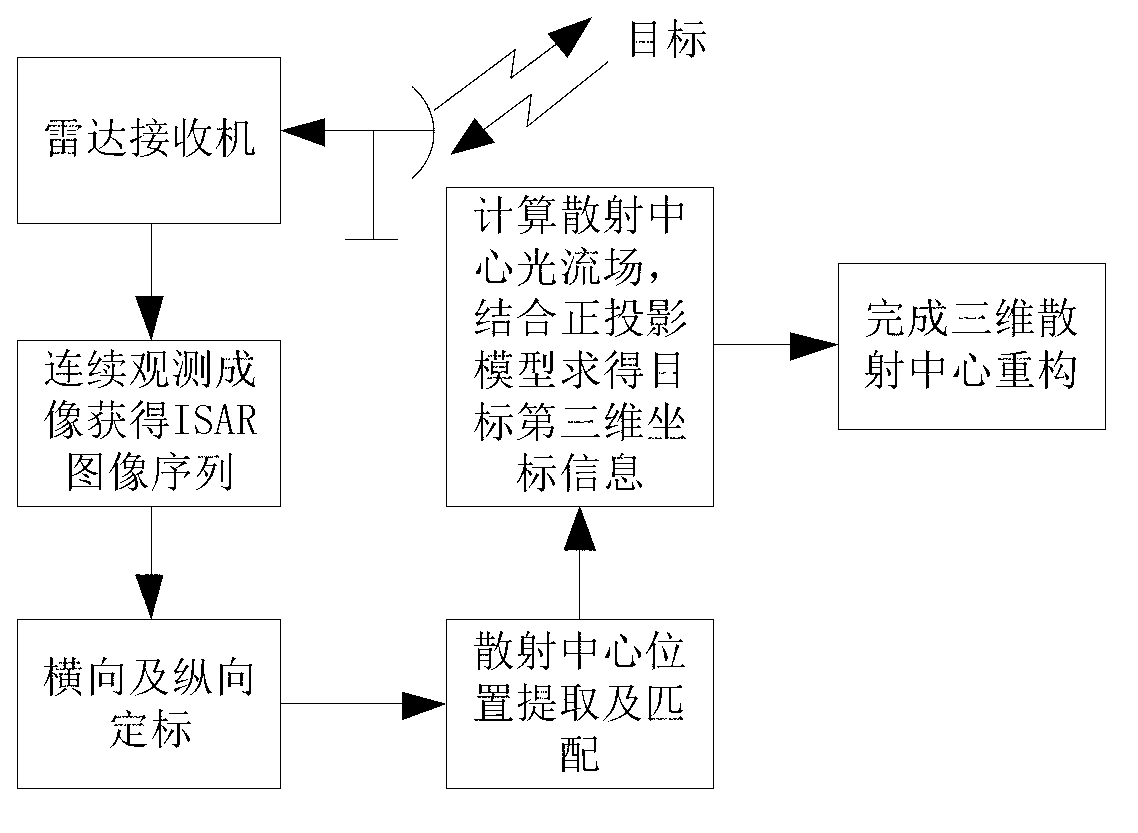

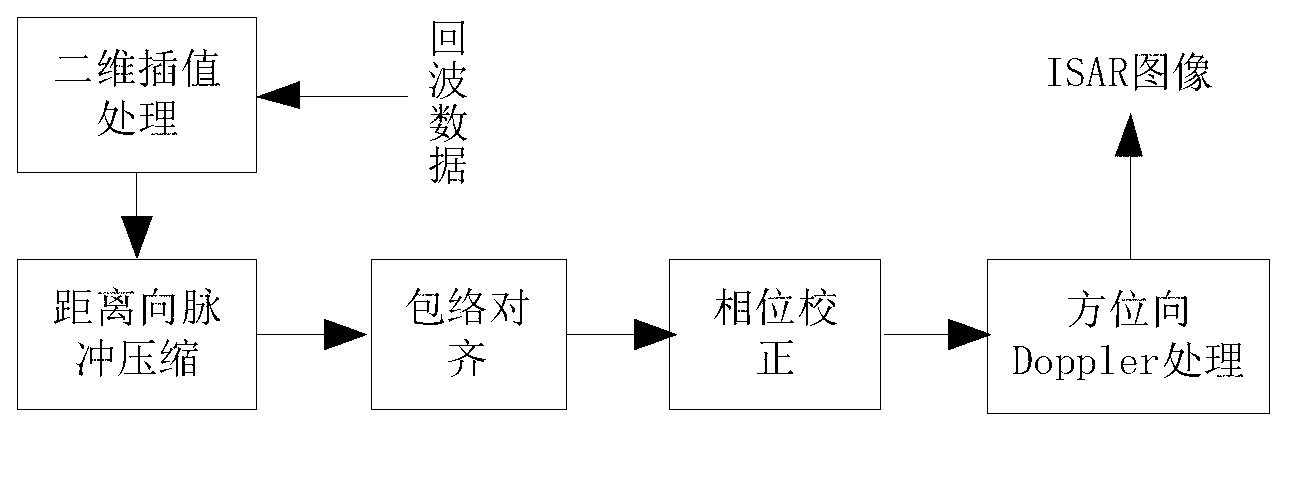

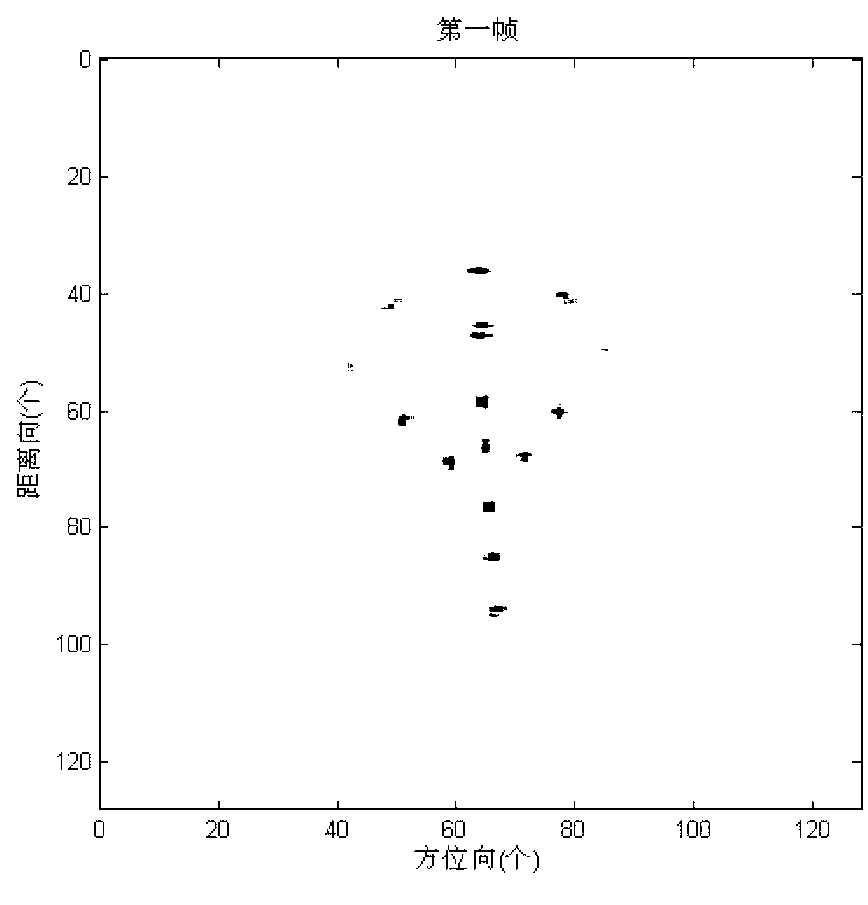

Method for reconstructing target three-dimensional scattering center of inverse synthetic aperture radar

InactiveCN103217674AResolve mismatchSmall amount of calculationRadio wave reradiation/reflectionInterferometric synthetic aperture radarInverse synthetic aperture radar

The invention provides a method for reconstructing target three-dimensional scattering center inverse synthetic aperture radar. The method for reconstructing the target three-dimensional scattering center of the inverse synthetic aperture radar comprises the following steps: conducting continuous image formation on echo data after motion compensation so as to obtain an ISAR two-dimensional image sequence; respectively conducting horizontal scaling and vertical scaling on the information storage and retrieval (ISAR) two-dimensional image sequence so as to obtain a position coordinate of the scattering center; and respectively extracting a position coordinate of the scattering center in an ISAR two-dimensional image of each frame, calculating a displacement velocity field of every two adjacent frames of the scattering center of the ISAR two-dimensional image, combining projection equation and target motion equation of an orthographic projection model so as to obtain estimated values of a third dimension coordinate by combining a projection equation and a target motion equation of an orthographic projection model, and averaging multiple estimated values to obtain the final third dimension coordinate. Therefore, reconstruction of the target three-dimensional scattering center is completed directly. The method for reconstructing the target three-dimensional scattering center of the inverse synthetic aperture radar does not need cost of extra system hardware, can distinguish scattering centers with different heights in the same distance and position resolution unit, does not need to utilize prior information such as observation perspective of the radar, and has relatively small calculating amount.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

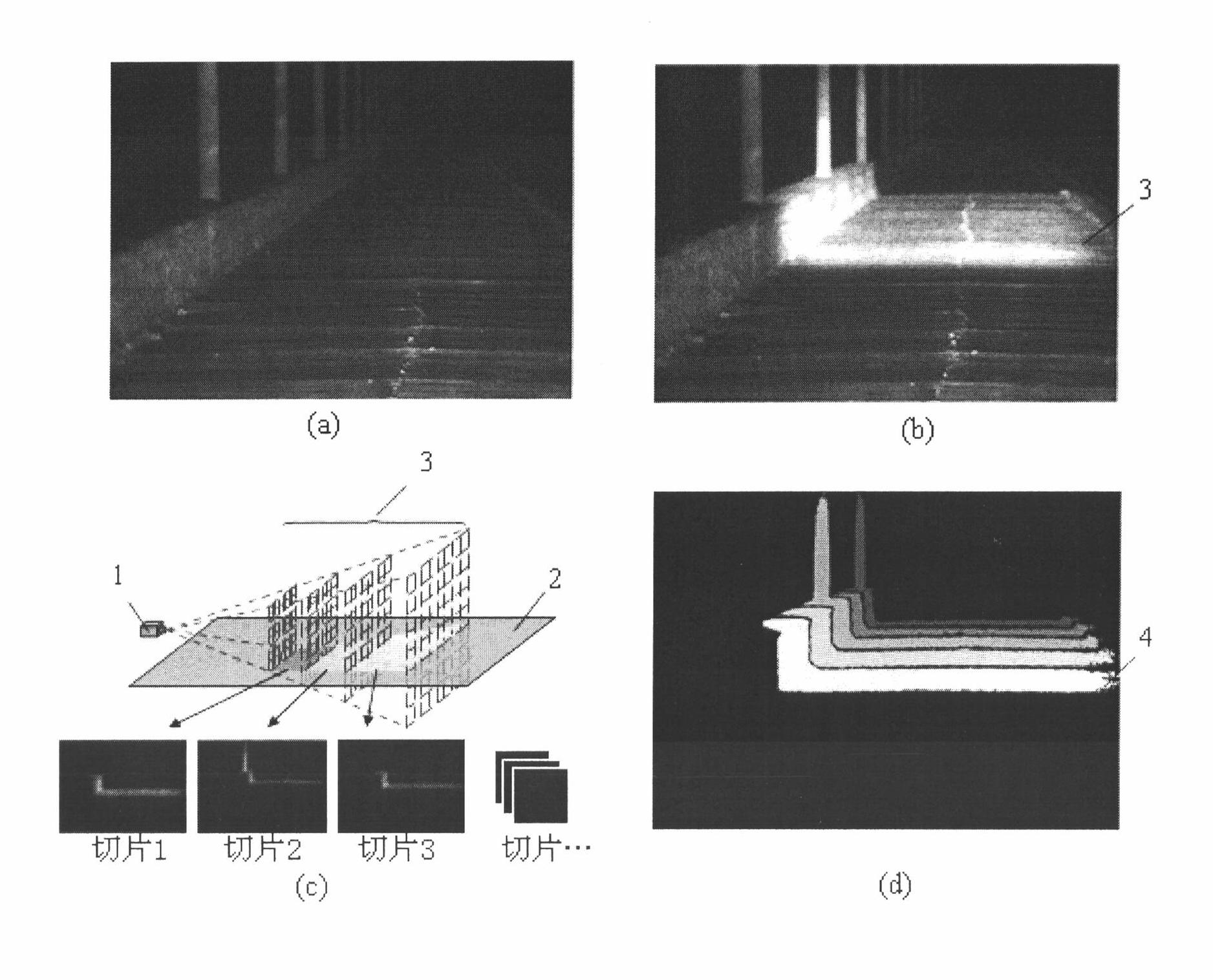

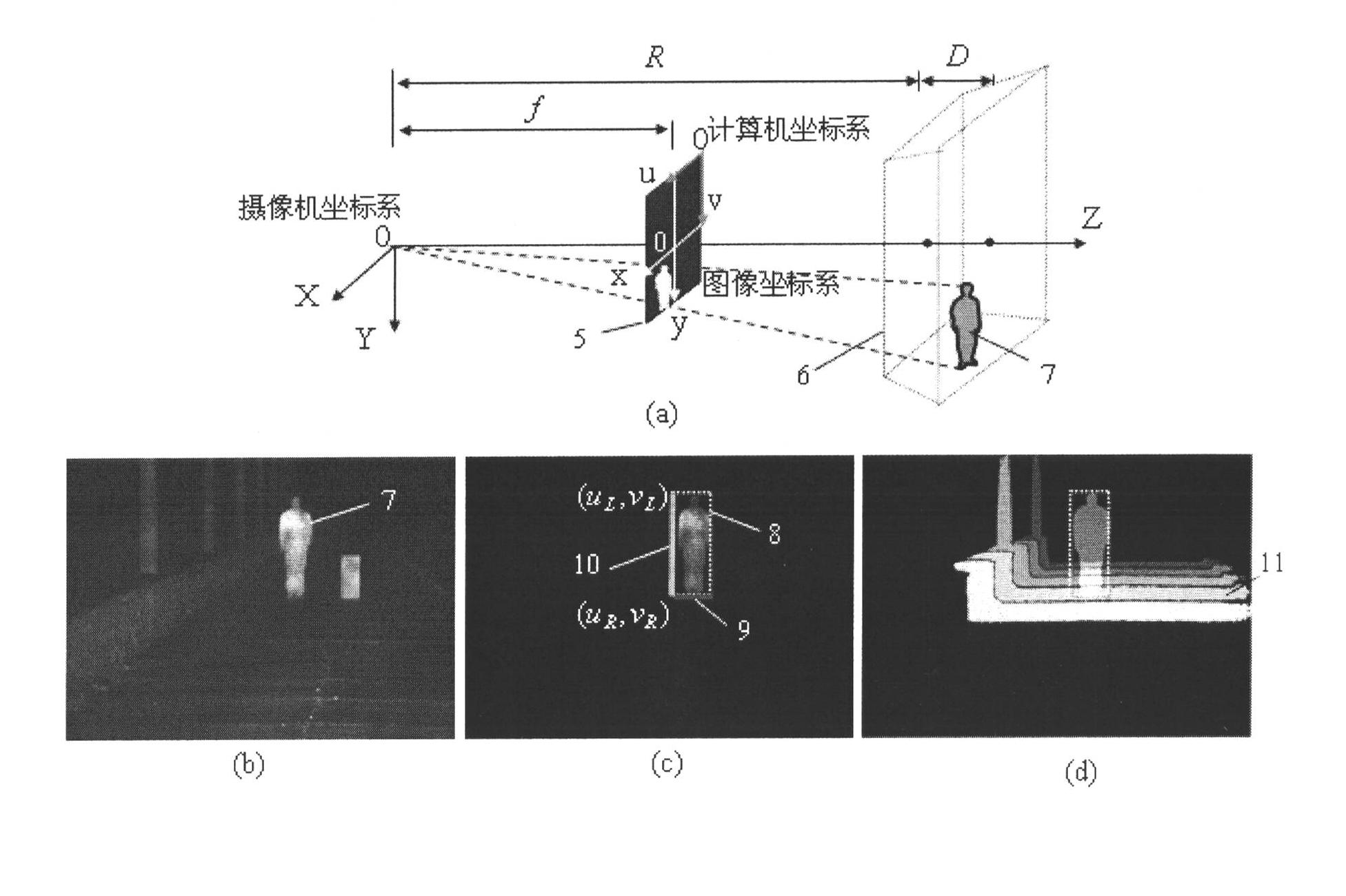

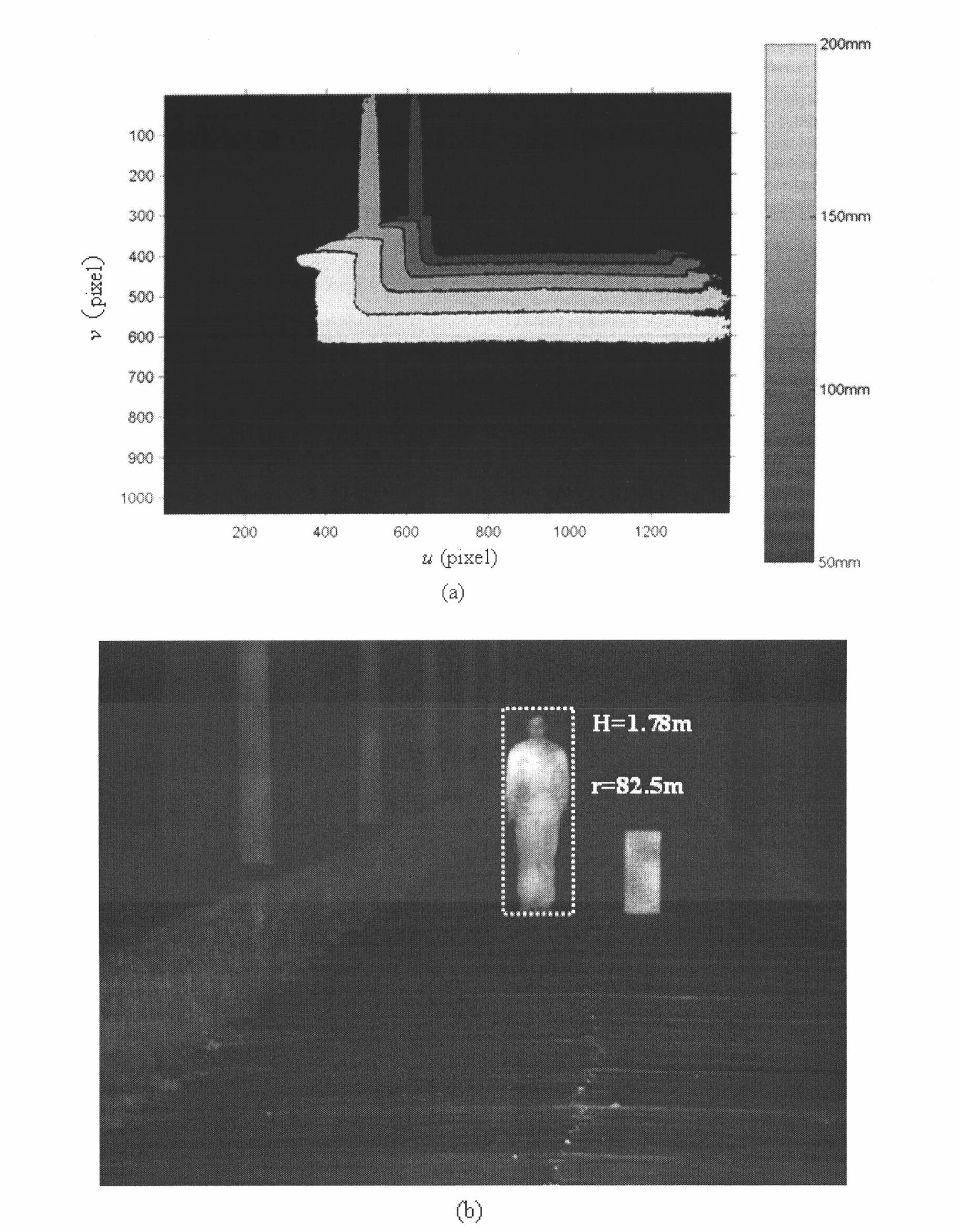

Method for acquiring characteristic size of remote video monitored target on basis of depth fingerprint

ActiveCN102073863ACalibration is concise and accurateReduce hardware costsCharacter and pattern recognitionClosed circuit television systemsVideo monitoringThree-dimensional space

The invention discloses a method for acquiring a characteristic size of a remote video monitored target on the basis of a depth fingerprint, which comprises the following steps of: acquiring a plurality of slice images of a region of interest of a monitored scene of a target video monitoring system by a gated imaging technique, overlapping the slice images to obtain a depth fingerprint of the region of interest and implanting the depth fingerprint into the target video monitoring system; when the monitored target appears in the region of interest, extracting the monitored target from the background, matching the monitored target with the depth fingerprint, and determining fingerprint lines which are subordinate to foot characteristic lines of the monitored target, wherein space distance information of the fingerprint lines is target distance information; and after acquiring the target distance information, inverting information of the characteristic size of the target from the length of a characteristic segment of the target to be measured, which takes a pixel as the unit, in a monitoring image according to a mopping relation between a three-dimensional space and a two-dimensional image plane under the condition of a perspective projection model. By the method, the problem that a conventional remote video monitoring system is difficult to acquire the information of the characteristic size of the target is solved.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com