Patents

Literature

182 results about "Image translation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Image translation refers to a technology where the user can translate the text on images or pictures taken of printed text (posters, banners, menu list, sign board, document, screenshot etc.).This is done by applying optical character recognition (OCR) technology to an image to extract any text contained in the image, and then have this text translated into a language of their choice, and the applying Digital image processing on the original image to get the translated image with a new language. Image traslation is related to machine translation.

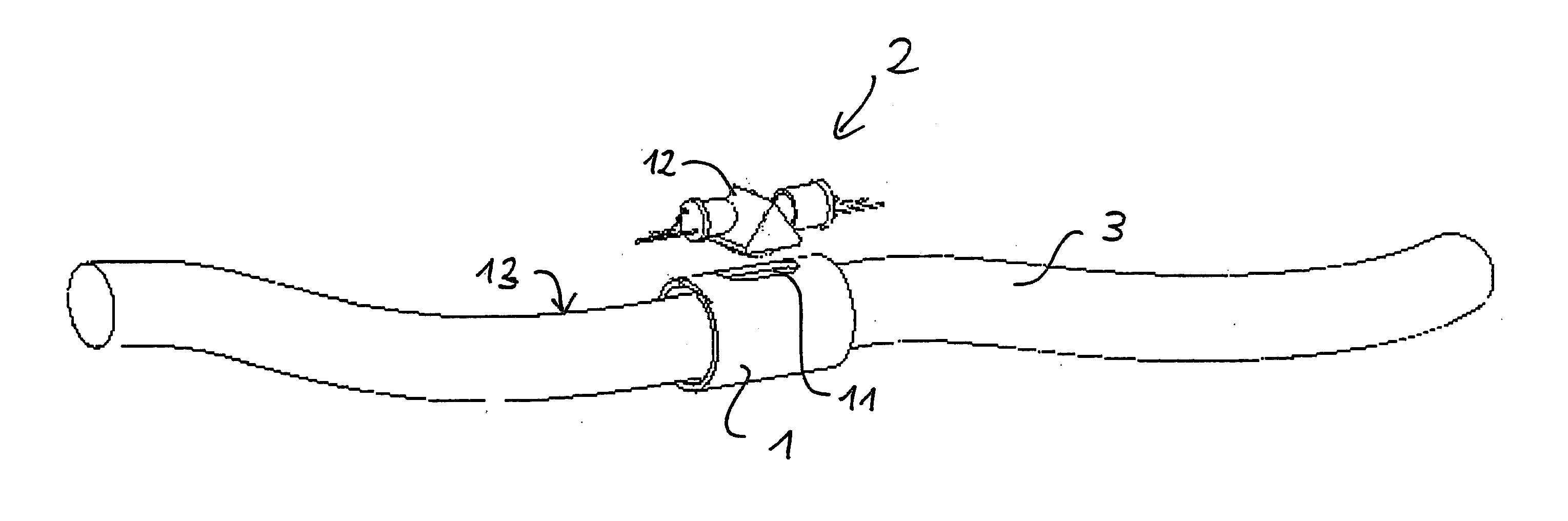

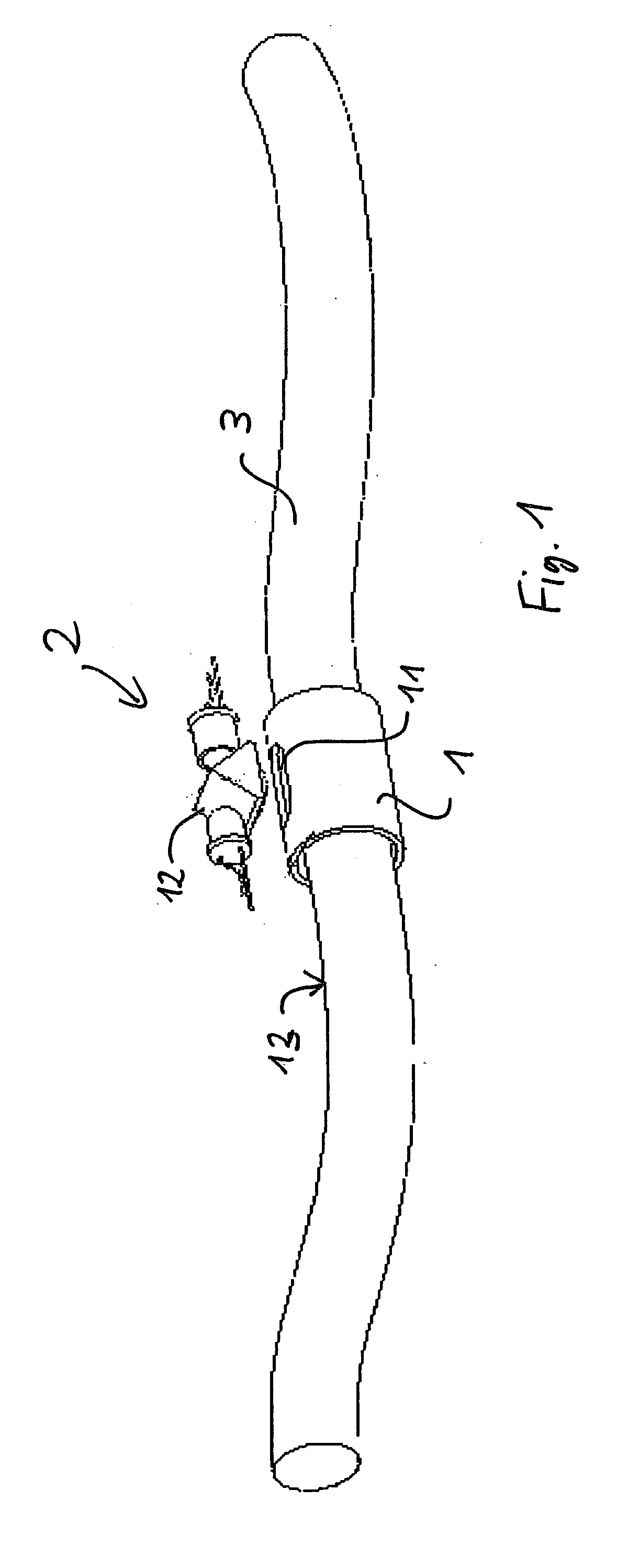

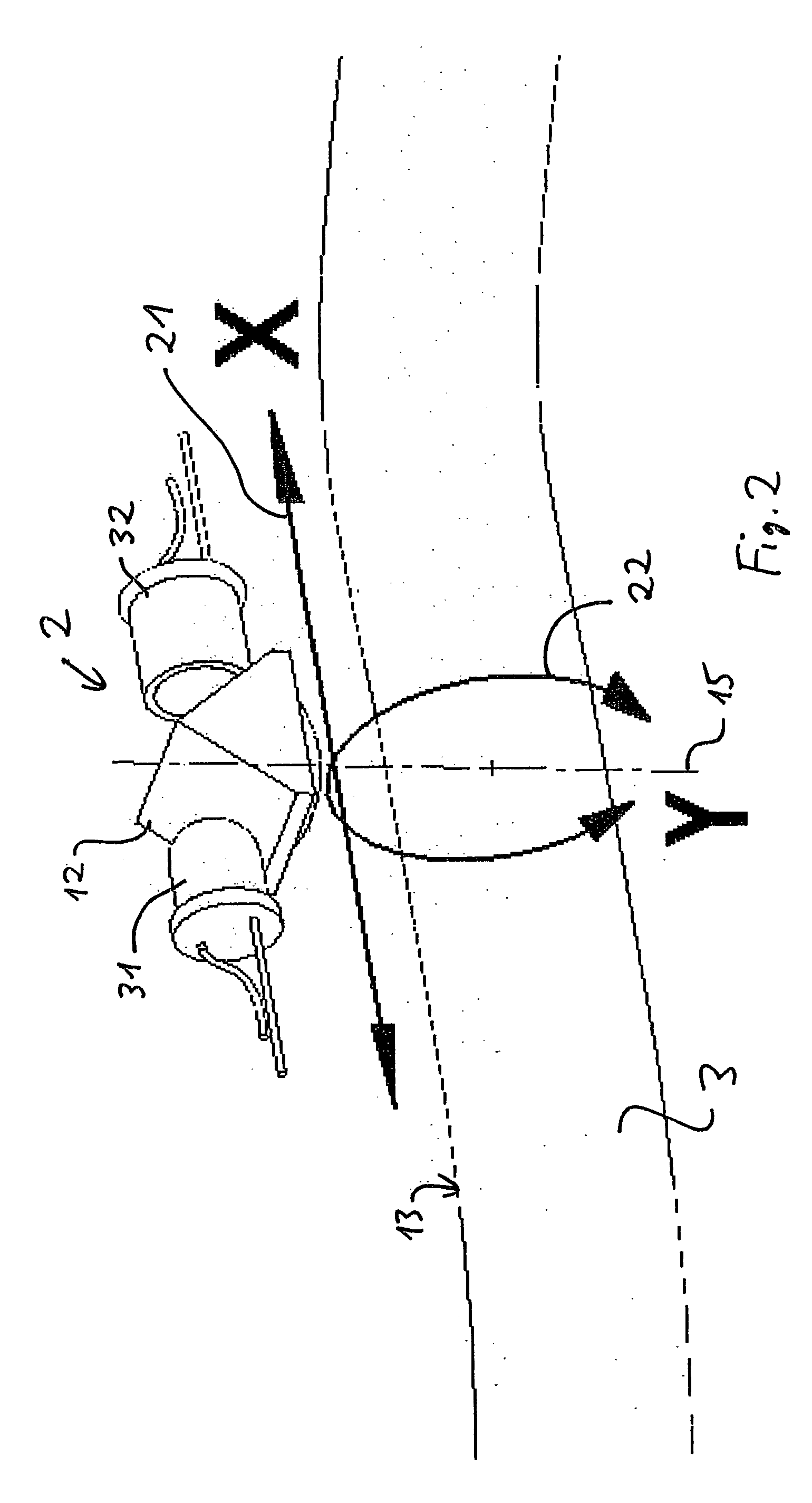

Device for determining the longitudinal and angular position of a rotationally symmetrical apparatus

InactiveUS20050075558A1Accurately determineEasy to trackDiagnostic recording/measuringSensorsMeasuring instrumentImage conversion

A device for determining the longitudinal and angular position of a rotationally symmetrical apparatus when guided inside a longitudinal element surrounding the same, including an imaging optical navigation sensor that measures the motion of the underlying surface by comparing successive images, and translates this image translation into a measurement of the longitudinal and rotational motion of the instrument. Features of the captured image are used to identify the insertion or withdrawal of the instrument, and to identify specific areas of the moving surface passing underneath the sensor, therefore allowing to establish the absolute position of the instrument, or to identify which instrument was inserted or in what cavity a tracking instrument was inserted. The device comprises a light source and a light detector. Light emitted by said light source is directed onto a surface of the rotationally symmetrical apparatus. Reflected light from said surface is detected by said light detector to produce a position signal showing a locally varying distribution in the longitudinal direction and in the peripheral direction to enable said precise position and angular measurement.

Owner:XITACT

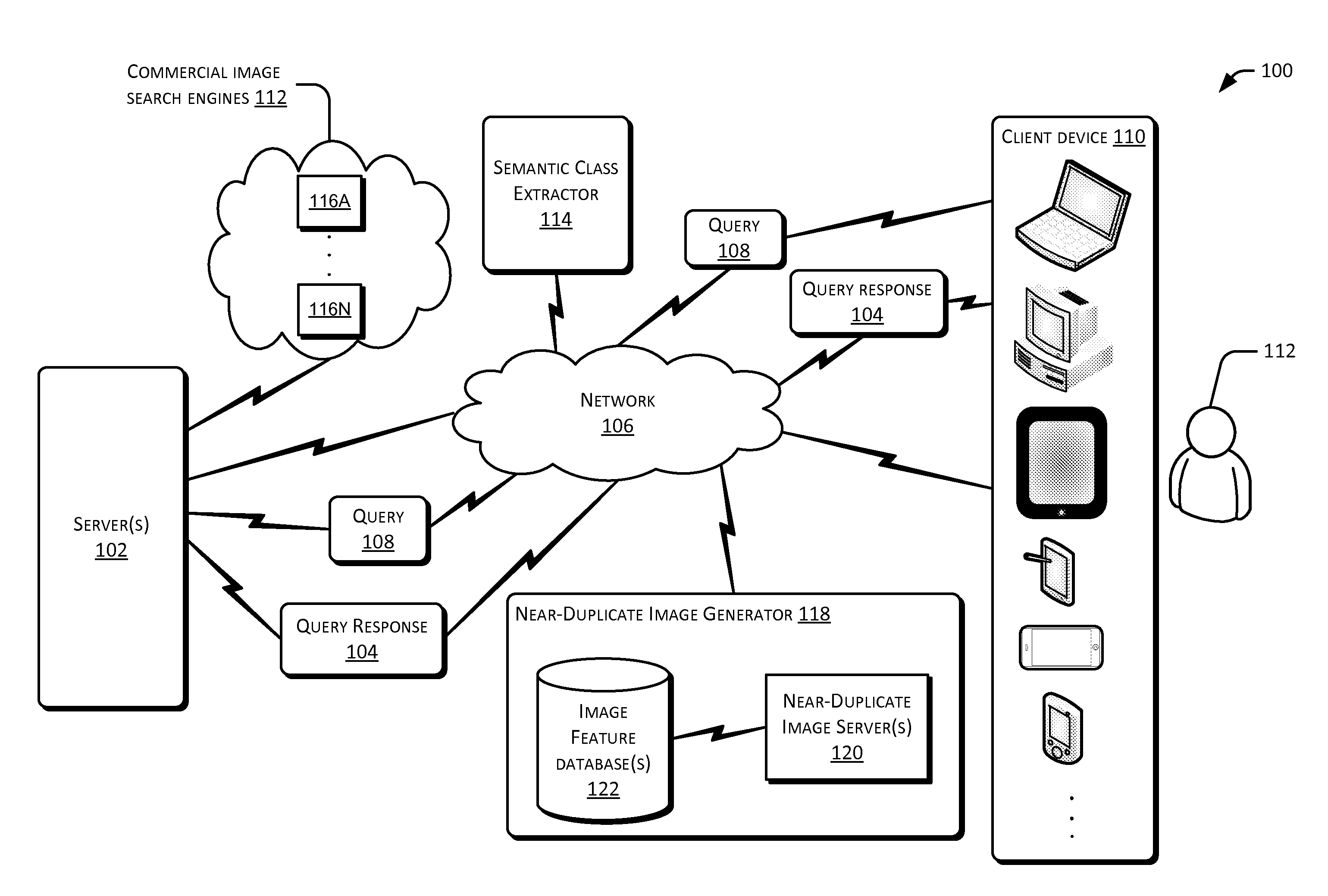

Text to Image Translation

ActiveUS20120296897A1Well formedDigital data processing detailsMachine learningTimed textImage translation

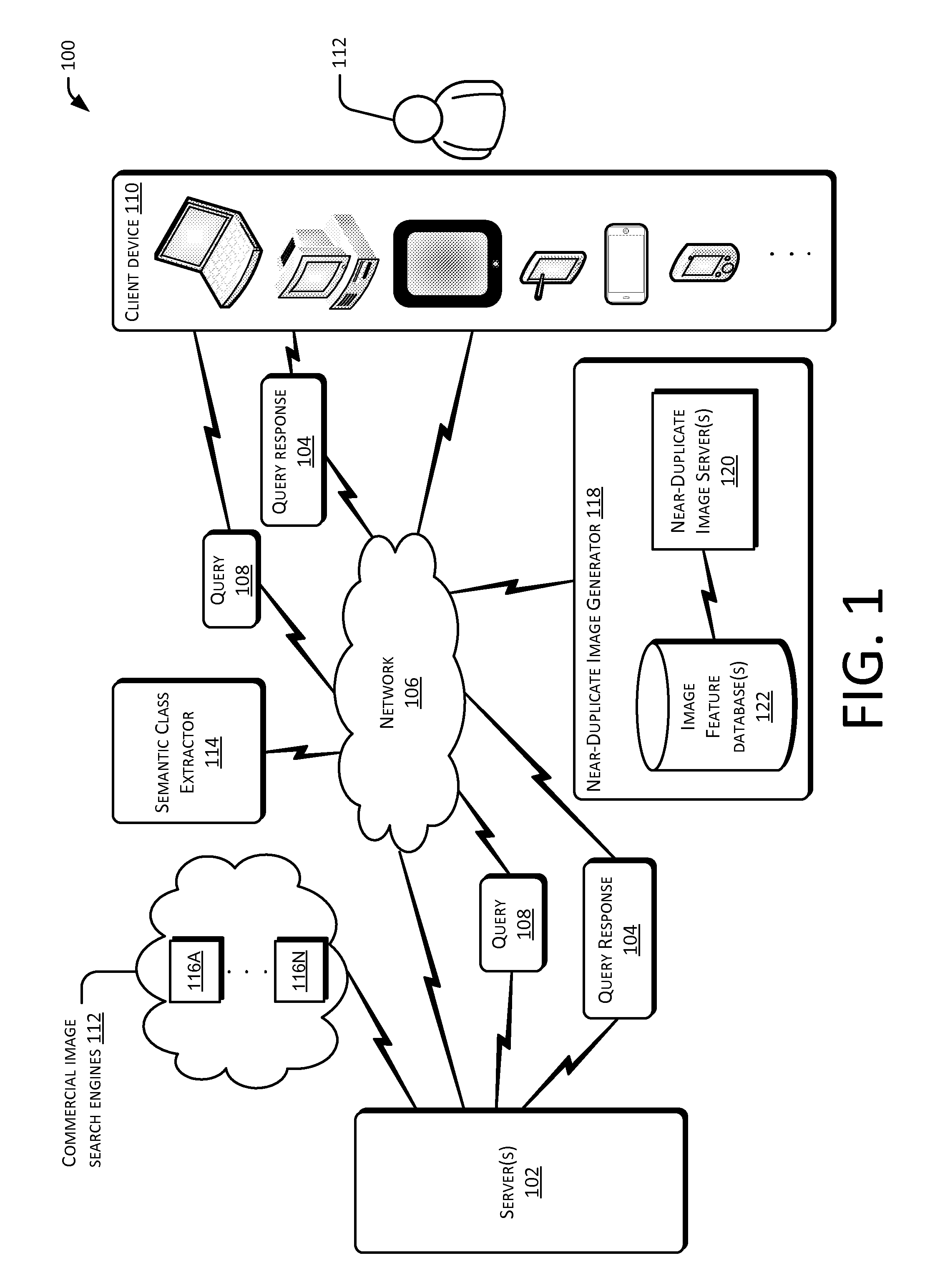

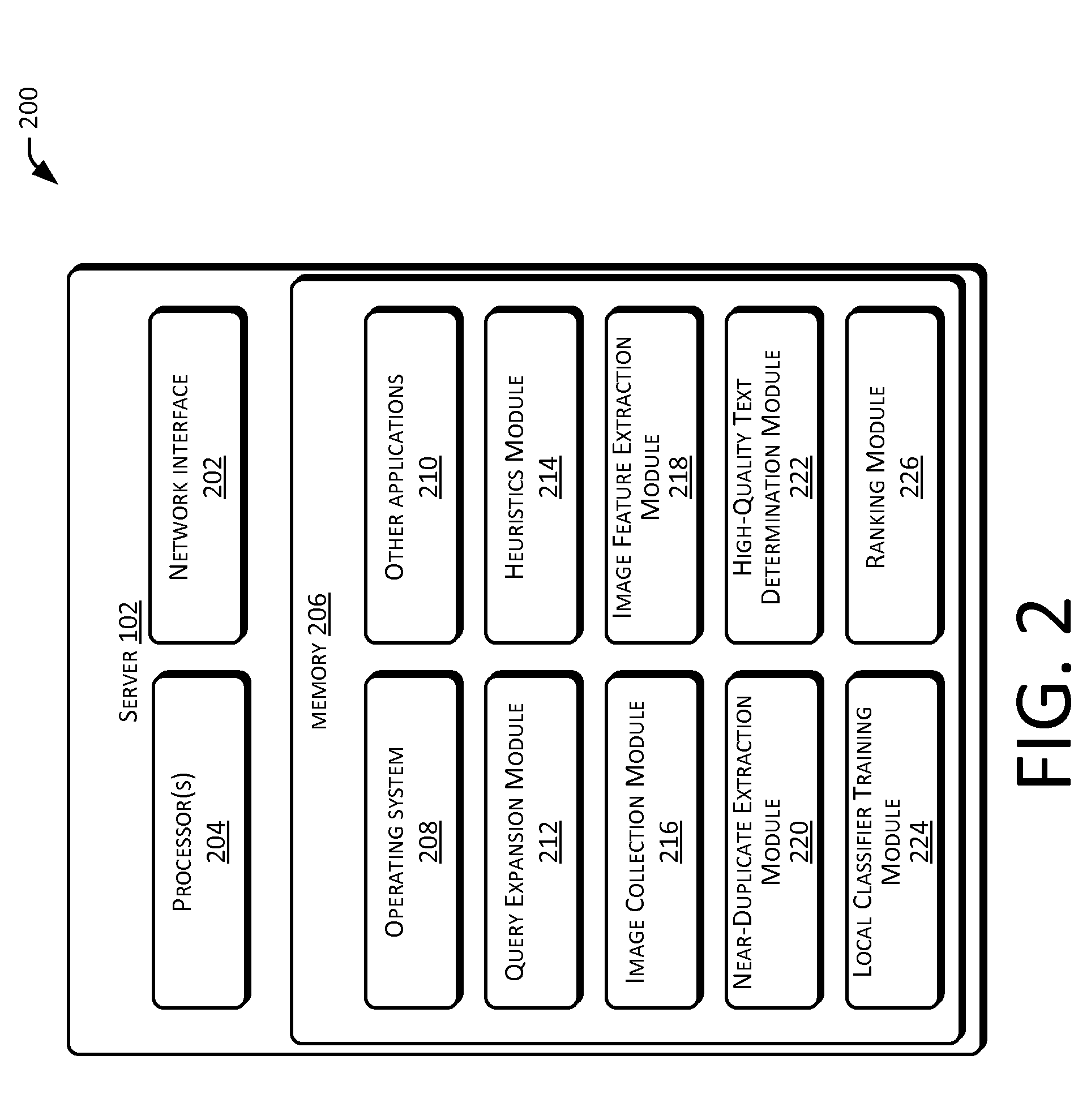

Techniques are described for online real time text to image translation suitable for virtually any submitted query. Semantic classes and associated analogous items for each of the semantic classes are determined for the submitted query. One or more requests are formulated that are associated with analogous items. The requests are used to obtain web based images and associated surrounding text. The web based images are used to obtain associated near-duplicate images. The surrounding text of images is analyzed to create high-quality text associated with each semantic class of the submitted query. One or more query dependent classifiers are trained online in real time to remove noisy images. A scoring function is used to score the images. The images with the highest score are returned as a query response.

Owner:MICROSOFT TECH LICENSING LLC

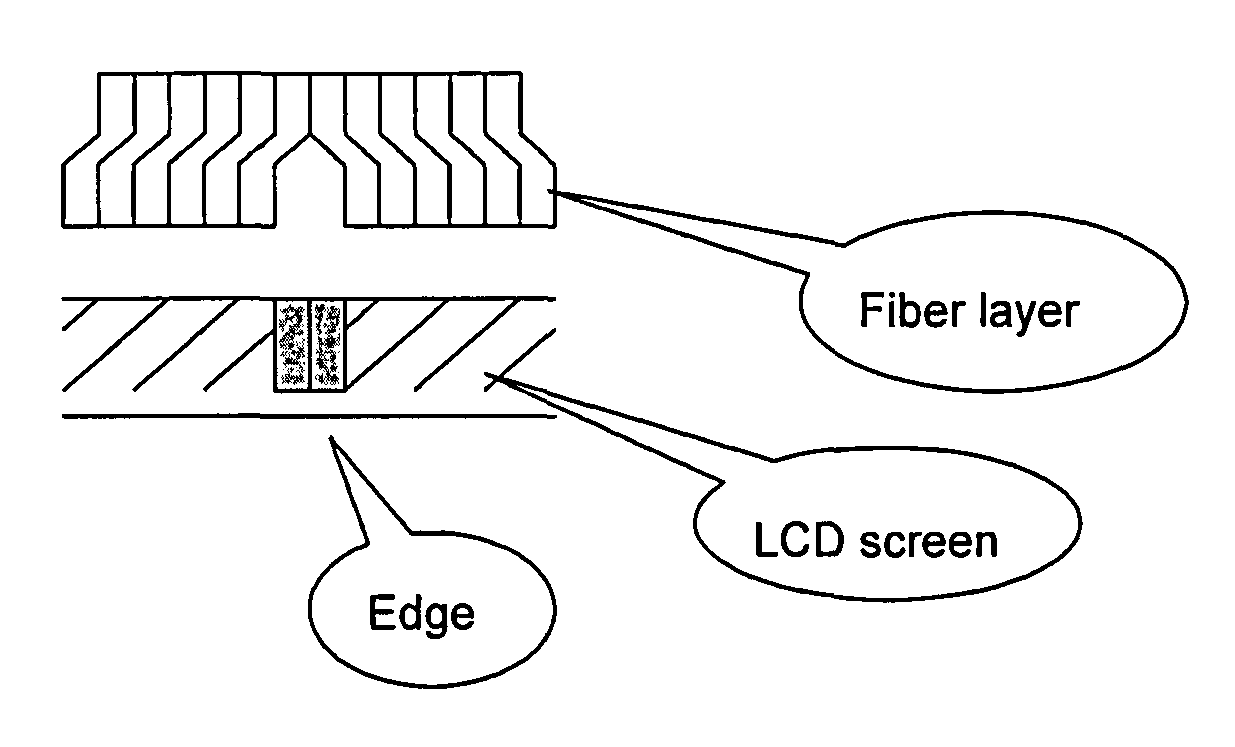

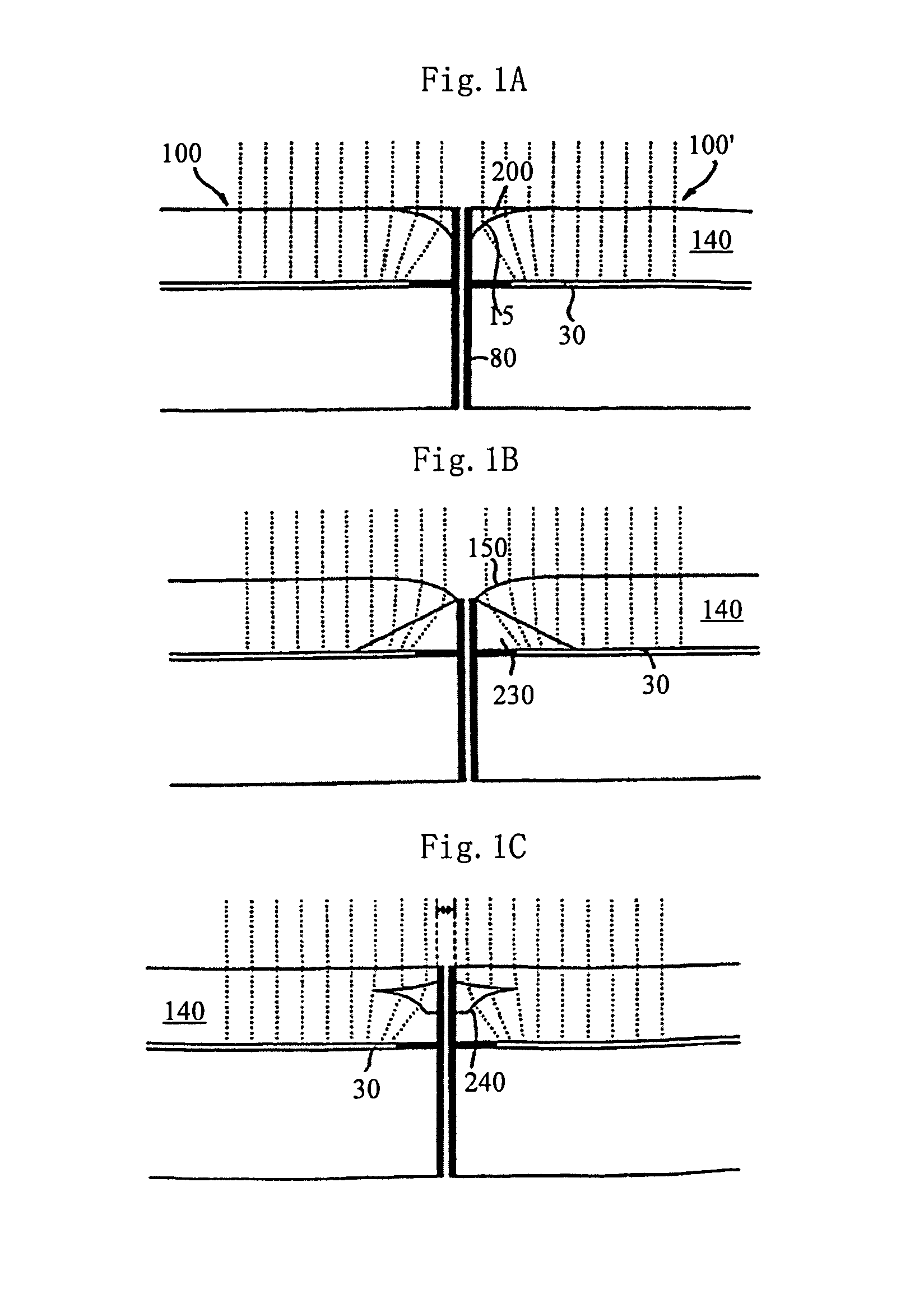

Method and Apparatus for Eliminating Seam Between Adjoined Screens

ActiveUS20080186252A1No damage to image qualityMassive and precise calculationTelevision system detailsProjectorsImage conversionComputer science

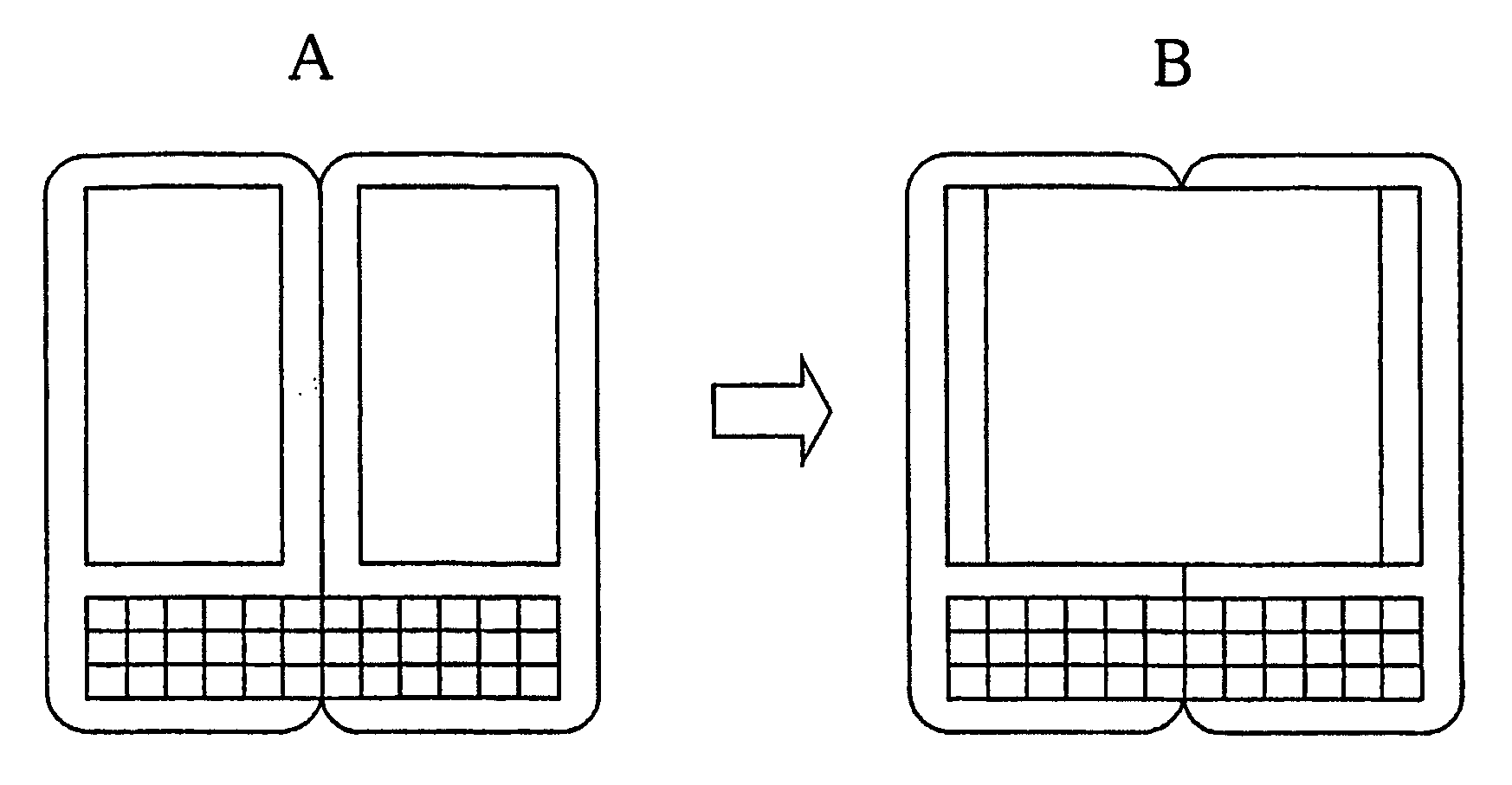

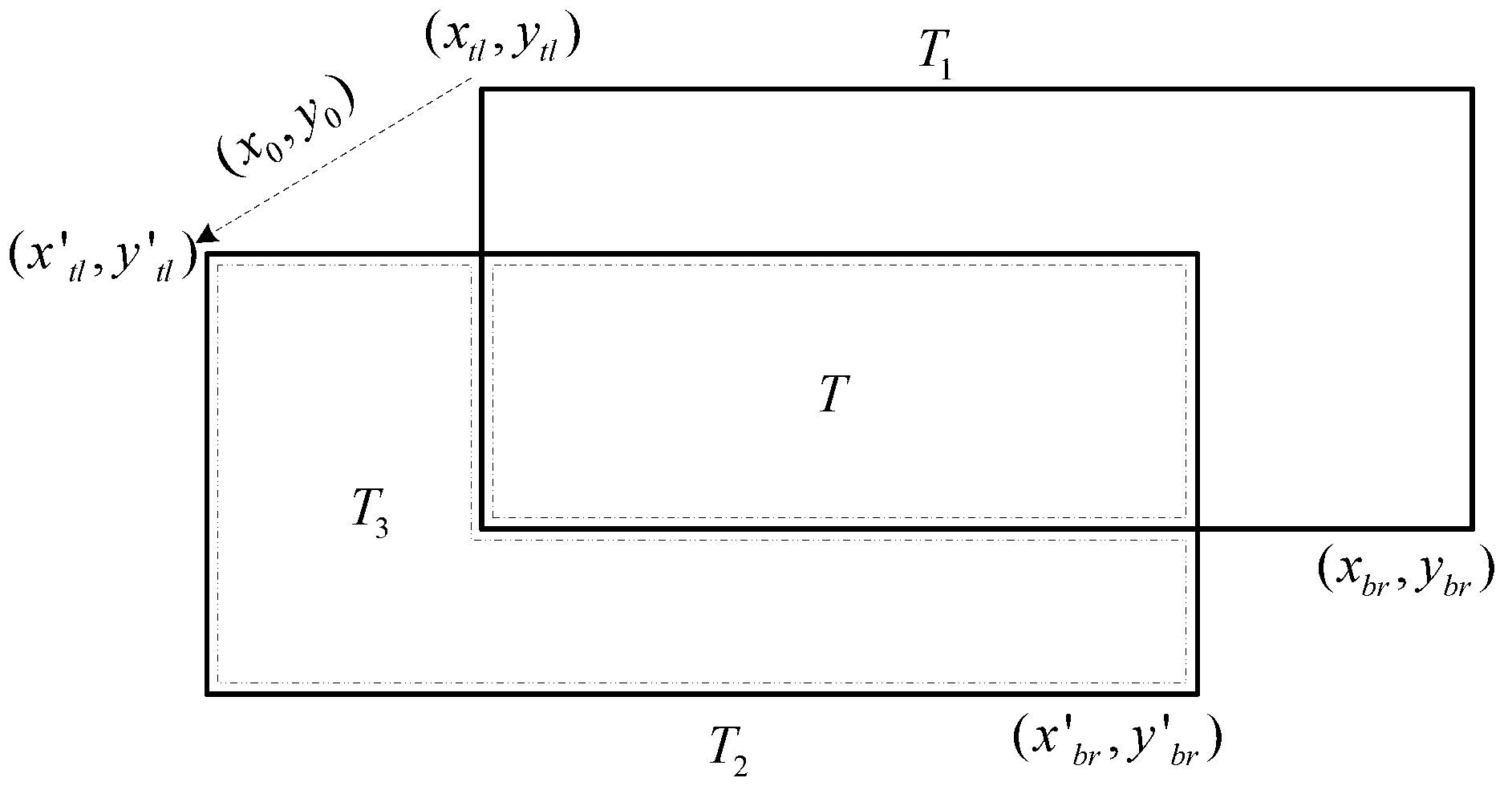

A method for eliminating a seam between adjoined screens includes step A: acquiring an original image and adapting the acquired image to an image translation in subsequent step B; step B: translating the acquired image toward the position of the seam; and step C: reverting the translated image to the original one. An apparatus for eliminating a seam between adjoined screens includes an image acquisition module, an image translation module and an image reversion module. The image acquisition module is configured for acquiring an original image and transmitting the acquired image to the image translation module. The image translation module is configured for translating the received image toward the position of the seam so as to cover the seam by the translated image. The image reversion module is configured for receiving the translated image from the image translation module and reverting the image to the original one.

Owner:LENOVO (BEIJING) CO LTD

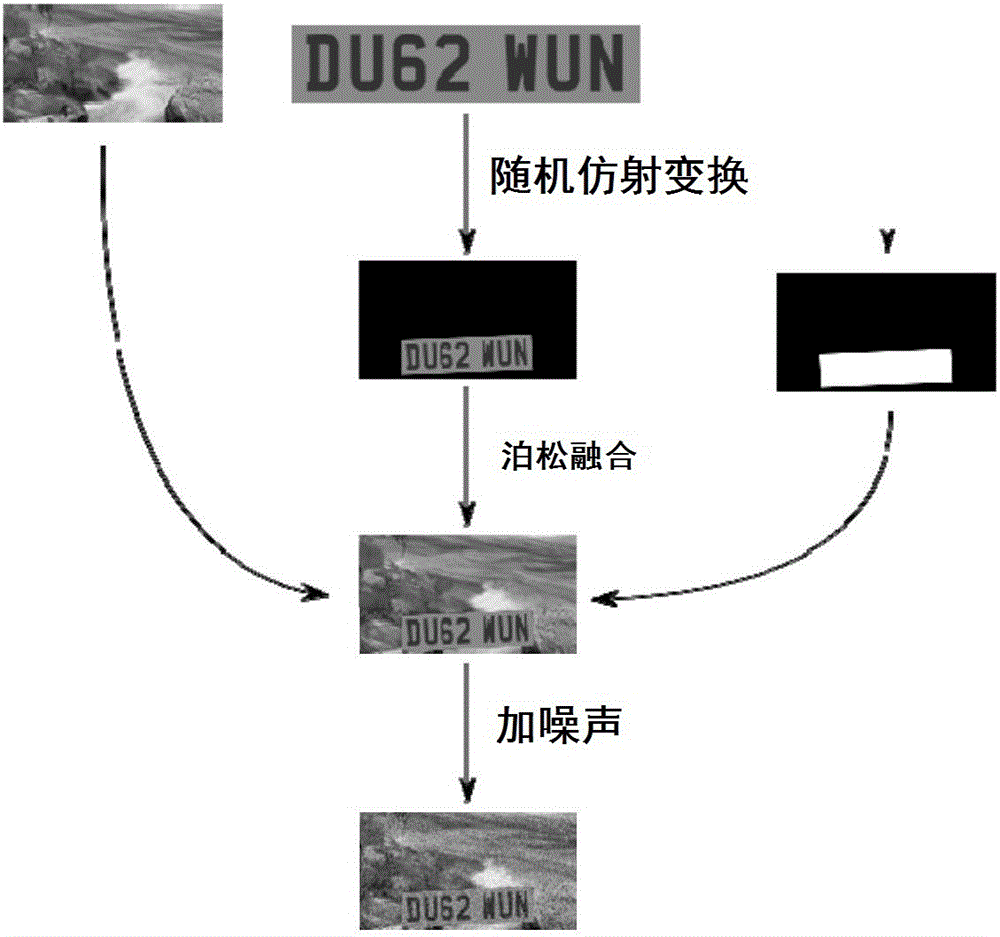

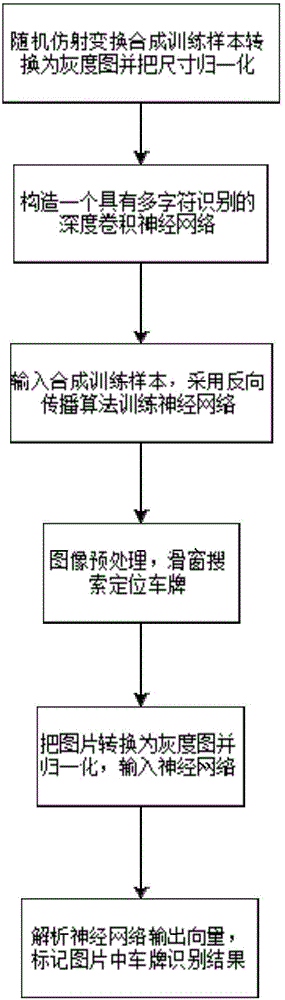

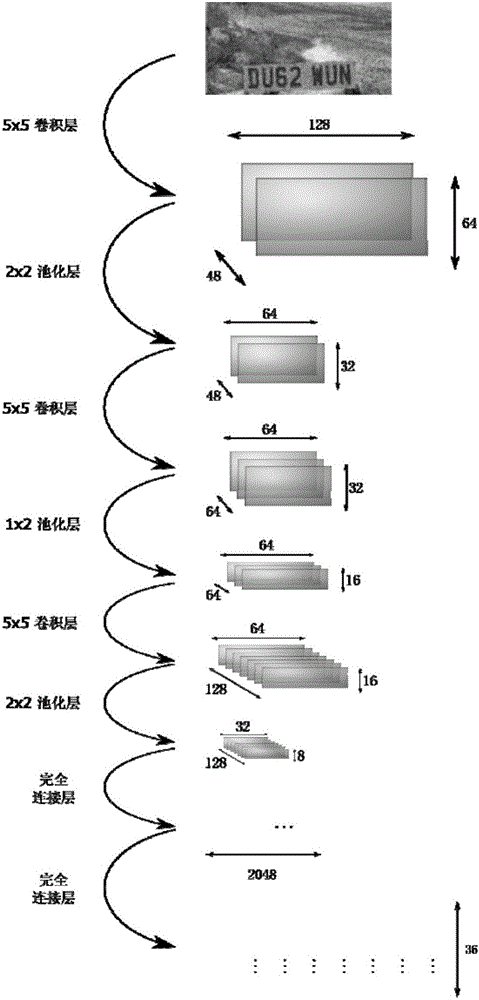

Automatic license plate identification method based on deep convolutional neural network

InactiveCN106709486AAvoid dependencyEasy to transplantImage enhancementImage analysisSlide windowNetwork structure

The invention discloses an automatic license plate identification method based on a deep convolutional neural network. The automatic license plate identification method comprises the steps of: firstly, designing a network structure and an input format of the neural network; adopting random affine transformation to synthesize a training sample, synthesizing a real scene picture and a grey-scale image license plate, adding noise to simulate and generate a large number of license plate images in a real scene; subjecting the neural network to back-propagation training, and training the neural network by adopting a supervised back-propagation algorithm; conducting sliding window searching, positioning a license plate through sliding a window, segmenting a picture and converting the picture into grey-scale images, and standardizing the grey-scale images to the standard input format. The automatic license plate identification method can effectively handle the influence on identification caused by image translation and rotation, can avoid the dependence on the specific environment and font in the identification process, is simple in algorithm implementation and high in robustness, and is easy to transplant.

Owner:NANJING UNIV OF SCI & TECH

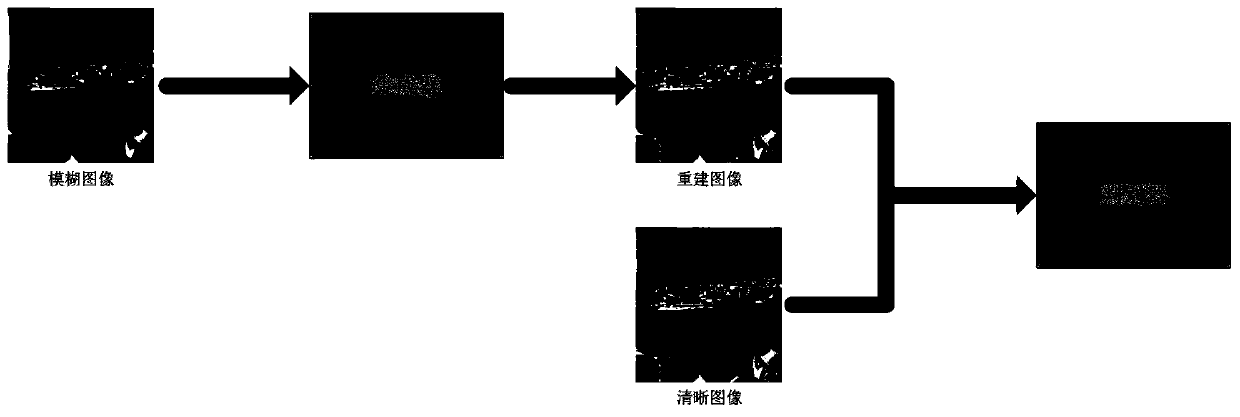

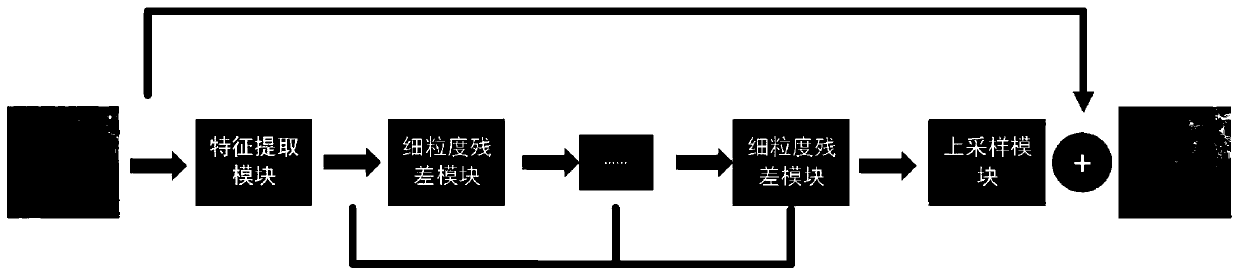

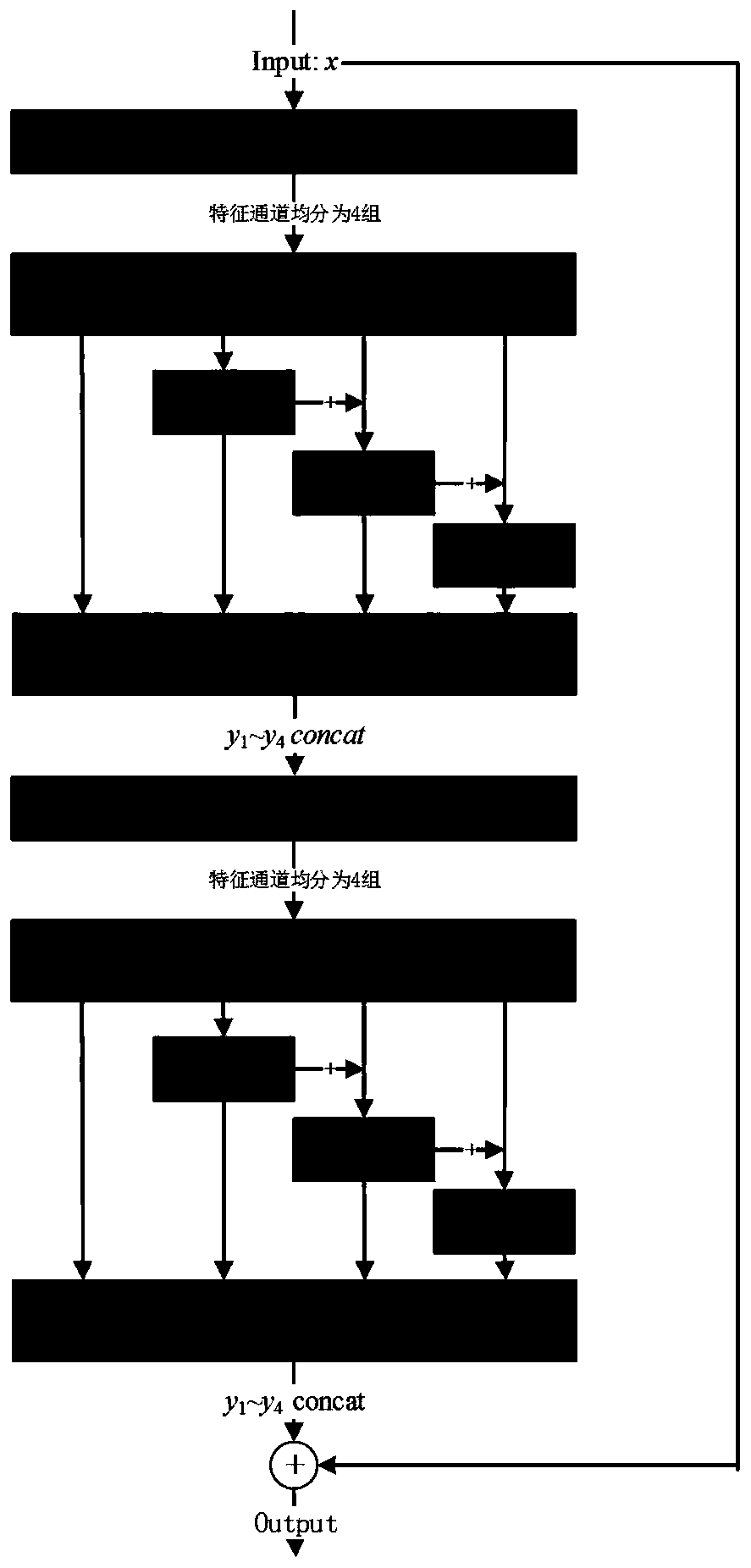

Single-image blind motion blur removing method based on multi-scale residual generative adversarial network

PendingCN111199522AImprove visual effectsGuaranteed accuracyImage enhancementImage analysisData setPaired Data

The invention discloses a single-image blind motion blur removing method based on a multi-scale residual generative adversarial network. The method comprises the following steps: acquiring a GoPRo paired data set, and connecting the GoPRo paired data set to form an image pair in a fuzzy-clear form; randomly cutting the training image into an image patch with the size of 256 * 256; taking the standardized image as model training input data; designing a convolutional neural network, and outputting a deblurred image; calculating the peak signal-to-noise ratio and structural similarity of the output information of the model and the clear image of the corresponding label, and performing loss optimization; and deblurring a picture with a motion blur scene in reality by using the optimized modelparameters to obtain a corresponding clear picture. Based on the convolutional neural network, the conditional generative adversarial network is adopted as a backbone network, and the fine-grained residual module is adopted as a main body module, so that the breakthrough of converting an image deblurring problem into an image translation problem and solving the image deblurring problem is realized, and important technical support is provided for subsequent operation of image deblurring.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

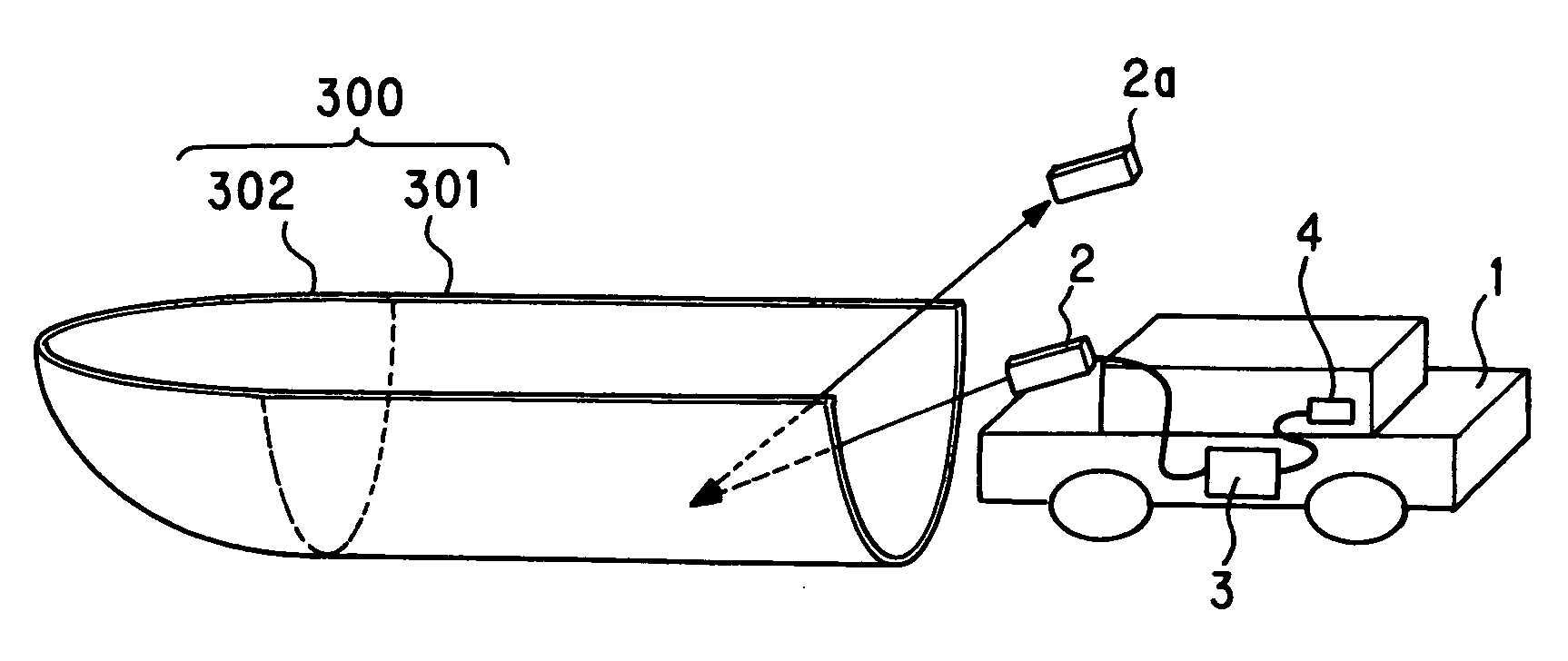

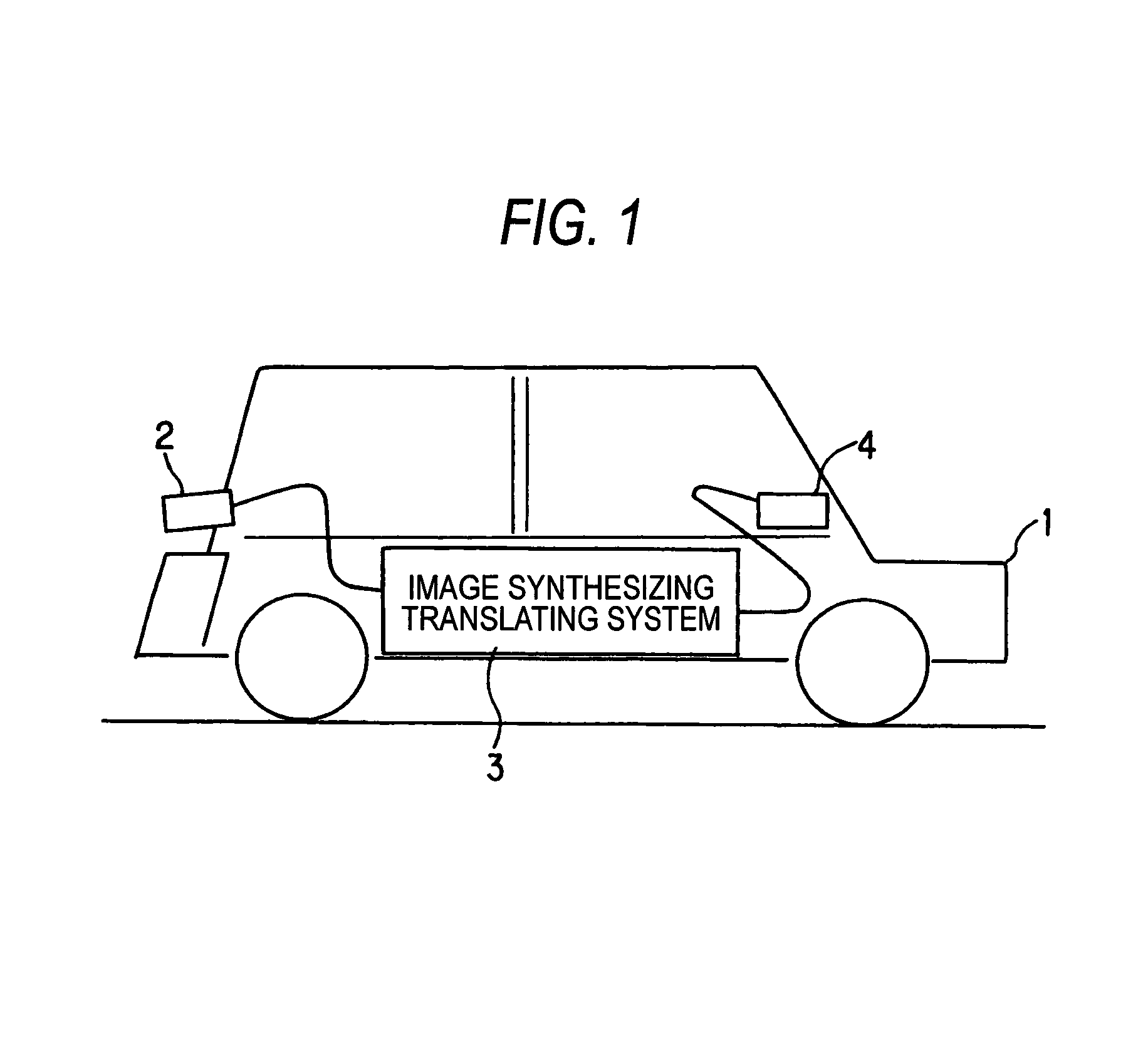

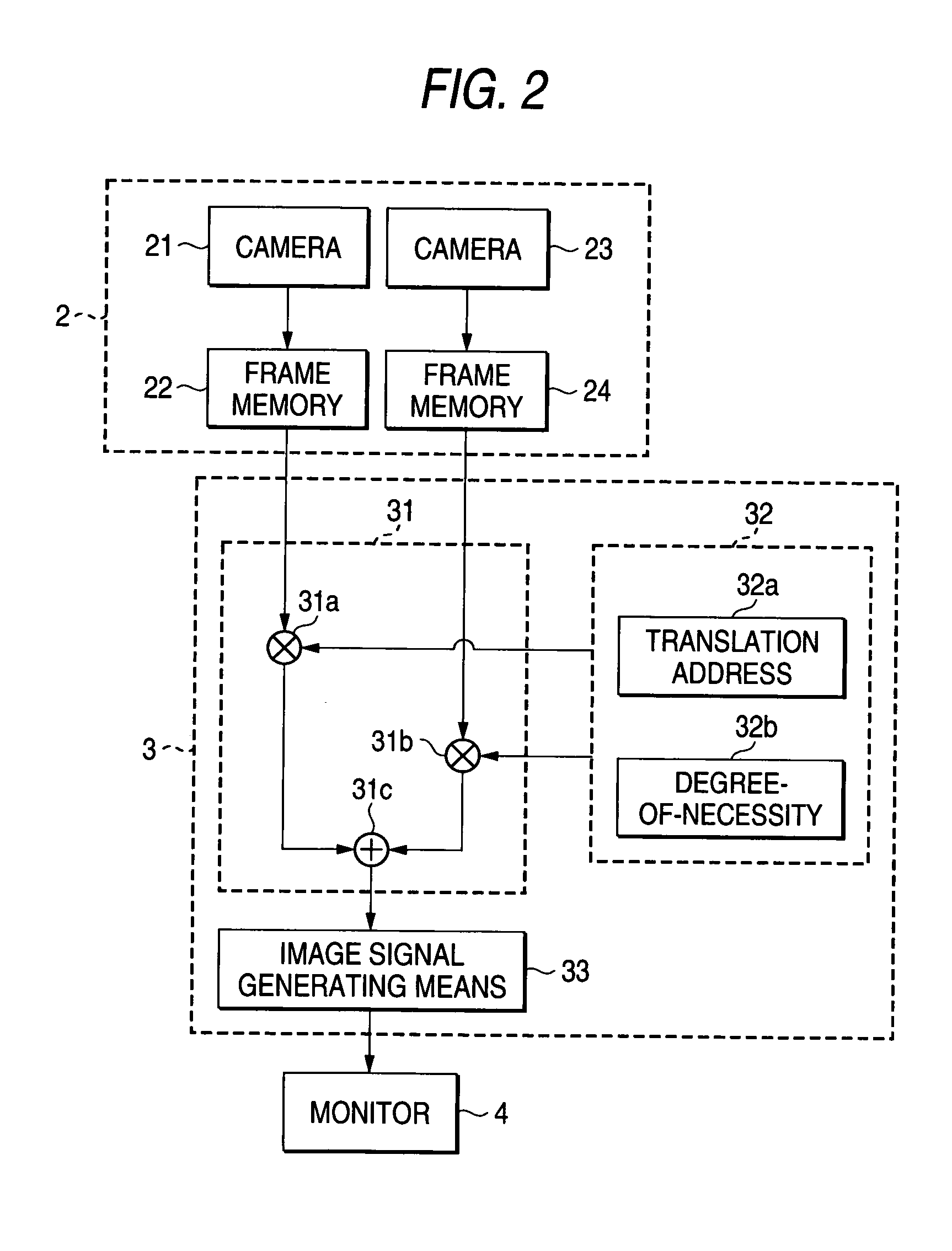

Drive assisting system

ActiveUS7554573B2Reduction factorEasy to confirmImage enhancementGeometric image transformationDriver/operatorEngineering

An object of the present invention is to provide a driving assistance system capable of displaying a picked-up image near a vehicle as a less-distorted image on a monitor.A driving assistance system according to the present invention comprises an imaging means (2) for picking up a surrounding image of a vehicle (1) on a road surface, an image translating means (3) for executing an image translation by using a three-dimensional projection model (300), which is convex toward a road surface side and whose height from the road surface is not changed within a predetermined range from a top end portion of the vehicle (1) in a traveling direction, to translate the image picked up by the imaging means (2) into an image viewed from a virtual camera (2a), and a displaying means (4) for displaying an image translated by the image translating means (3). The three-dimensional projection model (300) is configured by a cylindrical surface model (301) that is convex toward the road surface side, and a spherical surface model (302) connected to an end portion of the cylindrical surface model (301). Accordingly, the straight line on the road surface, which is in parallel with the center axis of the cylindrical surface model (301), is displayed as the straight line, and thus a clear and less-distorted screen can be provided to the driver.

Owner:PANASONIC CORP

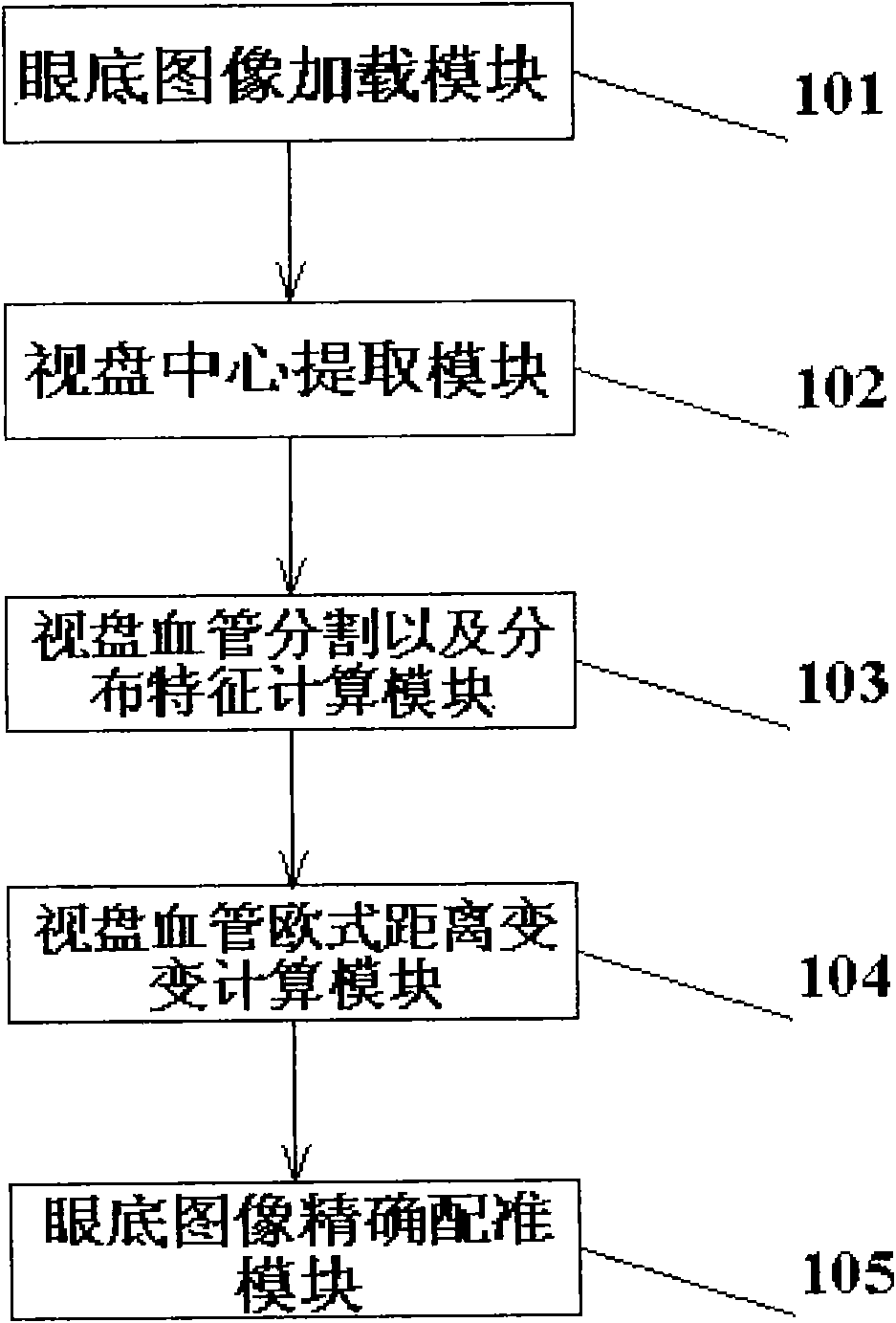

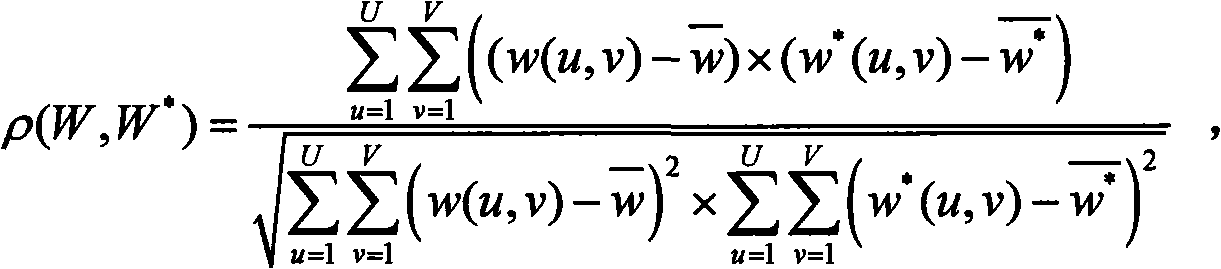

Ocular fundus image registration method estimated based on distance transformation parameter and rigid transformation parameter

InactiveCN101593351ARun fastImprove robustnessImage analysisTransformation parameterRigid transformation

The invention provides an ocular fundus image registration method estimated based on distance transformation parameter and rigid transformation parameter, comprising the following main steps: (1) ocular fundus images are loaded; (2) the optic disk center is extracted to estimate image translation parameters; (3) gradient vectors of pixel points in the adjacent zone of the optic disk are calculated, vessel segmentation is carried out, vessel distribution probability characteristics are calculated, and the estimation of image rotation parameters are obtained by minimizing two probability distribution relative entropies (Kullback-Leibler Divergence); (4) the Euclidean distance transformation of vessels segmented in step 3 is calculated; (5) accurate registration of images is carried out. The invention is a quick, precise, robust and automatic ocular fundus image registration algorithm, and has great application value on the aspect of ocular fundus image registration.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

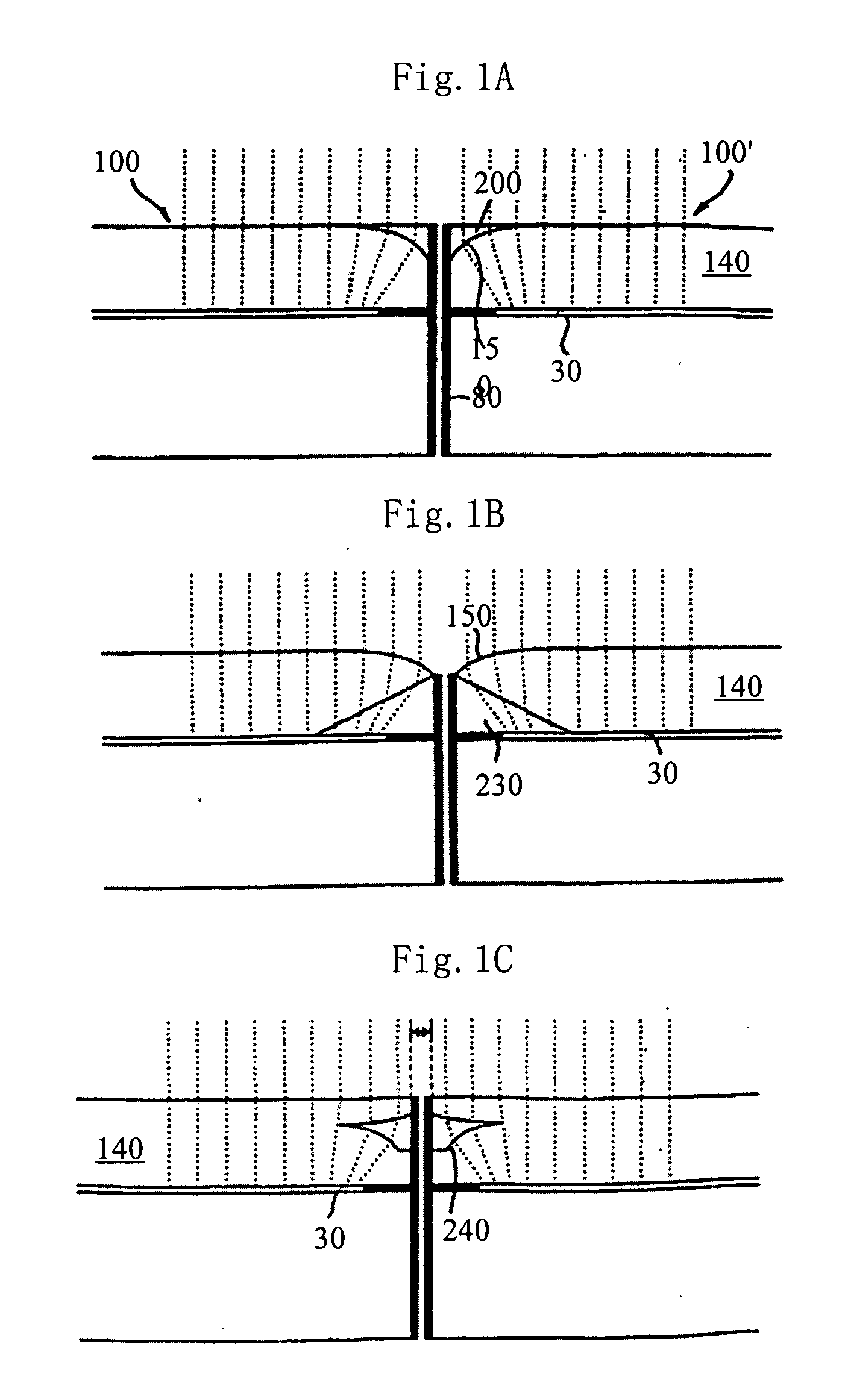

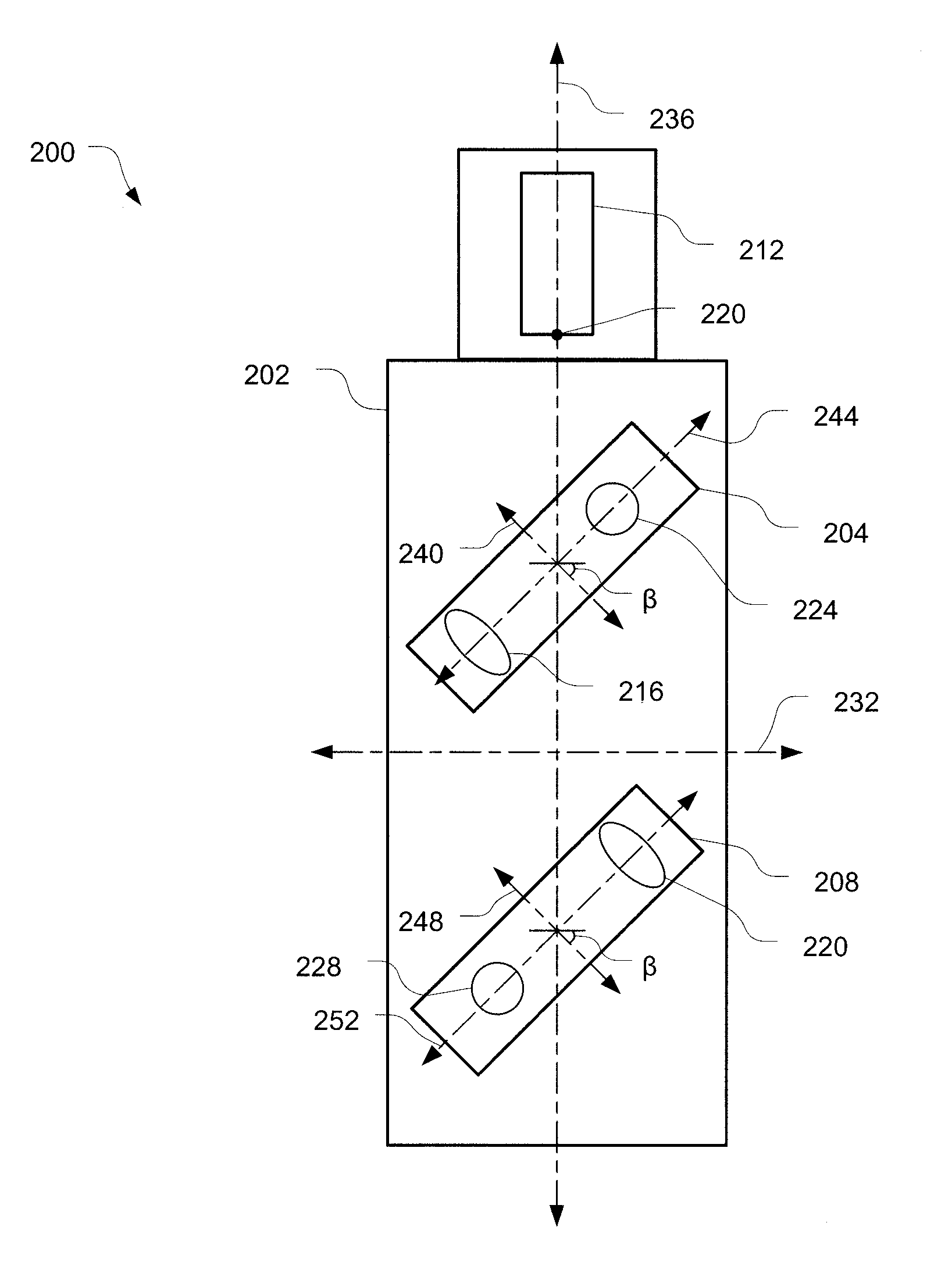

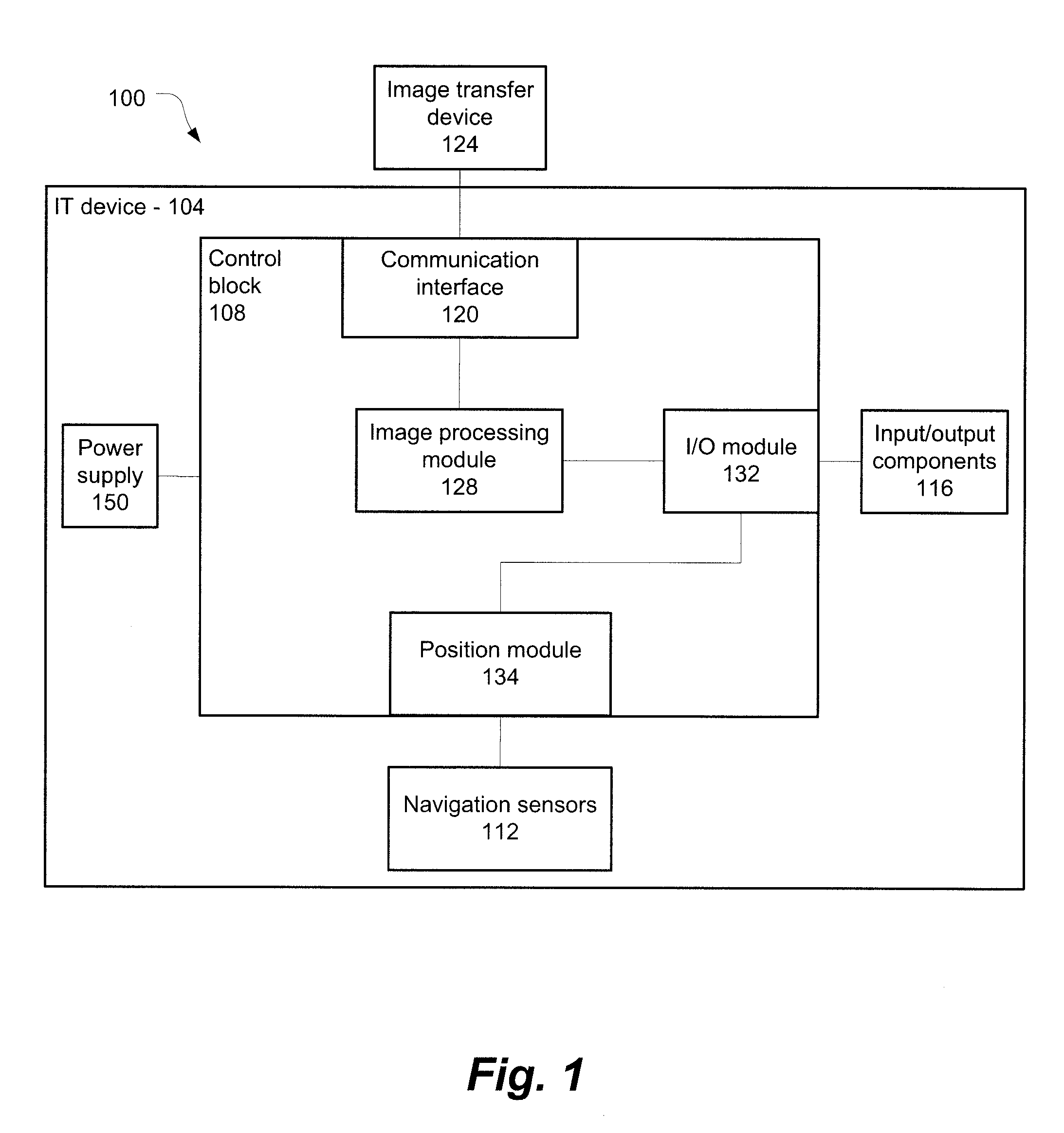

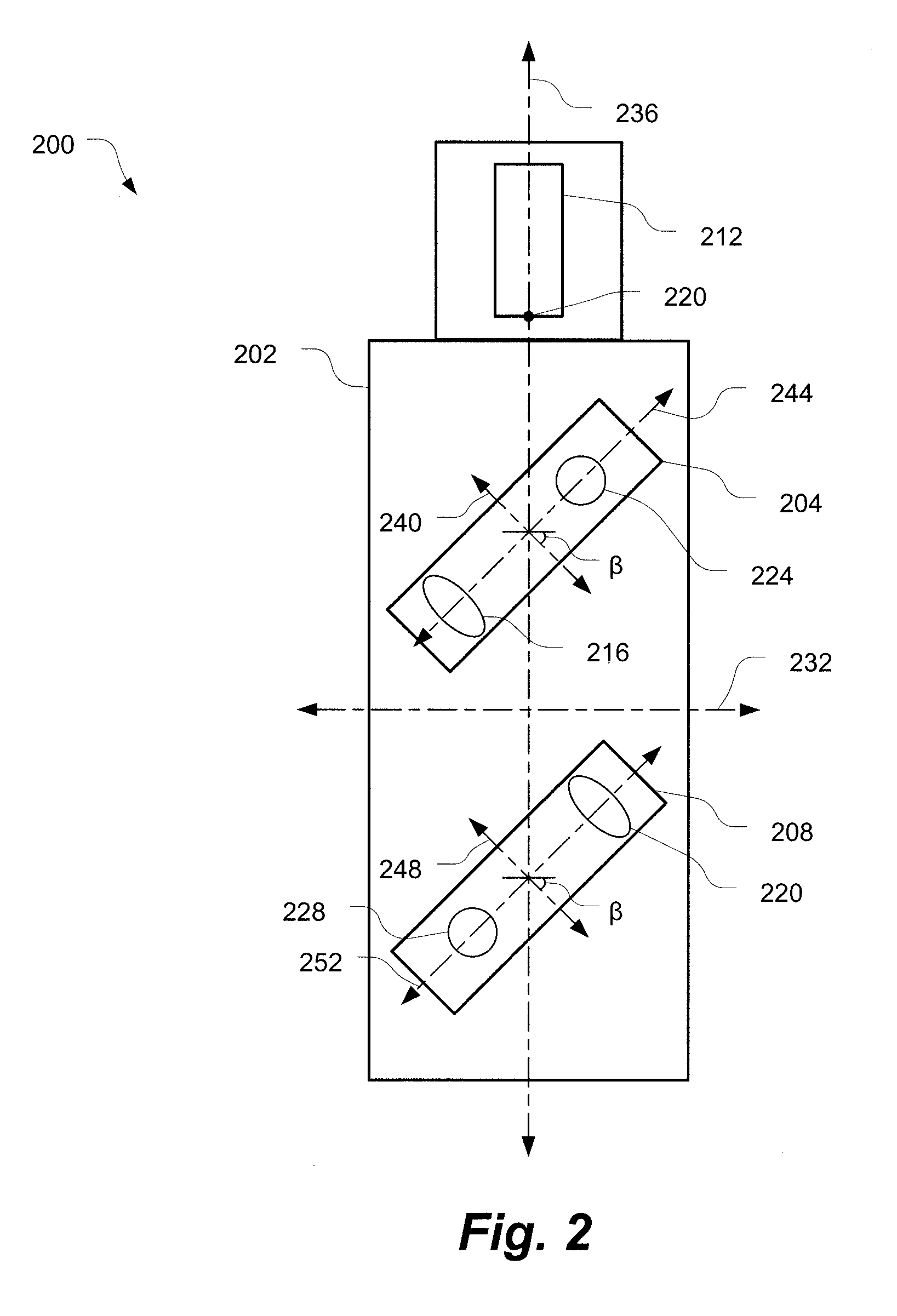

Sensor positioning in handheld image translation device

InactiveUS8396654B1Accurately determineTelevision system detailsDigitally marking record carriersMarine navigationImage transformation

Systems, apparatuses, and methods for an image translation device are described herein. The image translation device may include a navigation sensor defining a sensor coordinate system askew to a body coordinate system defined by a body of the image translation device. Other embodiments may be described and claimed.

Owner:MARVELL ASIA PTE LTD

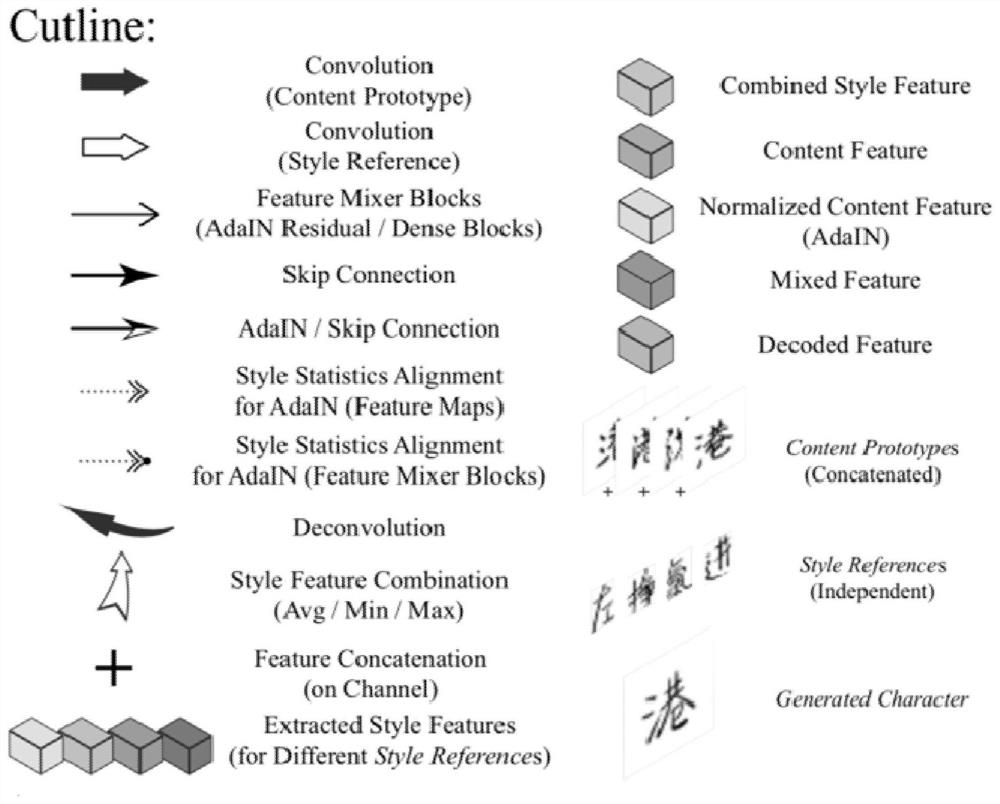

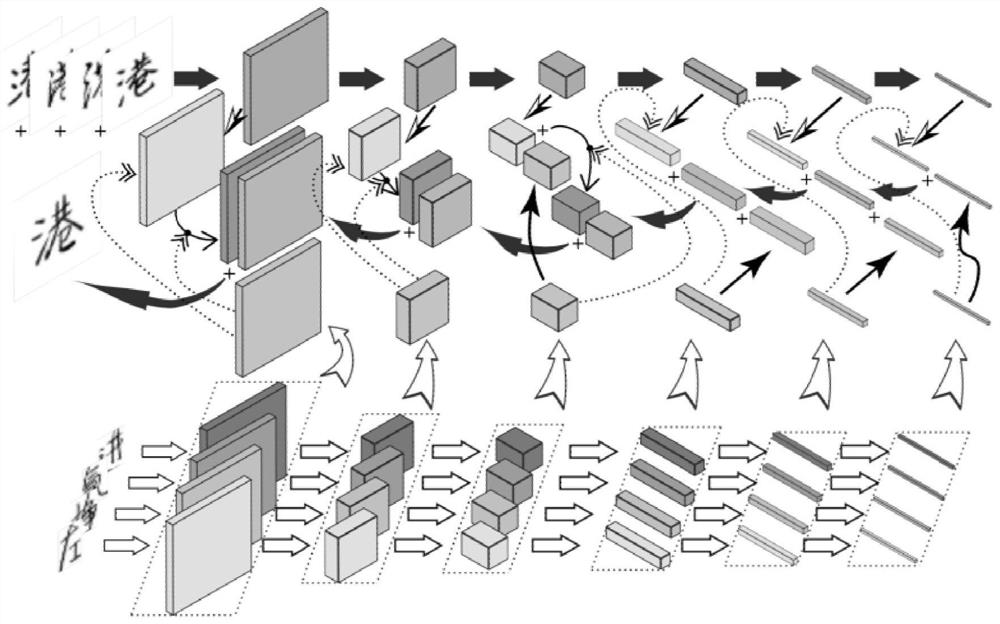

A style character generation method based on a small number of samples

ActiveCN109165376AInternal combustion piston enginesNatural language data processingHandwritingData set

The invention discloses a style character generation method based on a small number of samples. A style reference character data set composed of several style characters (handwriting style or print style) and a character of a standard font are used as a character content prototype data source, and an image translation model based on a deep generation antagonism network is used to train a charactergeneration model of character style transfer. The model can generate arbitrary characters with the same writing / printing style by using a given few (or even one) characters with a certain style (writing / printing) as the style reference template. The content of the generated character is determined by the typed content prototype (standard font).

Owner:XIAN JIAOTONG LIVERPOOL UNIV

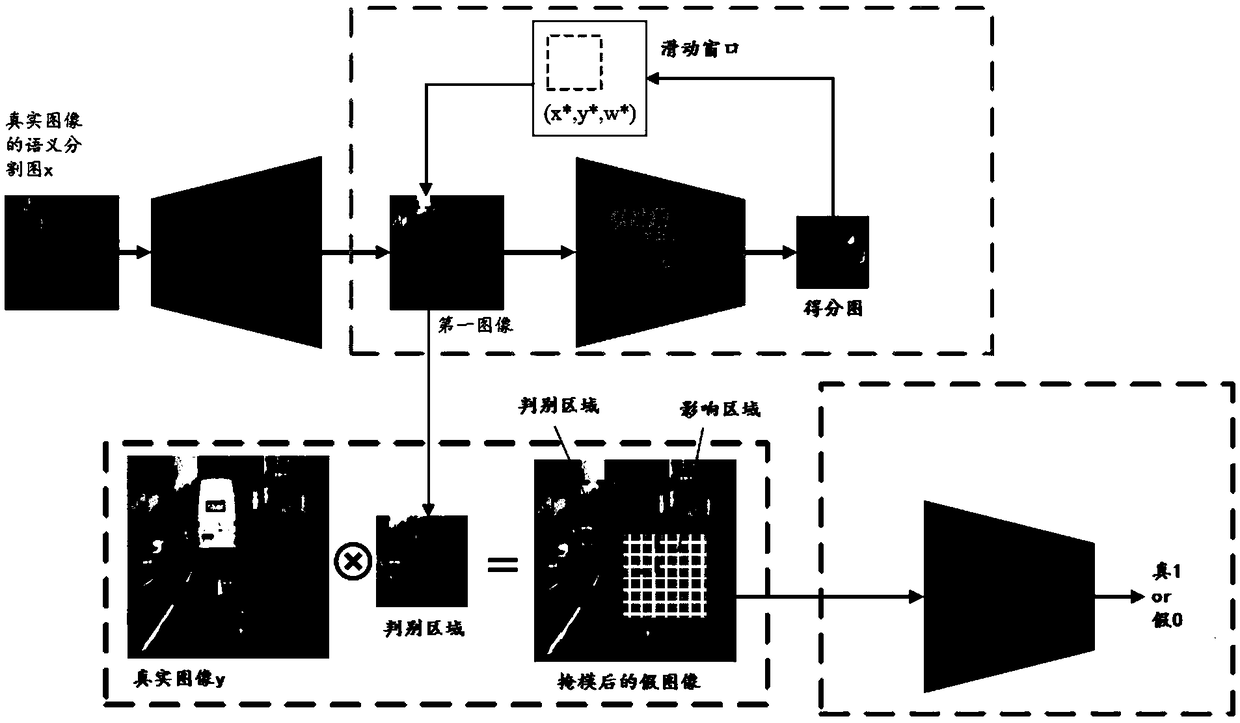

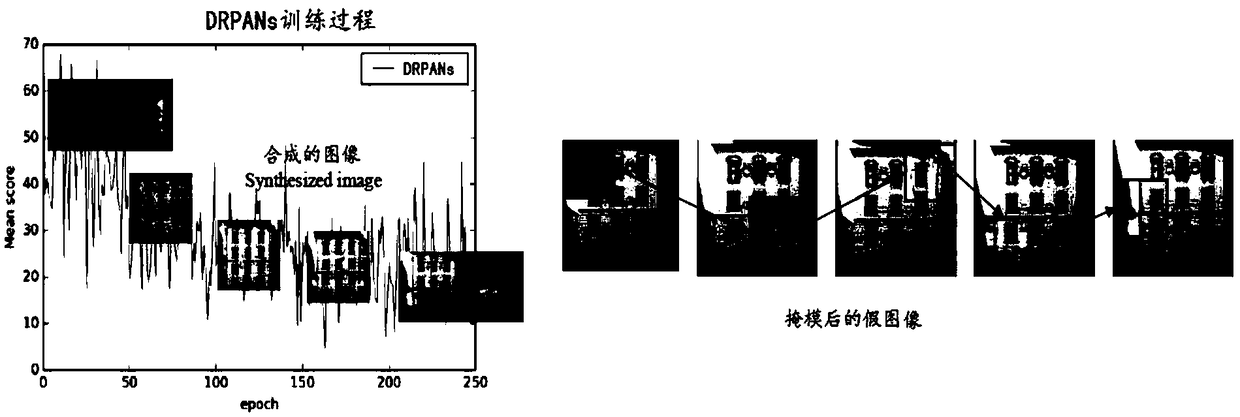

An image-to-image translation method based on a discriminant region candidate adversarial network

InactiveCN109166102AHigh resolutionReduce artifactsImage enhancementImage analysisSlide windowAdversarial network

The invention provides an image-to-image translation method based on a discriminant region candidate adversarial network. The method includes inputting a semantic segmentation map of a real image to the generator to generate a first image; inputting the first image into the image block discriminator, and predicting a score map by the image block discriminator; using a sliding window to find the most obvious artifact region image block in the score map, mapping the artifact region image block into the first image to obtain a discrimination region in the first image; performing a mask operationon the real image using the discrimination region to obtain a false image after the mask; inputting the real image and the false image after the mask into the corrector for judging whether the input image is true or false wherein the generator generates an image closer to the real image based on the correction of the corrector. The method can synthesize high-quality images with high resolution, real details and fewer artifacts.

Owner:OCEAN UNIV OF CHINA

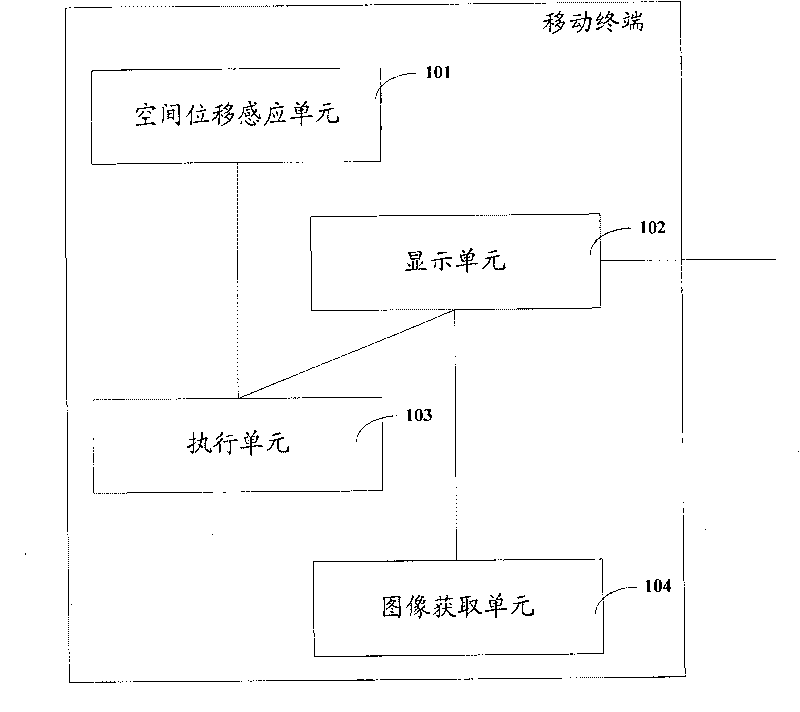

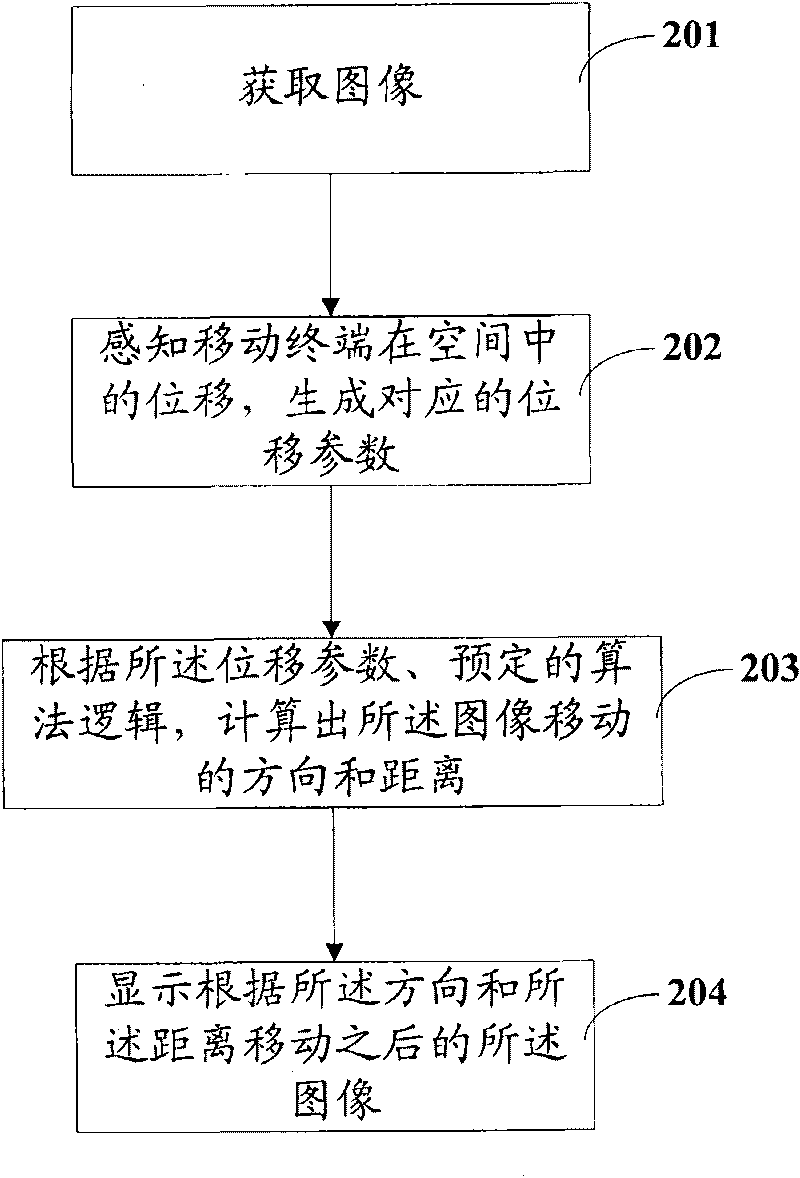

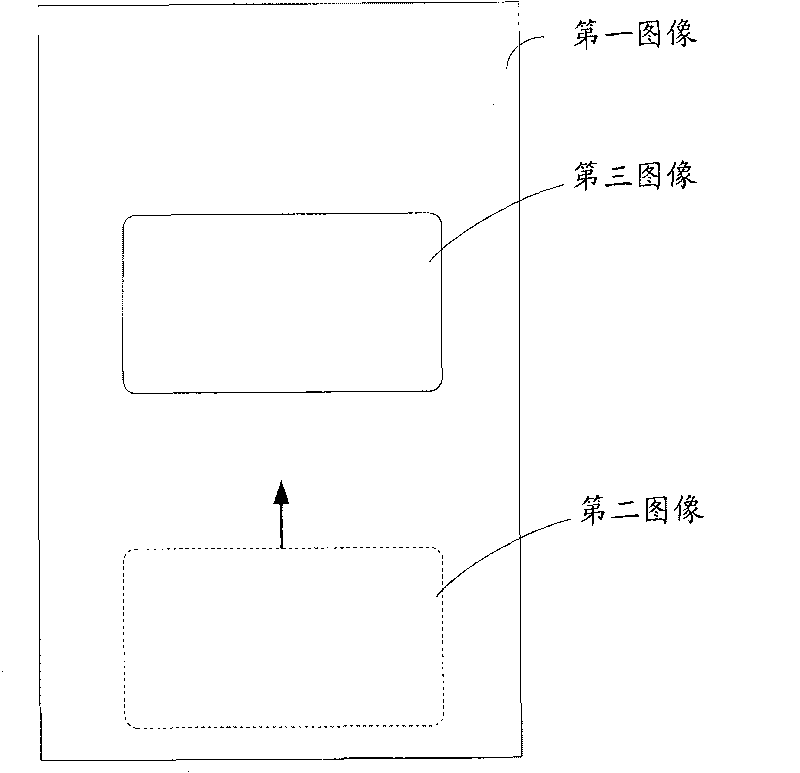

Mobile terminal and method for displaying images

InactiveCN101739199AEasy to browseInput/output processes for data processingComputer graphics (images)Execution unit

The invention provides a mobile terminal and a method for displaying images, wherein the mobile terminal stores a first image, the display area of the first image is larger than the area of a display region, and the mobile terminal comprises a spatial displacement sensing unit, an actuating unit, an image acquisition unit and a display unit, wherein the spatial displacement sensing unit is used for obtaining spatial position adjustment parameters; the actuating unit is used for obtaining image translation parameters correspondingly according to the spatial position adjustment parameters; the image acquisition unit is used for acquiring a second image, the second image is the part for constituting the first image, the area of the second image is equal to the area of the display region, and a third image is obtained according to the image translation parameters and the relative position between the translation display region and the first image; and the display unit is used for displaying the second image and displaying the third image. The embodiment of the invention has the following benefits: a user can conveniently and rapidly browse information of the image out of the display region without a touch screen and buttons.

Owner:LENOVO (BEIJING) CO LTD

Robust digital image adaptive zero-watermarking method

InactiveCN101908201AIntegrity guaranteedNo degradation of image qualityImage data processing detailsImaging processingWavelet approximation

The invention discloses a robust digital image adaptive zero-watermarking method. The method mainly comprises two parts, namely zero-watermark embedding and zero-watermark detection, wherein both the zero-watermark embedding and the zero-watermark detection are performed in a composite domain of discrete wavelet transform and discrete fourier transform; the characteristics of high stability of a wavelet approximation subgraph obtained by the discrete wavelet transform and translation invariance of an amplitude spectrum obtained by the discrete fourier transform are fully utilized, so that the method has high robustness, can resist common image processing and is completely immune from image translation attack; meanwhile, because a binary digital watermark is not embedded into the original digital image but is registered into a watermark database, no damage is caused to the original digital image data, the problem of quality reduction of the image does not exist, the embedded binary digital watermark is completely imperceptible, and the contradiction between the robustness and the imperceptibleness of the digital watermark can be balanced well.

Owner:NINGBO UNIV

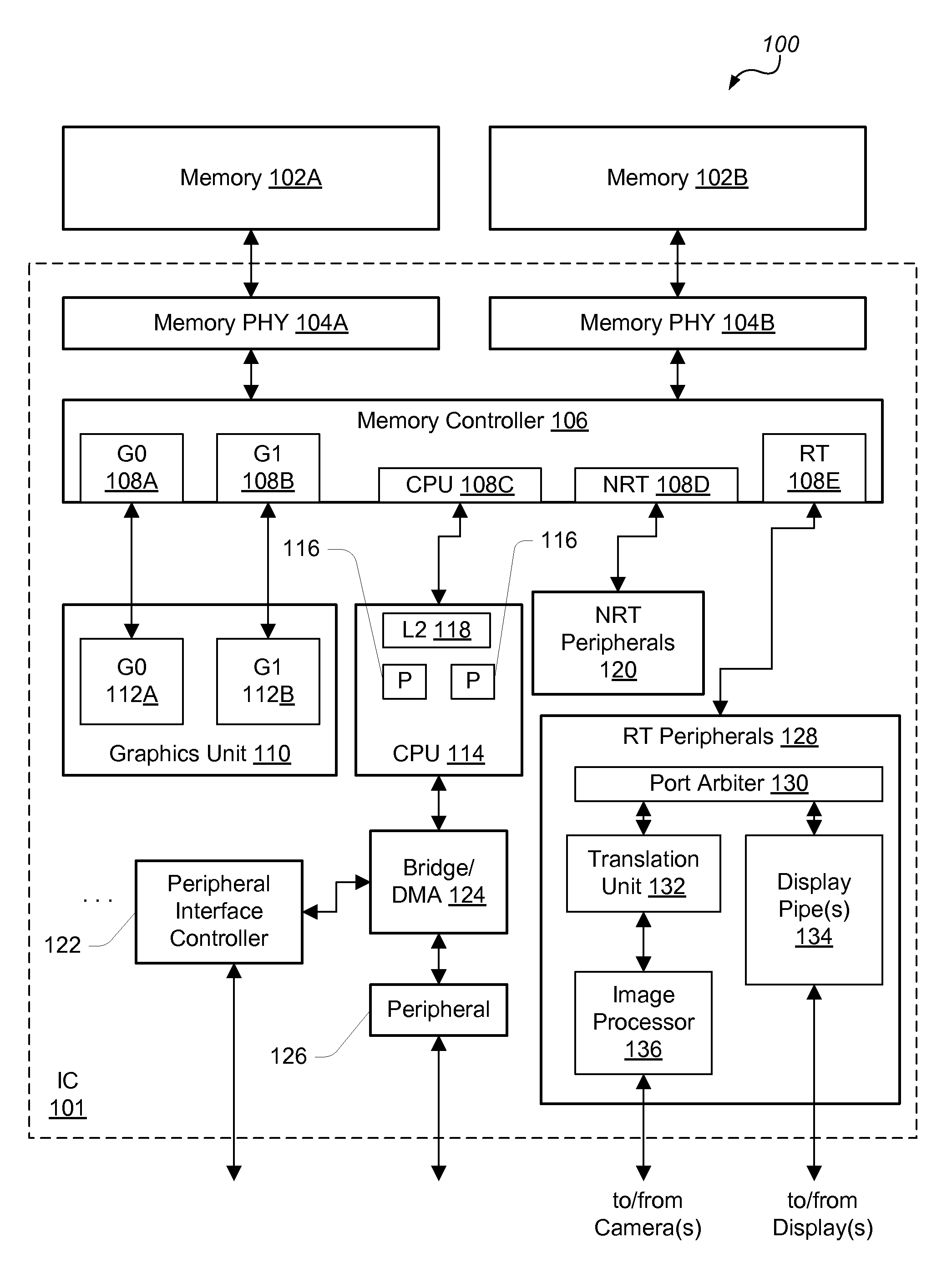

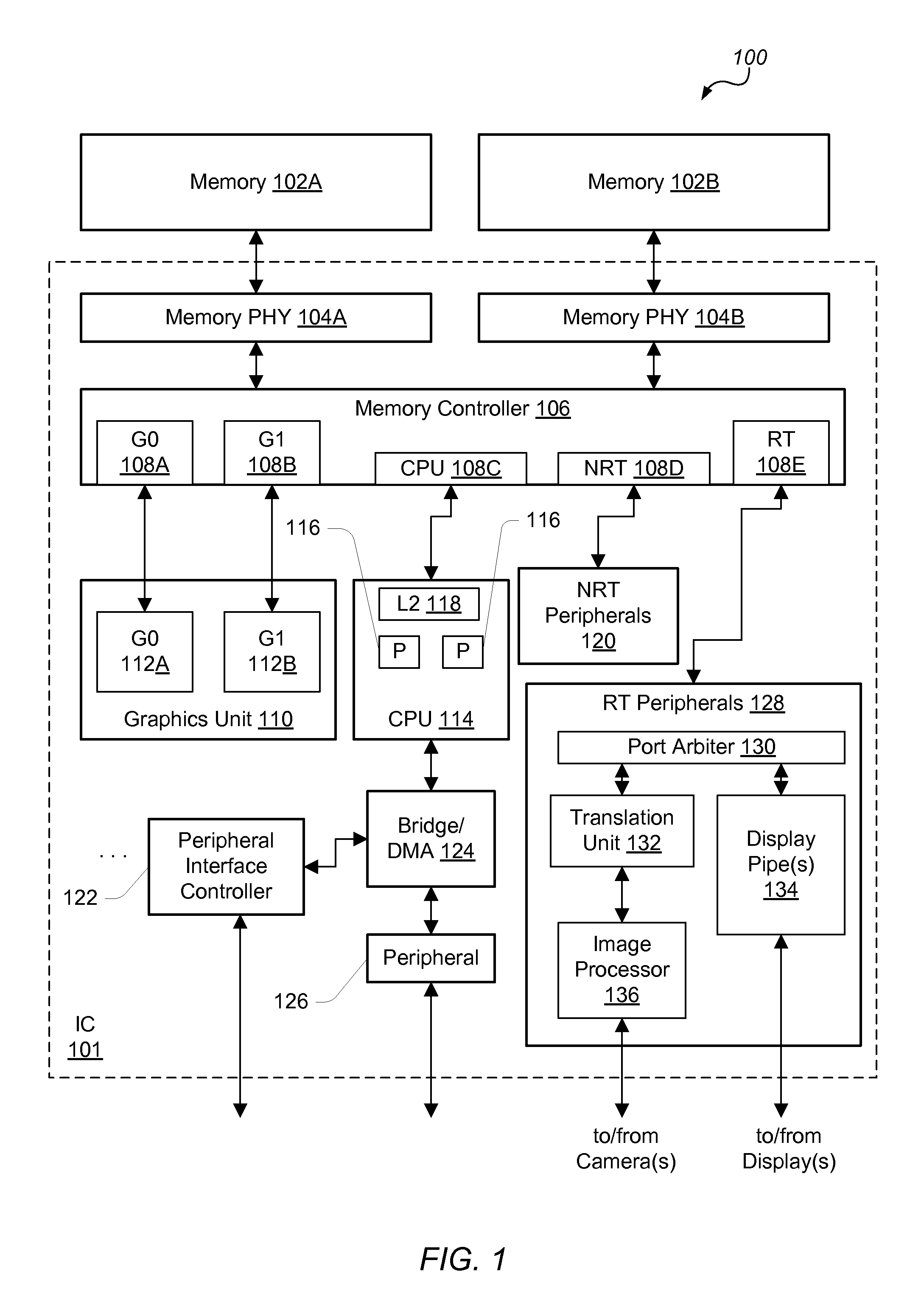

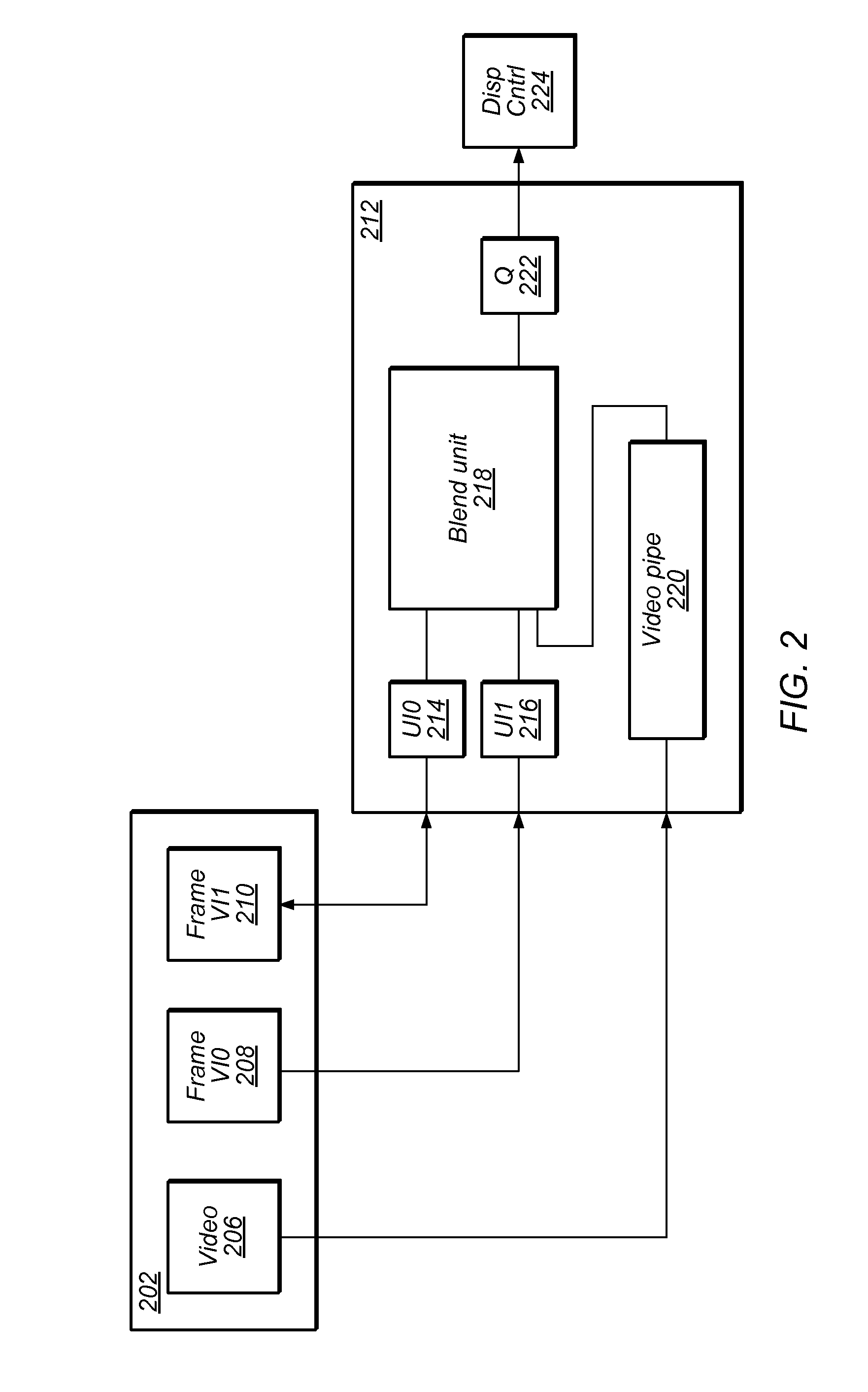

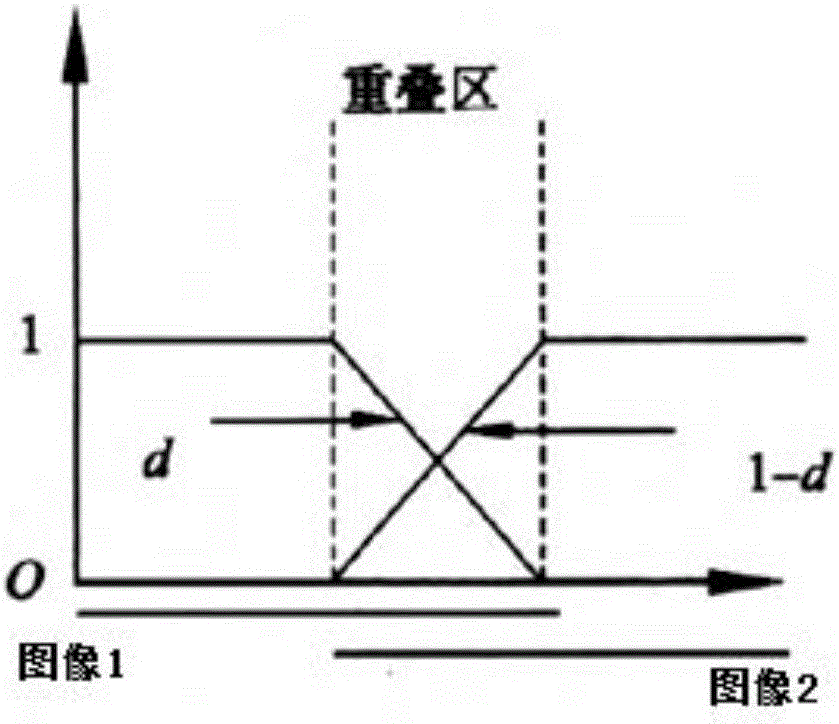

Edge Alphas for Image Translation

A video display pipe used for processing pixels of video and / or image frames may include edge Alpha registers for storing edge Alpha values corresponding to the edges of an image to be translated across a display screen. The edge Alpha values may be specified based on the fractional pixel value by which the image is to be moved in the current frame. The video pipe may copy the column and row of pixels that are in the direction of travel, and may apply the edge Alpha values to the copied column and row. The edge Alpha values may control blending of the additional column and row of the translated image with the adjacent pixels in the original frame, providing the effect of the partial pixel movement, simulating a sub-pixel rate of movement.

Owner:APPLE INC

Method and apparatus for eliminating seam between adjoined screens

ActiveUS8907863B2Increase in sizeIncreasing the thicknessTelevision system detailsProjectorsComputer scienceImage acquisition

A method for eliminating a seam between adjoined screens includes step A: acquiring an original image and adapting the acquired image to an image translation in subsequent step B; step B: translating the acquired image toward the position of the seam; and step C: reverting the translated image to the original one. An apparatus for eliminating a seam between adjoined screens includes an image acquisition module, an image translation module and an image reversion module. The image acquisition module is configured for acquiring an original image and transmitting the acquired image to the image translation module. The image translation module is configured for translating the received image toward the position of the seam so as to cover the seam by the translated image. The image reversion module is configured for receiving the translated image from the image translation module and reverting the image to the original one.

Owner:LENOVO (BEIJING) LTD

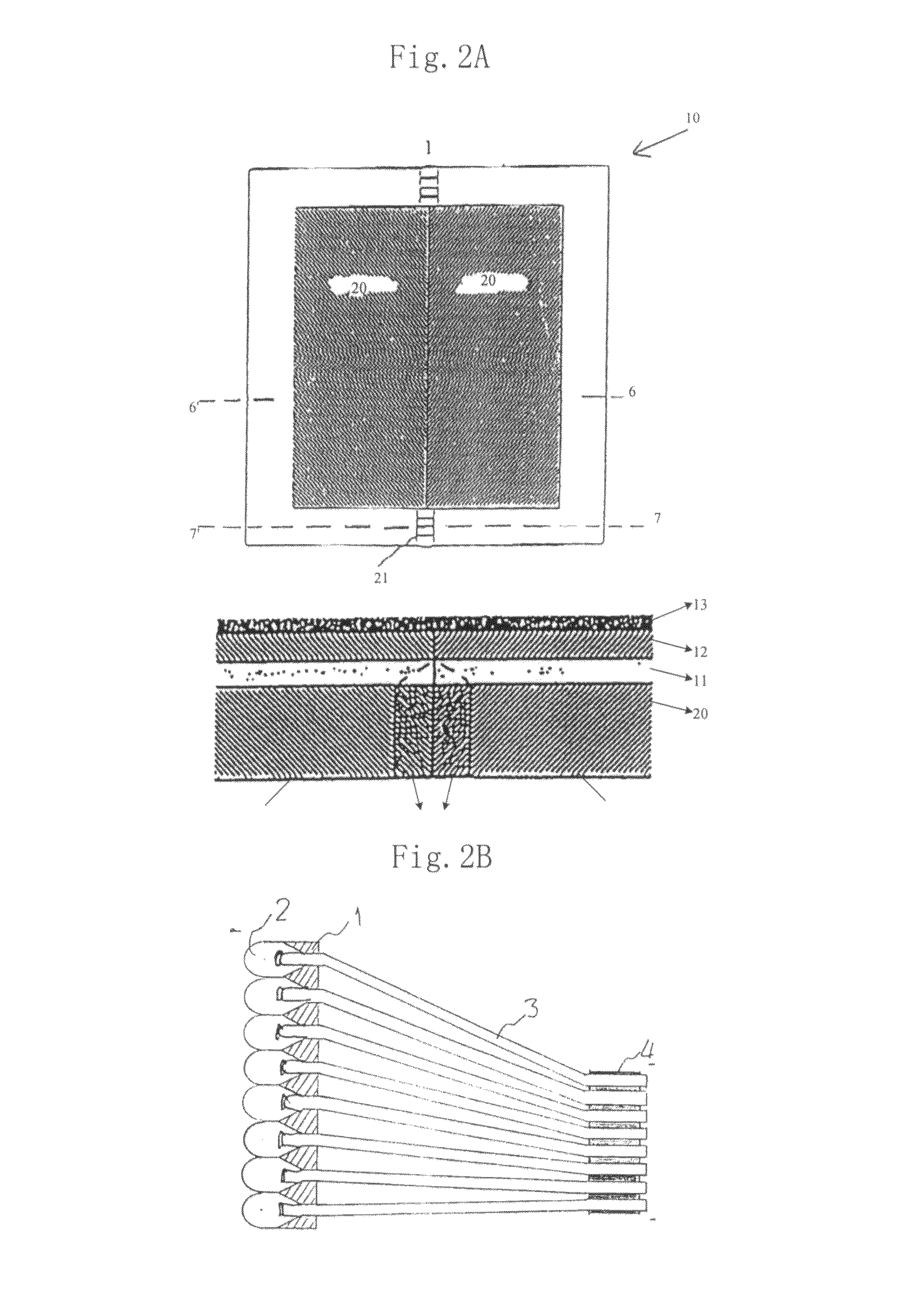

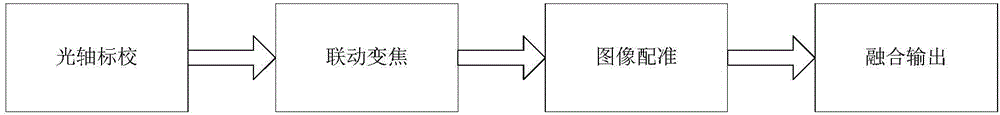

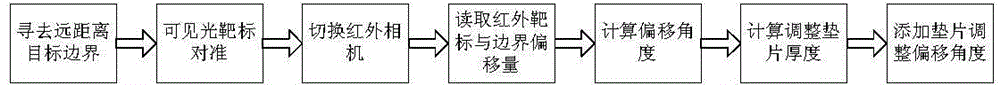

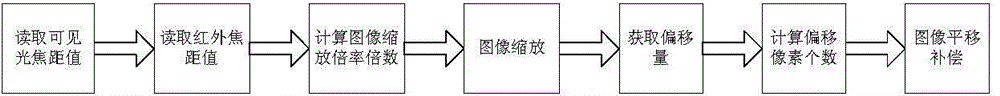

Image fusion method for airborne optoelectronic pod

An image fusion method for an airborne optoelectronic pod comprises steps as follows: (1) adjusting the optical axis of a visible light imaging system and the optical axis of an infrared imaging system to be in a parallel state; (2) performing linkage zoom calculation with the focal length of the visible light imaging system serving as the reference to acquire the corresponding focusing length of the infrared imaging system; (3) calculating respective optical amplification multiples according to the focusing length of the visible light imaging system and the focusing length of the infrared imaging system, performing image scaling, calculating deviation angles according to positions of focusing length sections where the focusing lengths are located, and performing image translation compensation; (4) fusing images of the visible light imaging system and the infrared imaging system after image registration. With the adoption of the method, the visible light imaging system and the infrared imaging system in the pod are associated, infrared image features of targets and visible light image features are fused into one way of image video to be output, and the requirement of the optoelectronic pod for all-weather day-and-light reconnaissance is met to the greatest extent.

Owner:BEIJING INST OF AEROSPACE CONTROL DEVICES

Image translation system and method

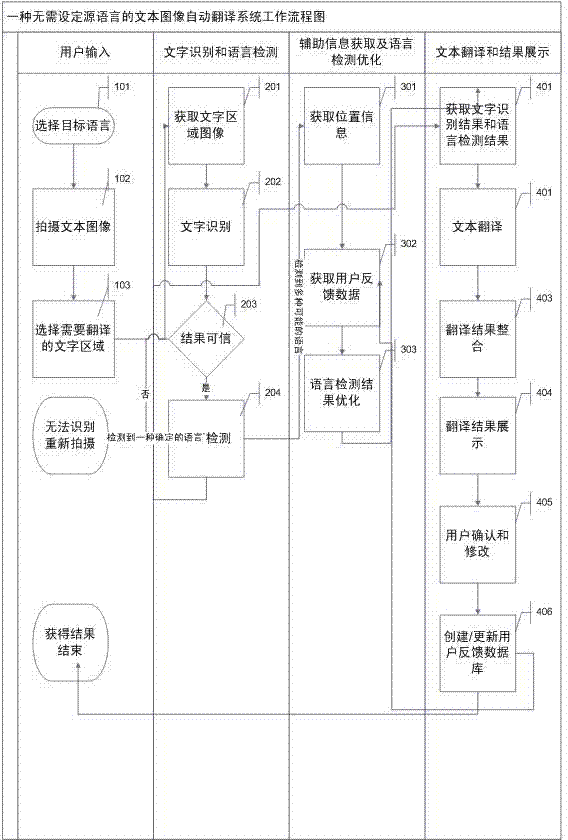

InactiveCN103699527AEasy to operateEasy and natural operationCharacter and pattern recognitionSpecial data processing applicationsMachine translationTranslation system

The invention discloses an image translation system and method. The method comprises, firstly, identifying texts in test images through a character identification technology, then language-detecting the identified texts through a language detecting technology, then further optimizing the language detecting results through auxiliary information such as geographic information to determine the source language of the text image, and lastly, translating the texts into a target language through a machine translation technology. During the entire process, a user just needs to input the text images into the image translation system to obtain final translation results and the source language information of the text images.

Owner:SHANGHAI HEHE INFORMATION TECH DEV

Intelligent graph modification method based on asymmetric loop generation against loss

ActiveCN109064423AGood colorIncrease brightnessImage enhancementImage analysisNetwork structureVisual perception

The invention discloses an intelligent image modification method based on asymmetric loop generation and loss resistance, which applies the idea of loop consistency in CycleGAN used for image translation to the field of intelligent image modification, proposes asymmetric loop generation and loss resistance, and applies wGAN to the training of loop generation and loss resistance network. The invention also discloses an intelligent image modification method based on asymmetric loop generation and loss resistance. The invention uses four sub-networks to form a whole network structure, and utilizes asymmetrical loop generation to antagonize the loss to train, and finally the forward generator can improve the color, brightness, portrait effect and other multi-characteristics of the untrimmed image, and improve the visual feeling of the image.

Owner:福建帝视信息科技有限公司

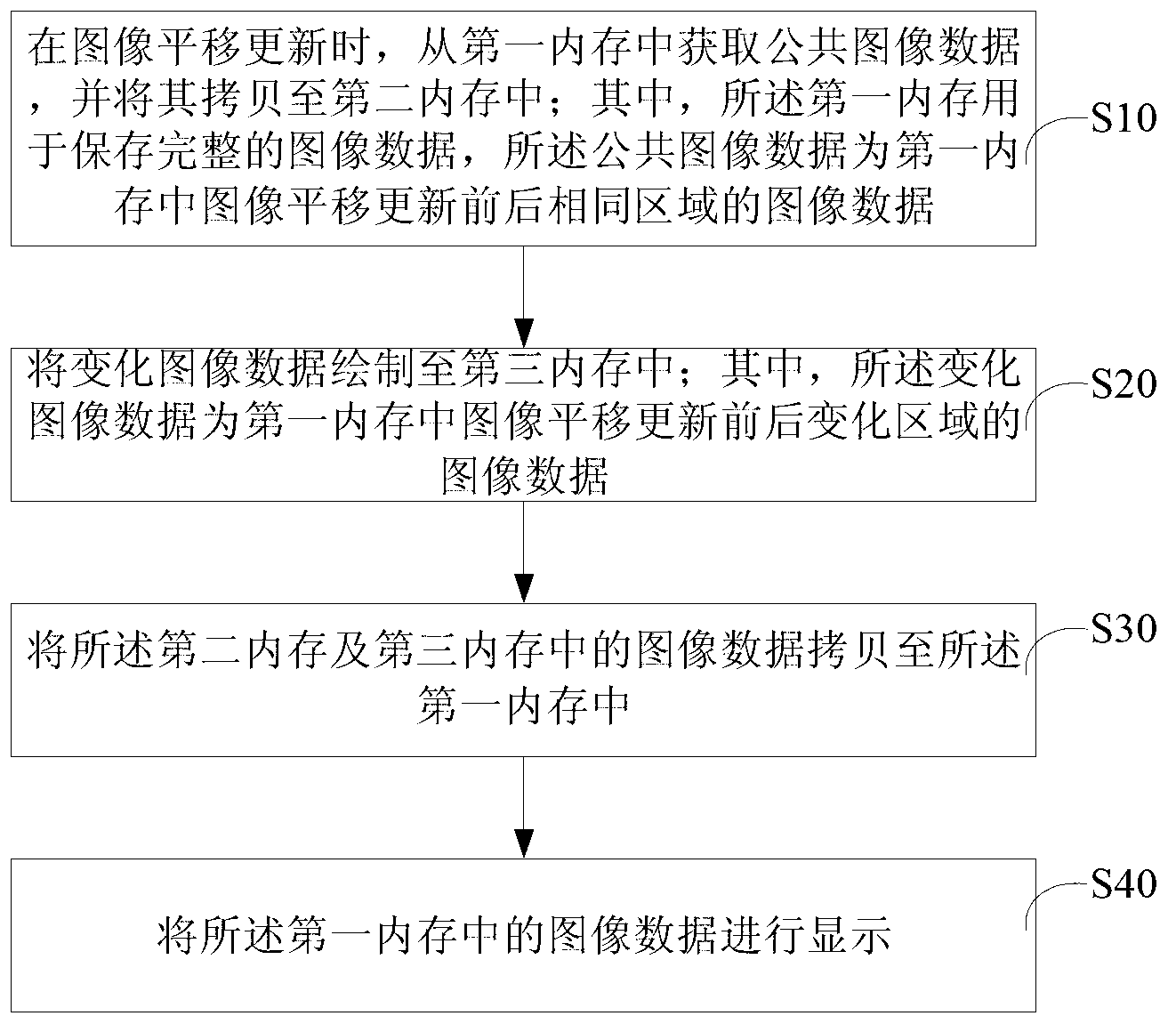

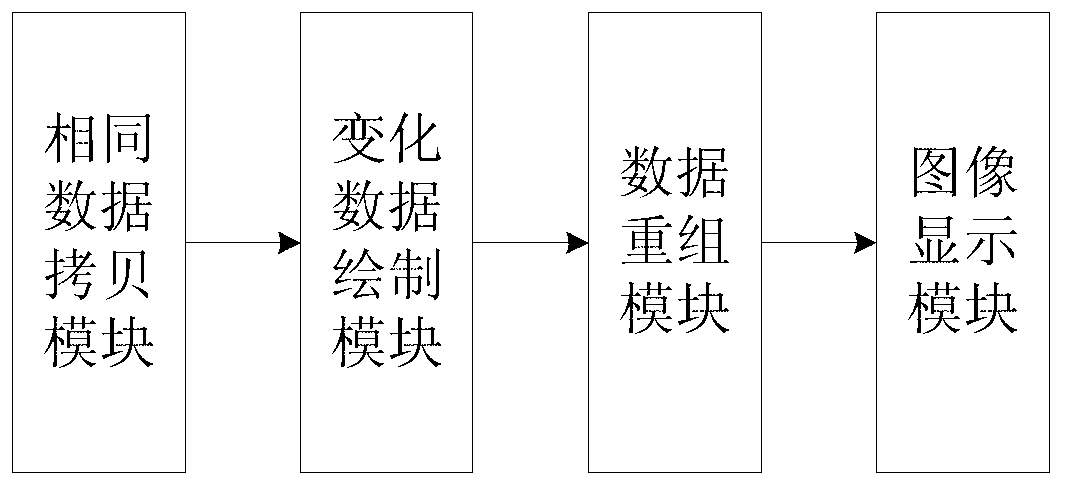

Image translation updating display method and system

ActiveCN103020888ADraw lessImprove display efficiencyImage data processing detailsInternal memoryComputer graphics (images)

Owner:GUANGDONG VTRON TECH CO LTD

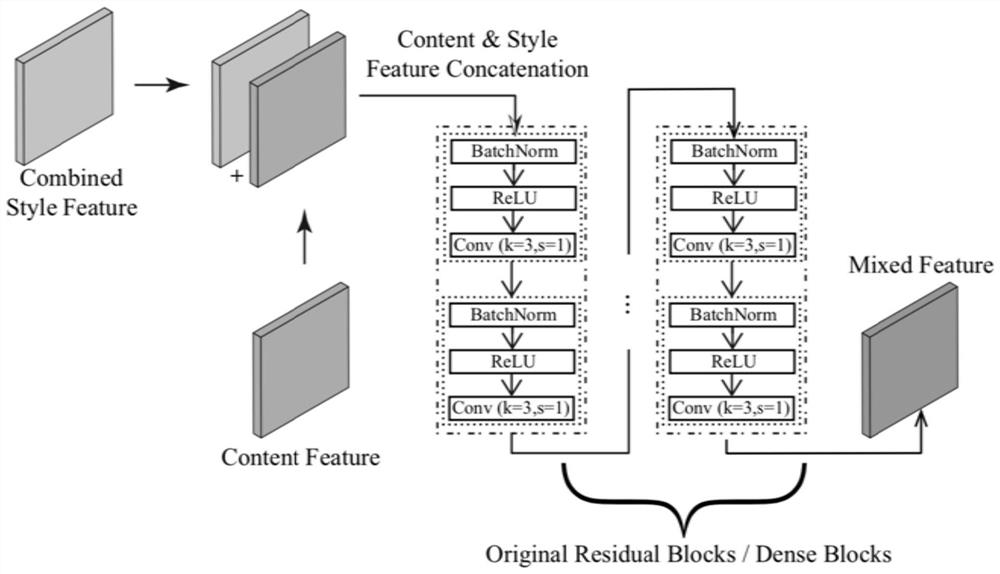

Style character generation method based on small number of samples and containing various normalization processing

PendingCN111753493AFast trainingNatural language data processingNeural architecturesData setData source

The invention discloses a style character generation method based on a small number of samples and containing various normalization processing. A style reference character data set is formed by usinga plurality of style characters; a plurality of standard font characters with the same content are used as a character content prototype data source; an image translation model which is based on a deep generative adversarial network and comprises a mixer and multiple normalization modes is used, an adversarial loss function provided by the patent is used in training, and finally, a character generation model which is used for character style migration and contains multiple normalization processing can be trained. According to the fully trained model, any character with the same writing or printing style can be generated by taking a small number of or even one character with the same style as a style reference template, and the content of the generated character is determined by an input content prototype with a standard style.

Owner:XIAN JIAOTONG LIVERPOOL UNIV

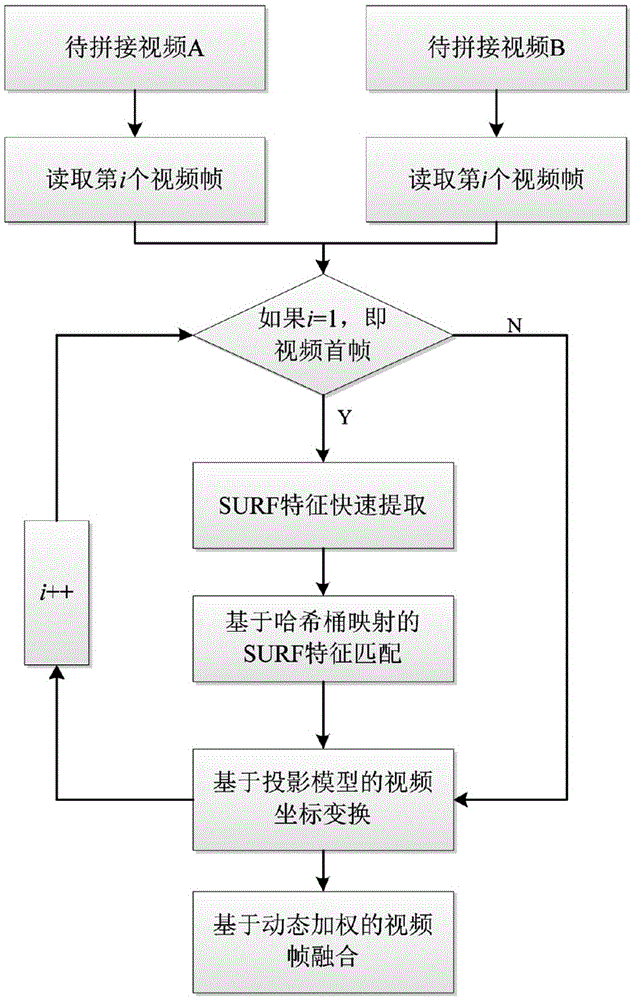

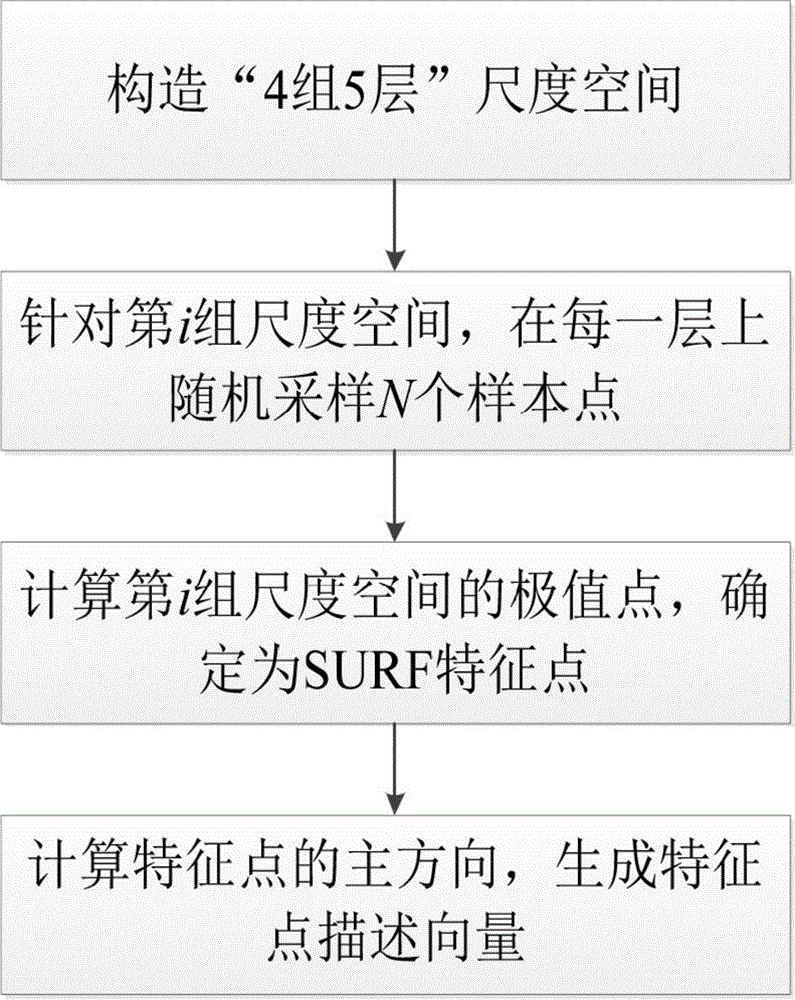

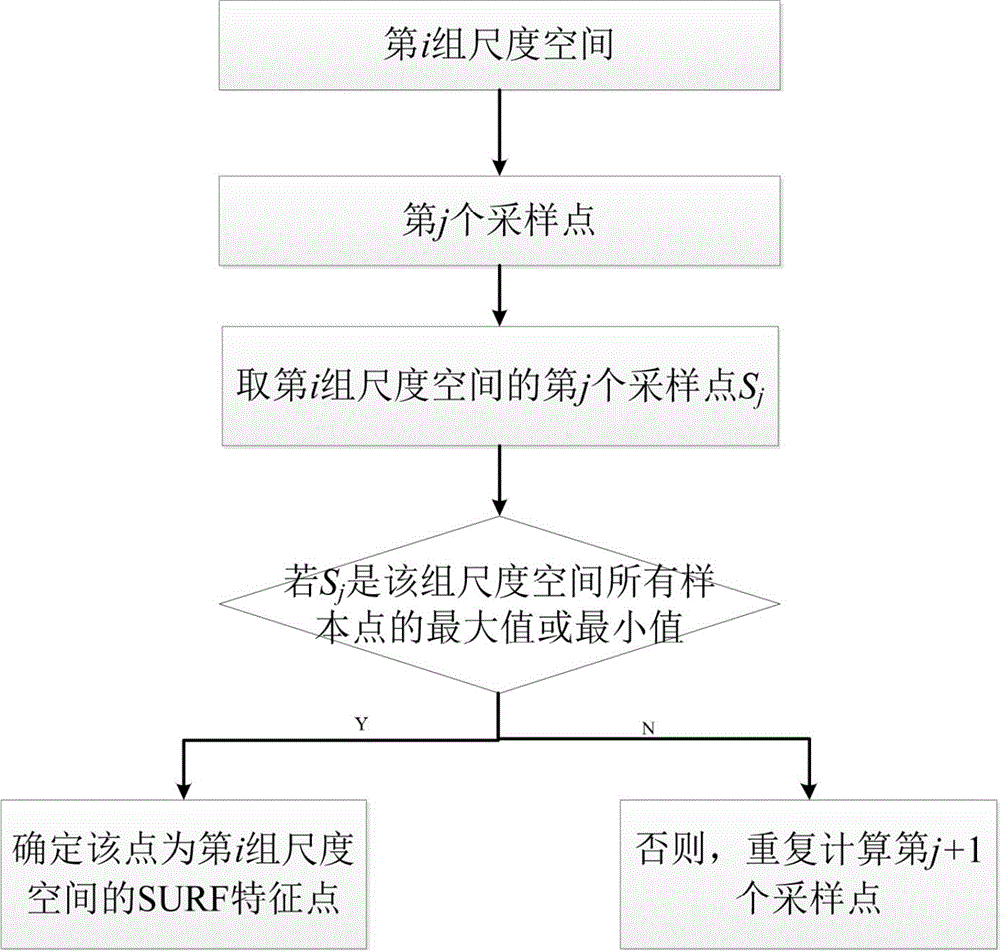

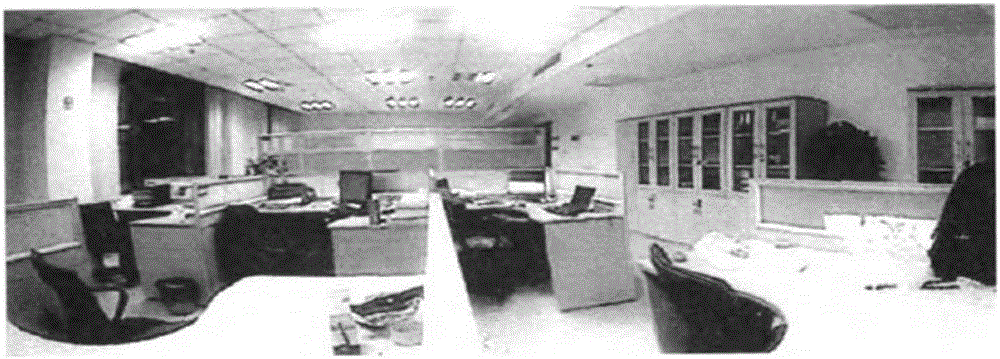

Panorama video automatic stitching method based on SURF feature tracking matching

ActiveCN105787876AImprove processing efficiencyProcessing speedImage enhancementImage analysisStereoscopic videoFeature vector

The invention discloses a panorama video automatic stitching method based on SURF feature tracking matching. The method comprises the following steps: a first frame distinguishing type processing method, the first frame serving to select a video reference coordinate system, directly performing video fusion on successive frames; in accordance with the first frame of a video to be stitched, extracting SURF feature points, generating feature point description vectors; based on the Hash mapping and a barrel storage method, searching for similar SURF feature vector point symmetries, constituting a similar feature set; using the vector point symmetries in the similar SURF feature set, resolving an optimal data relational degree coordinate system; conducting dynamic weighted summation on coordinate-converted pixel values of the video frames to be stitched, implementing seamless stitching fusion of the video. According to the invention, the method can implements omnibearing, multi-visual angle, and stereoscopic video seamless stitching fusion, and not only overcomes traces, fuzziness and double image brought by image translation, rotation, zooming and radiation transformation, and also increases efficiency and accuracy in conducting image calibration based on feature matching.

Owner:上海贵和软件技术有限公司

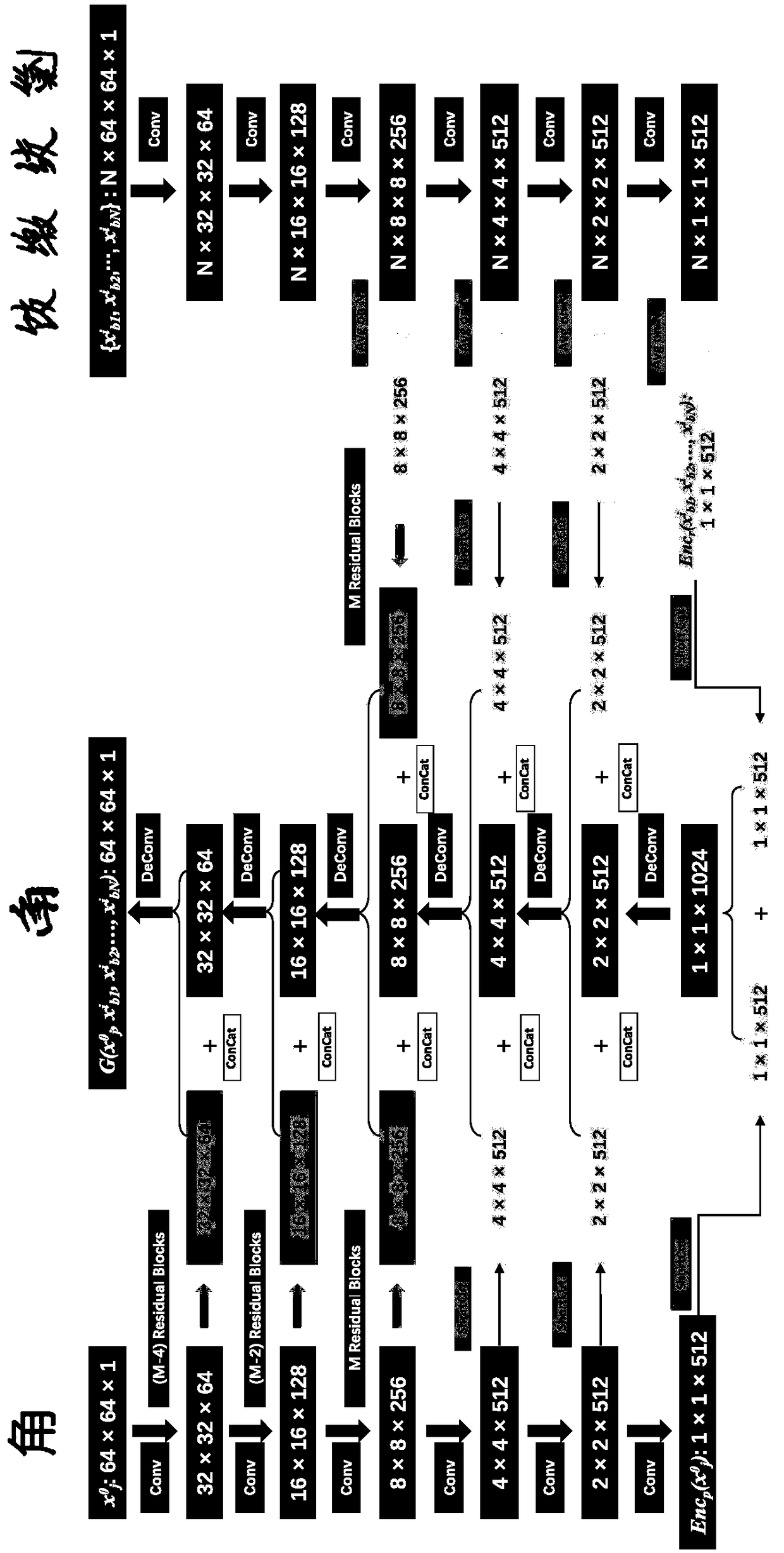

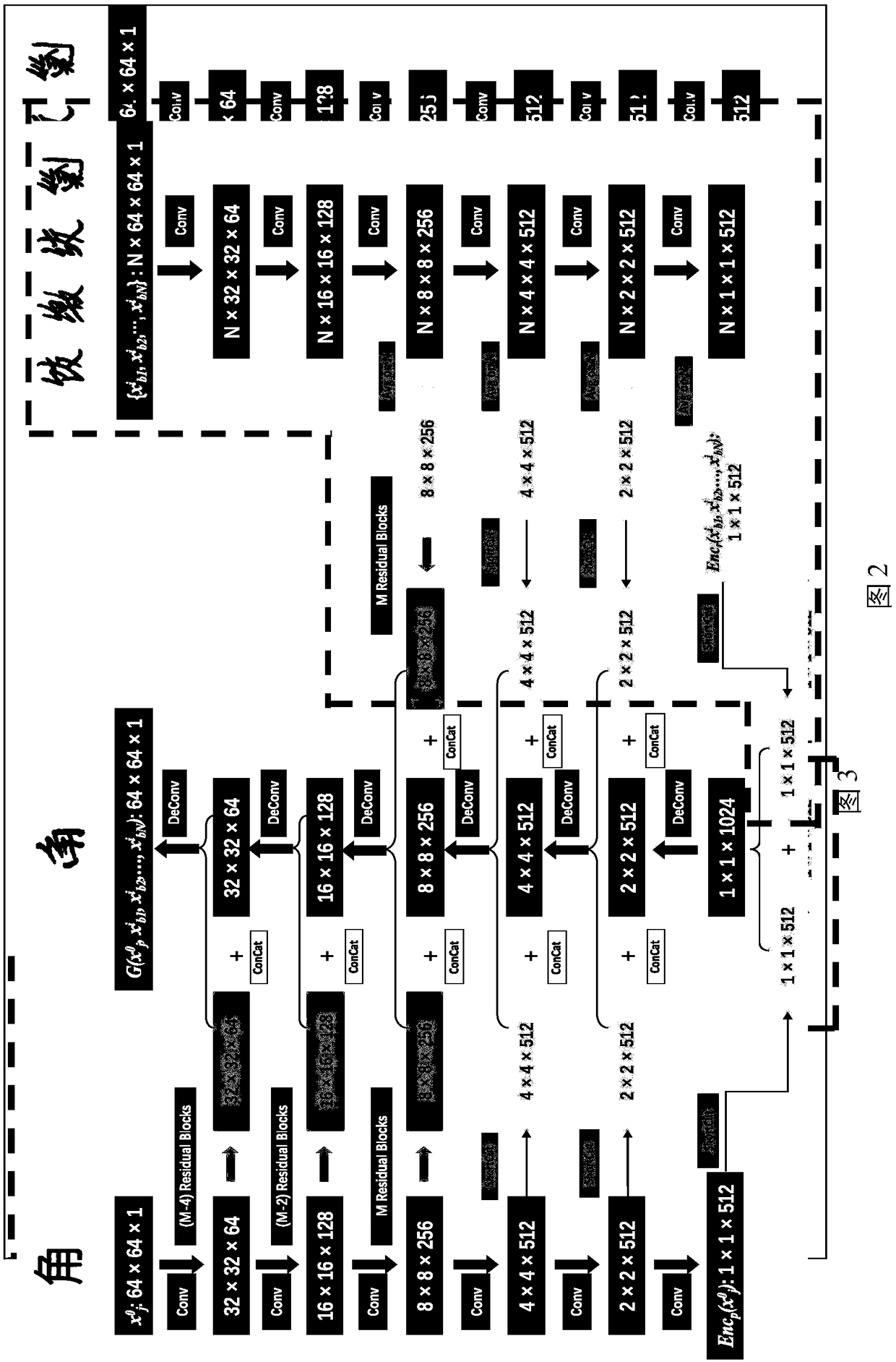

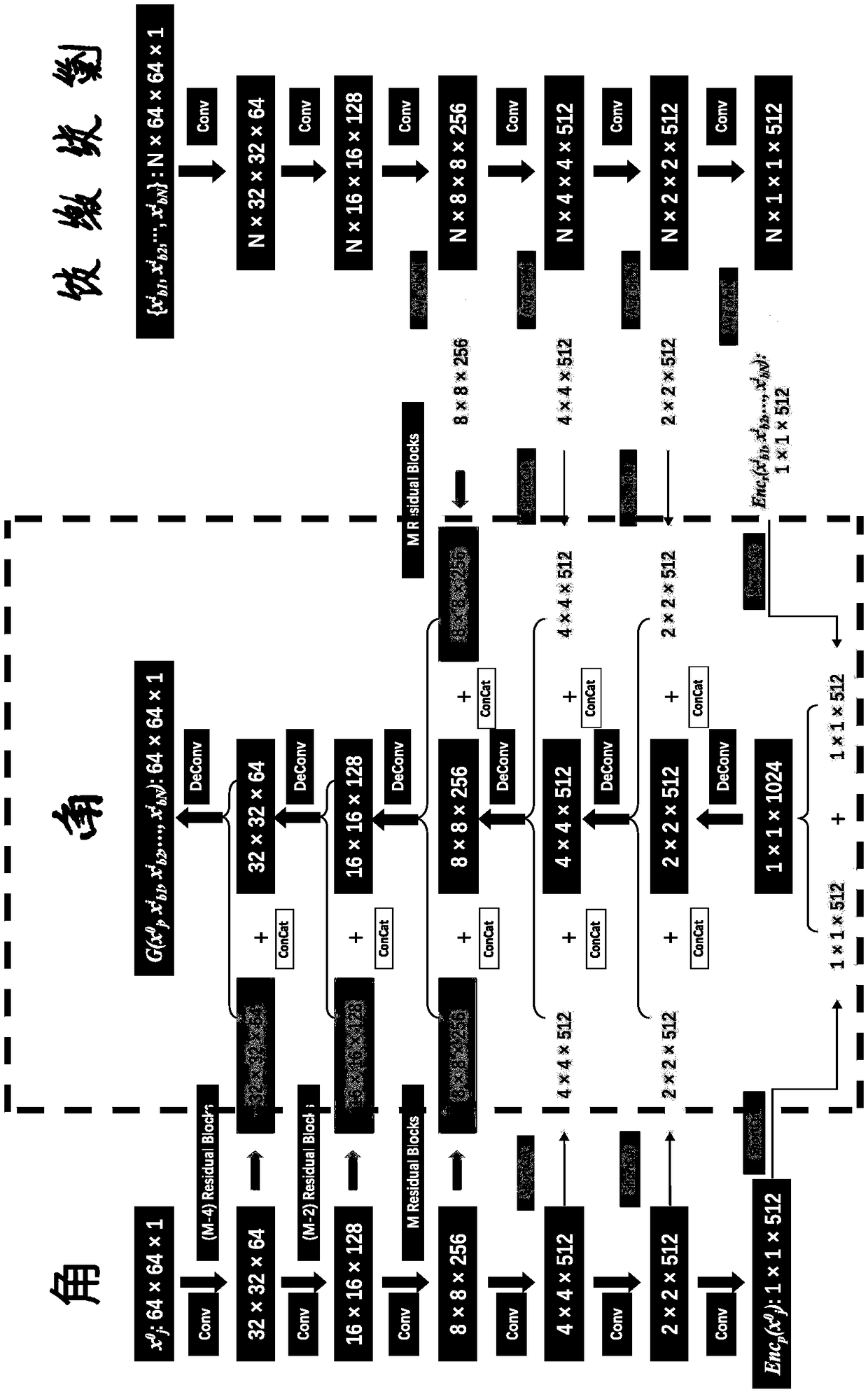

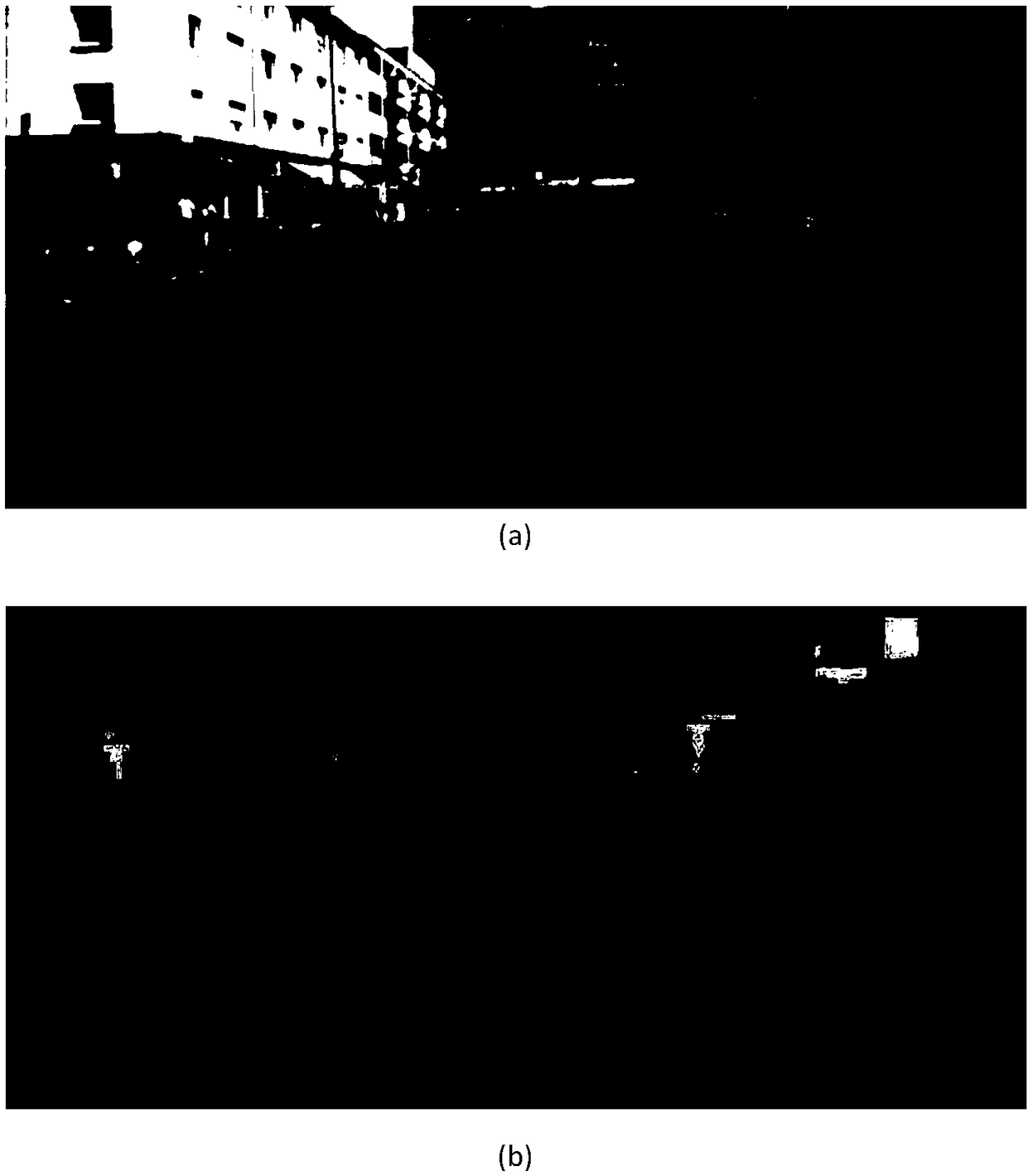

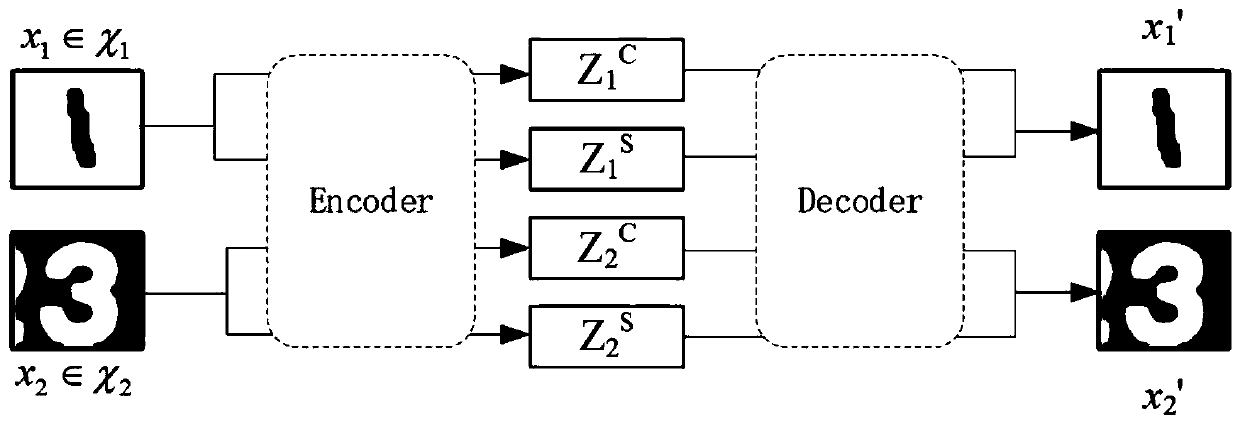

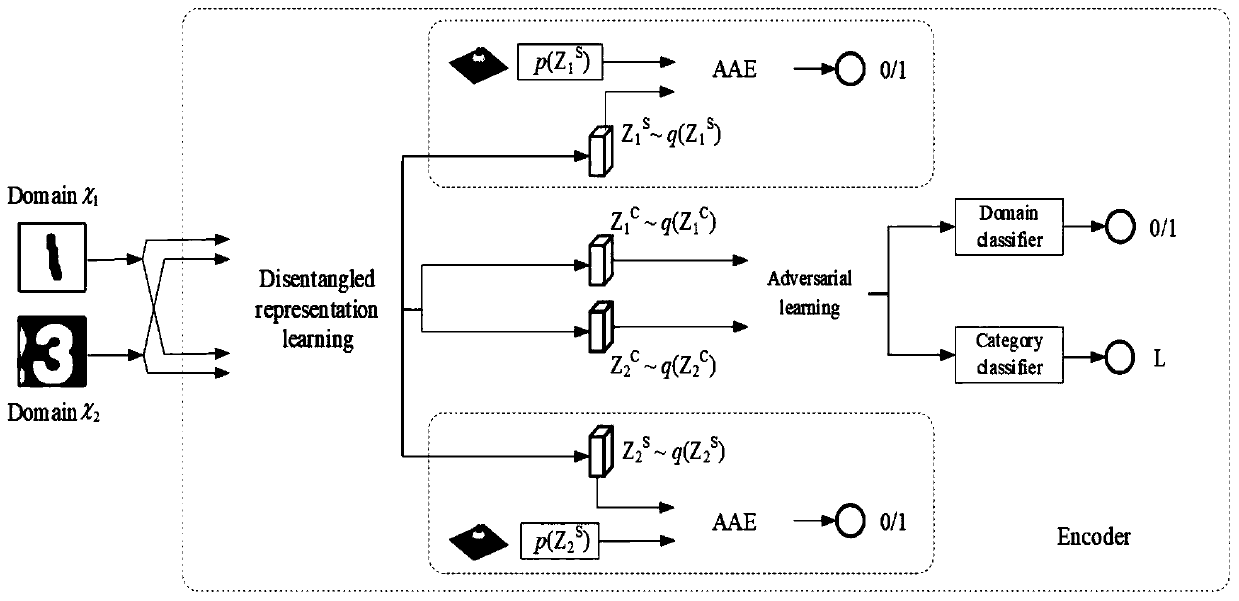

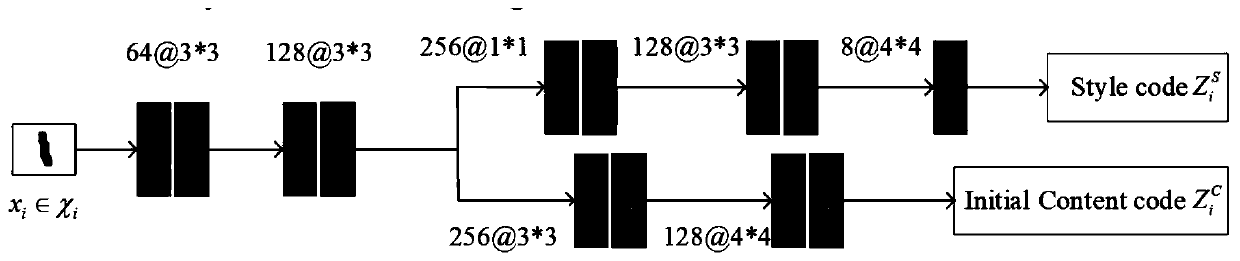

Semi-supervised multi-modal multi-class image translation method

ActiveCN110263865ACapture Semantic PropertiesQuality improvementCharacter and pattern recognitionMode transformationLearning network

The invention discloses a semi-supervised multi-modal multi-category image translation method. The method comprises the following steps of S1, inputting two images from different domains and a small number of labels; S2, inputting the input images and labels into the encoders, dividing the encoders into a content encoder and a style encoder, and respectively decoupling the images from the style encoder and the content encoder by using the decoupling representation learning to obtain the style codes and the content codes; S3, inputting the style codes into an adversarial auto-encoder to complete the image multi-class training; inputting the content codes into a content adversarial learning network to complete the image multi-mode transformation training; and S4, realizing the image reconstruction and multi-mode conversion through splicing the style codes and the content codes. According to the method, the problem caused by the requirement for image translation diversity is solved, and the multi-modal and multi-class cross-domain images can be generated by jointly decoding the potential content codes and the style codes.

Owner:BEIFANG UNIV OF NATITIES

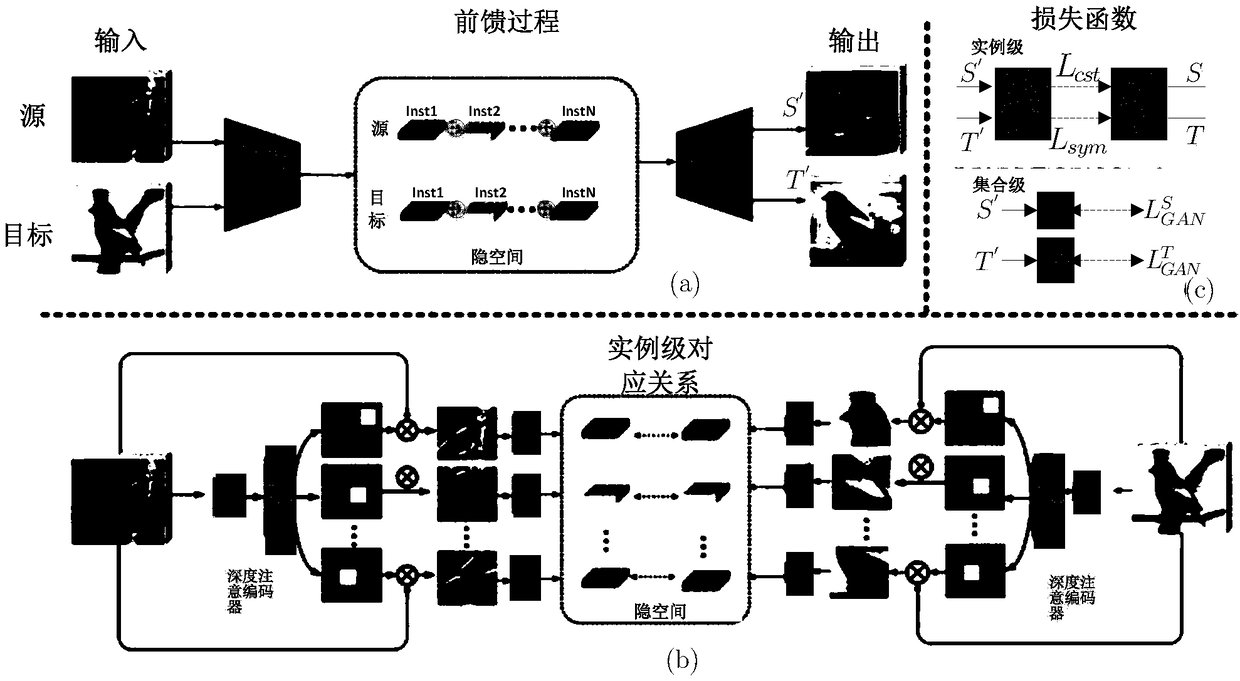

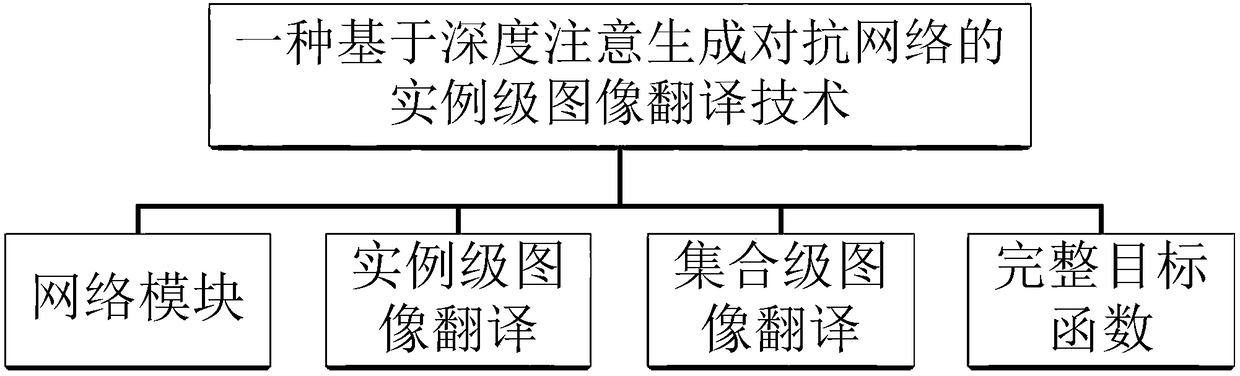

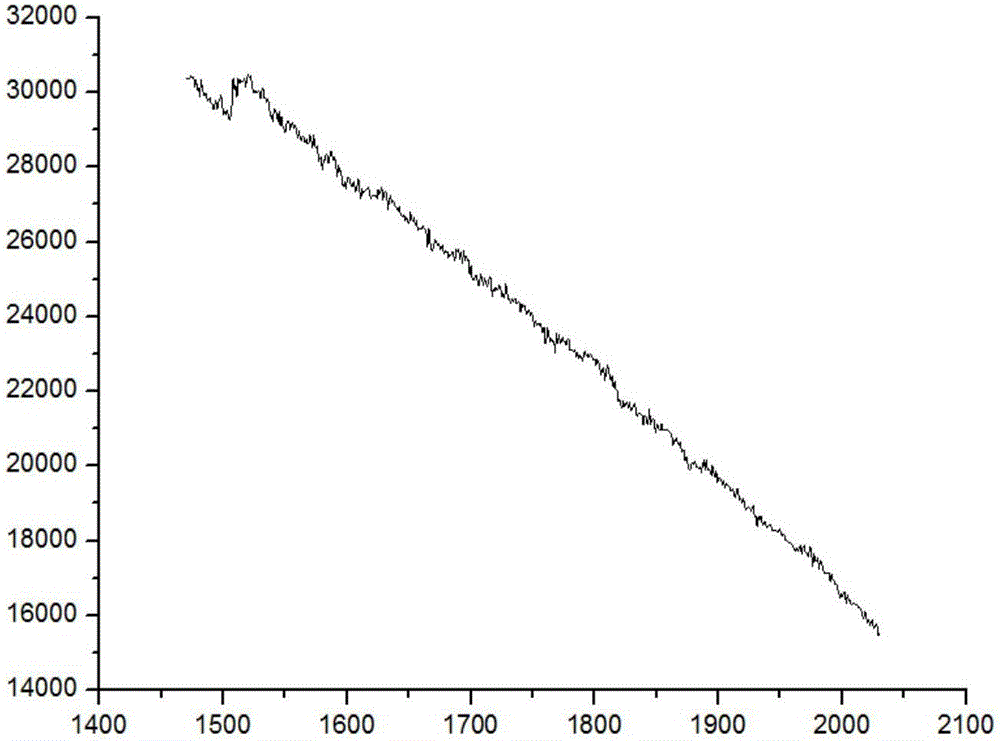

Instance level image translation technology based on deep attention generative adversarial network

InactiveCN108509952ACharacter and pattern recognitionNeural learning methodsAlgorithmGenerative adversarial network

The invention puts forward an instance level image translation technology based on a deep attention generative adversarial network. The main content of the technology comprises a network module, an instance level image translation, collection level image translation and an integral target function. The technology comprises the following steps that: firstly, using a deep attention coder, a generator and two descriminators to construct the deep attention generative adversarial network; then, according to a given input image, adopting a positioning function to predict the position of the attention area and calculate an attention mask; then, utilizing the generator to receive a structured expression from a latent space, and generating a translation sample; and finally, using the descriminatorsto discriminate the translated sample from a real image. The invention puts forward the instance level image translation technology based on the deep attention generative adversarial network, can besimultaneously applied to instance level and collection level constraints, solves a great quantity of practical tasks and can obtain an effect of better performance.

Owner:SHENZHEN WEITESHI TECH

Method of correcting projection background inconsistency in CT imaging

InactiveCN106408616AEasy to operateSmall amount of calculationImage enhancementReconstruction from projectionIlluminanceProjection image

The invention relates to a method of correcting projection background inconsistency in CT imaging, and specifically relates to a CT projection background cross-correlation registration method based on image grayscale information. The method comprises the following steps: (1) before acquisition of a sample CT projection image, acquiring a bright field image, and determining the spot center of the bright field image; (2) calculating a compensation function of the bright field image; (3) compensating the illumination of the bright field image; (4) compensating the illumination of the projection image; (5) calculating the actual offset between the background of the projection image and the background of the bright field image; and (6) performing image translation registration. A CT projection is registered using the principle of cross-correlation based on image grayscale information. The method has the advantages of no need to know the specific deflection parameter of a detector in advance, simple operation, and high computation efficiency.

Owner:SHANXI UNIV

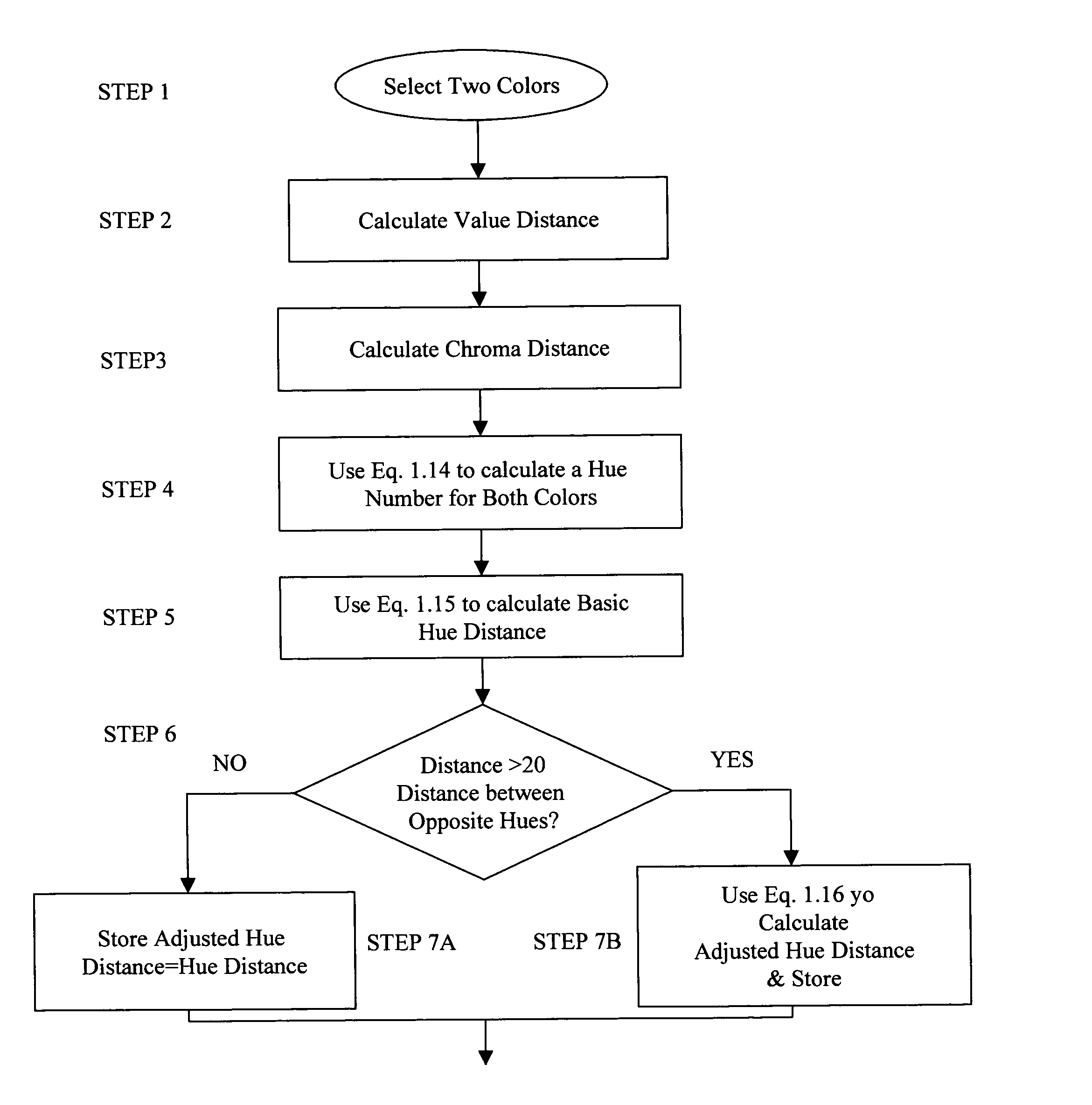

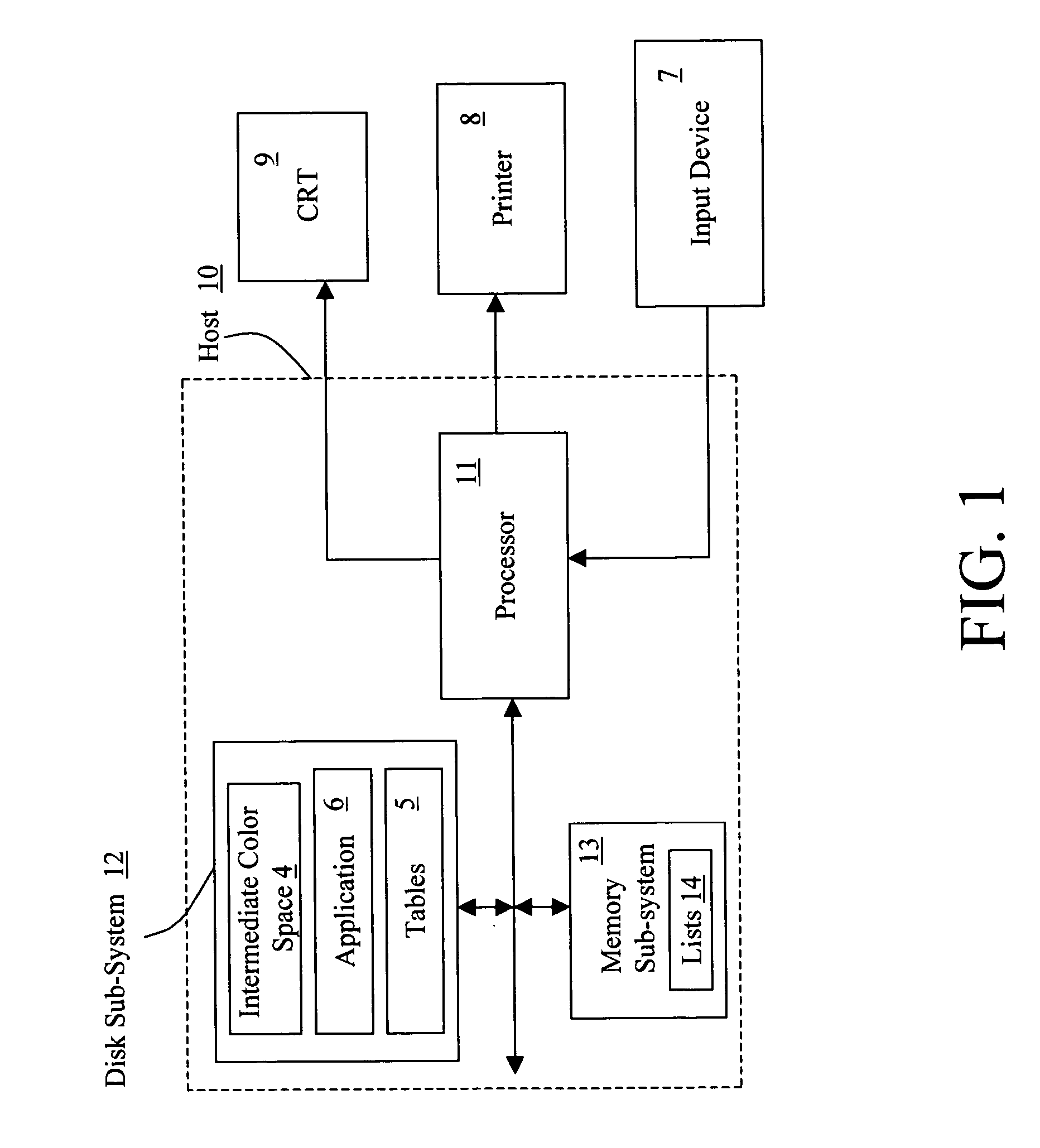

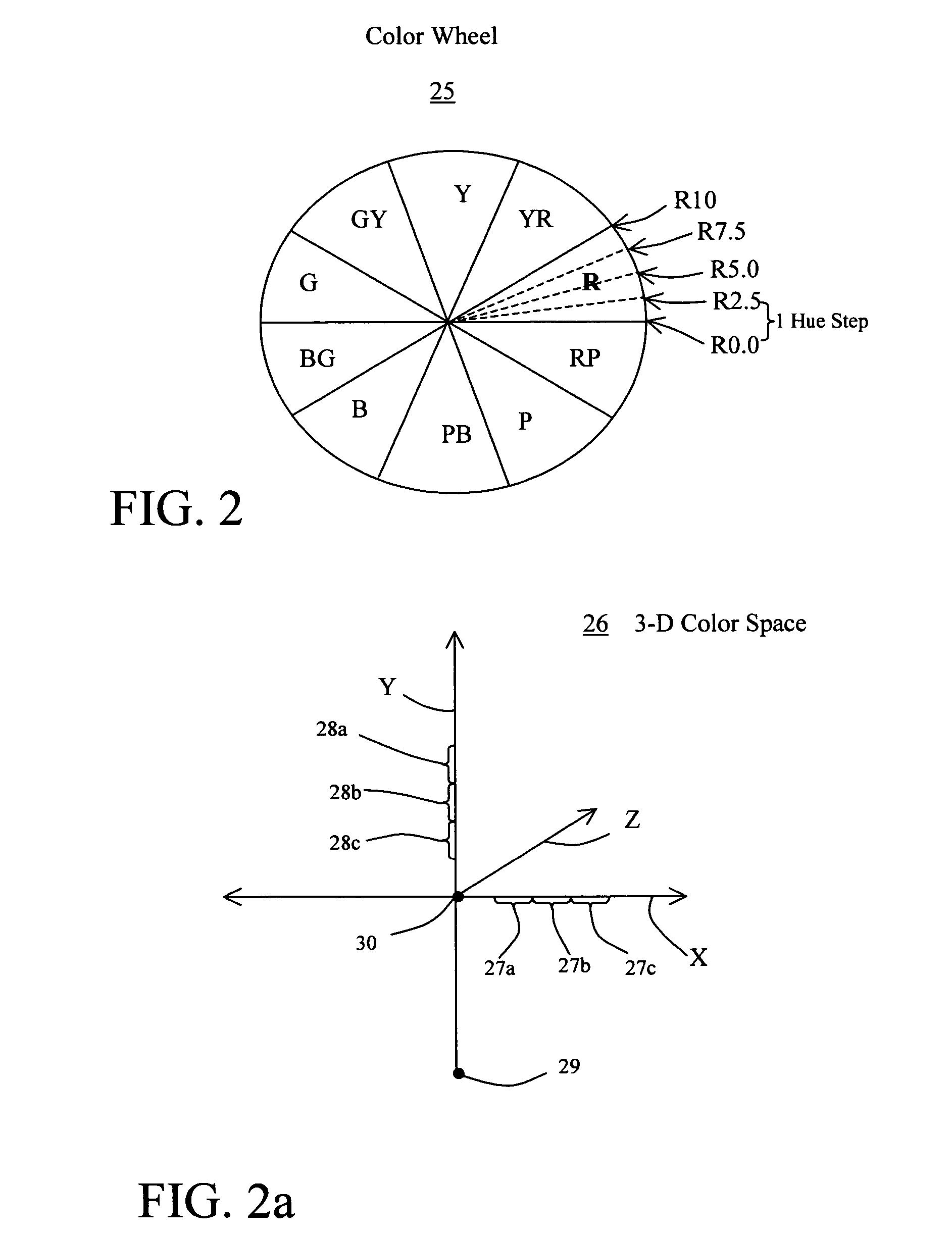

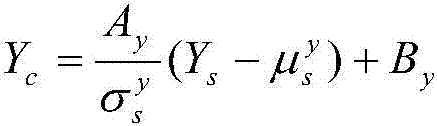

Color correction method with improved image translation accuracy

A color correction method utilizes a specially designed, perceptually ordered, intermediate color space and color value information from an original source device image and color value information from a repositioned source device image to quantify the accuracy of a color image translation process. The color correction method operates, under user control, to convert original source device image color values to intermediate space color values, where the color values can be analyzed to determine whether or not all of them are positioned within the gamut of a target device. If all of the original source device color values are not positioned within the gamut of the target device, the user can reposition these colors so that all or substantially all of the color values are contained within the gamut of the target device. After the color values are repositioned, an aggregate image closeness term and a proportion conservation term are calculated and the sum of these two terms is a scalar quantity equivalent to the accuracy of the image translation process.

Owner:MASTER COLORS

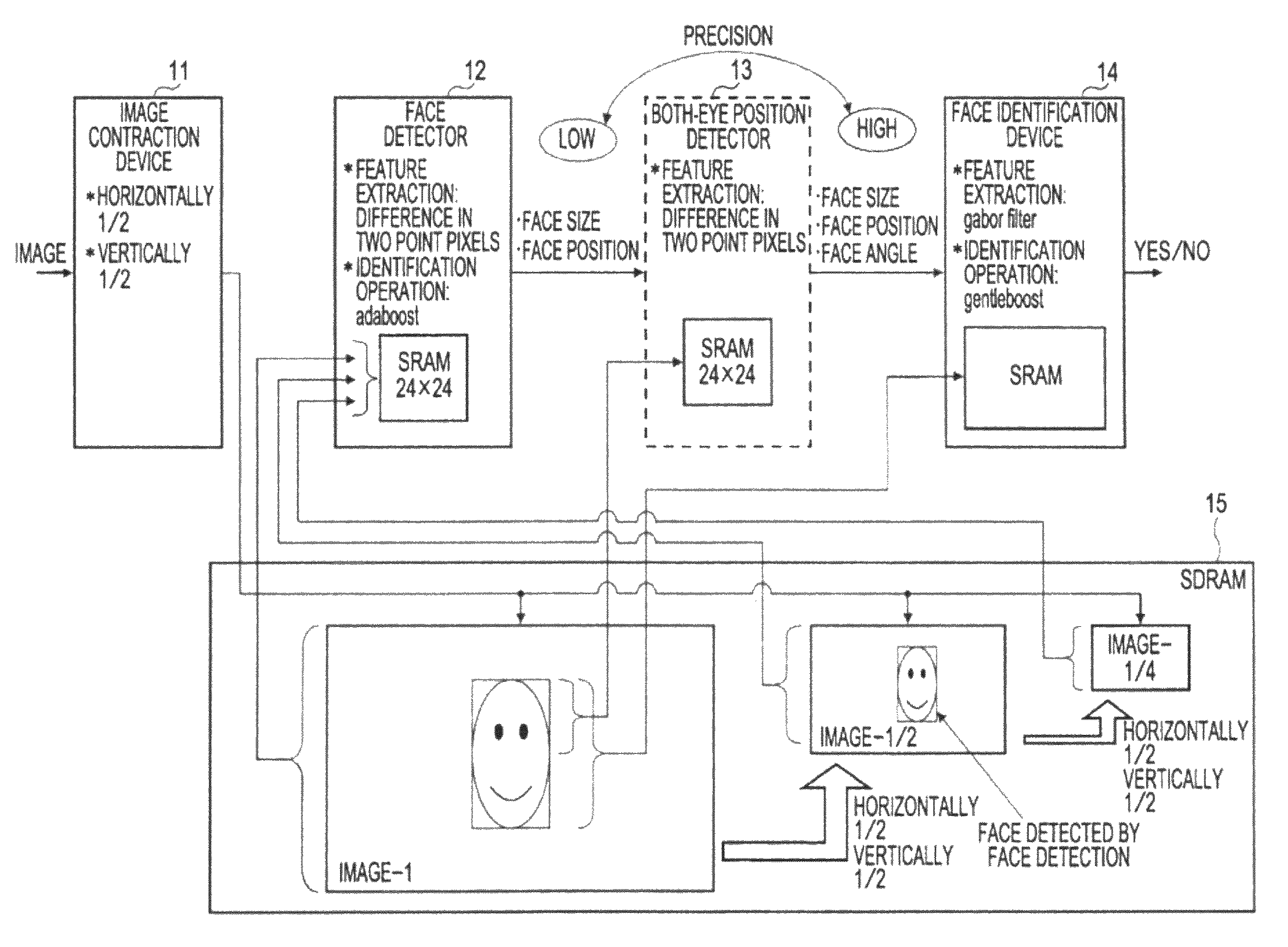

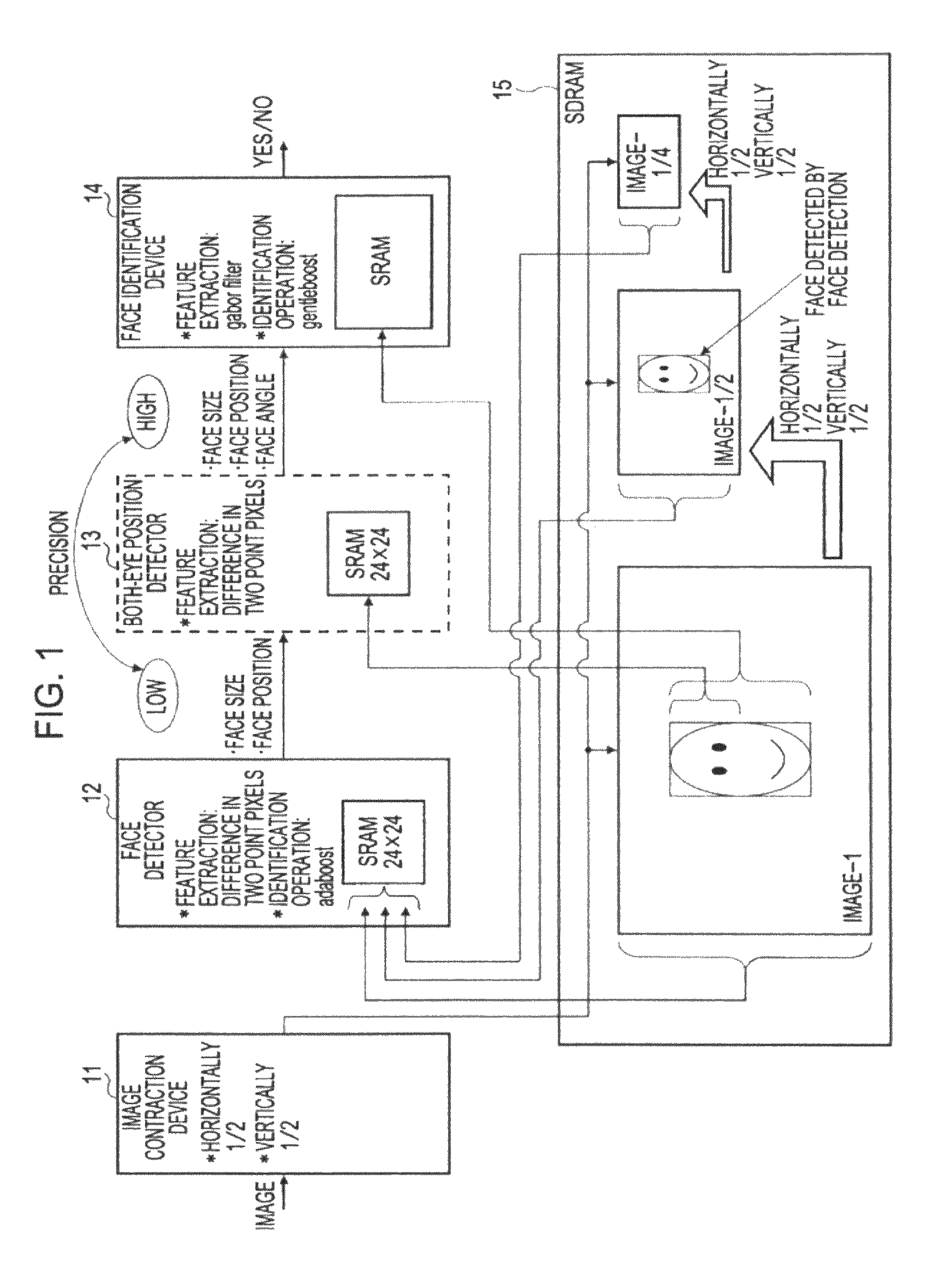

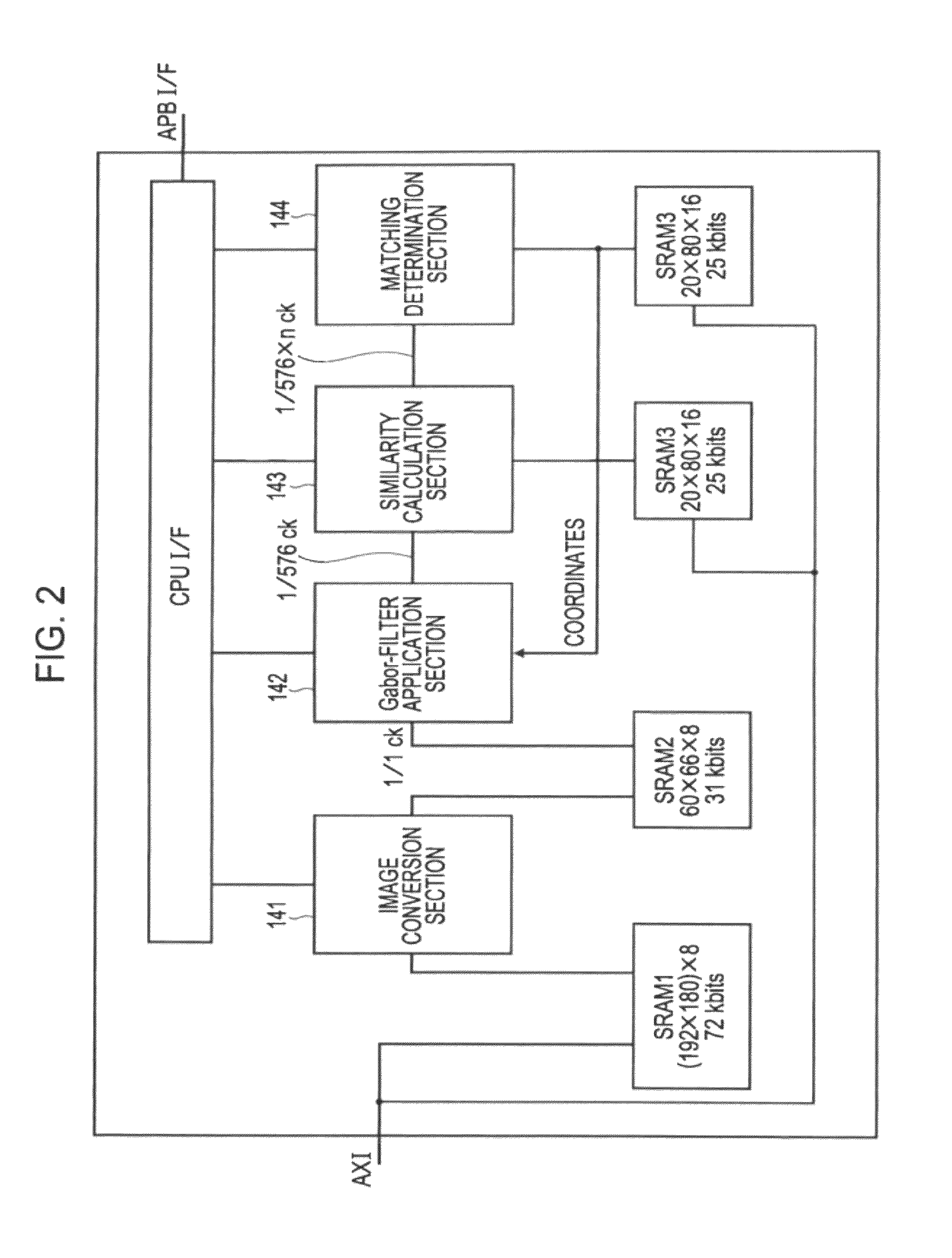

Image processing apparatus, image processing method and computer program

InactiveUS8155398B2Improve accuracySuppress errorCharacter and pattern recognitionImaging processingComputer graphics (images)

An image processing apparatus includes an image conversion section that receives an input of a face image to be identified, executes an image conversion on the input face image, and performs a normalization processing into an image. The image conversion section obtains a face image from a first memory storing the face image to be normalization processed, performs the normalization processing by an image conversion and stores the face image after the normalization processing into a second memory. The image processing apparatus includes a calculation section that calculates a conversion parameter for calculating a corresponding point in the first memory to each pixel position in the second memory. The conversion parameter defines one of an image contraction processing, an image rotation processing, or an image translation processing to be performed when the face image stored in the first memory is converted into the face image stored in the second memory.

Owner:SONY CORP

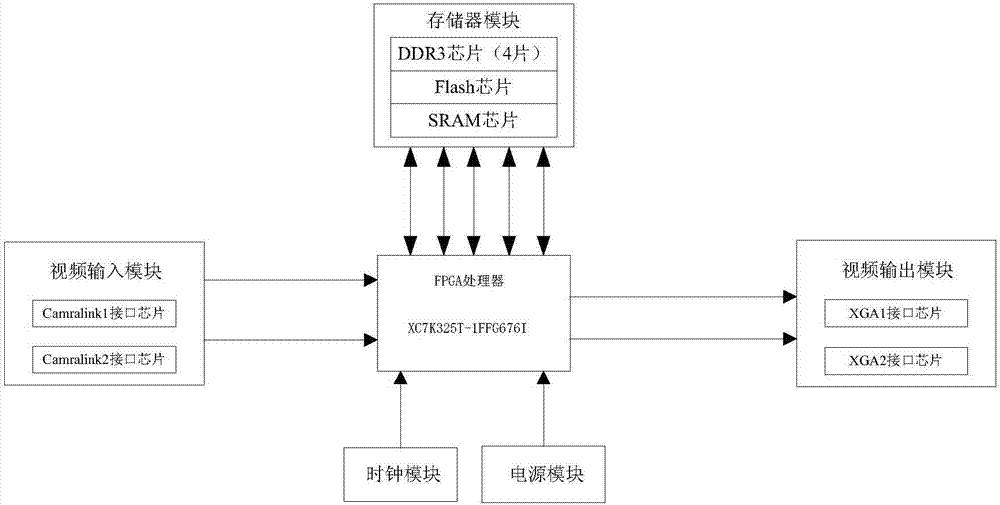

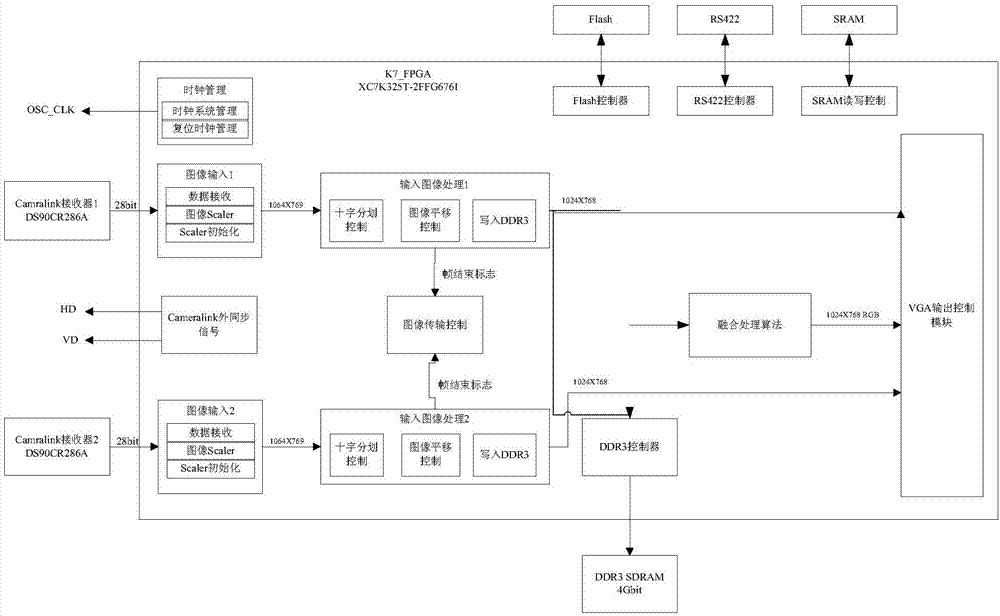

Fusion processing circuit of high-definition image

InactiveCN107169950AAchieve integrationHigh resolutionImage enhancementImage analysisHigh resolution imageVideo image

The invention relates to a fusion processing of a high-definition image. The circuit comprises a core processor, and a video input module, a video output module, a power supply module, a clock module and a memory module which are connected with the core processor. The video input module comprises two paths of Camralink port chips and is used for decoding Camralink data and transmitting decoded data to the core processor. The core processor is used for achieving receiving, image scaling, character superposition, image translation, image storage and an image fusion algorithm for real-time video image data, and achieving real-time video image data. The video output module is used for carrying out VGA output for the real-time video image data generated by the core processor. According to the invention, fusion of high-resolution real-time digital images is achieved; the processing speed is quicker and the data processing quantity is bigger than a simulation video image fusion processing circuit; and the resolution of the fused image is high.

Owner:江苏北方湖光光电有限公司

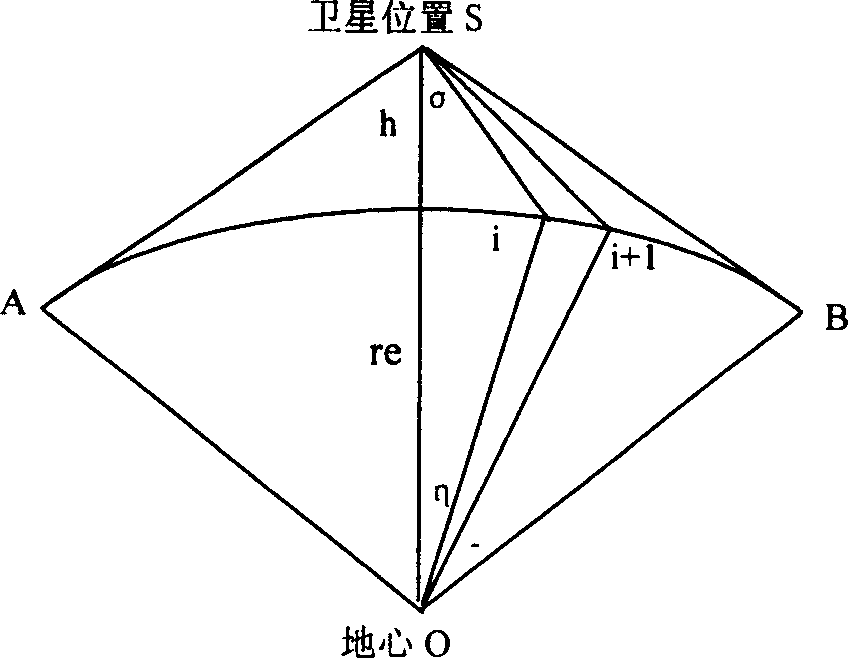

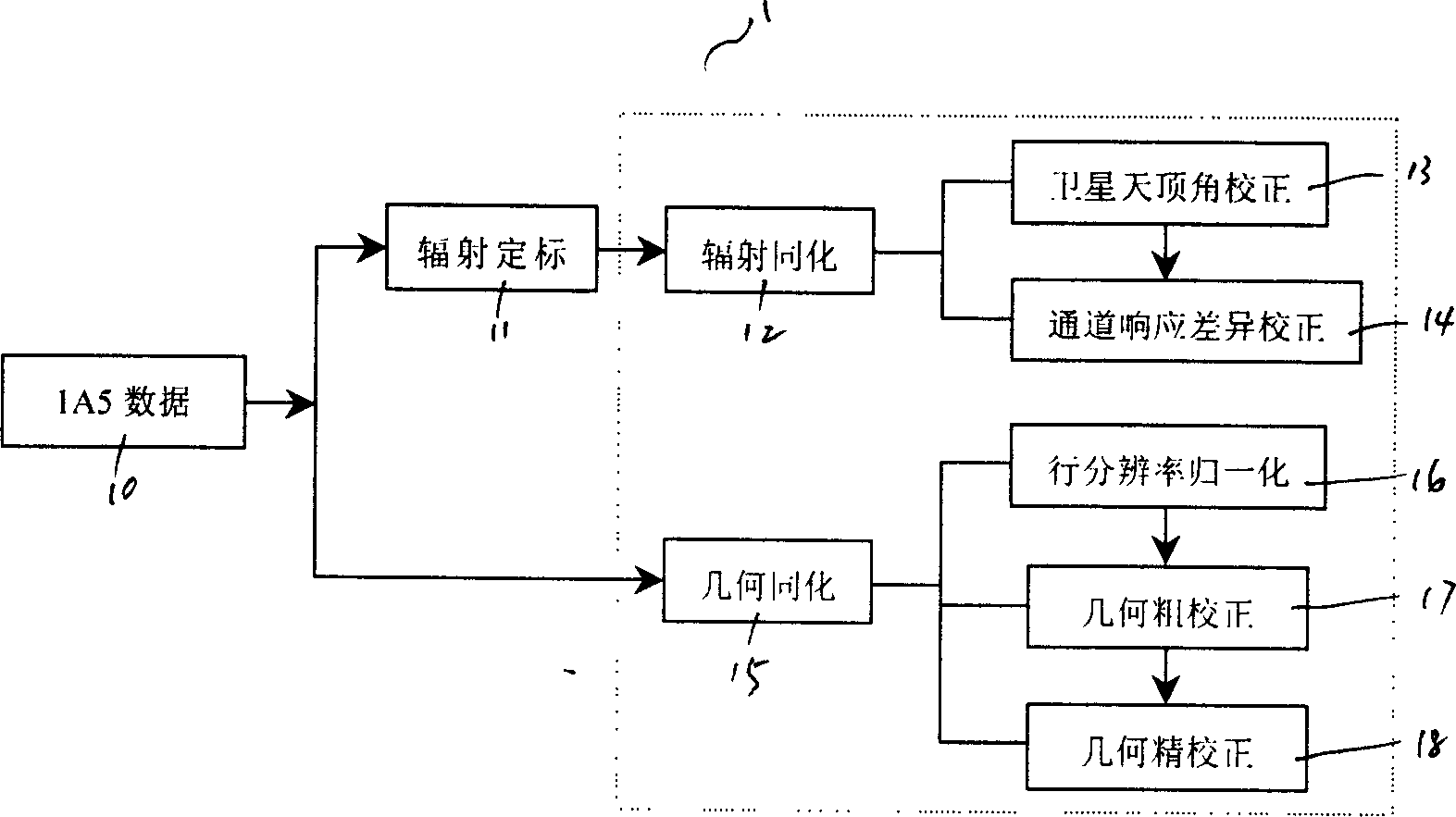

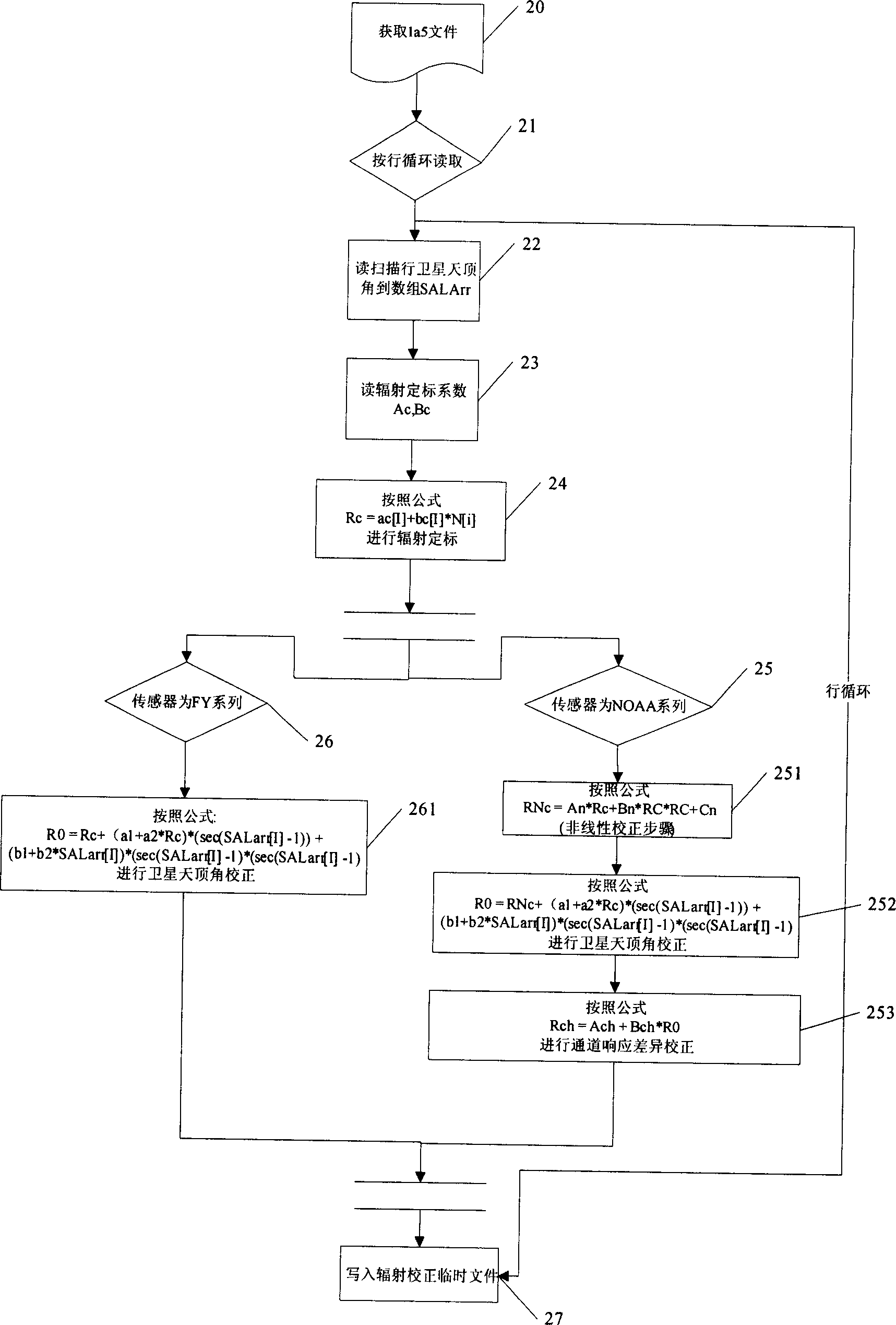

Automatic assimilation method for multi-source thermal infrared wave band data of polar-orbit meteorological satellite

InactiveCN1790051ASolve the assimilation preprocessing problemComparableElectromagnetic wave reradiationICT adaptationNatural satelliteOriginal data

The invention discloses a thermal-infrared band data automatic disposal method of multi-source polar orbit meteorological satellite with radiating assimilation subcourse and geometrical assimilation subcourse, which is characterized by the following: scaling the primitive data by radiation then correcting through satellite zenith angle; finishing radiation assimilation after correcting corresponding channel difference; accomplishing geometrical assimilation through distinguishability normalization, standard space projection transformation and image translation; finishing self-judgment of the same name pixel point through relative matching; reaching direct comparison of different satellite detecting radiation values without artificial operation; improving the precision of geometrical position for multi-source data; paving the base of data disposal and appliance of polar orbit satellite remote sensing data.

Owner:SHANGHAI INST OF TECHNICAL PHYSICS - CHINESE ACAD OF SCI

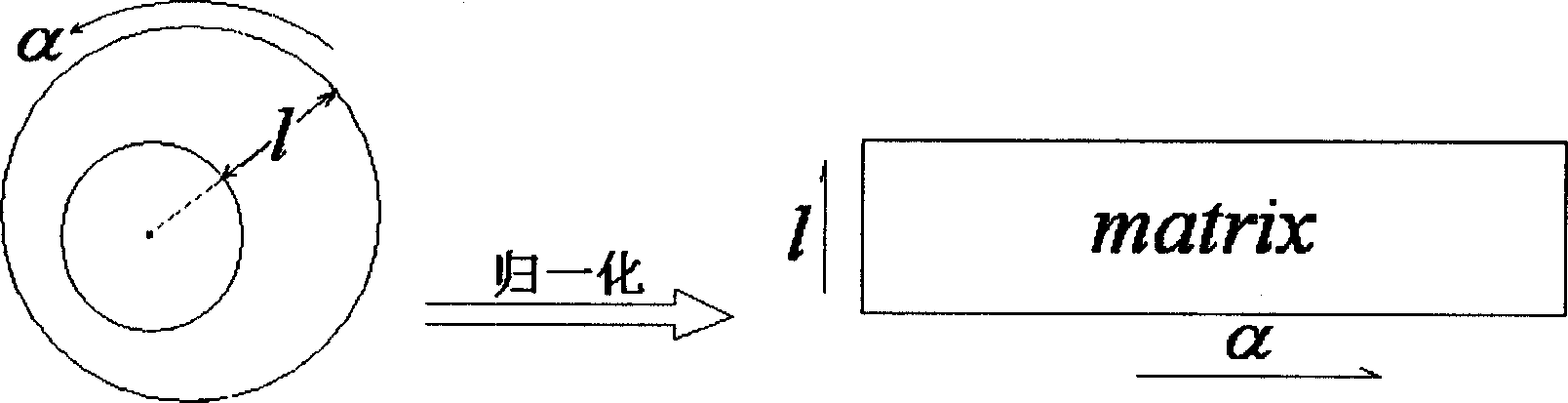

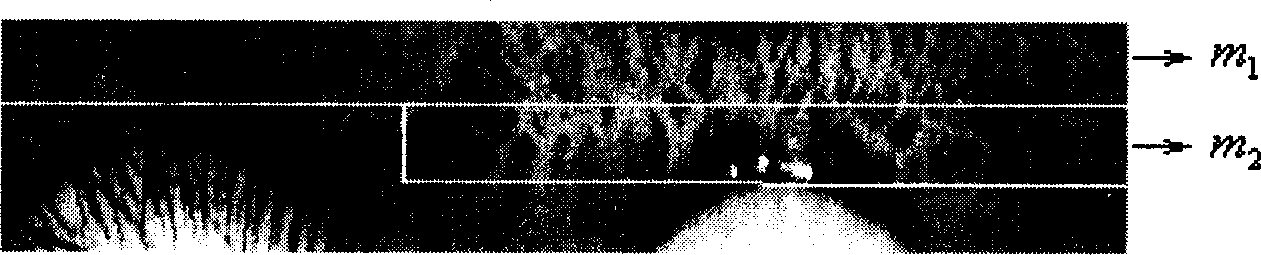

Iris recognition method based on wavelet transform and maximum detection

InactiveCN1885313AIncrease contrastImprove clarityCharacter and pattern recognitionSize factorGaussian function

The invention relates to an iris recognize method, which comprises: normalizing the positioned iris pattern into image matrix m; then shearing out the part matrix M with import pattern character in m, to be histogram balanced to obtain the image strengthen matrix N; dividing matrix N to calculate average value; then connecting the average values end-to-end to form character signal f (x); using first-order derivative of gauss function as small wave to coil the f (x) while the size factor S=1, 2, to obtain vectors t1 and t2; connecting t1 and t2 end-to-end, to form vector v; in the vector v, processing local model maximum checking, to obtain iris pattern character code binary valued vector V used to match and recognize; then comparing the Haiming distance between character codes V1 and V2 of two iris patterns, if it is lower than threshold value T, said two iris pattens are matched; or else, they are not matched. The invention can overcome the patter deformation; image translation and rotation caused by zoom pupil, with simplified calculation and improved recognize speed.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

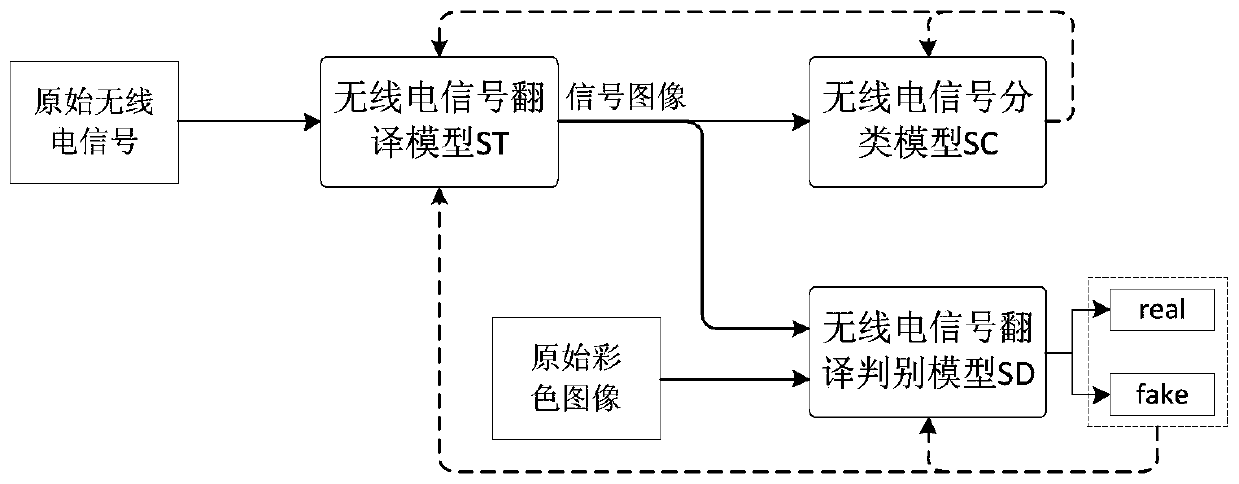

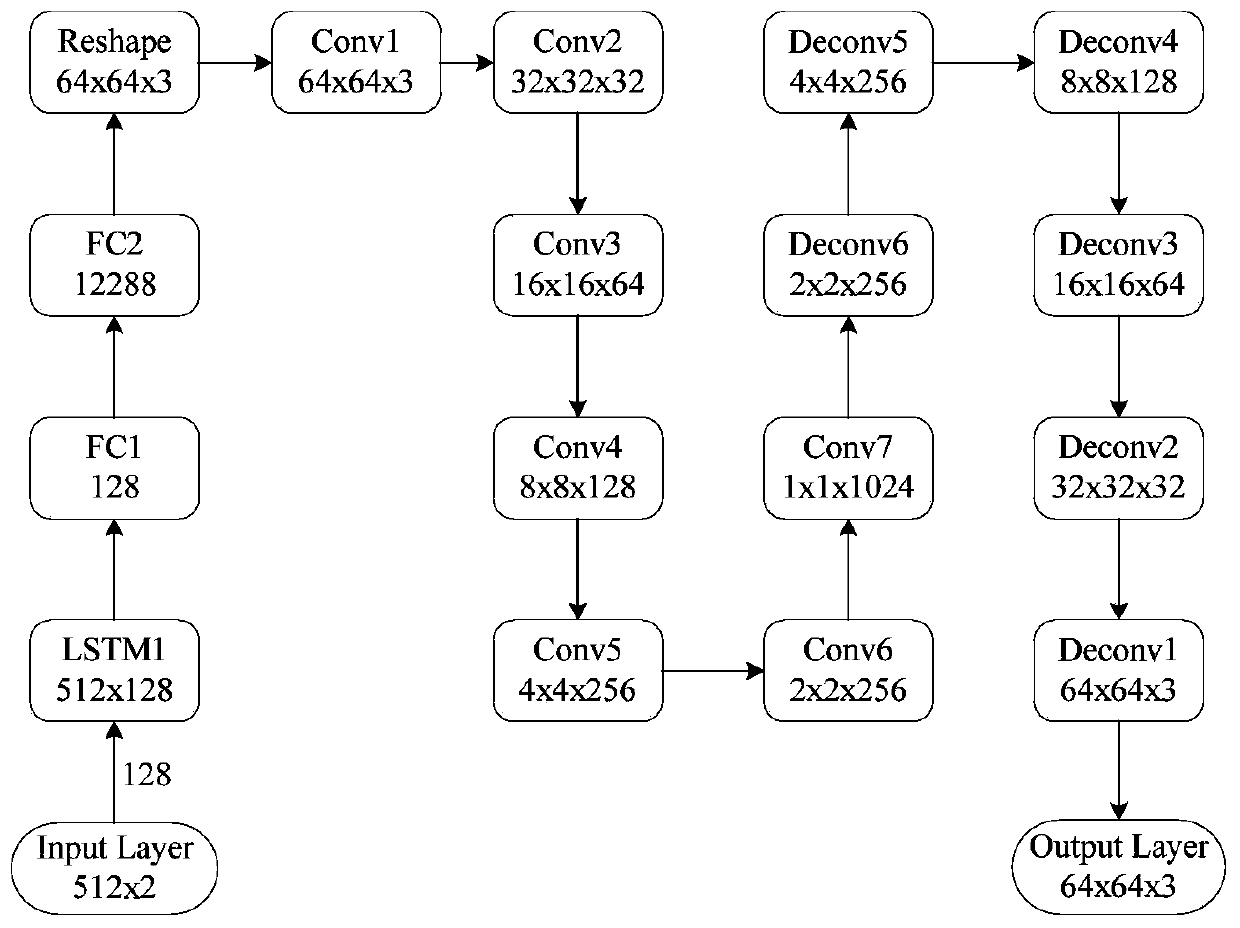

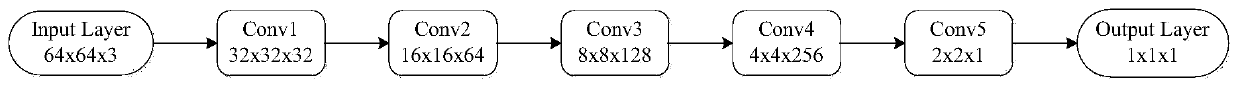

Signal-image translation method based on generative adversarial network

ActiveCN109887047AConcealment of information transmissionInformation value intensiveInternal combustion piston engines2D-image generationColor imageInformation transmission

The invention discloses a signal-image translation method based on a generative adversarial network, and a device for realizing the translation method comprises a translation model ST, a discrimination model SD and a classification model SC, and comprises the following steps of (1) pre-training the translation model and the classification model until the number of iterations reaches a set value; (2) inputting the real color image and a signal image obtained by the translation model into a discrimination model for confrontation training, and training parameters of the discrimination model; (3)inputting a signal image obtained by the translation model into a discrimination model for confrontation training, and training parameters of the translation model; (4) collaboratively training parameters of the translation model and the classification network; (5) repeating the steps (2) to (4) until the Nash equilibrium of the ST-SD reaches a preset number of training iterations; and (6) inputting the radio signal to be translated into the translation model to obtain a translated signal image. By utilizing the method, the diversity of translation results can be enhanced, and the security concealment of information transmission is ensured.

Owner:ZHEJIANG UNIV OF TECH

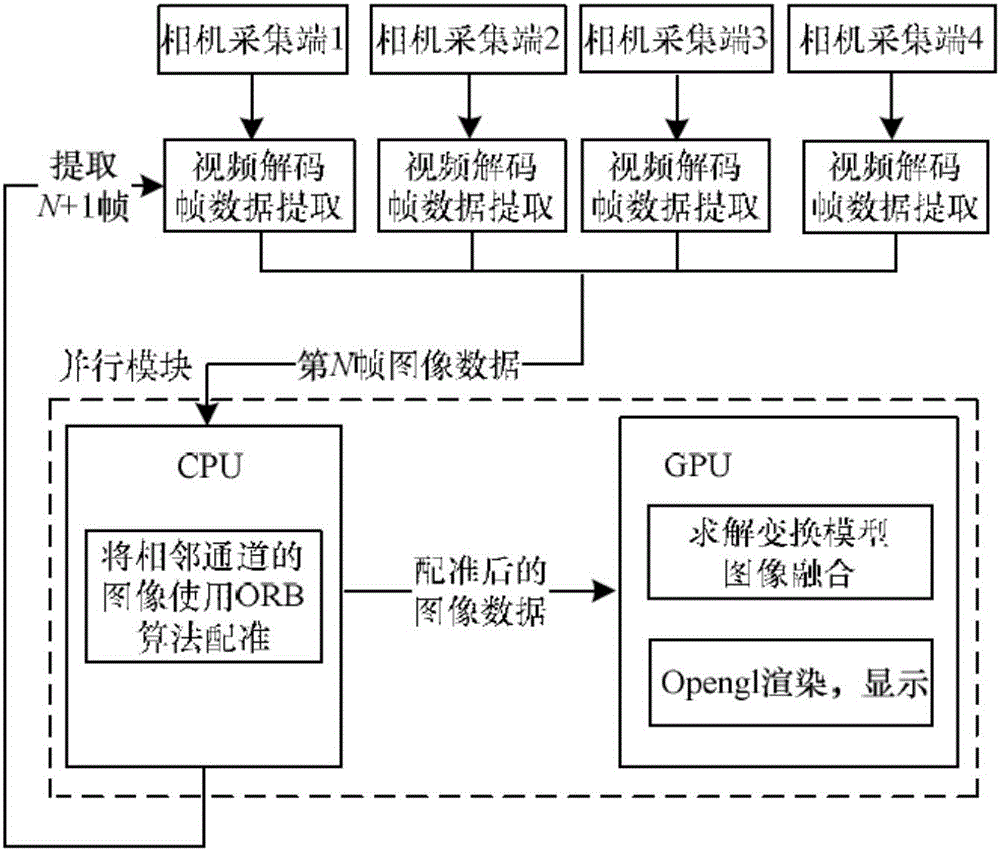

ORB algorithm based multipath rapid video splicing algorithm with image registering and image fusion in parallel

InactiveCN106657816AImprove computing efficiencySmall amount of calculationTelevision system detailsColor television detailsWeighted average methodImage fusion

The invention relates to an ORB algorithm based multipath rapid video splicing algorithm with image registering and image fusion in parallel, and relates to the field of computer vision. The method comprises the steps that S1) a self-adaption based image registering model is established, and an optimal image translation parameter is calculated by self-adaption; S2) according to the optimal image translation parameter in S1) image fusion is carried out on overlapped areas of two frames of images on the basis of a fading-in and fading-out weighted average method, so that two object images are fused and spliced into a panorama image, and splicing of an object video is realized; and S3) a bilateral filter carry out de-noising on the panorama image obtained in the S2), and then a high-quality clear splicing image is output. The algorithm of the invention is high in calculation efficiency, low in computational complexity and fully satisfies the requirement for instantaneity.

Owner:HUNAN VISION SPLEND PHOTOELECTRIC TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com