Patents

Literature

3690 results about "Generative adversarial network" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

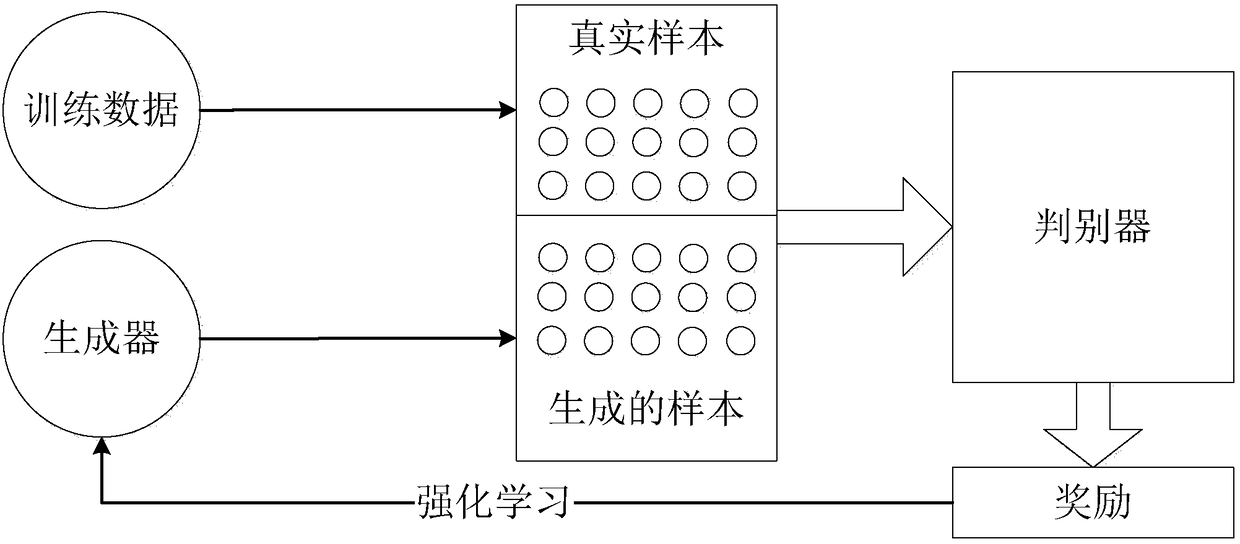

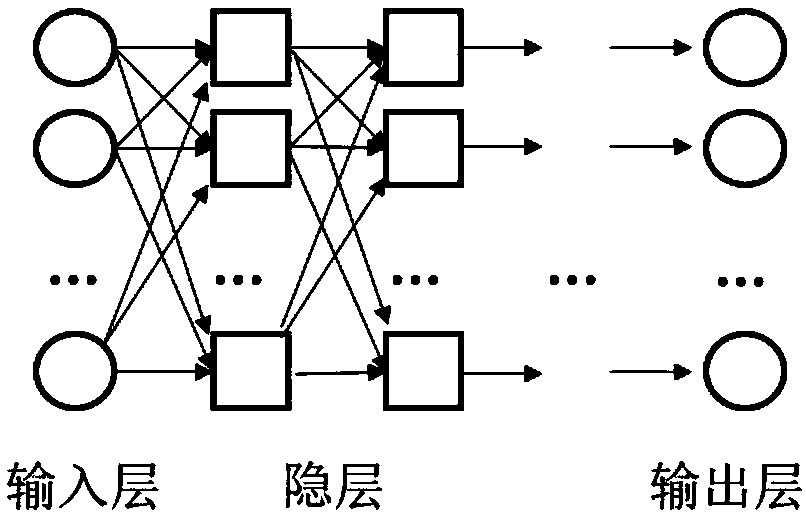

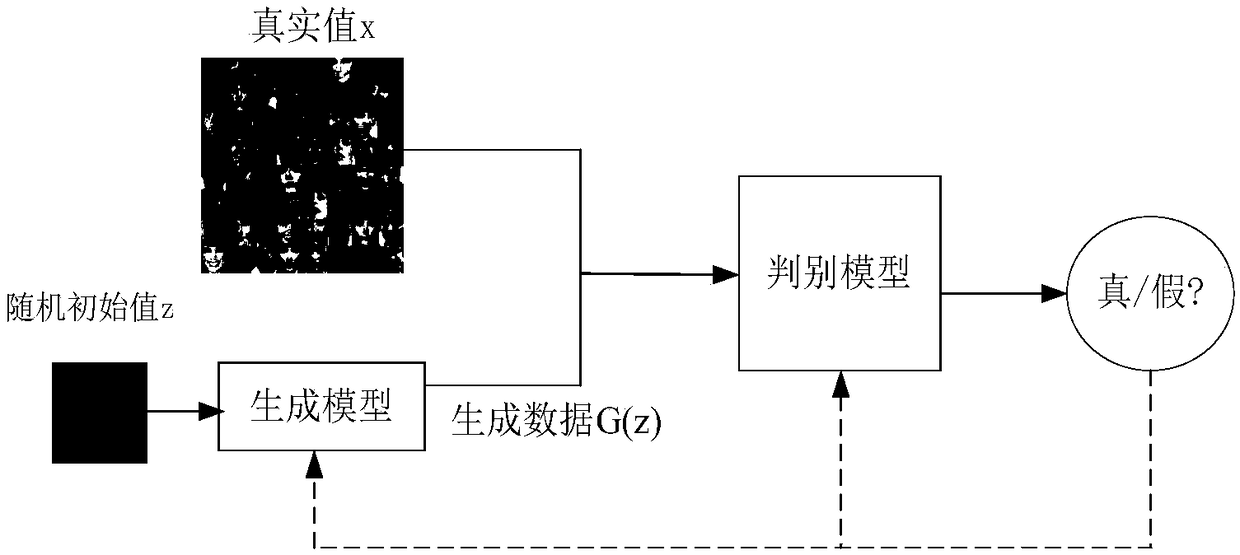

A generative adversarial network ( GAN) is a class of machine learning systems invented by Ian Goodfellow and his colleagues in 2014. Two neural networks contest with each other in a game (in the sense of game theory, often but not always in the form of a zero-sum game ).

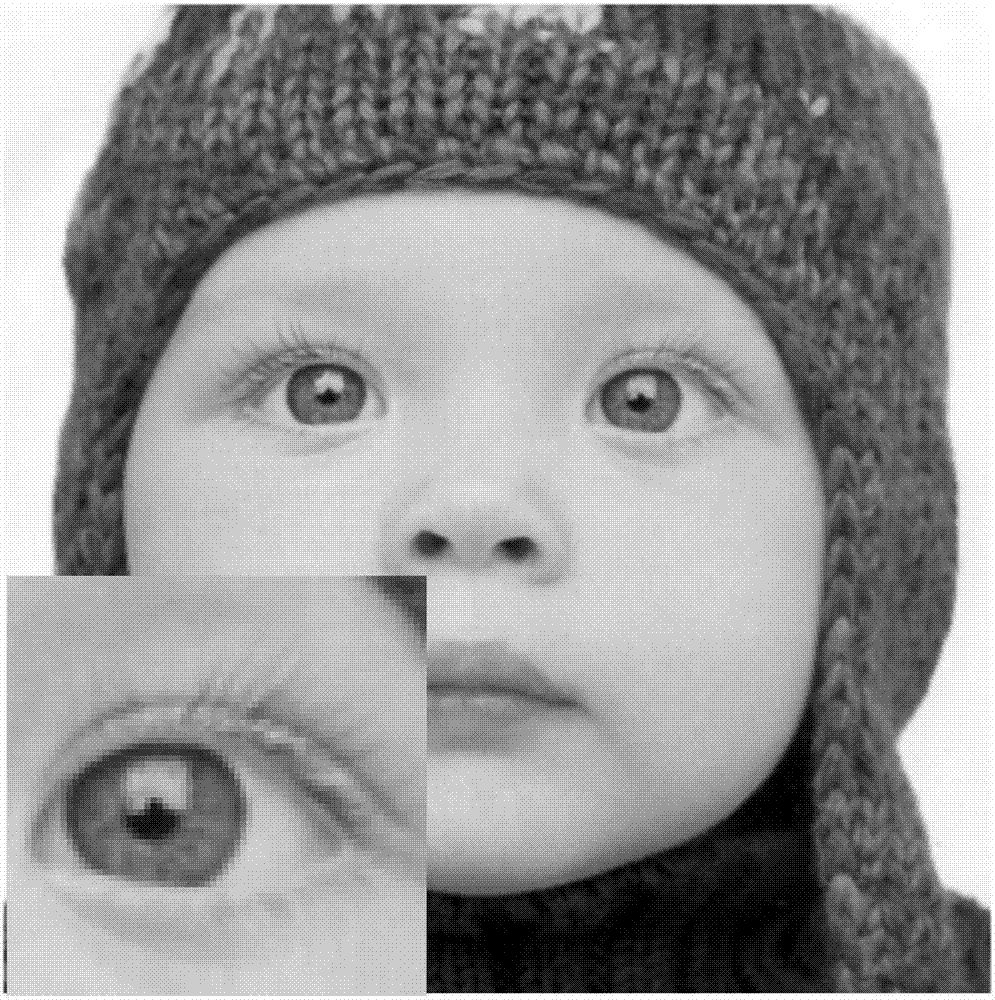

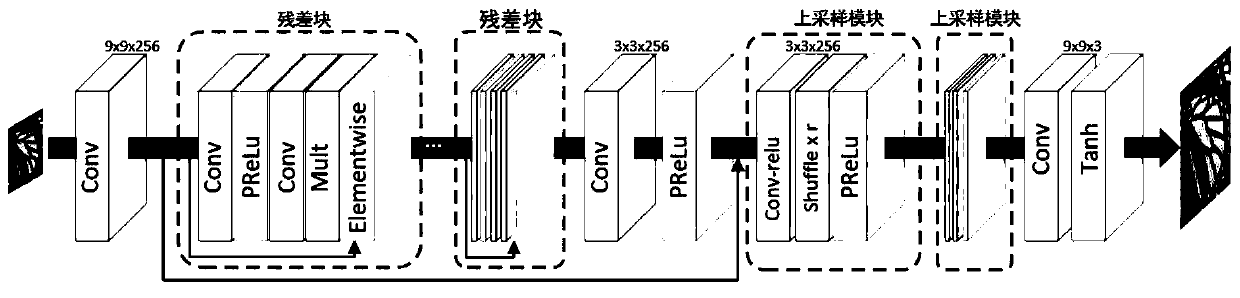

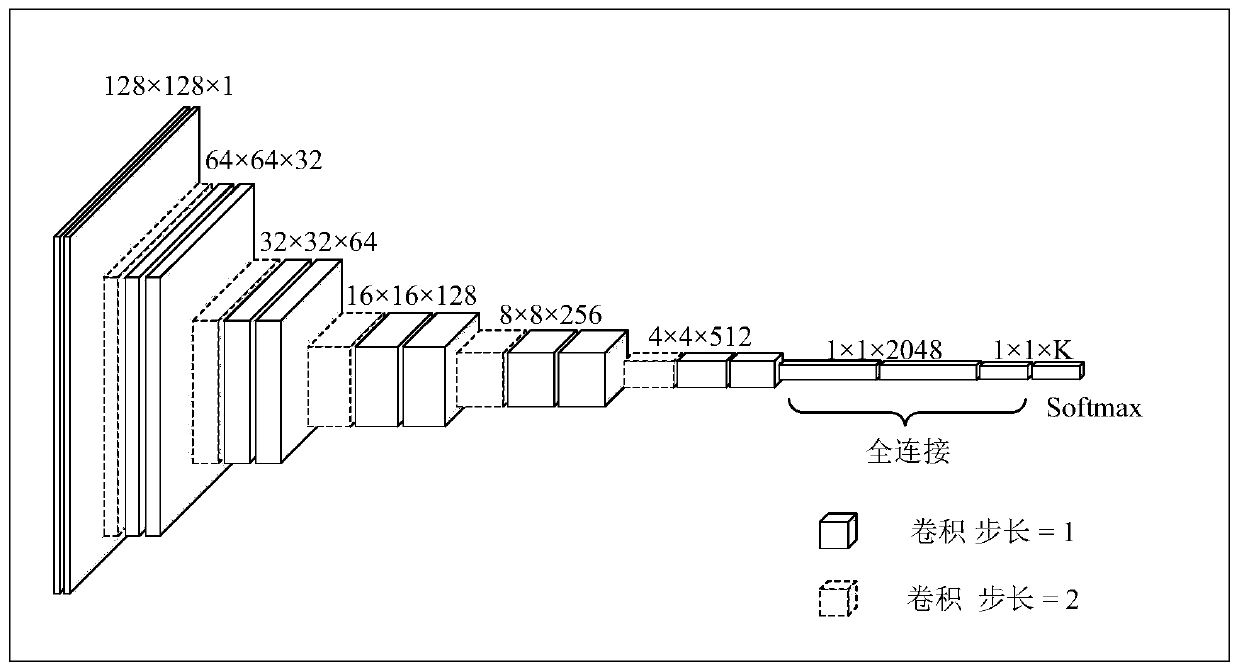

Human face super-resolution reconstruction method based on generative adversarial network and sub-pixel convolution

ActiveCN107154023AImprove recognition accuracyClear outline of the faceGeometric image transformationNeural architecturesData setImage resolution

The invention discloses a human face super-resolution reconstruction method based on a generative adversarial network and sub-pixel convolution, and the method comprises the steps: A, carrying out the preprocessing through a normally used public human face data set, and making a low-resolution human face image and a corresponding high-resolution human face image training set; B, constructing the generative adversarial network for training, adding a sub-pixel convolution to the generative adversarial network to achieve the generation of a super-resolution image and introduce a weighted type loss function comprising feature loss; C, sequentially inputting a training set obtained at step A into a generative adversarial network model for modeling training, adjusting the parameters, and achieving the convergence; D, carrying out the preprocessing of a to-be-processed low-resolution human face image, inputting the image into the generative adversarial network model, and obtaining a high-resolution image after super-resolution reconstruction. The method can achieve the generation of a corresponding high-resolution image which is clearer in human face contour, is more specific in detail and is invariable in features. The method improves the human face recognition accuracy, and is better in human face super-resolution reconstruction effect.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

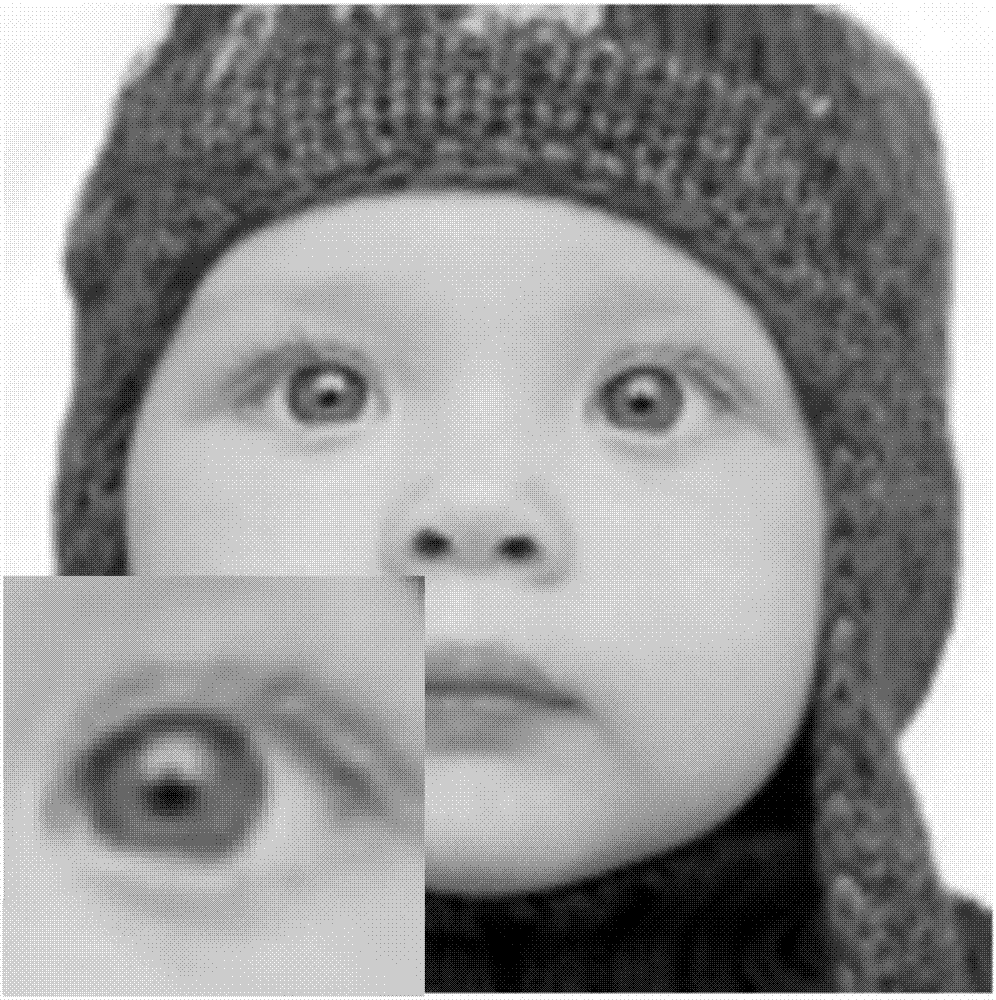

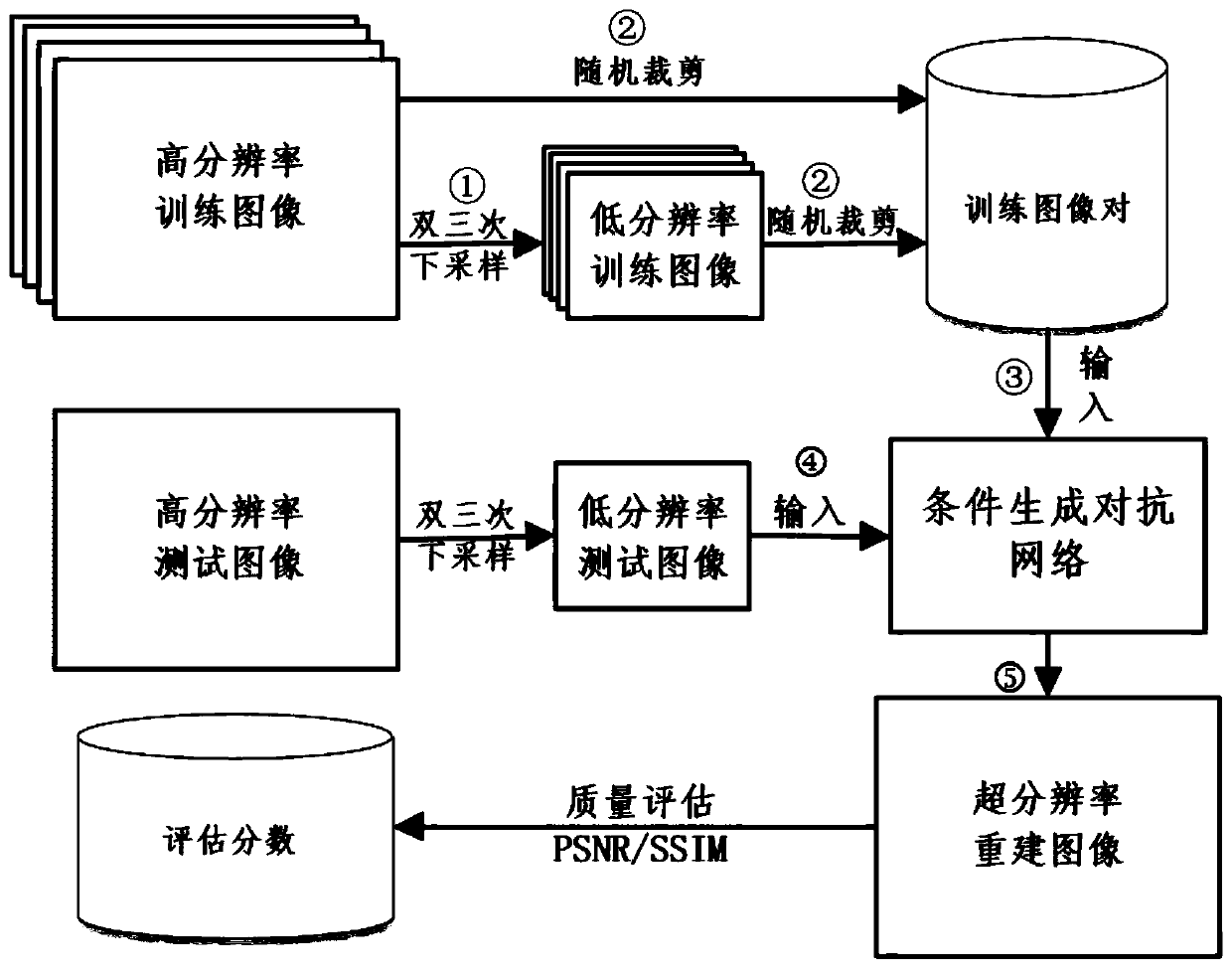

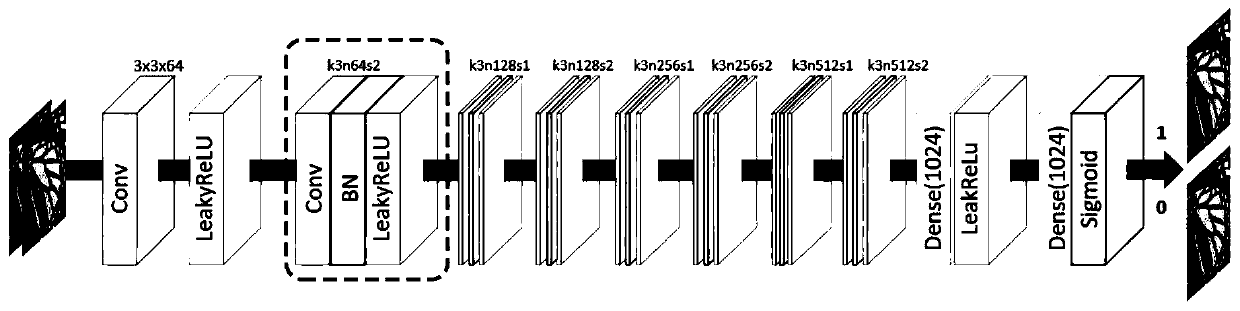

Single image super-resolution reconstruction method based on conditional generative adversarial network

ActiveCN110136063AImprove discrimination accuracyImprove performanceGeometric image transformationNeural architecturesGenerative adversarial networkReconstruction method

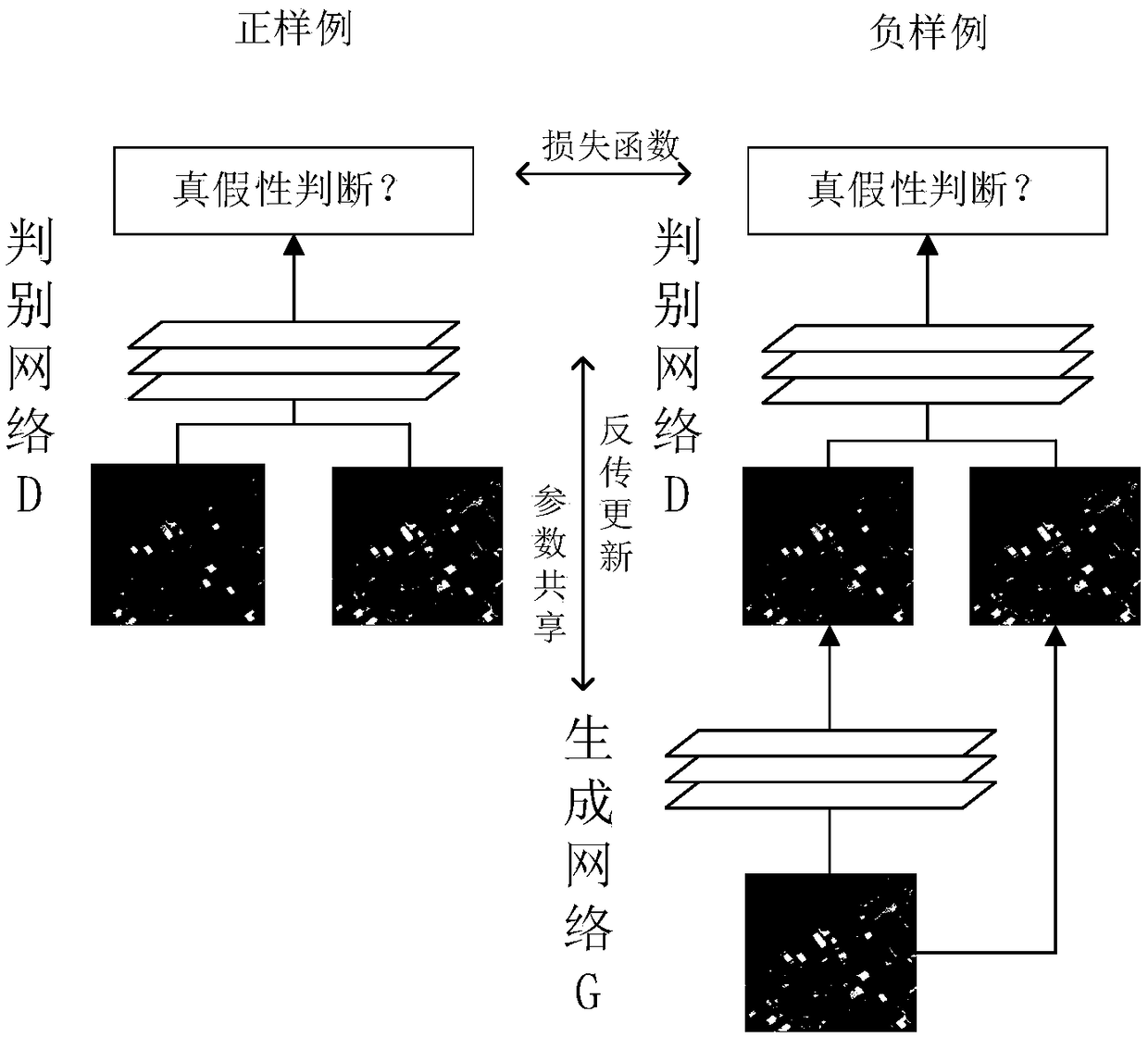

The invention discloses a single image super-resolution reconstruction method based on a conditional generative adversarial network. A judgment condition, namely an original real image, is added intoa judger network of the generative adversarial network. A deep residual error learning module is added into a generator network to realize learning of high-frequency information and alleviate the problem of gradient disappearance. The single low-resolution image is input to be reconstructed into a pre-trained conditional generative adversarial network, and super-resolution reconstruction is performed to obtain a reconstructed high-resolution image; learning steps of the conditional generative adversarial network model include: learning a model of the conditional adversarial network; inputtingthe high-resolution training set and the low-resolution training set into a conditional generative adversarial network model, using pre-trained model parameters as initialization parameters of the training, judging the convergence condition of the whole network through a loss function, obtaining a finally trained conditional generative adversarial network model when the loss function is converged,and storing the model parameters.

Owner:NANJING UNIV OF INFORMATION SCI & TECH

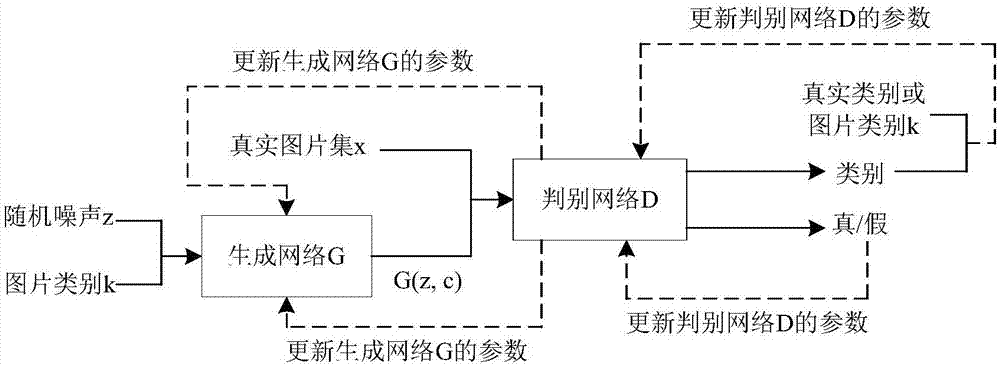

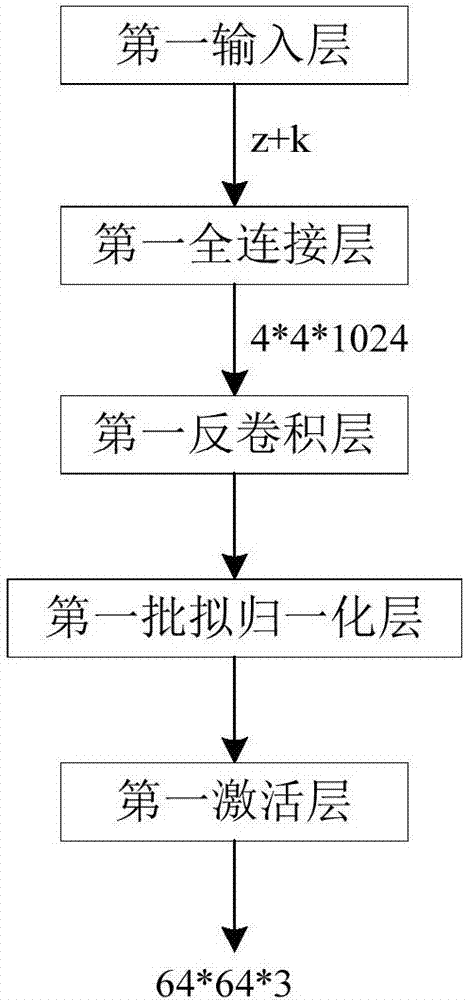

Picture generation method based on depth learning and generative adversarial network

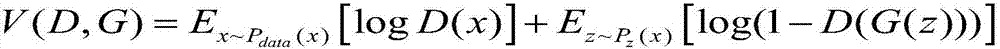

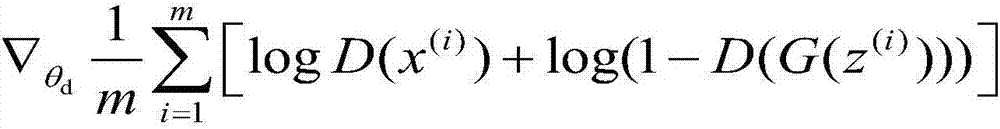

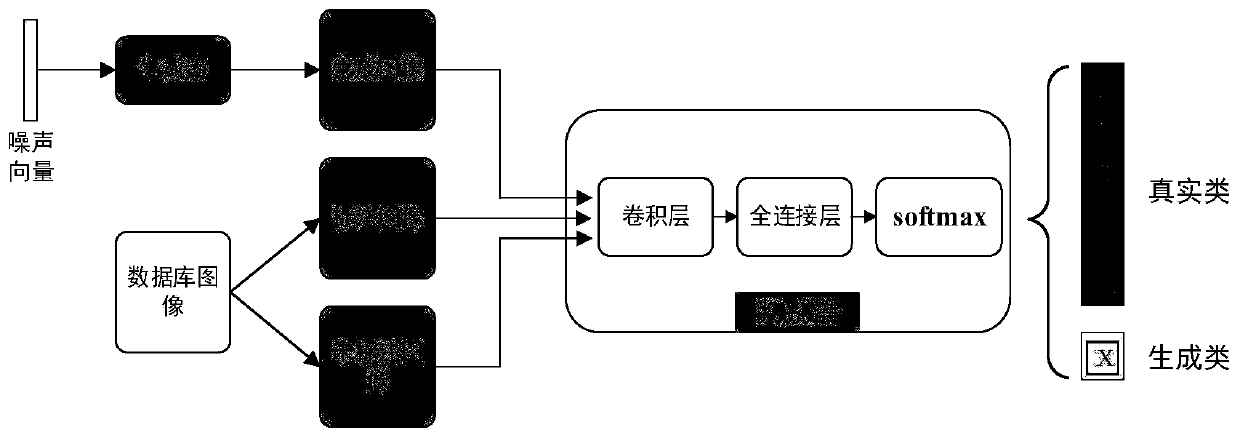

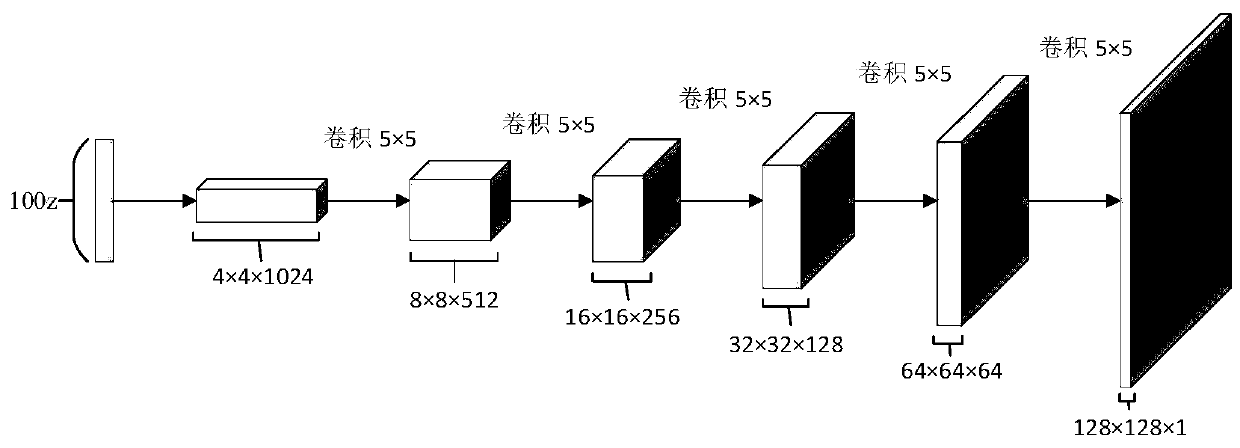

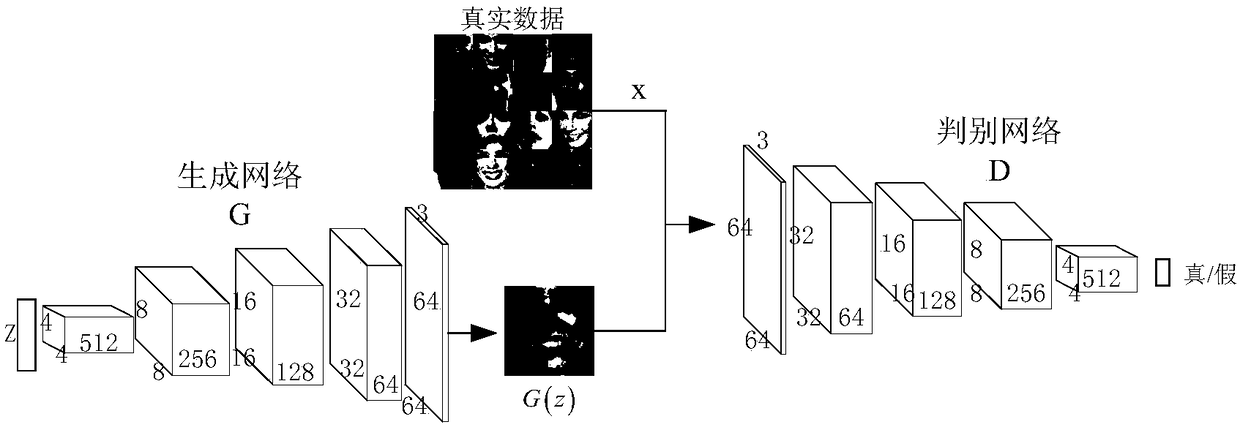

The invention discloses a picture generation method based on depth learning and a generative adversarial network. The method comprises steps that (1), a picture database is established, multiple real pictures are collected and are further classified and marked, and each picture has a unique class label k corresponding to the each picture; (2), the generation network G is constructed, a vector combined by a random noise signal z and the class label k is inputted to the generation network G, and generated data is taken as input of a discrimination network D; (3), the discrimination network D is constructed, and a loss function of the discrimination network D comprises a first loss function used for determining true and false pictures and a second loss function used for determining picture classes; (4), the generation network is trained; (5), needed pictures are generated, the random noise signal z and the class label k are inputted to the generation network G trained in the step (4) to acquire pictures in a designated class. The method is advantaged in that not only can the pictures can be generated, but also the designated generation picture classes can be further realized.

Owner:SHENZHEN GRADUATE SCHOOL TSINGHUA UNIV

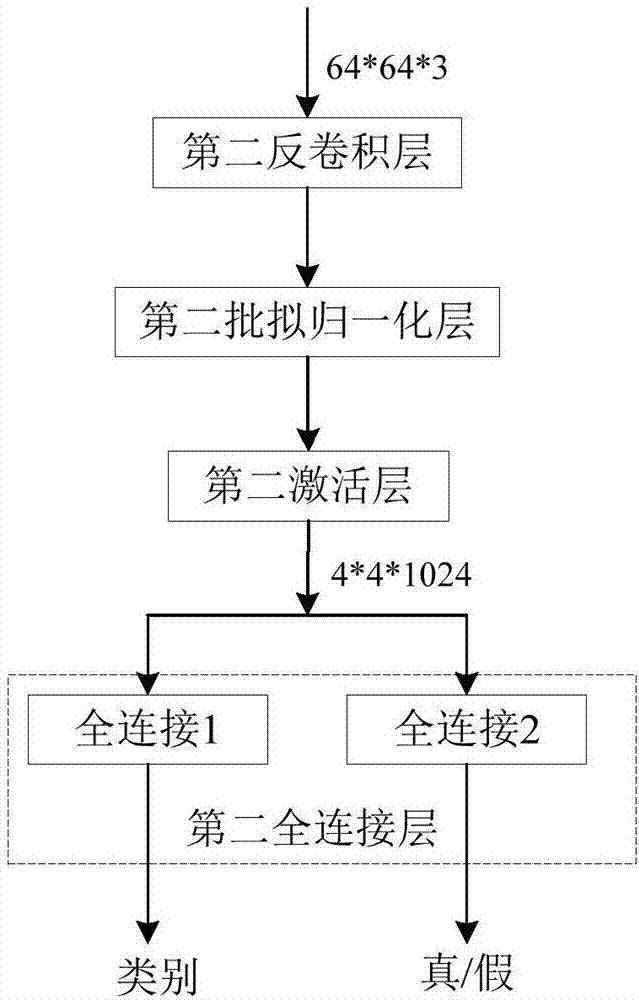

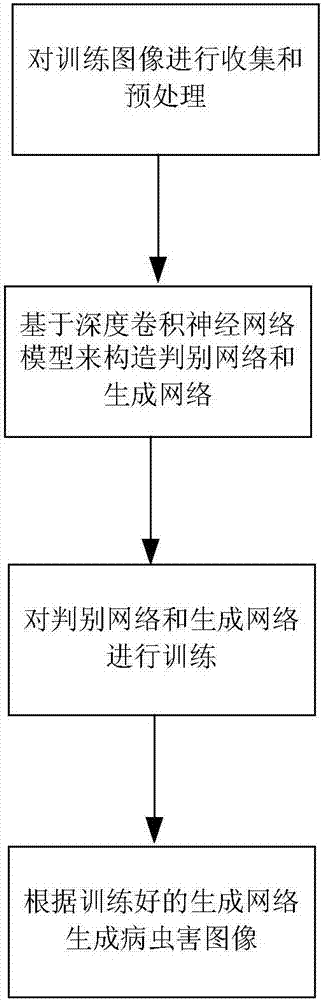

Pest and disease image generation method based on generative adversarial network

InactiveCN107016406AHard to solve problems with few and high acquisition costsCharacter and pattern recognitionNeural architecturesDiseaseGenerative adversarial network

The invention relates to a pest and disease image generation method based on a generative adversarial network. In the prior art, sampling images of pest and disease images are less. By using the method of the invention, the above defect is overcome. The method comprises the following steps of collecting and preprocessing trained images; based on a deep-convolution neural network model, constructing a discrimination network and a generation network; training the discrimination network and the generation network; and according to the trained generation network, generating the pest and disease images. In the invention, according to a few of existing pest and disease images, a lot of pest and disease images which are similar to a reality are generated, a sample image is provided for pest and disease image identification, and problems that the pest and disease images in an actual field are less and acquisition cost is high are solved.

Owner:HEFEI INSTITUTES OF PHYSICAL SCIENCE - CHINESE ACAD OF SCI

Deep learning adversarial attack defense method based on generative adversarial network

ActiveCN108322349AImprove the ability to defend against different types of adversarial samplesImprove defenseCharacter and pattern recognitionMachine learningGenerative adversarial networkG-network

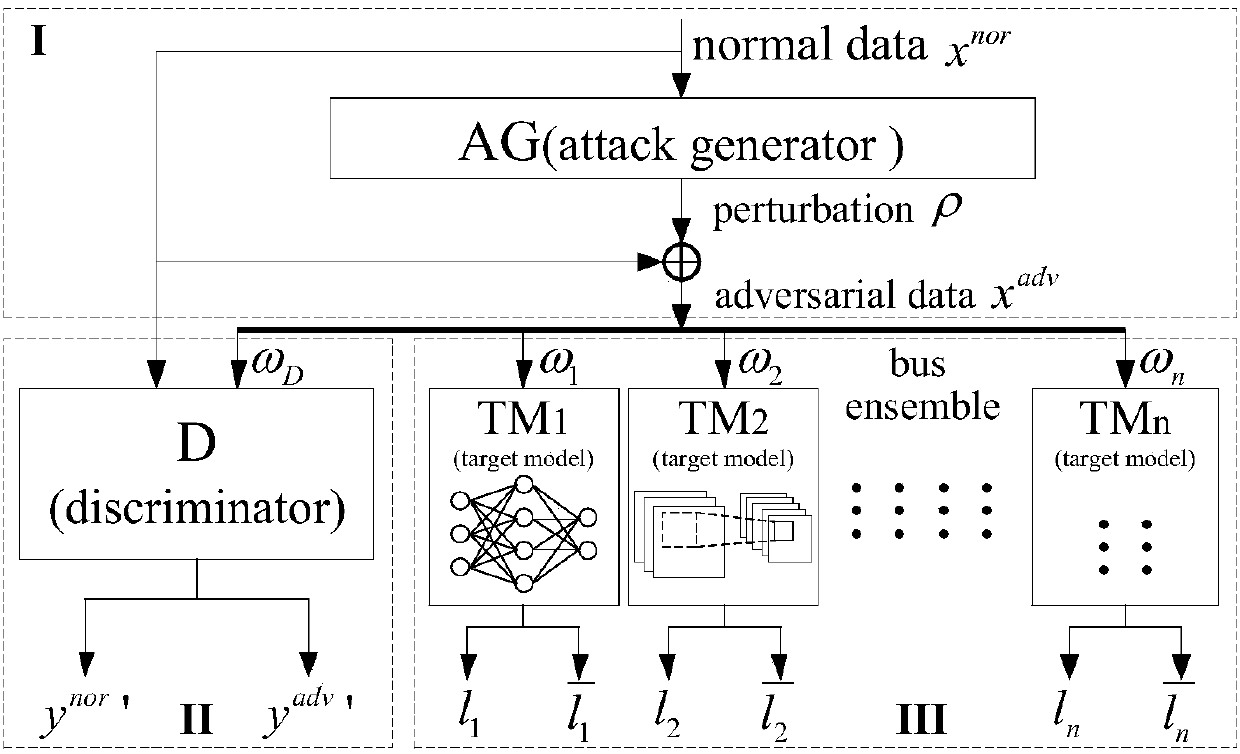

The invention provides a deep learning adversarial attack defense method based on a generative adversarial network. The method comprises the following steps: step 1), based on the high performance ofthe generative adversarial network in learning sample distribution, designing a method for generating an adversarial example through the generative adversarial network, and after adding a target modelnetwork set TMi, enabling the sample generation based on a G network to become a multi-objective optimization problem; and the training for an AG-GAN model is mainly for the parameter training of thegenerative network G and a discrimination network D, and is divided into three modules; and step 2), using the adversarial example generated by the AG-GAN to train an attacked deep learning model, soas to improve the capability of the deep learning model of defending different types of adversarial examples. The deep learning adversarial attack defense method based on the generative adversarial network provided by the invention effectively improves the security.

Owner:ZHEJIANG UNIV OF TECH

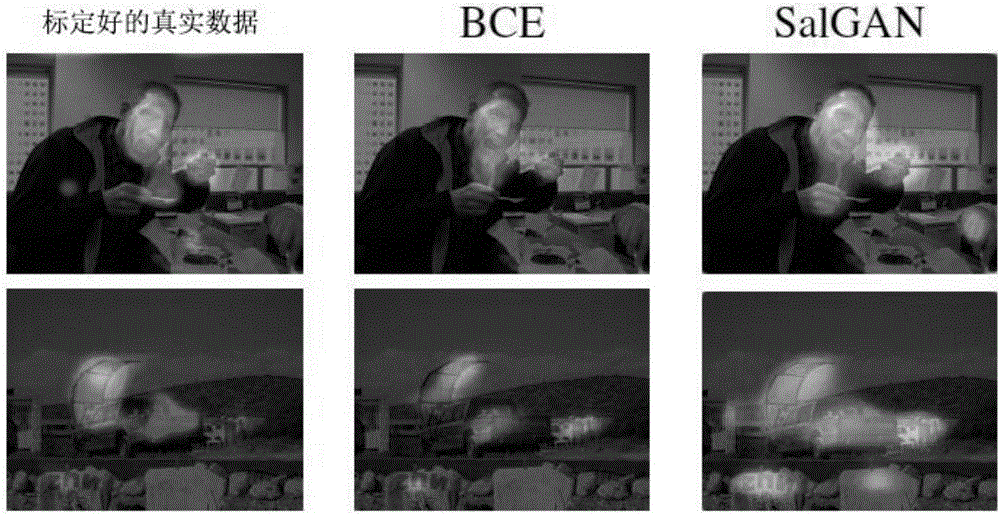

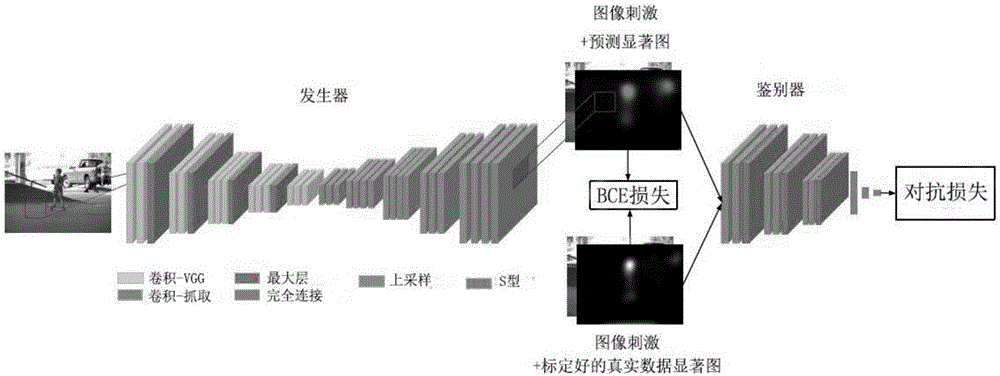

Visual significance prediction method based on generative adversarial network

InactiveCN106845471ACharacter and pattern recognitionNeural learning methodsDiscriminatorVisual saliency

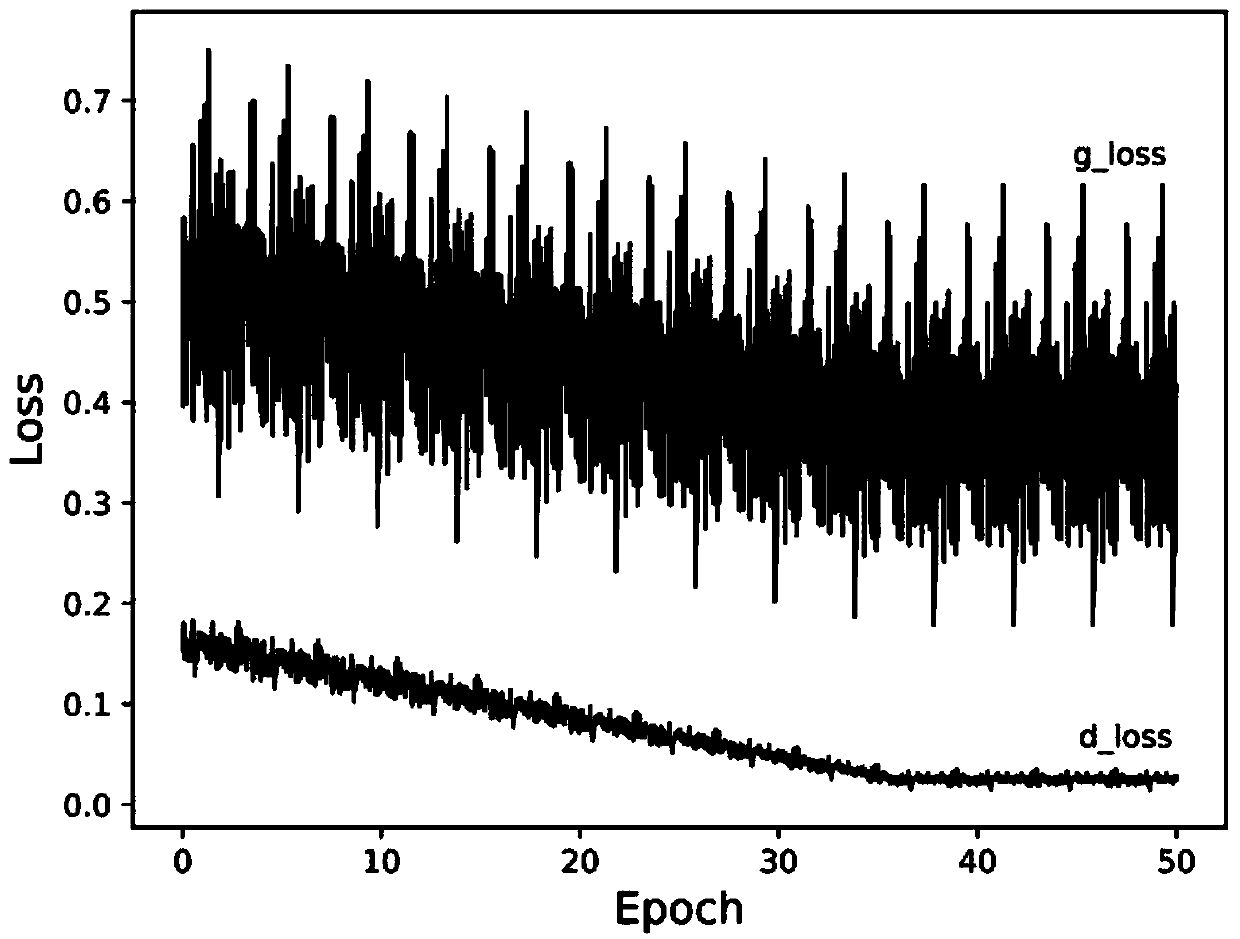

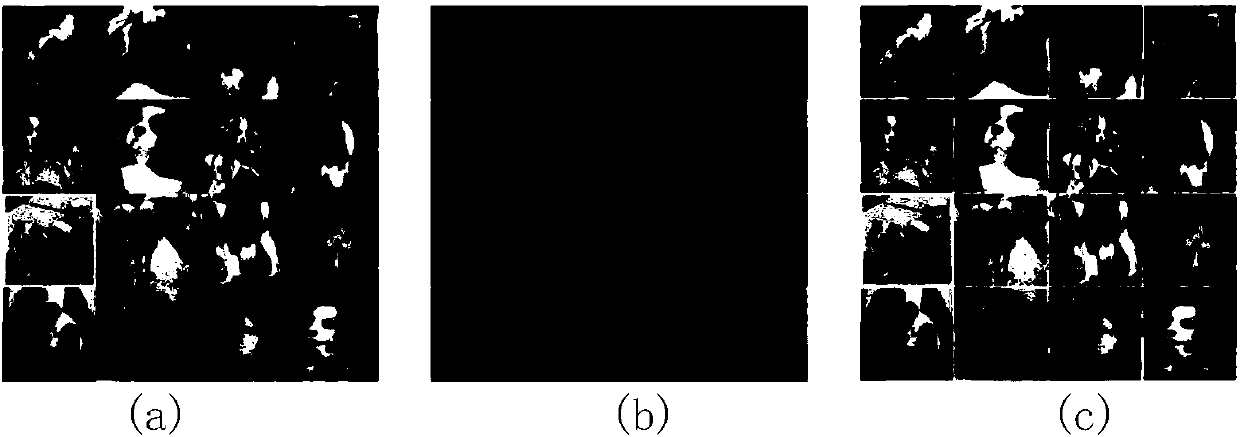

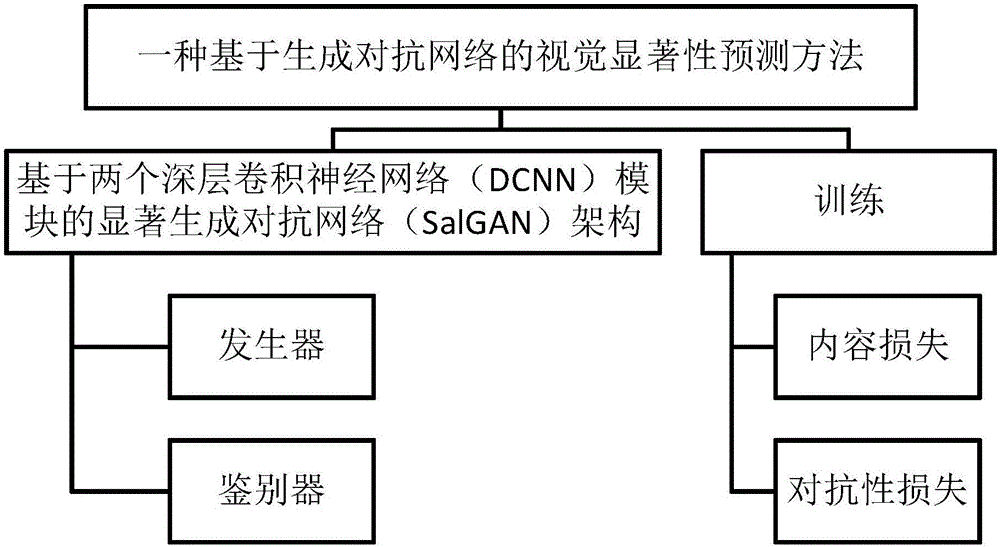

The invention provides a visual significance prediction method based on a generative adversarial network. which mainly comprises the steps of constructing a saliency generative adversarial network (SalGAN) based on two deep convolutional neural network (DCNN) modules, and training. Specifically, the method comprises the steps of constructing the saliency generative adversarial network (SalGAN) based on two deep convolutional neural network (DCNN) modules, wherein the saliency generative adversarial network comprises a generator and a discriminator, and the generator and the discriminator are combined for predicating a visual saliency graph of a preset input image; and training filter weight in the SalGAN through perception loss which is caused by combining content loss and adversarial loss. A loss function in the method is combination of error from the discriminator and cross entropy relative to calibrated true data, thereby improving stability and convergence rate in adversarial training. Compared with further training of individual cross entropy, adversarial training improves performance so that higher speed and higher efficiency are realized.

Owner:SHENZHEN WEITESHI TECH

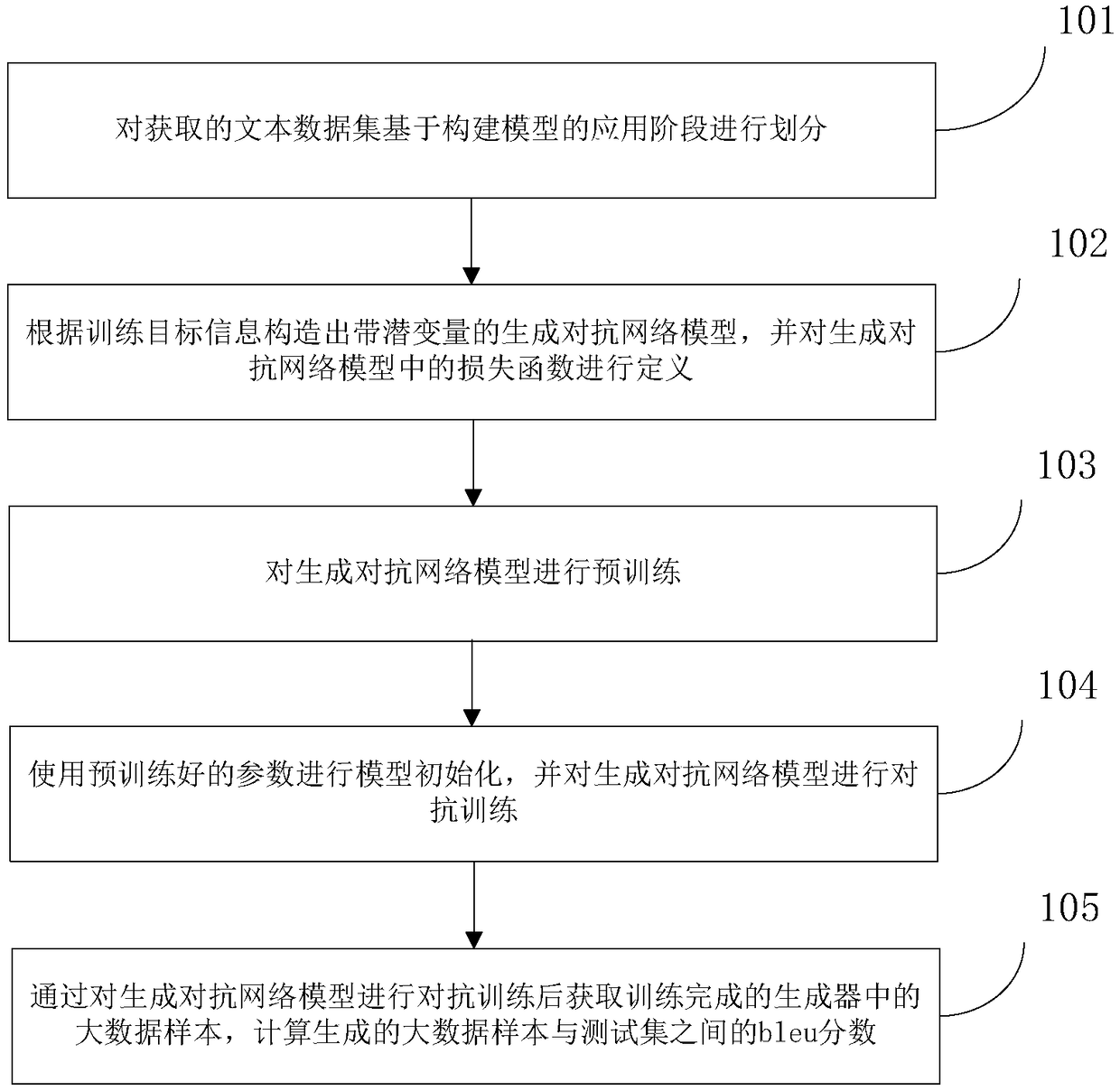

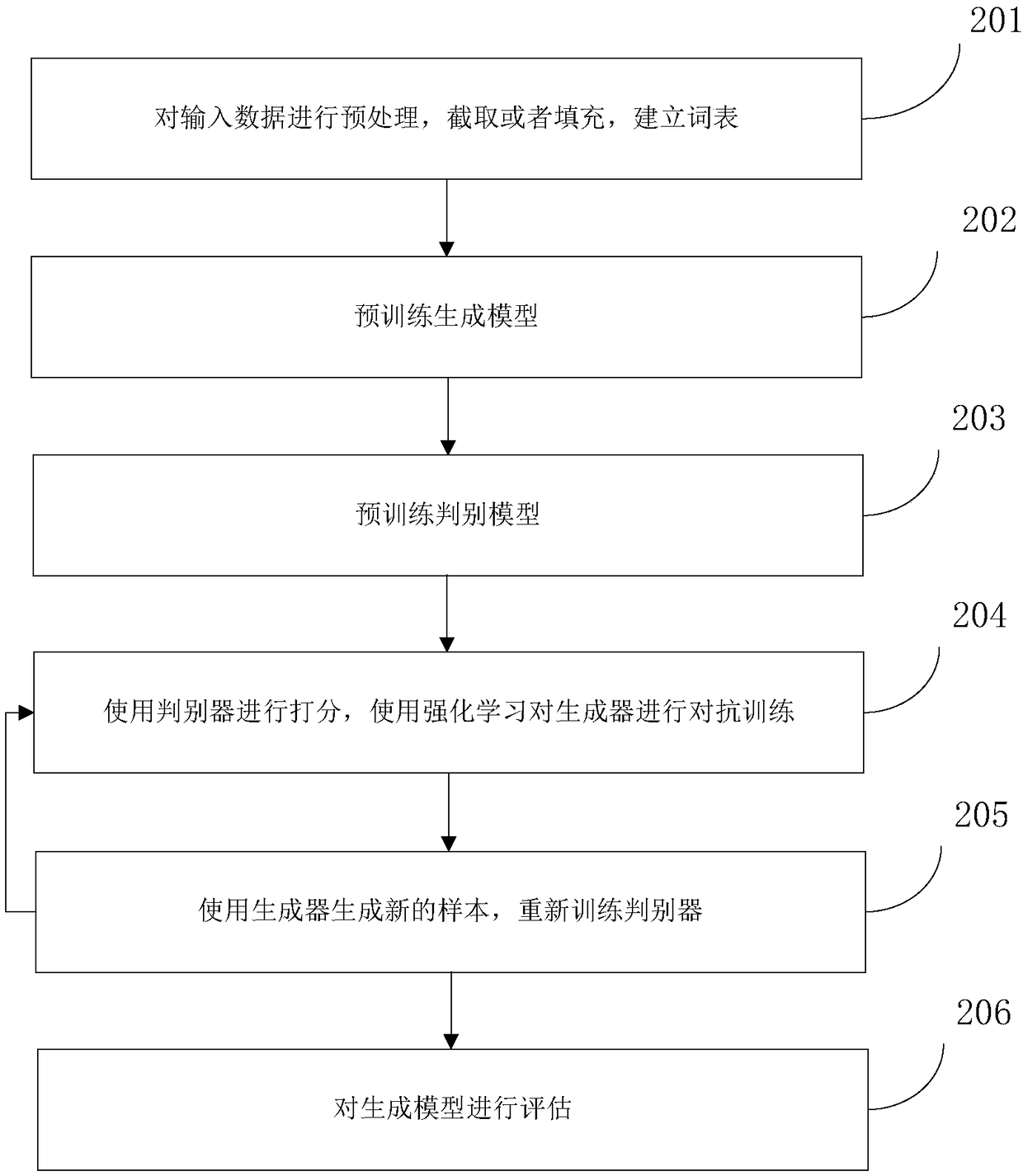

Automatic text generation method and device

InactiveCN108334497AGood effectEasy to learnNatural language data processingNeural architecturesAlgorithmGenerative adversarial network

Owner:BEIHANG UNIV

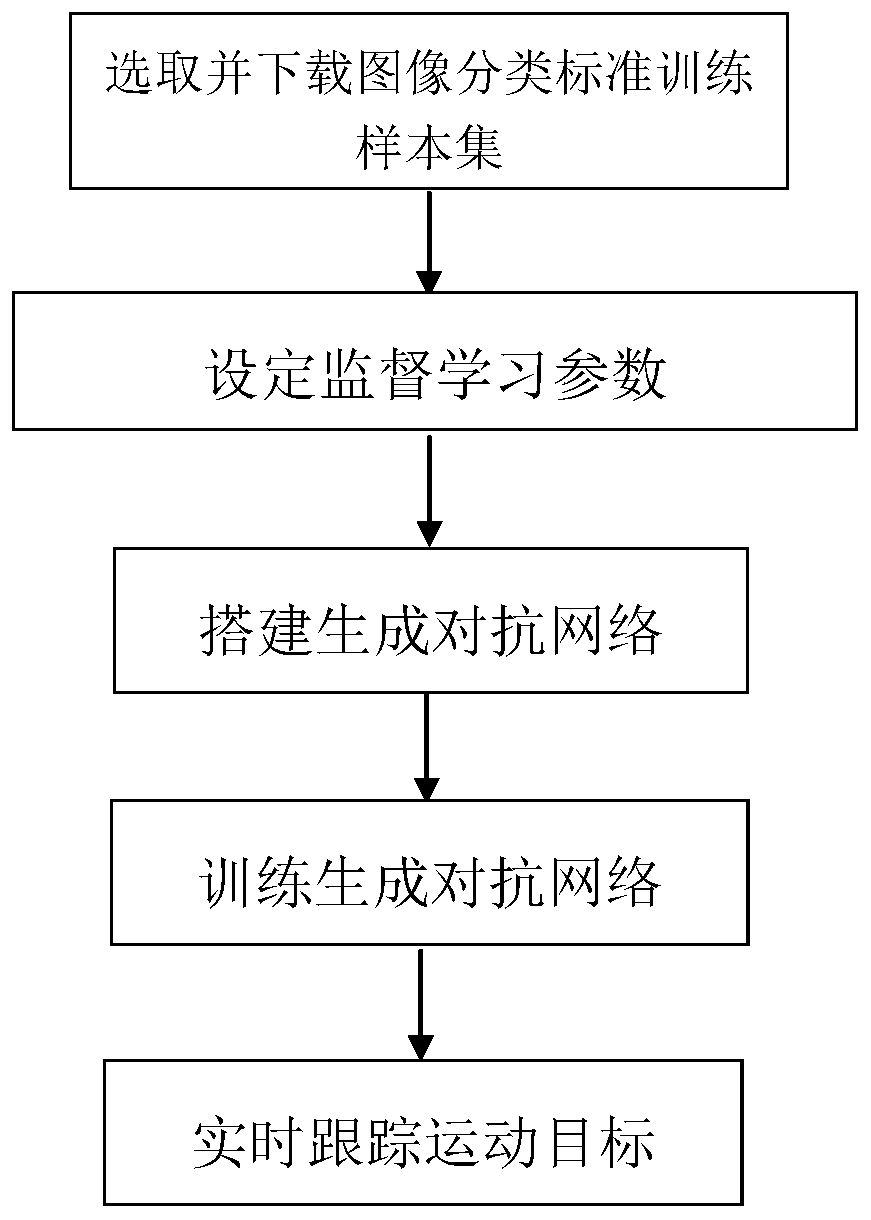

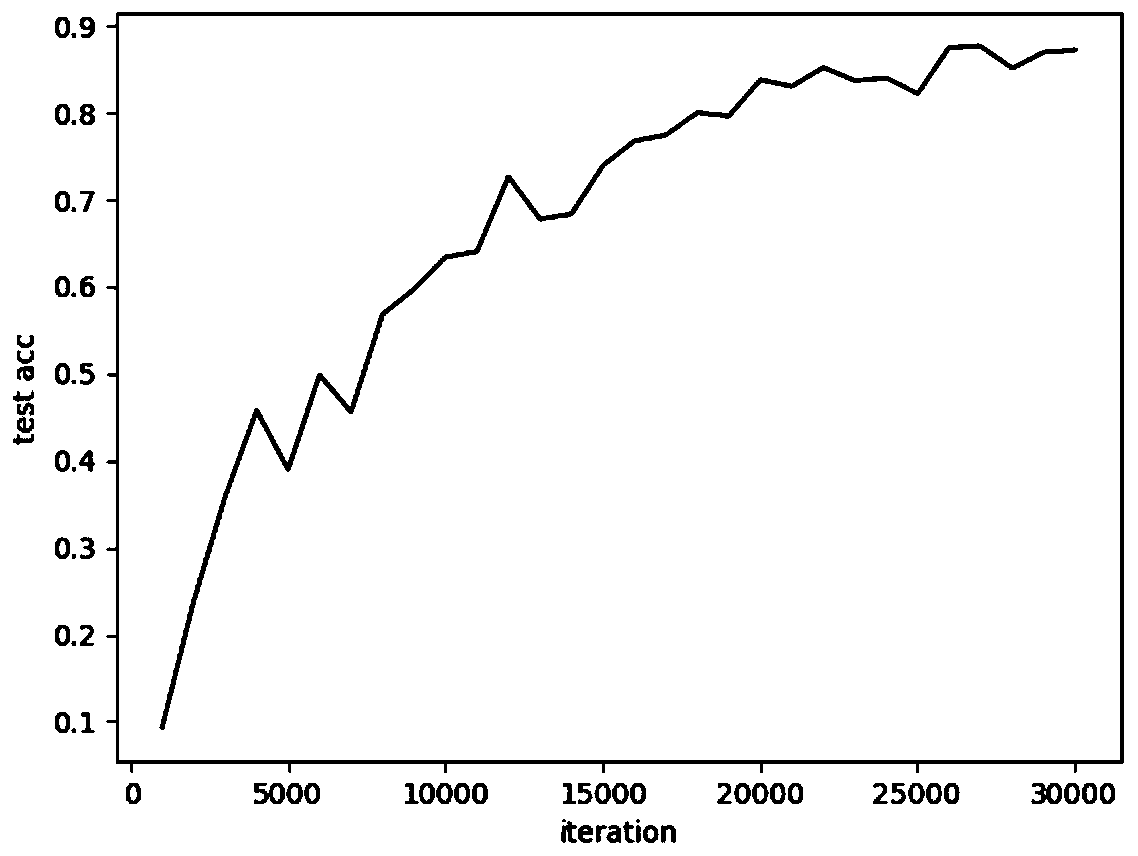

Semi-supervised image classification method based on generative adversarial network

InactiveCN110097103AImprove accuracyAccurate Classification AccuracyCharacter and pattern recognitionDiscriminatorStochastic gradient descent

The invention discloses a semi-supervised image classification method based on a generative adversarial network, which mainly solves the problems that the existing unsupervised learning classificationprecision is low and semi-supervised learning needs a large number of accurate labels, and comprises the following implementation steps of: 1) selecting and downloading a standard image training sample and a test sample; 2) setting relevant parameters of network supervised learning, and establishing a generative adversarial network consisting of a generator network, a discriminator network and anauxiliary classifier in parallel; 3) training the generative adversarial network by using a random gradient descent method; and 4) inputting the test sample to be classified into the trained generative adversarial network model, and outputting the category of the image to be detected. The method improves the image classification precision of unsupervised learning, can obtain a very good image classification effect on a sample set only containing a small amount of accurate annotation samples, and can be used for target classification in an actual scene.

Owner:XIDIAN UNIV

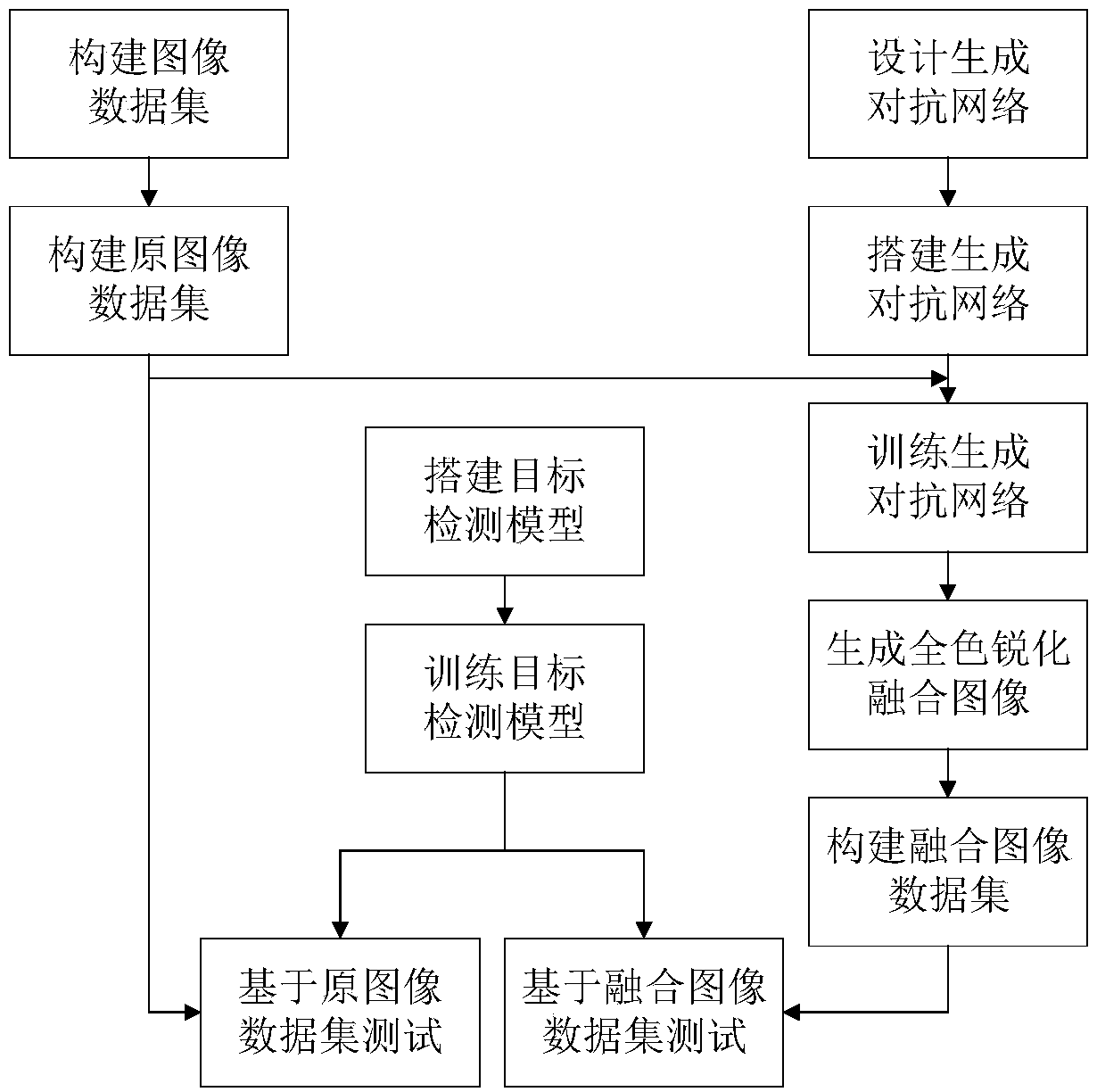

Remote-sensing image object detection method based on deep learning

ActiveCN108564109AClear Spatial DetailsReduce lossesCharacter and pattern recognitionNeural architecturesStochastic gradient descentData set

The invention relates to a remote-sensing image object detection method based on deep learning. The method comprises: using remote-sensing images to construct a related data set, namely an image dataset after classification and labeling on the remote-sensing images and class labels generated through labeling work; building a panchromatic sharpening model based on generative adversarial networks (GAN); building an object detection model based on a deep convolutional neural network, and carrying out end-to-end training on the model through methods of back propagation, random gradient descent and the like; and carrying out end-to-end testing on the built model. The method of the invention has the advantage of high accuracy.

Owner:TIANJIN UNIV

Semi-supervised X-ray image automatic labeling based on generative adversarial network

InactiveCN110110745ACharacter and pattern recognitionNeural architecturesX-rayGenerative adversarial network

The invention provides a semi-supervised X-ray automatic labeling method based on a generative adversarial network. A traditional training method is improved on the basis of an existing generative adversarial network method, and a semi-supervised training method combining supervised loss and unsupervised loss is used for carrying out image classification recognition based on a small number of labeled samples. The problem of data scarcity annotation of the X-ray image is studied. The method comprises: firstly, on the basis of a traditional unsupervised generative adversarial network, using a softmax for replacing a final output layer; expanding the X-ray image into a semi-supervised generative adversarial network, defining additional category label guide training for the generated sample, optimizing network parameters by adopting the semi-supervised training, and finally, automatically labeling the X-ray image by adopting a trained discriminant network. Compared with traditional supervised learning and other semi-supervised learning algorithms, the method has the advantage that in the aspect of medical X-ray image automatic labeling, the performance is improved.

Owner:SHANGHAI MARITIME UNIVERSITY

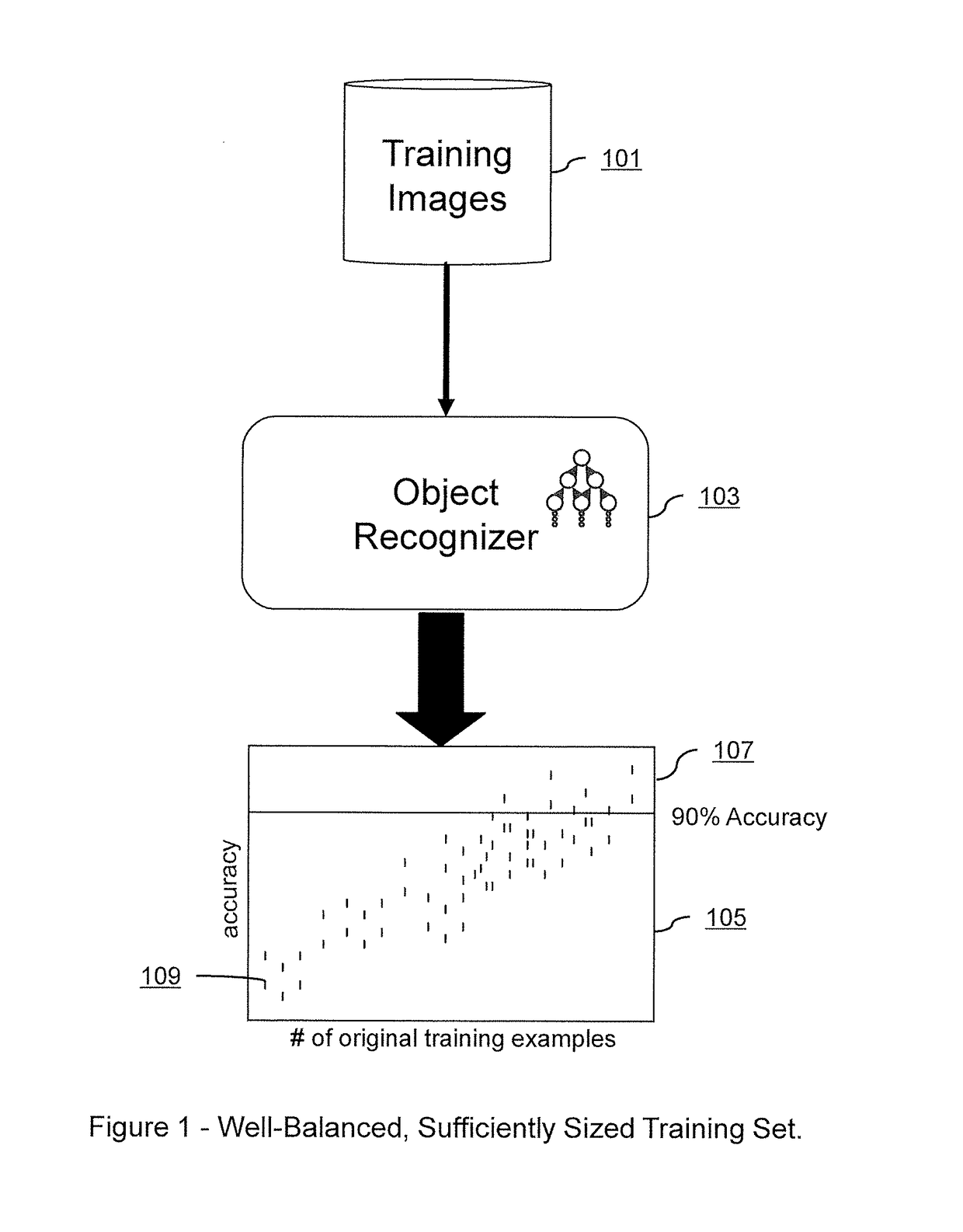

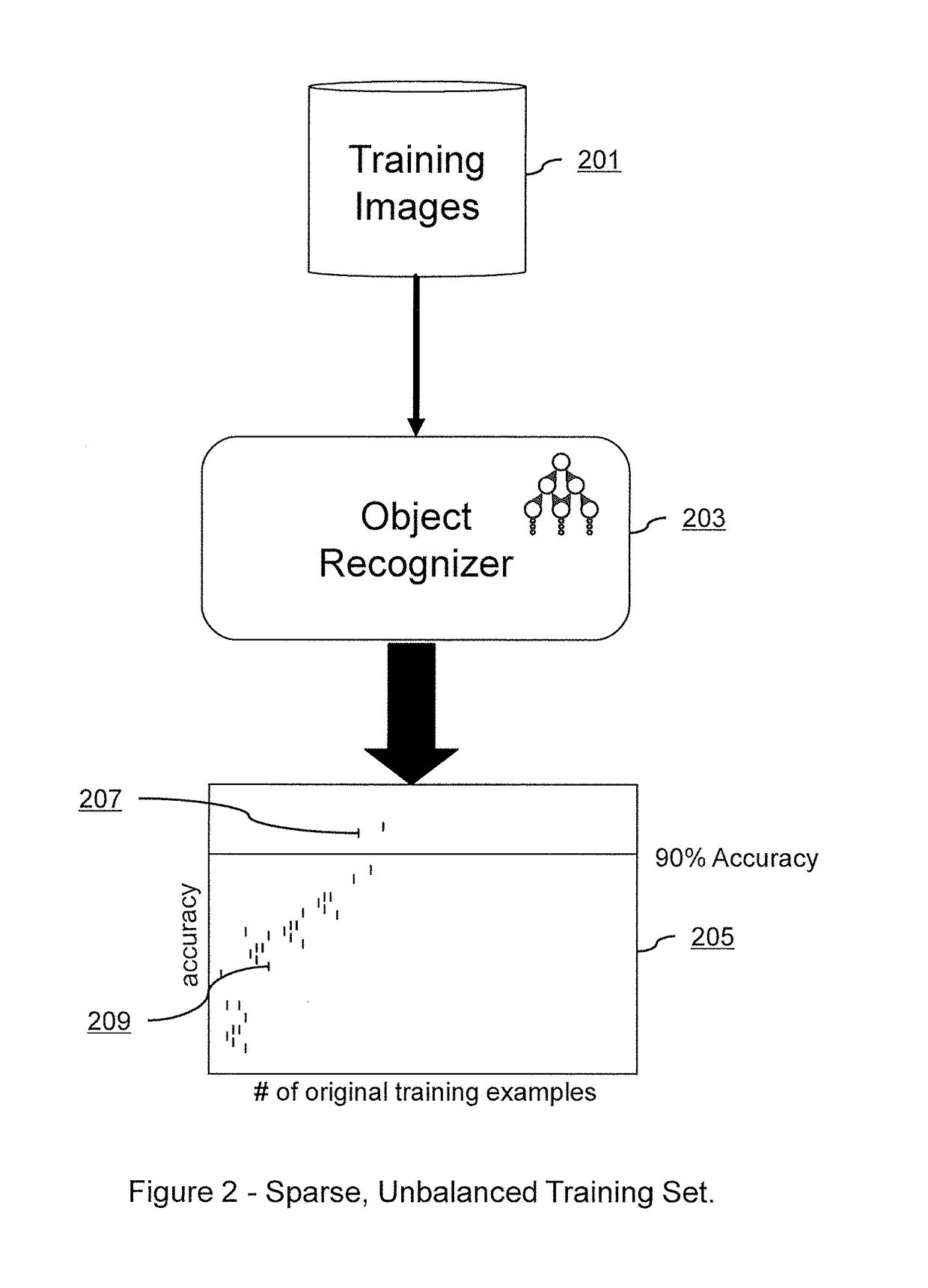

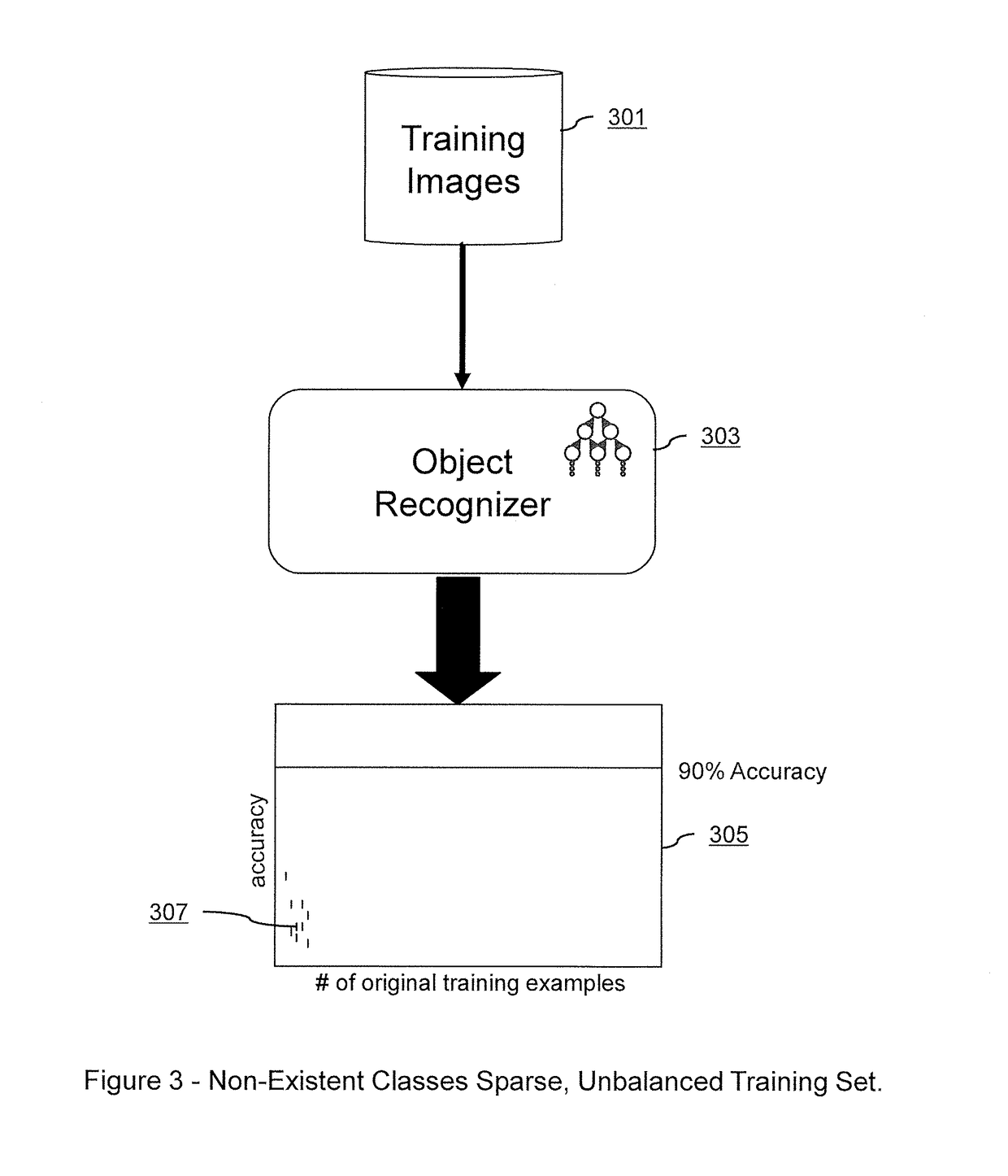

Systems and Methods for Deep Model Translation Generation

ActiveUS20190080205A1Low costAccelerated trainingCharacter and pattern recognitionNeural architecturesPattern recognitionGenerative adversarial network

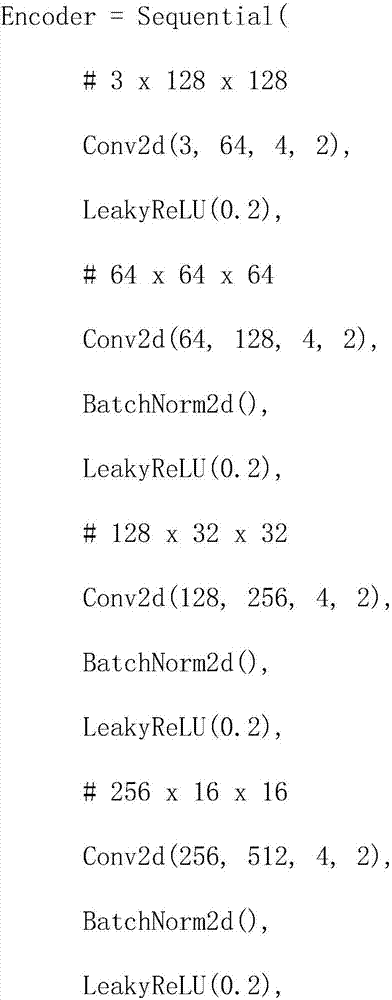

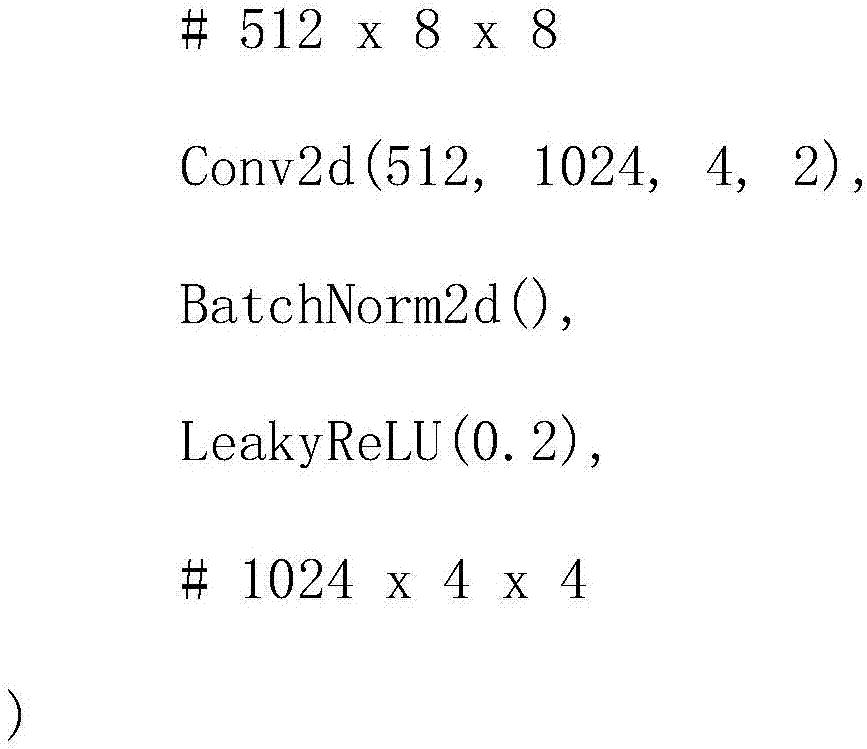

Embodiments of the present invention relate to systems and methods for improving the training of machine learning systems to recognize certain objects within a given image by supplementing an existing sparse set of real-world training images with a comparatively dense set of realistic training images. Embodiments may create such a dense set of realistic training images by training a machine learning translator with a convolutional autoencoder to translate a dense set of synthetic images of an object into more realistic training images. Embodiments may also create a dense set of realistic training images by training a generative adversarial network (“GAN”) to create realistic training images from a combination of the existing sparse set of real-world training images and either Gaussian noise, translated images, or synthetic images. The created dense set of realistic training images may then be used to more effectively train a machine learning object recognizer to recognize a target object in a newly presented digital image.

Owner:GENERAL DYNAMICS MISSION SYST INC

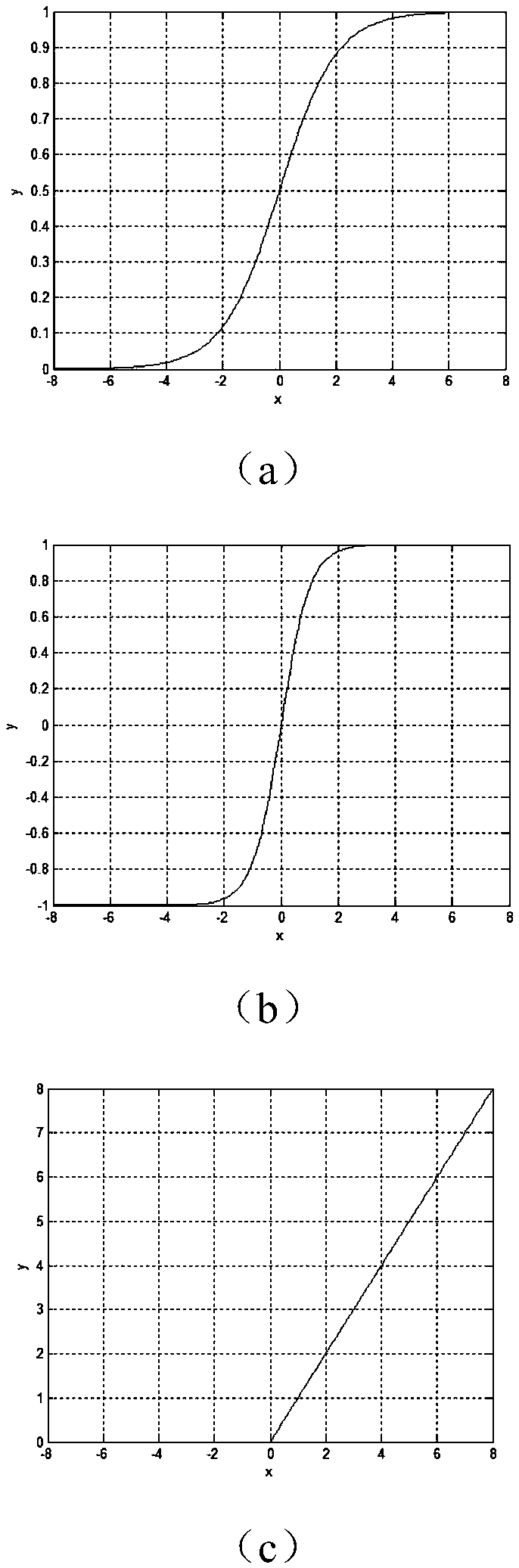

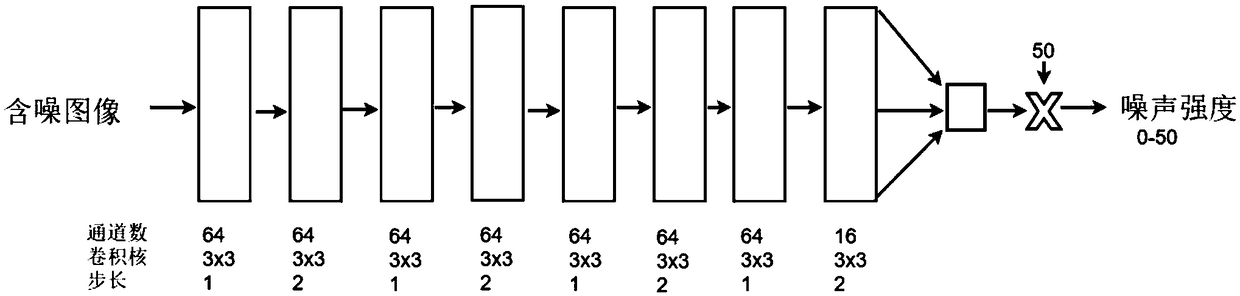

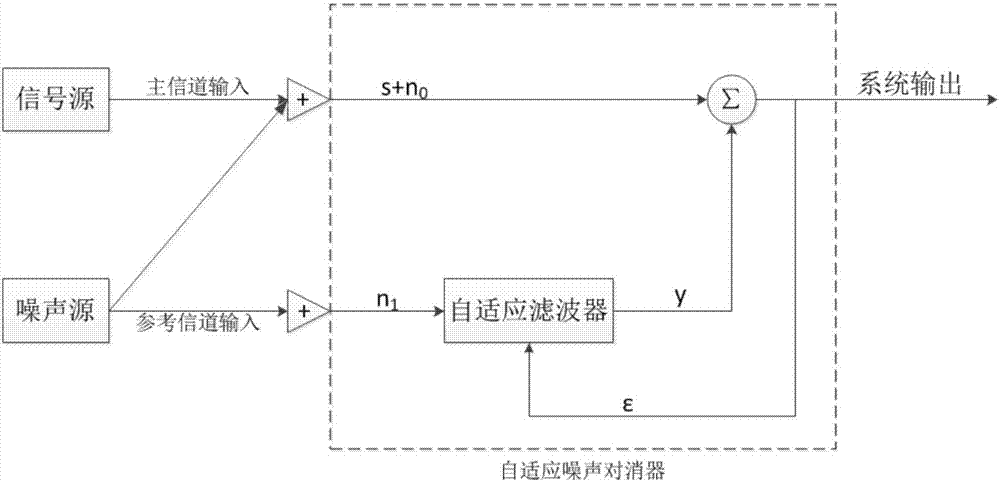

Image denoising method based on generative adversarial networks

InactiveCN108765319AEasy to trainEvenly distributedImage enhancementImage analysisGenerative adversarial networkImage denoising

The invention provides an image denoising method based on generative adversarial networks, and belongs to the technical field of computer vision. The method comprises the following steps: (1) designing a neural network for estimation for noise intensity of an image containing noises; (2) using image blocks in an image library to add noises of the intensity according to the estimated noise intensity to use the same as samples of training the networks; (3) in network training, designing a new generation network and discrimination network, and adopting a form of fixing the generation network to train the discrimination network and fixing discrimination network parameters to train the generation network to enable the networks to carry out adversarial training; and (4) using the trained generation network as a denoising network, and selecting a network parameter according to a result, which is obtained by the noise recognition network, to denoise the image containing the noises. The methodhas the effects and the advantages that a visual effect of the denoised image is improved without the need for manual intervention for adjusting the parameter, and texture details of the image can bebetter restored.

Owner:DALIAN UNIV OF TECH

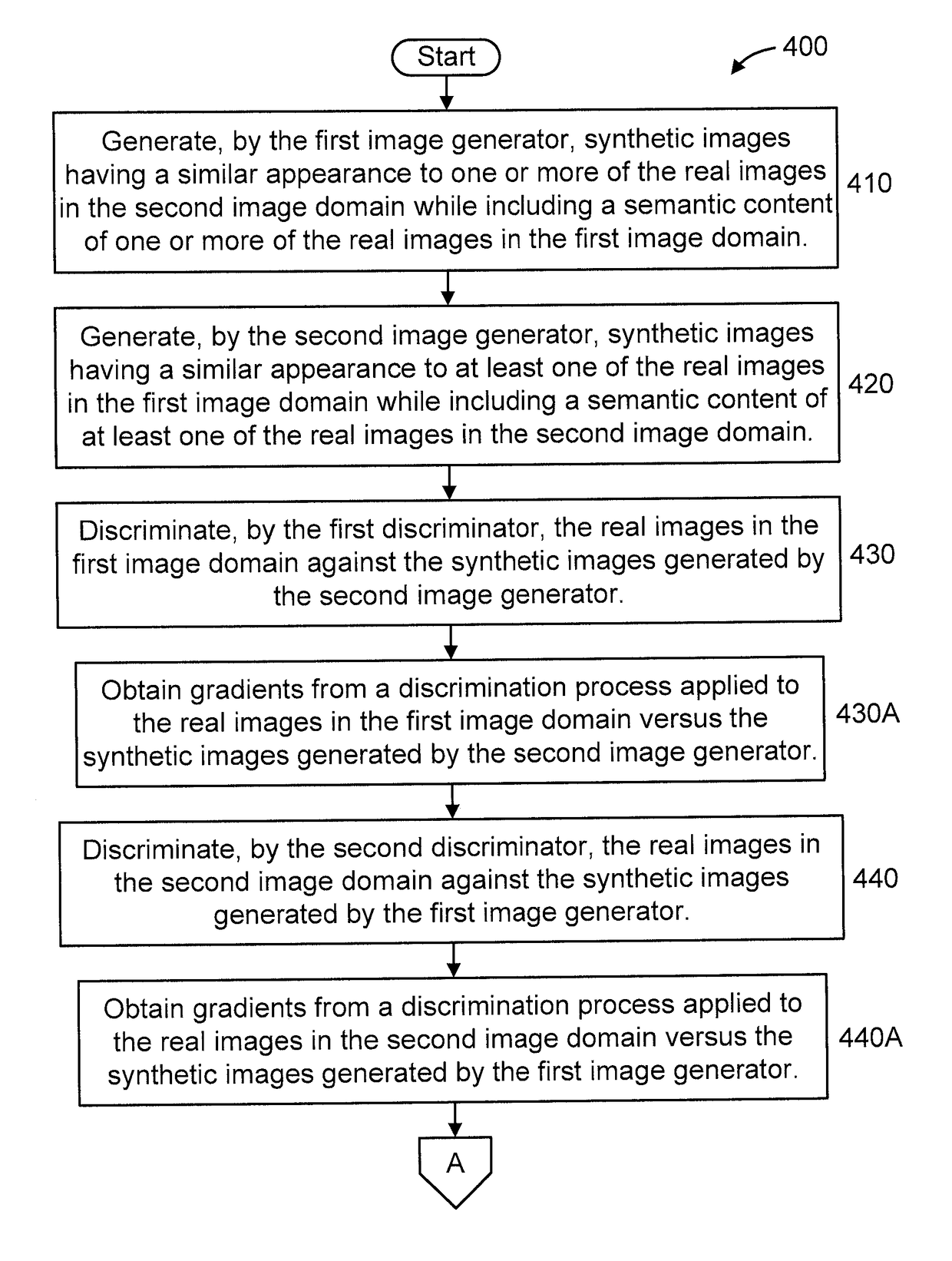

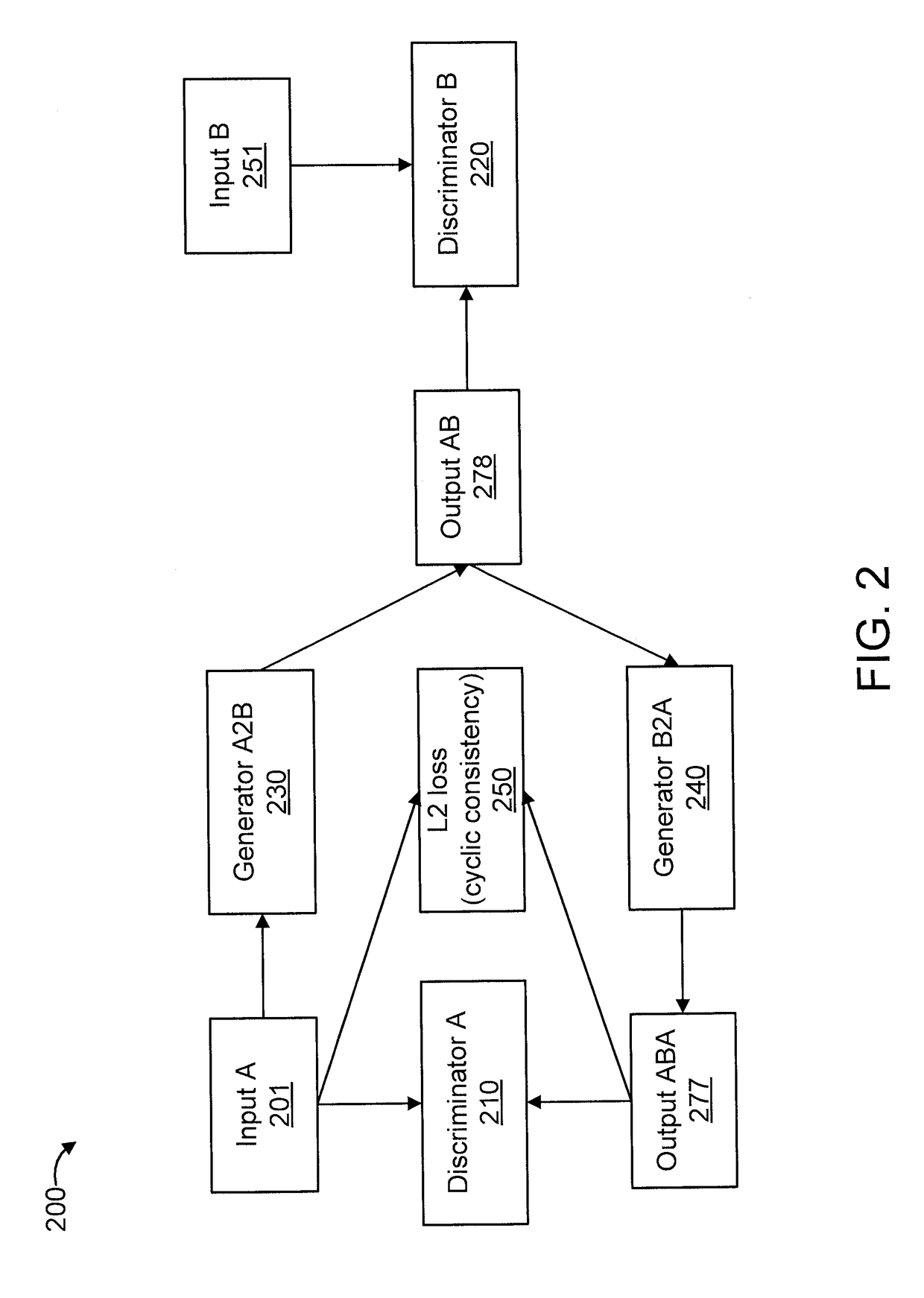

Cyclic generative adversarial network for unsupervised cross-domain image generation

ActiveUS20180307947A1Error rate of discriminativeQuality improvementTexturing/coloringCharacter and pattern recognitionGenerative adversarial networkAdversarial network

A system is provided for unsupervised cross-domain image generation relative to a first and second image domain that each include real images. A first generator generates synthetic images similar to real images in the second domain while including a semantic content of real images in the first domain. A second generator generates synthetic images similar to real images in the first domain while including a semantic content of real images in the second domain. A first discriminator discriminates real images in the first domain against synthetic images generated by the second generator. A second discriminator discriminates real images in the second domain against synthetic images generated by the first generator. The discriminators and generators are deep neural networks and respectively form a generative network and a discriminative network in a cyclic GAN framework configured to increase an error rate of the discriminative network to improve synthetic image quality.

Owner:NEC CORP

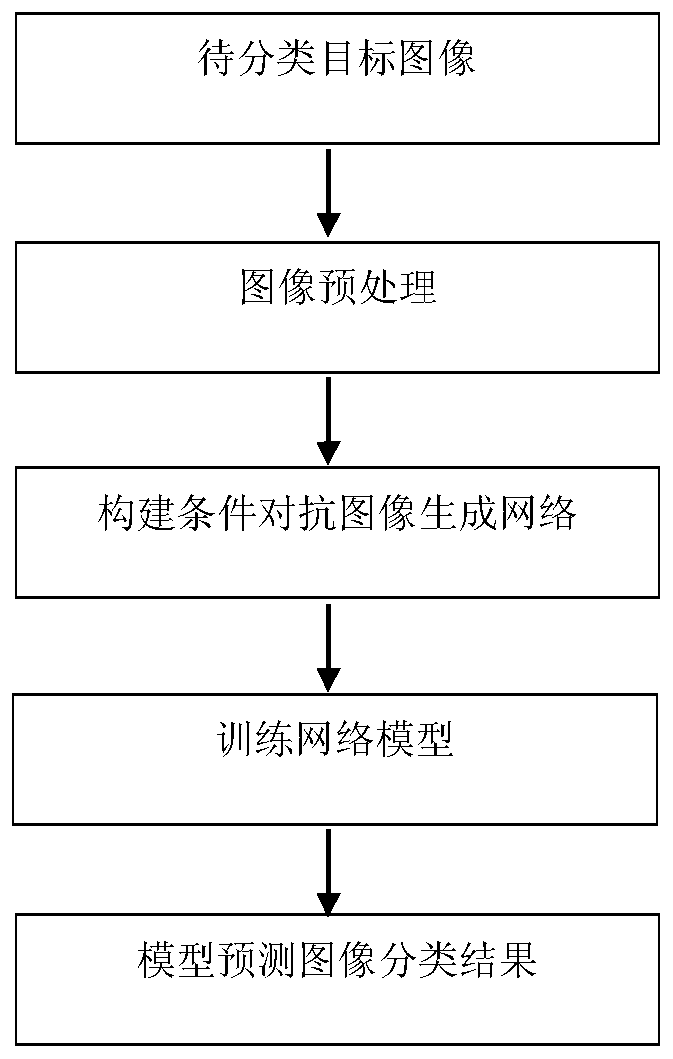

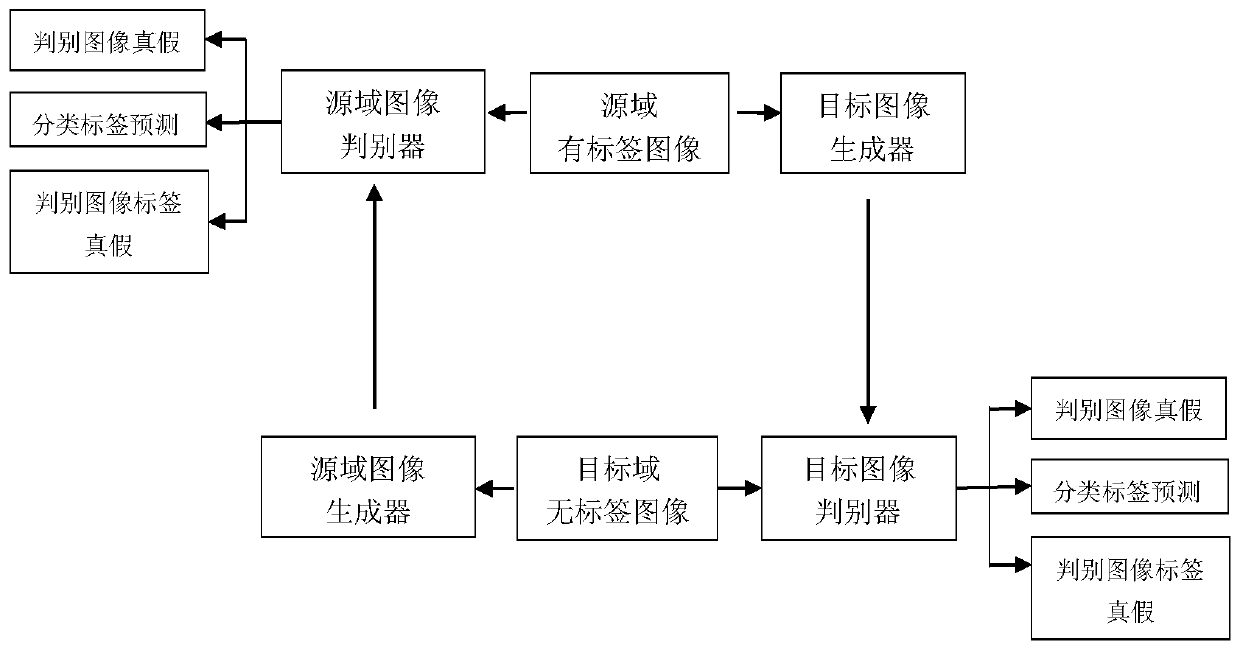

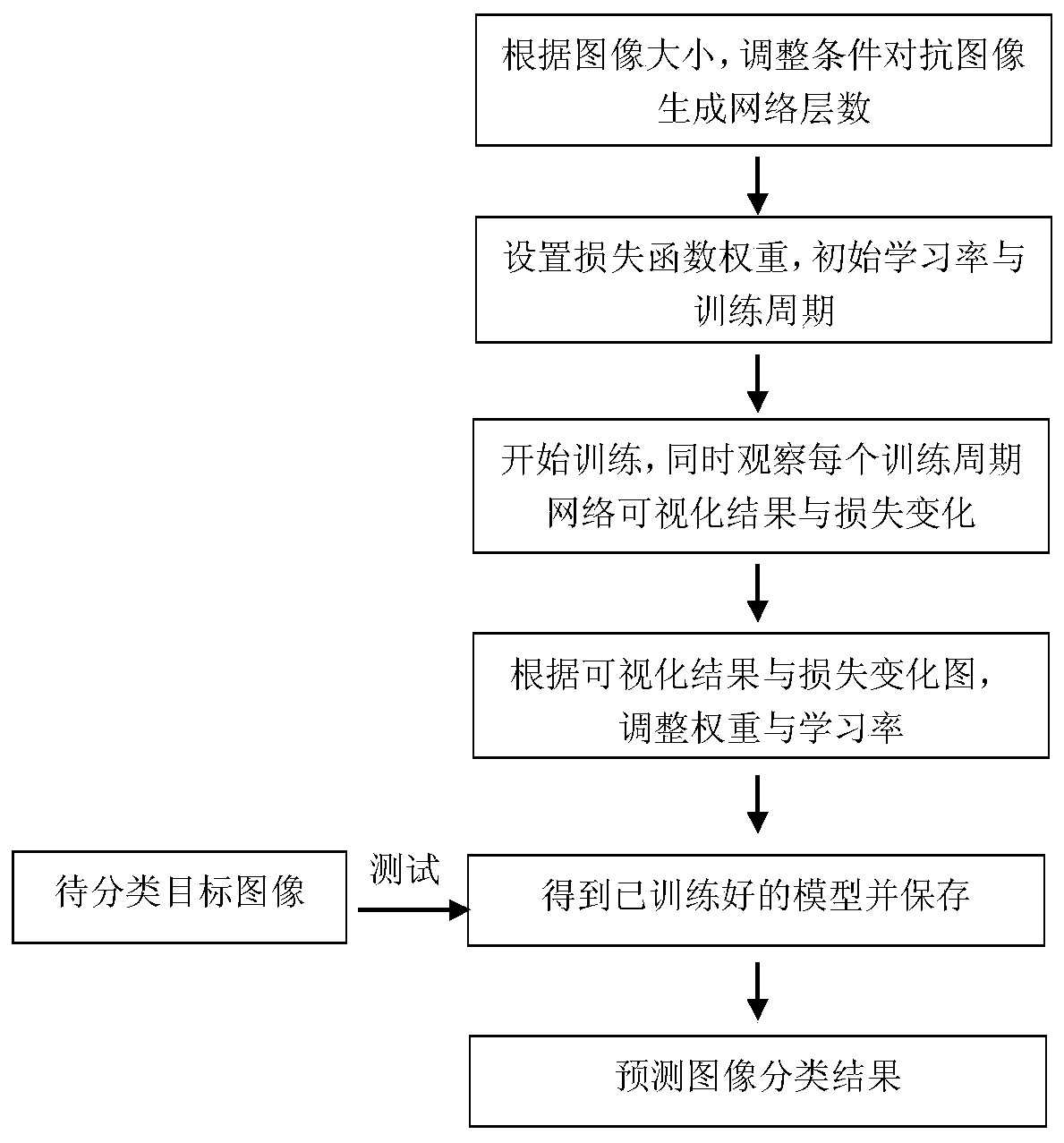

Unsupervised domain adaptive image classification method based on conditional generative adversarial network

ActiveCN109753992ARealize mutual conversionImprove domain adaptabilityCharacter and pattern recognitionNeural architecturesData setClassification methods

The invention discloses an unsupervised domain adaptive image classification method based on a conditional generative adversarial network. The method comprises the following steps: preprocessing an image data set; constructing a cross-domain conditional confrontation image generation network by adopting a cyclic consistent generation confrontation network and applying a constraint loss function; using the preprocessed image data set to train the constructed conditional adversarial image generation network; and testing the to-be-classified target image by using the trained network model to obtain a final classification result. According to the method, a conditional adversarial cross-domain image migration algorithm is adopted to carry out mutual conversion on source domain image samples andtarget domain image samples, and consistency loss function constraint is applied to classification prediction of target images before and after conversion. Meanwhile, discriminative classification tags are applied to carry out conditional adversarial learning to align joint distribution of source domain image tags and target domain image tags, so that the source domain image with the tags is applied to train the target domain image, classification of the target image is achieved, and classification precision is improved.

Owner:NANJING NORMAL UNIVERSITY

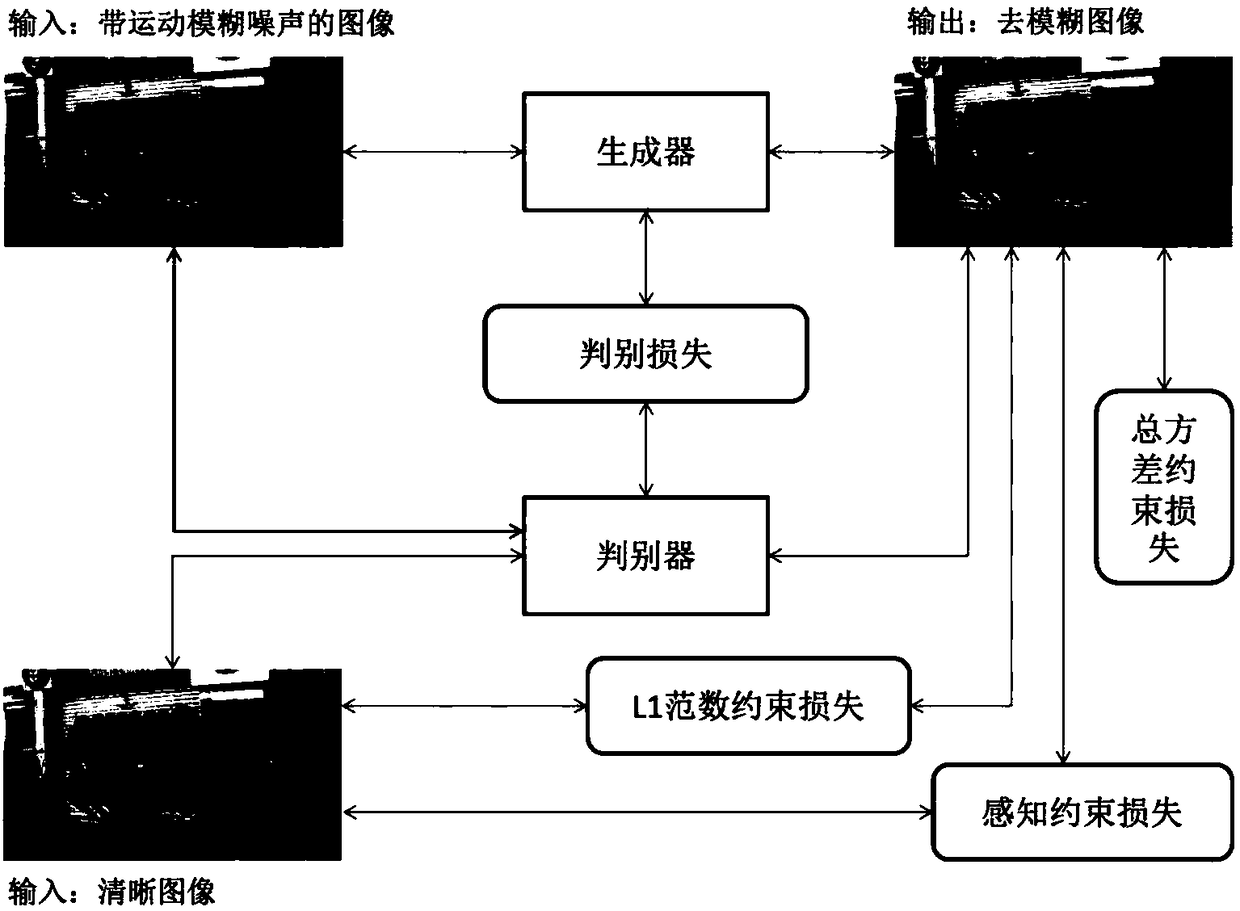

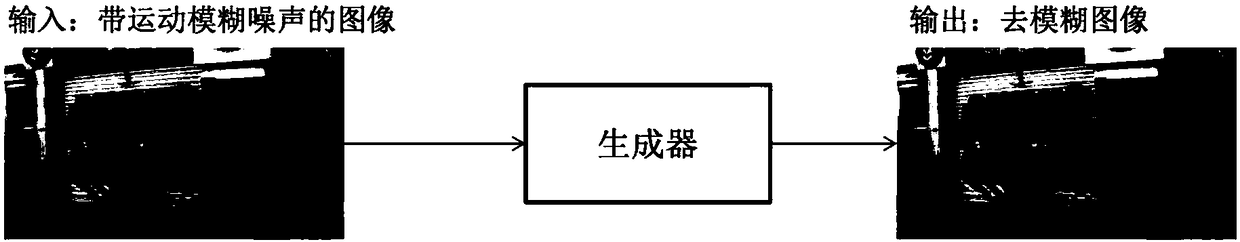

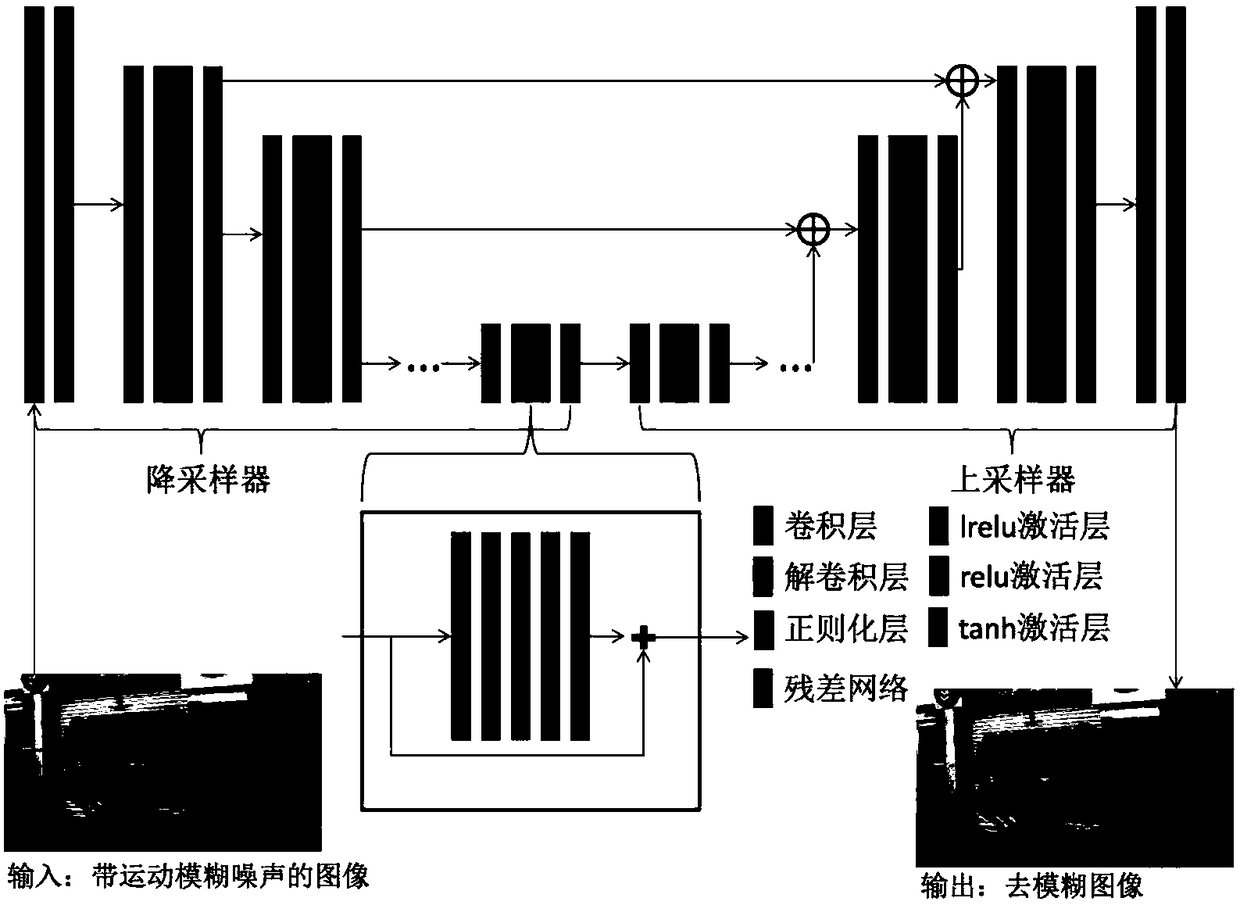

GAN (Generative Adversarial Network) based motion blur removing method of image

ActiveCN108416752AImprove efficiencyEasy to handleImage enhancementImage analysisDiscriminatorGenerative adversarial network

The invention discloses a GAN based motion blur removing method of an image and a motion blur removing GAN model for the method. The method comprises that the GAN model is designed; the model is trained; and in an application phase, the GAN model comprises a generator and a discriminator, the generator optimizes parameters continuously so that a generated image approaches distribution of a clear image, the discriminator optimizes parameters continuously so that whether the image is from fuzziness removing image distribution or clear image distribution can be discriminated more effectively, thegenerator comprises a down-sampling device and an up-sampling device, the down-sampling device carries out convolution on the image and extract semantic information from the image, and the up-sampling device carries out deconvolution on the image by combining the obtained semantic information with structure information of the image. Thus, motion blur of the image can be removed effectively, and the clear image satisfying perception of humans is obtained.

Owner:SUN YAT SEN UNIV

Generative adversarial network-based multi-pose face generation method

ActiveCN107292813AImprove recognition rateImprove the problem of lack of large-scale dataGeometric image transformationCharacter and pattern recognitionTraining phaseAttitude control

The present invention discloses a generative adversarial network-based multi-pose face generation method. According to the generative adversarial network-based multi-pose face generation method, in a training phase, the face data of various poses are collected; two deep neural networks G and D are trained on the basis of a generative adversarial network; and after training is completed, the generative network G is inputted on the basis of random sampling and pose control parameters, so that face images of various poses can be obtained. With the method of the invention adopted, a large quantity of different face images of a plurality of poses can be generated, and the problem of data shortage in the multi-pose face recognition field can be solved; the newly generated face images of various poses are adopted as training data to train an encoder for extracting the identity information of the images; in a final testing process, an image of a random pose is inputted, and identity information features are obtained through the trained encoder; and the face images of various poses of the same person are obtained through the trained generative network.

Owner:ZHEJIANG UNIV

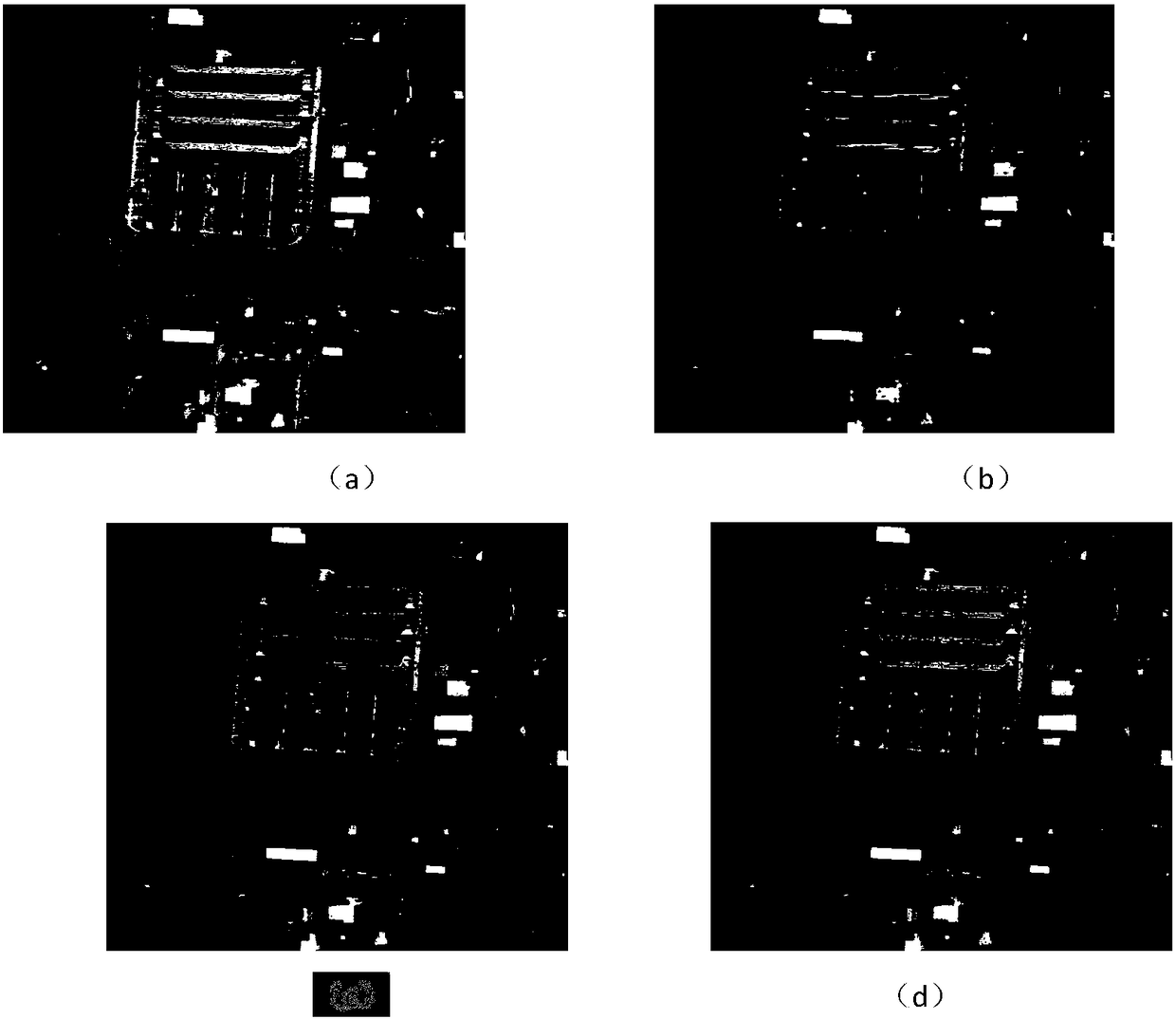

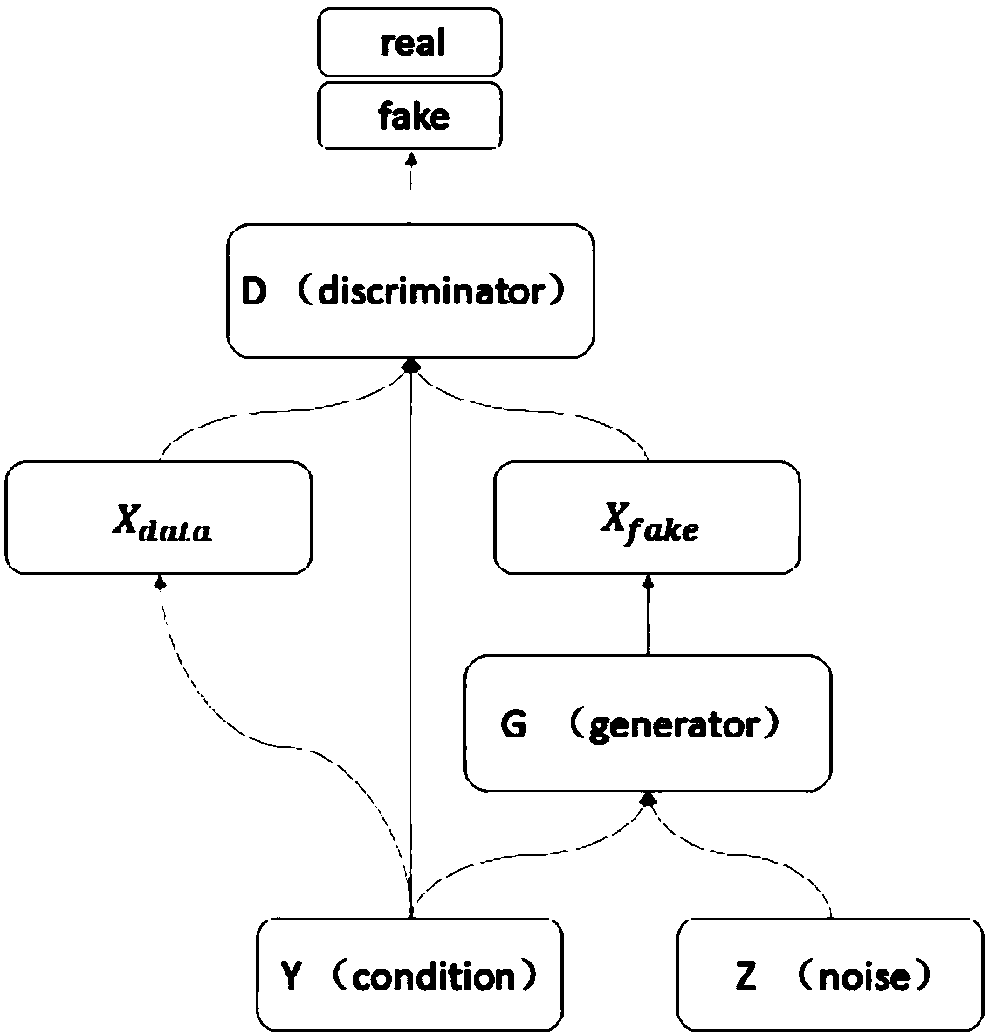

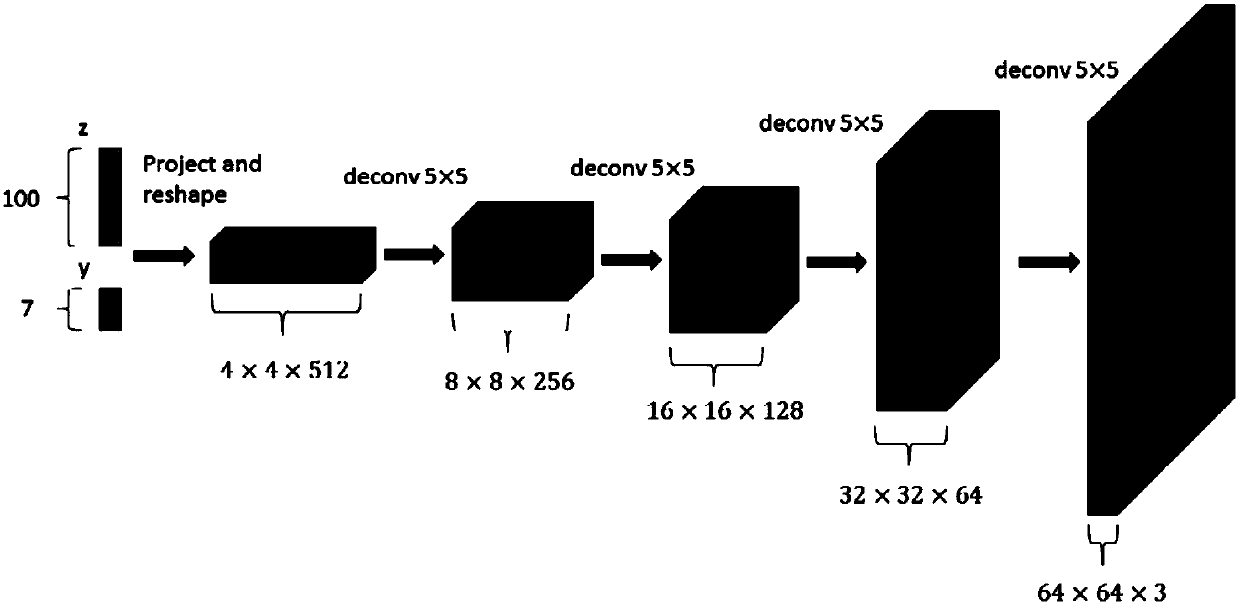

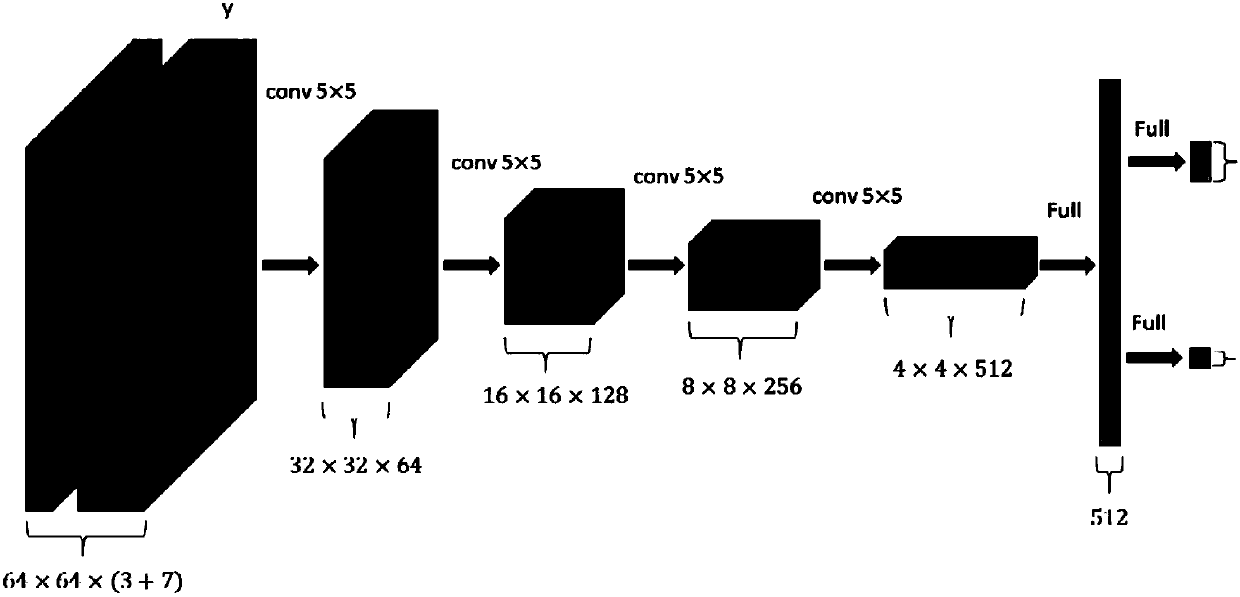

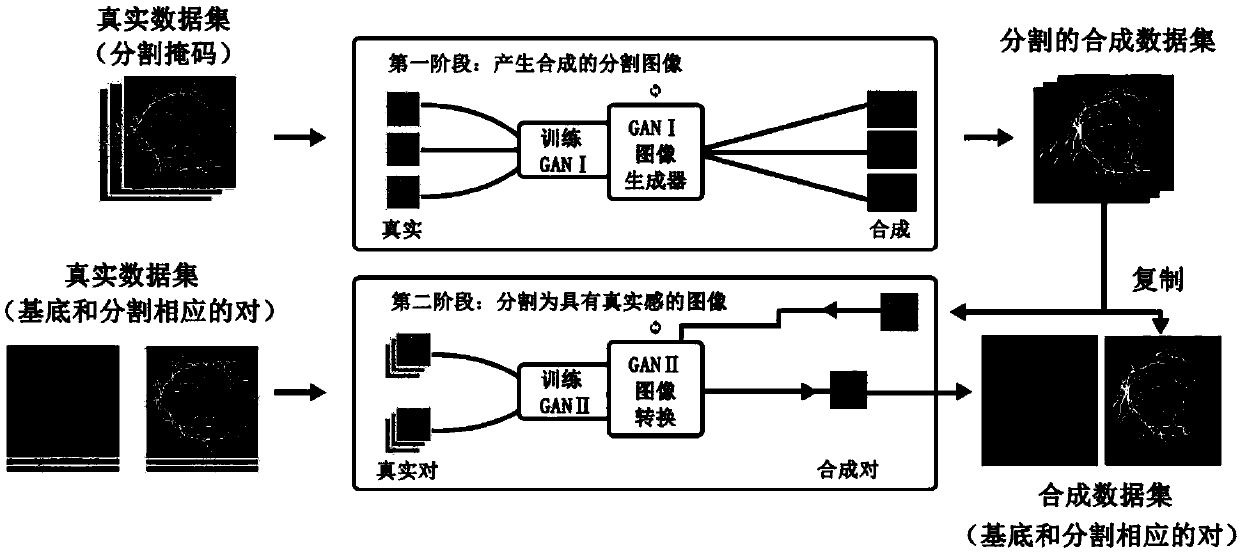

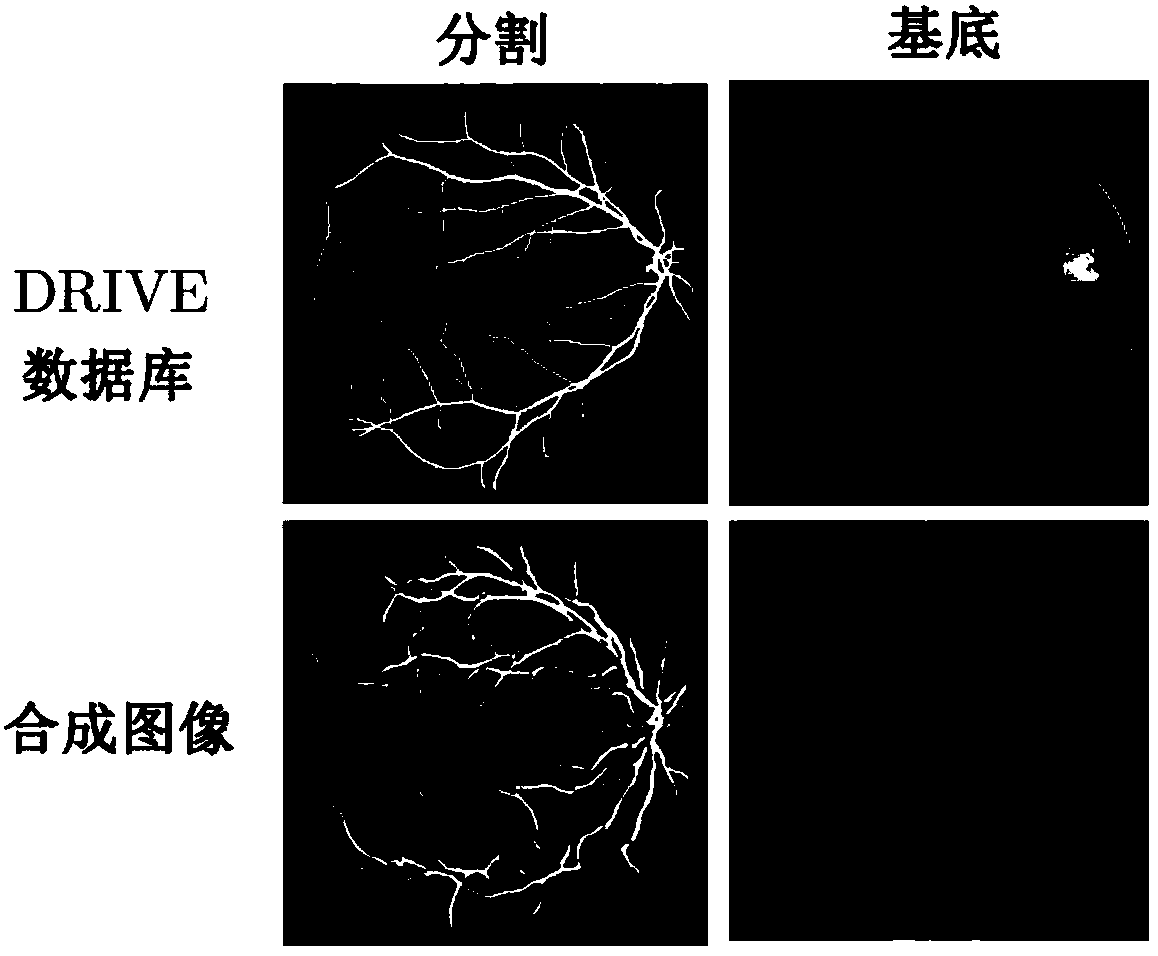

Medical image synthesis method based on double generative adversarial networks

The invention provides a medical image synthesis method based on double generative adversarial networks. The method comprises the main content of performing data management, generating the generativeadversarial networks, training a U-NET, establishing assessment indexes and obtaining processed pictures, wherein first, a DRIVE database is used to manage a first-stage GAN, then the first-stage GANgenerates a partitioning mask representing variable geometry of a dataset, a second-stage GAN converts the mask produced at the first stage into an image with a sense of reality, an generator minimizes a loss function of the true data in classification through a descriminator, then the U-NET is trained to assess the reliability of synthetic data, and finally the assessment indexes are establishedto measure a generated model. According to the method, by use of a pair of generative adversarial networks to create a new image generation path, the problem that the synthetic image contains a fake shadow and noise is solved, the stability and the sense of reality of the image are improved, and meanwhile image details are clearer.

Owner:SHENZHEN WEITESHI TECH

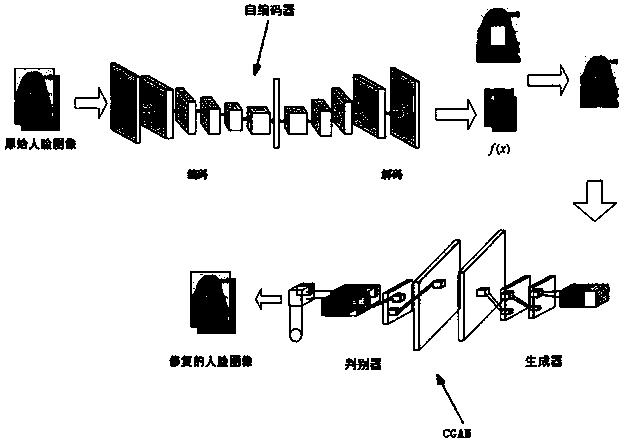

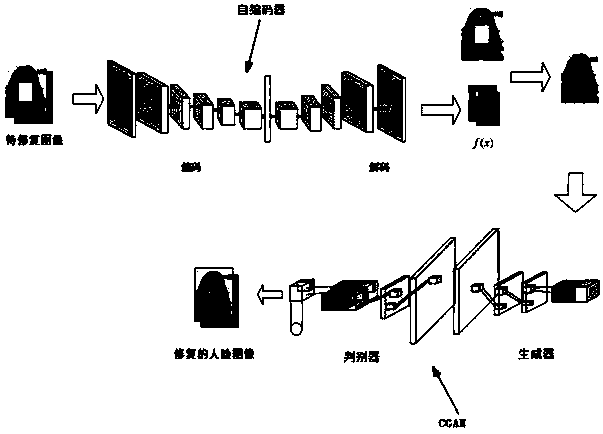

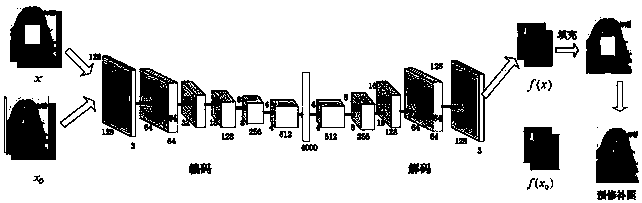

Method for repairing face defect images based on auto-encoder and generative adversarial networks

ActiveCN108520503AImprove clarityAvoid generatingImage enhancementCharacter and pattern recognitionPattern recognitionData set

The present invention provides a method for repairing face defect images based on an auto-encoder and generative adversarial networks. Through combination of the auto-encoder and the generative adversarial networks, the method comprises the following steps of: (1) performing face data set defect preprocessing; (2) employing the data set after processing to train the auto-encoder to allow the auto-encoder to reach the optimal state; (3) employing the data set after processing to train the condition generative adversarial networks to allow the condition generative adversarial networks to reach the optimal state; (4) inputting a defect image to be repaired into the trained encoder to generate a face image to be repaired; and (5) inputting the image to be repaired to the condition generative adversarial networks to generate much clearer and more natural restored face image. The method improves the restoring definition of the defect face area and the fidelity of the defect content, avoids the pseudomorphism of the defect area edges to the maximum extent, restricts the generation direction of the defect area and generates much clearer and more natural restoring effect.

Owner:XIANGTAN UNIV

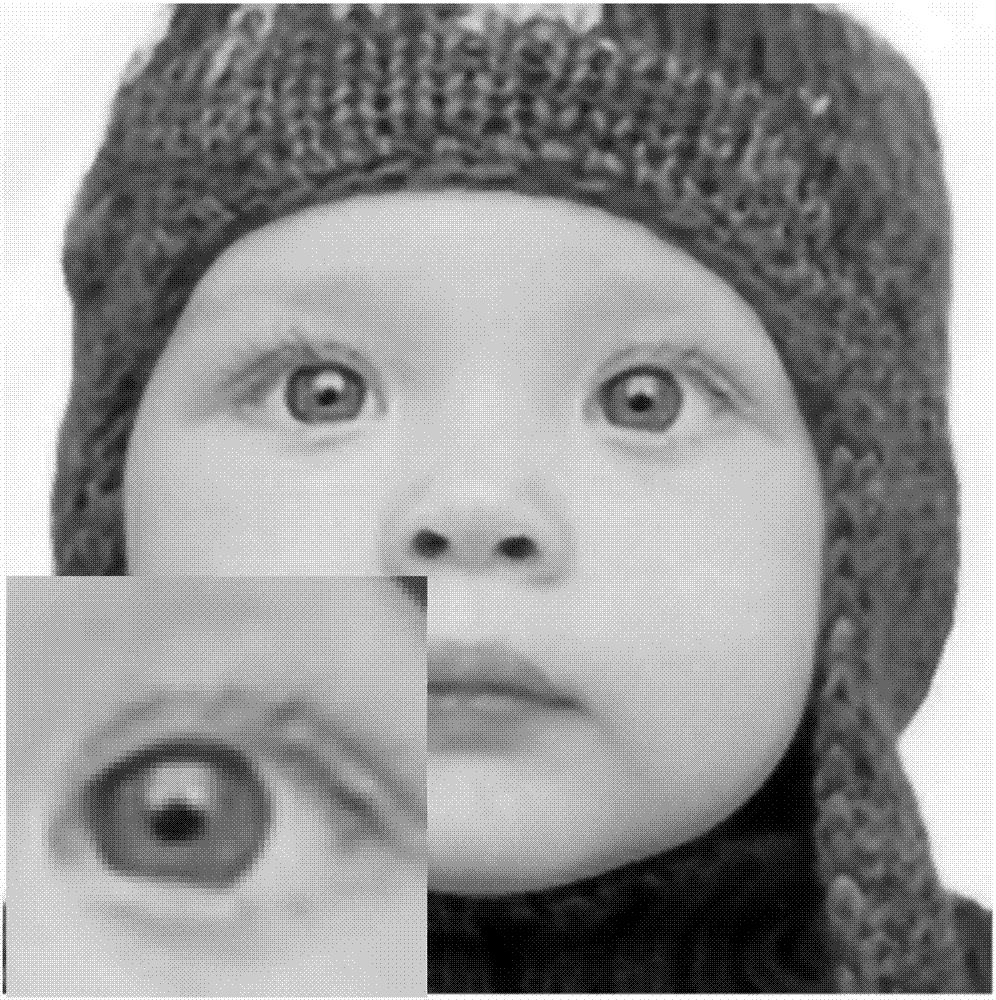

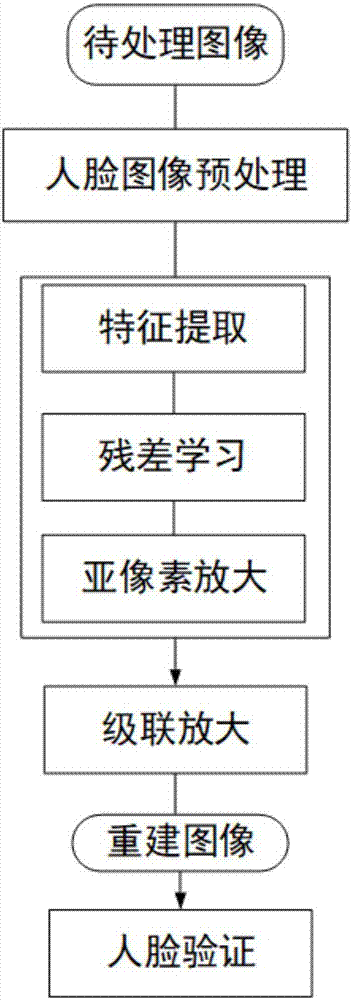

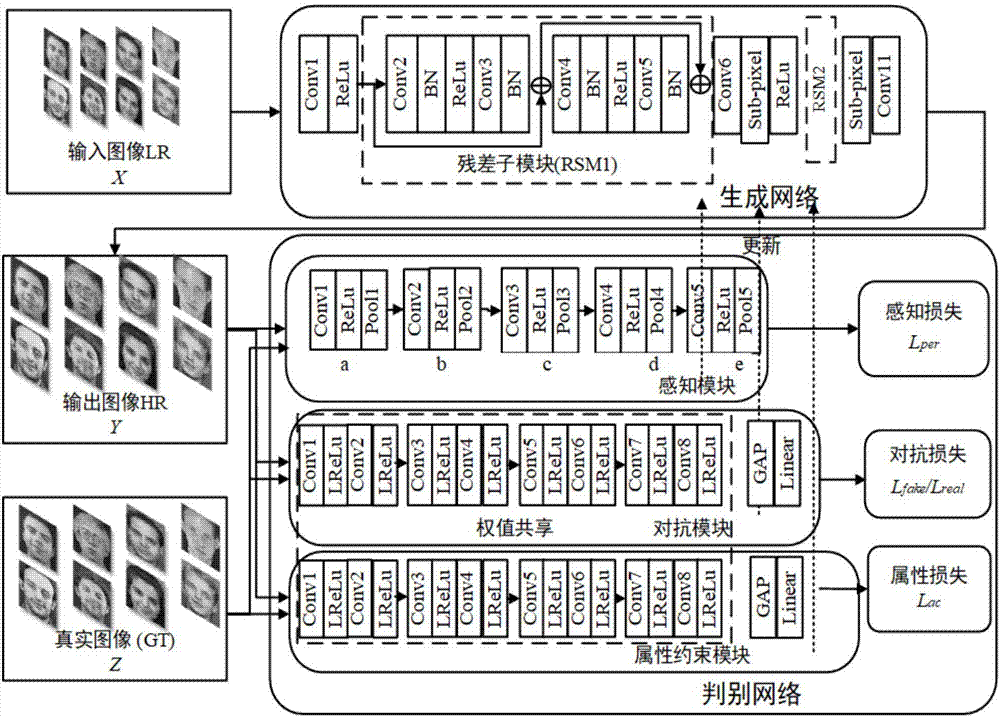

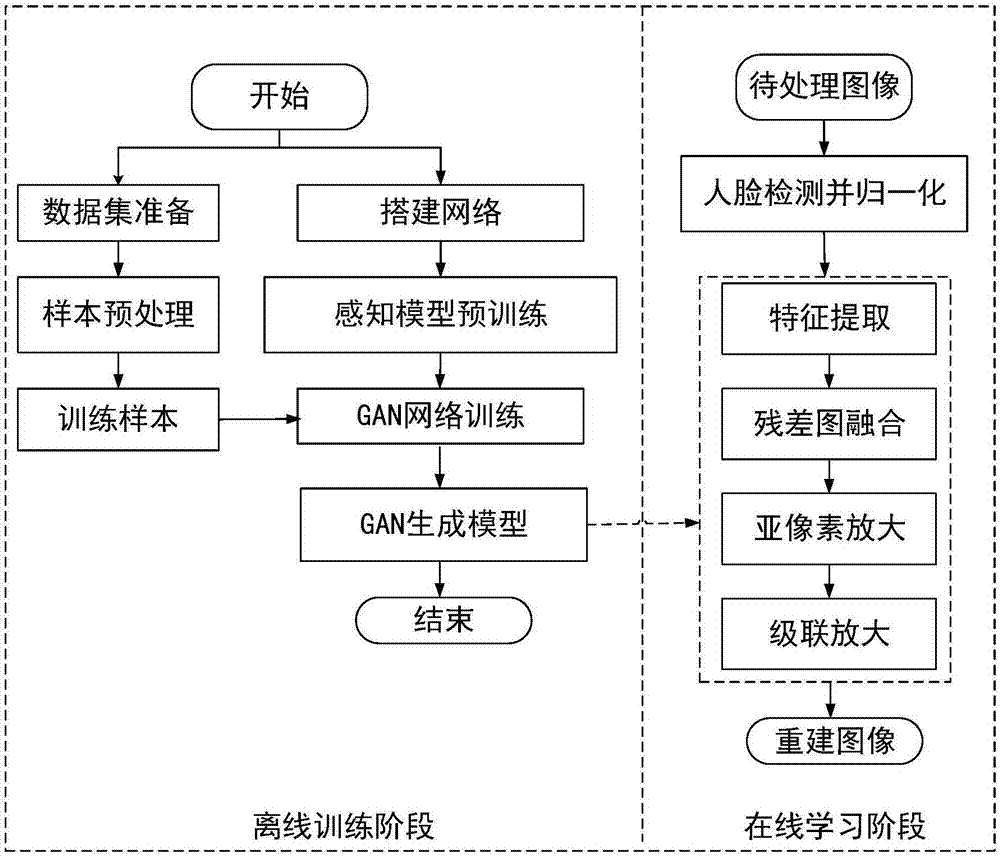

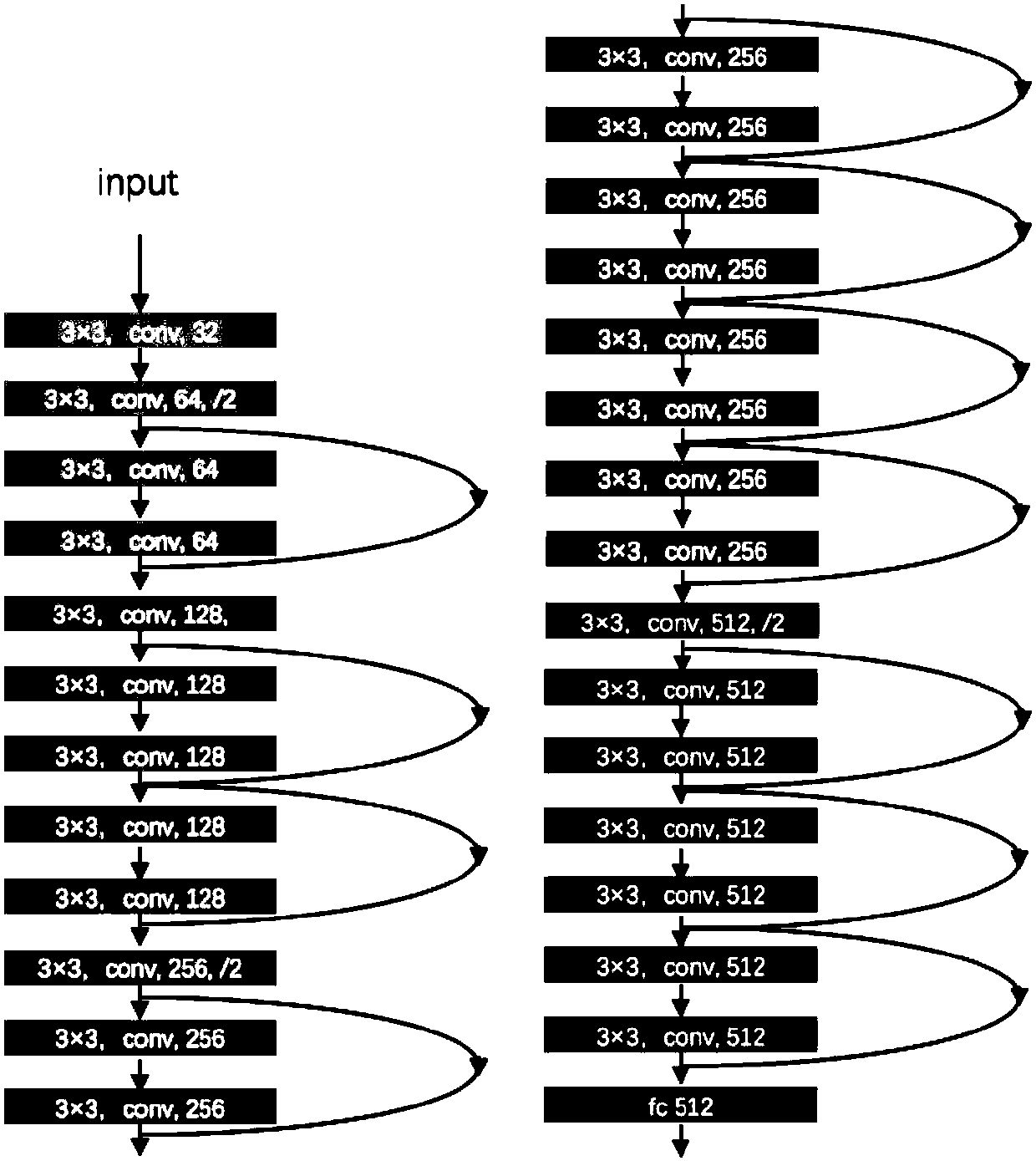

Face image super-resolution reconstruction method based on discriminable attribute constraint generative adversarial network

ActiveCN107977932AImprove learning effectImprove performanceImage enhancementImage analysisPattern recognitionGenerative adversarial network

The invention discloses a face image super-resolution reconstruction method based on a discriminable attribute constraint generative adversarial network, and belongs to the field of digital images / video signal processing. The method comprises the following steps: firstly, designing a processing flow of face detailed information enhancement; secondly, designing a network structure according to theflow, and acquiring an HR image from an LR image through the network; and lastly, performing face verification accuracy evaluation on the HR image through a face recognition network. Through adoptionof the method, enhancement including LR face image detailed information can be completed, and the accuracy of face verification is increased. Secondly, the generative network completes compensation ofimage high-frequency information firstly, then completes image amplification by subpixel convolution, and finally completes stepwise image amplification through a cascade structure, thereby completing enhancement of image detailed information. An attribute constraint module are trained together with a perception module and an adversarial model in order to perform fine adjustment of the performance of a network reconstructed image. Finally, a reconstructed image of the generative network is input into a face verification network, so that the accuracy of face verification is increased.

Owner:BEIJING UNIV OF TECH

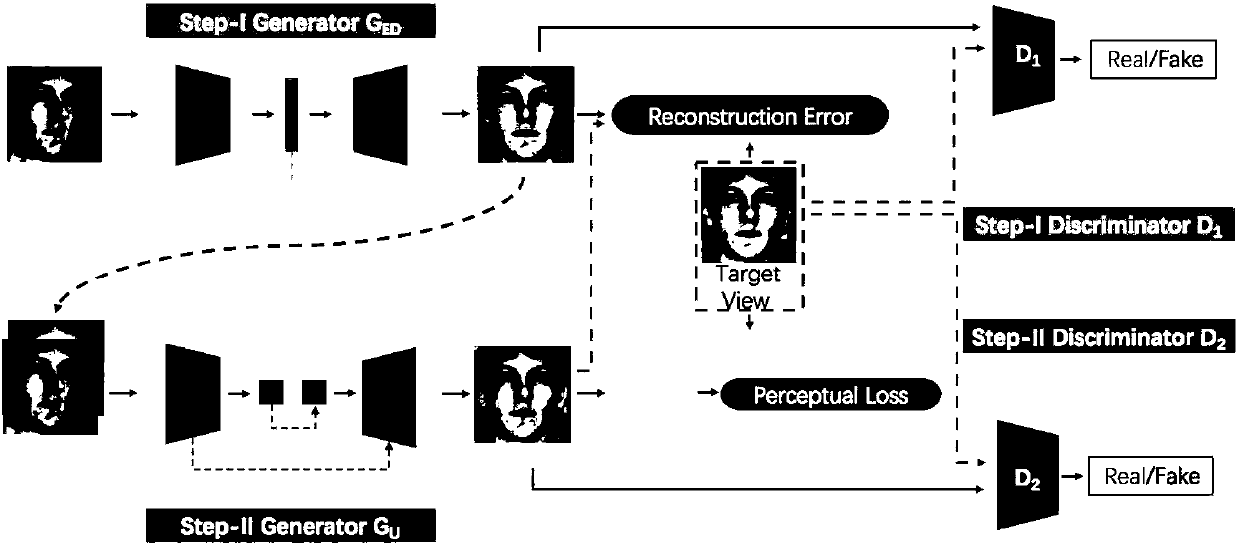

Face image enhancement method based on generative adversarial network

ActiveCN108537743AEasy to identifyEffective solution ideasImage enhancementImage analysisGenerative adversarial networkModel parameters

The invention discloses a face image enhancement method based on a generative adversarial network (GAN). The method comprises the following steps of: 1, preprocessing face images having multiple posesby using a 3D dense face alignment method; 2, designing a face enhancement network based on the GAN, and generating the GAN in two steps; 3, according to a task requirement, designing an objective function 4 corresponding to the Step-I and the Step-II, pre-training a recognition model by using MS-1-celeb, and pre-training a TS-GAN model by using augmented data; and 5, using Multi-PIE as a training Set, using a back propagation algorithm (4) to complete the pre-trained TS-GAN model parameters until convergence. The finally trained TS-GAN model can obtain a front face image corresponding to aninput image. Further, the front face image retains original illumination, is true in visual degree, and retains original identity information.

Owner:HANGZHOU DIANZI UNIV

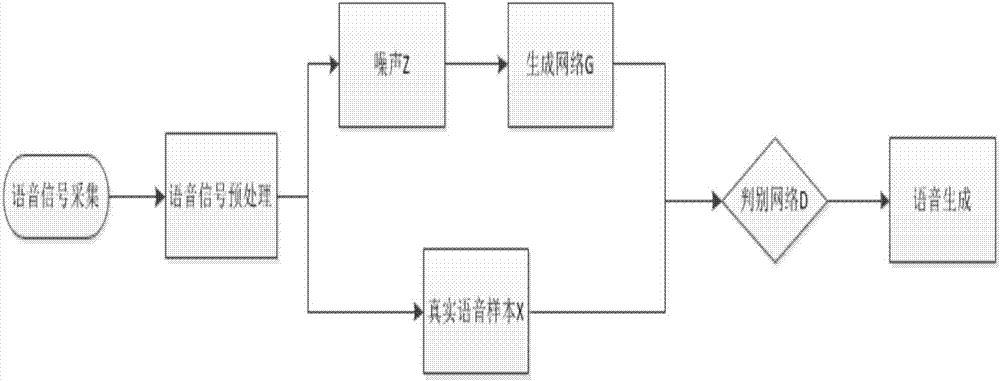

Voice production method based on deep convolutional generative adversarial network

ActiveCN107293289ASpeak clearlySpeak naturallySpeech recognitionMan machineGenerative adversarial network

The invention discloses a voice production method based on a deep convolutional generative adversarial network. The steps comprise: (1) acquiring voice signal samples; (2) preprocessing the voice signal samples; (3) inputting the voice signal samples to a deep convolutional generative adversarial network; (4) training the input voice signals; (5) generating voice signals similar to real voice contents. A tensorflow is used as a learning framework, and a deep convolutional generative adversarial network algorithm is used to train large quantity of voice signals. A dynamic game process of a distinguishing network D and a generation network G in the deep convolutional generative adversarial network is used to finally generate a natural voice signal close to original learning contents. The method generates voice based on the deep convolutional generative adversarial network, and solves problems that an intelligent device is overly dependent on a fixed voice library to sound in a man-machine face-to-face communication process, and mode is monotonous and is lack of variations and is not natural enough.

Owner:NANJING MEDICAL UNIV

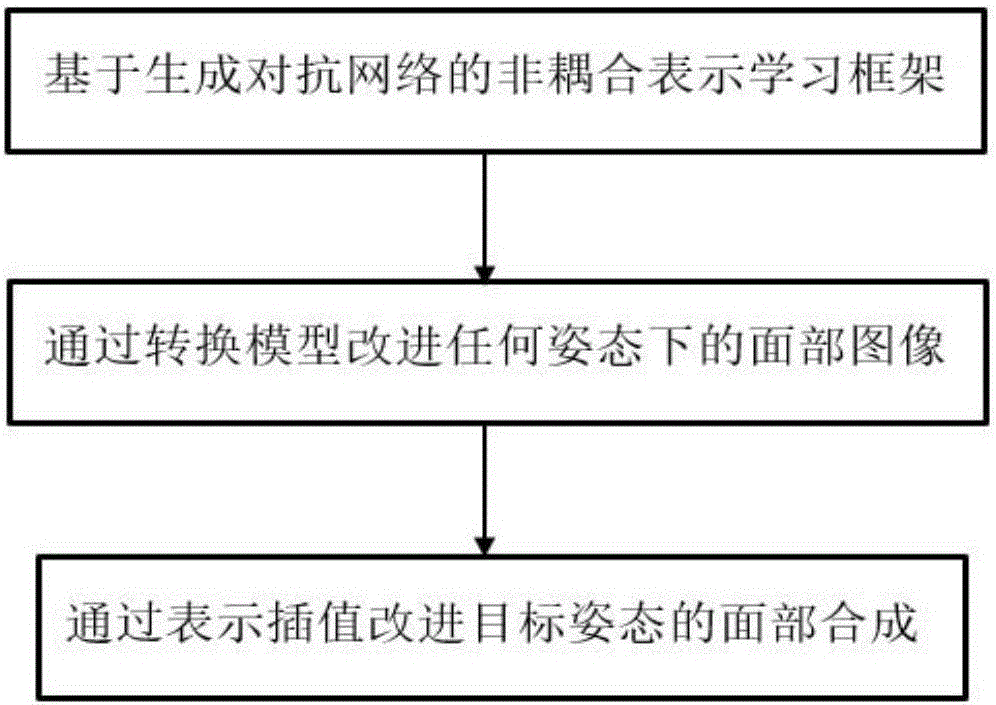

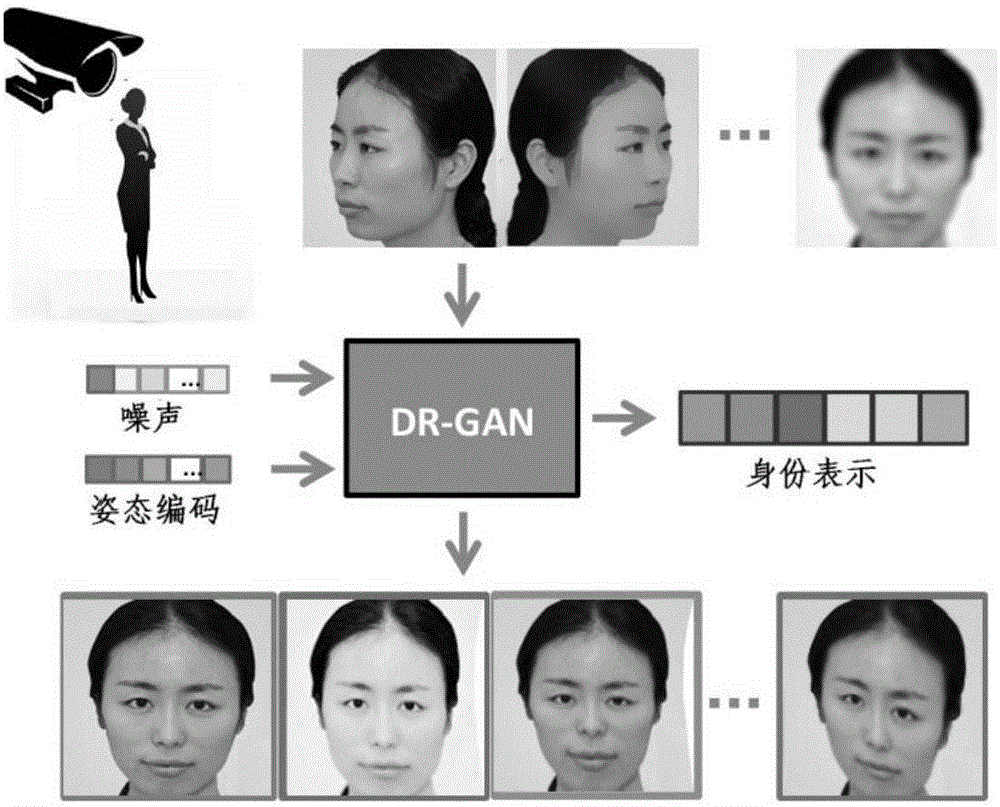

Rotary face expression learning method based on generative adversarial network

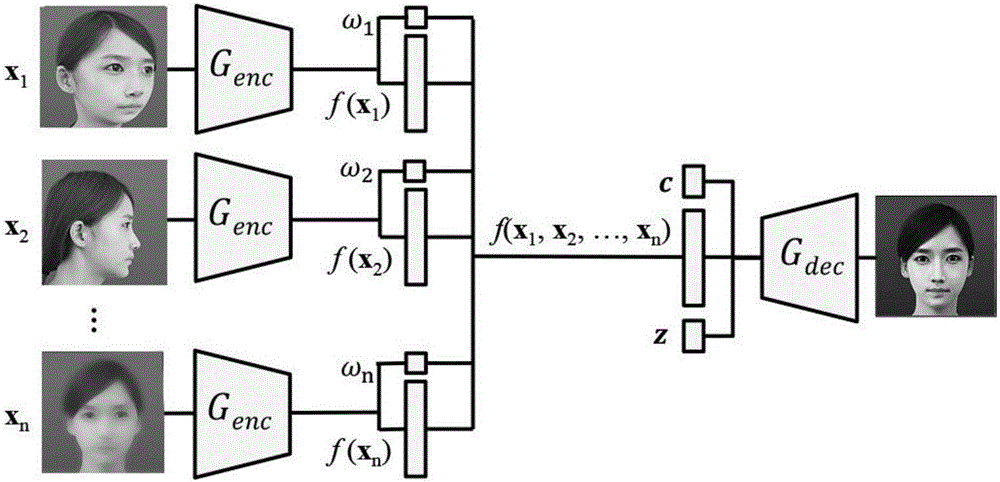

The invention relates to a rotary face expression learning method based on a generative adversarial network. The method comprises the following main contents: a non-coupling expression learning framework (DR-GAN) based on a generative adversarial network, improvement on face images with any attitude through a conversion model, and improvement on face synthesis of target attitudes through expression of interpolations. The method includes the following processes: providing an attitude code to a decoder, increasing attitude estimation constraint in a discriminator, separating attitude change features by feature expression learnt by DR-GAN learning in an explicit manner, taking one or more face images of a person as input, generating a unified identity feature expression, and generating any number of synthesized images of the person with different attitudes. The invention brings forward a non-coupling expression learning framework based on a generative adversarial network, which is used for rotary faces and face recognition and makes further contribution to new designs in the modeling field and to innovation solutions in the lie detection field.

Owner:SHENZHEN WEITESHI TECH

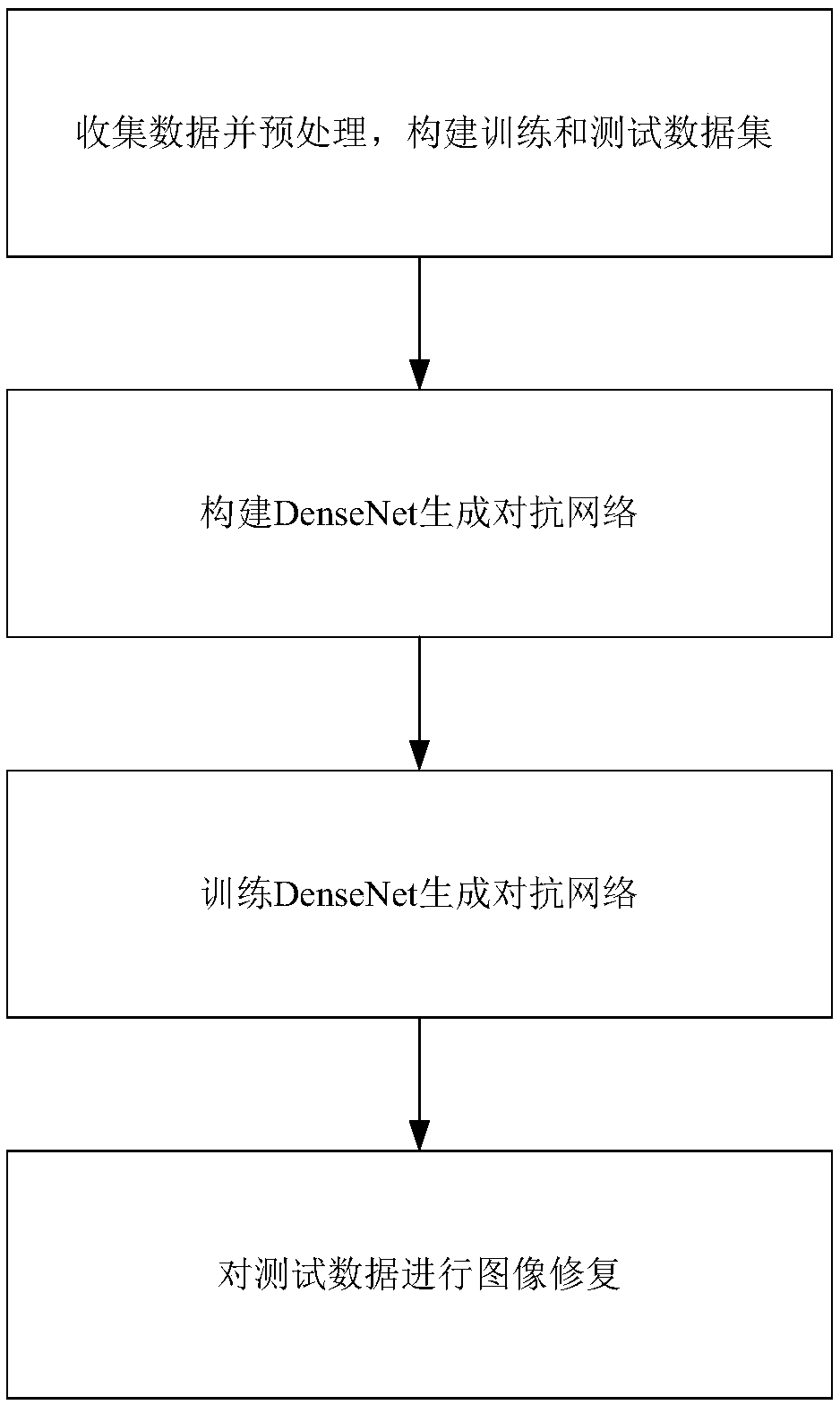

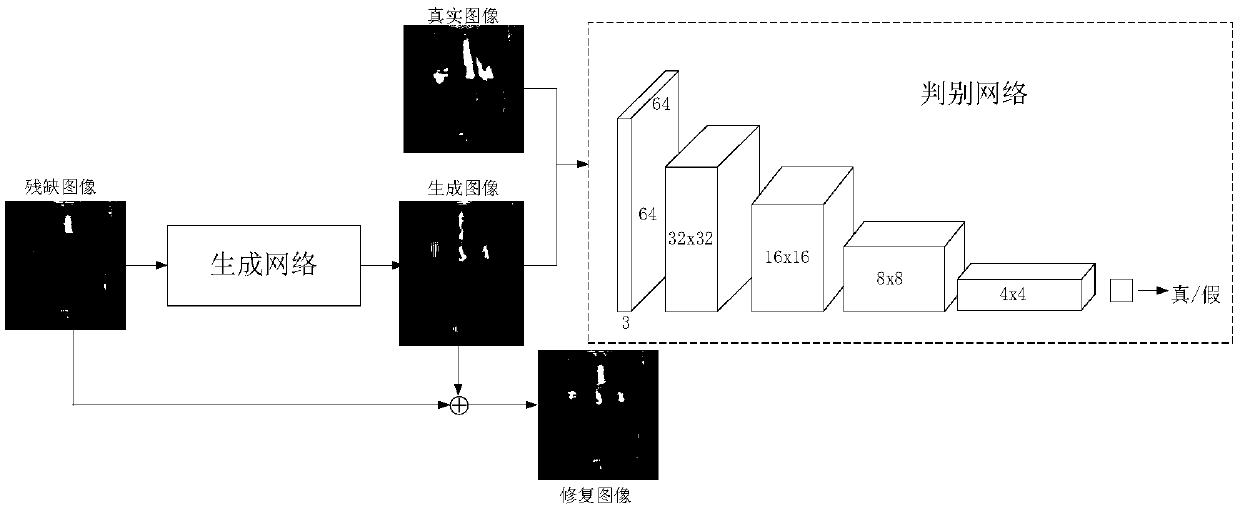

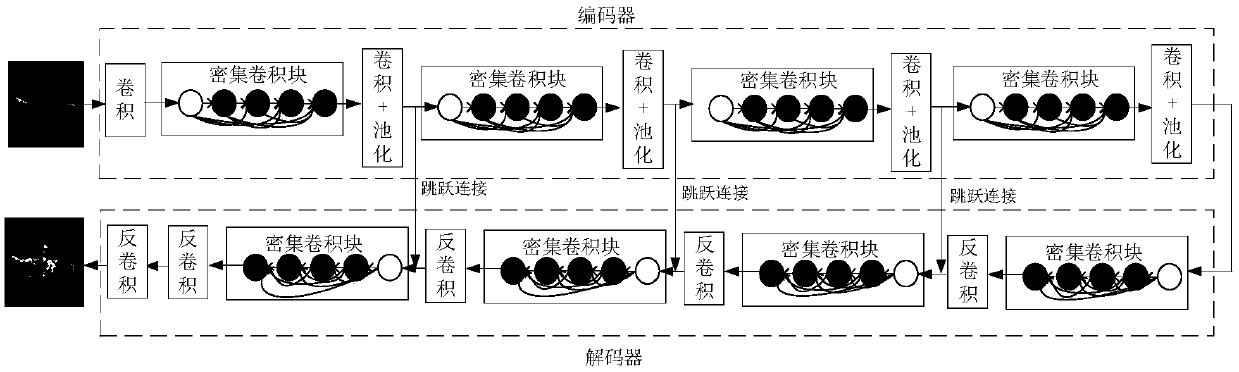

A semantic image restoration method based on a DenseNet generative adversarial network

The invention discloses a semantic image restoration method based on a DenseNet generative adversarial network. The method comprises the following steps of preprocessing collected images, and constructing a training and testing data set; constructing a DenseNet generative adversarial network; training a DenseNet generation adversarial network stage; and finally, realizing repair processing of thedefect image by using the trained network. Under the framework of the generative adversarial network, the DenseNet structure is introduced, a new loss function is constructed to optimize the network,the gradient disappearance is reduced, network parameters are reduced, meanwhile, transmission and utilization of features are improved, and the similarity and visual effect of large-area semantic information missing image restoration are improved and improved. Examples show that face image restoration with serious defect information can be achieved, and compared with other existing methods, the restoration results better conform to visual cognition.

Owner:BEIJING UNIV OF TECH

Image reflection removing method based on depth convolution generative adversarial network

ActiveCN107103590AFlexibility to defineAveraging of dismantling resultsImage enhancementCharacter and pattern recognitionPattern recognitionGenerative adversarial network

The invention discloses an image reflection removing method based on a depth convolution generative adversarial network. The method comprises the following steps: 1) data obtaining; 2) data processing; 3) model structuring; 4) loss defining; 5) model training; and 6) model verifying. In combination with the capability of a depth convolution neural network to extract high-level image semantic information and the flexible capability of the generative adversarial network to define the loss function, the method overcomes the limitations of a traditional method using low level pixel information, therefore, making the method with a better adaptive ability to the reflection removal of a generalized image.

Owner:SOUTH CHINA UNIV OF TECH

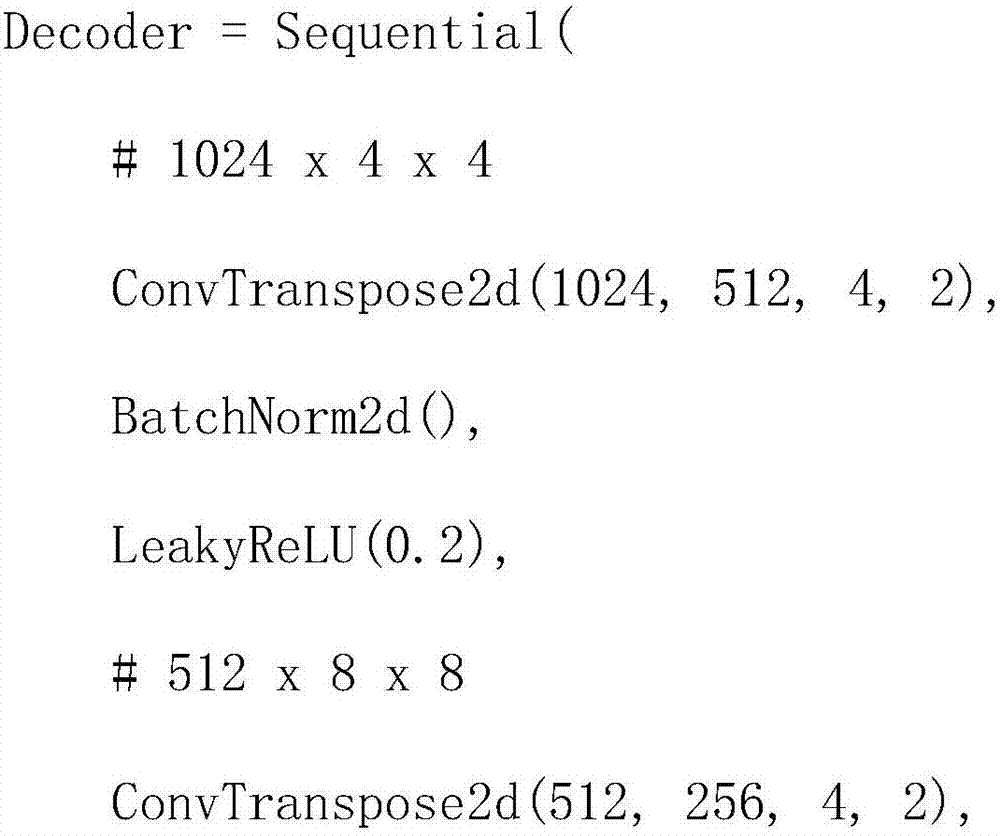

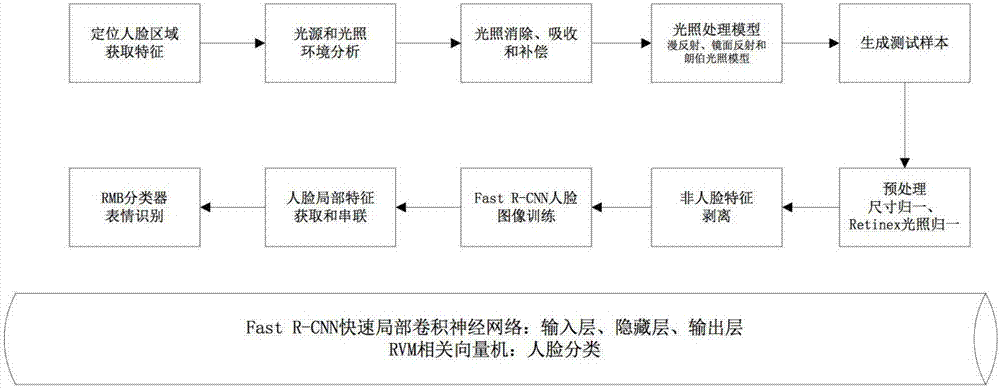

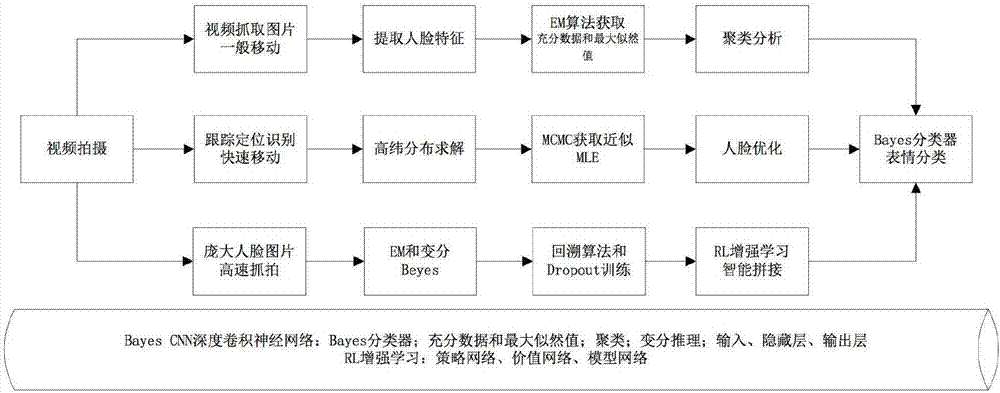

Human face emotion recognition method in complex environment

InactiveCN107423707AReal-time processingImprove accuracyImage enhancementImage analysisNerve networkFacial region

The invention discloses a human face emotion recognition method in a mobile embedded complex environment. In the method, a human face is divided into main areas of a forehead, eyebrows and eyes, cheeks, a noise, a month and a chain, and 68 feature points are further divided. In view of the above feature points, in order to realize the human face emotion recognition rate, the accuracy and the reliability in various environments, a human face and expression feature classification method is used in a normal condition, a Faster R-CNN face area convolution neural network-based method is used in conditions of light, reflection and shadow, a method of combining a Bayes Network, a Markoff chain and variational reasoning is used in complex conditions of motion, jitter, shaking, and movement, and a method of combining a deep convolution neural network, a super-resolution generative adversarial network (SRGANs), reinforcement learning, a backpropagation algorithm and a dropout algorithm is used in conditions of incomplete human face display, a multi-human face environment and noisy background. Thus, the human face expression recognition effects, the accuracy and the reliability can be promoted effectively.

Owner:深圳帕罗人工智能科技有限公司

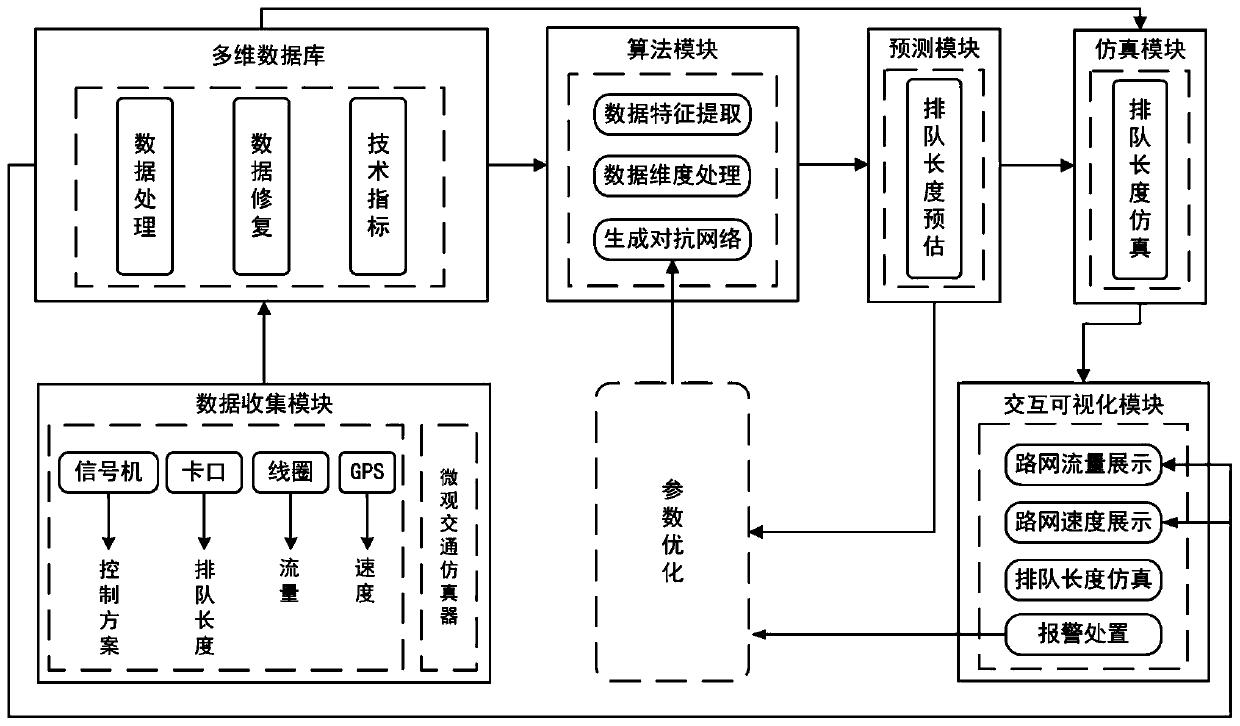

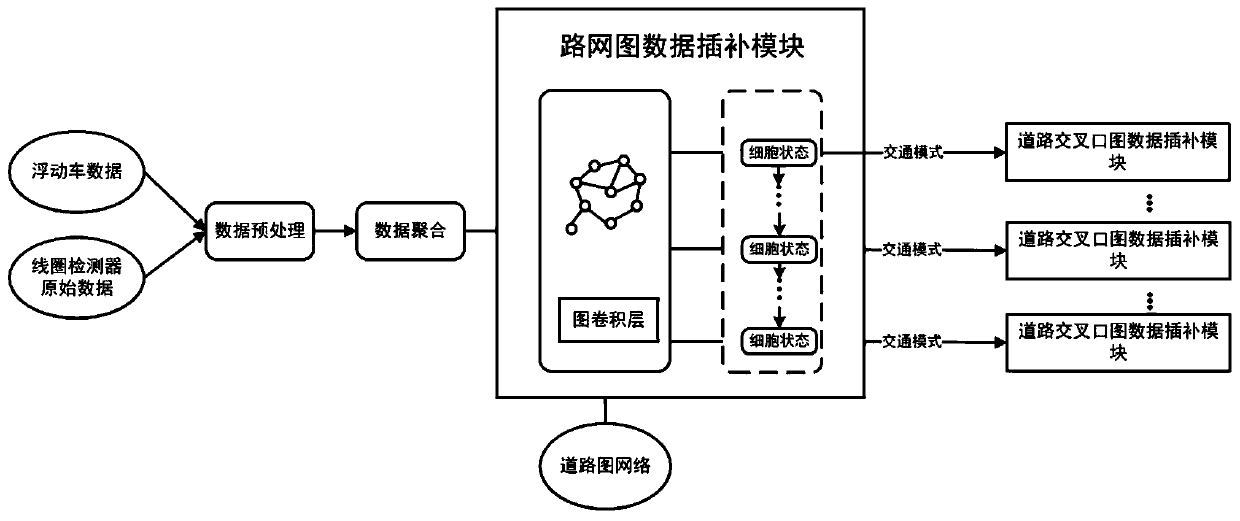

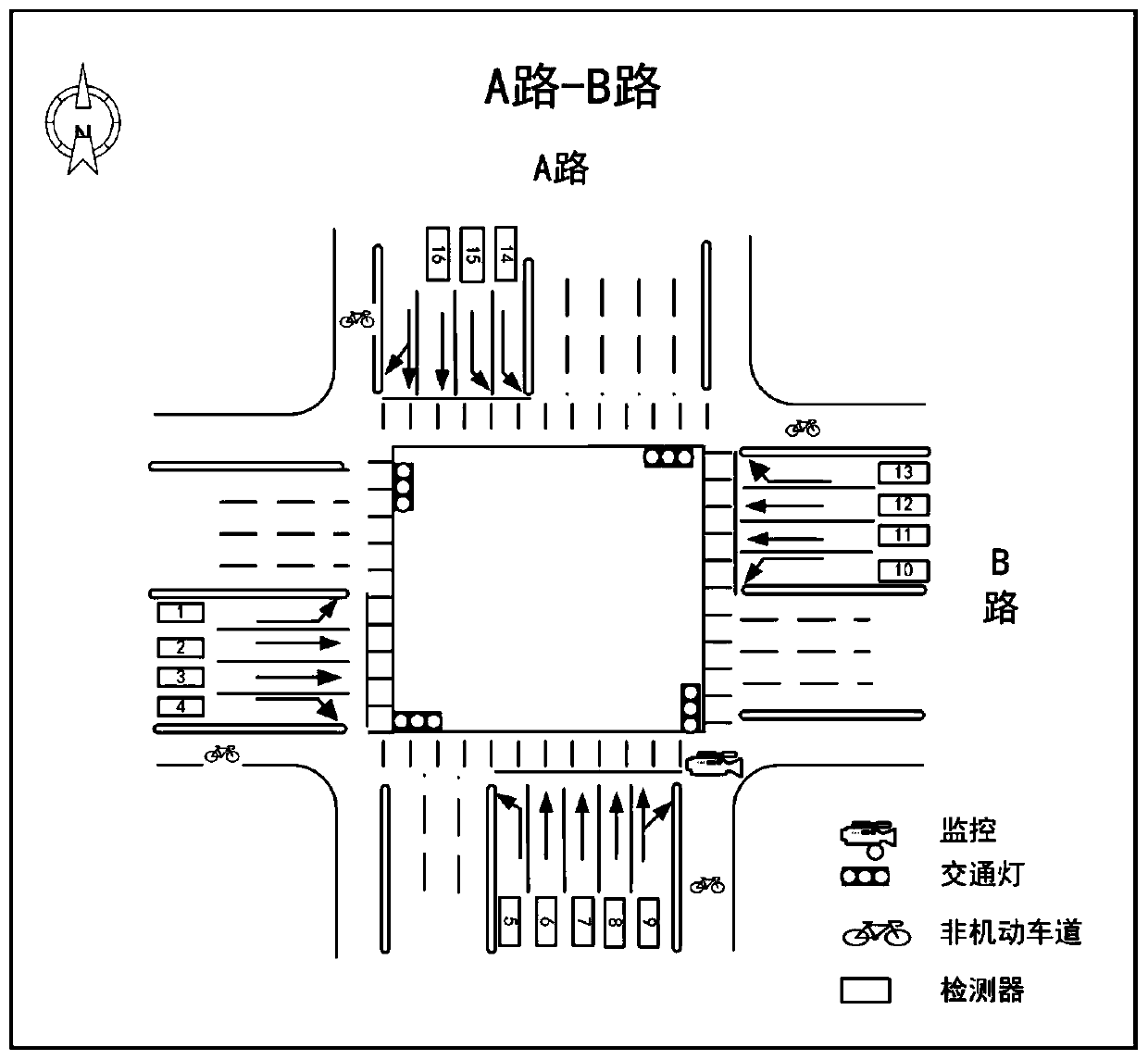

City-level intelligent traffic simulation system

ActiveCN110164128ASolve low work efficiencyDetection of traffic movementForecastingState predictionFeature extraction

The invention discloses a city-level intelligent traffic simulation system. With multi-source heterogeneous data as input, the vehicle queue length of each lane at an intersection can be predicted, the evolution of the vehicle queue length is further dynamically simulated, and the simulation system comprises a data collection module, a multidimensional database, an algorithm module, a traffic state prediction module, a traffic simulation derivation module and an interaction visualization module. In the data collection module, the collected data comprise traffic dynamic data and static data. The multidimensional database is used for receiving various traffic dynamic information in real time and storing road infrastructure configuration information. In the algorithm module, a generated confrontation network is used for data generation and state prediction on the vehicle queue length, and the processing process comprises data feature extraction, data dimension processing, confrontation network generation, and hyperparameter optimization. The vehicle queue length of each lane at the intersection can be predicted, and the evolution of the vehicle queue length is further dynamically simulated.

Owner:ENJOYOR COMPANY LIMITED

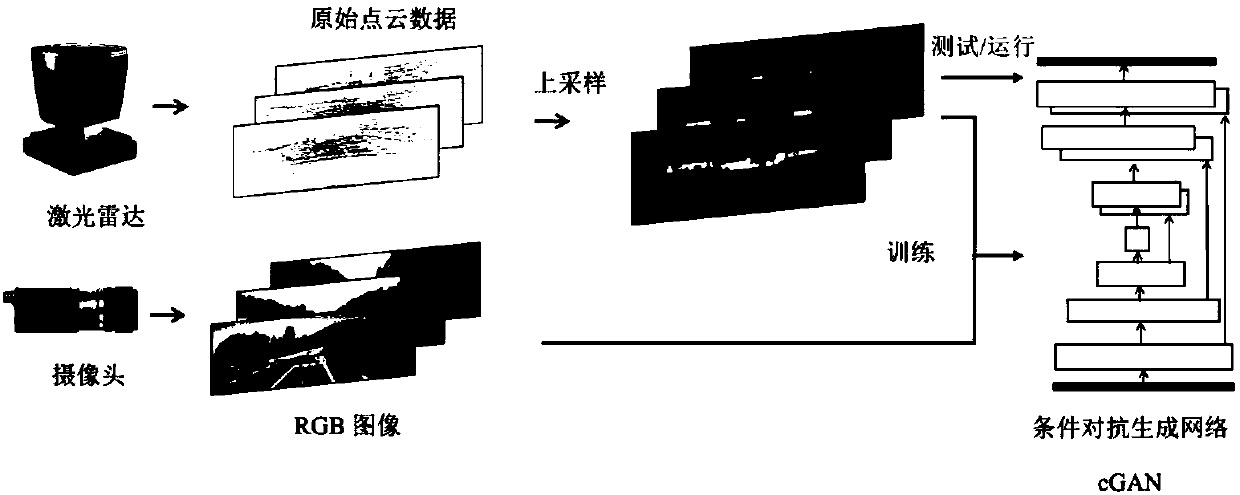

Radar generated color semantic image system and method based on conditional generative adversarial network

ActiveCN107862293AAvoid Imaging UncertaintyAvoid instabilityCharacter and pattern recognitionNeural architecturesGenerative adversarial networkNetwork model

The invention discloses a radar generated color semantic image system and method based on a conditional generative adversarial network, which belong to the technical fields of sensors and artificial intelligence. The system includes a data acquisition module based on radar point cloud and a camera, an original radar point cloud up-sampling module, a model training module based on a conditional generative adversarial network, and a model using module based on a conditional generative adversarial network. The method provided by the invention includes the following steps: constructing a radar point cloud-RGB image training set; constructing a conditional generative adversarial network based on a convolutional neural network to train a model; and finally, enabling the model to generate a colorroad scene image with meanings in real time in a vehicle environment by using sparse radar point cloud data and the trained conditional generative adversarial network only, and using the color road scene image in automatic driving and auxiliary driving analysis. The network efficiency is higher. The adjustment of network parameters can be speeded up, and an optimal result can be obtained. High accuracy and high stability are ensured.

Owner:BEIHANG UNIV

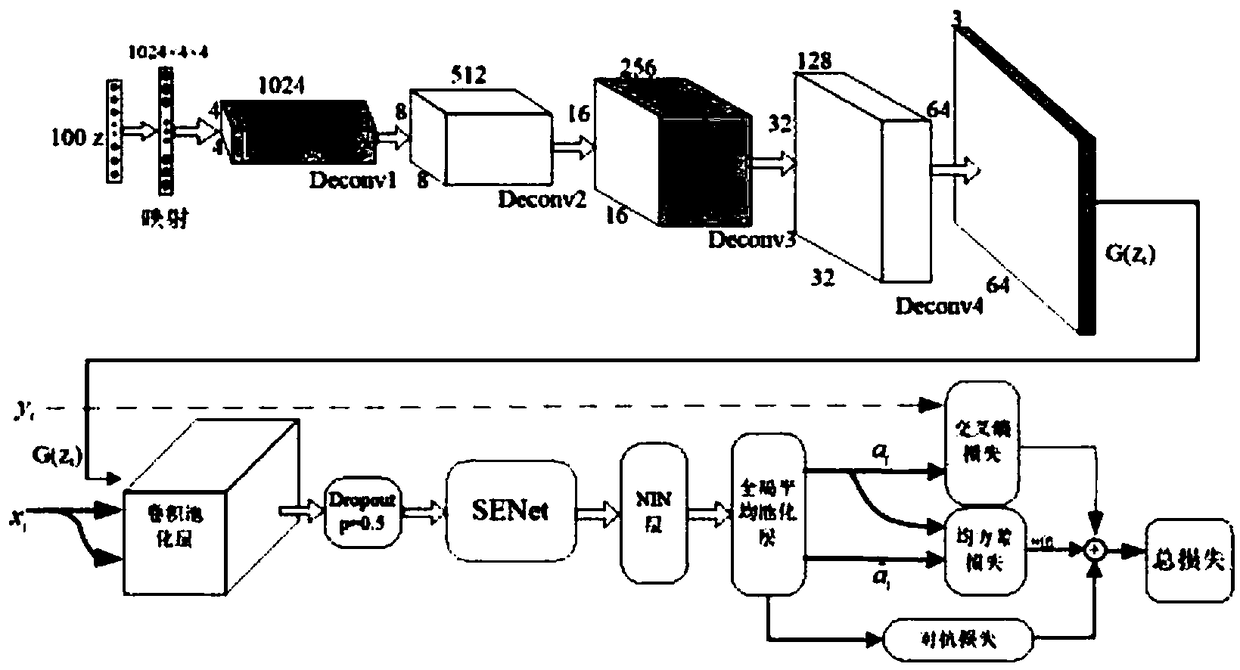

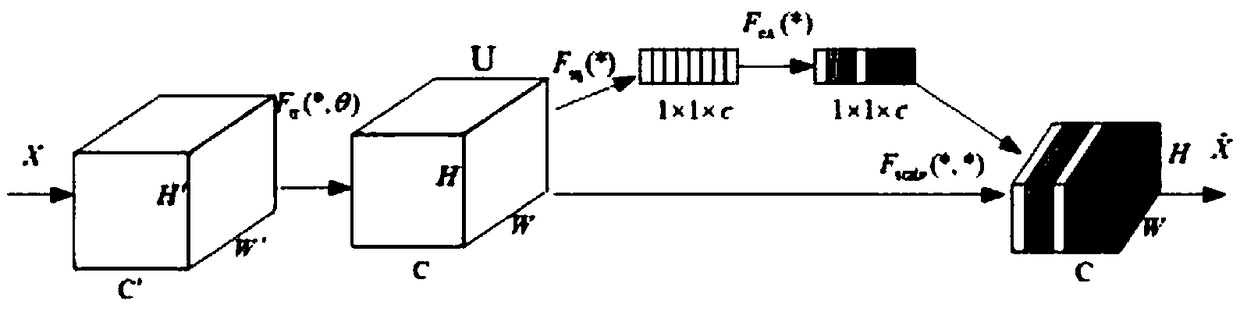

Image classification method based on confrontation network generated through feature recalibration

ActiveCN108805188AImprove generalization abilityClassification task performance improvementCharacter and pattern recognitionNeural architecturesGenerative adversarial networkClassification methods

The invention discloses an image classification method based on a confrontation network generated through feature recalibration. The image classification method based on the confrontation network generated through feature recalibration is suitable for the field of machine learning and comprises the steps that to-be-classified image data are input into a confrontation network model for network training; a generator and a discriminator which are constituted by a convolutional network are constructed; random noise is initialized and input into the generator; the random noise is subjected to multilevel deconvolution operation in the generator through the convolutional network, and finally, generated samples are obtained; the generated samples and authentic samples are input into the discriminator; and the input samples are subjected to convolution and pooling operation in the discriminator through the convolutional network, thus a feature graph is obtained, a compressed and activated SENetmodule is imported into an intermediate layer of the convolutional network to calibrate the feature graph, thus the calibrated feature graph is obtained, global average pooling is used, and finally,image data classification is output. The SENet module is imported into the intermediate layer of the discriminator, the importance degree of each feature channel is automatically learned, useful features relevant to a task are extracted, features irrelevant to the task are restrained, and thus semi-supervised learning performance is improved.

Owner:JIANGSU YUNYI ELECTRIC

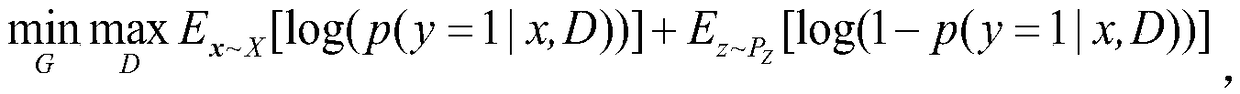

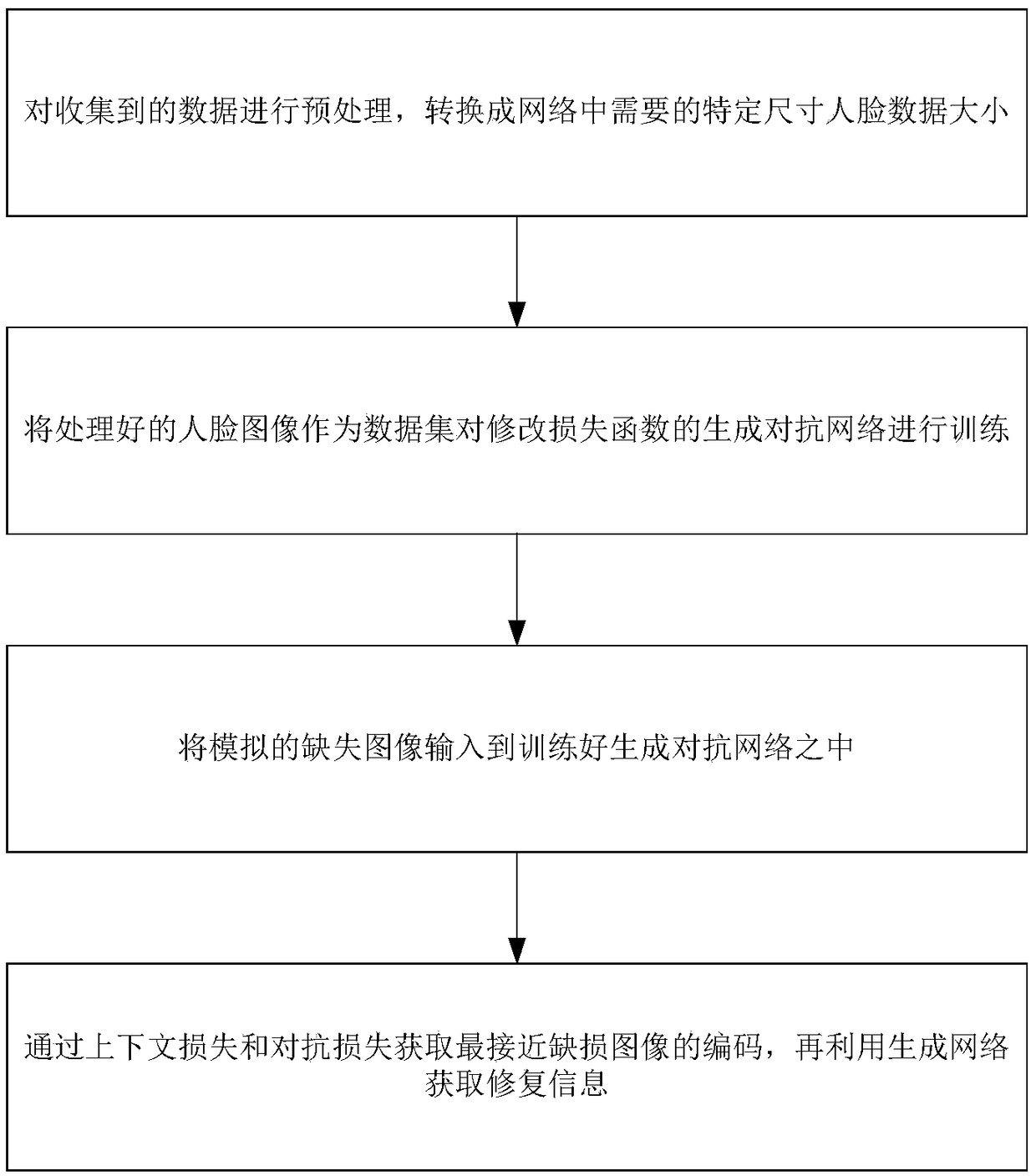

A face image restoration method based on a generation antagonism network

ActiveCN109377448AImprove stabilityGuarantee authenticityImage enhancementImage analysisData setTraining phase

The invention discloses a face image restoration method based on a generation antagonism network. The method comprises the following steps: a face data set is preprocessed, and a face image with a specific size is obtained by face recognition of the collected image; In the training phase, the collected face images are used as dataset to train the generating network and discriminant network, aimingat obtaining more realistic images through the generating network. In order to solve the problems of instability of training and mode collapse in the network, the least square loss is used as the loss function of discriminant network. In the repairing phase, a special mask is automatically added to the original image to simulate the real missing area, and the masked face image is input into the optimized depth convolution to generate an antagonistic network. The relevant random parameters are obtained through context loss and two antagonistic losses, and the repairing information is obtainedthrough the generated network. The invention can not only solve the face image repairing with serious defective information, but also generate a face repairing image which is more consistent with visual cognition.

Owner:BEIJING UNIV OF TECH

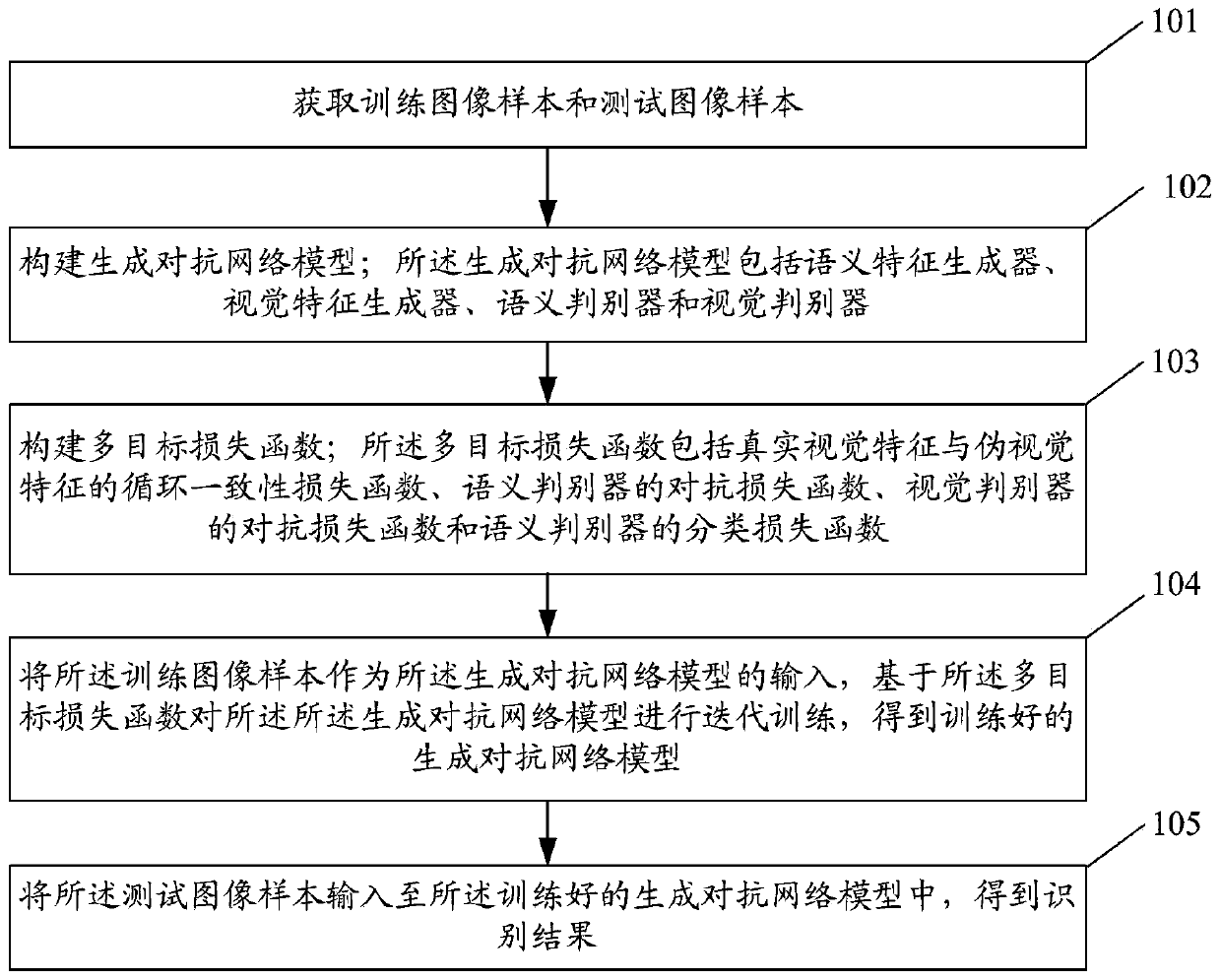

Zero-sample image recognition method and system based on generative adversarial network

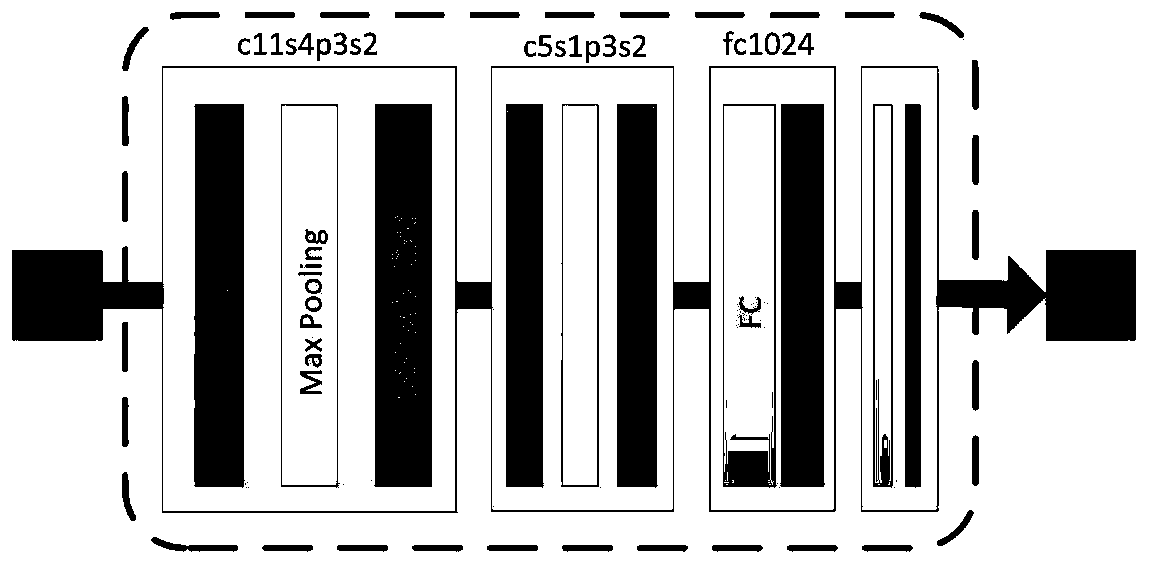

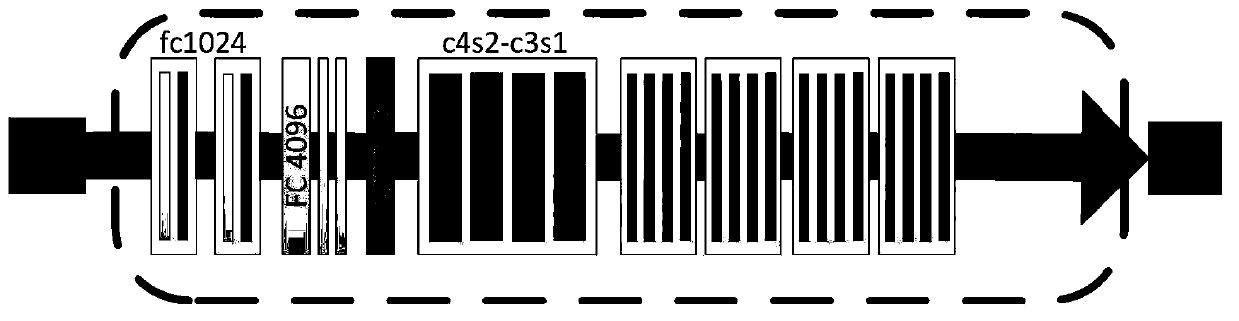

ActiveCN111476294AImprove generalization abilityHigh precisionCharacter and pattern recognitionNeural architecturesGenerative adversarial networkSample image

The invention discloses a zero-sample image recognition method and system based on a generative adversarial network. The method comprises the steps: obtaining a training image sample with annotation information and a test image sample without annotation information; constructing a generative adversarial network model, wherein the generative adversarial network model comprises a semantic feature generator, a visual feature generator, a semantic discriminator and a visual discriminator; constructing a multi-objective loss function comprising a cyclic consistency loss function, an adversarial loss function of a semantic discriminator, an adversarial loss function of a visual discriminator and a classification loss function of the semantic discriminator; taking the training image sample as theinput of a generative adversarial network model, carrying out the iterative training of the generative adversarial network model based on a multi-objective loss function, and obtaining a trained generative adversarial network model; and inputting the test image sample into the trained generative adversarial network model to obtain an identification result. According to the invention, the sketch without annotation information can be identified, and the precision of zero sample identification is high.

Owner:NANCHANG HANGKONG UNIVERSITY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com