Patents

Literature

520 results about "Facial region" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

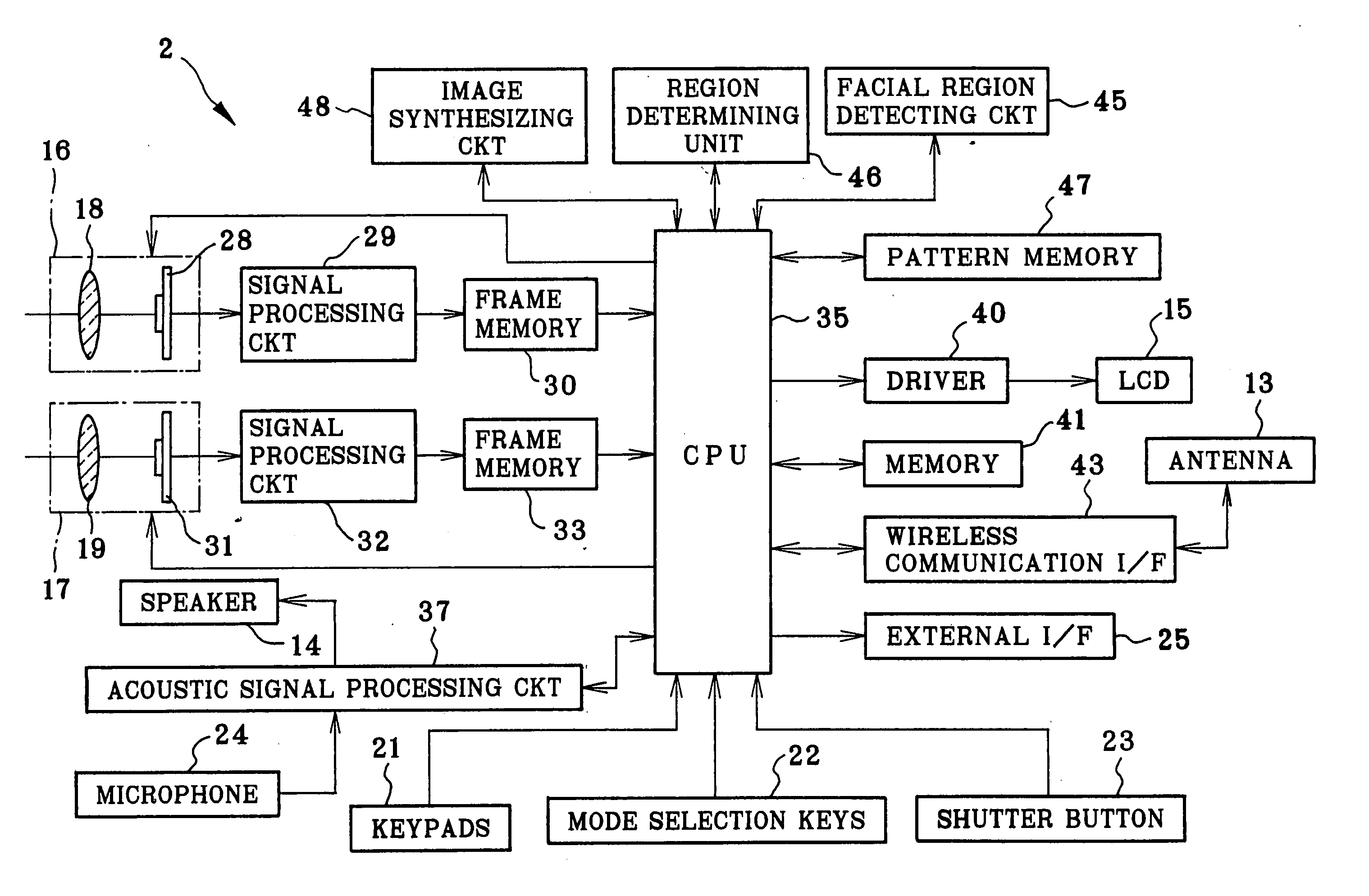

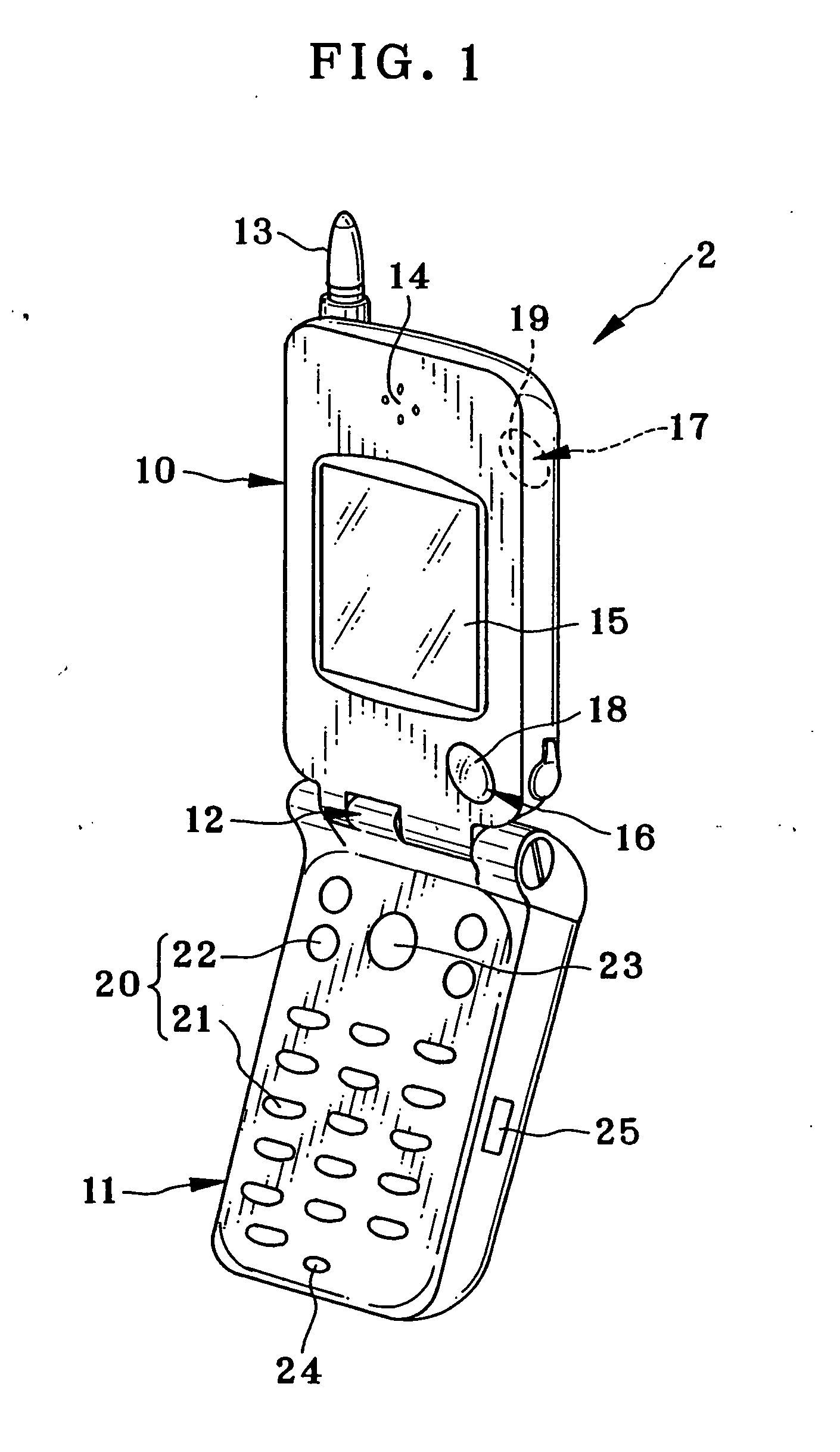

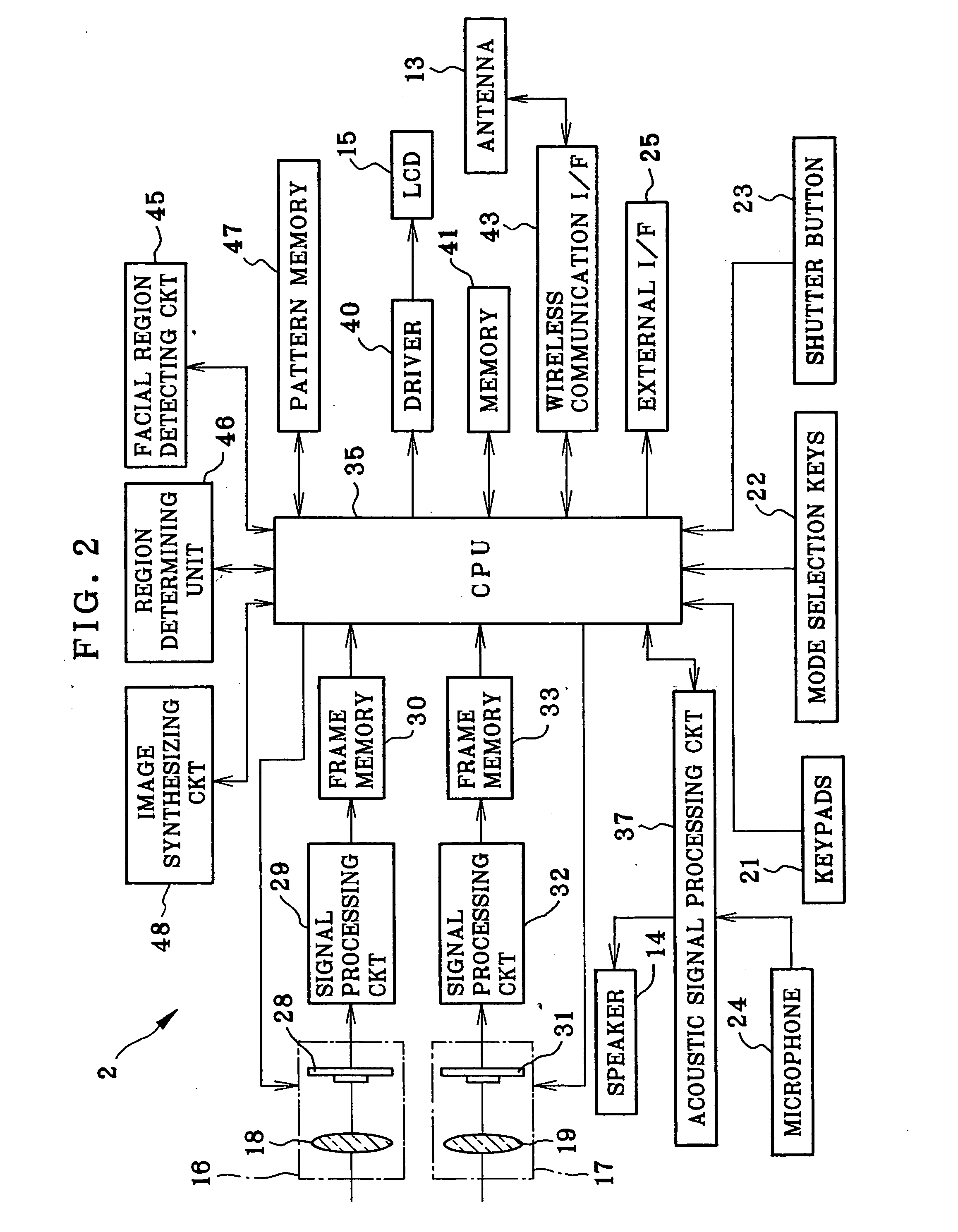

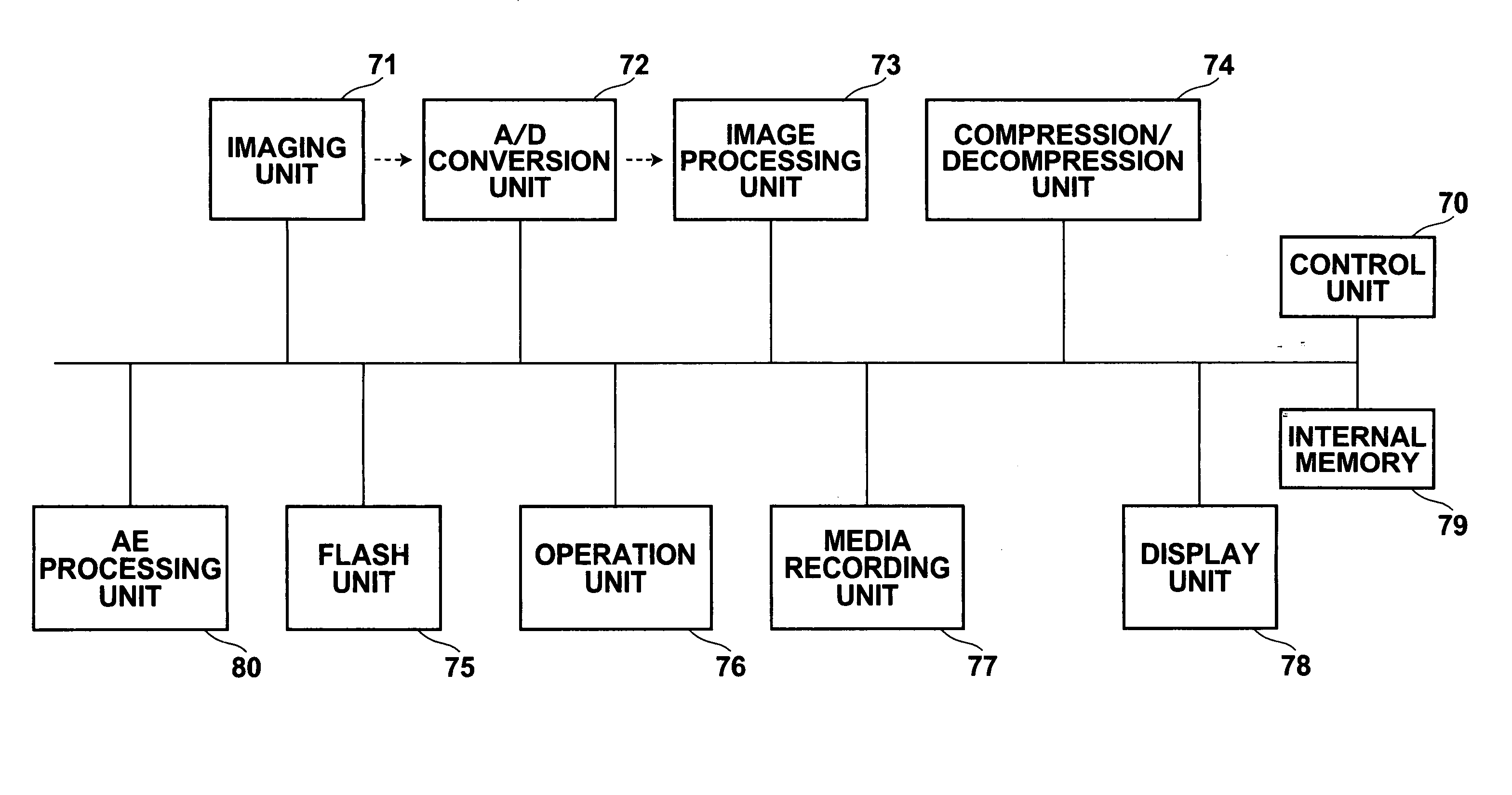

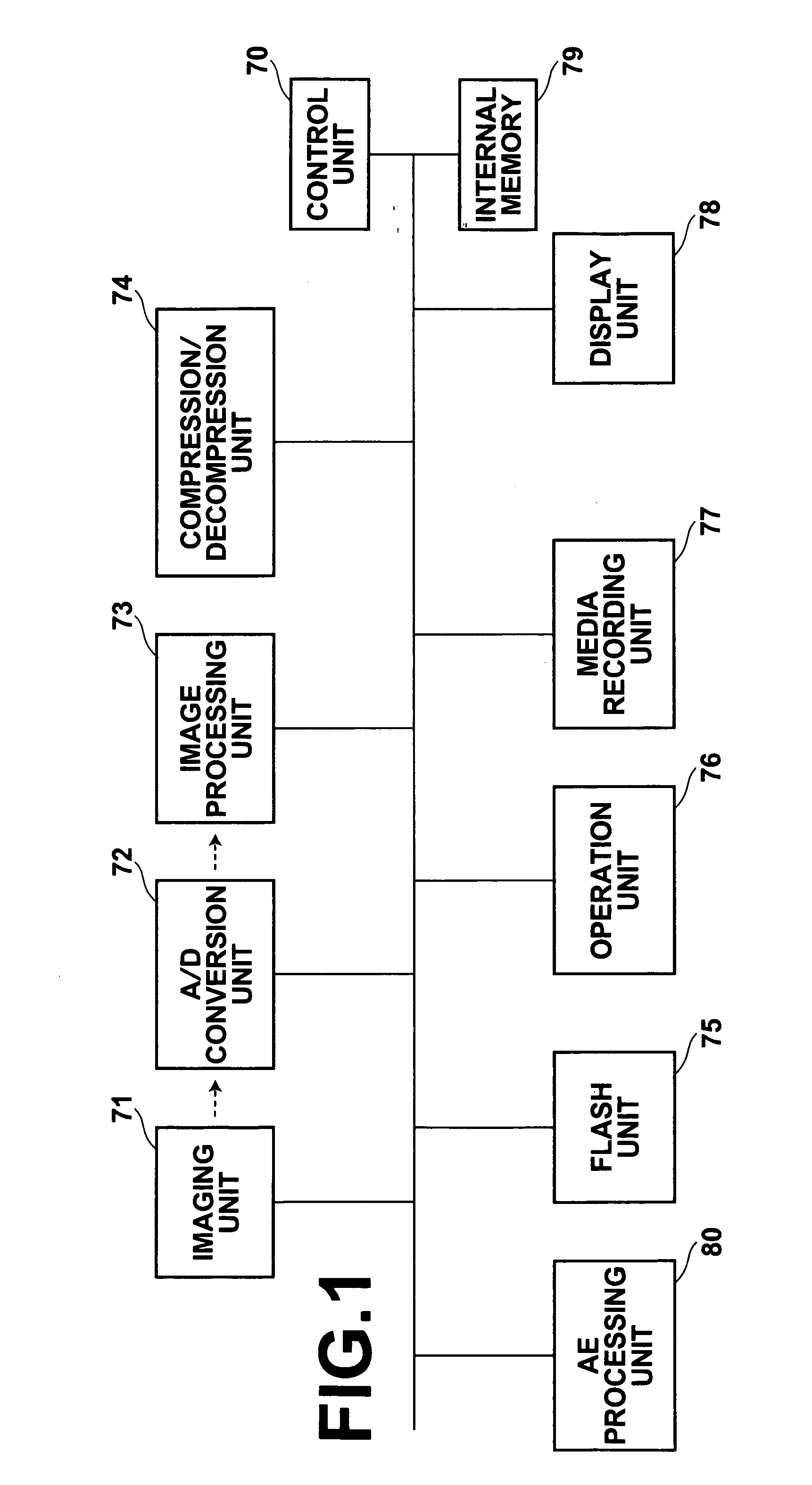

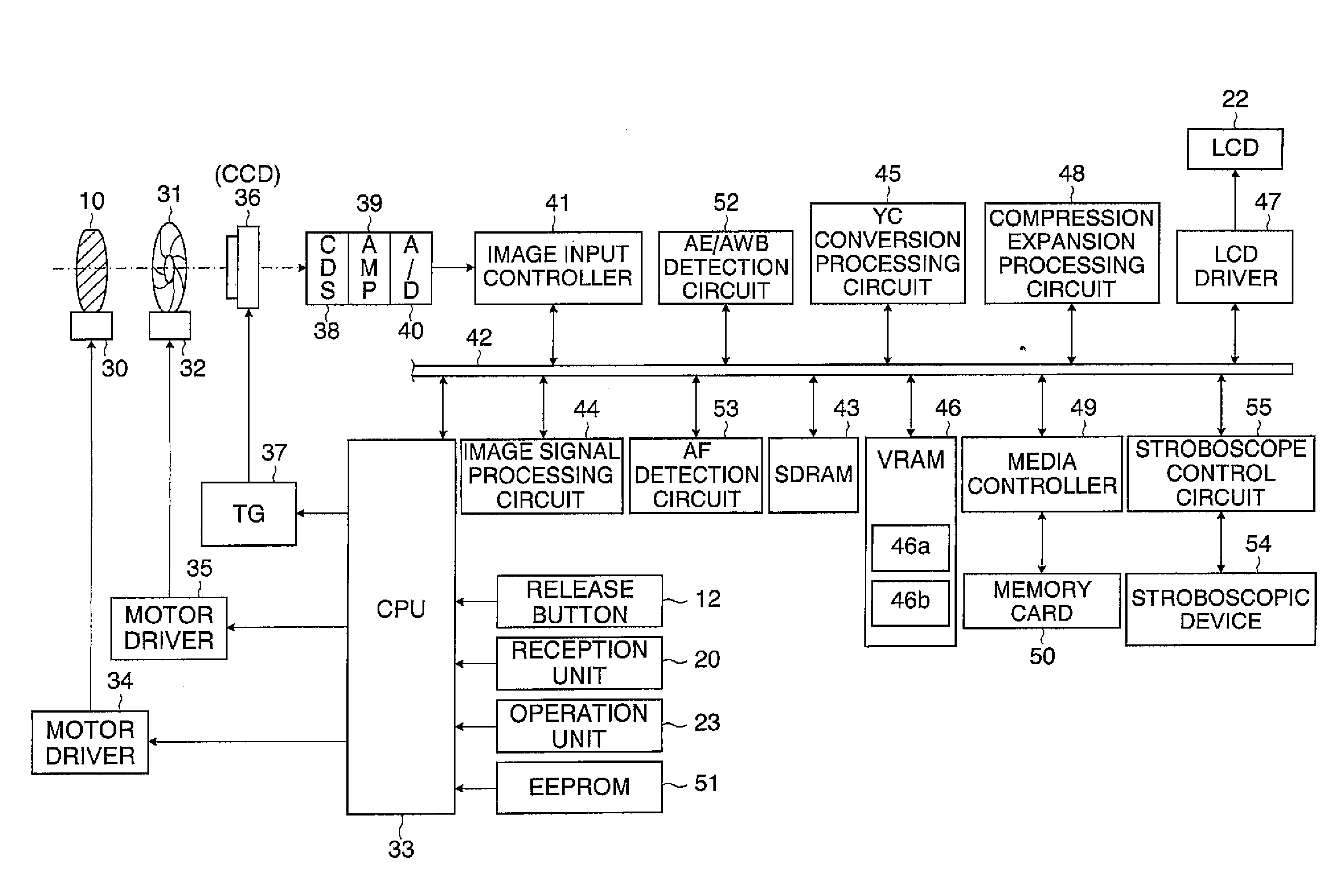

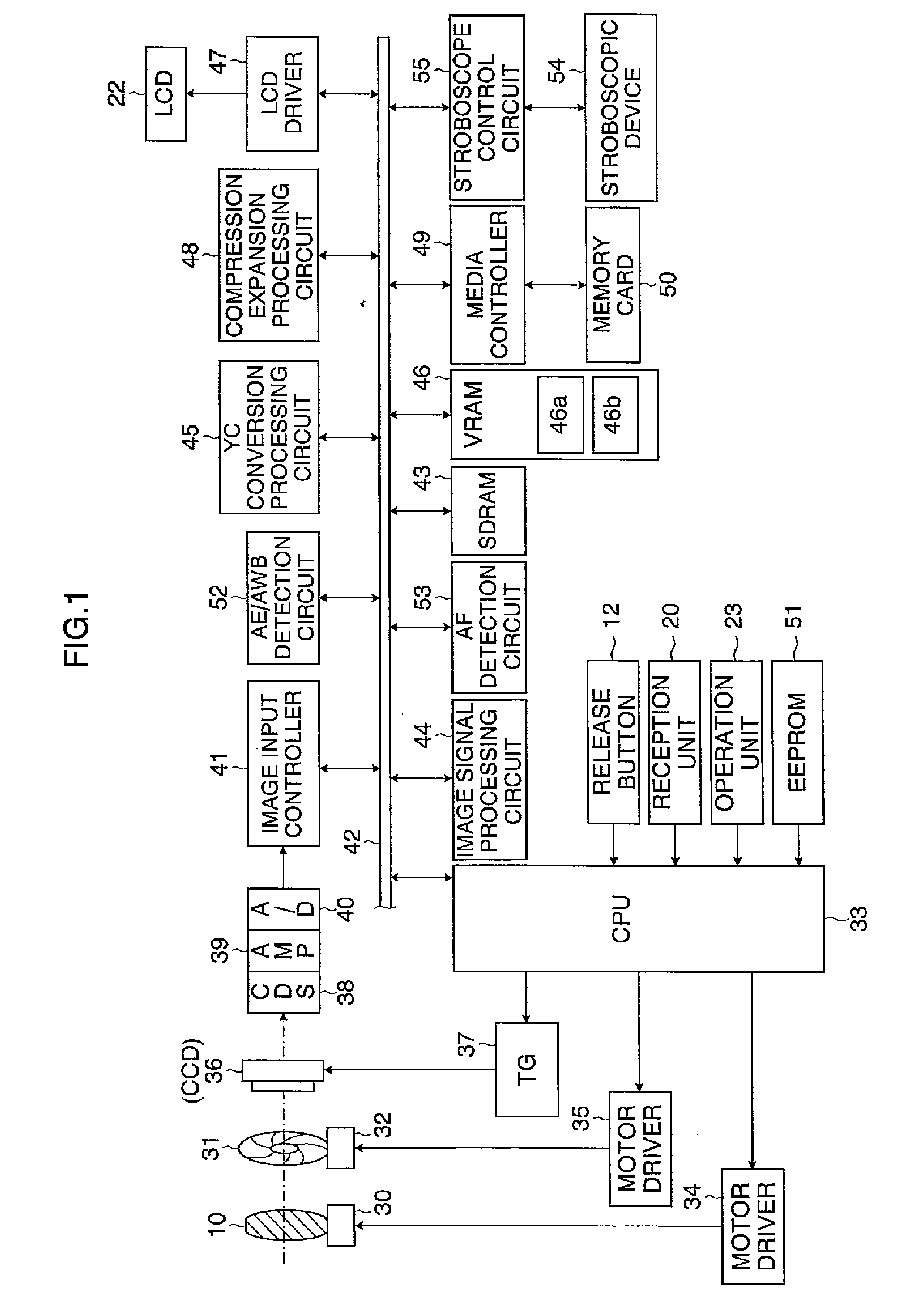

Image pickup device and image synthesizing method

InactiveUS20050036044A1Easy to synthesizeEdited readily and easilyTelevision system detailsImage enhancementFacial regionImaging data

An image pickup device is constituted by a handset of cellular phone, wherein an image pickup unit photographs a user as first object, to output image data of a first image frame. A facial region detecting circuit retrieves a facial image portion of the user as first object according to image data of the first image frame. An image synthesizing circuit is supplied with image data of a second image frame including persons as second object. The facial image portion is synthesized in a background region being defined outside the persons as second object within the second image frame.

Owner:FUJIFILM CORP +1

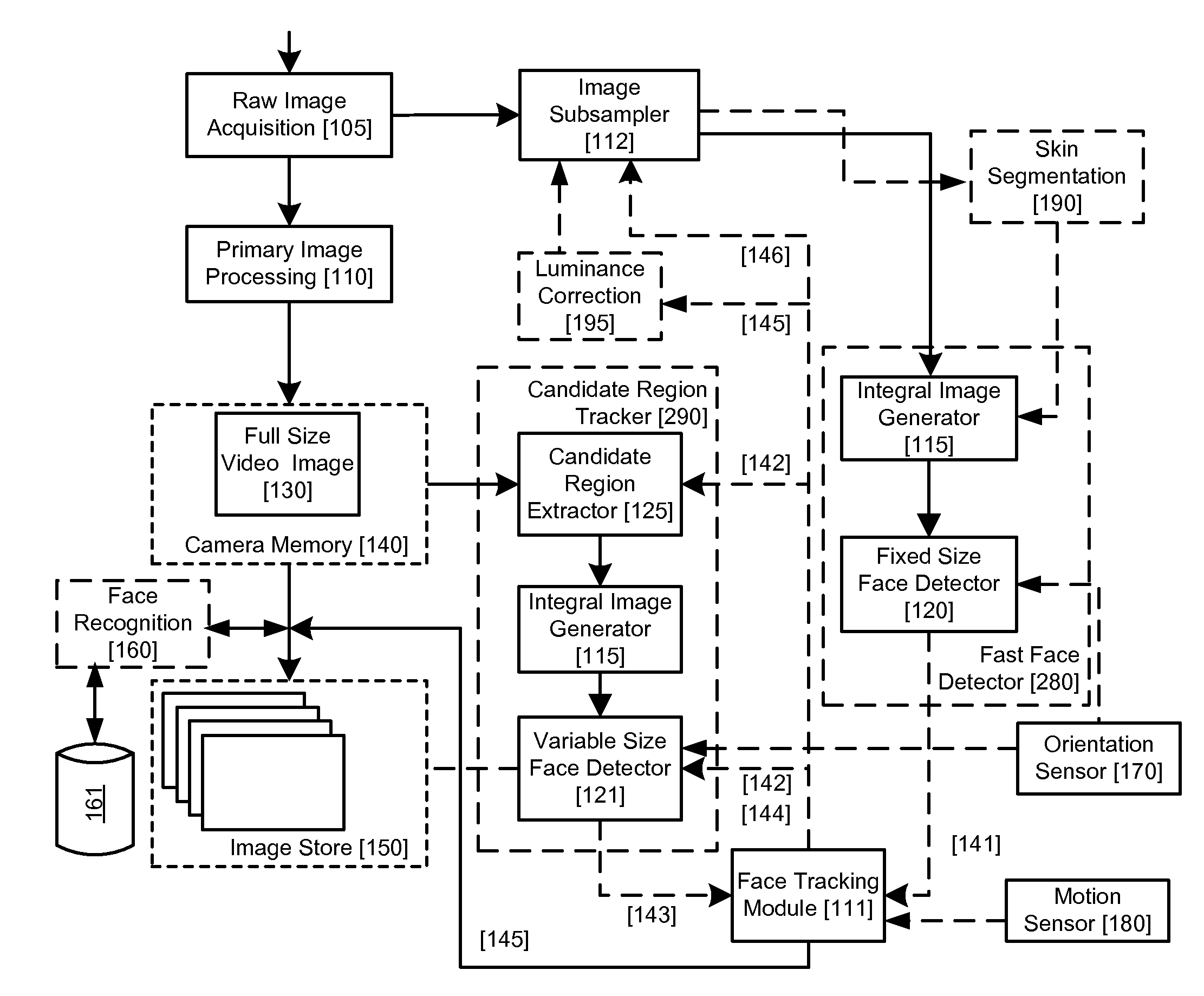

Real-time face tracking with reference images

A method of tracking a face in a reference image stream using a digital image acquisition device includes acquiring a full resolution main image and an image stream of relatively low resolution reference images each including one or more face regions. One or more face regions are identified within two or more of the reference images. A relative movement is determined between the two or more reference images. A size and location are determined of the one or more face regions within each of the two or more reference images. Concentrated face detection is applied to at least a portion of the full resolution main image in a predicted location for candidate face regions having a predicted size as a function of the determined relative movement and the size and location of the one or more face regions within the reference images, to provide a set of candidate face regions for the main image.

Owner:FOTONATION LTD

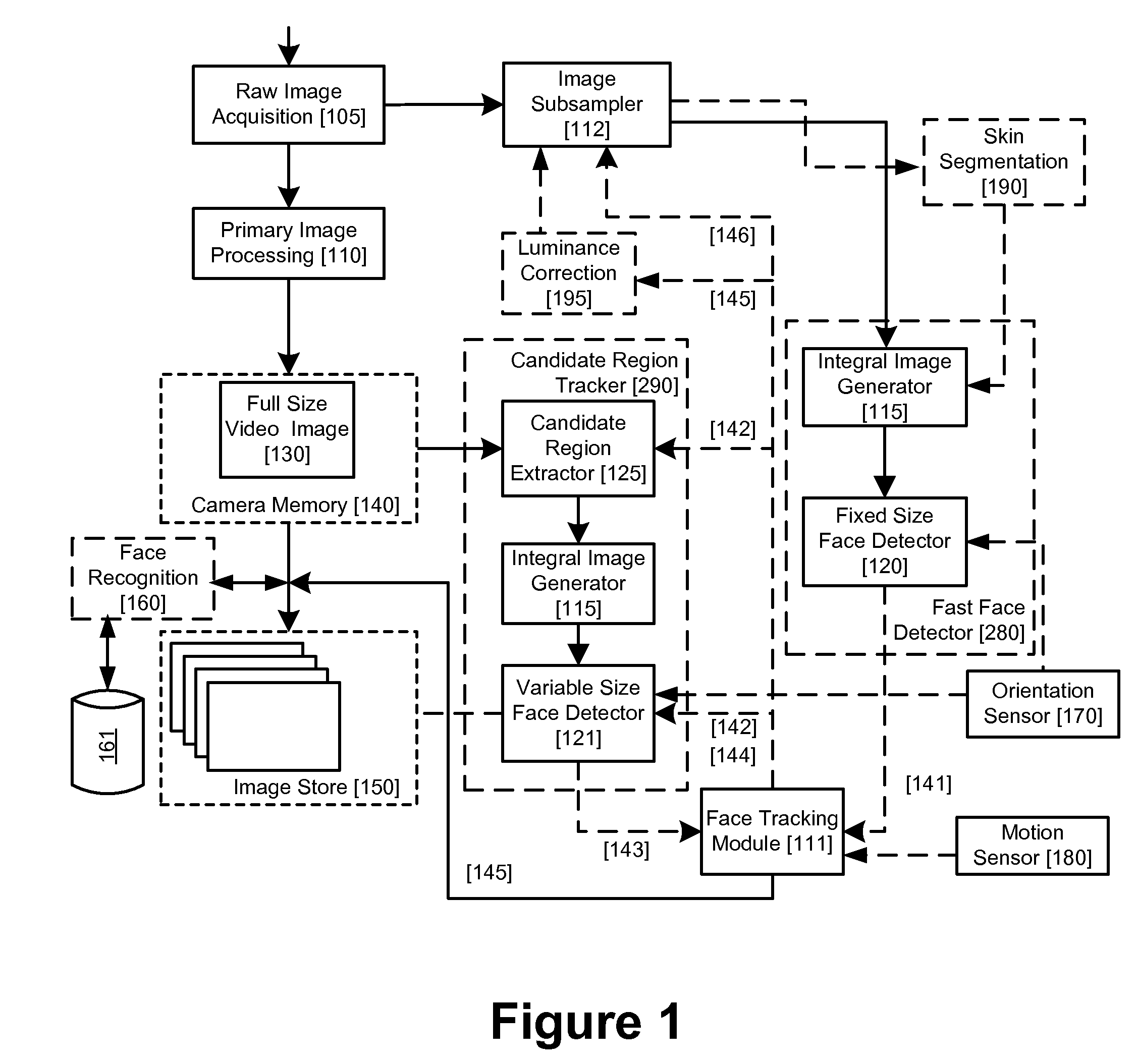

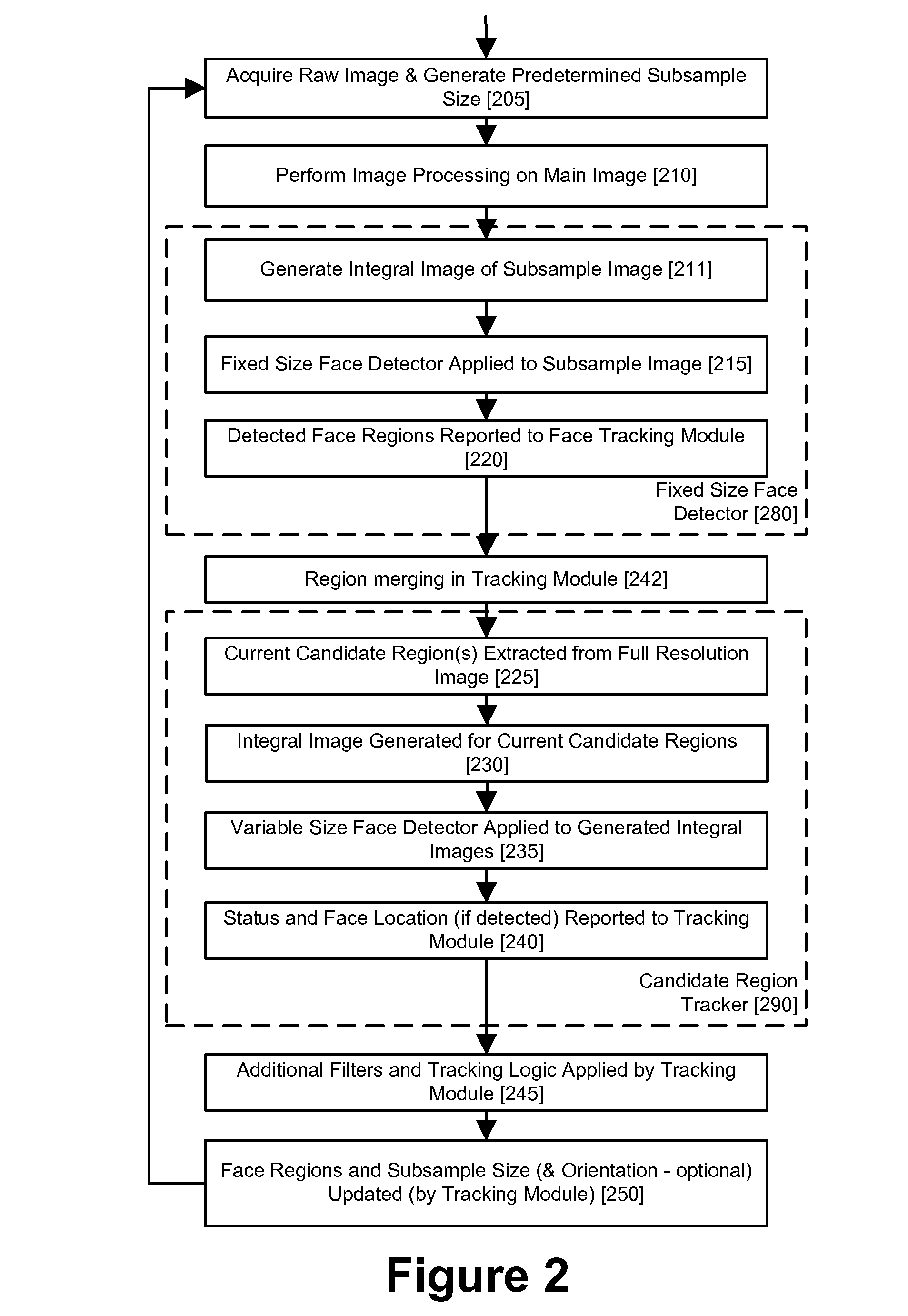

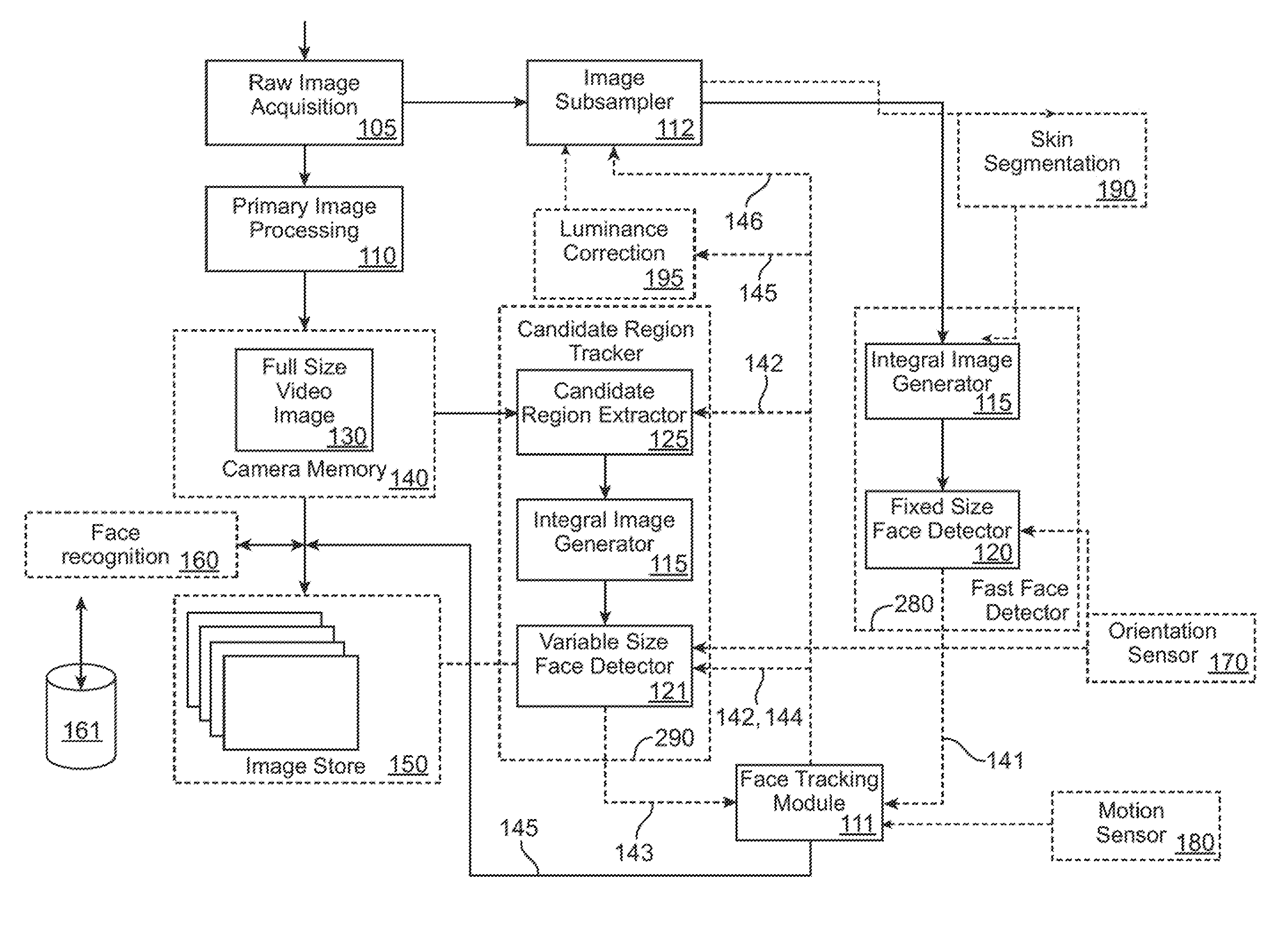

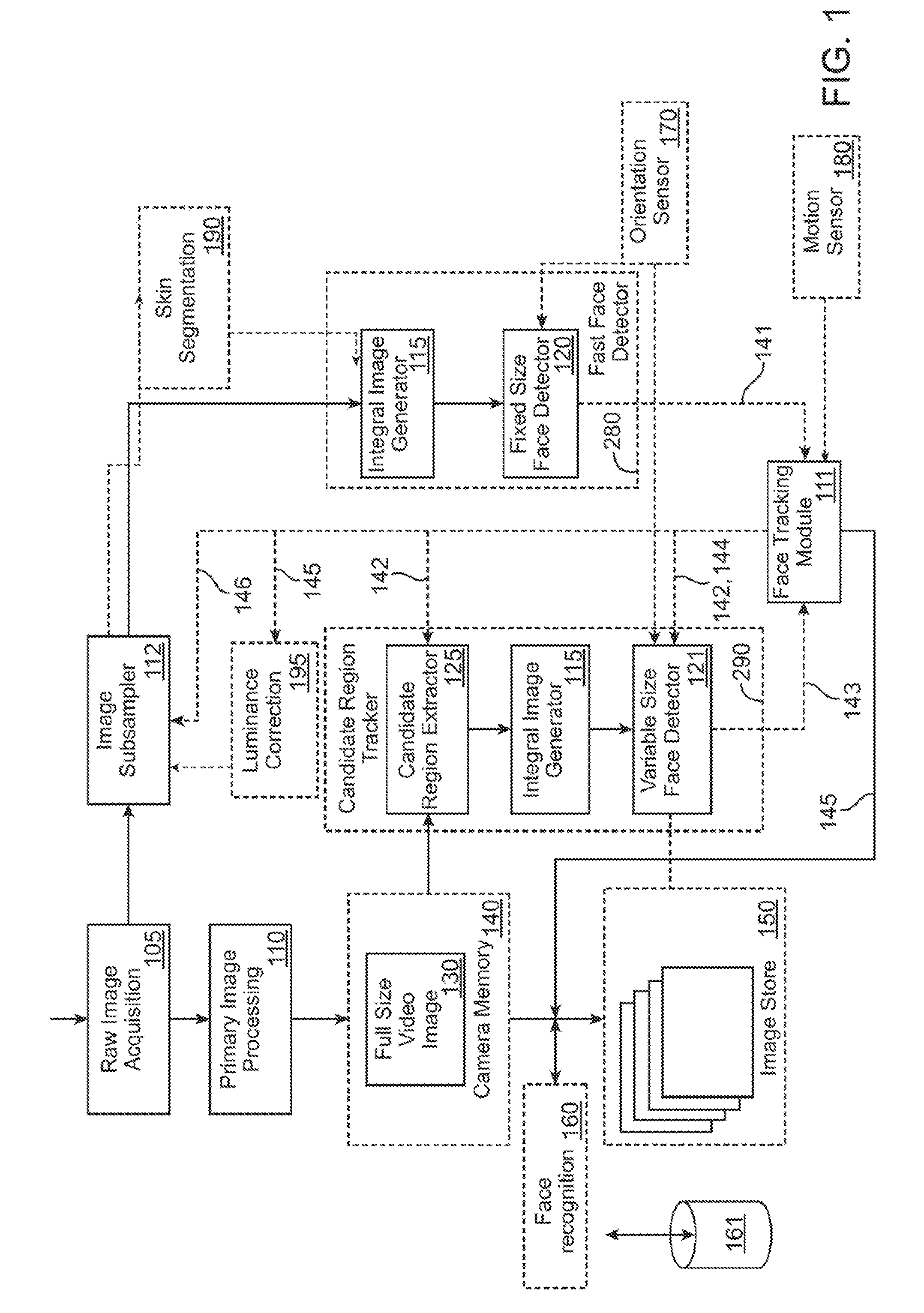

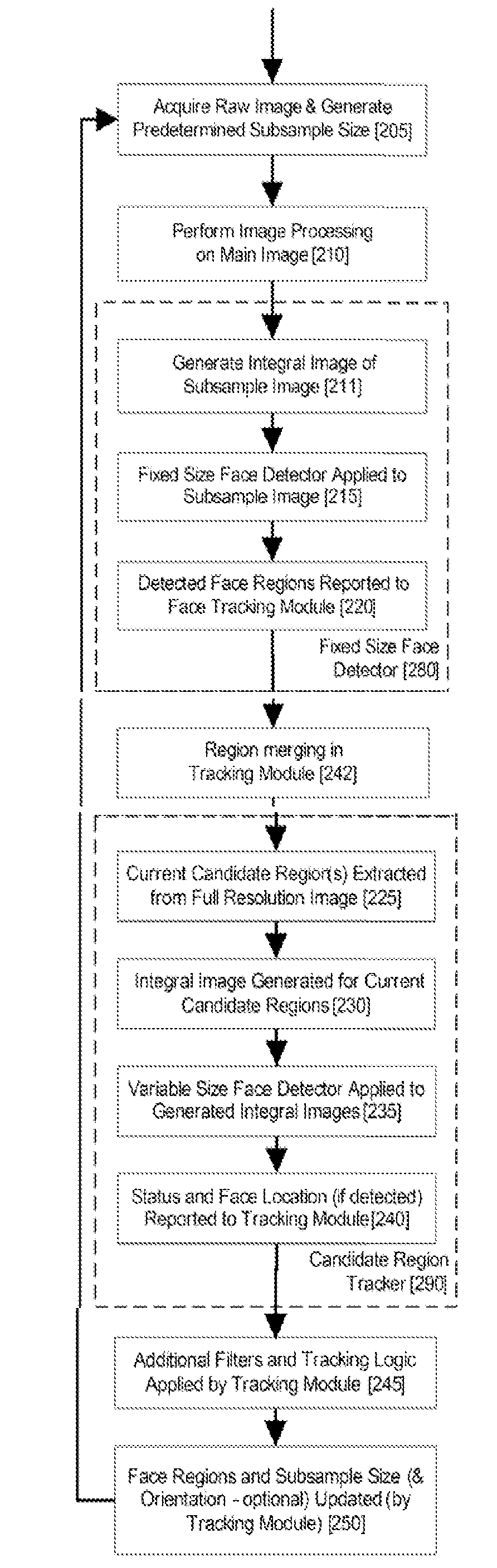

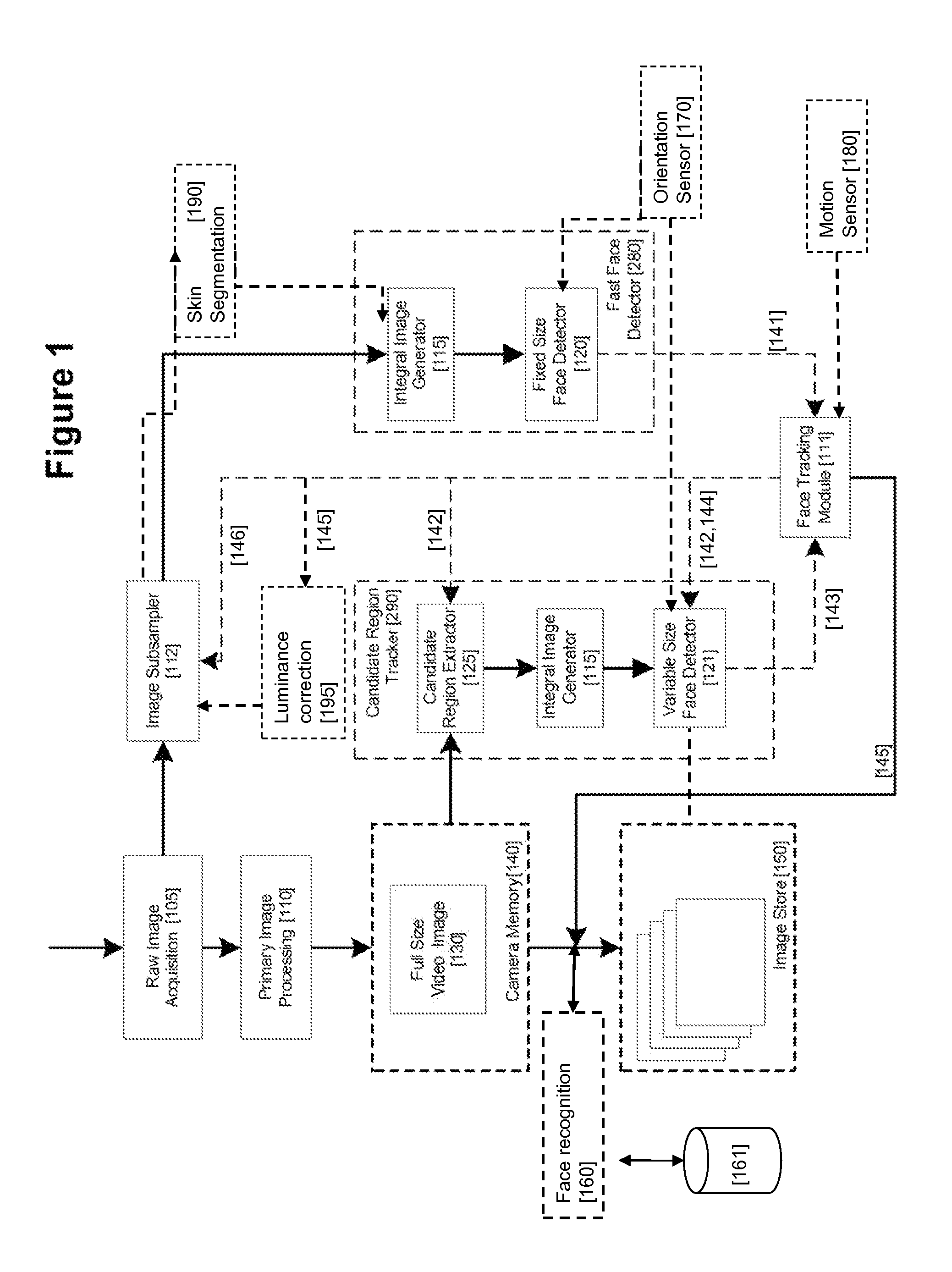

Face tracking for controlling imaging parameters

A method of tracking faces in an image stream with a digital image acquisition device includes receiving images from an image stream including faces, calculating corresponding integral images, and applying different subsets of face detection rectangles to the integral images to provide sets of candidate regions. The different subsets include candidate face regions of different sizes and / or locations within the images. The different candidate face regions from different images of the image stream are each tracked.

Owner:FOTONATION LTD

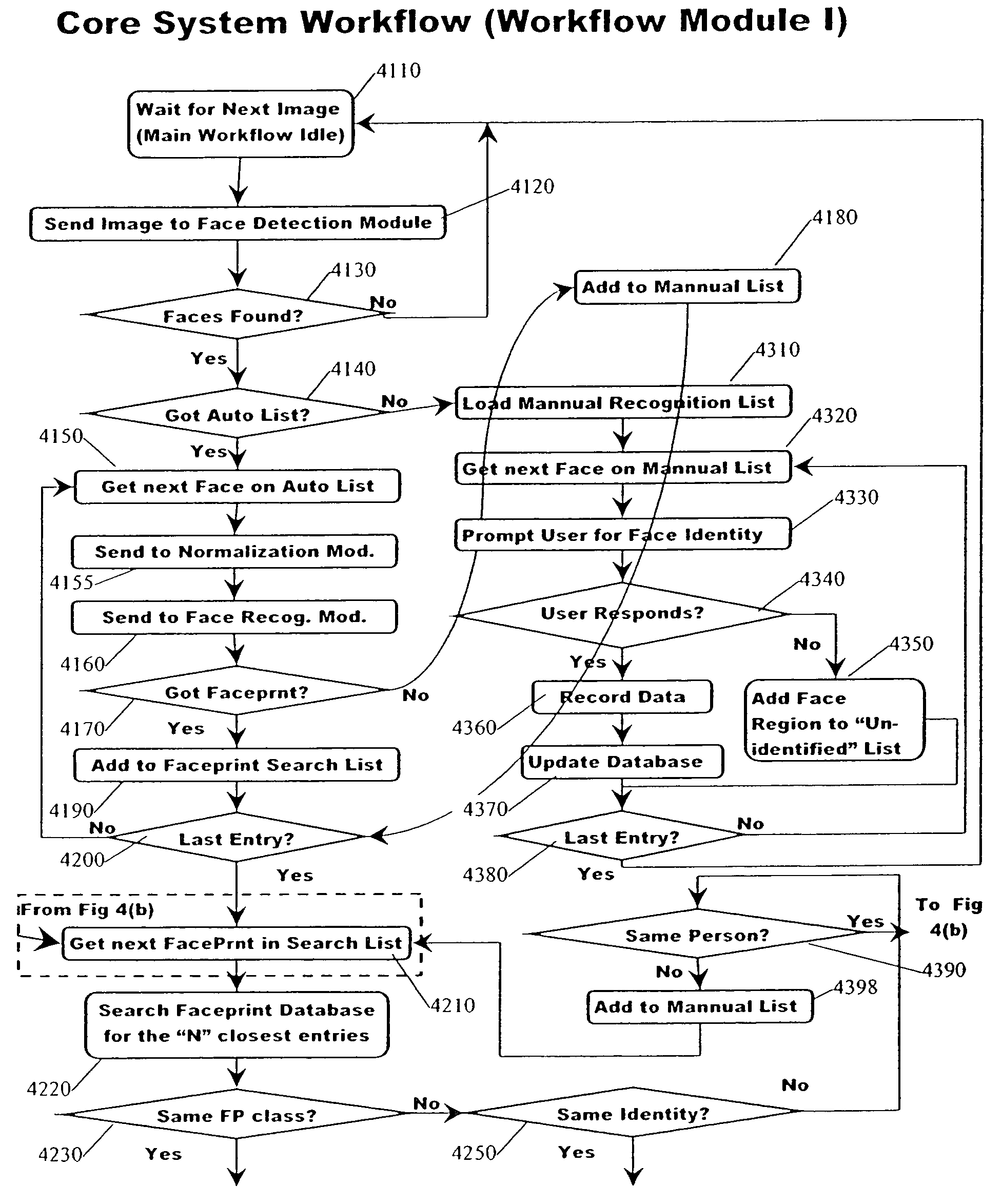

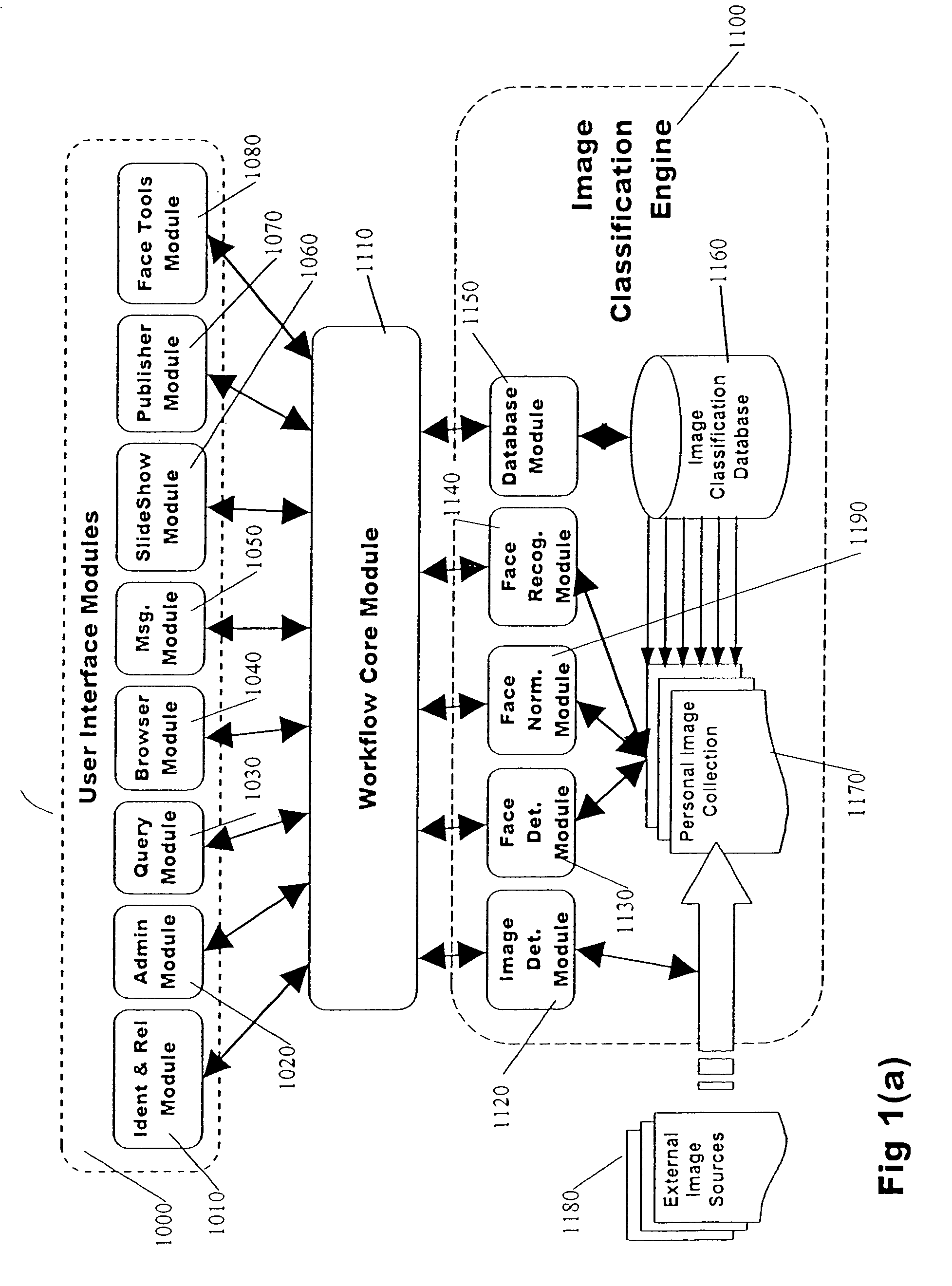

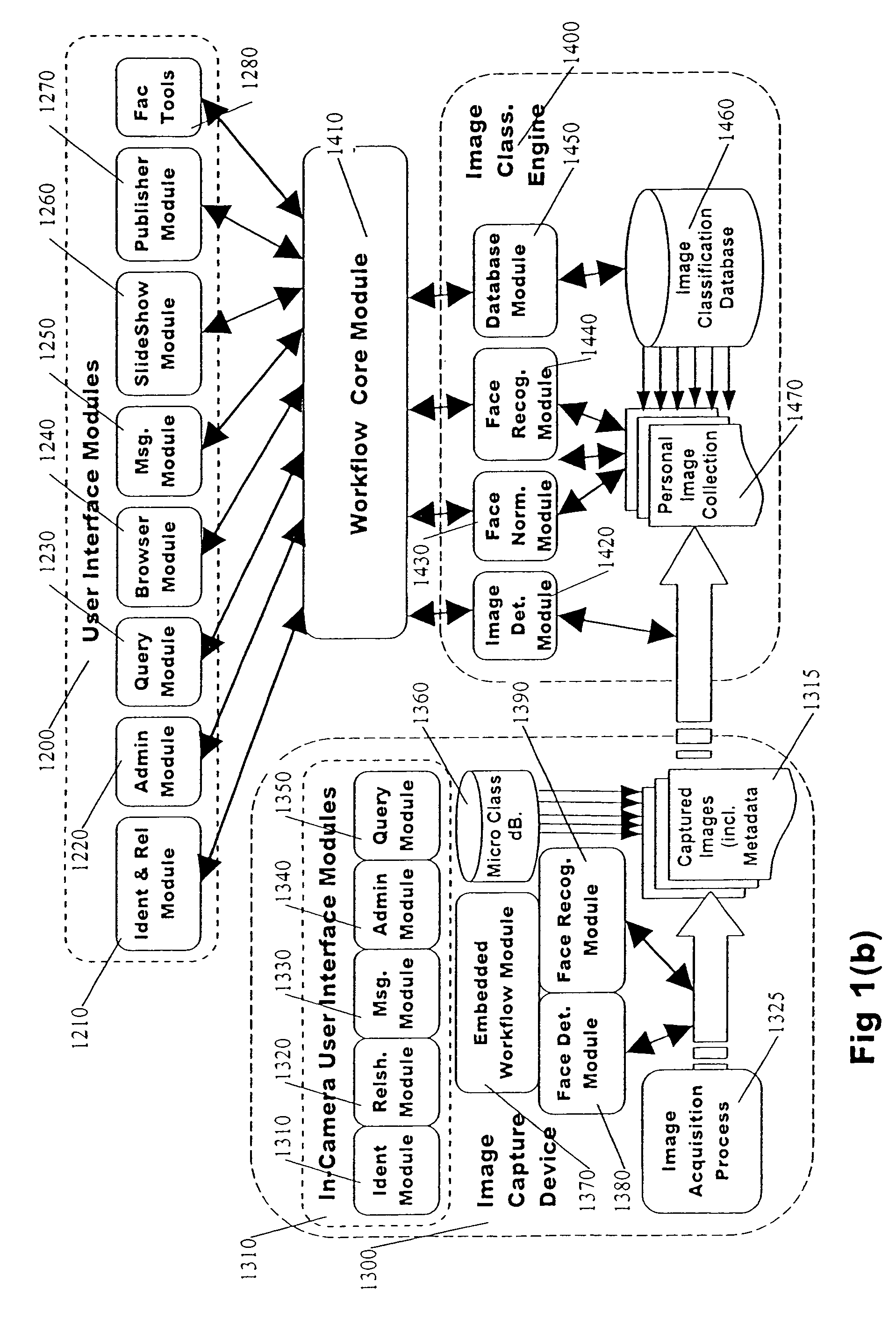

Classification system for consumer digital images using workflow, face detection, normalization, and face recognition

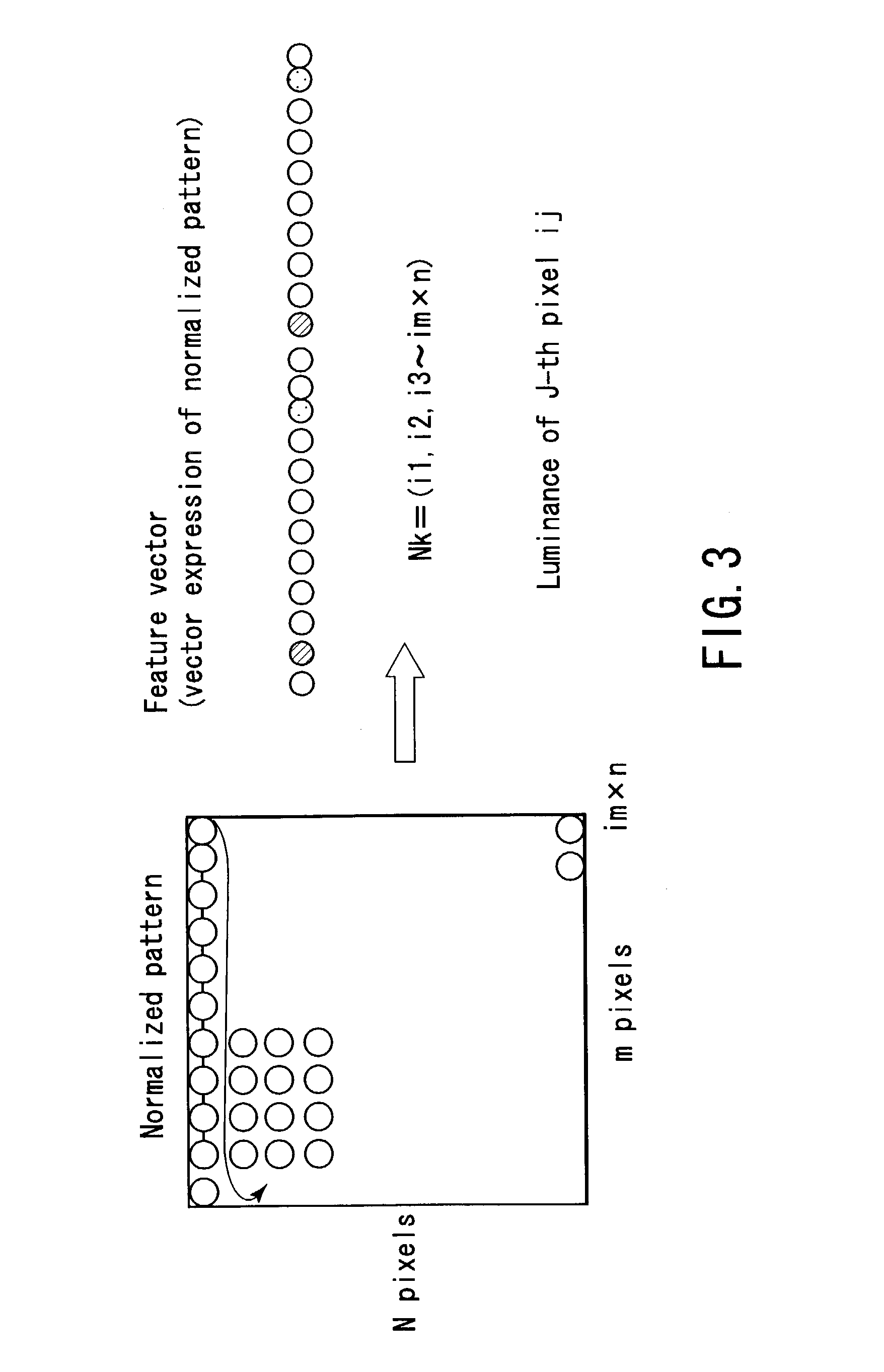

ActiveUS7555148B1Accurate identificationElectric signal transmission systemsImage analysisPattern recognitionFace detection

A processor-based system operating according to digitally-embedded programming instructions includes a face detection module for identifying face regions within digital images. A normalization module generates a normalized version of the face region that is at least pose normalized. A face recognition module extracts a set of face classifier parameter values from the normalized face region that are referred to as a faceprint. A workflow module compares the extracted faceprint to a database of archived faceprints previously determined to correspond to known identities. The workflow module determines based on the comparing whether the new faceprint corresponds to any of the known identities, and associates the new faceprint and normalized face region with a new or known identity within a database. A database module serves to archive data corresponding to the new faceprint and its associated parent image according to the associating by the workflow module within one or more digital data storage media.

Owner:FOTONATION LTD

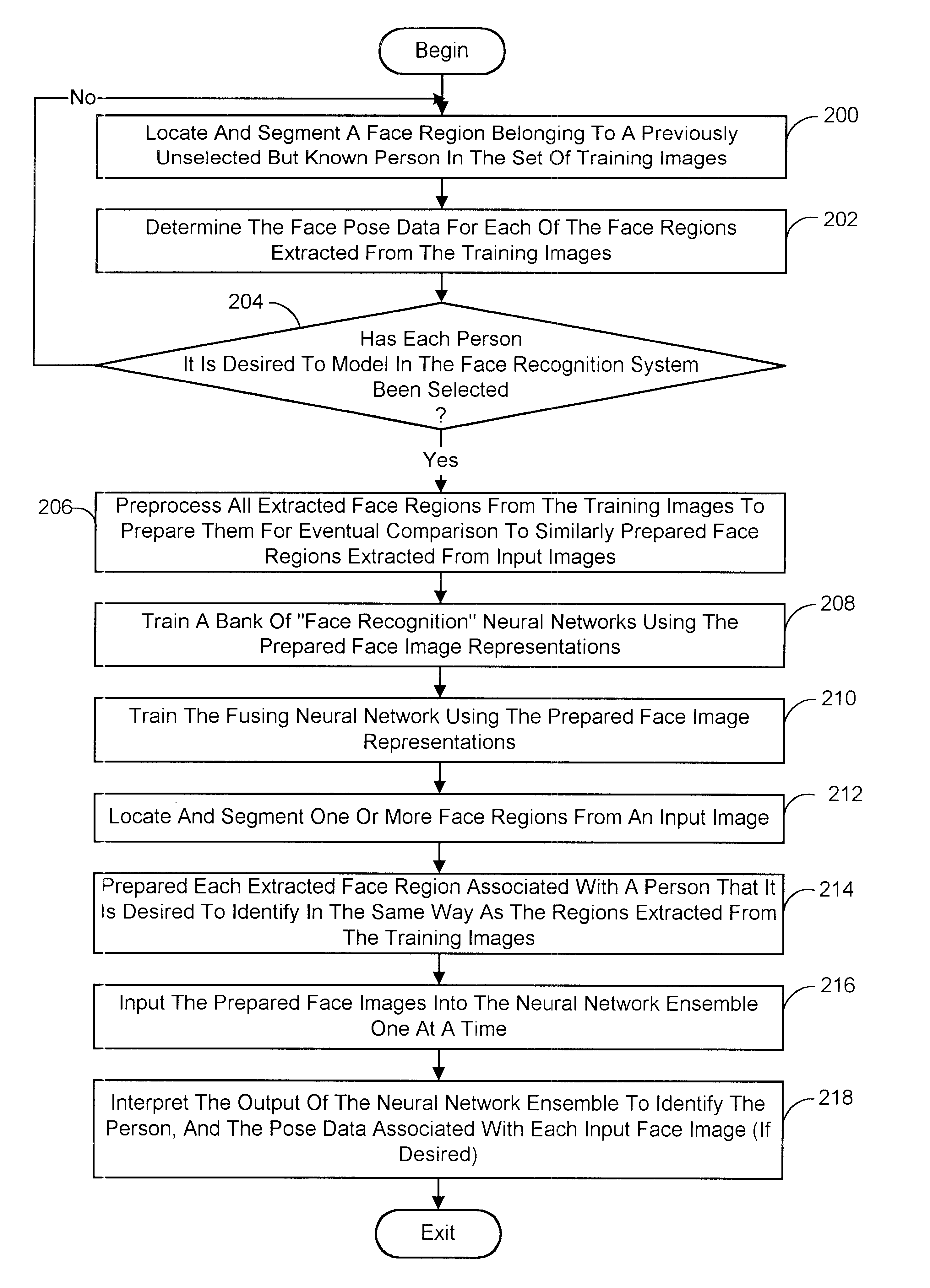

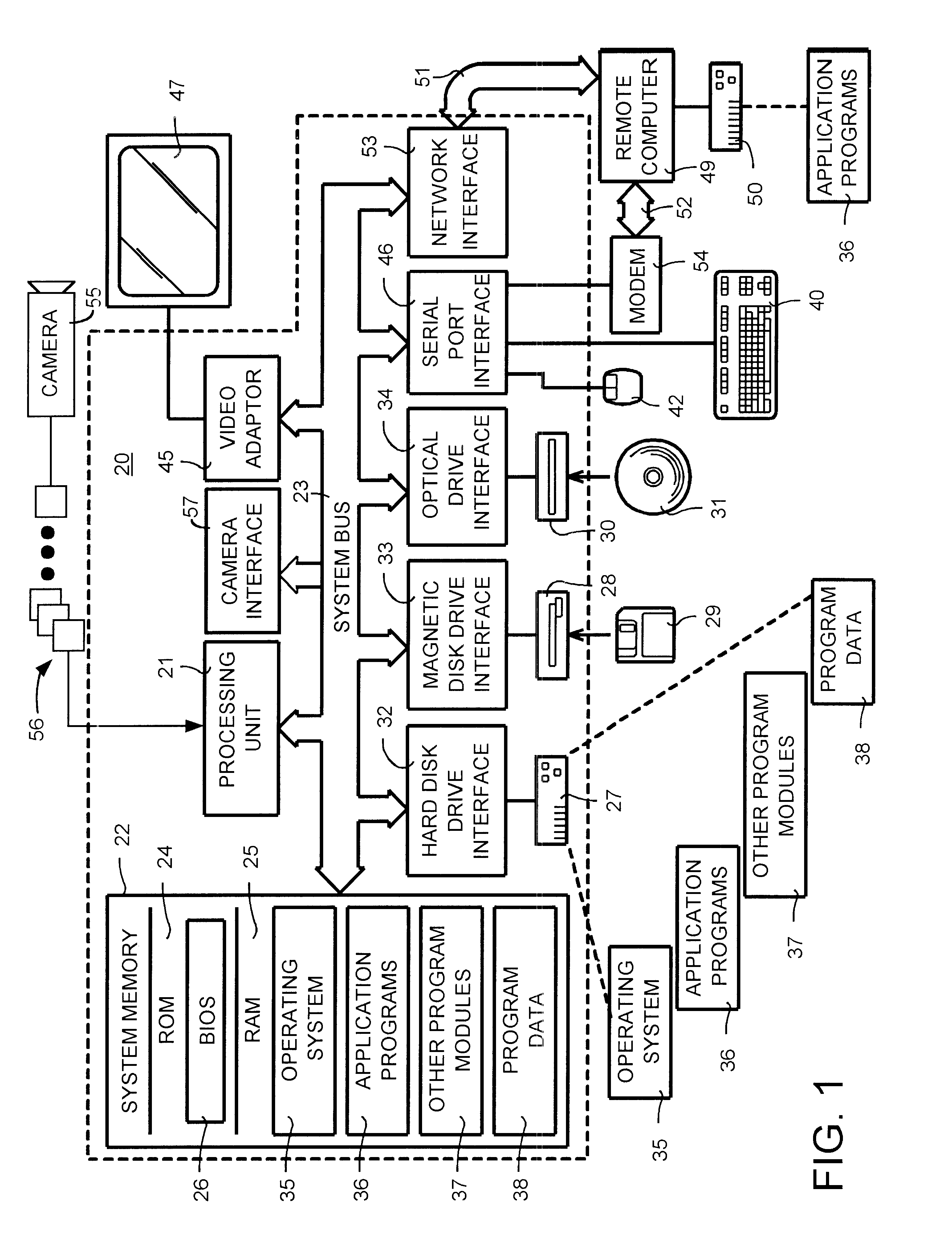

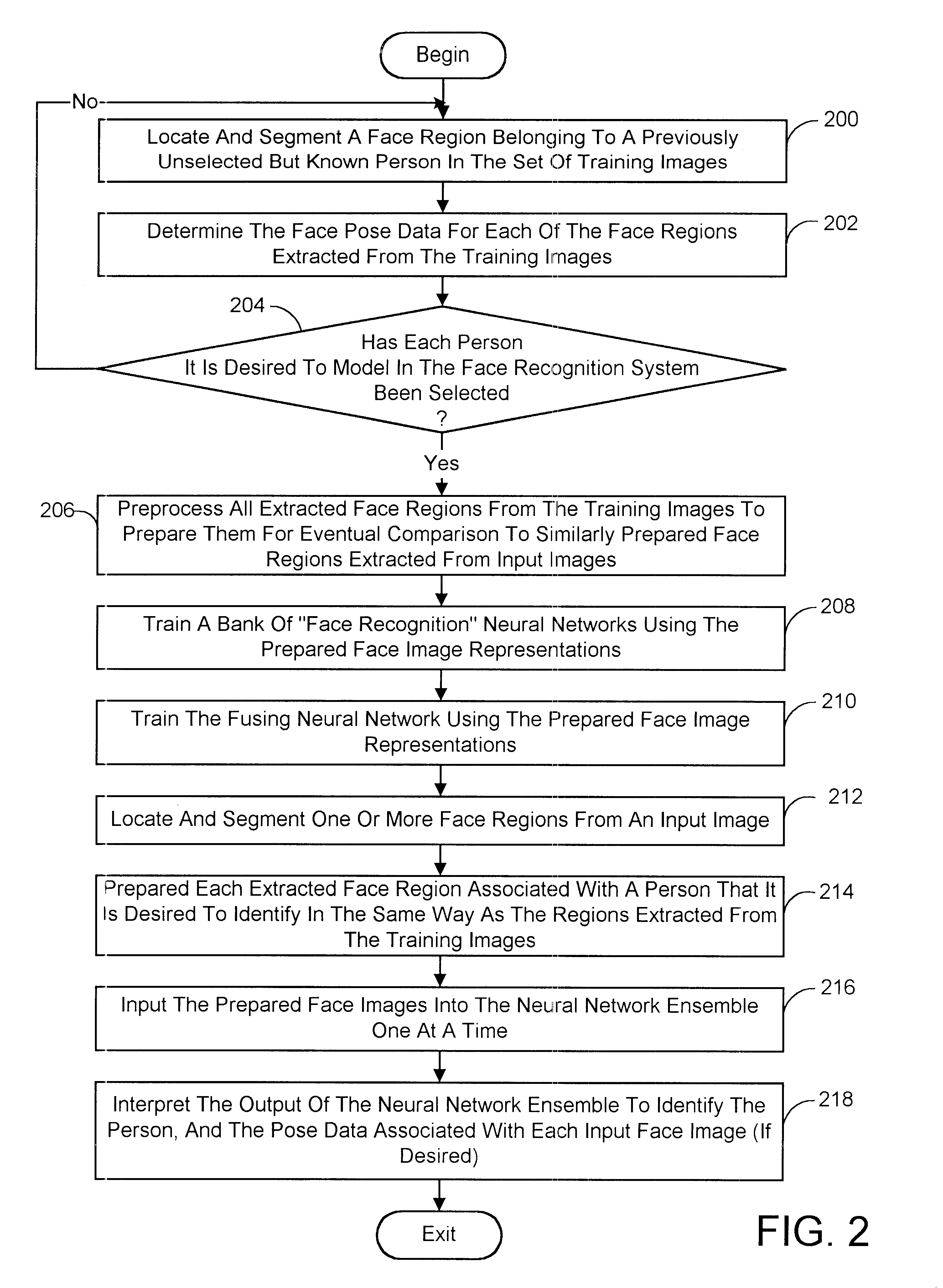

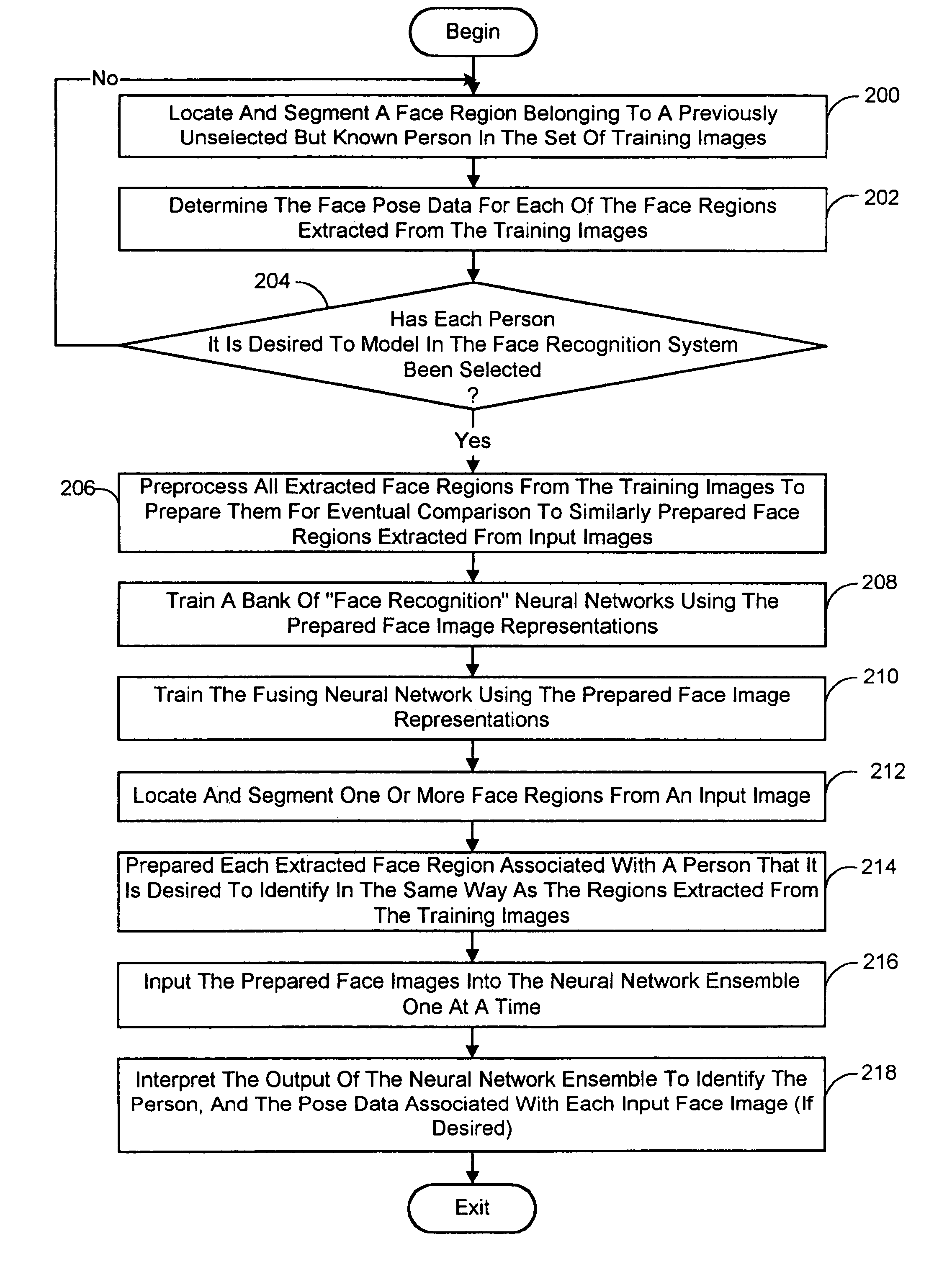

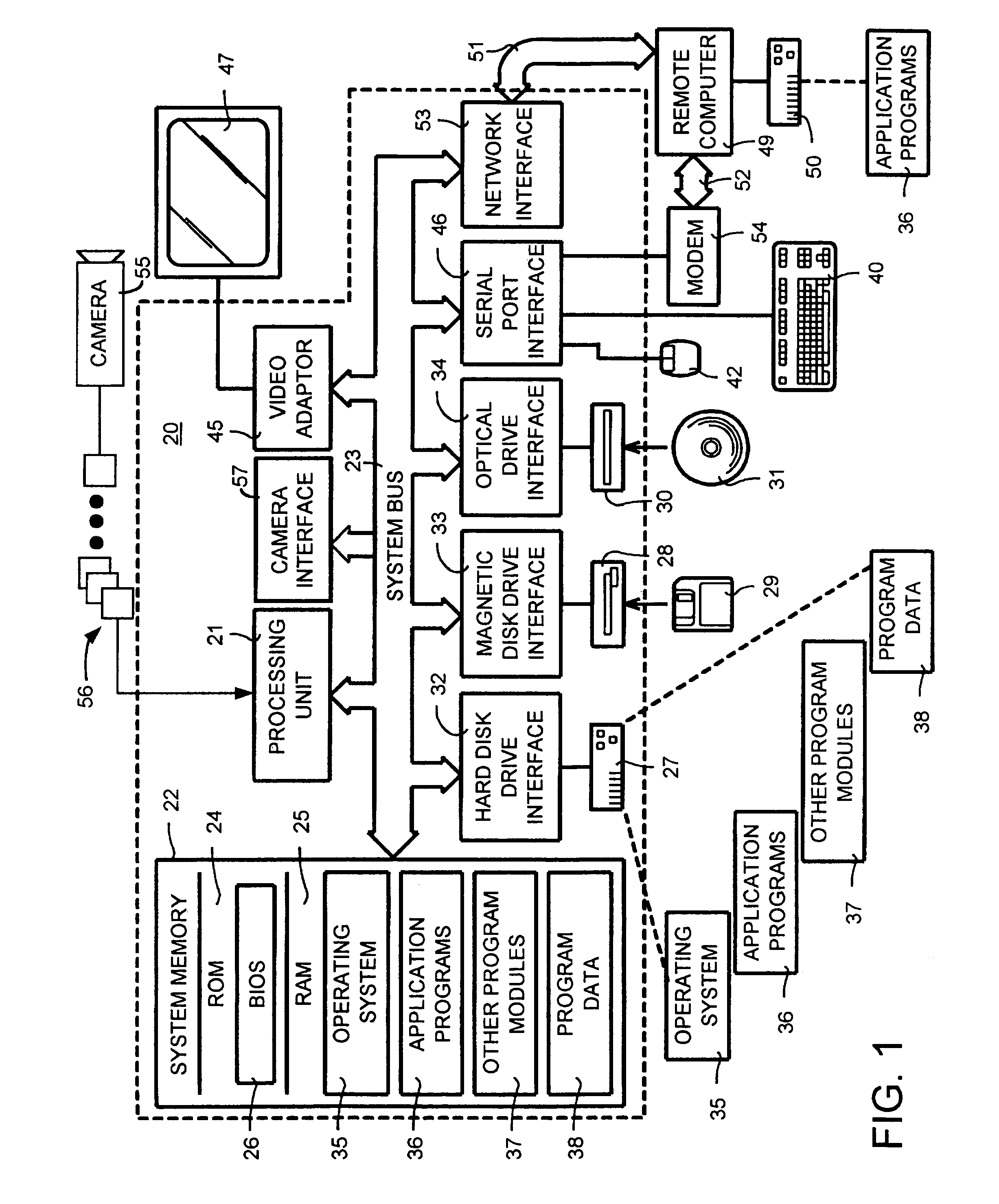

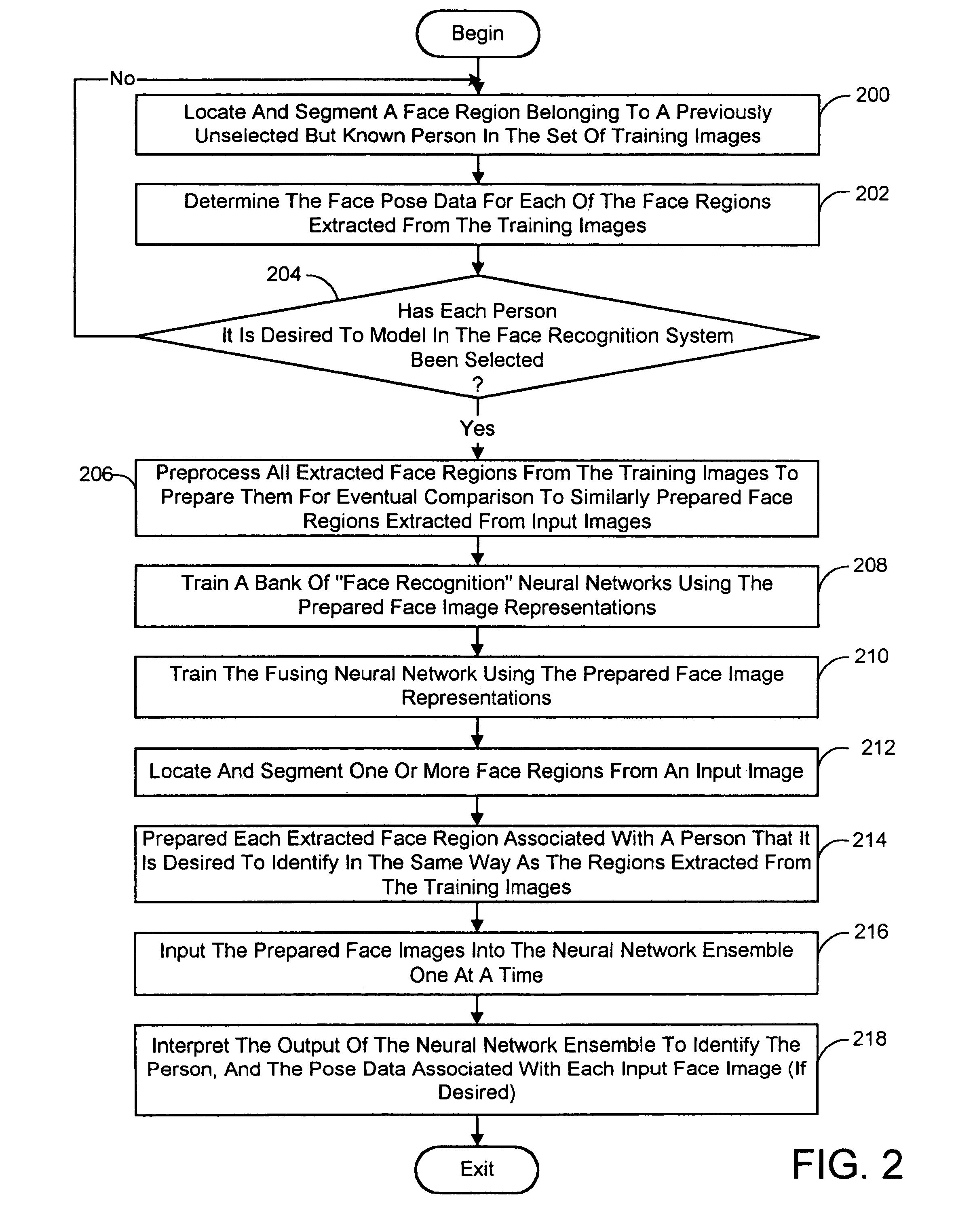

Pose-invariant face recognition system and process

A face recognition system and process for identifying a person depicted in an input image and their face pose. This system and process entails locating and extracting face regions belonging to known people from a set of model images, and determining the face pose for each of the face regions extracted. All the extracted face regions are preprocessed by normalizing, cropping, categorizing and finally abstracting them. More specifically, the images are normalized and cropped to show only a person's face, categorized according to the face pose of the depicted person's face by assigning them to one of a series of face pose ranges, and abstracted preferably via an eigenface approach. The preprocessed face images are preferably used to train a neural network ensemble having a first stage made up of a bank of face recognition neural networks each of which is dedicated to a particular pose range, and a second stage constituting a single fusing neural network that is used to combine the outputs from each of the first stage neural networks. Once trained, the input of a face region which has been extracted from an input image and preprocessed (i.e., normalized, cropped and abstracted) will cause just one of the output units of the fusing portion of the neural network ensemble to become active. The active output unit indicates either the identify of the person whose face was extracted from the input image and the associated face pose, or that the identity of the person is unknown to the system.

Owner:ZHIGU HLDG

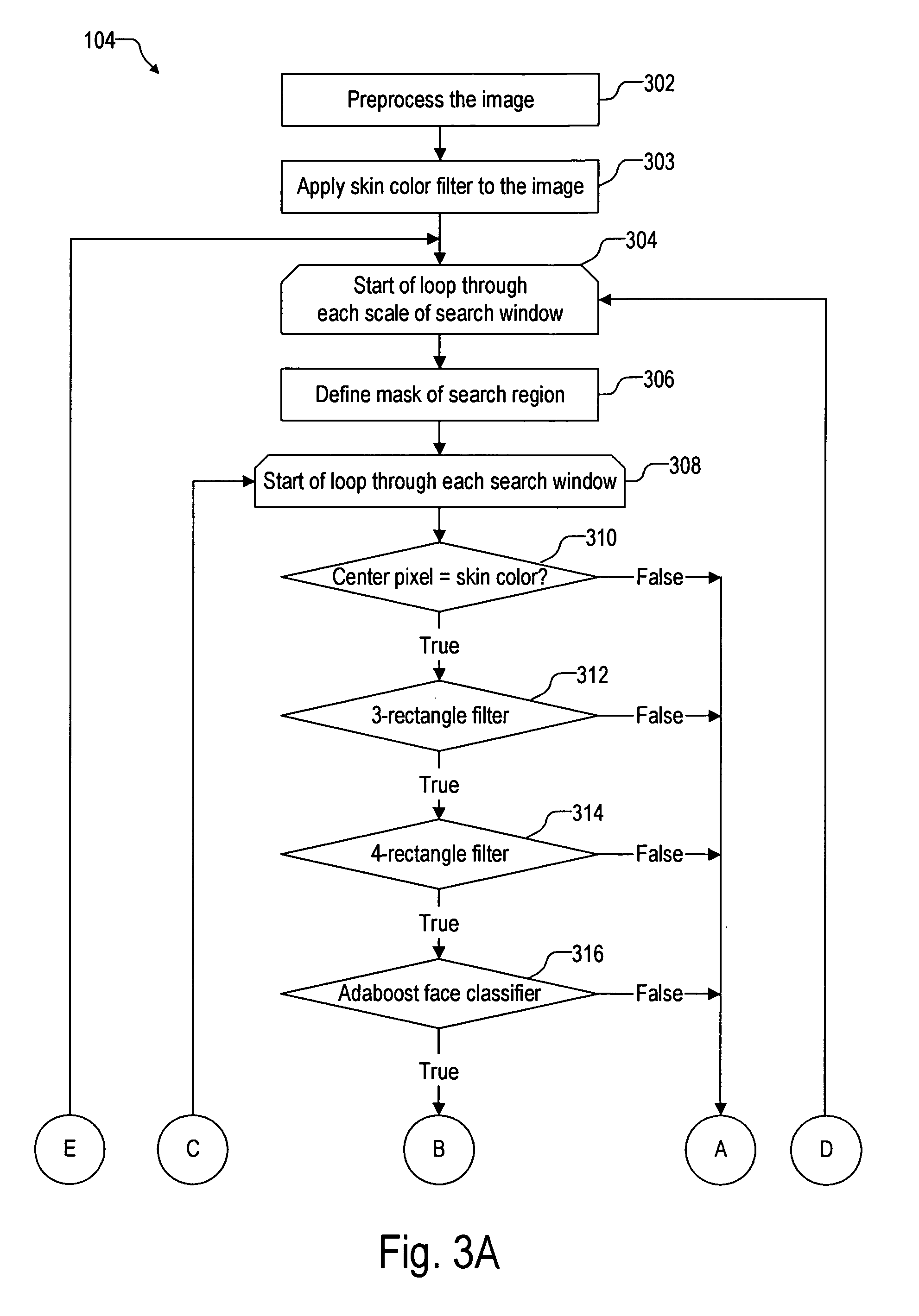

Face detection on mobile devices

ActiveUS20070154095A1Quick checkSimple processAcquiring/recognising eyesPattern recognitionFace detection

A method for detecting a facial area on a color image includes (a) placing a search window on the color image, (b) determining if a center pixel of the search window is a skin color pixel, indicating that the search window is a possible facial area candidate, (c) applying a 3-rectangle filter to the search window to determine if the search window is a possible facial area candidate, (d) applying a 4-rectangle filter to the search window to determine if the search window is a possible facial area candidate, (e) if steps (b), (c), (d) all determine that the search window is a possible facial area candidate, applying an AdaBoost filter to the search window to determine if the search window is a facial area candidate, and (f) if step (e) determines that the search window is a facial area candidate, saving the location of the search window.

Owner:ARCSOFT

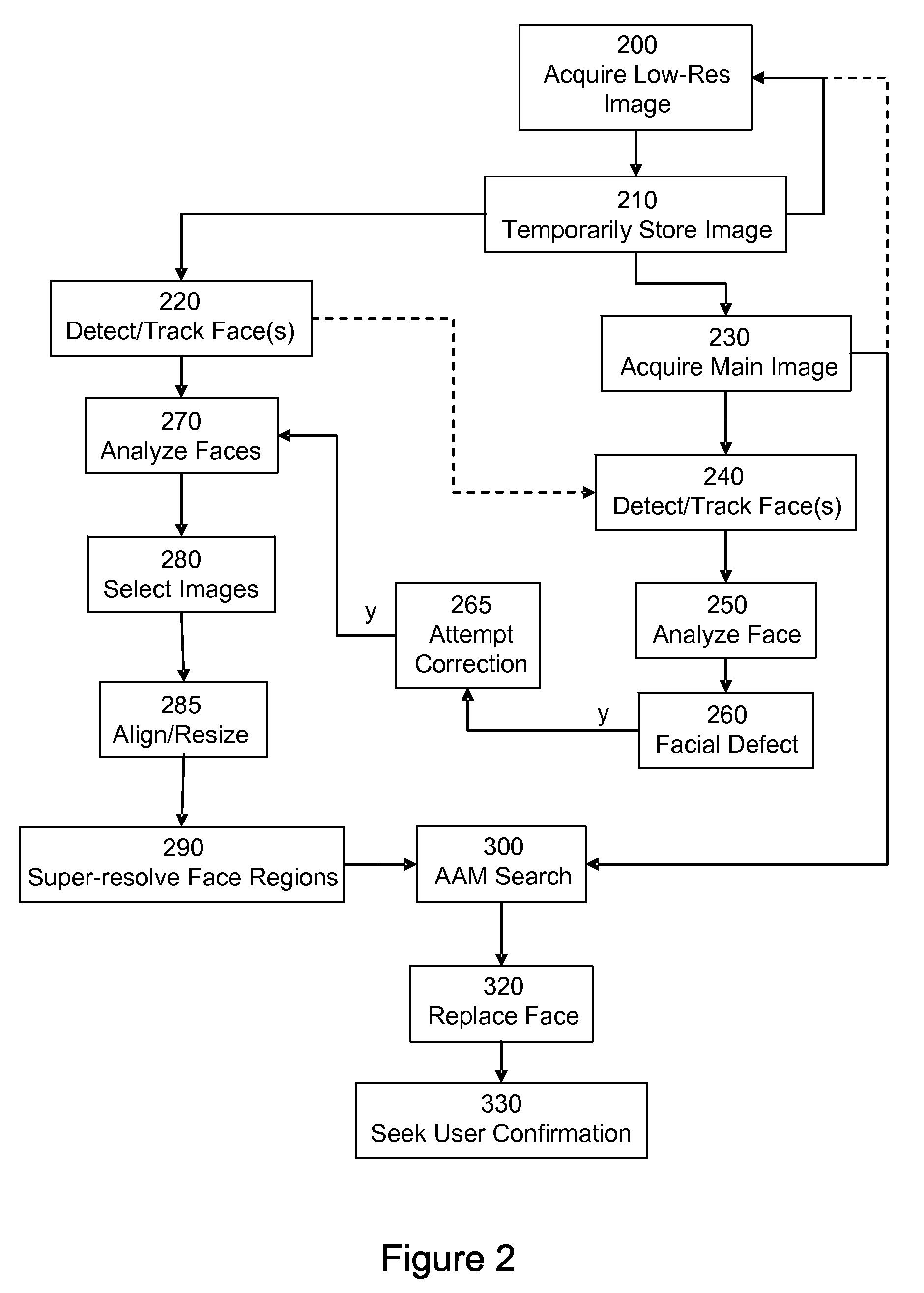

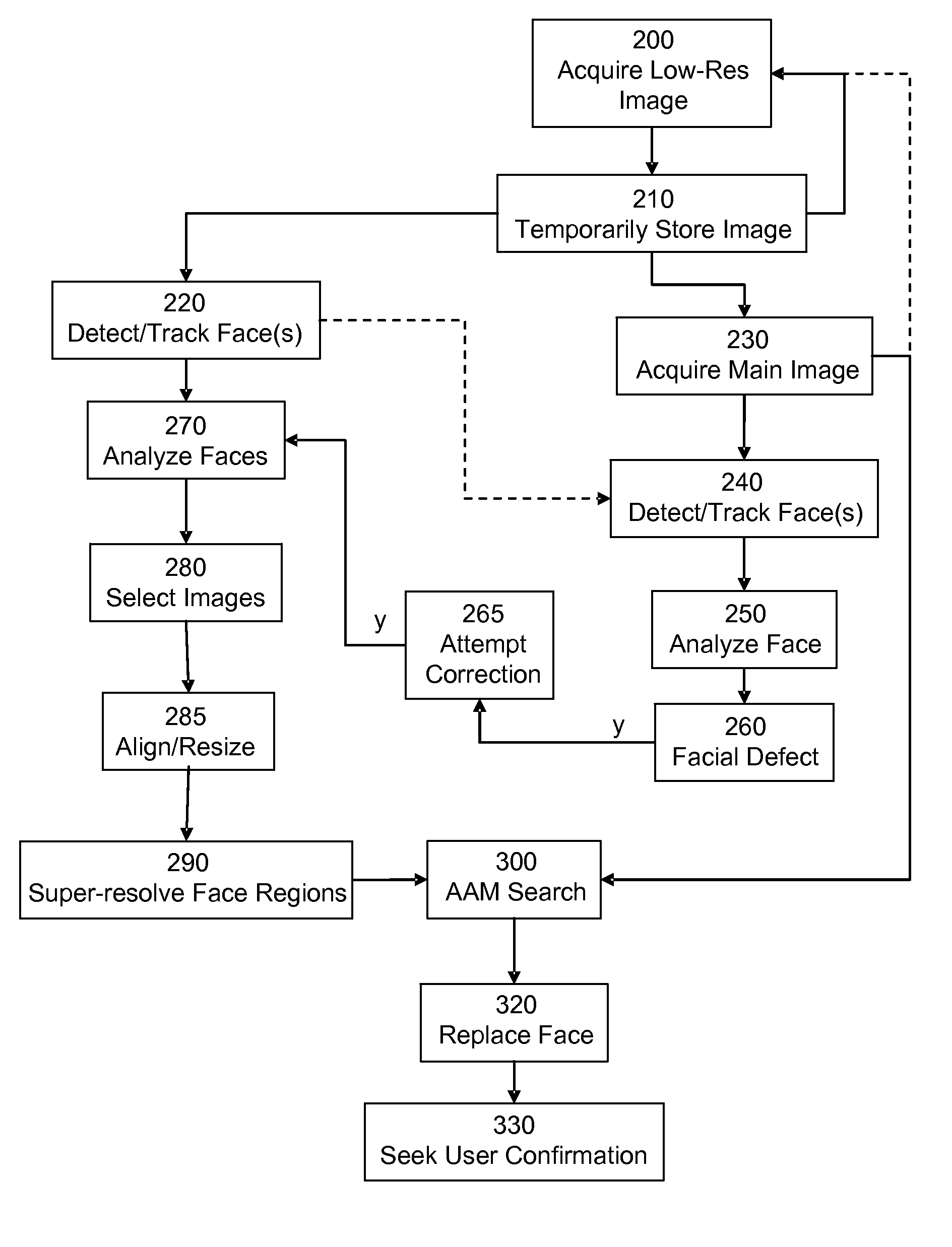

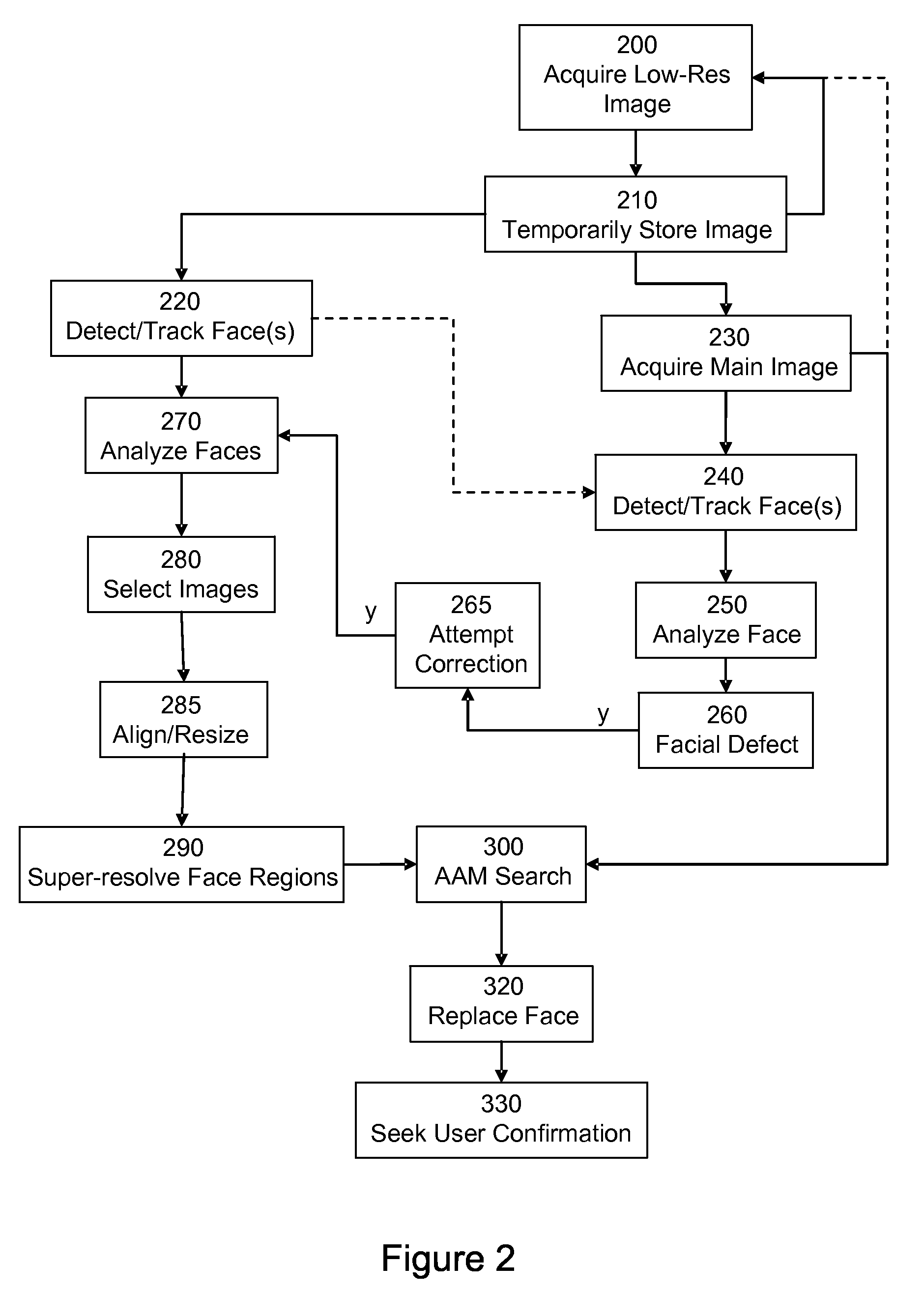

Image Processing Method and Apparatus

ActiveUS20080292193A1Television system detailsCharacter and pattern recognitionImaging processingImage resolution

An image processing technique includes acquiring a main image of a scene and determining one or more facial regions in the main image. The facial regions are analysed to determine if any of the facial regions includes a defect. A sequence of relatively low resolution images nominally of the same scene is also acquired. One or more sets of low resolution facial regions in the sequence of low resolution images are determined and analysed for defects. Defect free facial regions of a set are combined to provide a high quality defect free facial region. At least a portion of any defective facial regions of the main image are corrected with image information from a corresponding high quality defect free facial region.

Owner:FOTONATION LTD

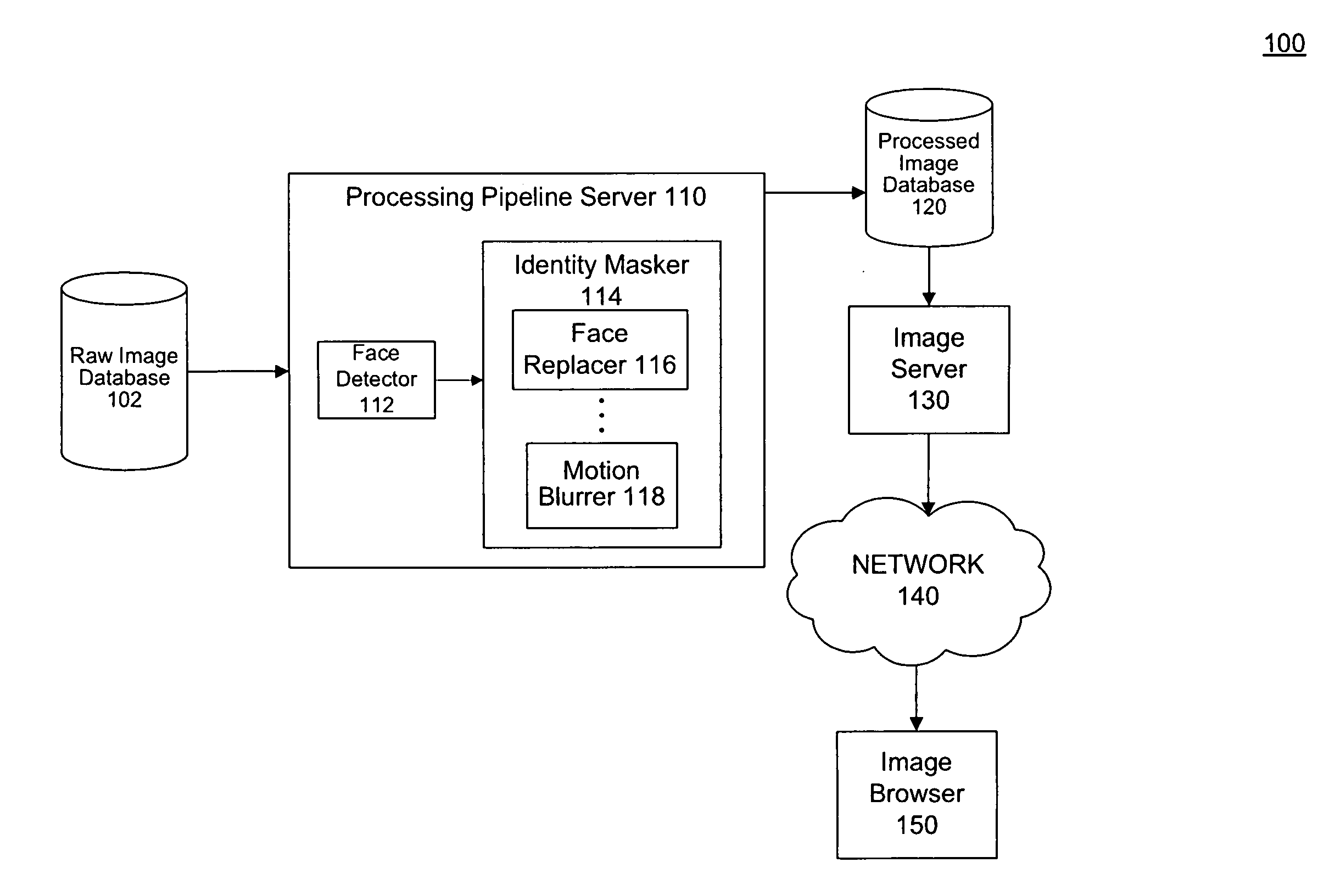

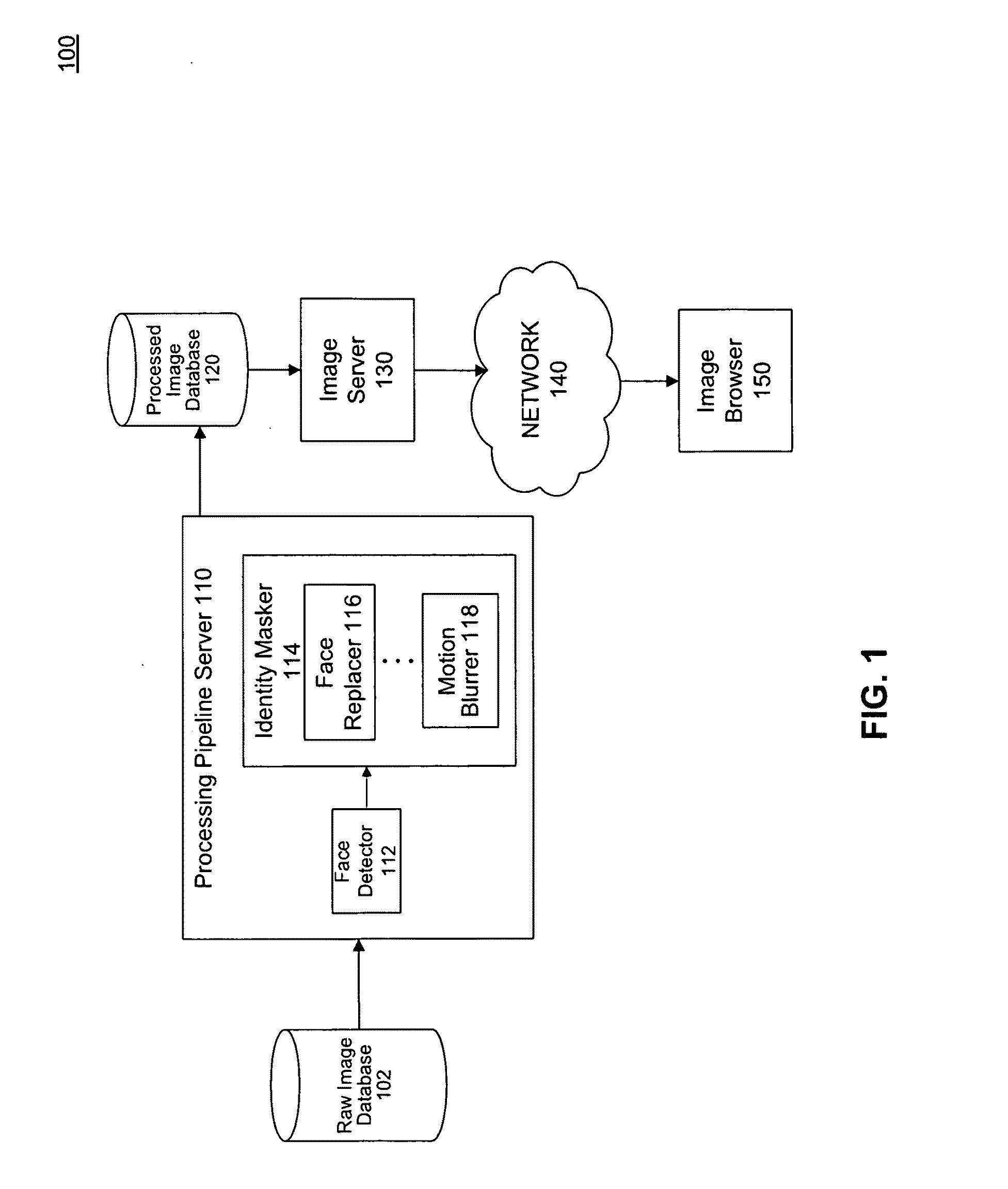

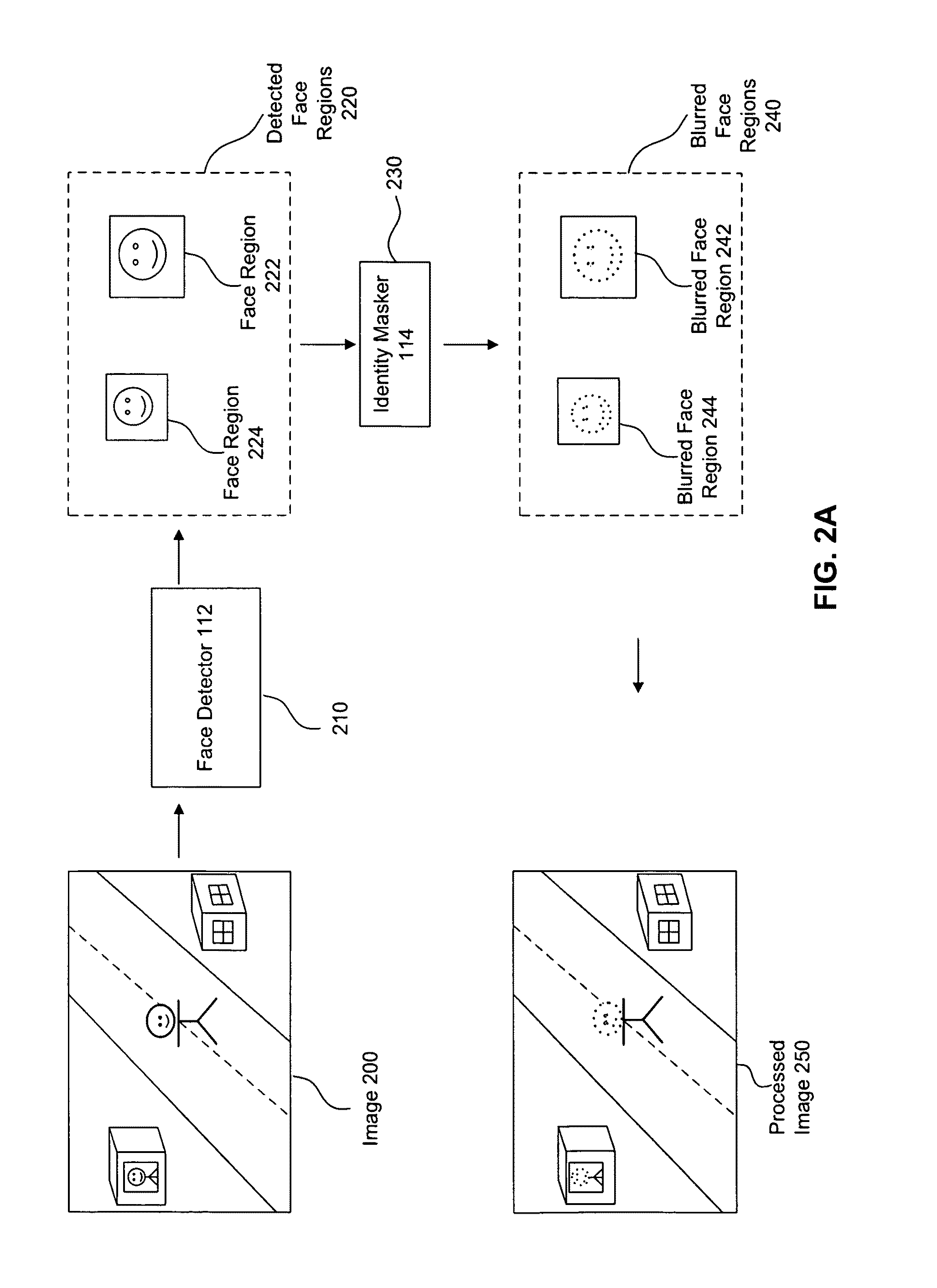

Automatic face detection and identity masking in images, and applications thereof

ActiveUS20090262987A1Increase probabilityGreat blurringImage enhancementImage analysisFace detectionMotion blur

A method and system of identity masking to obscure identities corresponding to face regions in an image is disclosed. A face detector is applied to detect a set of possible face regions in the image. Then an identity masker is used to process the detected face regions by identity masking techniques in order to obscure identities corresponding to the regions. For example, a detected face region can be blurred as if it is in motion by a motion blur algorithm, such that the blurred region can not be recognized as the original identity. Or the detected face region can be replaced by a substitute facial image by a face replacement algorithm to obscure the corresponding identity.

Owner:GOOGLE LLC

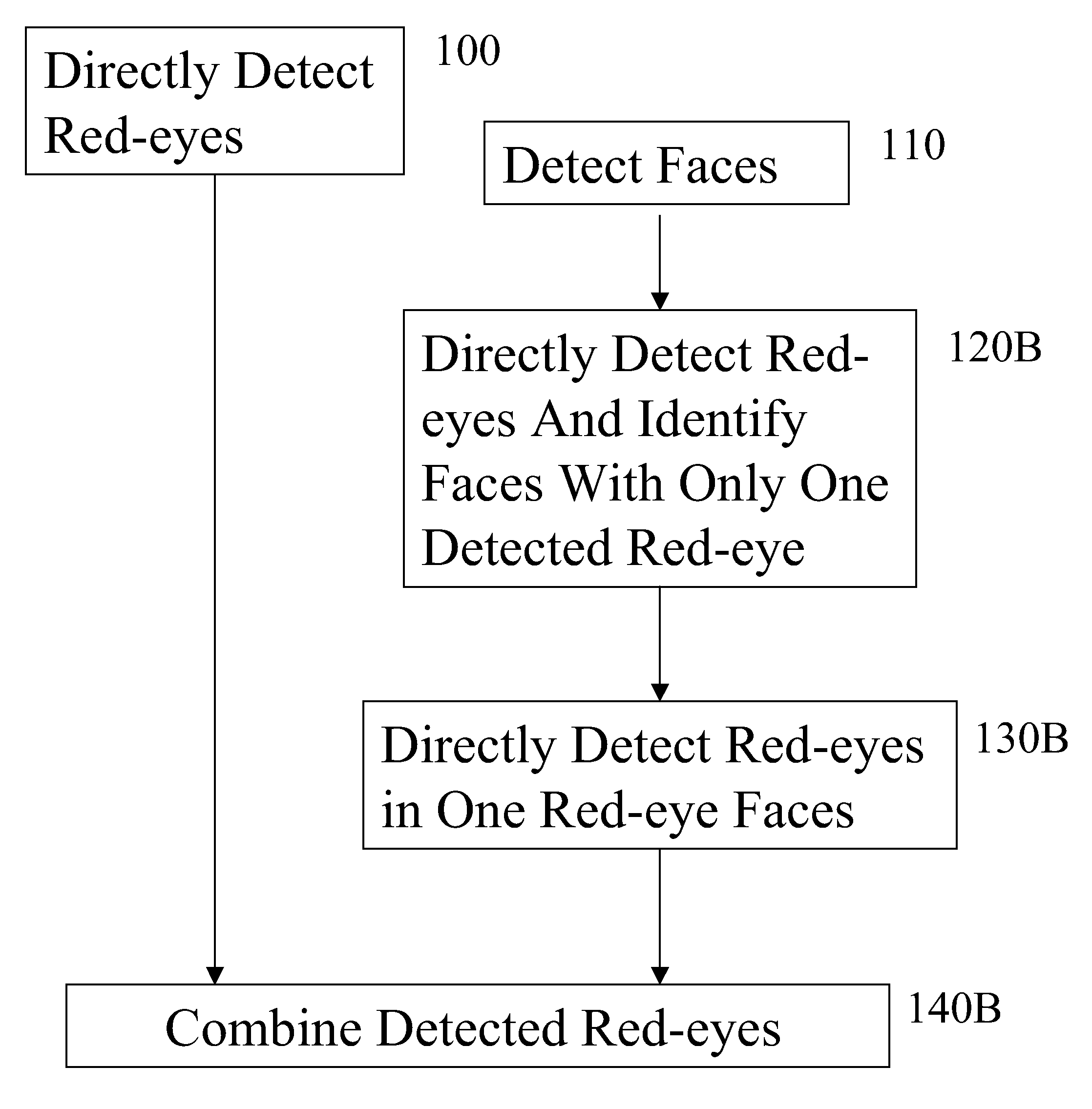

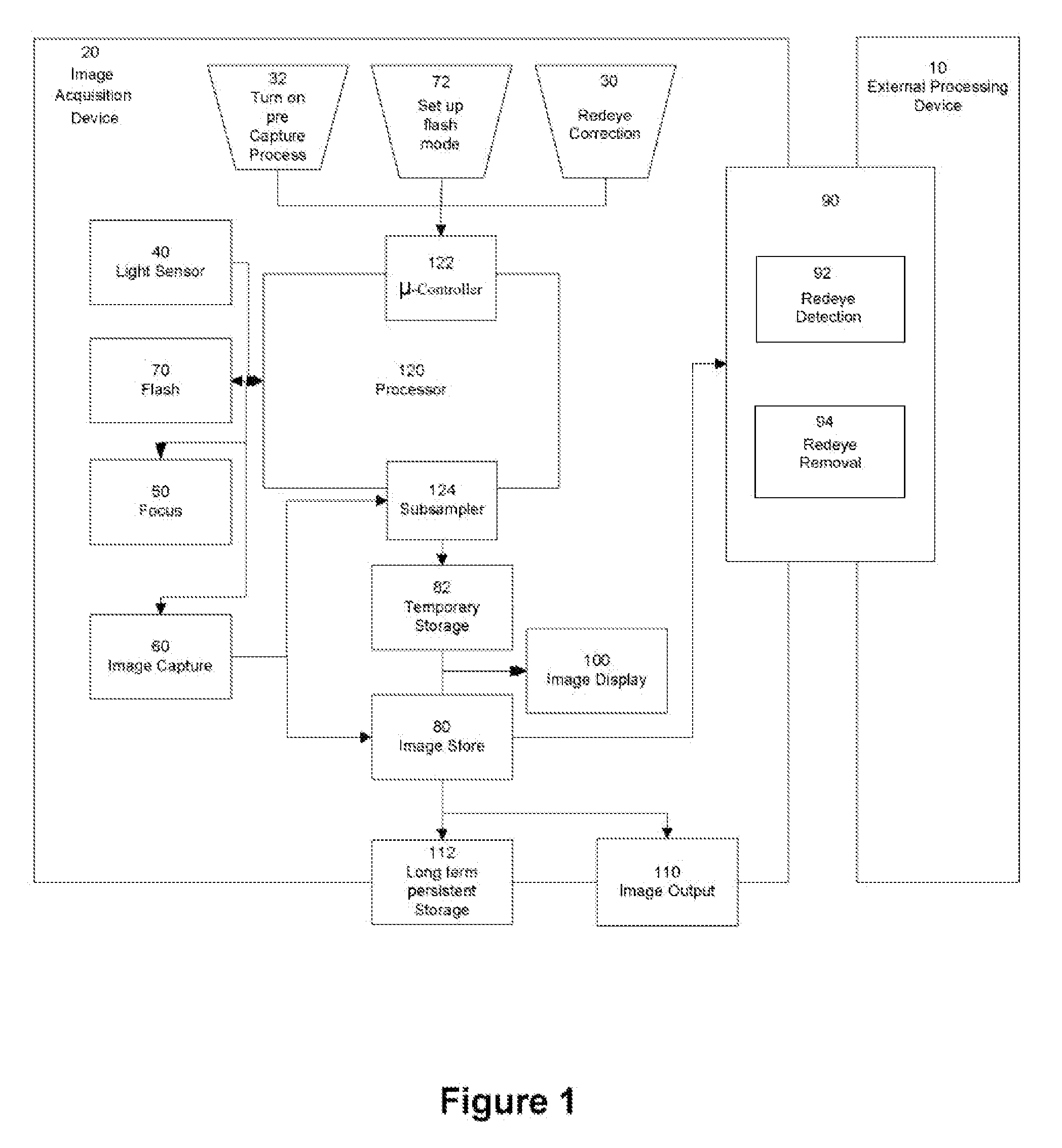

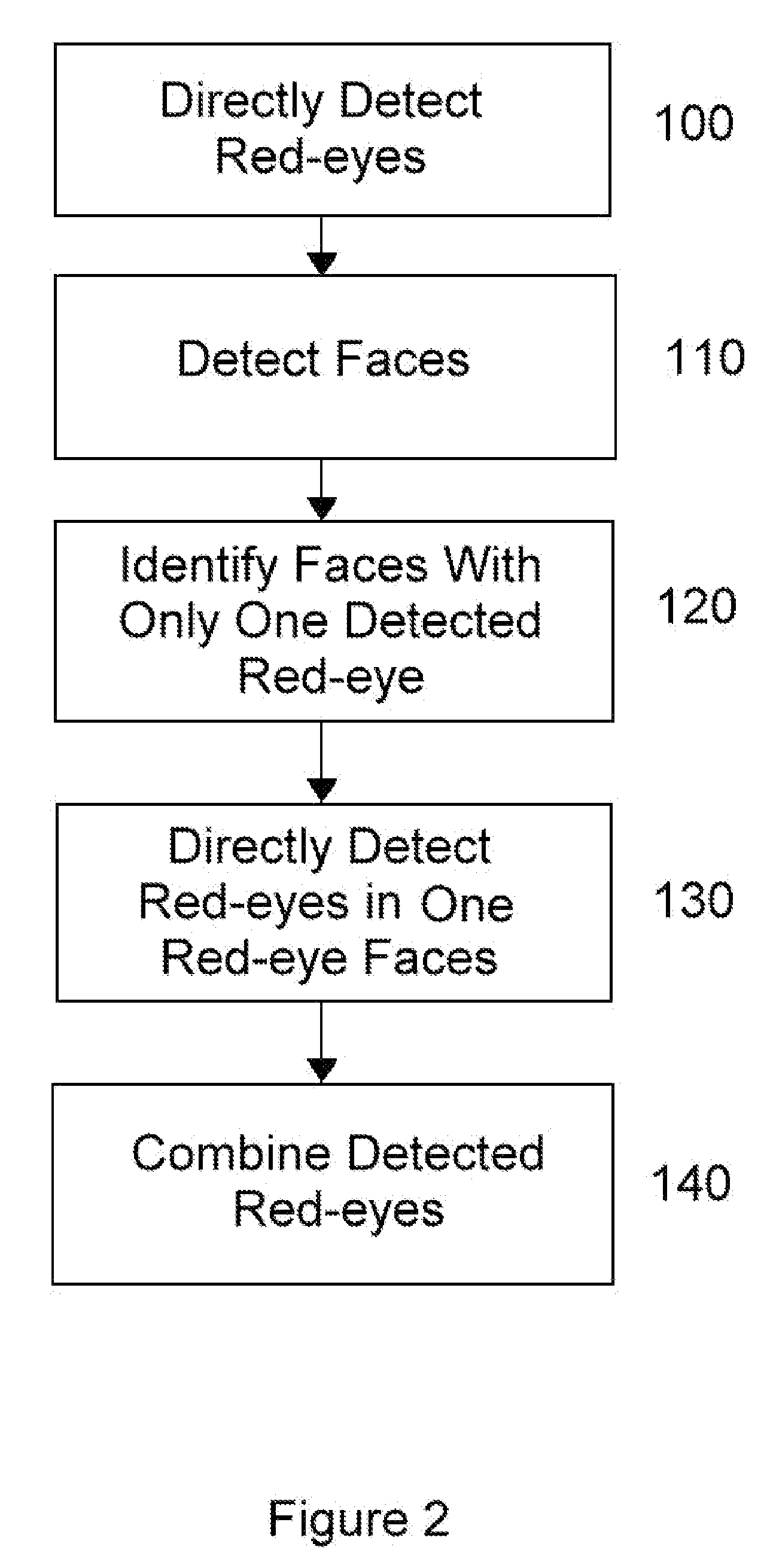

Method of detecting redeye in a digital image

A method for detecting redeye in a digital image comprises initially examining the image to detect redeyes, examining the image to detect face regions and, from the results of the preceding examinations, identifying those detected face regions each including only one detected redeye. Next, the identified face regions are examined, using less stringent search criteria than the initial examination, to detect additional redeyes in the face regions.

Owner:FOTONATION LTD

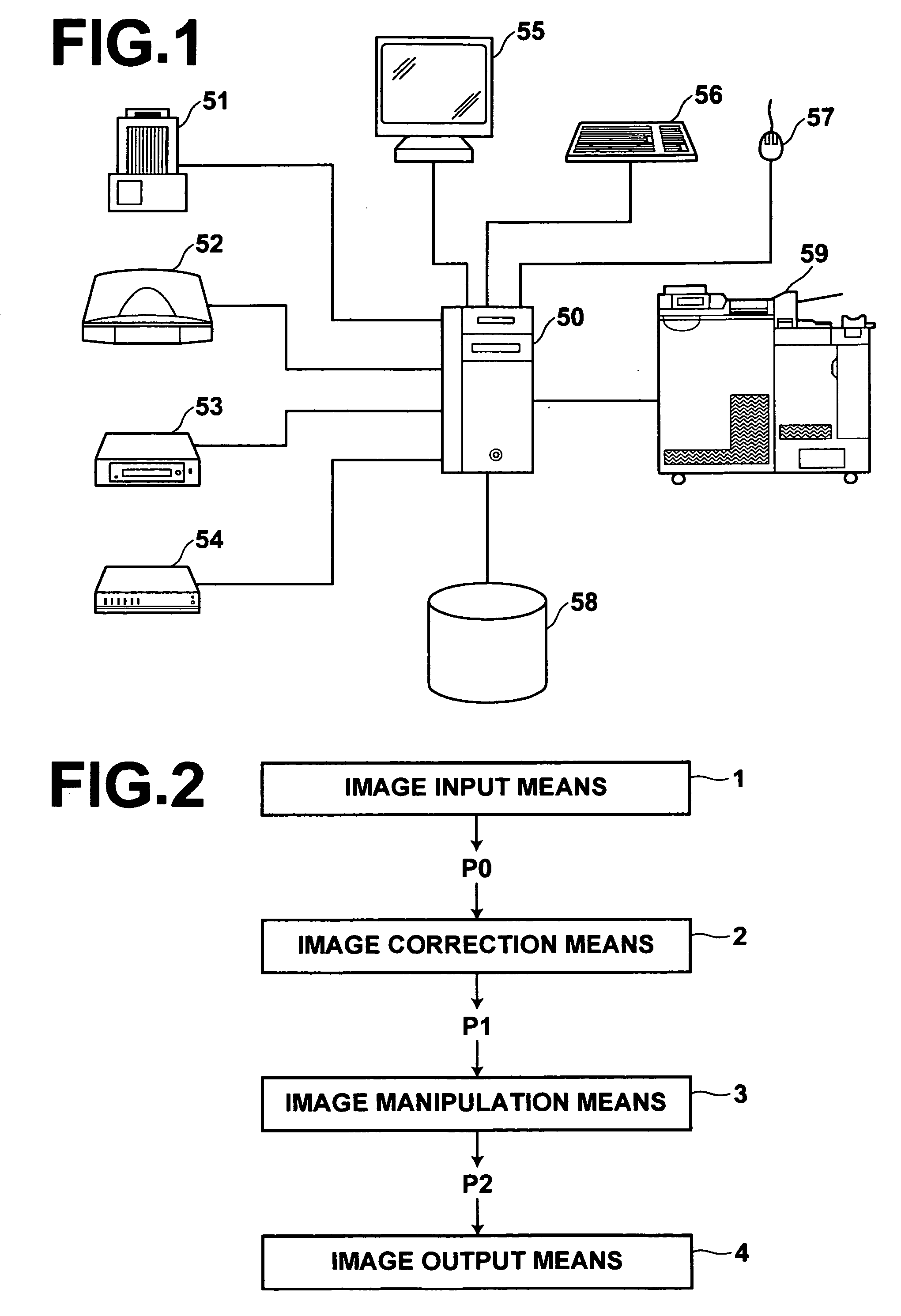

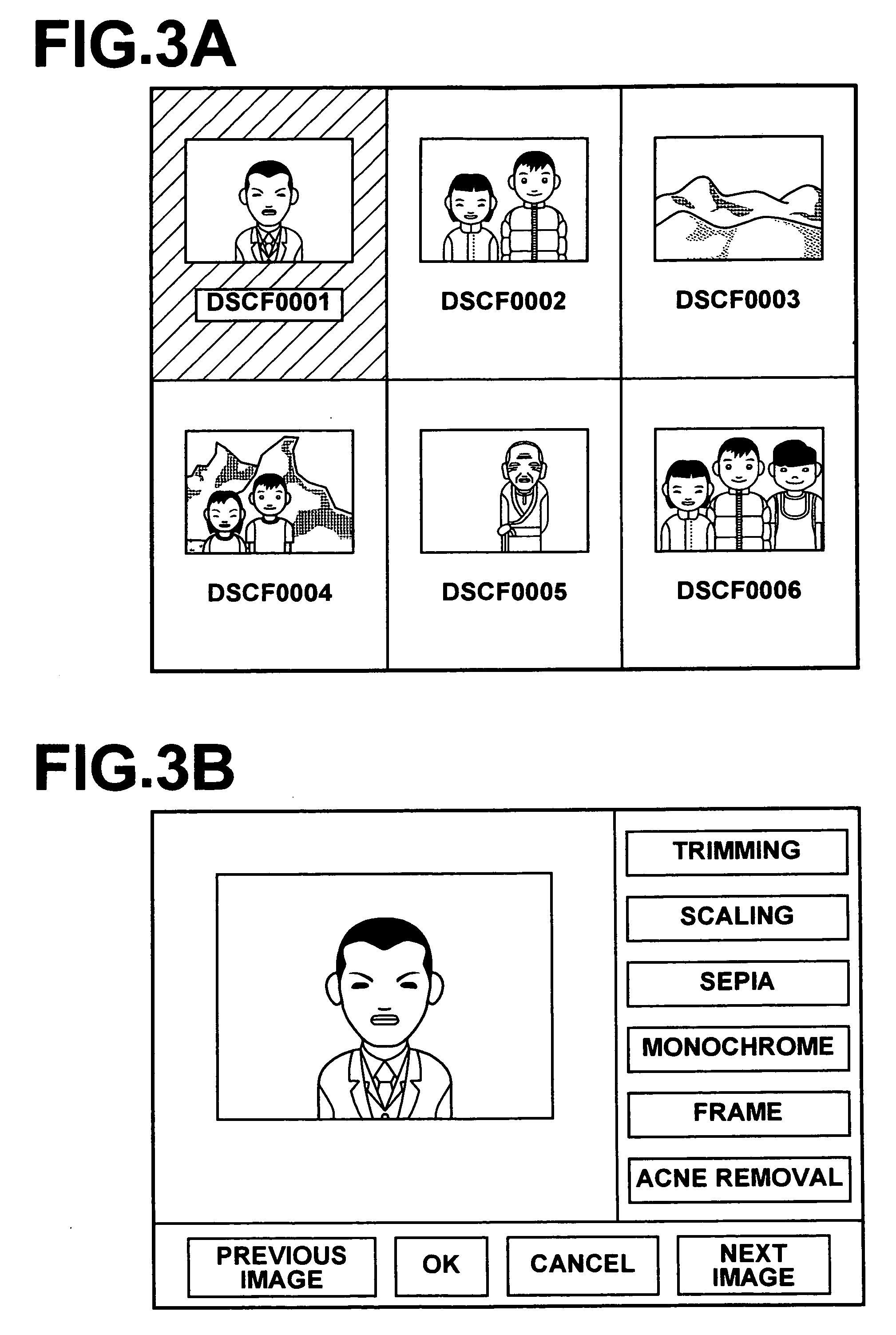

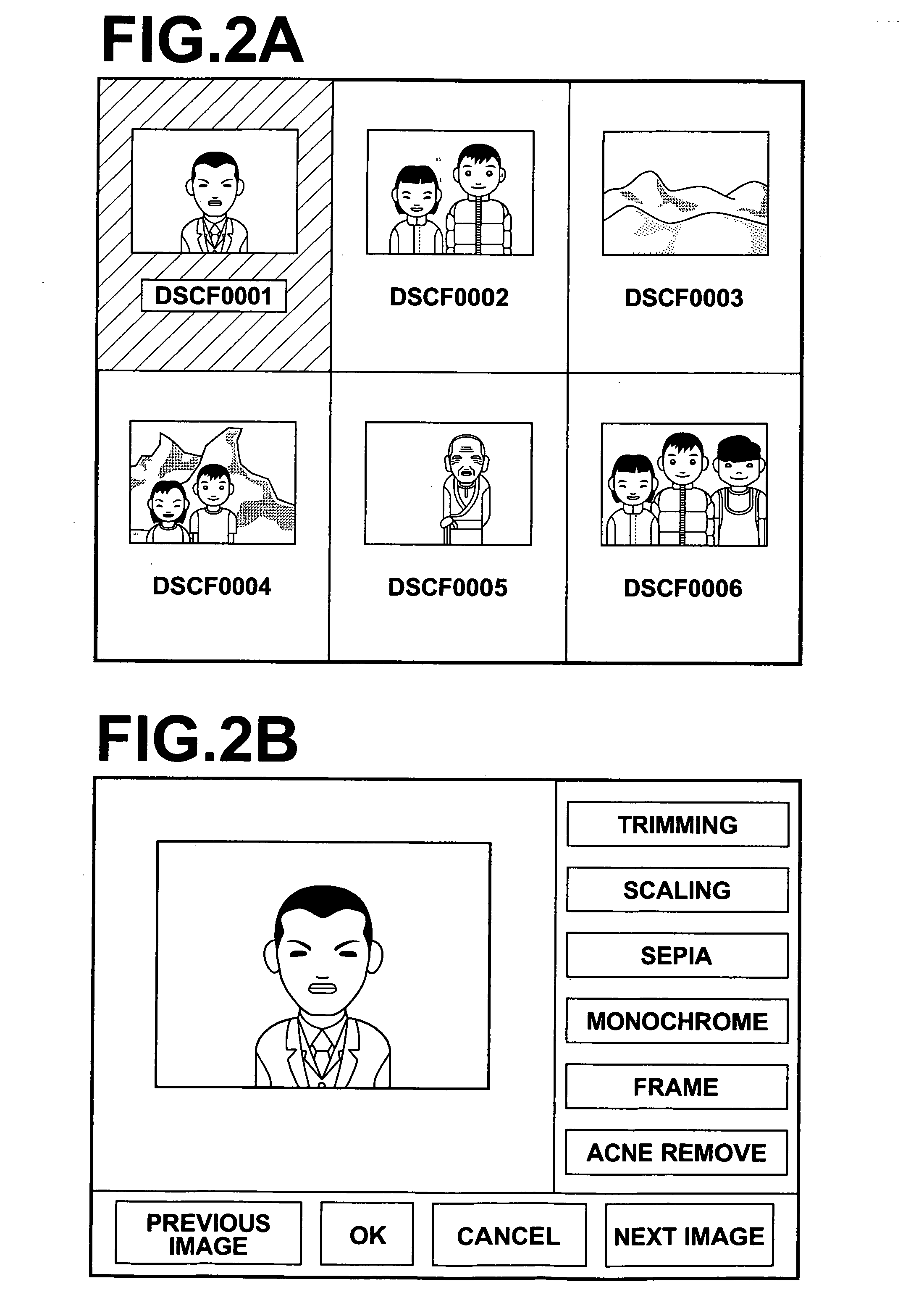

Image processing method, image processing apparatus, and computer-readable recording medium storing image processing program

ActiveUS20070070440A1Easy to operateQuality improvementImage enhancementCharacter and pattern recognitionFace detectionImaging processing

Sharpness is adjusted for more appropriately representing a predetermined structure in an image. A parameter acquisition unit obtains a weighting parameter for a principal component representing a degree of sharpness in a face region detected by a face detection unit as an example of the predetermined structure in the image, by fitting to the face region a mathematical model generated by a statistical method such as AAM based on a plurality of sample images representing human faces in different degrees of sharpness. Based on a value of the parameter, sharpness is adjusted in at least a part of the image. For example, a parameter changing unit changes the value of the parameter to a preset optimal face sharpness value, and an image reconstruction unit reconstructs the image based on the parameter having been changed and outputs the image having been subjected to the sharpness adjustment processing.

Owner:FUJIFILM CORP +1

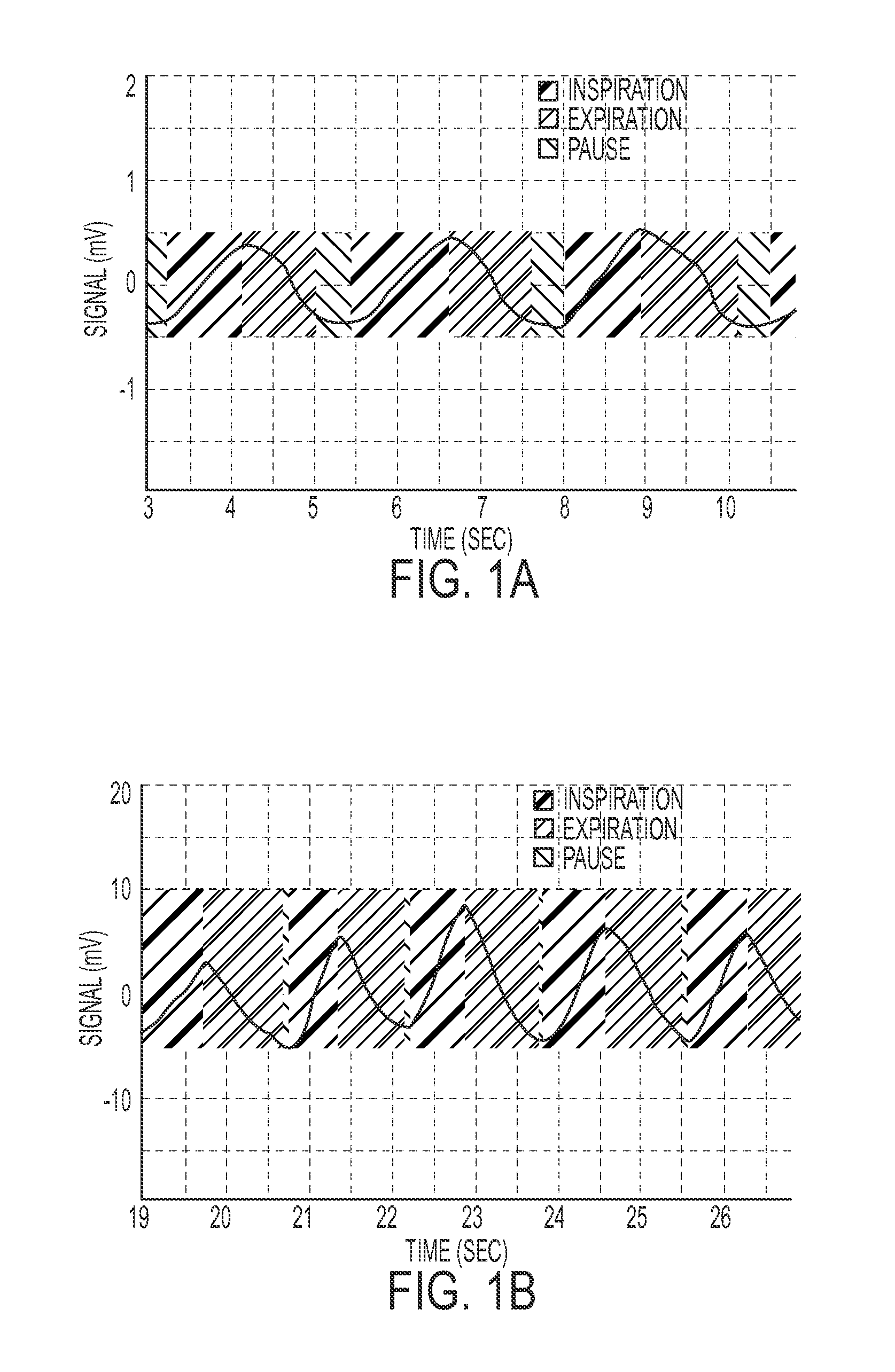

Monitoring respiration with a thermal imaging system

ActiveUS20120289850A1Reliable and accurate mannerMedical imagingRespiratory organ evaluationCommunication interfaceSpectral bands

What is disclosed is a system and method for monitoring respiration of a subject or subject of interest using a thermal imaging system with single or multiple spectral bands set to a temperature range of a facial region of that person. Temperatures of extremities of the head and face are used to locate facial features in the captured thermal images, i.e., nose and mouth, which are associated with respiration. The RGB signals obtained from the camera are plotted to obtain a respiration pattern. From the respiration pattern, a rate of respiration is obtained. The system includes display and communication interfaces wherein alerts can be activated if the respiration rate falls outside a level of acceptability. The teachings hereof find their uses in an array of devices such as, for example, devices which monitor the respiration of an infant to signal the onset of a respiratory problem or failure.

Owner:XEROX CORP

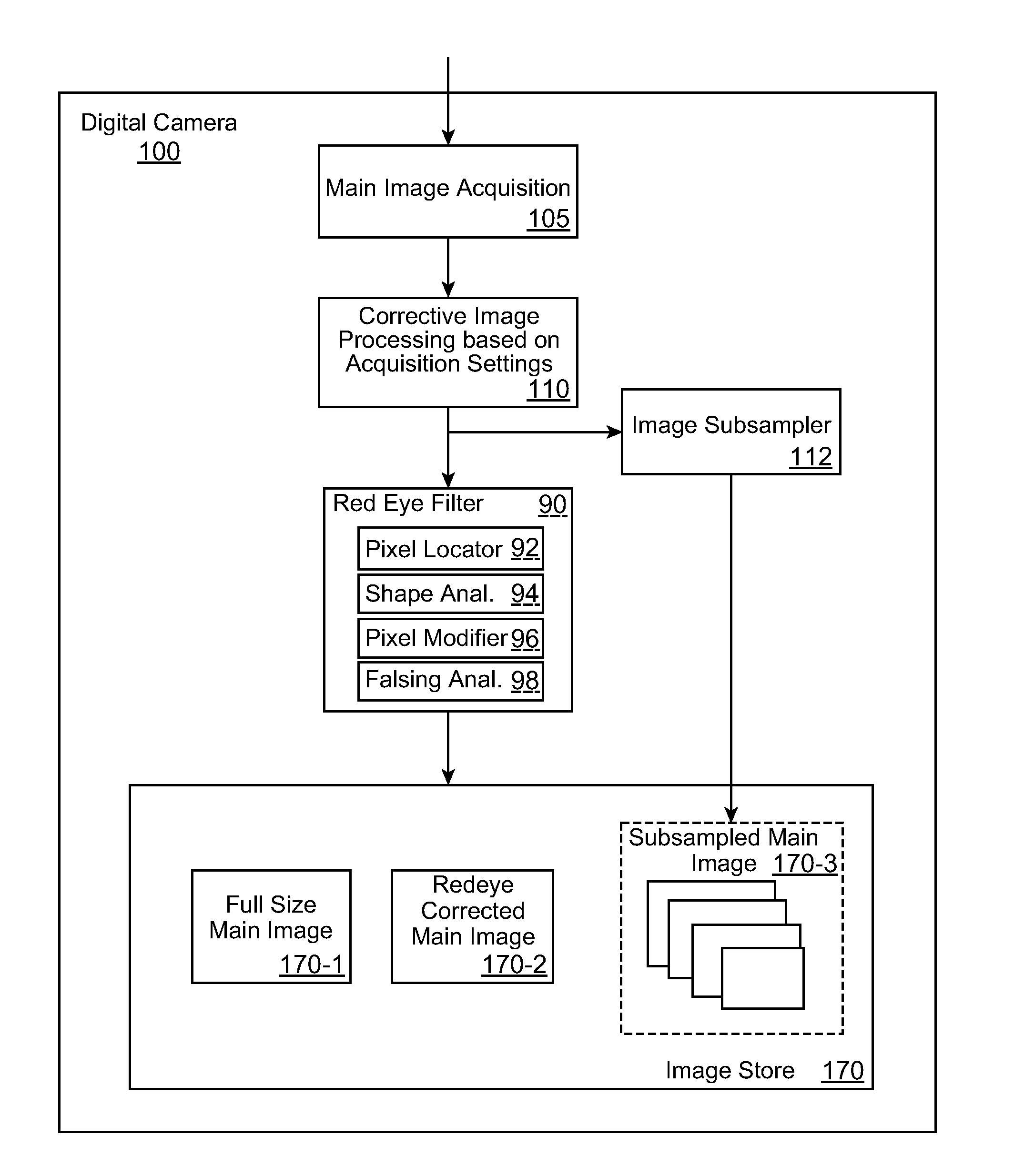

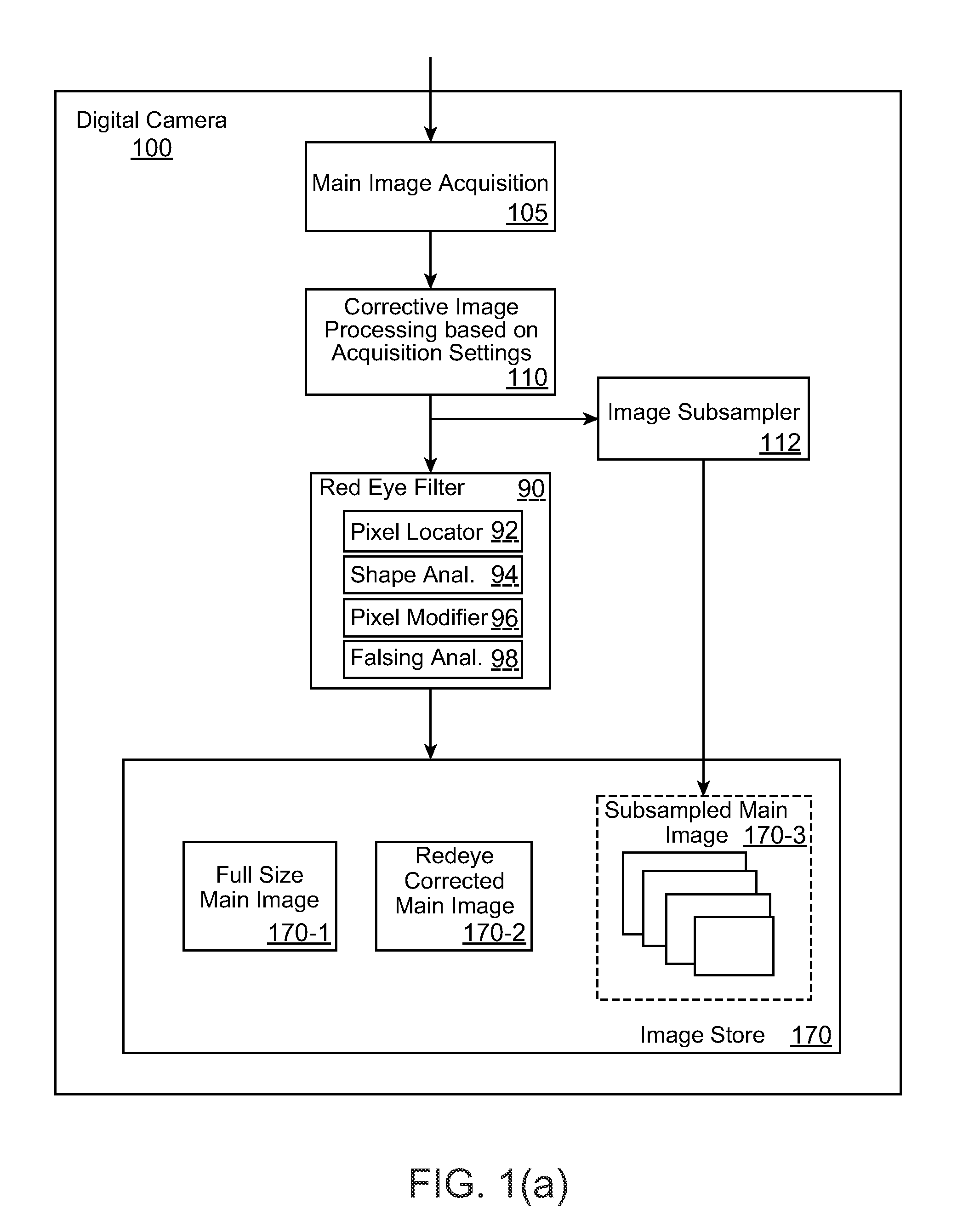

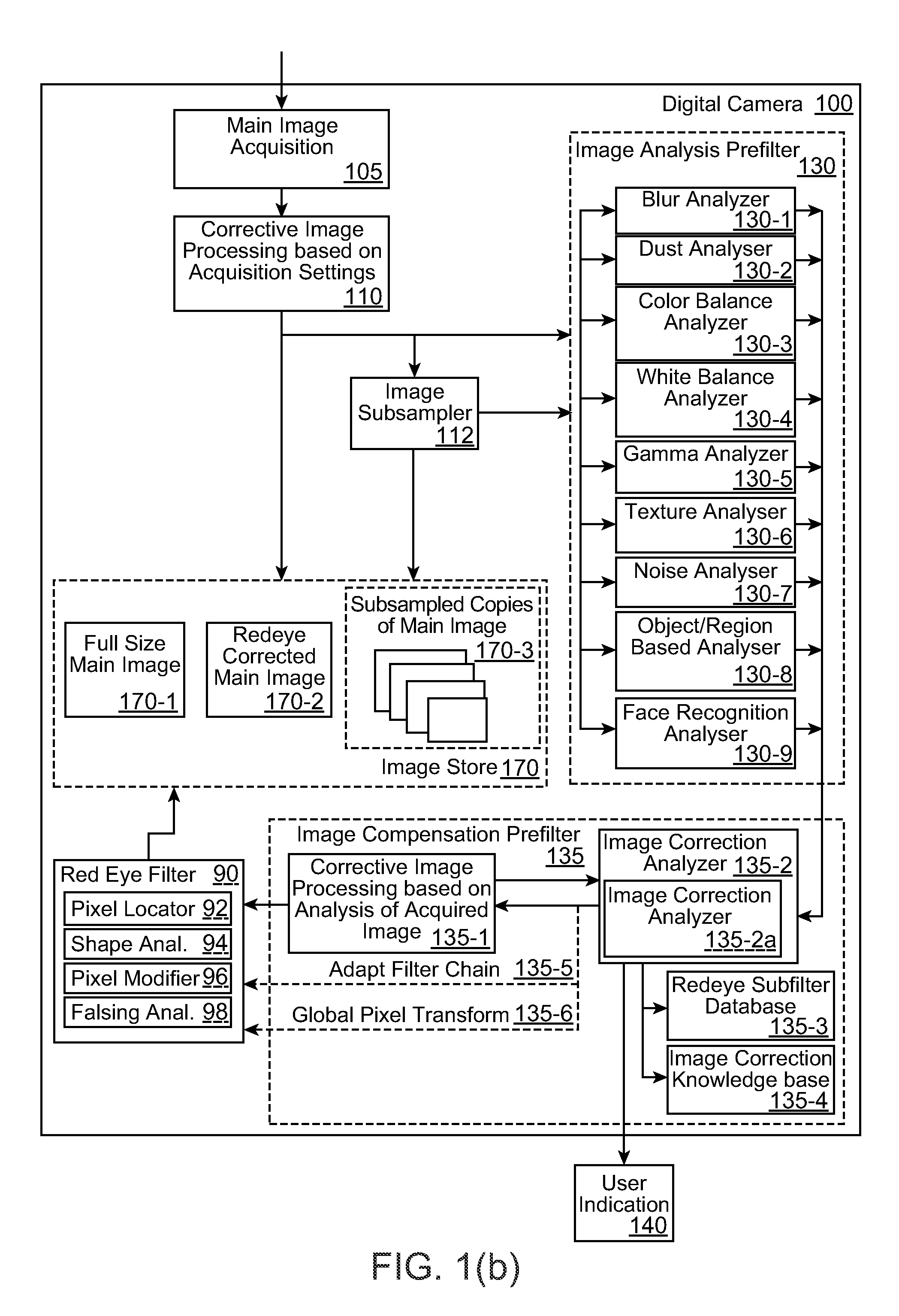

Analyzing partial face regions for red-eye detection in acquired digital images

A method for red-eye detection in an acquired digital image includes acquiring a first image, and analyzing one or more partial face regions within the first image. One or more characteristics of the first image are determined. One or more corrective processes are identified including red eye correction that can be beneficially applied to the first image according to the one or more characteristics. The one or more corrective processes are applied to the first image.

Owner:FOTONATION LTD

Photography apparatus, photography method, and photography program

InactiveUS20060268150A1Improve accuracyHigh color reproductionTelevision system detailsCharacter and pattern recognitionFace detectionMathematical model

An exposure value is determined based on a face region, with high accuracy and with less effect of a background region or density contrast caused by shadow. For this purpose, a face detection unit detects a face region from a face candidate region in a through image detected by a face candidate detection unit, by fitting to the face candidate region a mathematical model generated by a method of AAM using a plurality of sample images representing human faces. An exposure value determination unit then determines an exposure value for photography, based on the face region.

Owner:FUJIFILM CORP +1

Pose-invariant face recognition system and process

InactiveUS7127087B2Sure easyElectric signal transmission systemsImage analysisEigenfaceRecognition system

A face recognition system and process for identifying a person depicted in an input image and their face pose. This system and process entails locating and extracting face regions belonging to known people from a set of model images, and determining the face pose for each of the face regions extracted. All the extracted face regions are preprocessed by normalizing, cropping, categorizing and finally abstracting them. More specifically, the images are normalized and cropped to show only a persons face, categorized according to the face pose of the depicted person's face by assigning them to one of a series of face pose ranges, and abstracted preferably via an eigenface approach. The preprocessed face images are preferably used to train a neural network ensemble having a first stage made up of a bank of face recognition neural networks each of which is dedicated to a particular pose range, and a second stage constituting a single fusing neural network that is used to combine the outputs from each of the first stage neural networks. Once trained, the input of a face region which has been extracted from an input image and preprocessed (i.e., normalized, cropped and abstracted) will cause just one of the output units of the fusing portion of the neural network ensemble to become active. The active output unit indicates either the identify of the person whose face was extracted from the input image and the associated face pose, or that the identity of the person is unknown to the system.

Owner:ZHIGU HLDG

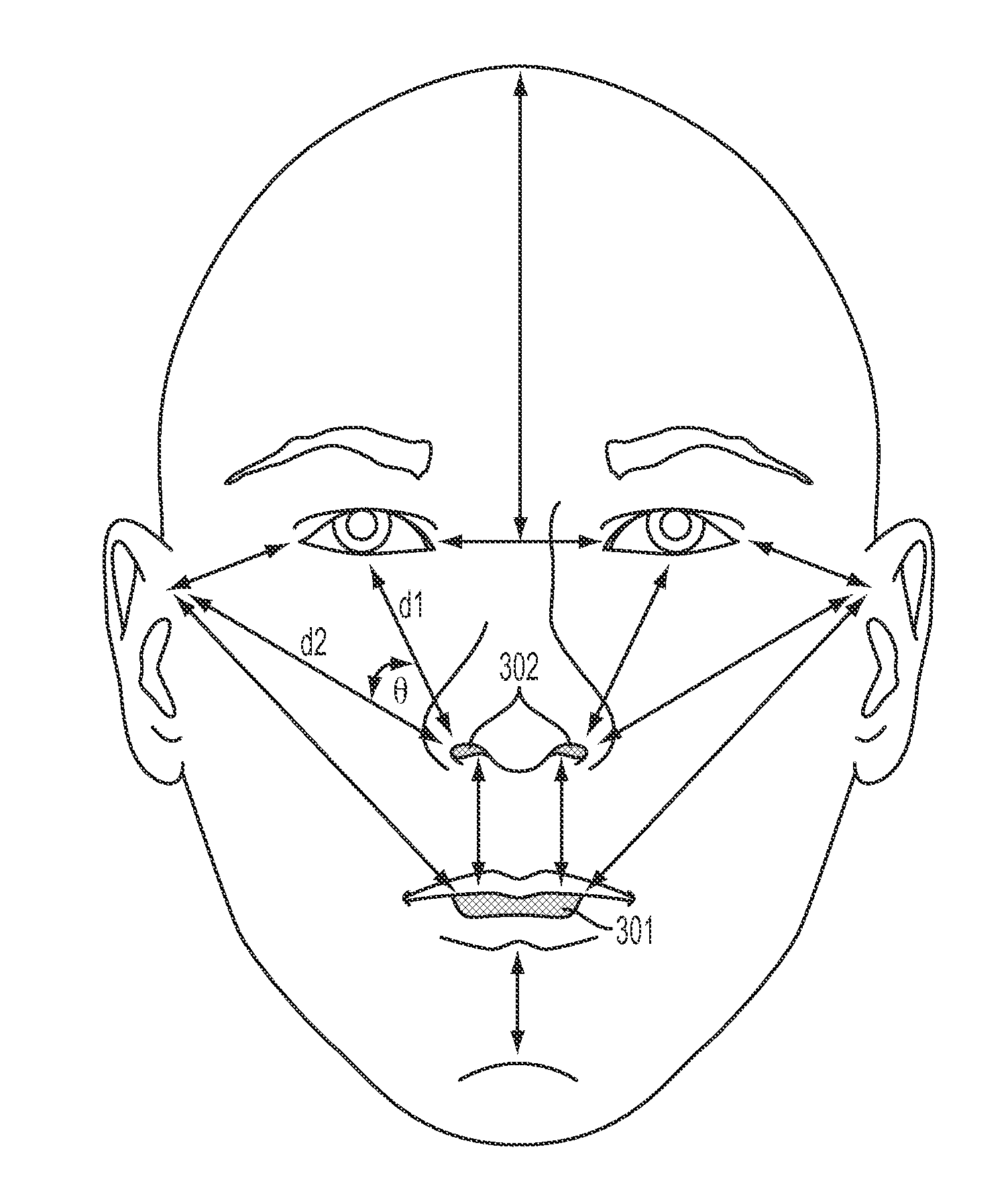

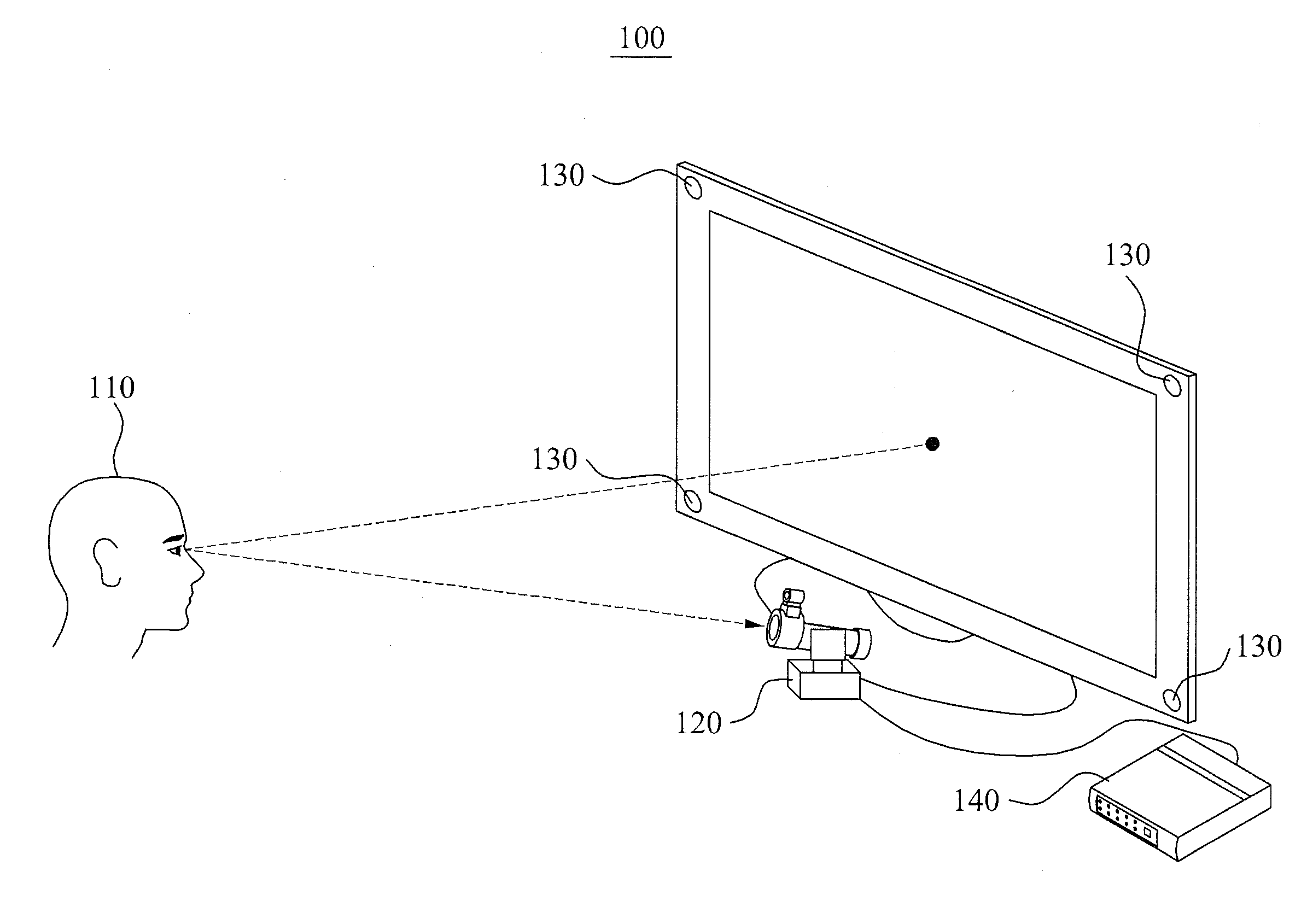

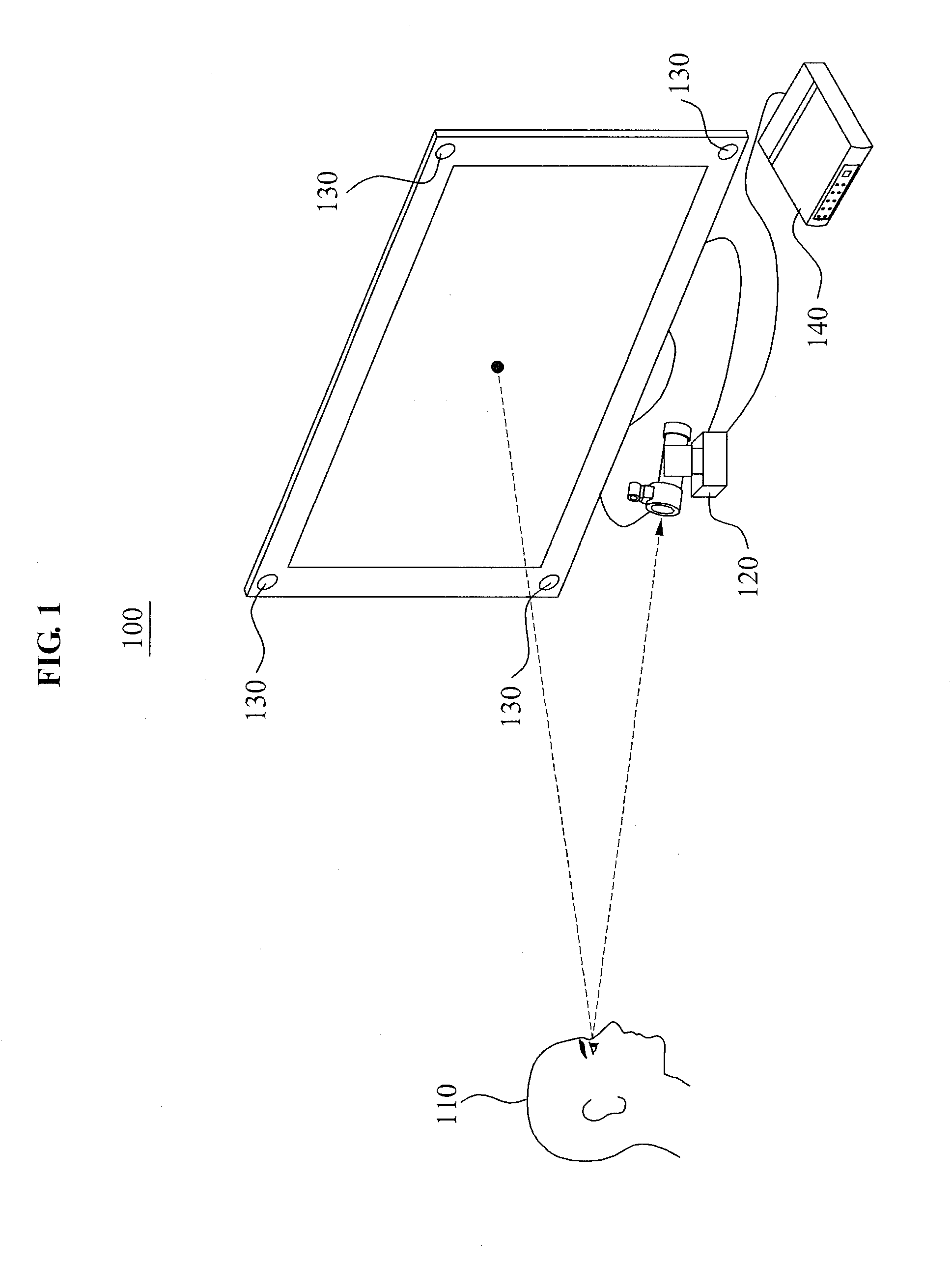

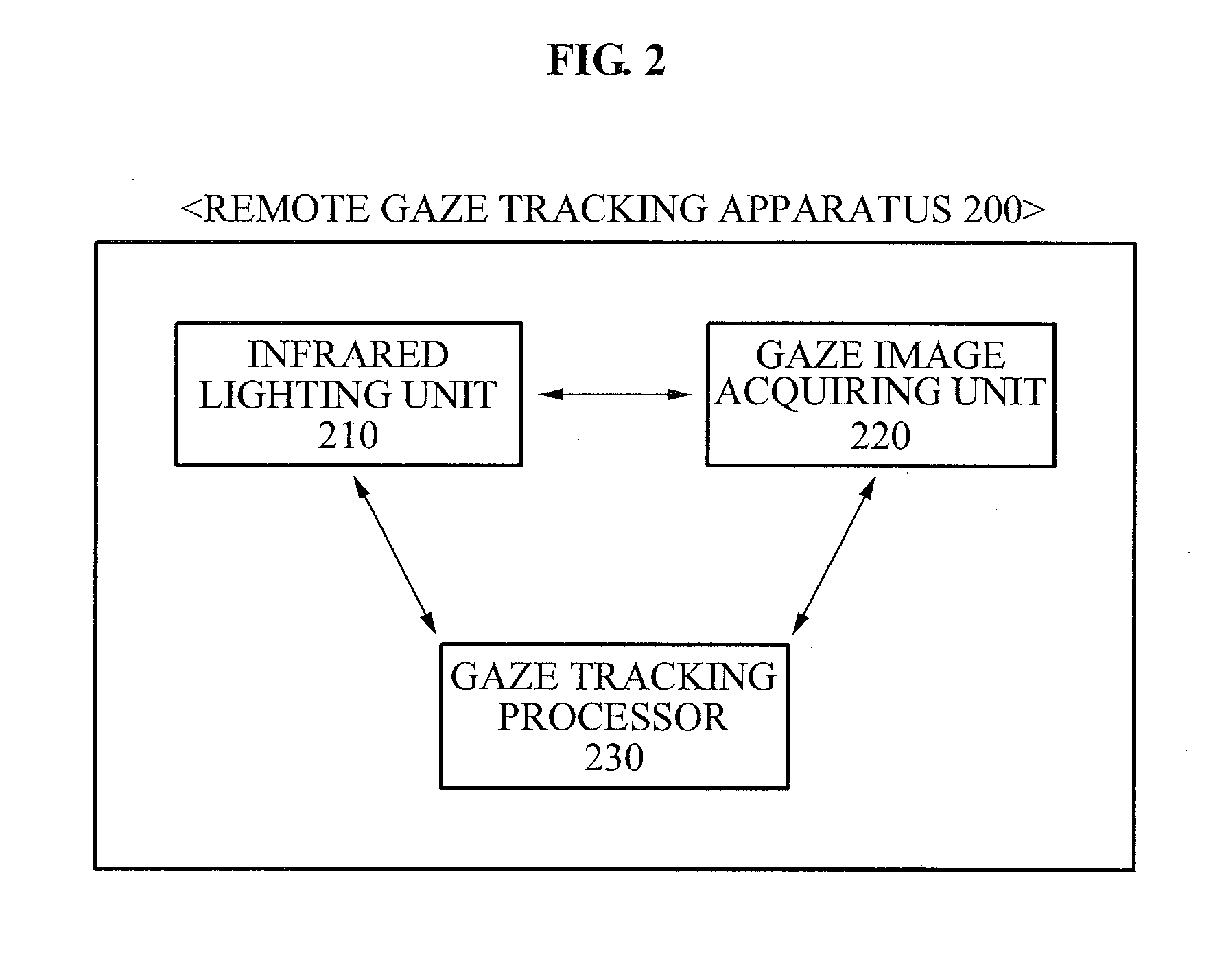

Gaze tracking system and method for controlling internet protocol TV at a distance

A remote gaze tracking apparatus and method for controlling an Internet Protocol Television (IPTV) are provided. An entire image including a facial region of a user may be acquired using a visible ray, the facial region may be detected from the acquired entire image, and a face width, a distance between eyes, and a distance between an eye and a screen may be acquired from the detected facial region. Additionally, an enlarged eye image corresponding to a face of the user may be acquired using at least one of the acquired face width, the acquired distance between the eyes, and the acquired distance between the eye and the screen, and an eye gaze of the user may be tracked using the acquired eye image.

Owner:ELECTRONICS & TELECOMM RES INST +1

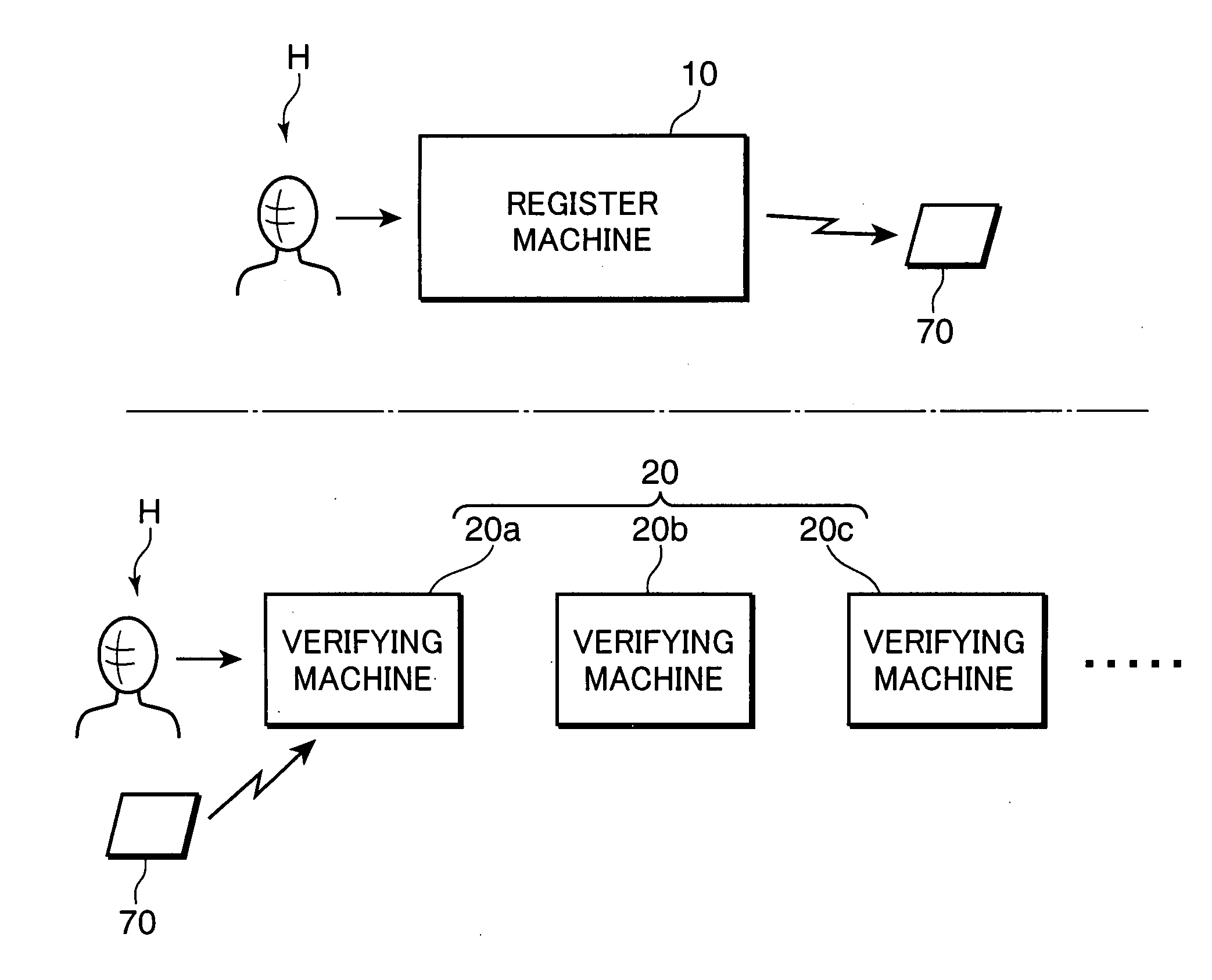

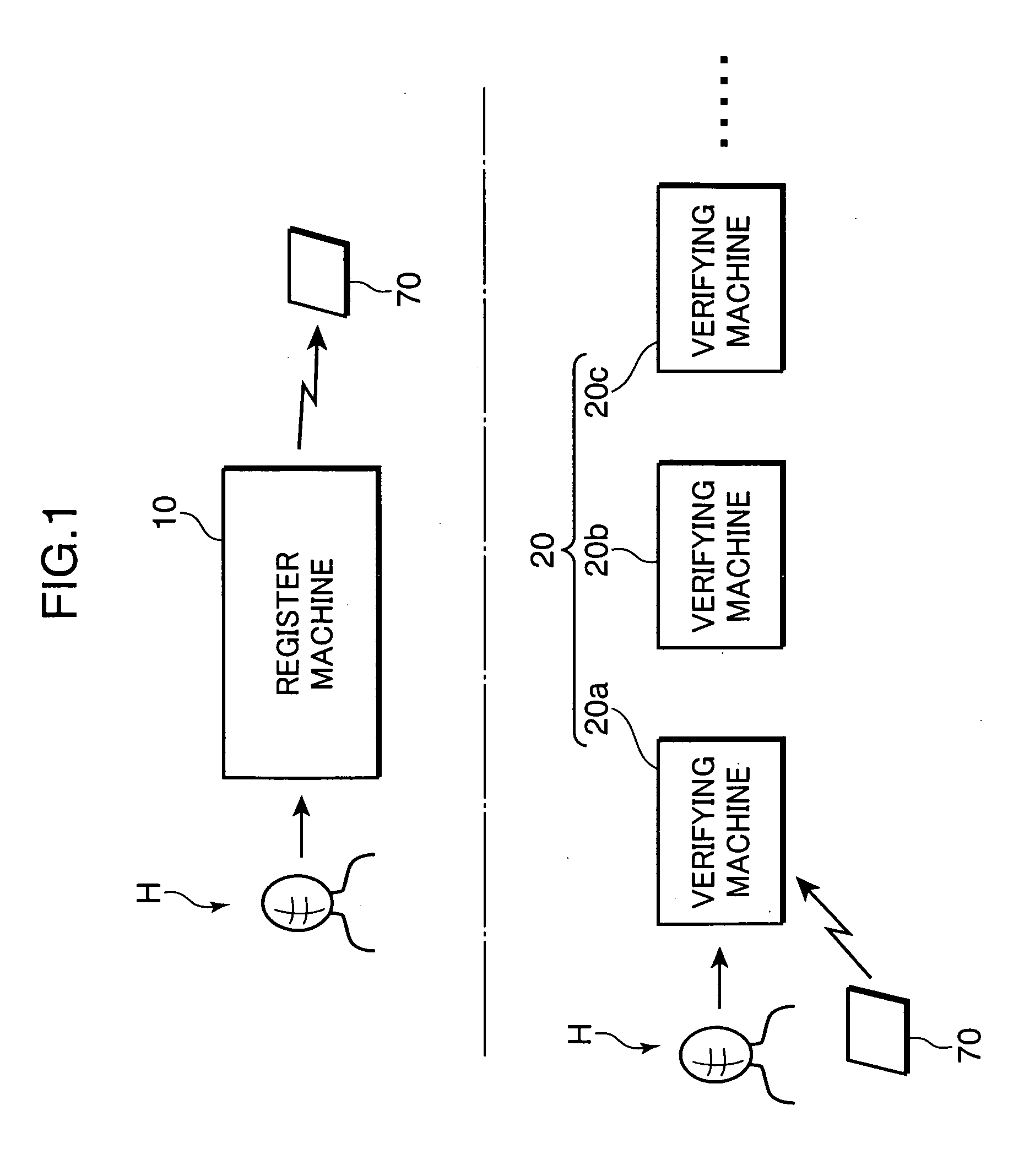

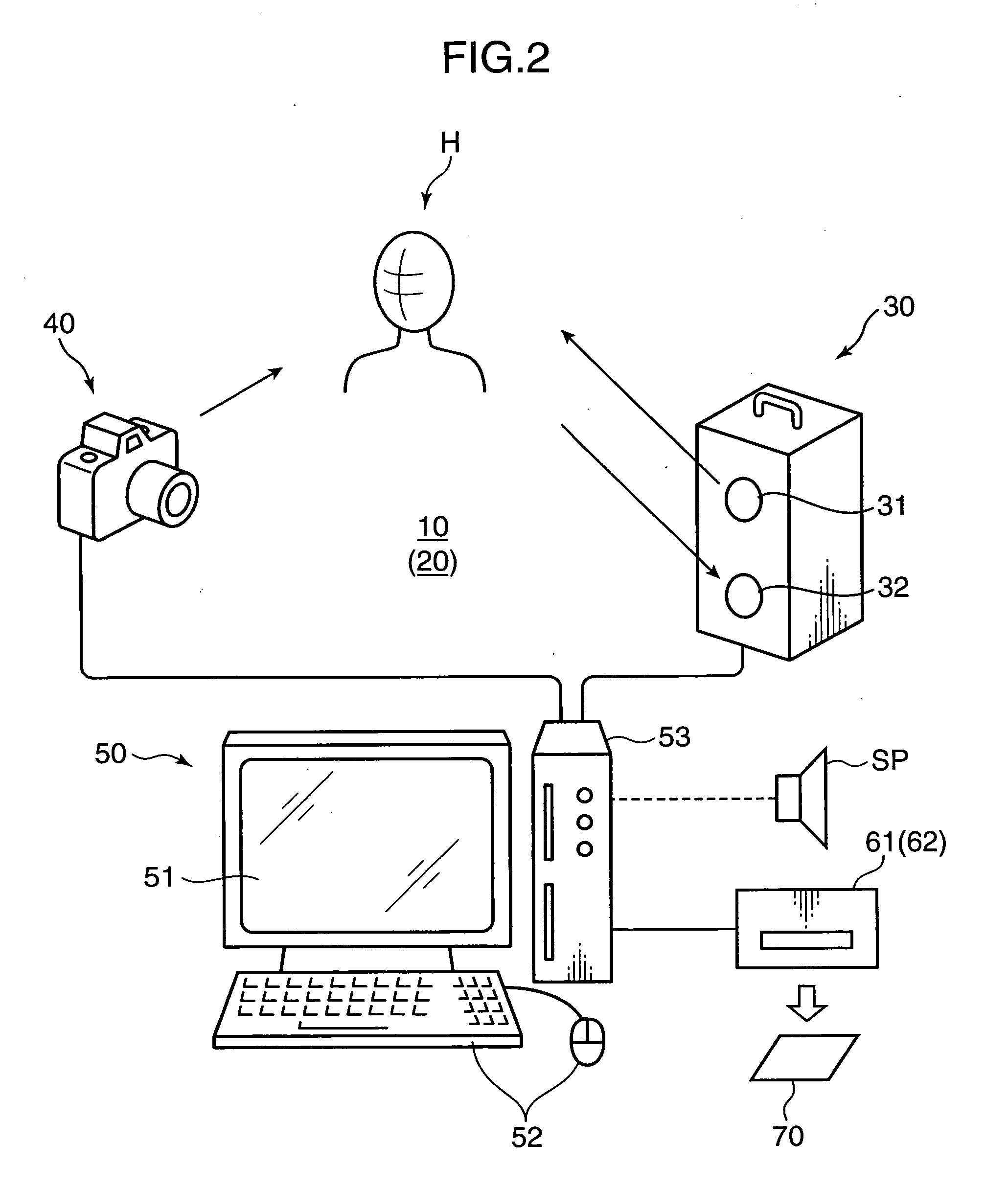

Face authentication system and face authentication method

InactiveUS20080175448A1Accurately rate qualityThree-dimensional object recognitionAuthentication systemThree dimensional data

A face authentication system includes: a data processing section for performing a predetermined data processing operation; a first data input section for inputting three-dimensional data on a face area of a subject to the data processing section; and a second data input section for inputting two-dimensional image data on the face area of the subject to the data processing section, the two-dimensional image data corresponding to the three-dimensional data to be inputted to the data processing section, wherein the data processing section includes: a quality rating section for rating the quality of the three-dimensional data based on the two-dimensional image data, and generating quality data, and an authentication processing section for executing a registration process or a verification process of authentication data based on the three-dimensional data, if the quality data satisfies a predetermined requirement.

Owner:KONICA MINOLTA INC

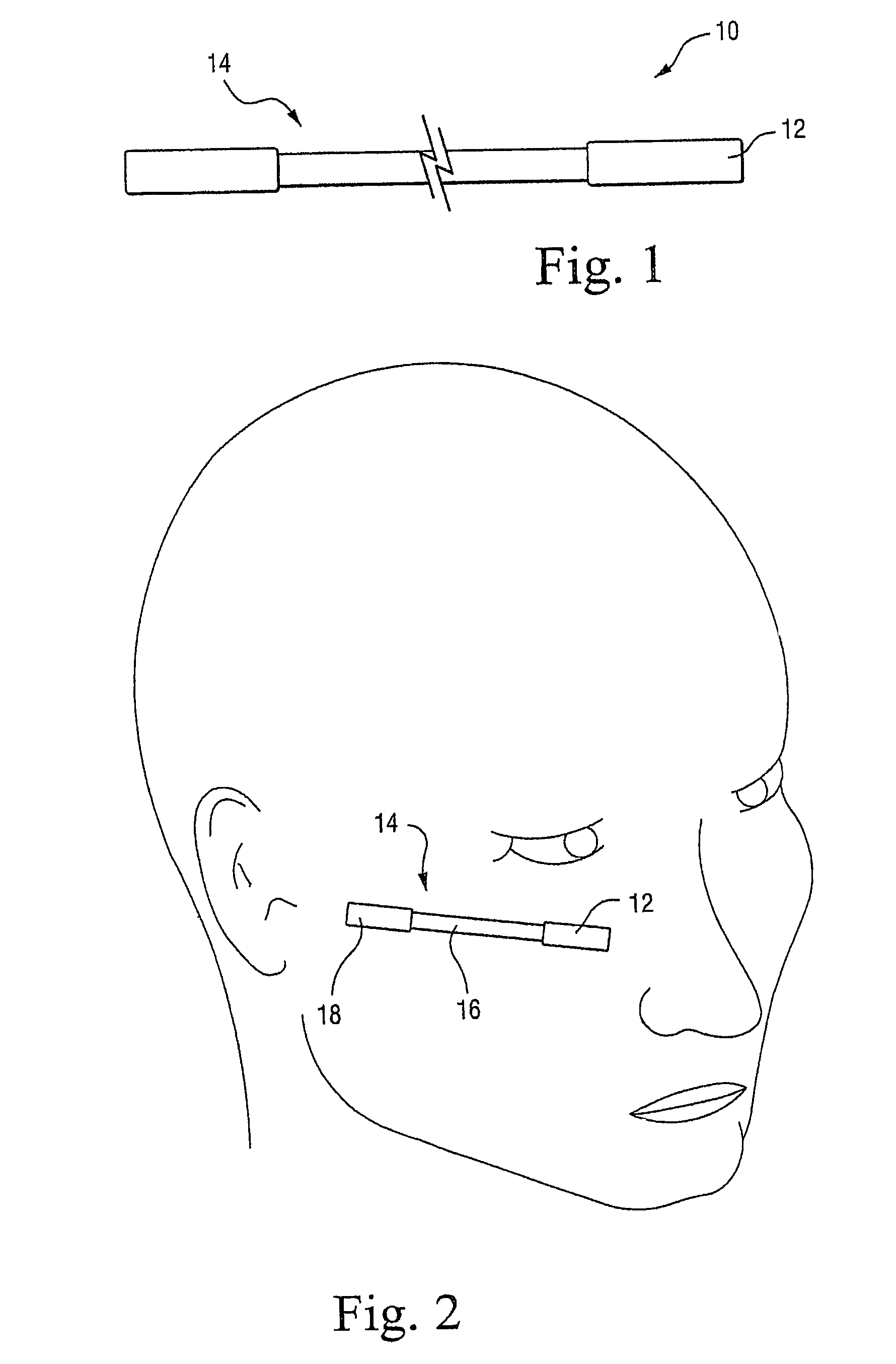

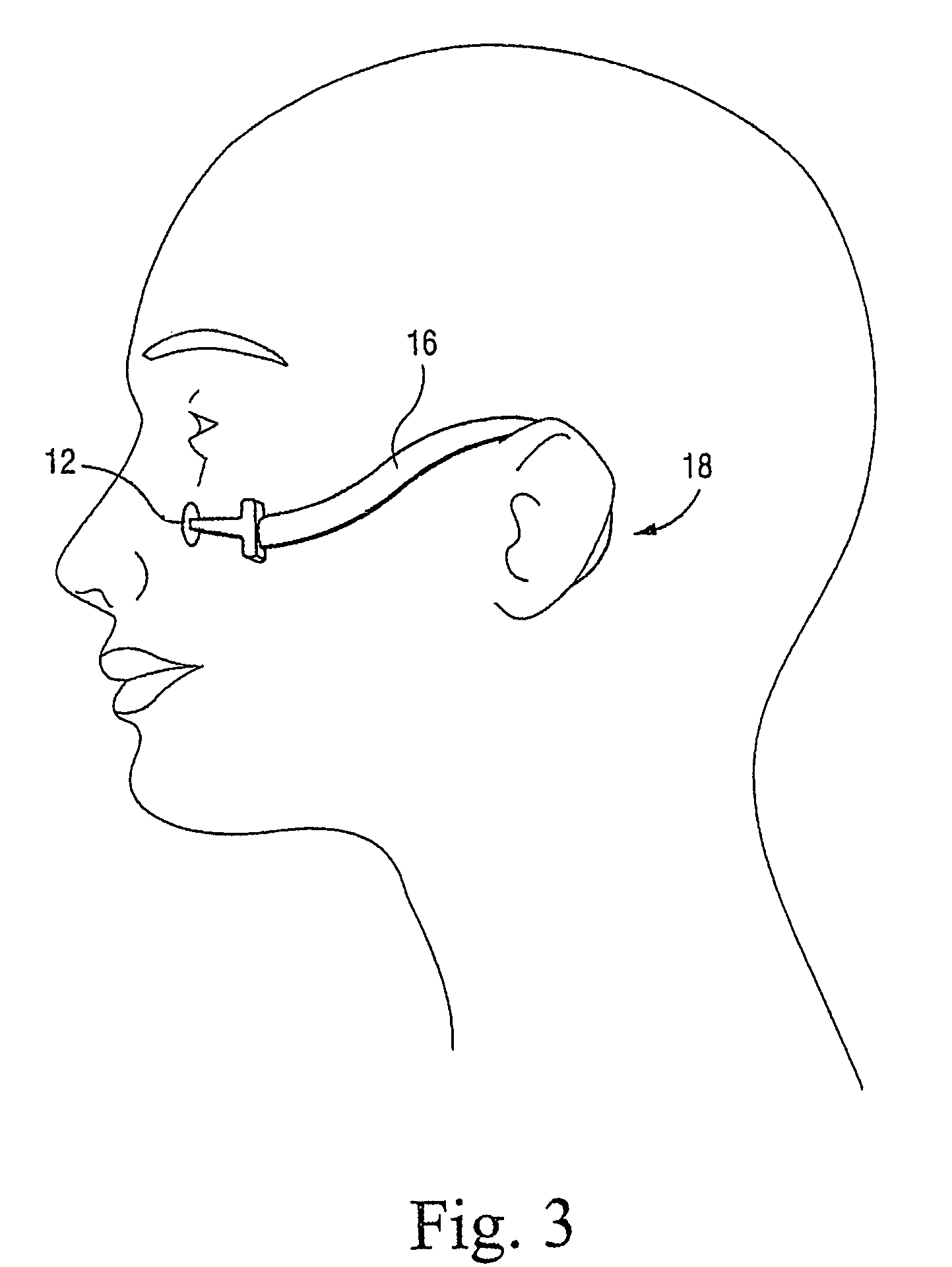

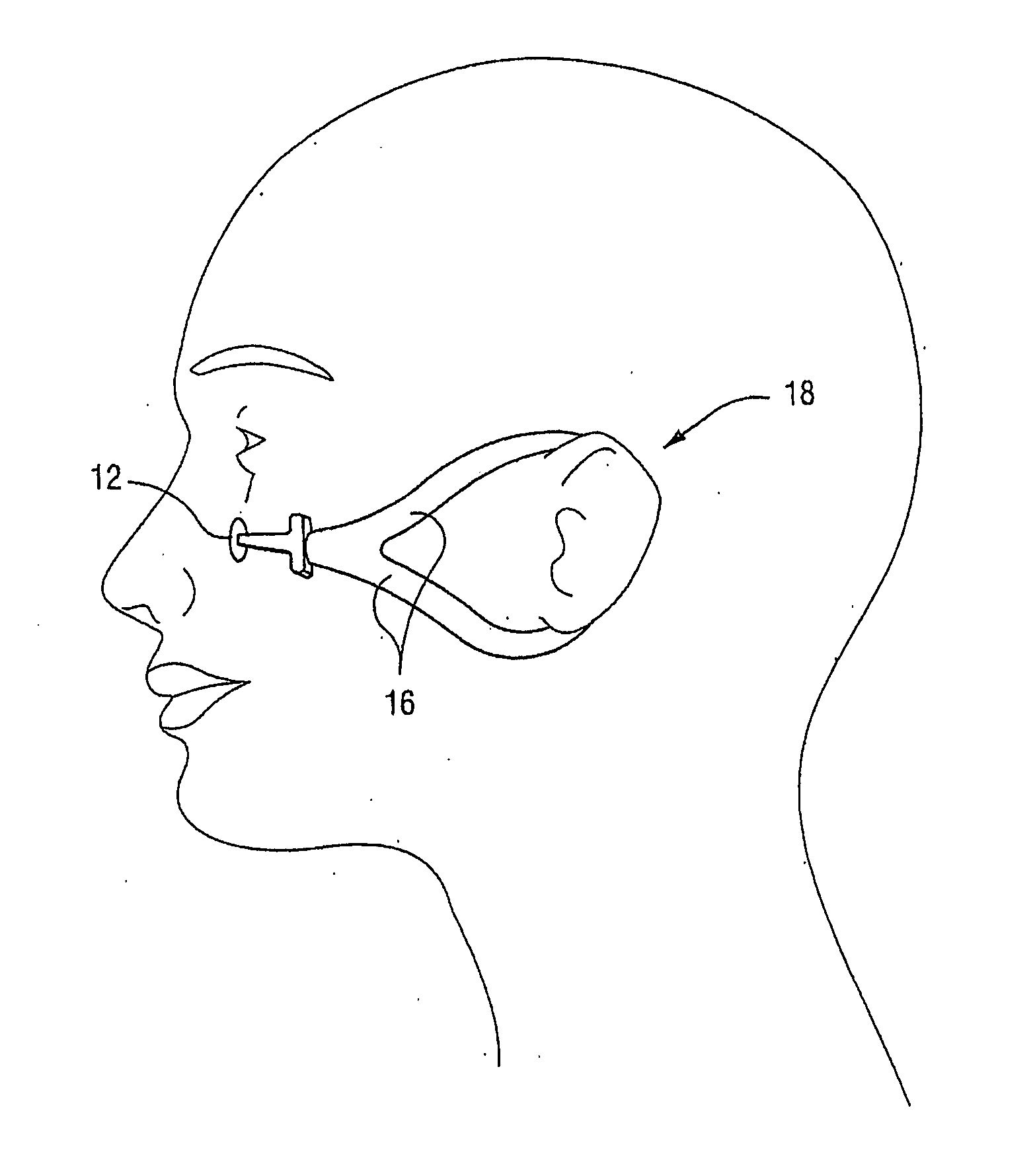

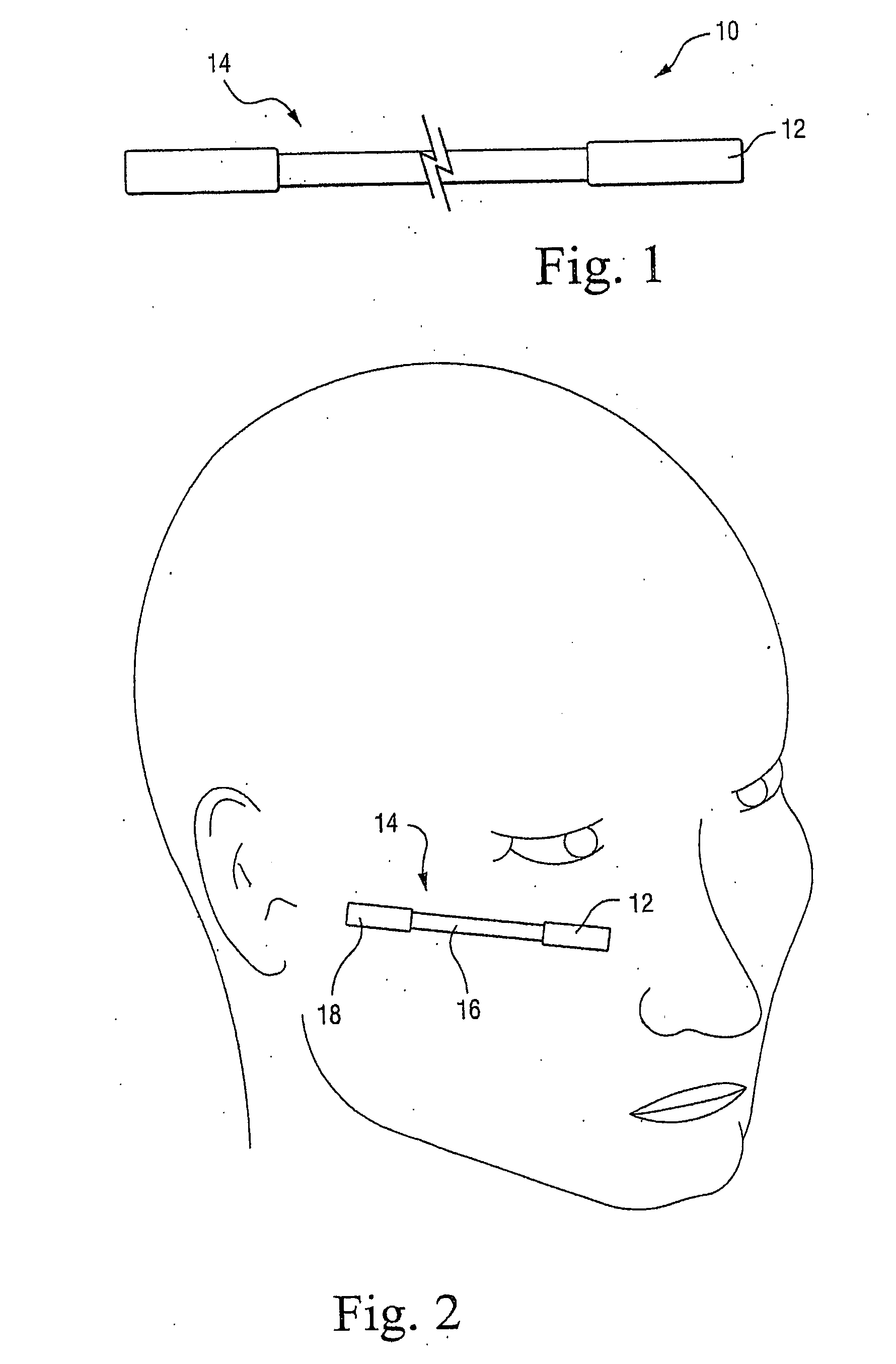

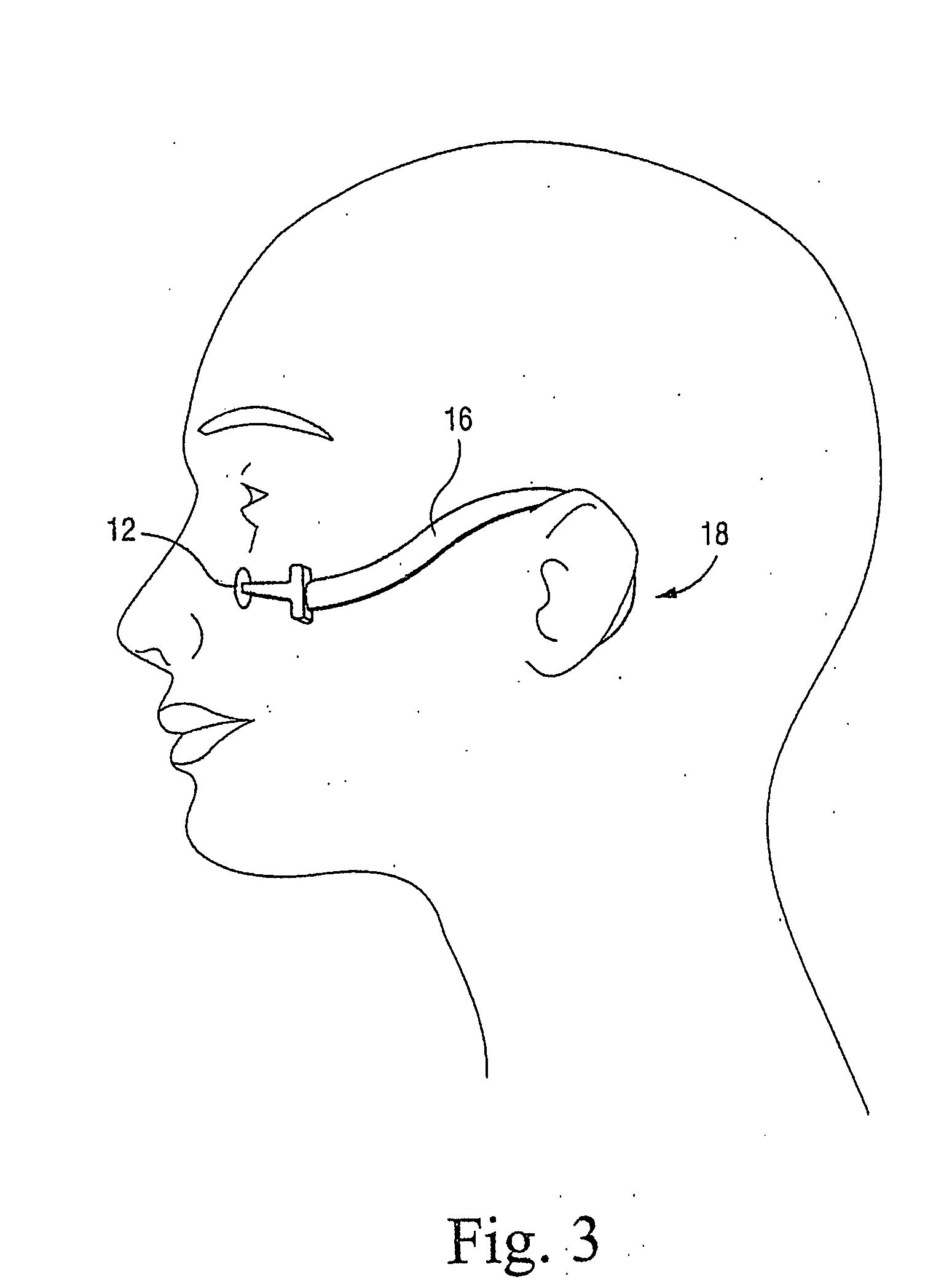

Nasal dilator

InactiveUS8051850B2Reduce flow resistanceLower impedanceRespiratory masksBreathing masksNasal passageNasal passages

A nasal dilator includes a contact pad attachable to a user's facial region below the user's eye and outboard from the user's nose. A tugging device is coupled with the contact pad and urges the contact pad in a direction away from the user's nose. With this structure, effective dilation of the nasal passages can be achieved in a comfortable manner. The dilator may also be incorporated into a CPAP mask and / or form part of an automated control system.

Owner:RESMED LTD

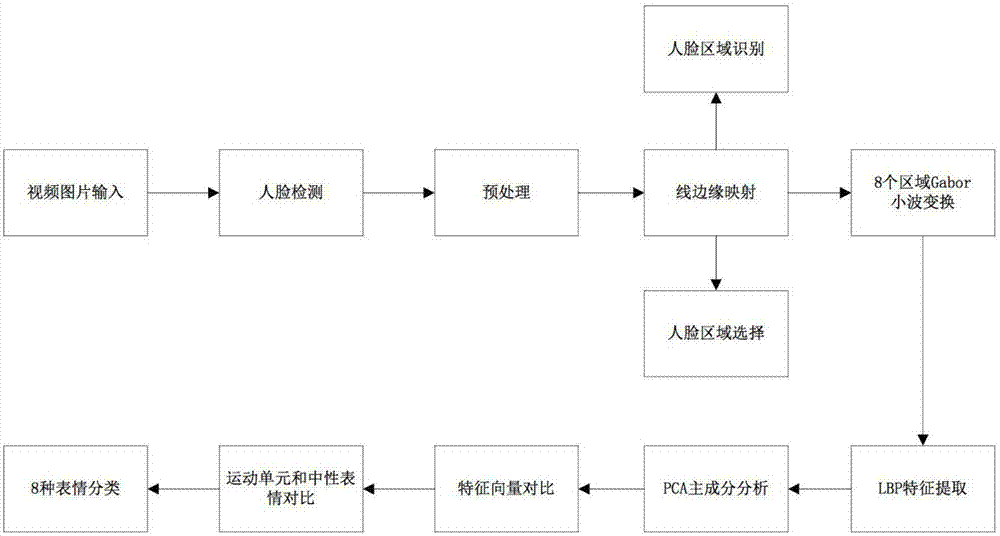

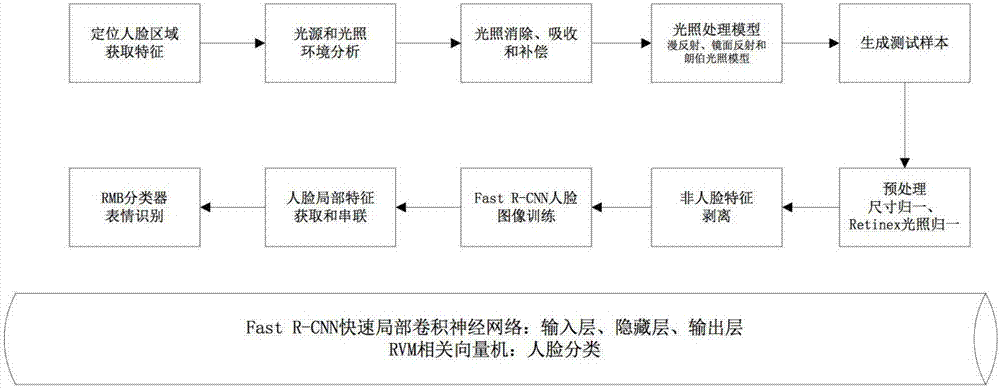

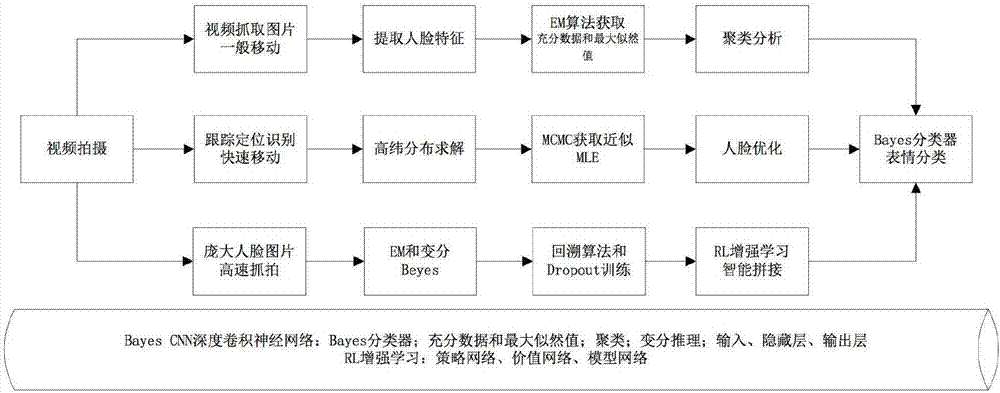

Human face emotion recognition method in complex environment

InactiveCN107423707AReal-time processingImprove accuracyImage enhancementImage analysisNerve networkFacial region

The invention discloses a human face emotion recognition method in a mobile embedded complex environment. In the method, a human face is divided into main areas of a forehead, eyebrows and eyes, cheeks, a noise, a month and a chain, and 68 feature points are further divided. In view of the above feature points, in order to realize the human face emotion recognition rate, the accuracy and the reliability in various environments, a human face and expression feature classification method is used in a normal condition, a Faster R-CNN face area convolution neural network-based method is used in conditions of light, reflection and shadow, a method of combining a Bayes Network, a Markoff chain and variational reasoning is used in complex conditions of motion, jitter, shaking, and movement, and a method of combining a deep convolution neural network, a super-resolution generative adversarial network (SRGANs), reinforcement learning, a backpropagation algorithm and a dropout algorithm is used in conditions of incomplete human face display, a multi-human face environment and noisy background. Thus, the human face expression recognition effects, the accuracy and the reliability can be promoted effectively.

Owner:深圳帕罗人工智能科技有限公司

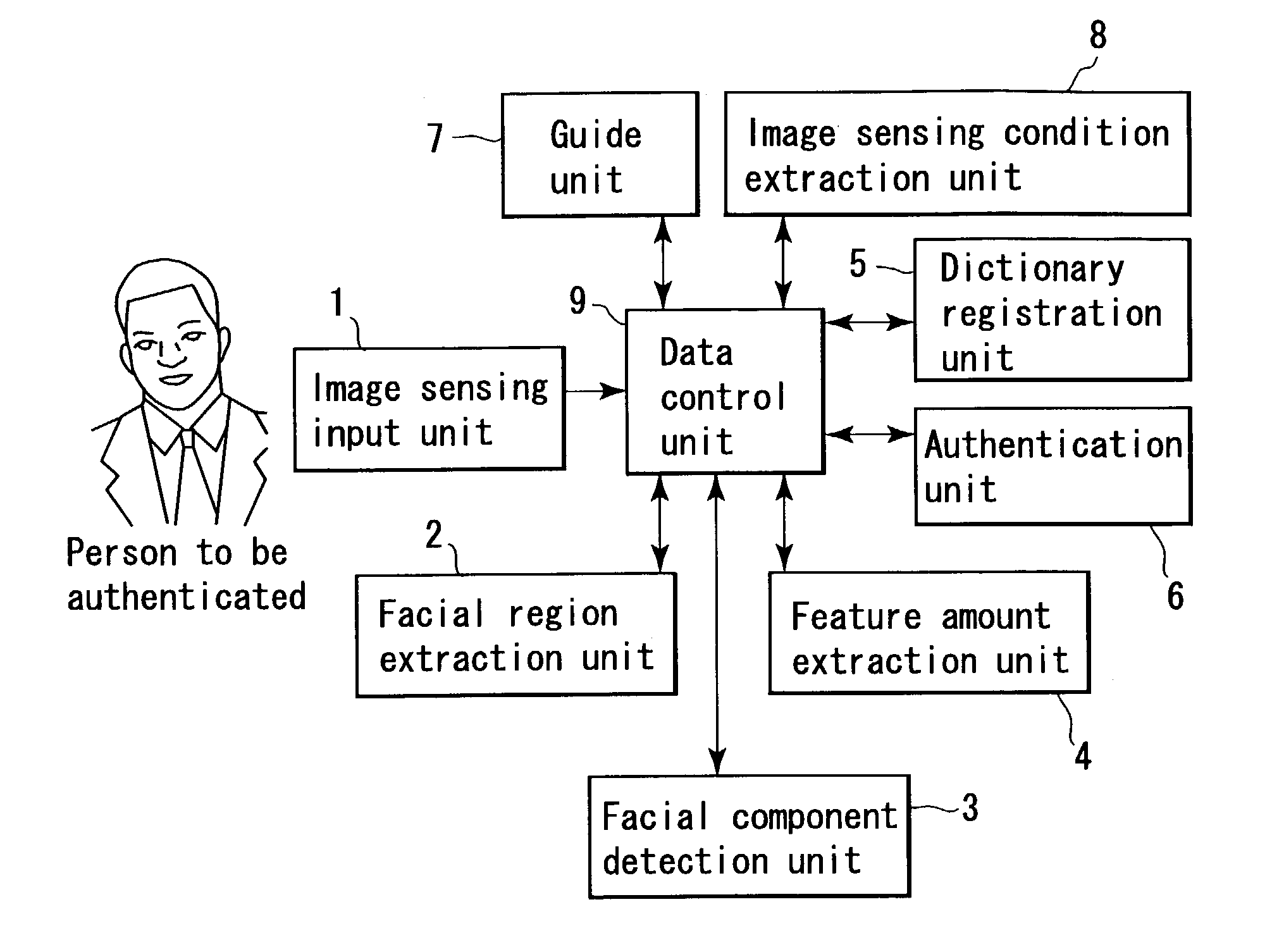

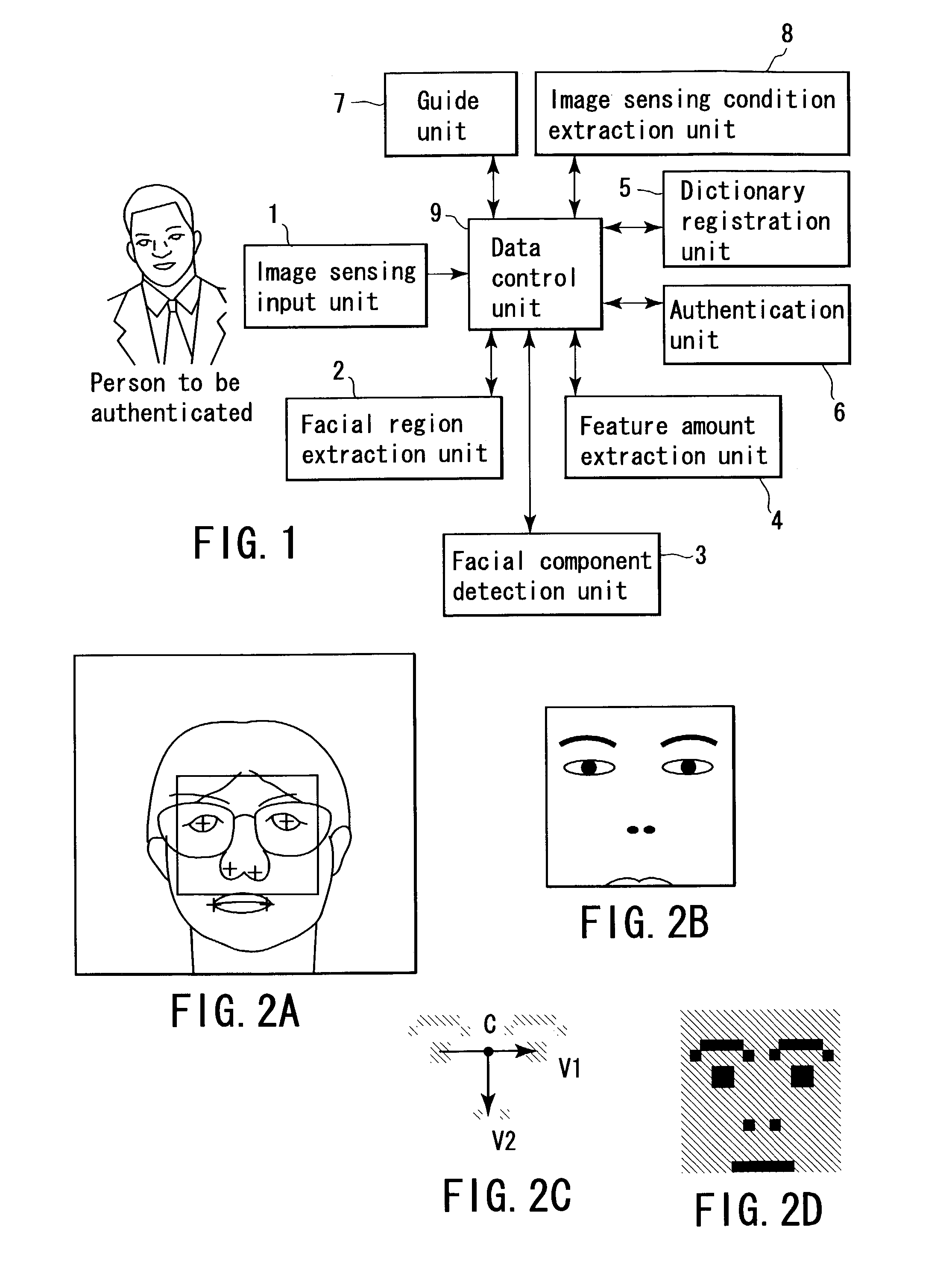

Personal authentication apparatus and personal authentication method

InactiveUS7412081B2Authentication precision is improvedReduce loadImage analysisCharacter and pattern recognitionPattern recognitionFacial region

A personal authentication apparatus includes a facial region extraction which extracts an image of a facial region of a person obtained from image sensing input unit, a guide unit which guides motion of the person of interest, a feature amount extraction unit which extracts the feature amount of a face from the image of the facial region extracted by the facial region extraction unit while the motion is guided by the guide unit, a dictionary registration unit which registers the feature amount extracted by the feature amount extraction unit as a feature amount of the person of interest, and an authentication unit which authenticates the person of interest in accordance with the similarity between the feature amount extracted by the feature amount extraction unit, and a feature amount which is registered by the dictionary registration unit.

Owner:KK TOSHIBA

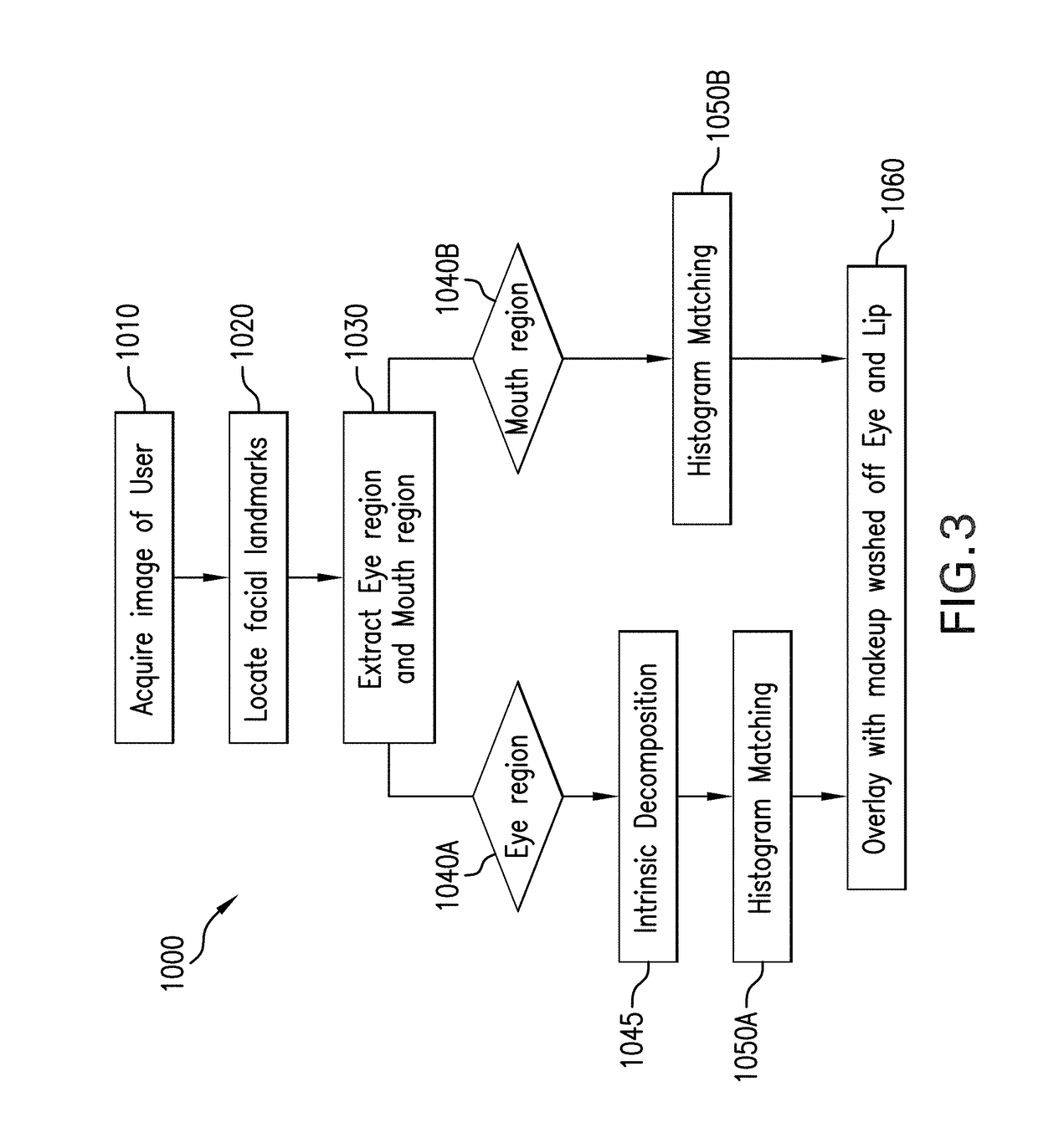

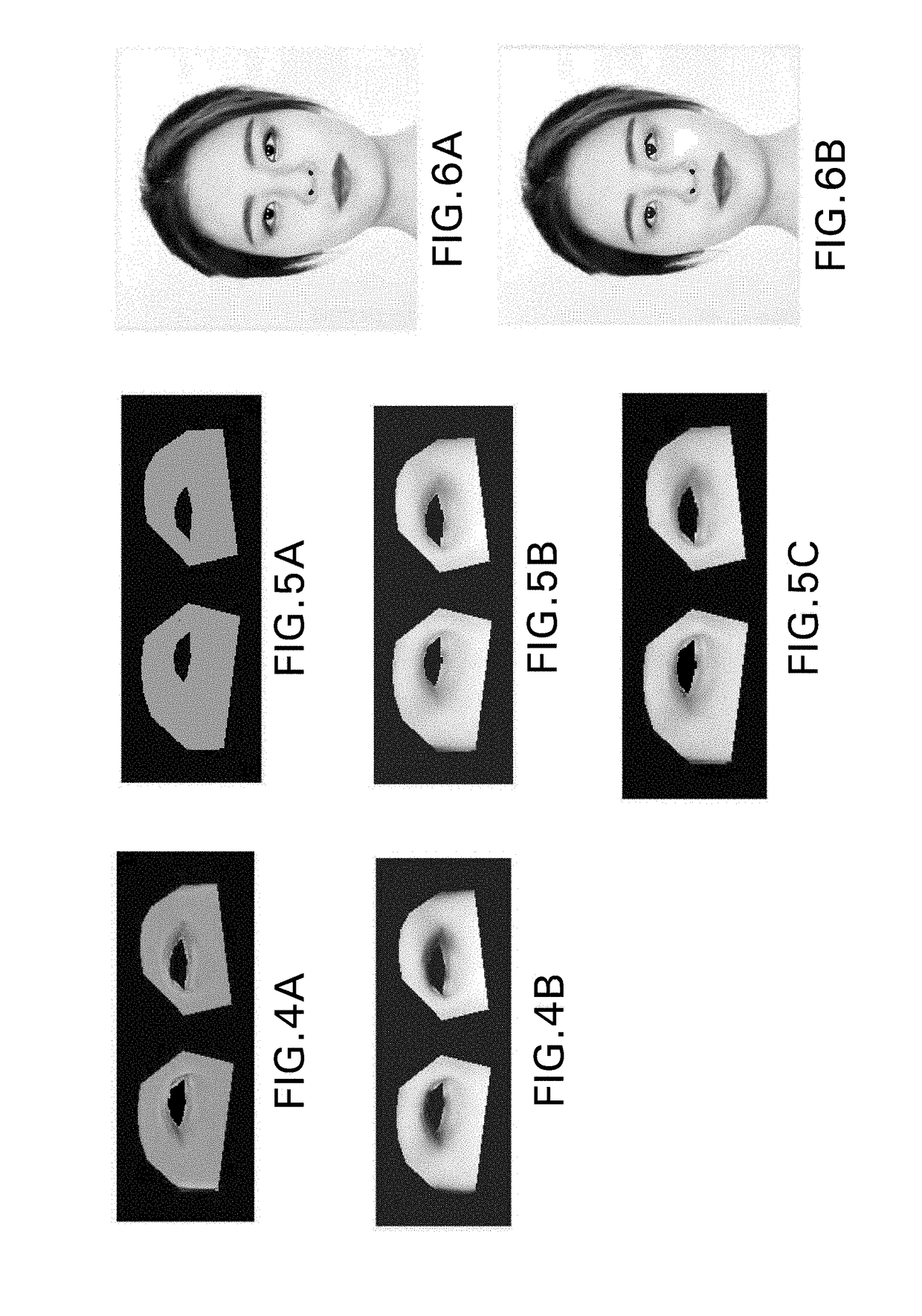

Systems and Methods for Virtual Facial Makeup Removal and Simulation, Fast Facial Detection and Landmark Tracking, Reduction in Input Video Lag and Shaking, and a Method for Recommending Makeup

ActiveUS20190014884A1High transparencyImprove flatnessImage enhancementImage analysisFacial regionNetwork model

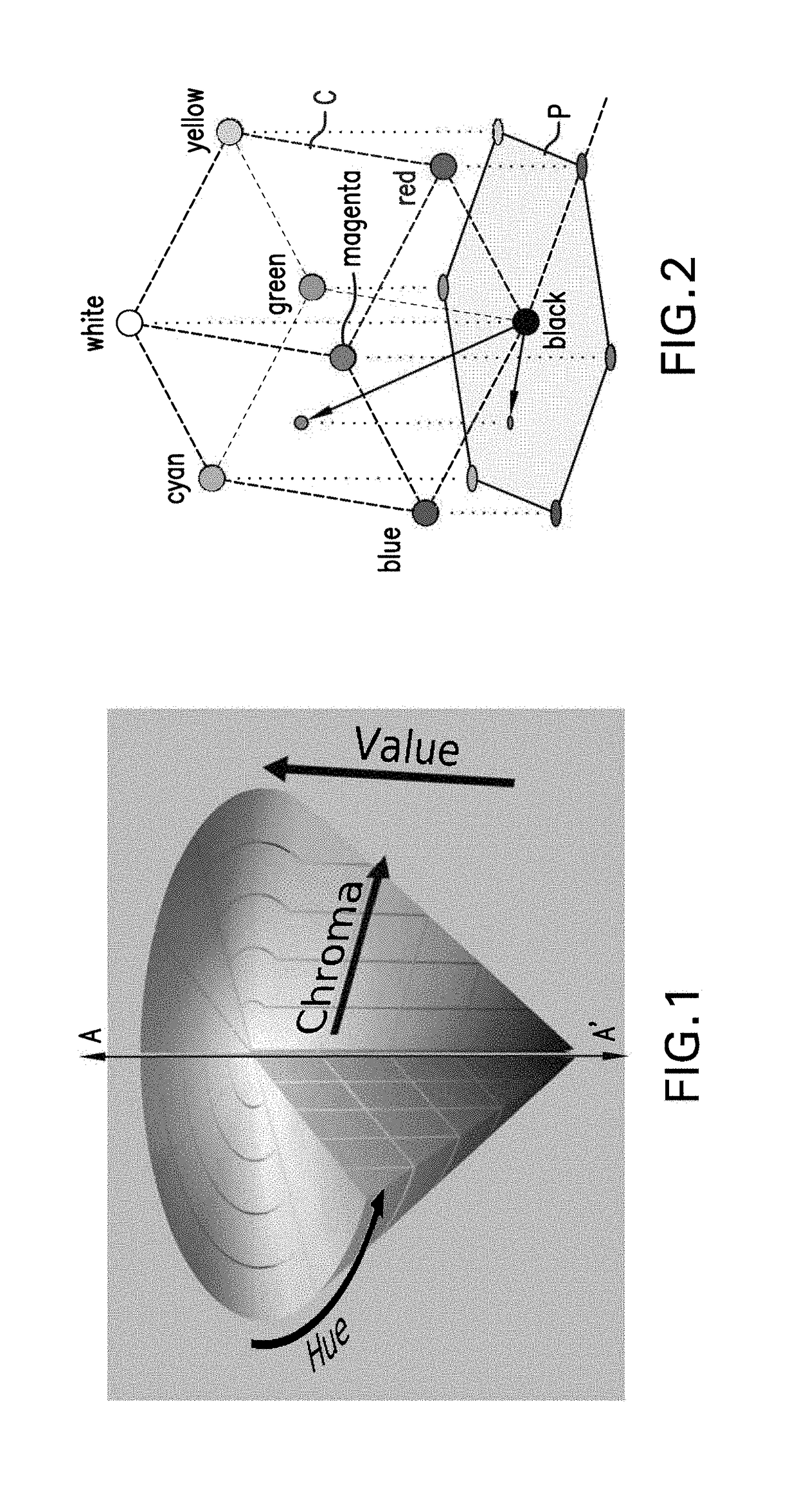

The present disclosure provides systems and methods for virtual facial makeup simulation through virtual makeup removal and virtual makeup add-ons, virtual end effects and simulated textures. In one aspect, the present disclosure provides a method for virtually removing facial makeup, the method comprising providing a facial image of a user with makeups being applied thereto, locating facial landmarks from the facial image of the user in one or more regions, decomposing some regions into first channels which are fed to histogram matching to obtain a first image without makeup in that region and transferring other regions into color channels which are fed into histogram matching under different lighting conditions to obtain a second image without makeup in that region, and combining the images to form a resultant image with makeups removed in the facial regions. The disclosure also provides systems and methods for virtually generating output effects on an input image having a face, for creating dynamic texturing to a lip region of a facial image, for a virtual eye makeup add-on that may include multiple layers, a makeup recommendation system based on a trained neural network model, a method for providing a virtual makeup tutorial, a method for fast facial detection and landmark tracking which may also reduce lag associated with fast movement and to reduce shaking from lack of movement, a method of adjusting brightness and of calibrating a color and a method for advanced landmark location and feature detection using a Gaussian mixture model.

Owner:SHISEIDO CO LTD

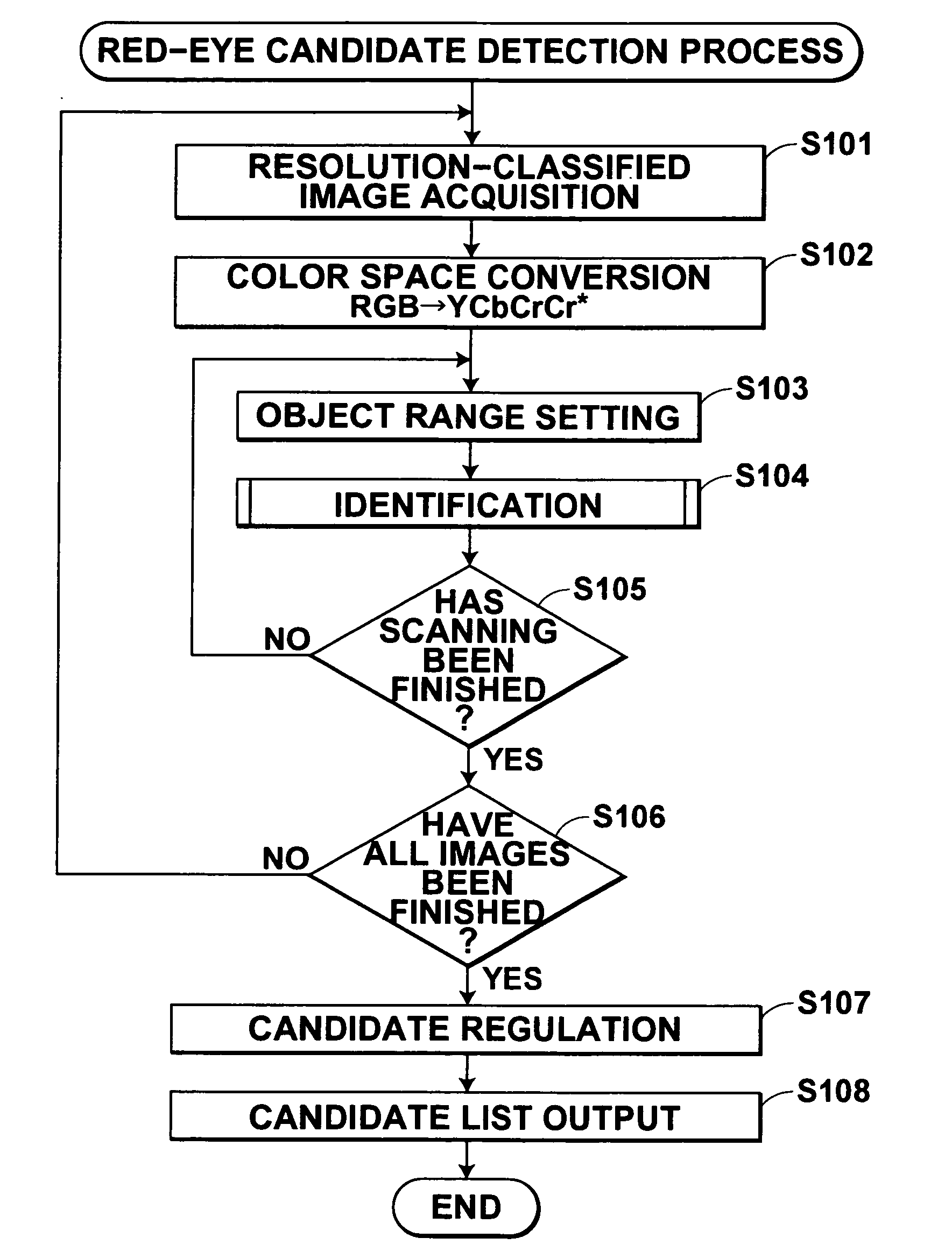

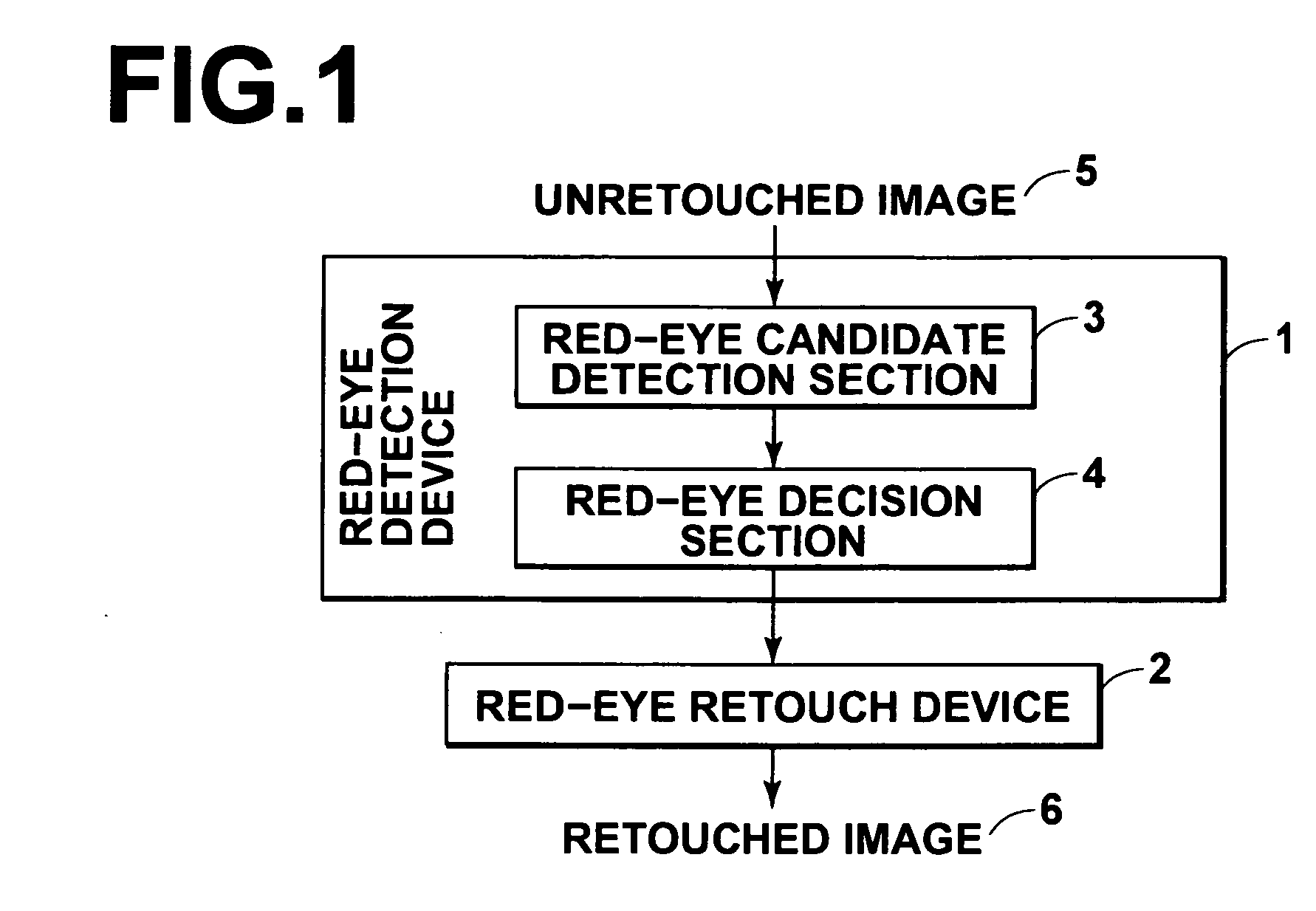

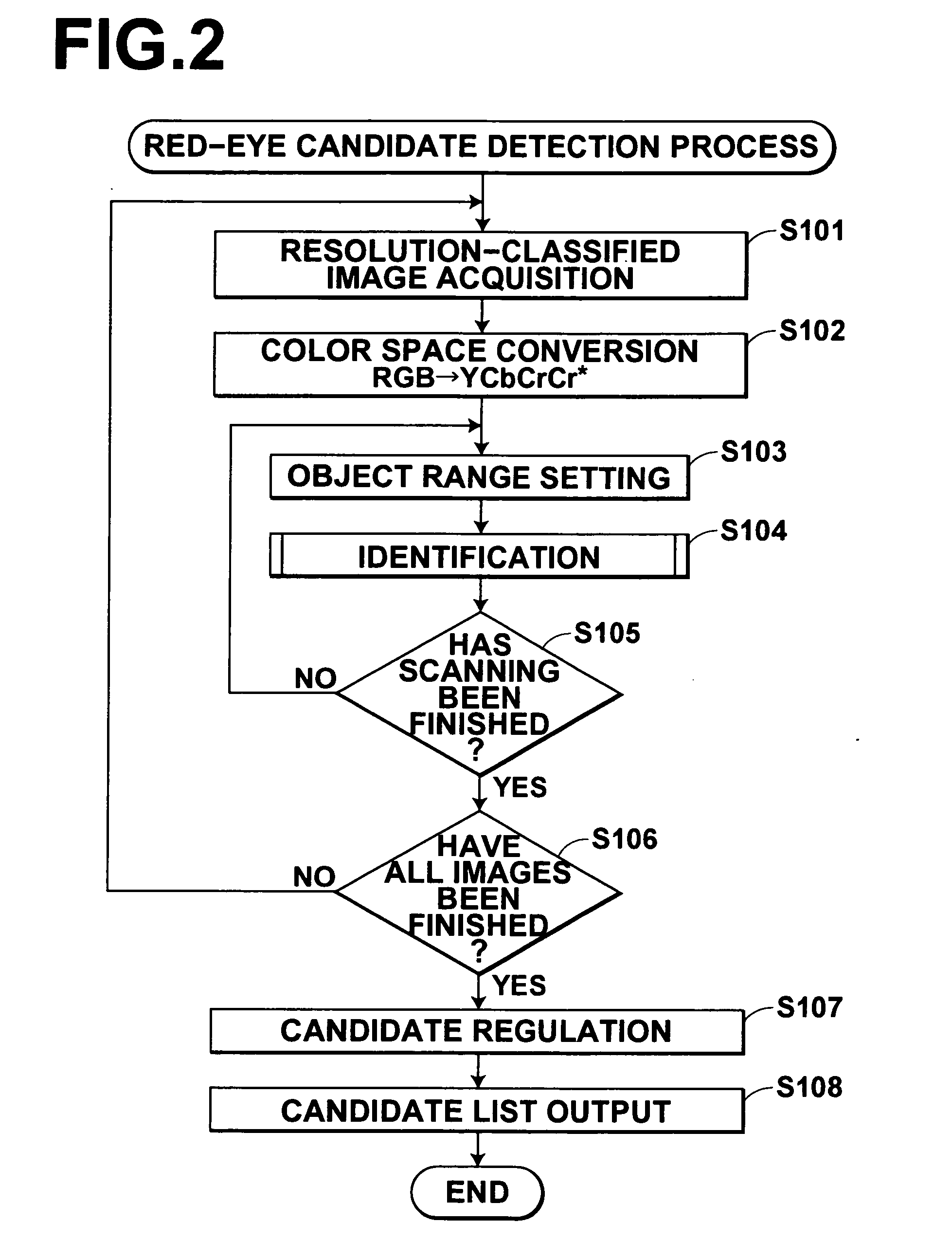

Red eye detection device, red eye detection method, and recording medium with red eye detection program

A red eye detection device detects, from an image that contains the pupil of an eye having a red eye region, the red eye region. One or more red eye candidate regions that can be estimated to be the red eye region are first detected by identifying at least one of the features of the pupil from among features of the image. Then, at least one of the features of a face region with a predetermined size that contains the pupil is identified from a region containing only one of the red eye candidate regions detected by the red eye candidate detection section and wider than the one red eye candidate region. Then, the red eye candidate region is confirmed as a red eye region, based on a result of the identification. Information on the confirmed red eye candidate region is output as information on the detected red eye region.

Owner:FUJIFILM CORP

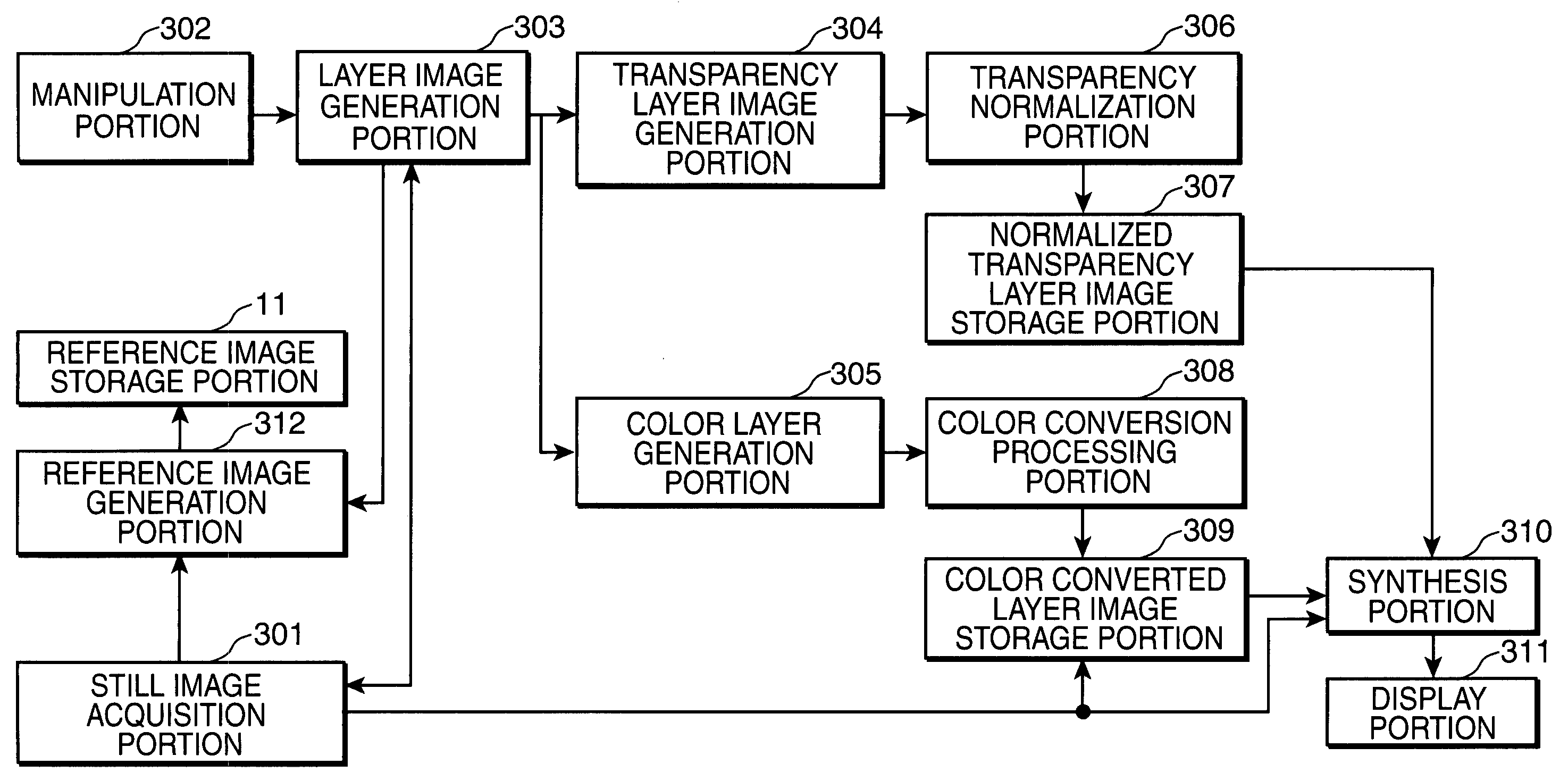

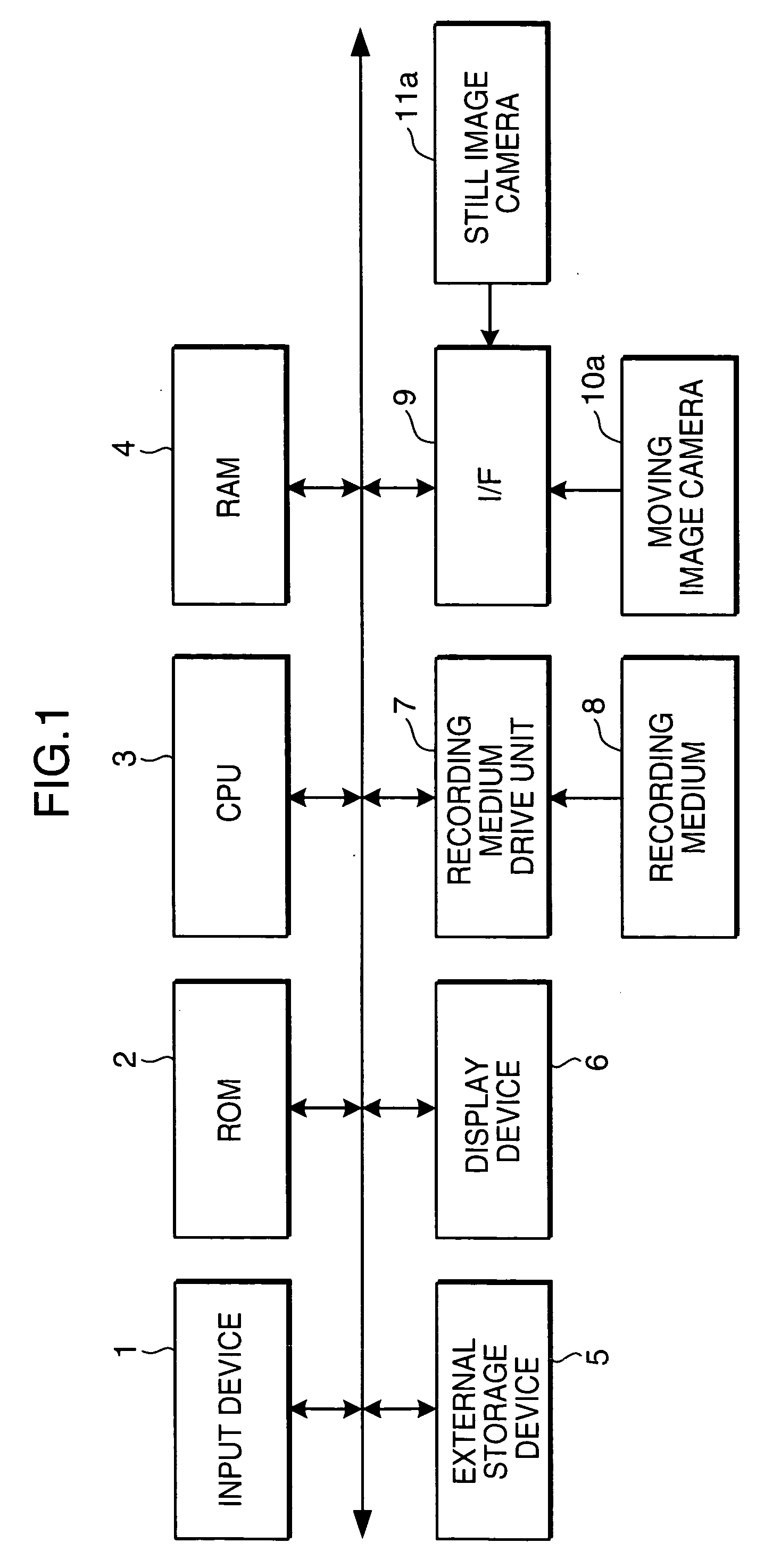

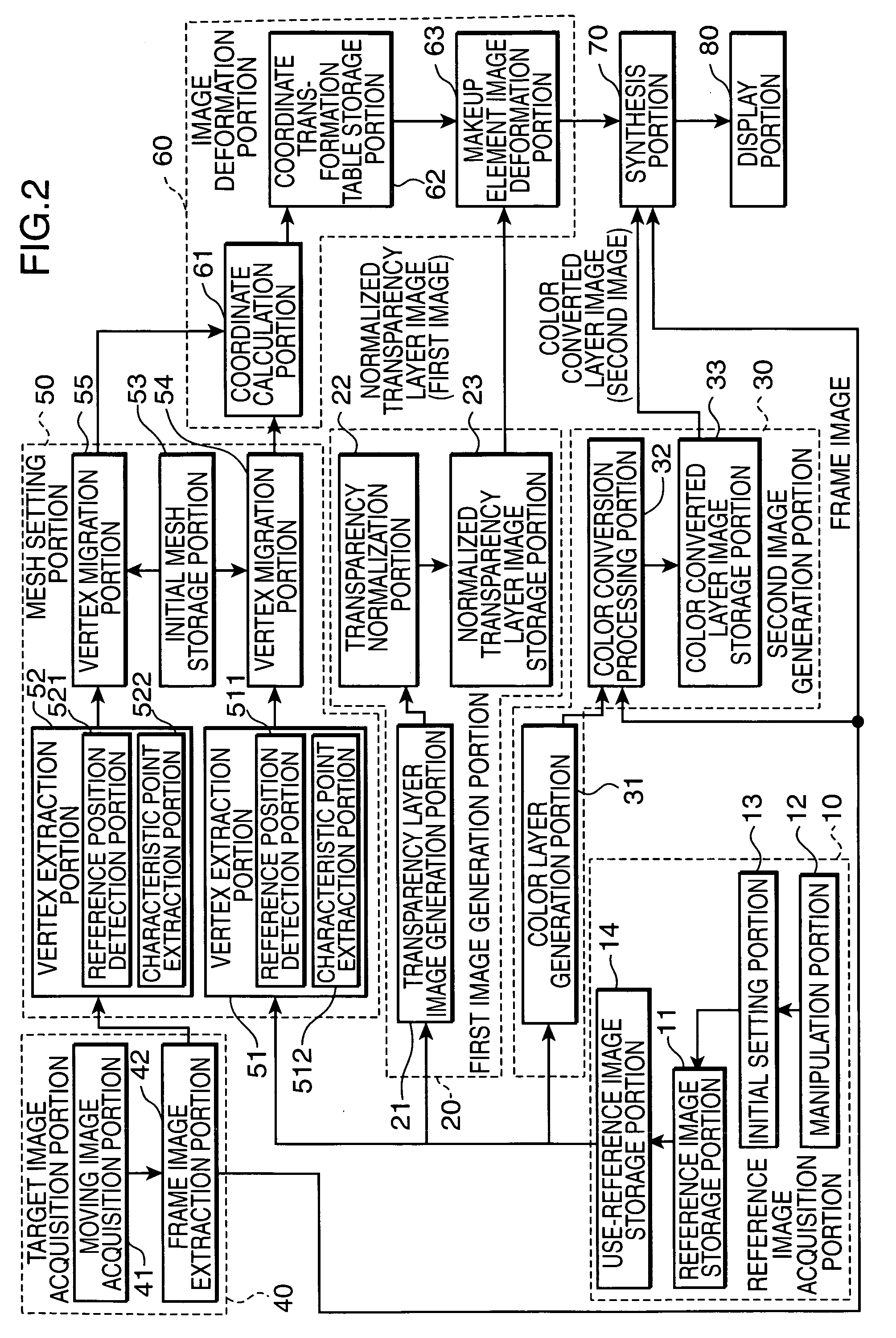

Makeup simulation program, makeup simulation device, and makeup simulation method

InactiveUS20070019882A1High quality imagingEasy to operateCharacter and pattern recognitionImage data processing detailsFacial regionReference image

A makeup simulation enables a high-quality simulation image quickly and simply. A first image generator portion 20 generates n transparency layer images in which the transparency of each pixel is normalized on the basis of n layer images in a use-reference image. A second image generator portion 30 generates n color converted layer images by applying a color conversion on a frame image using color components of the layer images. A mesh setting portion 50 sets a mesh in a facial region in each frame image and a makeup pattern image. An image deformer 60 calculates a difference between vertices of meshes in both images and deforms a makeup element image in each normalized transparency layer image based on this difference to fit in the facial region of the frame image. A synthesizer 70 synthesizes color converted layer images and each frame image by alpha blending.

Owner:DIGITAL FASHION

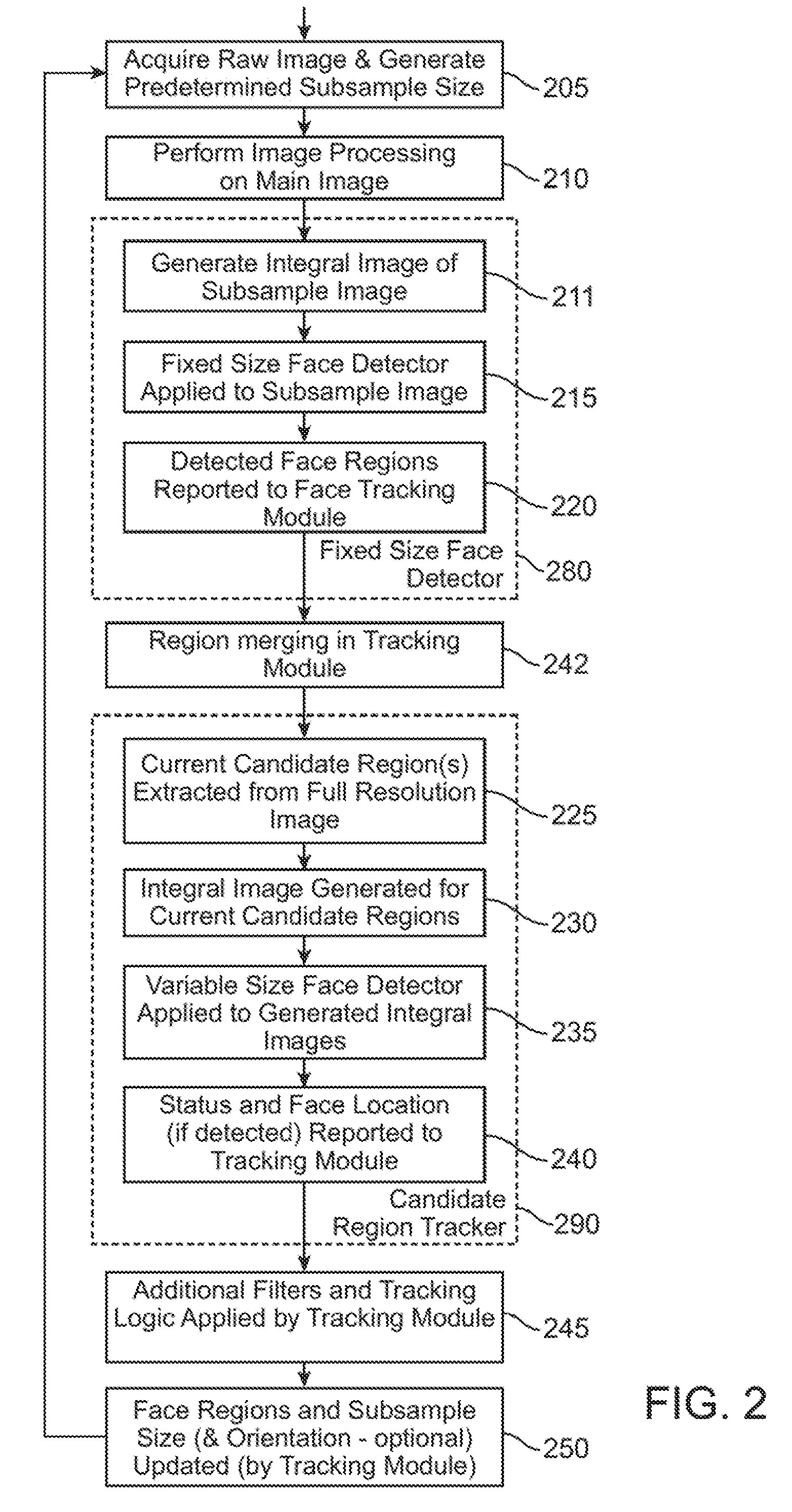

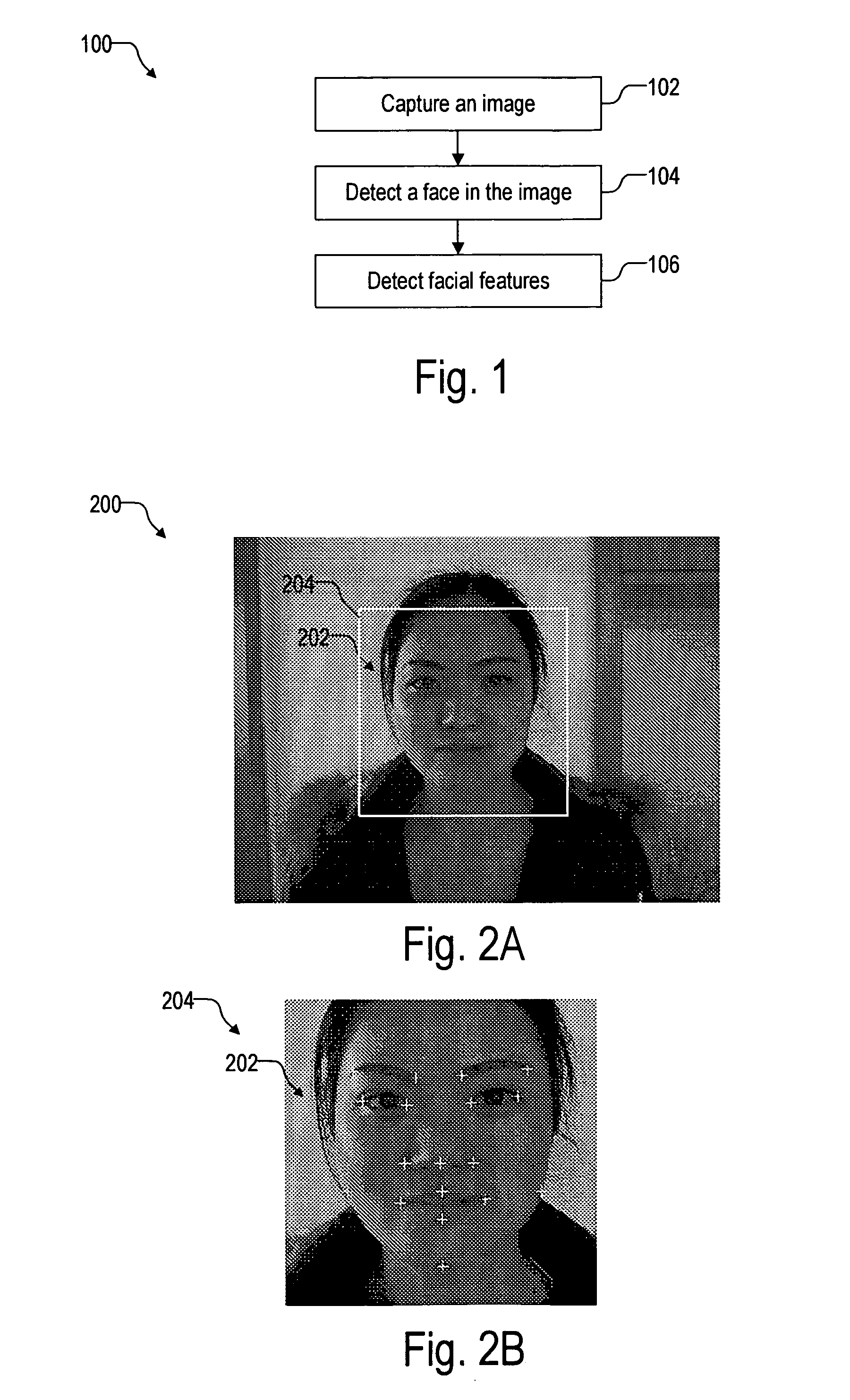

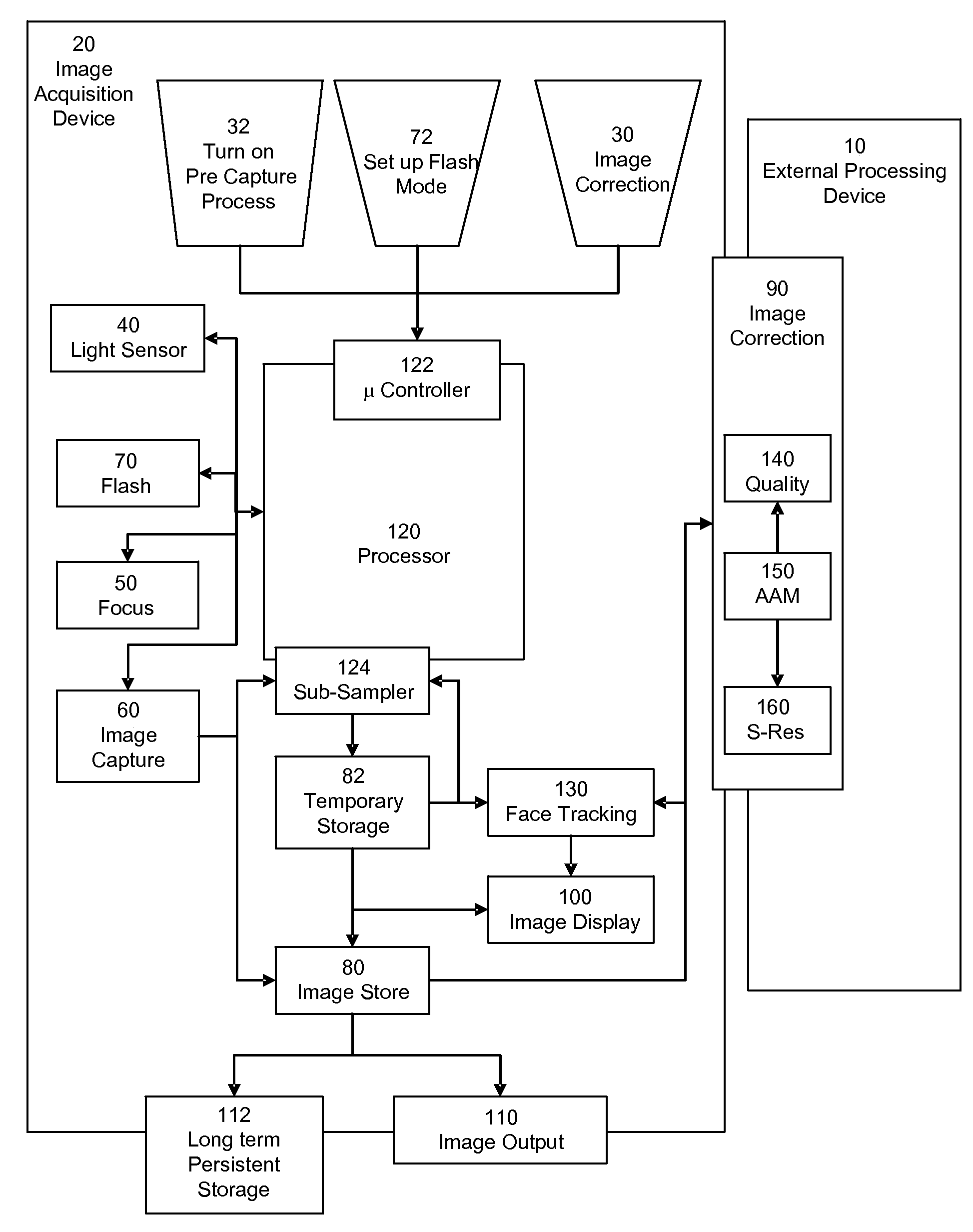

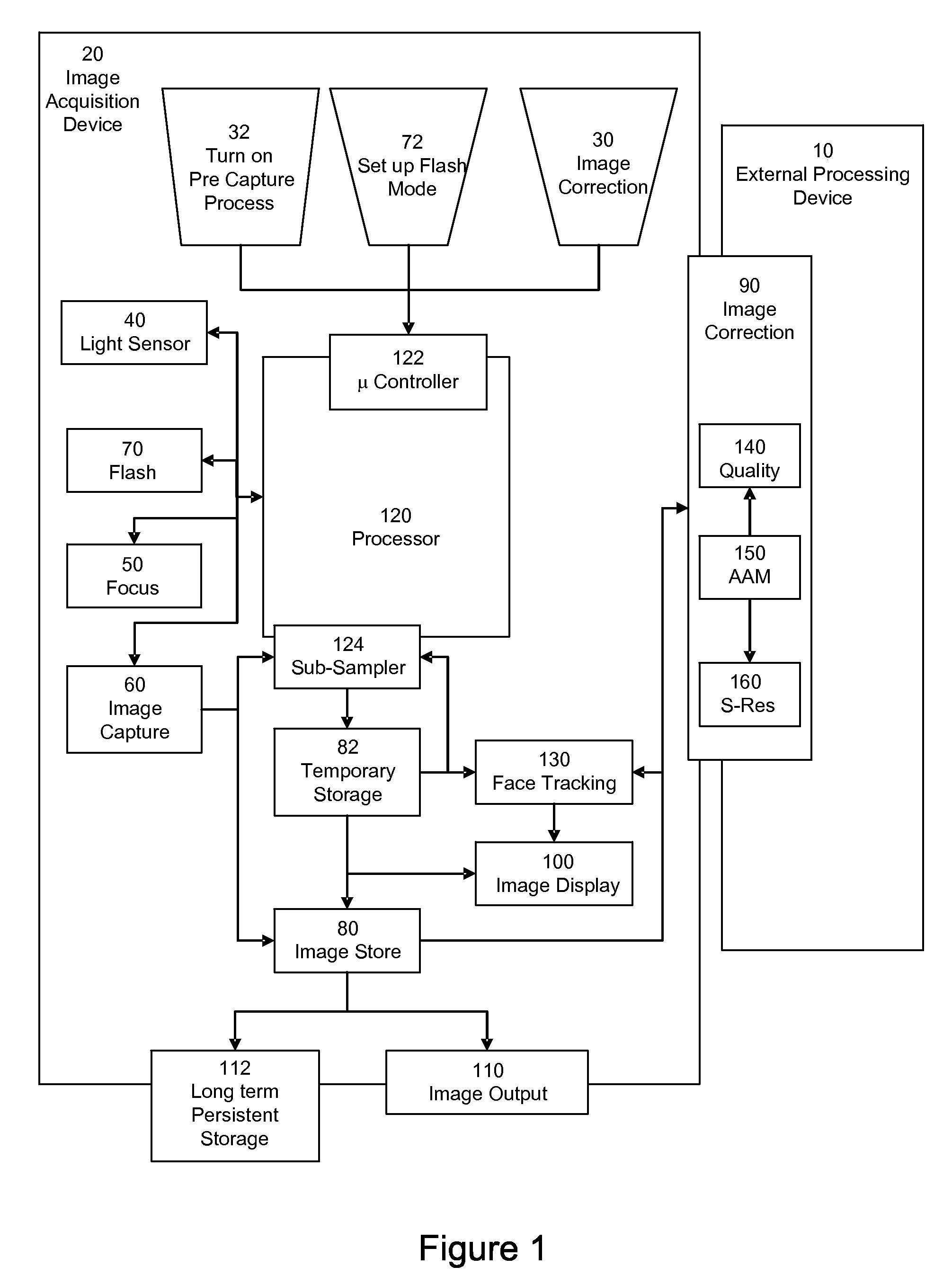

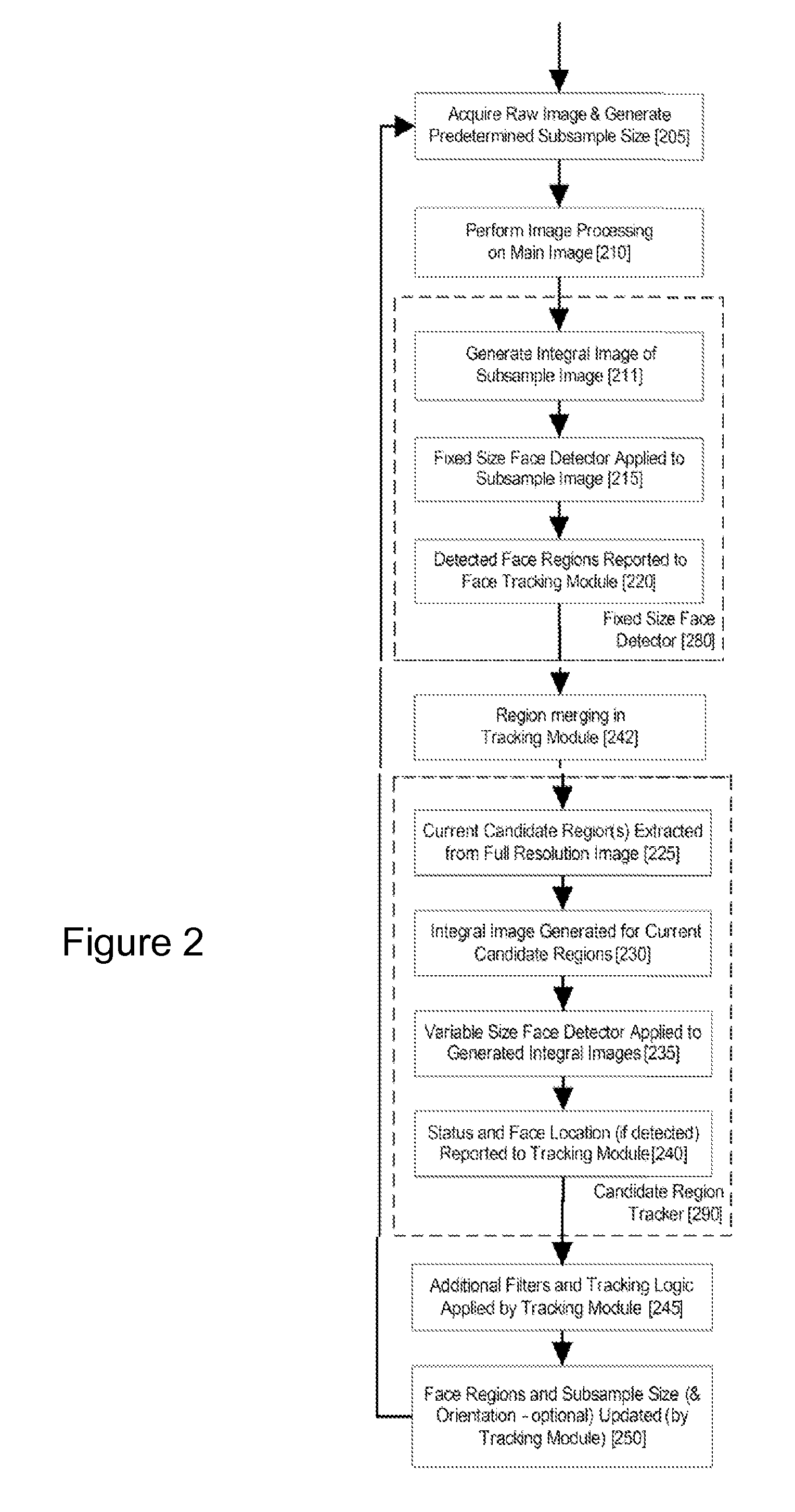

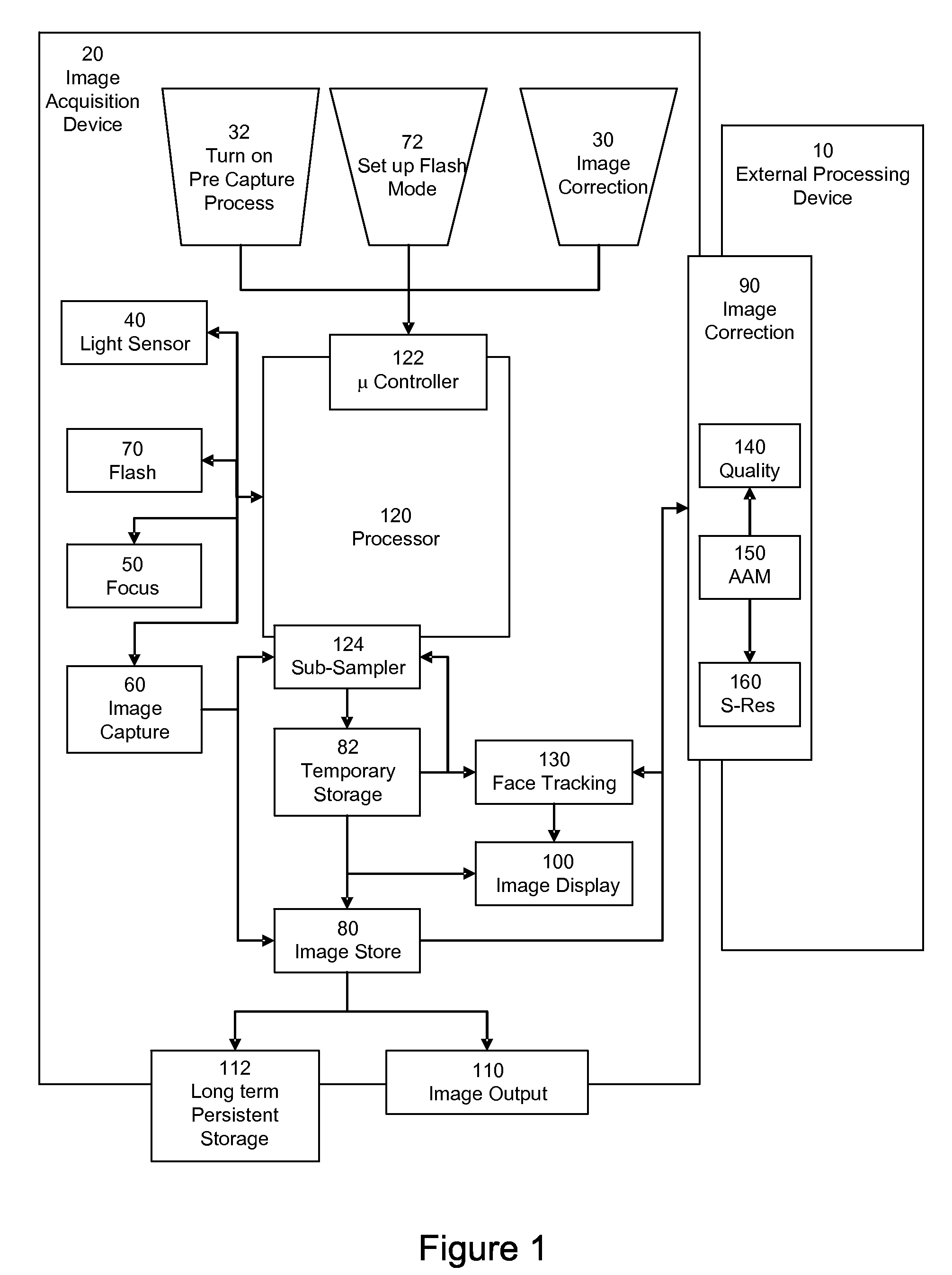

Real-time face tracking in a digital image acquisition device

ActiveUS7460694B2Improve performance accuracyReduce calculationTelevision system detailsCharacter and pattern recognitionFace detectionImaging processing

An image processing apparatus for tracking faces in an image stream iteratively receives a new acquired image from the image stream, the image potentially including one or more face regions. The acquired image is sub-sampled (112) at a specified resolution to provide a sub-sampled image. An integral image is then calculated for a least a portion of the sub-sampled image. Fixed size face detection (20) is applied to at least a portion of the integral image to provide a set of candidate face regions. Responsive to the set of candidate face regions produced and any previously detected candidate face regions, the resolution at which a next acquired image is sub-sampled is adjusted.

Owner:FOTONATION LTD

Image processing method and apparatus

An image processing technique includes acquiring a main image of a scene and determining one or more facial regions in the main image. The facial regions are analysed to determine if any of the facial regions includes a defect. A sequence of relatively low resolution images nominally of the same scene is also acquired. One or more sets of low resolution facial regions in the sequence of low resolution images are determined and analysed for defects. Defect free facial regions of a set are combined to provide a high quality defect free facial region. At least a portion of any defective facial regions of the main image are corrected with image information from a corresponding high quality defect free facial region.

Owner:FOTONATION LTD

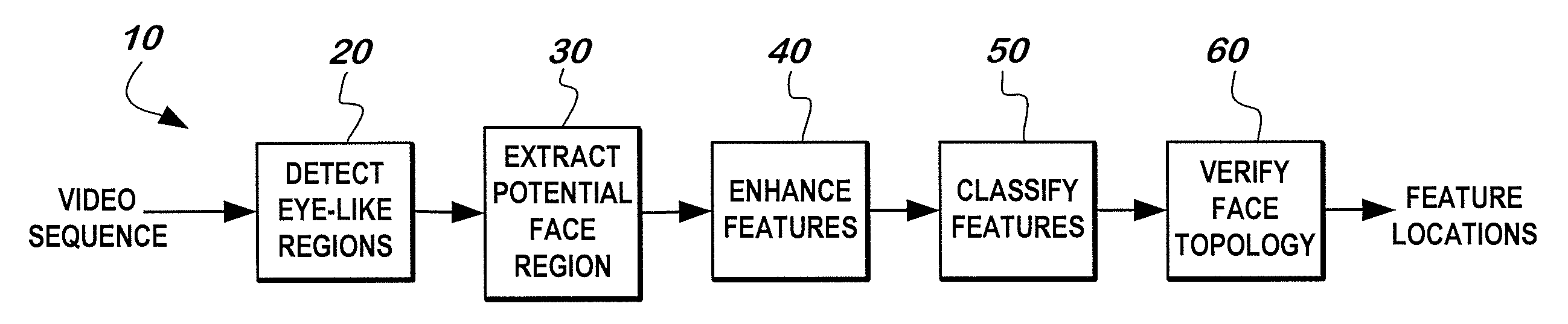

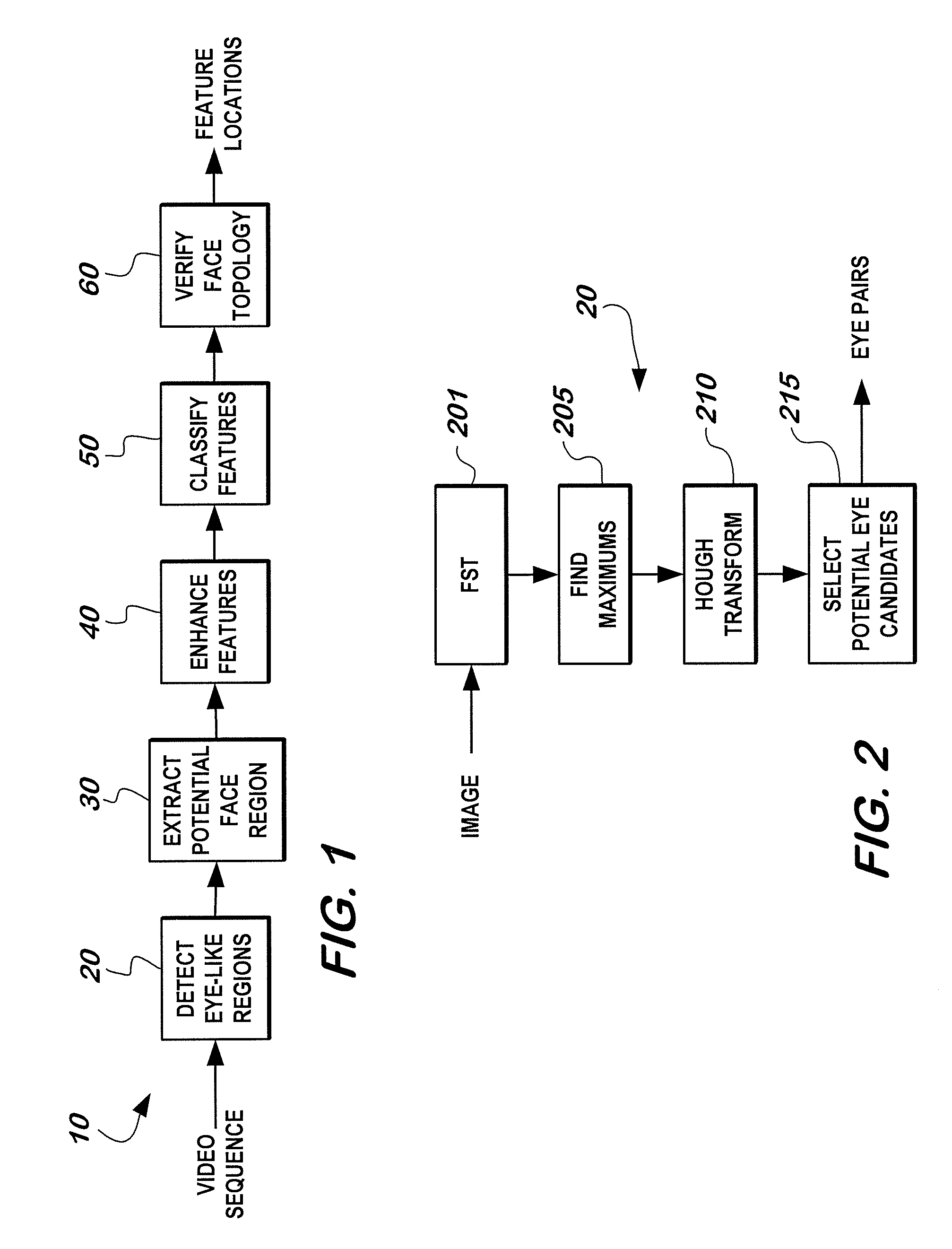

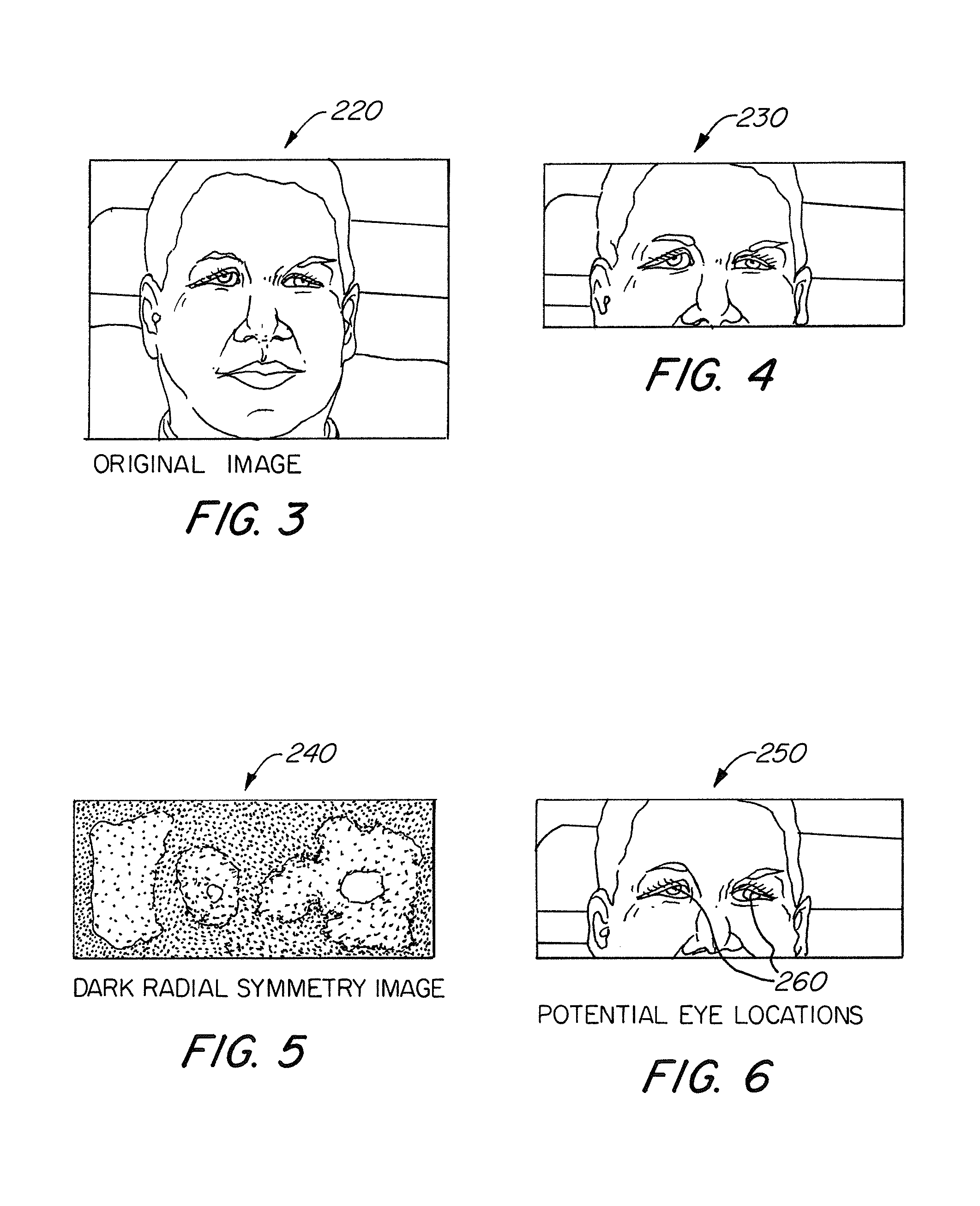

Method and apparatus for the automatic detection of facial features

InactiveUS7460693B2Image analysisCharacter and pattern recognitionPattern recognitionComputerized system

A method of utilizing a computer system to automatically detect the location of a face within a series of images, the method comprising the steps of: detecting eye like regions within the series of images; utilizing the eye like regions to extract potential face regions within the series of images; enhancing the facial features in the extracted potential face regions; classifying the features; and verifying the face topology within the potential face regions.

Owner:SEEING MACHINES

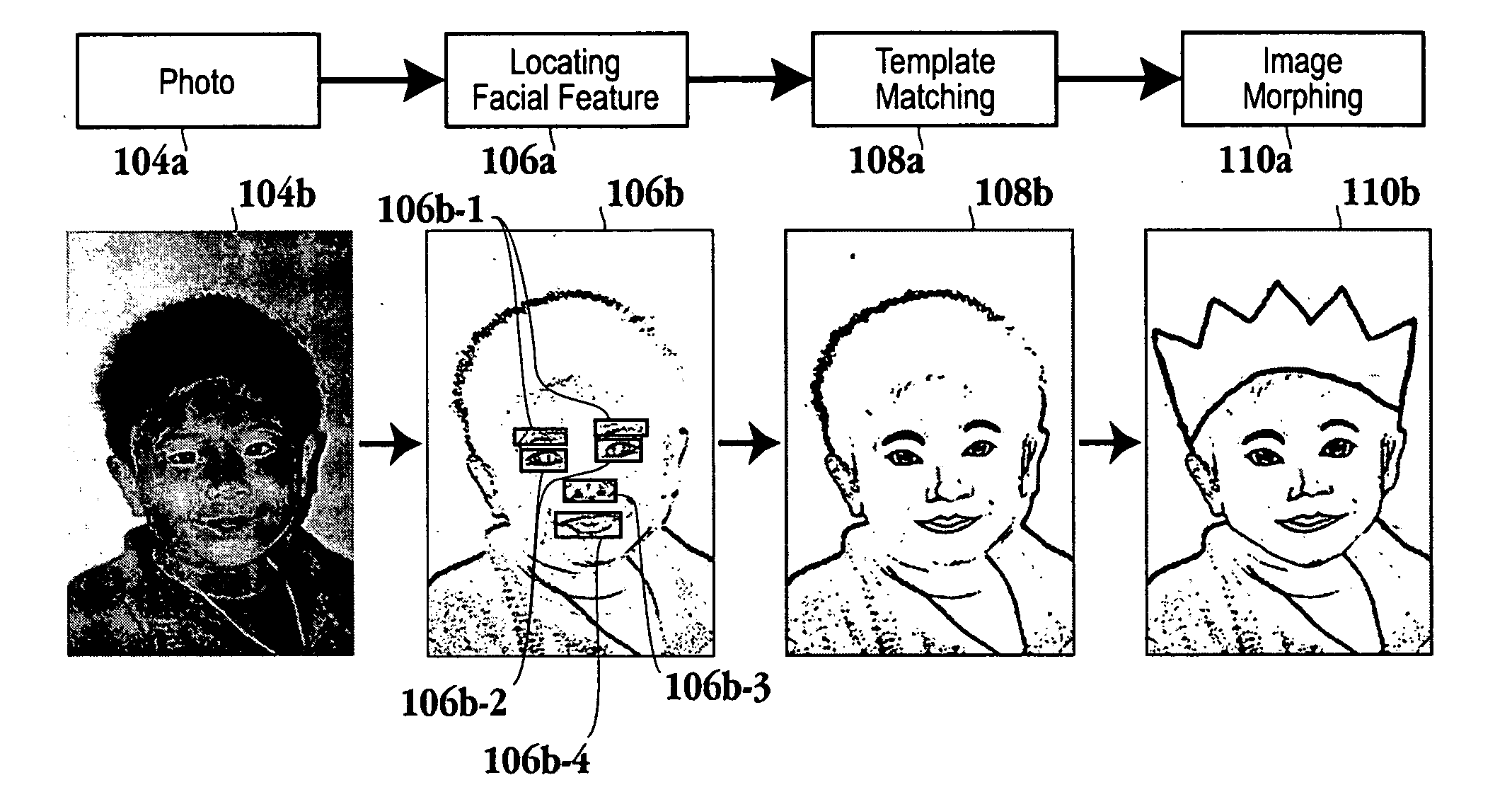

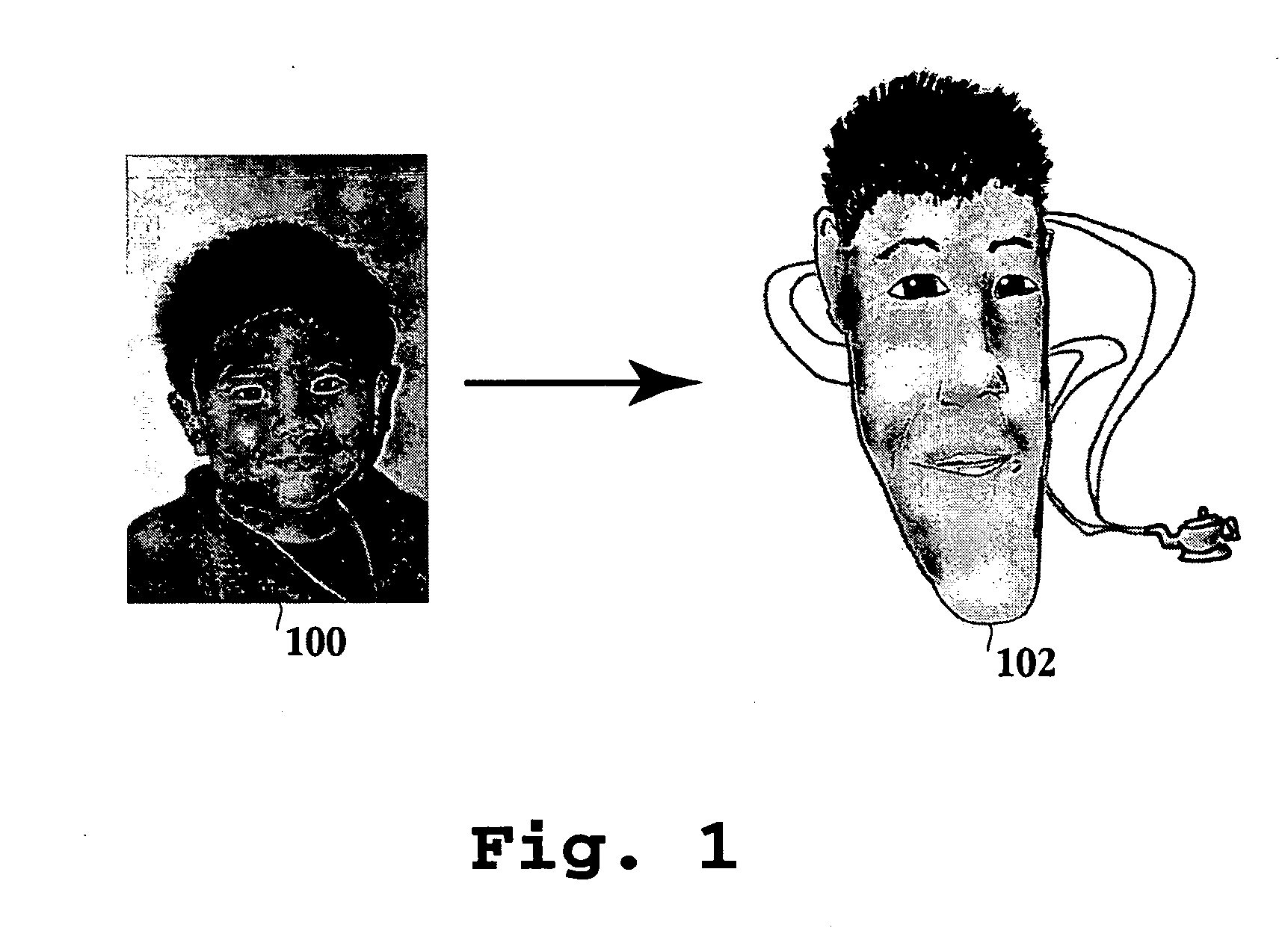

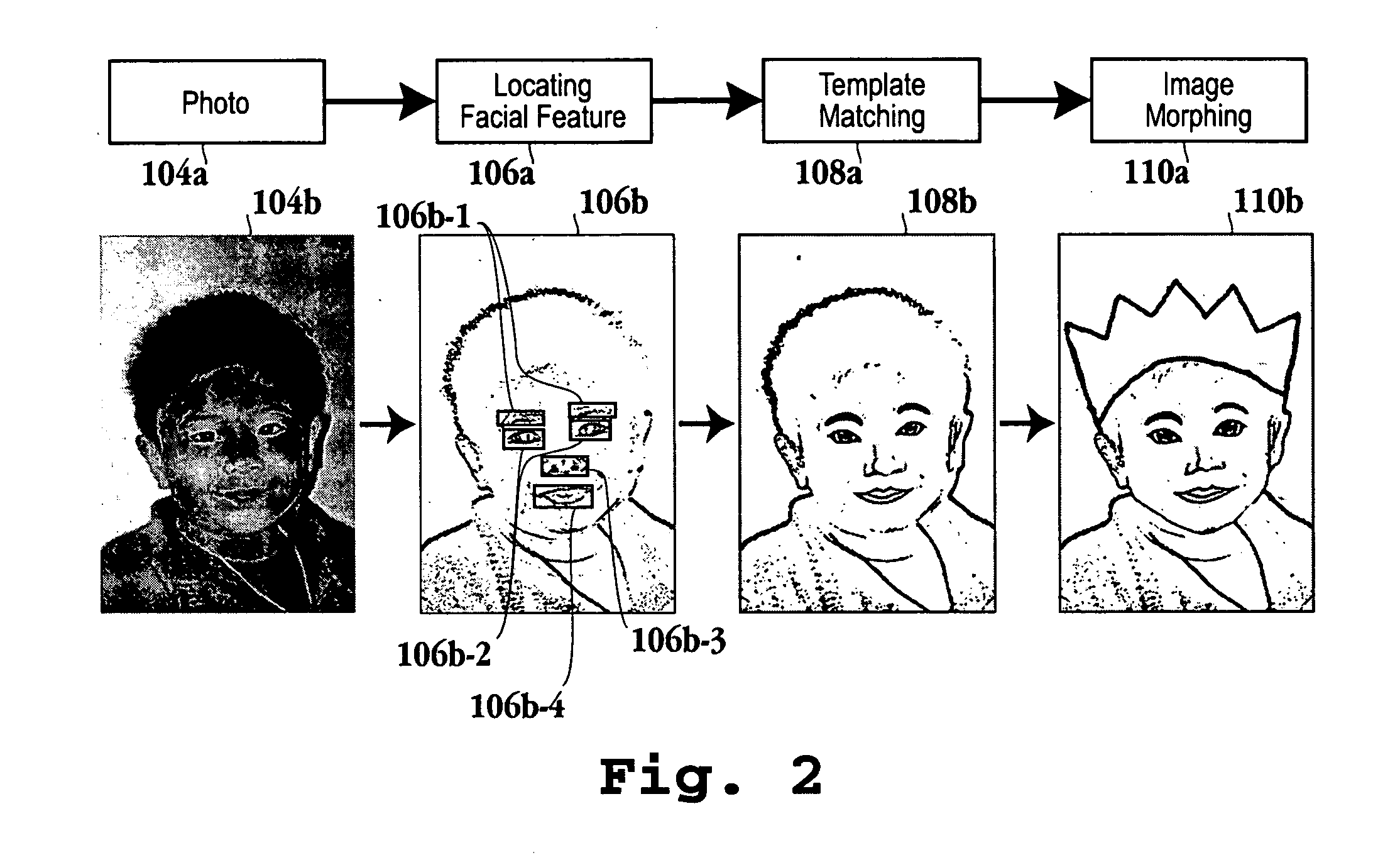

Method and apparatus for converting a photo to a caricature image

A method for creating a caricature image from a digital image is provided. The method initiates with capturing digital image data. The method includes locating a facial region within the captured image data. Then, a facial feature template matching a facial feature of the facial region is selected. Next, the facial feature template is substituted for the facial feature. Then, the facial feature template is transformed into a caricature or non-realistic image. A computer readable medium, an image capture device capable of creating a caricature from a captured image and an integrated circuit are also provided.

Owner:SEIKO EPSON CORP

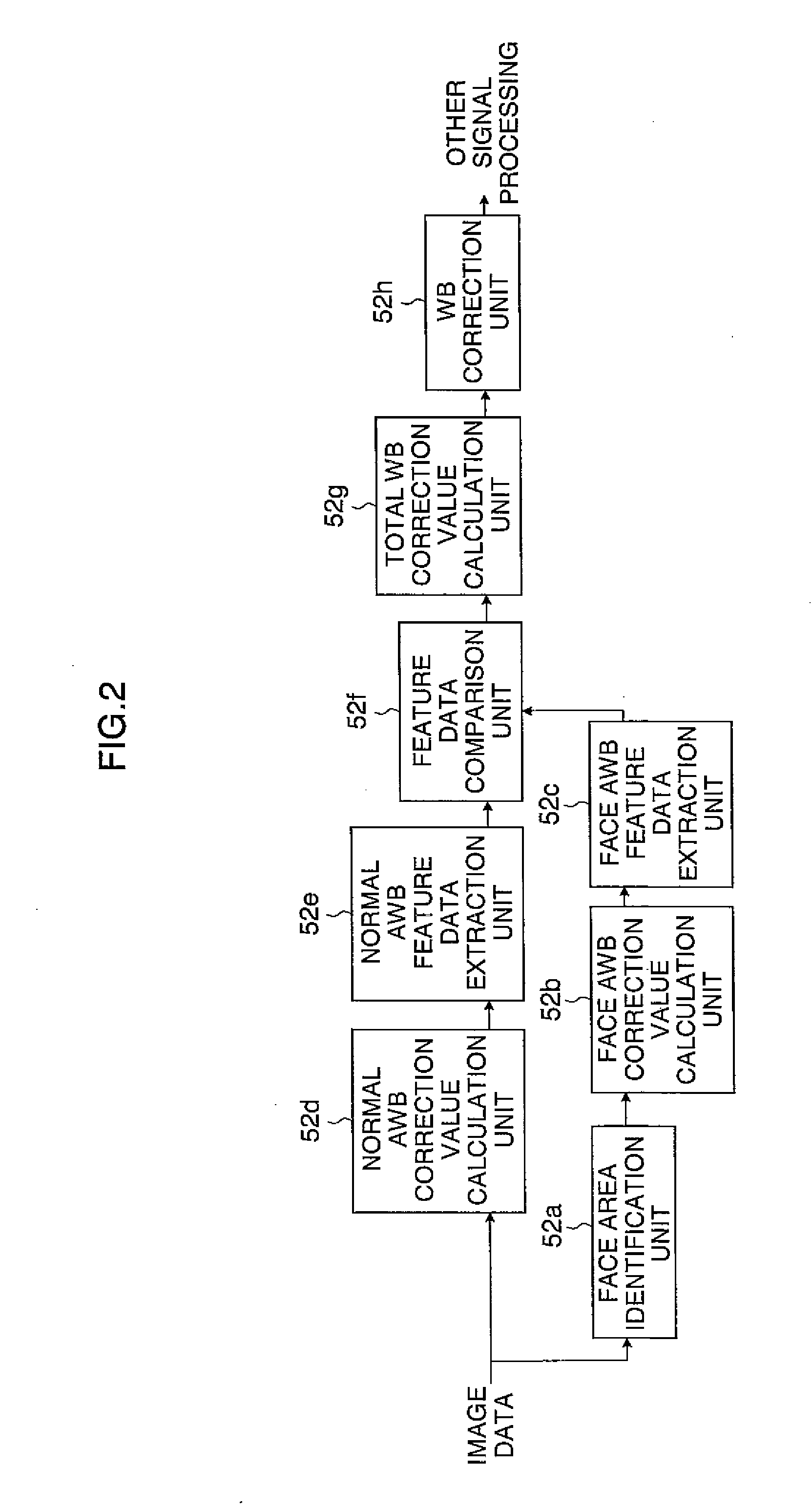

Auto white balance correction value calculation device, method, program, and image pickup device

ActiveUS20090021602A1Prevent an erroneous correctionAvoid balanceTelevision system detailsColor signal processing circuitsFace detectionFacial region

A normal AWB (auto white balance) correction value is calculated based on inputted image data. Further, a face area is identified from the inputted image data and a face AWB correction value is calculated based on image data in the face area. Then, first feature data and second feature data are extracted from the inputted image data and image data in the face area, respectively. A total AWB correction value is calculated in accordance with at least one of the face AWB correction value and the normal AWB correction value based on a comparison result of the first feature data and the second feature data. Thus, an erroneous correction can be prevented in an AWB correction using a face detection function.

Owner:FUJIFILM CORP

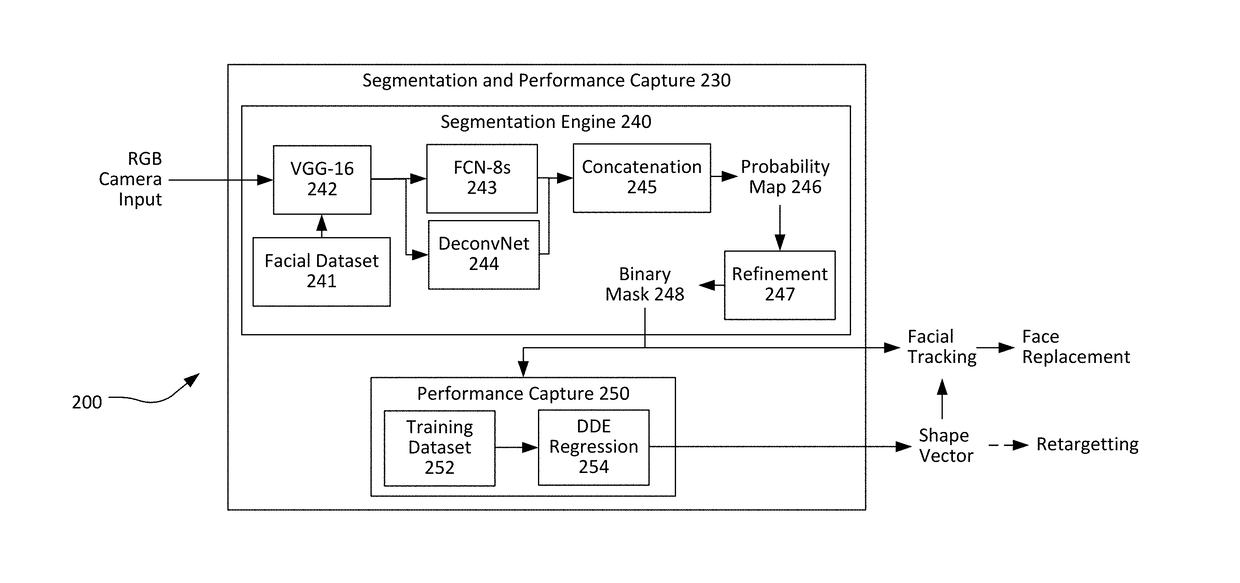

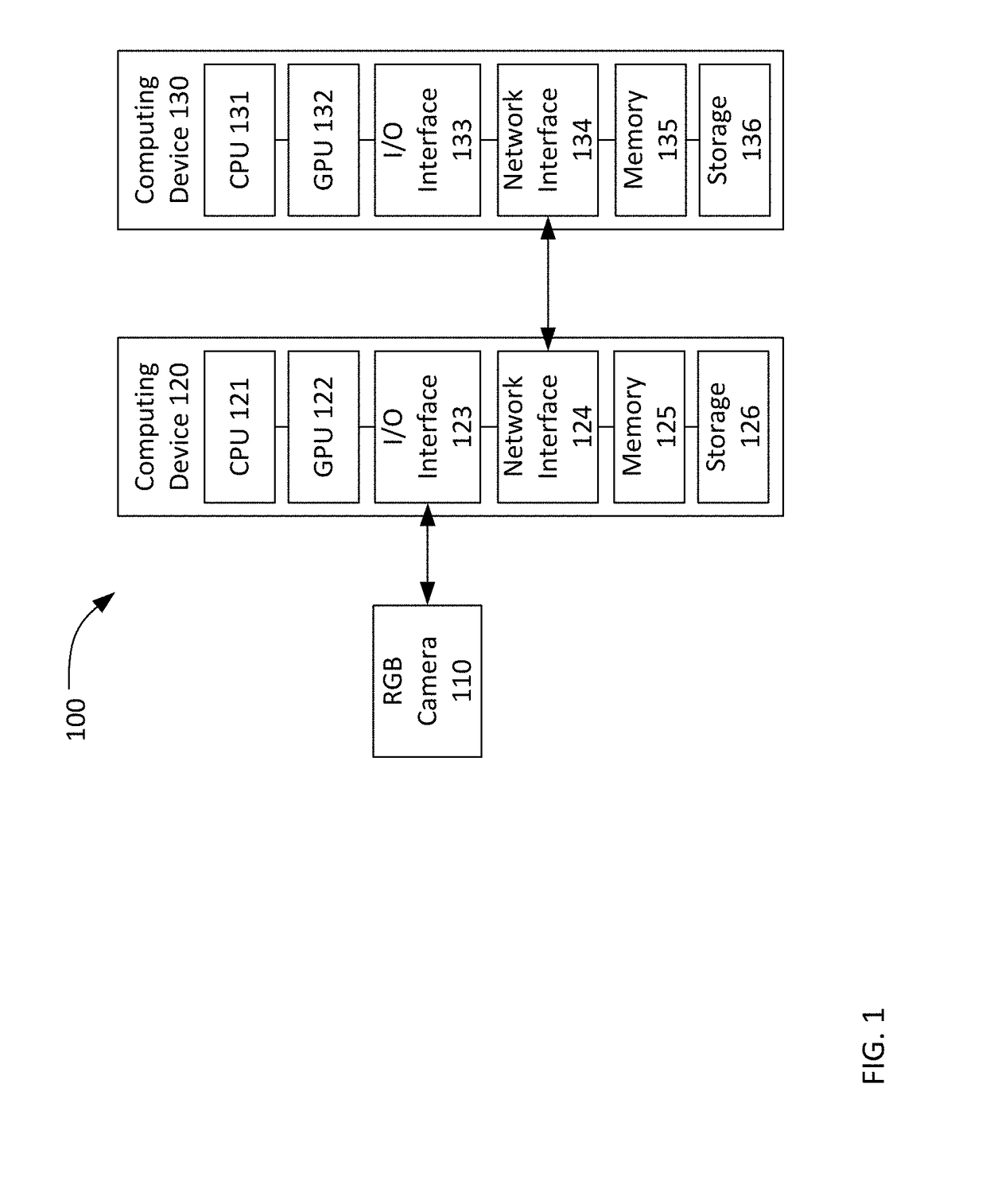

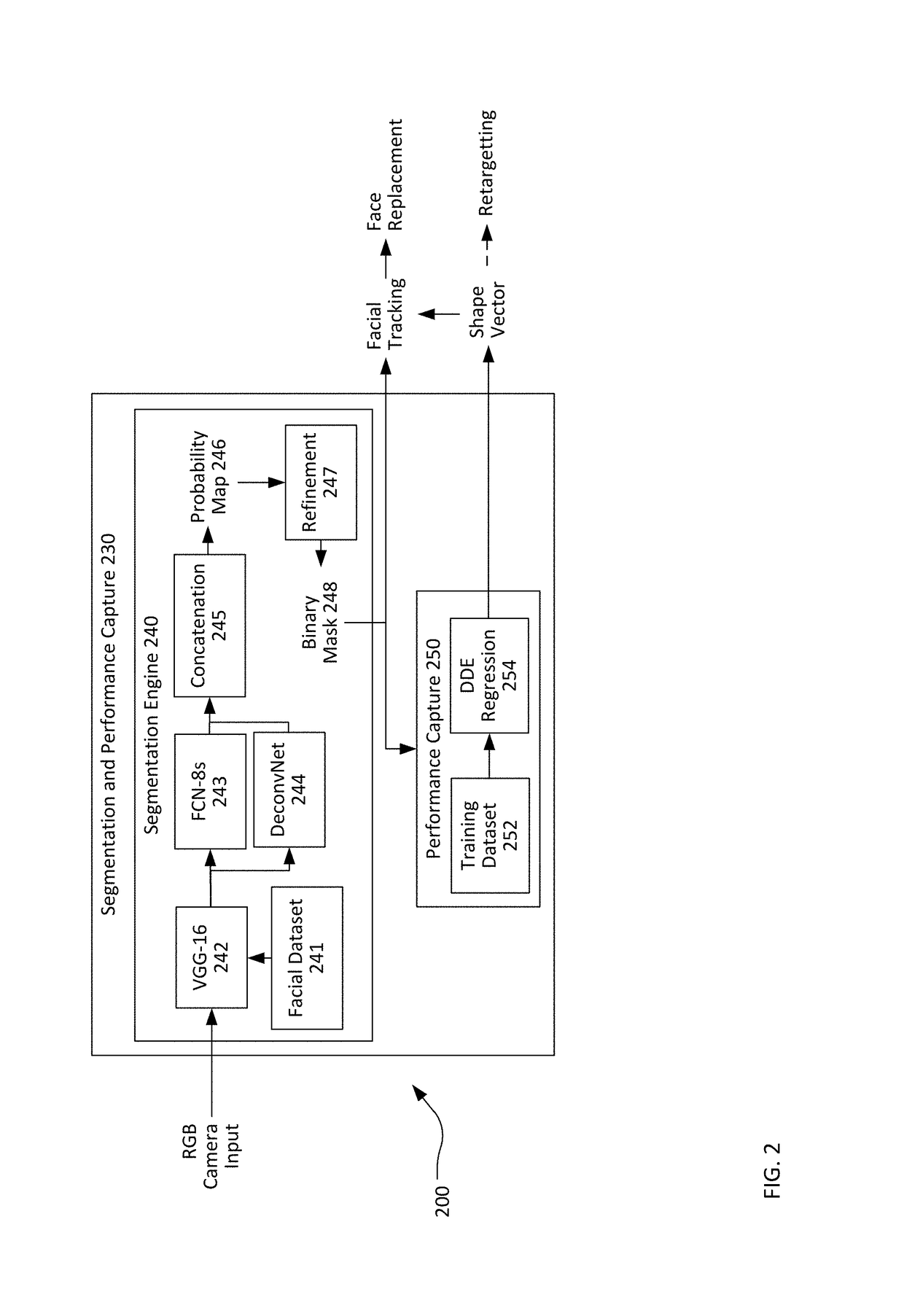

Real-time facial segmentation and performance capture from RGB input

There is disclosed a system and method of performing facial recognition from RGB image data. The method includes generating a lower-resolution image from the RGB image data, performing a convolution of the lower-resolution image data to derive a probability map identifying probable facial regions and a probable non-facial regions, and performing a first deconvolution on the lower-resolution image using a bilinear interpolation layer to derive a set of coarse facial segments. The method further includes performing a second deconvolution on the lower-resolution image using a series of unpooling, deconvolution, and rectification layers to derive a set of fine facial segments, concatenating the set of coarse facial segments to the set of fine facial segments to create an image matrix made up of a set of facial segments, and generating a binary facial mask identifying probable facial regions and probable non-facial regions from the image matrix.

Owner:PINSCREEN INC

Nasal Dilator

InactiveUS20090183734A1Reduce flow resistanceLower impedanceRespiratory masksBreathing masksNasal cavityNasal passage

A nasal dilator includes a contact pad attachable to a user's facial region below the user's eye and outboard from the user's nose. A tugging device is coupled with the contact pad and urges the contact pad in a direction away from the user's nose. With this structure, effective dilation of the nasal passages can be achieved in a comfortable manner. The dilator may also be incorporated into a CPAP mask and / or form part of an automated control system.

Owner:RESMED LTD

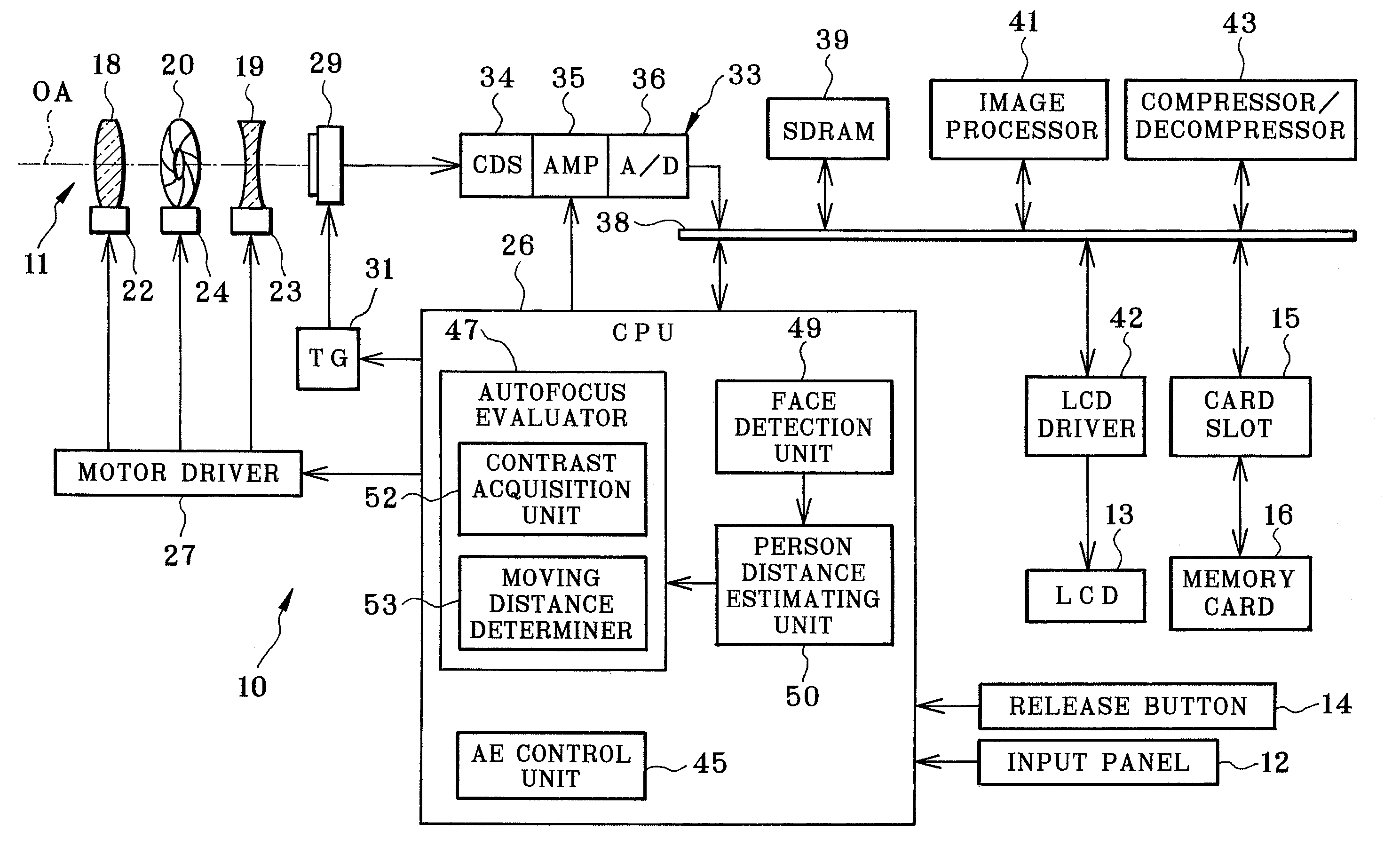

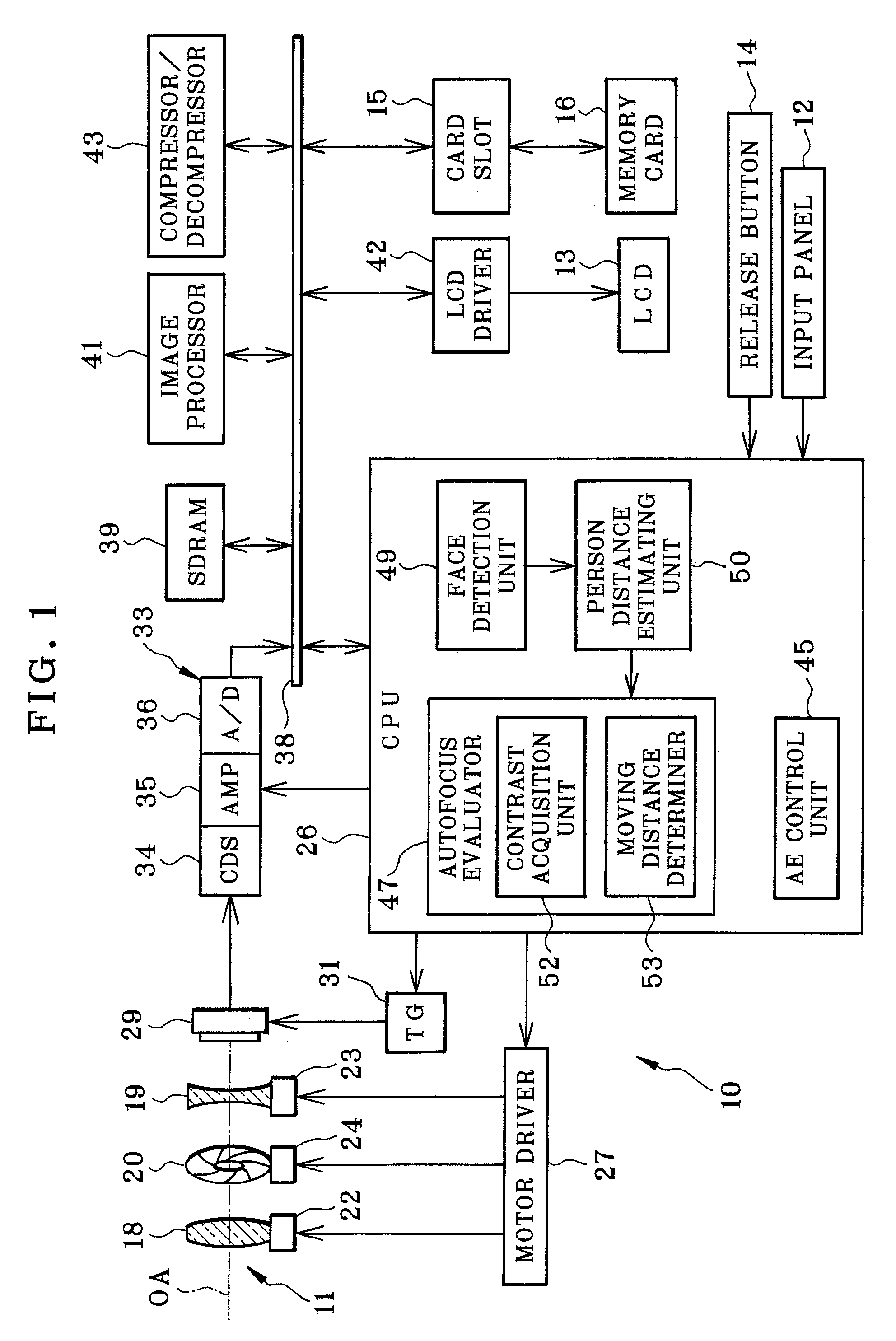

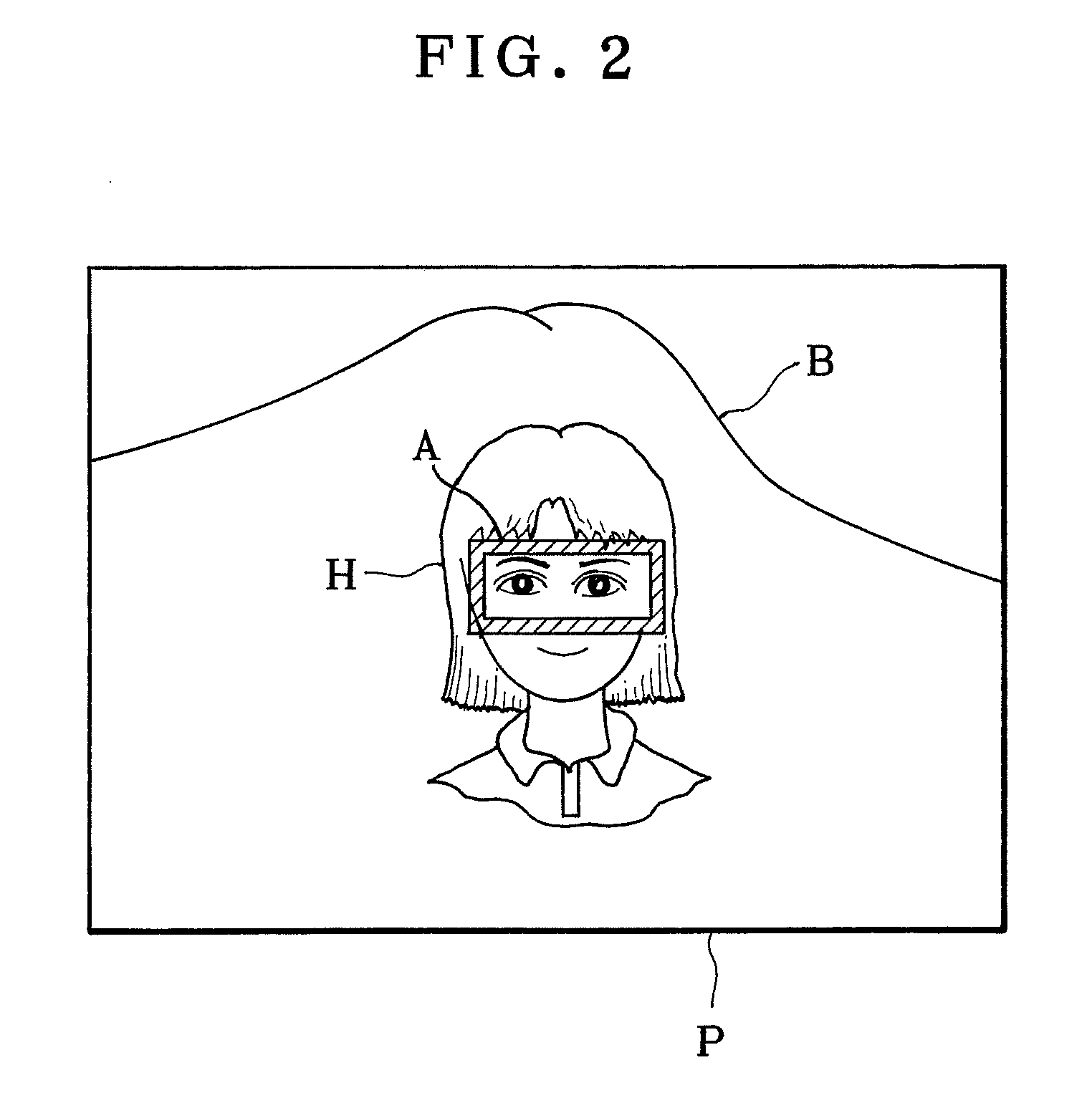

Image pickup apparatus, focusing control method and principal object detecting method

InactiveUS20080252773A1Increase reflectionTelevision system detailsProjector focusing arrangementCamera lensImaging analysis

In a digital still camera, an image is picked up by detecting object light from a lens system having a focus lens. A region of a human face is detected within the image by image analysis thereof. An estimated distance of the facial region is determined according to a size of the facial region. A lens moving distance of the focus lens is determined according to the estimated distance. A contrast value of the image is acquired in moving the focus lens by the lens moving distance. An in-focus lens position is determined according to the contrast value, to set the focus lens in the in-focus lens position. Furthermore, before the image pickup step, so great an aperture value of the lens system on the optical path is set as to enable image pickup of the facial region within a depth of field.

Owner:FUJIFILM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com