Patents

Literature

4941 results about "Reinforcement learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

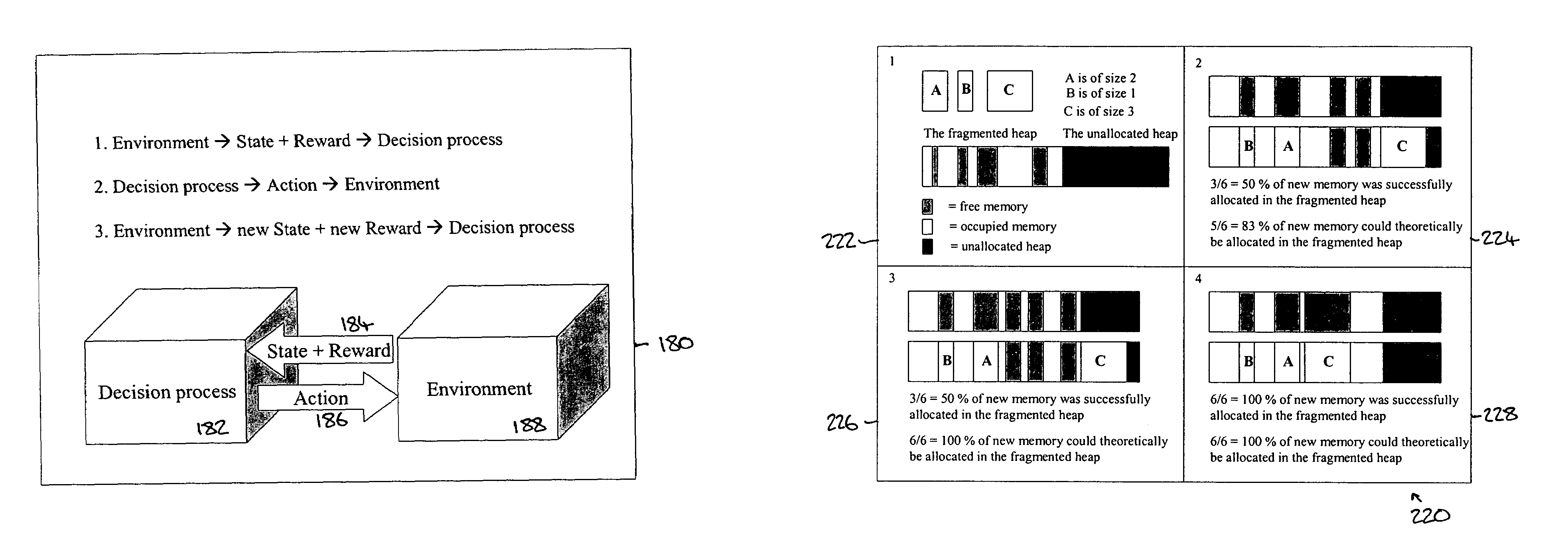

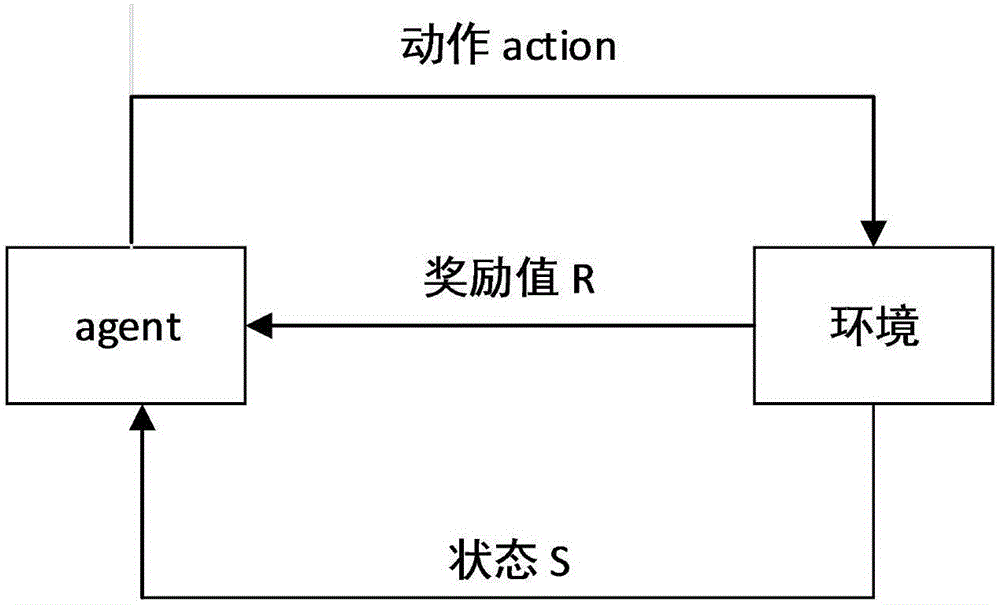

Reinforcement learning (RL) is an area of machine learning concerned with how software agents ought to take actions in an environment so as to maximize some notion of cumulative reward. Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning.

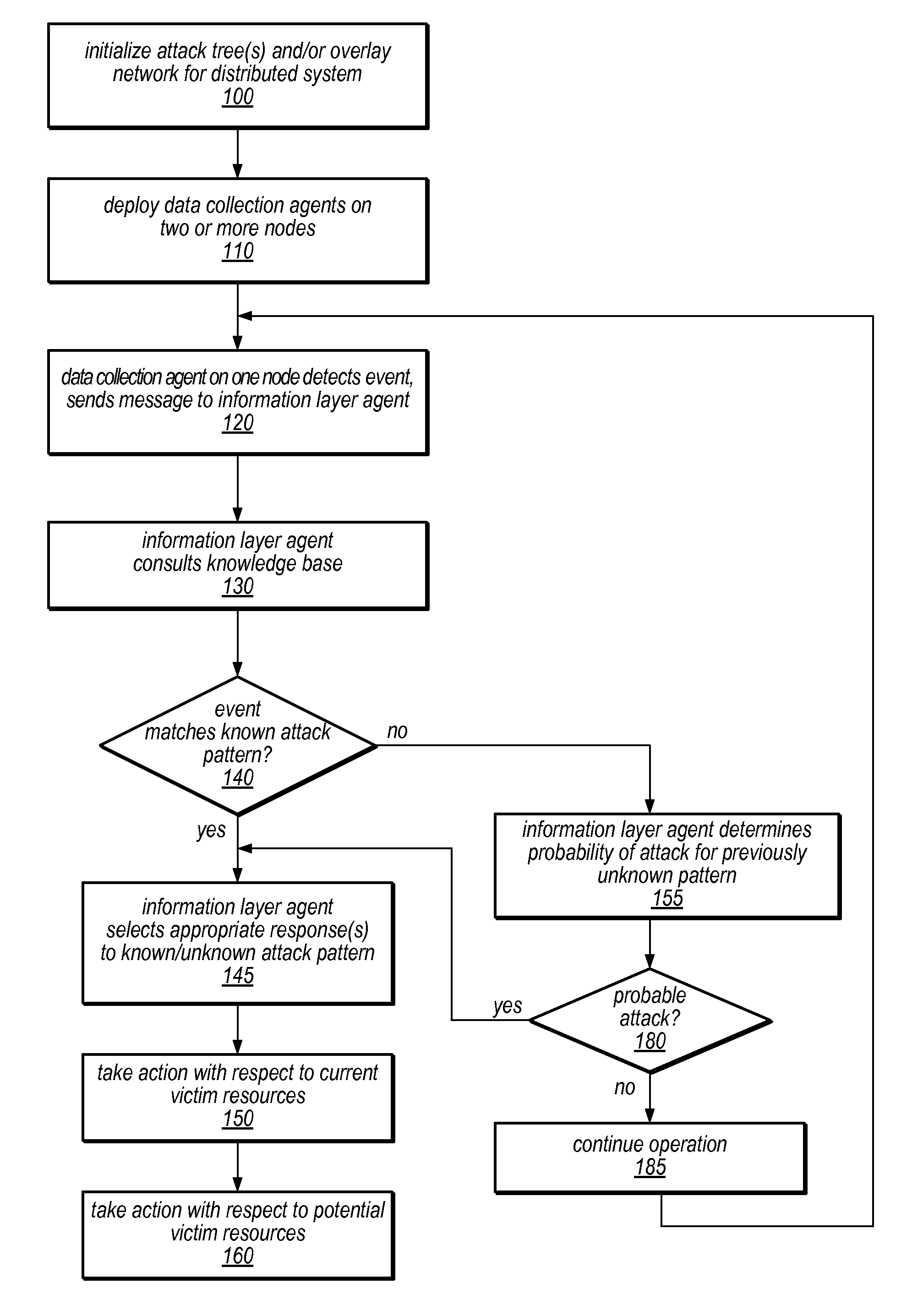

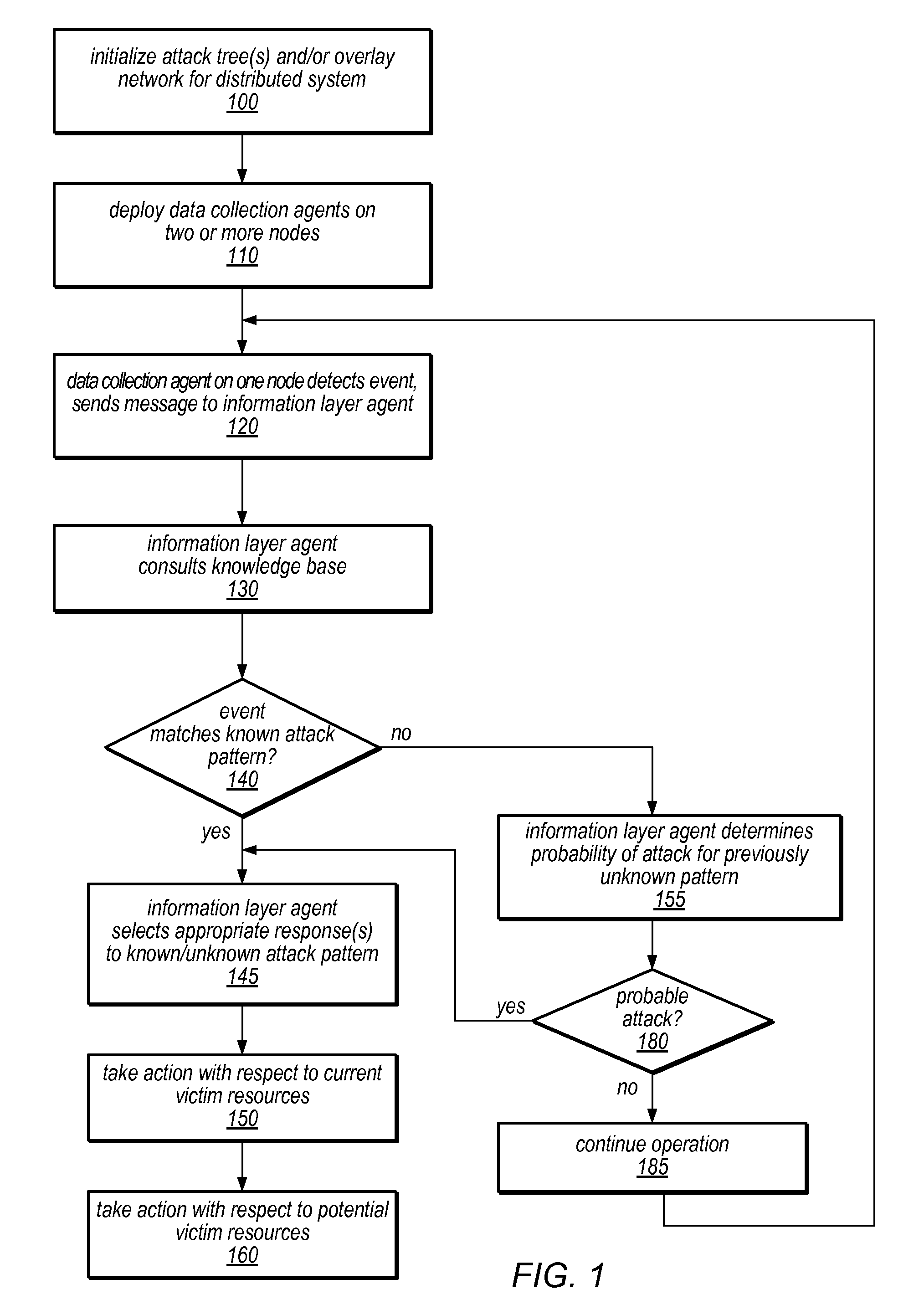

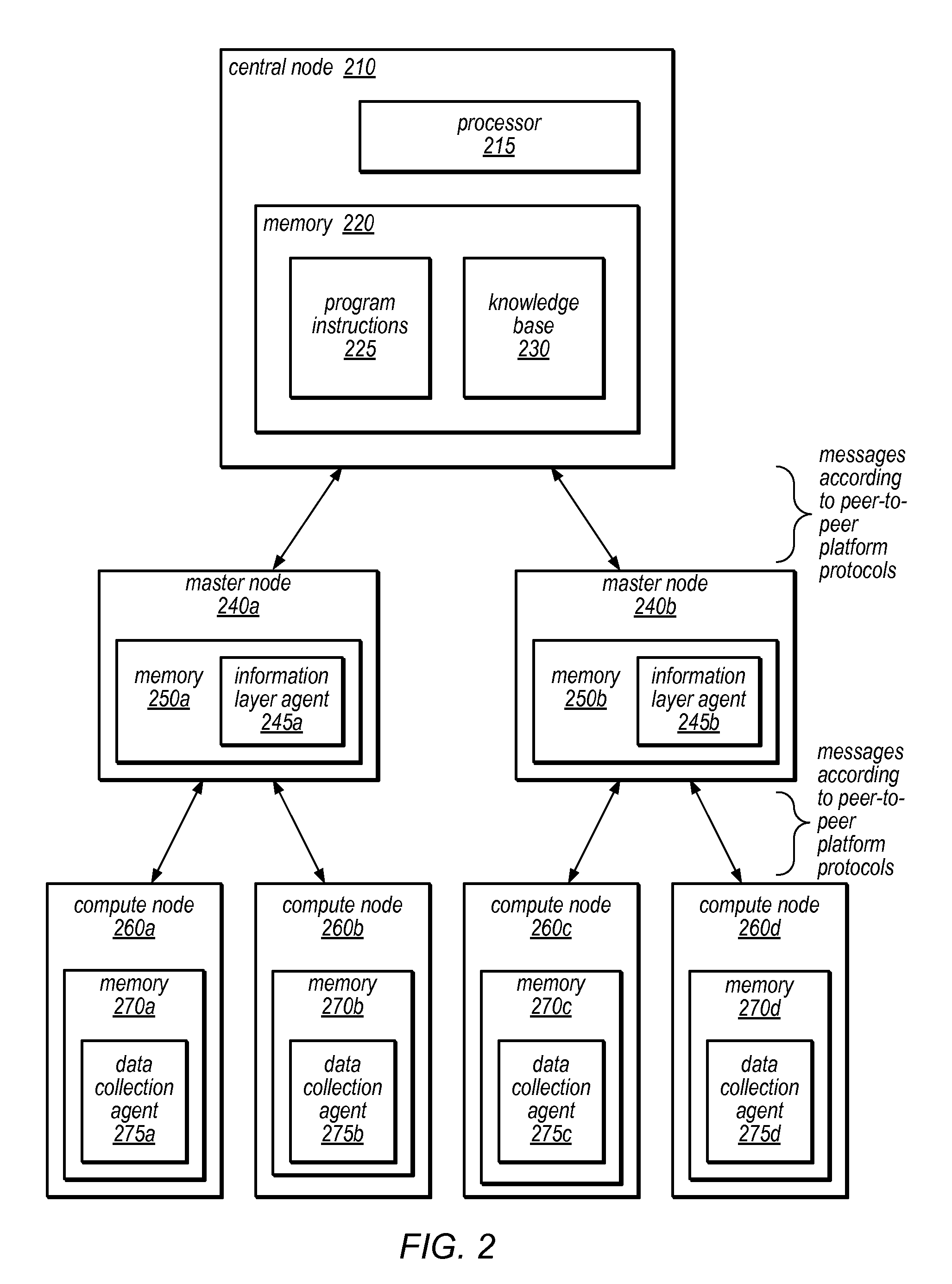

System and Method for Distributed Denial of Service Identification and Prevention

Systems and methods for discovery and classification of denial of service attacks in a distributed computing system may employ local agents on nodes thereof to detect resource-related events. An information later agent may determine if events indicate attacks, perform clustering analysis to determine if they represent known or unknown attack patterns, classify the attacks, and initiate appropriate responses to prevent and / or mitigate the attack, including sending warnings and / or modifying resource pool(s). The information layer agent may consult a knowledge base comprising information associated with known attack patterns, including state-action mappings. An attack tree model and an overlay network (over which detection and / or response messages may be sent) may be constructed for the distributed system. They may be dynamically modified in response to changes in system configuration, state, and / or workload. Reinforcement learning may be applied to the tuning of attack detection and classification techniques and to the identification of appropriate responses.

Owner:ORACLE INT CORP

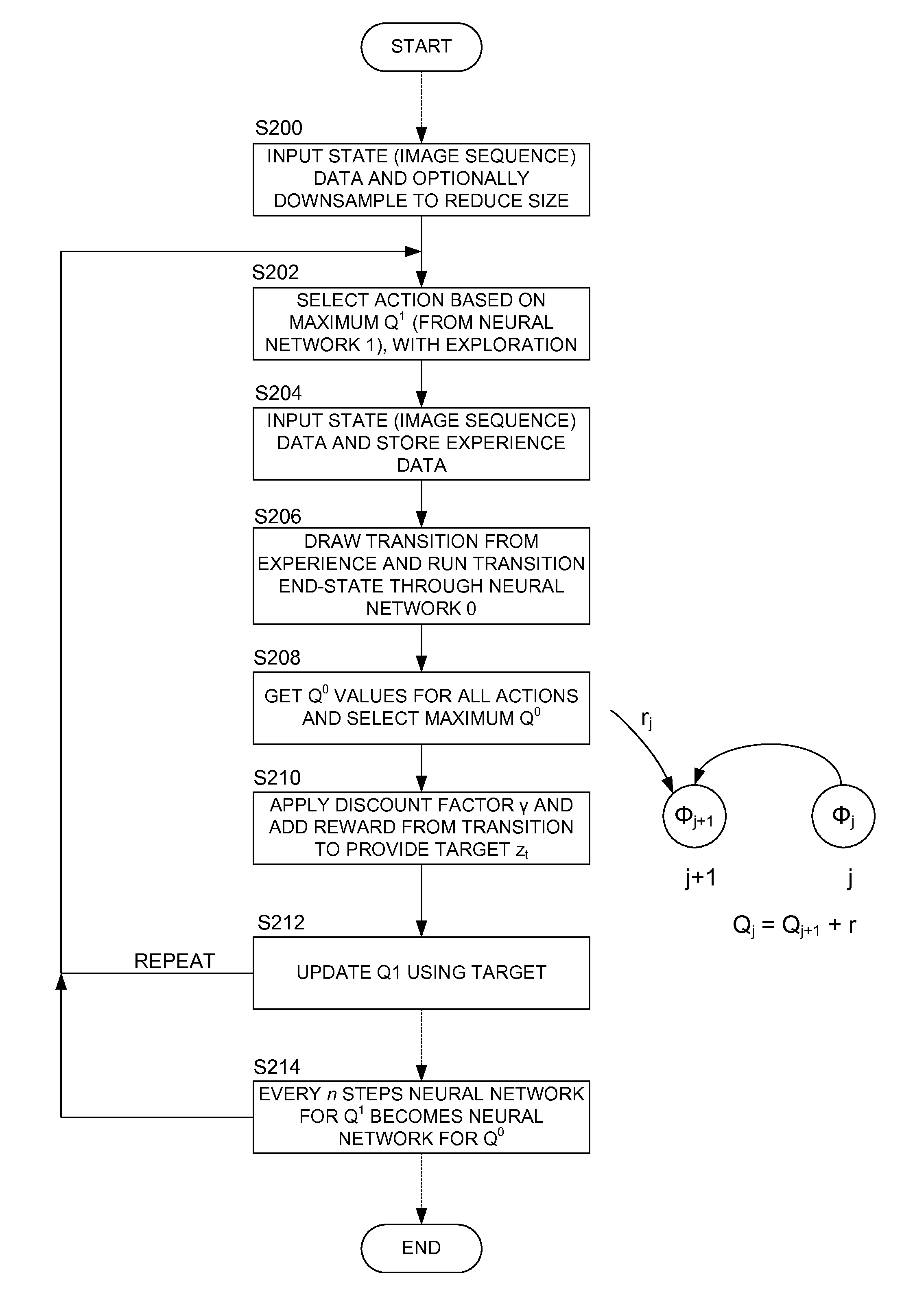

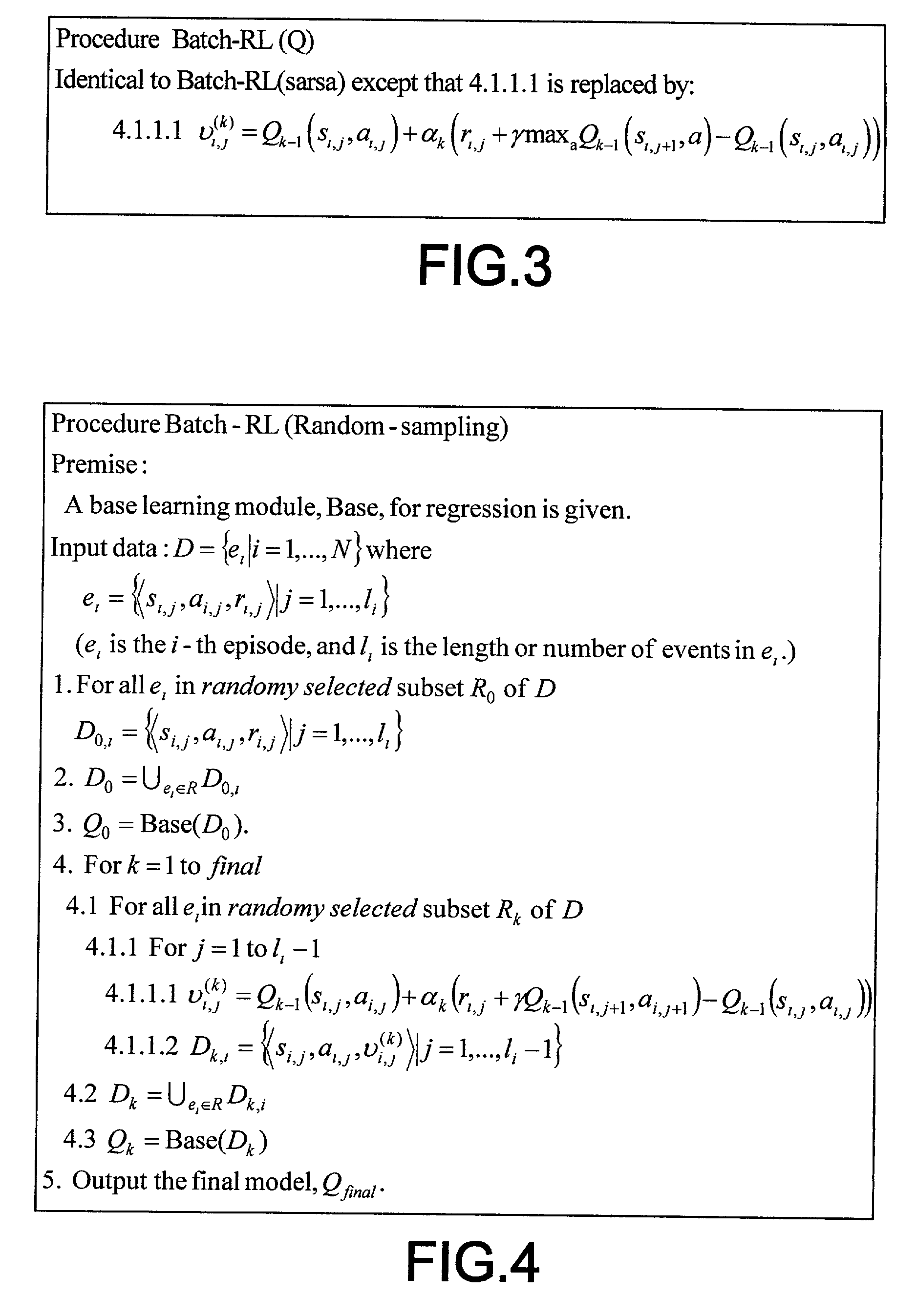

Methods and apparatus for reinforcement learning

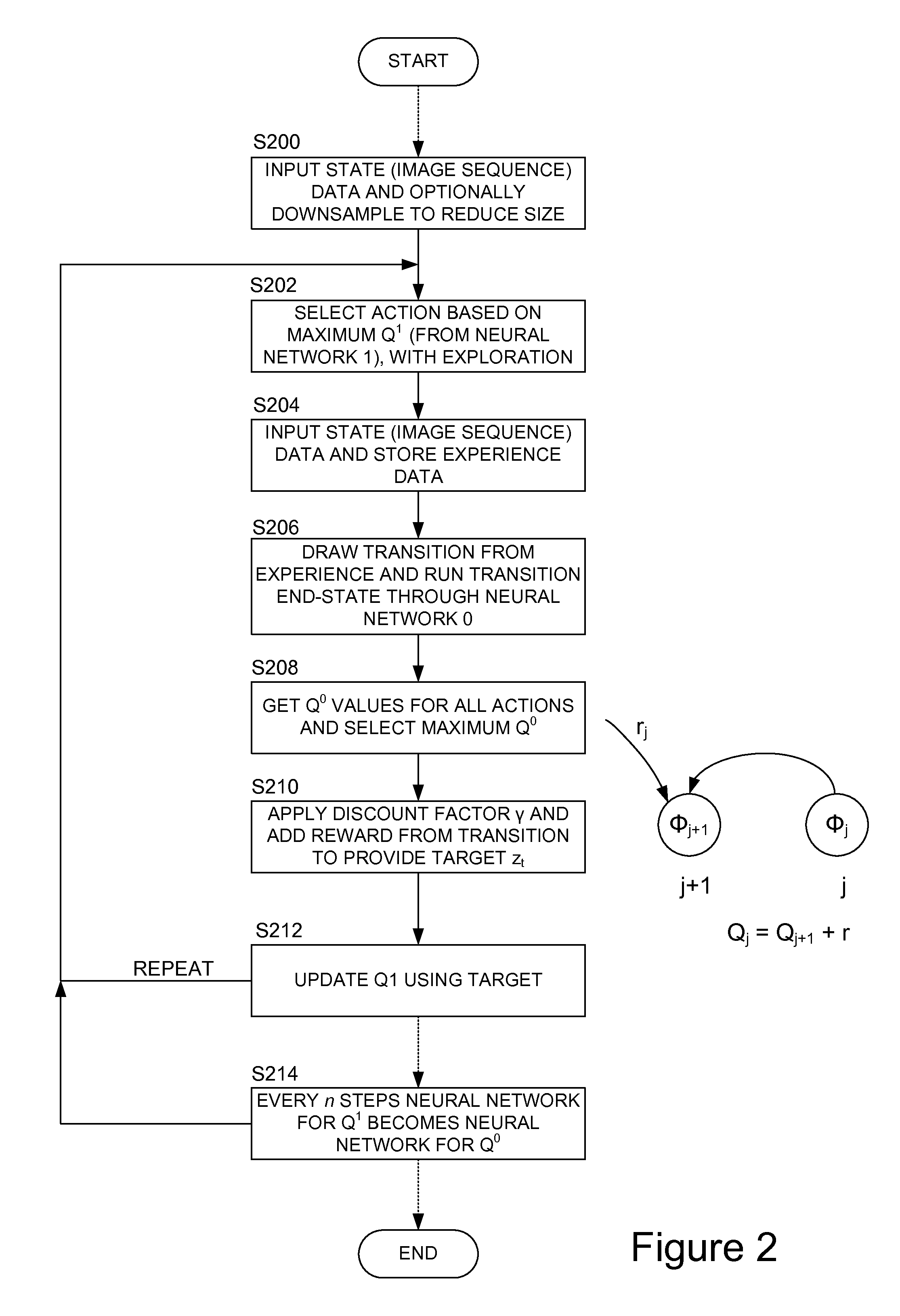

ActiveUS20150100530A1Reduce computing costLarge data setDigital computer detailsArtificial lifeAlgorithmReinforcement learning

Owner:DEEPMIND TECH LTD

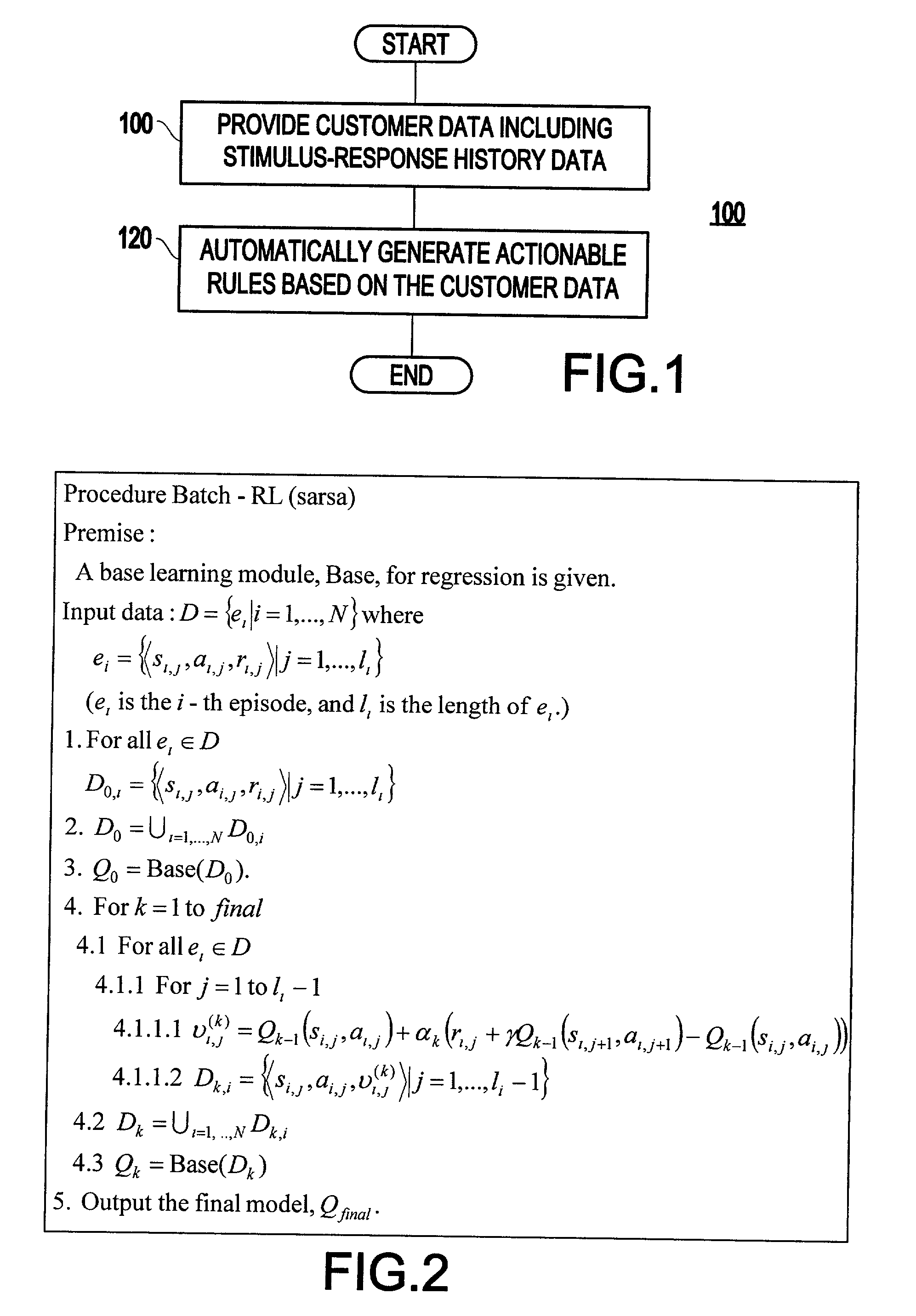

System and method for sequential decision making for customer relationship management

InactiveUS20040015386A1Market predictionsDigital computer detailsCustomer relationship managementData mining

A system and method for sequential decision-making for customer relationship management includes providing customer data including stimulus-response history data, and automatically generating actionable rules based on the customer data. Further, automatically generating actionable rules may include estimating a value function using reinforcement learning.

Owner:IBM CORP

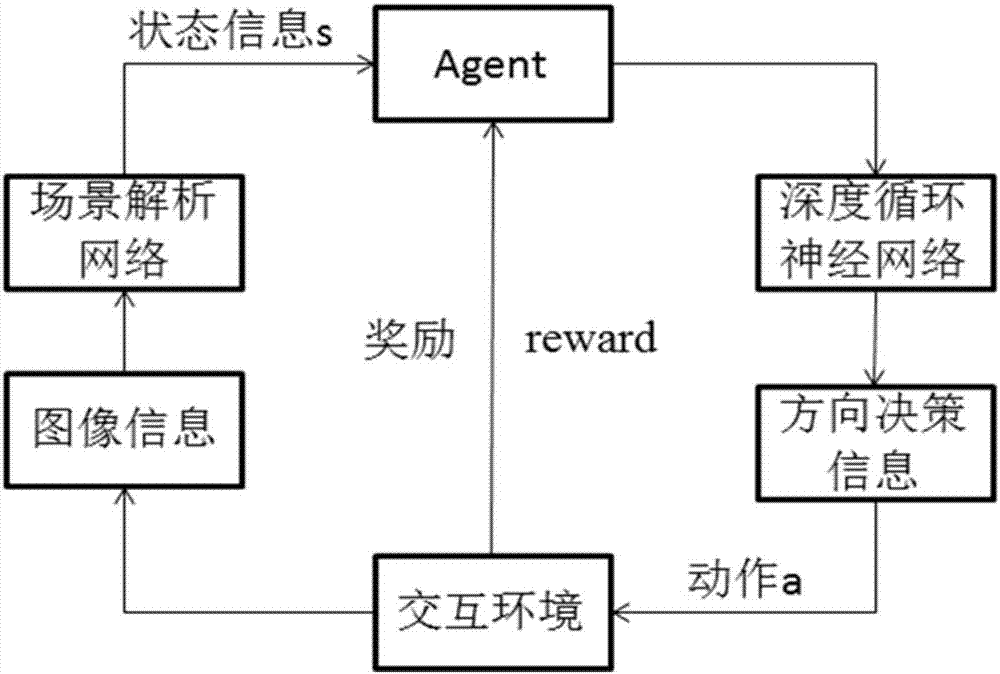

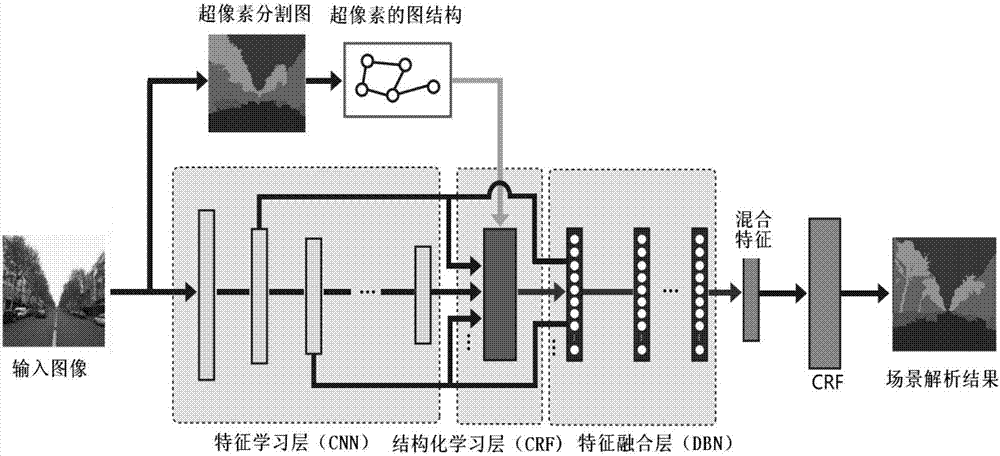

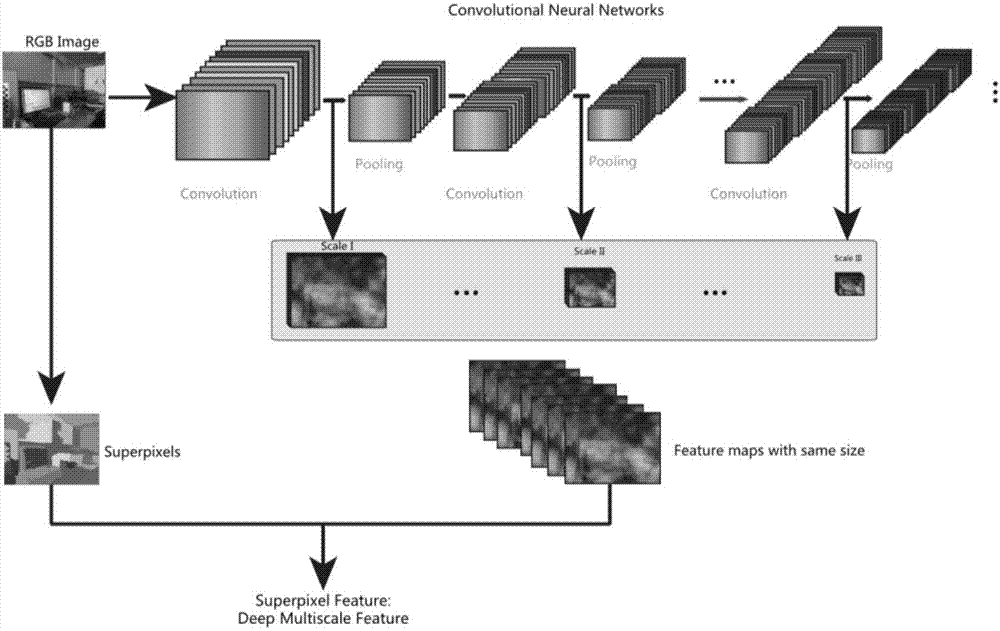

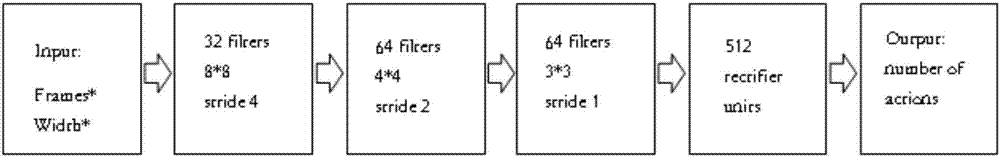

Deep and reinforcement learning-based real-time online path planning method of

ActiveCN106970615AReasonable method designAccurate path planningPosition/course control in two dimensionsPlanning approachStudy methods

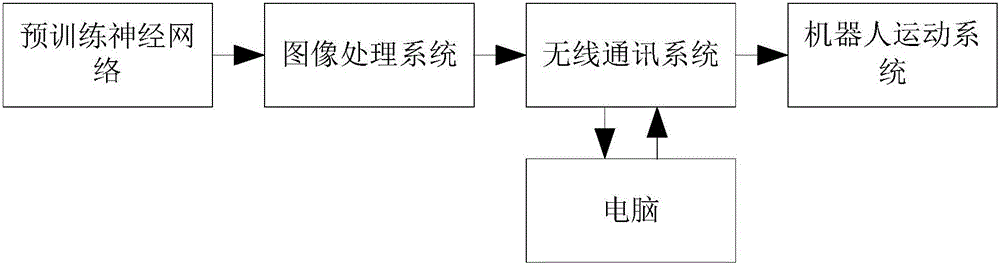

The present invention provides a deep and reinforcement learning-based real-time online path planning method. According to the method, the high-level semantic information of an image is obtained through using a deep learning method, the path planning of the end-to-end real-time scenes of an environment can be completed through using a reinforcement learning method. In a training process, image information collected in the environment is brought into a scene analysis network as a current state, so that an analytical result can be obtained; the analytical result is inputted into a designed deep cyclic neural network; and the decision-making action of each step of an intelligent body in a specific scene can be obtained through training, so that an optimal complete path can be obtained. In an actual application process, image information collected by a camera is inputted into a trained deep and reinforcement learning network, so that the direction information of the walking of the intelligent body can be obtained. With the method of the invention, obtained image information can be utilized to the greatest extent under a premise that the robustness of the method is ensured and the method slightly depends on the environment, and real-time scene walking information path planning can be realized.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

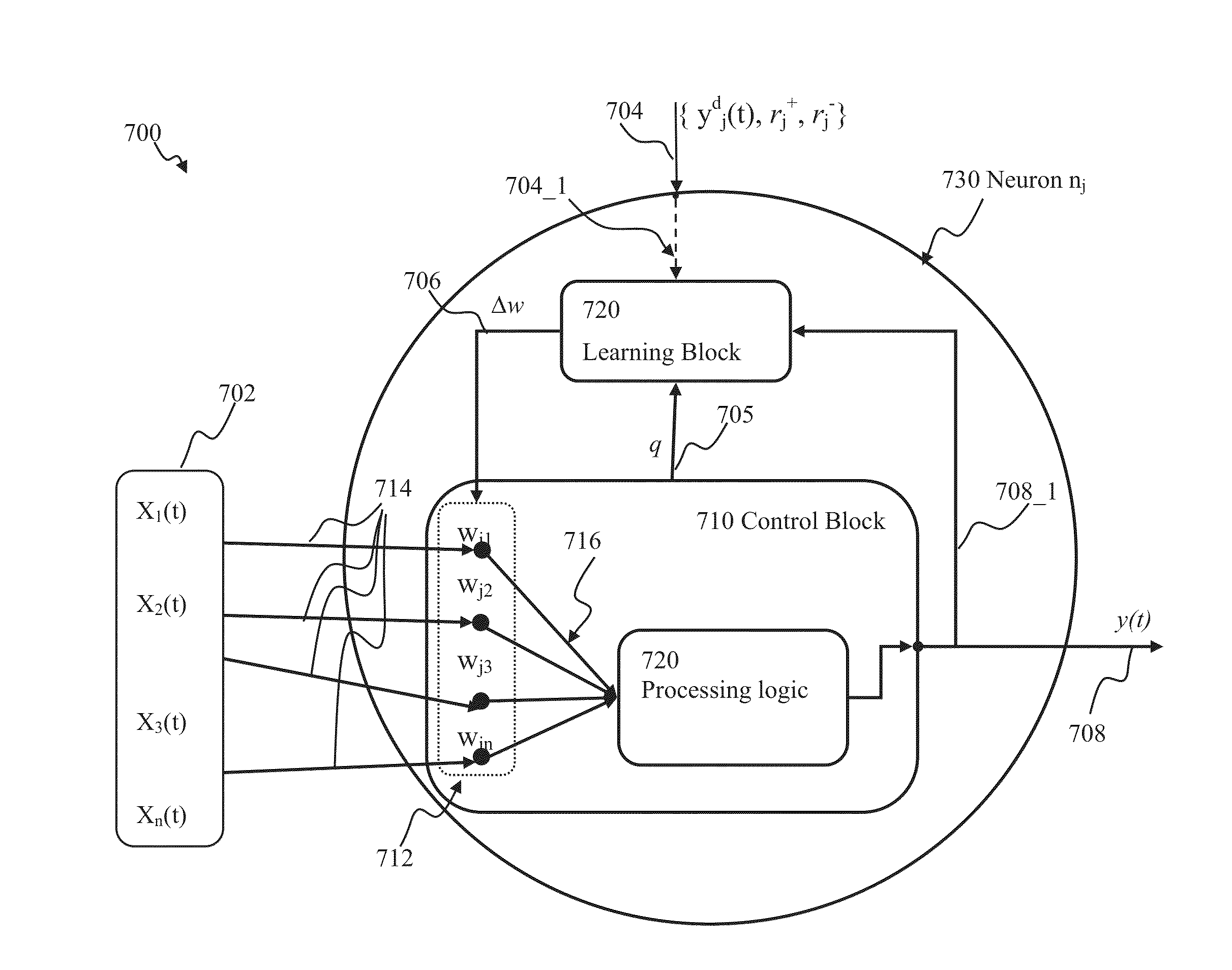

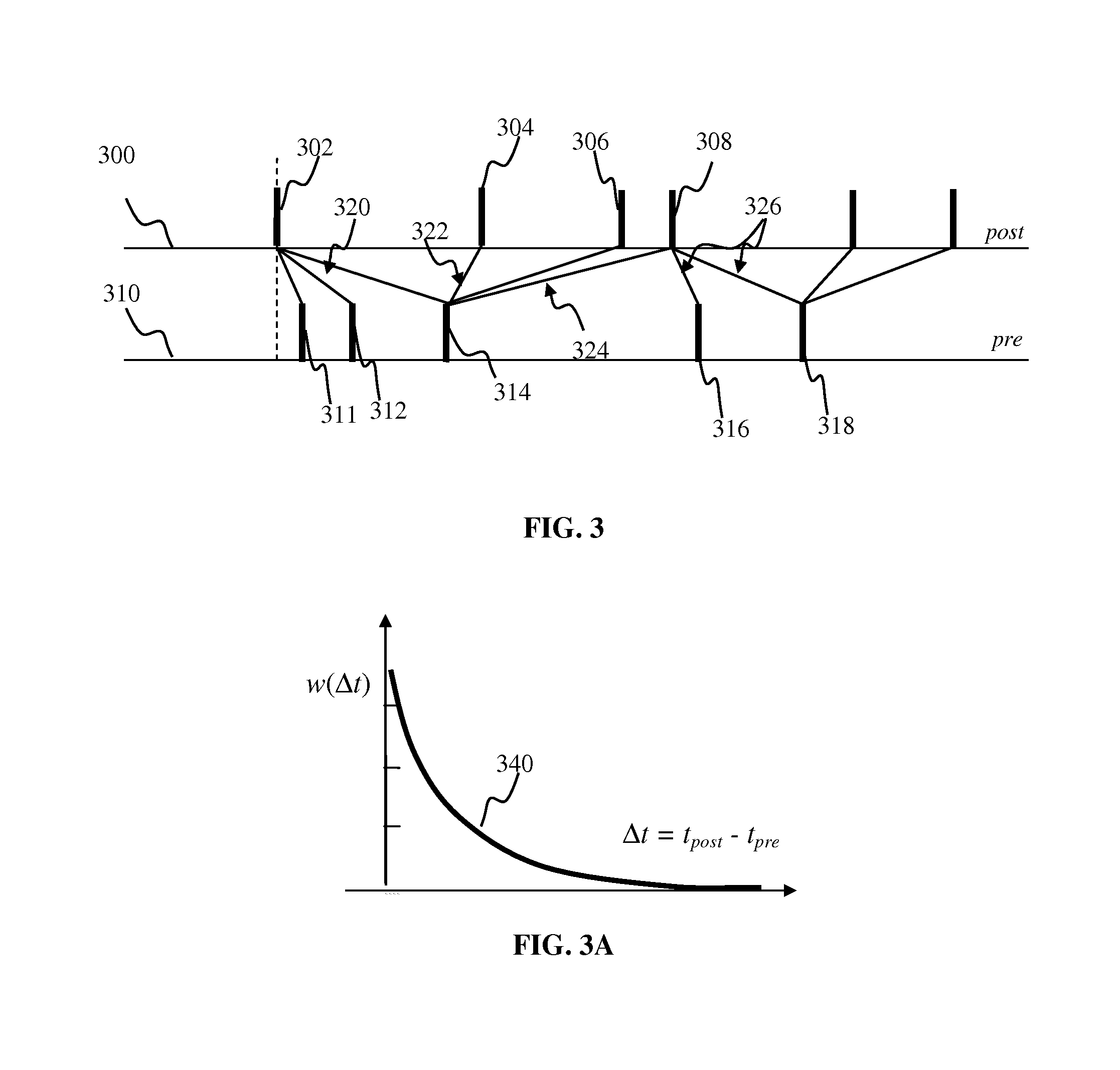

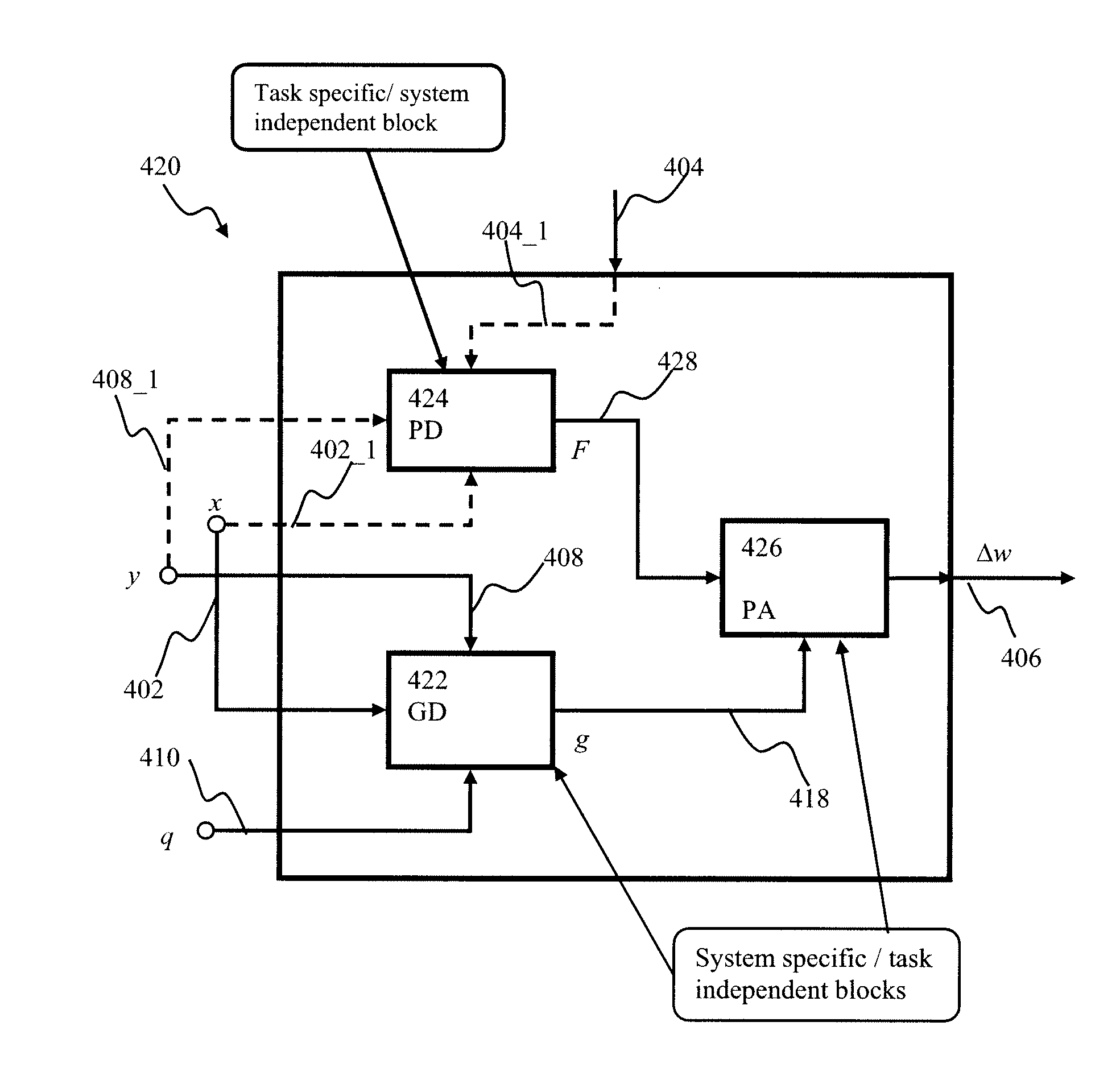

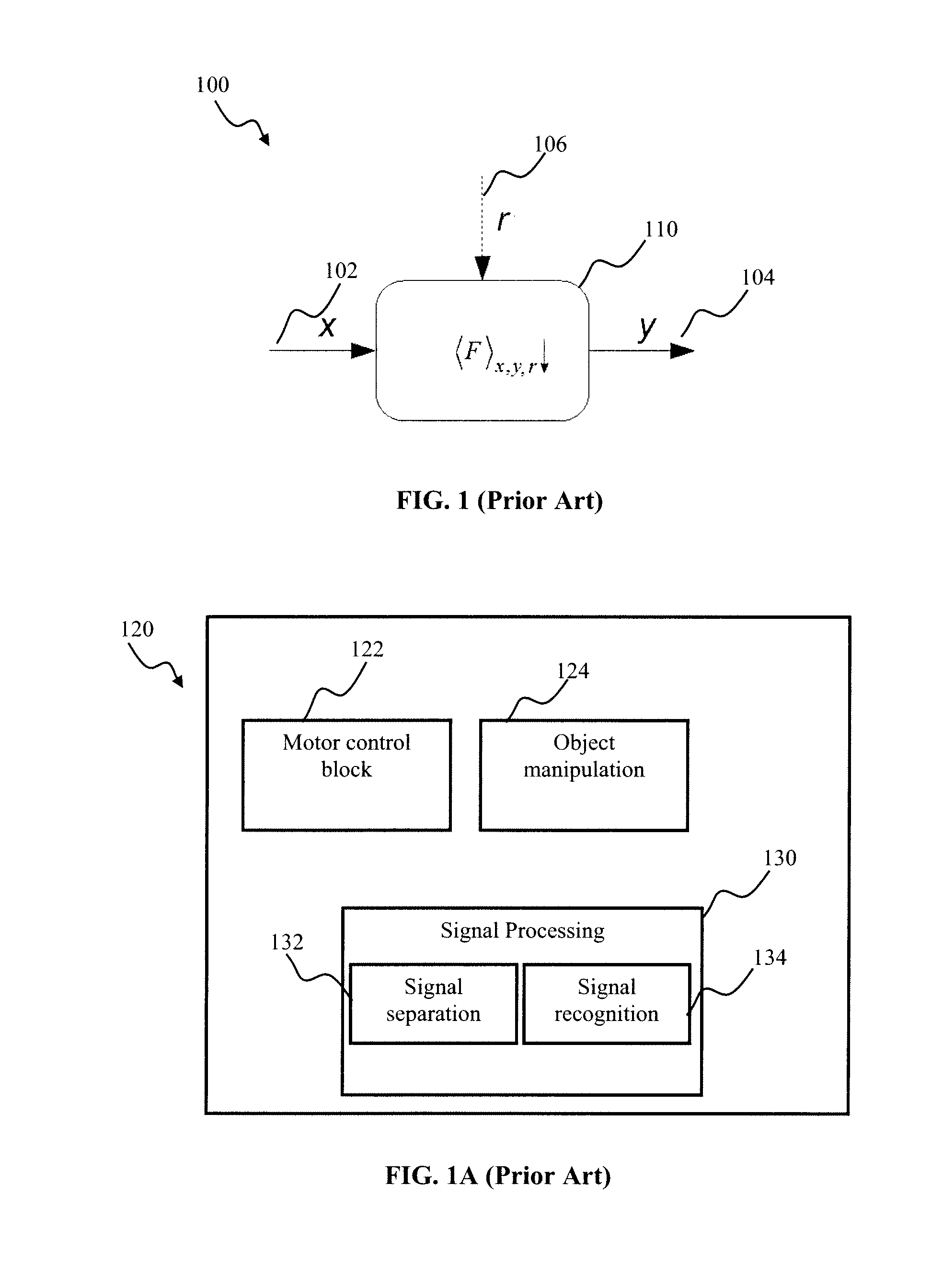

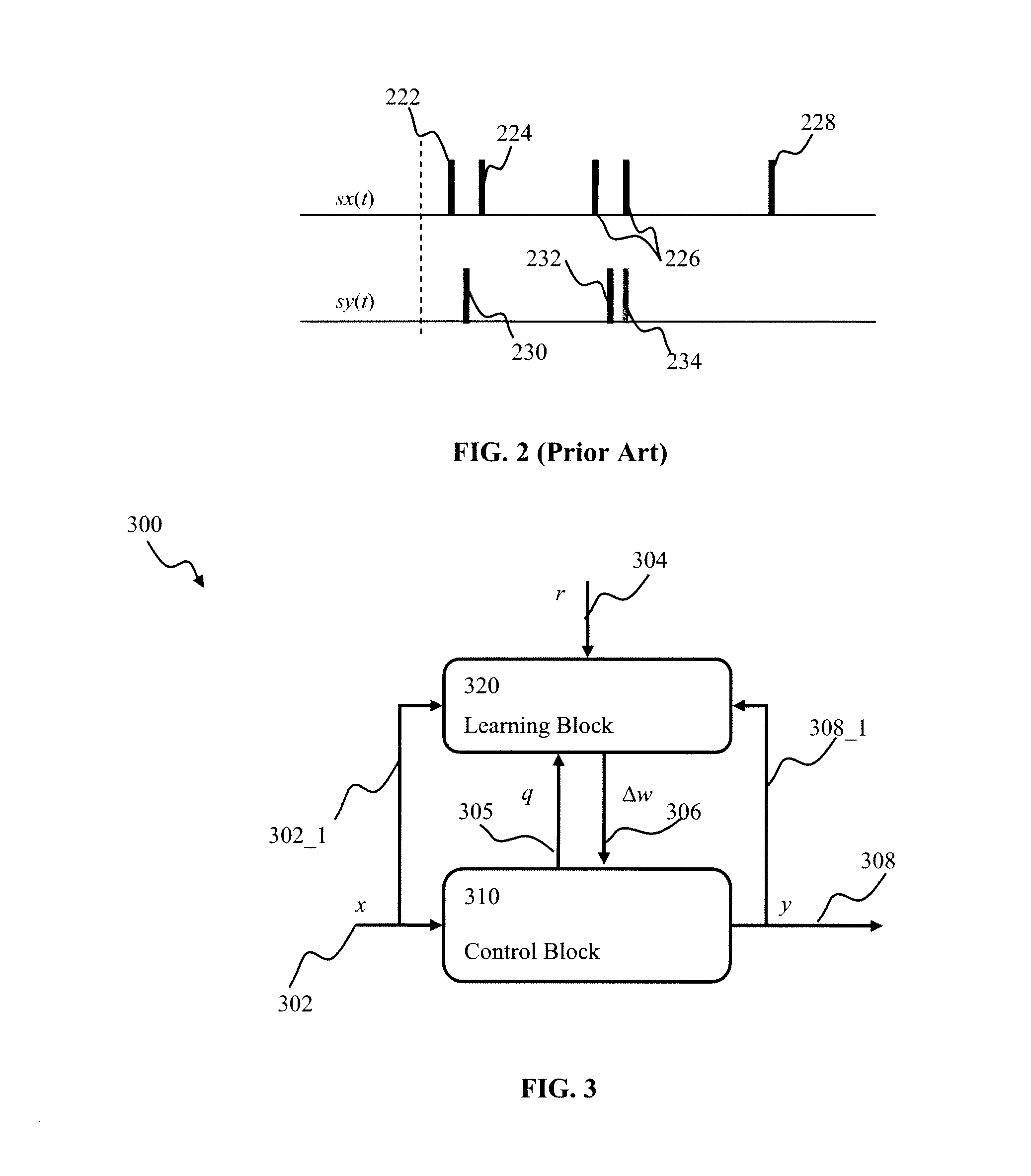

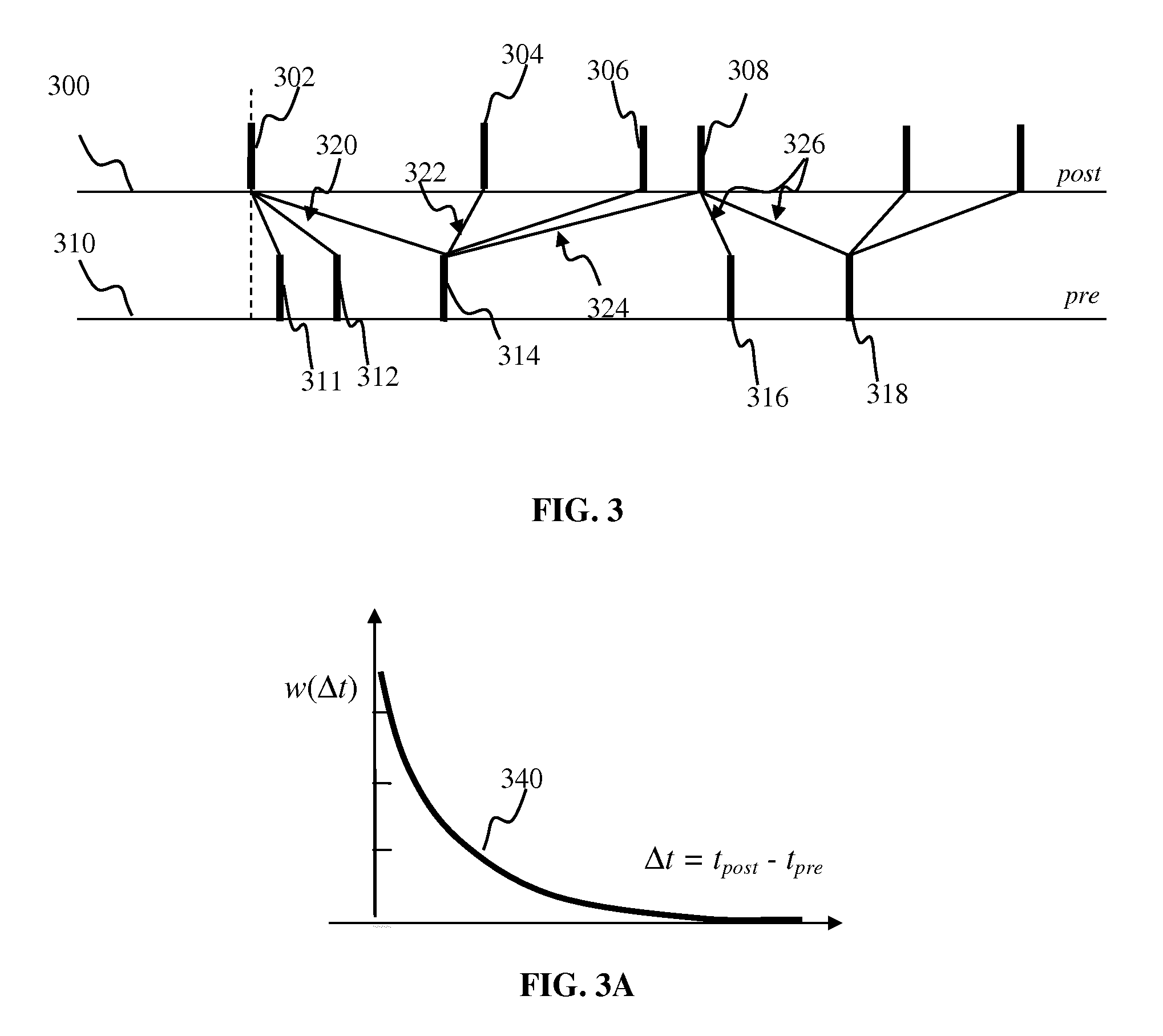

Stochastic spiking network learning apparatus and methods

Generalized learning rules may be implemented. A framework may be used to enable adaptive spiking neuron signal processing system to flexibly combine different learning rules (supervised, unsupervised, reinforcement learning) with different methods (online or batch learning). The generalized learning framework may employ time-averaged performance function as the learning measure thereby enabling modular architecture where learning tasks are separated from control tasks, so that changes in one of the modules do not necessitate changes within the other. Separation of learning tasks from the control tasks implementations may allow dynamic reconfiguration of the learning block in response to a task change or learning method change in real time. The generalized spiking neuron learning apparatus may be capable of implementing several learning rules concurrently based on the desired control application and without requiring users to explicitly identify the required learning rule composition for that task.

Owner:BRAIN CORP

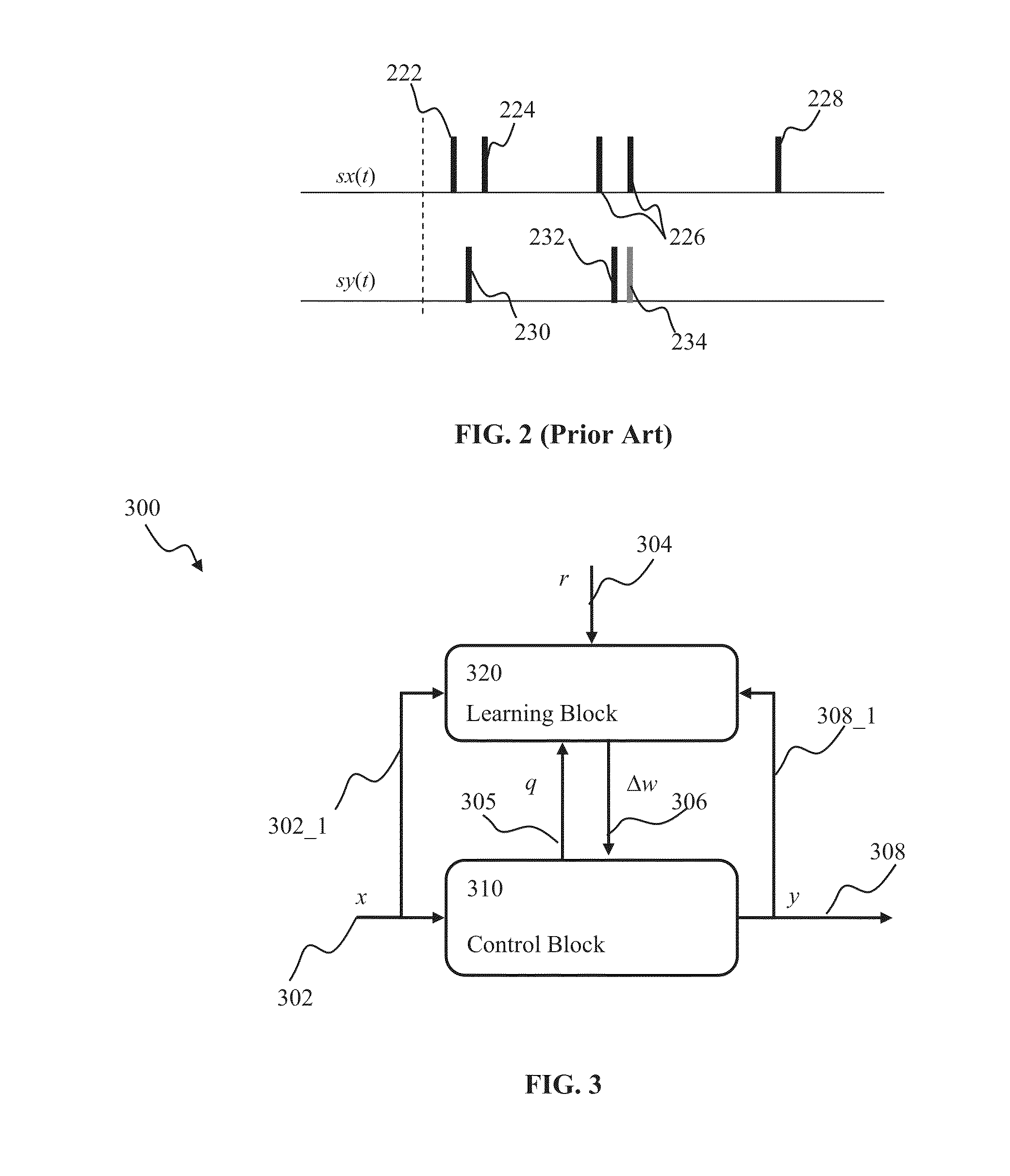

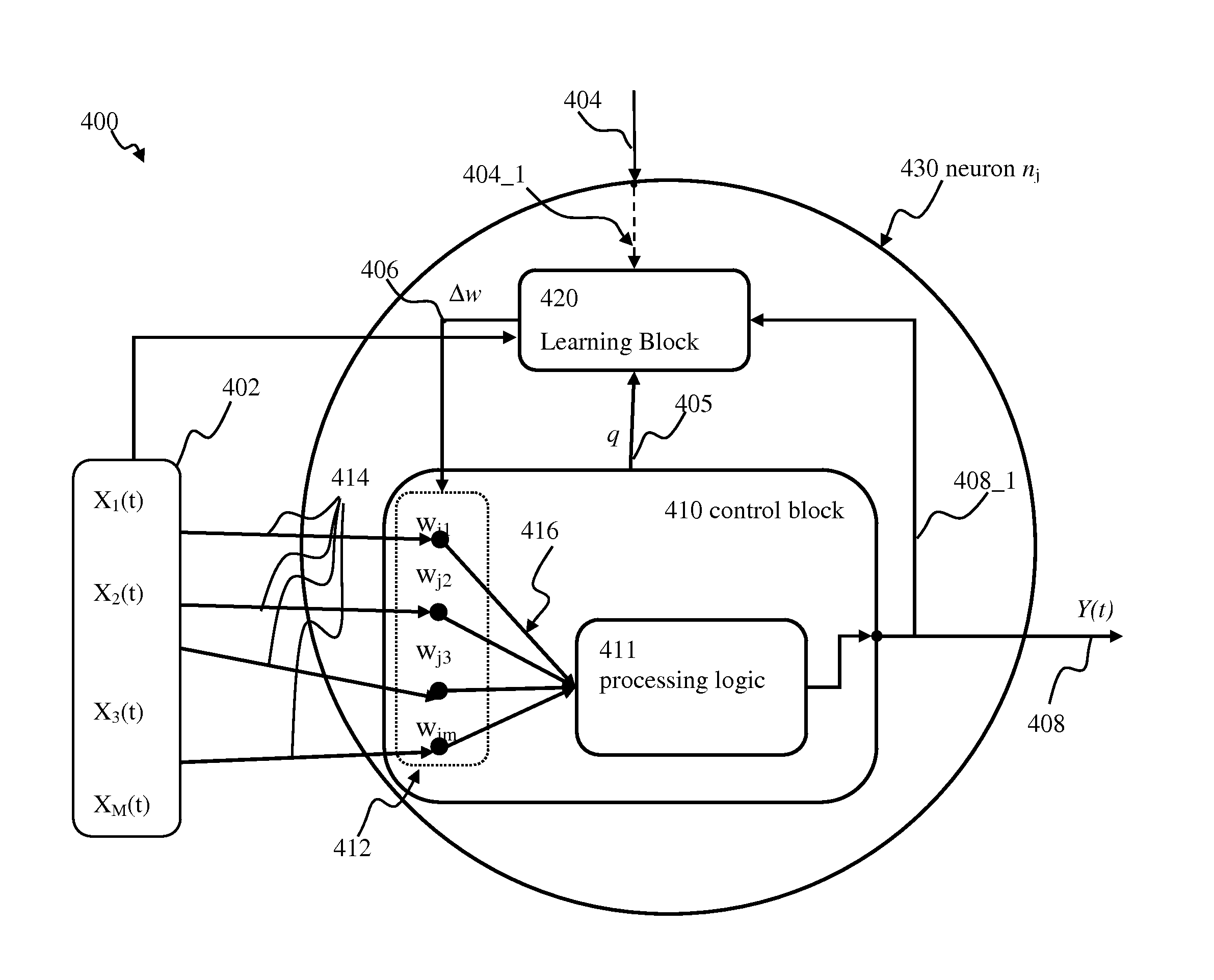

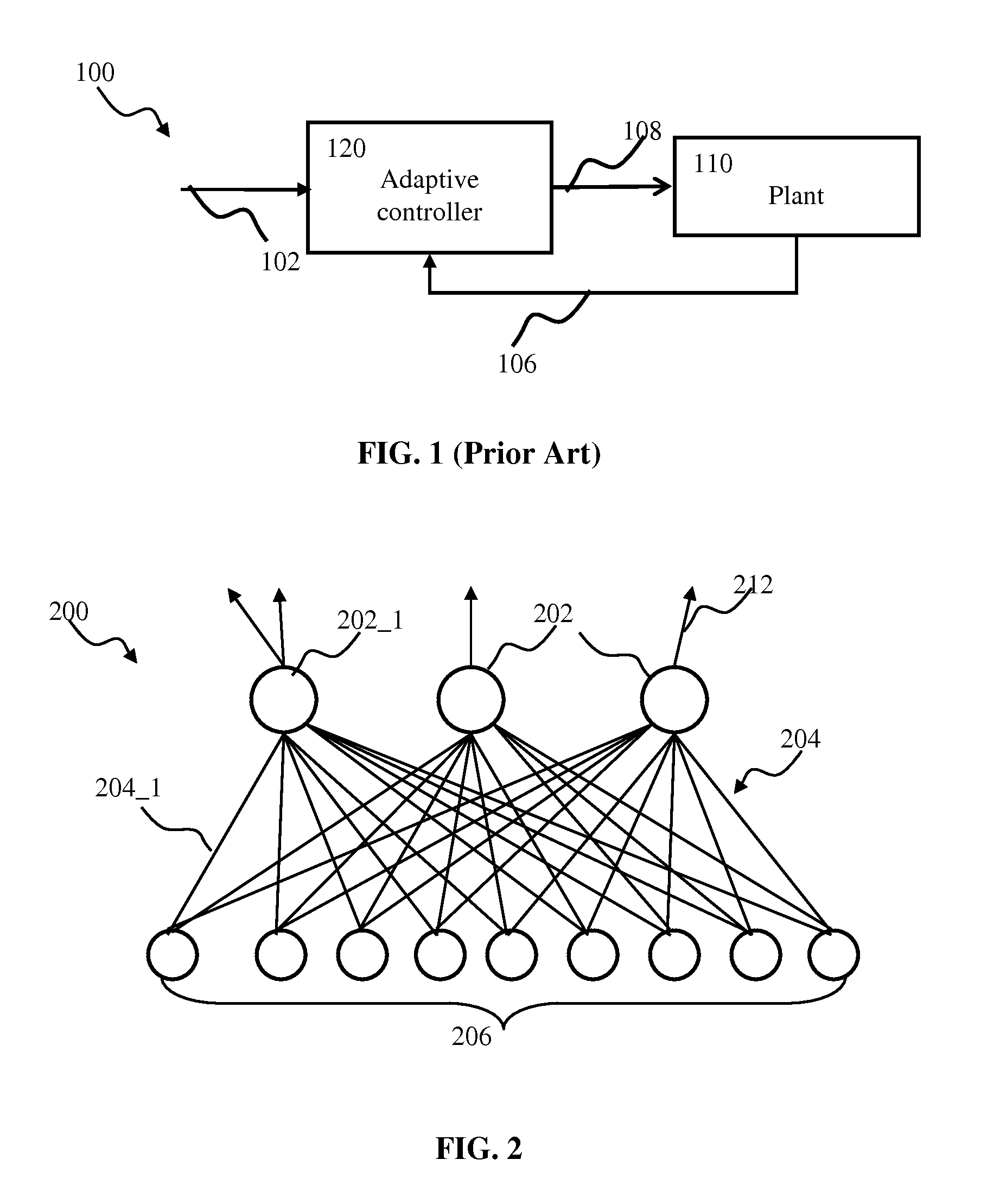

Spiking neuron network adaptive control apparatus and methods

ActiveUS20140081895A1Minimizing distance measureSmooth connectionDigital computer detailsNeural architecturesNeuron networkSpiking neural network

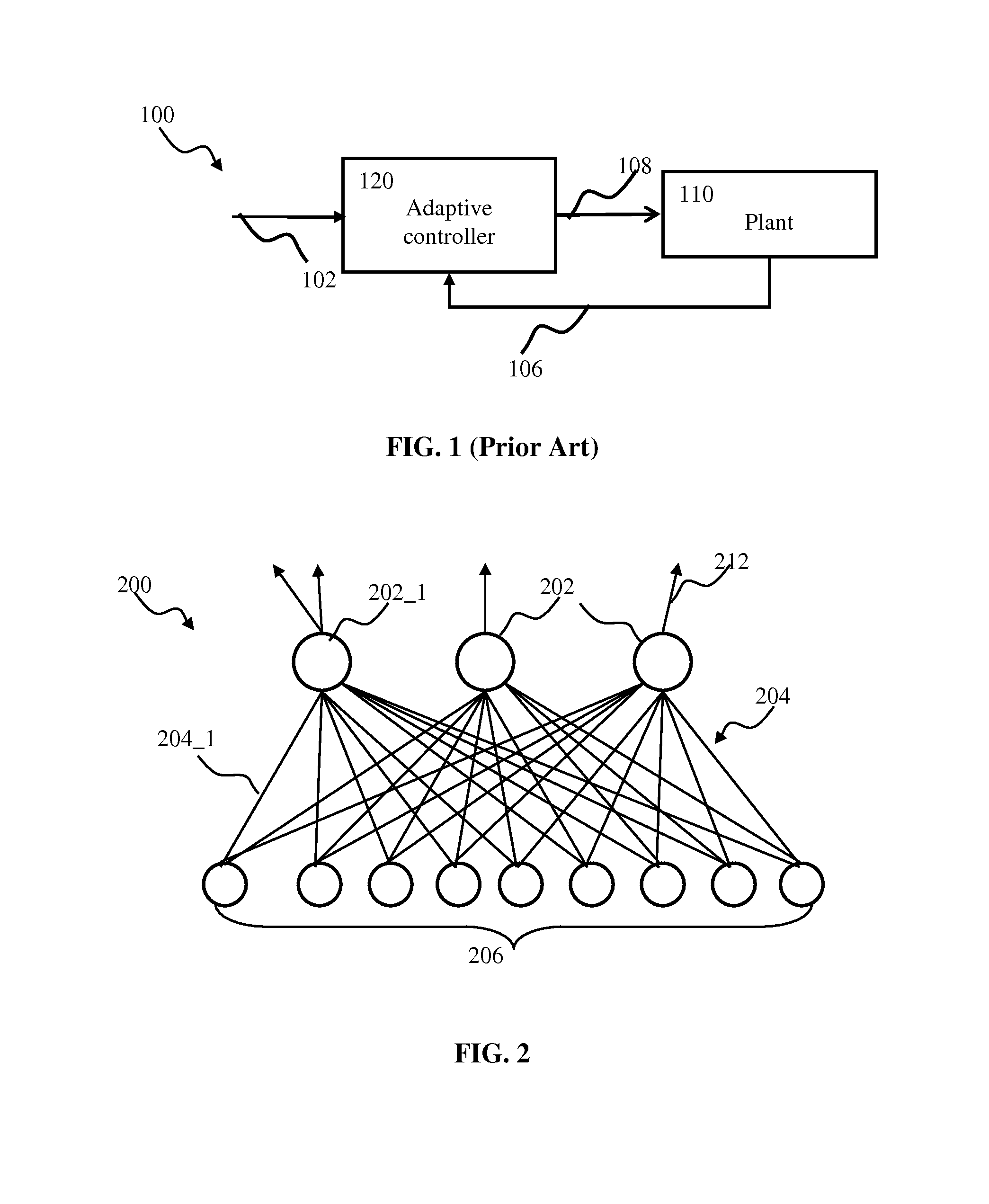

Adaptive controller apparatus of a plant may be implemented. The controller may comprise an encoder block and a control block. The encoder may utilize basis function kernel expansion technique to encode an arbitrary combination of inputs into spike output. The controller may comprise spiking neuron network operable according to reinforcement learning process. The network may receive the encoder output via a plurality of plastic connections. The process may be configured to adaptively modify connection weights in order to maximize process performance, associated with a target outcome. The relevant features of the input may be identified and used for enabling the controlled plant to achieve the target outcome.

Owner:BRAIN CORP

Stochastic apparatus and methods for implementing generalized learning rules

ActiveUS20130325773A1Shorten the timeDigital computer detailsDigital dataPerformance functionModularity

Generalized learning rules may be implemented. A framework may be used to enable adaptive signal processing system to flexibly, combine different learning rules (supervised, unsupervised, reinforcement learning) with different methods (online or batch learning). The generalized learning framework may employ time-averaged performance function as the learning measure thereby enabling modular architecture where learning tasks are separated from control tasks, so that changes in one of the modules do not necessitate changes within the other. The generalized learning apparatus may be capable of implementing several learning rules concurrently based on the desired control application and without requiring users to explicitly identify the required learning rule composition for that application.

Owner:BRAIN CORP

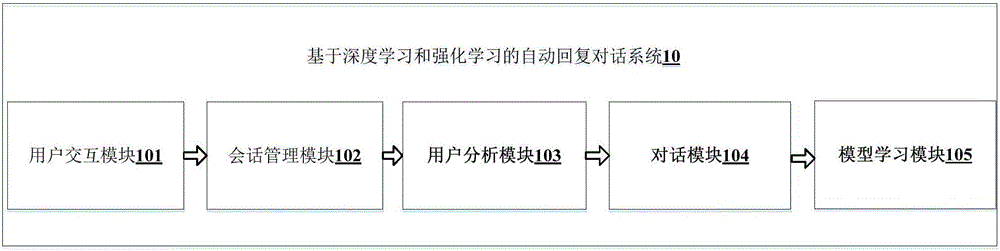

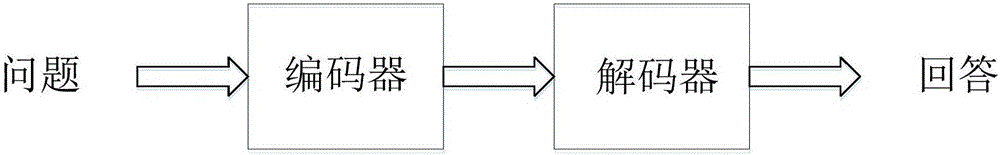

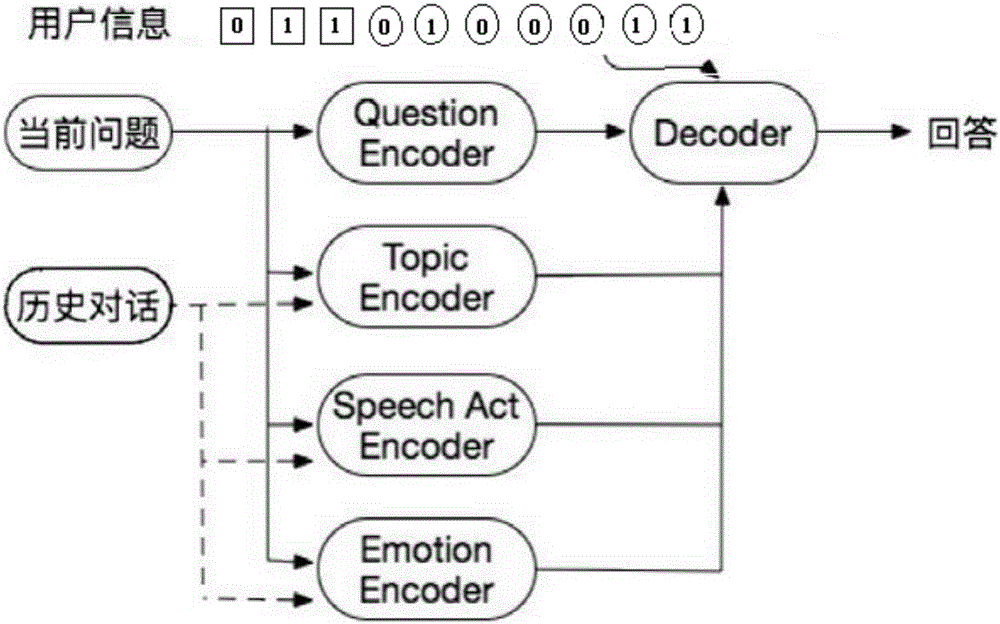

Dialogue automatic reply system based on deep learning and reinforcement learning

The invention discloses a dialogue automatic reply system based on deep learning and reinforcement learning. The dialogue automatic reply system comprises a user interaction module which receives question information inputted by a user in a dialogue system interface; a session management module which records the active state of the user, wherein the active state includes historical dialogue information, user position transformation information and user emotion change information; a user analysis module which analyzes registration information and the active state of the user and portraits for the user so as to obtain user portrait information; a dialogue module which generates reply information through a language module according to the question information of the user with combination of the portrait of the user; and a model learning module which updates the language model through the reinforcement learning technology according to the reply information generated by the language model. According to the dialogue automatic reply system based on deep learning and reinforcement learning, the reply of the dialogue meeting the personality of the user can be given according to the dialogue text inputted by the user with combination of context information, the personality characteristics of the user and the intentions in the dialogue.

Owner:EMOTIBOT TECH LTD

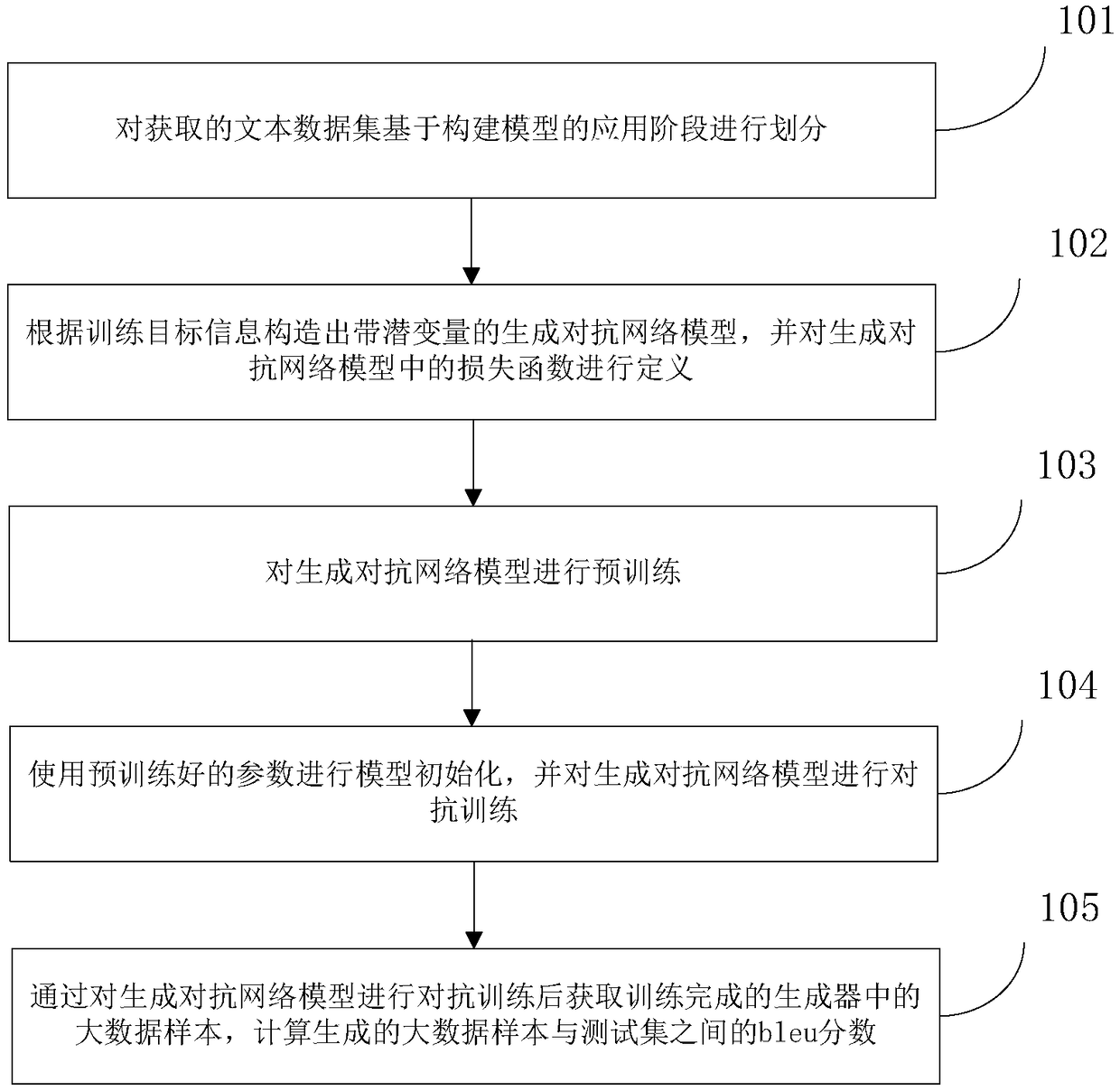

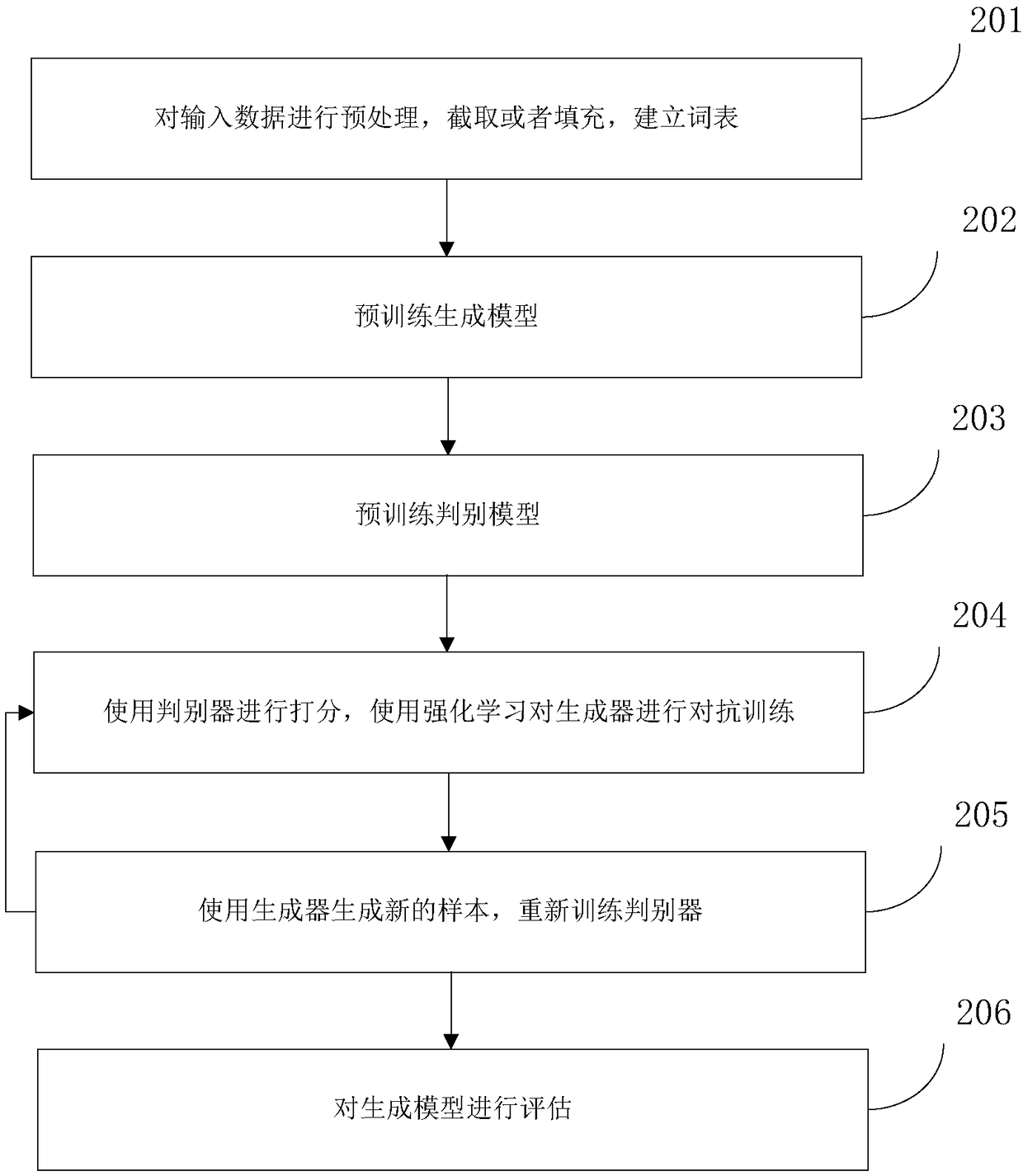

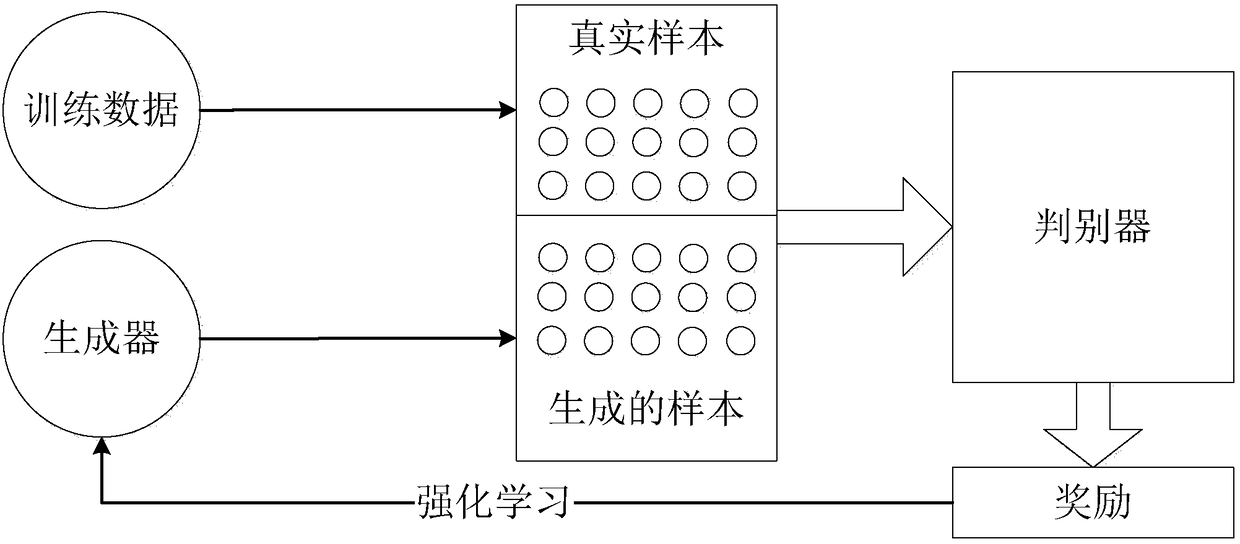

Automatic text generation method and device

InactiveCN108334497AGood effectEasy to learnNatural language data processingNeural architecturesAlgorithmGenerative adversarial network

Owner:BEIHANG UNIV

Autonomous underwater vehicle trajectory tracking control method based on deep reinforcement learning

ActiveCN108803321AStabilize the learning processOptimal target strategyAdaptive controlSimulationIntelligent control

The invention provides an autonomous underwater vehicle (AUV) trajectory tracking control method based on deep reinforcement learning, belonging to the field of deep reinforcement learning and intelligent control. The autonomous underwater vehicle trajectory tracking control method based on deep reinforcement learning includes the steps: defining an AUV trajectory tracking control problem; establishing a Markov decision-making process model of the AUV trajectory tracking problem; constructing a hybrid policy-evaluation network which consists of multiple policy networks and evaluation networks;and finally, solving the target policy of AUV trajectory tracking control by the constructed hybrid policy-evaluation network, for the multiple evaluation networks, evaluating the performance of eachevaluation network by defining an expected Bellman absolute error and updating only one evaluation network with the lowest performance at each time step, and for the multiple policy networks, randomly selecting one policy network at each time step and using a deterministic policy gradient to update, so that the finally learned policy is the mean value of all the policy networks. The autonomous underwater vehicle trajectory tracking control method based on deep reinforcement learning is not easy to be influenced by the bad AUV historical tracking trajectory, and has high precision.

Owner:TSINGHUA UNIV

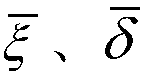

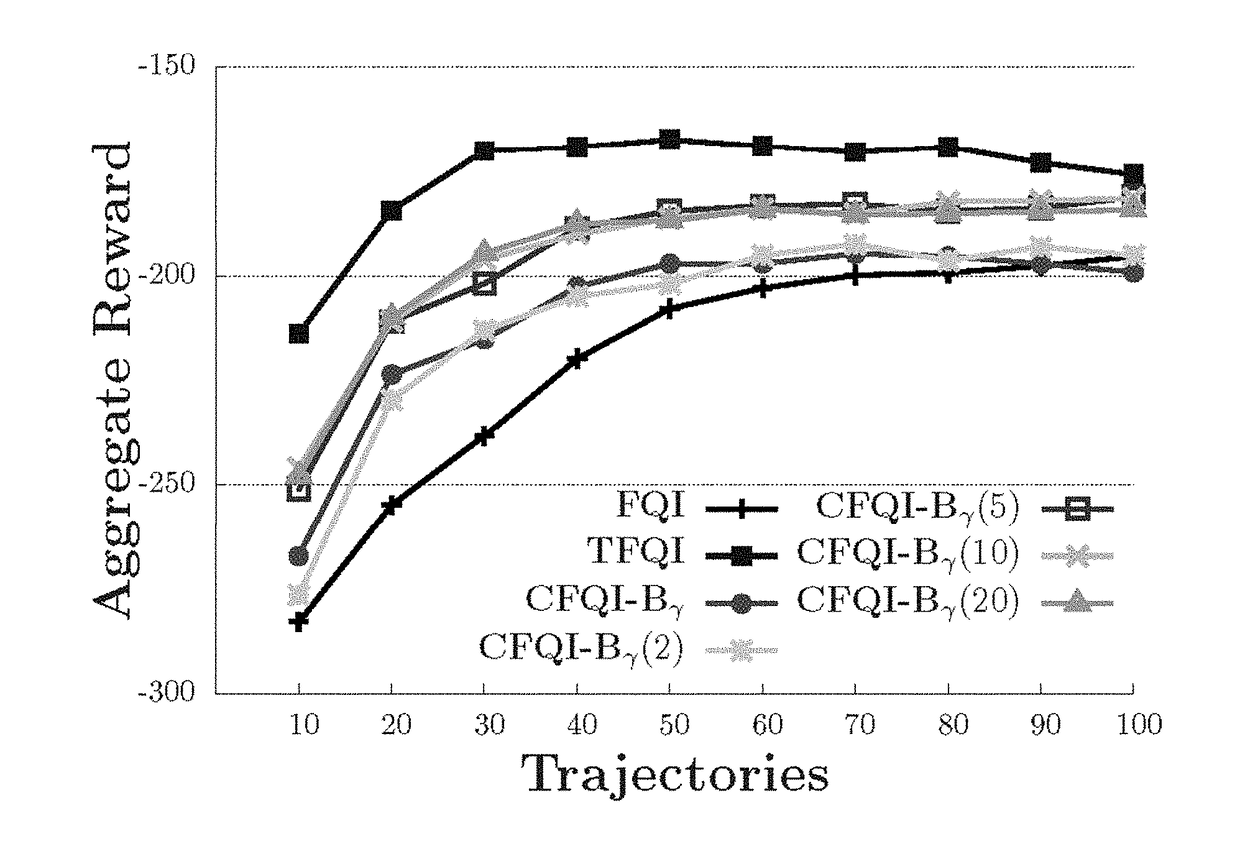

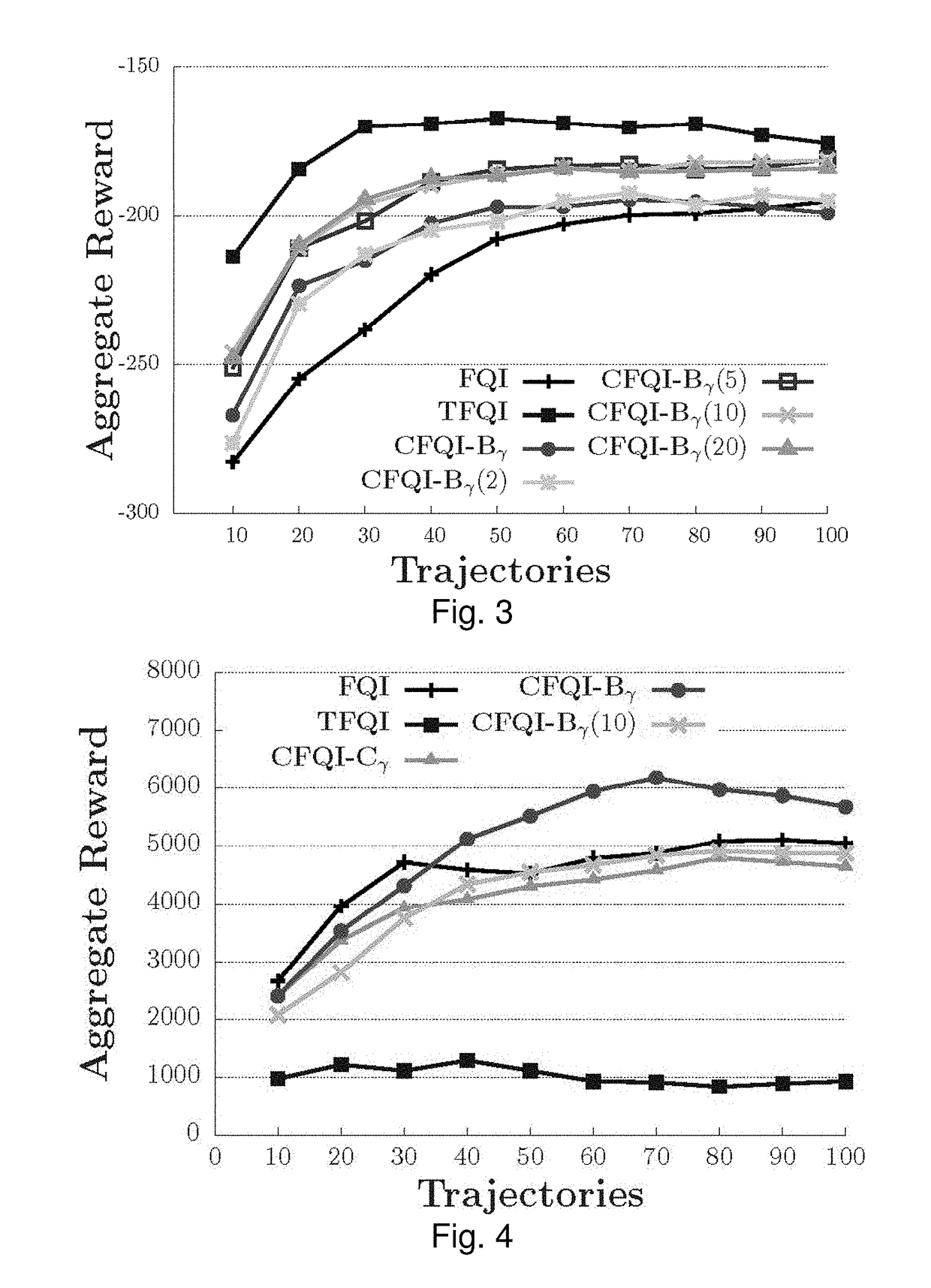

Approximate value iteration with complex returns by bounding

ActiveUS20180012137A1Reduce varianceBetter estimatorComputer controlProbabilistic networksData setControl system

A control system and method for controlling a system, which employs a data set representing a plurality of states and associated trajectories of an environment of the system; and which iteratively determines an estimate of an optimal control policy for the system. The iterative process performs the substeps, until convergence, of estimating a long term value for operation at a respective state of the environment over a series of predicted future environmental states; using a complex return of the data set to determine a bound to improve the estimated long term value; and producing an updated estimate of an optimal control policy dependent on the improved estimate of the long term value. The control system may produce an output signal to control the system directly, or output the optimized control policy. The system preferably is a reinforcement learning system which continually improves.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

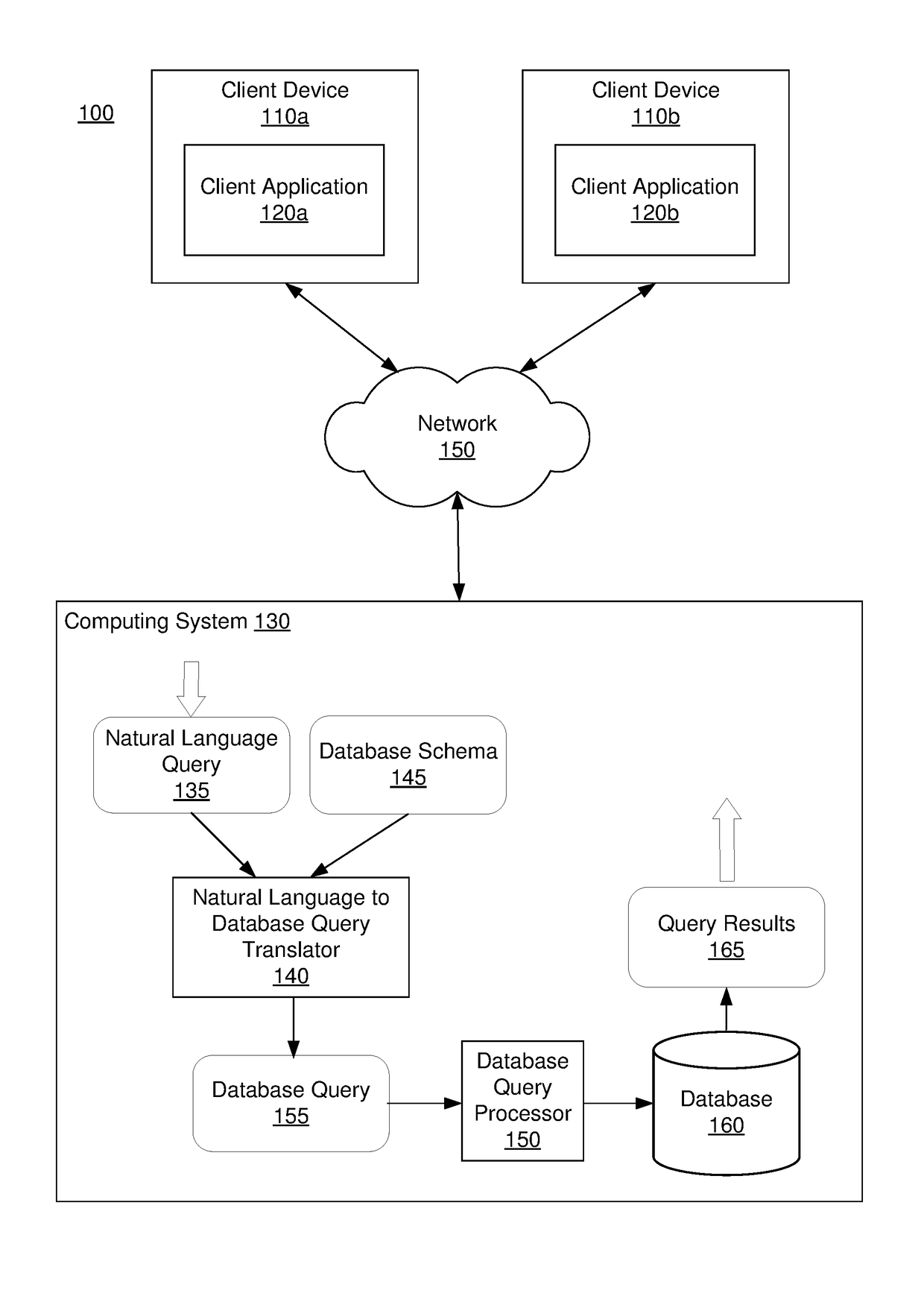

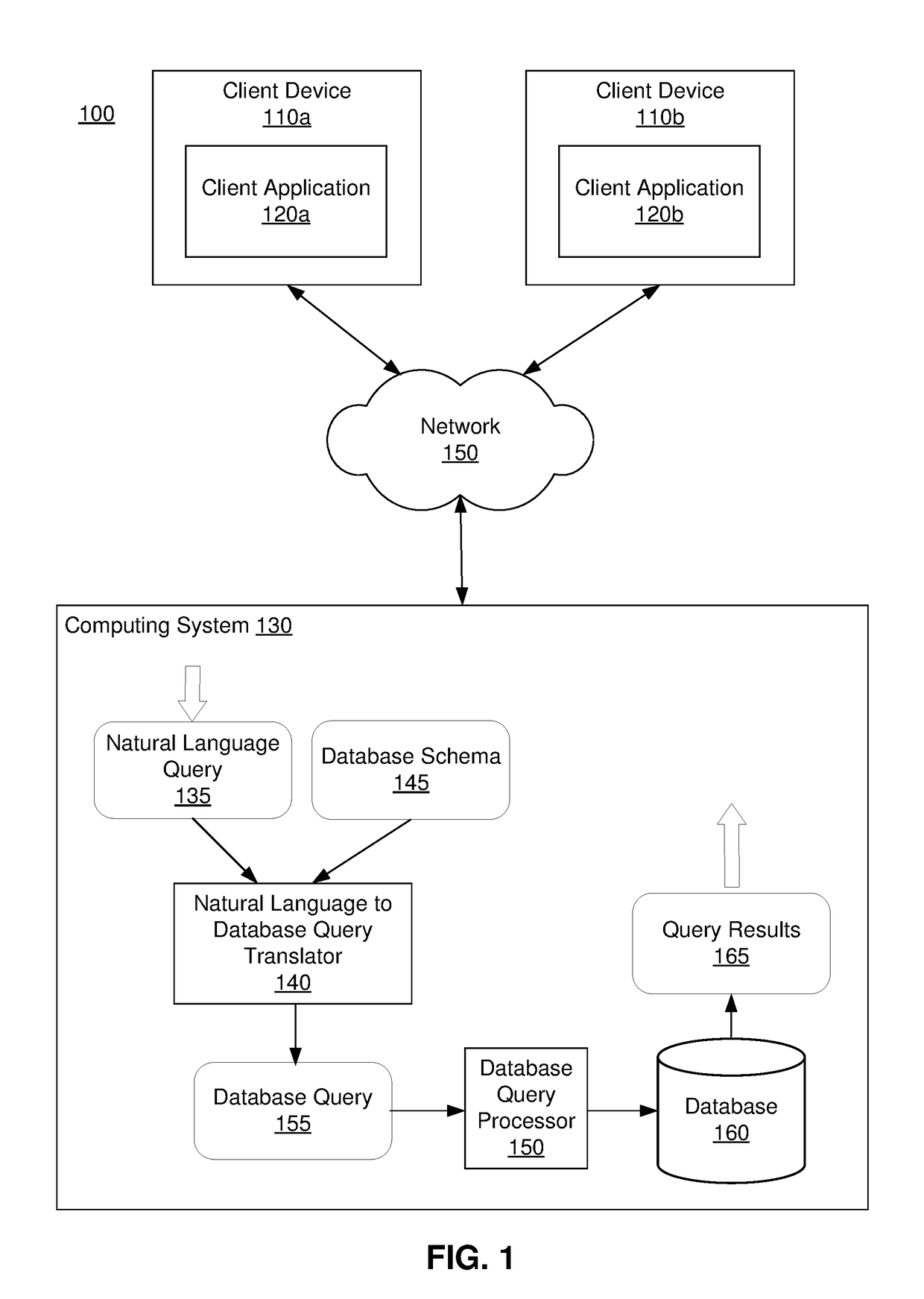

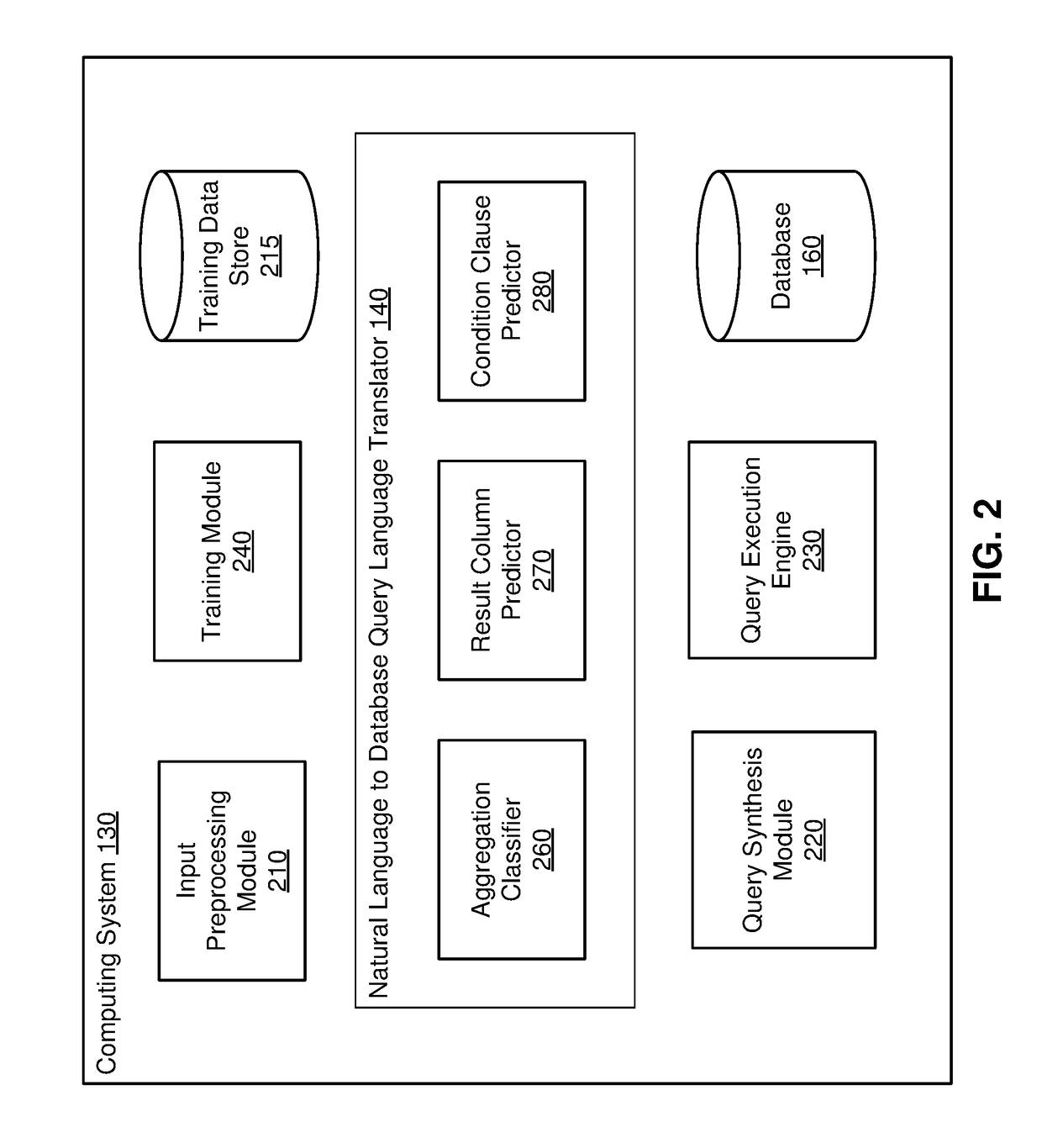

Neural network based translation of natural language queries to database queries

A computing system uses neural networks to translate natural language queries to database queries. The computing system uses a plurality of machine learning based models, each machine learning model for generating a portion of the database query. The machine learning models use an input representation generated based on terms of the input natural language query, a set of columns of the database schema, and the vocabulary of a database query language, for example, structured query language SQL. The plurality of machine learning based models may include an aggregation classifier model for determining an aggregation operator in the database query, a result column predictor model for determining the result columns of the database query, and a condition clause predictor model for determining the condition clause of the database query. The condition clause predictor is based on reinforcement learning.

Owner:SALESFORCE COM INC

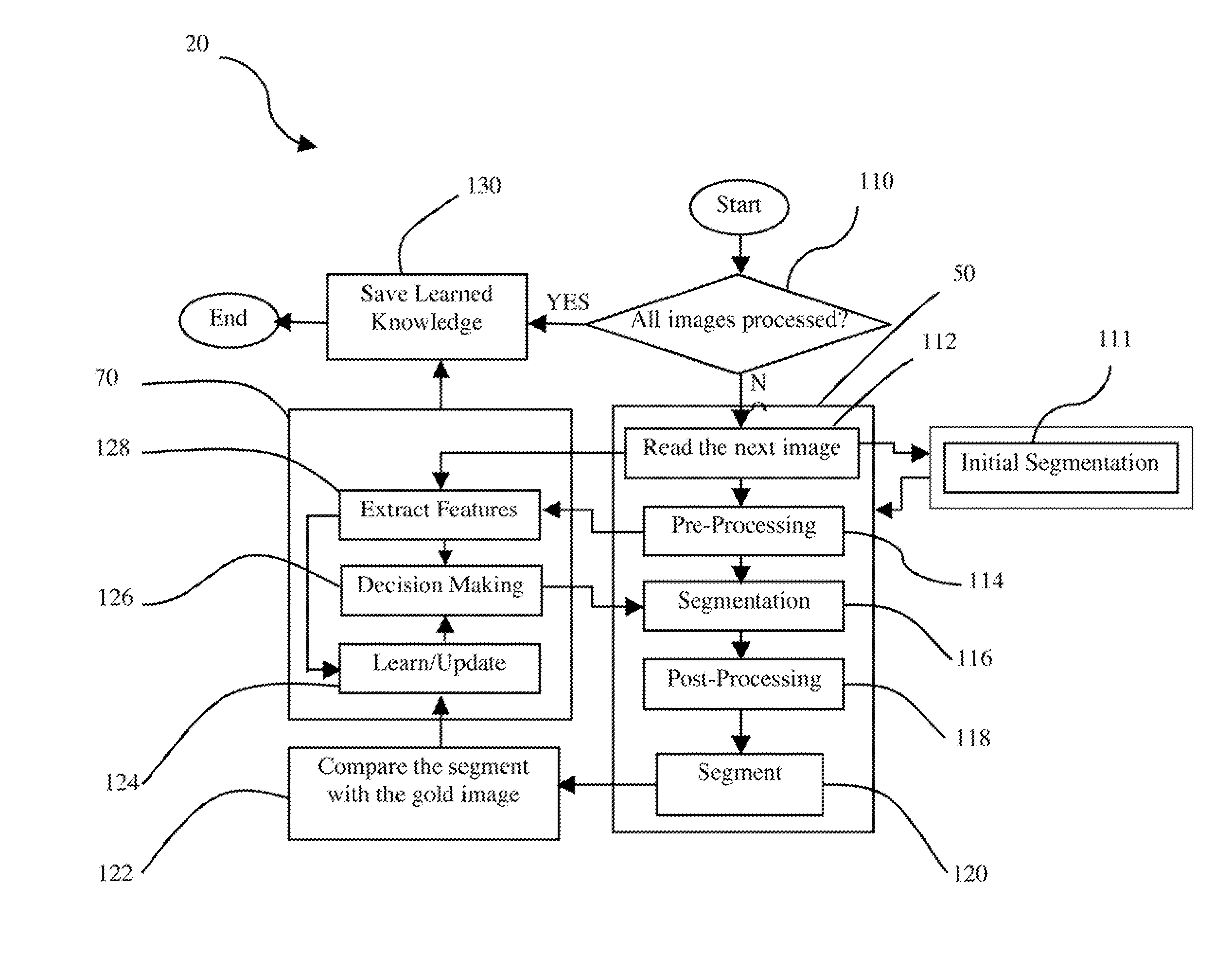

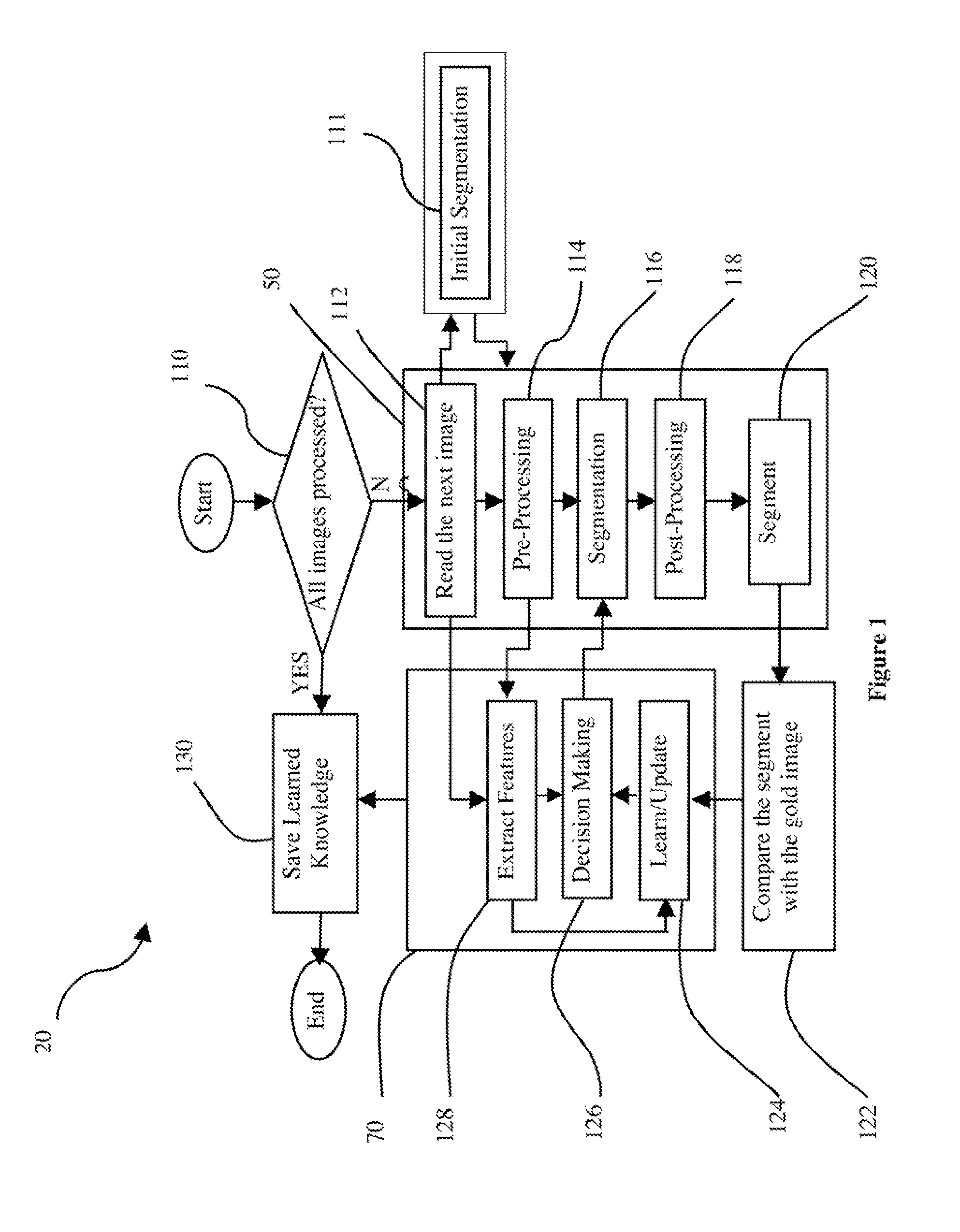

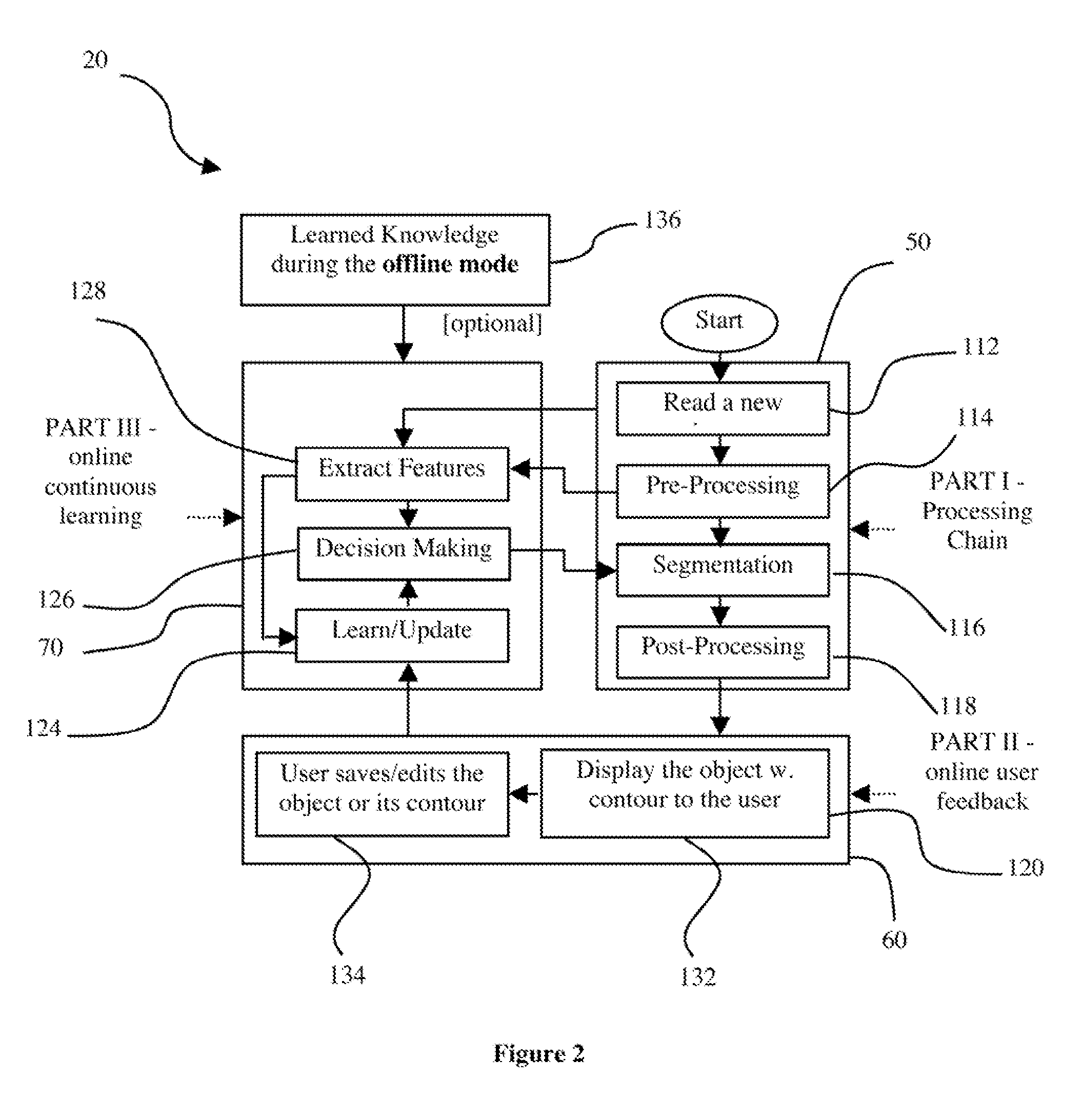

System and method for image segmentation

A method of segmenting images receives an image (such as a medical image) and a segment in relation to the image, displays them to an observer, receives a modification to the segment from the observer, and generates a second segment in relation to a second image, responsive to the modification. An image segmentation system includes a learning scheme or model to take input from an observer feedback interface and to communicate with a means for drawing an image segment to permit adjustment of at least one image segmentation parameter (such as a threshold value). The learning scheme is provided with a knowledge base which may initially be created by processing offline images. The learning scheme may use any scheme such as a reinforcement learning agent, a fuzzy inference system or a neural network.

Owner:UNIVERSITY OF WATERLOO

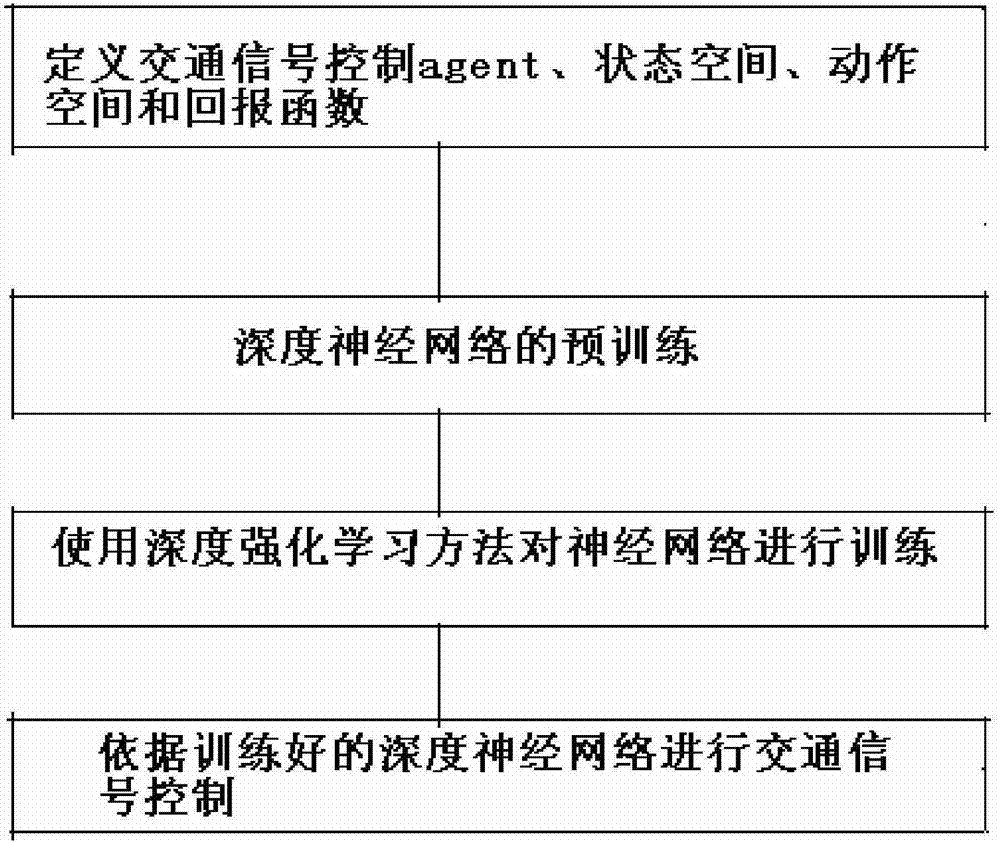

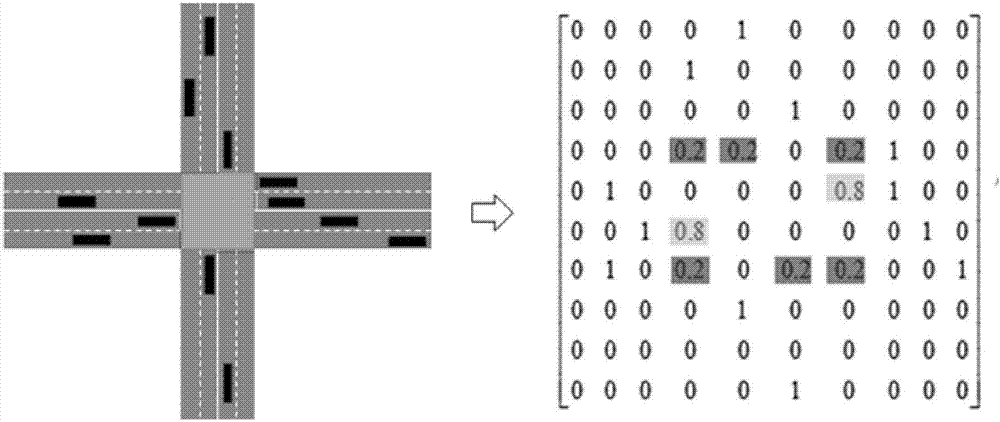

Traffic signal self-adaptive control method based on deep reinforcement learning

InactiveCN106910351ARealize precise perceptionSolve the problem of inaccurate perception of traffic statusControlling traffic signalsNeural architecturesTraffic signalReturn function

The invention relates to the technical field of traffic control and artificial intelligence and provides a traffic signal self-adaptive control method based on deep reinforcement learning. The method includes the following steps that 1, a traffic signal control agent, a state space S, a motion space A and a return function r are defined; 2, a deep neutral network is pre-trained; 3, the neutral network is trained through a deep reinforcement learning method; 4, traffic signal control is carried out according to the trained deep neutral network. By preprocessing traffic data acquired by magnetic induction, video, an RFID, vehicle internet and the like, low-layer expression of the traffic state containing vehicle position information is obtained; then the traffic state is perceived through a multilayer perceptron of deep learning, and high-layer abstract features of the current traffic state are obtained; on the basis, a proper timing plan is selected according to the high-layer abstract features of the current traffic state through the decision making capacity of reinforcement learning, self-adaptive control of traffic signals is achieved, the vehicle travel time is shortened accordingly, and safe, smooth, orderly and efficient operation of traffic is guaranteed.

Owner:DALIAN UNIV OF TECH

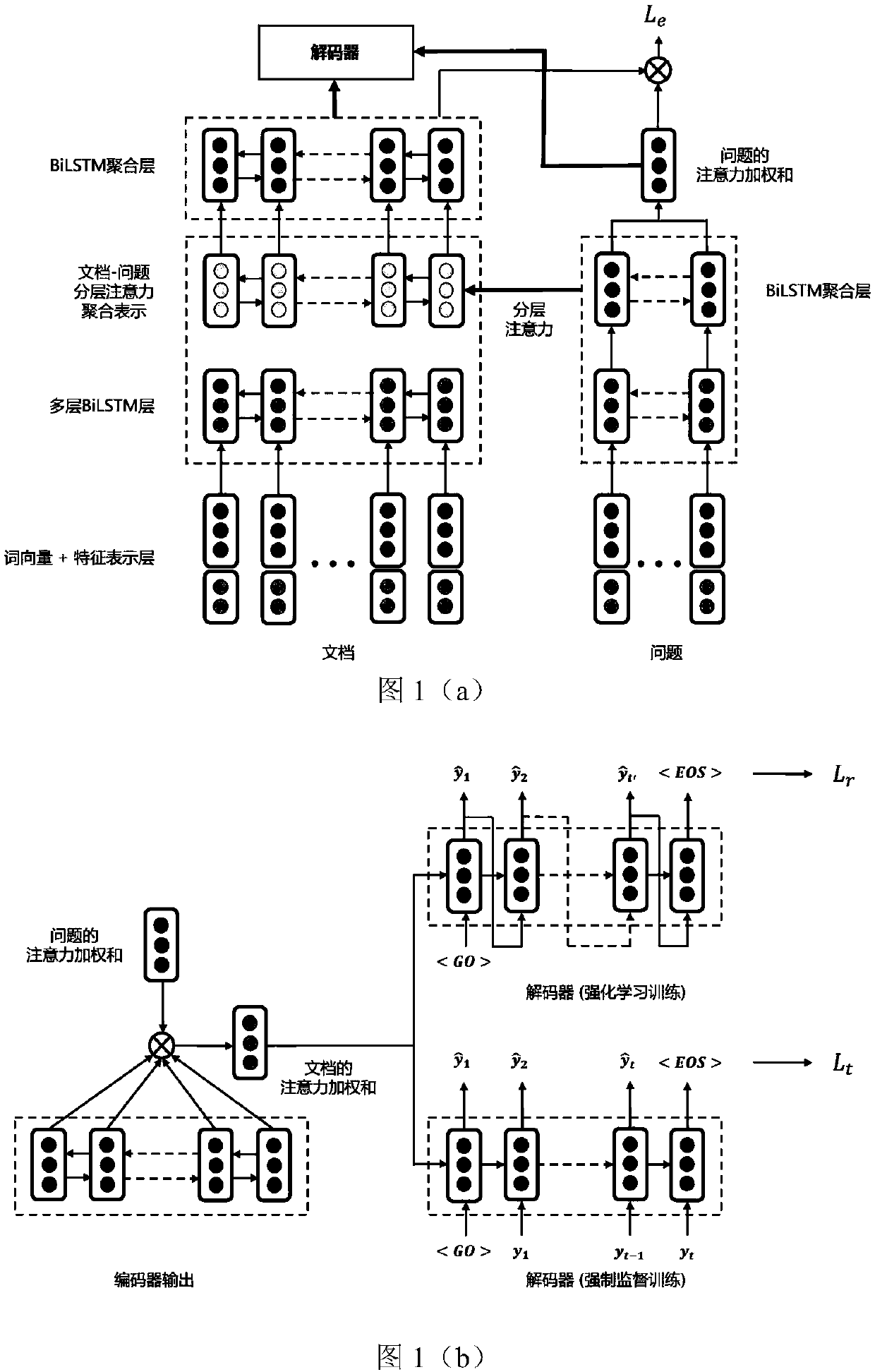

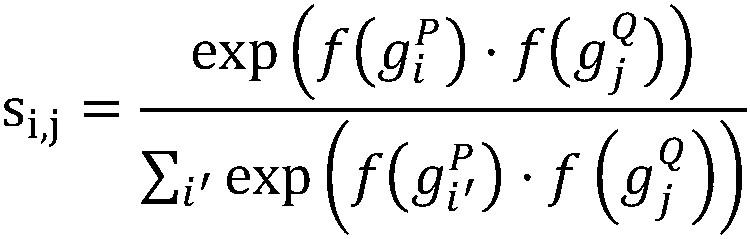

Deep neural network and reinforcement learning-based generative machine reading comprehension method

ActiveCN108415977AEasy extractionPromote generationSemantic analysisNeural architecturesStudy methodsReinforcement learning

The invention discloses a deep neural network and reinforcement learning-based generative machine reading comprehension method. According to the method, texts and questions are encoded through an attention mechanism-combined deep neural network so as to form question information-fused text vector expressions, and decoding is carried out through a unidirectional LSTM decoder so as to gradually generate corresponding answer texts. According to the reading comprehension method, the advantages of extractive models and generative models are fused, training is carried out by adoption of a multi-taskcombined optimization manner, and a reinforcement learning method is used in the training process, so that benefit is brought to generate more correct and fluent answer texts.

Owner:SOUTH CHINA UNIV OF TECH

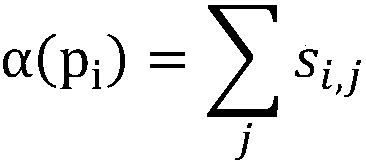

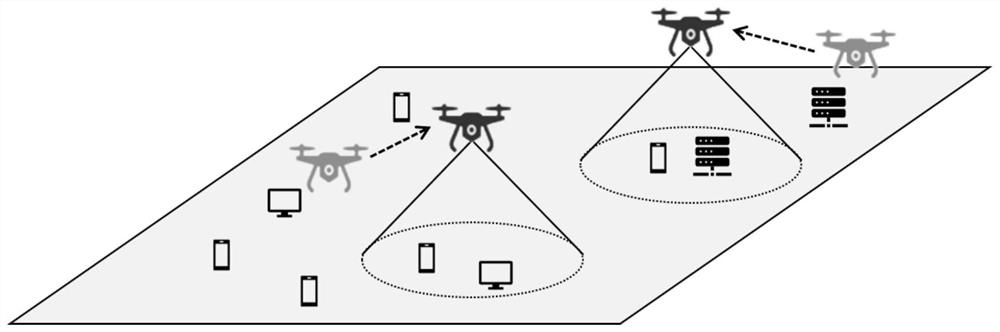

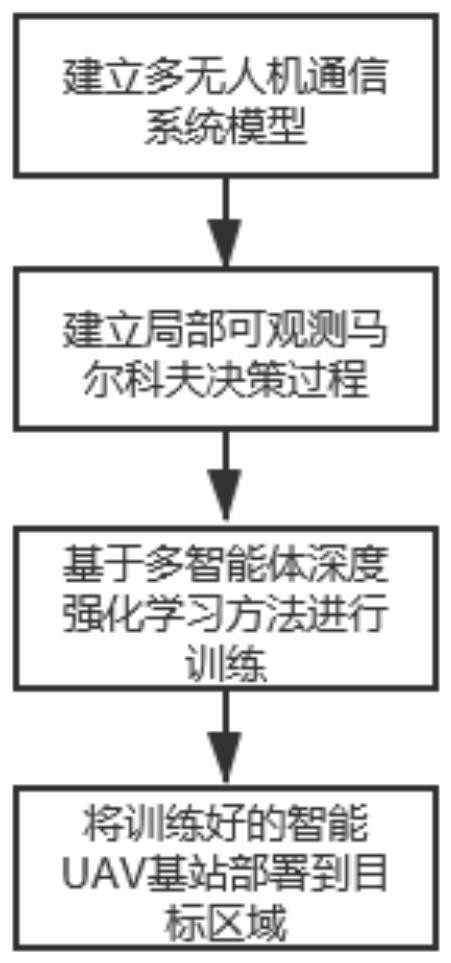

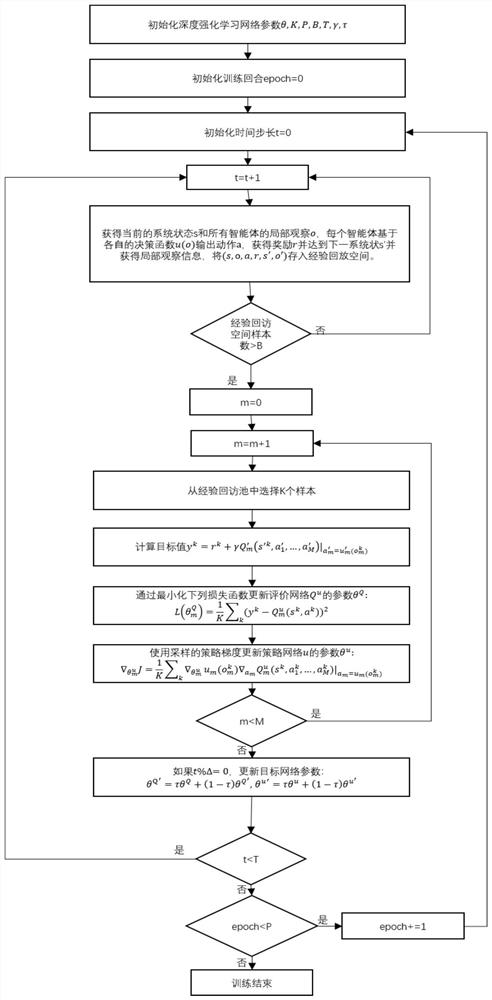

Unmanned aerial vehicle network hovering position optimization method based on multi-agent deep reinforcement learning

ActiveCN111786713AReduce energy consumptionEnsure fairnessRadio transmissionNeural learning methodsSimulationUncrewed vehicle

The invention discloses an unmanned aerial vehicle network hovering position optimization method based on multi-agent deep reinforcement learning. The method comprises the following steps: firstly, modeling a channel model, a coverage model and an energy loss model in an unmanned aerial vehicle to ground communication scene; modeling a throughput maximization problem of the unmanned aerial vehicleto ground communication network into a locally observable Markov decision process; obtaining local observation information and instantaneous rewards through continuous interaction between the unmanned aerial vehicle and the environment, conducting centralized training based on the information to obtain a distributed strategy network; deploying a strategy network in each unmanned aerial vehicle, so that each unmanned aerial vehicle can obtain a moving direction and a moving distance decision based on local observation information of the unmanned aerial vehicle, adjusts the hovering position, and carries out distributed cooperation. In addition, proportional fair scheduling and unmanned aerial vehicle energy consumption loss information are introduced into an instantaneous reward function,the fairness of the unmanned aerial vehicles for ground user services is guaranteed while the throughput is improved, energy consumption loss is reduced, and the unmanned aerial vehicle cluster can adapt to the dynamic environment.

Owner:DALIAN UNIV OF TECH

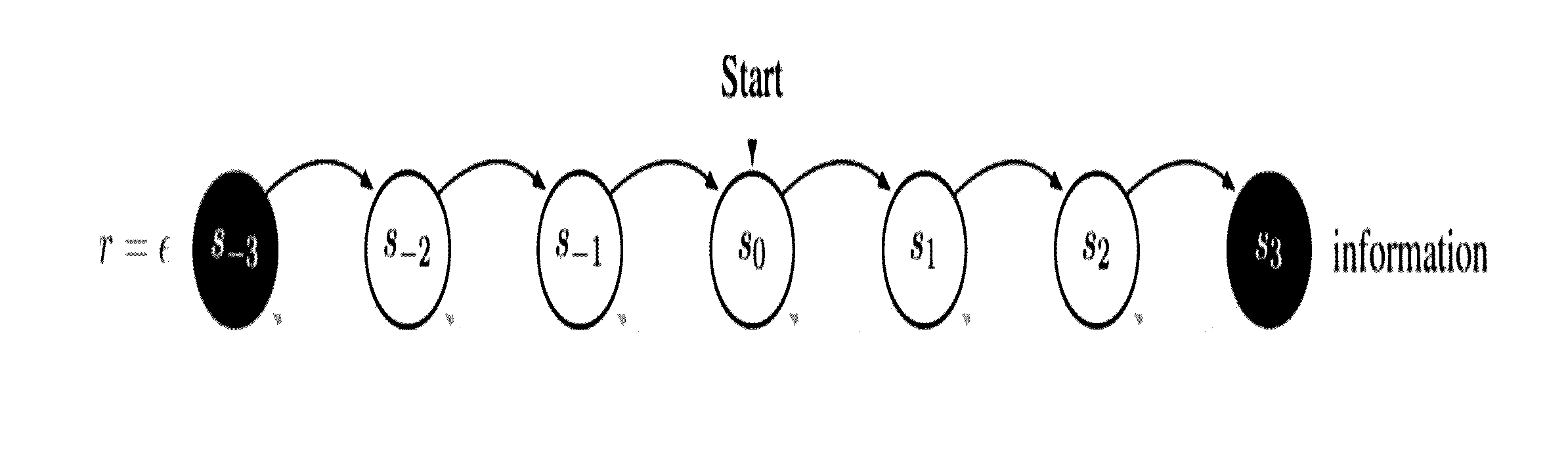

System and method for garbage collection in a computer system, which uses reinforcement learning to adjust the allocation of memory space, calculate a reward, and use the reward to determine further actions to be taken on the memory space

ActiveUS7174354B2Easy to collect and processSimple designData processing applicationsMemory adressing/allocation/relocationWaste collectionComputerized system

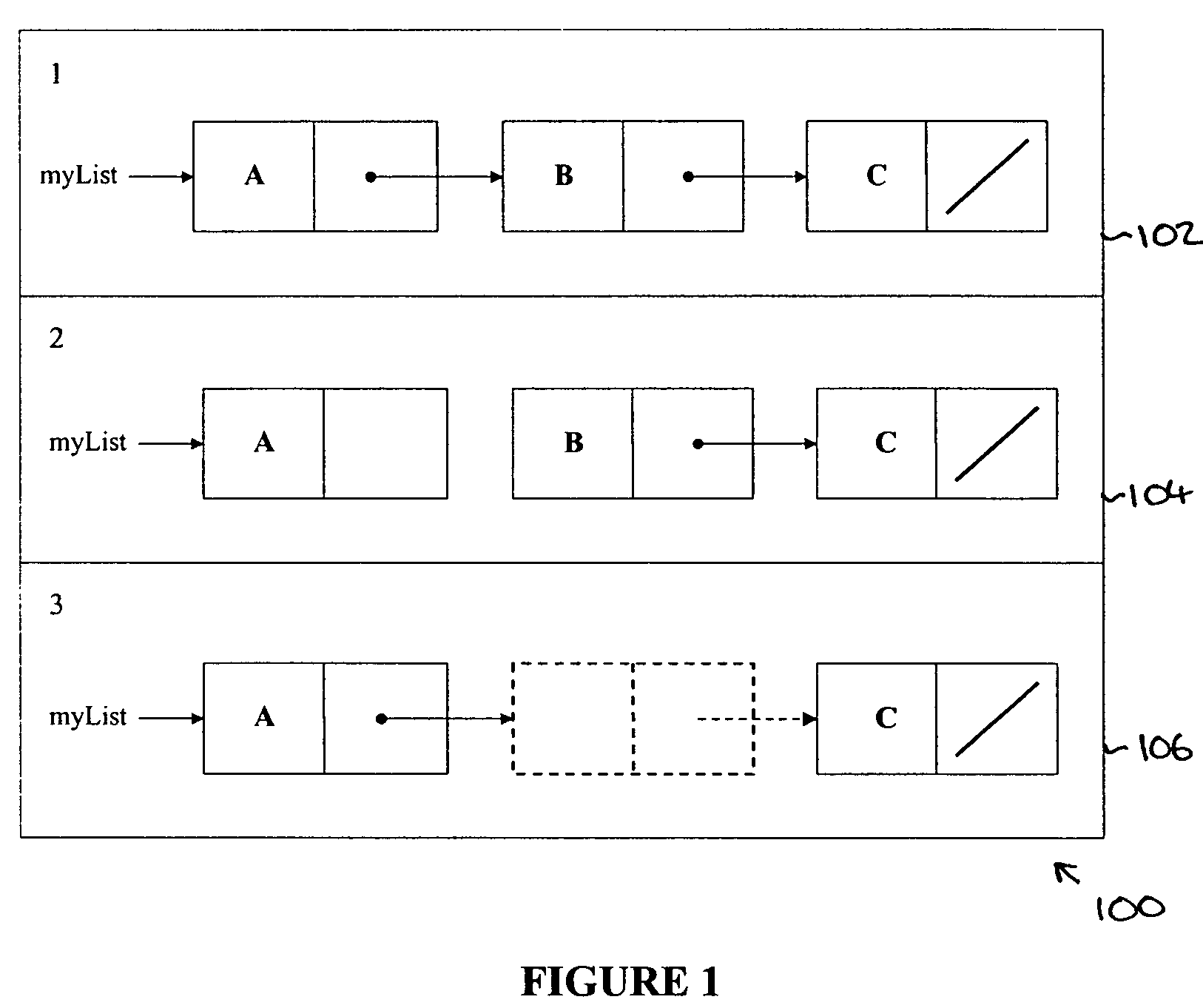

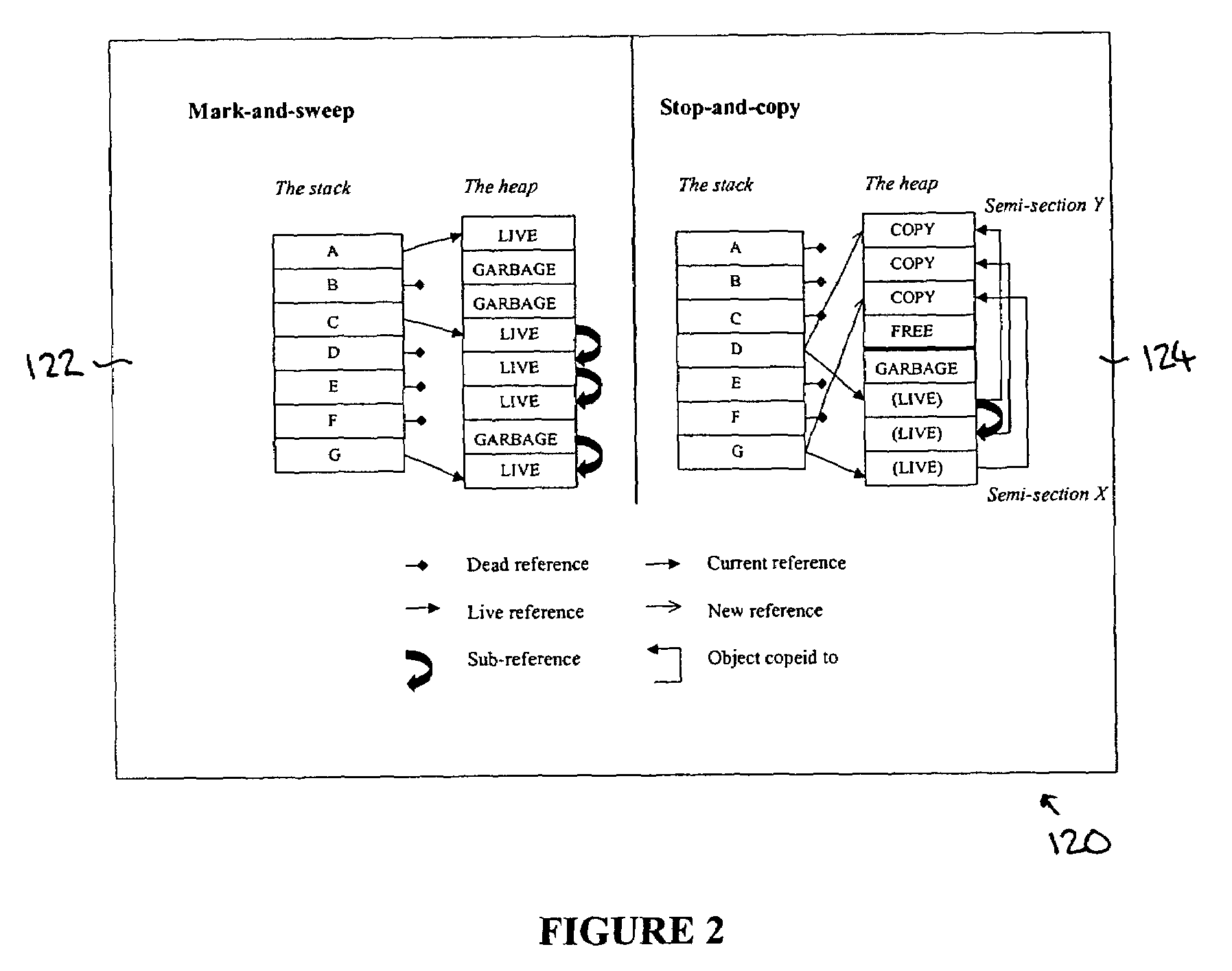

A system and method for use with a virtual machine, including an adaptive, automated memory management process that takes decisions regarding which garbage collector technique should be used, based on information extracted from the currently active applications. Reinforcement learning is used to decide under which circumstances to invoke the garbage collecting processing. The learning task is specified by rewards and penalties that indirectly tell the RLS agent what it is supposed to do instead of telling it how to accomplish the task. The decision is based on information about the memory allocation behavior of currently running applications. Embodiments of the system can be applied to the task of intelligent memory management in virtual machines, such as the Java Virtual Machine (JVM).

Owner:ORACLE INT CORP

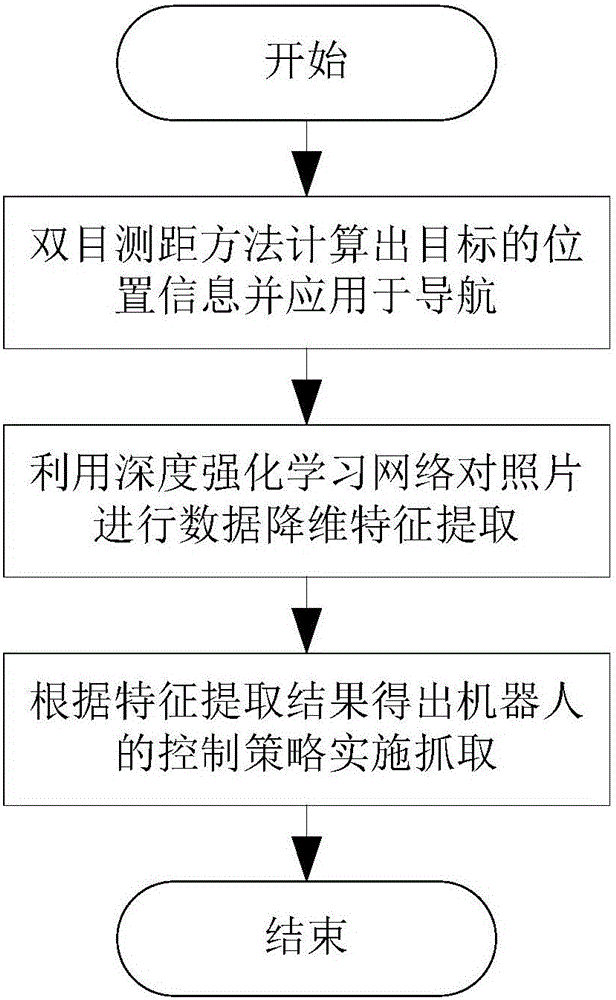

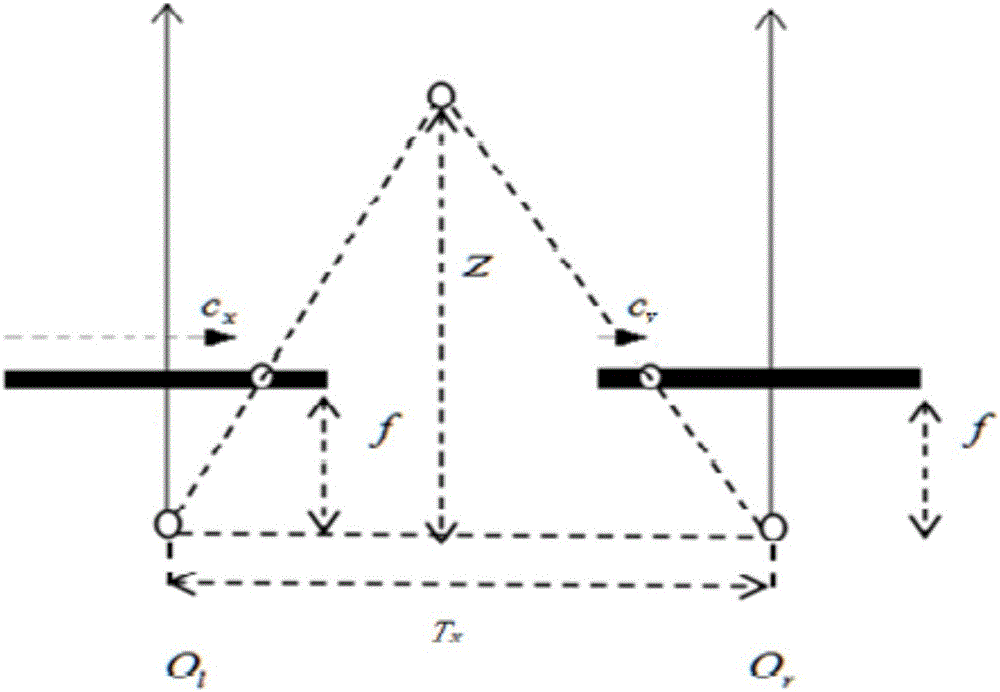

Robot adaptive grabbing method based on deep reinforcement learning

InactiveCN106094516AGuaranteed convergenceSolve problems that require each other independentlyAdaptive controlFeature extractionRobotic arm

The invention provides a robot adaptive grabbing method based on deep reinforcement learning. The method comprises the following steps: when distanced a certain distance away from an object to be grabbed, a robot obtaining a picture of a target through a pick-up head in the front, then according to the picture, calculating position information of the target by use of a binocular distance measurement method, and applying the calculated position information to robot navigation; when the target goes into the grabbing scope of a manipulator, taking a picture of the target through the pick-up head in the front again, and by use of a DDPG-based deep reinforcement learning network trained in advance, performing data dimension reduction feature extraction on the picture; and according to a feature extraction result, obtaining a control strategy of the robot, and the robot controlling a movement path and the posture of the manipulator by use of a control strategy so as to realize adaptive grabbing of the target. The grabbing method can realize adaptive grabbing of objects which are in different sizes and shapes and are not fixedly positioned and has quite good market application prospect.

Owner:NANJING UNIV

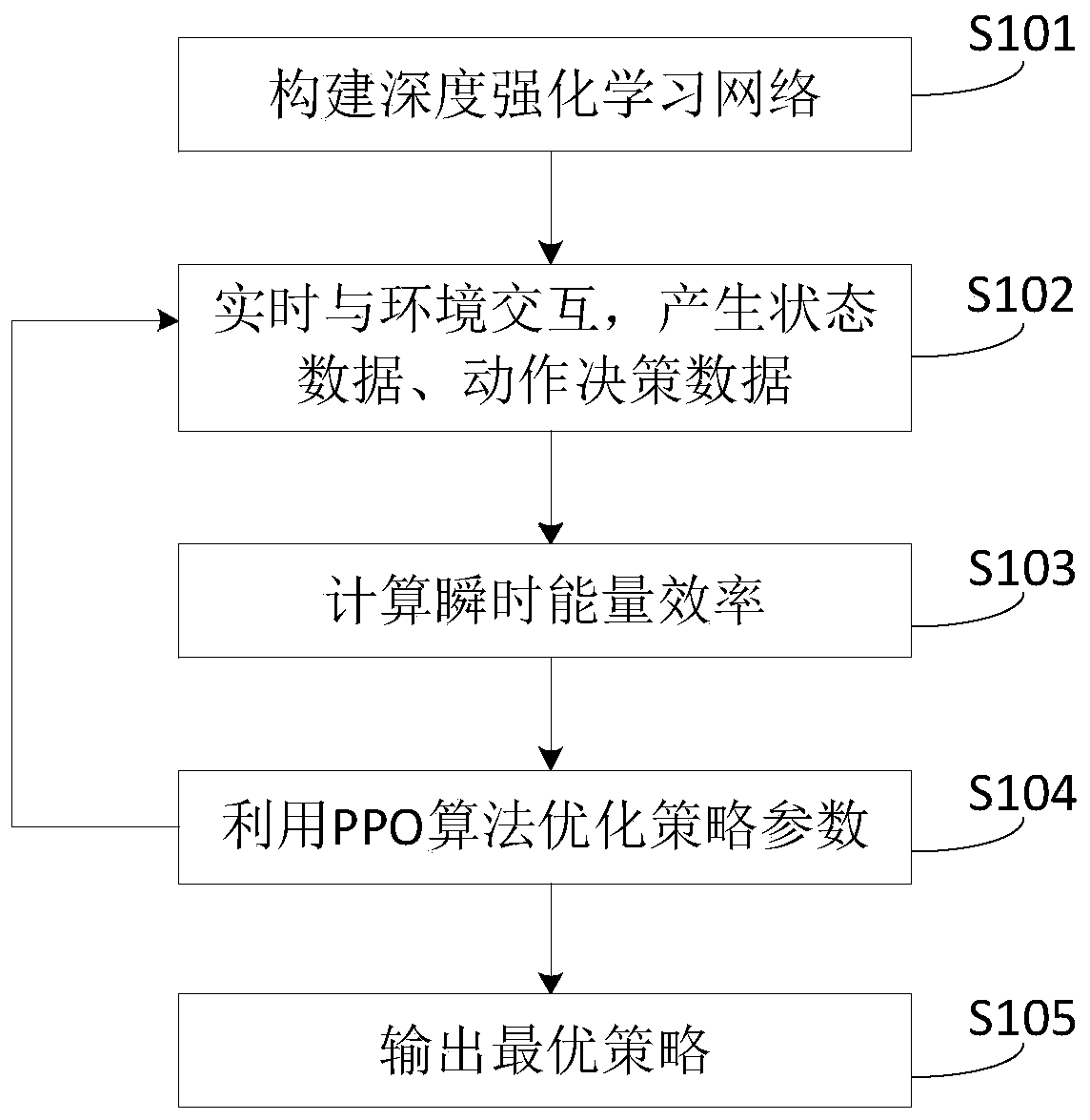

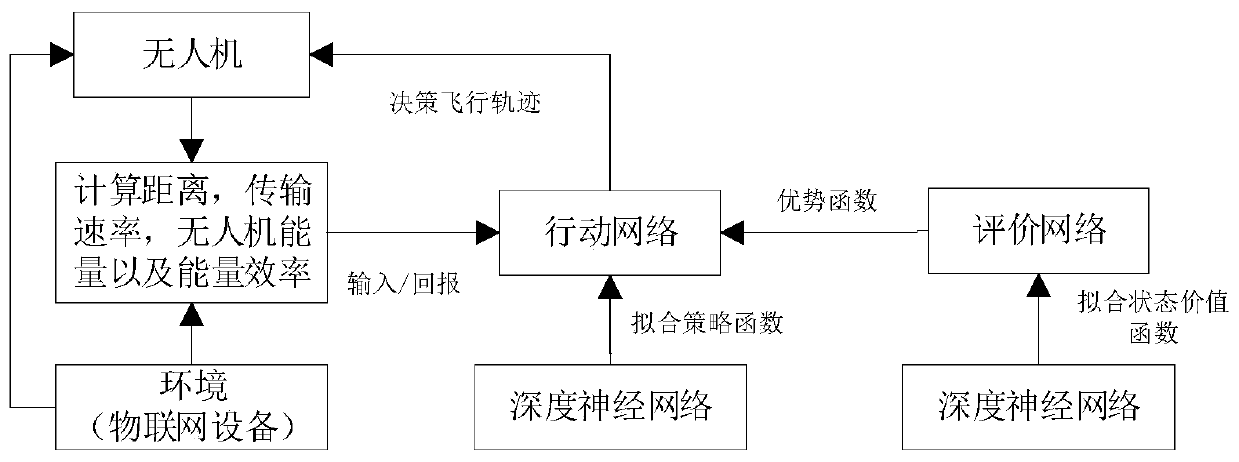

Unmanned aerial vehicle trajectory optimization method and device based on deep reinforcement learning and unmanned aerial vehicle

ActiveCN110488861AAchieve flight control optimizationMeet the needs of the actual flight environmentPosition/course control in three dimensionsFlight directionTrajectory optimization

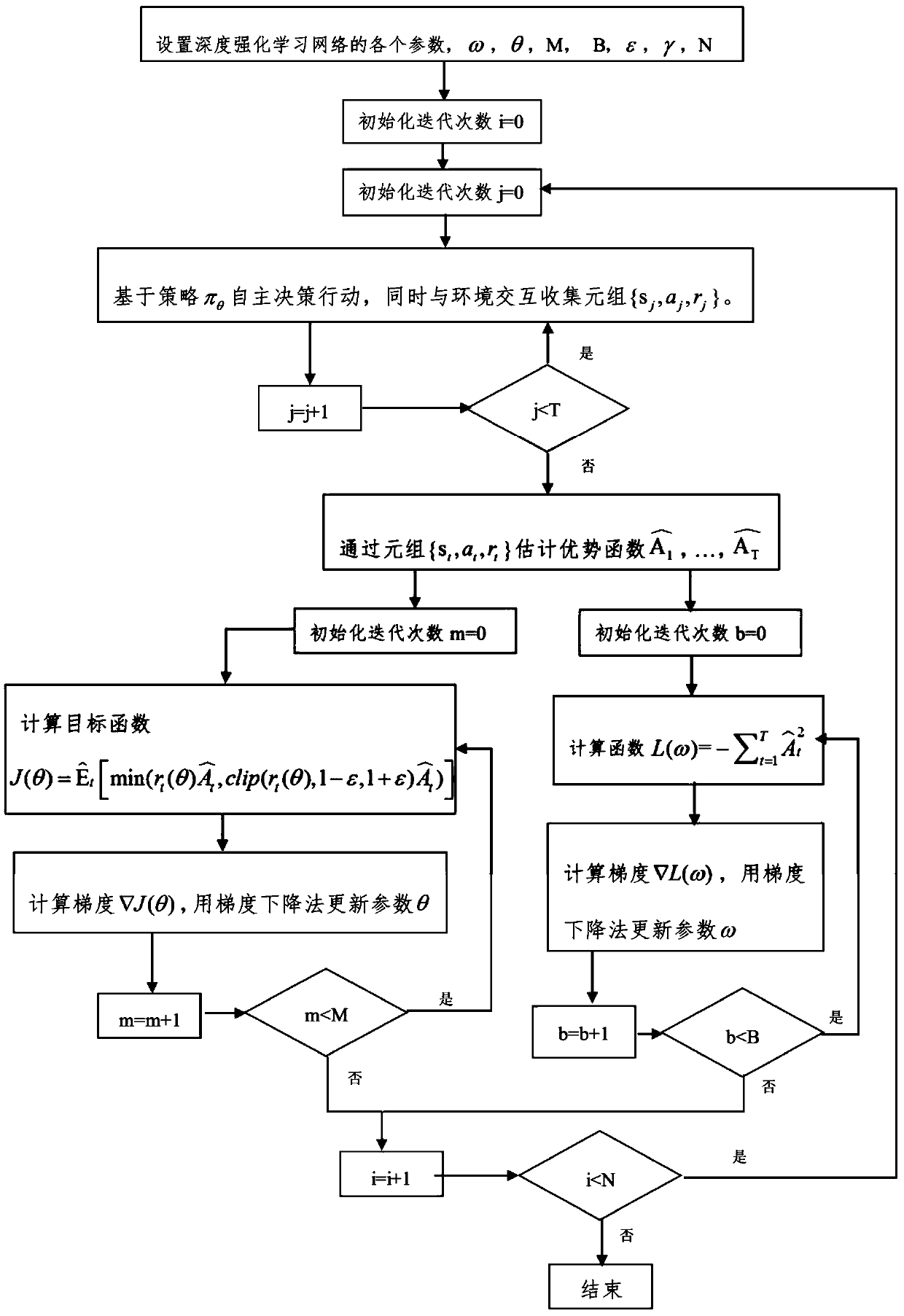

The invention discloses an unmanned aerial vehicle trajectory optimization method and device based on deep reinforcement learning and an unmanned aerial vehicle. The method comprises the steps: constructing a reinforcement learning network in advance, and generating state data and action decision data in real time in a flight process of an unmanned aerial vehicle; and taking the state data as input, the action decision data as output and the instantaneous energy efficiency as reward return, optimizing strategy parameters by utilizing a PPO algorithm, and outputting an optimal strategy. The device comprises a construction module, a training data collection module and a training module. The unmanned aerial vehicle comprises a processor, and the processor is used for executing the unmanned aerial vehicle trajectory optimization method based on deep reinforcement learning. The method has the capability of carrying out autonomous learning from accumulated flight data, can intelligently determine the optimal flight speed, acceleration, flight direction and return time in an unknown communication scene, concludes a flight strategy with the optimal energy efficiency, and is higher in environment adaptability and generalization capability.

Owner:BEIJING UNIV OF POSTS & TELECOMM

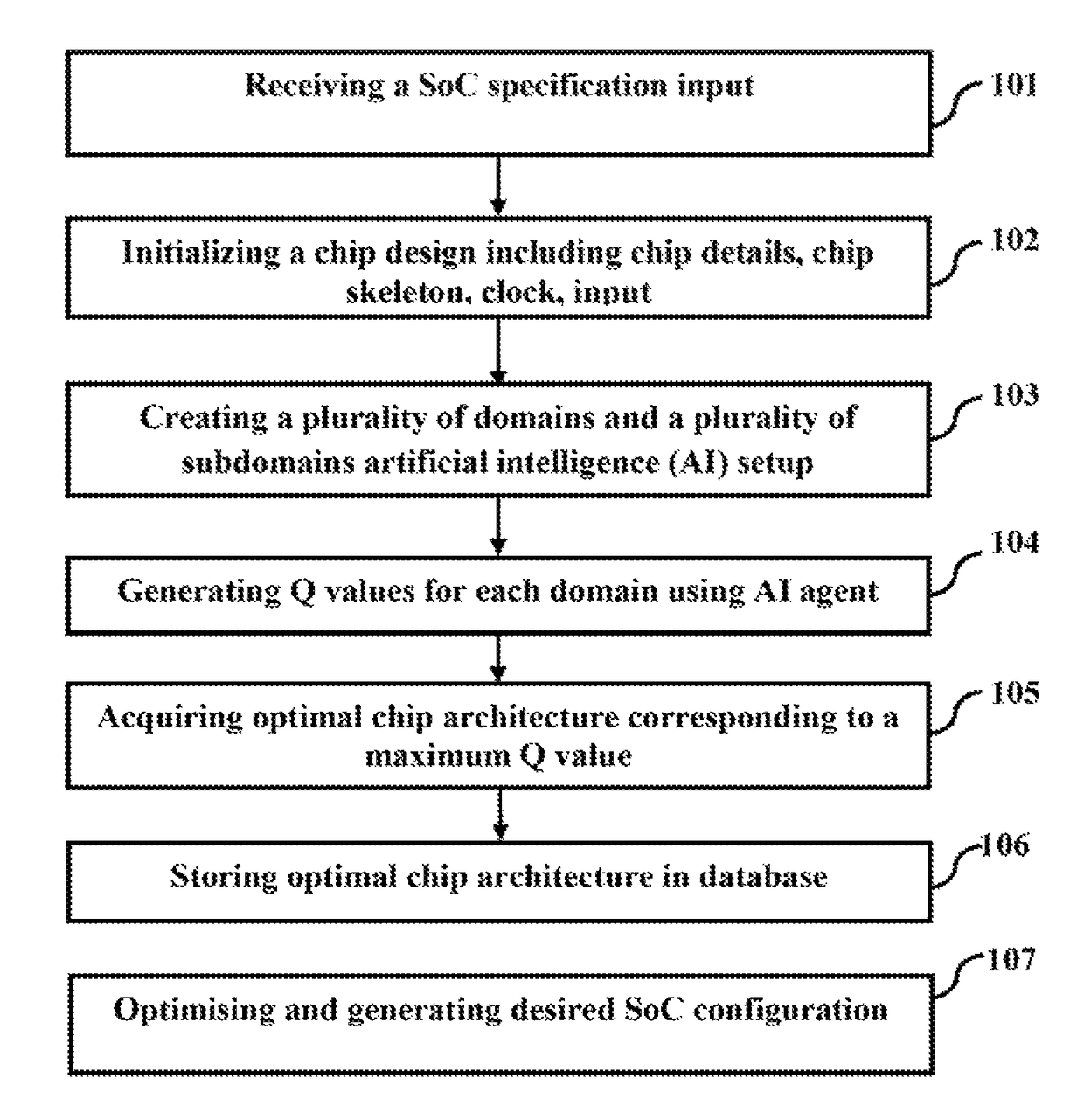

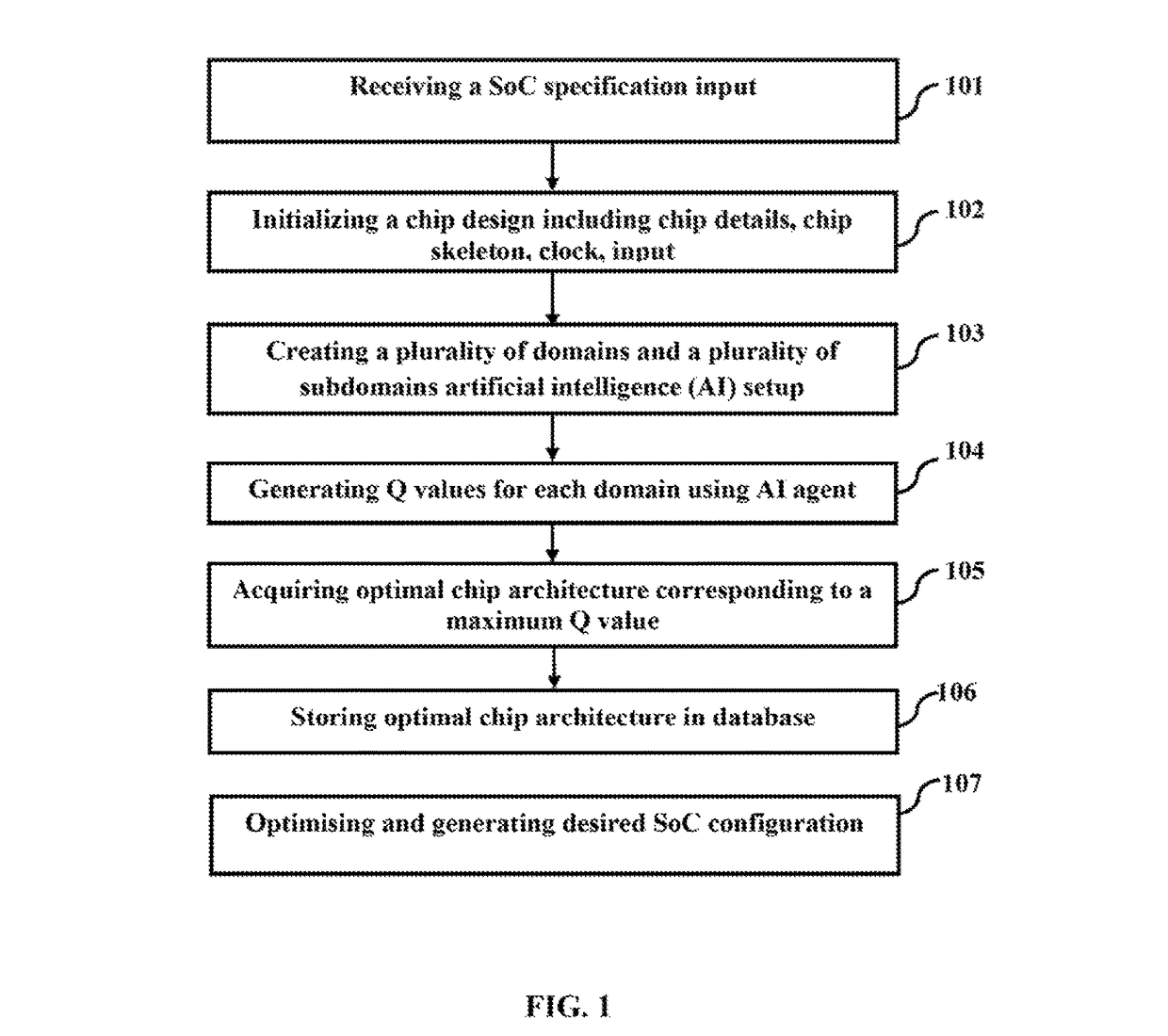

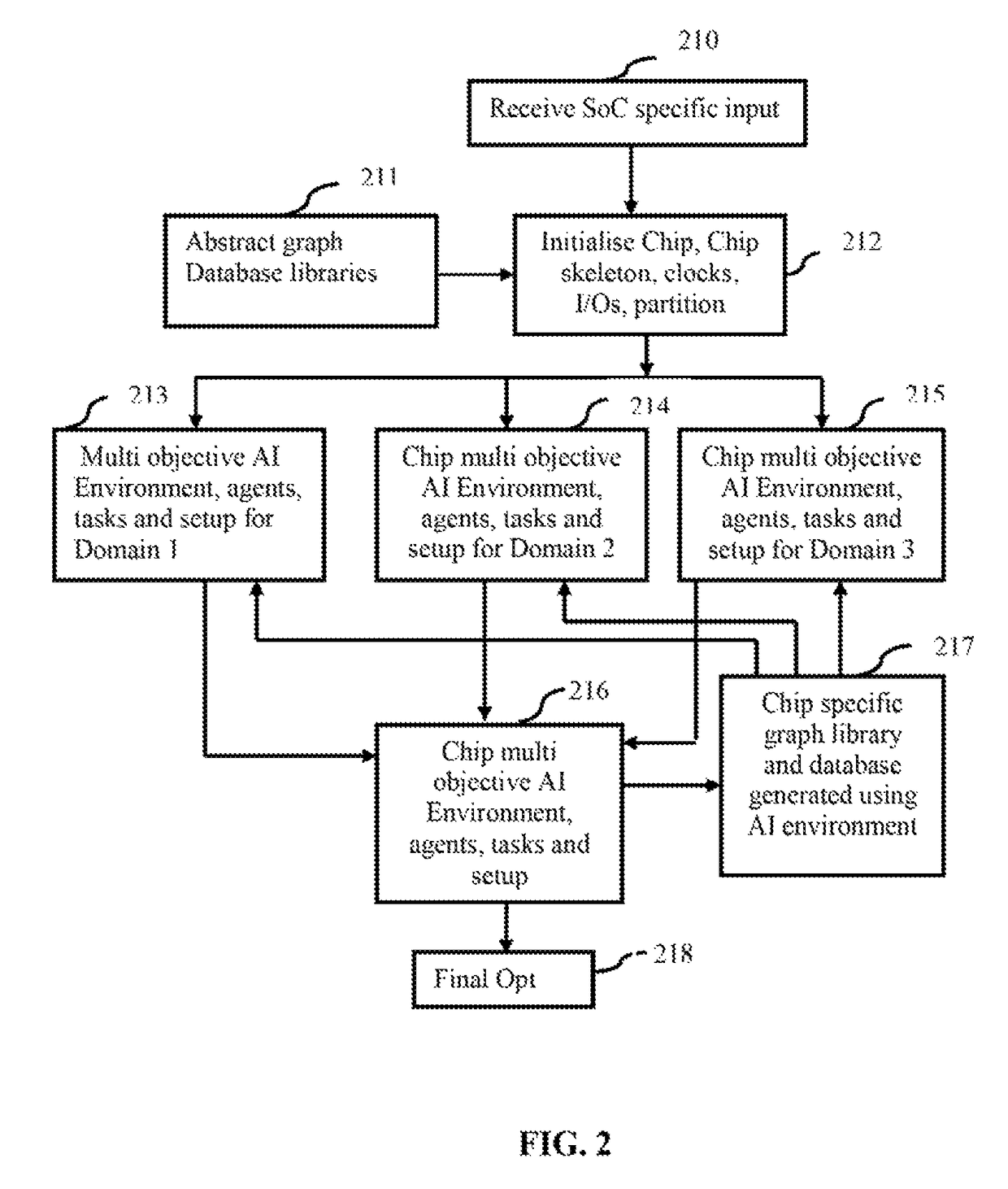

System and method for designing system on chip (SoC) circuits through artificial intelligence and reinforcement learning

ActiveUS9792397B1Reduce complexityGood decisionDesign optimisation/simulationCAD circuit designComputer architectureRelevant information

The embodiments herein discloses a system and method for designing SoC using AI and Reinforcement Learning (RL) techniques. Reinforcement Learning is done either hierarchically in several steps or in a single-step comprising environment, tasks, agents and experiments, to have access to SoC (System on a Chip) related information. The AI agent is configured to learn from the interaction and plan the implementation of a SoC circuit design. Q values generated for each domain and sub domain are stored in a hierarchical SMDP structure in a form of SMDP Q table in a big data database. An optimal chip architecture corresponding to a maximum Q value of a top level in the SMDP Q table is acquired and stored in a database for learning and inference. Desired SoC configuration is optimized and generated based on the optimal chip architecture and the generated chip specific graph library.

Owner:ALPHAICS CORP

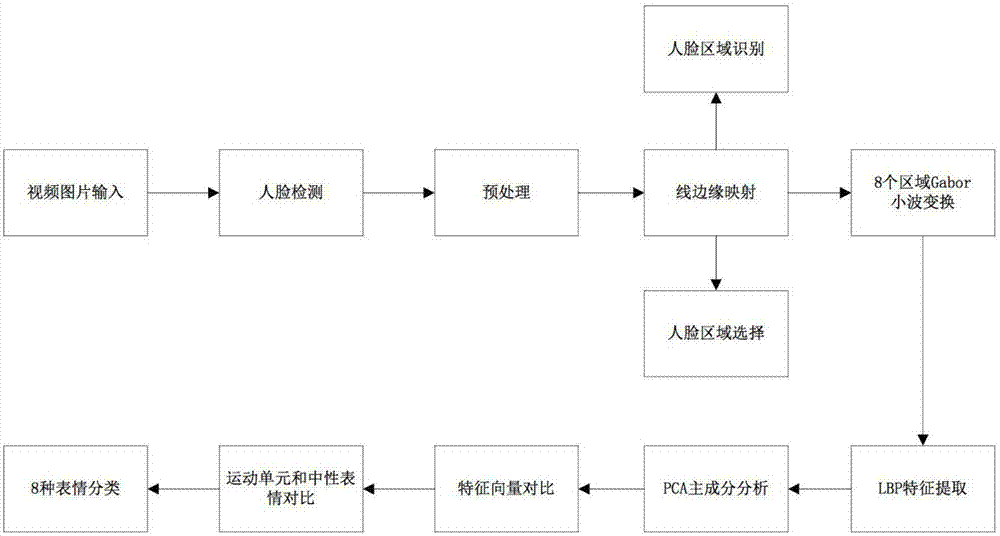

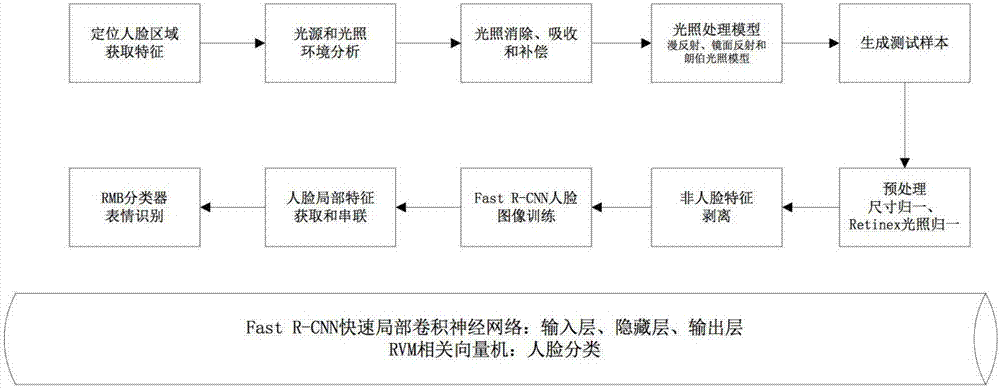

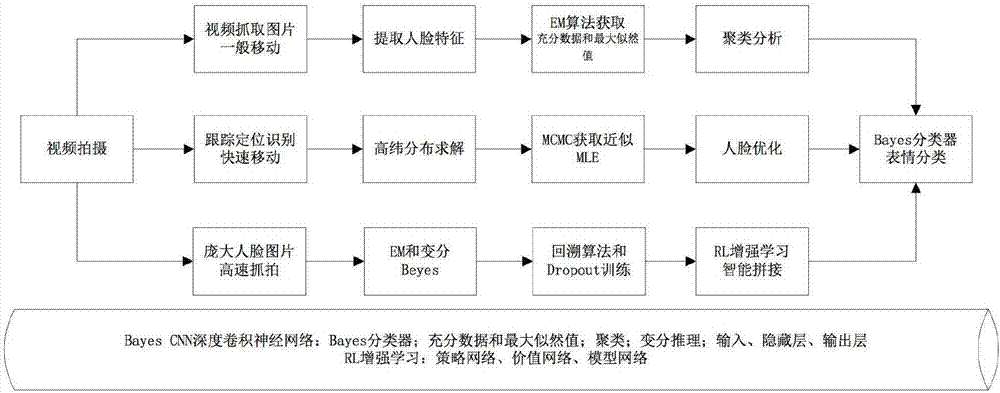

Human face emotion recognition method in complex environment

InactiveCN107423707AReal-time processingImprove accuracyImage enhancementImage analysisNerve networkFacial region

The invention discloses a human face emotion recognition method in a mobile embedded complex environment. In the method, a human face is divided into main areas of a forehead, eyebrows and eyes, cheeks, a noise, a month and a chain, and 68 feature points are further divided. In view of the above feature points, in order to realize the human face emotion recognition rate, the accuracy and the reliability in various environments, a human face and expression feature classification method is used in a normal condition, a Faster R-CNN face area convolution neural network-based method is used in conditions of light, reflection and shadow, a method of combining a Bayes Network, a Markoff chain and variational reasoning is used in complex conditions of motion, jitter, shaking, and movement, and a method of combining a deep convolution neural network, a super-resolution generative adversarial network (SRGANs), reinforcement learning, a backpropagation algorithm and a dropout algorithm is used in conditions of incomplete human face display, a multi-human face environment and noisy background. Thus, the human face expression recognition effects, the accuracy and the reliability can be promoted effectively.

Owner:深圳帕罗人工智能科技有限公司

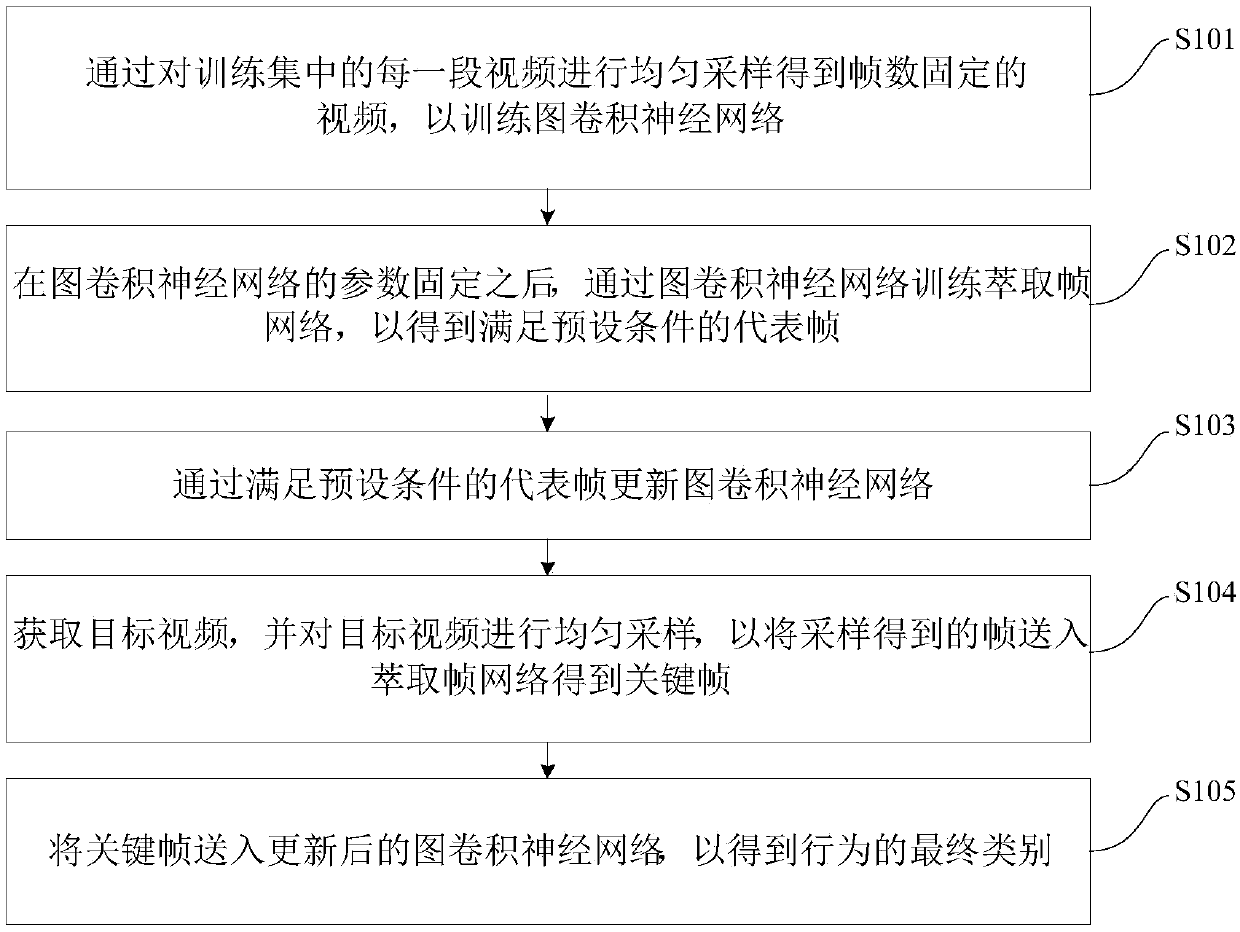

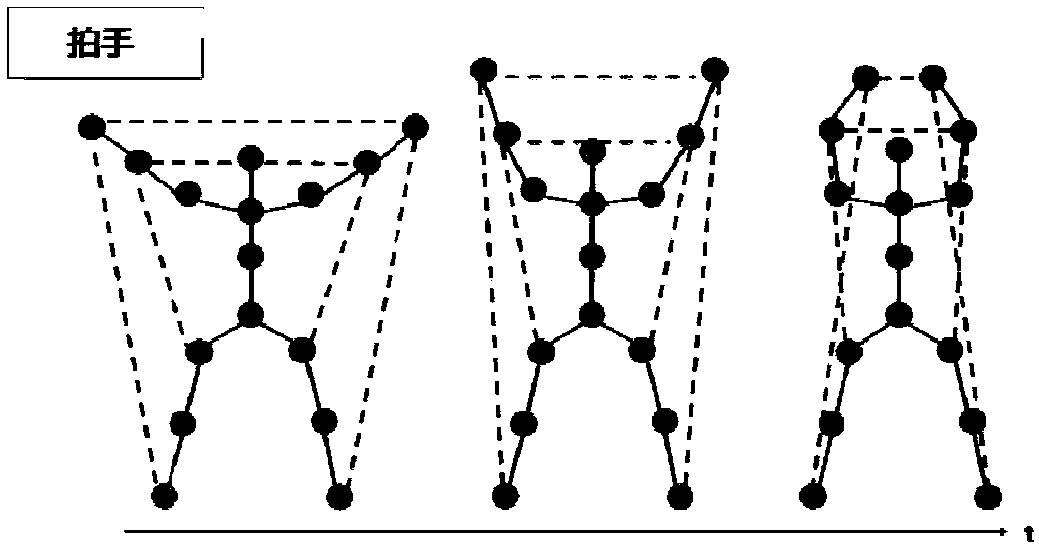

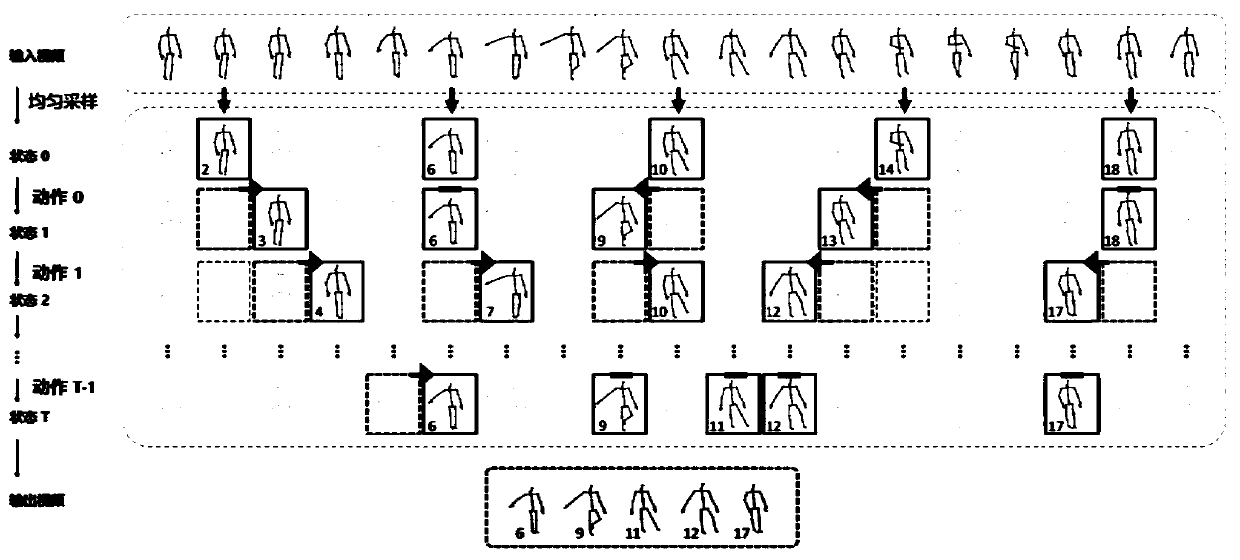

Human skeleton behavior recognition method and device based on deep reinforcement learning

ActiveCN108304795AImprove discrimination abilityEasy to identifyCharacter and pattern recognitionNeural architecturesFixed frameKey frame

The invention discloses a human skeleton behavior recognition method and device based on deep reinforcement learning. The method comprises: uniform sampling is carried out on each video segment in a training set to obtain a video with a fixed frame number, thereby training a graphic convolutional neural network; after parameter fixation of the graphic convolutional neural network, an extraction frame network is trained by using the graphic convolutional neural network to obtain a representative frame meeting a preset condition; the graphic convolutional neural network is updated by using the representative frame meeting the preset condition; a target video is obtained and uniform sampling is carried out on the target video, so that a frame obtained by sampling is sent to the extraction frame network to obtain a key frame; and the key frame is sent to the updated graphic convolutional neural network to obtain a final type of the behavior. Therefore, the discriminability of the selectedframe is enhanced; redundant information is removed; the recognition performance is improved; and the calculation amount at the test phase is reduced. Besides, with full utilization of the topologicalrelationship of the human skeletons, the performance of the behavior recognition is improved.

Owner:TSINGHUA UNIV

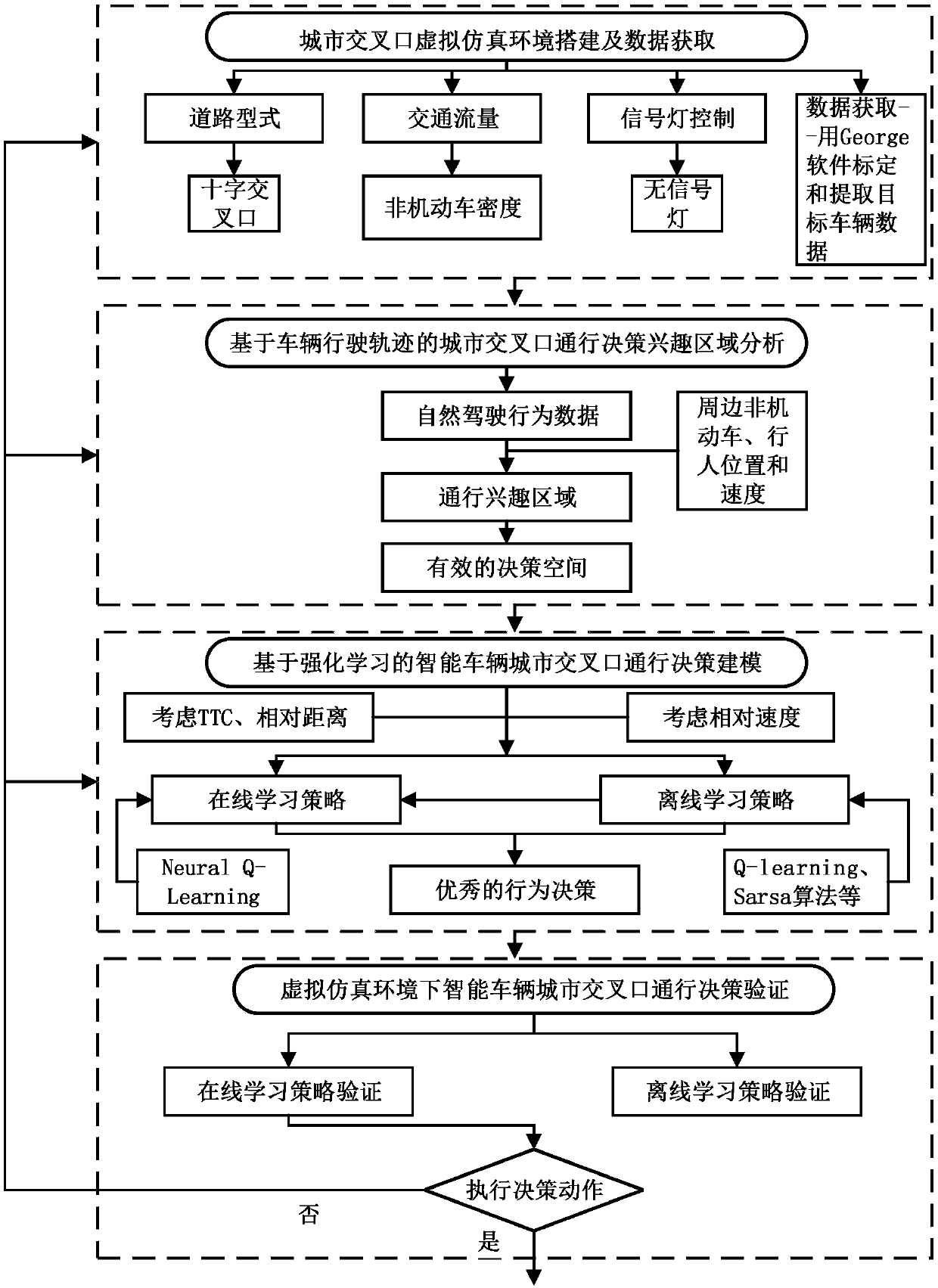

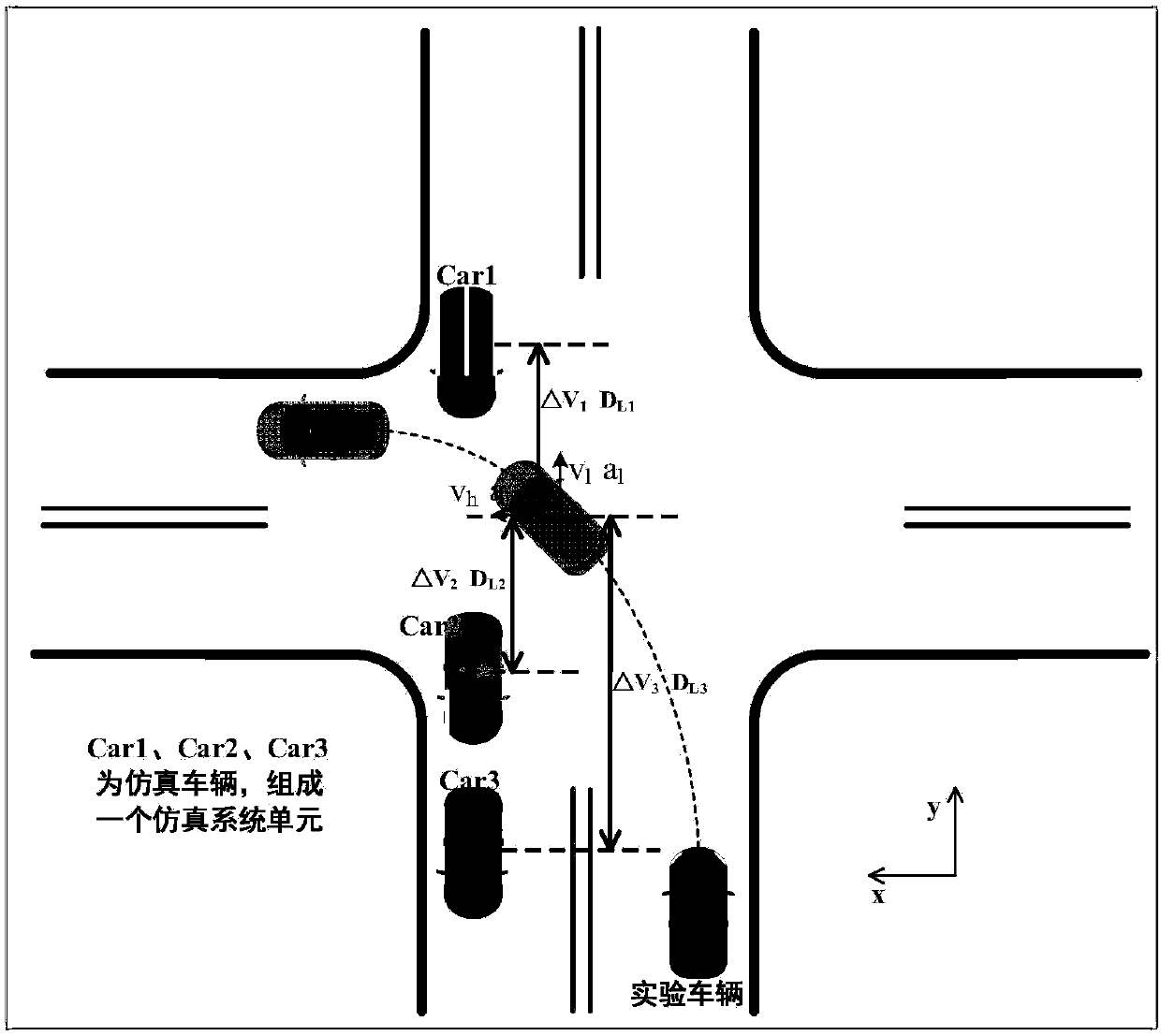

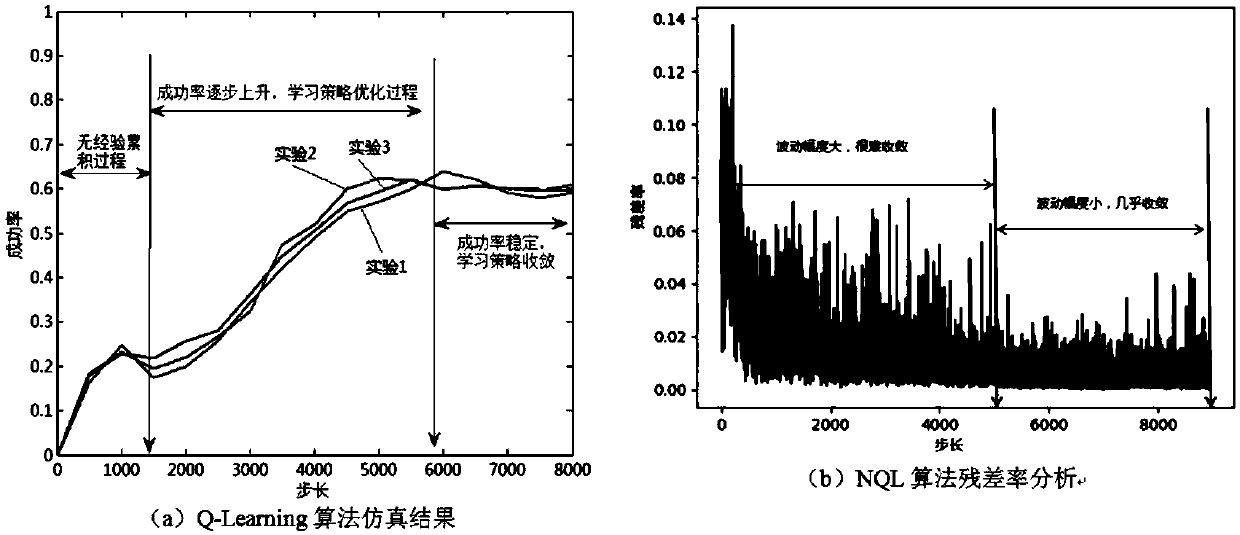

Intensive learning based urban intersection passing method for driverless vehicle

ActiveCN108932840AImprove real-time performanceReduce the dimensionality of behavioral decision-making state spaceControlling traffic signalsDetection of traffic movementMoving averageLearning based

The invention discloses an intensive learning based urban intersection passing method for a driverless vehicle. The method includes a step 1 of collecting vehicle continuous running state informationand position information through a photographing method, the vehicle continuous running state information and position information including speed, lateral speed and acceleration value, longitudinal speed and acceleration value, traveling track curvature value, accelerator opening degree and brake pedal pressure; a second step of obtaining characteristic motion track and the velocity quantity of actual data through clustering; a step 3 of processing original data by an exponential weighting moving average method; a step 4 of realizing the interaction passing method by utilizing an NQL algorithm. The NQL algorithm of the invention is obviously superior to a Q learning algorithm in learning ability when handling complex intersection scenes and a better training effect can be achieved in shorter training time with less training data.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

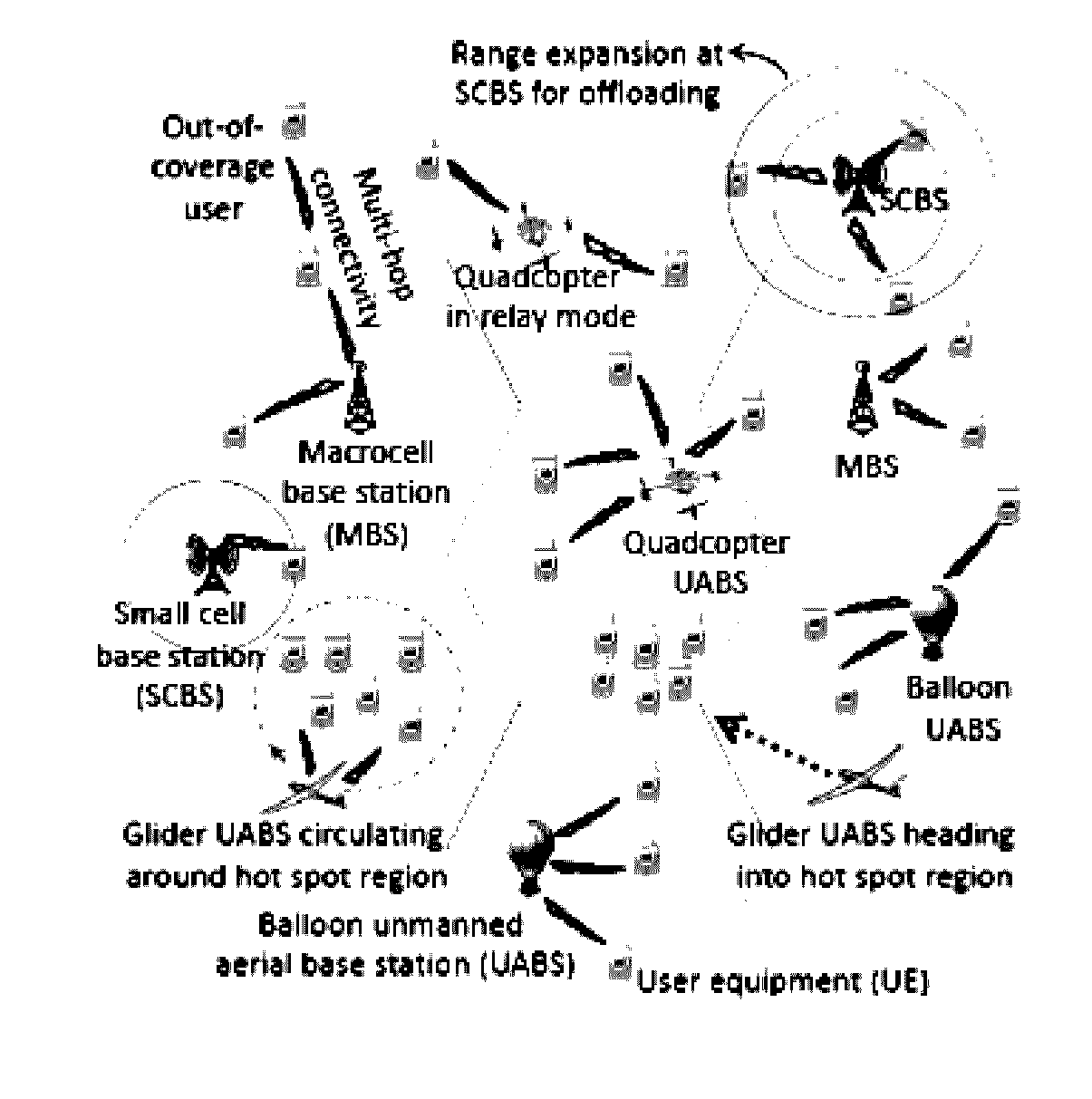

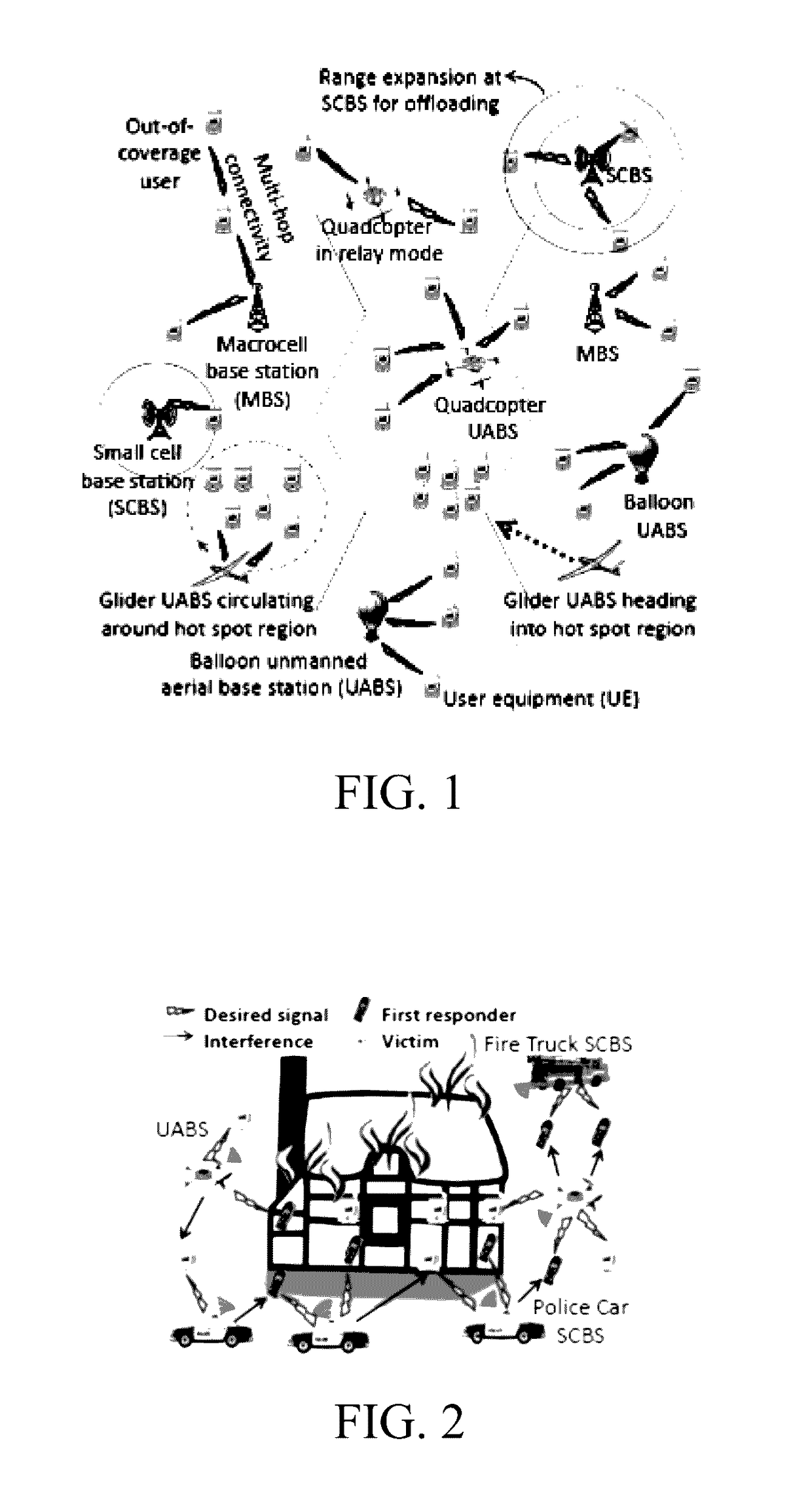

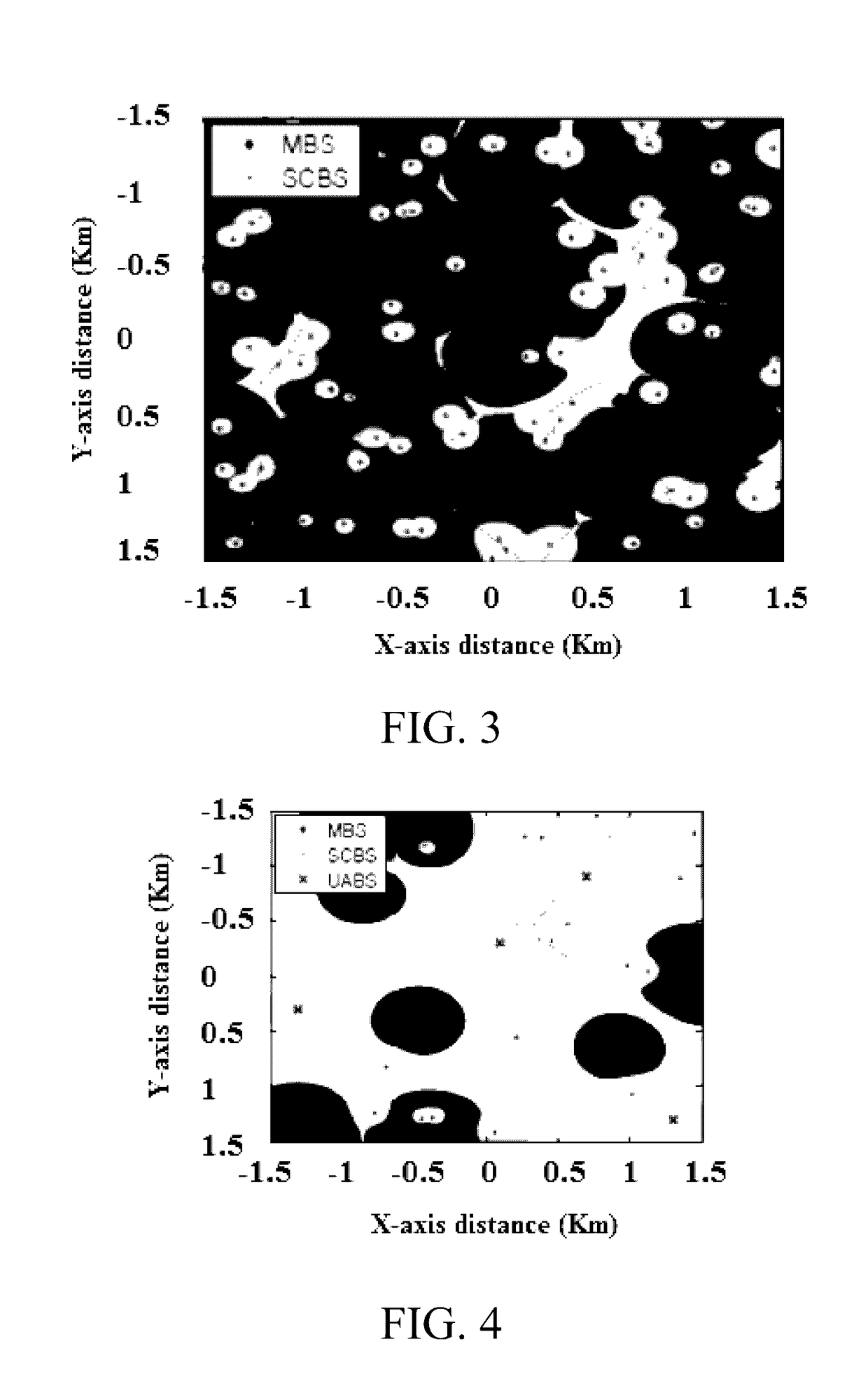

Interference and mobility management in UAV-assisted wireless networks

ActiveUS9622133B1High speed communicationNetwork topologiesConnection managementCommunication interfaceWireless mesh network

Techniques and systems are disclosed for addressing the challenges in interference and mobility management in broadband, UAV-assisted heterogeneous network (BAHN) scenarios. Implementations include BAHN control components, for example, at a controlling network node of a BAHN. Generally, a component implementing techniques for managing interference and handover in a BAHN gathers state data from network nodes or devices in the BAHN, determines a candidate BAHN model that optimizes interference and handover metrics, and determines and performs model adjustments to the network parameters, BS parameters, and UAV-assisted base station (UABS) device locations and velocities to conform to the optimized candidate BAHN model. Also described is a UABS apparatus having a UAV, communications interface for communicating with a HetNet in accordance with wireless air interface standards, and a computing device suitable for implementing BAHN control or reinforcement learning components.

Owner:FLORIDA INTERNATIONAL UNIVERSITY

Mobile robot path planning method with combination of depth automatic encoder and Q-learning algorithm

ActiveCN105137967AAchieve cognitionImprove the ability to process imagesBiological neural network modelsPosition/course control in two dimensionsAlgorithmReward value

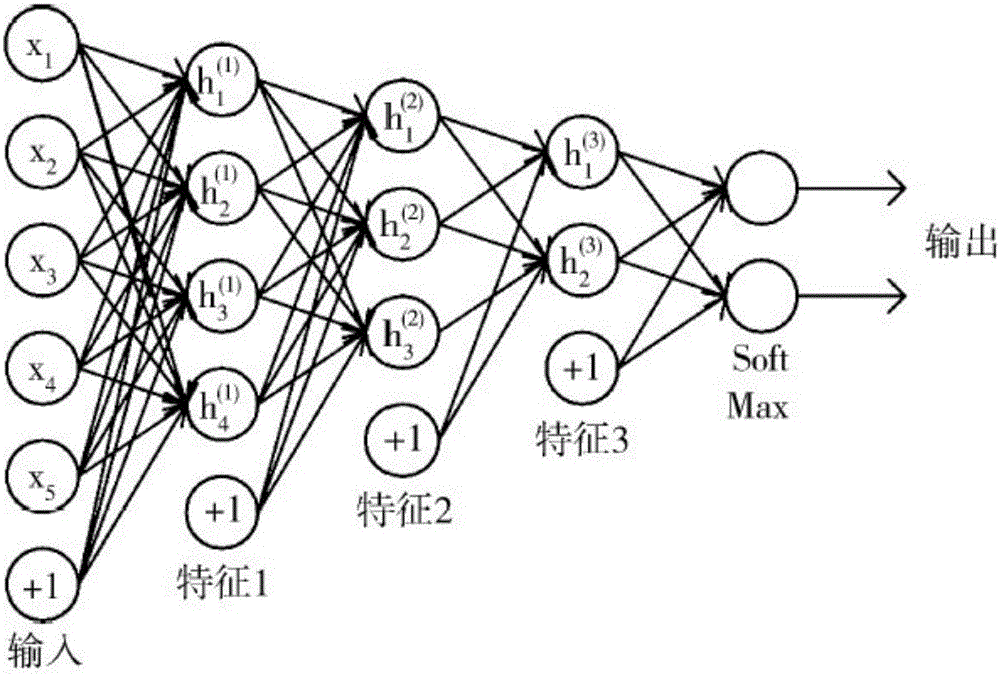

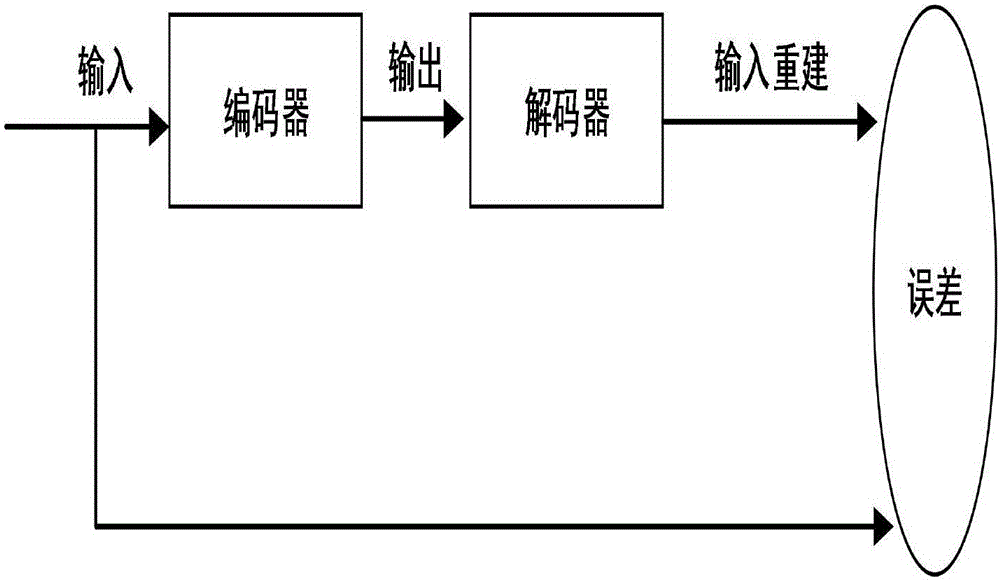

The invention provides a mobile robot path planning method with combination of a depth automatic encoder and a Q-learning algorithm. The method comprises a depth automatic encoder part, a BP neural network part and a reinforced learning part. The depth automatic encoder part mainly adopts the depth automatic encoder to process images of an environment in which a robot is positioned so that the characteristics of the image data are acquired, and a foundation is laid for subsequent environment cognition. The BP neural network part is mainly for realizing fitting of reward values and the image characteristic data so that combination of the depth automatic encoder and the reinforced learning can be realized. According to the Q-learning algorithm, knowledge is obtained in an action-evaluation environment via interactive learning with the environment, and an action scheme is improved to be suitable for the environment to achieve the desired purpose. The robot interacts with the environment to realize autonomous learning, and finally a feasible path from a start point to a terminal point can be found. System image processing capacity can be enhanced, and environment cognition can be realized via combination of the depth automatic encoder and the BP neural network.

Owner:BEIJING UNIV OF TECH

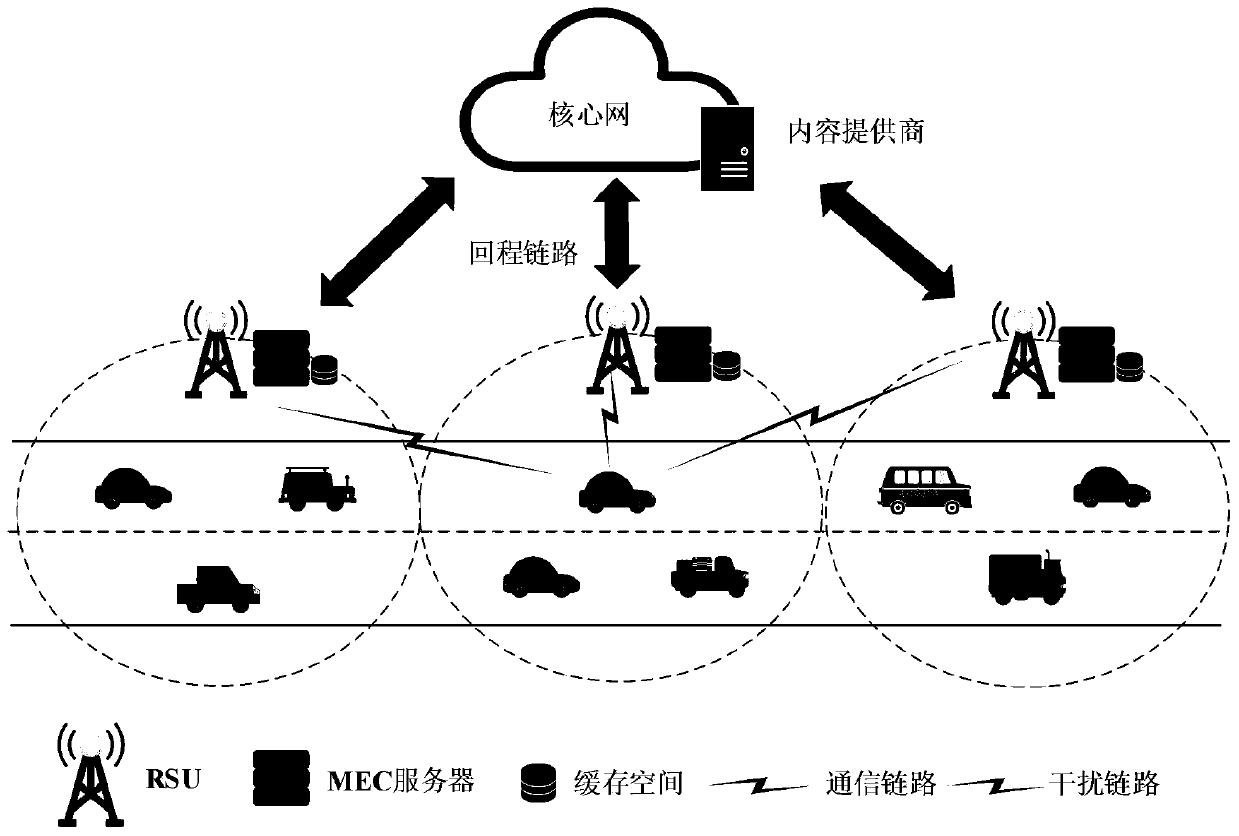

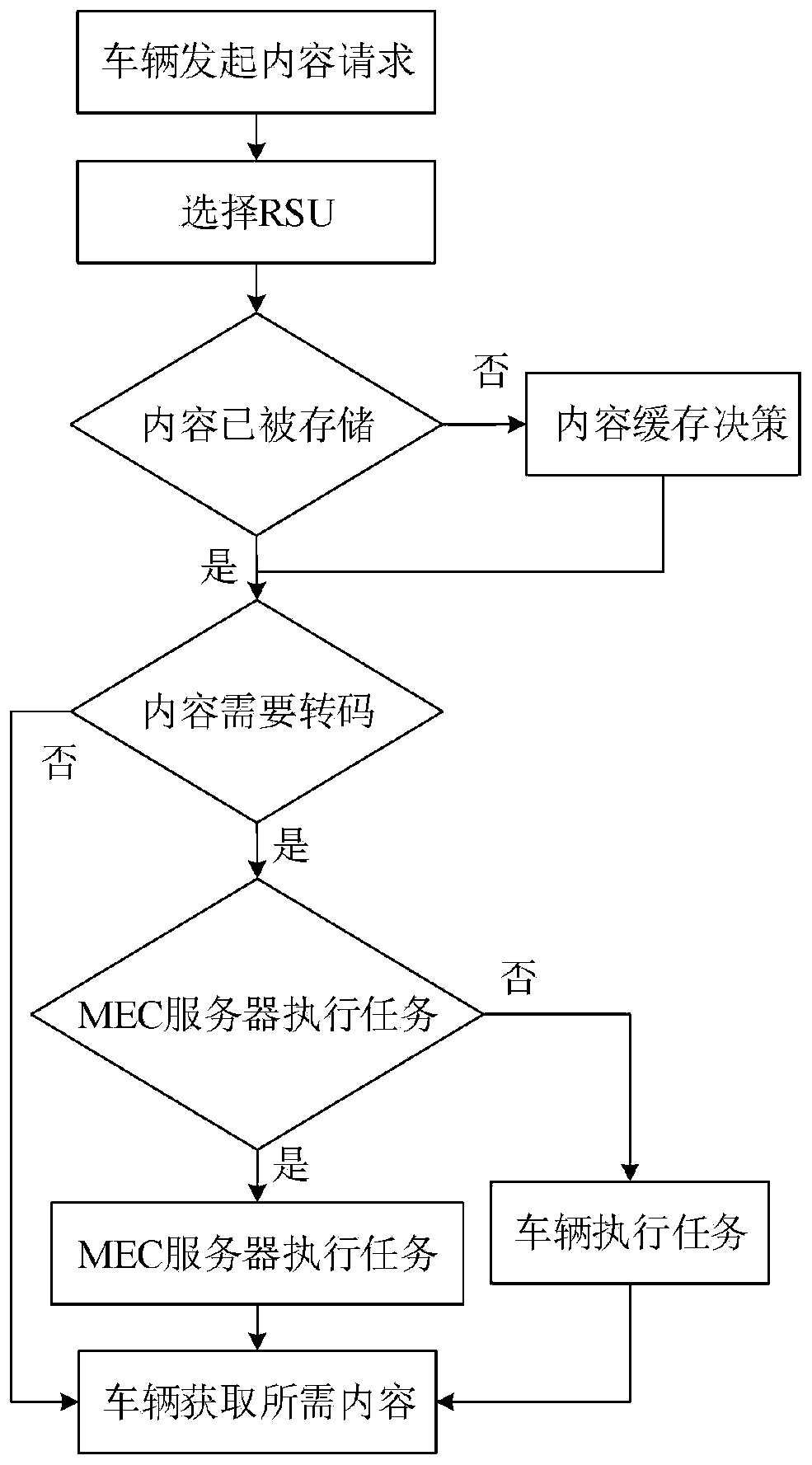

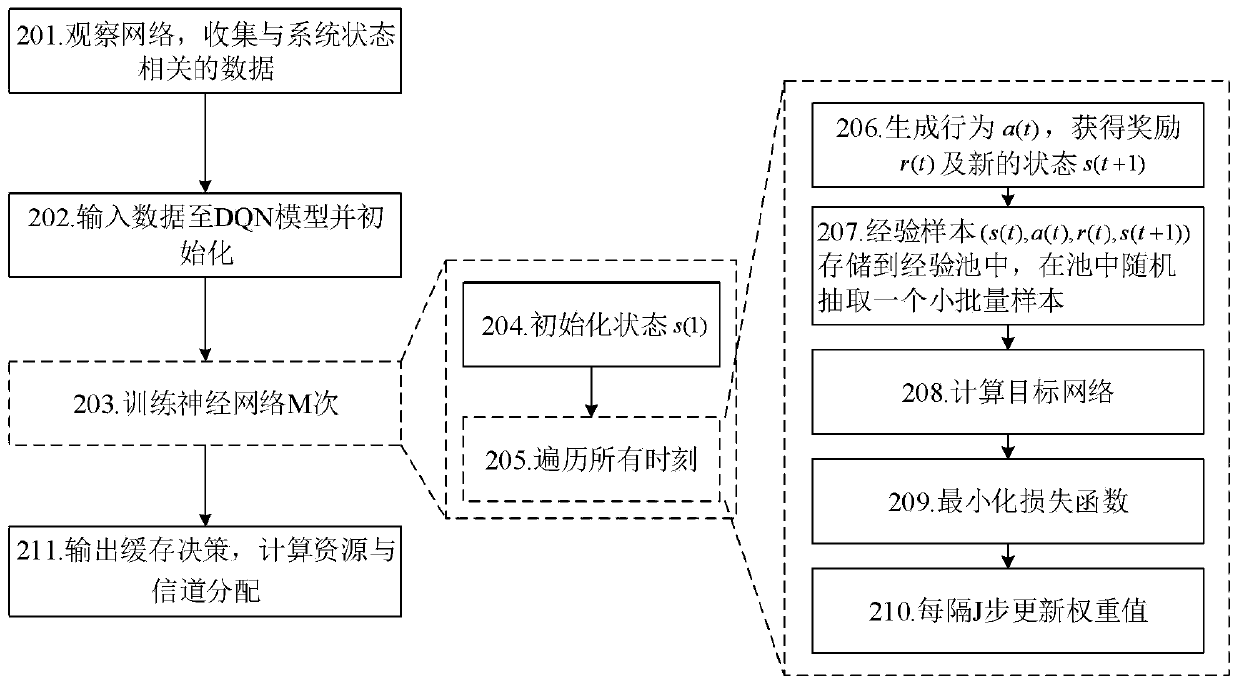

Content caching decision and resource allocation combined optimization method based on mobile edge computing in Internet of Vehicles

ActiveCN110312231AParticular environment based servicesVehicle infrastructure communicationFrequency spectrumThe Internet

The invention relates to a content caching decision and resource allocation combined optimization method based on mobile edge computing in the Internet of Vehicles, and belongs to the technical fieldof mobile communication. The mobile edge calculation MEC is used as a foreground edge calculation, and has strong calculation capability and storage capability. The MEC server is deployed on the RSU side and can provide storage space and computing resources for vehicle users. Although the MEC provides services similar to cloud computing, the problems of caching, computing resource allocation, spectrum resource allocation and the like still exist. The method aims to maximize the network revenue under the condition of guaranteeing the time delay requirement. According to the method, a content caching decision, MEC server computing resource distribution and spectrum resource distribution are jointly modeled into a Markov decision process (MDP), a deep reinforcement learning method is used forsolving, and an optimal content caching decision, computing resource distribution and spectrum distribution are obtained.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

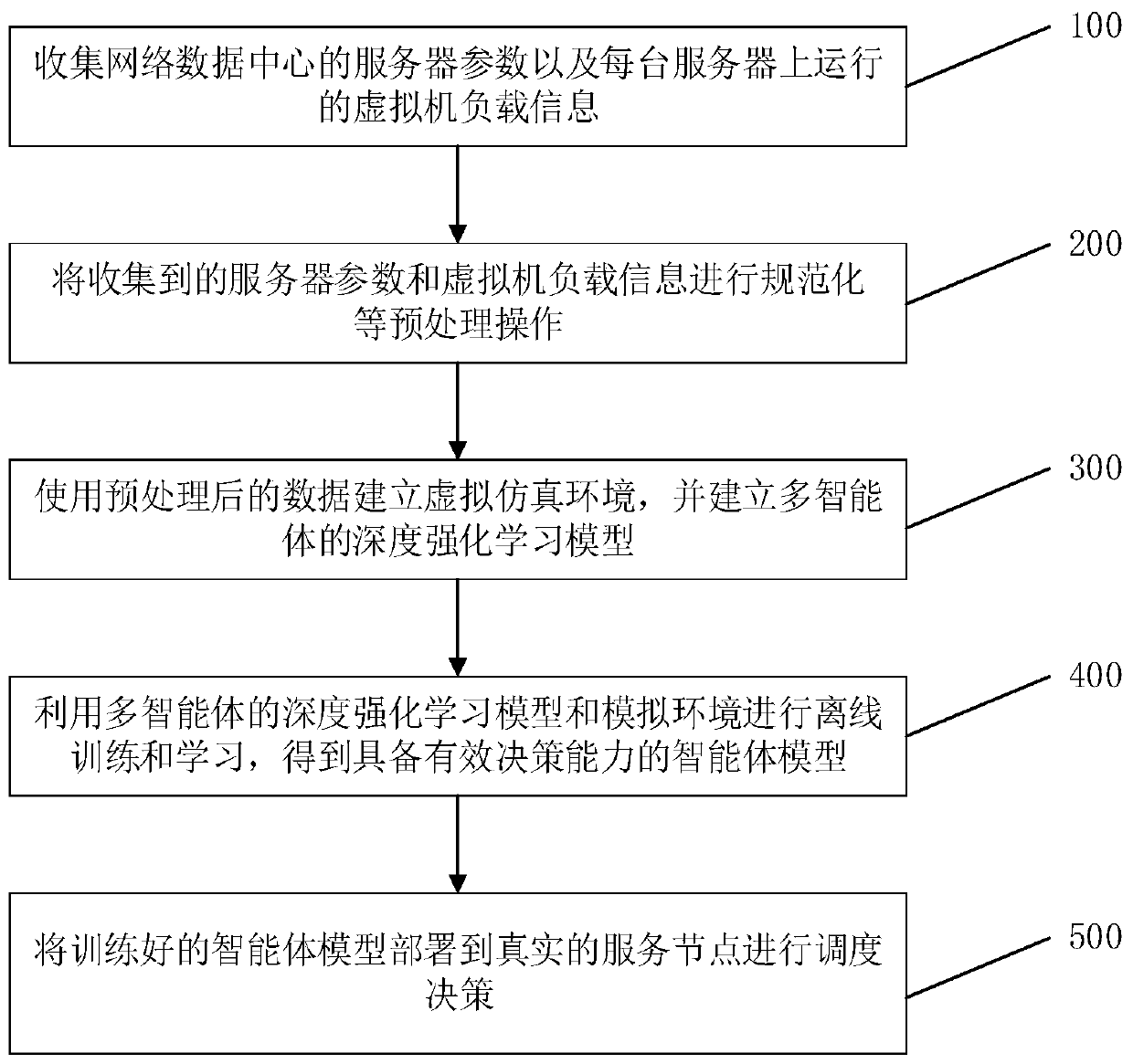

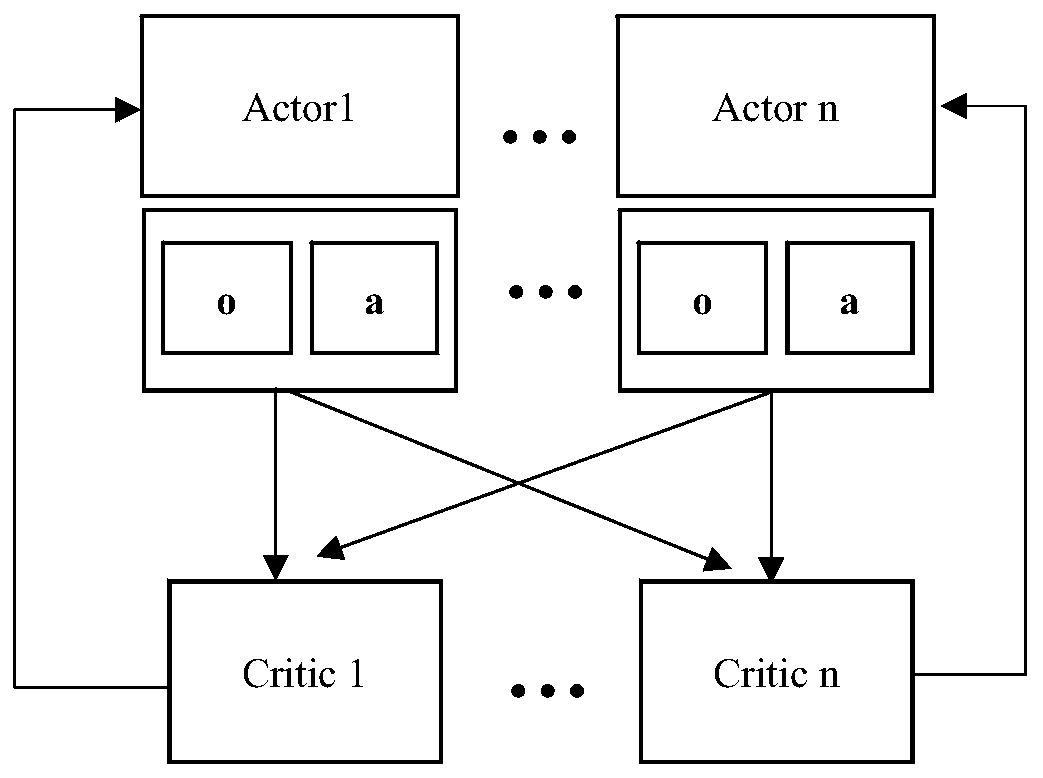

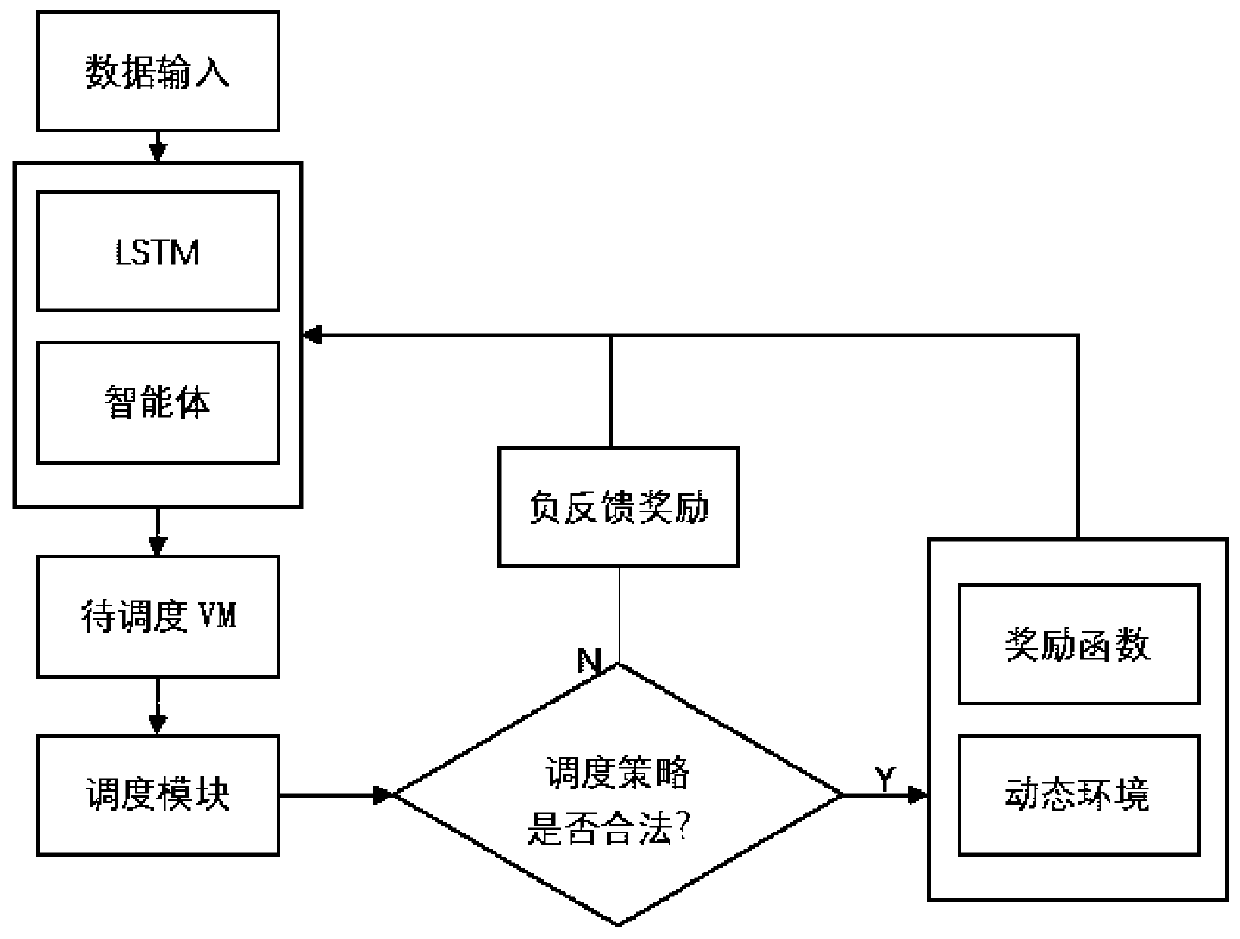

Multi-agent reinforcement learning scheduling method and system, and electronic device

ActiveCN109947567ALoad balancingEnhanced informationProgram initiation/switchingResource allocationData centerNetwork data

The invention relates to a multi-agent reinforcement learning scheduling method, a multi-agent reinforcement learning scheduling system and electronic equipment. The method comprises the following steps: step a, collecting server parameters of a network data center and virtual machine load information running on each server; b, establishing a virtual simulation environment by using the parametersof the server and the load information of the virtual machine, and establishing a deep reinforcement learning model of multiple agents; c, performing offline training and learning by utilizing the deep reinforcement learning model of the multiple agents and a simulation environment, and training an agent model for each server; and d, deploying the agent model to a real service node, and schedulingaccording to the load condition of each service node. The service running on the server is virtualized through the virtualization technology, load balancing is carried out in a virtual machine scheduling mode, resource distribution is more macroscopic, and a strategy that multiple agents generate cooperation in a complex dynamic environment can be achieved.

Owner:SHENZHEN INST OF ADVANCED TECH

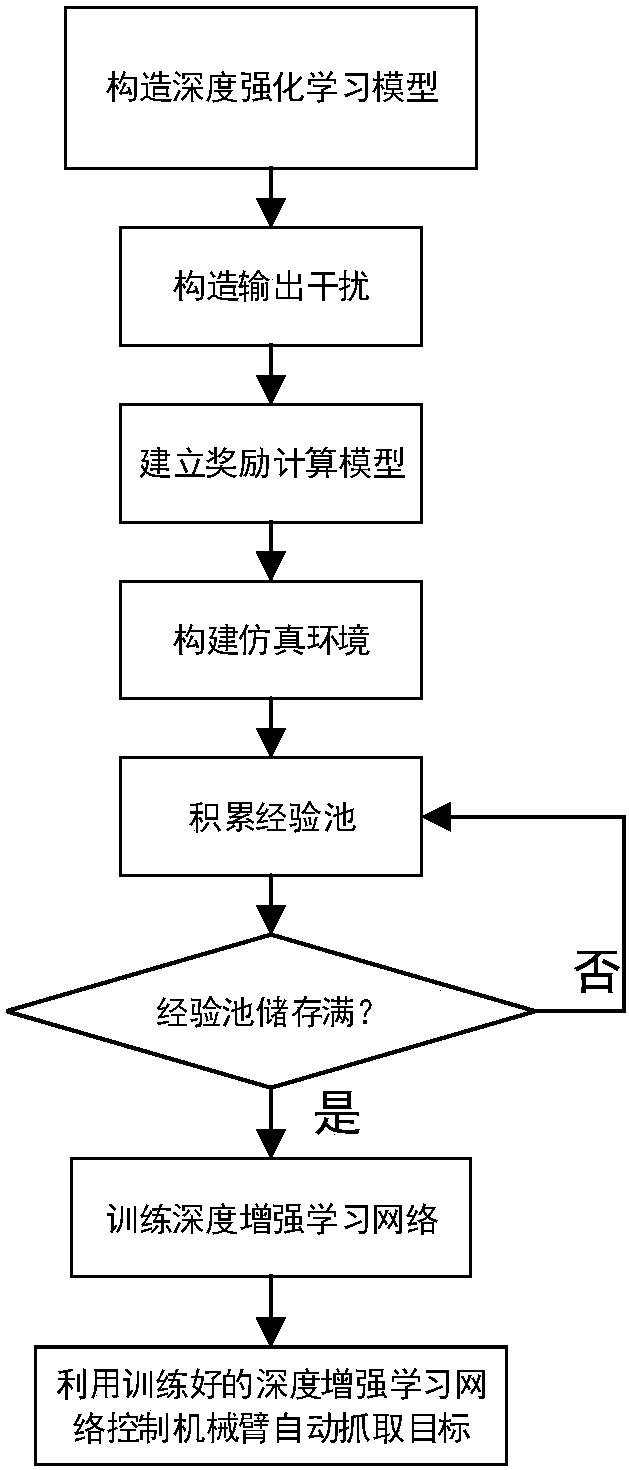

Industrial mechanical arm automatic control method based on deep reinforcement learning

ActiveCN108052004ASolve automatic control problemsRun fastProgramme-controlled manipulatorDesign optimisation/simulationAutomatic controlSimulation

The invention relates to an industrial mechanical arm automatic control method based on deep reinforcement learning. The industrial mechanical arm automatic control method based on deep reinforcementlearning includes the steps: constructing a deep reinforcement learning model, constructing output interference, establishing a reward rt calculation model, constructing a simulation environment, accumulating an experience pool, training a deep reinforcement learning neural network, and controlling an industrial mechanical arm to move in reality by means of the trained deep reinforcement learningmodel. By adding into the deep reinforcement learning network, the industrial mechanical arm automatic control method based on deep reinforcement learning can solve the automatic control problem of the industrial mechanical arm in an complicated environment, so as to complete automatic control of the mechanical arm, and the operating speed and the accuracy of the industrial mechanical arm are highafter completion of training.

Owner:HUBEI UNIV OF TECH

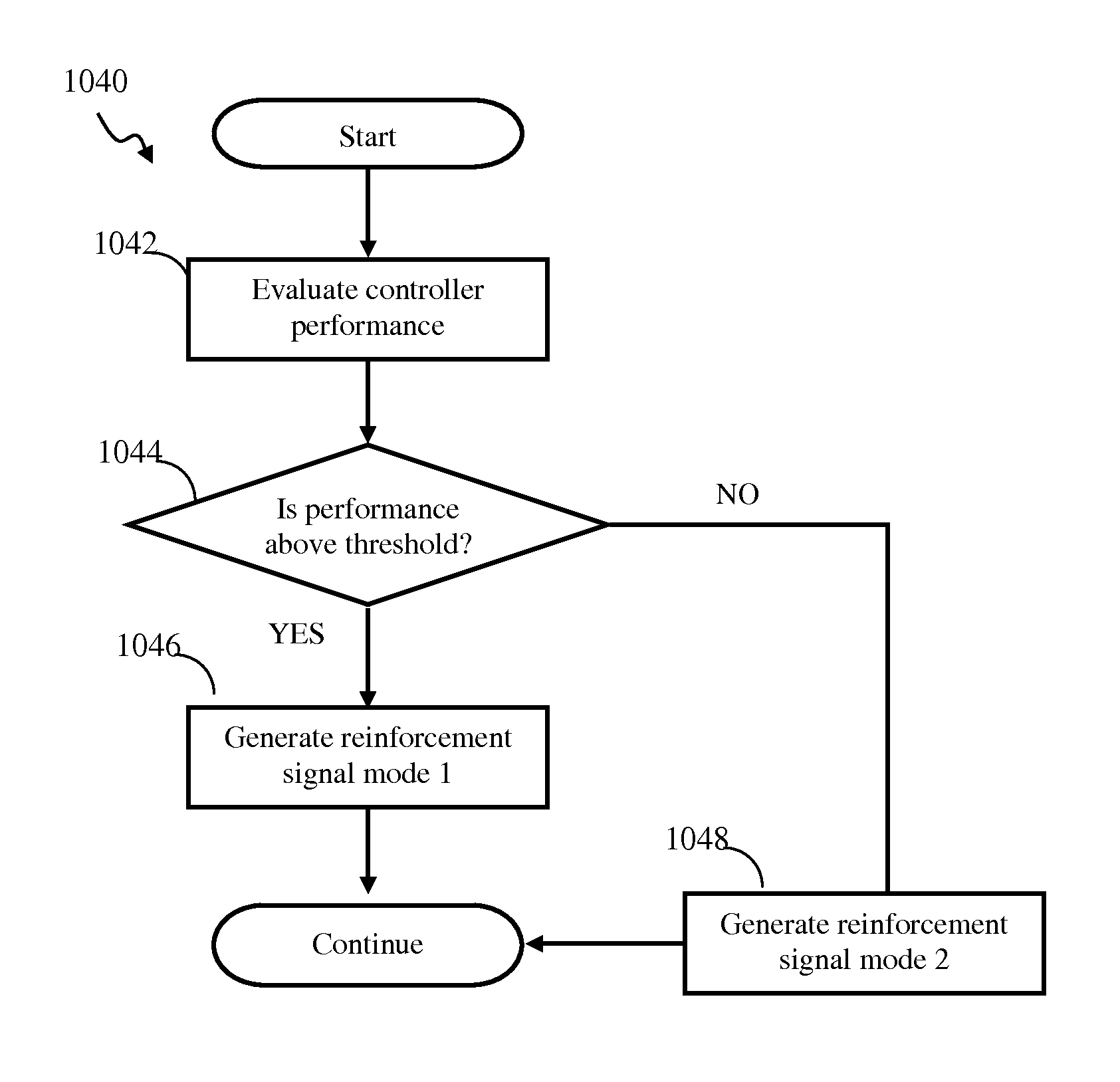

Modulated stochasticity spiking neuron network controller apparatus and methods

ActiveUS9189730B1Lower Level RequirementsDiminished rate of learningNeural architecturesNeural learning methodsNeuron networkNeural network controller

Adaptive controller apparatus of a plant may be implemented. The controller may comprise an encoder block and a control block. The encoder may utilize basis function kernel expansion technique to encode an arbitrary combination of inputs into spike output. The controller may comprise spiking neuron network operable according to reinforcement learning process. The network may receive the encoder output via a plurality of plastic connections. The process may be configured to adaptively modify connection weights in order to maximize process performance, associated with a target outcome. The relevant features of the input may be identified and used for enabling the controlled plant to achieve the target outcome. The stochasticity of the learning process may be modulated. Stochasticity may be increased during initial stage of learning in order to encourage exploration. During subsequent controller operation, stochasticity may be reduced to reduce energy use by the controller.

Owner:BRAIN CORP

Systems and Methods for Providing Reinforcement Learning in a Deep Learning System

Systems and methods for providing reinforcement learning for a deep learning network are disclosed. A reinforcement learning process that provides deep exploration is provided by a bootstrap that applied to a sample of observed and artificial data to facilitate deep exploration via a Thompson sampling approach.

Owner:THE BOARD OF TRUSTEES OF THE LELAND STANFORD JUNIOR UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com