Patents

Literature

330 results about "Q-learning" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

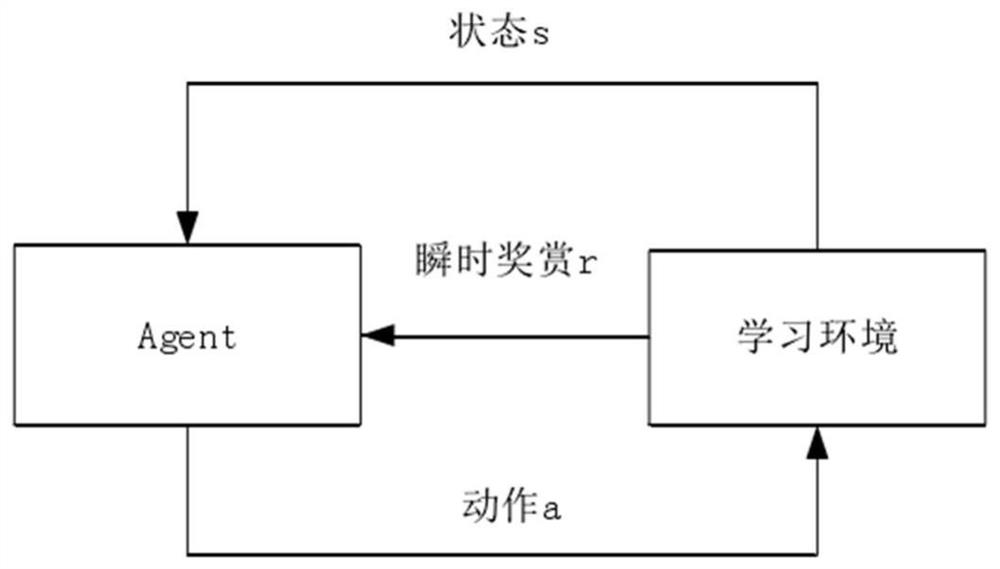

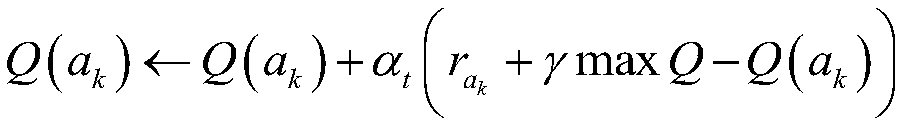

Q-learning is a model-free reinforcement learning algorithm. The goal of Q-learning is to learn a policy, which tells an agent what action to take under what circumstances. It does not require a model (hence the connotation "model-free") of the environment, and it can handle problems with stochastic transitions and rewards, without requiring adaptations.

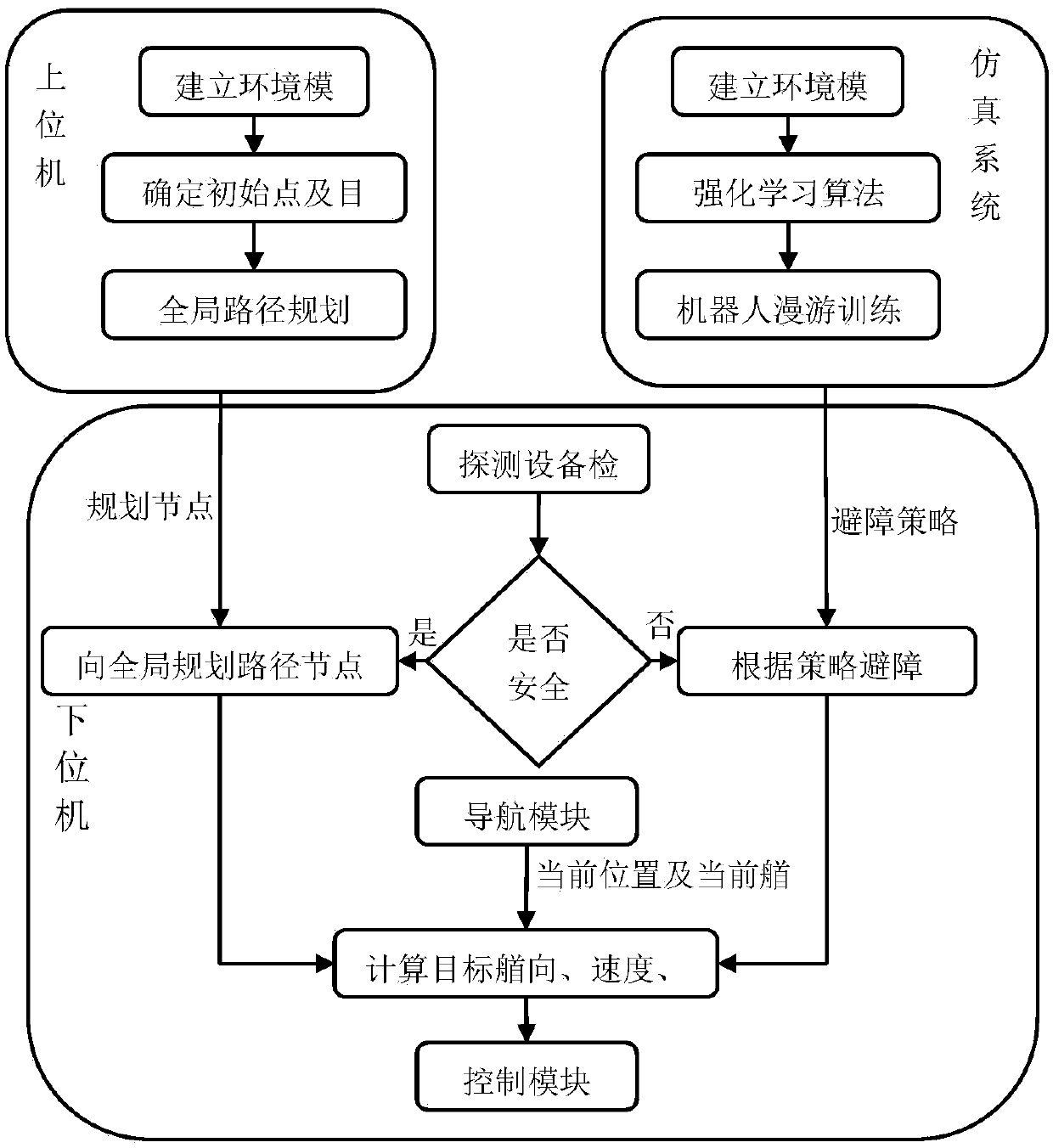

AUV (Autonomous Underwater Vehicle) three-dimensional path planning method based on reinforcement learning

ActiveCN109540151AGuaranteed optimalityGuaranteed economyNavigational calculation instrumentsPosition/course control in three dimensionsNODALSimulation

The invention designs an AUV (Autonomous Underwater Vehicle) three-dimensional path planning method based on reinforcement learning. The AUV three-dimensional path planning method comprises the following steps: firstly, modeling a known underwater working environment, and performing global path planning for an AUV; secondly, designing a bonus value specific to a special working environment and a planning target of the AUV, performing obstacle avoidance training on the AUV by using a Q learning method improved on the basis of a self-organizing neural network, and writing an obstacle avoidance strategy obtained by training into an internal control system of a robot; and finally receiving global path planning nodes after the robot enters into water, calculating a target heading plan by the AUV with the global path planning nodes as target nodes for planning a route, and avoiding obstacles by using the obstacle avoidance strategy in case of emergent obstacles. Through adoption of the method, the economical efficiency of the AUV routing path is ensured, and the security in case of emergent obstacles is ensured. Meanwhile, the route planning accuracy can be improved; the planning time isshortened; and the environmental adaptability of the AUV is enhanced. The method can be applied to the AUV which carriers an obstacle avoidance sonar and can implement autonomous routing.

Owner:HARBIN ENG UNIV

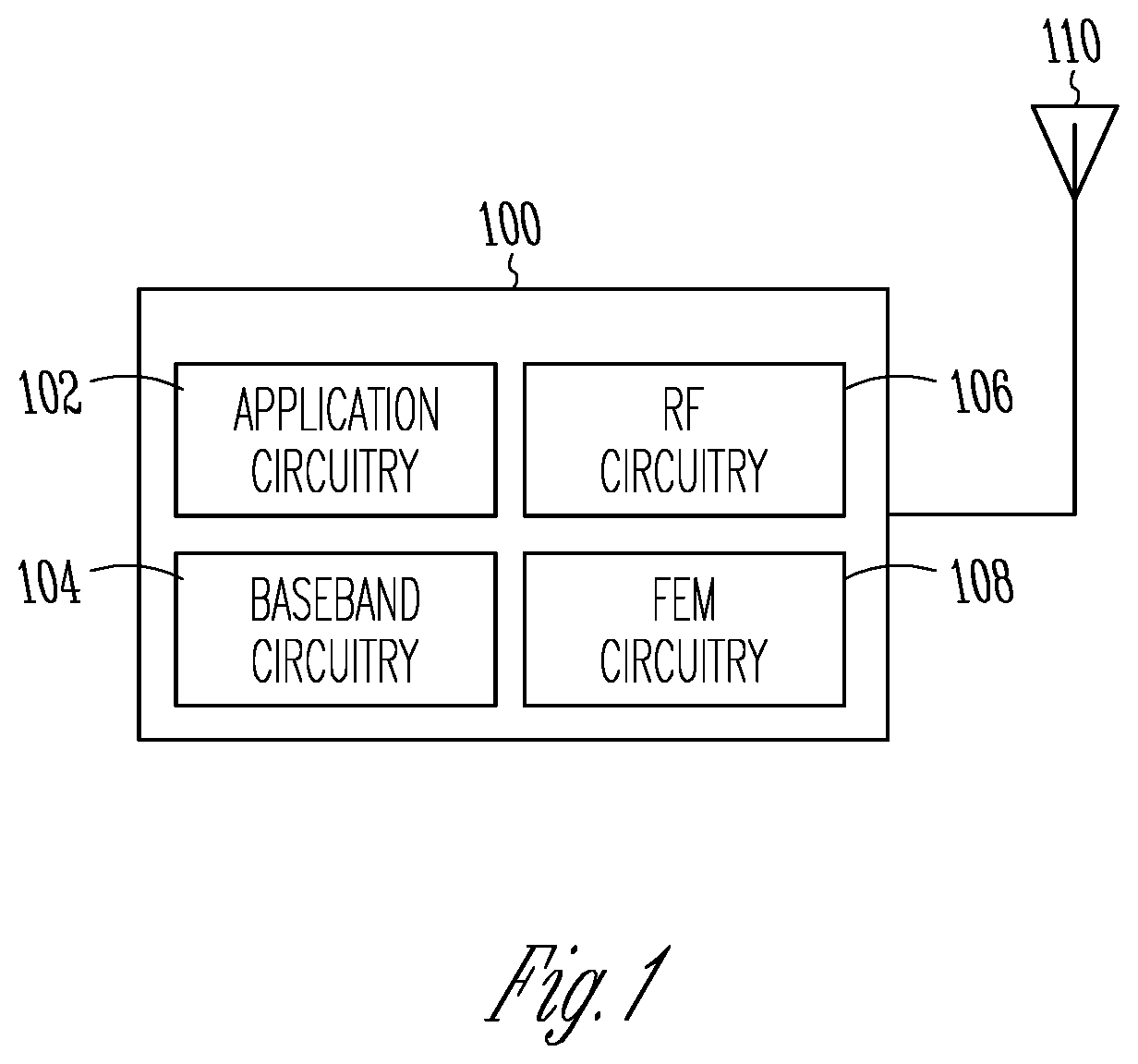

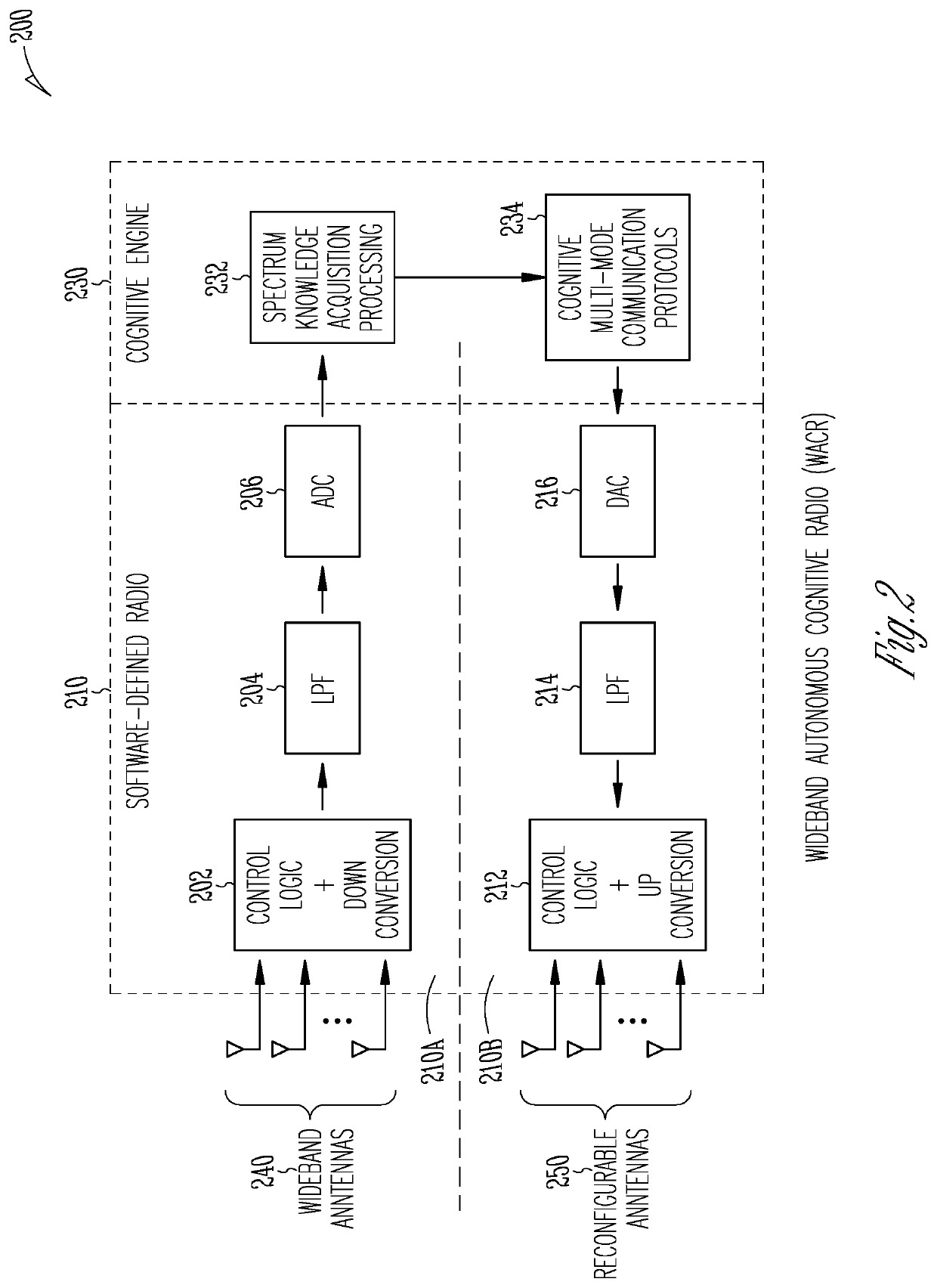

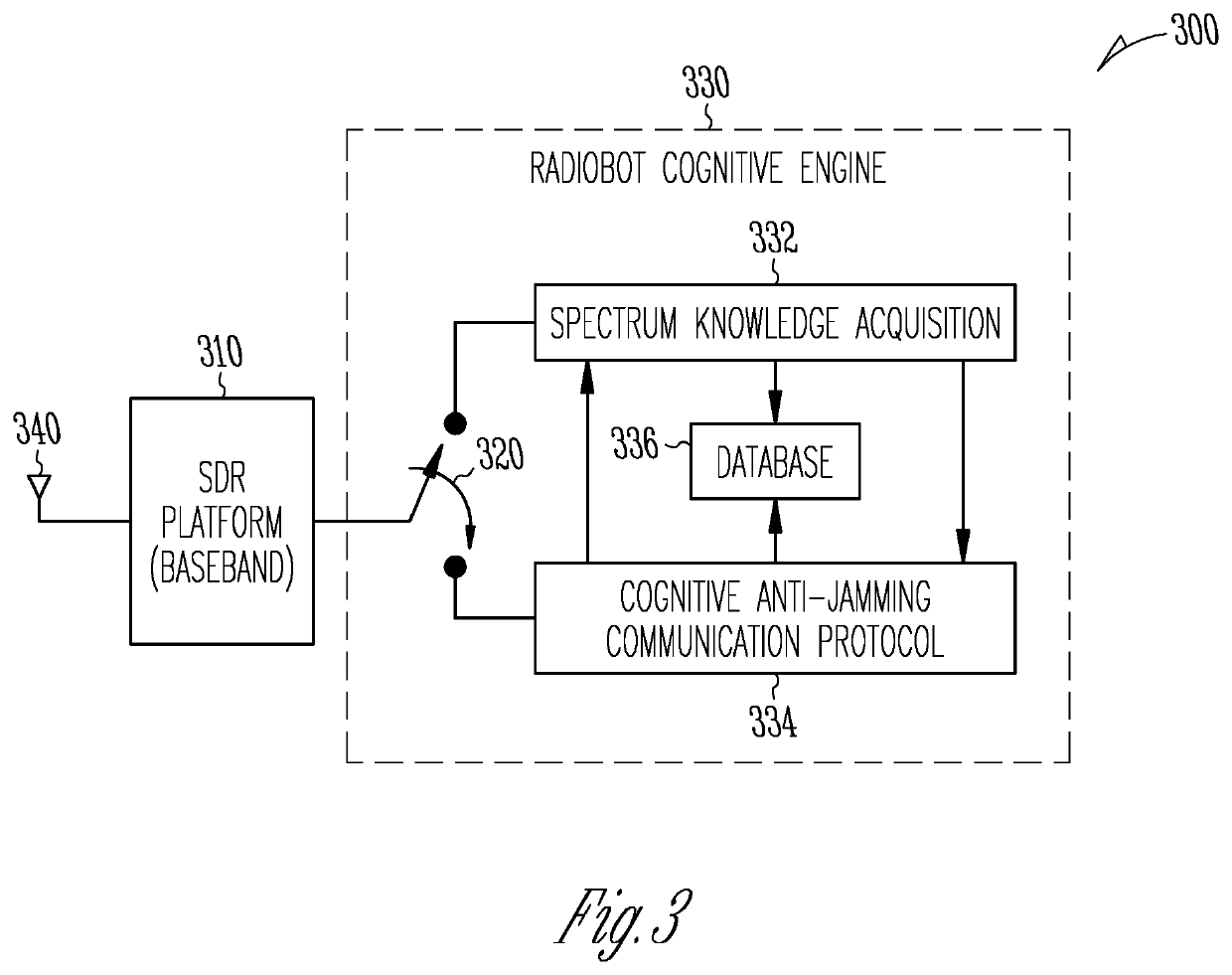

Reinforcement learning based cognitive Anti-jamming communications system and method

InactiveUS20200153535A1Random number generatorsCharacter and pattern recognitionAnti jammingCommunications system

Systems and methods of using machine-learning in a cognitive radio to avoid a jammer are described. Smoothed power spectral density is used to detect activity in a sub-band and basic characteristics of different signals therein extracted. If unable to classify the signals as either a valid signal or a jammer using the basic characteristics, ANN-based classification with cumulants features of the signals is used. Multiple periods are used to train sensing and communications (S / C) polices to track and avoid a jammer using RL (e.g. Q learning). The ANN has input neurons of higher order cumulants of a sensing channel and a single output neuron. The S / C polices are coupled during training and communication using negative or decreasing rewards based on the time the sensing policy takes to determine jammer presence and that the cognitive radio is jammed. A feedback channel provides a new communications channel to a radio transmitting to the cognitive radio.

Owner:BLUECOM SYST & CONSULTING LLC

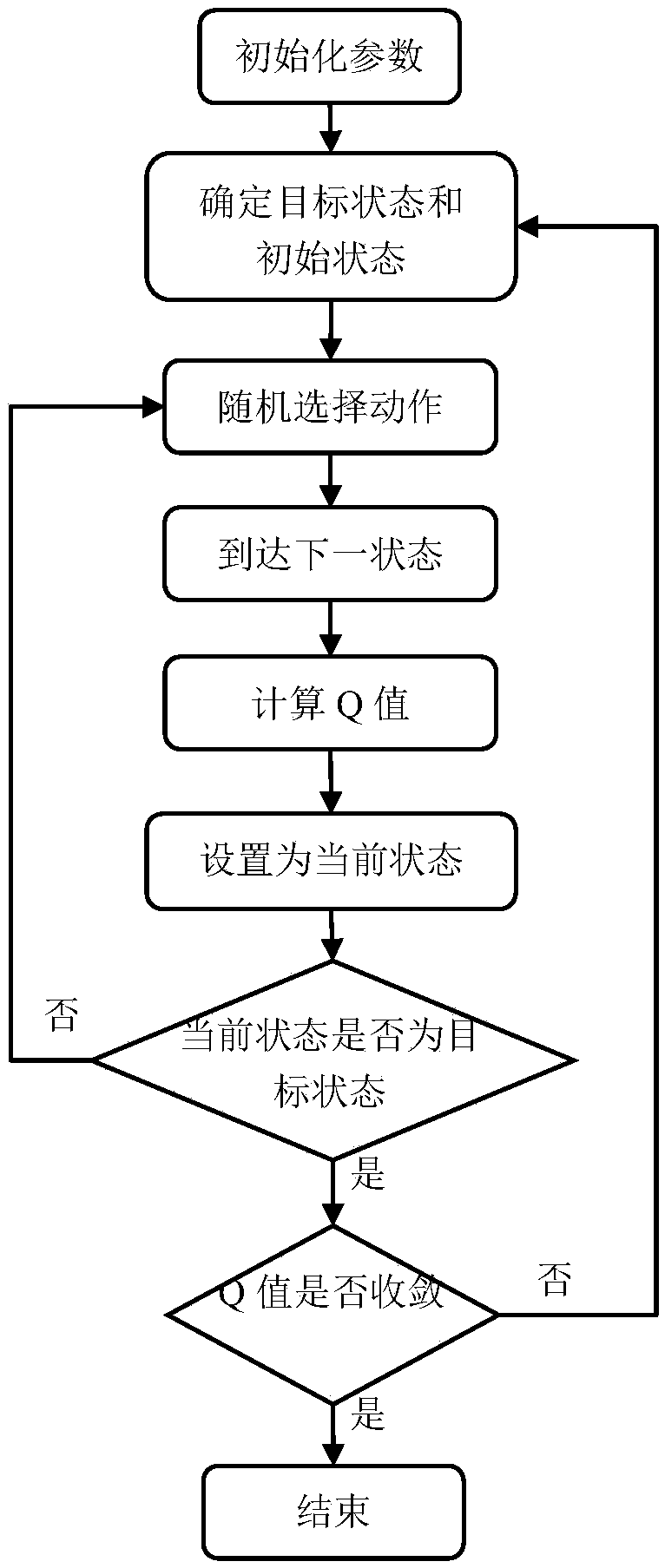

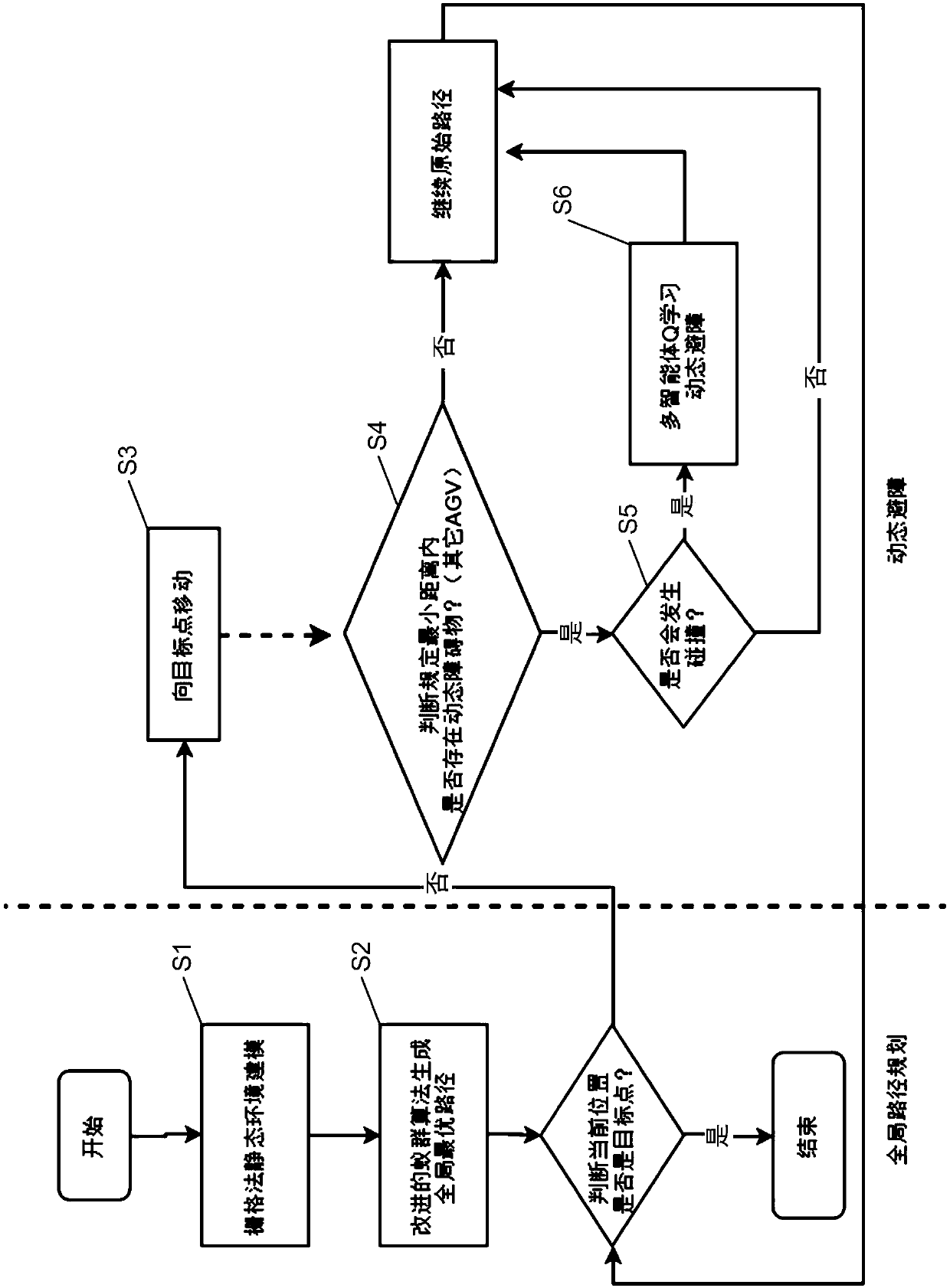

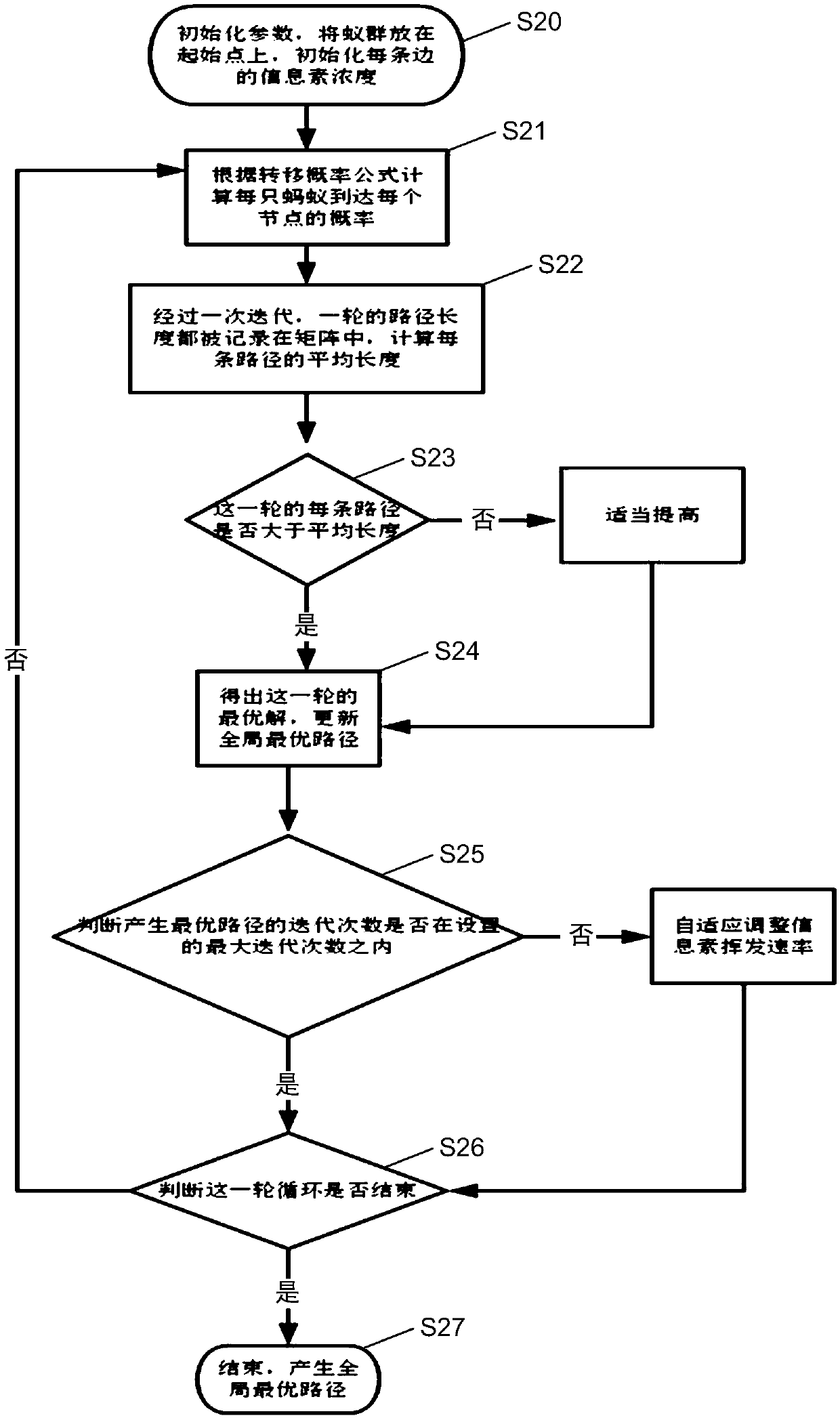

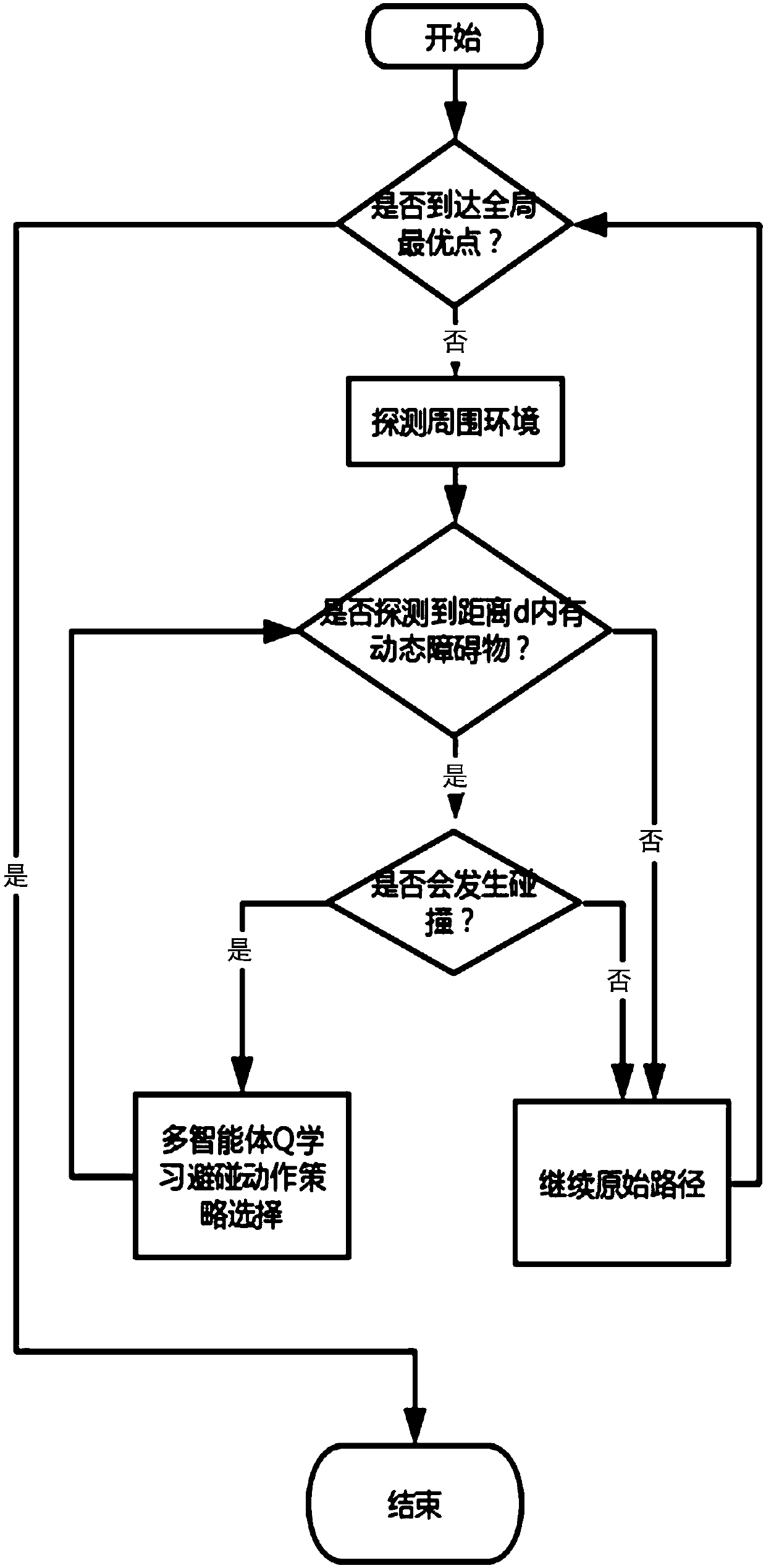

AGV (Automated Guided Vehicle) route planning method and system based on ant colony algorithm and multi-intelligent agent Q learning

ActiveCN108776483AOptimize global search capabilitiesFast convergencePosition/course control in two dimensionsAutomated guided vehicleEngineering

The invention discloses an AGV (Automated Guided Vehicle) route planning method and system based on an ant colony algorithm and multi-intelligent agent Q learning, improving the global optimization ability, realizing a case that an AGV learns how to avoid an obstacle in the interaction process by introducing the multi-intelligent agent Q learning into a route planning research of the AGV, and canplay independence and learning capacity of the AGV better. The AGV route planning method and system is characterized in that according to a static environment, carrying out modeling on an AGV operation environment by utilizing a grid method, and setting an initial point and a target point; according to coordinates of the initial point and the target point of the AGV, generating a global optimal route by the ant colony algorithm; enabling the AGV to move towards the target point according to the global optimal route, and when detecting that a dynamic obstacle exists in a minimum distance, carrying out selection of an obstacle avoidance strategy by an environment state corresponding to the multi-intelligent agent Q learning so as to take a corresponding obstacle avoidance action, and after ending obstacle avoidance, returning to an original route to continuously move.

Owner:YTO EXPRESS CO LTD

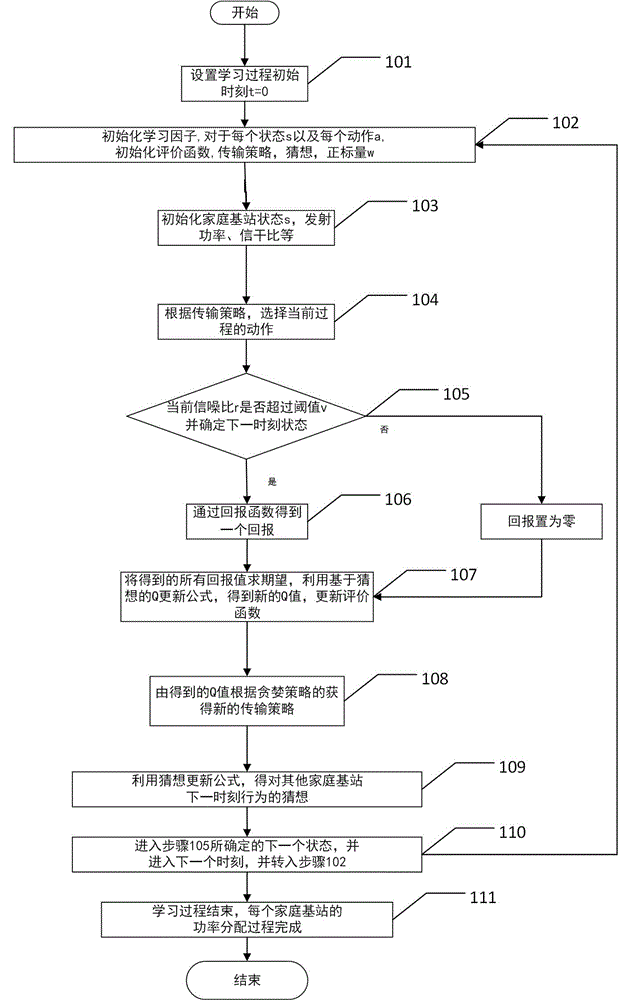

Resource allocation method for reinforcement learning in ultra-dense network

InactiveCN106358308AImprove performancePower managementNetwork topologiesSystem capacityTransmitted power

A resource allocation method for reinforcement learning in an ultra-dense network is provided. The invention relates to the field of ultra-dense networks in 5G (fifth generation) mobile communications and provides a method for allocating resources between a home node B and a macro node B, between a home node B and another home node B and between a home node B and a mobile user in a dense deployment network; the method is implemented through power control, each femotcell is considered as an intelligent body to jointly adjust transmitting powers of home node Bs, the densely deployed home node Bs are avoided causing severe jamming to a macro node B and an adjacent B when transmitting at maximum powder, and system throughput is maximized; user delay QoS is considered, and traditional 'Shannon capacity' is replaced with 'available capacity' that may ensure user delay; a supermodular game model is utilized such that whole network power distribution gains Nash equilibrium; the reinforcement learning method Q-learning is utilized such that the home node B has learning function, and optimal power distribution can be achieved; by using the resource allocation method, it is possible to effectively improve the system capacity of an ultra-dense network at the premise of satisfying user delay.

Owner:BEIJING UNIV OF CHEM TECH

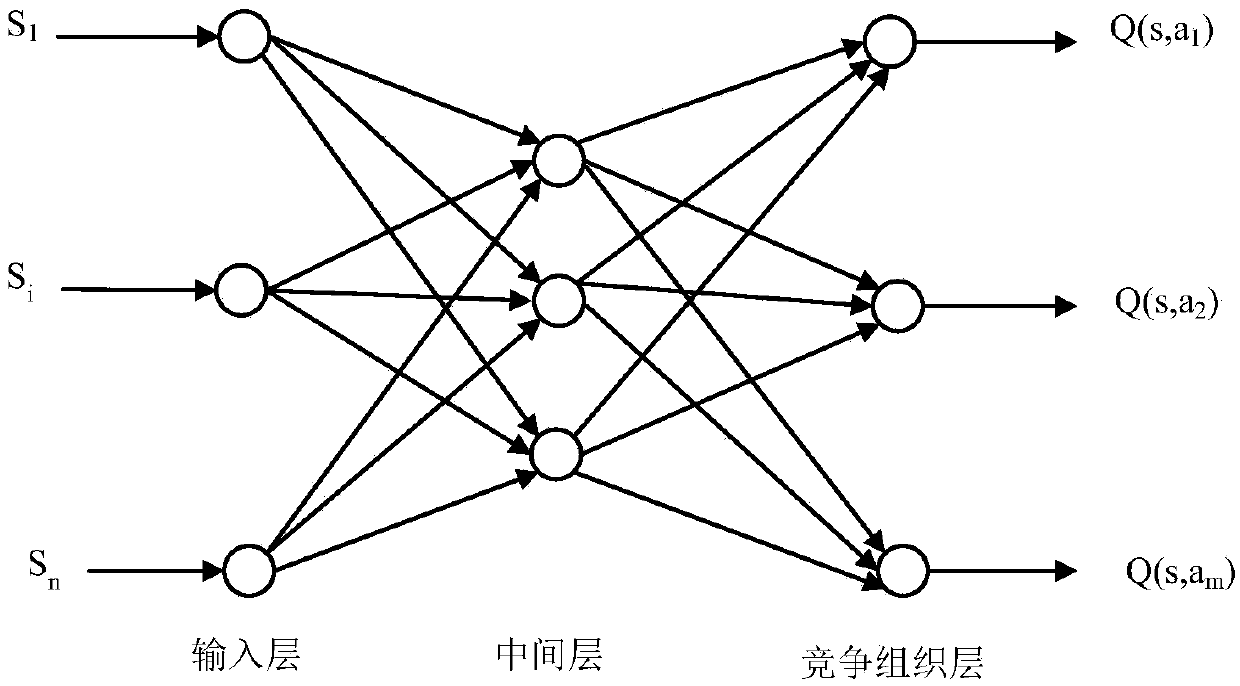

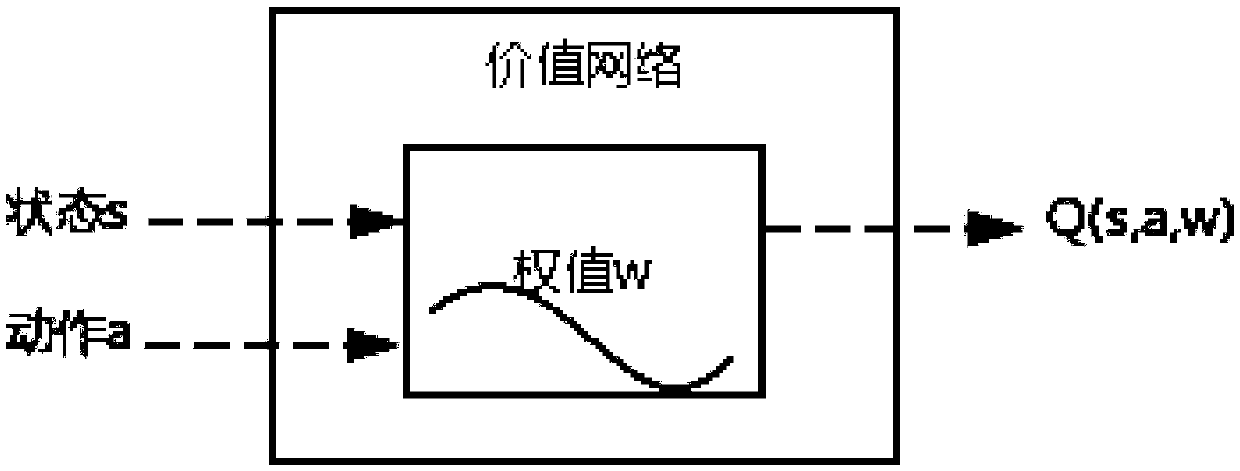

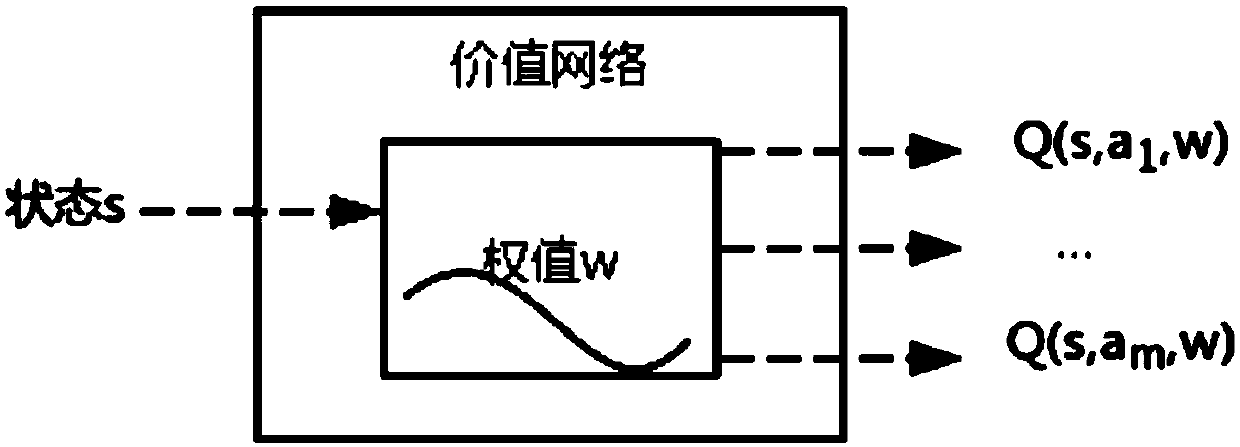

An unmanned ship path planning method based on a Q learning neural network

InactiveCN109726866ASolving autonomous navigation path planning problemsShort decision timeForecastingNeural learning methodsSimulationQ-learning

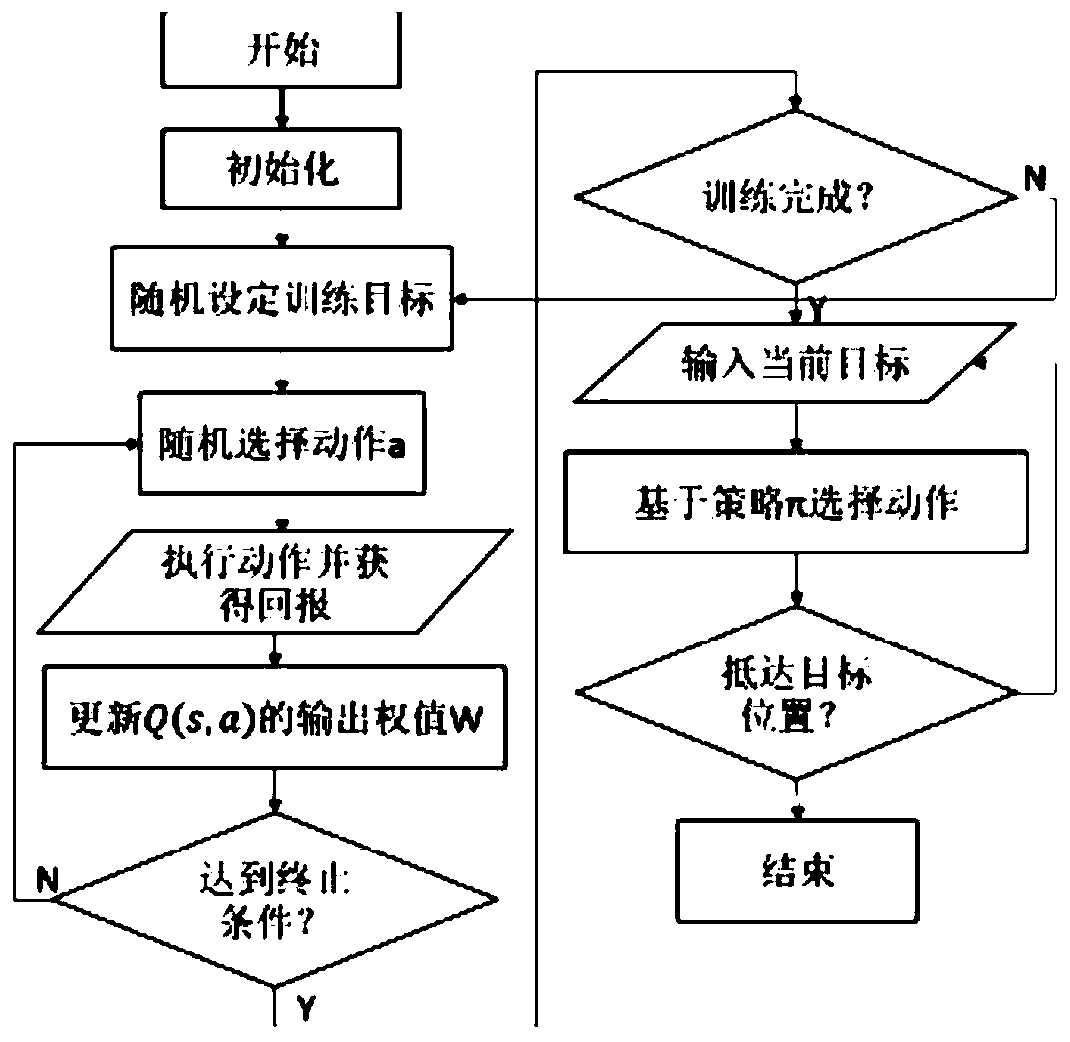

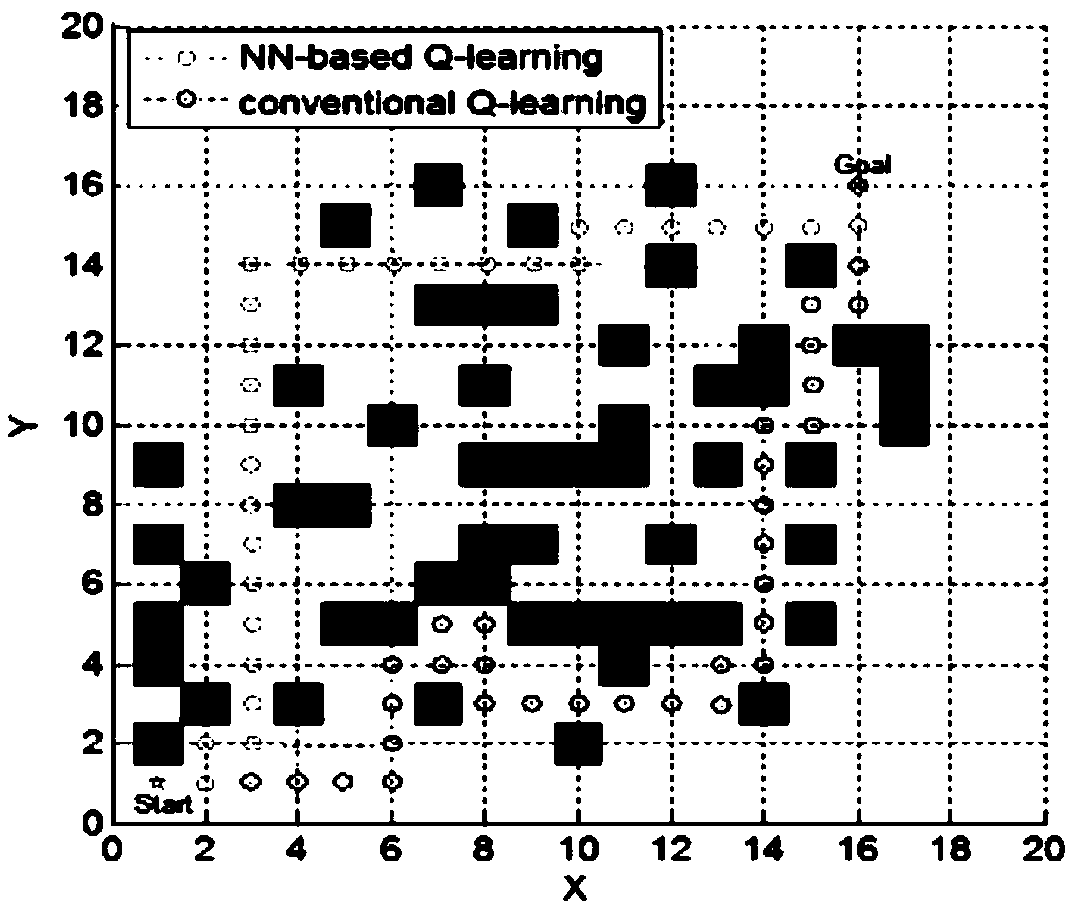

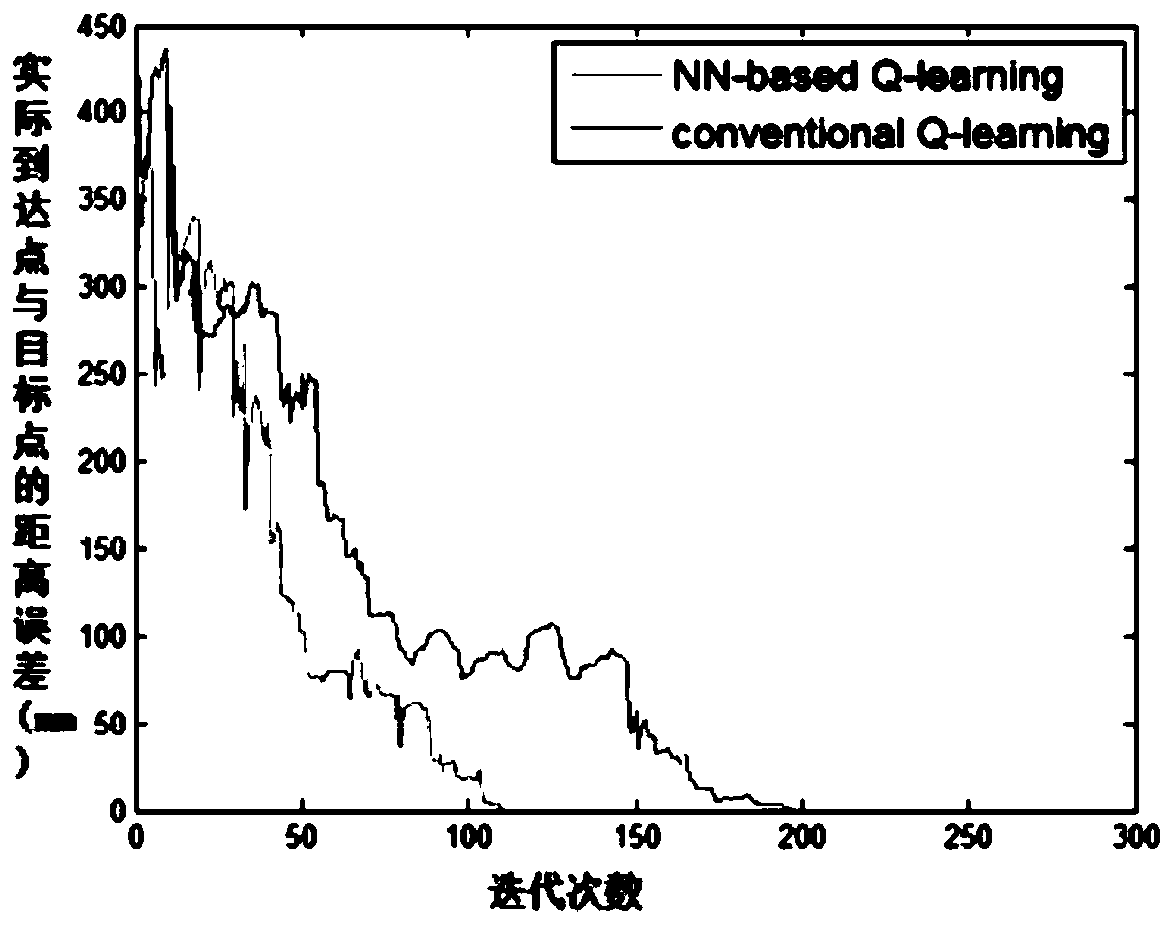

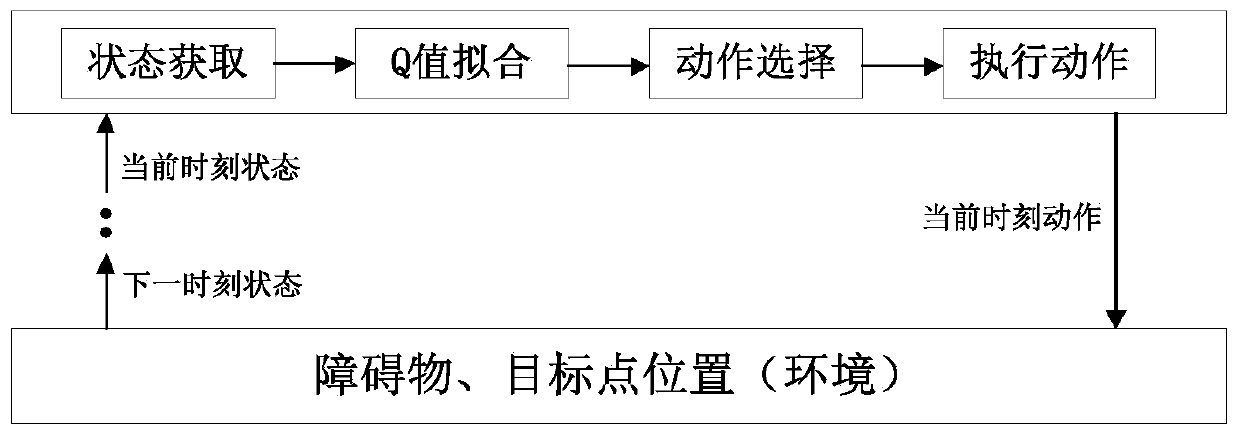

The invention discloses an unmanned ship path planning method based on a Q learning neural network. The method comprises the following steps: a) initializing a storage area D; B) initializing a Q network, a state and an action initial value; C) randomly setting a training target; D) randomly selecting an action at a to obtain a current reward rt and a next moment state st + 1, and storing (st, at,rt and st + 1) into a storage area D; E) randomly sampling a batch of data from the storage area D for training, namely a batch (st, at, rt, st + 1), and considering the state when the USV reaches the target position or exceeds the maximum time of each round as the final state; F) if the st + 1 is not the final state, returning to the step d), if the st + 1 is the final state, updating Q networkparameters, returning to the step d), and repeating n rounds to finish the algorithm; And g) setting a target, and carrying out path planning by using the trained Q network until the USV reaches the target position. The decision-making time is short, the path is more optimized, and the real-time requirement of online planning can be met.

Owner:ZHEJIANG FORESTRY UNIVERSITY

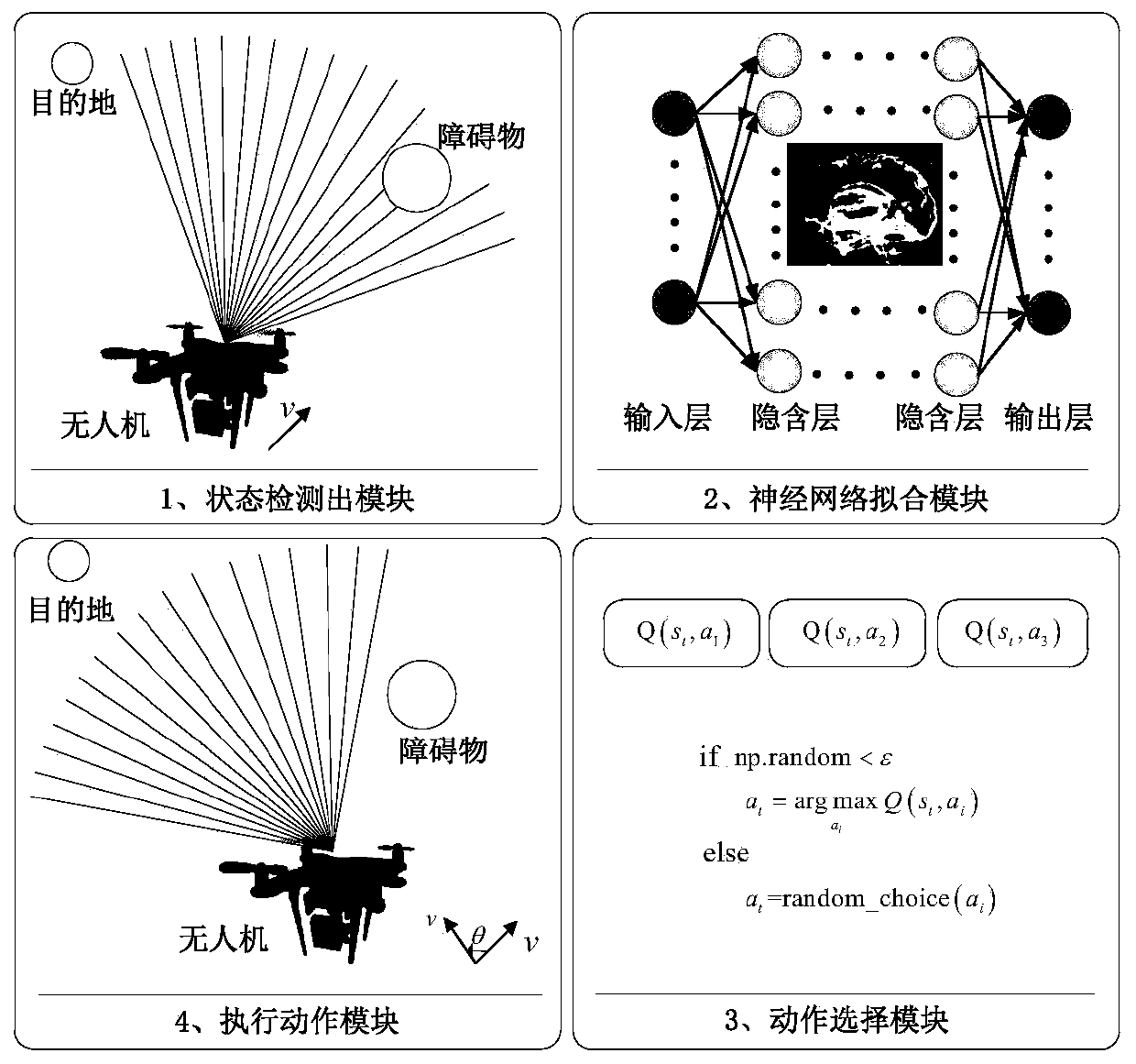

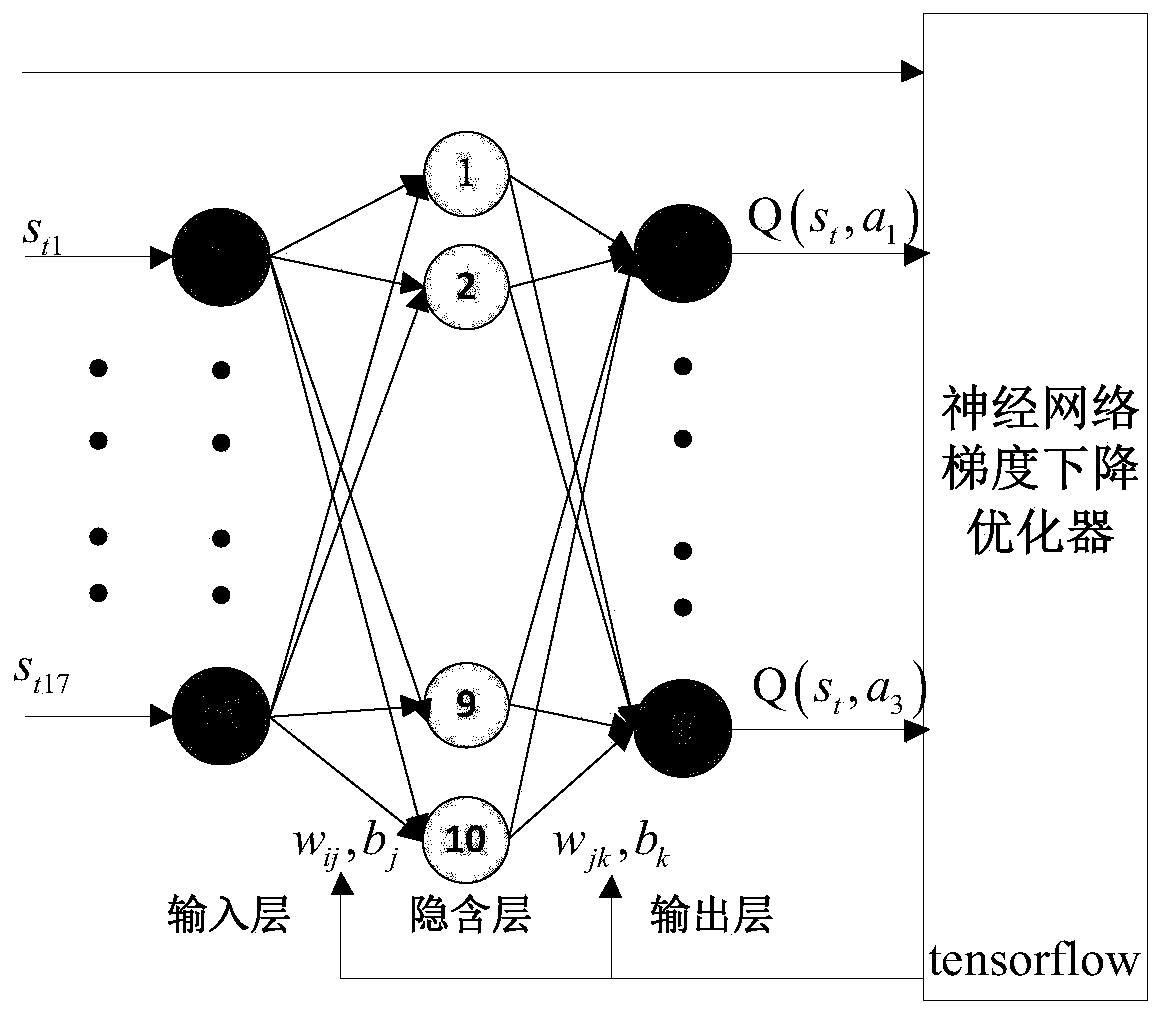

Depth Q learning-based UAV (unmanned aerial vehicle) environment perception and autonomous obstacle avoidance method

ActiveCN109933086AImprove robustnessAvoid passingAutonomous decision making processPosition/course control in three dimensionsLearning basedRadar

The invention belongs to the field of the environment perception and autonomous obstacle avoidance of quadrotor unmanned aerial vehicles and relates to a depth Q learning-based UAV (unmanned aerial vehicle) environment perception and autonomous obstacle avoidance method. The invention aims to reduce resource loss and cost and satisfy the real-time performance, robustness and safety requirements ofthe autonomous obstacle avoidance of an unmanned aerial vehicle. According to the depth Q learning-based UAV (unmanned aerial vehicle) environment perception and autonomous obstacle avoidance methodprovided by the technical schemes of the invention, a radar is utilized to detect a path within a certain distance in front of an unmanned aerial vehicle, so that a distance between the radar and an obstacle and a distance between the radar and a target point are obtained and are adopted as the current states of the unmanned aerial vehicle; during a training process, a neural network is used to simulate a depth learning Q value corresponding to each state-action of the unmanned aerial vehicle; and when a training result gradually converges, a greedy algorithm is used to select an optimal action for the unmanned aerial vehicle under each specific state, and therefore, the autonomous obstacle avoidance of the unmanned aerial vehicle can be realized. The method of the invention is mainly applied to unmanned aerial vehicle environment perception and autonomous obstacle avoidance control conditions.

Owner:TIANJIN UNIV

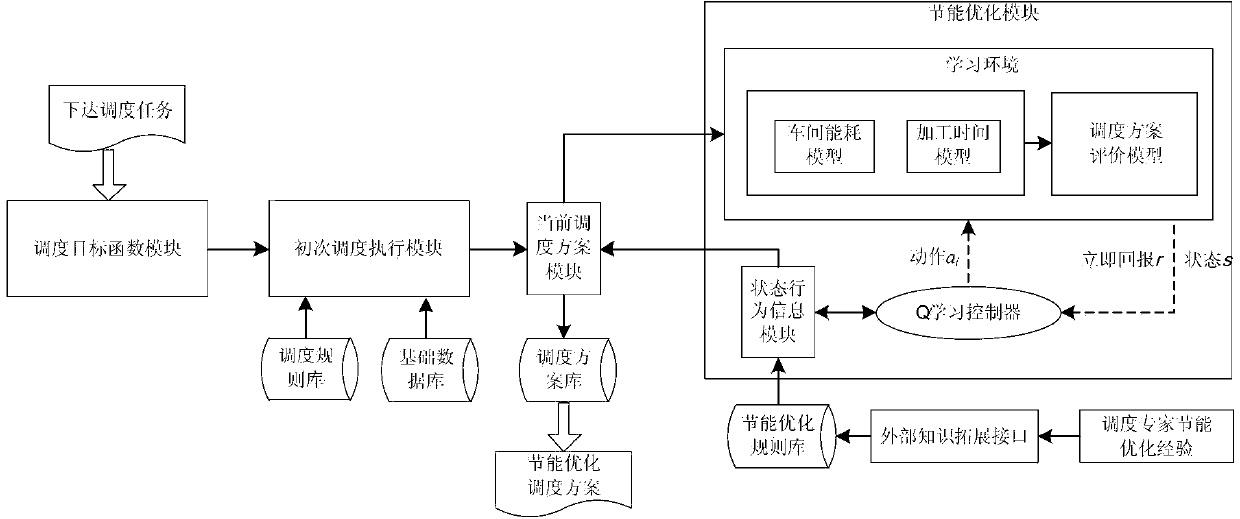

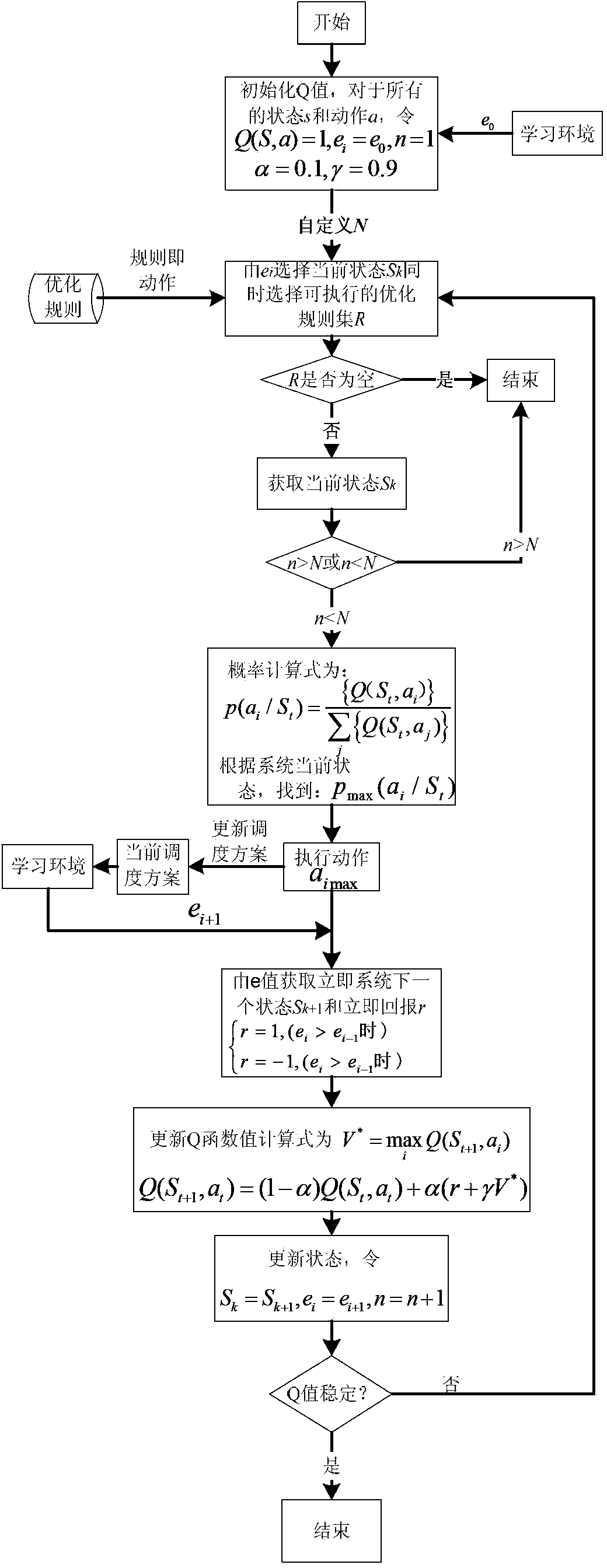

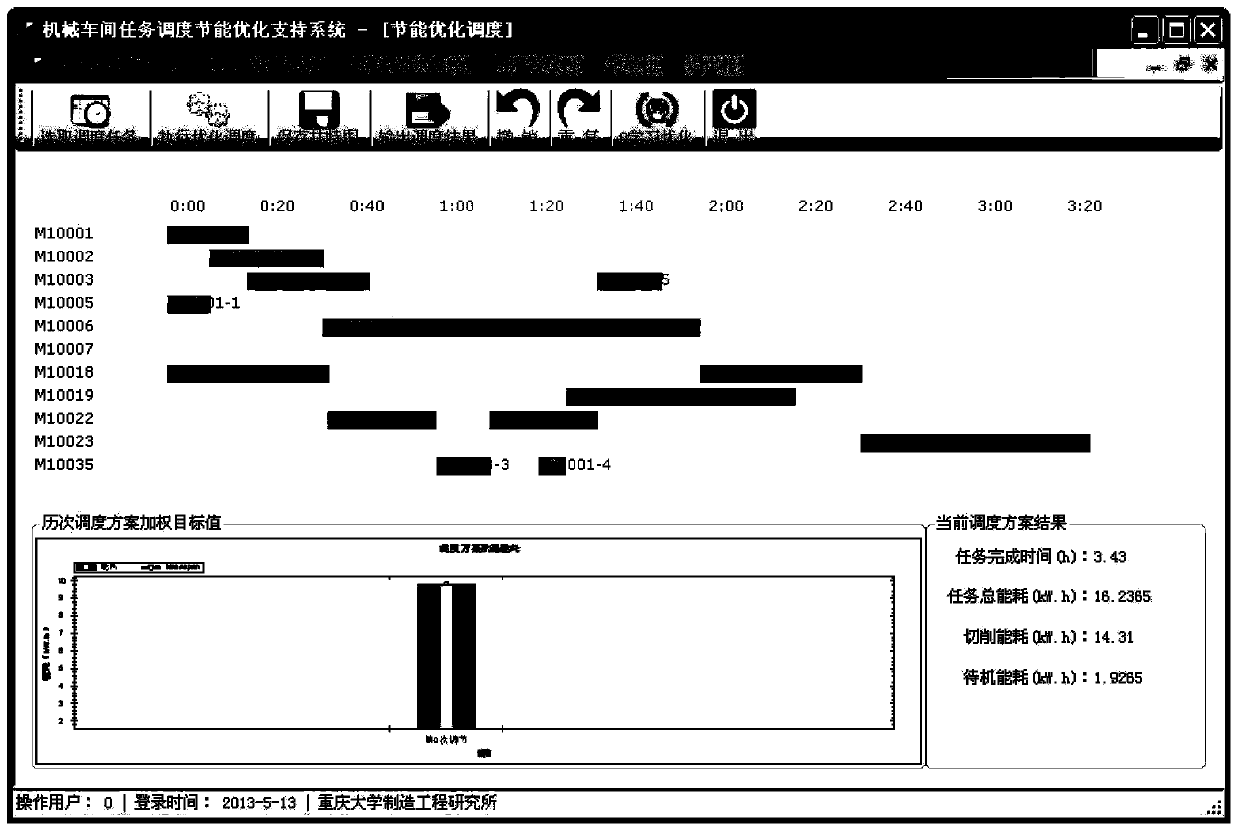

Machine workshop task scheduling energy-saving optimization system based on reinforcement learning

ActiveCN103390195AReduce energy consumptionReduce computational complexityForecastingManufacturing computing systemsLearning controllerEnergy expenditure

The invention discloses a machine workshop task scheduling energy-saving optimization system based on reinforcement learning. The machine workshop task scheduling energy-saving optimization system comprises a scheduling target function module, a basic database, a scheduling rule library, a primary scheduling execution module, an energy-saving optimization rule library, an energy-saving optimization module and a scheduling scheme library. Firstly, scheduling targets are set in the scheduling target function module, and primary scheduling is executed in the primary scheduling execution module by selecting scheduling rules from the scheduling rule library and utilizing basic data of the basic database to obtain primary scheduling schemes; then, energy-saving optimization experiences of experts are typed in from an outside-system knowledge expanding port to obtain energy-saving optimization rules; meanwhile, a learning environment is built in the energy-saving optimization module and comprises a workshop energy-consumption module, a processing time module and a scheduling scheme evaluation model; finally, an energy-saving optimization strategy is obtained for the primary scheduling schemes through interaction between a Q learning controller and the learning environment. The machine workshop task scheduling energy-saving optimization system can reduce energy consumption in a machine workshop production process by means of scheduling and has great significance for energy saving and emission reduction of machine workshops.

Owner:CHONGQING UNIV

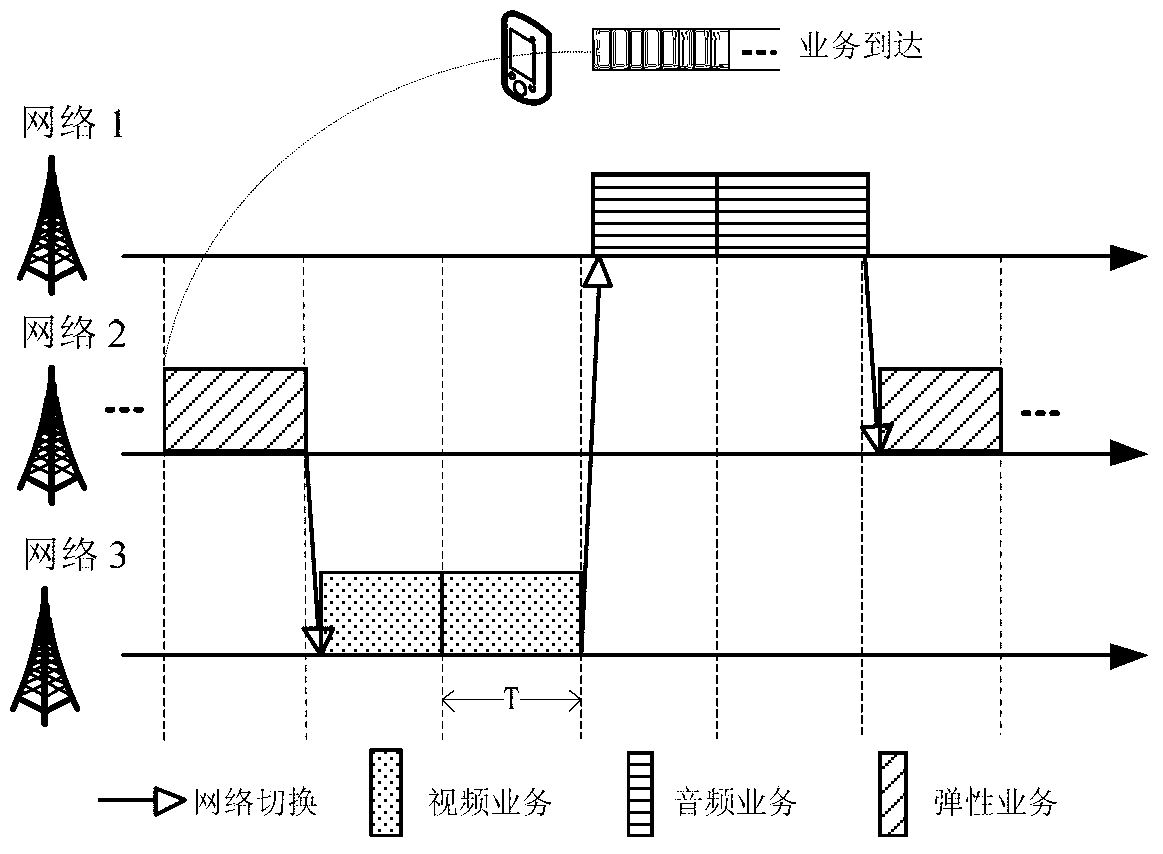

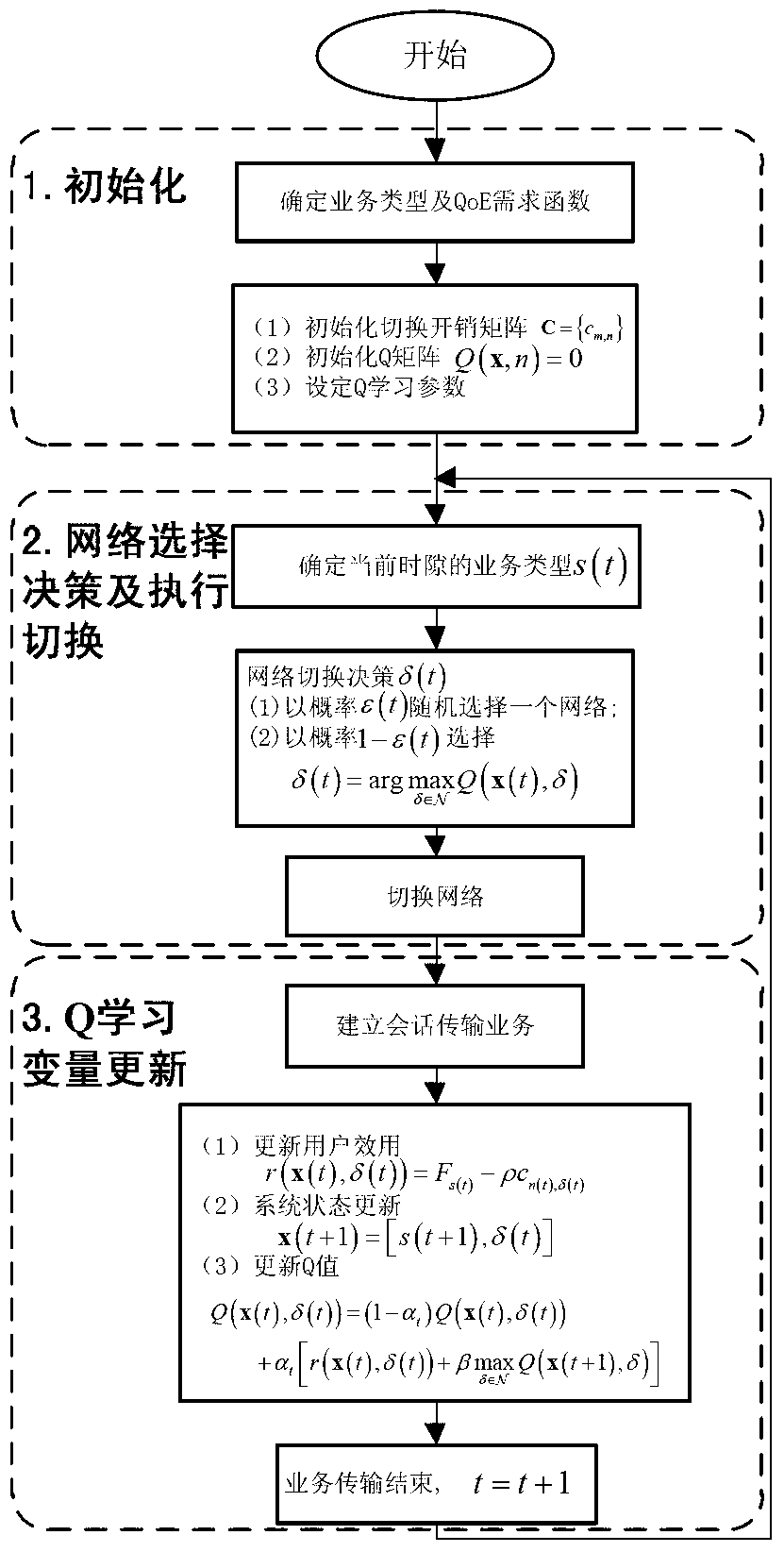

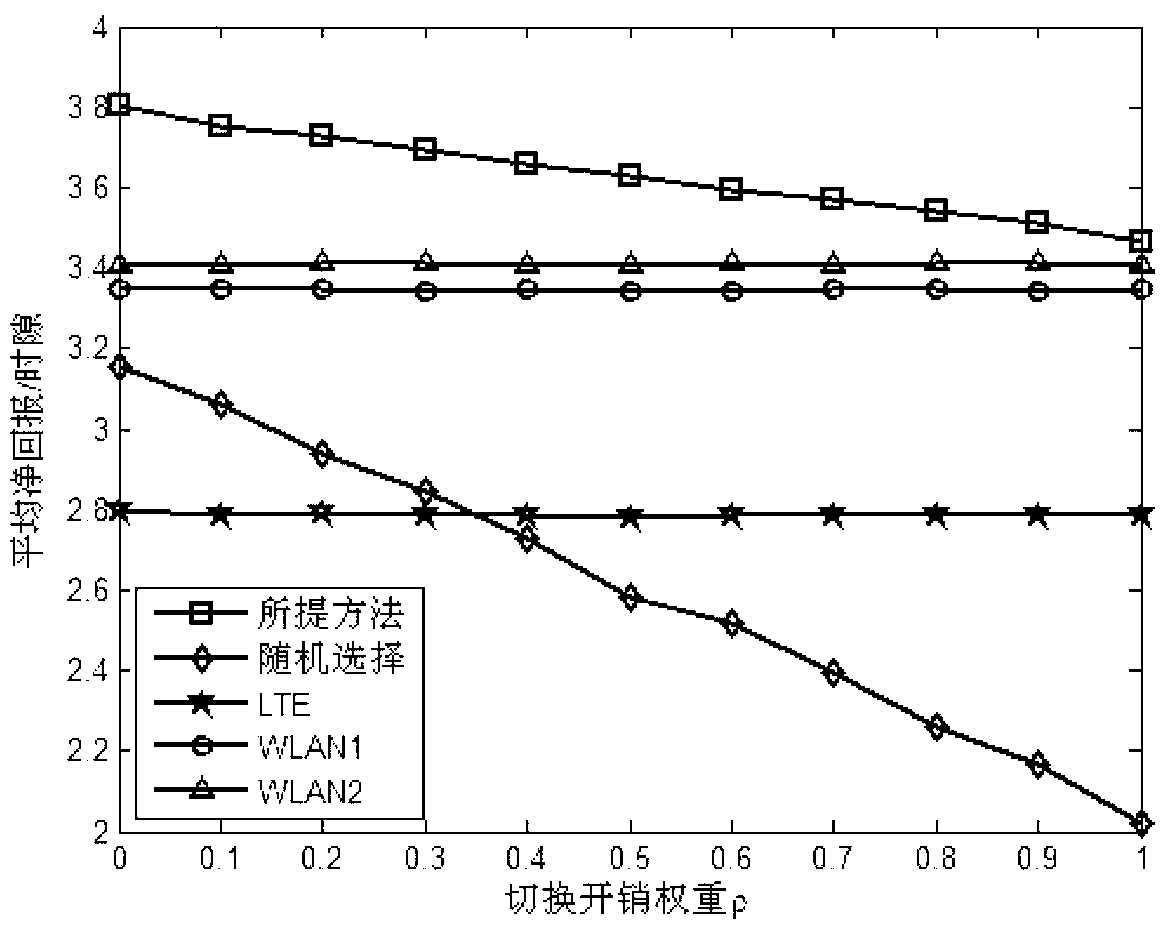

Dynamic network selection method for optimizing quality of experience (QoE) of user in heterogeneous wireless network

ActiveCN103327556AOptimize QoEEfficient use ofNetwork traffic/resource managementAccess networkStudy methods

The invention discloses a dynamic network selection method for optimizing the quality of experience (QoE) of a user in a heterogeneous wireless network. According to the method, an access network is periodically and dynamically updated according to the type of transmitted service and a network in which the current user accesses. The method comprises the following steps of: constructing user QoE demand functions of three service types and initializing variables in Q learning; performing network selection decision and execution switching by a Q learning method; and updating the variables in the Q learning method. According to the method, from the visual angle of the user, different service characteristics are distinguished, and the QoE of the user is optimized. According to the dynamic network selection method disclosed by the invention, heterogeneous wireless network resources can be efficiently used; based on reinforced learning, transcendental network state information is not needed; and the dynamic network selection method is higher in flexibility and can be suitable for various dynamic network environments.

Owner:COMM ENG COLLEGE SCI & ENGINEEIRNG UNIV PLA

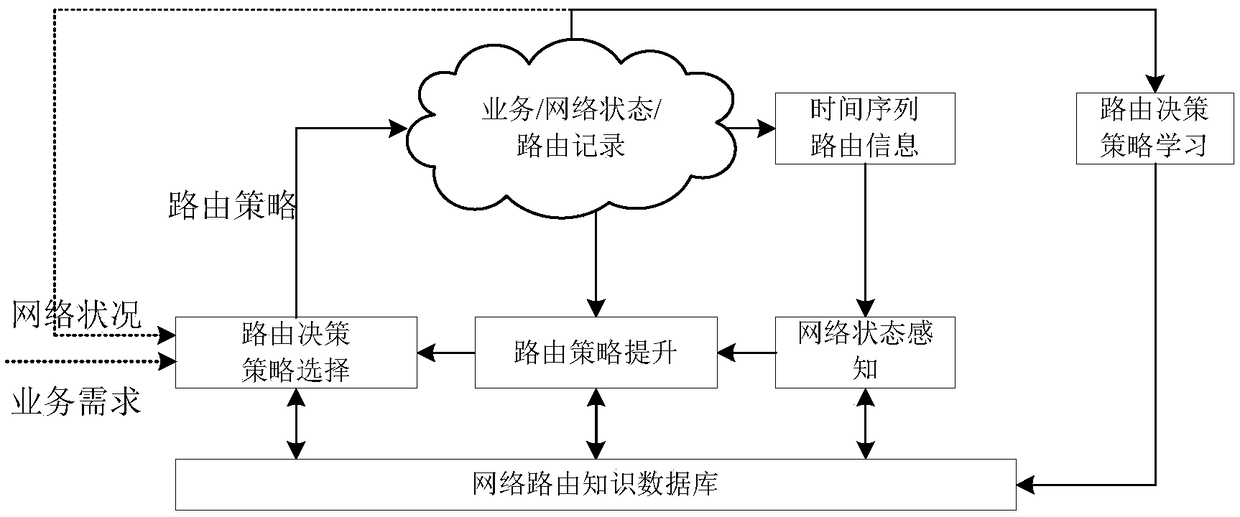

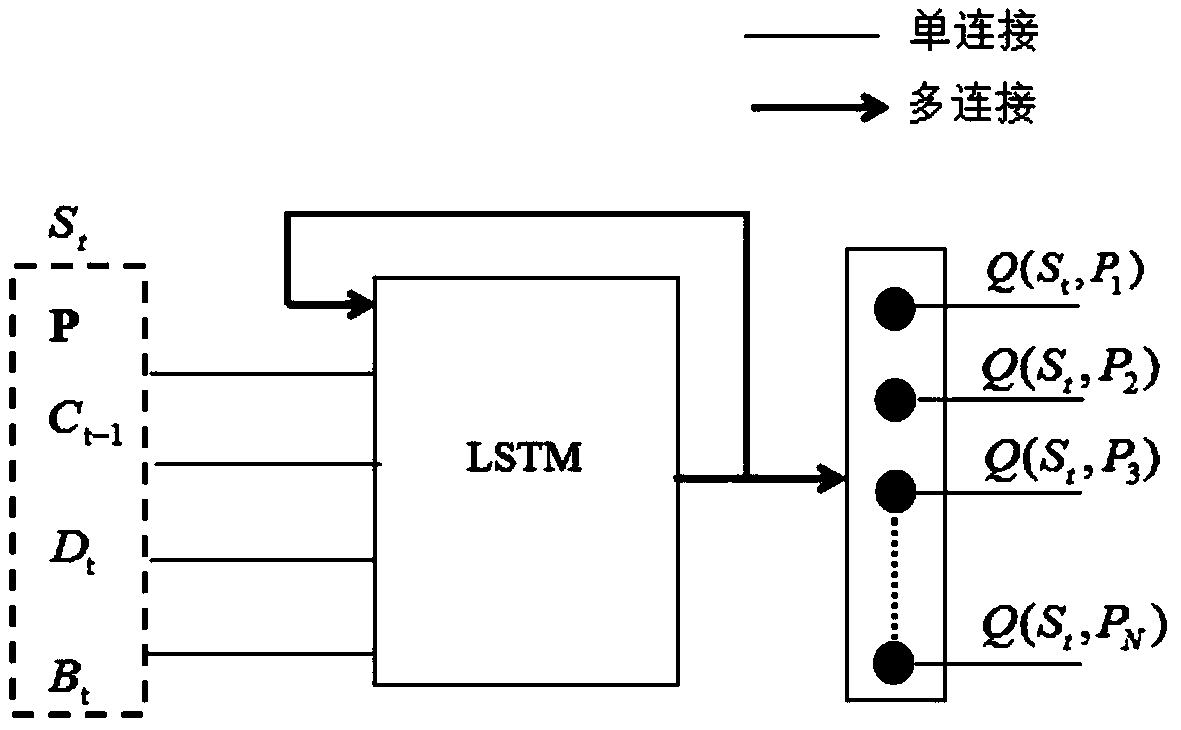

Fast routing decision algorithm based on Q learning and LSTM neural network

ActiveCN108667734ASolve the problem of slow convergence and long training processSave time and costData switching networksRouting decisionDecision model

The invention discloses a fast routing decision algorithm based on Q learning and a LSTM neural network. The algorithm is mainly divided into two stages including model training and dynamic routing decision; and the model training stage is mainly used for calculating an optimal or a relatively better path which satisfies a constraint condition according to different QoS requests through a heuristic algorithm. Then, input and corresponding output of the heuristic algorithm are combined to form a training set of a machine learning model, and the training set is taken as a target Q value of different routing to train a decision model. On the basis, when a controller receives a new QoS request, correspondingly, the machine learning model jointly takes the current network state and the constraint condition in the request as input of the model, corresponding Q value can be calculated quickly through the routing decision model in which LSTM and Q learning are combined, and prediction is completed and an optimal path can be output. Time for the process is greatly reduced in comparison with the heuristic algorithm, but the result is very similar.

Owner:NANJING UNIV OF POSTS & TELECOMM

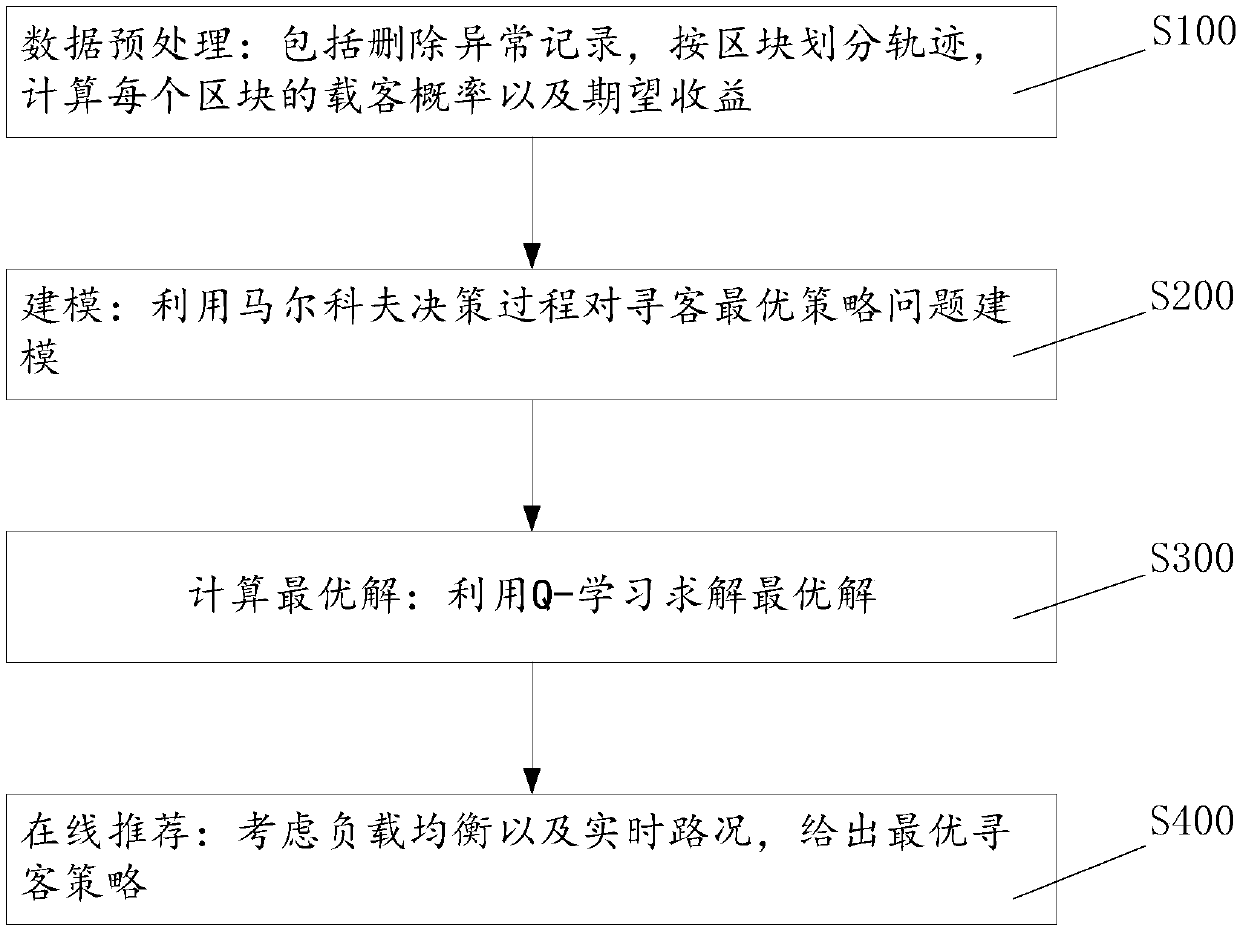

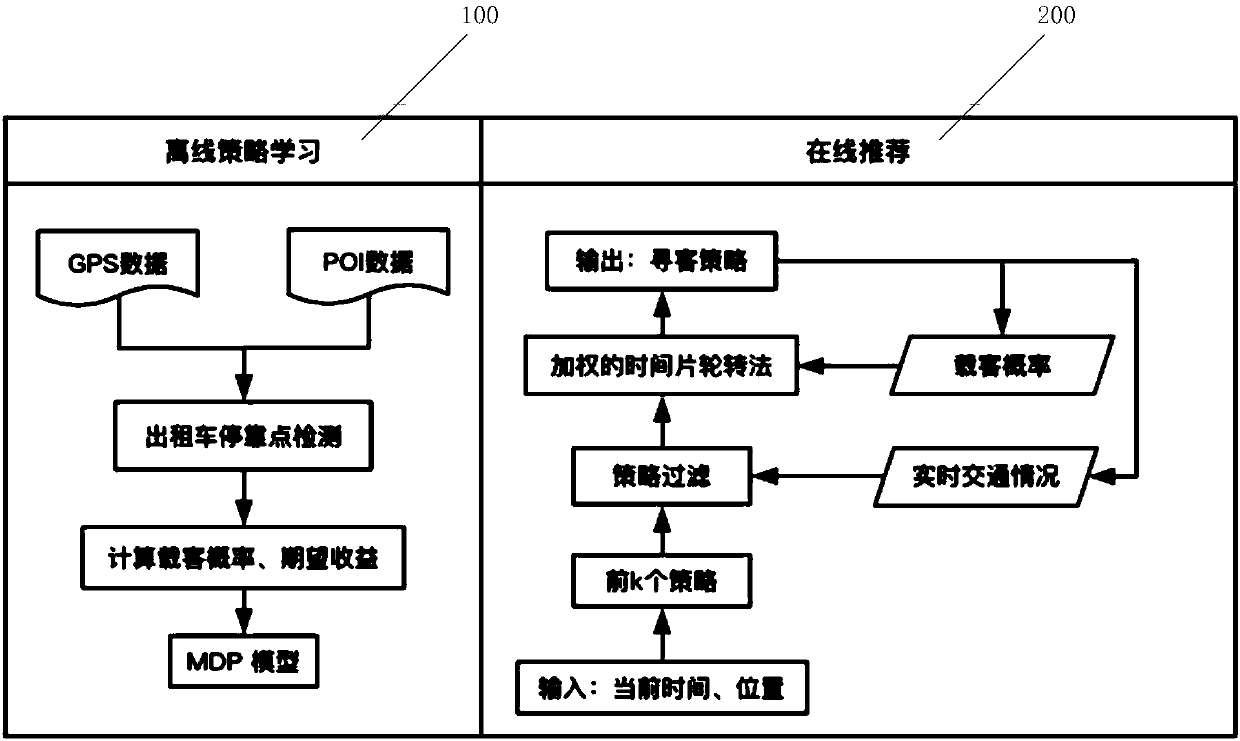

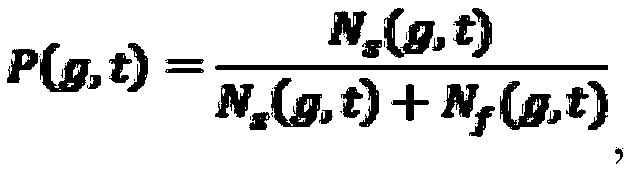

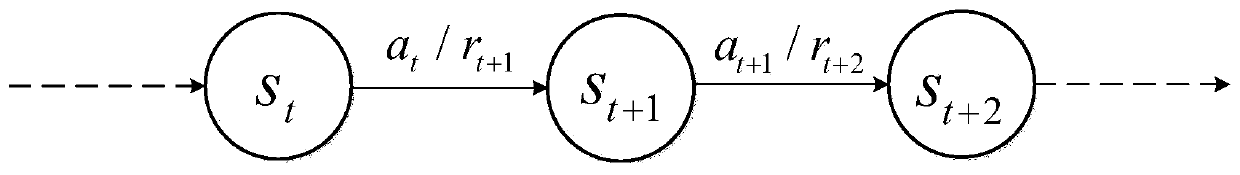

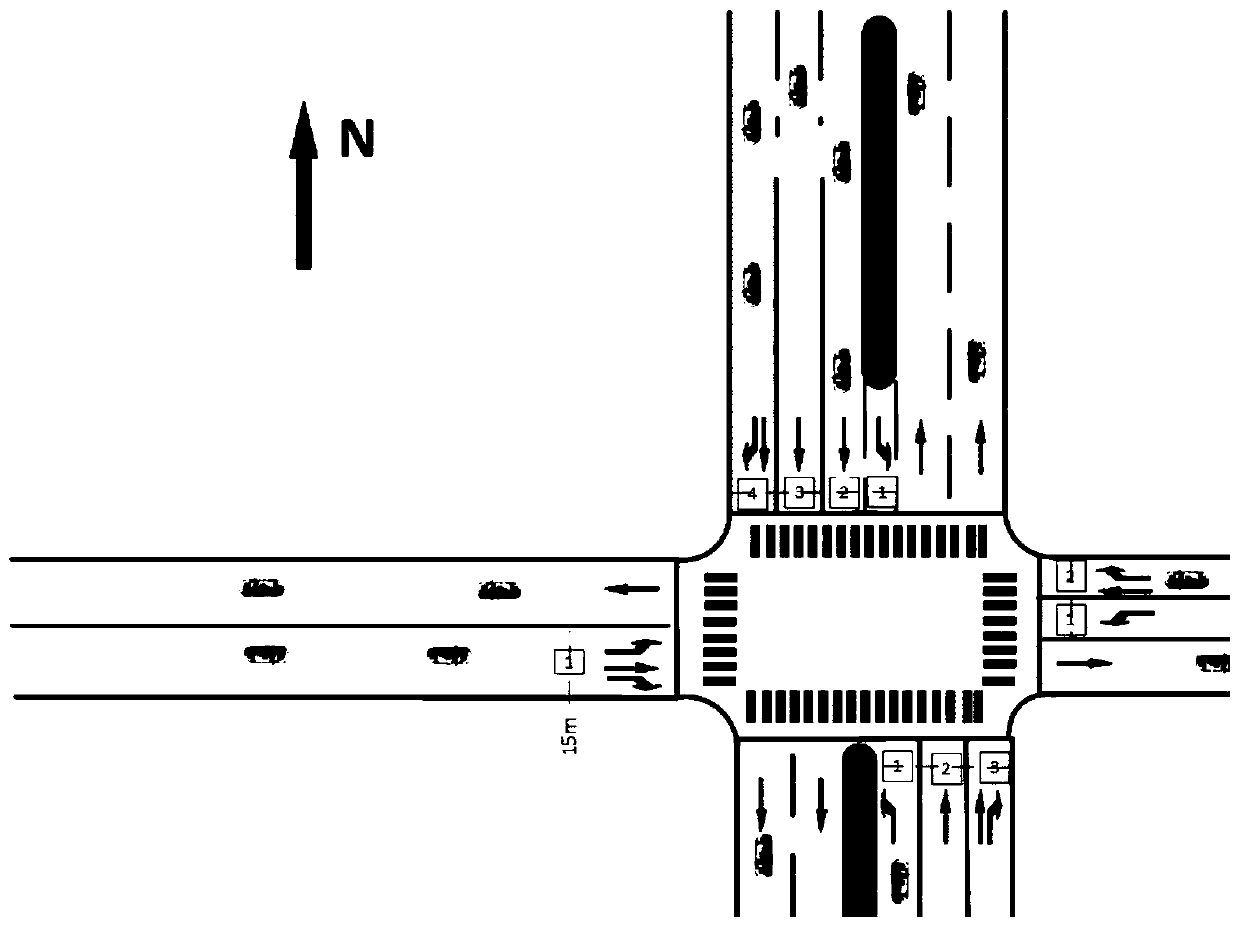

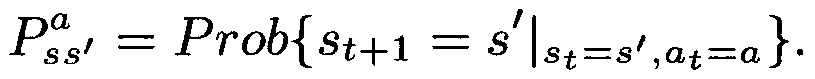

Method for recommending taxi passenger-searching strategy based on Markov decision process

InactiveCN107832882AAddress competitionAvoid driving intoForecastingBuying/selling/leasing transactionsQ-learningTraffic conditions

The invention provides a method for recommending a taxi passenger-searching strategy based on a Markov decision process. The method comprise the following steps of data preprocessing: including deleting abnormal records, determining trajectories according to blocks, and calculating the passenger carrying probability and the expected revenue of each block; modeling: using a Markov decision processto model a passenger-searching optimal strategy problem; calculating the optimal solution: Using Q-learning to solve the optimal solution; online recommendation: giving the optimal passenger-searchingstrategy in consideration of load balancing and real-time traffic conditions. The method for recommending a taxi passenger-searching strategy based on a Markov decision process provides two differentstrategies for the waiting area and the searching areas, can calculate the expected revenue of each strategy according to the historical trajectories, receives the real-time traffic conditions and processes the taxi recommendation requests by a dispatching system so that the optimal strategy recommendation can be given after a taxi passenger gets off.

Owner:SHANGHAI JIAO TONG UNIV

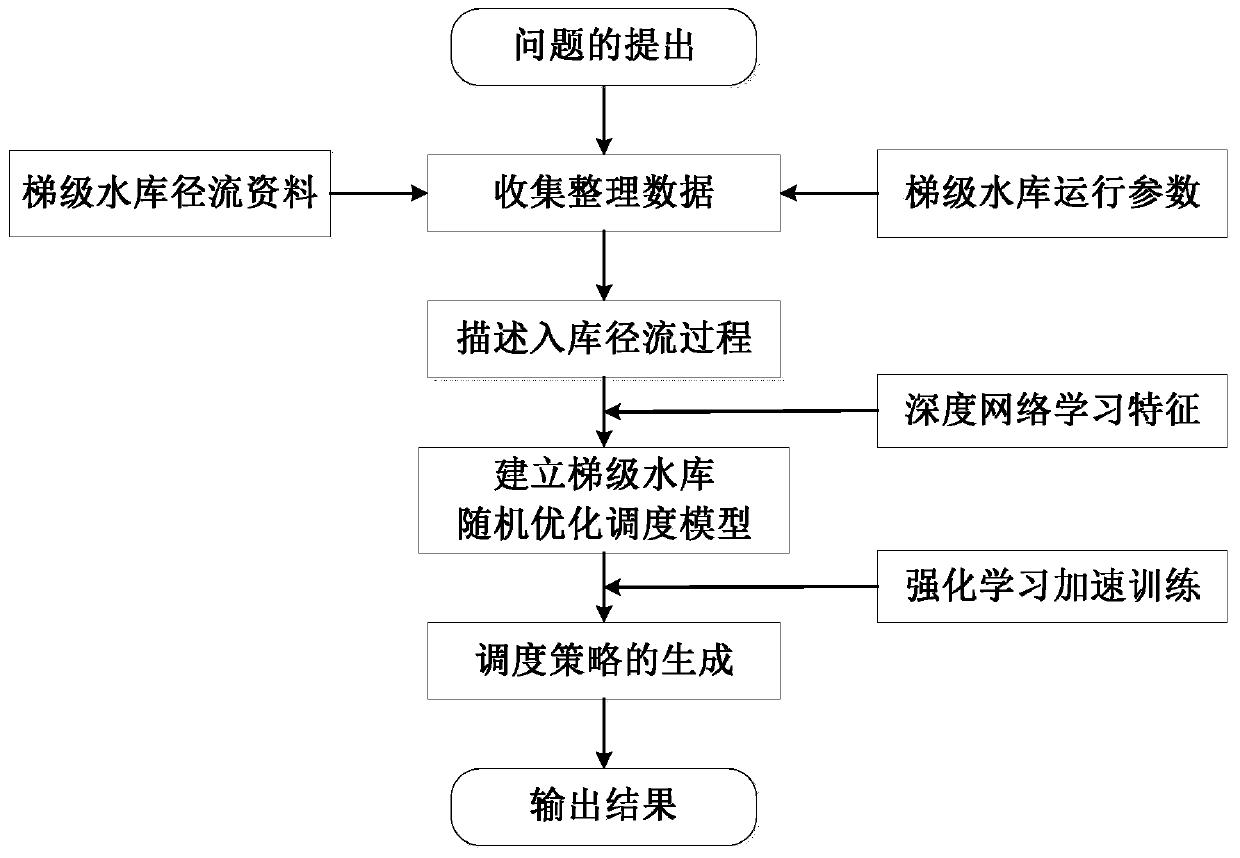

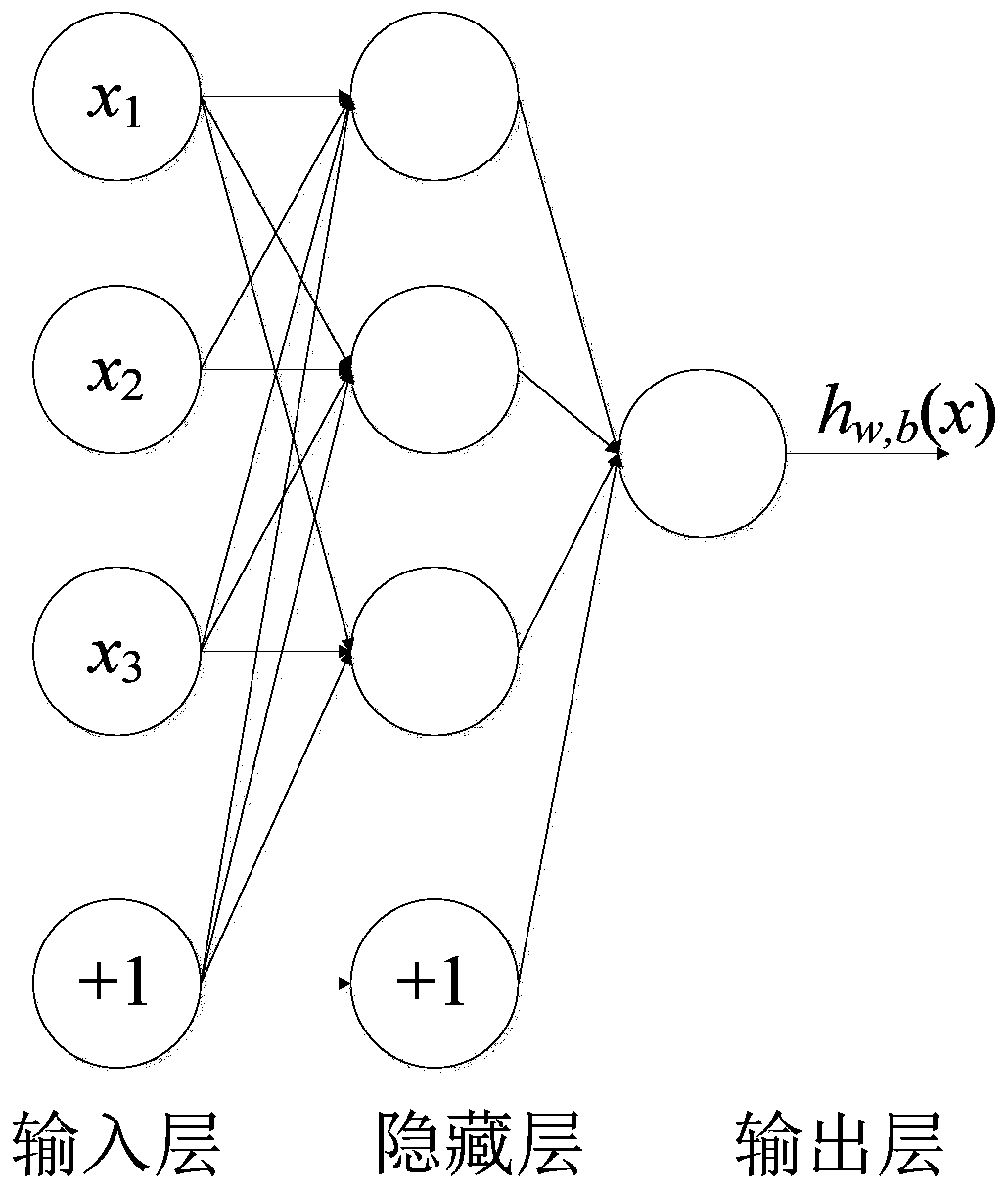

Cascade reservoir random optimization scheduling method based on deep Q learning

PendingCN110930016ASolve the fundamental instability problem of the approximationEffectively deal with the "curse of dimensionality" problemForecastingDesign optimisation/simulationAlgorithmTransition probability matrix

The invention discloses a cascade reservoir random optimization scheduling method based on deep Q learning. The method comprises the following steps: describing the reservoir diameter process of a reservoir; establishing a Markov decision process MDPS model; establishing a probability transfer matrix; establishing a cascade reservoir random optimization scheduling model; determining a constraint function of the model: introducing a deep neural network, extracting runoff state characteristics of the cascade reservoir, Meanwhile, realizing approximate representation and optimization of a targetvalue function of the scheduling model; applying reinforcement learning to reservoir random optimization scheduling; establishing a DQN model; and solving the cascade reservoir stochastic optimizationscheduling model by adopting a deep reinforcement learning algorithm. According to the cascade reservoir stochastic optimization scheduling method based on deep Q learning, cascade reservoir stochastic optimization scheduling is realized, so that the generator set is fully utilized in the scheduling period, the power demand and various constraint conditions are met, and the annual average power generation income is maximum.

Owner:CHINA THREE GORGES UNIV

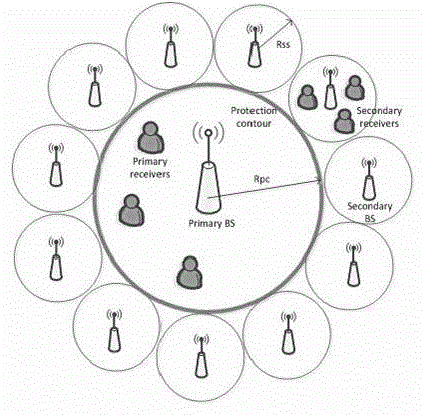

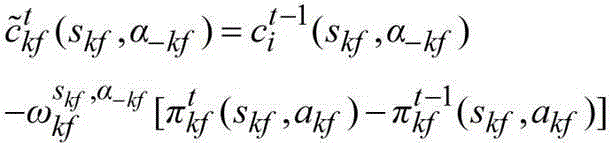

Resource allocation and power control combined optimization method based on reinforcement learning in heterogeneous network

ActiveCN108521673ALong-run system utility maximizationEfficient resource allocationWireless communicationTransmission channelHeterogeneous network

The invention belongs to the technical field of wireless communication, in particular to a resource distribution and power control combined optimization method based on reinforcement learning in a heterogeneous network. The method aims at dynamic and time-varying characteristics of factors such as transmission channels and transmission power, and an optimal resource distribution, user associationand power control combined strategy is obtained by utilizing a distributed Q learning method through establishing a multi-agent enhanced learning framework in combination with the conditions of user satisfaction degree and operator benefit seeking on the basis of establishing a heterogeneous cellular network system model on the premise that the user selfishness and the operator benefit in the heterogeneous network are considered, so that the maximization of the long-term system utility of the whole network is achieved.

Owner:CHINA CONSTR THIRD BUREAU FIRST CONSTR & INSTALLATION CO LTD

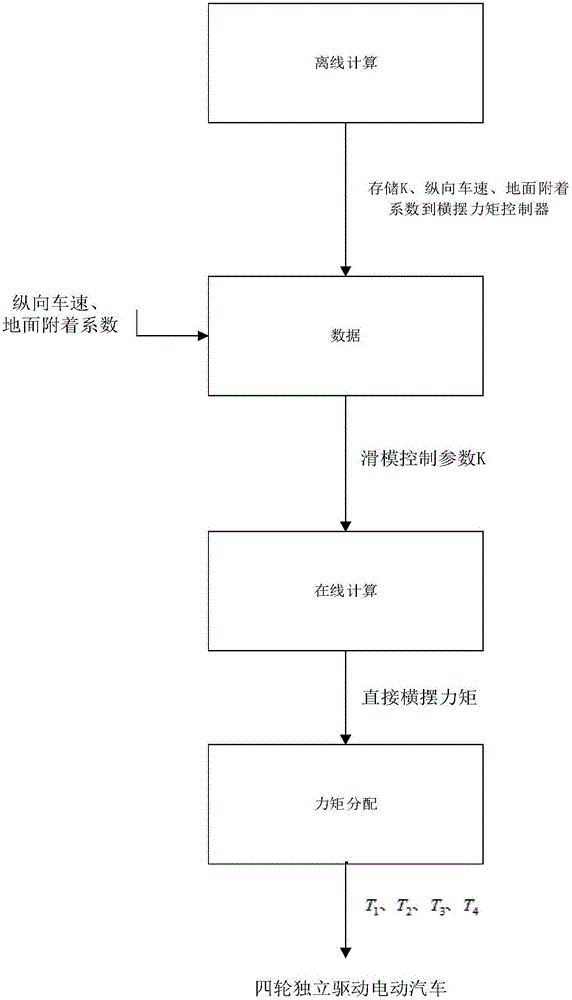

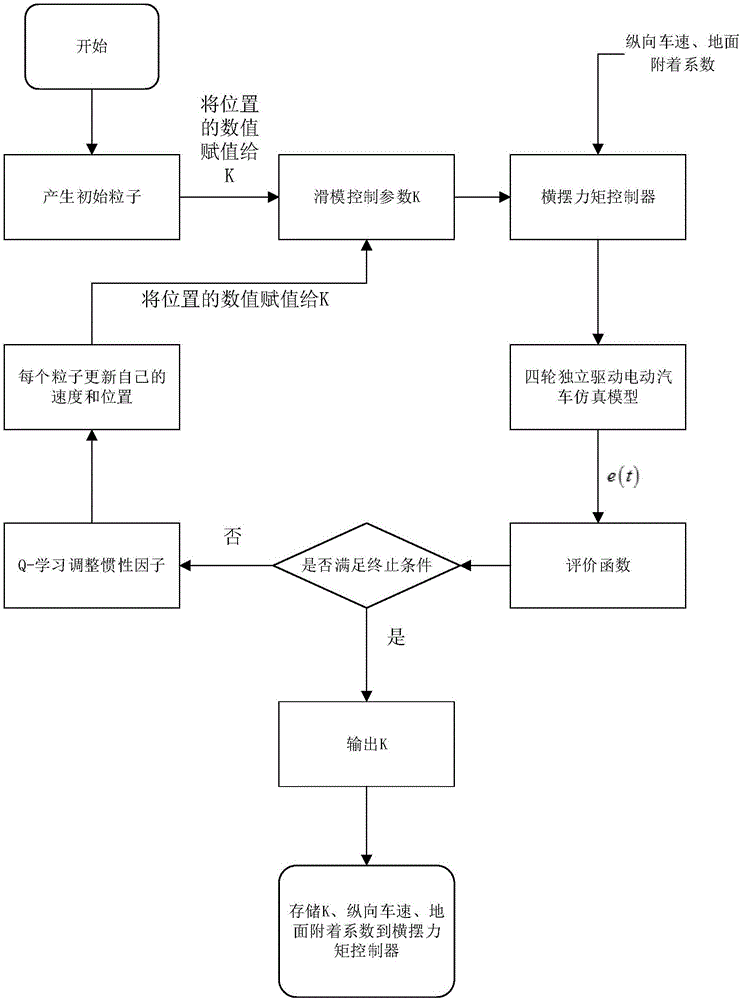

Stability control method for four-wheel independent driving electric automobile based on Q-learning

InactiveCN106218633AReduce computing timeImprove real-time performanceSpeed controllerElectric devicesControl systemOptimal control

The invention discloses a stability control method for a four-wheel independent driving electric automobile based on Q-learning. The control method comprises the following steps of selecting corresponding optimal control parameters based on actual external conditions, and calculating and obtaining an ideal control torque by utilizing the parameters; calculating slip form control parameters K of a yaw torque controller under different external conditions, and storing different external conditions and the slip form control parameters K which are in the yaw torque controller and correspond to the different external conditions, to a stability control system; and reasonably distributing the calculated ideal control torque to four wheels. According to the stability control method disclosed by the invention, the control parameters required by online calculation are found by the Q-learning manner, and stored to the yaw torque controller, so that during working, the yaw torque controller of the four-wheel independent driving electric automobile can directly call the control parameters in a manner of table look-up, the calculating time is greatly shortened, and the real-time capability, the robust ability and the practicability of the stability control system of the four-wheel independent driving electric automobile are improved.

Owner:DALIAN UNIV OF TECH

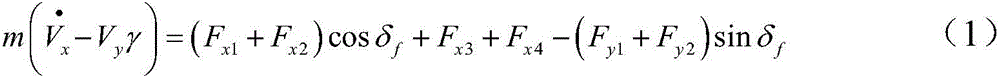

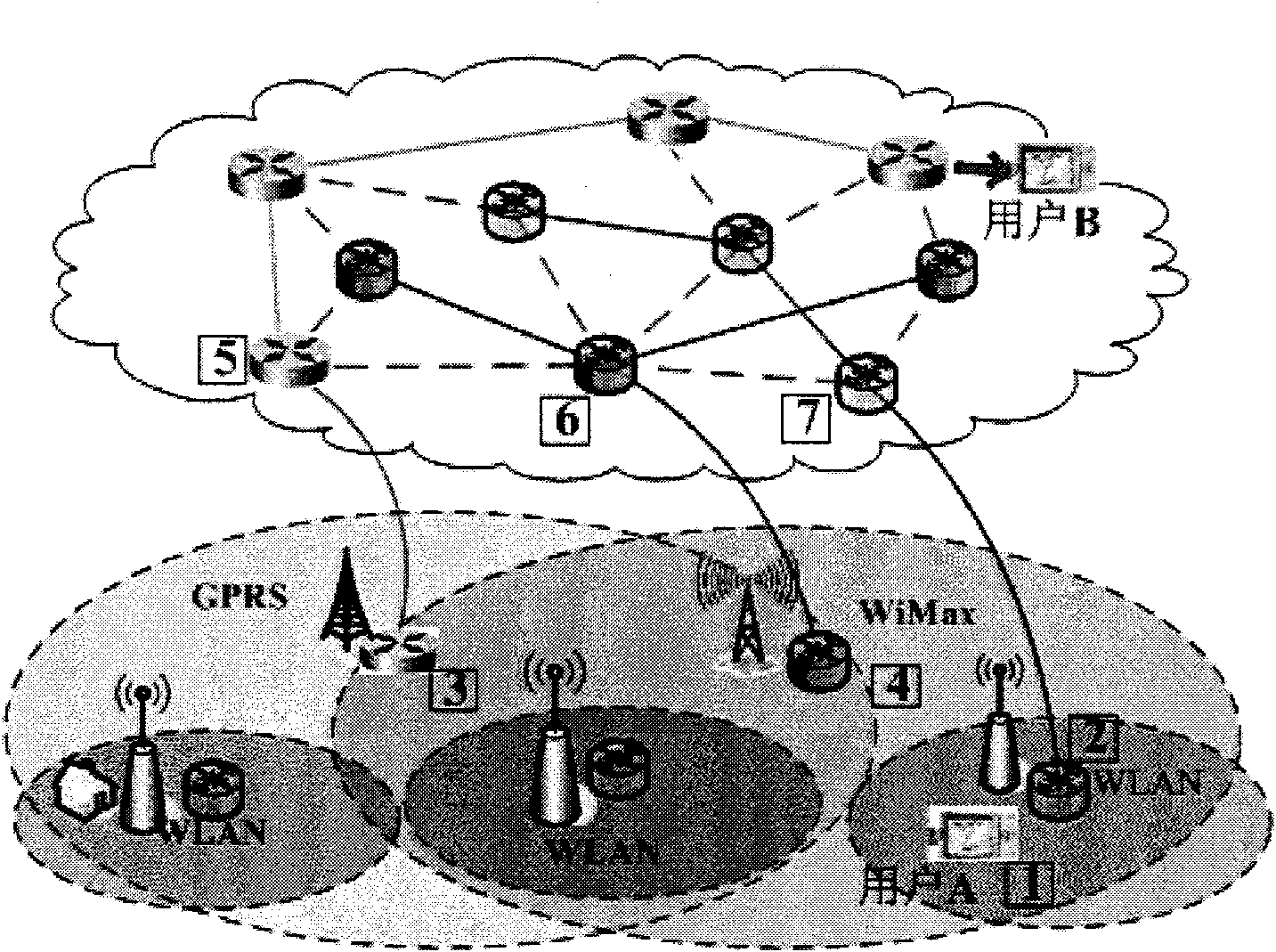

Routing method for heterogeneous network based on cognition

InactiveCN101835235AReduce congestionUtilization improvement and enhancementWireless communicationCognitionTime delays

The invention discloses a routing method for a heterogeneous network based on cognition, mainly solving the problem that the existing method considers only part of environment factors of the network, and neglects the dynamic complexity of a heterogeneous network environment so as not to cognize and predict the environment. The method comprises the following steps: firstly, judging the types of the existing available networks, perceiving a network environment state, evaluating the network environment state by using a Q-learning method and calculating end-to-end time delay and the estimated values thereof; secondly, using a utility function based on multiple parameters to obtain the utility values of various networks according to the perceived network environment state; then selecting the network with the biggest utility value by a probability of P=0.9, and selecting other available networks as target networks by the probability of 1-P; and finally continuously transmitting businesses, implementing network switch and updating the network environment state by the target networks. The routing method improves the utilization ratio of network resources, achieves load balance of the network and can be used in the heterogeneous network environment.

Owner:XIDIAN UNIV

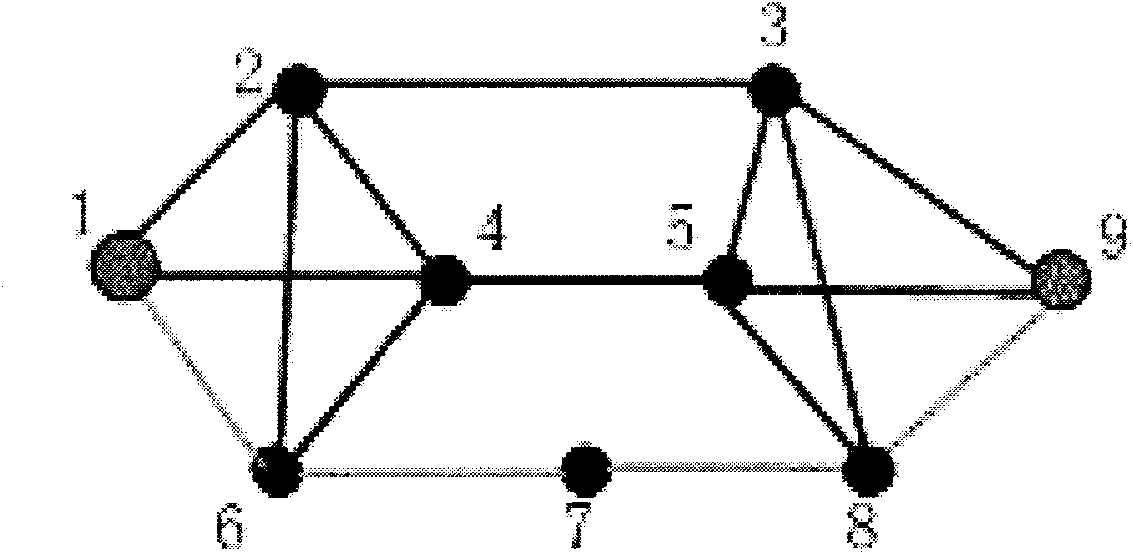

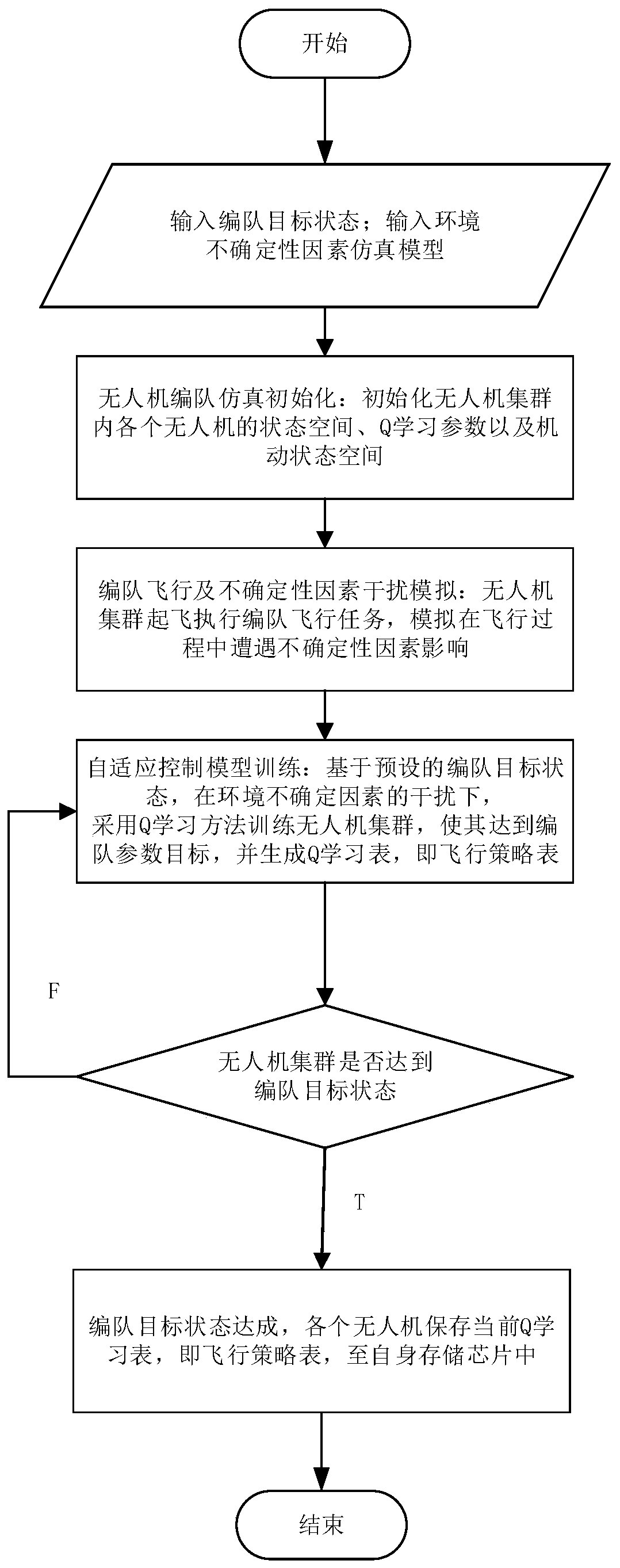

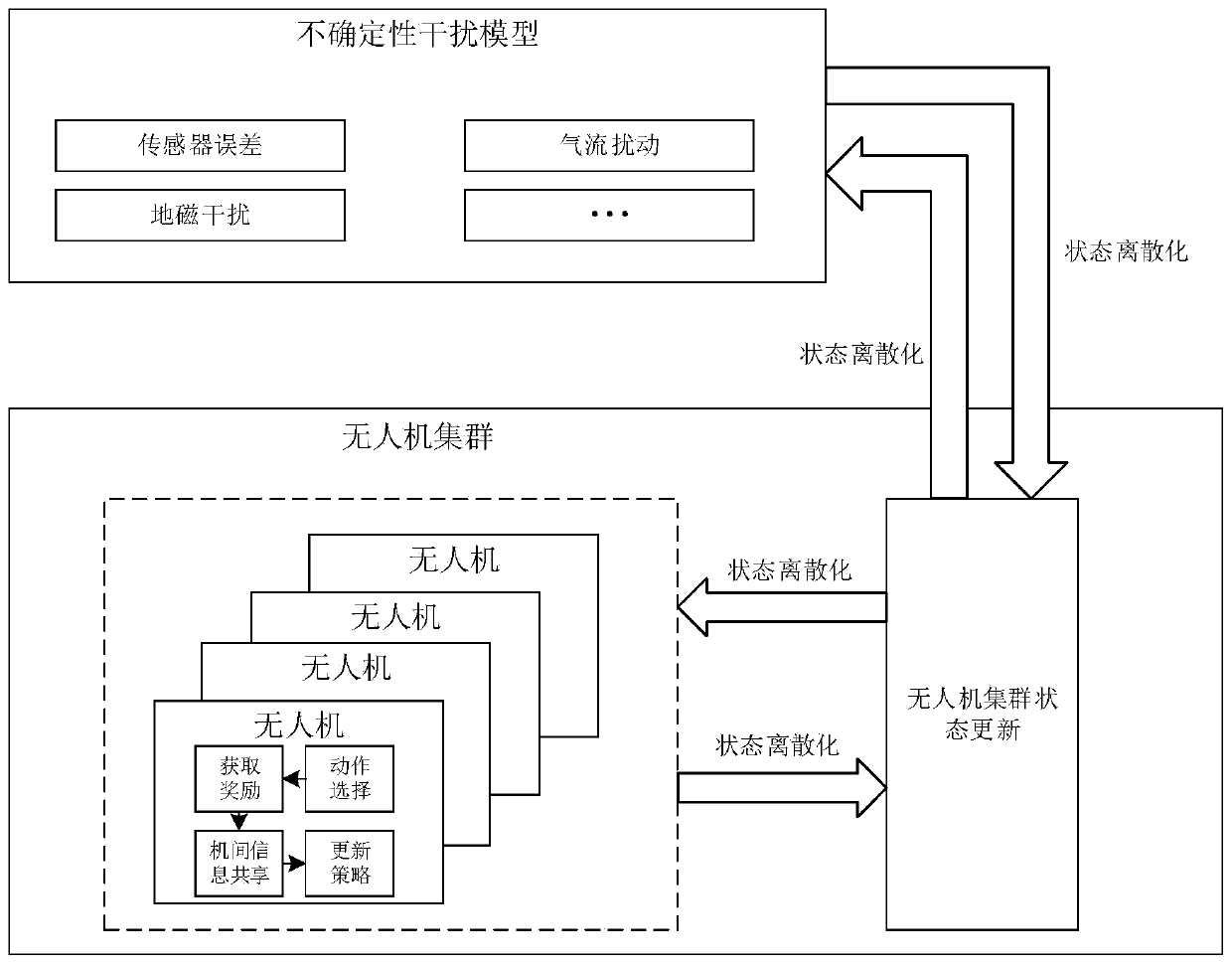

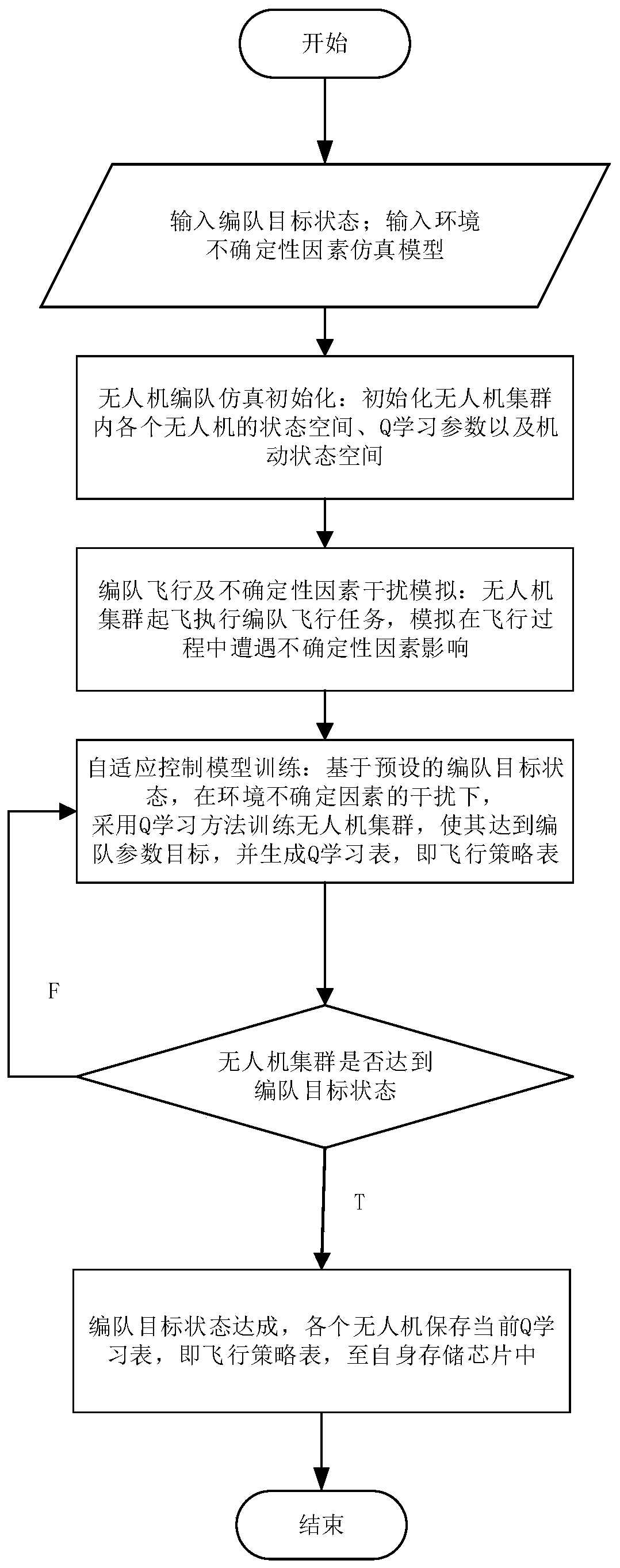

Distributed formation method of unmanned aerial vehicle cluster based on reinforcement learning

ActiveCN110007688AGuarantee stabilityGuaranteed robustnessPosition/course control in three dimensionsQ-learningCertainty factor

The invention discloses a distributed formation method of an unmanned aerial vehicle cluster based on reinforcement learning. The distributed formation method comprises the steps that step (1), a formation target state function and a simulation model of environmental uncertainty factors are obtained, and an unmanned aerial vehicle formation simulation model is established; step (2), under the interference of the environmental uncertainty factors, based on the unmanned aerial vehicle formation simulation model established in the step (1), a Q learning method is adopted to train the unmanned aerial vehicle cluster to update a flight strategy table; step (3), the value of the completion degree of the formation target state is calculated according to the obtained formation target state function, the obtained value of the completion degree of the formation target state is compared with a preset value of the formation target state, whether the formation target state is reached or not is judged according to the comparison results, if the formation target state is reached, a step (4) is performed, and if not, the step (2) is entered; and step (4), the updated flight strategy table is saved. According to the distributed formation method of the unmanned aerial vehicle cluster based on reinforcement learning, flight strategy parameters with adaptability are provided for the cluster, and the stability and robustness of the unmanned aerial vehicle cluster formation are guaranteed.

Owner:XIDIAN UNIV

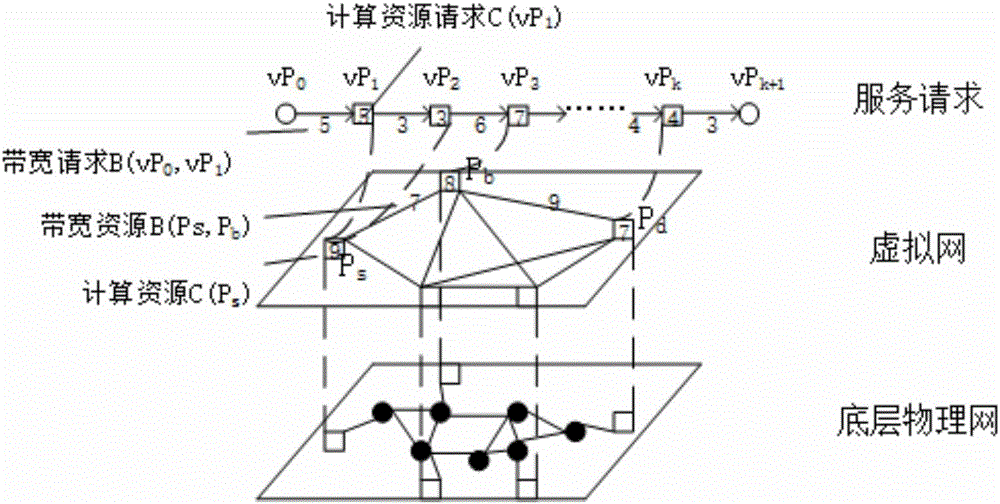

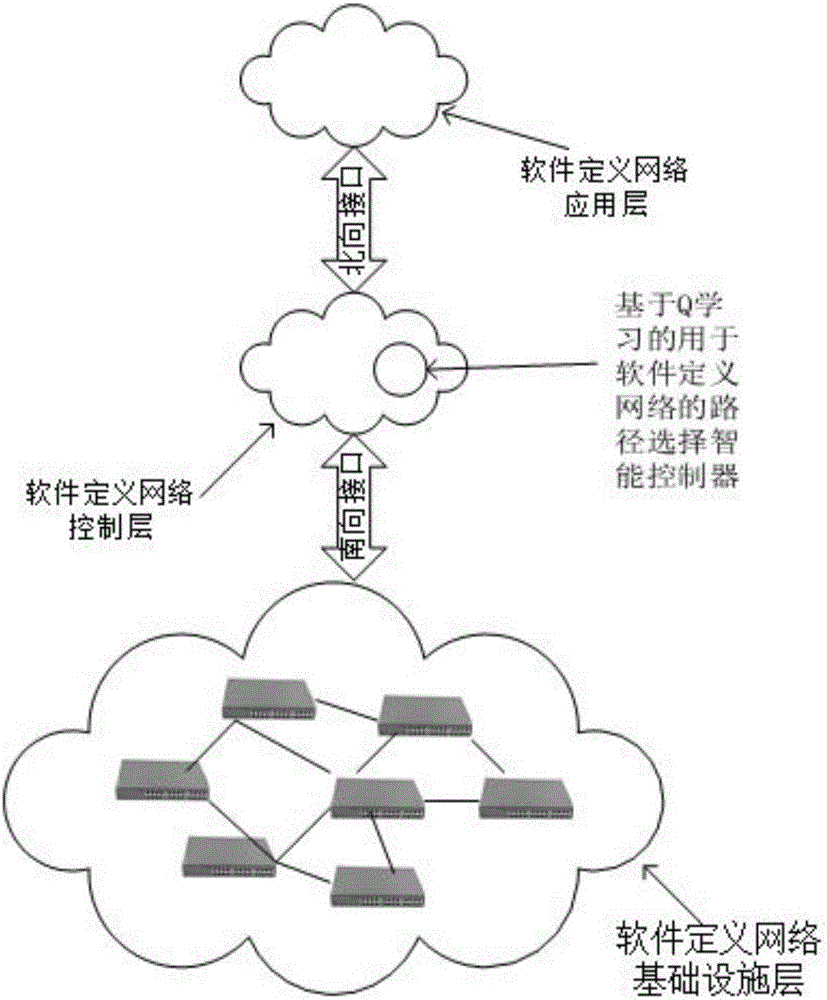

Path selection method for software defined network based on Q learning

ActiveCN106411749AShort forwarding pathShorten the timeData switching networksComputer terminalQ-learning

The invention discloses a path selection method for a software defined network based on Q learning. A software defined network infrastructure layer receives a service request, constructs a virtual network, and allocates a proper network path to complete the service request, and the path selection method is characterized in that the proper network path is acquired in a Q learning mode: (1) setting a plurality of service nodes P on the constructed virtual network, and correspondingly allocating corresponding bandwidth resources to each service node; (2) decomposing the received service request into available actions a, and attempting to select a path capable of arriving at a terminal according to eta-greedy; (3) recording data summarization as a Q value table, and updating the Q value table; and (4) finding the proper path according to recorded data in the Q value table. According to the path selection method disclosed by the invention, a network path with short forwarding path, little time consumption, little bandwidth resource occupation and suitable for dynamic and complex networks can be found by the Q learning manner, and meanwhile other service requests can be satisfied as many as possible.

Owner:JIANGSU ELECTRIC POWER CO

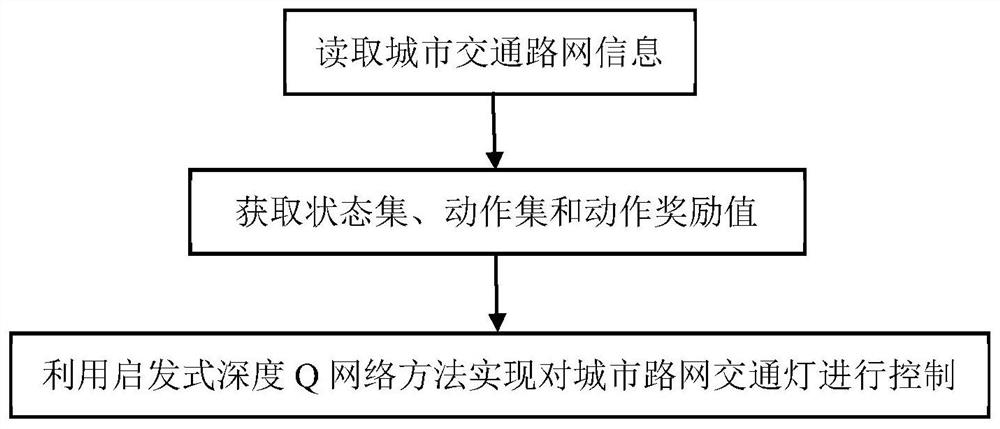

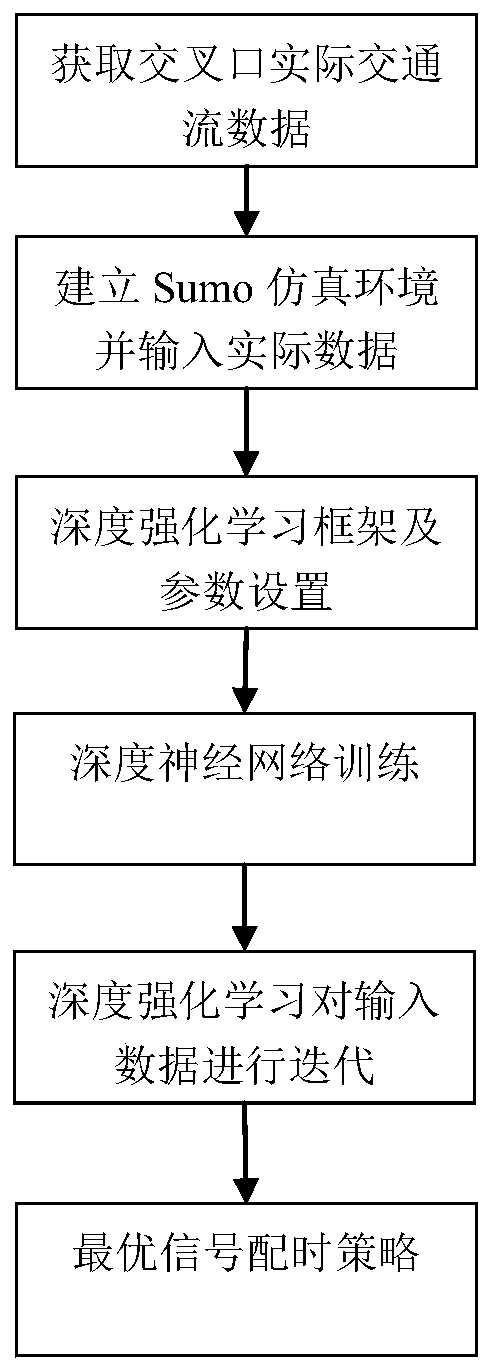

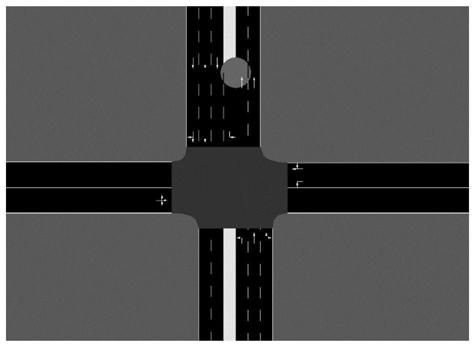

Traffic light control method based on heuristic deep Q network

ActiveCN111696370AAvoid correlationConvincing training resultsControlling traffic signalsCharacter and pattern recognitionTraffic signalTraffic network

The invention discloses a multi-intersection traffic signal control method based on heuristic deep Q learning, and mainly solves problems of correlation of training data, incapability of rapid convergence of a traffic light control strategy and low control efficiency in an existing method. The method comprises steps that the urban traffic network information is read, a vehicle traffic state set ofeach intersection is established, and the read urban traffic network information is converted into an adjacent matrix to be stored; a state set, an action set and an action reward value of each intersection are acquired from a vehicle traffic state set of each intersection; according to the state set, the action set, the action reward value and the adjacency matrix, a heuristic deep Q network method is used for continuously executing actions according to the state of each intersection to obtain rewards and then to the next state, and urban road network traffic lights are controlled. The method can improve control efficiency of intersection traffic signal lamps, improves performance of the multi-intersection traffic signal controller, can be used for urban traffic management, and reduces the urban traffic congestion.

Owner:XIDIAN UNIV

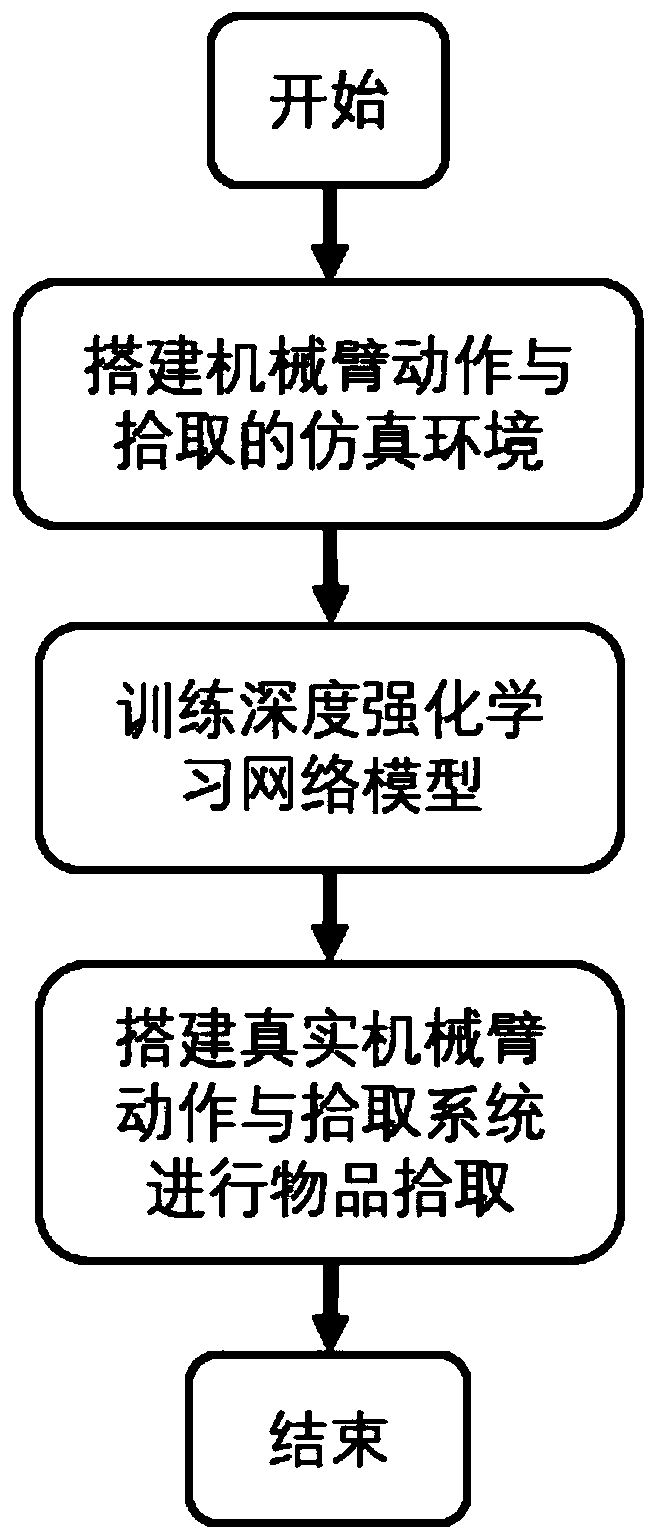

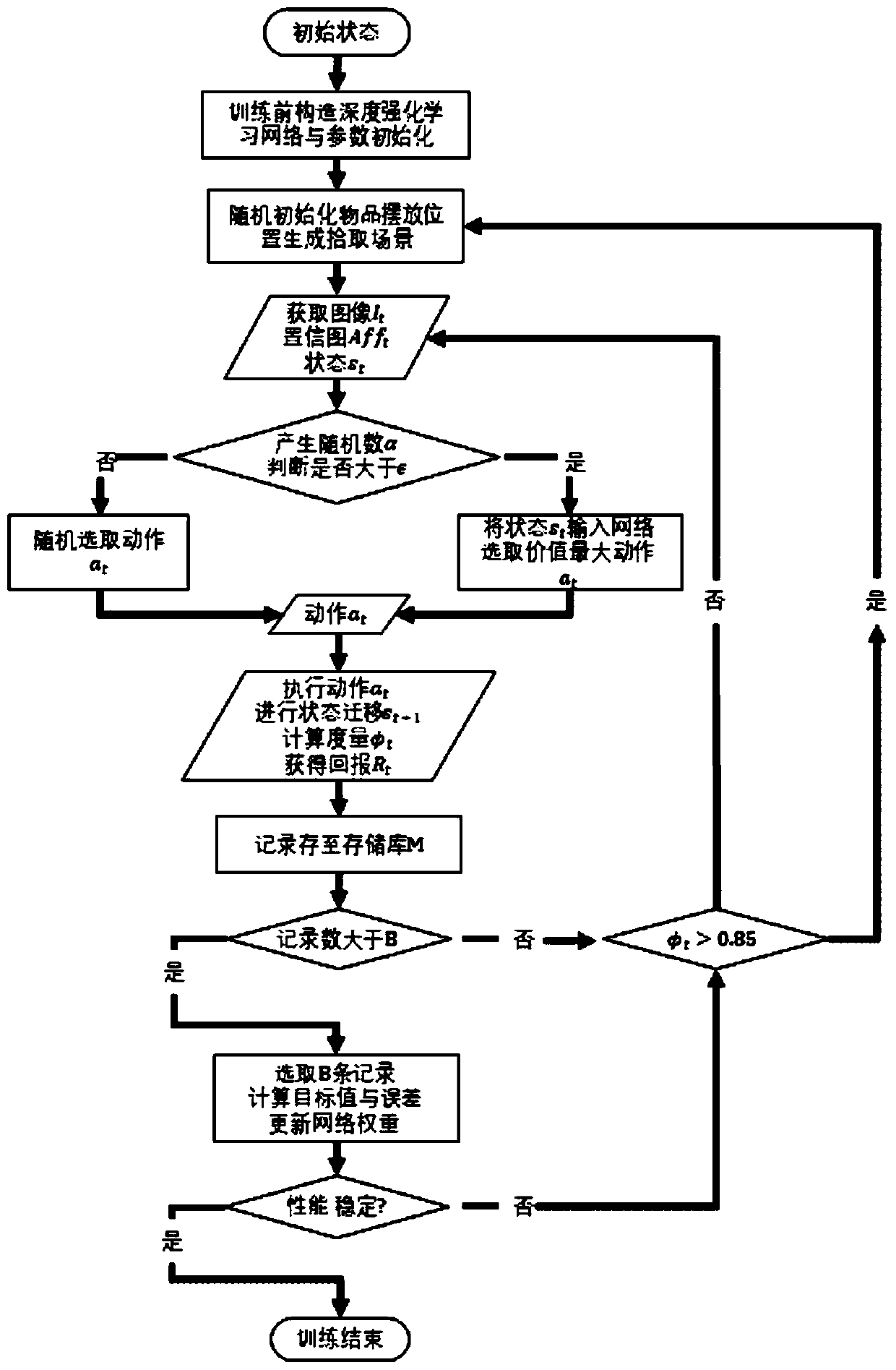

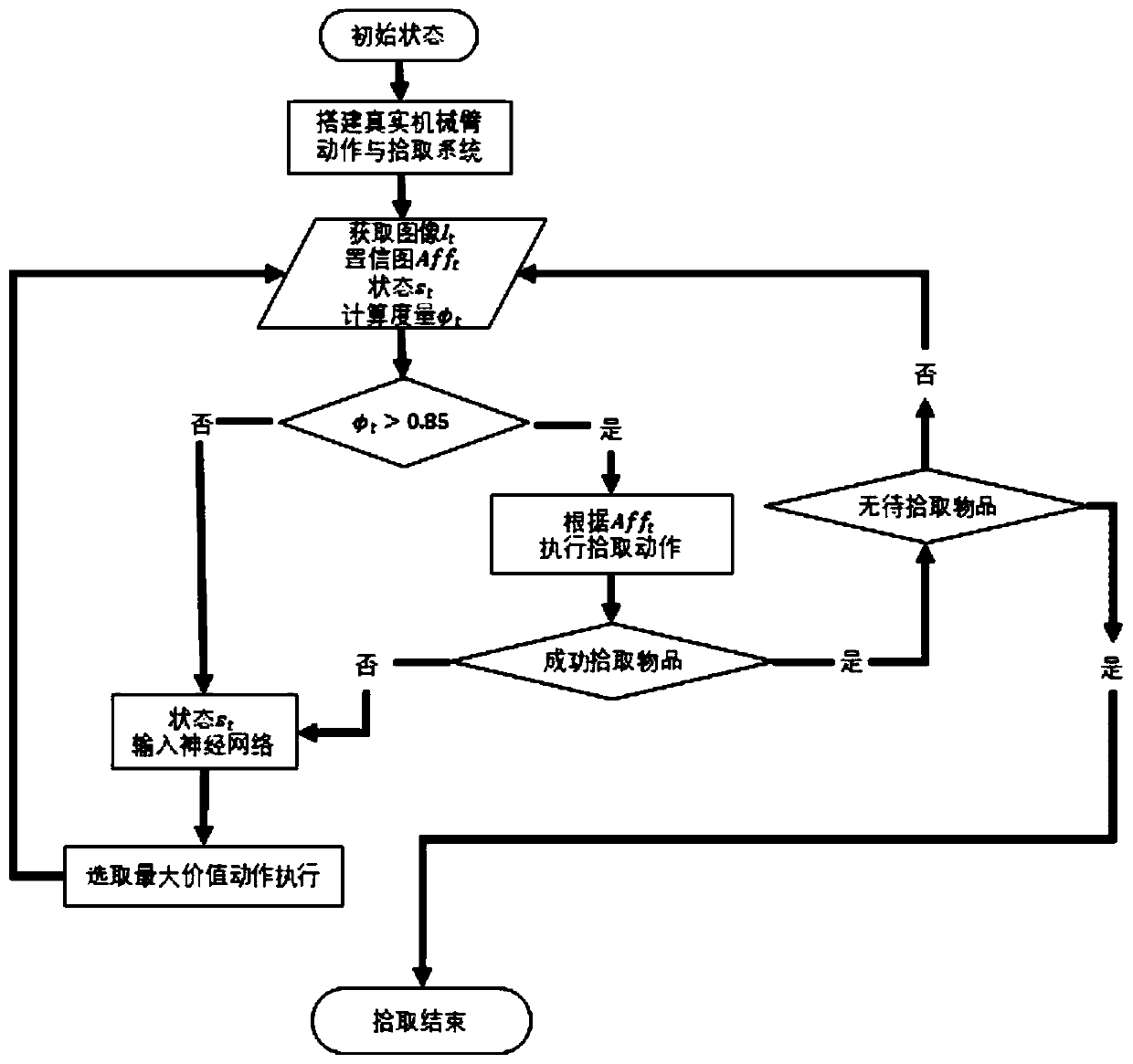

Item active pick-up method through mechanical arm based on deep and reinforced learning

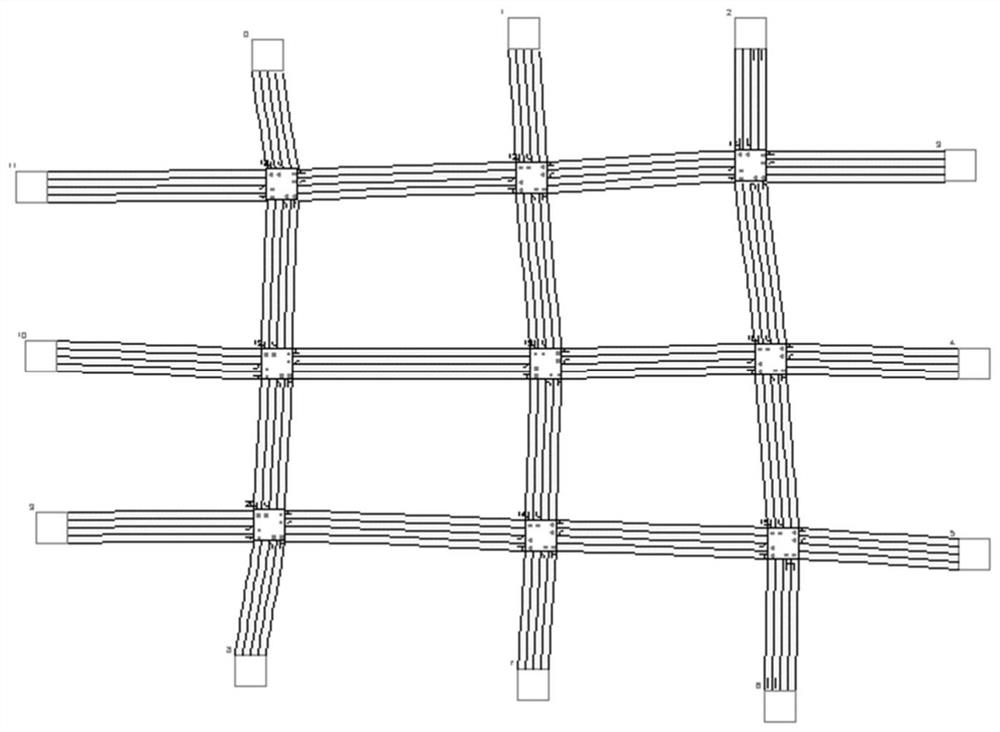

ActiveCN110450153AEfficient extractionPick up scene optimizationProgramme-controlled manipulatorRoboticsColor image

The invention provides an item active pick-up method through a mechanical arm based on deep and reinforced learning, and belongs to the field of artificial intelligence and robotics applications. Themethod comprises the following steps of firstly establishing a simulation environment containing the machine arm and an item pick-up scene; establishing a deep Q learning network NQ based on multipleparallel U-Nets; carrying out multiple robot grabbing action policy tests in the simulation environment to perform training on the NQ, thus obtaining a trained deep learning network; establishing an item pick-up system in actual pick-up use, utilizing the trained deep learning network, inputting deep color images, and determining to utilize an action policy of actively changing a scene or pick upan item through the mechanical arm according to a measurement defined on a confidence image. According to the item active pick-up method disclosed by the invention, by actively changing an item pick-up environment through the mechanical arm and adapting to different pick-up conditions, a high success rate of pick-up can be achieved.

Owner:TSINGHUA UNIV

Energy efficiency-oriented multi-agent deep reinforcement learning optimization method for unmanned aerial vehicle group

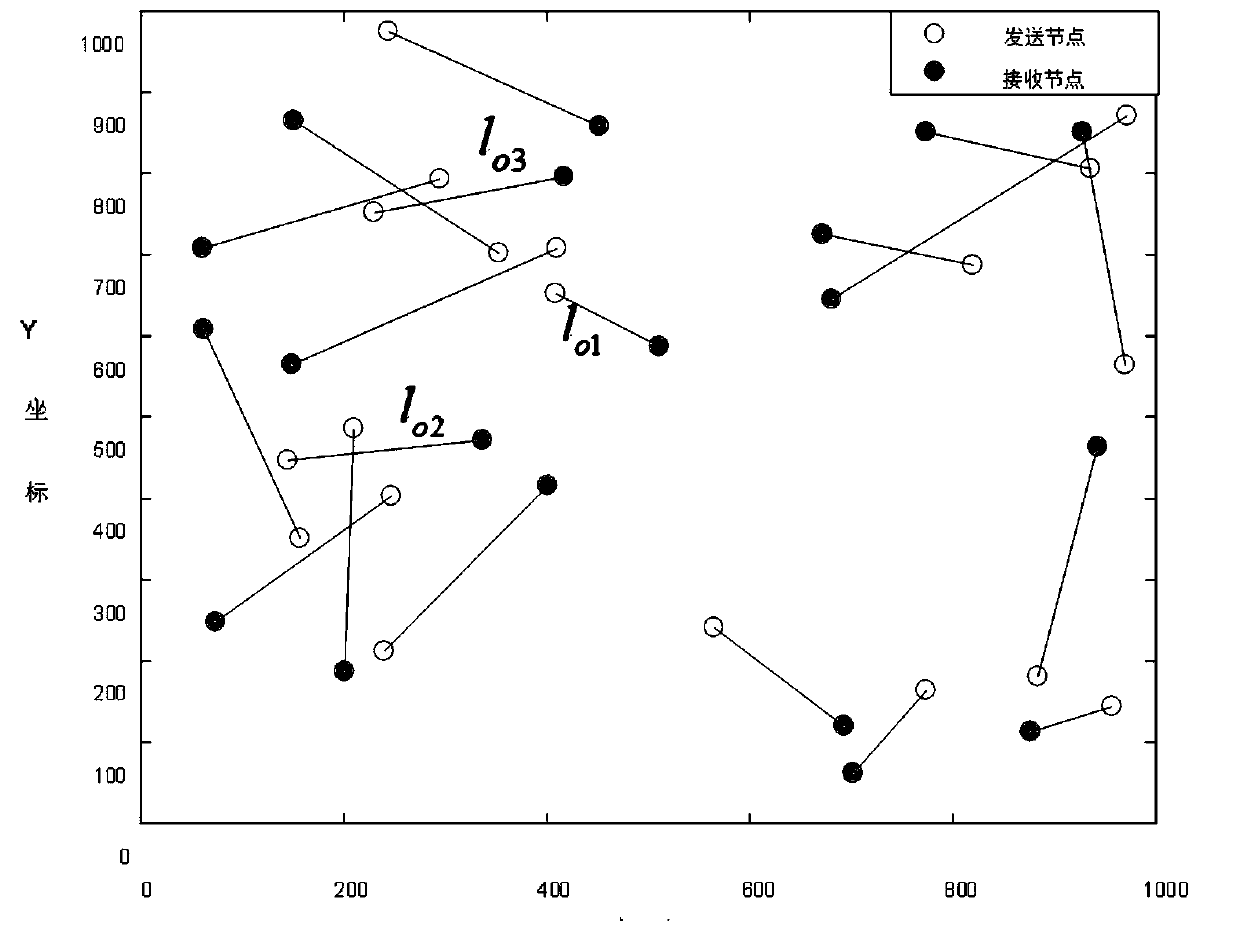

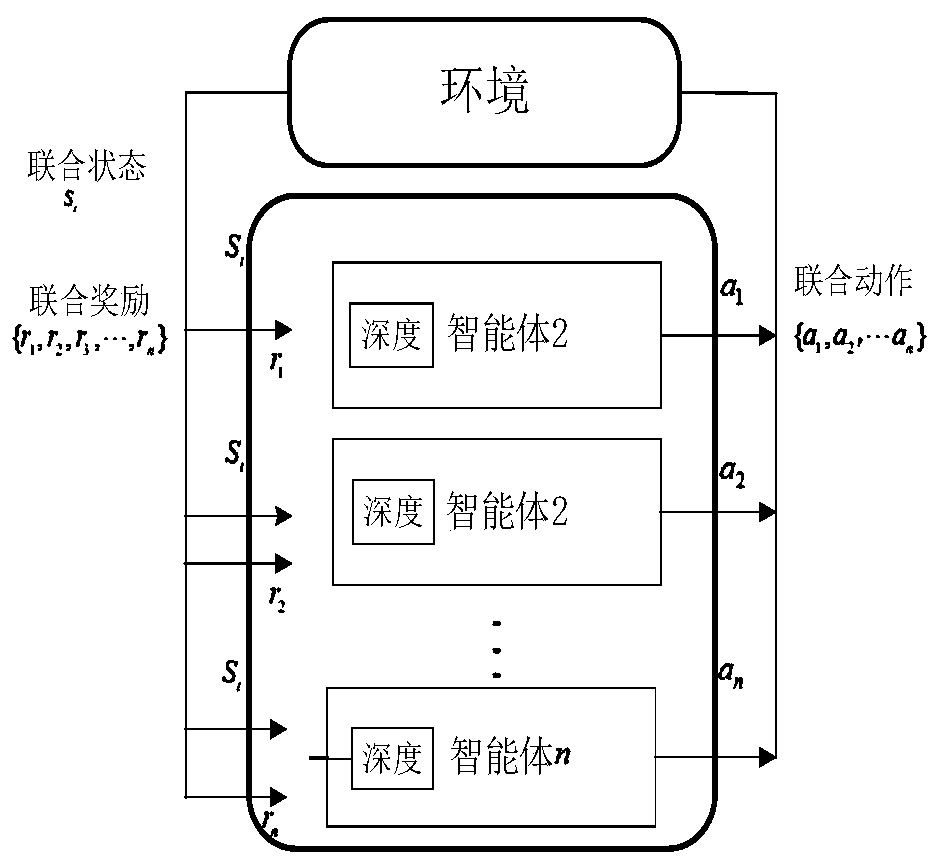

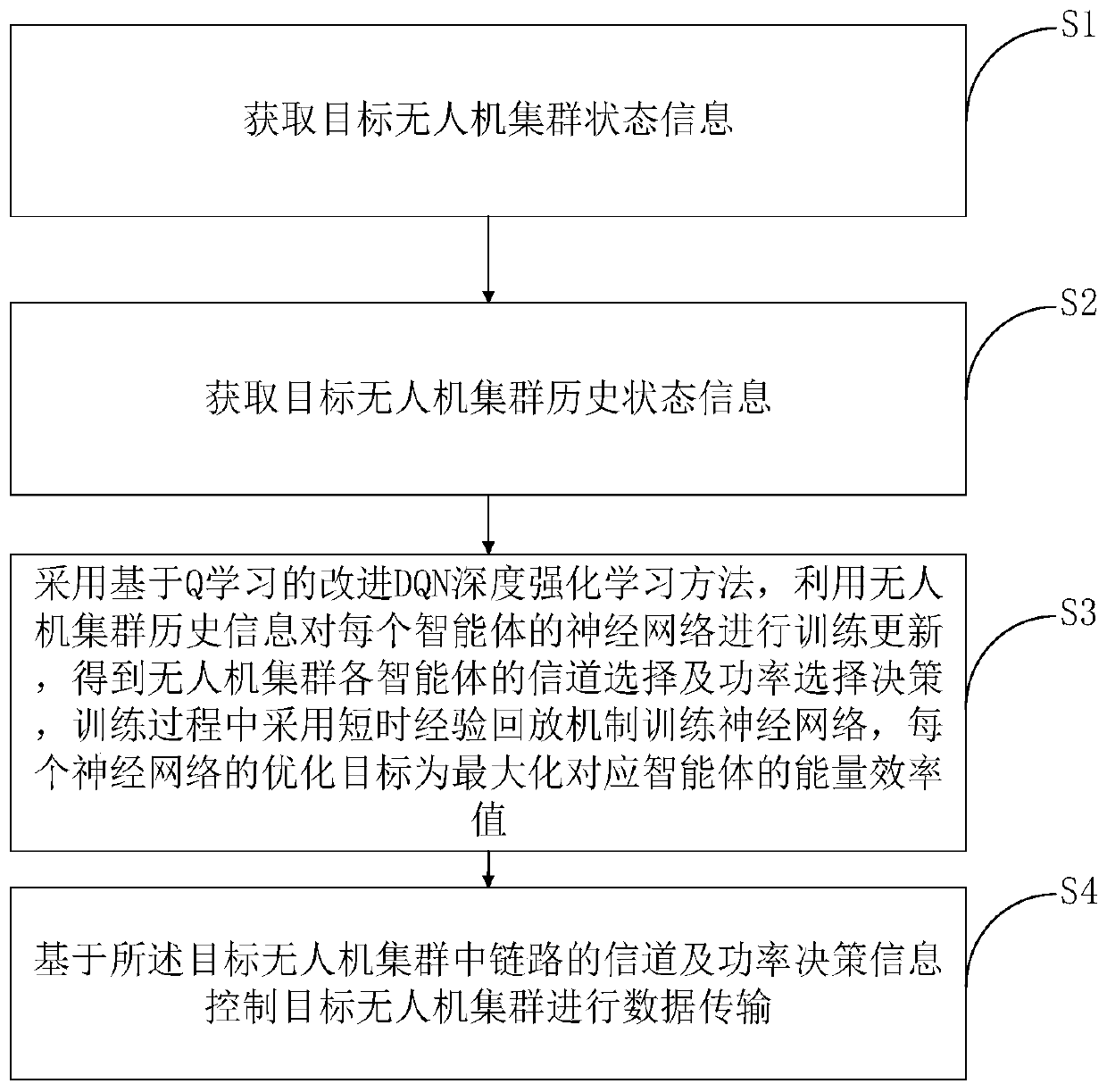

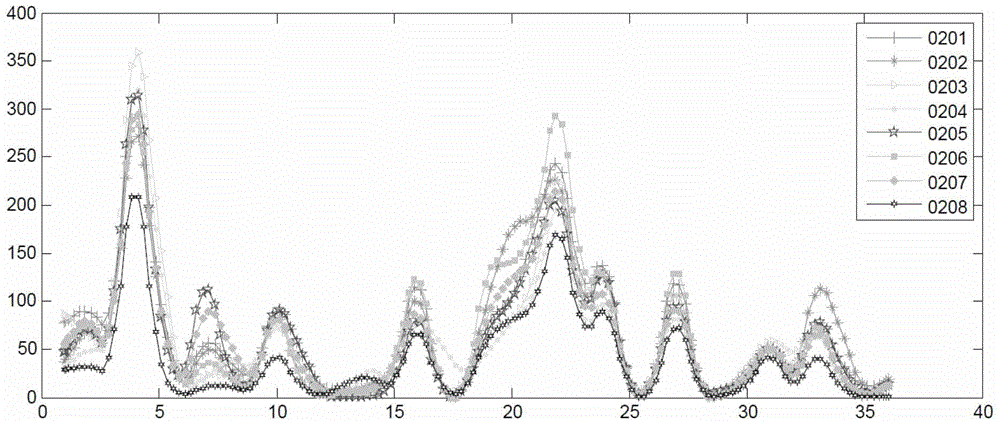

ActiveCN110958680AExtend the life cycleImprove energy efficiencyPower managementNetwork topologiesUncrewed vehicleEngineering

The invention discloses an energy efficiency-oriented multi-agent deep reinforcement learning optimization method for an unmanned aerial vehicle group. The method comprises the steps of adopting an improved DQN deep reinforcement learning method based on Q learning, training and updating the neural network of each intelligent agent by using historical information of the unmanned aerial vehicle cluster to obtain channel selection and power selection decisions of each intelligent agent of the unmanned aerial vehicle cluster, training the neural network by using a short-time experience playback mechanism in the training process, and maximizing the energy efficiency value of the corresponding intelligent agent by using the optimization target of each neural network. According to the invention,a distributed multi-agent deep strong chemical method is adopted, and a short-time experience playback mechanism is set to train a neural network to mine a change rule contained in a dynamic networkenvironment. The problem that a convergence solution cannot be obtained in a large state space faced by traditional reinforcement learning is solved. Multi-agent distributed cooperative learning is achieved, the energy efficiency of unmanned aerial vehicle cluster communication is improved, the life cycle of an unmanned aerial vehicle cluster is prolonged, and the dynamic adaptive capacity of an unmanned aerial vehicle cluster communication network is enhanced.

Owner:YANGTZE NORMAL UNIVERSITY

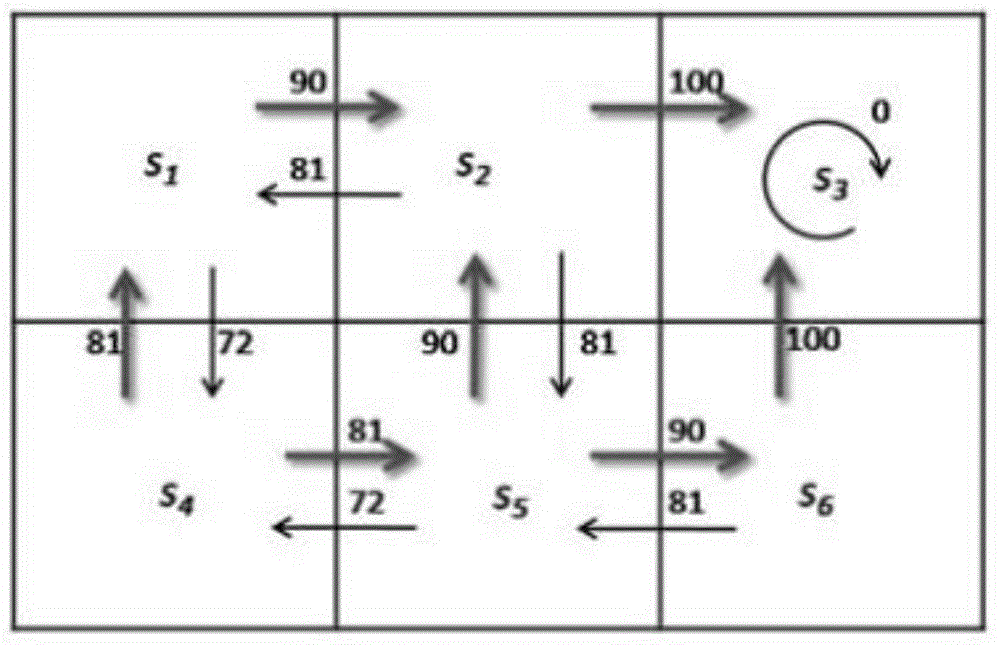

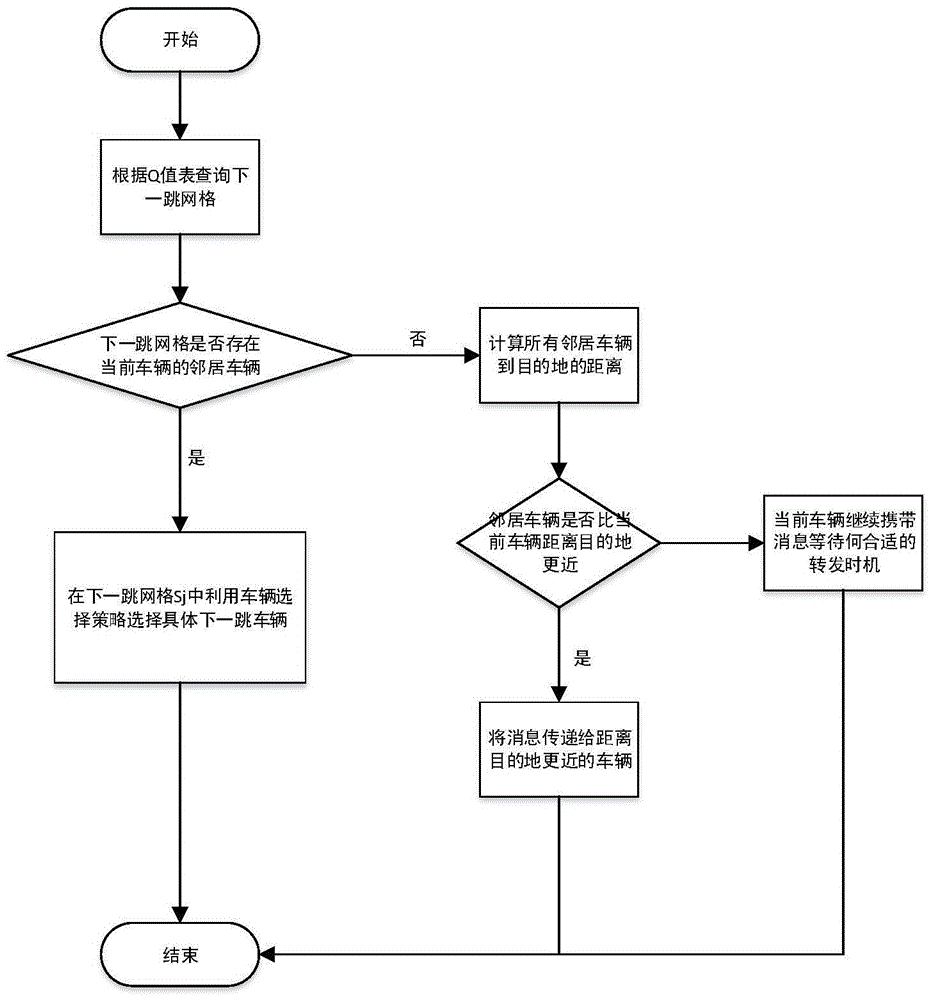

Q-learning based vehicular ad hoc network routing method

InactiveCN104640168ANo congestionImprove delivery success rateNetwork topologiesLocation information based serviceMessage passingGlobal Positioning System

The invention relates to a Q-learning based vehicular ad hoc network routing method and belongs to the technical field of Internet-of-things communication. The method includes that (1) a GPS (global positioning system) is loaded to each vehicle in a network, and the vehicles acquire neighbor node information by passing Hello messages therebetween; (2) a city region is divided into equal grids, the position of each grid represents a different state, and transferring from one grid to the adjacent grid represents an action; (3) a Q-value table is learnt; (4) parameters are set; (5) routing strategies QGrid_G and QGrid_M are selected. Vehicles newly added into the network acquire the Q-value table obtained by offline learning from the neighbor vehicles, and the vehicles can be informed of the optimal next-hop grid of message passing by querying the Q-value table of the message destination grid. The grid sequence that the vehicles mostly frequently travel is taken into consideration from a macroscopic point of view, the vehicle which is mostly likely to arrive at the optimal next-hop grid is selected by considering from a microcosmic point of view, and passing success rate of messages in the urban traffic network is increased effectively by the macroscopic and microcosmic combination mode.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

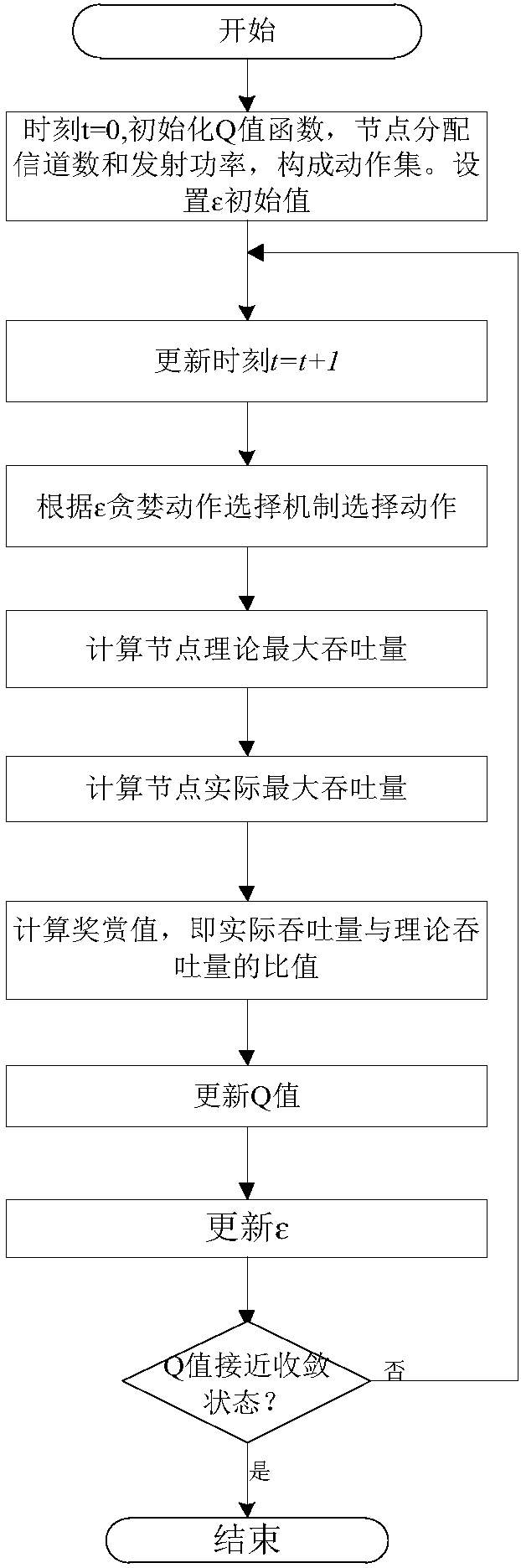

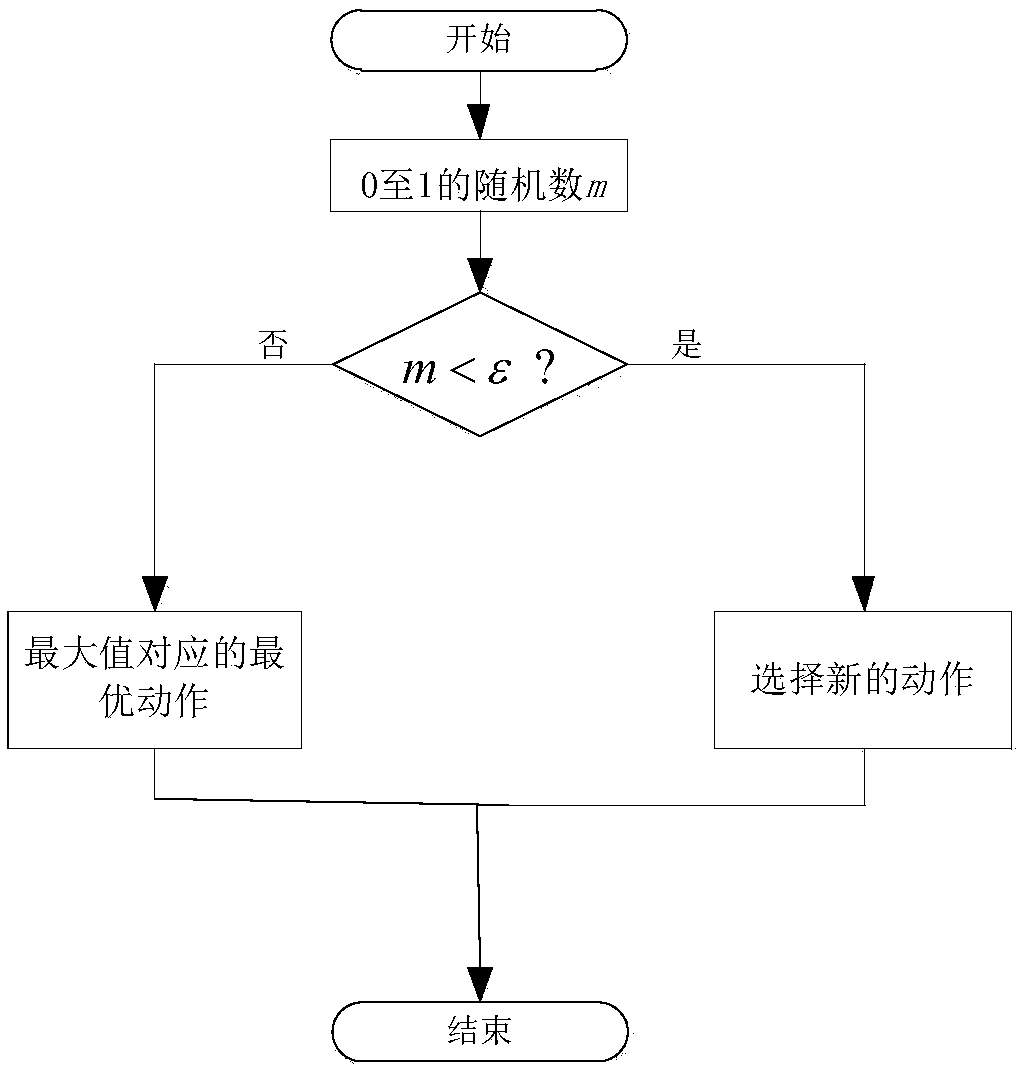

Wireless network distributed autonomous resource allocation method based on stateless Q learning

ActiveCN108112082AExcellent throughputHigh level techniquesWireless communicationWireless mesh networkPrior information

The invention discloses a wireless network distributed autonomous resource allocation method based on stateless Q learning. First, the number of channels and the transmitted power are taken as a set of actions, and a set of actions is randomly selected to calculate the actual network throughput; then the ratio of the actual network throughput and the theoretical throughput is taken as a reward after the action selection, and an action value function is updated according to the reward; and finally, the iterative adjustment of the actions can find the maximum solution of a cumulative reward value function, and the corresponding action thereof can reach the optimum performance of the wireless network. The method provided by the invention allows each node to autonomously perform channel allocation and transmitted power control to maximize the network throughput under the condition that prior information such as resource configuration of other nodes in the network is unknown.

Owner:BEIJING UNIV OF TECH

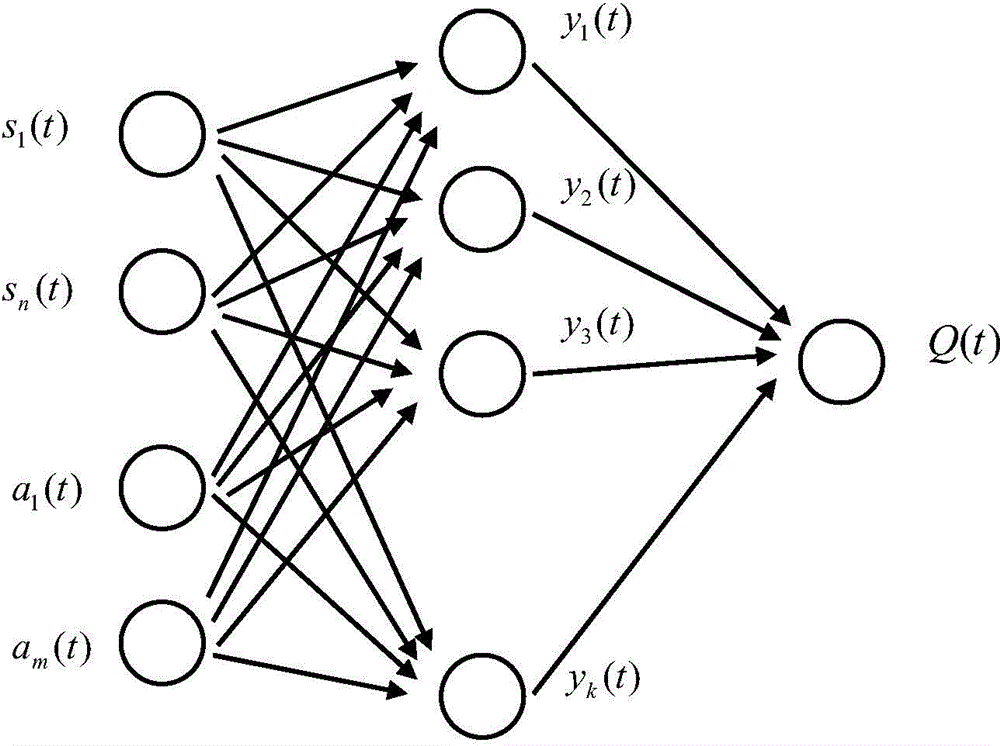

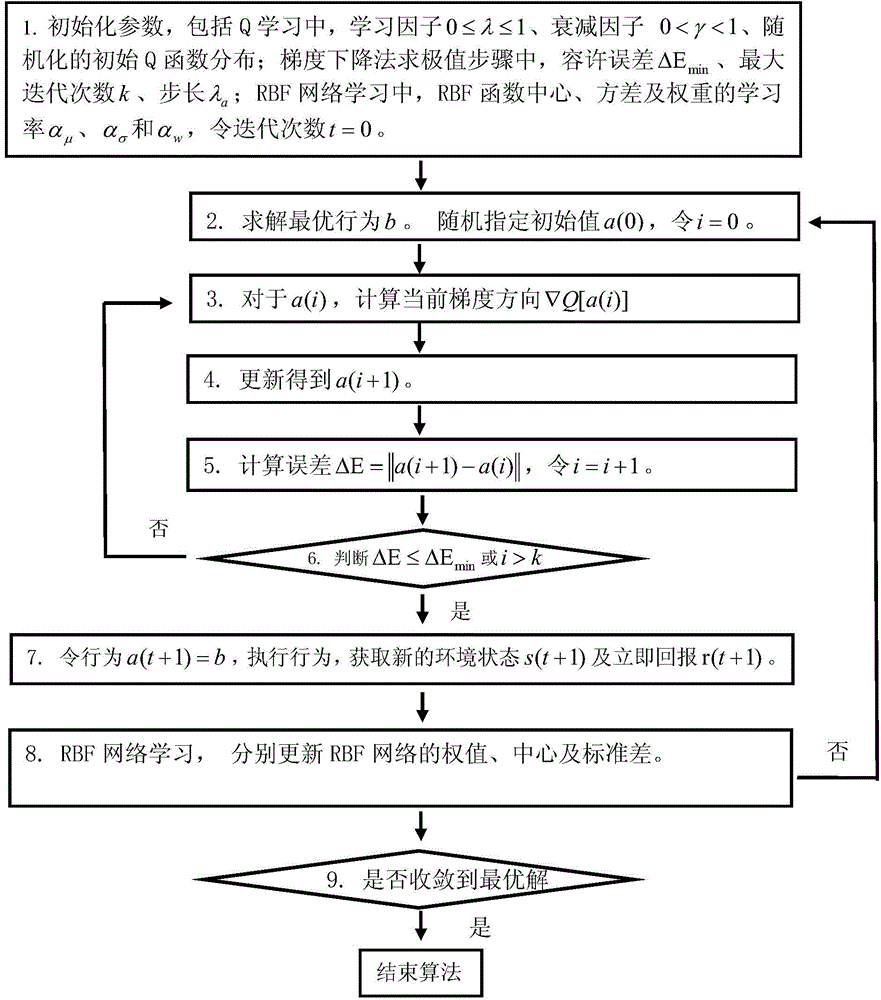

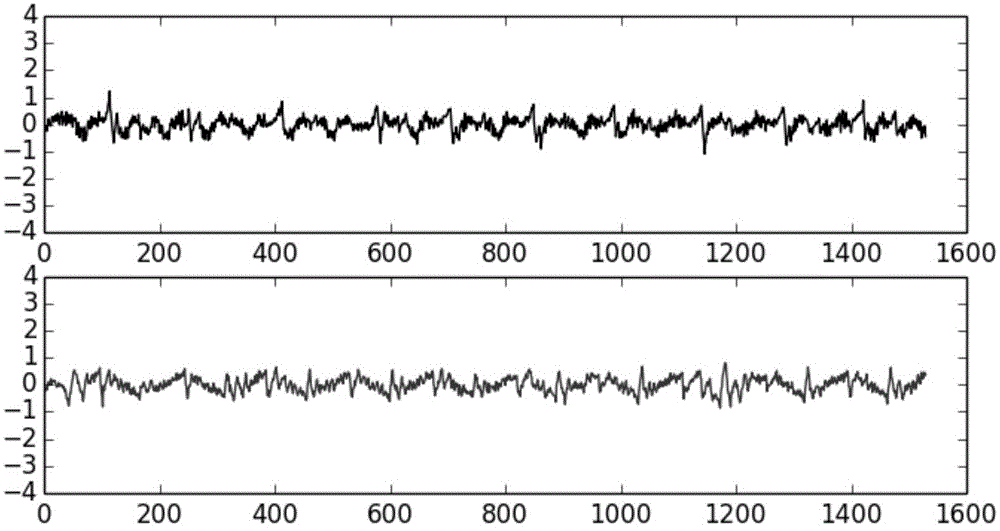

Humanoid robot stable control method of RBF-Q learning frame

ActiveCN104932264AStrong global approximation capabilityImprove generalization abilityAdaptive controlKnee JointAnkle

The invention discloses a humanoid robot stable control method of an RBF-Q learning frame. The method comprises the following steps: the RBF-Q learning frame which solves the problems of state space serialization and behavior space serialization in a Q learning process is brought forward; an online motion adjusting stable control algorithm of the RBF-Q learning is brought forward, loci of the hip joint, the knee joint and the ankle joint of a support leg are generated, and a humanoid robot is controlled to walk stably through calculation of angles of other joints; and finally, the feasibility and validity of an RBF-Q learning frame method are verified on the Vitruvian Man humanoid robot platform designed by the laboratory. The method provided by the invention can generate a stable walking gait of the humanoid robot in an online learning process.

Owner:SOUTH CHINA UNIV OF TECH

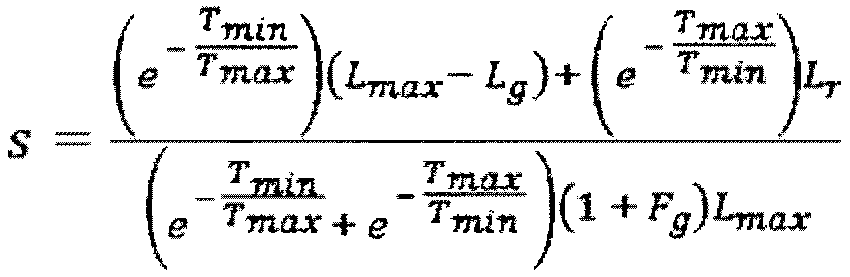

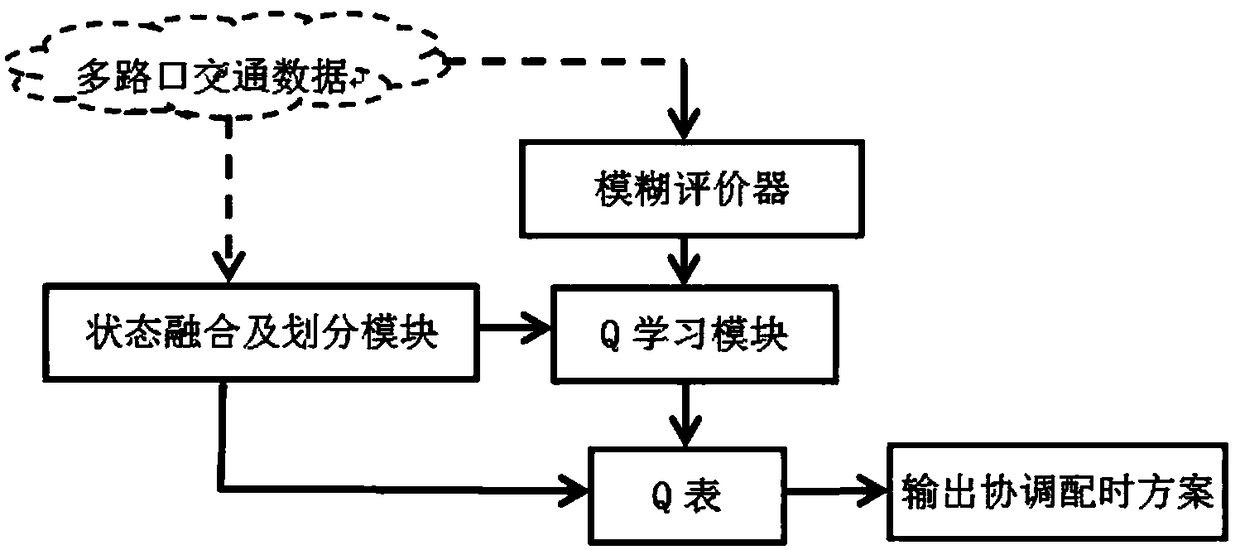

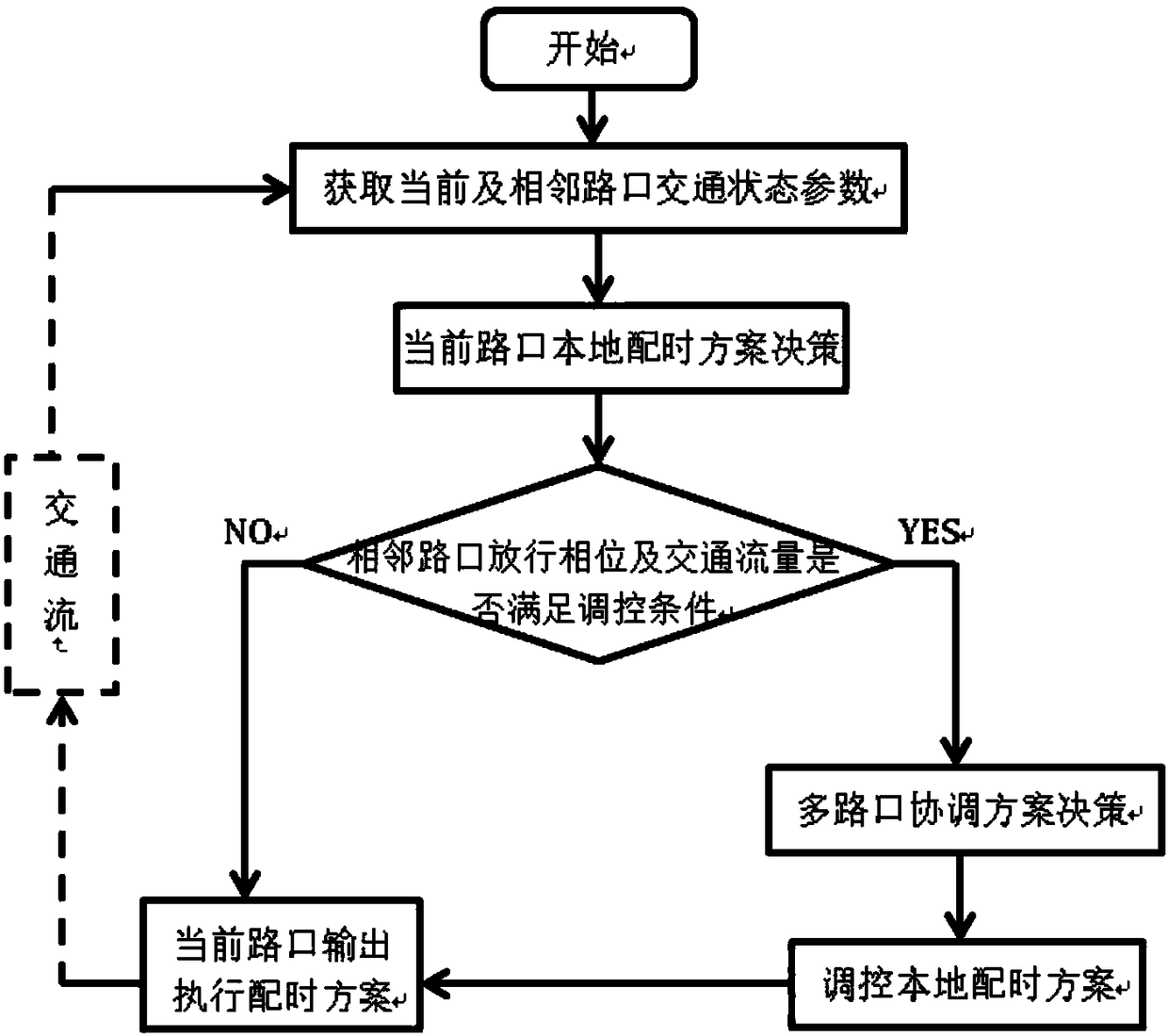

Multi-intersection self-adaptive phase difference coordinated-control system and method based on Q learning

PendingCN108510764ARapid Coordinated Signal ControlImprove traffic efficiencyRoad vehicles traffic controlAdaptive controlTraffic capacityTraffic signal

The invention discloses a multi-intersection self-adaptive phase difference coordinated-control system and method based on Q learning. The system comprises an intersection control module, a coordinated-control module, a Q learning control module, a regulation module and an output execution module. The intersection control module is used for providing a reasonable single-intersection traffic timingplan for a current phase according the traffic state of a local interaction. The coordinated-control module is used for judging whether the current phase needs phase difference coordination or not byanalyzing the traffic states of local interaction and adjacent intersections. The multi-intersection self-adaptive phase difference coordinated-control method can effectively shorten the response time for traffic jam, quickly coordinate signal control of the intersections and improve the intersection passing efficiency and has very good universality in the application of traffic signal self-adaptive control. The system can give accurate and reasonable green lamp timing plans through phase coordination and is more suitable for large-scale intersections having larger vehicle flow compared withcoordination control free of accurate time.

Owner:NANJING UNIV OF POSTS & TELECOMM

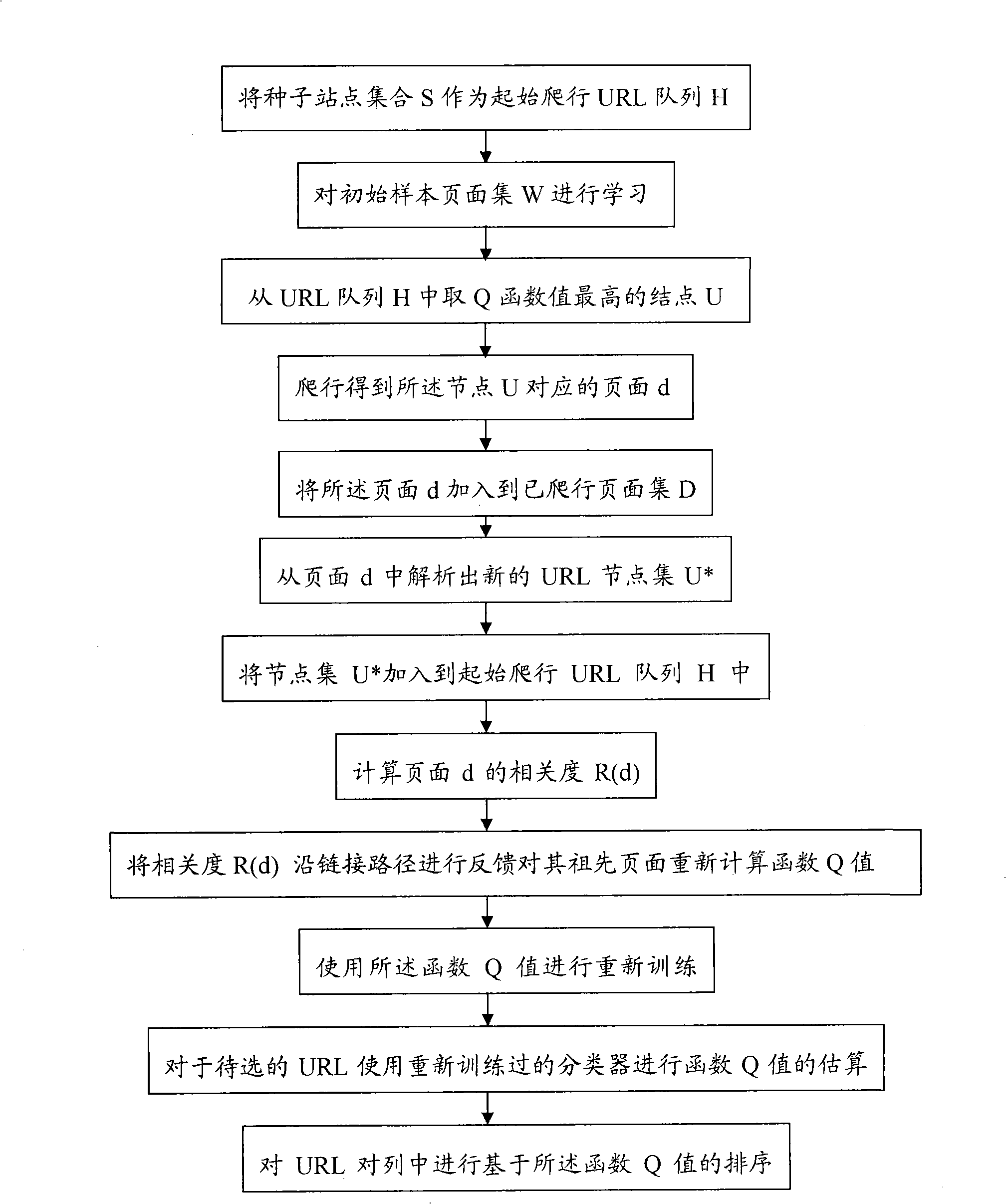

Studying method and system based on increment Q-Learning

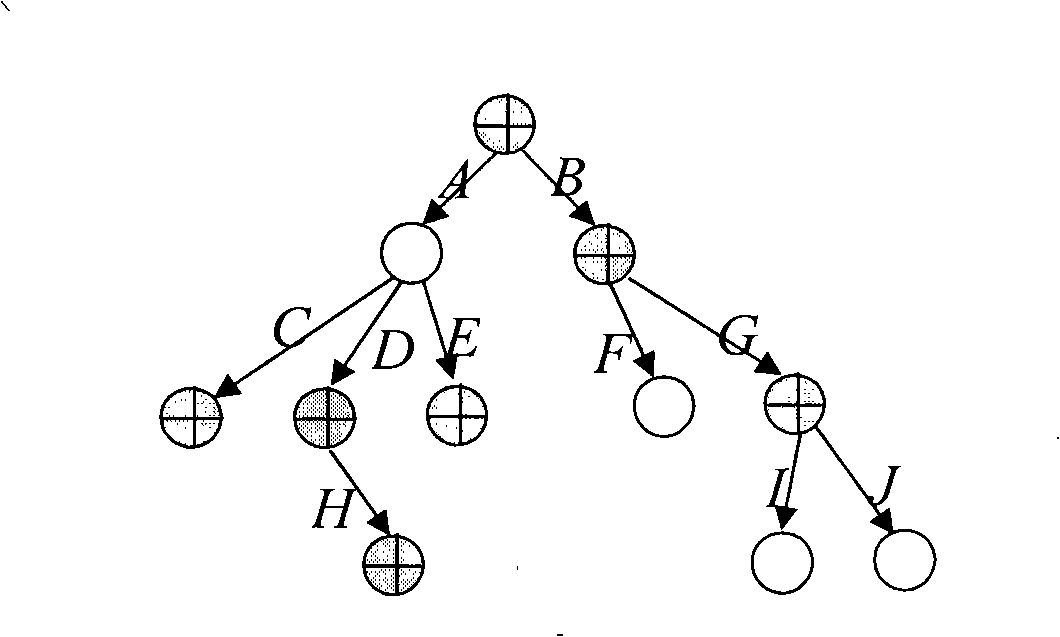

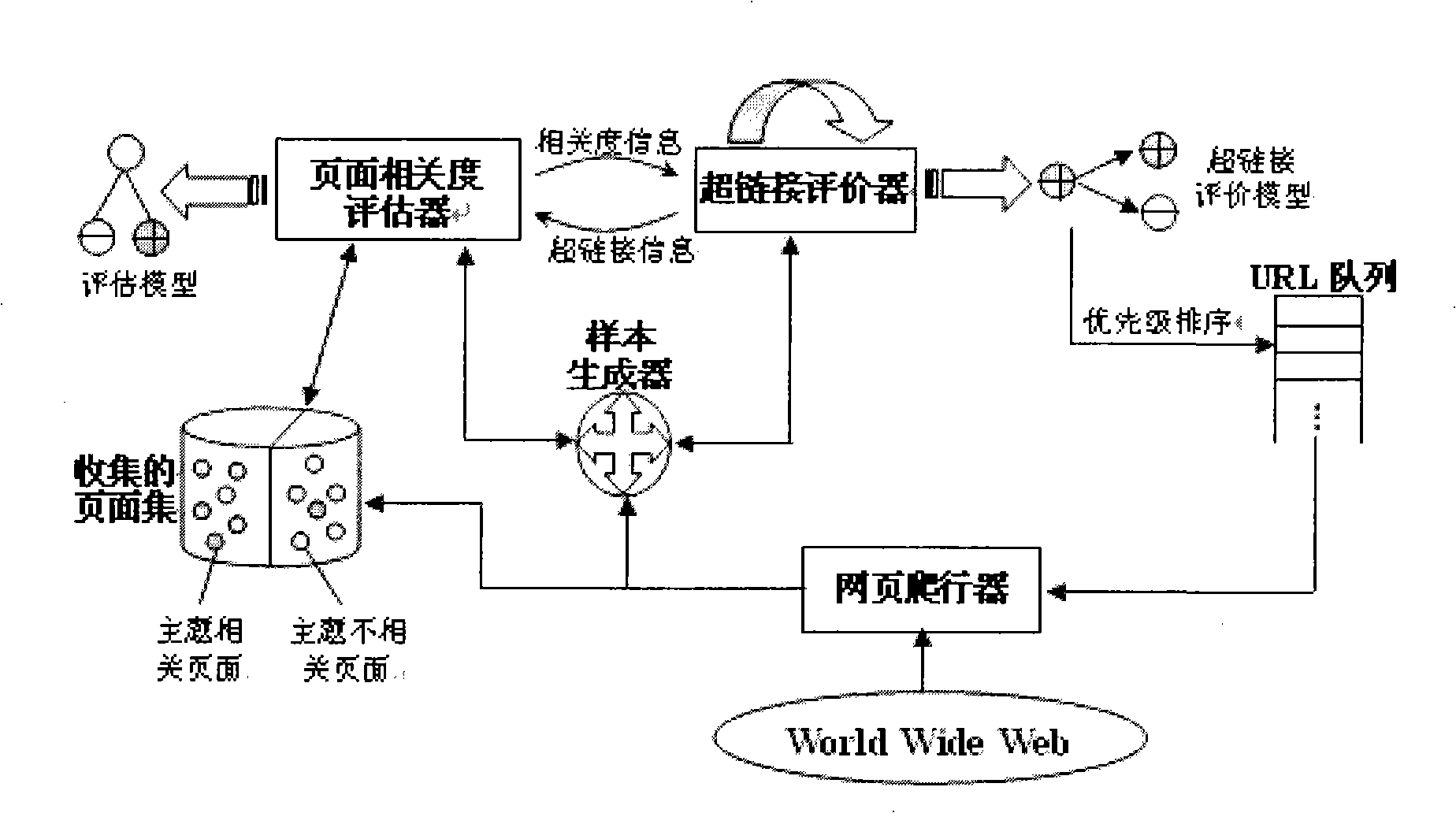

InactiveCN101261634AImprove architectureAdaptableComputing modelsSpecial data processing applicationsHyperlinkCategorical models

The invention relates to a learning method and a system based on increment Q-Learning. In the method, the system recalculates the Q value of each knot function on the hyperlink link corresponding to a new creeping web page; the system re-disperses the function Q value according to the newly calculated function Q value to form a new sample; then an NB classifying device is retrained to obtain a new Q value classification model which is utilized for recalculating the Q value of each candidate URL in a URL queue; finally the IQ-Learning arithmetic leads a page correlation evaluating device to carry out increment learning. The innovation point of the system structure of the invention lies in the addition of the Q-Learning on-line sample generator which carries out analysis and evaluation to the pages obtained by on-line creeping and generates new positive-example samples or negative-example samples so as to cause the increment leaning to be possible. The technique introduced by the invention effectively enhances the obtaining rate of theme crawlers.

Owner:HARBIN INST OF TECH SHENZHEN GRADUATE SCHOOL

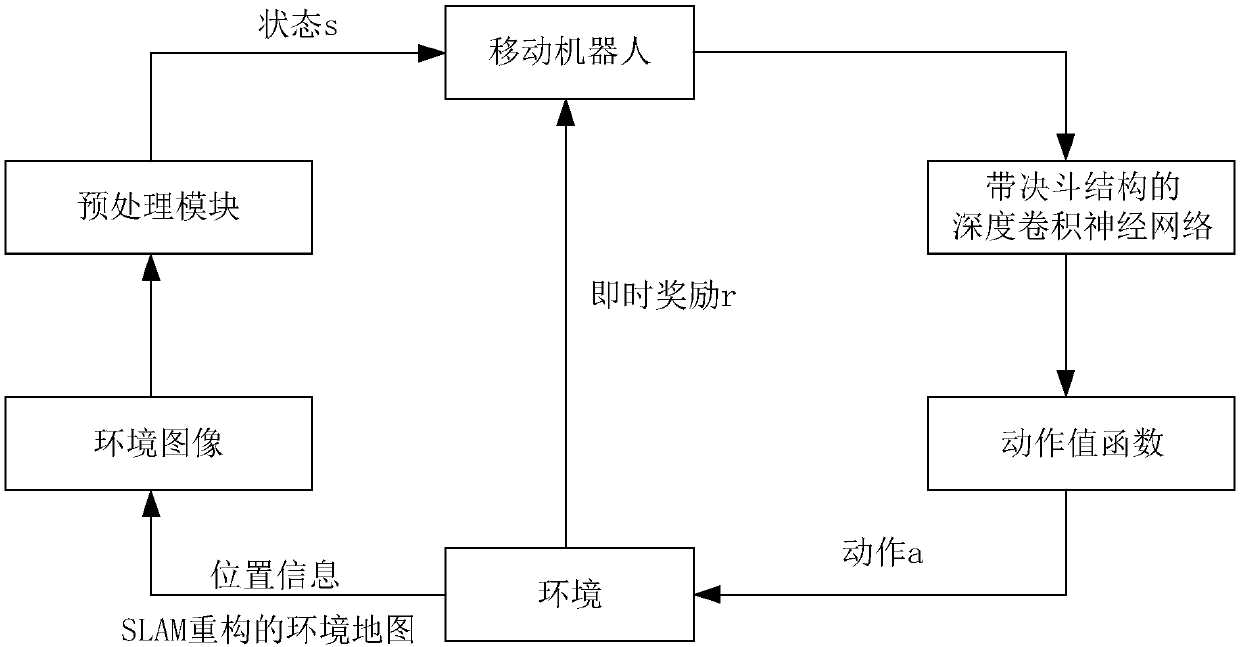

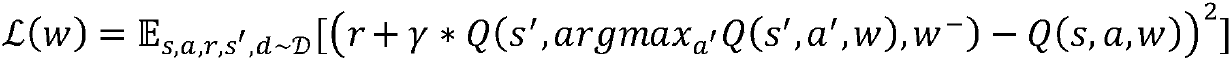

Rapid path planning method based on variant dual DQNs (deep Q-networks) and mobile robot

InactiveCN108375379AOvercoming the problem of overestimation of action valueFast path planning methodInstruments for road network navigationFast pathEstimation methods

The invention discloses a rapid path planning method based on variant dual DQNs (deep Q-networks) and a mobile robot, wherein the mobile robot samples mini-batch conversion information from an experience replay storage; one of two dueling deep convolutional neural networks is selected as a first online network according to first preset rules, with the other serving as a first target network; predicted online operate value function Q(s, a; w) and greedy operation a' are acquired; maximum value of the predicted target operate value function is acquired; a loss function on current time step is calculated according to the maximum value of the predicted target operate value function and the predicted online operate value function; online weight parameter w is updated via an adaptive moment estimation method according to the loss function. The weight parameter updating mode based on dual Q learning and dueling DQN, and path planning is more effectively achieved for the mobile robot.

Owner:UNIV OF SHANGHAI FOR SCI & TECH

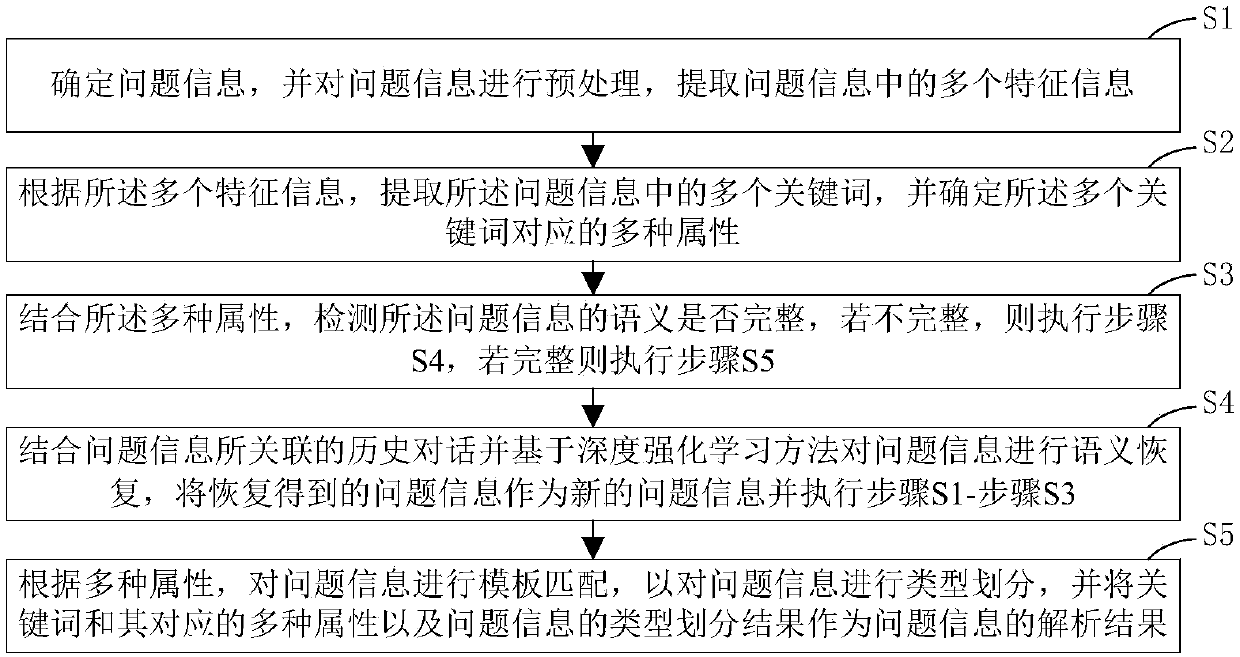

Question analyzing method and system for interactive question answering

InactiveCN107632979AImprove the accuracy of semantic recoveryMeet the needs of industrial applicationsNeural architecturesSpecial data processing applicationsTemplate matchingStudy methods

The invention discloses a question analyzing method and system for interactive question answering. The method includes the steps of S1, determining question information, preprocessing the question information and extracting multiple pieces of feature information in the question information; S2, extracting a plurality of keywords in the question information according to the feature information, anddetermining a plurality of properties corresponding to the keywords; S3, determining whether the semantic meaning of the question information is complete or not in combination with the properties, ifnot, executing the step S4, otherwise executing the step S5; S4, restoring the semantic meaning of the question information in combination with a historical conversation and based on a deep learningenhancing method, using the restored question information as new question information and executing the step S1 to S3; S5, conducting template matching on the question information according to the properties and dividing the question information according to types. The deep Q-Learning method based on deep learning enhancing is added, and therefore the semantic meaning restoration accuracy is improved, and the industrial application requirements are well met.

Owner:HUAZHONG UNIV OF SCI & TECH

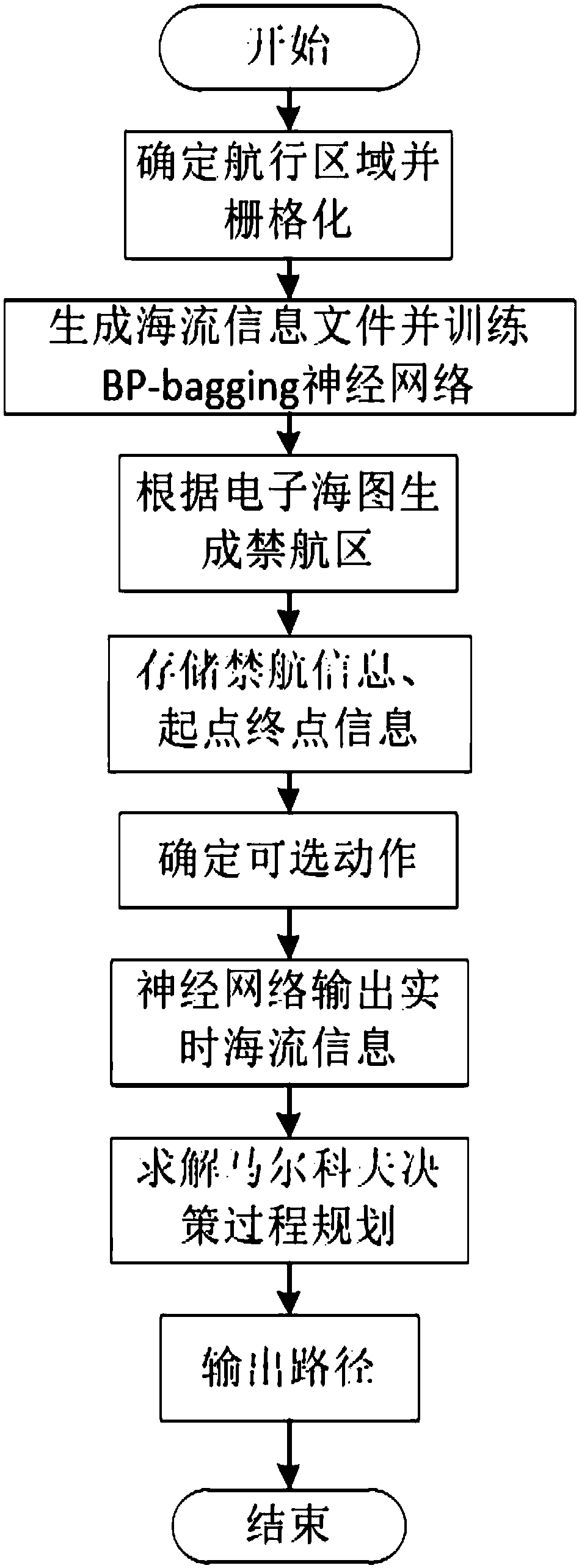

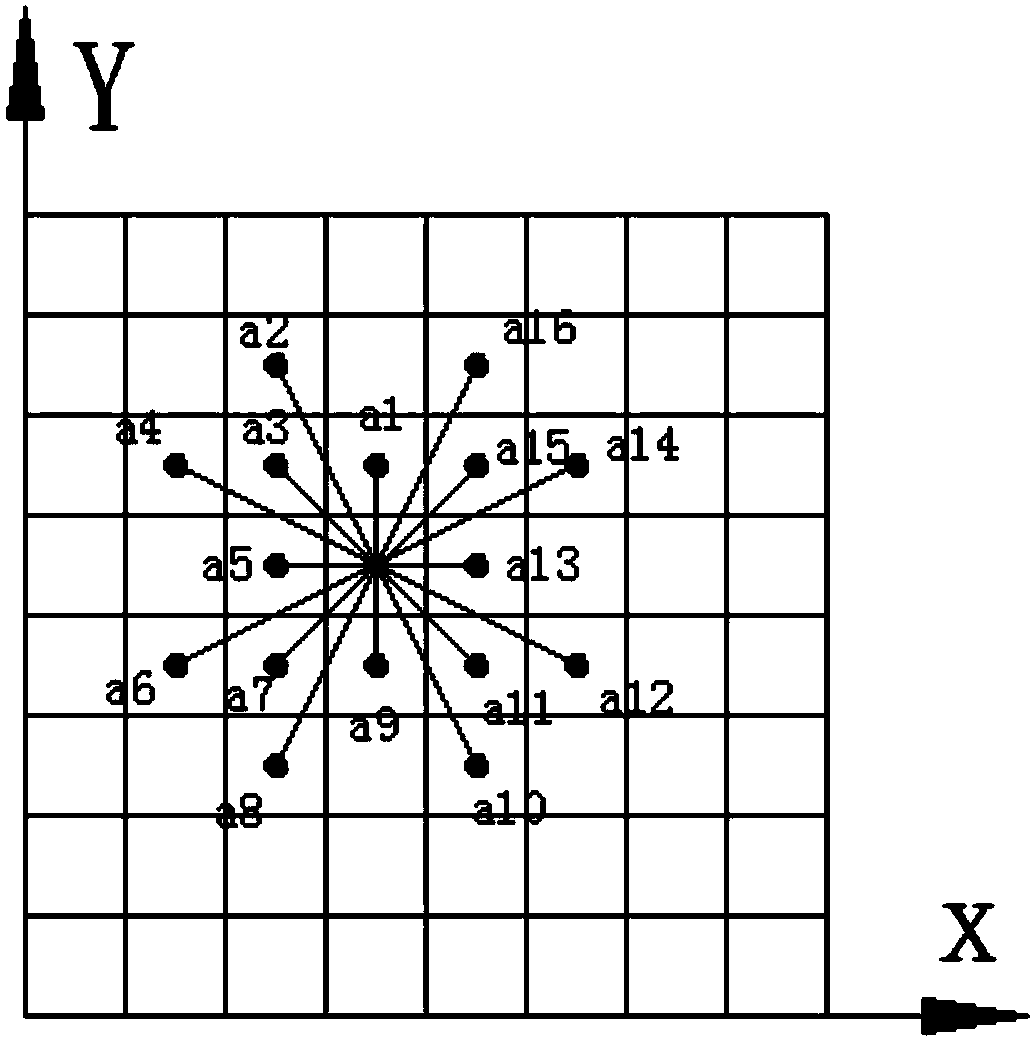

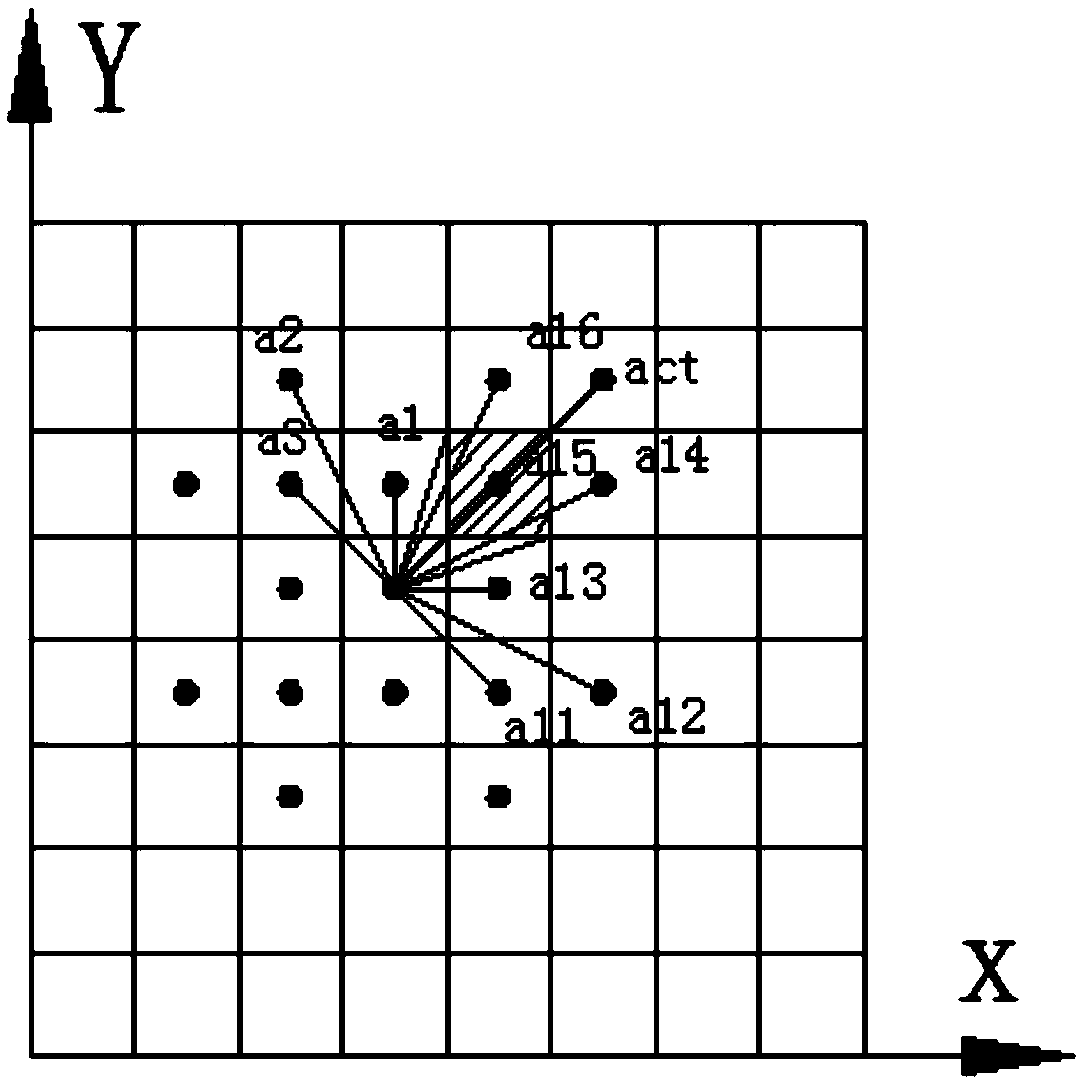

Method for planning paths on basis of ocean current prediction models

ActiveCN108803313AFast convergenceReduce complexityPosition/course control in three dimensionsAdaptive controlLongitudeQ-learning

Owner:HARBIN ENG UNIV

Single-point intersection signal control method based on deep cyclic Q learning

InactiveCN111243271AImprove traffic efficiencyImprove performanceDetection of traffic movementNeural architecturesEngineeringAlgorithms performance

The invention discloses a single-point intersection signal control method based on deep cycle Q learning. The method comprises the following steps: learning an optimal signal control strategy at a single intersection by using a deep cyclic Q learning (DRQN) algorithm, wherein according to the DRQN algorithm, an LSTM neural network is introduced on the basis of DQN, the characteristic that the LSTMcan memorize time axis information is utilized, the current intersection input state is comprehensively expressed by combining the states of the intersection at the previous several moments instead of the state of the intersection at the current moment and thus the influence of the POMDP characteristics of the intersection on deep Q learning performance is reduced. The performance of the improvedDRQN algorithm provided by the invention is superior to that of the DQN algorithm and is also superior to that of a traditional intersection timing control method. When the traffic flow is close to saturation and supersaturation, the DRQN algorithm can observe the state of the intersection at each moment and make an optimal opportunity choice, thereby improving the traffic efficiency of the intersection.

Owner:DUOLUN TECH CO LTD +1

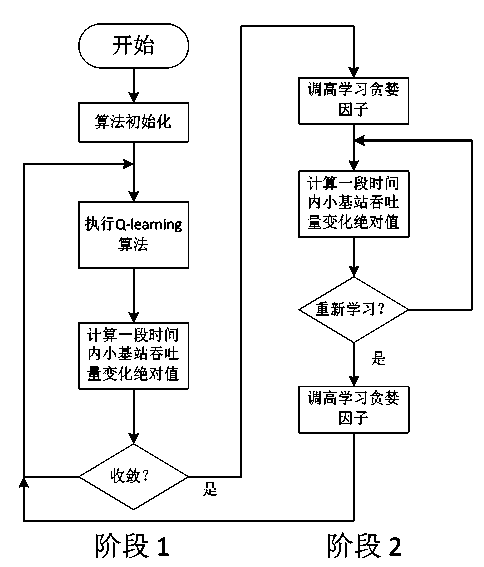

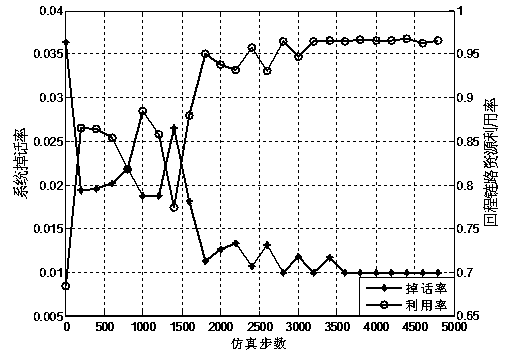

Distribution type method for adjusting small base station transmitting power bias values in self-adaptive mode

ActiveCN103906076ATake advantage ofIncrease capacityPower managementNetwork planningSystem capacityMacro base stations

The invention discloses a distribution type method for adjusting small base station transmitting power bias values in a self-adaptive mode. Under the condition that the return link capacity of a small base station is limited, the Q-learning method in machine learning is used for adjusting small base station transmitting power bias in the self-adaptive mode to share macro honeycomb loads, and the utilization rate of small base station return link resources is improved. Through the Q-learning method in machine learning, each small base station can conveniently monitor the number of peripheral users and changes of user distribution in real time, reference signal power bias values of the small base station are timely adjusted to obtain the optimal bias value selection under the current condition, and therefore the resources of the small base station are fully utilized for achieving the load sharing function for a macro bass station. The method can effectively improve system capacity and the covering effect, facilitates operation cost saving of the macro base station, and achieves green communication.

Owner:CERTUS NETWORK TECHNANJING

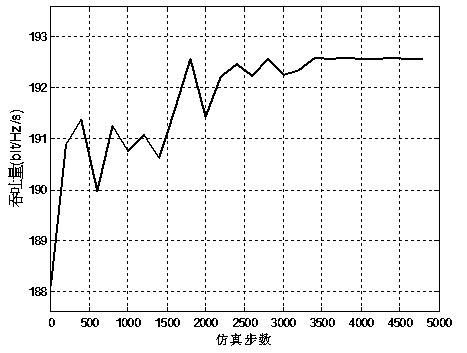

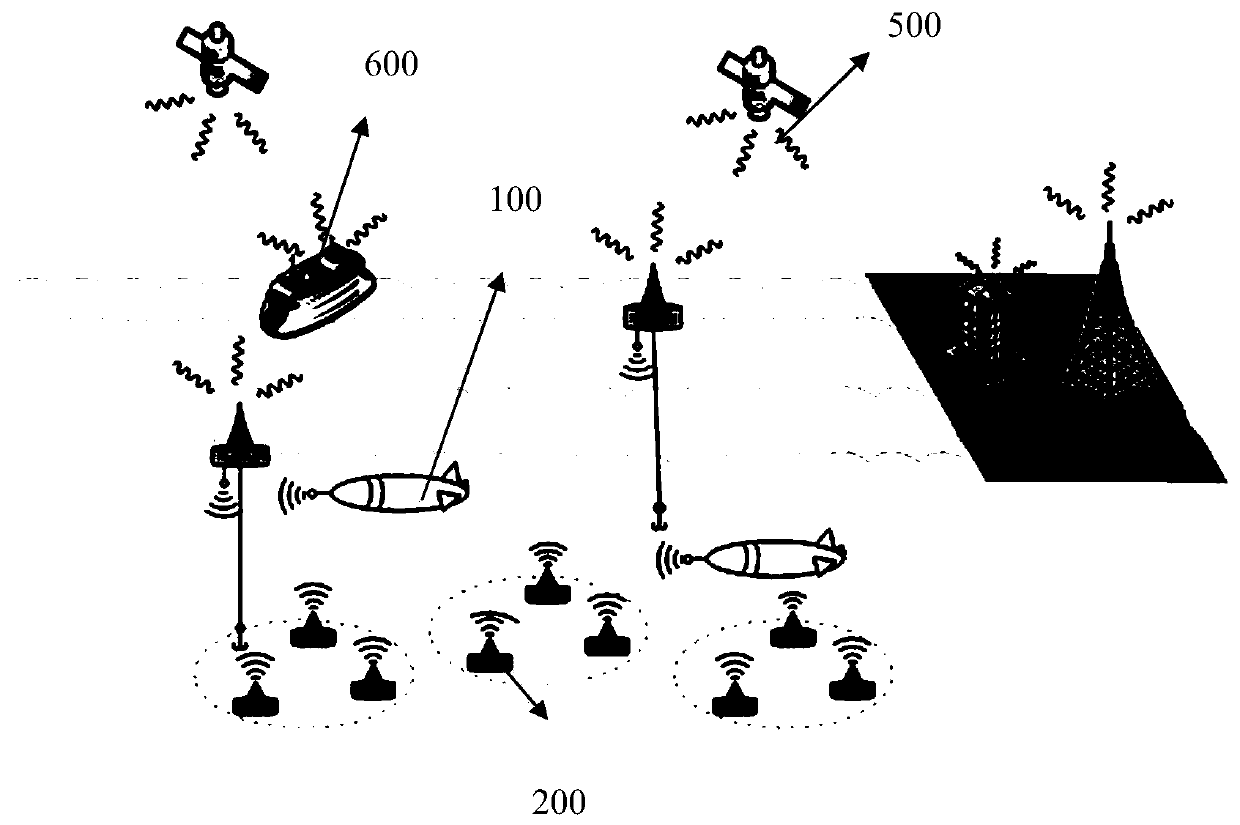

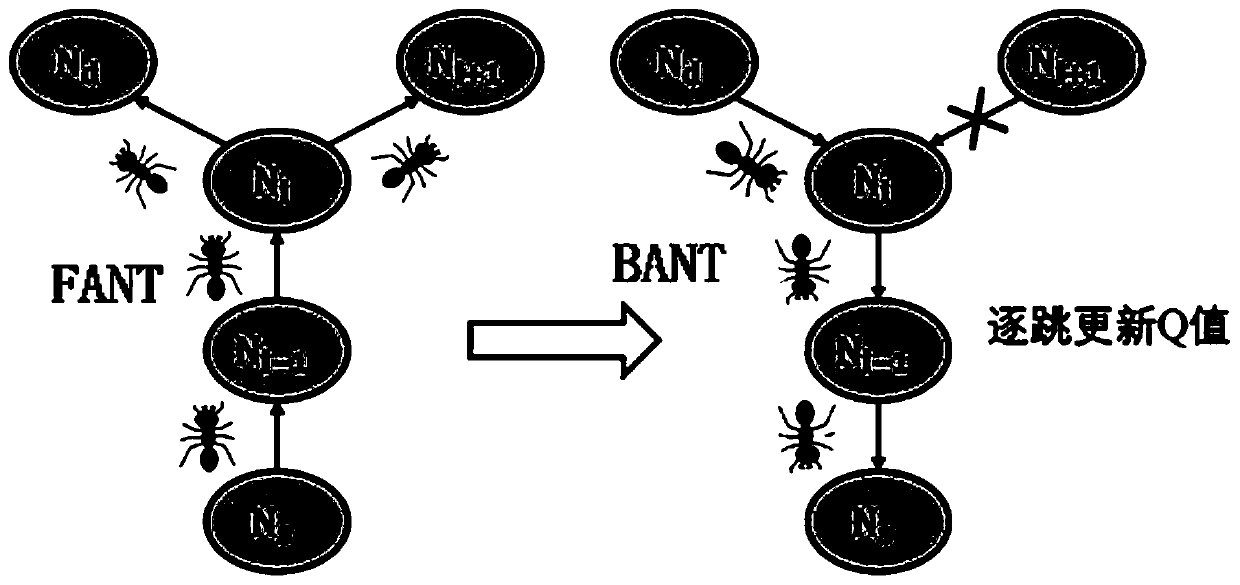

Underwater multi-agent-oriented Q learning ant colony routing method

ActiveCN111065145AImprove convergence speedImprove robustnessNetwork topologiesEngineeringUnderwater wireless sensor networks

The invention provides an underwater multi-agent-oriented Q learning ant colony routing method, which is combined with reinforcement learning and ant colony algorithm to adapt to and learn characteristics of a dynamic underwater environment, and comprises the following steps of: a routing discovery stage, a routing maintenance stage and a routing hole processing mechanism. Pheromones in the ant colony algorithm are mapped into a Q value in Q learning. The delay and bandwidth of links and the residual energy and throughput of nodes are comprehensively considered as a Q value function to selecta next hop link. A routing protocol also realizes a hole sensing mechanism. ACK return time is recorded through node timing broadcast and a timer. Whether the nodes are in a routing holes are judged,and the network is prevented from using the nodes in the hole through a penalty function of Q learning. The energy and depth of the nodes and the link stability are considered, the end-to-end delay ofthe nodes is reduced through Q learning, the data delivery rate is increased, and the service life of the underwater wireless sensor network is prolonged.

Owner:TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com