Patents

Literature

61576 results about "Utilization rate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In business, the utilization rate is an important number for firms that charge their time to clients and for those that need to maximize the productive time of their employees. It can reflect the billing efficiency or the overall productive use of an individual or a firm. Looked at simply, there are two methods to calculate the utilization rate.

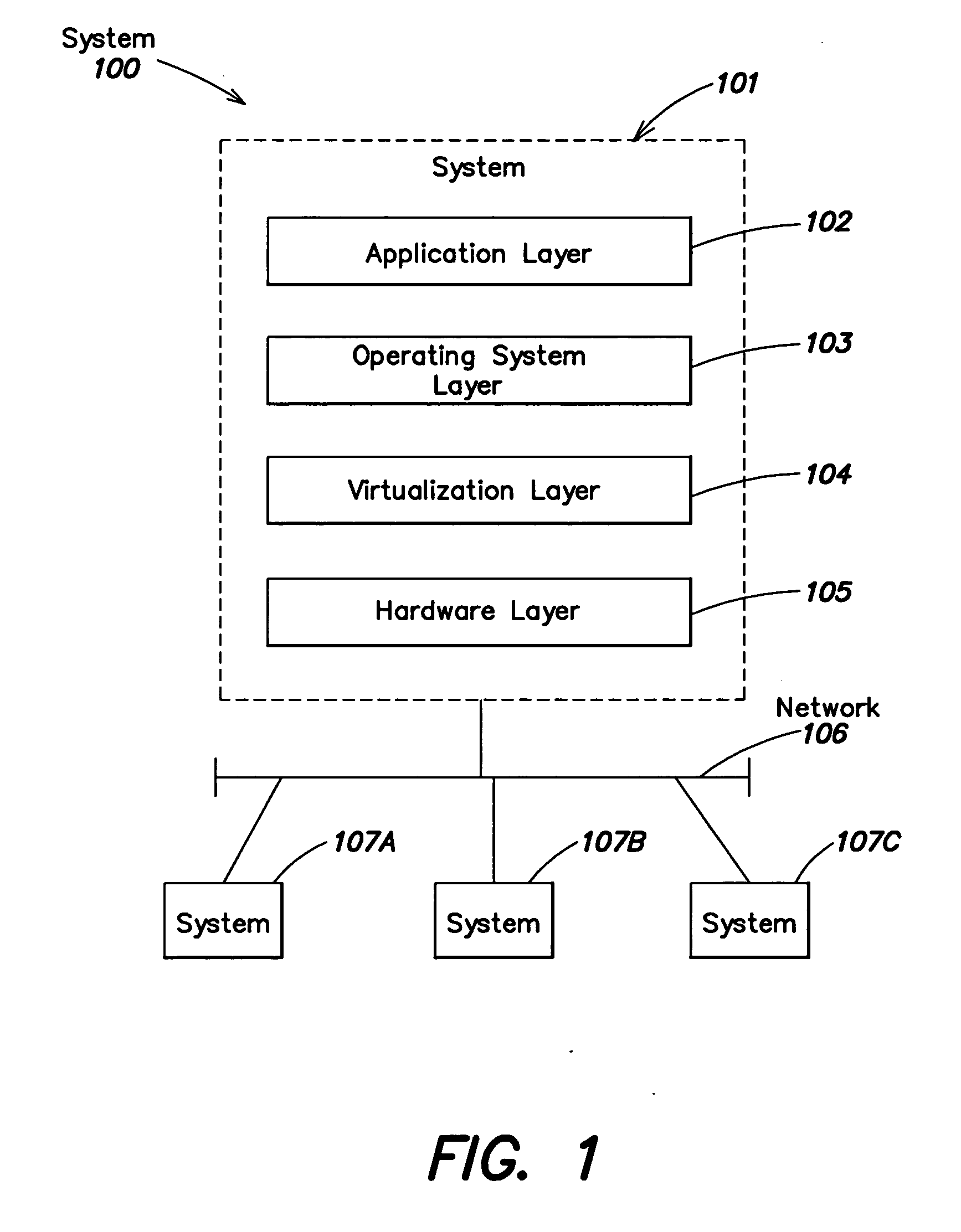

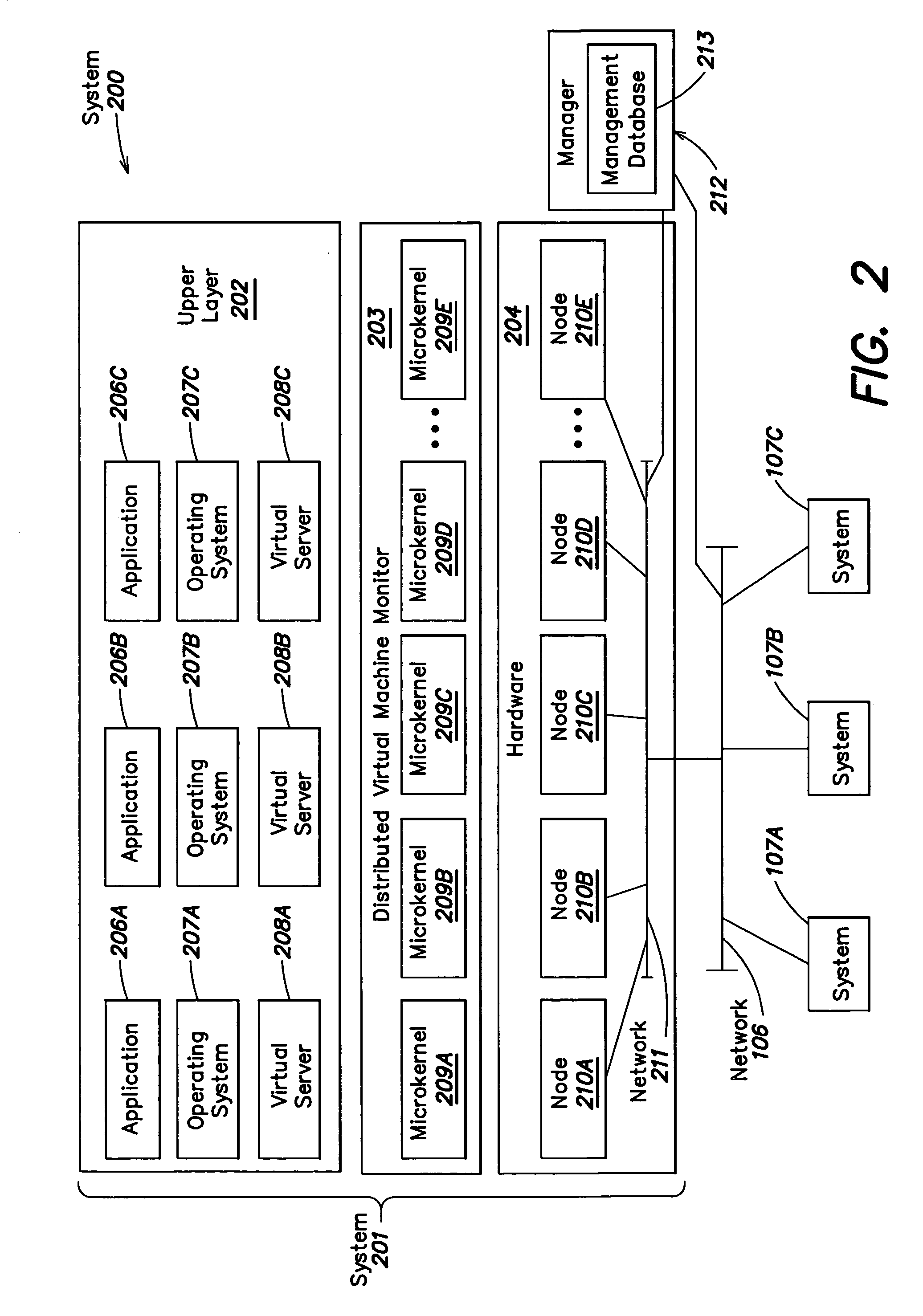

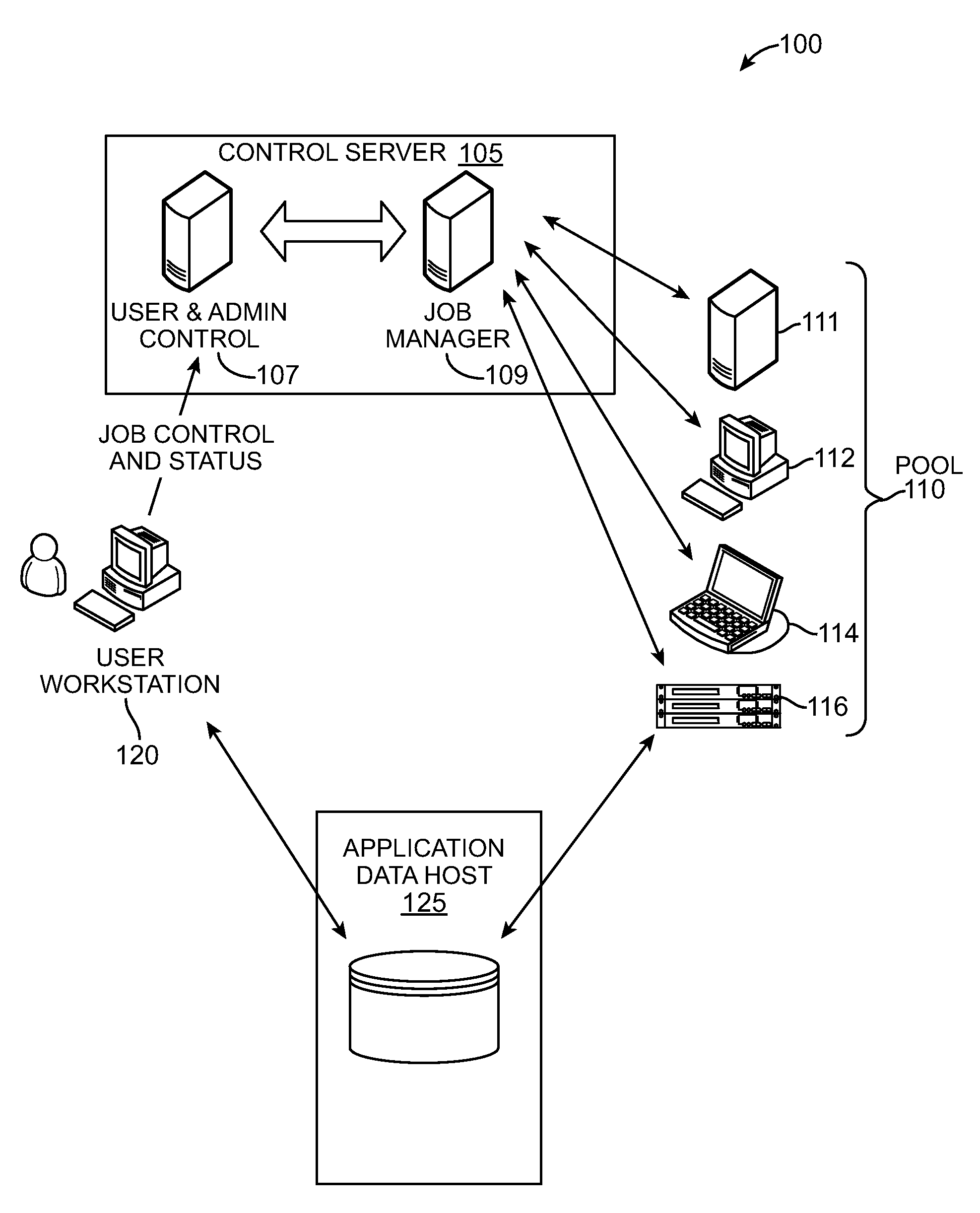

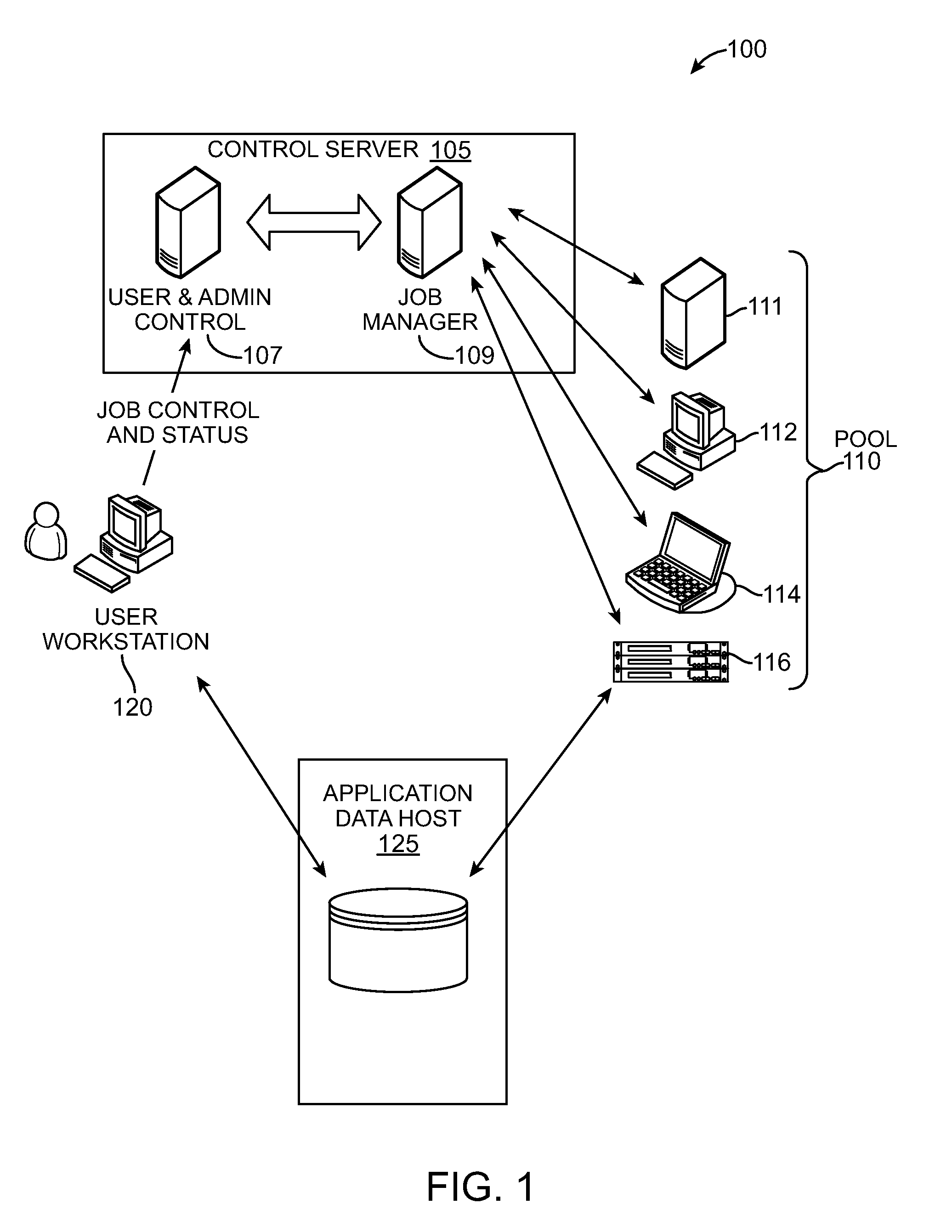

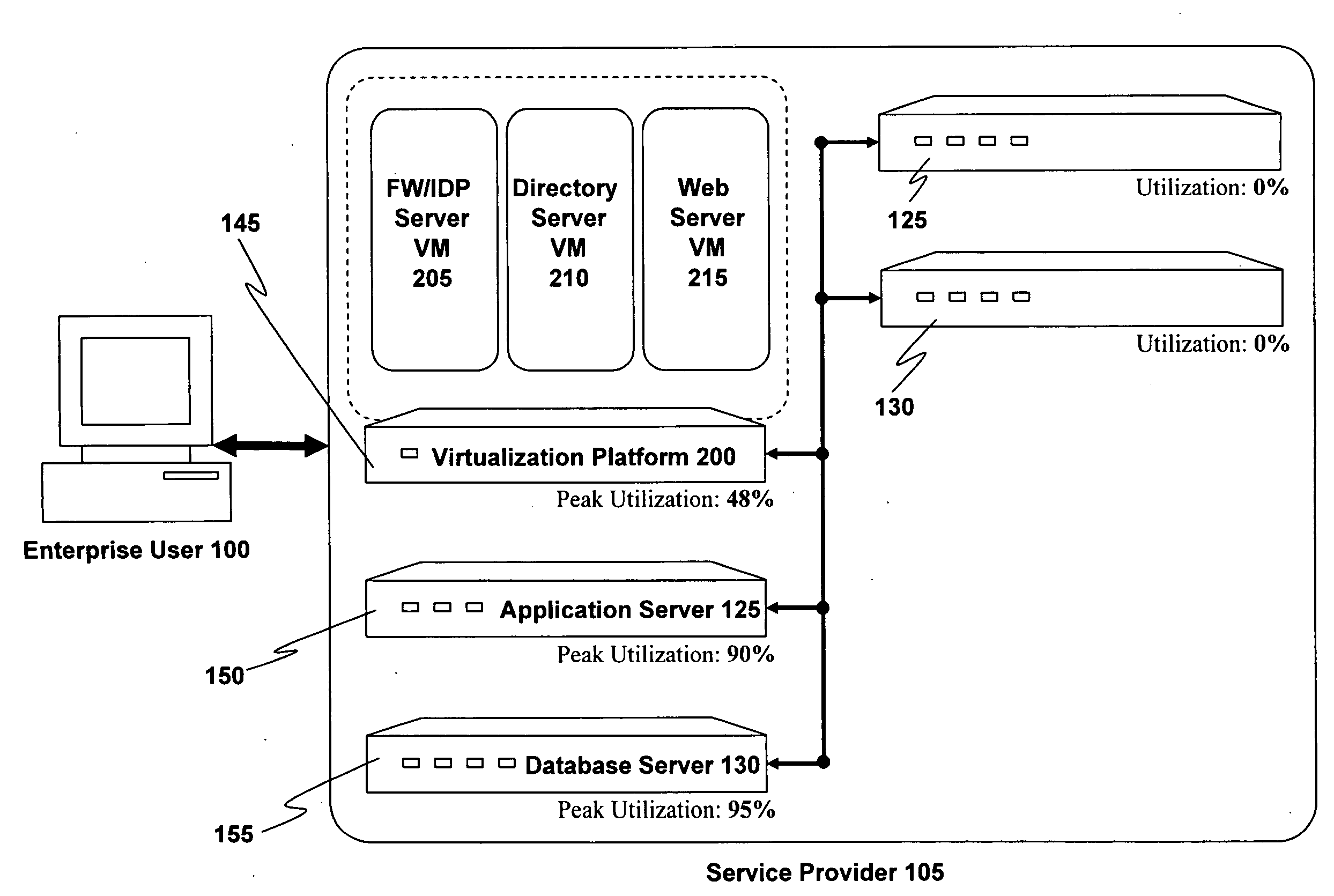

System and method for managing virtual servers

ActiveUS20050120160A1Grow and shrink capabilityMaximize useResource allocationMemory adressing/allocation/relocationOperational systemPrimitive state

A management capability is provided for a virtual computing platform. In one example, this platform allows interconnected physical resources such as processors, memory, network interfaces and storage interfaces to be abstracted and mapped to virtual resources (e.g., virtual mainframes, virtual partitions). Virtual resources contained in a virtual partition can be assembled into virtual servers that execute a guest operating system (e.g., Linux). In one example, the abstraction is unique in that any resource is available to any virtual server regardless of the physical boundaries that separate the resources. For example, any number of physical processors or any amount of physical memory can be used by a virtual server even if these resources span different nodes. A virtual computing platform is provided that allows for the creation, deletion, modification, control (e.g., start, stop, suspend, resume) and status (i.e., events) of the virtual servers which execute on the virtual computing platform and the management capability provides controls for these functions. In a particular example, such a platform allows the number and type of virtual resources consumed by a virtual server to be scaled up or down when the virtual server is running. For instance, an administrator may scale a virtual server manually or may define one or more policies that automatically scale a virtual server. Further, using the management API, a virtual server can monitor itself and can scale itself up or down depending on its need for processing, memory and I / O resources. For example, a virtual server may monitor its CPU utilization and invoke controls through the management API to allocate a new processor for itself when its utilization exceeds a specific threshold. Conversely, a virtual server may scale down its processor count when its utilization falls. Policies can be used to execute one or more management controls. More specifically, a management capability is provided that allows policies to be defined using management object's properties, events and / or method results. A management policy may also incorporate external data (e.g., an external event) in its definition. A policy may be triggered, causing the management server or other computing entity to execute an action. An action may utilize one or more management controls. In addition, an action may access external capabilities such as sending notification e-mail or sending a text message to a telephone paging system. Further, management capability controls may be executed using a discrete transaction referred to as a “job.” A series of management controls may be assembled into a job using one or management interfaces. Errors that occur when a job is executed may cause the job to be rolled back, allowing affected virtual servers to return to their original state.

Owner:ORACLE INT CORP

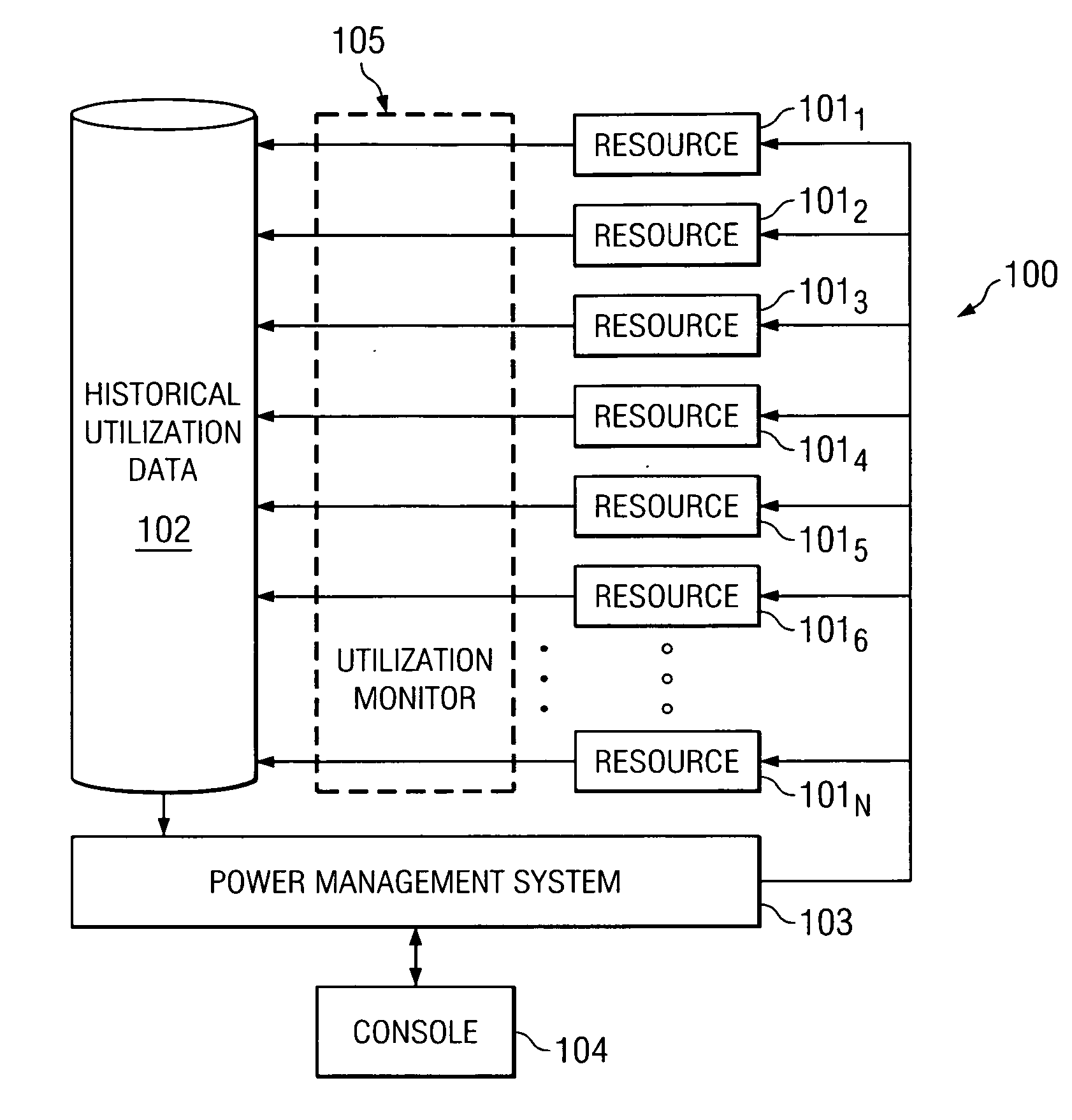

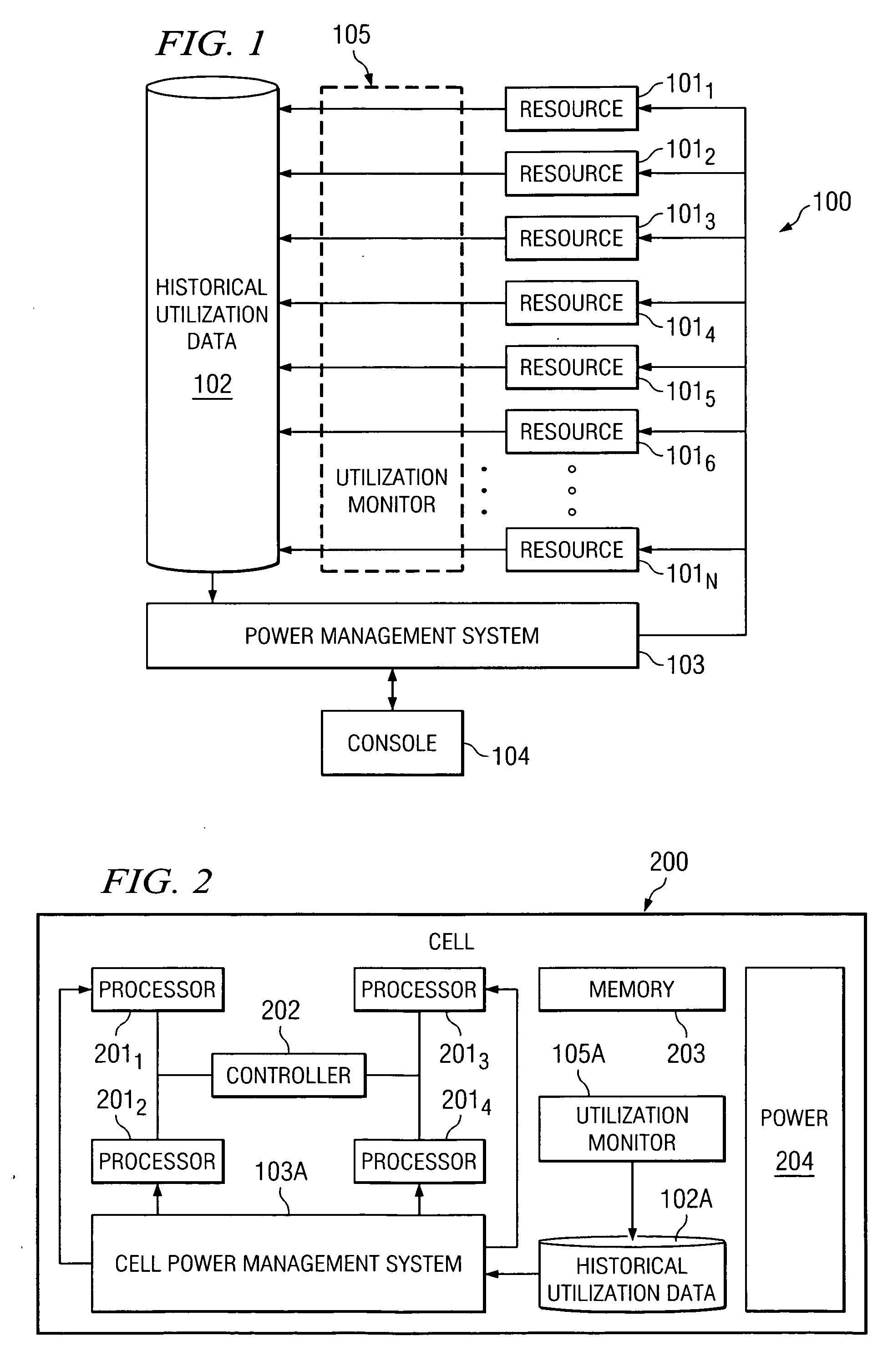

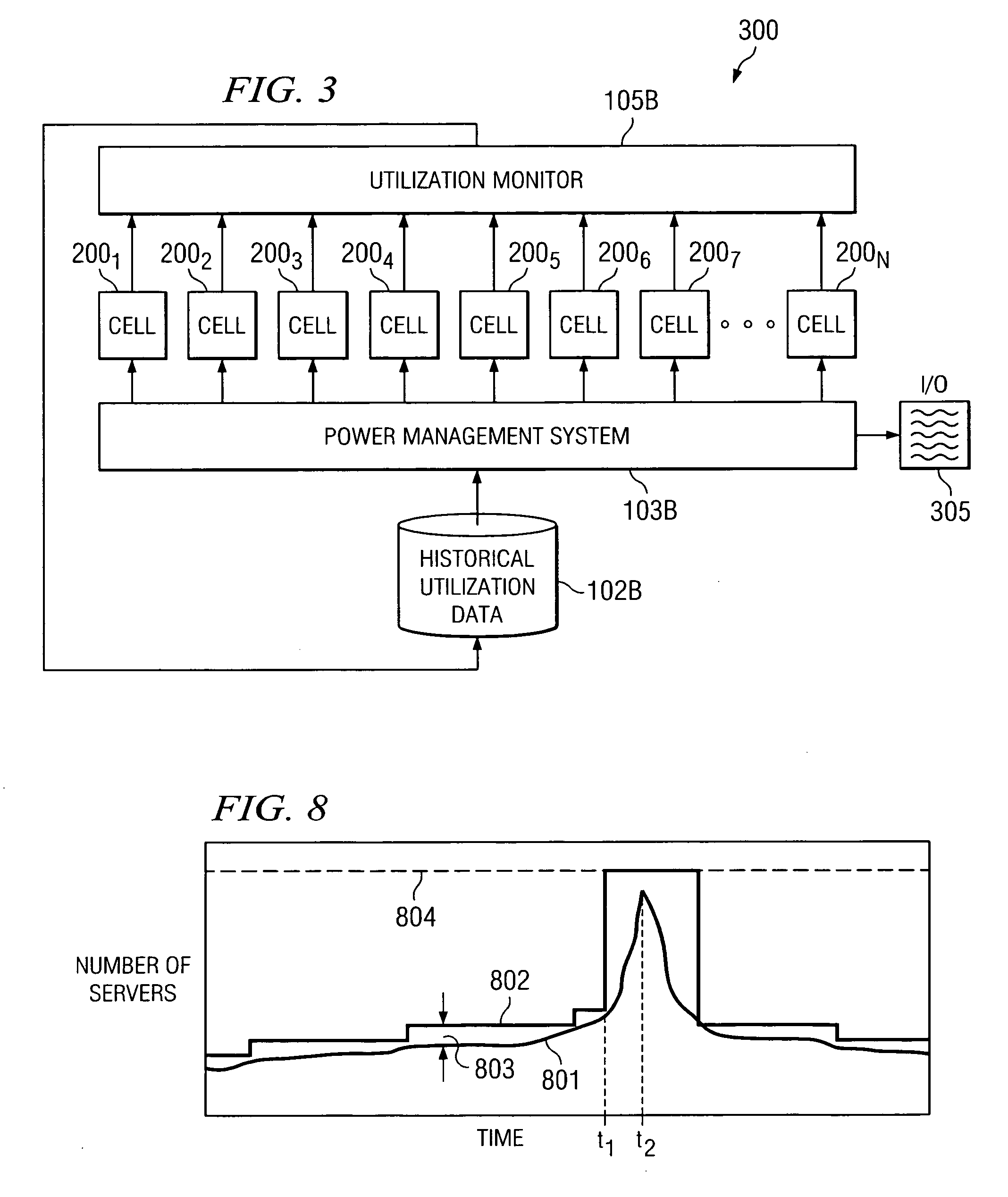

System and method for controlling power to resources based on historical utilization data

ActiveUS20060184287A1Energy efficient ICTMechanical power/torque controlResource basedSystem configuration

A method comprises collecting utilization data for a resource, and predicting by a power management system, based on the collected utilization data, future utilization of the resource. The method further comprises controlling, by the power management system, power to the resource, based at least in part on the predicted future utilization of the resource. In one embodiment, the utilization data is collected for a plurality of resources that are operable to perform tasks, and the method further comprises determining, by the power management system, how many of the resources are needed to provide a desired capacity for servicing the predicted future utilization of the resources for performing the tasks. The method further comprises configuring, by the power management system, ones of the resources exceeding the determined number of resources needed to provide the desired capacity in a reduced power-consumption mode.

Owner:MOSAID TECH

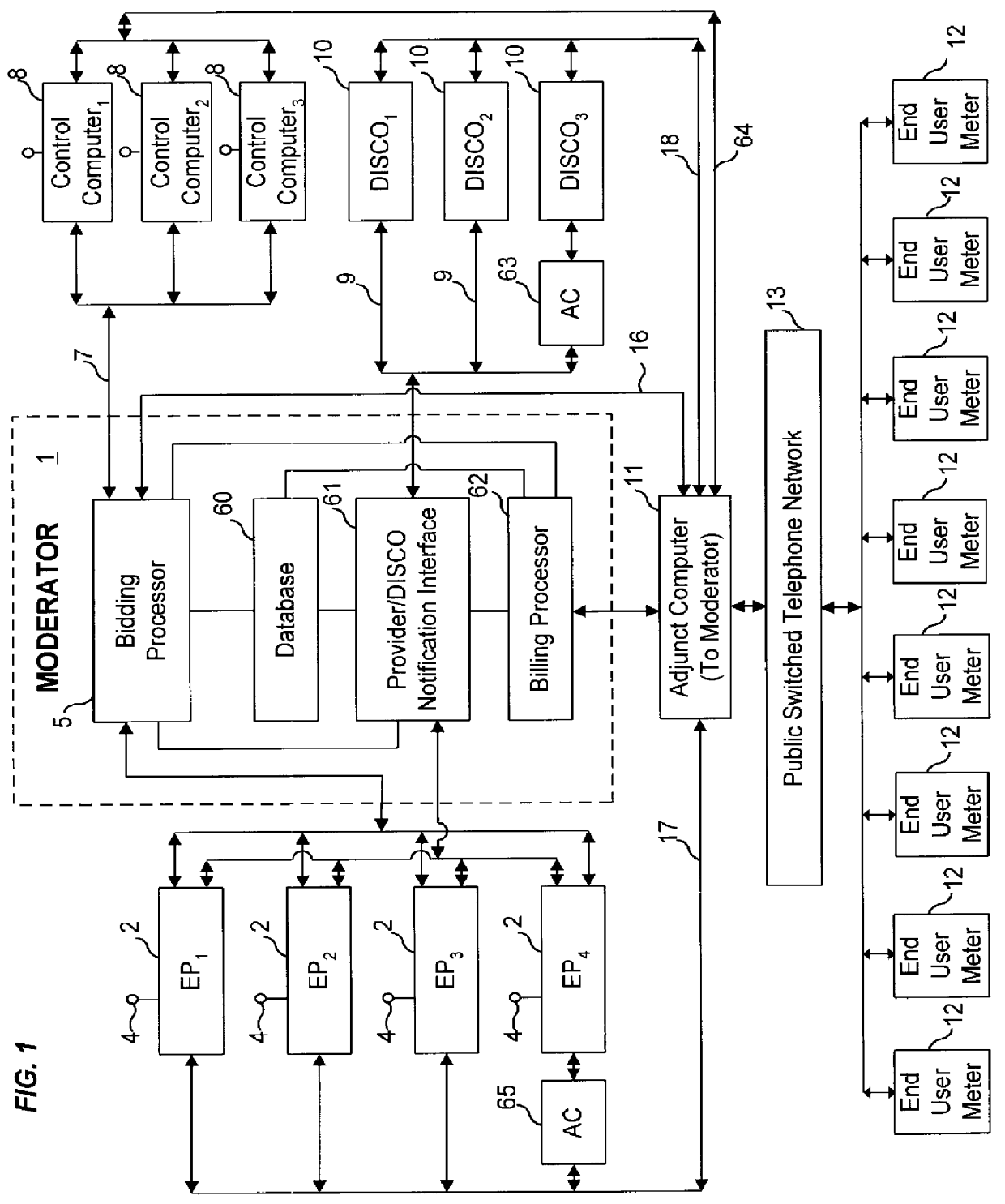

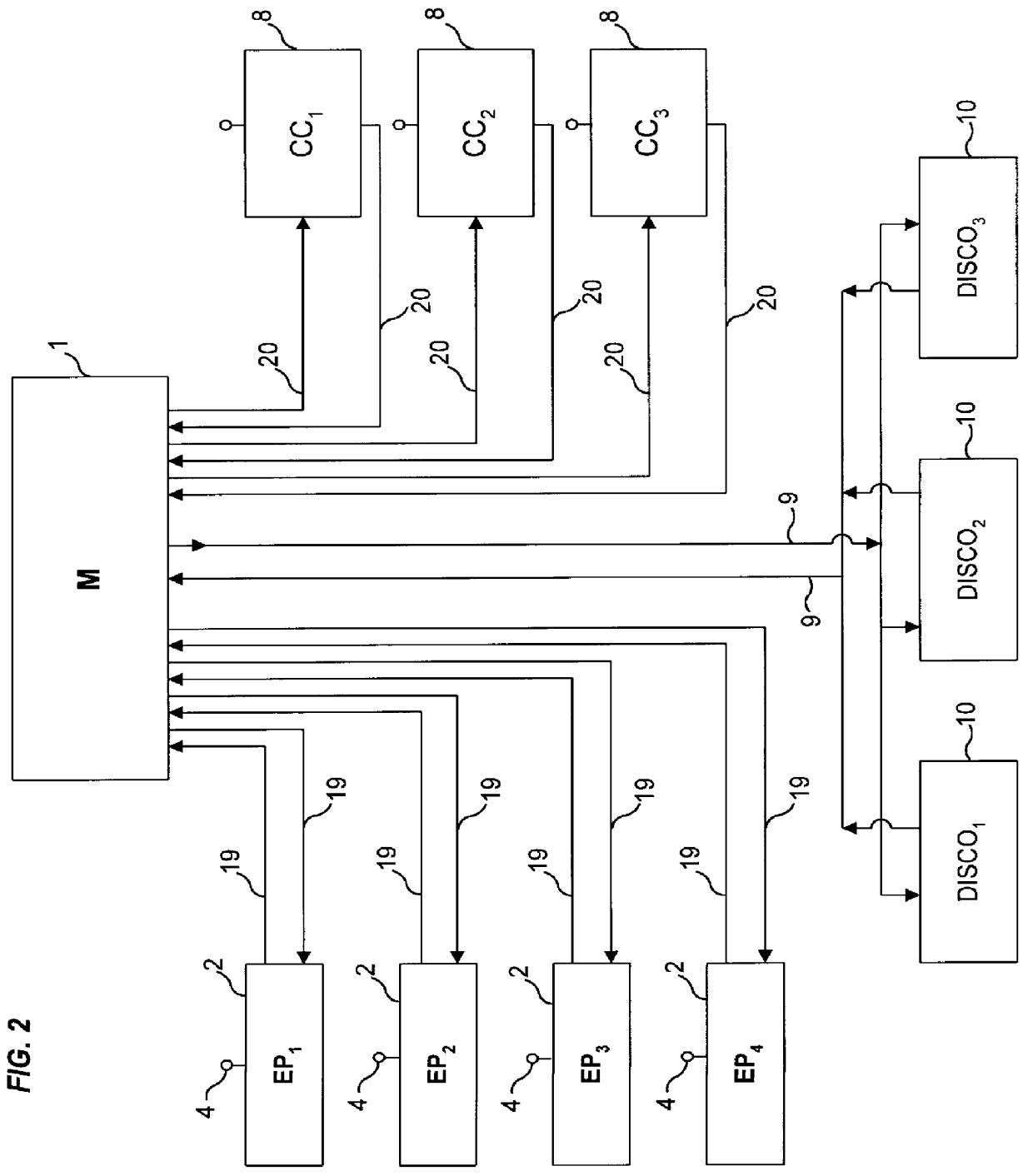

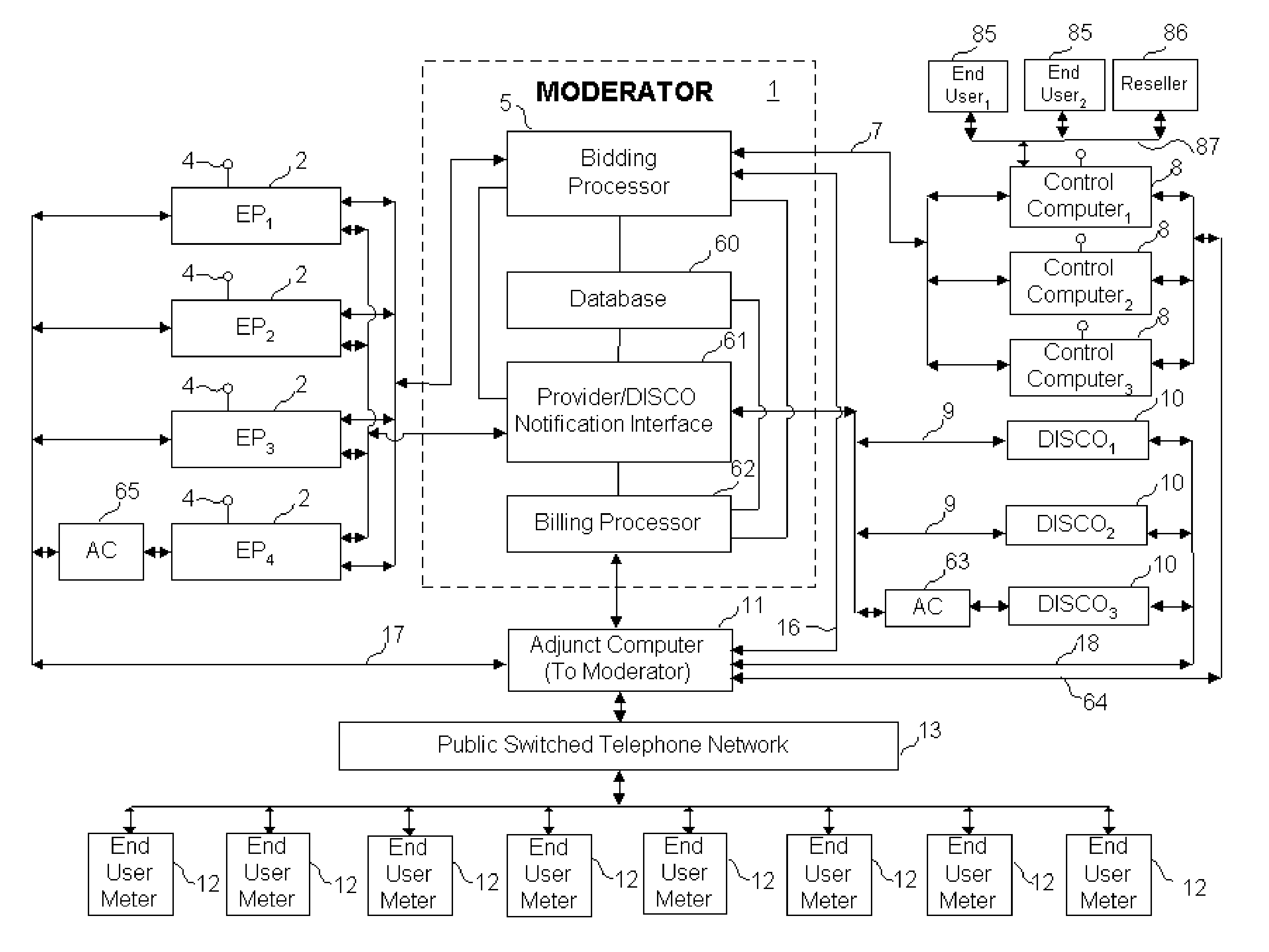

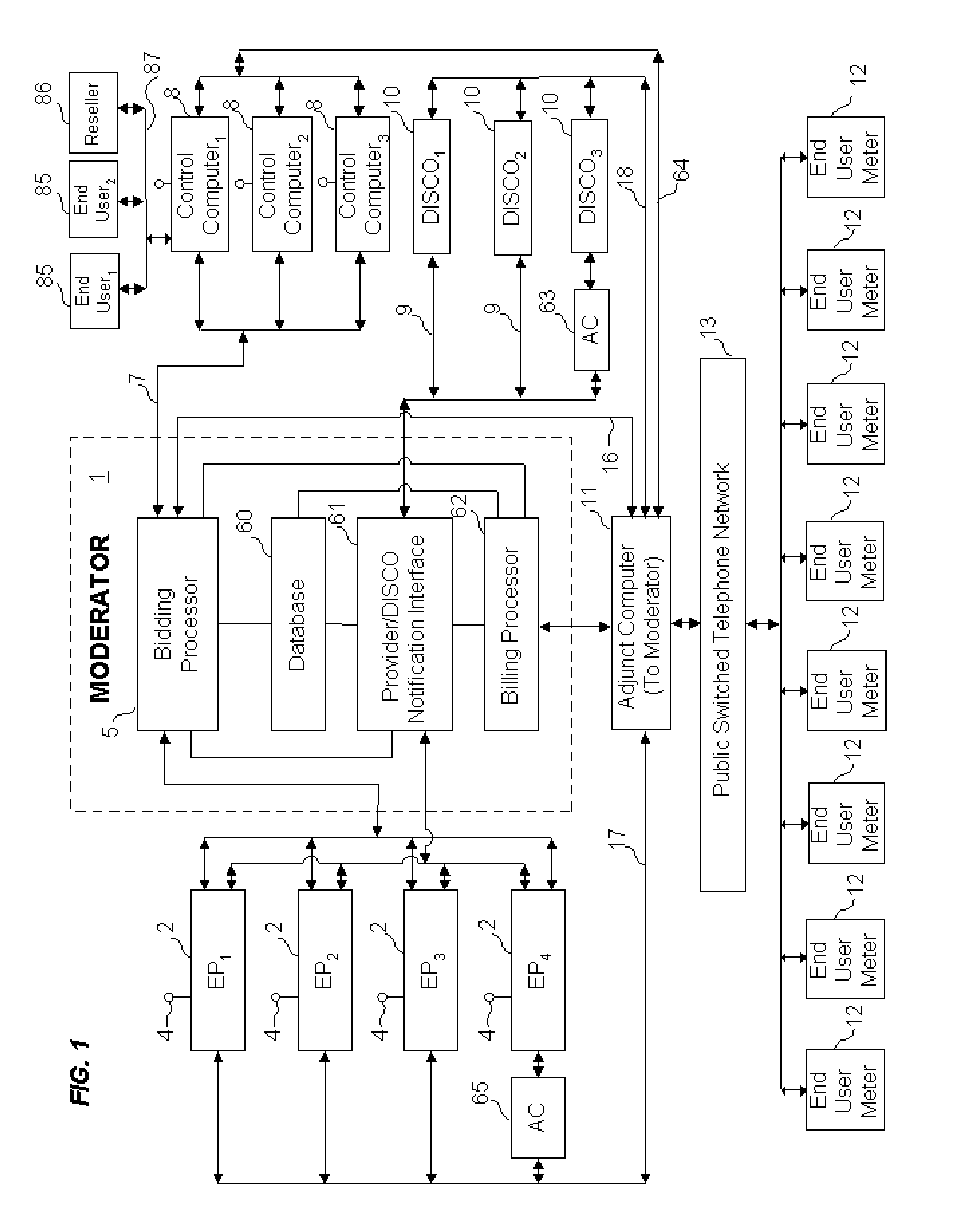

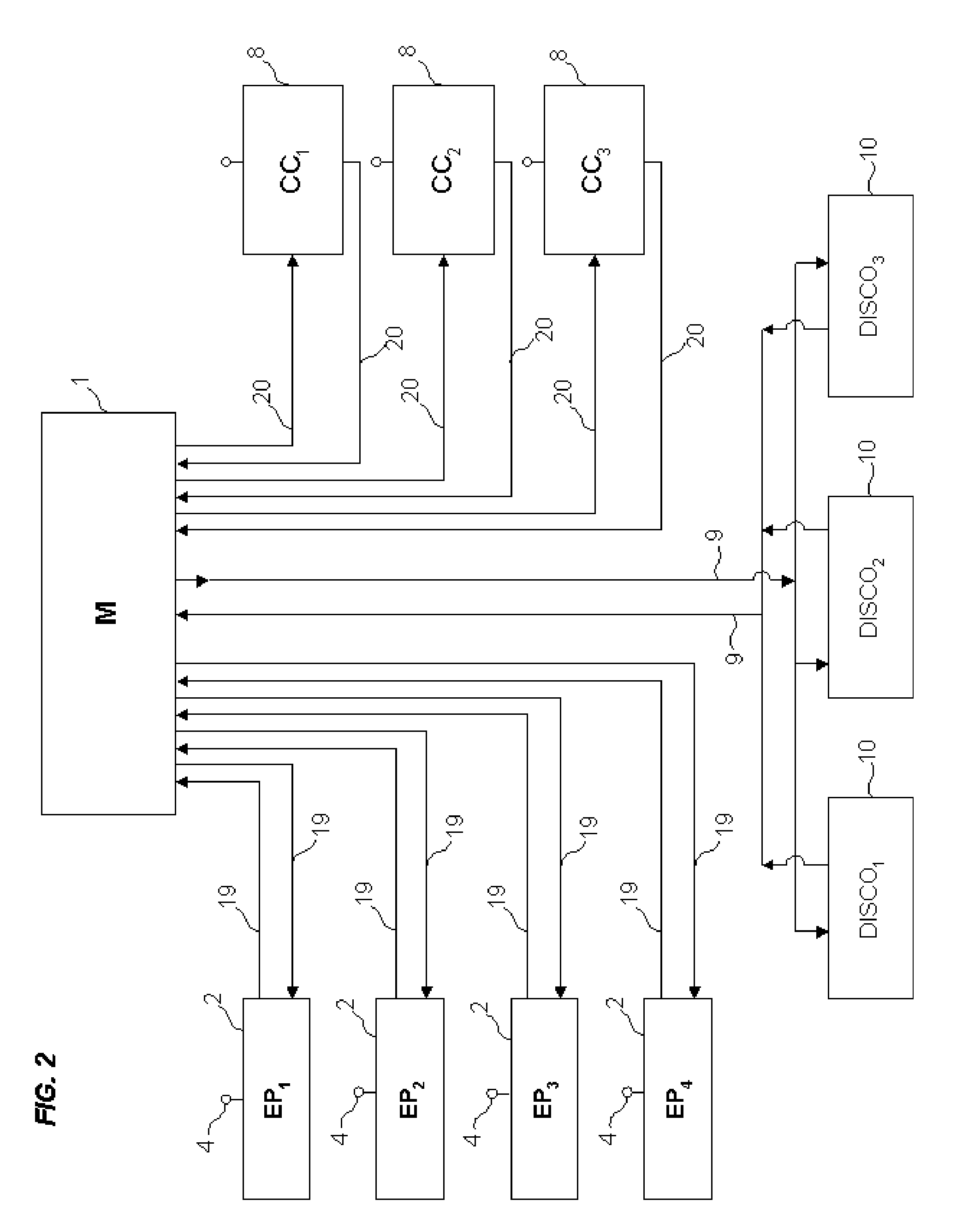

Bidding for energy supply

An auction service is provided that stimulates competition between energy suppliers (i.e., electric power or natural gas). A bidding moderator (Moderator) receives bids from the competing suppliers of the rate each is willing to charge to particular end users for estimated quantities of electric power or gas supply (separate auctions). Each supplier receives competing bids from the Moderator and has the opportunity to adjust its own bids down or up, depending on whether it wants to encourage or discourage additional energy delivery commitments in a particular geographic area or to a particular customer group. Each supplier's bids can also be changed to reflect each supplier's capacity utilization. Appropriate billing arrangements are also disclosed.

Owner:GEOPHONIC NETWORKS

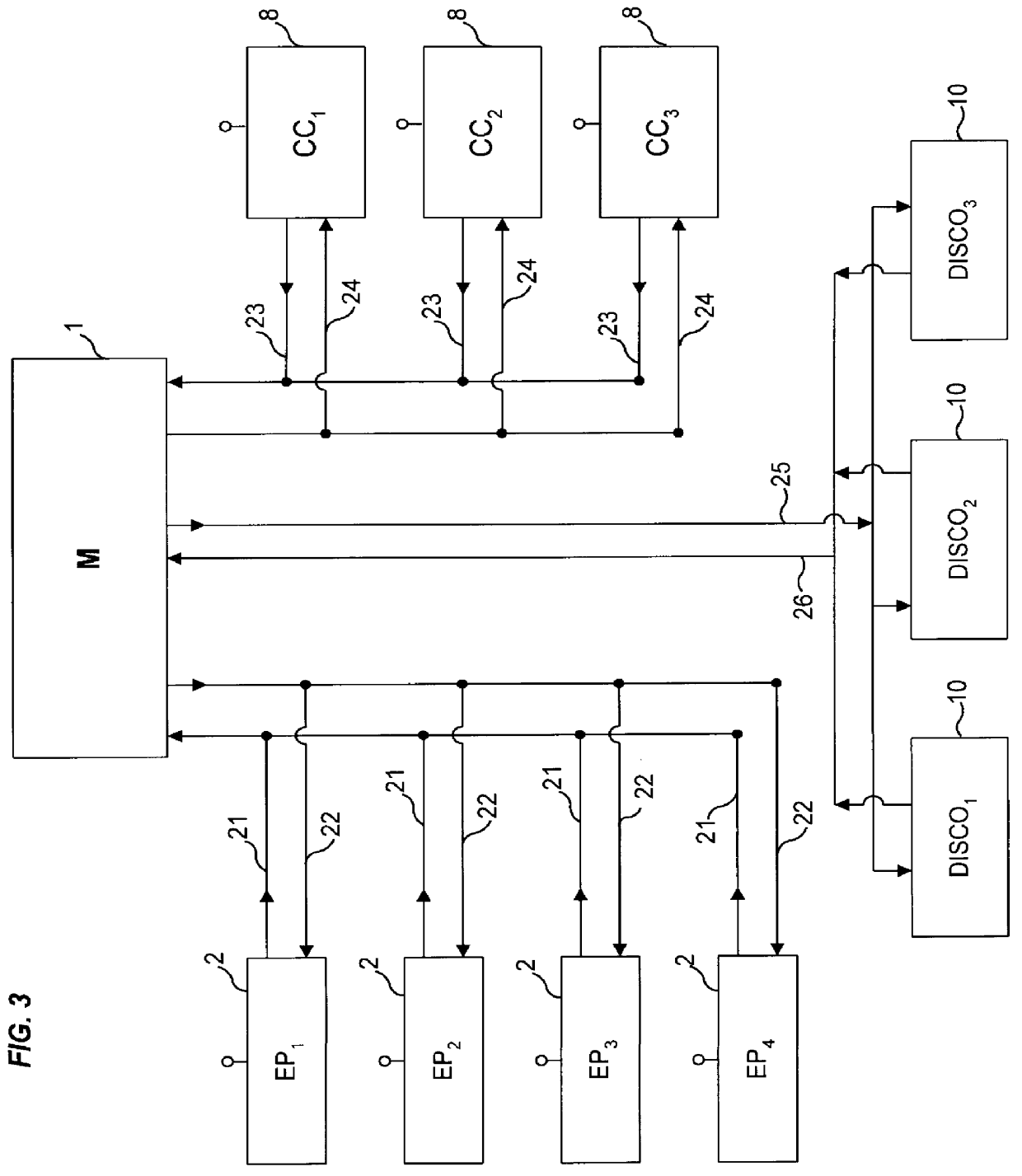

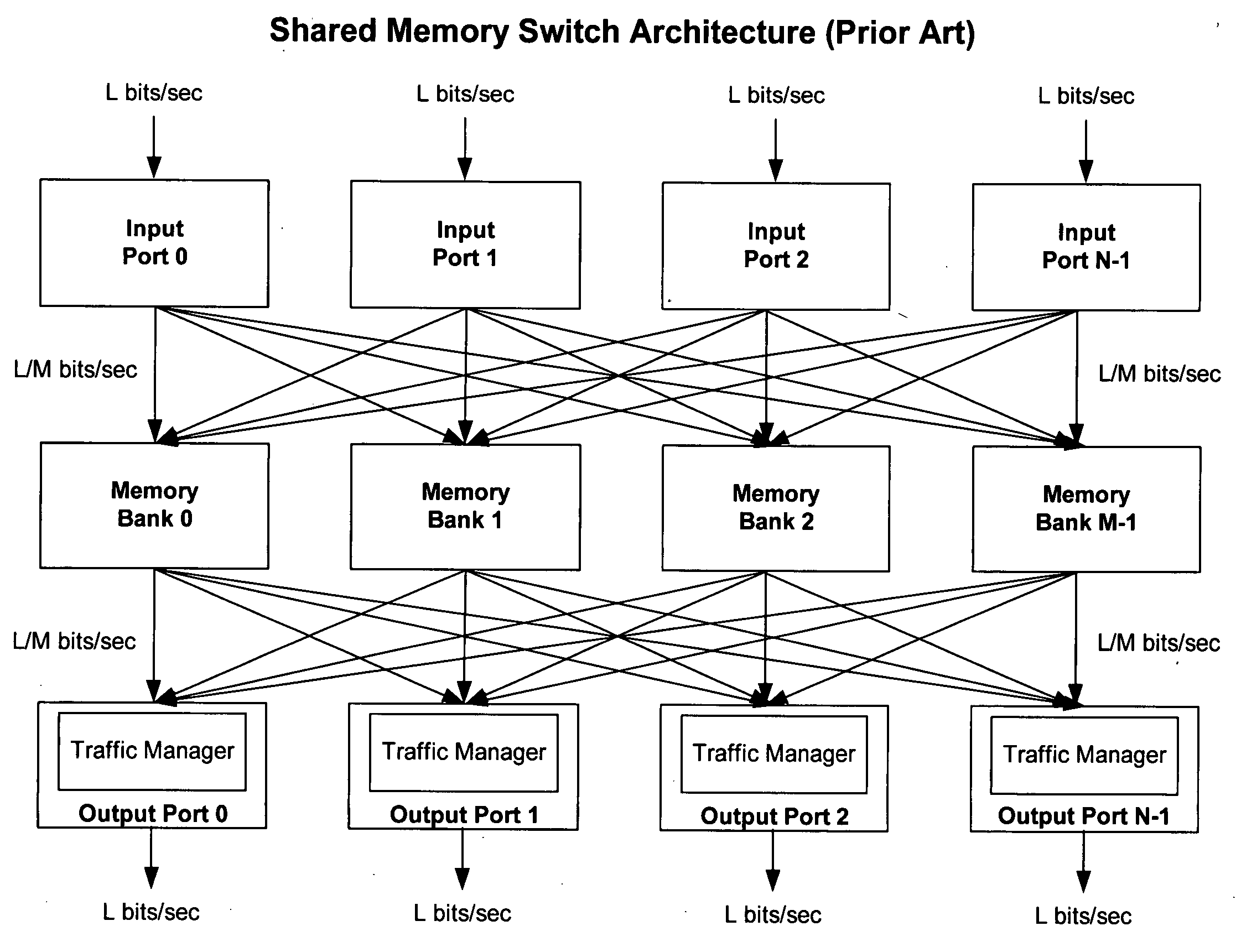

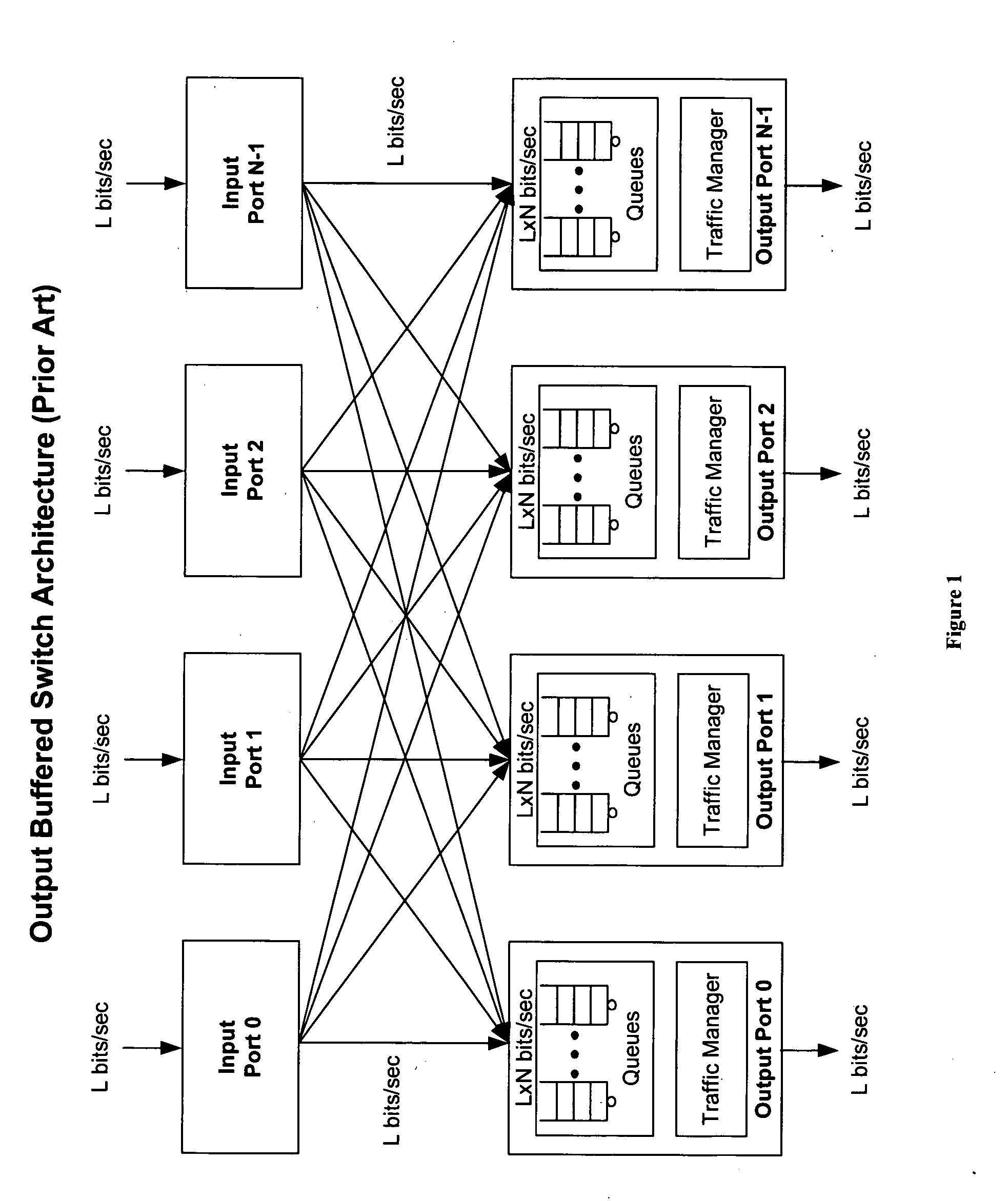

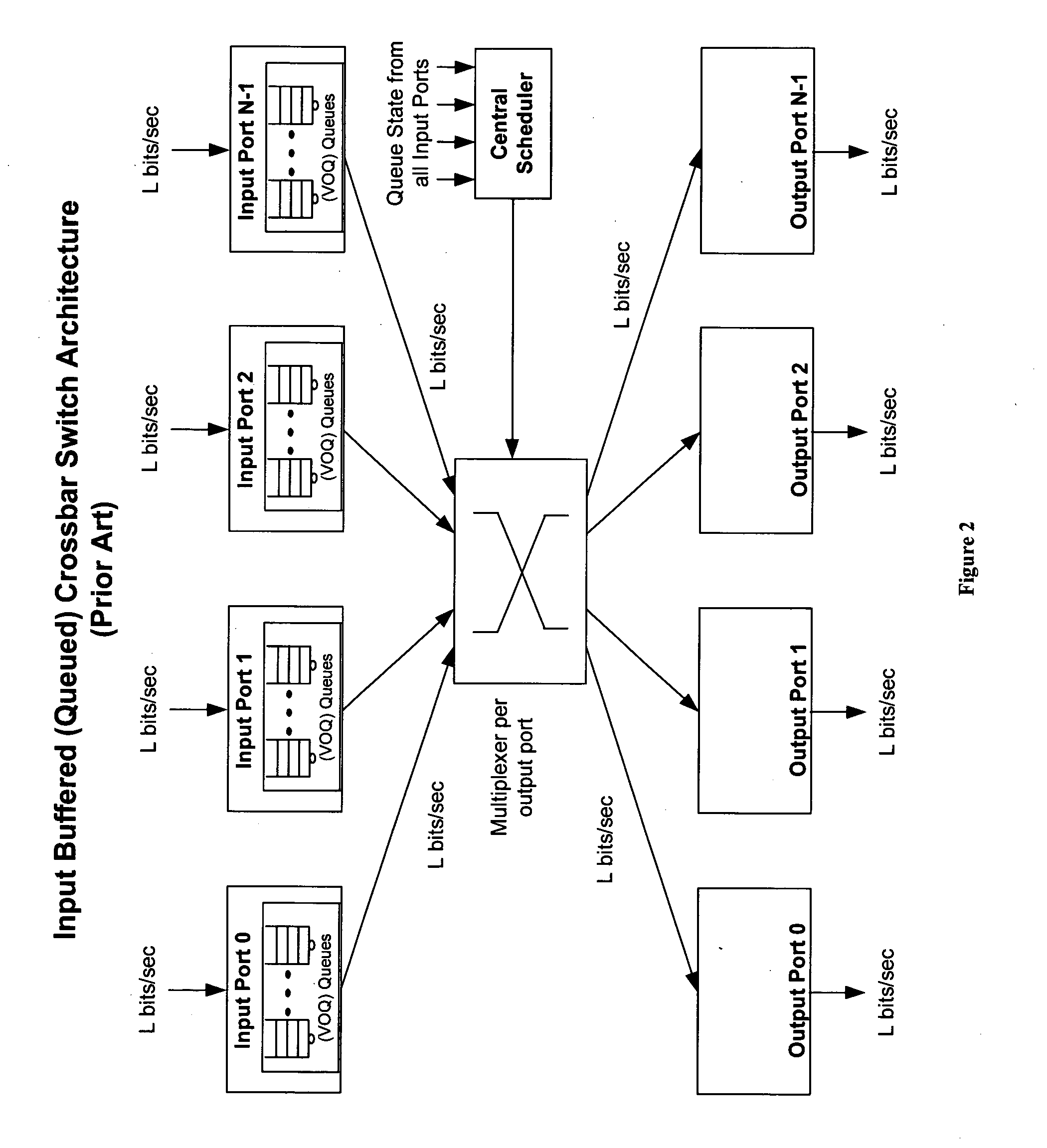

Method of and system for physically distributed, logically shared, and data slice-synchronized shared memory switching

An improved data networking technique and apparatus using a novel physically distributed but logically shared and data-sliced synchronized shared memory switching datapath architecture integrated with a novel distributed data control path architecture to provide ideal output-buffered switching of data in networking systems, such as routers and switches, to support the increasing port densities and line rates with maximized network utilization and with per flow bit-rate latency and jitter guarantees, all while maintaining optimal throughput and quality of services under all data traffic scenarios, and with features of scalability in terms of number of data queues, ports and line rates, particularly for requirements ranging from network edge routers to the core of the network, thereby to eliminate both the need for the complication of centralized control for gathering system-wide information and for processing the same for egress traffic management functions and the need for a centralized scheduler, and eliminating also the need for buffering other than in the actual shared memory itself,—all with complete non-blocking data switching between ingress and egress ports, under all circumstances and scenarios.

Owner:QOS LOGIX

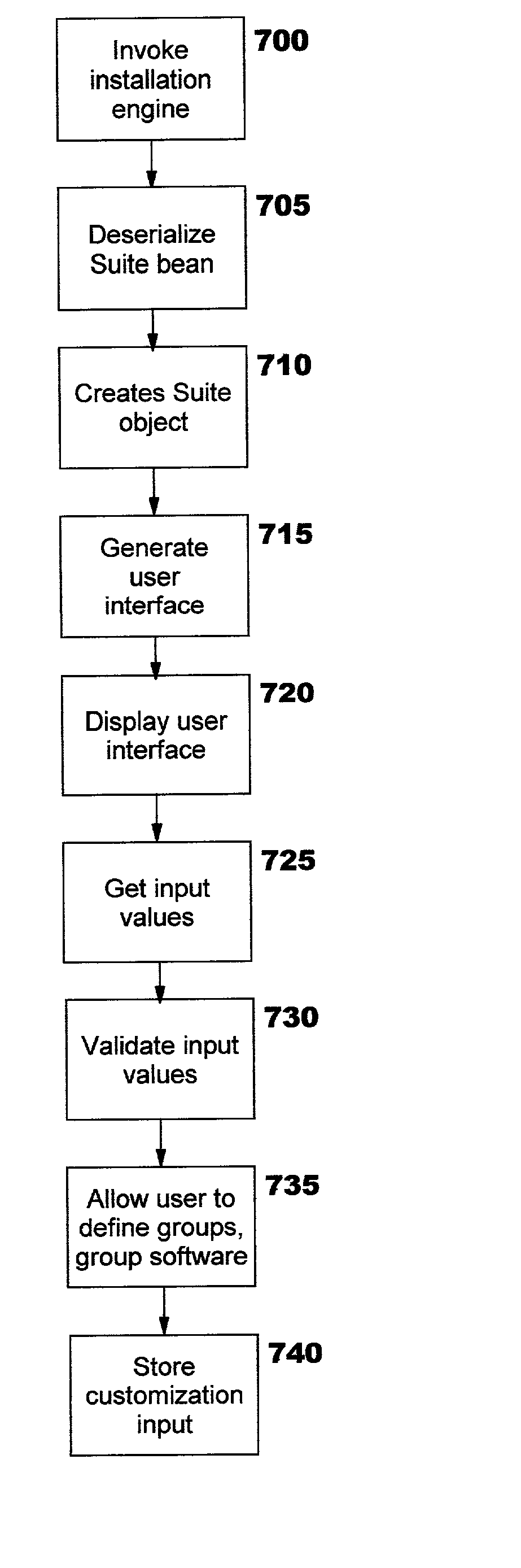

Efficient installation of software packages

InactiveUS20020188941A1Manual processProgram loading/initiatingMemory systemsResource utilizationSoftware engineering

Methods, systems, and computer program products for improving installation of software packages using an incremental conditional installation process (and optionally, caching of installation components). An object model is disclosed which enables specification of the conditional installation information as one or more elements of the model (and therefore of an object, document, etc., which is created according to the model). Conditional installation information may be defined at a suite level and / or at a component level. The identified checking process then executes to determine whether the corresponding suite or component should be installed. One or more components may be cached, if desired. Resource utilization is improved using the conditional installation and optional caching techniques, enabling reductions in disk space usage, CPU consumption, and / or networking bandwidth consumption.

Owner:IBM CORP

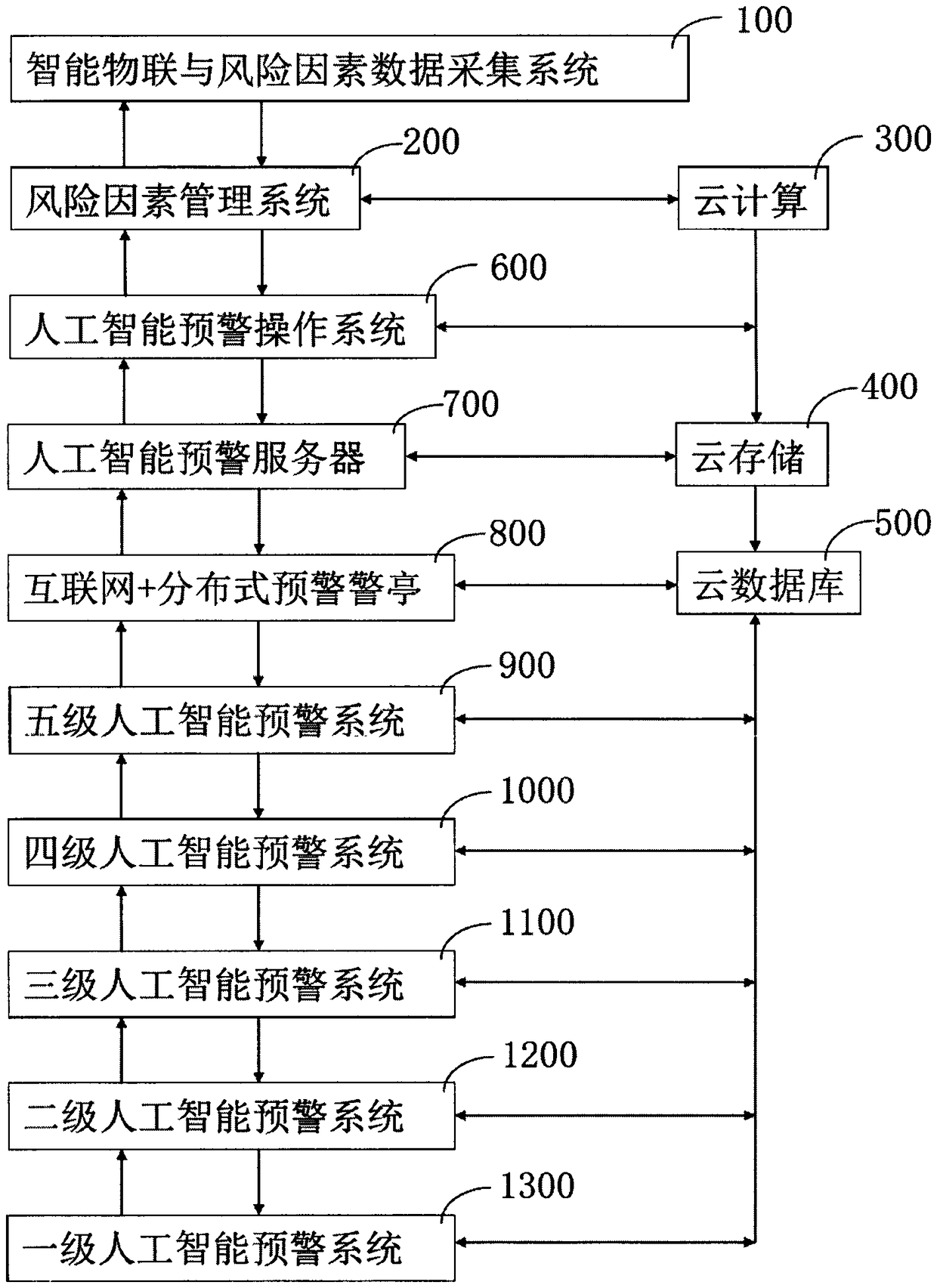

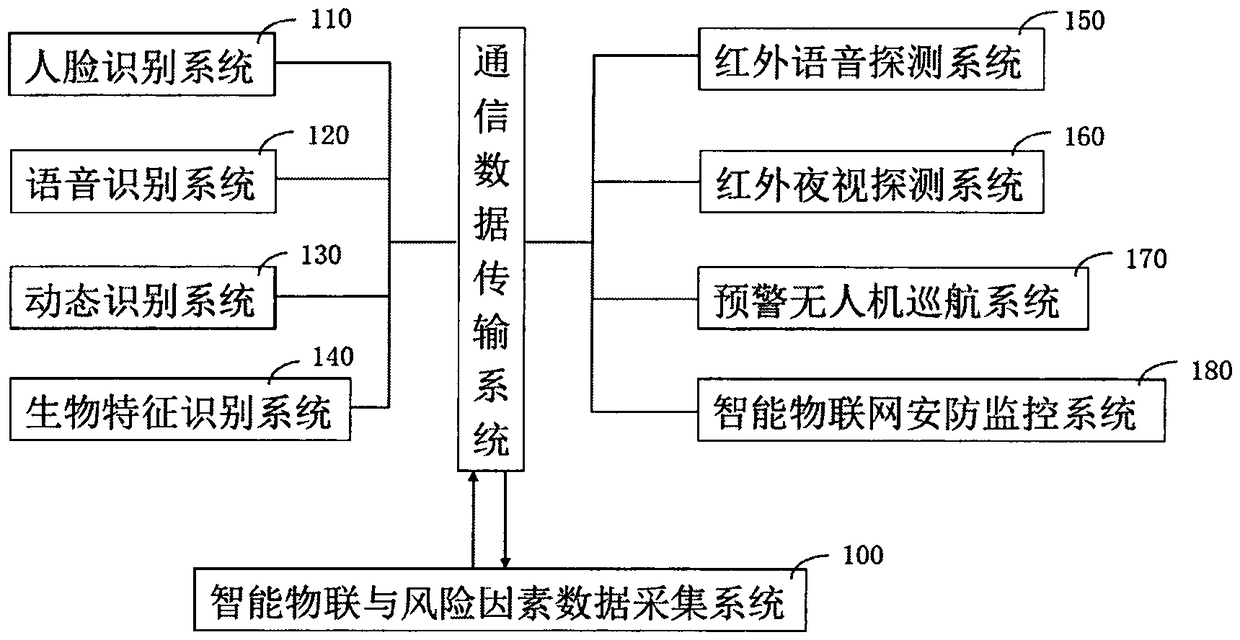

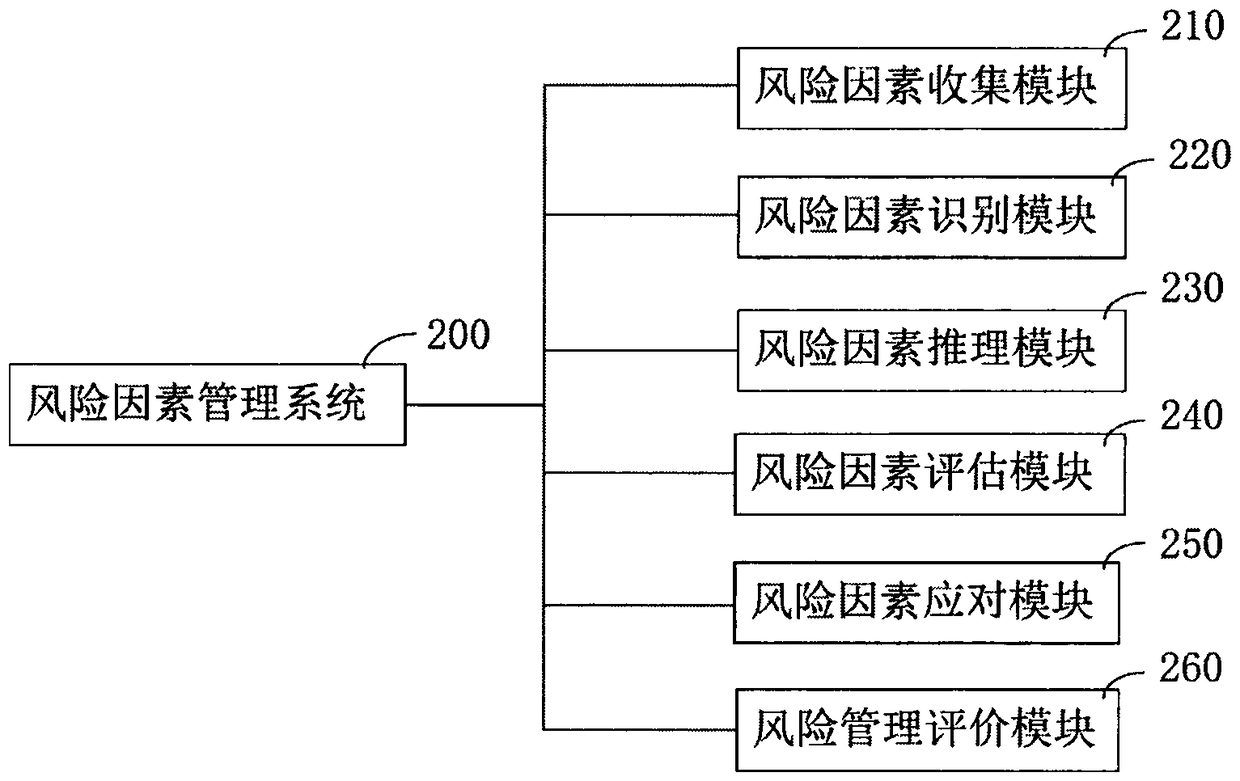

Artificial intelligence early warning system

ActiveCN109447048APrevent overpassSemantic analysisOther databases indexingThree levelInformation resource

The invention relates to an artificial intelligence early warning system. The system comprises an intelligent Internet of Things and risk factor data acquisition system (100), and a risk factor management system (200), a cloud computing device (300), a cloud storage device (400), a cloud database (500), and an artificial intelligence early warning operation system (600), an artificial intelligenceearly warning server (700), an internet + distributed early warning police booth (800), a five-level artificial intelligence early warning system (900), a four-level artificial intelligence early warning system (1000), a three-level artificial intelligence early warning system (1100), a two-level artificial intelligence early warning system (1200) and a one-level artificial intelligence early warning system (1300). The artificial intelligence early warning system of the present invention collects, compares, analyzes, deduces, evaluates the risk factors, and carries out the cloud computing, cloud storage, graded alarming and prevention and control, so that all-weather 24-hour monitoring on monitoring points around the police box is achieved, a user can share information, the utilization rate of information resources is increased, and the safety guarantee is increased for maintaining the stability of the borderlands.

Owner:苏州闪驰数控系统集成有限公司

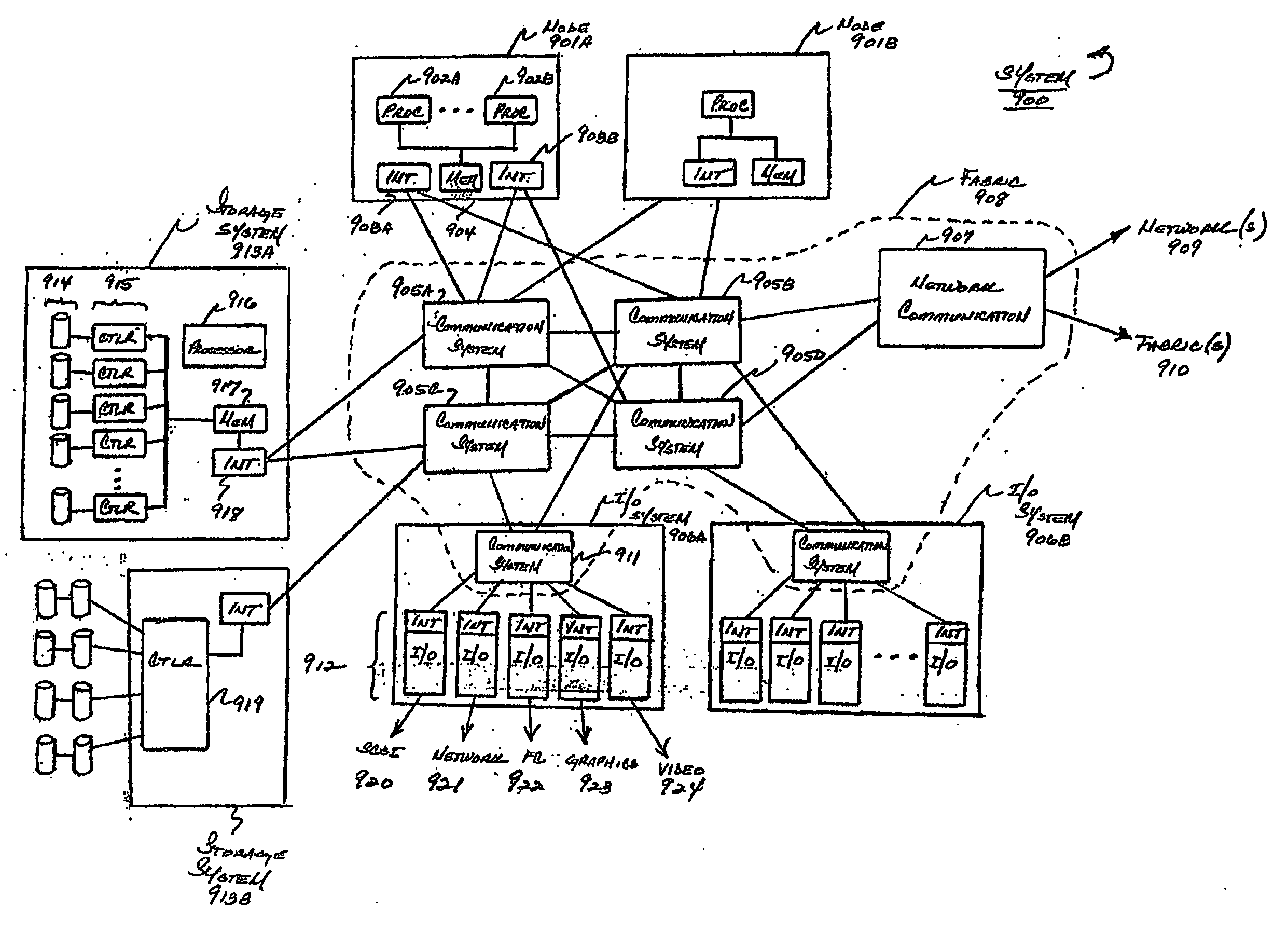

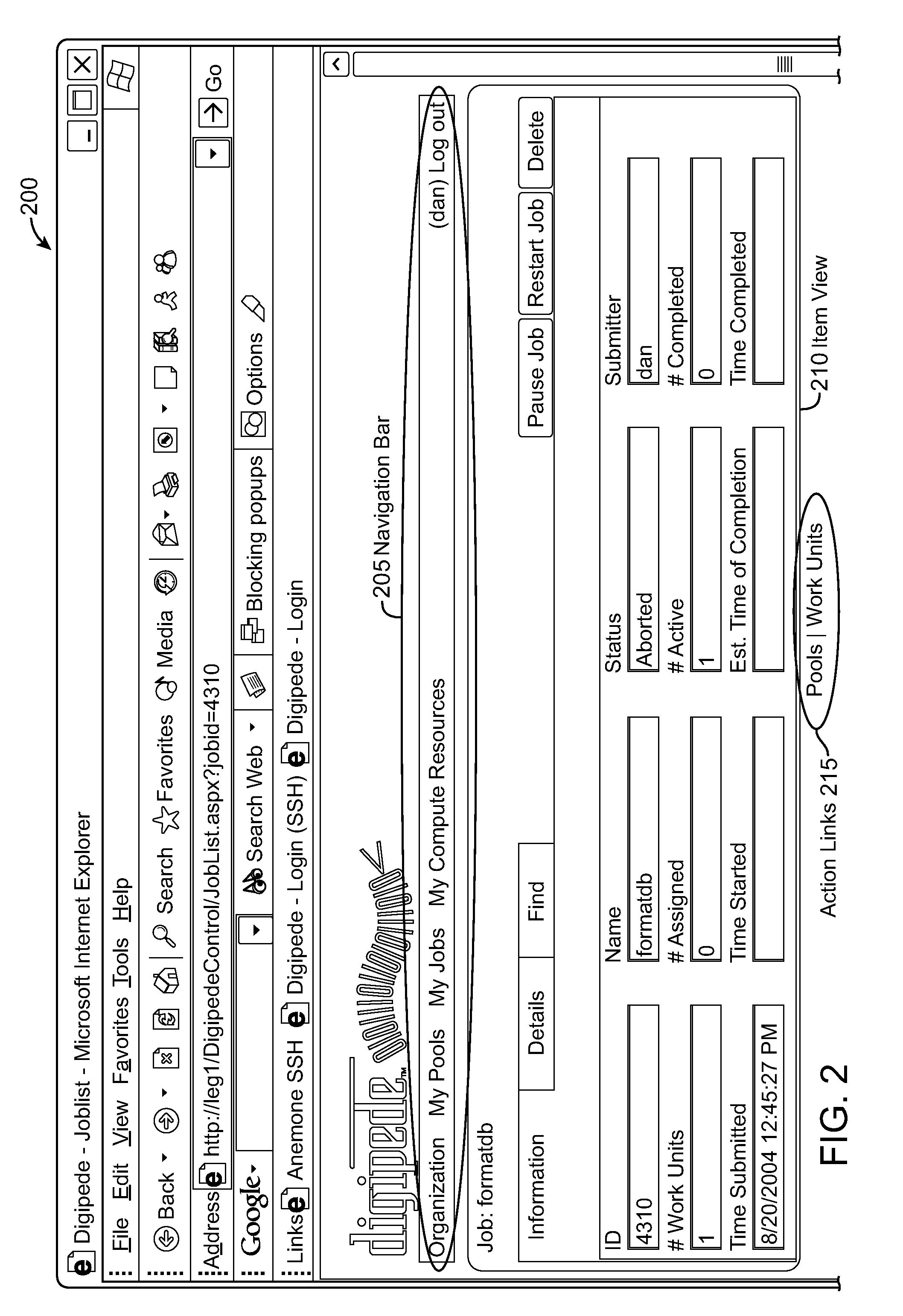

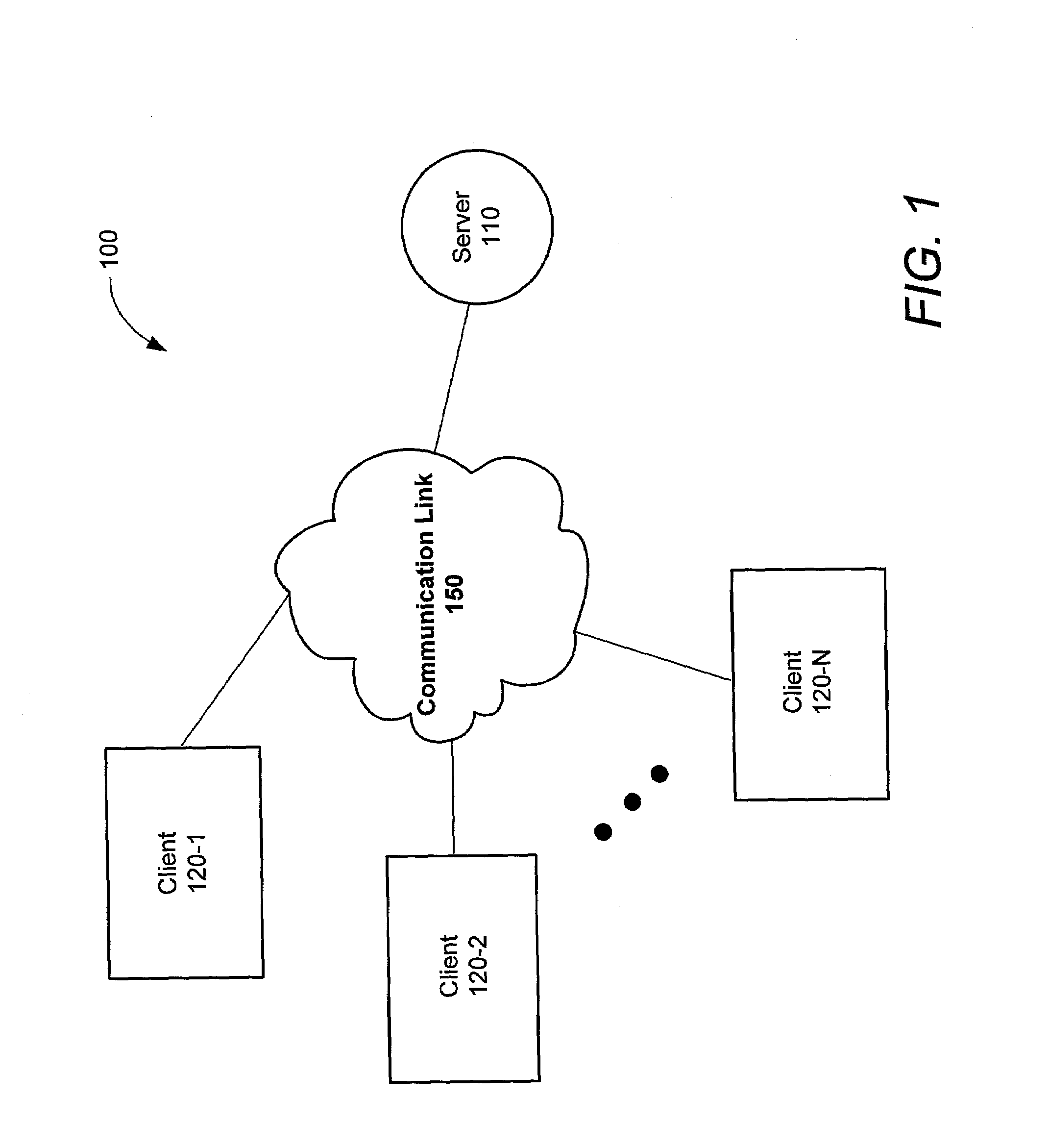

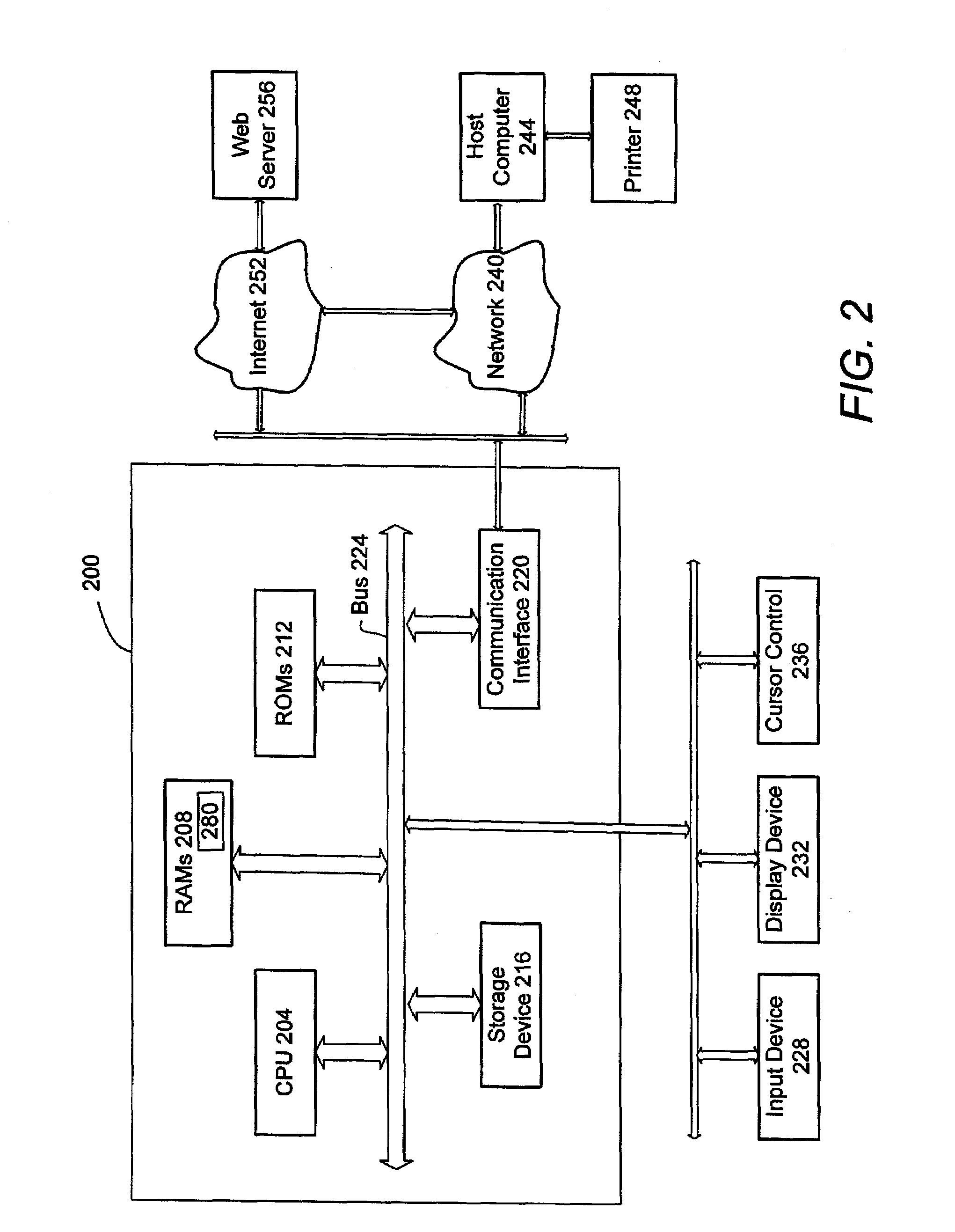

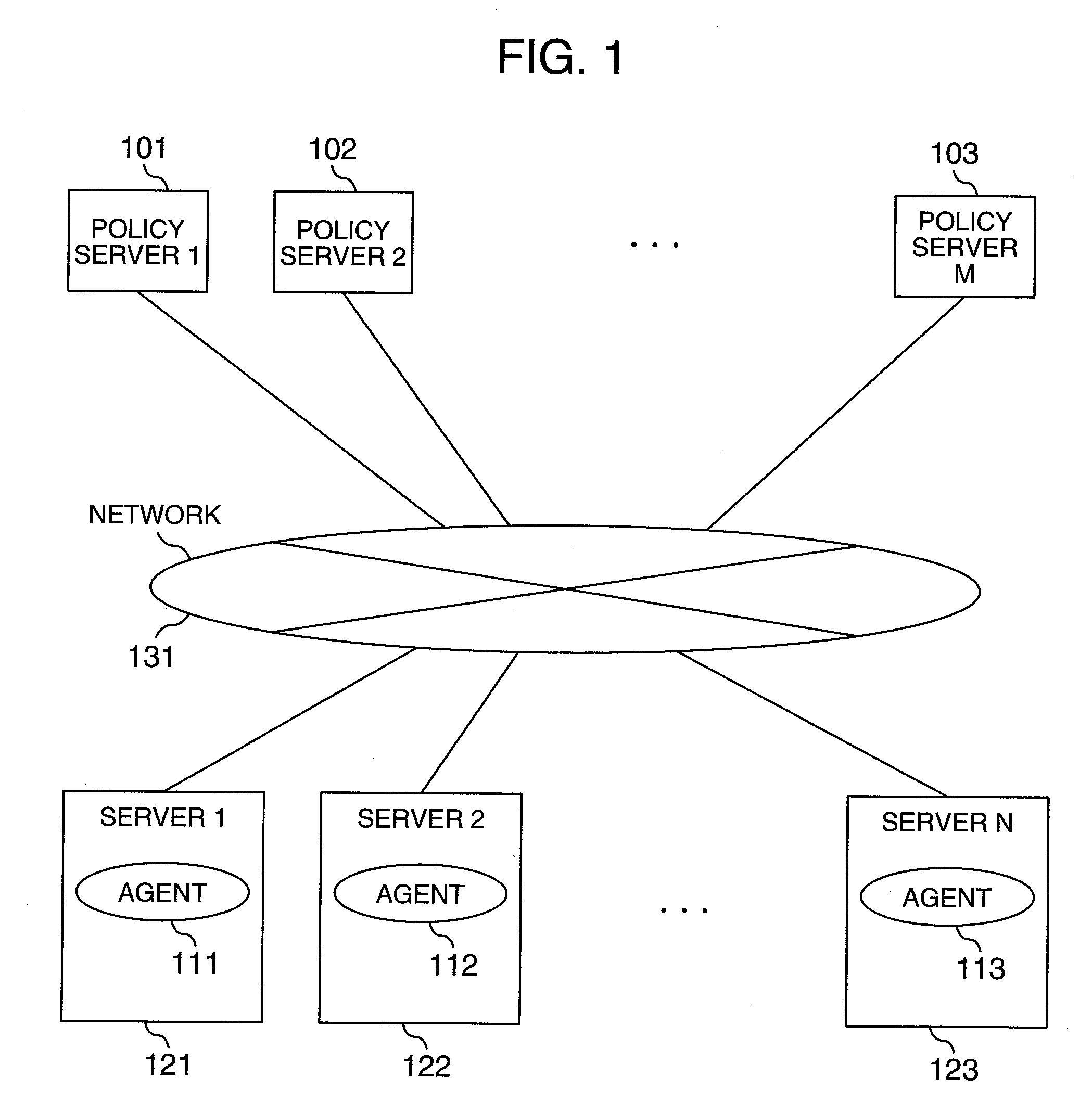

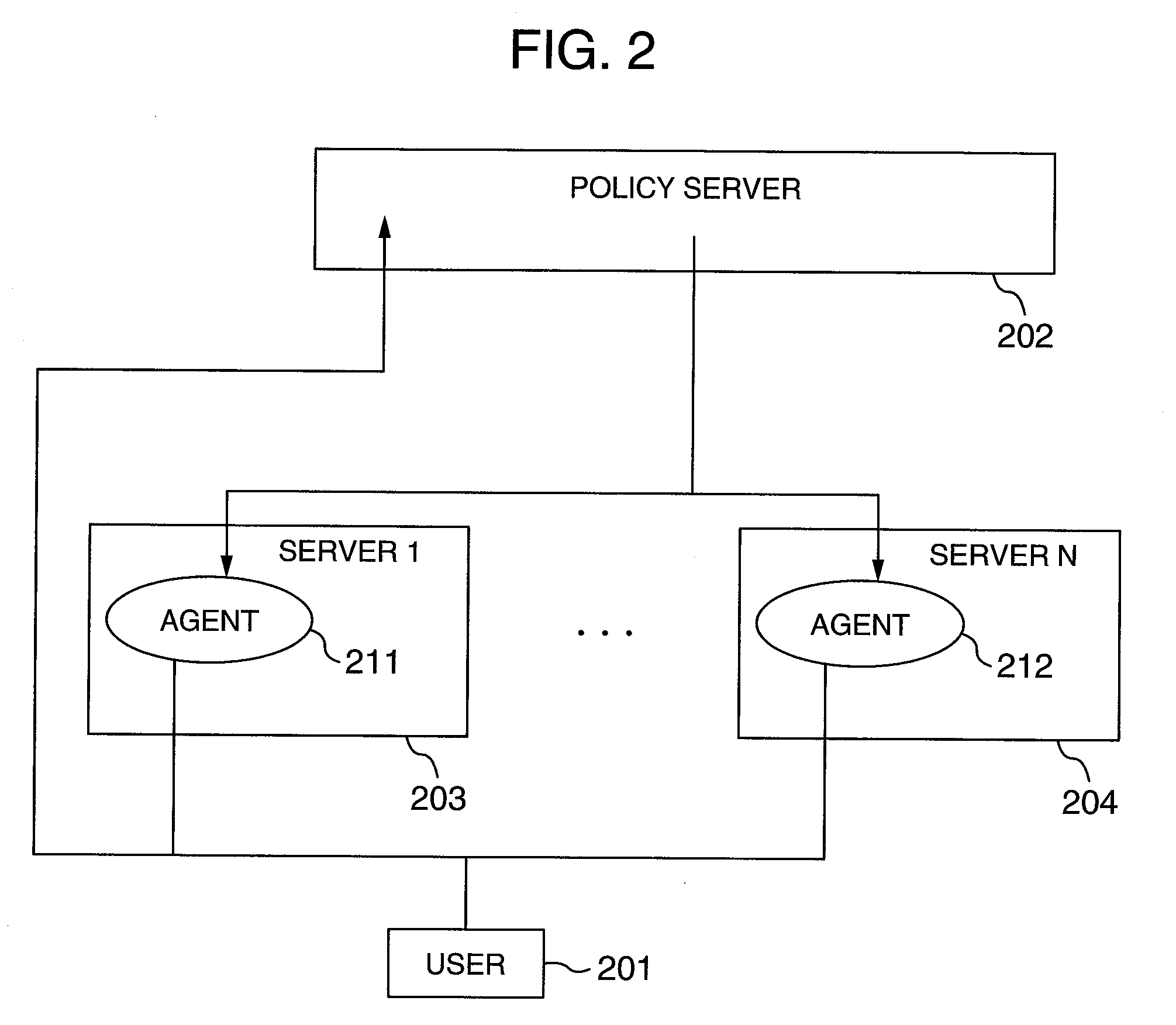

Multicore Distributed Processing System

ActiveUS20090049443A1Improve application performanceFaster and relatively lightweightGeneral purpose stored program computerMultiprogramming arrangementsMulti processorWork unit

A distributed processing system delegates the allocation and control of computing work units to agent applications running on computing resources including multi-processor and multi-core systems. The distributed processing system includes at least one agent associated with at least one computing resource. The distributed processing system creates work units corresponding with execution phases of applications. Work units can be associated with concurrency data that specifies how applications are executed on multiple processors and / or processor cores. The agent collects information about its associated computing resources and requests work units from the server using this information and the concurrency data. An agent can monitor the performance of executing work units to better select subsequent work units. The distributed processing system may also be implemented within a single computing resource to improve processor core utilization of applications. Additional computing resources can augment the single computing resource and execute pending work units at any time.

Owner:DIGIPEDE TECH LLC

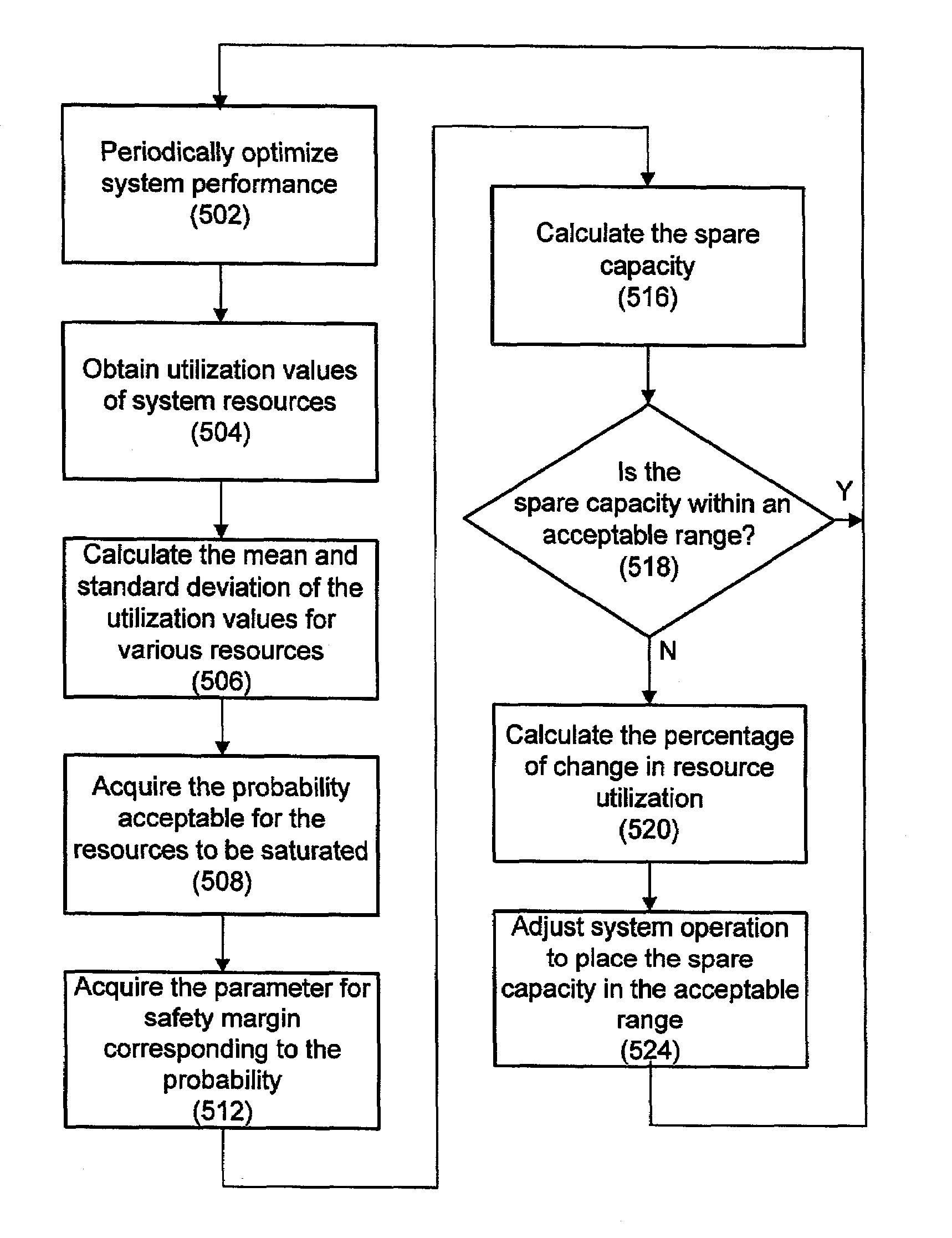

Managing power consumption based on utilization statistics

InactiveUS6996728B2Save powerEnergy efficient ICTVolume/mass flow measurementService-level agreementResource depletion

The present invention, in various embodiments, provides techniques for managing system power. In one embodiment, system compute loads and / or system resources invoked by services running on the system consume power. To better manage power consumption, the spare capacity of a system resource is periodically measured, and if this spare capacity is outside a predefined range, then the resource operation is adjusted, e.g., the CPU speed is increased or decreased, so that the spare capacity is within the range. Further, the spare capacity is kept as close to zero as practical, and this spare capacity is determined based on the statistical distribution of a number of utilization values of the resources, which is also taken periodically. The spare capacity is also calculated based on considerations of the probability that the system resources are saturated. In one embodiment, to maintain the services required by a Service Level Agreement (SLA), a correlation between an SLA parameter and a resource utilization is determined. In addition to other factors and the correlation of the parameters, the spare capacity of the resource utilization is adjusted based on the spare capacity of the SLA parameter. Various embodiments include optimizing system performance before calculating system spare capacity, saving power for system groups or clusters, saving power for special conditions such as brown-out, high temperature, etc.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

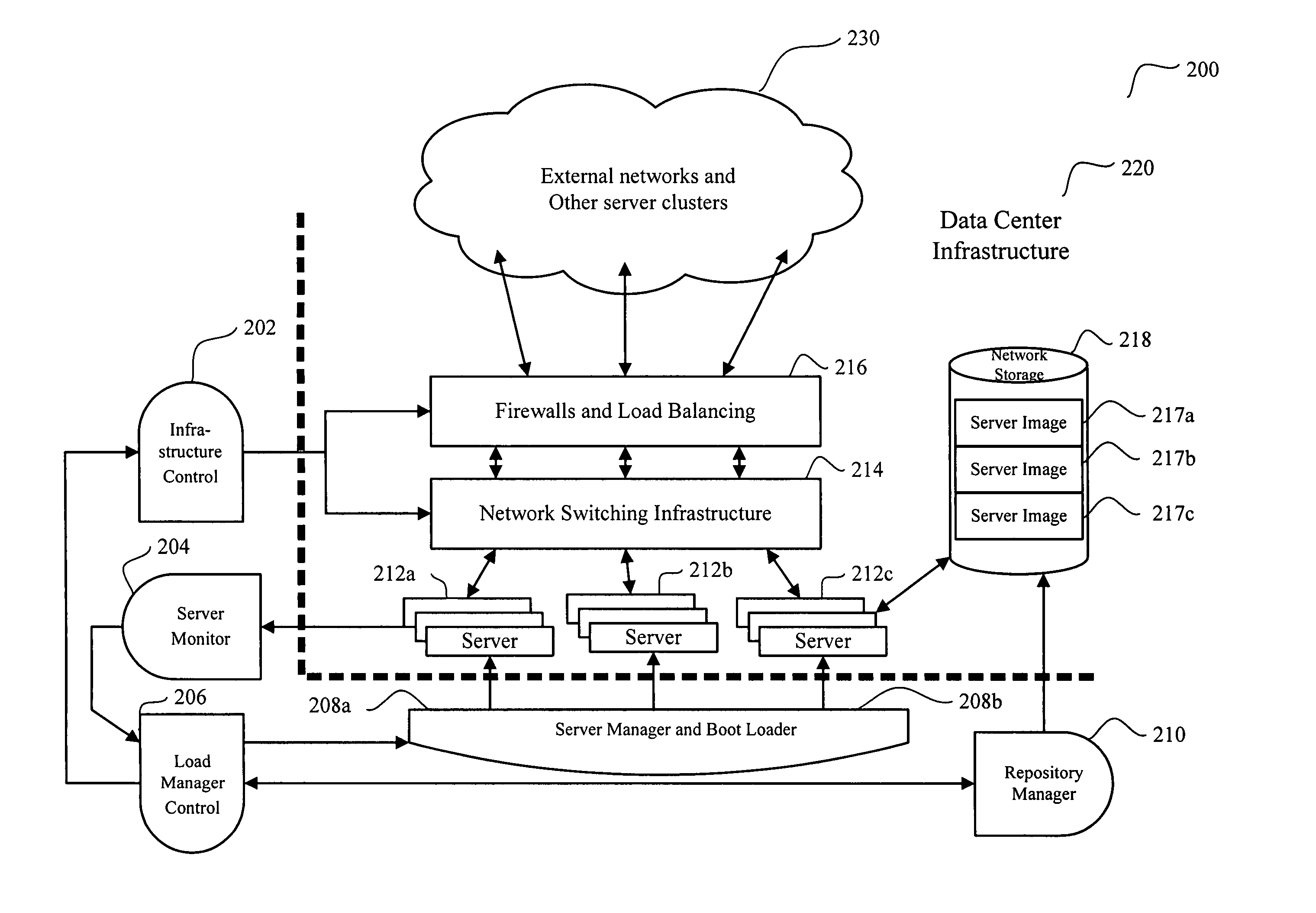

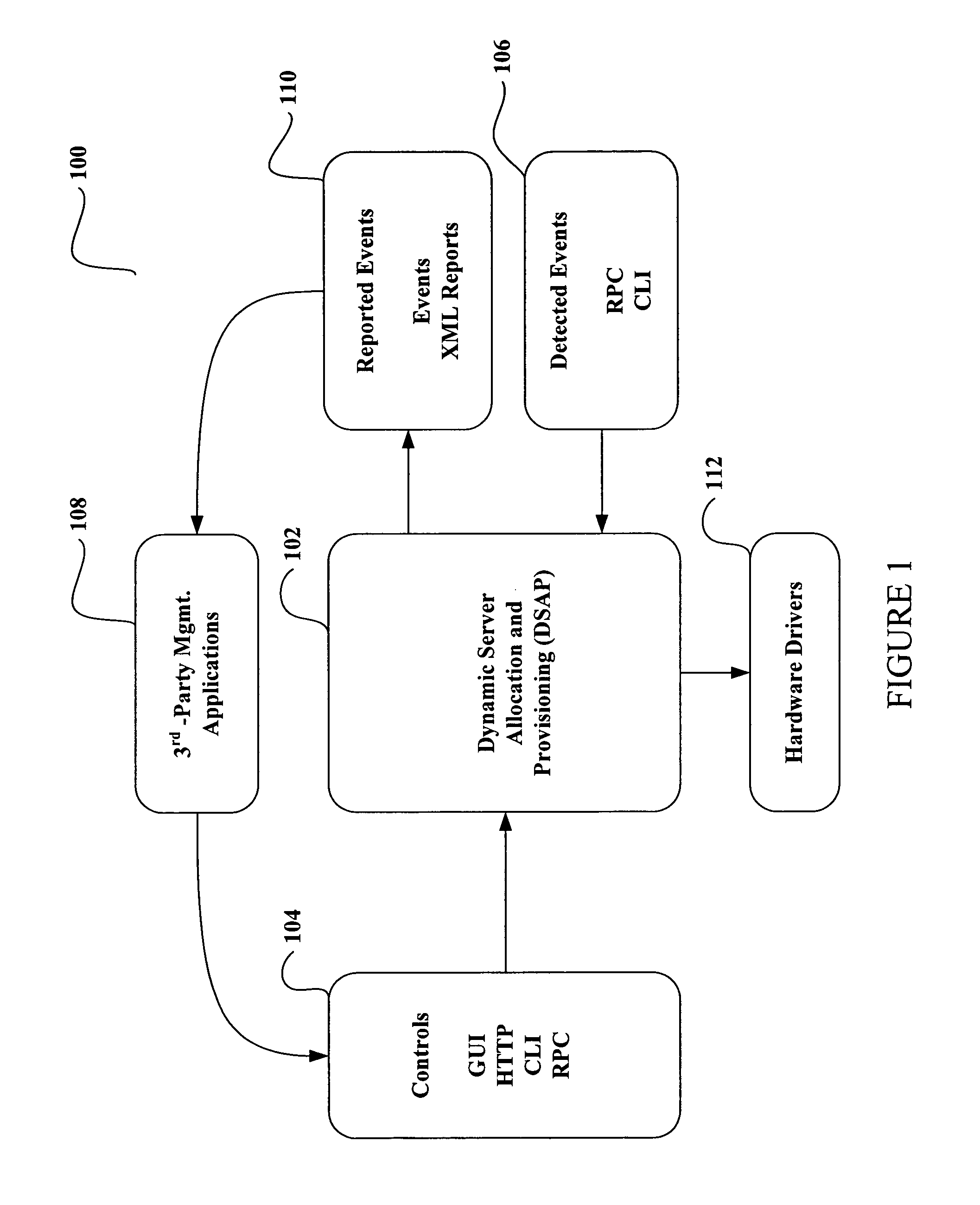

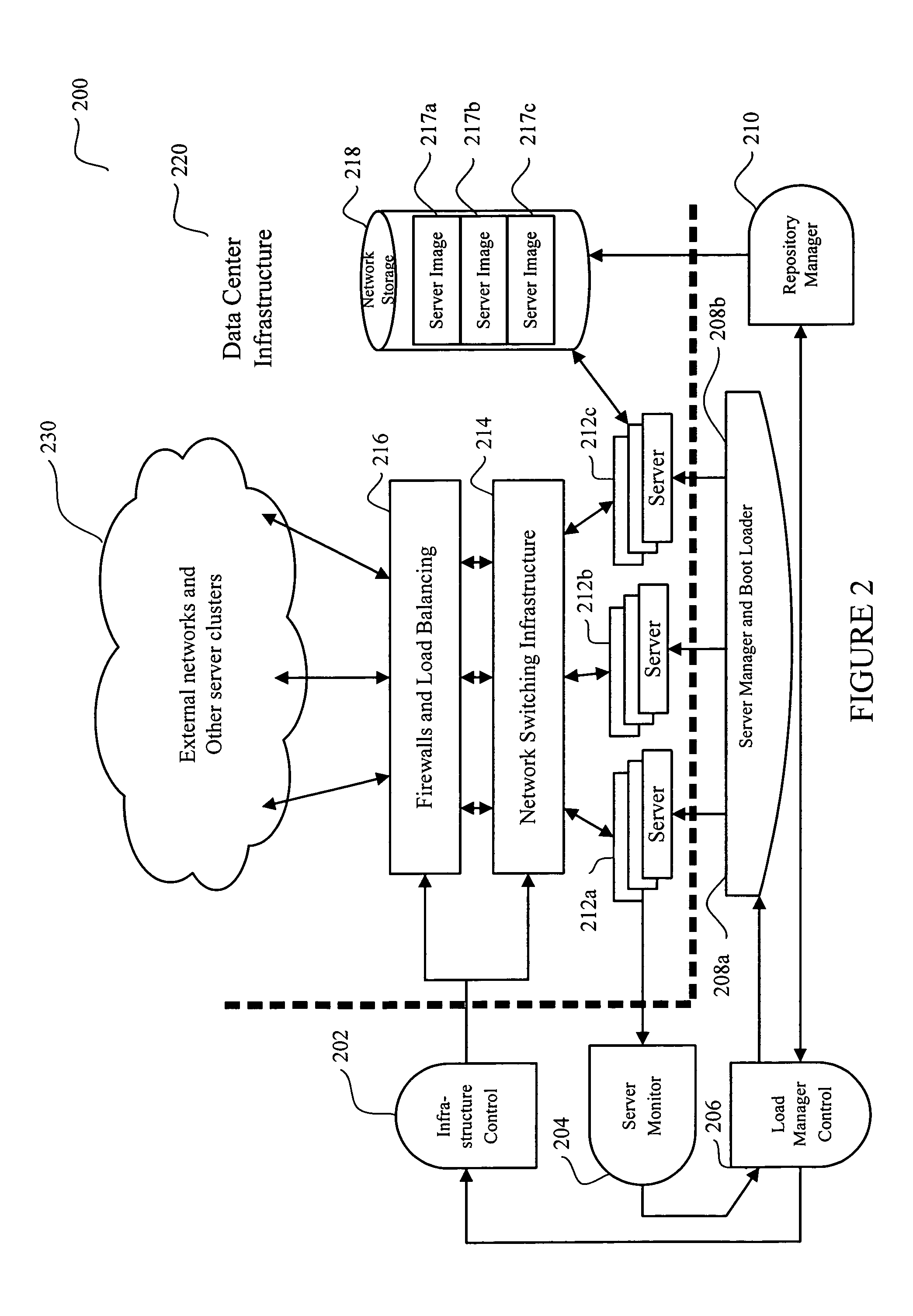

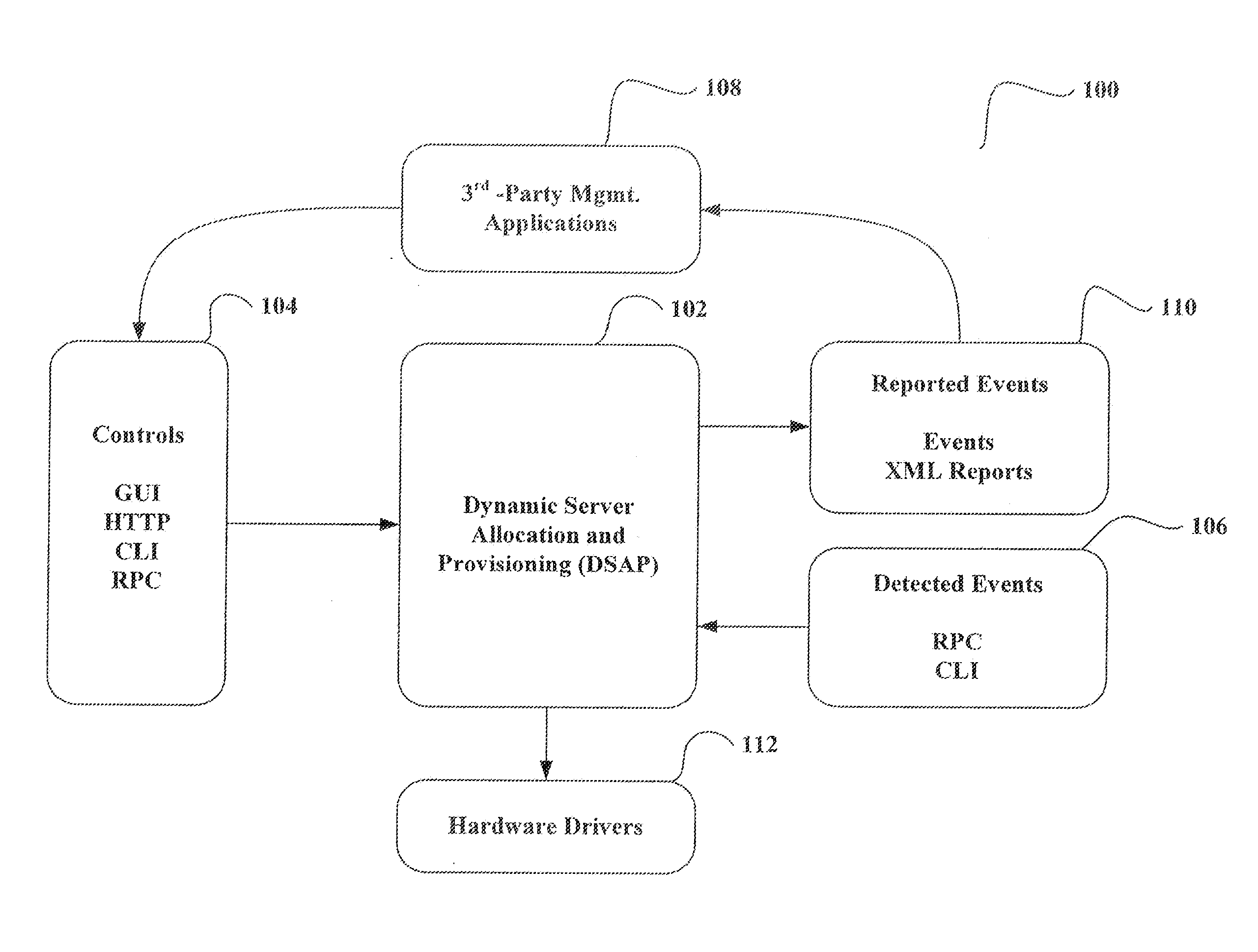

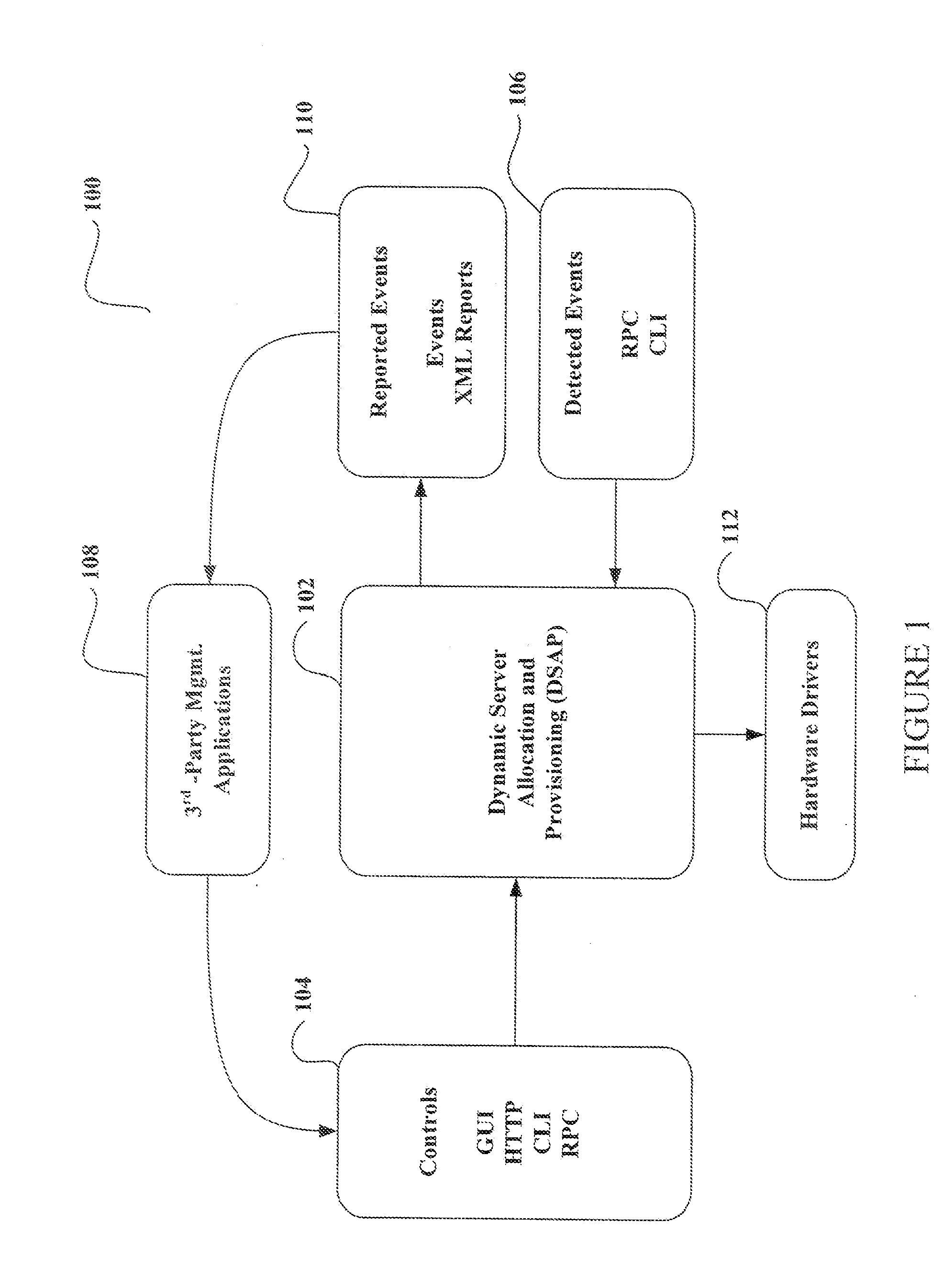

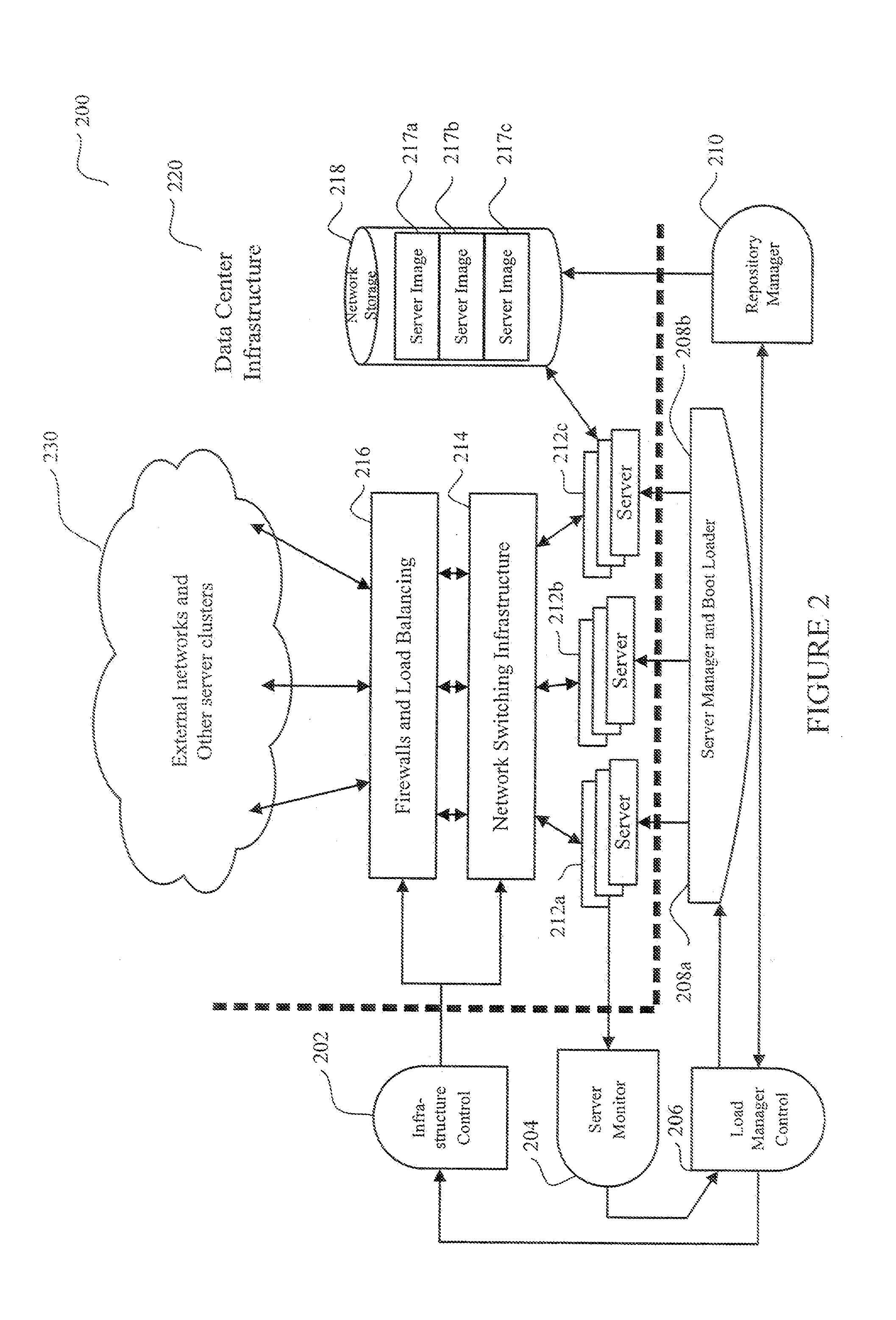

System and method for dynamic server allocation and provisioning

ActiveUS7213065B2Lower cost of capitalQuality improvementResource allocationDigital computer detailsManagement toolVirtual LAN

A management tool that streamlines the server allocation and provisioning processes within a data center is provided. The system, method, and computer program product divide the server provisioning and allocation into two separate tasks. Provisioning a server is accomplished by generating a fully configured, bootable system image, complete with network address assignments, virtual LAN (VLAN) configuration, load balancing configuration, and the like. System images are stored in a storage repository and are accessible to more than one server. Allocation is accomplished using a switching mechanism which matches each server with an appropriate system image based upon current configuration or requirements of the data center. Thus, real-time provisioning and allocation of servers in the form of automated responses to changing conditions within the data center is possible. The ability to instantly re-provision servers, safely and securely switch under-utilized server capacity to more productive tasks, and improve server utilization is also provided.

Owner:RACEMI

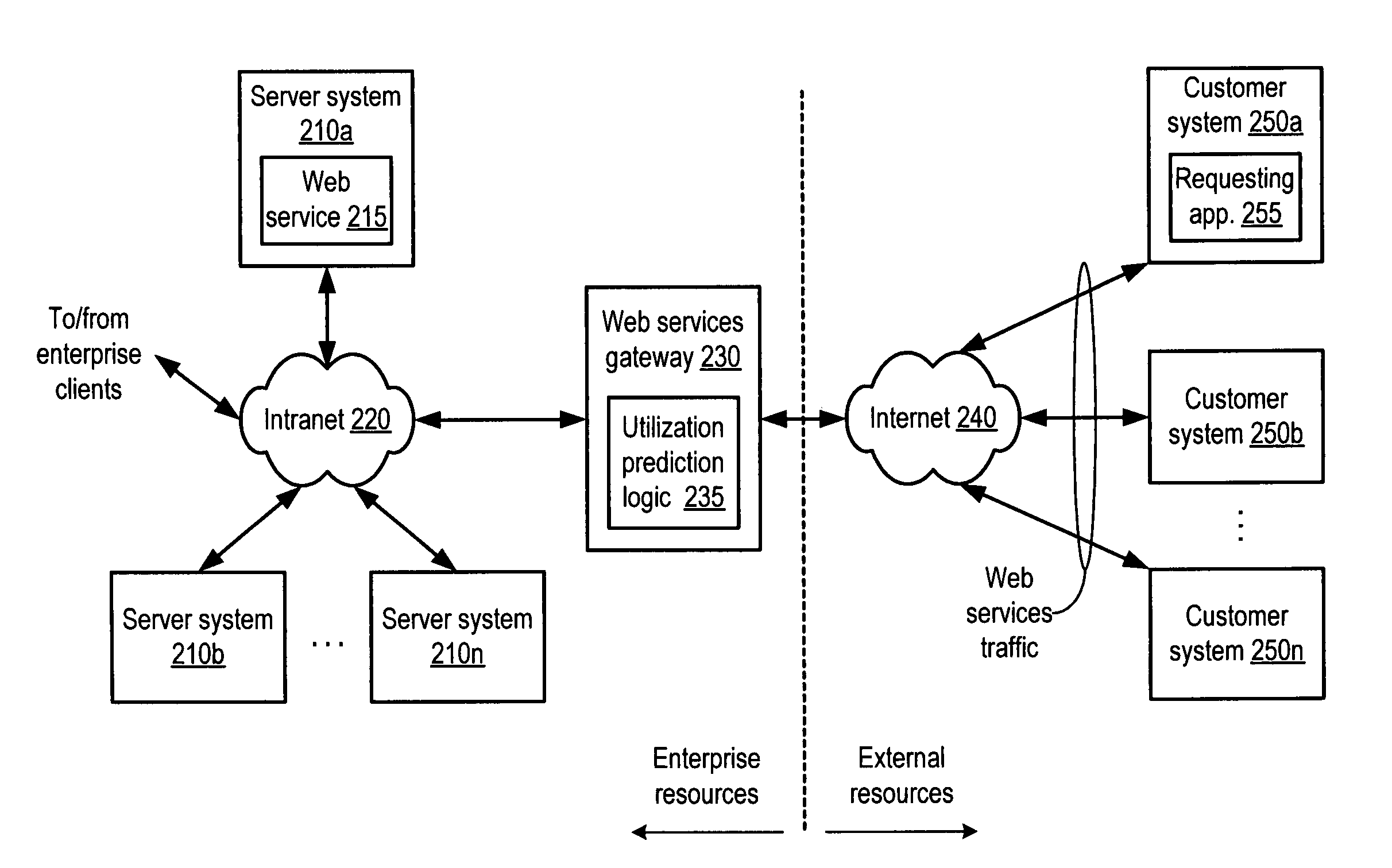

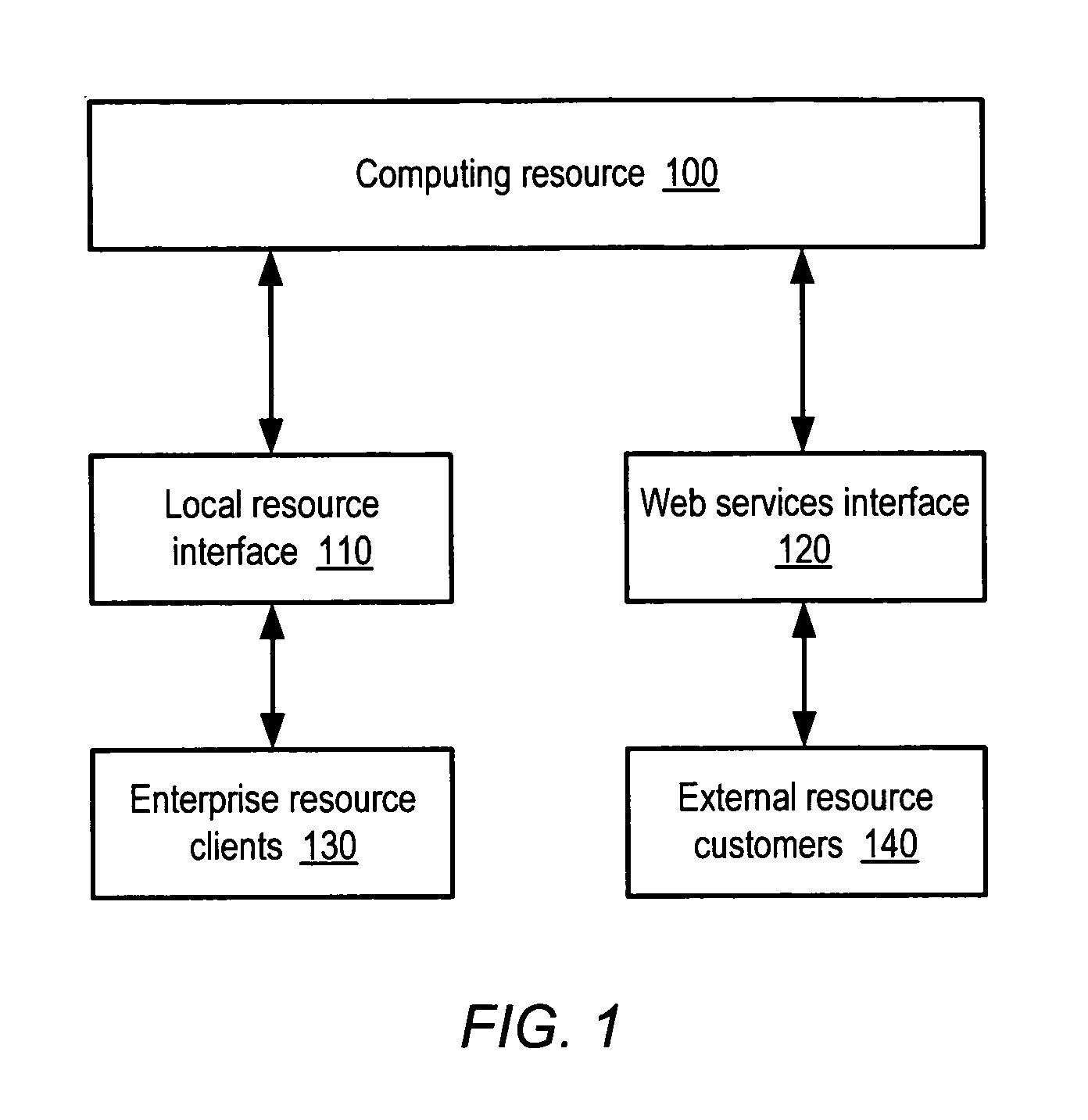

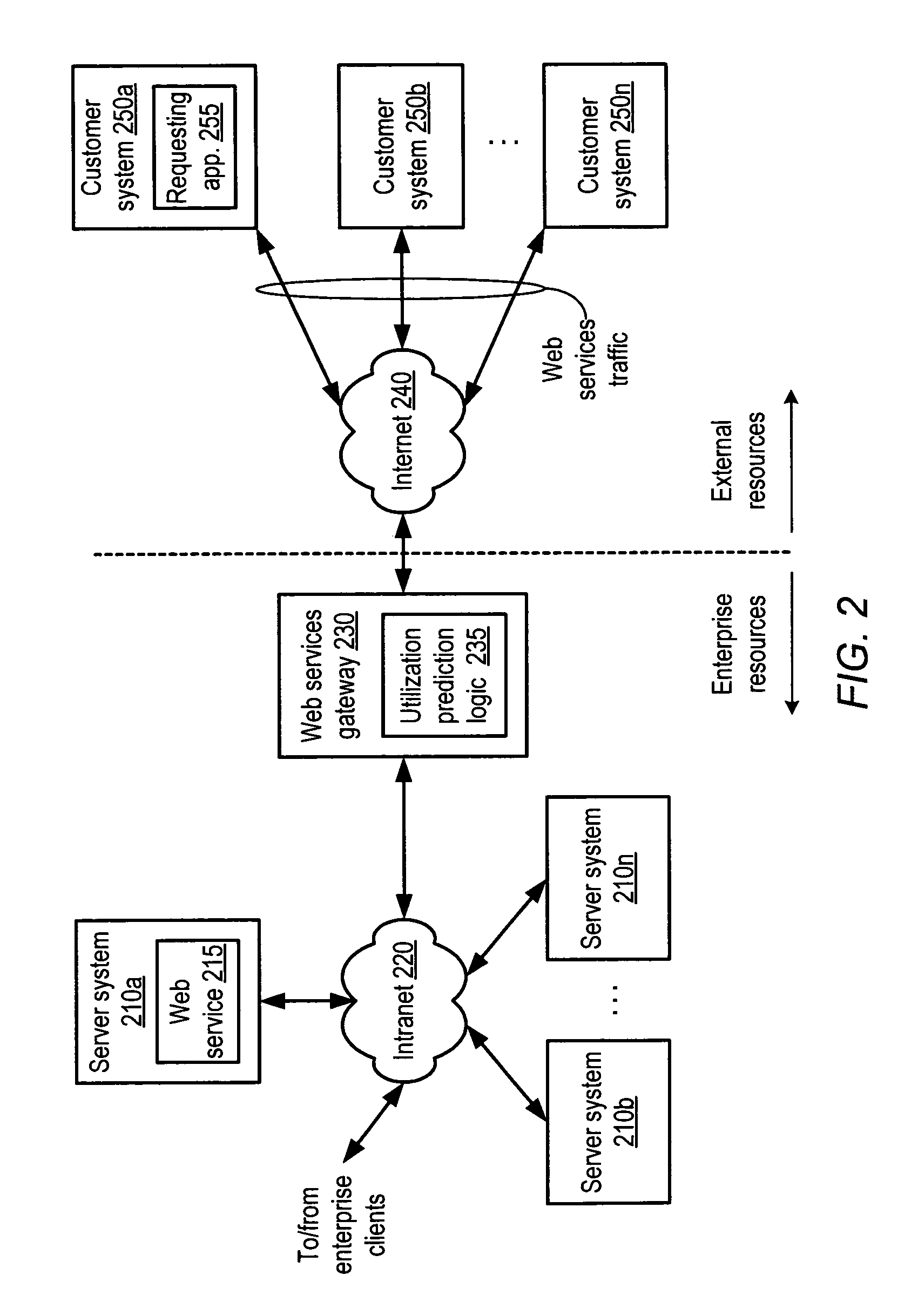

Method and system for dynamic pricing of web services utilization

A method and system for dynamic pricing of web services utilization. According to one embodiment, a method may include dynamically predicting utilization of a web services computing resource that is expected to occur during a given interval of time, and dependent upon the dynamically predicted utilization, setting a price associated with utilization of the web services computing resource occurring during the given interval of time. The method may further include providing the price to a customer.

Owner:AMAZON TECH INC

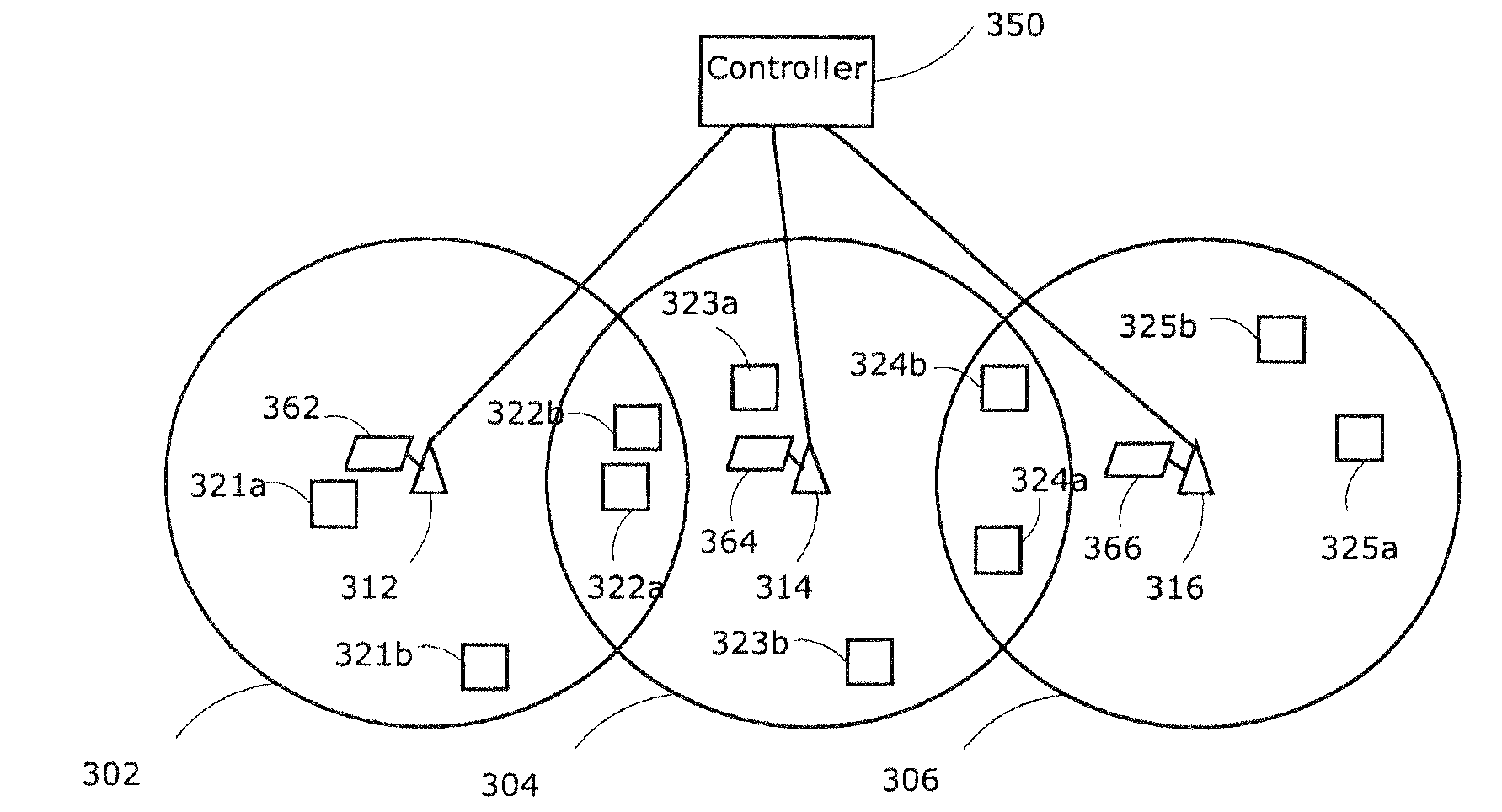

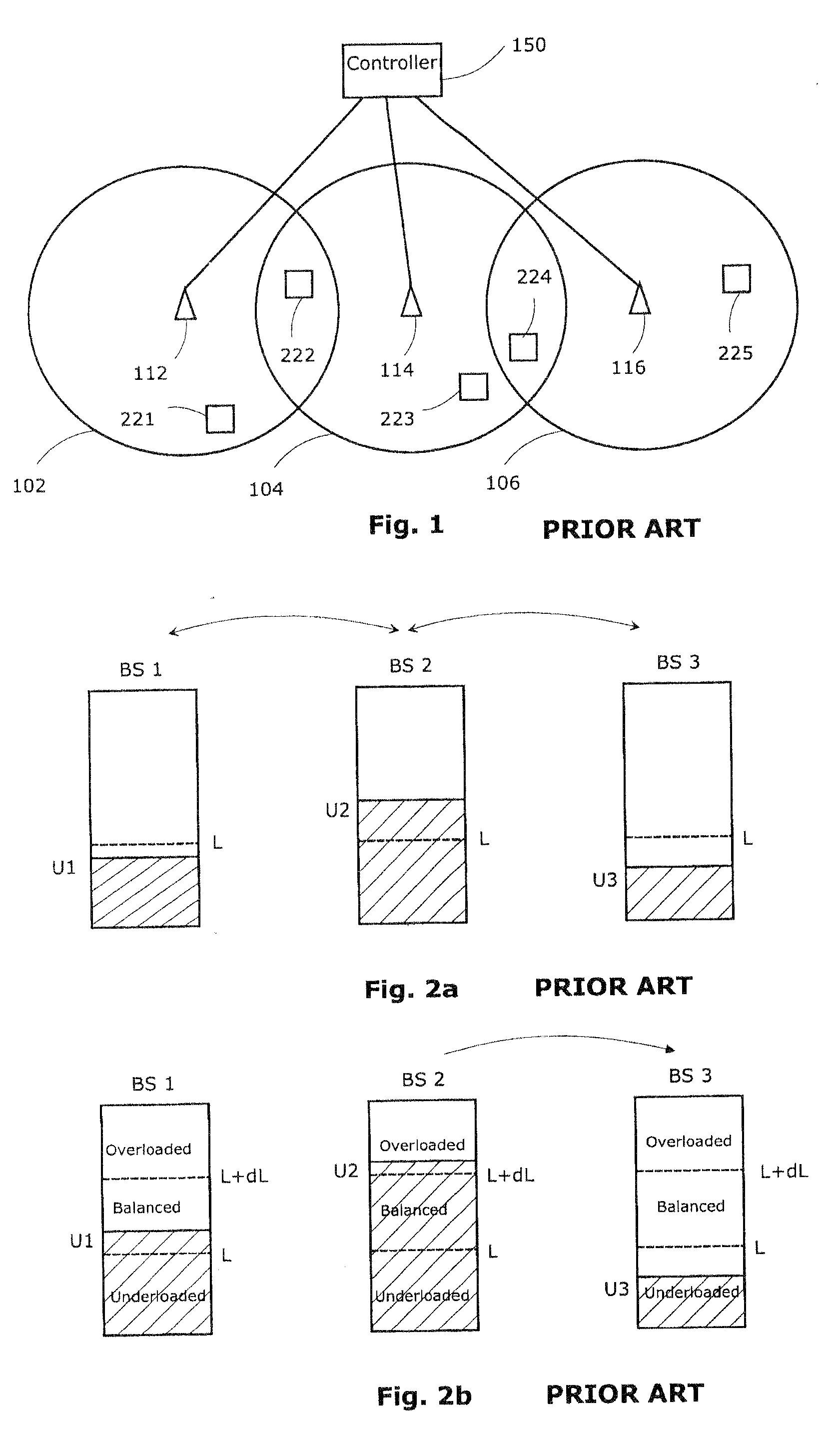

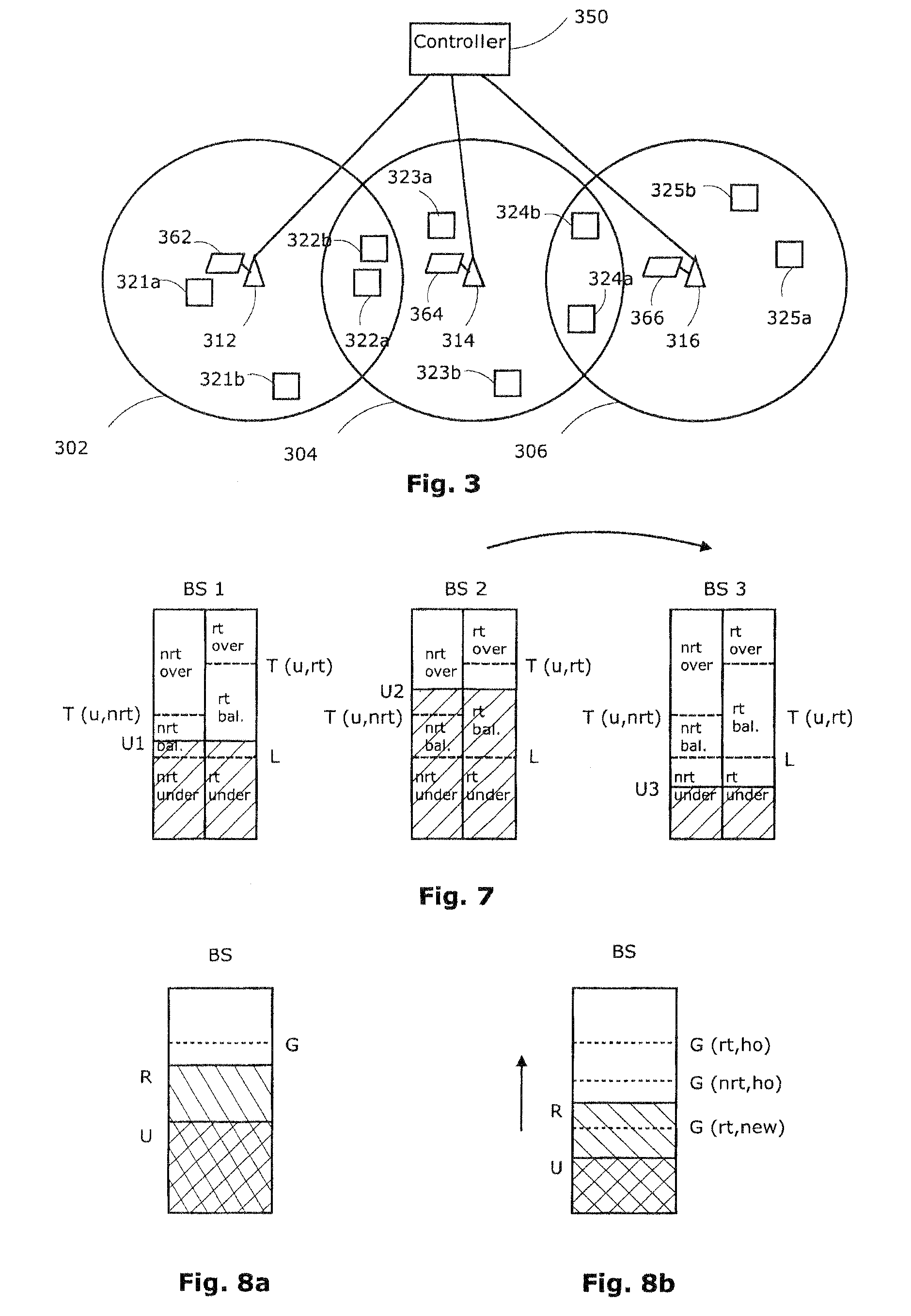

Load balancing in mobile environment

InactiveUS20090163223A1Unbalanced loadImprove QoSRadio/inductive link selection arrangementsWireless communicationResource utilizationHandover

In next generation wireless networks such as a Mobile WiMAX traffic prioritization is used to provide differentiated quality of service (QoS). Unnecessary ping-pong handovers that result from premature reaction to fluctuating radio resources pose a great threat to the QoS of delay sensitive connections such as VoIP which are sensitive to scanning and require heavy handover mechanisms. Traffic-class-specific variables are defined to tolerate unbalance in the radio system in order to avoid making the system slow to react to traffic variations and decreasing system wide resource utilization. By setting thresholds to trigger load balancing gradually in fluctuating environment the delay sensitive connections avoid unnecessary handovers and the delay tolerant connections have a chance to react to the load increase and get higher bandwidth from a less congested BS. A framework for the resolution of static user terminals in the overlapping area within adjacent cells will be described.

Owner:ELEKTROBIT WIRELESS COMM LTD

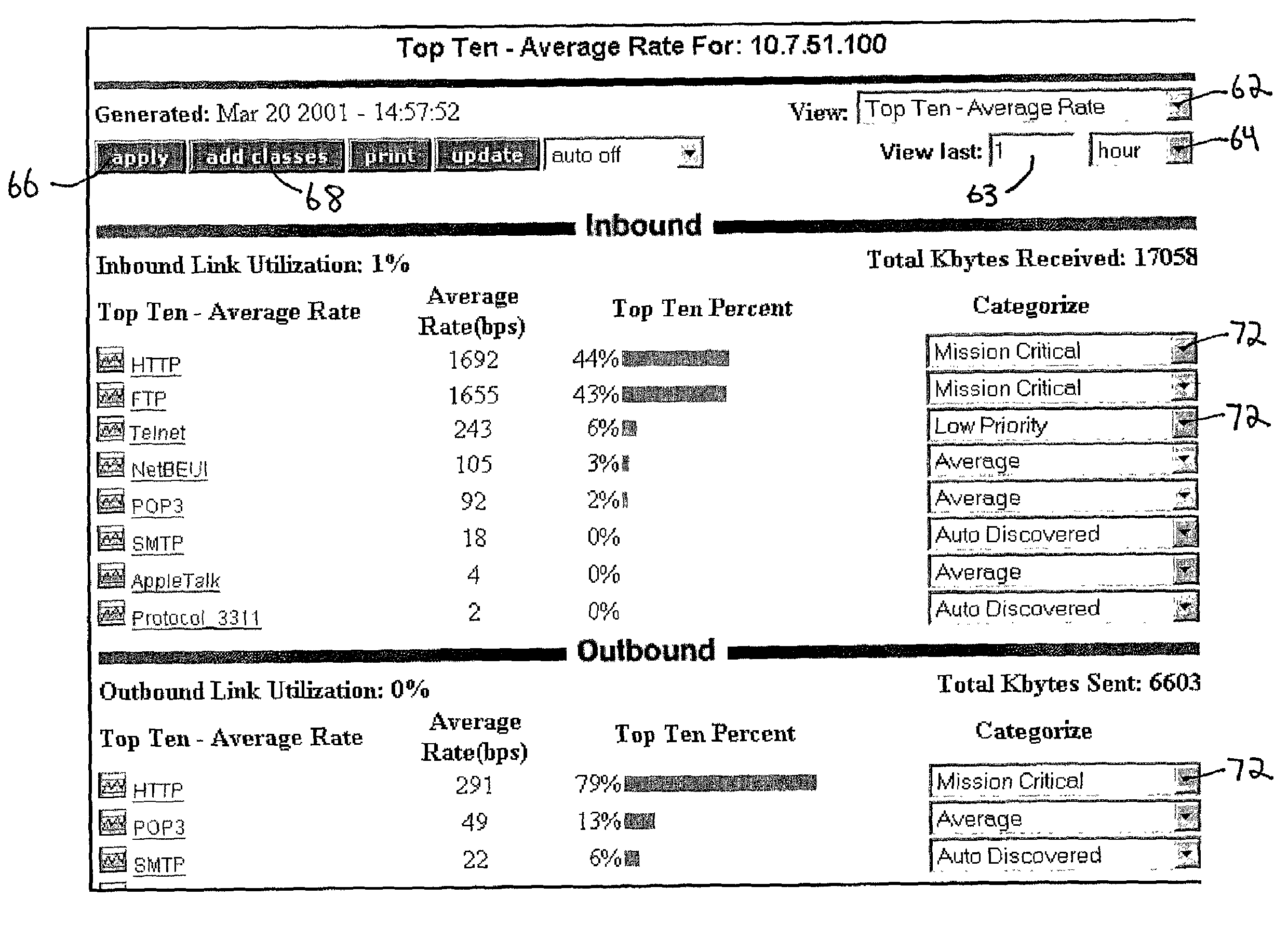

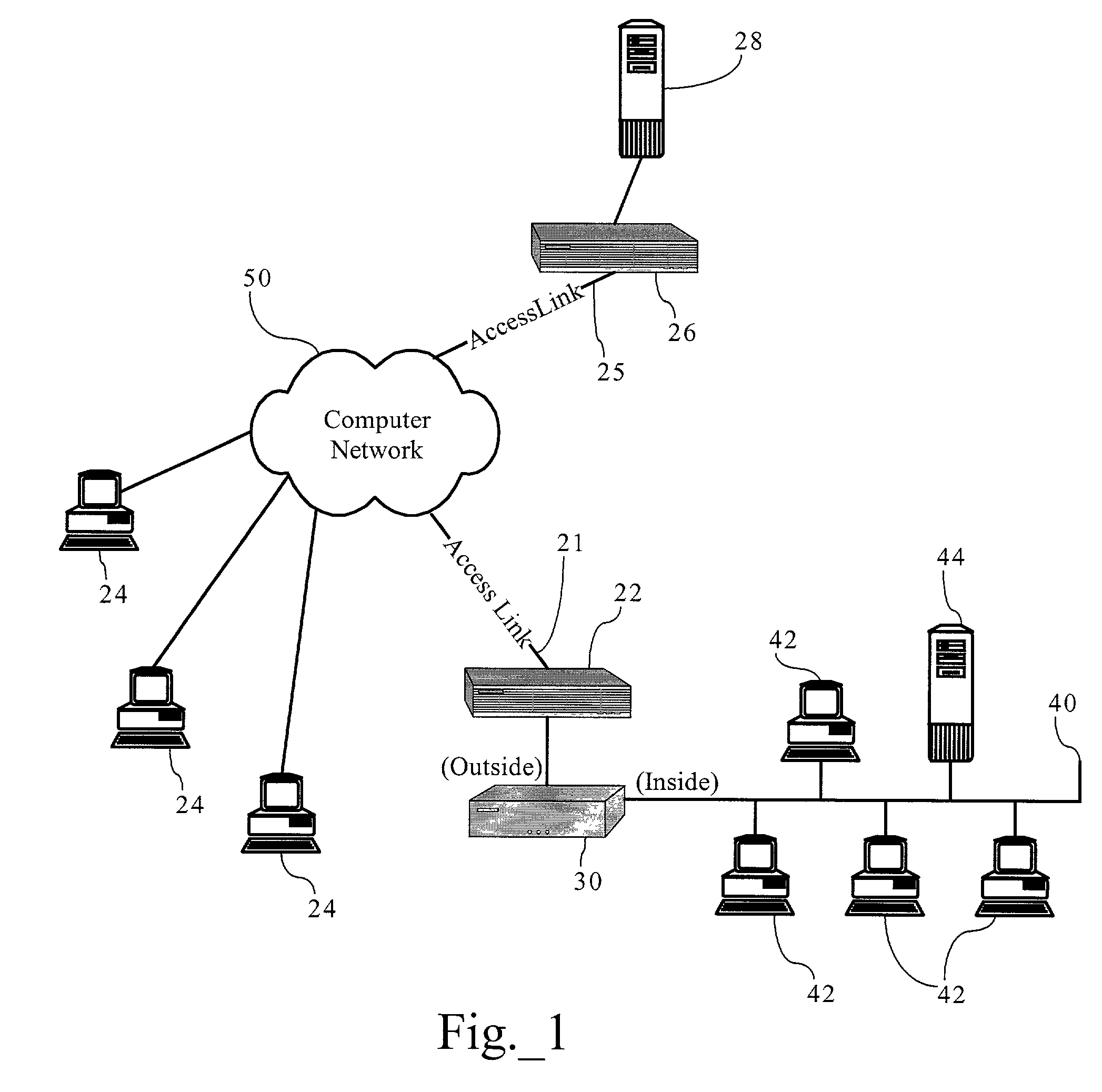

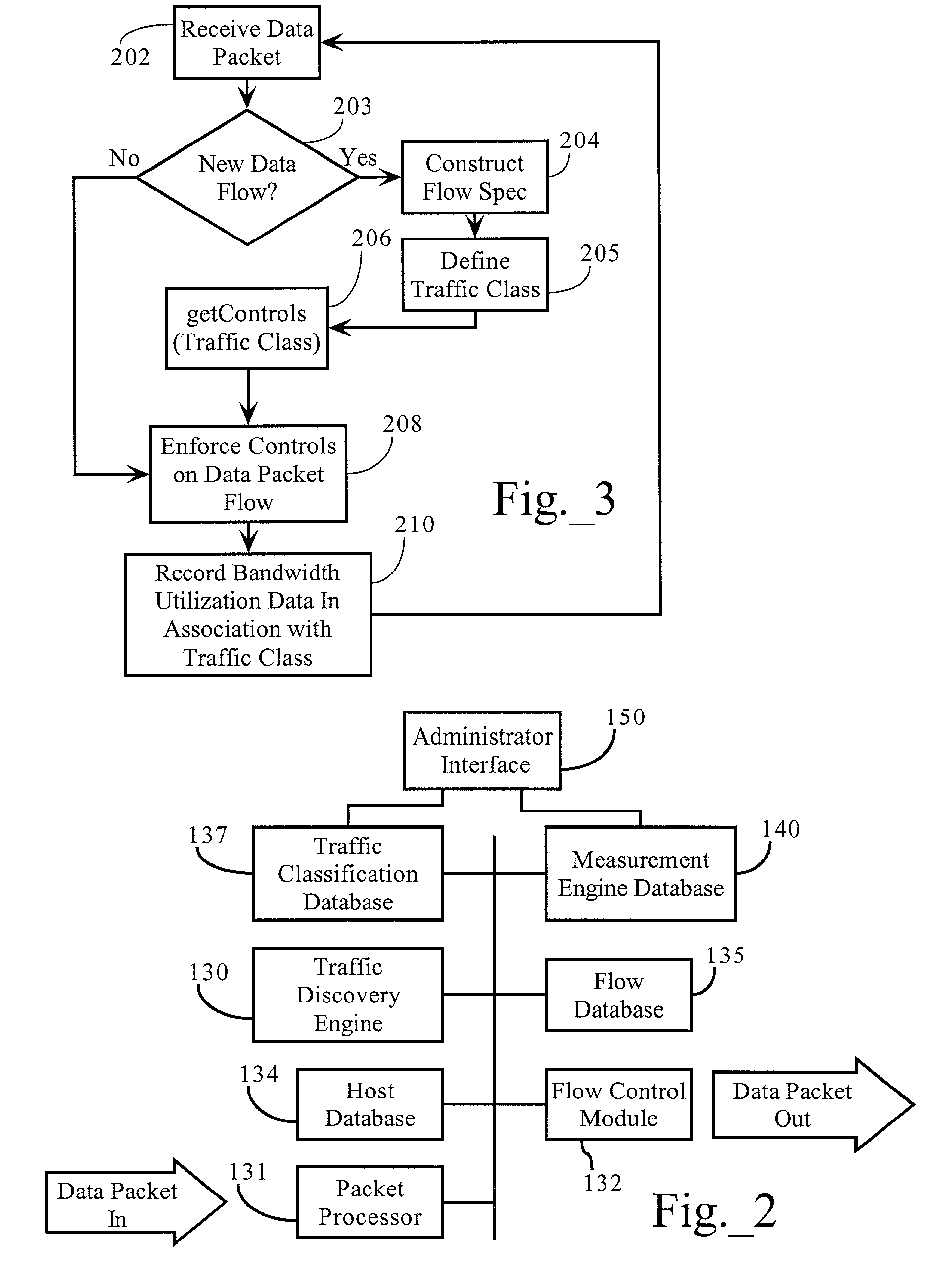

Interface facilitating configuration of network resource utilization

ActiveUS7203169B1OptimizationQuickly easily configureError preventionFrequency-division multiplex detailsResource utilizationParameter control

Methods, apparatuses and systems facilitating the configuration of parameters controlling utilization of a network resource. In one embodiment, the present invention allows a network administrator to quickly and easily configure effective bandwidth utilization controls and observe the results of applying them. According to one embodiment, a network administrator is presented with an interface displaying the most significant traffic types with respect to a bandwidth utilization or other network statistic and allowing for the association of bandwidth utilization controls to these traffic types.

Owner:CA TECH INC

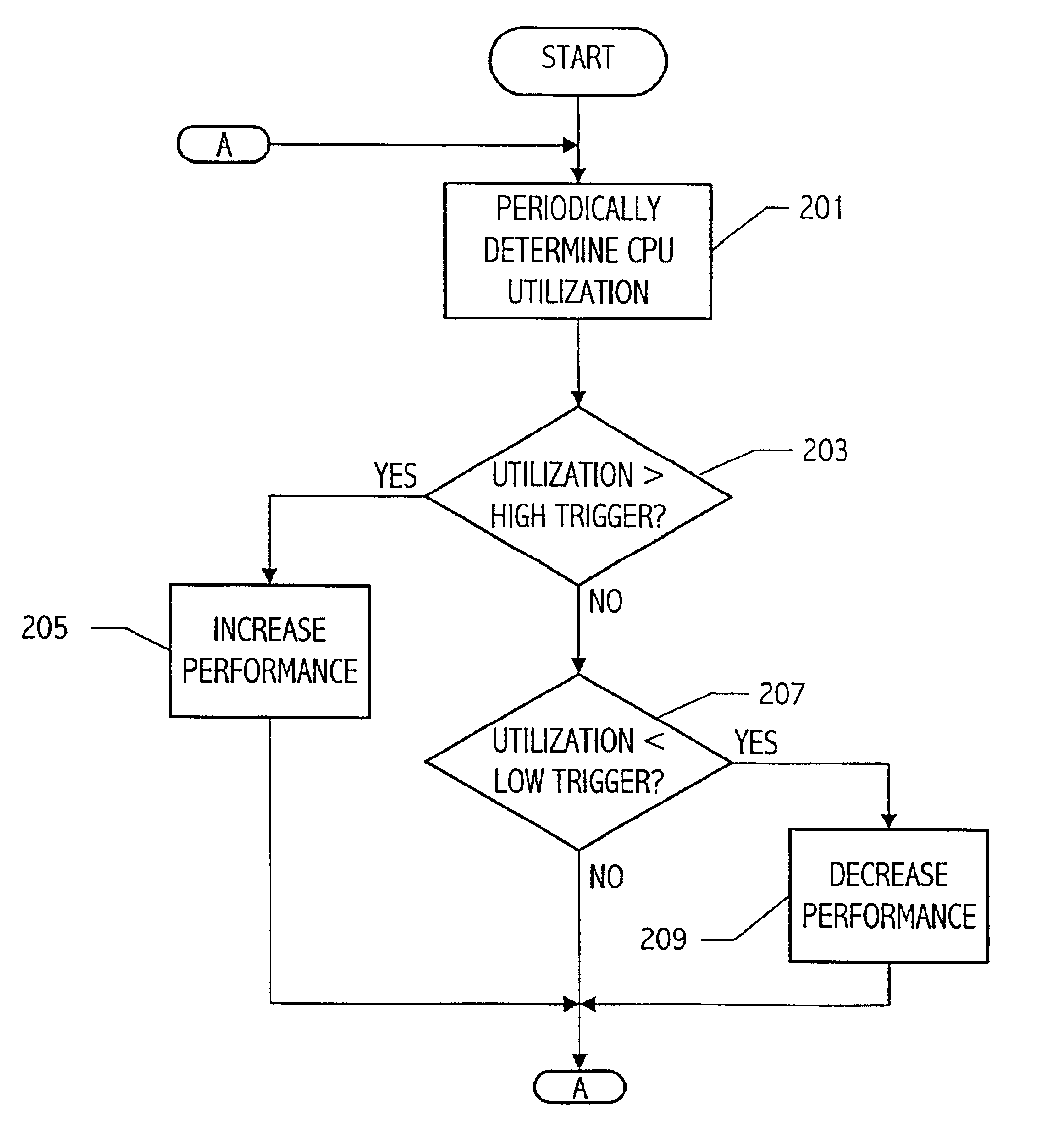

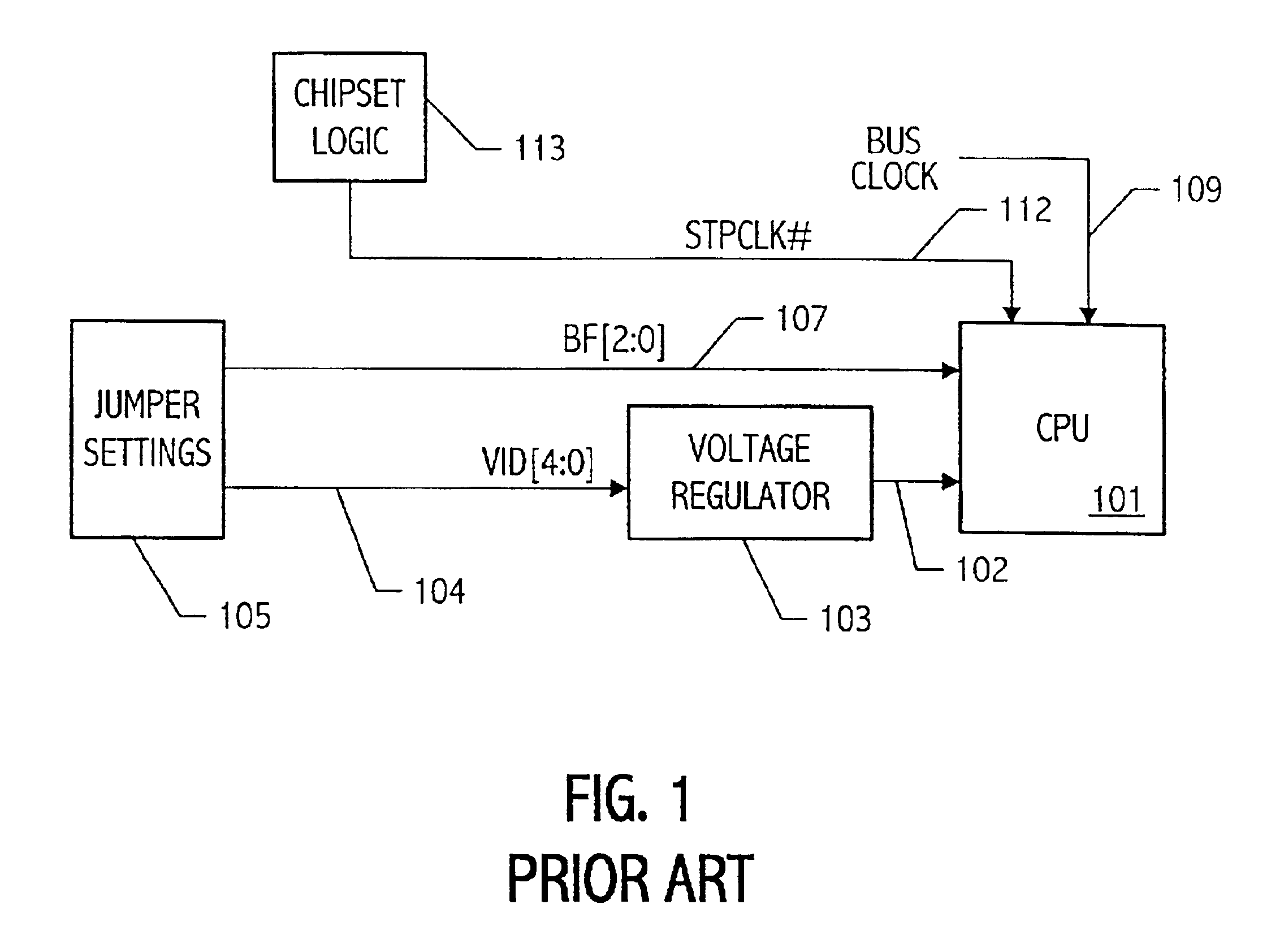

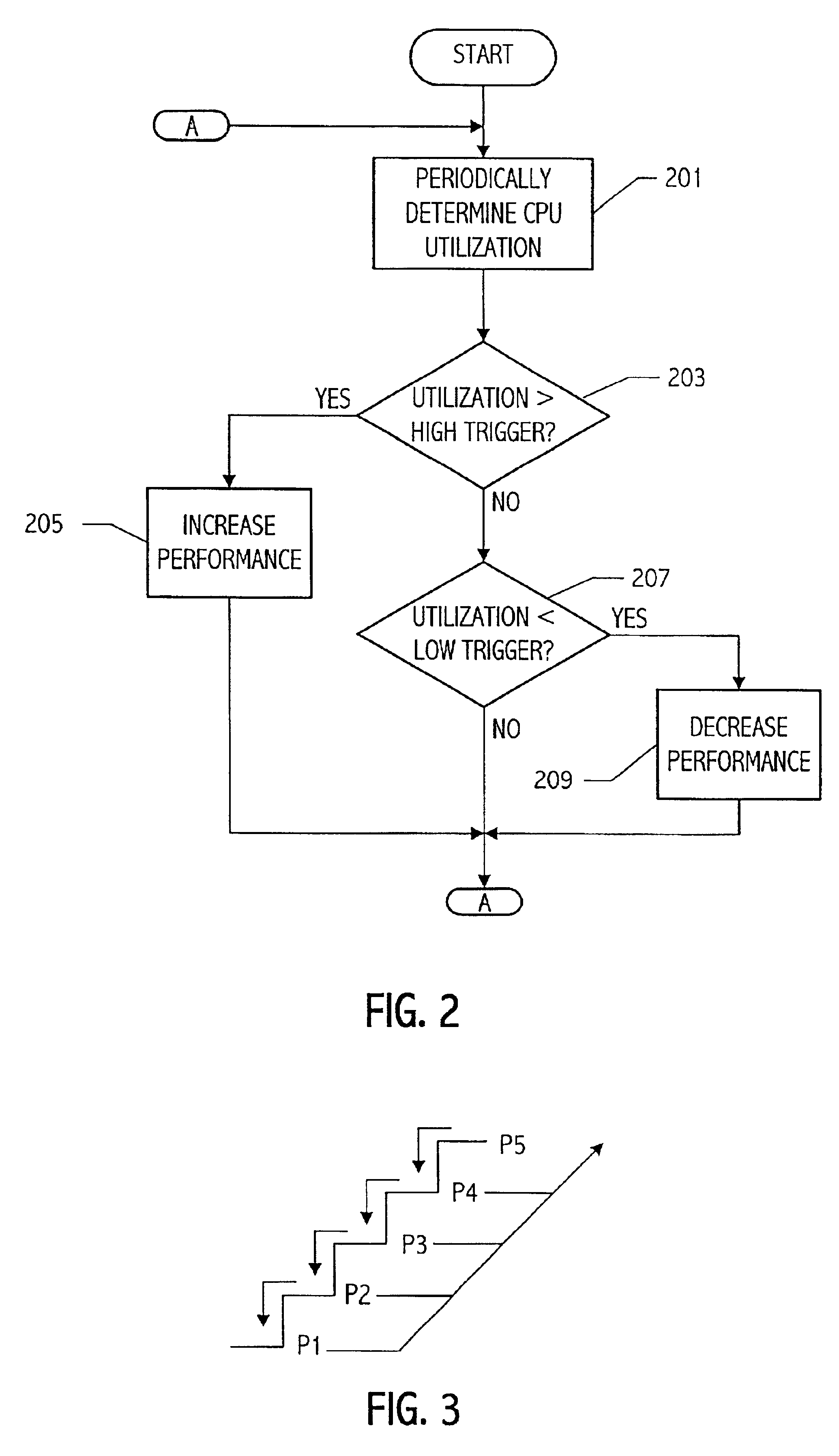

CPU utilization measurement techniques for use in power management

InactiveUS6845456B1Eliminate useEnergy efficient ICTVolume/mass flow measurementUtilization ratePower management

A computer system that has multiple performance states periodically obtains utilization information for a plurality of tasks operating on the processor and determines processor utilization according to the utilization information for the plurality of tasks. The system compares the processor utilization to at least one threshold and selectively adjusts a current processor performance state to another performance state according to the comparison.

Owner:GLOBALFOUNDRIES INC

Virtual machine utility computing method and system

ActiveUS20100199285A1Realize automatic adjustmentComplete banking machinesResource allocationUtility computingResource utilization

An analytics engine receives real-time statistics from a set of virtual machines supporting a line of business (LOB) application. The statistics relate to computing resource utilization and are used by the analytics engine to generate a prediction of demand for the LOB application in order to dynamically control the provisioning of virtual machines to support the LOB application.

Owner:VMWARE INC

Nano-Chinese medicinal biological product and its preparation

A nano-class biologic product of Chinese-medicinal material for higher biological utilization rate and target nature, lower toxic by-effect and improved curative effect is prepared through preparing the nanoparticles of Chinese-medicinal materials, and preparing nano-class (50-80 nm) biologic product.

Owner:陈永丽

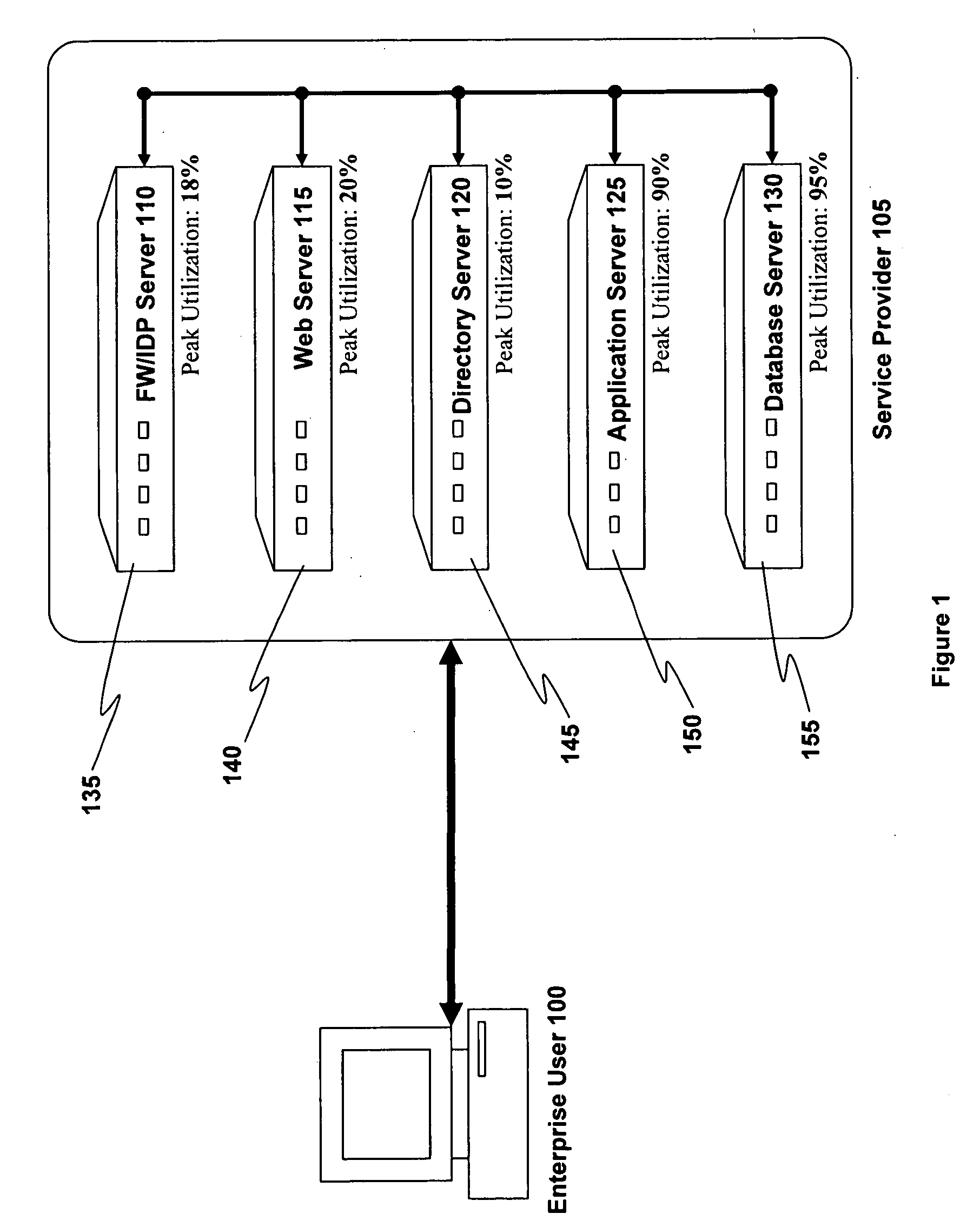

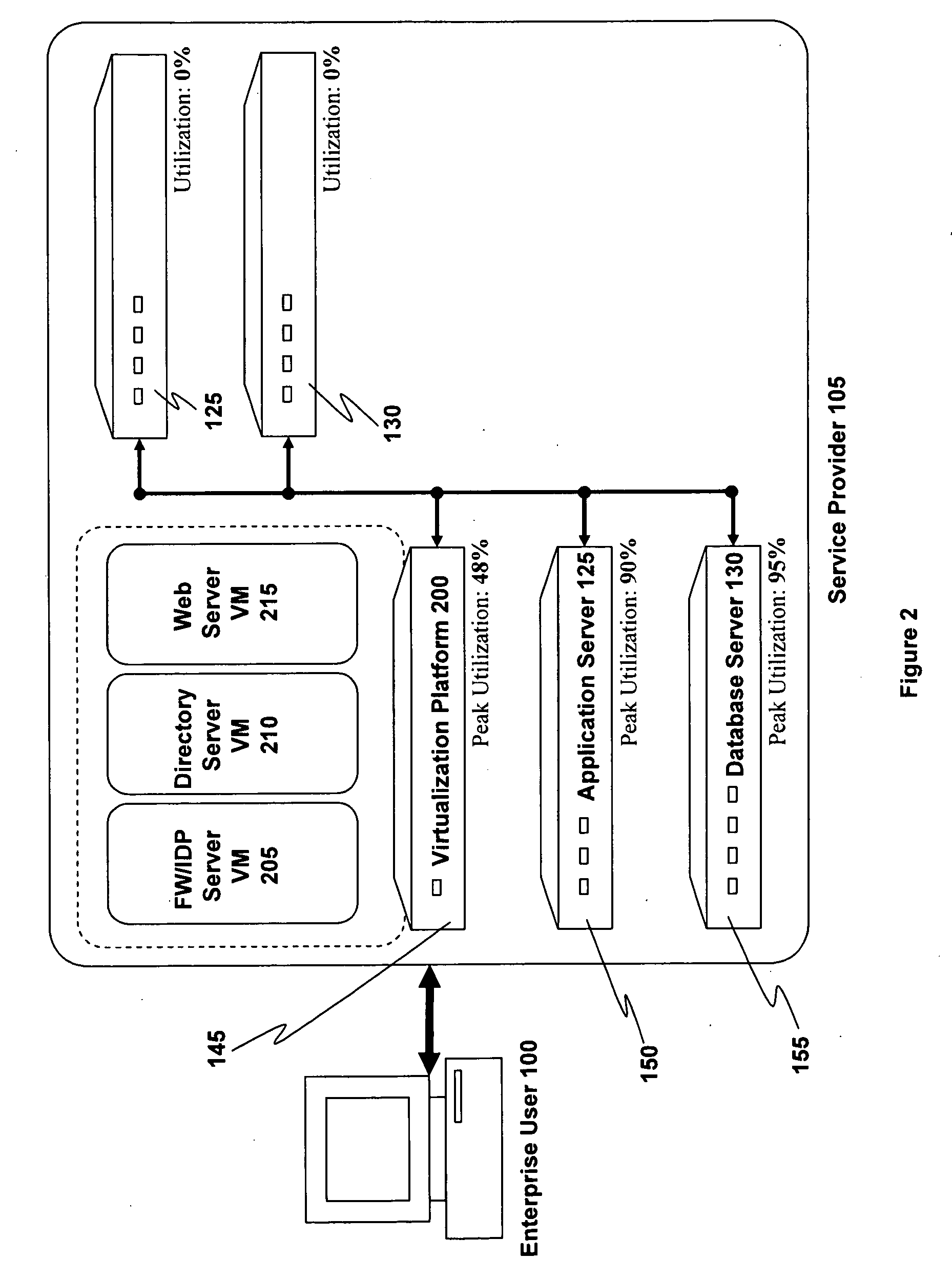

System and method for dynamic server allocation and provisioning

InactiveUS20070250608A1Lower cost of capitalQuality improvementResource allocationDigital computer detailsLoad SheddingManagement tool

A management tool that streamlines the server allocation and provisioning processes within a data center is provided. The system, method, and computer program product divide the server provisioning and allocation into two separate tasks. Provisioning a server is accomplished by generating a fully configured, bootable system image, complete with network address assignments, virtual LAN (VLAN) configuration, load balancing configuration, and the like. System images are stored in a storage repository and are accessible to more than one server. Allocation is accomplished using a switching mechanism which matches each server with an appropriate system image based upon current configuration or requirements of the data center. Thus, real-time provisioning and allocation of servers in the form of automated responses to changing conditions within the data center is possible. The ability to instantly re-provision servers, safely and securely switch under-utilized server capacity to more productive tasks, and improve server utilization is also provided.

Owner:RACEMI

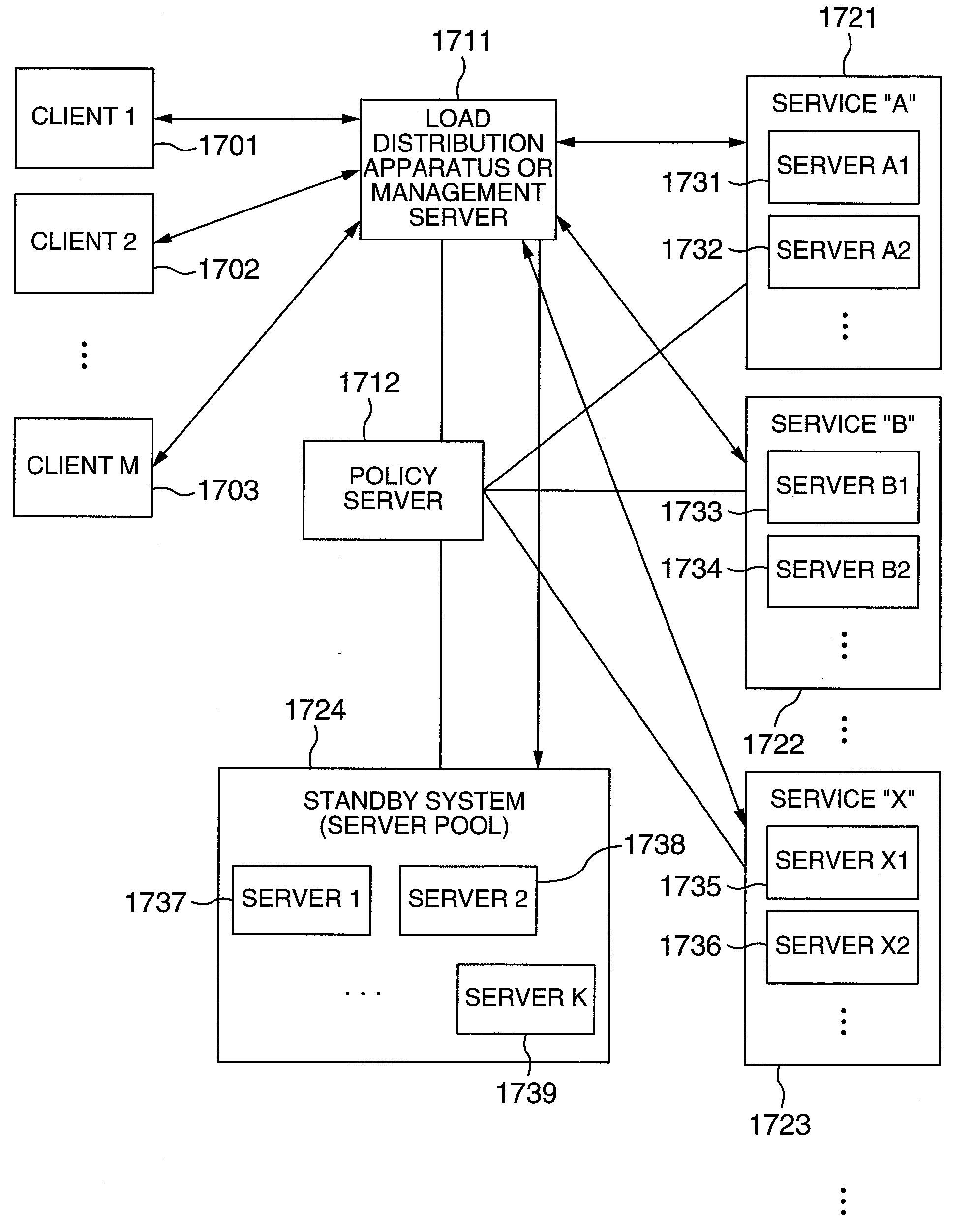

Computer resource distribution method based on prediciton

InactiveUS20090113056A1Reduce in quantityReduce maintenance costsResource allocationDigital computer detailsComputer resourcesDistribution method

Owner:HITACHI LTD

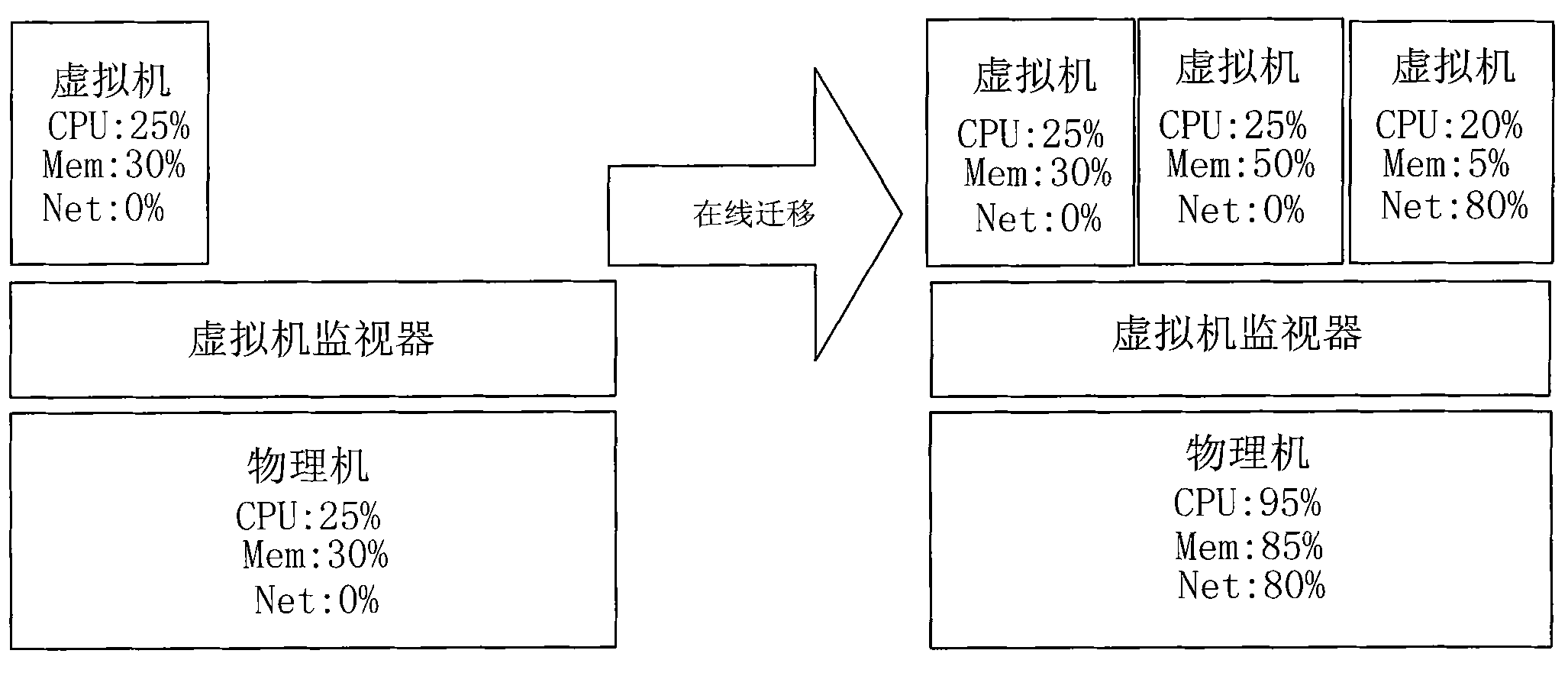

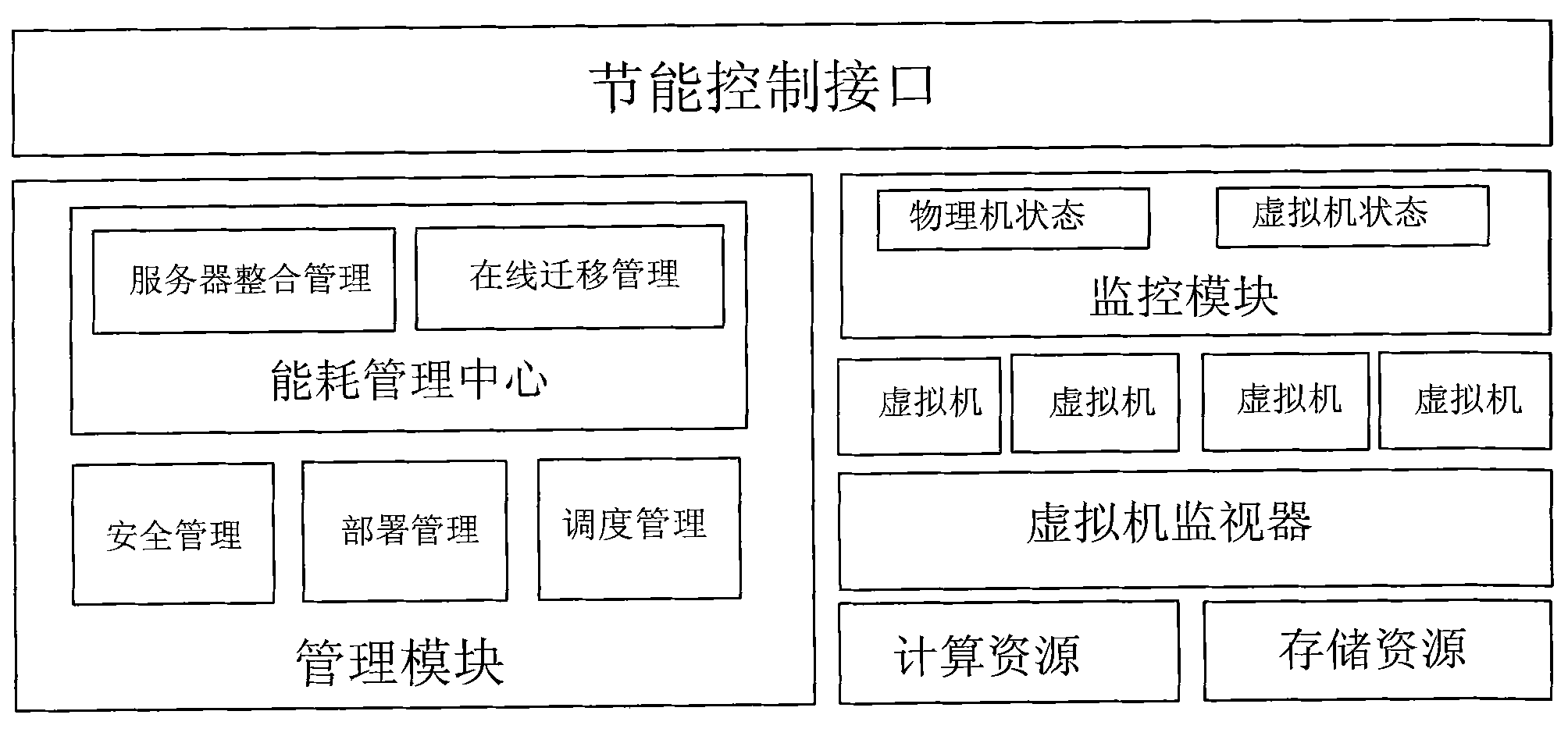

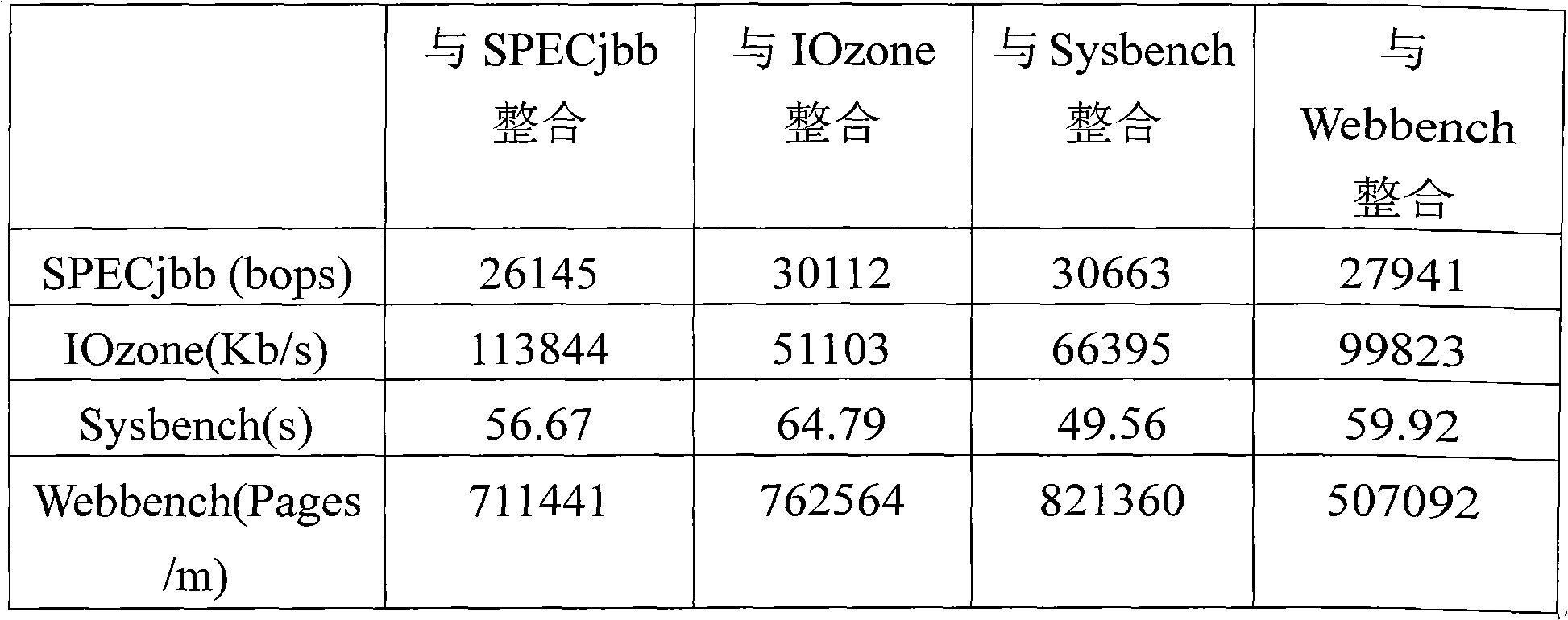

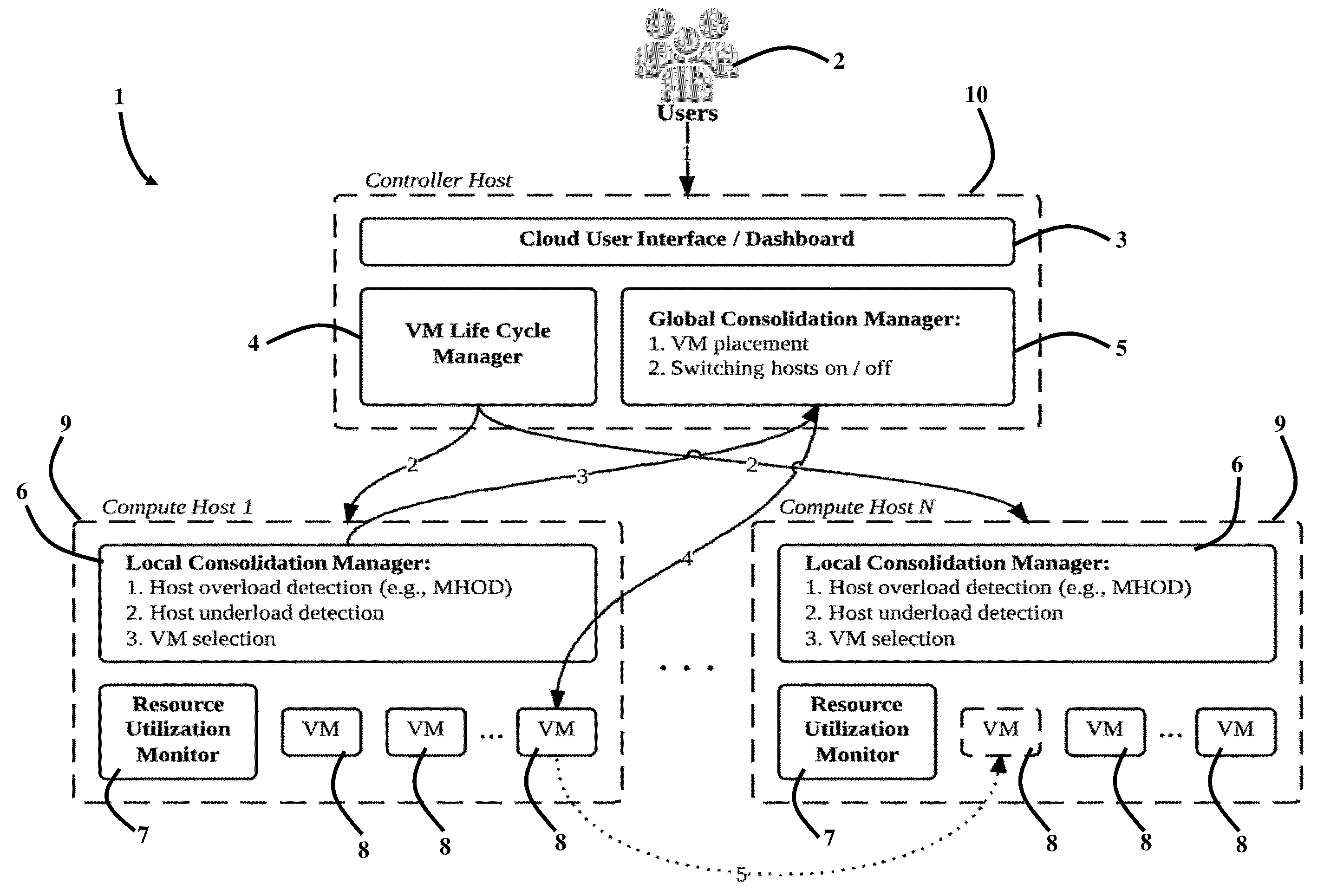

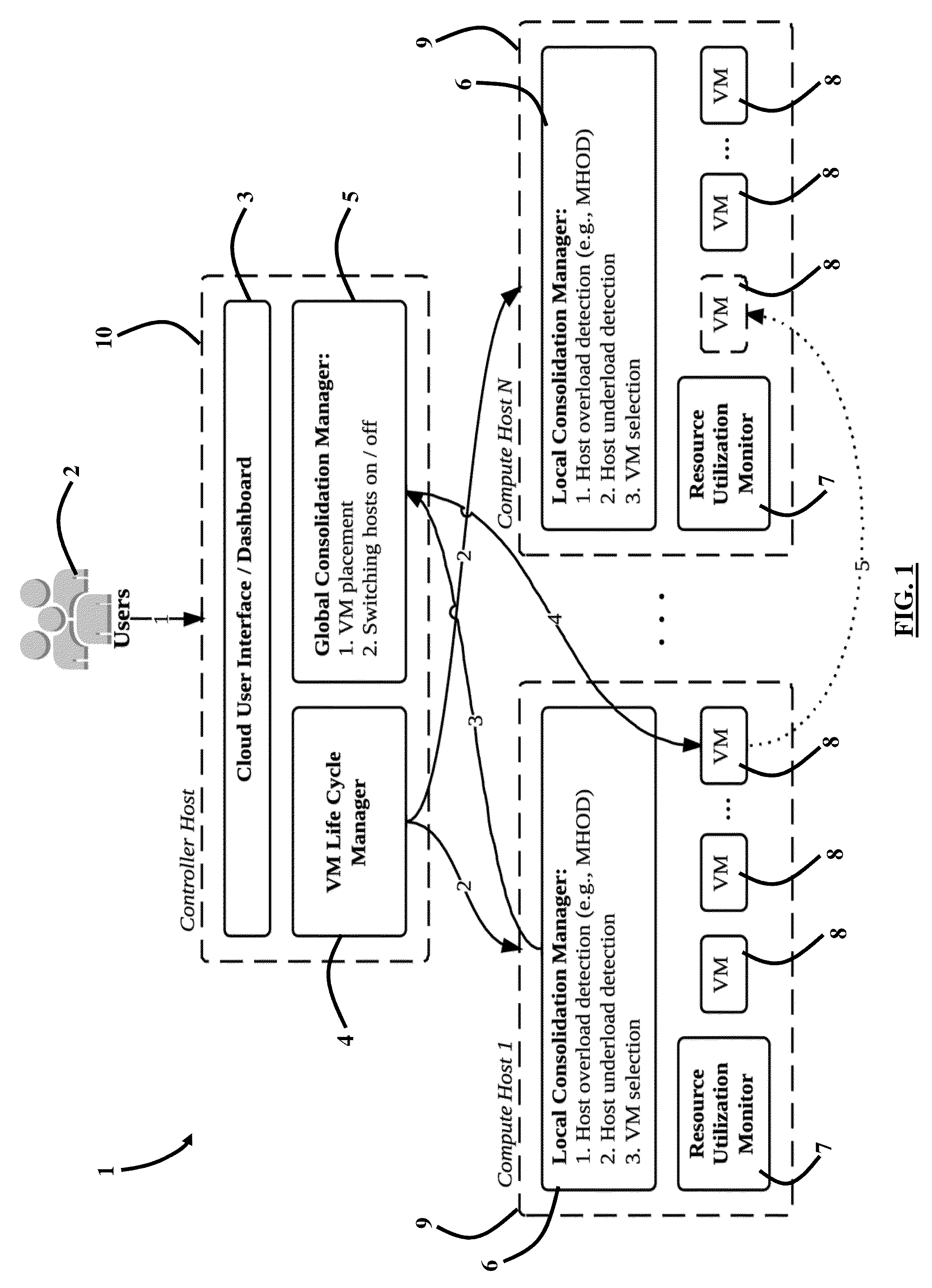

Energy-saving method of cloud data center based on virtual machine migration and load perception integration

ActiveCN102096461AGood technical effectAutomatic Dynamic ReintegrationEnergy efficient ICTMultiprogramming arrangementsVirtualizationComputer architecture

The invention relates to a system level virtualization technology and an energy-saving technology in the field of the structure of a computer system, and discloses an energy-saving method of a cloud data center based on virtual machine migration and load perception integration. The method comprises the steps: dynamically completing the migration and the reintegration of the load of a virtual machine in the cloud data center by monitoring the resource utilization rate of a physical machine and the virtual machine and the resource use condition of current each physical server under the uniform coordination and control of an optimized integration strategy management module of the load perception and an on-line migration control module of the virtual machine, and tuning off the physical servers which run without the load, so that the total use ratio of the server resource is improved, and the aim of energy saving is achieved. The energy-saving method of the cloud data center based on the virtual machine on-line migration and the load perception integration technology is effectively realized, the amount of the physical servers which are actually demanded by the cloud data center is reduced, and the green energy saving is realized.

Owner:ZHEJIANG UNIV

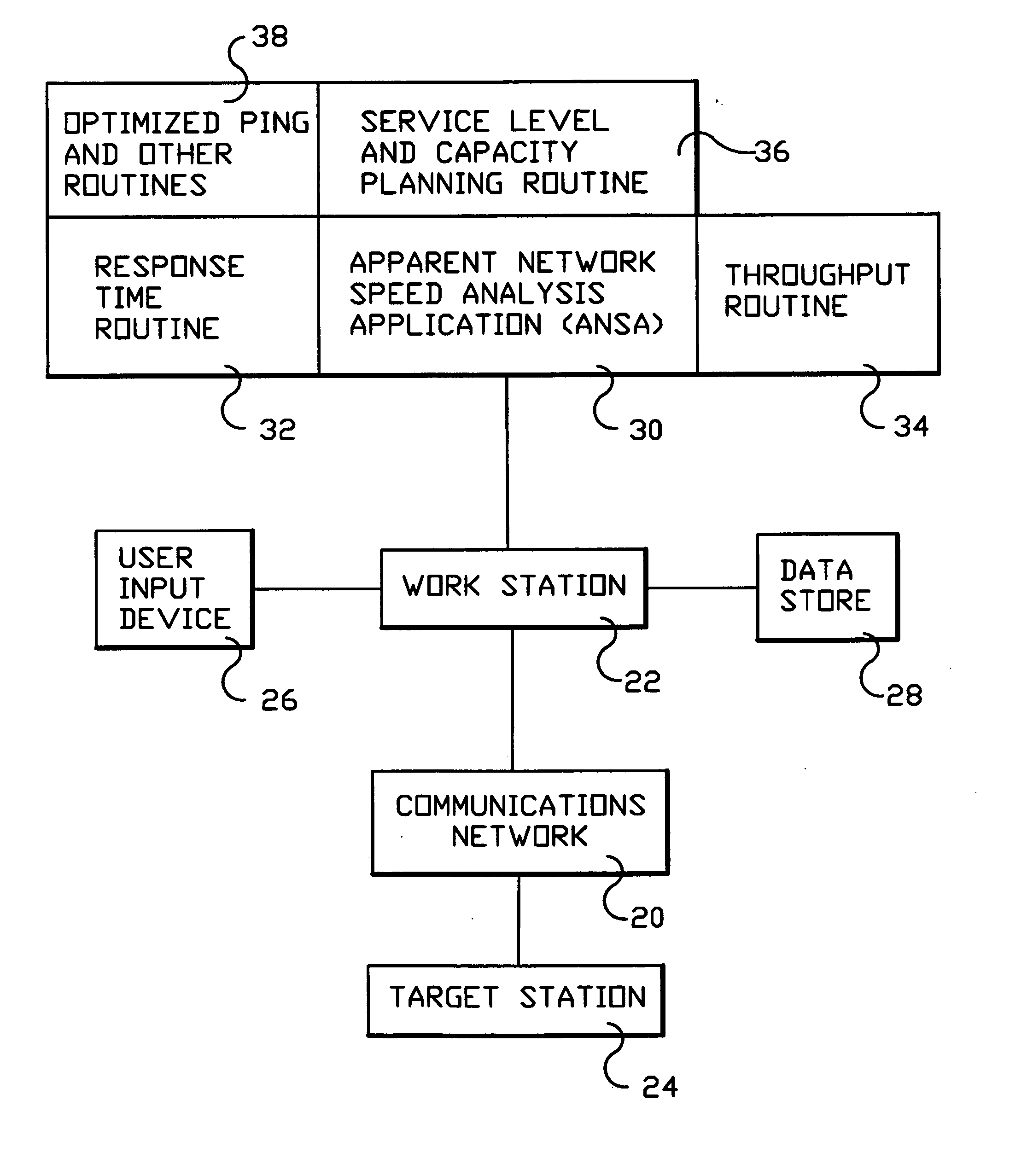

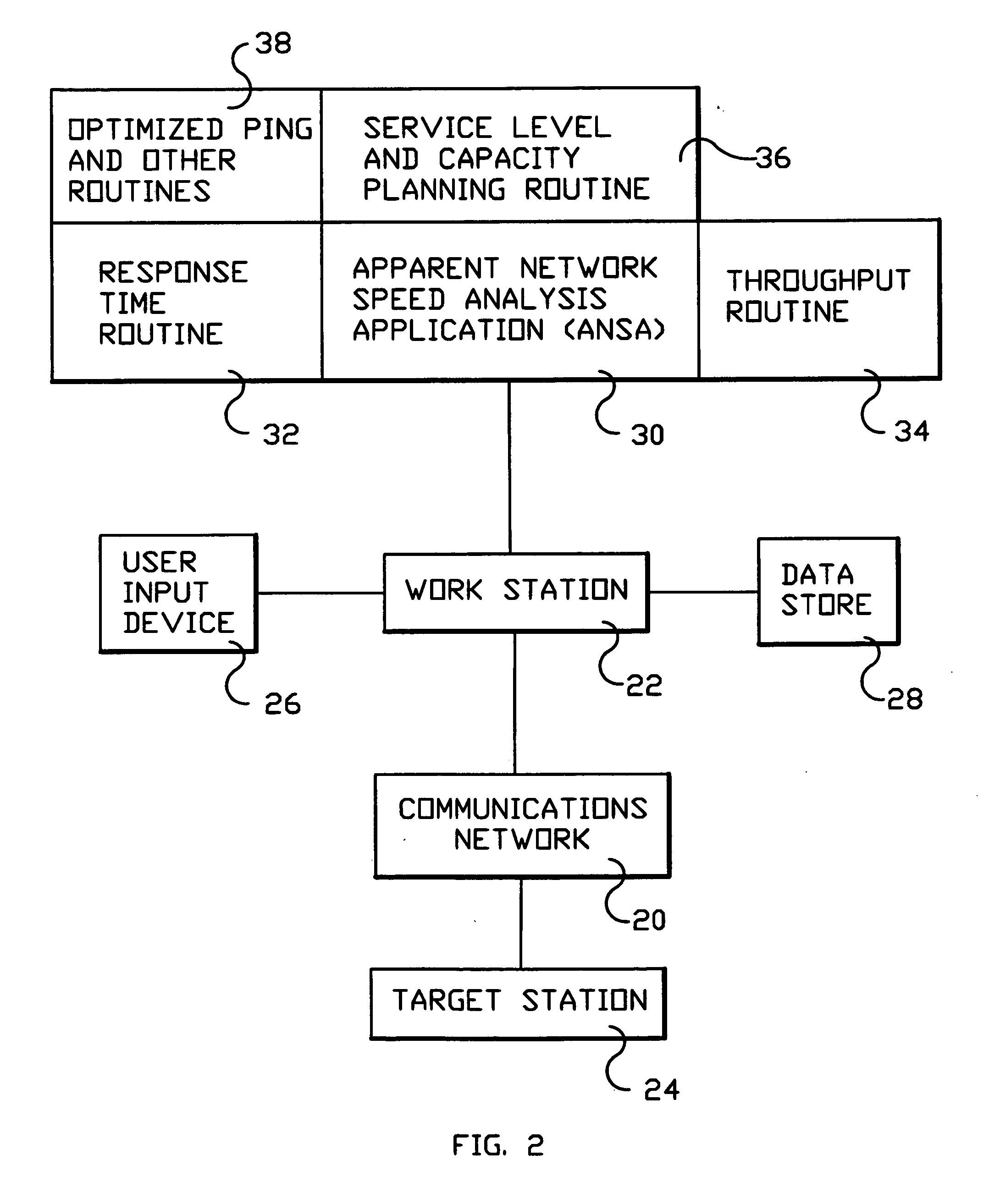

System and method for monitoring performance, analyzing capacity and utilization, and planning capacity for networks and intelligent, network connected processes

InactiveUS20050018611A1Error preventionFrequency-division multiplex detailsTotal responseTest sample

Analysis of networks and testing and analyzing intelligent, network connected devices. An instantaneous network utilization value is assigned for the worst surviving ping instance of between 90% and 99% (determined proportionately from the ratio of dropped test samples to surviving test samples), and then used to solve for average network message size and average utilization of the network. A plurality transactions of different types are transmitted across the network to intelligent end systems and the results mathematically evaluated to determine the portion of the total response time contributed by the network and by the end processors; the utilization of the end processor processing subsystems and of the end processor I / O subsystems; and the utilization of the end system as a whole; and of the network and end processors considered as a unitary entity. Steps include determining utilization of the network when test packets are dropped by the network; utilization of intelligent processor and other devices attached to the network when test transactions are dropped, and when not dropped; and response time for remote processes at both the network and processor level.

Owner:IBM CORP

System, Method and Computer Program Product for Energy-Efficient and Service Level Agreement (SLA)-Based Management of Data Centers for Cloud Computing

ActiveUS20150039764A1Increase profitReduce energy consumptionDigital computer detailsProgram controlService-level agreementLevel of service

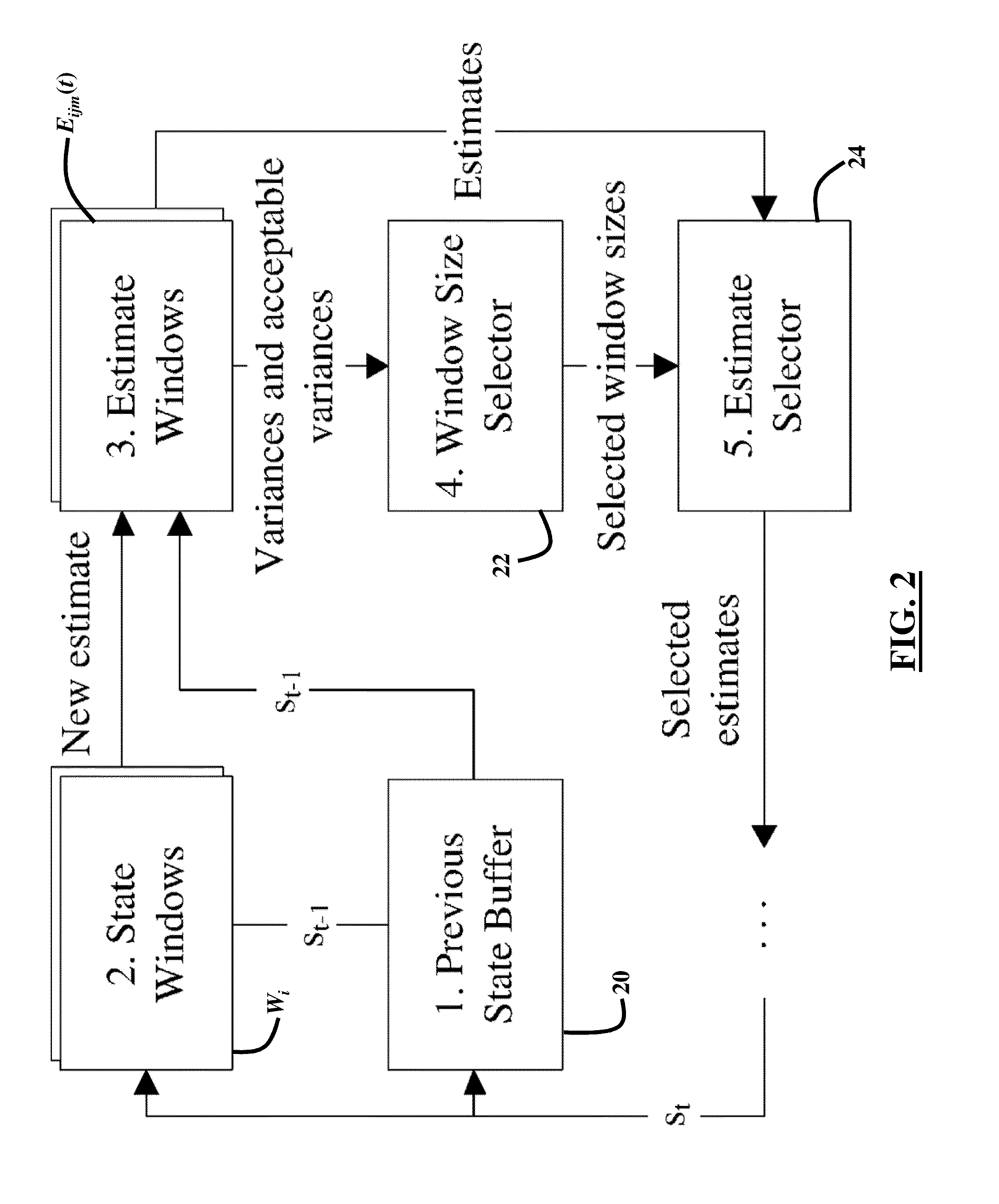

Improving the utilization of physical resources and reducing energy consumption in a cloud data center includes providing a plurality of virtual machines in the cloud data center; periodically reallocating resources of the plurality of virtual machines according to a current resource demand of the plurality of virtual machines in order to minimize a number of active physical servers required to handle a workload of the physical servers; maximizing a mean inter-migration time between virtual machine migrations under the quality of service requirement based on a Markov chain model; and using a multisize sliding window workload estimation process for a non-stationary workload to maximize the mean inter-migration time.

Owner:MANJRASOFT PTY LTD

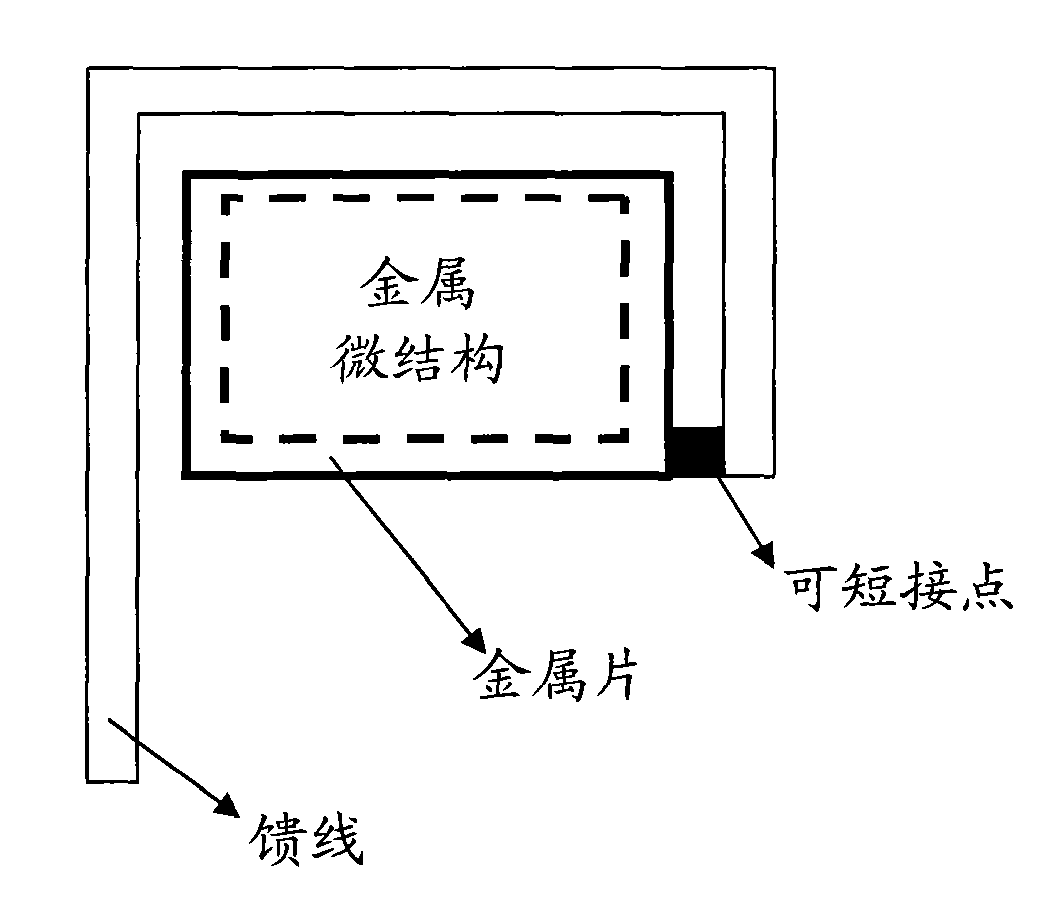

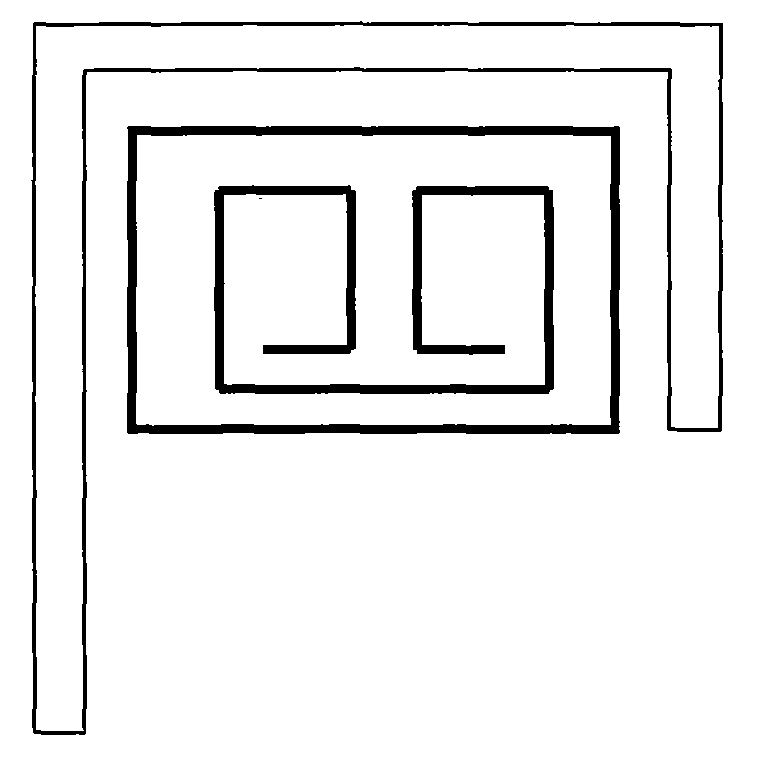

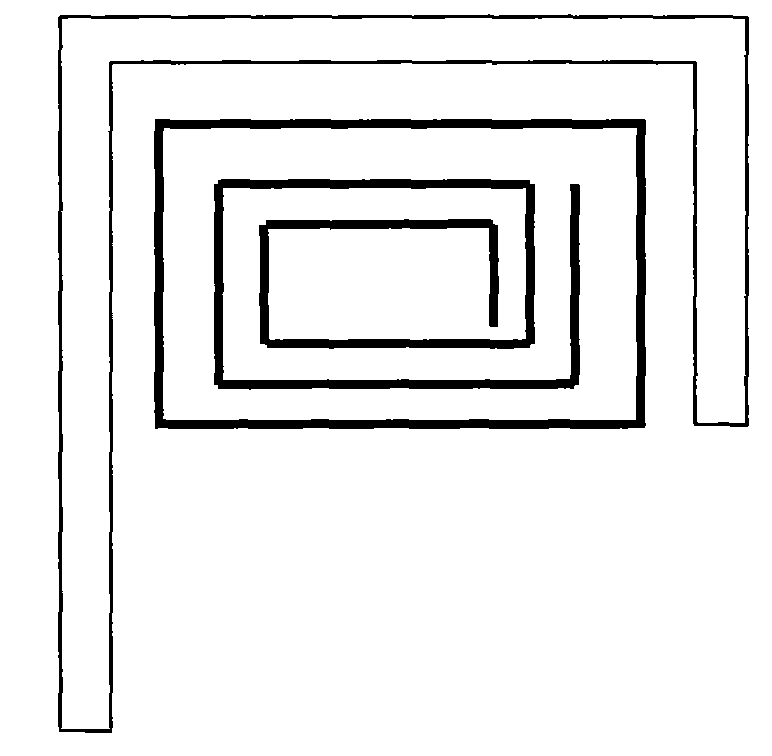

Monopole radio frequency antenna

InactiveCN101667680AImproving Impedance MatchingReduce the impactElongated active element feedCouplingMetal sheet

The invention discloses a monopole radio frequency antenna comprising a metal sheet, a feeder line and a medium for the placement of the metal sheet and the feeder line. The feeder line is fed into the metal sheet through a coupling mode. The monopole radio frequency antenna has the advantages of small size, simple machining, low cost, high utilization rate of an antenna radiation area, easy matching design of a multimode antenna, high interference resisting capability and the like.

Owner:深圳大鹏光启科技有限公司

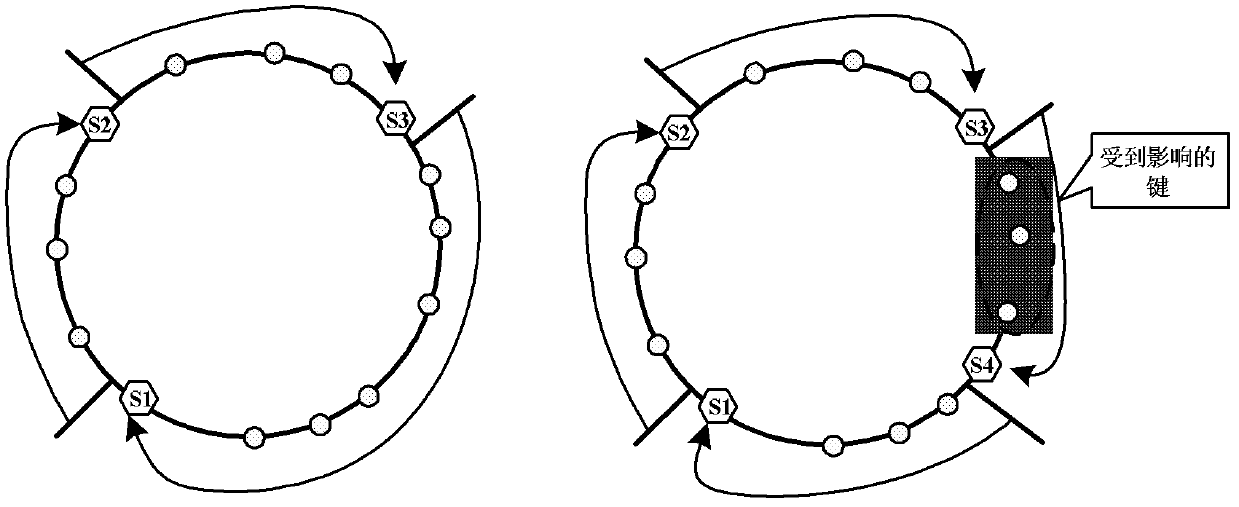

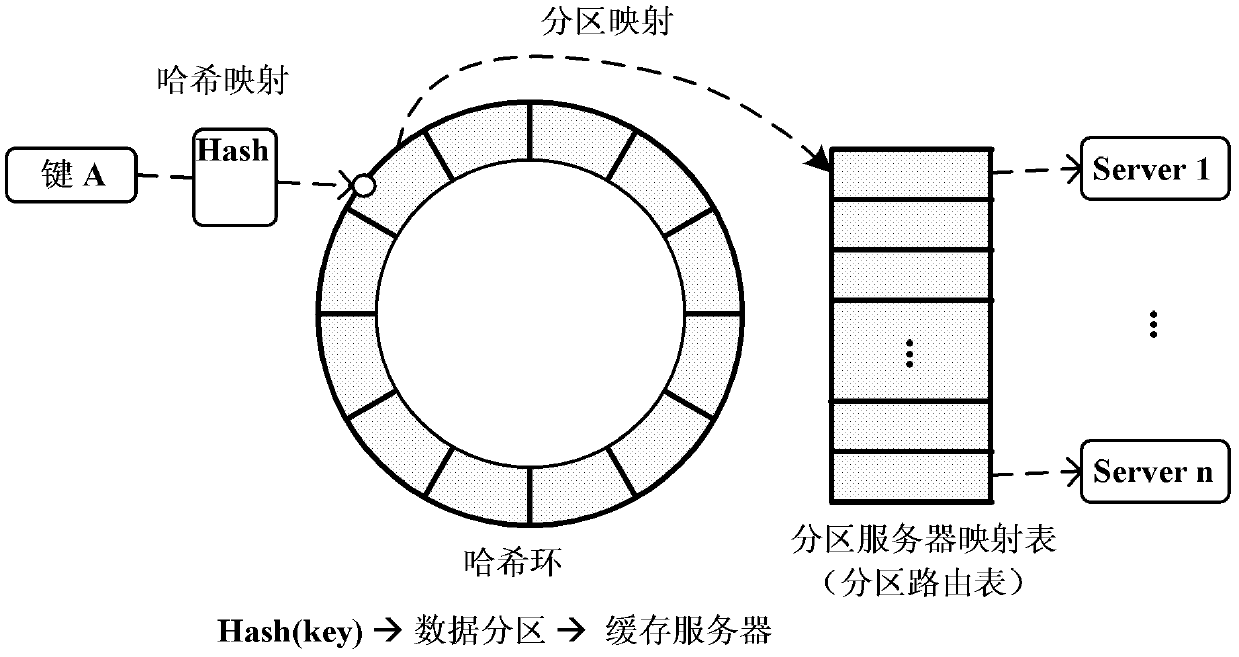

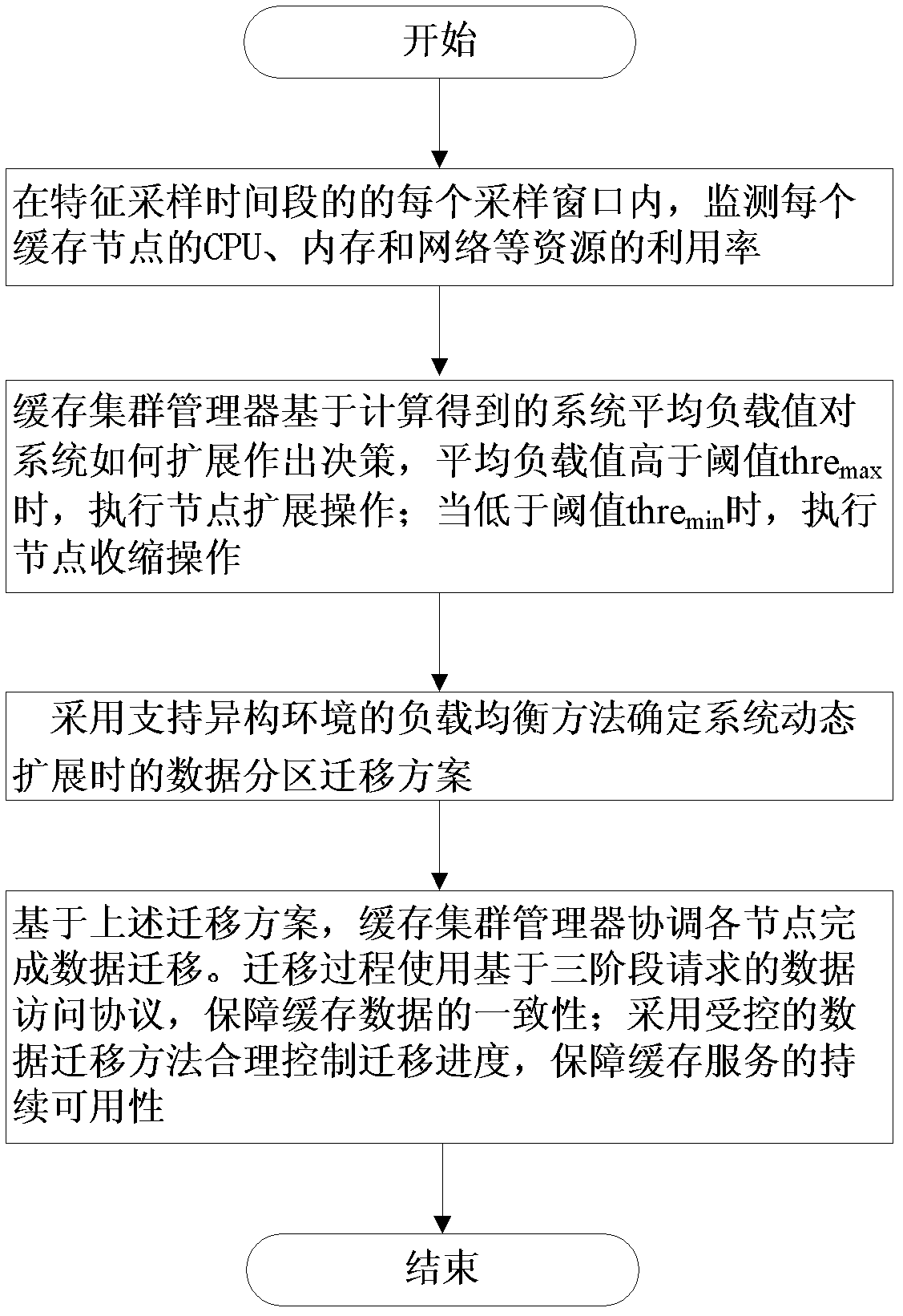

Distributed type dynamic cache expanding method and system supporting load balancing

InactiveCN102244685AReduce overheadImprove performanceData switching networksTraffic capacityCache server

The invention discloses a distributed type dynamic cache expanding method and system supporting load balancing, which belong to the technical field of software. The method comprises steps of: 1) monitoring respective resource utilization rate at regular intervals by each cache server; 2) calculating respective weighing load value Li according to the current monitored resource utilization rate, and sending the weighting load value Li to a cache clustering manager by each cache server; 3) calculating current average load value of a distributed cache system by the cache clustering manager according to the weighting load value Li, and executing expansion operation when the current average load value is higher than a threshold thremax; and executing shrink operation when the current average load value is lower than a set threshold thremin. The system comprises the cache servers, a cache client side and the cache clustering manager, wherein the cache servers are connected with the cache client side and the cache clustering manager through the network. The invention ensures the uniform distribution of the network flow among the cache nodes, optimizes the utilization rate of system resources, and solves the problems of ensuring data consistency and continuous availability of services.

Owner:济南君安泰投资集团有限公司

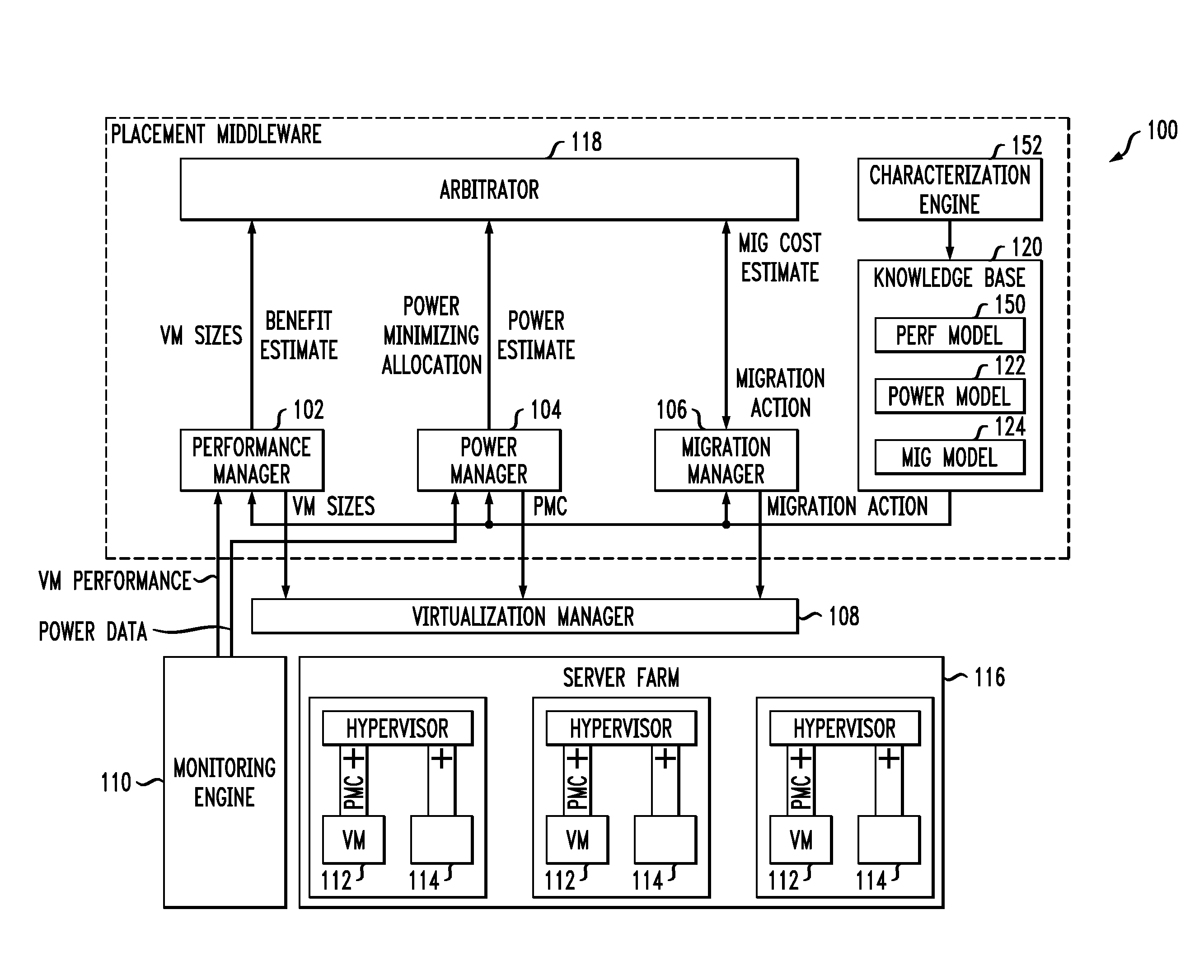

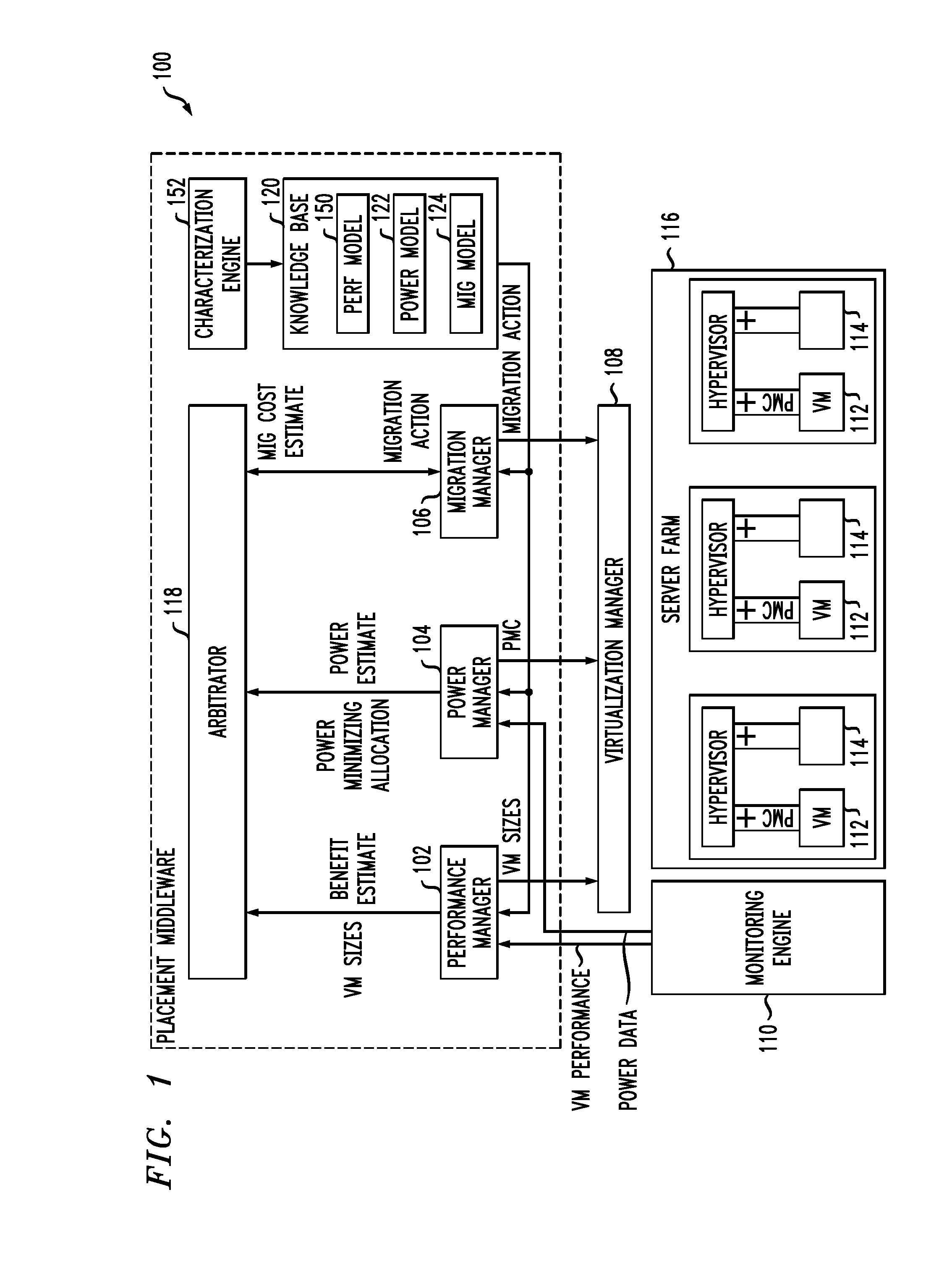

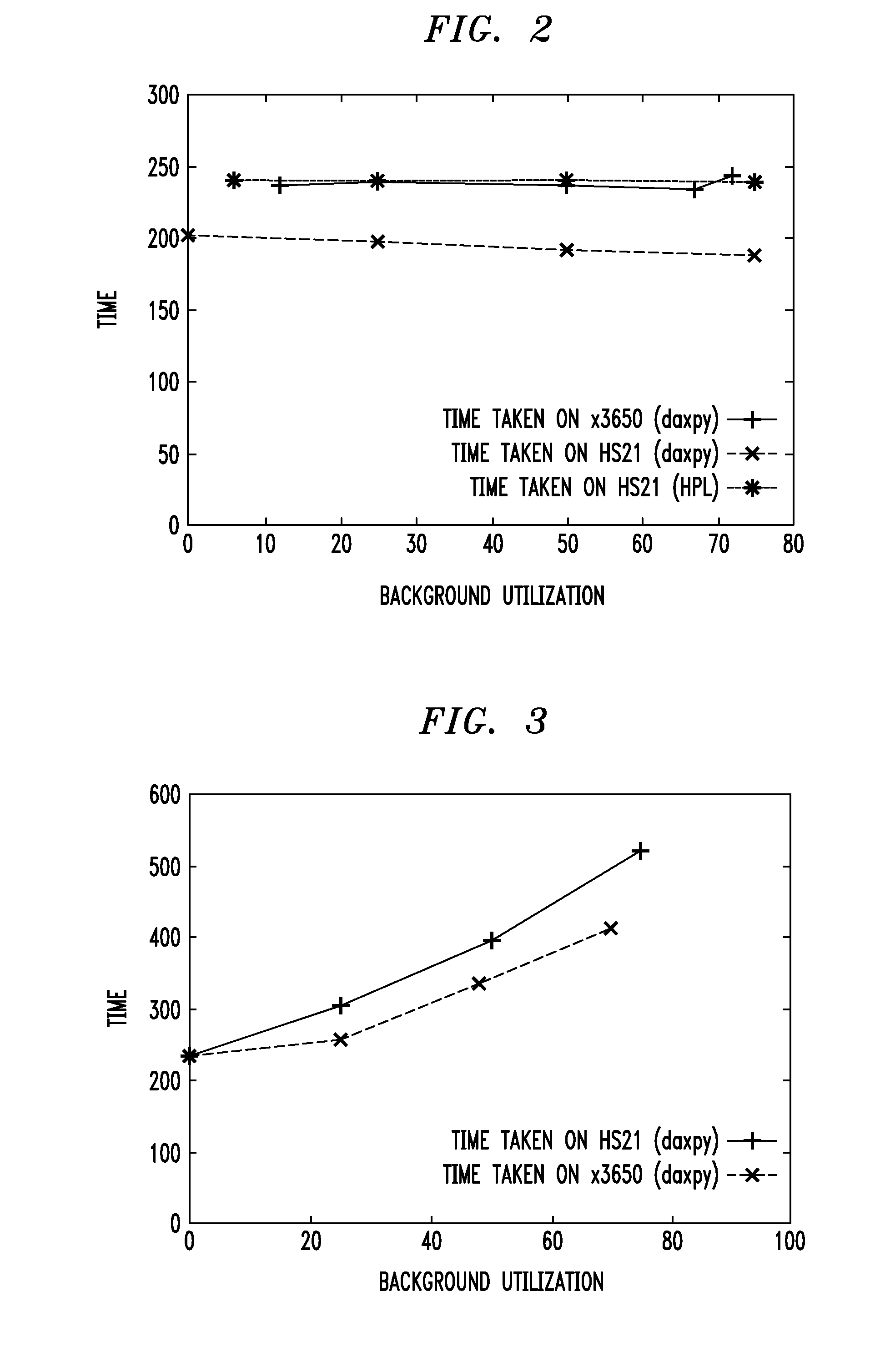

Techniques for placing applications in heterogeneous virtualized systems while minimizing power and migration cost

ActiveUS20100180275A1Minimizing migrationMinimize powerVolume/mass flow measurementPower supply for data processingVirtualizationN application

N applications are placed on M virtualized servers having power management capability. A time horizon is divided into a plurality of time windows, and, for each given one of the windows, a placement of the N applications is computed, taking into account power cost, migration cost, and performance benefit. The migration cost refers to cost to migrate from a first virtualized server to a second virtualized server for the given one of the windows. The N applications are placed onto the M virtualized servers, for each of the plurality of time windows, in accordance with the placement computed in the computing step for each of the windows. In an alternative aspect, power cost and performance benefit, but not migration cost, are taken into account; there are a plurality of virtual machines; and the computing step includes, for each of the windows, determining a target utilization for each of the servers based on a power model for each given one of the servers; picking a given one of the servers with a least power increase per unit increase in capacity, until capacity has been allocated to fit all the virtual machines; and employing a first fit decreasing bin packing technique to compute placement of the applications on the virtualized servers.

Owner:IBM CORP

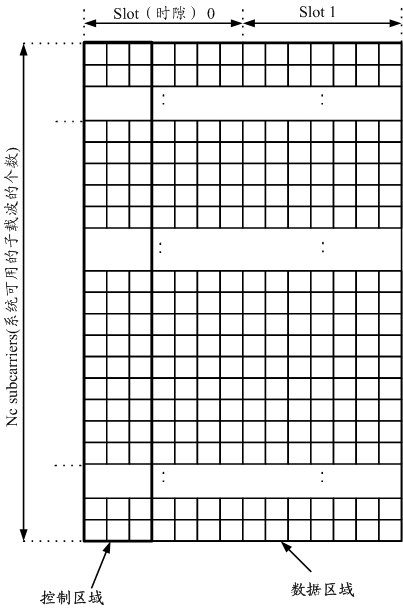

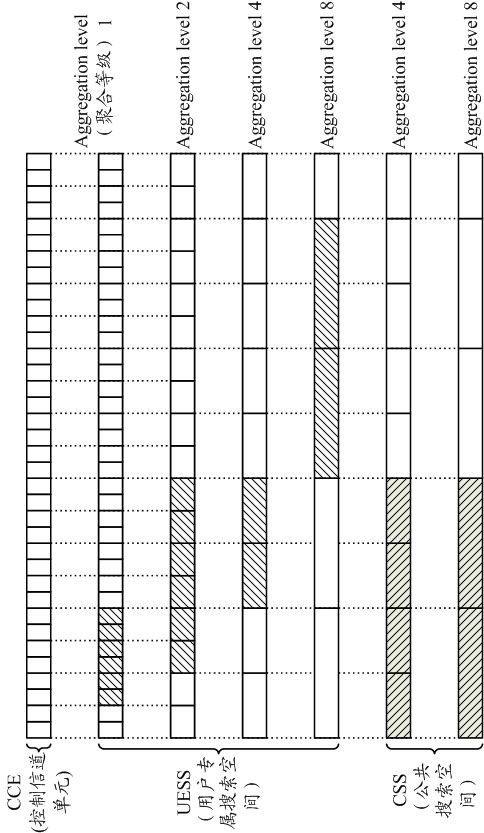

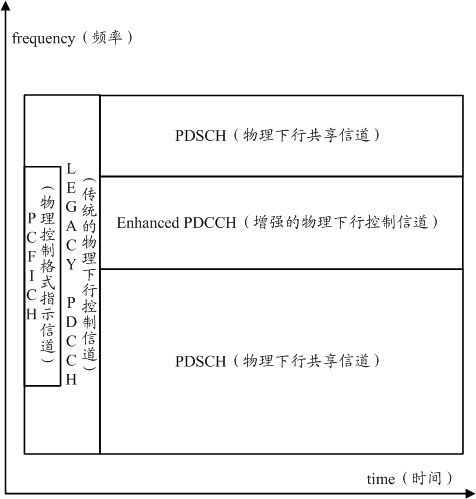

Method for receiving and transmitting information on physical downlink control channel and equipment thereof

InactiveCN102170703AImprove resource utilizationIncrease capacityWireless communicationControl channelUser equipment

The invention discloses a method for receiving and transmitting information on a physical downlink control channel and equipment thereof. The method comprises the following steps: the resource position of an enhanced physical downlink control channel cluster is indicated to user equipment by a base station; scheduling information is transmitted to the user equipment on the resource position of a physical downlink control channel cluster by the base station; the resource position of the enhanced physical downlink control channel cluster is determined by the user equipment according to the indication of the base station; and blind check carried out on the resource position of the enhanced physical downlink control channel cluster by the user equipment to receive the scheduling information transmitted by the base station to the user equipment. The invention can effectively provide the capacity of the physical downlink control channel, simultaneously, can effectively improve the utilization rate of the resource of the enhanced physical downlink control channel.

Owner:CHINA ACAD OF TELECOMM TECH

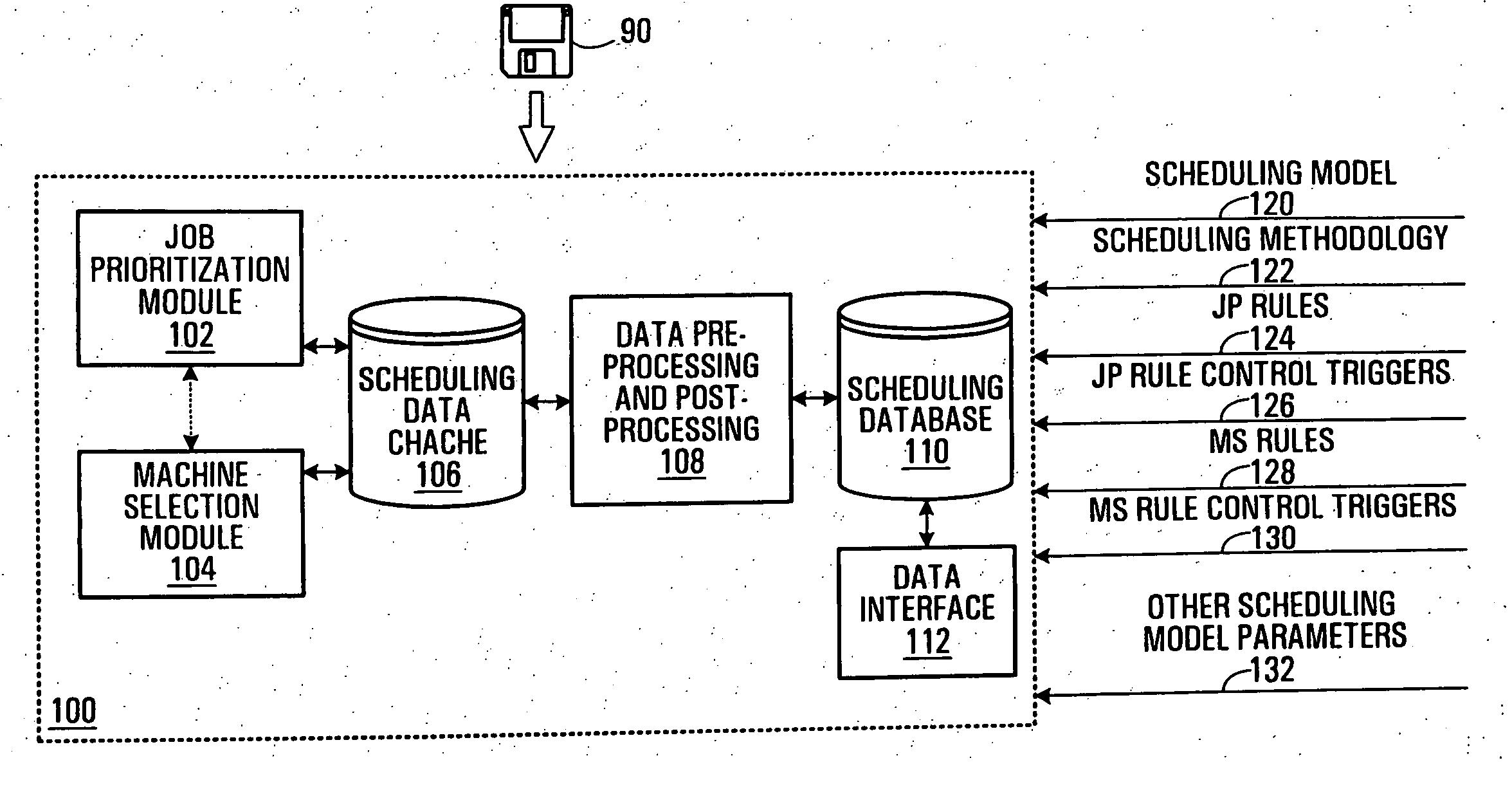

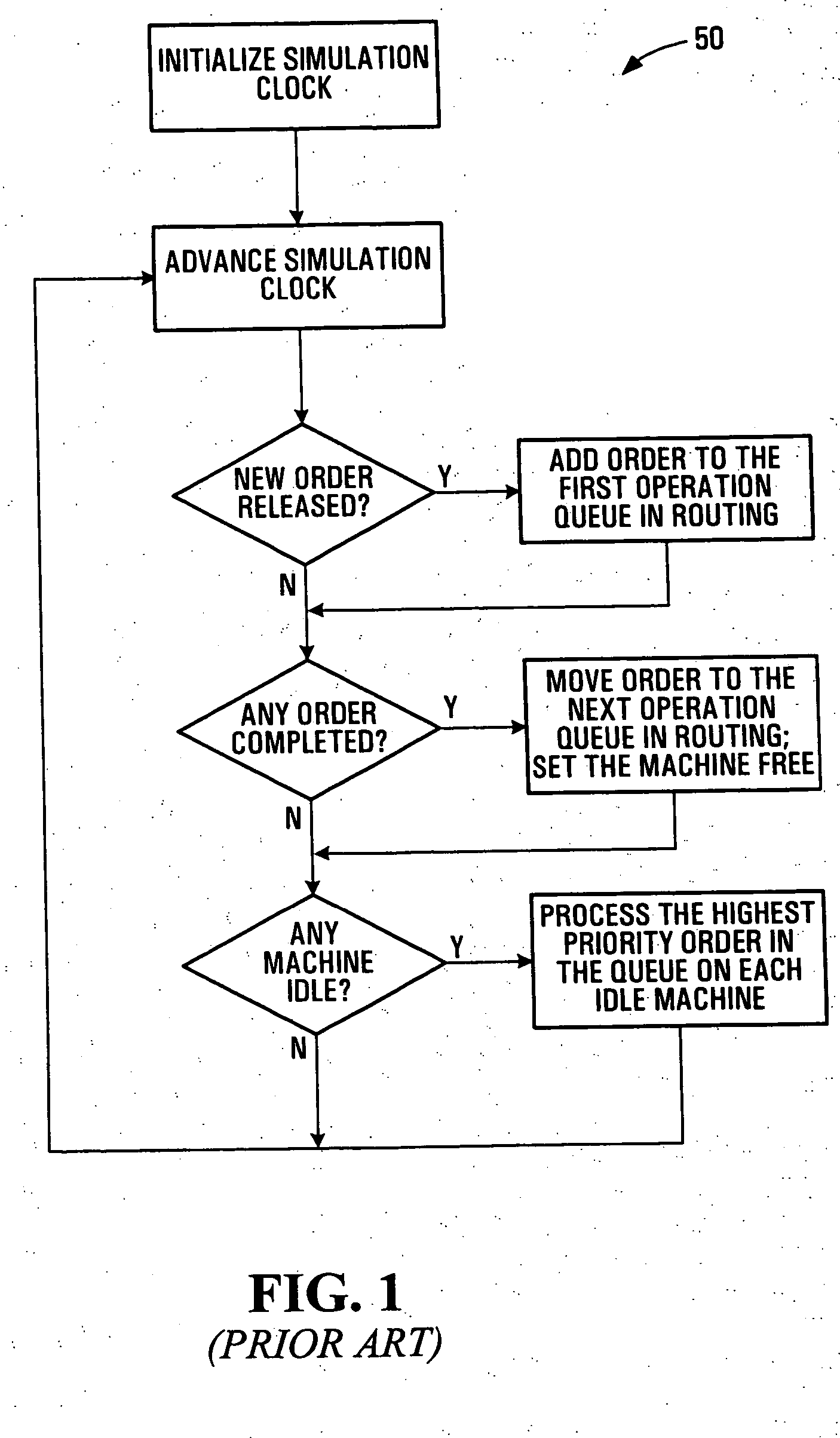

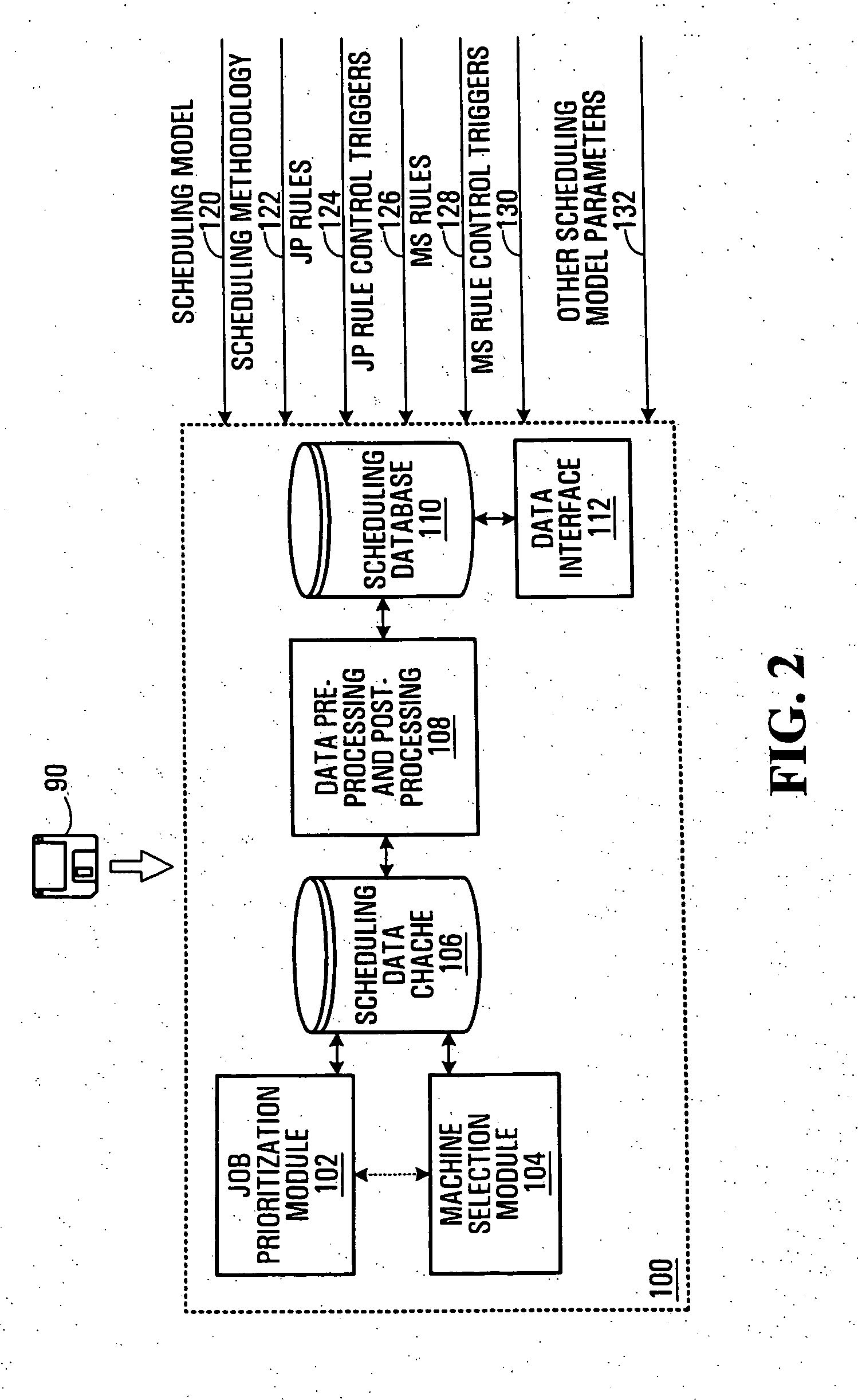

Finite capacity scheduling using job prioritization and machine selection

InactiveUS20050154625A1Improve machine utilizationResourcesSpecial data processing applicationsMachine selectionMachine utilization

In a method, device, and computer-readable medium for finite capacity scheduling, heuristic rules are applied in two integrated stages: Job Prioritization and Machine Selection. During Job Prioritization (“JP”), jobs are prioritized based on a set of JP rules which are machine independent. During Machine Selection (“MS”), jobs are scheduled for execution at machines that are deemed to be best suited based on a set of MS rules. The two-stage approach allows scheduling goals to be achieved for performance measures relating to both jobs and machines. For example, machine utilization may be improved while product cycle time objectives are still met. Two user-configurable options, namely scheduling model (job shop or flow shop) and scheduling methodology (forward, backward, or bottleneck), govern the scheduling process. A memory may store a three-dimensional linked list data structure for use in scheduling work orders for execution at machines assigned to work centers.

Owner:AGENCY FOR SCI TECH & RES

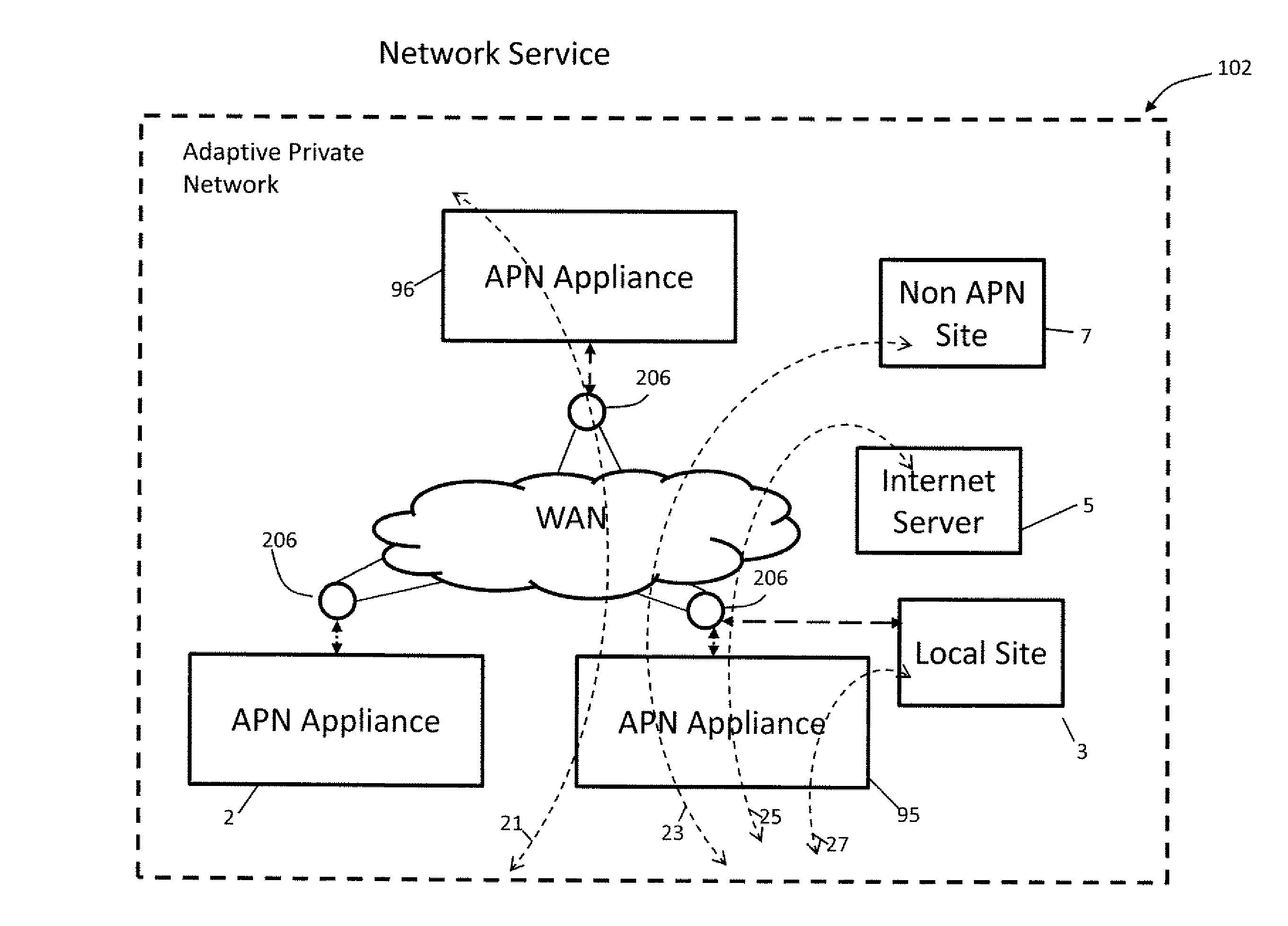

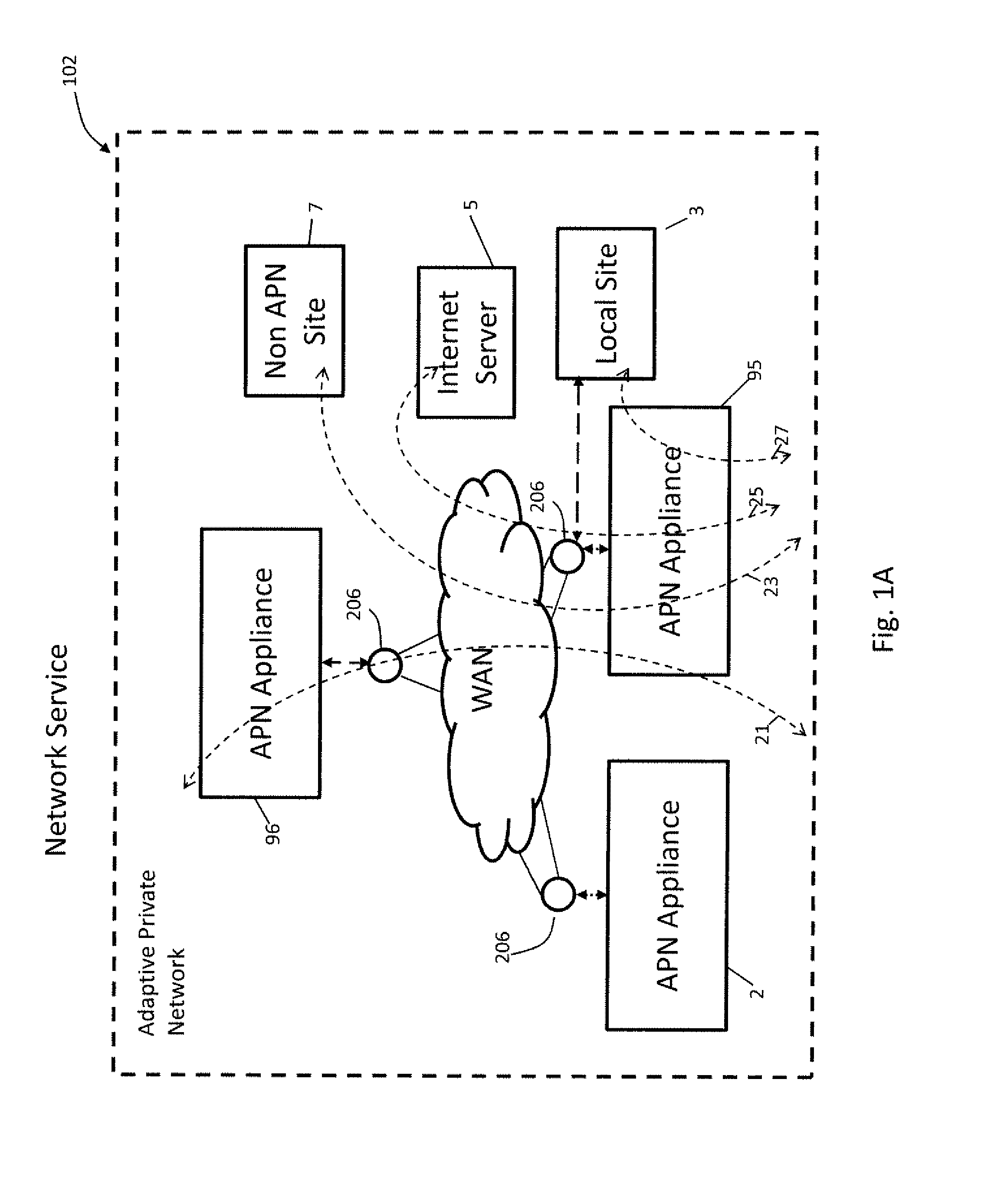

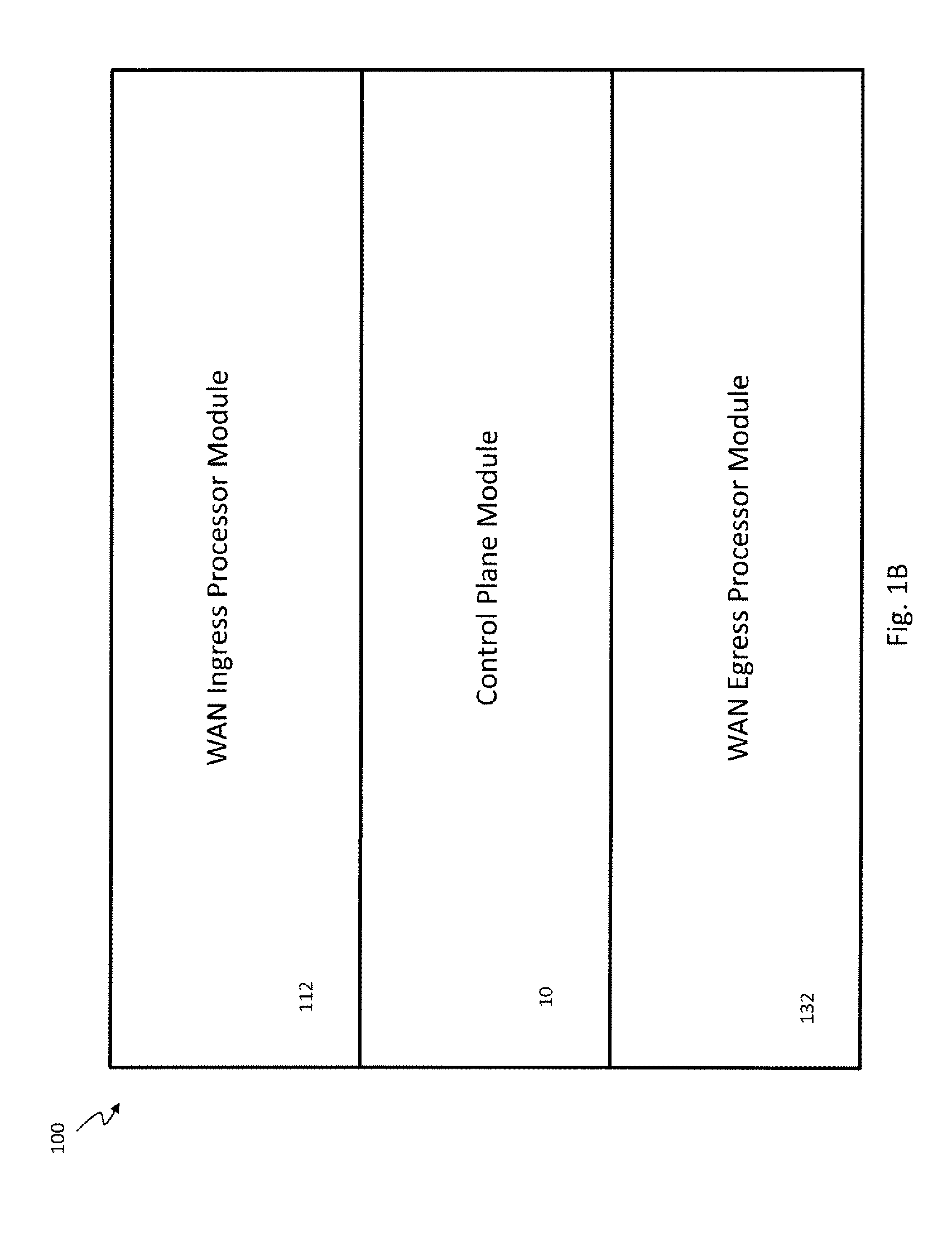

Flow-Based Adaptive Private Network with Multiple Wan-Paths

ActiveUS20090310485A1Improve performance and reliability and predictabilityError preventionTransmission systemsPrivate networkByte

Systems and techniques are described which improve performance, reliability, and predictability of networks without having costly hardware upgrades or replacement of existing network equipment. An adaptive communication controller provides WAN performance and utilization measurements to another network node over multiple parallel communication paths across disparate asymmetric networks which vary in behavior frequently over time. An egress processor module receives communication path quality reports and tagged path packet data and generates accurate arrival times, send times, sequence numbers and unutilized byte counts for the tagged packets. A control module generates path quality reports describing performance of the multiple parallel communication paths based on the received information and generates heartbeat packets for transmission on the multiple parallel communication paths if no other tagged data has been received in a predetermined period of time to ensure performance is continually monitored. An ingress processor module transmits the generated path quality reports and heartbeat packets.

Owner:TALARI NETWORKS

Bidding for Energy Supply

<heading lvl="0">Abstract of Disclosure< / heading> An auction service is provided that stimulates competition between energy suppliers (i.e., electric power or natural gas). A bidding moderator (Moderator) receives offers from competing suppliers specifying the economic terms each is willing to offer to end users for estimated quantities of electric power or gas supply (separate auctions). Each supplier receives feedback from the Moderator based on competitors offers and has the opportunity to adjust its own offers down or up, depending on whether it wants to encourage or discourage additional energy delivery commitments in a particular geographic area or to a particular customer group. Each supplier s offers can also be changed to reflect each supplier s capacity utilization. The Moderator selects at least two suppliers to provide energy to each end user, with each supplier providing a portion of the energy to be used by each end user at an end-user facility during a specific future time interval.

Owner:GEOPHONIC NETWORKS

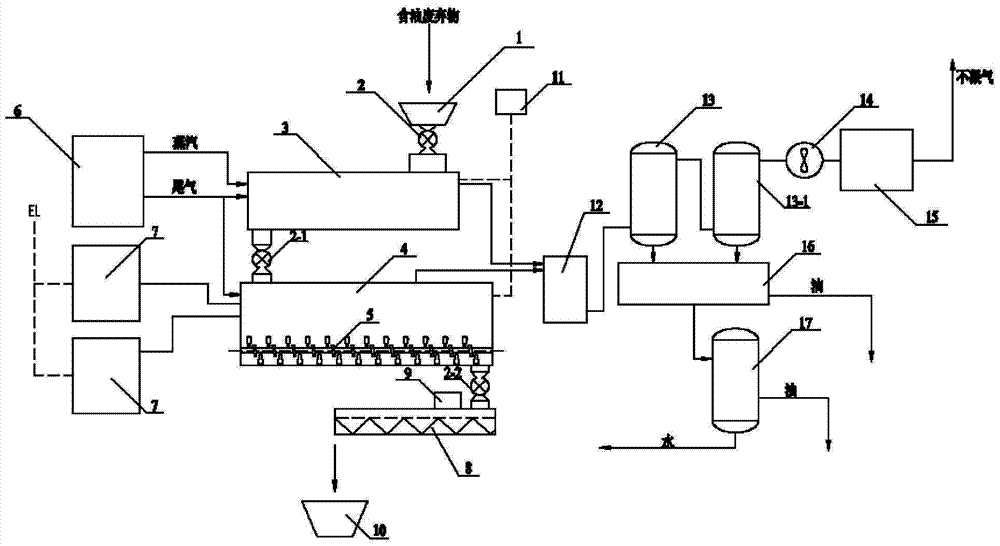

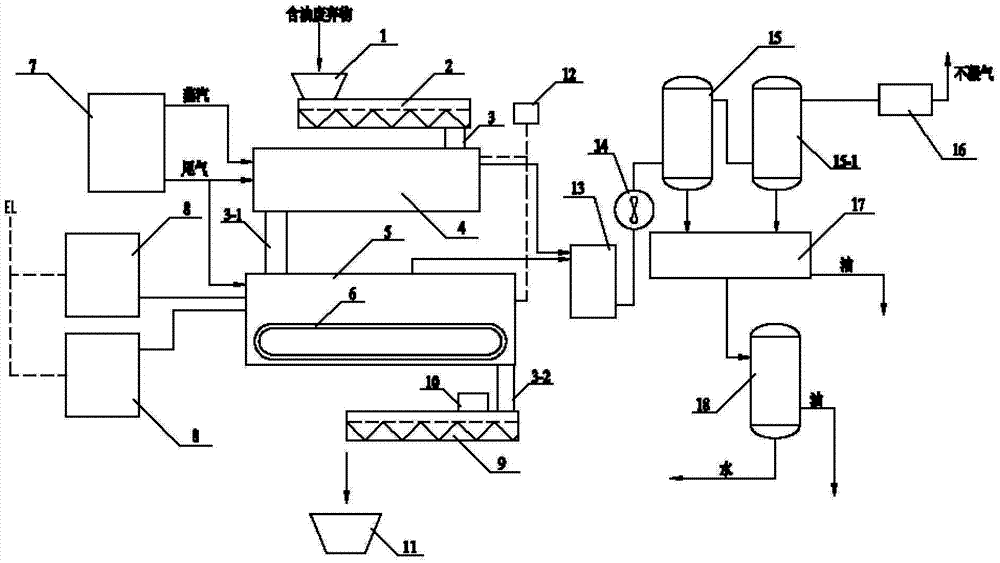

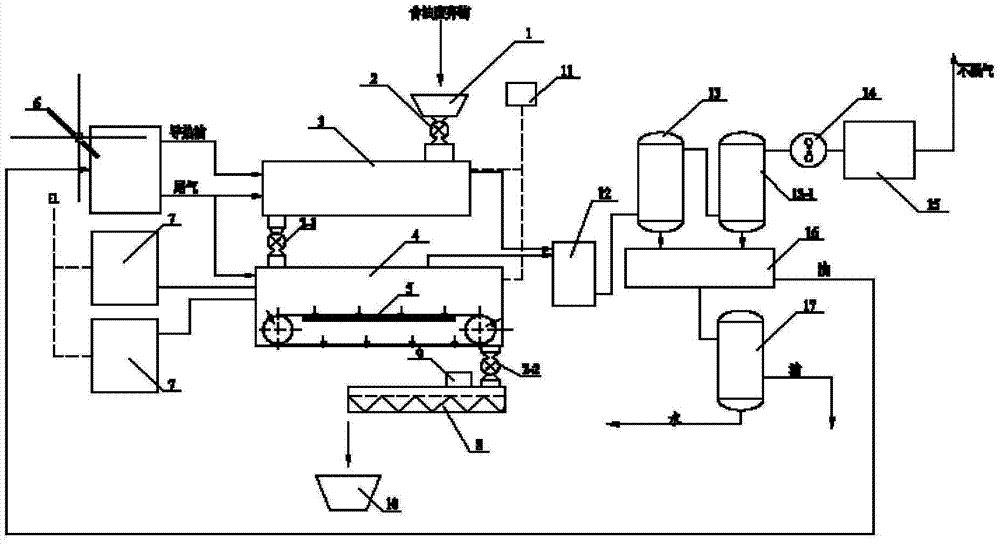

Industrial treatment method and industrial treatment device for oil field waste

ActiveCN103923670ALow boiling pointIncrease gasification rateElectrical coke oven heatingLiquid hydrocarbon mixture productionMicrowave pyrolysisEnvironmental engineering

The invention provides an industrial treatment method for oil field waste. The industrial treatment method for oil field waste comprises the following steps: carrying out sampling analysis on the oil field waste, preheating to 80-300 DEG C by using high-temperature steam or conduction oil, then carrying out microwave pyrolysis treatment, controlling pressure at minus 5000 to minus 100 Pa, thus obtaining solid treatment substances and gas, condensing, separating and purifying the gas, and finally recycling so as to obtain water, oil and non-condensable gas. An industrial treatment device for oil field waste comprises a feed hopper, a dryer, a microwave heating cavity, a microwave generator, a heating device and a condensation separation purification device, wherein the feed hopper is connected to the dryer which is connected to the microwave heating cavity, a steam or conduction oil outlet of the heating device is connected to a steam or conduction oil outlet of the dryer; gas outlets of the dryer and the microwave heating cavity are connected to the condensation separation purification device; the microwave generator is connected to the microwave heating cavity. The industrial treatment method and the industrial treatment device have good treatment effects, high utilization rate of energy sources and good economical efficiency.

Owner:RUIJIE ENVIRONMENTAL PROTECTION TECH CO LTD

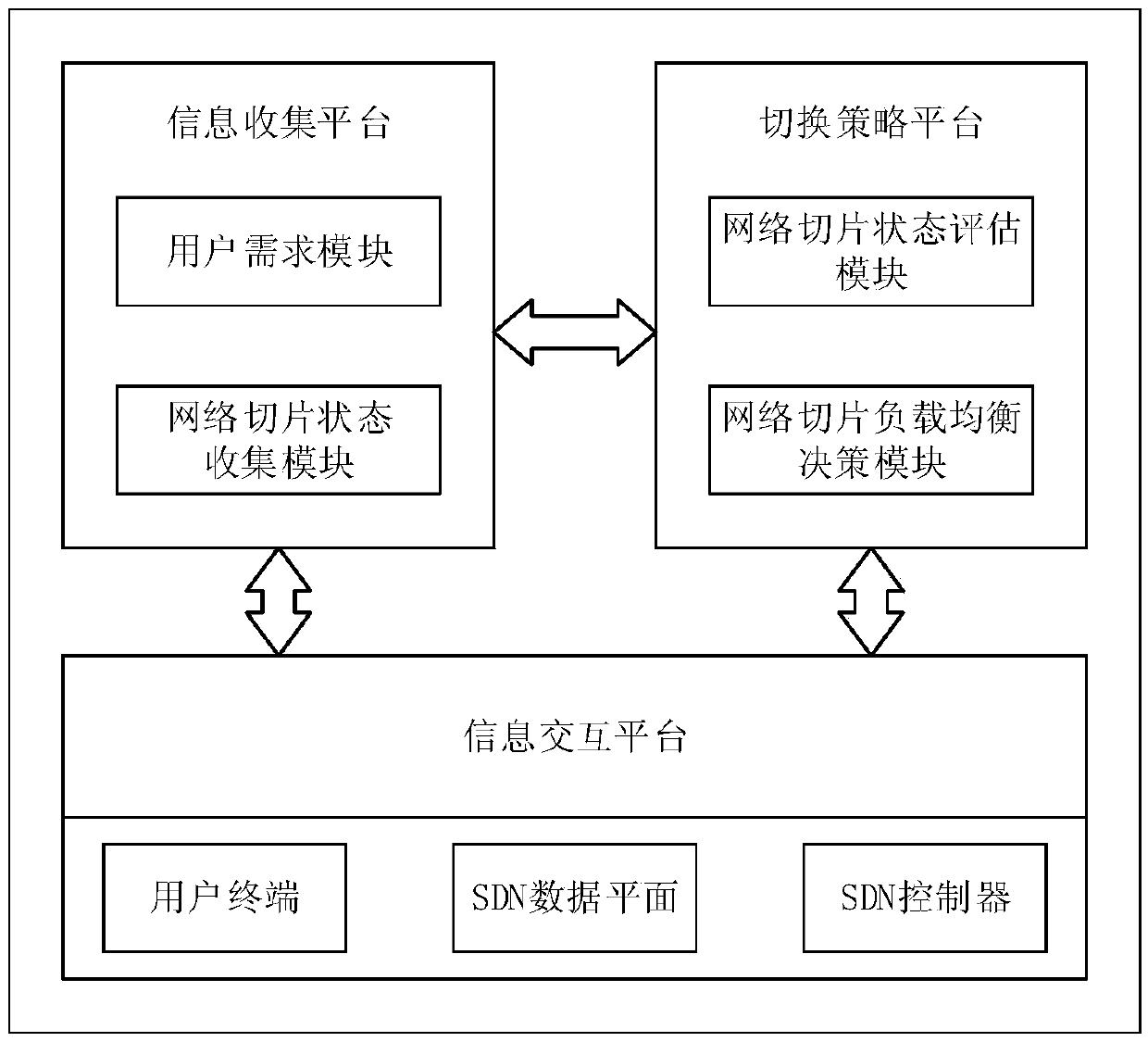

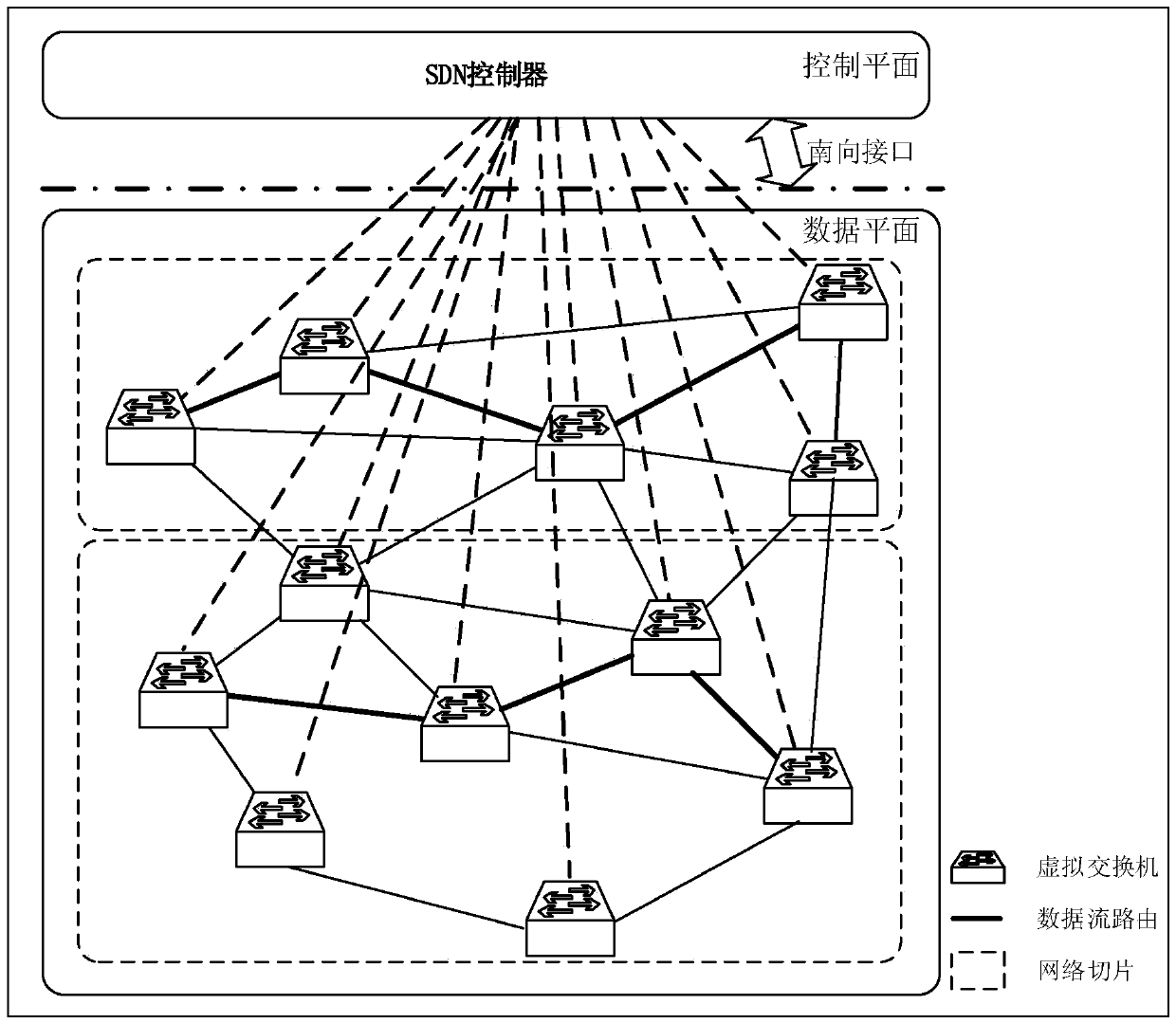

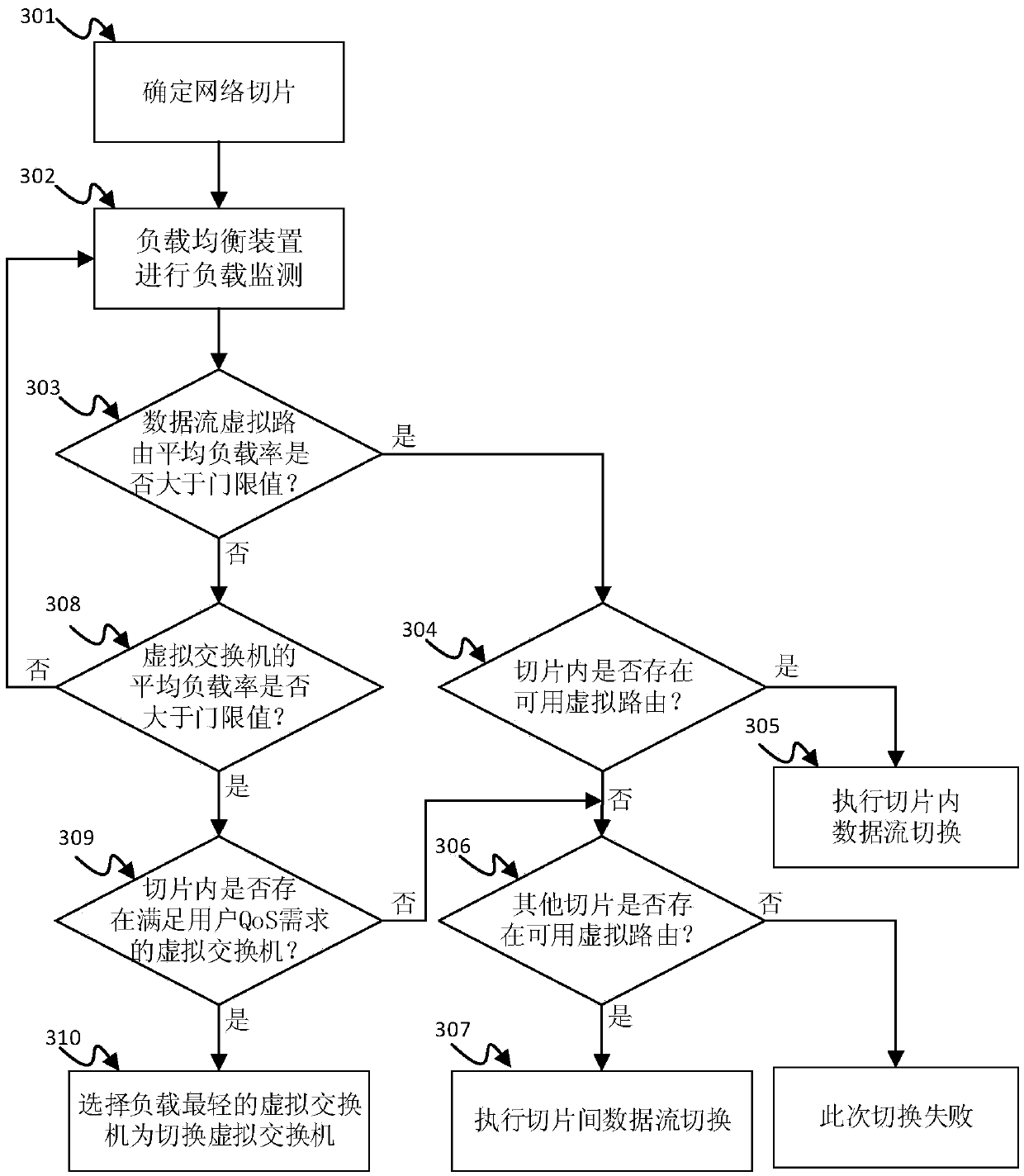

Software defined networking load balancingdevice and method

ActiveCN105516312AImprove QoSBoost QoS BoostNetwork topologiesTransmissionData streamAssessment data

Owner:重庆邮莱特科技有限公司

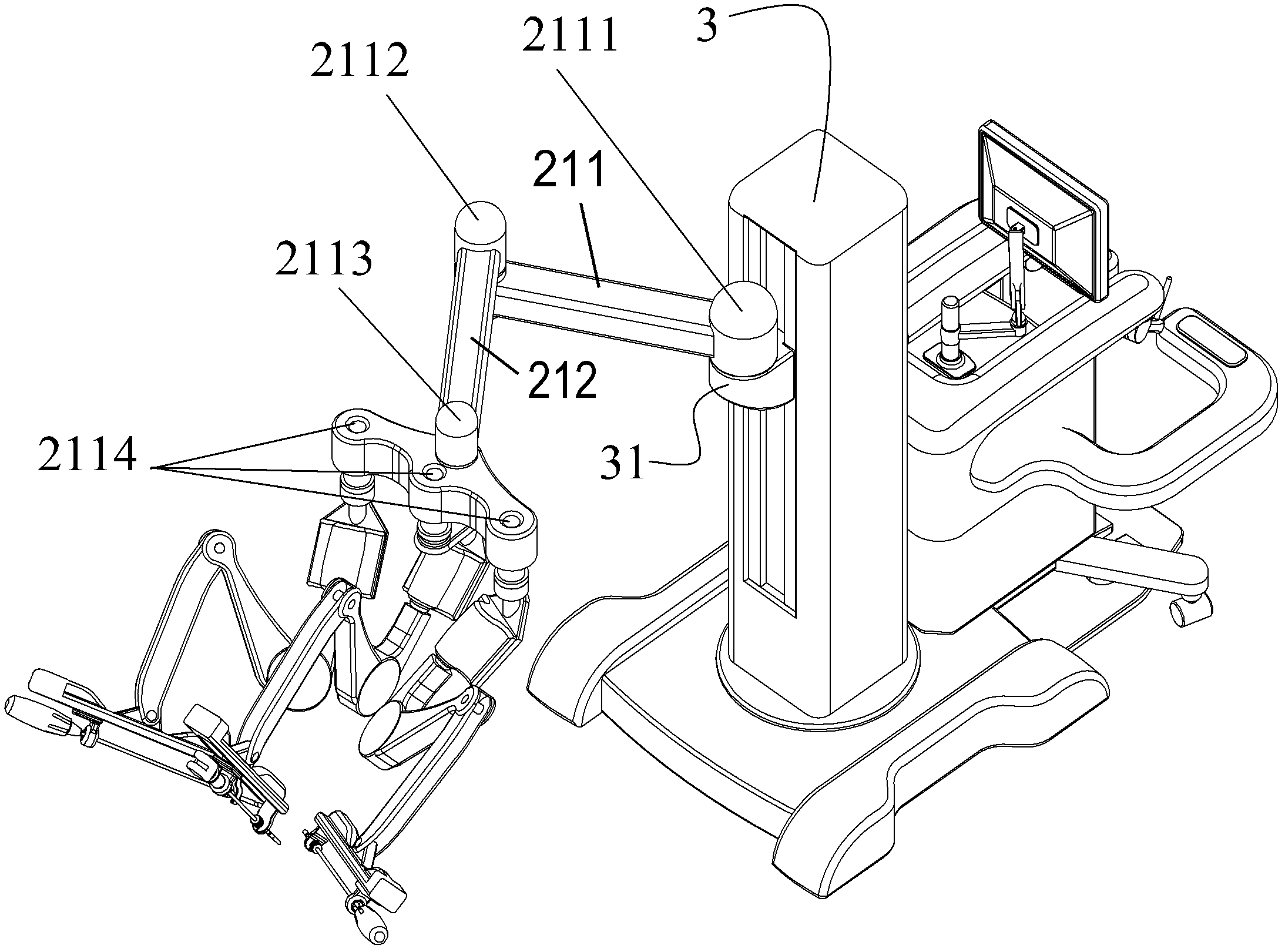

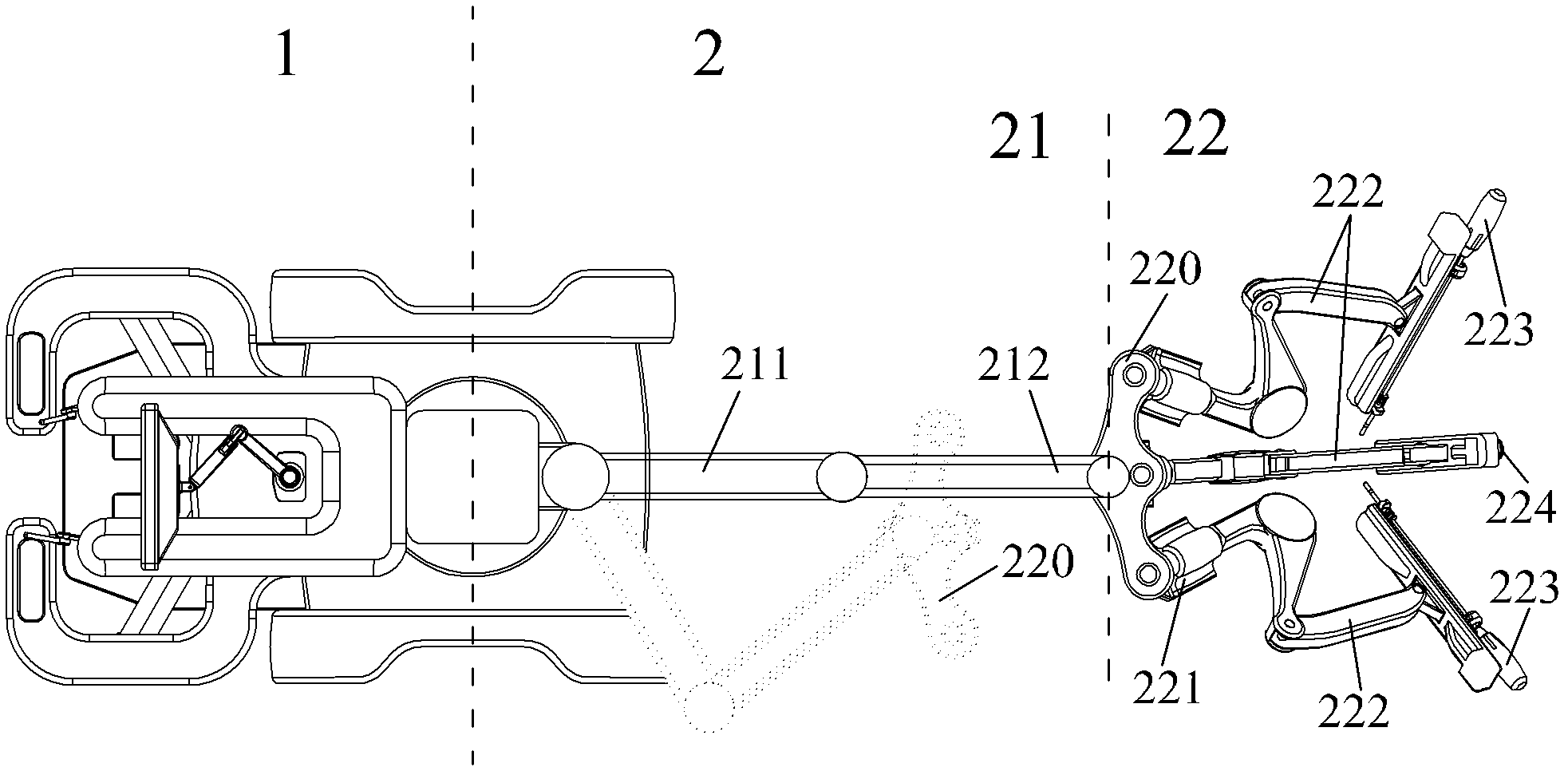

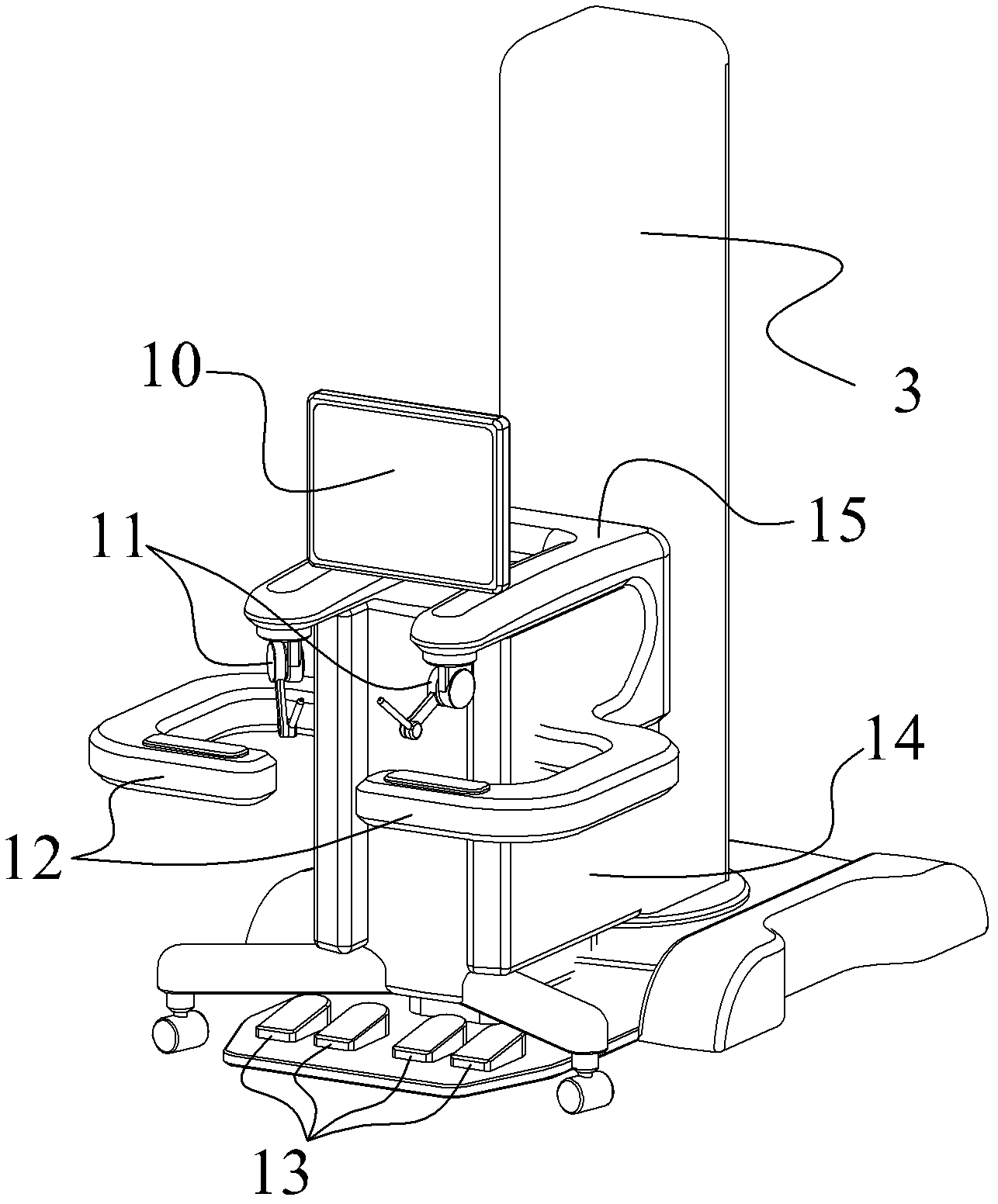

Arrangement structure for mechanical arm of minimally invasive surgery robot

InactiveCN102973317AEasy transferReduce in quantityDiagnosticsSurgical robotsLess invasive surgeryControl theory

An arrangement structure for a mechanical arm of a minimally invasive surgery robot comprises a main operation end part, an auxiliary operation end part, a driven adjusting arm and a driving operation arm combination. The main operation end part and the auxiliary operation end part are connected in a front and back mode through a vertical column to form a whole, and a sliding block vertically sliding is arranged on the front end face of the vertical column. The driven adjusting arm comprises a first connection rod and a second connection rod, the driving operation arm combination comprises a driving arm support platform, at least three driving arm seats and at least three driving operation arms, and at least three same driving arms are respectively in rotating connection with at least three driving arm seats. The arrangement structure for the mechanical arm of the minimally invasive surgery robot has the advantages that a main operation end is integrated with a driven operating end to enable the robot to be conveniently shifted, the arrangement structure for the mechanical arm of the minimally invasive surgery robot achieves support and adjustment of a plurality of driving operation arms, reduces total volume of the robot, and improves utilization rate of a space in a surgery room, and the arrangement structure for the mechanical arm of the minimally invasive surgery robot not only saves the space of the surgery room, but also has the advantage of being capable of fast moving, and does not need a special surgery room.

Owner:周宁新 +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com