Distributed type dynamic cache expanding method and system supporting load balancing

A distributed cache and load balancing technology, applied in transmission systems, digital transmission systems, electrical components, etc., can solve problems such as reducing migration overhead, and achieve the effects of reducing network overhead, improving performance, and shortening response time.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

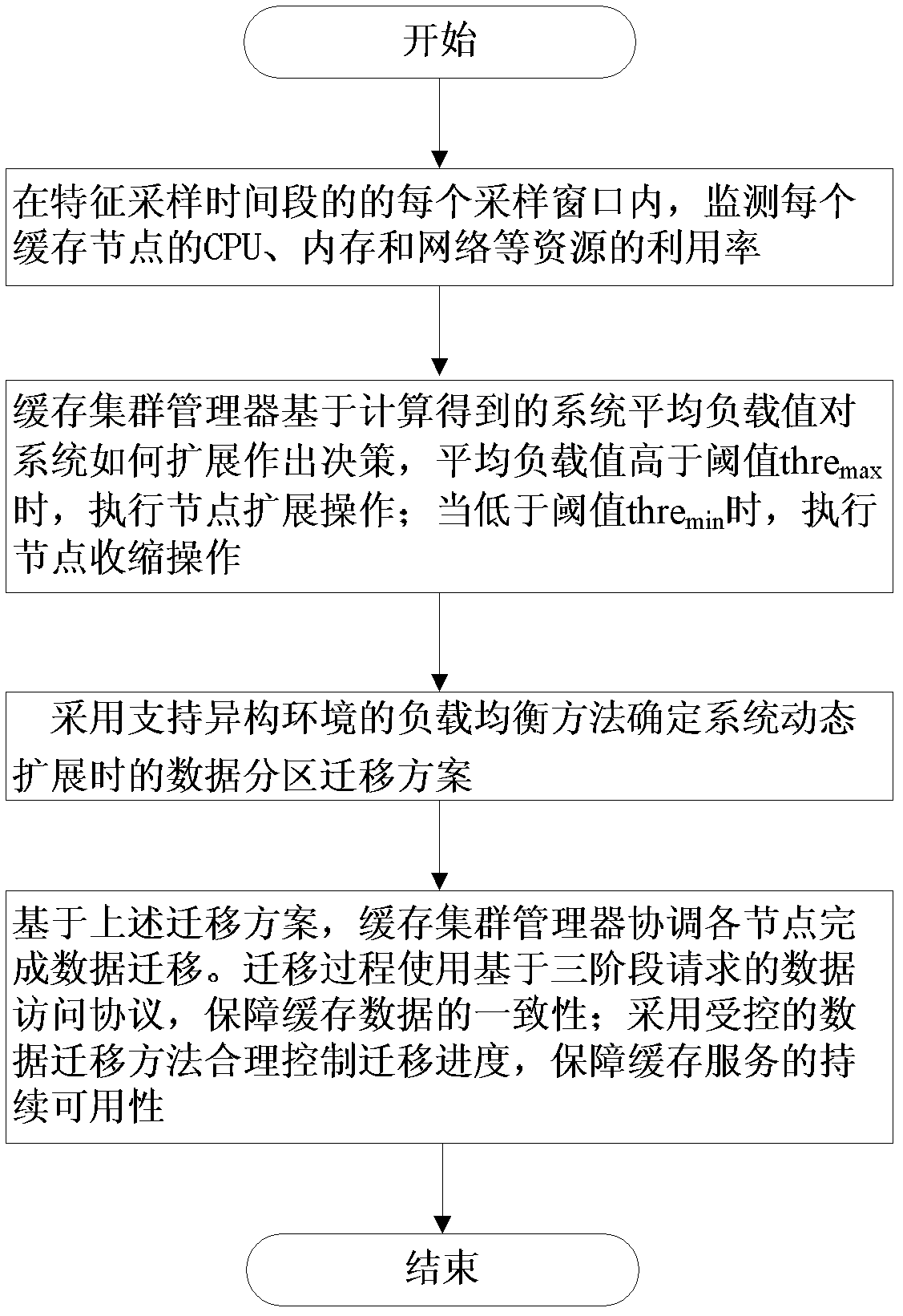

[0052] The present invention will be further described below in conjunction with specific embodiments and accompanying drawings.

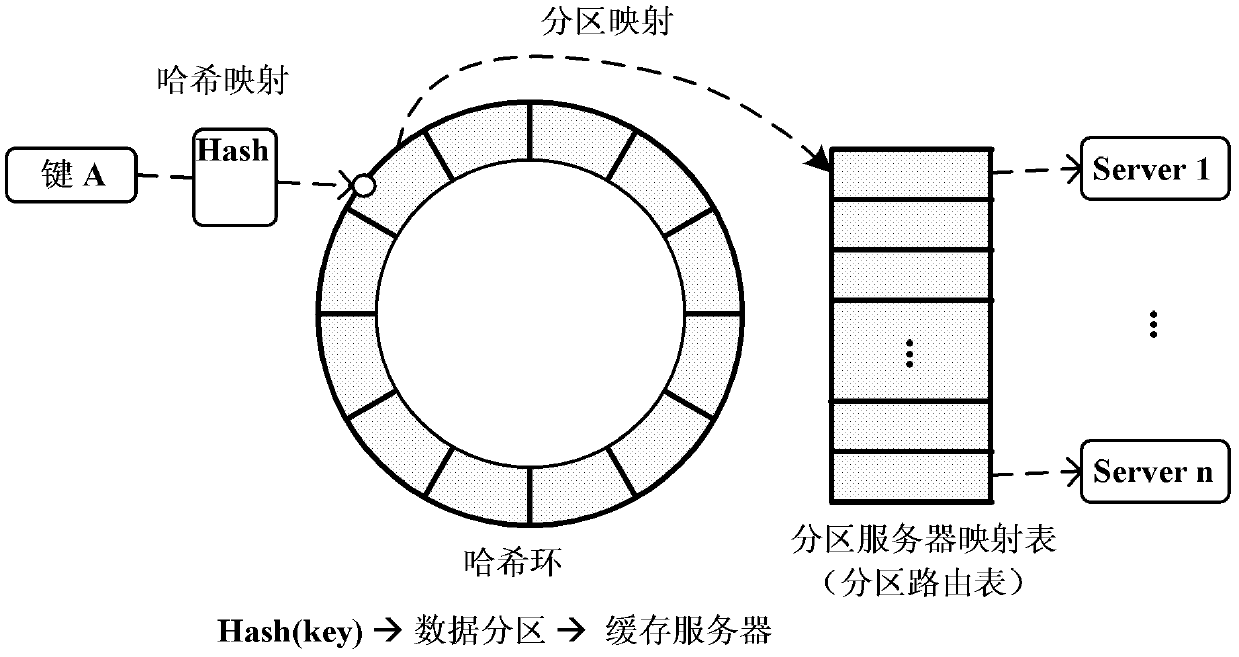

[0053] The entire distributed cache system consists of three parts: cache server (Cache server), cache client (Cache Client) and cache cluster manager (Cache Admin). The three are connected through the network. Each cache server runs independently, and is uniformly monitored and managed by the cache cluster manager through the management agent. The management agent is located on the same physical node as the cache server and is responsible for generating JMX management MBeans. After receiving the control command from the cache cluster manager, the management agent will automatically adapt the command and control the cache service process to perform corresponding operations.

[0054] In the cache cluster manager, the topology monitor uses Jgroups-based multicast technology to monitor the topology changes of server nodes, and obtains the performance...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com