Patents

Literature

852 results about "Response time" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In technology, response time is the time a system or functional unit takes to react to a given input.

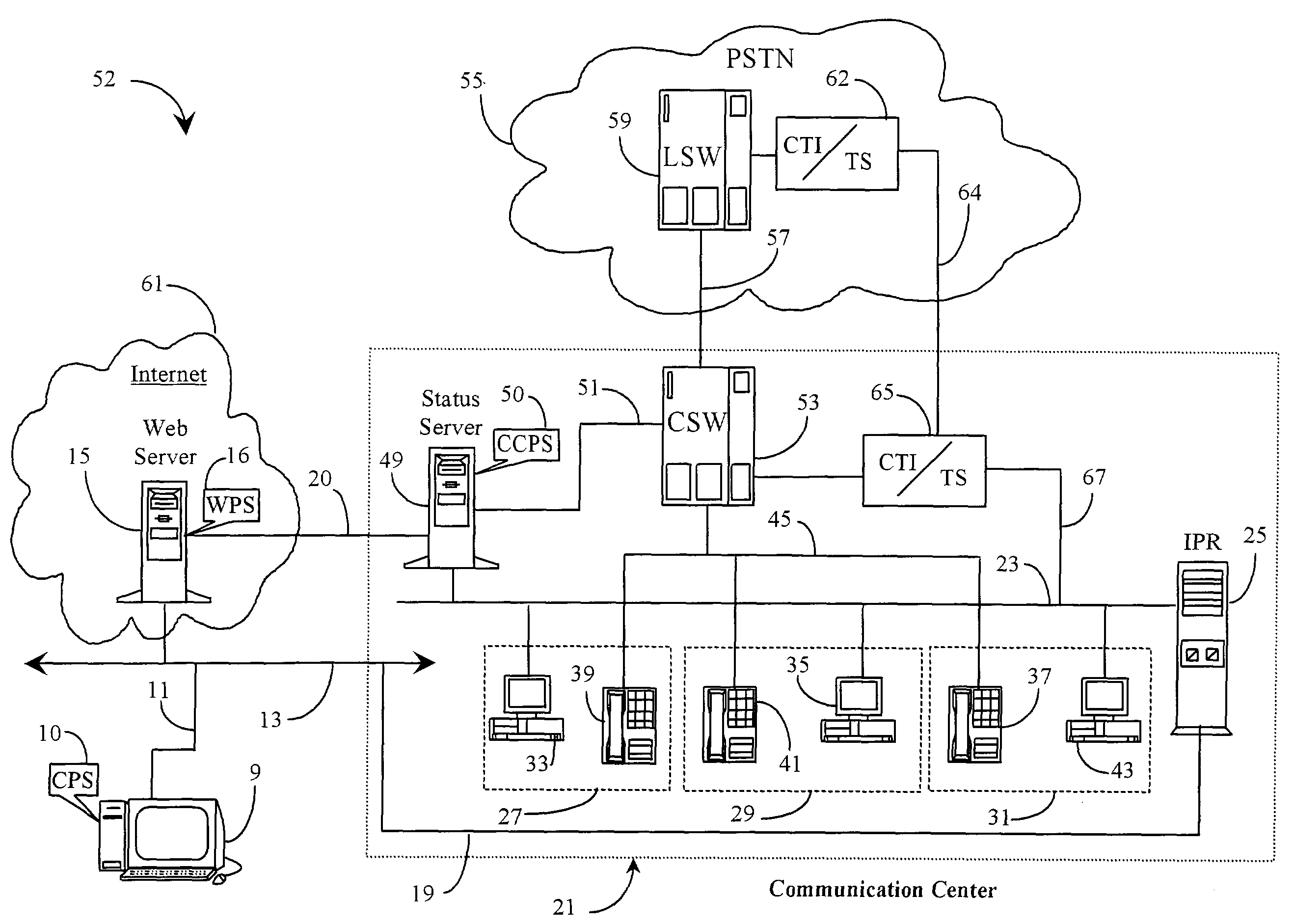

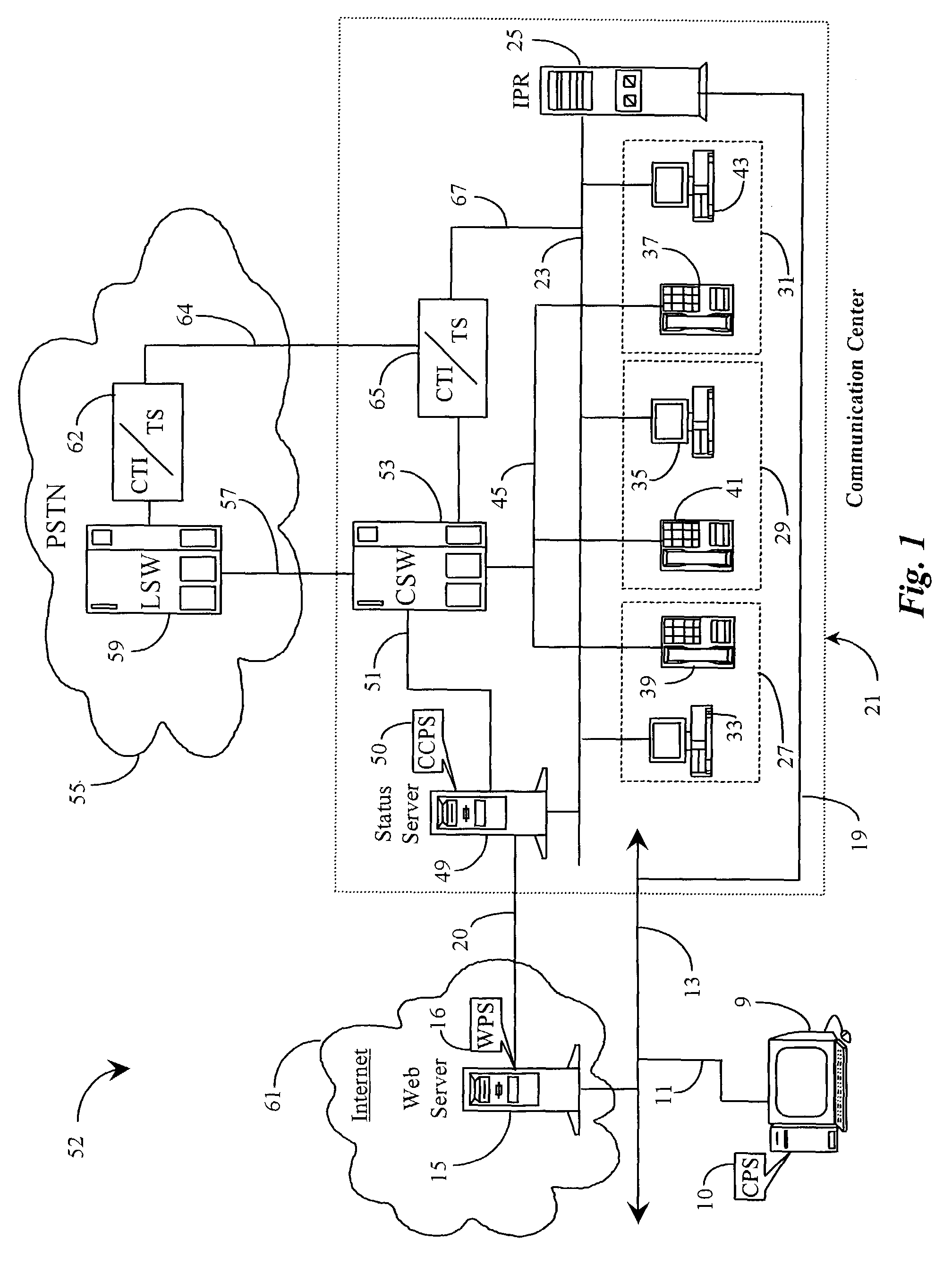

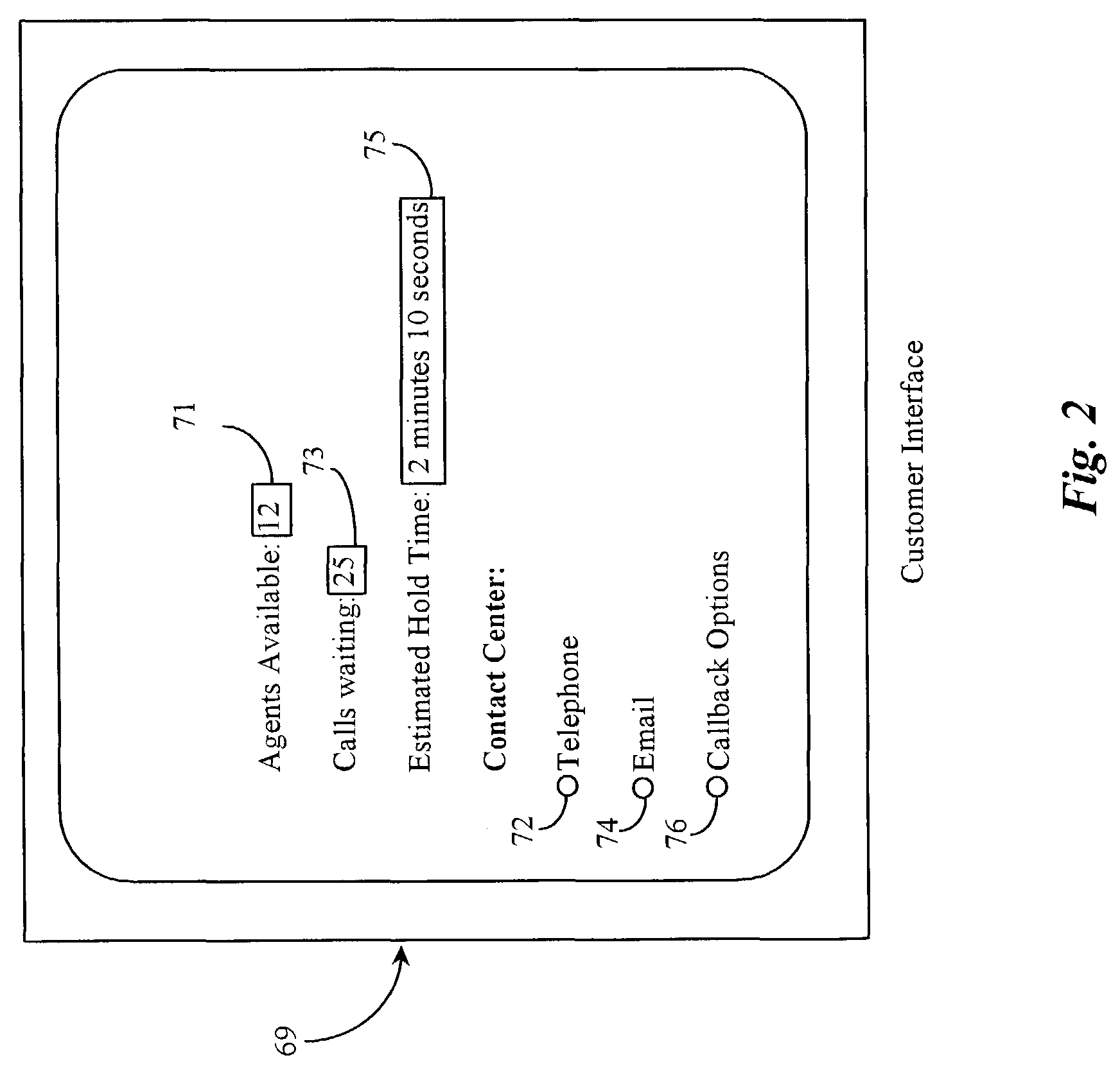

Method and apparatus for optimizing response time to events in queue

ActiveUS7929562B2Short response timeQuick cureError preventionFrequency-division multiplex detailsApplication softwareProcess time

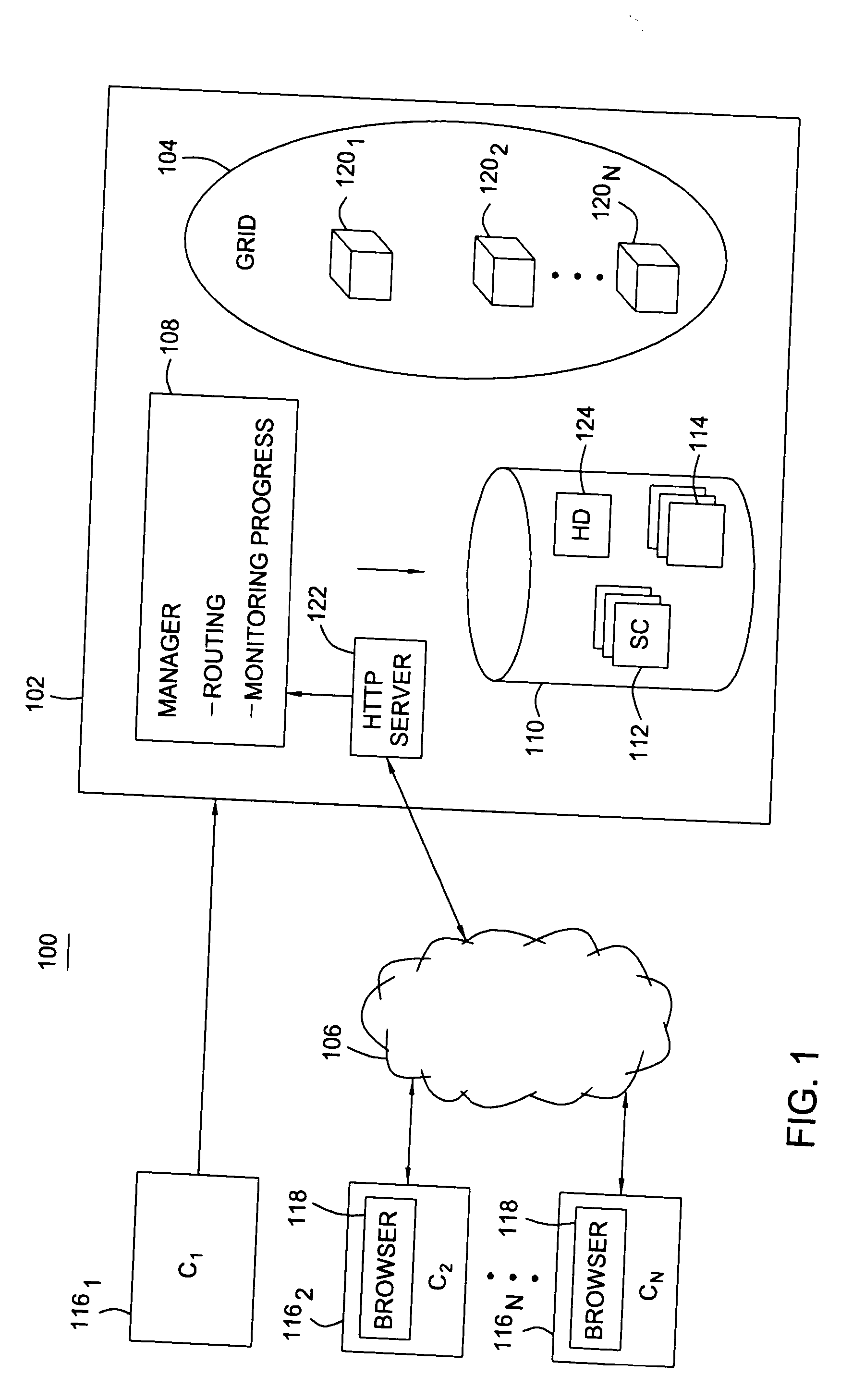

A system for optimizing response time to events or representations thereof waiting in a queue has a first server having access to the queue; a software application running on the first server; and a second server accessible from the first server, the second server containing rules governing the optimization. In a preferred embodiment, the software application at least periodically accesses the queue and parses certain ones of events or tokens in the queue and compares the parsed results against rules accessed from the second server in order to determine a measure of disposal time for each parsed event wherein if the determined measure is sufficiently low for one or more of the parsed events, those one or more events are modified to a reflect a higher priority state than originally assigned enabling faster treatment of those events resulting in relief from those events to the queue system load.

Owner:GENESYS TELECOMMUNICATIONS LABORATORIES INC

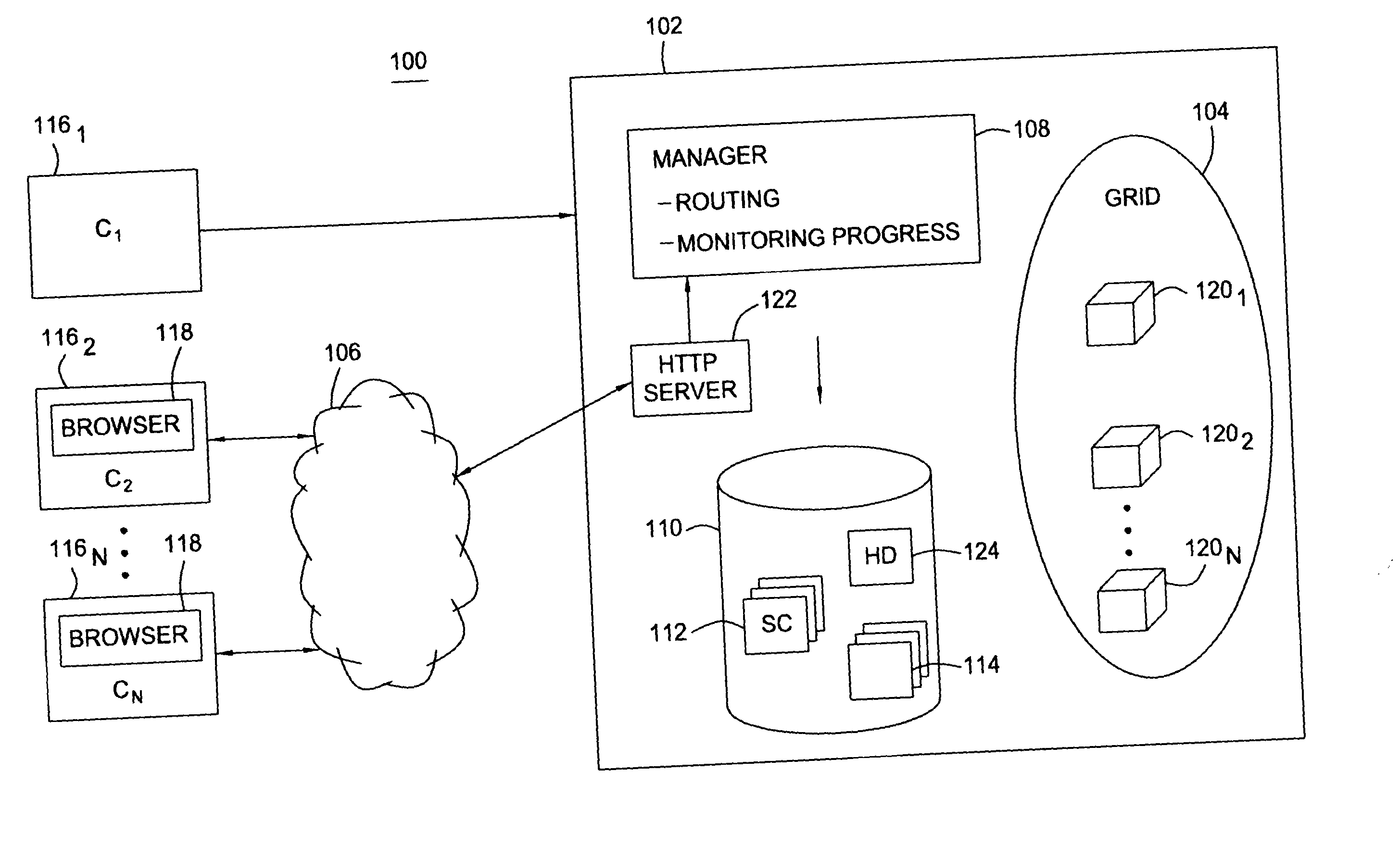

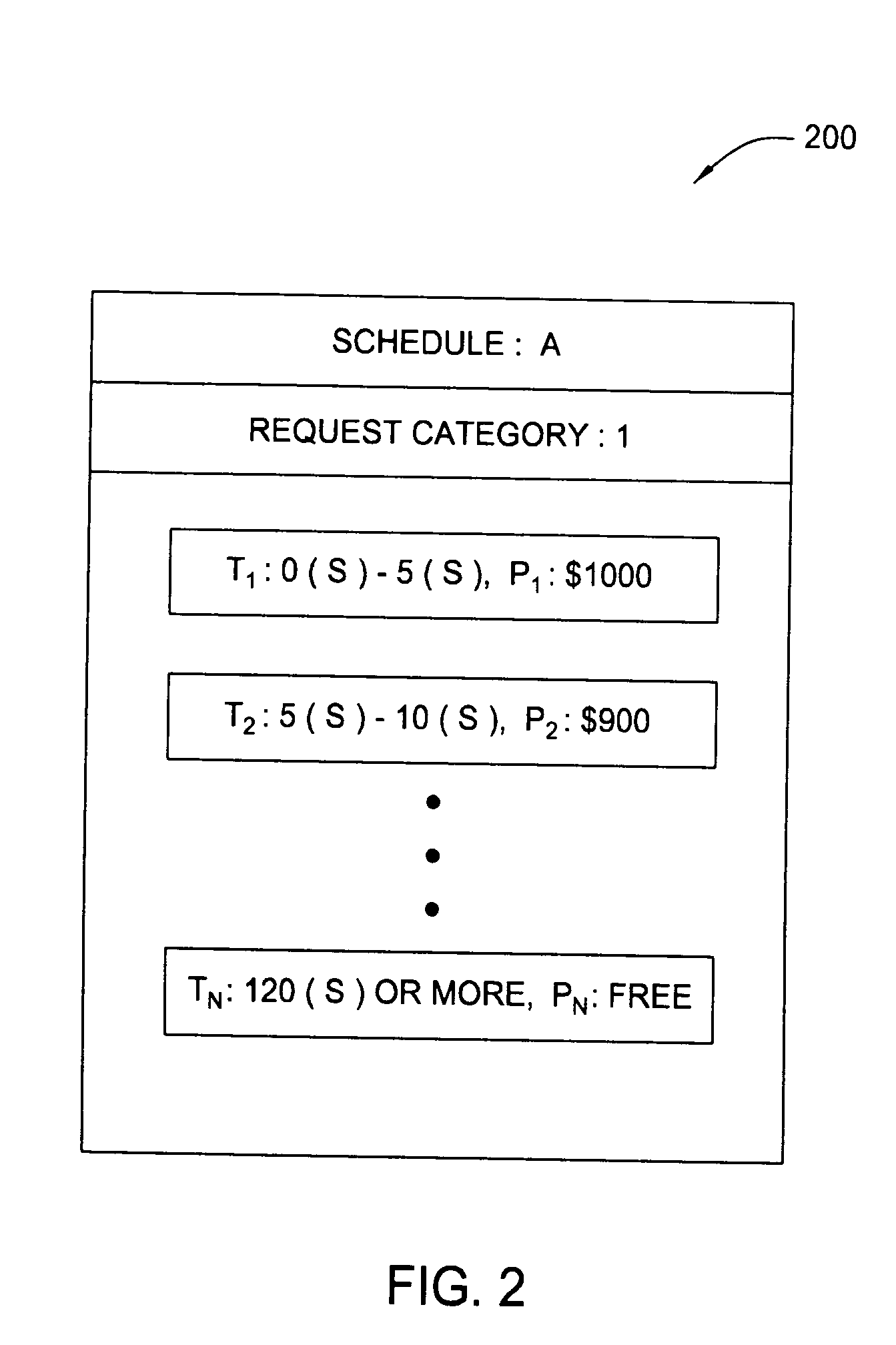

Power on demand tiered response time pricing

Methods, articles of manufacture, and systems for determining a fee to be charged for requests processed in a grid computing environment based. In one embodiment the fee may be determined based on the time it takes to process a request and / or pricing schedules that may vary depending on a variety of pricing criteria. In another embodiment, a completion time criterion that defines a maximum acceptable time to complete a request may be specified. If the amount of time needed to perform the request is less than the maximum acceptable time specified, returning the results may be delayed to avoid providing services valued in excess of what the customer has paid for.

Owner:EBAY INC

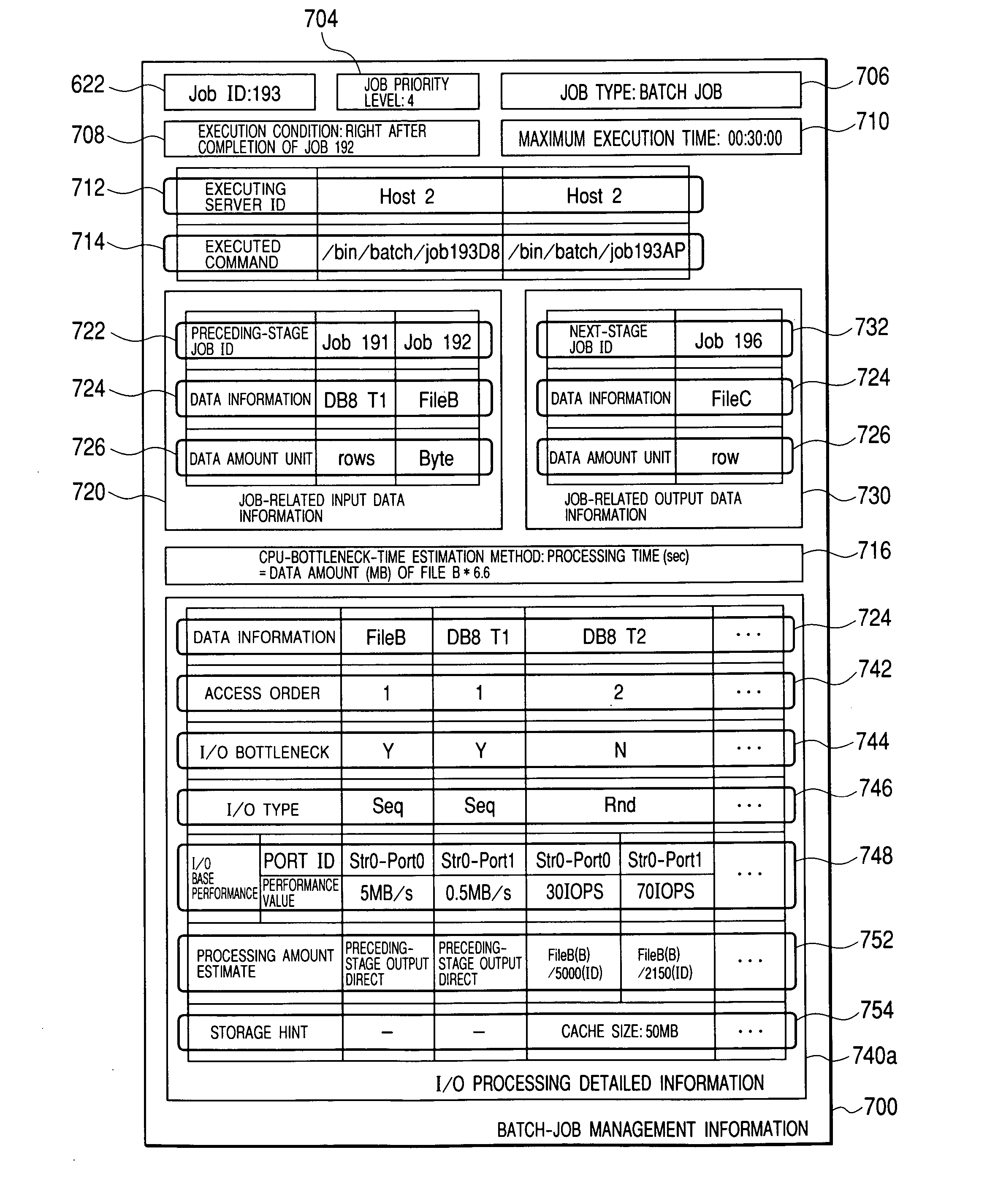

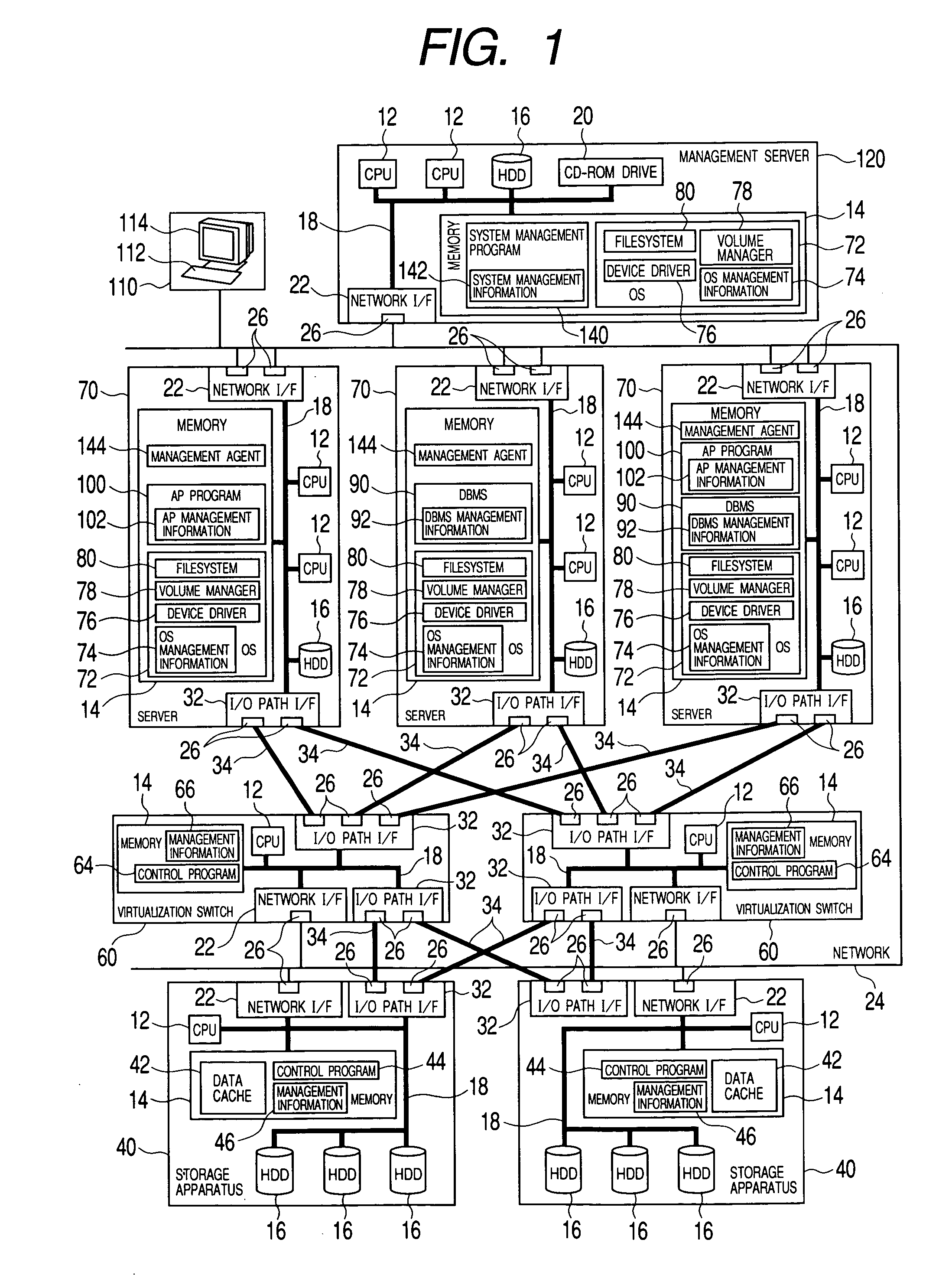

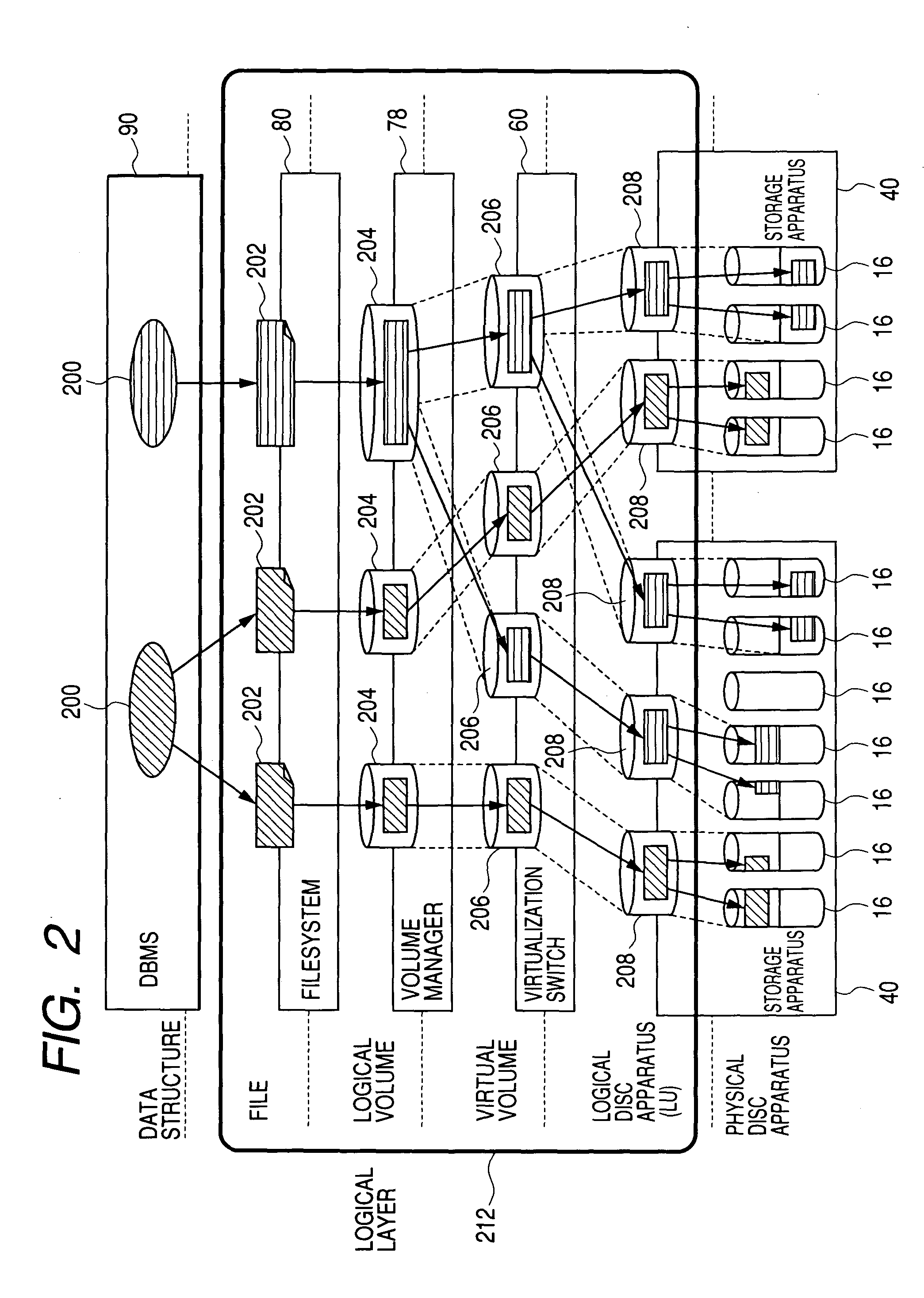

Computer system for managing performances of storage apparatus and performance management method of the computer system

InactiveUS20040193827A1Low costInput/output to record carriersDigital data information retrievalSystem elementSystem monitor

In a computer system with a DBMS running thereon, management of the performance of a storage apparatus is executed by using a performance indicator provided by a user job so as to simplify the management of the performance. For this reason, a management server employed in the computer system monitors an operating state of each system element, a response time onto a job and other information. Pre-given information on a process such as a performance requirement the collected monitored information are used by the management server in issuing a command to change allocation of a processing amount to a port, an allocation of a cache area for data, the logical configuration of disc drives and other parameters in order to carry out the new process or in the case where a result of a judgment based on the monitored information indicates that tuning is necessary. In the case of a process for a batch job, a method for estimating a processing time is given to the management server, which issues a setting modification command based on an estimated processing time. In the case of a process for an on-line job, on the other hand, a command to modify settings of a member bearing a heavy load is issued in the case where a response time on a process and / or a throughput do not meet their performance requirement.

Owner:HITACHI LTD

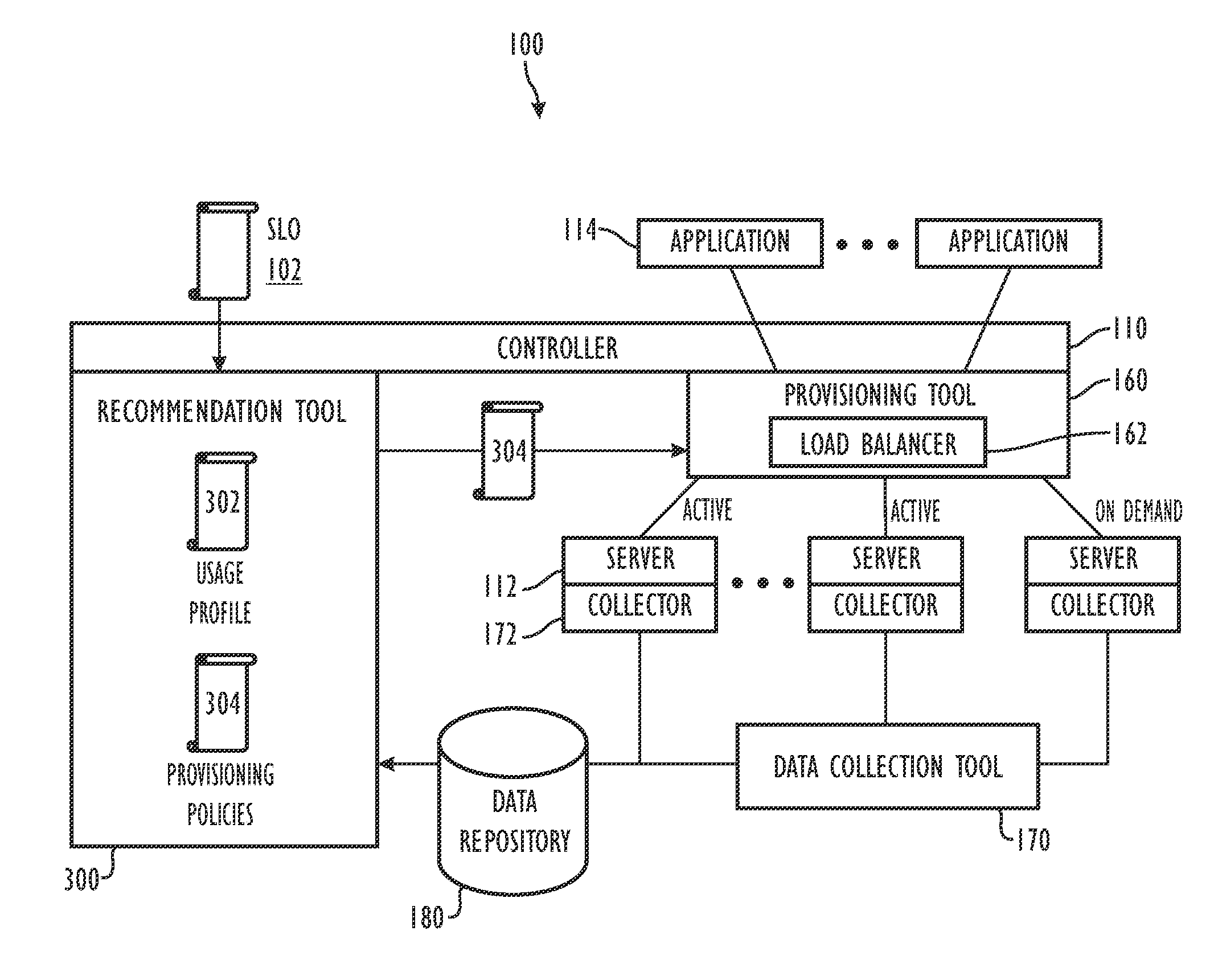

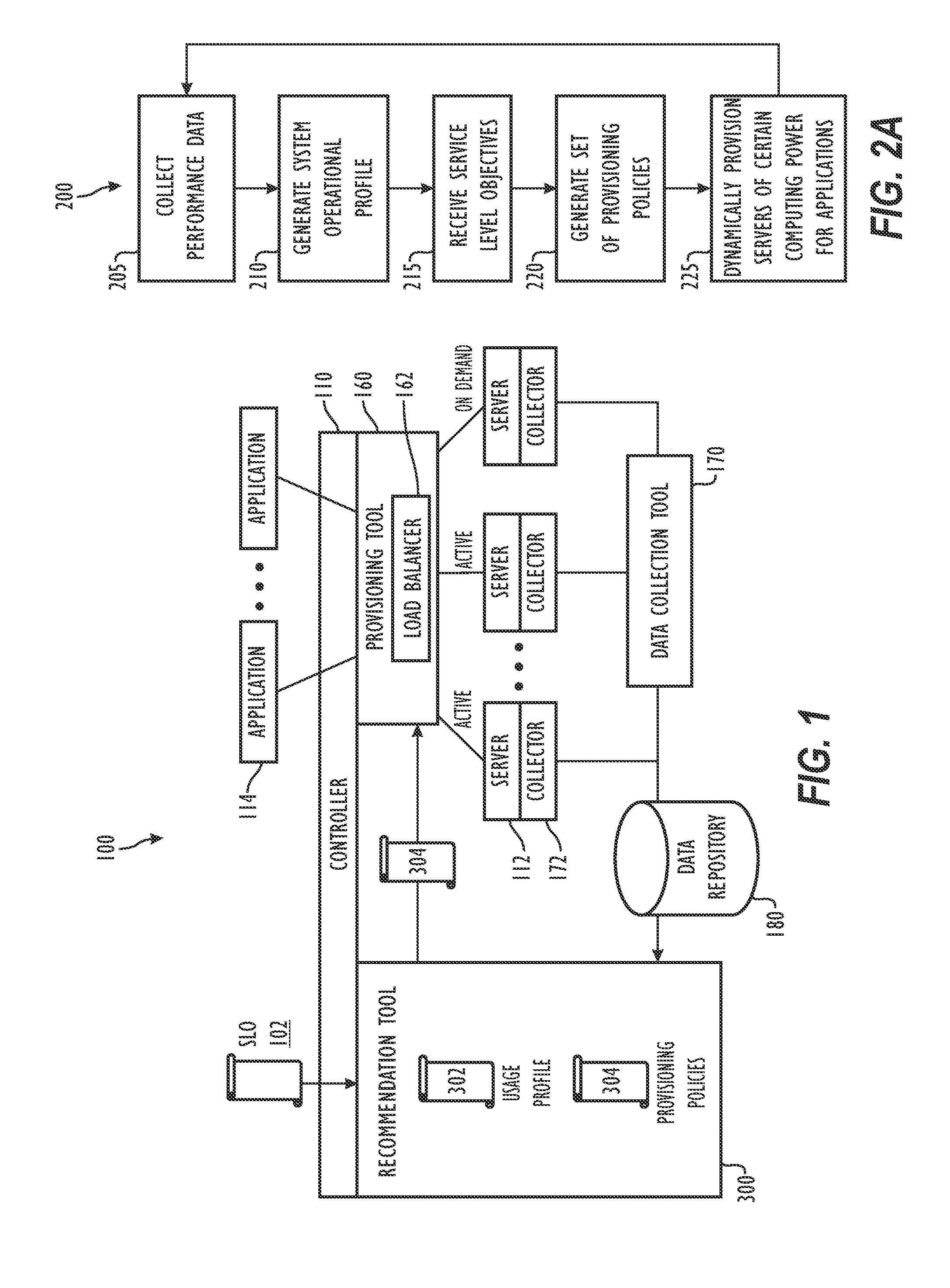

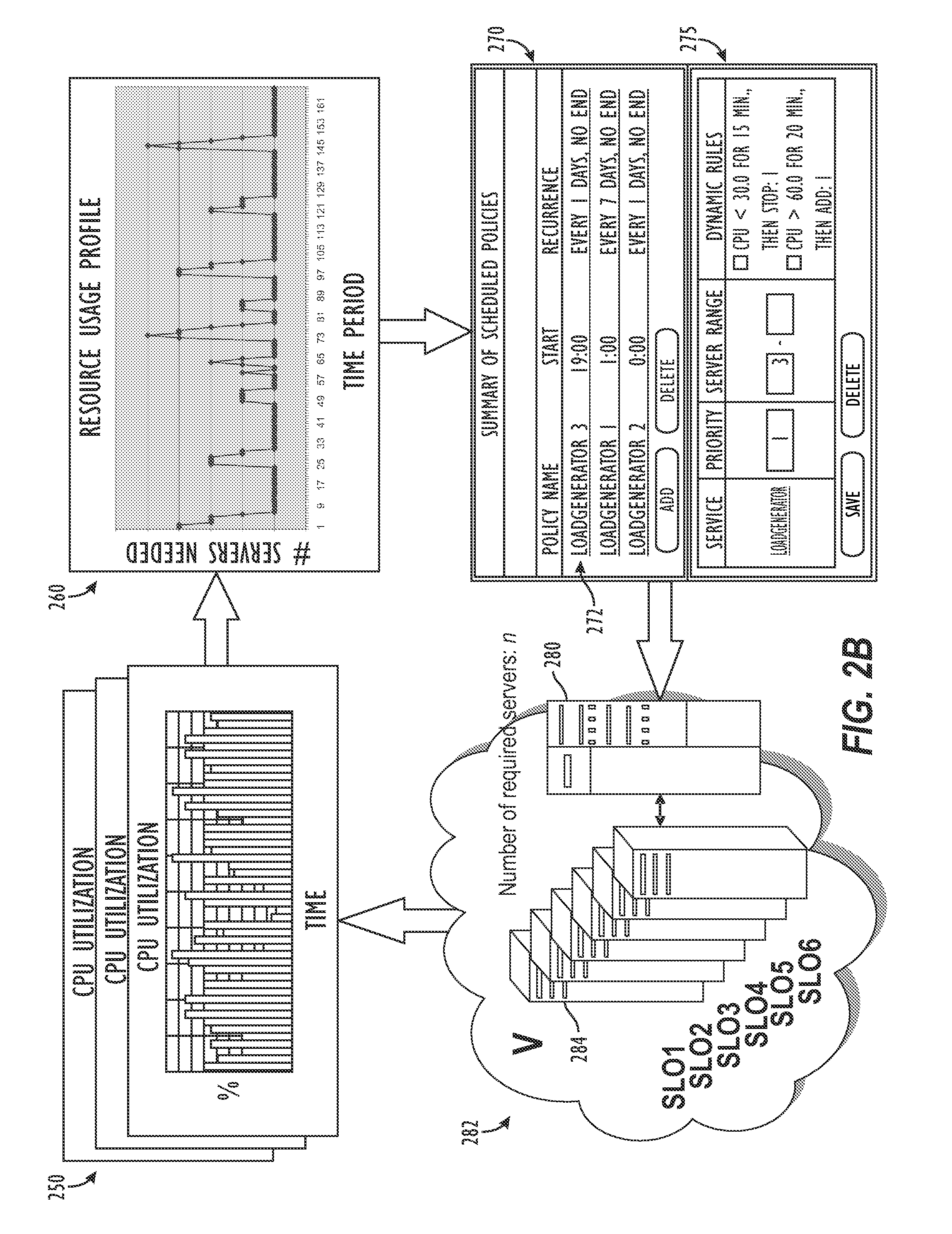

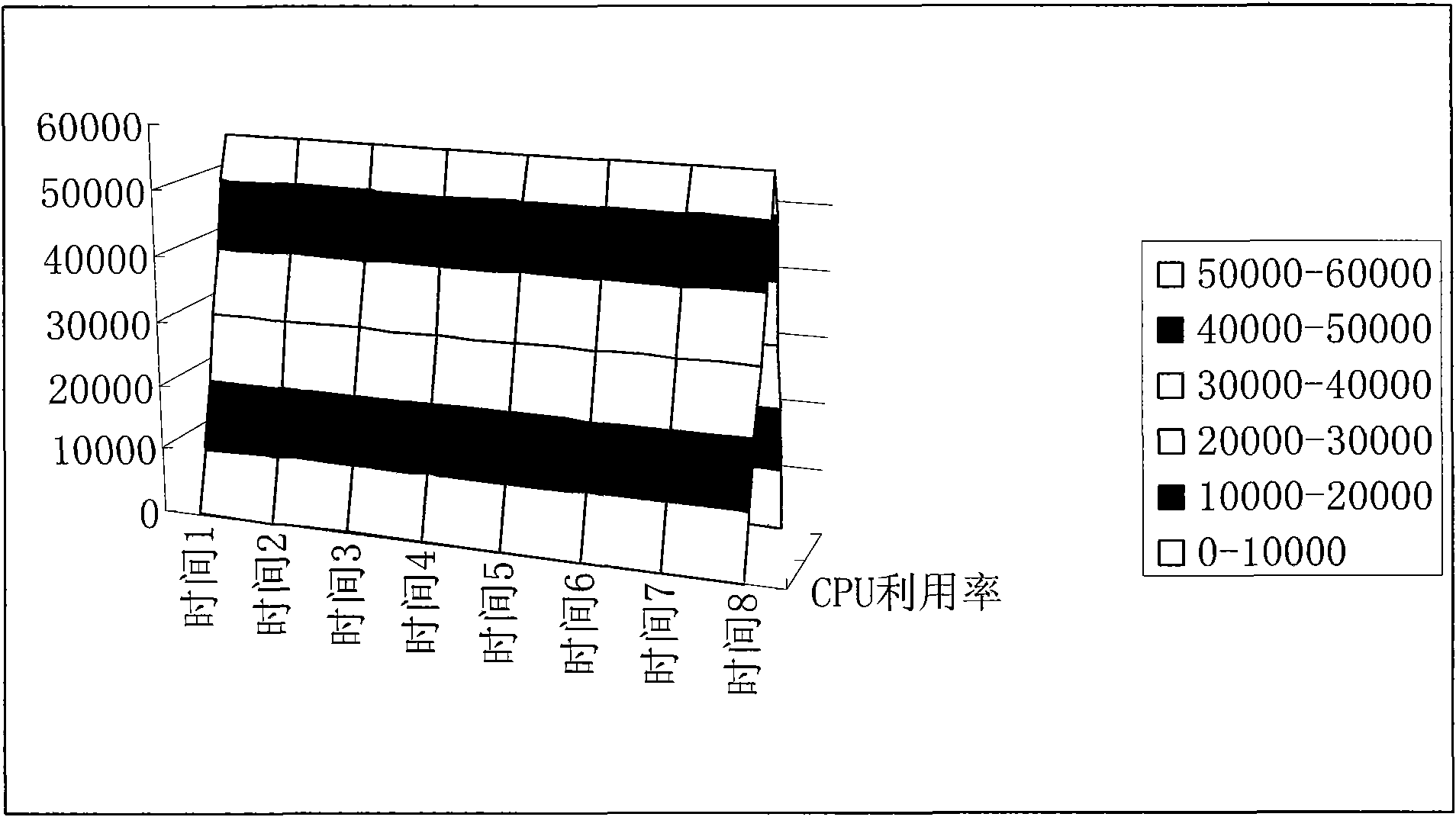

Automated Capacity Provisioning Method Using Historical Performance Data

ActiveUS20080059972A1Multiprogramming arrangementsTransmissionCapacity provisioningComputerized system

An automated system obtains performance data of a computer system having partitioned servers. The performance data includes a performance rating and a current measured utilization of each server, actual workload (e.g. transaction arrival rate), and actual service levels (e.g. response time or transaction processing rate). From the data, automated system normalizes a utilization value for each server over time and generates a weighted average for each and expected service levels for various times and workloads. Automated system receives a service level objective (SLO) for each server and future time and automatically determines a policy based on the weighted average normalized utilization values, past performance information, and received SLOs. The policy can include rules for provisioning required servers to meet the SLOs, a throughput for each server, and a potential service level for each server. Based on the generated policy, the system automatically provisions operation of the servers across partitions.

Owner:BMC SOFTWARE

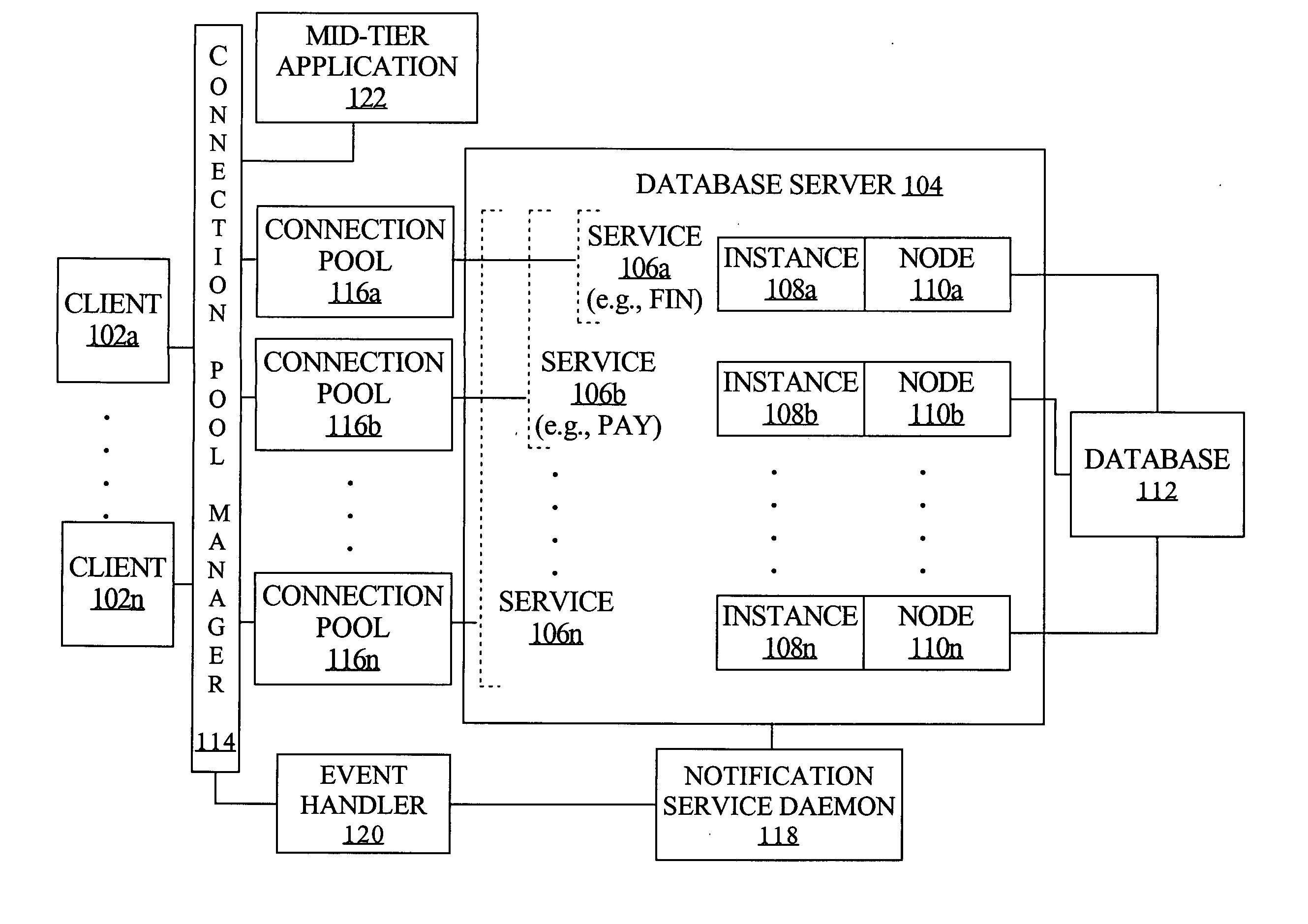

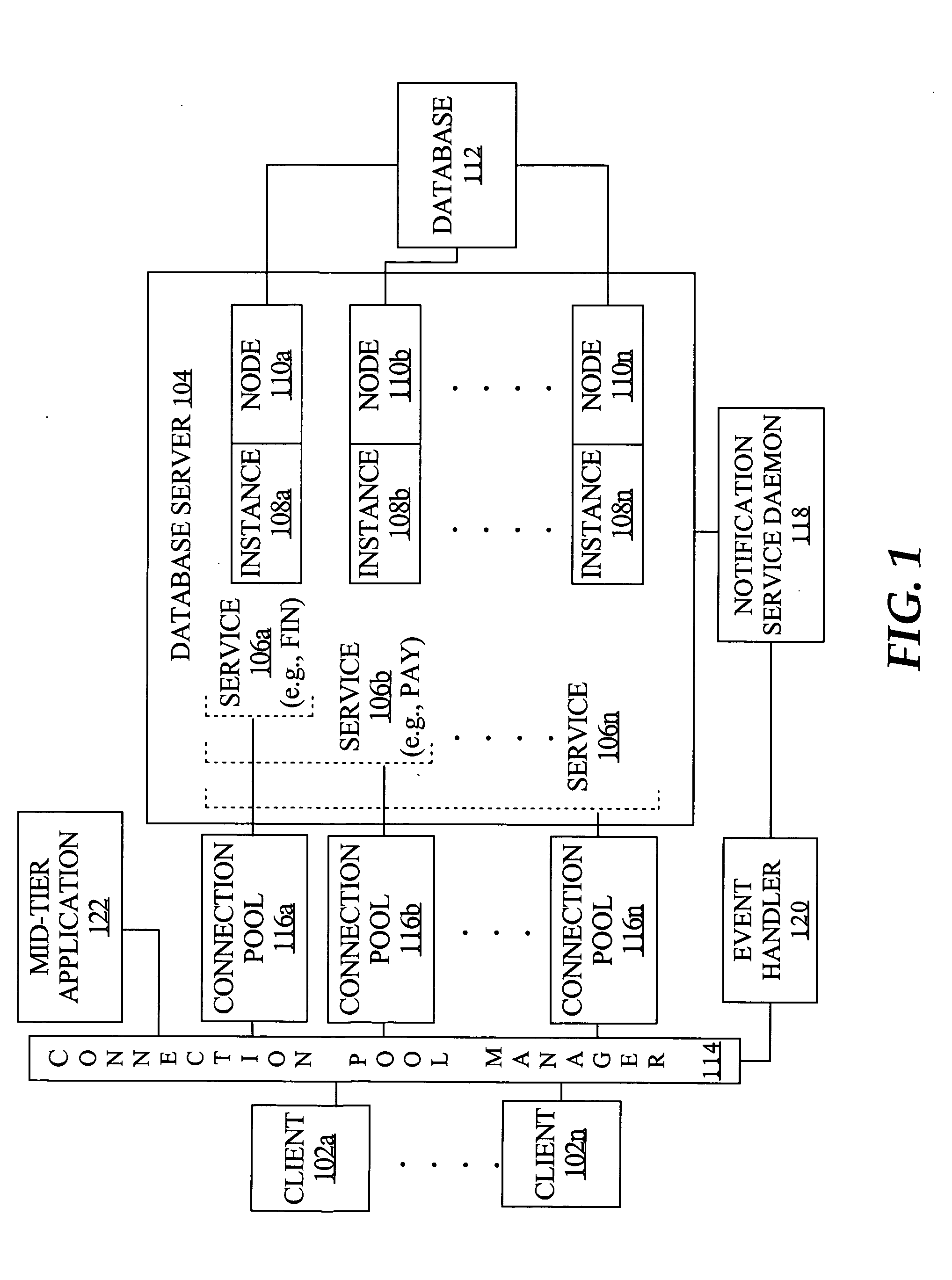

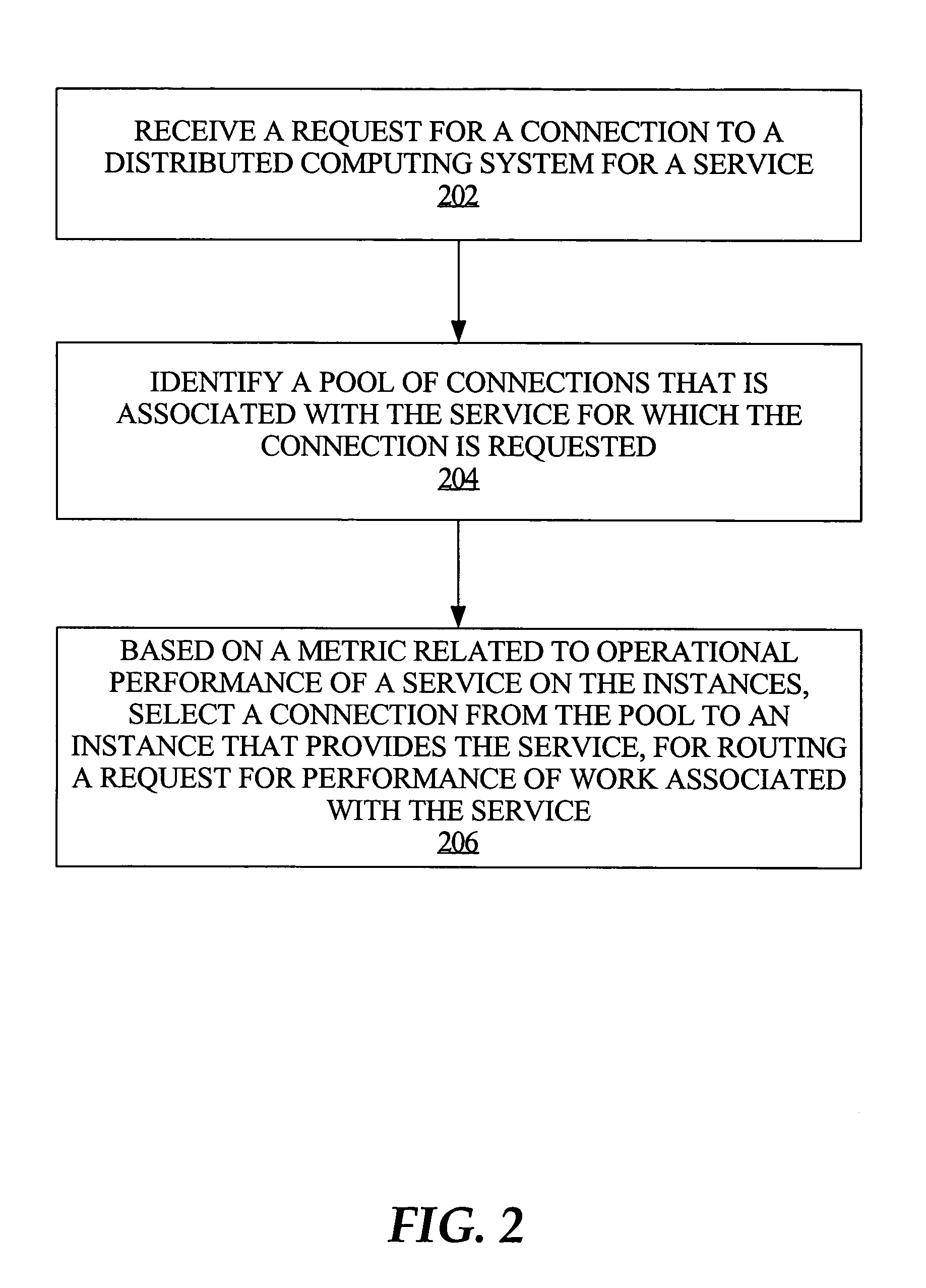

Connection pool use of runtime load balancing service performance advisories

Runtime connection load balancing of work across connections to a clustered computing system involves the routing of requests for a service, based on the current operational performance of each of the instances that offer the service. A connection is selected from an identified connection pool, to connect to an instance that provides the service for routing a work request. The operational performance of the instances may be represented by performance information that characterizes the response time and / or the throughput of the service that is provided by a particular instance on a respective node of the system, and is relative to other instances that offer the same service.

Owner:ORACLE INT CORP

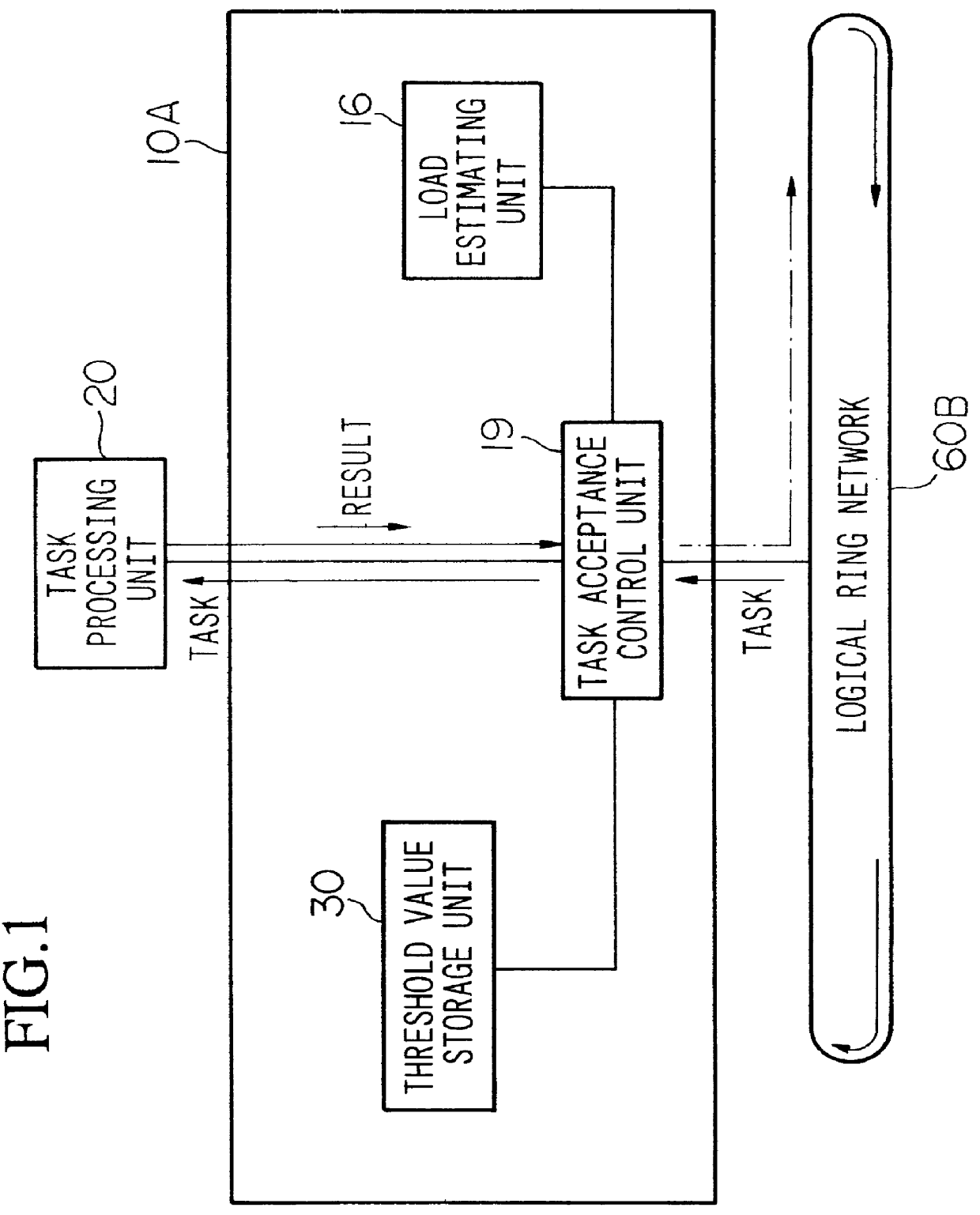

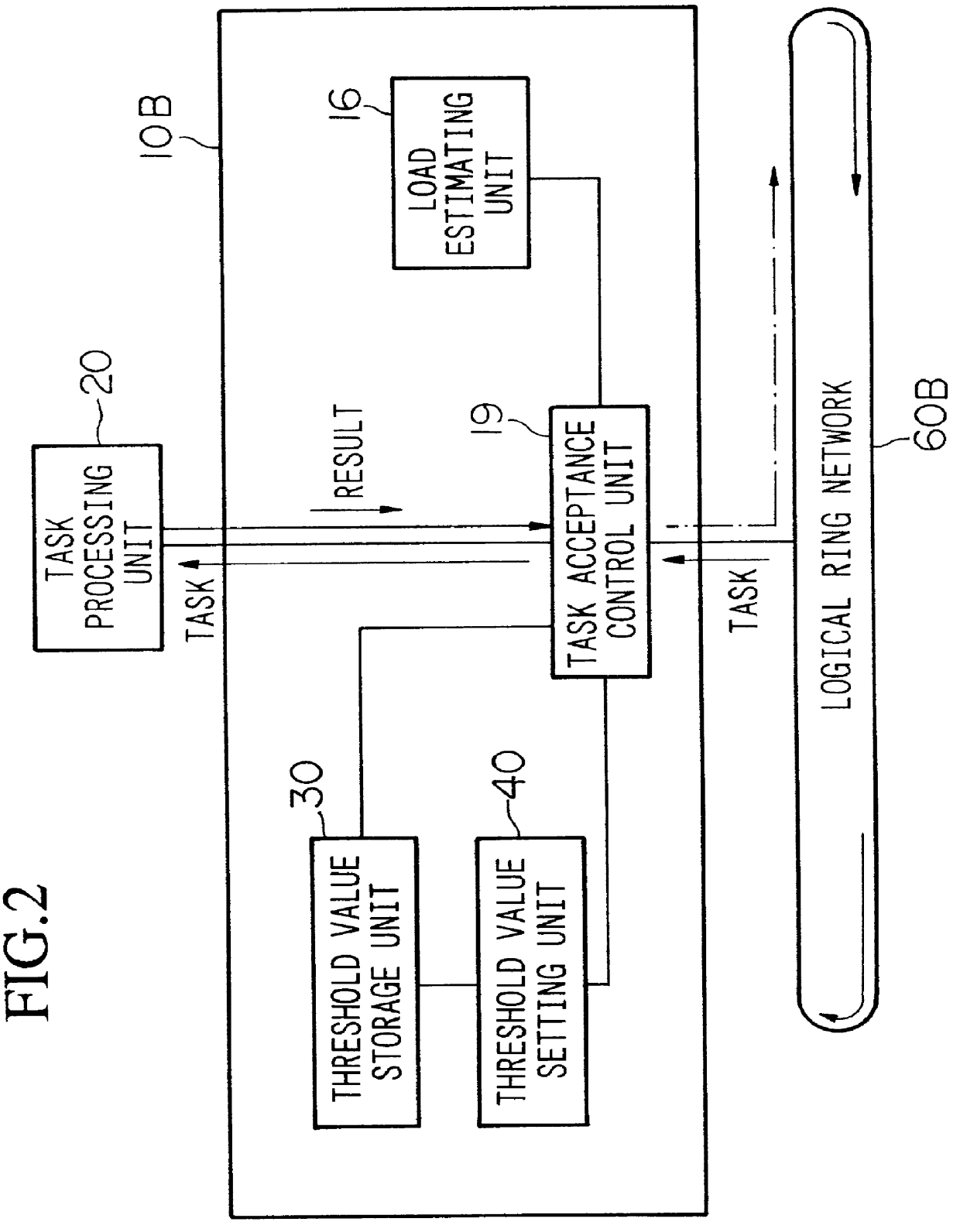

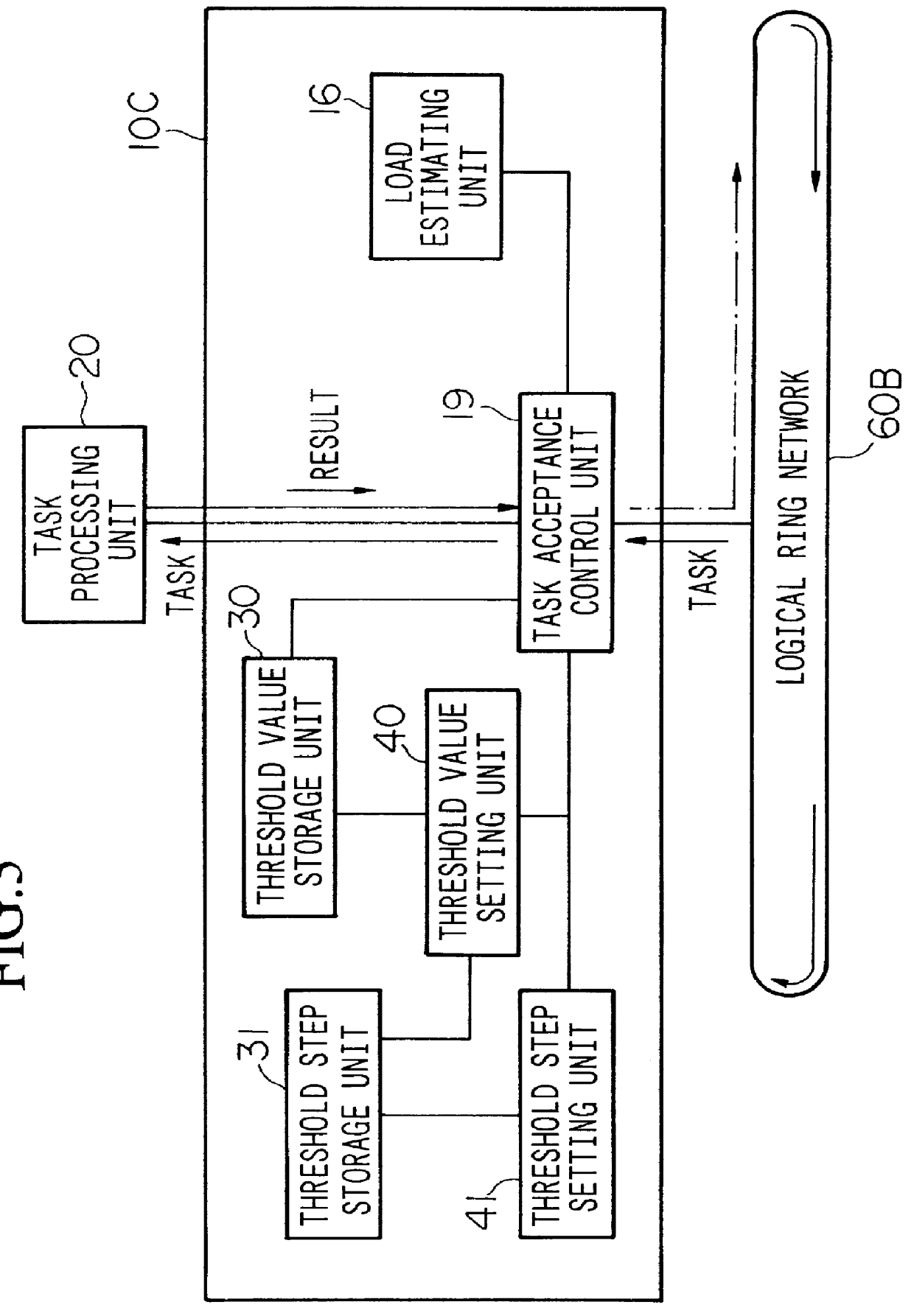

Non-uniform system load balance method and apparatus for updating threshold of tasks according to estimated load fluctuation

InactiveUS6026425AMinimize response timeGuaranteed effective useResource allocationMultiple digital computer combinationsUniform systemRing network

A load balancing method and apparatus are provided, by which loads in a wide range of the entire system can be balanced with a small overhead with respect to load balancing processing, and the mean response time of the entire system can be shortened. The method has the steps of (i) estimating a load of the present node based on the number of tasks being or waiting to be processed and determining an estimated load value of the node; (ii) accepting a task passing through a logical ring network, and every time a task is accepted, comparing the estimated load value and a threshold value which is set with respect to the number of tasks existing in the node so as to limit the load of the node; and (iii) judging, based on a result of the comparison, whether the accepted task is handed over to a task processing unit, or is transmitted to the logical ring network again without handing over the task to the task processing unit. The threshold value may be changed in accordance with a change of the number of tasks passing through the logical ring network during a fixed period, or when a round trip task is detected.

Owner:NIPPON TELEGRAPH & TELEPHONE CORP

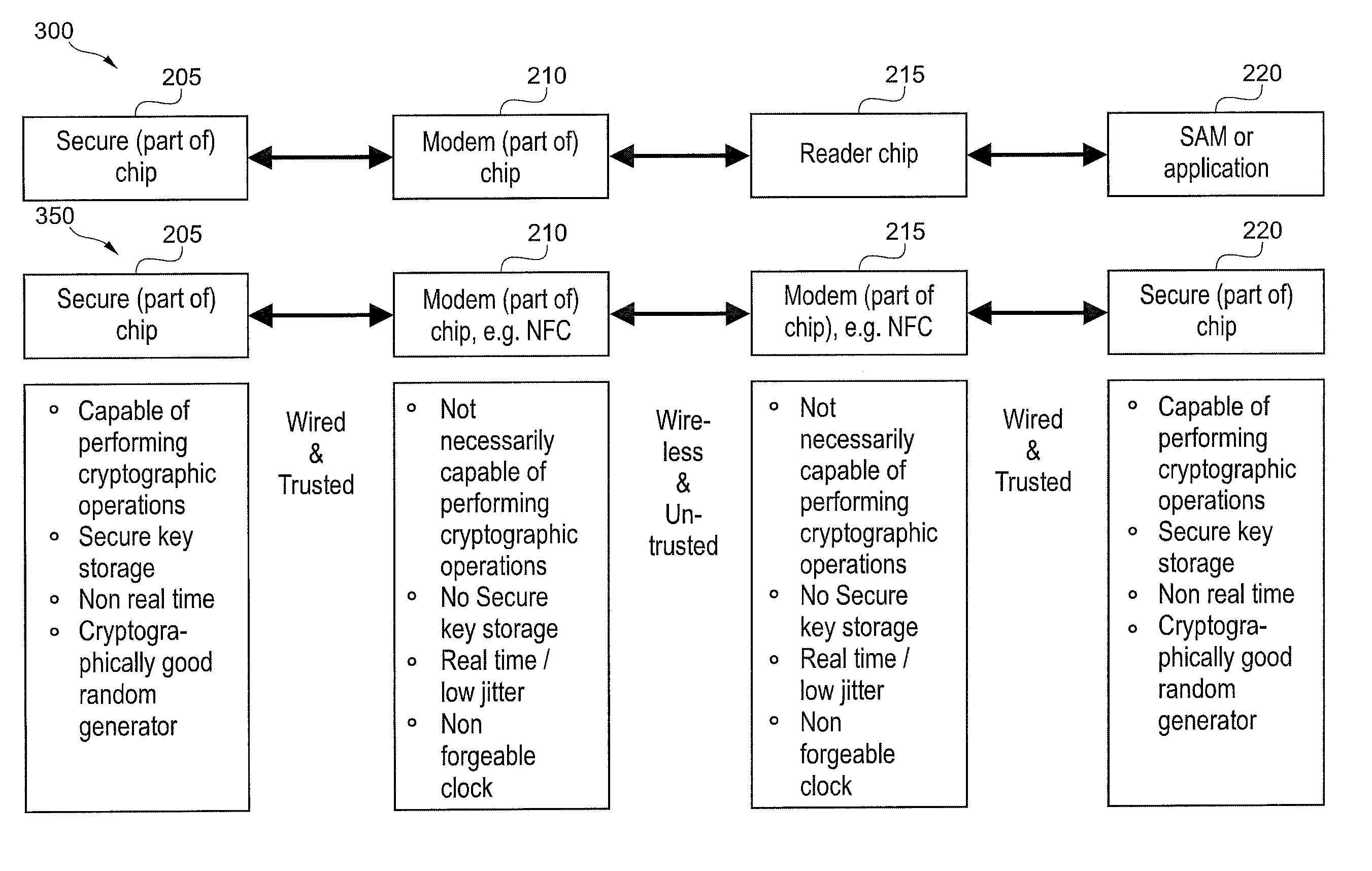

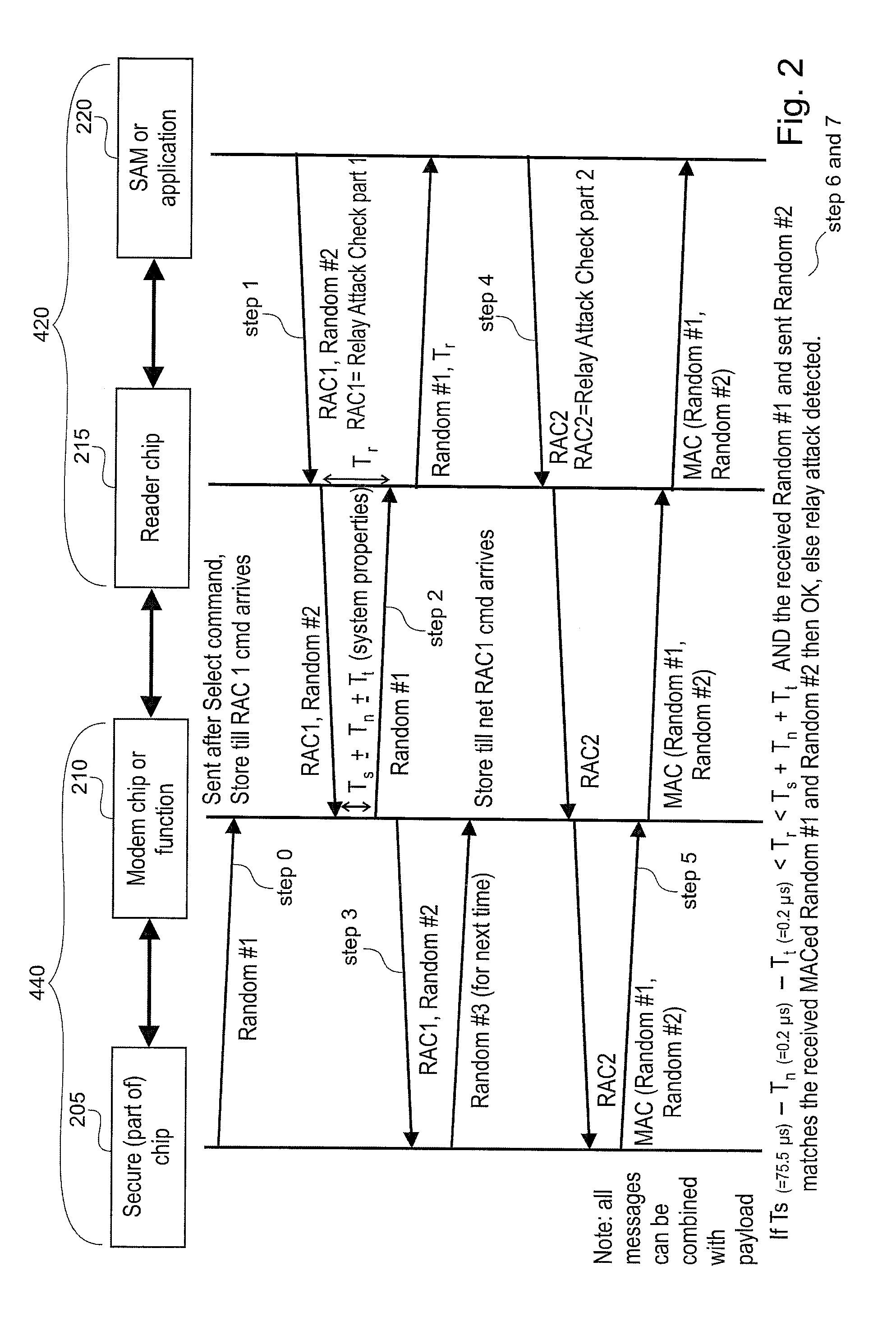

Decoupling of measuring the response time of a transponder and its authentication

ActiveUS20110078549A1Short response timeImprove reliabilityDigital data processing detailsUser identity/authority verificationEngineeringAuthentication

Reader (420) for determining the validity of a connection to a transponder (440), designed to measure a response time of a transponder (440) and to authenticate the transponder (440) in two separate steps. Transponder (440) for determining the validity of a connection to a reader (420), wherein the transponder (440) is designed to provide information for response time measurement to said reader (420) and to provide information for authentication to said reader (420) in two separate steps, wherein at least a part of data used for the authentication is included in a communication message transmitted between the reader (420) and the transponder (440) during the measuring of the response time.

Owner:NXP BV

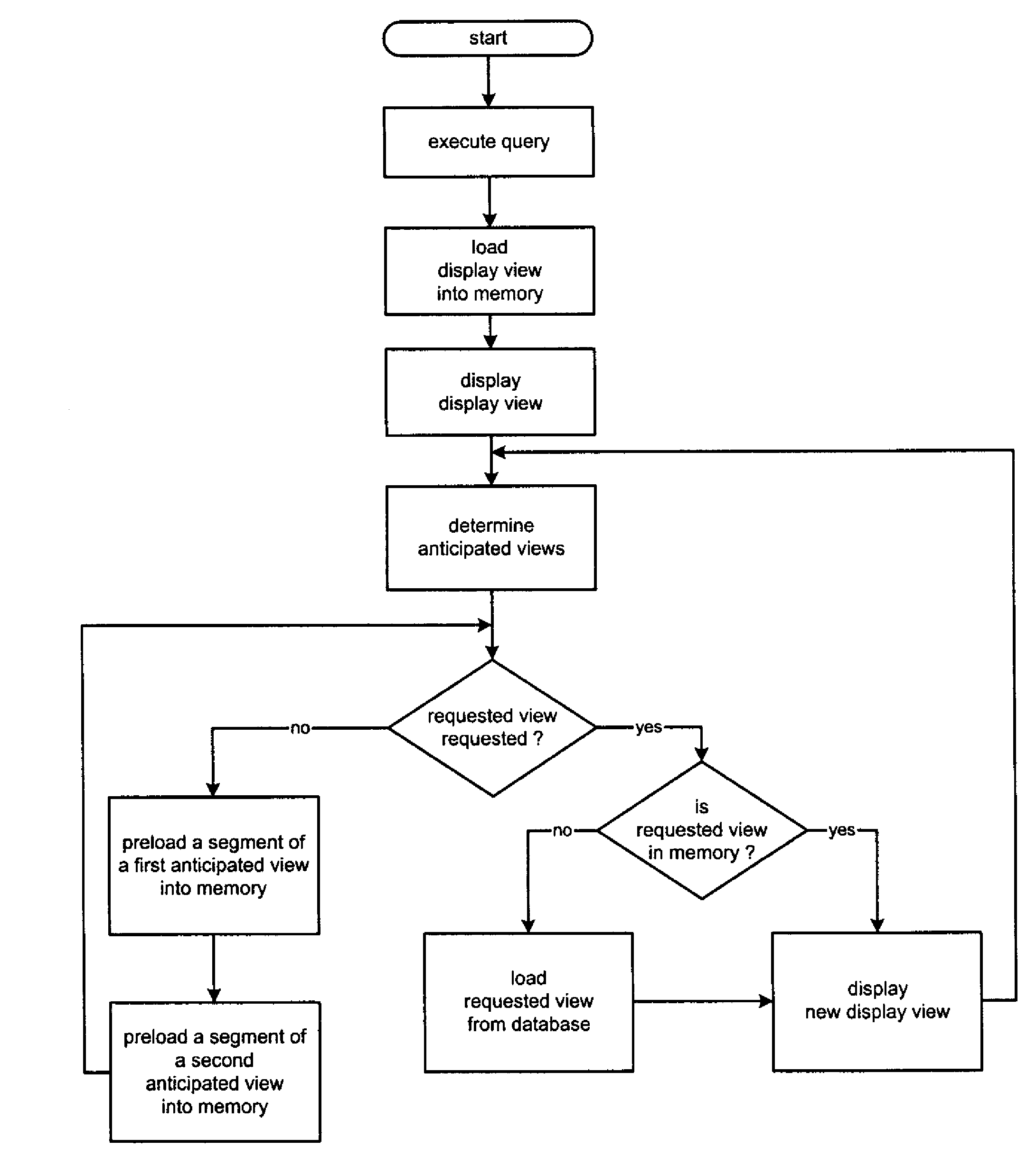

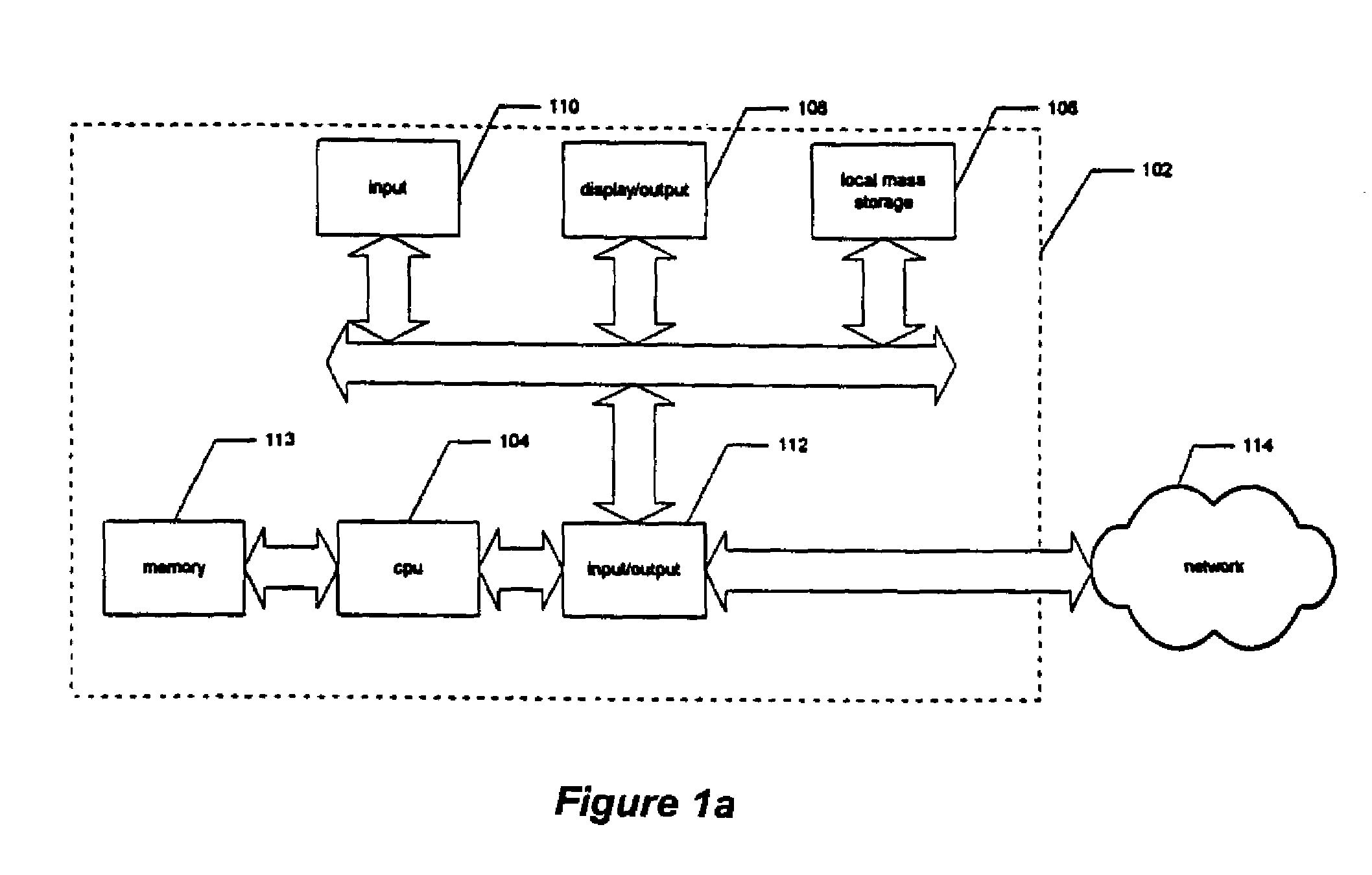

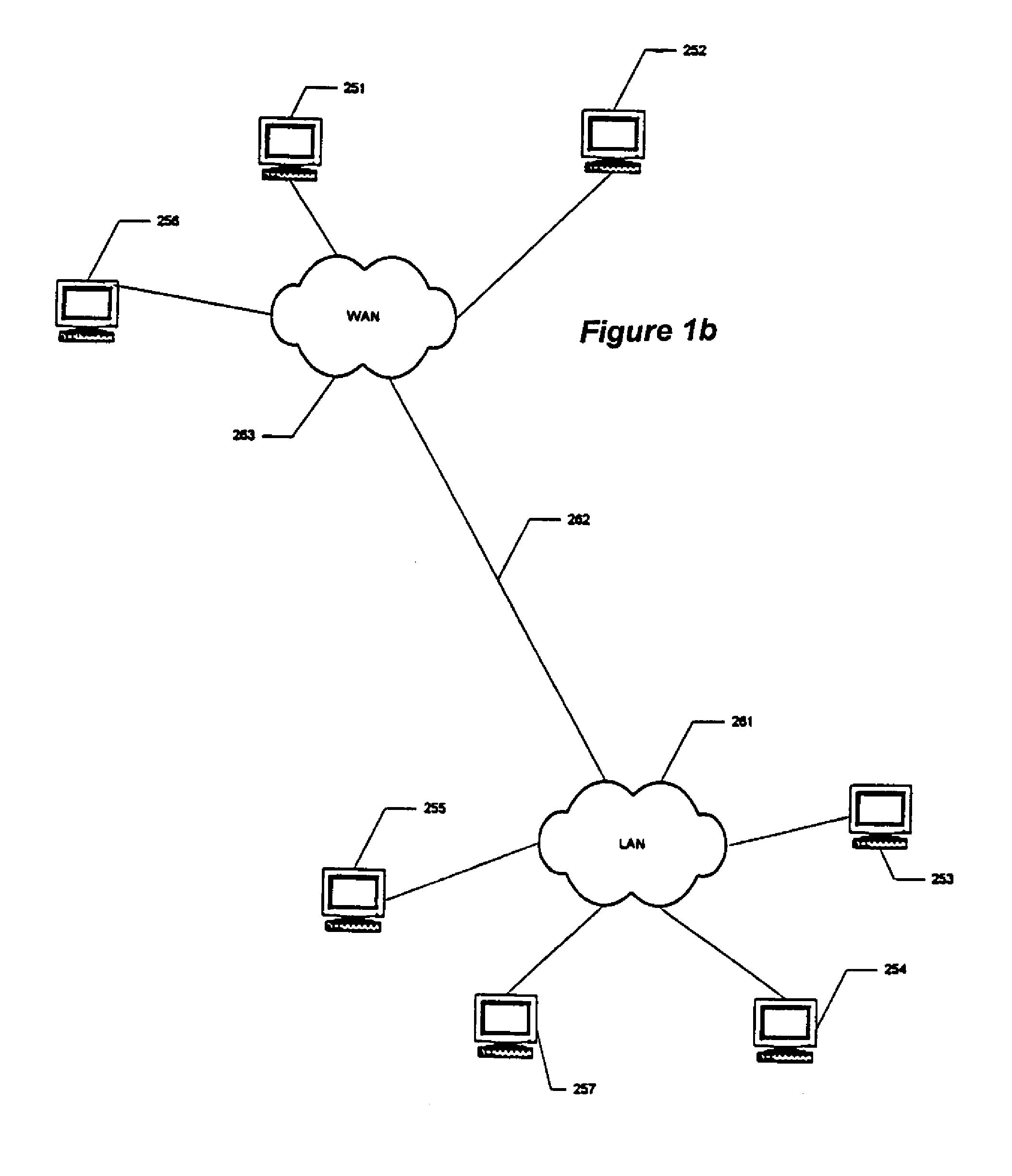

System and method for information retrieval employing a preloading procedure

InactiveUS7467137B1Quicker response timeAccurate prediction informationData processing applicationsDigital data information retrievalDocument retrievalDisplay device

A document retrieval system having improved response time. During the time the user spends viewing the displayed information, other information that the user is likely to read or study later is preloaded into memory. If the user later requests the preloaded information, it can be written to the display very quickly. As a result, the user's request to view new information can be serviced quickly.

Owner:GOOGLE LLC

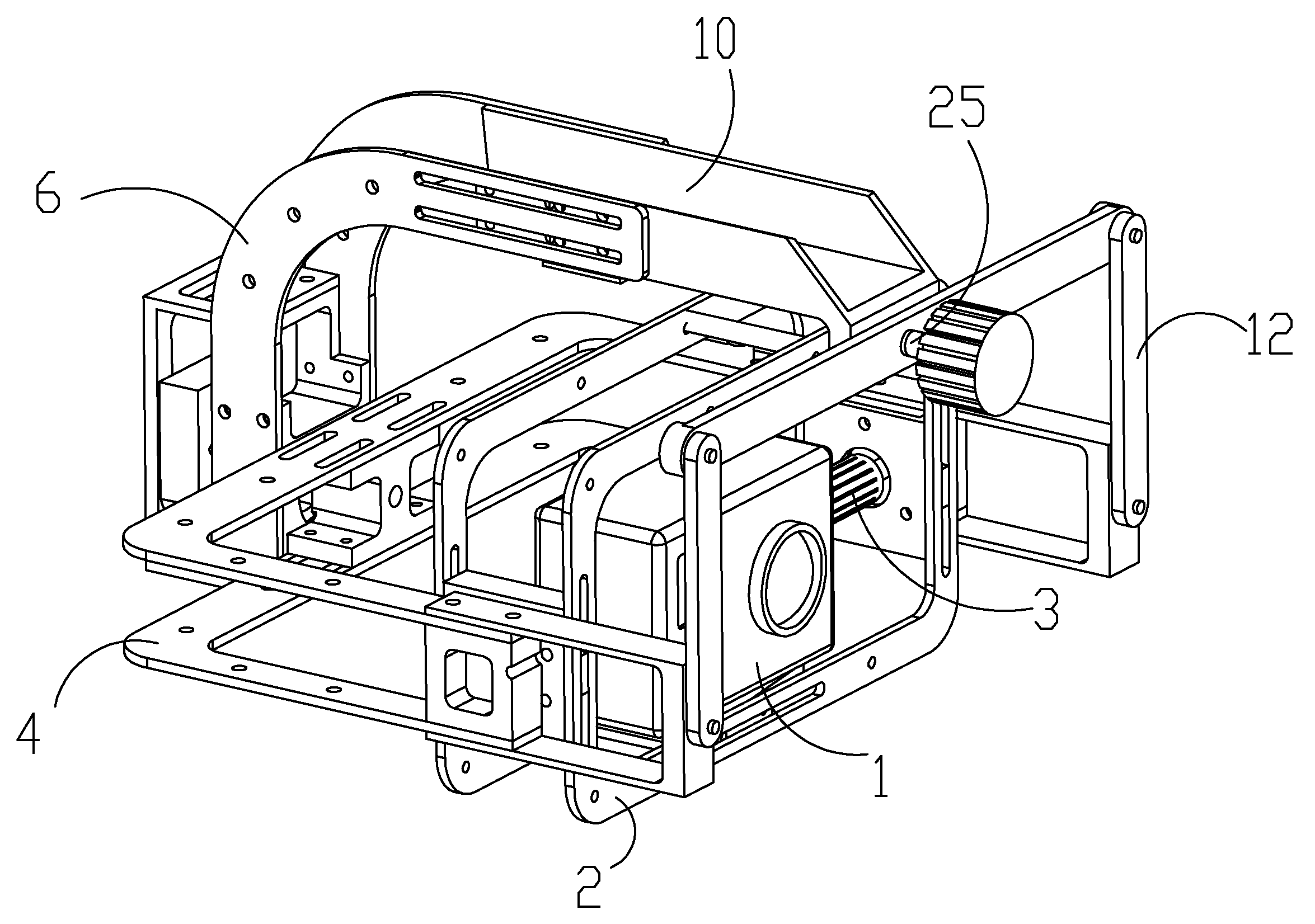

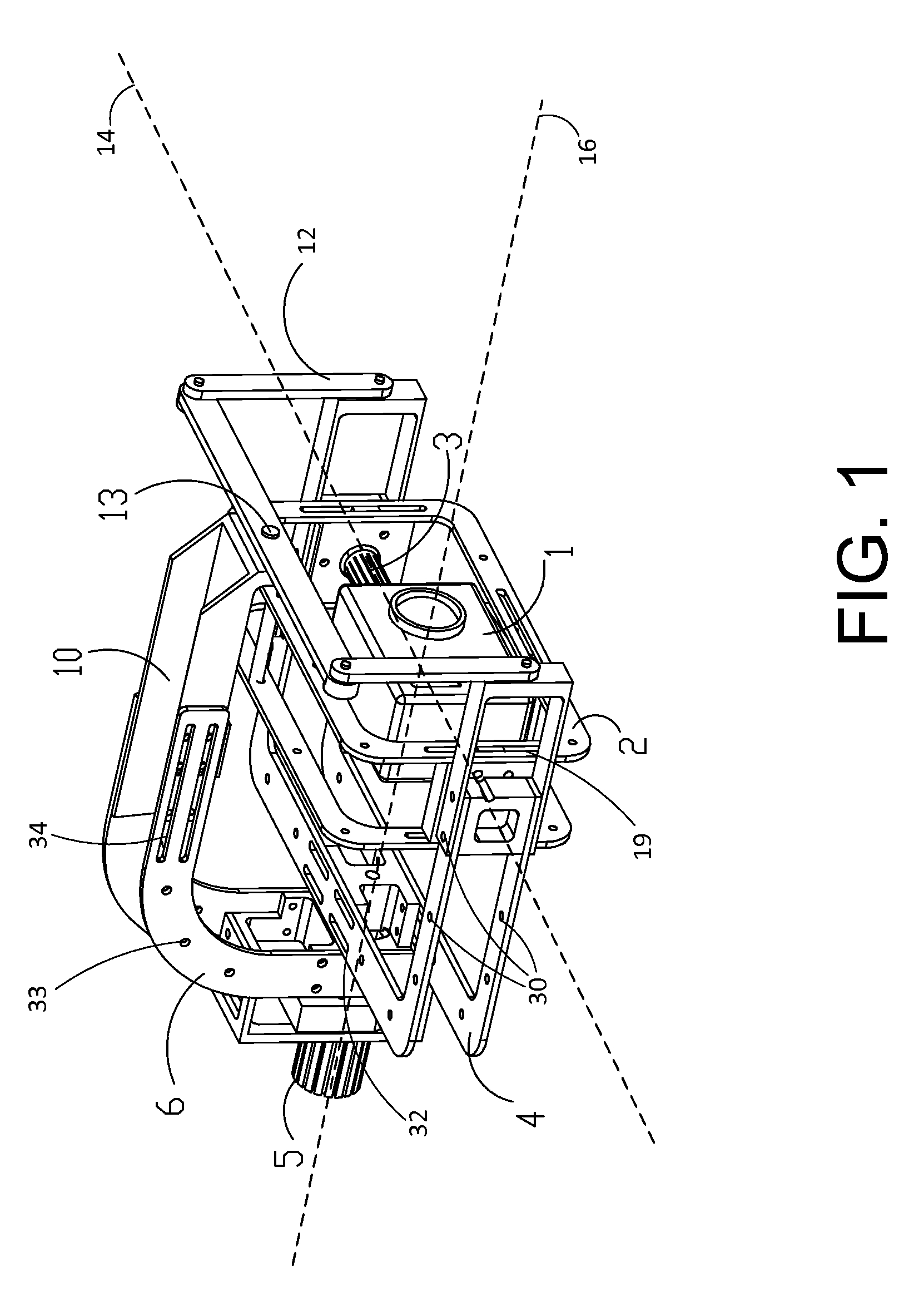

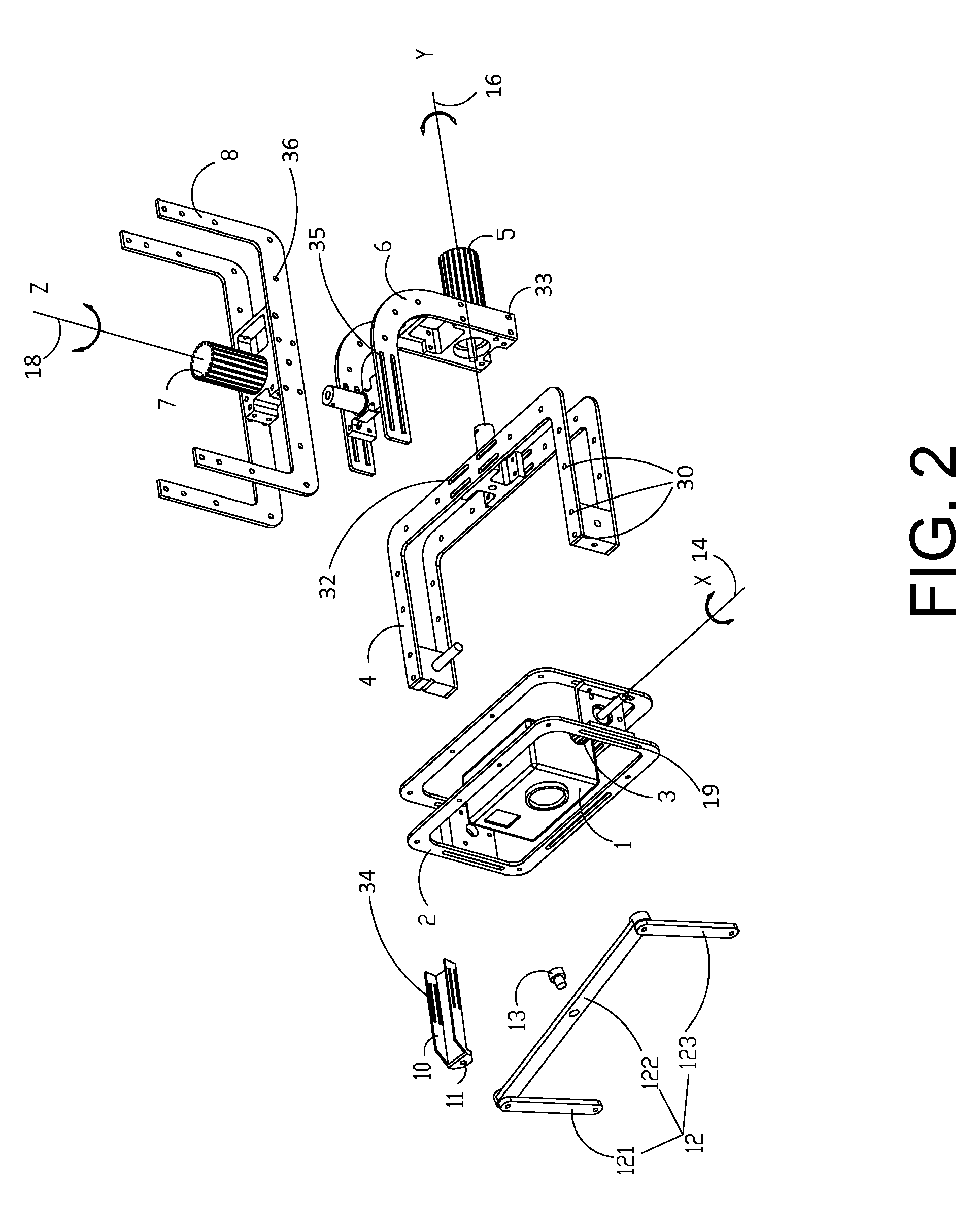

Stabilizing platform

InactiveUS8938160B2Rapid response to adjustmentQuality improvementAircraft componentsTelevision system detailsMedicineSimulation

Owner:SZ DJI OSMO TECH CO LTD

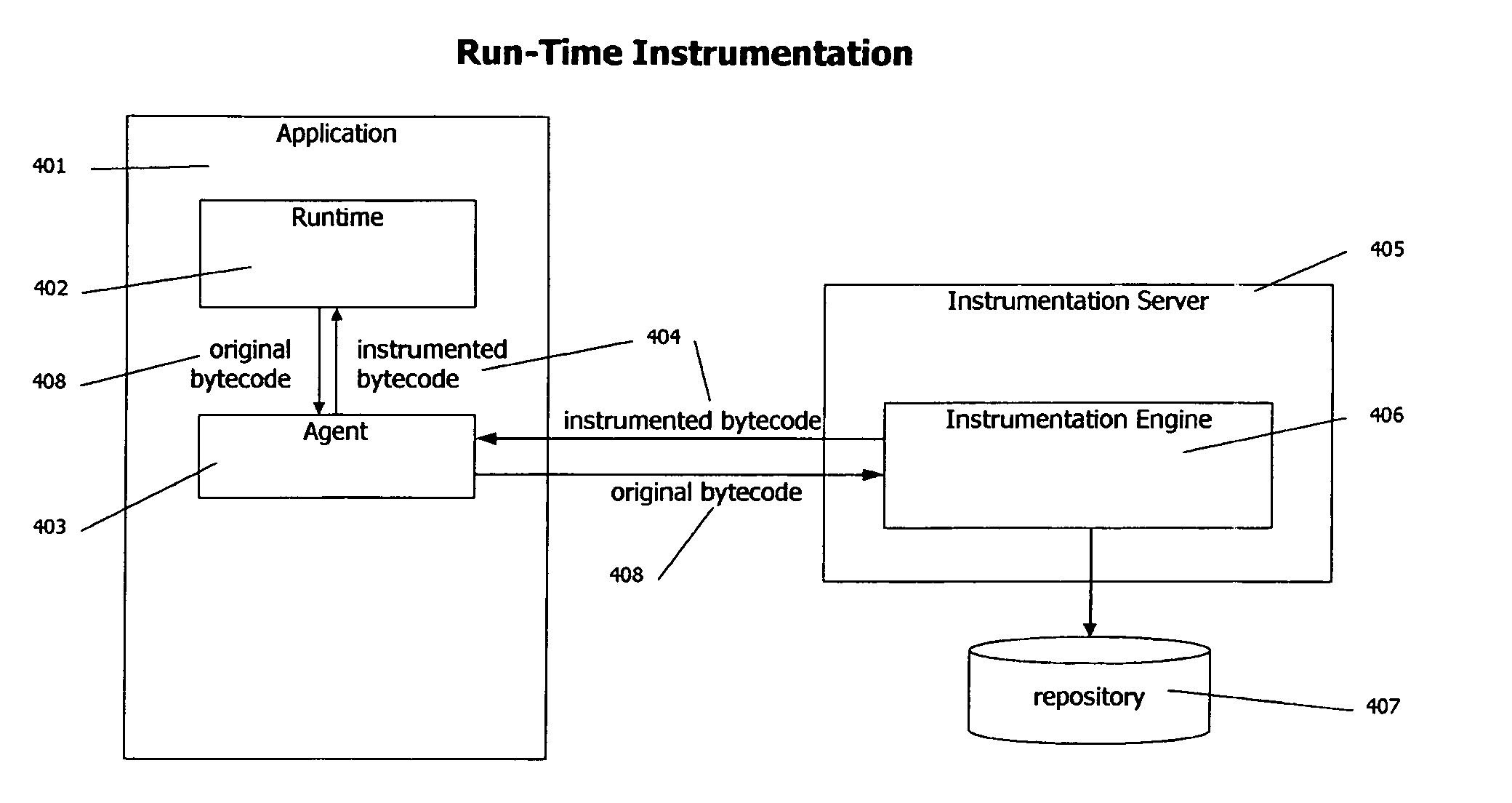

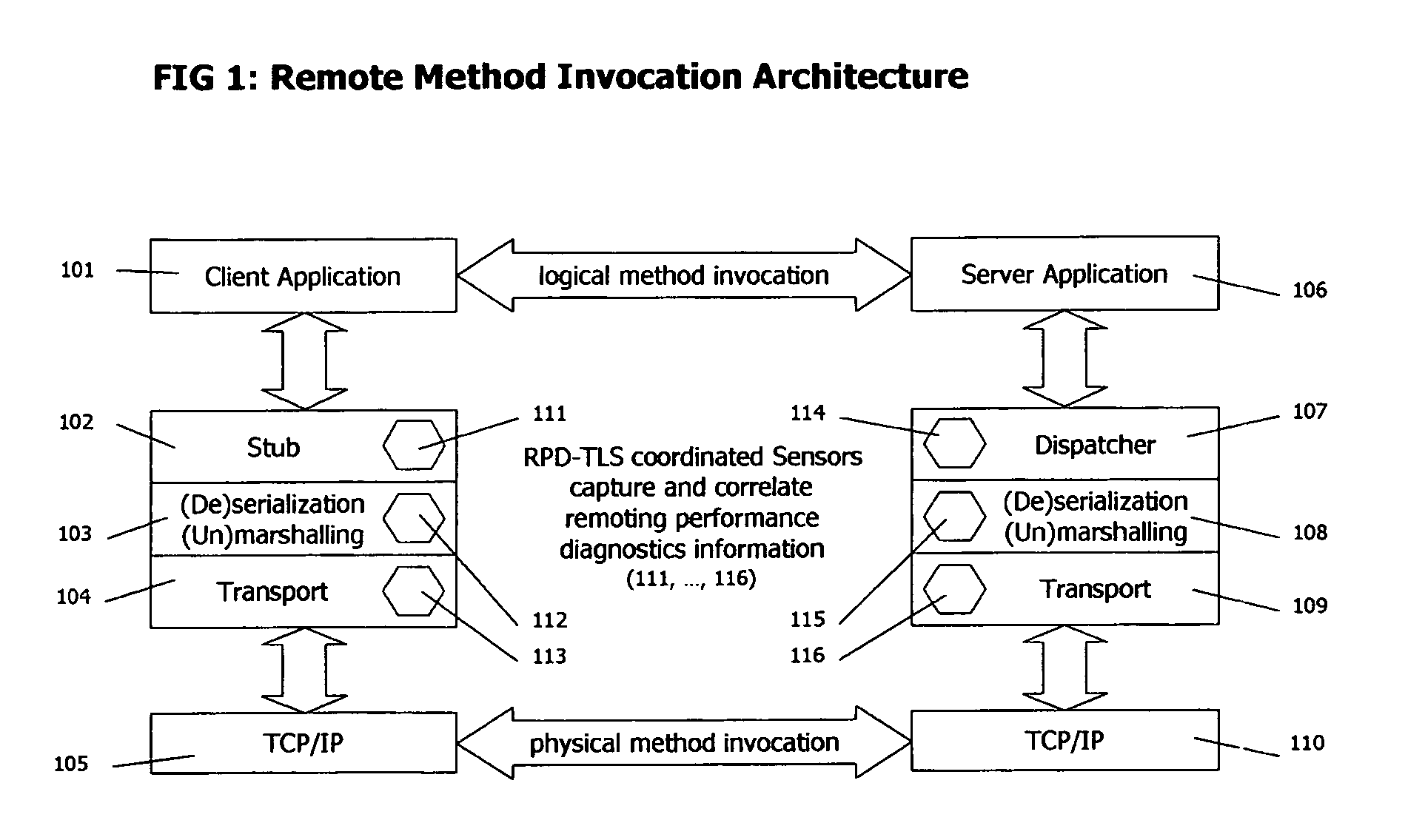

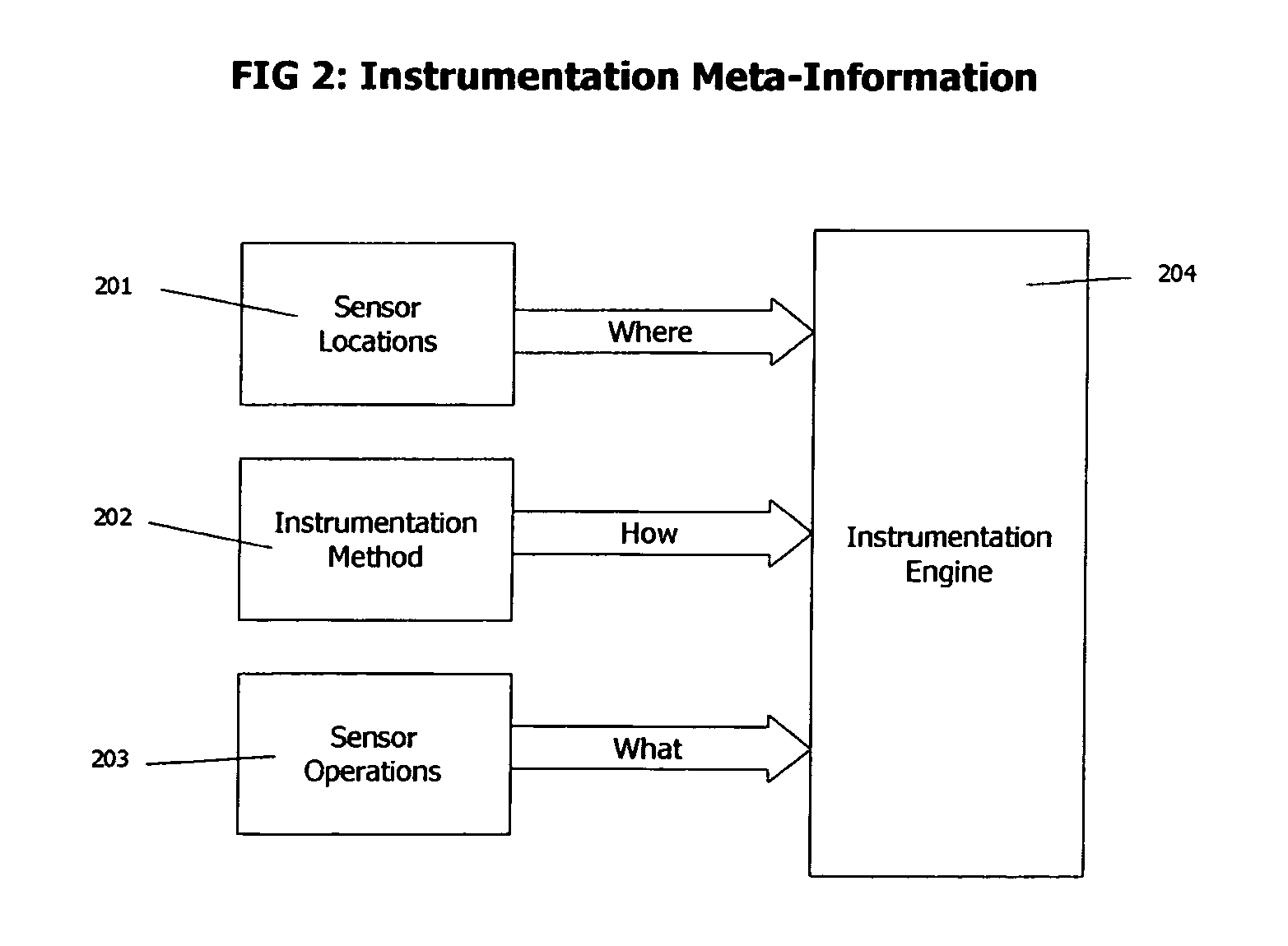

Method and system for automated analysis of the performance of remote method invocations in multi-tier applications using bytecode instrumentation

ActiveUS20070169055A1Minimal disruptionError detection/correctionSpecific program execution arrangementsSerializationClient-side

Provided is a method and system for monitoring and diagnosing the performance of remote method invocations using bytecode instrumentation in distributed multi-tier applications. The provided method and system involves automated instrumentation of client application bytecode and server application bytecode with sensors for measuring performance of remote method invocations and operations performed during remote method invocations. Performance information is captured for each remote method invocation separately, allowing performance diagnosis of multithreaded execution of remote method invocations, so that throughput and response time information are accurate even when other threads perform remote method invocations concurrently. This makes the present invention suitable for performance diagnosis of remote method invocations on systems under load, such as found in production and load-testing environments. The captured performance metrics include throughput and response time information of remote method invocation, object serialization, and transport. The performance metrics are captured per remote method invocation. The above performance metrics enable developers and administrators to optimize their programming code for performance. Performance metrics are preferably further sent to a processing unit for storage, analysis, and correlation.

Owner:DYNATRACE

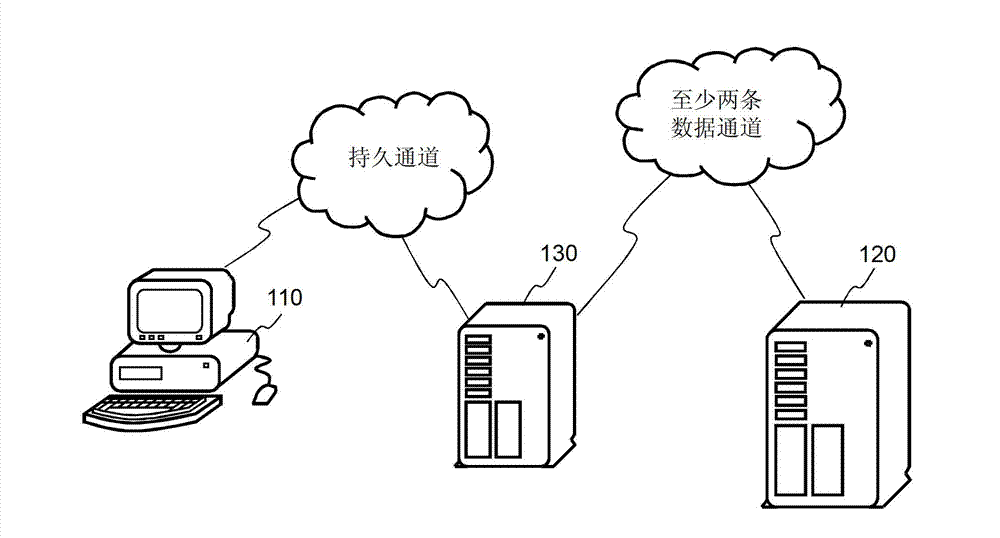

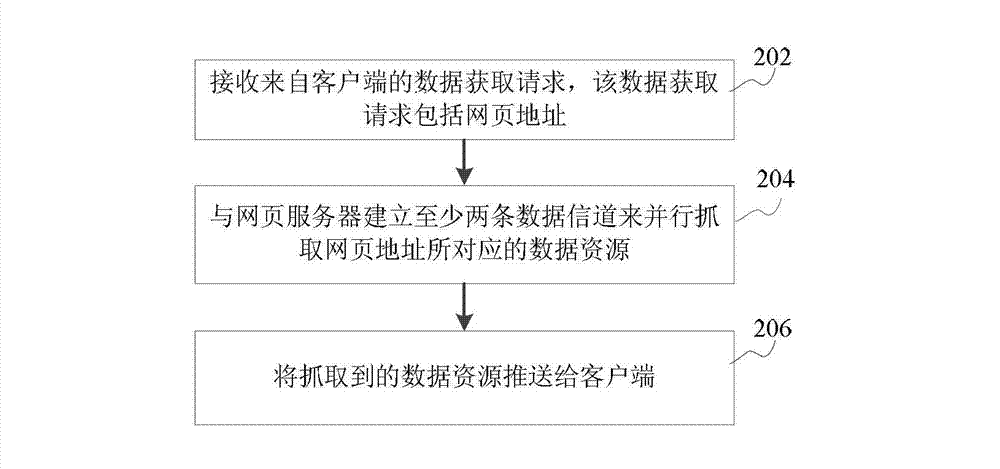

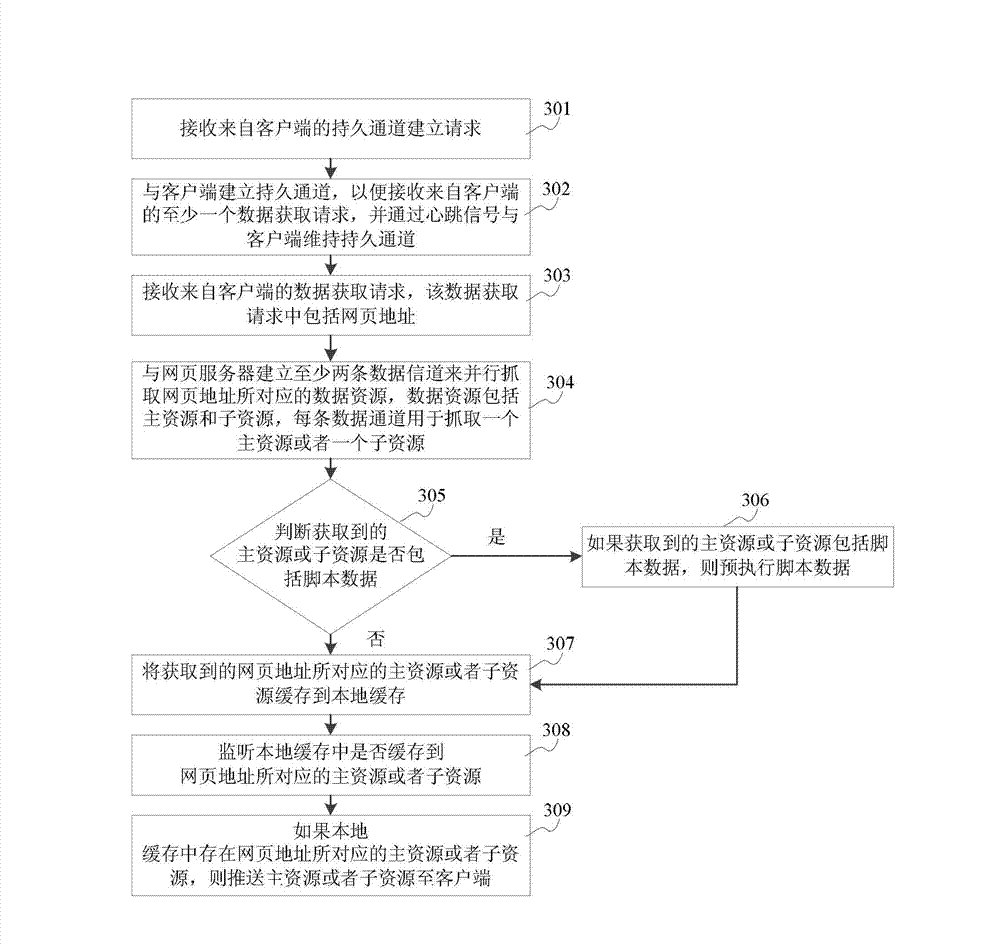

Data acquisition method, system and equipment

The invention discloses a data acquisition method, a data acquisition system and data acquisition equipment, and belongs to the field of computer networks. The method comprises the following steps of: receiving a data acquisition request from a client, wherein the data acquisition request comprises a webpage address; establishing at least two data channels with a webpage server, and capturing data resources corresponding to the webpage address in parallel; and sending the captured data resources to the client. According to a scheme of establishing a plurality of data channels by using a proxy server, then capturing data resources of a webpage and actively sending the data resources to the client, the problems of waste of flow and relatively long response time when the client acquires network data are solved; and an effect that the client can quickly acquire all the data resources of the webpage so as to display the data resources to a user when the data acquisition request is sent by one time is realized.

Owner:HUAWEI TECH CO LTD

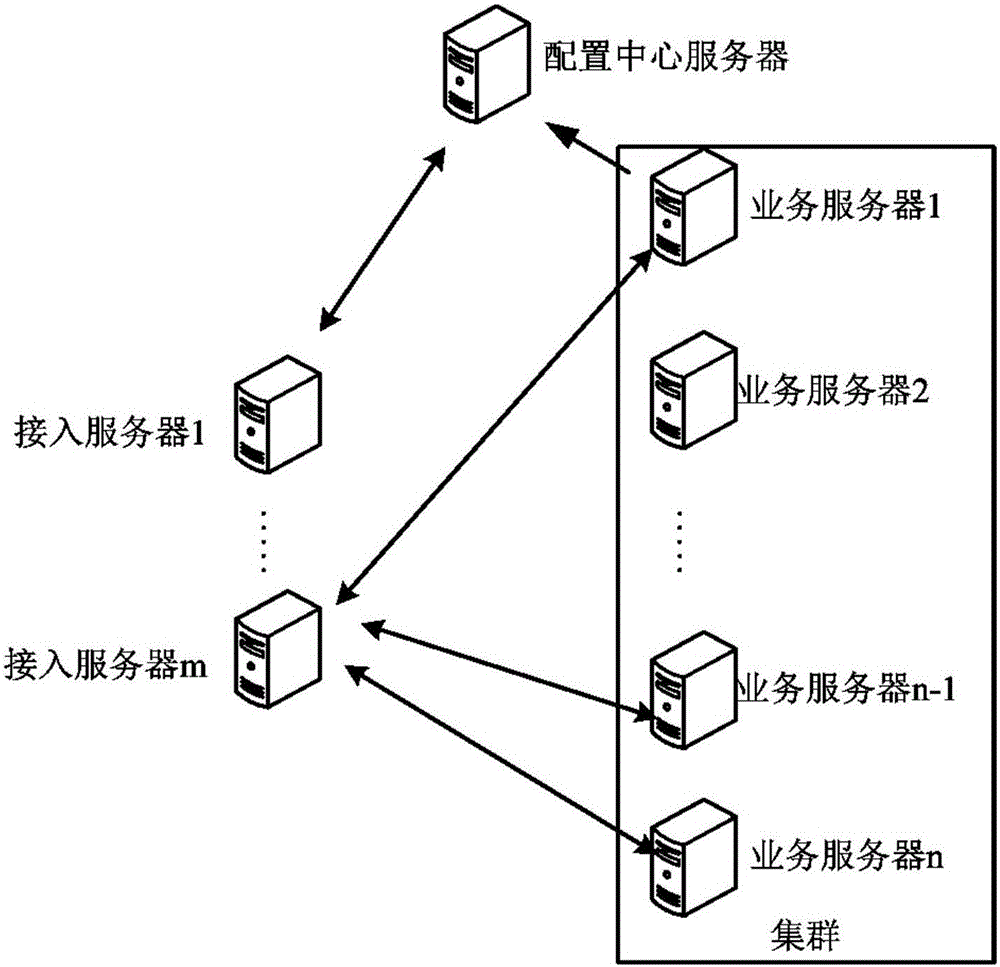

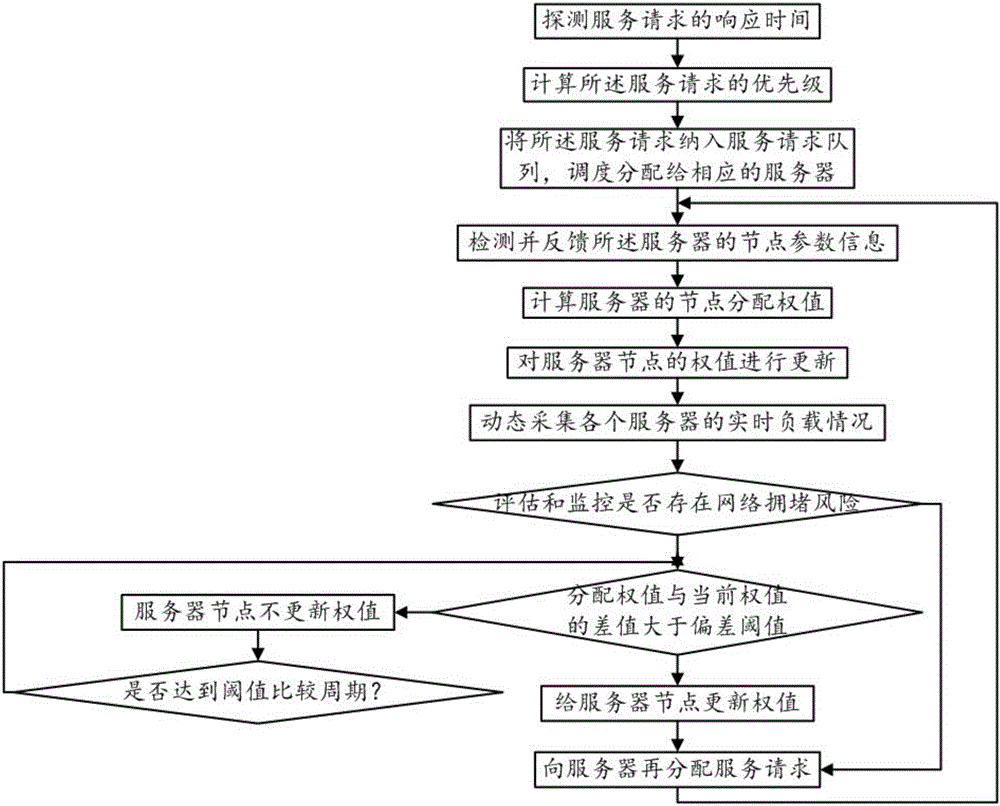

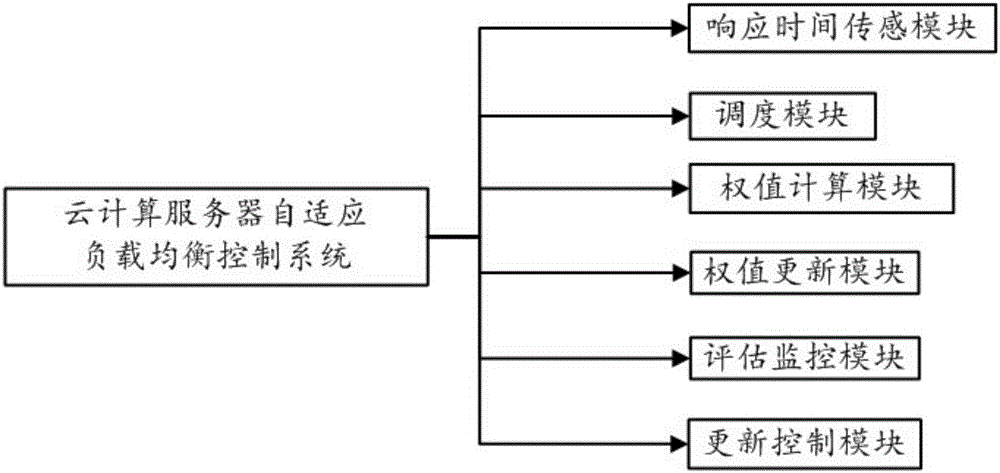

Method and system for controlling adaptive load-balancing of cloud computing server

InactiveCN105007312ALoad balancingEfficiently allocate system resourcesData switching networksApplication serverControl system

The invention relates to the field of computers and discloses a method for controlling adaptive load-balancing of a cloud computing server. The method comprises the steps as follows: detecting response time of a service request, calculating the priority of the service request, adding the service request into a service request queue, and dispatching and distributing the service request to a corresponding server; detecting and feeding back node parameter information of the server, calculating node distribution weight of the server, and updating the weight of the server node; dynamically collecting real-time load conditions of each server, analyzing and re-calculating the performance of each server in a cluster, evaluating and monitoring whether there is a network congestion risk or not, and re-distributing the service request in the request queue to the server according to an investigating result. The invention further discloses a system for controlling adaptive load-balancing of the cloud computing server. The method and the system for controlling adaptive load-balancing of the cloud computing server of the invention could balance the load of each application server to the largest extent so as to effectively configure system resources.

Owner:叶秀兰

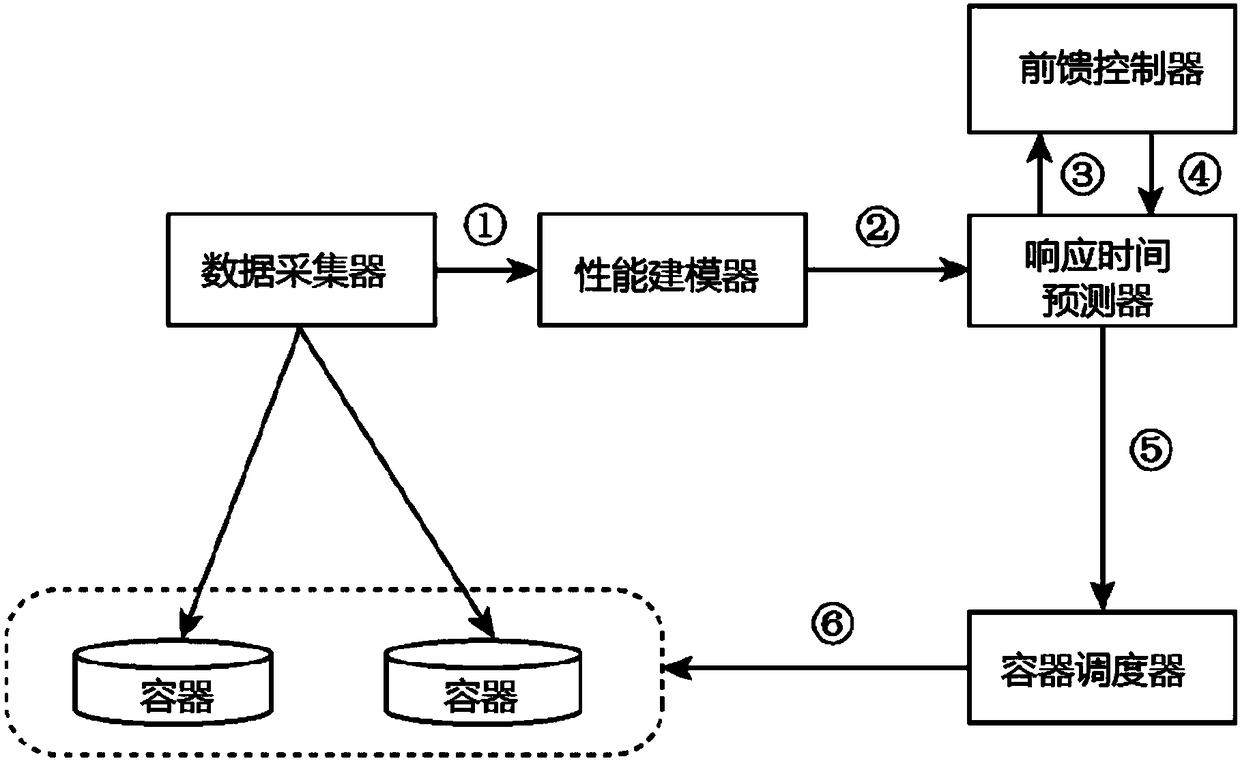

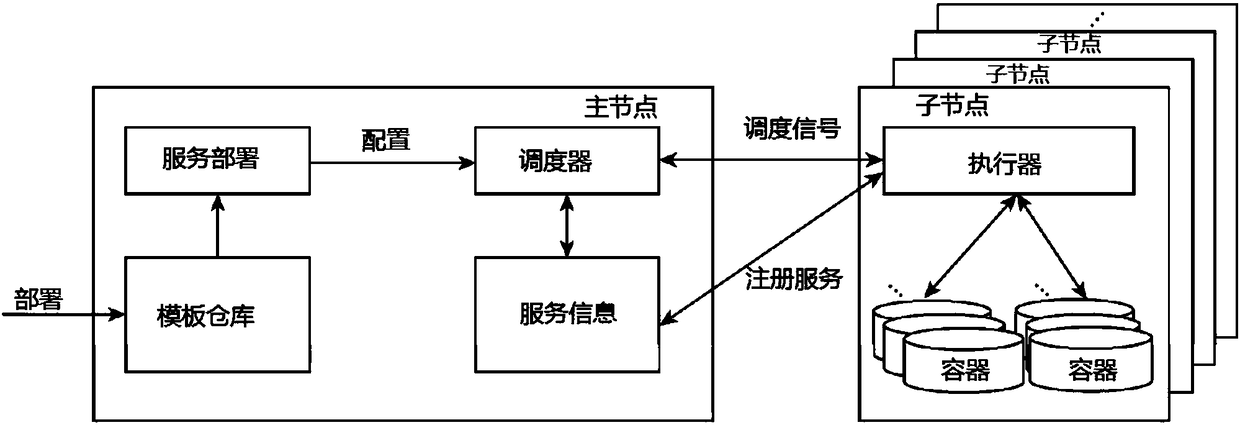

Docker self-adaptive scheduling system with task sensing function

InactiveCN108228347AImprove effectivenessImprove forecast accuracyProgram initiation/switchingResource allocationQuality of serviceData acquisition

Disclosed is a Docker self-adaptive scheduling system with a task sensing function. A Docker self-adaptive scheduling method comprises the steps that a data collector collects the load of each container and the resource usage rate of a CPU and a memory system; based on a queuing theory and with the resource usage rate as a benchmark, a performance modeler constructs an application performance model and characterizes an association relationship of the load and response time; a response time predictor estimates parameters of the performance model during running through a Kalman filter, wherein estimation is conducted under a convergence condition of meeting a predicted and measured response error expectation; a feed-forward controller analyzes a residual mean and variance through a fuzzy controller to obtain a feed-forward adjustment value of a control parameter of the Kalman filter; a container scheduler judges whether or not a predicted value of the response time violates application service quality and conducts scheduling according to a scheduling algorithm; the container scheduler conducts container expansion or shrinkage or migration.

Owner:SHANGHAI DIANJI UNIV

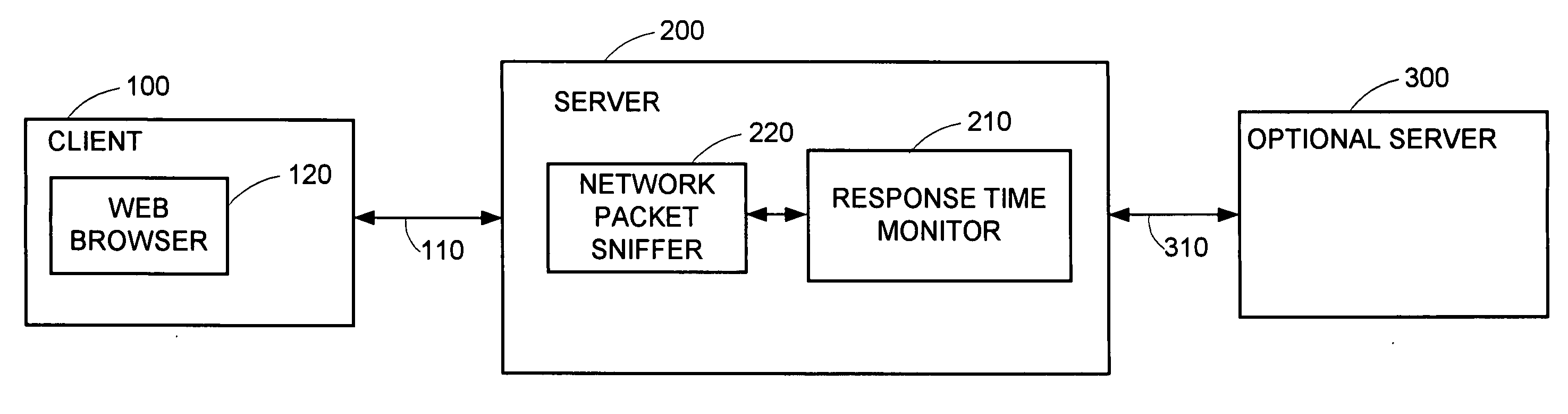

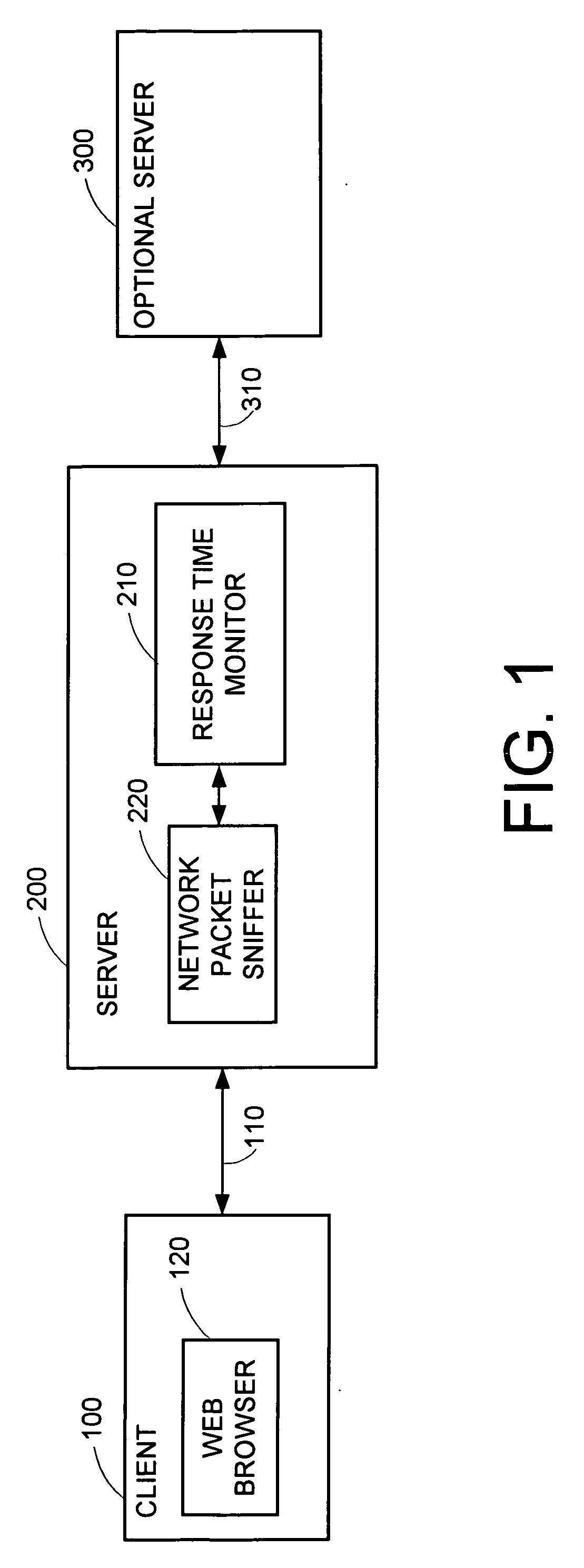

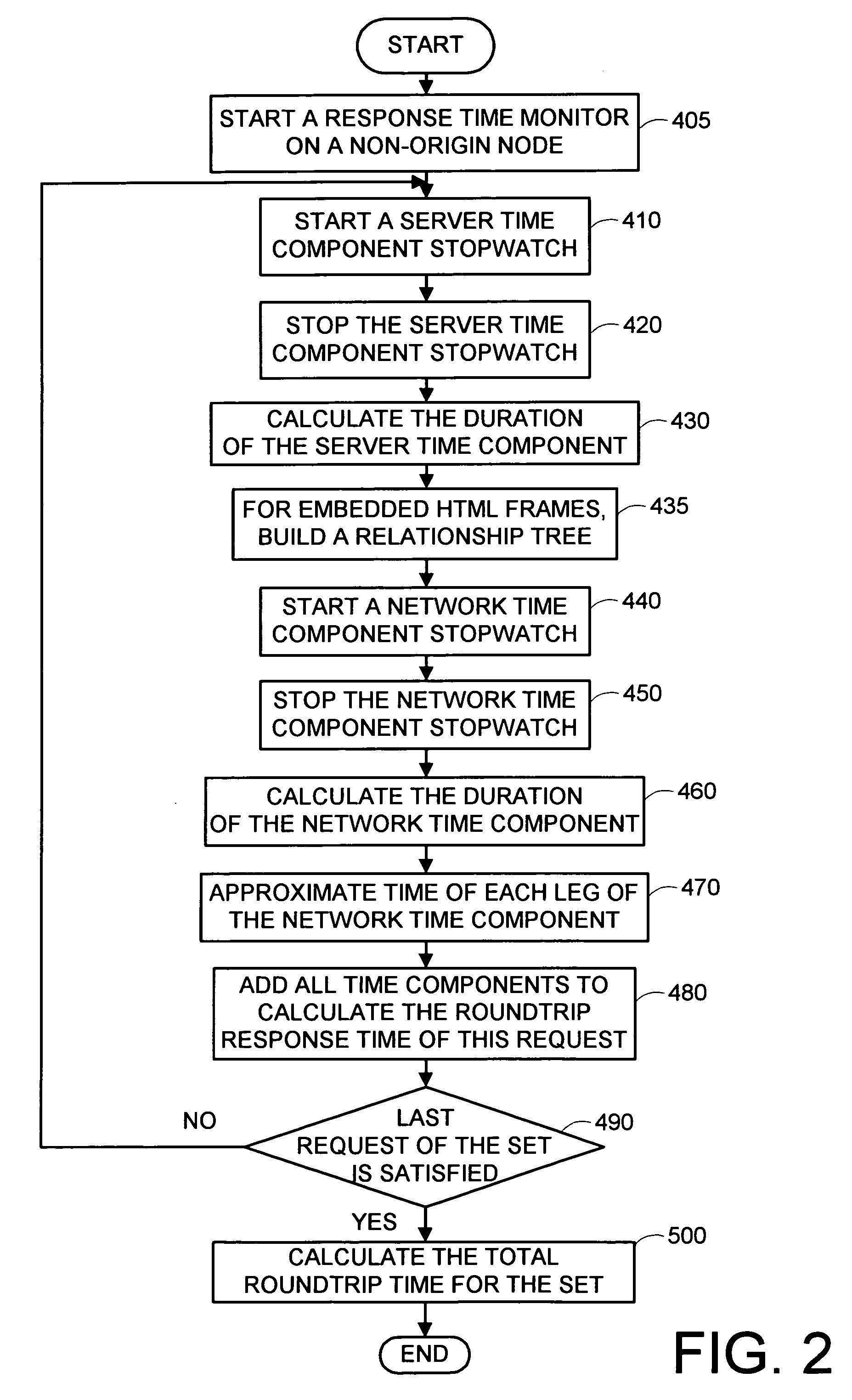

System and method utilizing a single agent on a non-origin node for measuring the roundtrip response time of web pages with embedded HTML frames over a public or private network

A computer-based system and method utilizing a single response time monitor, located on a non-origin node of a public or private network, for measuring the total roundtrip response time of a set of requests resulting from an original request having embedded HTML frames. The response time monitor builds a relationship tree for the original request and its embedded HTML frames. For each request from the set of request resulting from the original request, including requests for the embedded HTML frames, the method uses the response time monitor to detect a start time and end time of each component of the request's roundtrip response time in order to calculate each component's duration. Later, the response time monitor calculates the total roundtrip response time by adding together the duration of all components of the original request and all its additional requests that have the same network address and port number.

Owner:IBM CORP

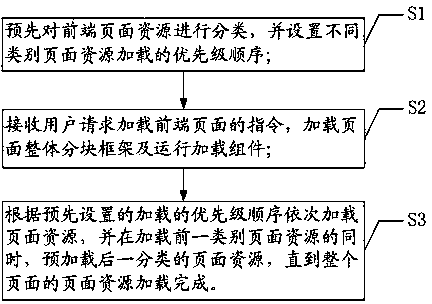

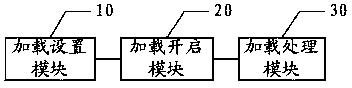

Front-end page loading method and system

ActiveCN104166569AShort response timeEasy accessProgram loading/initiatingDatabaseComputer engineering

The invention provides a front-end page loading method and system. Page resources are firstly classified, loaded priority sequences are set for the classified page resources, and when a previous category of page resources are loaded, a next category of page resources are obtained in the background in advance, so that after loading of the previous category of page resources is finished, the next category of page resources are completely loaded or a part of the next category of page resources is loaded in the background, the response time of a front-end page during loading is decreased, and the loaded priority sequences can be set according to the demands of users. Therefore, the front-end page loading method and system brings convenience to the users during page visiting.

Owner:BEIJING CLOUD SPOWER EDUCATION & TECH

Multistage intelligent database search method

InactiveUS7107263B2Handles typographic errors efficientlyEnhanced multistage searchData processing applicationsText database indexingDocument preparationIntelligent database

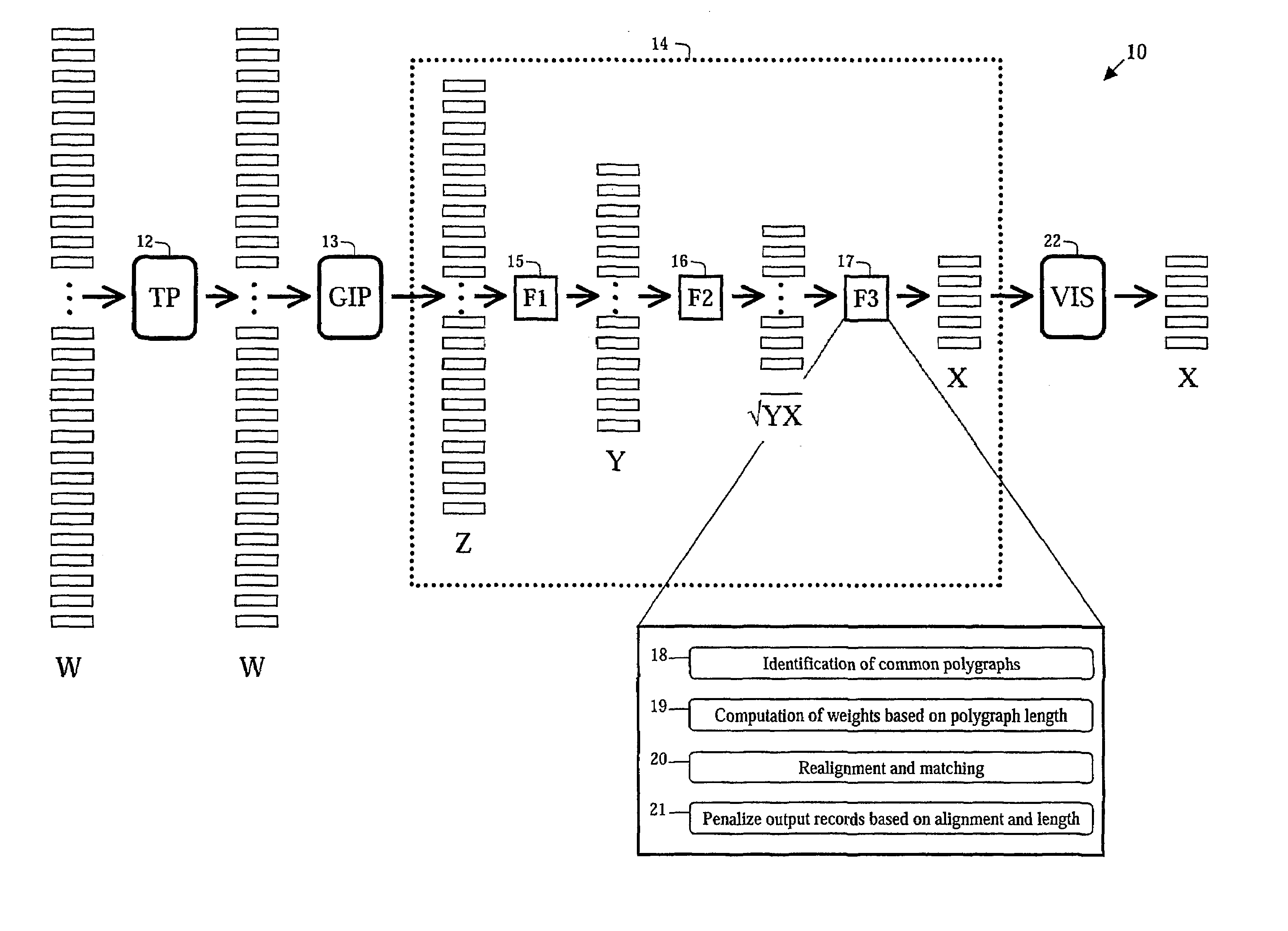

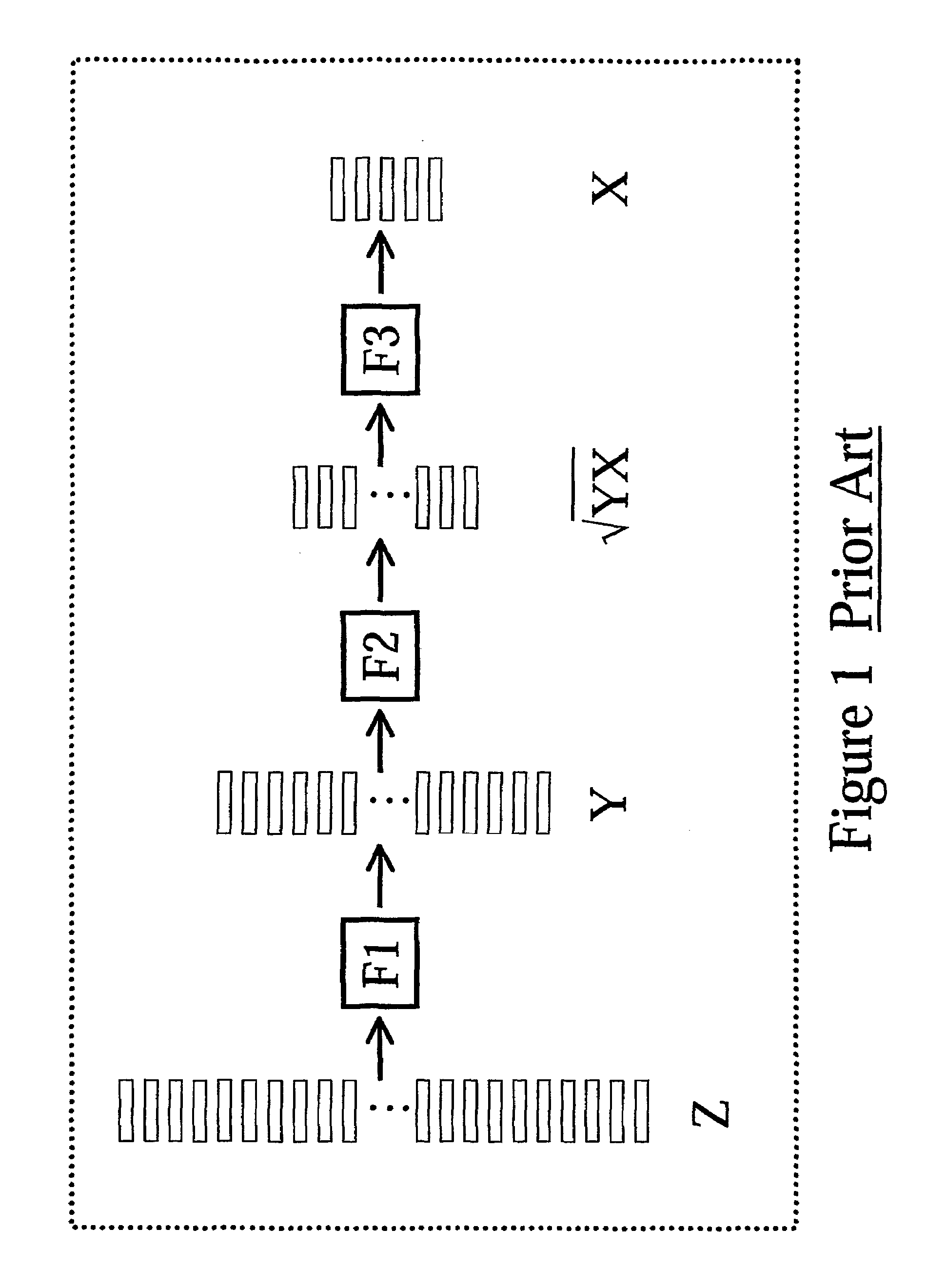

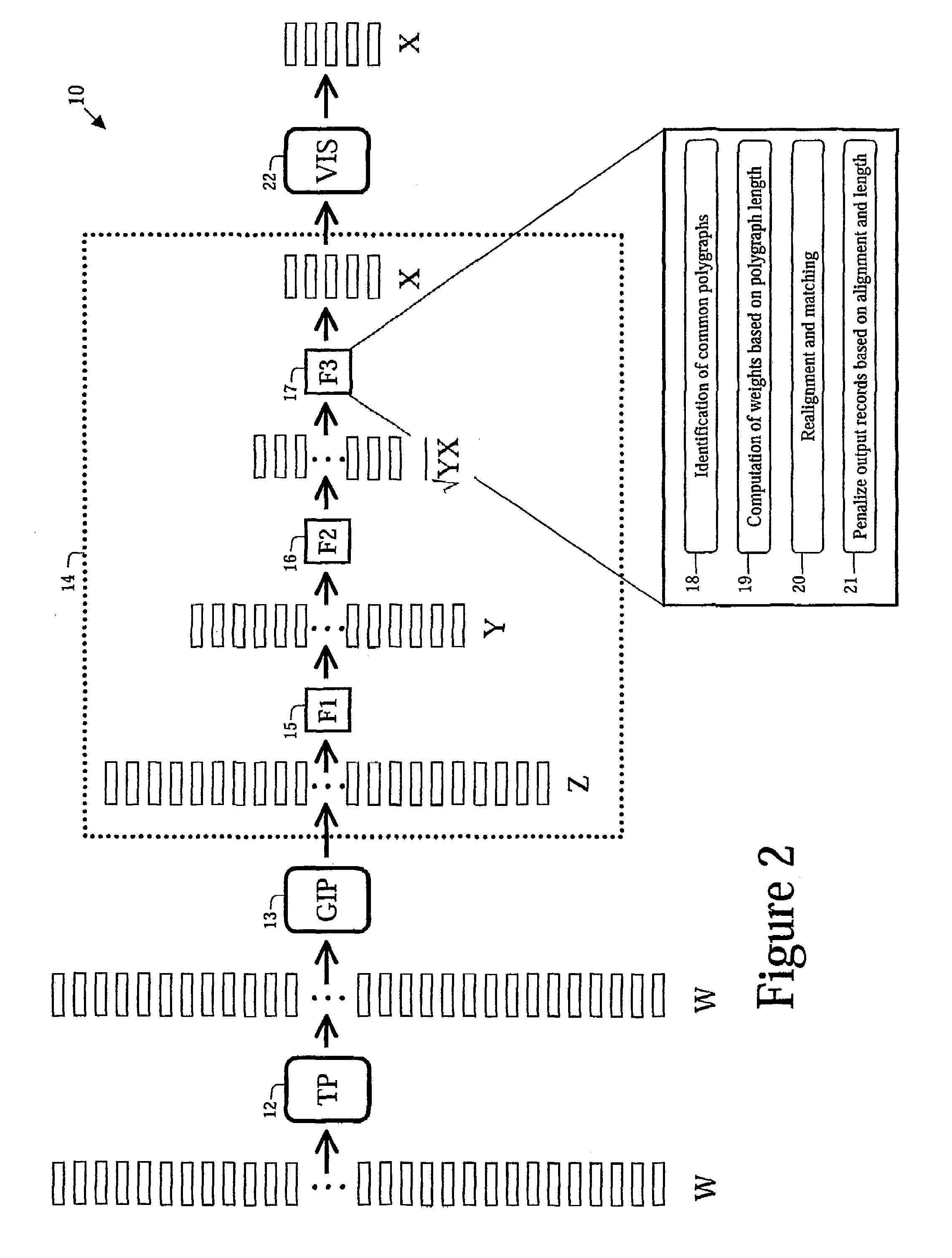

An improved multistage intelligent database search method includes (1) a prefilter that uses a precomputed index to compute a list of most “promising” records that serves as input to the original multistage search method, resulting in dramatically faster response time; (2) a revised polygraph weighting scheme correcting an erroneous weighting scheme in the original method; (3) a method for providing visualization of character matching strength to users using the bipartite graphs computed by the multistage method; (4) a technique for complementing direct search of textual data with search of a phonetic version of the same data, in such a way that the results can be combined; and (5) several smaller improvements that further refine search quality, deal more effectively with multilingual data and Asian character sets, and make the multistage method a practical and more efficient technique for searching document repositories.

Owner:CLOUD SOFTWARE GRP INC

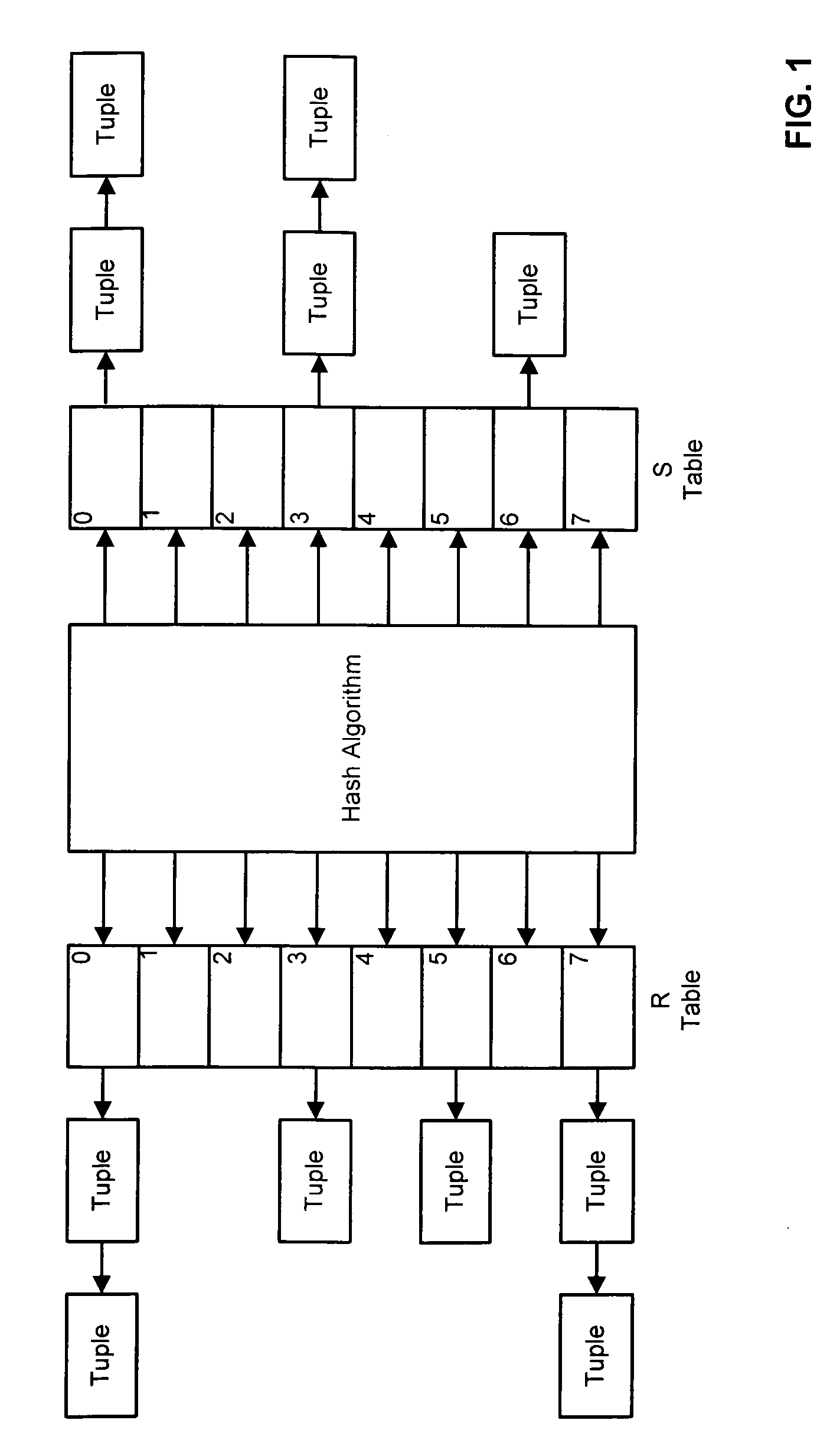

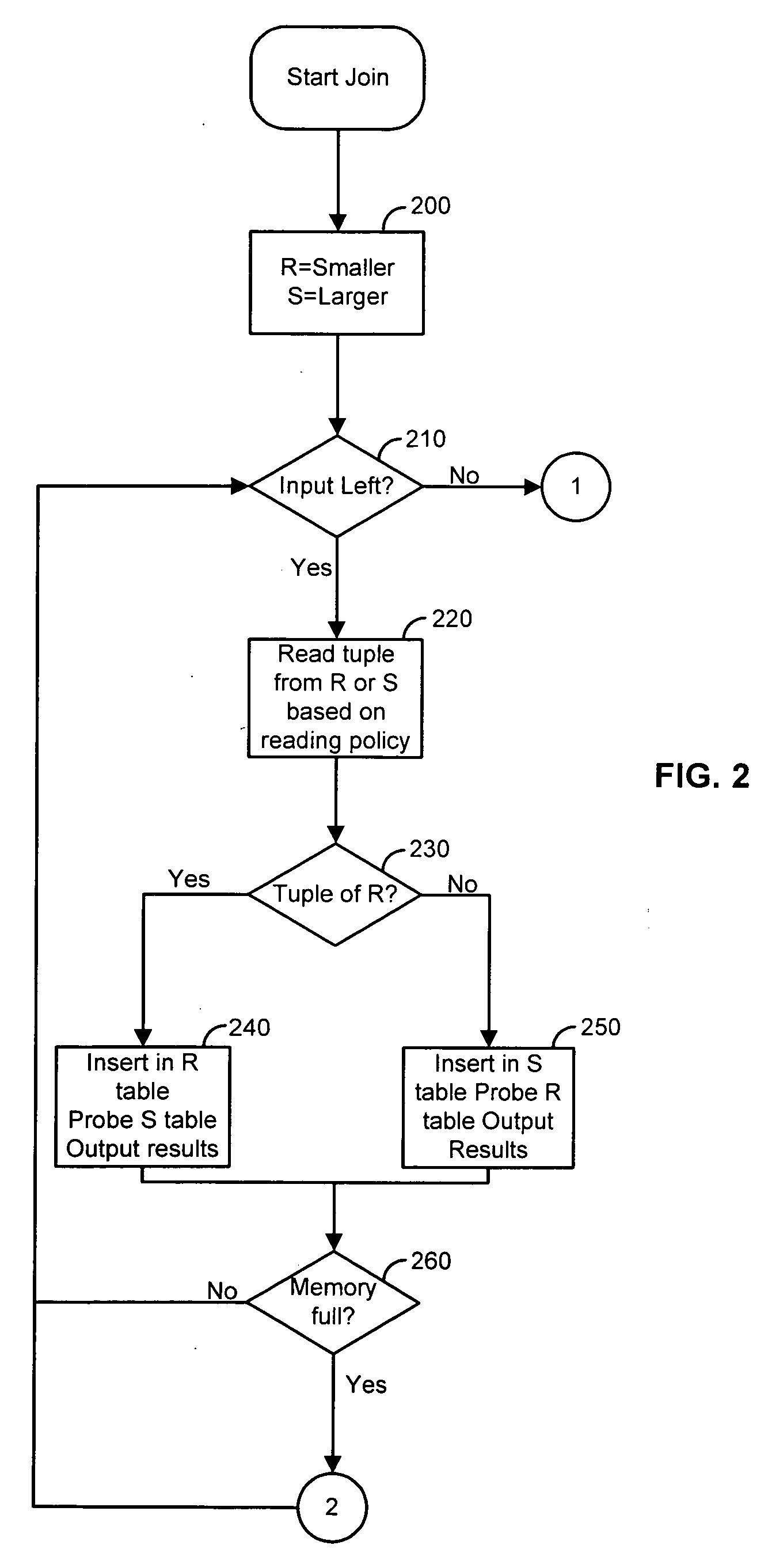

Early hash join

InactiveUS20060288030A1Fast response timeRapid productionDigital data information retrievalDigital data processing detailsHash joinParallel computing

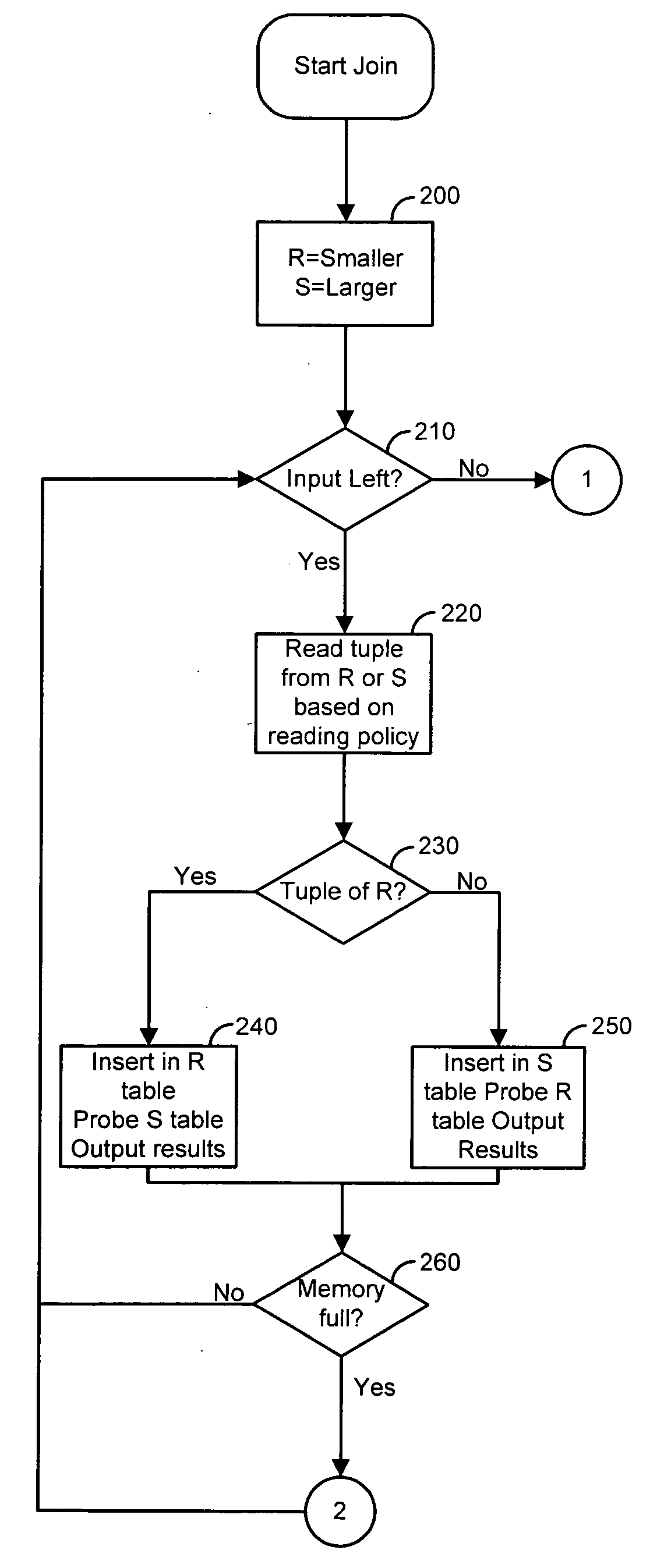

Minimizing both the response time to produce the first few thousand results and the overall execution time is important for interactive querying. Current join algorithms either minimize the execution time at the expense of response time or minimize response time by producing results early without optimizing the total time. Disclosed herein is a hash-based join algorithm, called early hash join, which can be dynamically configured at any point during join processing to tradeoff faster production of results for overall execution time. Varying how inputs are read has a major effect on these two factors and provide formulas that allow an optimizer to calculate the expected rate of join output and the number of I / O operations performed using different input reading strategies.

Owner:IOWA RES FOUND UNIV OF

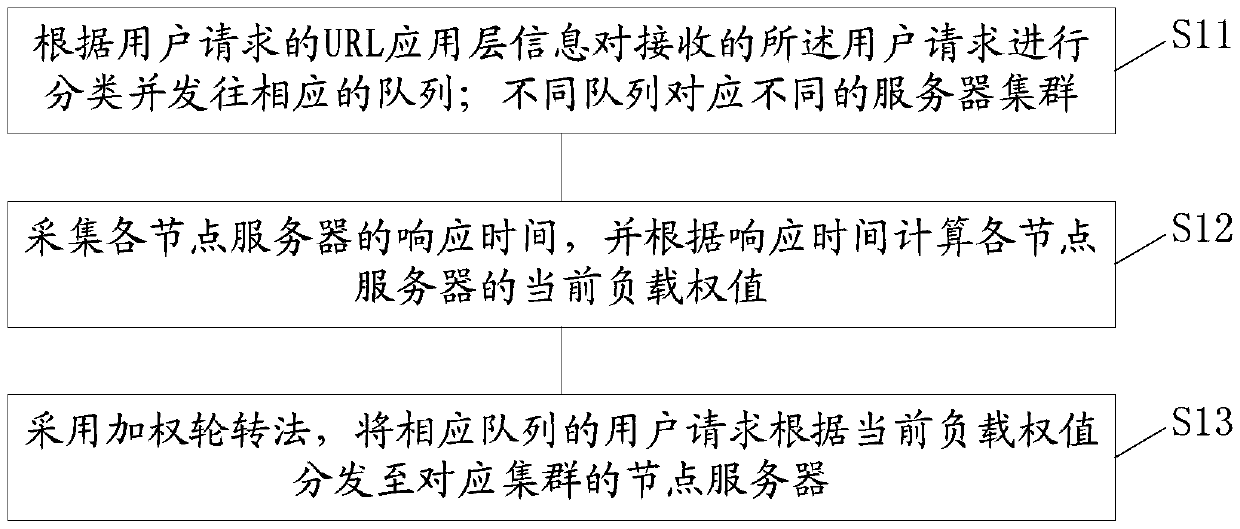

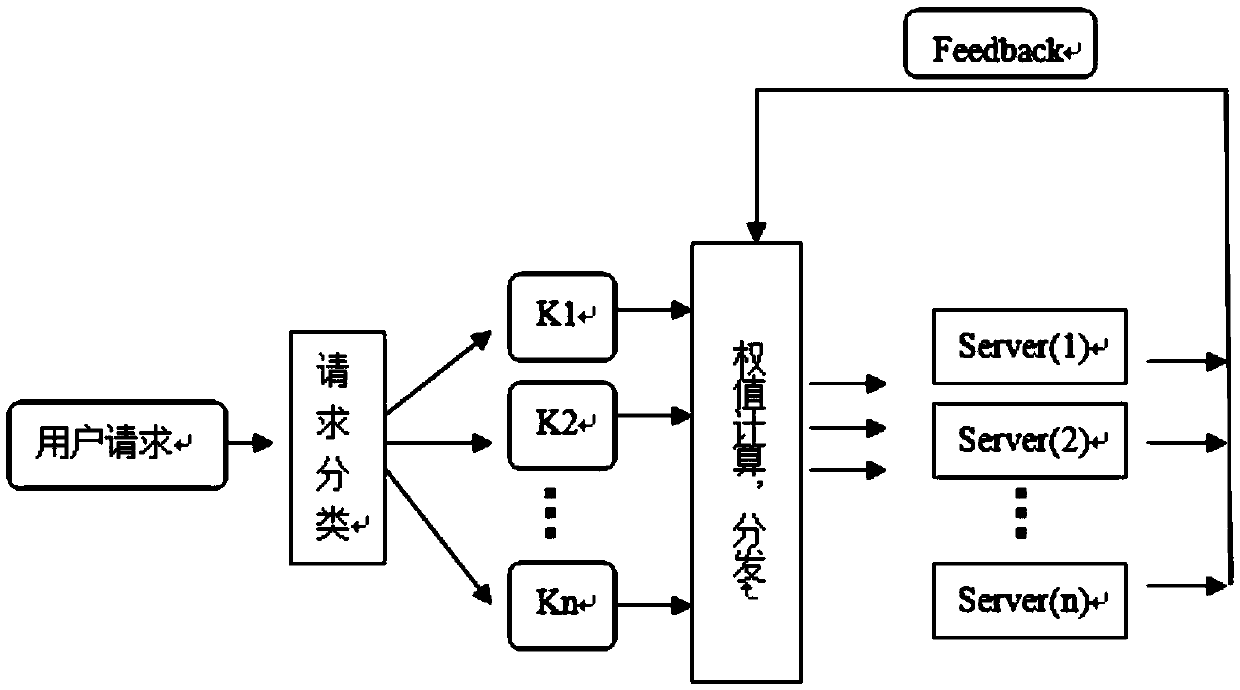

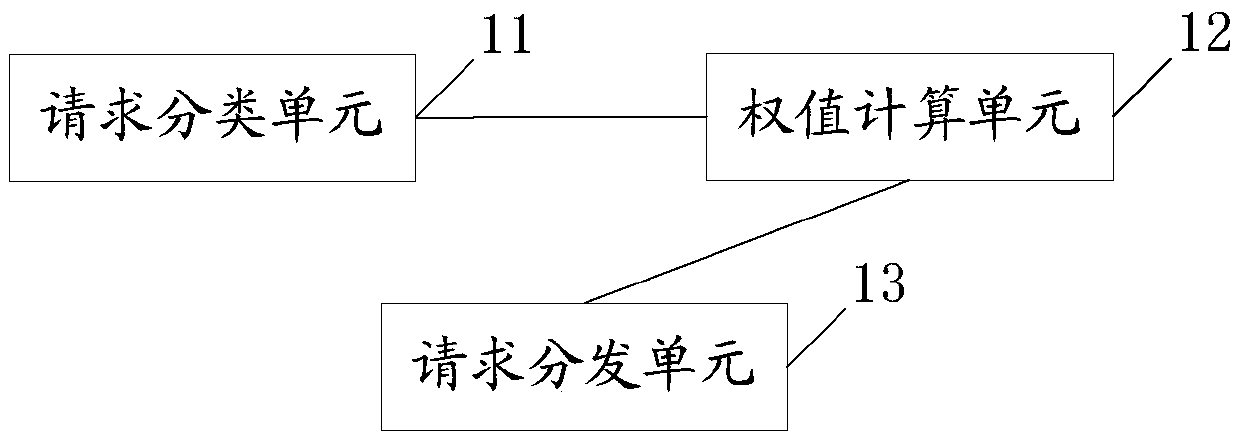

Method and device for load balance of computer

InactiveCN105516360AImprove performanceOvercoming the disadvantages of static load balancingTransmissionEngineeringUniform resource locator

The invention provides a method and a device for dynamic load balance of a server. The method for the dynamic load balance comprises the following steps of according to the information of an URL (uniform resource locator) application layer of a user request, classifying the received user requests, and sending to a corresponding queue; enabling different queues to correspond to different server clusters; collecting the response time of each node server, and calculating the current load weight of each node server according to the response time; adopting a weighted round method to distribute the user request of the corresponding queue to the node server of the corresponding cluster according to the current load weight. The method has the advantages that the disadvantages of the static load balance are overcome, the distribution is performed according to the load of the node server in real time, the same requests are distributed to the same servers according to the request content, the properties of various servers can be fully played, and the load is furthest balanced.

Owner:SUZHOU PARKTECH IOT CO LTD

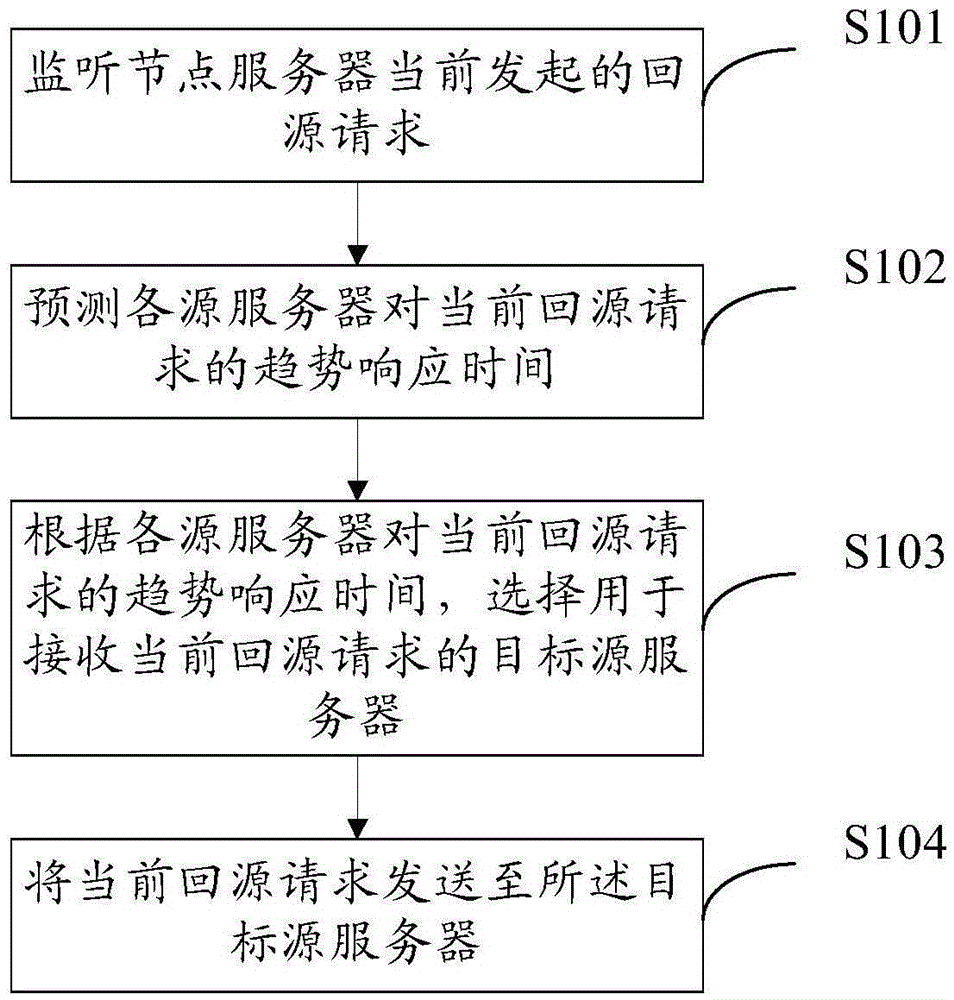

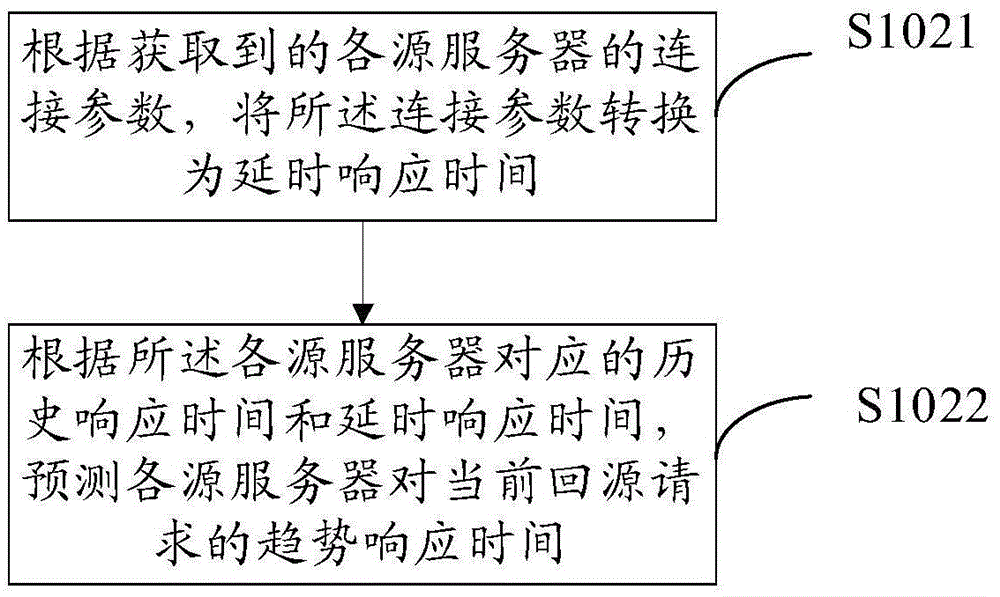

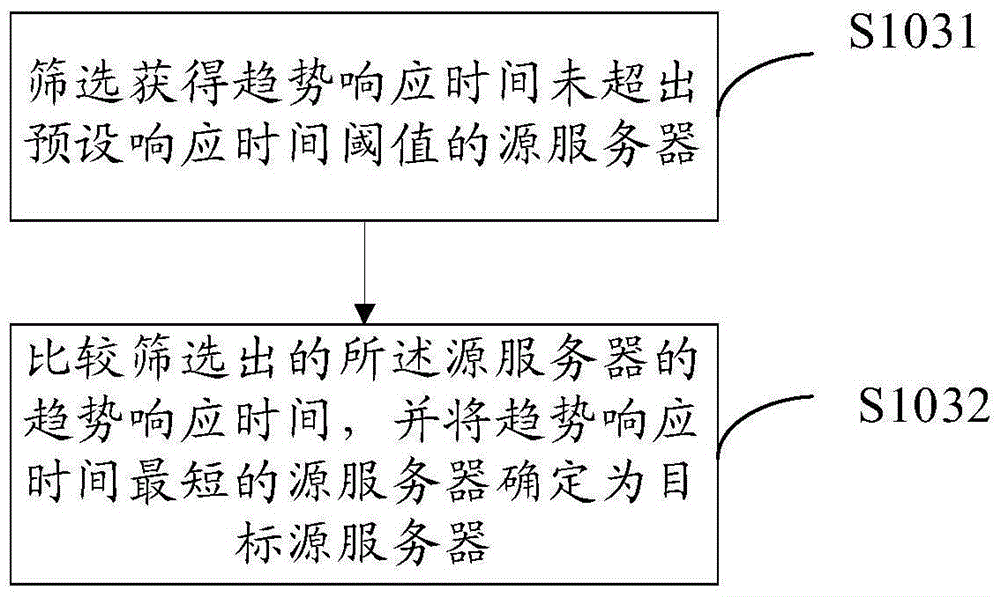

Back source request processing method and device

The invention provides a back source request processing method and device. The method comprise the steps that a back source request currently initiated by a node server is monitored; according to the history response time of each source server responding to a history back source request, the trend response time of each source server for a current back source request is predicted, wherein the history response time is acquired by the node server; according to the trend response time of each source server for the current back source request, a target source server which is used for receiving the current back source request is selected; and the current back source request is sent to the target server source. According to the back source request processing method and device, by taking full account of the current processing capability of a source server, a back source server is selected; the back source request response speed of the node server is ensured; and the user experience of a user is ensured.

Owner:LETV CLOUD COMPUTING CO LTD

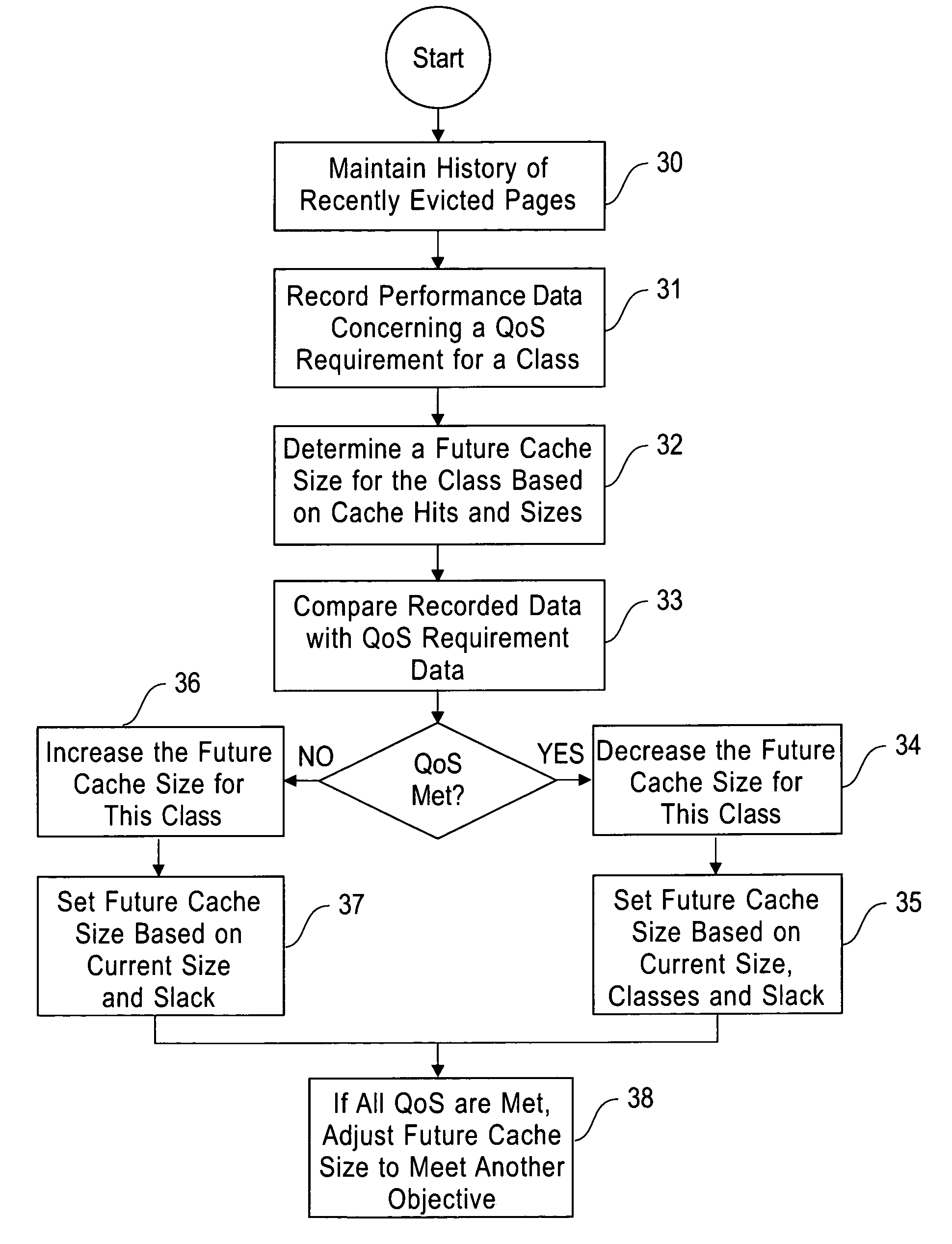

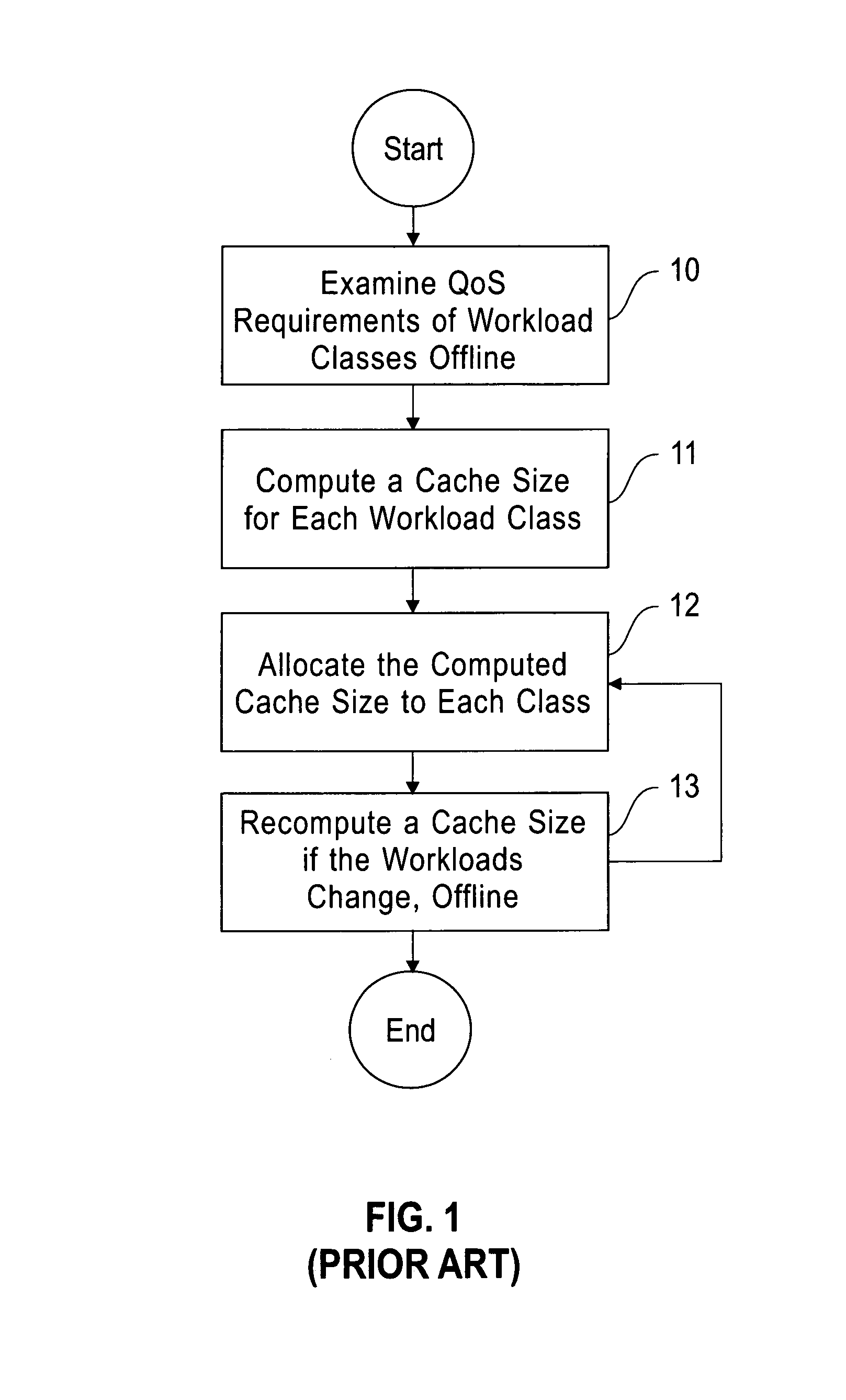

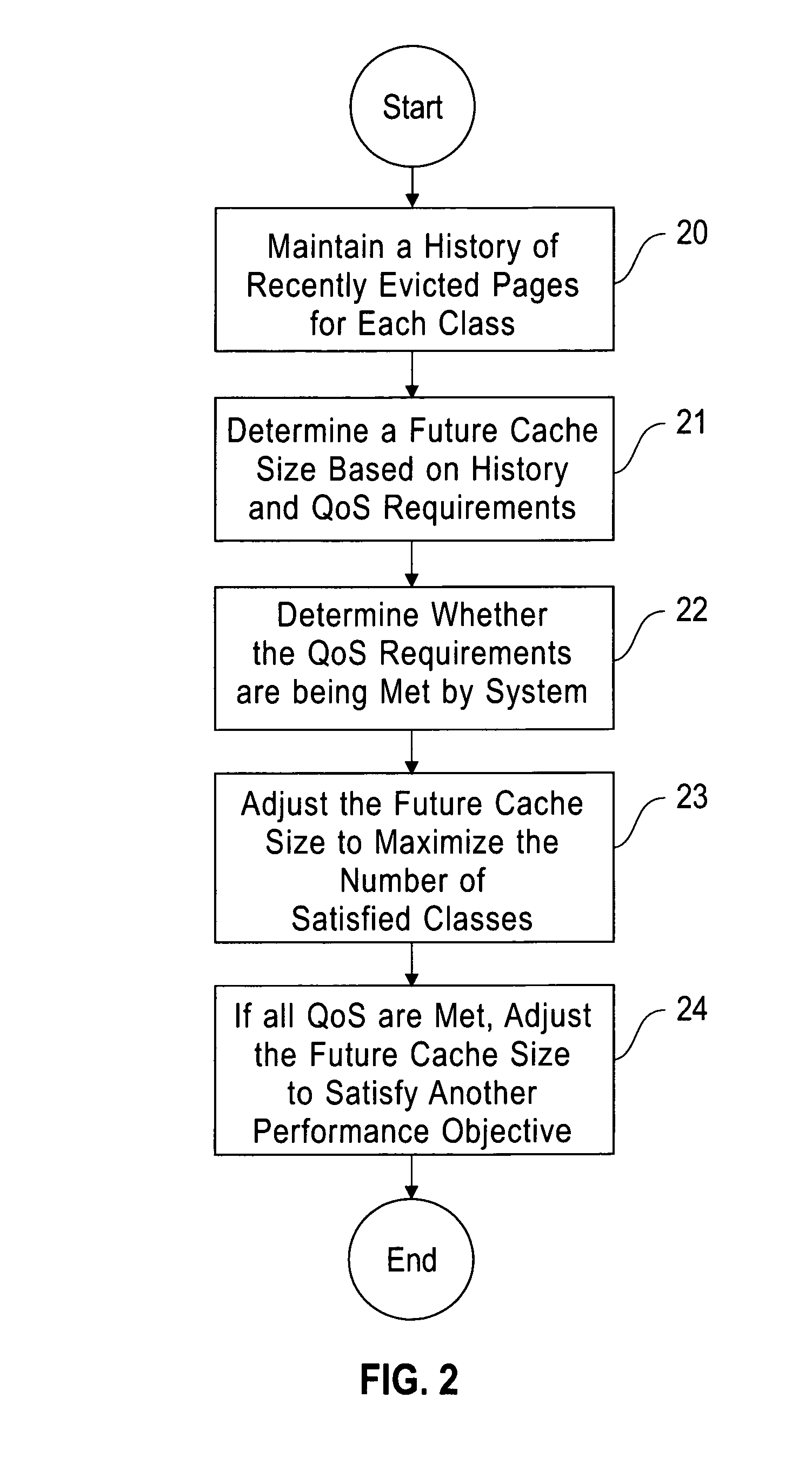

System and method for dynamically allocating cache space among different workload classes that can have different quality of service (QoS) requirements where the system and method may maintain a history of recently evicted pages for each class and may determine a future cache size for the class based on the history and the QoS requirements

ActiveUS7107403B2Maximizing numberMemory architecture accessing/allocationResource allocationQuality of serviceParallel computing

A method and system for dynamically allocating cache space in a storage system among multiple workload classes each having a unique set of quality-of-service (QoS) requirements. The invention dynamically adapts the space allocated to each class depending upon the observed response time for each class and the observed temporal locality in each class. The dynamic allocation is achieved by maintaining a history of recently evicted pages for each class, determining a future cache size for the class based on the history and the QoS requirements where the future cache size might be different than a current cache size for the class, determining whether the QoS requirements for the class are being met, and adjusting the future cache size to maximize the number of classes in which the QoS requirements are met. The future cache sizes are increased for the classes whose QoS requirements are not met while they are decreased for those whose QoS requirements are met.

Owner:IBM CORP

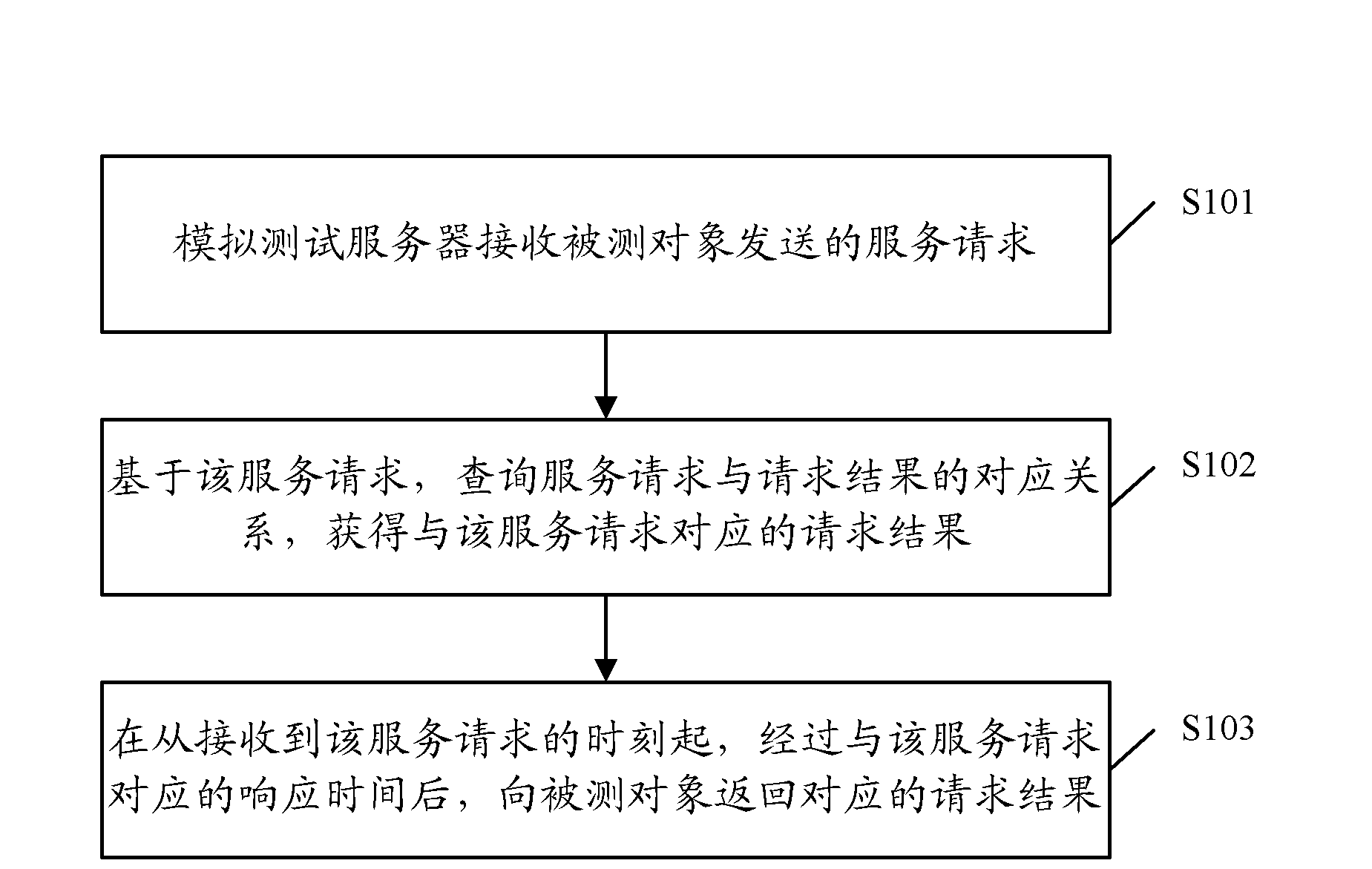

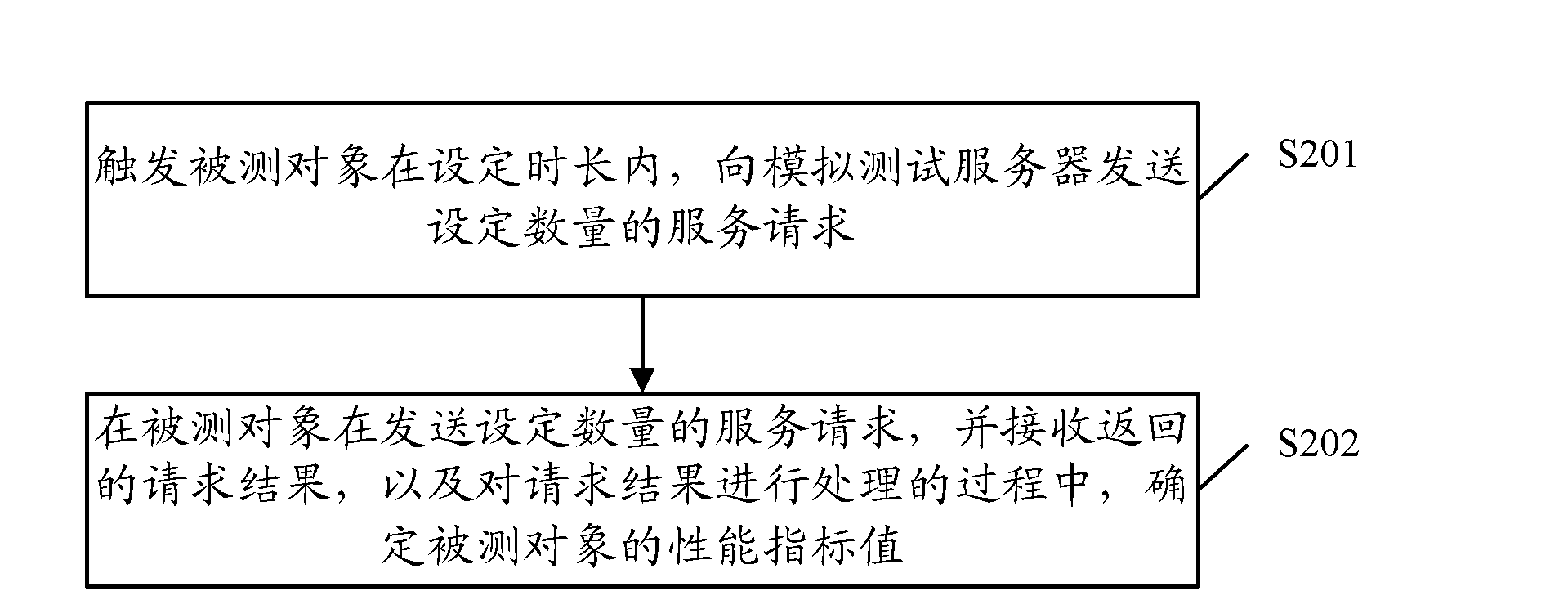

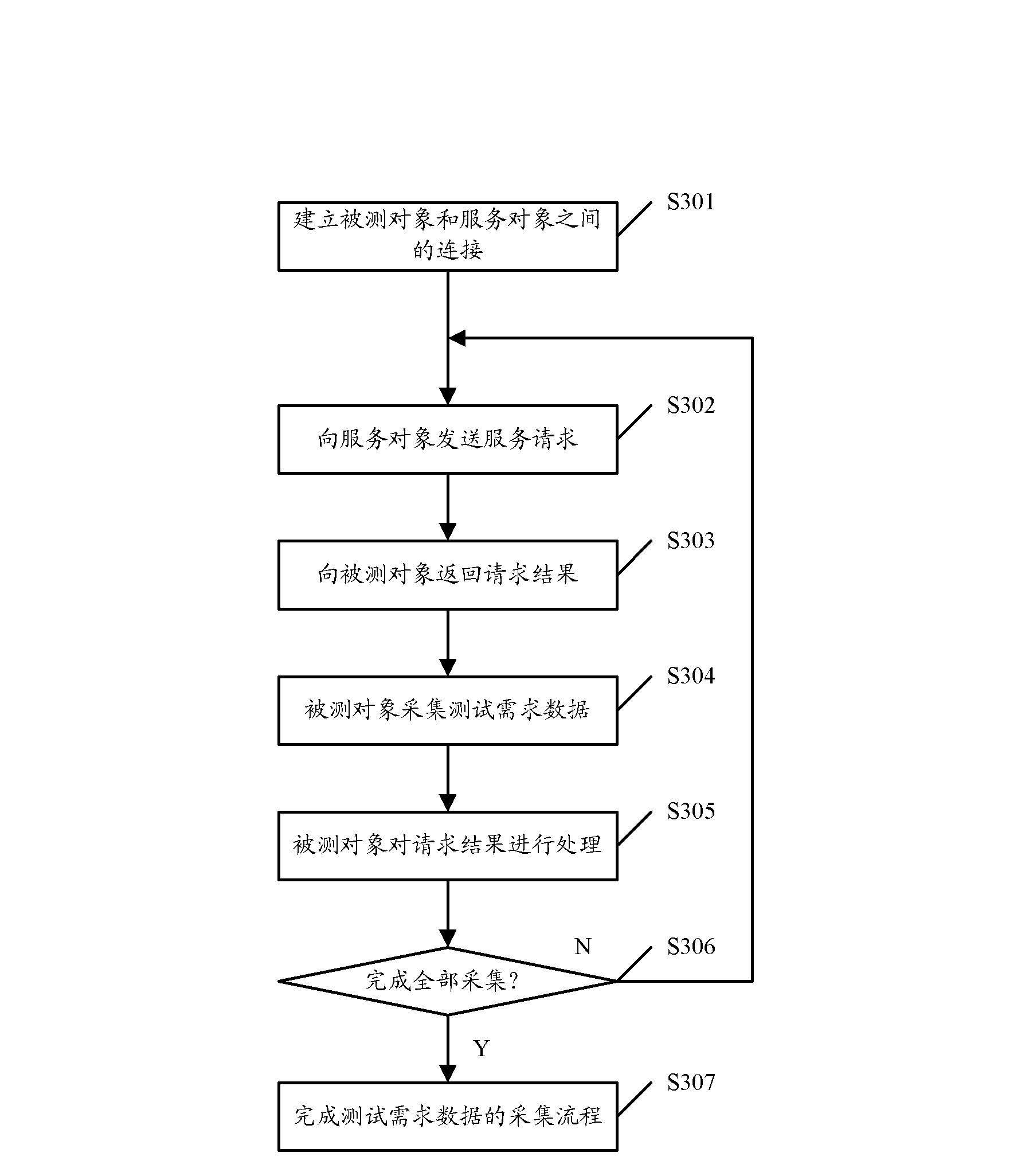

Service request processing method, simulation performance test method and simulation performance test device

ActiveCN103150238ASimulation is accurateImprove accuracyFunctional testingTest objectComputer science

The invention discloses a service request processing method, a simulation performance test method and a simulation performance test device. The service request processing method comprises the following steps of receiving a service request transmitted by a tested object through a simulation test server; inquiring a correspondence between the service request and a request result based on the service request, acquiring a request result corresponding to the service request, wherein the correspondent request result is a request result returned by a service object to the tested object when the tested object transmits the service request to the service object; and returning a correspondent request result to the tested object after a response time corresponding to the service request since the moment that the service request is received, wherein the response time is the response time that the service object returns the request result to the tested object when the tested object transmits the service request to the service object. By adopting the scheme provided by the embodiment, the accuracy for testing the performance can be improved.

Owner:ALIBABA GRP HLDG LTD

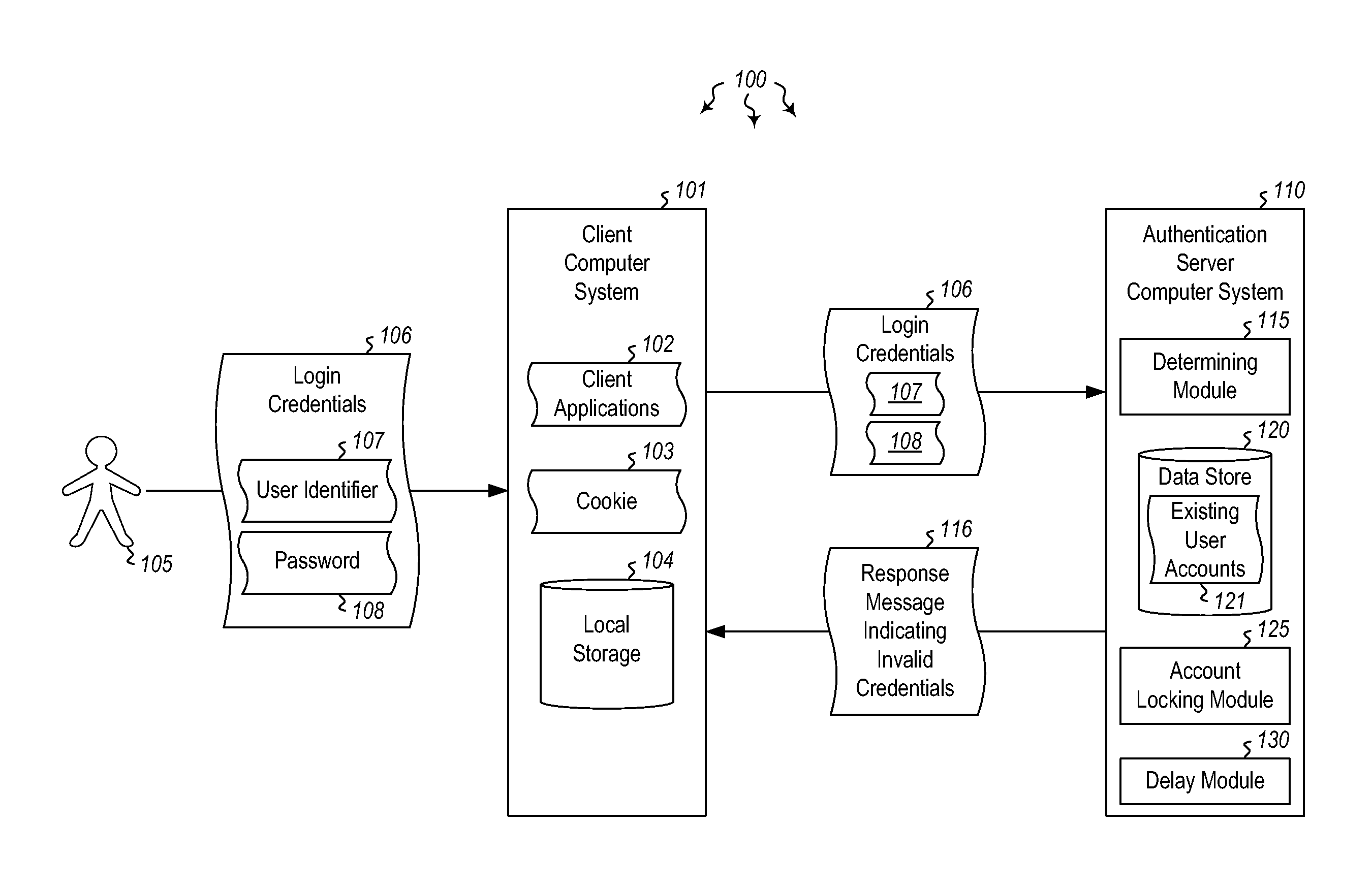

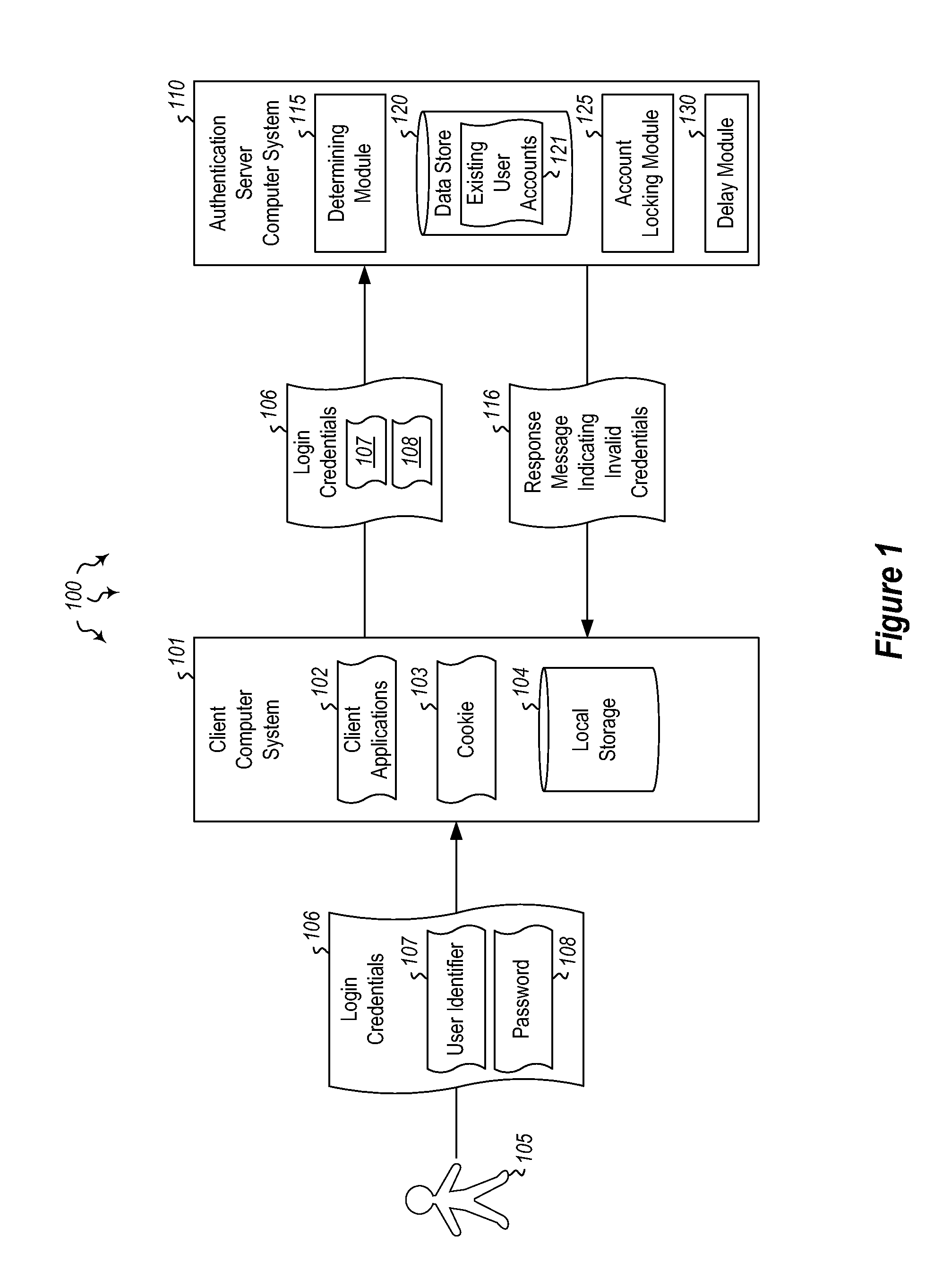

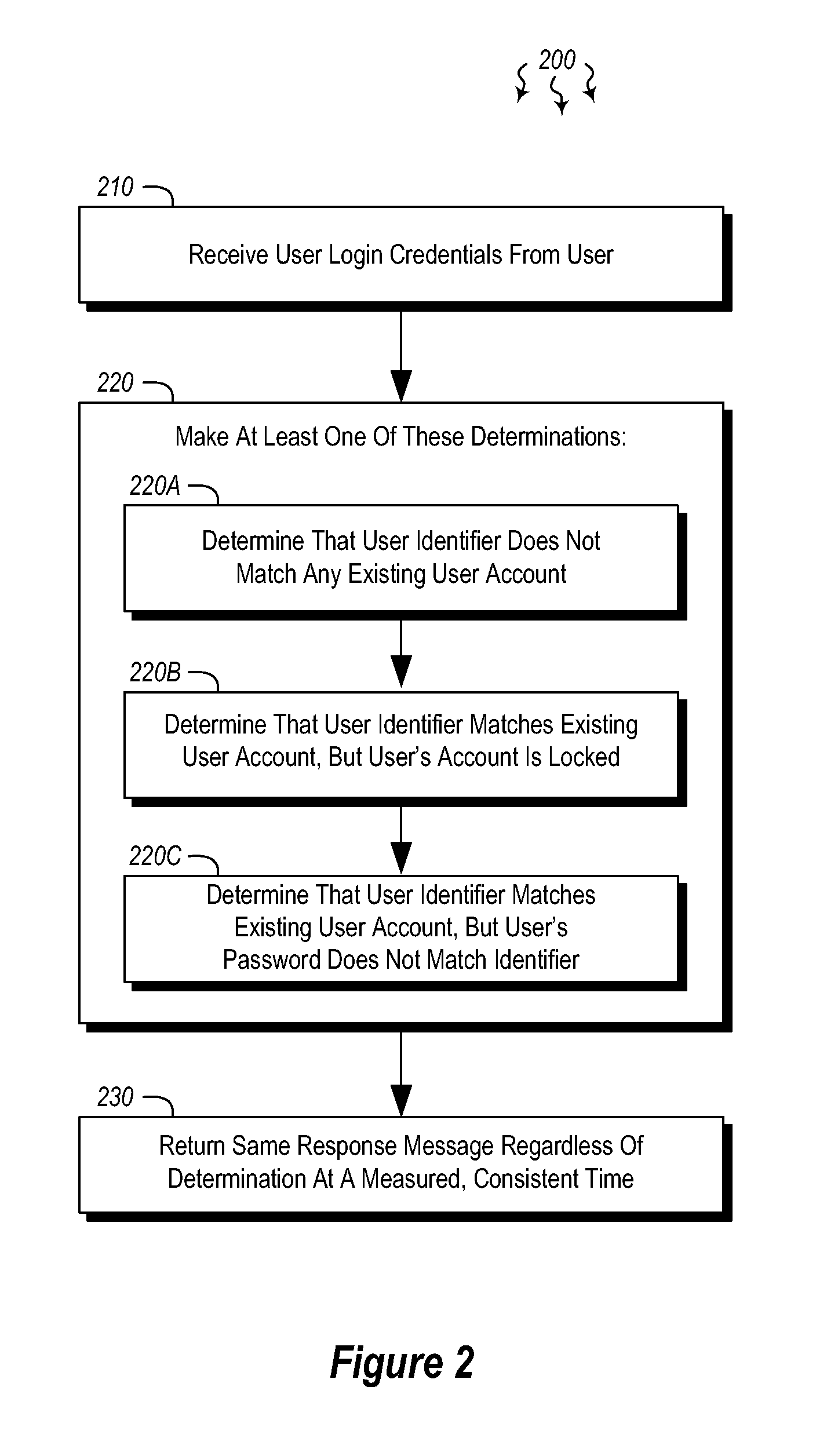

Efficiently throttling user authentication

ActiveUS20130198819A1Digital data processing detailsMultiple digital computer combinationsPasswordInternet privacy

In an embodiment, an administrative computer system receives user login credentials from a user and makes at least one of the following determinations: that the user identifier does not match any existing user account, that the user identifier matches at least one existing user account, but that the user's account is in a locked state, or that the user identifier matches at least one existing user account, but the user's password does not match the user identifier. The administrative computer system then returns to the user the same response message regardless of which determination is made. The response indicates that the user's login credentials are invalid. The response also prevents the user from determining which of the credentials was invalid, as the response message is the same for each determination and is sent to the user after a measured response time that is the same for each determination.

Owner:MICROSOFT TECH LICENSING LLC

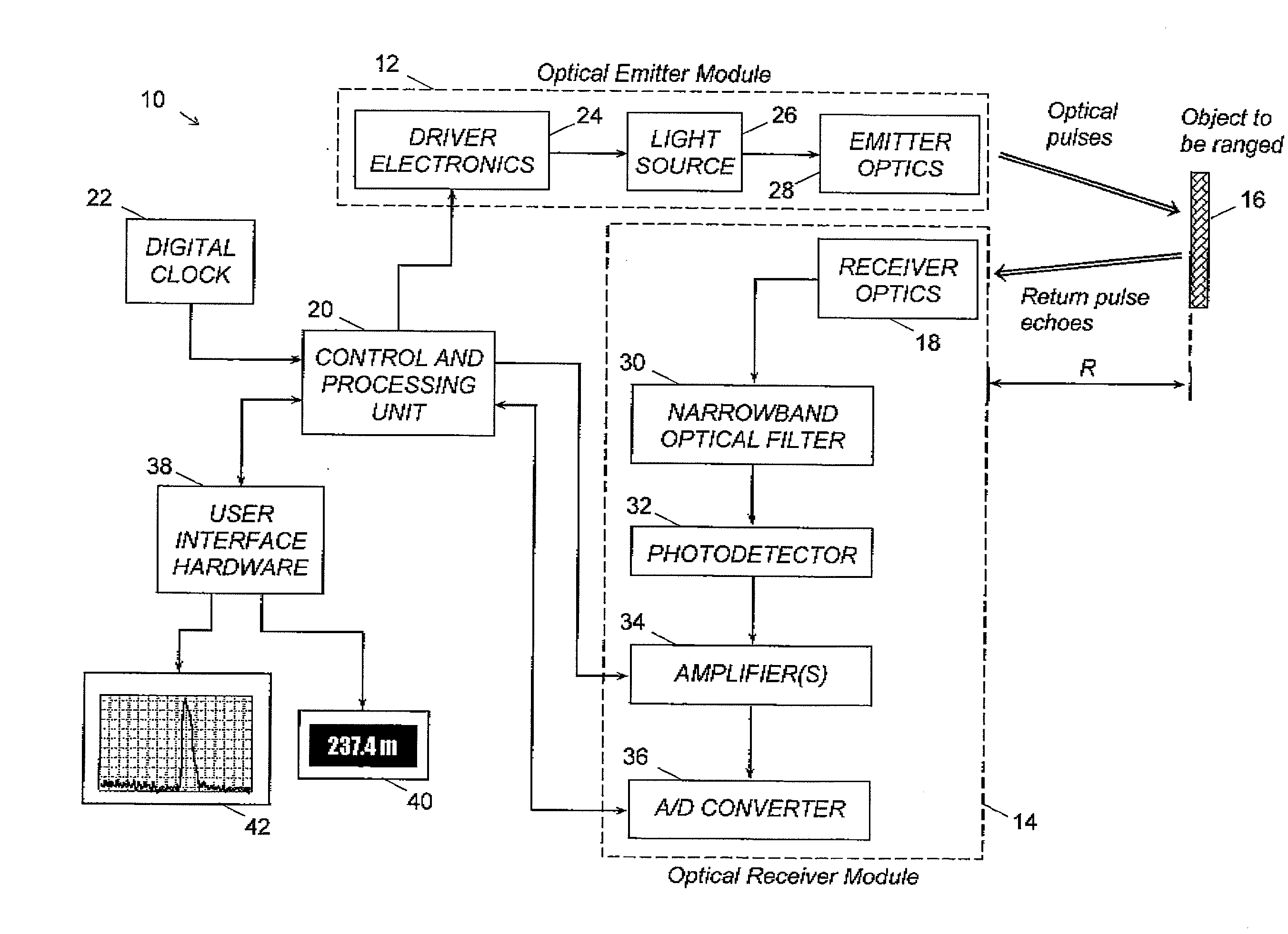

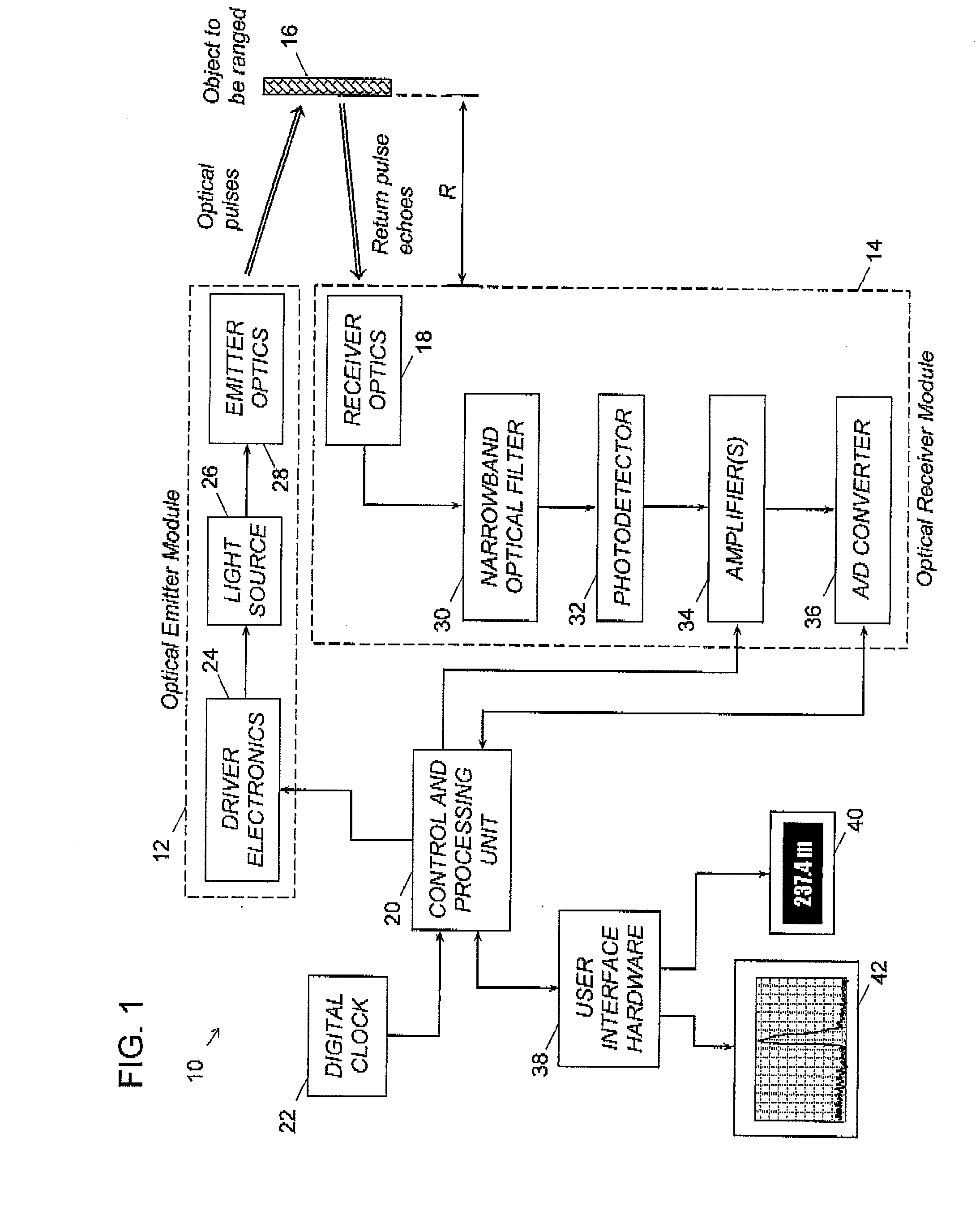

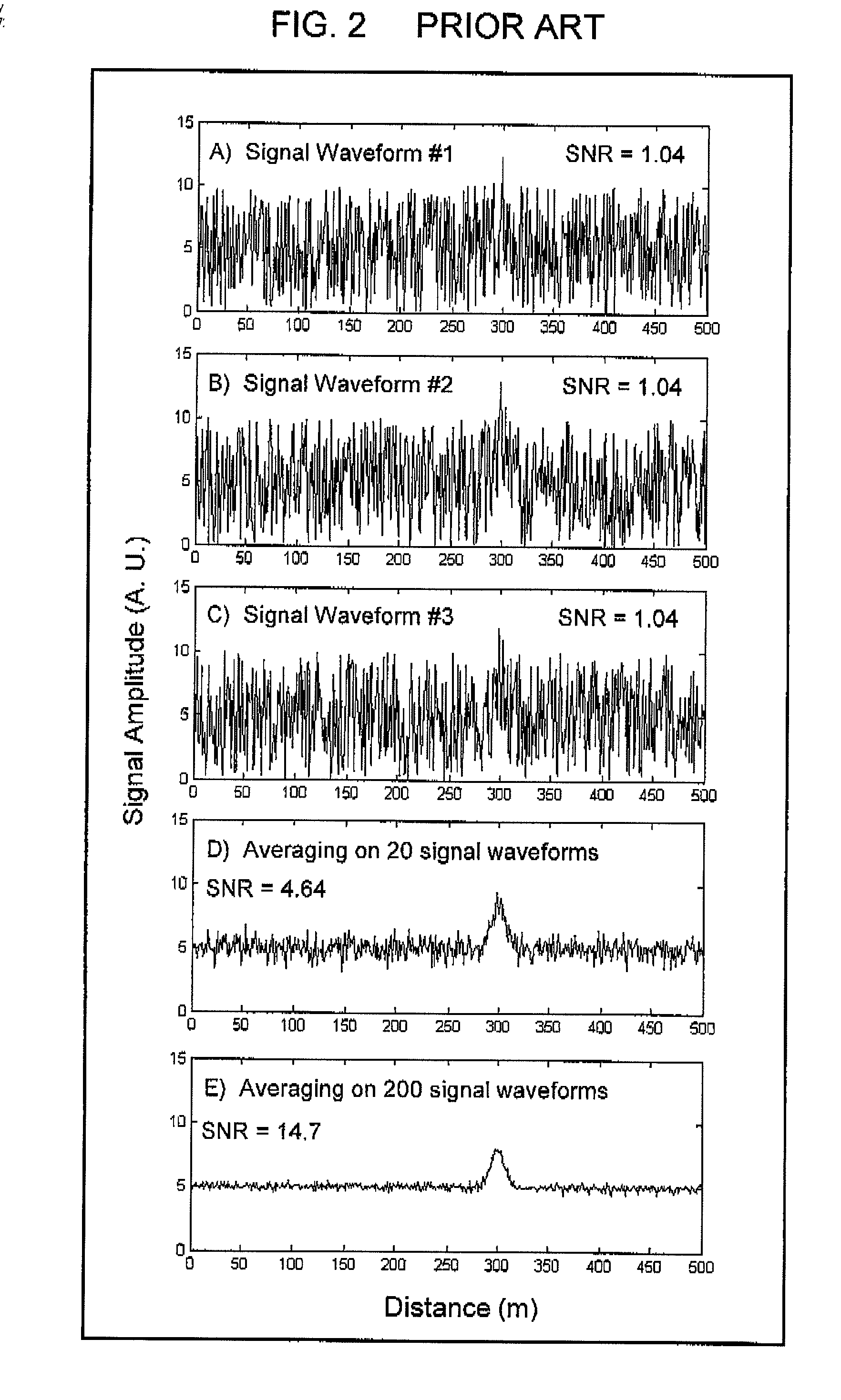

Digital signal processing in optical systems used for ranging applications

ActiveUS20090119044A1Easy to handleNoise figure or signal-to-noise ratio measurementInvestigating moving sheetsDigital signal processingOptic system

Methods and apparatuses for reducing the response time along with increasing the probability of ranging of optical rangefinders that digitize the signal waveforms obtained from the pulse echoes returned from various types of objects to be ranged, the pulse echoes being too weak to allow successful ranging from a single waveform or the objects being possibly in motion during the capture of the pulse echoes. In a first embodiment of the invention, the response time at close range of a digital optical rangefinder is reduced by using a signal averaging process wherein the number of data to be averaged varies with the distance according to a predetermined function. In a second embodiment of the invention, the probability of ranging objects in motion along the line of sight of a digital optical rangefinder is increased and the object velocity measured by performing a range shift of each acquired signal waveform prior to averaging. In a third embodiment of the invention, the signal waveforms acquired in the line of sight of a digital optical rangefinder are scanned over a predetermined zone and range shifted and averaged to allow for early detection and ranging of objects that enter in the zone.

Owner:LEDDARTECH INC

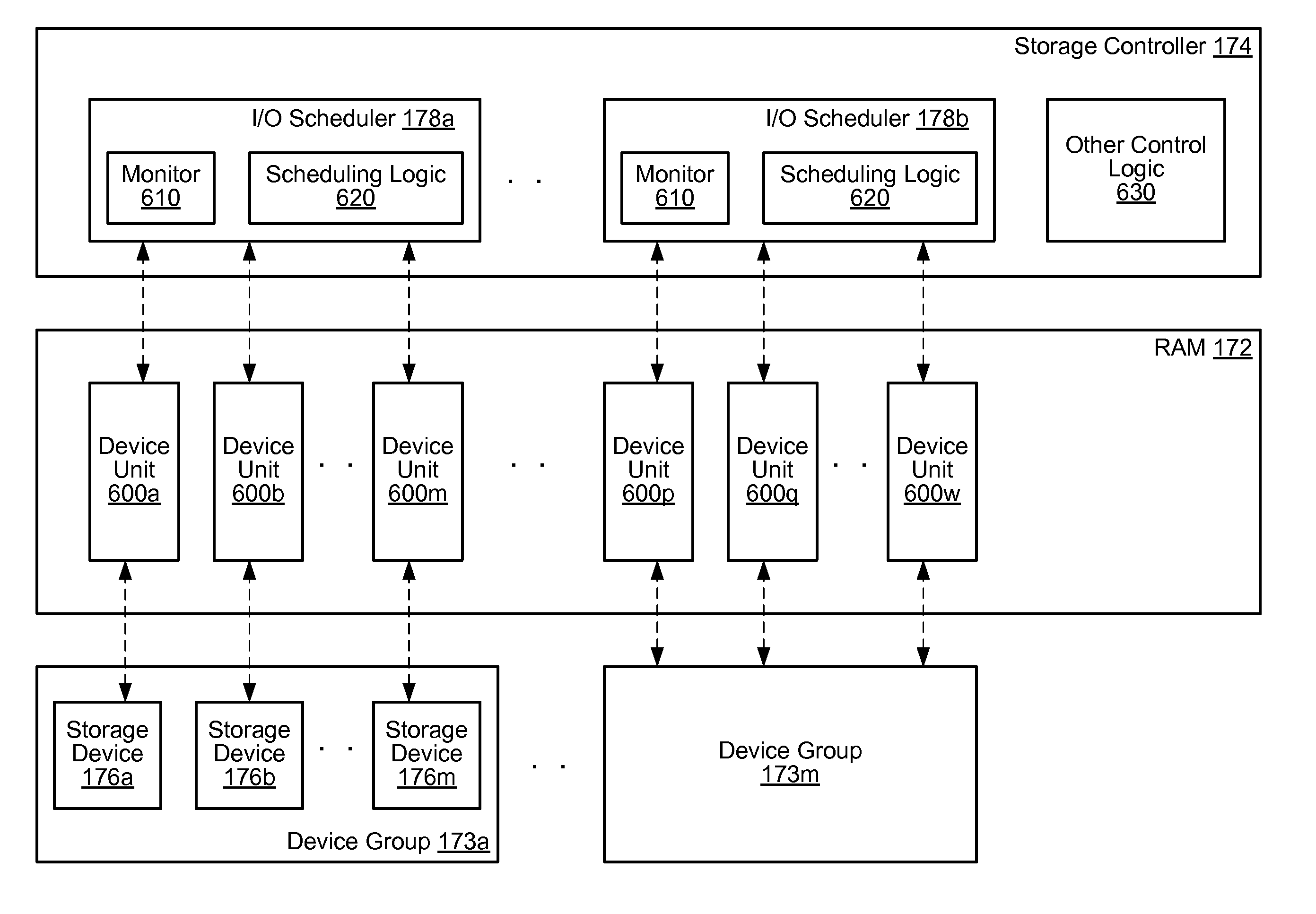

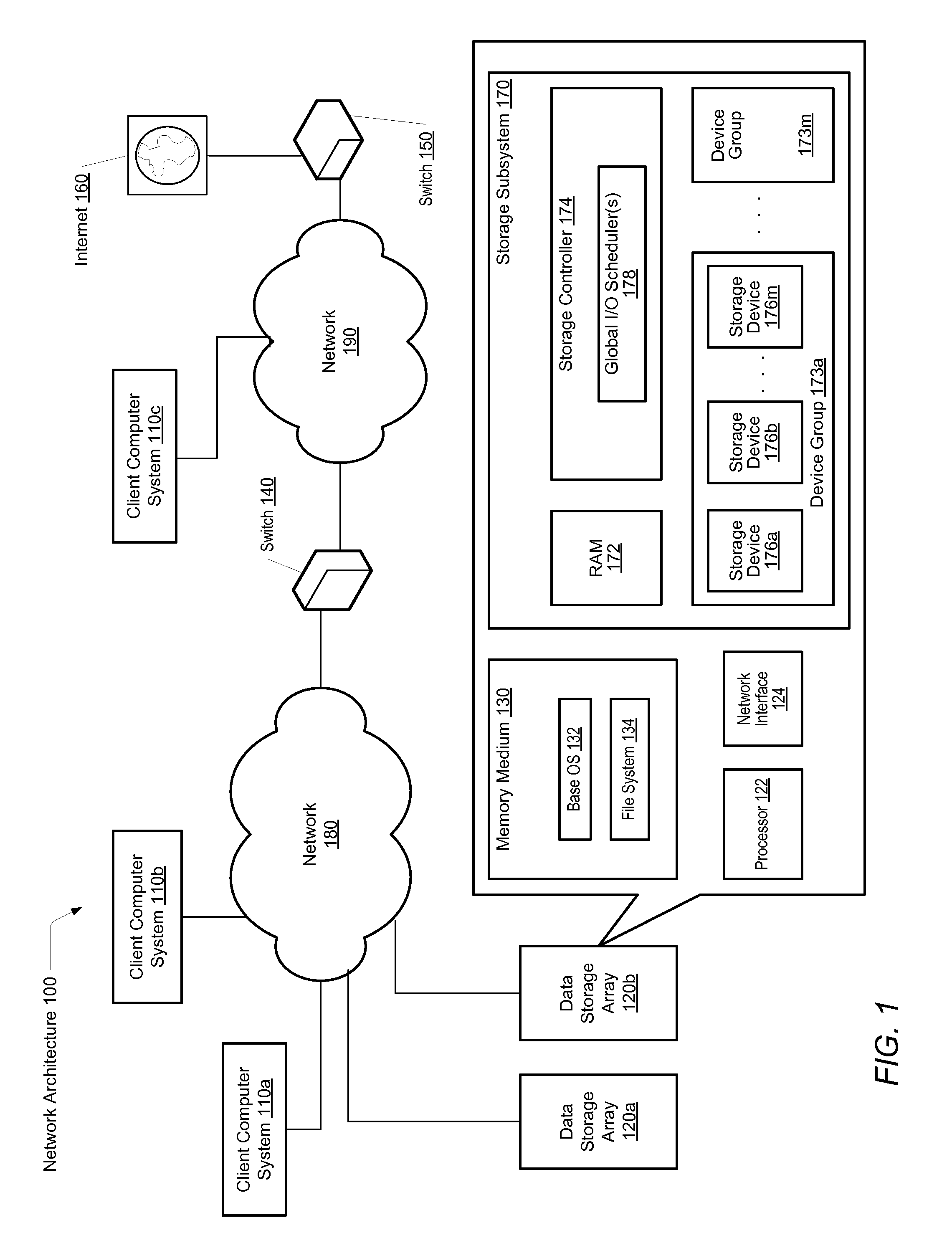

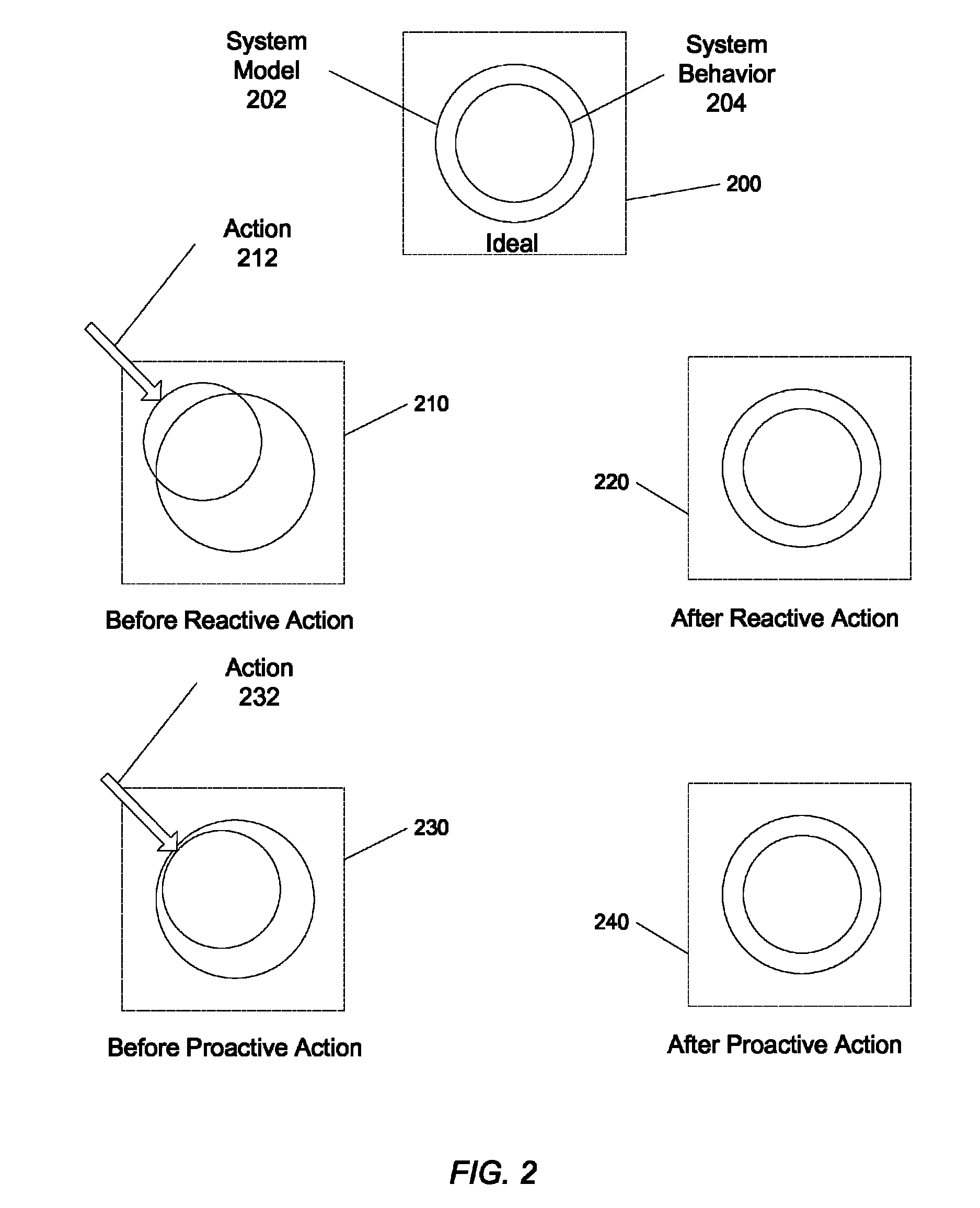

Scheduling of I/O in an SSD environment

ActiveUS8589655B2Reduce generationReduce the possibilityMemory architecture accessing/allocationInput/output to record carriersSolid-state storageControl store

A system and method for effectively scheduling read and write operations among a plurality of solid-state storage devices. A computer system comprises client computers and data storage arrays coupled to one another via a network. A data storage array utilizes solid-state drives and Flash memory cells for data storage. A storage controller within a data storage array comprises an I / O scheduler. The characteristics of corresponding storage devices are used to schedule I / O requests to the storage devices in order to maintain relatively consistent response times at predicted times. In order to reduce a likelihood of unscheduled behaviors of the storage devices, the storage controller is configured to schedule proactive operations on the storage devices that will reduce a number of occurrences of unscheduled behaviors.

Owner:PURE STORAGE

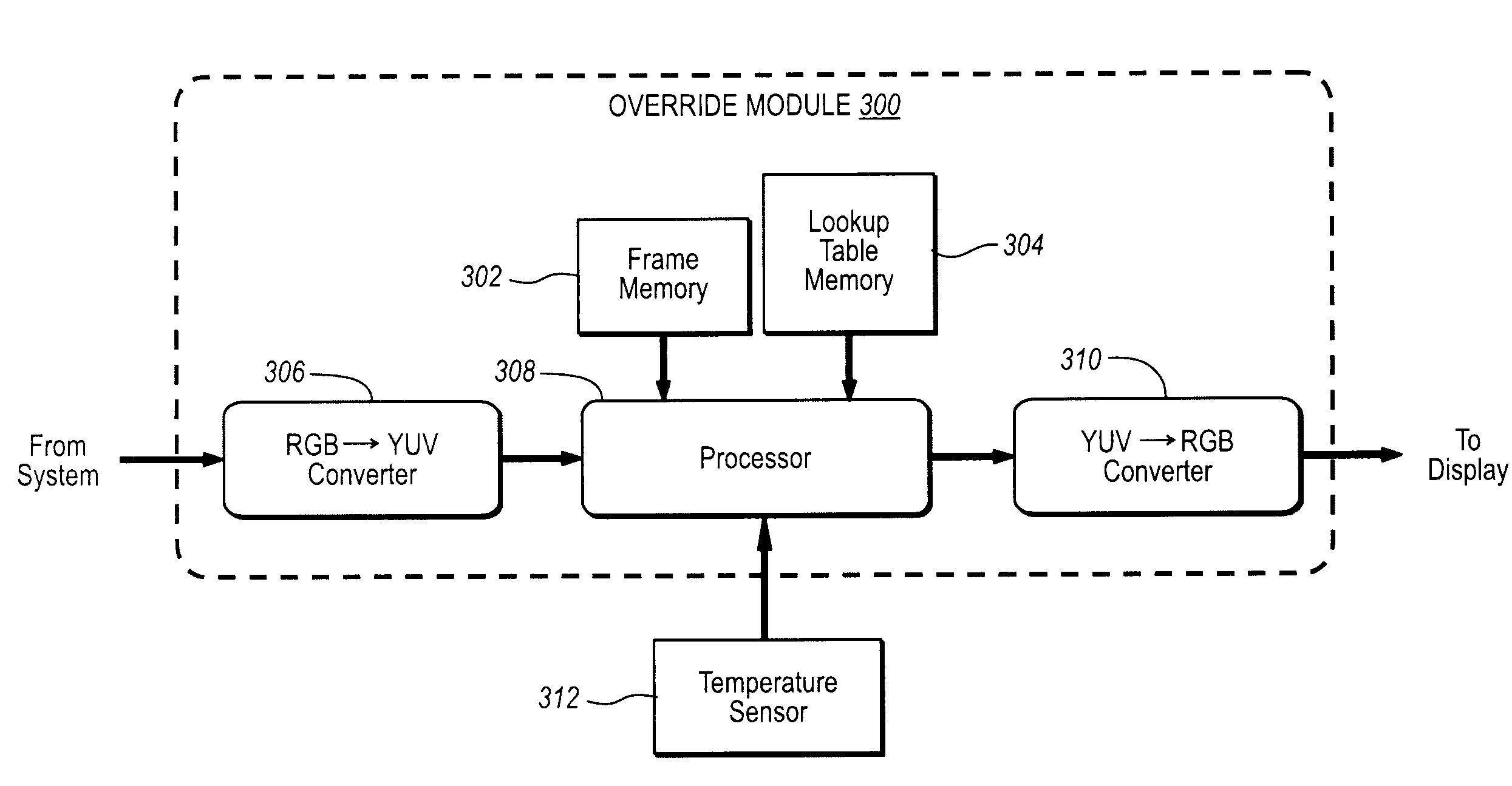

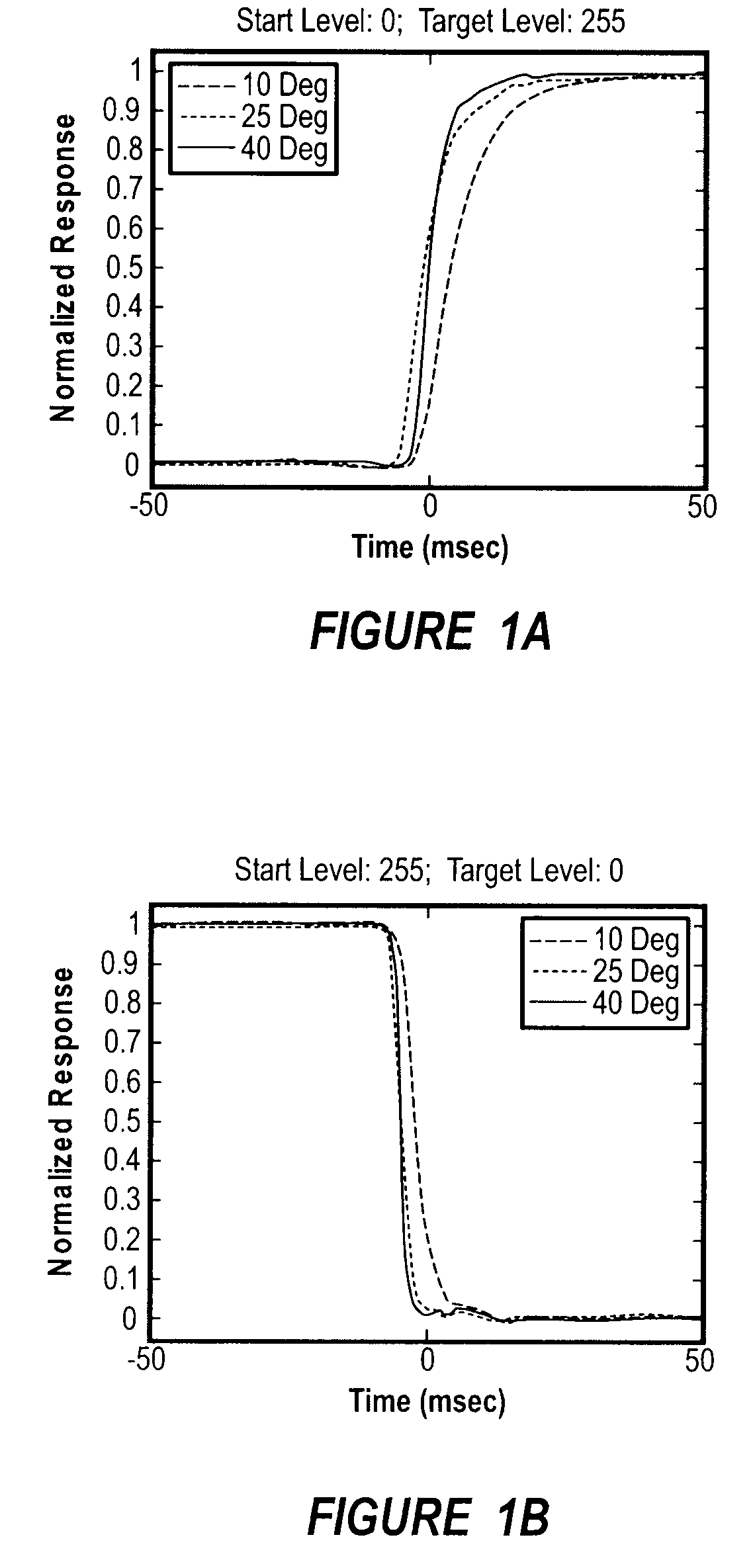

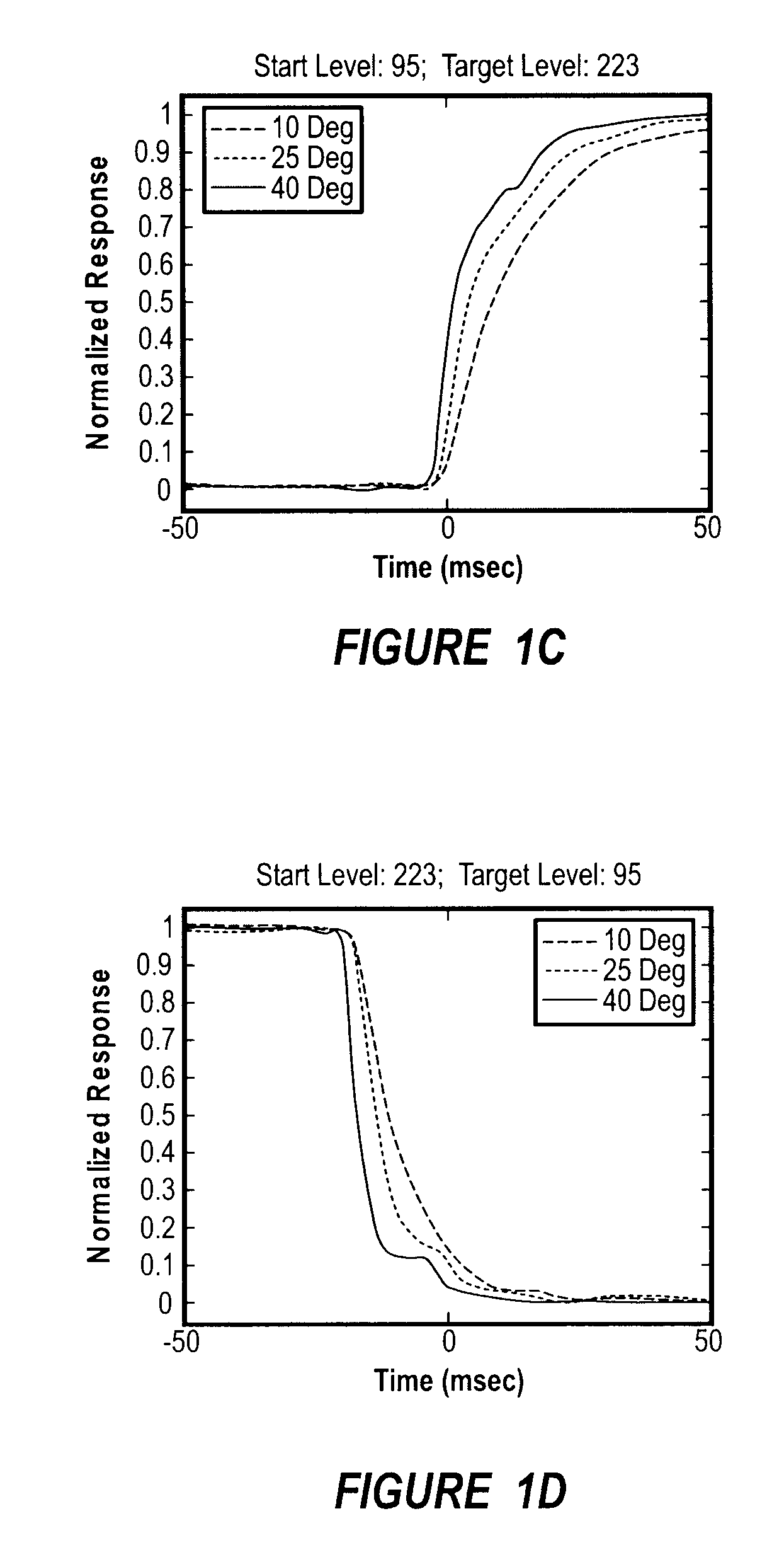

Temperature Adaptive Overdrive Method, System And Apparatus

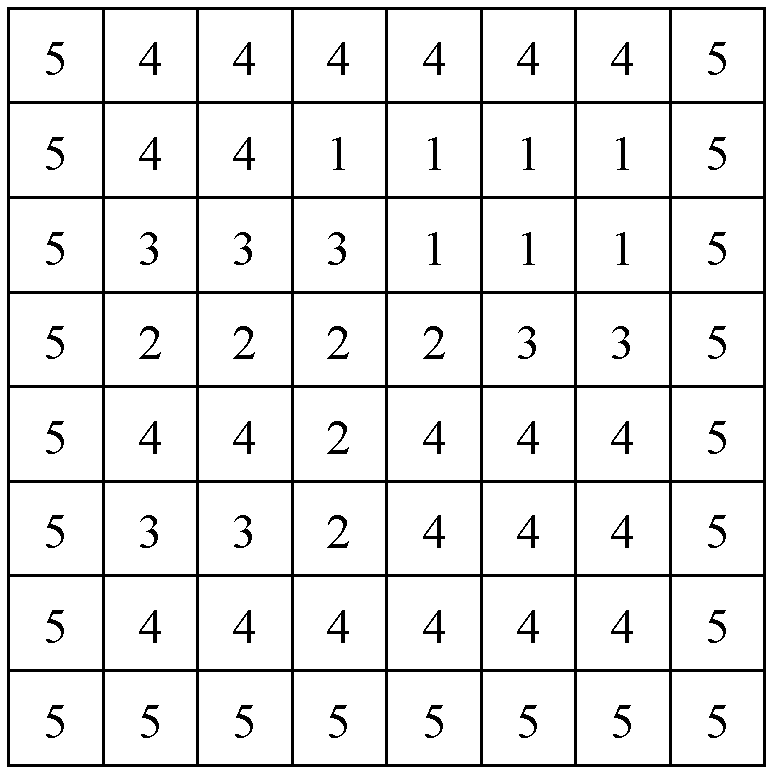

ActiveUS20080231624A1Reduce needLow costCathode-ray tube indicatorsInput/output processes for data processingLiquid crystallineGray level

A method and system for calculating an overdrive parameter for a liquid crystal within an LCD device to compensate for temperature variations. An example system includes a temperature sensor for measuring an ambient temperature near a liquid crystal and a memory for storing a lookup table containing a plurality of overdrive parameters. Each overdrive parameter corresponds to a graylevel transition between a previous frame and a current frame, and represents a level at which a liquid crystal is driven in order to achieve a desired response time for the graylevel transition at a reference temperature. A processor extracts the appropriate overdrive parameter from the lookup and calculates an adapted overdrive parameter that adjusts for the difference between the measured ambient temperature and the reference temperature.

Owner:138 EAST LCD ADVANCEMENTS LTD

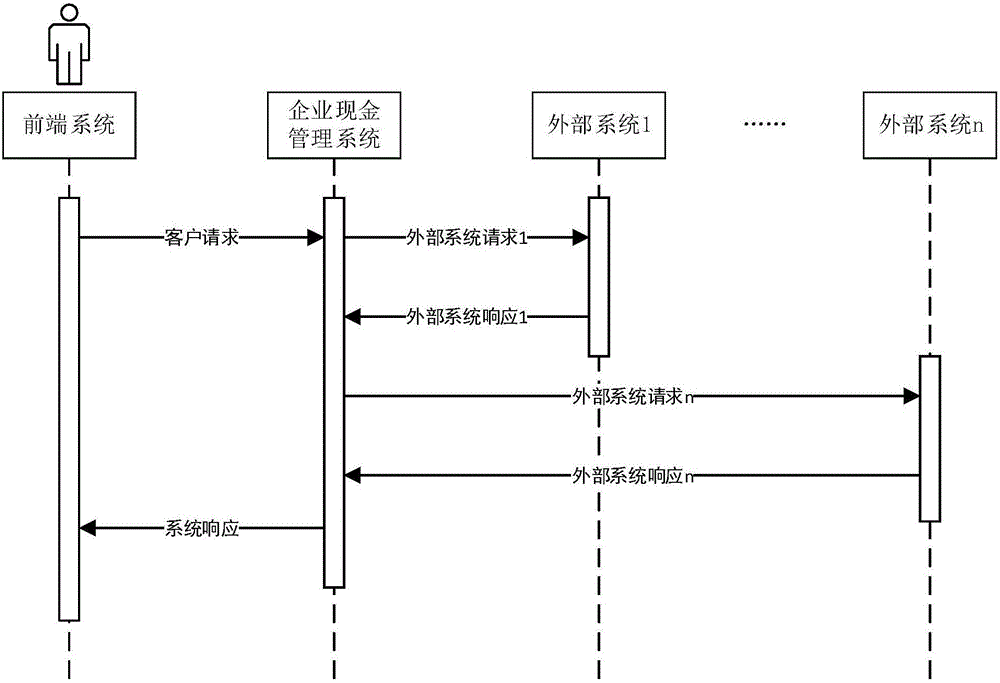

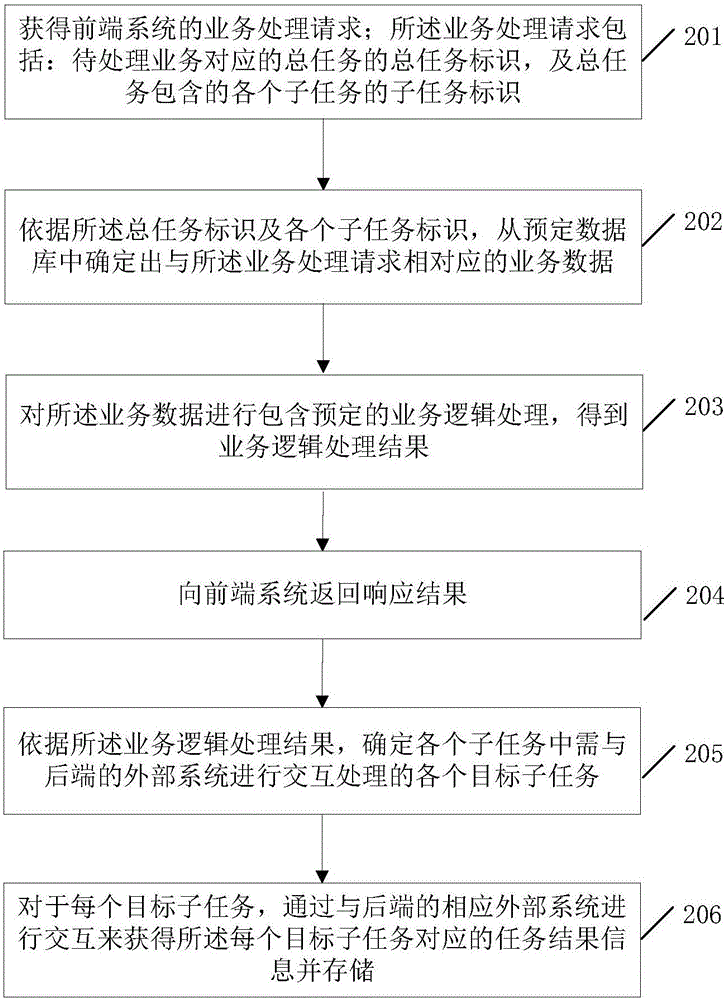

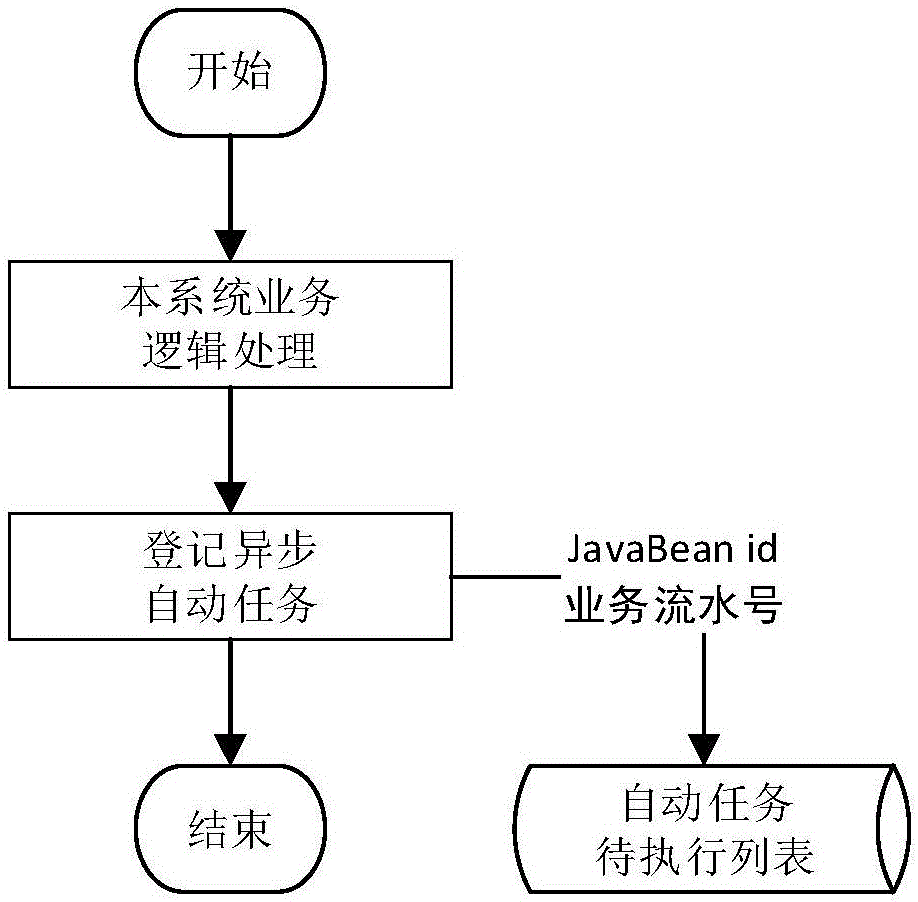

Business processing method and device

ActiveCN106603708AReduce response latencyFix response time issuesFinanceTransmissionLinear correlationEnd system

The present invention discloses a business processing method and device. The business processing method comprises: after the business processing request of a front-end system is obtained and is subjected to the required real-time processing, a response result is returned back to the front-end system, such as a prompt information of having submitted the front-end system request is returned back, subsequently, and an asynchronous mode is employed to perform interaction with an external system (namely the response of the front-end system cannot take the real-time execution of the interaction processing as the premise) so as to realize processing the part, which is needed to perform interaction with the external system, of the business processing request. Therefore, the business processing method and device move the time nodes which respond to the front-end system forwards to allow the response of the front-end system not to take the real-time execution of the cross-system interaction processing as the premise so as to solve the linear correlation problem of the response time and the volume of business and have no limitation of the factors such as the response speed, the fault state and the communication state at the cross-system calling so as to effectively reduce the response waiting time of the front-end system.

Owner:CHINA CONSTRUCTION BANK

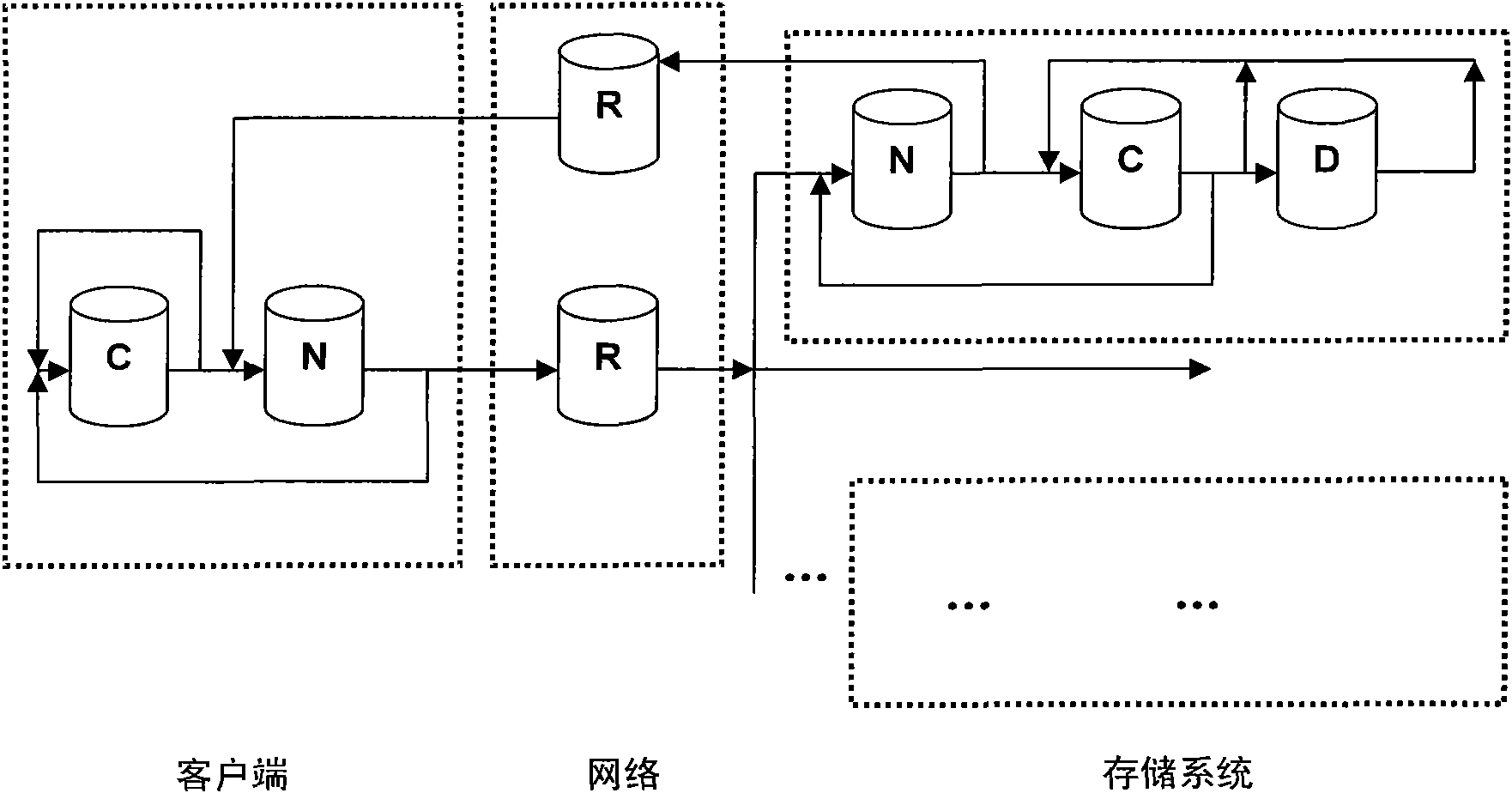

Method for optimizing system performance by dynamically tracking IO processing path of storage system

ActiveCN101616174AShort response timeFulfil requirementsData switching networksElectric digital data processingSystem capacityFully developed

The invention provides a method for optimizing system performance by dynamically tracking an IO processing path of a storage system, which comprises the following steps: sending a read and write request to the storage system through a network by using a server; tracking an IO request in each device; introducing an IO request inspection mechanism into the process of processing the request by the storage system; setting an IO processing identification for each process module; computing the response time of each processing node; computing the total processing time of the whole IO request through the formula and recording in a blog mode; accurately positioning the error through the time of processing the IO request and improving, wherein the time for processing the IO request is displayed in the blog; fully developing the system capacity through changing system resources distributed to each user and service programs; and meeting the requirements of the users by using possibly less resources and achieving the purpose of serving various users.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

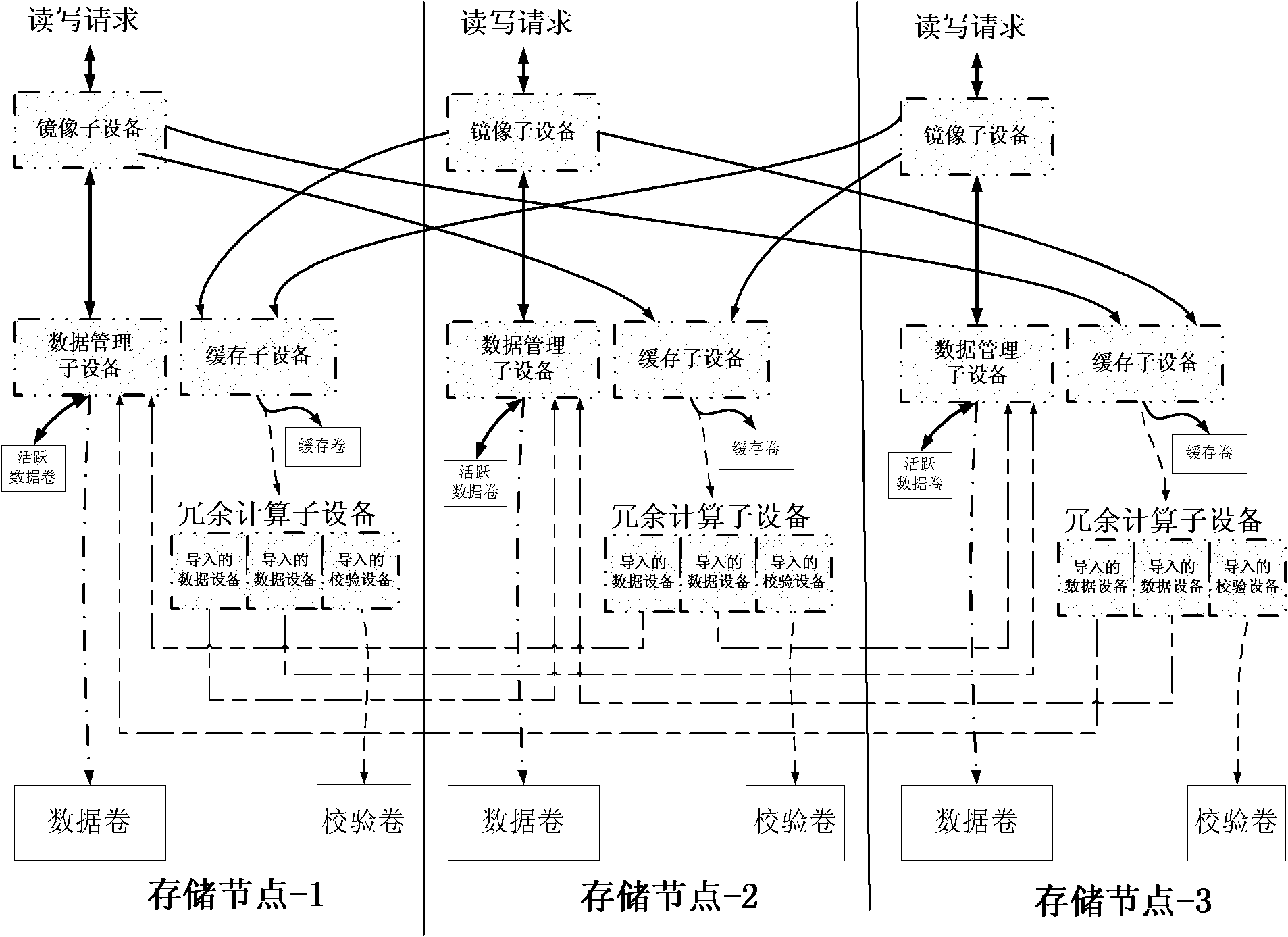

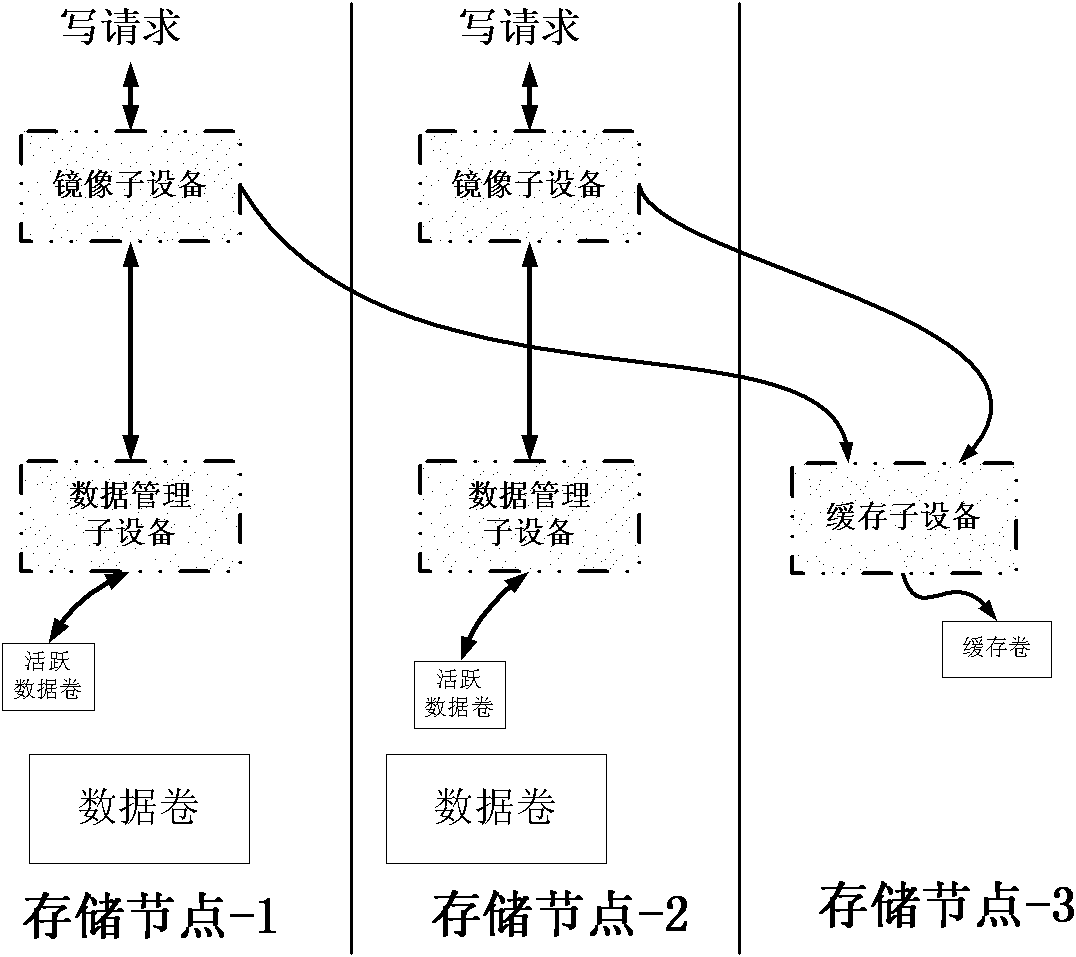

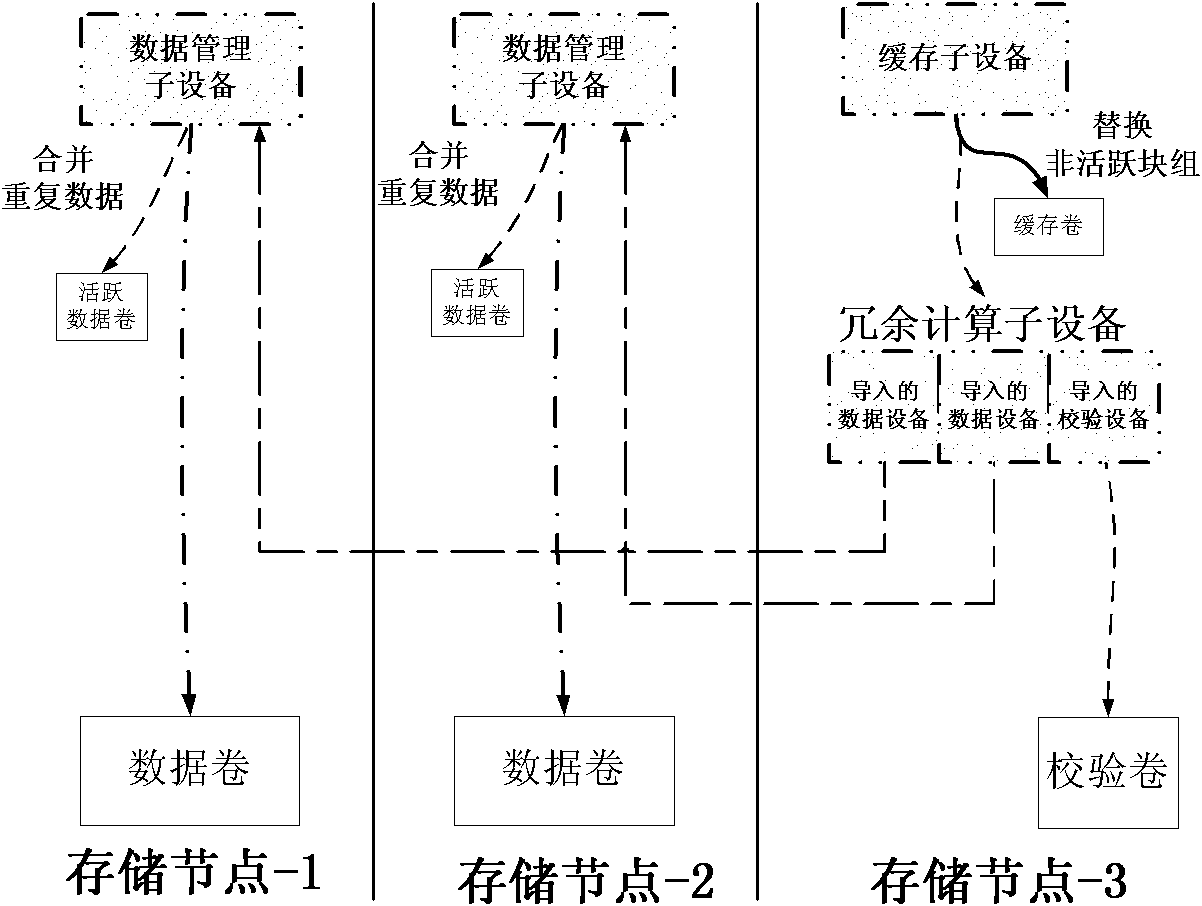

Network RAID (redundant array of independent disk) system

ActiveCN102053802AShort response timeImprove read and write performanceInput/output to record carriersDisk arrayActive data

The invention provides a network RAID (redundant array of independent disk) system, comprising at least three storage nodes, wherein each storage node comprises at least one associated storage node. Each storage node comprises a data volume used for storing application data, an active data volume used for storing partial application data, a mirror image subset used for receiving an application read-write request and a data management subset used for managing data relation in the data volume and the active data volume as well as a redundancy calculating subset used for calculating the redundancy of a verification block, a verification volume used for storing the data of the verification block, a caching volume used for storing latterly modified write active data and a caching subset used for receiving a write active data request and substituting non-active data to the redundancy calculating subset. The network RAID system provided by the invention also provides a data processing method applied to the network RAID system. The invention has the advantages that the response time for reading a request is short, the read-write performance is good, and the storage reliability is higher.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

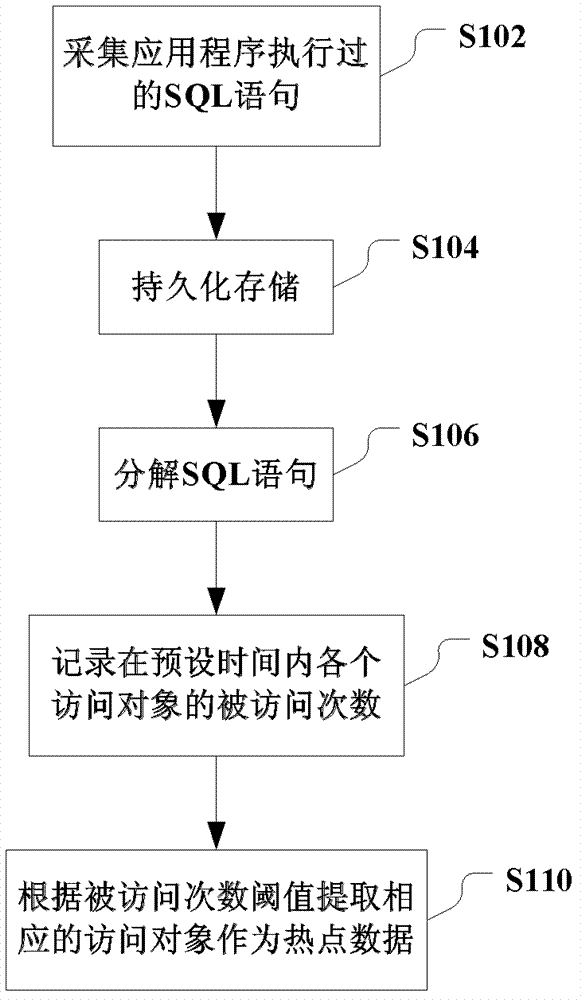

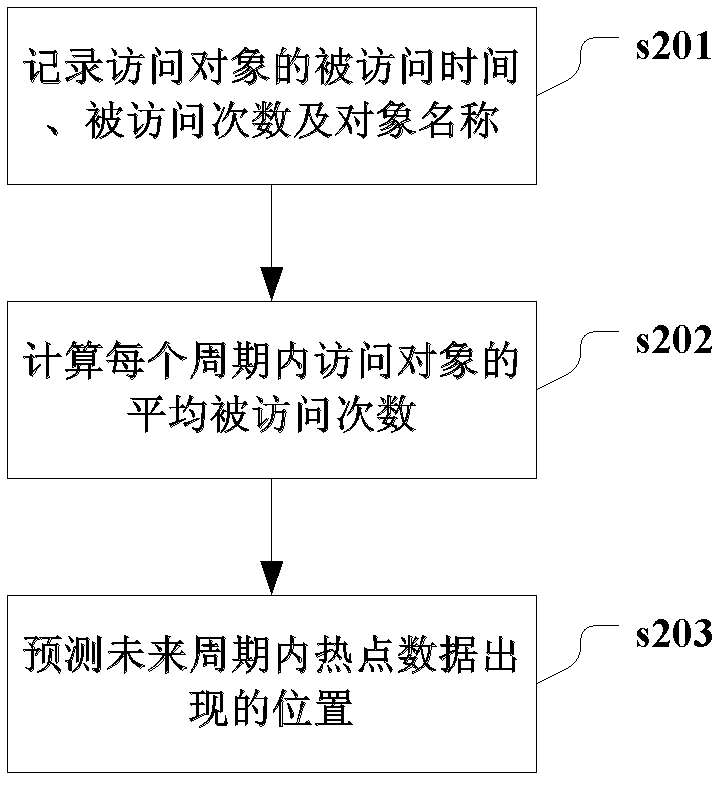

Method and system for managing data, and data analyzing device

ActiveCN103092867APrecise managementReduce demandSpecial data processing applicationsAccess timeData profiling

The invention discloses a method and a system for managing data, and a data analyzing device. The method includes: collecting structured query language (SQL) statements executed by an application program, and performing persistent storage; decomposing the SQL statements to obtain access objects of the SQL statements; recording accessed times of each access object in preset time; and extracting corresponding access objects as hot point data according to threshold value of the accessed times. According to the method and the system for managing the data, and the data analyzing device, the accessed times of the access objects of the SQL statements are obtained by analyzing the SQL statements executed by the application program, and accordingly the hot point data is obtained. Use status of logic layer objects (such as a chart and an index) is accurately reflected by finding the hot point data on a data application layer, and furthermore the hot point data can be accurately managed, system processing response time is decreased, system processing corresponding speed is improved, demand for high-end storage devices is reduced, and mass data management cost is reduced.

Owner:中国移动通信集团甘肃有限公司

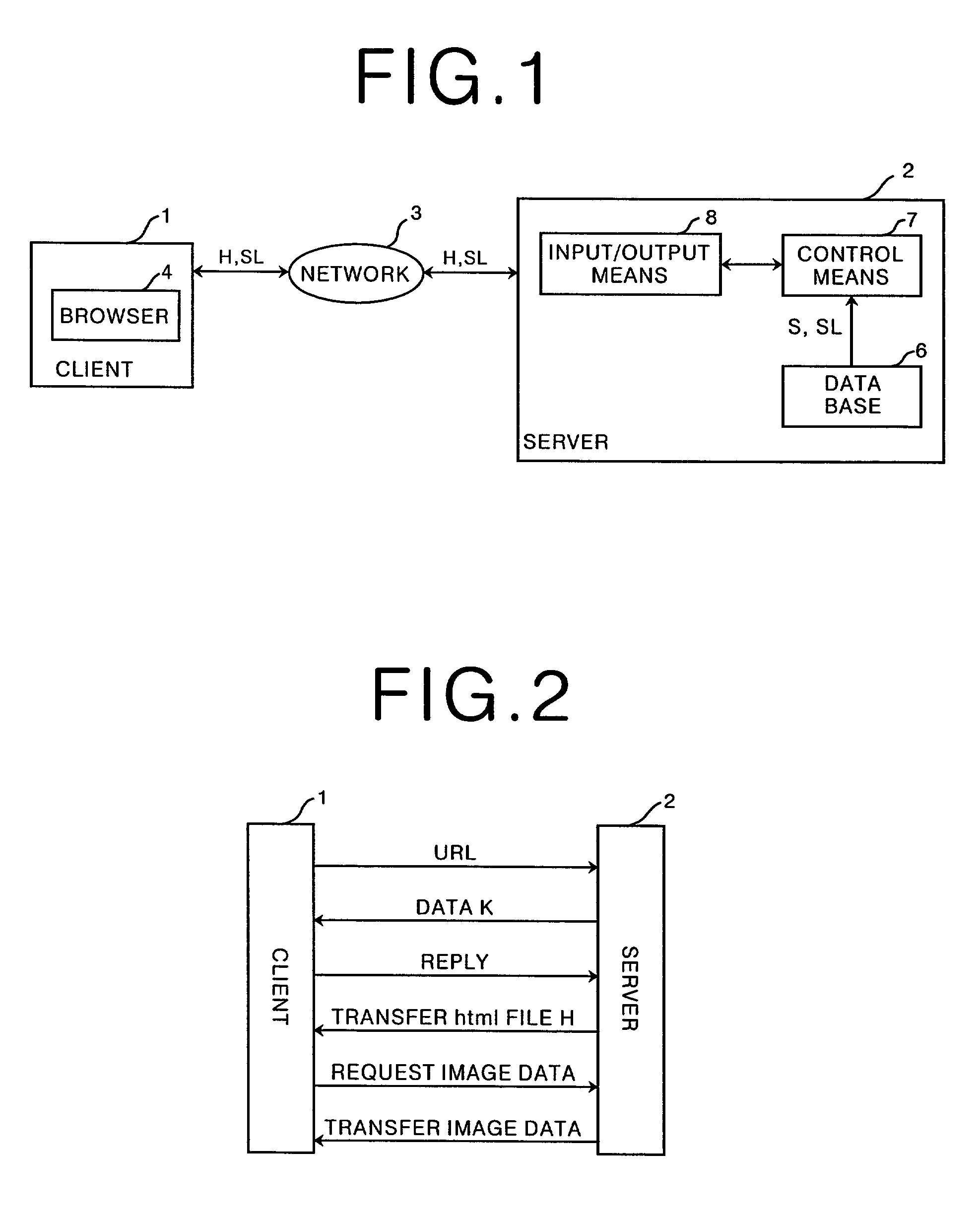

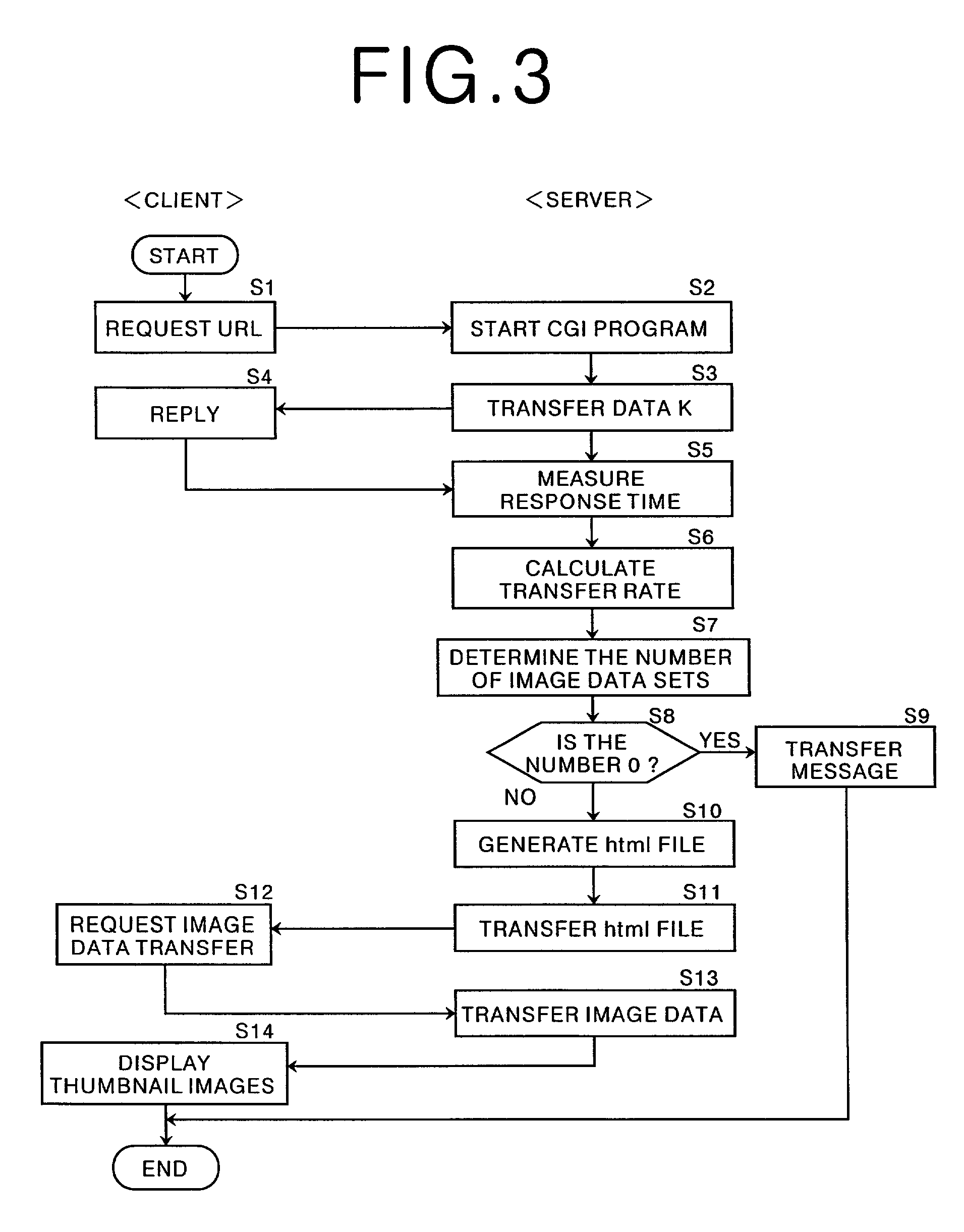

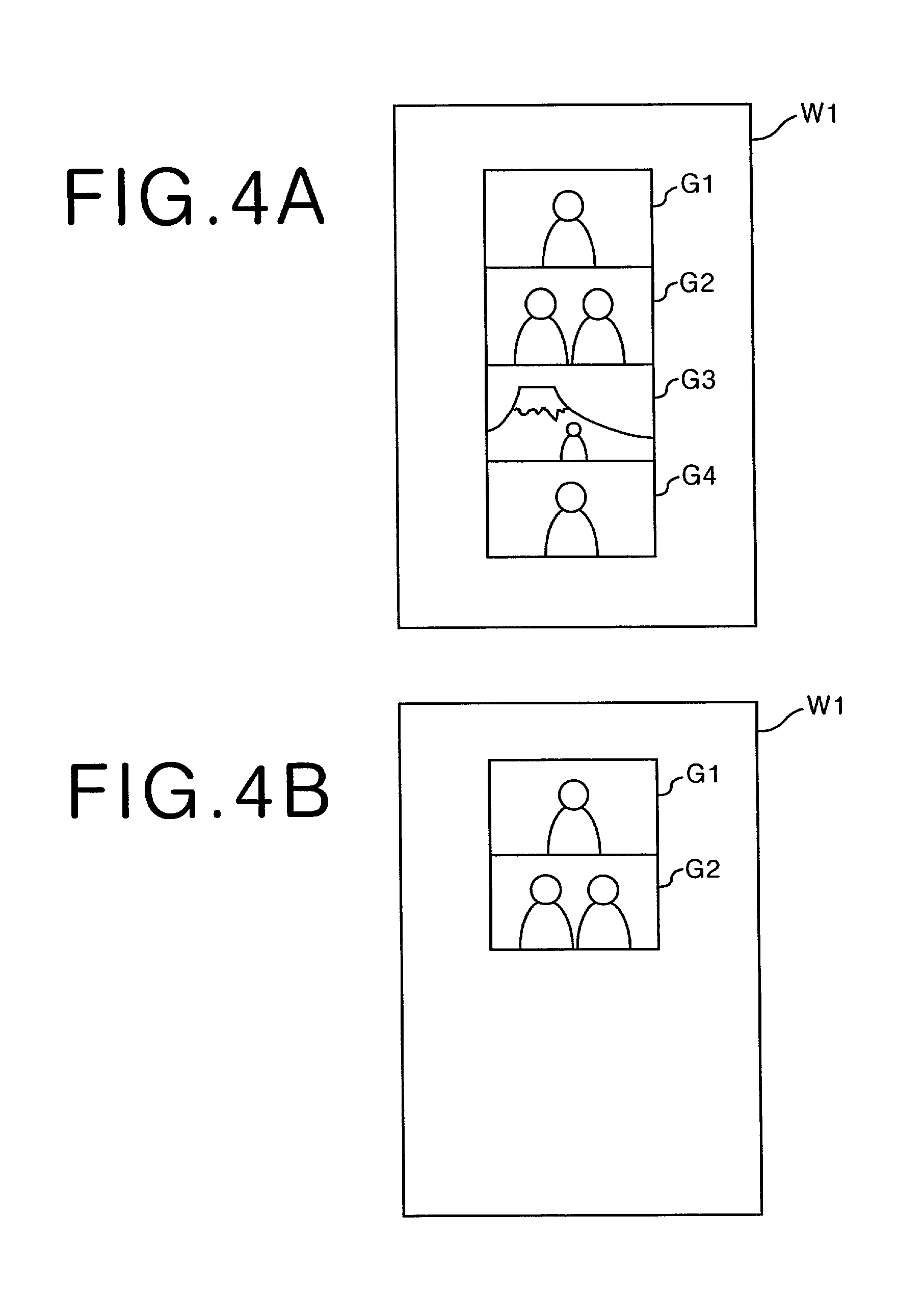

Method, apparatus, and recording medium for controlling image data transfer

InactiveUS20010023438A1Multiple digital computer combinationsTwo-way working systemsData setData transport

When a plurality of image data sets such as thumbnail images are transferred, stress of a user waiting for the transfer can be reduced. When a client requests a URL for displaying thumbnail images from a server, the server starts up a CGI program and sends data K for measuring a response time to the client. The client replies at the time of receiving the data, and the server measures the response time as a transfer rate on a network. Based on a permitted transfer time set in advance and the response time, the number of the image data sets is determined and an html file for displaying images whose number has been determined is transferred to the client. The client then requests transfer of the image data sets that have been determined from the server, and the server transfers the image data sets to the client. In this manner, the client can display the thumbnail images.

Owner:FUJIFILM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com