Patents

Literature

22057 results about "Throughput" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In general terms, throughput is the rate of production or the rate at which something is processed. When used in the context of communication networks, such as Ethernet or packet radio, throughput or network throughput is the rate of successful message delivery over a communication channel. The data these messages belong to may be delivered over a physical or logical link, or it can pass through a certain network node. Throughput is usually measured in bits per second (bit/s or bps), and sometimes in data packets per second (p/s or pps) or data packets per time slot.

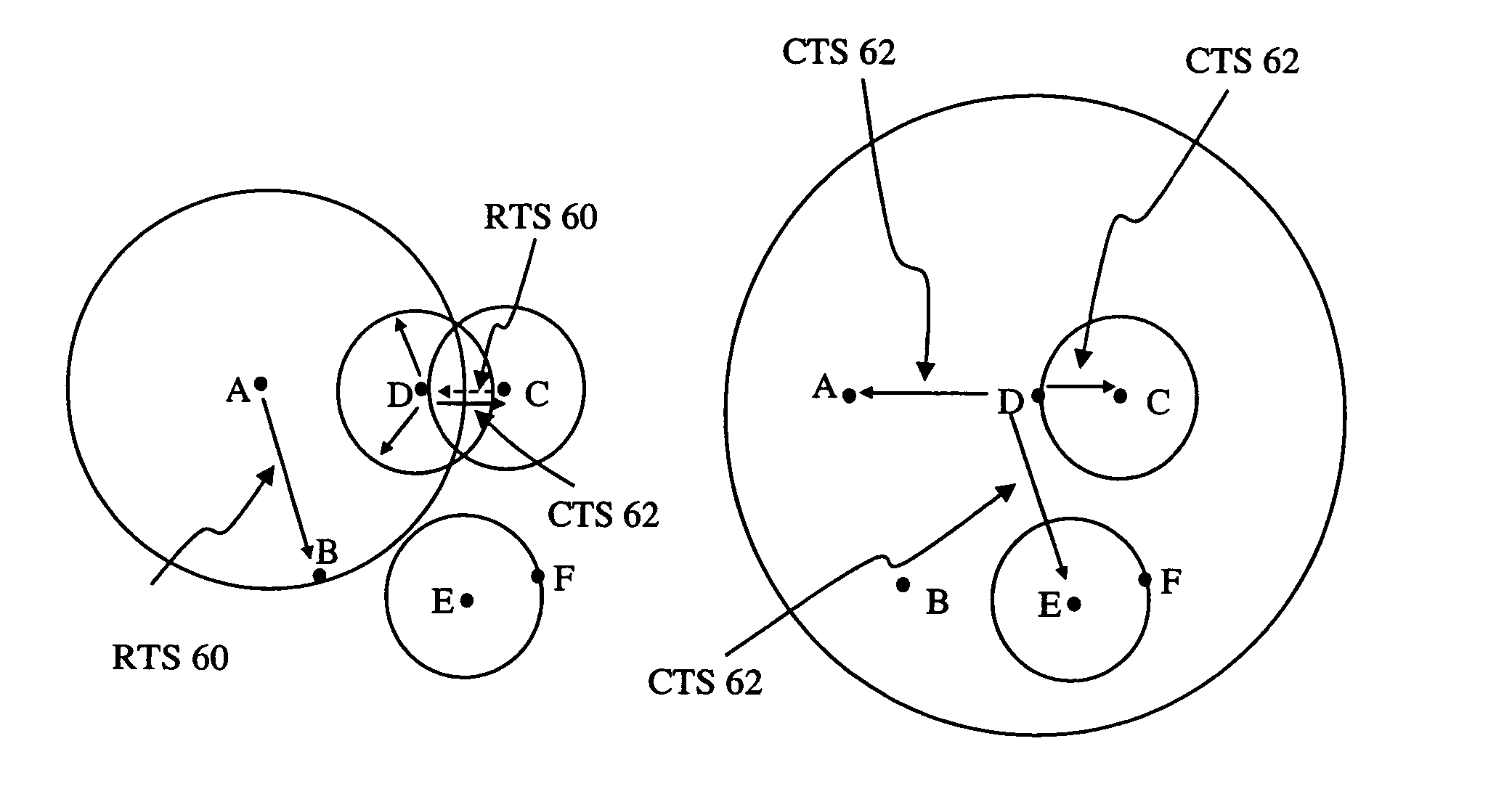

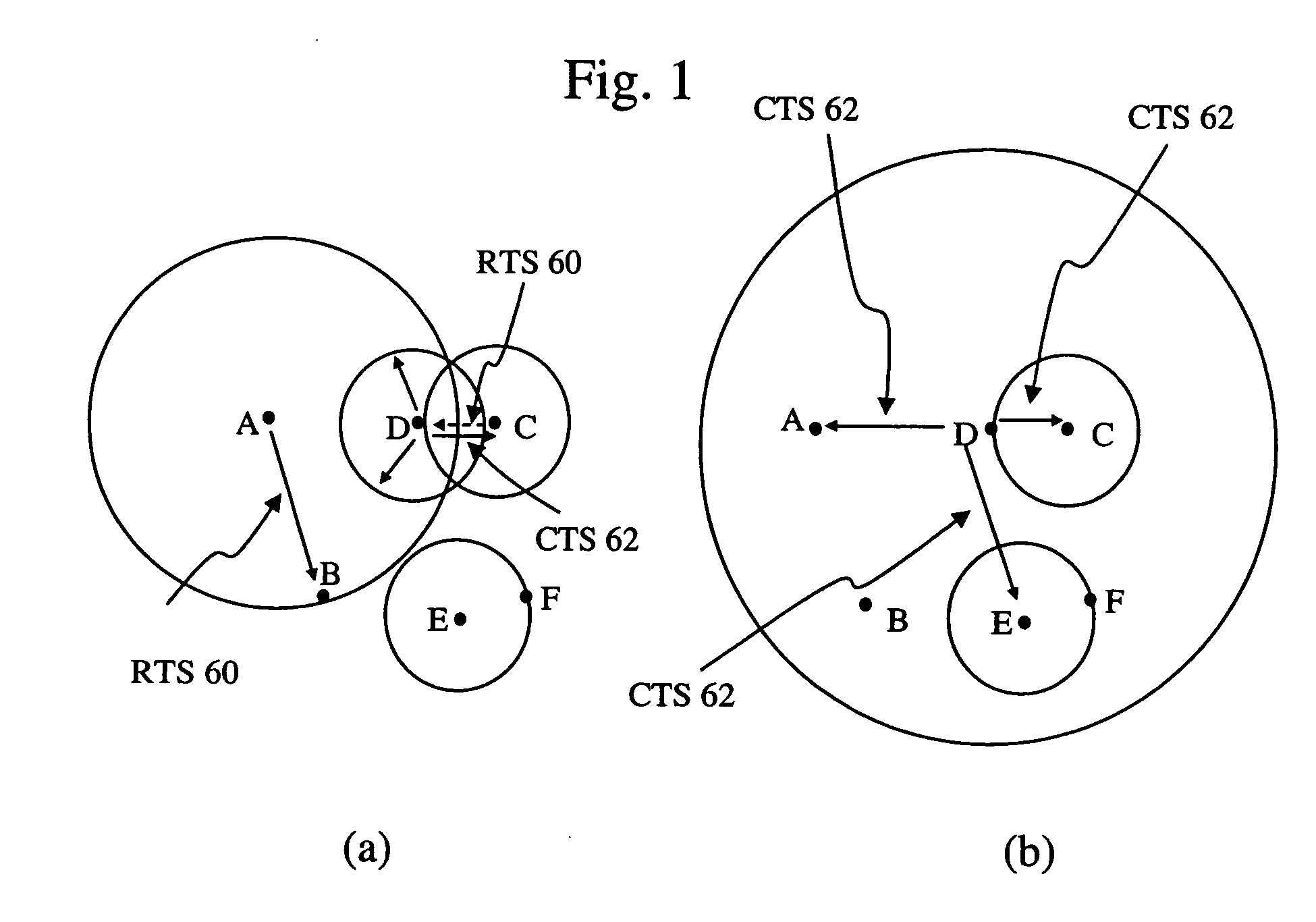

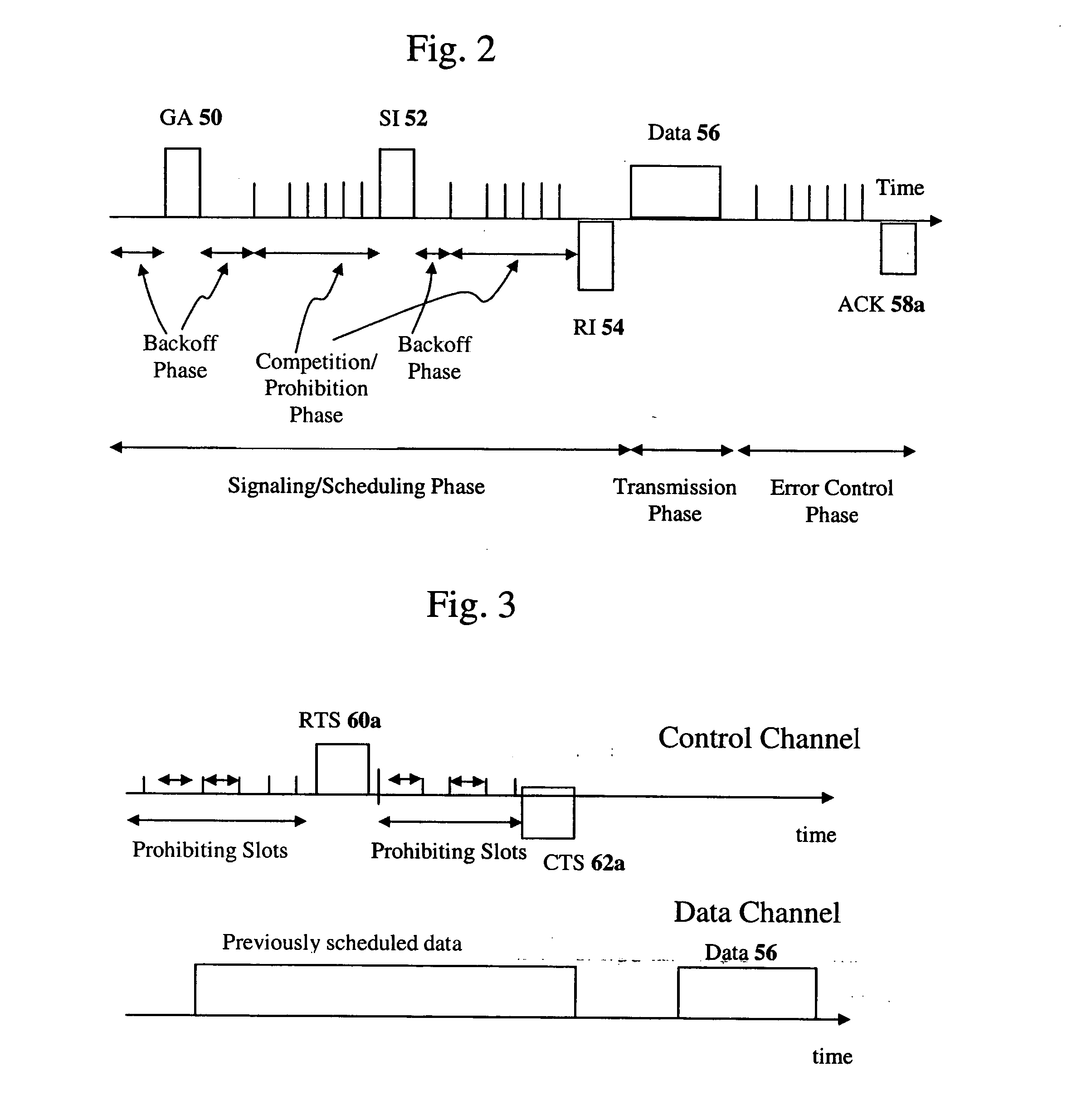

Method of interference management for interference/collision avoidance and spatial reuse enhancement

InactiveUS20050058151A1Improve rendering capabilitiesImprove channel utilizationEnergy efficient ICTPower managementDifferentiated servicesDifferentiated service

A method called the evolvable interference management (EIM) method is disclosed in this patent for avoiding interference and collision and increasing network throughput and energy efficiency in wireless networks. EIM employs sensitive CSMA / CA, patching approaches, interference engineering, differentiated multichannel, detached dialogues, and / or spread spectrum techniques to solve the interference and QoS problems. EIM-based protocols can considerably increase network throughput and QoS differentiation capability as compared to IEEE 802.11e in multihop networking environments. Due to the improvements achievable by EIM, the techniques and mechanisms presented in this application may be applied to obtain an extension to IEEE 802.11 to better support differentiated service and power control in ad hoc networks and multihop wireless LANs. New protocols may also be designed based on EIM.

Owner:YEH CHIHSIANG

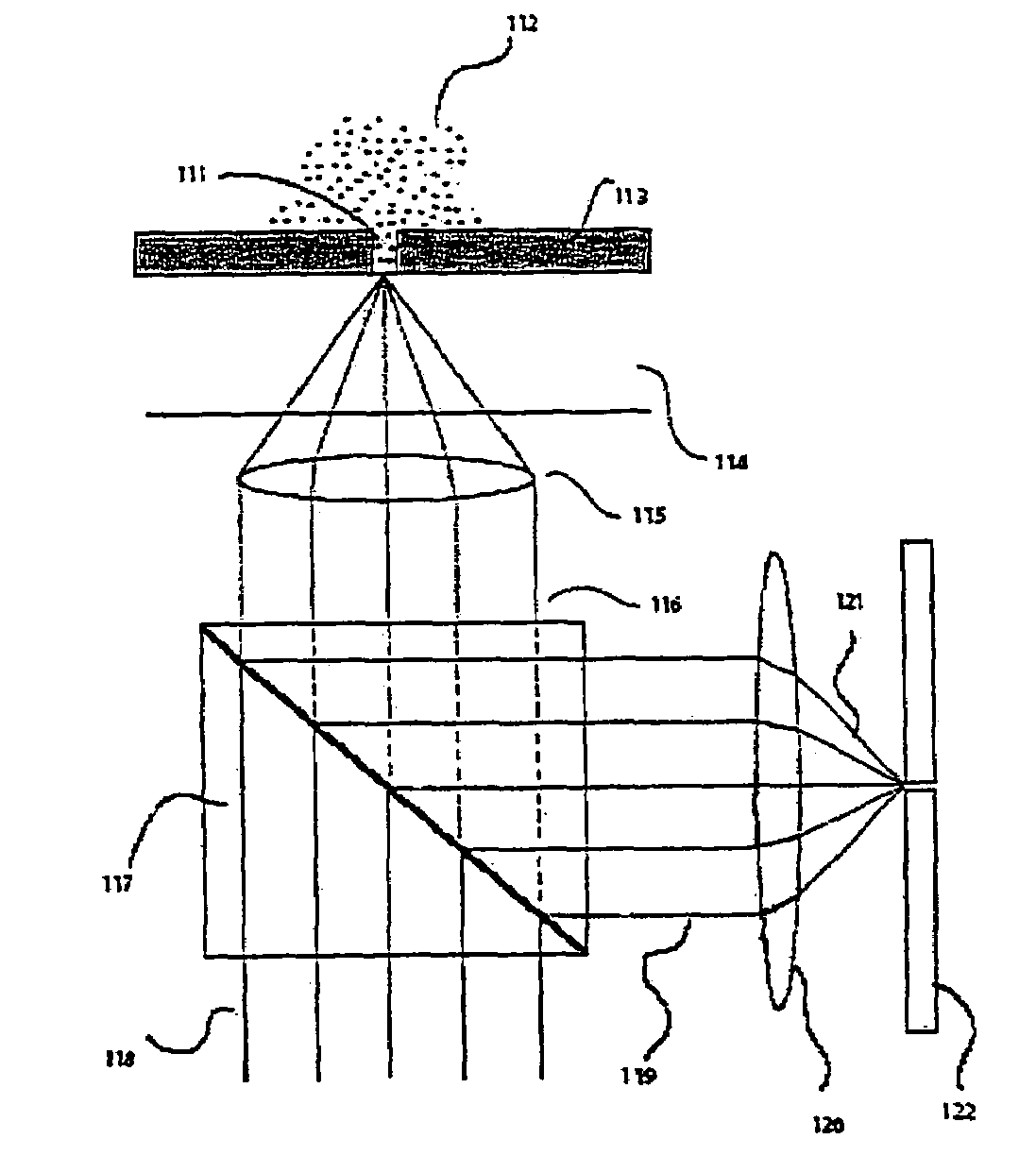

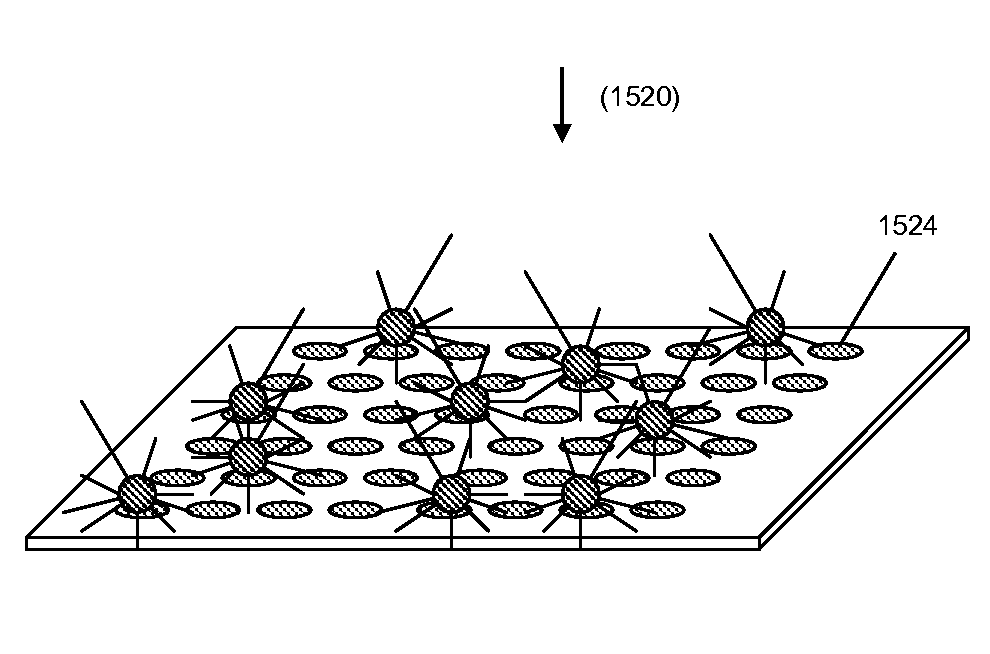

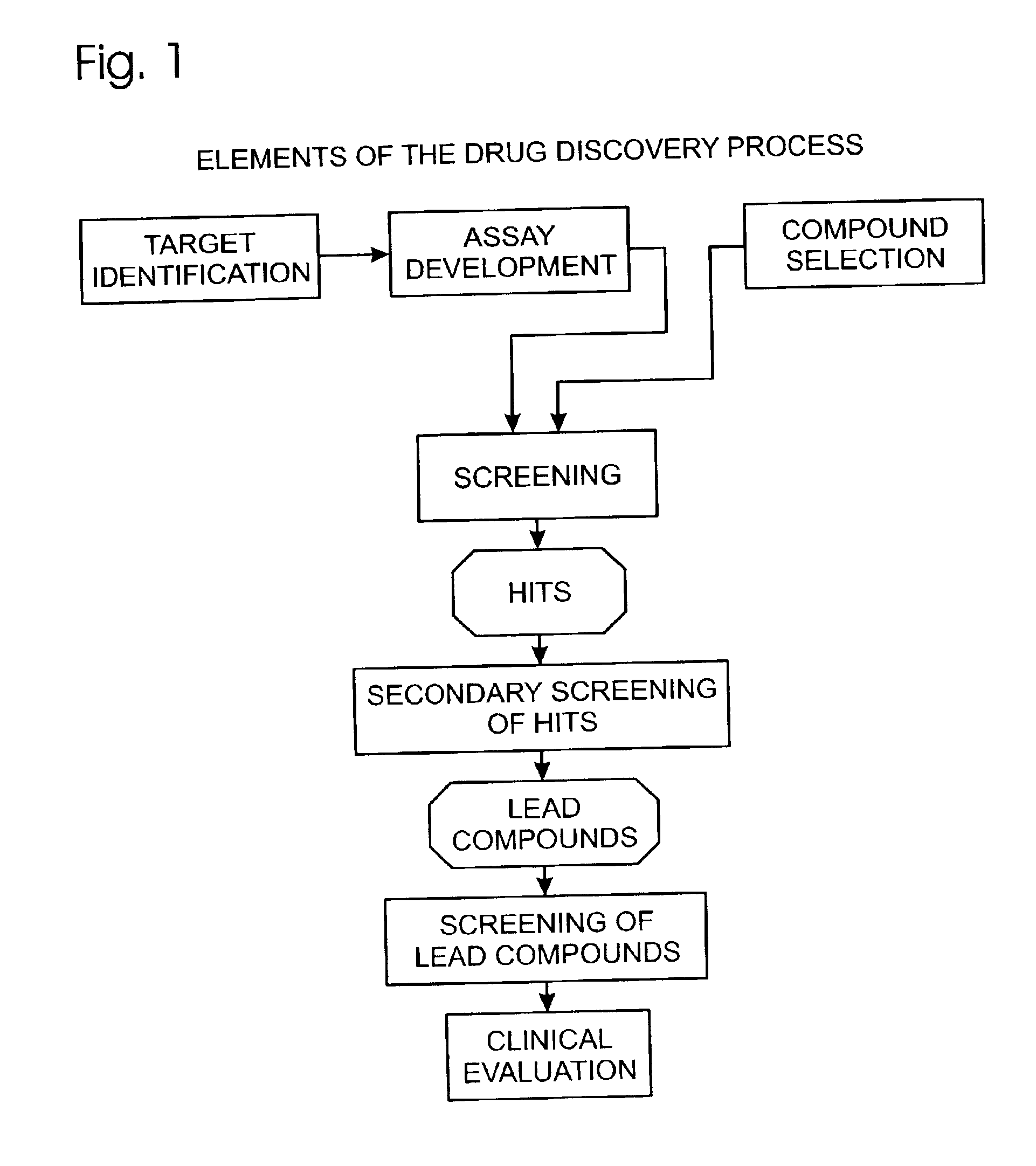

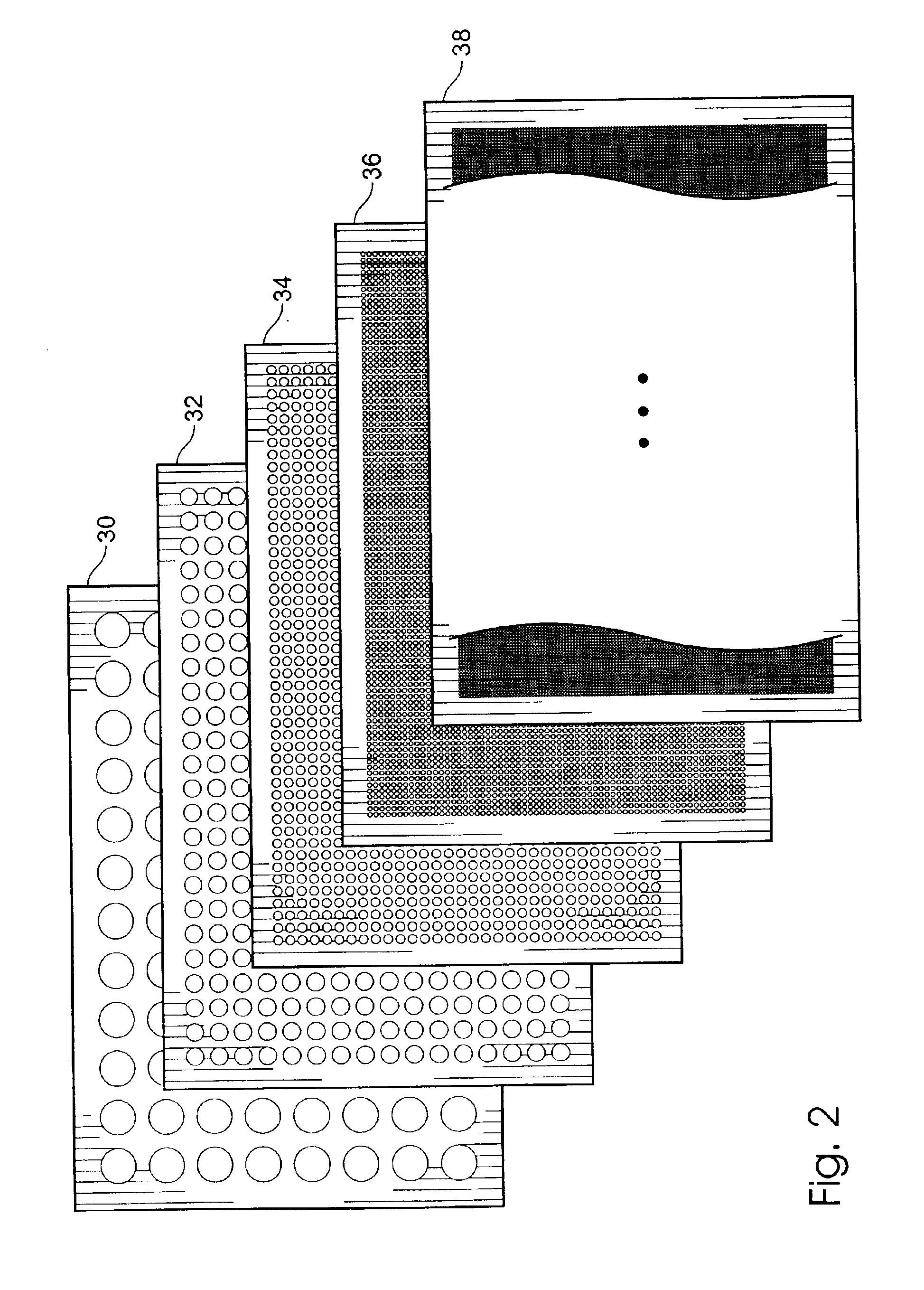

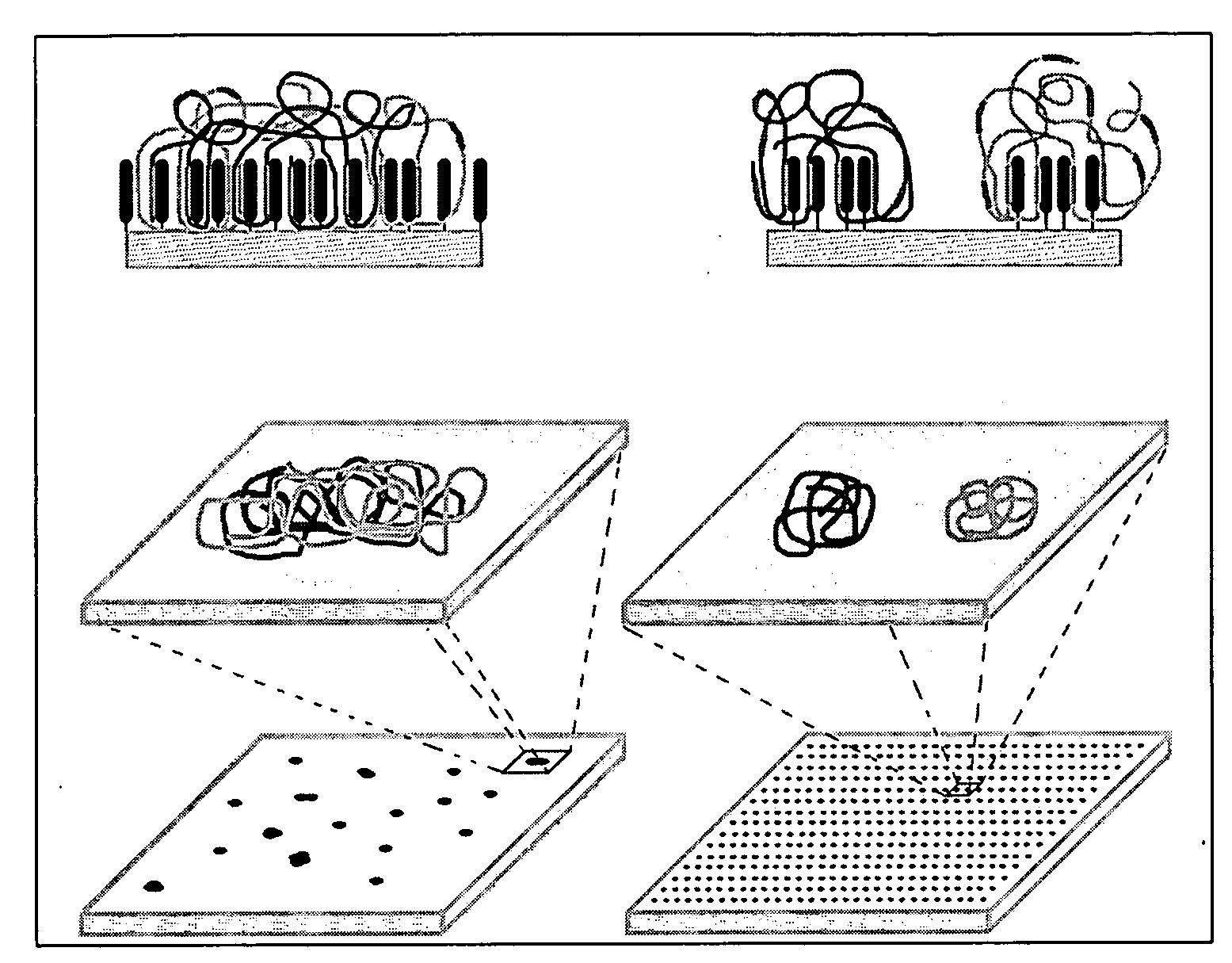

Miniaturized cell array methods and apparatus for cell-based screening

InactiveUS6103479AImprove throughputIncrease contentBioreactor/fermenter combinationsMaterial nanotechnologyTemporal informationHigh-Throughput Screening Methods

The present invention discloses devices and methods of performing high throughput screening of the physiological response of cells to biologically active compounds and methods of combining high-throughput with high-content spatial information at the cellular and subcellular level as well as temporal information about changes in physiological, biochemical and molecular activities. The present invention allows multiple types of cell interactions to be studied simultaneously by combining multicolor luminescence reading, microfluidic delivery, and environmental control of living cells in non-uniform micro-patterned arrays.

Owner:CELLOMICS

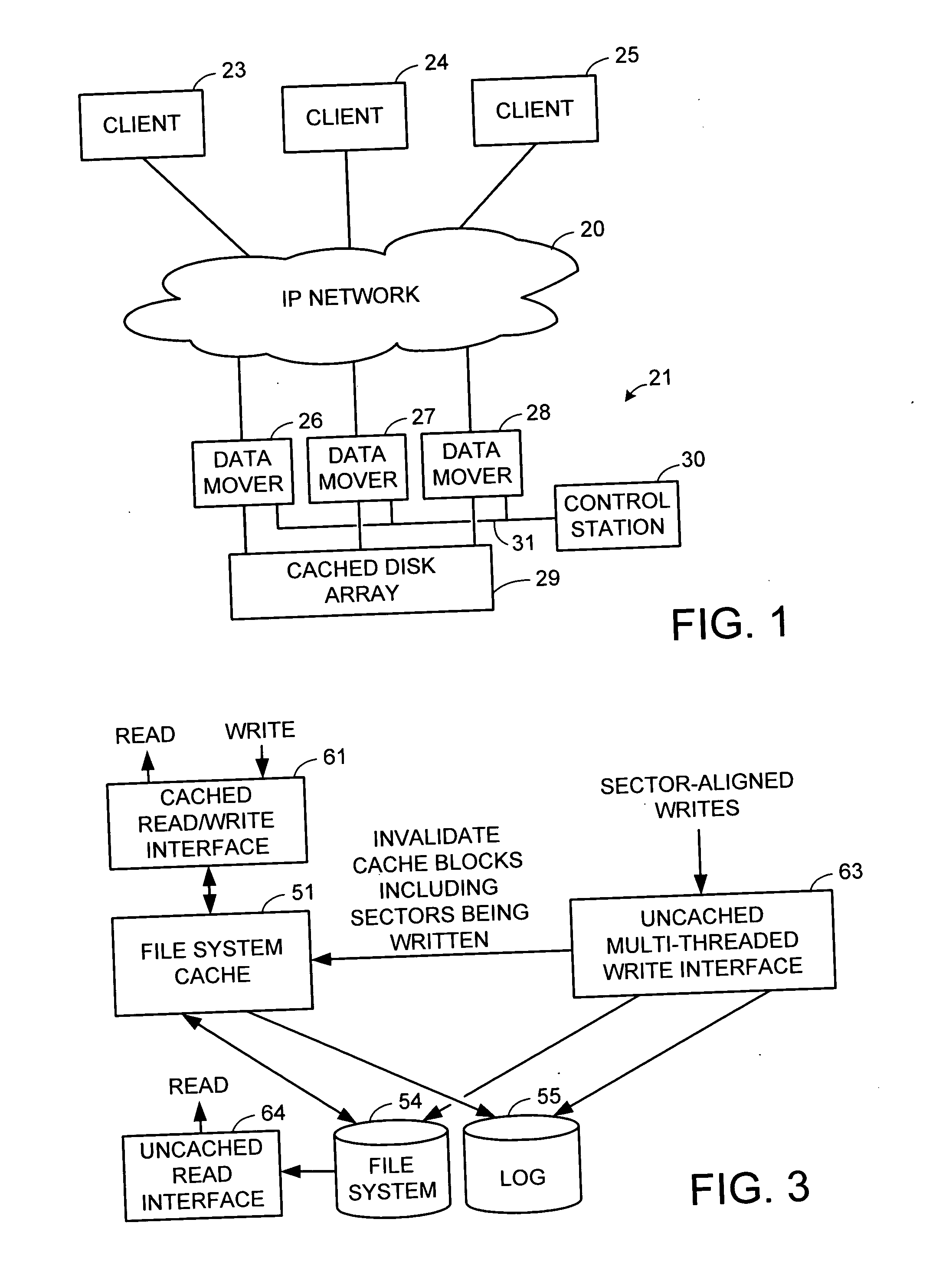

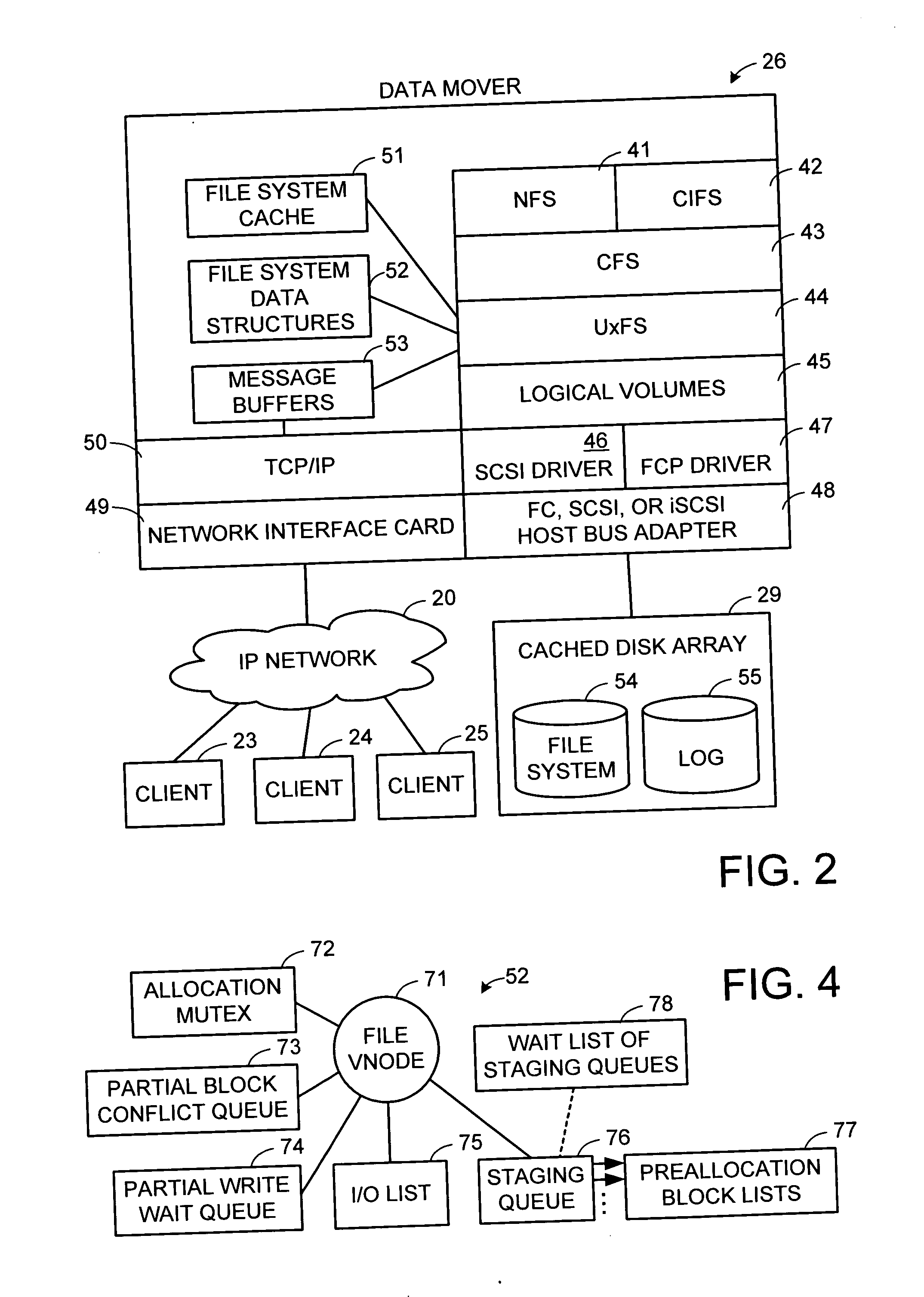

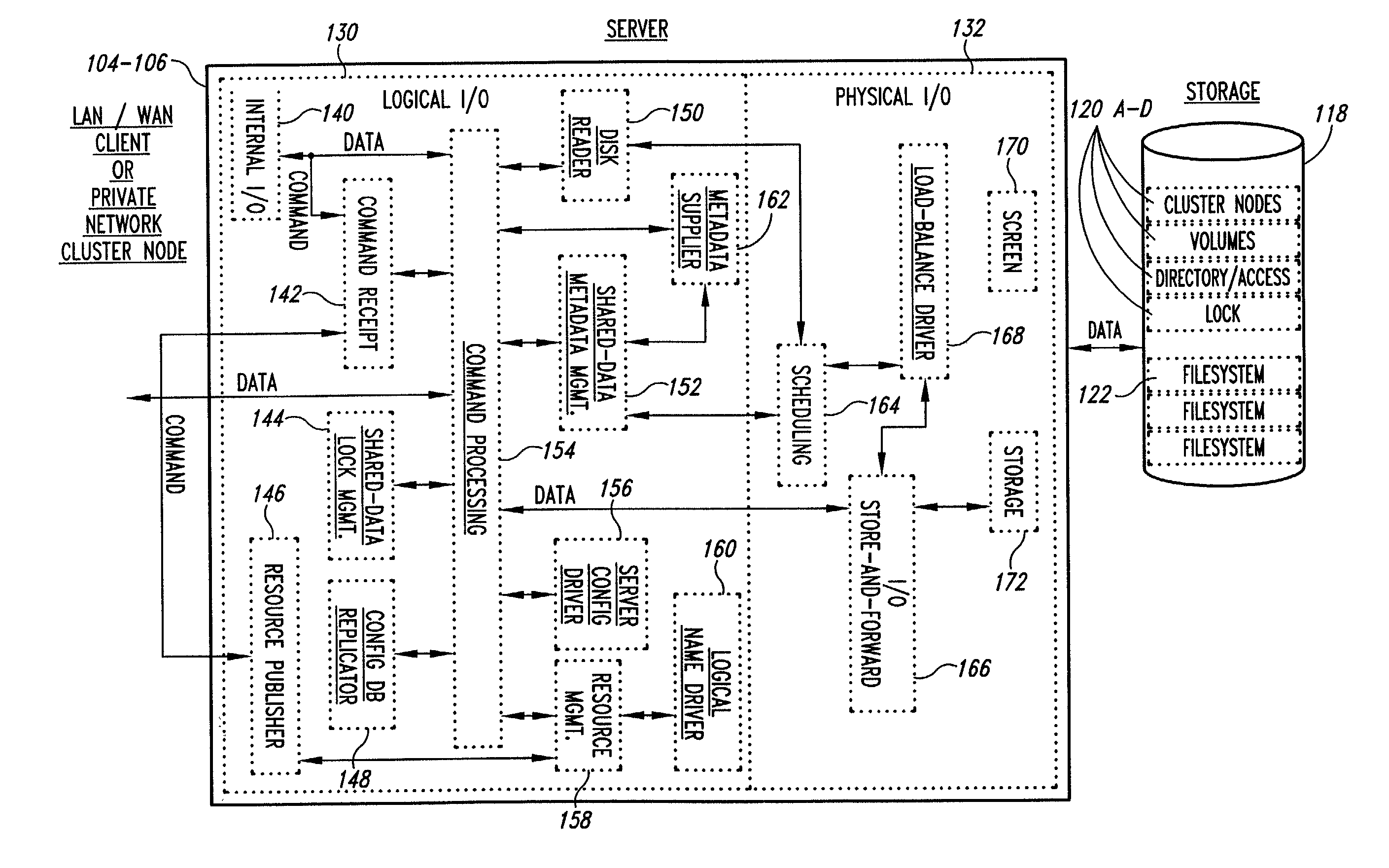

Multi-threaded write interface and methods for increasing the single file read and write throughput of a file server

ActiveUS20050066095A1Digital data information retrievalDigital data processing detailsData integrityFile allocation

A write interface in a file server provides permission management for concurrent access to data blocks of a file, ensures correct use and update of indirect blocks in a tree of the file, preallocates file blocks when the file is extended, solves access conflicts for concurrent reads and writes to the same block, and permits the use of pipelined processors. For example, a write operation includes obtaining a per file allocation mutex (mutually exclusive lock), preallocating a metadata block, releasing the allocation mutex, issuing an asynchronous write request for writing to the file, waiting for the asynchronous write request to complete, obtaining the allocation mutex, committing the preallocated metadata block, and releasing the allocation mutex. Since no locks are held during the writing of data to the on-disk storage and this data write takes the majority of the time, the method enhances concurrency while maintaining data integrity.

Owner:EMC IP HLDG CO LLC

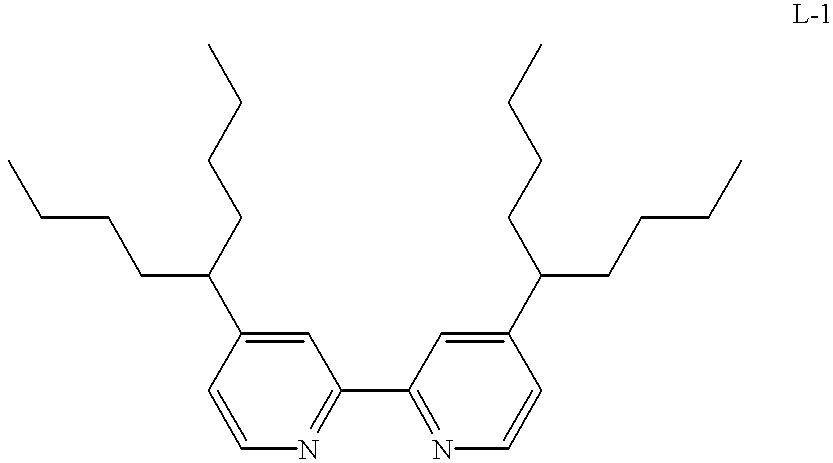

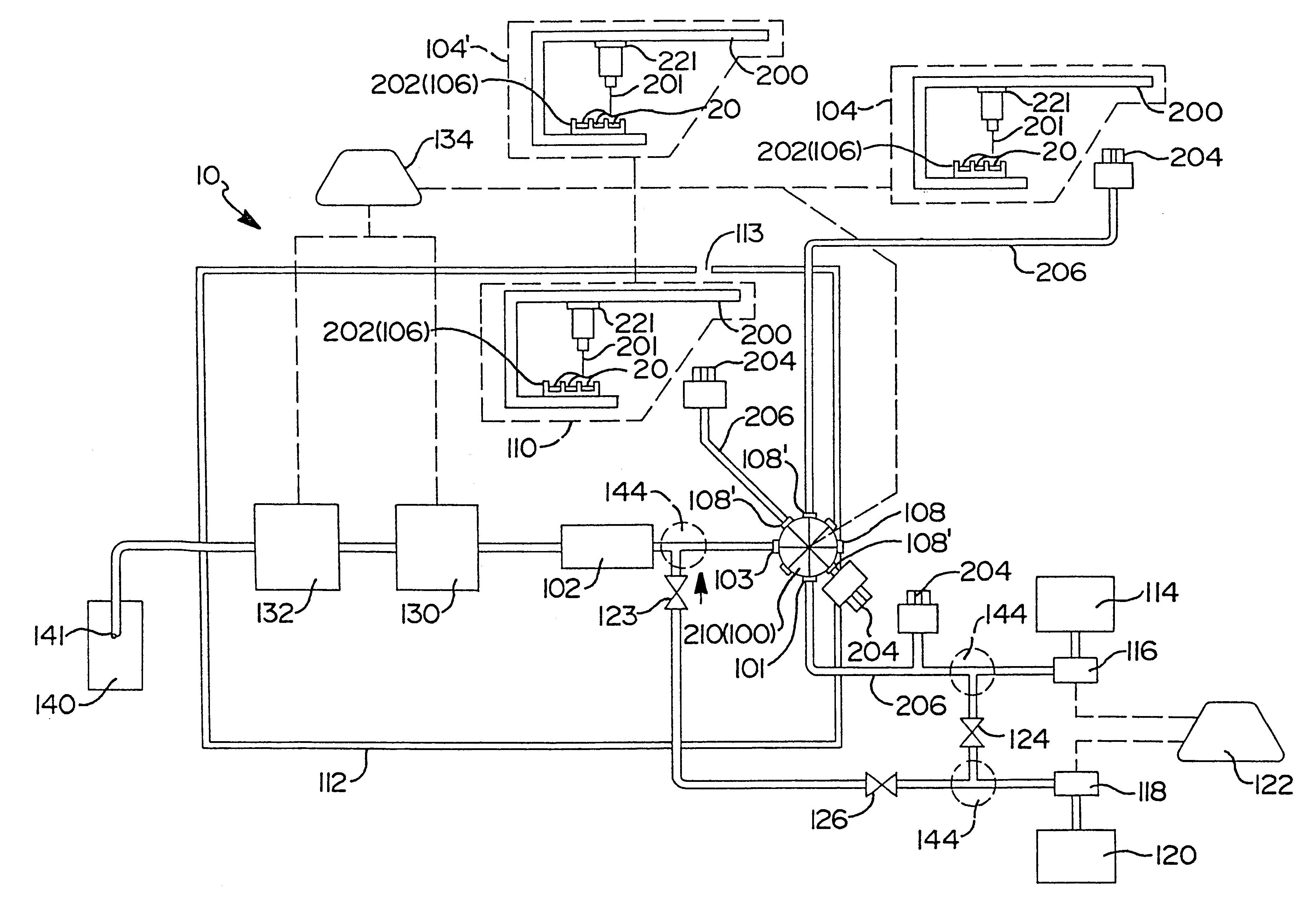

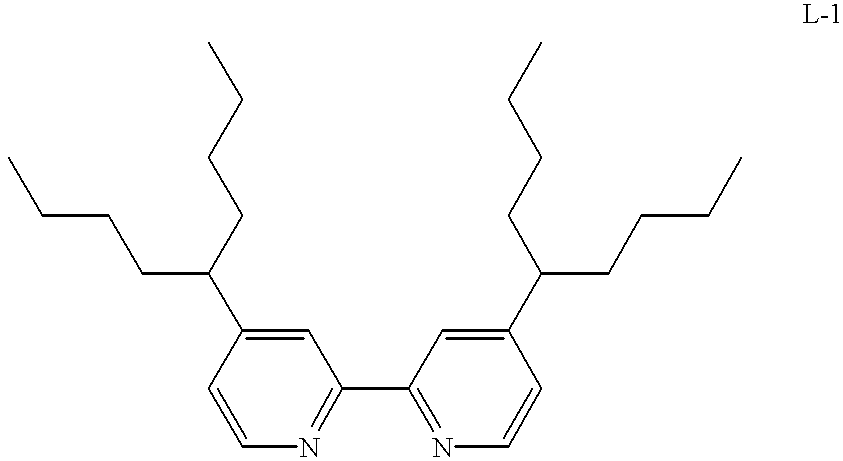

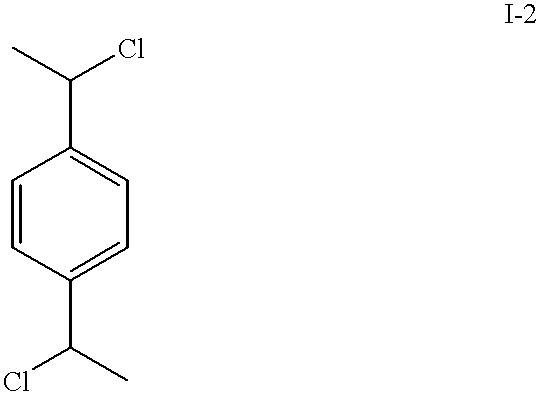

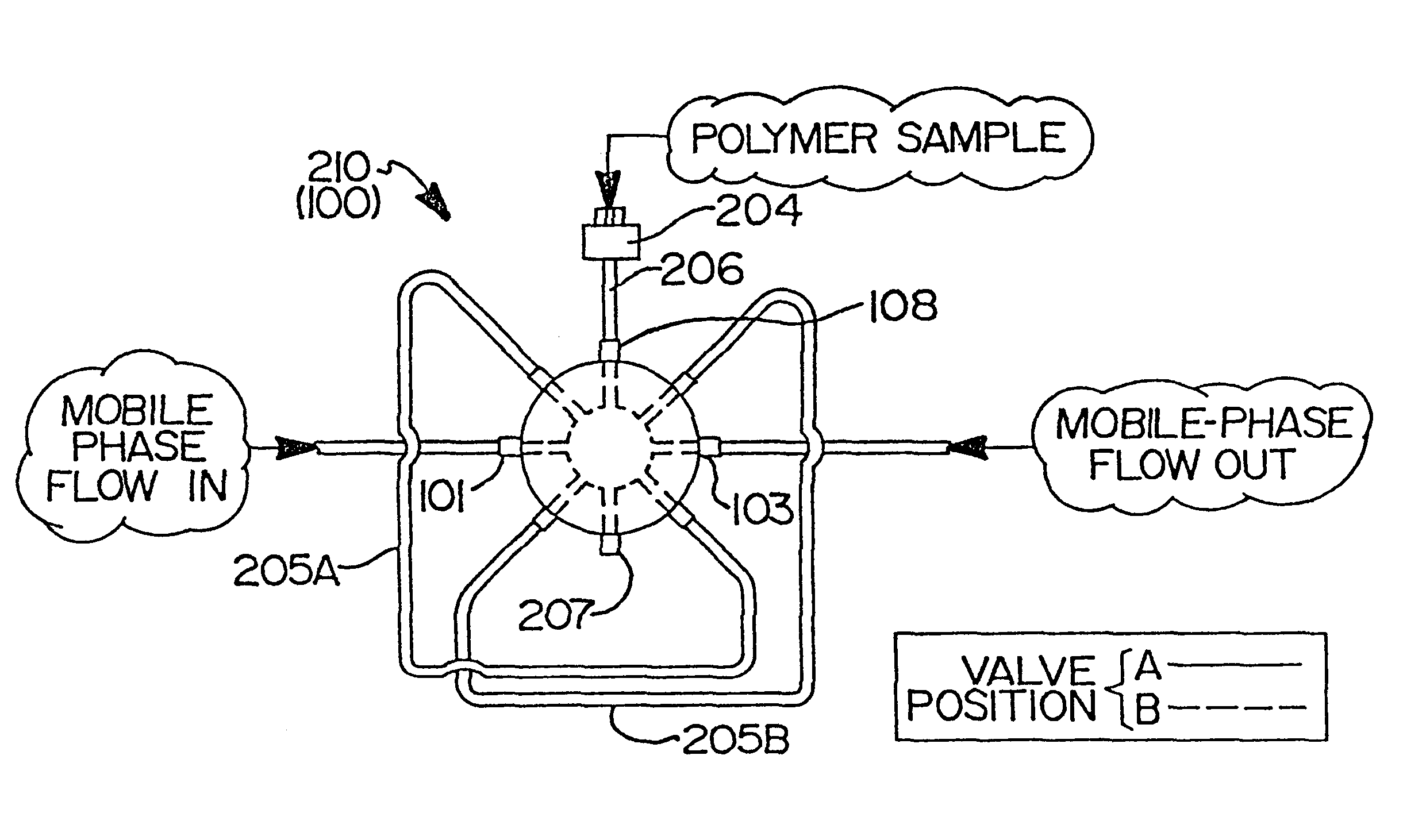

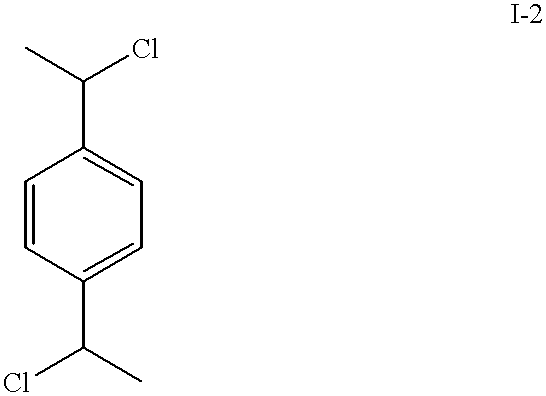

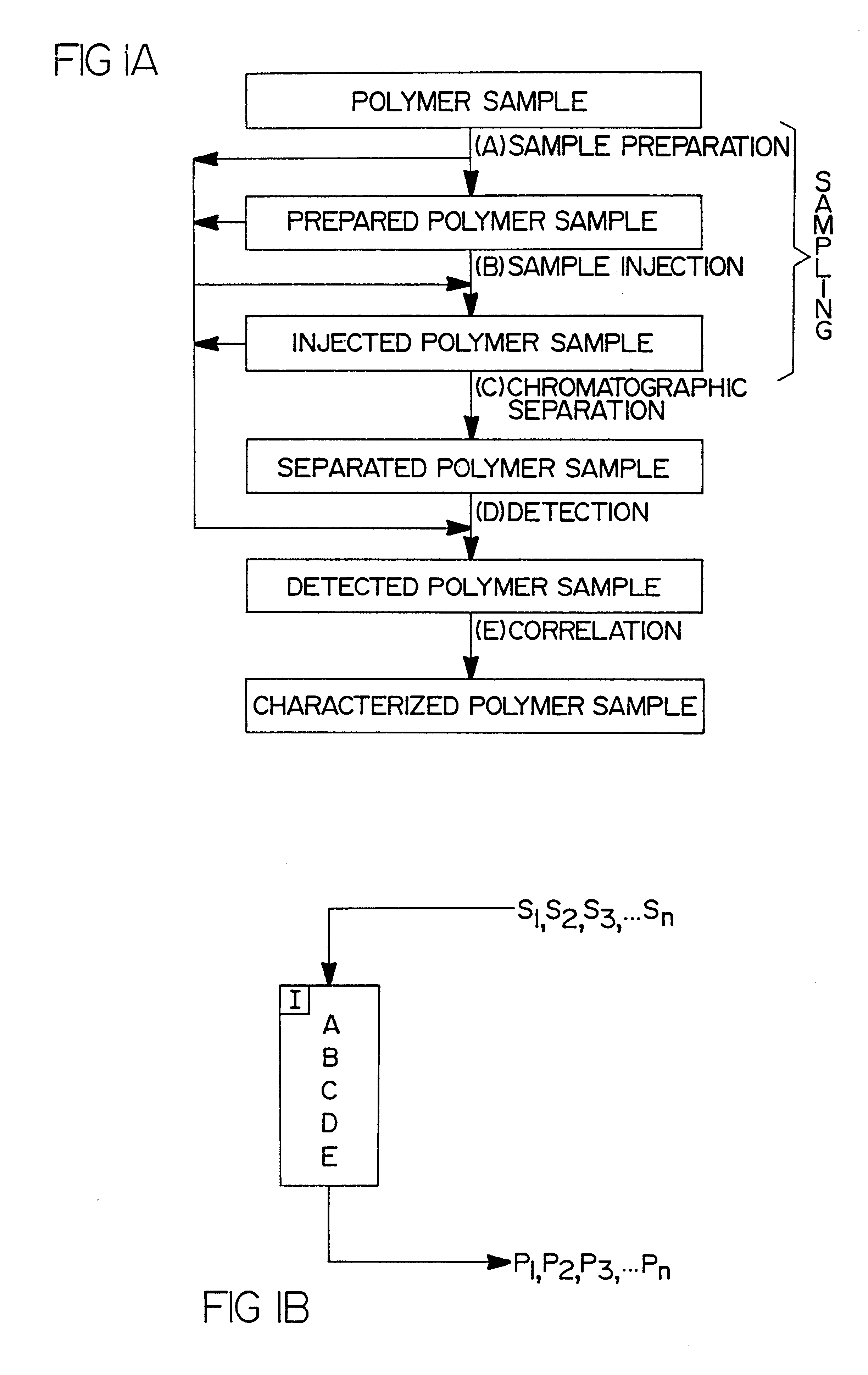

Flow-injection analysis and variable-flow light-scattering methods and apparatus for characterizing polymers

InactiveUS6175409B1Avoid backlogImprove throughputSequential/parallel process reactionsSamplingFlow injection analysisPolymer

Rapid characterization and screening of polymer samples to determine average molecular weight, molecular weight distribution and other properties is disclosed. Rapid flow characterization systems and methods, including liquid chromatography and flow-injection analysis systems and methods are preferably employed. High throughput, automated sampling systems and methods, high-temperature characterization systems and methods, and rapid, indirect calibration compositions and methods are also disclosed. The described methods, systems, and devices have primary applications in combinatorial polymer research and in industrial process control.

Owner:INTERMOLECULAR

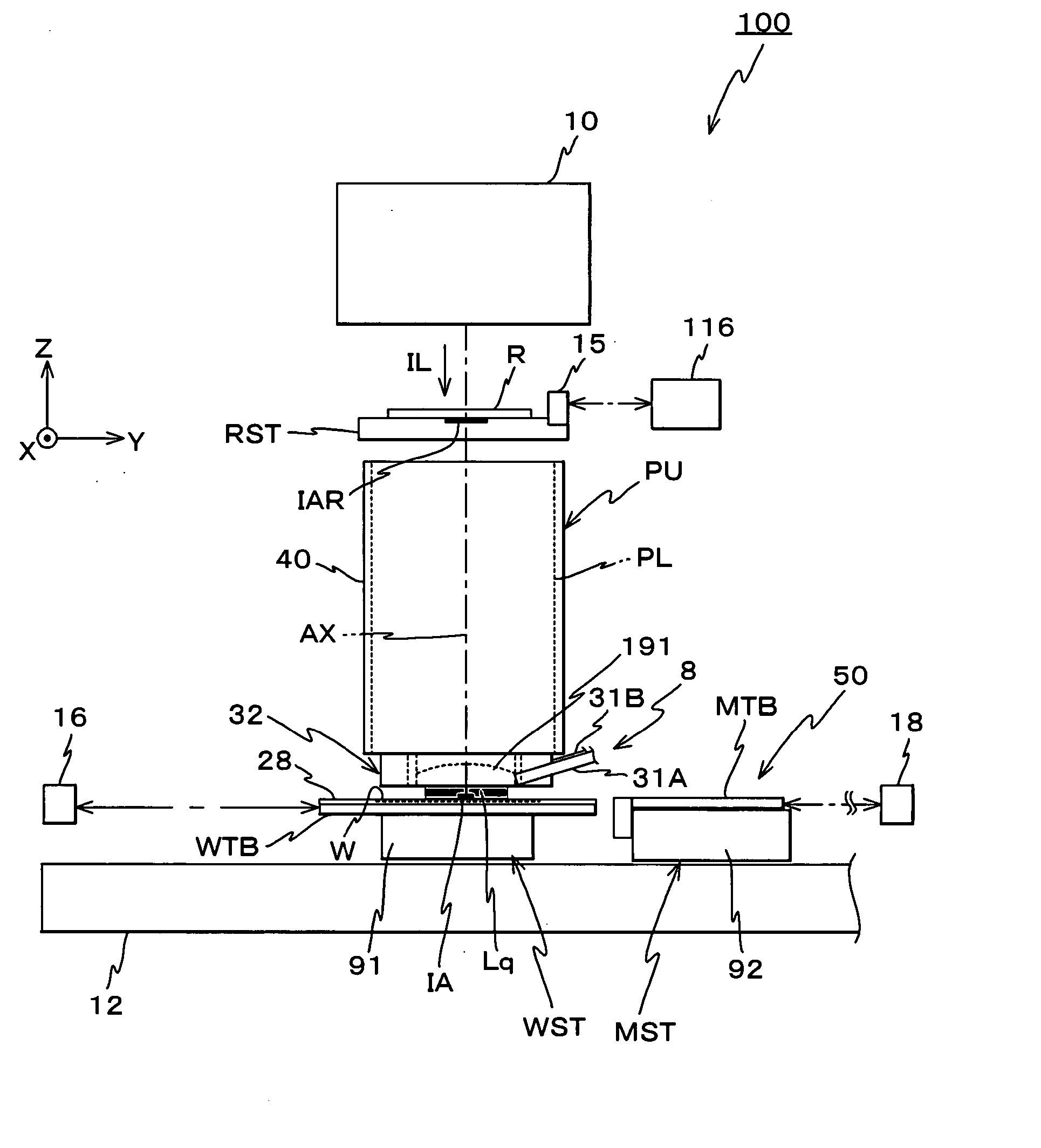

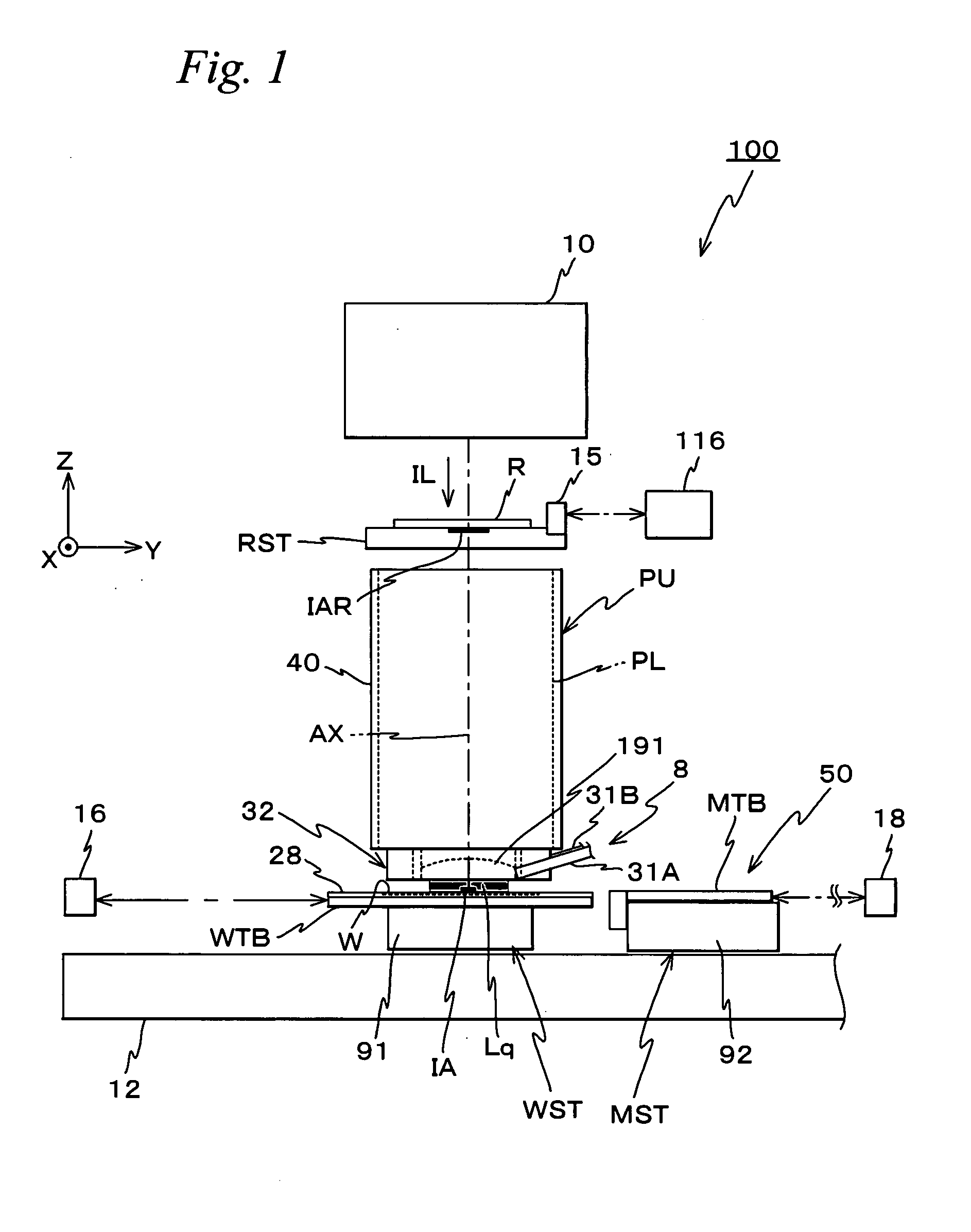

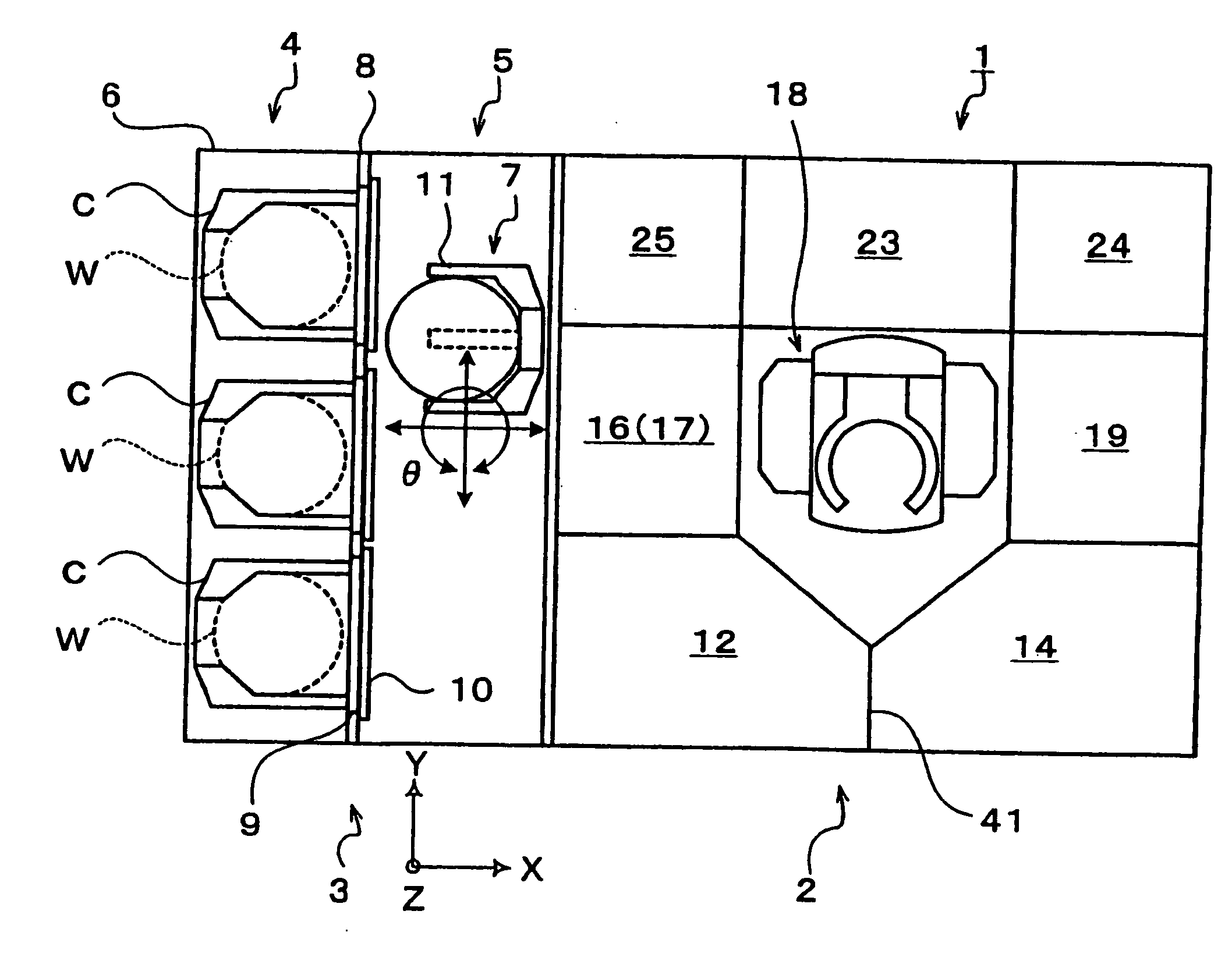

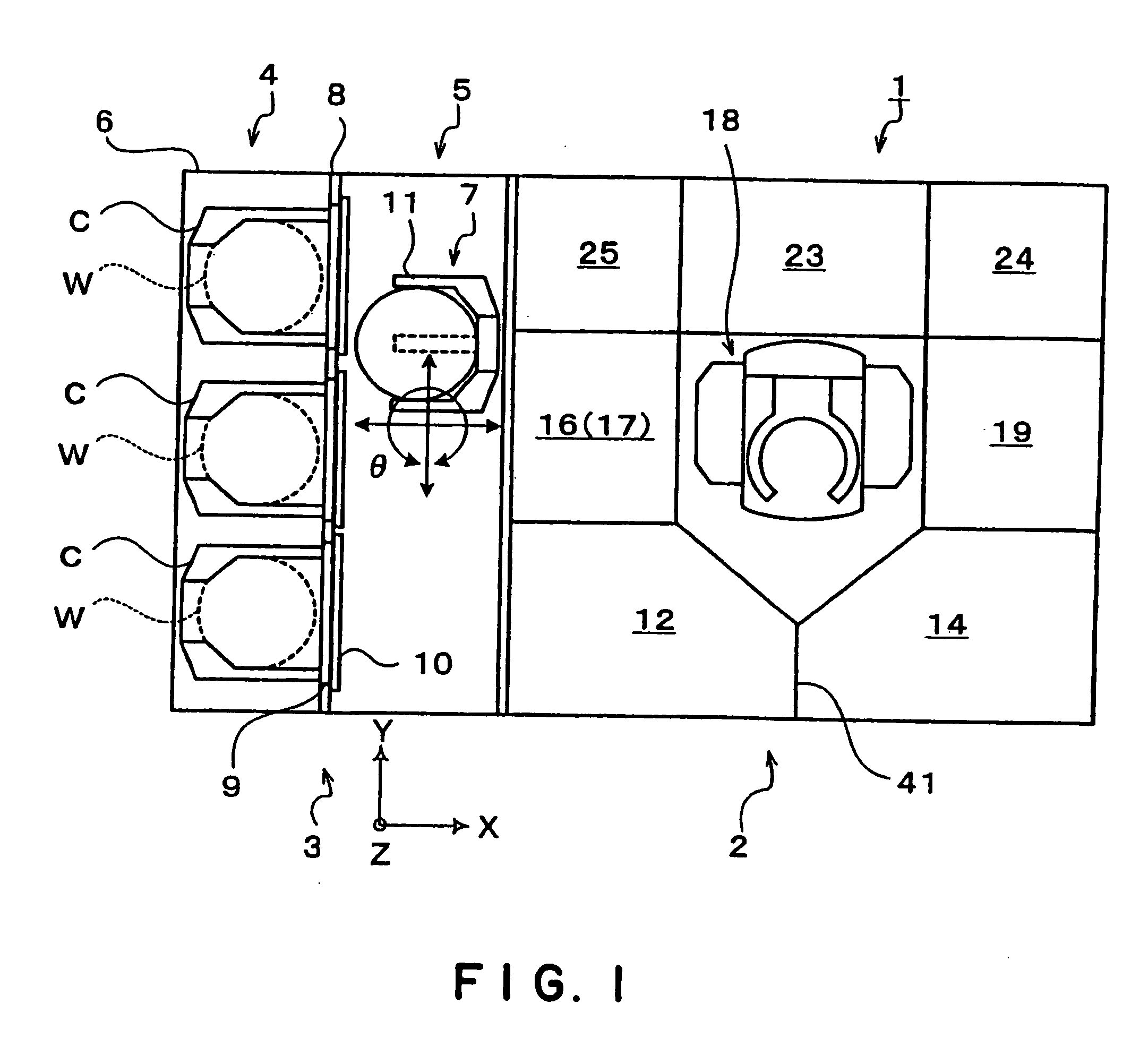

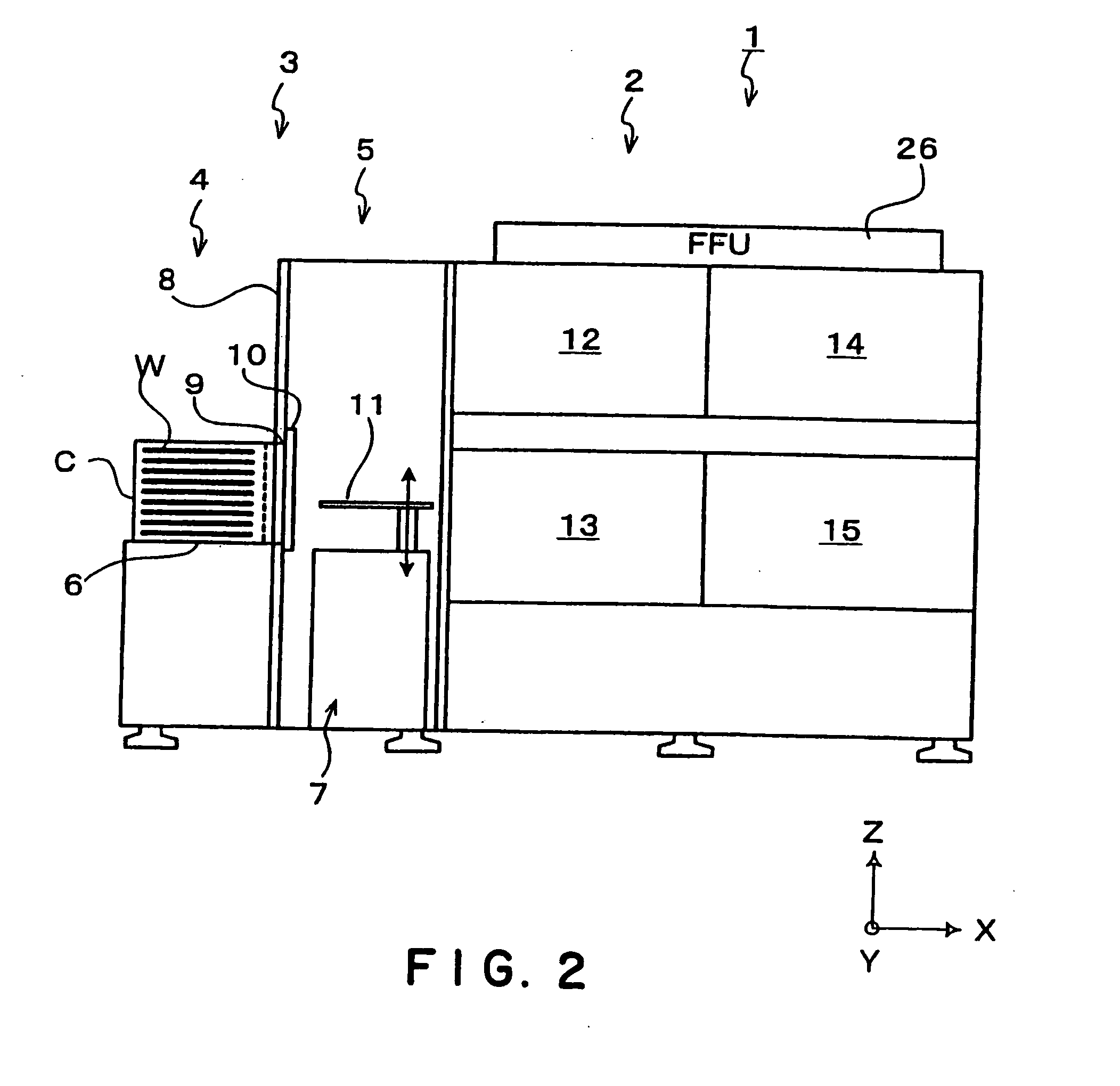

Pattern forming apparatus, mark detecting apparatus, exposure apparatus, pattern forming method, exposure method, and device manufacturing method

ActiveUS20080088843A1Good precisionImprove accuracyPhotomechanical apparatusSemiconductor/solid-state device manufacturingEngineeringThroughput

While a wafer stage linearly moves in a Y-axis direction, a multipoint AF system detects surface position information of the wafer surface at a plurality of detection points that are set at a predetermined distance in an X-axis direction and also a plurality of alignment systems that are arrayed in a line along the X-axis direction detect each of marks at positions different from one another on the wafer. That is, detection of surface position information of the wafer surface at a plurality of detection points and detection of the marks at positions different from one another on the wafer are finished, only by the wafer stage (wafer) linearly passing through the array of the plurality of detection points of the multipoint AF system and the plurality of alignment systems, and therefore, the throughput can be improved, compared with the case where a detection operation of the marks and a detection operation of the surface position information (focus information) are independently performed.

Owner:NIKON CORP

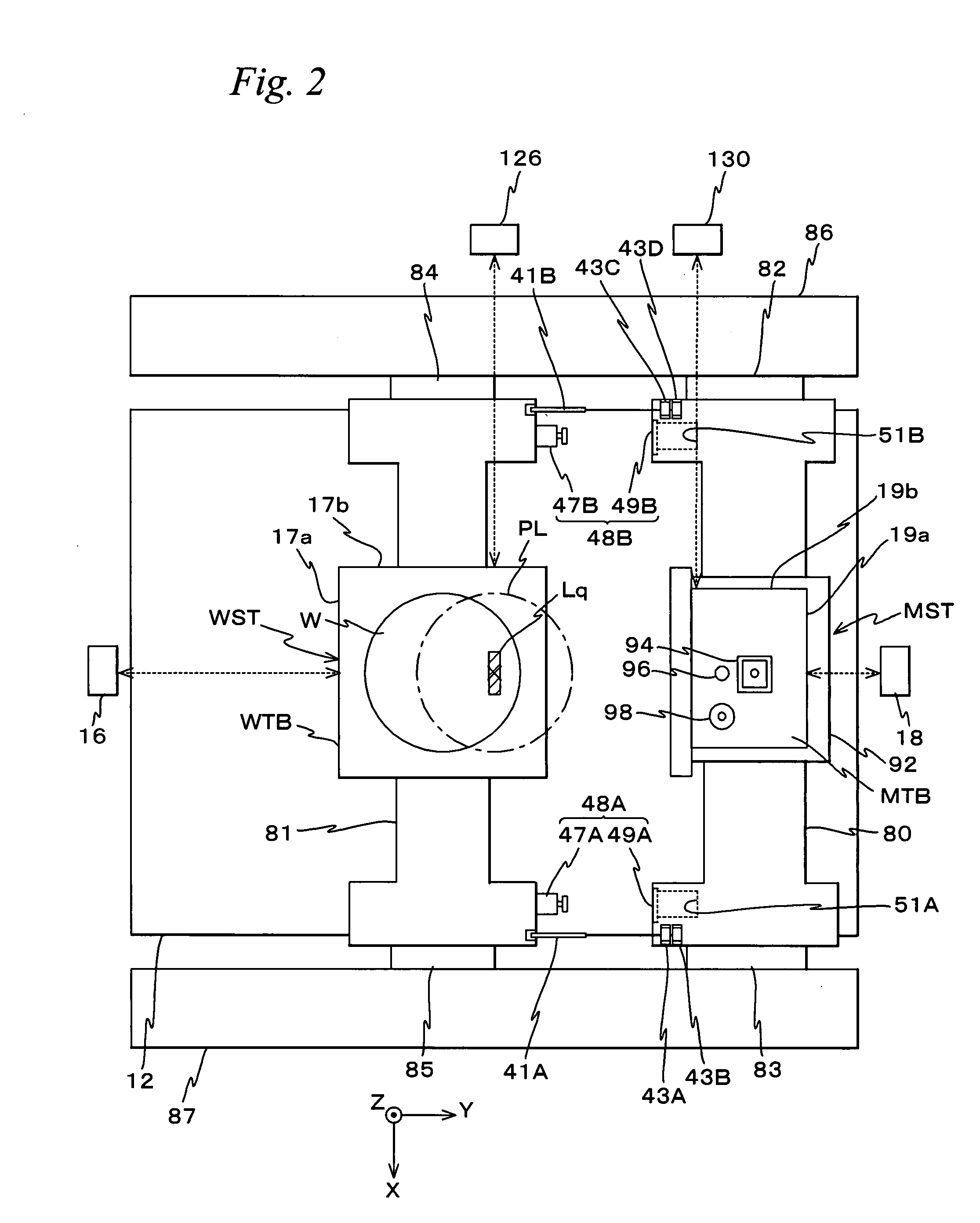

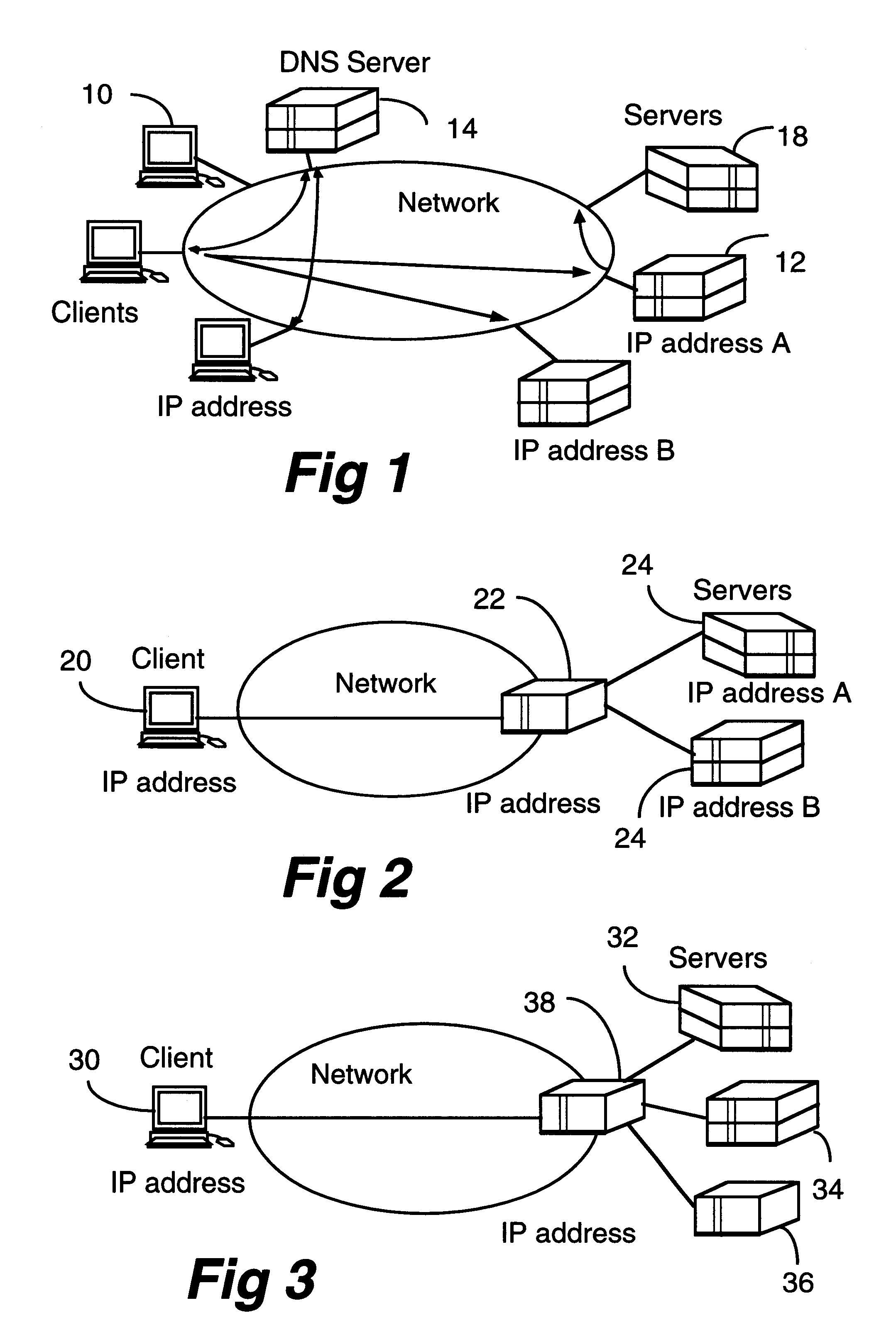

Scaleable web server and method of efficiently managing multiple servers

InactiveUS6330602B1Improve performanceEfficient managementData switching by path configurationMultiple digital computer combinationsInformation resourceWeb service

A client-server architecture includes a plurality of clients and a plurality of servers. Information resources are replicated among the servers. According to one aspect, the invention includes an intermediary device called a "depot" sitting transparently between a client and a pool of servers which have the replicated information resources. The depot dynamically distributes multiple sessions contained in a client request among the servers. This architecture realizes a good granular scaleability of servers, and improved server throughput with a good response time. Multiple depots also realize robustness.

Owner:AVAYA INC

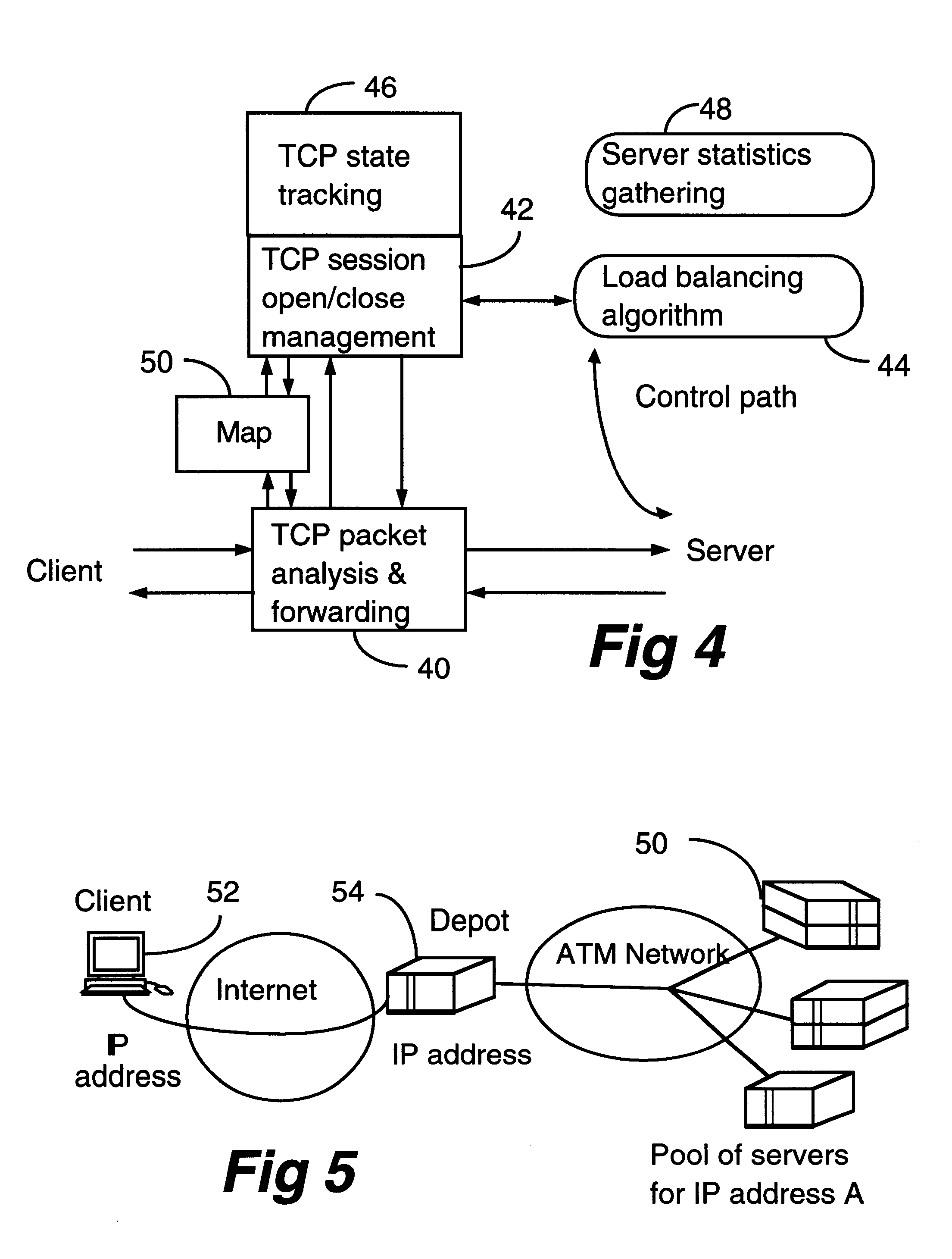

Apparatus and method for analysis of molecules

ActiveUS7302146B2Improve accuracyEasy to implementMaterial nanotechnologyCladded optical fibreMolecular analysisChemical reaction

Owner:PACIFIC BIOSCIENCES

Method and system for automated optimization of antenna positioning in 3-D

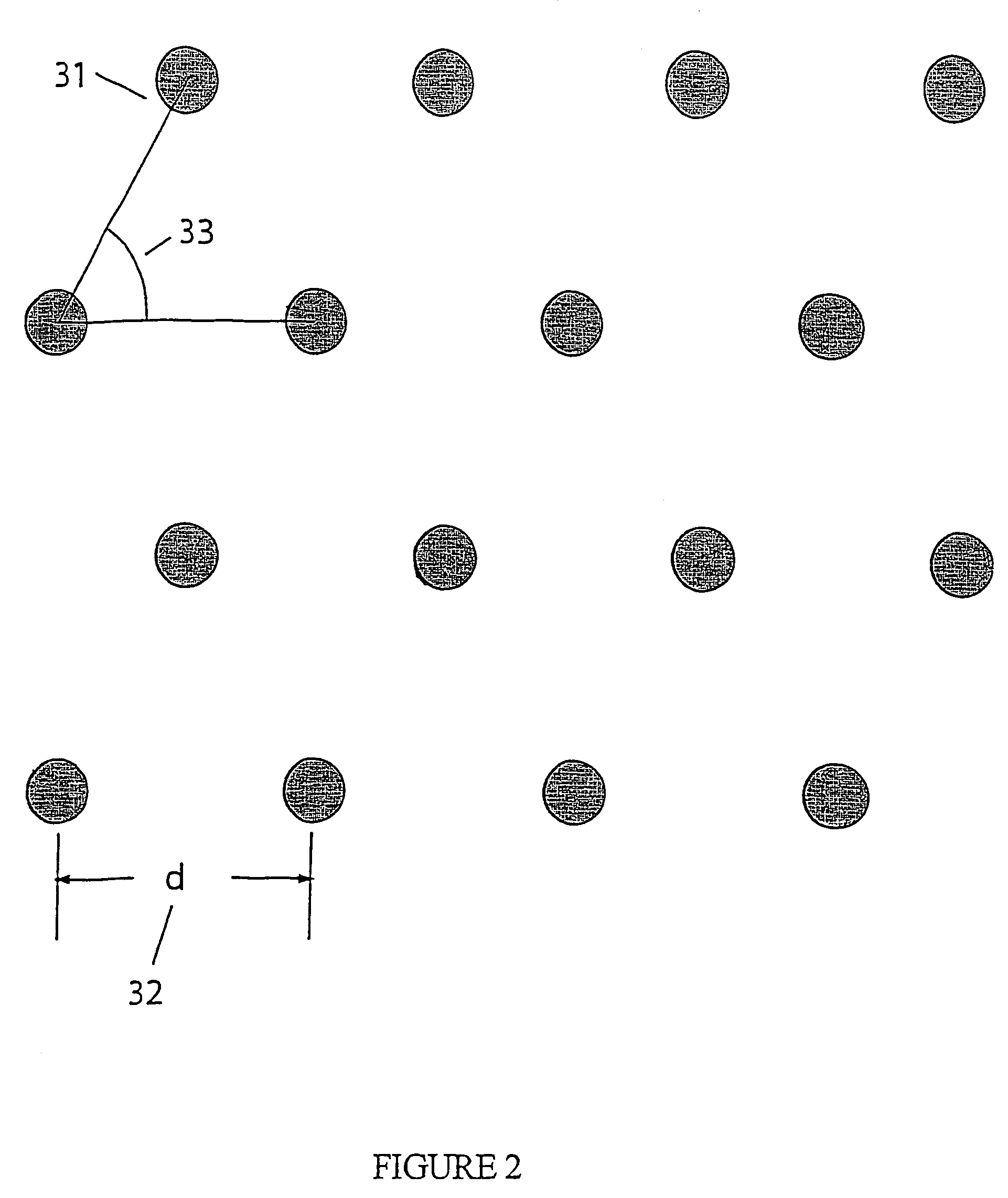

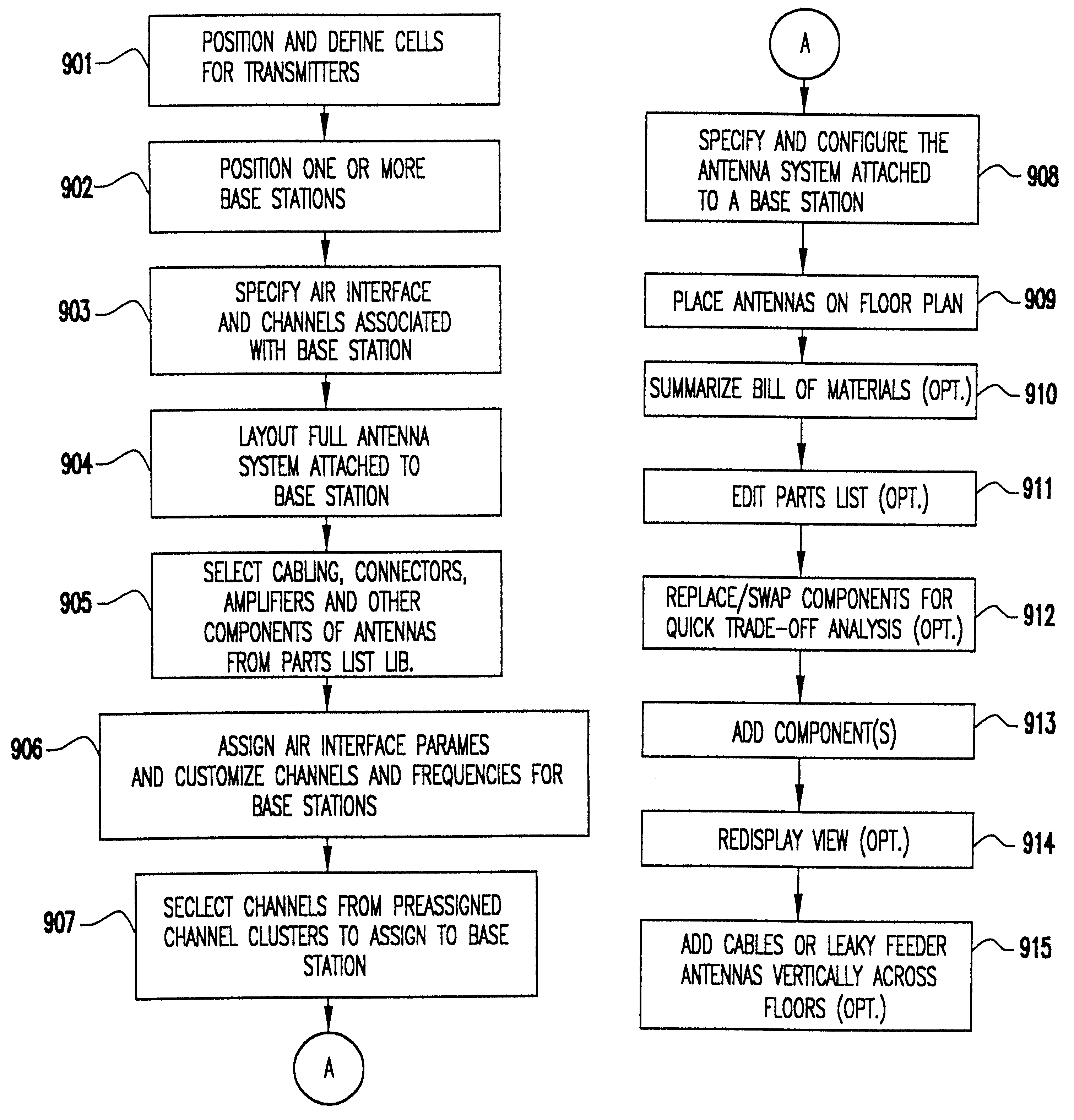

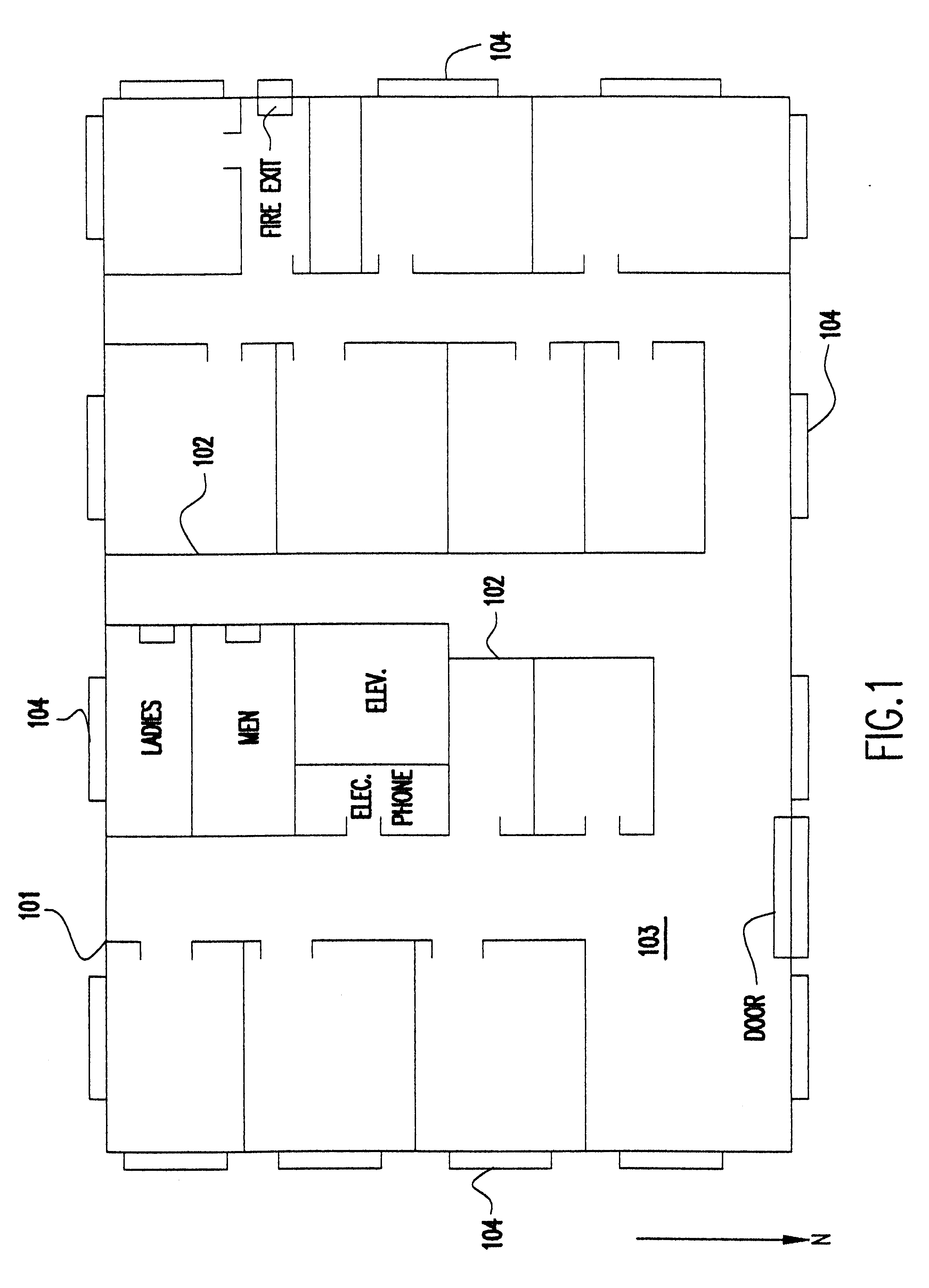

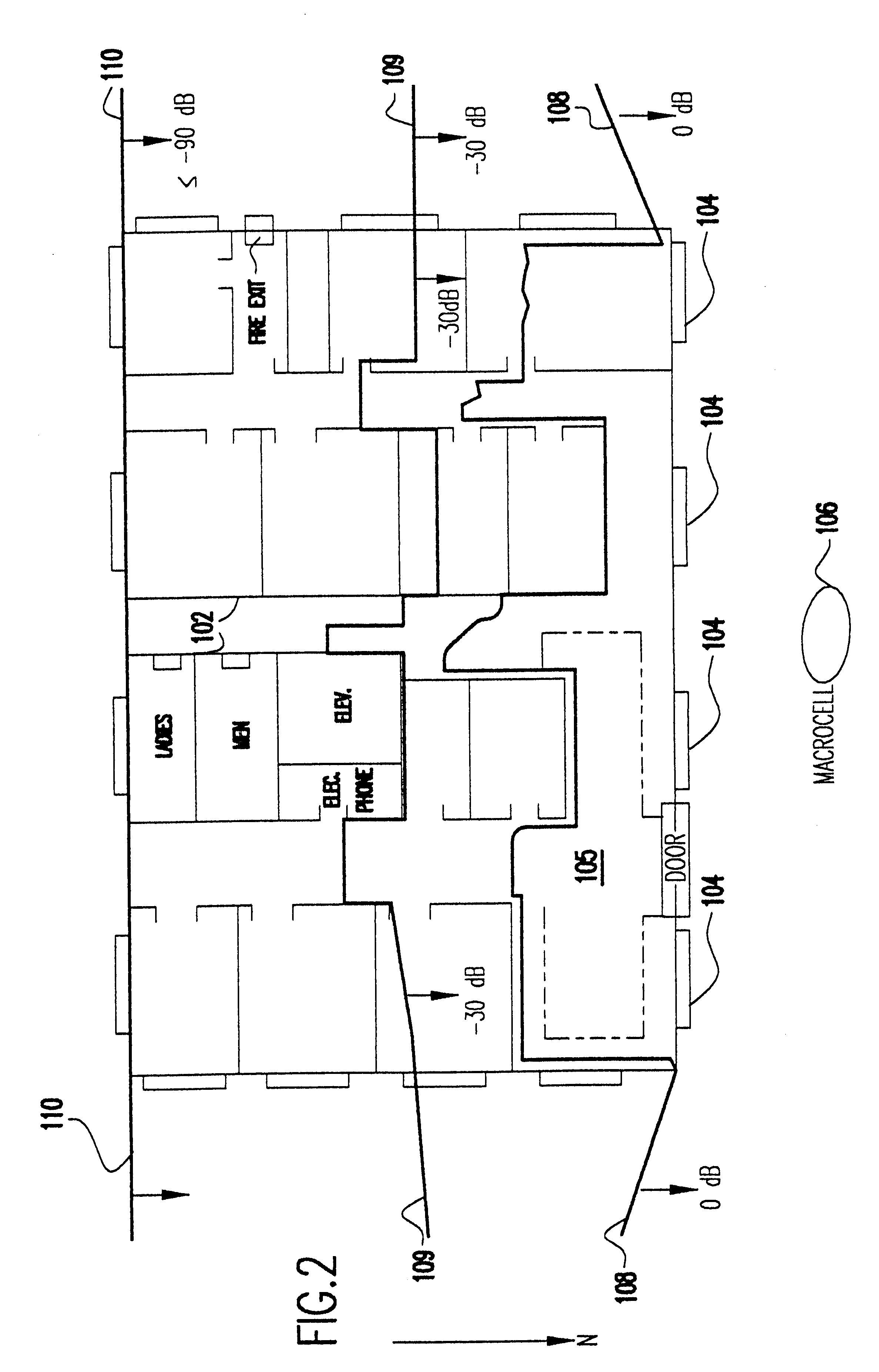

InactiveUS6317599B1Quick fixSignificant valueMachines/enginesComputation using non-denominational number representationEngineeringRadio frequency

A method for engineering management and planning for the design of a wireless communications network in three-dimensions (3-D) combines computerized organization, database fusion, and radio frequency (RF) site-specific planning models. The method enables a designer to keep track of wireless system performance throughout the process of pre-bid design, installation and maintenance of a wireless system. Using a database of information that defines the desired environment, predictions of antenna coverage, system coverage and interference, and other wireless system performance criteria, such as frame error rate and network throughput, can be made. Watch points are created to ensure, in real time, that any modifications to the design of the wireless system do not degrade the performance of the system with respect to the watch point locations.

Owner:EXTREME NETWORKS INC

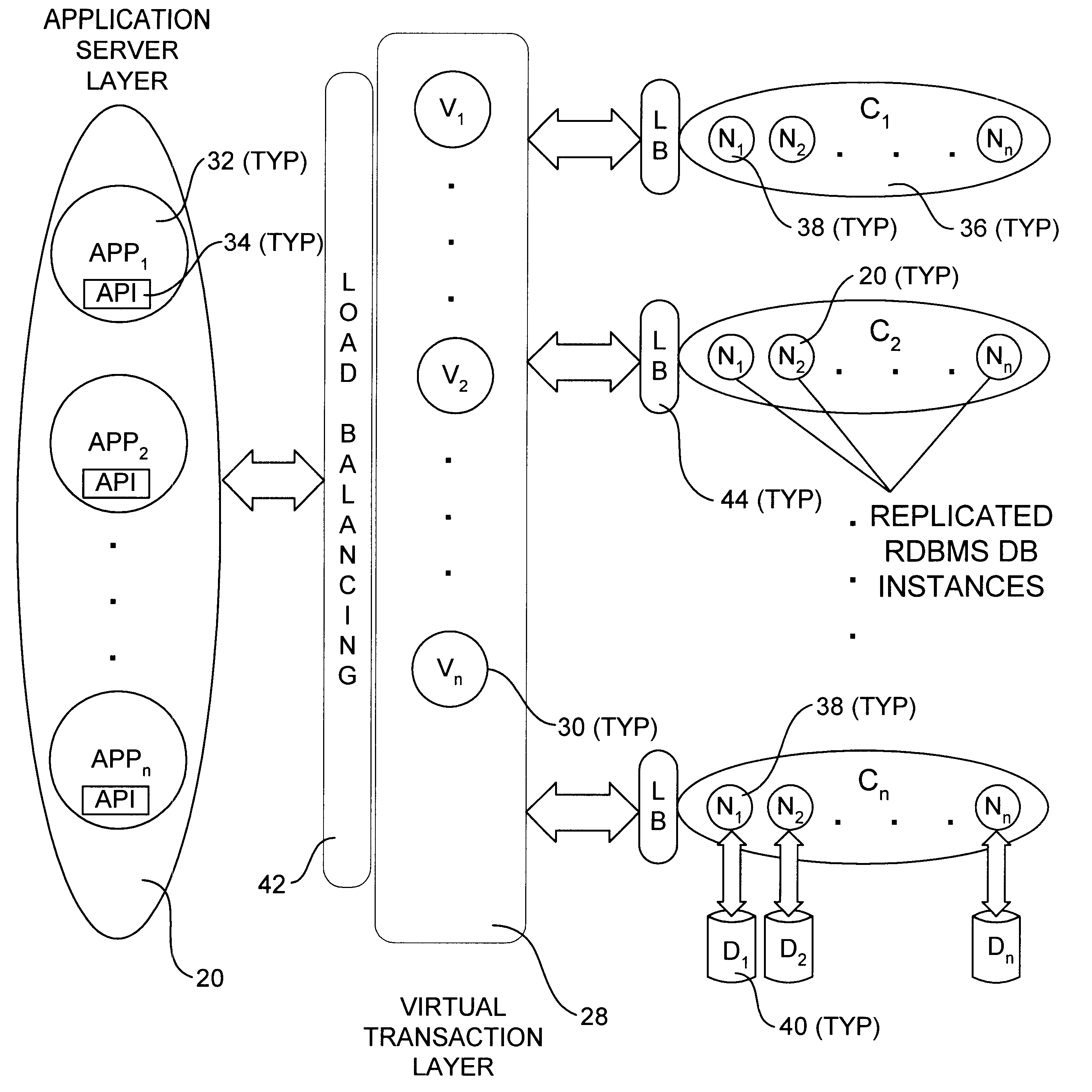

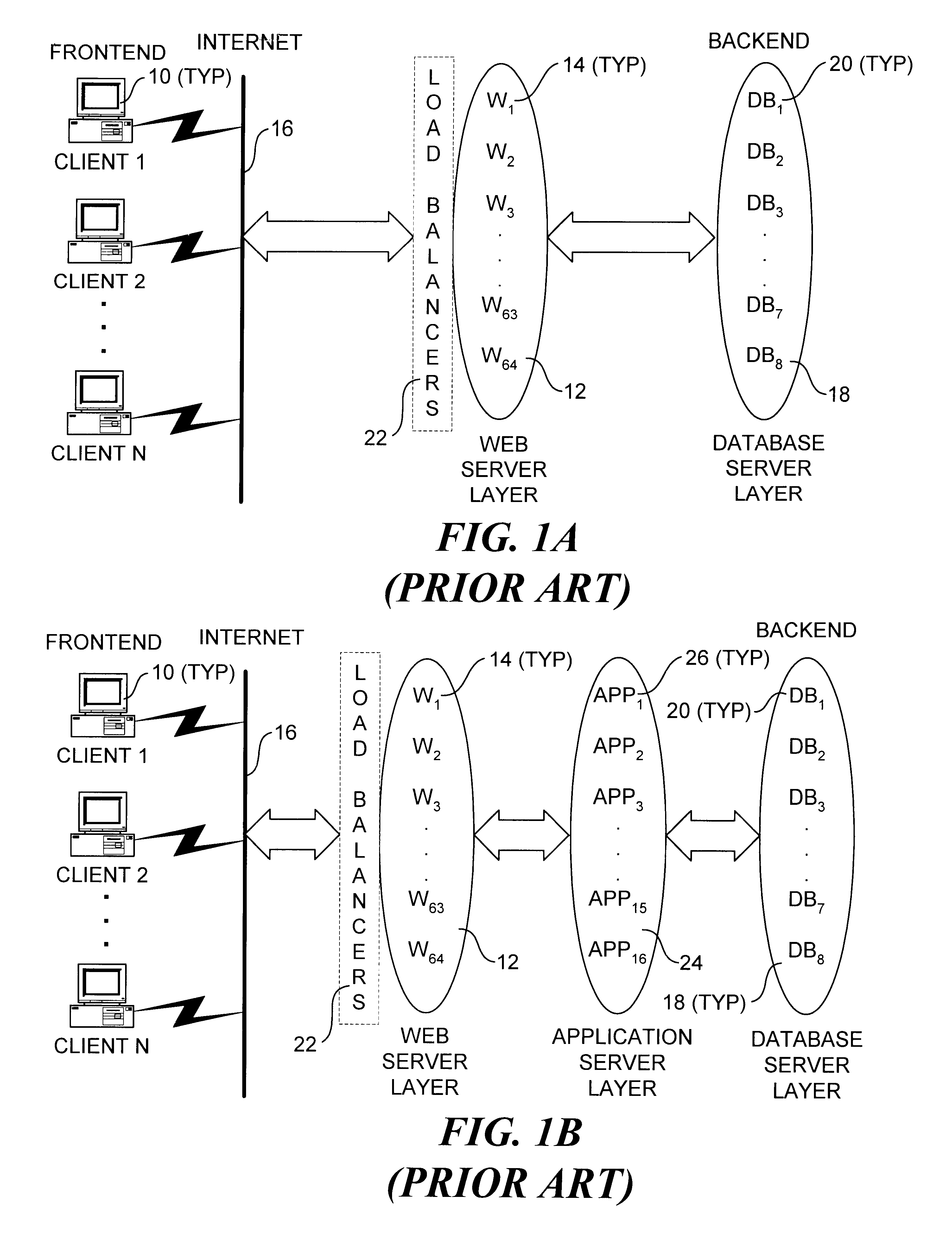

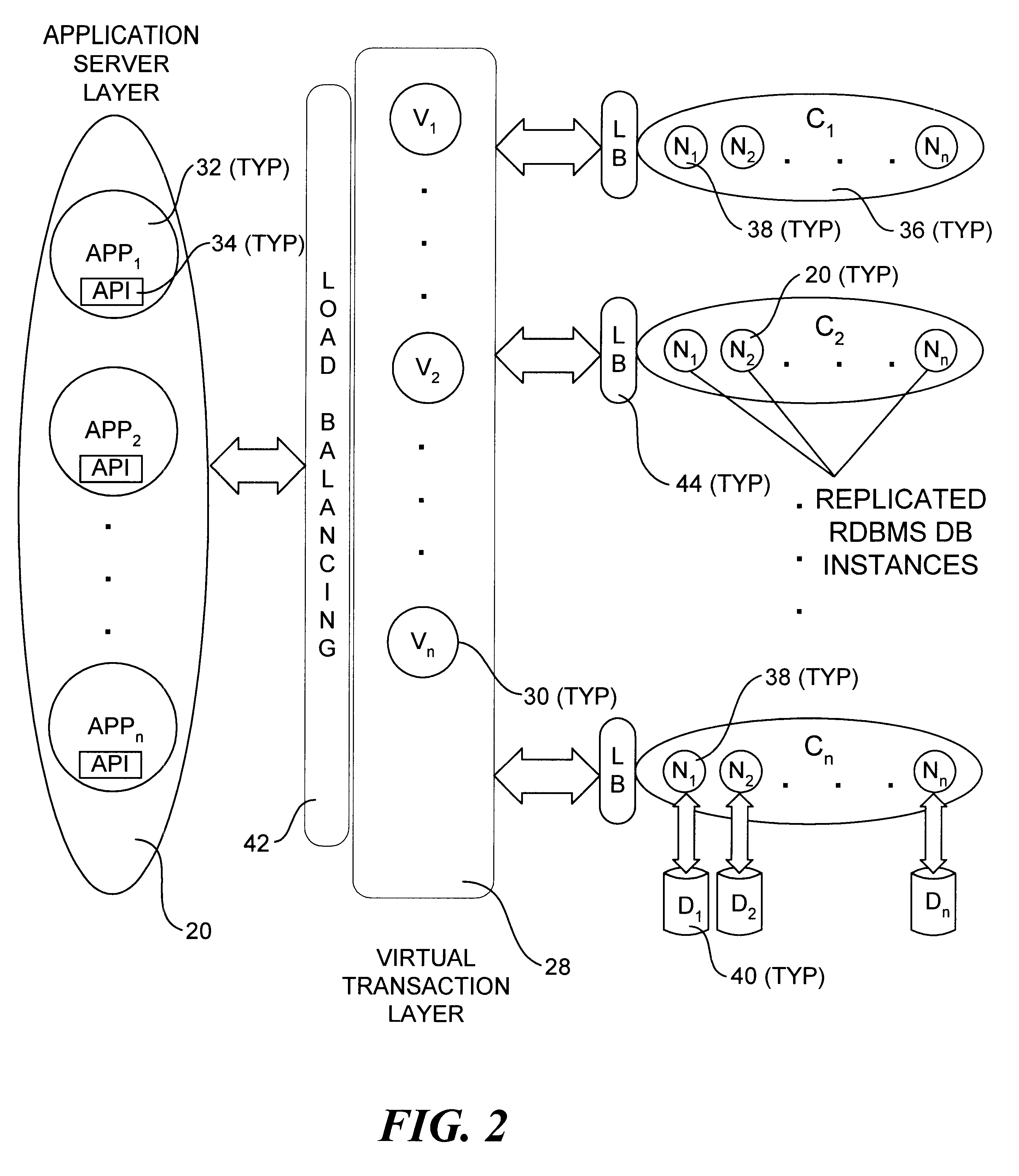

Internet database system

InactiveUS6523036B1Data processing applicationsDigital data processing detailsApplication serverHash function

An incrementally-scalable database system and method. The system architecture enables database servers to be scaled by adding resources, such as additional servers, without requiring that the system be taken offline. Such scaling includes both adding one or more computer servers to a given server cluster, which enables an increase in database read transaction throughput, and adding one or more server clusters to the system configuration, which provides for increased read and write transaction throughput. The system also provides for load balancing read transactions across each server cluster, and load balancing write transactions across a plurality of server clusters. The system architecture includes an application server layer including one or more computers on which an application program(s) is running, a database server layer comprising two or more server clusters that each include two or more computer servers with replicated data, and an intermediate "virtual transaction" layer that includes at least two computers that facilitate database transactions with one or more databases operating in the database server layer. Data in the database(s) are evenly distributed across the server clusters in fragmented mutually exclusive subsets of data based on a hashing function. An application program interface is provided so as to enable application programs to perform a full range of database transactions without regard for where data is stored, or what database(s) is operating in the database server layer.

Owner:EMC IP HLDG CO LLC

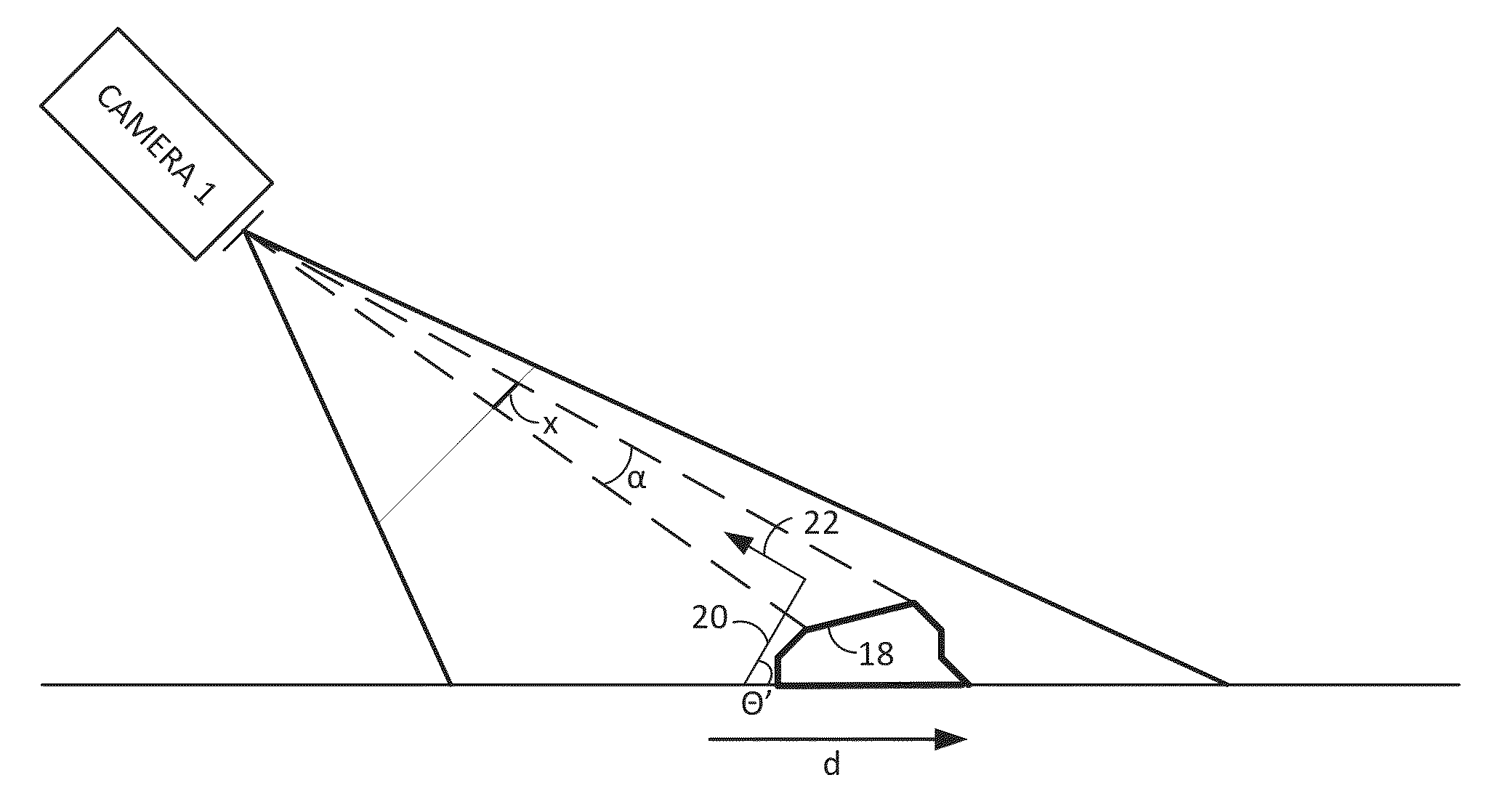

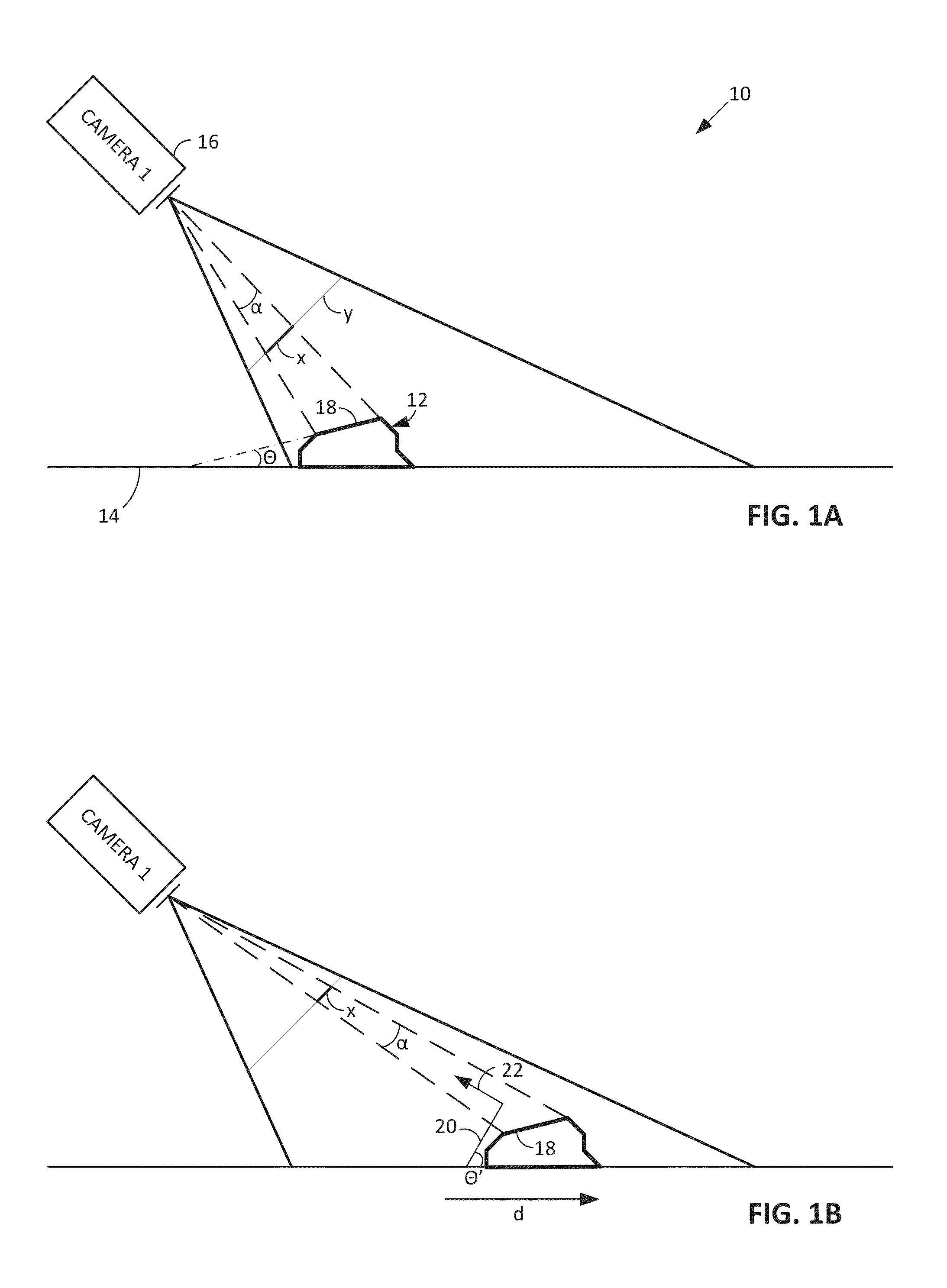

Methods and arrangements for identifying objects

ActiveUS20130223673A1Increase check-out speedImprove accuracyStatic indicating devicesCash registersPattern recognitionGeometric primitive

In some arrangements, product packaging is digitally watermarked over most of its extent to facilitate high-throughput item identification at retail checkouts. Imagery captured by conventional or plenoptic cameras can be processed (e.g., by GPUs) to derive several different perspective-transformed views—further minimizing the need to manually reposition items for identification. Crinkles and other deformations in product packaging can be optically sensed, allowing such surfaces to be virtually flattened to aid identification. Piles of items can be 3D-modelled and virtually segmented into geometric primitives to aid identification, and to discover locations of obscured items. Other data (e.g., including data from sensors in aisles, shelves and carts, and gaze tracking for clues about visual saliency) can be used in assessing identification hypotheses about an item. A great variety of other features and arrangements are also detailed.

Owner:DIGIMARC CORP

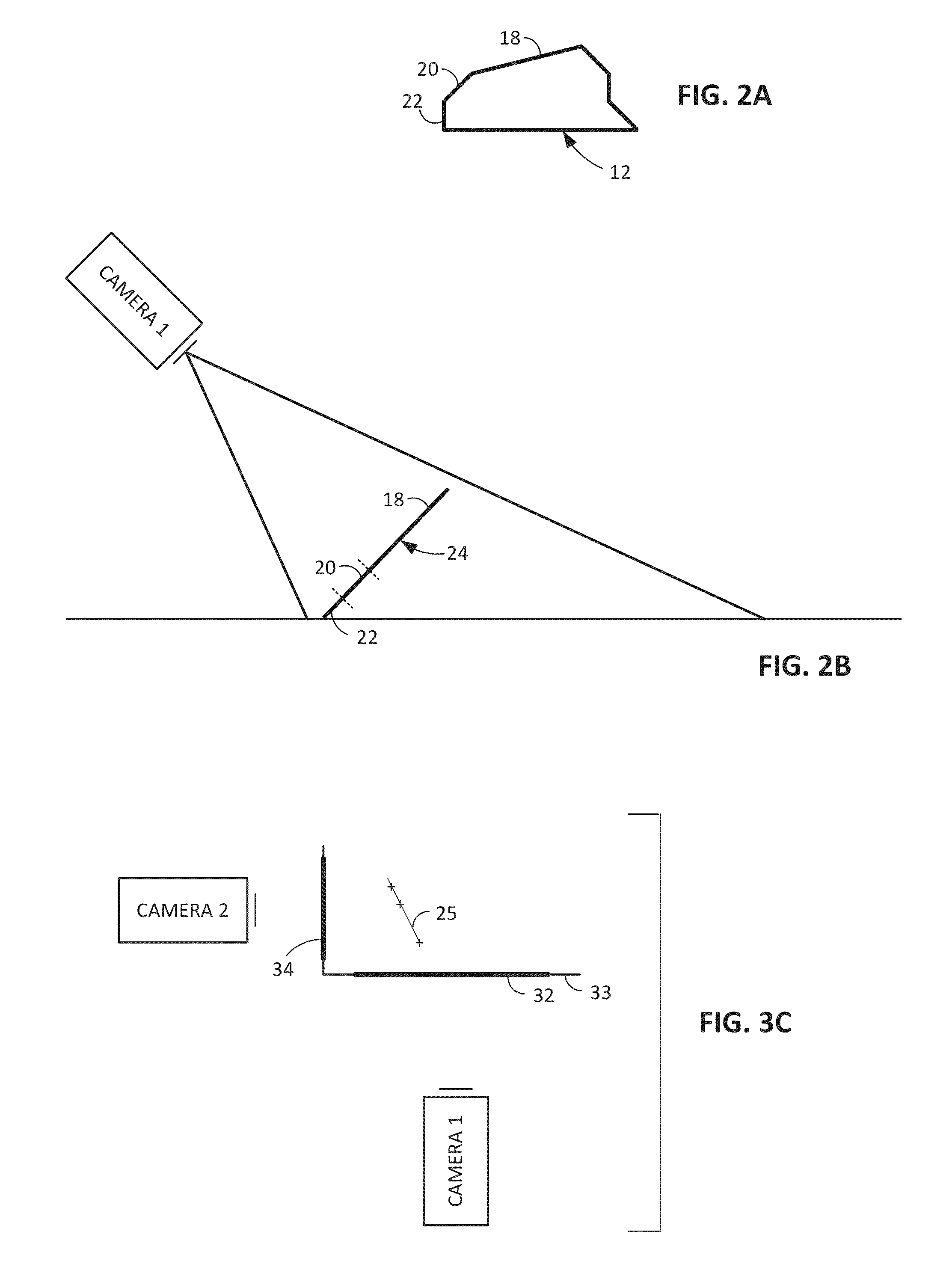

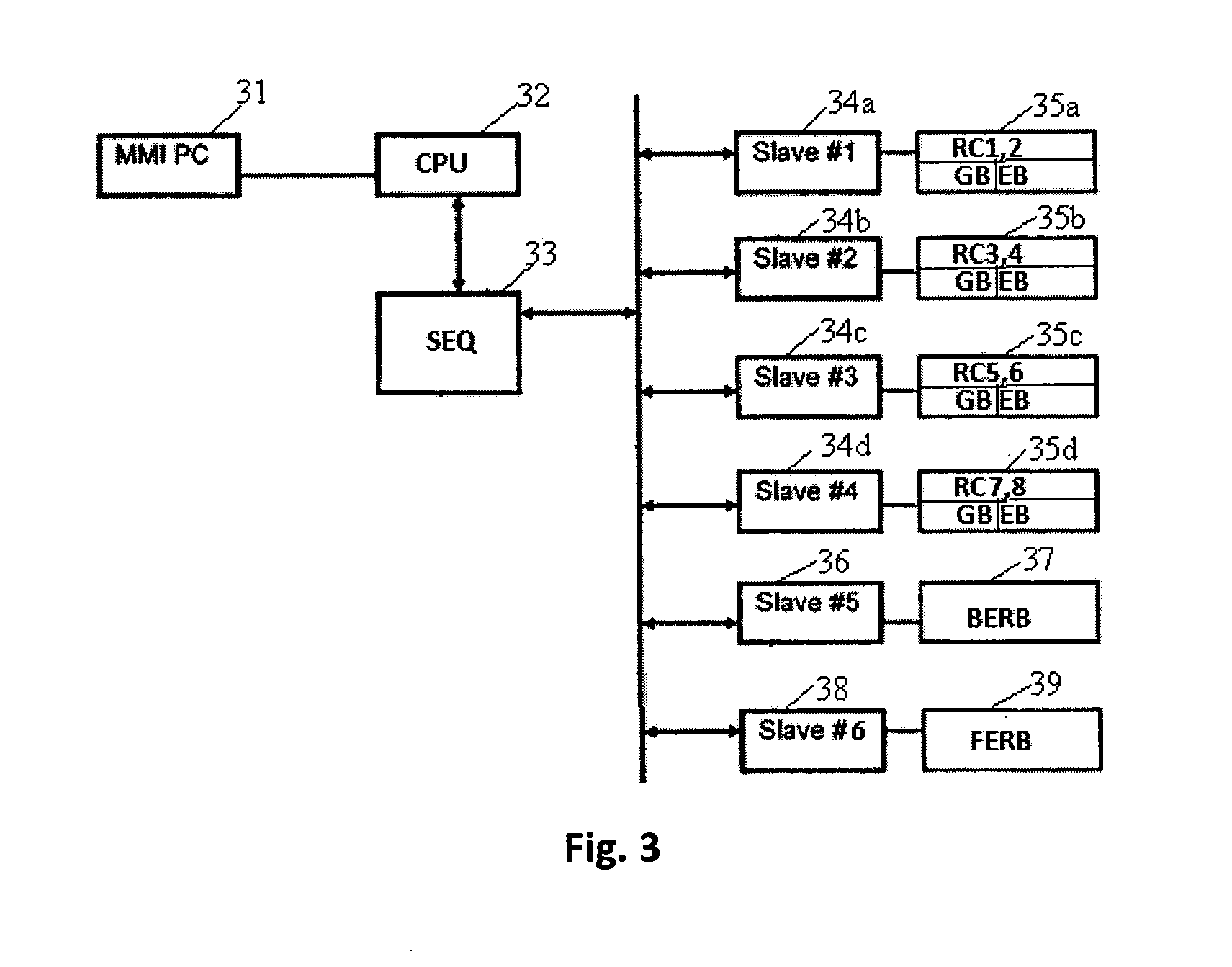

Software sequencer for integrated substrate processing system

InactiveUS20080216077A1Programme controlSemiconductor/solid-state device manufacturingProgram planningDistributed computing

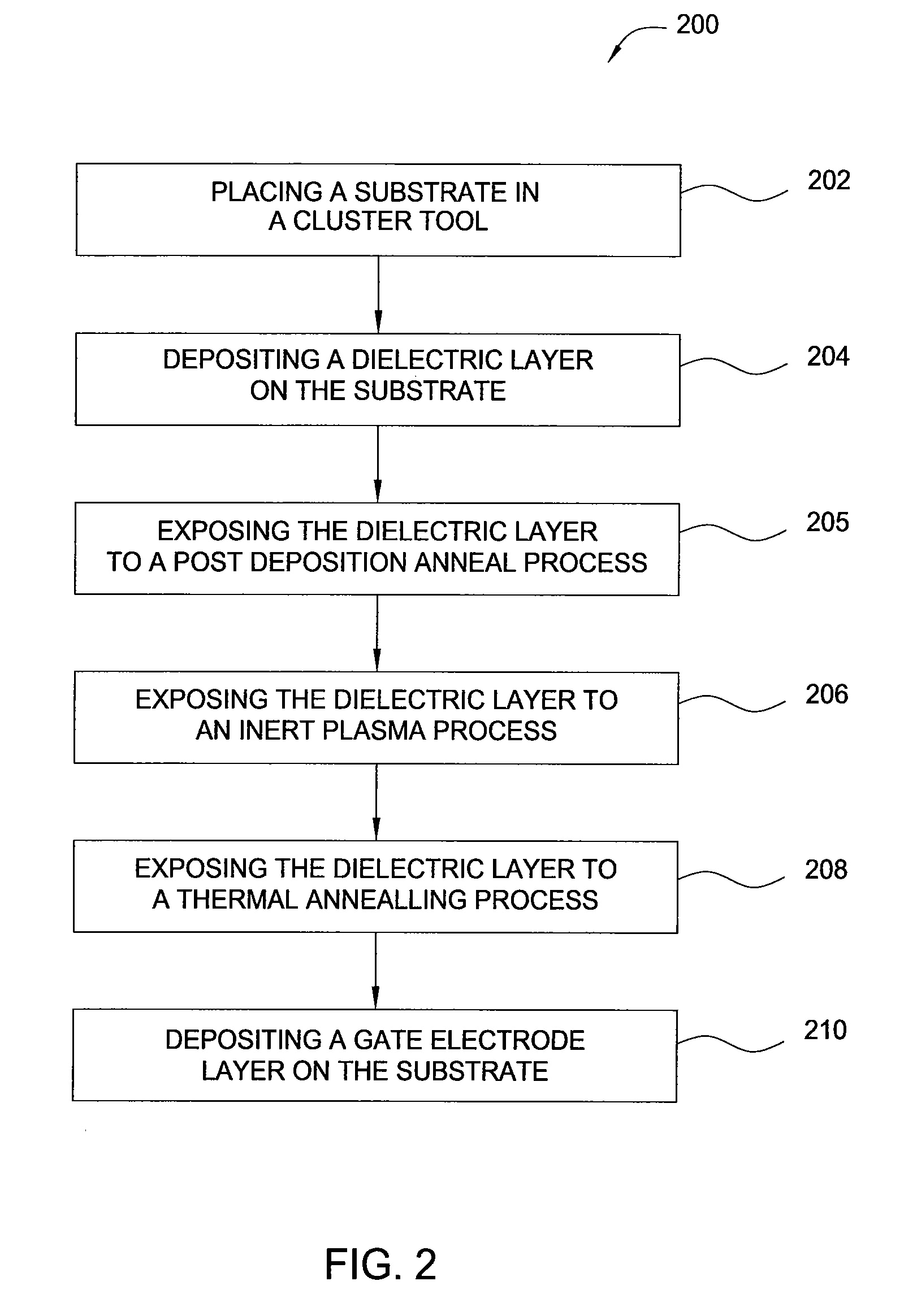

Embodiments of the invention generally provide apparatus and method for scheduling a process sequence to achieve maximum throughput and process consistency in a cluster tool having a set of constraints. One embodiment of the present invention provides a method for scheduling a process sequence comprising determining an initial individual schedule by assigning resources to perform the process sequence, calculating a fundamental period, detecting resource conflicts in a schedule generated from the individual schedule and the fundamental period, and adjusting the individual schedule to remove the resource conflicts.

Owner:APPLIED MATERIALS INC

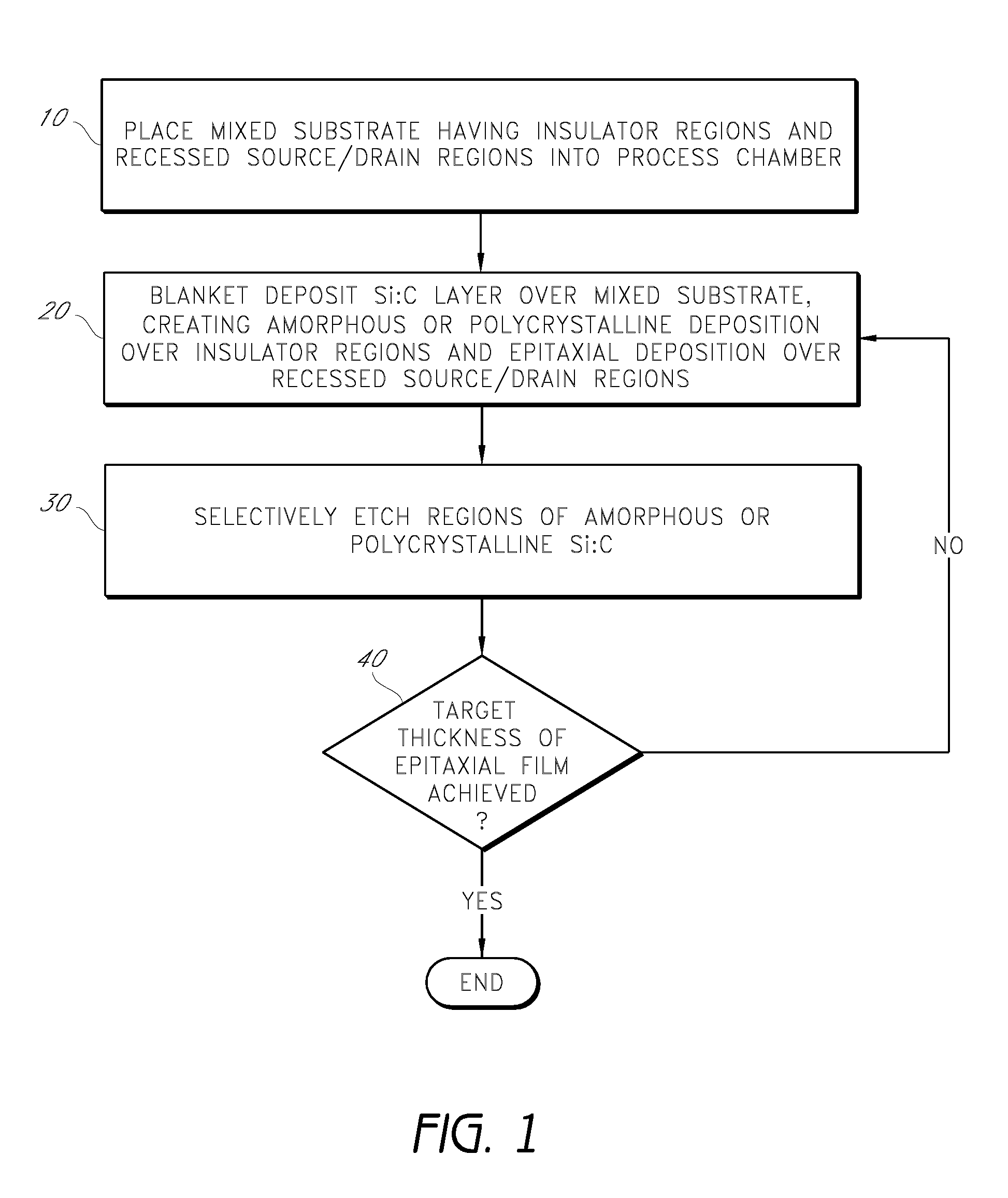

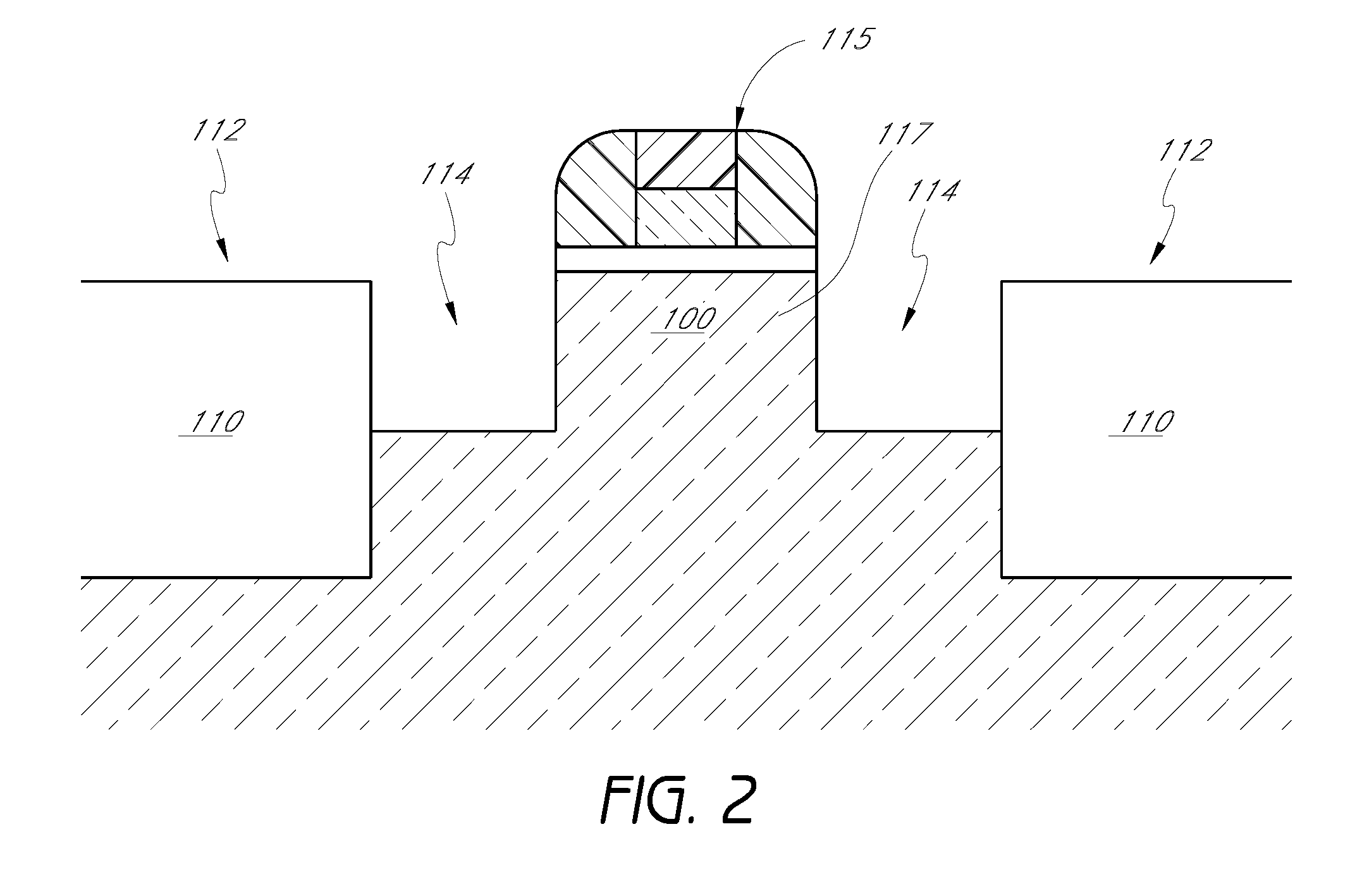

Selective epitaxial formation of semiconductor films

ActiveUS8278176B2Semiconductor/solid-state device manufacturingSemiconductor devicesCyclic processMetallurgy

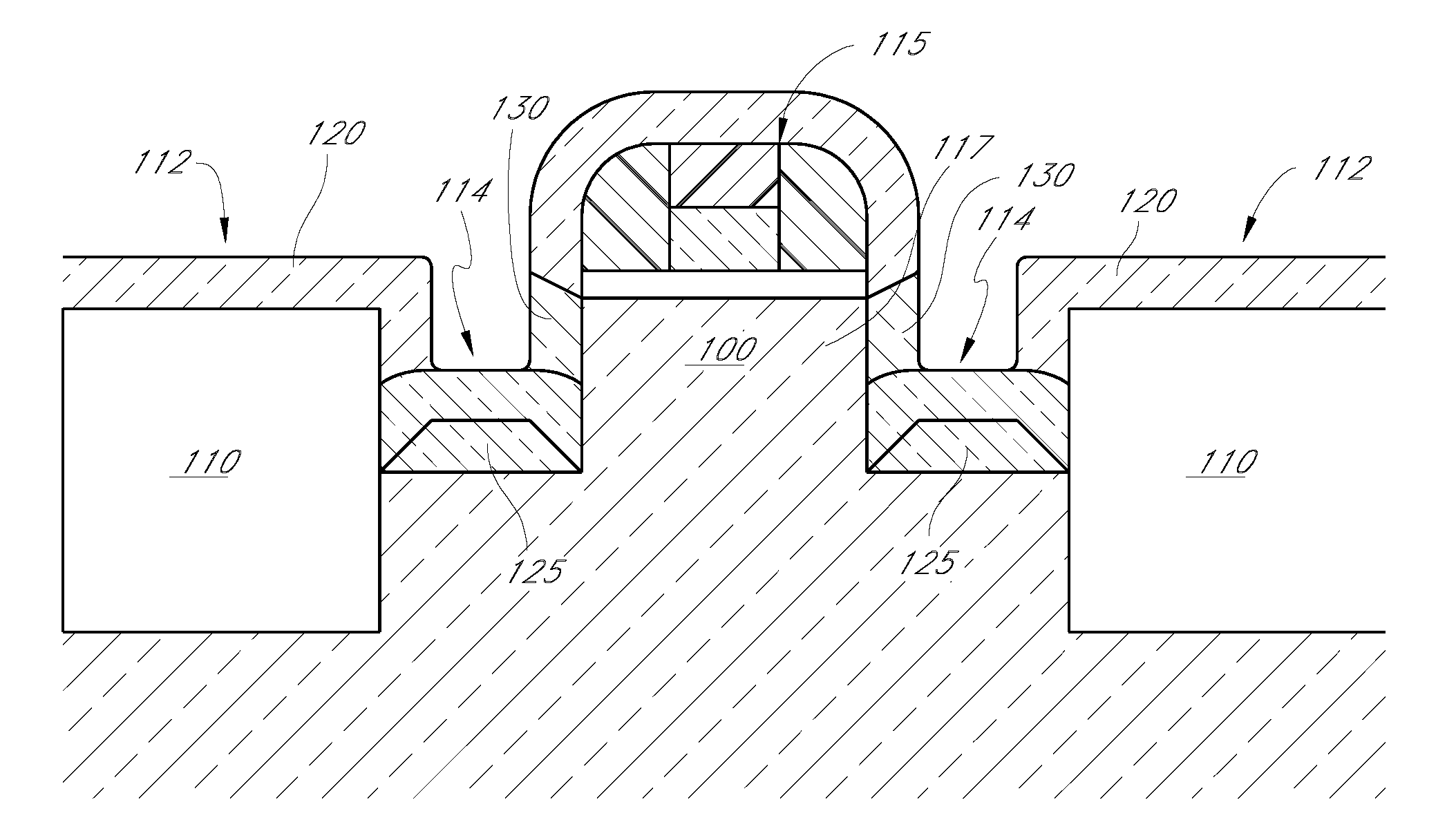

Epitaxial layers are selectively formed in semiconductor windows by a cyclical process of repeated blanket deposition and selective etching. The blanket deposition phases leave non-epitaxial material over insulating regions, such as field oxide, and the selective etch phases preferentially remove non-epitaxial material while deposited epitaxial material builds up cycle-by-cycle. Quality of the epitaxial material improves relative to selective processes where no deposition occurs on insulators. Use of a germanium catalyst during the etch phases of the process aid etch rates and facilitate economical maintenance of isothermal and / or isobaric conditions throughout the cycles. Throughput and quality are improved by use of trisilane, formation of amorphous material over the insulating regions and minimizing the thickness ratio of amorphous:epitaxial material in each deposition phase.

Owner:ASM IP HLDG BV

High-temperature characterization of polymers

InactiveUS6260407B1Avoid backlogImprove throughputSequential/parallel process reactionsComponent separationElutionChromatography column

Rapid characterization and screening of polymer samples to determine average molecular weight, molecular weight distribution and other properties is disclosed. Rapid flow characterization systems and methods, including liquid chromatography and flow-injection analysis systems and methods are preferably employed. High throughput, automated sampling systems and methods, high-temperature characterization systems and methods, and rapid, indirect calibration compositions and methods are also disclosed. In preferred high-temperature embodiments, the polymer sample is maintained at a temperature of not less than about 75° C. during sample preparation, loading into a liquid chromatography or flow-injection analysis system, injection into a mobile phase of a liquid chromatography or flow-injection analysis system, and / or elution from chromatographic column. The described methods, systems, and device have primary applications in combinatorial polymer research and in industrial process control.

Owner:INTERMOLECULAR

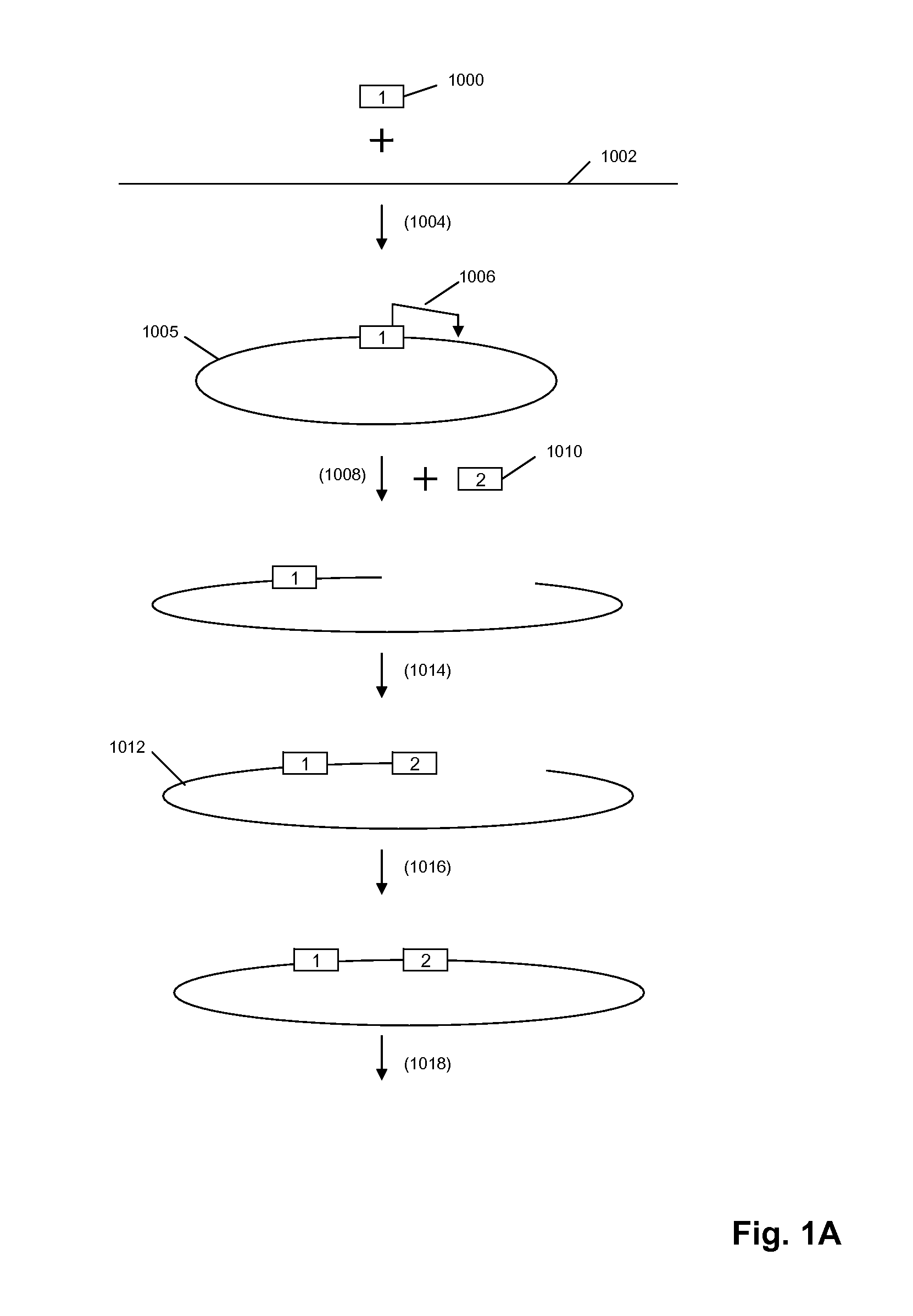

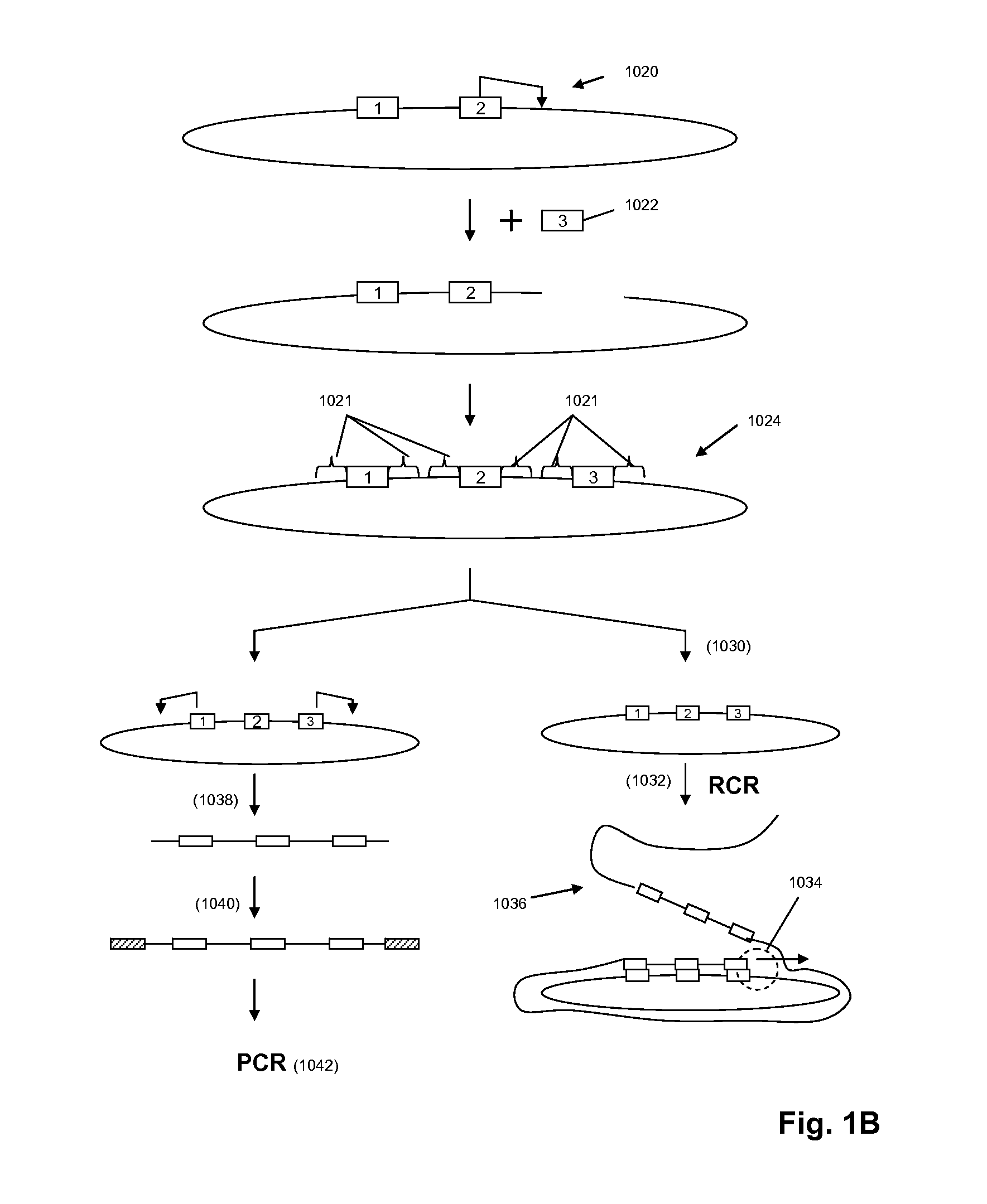

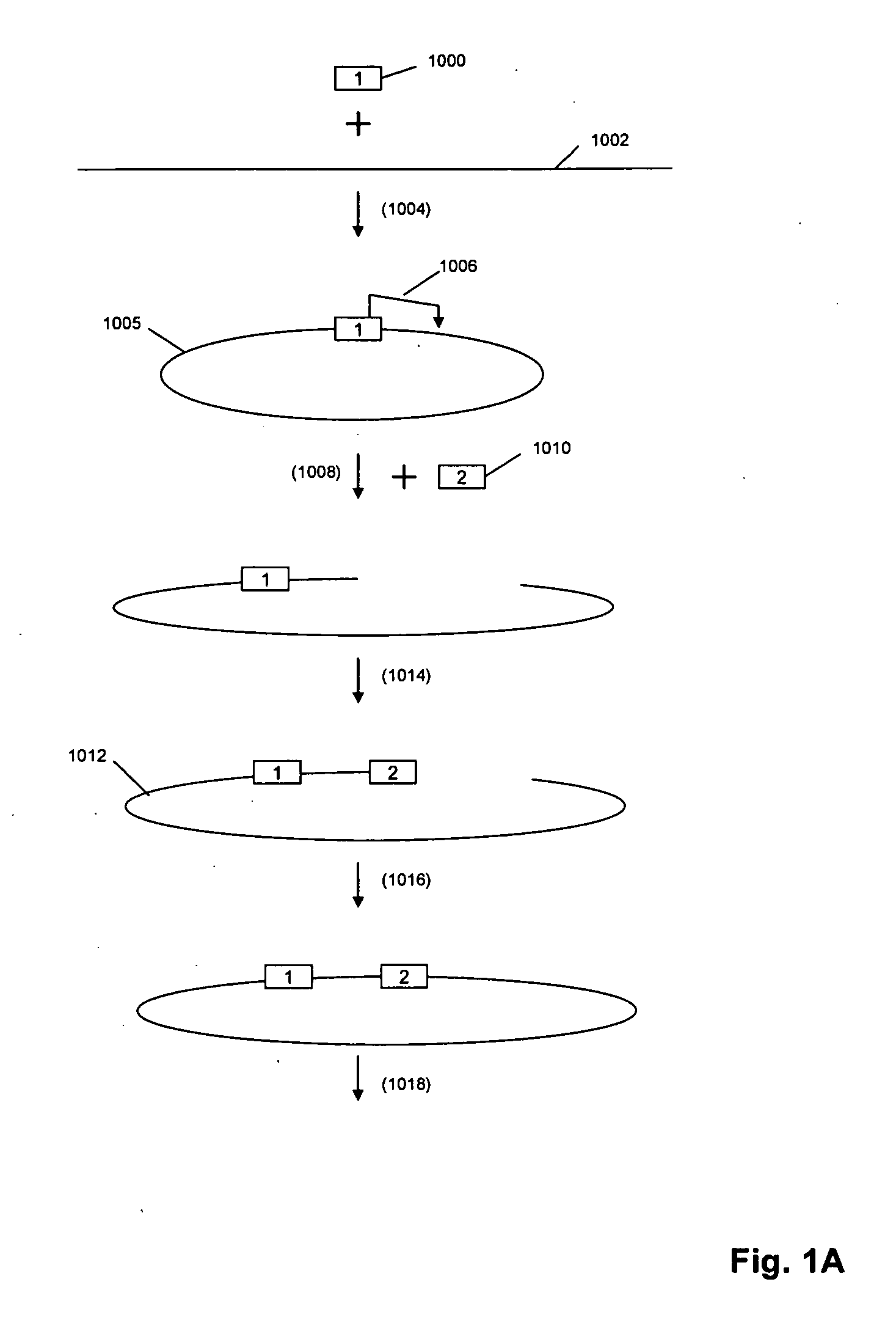

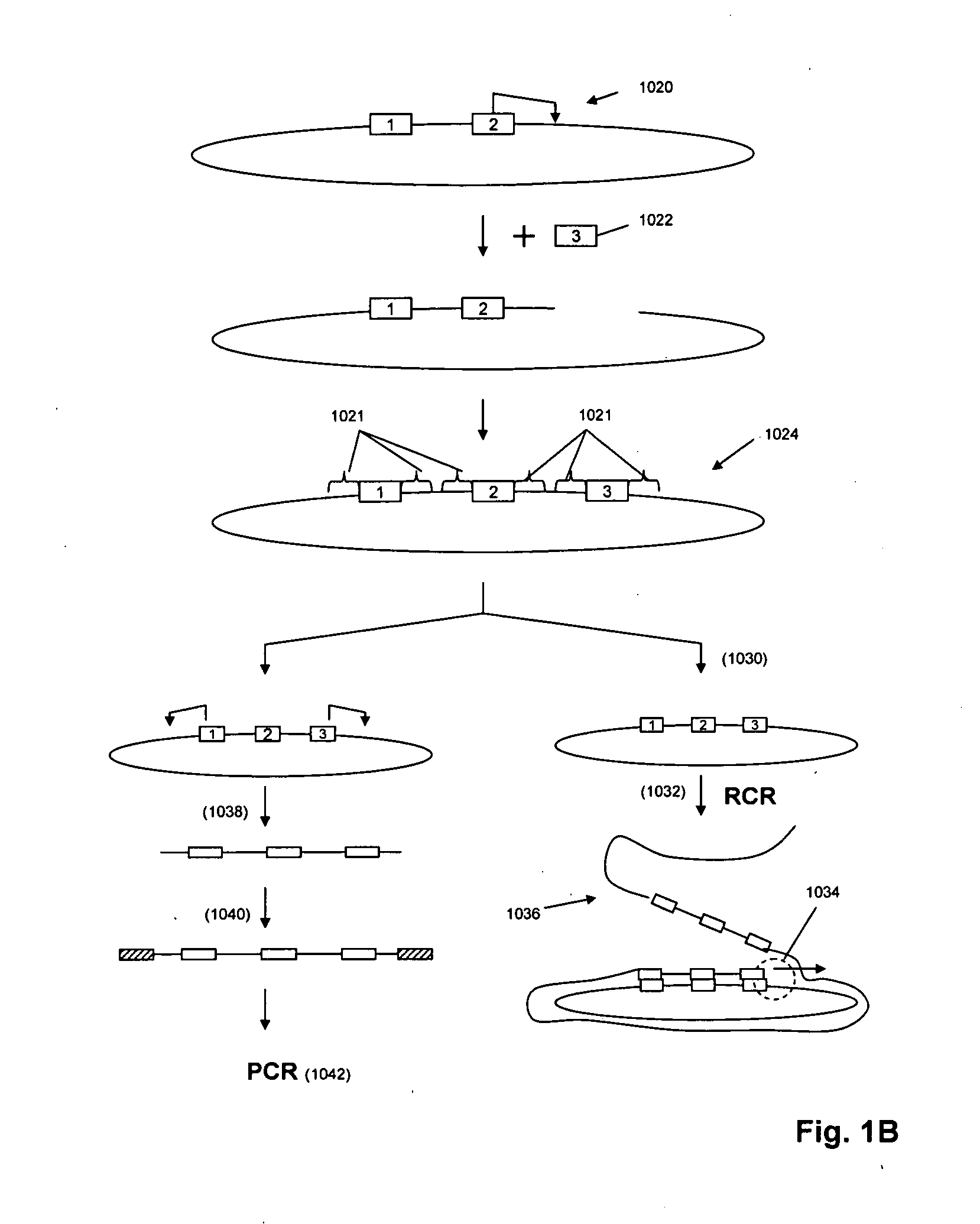

High throughput genome sequencing on DNA arrays

ActiveUS20090005252A1Simple processMicrobiological testing/measurementLibrary member identificationRe sequencingNucleotide

The present invention is directed to methods and compositions for acquiring nucleotide sequence information of target sequences using adaptors interspersed in target polynucleotides. The sequence information can be new, e.g. sequencing unknown nucleic acids, re-sequencing, or genotyping. The invention preferably includes methods for inserting a plurality of adaptors at spaced locations within a target polynucleotide or a fragment of a polynucleotide. Such adaptors may serve as platforms for interrogating adjacent sequences using various sequencing chemistries, such as those that identify nucleotides by primer extension, probe ligation, and the like. Encompassed in the invention are methods and compositions for the insertion of known adaptor sequences into target sequences, such that there is an interruption of contiguous target sequence with the adaptors. By sequencing both “upstream” and “downstream” of the adaptors, identification of entire target sequences may be accomplished.

Owner:COMPLETE GENOMICS INC

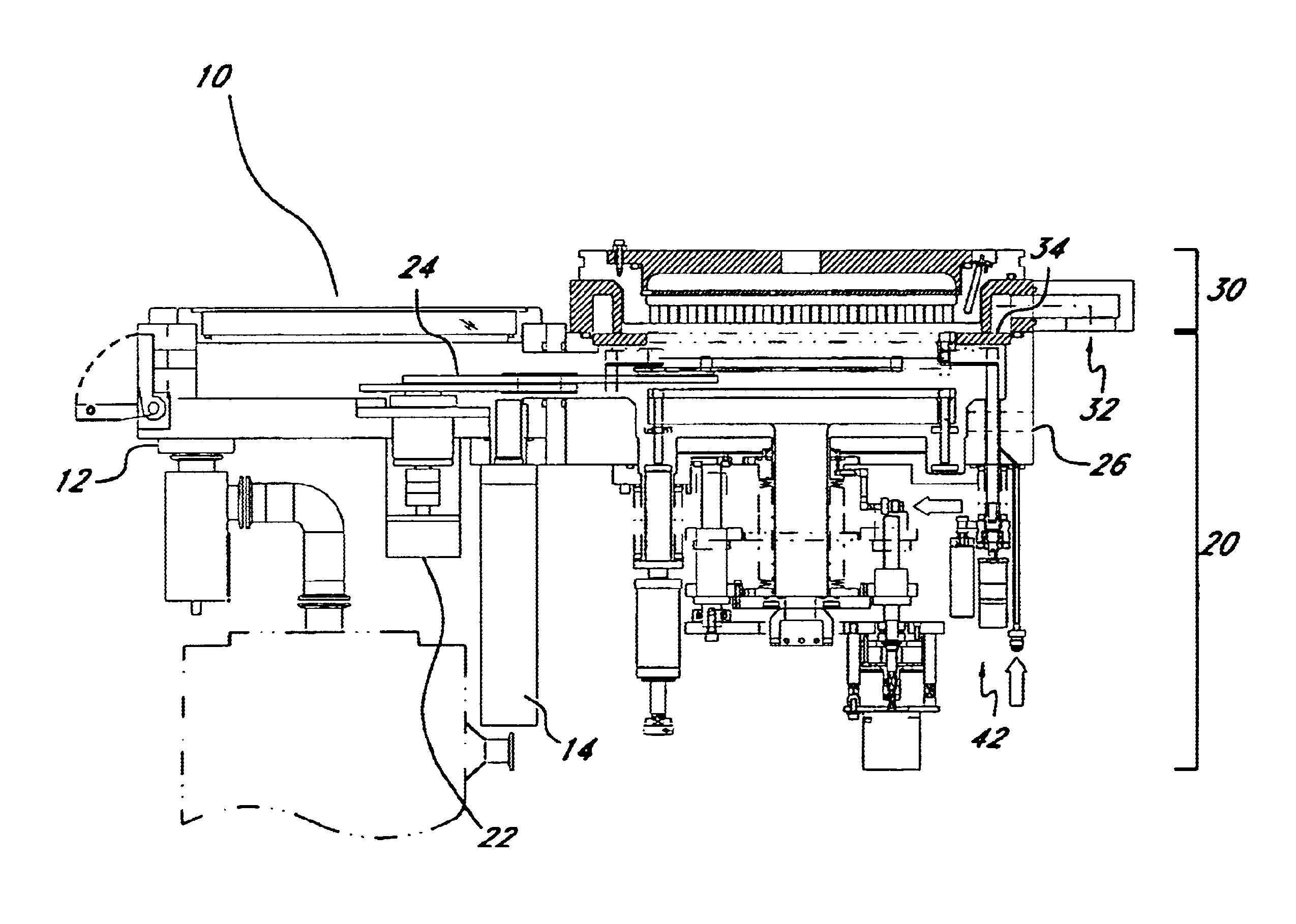

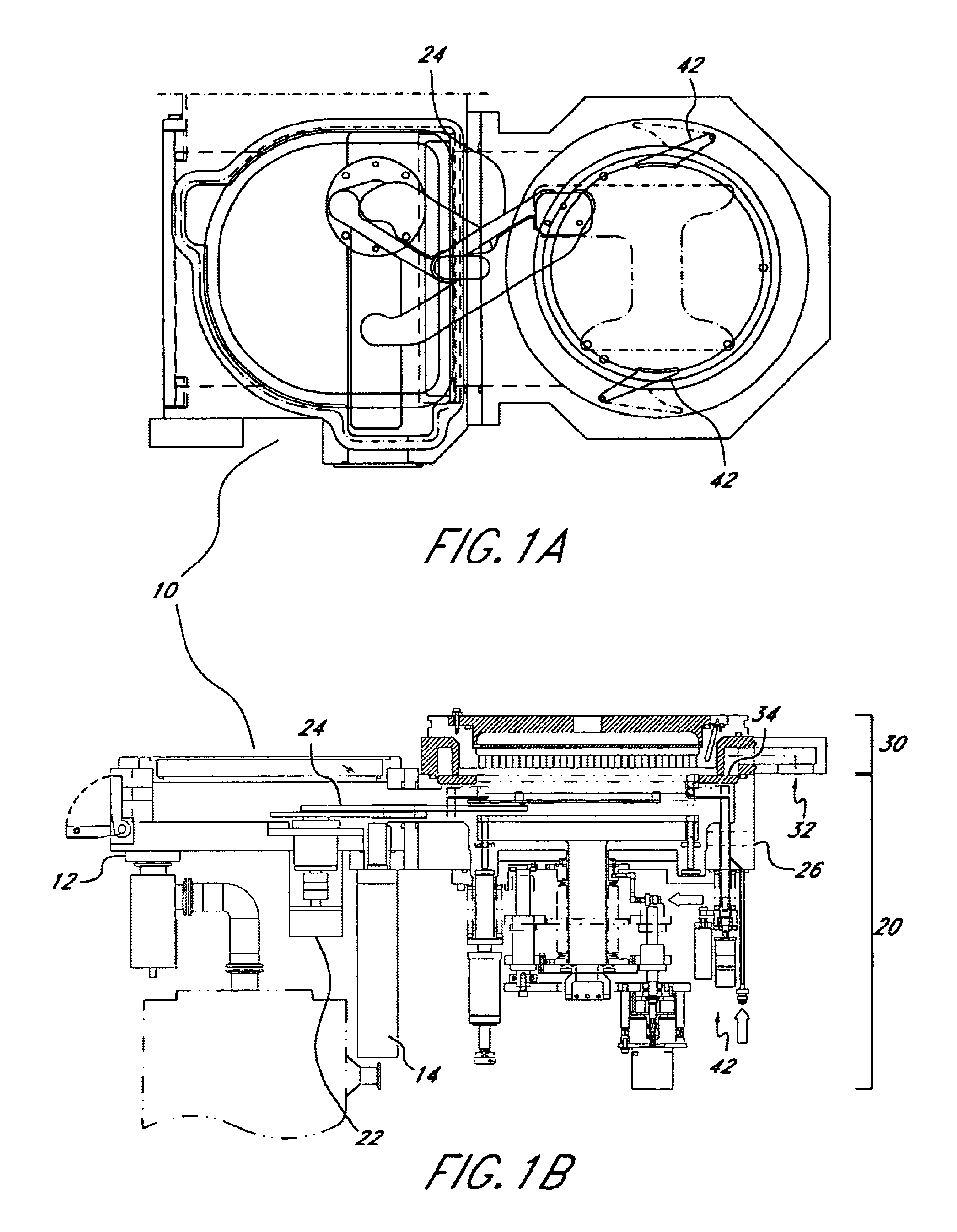

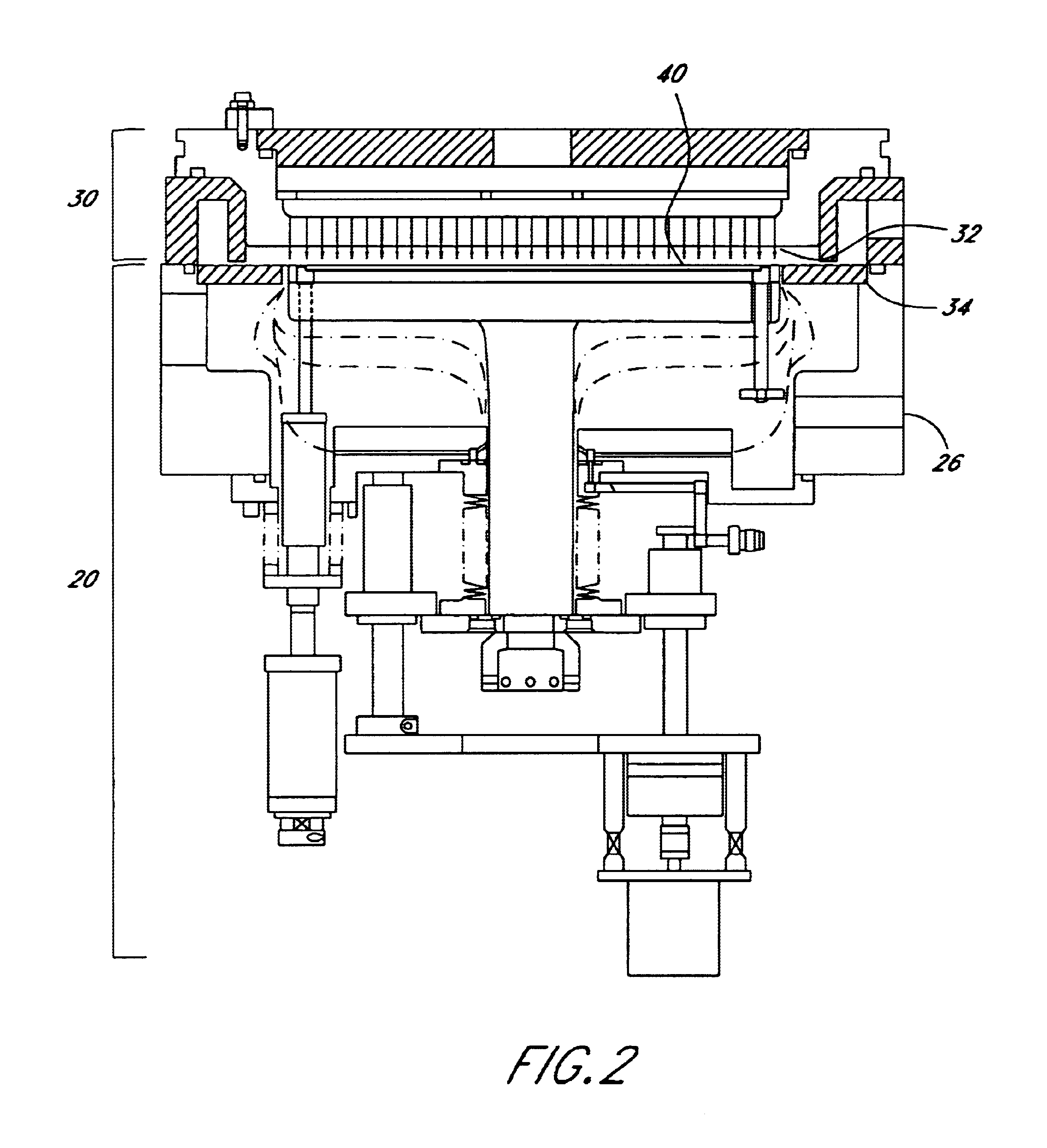

Semiconductor processing apparatus comprising chamber partitioned into reaction and transfer sections

InactiveUS6899507B2Reduce adhesionImprove efficiencySemiconductor/solid-state device manufacturingCharge manipulationEngineeringSemiconductor

Semiconductor processing equipment that has increased efficiency, throughput, and stability, as well as reduced operating cost, footprint, and faceprint is provided. Other than during deposition, the atmosphere of both the reaction chamber and the transfer chamber are evacuated using the transfer chamber exhaust port, which is located below the surface of the semiconductor wafer. This configuration prevents particles generated during wafer transfer or during deposition from adhering to the surface of the semiconductor wafer. Additionally, by introducing a purge gas into the transfer chamber during deposition, and by using an insulation separating plate 34, the atmospheres of the transfer and reaction chambers can be effectively isolated from each other, thereby preventing deposition on the walls and components of the transfer chamber. Finally, the configuration described herein permits a wafer buffer mechanism to be used with the semiconductor processing equipment, thereby further increasing throughput and efficiency.

Owner:ASM JAPAN

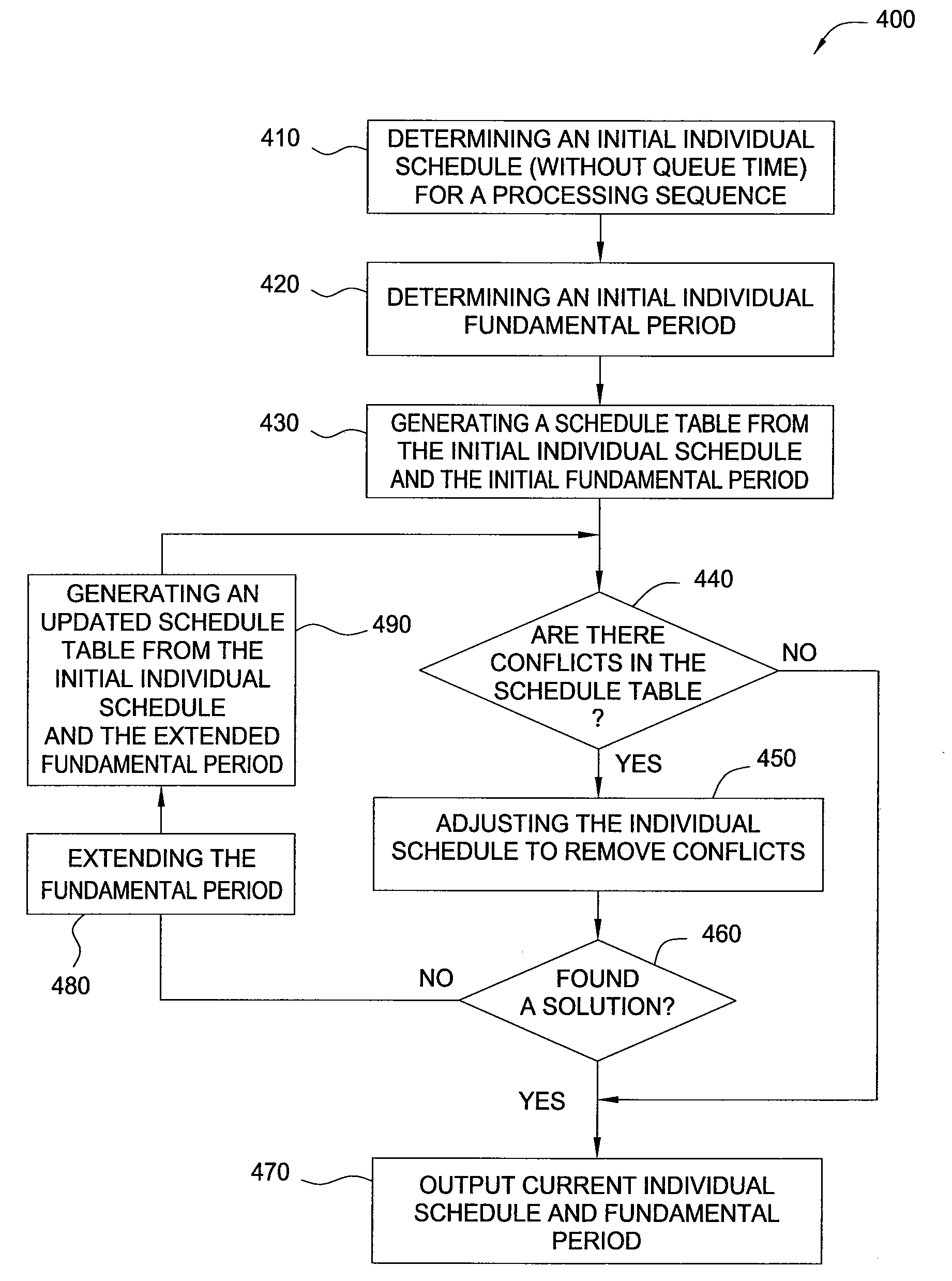

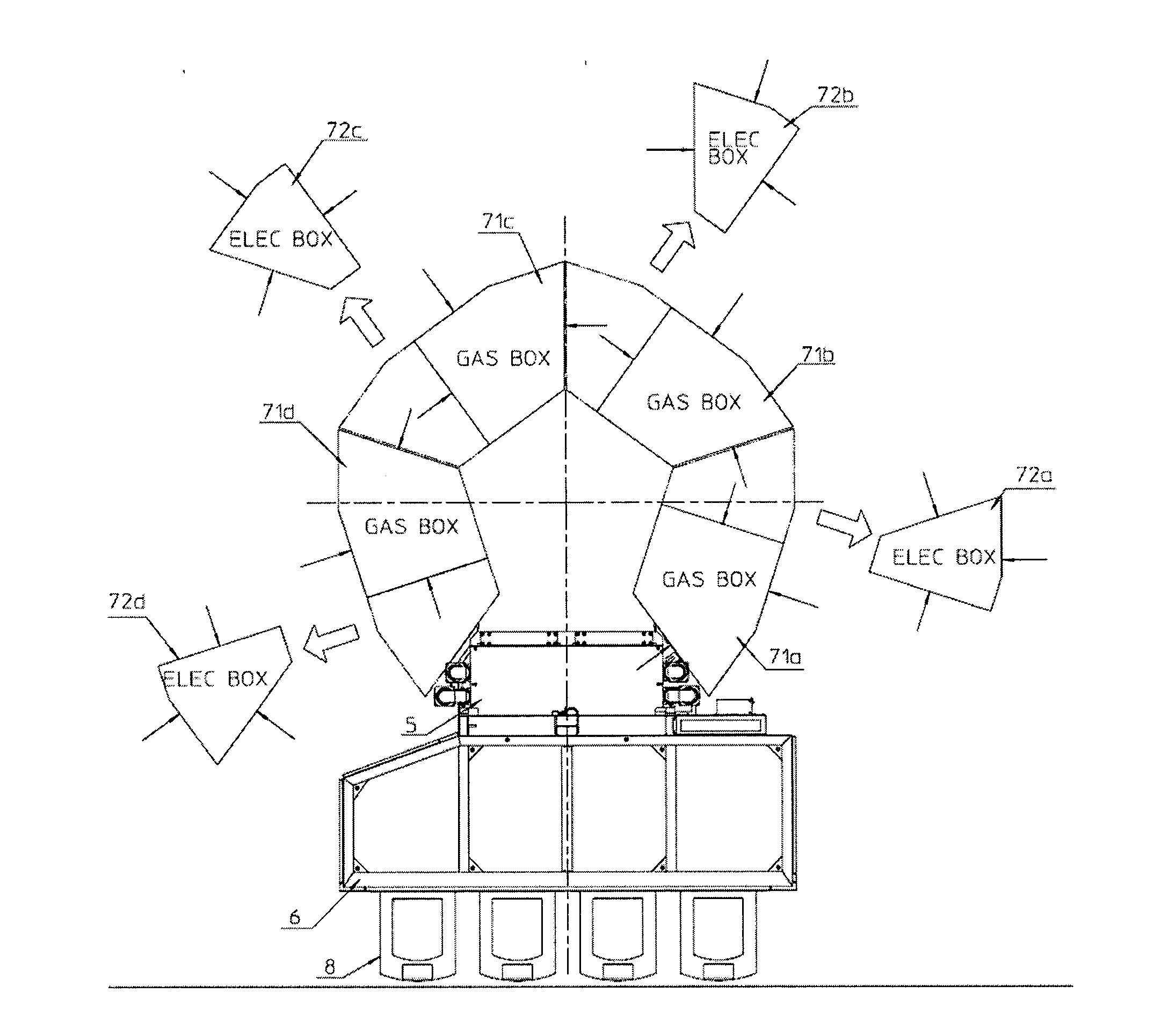

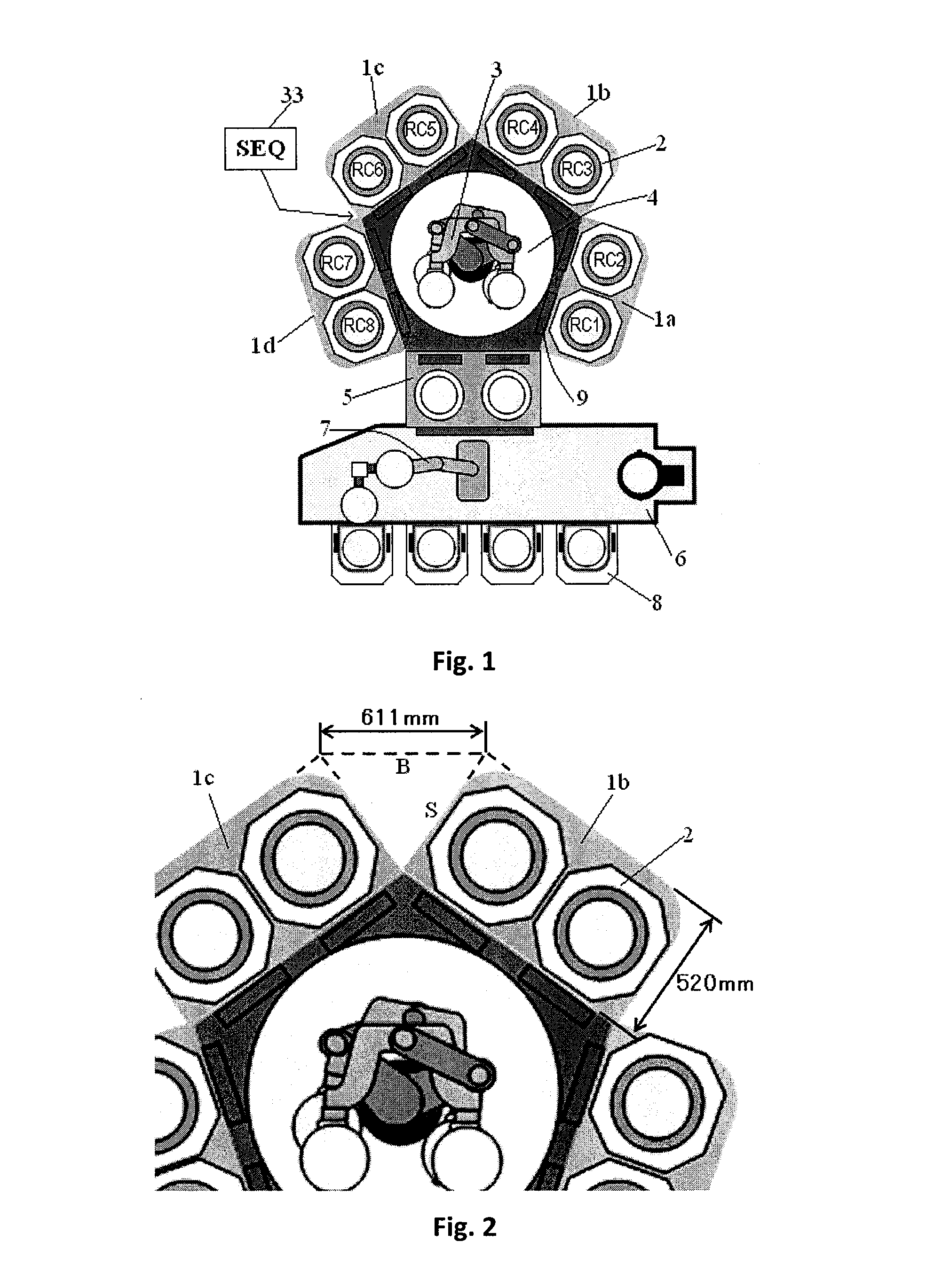

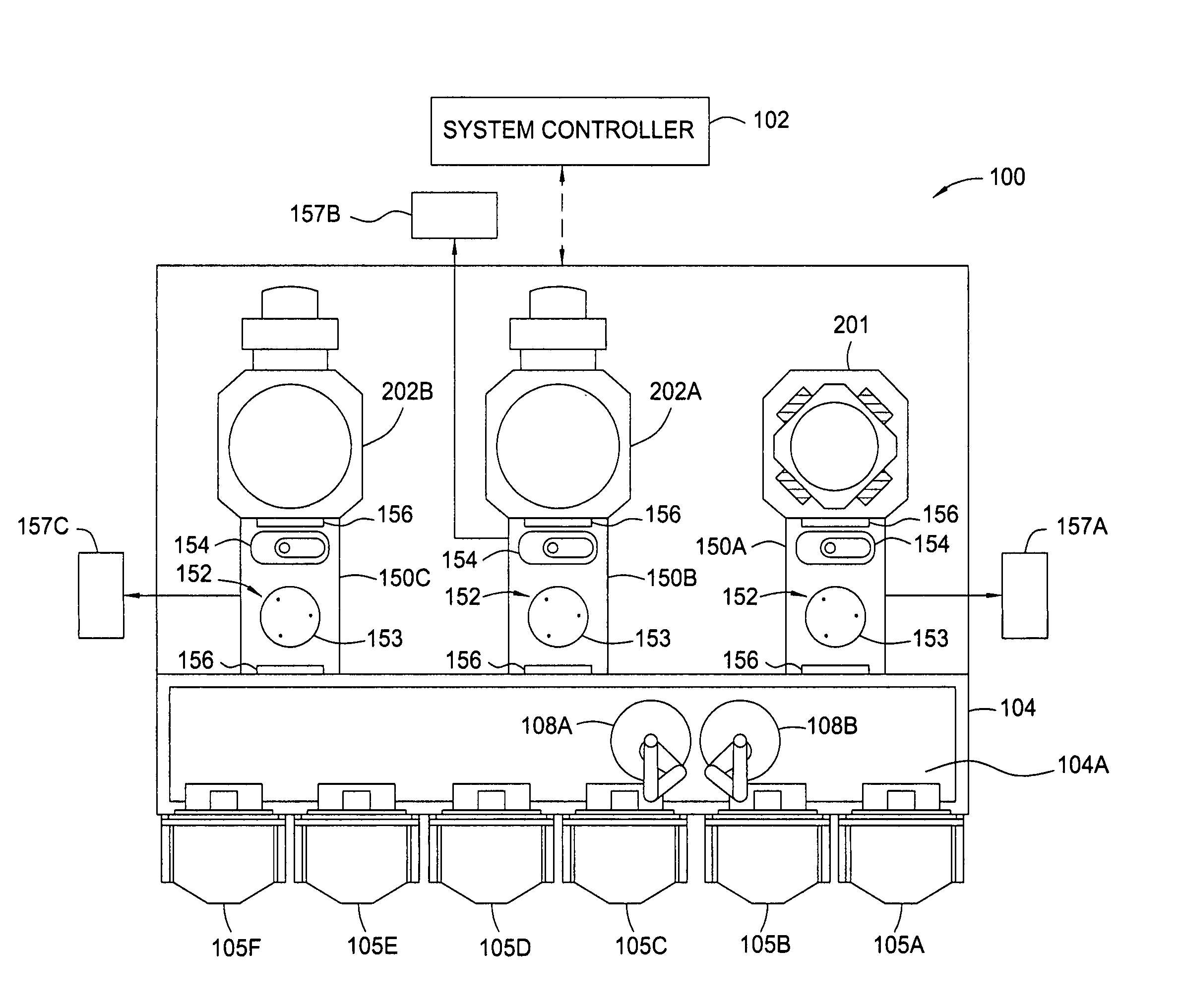

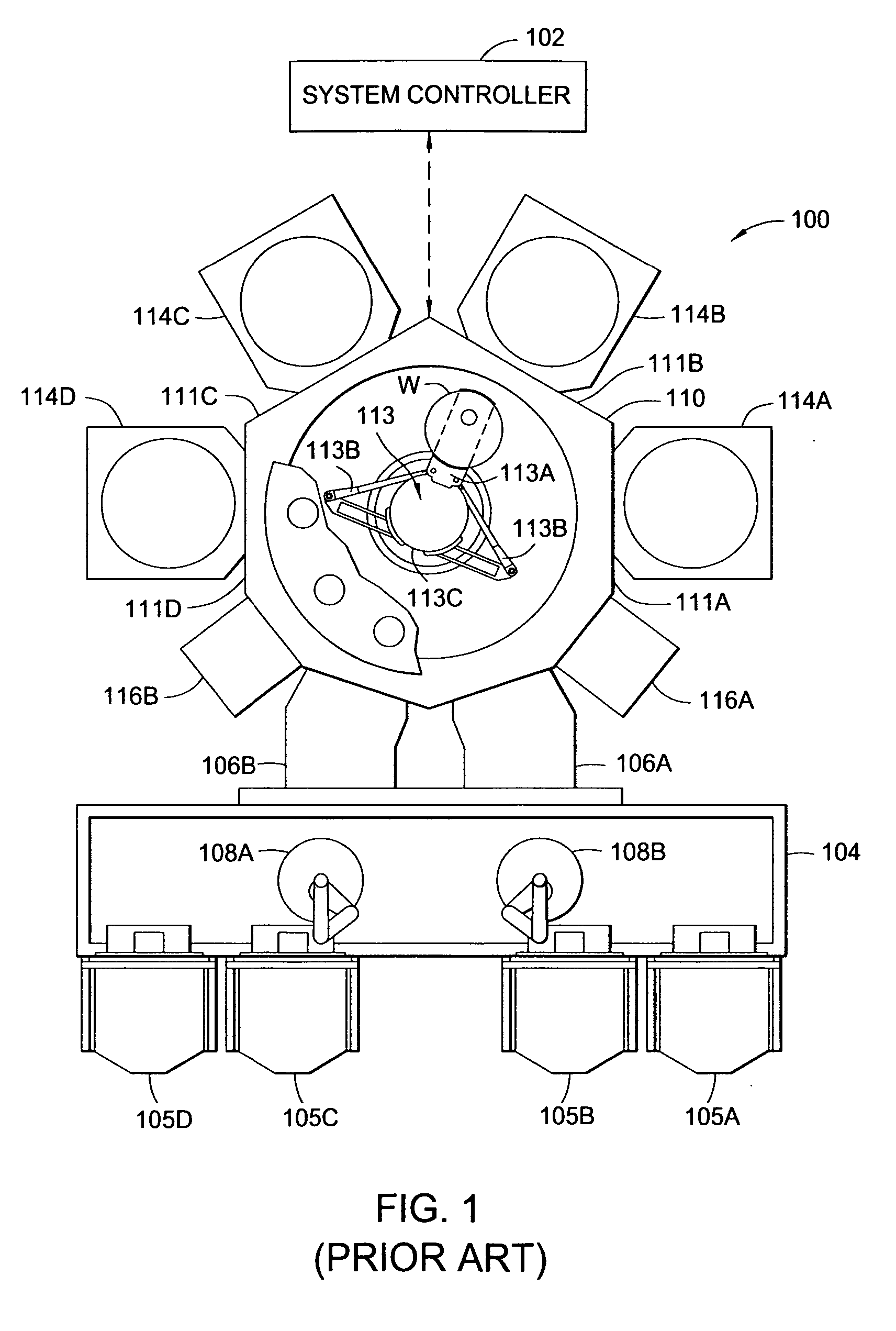

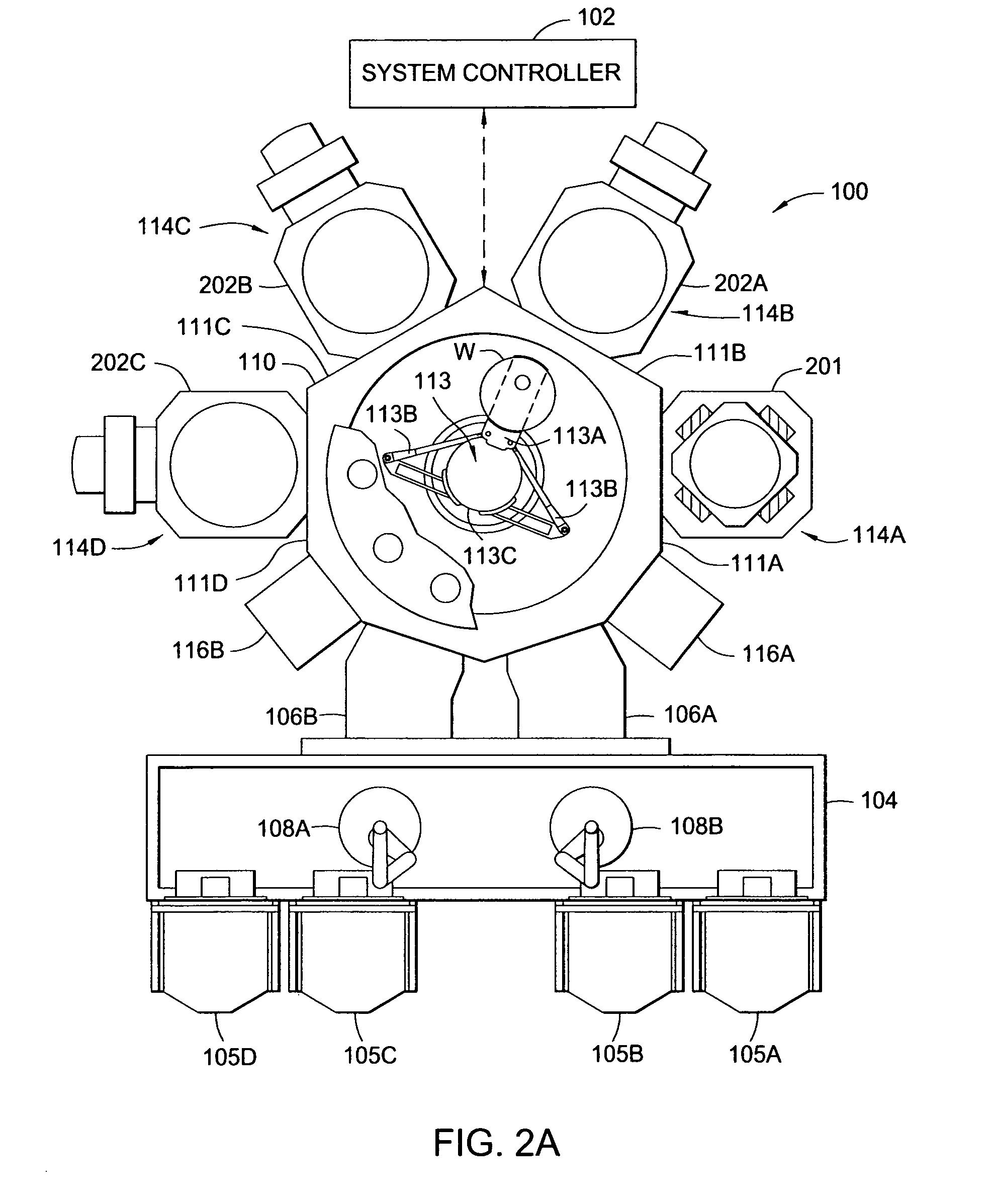

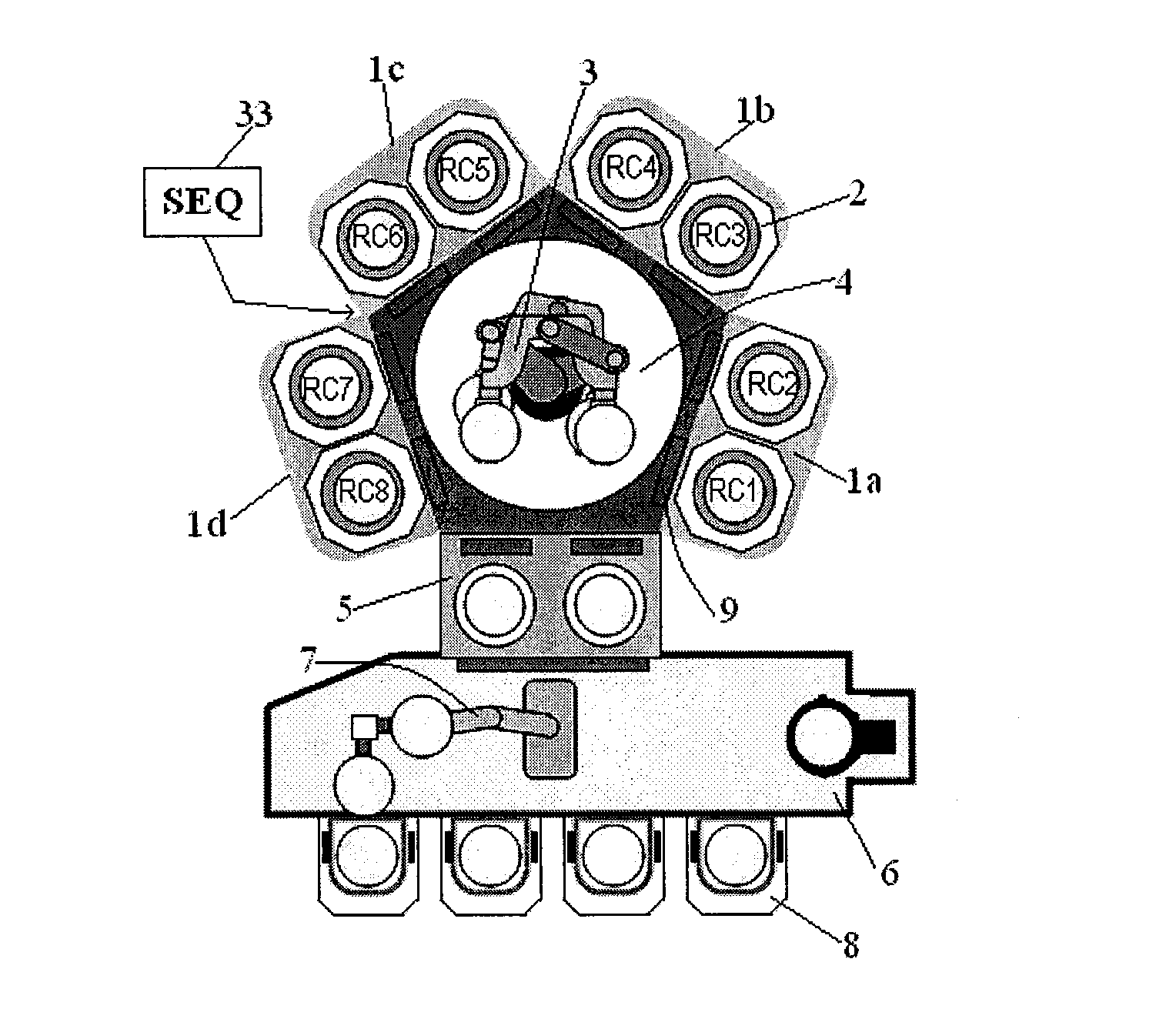

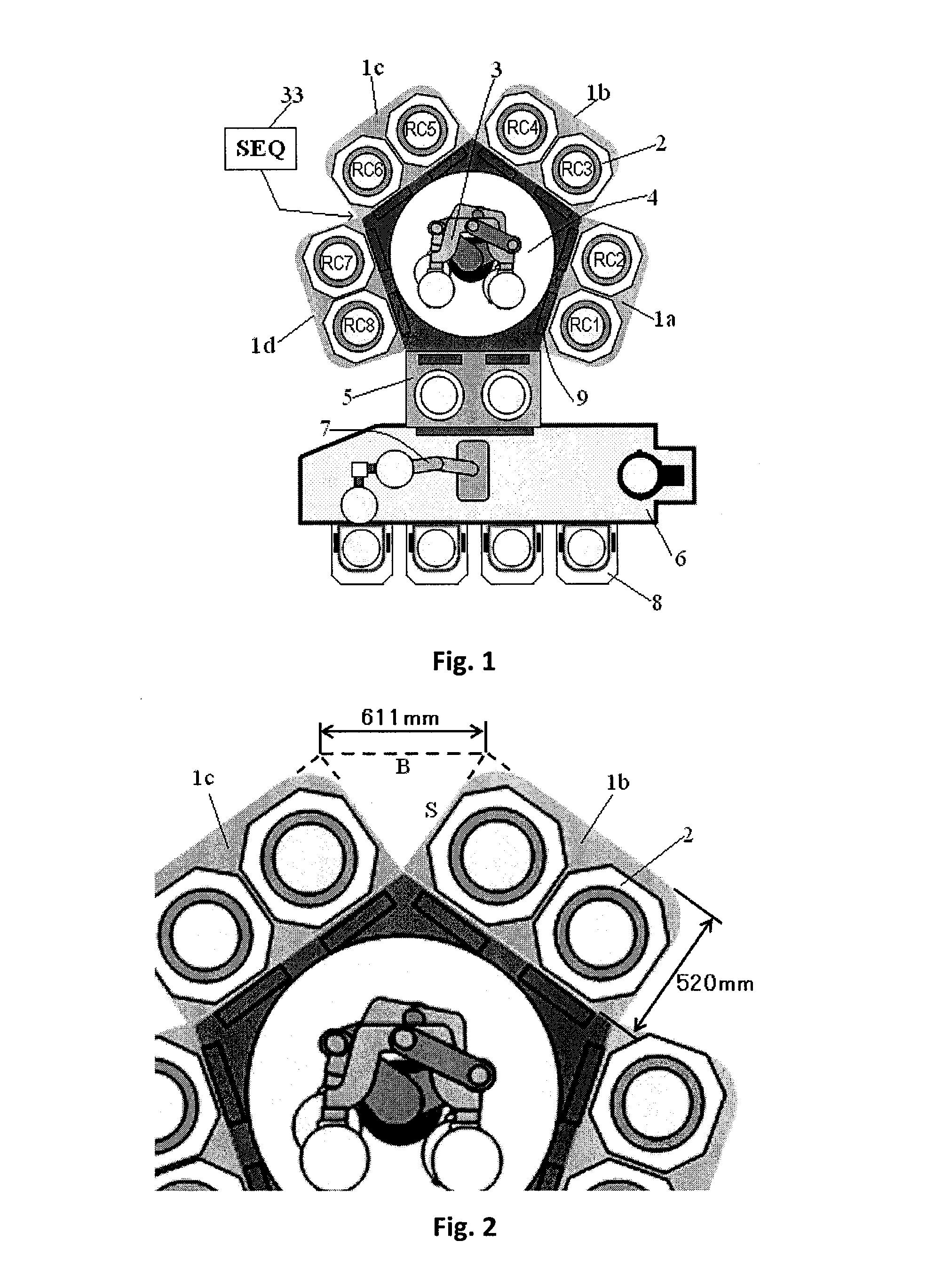

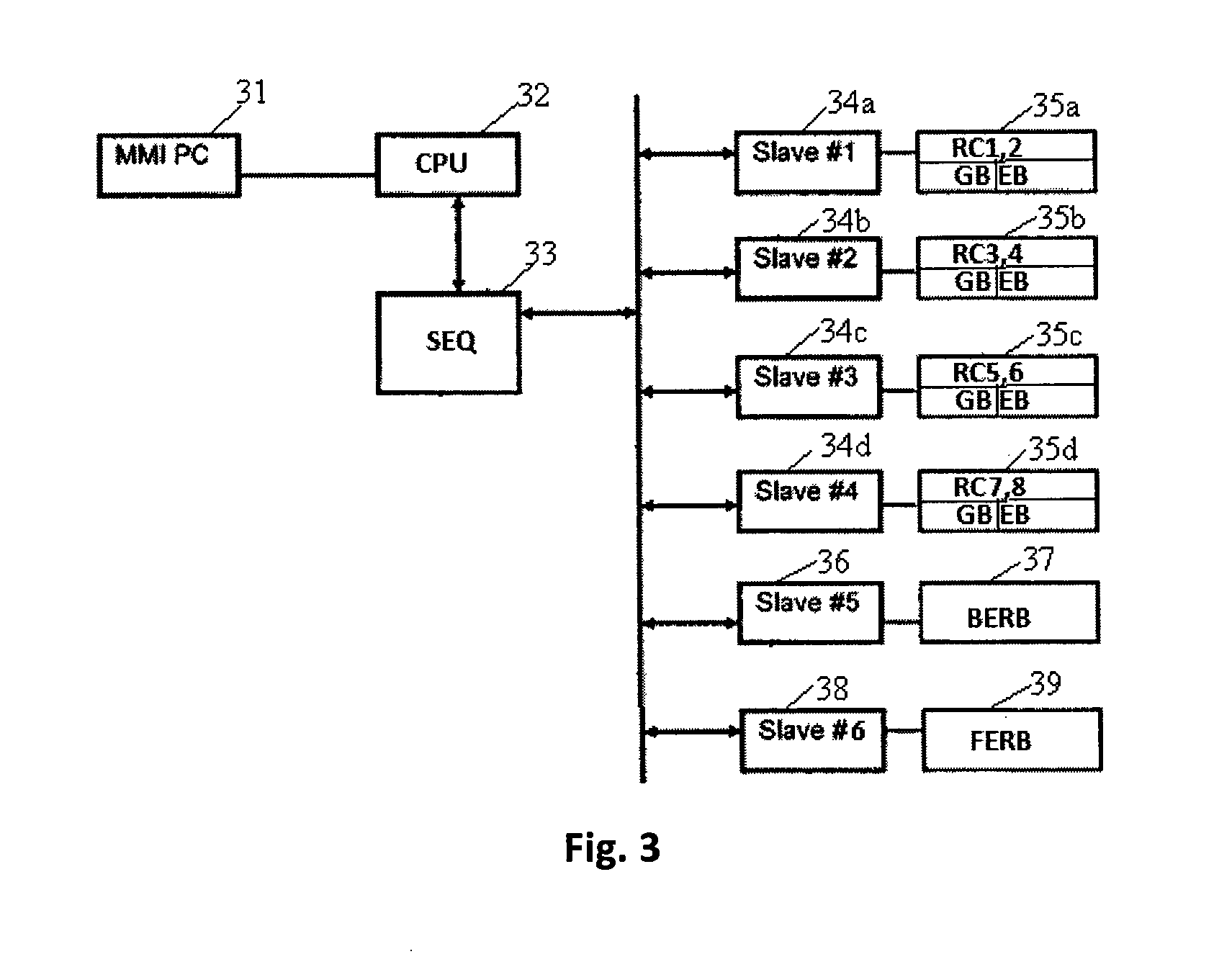

High-Throughput Semiconductor-Processing Apparatus Equipped with Multiple Dual-Chamber Modules

A wafer-processing apparatus includes: eight or ten reactors with identical capacity for processing wafers on the same plane, constituting four or five discrete units, each unit having two reactors arranged side by side with their fronts aligned in a line; a wafer-handling chamber including two wafer-handling robot arms each having at least two end-effectors; a load lock chamber; and a sequencer for performing, using the two wafer-handling robot arms, steps of unloading / loading processed / unprocessed wafers from / to any one of the units, and steps of unloading / loading processed / unprocessed wafers from / to all the other respective units in sequence while the wafers are in the one of the units.

Owner:ASM JAPAN

Substrate processing apparatus using a batch processing chamber

InactiveUS20060156979A1Semiconductor/solid-state device manufacturingChemical vapor deposition coatingBatch processingHandling system

Aspects of the invention include a method and apparatus for processing a substrate using a multi-chamber processing system (e.g., a cluster tool) adapted to process substrates in one or more batch and / or single substrate processing chambers to increase the system throughput. In one embodiment, a system is configured to perform a substrate processing sequence that contains batch processing chambers only, or batch and single substrate processing chambers, to optimize throughput and minimize processing defects due to exposure to a contaminating environment. In one embodiment, a batch processing chamber is used to increase the system throughput by performing a process recipe step that is disproportionately long compared to other process recipe steps in the substrate processing sequence that are performed on the cluster tool. In another embodiment, two or more batch chambers are used to process multiple substrates using one or more of the disproportionately long processing steps in a processing sequence. Aspects of the invention also include an apparatus and method for delivering a precursor to a processing chamber so that a repeatable ALD or CVD deposition process can be performed.

Owner:APPLIED MATERIALS INC

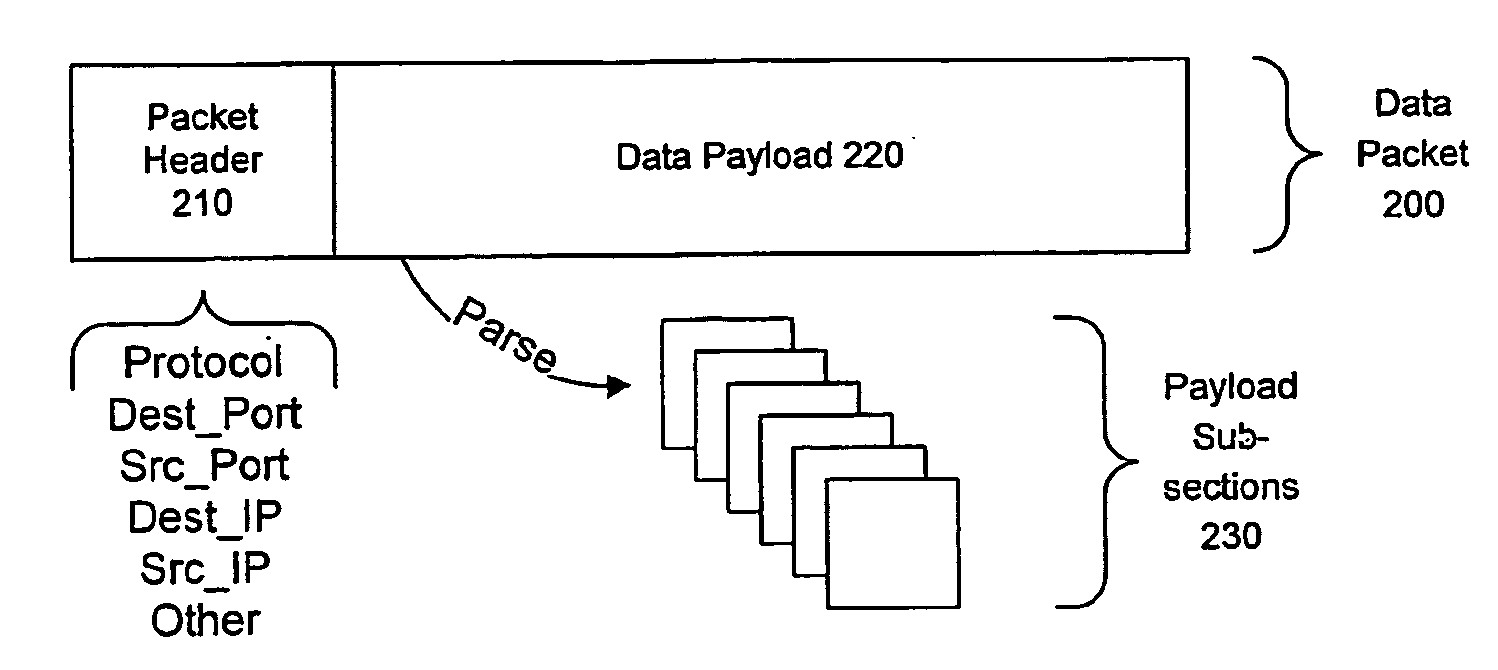

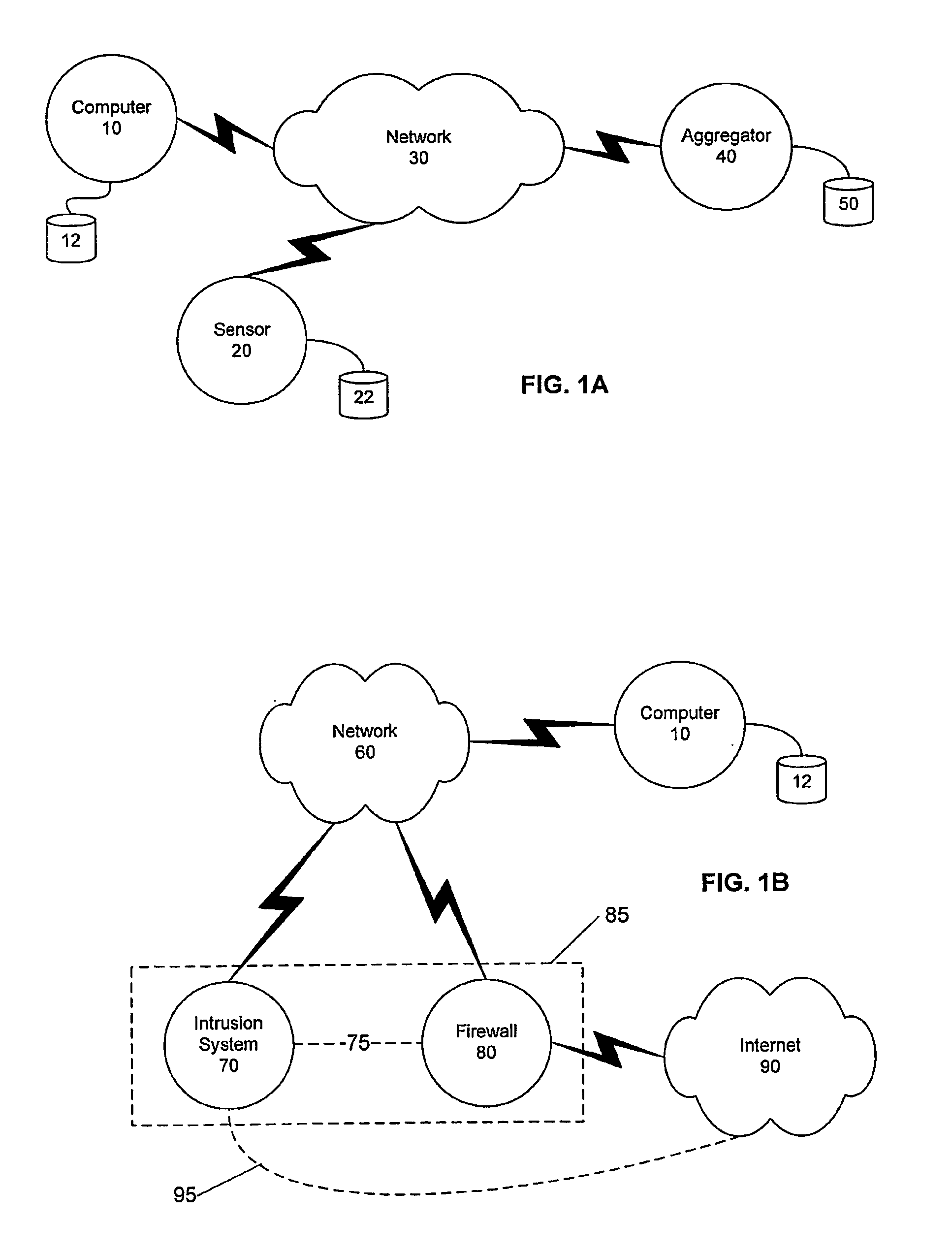

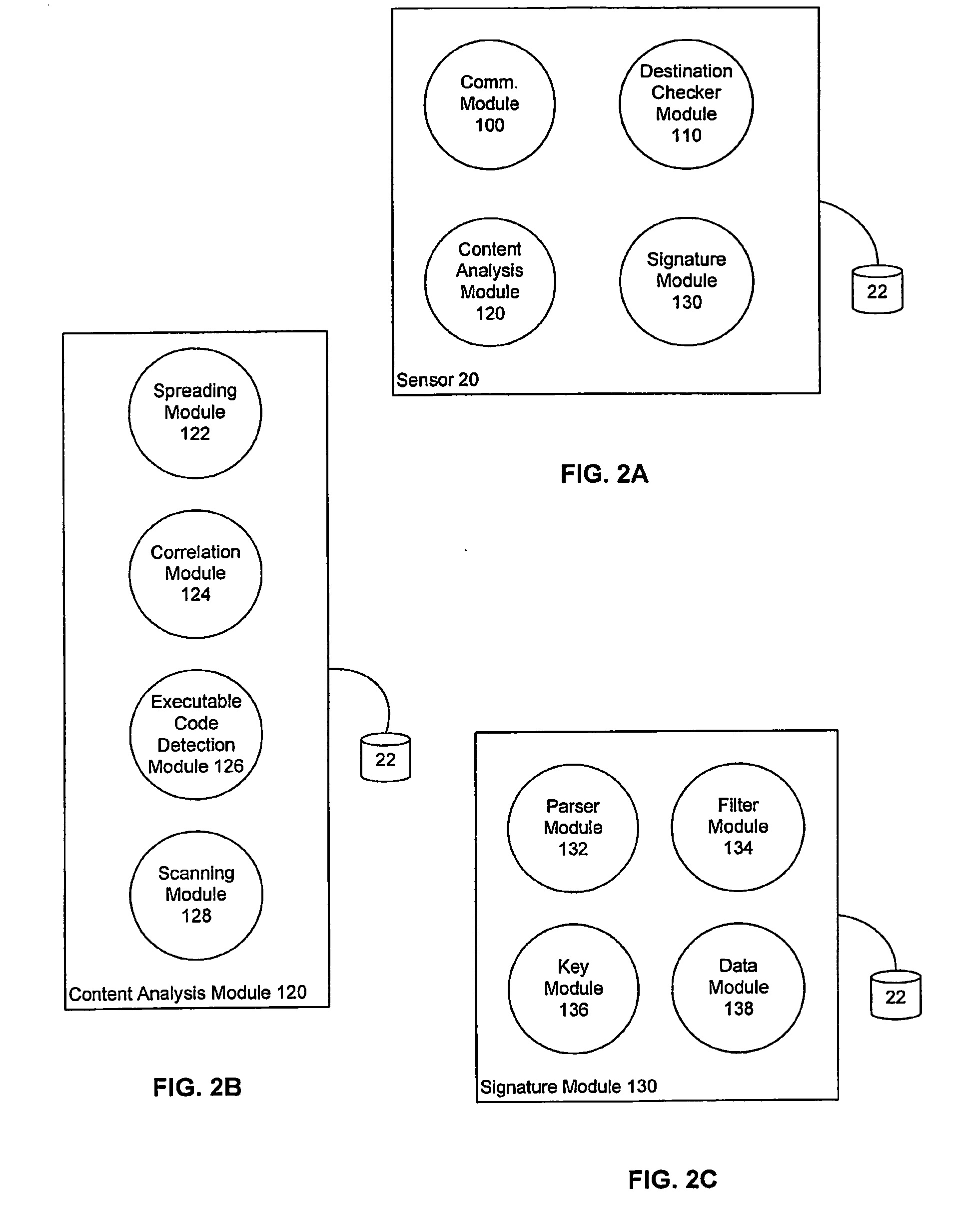

Detecting Public Network Attacks Using Signatures and Fast Content Analysis

ActiveUS20080307524A1Memory loss protectionUser identity/authority verificationComputer hardwarePrivate network

Network worms or viruses are a growing threat to the security of public and private networks and the individual computers that make up those networks. A content sifting method if provided that automatically generates a precise signature for a worm or virus that can then be used to significantly reduce the propagation of the worm elsewhere in the network or eradicate the worm altogether. The content sifting method is complemented by a value sampling method that increases the throughput of network traffic that can be monitored. Together, the methods track the number of times invariant strings appear in packets and the network address dispersion of those packets including variant strings. When an invariant string reaches a particular threshold of appearances and address dispersion, the string is reported as a signature for suspected worm.

Owner:RGT UNIV OF CALIFORNIA

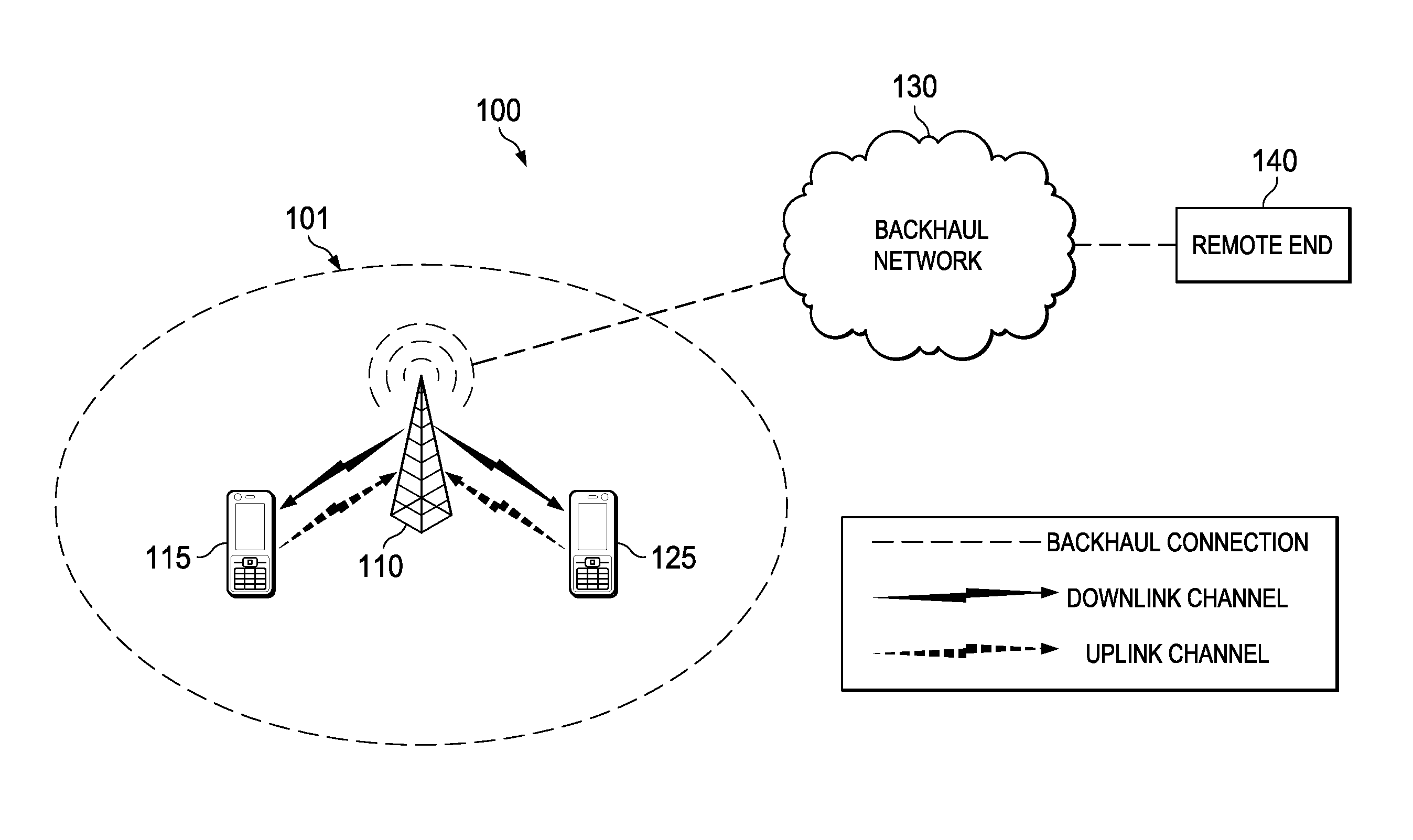

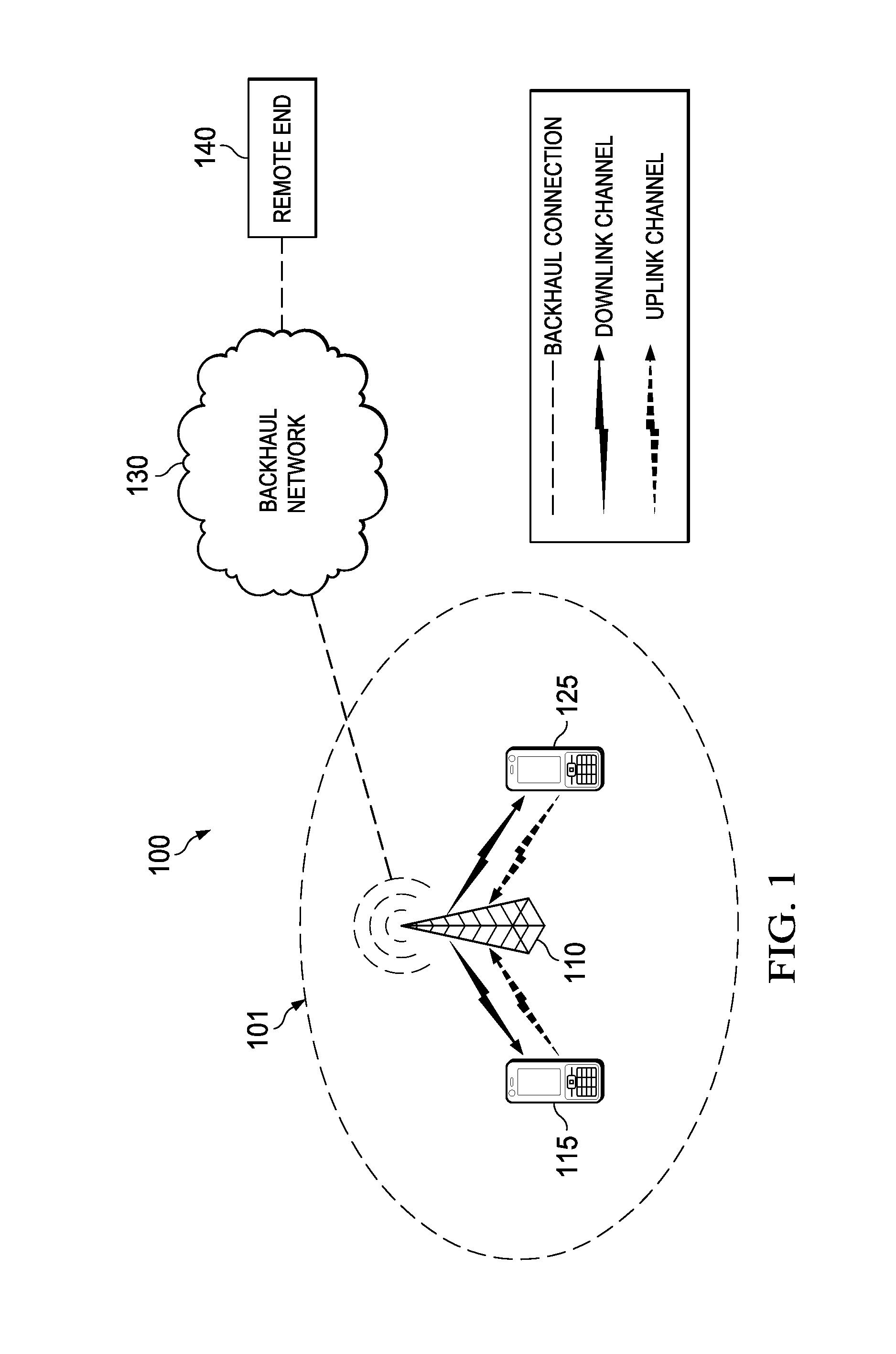

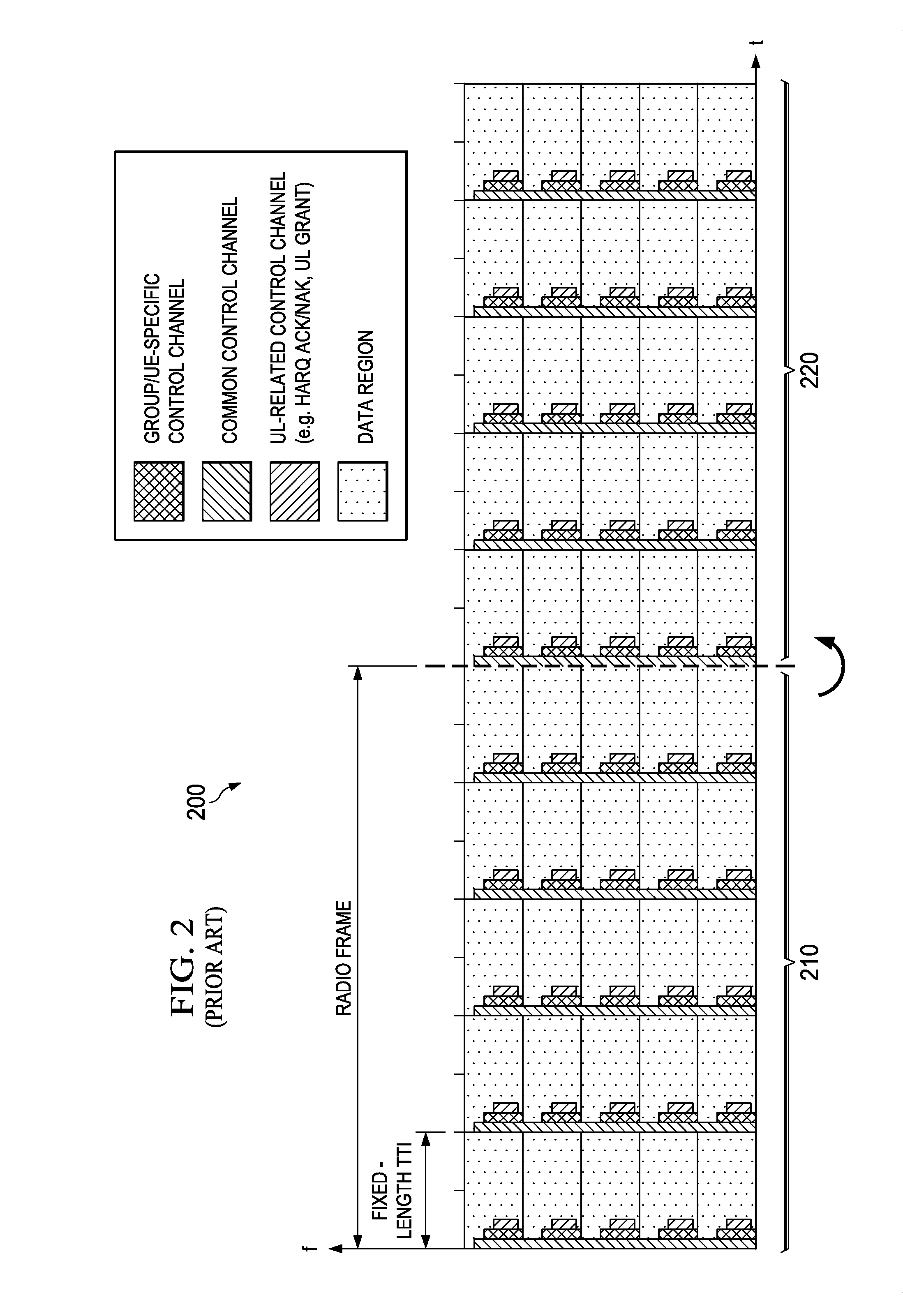

System and Method for Adaptive Transmission Time Interval (TTI) Structure

ActiveUS20140071954A1Length be adaptNetwork traffic/resource managementTime-division multiplexQuality of serviceStructure of Management Information

Methods and devices are provided for communicating data in a wireless channel. In one example, a method includes adapting the transmission time interval (TTI) length of transport container for transmitting data in accordance with a criteria. The criteria may include (but is not limited to) a latency requirement of the data, a buffer size associated with the data, a mobility characteristic of a device that will receive the data. The TTI lengths may be manipulated for a variety of reasons, such as for reducing overhead, satisfy quality of service (QoS) requirements, maximize network throughput, etc. In some embodiments, TTIs having different TTI lengths may be carried in a common radio frame. In other embodiments, the wireless channel may partitioned into multiple bands each of which carrying (exclusively or otherwise) TTIs having a certain TTI length.

Owner:HUAWEI TECH CO LTD

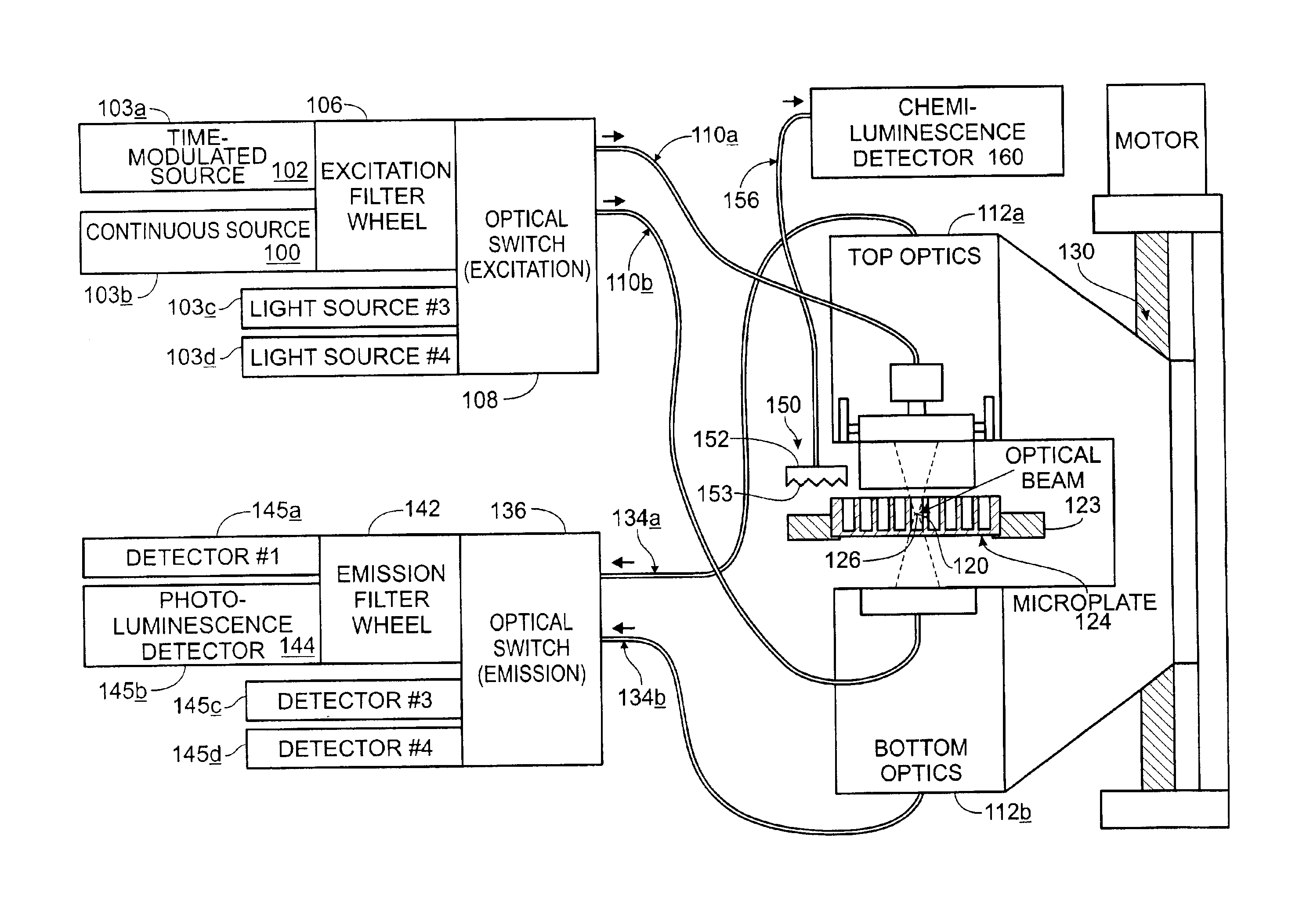

Sample analysis systems

InactiveUS6982431B2Investigating moving fluids/granular solidsScattering properties measurementsBiologyThroughput

Systems, and components thereof, for analyzing samples. These systems include apparatus and methods for generating, transmitting, detecting, and / or analyzing light, including without limitation high-throughput optical screening devices for analyzing samples at one or more assay sites. These systems also include apparatus and methods for supporting samples for analysis, including without limitation multiwell sample holders such as microplates.

Owner:MOLECULAR DEVICES

High throughput genome sequencing on DNA arrays

ActiveUS20090011943A1Microbiological testing/measurementLibrary screeningRe sequencingNucleotide sequencing

The present invention is directed to methods and compositions for acquiring nucleotide sequence information of target sequences using adaptors interspersed in target polynucleotides. The sequence information can be new, e.g. sequencing unknown nucleic acids, re-sequencing, or genotyping. The invention preferably includes methods for inserting a plurality of adaptors at spaced locations within a target polynucleotide or a fragment of a polynucleotide. Such adaptors may serve as platforms for interrogating adjacent sequences using various sequencing chemistries, such as those that identify nucleotides by primer extension, probe ligation, and the like. Encompassed in the invention are methods and compositions for the insertion of known adaptor sequences into target sequences, such that there is an interruption of contiguous target sequence with the adaptors. By sequencing both “upstream” and “downstream” of the adaptors, identification of entire target sequences may be accomplished.

Owner:COMPLETE GENOMICS INC

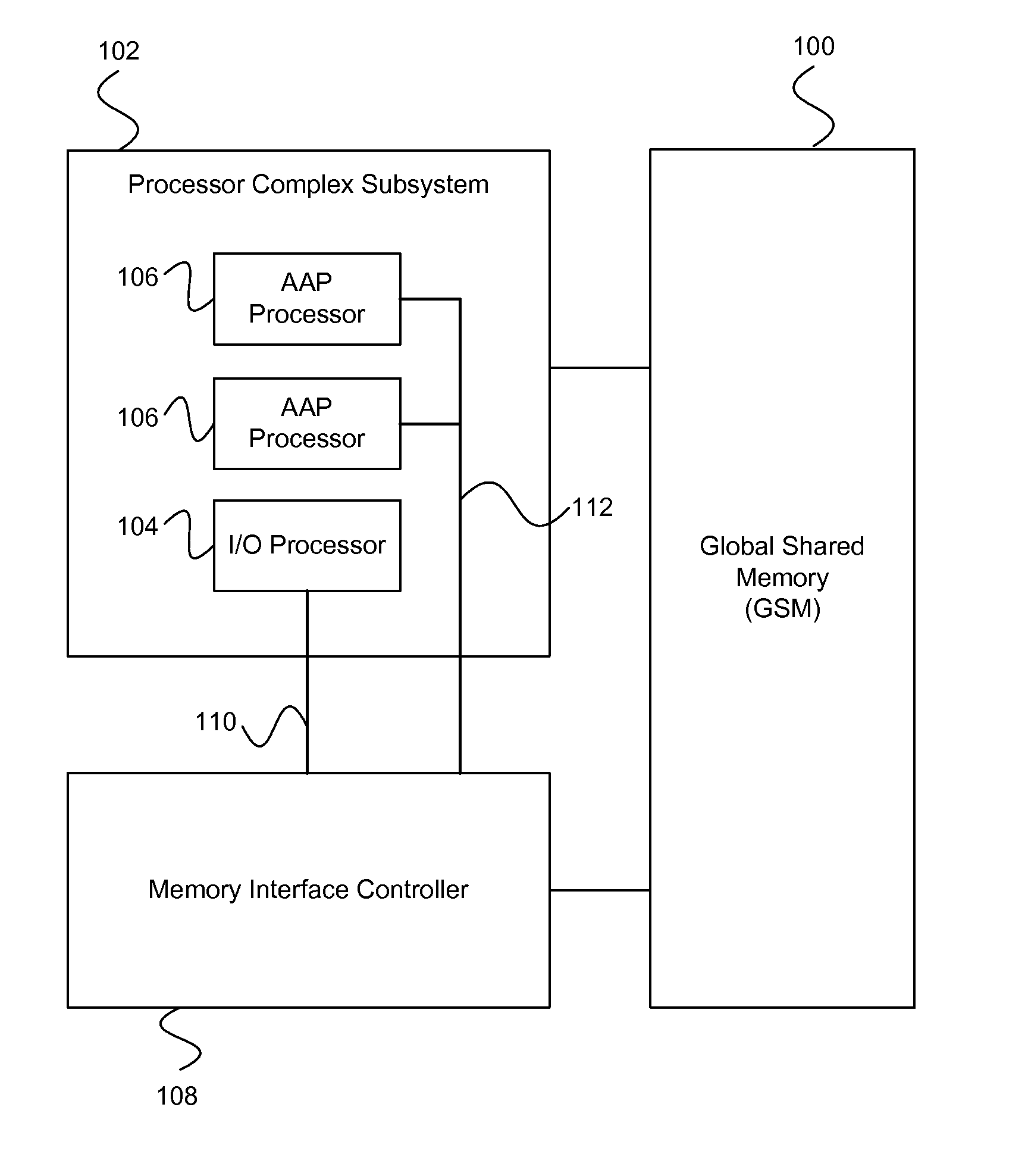

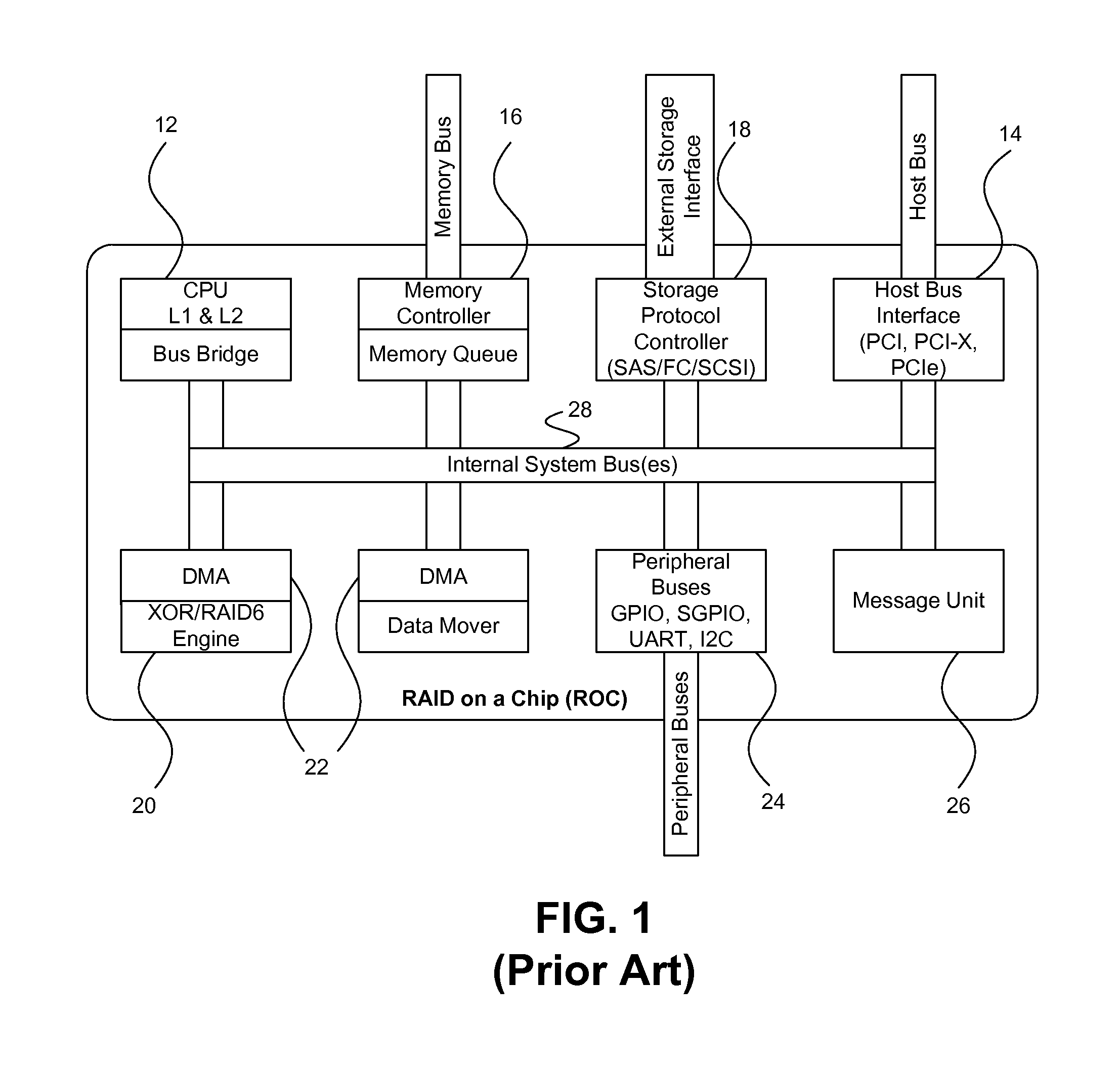

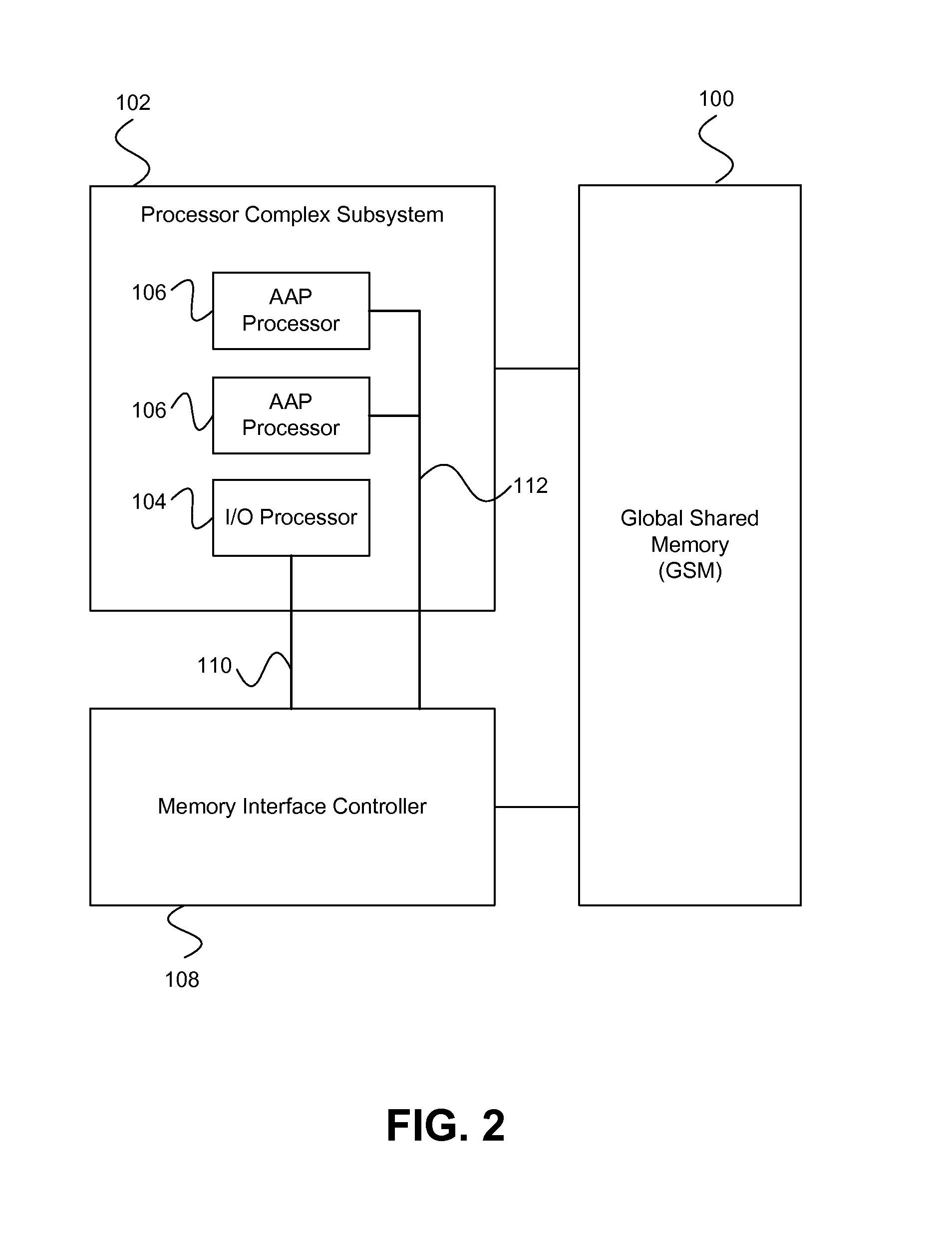

On-chip shared memory based device architecture

ActiveUS7743191B1Reduce disadvantagesLow costRedundant array of inexpensive disk systemsRecord information storageExtensibilityRAID

A method and architecture are provided for SOC (System on a Chip) devices for RAID processing, which is commonly referred as RAID-on-a-Chip (ROC). The architecture utilizes a shared memory structure as interconnect mechanism among hardware components, CPUs and software entities. The shared memory structure provides a common scratchpad buffer space for holding data that is processed by the various entities, provides interconnection for process / engine communications, and provides a queue for message passing using a common communication method that is agnostic to whether the engines are implemented in hardware or software. A plurality of hardware engines are supported as masters of the shared memory. The architectures provide superior throughput performance, flexibility in software / hardware co-design, scalability of both functionality and performance, and support a very simple abstracted parallel programming model for parallel processing.

Owner:MICROSEMI STORAGE SOLUTIONS

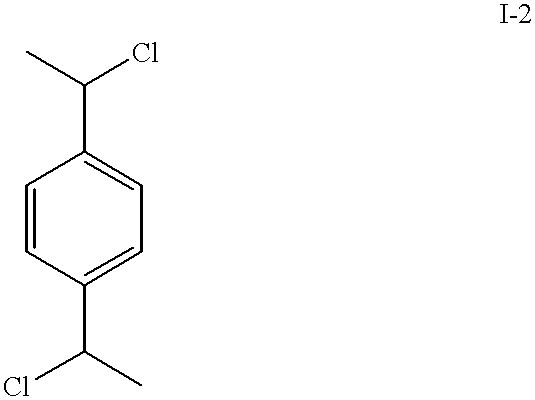

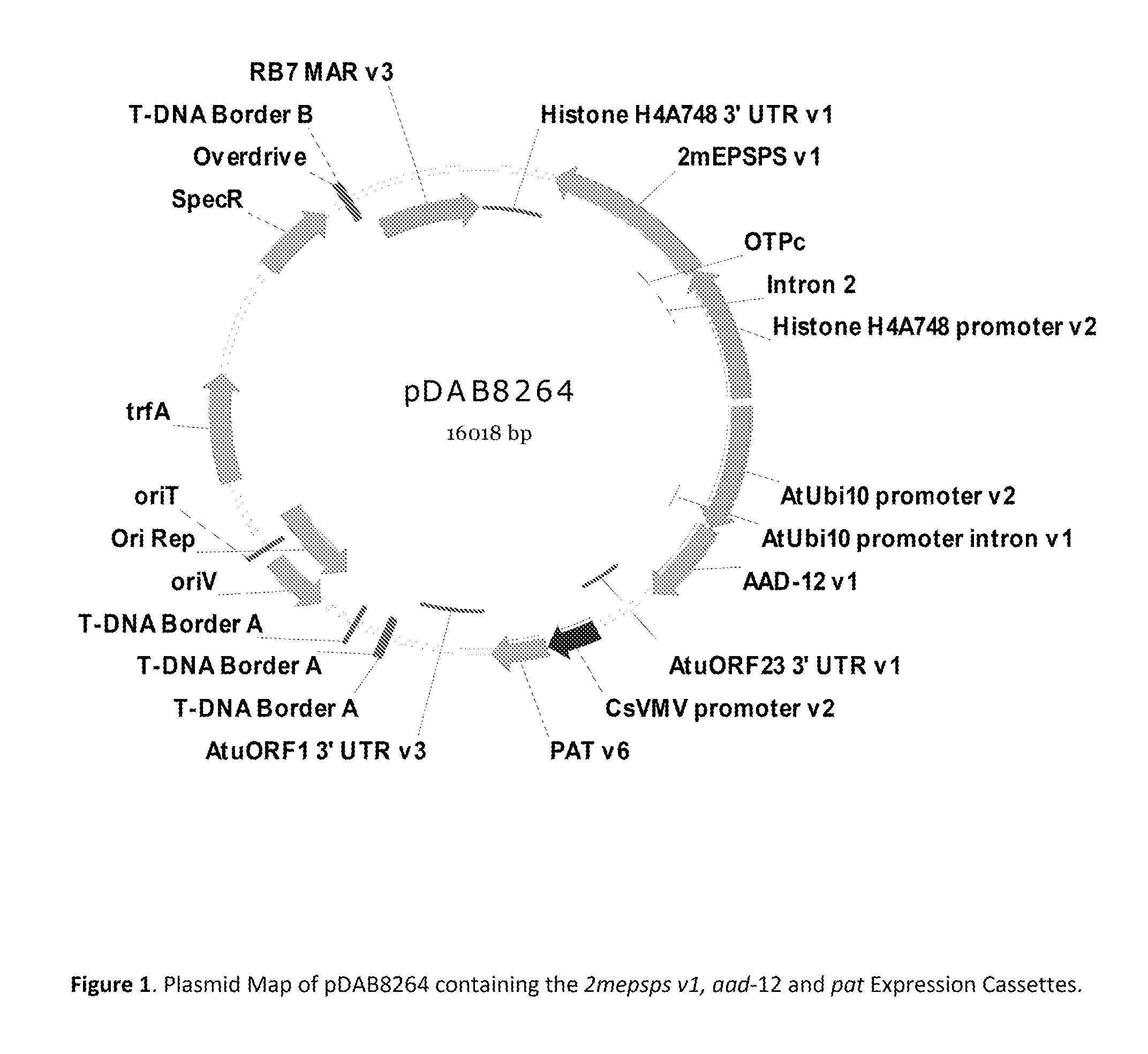

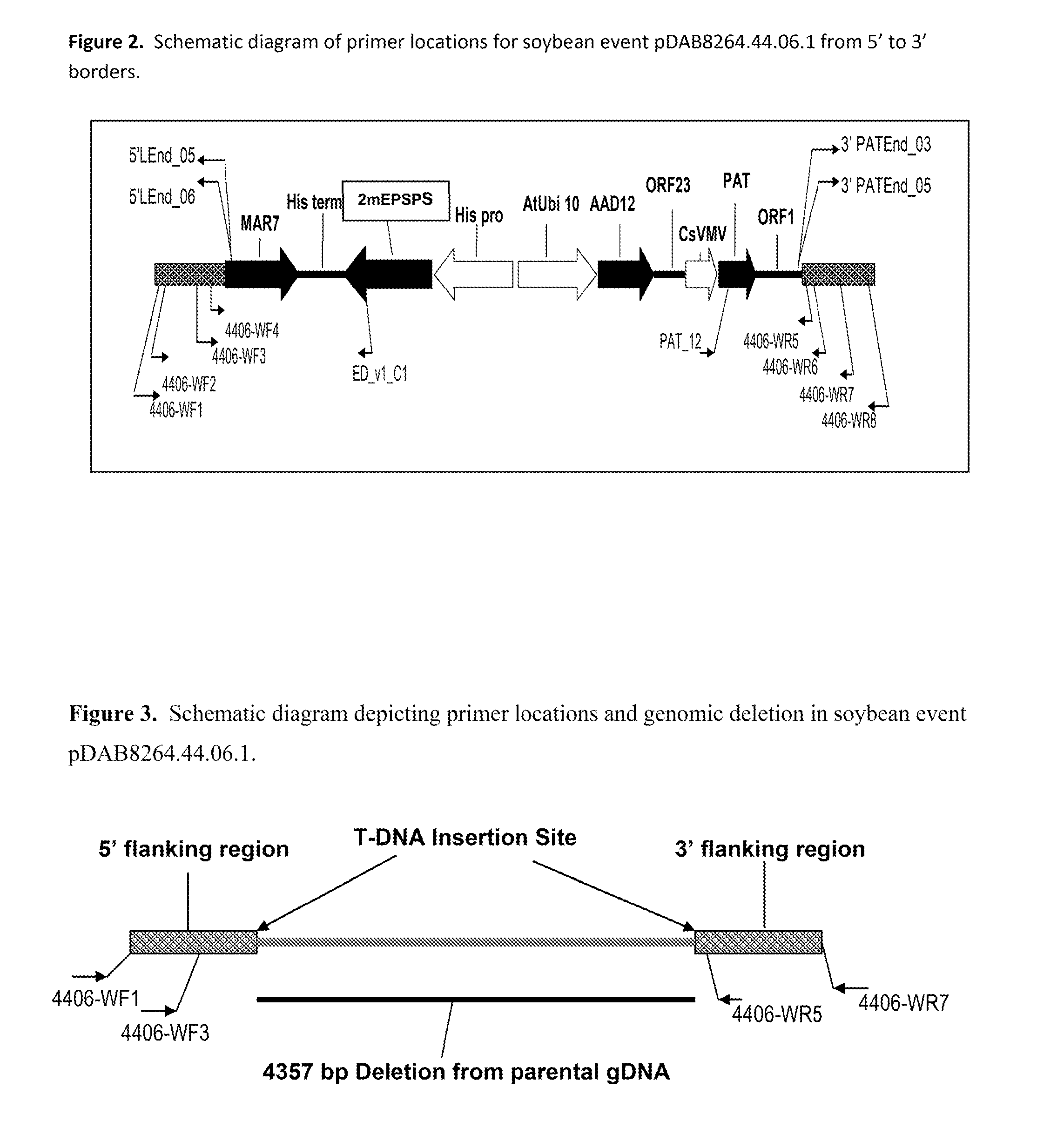

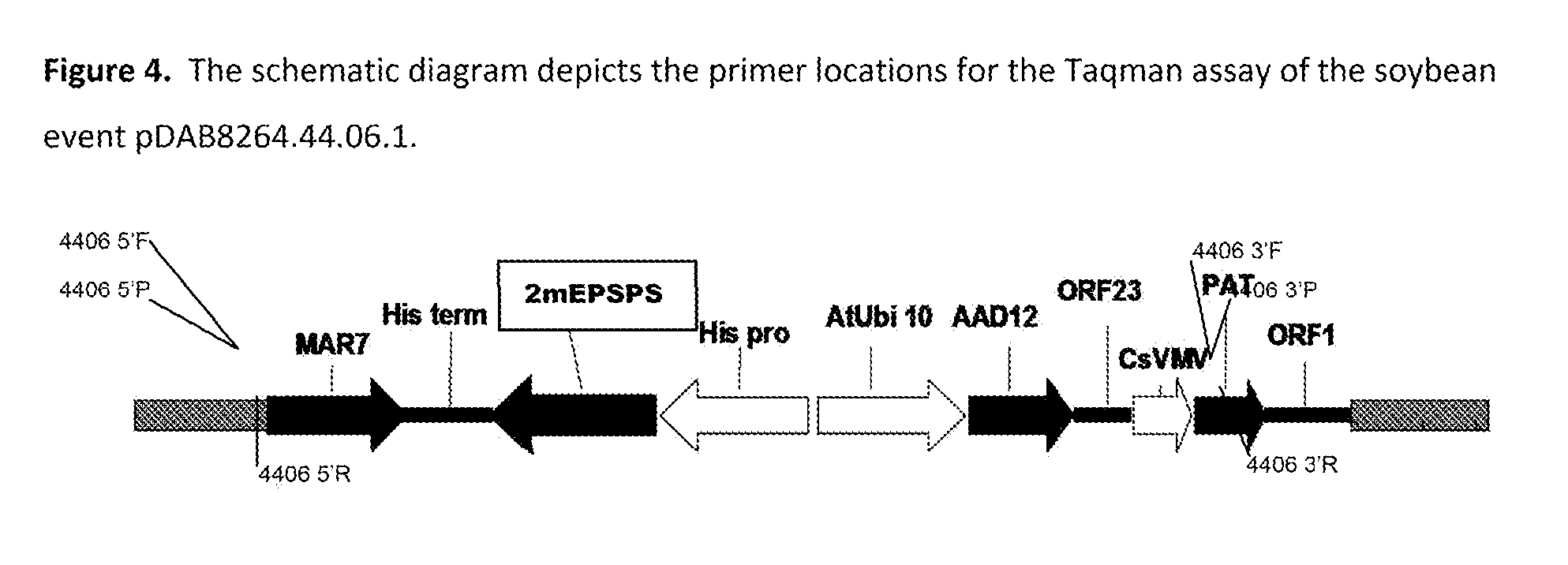

Stacked herbicide tolerance event 8264.44.06.1, related transgenic soybean lines, and detection thereof

ActiveUS9540655B2Preserve usefulnessIncrease flexibilityBiocideMicrobiological testing/measurementPcr assayMultiple traits

Owner:M S TECH +1

High-throughput semiconductor-processing apparatus equipped with multiple dual-chamber modules

Owner:ASM JAPAN

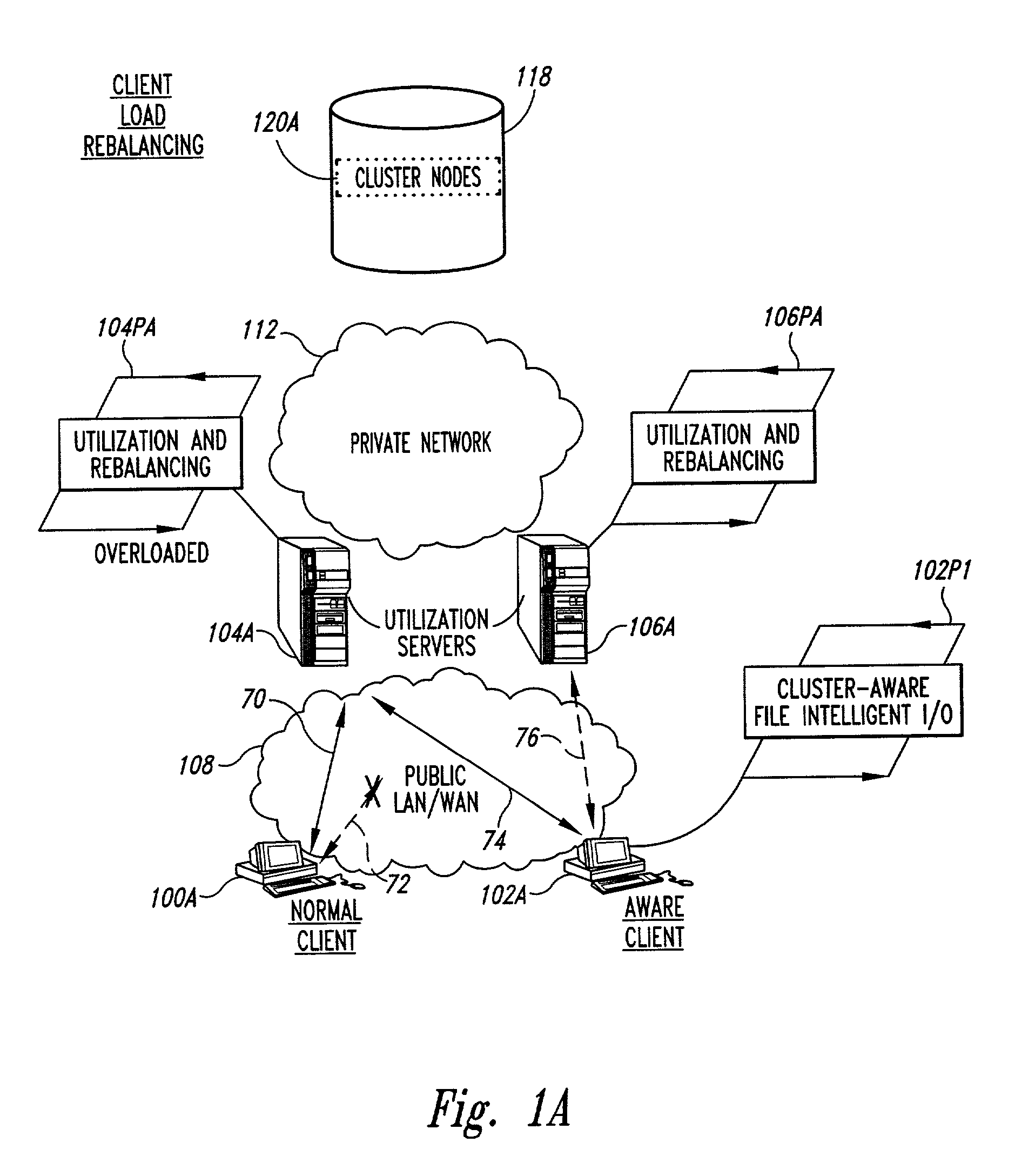

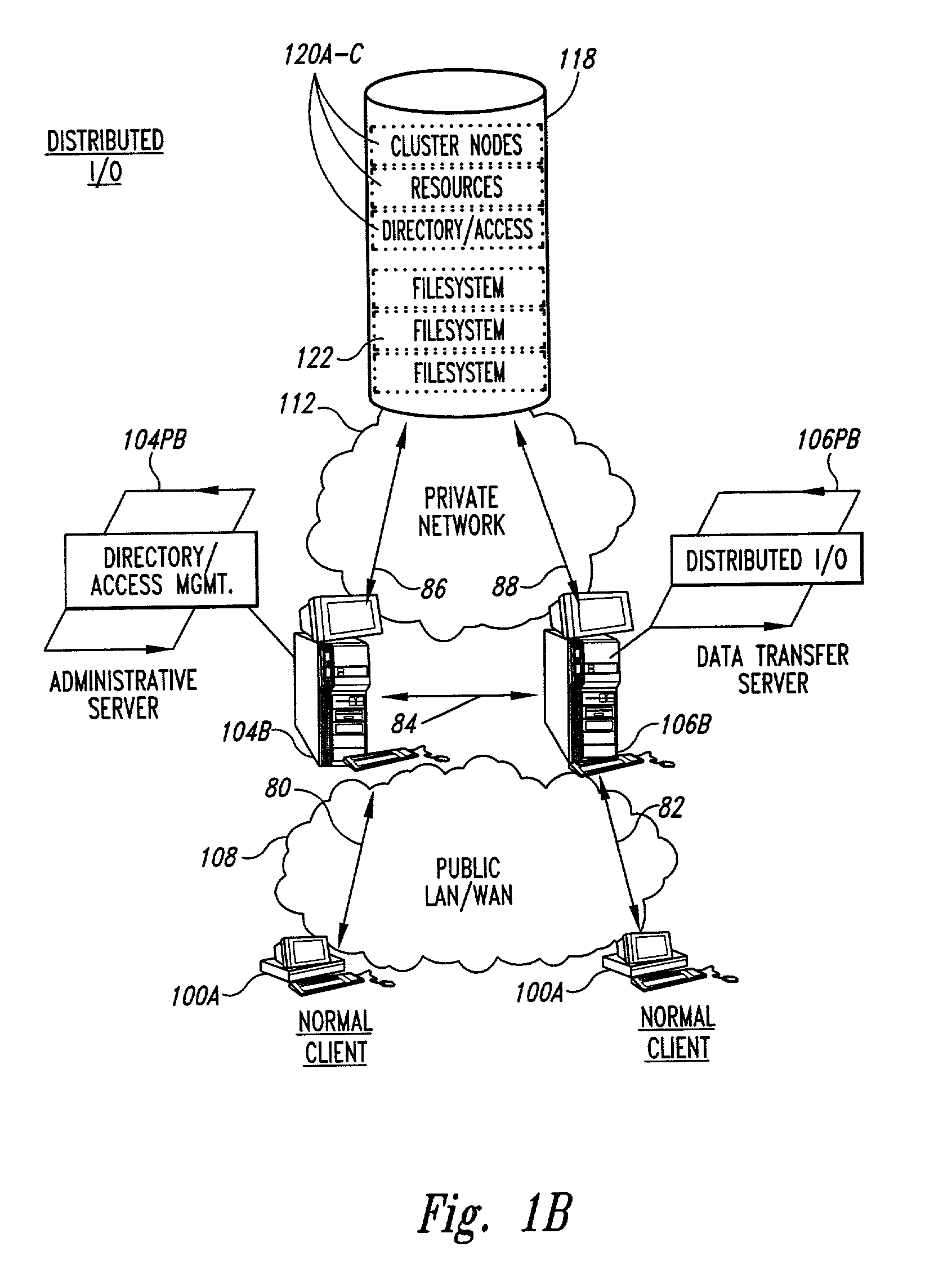

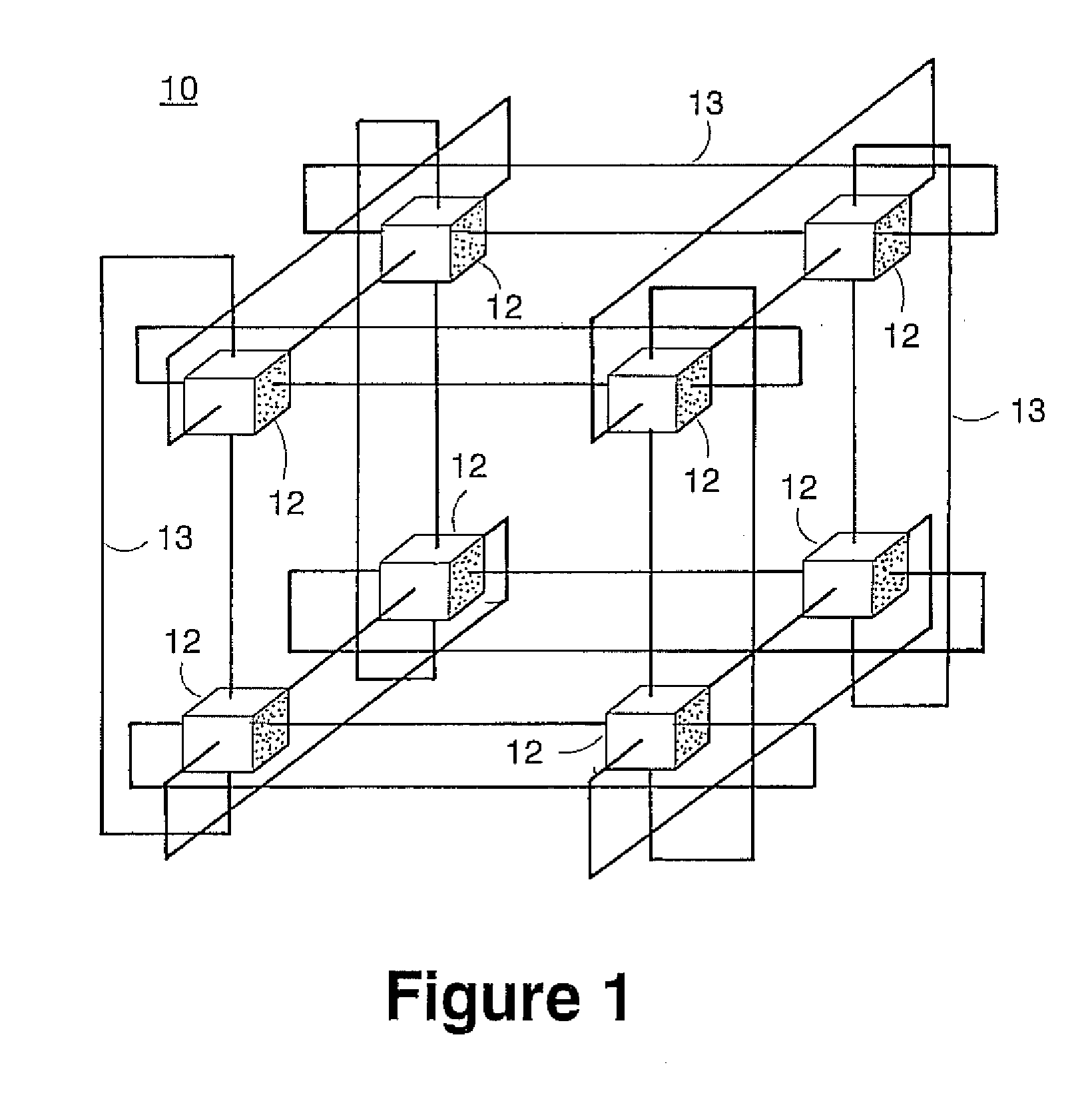

Dynamic load balancing of a network of client and server computer

InactiveUS20030126200A1Program synchronisationMultiple digital computer combinationsDynamic load balancingClient-side

Methods for load rebalancing by clients in a network are disclosed. Client load rebalancing allows the clients to optimize throughput between themselves and the resources accessed by the nodes. A network which implements this embodiment of the invention can dynamically rebalance itself to optimize throughput by migrating client I / O requests from overutilized pathways to underutilized pathways. Client load rebalancing refers to the ability of a client enabled with processes in accordance with the current invention to remap a path through a plurality of nodes to a resource. The remapping may take place in response to a redirection command emanating from an overloaded node, e.g. server. These embodiments disclosed allow more efficient, robust communication between a plurality of clients and a plurality of resources via a plurality of nodes. In an embodiment of the invention a method for load balancing on a network is disclosed. The network includes at least one client node coupled to a plurality of server nodes, and at least one resource coupled to at least a first and a second server node of the plurality of server nodes. The method comprises the acts of: receiving at a first server node among the plurality of server nodes a request for the at least one resource; determining a utilization condition of the first server node; and re-directing subsequent requests for the at least one resource to a second server node among the plurality of server nodes in response to the determining act. In another embodiment of the invention the method comprises the acts of: sending an I / O request from the at least one client to the first server node for the at least one resource; determining an I / O failure of the first server node; and re-directing subsequent requests from the at least one client for the at least one resource to an other among the plurality of server nodes in response to the determining act.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Indirect calibration of polymer characterization systems

InactiveUS6294388B1Avoid backlogImprove throughputIon-exchange process apparatusSamplingPolymer characterizationFlow injection analysis

Rapid characterization and screening of polymer samples to determine average molecular weight, molecular weight distribution and other properties is disclosed. Rapid flow characterization systems and methods, including liquid chromatography and flow-injection analysis systems and methods are preferably employed. High throughput, automated sampling systems and methods, high-temperature characterization systems and methods, and rapid, indirect calibration compositions and methods are also disclosed. The described methods, systems, and devices have primary applications in combinatorial polymer research and in industrial process control.

Owner:INTERMOLECULAR

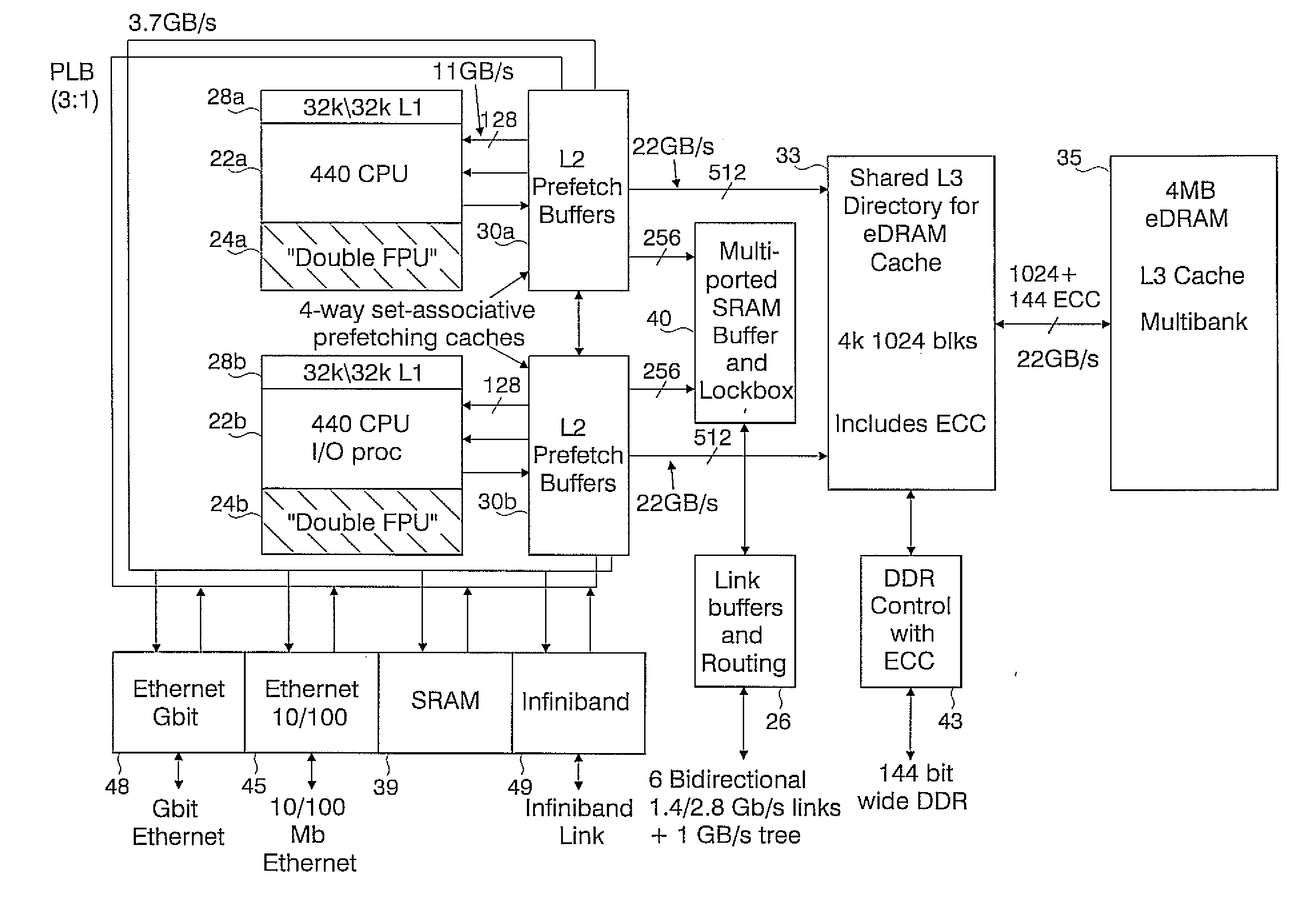

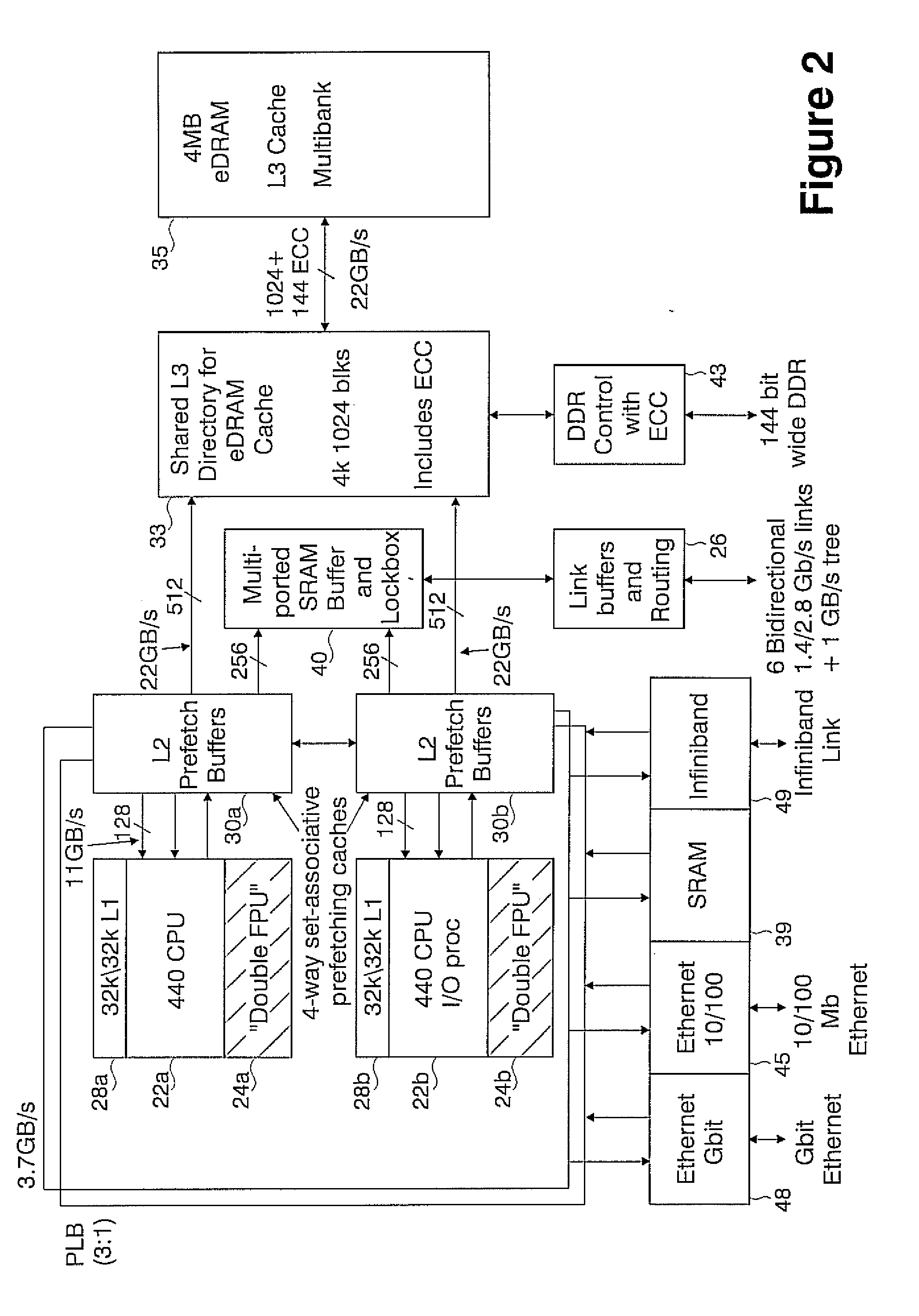

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

Substrate processing apparatus

InactiveUS20080210278A1Stable processingImprove throughputPretreated surfacesSemiconductor/solid-state device manufacturingResistLow speed

A substrate processing apparatus is provided. The apparatus includes a plurality of fluid suppliers 61, 61, 63 for supplying different processing fluids. In processing a wafer W, the substrate processing apparatus moves the fluid suppliers 61, 62, 63 along the peripheral part of the wafer W relatively. The fluid suppliers 61, 62, 63 are arranged in a direction extending from the circumference of the wafer W to its inside. With the arrangement, the apparatus is capable of stable processing of the wafer W in spite of rotating the wafer W at a low speed. Further, it is possible to improve a throughput of the apparatus in resist processing.

Owner:TOKYO ELECTRON LTD

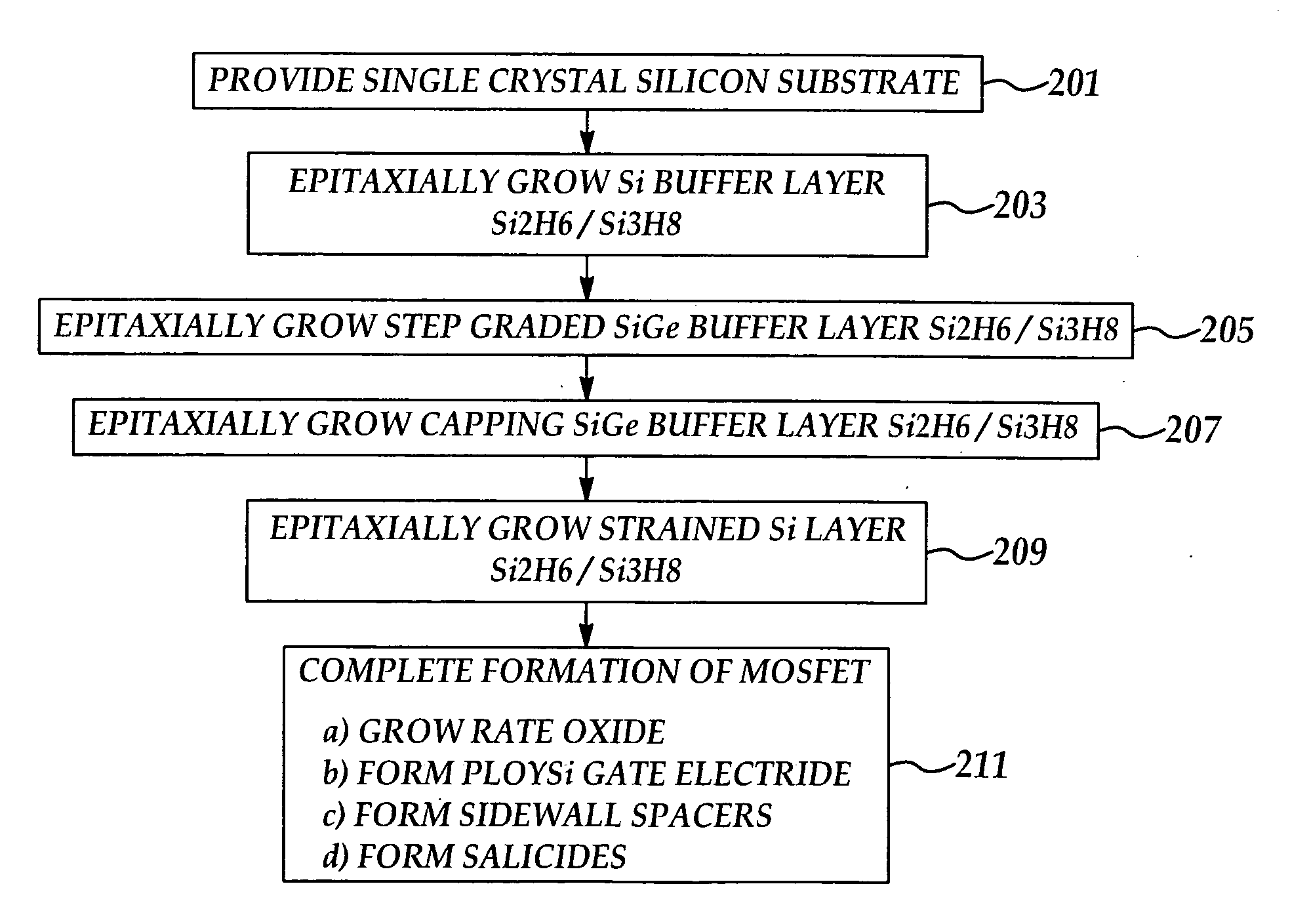

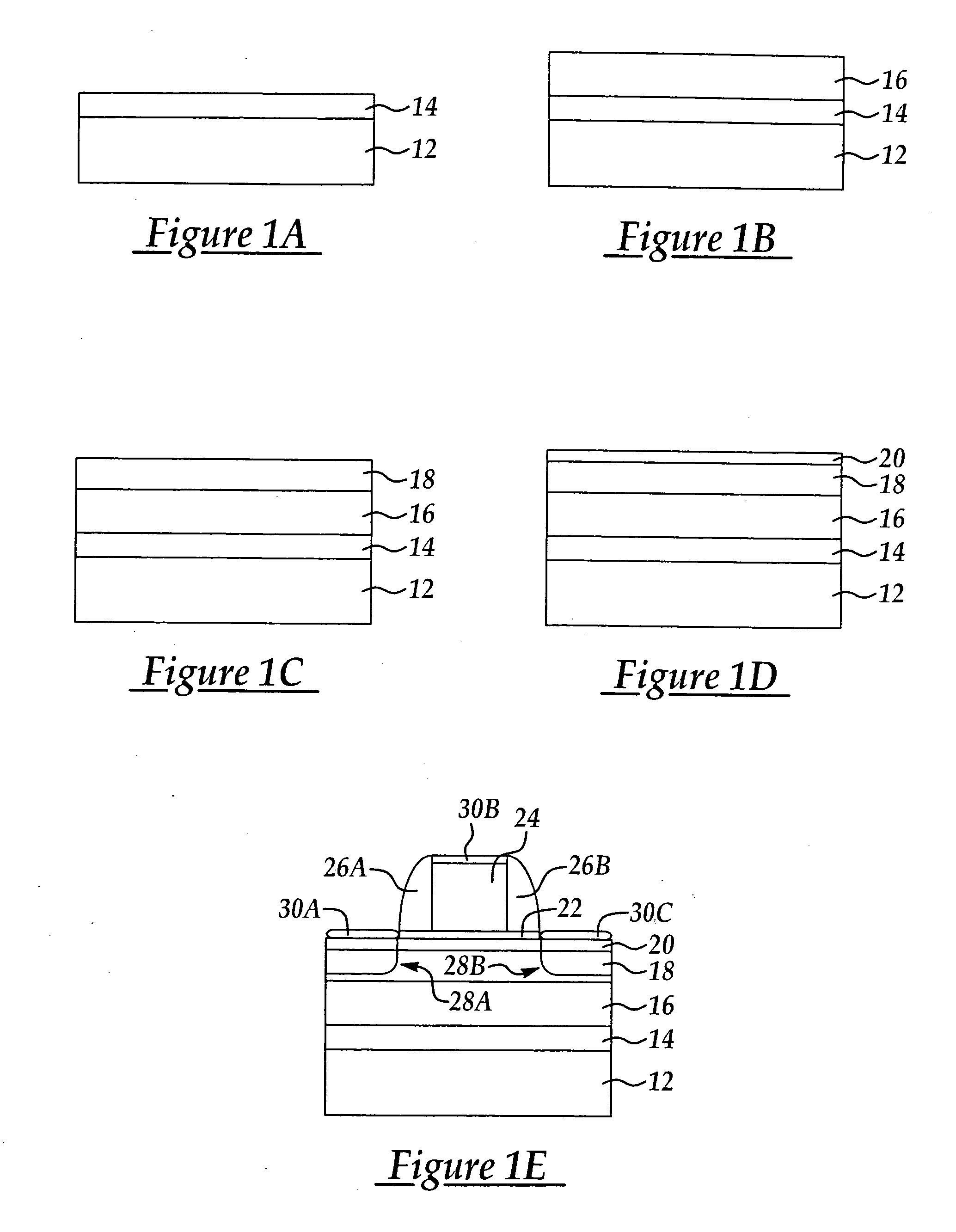

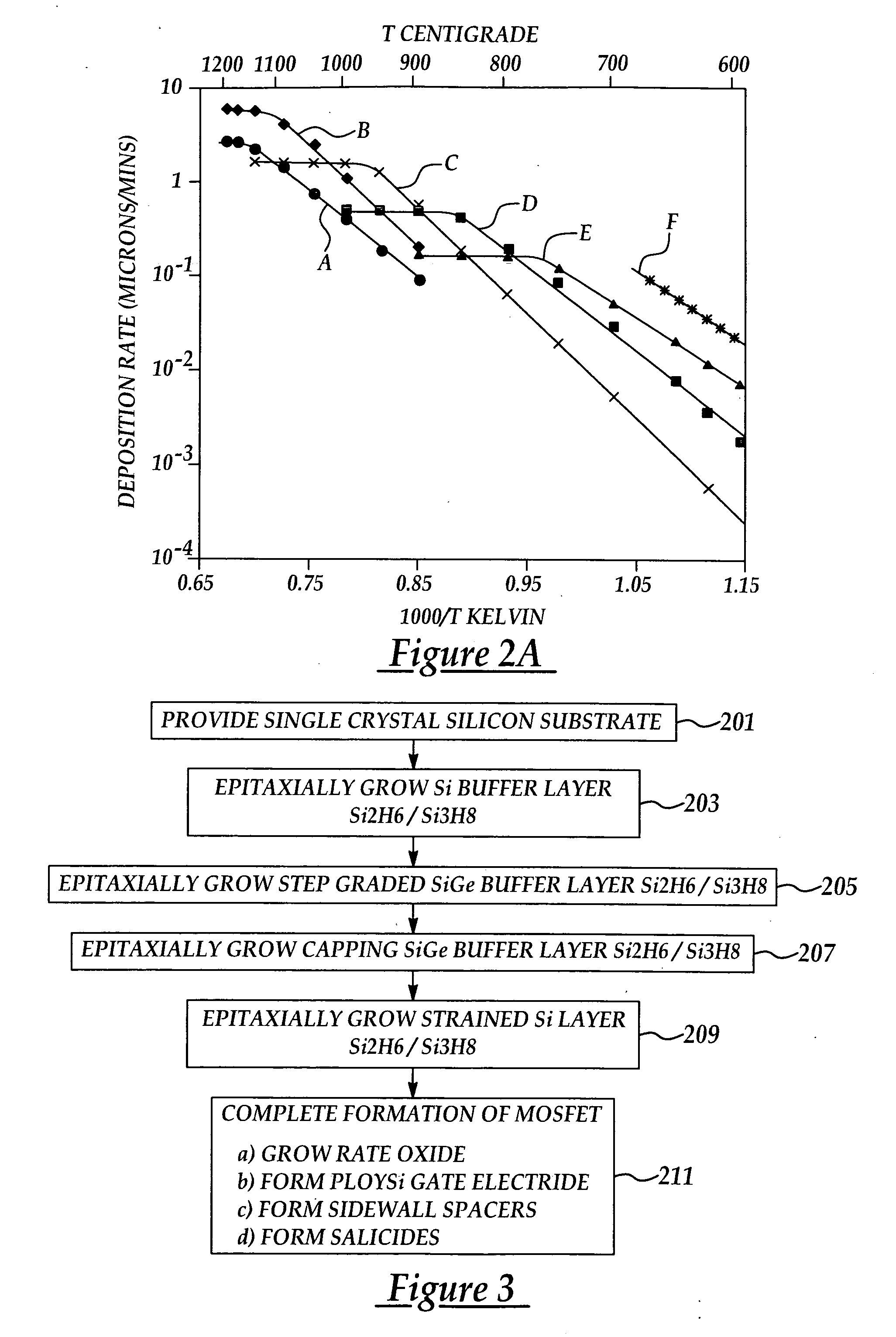

Method for producing high throughput strained-si channel mosfets

InactiveUS20050245058A1Improve throughputReduce defect densityPolycrystalline material growthFrom solid stateSilanesMaterials science

A method for forming a strained silicon layer device with improved wafer throughput and low defect density including providing a silicon substrate; epitaxially growing a first silicon layer using at least one deposition precursor selected from the group consisting of disilane and trisilane; epitaxially growing a step-grade SiGe buffer layer over and contacting the first silicon layer using at least one deposition precursor selected from the group consisting of disilane and trisilane; epitaxially growing a SiGe capping layer over and contacting the step-grade SiGe buffer layer using at least one deposition precursor selected from the group consisting of disilane and trisilane; and, epitaxially growing a second silicon layer using at least one deposition precursor selected from the group consisting of disilane and silane.

Owner:TAIWAN SEMICON MFG CO LTD

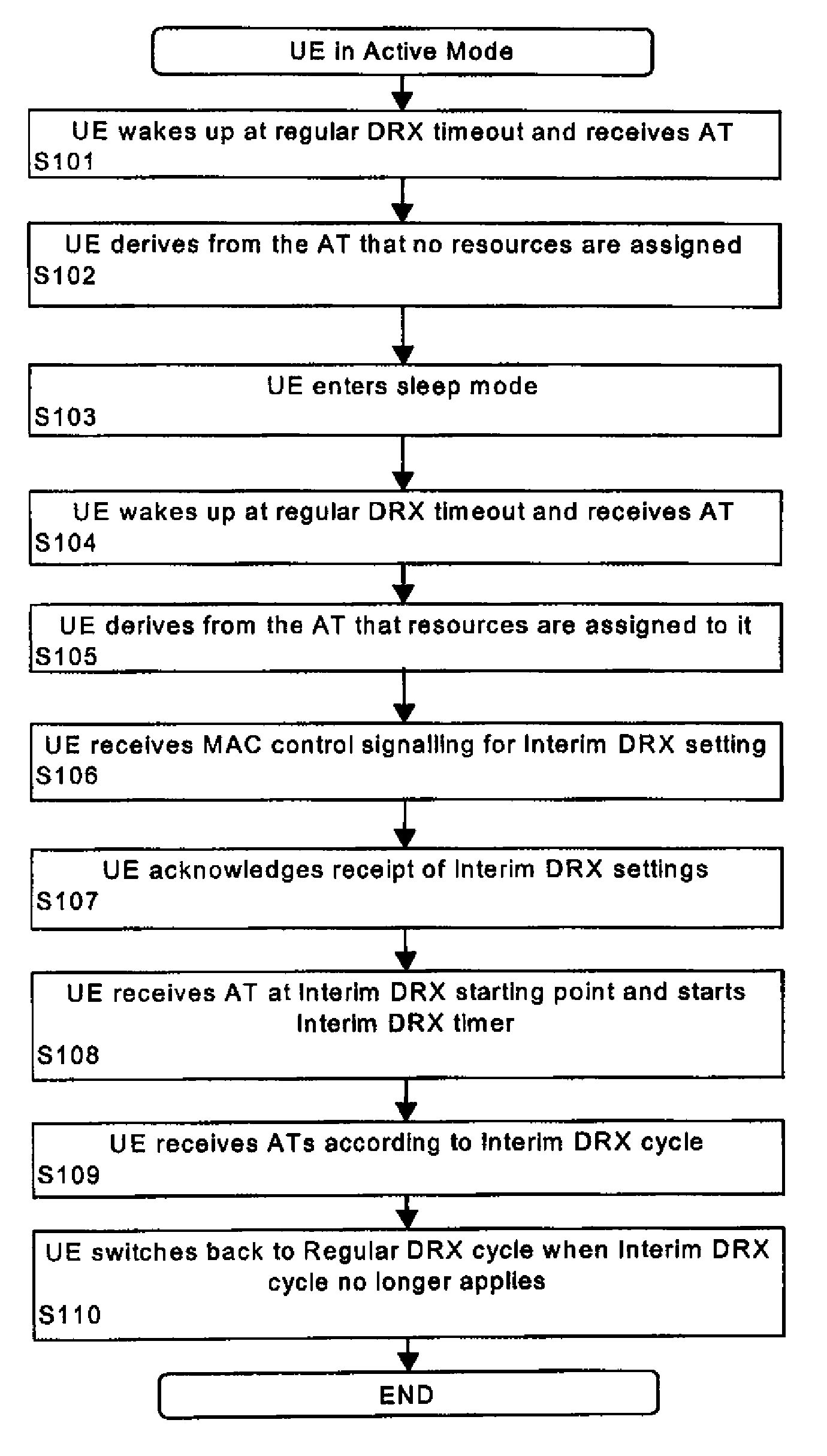

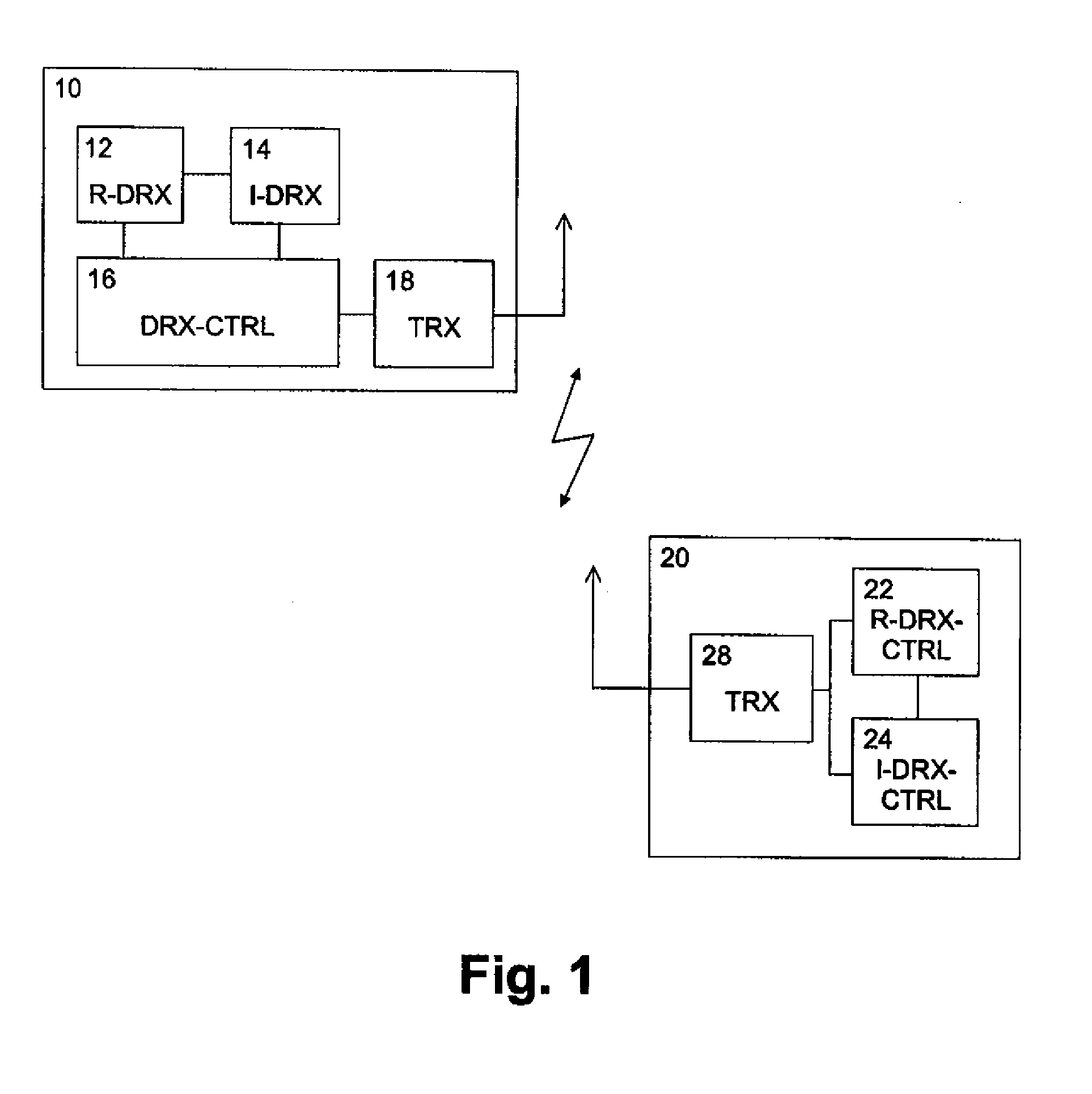

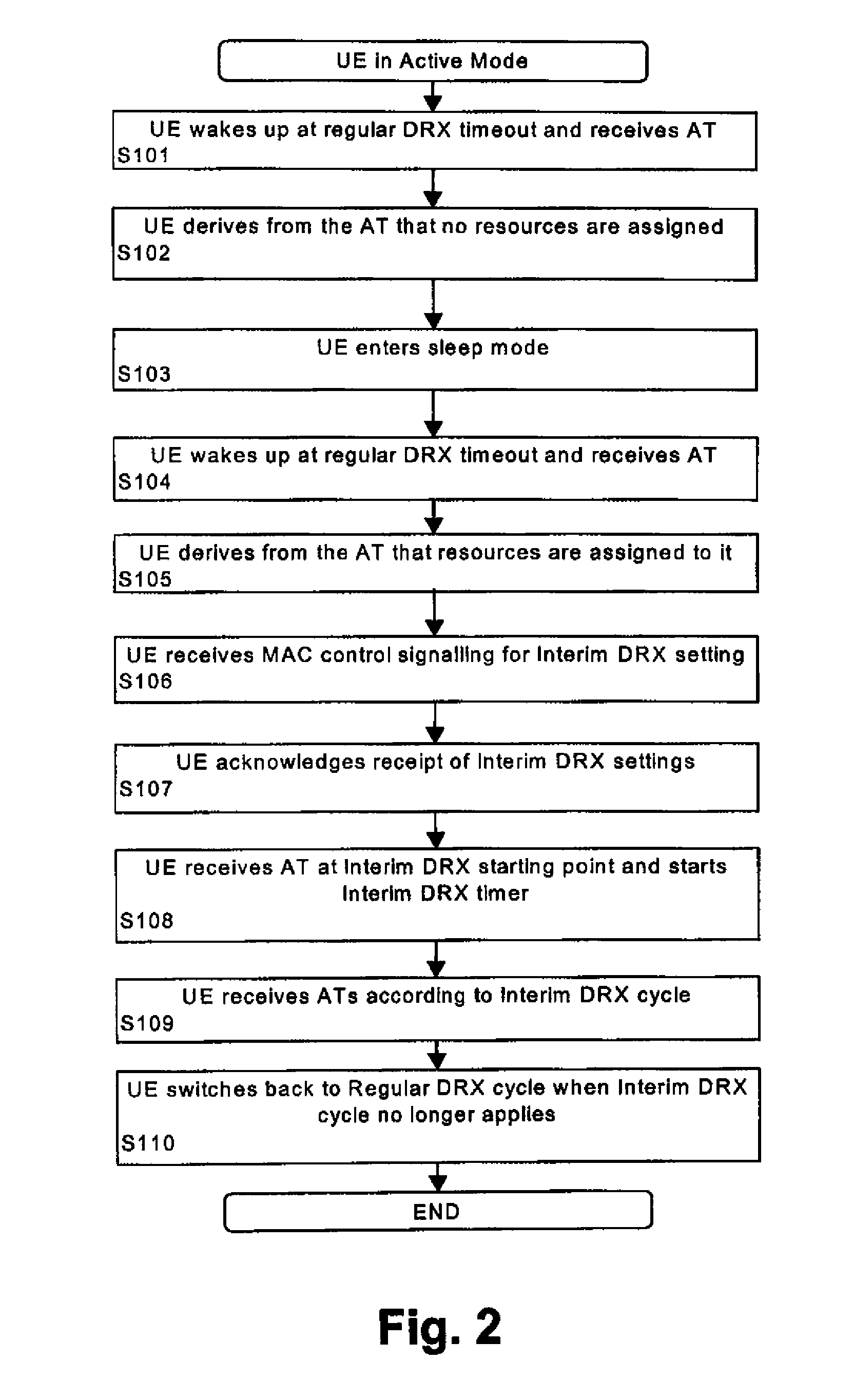

Method and system for providing interim discontinuous reception/transmission

ActiveUS20070291728A1Flexible DRX/DTXEasy to adjustPower managementEnergy efficient ICTComputer hardwareControl layer

A method, terminal device, network element, system and computer program product for controlling discontinuous reception or transmission at a terminal device of a communication network are disclosed. A regular discontinuous reception or transmission cycle of a regular discontinuous reception or transmission scheme is set by using a first control layer, and in addition thereto a shorter temporary discontinuous reception or transmission cycle of an interim discontinuous reception or transmission scheme can be set by using a second control layer. This arrangement provides long discontinuous reception or transmission cycles for power consumption improvements while at the same time ensuring that the network can easily and flexibly shorten these cycles for increased data throughput, if needed.

Owner:NOKIA TECHNOLOGLES OY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com