Patents

Literature

315 results about "Supercomputer" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A supercomputer is a computer with a high level of performance compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there are supercomputers which can perform over a hundred quadrillion FLOPS. Since November 2017, all of the world's fastest 500 supercomputers run Linux-based operating systems. Additional research is being conducted in China, the United States, the European Union, Taiwan and Japan to build even faster, more powerful and technologically superior exascale supercomputers.

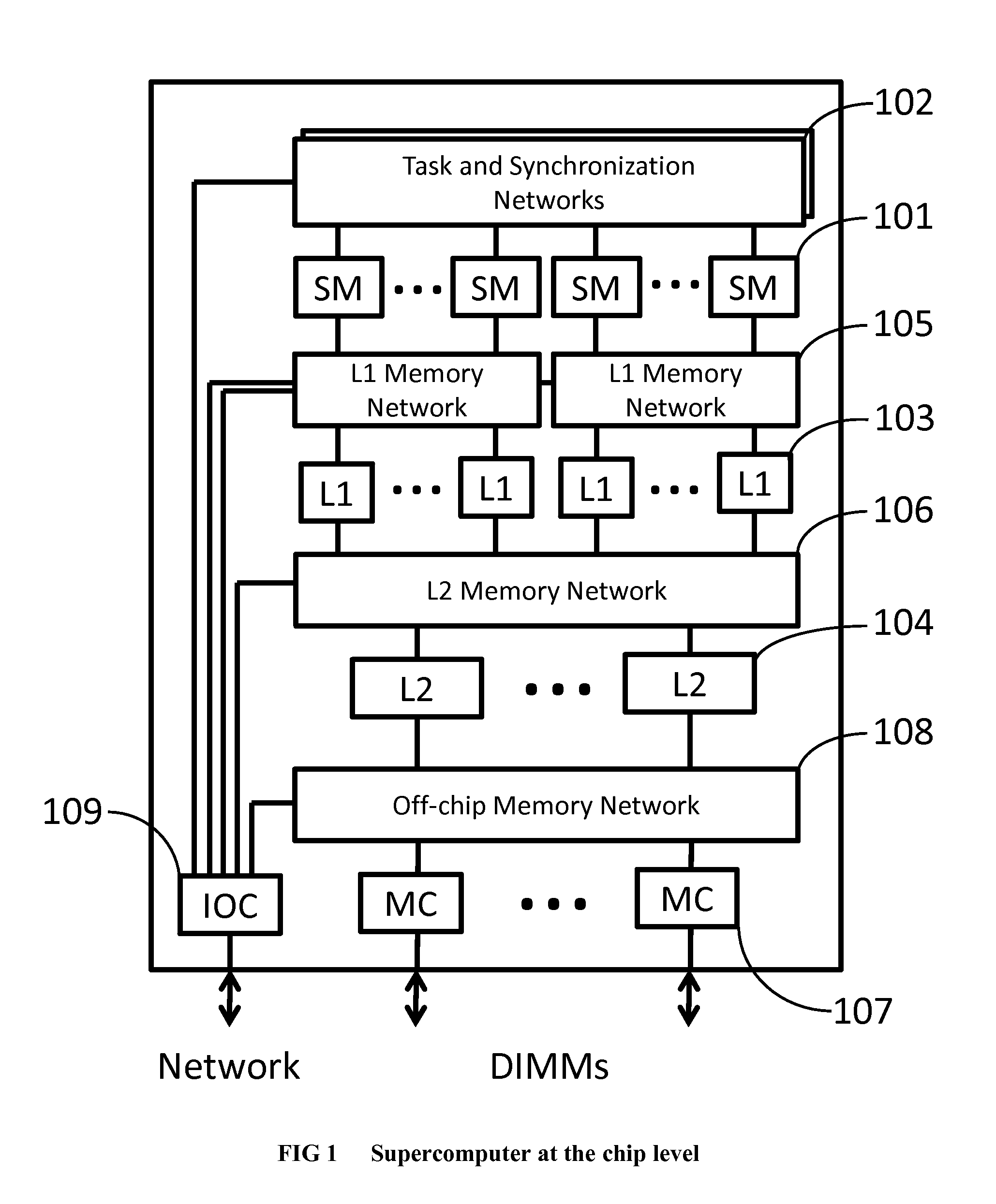

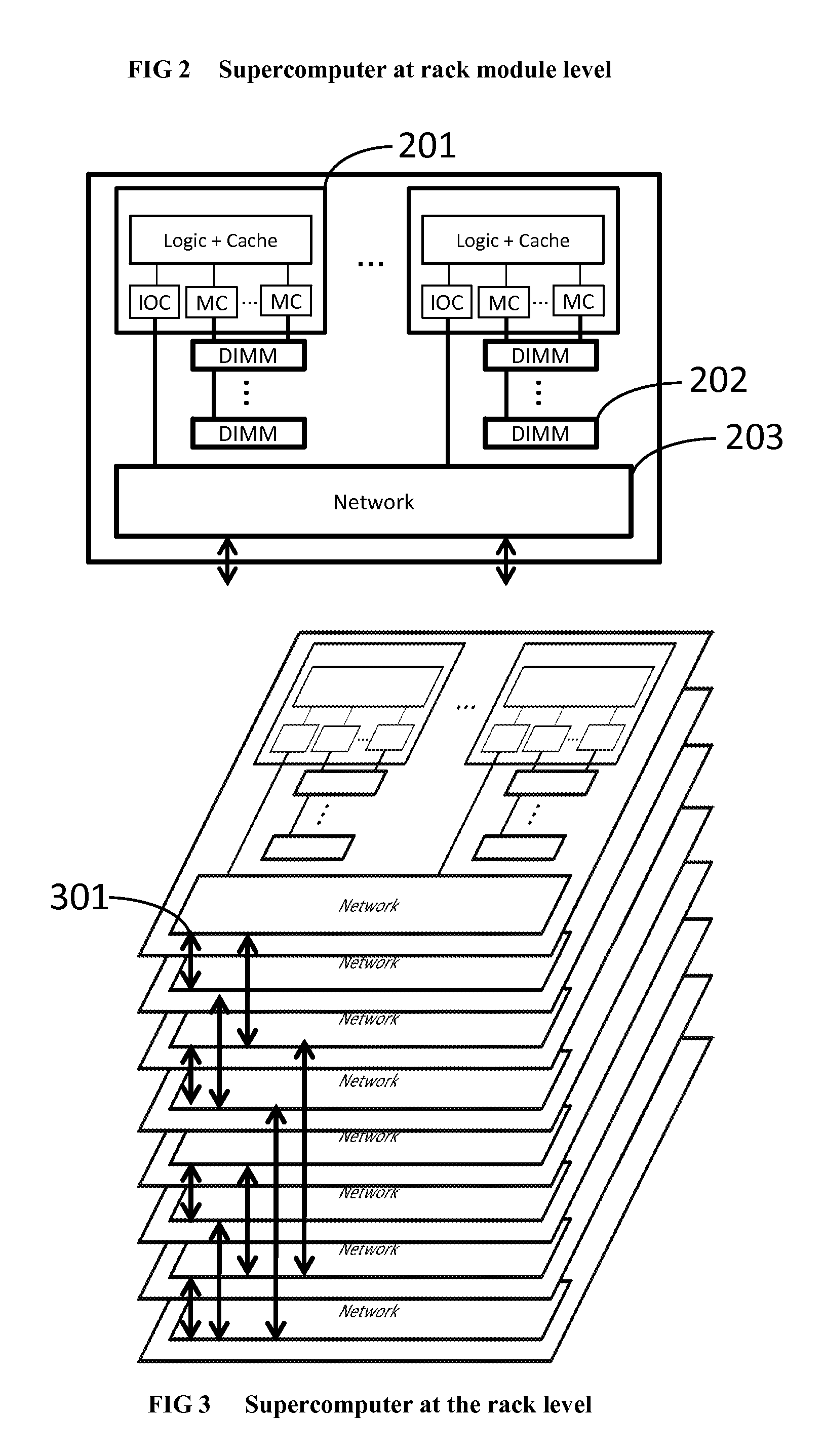

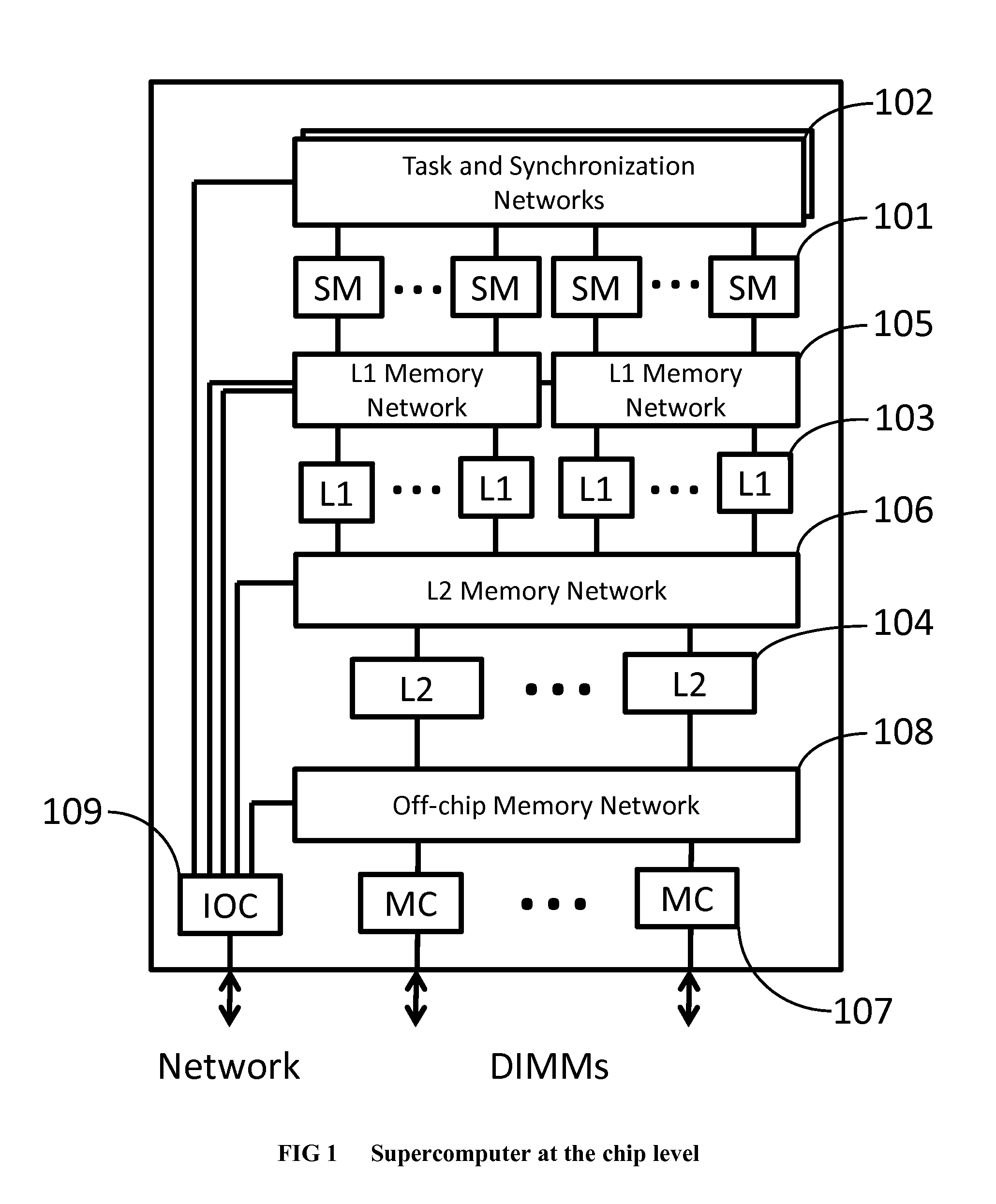

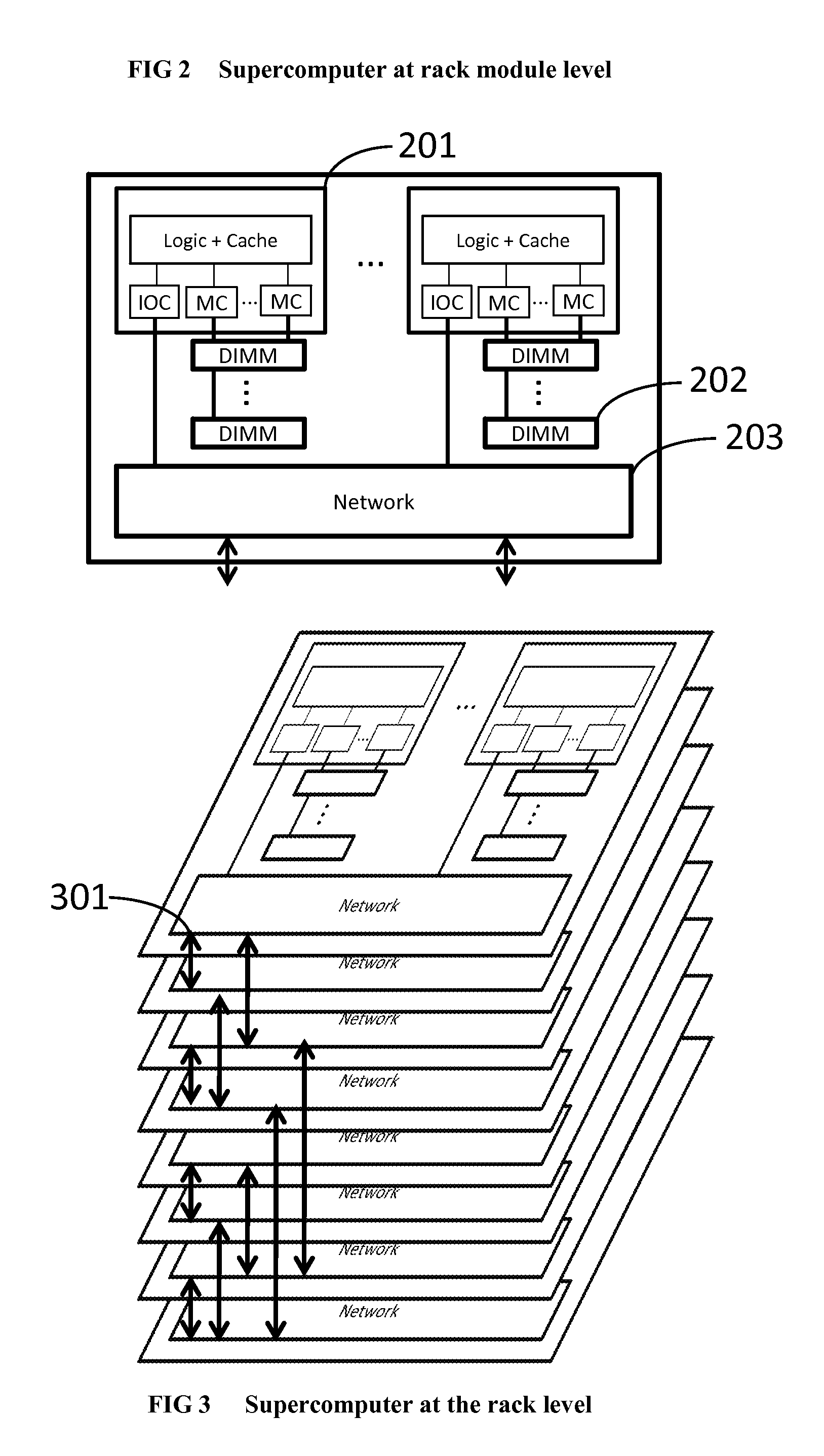

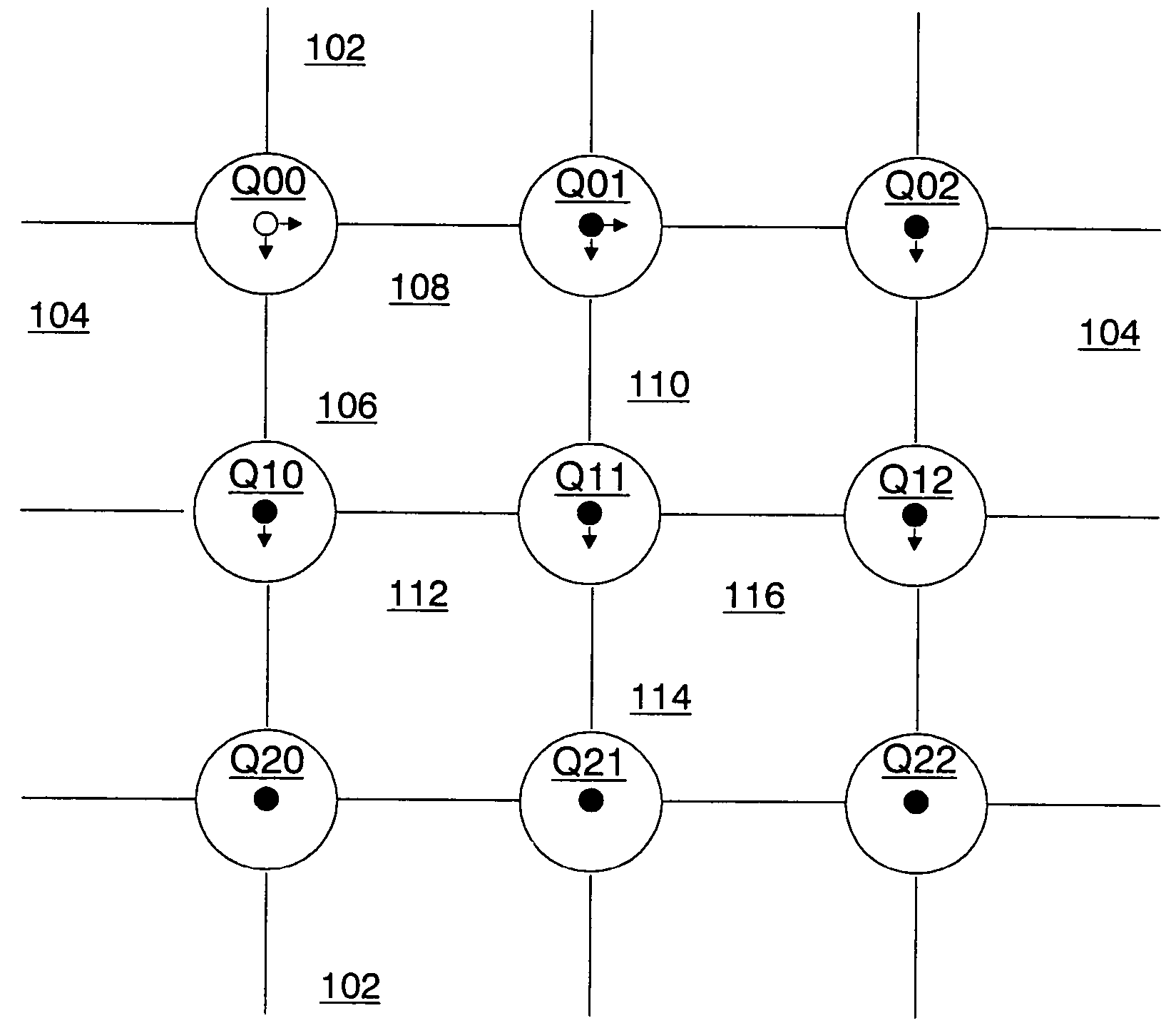

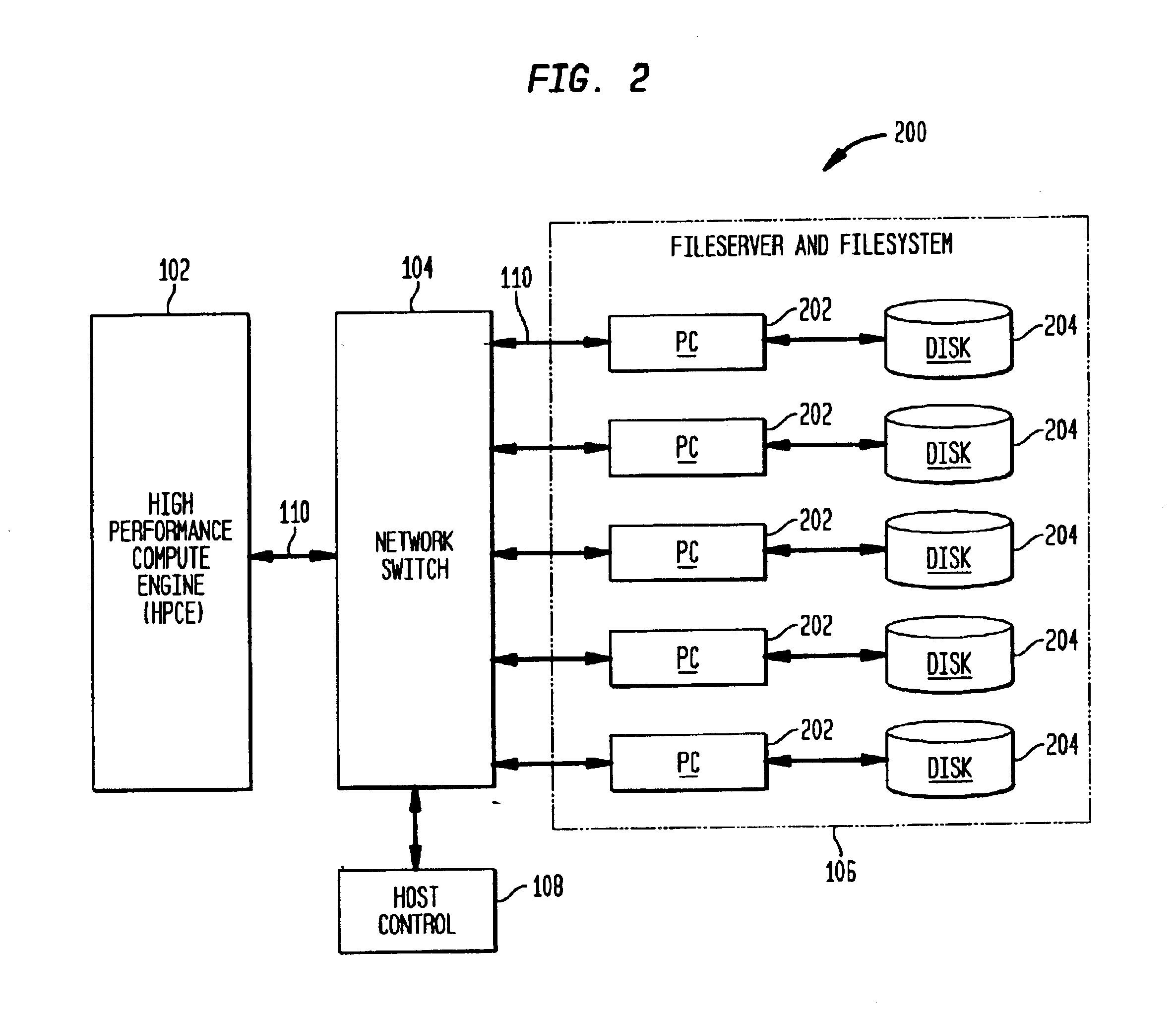

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

Method and system for converting a single-threaded software program into an application-specific supercomputer

ActiveUS20130125097A1Improve efficiencyLow overhead implementationMemory architecture accessing/allocationTransformation of program codeSupercomputerComputer architecture

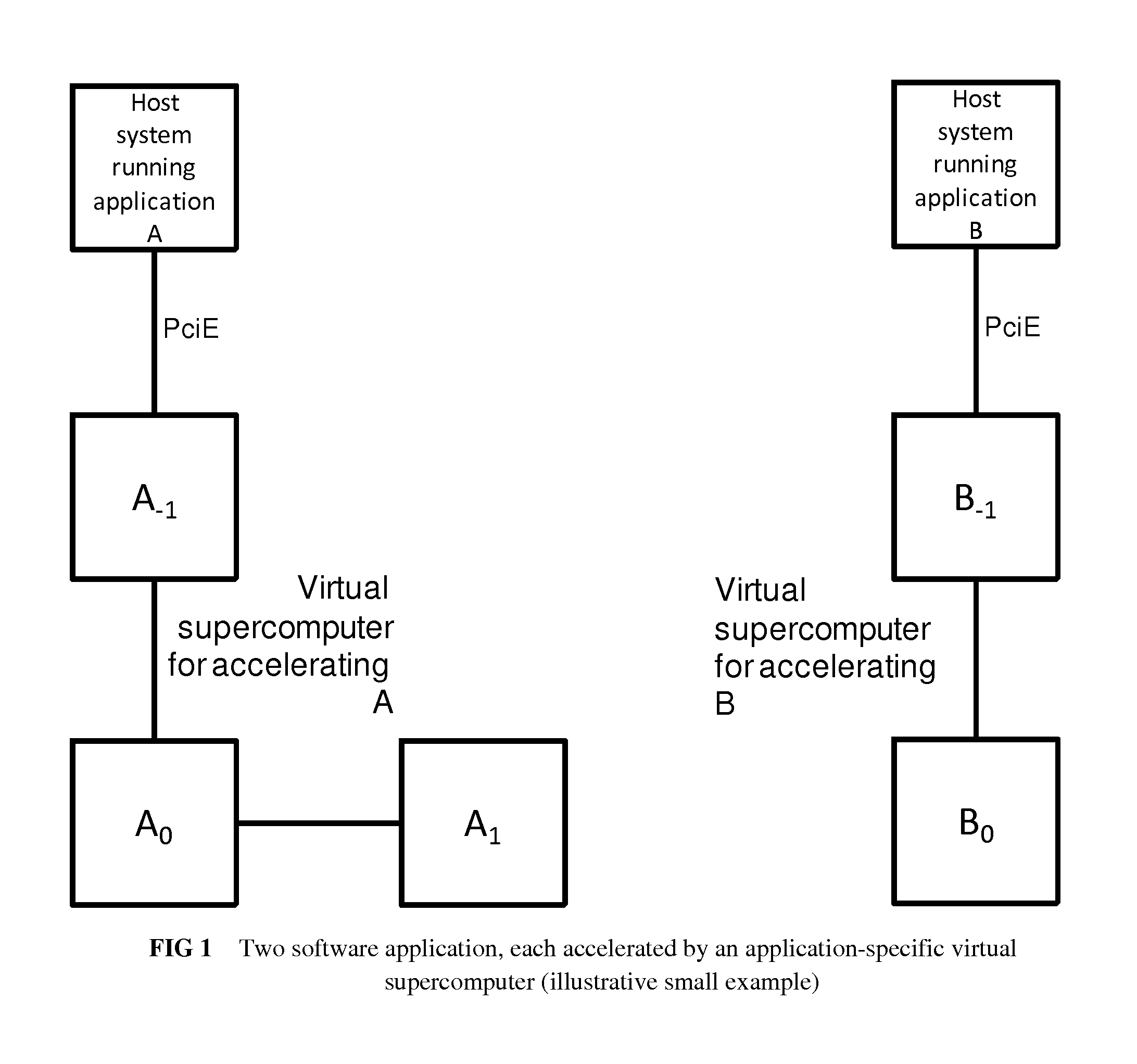

The invention comprises (i) a compilation method for automatically converting a single-threaded software program into an application-specific supercomputer, and (ii) the supercomputer system structure generated as a result of applying this method. The compilation method comprises: (a) Converting an arbitrary code fragment from the application into customized hardware whose execution is functionally equivalent to the software execution of the code fragment; and (b) Generating interfaces on the hardware and software parts of the application, which (i) Perform a software-to-hardware program state transfer at the entries of the code fragment; (ii) Perform a hardware-to-software program state transfer at the exits of the code fragment; and (iii) Maintain memory coherence between the software and hardware memories. If the resulting hardware design is large, it is divided into partitions such that each partition can fit into a single chip. Then, a single union chip is created which can realize any of the partitions.

Owner:GLOBAL SUPERCOMPUTING CORP

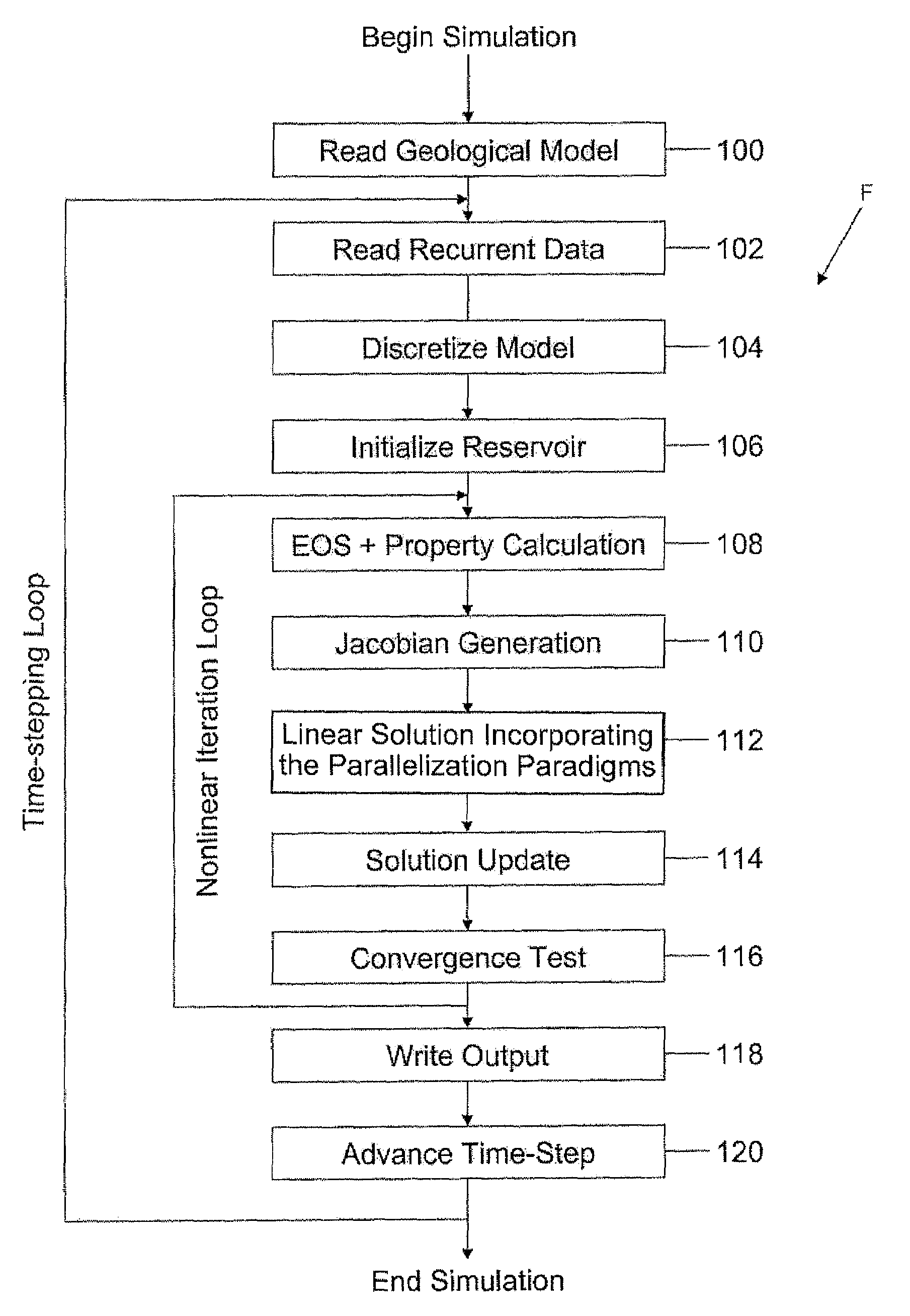

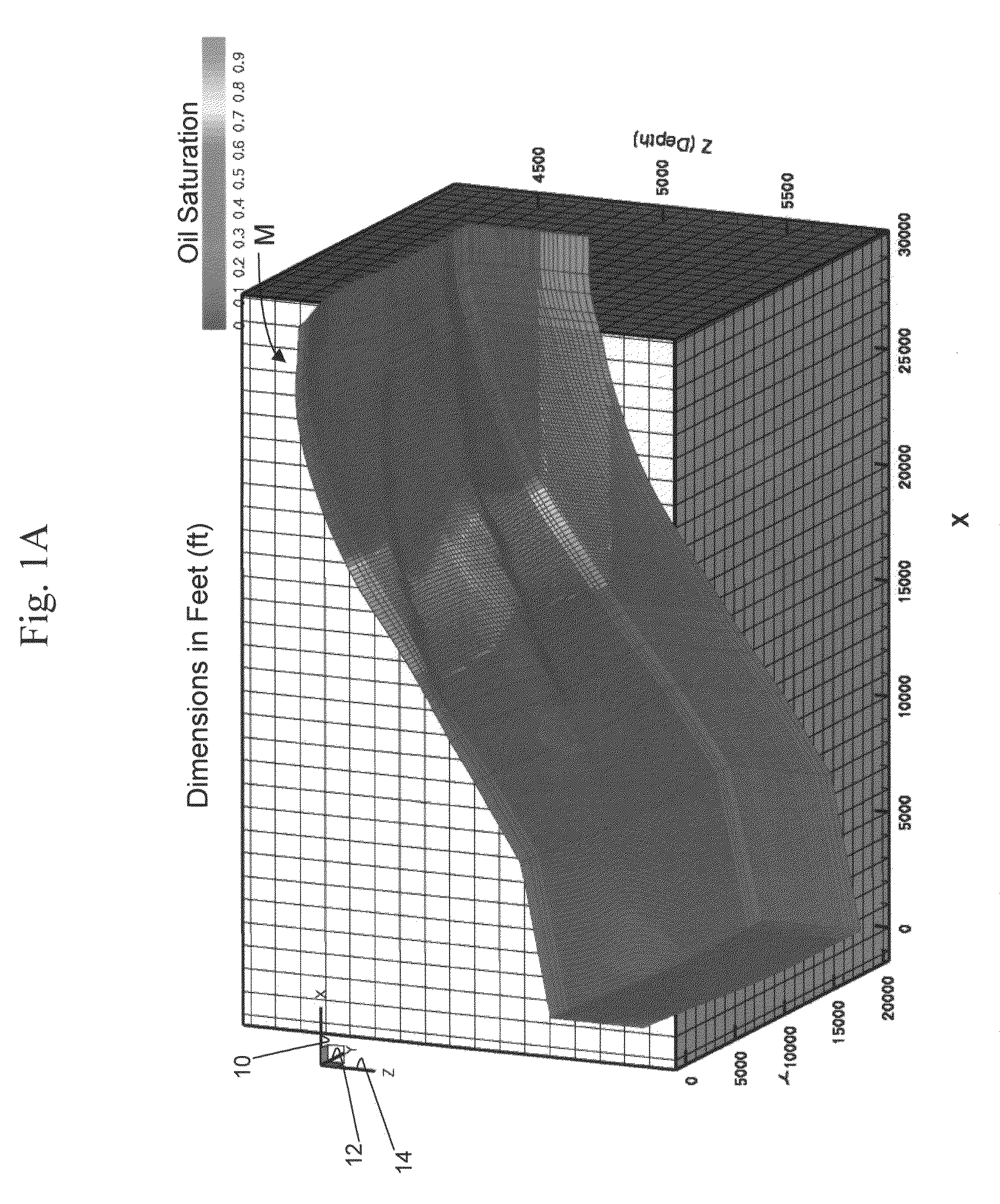

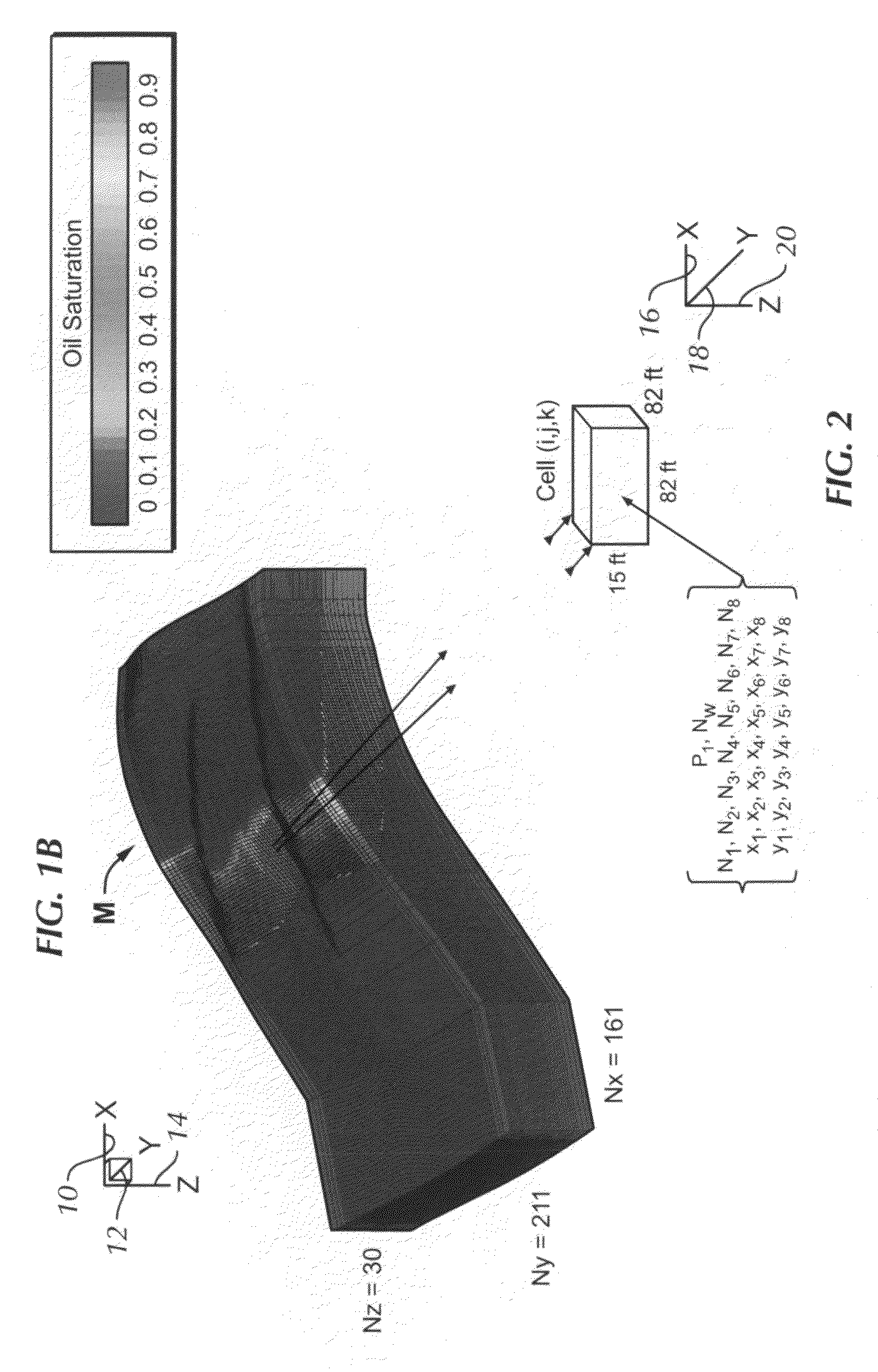

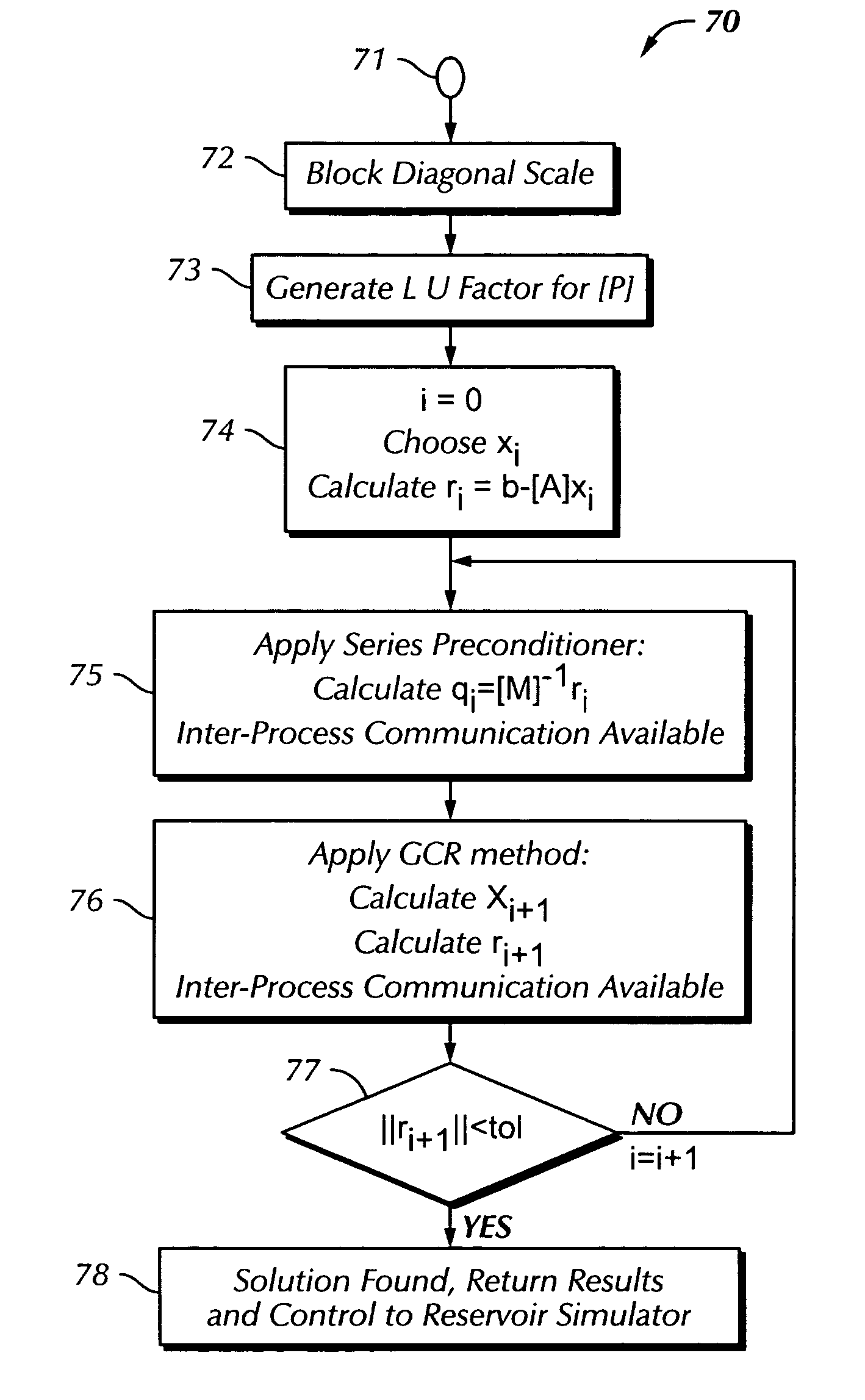

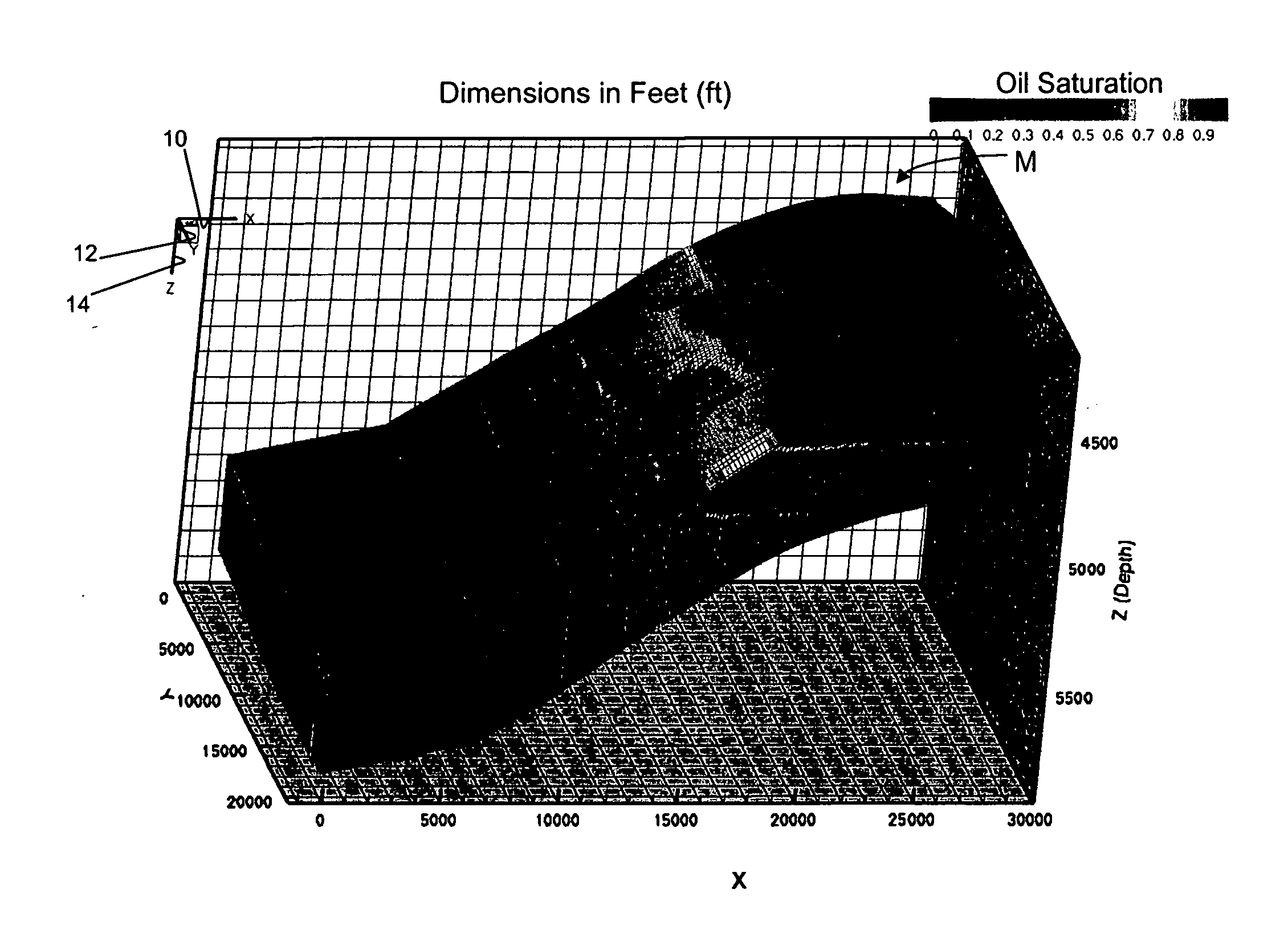

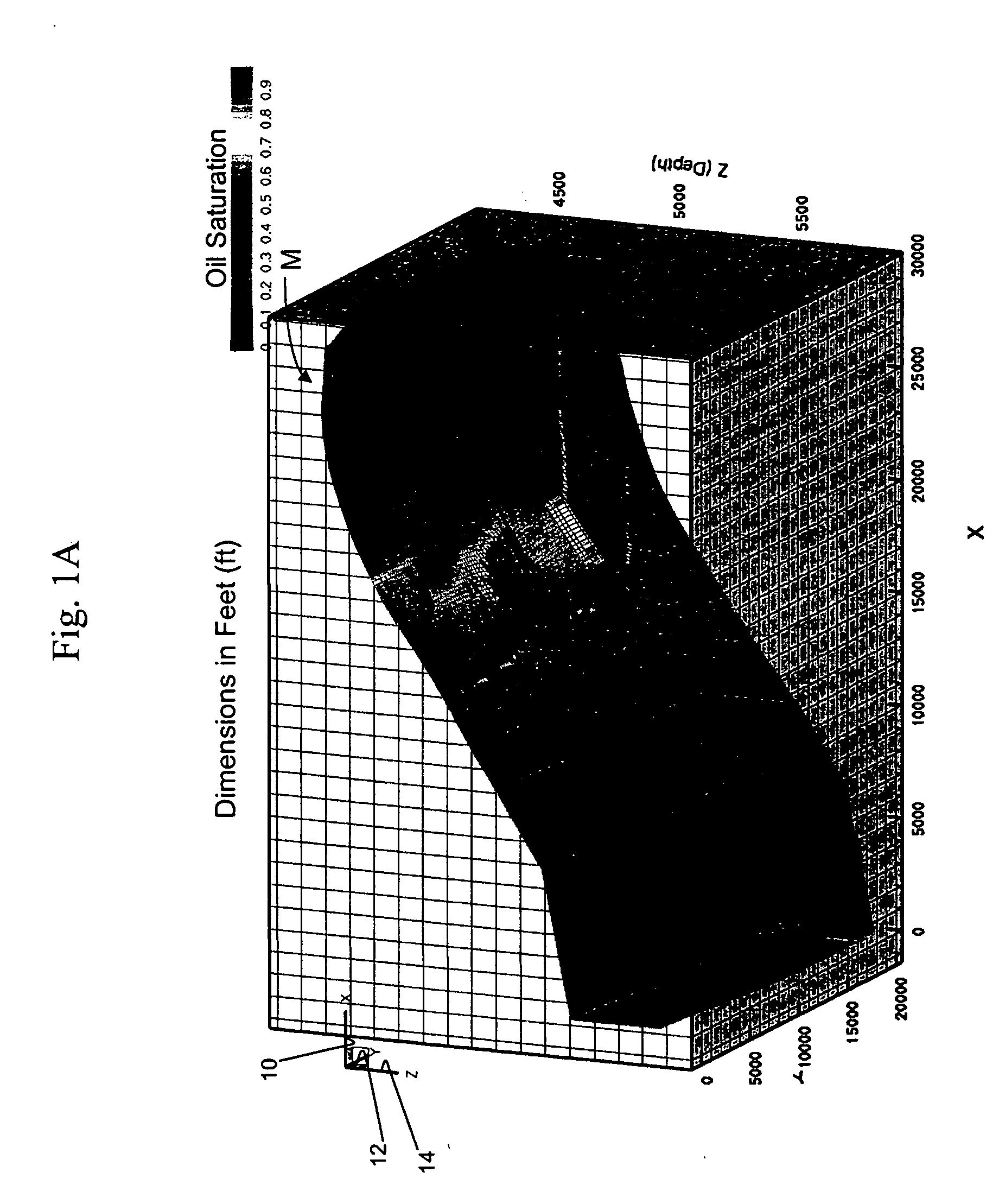

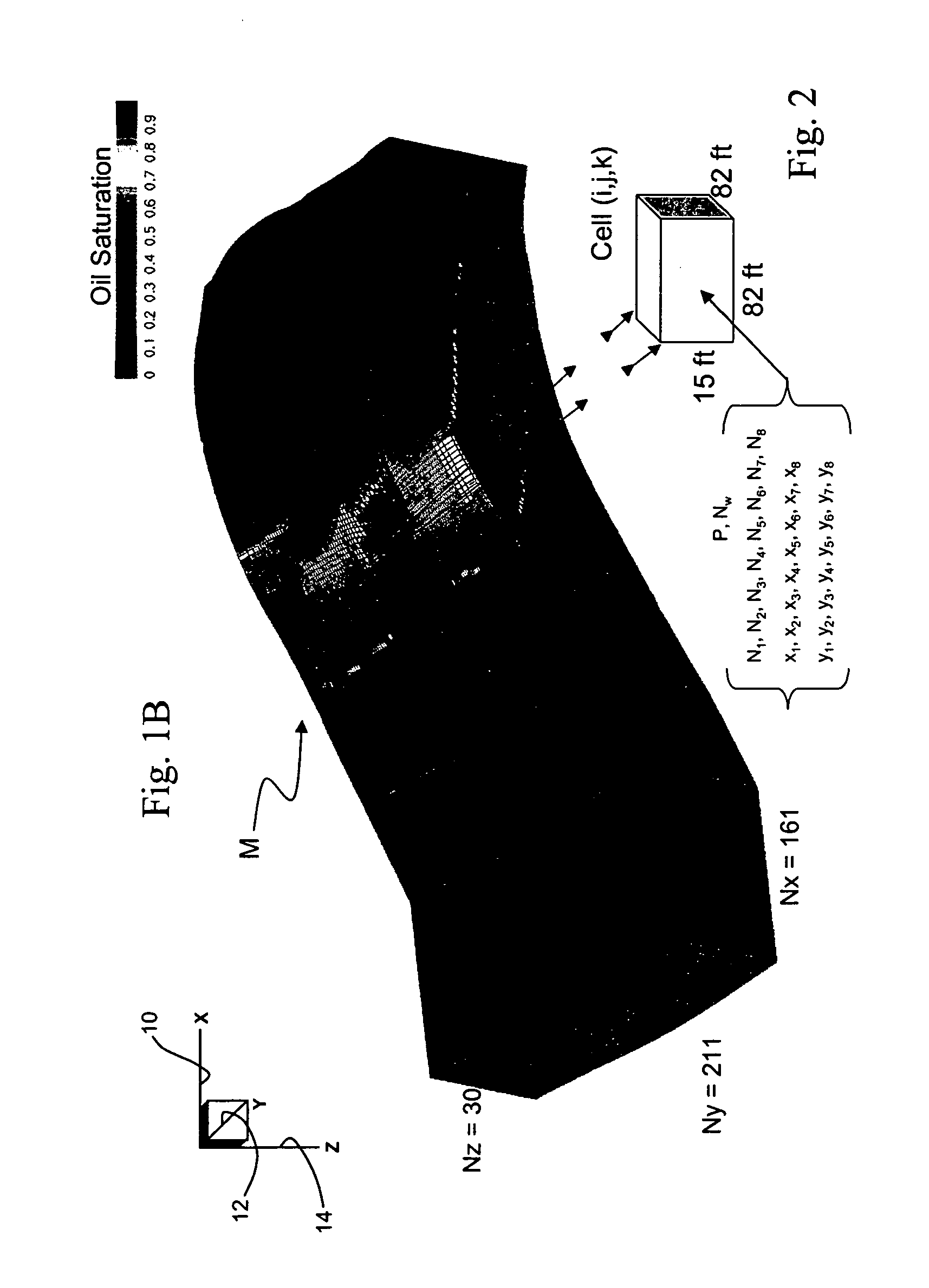

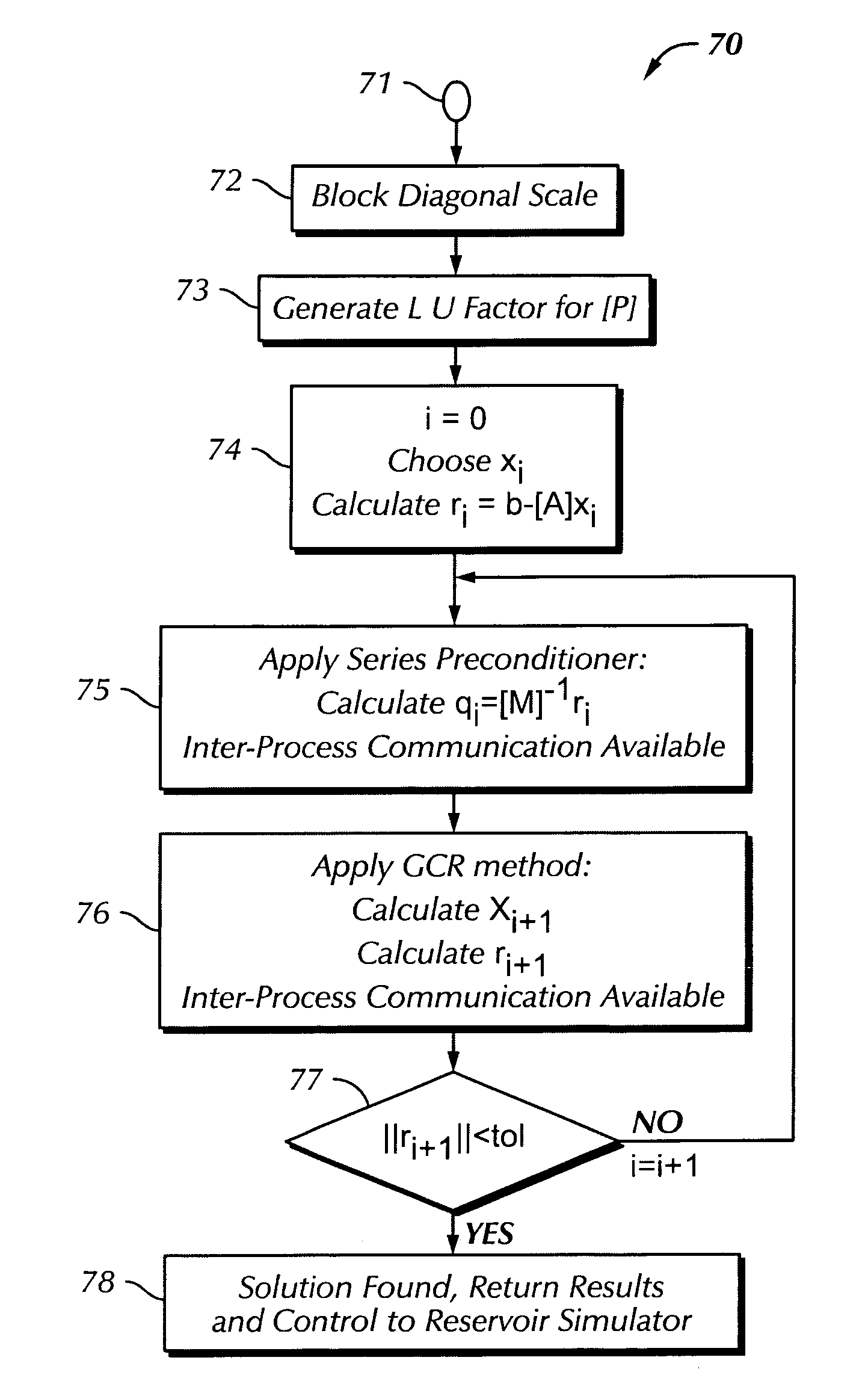

Highly-parallel, implicit compositional reservoir simulator for multi-million-cell models

ActiveUS7526418B2Reduce processing timeElectric/magnetic detection for well-loggingPermeability/surface area analysisSupercomputerDistributed memory

A fully-parallelized, highly-efficient compositional implicit hydrocarbon reservoir simulator is provided. The simulator is capable of solving giant reservoir models, of the type frequently encountered in the Middle East and elsewhere in the world, with fast turnaround time. The simulator may be implemented in a variety of computer platforms ranging from shared-memory and distributed-memory supercomputers to commercial and self-made clusters of personal computers. The performance capabilities enable analysis of reservoir models in full detail, using both fine geological characterization and detailed individual definition of the hydrocarbon components present in the reservoir fluids.

Owner:SAUDI ARABIAN OIL CO

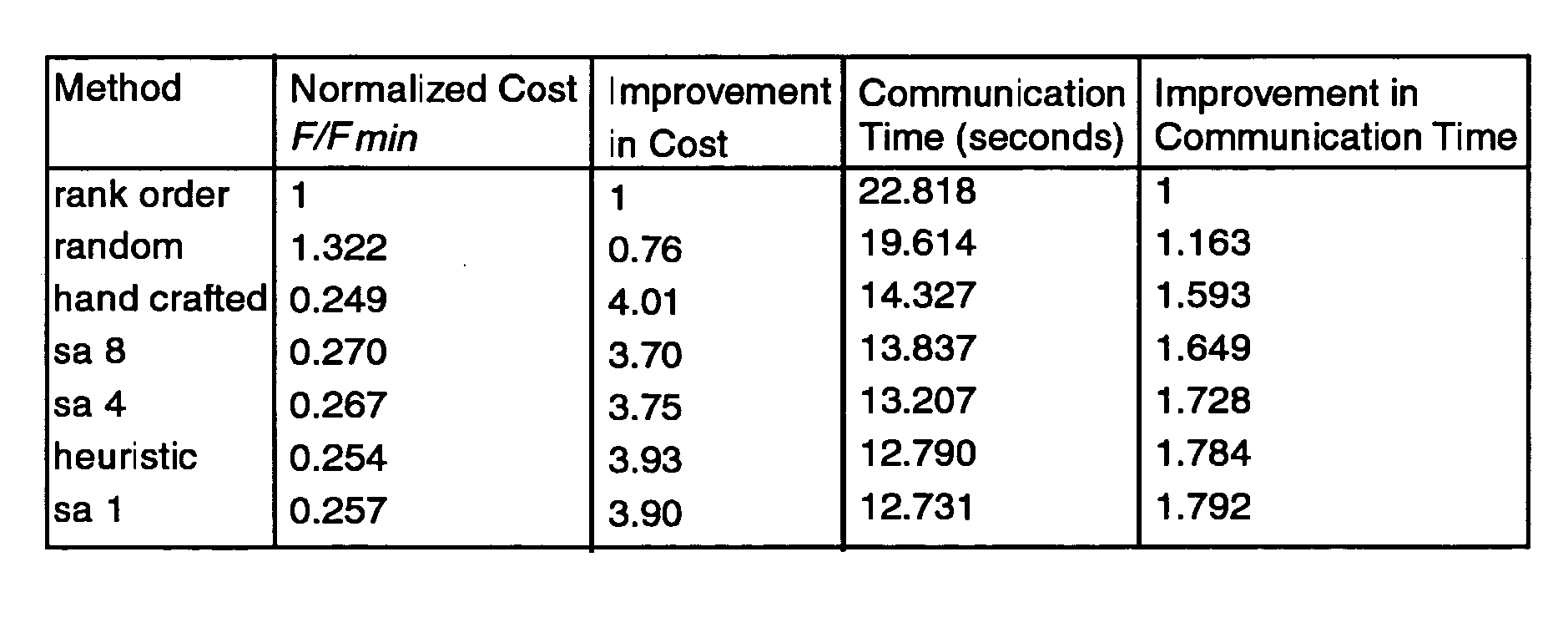

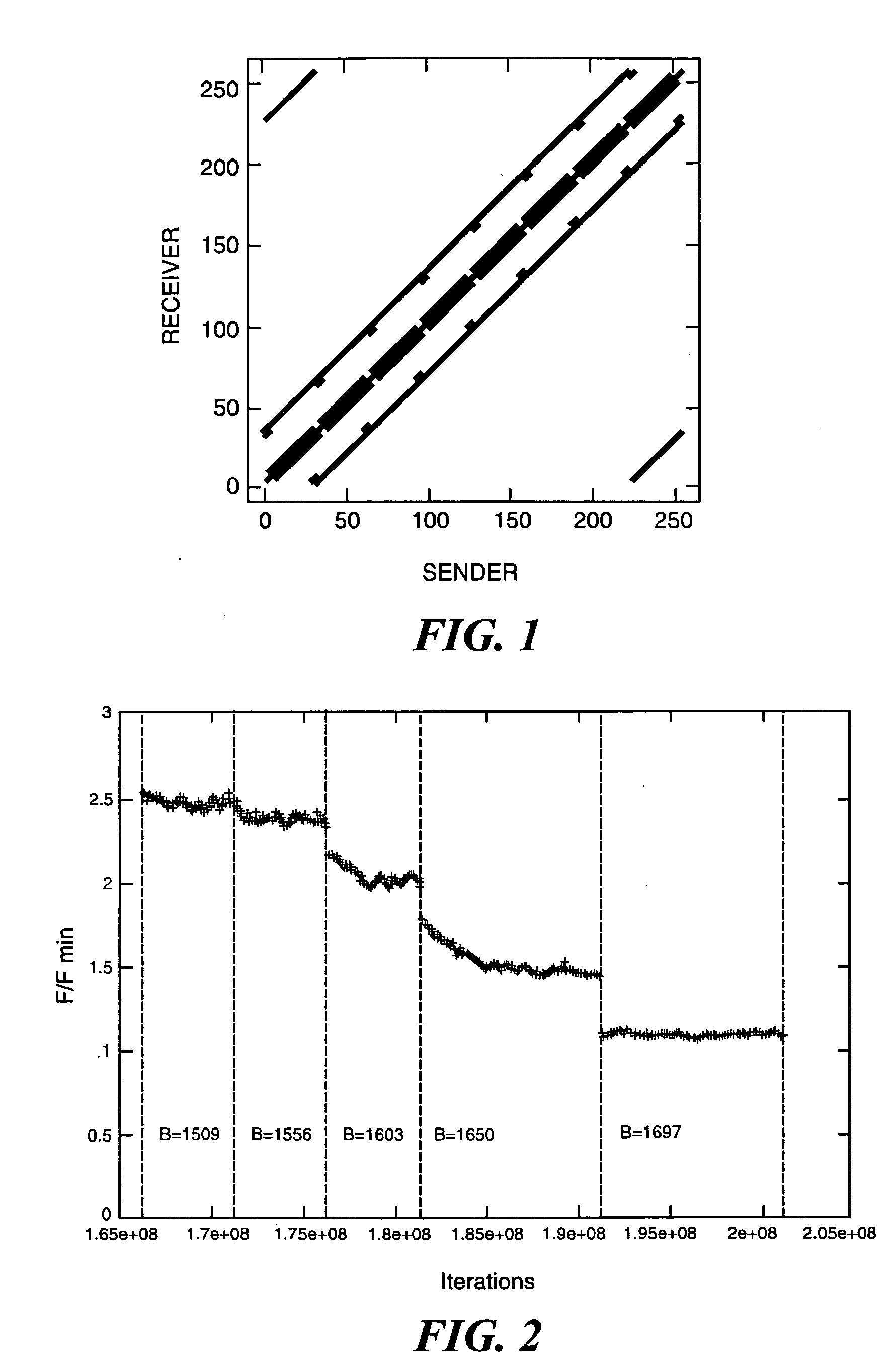

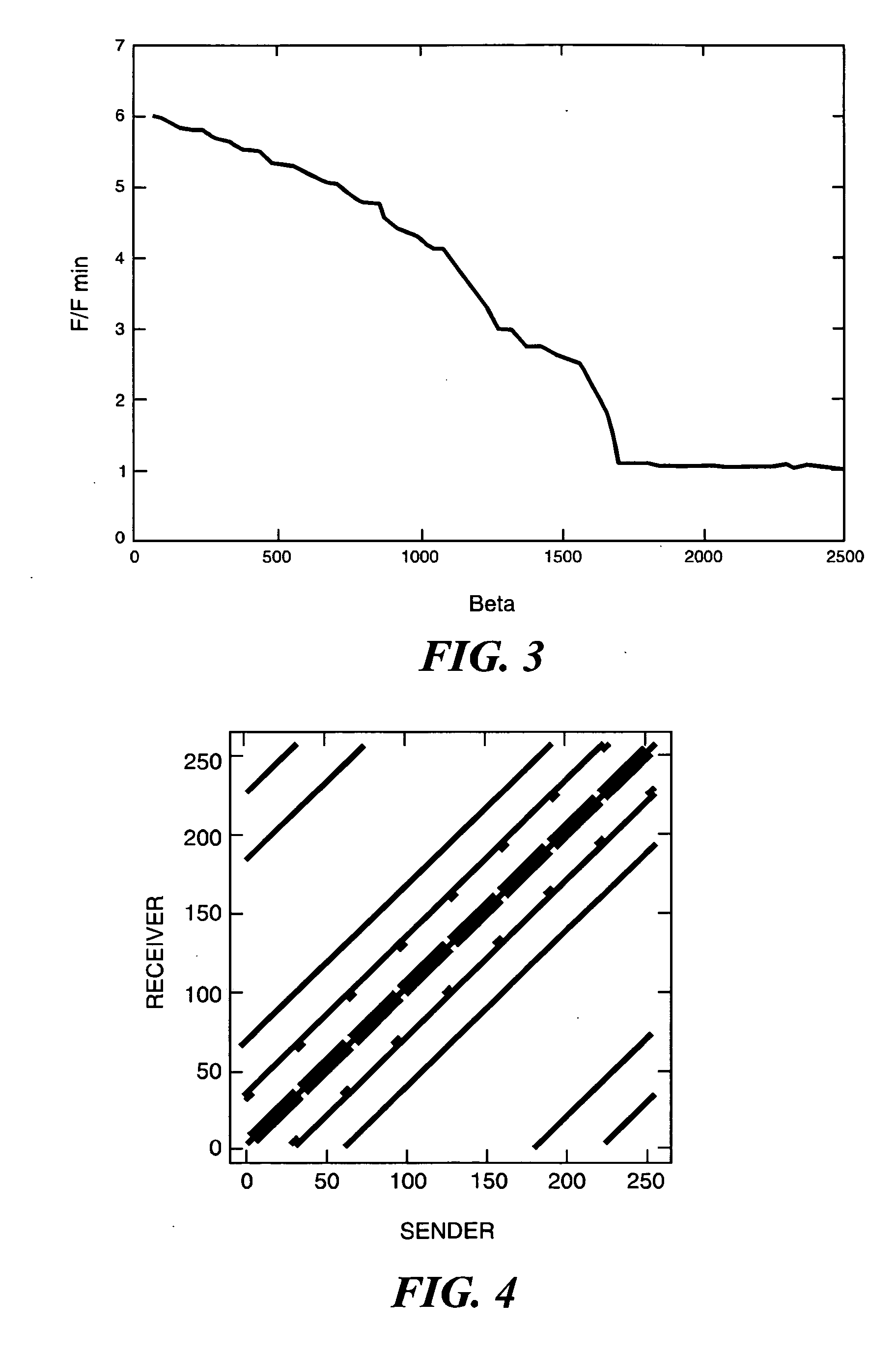

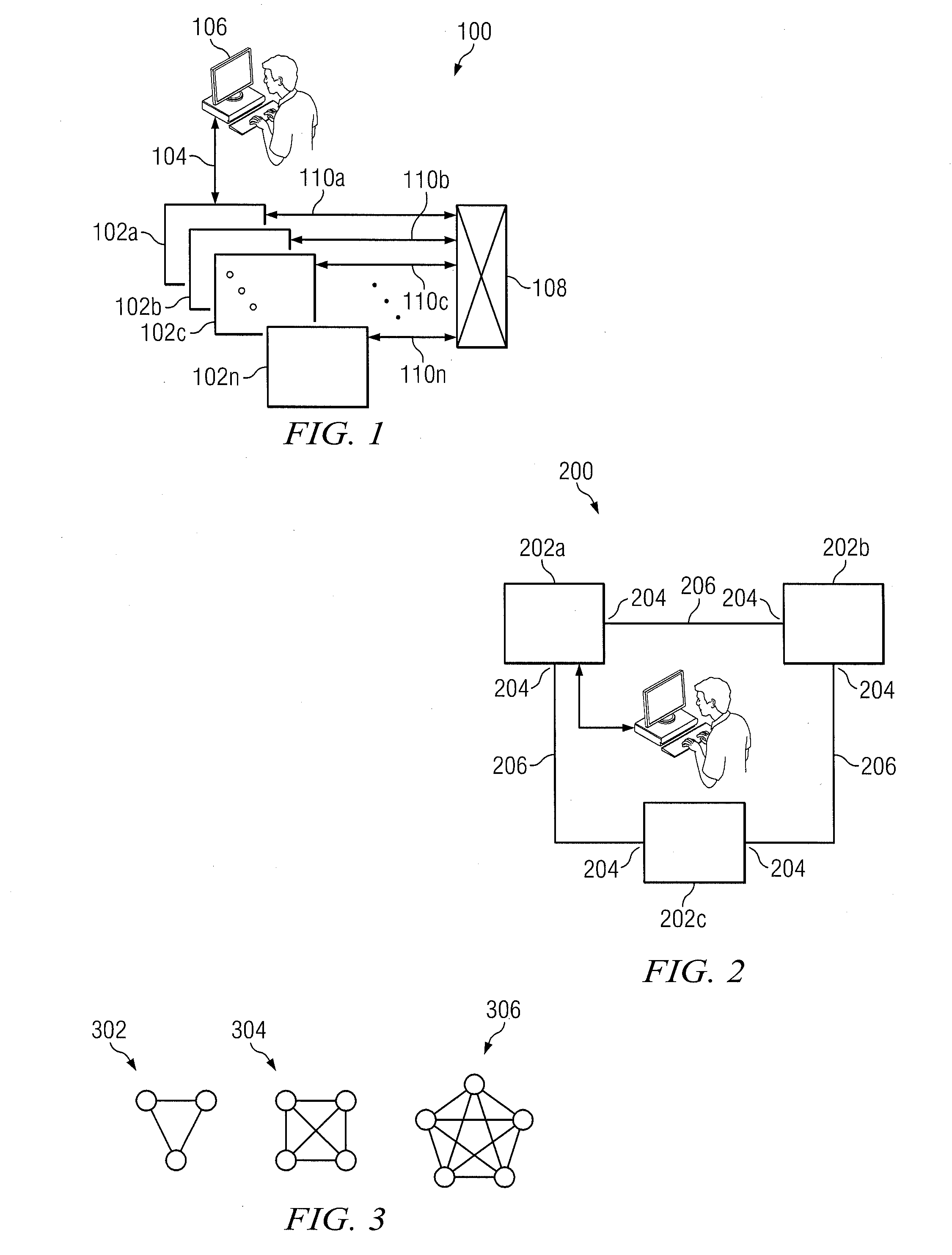

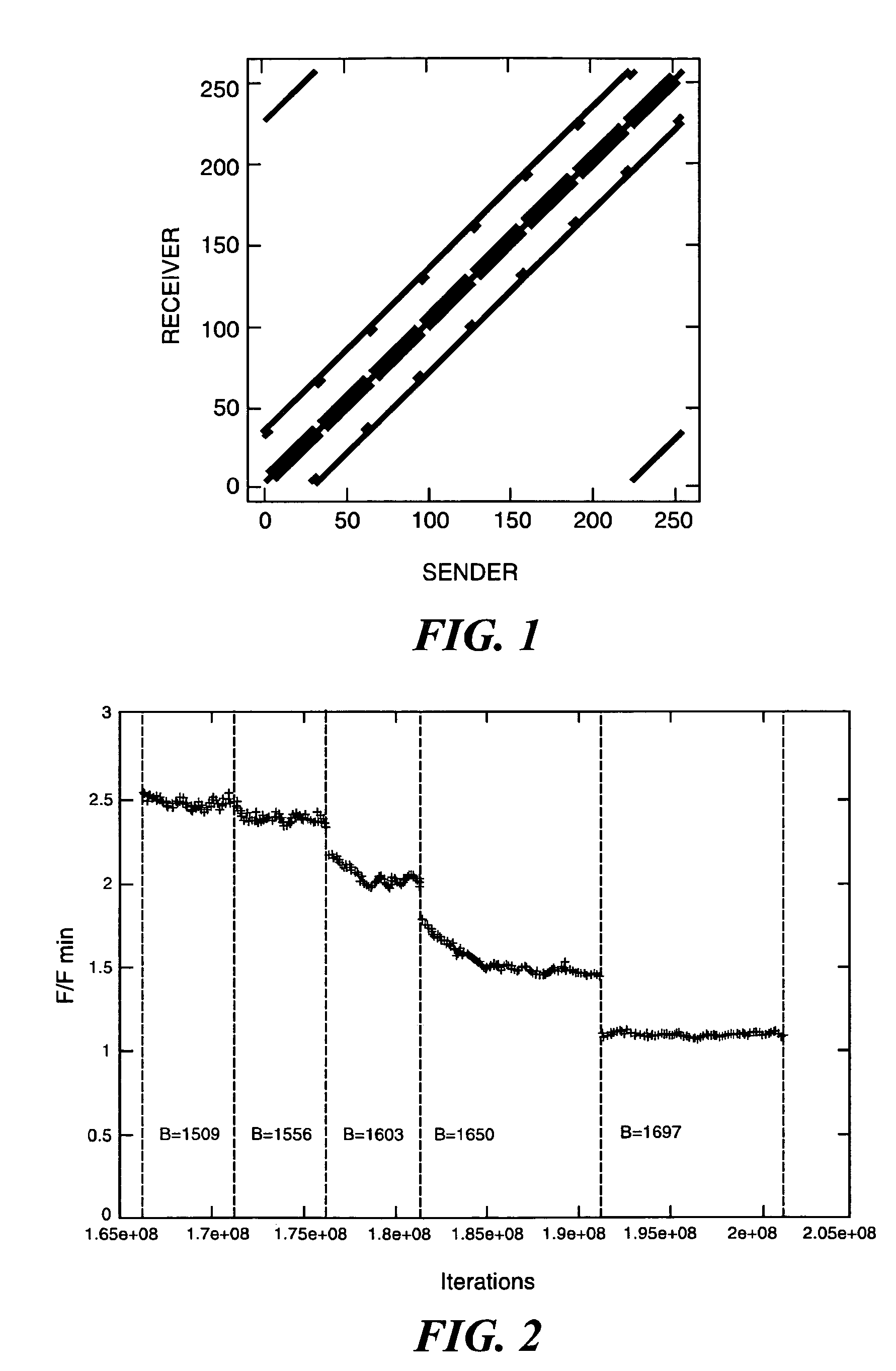

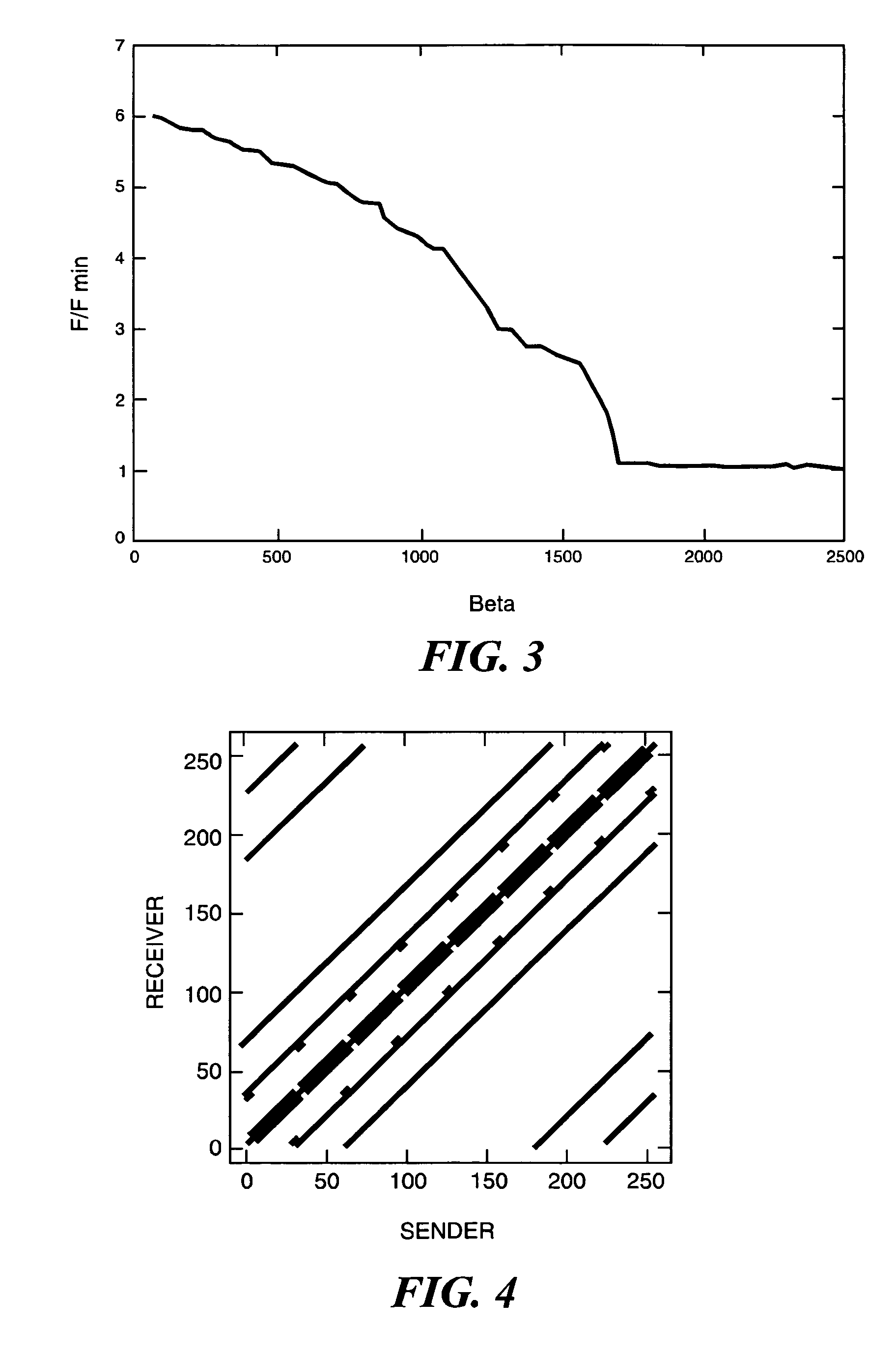

Optimizing layout of an application on a massively parallel supercomputer

InactiveUS20060101104A1Shorten the timeMinimize cost functionDigital data processing detailsDigital computer detailsMarkov chainSupercomputer

A general computer-implement method and apparatus to optimize problem layout on a massively parallel supercomputer is described. The method takes as input the communication matrix of an arbitrary problem in the form of an array whose entries C(i, j) are the amount to data communicated from domain i to domain j. Given C(i, j), first implement a heuristic map is implemented which attempts sequentially to map a domain and its communications neighbors either to the same supercomputer node or to near-neighbor nodes on the supercomputer torus while keeping the number of domains mapped to a supercomputer node constant (as much as possible). Next a Markov Chain of maps is generated from the initial map using Monte Carlo simulation with Free Energy (cost function) F=Σi,jC(i,j)H(i,j)—where H(i,j) is the smallest number of hops on the supercomputer torus between domain i and domain j. On the cases tested, found was that the method produces good mappings and has the potential to be used as a general layout optimization tool for parallel codes. At the moment, the serial code implemented to test the method is un-optimized so that computation time to find the optimum map can be several hours on a typical PC. For production implementation, good parallel code for our algorithm would be required which could itself be implemented on supercomputer.

Owner:IBM CORP

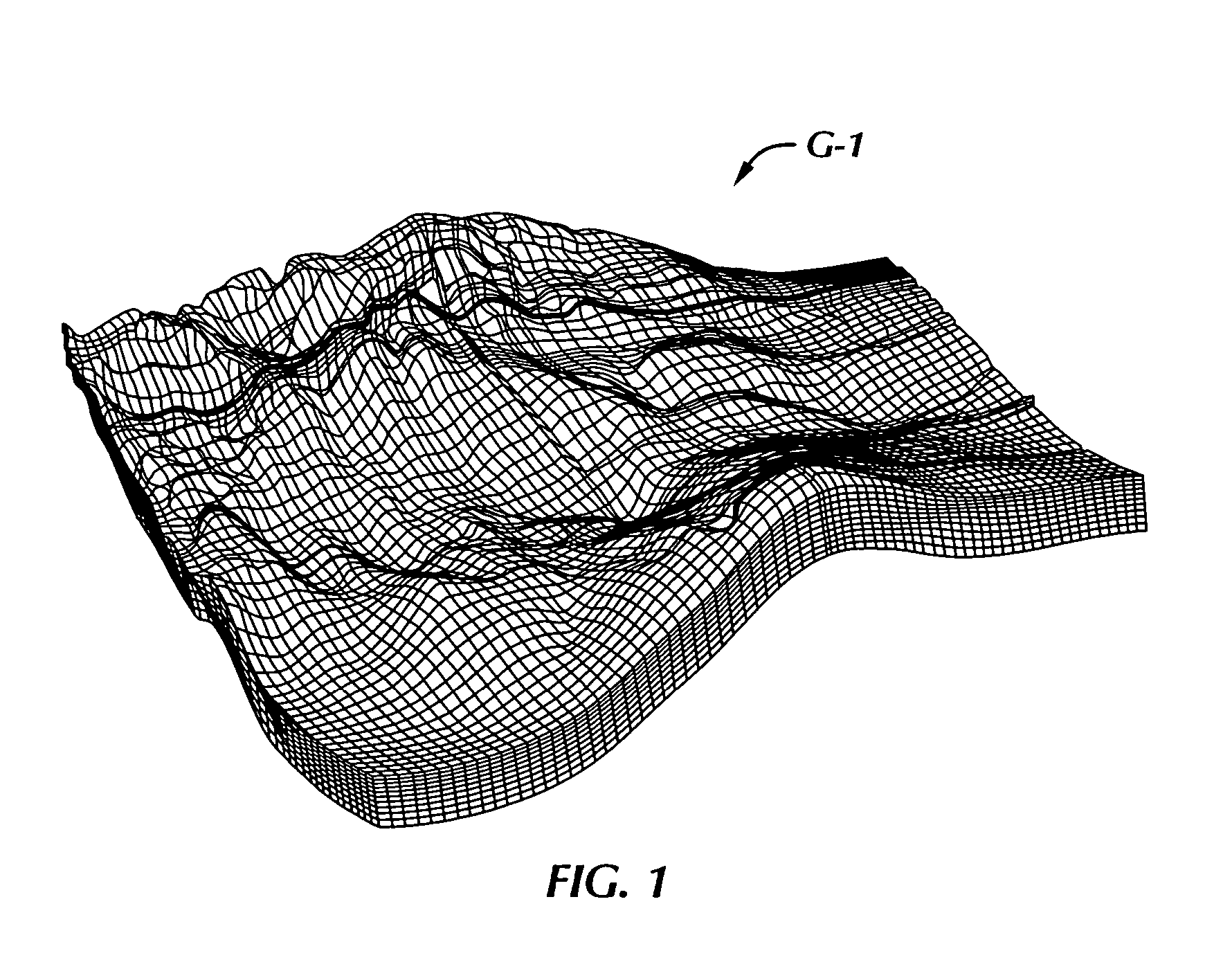

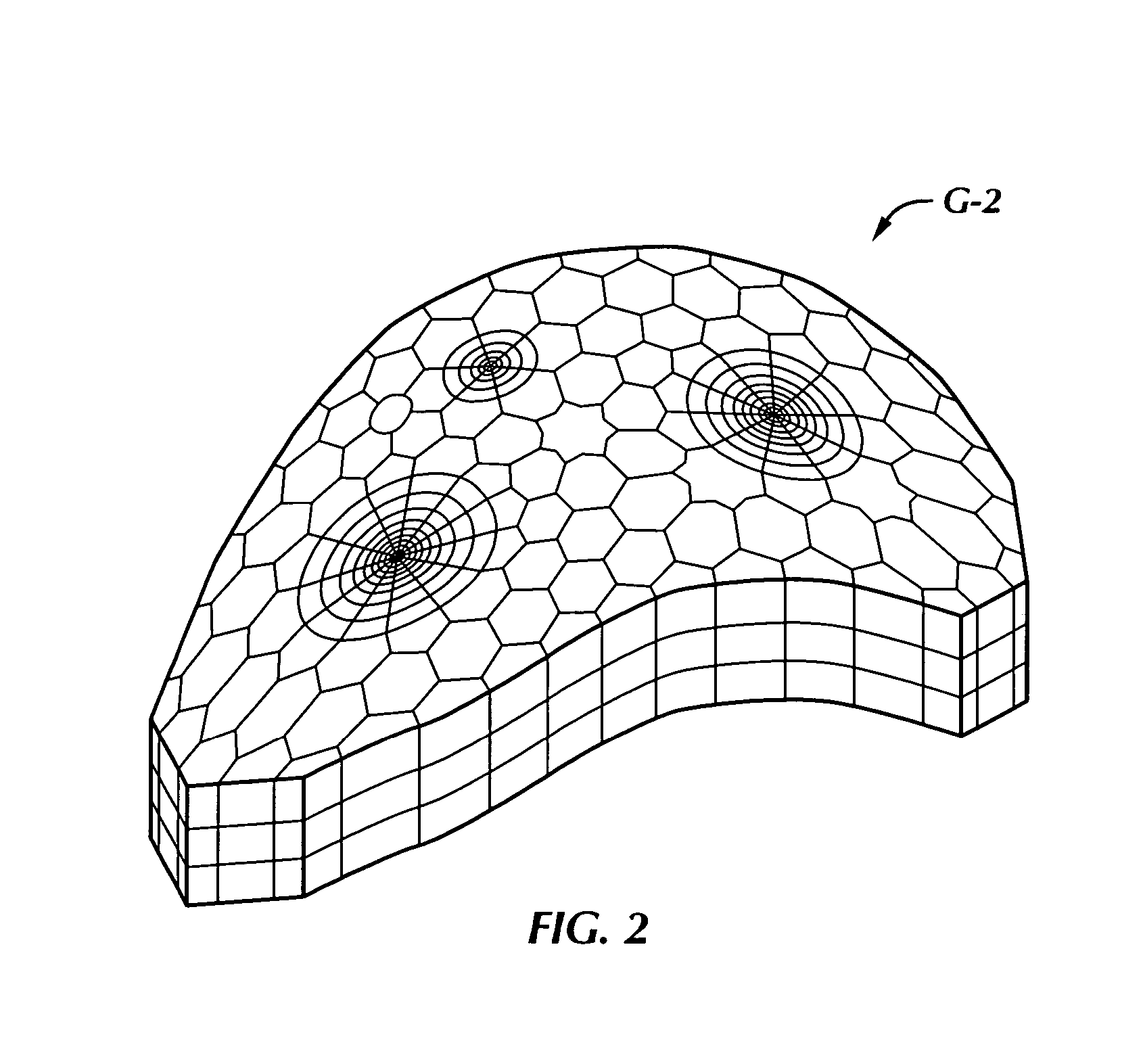

Solution method and apparatus for large-scale simulation of layered formations

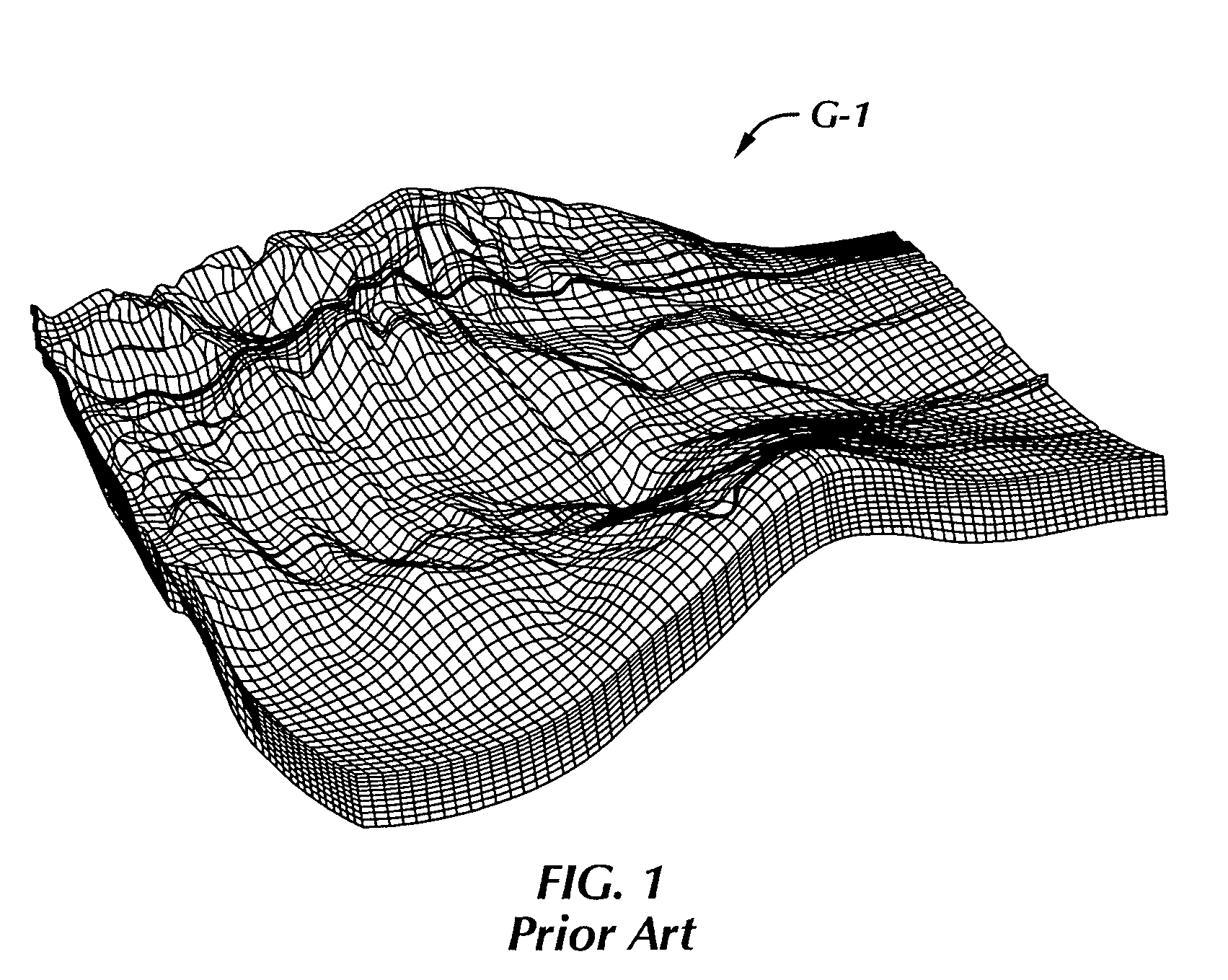

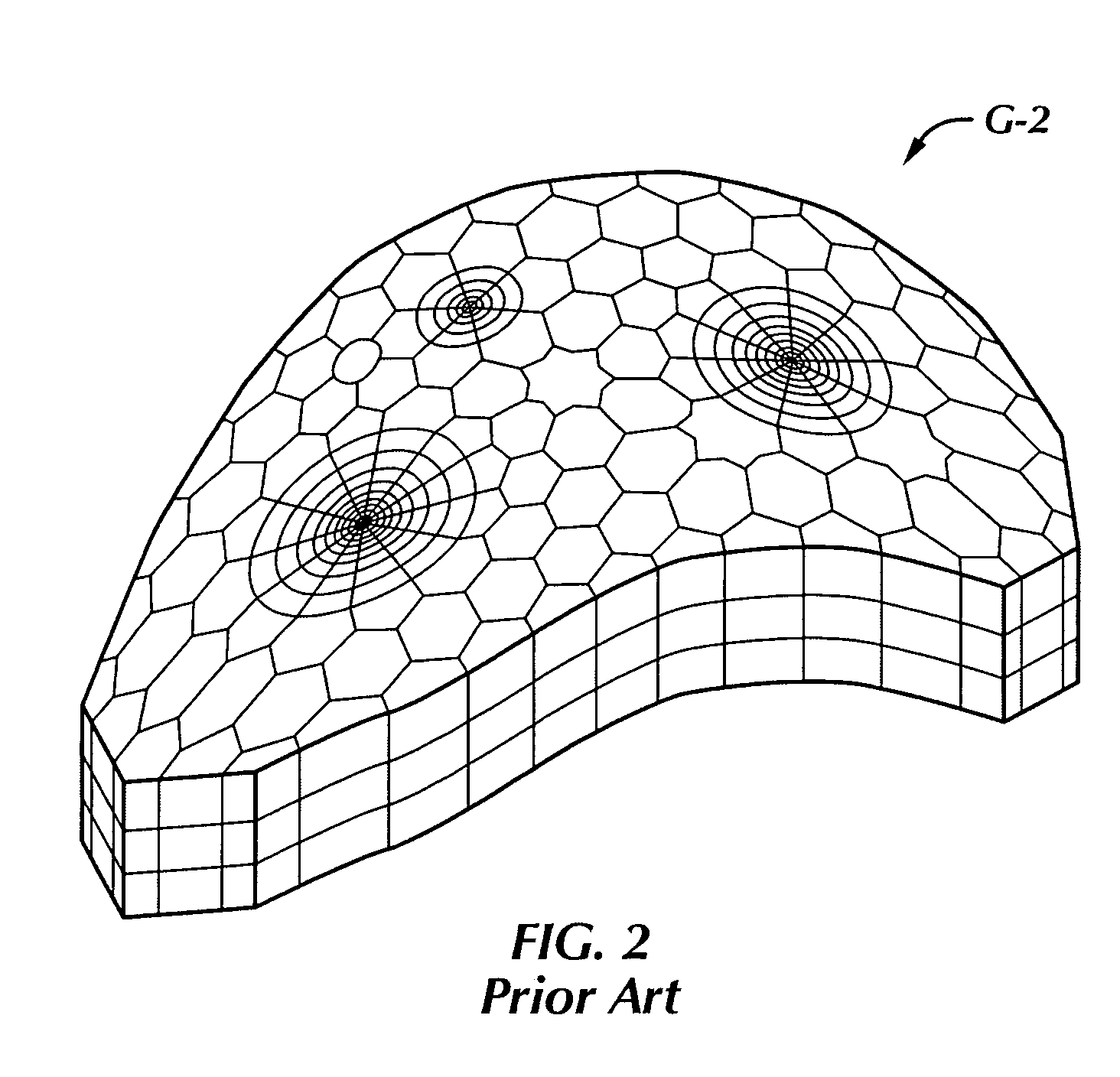

ActiveUS20060235667A1Design optimisation/simulationSpecial data processing applicationsSupercomputerAngular point

A targeted heterogeneous medium in the form of an underground layered formation is gridded into a layered structured grid or a layered semi-unstructured grid. The structured grid can be of the irregular corner-point-geometry grid type or the simple Cartesian grid type. The semi-unstructured grid is areally unstructured, formed by arbitrarily connected control-volumes derived from the dual grid of a suitable triangulation; but the connectivity pattern does not change from layer to layer. Problems with determining fluid movement and other state changes in the formation are solved by exploiting the layered structure of the medium. The techniques are particularly suited for large-scale simulation by parallel processing on a supercomputer with multiple central processing units (CPU's).

Owner:SAUDI ARABIAN OIL CO

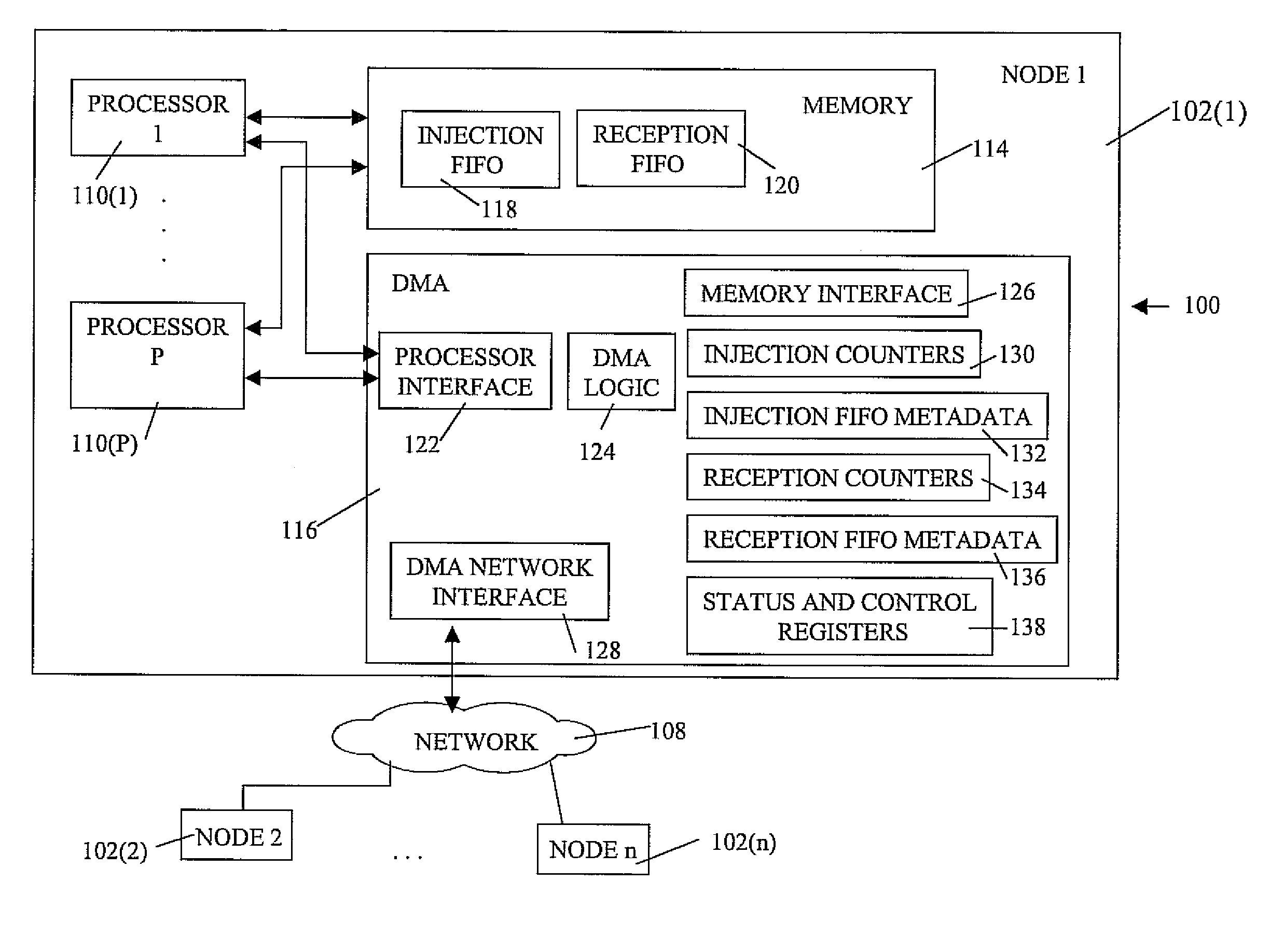

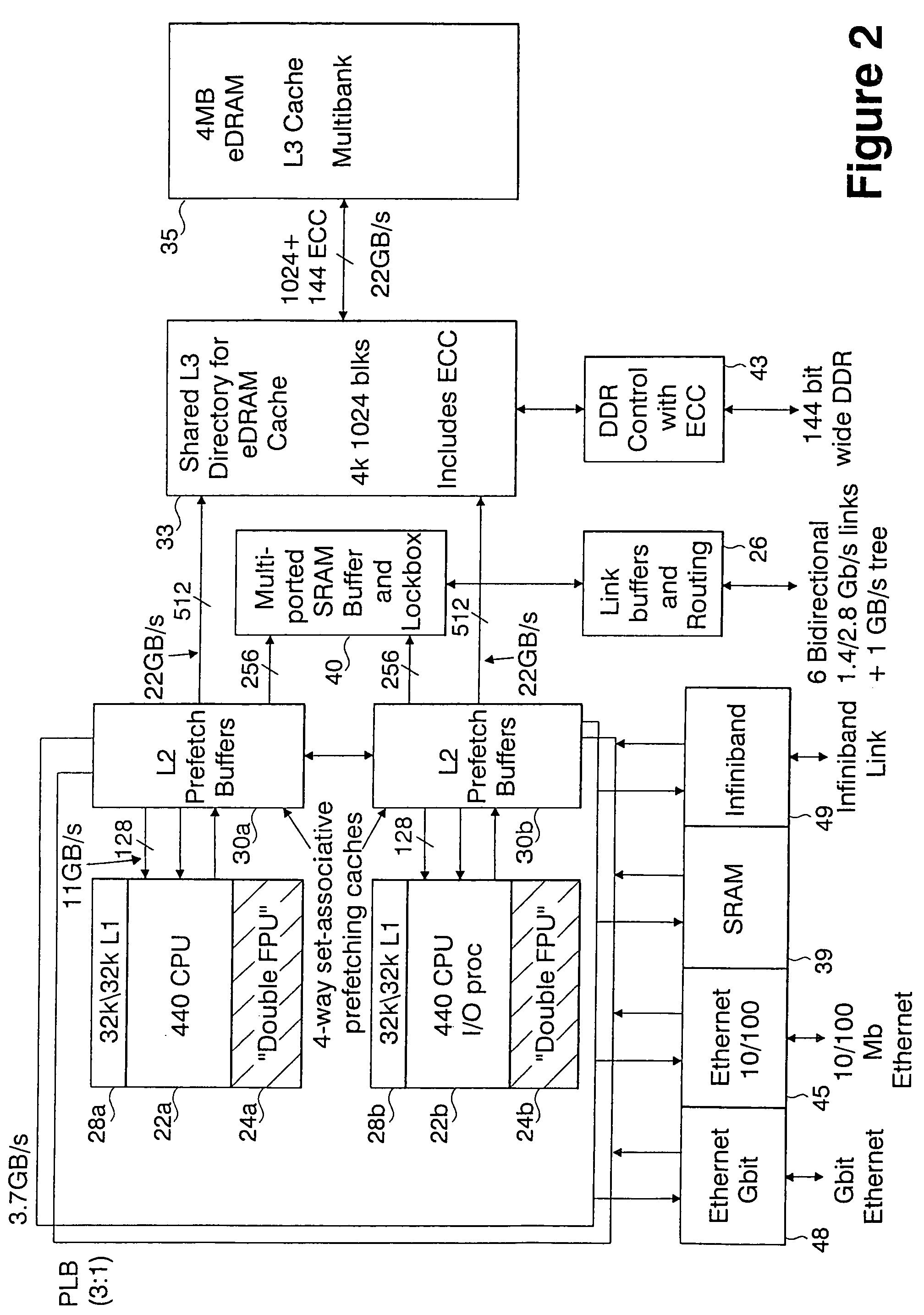

Ultrascalable petaflop parallel supercomputer

InactiveUS7761687B2Maximize throughputDelay minimizationGeneral purpose stored program computerElectric digital data processingSupercomputerPacket communication

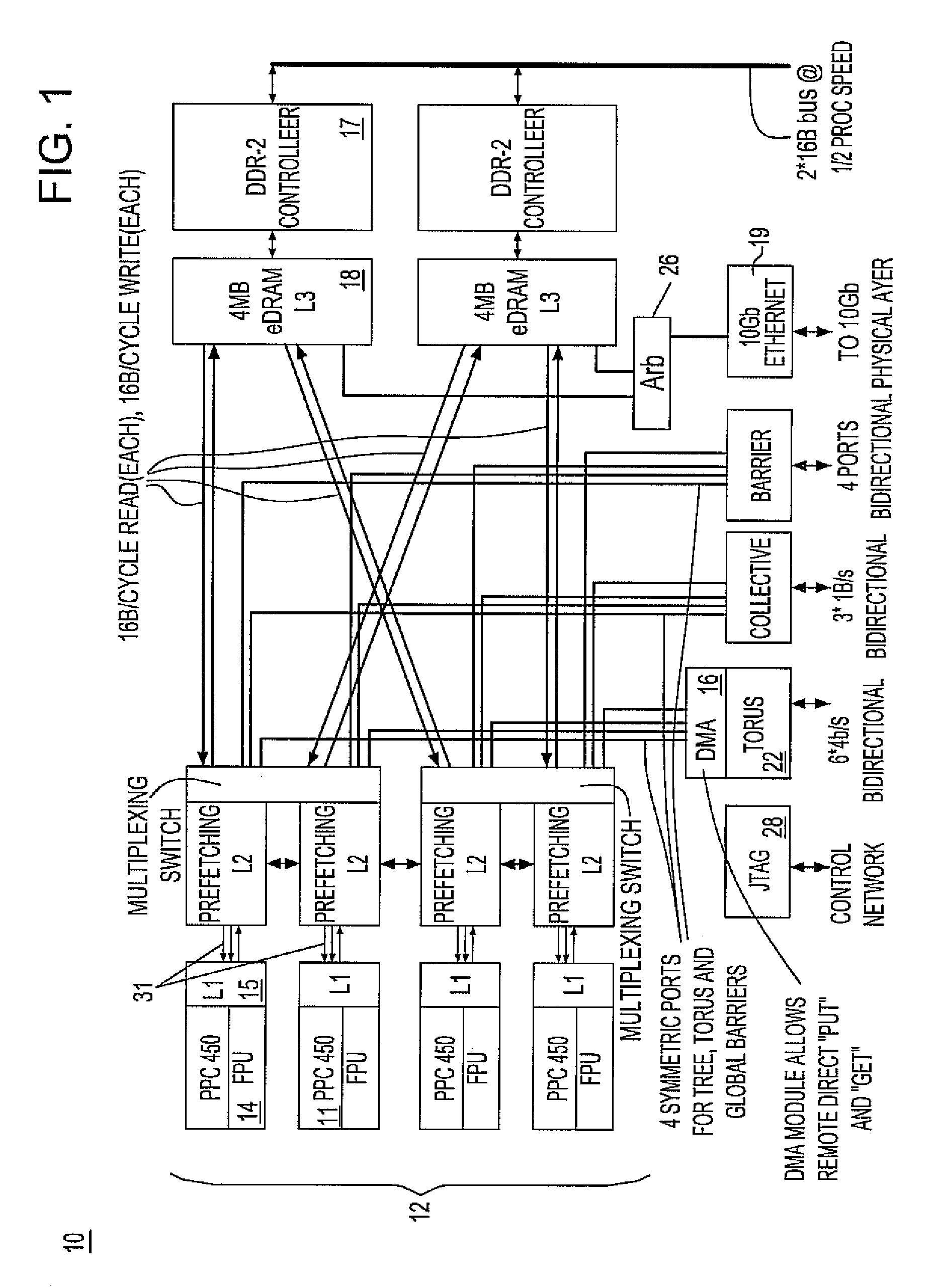

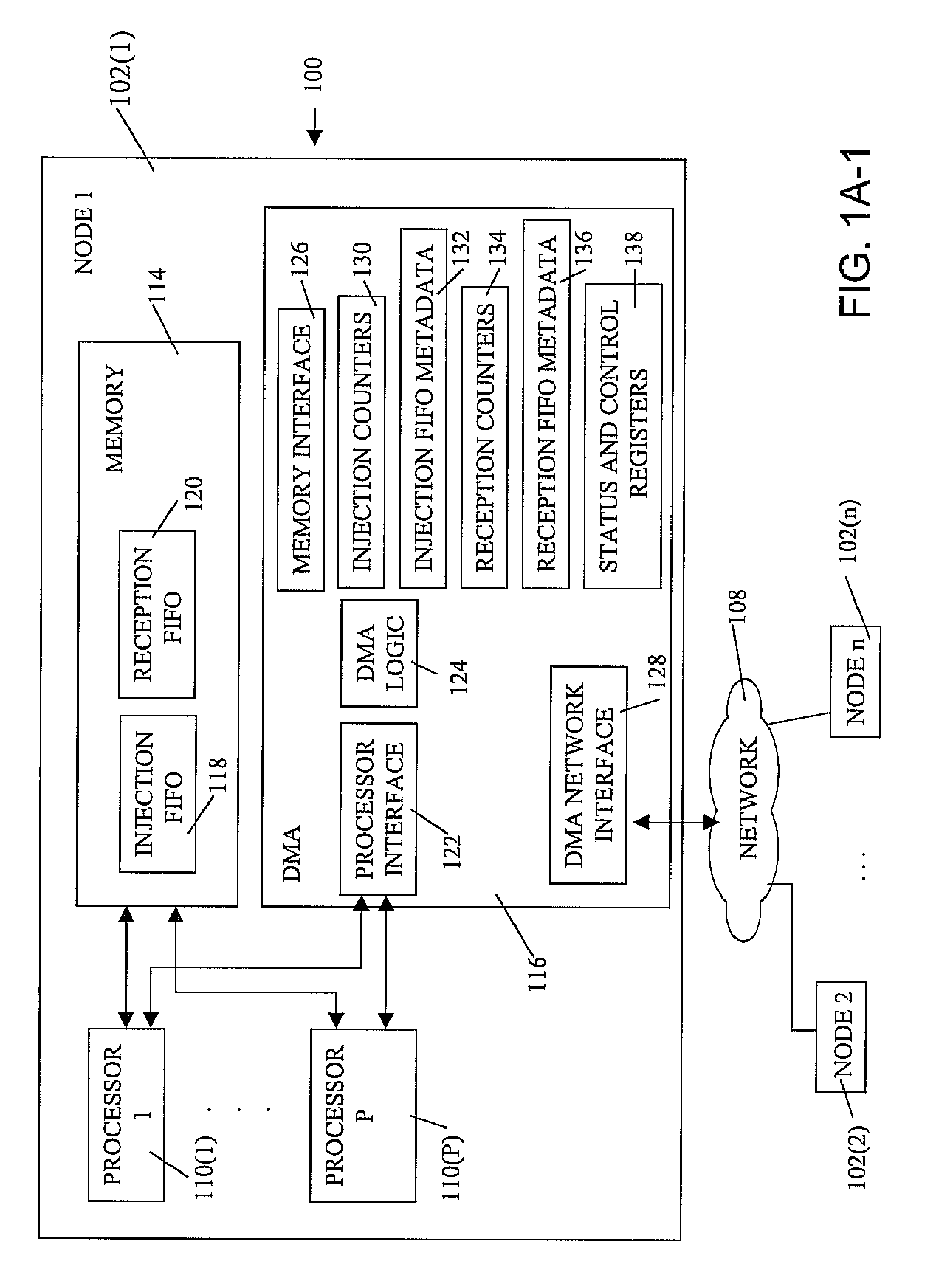

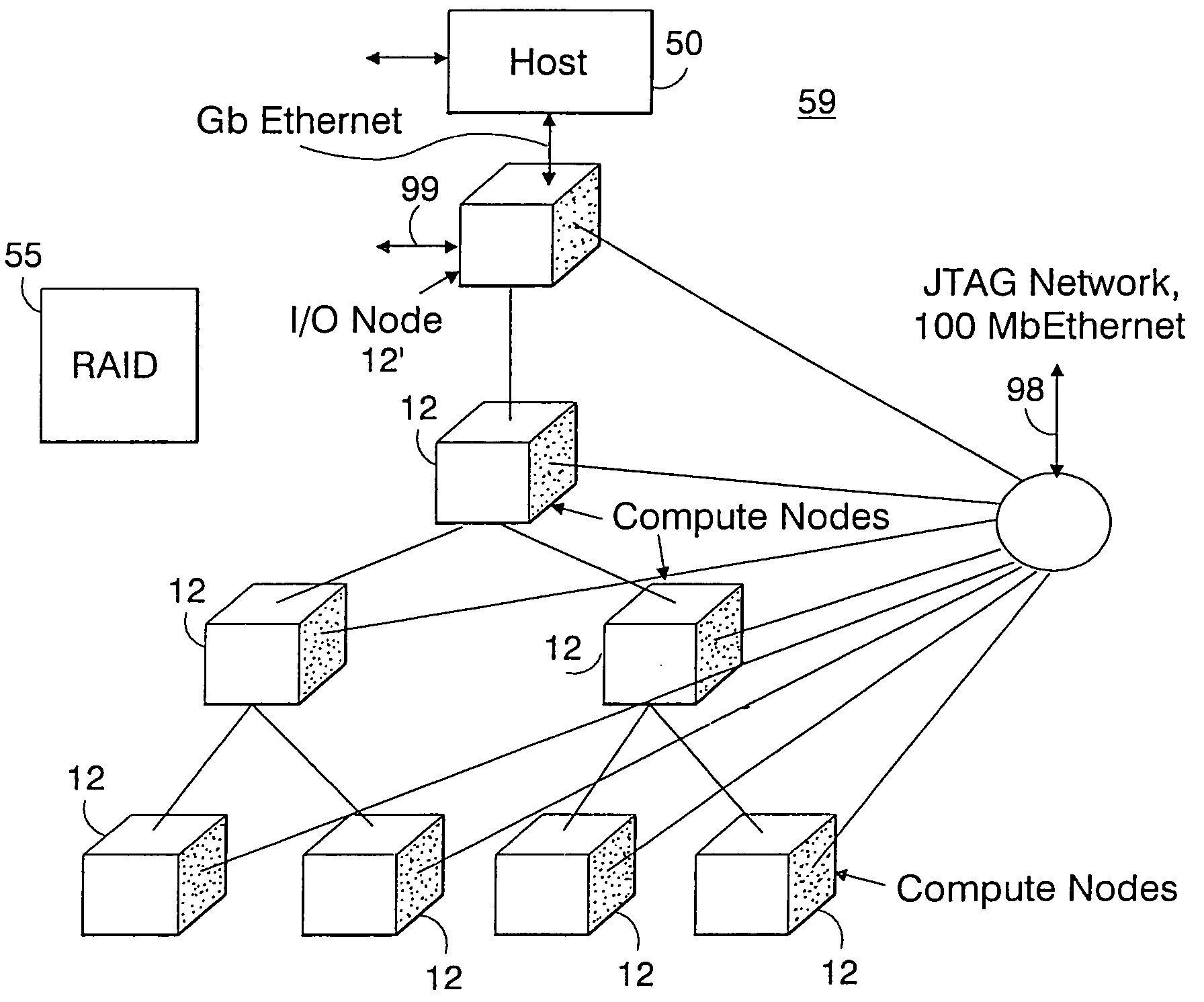

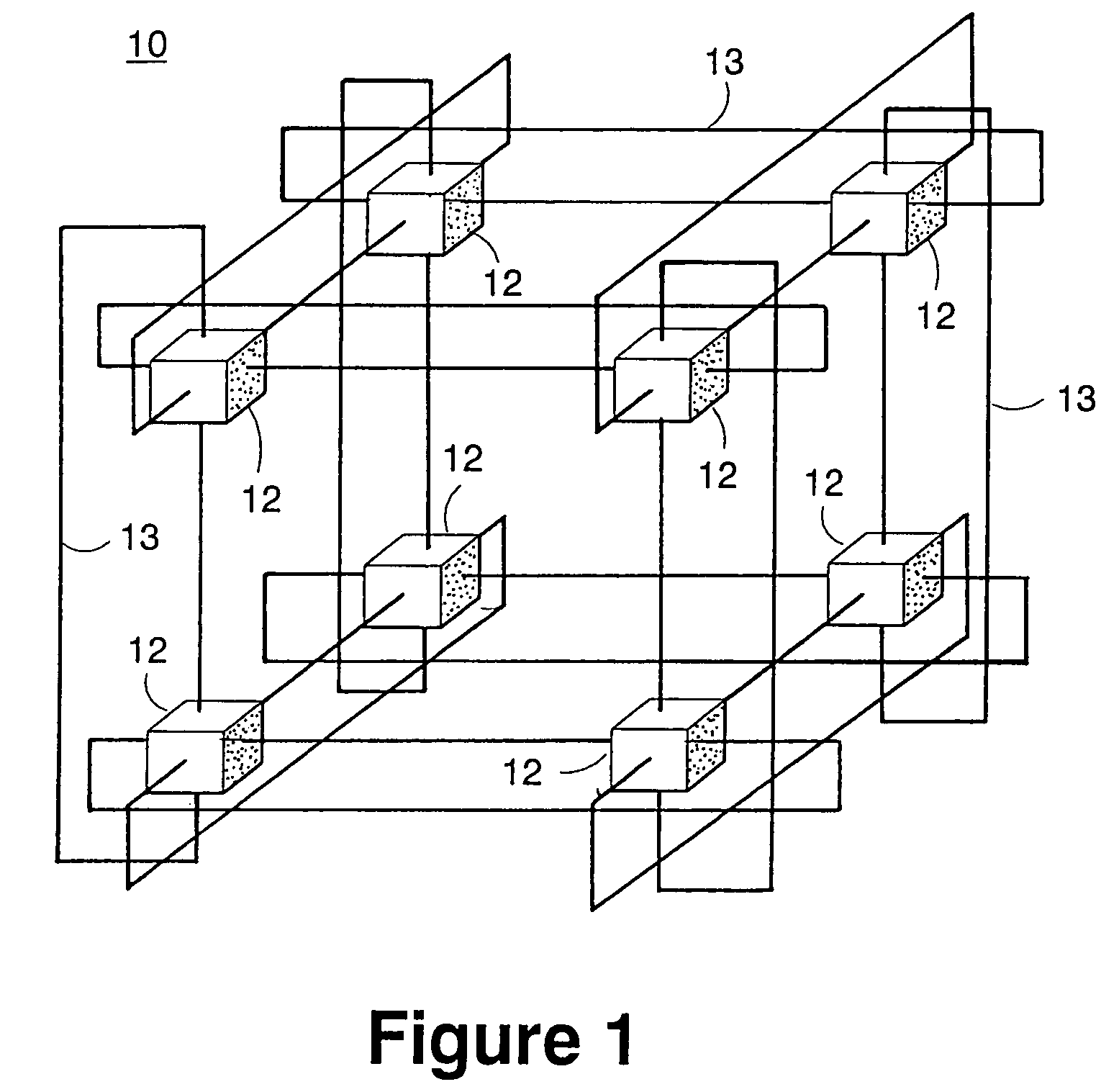

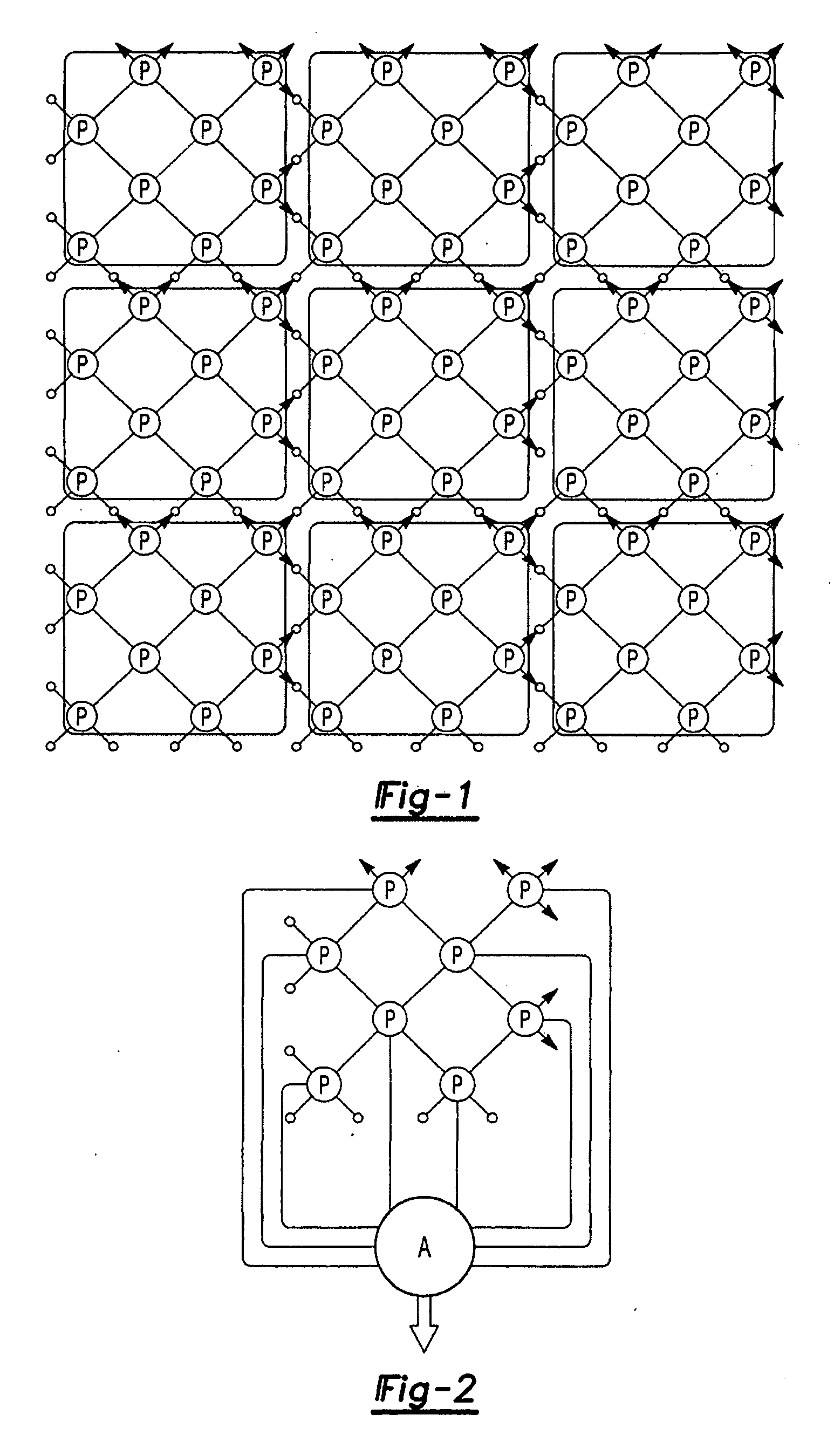

A massively parallel supercomputer of petaOPS-scale includes node architectures based upon System-On-a-Chip technology, where each processing node comprises a single Application Specific Integrated Circuit (ASIC) having up to four processing elements. The ASIC nodes are interconnected by multiple independent networks that optimally maximize the throughput of packet communications between nodes with minimal latency. The multiple networks may include three high-speed networks for parallel algorithm message passing including a Torus, collective network, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. The use of a DMA engine is provided to facilitate message passing among the nodes without the expenditure of processing resources at the node.

Owner:INT BUSINESS MASCH CORP

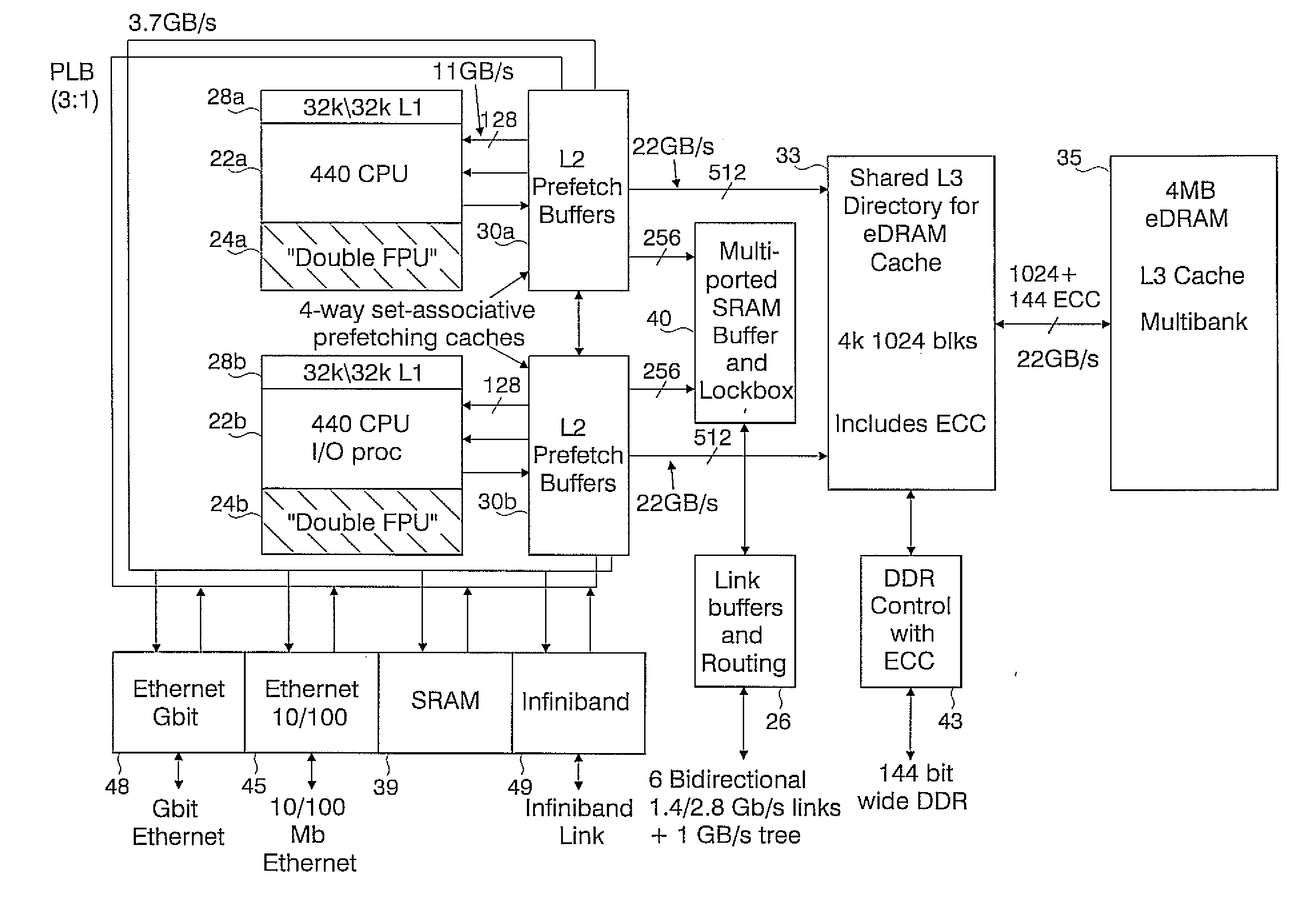

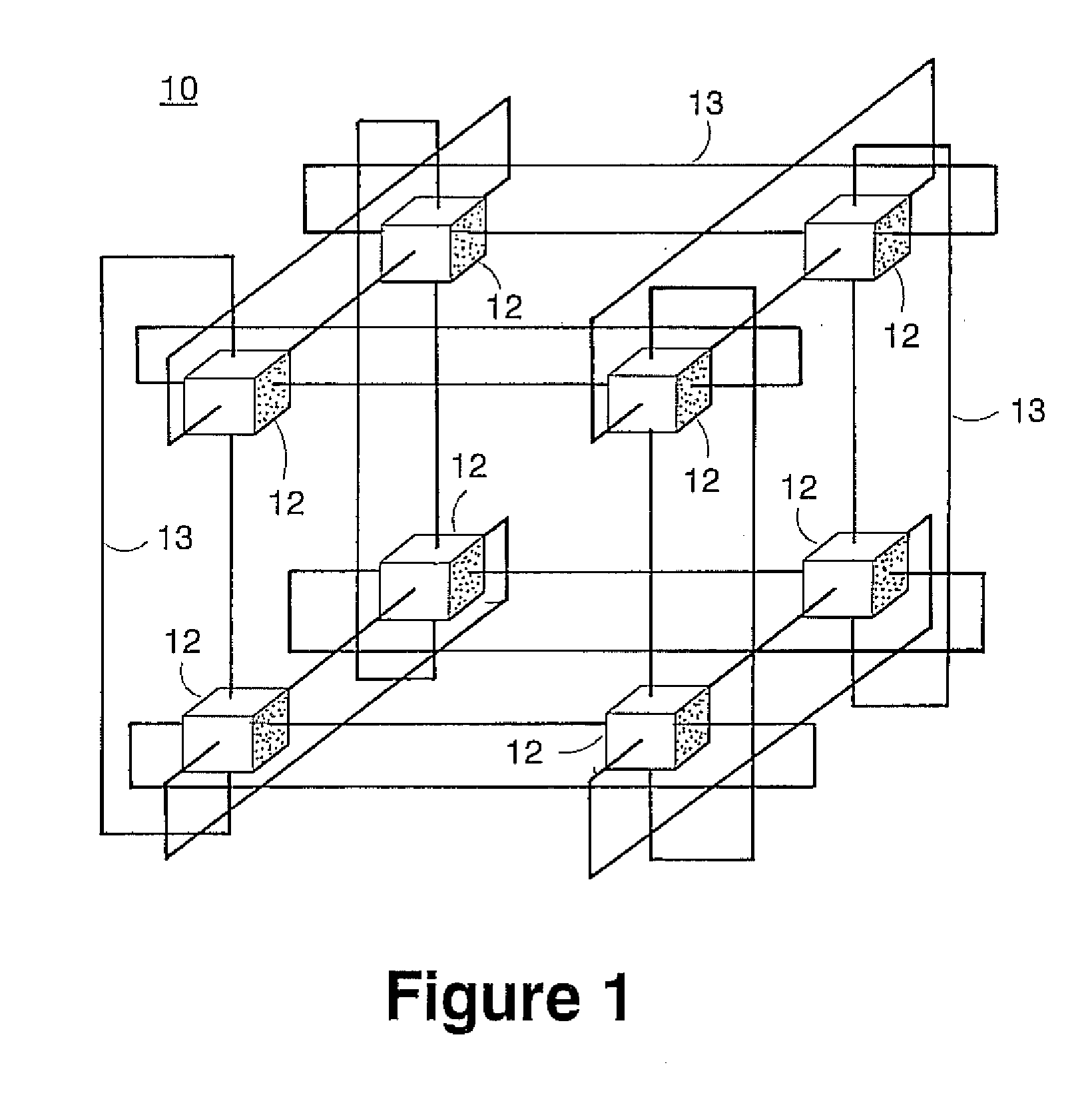

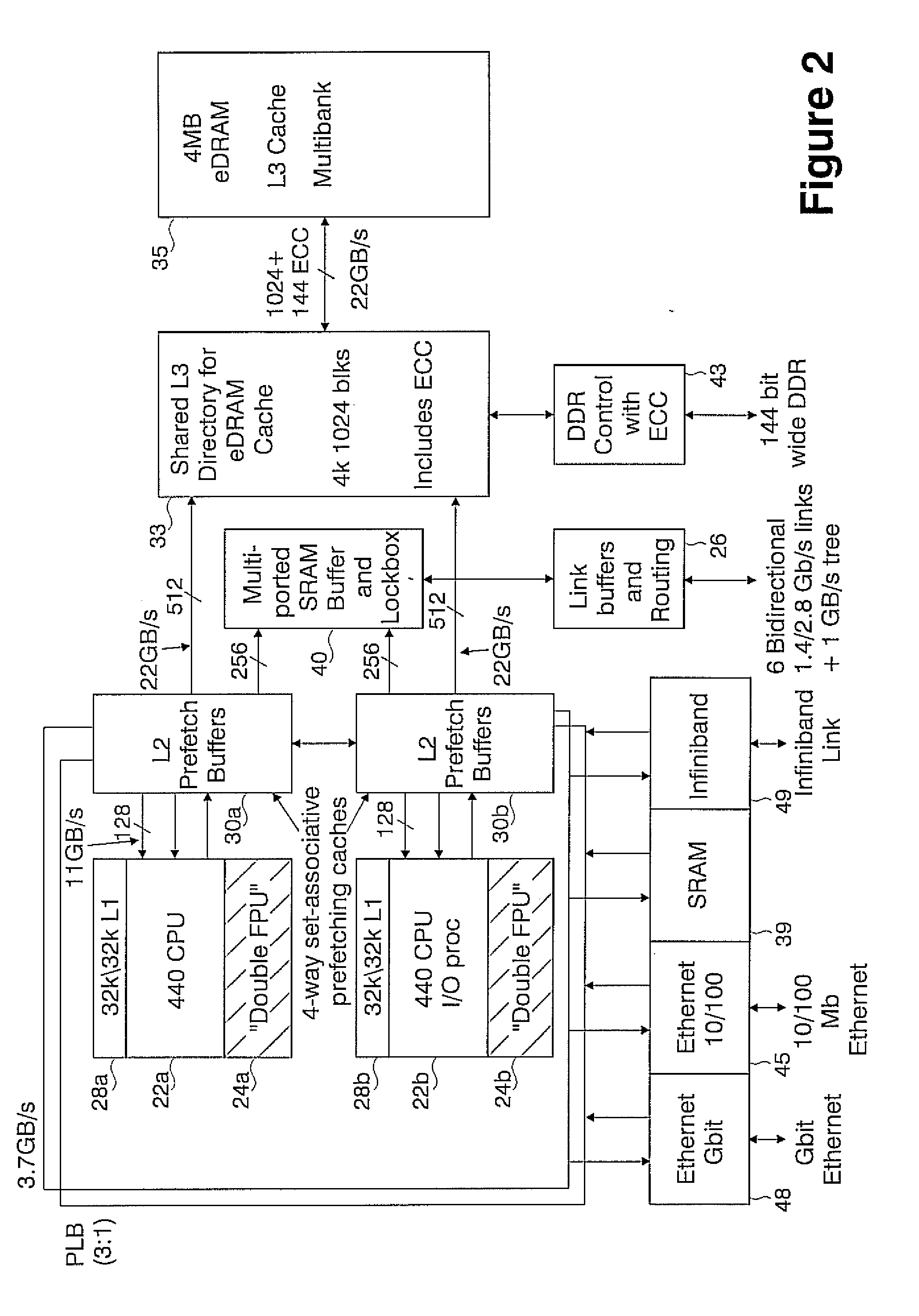

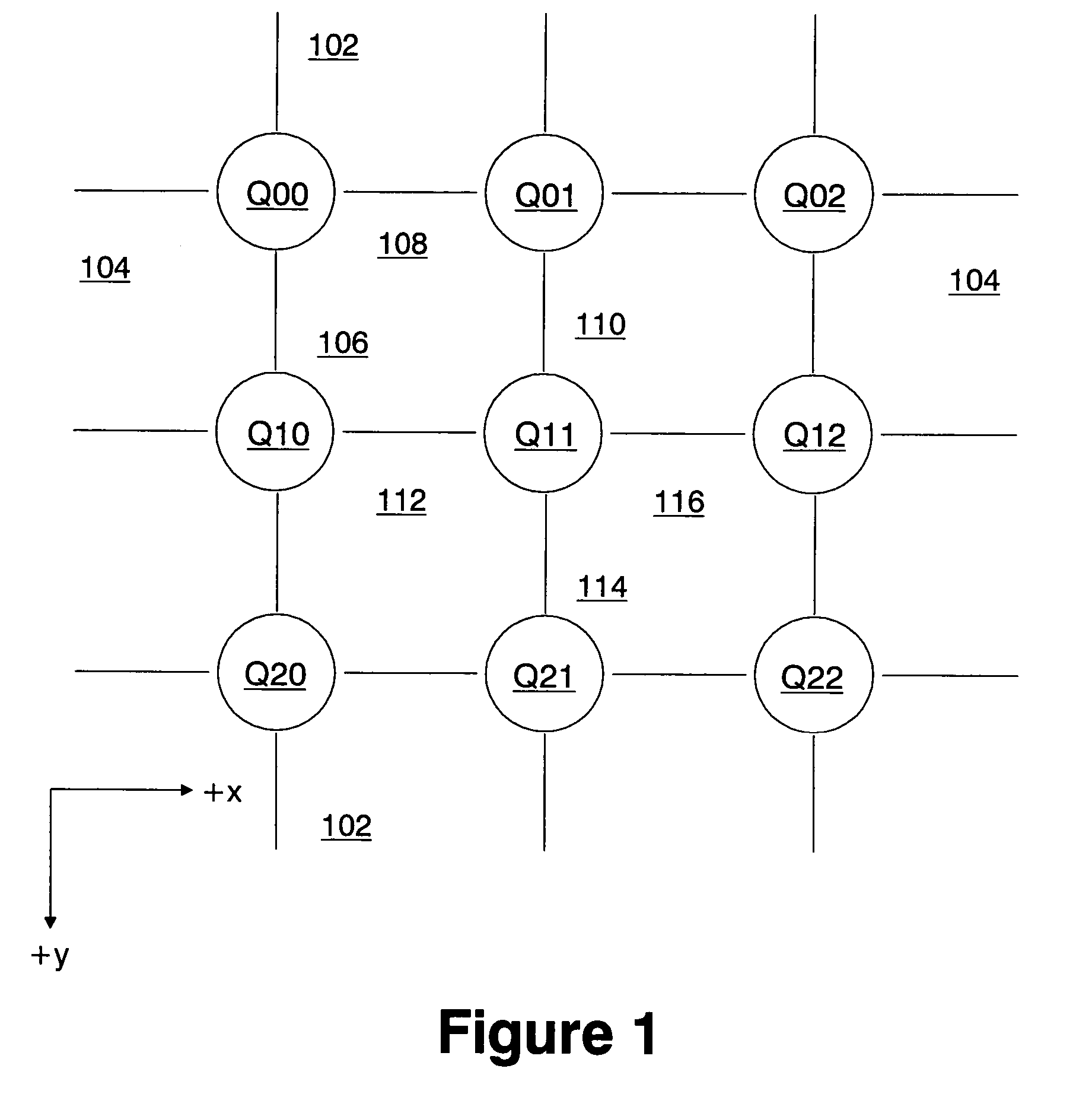

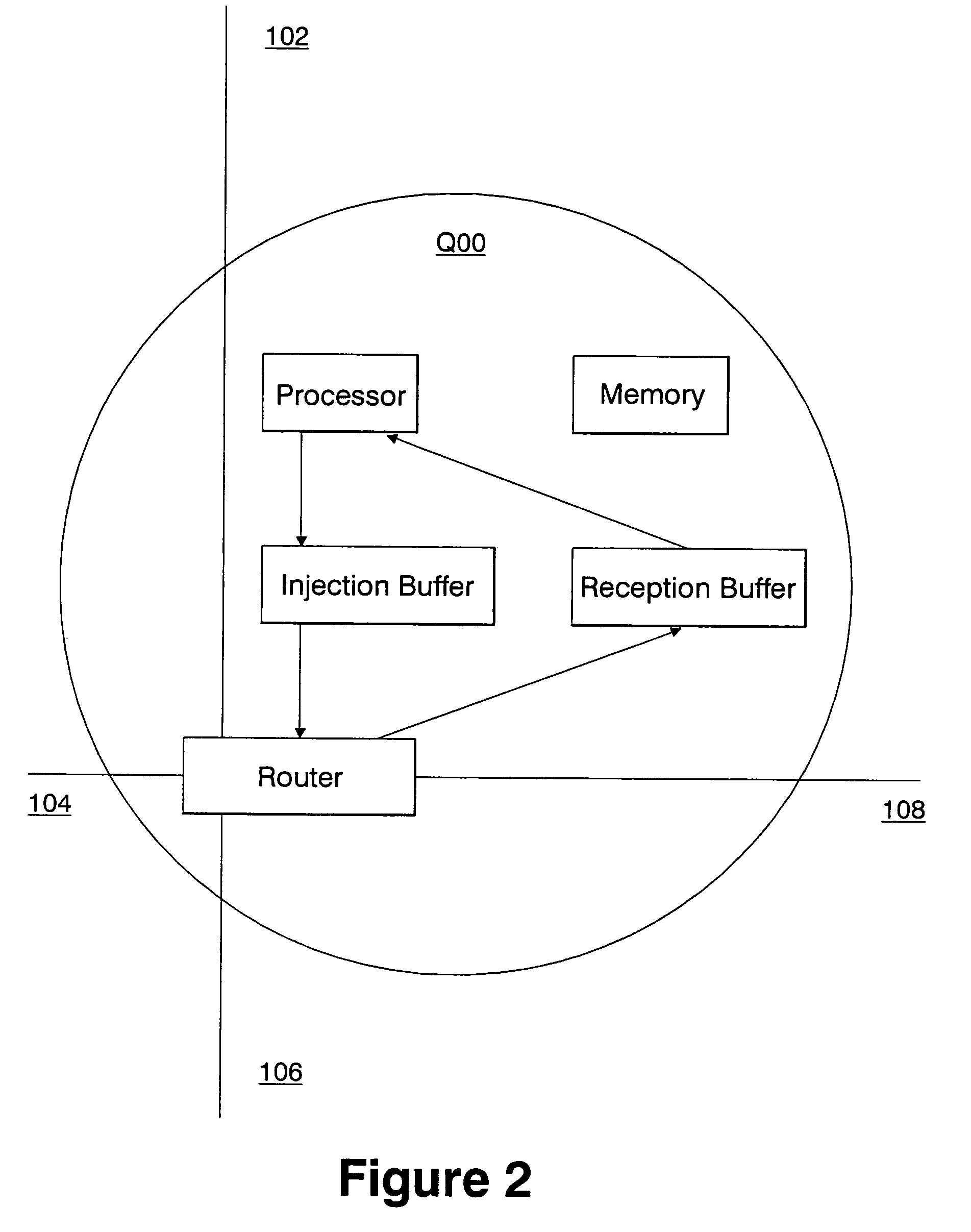

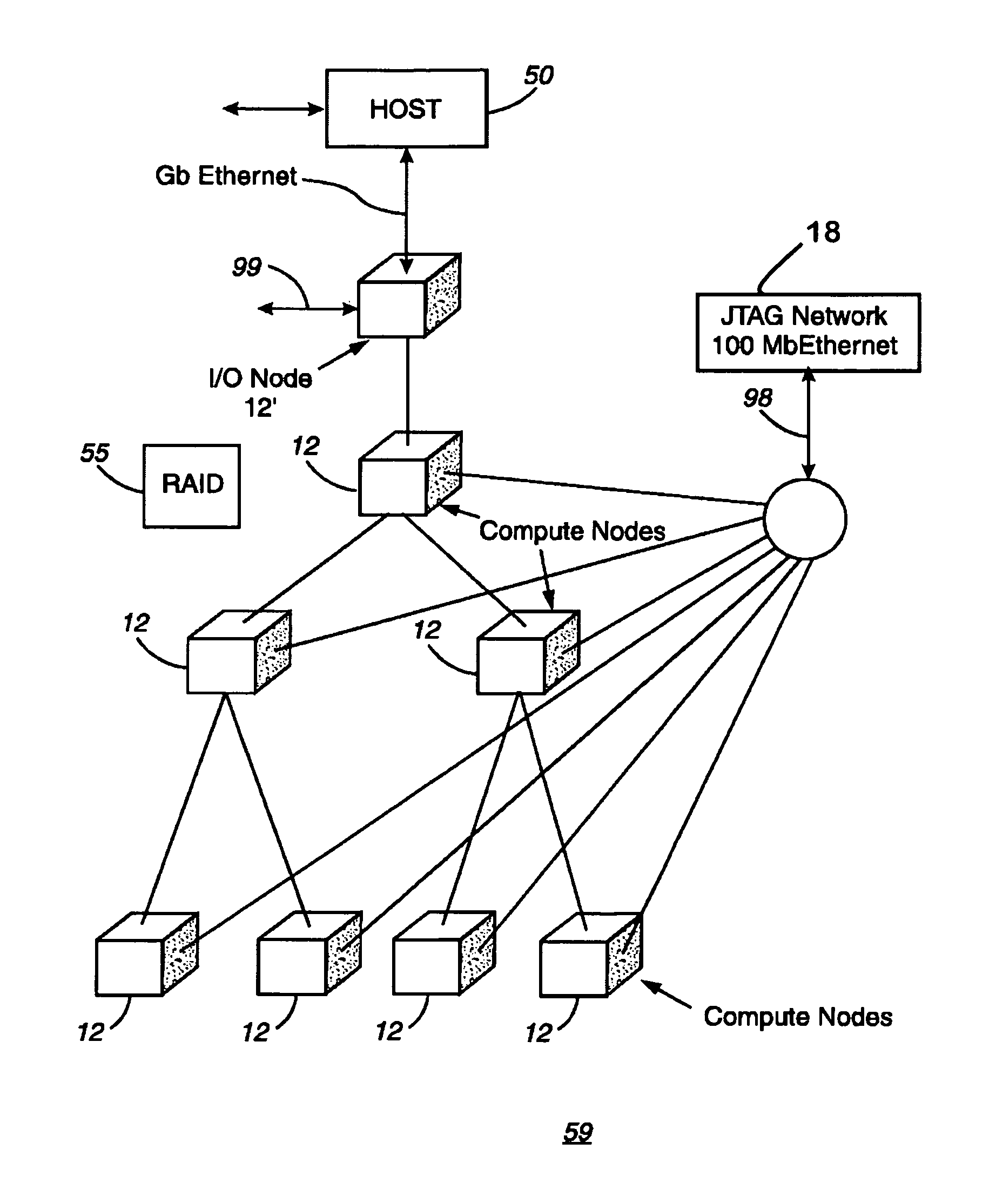

Massively parallel supercomputer

InactiveUS7555566B2Massive level of scalabilityUnprecedented level of scalabilityError preventionProgram synchronisationPacket communicationSupercomputer

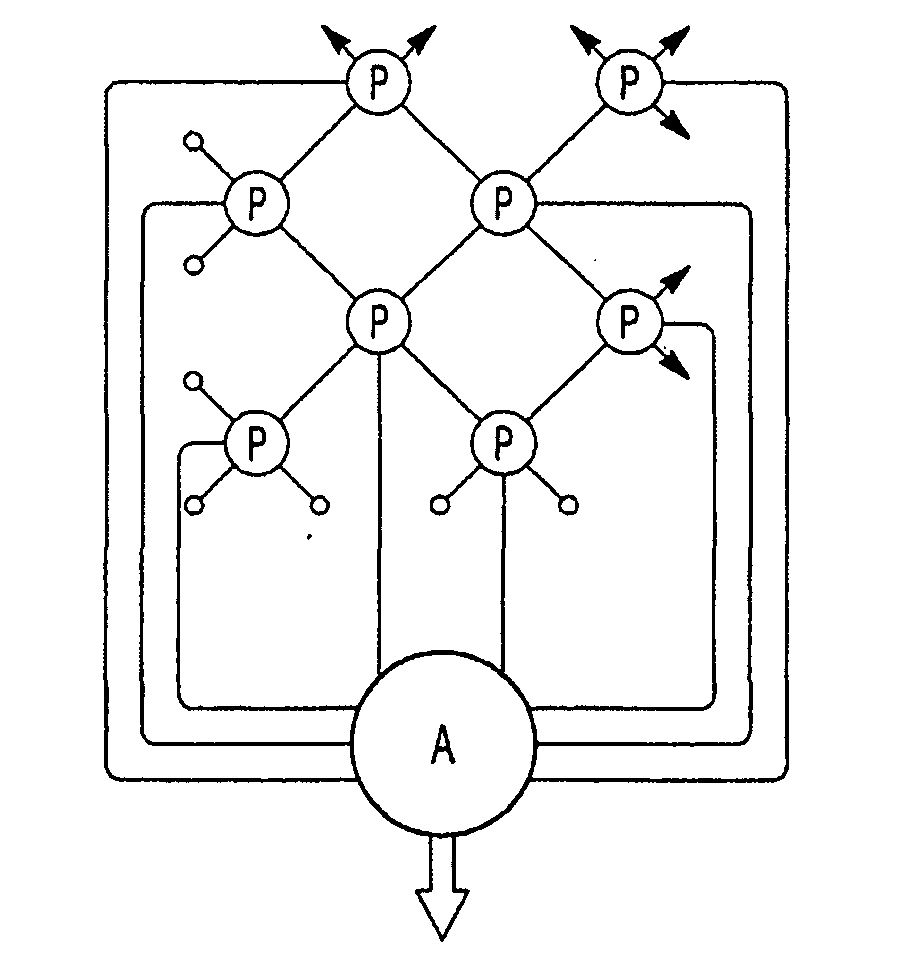

A novel massively parallel supercomputer of hundreds of teraOPS-scale includes node architectures based upon System-On-a-Chip technology, i.e., each processing node comprises a single Application Specific Integrated Circuit (ASIC). Within each ASIC node is a plurality of processing elements each of which consists of a central processing unit (CPU) and plurality of floating point processors to enable optimal balance of computational performance, packaging density, low cost, and power and cooling requirements. The plurality of processors within a single node may be used individually or simultaneously to work on any combination of computation or communication as required by the particular algorithm being solved or executed at any point in time. The system-on-a-chip ASIC nodes are interconnected by multiple independent networks that optimally maximizes packet communications throughput and minimizes latency. In the preferred embodiment, the multiple networks include three high-speed networks for parallel algorithm message passing including a Torus, Global Tree, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. For particular classes of parallel algorithms, or parts of parallel calculations, this architecture exhibits exceptional computational performance, and may be enabled to perform calculations for new classes of parallel algorithms. Additional networks are provided for external connectivity and used for Input / Output, System Management and Configuration, and Debug and Monitoring functions. Special node packaging techniques implementing midplane and other hardware devices facilitates partitioning of the supercomputer in multiple networks for optimizing supercomputing resources.

Owner:IBM CORP

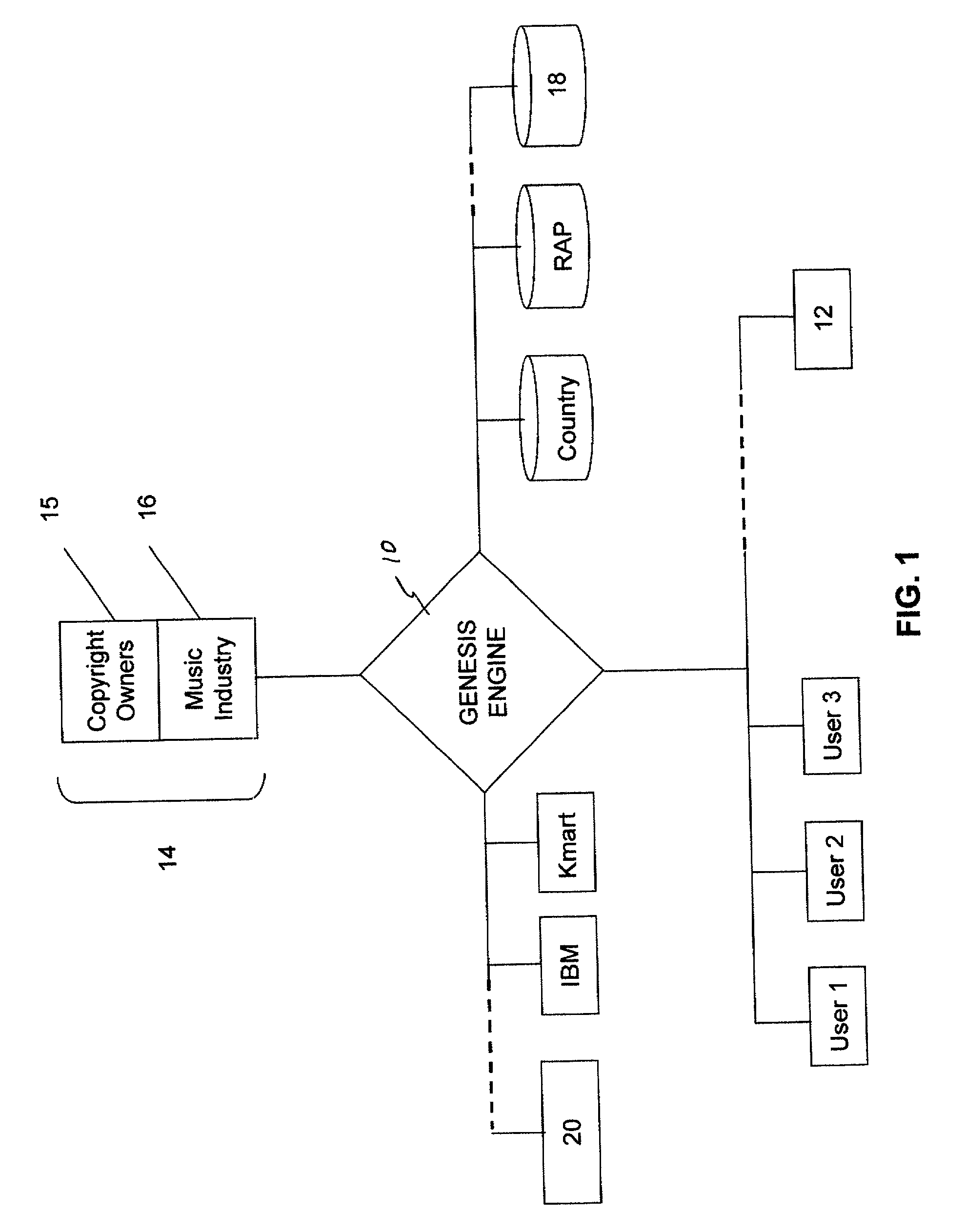

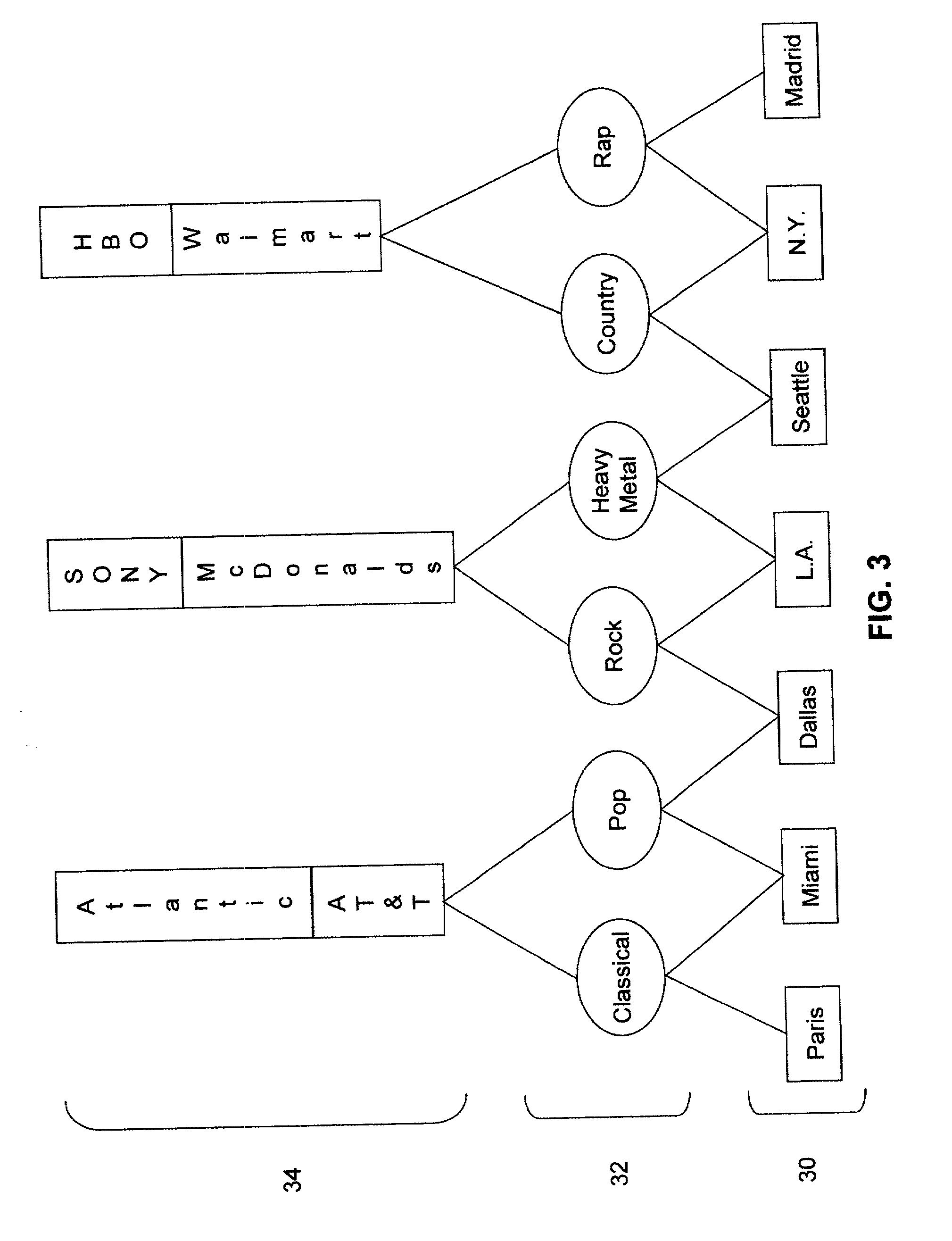

Method of and apparatus for delivery of proprietary audio and visual works to purchaser electronic devices

InactiveUS20010037304A1Computer security arrangementsBuying/selling/leasing transactionsSupercomputerData warehouse

A method of delivering audio and audio visual works to users of computer terminals includes the steps of: providing a data warehouse of digitized works; providing program means for end user computers to access, select and play at least one of the works; providing means for controlling end user access to the works and for collecting payment for playing at least one of the works; and diverting a portion of the payment for playing the at least one work to the holder of a copyright to the at least one work. The method preferably includes the additional steps of encrypting the works; and providing the end user with program means for deciphering the works. The method still further preferably includes additional the steps of delivering advertising matter to the end user with each work the end user selects and plays; keeping a record of the particular works each end user selects and plays; customizing advertising delivered to the end user to fit within any pattern of work selection by the particular end user. An apparatus for performing the method is also provided, including a computer hive made up of several inter-linked computers having specialized functions, the computers operating in unison to build a supercomputer that has shared disk space and memory, in which each node belongs to the collective and possesses its own business rules and membership to an organization managerial hierarchy.

Owner:PAIZ RICHARD S

Highly-parallel, implicit compositional reservoir simulator for multi-million-cell models

ActiveUS20060036418A1Reducing computer processing timeReduce processing timeElectric/magnetic detection for well-loggingPermeability/surface area analysisSupercomputerDistributed memory

A fully-parallelized, highly-efficient compositional implicit hydrocarbon reservoir simulator is provided. The simulator is capable of solving giant reservoir models, of the type frequently encountered in the Middle East and elsewhere in the world, with fast turnaround time. The simulator may be implemented in a variety of computer platforms ranging from shared-memory and distributed-memory supercomputers to commercial and self-made clusters of personal computers. The performance capabilities enable analysis of reservoir models in full detail, using both fine geological characterization and detailed individual definition of the hydrocarbon components present in the reservoir fluids.

Owner:SAUDI ARABIAN OIL CO

Solution method and apparatus for large-scale simulation of layered formations

ActiveUS7596480B2Design optimisation/simulationSpecial data processing applicationsSupercomputerTypes of mesh

A targeted heterogeneous medium in the form of an underground layered formation is gridded into a layered structured grid or a layered semi-unstructured grid. The structured grid can be of the irregular corner-point-geometry grid type or the simple Cartesian grid type. The semi-unstructured grid is really unstructured, formed by arbitrarily connected control-volumes derived from the dual grid of a suitable triangulation; but the connectivity pattern does not change from layer to layer. Problems with determining fluid movement and other state changes in the formation are solved by exploiting the layered structure of the medium. The techniques are particularly suited for large-scale simulation by parallel processing on a supercomputer with multiple central processing units (CPU's).

Owner:SAUDI ARABIAN OIL CO

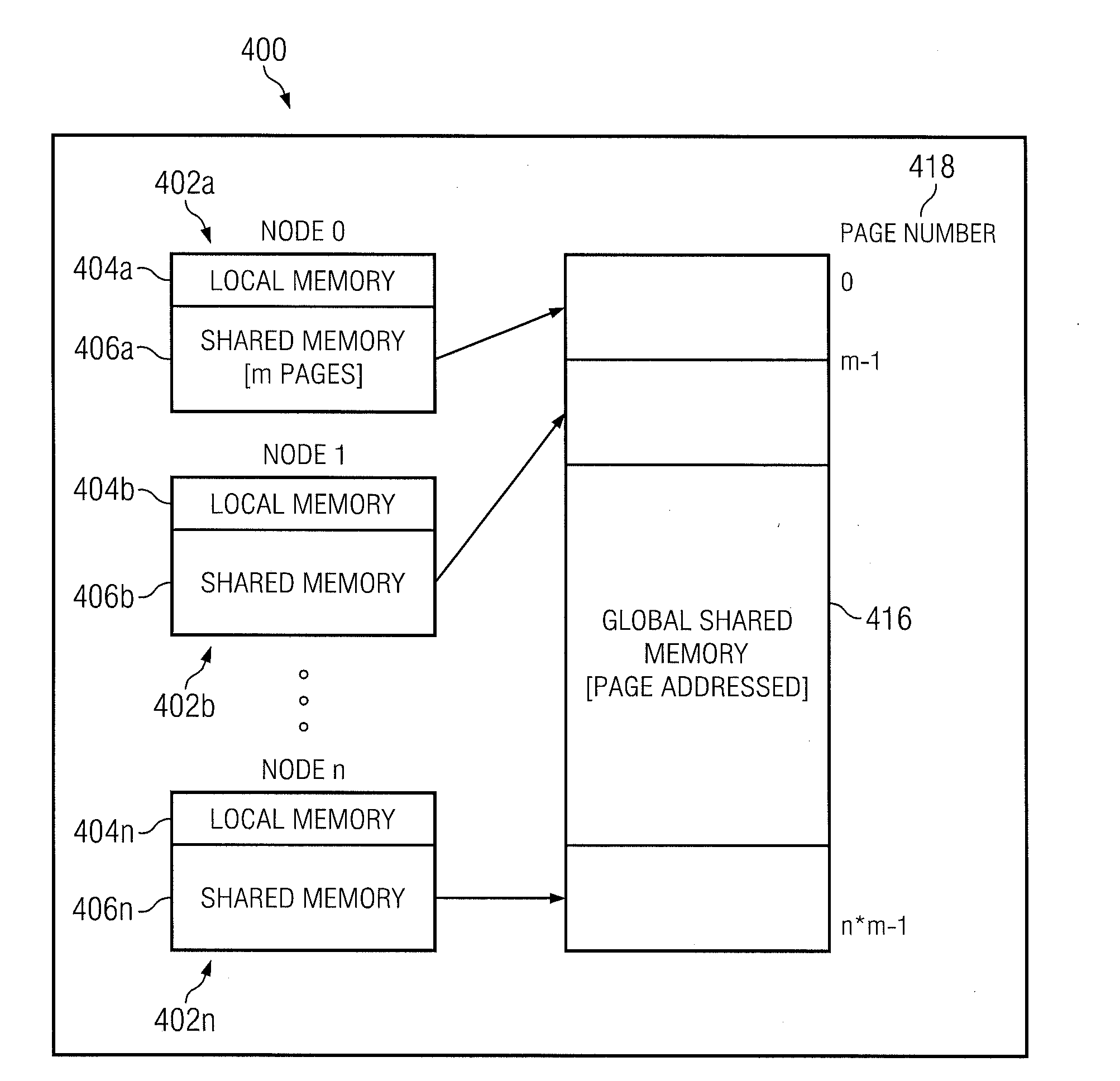

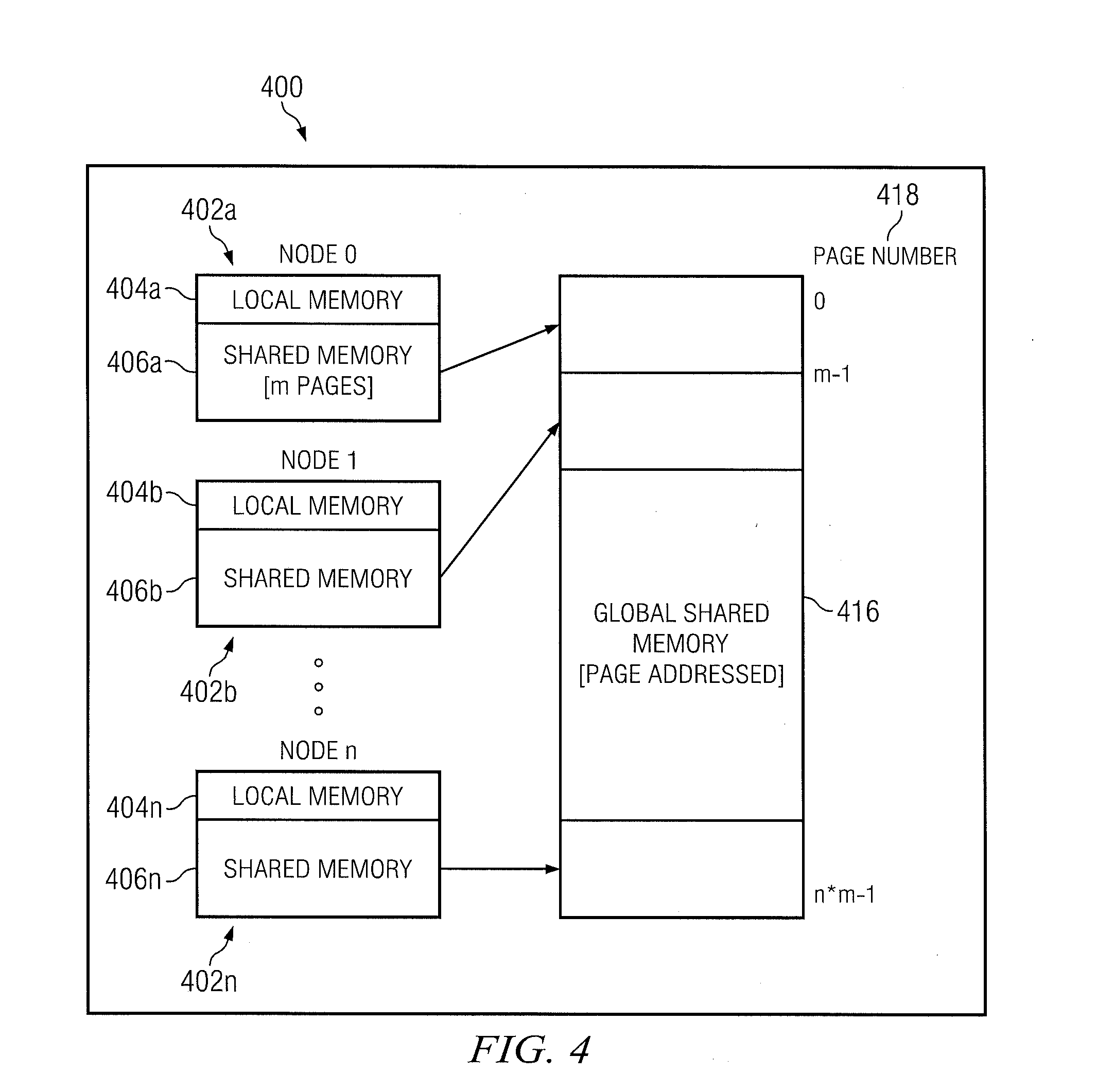

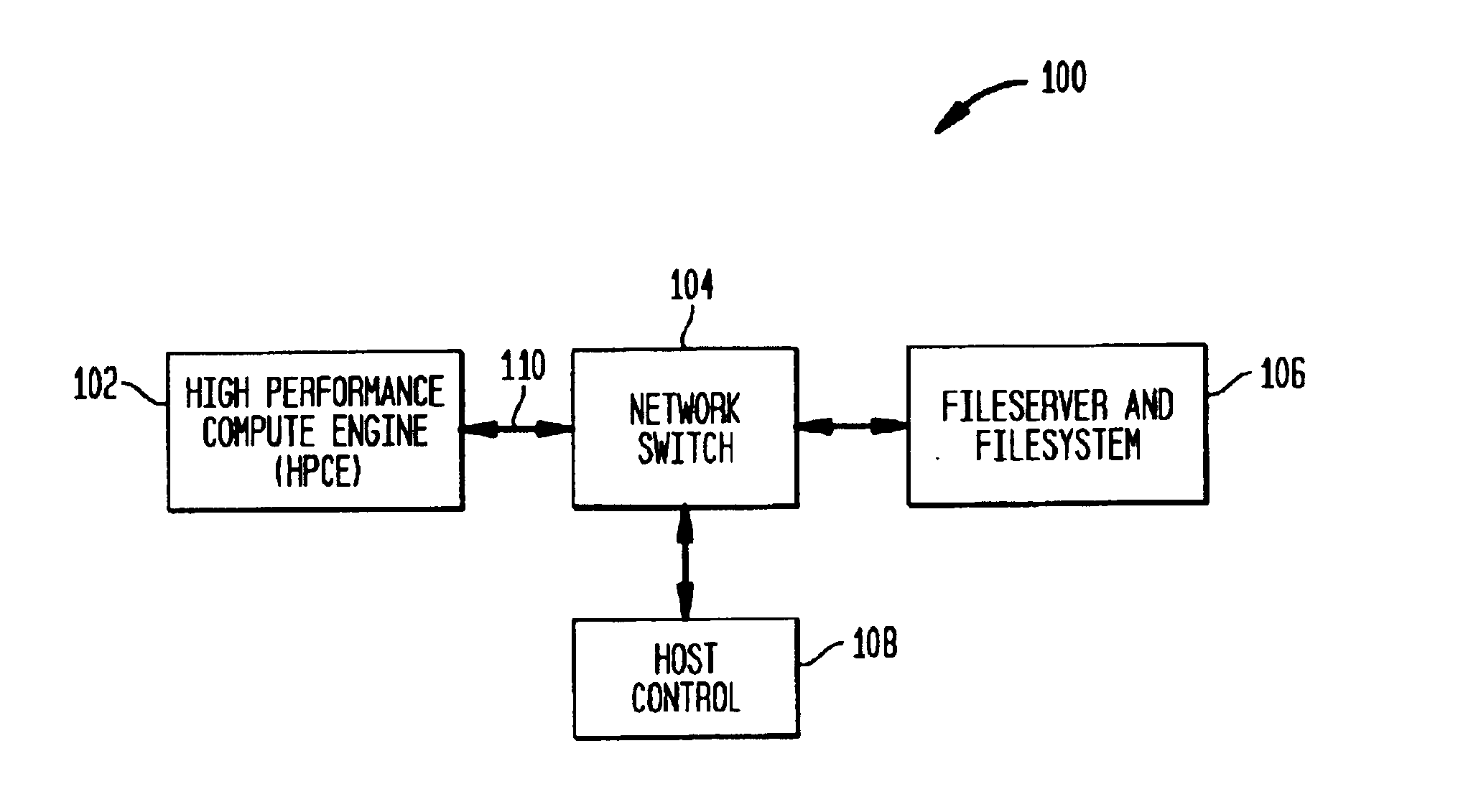

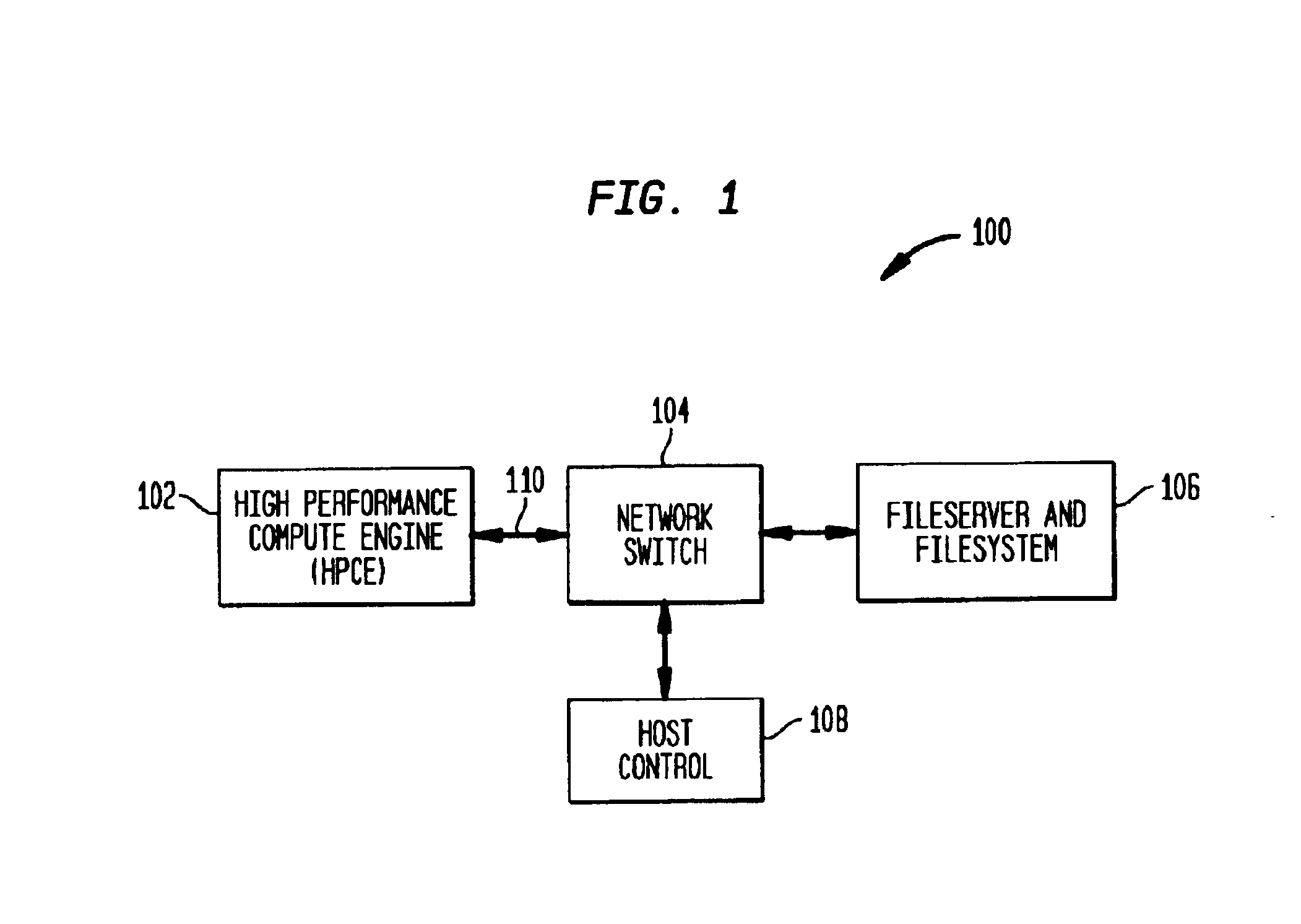

Distributed symmetric multiprocessing computing architecture

ActiveUS20110125974A1Shorten the progressMemory adressing/allocation/relocationDigital computer detailsSupercomputerGlobal address space

Example embodiments of the present invention includes systems and methods for implementing a scalable symmetric multiprocessing (shared memory) computer architecture using a network of homogeneous multi-core servers. The level of processor and memory performance achieved is suitable for running applications that currently require cache coherent shared memory mainframes and supercomputers. The architecture combines new operating system extensions with a high-speed network that supports remote direct memory access to achieve an effective global distributed shared memory. A distributed thread model allows a process running in a head node to fork threads in other (worker) nodes that run in the same global address space. Thread synchronization is supported by a distributed mutex implementation. A transactional memory model allows a multi-threaded program to maintain global memory page consistency across the distributed architecture. A distributed file access implementation supports non-contentious file I / O for threads. These and other functions provide a symmetric multiprocessing programming model consistent with standards such as Portable Operating System Interface for Unix (POSIX).

Owner:ANDERSON RICHARD S

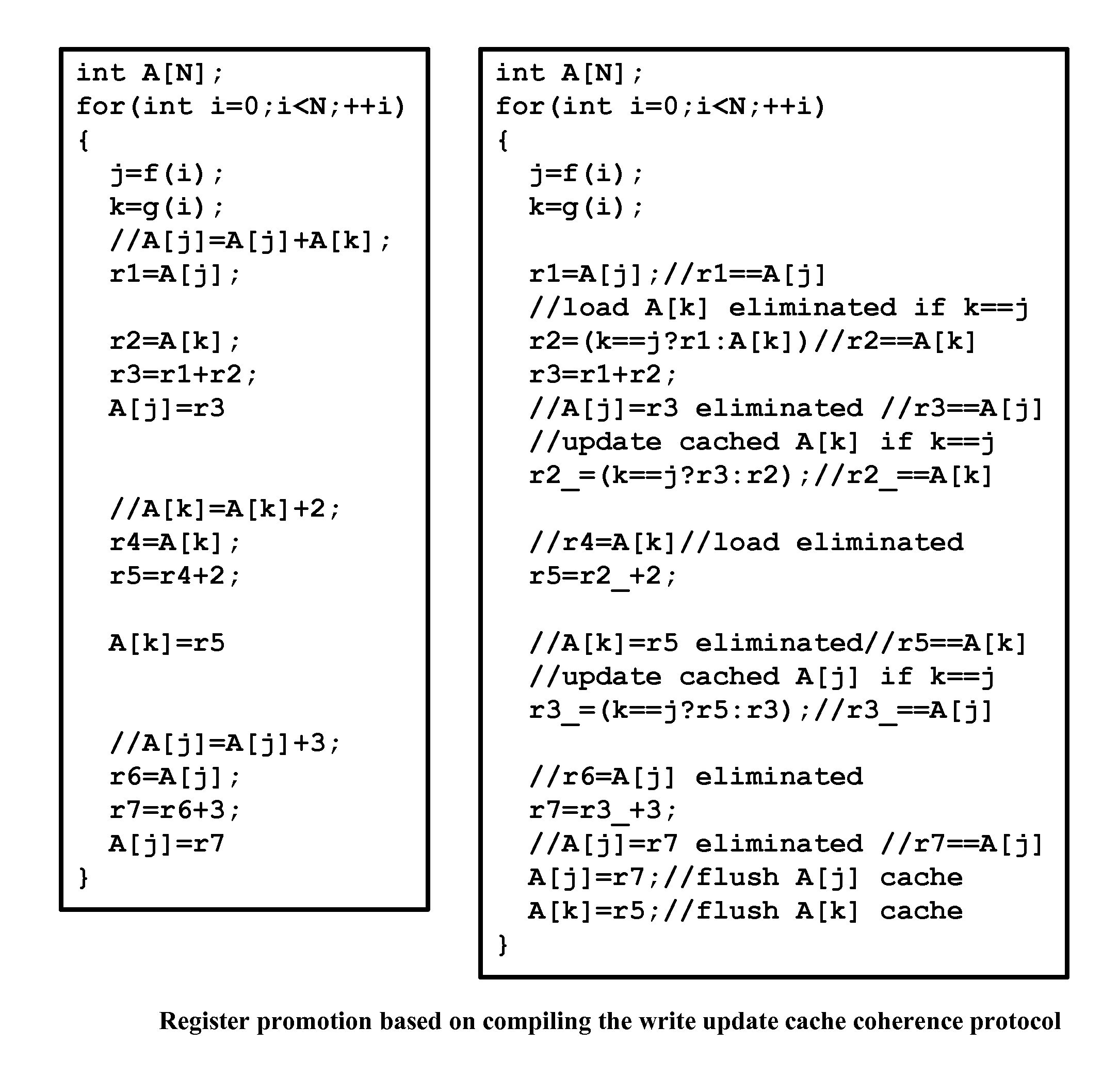

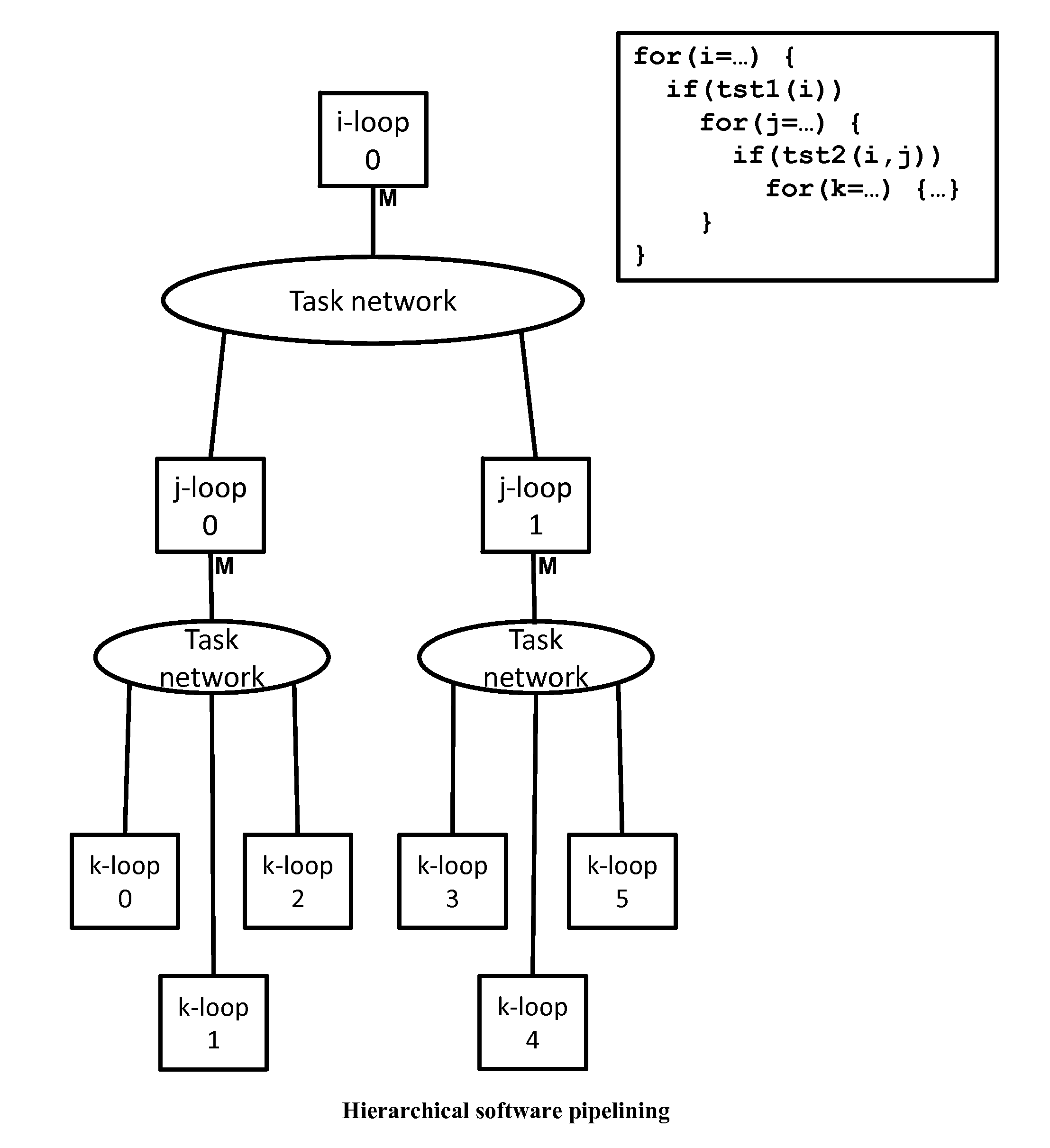

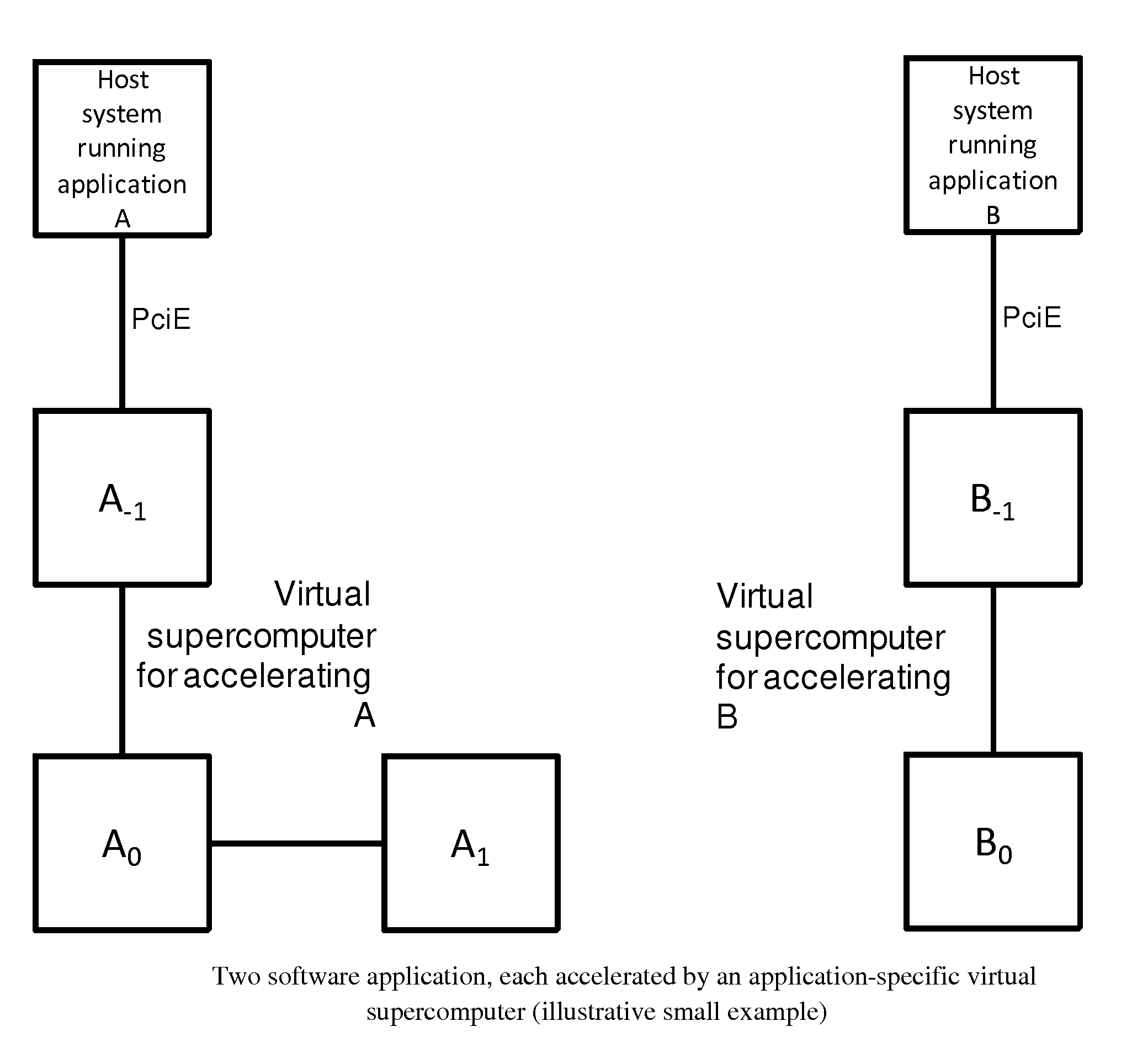

Method and system for converting a single-threaded software program into an application-specific supercomputer

ActiveUS8966457B2Reduce expensesReduce overheadMemory architecture accessing/allocationTransformation of program codeSupercomputerComputer architecture

The invention comprises (i) a compilation method for automatically converting a single-threaded software program into an application-specific supercomputer, and (ii) the supercomputer system structure generated as a result of applying this method. The compilation method comprises: (a) Converting an arbitrary code fragment from the application into customized hardware whose execution is functionally equivalent to the software execution of the code fragment; and (b) Generating interfaces on the hardware and software parts of the application, which (i) Perform a software-to-hardware program state transfer at the entries of the code fragment; (ii) Perform a hardware-to-software program state transfer at the exits of the code fragment; and (iii) Maintain memory coherence between the software and hardware memories. If the resulting hardware design is large, it is divided into partitions such that each partition can fit into a single chip. Then, a single union chip is created which can realize any of the partitions.

Owner:GLOBAL SUPERCOMPUTING CORP

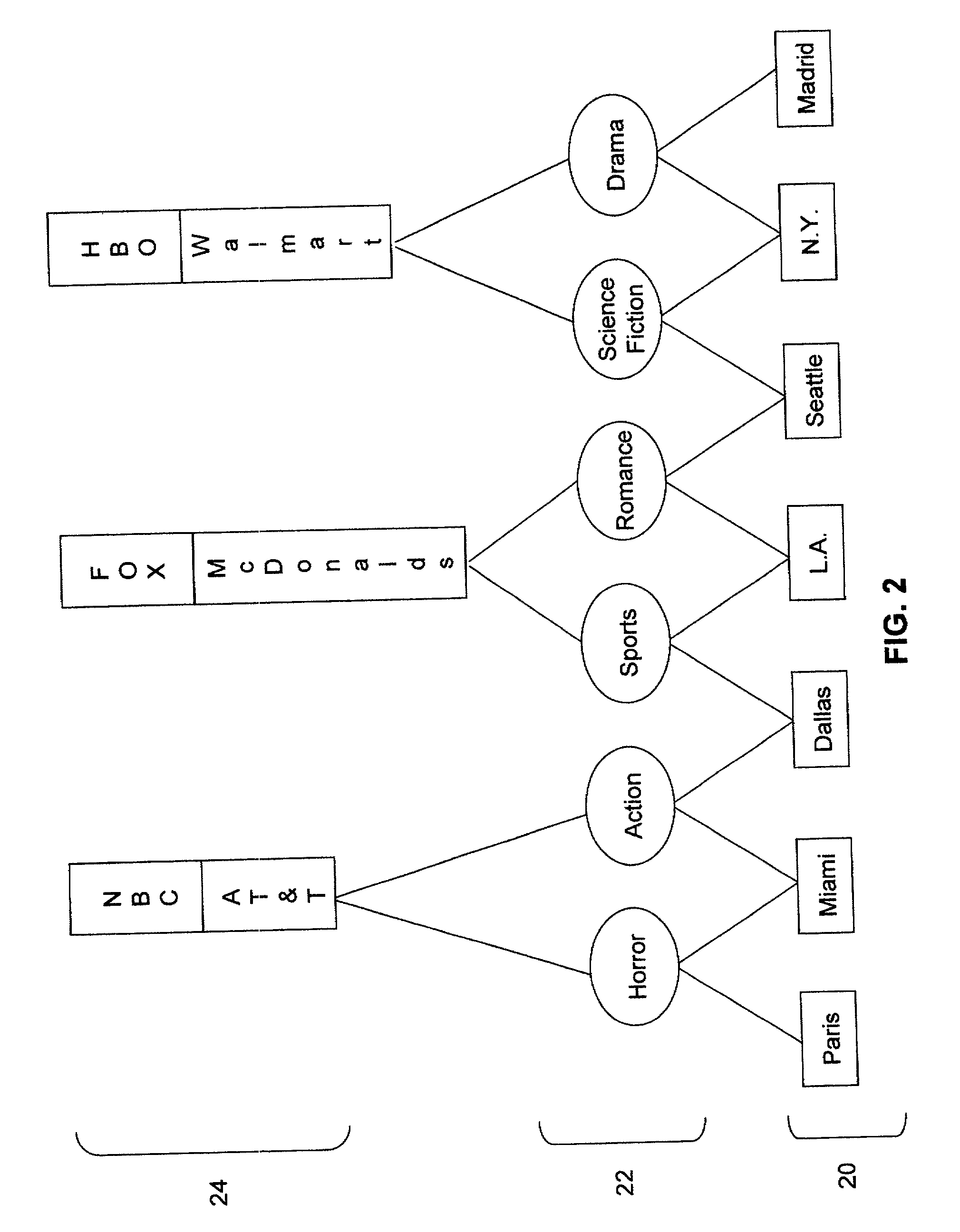

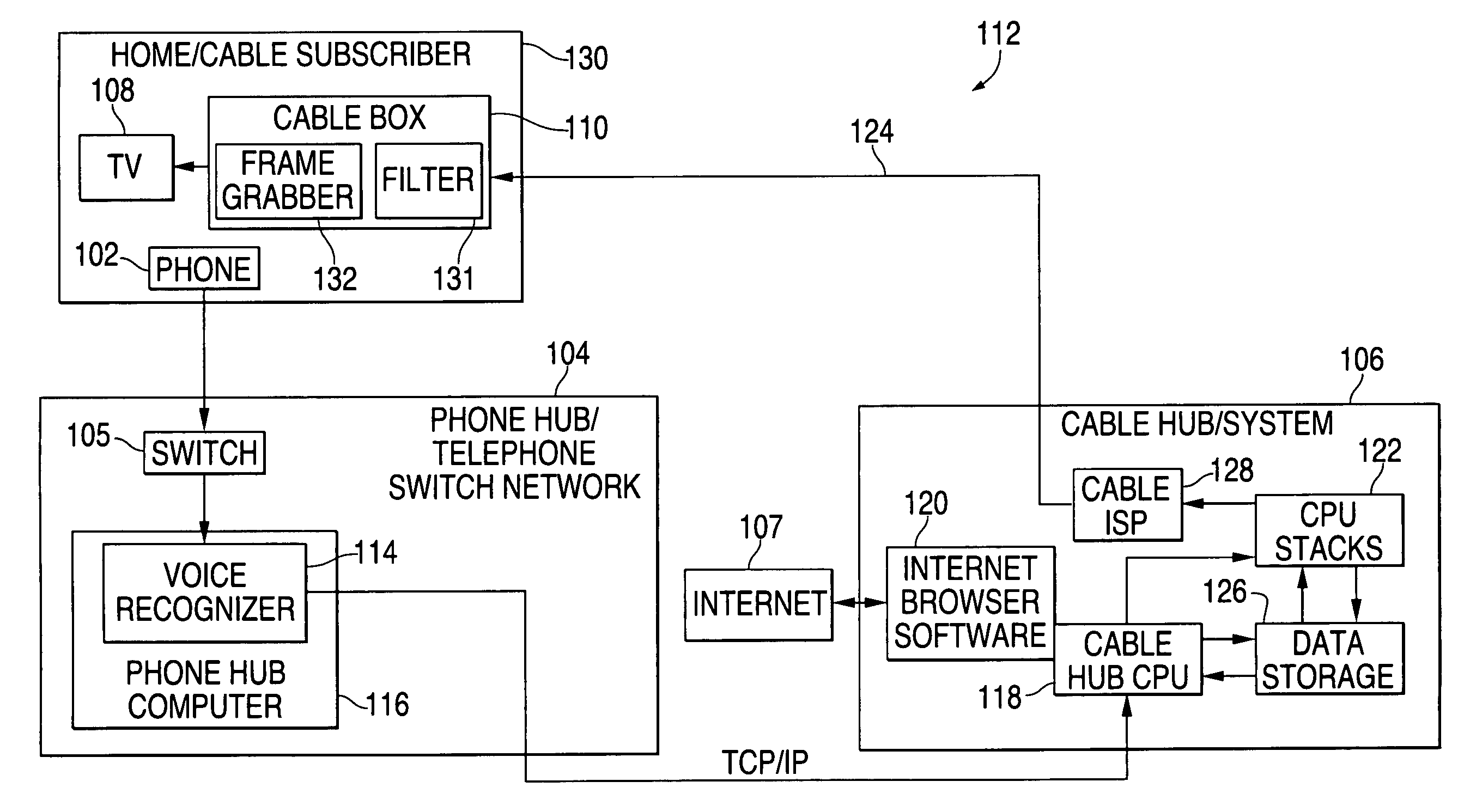

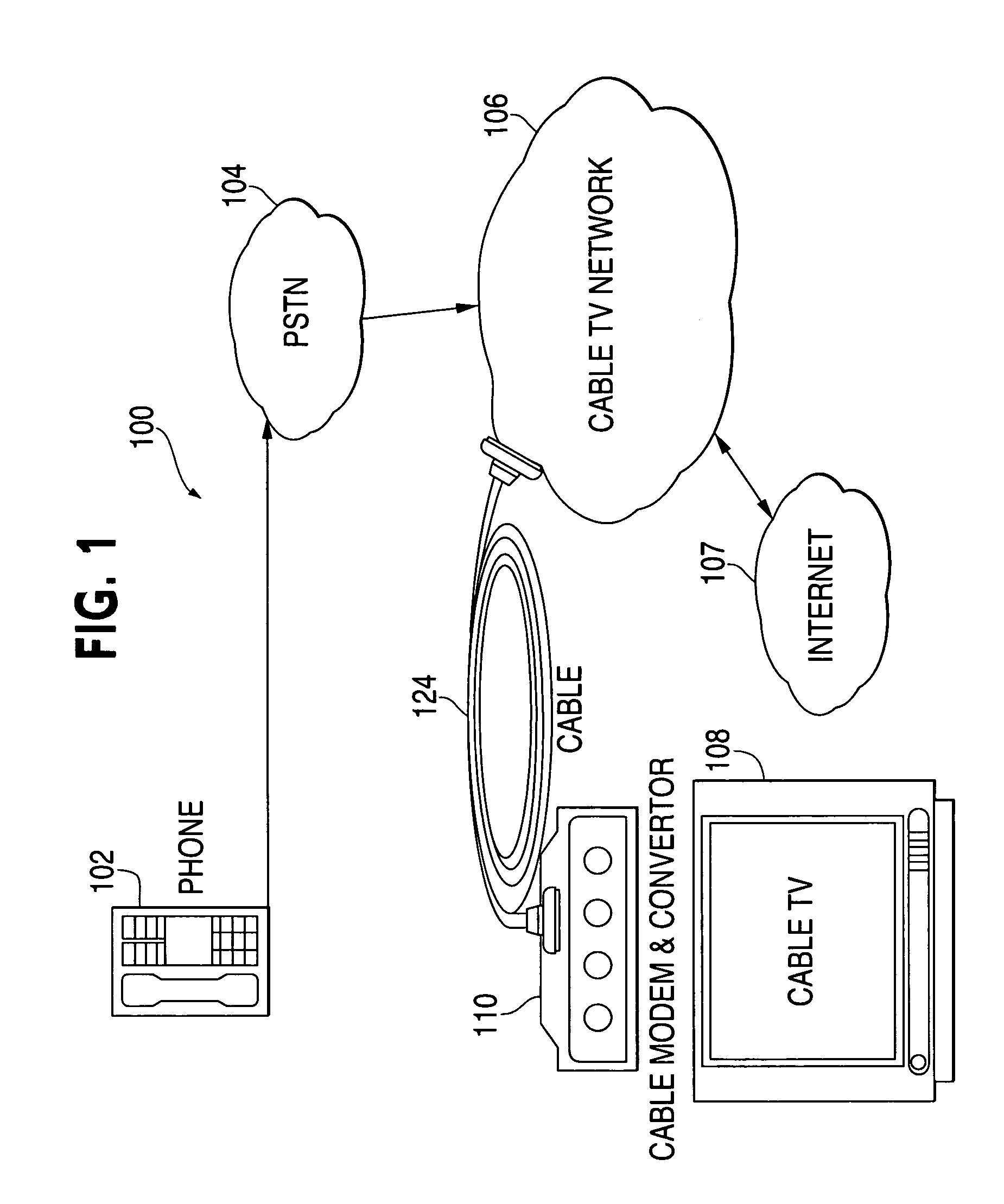

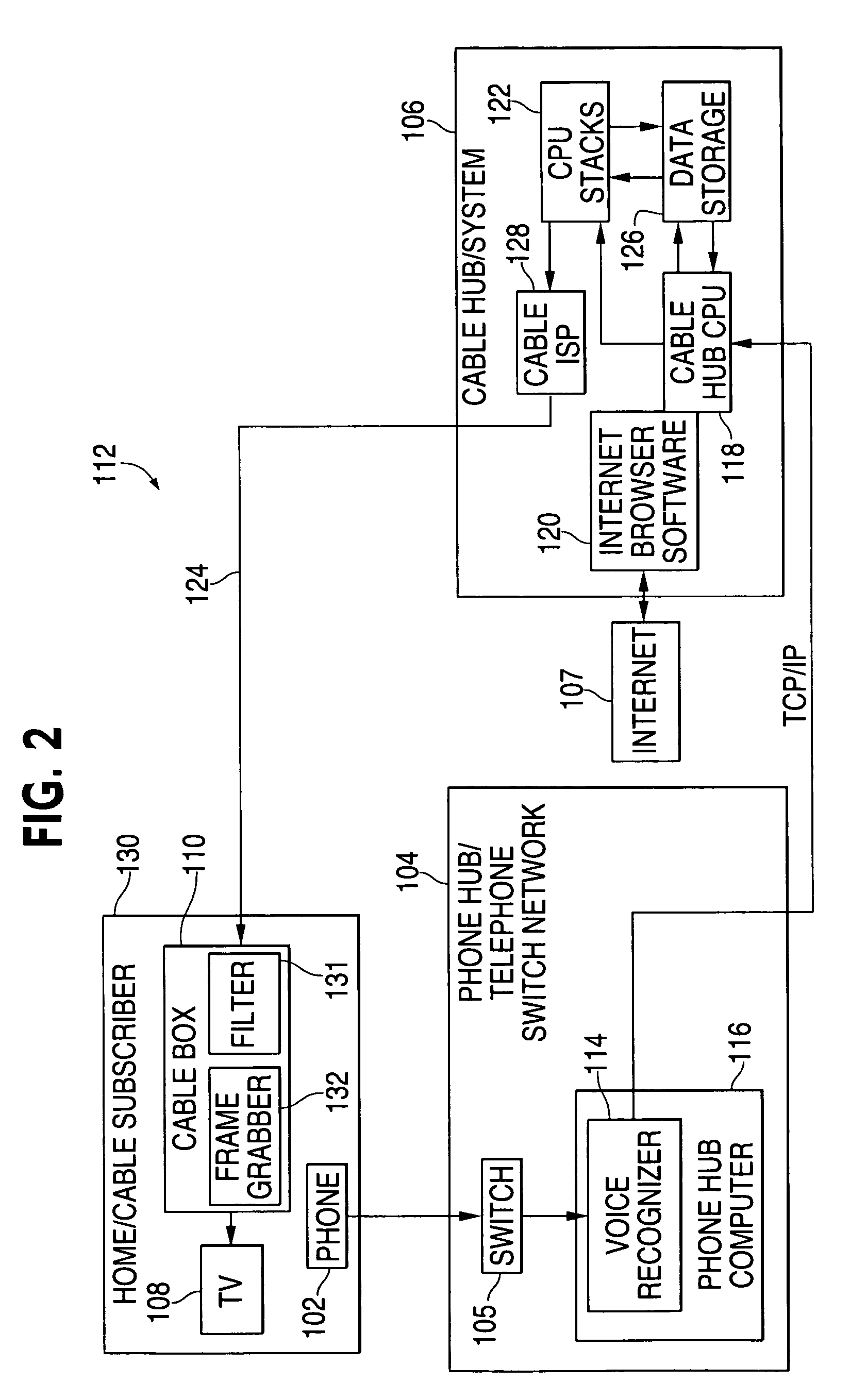

Method and apparatus for internet TV

InactiveUS6978475B1User-friendly interfaceAutomatic exchangesTwo-way working systemsComputer hardwareSupercomputer

A telephone interface and voice recognition driven Internet browser system and method for accessing / browsing the Internet on a cable or satellite television includes a phone for receiving a voice signal from a user. The voice signal controls a telephone interface which displays Internet contents on the television via a cable or satellite television channel. The system also includes a voice recognizer, preferably operated on a supercomputer, for recognizing / interpreting / analyzing the voice signal and generating command signals to access / browse the Internet. The voice recognizer is capable of recognizing / interpreting / analyzing voice signals transmitted from a plurality of users in real time. The system further includes a stack of computers and an Internet browser. Each of the stack of computers is capable of accessing / browsing the Internet and retrieving / organizing requested Internet contents via the Internet browser. The requested Internet contents are sent to the user via a cable or satellite television channel.

Owner:CEDAR LANE TECH INC

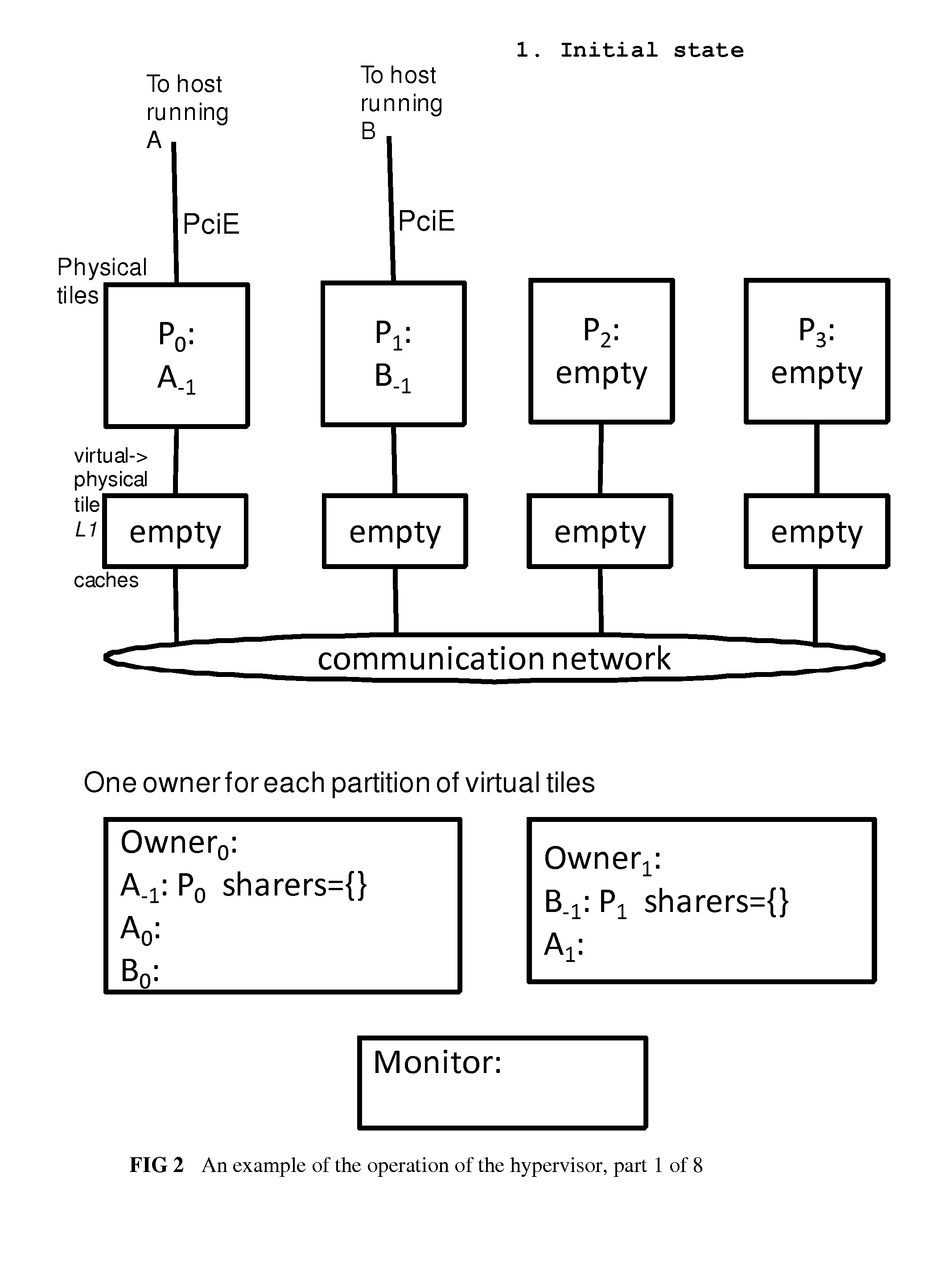

Parallel hardware hypervisor for virtualizing application-specific supercomputers

A parallel hypervisor system for virtualizing application-specific supercomputers is disclosed. The hypervisor system comprises (a) at least one software-virtual hardware pair consisting of a software application, and an application-specific virtual supercomputer for accelerating the said software application, wherein (i) The virtual supercomputer contains one or more virtual tiles; and (ii) The software application and the virtual tiles communicate among themselves with messages; (b) One or more reconfigurable physical tiles, wherein each virtual tile of each supercomputer can be implemented on at least one physical tile, by configuring the physical tile to perform the virtual tile's function; and (c) A scheduler implemented substantially in hardware, for parallel pre-emptive scheduling of the virtual tiles on the physical tiles.

Owner:GLOBAL SUPERCOMPUTING CORP

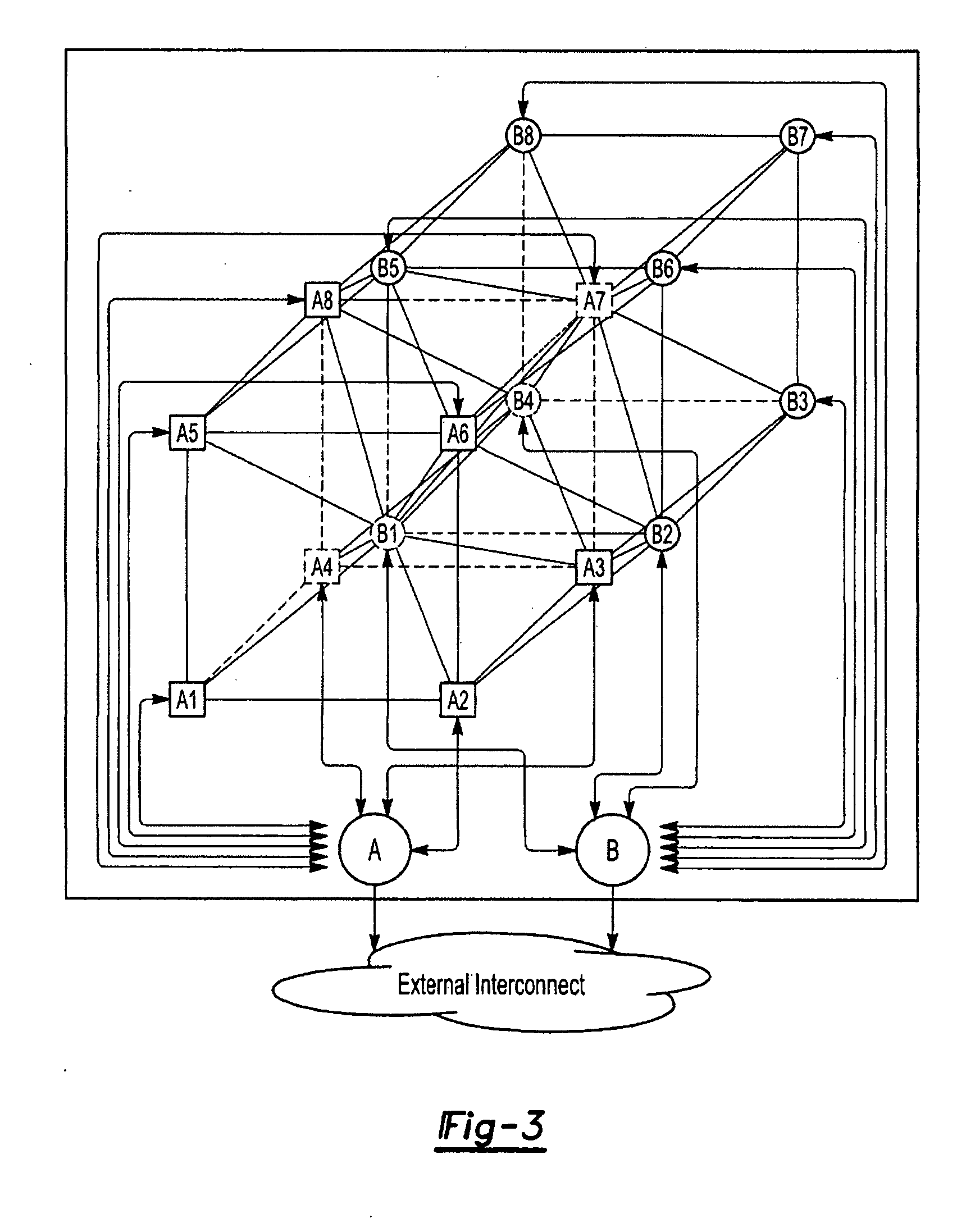

Ultra-scalable supercomputer based on mpu architecture

InactiveUS20090094436A1High-performance and sustained computing resourceHigh-performance and sustained computing resourcesProgram control using stored programsData switching by path configurationSupercomputerTraffic capacity

The invention provides an ultra-scalable supercomputer based on MPU architecture in achieving the well-balanced performance of hundreds of TFLOPS or PFLOPS range in applications. The supercomputer system design includes the interconnect topology and its corresponding routing strategies, the communication subsystem design and implementation, the software and hardware schematic implementations. The supercomputer comprises a plurality of processing nodes powering the parallel processing and Axon nodes connecting computing nodes while implementing the external interconnections. The interconnect topology can be based on MPU architecture and the communication routing logic as required by switching logics is implemented in the FPGA chips while some modular designs for accelerating particular traffic patterns from applications and meliorating the communication overhead are able to be deployed as well.

Owner:SHANGHAI REDNEURONS

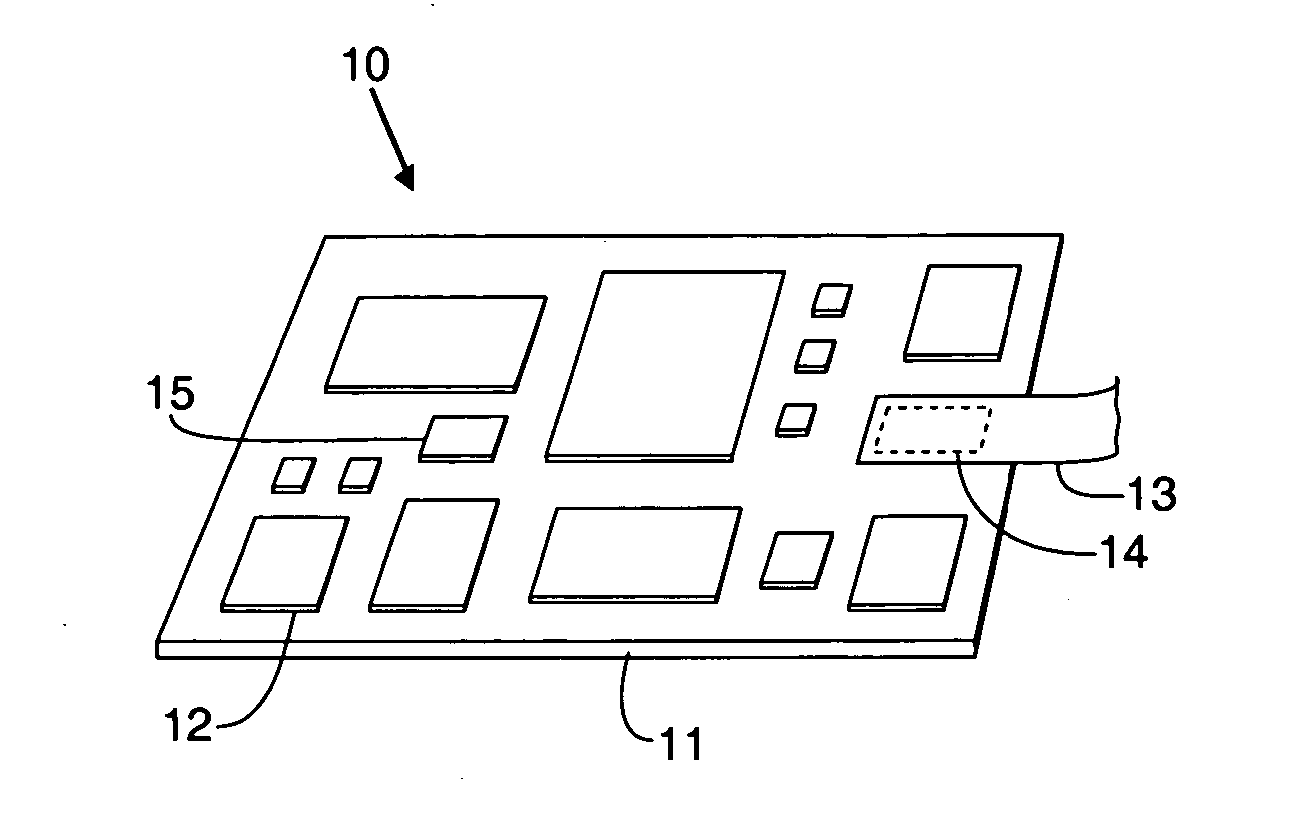

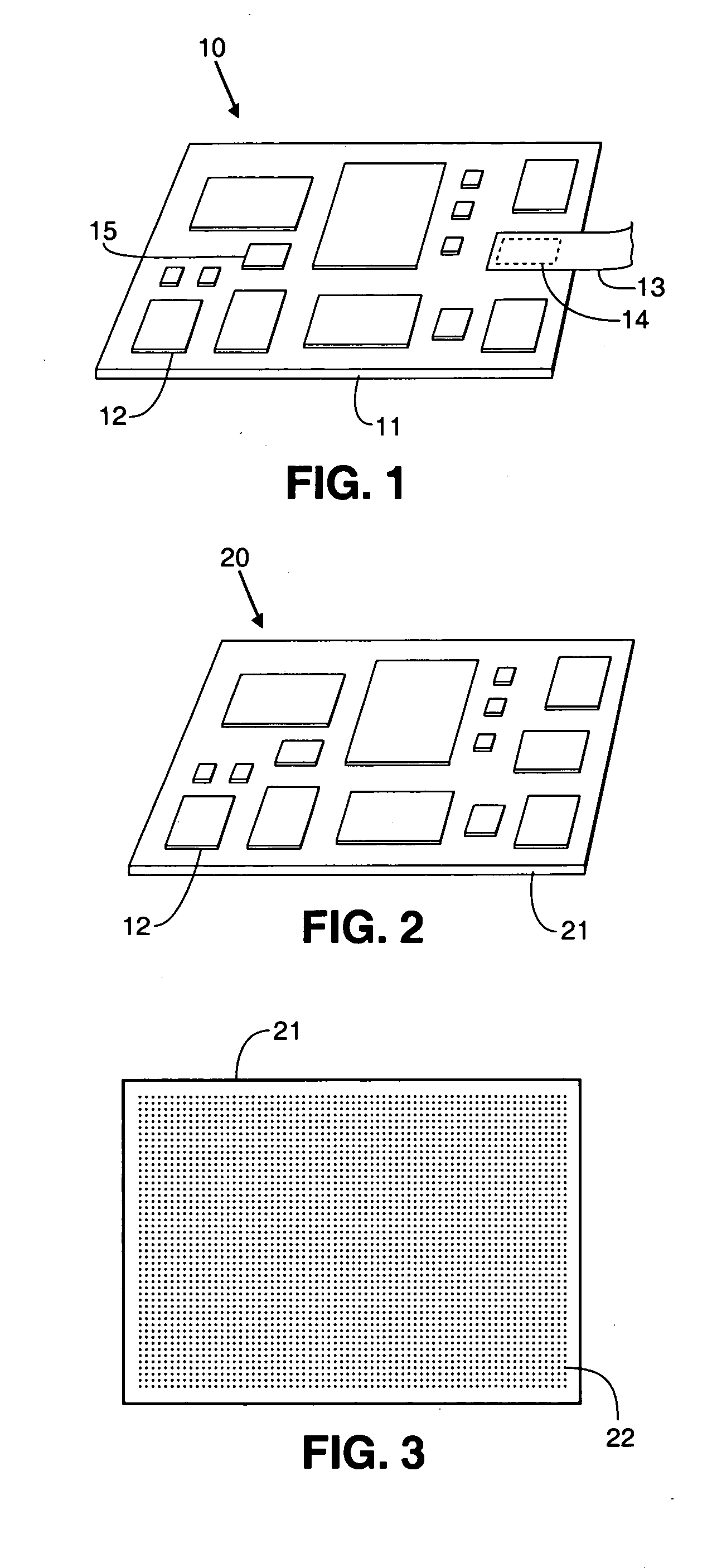

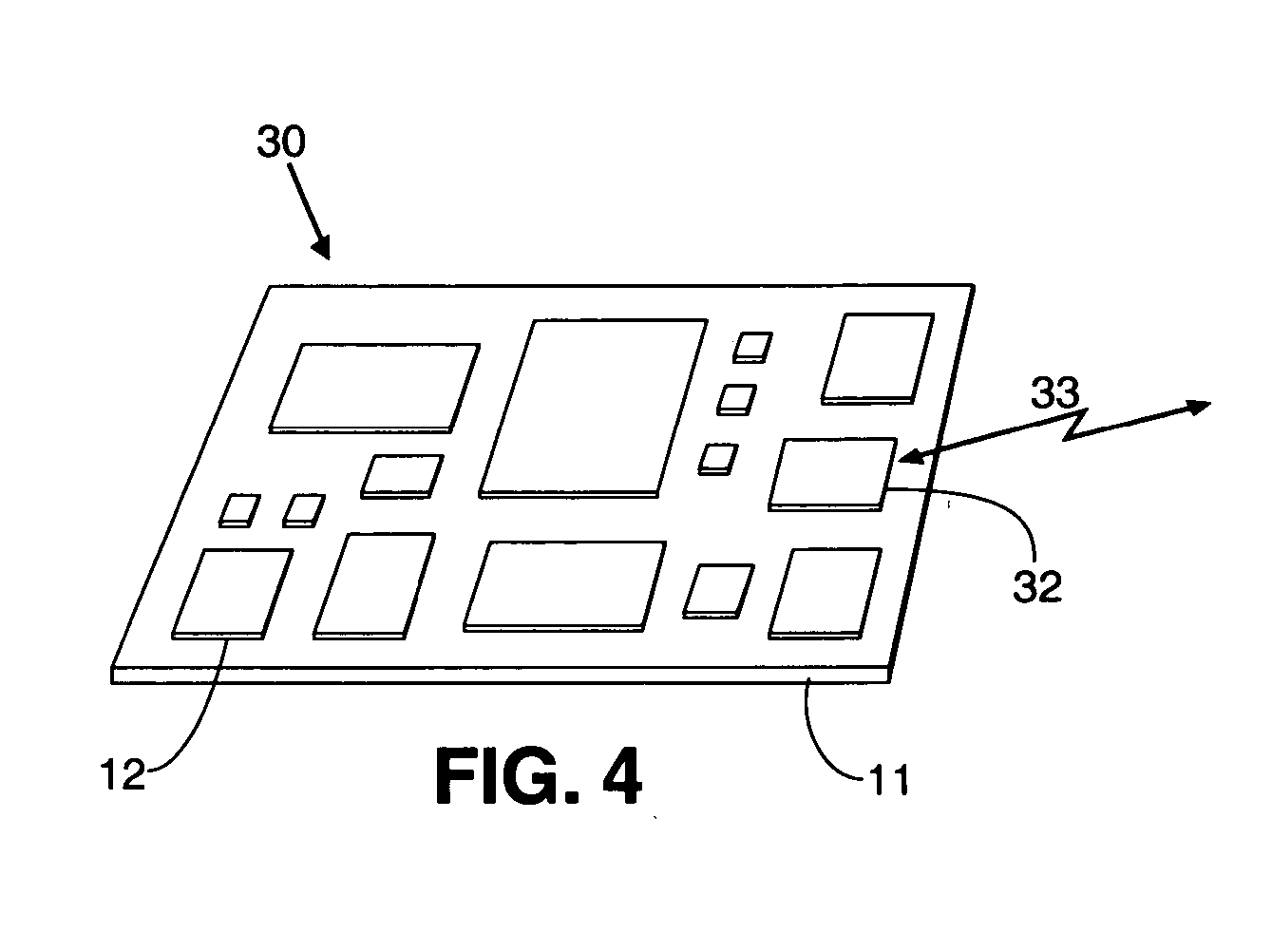

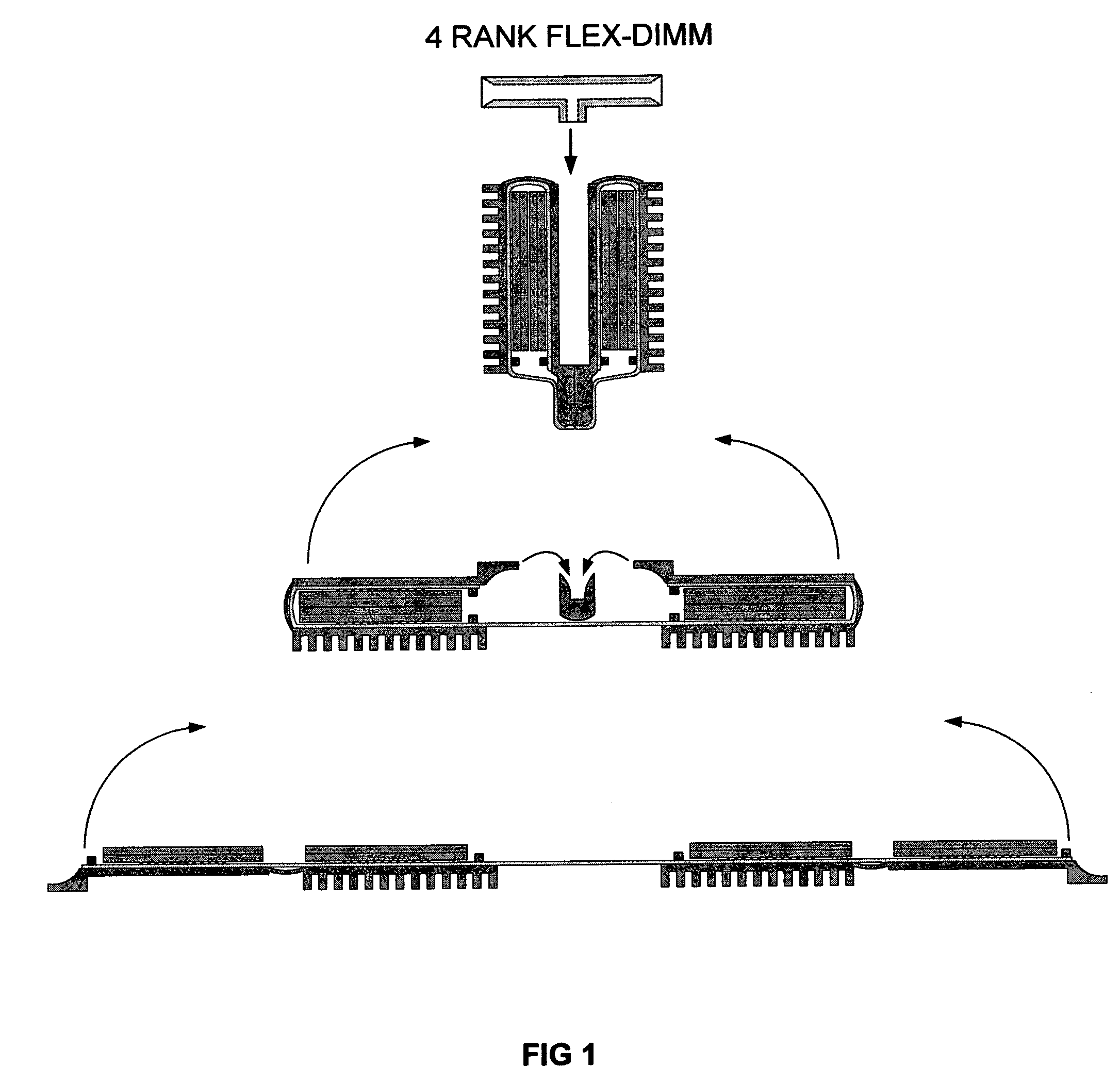

Copper-faced modules, imprinted copper circuits, and their application to supercomputers

InactiveUS20050040513A1Avoid mechanical failureReduce thermal strainInsulating substrate metal adhesion improvementSemiconductor/solid-state device detailsSupercomputerElectronic systems

A method for fabricating copper-faced electronic modules is described. These modules are mechanically robust, thermally accessible for cooling purposes, and capable of supporting high power circuits, including operation at 10 GHz and above. An imprinting method is described for patterning the copper layers of the interconnection circuit, including a variation of the imprinting method to create a special assembly layer having wells filled with solder. The flip chip assembly method comprising stud bumps inserted into wells enables unlimited rework of defective chips. The methods can be applied to multi chip modules that may be connected to other electronic systems or subsystems using feeds through the copper substrate, using a new type of module access cable, or by wireless means. The top copper plate can be replaced with a chamber containing circulating cooling fluid for aggressive cooling that may be required for servers and supercomputers. Application of these methods to create a liquid cooled supercomputer is described.

Owner:SALMON TECH

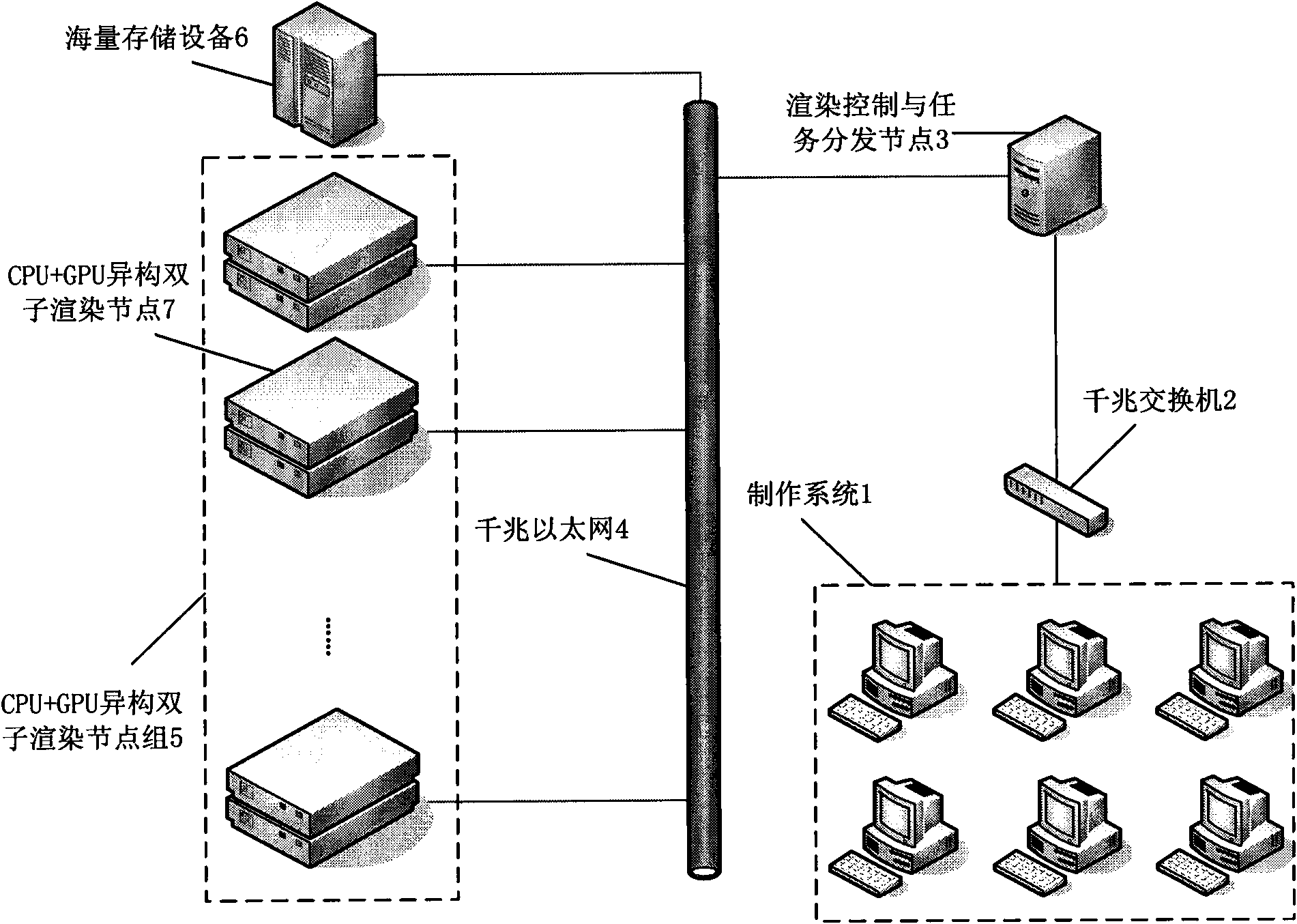

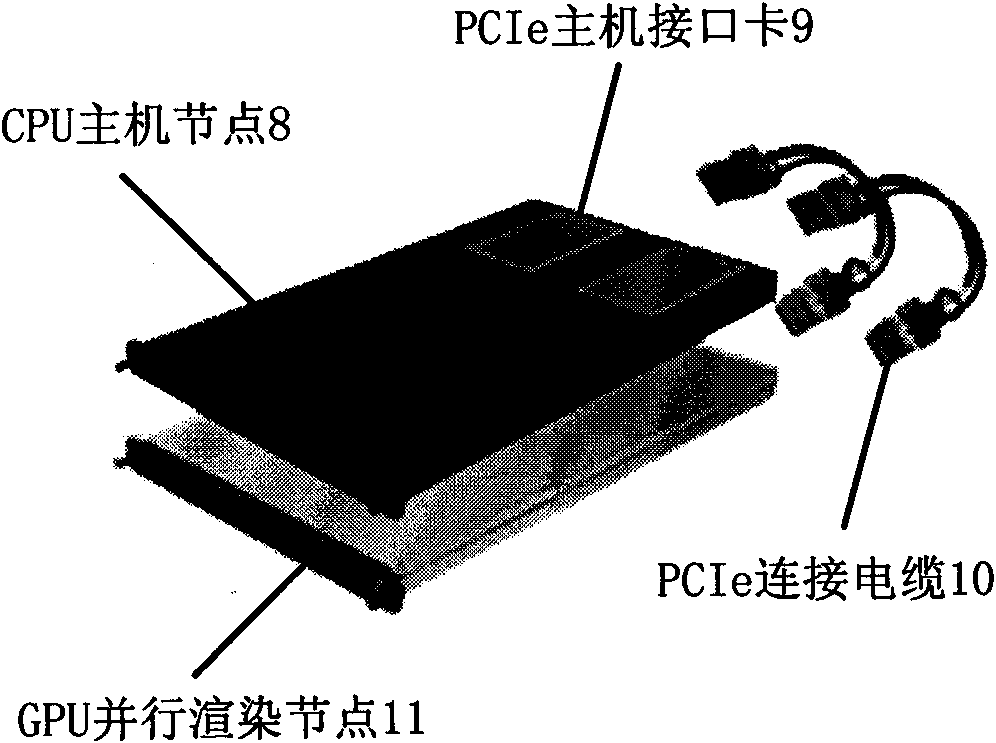

Render farm based on CPU cluster

InactiveCN101587583AImprove performanceHigh energy consumptionProcessor architectures/configurationData switching networksSupercomputerVideo image

The present invention discloses a render farm based on CPU cluster. A distributed parallel cluster rendering system is constructed with high-efficiency low-energy-consumption CPUs so that the computing power obtains and even exceeds the computing performance of a supercomputer. The invention settles a batch rendering problem in the digital innovation producing process. Through using the render farm based on the CPU cluster according to the invention, the producing of the three-dimensional cartoon, special effect of video image, architecture designing, etc. can be completed with a high efficiency. The render farm based on CPU cluster according to the invention further has the advantages of increasing the rendering speed for more than 40 times, reducing the investment cost of building the render farm for 20%-70%, and saving the energy consumption in the production process for 60%-80%.

Owner:CHANGCHUN UNIV OF SCI & TECH

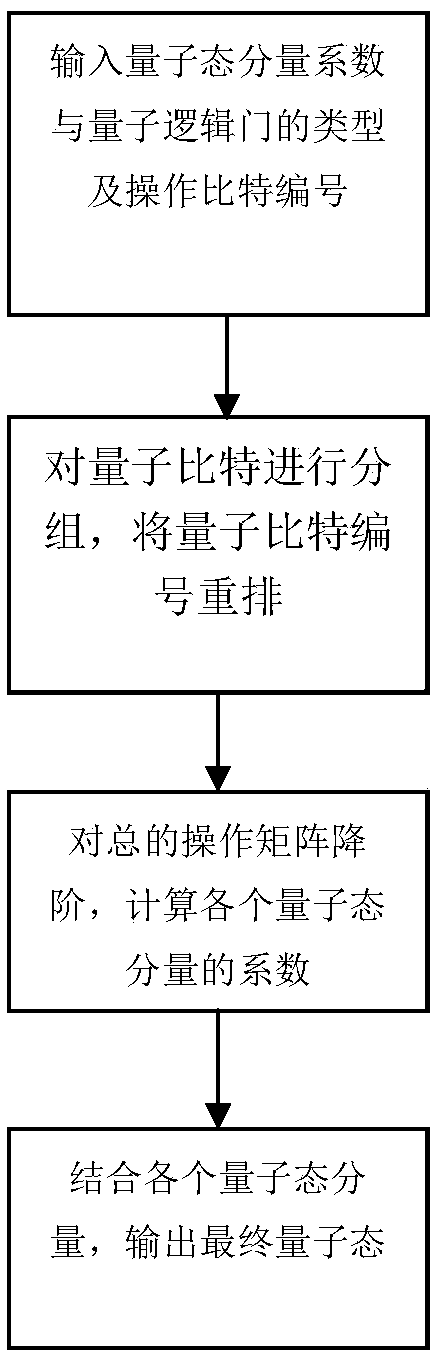

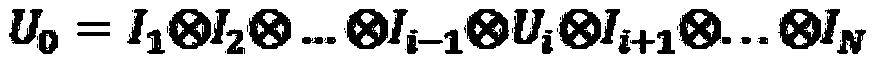

Low-complexity quantum circuit simulation system

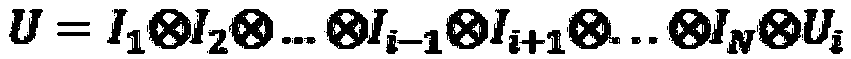

ActiveCN108154240AScalableAvoid the disadvantage of too manyQuantum computersSupercomputerQuantum logic

The invention discloses a low-complexity quantum circuit simulation system, and belongs to the field of quantum computing. The low-complexity quantum circuit simulation system overcomes the technicalproblems in the prior art that the quantum circuit simulation storage space is too large and the calculation time is too long. The system comprises an input module, a storage module and an output module, the storage process of the storage module for data is as follows: (1) a mathematical model is established to represent the operation of a quantum state and quantum logic gate; (2) quantum bits aregrouped and the serial numbers of the quantum bits are rearranged; (3) degree reduction is conducted on a total operation matrix U0, and an output state is calculated. According to the system, a matrix element of a quantum state vector is directly operated by using an connotative inherent law of a quantum logic gate, at the same time, the method has expandability, in the future, a supercomputer can be used to simulate a quantum computer with more bits.

Owner:ORIGIN QUANTUM COMPUTING TECH (HEFEI) CO LTD

Class network routing

InactiveUS7587516B2Optimize networkEffective supportError preventionEfficient regulation technologiesSupercomputerDistributed memory

Class network routing is implemented in a network such as a computer network comprising a plurality of parallel compute processors at nodes thereof. Class network routing allows a compute processor to broadcast a message to a range (one or more) of other compute processors in the computer network, such as processors in a column or a row. Normally this type of operation requires a separate message to be sent to each processor. With class network routing pursuant to the invention, a single message is sufficient, which generally reduces the total number of messages in the network as well as the latency to do a broadcast. Class network routing is also applied to dense matrix inversion algorithms on distributed memory parallel supercomputers with hardware class function (multicast) capability. This is achieved by exploiting the fact that the communication patterns of dense matrix inversion can be served by hardware class functions, which results in faster execution times.

Owner:IBM CORP

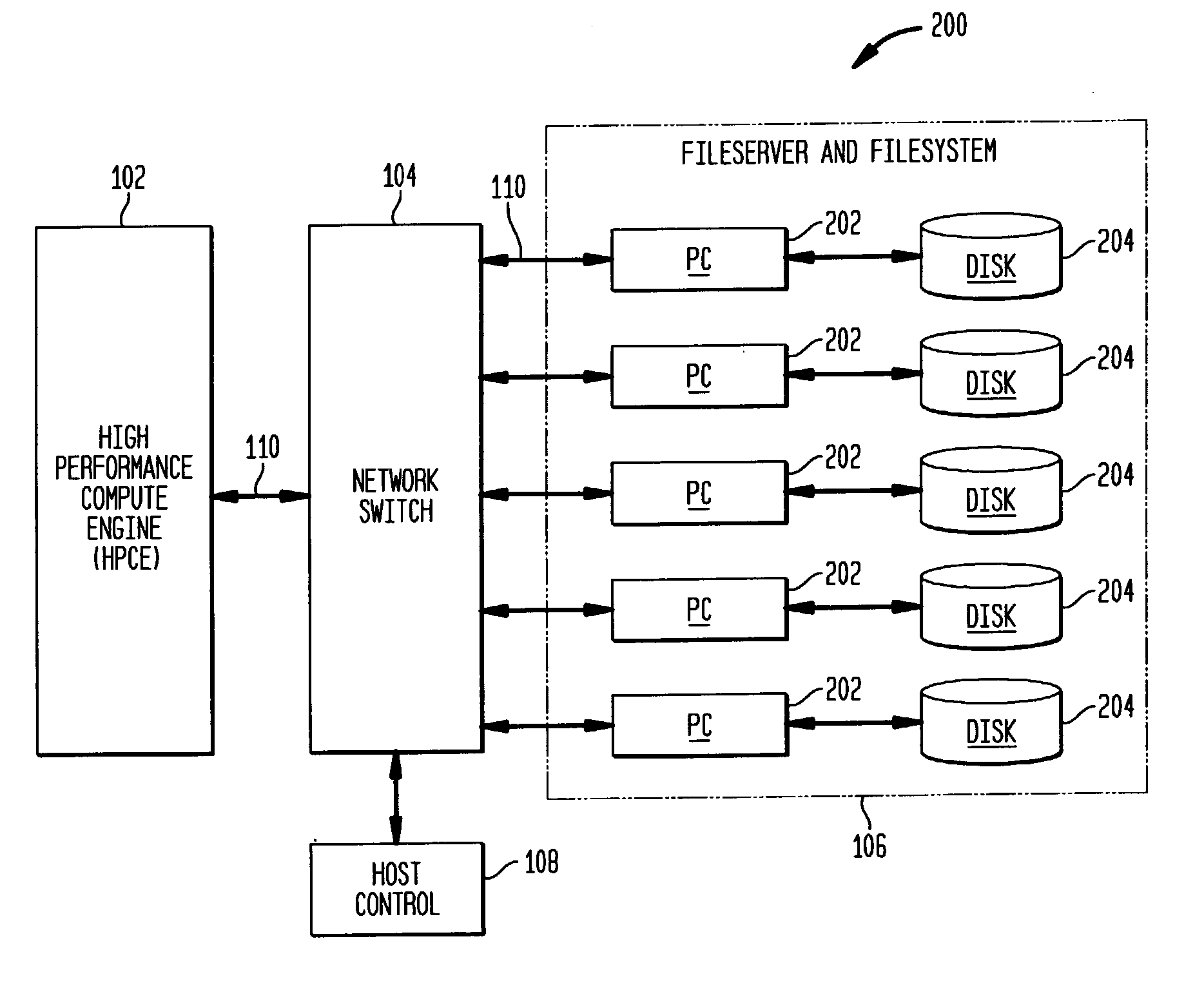

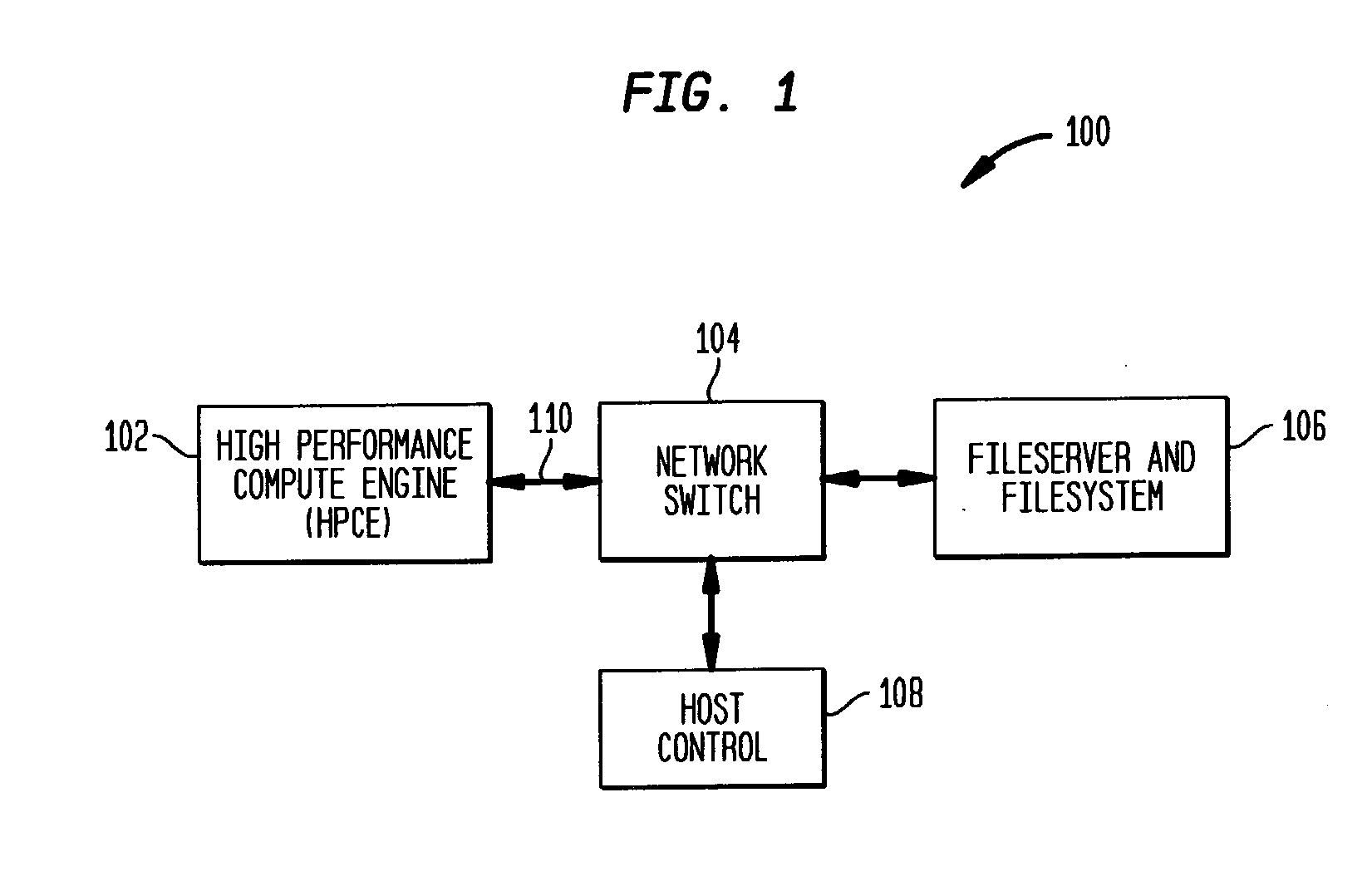

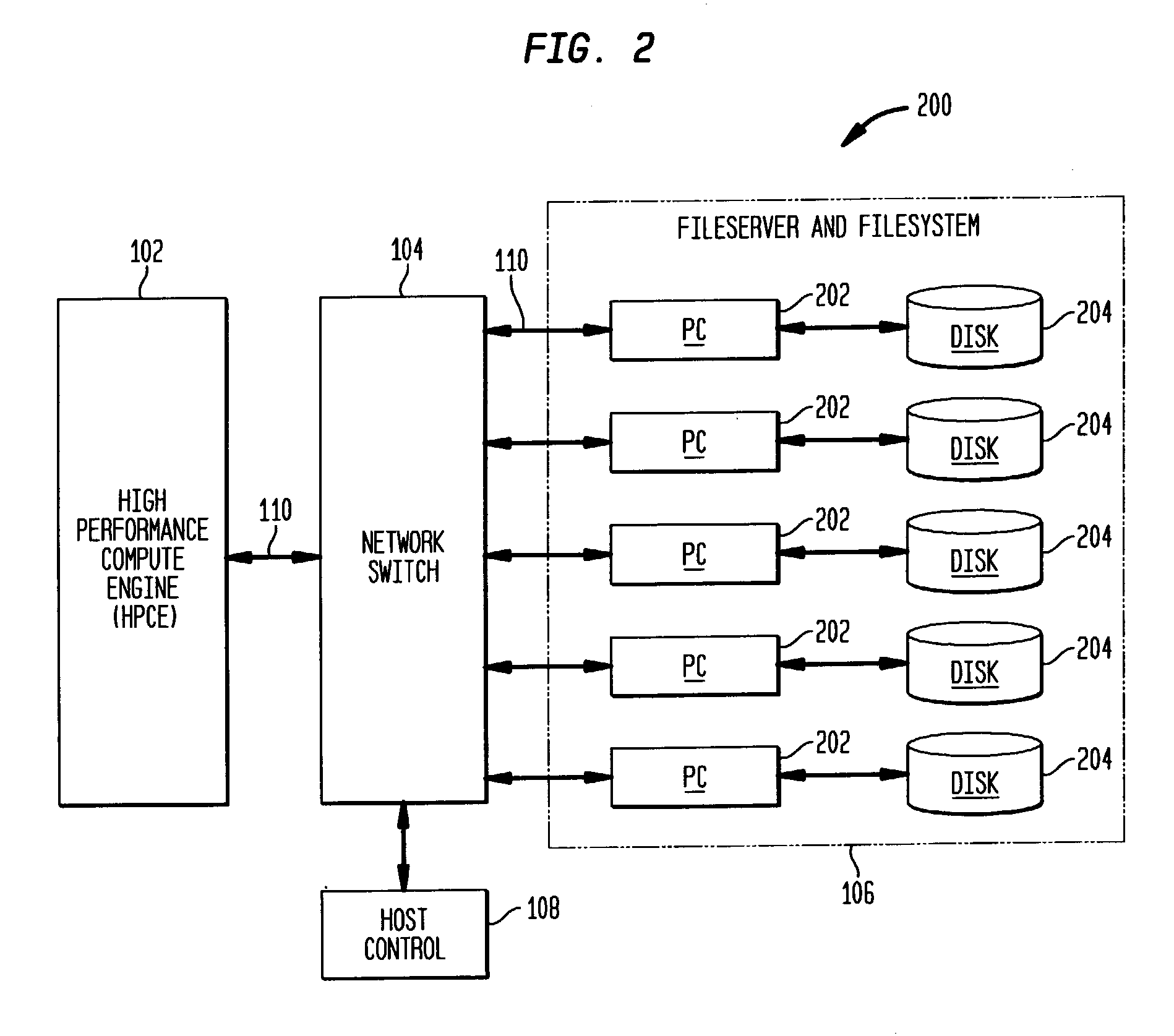

Checkpointing filesystem

The present in invention is directed to a checkpointing filesystem of a distributed-memory parallel supercomputer comprising a node that accesses user data on the filesystem, the filesystem comprising an interface that is associated with a disk for storing the user data. The checkpointing filesystem provides for taking and checkpoint of the filesystem and rolling back to a previously taken checkpoint, as well as for writing user data to and deleting user data from the checkpointing filesystem. The checkpointing filesystem provides a recently written file allocation table (WFAT) for maintaining information regarding the user data written since a previously taken checkpoint and a recently deleted file allocation table (DFAT) for maintaining information regarding user data deleted from since the previously taken checkpoint, both of which are utilized by the checkpointing filesystem to take a checkpoint of the filesystem and rollback the filesystem to a previously taken checkpoint, as well as to write and delete user data from the checkpointing filesystem.

Owner:IBM CORP

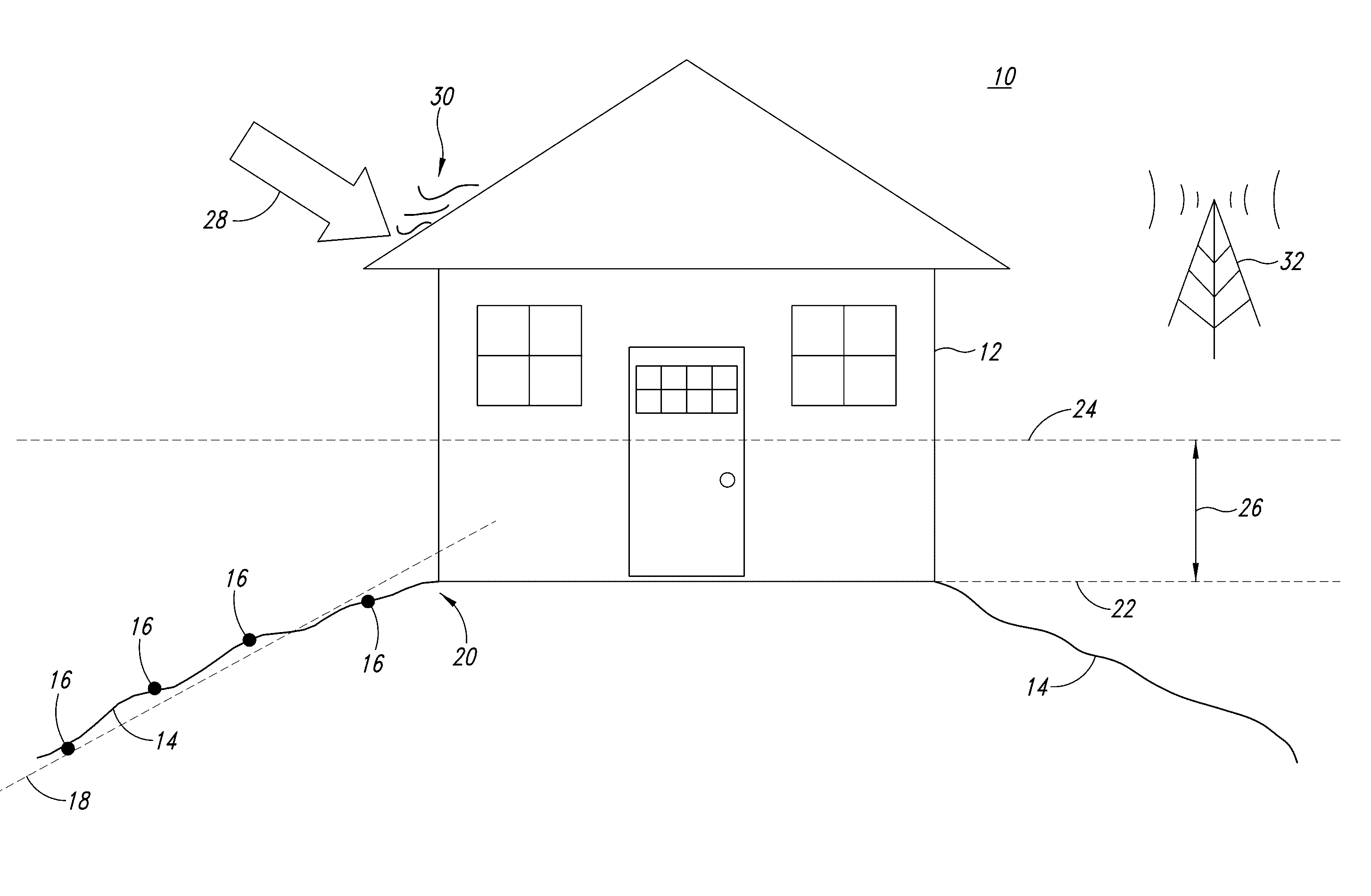

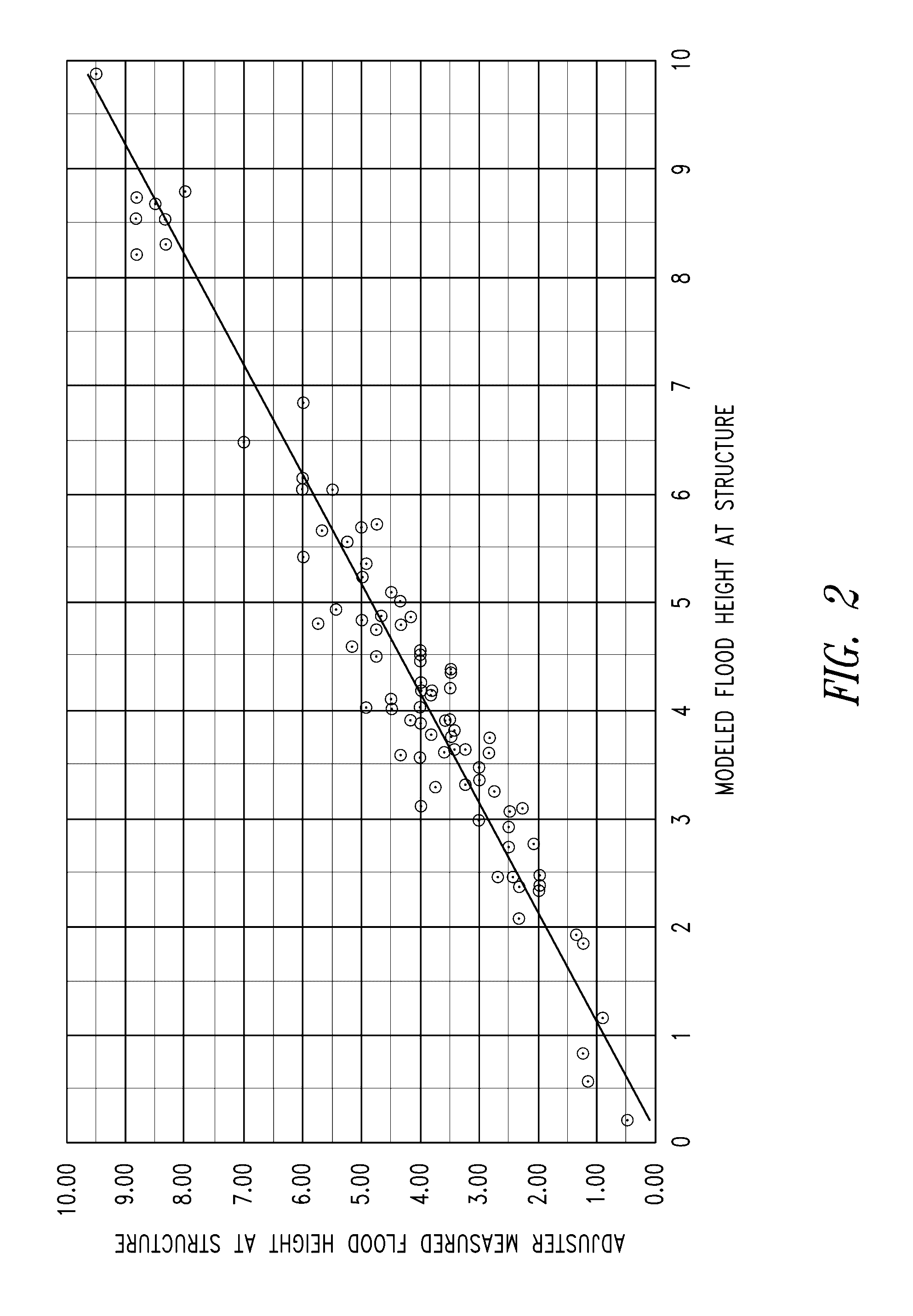

Large scale analysis of catastrophic weather damage

Estimating storm damage on a large scale includes collecting geospatial data from a plurality of sensors disparately situated in a defined geographic area; collecting the geospatial data occurs before and during a determined or simulated significant weather event. Geospatial property attribute information for each of a plurality of real property structures within the defined geographic area is also provided. A supercomputer estimates a magnitude and duration of significant weather event forces at points associated with each of the plurality of real property structures according to a significant weather event model in order to produce at least one model output data set. The model output data set is applied to the geospatial property attribute information and, based on the application of model output data, damage to the plurality of real property structures is automatically estimated.

Owner:QRISQ ANALYTICS LLC

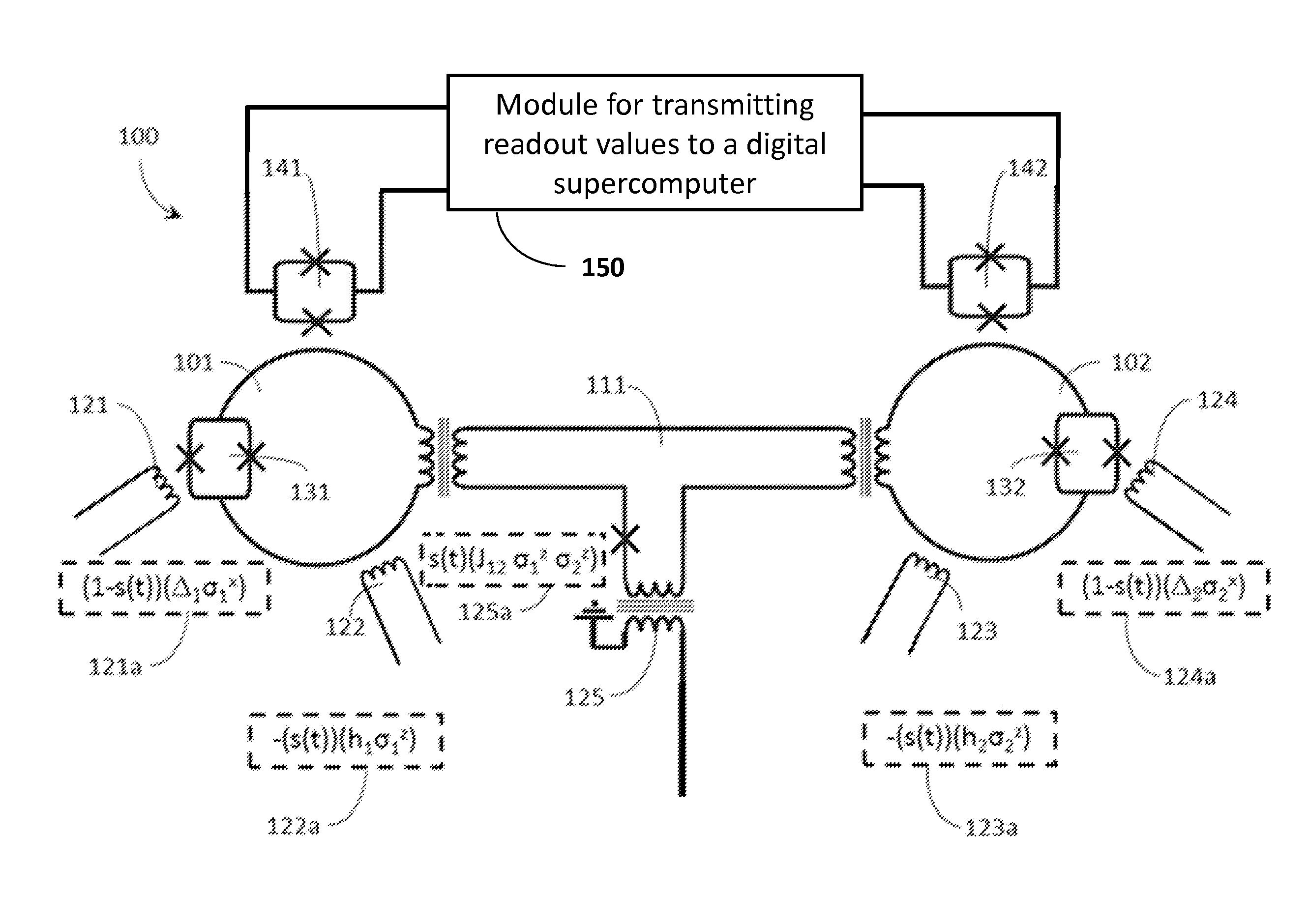

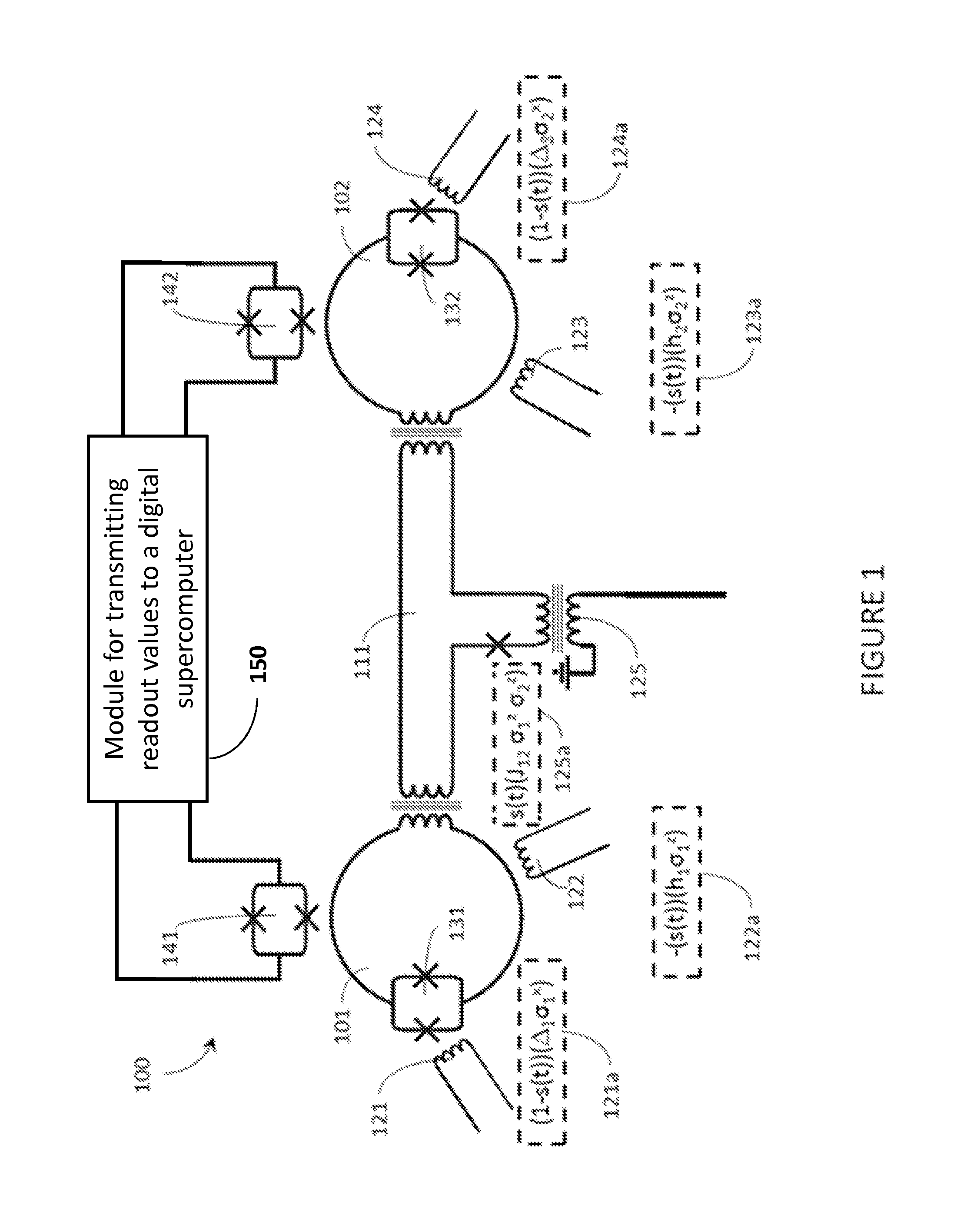

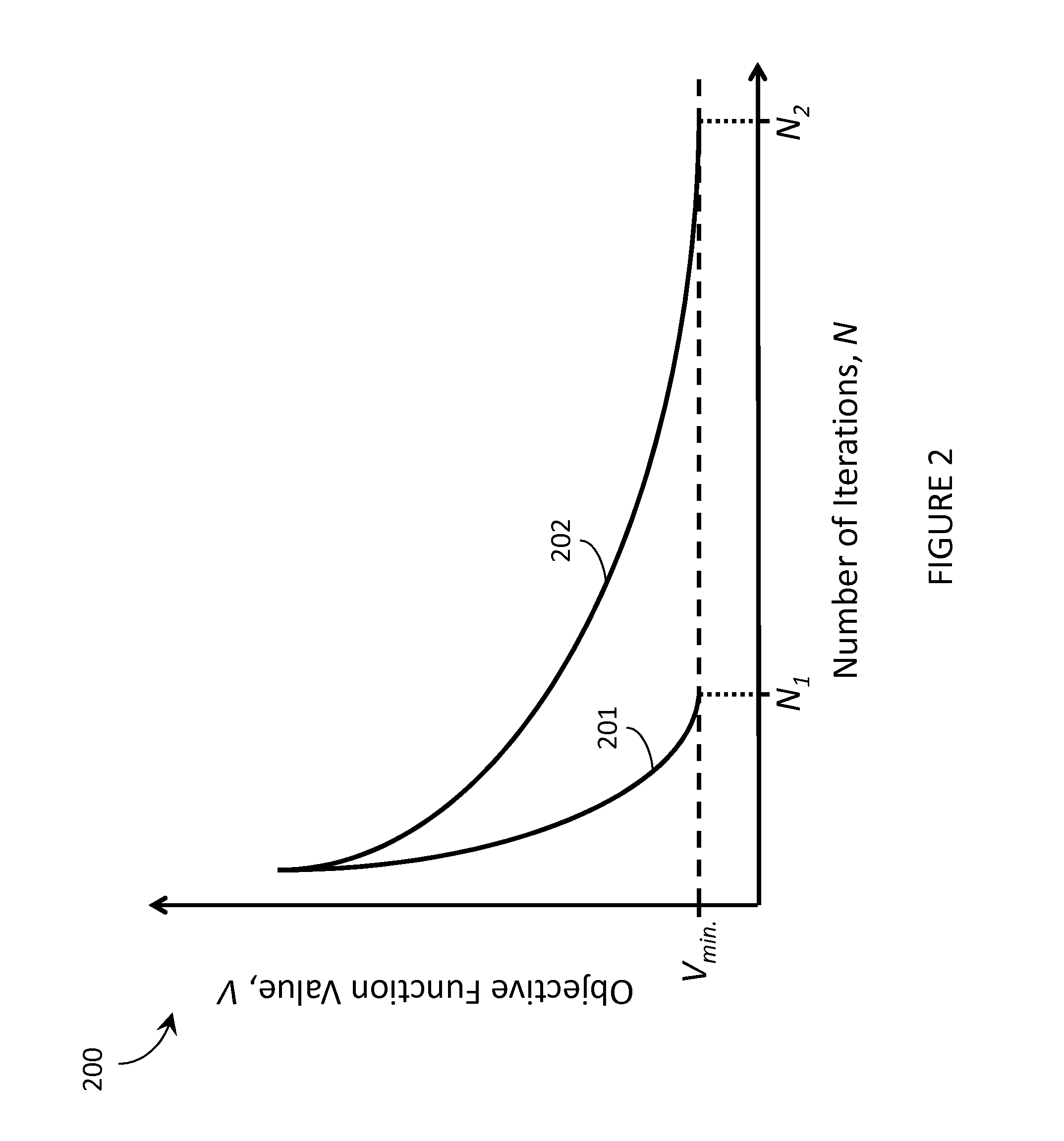

Systems and methods for interacting with a quantum computing system

Systems and methods that employ interactions between quantum computing systems and digital computing systems are described. For an iterative method, a quantum computing system may be designed, operated, and / or adapted to provide a rate of convergence that is greater than the rate of convergence of a digital supercomputer. When the digital supercomputer is iteratively used to evaluate an objective function at a cost incurred of C per iteration, the quantum computing system may be used to provide the input parameter(s) to the objective function and quickly converge on the input parameter(s) that optimize the objective function. Thus, a quantum computing system may be used to minimize the total cost incurred CT for consumption of digital supercomputer resources when a digital supercomputer is iteratively employed to evaluate an objective function.

Owner:D WAVE SYSTEMS INC

Optimizing layout of an application on a massively parallel supercomputer

InactiveUS8117288B2Minimize timeShorten the timeDigital data processing detailsDigital computer detailsMarkov chainSupercomputer

A general computer-implement method and apparatus to optimize problem layout on a massively parallel supercomputer is described. The method takes as input the communication matrix of an arbitrary problem in the form of an array whose entries C(i, j) are the amount to data communicated from domain i to domain j. Given C(i, j), first implement a heuristic map is implemented which attempts sequentially to map a domain and its communications neighbors either to the same supercomputer node or to near-neighbor nodes on the supercomputer torus while keeping the number of domains mapped to a supercomputer node constant (as much as possible). Next a Markov Chain of maps is generated from the initial map using Monte Carlo simulation with Free Energy (cost function) F=Σi,jC(i,j)H(i,j)− where H(i,j) is the smallest number of hops on the supercomputer torus between domain i and domain j. On the cases tested, found was that the method produces good mappings and has the potential to be used as a general layout optimization tool for parallel codes. At the moment, the serial code implemented to test the method is un-optimized so that computation time to find the optimum map can be several hours on a typical PC. For production implementation, good parallel code for our algorithm would be required which could itself be implemented on supercomputer.

Owner:IBM CORP

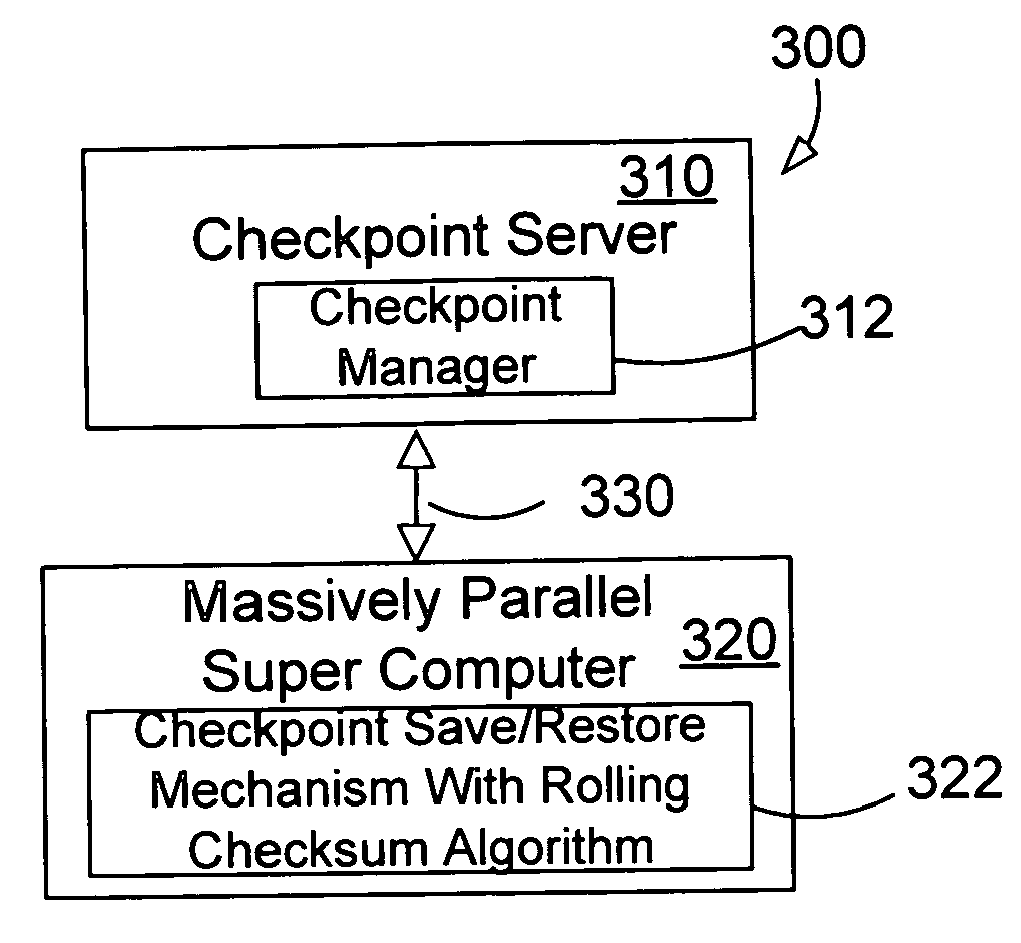

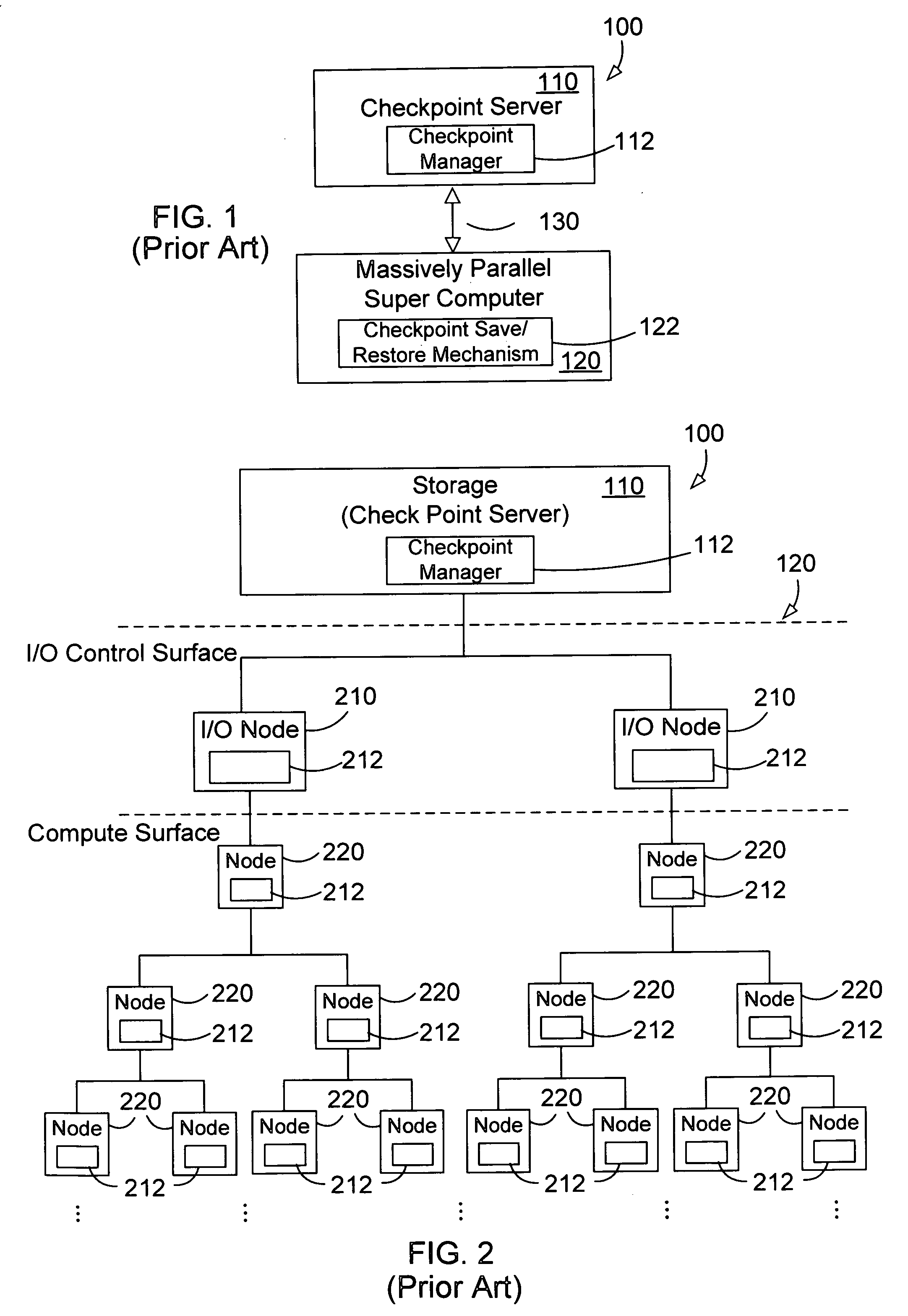

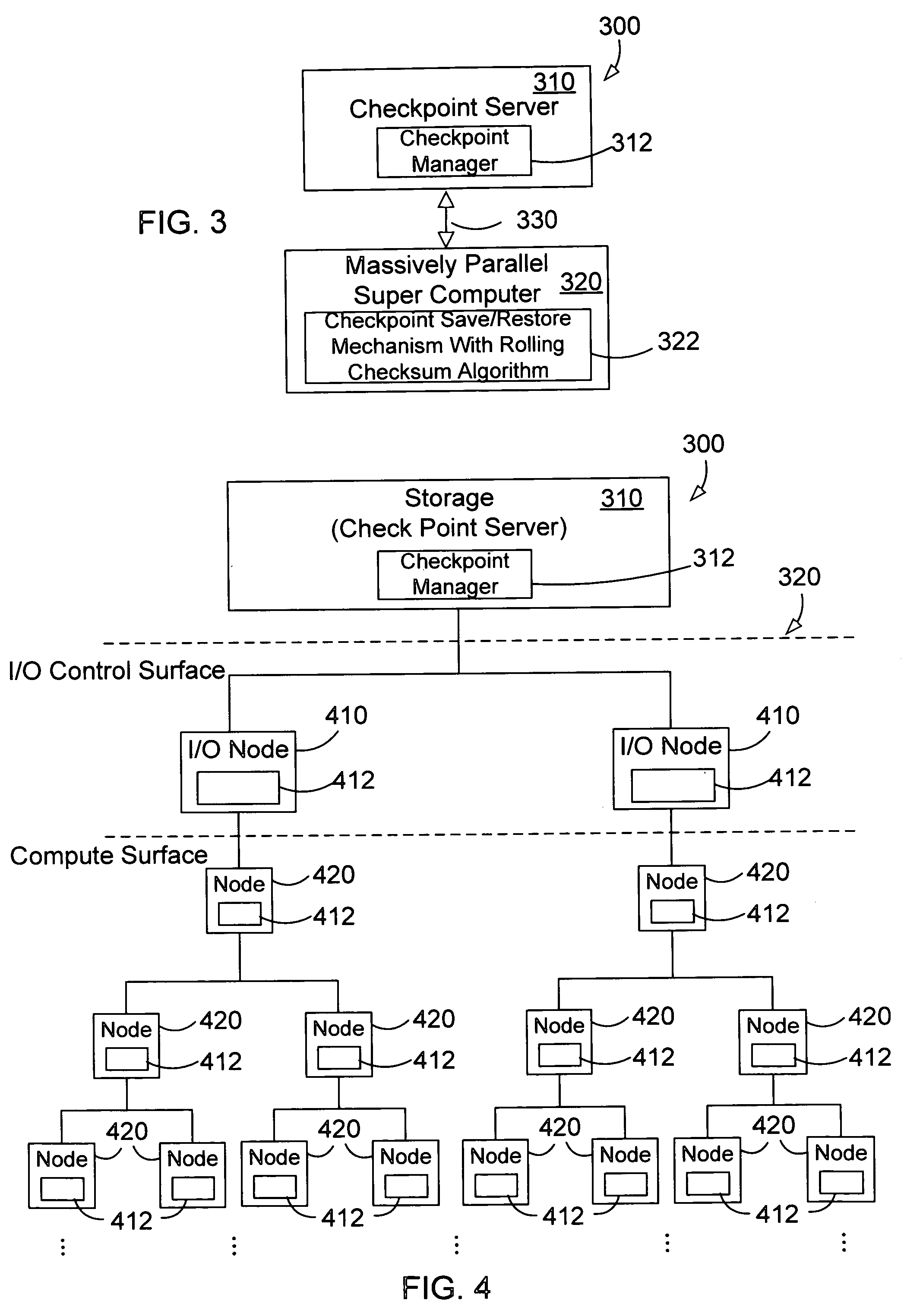

Method and apparatus for template based parallel checkpointing

InactiveUS20060236152A1Reduce the amount requiredImprove efficiencyError detection/correctionData compressionParallel computing

A method and apparatus for a template based parallel checkpoint save for a massively parallel super computer system using a parallel checksum algorithm such as rsync. In preferred embodiments, the checkpoint data for each node is compared to a template checkpoint file that resides in the storage and that was previously produced. Embodiments herein greatly decrease the amount of data that must be transmitted and stored for faster checkpointing and increased efficiency of the computer system. Embodiments are directed a parallel computer system with nodes arranged in a cluster with a high speed interconnect that can perform broadcast communication. The checkpoint contains a set of actual small data blocks with their corresponding checksums from all nodes in the system. The data blocks may be compressed using conventional non-lossy data compression algorithms to further reduce the overall checkpoint size.

Owner:IBM CORP

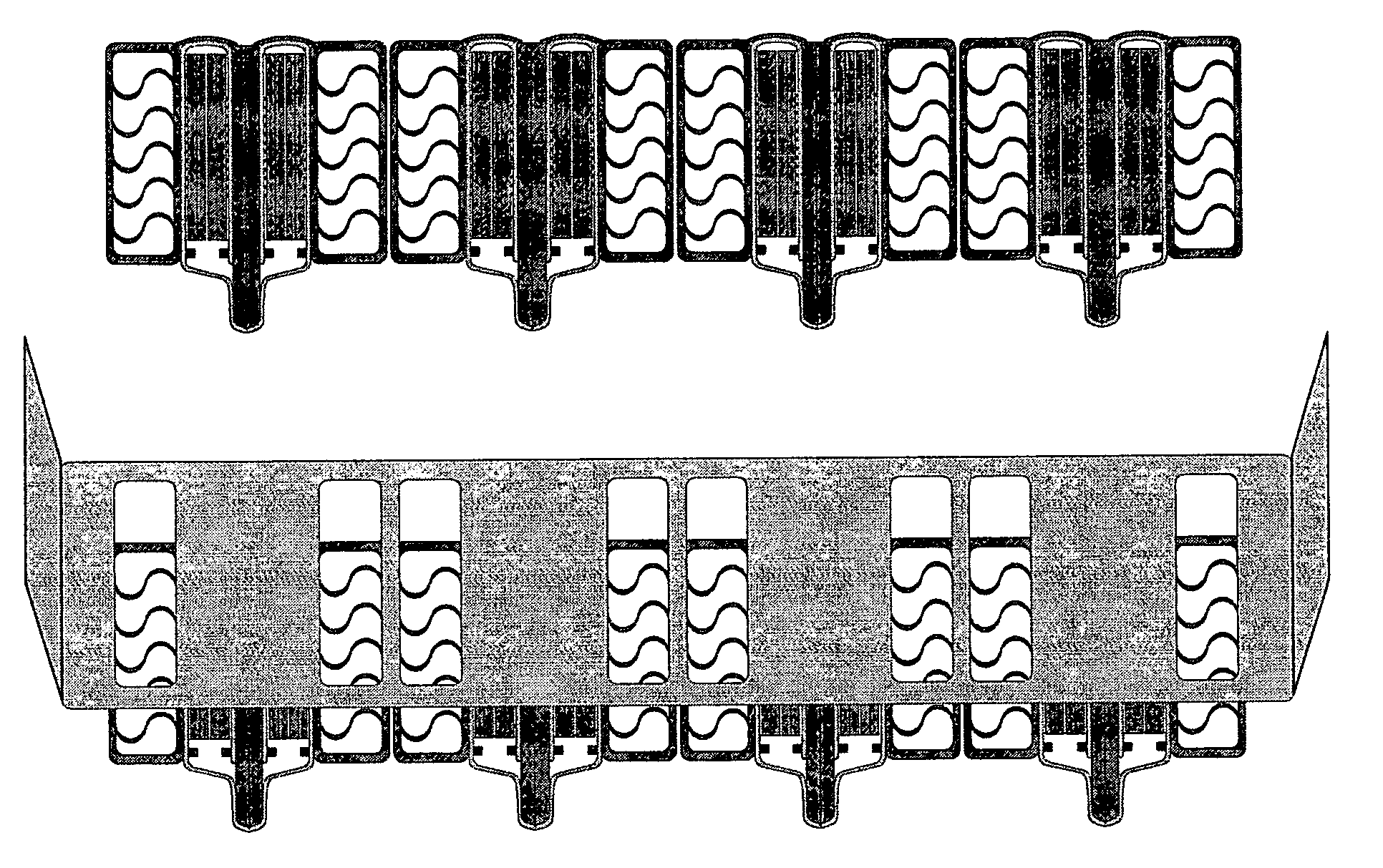

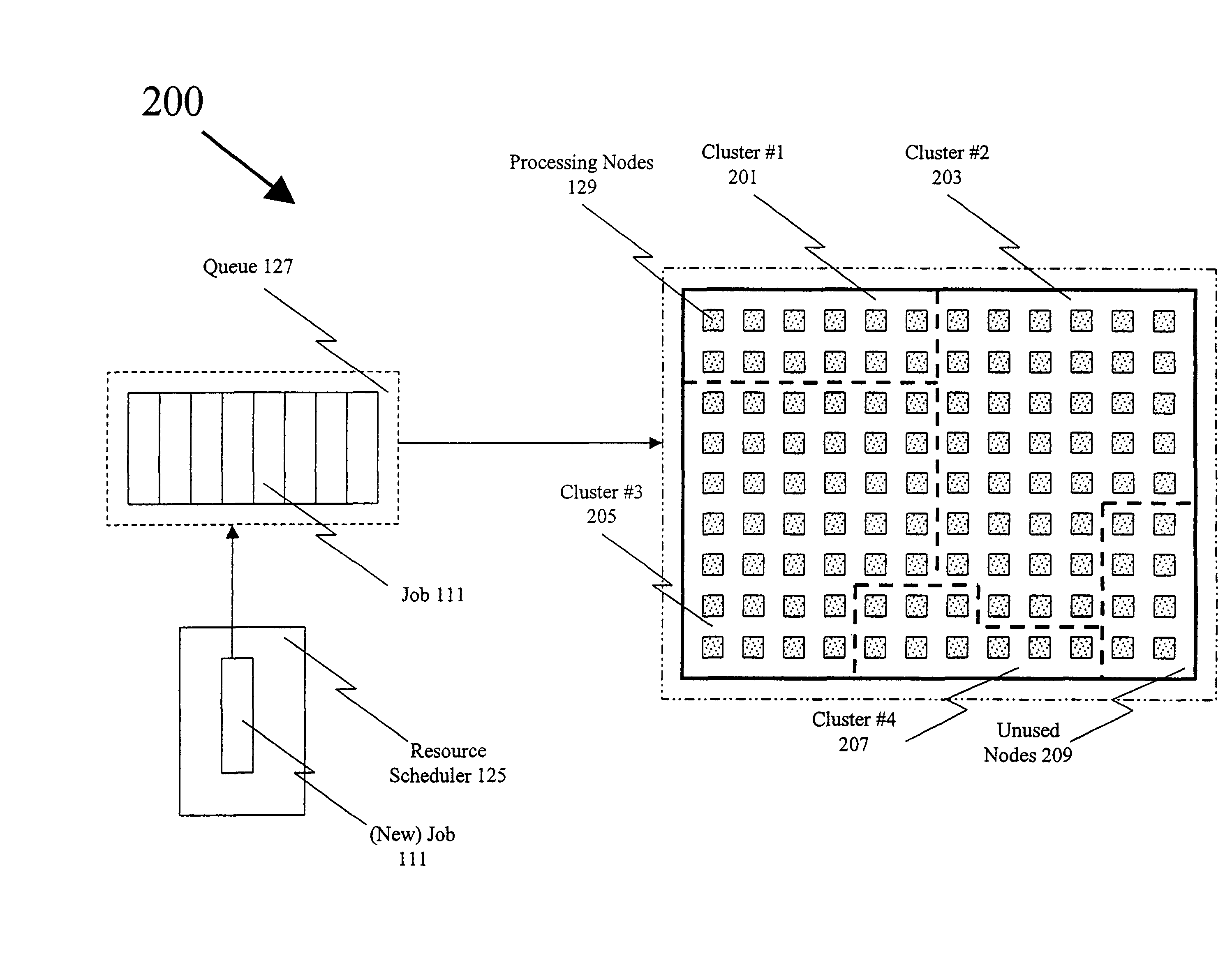

Thermal management system for computers

InactiveUS20080192428A1Digital data processing detailsPrinted circuit aspectsSupercomputerThermal management system

The invention involves systems to channel the air available for cooling inside the chassis of the computing device to force the air into selected channels in the memory bank (i.e., between the modules rather than around the modules or some other path of least resistance). This tunneled cooling (or collimated cooling) is made possible by using a set of baffles (or apertures) placed upstream of and between the cooling air supply and the memory bank area to force air to go only through the rectangular space available between adjacent modules in the memory bank of the high speed computing machines (super computers or blade servers in these cases). In some instances, it may be desirable to include both a blower fan, for forcing cool air through the baffles and through the heat exchanger(s) aligned with openings in the baffles, and a suction fan to draw or pull air as it exits the rear of the blade server chassis. The invention includes a high performance heat exchanger to be thermally coupled to the memory chips and either placed between adjacent modules (in the memory bank) or integrated within a cross sectional area of the module that is in the cooling air path; whereby, the heat from a given module is transferred laterally to a heat sink that, in turn, transfers the heat to the heat exchanger which in turn is placed in the path of cool air. However in this case, the cool air has no other alternative path but to pass through the high performance heat exchanger. The efficiency of heat exhaust is thereby maximized. Lateral heat conduction and removal is a preferred method for module cooling in order to minimize the total module height of vertically mounted Very Low Profile (VLP) memory modules. The invention is applicable to a wide range of modules; however, it is particularly suitable for a set of memory modules with unique packaging techniques that further enhance the heat exhaust.

Owner:MICROELECTRONICS ASSEMBLY TECH

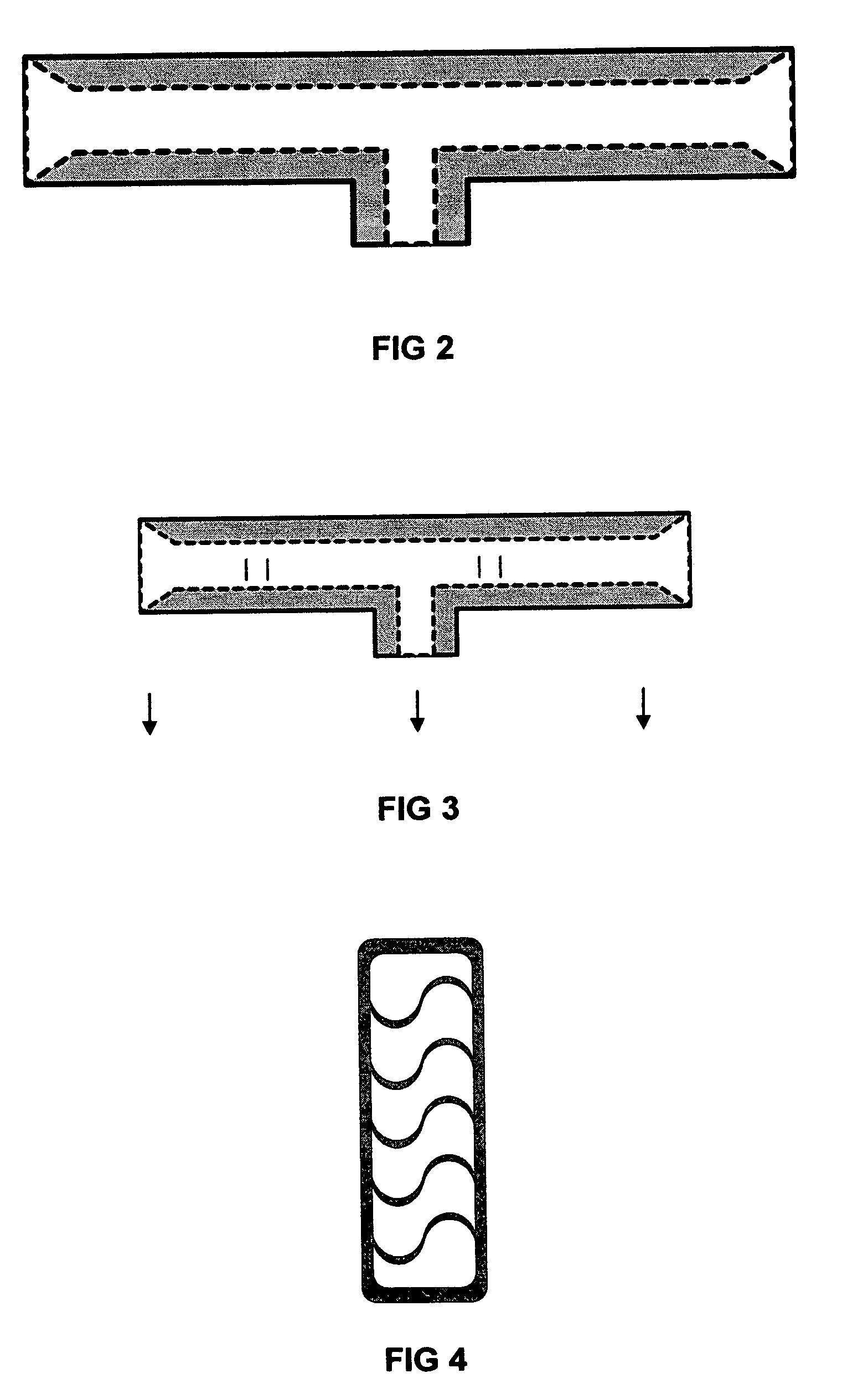

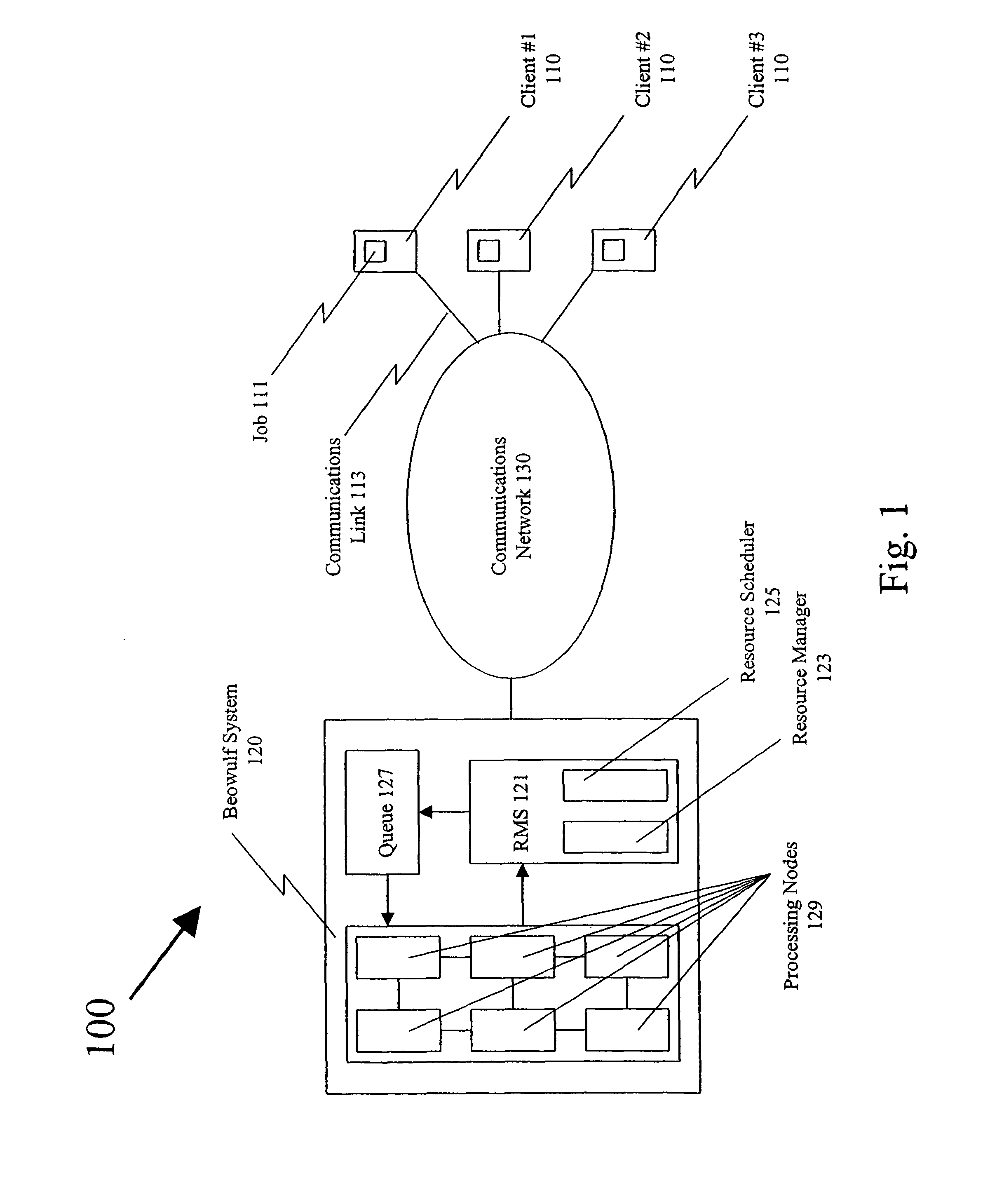

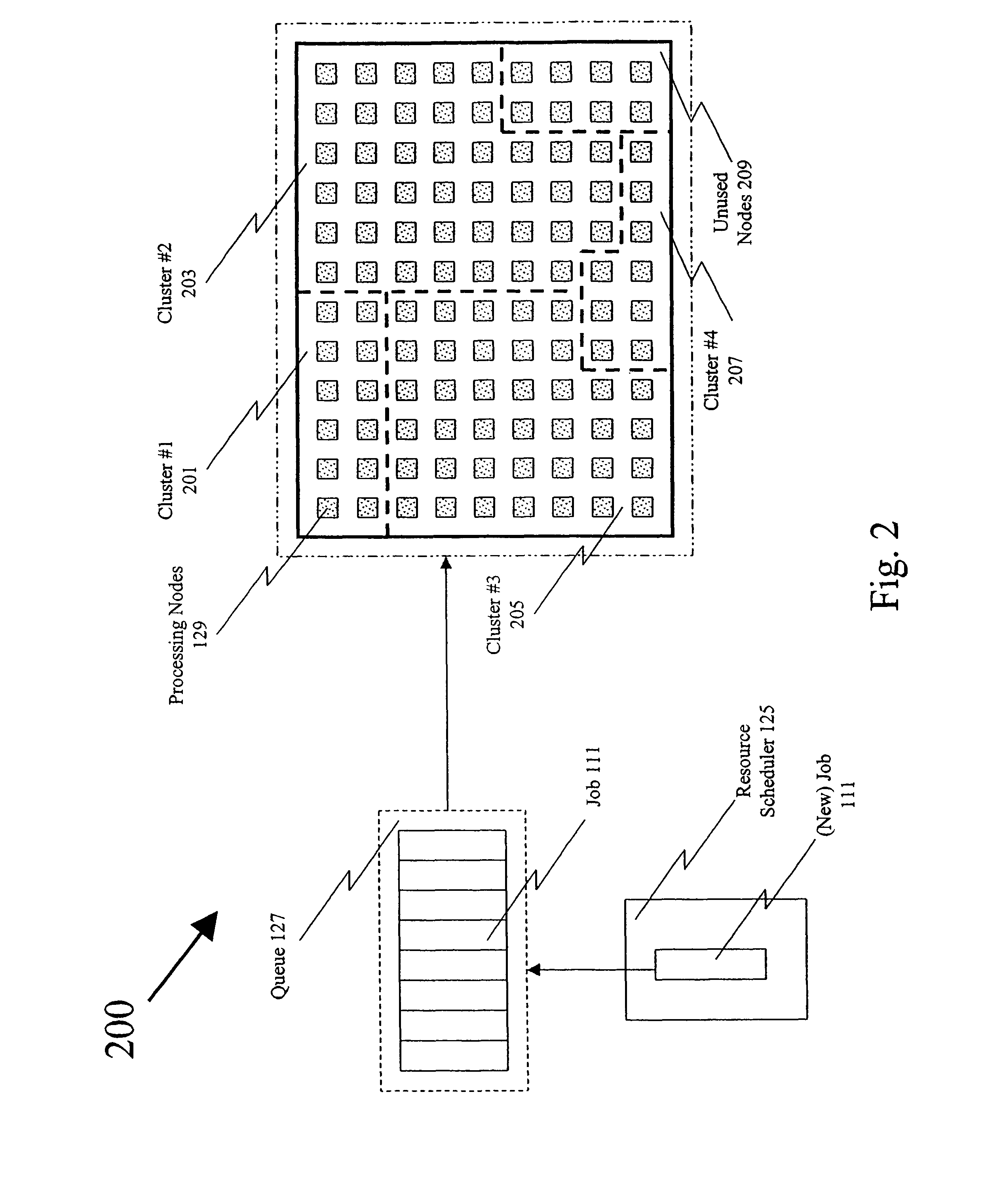

Dynamic job processing based on estimated completion time and specified tolerance time

InactiveUS7937705B1Resource allocationMultiple digital computer combinationsSupercomputerCompletion time

The invention provides a system and method for managing clusters of parallel processors for use by groups and individuals requiring supercomputer level computational power. A Beowulf cluster provides supercomputer level processing power. Unlike a traditional Beowulf cluster; however, cluster size in not singular or static. As jobs are received from users / customers, a Resource Management System (RMS) dynamically configures and reconfigures the available nodes in the system into clusters of the appropriate sizes to process the jobs. Depending on the overall size of the system, many users may have simultaneous access to supercomputer level computational processing. Users are preferably billed based on the time for completion with faster times demanding higher fees.

Owner:CALLIDUS SOFTWARE

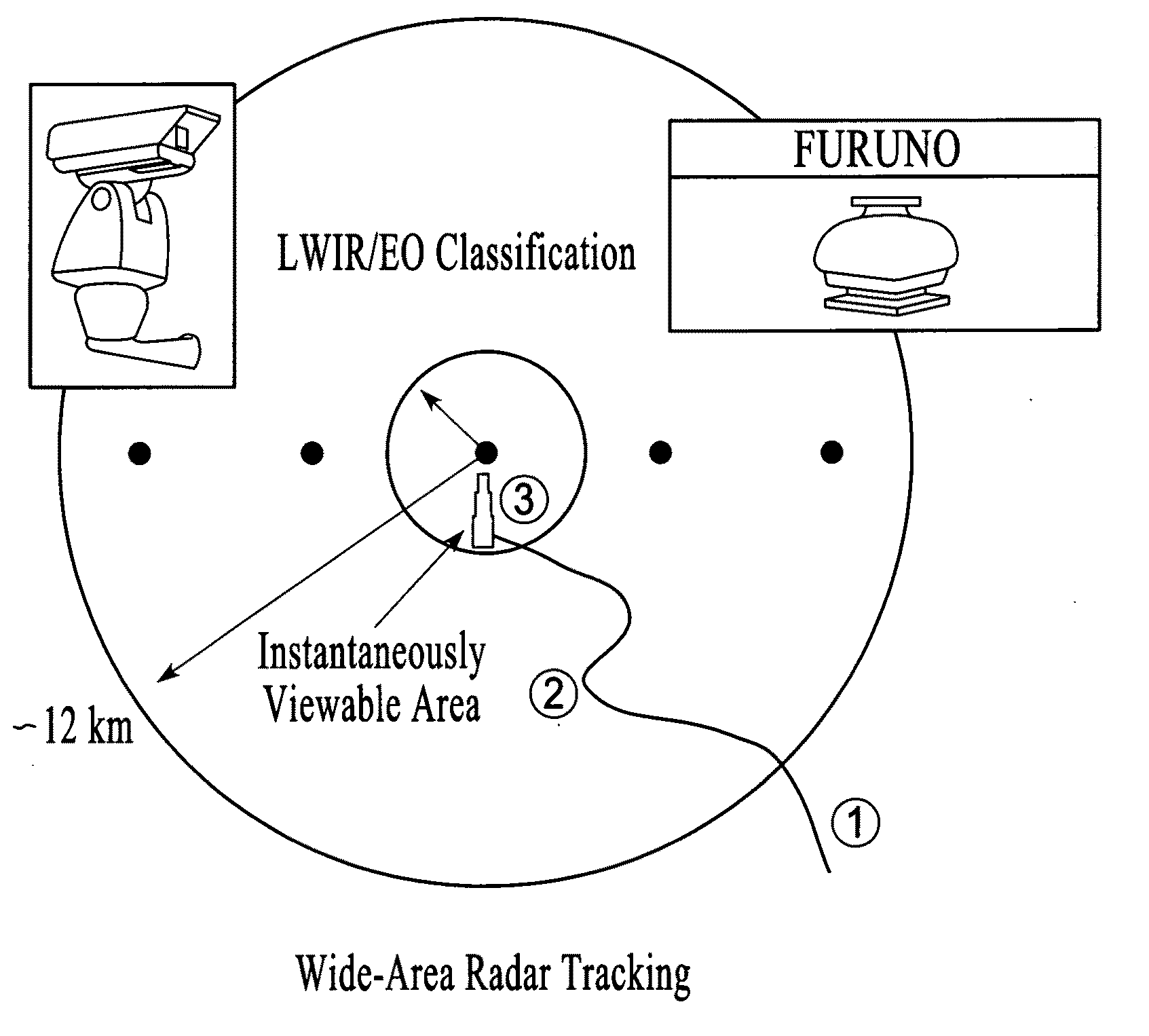

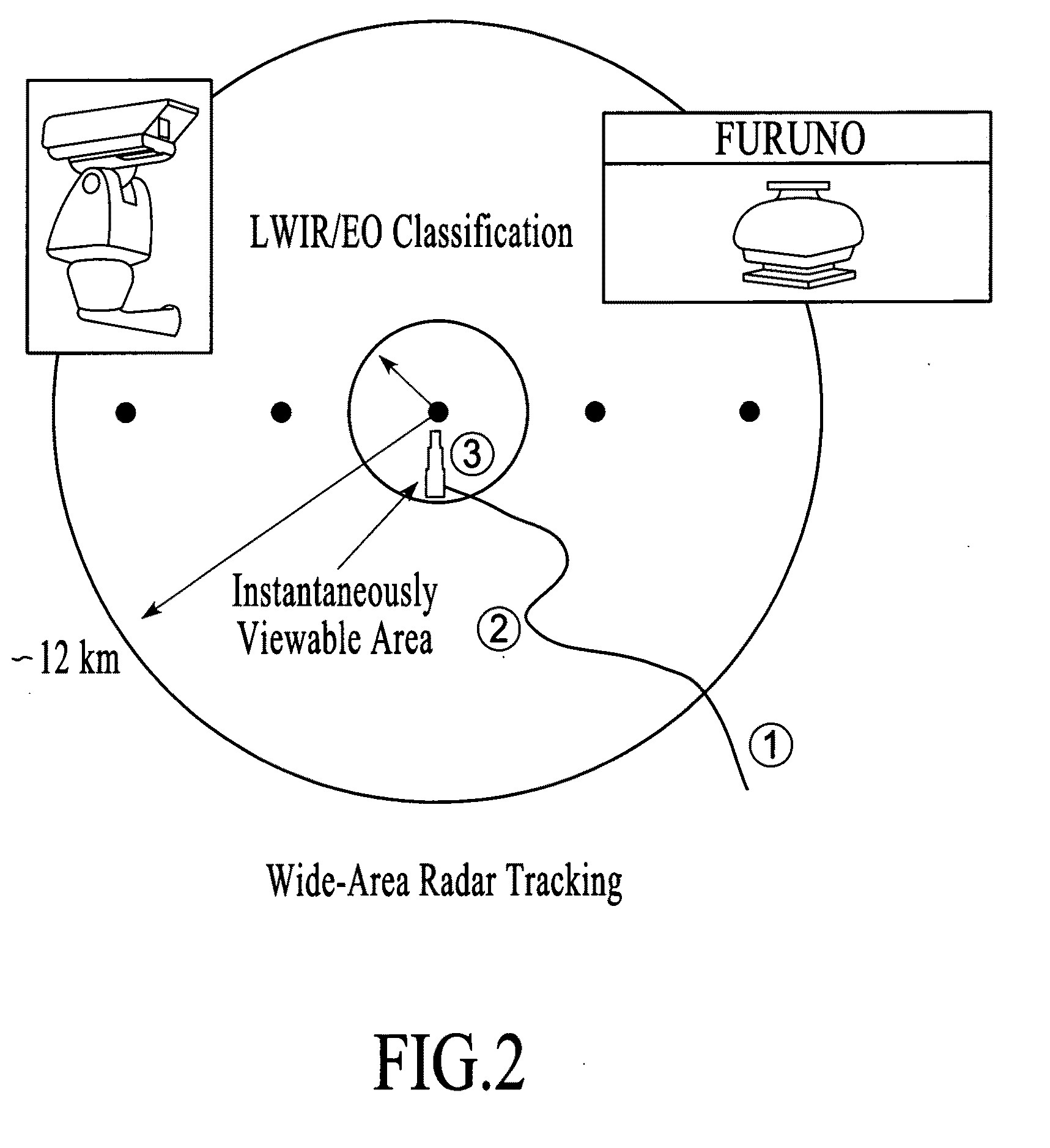

Method for surveillance to detect a land target

A land-based Smart-Sensor System and several system architectures for detection, tracking, and classification of people and vehicles automatically and in real time for border, property, and facility security surveillance is described. The preferred embodiment of the proposed Smart-Sensor System is comprised of (1) a low-cost, non-coherent radar, whose function is to detect and track people, singly or in groups, and various means of transportation, which may include vehicles, animals, or aircraft, singly or in groups, and cue (2) an optical sensor such as a long-wave infrared (LWIR) sensor, whose function is to classify the identified targets and produce movie clips for operator validation and use, and (3) an IBM CELL supercomputer to process the collected data in real-time. The Smart Sensor System can be implemented in a tower-based or a mobile-based, or combination system architecture. The radar can also be operated as a stand-alone system.

Owner:VISTA RES INC

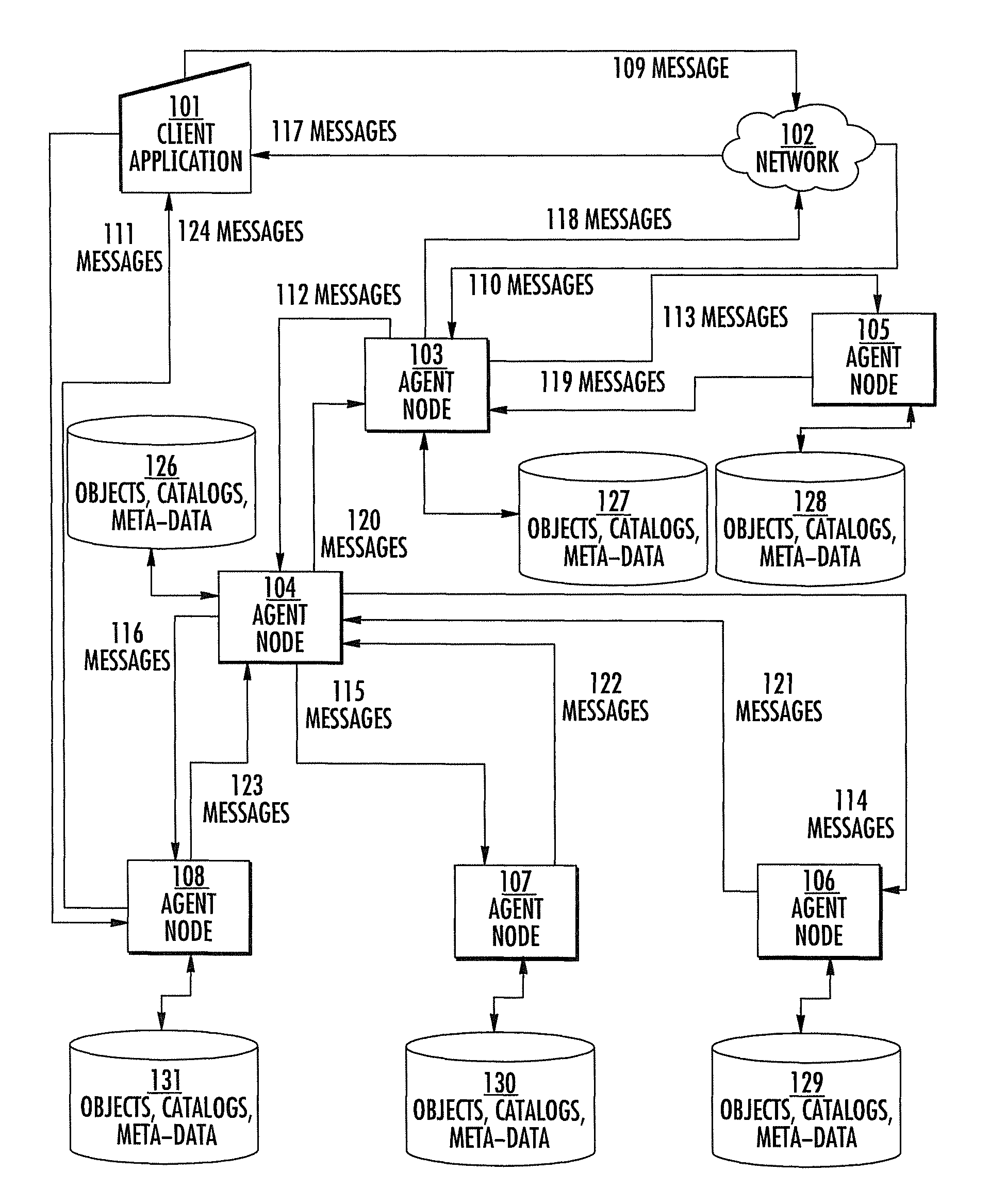

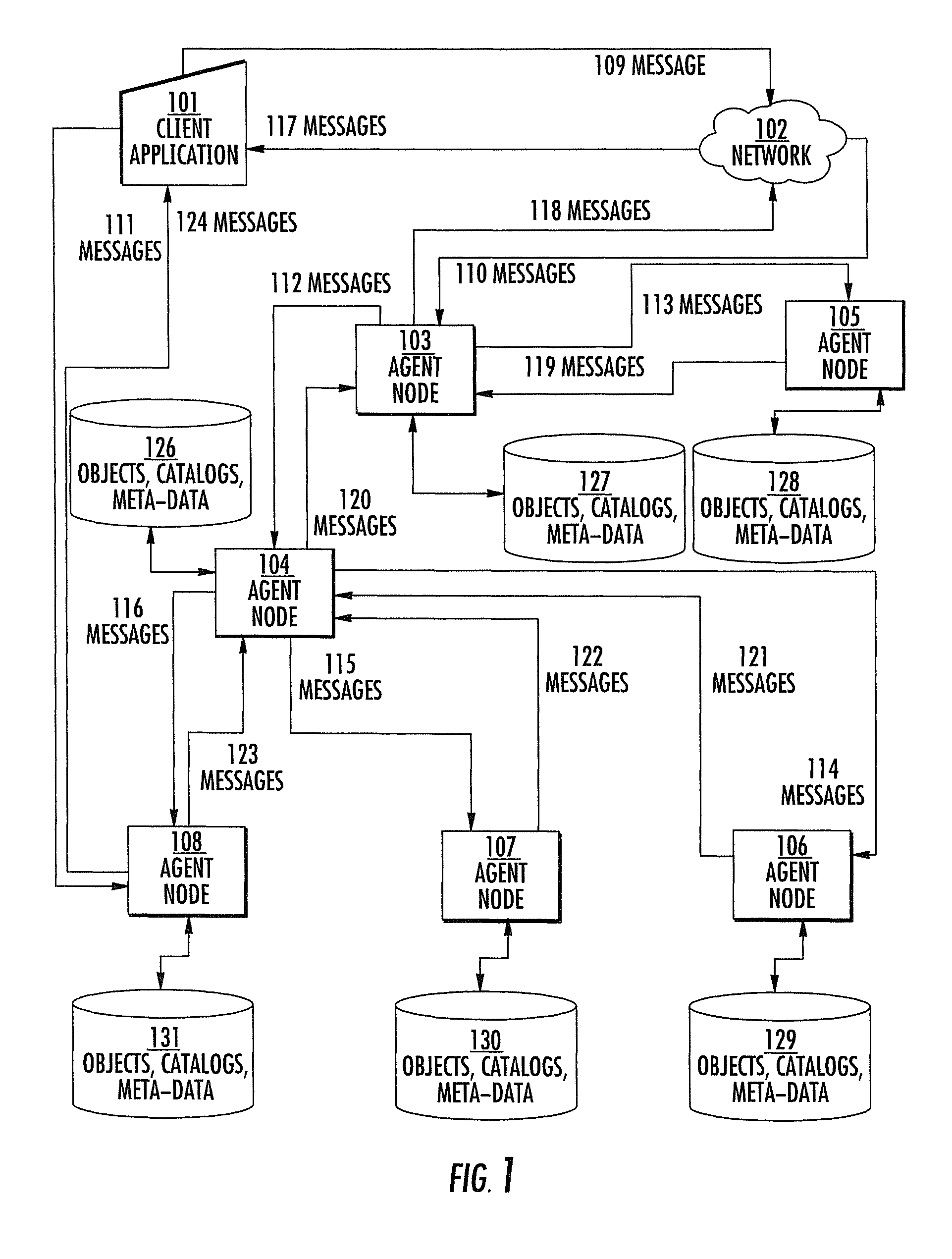

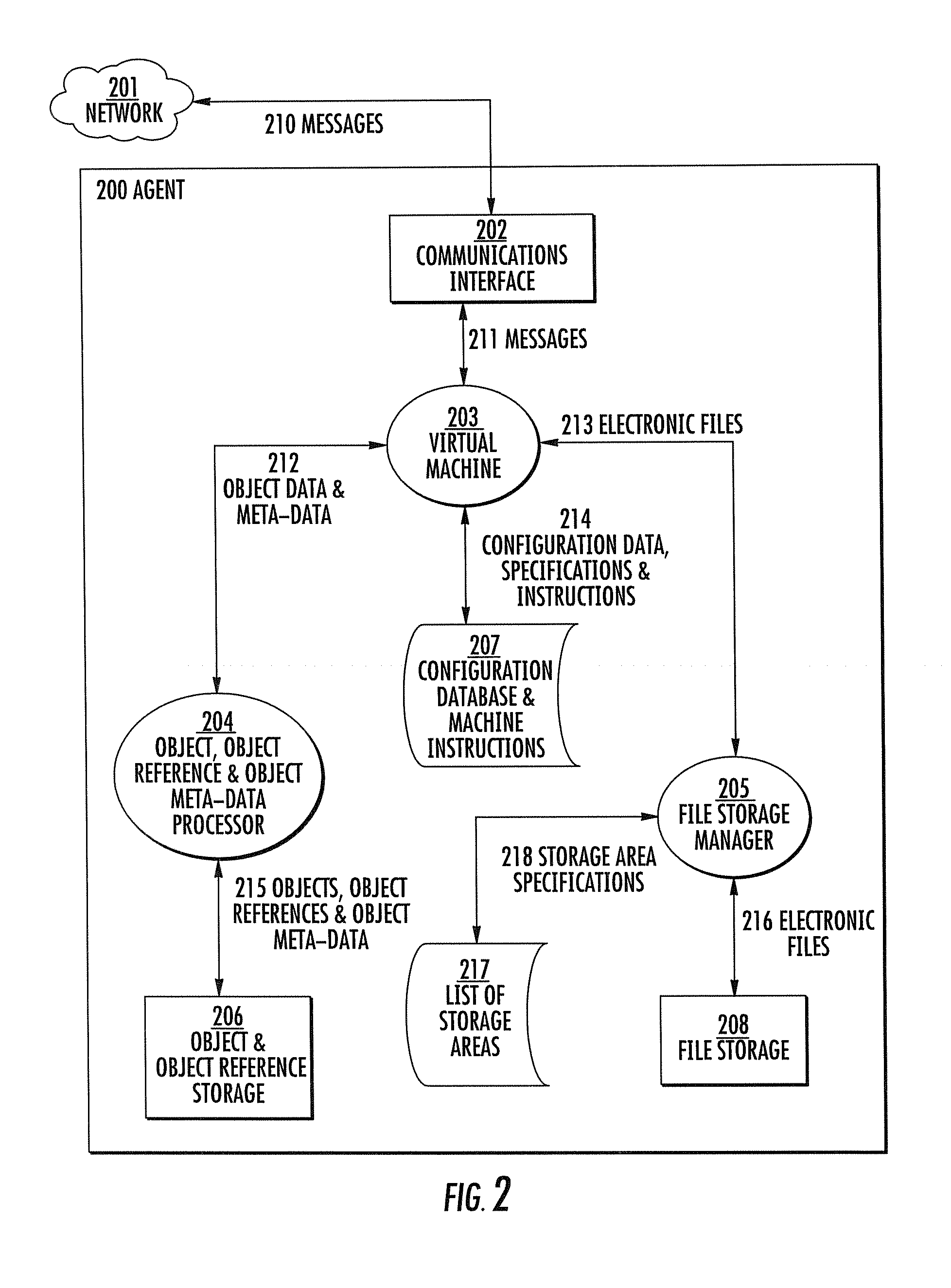

Storing and retrieving objects on a computer network in a distributed database

Further preferred embodiments of the present invention include methods directed to (a) Active Data Structures, (b) Mobile Devices, (c) Ad-Hoc Device Collections, and (d) Concurrent Massively Parallel Supercomputers. Therein, a distributed, object-oriented database engine utilizing independent, intelligent processing nodes as a cooperative, massively parallel system with redundancy and fault tolerance. Instead of using traditional methods of parallelism as found in most distributed databases, the invention utilizes a messaging system and a series of message processing nodes to determine where attributes and data files associated with objects are stored. The architecture is loosely coupled, each node independently determining if it manages or routes storage and retrieval requests.

Owner:POINTOFDATA CORP

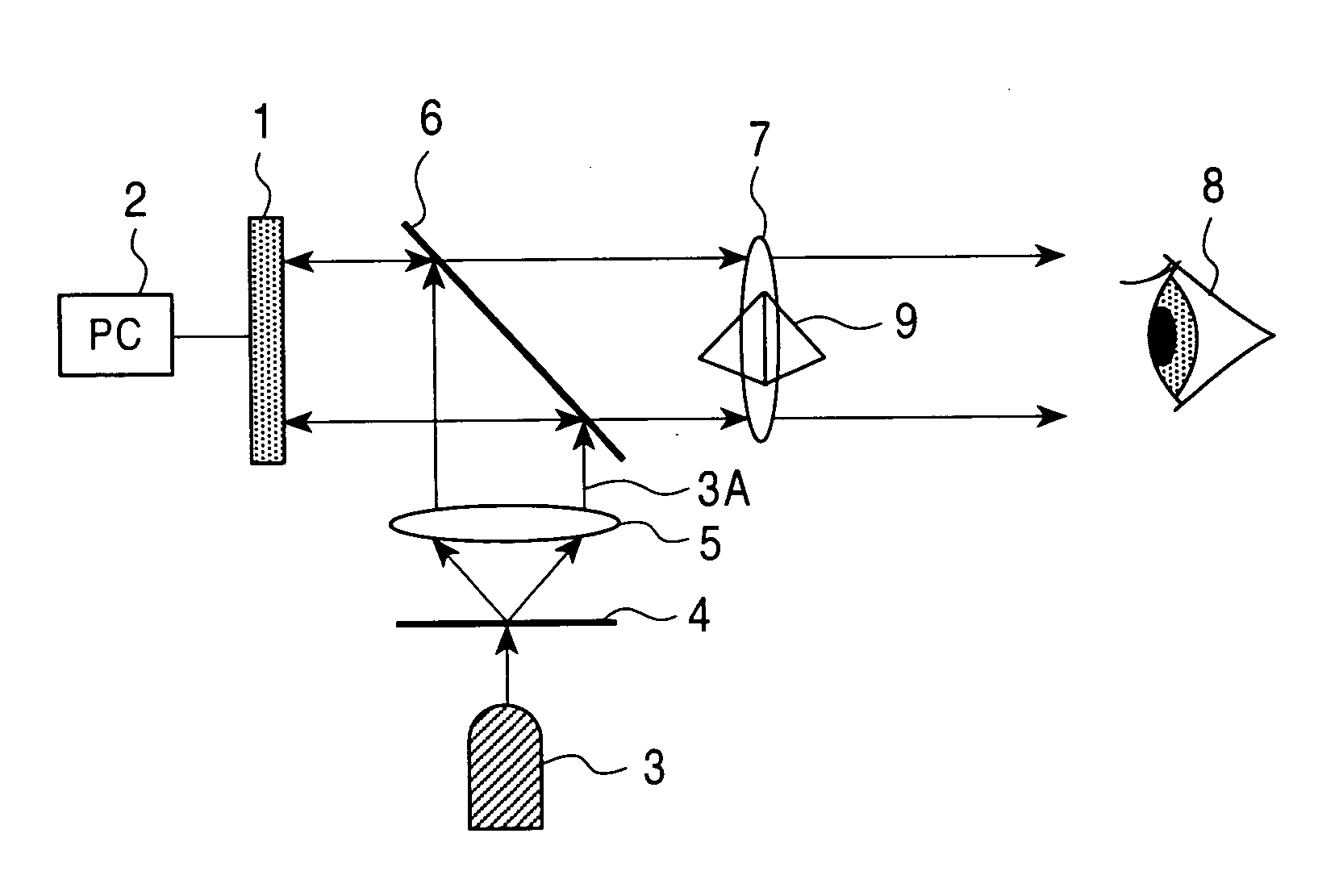

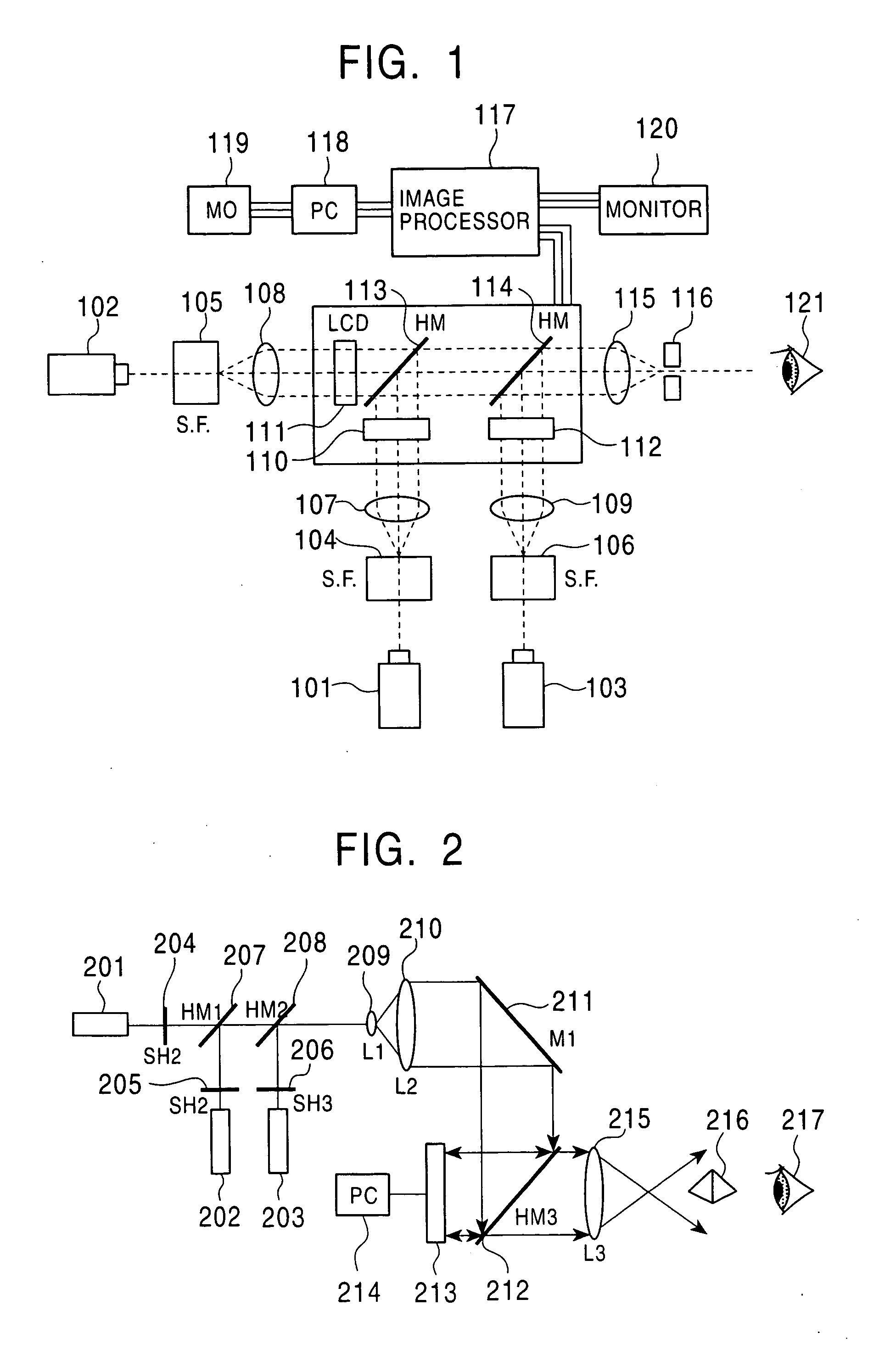

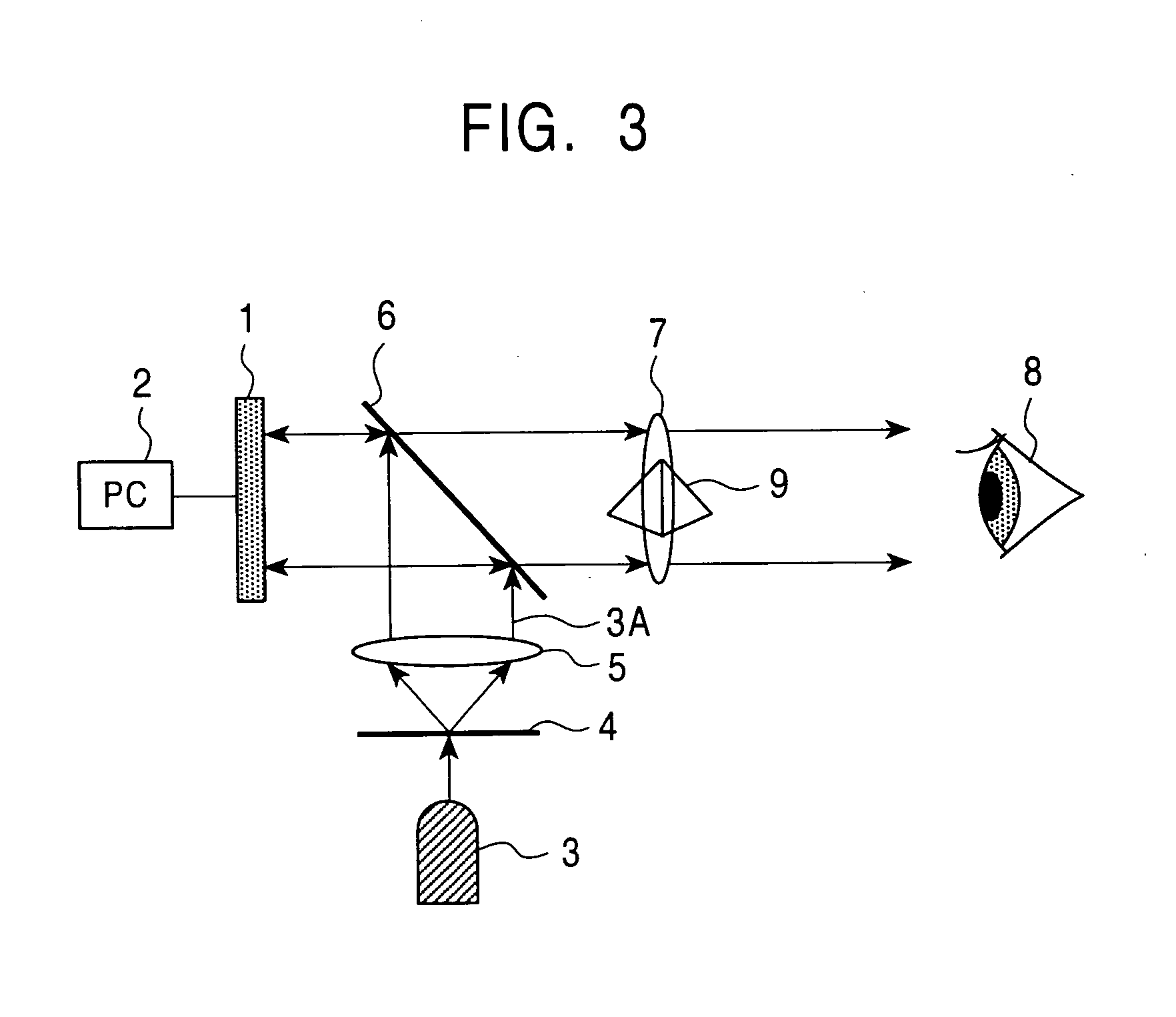

Moving image holography reproducing device and color moving image holography reproducing device

InactiveUS20050041271A1Easy to handleSimply usedHolographic light sources/light beam propertiesActive addressable light modulatorSupercomputerLiquid-crystal display

A moving-image holographic reproducing device that includes a reflective liquid crystal display and further a light-emitting diode functioning as a light source and that is capable of reproducing a high-resolution image in a simple way is provided. Also, a color moving-image holographic reproducing device that is capable of reproducing a color holographic image by a significantly simplified structure using a single plate hologram without the need for time-multiplexing is provided. The moving-image holographic reproducing device includes a computer for creating a hologram from three-dimensional coordinate data, a reflective liquid crystal display for displaying the hologram, a half mirror for projecting the displayed hologram, and a light-emitting diode, wherein a reconstructed three-dimensional image is displayed by illuminating the half mirror with light emitted from the light-emitting diode. Manipulating a sufficiently large size three-dimensional holographic image in real time requires computational power one thousand to ten thousand times higher than that of current supercomputers. According to the present invention, such an amount of information can be processed at high speed by dedicated hardware in a highly parallel and distributed manner.

Owner:JAPAN SCI & TECH CORP

Checkpointing filesystem

InactiveUS6895416B2Fast executionError preventionProgram synchronisationSupercomputerDistributed memory

The present in invention is directed to a checkpointing filesystem of a distributed-memory parallel supercomputer comprising a node that accesses user data on the filesystem, the filesystem comprising an interface that is associated with a disk for storing the user data. The checkpointing filesystem provides for taking and checkpoint of the filesystem and rolling back to a previously taken checkpoint, as well as for writing user data to and deleting user data from the checkpointing filesystem. The checkpointing filesystem provides a recently written file allocation table (WFAT) for maintaining information regarding the user data written since a previously taken checkpoint and a recently deleted file allocation table (DFAT) for maintaining information regarding user data deleted from since the previously taken checkpoint, both of which are utilized by the checkpointing filesystem to take a checkpoint of the filesystem and rollback the filesystem to a previously taken checkpoint, as well as to write and delete user data from the checkpointing filesystem.

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com