Patents

Literature

2242 results about "Floating point" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

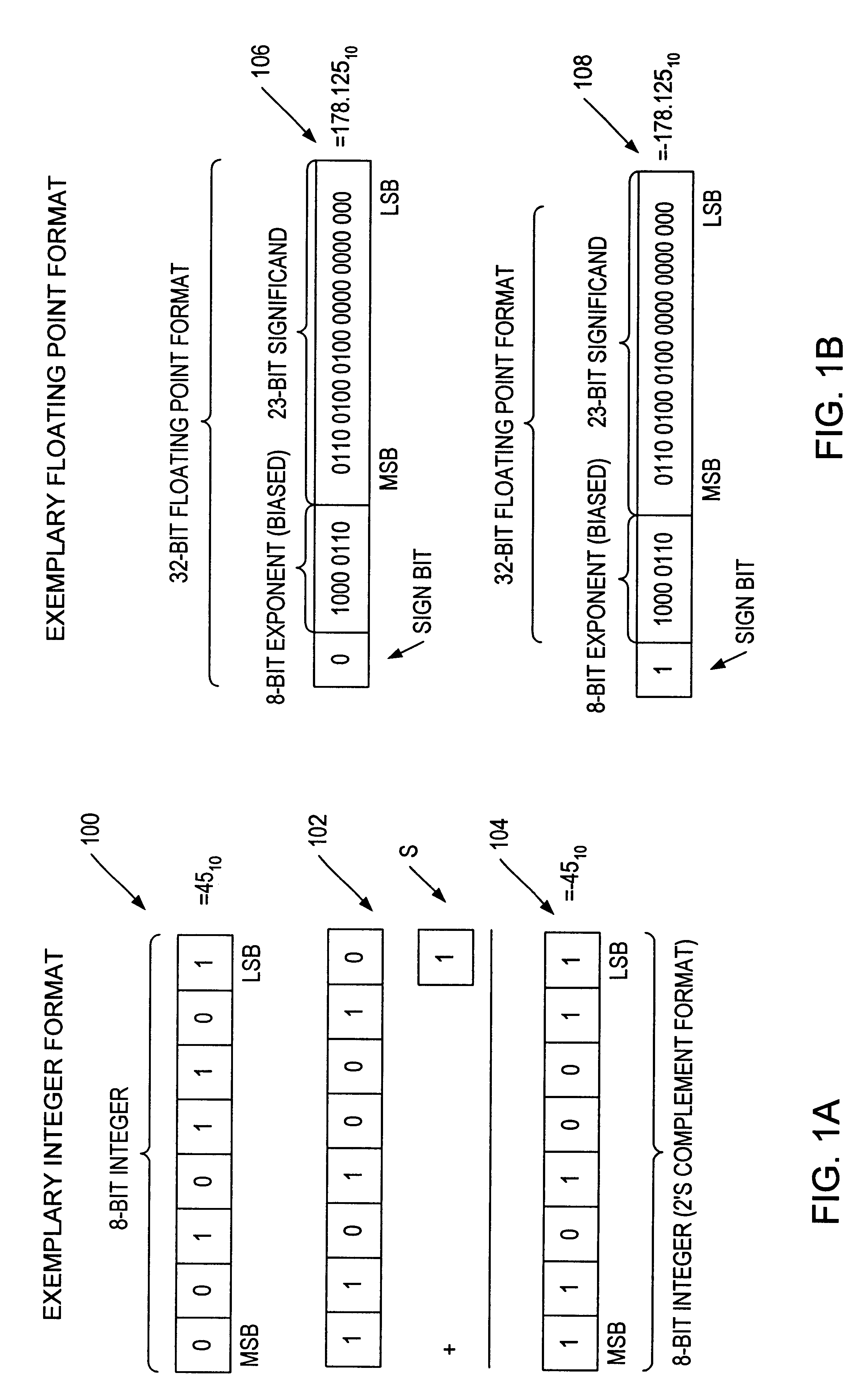

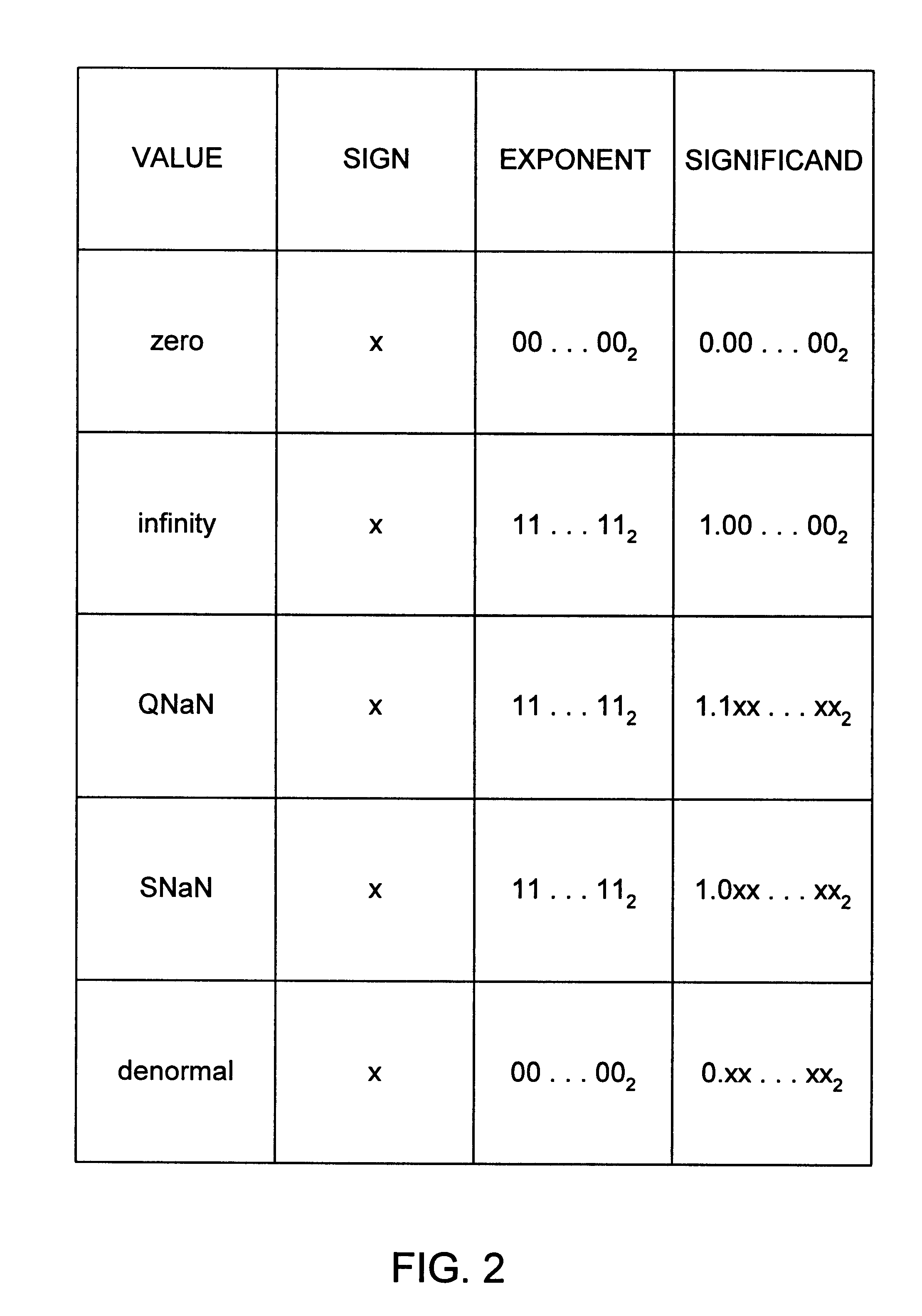

In computing, floating-point arithmetic (FP) is arithmetic using formulaic representation of real numbers as an approximation to support a trade-off between range and precision. For this reason, floating-point computation is often found in systems which include very small and very large real numbers, which require fast processing times. A number is, in general, represented approximately to a fixed number of significant digits (the significand) and scaled using an exponent in some fixed base; the base for the scaling is normally two, ten, or sixteen. A number that can be represented exactly is of the following form...

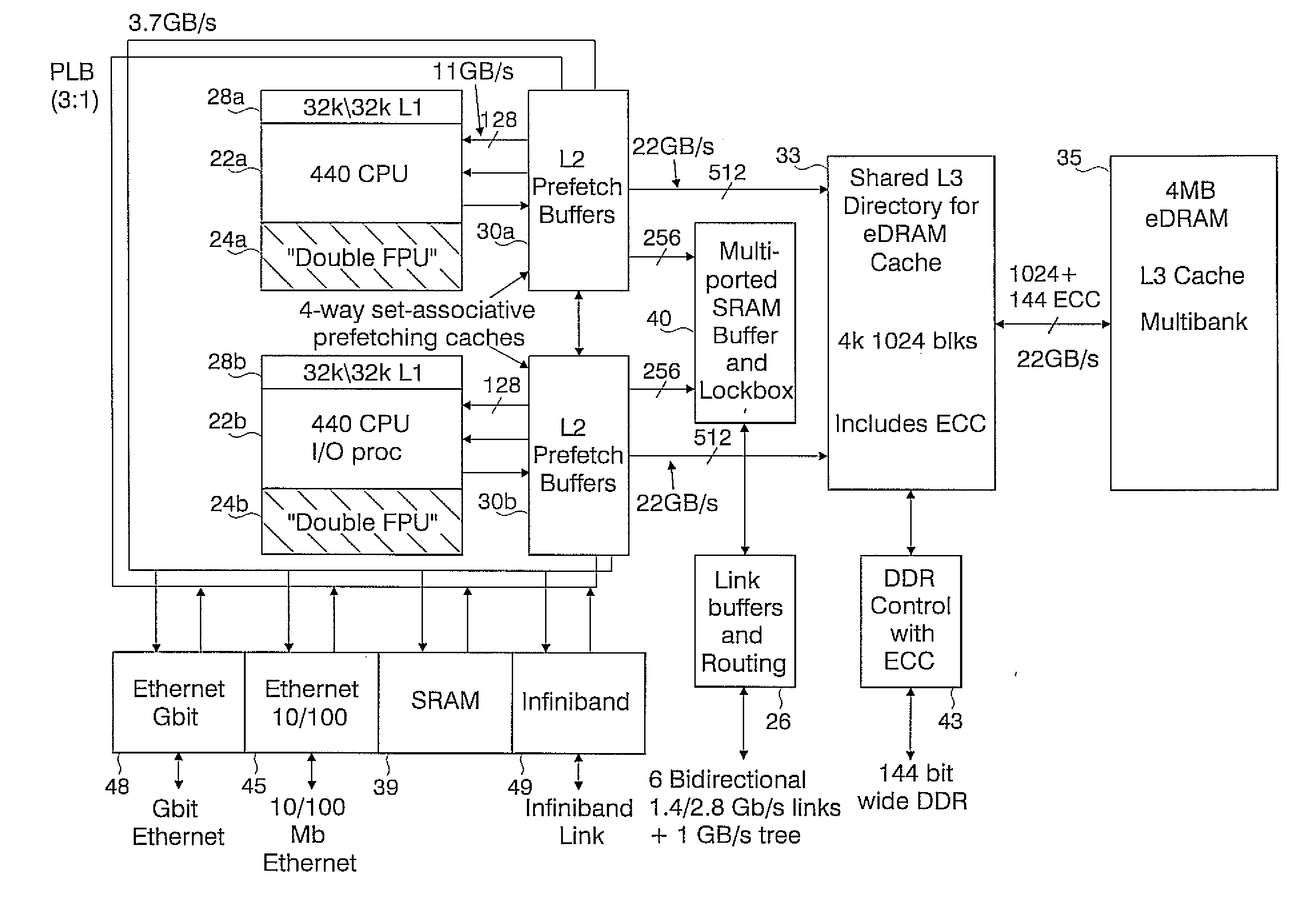

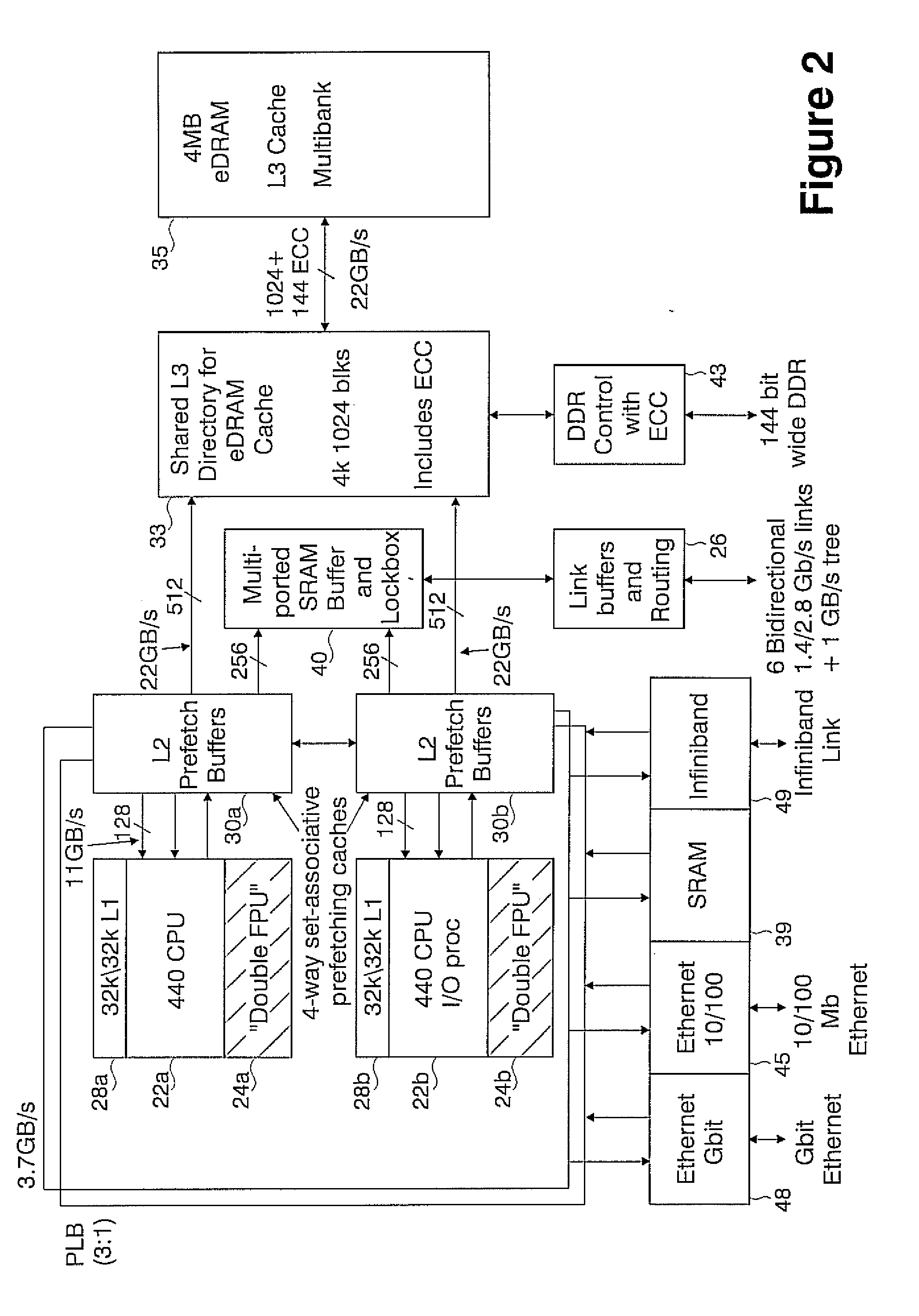

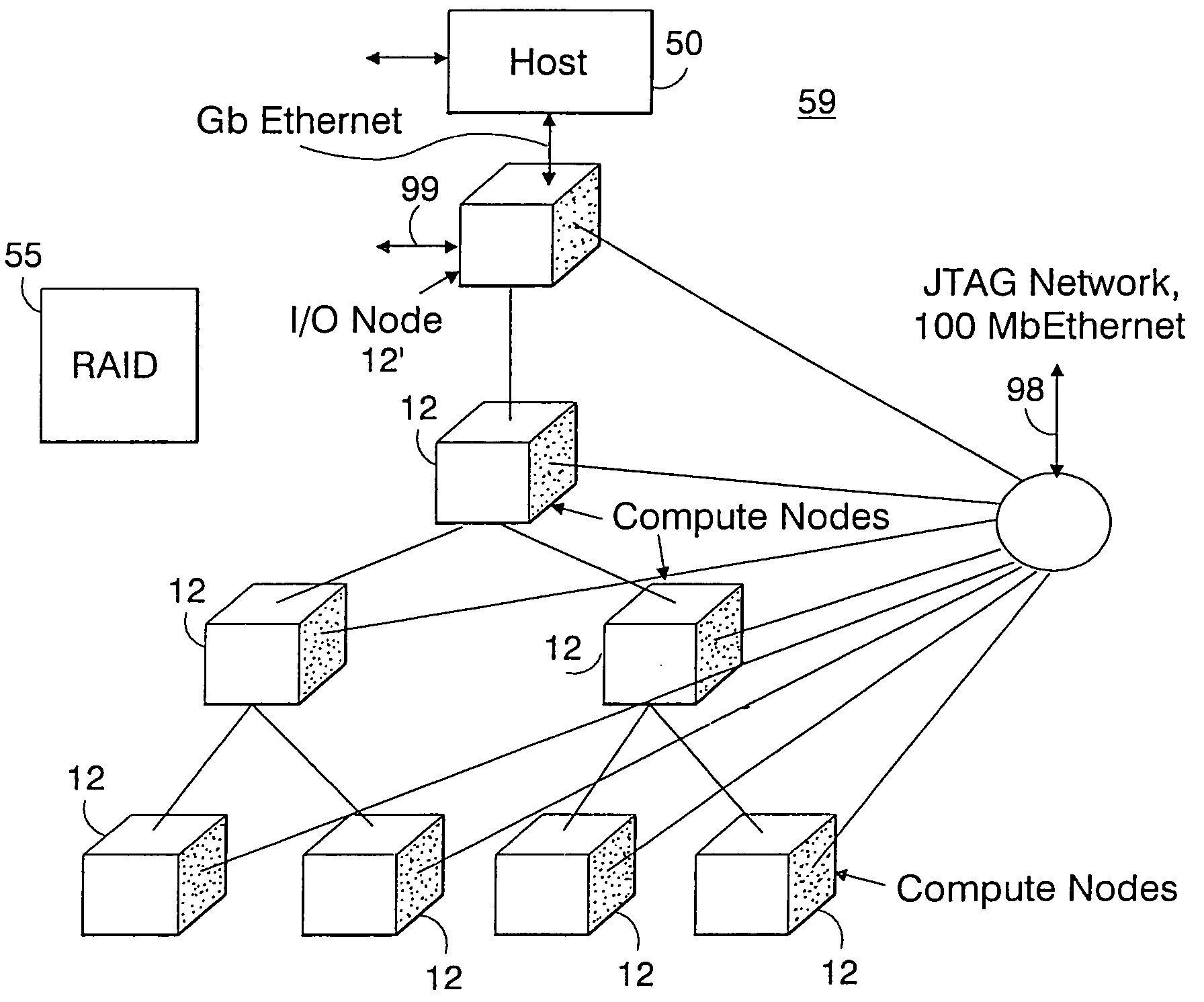

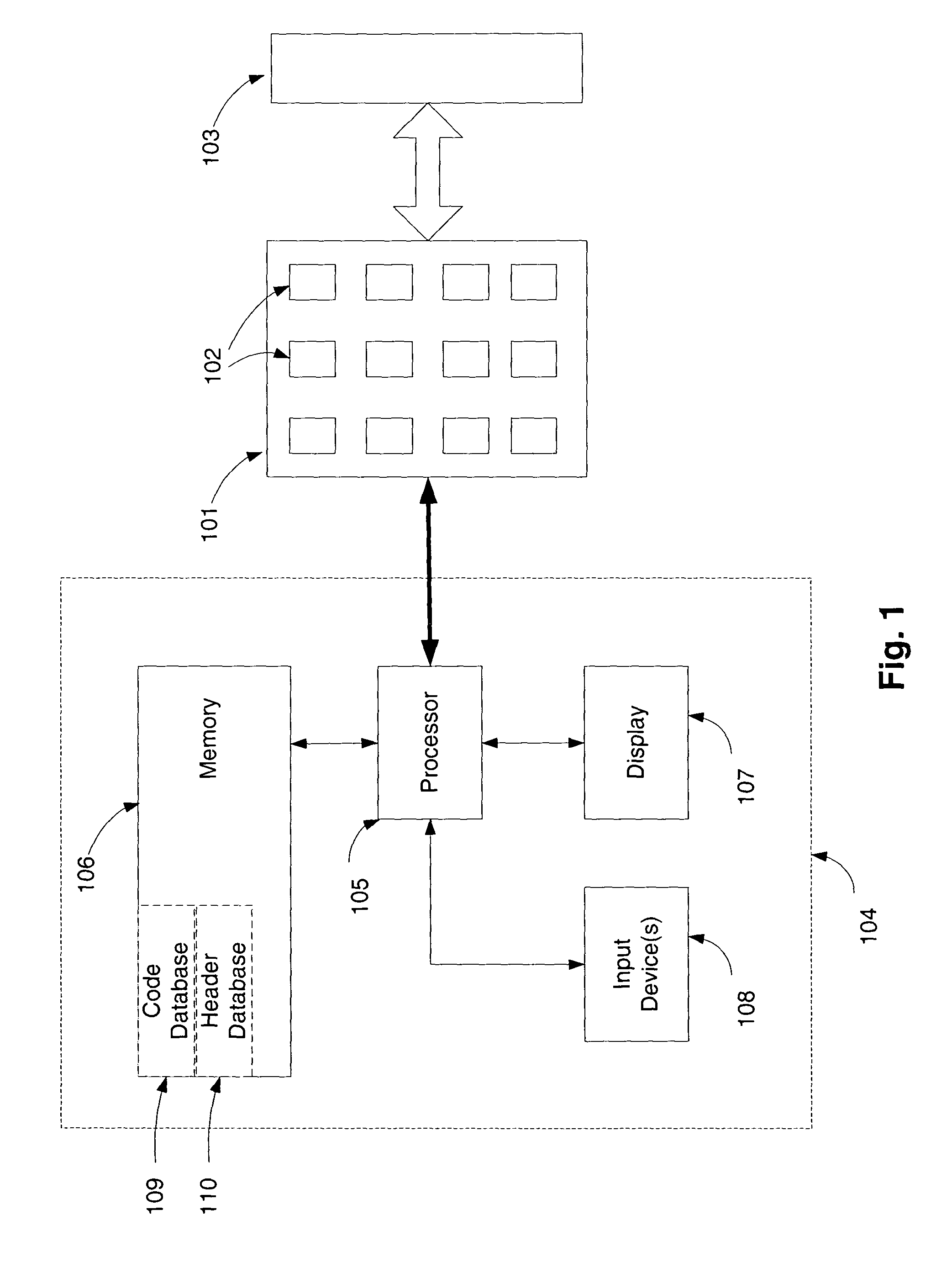

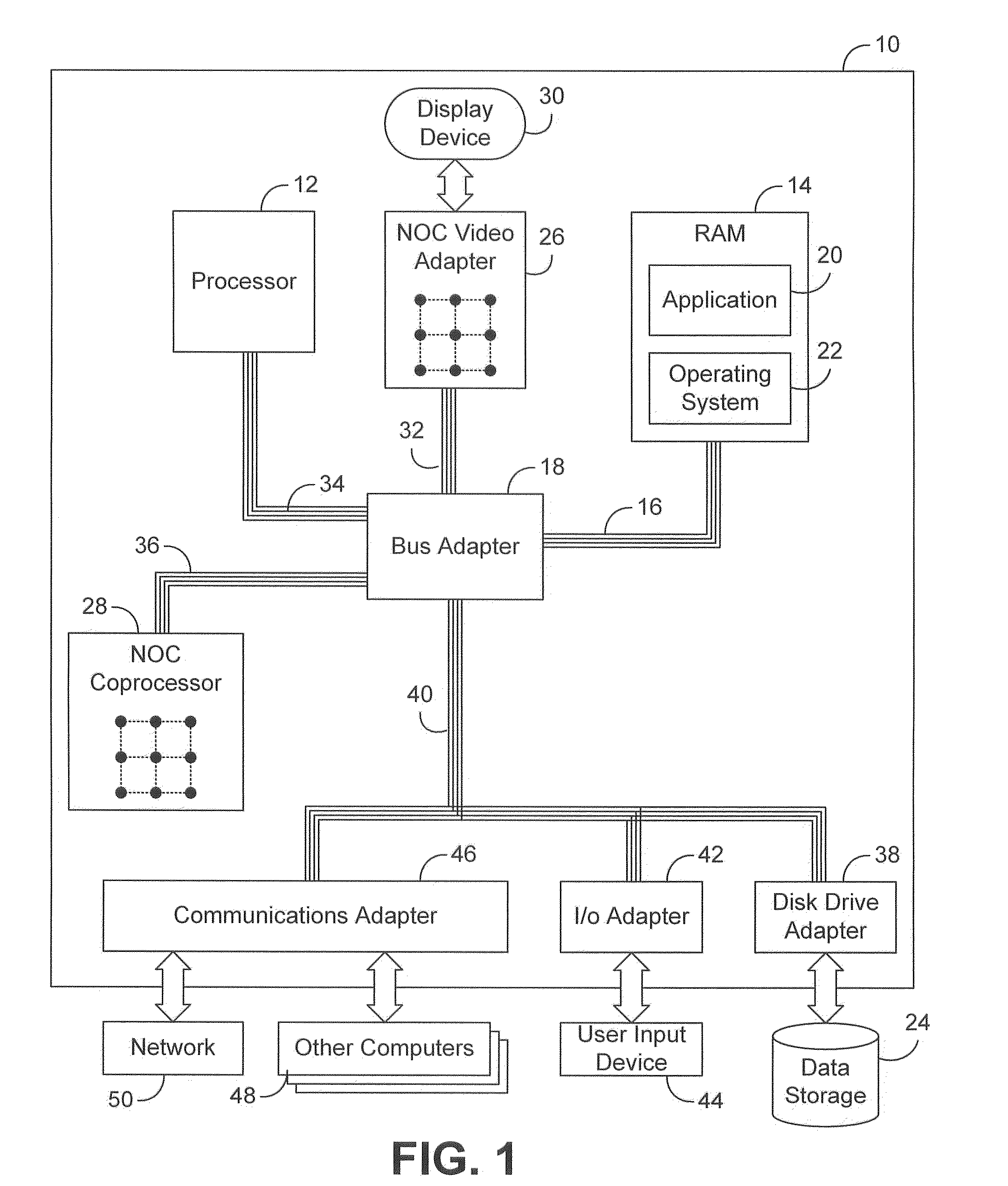

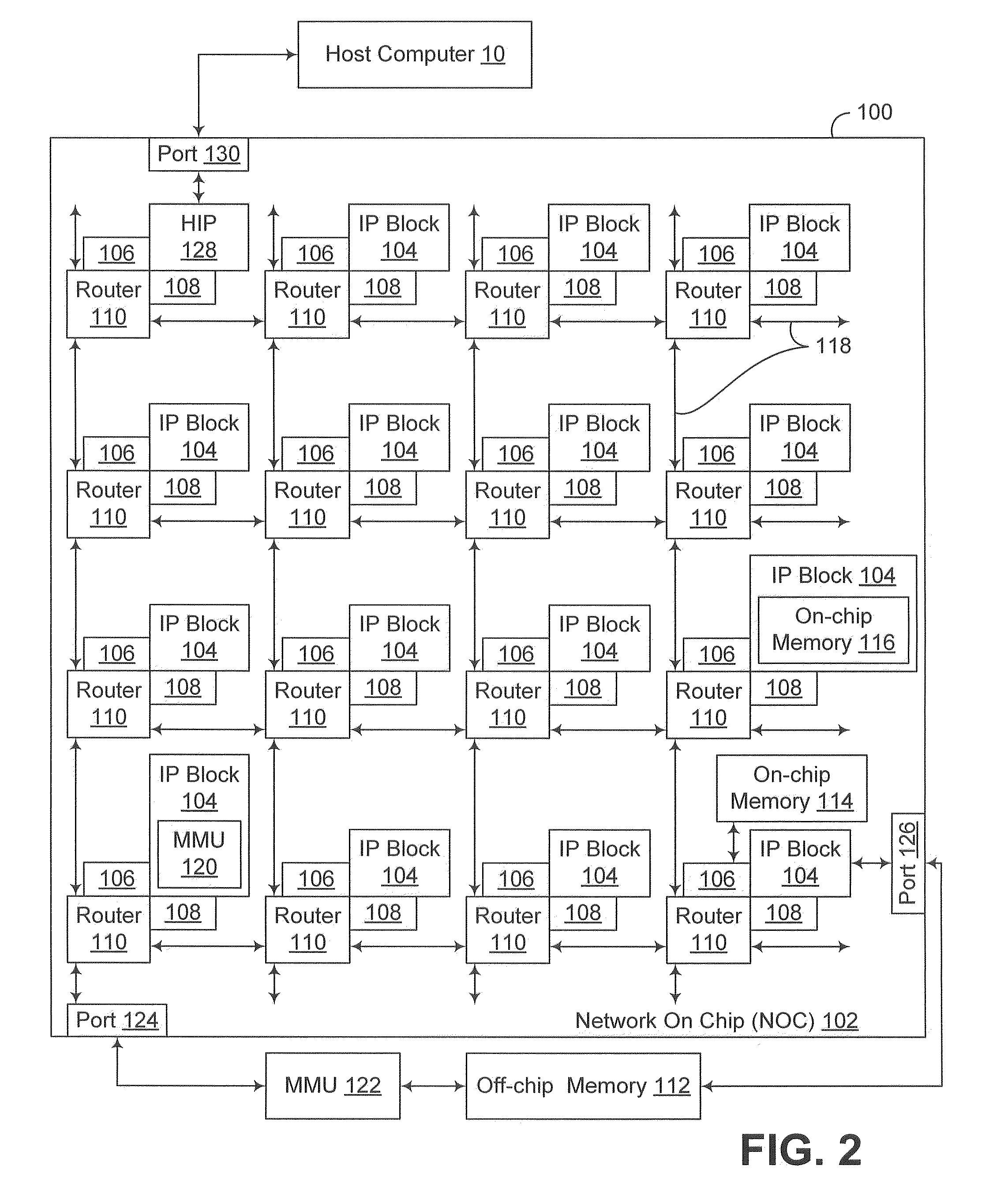

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

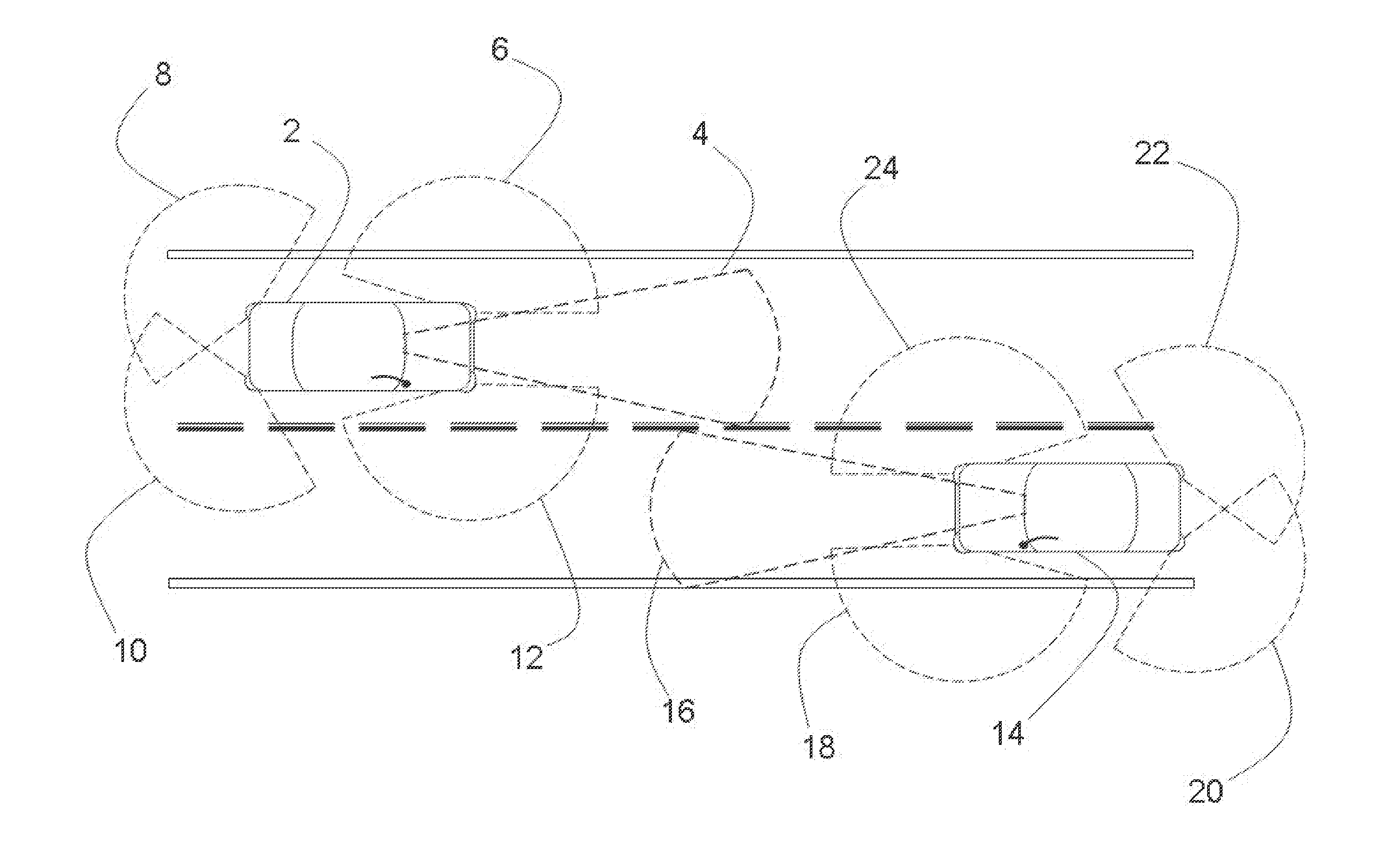

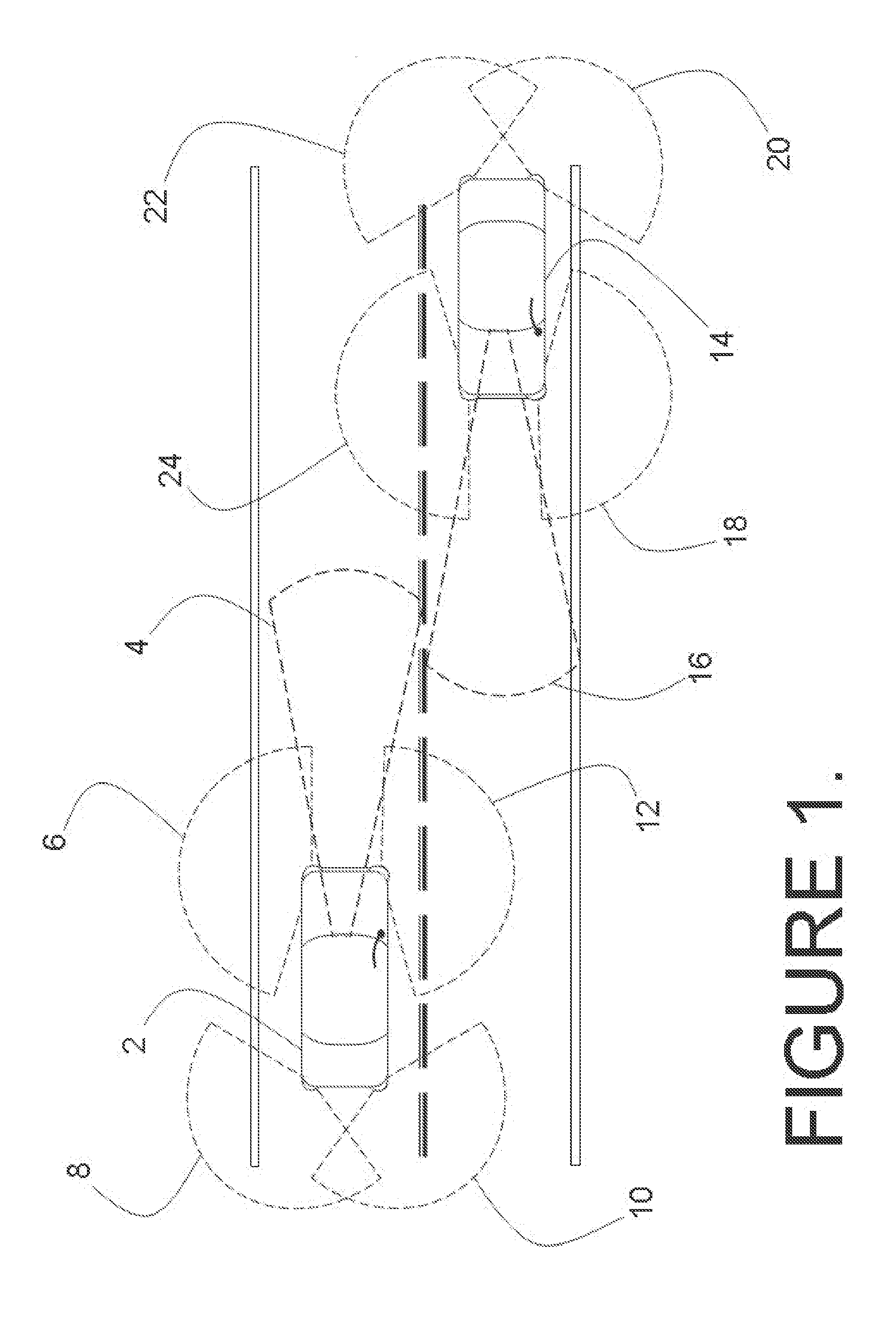

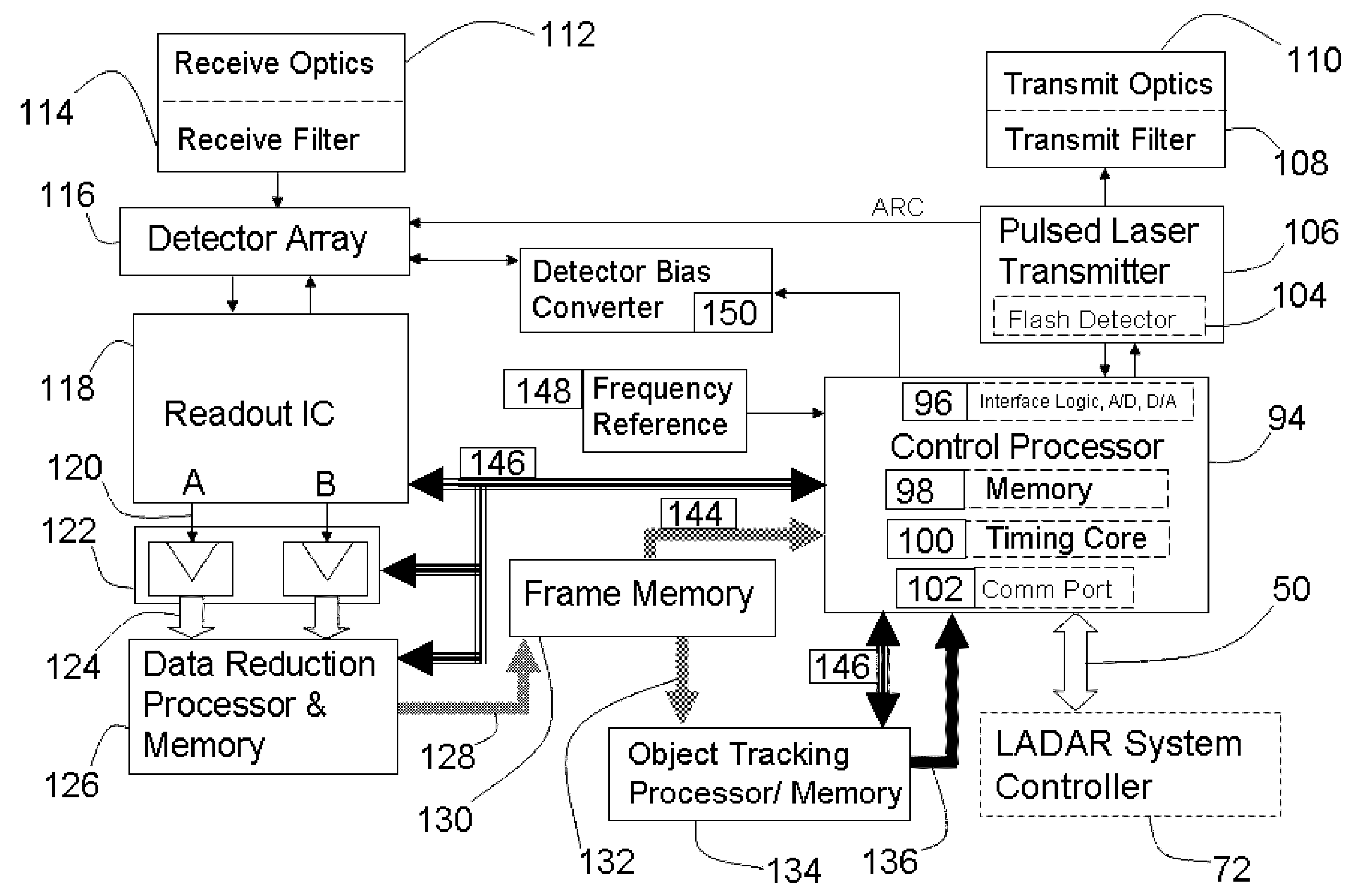

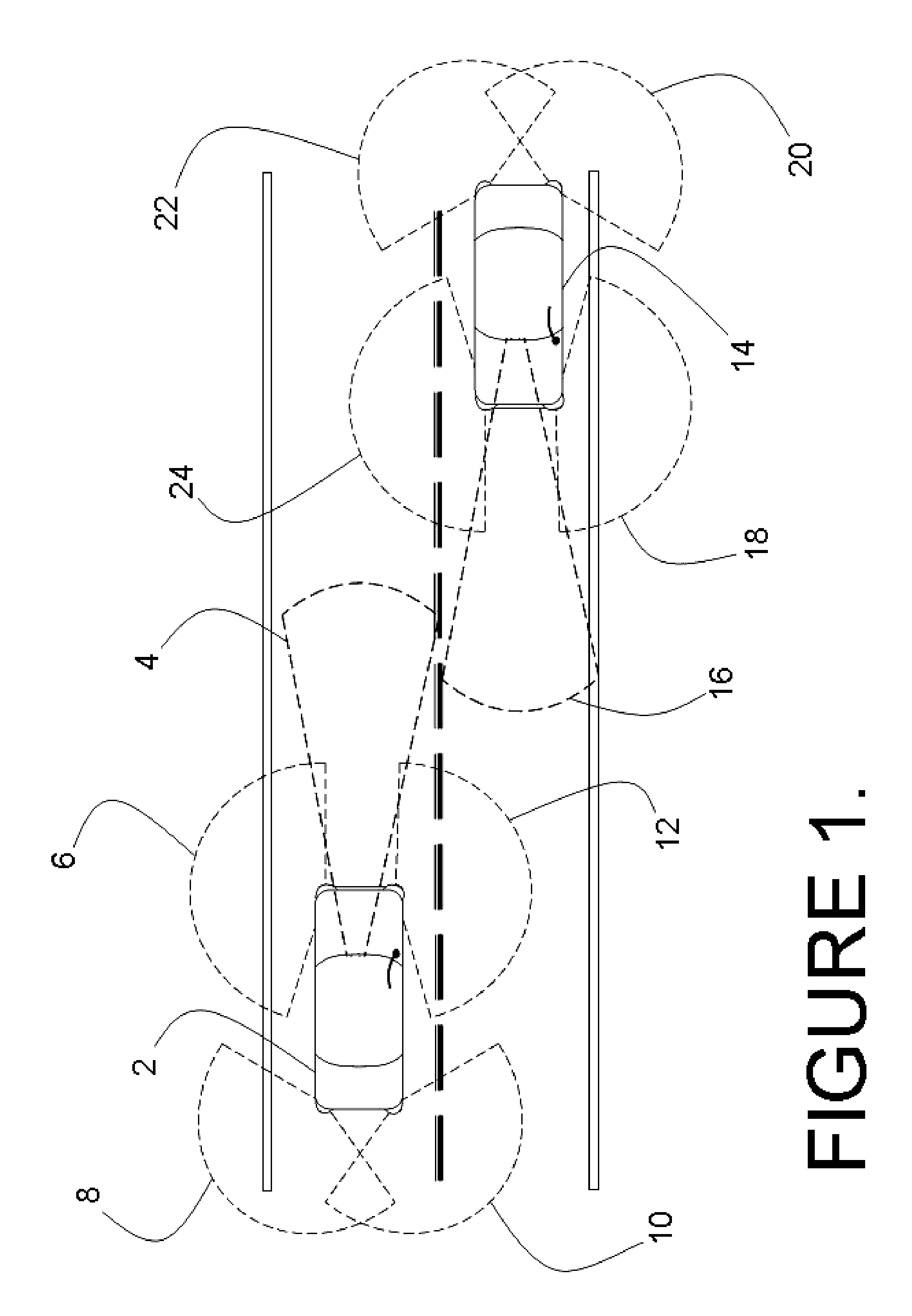

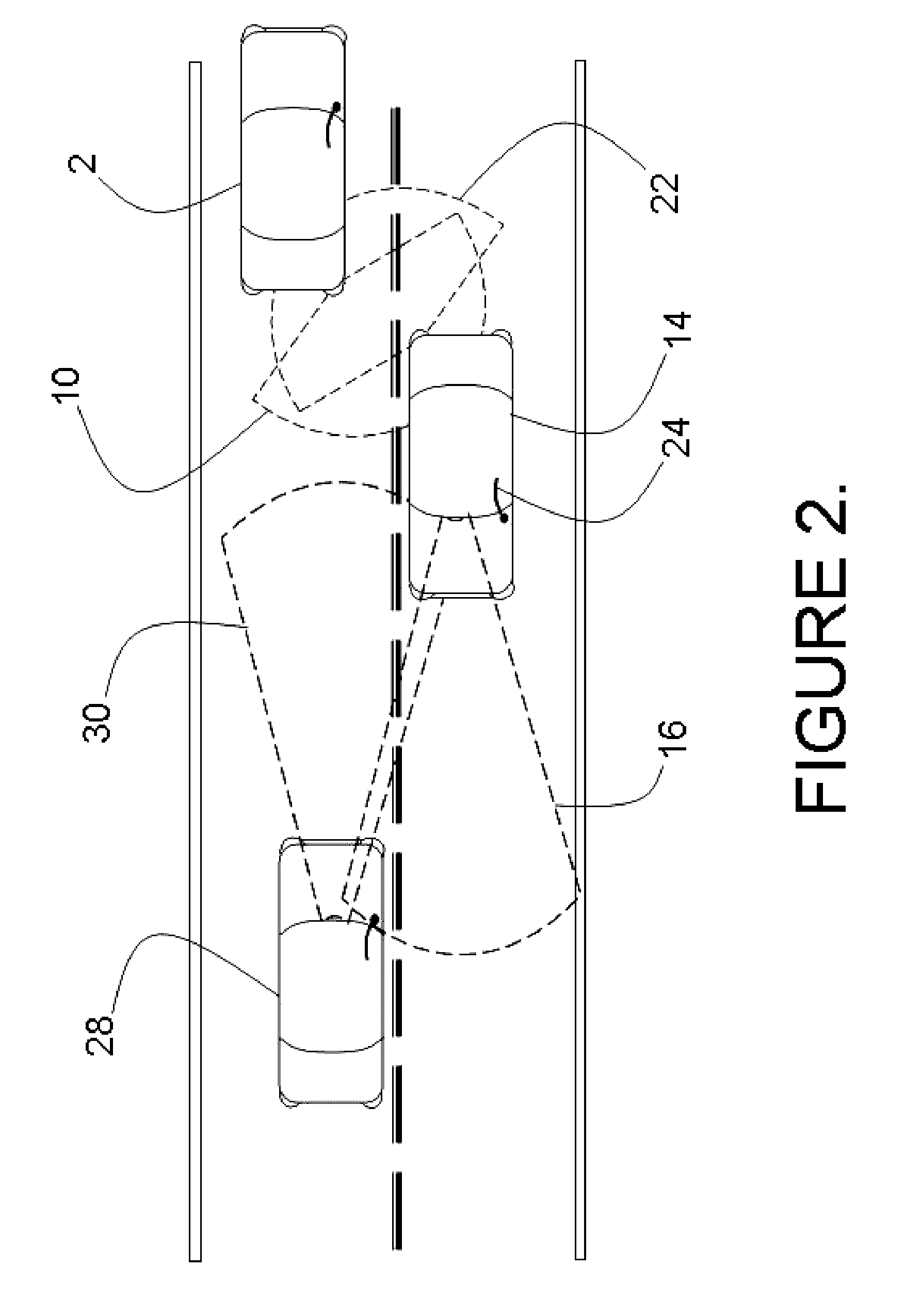

Ladar sensor for a dense environment

ActiveUS20160003946A1Optical rangefindersElectromagnetic wave reradiationDiscriminatorFloating point

A multi-ladar sensor system is proposed for operating in dense environments where many ladar sensors are transmitting and receiving burst mode light in the same space, as may be typical of an automotive application. The system makes use of several techniques to reduce mutual interference between independently operating ladar sensors. In one embodiment, the individual ladar sensors are each assigned a wavelength of operation, and an optical receive filter for blocking the light transmitted at other wavelengths, an example of wavelength division multiplexing (WDM). Each ladar sensor, or platform, may also be assigned a pulse width selected from a list, and may use a pulse width discriminator circuit to separate pulses of interest from the clutter of other transmitters. Higher level coding, involving pulse sequences and code sequence correlation, may be implemented in a system of code division multiplexing, CDM. A digital processor optimized to execute mathematical operations is described which has a hardware implemented floating point divider, allowing for real time processing of received ladar pulses, and sequences of pulses.

Owner:CONTINENTAL AUTONOMOUS MOBILITY US LLC

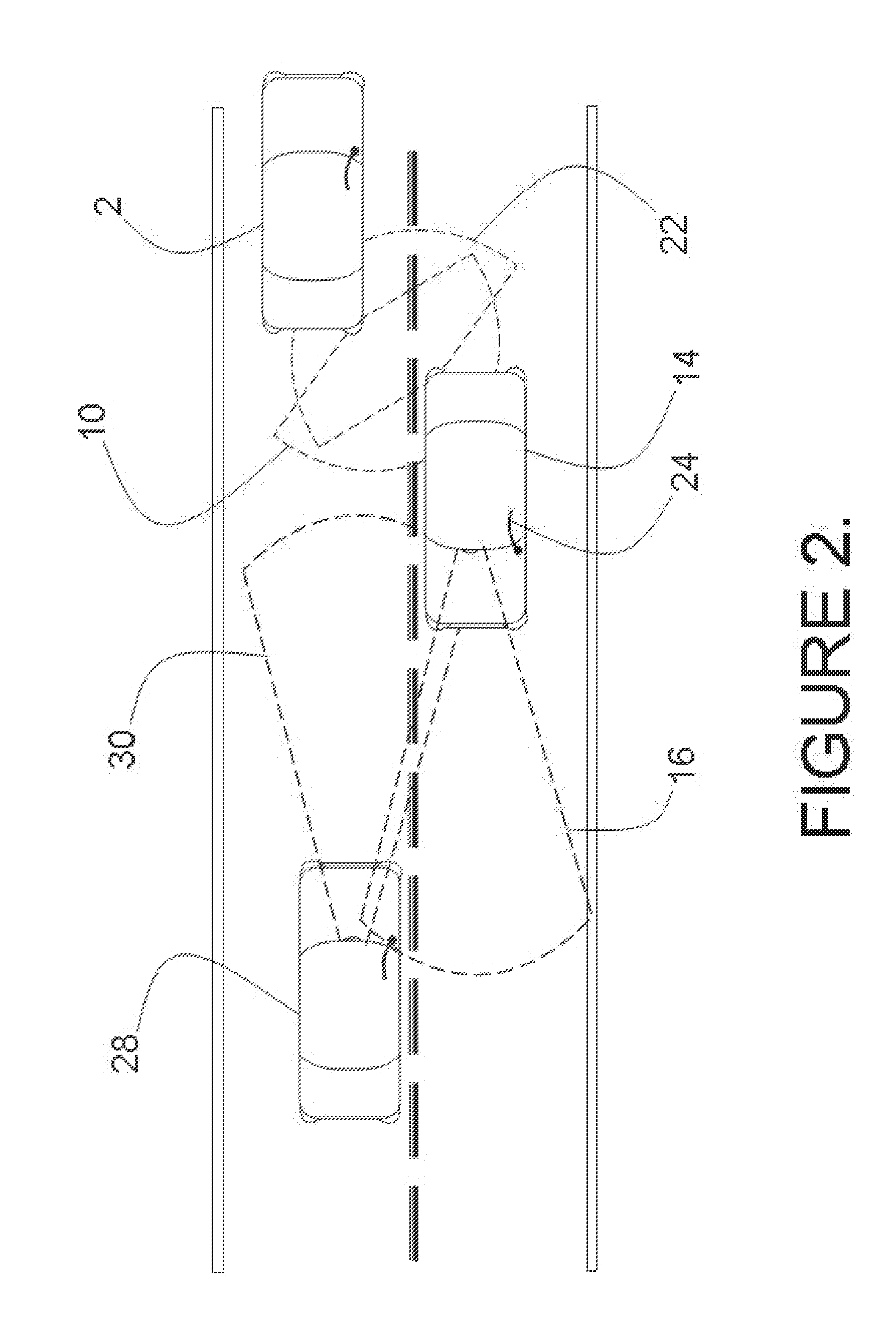

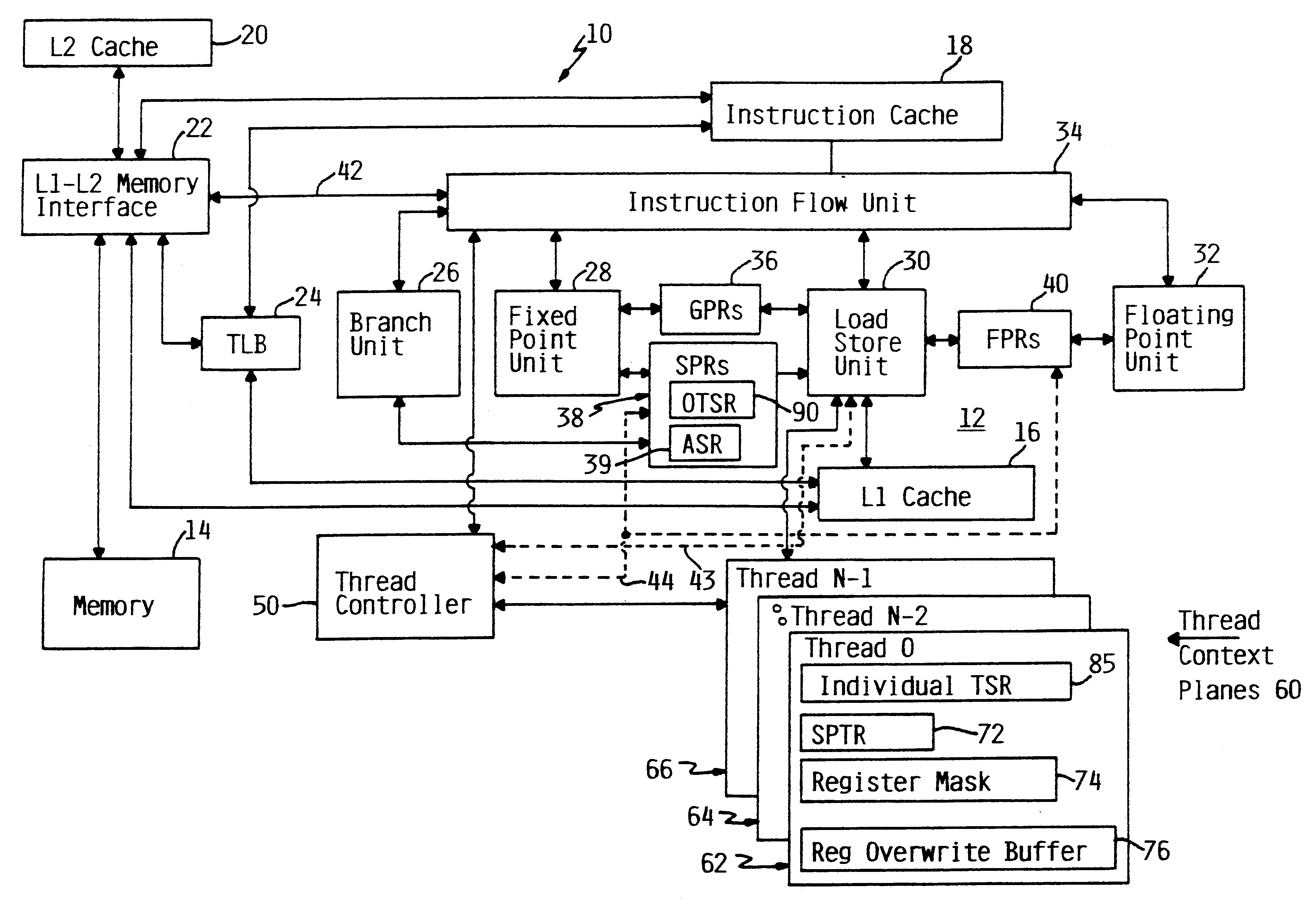

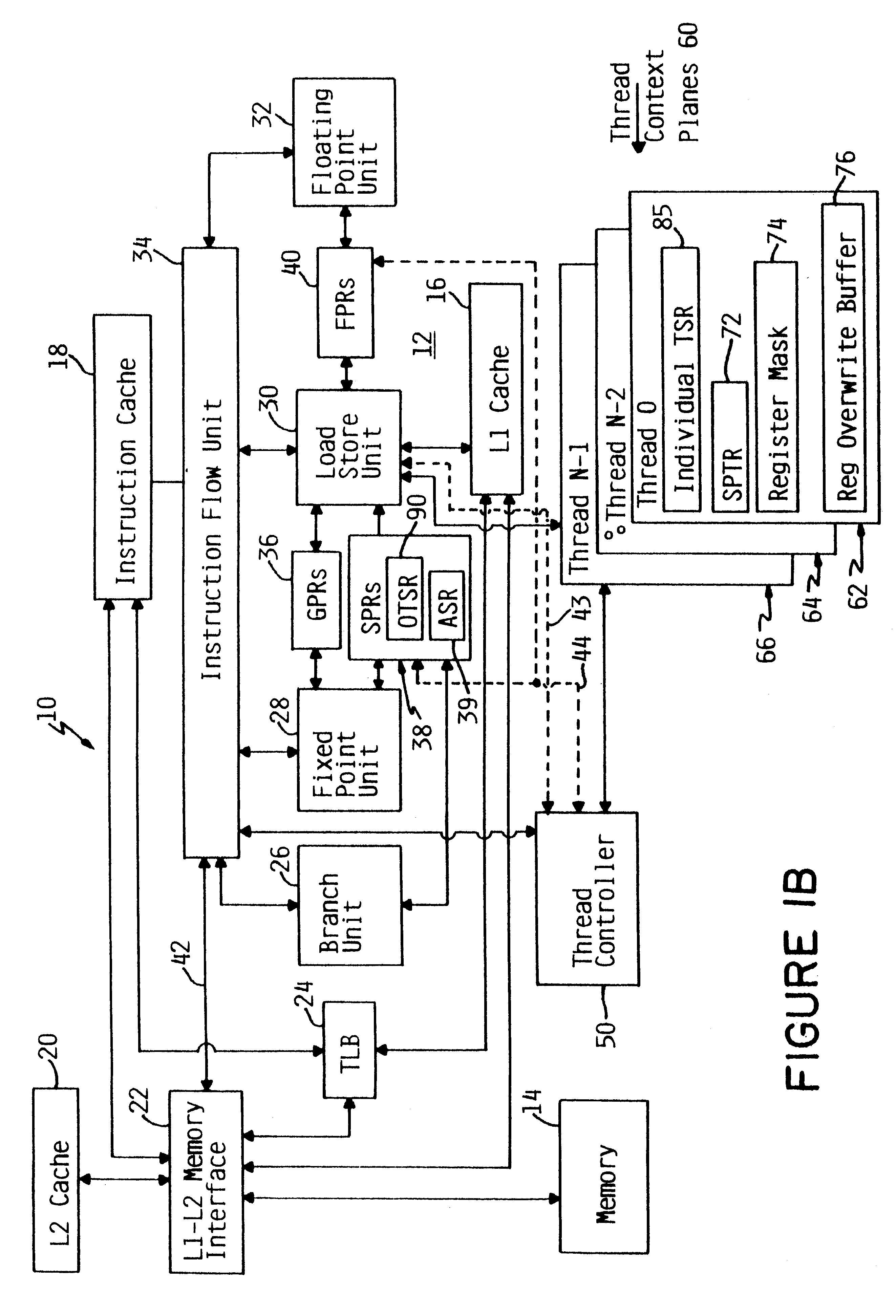

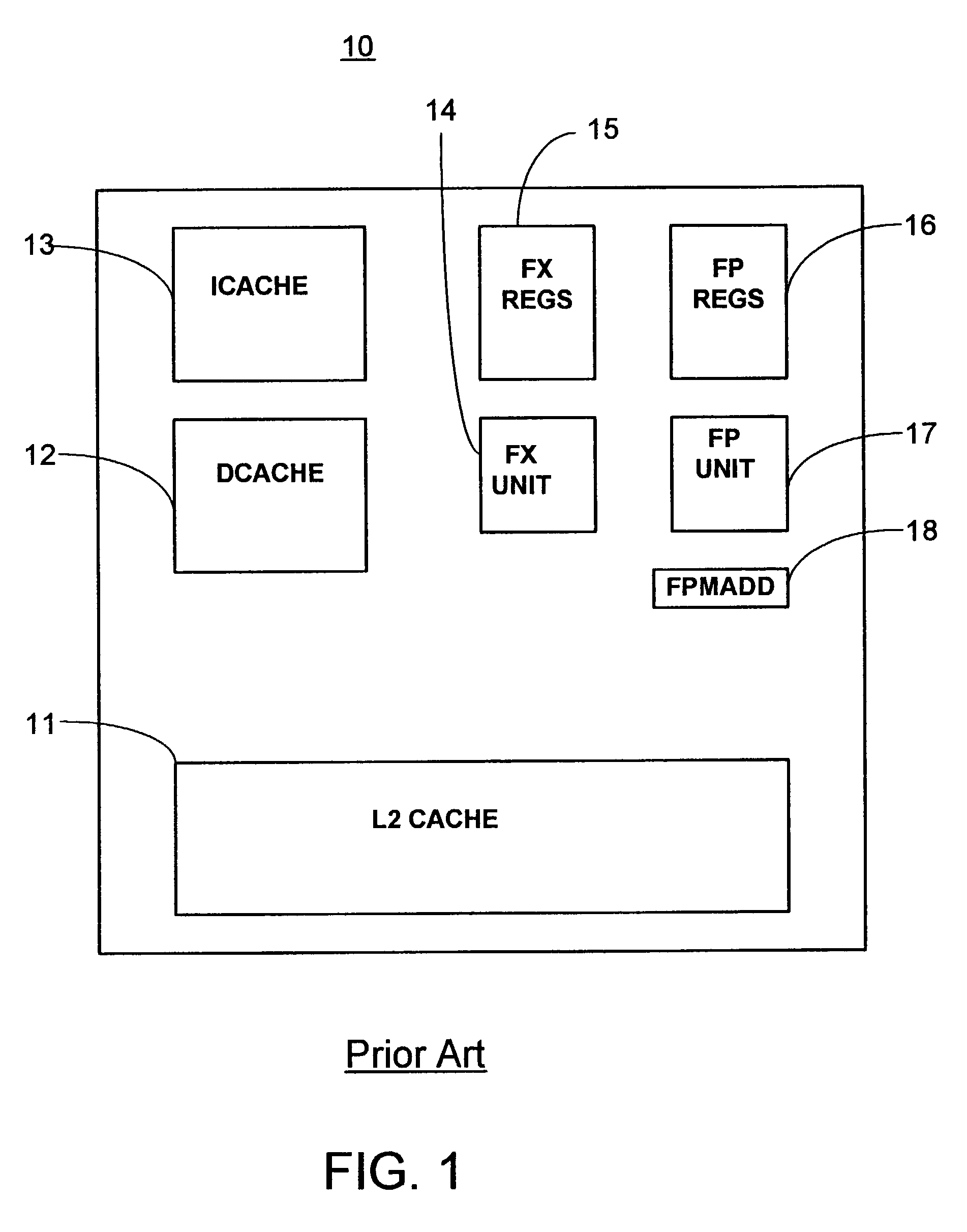

Apparatus and method for retrofitting multi-threaded operations on a computer by partitioning and overlapping registers

InactiveUS6233599B1Improve multithreading performance of processorImprove performanceResource allocationDigital computer detailsGeneral purposeProcessor register

An apparatus and method for performing multithreaded operations includes partitioning the general purpose and / or floating point processor registers into register subsets, including overlapping register subsets, allocating the register subsets to the threads, and managing the register subsets during thread switching. Register overwrite buffers preserve thread resources in overlapping registers during the thread switching process. Thread resources are loaded into the corresponding register subsets or, when overlapping register subsets are employed, into either the corresponding register subset or the corresponding register overwrite buffer. A thread status register is utilized by a thread controller to keep track of READY / NOT-READY threads, the active thread, and whether single-thread or multithread operations are permitted. Furthermore, the registers in the register subsets include a thread identifier field to identify the corresponding thread. Register masks may also be used to identify which registers belong to the various register subsets.

Owner:IBM CORP

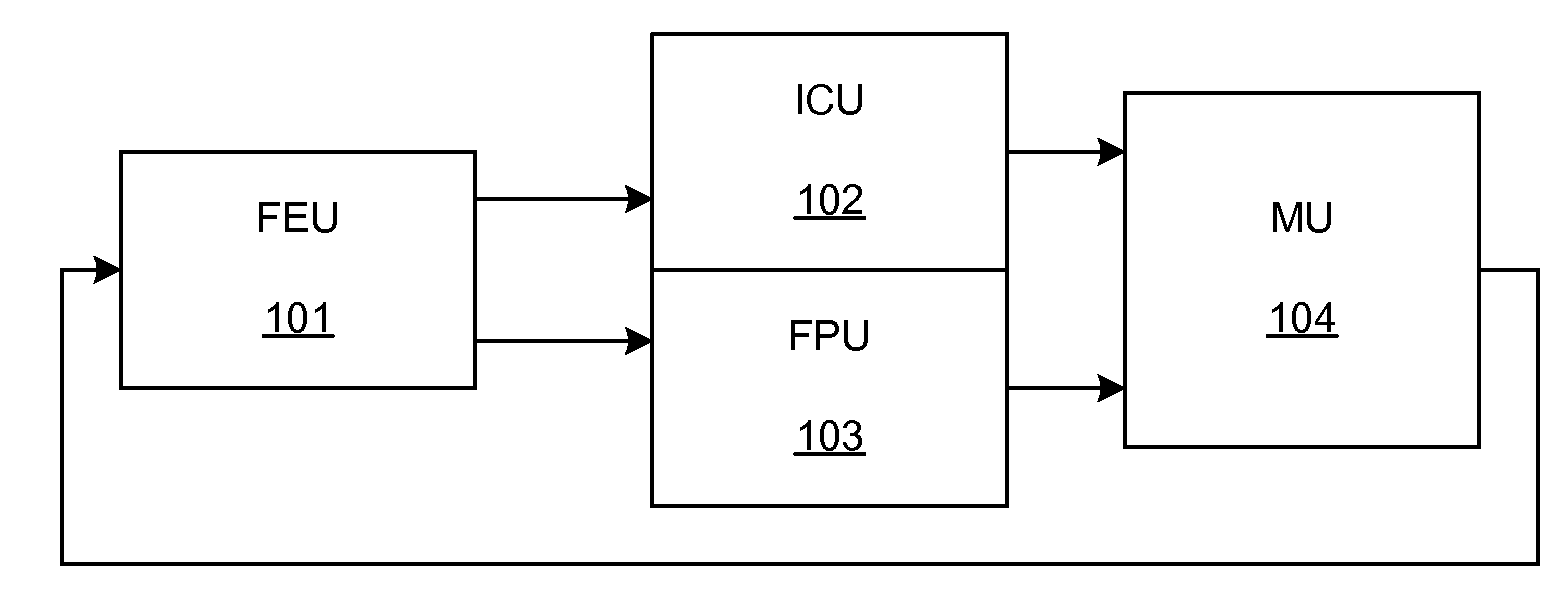

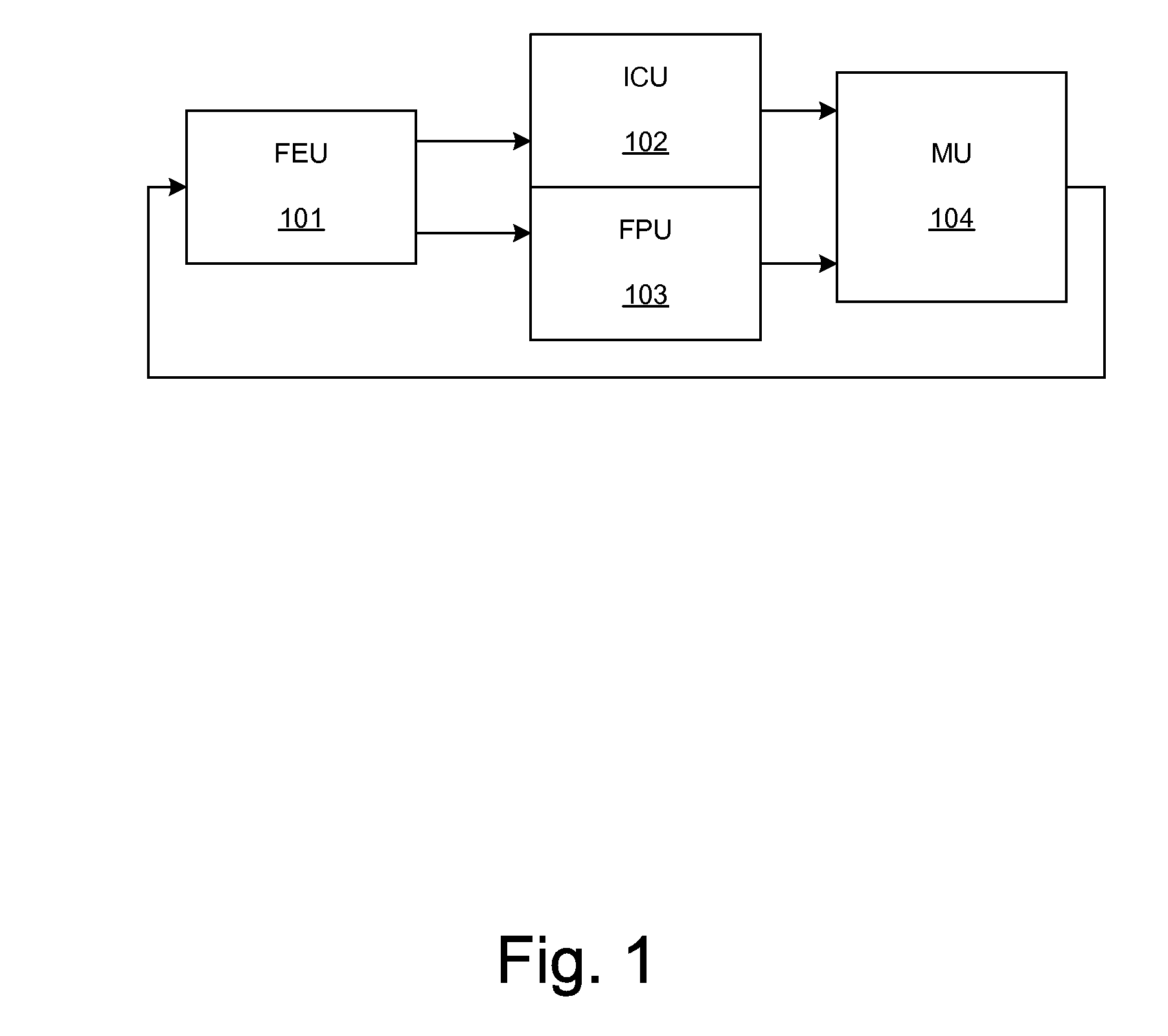

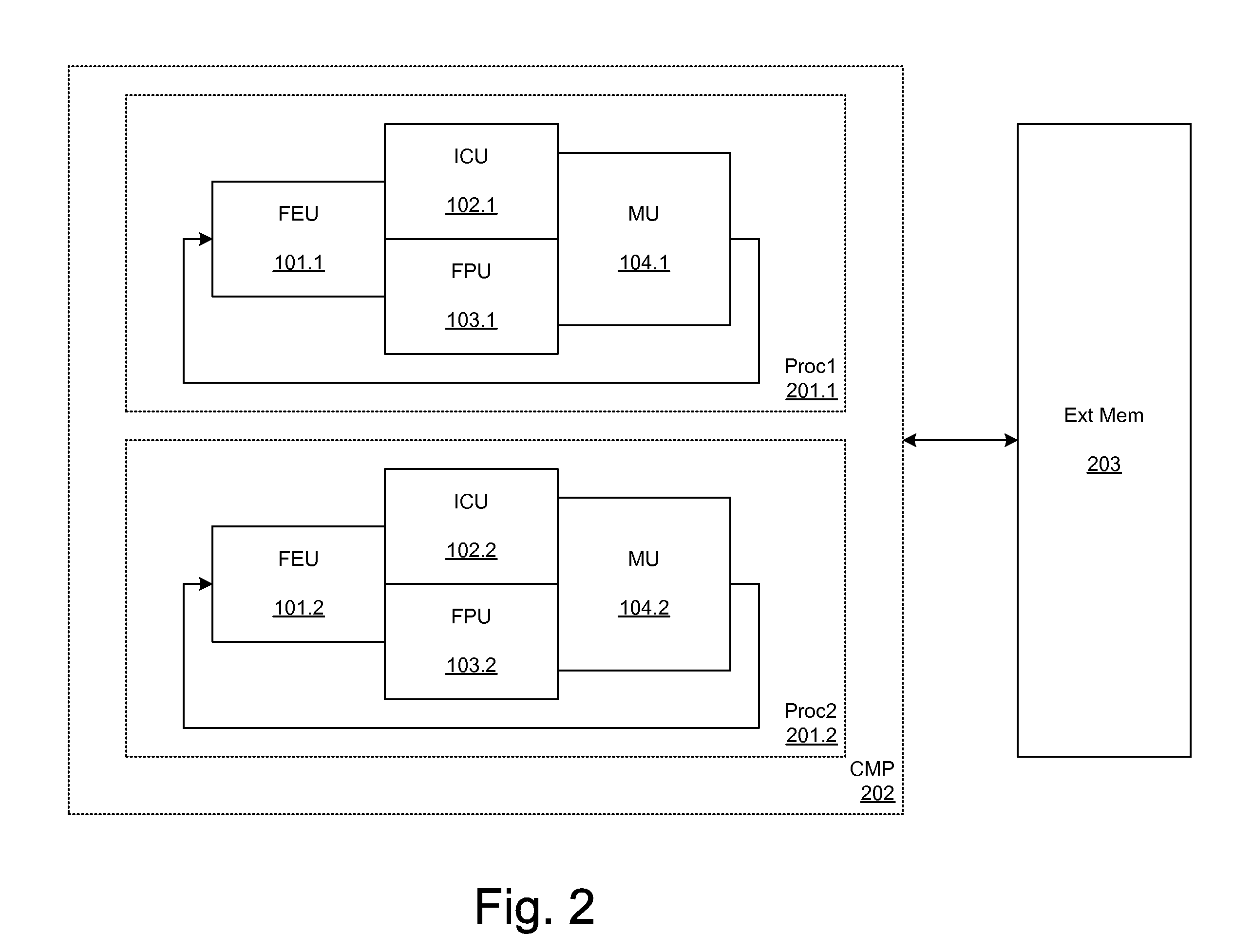

Adaptive computing ensemble microprocessor architecture

ActiveUS7389403B1Improve performanceImprove efficiencyEnergy efficient ICTGeneral purpose stored program computerExecution controlPower usage

An Adaptive Computing Ensemble (ACE) includes a plurality of flexible computation units as well as an execution controller to allocate the units to Computing Ensembles (CEs) and to assign threads to the CEs. The units may be any combination of ACE-enabled units, including instruction fetch and decode units, integer execution and pipeline control units, floating-point execution units, segmentation units, special-purpose units, reconfigurable units, and memory units. Some of the units may be replicated, e.g. there may be a plurality of integer execution and pipeline control units. Some of the units may be present in a plurality of implementations, varying by performance, power usage, or both. The execution controller dynamically alters the allocation of units to threads in response to changing performance and power consumption observed behaviors and requirements. The execution controller also dynamically alters performance and power characteristics of the ACE-enabled units, according to the observed behaviors and requirements.

Owner:ORACLE INT CORP

LADAR sensor for a dense environment

A multi-ladar sensor system is proposed for operating in dense environments where many ladar sensors are transmitting and receiving burst mode light in the same space, as may be typical of an automotive application. The system makes use of several techniques to reduce mutual interference between independently operating ladar sensors. In one embodiment, the individual ladar sensors are each assigned a wavelength of operation, and an optical receive filter for blocking the light transmitted at other wavelengths, an example of wavelength division multiplexing (WDM). Each ladar sensor, or platform, may also be assigned a pulse width selected from a list, and may use a pulse width discriminator circuit to separate pulses of interest from the clutter of other transmitters. Higher level coding, involving pulse sequences and code sequence correlation, may be implemented in a system of code division multiplexing, CDM. A digital processor optimized to execute mathematical operations is described which has a hardware implemented floating point divider, allowing for real time processing of received ladar pulses, and sequences of pulses.

Owner:CONTINENTAL AUTONOMOUS MOBILITY US LLC

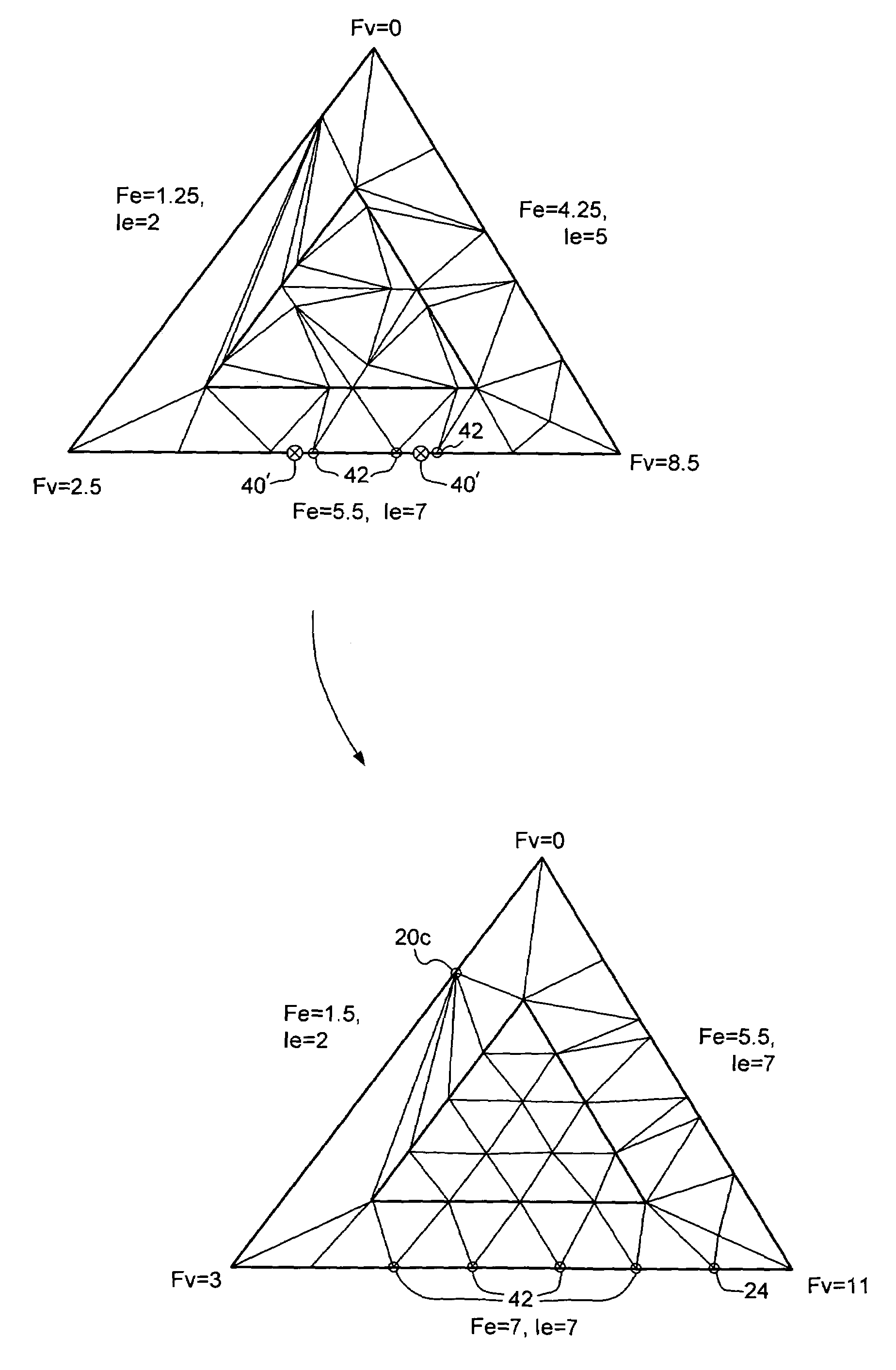

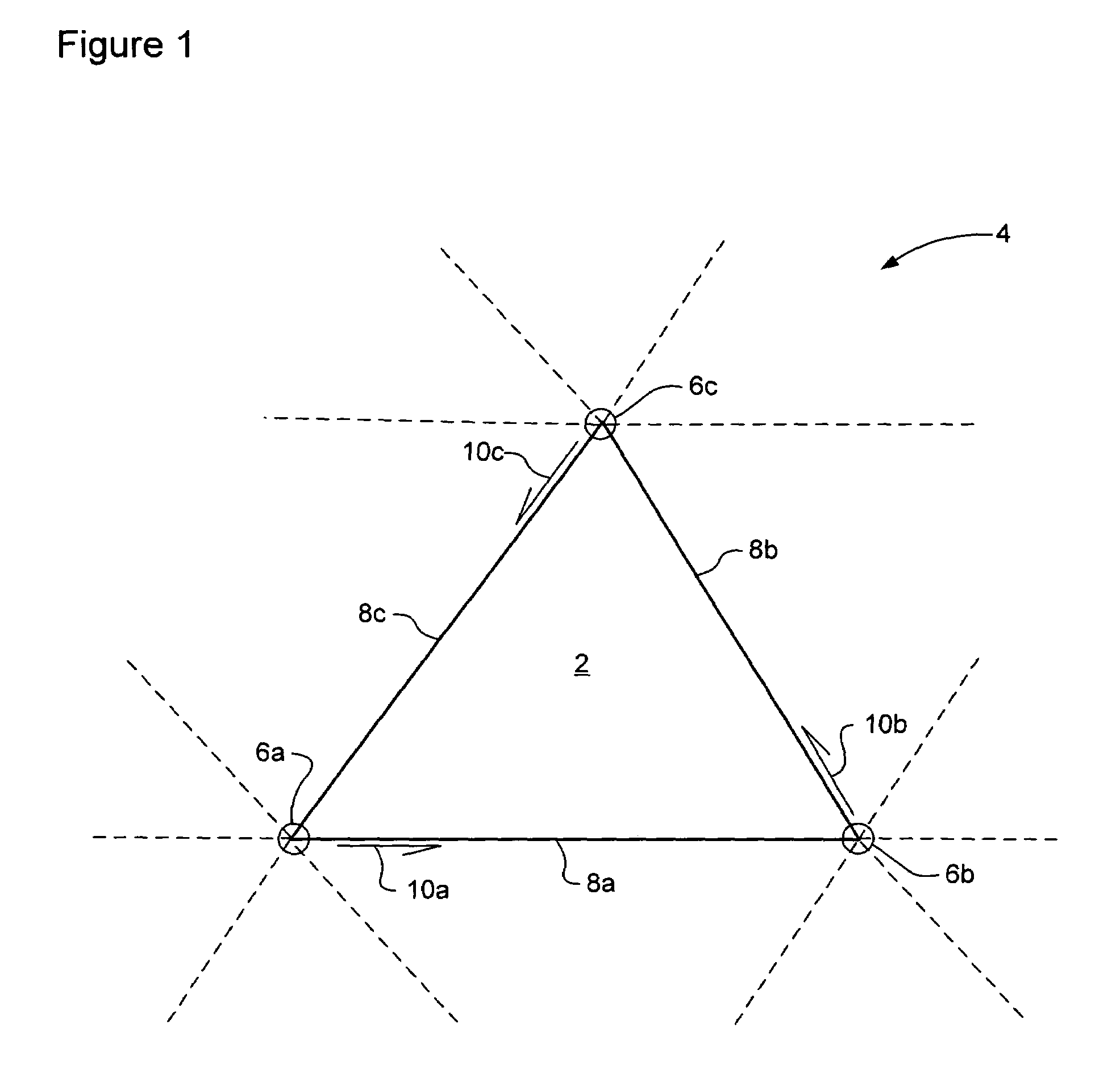

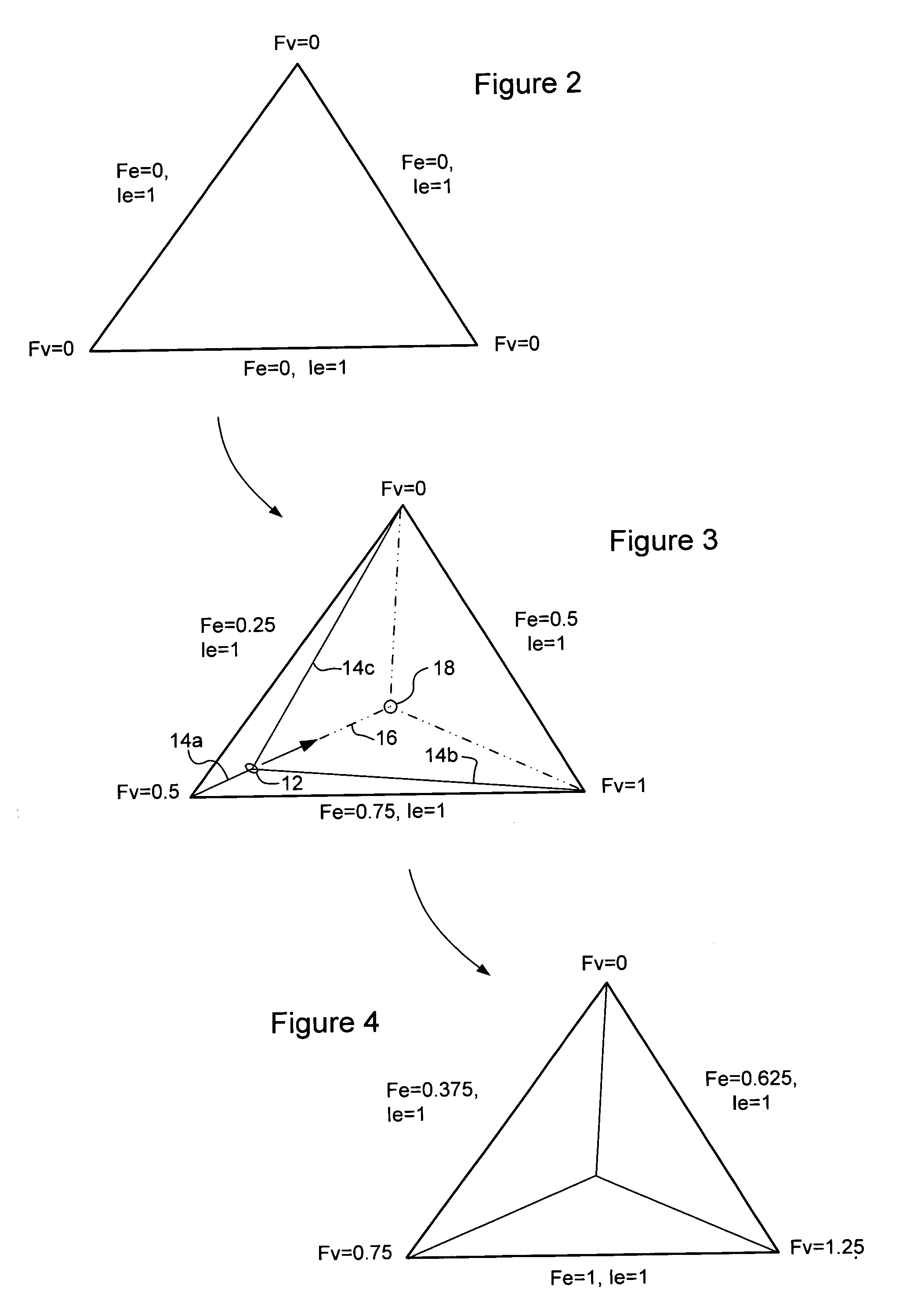

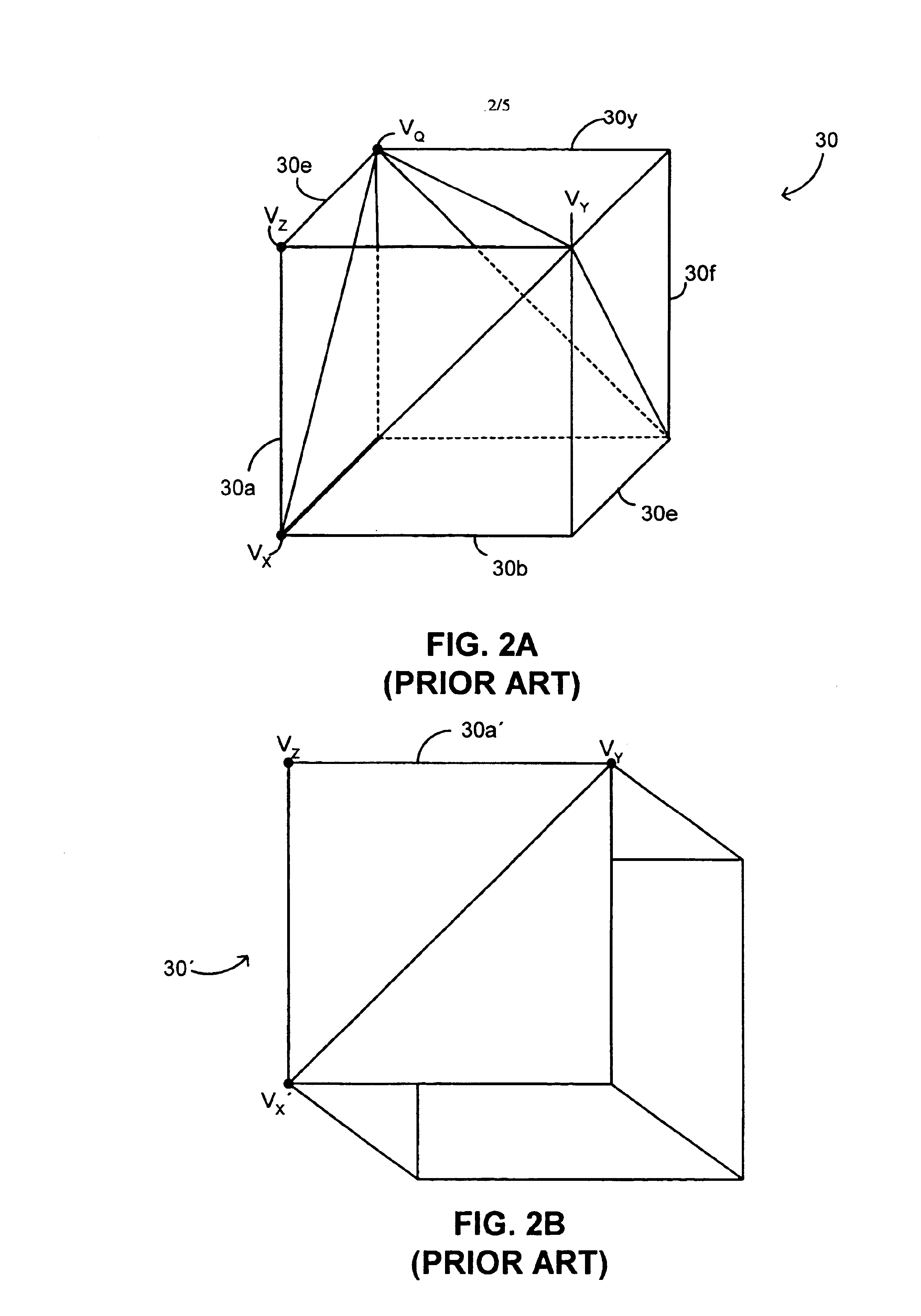

Dynamic tessellation of a base mesh

InactiveUS6940505B1Avoid crackingEfficient of processing resourceDrawing from basic elements3D-image renderingComputational scienceLevel of detail

A primitive of a base mesh having at least three base vertices is dynamically tessellated to enable smooth changes in detail of an image rendered on a screen. A respective floating point vertex tessellation value (Fv) is assigned to each base vertex of the base mesh, based on a desired level of detail in the rendered image. For each edge of the primitive: a respective floating point edge tessellation rate (Fe) of the edge is calculated using the respective vertex tessellation values (Fv) of the base vertices terminating the edge. A position of at least one child vertex of the primitive is then calculated using the respective calculated edge tessellation rate (Fe). By this means, child vertices of the primitive can be generated coincident with a parent vertex, and smoothly migrate in response to changing vertex tessellation values of the base vertices of the primitive.

Owner:MATROX

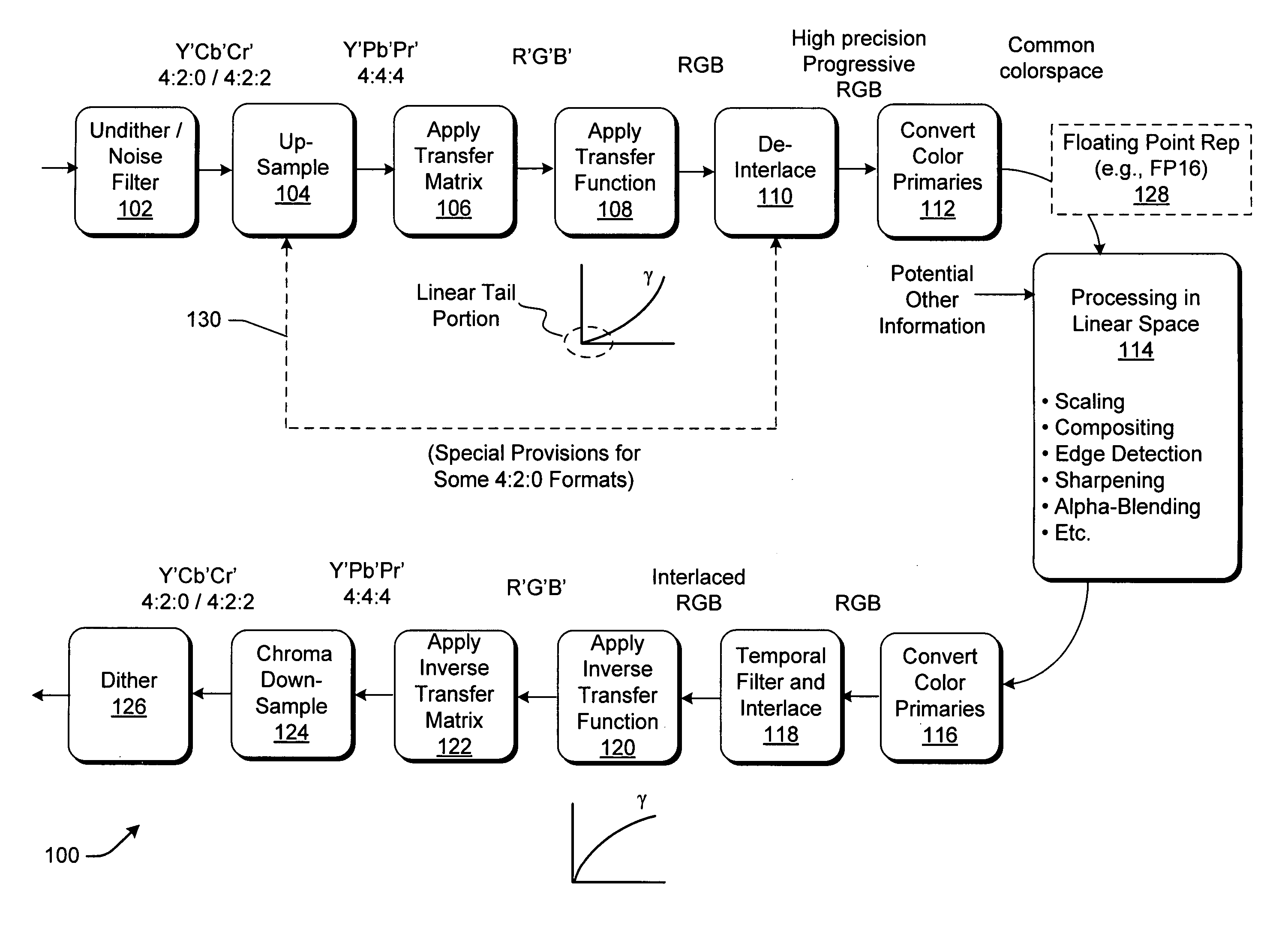

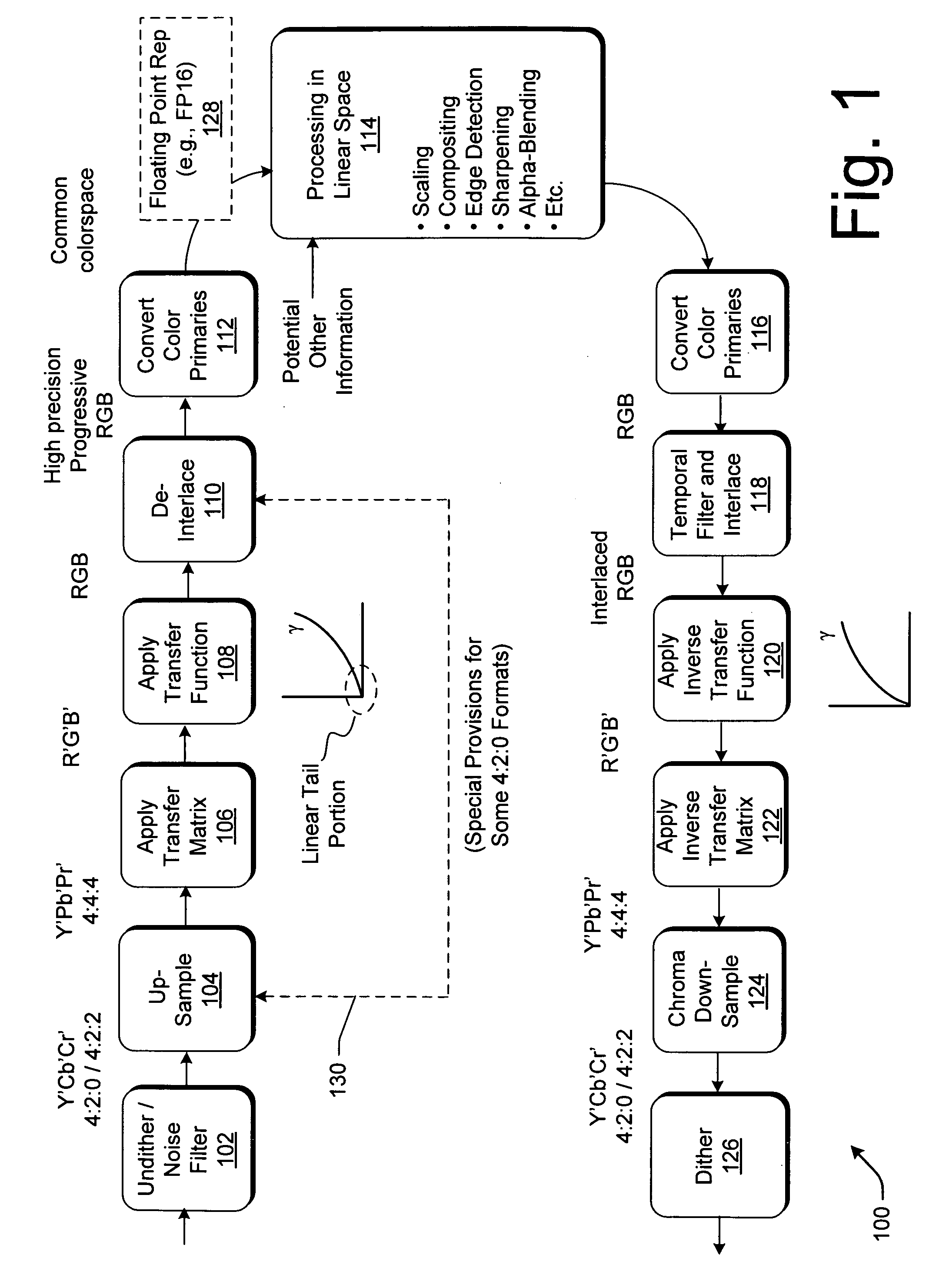

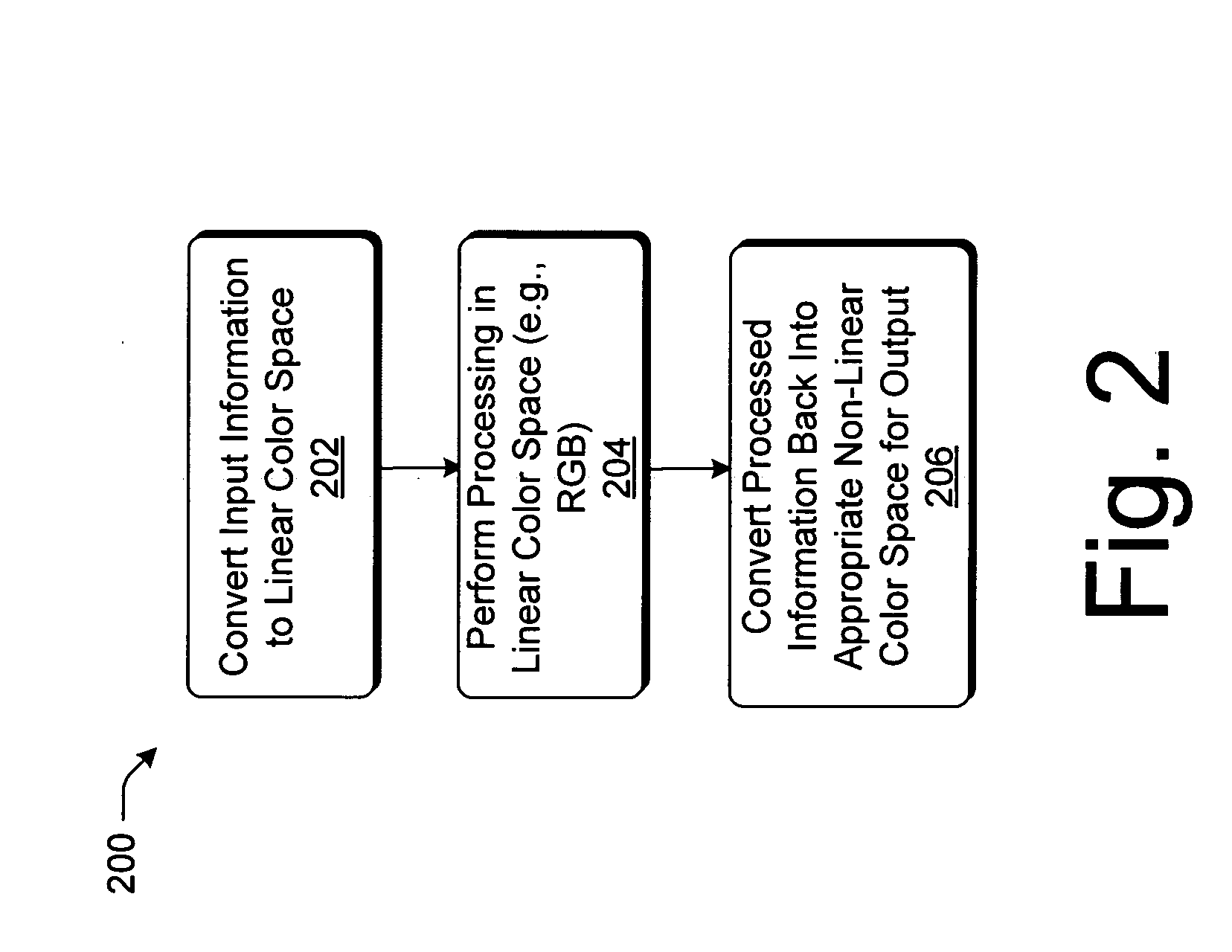

Image processing using linear light values and other image processing improvements

ActiveUS20050063586A1Reduce the amount requiredImprove accuracyCharacter and pattern recognitionPictoral communicationImaging processingFloating point

Strategies are described for processing image information in a linear form to reduce the amount of artifacts (compared to processing the data in nonlinear form). Exemplary types of processing operations can include, scaling, compositing, alpha-blending, edge detection, and so forth. In a more specific implementation, strategies are described for processing image information that is: a) linear; b) in the RGB color space; c) high precision (e.g., provided by floating point representation); d) progressive; and e) full channel. Other improvements provide strategies for: a) processing image information in a pseudo-linear space to improve processing speed; b) implementing an improved error dispersion technique; c) dynamically calculating and applying filter kernels; d) producing pipeline code in an optimal manner; and e) implementing various processing tasks using novel pixel shader techniques.

Owner:MICROSOFT TECH LICENSING LLC

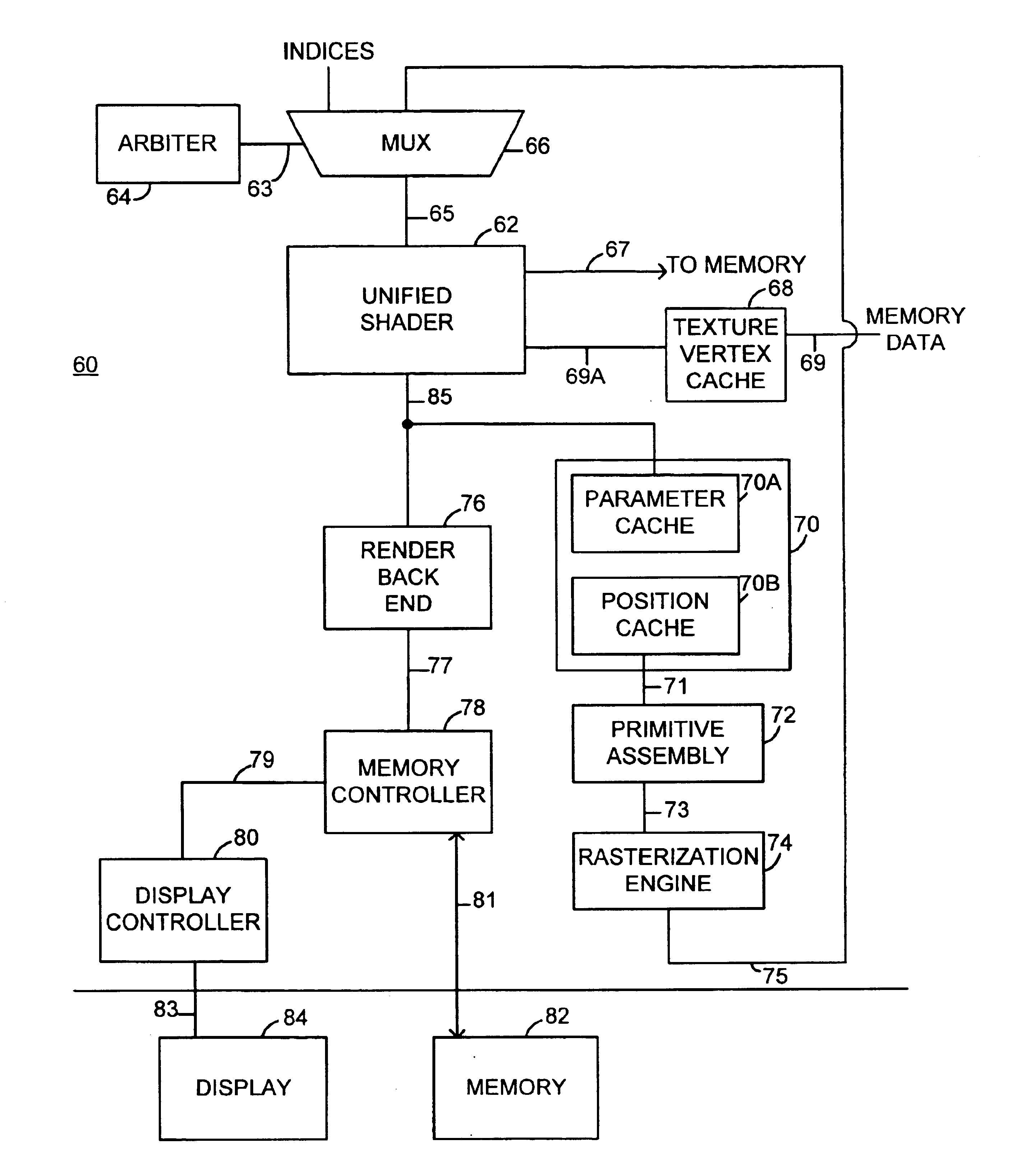

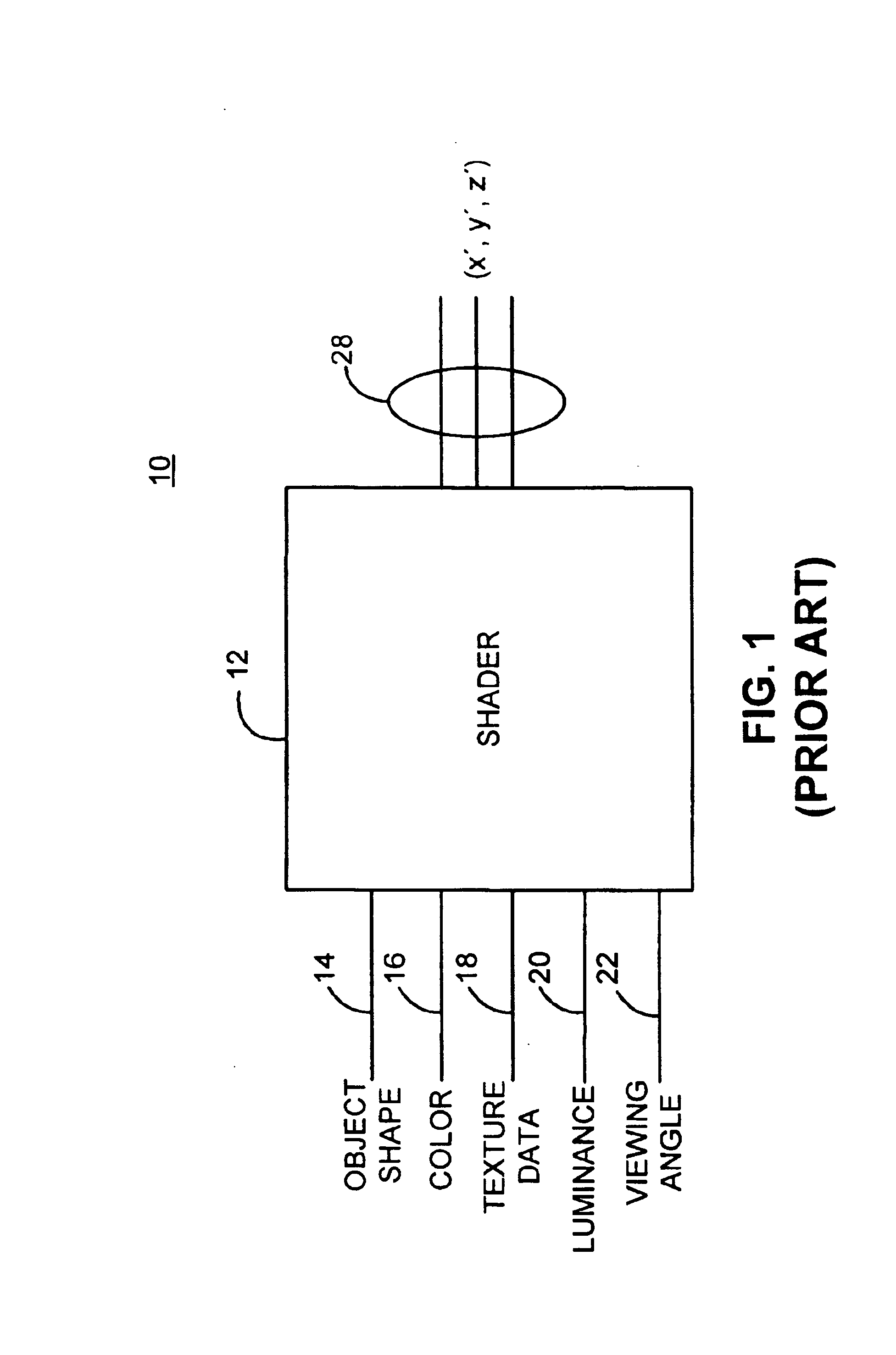

Graphics processing architecture employing a unified shader

ActiveUS6897871B1More computationally efficientFlexiblyDigital computer detailsCathode-ray tube indicatorsControl signalLogical operations

A graphics processing architecture employing a single shader is disclosed. The architecture includes a circuit operative to select one of a plurality of inputs in response to a control signal; and a shader, coupled to the arbiter, operative to process the selected one of the plurality of inputs, the shader including means for performing vertex operations and pixel operations, and wherein the shader performs one of the vertex operations or pixel operations based on the selected one of the plurality of inputs. The shader includes a register block which is used to store the plurality of selected inputs, a sequencer which maintains vertex manipulation and pixel manipulations instructions and a processor capable of executing both floating point arithmetic and logical operations on the selected inputs in response to the instructions maintained in the sequencer.

Owner:ATI TECH INC

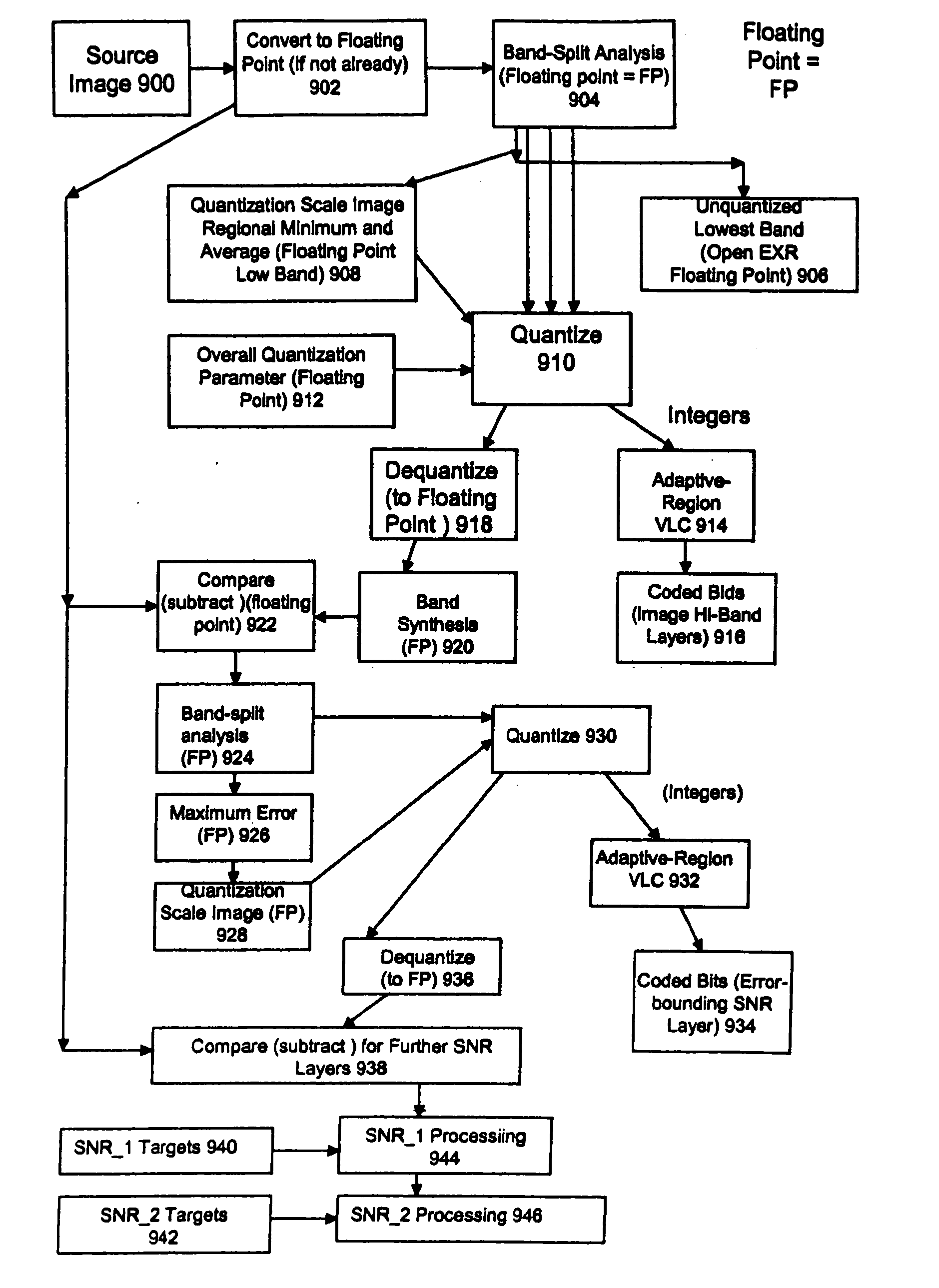

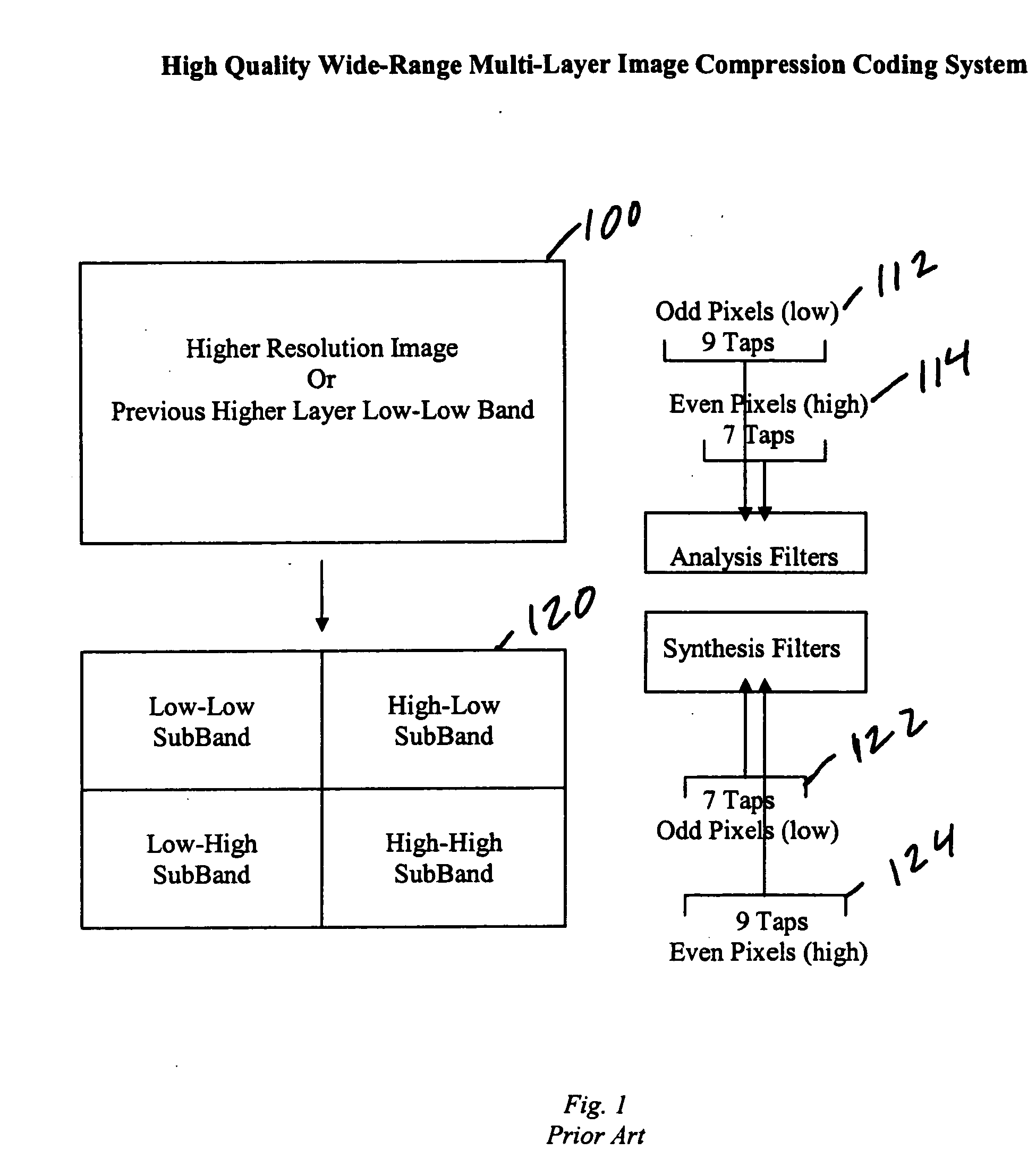

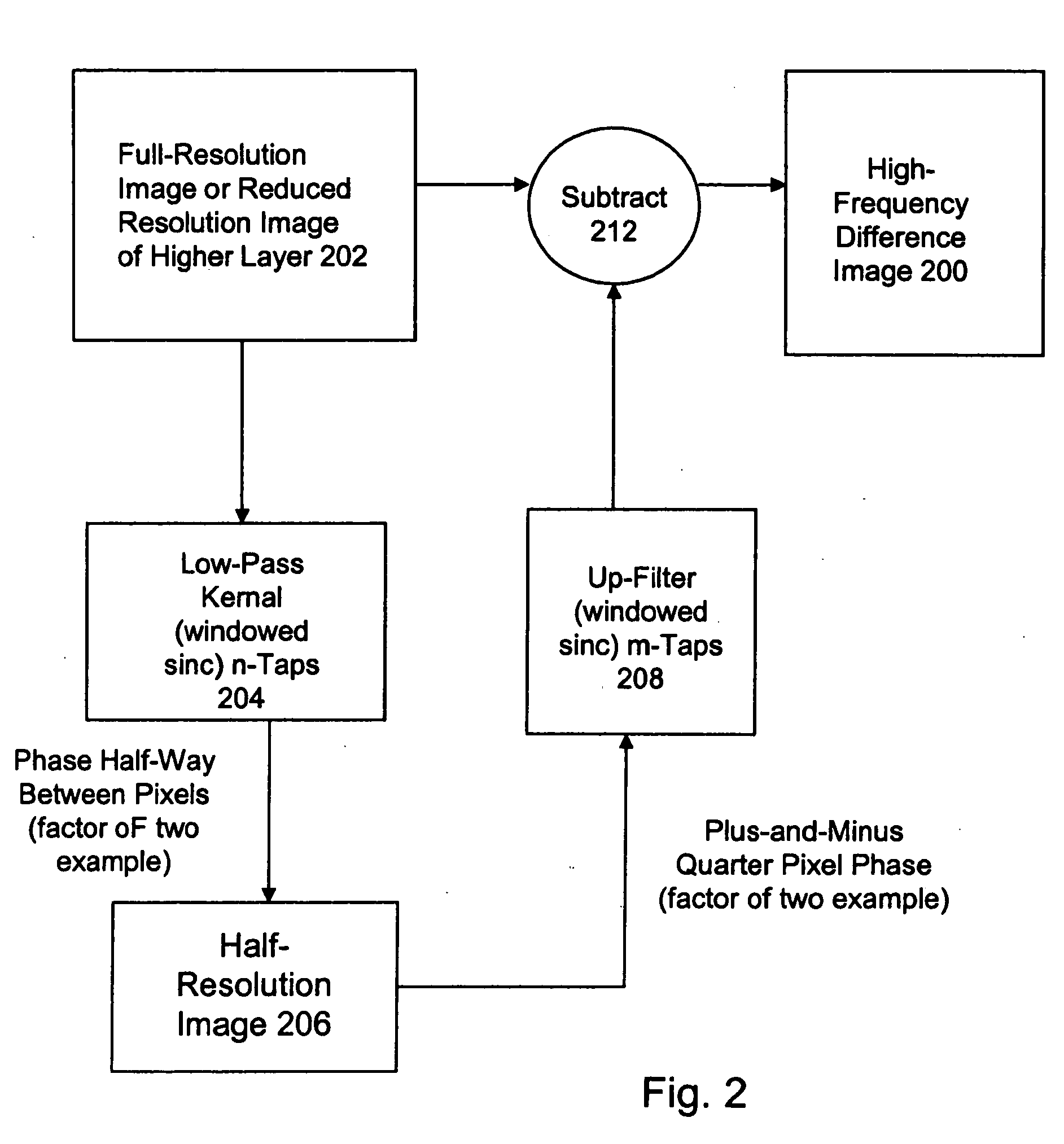

High quality wide-range multi-layer image compression coding system

ActiveUS20060071825A1Reduce sharpnessReduce detailsCode conversionCharacter and pattern recognitionFloating pointImage compression

Systems, methods, and computer programs for high quality wide-range multi-layer image compression coding, including consistent ubiquitous use of floating point values in essentially all computations; an adjustable floating-point deadband; use of an optimal band-split filter; use of entire SNR layers at lower resolution levels; targeting of specific SNR layers to specific quality improvements; concentration of coding bits in regions of interest in targeted band-split and SNR layers; use of statically-assigned targets for high-pass and / or for SNR layers; improved SNR by using a lower quantization value for regions of an image showing a higher compression coding error; application of non-linear functions of color when computing difference values when creating an SNR layer; use of finer overall quantization at lower resolution levels with regional quantization scaling; removal of source image noise before motion-compensated compression or film steadying; use of one or more full-range low bands; use of alternate quantization control images for SNR bands and other high resolution enhancing bands; application of lossless variable-length coding using adaptive regions; use of a folder and file structure for layers of bits; and a method of inserting new intra frames by counting the number of bits needed for a motion compensated frame.

Owner:DEMOS GARY

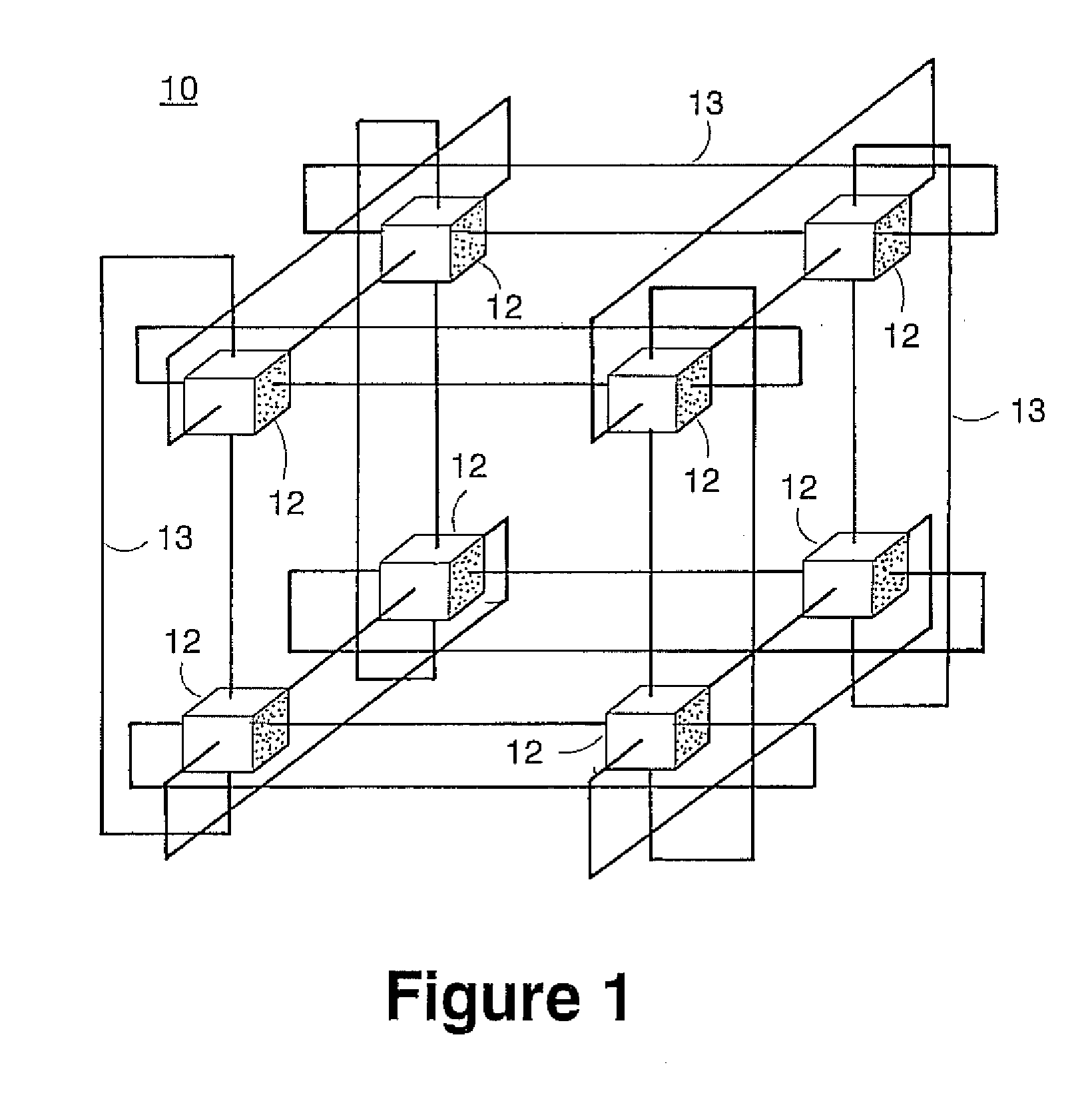

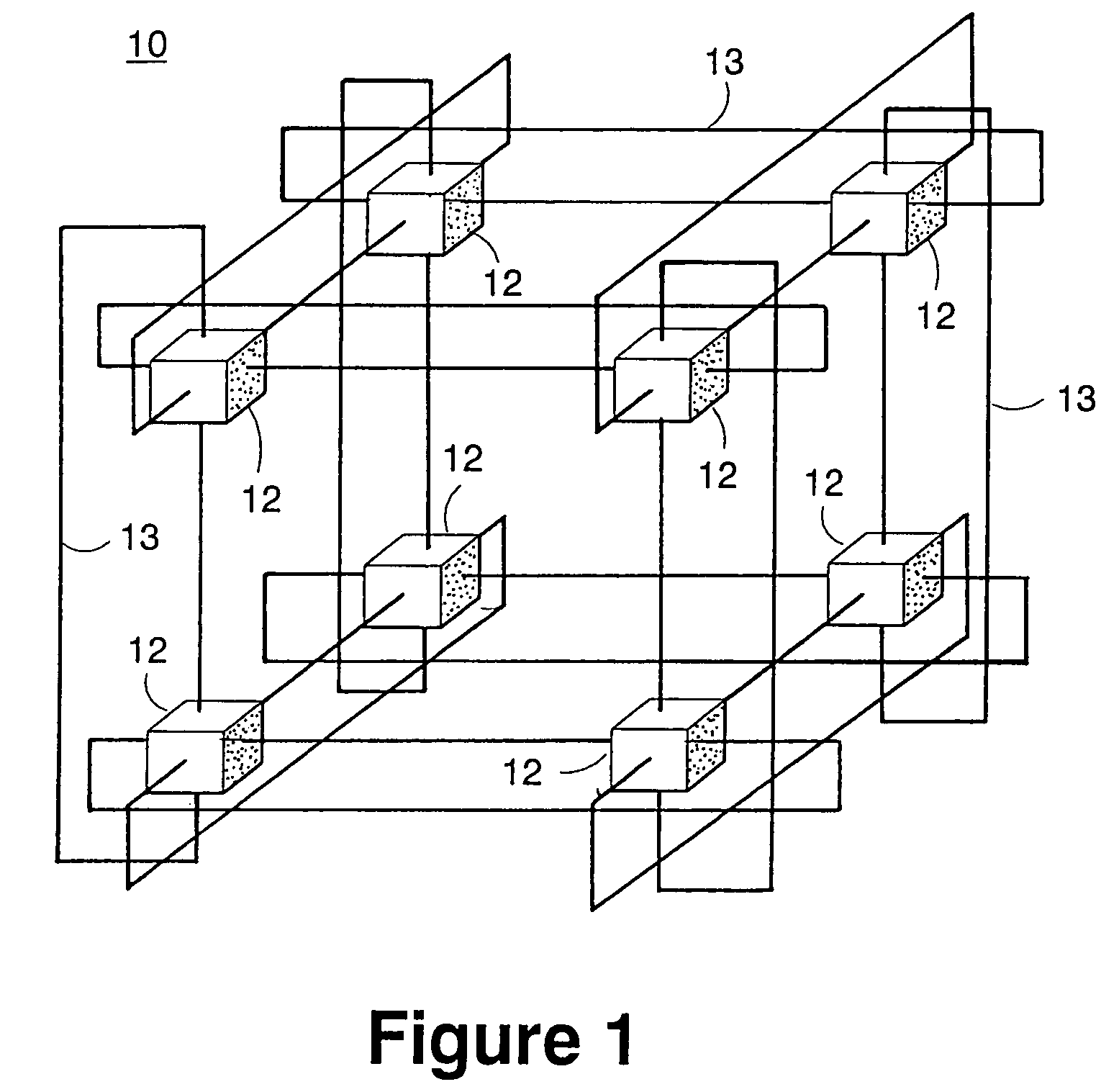

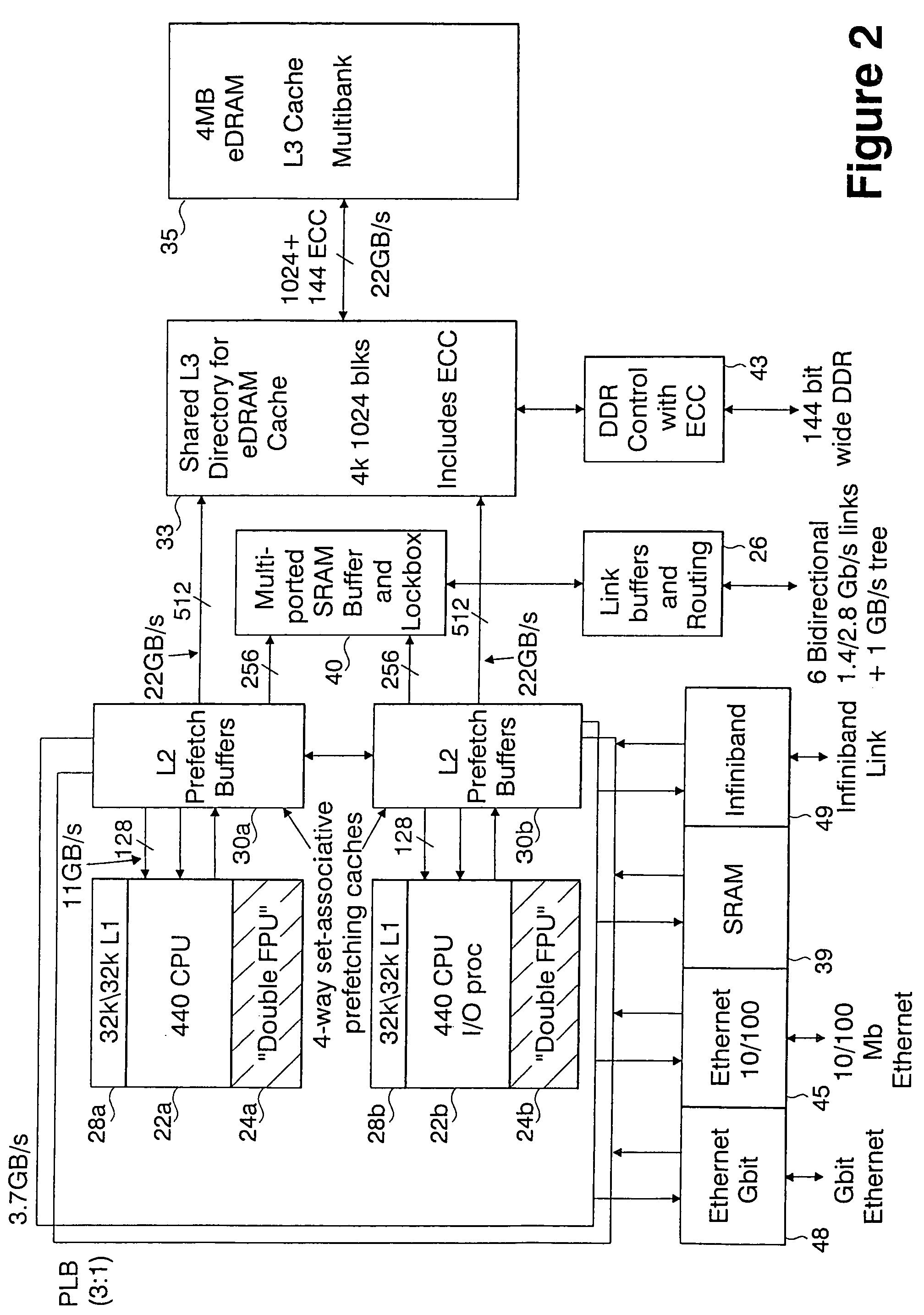

Massively parallel supercomputer

InactiveUS7555566B2Massive level of scalabilityUnprecedented level of scalabilityError preventionProgram synchronisationPacket communicationSupercomputer

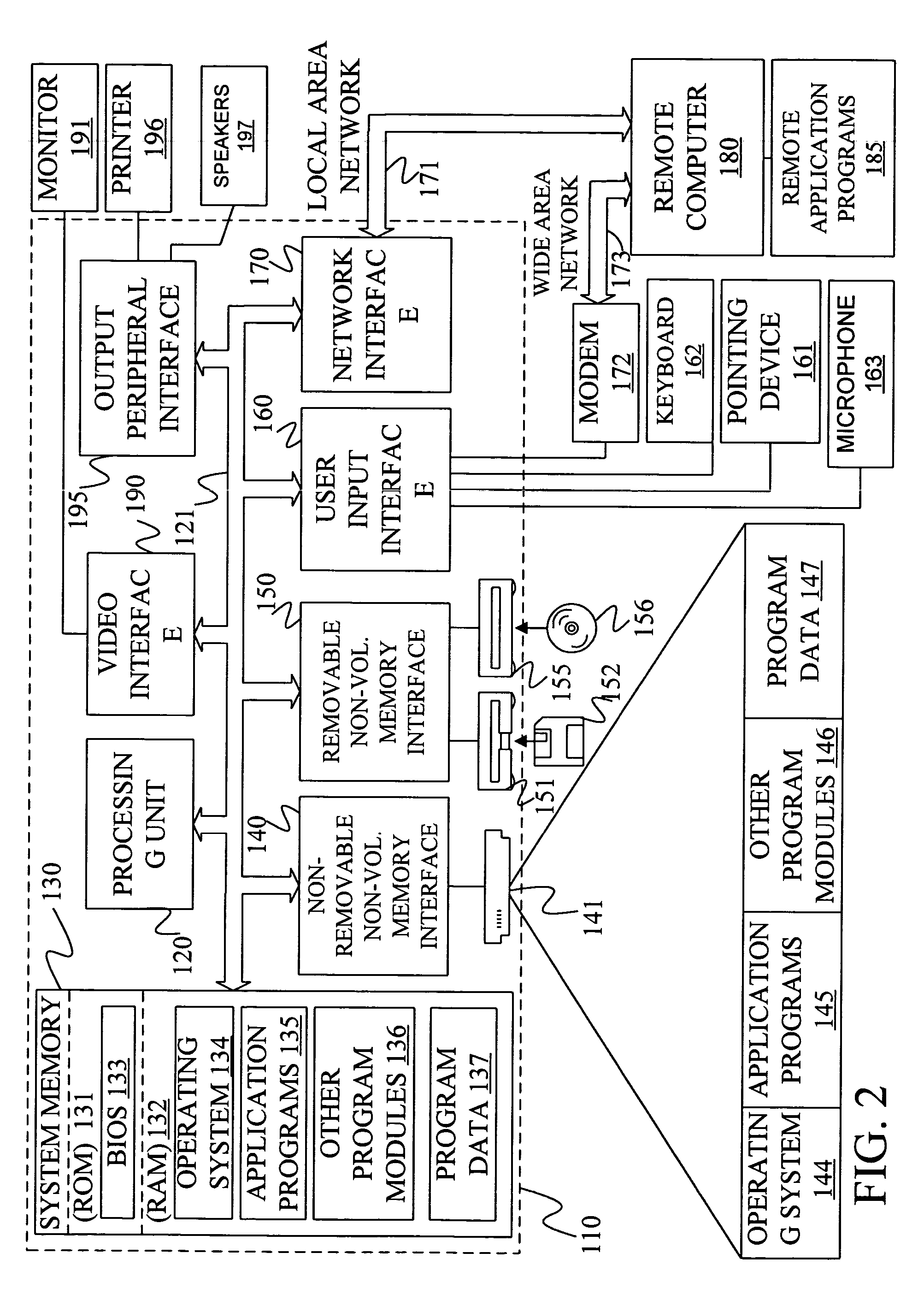

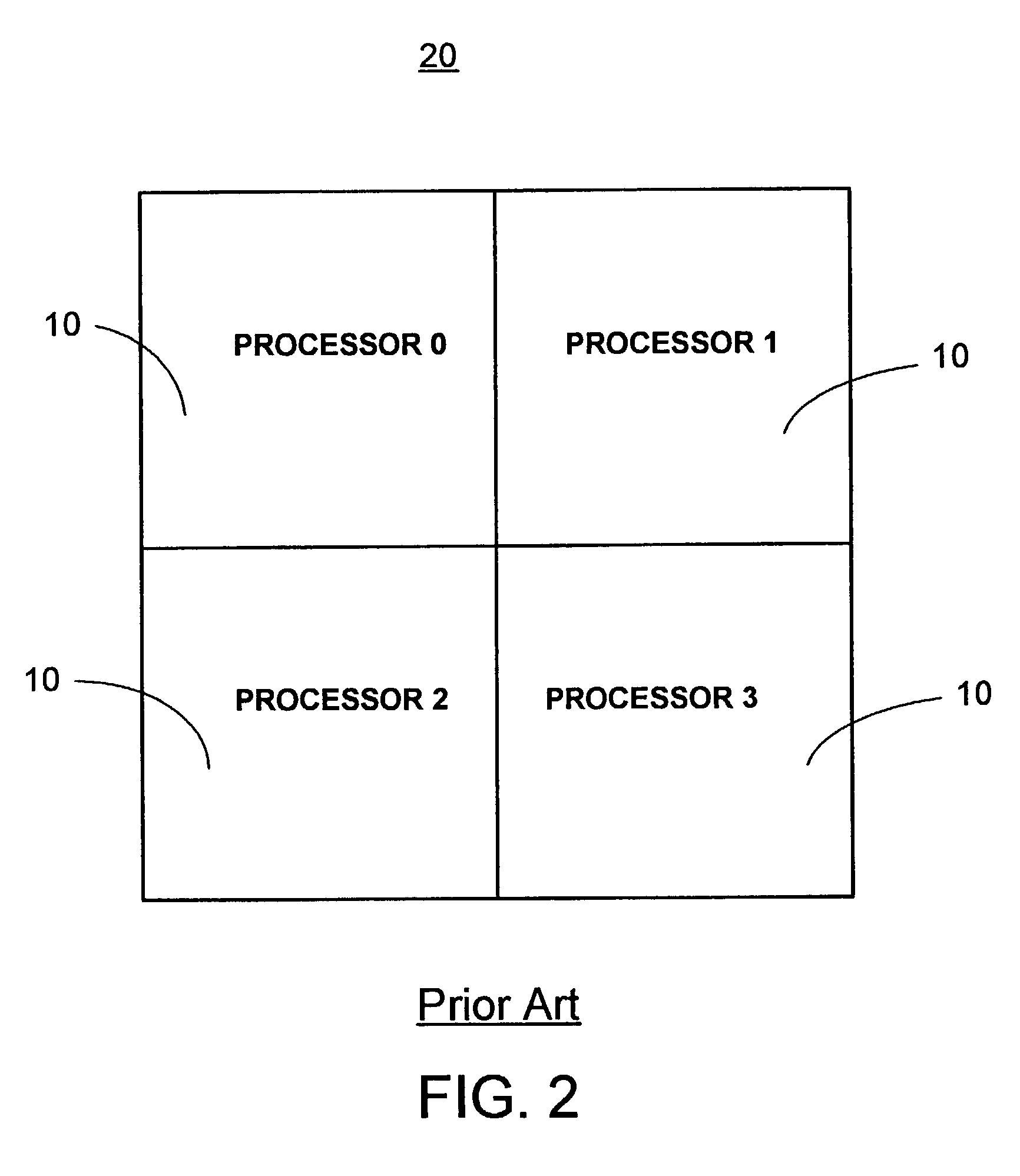

A novel massively parallel supercomputer of hundreds of teraOPS-scale includes node architectures based upon System-On-a-Chip technology, i.e., each processing node comprises a single Application Specific Integrated Circuit (ASIC). Within each ASIC node is a plurality of processing elements each of which consists of a central processing unit (CPU) and plurality of floating point processors to enable optimal balance of computational performance, packaging density, low cost, and power and cooling requirements. The plurality of processors within a single node may be used individually or simultaneously to work on any combination of computation or communication as required by the particular algorithm being solved or executed at any point in time. The system-on-a-chip ASIC nodes are interconnected by multiple independent networks that optimally maximizes packet communications throughput and minimizes latency. In the preferred embodiment, the multiple networks include three high-speed networks for parallel algorithm message passing including a Torus, Global Tree, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. For particular classes of parallel algorithms, or parts of parallel calculations, this architecture exhibits exceptional computational performance, and may be enabled to perform calculations for new classes of parallel algorithms. Additional networks are provided for external connectivity and used for Input / Output, System Management and Configuration, and Debug and Monitoring functions. Special node packaging techniques implementing midplane and other hardware devices facilitates partitioning of the supercomputer in multiple networks for optimizing supercomputing resources.

Owner:IBM CORP

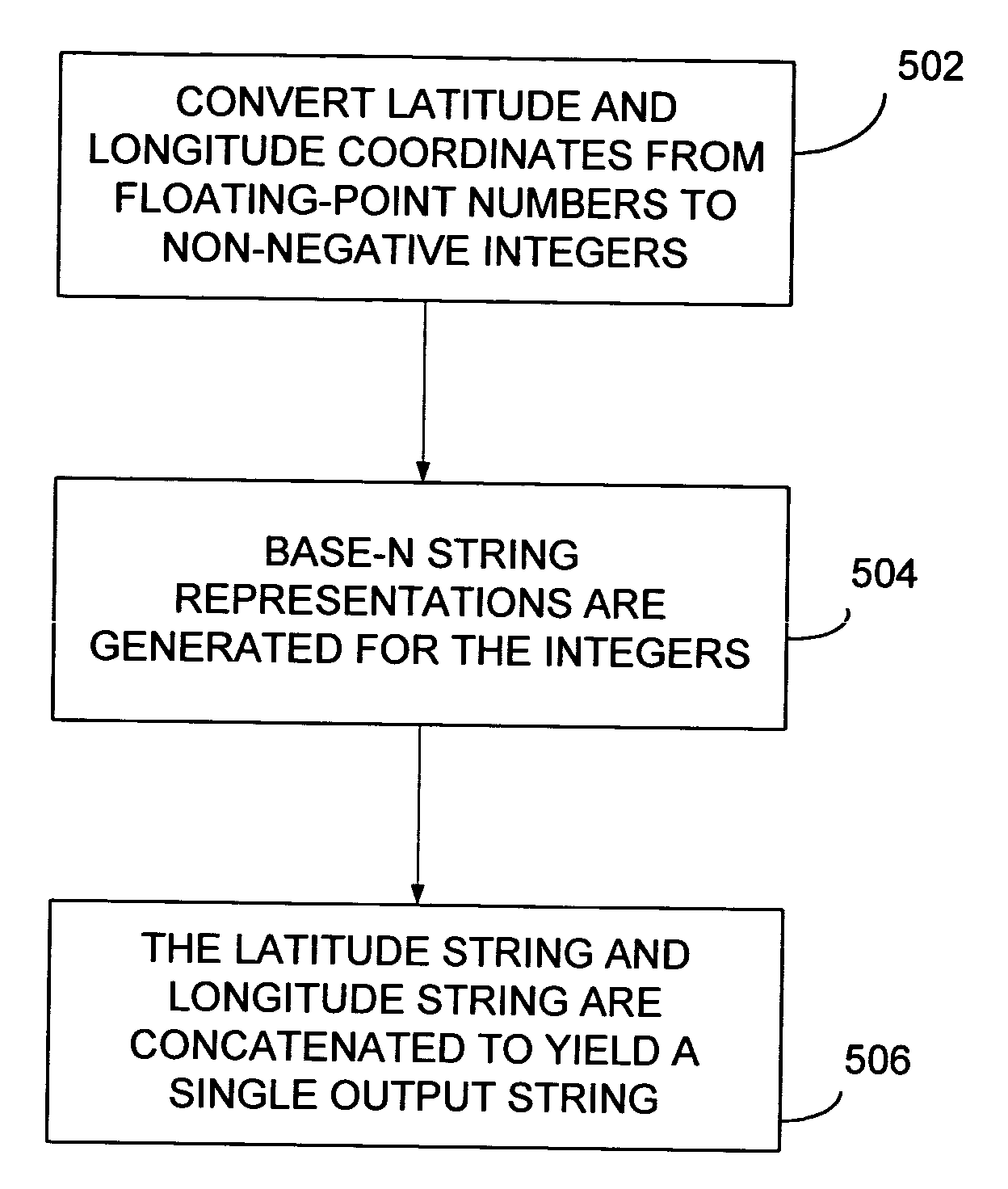

Compact text encoding of latitude/longitude coordinates

InactiveUS20050023524A1Data processing applicationsDigital data information retrievalTheoretical computer scienceLongitude

Methods are disclosed for encoding latitude / longitude coordinates within a URL in a relatively compact form. The method includes converting latitude and longitude coordinates from floating-point numbers to non-negative integers. A set of base-N string representations are generated for the integers (N represents the number of characters in an implementation-defined character set being utilized). The latitude string and longitude string are then concatenated to yield a single output string. The output string is utilized as a geographic indicator with a URL.

Owner:MICROSOFT TECH LICENSING LLC

Multimedia adaptive scrambling system (MASS)

InactiveUS6888943B1Avoid delayRecord information storageElectronic switchingComputer hardwareSignal-to-noise ratio (imaging)

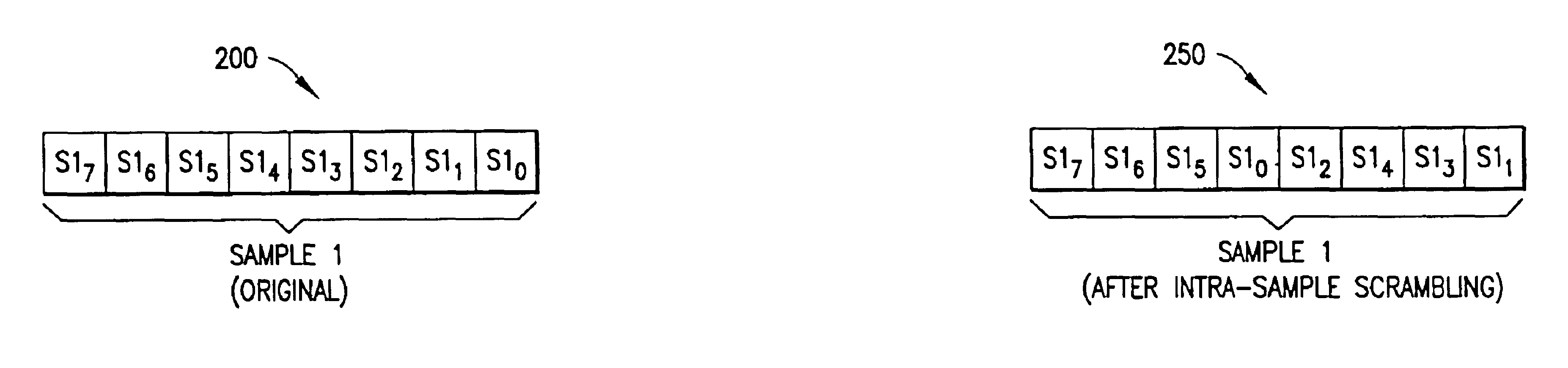

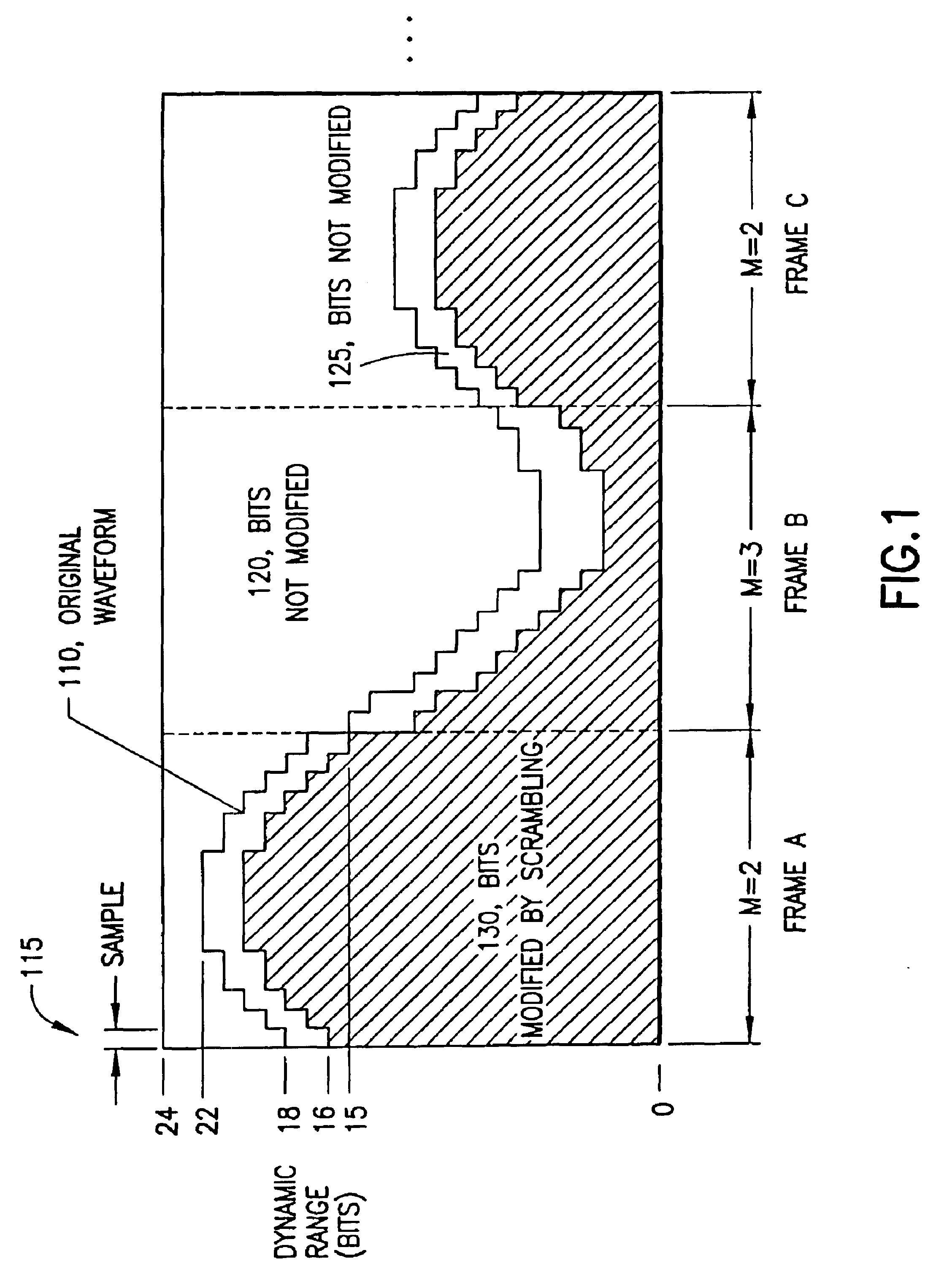

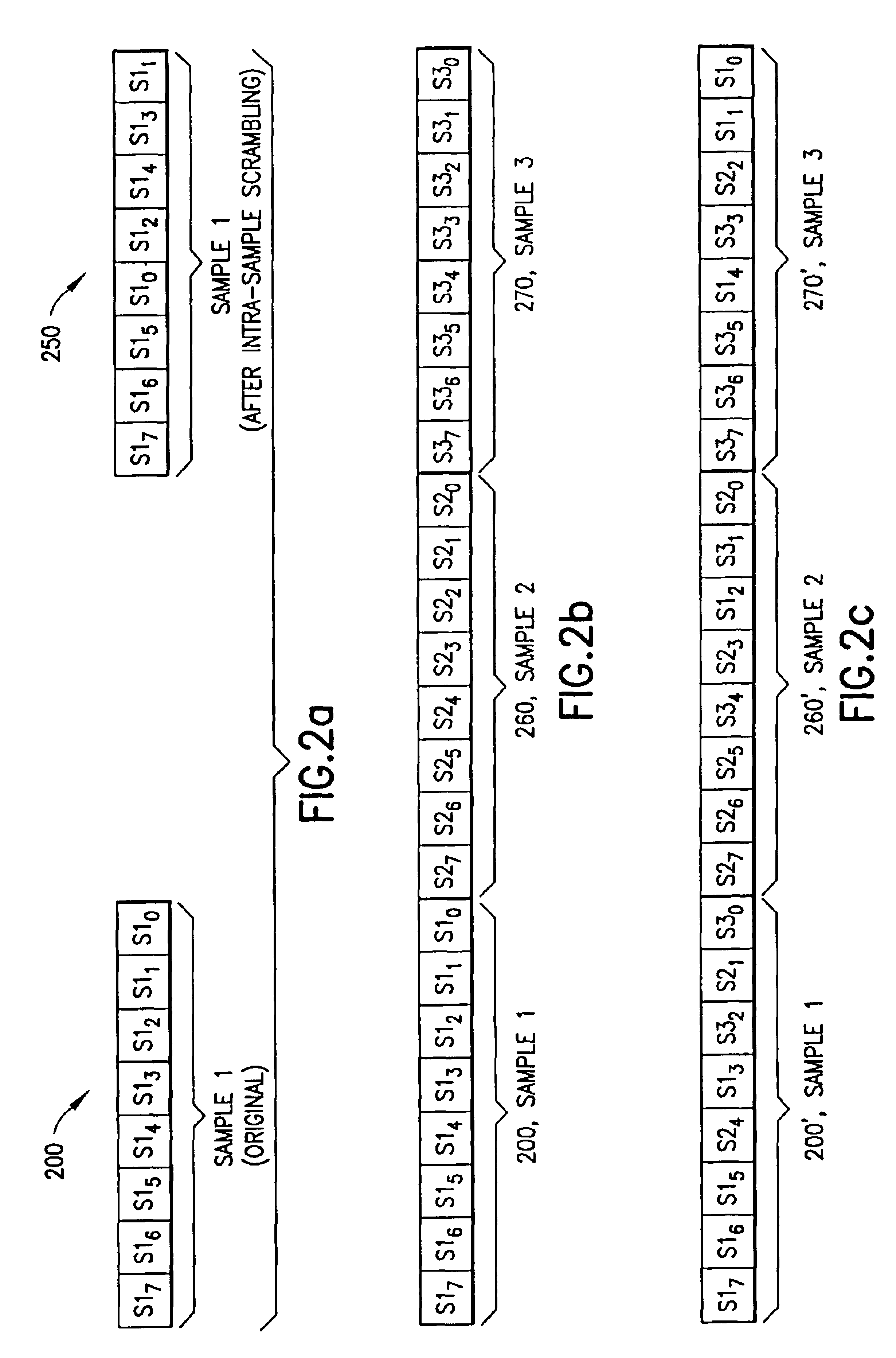

A system (300, 500) for scrambling digital samples (115, 200, 250, 260, 270) of multimedia data, including audio and video data samples, such that the content of the samples is degraded but still recognizable, or otherwise provided at a desired quality level. The samples may be in any conceivable compressed or uncompressed digital format, including Pulse Code Modulation (PCM) samples, samples in floating point representation, samples in companding schemes (e.g., μ-law and A-law), and other compressed bit streams. The quality level may be associated with a particular signal to noise ratio, or quality level that is determined by objective and / or subjective tests, for example. A number of LSBs can be scrambled in successive samples in successive frames (FRAME A, FRAME B, FRAME C). Moreover, the parameters for scrambling may change from frame to frame. Furthermore, all or part of the scrambling key (310) can be embedded (340) in the scrambled data and recovered at a decoder (400, 600) to be used in descrambling. After descrambling, the scramble key is no longer recoverable because the scramble key itself is scrambled by the descrambler.

Owner:VERANCE

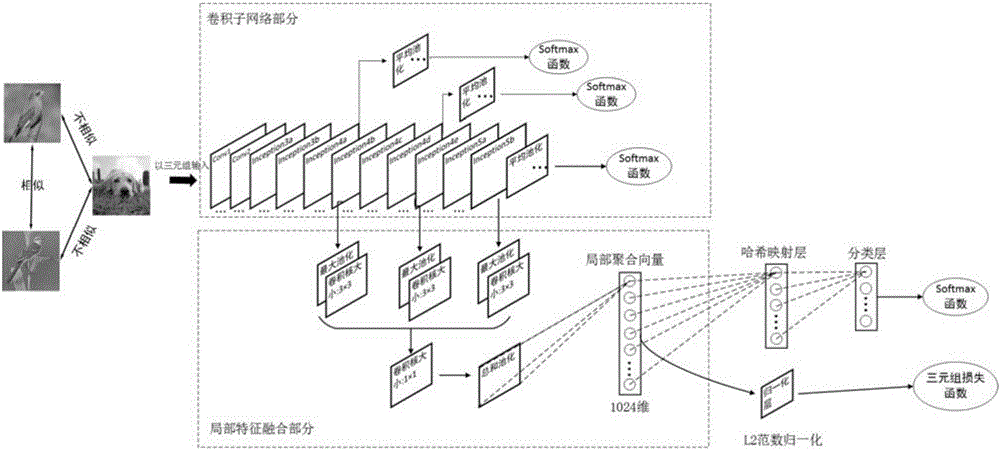

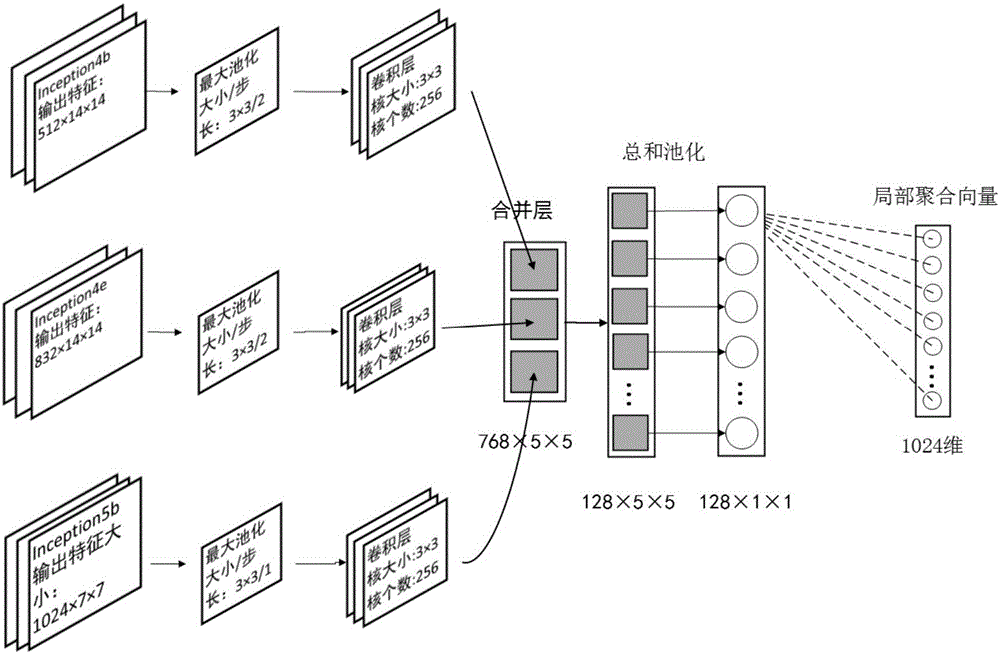

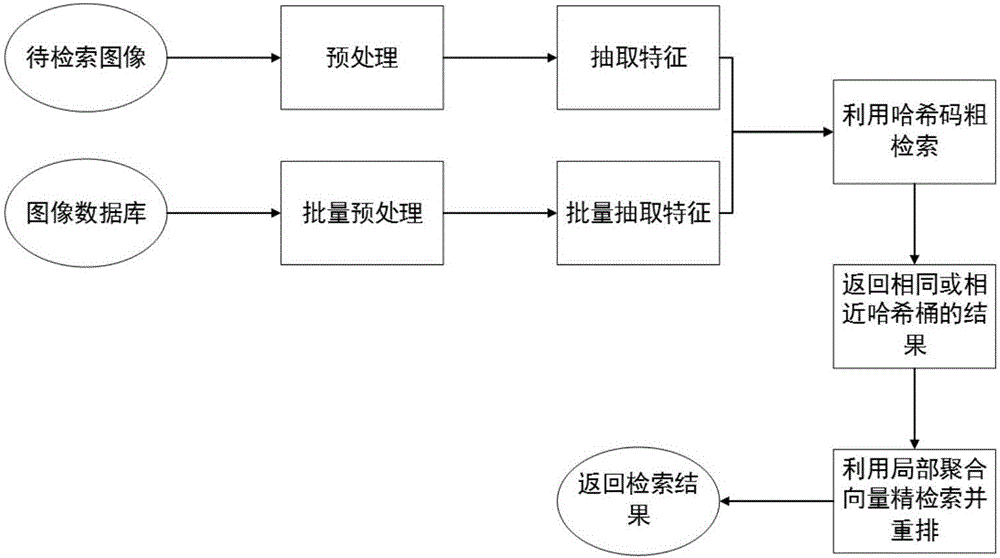

Method for Hash image retrieval based on deep learning and local feature fusion

ActiveCN106682233AFast and Efficient Image Retrieval TasksCharacter and pattern recognitionSpecial data processing applicationsFloating pointImage retrieval

The invention relates to a method for Hash image retrieval based on deep learning and local feature fusion. The method comprises a step (1) of preprocessing an image; a step (2) of using a convolutional neural network to train images containing category tags; a step (3) of using a binarization mode to generate Hash codes of the images and extract 1024-dimensional floating-point type local polymerization vectors; a step (4) of using the Hash codes to perform rough retrieval; and a step (5) of using the local polymerization vectors to perform fine retrieval. According to the method for Hash image retrieval based on deep learning and local feature fusion, an approximate nearest neighbor search strategy is utilized to perform image retrieval after two features are extracted, the retrieval accuracy is high, and the retrieval speed is quick.

Owner:HUAQIAO UNIVERSITY

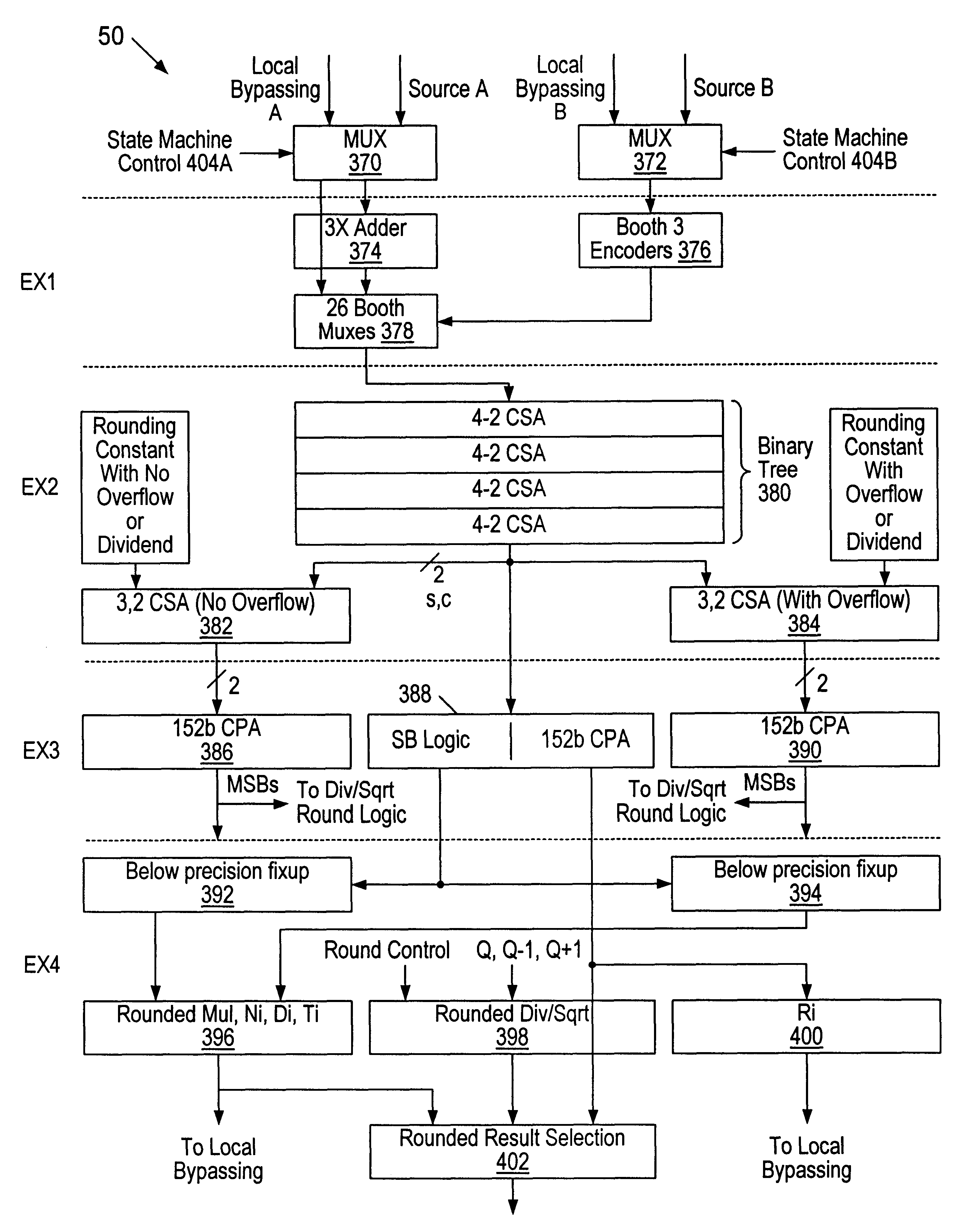

Shared FP and SIMD 3D multiplier

InactiveUS6490607B1Computations using contact-making devicesRuntime instruction translationComputerized systemTheoretical computer science

A multiplier configured to perform multiplication of both scalar floating point values (XxY) and packed floating point values (i.e., X1xY1 and X2xY2). In addition, the multiplier may be configured to calculate XxY-Z. The multiplier comprises selection logic for selecting source operands, a partial product generator, an adder tree, and two or more adders configured to sum the results from the adder tree to achieve a final result. The multiplier may also be configured to perform iterative multiplication operations to implement such arithmetical operations such as division and square root. The multiplier may be configured to generate two versions of the final result, one assuming there is an overflow, and another assuming there is not an overflow. A computer system and method for performing multiplication are also disclosed.

Owner:ADVANCED SILICON TECH

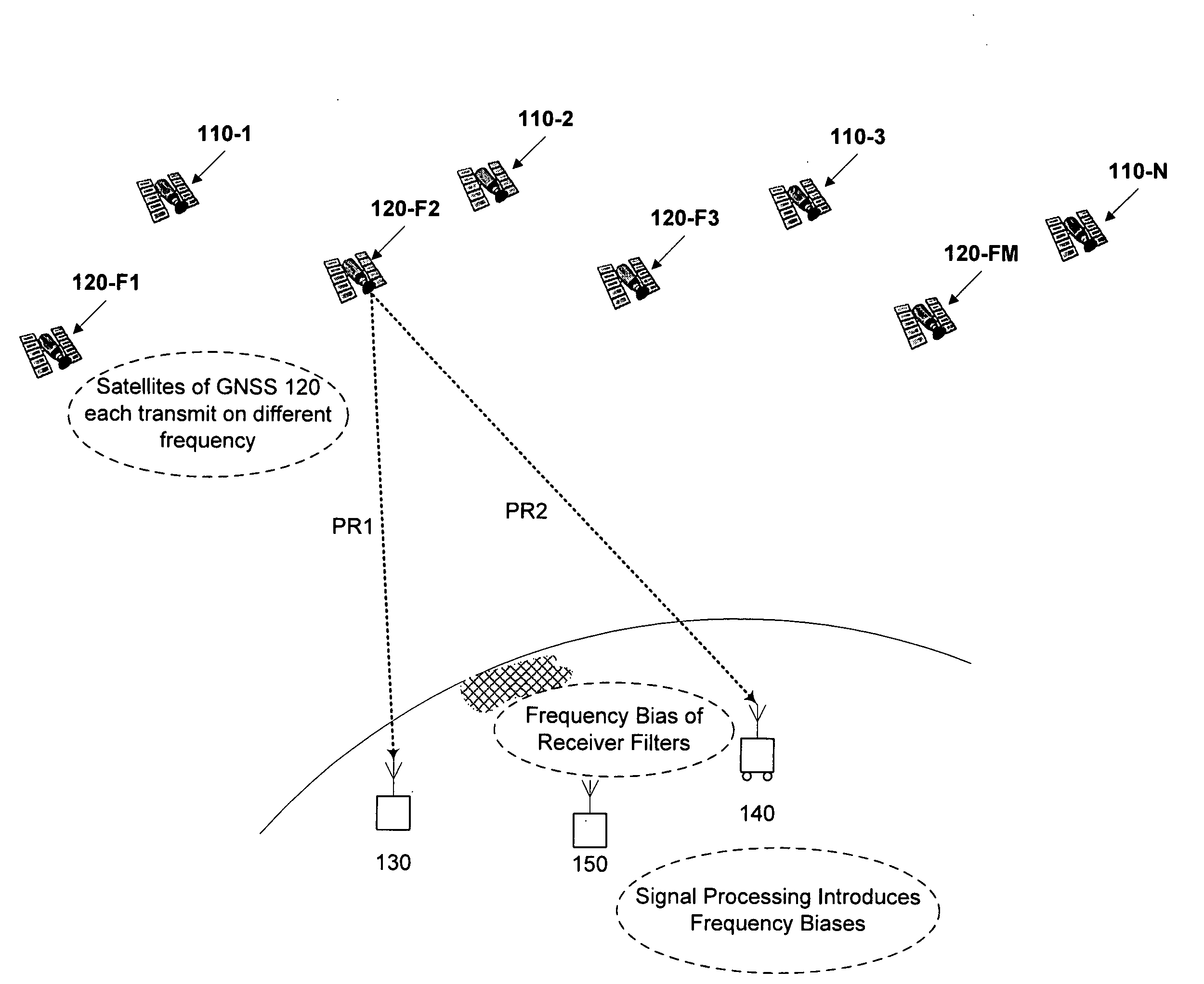

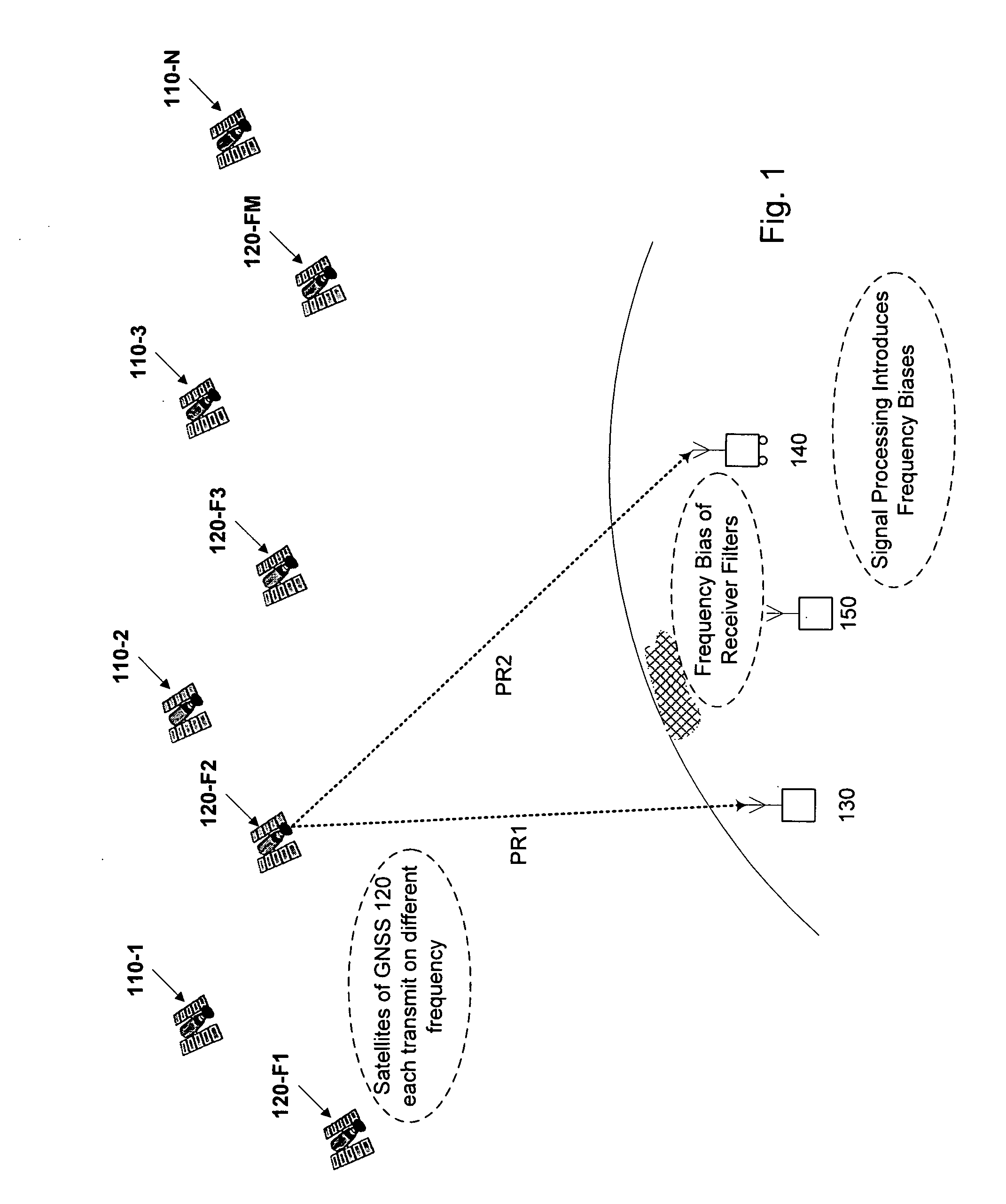

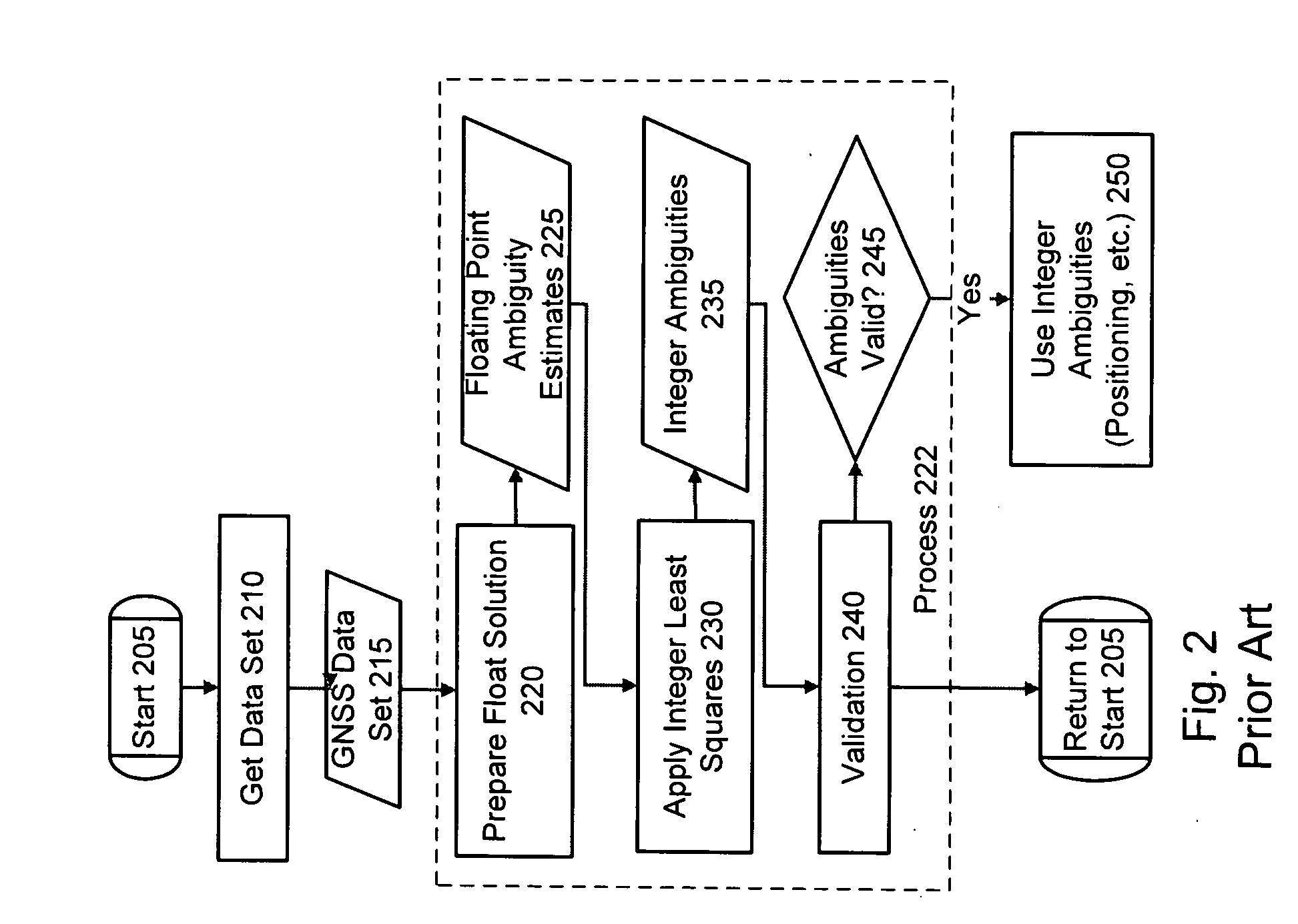

Processing Multi-GNSS data from mixed-type receivers

Computer-implemented methods and apparatus are presented for processing data collected by at least two receivers from multiple satellites of multiple GNSS, where at least one GNSS is FDMA. Data sets are obtained which comprise a first data set from a first receiver and a second data set from a second receiver. The first data set comprises a first FDMA data set and the second data set comprises a second FDMA data set. At least one of a code bias and a phase bias may exist between the first FDMA data set and the second FDMA data set. At least one receiver-type bias is determined, to be applied when the data sets are obtained from receivers of different types. The data sets are processed, based on the at least one receiver-type bias, to estimate carrier floating-point ambiguities. Carrier integer ambiguities are determined from the floating-point ambiguities. The scheme enables GLONASS carrier phase ambiguities to be resolved and used in a combined FDMA / CDMA (e.g., GLONASS / GPS) centimeter-level solution. It is applicable to real-time kinematic (RTK) positioning, high-precision post-processing of positions and network RTK positioning.

Owner:TRIMBLE NAVIGATION LTD

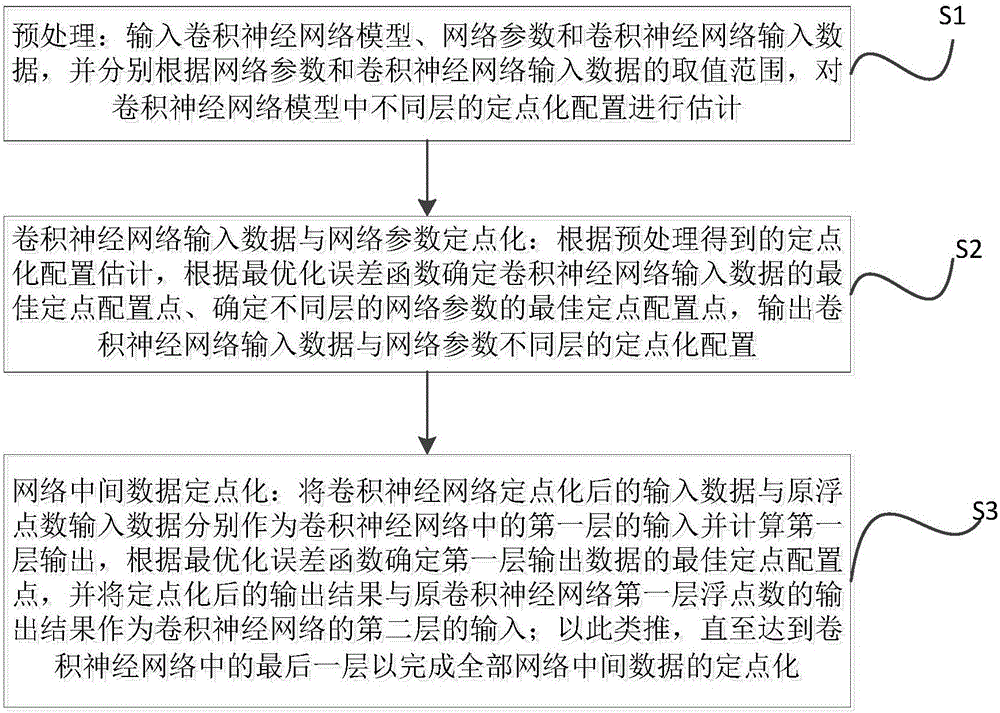

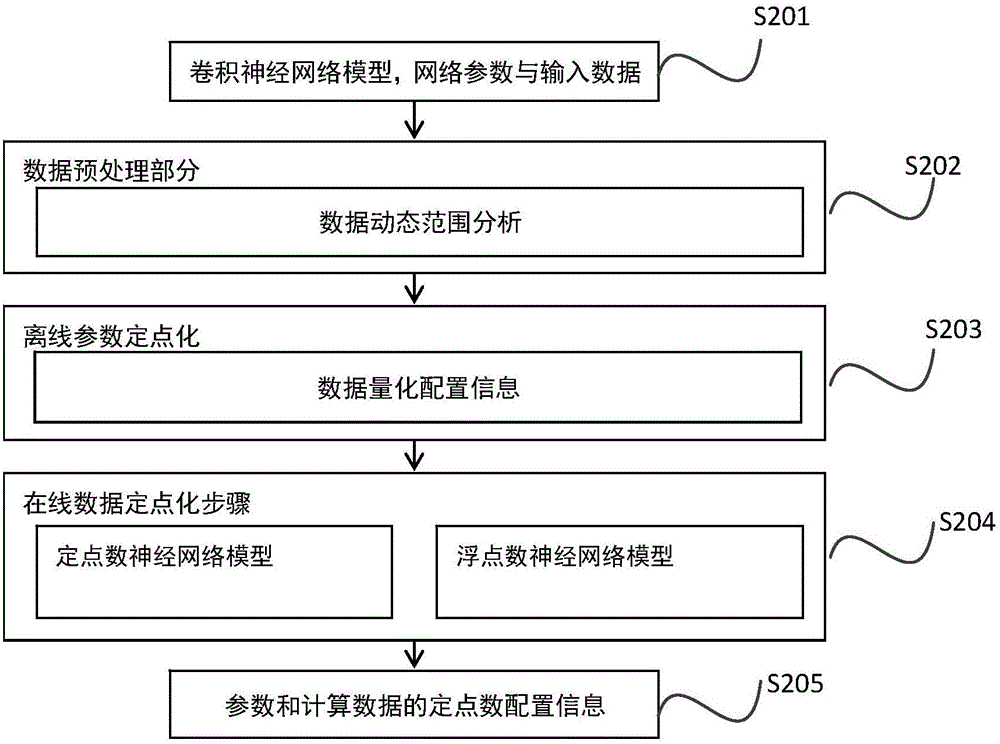

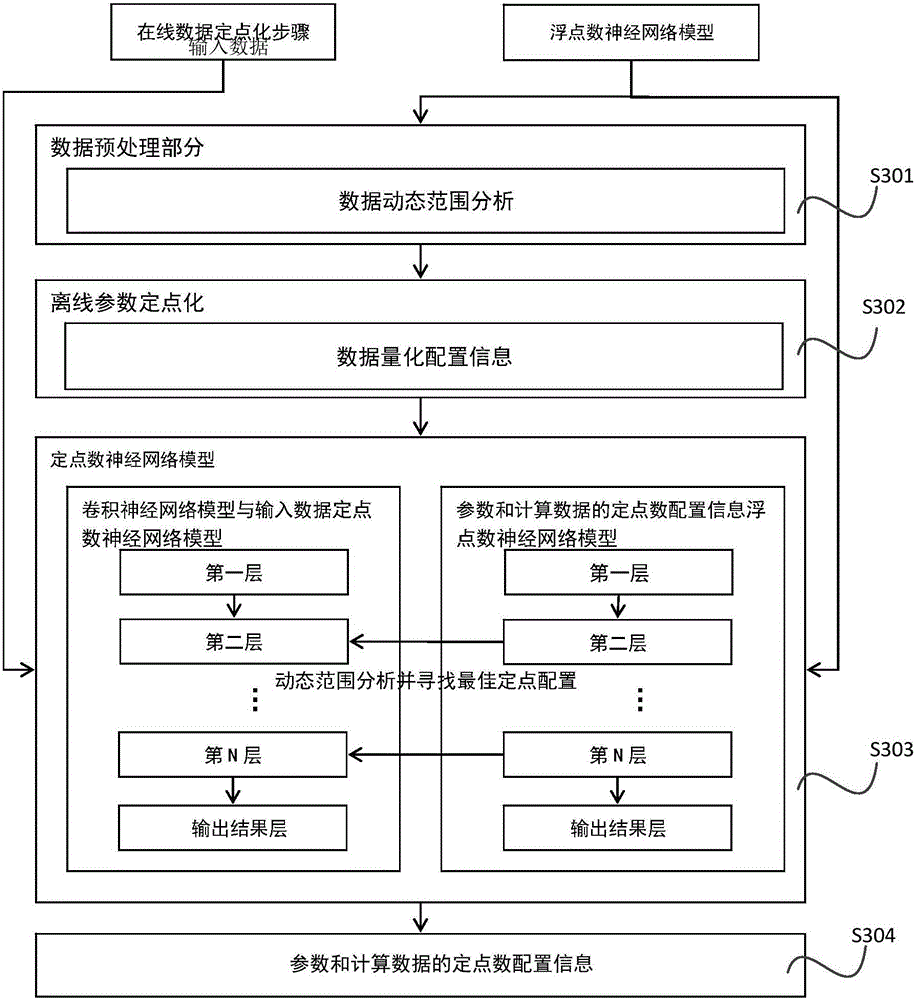

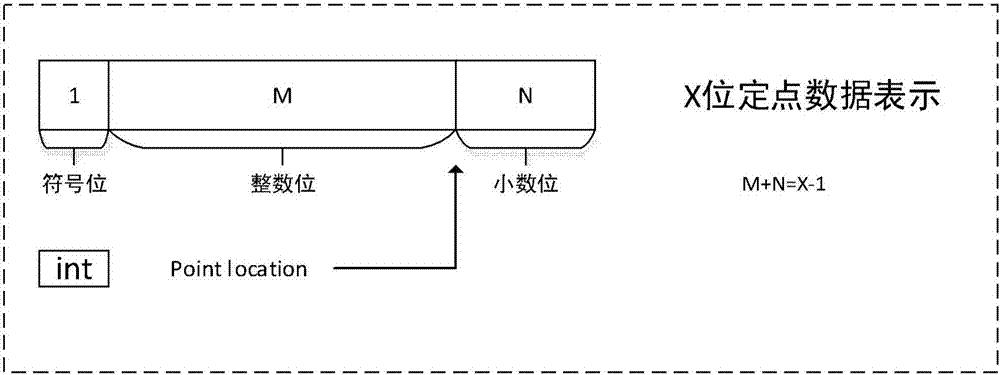

Method and apparatus for fixed-pointing layer-wise variable precision in convolutional neural network

InactiveCN105760933AReduce precision lossFast transmissionNeural learning methodsFloating pointNetwork data

The invention discloses a method and an apparatus for fixed-pointing the layer-wise variable precision in a convolutional neural network. The method comprises the following steps: estimating fixed-pointing configuration input to various layers in the convolutional neural network model respectively in accordance with input network parameters and a value range of input data; based on the acquired fixed-point configuration estimation and the optimal error function, determining the best fixed-point configuration points of the input data and network parameters of various layers and outputting the best fixed-point configuration points; inputting respectively the input data which is subject to fixed-pointing and an input data of an original floating-point number as a first layer in the convolutional neural network and computing the optimal fixed-point configuration point of the output data of the layer, and inputting the output result and an output result of the original first layer floating-point number as a second layer. The rest of the steps can be done in the aforementioned manner until the last layer completes the whole fixed-pointing. The method of the invention guarantees the minimum precision loss of each layer subject to fixed-pointing of the convolutional neural network, can explicitly lower space required by storing network data, and can increase transmitting velocity of network parameters.

Owner:BEIJING DEEPHI INTELLIGENT TECH CO LTD

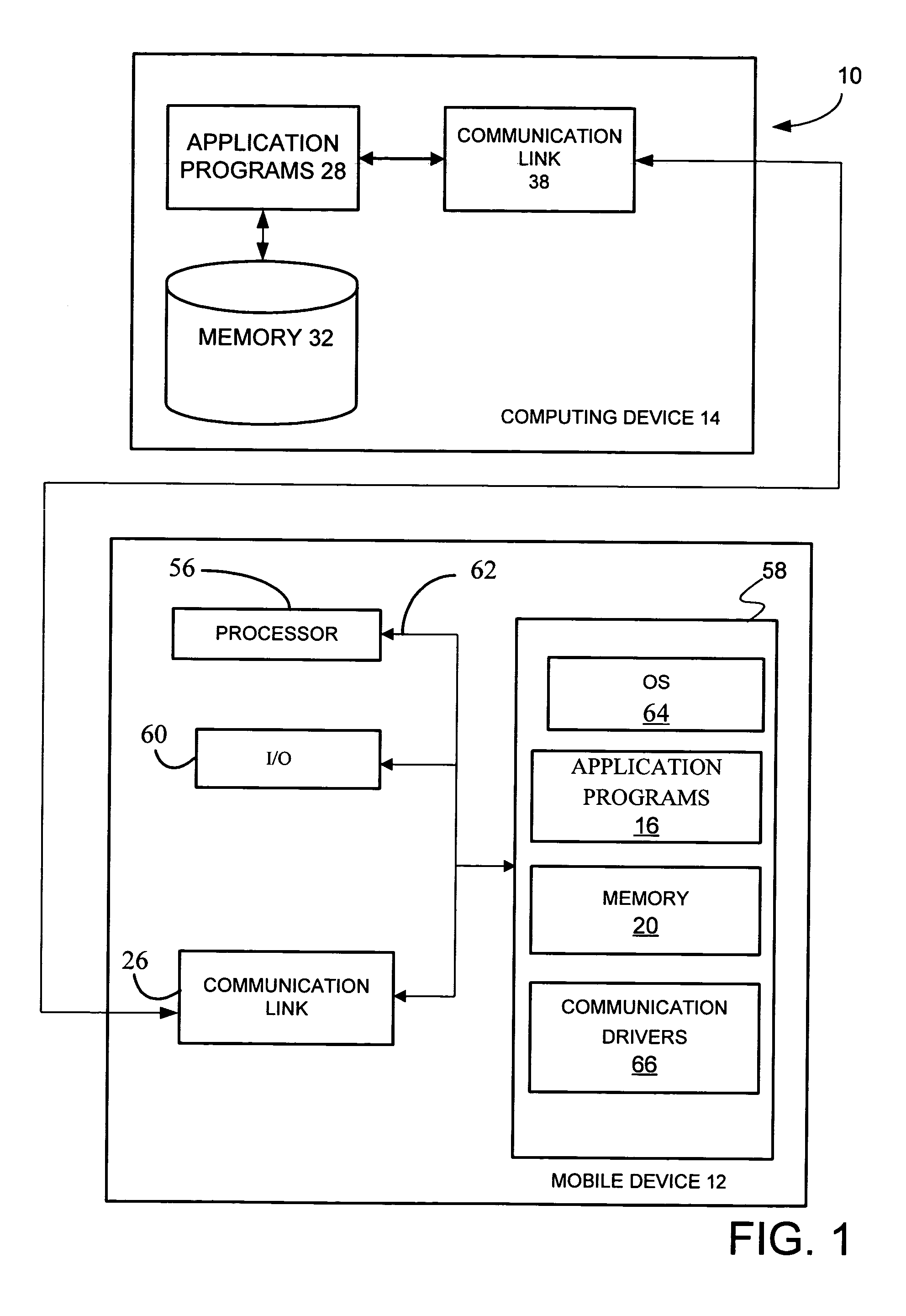

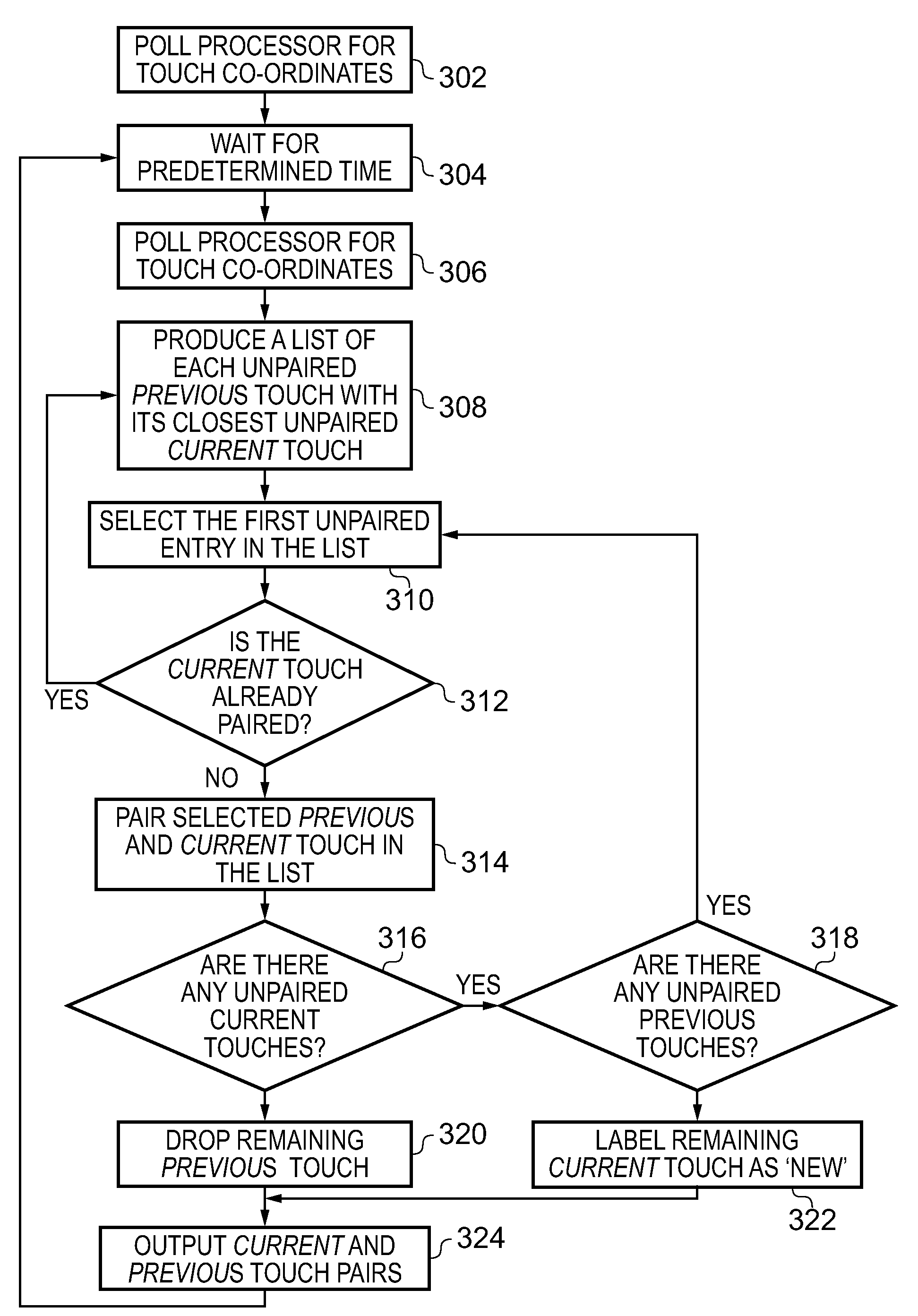

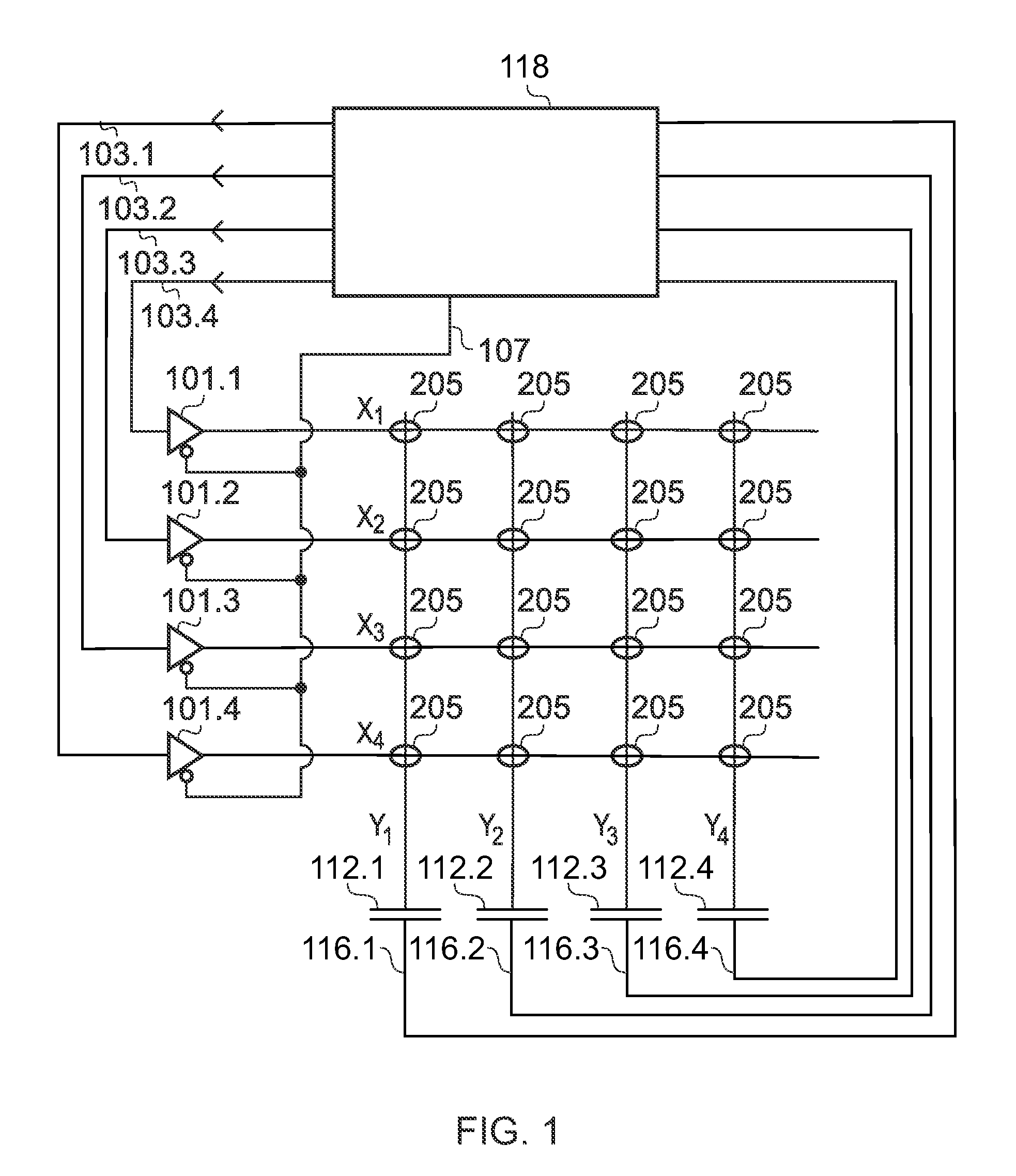

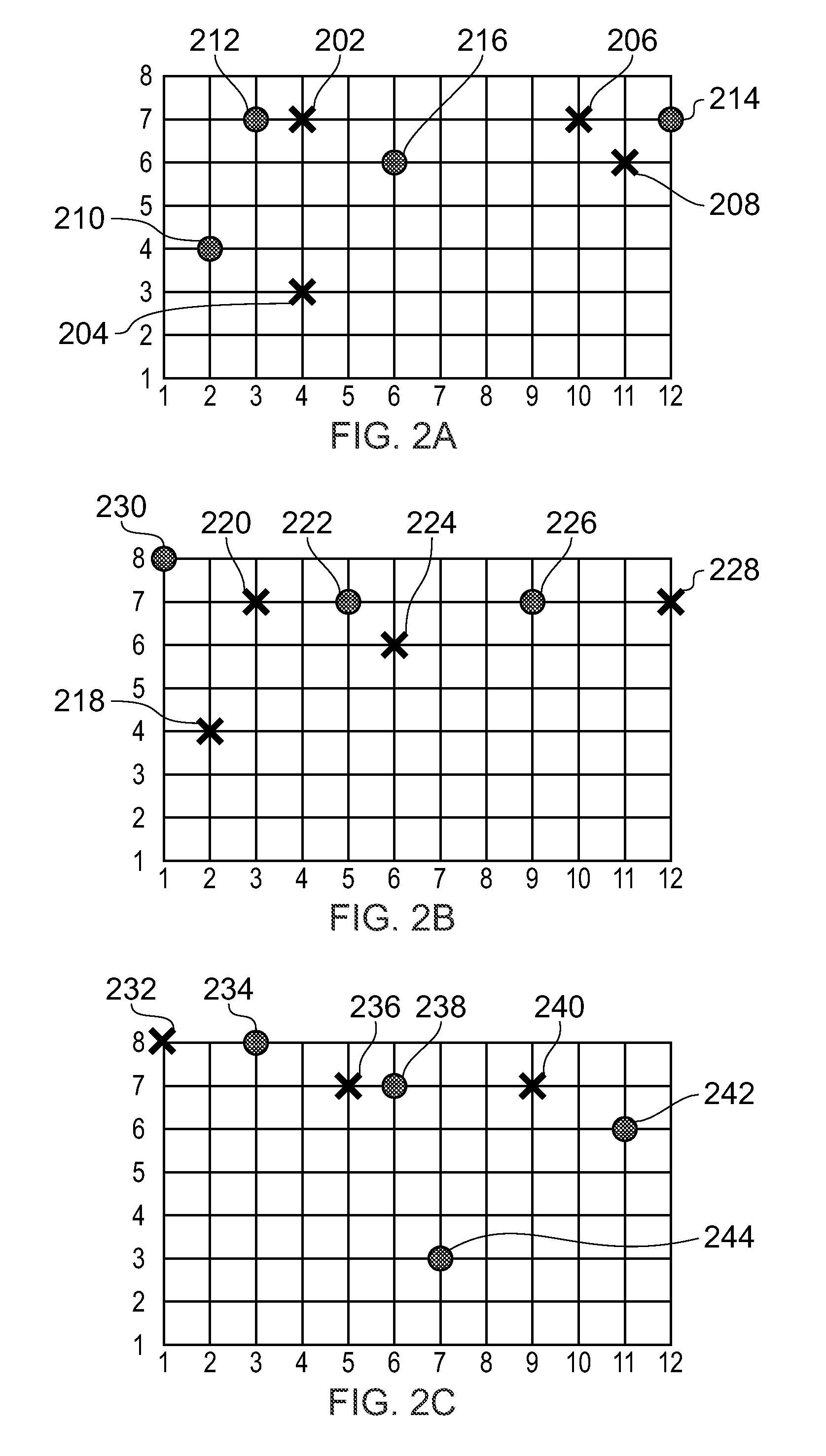

Multi-Touch Tracking

ActiveUS20100097342A1Simple calculationLess computation timeInput/output processes for data processingMicrocontrollerData set

A method of tracking multiple touches over time on a touch sensor, for example a capacitive touch screen. The method analyses first and second touch data sets from adjacent first and second time frames. First, the touch data sets are analyzed to determine the closest touch in the second time frame to each of the touches in the first time frame, and calculating the separation between each such pair of touches. Then, starting with the pair of touches having the smallest separation, each pair is validated until a pairing is attempted between touches for which the touch in the second time frame has already been paired. At this point, the as-yet unpaired touches from the first and second touch data sets are re-processed by re-applying the computations but only including the as-yet unpaired touches. This re-processing is iterated until no further pairings need to be made. The method avoids complex algebra and floating point operations, and has little memory requirement. As such it is ideally suited to implementation on a microcontroller.

Owner:NEODRON LTD

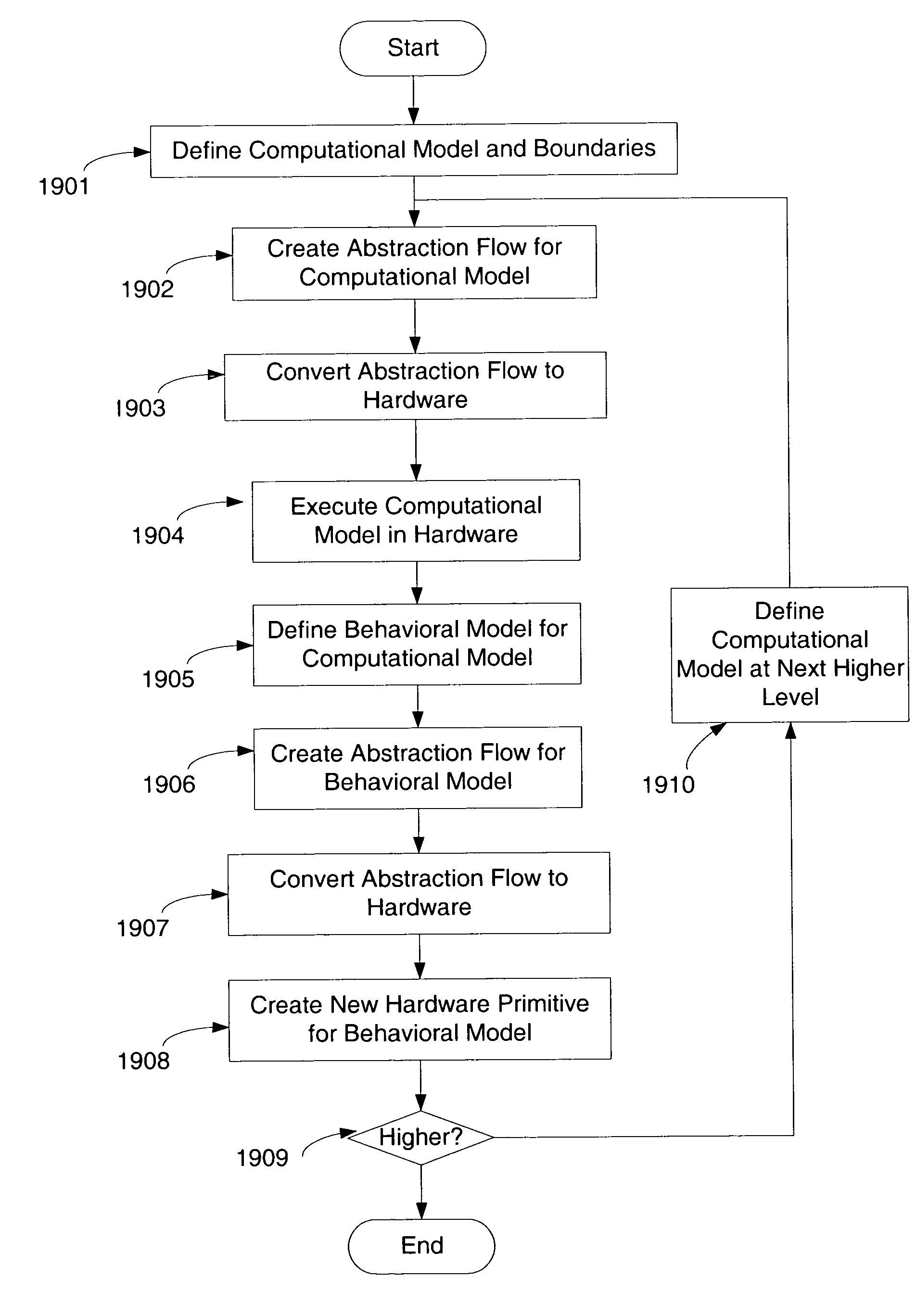

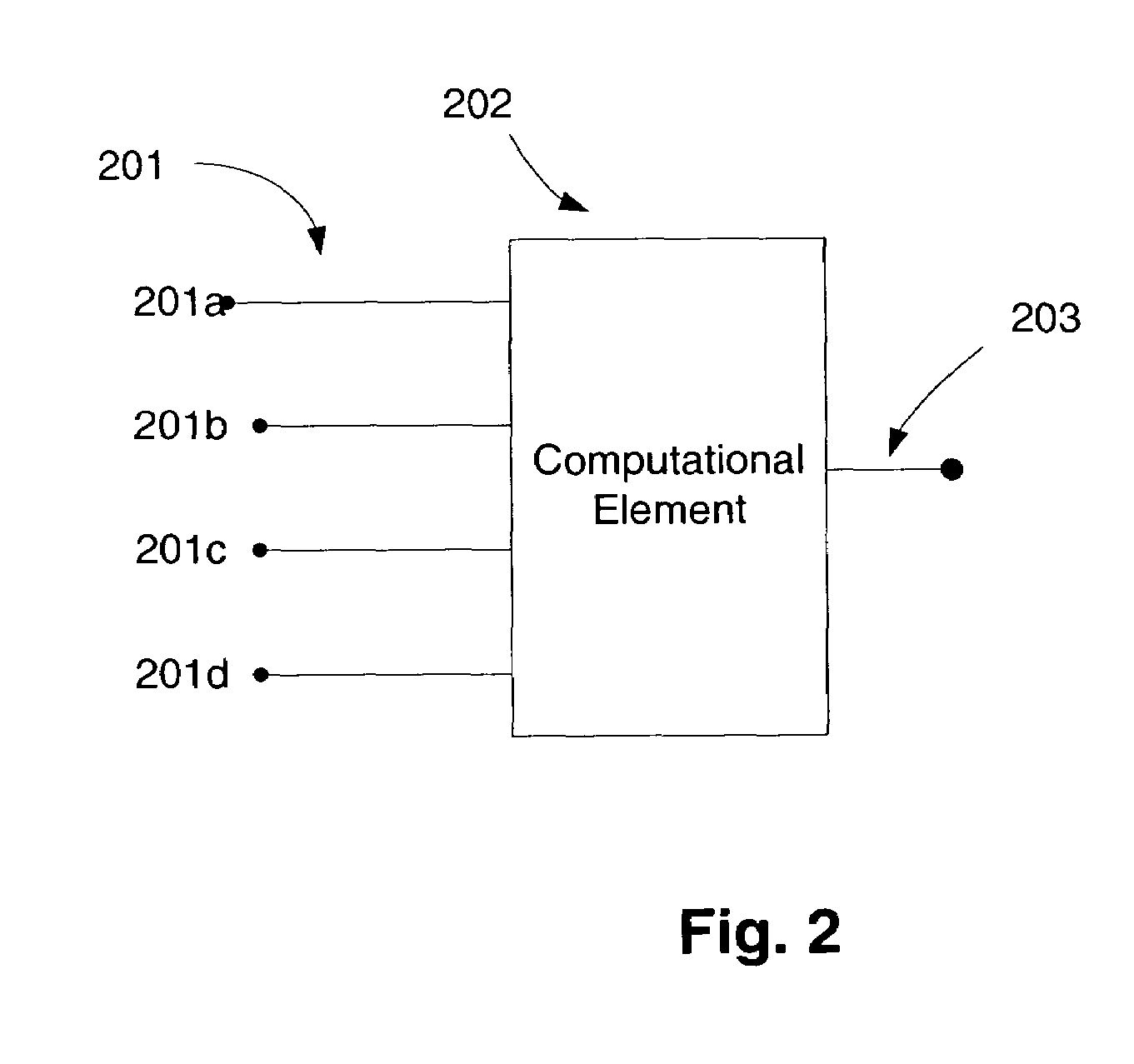

Polymorphic computational system and method in signals intelligence analysis

ActiveUS7386833B2Multiple digital computer combinationsComputer aided designLogic emulationSignals intelligence

Configuration software is used for generating hardware-level code and data that may be used with reconfigurable / polymorphic computing platforms, such as logic emulators, and which may be used for conducting signals intelligence analysis, such as encryption / decryption processing, image analysis, etc. A user may use development tools to create visual representations of desired process algorithms, data structures, and interconnections, and system may generate intermediate data from this visual representation. The Intermediate data may be used to consult a database of predefined code segments, and segments may be assembled to generate monolithic block of hardware syhthesizable (RTL, VHDL, etc.) code for implementing the user's process in hardware. Efficiencies may be accounted for to minimize circuit components or processing time. Floating point calculations may be supported by a defined data structure that is readily implemented in hardware.

Owner:SIEMENS PROD LIFECYCLE MANAGEMENT SOFTWARE INC

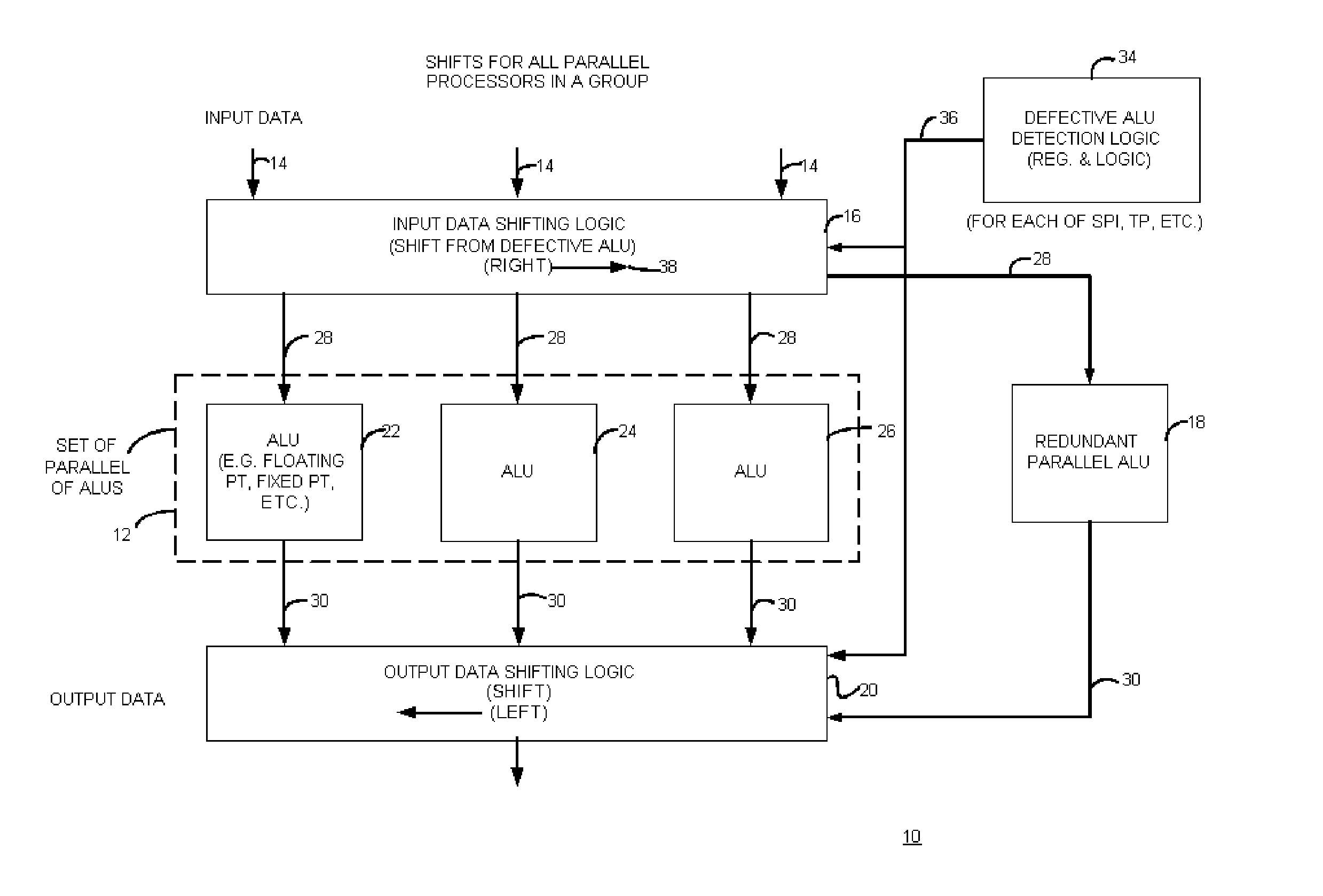

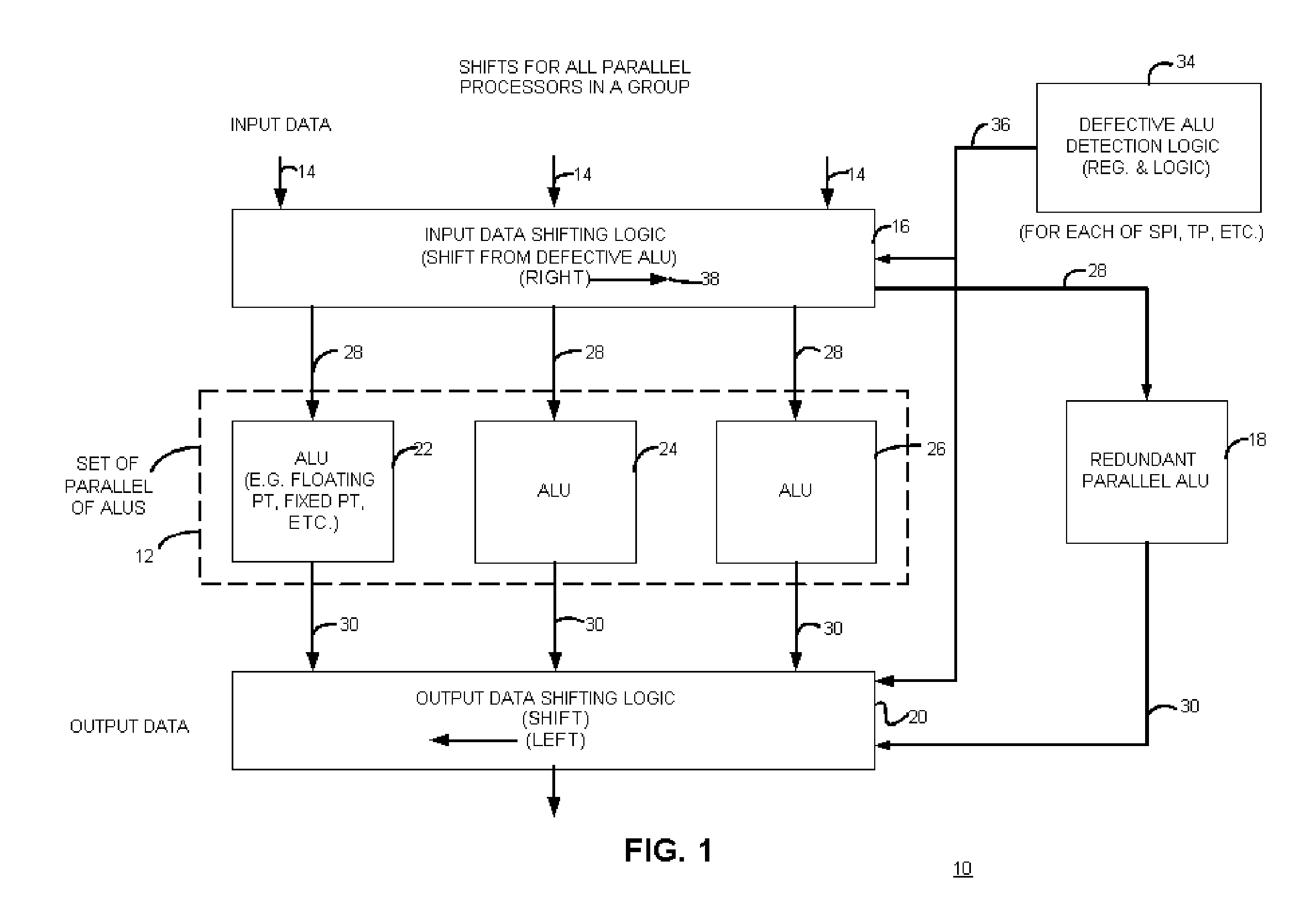

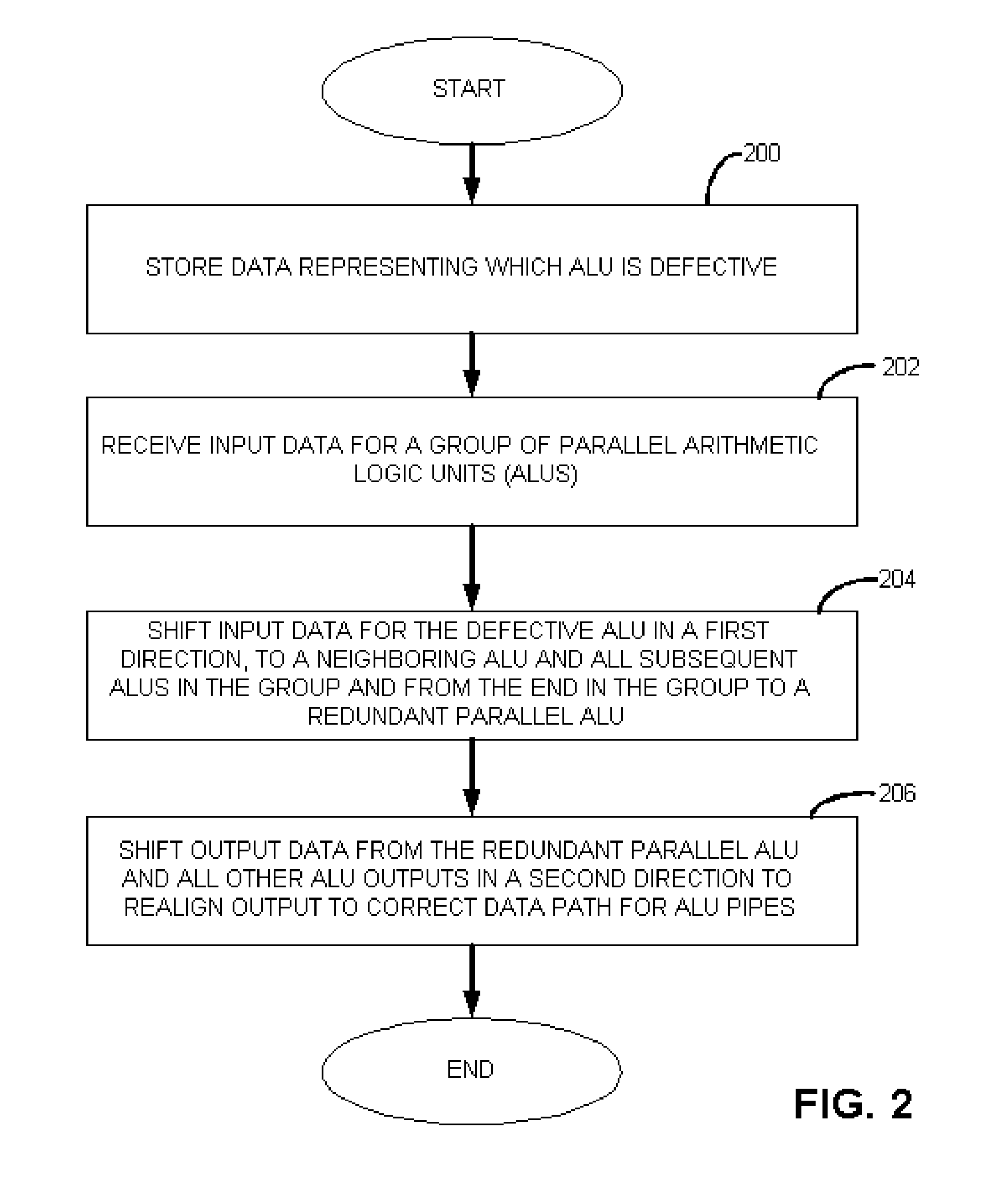

Graphics processing logic with variable arithmetic logic unit control and method therefor

Briefly, graphics data processing logic includes a plurality of parallel arithmetic logic units (ALUs), such as floating point processors or any other suitable logic, that operate as a vector processor on at least one of pixel data and vertex data (or both) and a programmable storage element that contains data representing which of the plurality of arithmetic logic units are not to receive data for processing. The graphics data processing logic also includes parallel ALU data packing logic that is operatively coupled to the plurality of arithmetic logic processing units and to the programmable storage element to pack data only for the plurality of arithmetic logic units identified by the data in the programmable storage element as being enabled.

Owner:ATI TECH INC

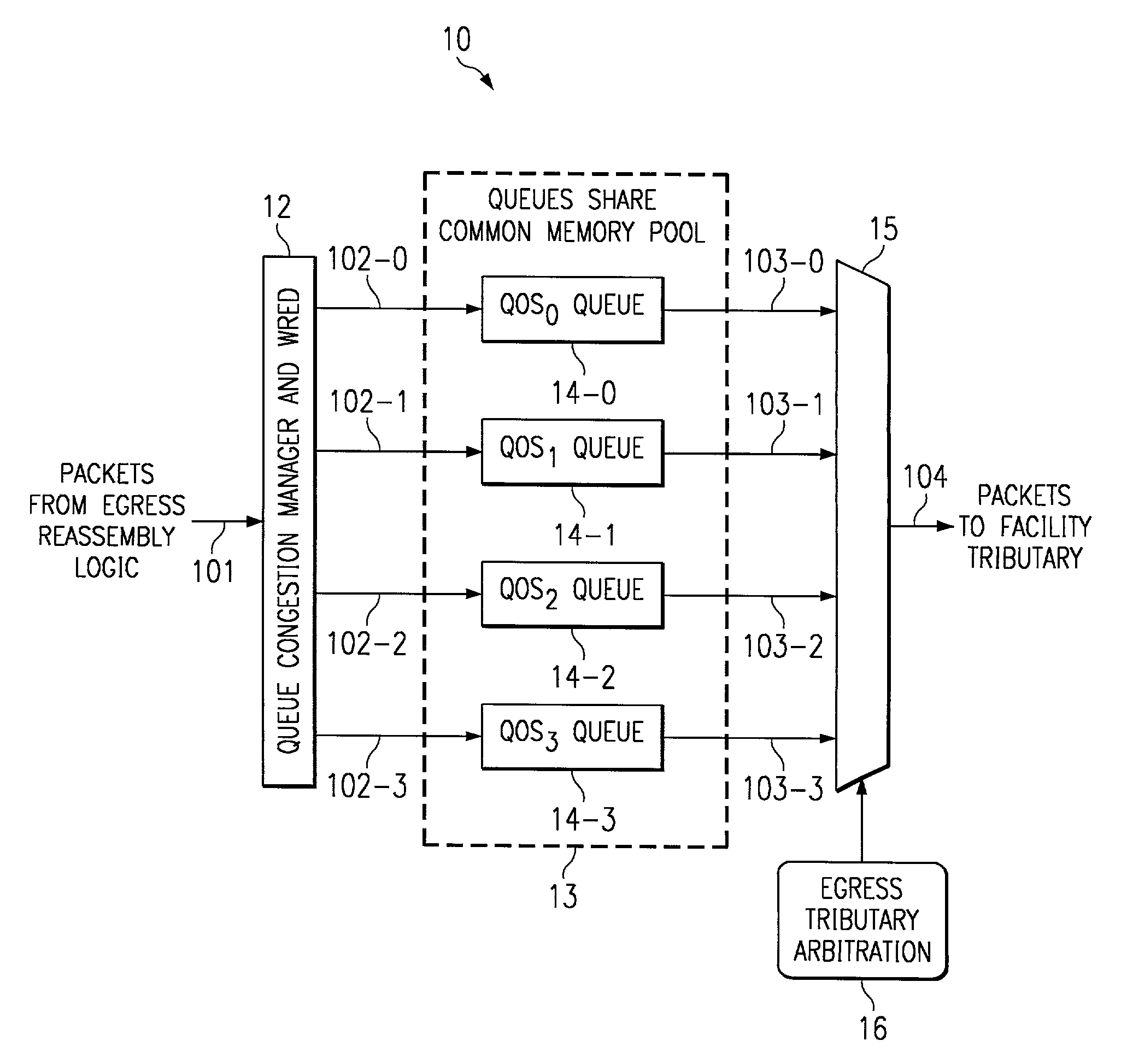

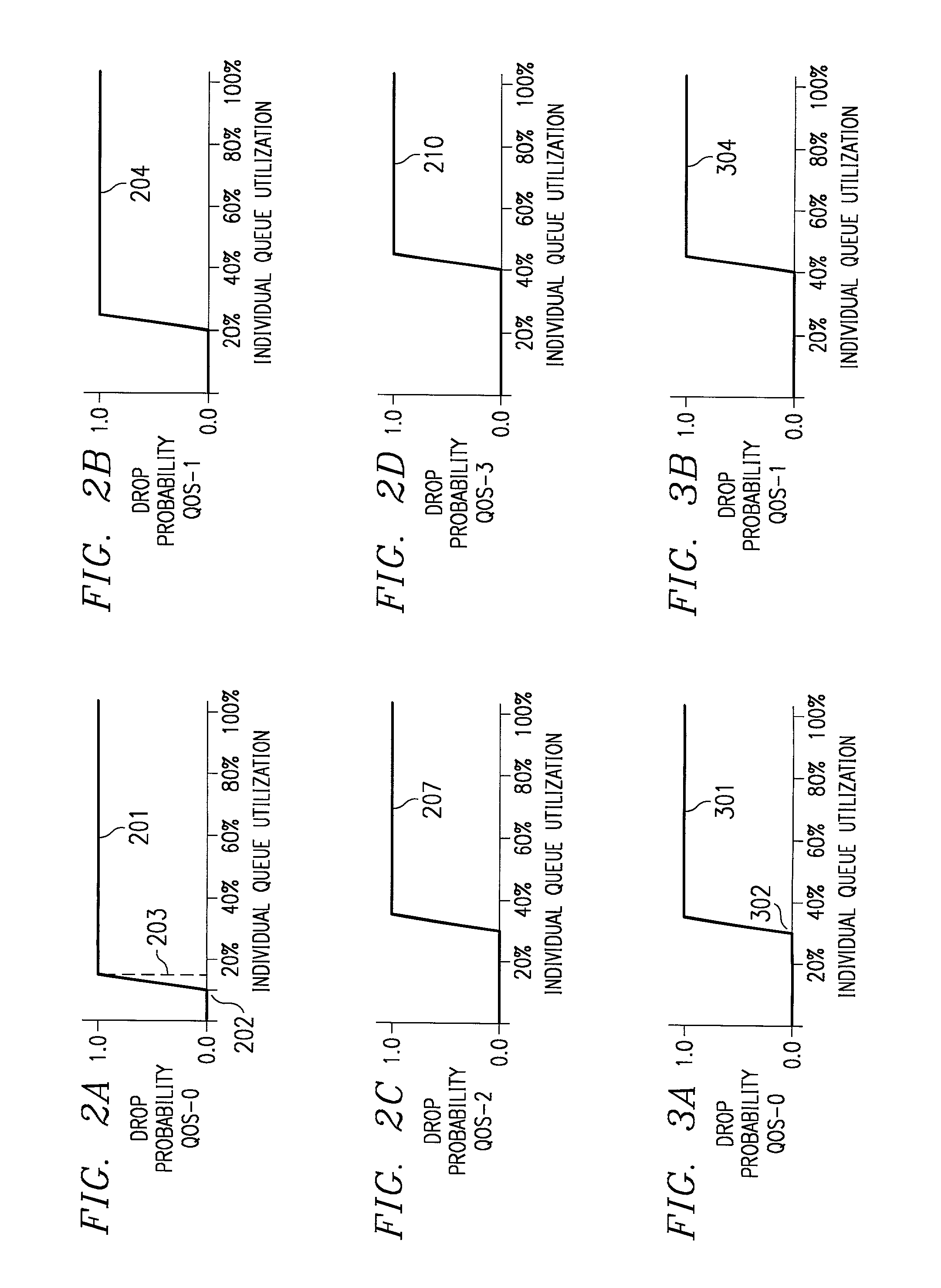

System and method for router queue and congestion management

In a multi-QOS level queuing structure, packet payload pointers are stored in multiple queues and packet payloads in a common memory pool. Algorithms control the drop probability of packets entering the queuing structure. Instantaneous drop probabilities are obtained by comparing measured instantaneous queue size with calculated minimum and maximum queue sizes. Non-utilized common memory space is allocated simultaneously to all queues. Time averaged drop probabilities follow a traditional Weighted Random Early Discard mechanism. Algorithms are adapted to a multi-level QOS structure, floating point format, and hardware implementation. Packet flow from a router egress queuing structure into a single egress port tributary is controlled by an arbitration algorithm using a rate metering mechanism. The queuing structure is replicated for each egress tributary in the router system.

Owner:AVAGO TECH INT SALES PTE LTD

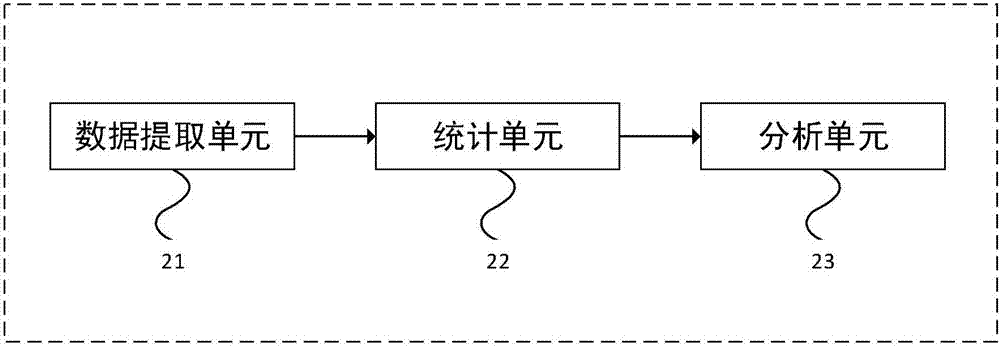

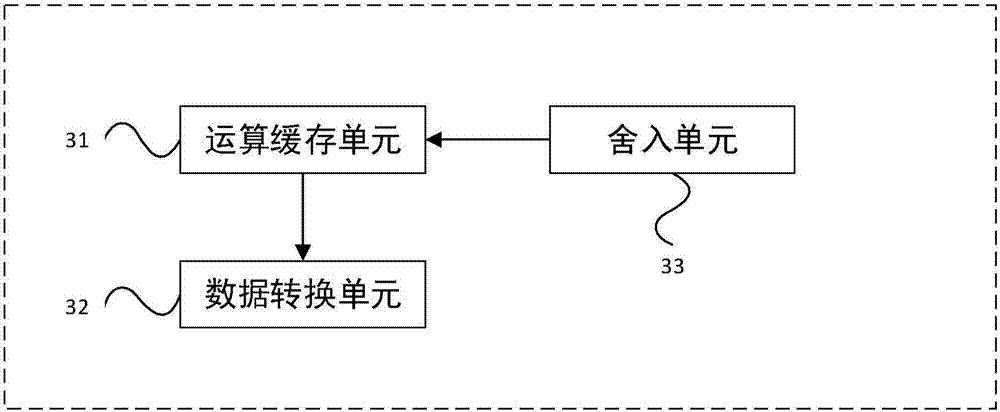

Device and method for executing forward operation of artificial neural network

PendingCN107330515ASmall area overheadReduce area overhead and optimize hardware area power consumptionDigital data processing detailsCode conversionData operationsComputer module

The invention provides a device and a method for executing a forward operation of an artificial neural network. The device comprises the components of a floating point data statistics module which is used for performing statistics analysis on varies types of required data and obtains the point location of fixed point data; a data conversion unit which is used for realizing conversion from a long-bit floating point data type to a short-bit floating point data type according to the point location of the fixed point data; and a fixed point data operation module which is used for performing artificial neural network forward operation on the short-bit floating point data. According to the device provided by the invention, through representing the data in the forward operation of the multilayer artificial neural network by short-bit fixed points, and utilizing the corresponding fixed point data operation module, forward operation for the short-bit fixed points in the artificial neural network is realized, thereby greatly improving performance-to-power ratio of hardware.

Owner:CAMBRICON TECH CO LTD

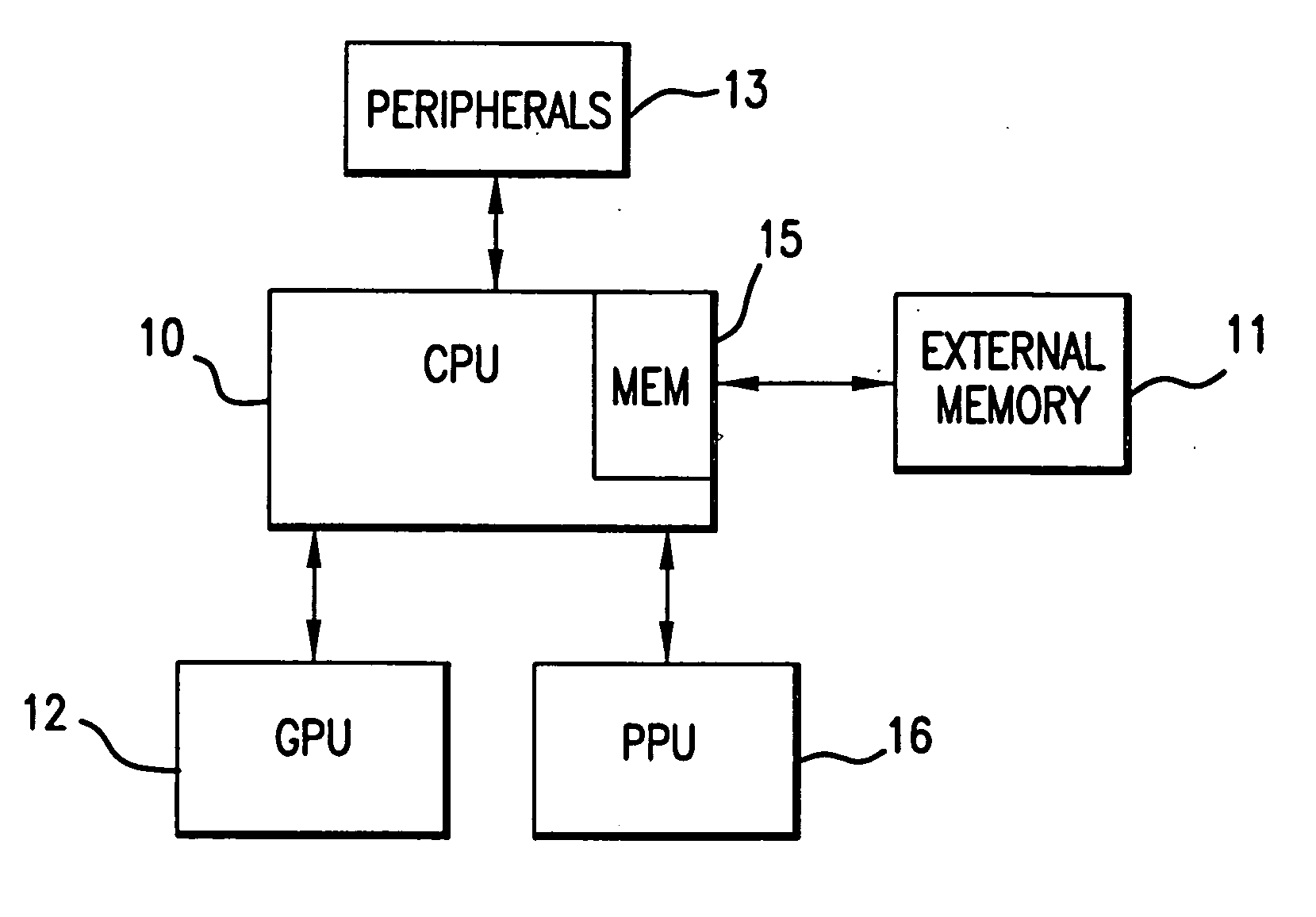

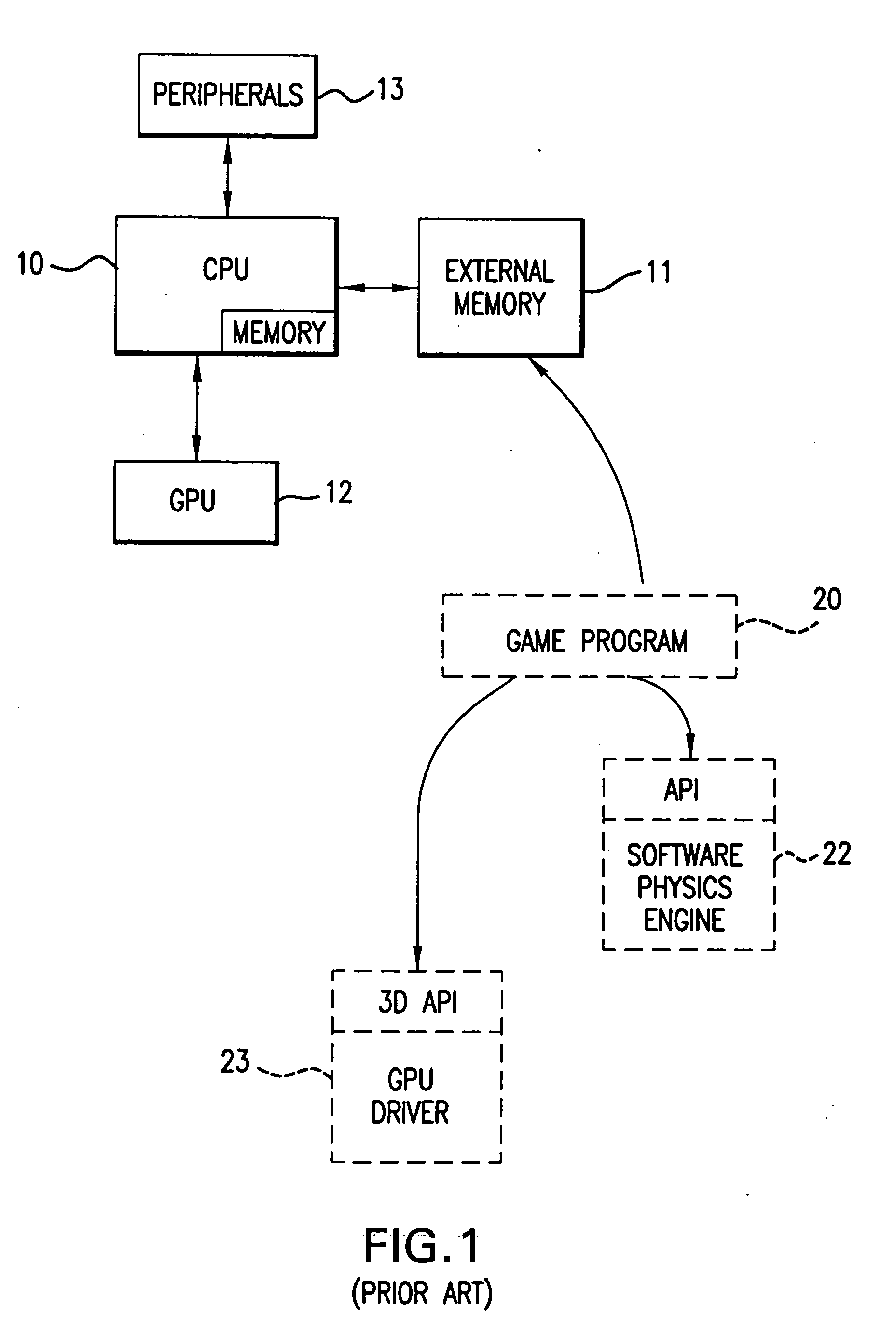

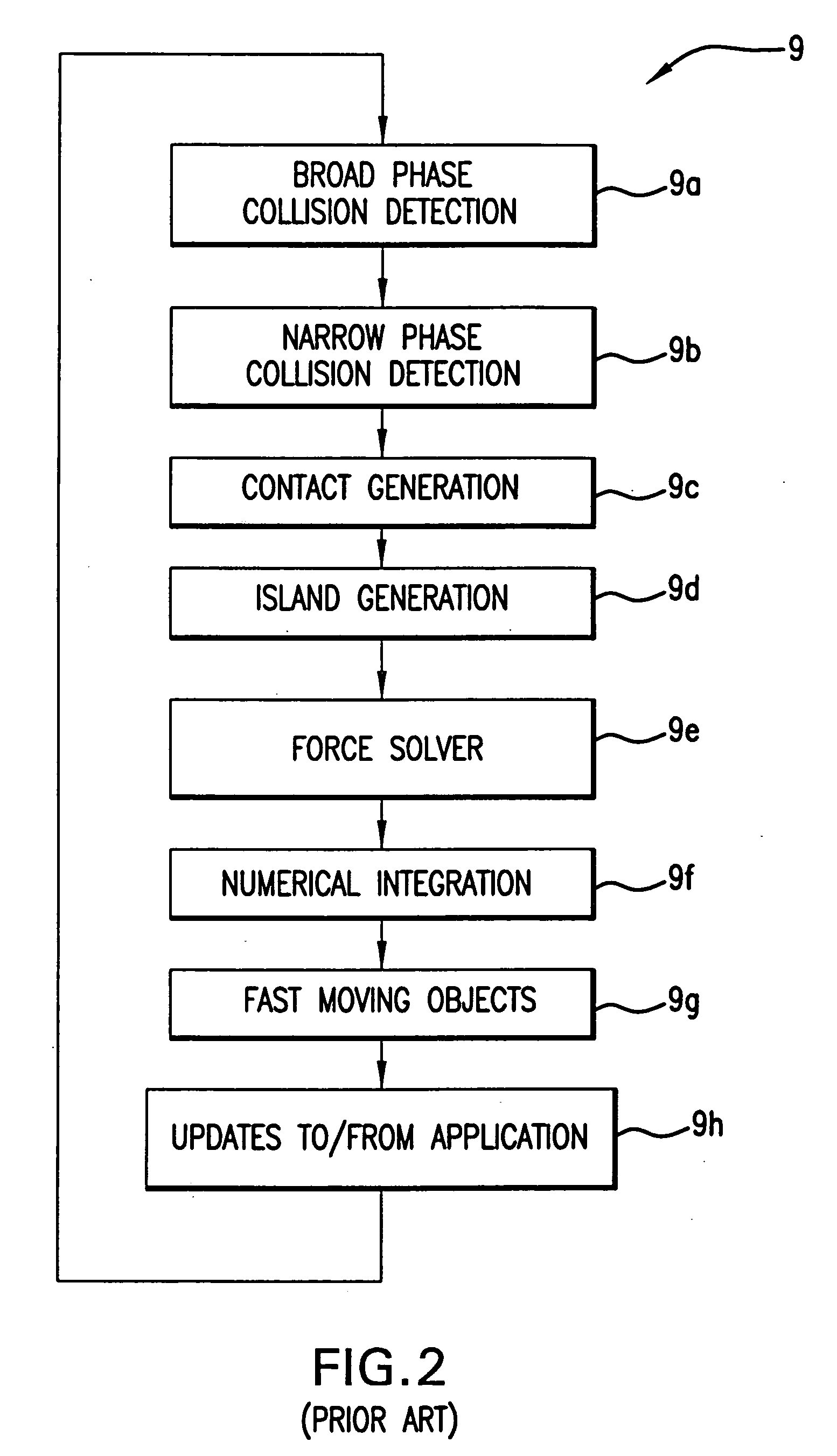

System incorporating physics processing unit

InactiveUS20050086040A1Efficient communicationEffective calculationProcessor architectures/configurationProgram controlAnimationPhysics processing unit

A system, such as a PC, incorporating a dedicated physics processing unit adapted to generate physics data for use within a physics simulation or game animation. The hardware-based physics processing unit is characterized by a unique architecture designed to efficiently calculate physics data, including multiple, parallel floating point operations.

Owner:NVIDIA CORP

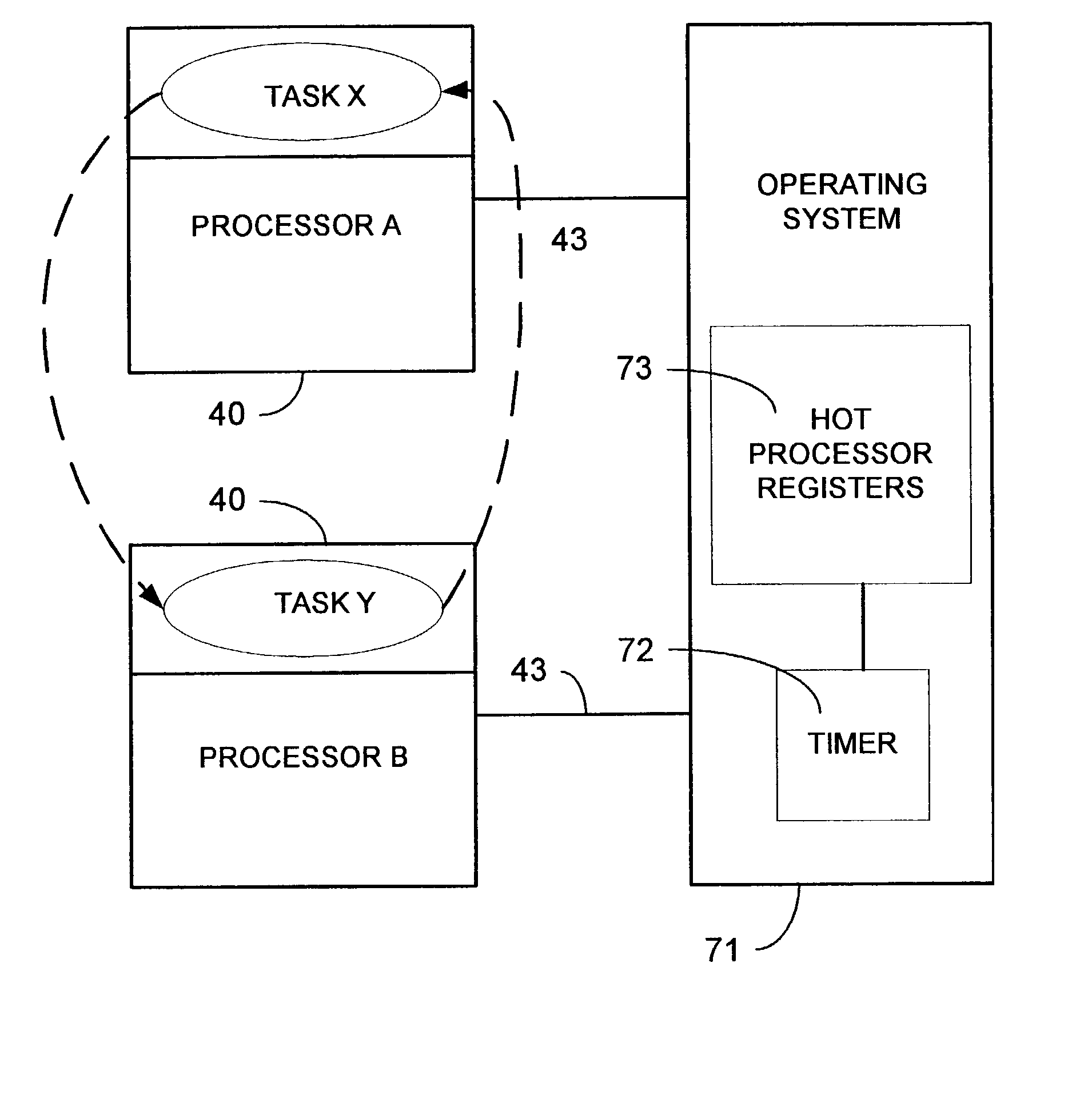

Method and apparatus to eliminate processor core hot spots

Methods and apparatus are provided for eliminating hot spots on processor chips in a symmetric multiprocessor (SMP) computer system. Some operations, in particular, floating point multiply / add, repetitively utilize portions of a processor chip to the point that the average power of the affected portions exceeds cooling capabilities. The localized temperature of the affected portions can then exceed design limits. The current invention determines when a hot spot occurs and task swaps the task to another processor prior to the localized temperature becoming too hot. Moving of tasks to processors that have data affinity with the processor reporting a hot spot is considered. Further considerations include prioritizing unused processors and those processors that have not recently reported a hot spot.

Owner:IBM CORP

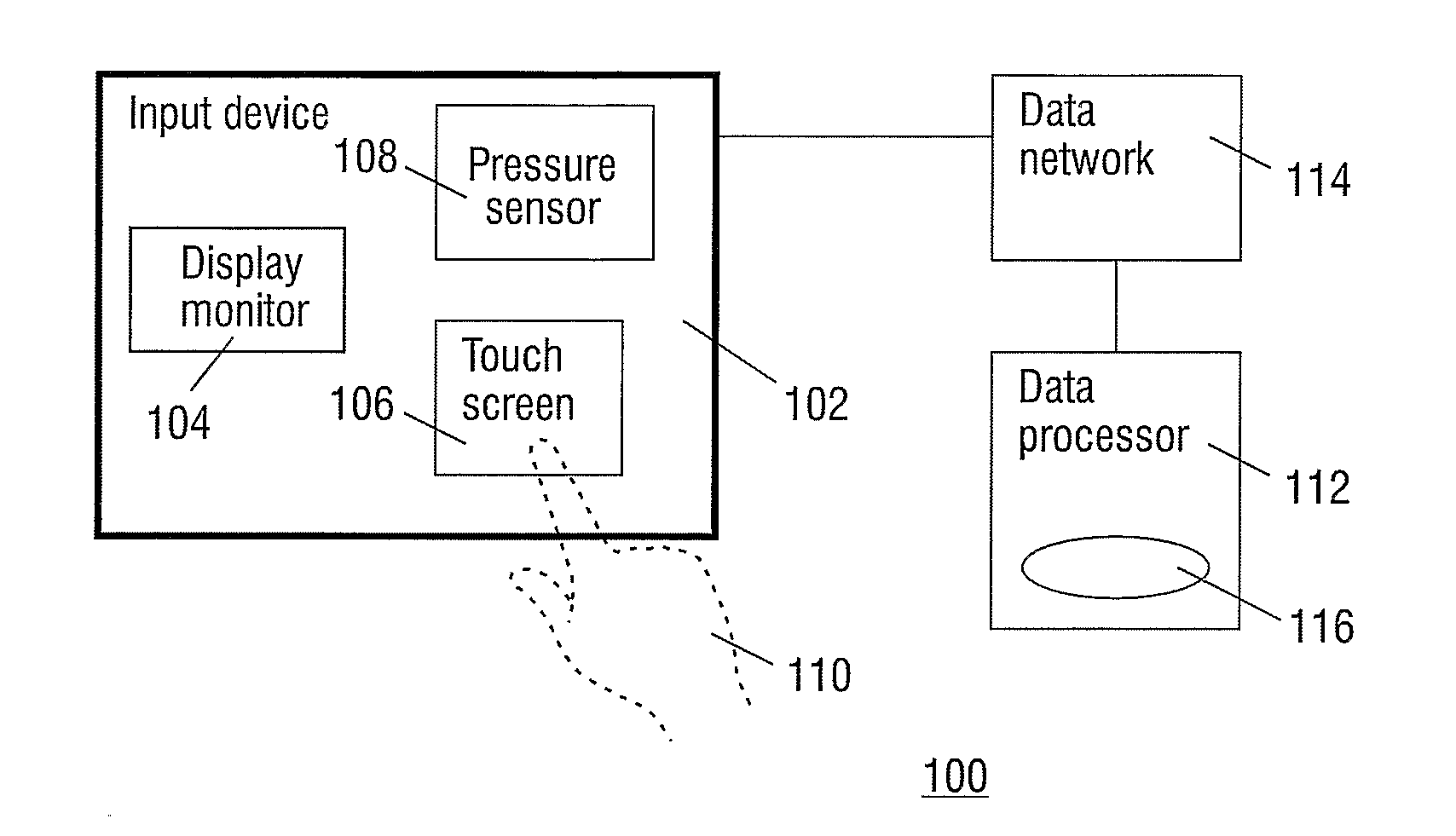

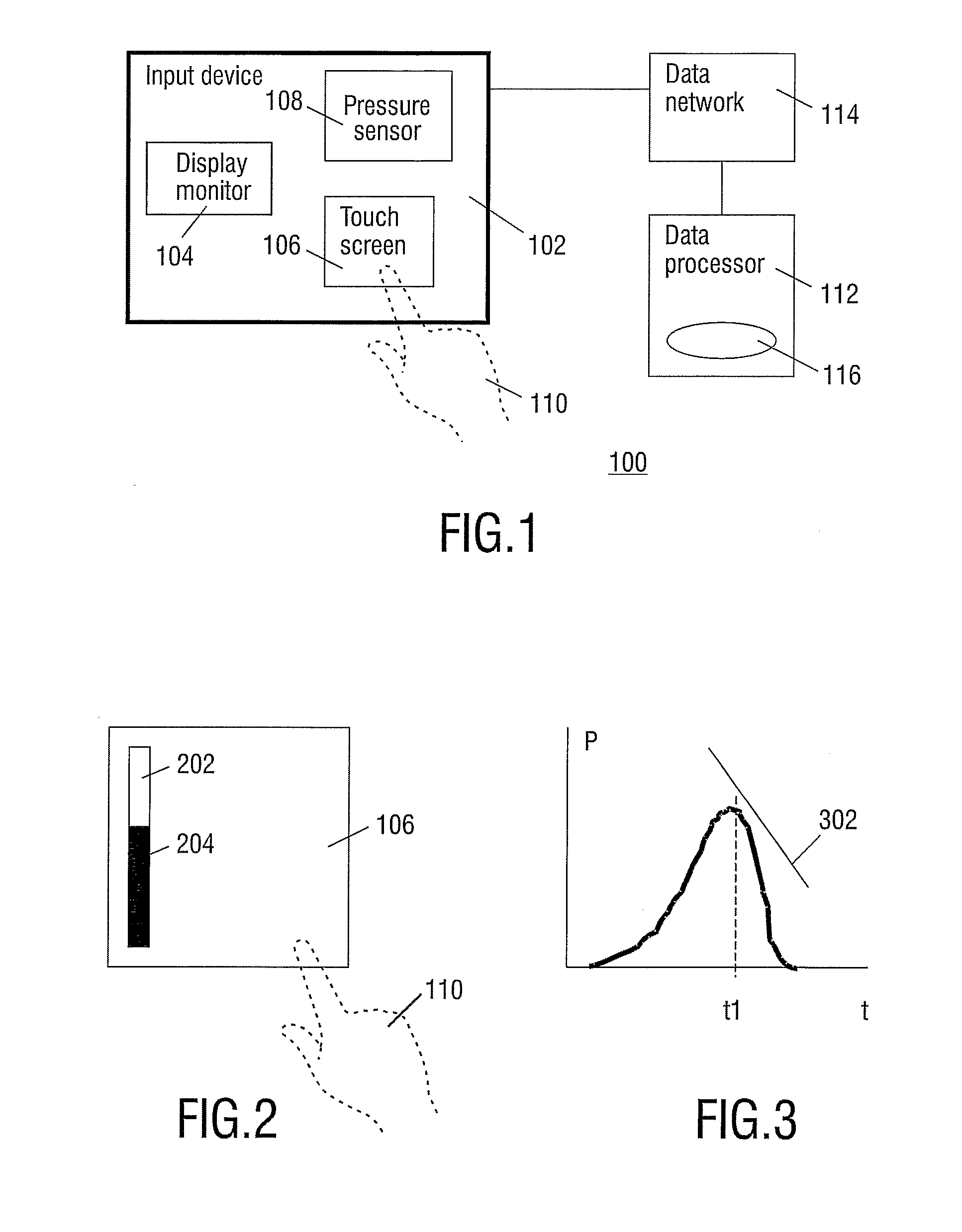

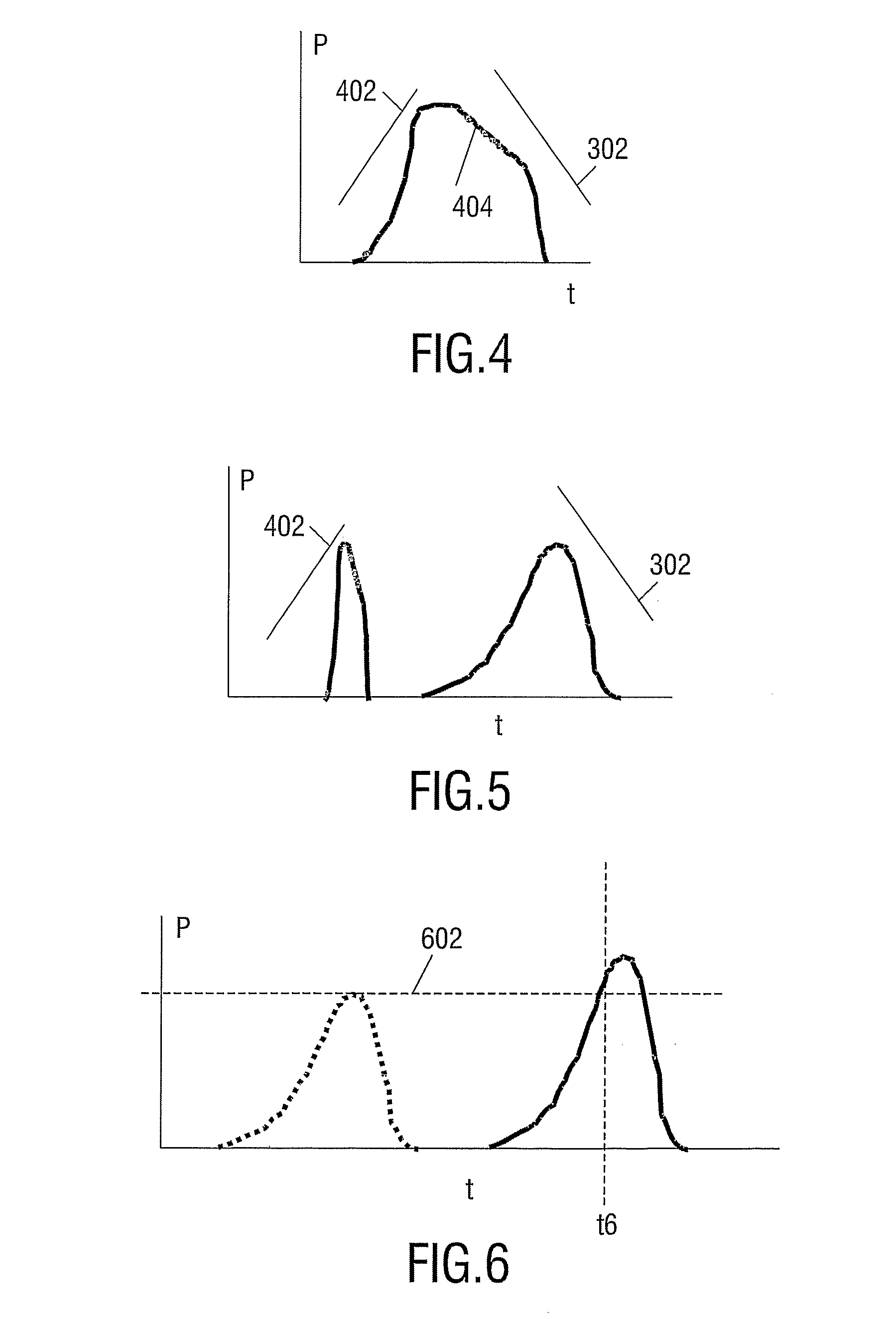

Touch Screen Slider for Setting Floating Point Value

InactiveUS20080105470A1Easy to useTransmission systemsGraph readingData processing systemFloating point

A data processing system comprises a pressure-sensitive input device for assigning a floating-point value to a parameter under control of a pressure applied to the device. The system is operative to detect a rate of change of the pressure to control the assigning, e.g., to validate the current value as being input or to unlock the value as set.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

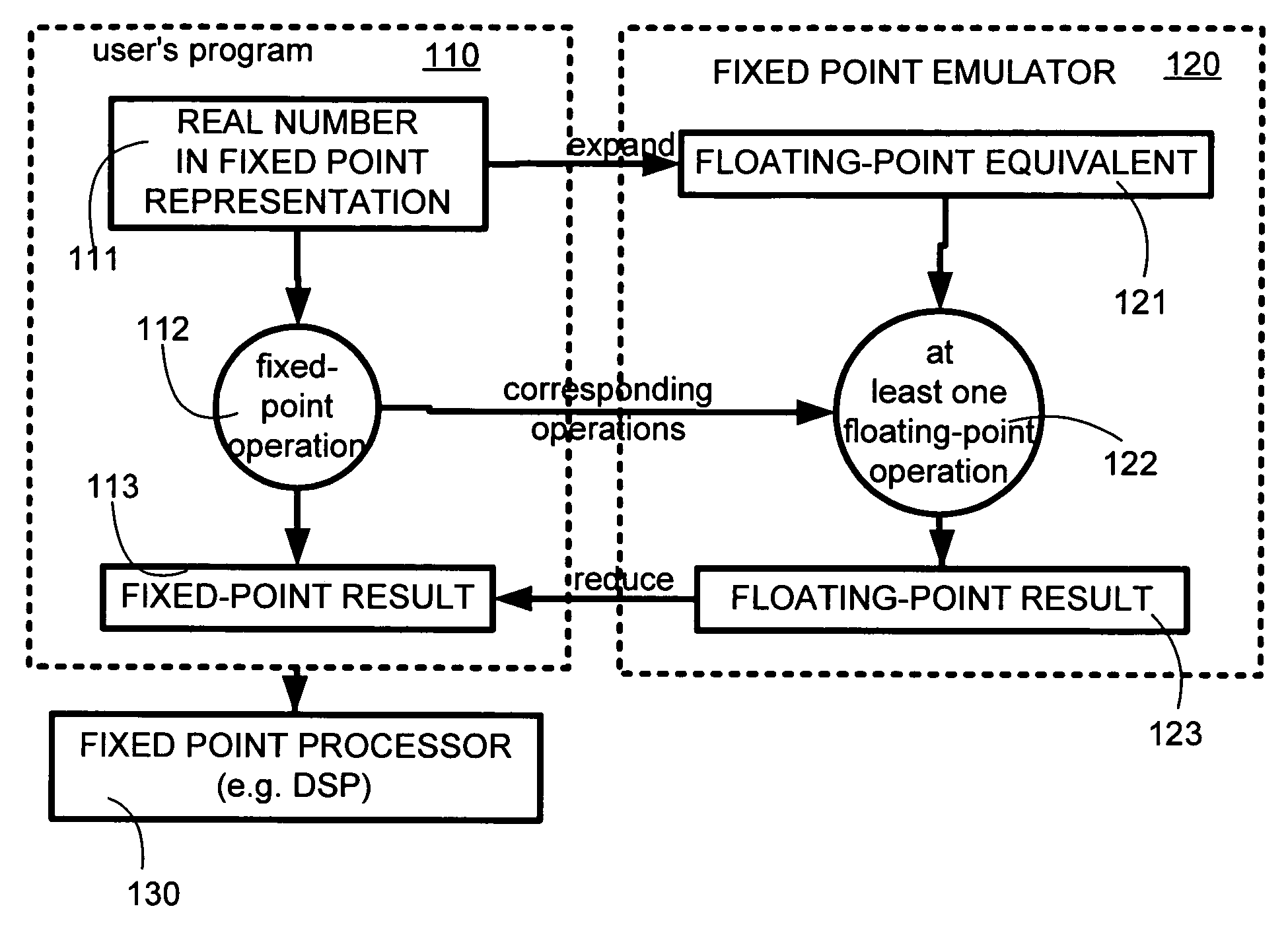

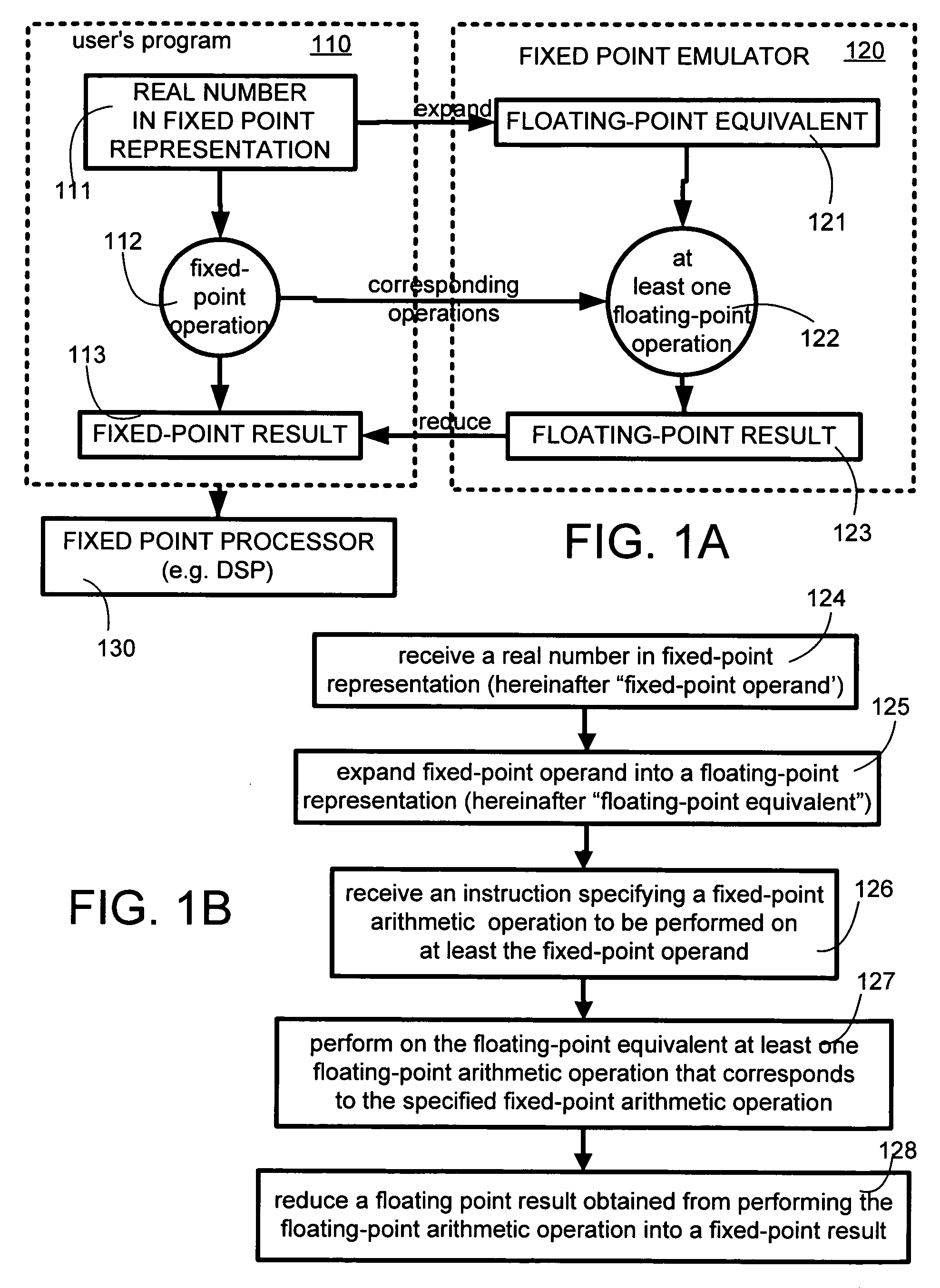

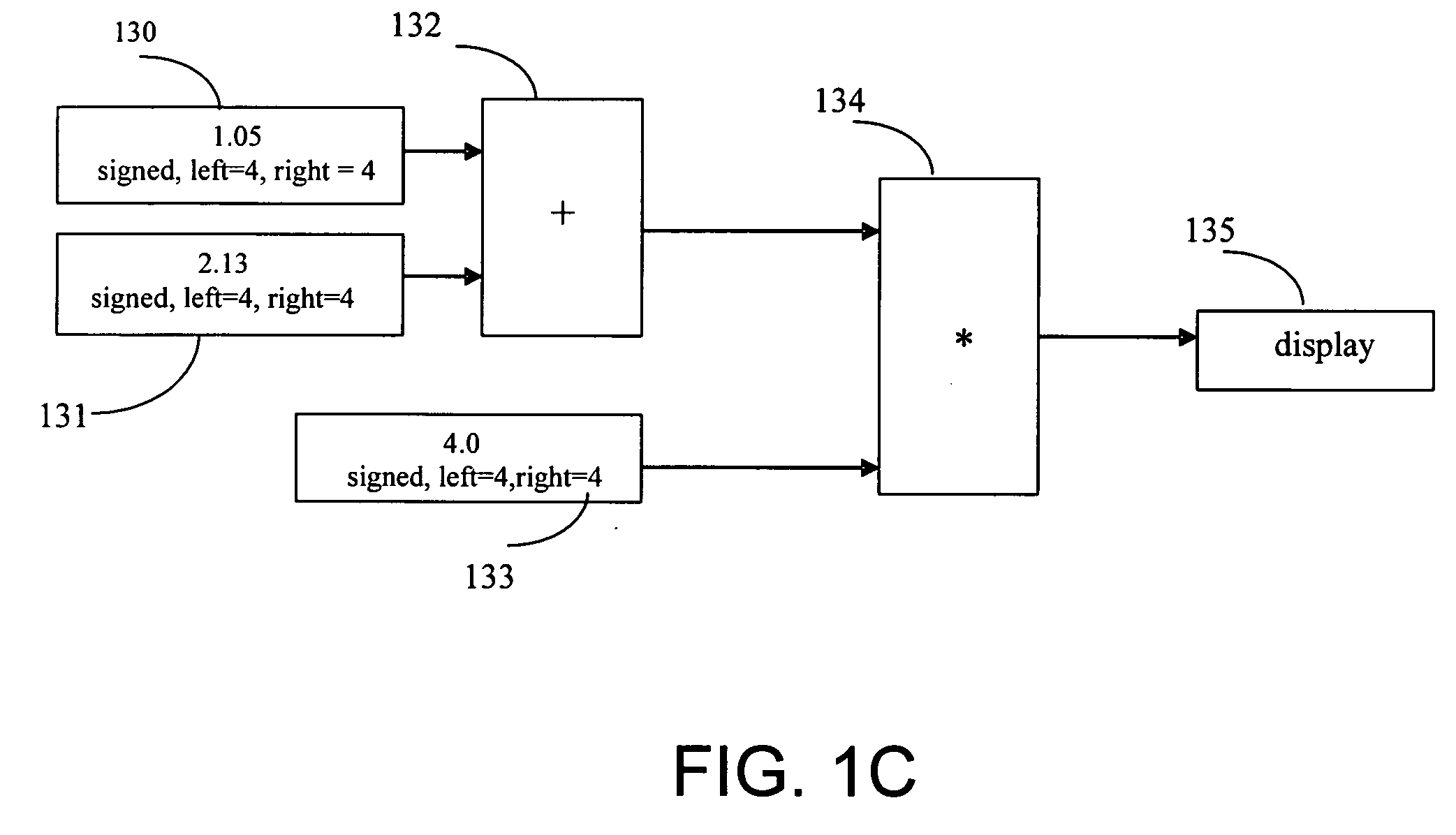

Emulation of a fixed point operation using a corresponding floating point operation

InactiveUS20050065990A1Simpler and more readableNot easy to make mistakesSoftware simulation/interpretation/emulationMemory systemsOperator overloadingReal arithmetic

A computer is programmed to emulate a fixed-point operation that is normally performed on fixed-point operands, by use of a floating-point operation that is normally performed on floating-point operands. Several embodiments of the just-described computer emulate a fixed-point operation by: expanding at least one fixed-point operand into a floating-point representation (also called “floating-point equivalent”), performing, on the floating-point equivalent, a floating-point operation that corresponds to the fixed-point operation, and reducing a floating-point result into a fixed-point result. The just-described fixed-point result may have the same representation as the fixed-point operand(s) and / or any user-specified fixed-point representation, depending on the embodiment. Also depending on the embodiment, the operands and the result may be either real or complex, and may be either scalar or vector. The above-described emulation may be performed either with an interpreter or with a compiler, depending on the embodiment. A conventional interpreter for an object-oriented language (such as MATLAB version 6) may be extended with a toolbox to perform the emulation. Use of type propagation and operator overloading minimizes the number of changes that a user must make to their program, in order to be able to use such emulation.

Owner:AGILITY DESIGN SOLUTIONS

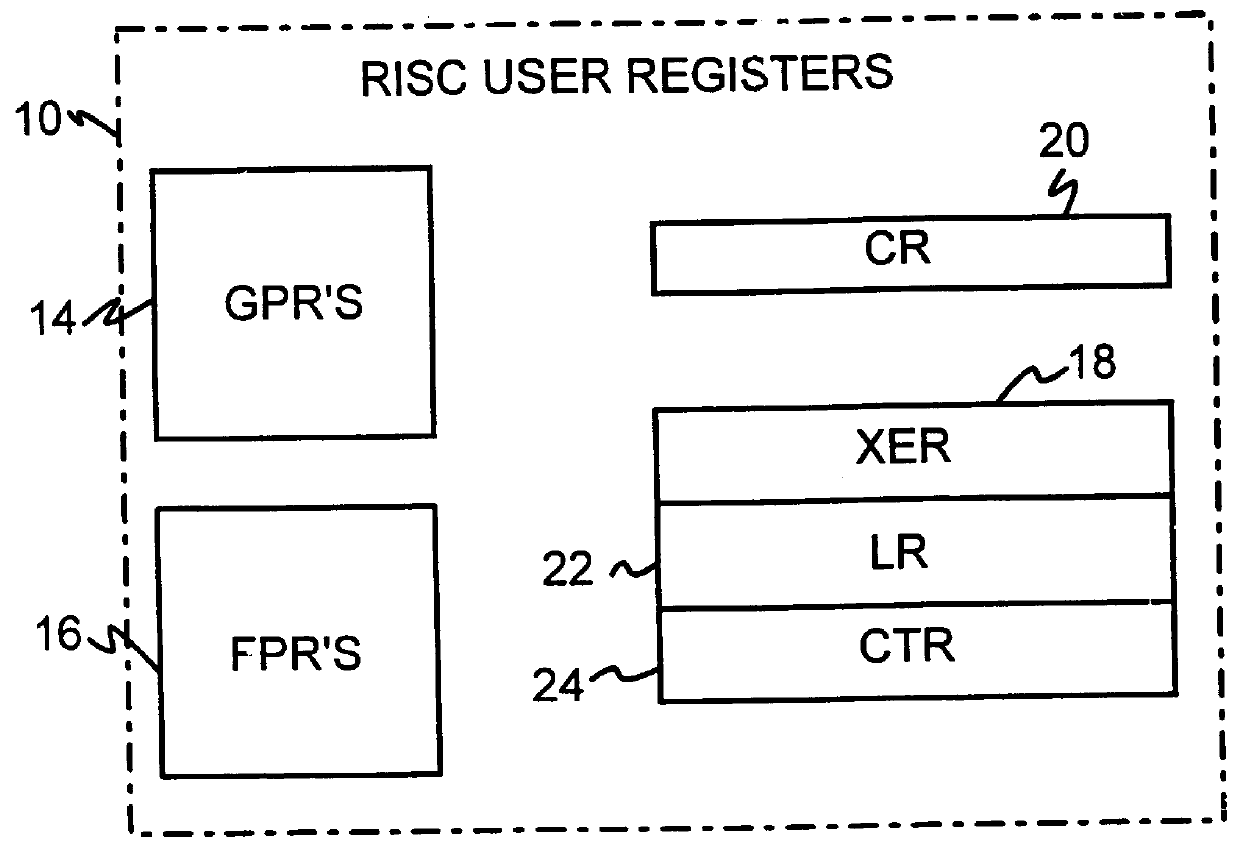

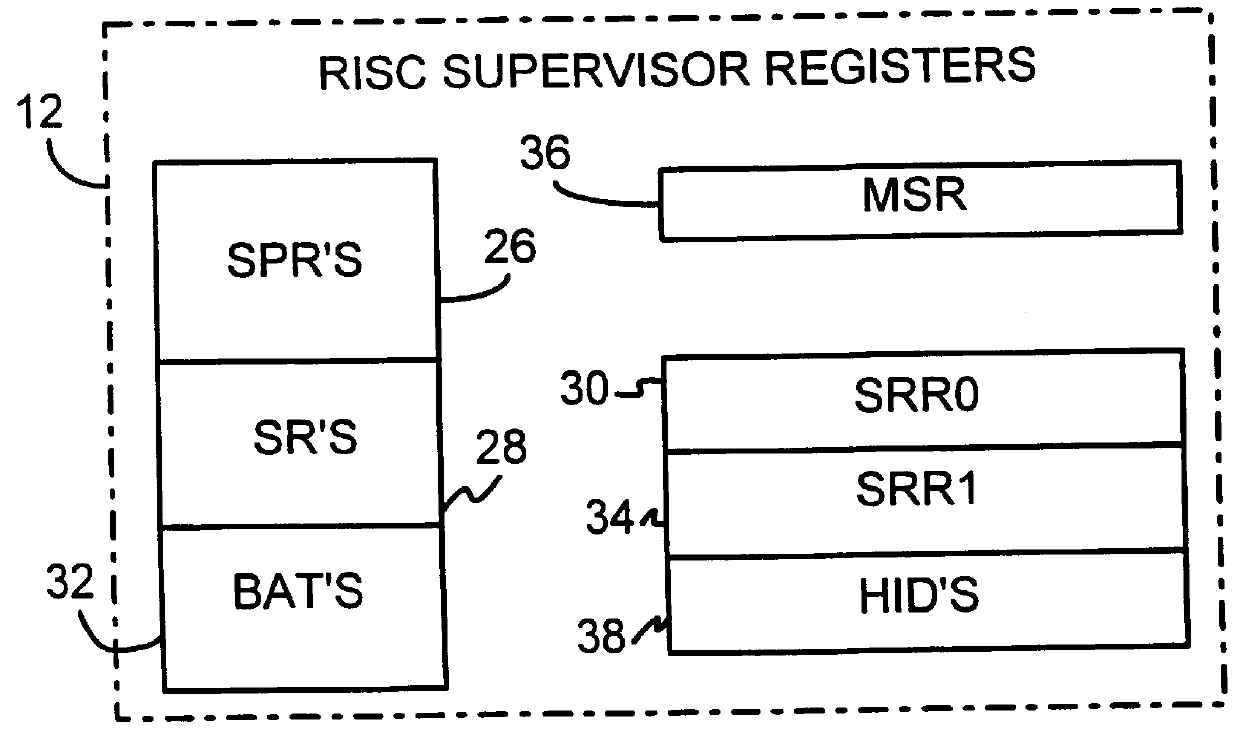

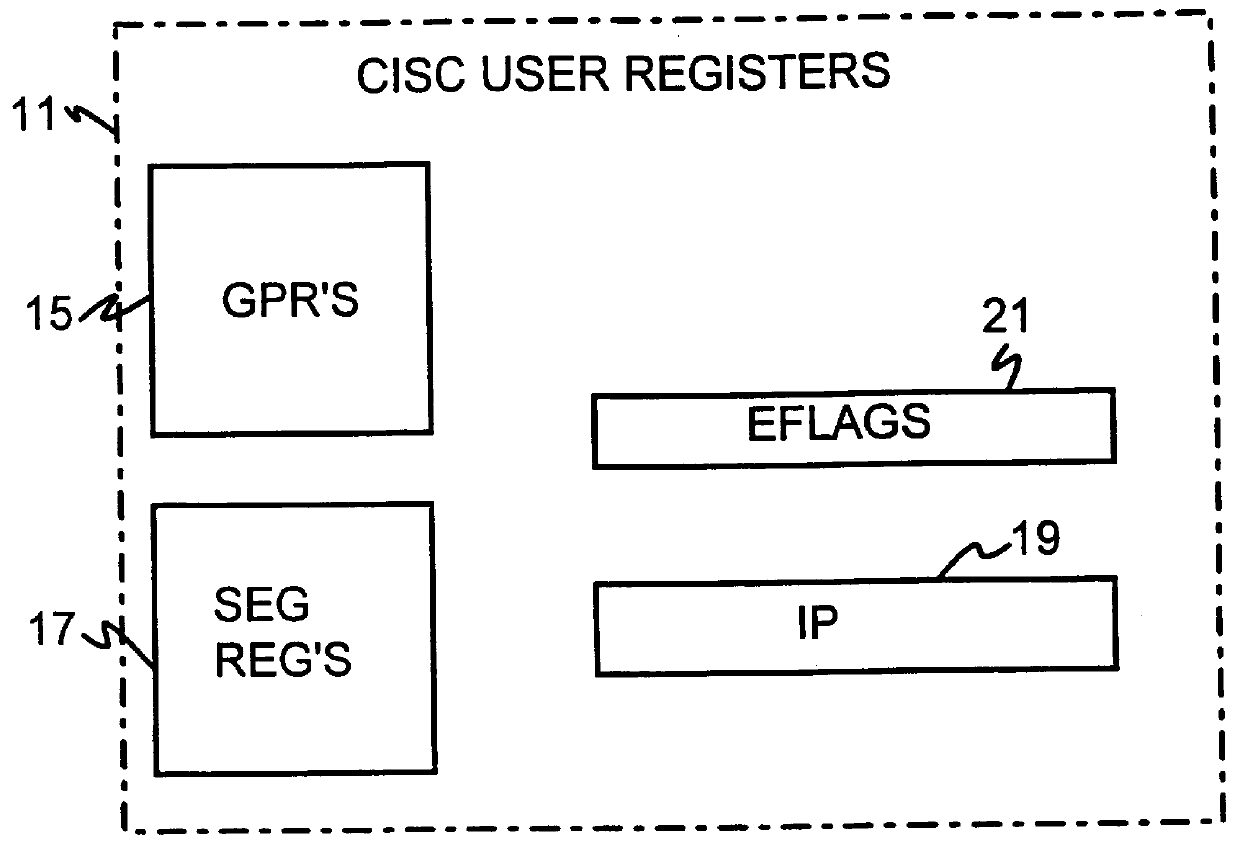

Shared register architecture for a dual-instruction-set CPU to facilitate data exchange between the instruction sets

A dual-instruction set central processing unit (CPU) is capable of executing instructions from a reduced instruction set computer (RISC) instruction set and from a complex instruction set computer (CISC) instruction set. Data and address information may be to transferred from a CISC program to a RISC program running on the CPU by using shared registers. The architecturally-defined registers in the CISC instruction set are merged or folded into some of the architecturally-defined registers in the RISC architecture so that these merged registers are shared by the two instructions sets. In particular, the flags or condition code registers defined by each architecture are merged together so that CISC instructions and RISC instructions will implicitly update the same merged flags register when performing computational instructions. The RISC and CISC registers are folded together so that the CISC flags are at one end of the register while the frequently used RISC flags are at the other end, but the RISC instructions can read or write any bit in the merged register. The CISC code segment base address is stored in the RISC branch count register, while the CISC floating point instruction address is stored in the RISC branch link register. The general-purpose registers (GPR's) are also merged together, allowing a CISC program to pass data to a RISC program merely by writing one of its GPR's, switching control to the RISC program, and the RISC program reading one of its GPR's that is merged with and corresponds to the CISC GPR that was written to by the CISC program.

Owner:SAMSUNG ELECTRONICS CO LTD

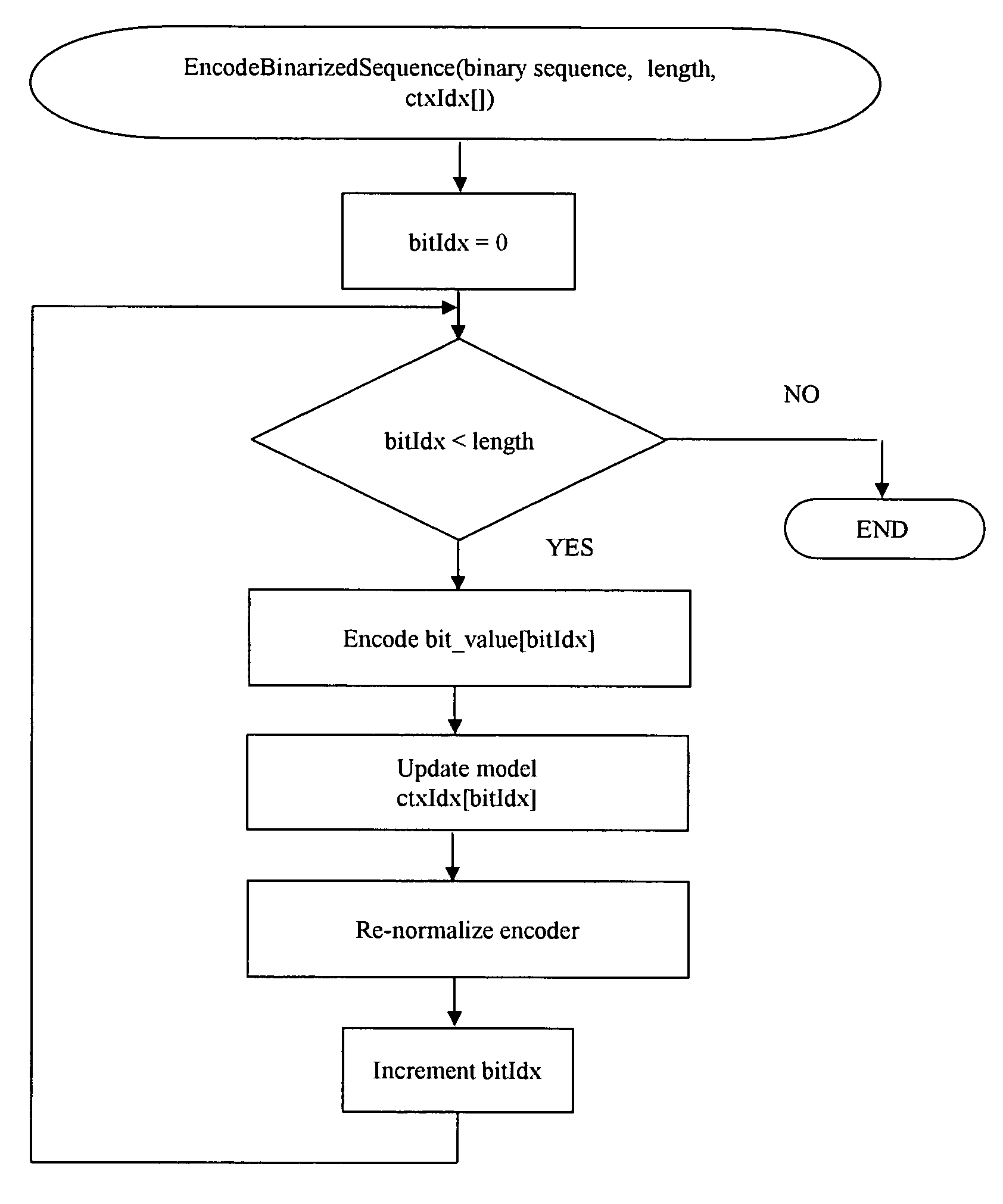

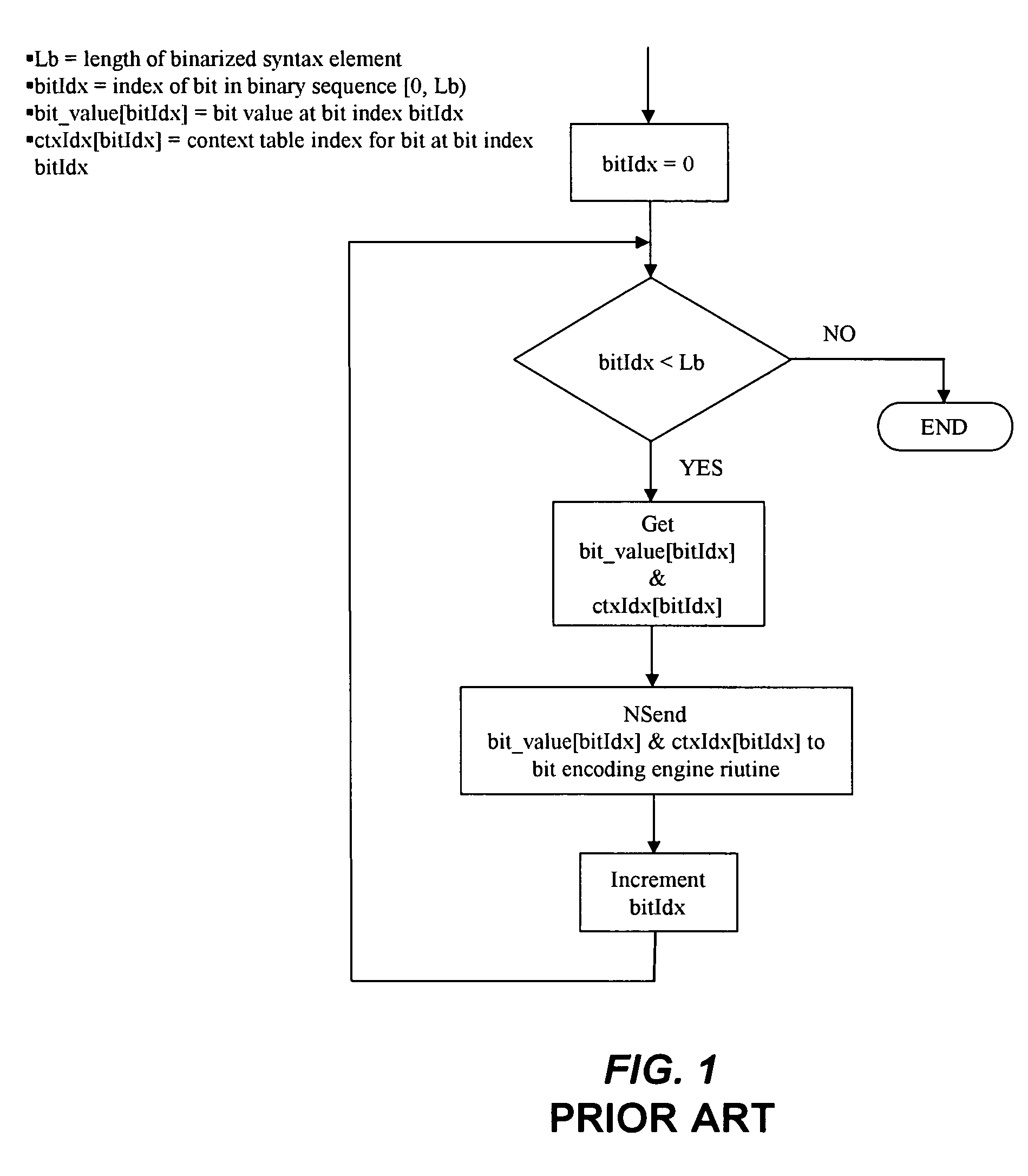

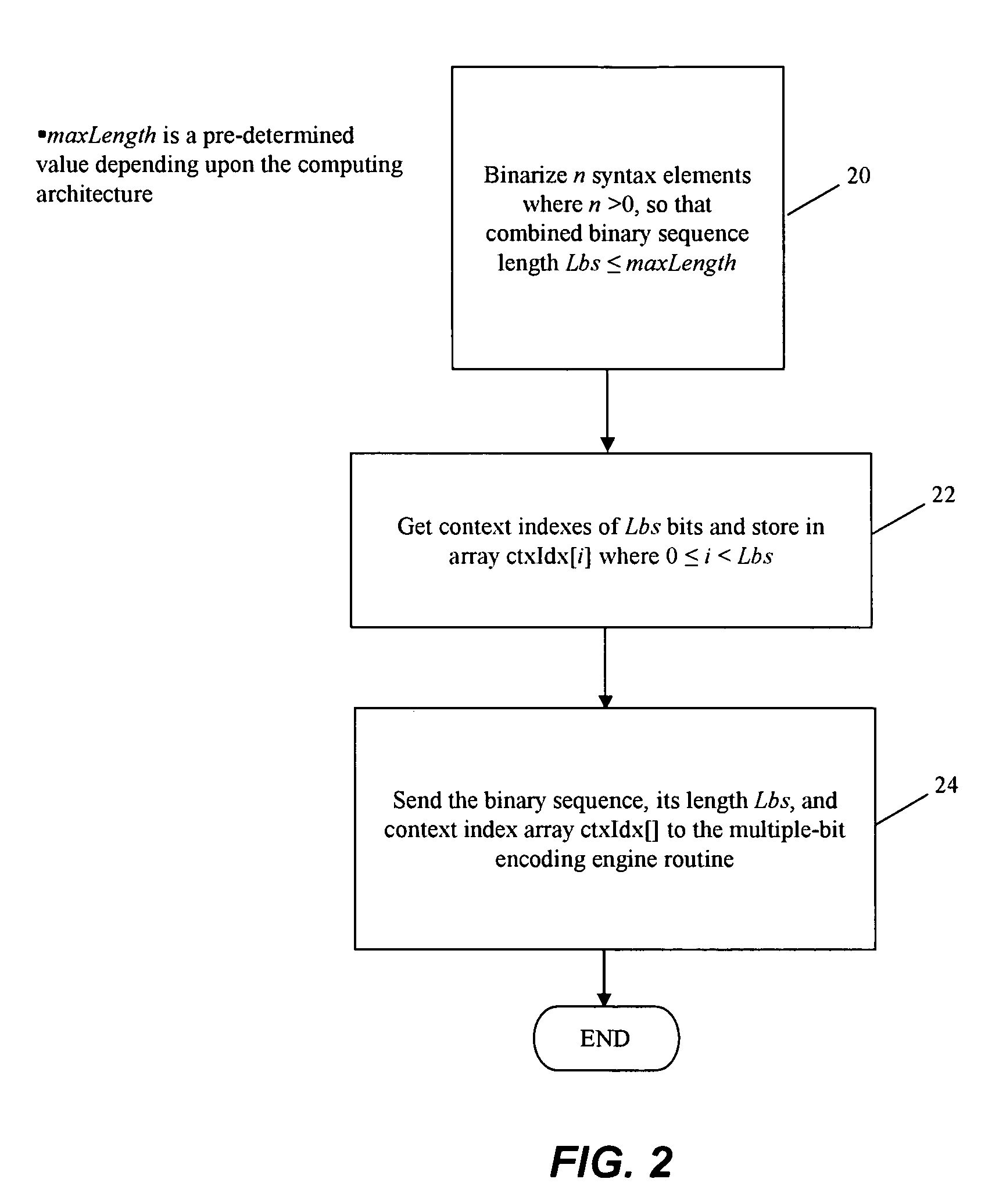

Method and system for fast context based adaptive binary arithmetic coding

InactiveUS7221296B2Improve the level ofReduce overheadCode conversionCharacter and pattern recognitionParallel computingFloating point

A method and processor for providing context-based adaptive binary arithmetic CABAC coding. Binarization is performed on one or more syntax elements to obtain a binary sequence. Data bits of the said binary sequence are provided to an arithmetic encoding unit in bulk. Binarization is performed on one or more syntax elements to generate exp Golomb code by converting mapped syntax-element values to corresponding floating point type values. Re-normalization of CABAC encoding is performed by restructuring the re-normalization into two processing units including an arithmetic encoding unit and a bit writing unit. The bit writing unit is configured to format signal bits into a multiple-bit sequence, and write multiple bits simultaneously during an execution of a bit writing loop.

Owner:STREAMING NETWORKS PVT

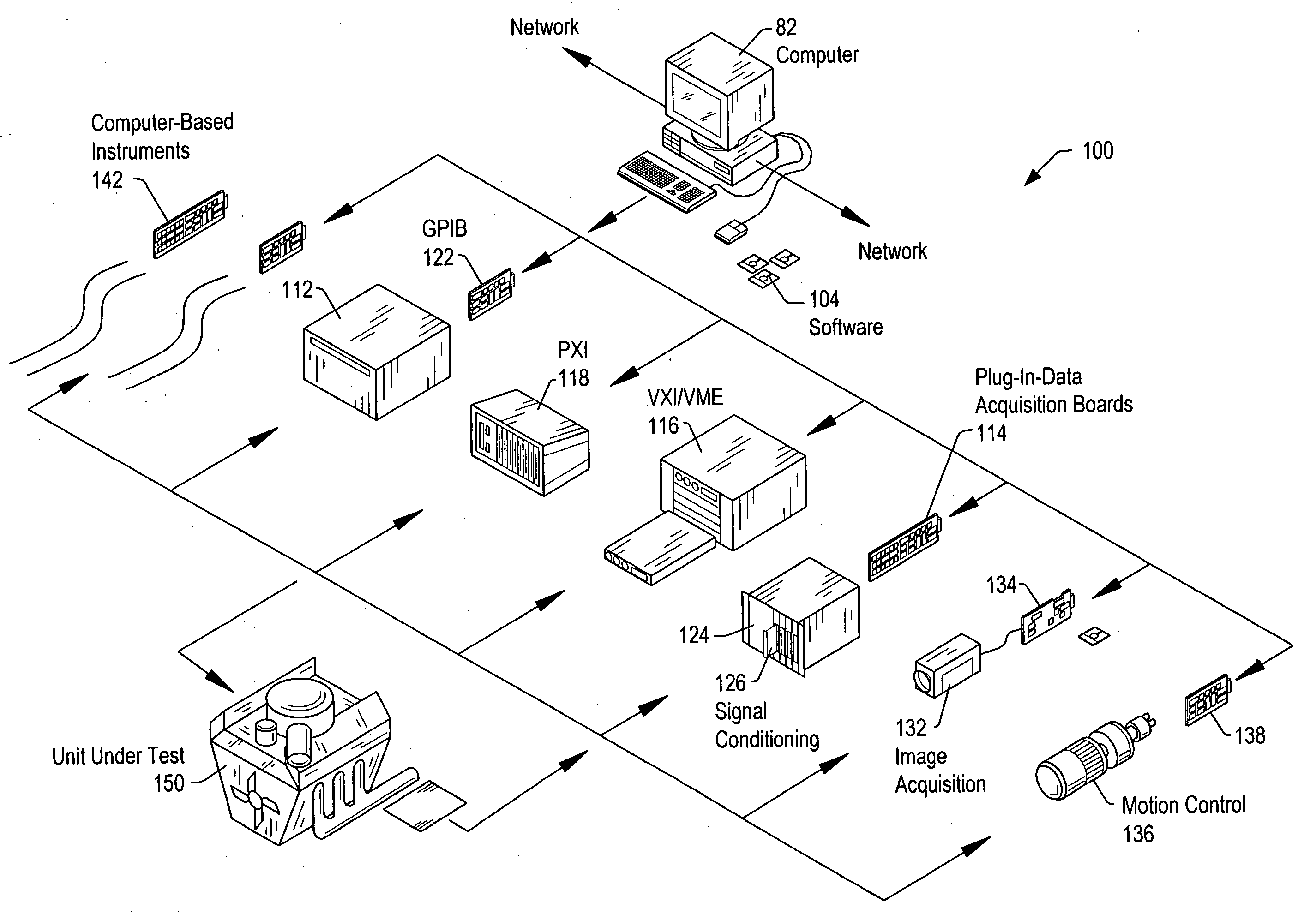

Automatic graph display

System and method for displaying signals. First user input requesting display of a first signal is received, e.g., to a graphical user interface (GUI) comprised in a signal analysis function development environment, and the first signal programmatically analyzed in response to the first user input. A display tool operable to display the first signal is programmatically determined based on said analyzing, and the first signal displayed in the display tool, e.g., a data type of the first signal is determined, e.g., integer, floating point, Boolean, or user-defined data in a time-domain, frequency-domain, or spatial-domain, and the display tool programmatically determined based on the determined data type, e.g., via a loop-up table, where the display tool comprises an indicator operable to display the signal data. The signal comprises signal data, e.g., signal plot data, where the display tool comprises a graph, or tabular data, where the display tool comprises a table.

Owner:NATIONAL INSTRUMENTS

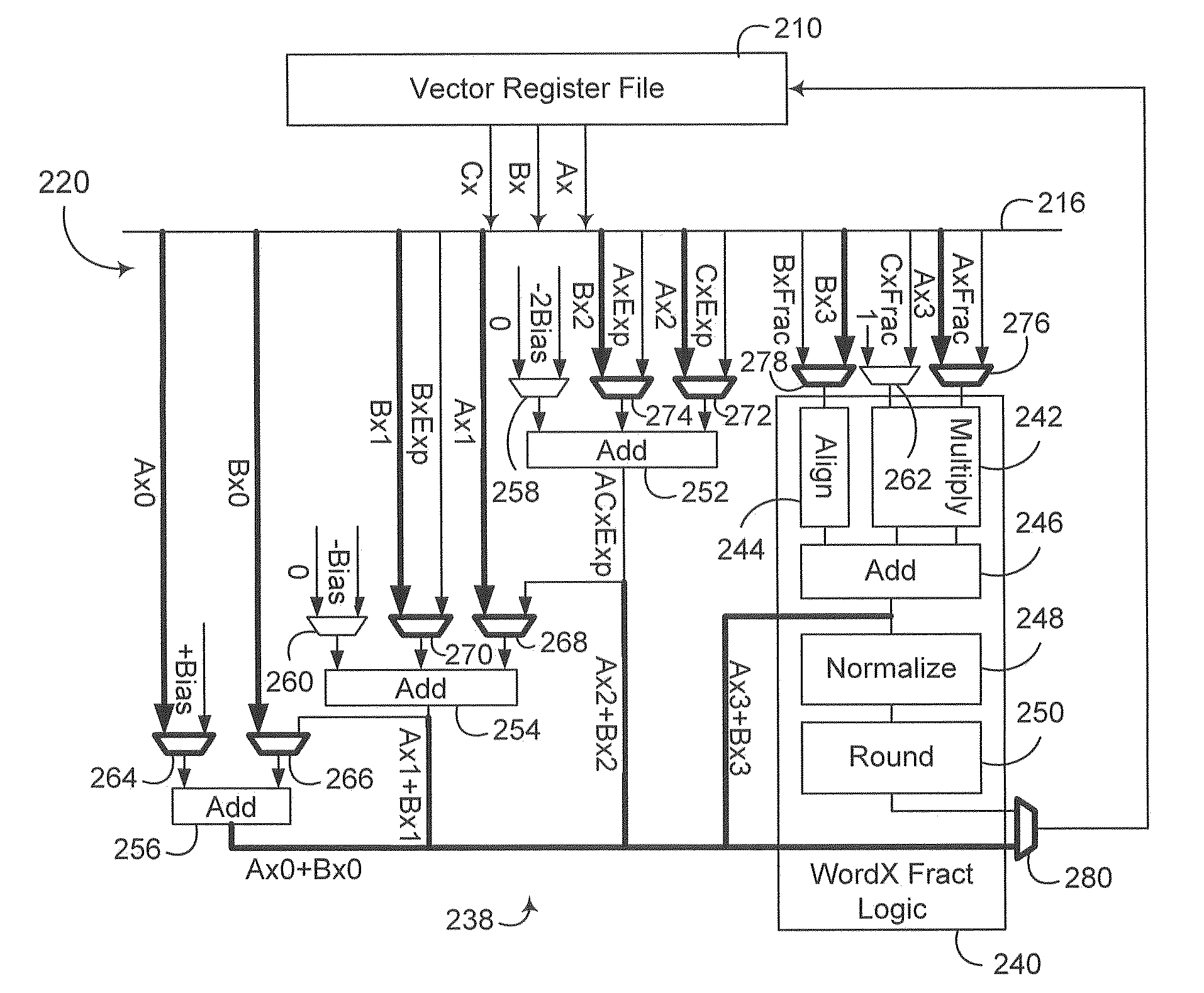

Floating point execution unit with fixed point functionality

InactiveUS20130036296A1Easy to operateRegister arrangementsDigital computer detailsParallel computingFloating point

A floating point execution unit is capable of selectively repurposing one or more adders in an exponent path of the floating point execution unit to perform fixed point addition operations, thereby providing fixed point functionality in the floating point execution unit.

Owner:IBM CORP

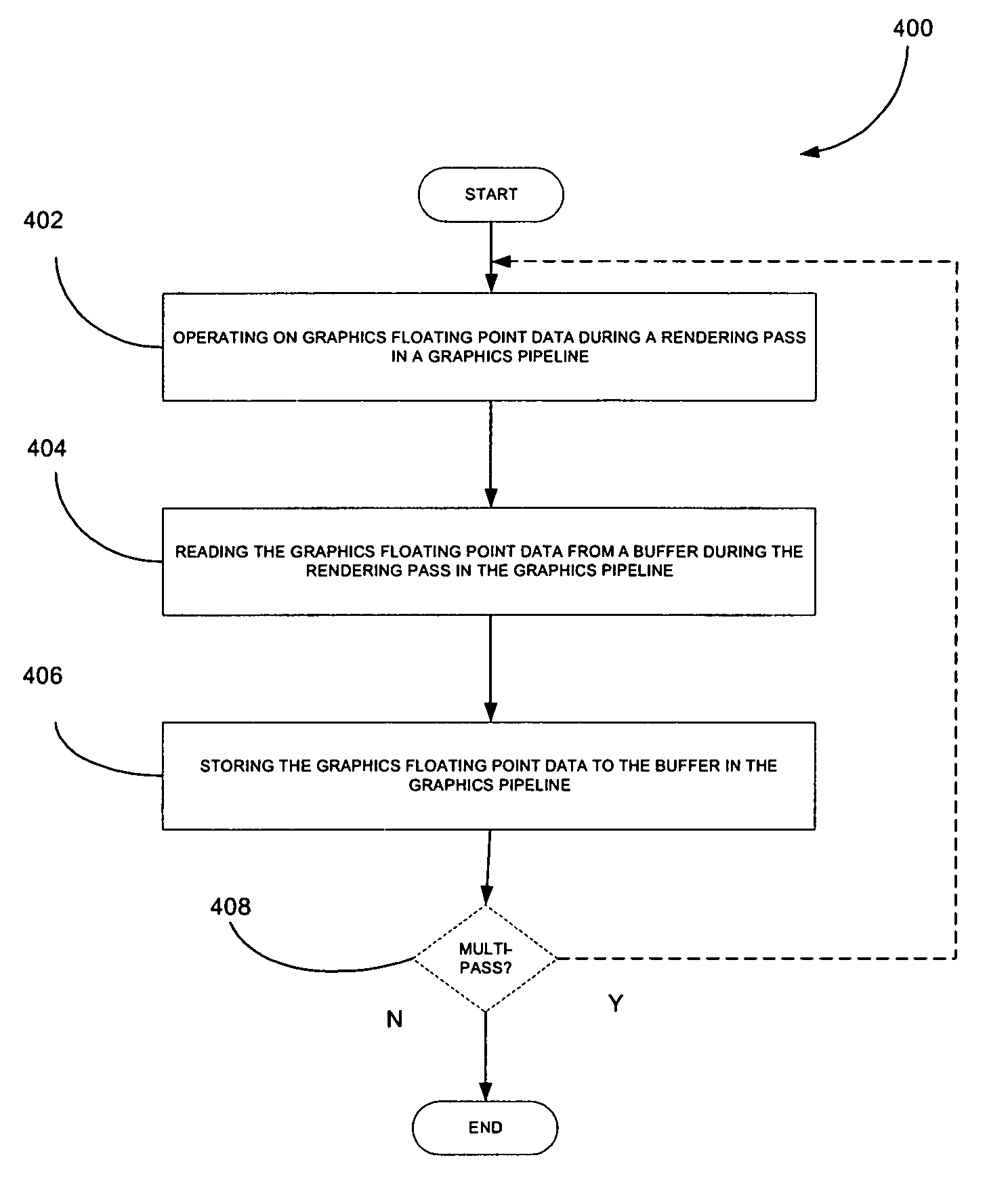

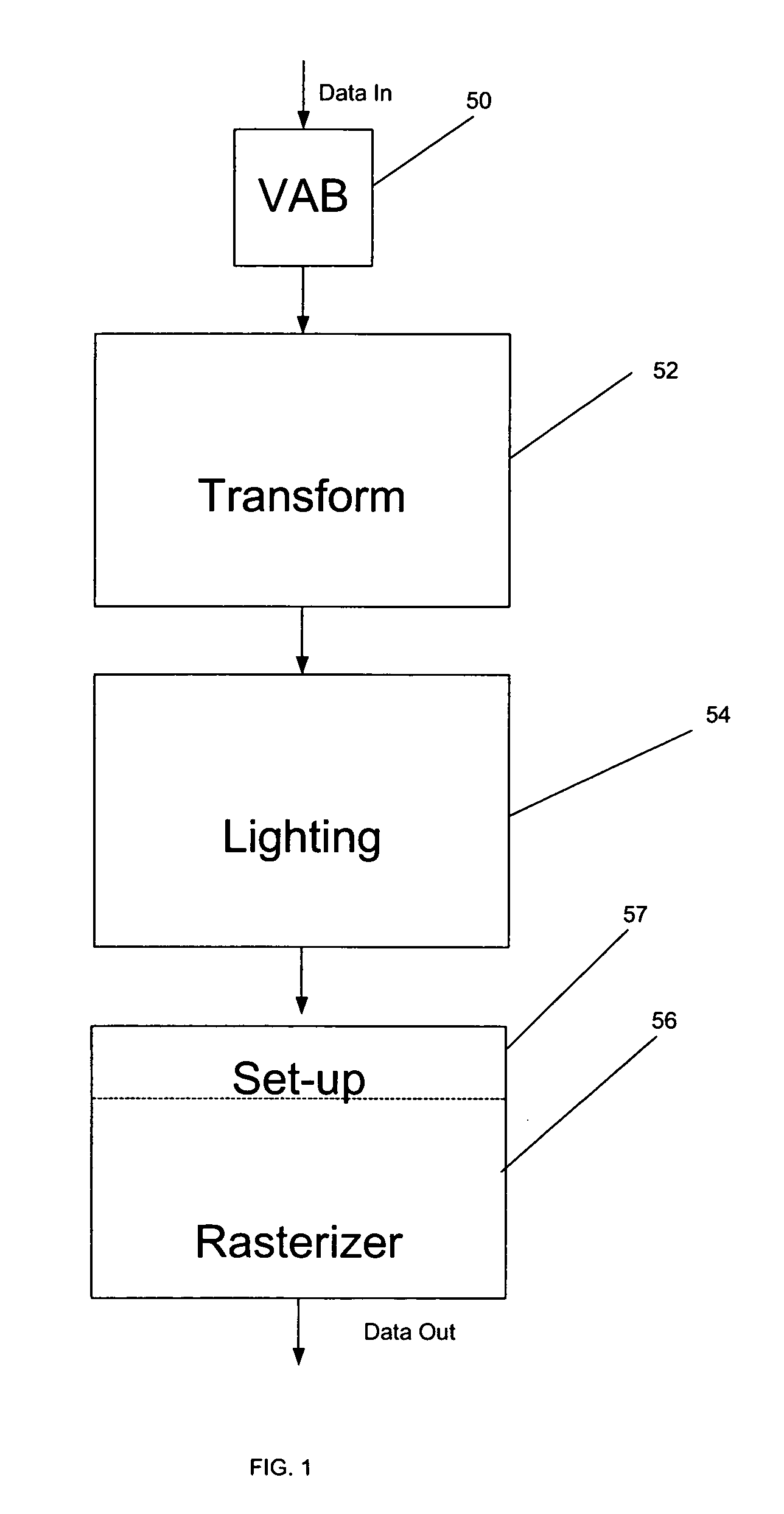

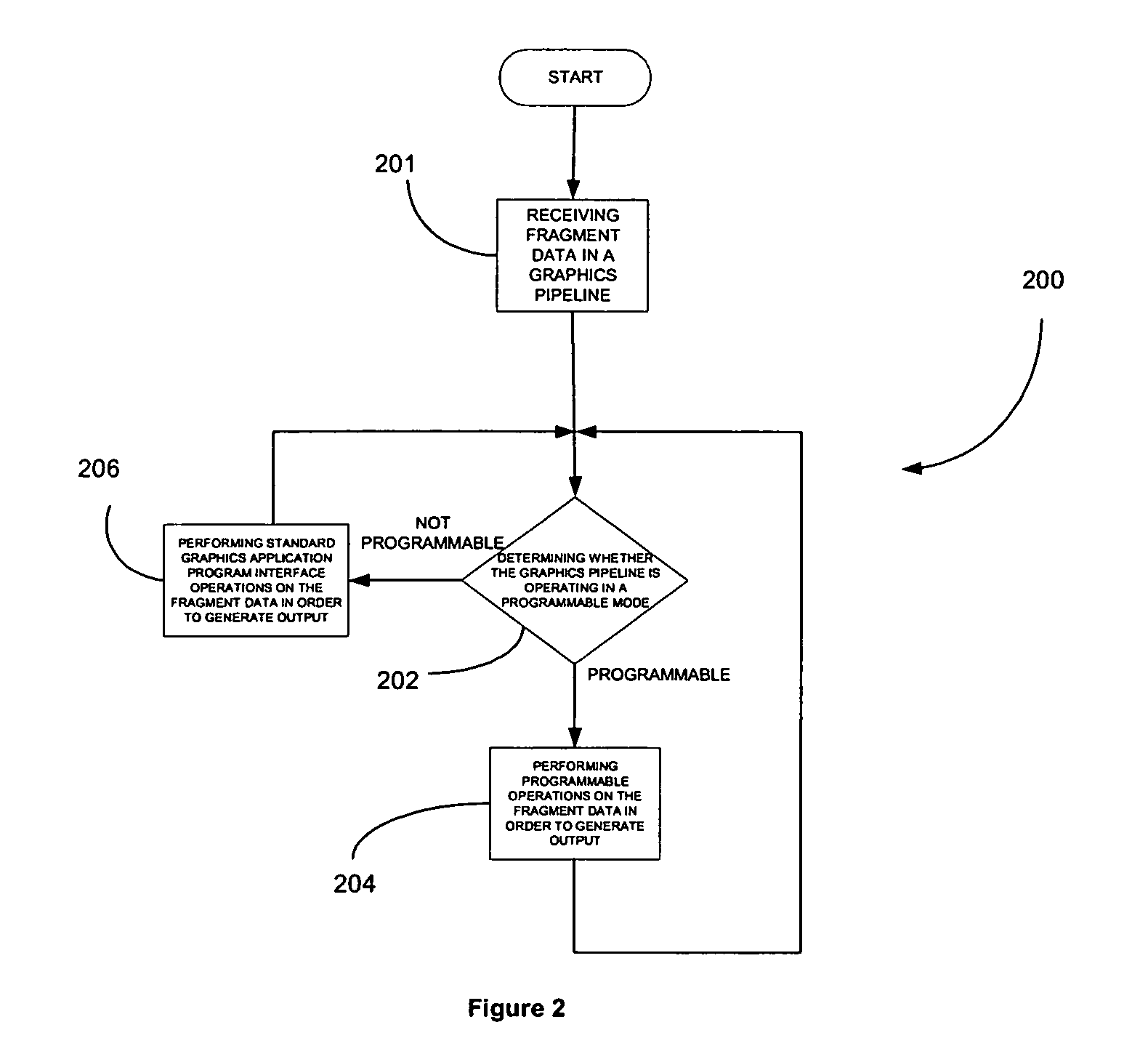

Floating point buffer system and method for use during programmable fragment processing in a graphics pipeline

InactiveUS7009615B1Improve precisionIncrease rangeImage memory managementCathode-ray tube indicatorsFragment processingComputational science

A system, method and computer program product are provided for buffering data in a computer graphics pipeline. Initially, graphics floating point data is read from a buffer in a graphics pipeline. Next, the graphics floating point data is operated upon in the graphics pipeline. Further, the graphics floating point data is stored to the buffer in the graphics pipeline.

Owner:NVIDIA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com