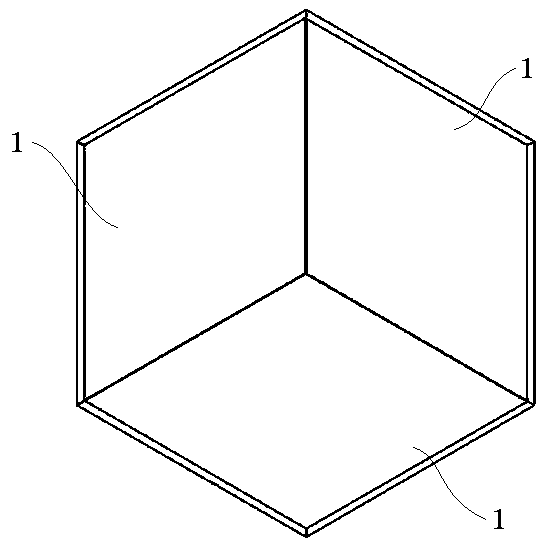

Patents

Literature

230results about How to "Reduce precision loss" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

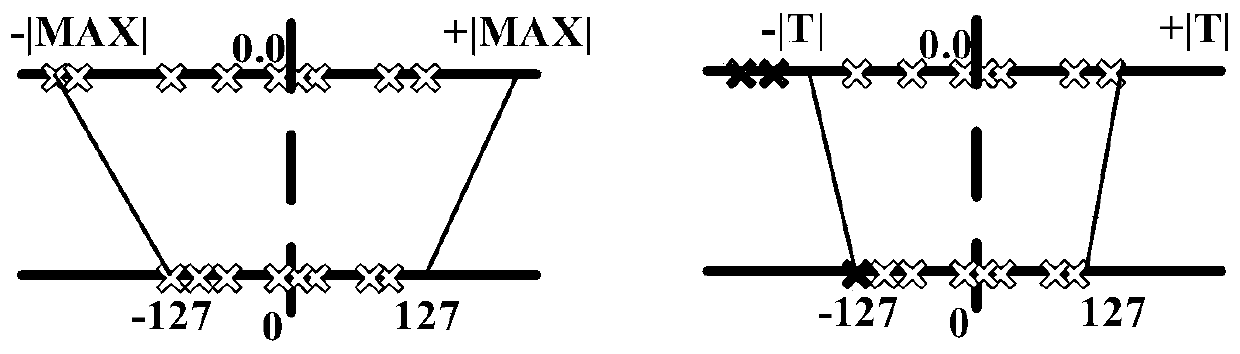

A CNN-based low-precision training and 8-bit integer quantitative reasoning method

InactiveCN109902745AReduce computing timeImprove computing efficiencyCharacter and pattern recognitionNeural architecturesAlgorithmFloating point

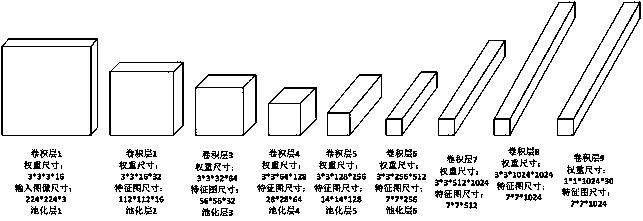

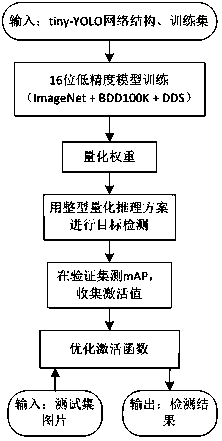

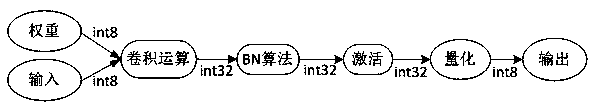

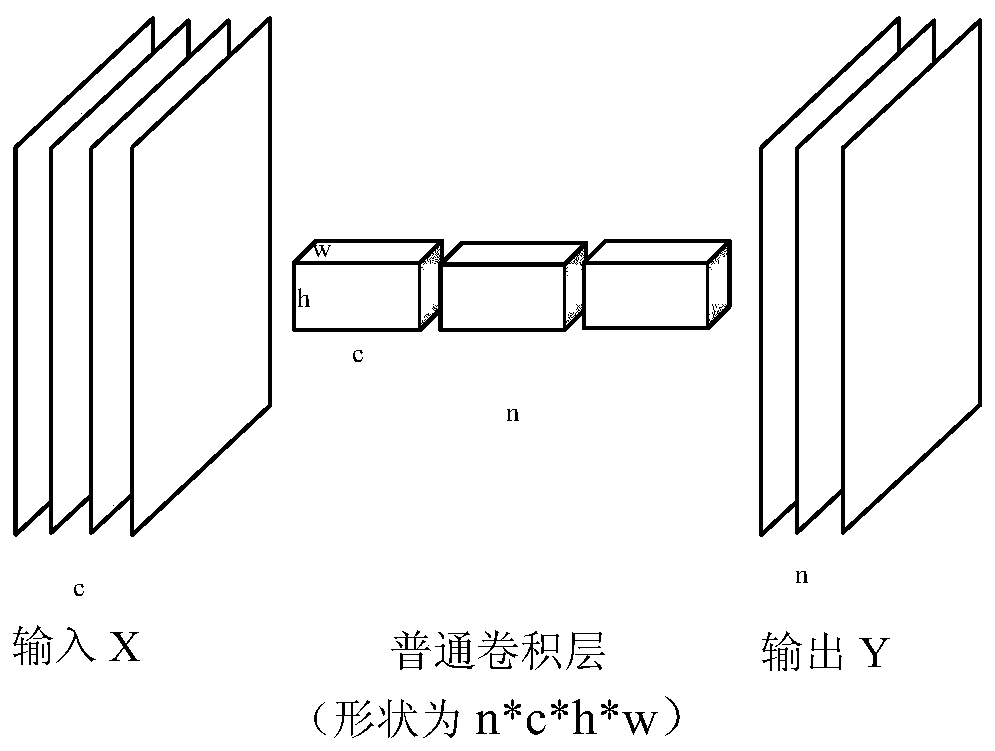

The invention provides a CNN-based low-precision training and 8-bit integer quantization reasoning method. The method mainly comprises the steps of carryin gout low-precision model training; Performing model training by using a 16-bit floating point type low-precision fixed point algorithm to obtain a model for target detection; Quantifying the weight; Proposing an 8-bit integer quantization scheme, and quantizing the weight parameters of the convolutional neural network from 16-bit floating point type to 8-bit integer according to layers; carrying out 8-bit integer quantitative reasoning; quantizing the activation value into 8-bit integer data, i.e., each layer of the CNN accepts an int8 type quantization input and generates an int8 quantization output. According to the invention, a 16-bit floating point type low-precision fixed point algorithm is used to train a model to obtain a weight; Compared with a 32-bit floating point type algorithm, the method has the advantages that the 8-bit integer quantization reasoning is directly carried out on the weight obtained by training the model, the reasoning process of the convolutional layer is optimized, and the precision loss caused by the low-bit fixed point quantization reasoning is effectively reduced.

Owner:成都康乔电子有限责任公司 +1

Joint neural network model compression method based on channel pruning and quantitative training

PendingCN111652366AImprove acceleration performanceGood pruning effectNeural architecturesNeural learning methodsNetwork modelEngineering

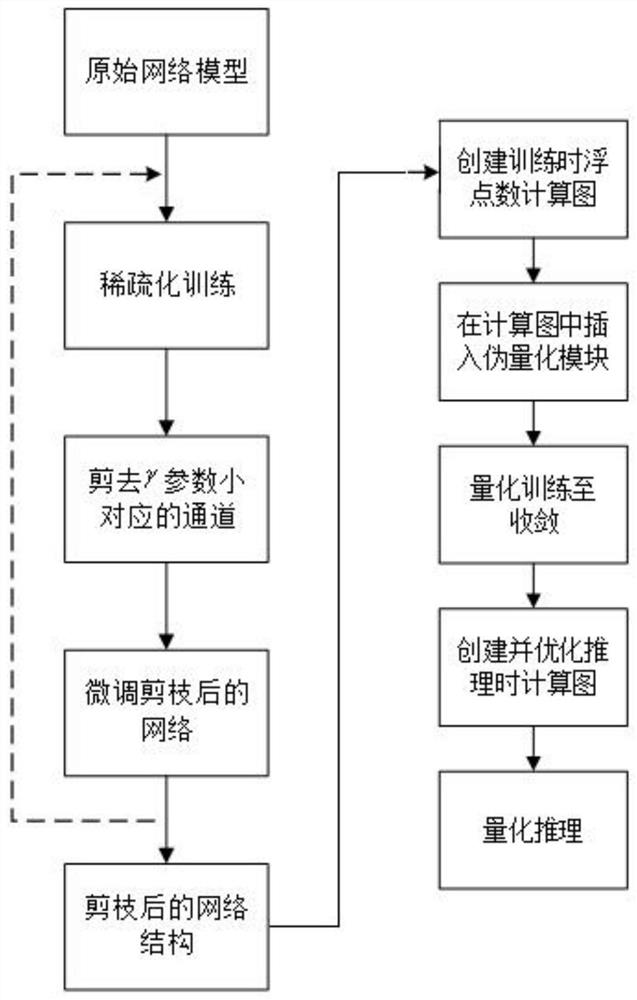

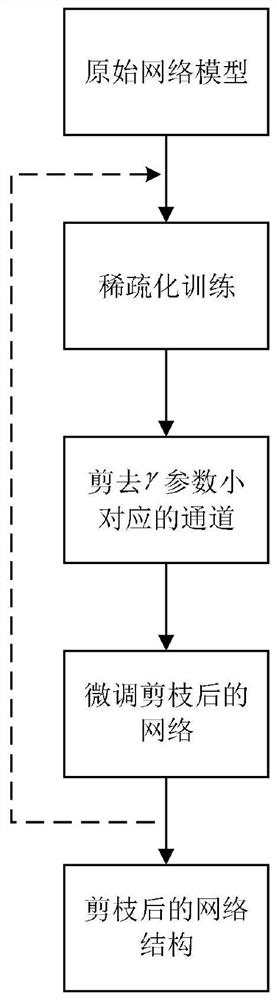

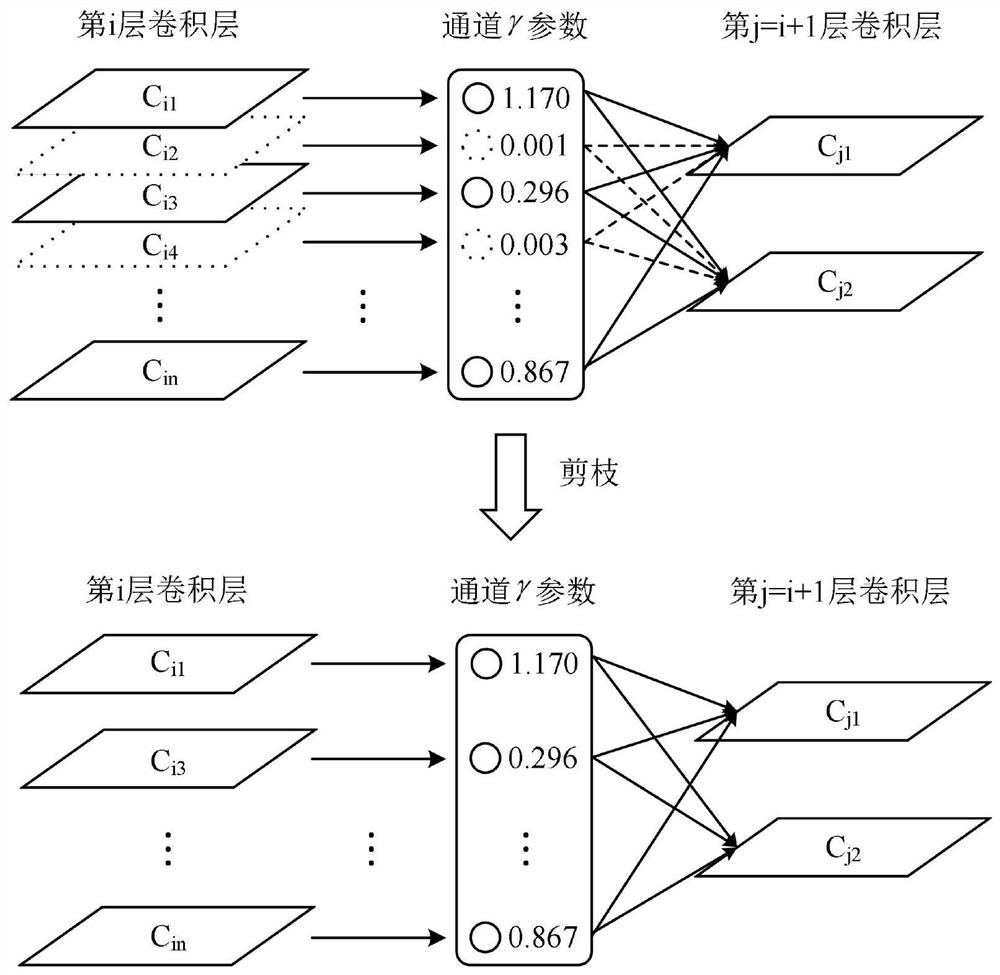

The invention discloses a joint neural network model compression method based on channel pruning and quantitative training. The method comprises the steps: 1, sparsifying a training model; step 2, training model pruning; 3, finely adjusting the model; 4, quantifying the model after pruning is finished, and constructing a conventional floating-point number calculation graph; step 5, inserting pseudo-quantization modules into corresponding positions of convolution calculation in the calculation graph, inserting two pseudo-quantization modules into a convolution weight and an activation value, and quantizing the weight and the activation value into 8-bit integer; 6, dynamically quantifying the training model until convergence; 7, performing quantitative reasoning; and 8, finally obtaining a pruned and quantified model. Through two technologies of pruning and quantification, the time and space consumption of the model is greatly reduced under the condition of maintaining the accuracy of the model.

Owner:HARBIN INST OF TECH

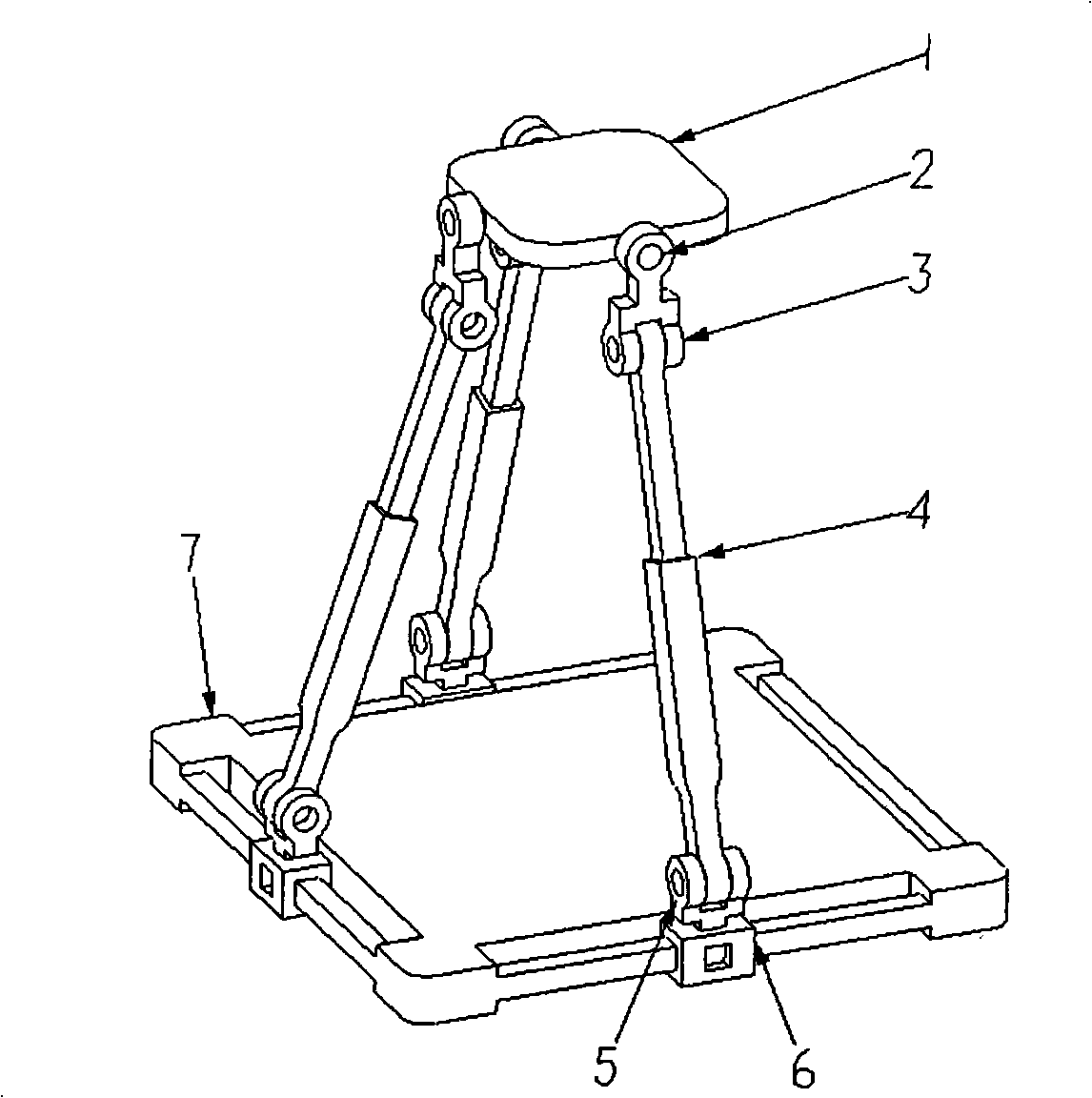

Fork four-freedom parallel connection robot mechanism

InactiveCN101259617AIncrease stiffnessSimple structureProgramme-controlled manipulatorSingle degree of freedomKinematic pair

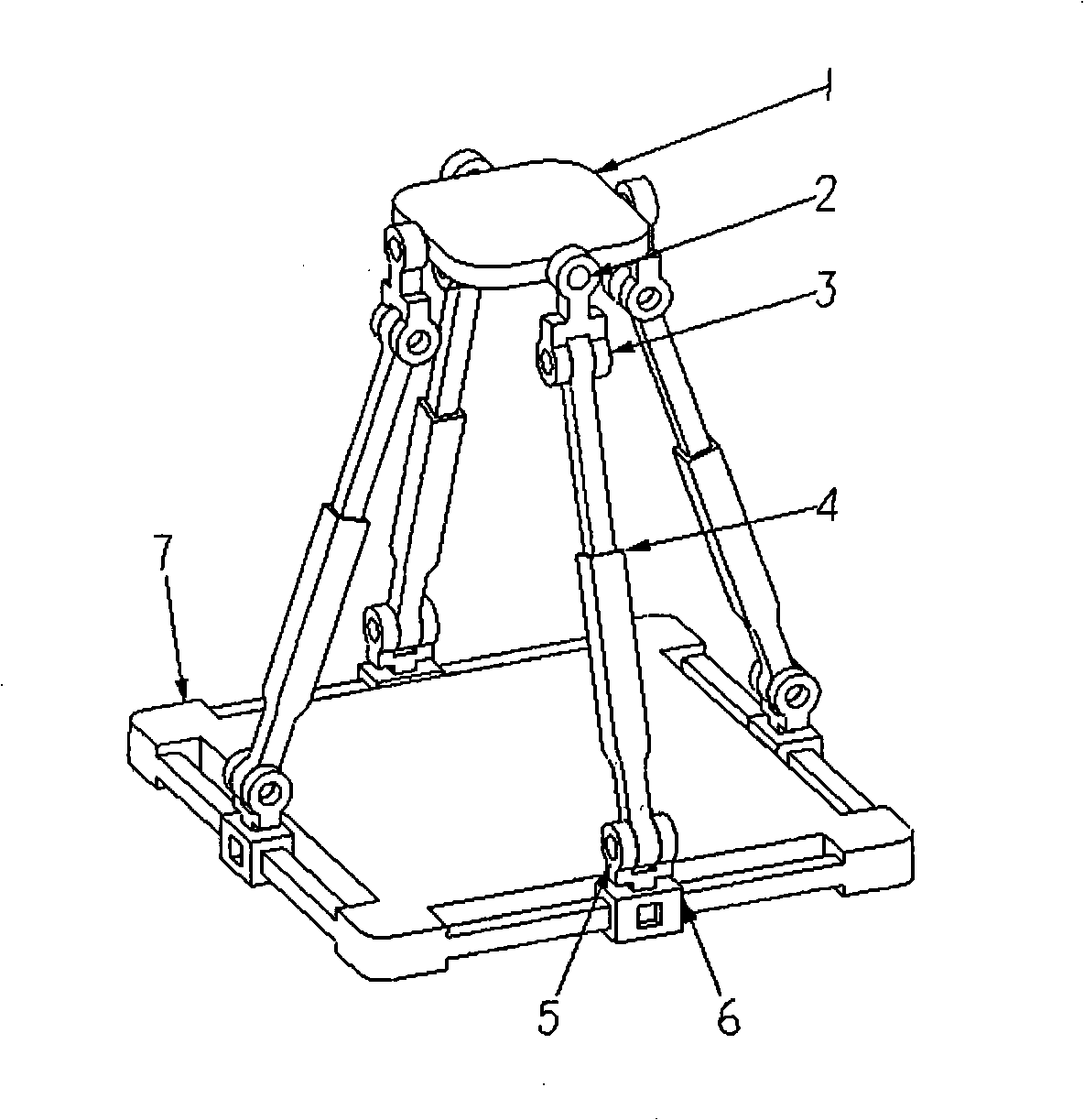

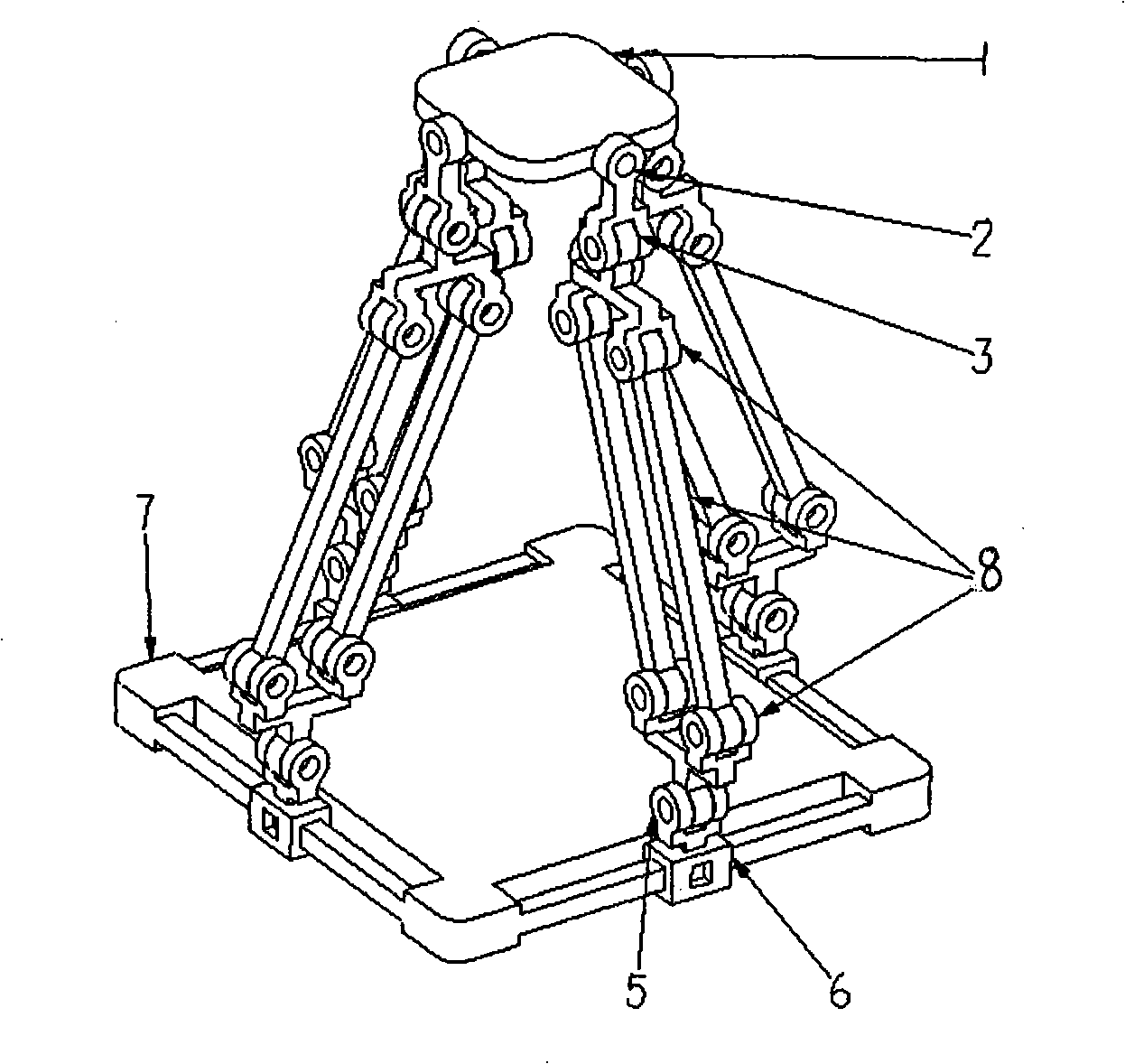

The invention discloses a parallel robot mechanism with four branched degrees of freedom and consists of a moving platform, a fixed platform and 2 to 4 movable branched chains which are provided with the same structure and is used for connecting the moving platform and the fixed platform; wherein, the movable branched chains comprises a moving pair, a first rotary pair, a kinematic pair with a single degree of freedom, a second rotary pair and a third rotary pair from the fixed platform to the moving platform respectively; the mechanism is provided with three moving degrees of freedom and two rotary degrees of freedom under the initial configuration; the moving platform can rotate around the axial lines of the two rotary pairs respectively and the axial lines are connected with the moving platform by winding two branches adjacent to the moving platform; when the moving platform begins to rotate along one direction, the other rotary direction is automatically locked, that is to say that the mechanism is provided with only three moving degrees of freedom and a rotary degree of freedom after the moving platform rotates from an initial position. The parallel robot mechanism can be widely applied to parallel robots, micro-motion worktables and virtual axial machine tools, etc. and has the advantages of high rigidity, simple structure, moving and rotary decoupling, easy control, good dynamic performance, good processing and assembling performance, etc.

Owner:ZHEJIANG SCI-TECH UNIV

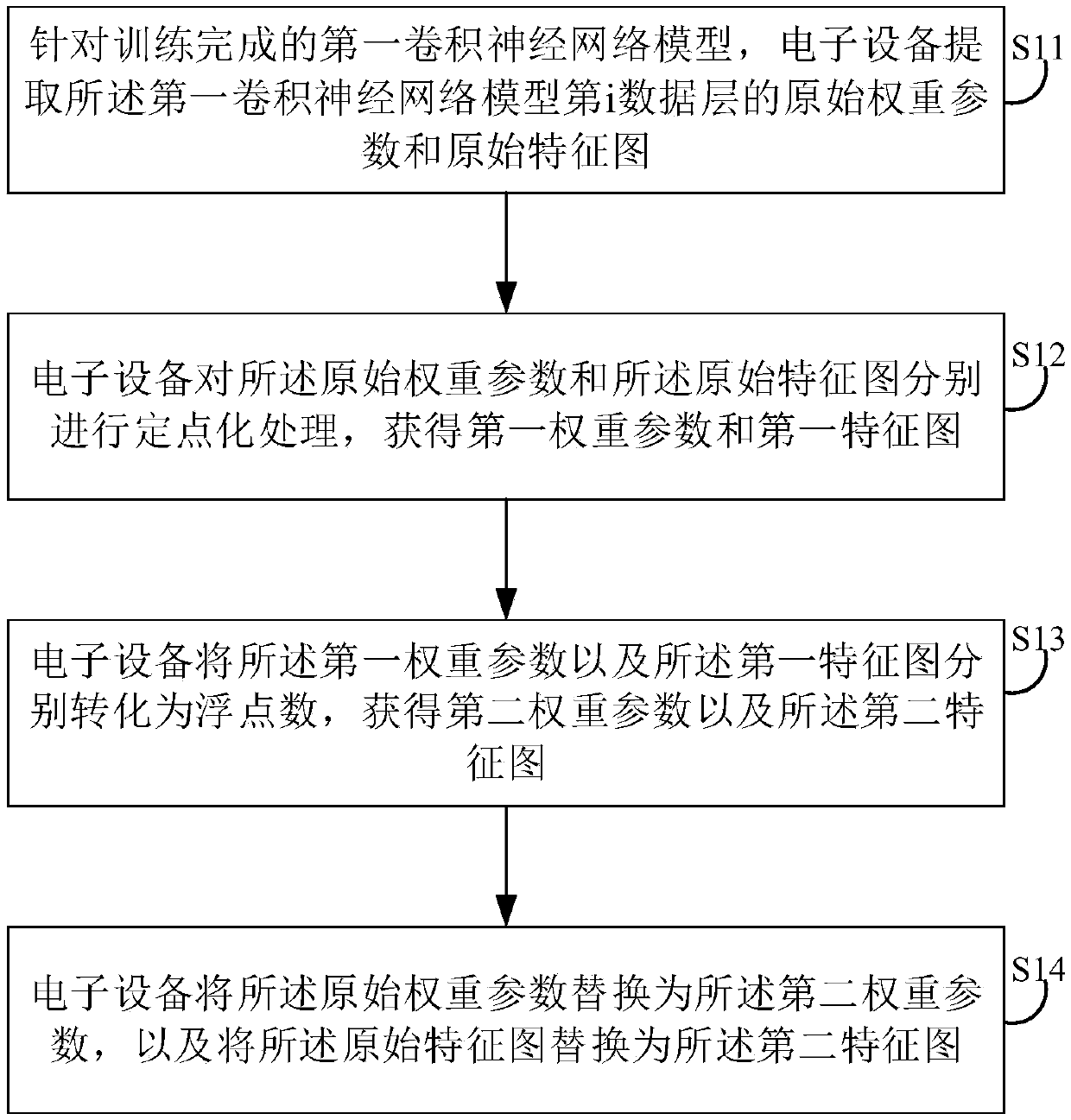

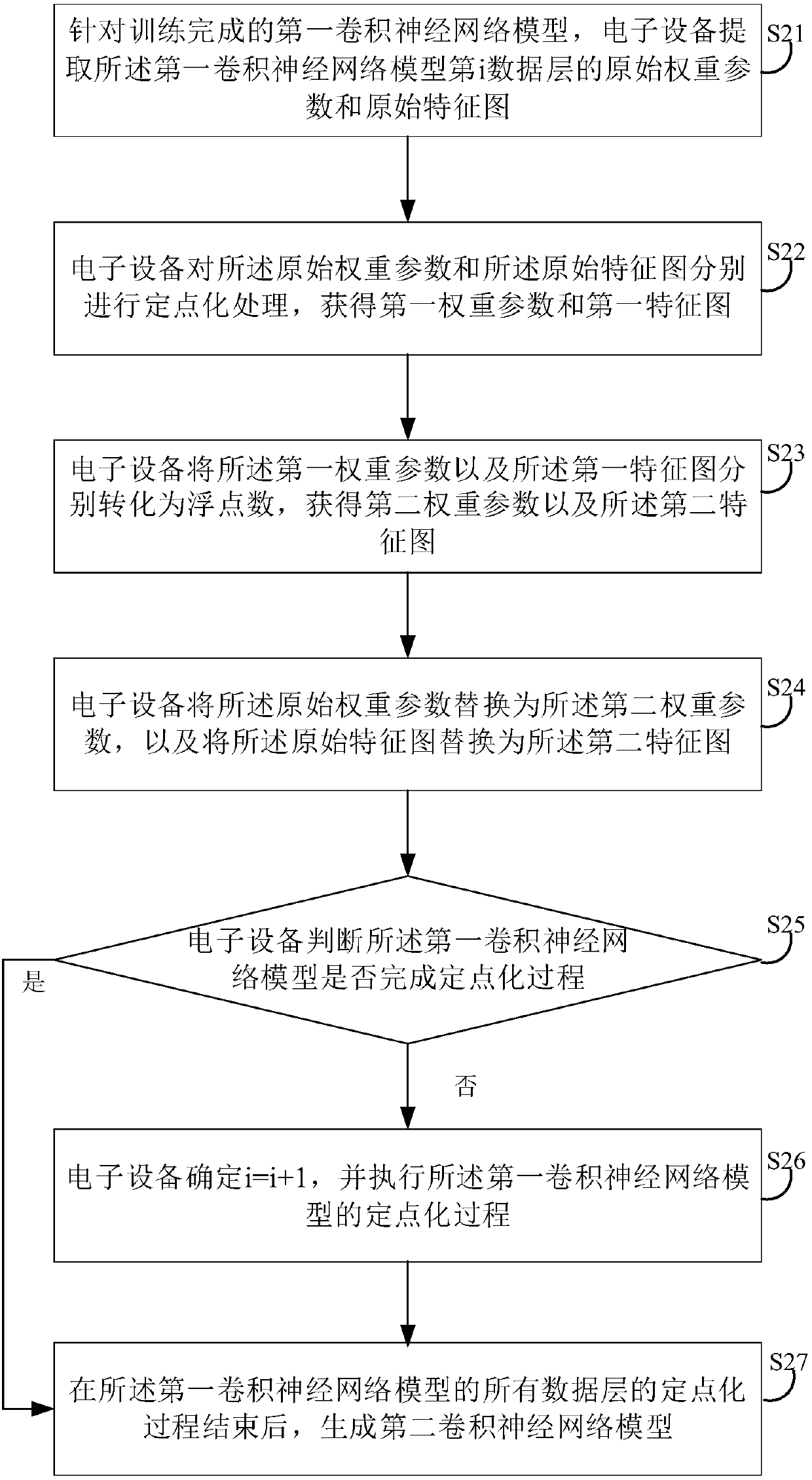

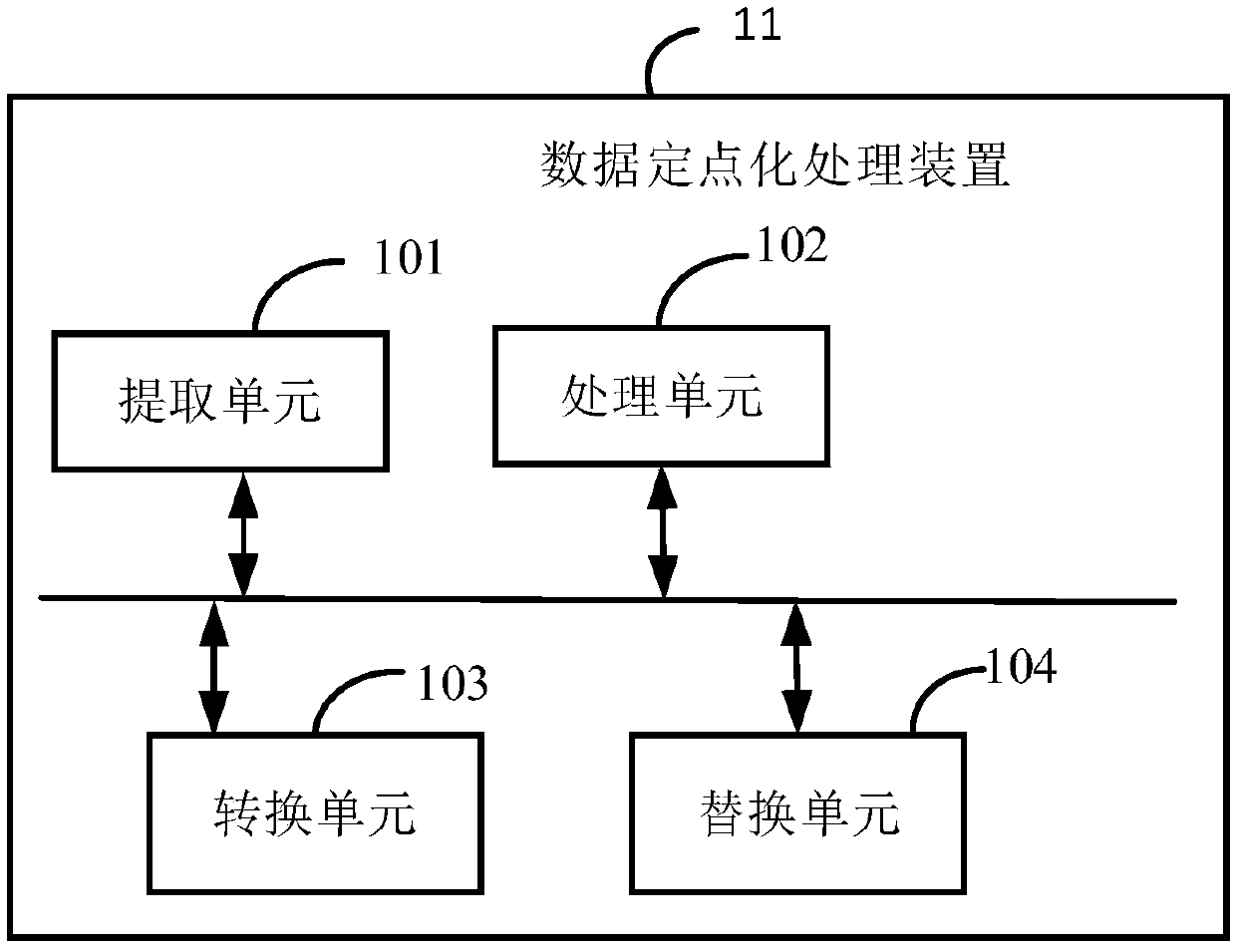

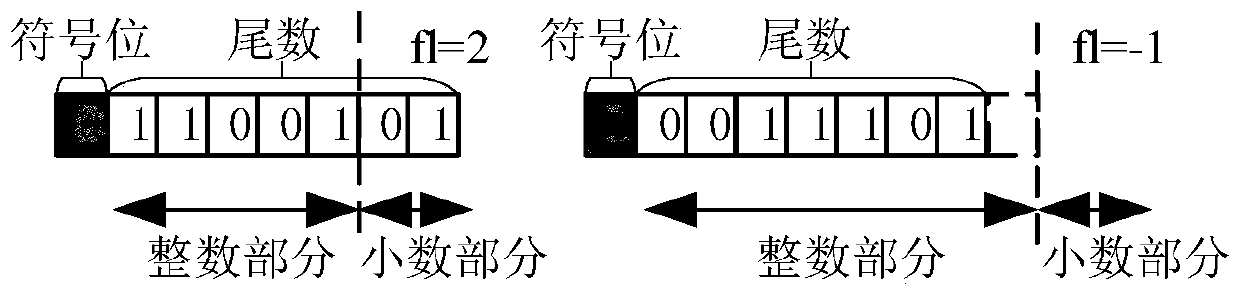

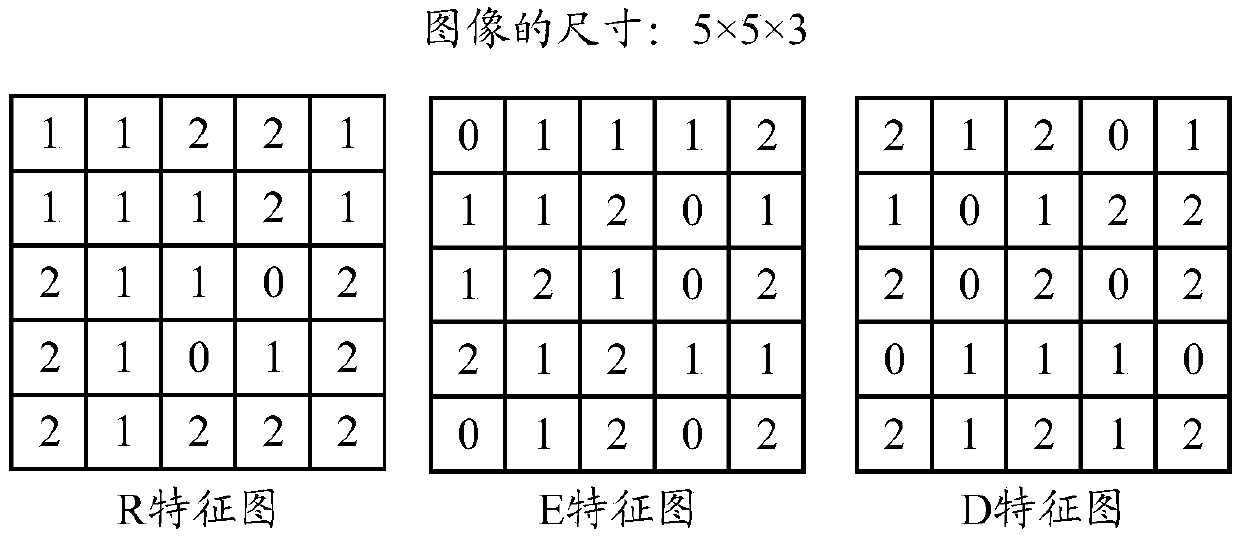

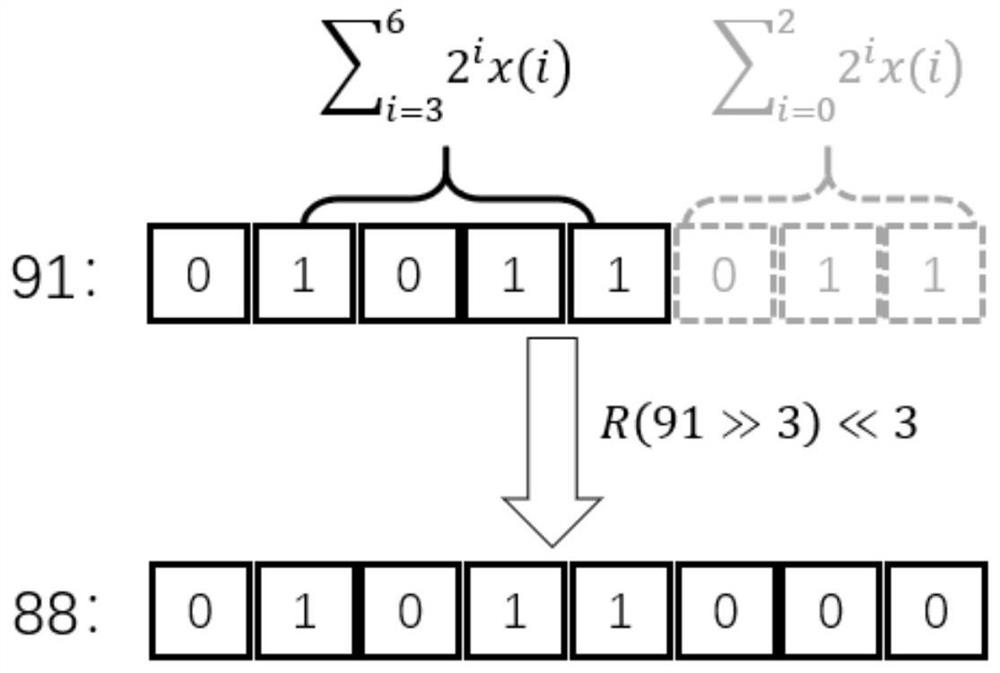

Data fixed-point processing method, device, electronic equipment and computer storage medium

ActiveCN108053028AReduce precision lossNeural architecturesNeural learning methodsFloating pointElectric equipment

The invention provides a data fixed-point processing method. The method includes the following steps: for a trained first convolutional neural network model, extracting an original weight parameter and original feature map of the ith data layer of the first convolutional neural network model, wherein i is the number of layers of the network and is a positive integer; performing fixed-point processing on the original weight parameter and original feature map to obtain a first weight parameter and a first feature map; converting the first weight parameter and first feature map into floating point numbers respectively to obtain a second weight parameter and a second feature map; and replacing the original weight parameter with the second weight parameter, and replacing the original feature map with the second feature map. The data fixed-point processing method provided by the invention can ensure that relatively small precision loss can be achieved at relatively small quantification bit width.

Owner:SHENZHEN LIFEI TECHNOLOGIES CO LTD

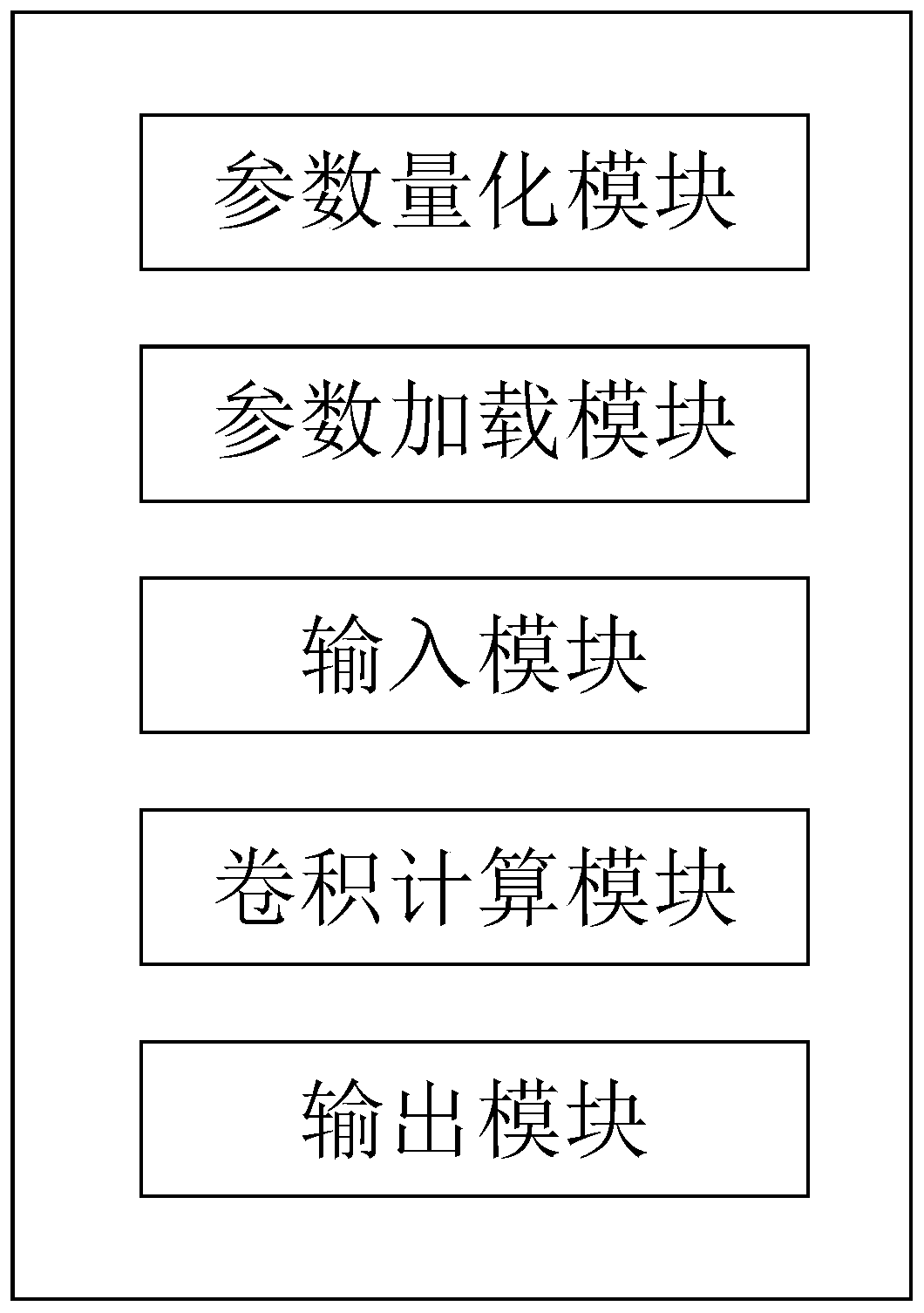

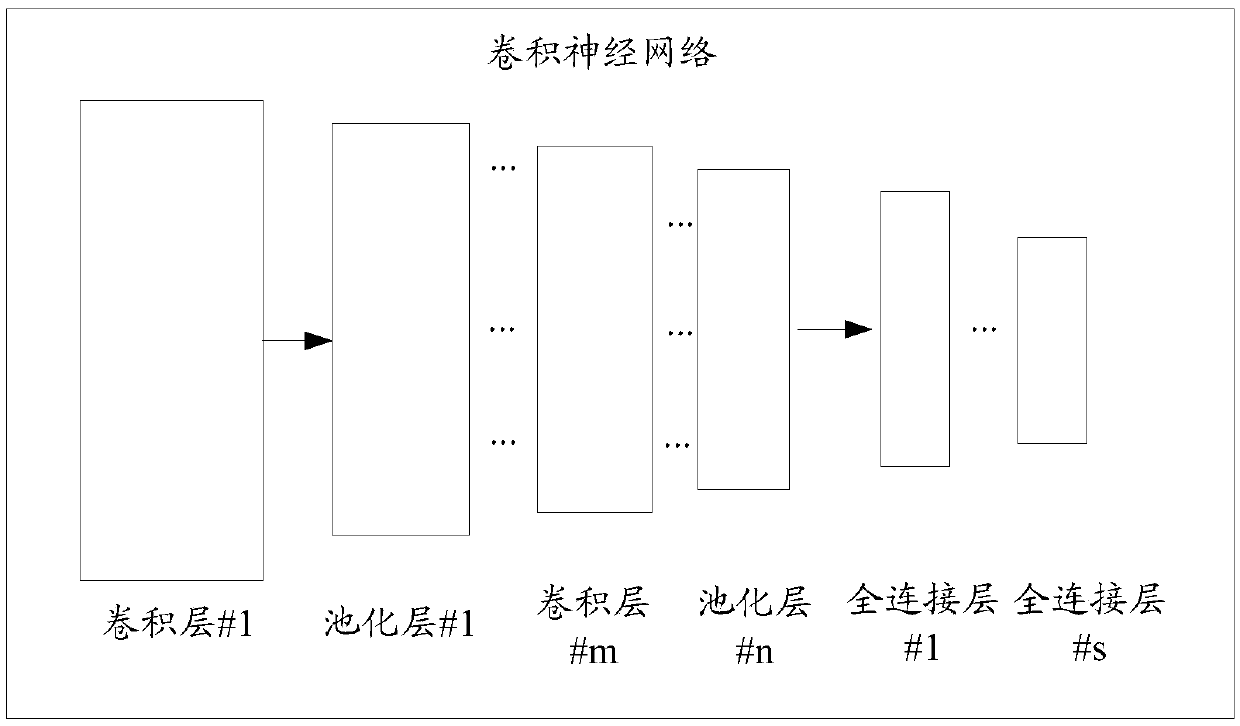

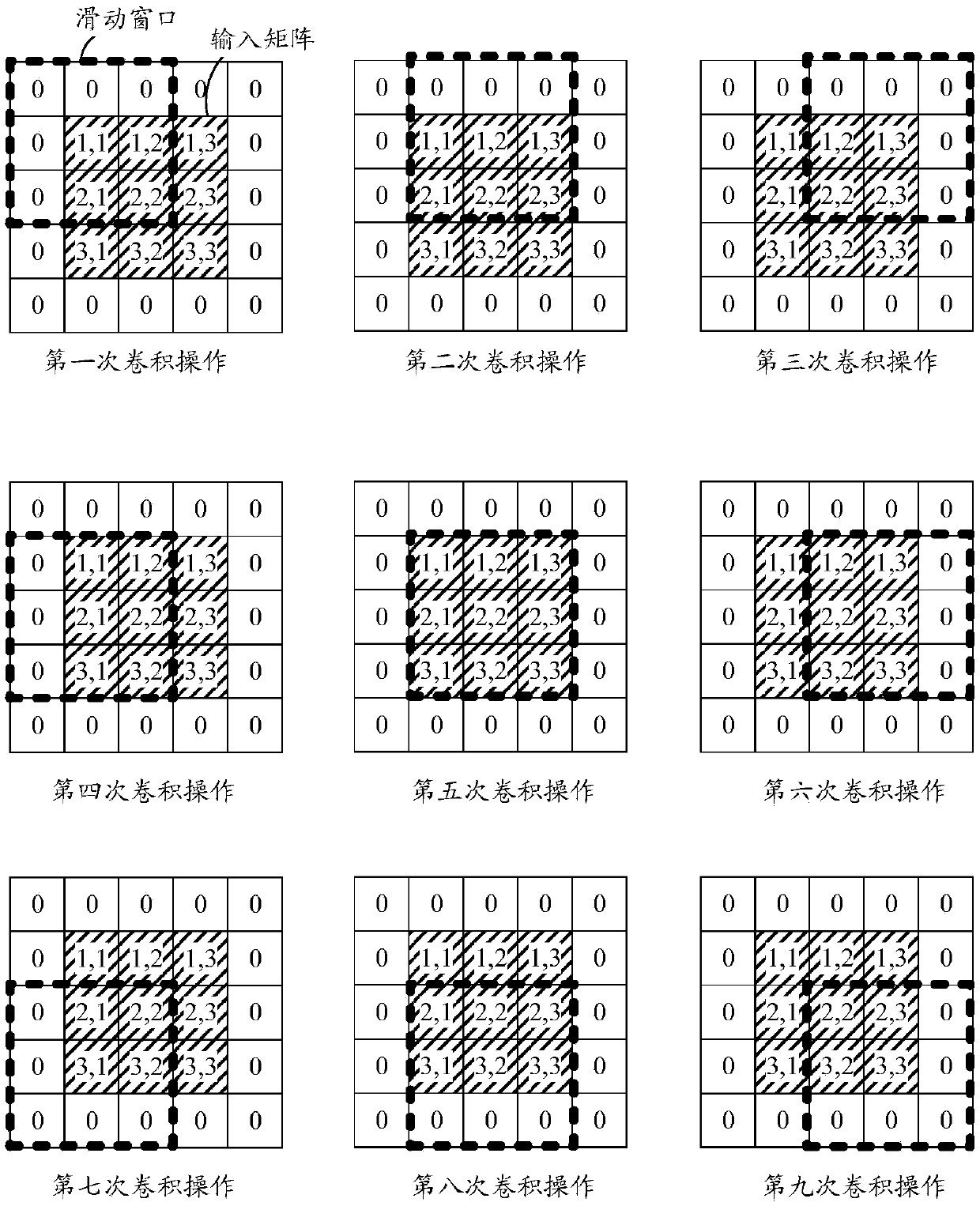

FPGA-based accelerated convolution calculation system and convolutional neural network

ActiveCN110880038AReduce storageSave storage spaceNeural architecturesPhysical realisationAlgorithmTheoretical computer science

The invention belongs to the field of deep learning, particularly relates to an FPGA-based accelerated convolution calculation system and a convolutional neural network, and aims to solve the problemsin the prior art. The system comprises a parameter quantification module for storing fixed-point weight parameters, scales and offsets of each convolution layer; a parameter loading module which is used for loading the fixed-point CNN model parameter file into the FPGA; an input module which is used for acquiring low-bit data after fixed-point processing of the input data; a convolution calculation module which is used for splitting the feature map matrix of the input data into a plurality of small matrixes, sequentially loading the small matrixes into the FPGA and carrying out convolution calculation in batches according to the number of convolution kernels; an output module which is used for combining convolution calculation results corresponding to the small matrixes to serve as an input image of the next layer; according to the system, on the premise that the precision loss of the network model is very small on the hardware FPGA, the storage of the network model is reduced, and the accelerated convolution calculation is realized.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

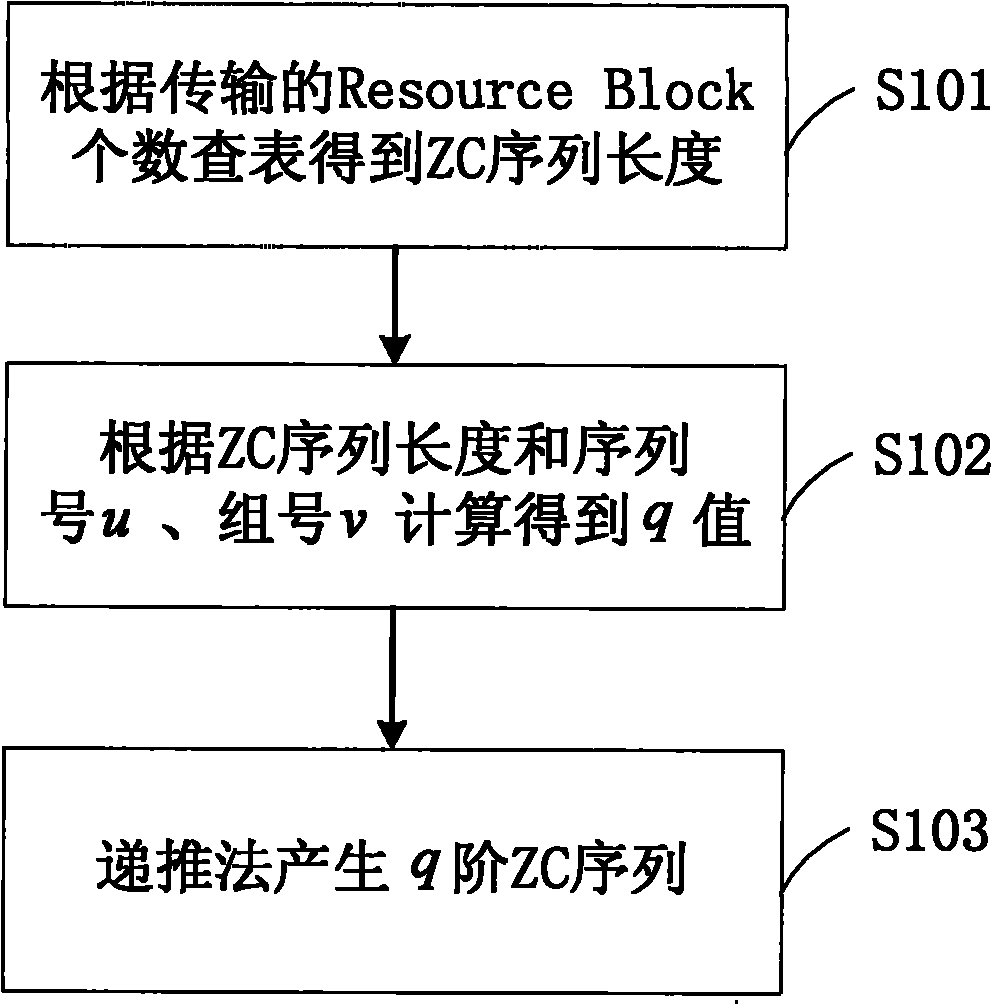

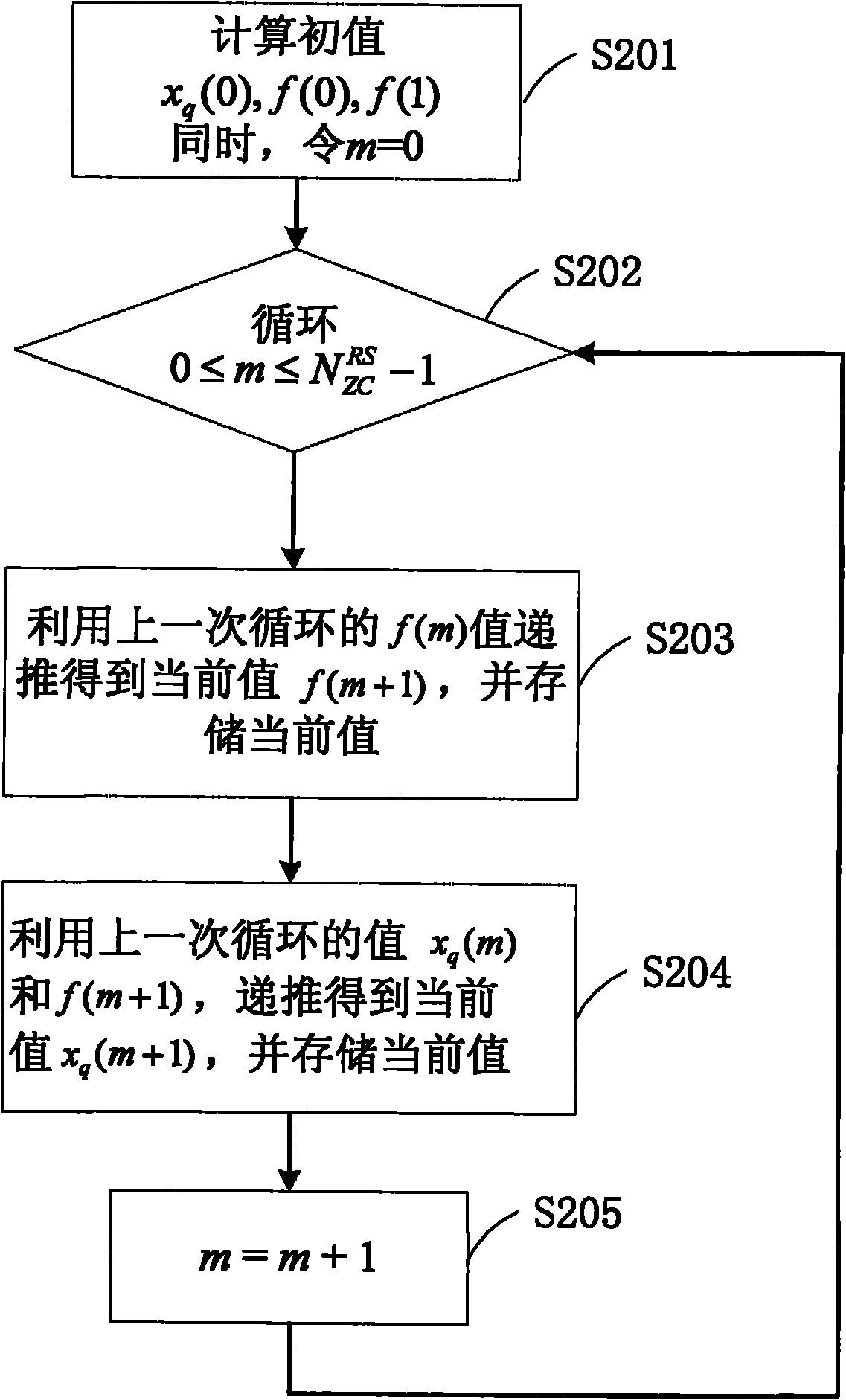

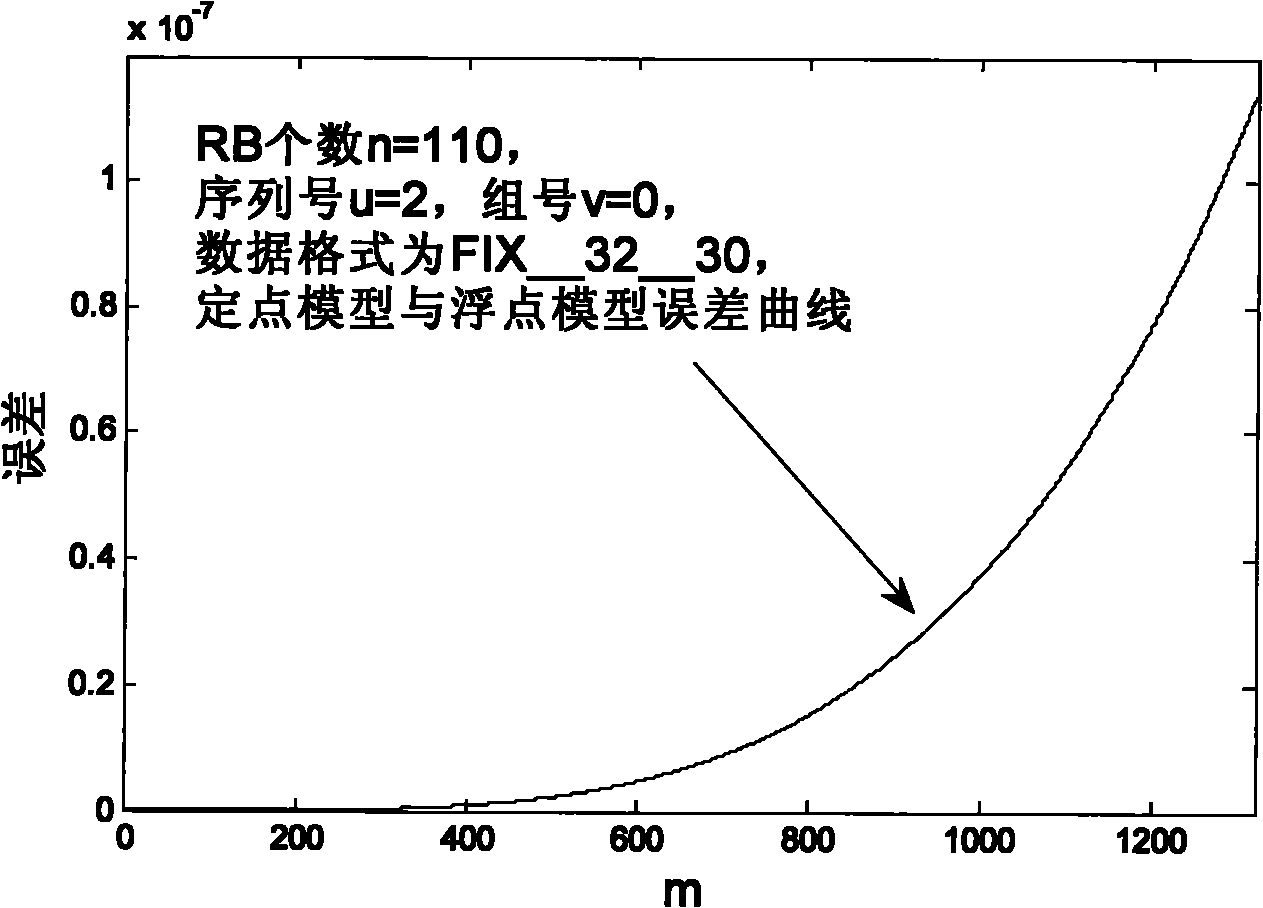

Generation method of LTE (Long Term Evolution) system upstream reference signal q-step ZC (Zadoff-Chu) sequence system thereof

InactiveCN101917356AEasy to implementSave resourcesMulti-frequency code systemsTransmitter/receiver shaping networksProblem of timeResource block

The invention relates to a generation method of an LTE (Long Term Evolution) system upstream reference signal q-step ZC (Zadoff-Chu) sequence and a system thereof. The method comprises the steps of: firstly, acquiring the length of a ZC sequence according to the number of resource blocks; secondly, calculating a q value according to the length of the ZC sequence, a sequence number and a group number; and thirdly, generating the q-step ZC sequence by a recurrence method. In the invention, the problem of longer calculation time for calculating the maximum prime number is avoided by generating a ZC sequence length lookup table; since the ZC sequence is generated through recursion by skillfully using phase characteristics, the multiplication and division with large amplitude and poor precision during direct calculation of phases are avoided, and the invention has important meaning in practical realization of fixed points; the ZC sequence is generated by the recursion algorithm, and the problem of long calculation time caused by calculating trigonometric function many times during generating the ZC sequence directly by a formula is avoided.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

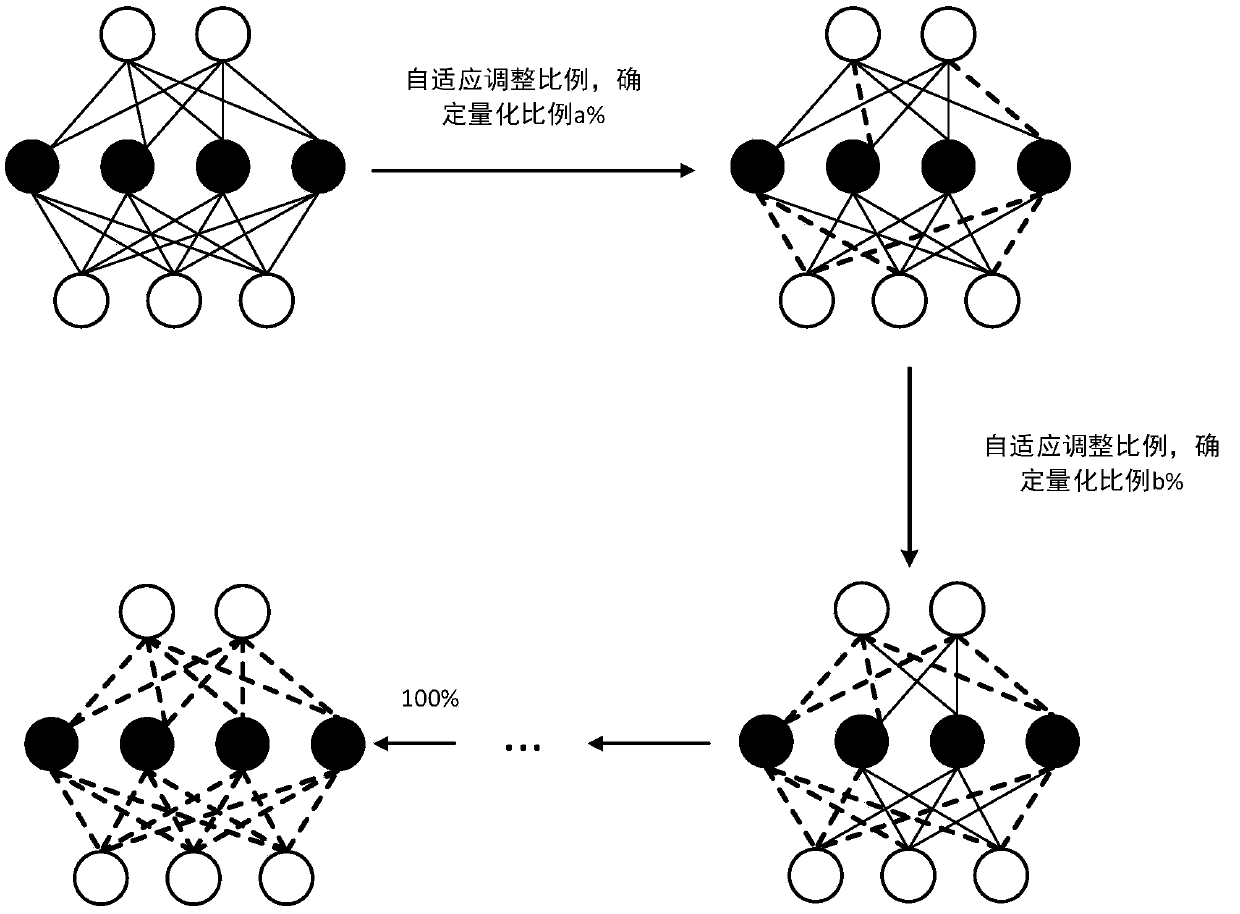

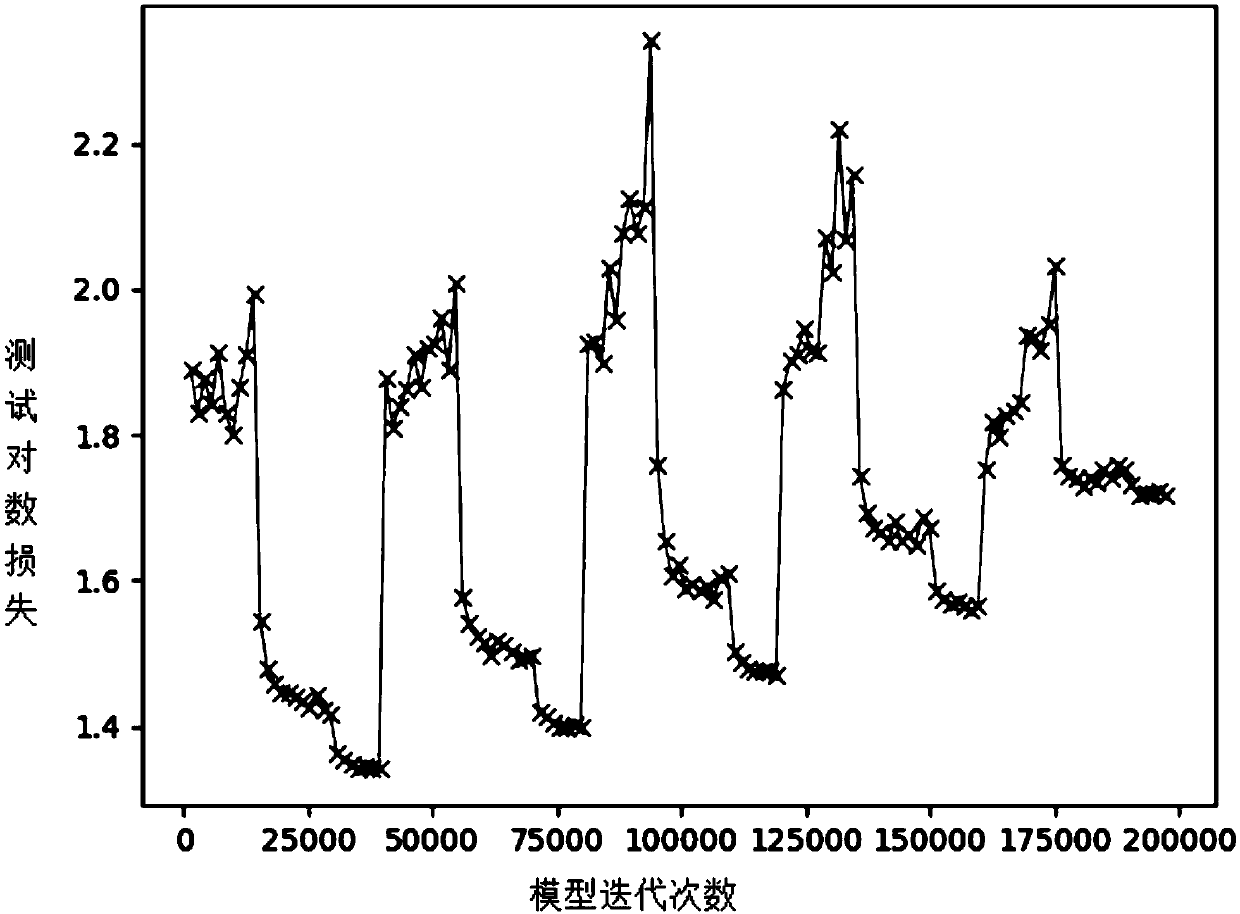

Adaptive iterative convolution neural network model compression method

An adaptive iterative convolution neural network model compression method includes: preprocessing the training data, training the convolution neural network with the training data, selecting the optimal model as the model to be compressed, using the adaptive iterative convolution neural network model compression method to compress the model, evaluating the compressed model, and selecting the optimal model as the compressed model. The invention has the following advantages: self-adaptive adjustment of the quantization ratio and less parameters; adaptive iterative compression which can improve the accuracy of the model after compression. The invention supports common convolution neural network model compression and can compress to a specific number of bits according to requirements, so the method of the invention can efficiently compress the convolution neural network model and apply the model to a mobile device.

Owner:SOUTH CHINA UNIV OF TECH

Image processing method and device based on convolutional neural network model

ActiveCN110363279AReduce mistakesHigh precisionGeometric image transformationNeural architecturesImaging processingAlgorithm

The invention provides an image processing method and device based on a convolutional neural network model. The method comprises: obtaining a first weight parameter set corresponding to a neural network layer, wherein the first weight parameter set comprises N1 first weight parameters, and N1 is an integer larger than or equal to 1; calculating the ratio of the N1 first weight parameters to the first numerical value m respectively, and obtaining N1 second weight parameters, wherein m is larger than or equal to |Wmax| and smaller than or equal to 2|Wmax|, and Wmax is the weight parameter with the maximum absolute value in the first weight parameter set; quantizing the N1 second weight parameters into the sum of at least two Q powers of 2, and obtaining N1 third weight parameters, wherein Qis smaller than or equal to 0, and Q is an integer; and obtaining a to-be-processed image; and processing the to-be-processed image according to the N1 third weight parameters to obtain an output image. According to the invention, errors caused by weight quantization can be reduced, so that precision loss is reduced.

Owner:HUAWEI TECH CO LTD

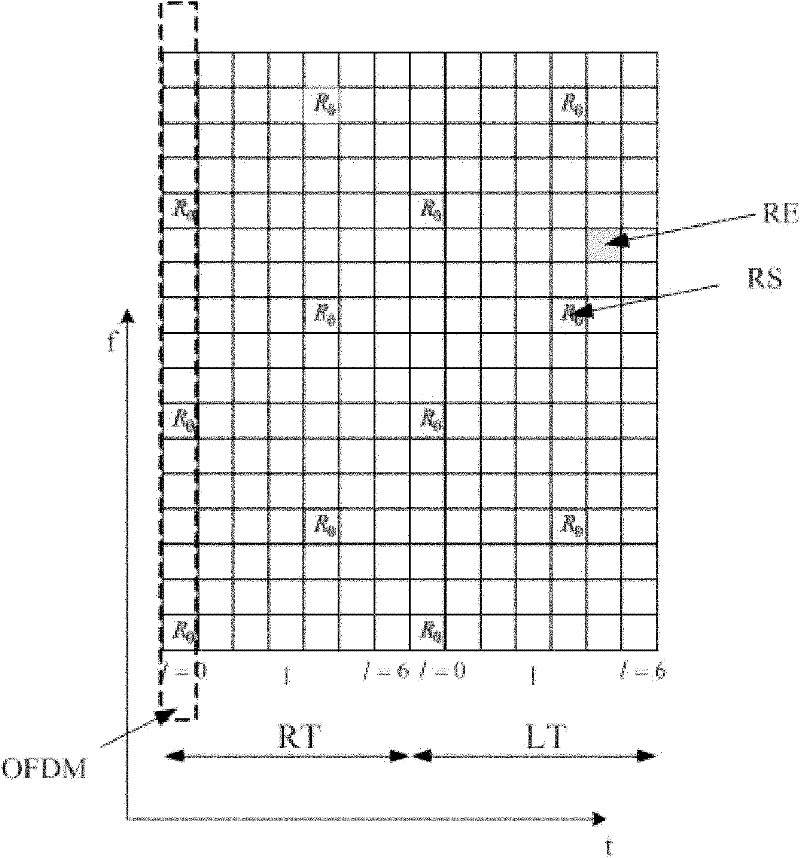

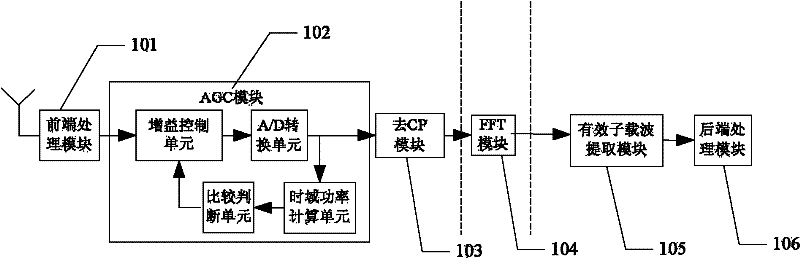

Automatic gain control method and device

ActiveCN102546505AReduce precision lossPower managementMulti-frequency code systemsTime domainCarrier signal

The invention discloses an automatic gain control method and device. The method comprises the following steps: receiving an OFDM (orthogonal frequency division multiplexing) time domain signal, converting the OFDM time domain signal to a base band to be processed, and converting the base band into a frequency domain to obtain a corresponding OFDM frequency domain signal; extracting an effective subcarrier of the OFDM frequency domain signal; computing a power ratio of received power on a virtual subcarrier part of the OFDM frequency domain signal to received power on the effective subcarrier part; when the power ratio is greater than a preset threshold value, data on the effective subcarrier is shifted left; and otherwise, data on the effective subcarrier is not shifted left. Through the method and the device, automatic gain control under the condition of smaller interference on the virtual subcarrier part can be realized, and data on the effective subcarrier part can be amplified under the condition of larger interference on the virtual subcarrier part, so that precision loss of subsequent processing is reduced conveniently, influences a on subsequent demodulation performance and the overall performance of a receiver are avoided, and better automatic gain control effect is achieved.

Owner:DATANG MOBILE COMM EQUIP CO LTD

Self-lubricating aluminum-based composite material and method for preparing same

InactiveCN101328553AControl volume fractionReduce volume fractionFrictional coefficientBoron nitride

The invention provides a self lubrication aluminum based composite material and a preparation method thereof, relating to an aluminum based composite material and a preparation method thereof. The invention solves the problems of high requirement on equipment, high cost, poor material performance and difficult second shaping and mechanical processing of materials in the synthesis of the prior self lubrication aluminum based composite material. The self lubrication aluminum based composite material is prepared from titanium diboride reinforced particles, aluminum particles or aluminum alloy particles, boron nitride particles and matrix aluminum alloy. The preparation method comprises the following steps: 1. raw materials are weighted according to the volume ratio; 2. the materials except for aluminum alloy are subjected to mixing and preliminary shaping to obtain the prefabricated part, and the prefabricated part is put in a mould and heated; 3. the aluminum alloy is heated until the aluminum alloy is melted; 4. the melted aluminum alloy is cast in the mould for pressure infiltration; and 5. the composite material of the invention is obtained after cooling and demoulding. The self lubrication aluminum based composite material of the invention has the advantages of good strength and elastic modulus, low frictional coefficient, high corrosion resistance, good plasticity and the realization of thermo forming. The preparation method of the invention has the advantages of simple method, easy operation and easy process control.

Owner:HARBIN INST OF TECH

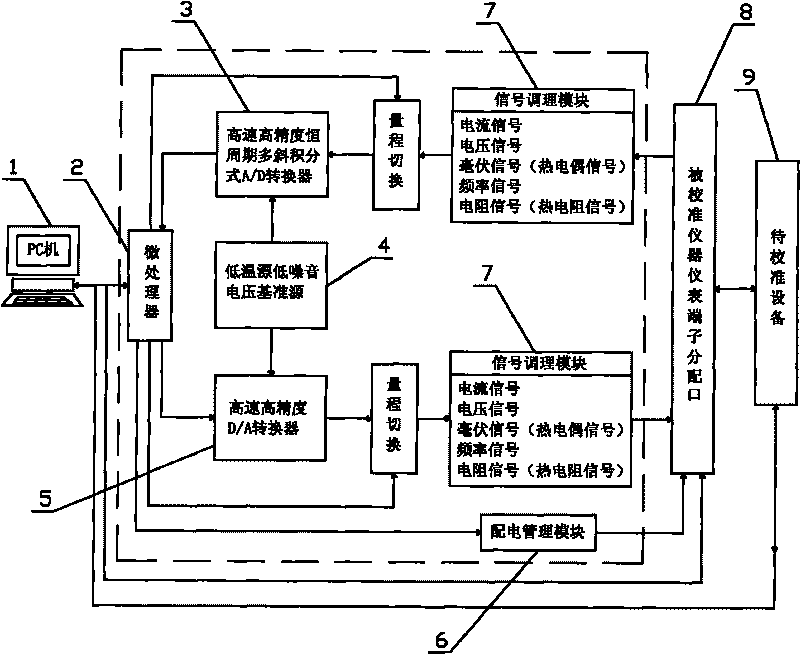

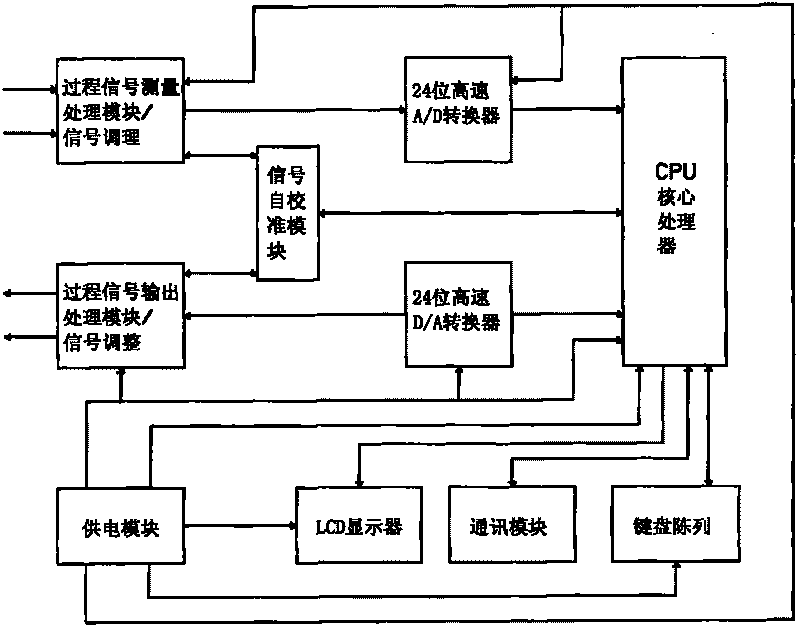

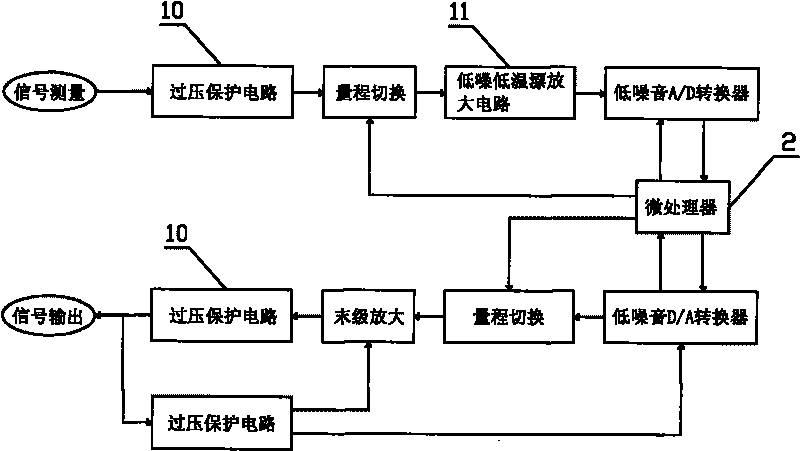

High-speed high-precision process parameter online calibration tester

InactiveCN101738947AFast conversionReduce precision lossProgramme control in sequence/logic controllersLow noiseMeasuring instrument

The invention relates to a measuring instrument for an automation meter and discloses a high-speed high-precision process parameter online calibration tester. The tester comprises a microprocessor which is connected with a PC computer and used for data communication, and a measuring module, an output module, a power distribution management module and a signal conditioning module which are connected with the microcomputer; the measuring module comprises a high-speed high-precision constant-cycle multiple oblique integral A / D convertor; and the output module comprises a high-speed high-precision A / D convertor, wherein a low-temperature drift low-noise voltage reference source is connected between the high-speed high-precision constant-cycle multiple oblique integral A / D convertor and the high-speed high-precision A / D convertor. The tester is mainly used for measuring an industrial autocontrol system (DCS and PLC) and other automation meters and performing automatic high-precision calibration testing on output signals, can be used for research and development of products and quality detection and control of the products on a production line, and is widely applied to the maintenance and calibration of apparatus and instruments in the production process in electric power, petrifaction, metallurgy and the like.

Owner:杭州精久科技有限公司

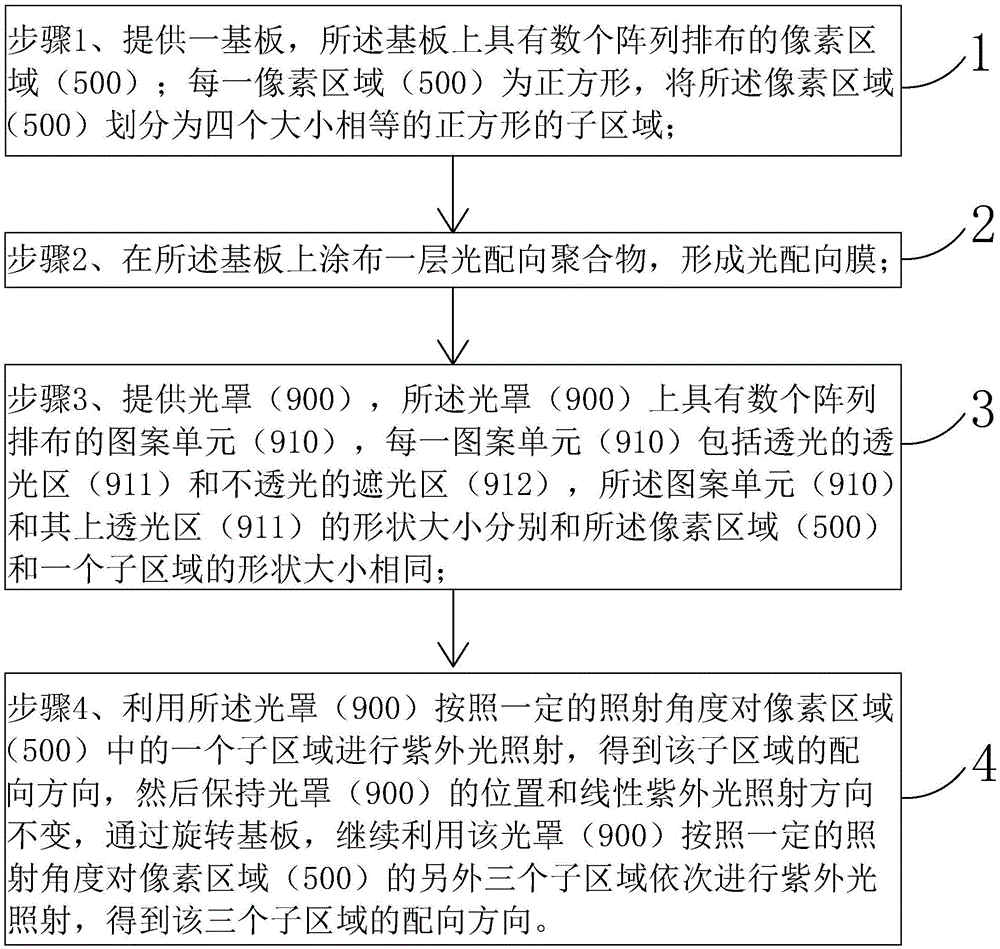

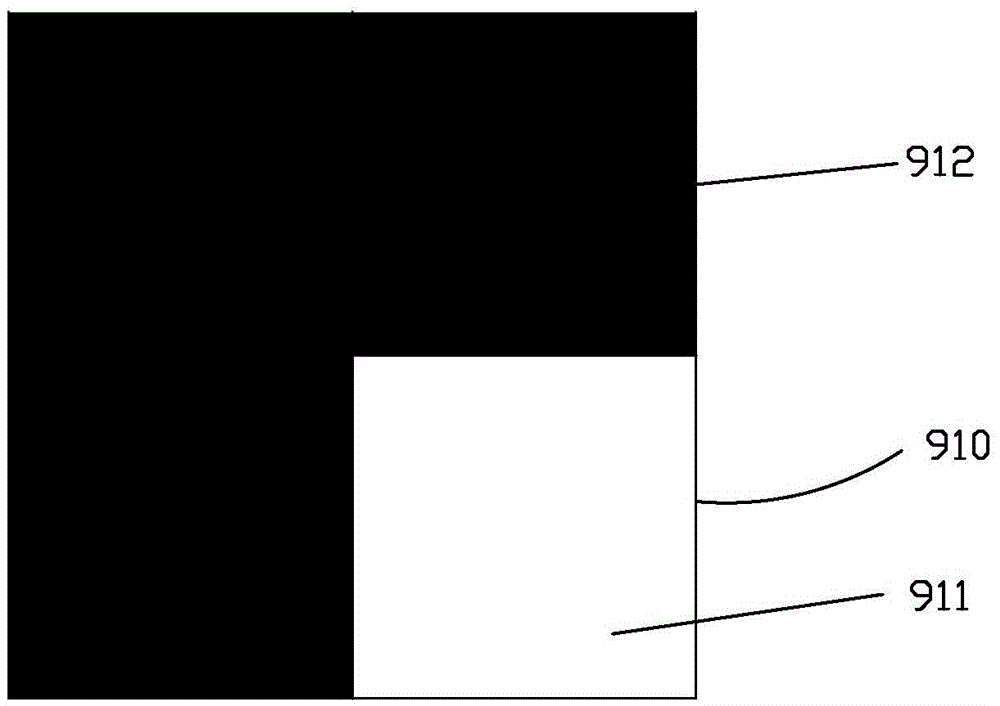

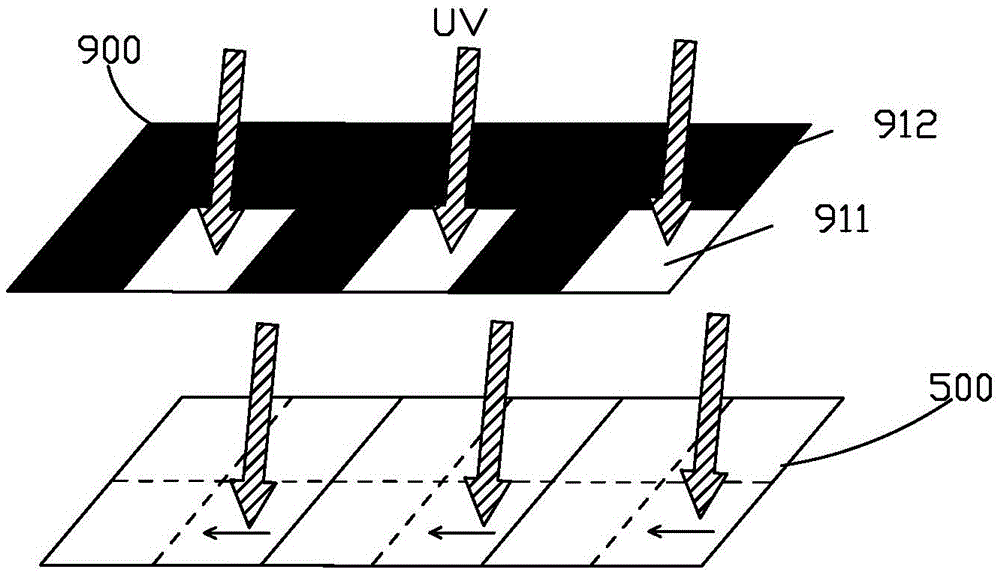

Vertical photo-alignment method and liquid crystal display panel manufacturing method

ActiveCN105487301AIncrease contrastImprove resolutionNon-linear opticsLiquid-crystal displayUltraviolet lights

The invention provides a vertical photo-alignment method and a liquid crystal display panel manufacturing method. According to the vertical photo-alignment method, pixel areas are designed to be in a square shape, each pixel area is divided into four sub areas which are the same in size, each pattern unit, corresponding to the corresponding pixel area, on the corresponding photomask comprises a light-transmitting area and a shading area, and the shape and size of each light-transmitting area are the same as the shape and size of one of the sub areas of the corresponding pixel area; firstly, the photomasks are utilized to conduct ultraviolet light irradiation on one of the sub areas in the corresponding pixel area according to a certain irradiation angle, and the photo-alignment direction of the sub area is obtained; then, the positions of the photomasks and the linear ultraviolet light irradiation direction are maintained unchanged, a base plate is rotated, ultraviolet light irradiation is sequentially conducted on the other three sub areas of the corresponding pixel area, and the corresponding pixel area is made to have four sub areas which are different from one another in photo-alignment direction. The vertical photo-alignment method and the liquid crystal display panel manufacturing method are high in photo-alignment precision, simple in method and low in cost.

Owner:TCL CHINA STAR OPTOELECTRONICS TECH CO LTD

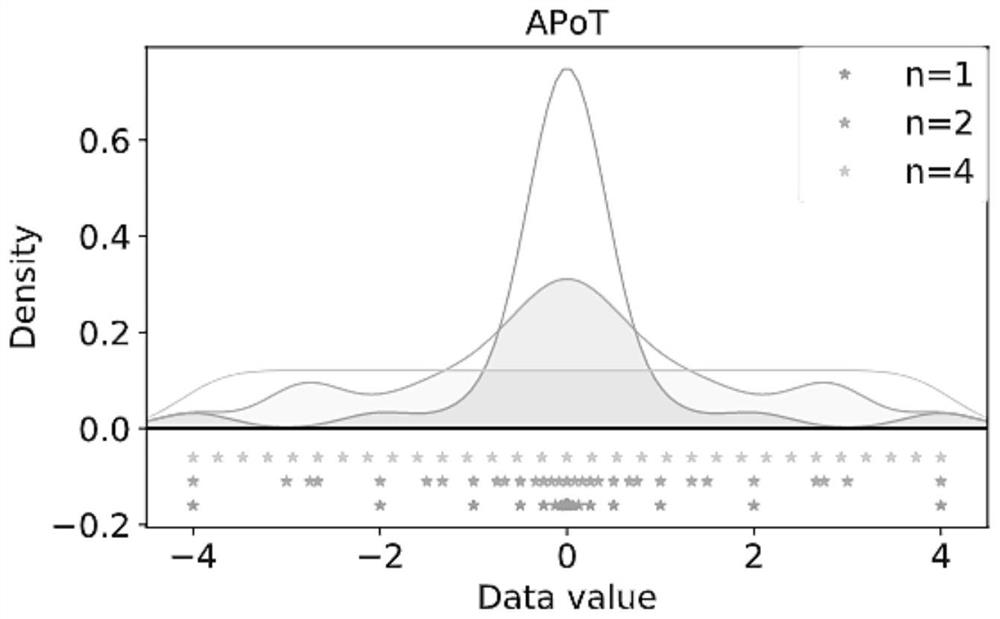

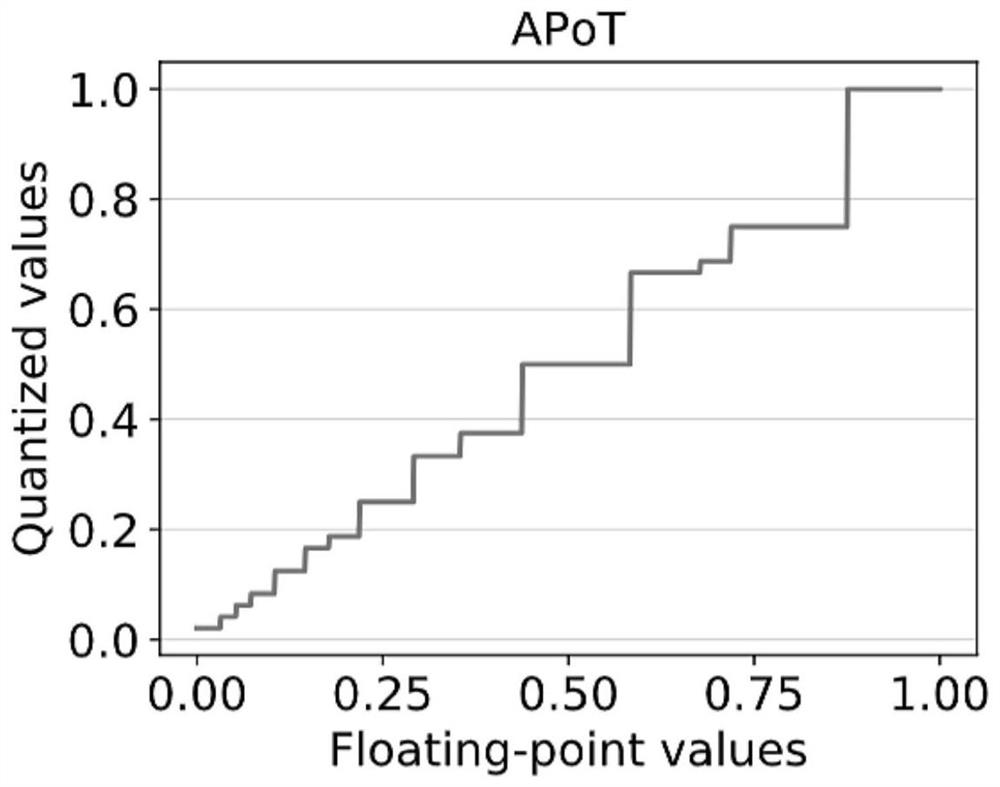

Deep neural network quantification method based on elastic significant bits

ActiveCN111768002AReduce precision lossImprove efficiencyPhysical realisationNeural learning methodsAlgorithmOriginal data

The invention provides a deep neural network quantization method based on elastic significant bits. According to the method, a fixed point number or a floating point number is quantized into a quantized value with elastic significant bits, redundant mantissa parts are discarded, and the distribution difference between the quantized value and original data is quantitatively evaluated in a feasiblesolution mode. According to the invention, by using the quantized values with elastic significant bits, the distribution of the quantized values can cover a series of bell-shaped distribution from long tails to uniformity through different significant bits to adapt to weight / activation distribution of DNNs, so that low precision loss is ensured; multiplication calculation can be realized by multiple shift addition on hardware, so that the overall efficiency of the quantization model is improved; and the distribution difference function quantitatively estimates the quantization loss brought bydifferent quantization schemes, so that the optimal quantization scheme can be selected under different conditions, low quantization loss is achieved, and the precision of the quantization model is improved.

Owner:NANKAI UNIV

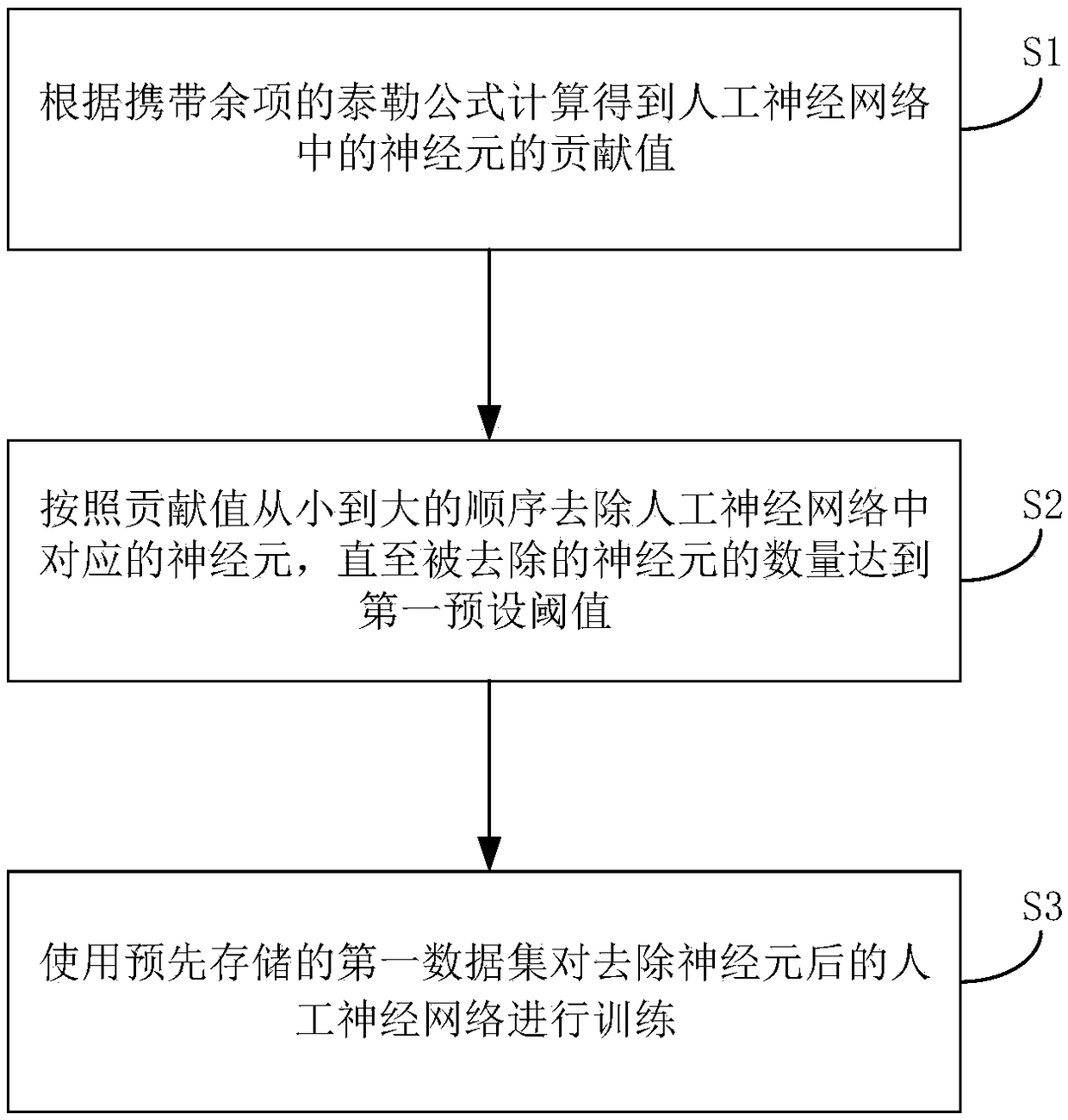

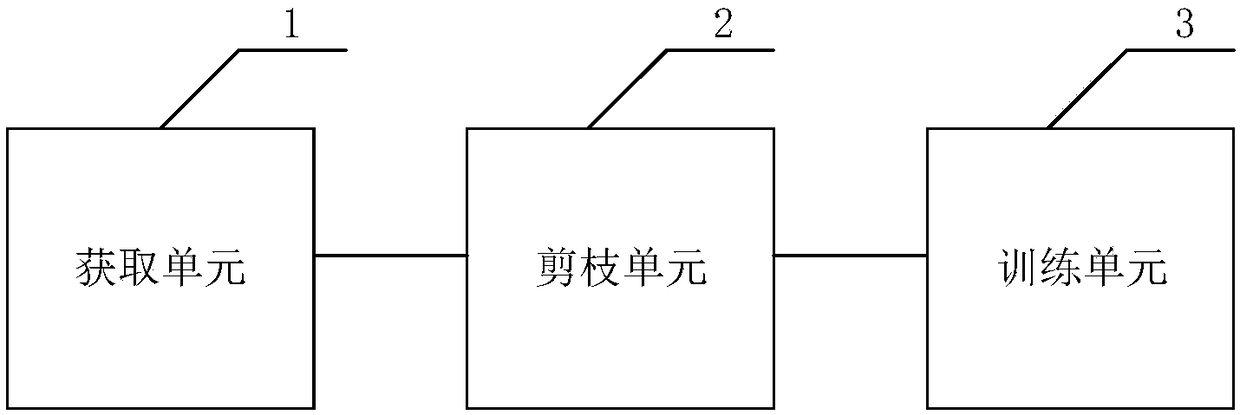

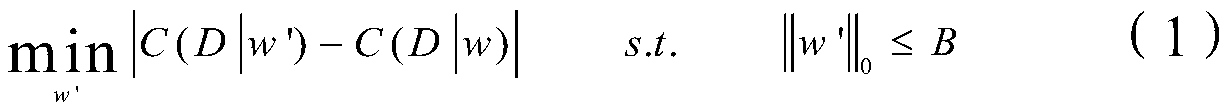

Pruning method, device and apparatus of artificial neural network and readable storage medium

InactiveCN108154232AProcessing speedReduce precision lossPhysical realisationNeural learning methodsData setAlgorithm

The invention discloses a pruning method, device and apparatus of an artificial neural network and a readable storage medium. The pruning method comprises: calculating according to a Taylor's formulacarrying a remainder to obtain contribution values of neurons in the artificial neural network; removing the corresponding neurons in the artificial neural network according to the sequence of the contribution values until the quantity of the removed neurons reaches a first preset threshold; using a pre-stored first dataset to train the artificial neural network with the neurons removed. Calculating is performed with the Taylor's formula carrying the remainder to obtain contribution values of neurons in the artificial neural network, the contribution values are closer to real contribution values than those in the artificial neural network which are obtained by performing calculating with a non-remainder Taylor's formula in the prior art; therefore, being pruned according to contribution values, obtained by performing calculating via the Taylor's formula carrying the remainder, the artificial neural network is more precise with precision loss reduced.

Owner:厦门熵基科技有限公司

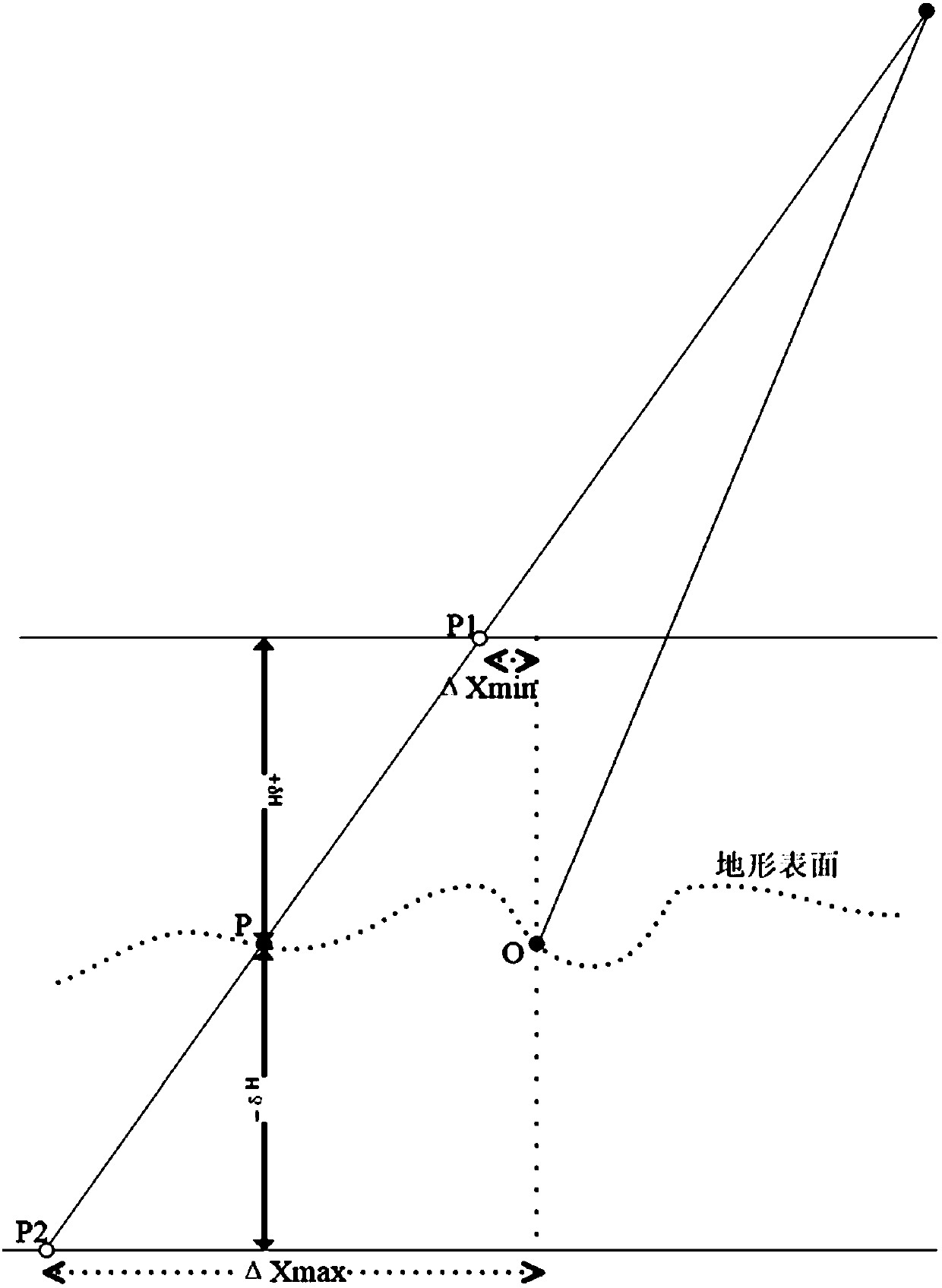

Target positioning method and system under large-tilt remote observation environment

ActiveCN107656286AReduce precision lossImprove applicabilityPhotogrammetry/videogrammetryElectromagnetic wave reradiationLaser rangingVirtual control

The invention provides a target positioning method and system under a large-tilt remote observation environment. The target positioning method is characterized in that video images and laser ranging information are integrated to achieve target positioning. The target positioning method comprises the steps of carrying out data preparation, performing target coarse positioning by using the laser ranging information to serve as a constraint condition, determining a coordinate extrapolation tolerance range of a ground feature point, and modifying initial coordinate values of the ground feature point; performing GPS-aided bundle block adjustment with virtual control point constraints by taking a target point as a virtual control point; and performing nonlinear iteration solving for forward intersection target positioning by taking the target coarse positioning result as an initial value and taking the acquired image position and attitude as known conditions, and repeating the above steps toimprove the target positioning accuracy. The target positioning method effectively integrates advantages of an optical sensor and a laser device on information acquisition, not only can meet the requirement of laser single-target positioning, but also can meet the requirement of optical video image multi-target positioning, and improves the target positioning accuracy to the great extent.

Owner:WUHAN UNIV

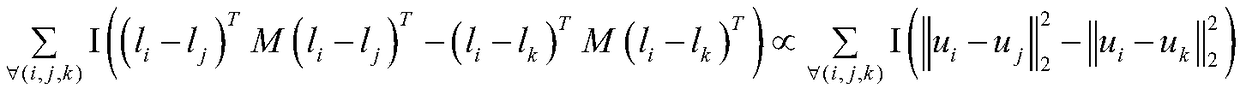

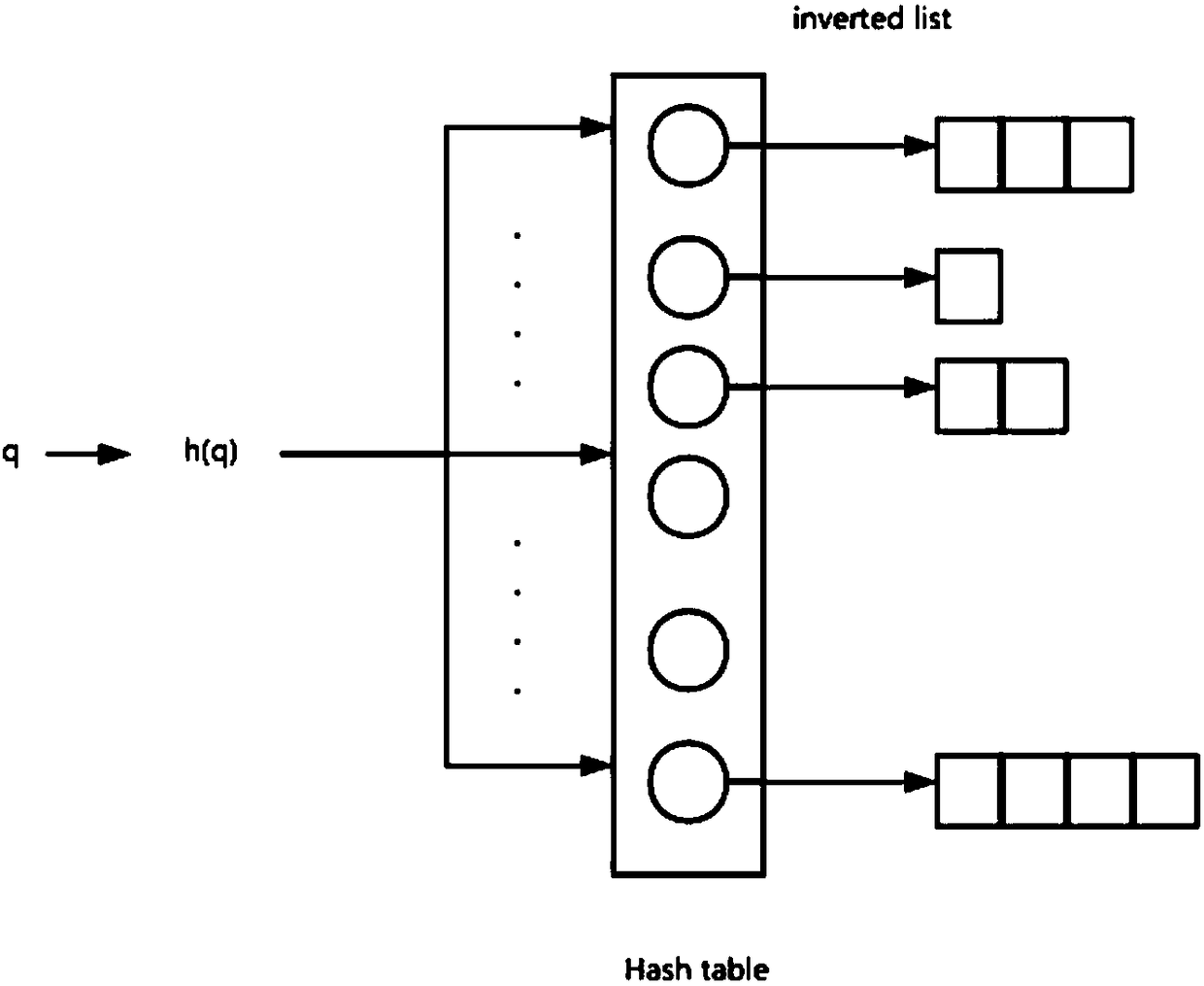

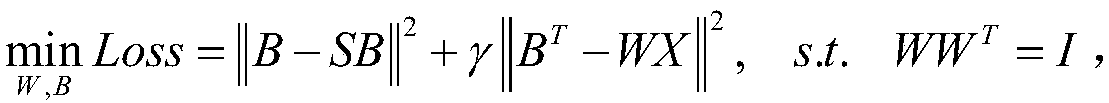

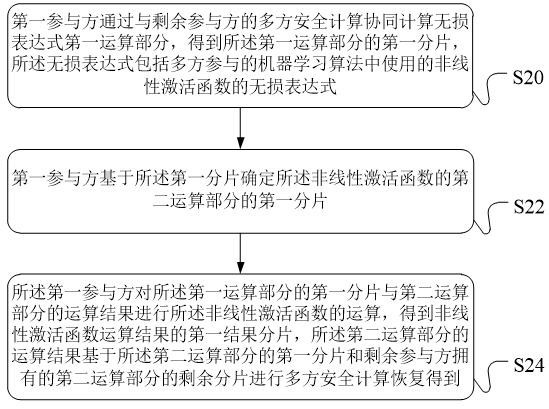

Sequence constrained hashing algorithm in image retrieval

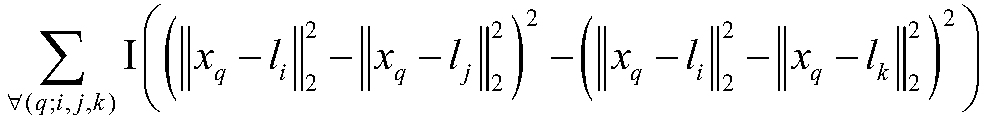

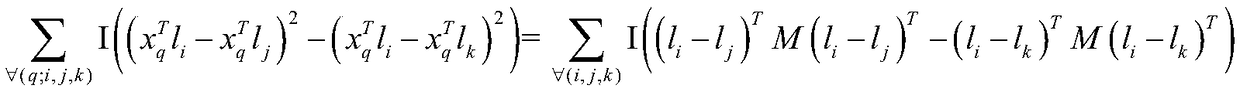

InactiveCN109145143AAdapt to flow pattern distributionReduce precision lossCharacter and pattern recognitionMetadata still image retrievalAlgorithmHamming space

A sequence constrained hashing algorithm in image retrieval relates to image retrieval. First of all, in the process of training the model, the relaxation of the original problem usually brings a lotof loss of precision, that is, the model is usually in real space to learn and optimize the model. At the same time, the previous hashing algorithms always keep the point-to-point relationship of theoriginal data in Hamming space, and ignore the nature of the retrieval task, that is, sorting. In order to deal with the problem of large-scale image search and obtain more accurate ranking results bybinary coding, in order to overcome the problems of large-scale image retrieval and improve the use of the model, we can deal with the problem of image search in different feature metric spaces.

Owner:XIAMEN UNIV

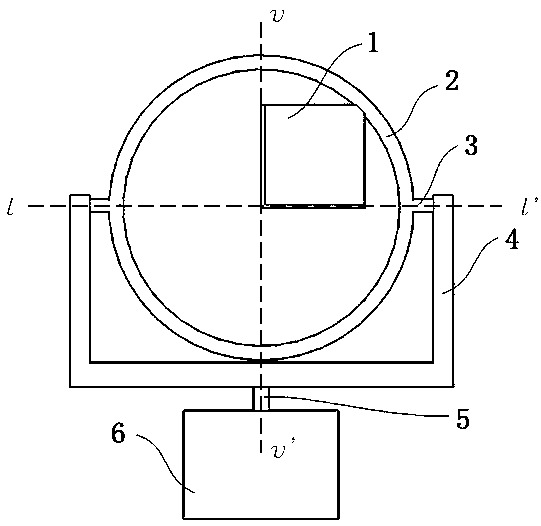

Novel target used for point cloud registration, and extraction algorithm thereof

PendingCN109323656AFlexible and simple layoutImprove registration accuracyImage enhancementImage analysisLaser scanningExceptional point

The invention discloses a novel target used for point cloud registration, and an extraction algorithm thereof. The novel target comprises at least three reflection boards which intersect at a fixed point of intersection, wherein the reflection boards are fixedly connected in a bracket; two sides of the bracket are rotatably connected to two end parts of a bracket supporting seat through a bracketspindle; the bracket supporting seat is connected with a base through a connecting column; and the point of intersection of the central axis (vv') of the connecting column and the central axis (ll') of the bracket spindle and the fixed point of intersection coincide. By use of the extraction algorithm, the point cloud data is subjected to plane fitting, then, an exceptional point with an overlargedeviation is removed, and finally, the plane equation of a plane where the reflection board is positioned is combined to solve the fixed point of intersection. The novel target has the advantages that the target can be flexibly and simply laid, is suitable for observation in any direction, can be placed on planes including ground and the like, and can provide a large scanning range. When the target is far away from a laser scanner, accuracy losses are few so as to be favorable for improving the registration accuracy of a whole measurement area.

Owner:SHANGHAI GEOTECHN INVESTIGATIONS & DESIGN INST

Neural network generation and image processing method and device, platform and electronic equipment

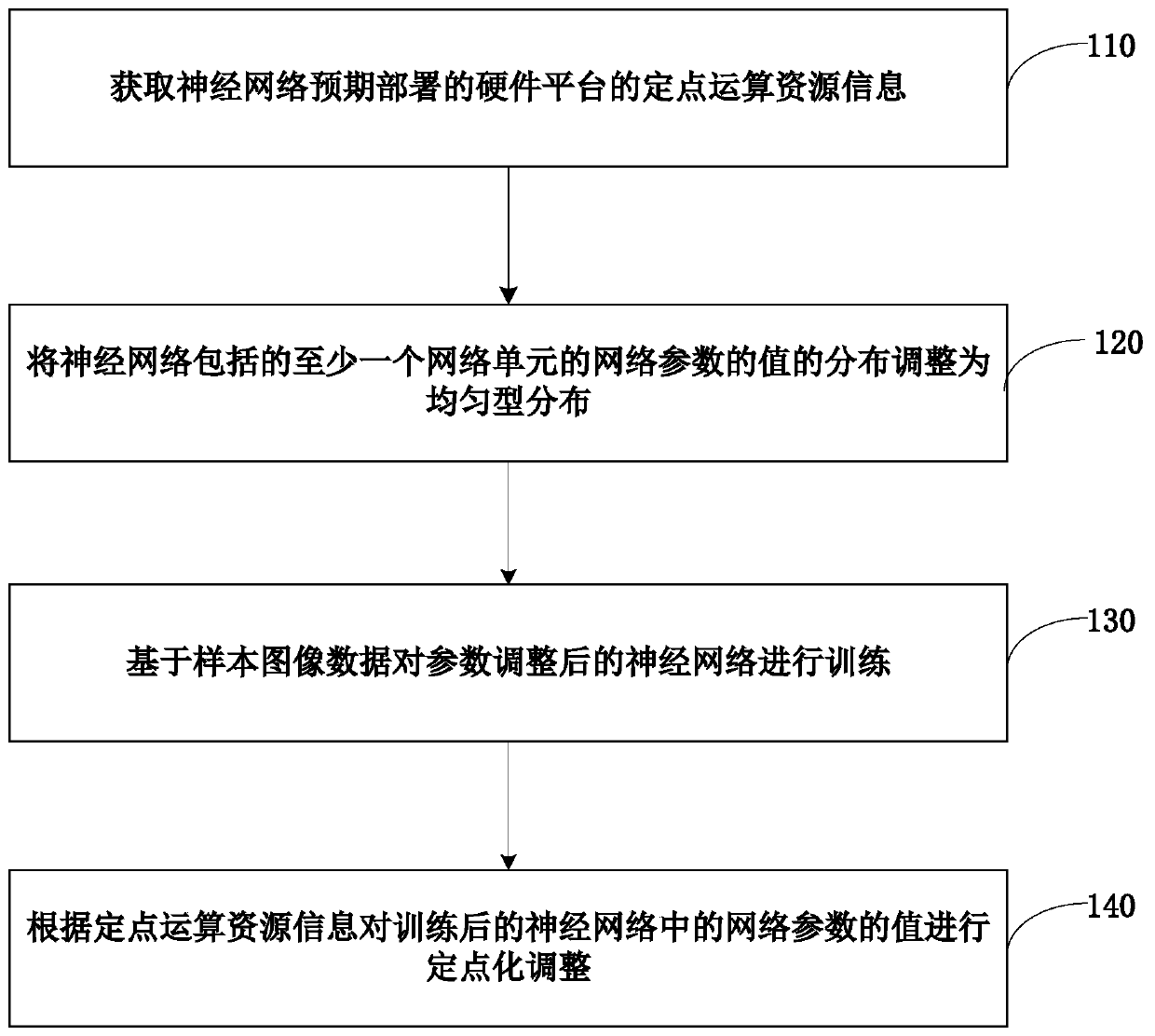

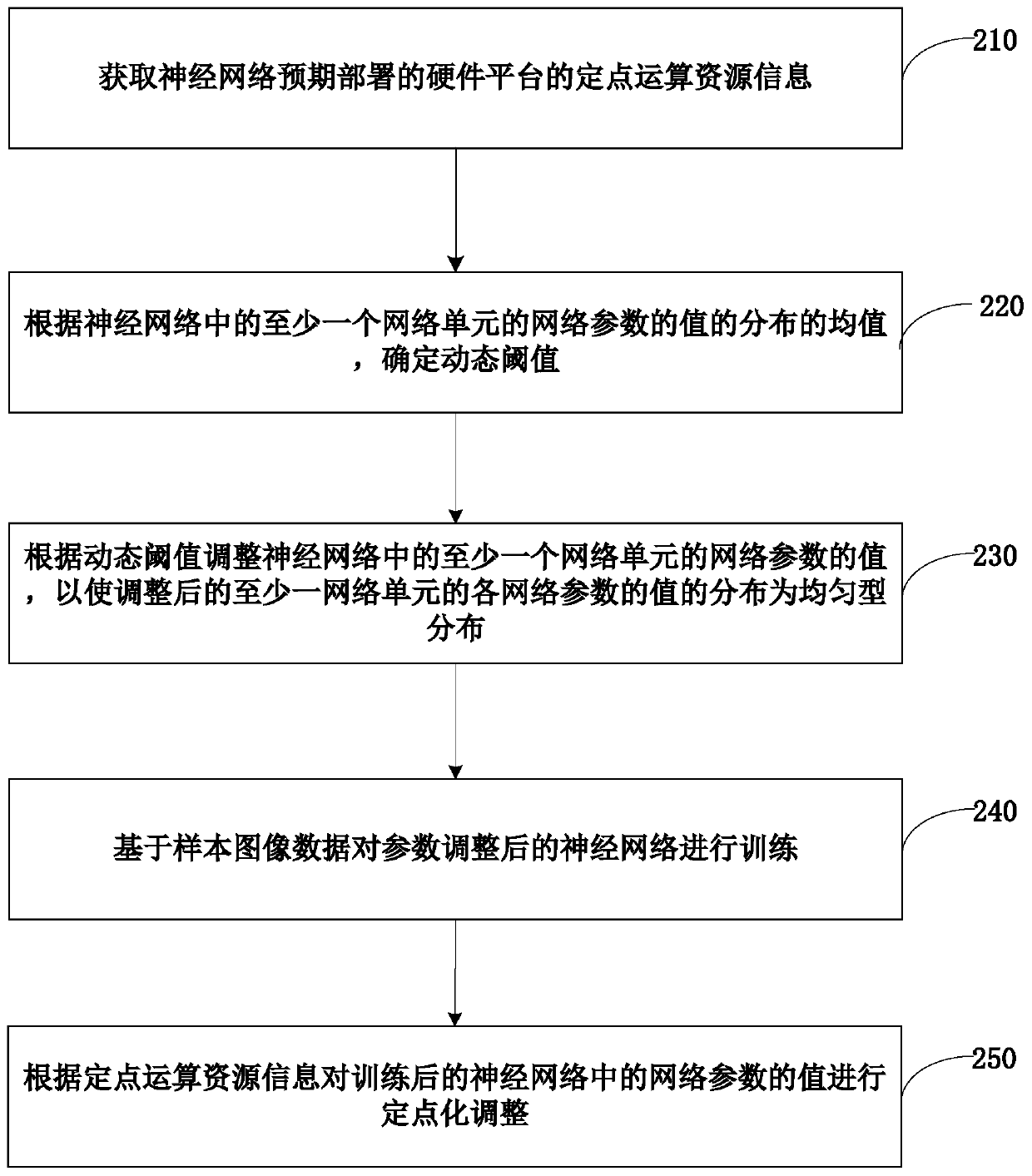

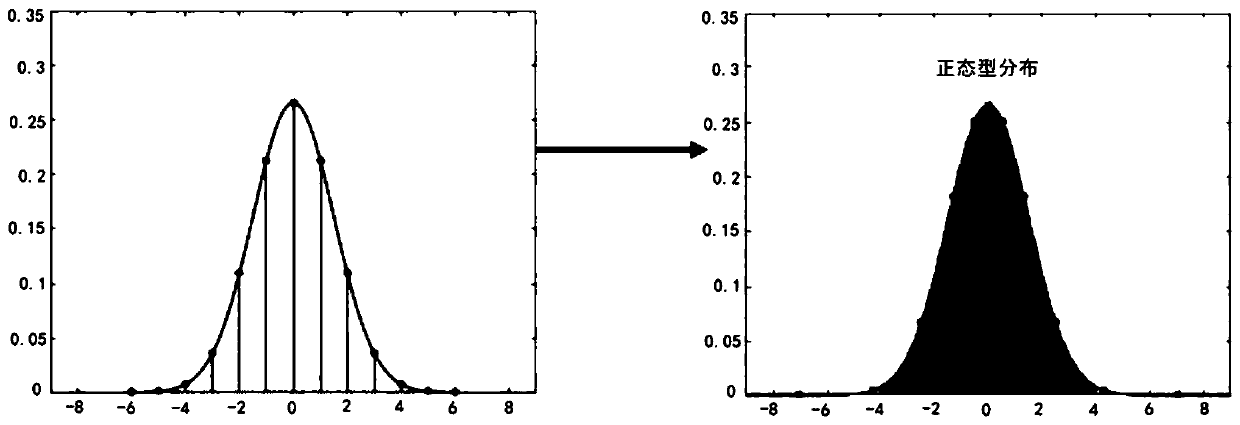

ActiveCN109800865AReduce precision lossImprove processing efficiencyCharacter and pattern recognitionNeural architecturesImaging processingResource information

The embodiment of the invention discloses a neural network generation and image processing method and device, a platform and electronic equipment, and the method comprises the steps: obtaining the fixed-point operation resource information of a hardware platform which is expected to be deployed by a neural network; adjusting the distribution of the values of the network parameters of at least onenetwork unit included in the neural network to be uniform distribution; training the neural network after parameter adjustment based on the sample image data; subjecting the values of the network parameters in the trained neural network to fixed-point adjustment according to the fixed-point operation resource information, reducing the values of the network parameters adjusted to be uniformly distributed are more suitable for fixed-point adjustment, the precision loss caused by conversion from the floating-point number to the fixed-point number, and improving the precision of the neural networksubjected to fixed-point adjustment; and carrying out fixed-point adjustment out based on the fixed-point operation resource information of the hardware platform, so that the adjusted neural networkcan run on the platform with limited hardware resources.

Owner:BEIJING SENSETIME TECH DEV CO LTD

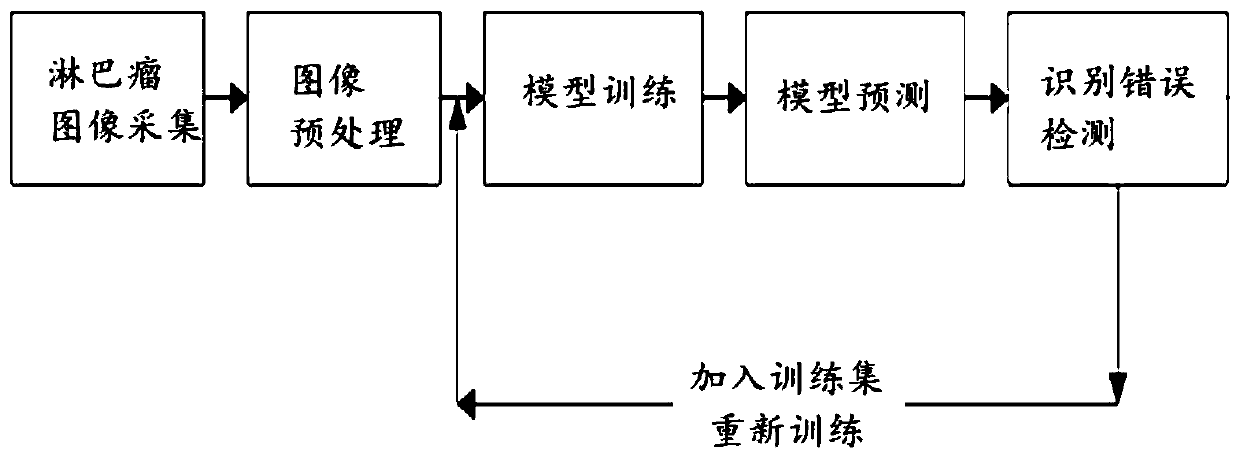

Enteroscopy lymphoma auxiliary diagnosis system based on deep learning

InactiveCN111091559AReduce workloadImprove recognition accuracyImage enhancementImage analysisCircumscribed lesionClassification result

The invention discloses an enteroscope lymphoma auxiliary diagnosis system based on deep learning. The system comprises an image collection module which is connected to an endoscope host through a collection card, and obtains the information of each frame of image collected by the endoscope host; the training set making module is used for selecting a single-frame image with lymphoma lesions as a training sample, labeling a lesion area in the training sample and generating labeling text information corresponding to a labeling position; the auxiliary diagnosis module is used for constructing anauxiliary diagnosis model and carrying out optimization training on the auxiliary diagnosis model by adopting the training set; and inputting an image to be detected into the trained auxiliary diagnosis model, and outputting an image classification result about whether lymphoma lesions exist or not. According to the method, the lymphoma lesion area under the enteroscope is automatically recognizedbased on the neural network algorithm, a doctor only needs to check the recognized image with lesion again, and the workload of picture check of the doctor is greatly reduced.

Owner:SHANDONG UNIV QILU HOSPITAL +1

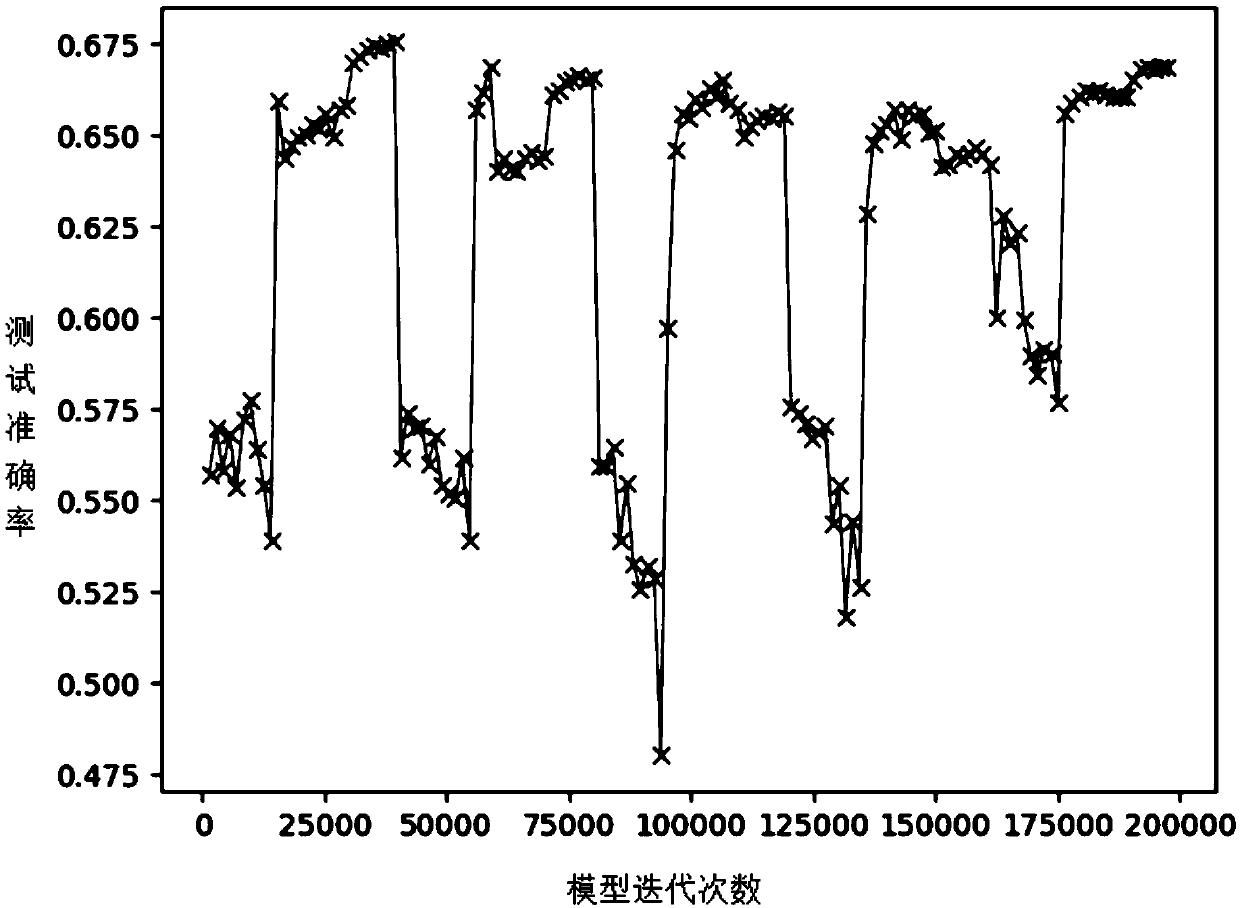

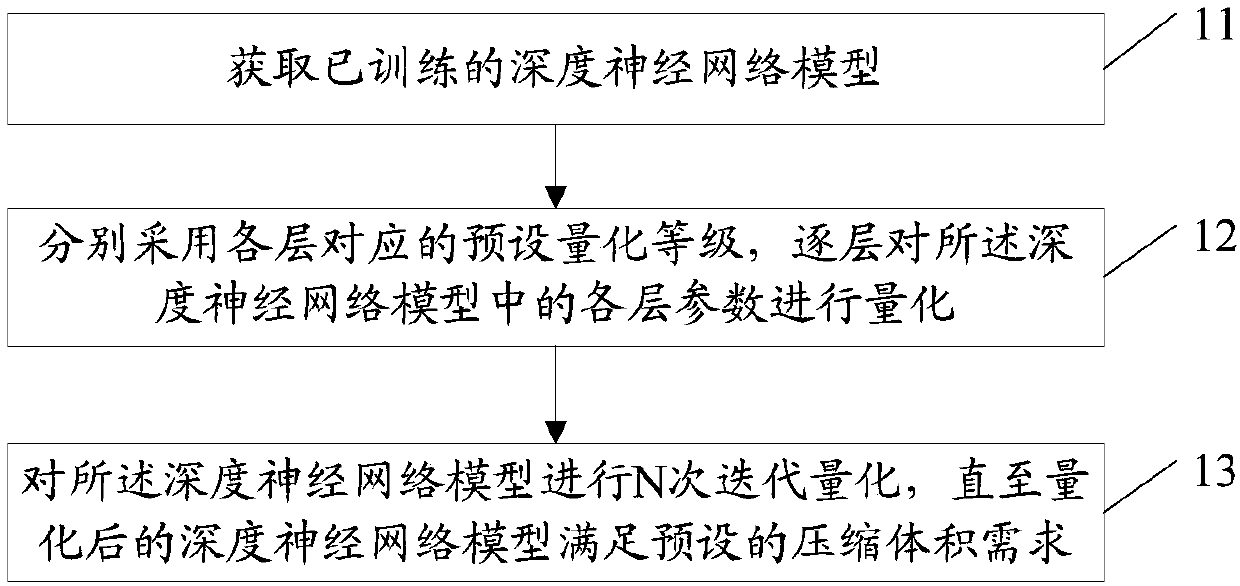

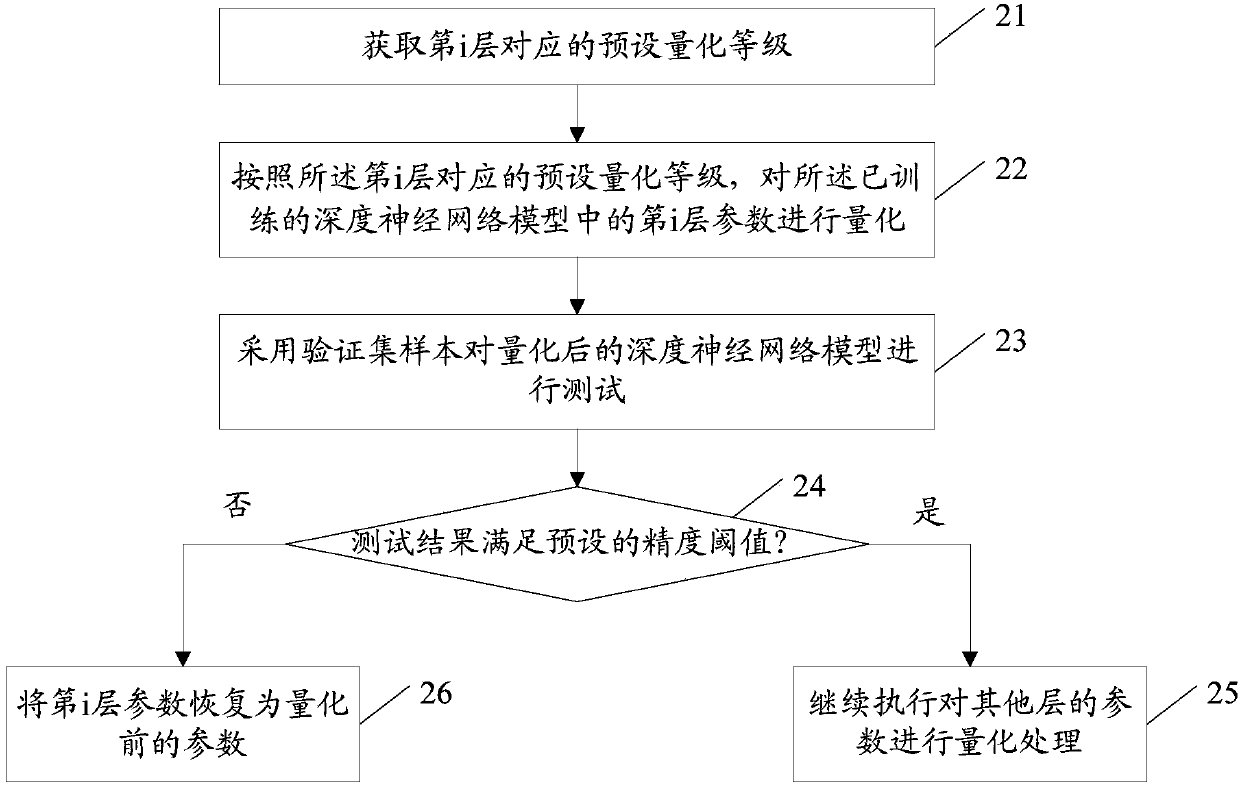

Deep neural network model-based compression method and apparatus, terminal and storage medium

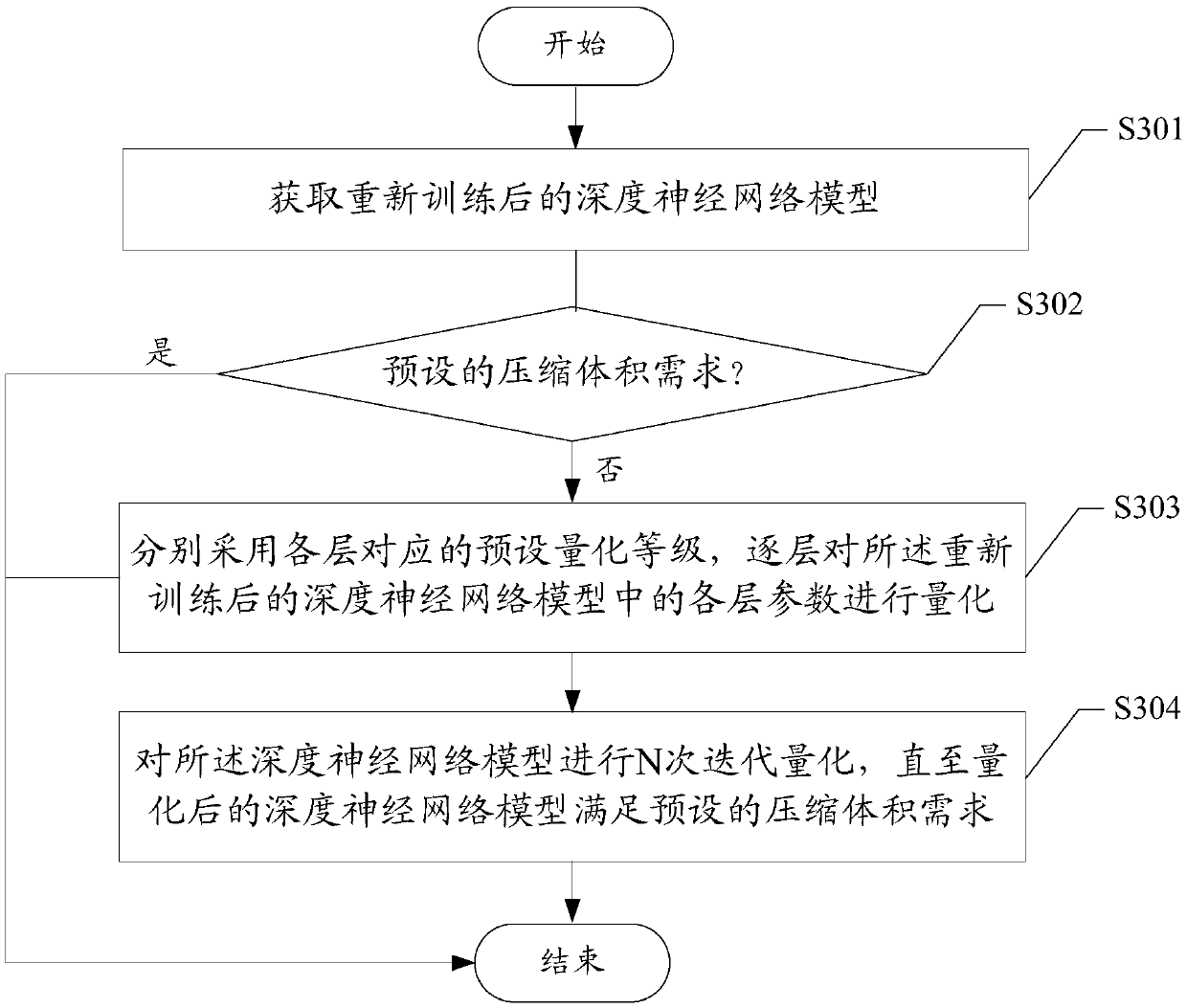

InactiveCN108734268AReduce in quantityReduce precision lossNeural architecturesAlgorithmTheoretical computer science

The invention discloses a deep neural network model-based compression method and apparatus, a terminal and a storage medium. The method comprises the steps of acquiring a trained deep neural network model; by adopting preset quantization levels corresponding to all layers, carrying out iterative quantization on parameters of the layers in the deep neural network model layer by layer, until the quantized deep neural network model meets a preset compression volume demand, wherein the parameter of the ith layer is subjected to the following quantization processing: according to the preset quantization level corresponding to the ith layer, quantizing the parameter of the ith layer; testing the quantized deep neural network model by adopting a verification set sample; when a test result shows that the precision after the quantization does not meet a preset precision threshold value, restoring the parameter of the ith layer to be the parameter before the quantization; and when the preset precision threshold value is met, continuing to perform quantization processing on the parameters of other layers. By the adoption of the scheme, the precision and effectiveness of the deep neural network model can be taken into account when the deep neural network model is compressed.

Owner:SPREADTRUM COMM (SHANGHAI) CO LTD

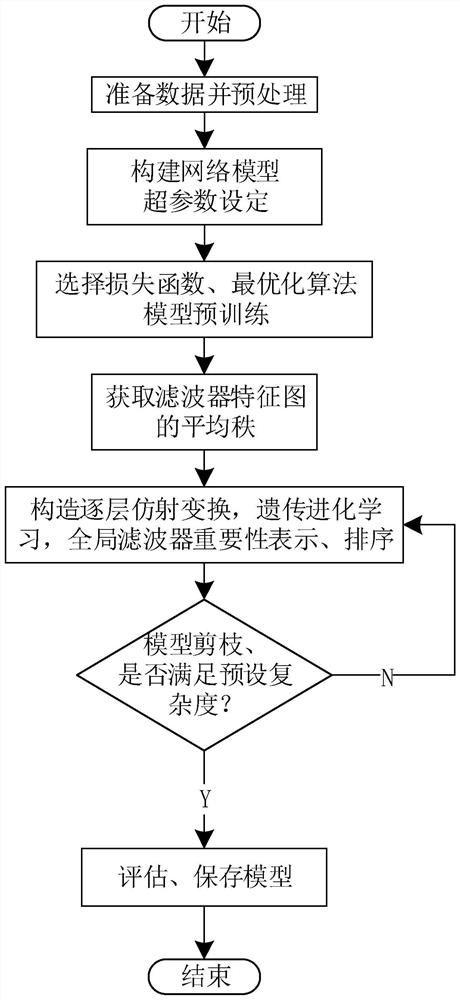

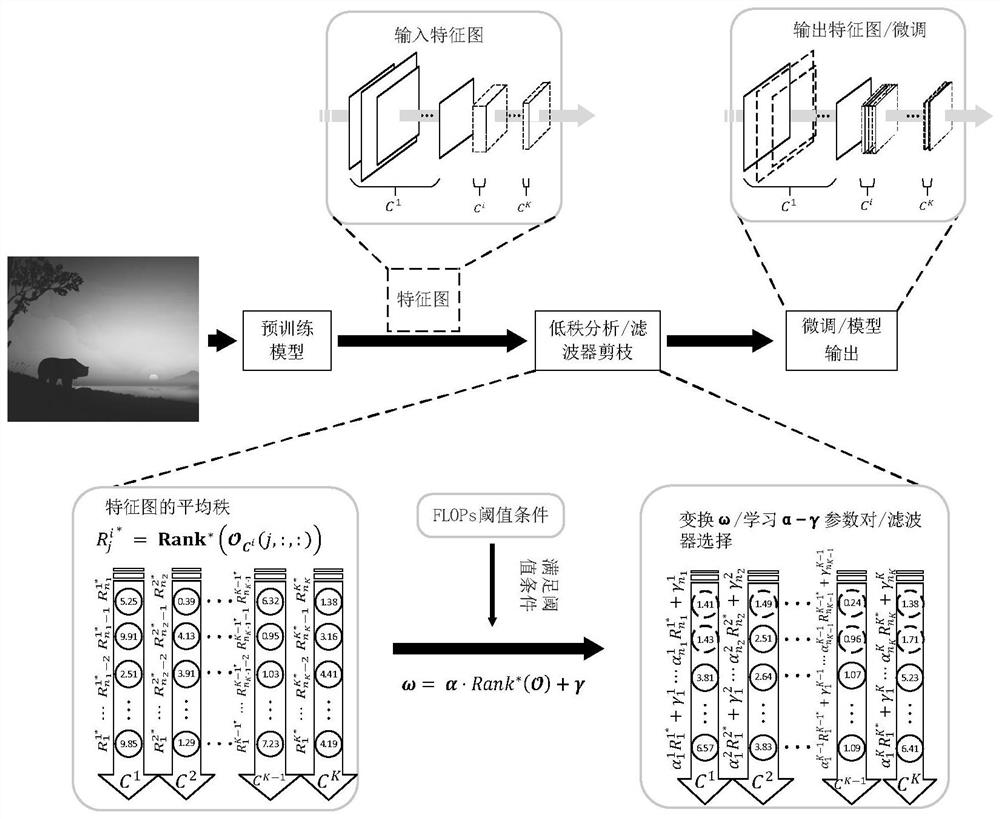

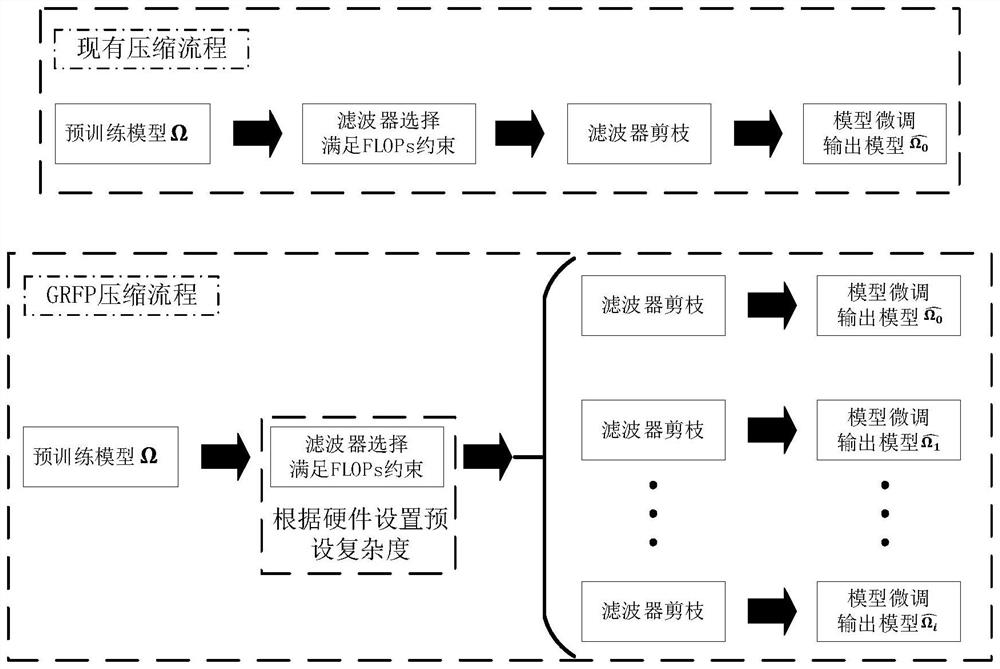

Global rank perception neural network model compression method based on filter feature map

PendingCN114037844ASolve the ill-chosen problemSolve efficiency problemsCharacter and pattern recognitionNeural architecturesAdaptive learningAlgorithm

The invention discloses a global rank perception neural network model compression method based on a filter feature map, and solves the problems of high labor cost, low pruning efficiency and poor stability of a filter pruning model compression method. The method comprises the steps of obtaining and preprocessing image data; constructing a universal convolutional neural network, and setting hyper-parameters; selecting a loss function and an optimization algorithm; training and storing the pre-training model; acquiring an average rank of the feature map; adaptively learning a parameter matrix; representing and sequencing the importance of the filters; pruning and storing the pre-training model; and finely adjusting the network model to realize the global rank perception neural network model compression based on the filter feature map. According to the method, one-time learning and multi-time pruning are adopted, the filters are globally sequenced, pruning is unified, and ill-conditioned selection does not exist. The pruning effect and stability are better, the pruning efficiency of large-scale different-complexity networks is high, and the adaptability of different edge devices is good. The method is used for computing and storing resource-limited edge equipment.

Owner:XIDIAN UNIV

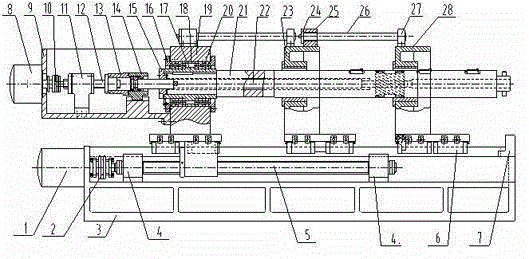

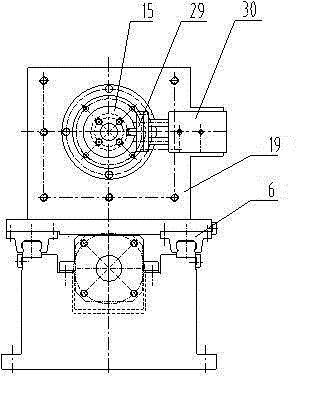

Boring rod inserting device for combined machine tool

ActiveCN103909292AReduce precision lossAvoid wear and tearFeeding apparatusBoring/drilling componentsControl engineeringStructural engineering

Owner:东台市富城资产经营有限公司

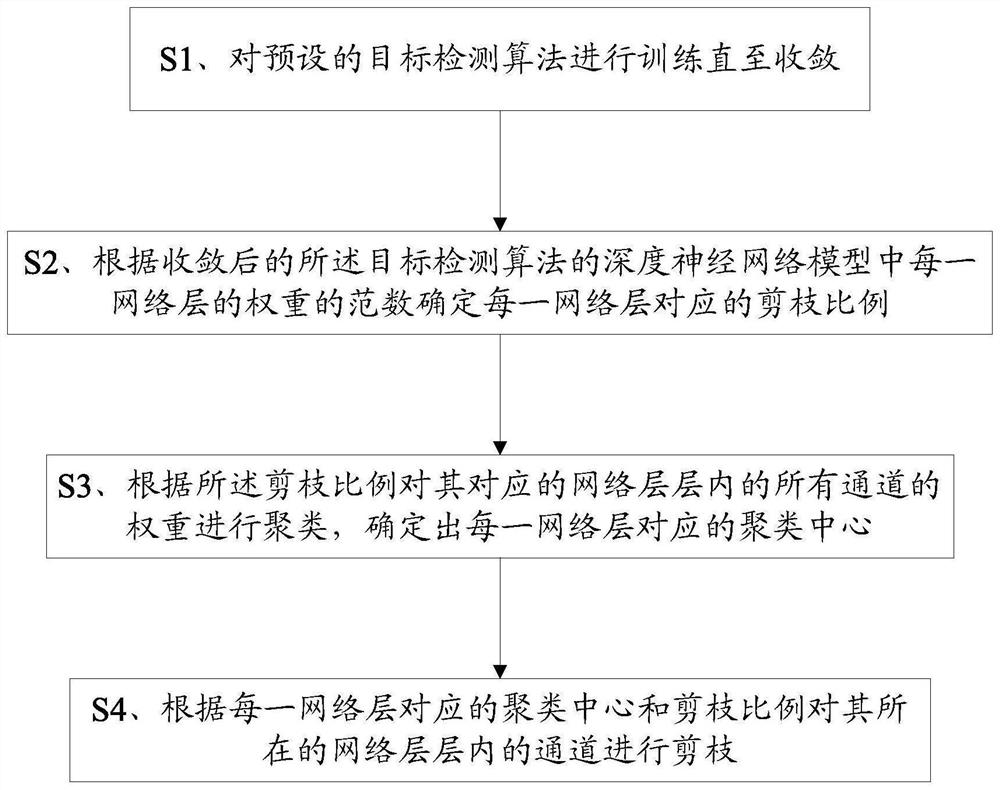

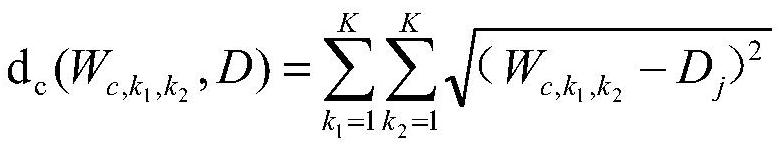

Pruning method applied to target detection and terminal

ActiveCN111612144AAchieve compressionGuaranteed compressionCharacter and pattern recognitionNeural architecturesPattern recognitionAlgorithm

The invention discloses a pruning method applied to target detection and a terminal. The pruning method comprises the following steps: training a preset target detection algorithm until convergence; determining a pruning proportion corresponding to each network layer according to the norm of the weight of each network layer in the converged deep neural network model of the target detection algorithm; clustering the weights of all channels in the network layer corresponding to the pruning proportion according to the pruning proportion, and determining a clustering center corresponding to each network layer; according to the clustering center and the pruning proportion corresponding to each network layer, pruning channels in the network layer where the network layer is located; realizing pruning of channels of each network layer in the deep neural network model based on norm weight clustering; and deleting redundant channels to compress the deep neural network model, thus the pruning process is simple, consumed time is short, dependence is little in the pruning process, dependence on any parameter and a specific layer is not needed, and meanwhile precision loss is reduced while compression is guaranteed.

Owner:SANLI VIDEO FREQUENCY SCI & TECH SHENZHEN

Efficient image retrieval method based on discrete local linear imbedding Hash

InactiveCN108182256AAdapt to flow pattern distributionReduce precision lossCharacter and pattern recognitionSpecial data processing applicationsPrincipal component analysisImage retrieval

The invention discloses an efficient image retrieval method based on discrete local linear imbedding Hash and relates to image retrieval. For images in an image library, a part of images are randomlyselected as a training set, and corresponding image characteristics are extracted; a principal component analysis method is adopted, and dimensions of original image characteristics are lowered till the dimensions are equal to the lengths of Hash codes; a similarity relation matrix of training samples and an optimal reconstruction weight of each data point are established; a corresponding Hash function is learned through iterative optimization; the corresponding Hash function is output, and the Hash codes of the whole image library are calculated; for query images, firstly, corresponding characteristics are extracted, then the image characteristics are subjected to Hash coding by adopting the same method according to a trained Hash coding function, the Hamming distance between the Hash codes of the query images and image characteristic codes of the image library is calculated, the similarity between the query images and images to be retrieved in the image library is measured by using the Hamming distance, and images with high similarity are returned.

Owner:XIAMEN UNIV

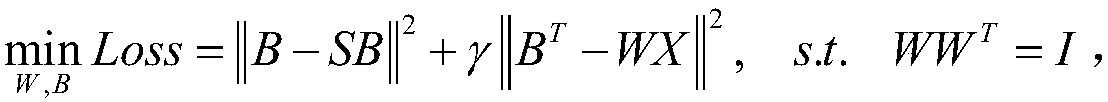

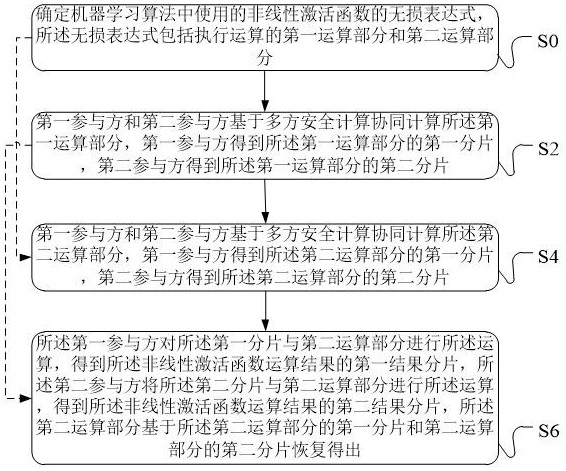

Privacy protection data processing method, device and equipment and machine learning system

ActiveCN112000990AImprove data processing efficiencyImprove processing efficiencyDigital data protectionMachine learningActivation functionAlgorithm

The invention provides a privacy protection data processing method, device and equipment and a machine learning system. In one embodiment of the method, when a data processing result of a non-linear activation function used in a machine learning algorithm needs to be obtained in a multi-party participated data sharing application scene, a lossless expression of the non-linear activation function can be used, each subitem can be cooperatively calculated by utilizing multi-party security calculation, the subitem can be prevented from being expanded into an approximate evaluation algorithm of a polynomial, the calculation complexity is reduced, and the data processing efficiency of the non-linear activation function calculated by computer equipment is improved. Moreover, the complexity and precision loss of approximate calculation of nonlinear activation functions such as Taylor expansion can be greatly reduced.

Owner:ALIPAY (HANGZHOU) INFORMATION TECH CO LTD

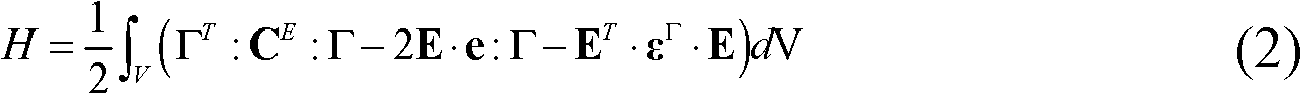

Piezoelectric composite rod machine-electric coupling performance emulation and simulation method

InactiveCN102096737ASmall amount of calculationSave 2-3 stage calculationsSpecial data processing applicationsTensor decompositionSimulation

The invention relates to the field of material mechanic performance characterization, and discloses a piezoelectric composite rod machine-electric coupling performance emulation and simulation method. The method comprises the following steps of: constructing a piezoelectric composite rod three-dimensional electromechanical enthalpy equation based on a Hamilton principle and a rotation tensor decomposition concept; strictly reducing the dimensionality of an original three-dimensional model to form a one-dimensional model by taking the slenderness ratio of a piezoelectric composite rod as a small parameter; asymptotically computing the one-dimensional electromechanical enthalpy of the piezoelectric composite rod; storing a second-order asymptotically corrected energy term in an approximate enthalpy expression to obtain the one-dimensional electromechanical enthalpy which is equivalent to the original three-dimensional electromechanical enthalpy; and converting an approximate enthalpy into an additional one-dimensional electric freedom degree-containing general Timoshenko model form through an equilibrium equation so as to be convenient for engineering application. The method has high practicability, high universality, and stable and reliable obtained results, and can effectively simulate piezoelectric composite rod machine-electric coupling performance and accurately capture a medium effect and piezoelectric material polarization properties.

Owner:CHONGQING UNIV

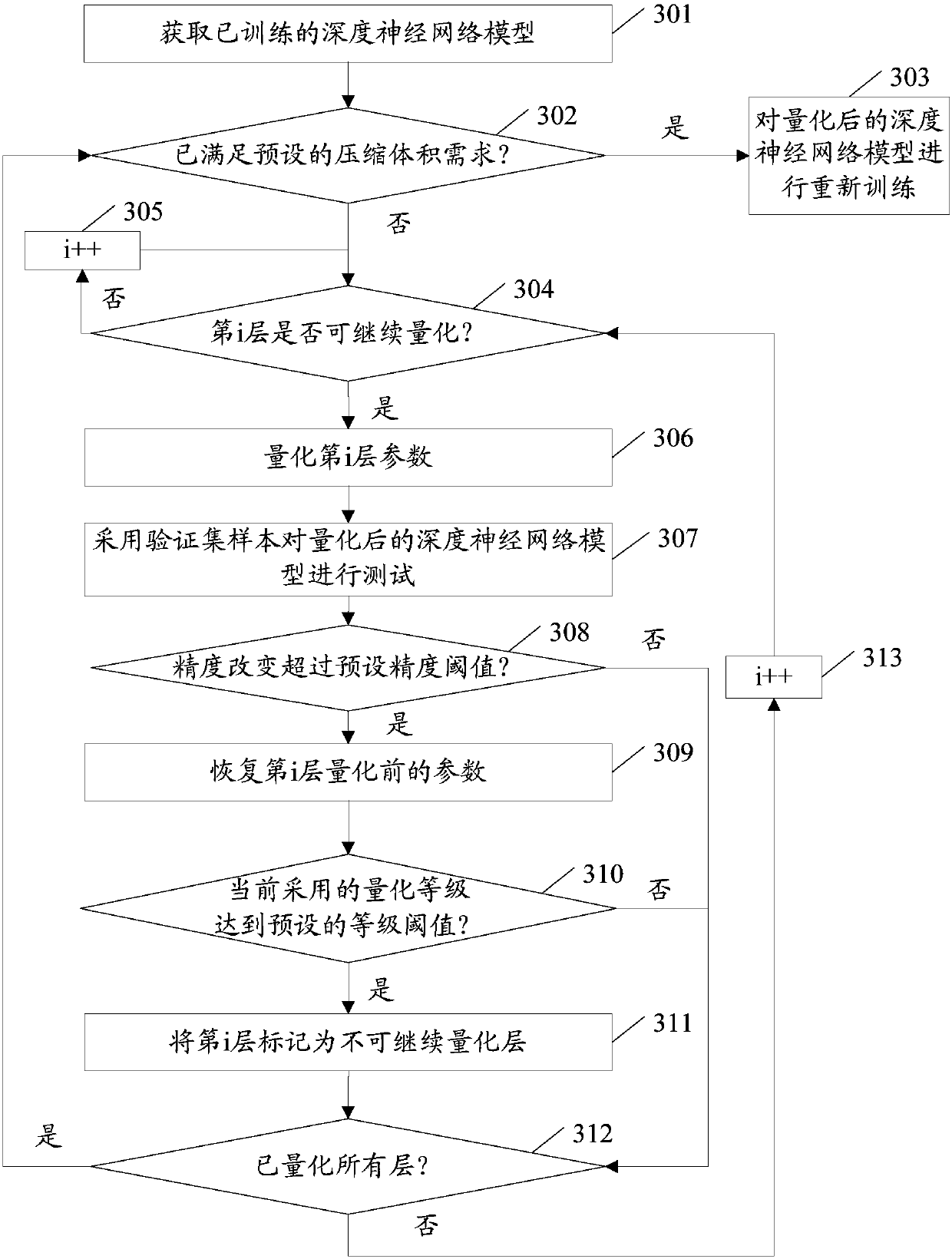

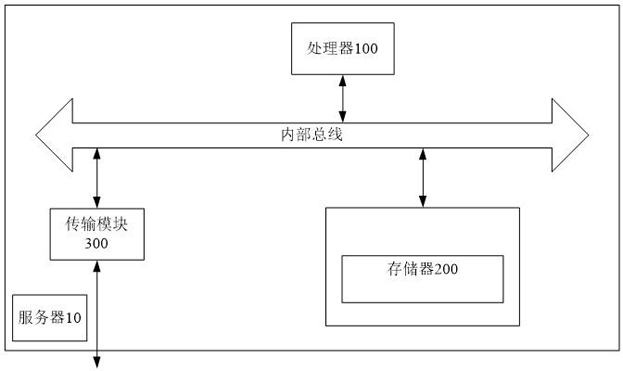

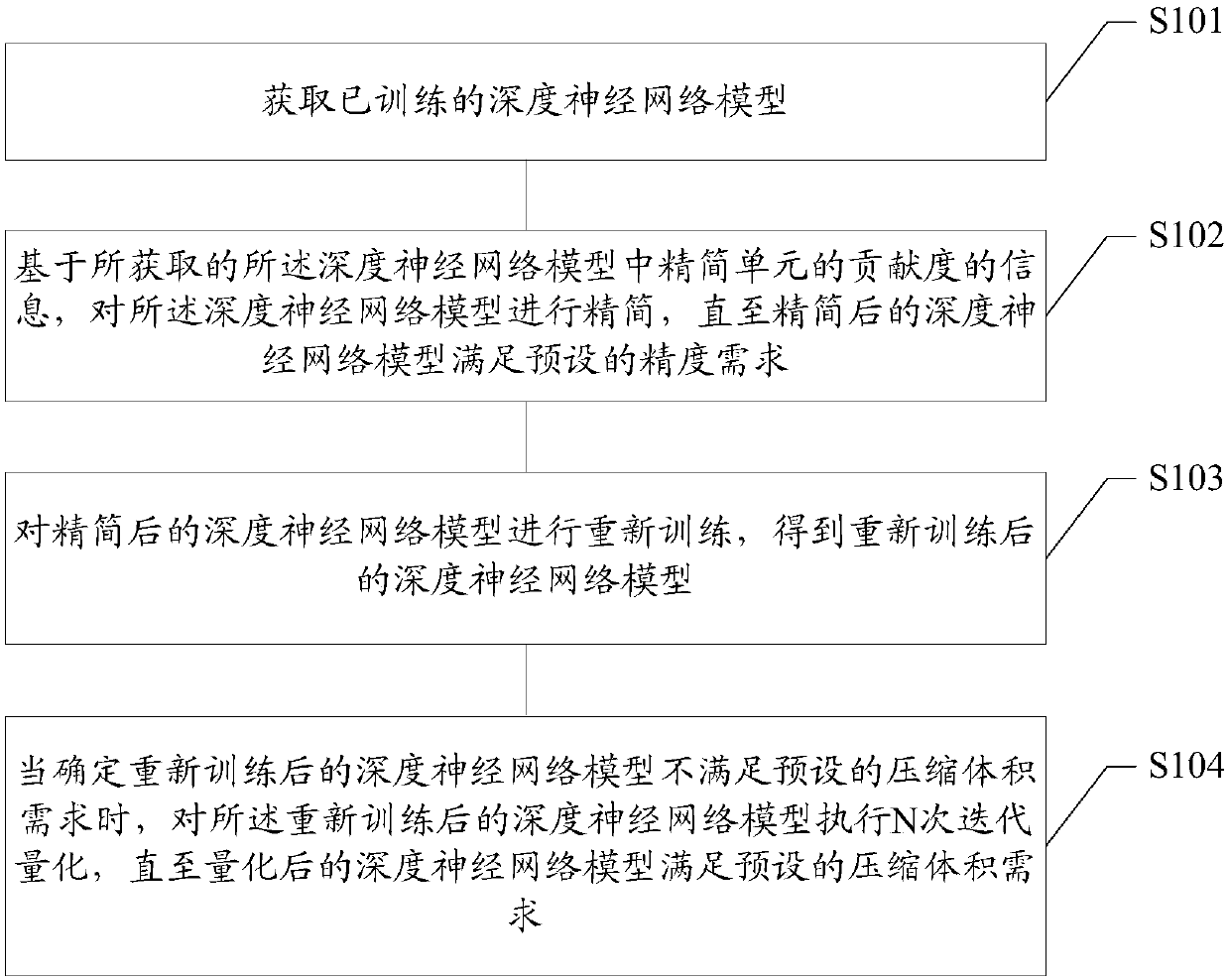

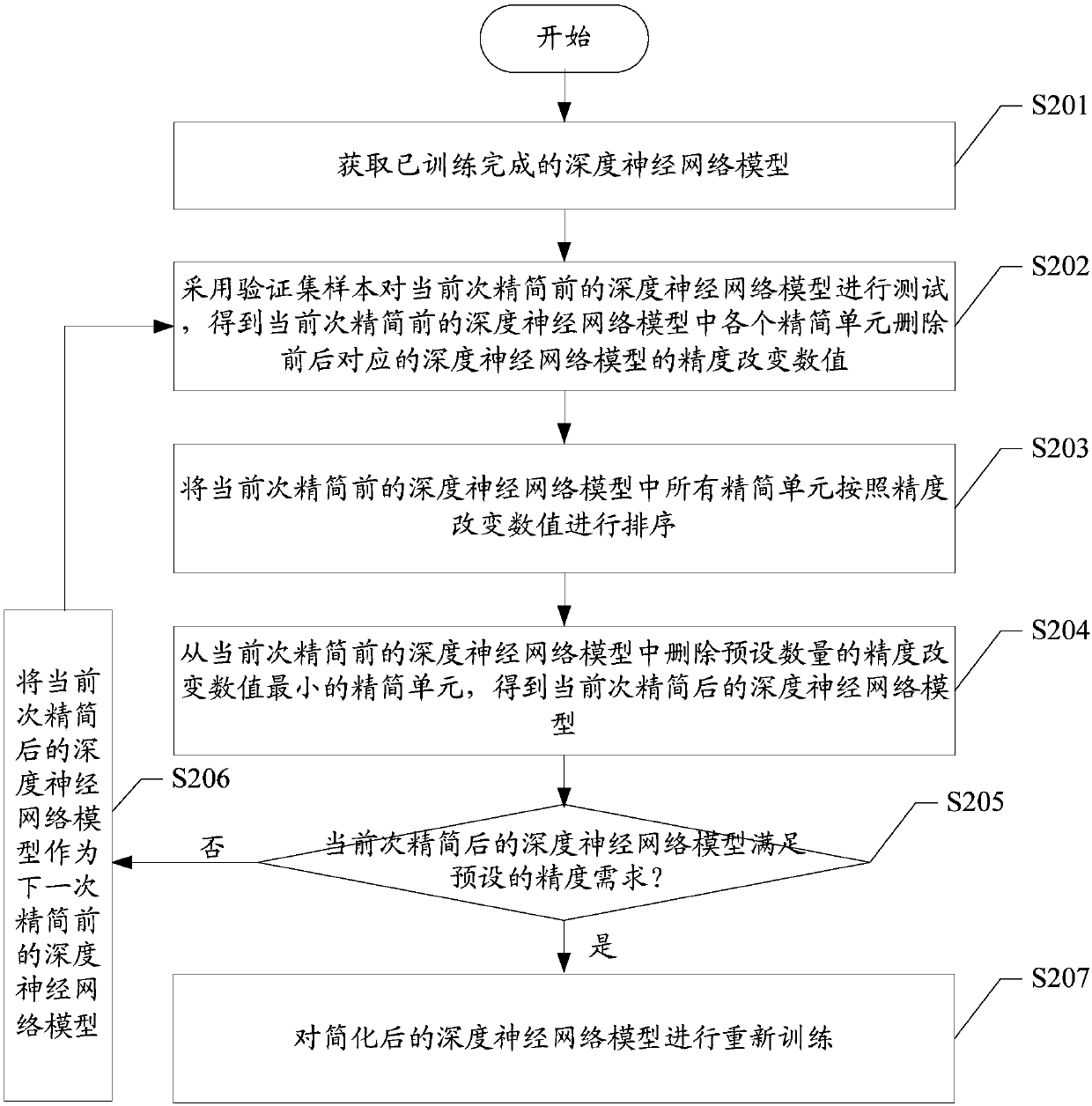

Compression method and apparatus for deep neural network model, terminal and storage medium

InactiveCN108734266AHigh precisionReduce in quantityNeural architecturesComputer terminalData mining

The invention discloses a compression method and apparatus for a deep neural network model, a terminal and a storage medium. The method comprises the steps of simplifying the deep neural network modelbased on obtained information of contribution degrees of simplified units of all layers in the deep neural network model, until the simplified deep neural network model meets a preset precision demand; re-training the simplified deep neural network model to obtain a retrained deep neural network model; when it is determined that the retrained deep neural network model does not meet a preset compression volume demand, carrying out iterative quantization on parameters of the layers in the retrained deep neural network model; and performing N-time iterative quantization on the retrained deep neural network model, until the quantized deep neural network model meets the preset compression volume demand. According to the scheme, the precision and effectiveness of the deep neural network model can be taken into account when the deep neural network model is compressed.

Owner:SPREADTRUM COMM (SHANGHAI) CO LTD

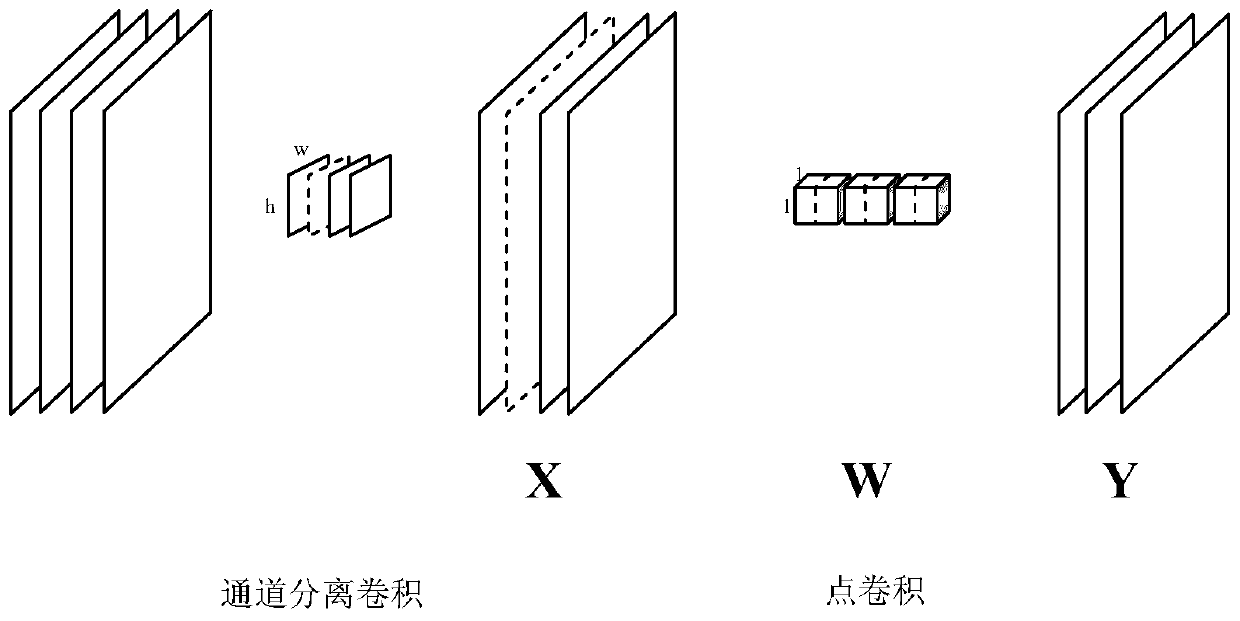

Pruning method for embedded network model

InactiveCN109754080AReduce reconstruction errorImprove practicalityNeural architecturesNeural learning methodsNetwork modelComputer science

The invention discloses a pruning method for an embedded network model. The pruning method is used for solving the technical problem that an existing pruning method is poor in practicability. According to the technical scheme, the method comprises the following steps: firstly, establishing a mobienet SSD network model, and carrying out a forward operation to obtain data required by pruning calculation; Channels which are not important to a convolution layer calculation result are selected through lasso regression, and channels which have relatively low influence on a summation result in the channels are selected through a lasso algorithm; The Mobienet resolves an original layer of convolution into a channel separation convolution layer and a point convolution layer, and an input channel ofthe channel separation convolution layer is equal to an output channel of the channel separation convolution layer. According to the method, the reconstruction error is reduced to serve as the core,the lasso is used for picking out unimportant channels in all convolution layers, then channel trimming is conducted on all the convolution layers according to the special structure of the mobienet, compression acceleration of the mobienet SSD is completed, and the practicability is good.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

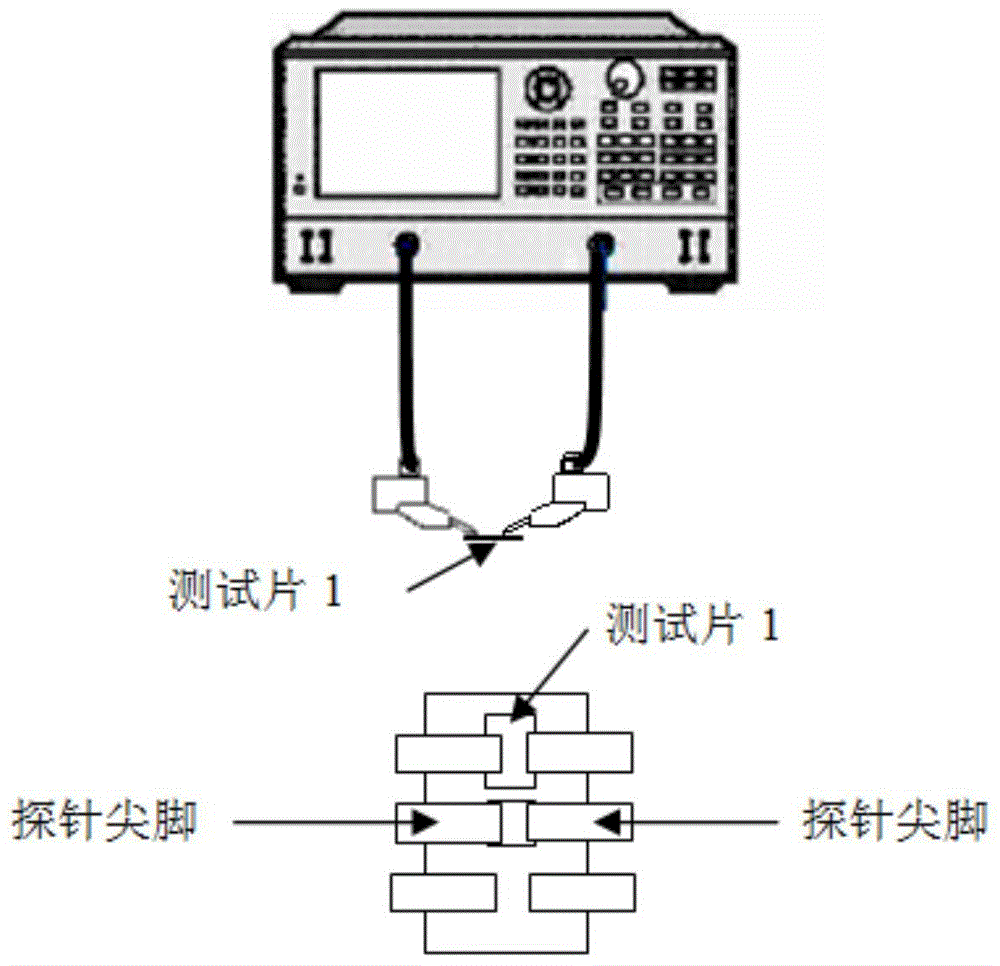

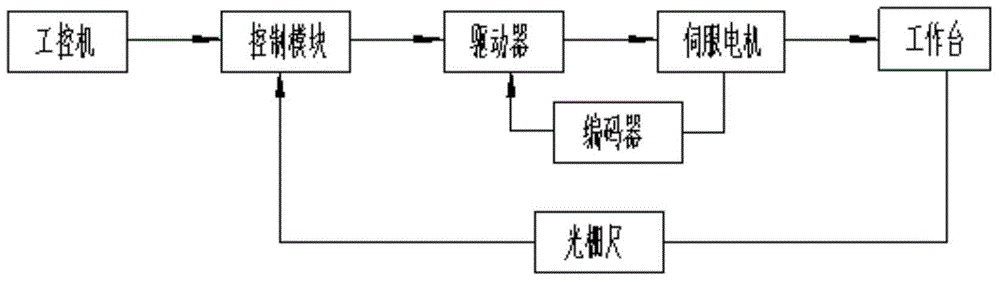

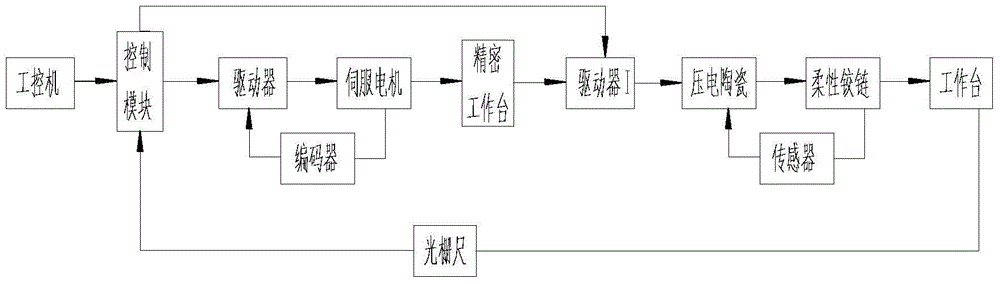

Controllable high-frequency-response probe test moving device for microwave and millimeter wave chips

ActiveCN104793121AFast testShorten the test cycleIndividual semiconductor device testingGratingEngineering

The invention discloses a controllable high-frequency-response probe test moving device for microwave and millimeter wave chips. The controllable high-frequency-response probe test moving device comprises an industrial personal computer, a control module, a driver, a servo motor, a precision displacement device and a grating ruler. The precision displacement device comprises a precision workbench, a driver I, piezoelectric ceramics and a flexible hinge. The industrial personal computer is connected with the control module, the control module is connected with the driver, the driver is connected with the servo motor, the servo motor is connected with the driver through an encoder, the servo motor is connected with the precision workbench, the precision workbench is connected with the driver I, the driver I is connected with the piezoelectric ceramics, the piezoelectric ceramics is connected with the flexible hinge, the flexible hinge is connected with the workbench, the workbench is connected with the control module through the grating ruler, the driver I is connected with the control module, prior one-time positioning is changed into coarse positioning in which the motor drives a lead screw, and the precision displacement device belongs to secondary positioning of fine positioning. A fine positioning system is high in test speed, and on the premise of not affecting test acceptability, test cycle is shortened greatly, efficiency and productivity are improved, and test cost is reduced.

Owner:THE 41ST INST OF CHINA ELECTRONICS TECH GRP

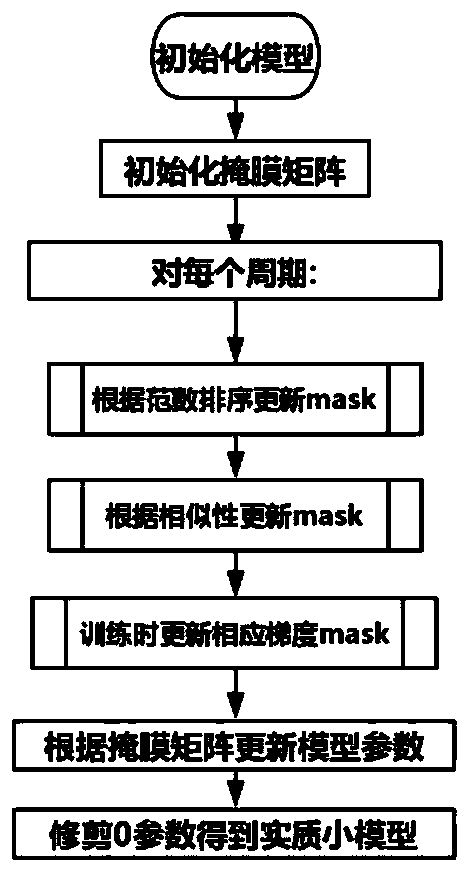

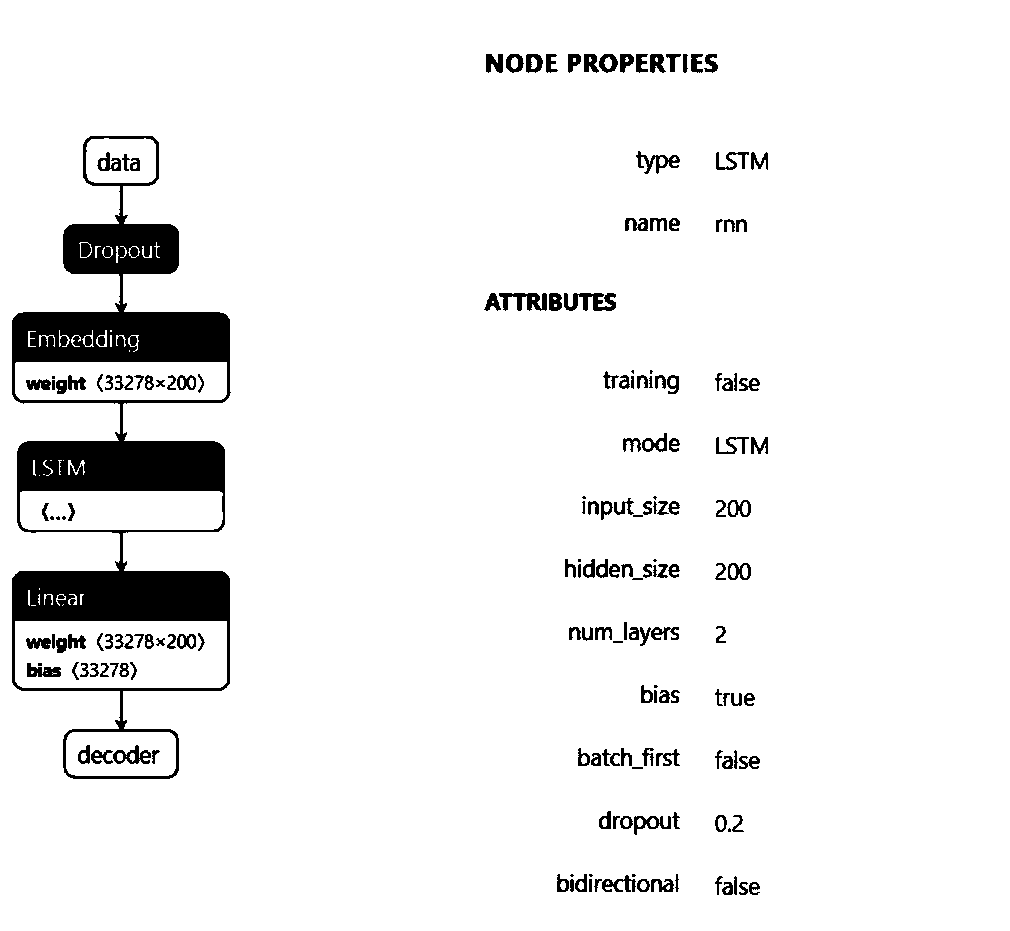

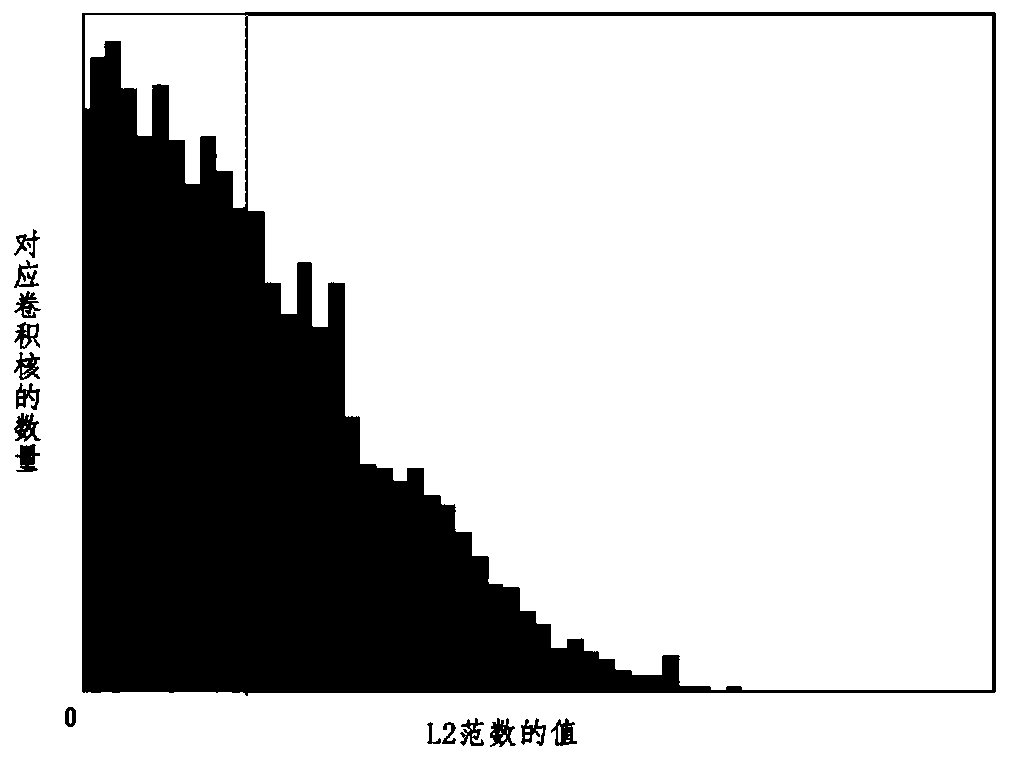

Convolution kernel similarity pruning-based recurrent neural network model compression method

InactiveCN111126602AEfficient compressionReduce precision lossNeural architecturesNeural learning methodsPattern recognitionAlgorithm

The invention discloses a convolution kernel similarity pruning-based recurrent neural network model compression method and belongs to the technical field of computer electronic information. The method comprises the steps of: loading a pre-trained recurrent neural network model into a compressed recurrent neural network for training the pre-trained recurrent neural network model so as to obtain aweight matrix-initialized recurrent neural network model; calculating the L2 norms of each convolution kernel in the recurrent neural network model, sorting the L2 norms, and selecting convolution kernels in a norm pruning rate range and pruning the convolution kernels; and calculating the weight center of the convolution kernel of the pruned pre-trained recurrent neural network model, selecting convolution kernels in a similarity pruning rate P2 range and pruning the convolution kernels, performing gradient updating on a weight matrix corresponding to the convolution kernels, and pruning parameters in the updated weight matrix to obtain a compressed recurrent neural network model. According to the recurrent neural network model compression method provided by the invention, the large recurrent neural network model is effectively compressed while accuracy loss in a pruning process is reduced.

Owner:ZHEJIANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com