Patents

Literature

1551 results about "Activation function" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

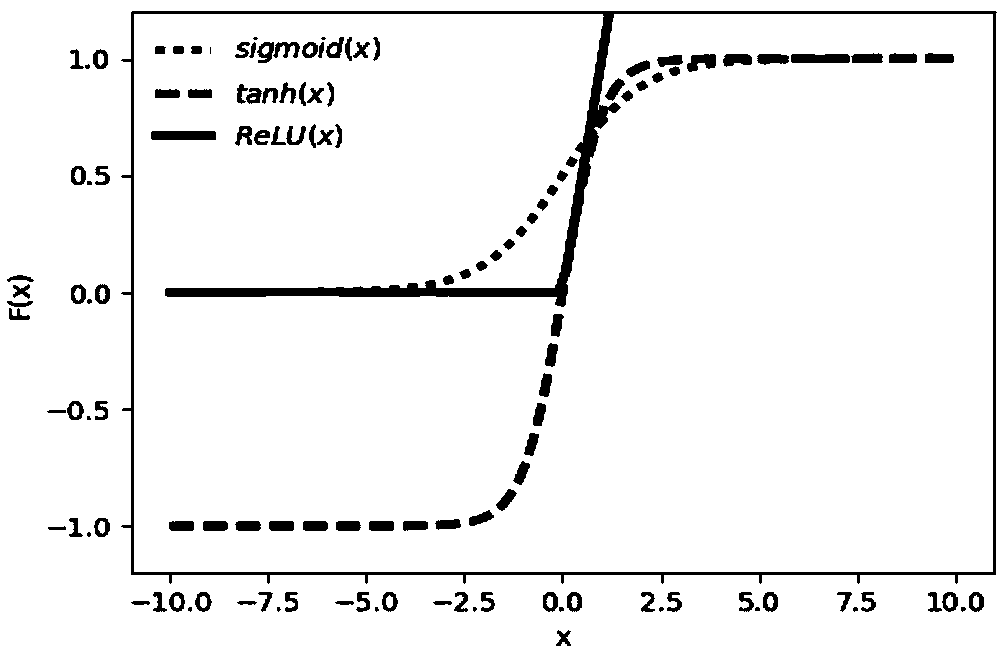

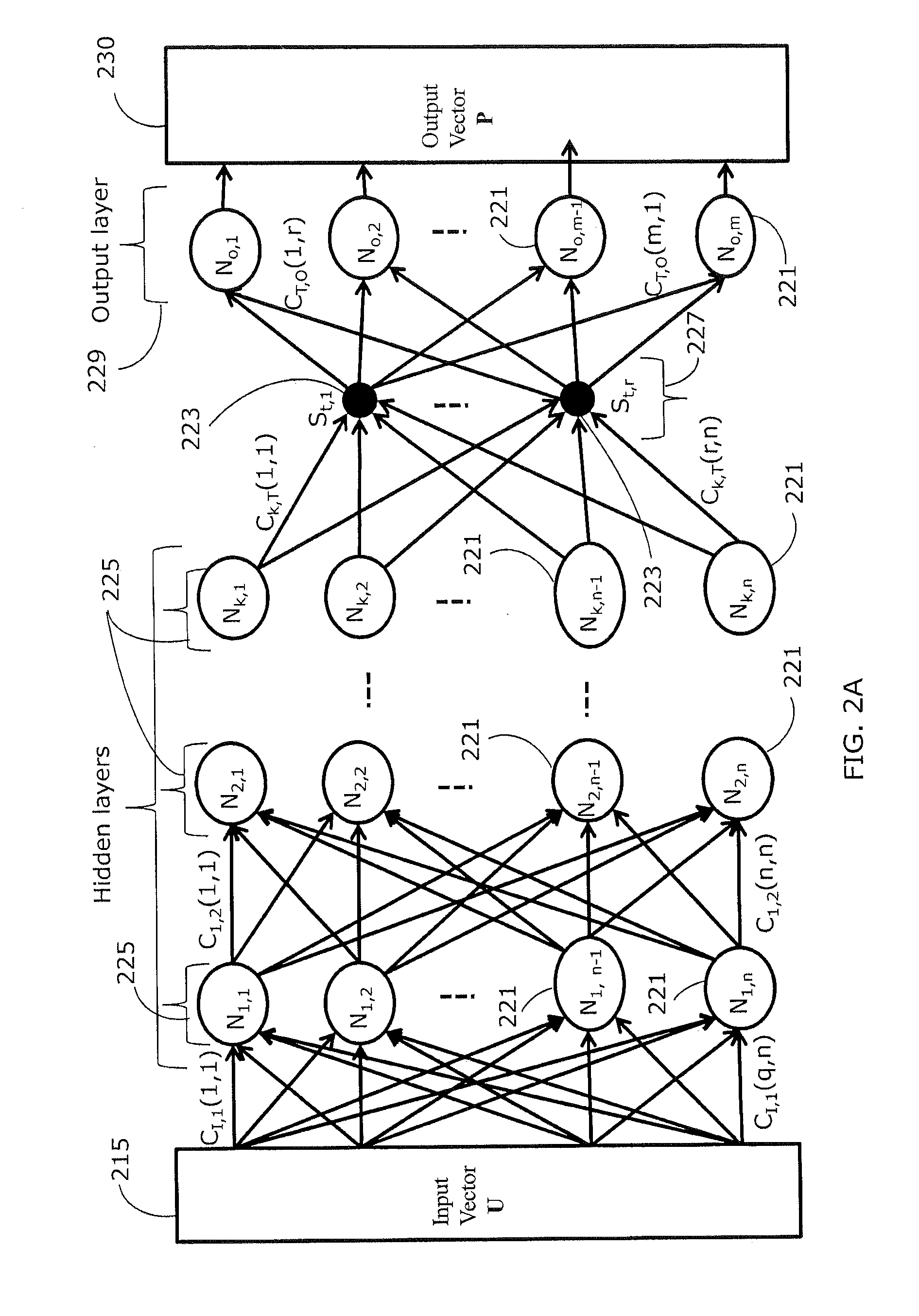

In artificial neural networks, the activation function of a node defines the output of that node given an input or set of inputs. A standard computer chip circuit can be seen as a digital network of activation functions that can be "ON" (1) or "OFF" (0), depending on input. This is similar to the behavior of the linear perceptron in neural networks. However, only nonlinear activation functions allow such networks to compute nontrivial problems using only a small number of nodes. In artificial neural networks, this function is also called the transfer function.

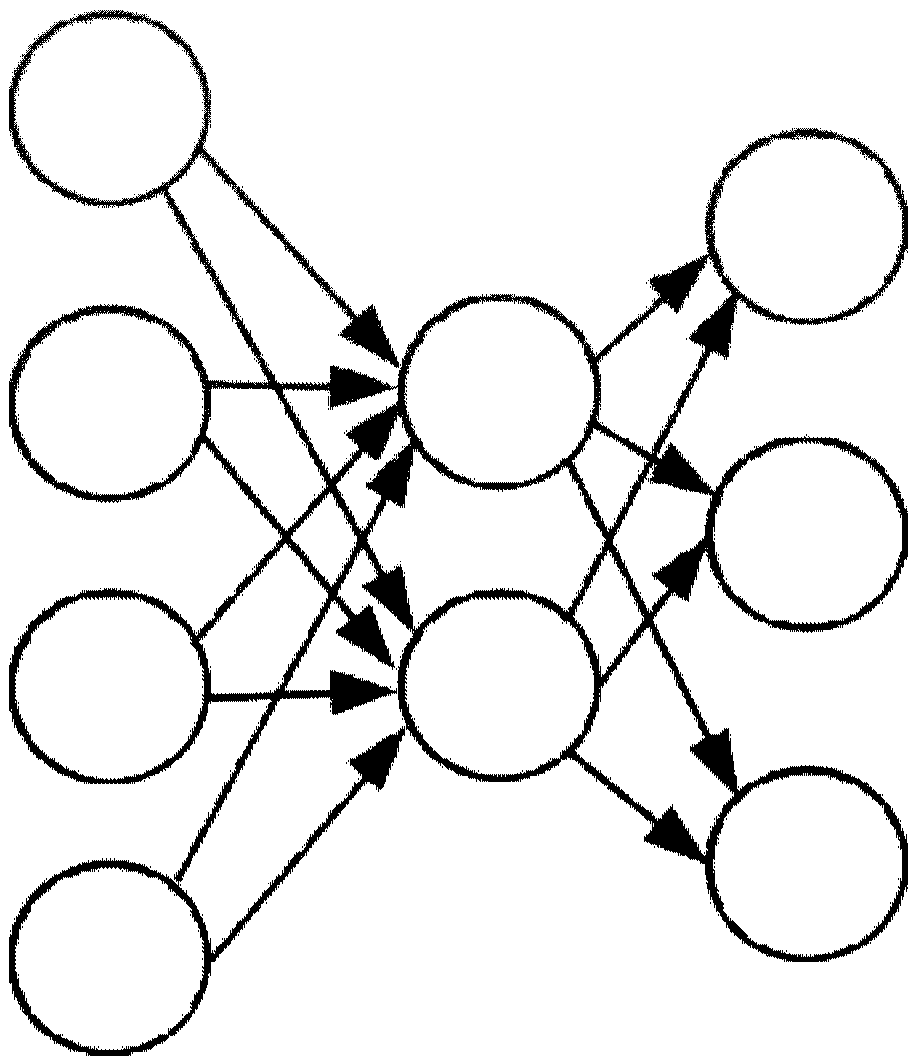

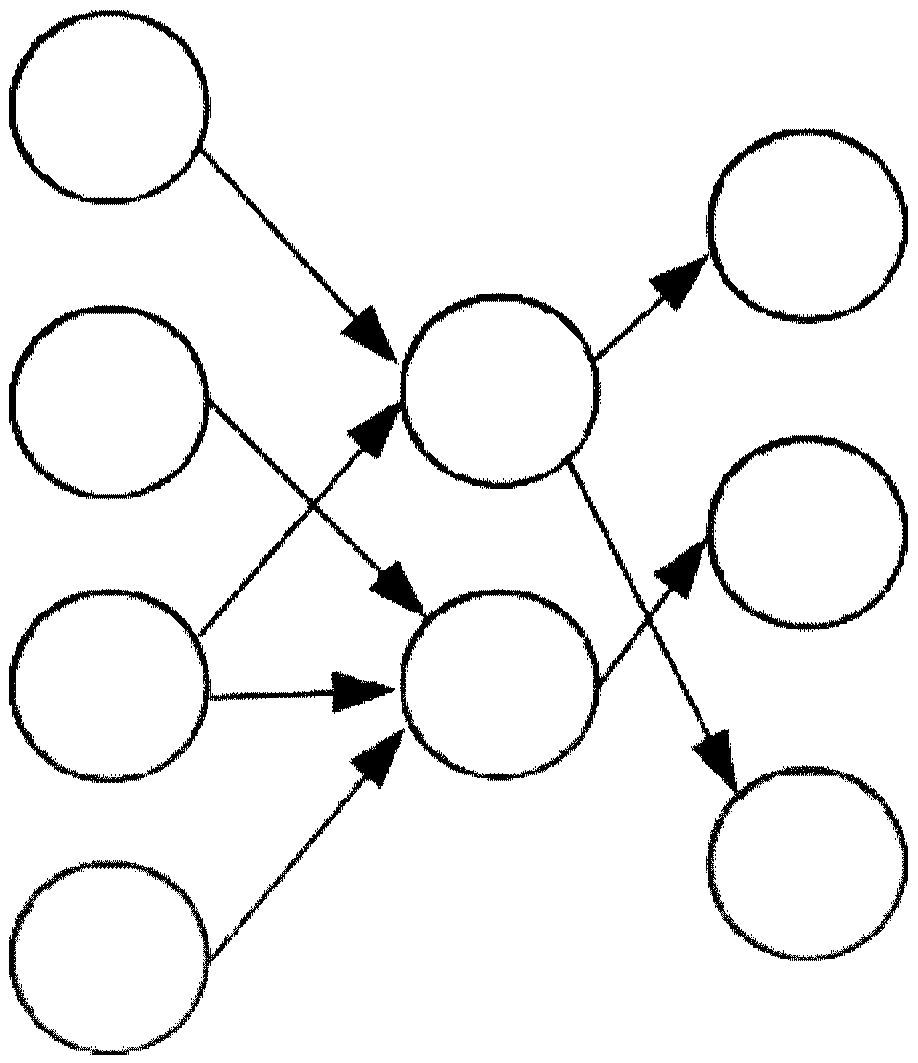

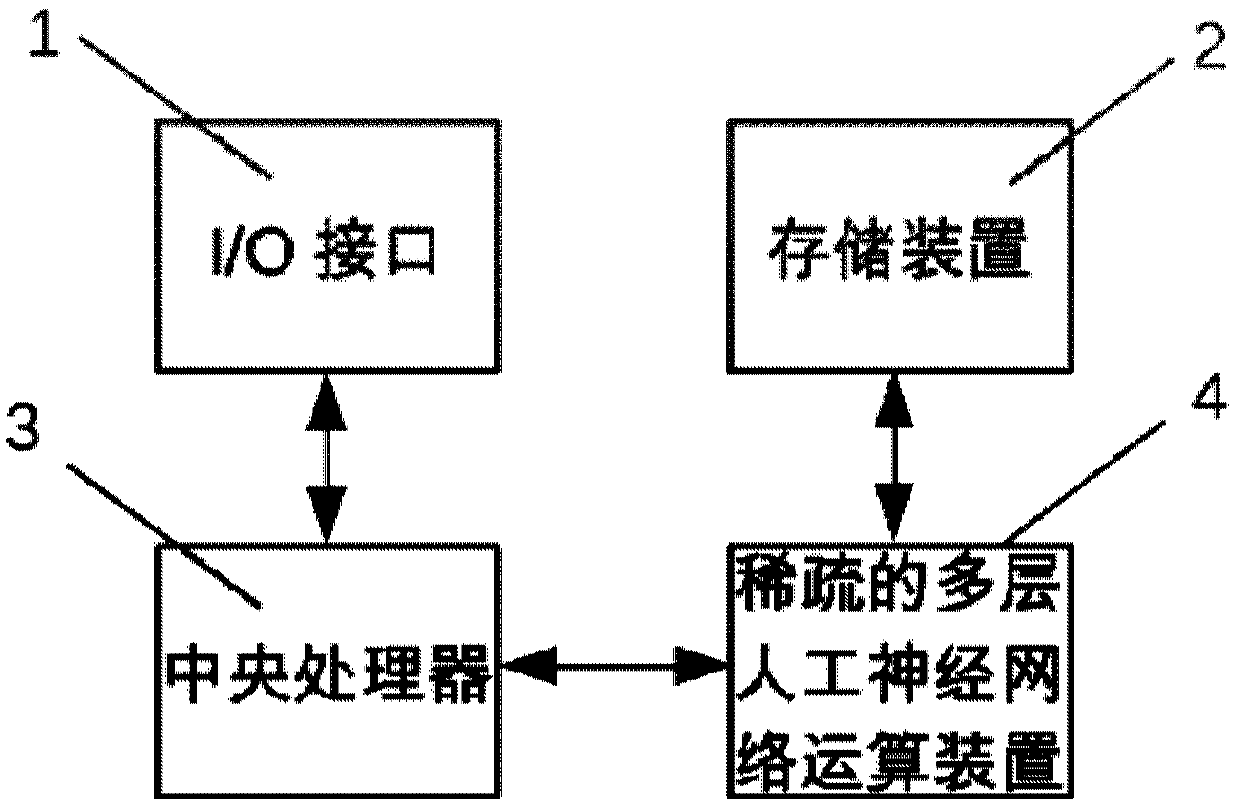

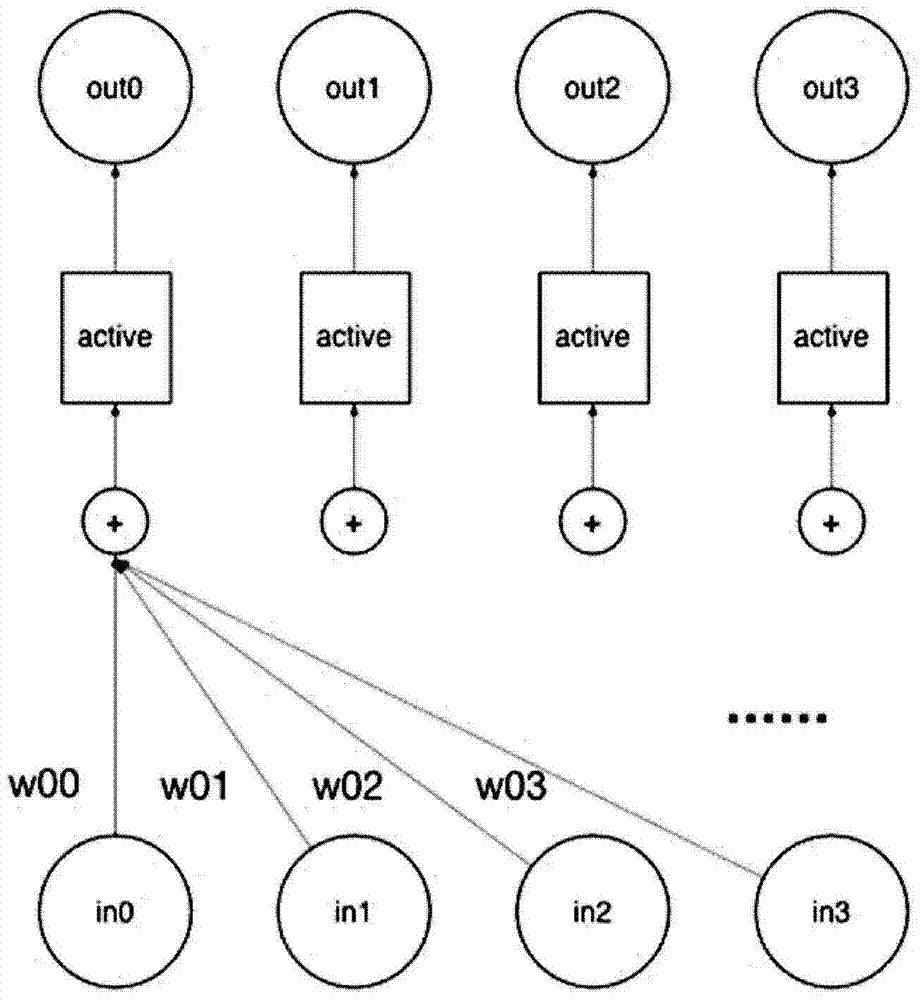

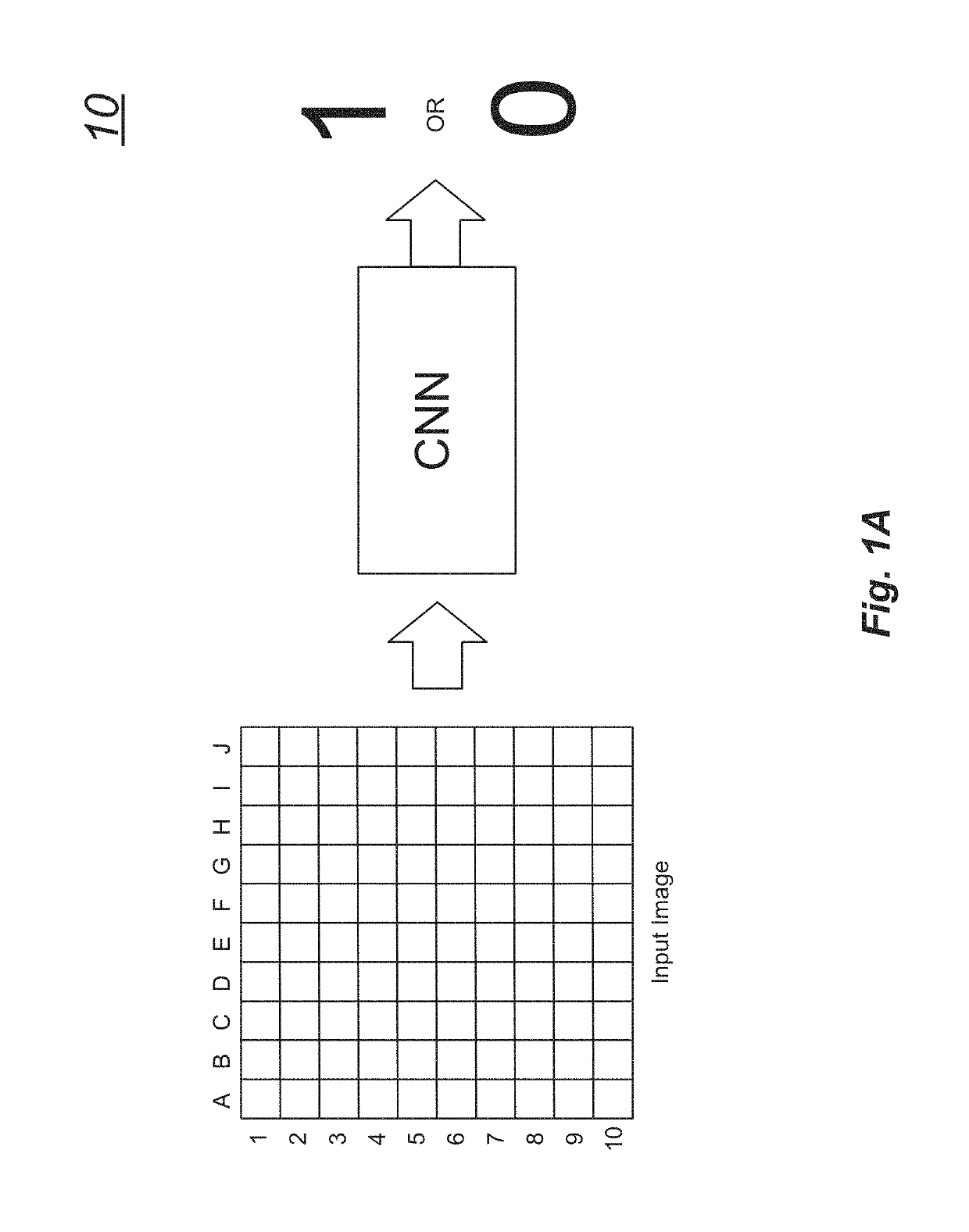

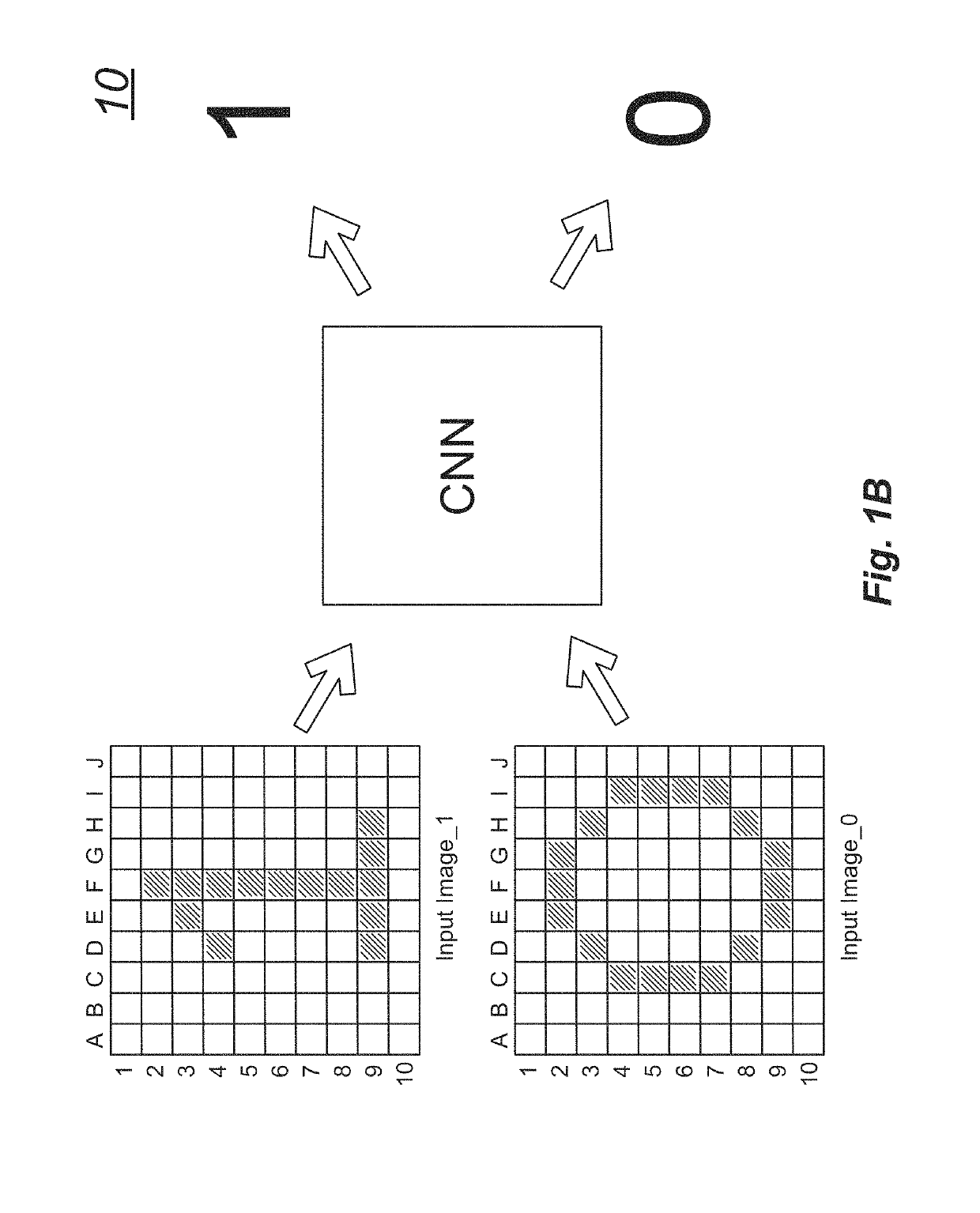

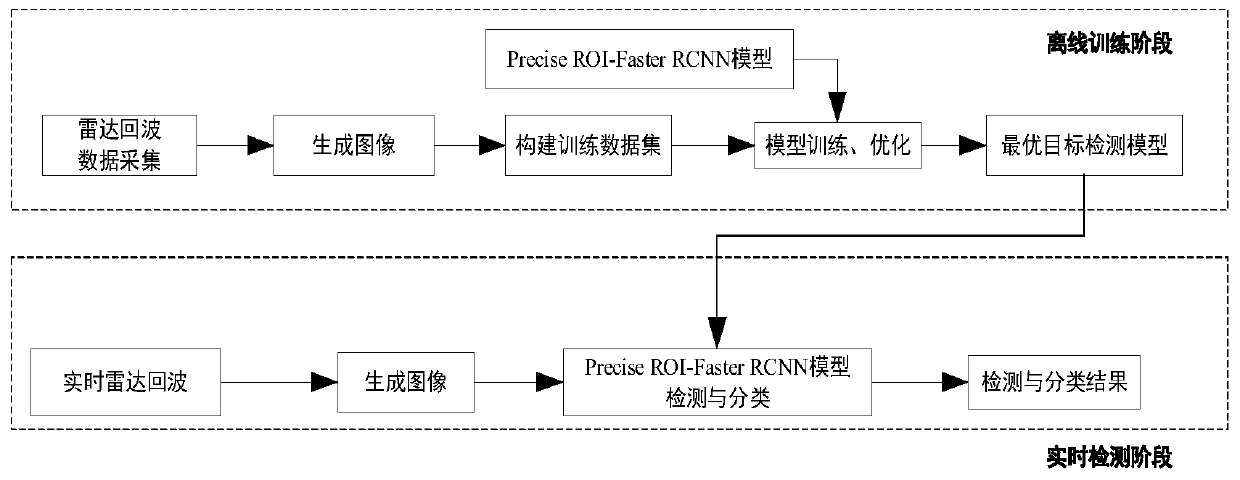

Artificial neural network calculating device and method for sparse connection

ActiveCN105512723ASolve the problem of insufficient computing performance and high front-end decoding overheadAdd supportMemory architecture accessing/allocationDigital data processing detailsActivation functionMemory bandwidth

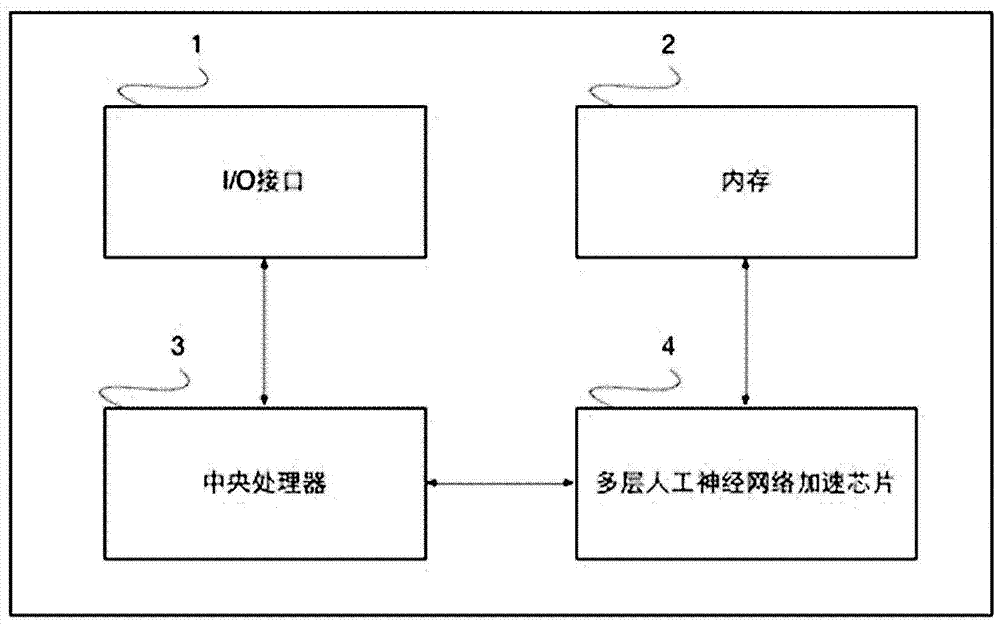

An artificial neural network calculating device for sparse connection comprises a mapping unit used for converting input data into the storage mode that input nerve cells and weight values correspond one by one, a storage unit used for storing data and instructions, and an operation unit used for executing corresponding operation on the data according to the instructions. The operation unit mainly executes three steps of operation, wherein in the first step, the input nerve cells and weight value data are multiplied; in the second step, addition tree operation is executed, the weighted output nerve cells processed in the first step are added level by level through an addition tree, or the output nerve cells are added with offset to obtain offset-added output nerve cells; in the third step, activation function operation is executed, and the final output nerve cells are obtained. By means of the device, the problems that the operation performance of a CPU and a GPU is insufficient, and the expenditure of front end coding is large are solved, support to a multi-layer artificial neural network operation algorithm is effectively improved, and the problem that memory bandwidth becomes a bottleneck of multi-layer artificial neural network operation and the performance of a training algorithm of the multi-layer artificial neural network operation is solved.

Owner:CAMBRICON TECH CO LTD

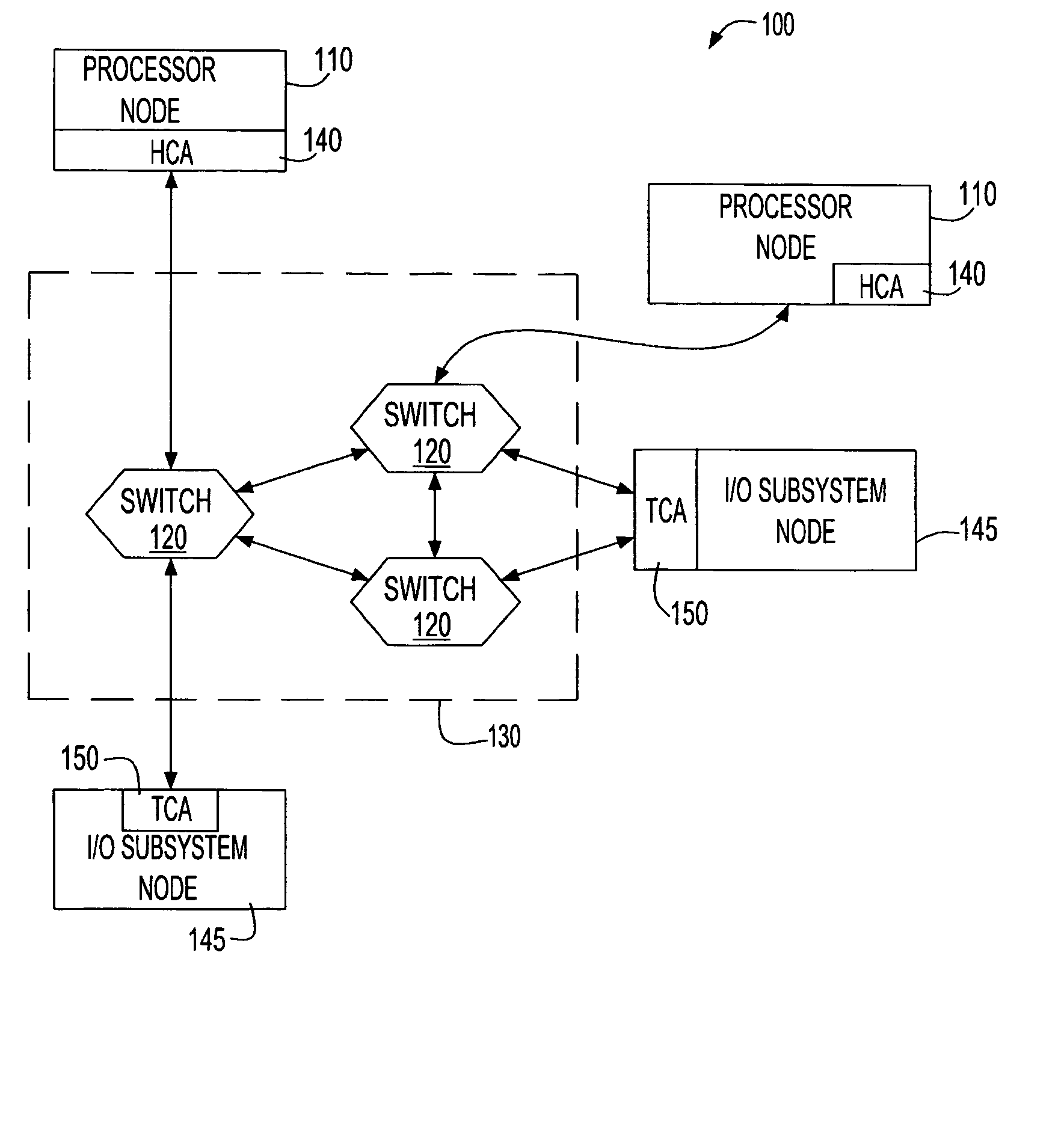

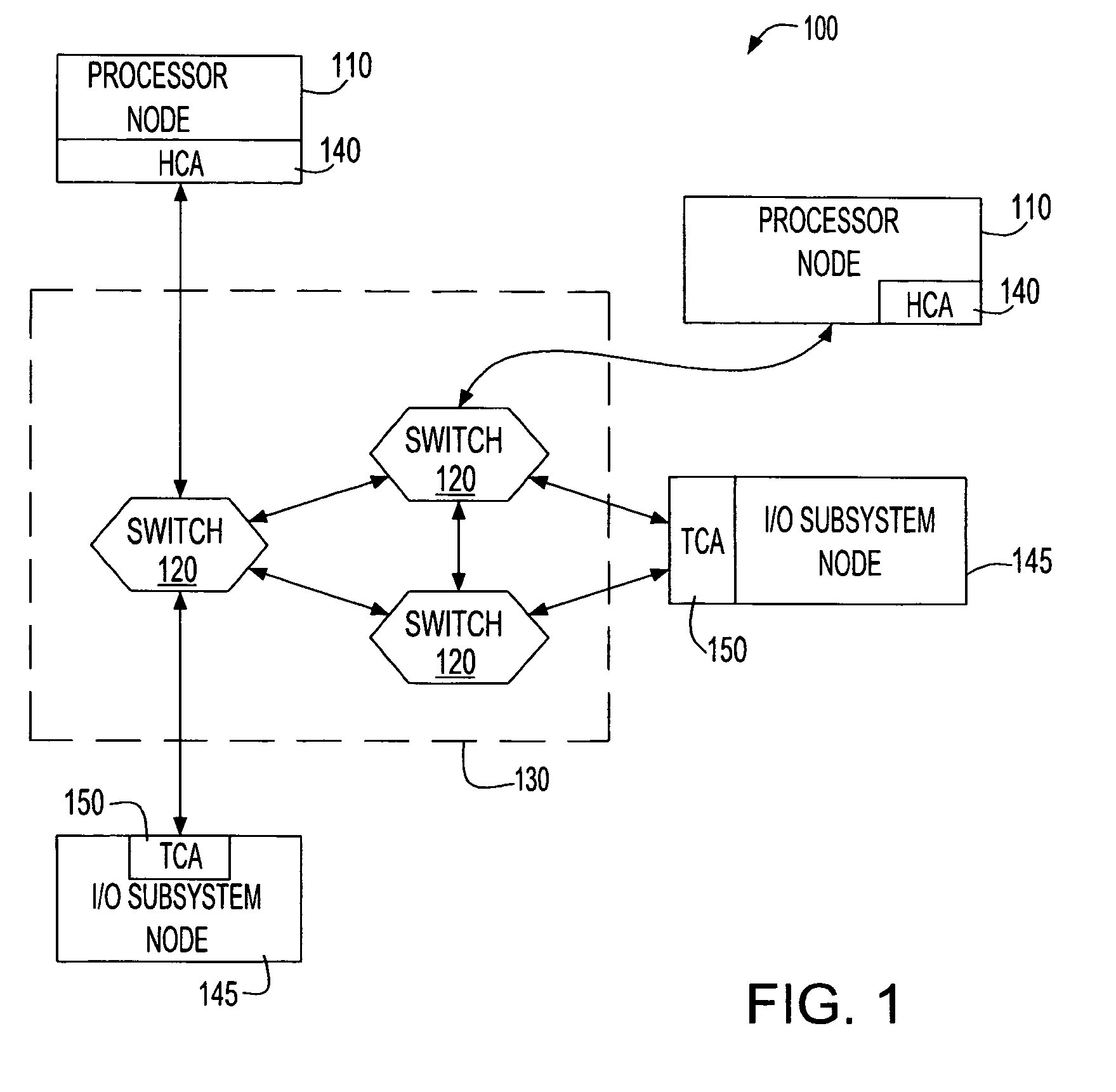

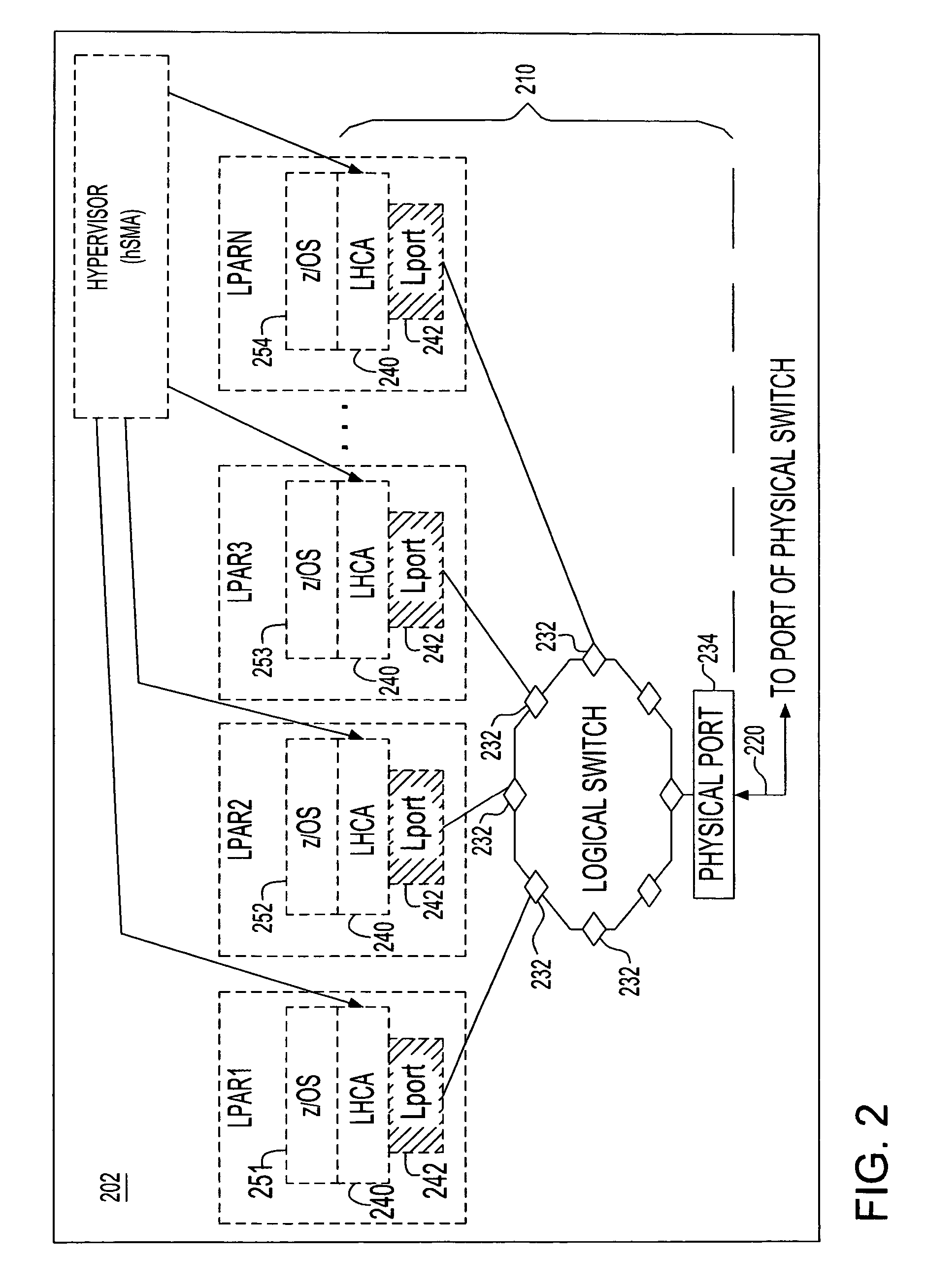

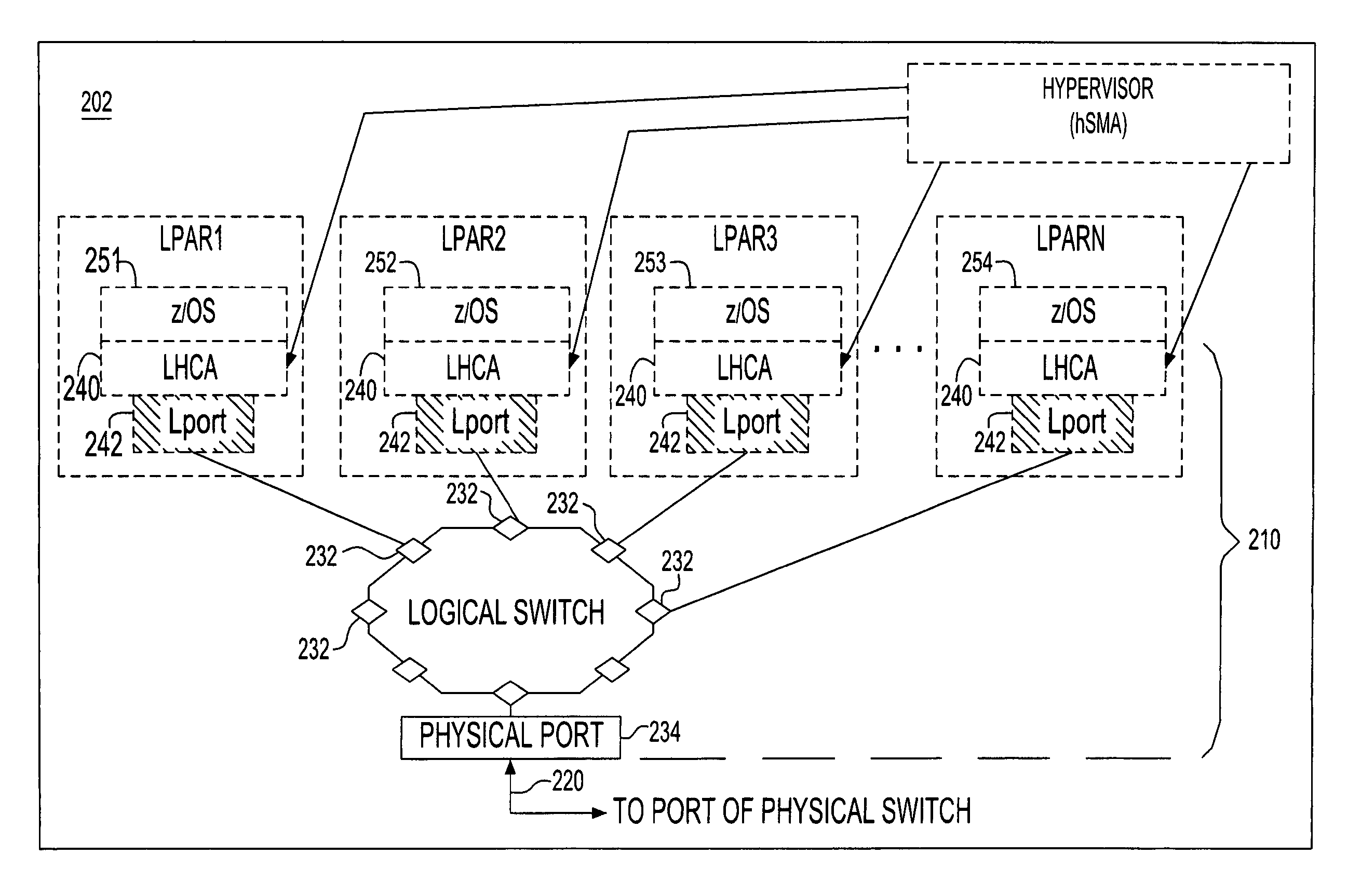

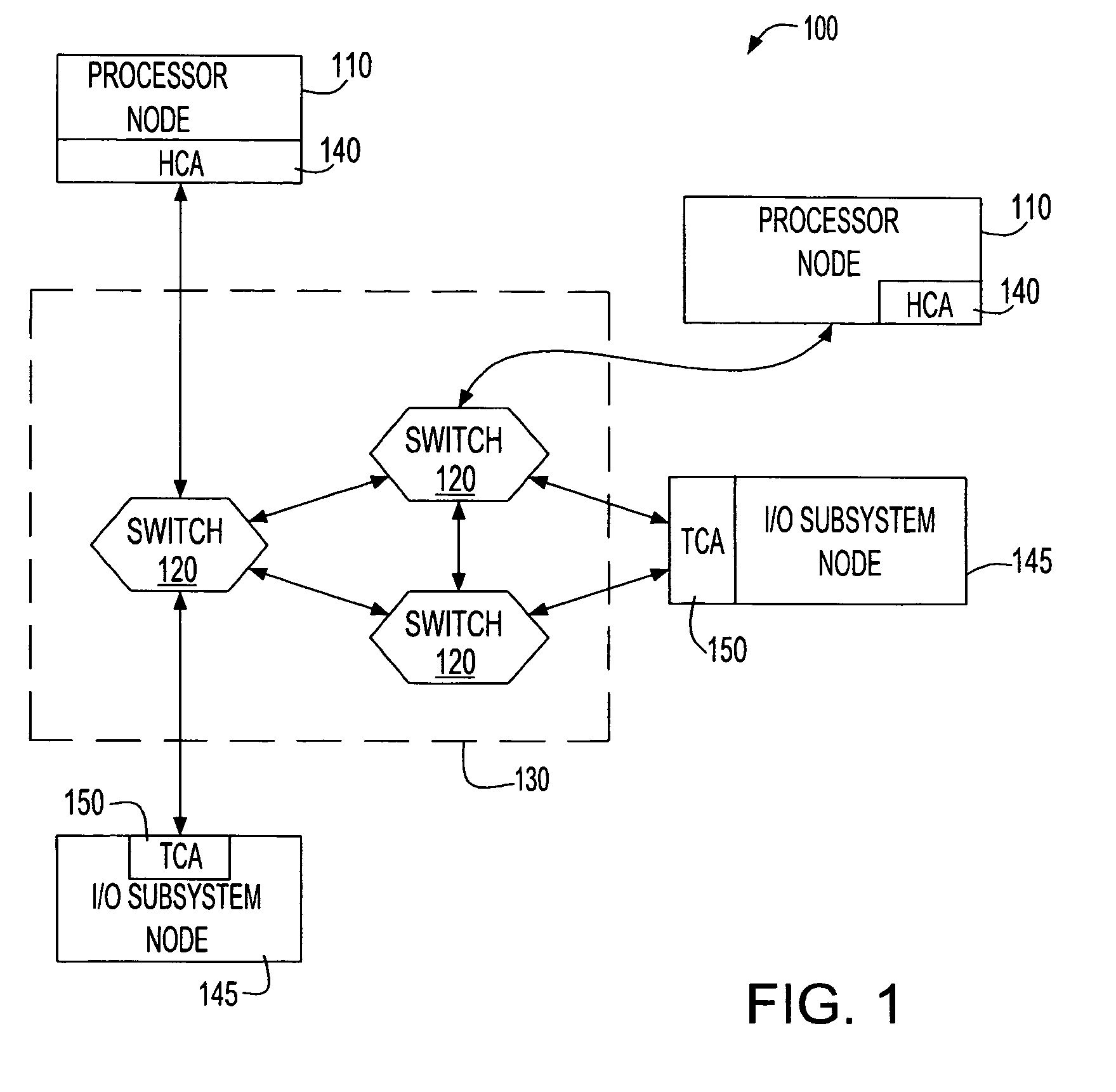

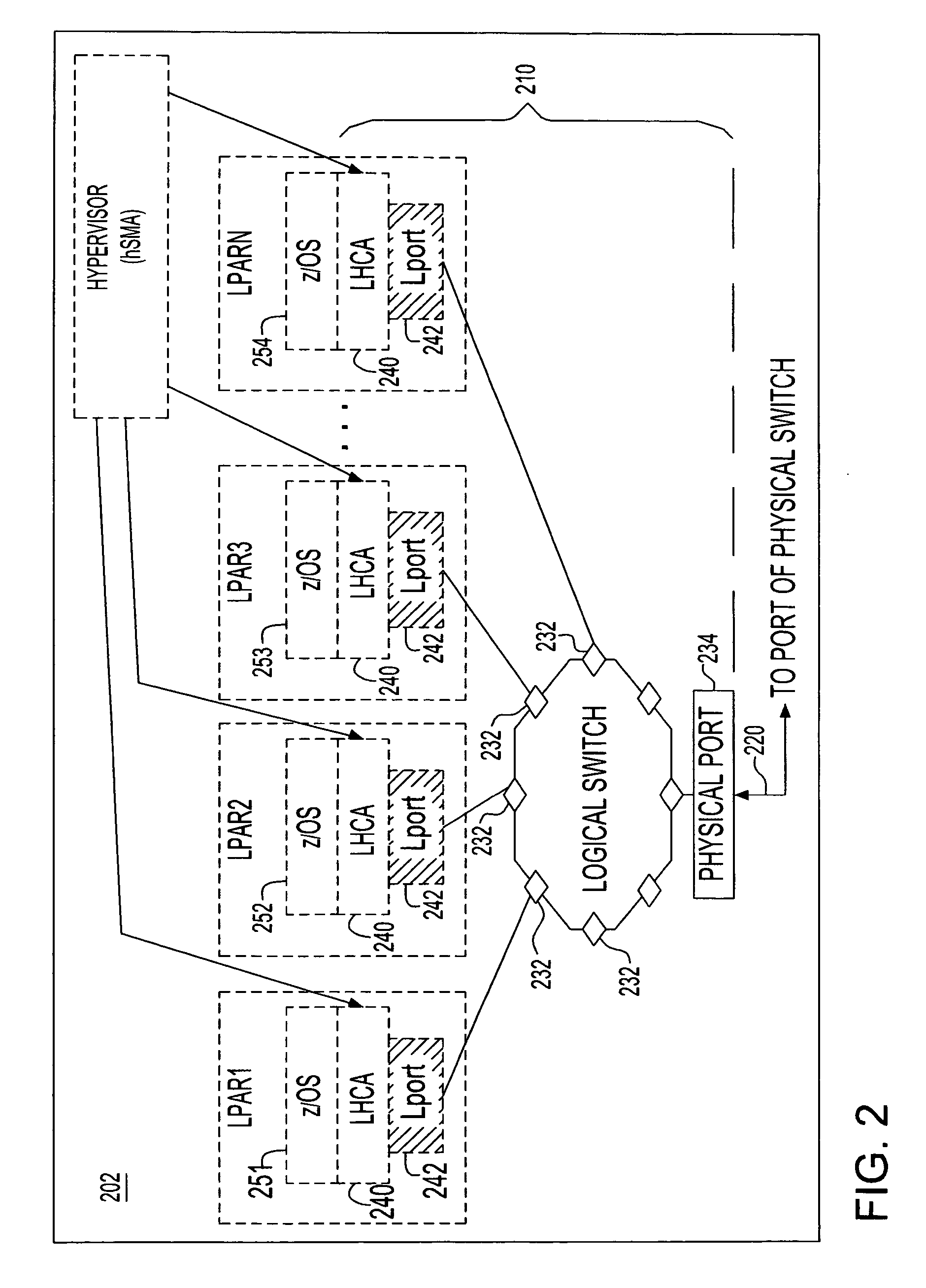

Virtualization of an I/O adapter port using enablement and activation functions

ActiveUS7200704B2Multiple digital computer combinationsData switching networksCommunication interfaceVirtualization

A method for configuring a communication port of a communications interface of an information handling system into a plurality of virtual ports. A first command is issued to obtain information indicating a number of images of virtual ports supportable by the communications interface. A second command is then issued requesting the communications interface to virtualize the communication port. In response to the second command, one or more virtual switches are then configured to connect to the communication port, each virtual switch including a plurality of virtual ports, such that the one or more virtual switches are configured in a manner sufficient to support the number of images of virtual ports indicated by the obtained information. Thereafter, upon request via issuance of a third command, a logical link is established between one of the virtual ports of one of the virtual switches and a communicating element of the information handling system.

Owner:IBM CORP

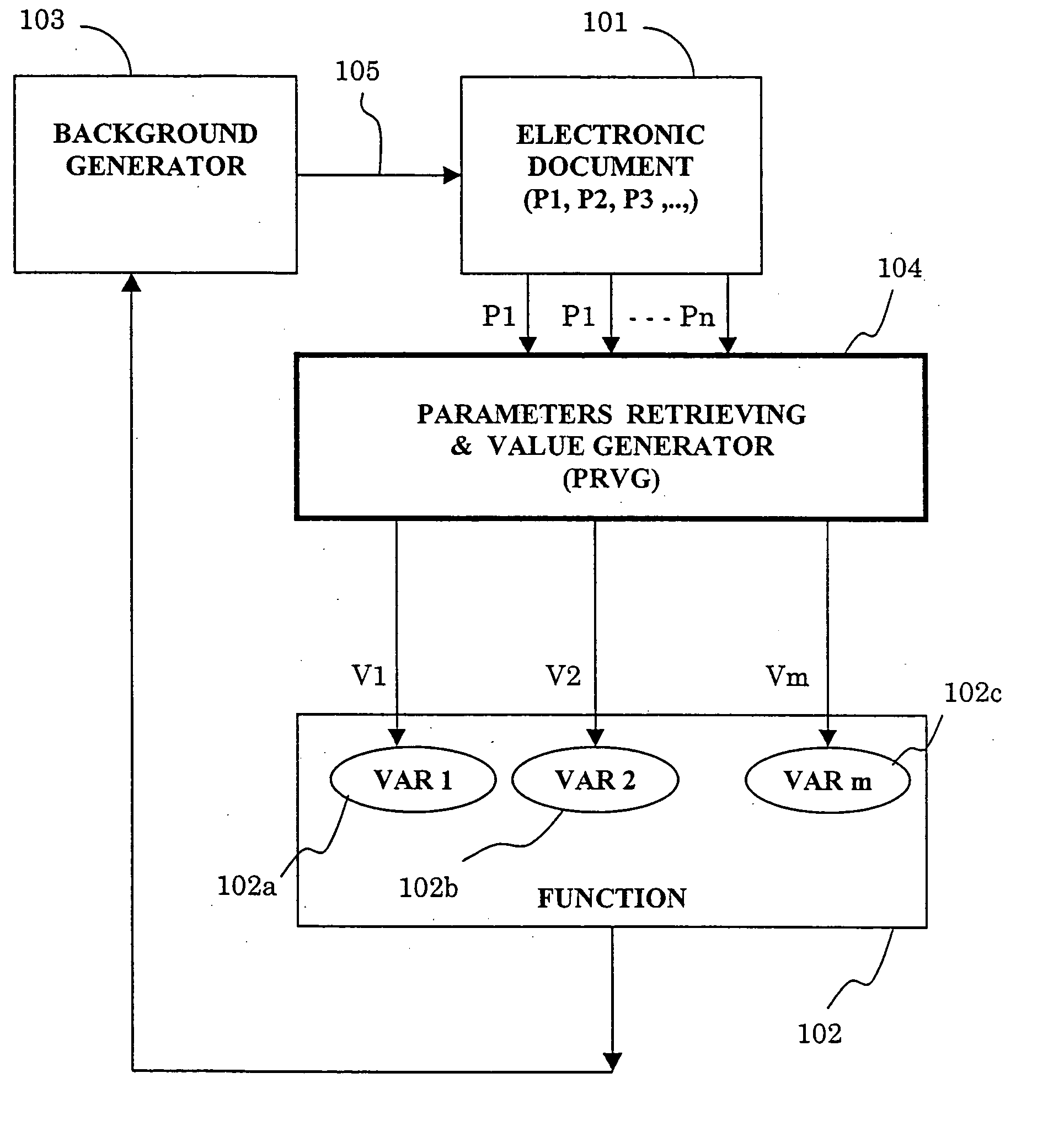

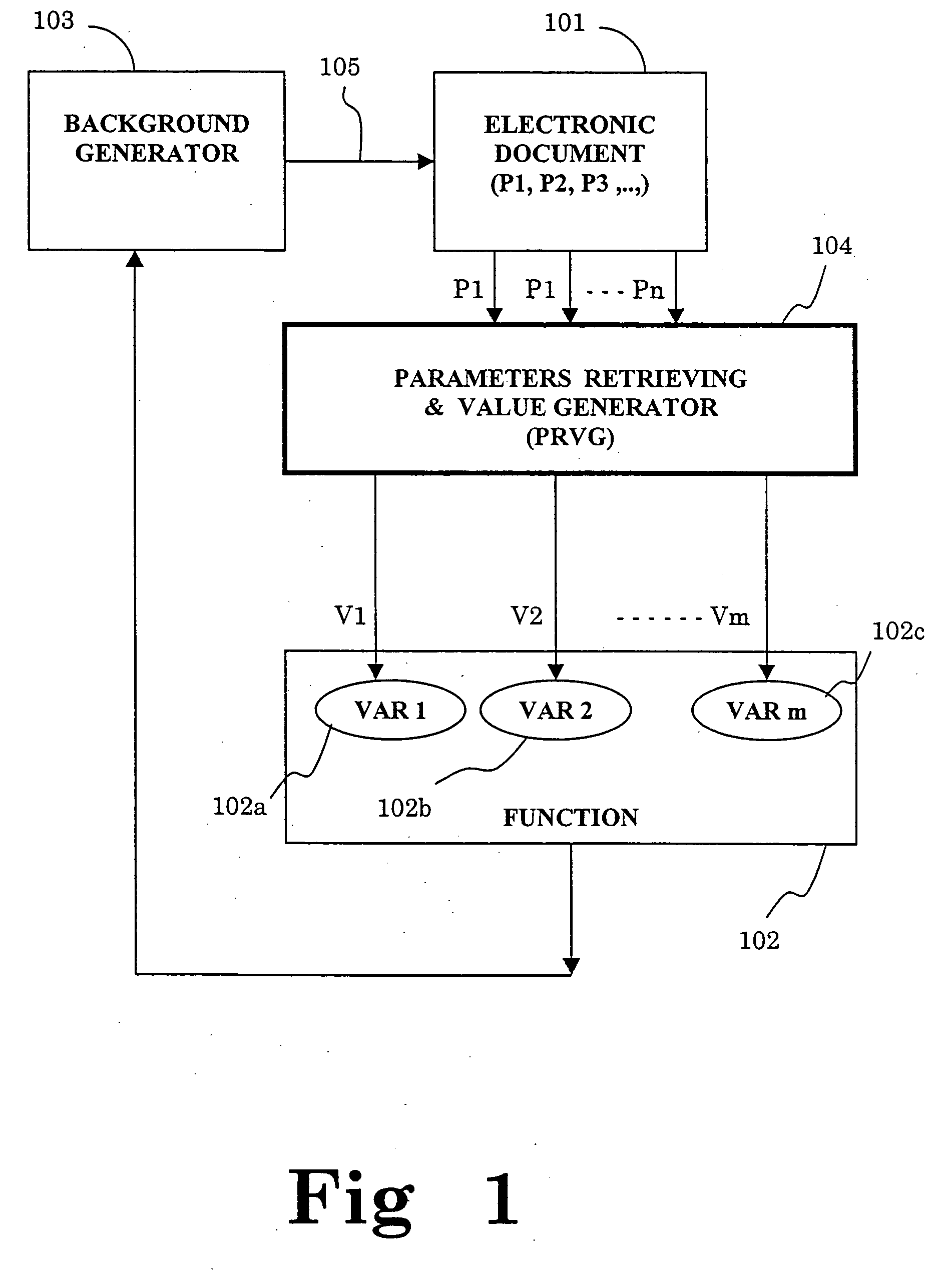

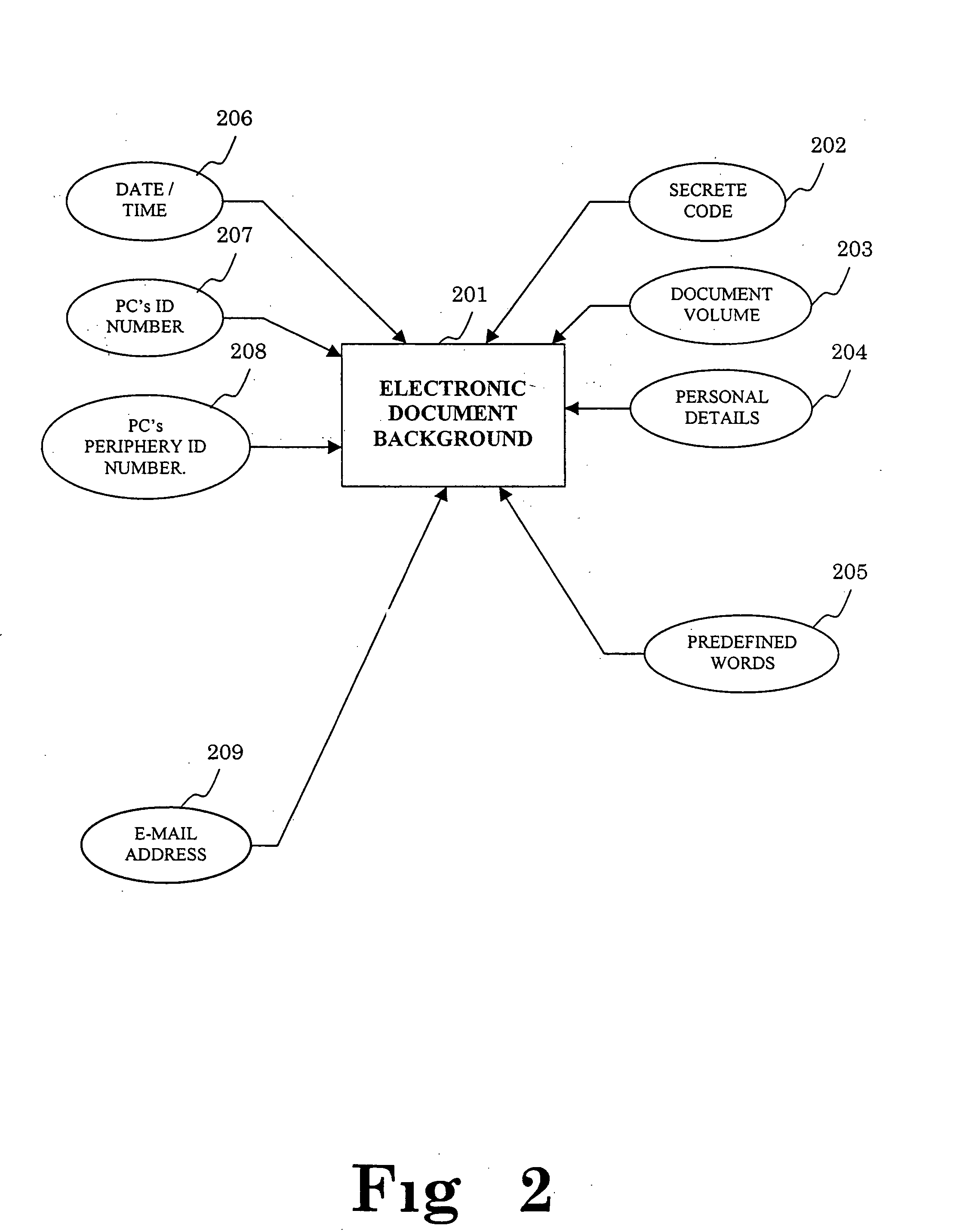

Method and system for assigning a background to a document and document having a background made according to the method and system

InactiveUS20050166144A1Efficient verificationEasy to identifyNatural language data processingSpecial data processing applicationsElectronic documentActivation function

This invention is concerned with a method and system for indicating selective parameters in a document, comprising: defining parameters for affecting the document; defining a function which includes the defined parameters as variables; providing a background generator receiving the function result as an input, for accordingly outputting a background relative to the input; and checking the document and substituting actual values reflecting the parameters to the function variables, and activating the function to obtain and provide results to the background generator, to produce and apply a specific background to the document, and the system produces a background to a document, and includes an electronic document with associated parameters; parameters retrieving and value generator for examining predefined parameters, and providing values to variables of a predefined function; a predefined function including variables, for producing an output result, which is provided to a background generator; and a background generator receiving the function output result, for accordingly applying to the document a specific background relative to the function result.

Owner:TELEFON AB LM ERICSSON (PUBL)

Virtualization of an I/O adapter port using enablement and activation functions

ActiveUS20060230219A1Sufficient supportMultiple digital computer combinationsElectric digital data processingVirtualizationCommunication interface

A method is provided for configuring a communication port of a communications interface of an information handling system into a plurality of virtual ports. A first command is issued to obtain information indicating a number of images of virtual ports supportable by the communications interface. A second command is then issued requesting the communications interface to virtualize the communication port. In response to the second command, one or more virtual switches are then configured to connect to the communication port, each virtual switch including a plurality of virtual ports, such that the one or more virtual switches are configured in a manner sufficient to support the number of images of virtual ports indicated by the obtained information. Thereafter, upon request via issuance of a third command, a logical link is established between one of the virtual ports of one of the virtual switches and a communicating element of the information handling system.

Owner:IBM CORP

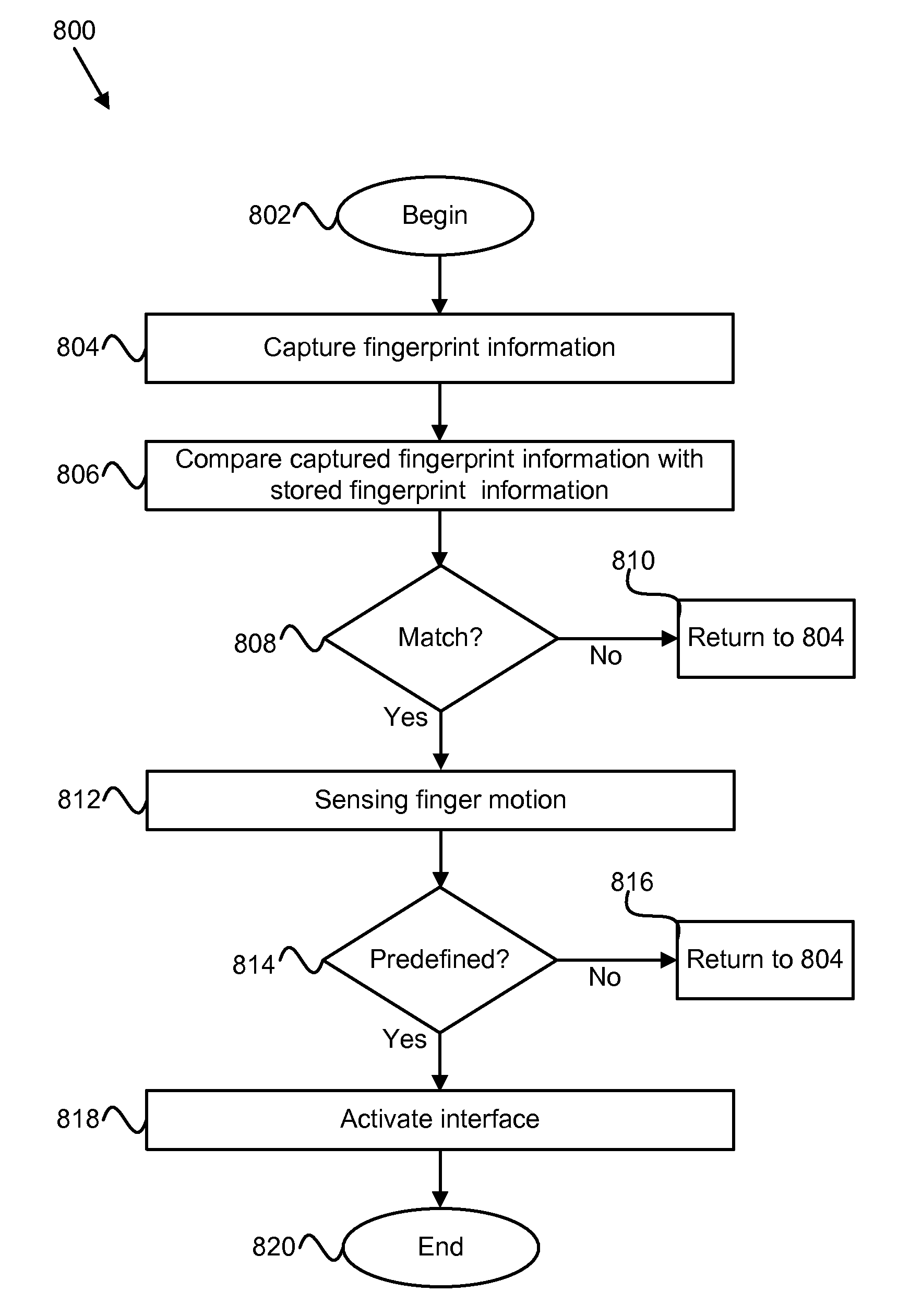

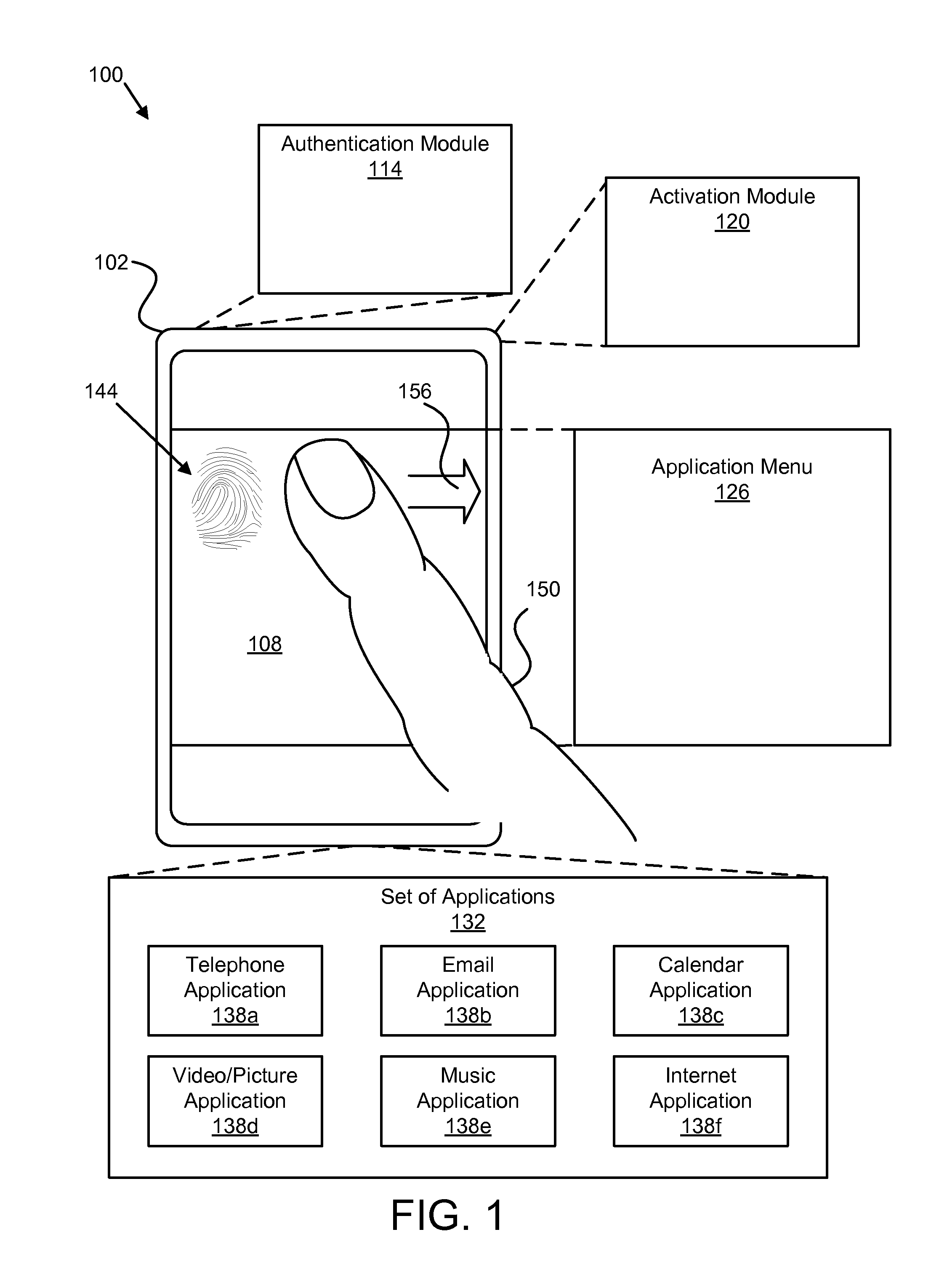

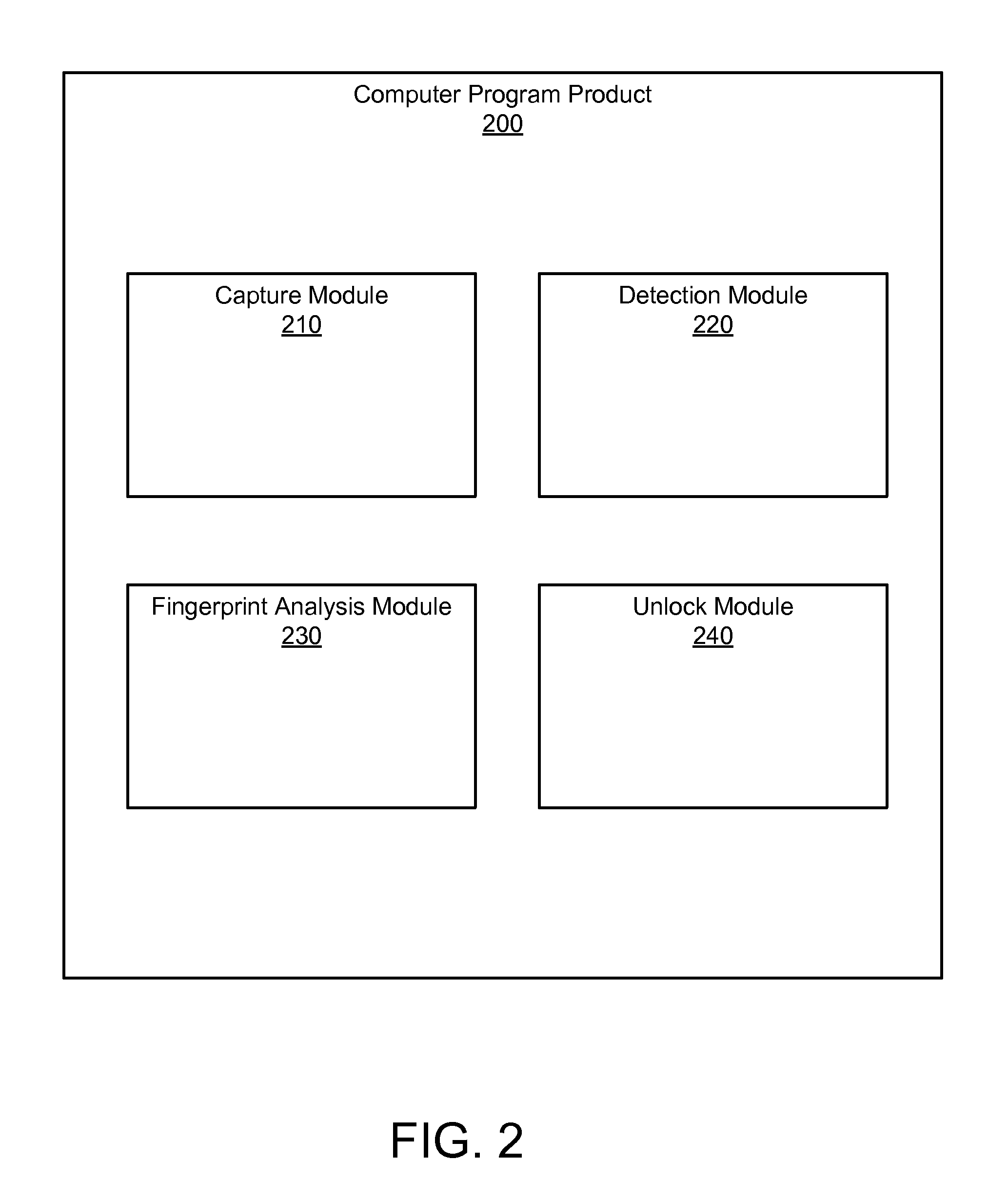

Apparatus, system, and method for providing authentication and activation functions to a computing device

InactiveUS20090224874A1Electric signal transmission systemsImage analysisActivation functionInternet privacy

An apparatus, system, and method are disclosed for authenticating and activating access to a computing device that captures fingerprint information from a user finger and compares the captured fingerprint information to stored fingerprint information to determine an authenticating correlation. The apparatus, system, and method also sense finger motion to detect a predefined user finger action that leads to the activation of an interface or an application.

Owner:IBM CORP

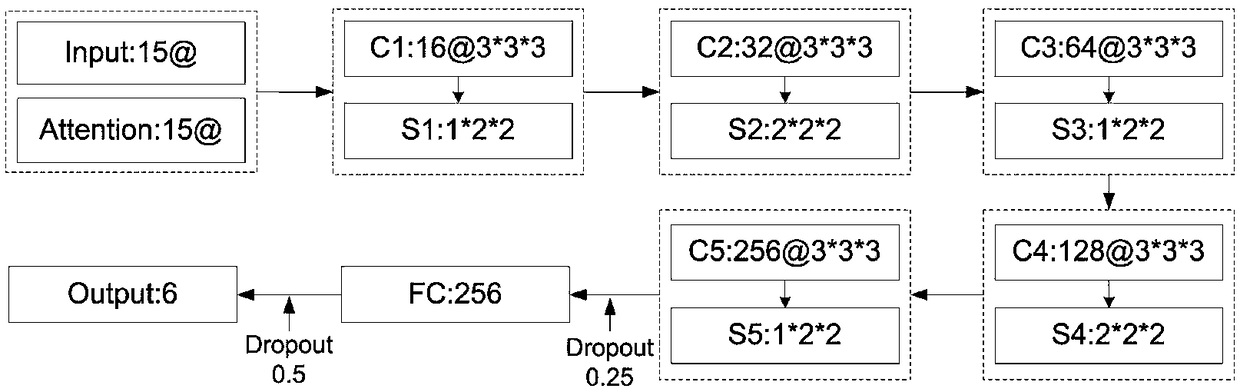

Human behavior recognition method based on attention mechanism and 3D convolutional neural network

ActiveCN108830157AImprove the accuracy of behavior recognitionEfficient use ofCharacter and pattern recognitionNeural architecturesHuman behaviorActivation function

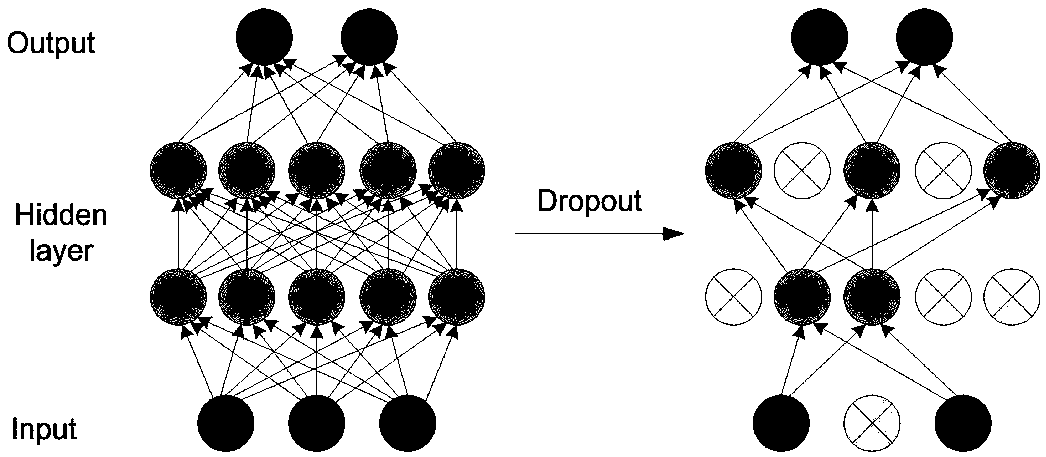

The invention discloses a human behavior recognition method based on an attention mechanism and a 3D convolutional neural network. According to the human behavior recognition method, a 3D convolutional neural network is constructed; and the input layer of the 3D convolutional neural network includes two channels: an original grayscale image and an attention matrix. A 3D CNN model for recognizing ahuman behavior in a video is constructed; an attention mechanism is introduced; a distance between two frames is calculated to form an attention matrix; the attention matrix and an original human behavior video sequence form double channels inputted into the constructed 3D CNN and convolution operation is carried out to carry out vital feature extraction on a visual focus area. Meanwhile, the 3DCNN structure is optimized; a Dropout layer is randomly added to the network to freeze some connection weights of the network; the ReLU activation function is employed, so that the network sparsity isimproved; problems that computing load leap and gradient disappearing due to the dimension increasing and the layer number increasing are solved; overfitting under a small data set is prevented; and the network recognition accuracy is improved and the time losses are reduced.

Owner:NORTH CHINA ELECTRIC POWER UNIV (BAODING) +1

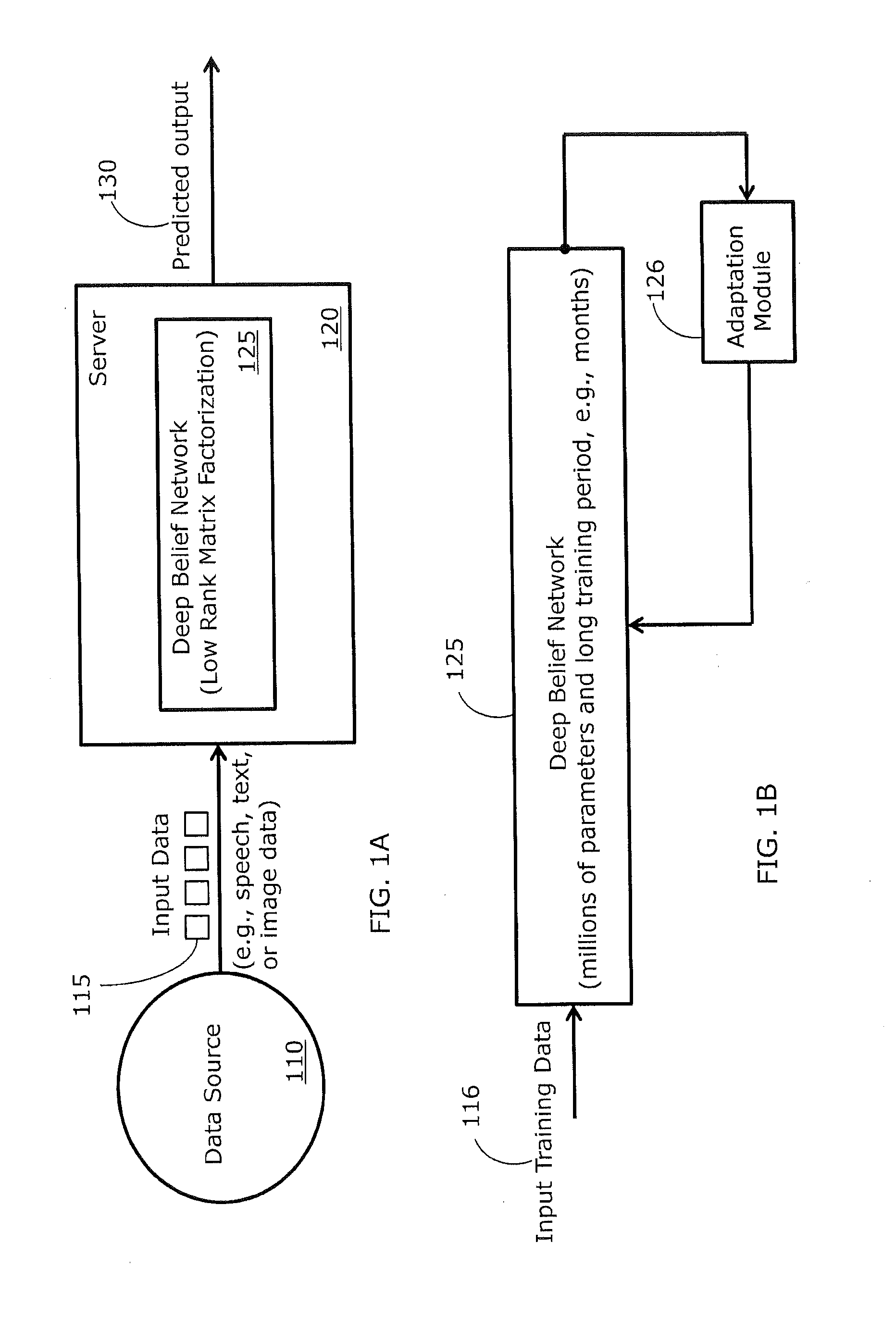

Method and Apparatus of Processing Data Using Deep Belief Networks Employing Low-Rank Matrix Factorization

InactiveUS20140156575A1Mathematical modelsDigital computer detailsDeep belief networkActivation function

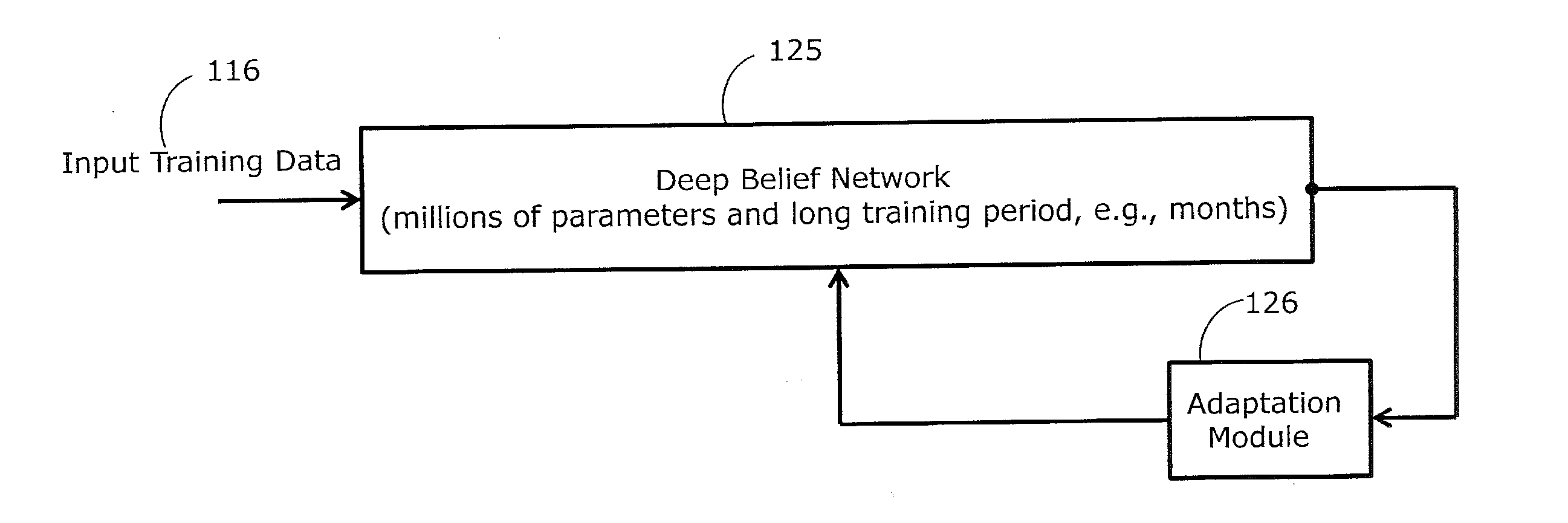

Deep belief networks are usually associated with a large number of parameters and high computational complexity. The large number of parameters results in a long and computationally consuming training phase. According to at least one example embodiment, low-rank matrix factorization is used to approximate at least a first set of parameters, associated with an output layer, with a second and a third set of parameters. The total number of parameters in the second and third sets of parameters is smaller than the number of sets of parameters in the first set. An architecture of a resulting artificial neural network, when employing low-rank matrix factorization, may be characterized with a low-rank layer, not employing activation function(s), and defined by a relatively small number of nodes and the second set of parameters. By using low rank matrix factorization, training is faster, leading to rapid deployment of the respective system.

Owner:NUANCE COMM INC

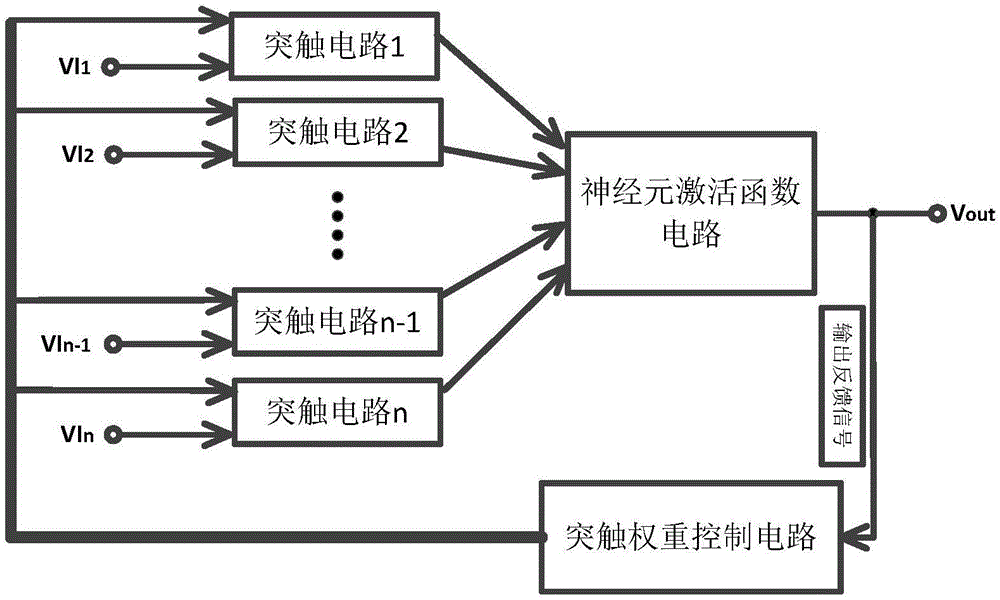

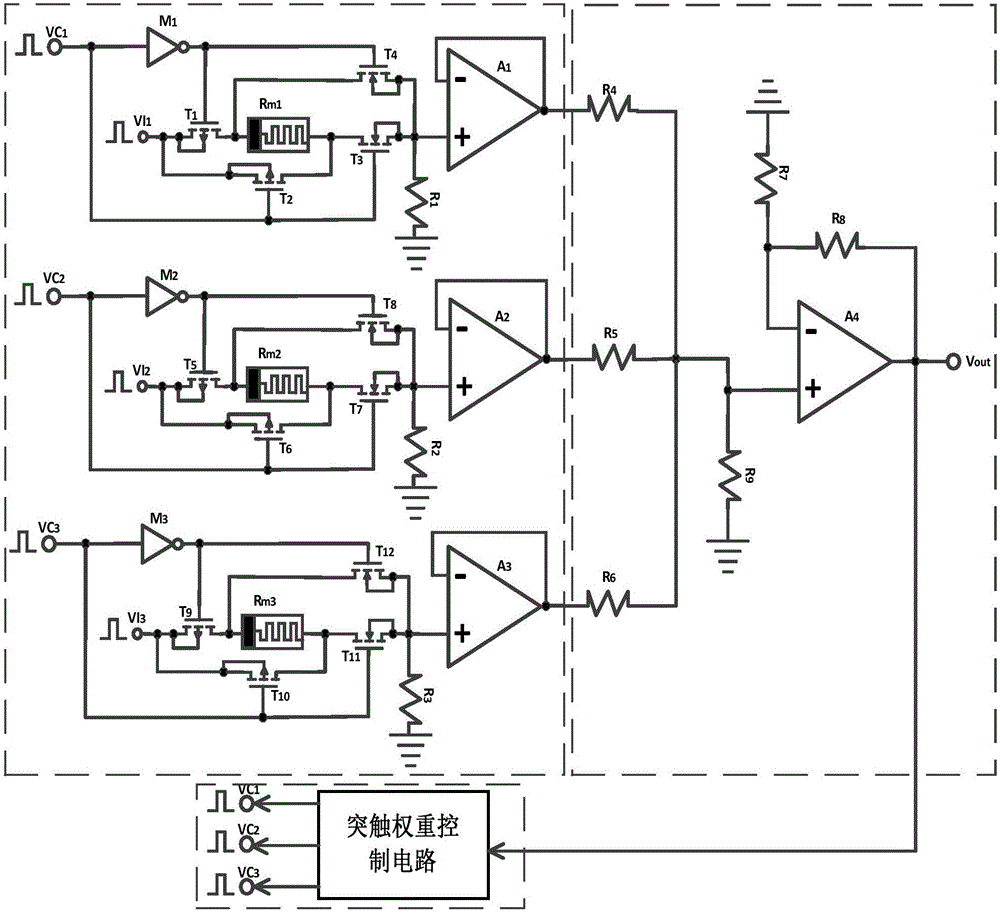

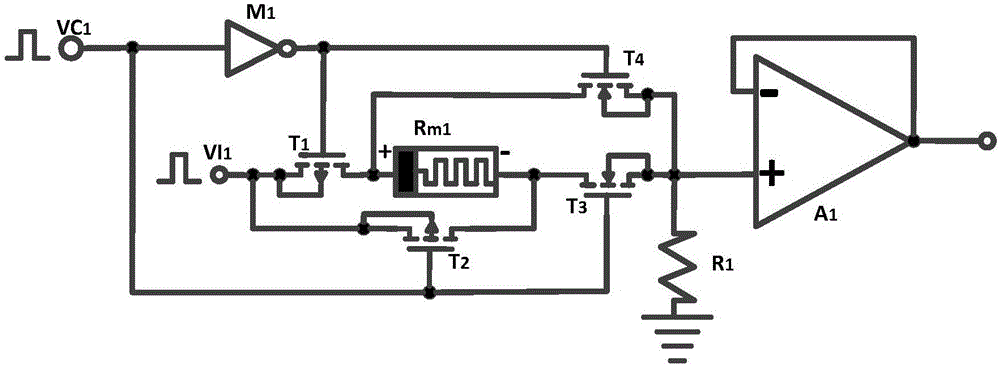

Memristor-based neuron circuit

ActiveCN106815636AGood effectEasy compositionNeural architecturesPhysical realisationActivation functionNerve network

The invention discloses a memristor-based neuron circuit, comprising a synaptic circuit, a neuron activation function circuit and a synaptic weight control circuit. In the synaptic circuit, a memristor, under the control of four MOS tubes, changes the memristor value to simulate the change of the synaptic weight in the neuron network. The designed neuron synaptic circuit is capable of being directly connected with a digital logic electrical level so as to achieve convenient and real-time adjustment to the synaptic weight and through the use of the feature that the output voltage of an operational amplifier is restricted by the power supply voltage, the neuron circuit activation function can be realized as a saturated linear function. The neuron synaptic weight change circuit can utilize the existing CMOS micro-controller and at the same time, the micro-controller can be loaded by the neuron network algorithm to change the synaptic weight to realize corresponding functions. According to the invention, a plurality of neuron circuits could be connected into a large-scale neuron network for complicated functions such as mode identification, signal processing, associated memory and non-linear mapping, etc.

Owner:HUAZHONG UNIV OF SCI & TECH

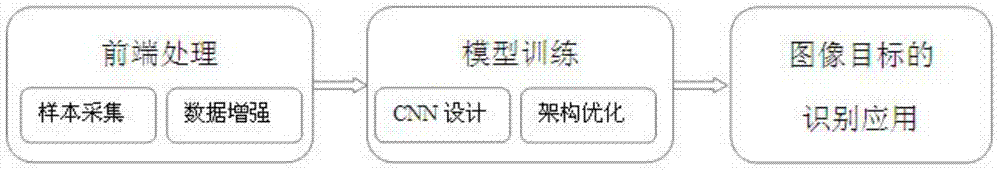

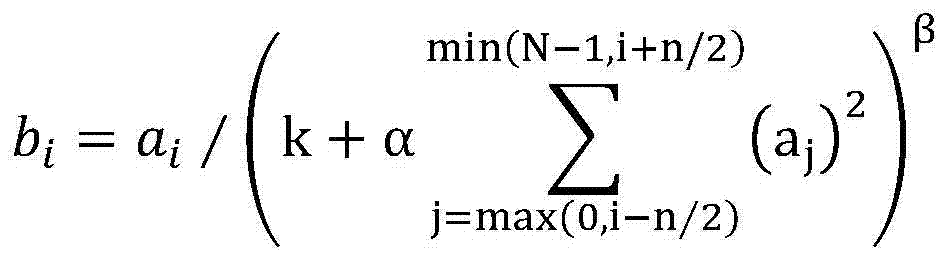

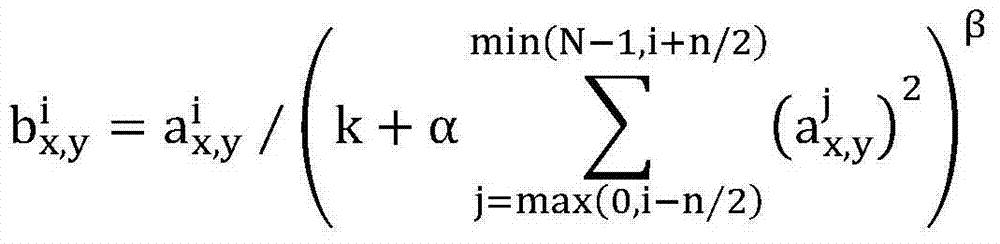

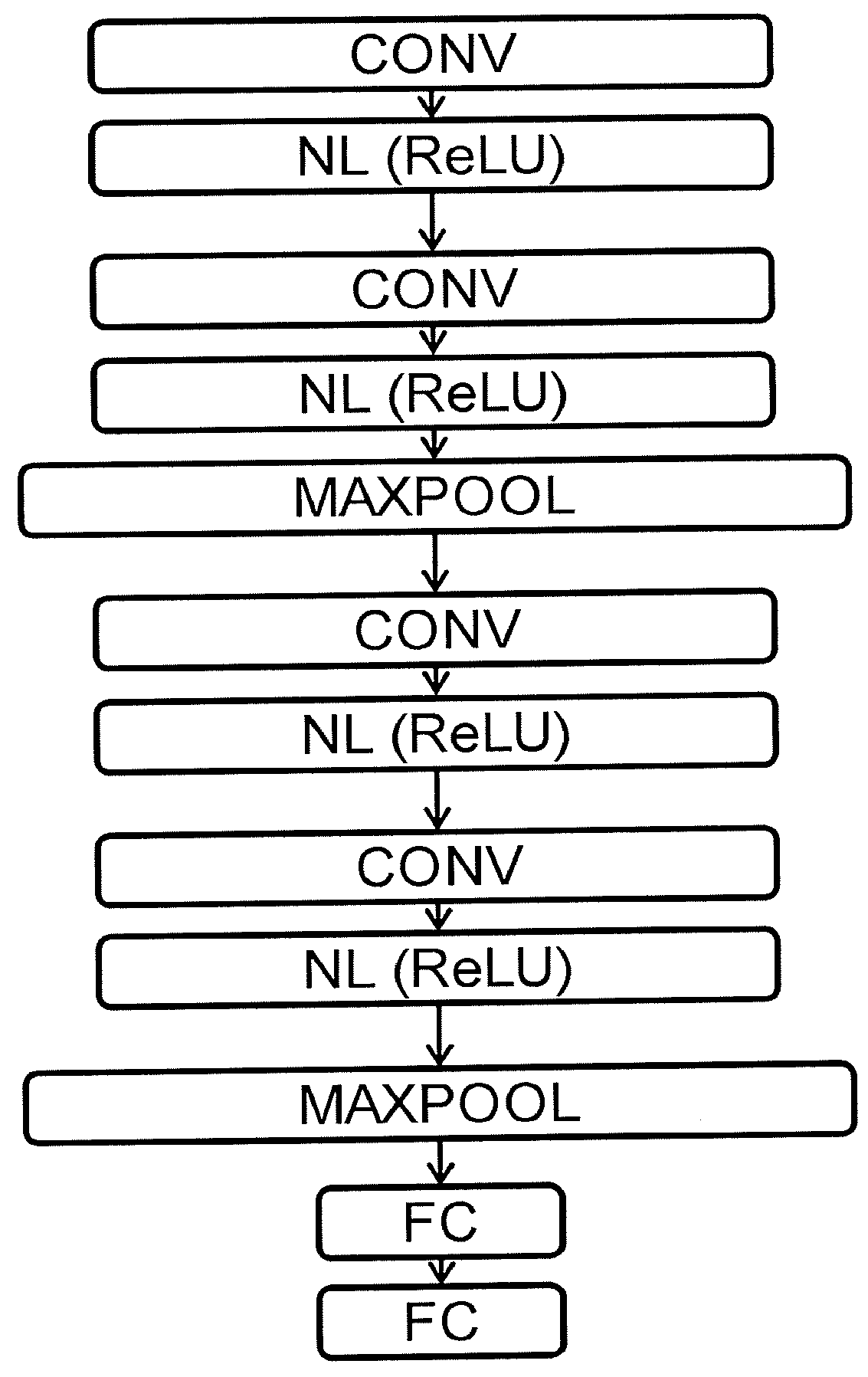

Image target recognition method based on optimized convolution architecture

InactiveCN104517122AExpand the training sample setReduce overfittingCharacter and pattern recognitionNeural learning methodsPattern recognitionActivation function

The invention discloses an image target recognition method based on optimized convolution architecture. The image target recognition method includes collecting and enhancing an input image to form a sample; training the sample on the basis of the optimized convolution architecture; performing classified recognition on an image target by using the convolution architecture after training, wherein optimization of convolution architecture includes ReLU activation function; locally responding to normalization; overlapping and merging a convolution area; adopting neuron connection Drop-out technology; performing heuristic learning. Compared with the prior art, the image target recognition method has the advantages that tape label samples can be expanded, and the image target recognition method is supportive of classification of many objects and acquiring of high training convergence speed and high image target recognition rate and has higher robustness.

Owner:ZHEJIANG UNIV

Artificial neural network compression coding device and artificial neural network compression coding method

ActiveCN106991477AReduce model sizeFast data processingDigital data processing detailsProgram controlActivation functionNerve network

An artificial neural network compression coding device comprises a memory interface unit, an instruction buffer memory, a controller unit and an operation unit, wherein the operation unit is used for performing corresponding operation on data from the memory interface unit according to the instruction of the controller unit. The operation unit mainly performs three steps of: 1, multiplexing an input neuron with weight data; 2, performing an addition tree operation for adding the weighted output neurons after the first step through an addition tree or obtaining a biased output neurons through adding the output neurons and a bias; and 3, executing a function activation operation, and obtaining a final output neuron. The invention further provides an artificial neural network compression coding method. The artificial neural network compression coding device and the artificial neural network compression coding method have advantages of effectively reducing size of an artificial neural network model, improving data processing speed of the artificial neural network, effectively reducing power consumption and improving resource utilization rate.

Owner:CAMBRICON TECH CO LTD

A method for identifying fruit tree diseases and pests under complex background based on cavity convolution

InactiveCN109344883AImprove recognition accuracyImprove robustnessCharacter and pattern recognitionNeural architecturesData setActivation function

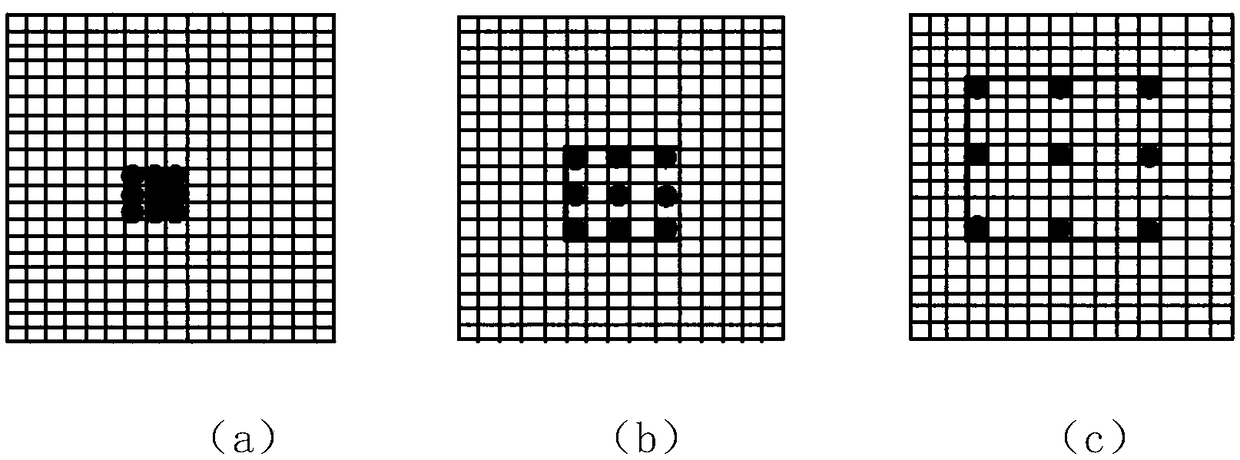

A method for identifying diseases and pests of fruit trees under complex background based on cavity convolution is disclosed, At first, that original train set with sample labels is obtain by collecting images of diseases and insect pests of fruit tree leaves, and then the expanded train sample set is obtained by data augmentation operation, and then the average value is obtained, all the originaltraining set images are averaged, and then randomly scrambled to form a training data set; Then, the cavity convolution neural network model is established, and the disease classification training iscarried out by using the training data set. The characteristic image of the input image is extracted by the cavity convolution layer, and the nonlinear characteristic image of the input image is obtained by using the nonlinear activation function. Pooling layer is used to reduce the convolution layer weight parameters. Using multi-scale convolution kernel to obtain different features of the inputimage, and finally using Soft Max classifier to classify the disease types of the input image; The invention shortens the training time of the model, accelerates the recognition speed and improves the recognition accuracy.

Owner:XIJING UNIV

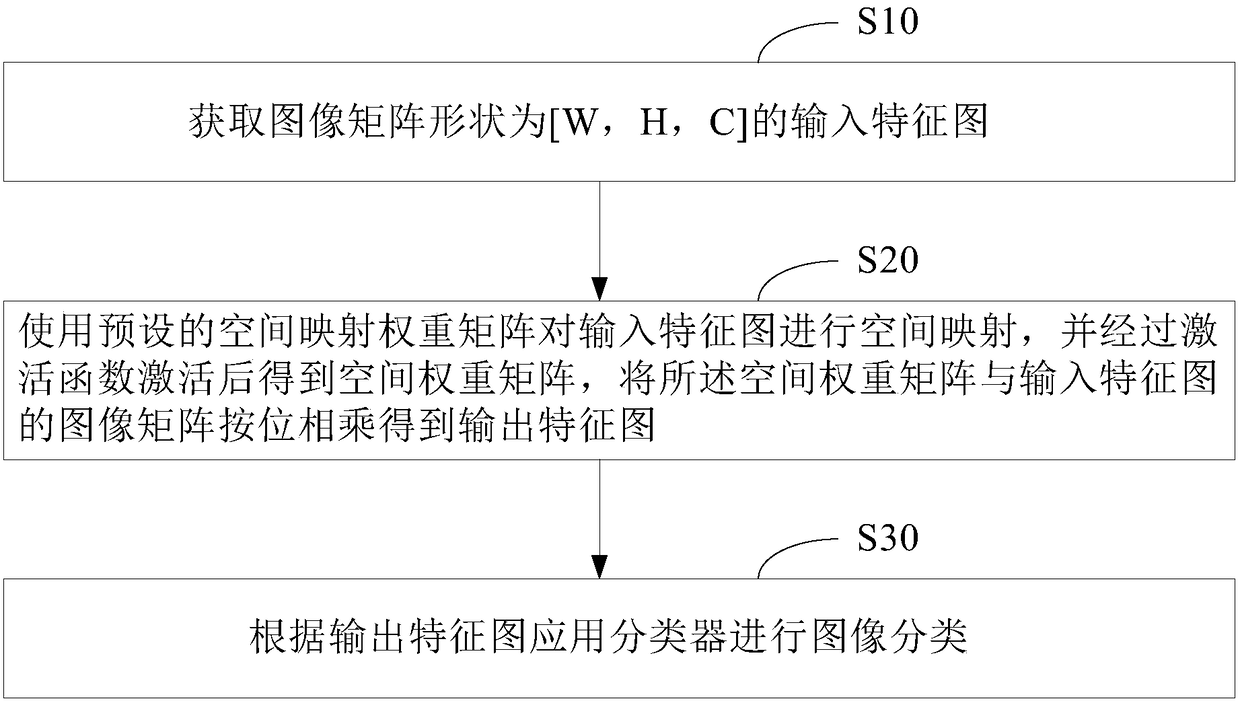

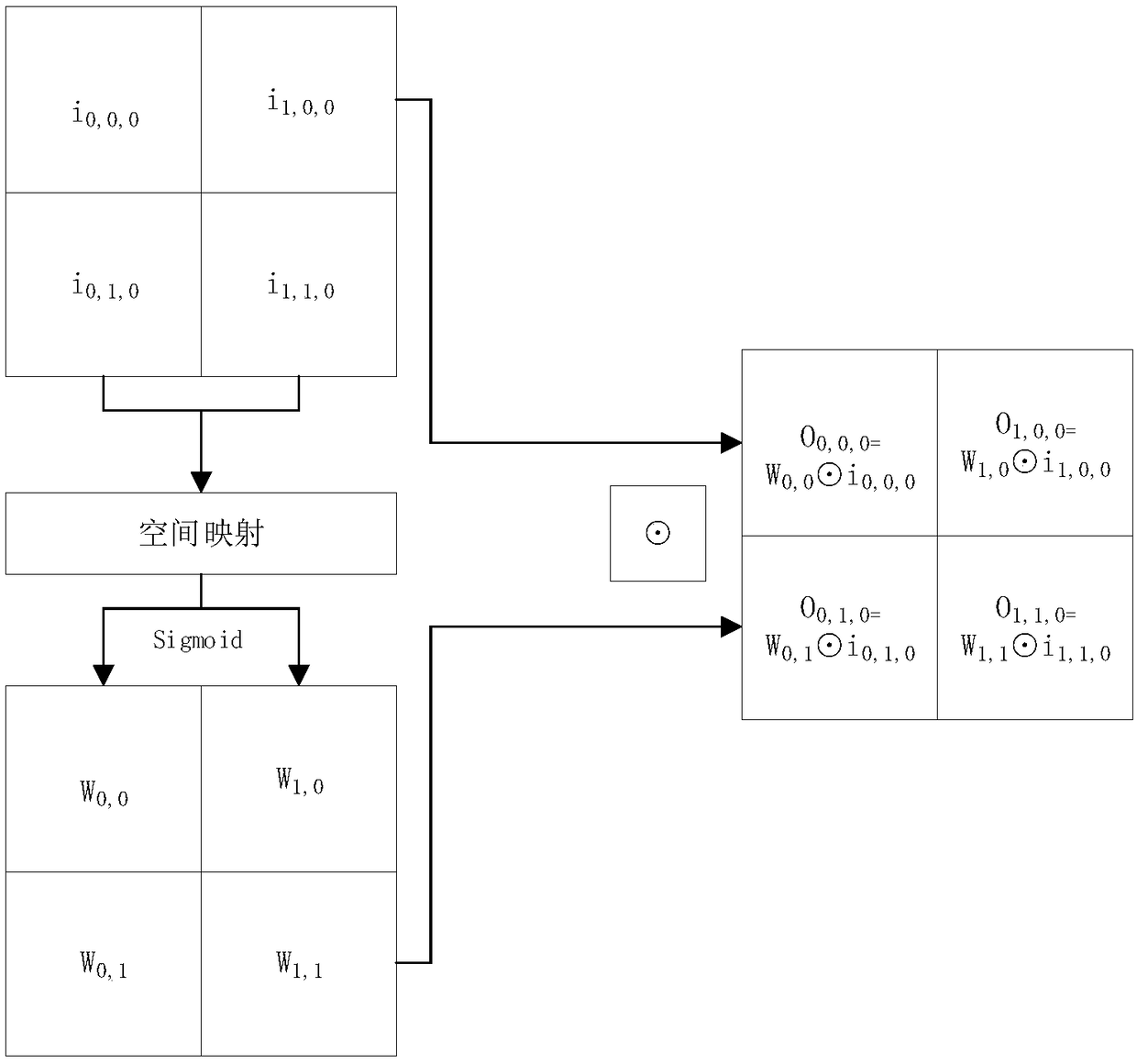

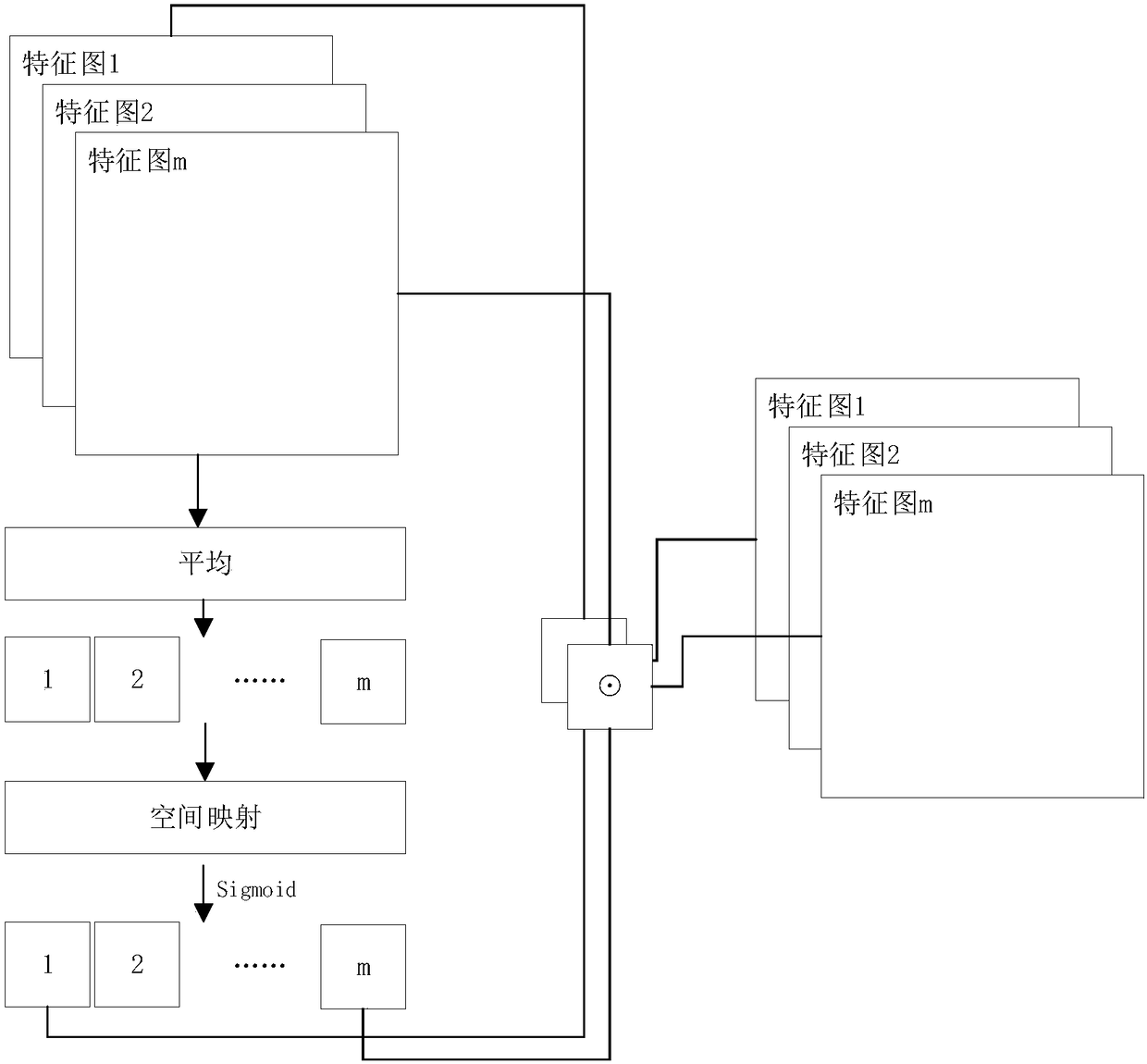

Attention model-based image identification method and system

InactiveCN108364023AImprove the extraction effectImprove targetingCharacter and pattern recognitionNeural architecturesAttention modelFeature extraction

The invention provides an attention model-based image identification method and system. The method comprises the steps of firstly obtaining an input feature graph with an image matrix in a shape [W,H,C], wherein W is width, H is height and C is a channel number; and secondly performing space mapping on the input feature graph by using a preset space mapping weight matrix, performing activation through an activation function to obtain a space weight matrix, and multiplying the space weight matrix by the image matrix of the input feature graph by bit to obtain an output feature graph, wherein the preset space mapping weight matrix is a space attention matrix [C,1] with attention depending on image width and height, at the moment, the shape of the space weight matrix is [W,H,1], or the presetspace mapping matrix is a channel attention matrix [C,C] with attention depending on the image channel number, at the moment, the shape of the space weight matrix is [1,1,C]. The pertinence of feature extraction can be effectively improved, so that the extraction capability of image local features is enhanced.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

Acceleration unit for a deep learning engine

ActiveUS20190266479A1Volume/mass flow measurementCharacter and pattern recognitionStreaming dataActivation function

Embodiments of a device include an integrated circuit, a reconfigurable stream switch formed in the integrated circuit along with a plurality of convolution accelerators and an arithmetic unit coupled to the reconfigurable stream switch. The arithmetic unit has at least one input and at least one output. The at least one input is arranged to receive streaming data passed through the reconfigurable stream switch, and the at least one output is arranged to stream resultant data through the reconfigurable stream switch. The arithmetic unit also has a plurality of data paths. At least one of the plurality of data paths is solely dedicated to performance of operations that accelerate an activation function represented in the form of a piece-wise second order polynomial approximation.

Owner:STMICROELECTRONICS INT NV +1

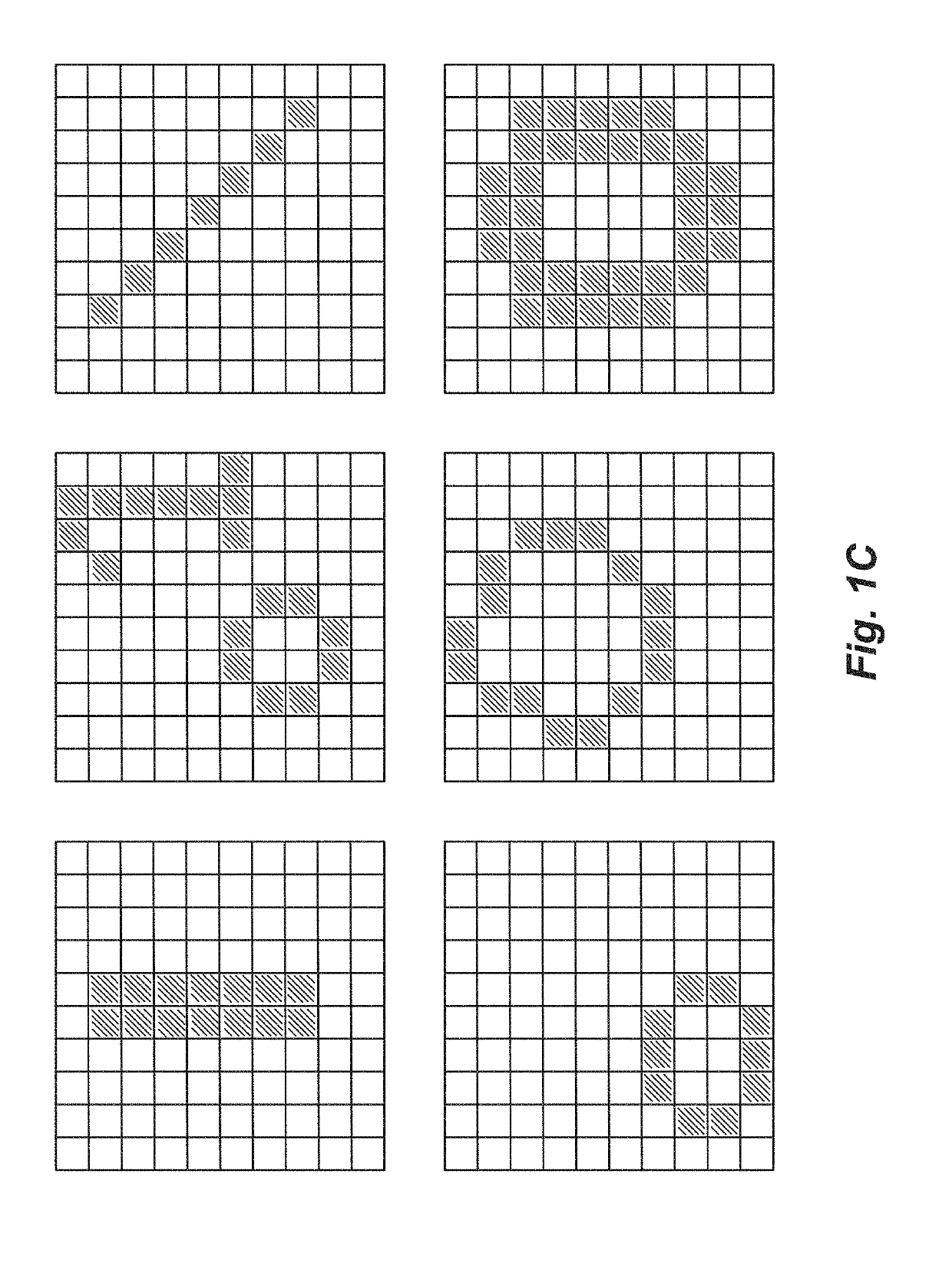

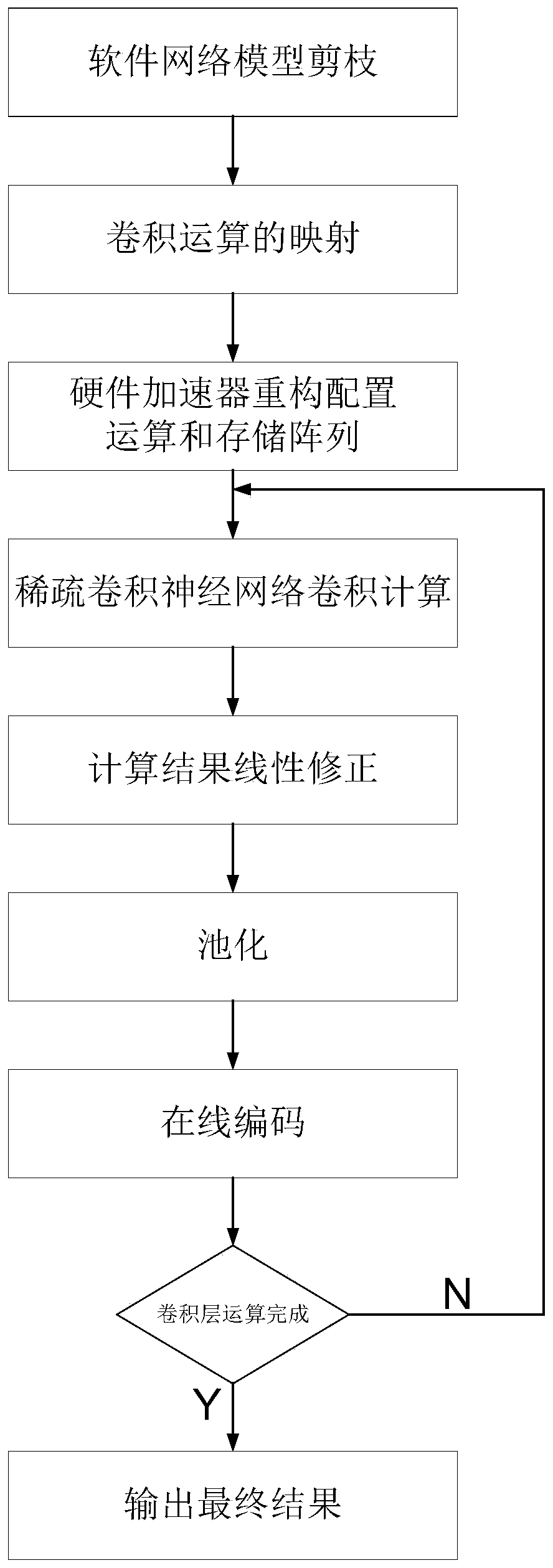

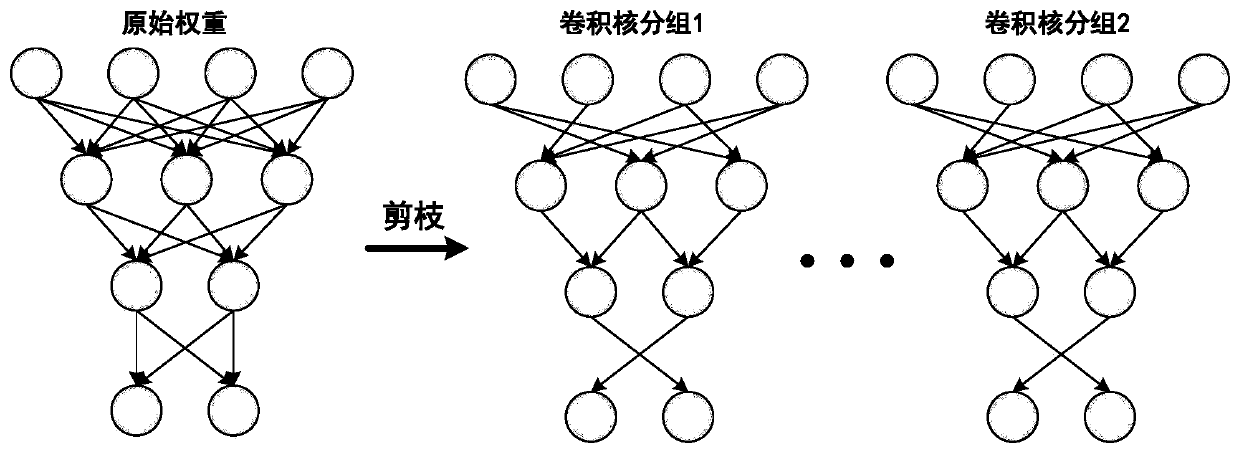

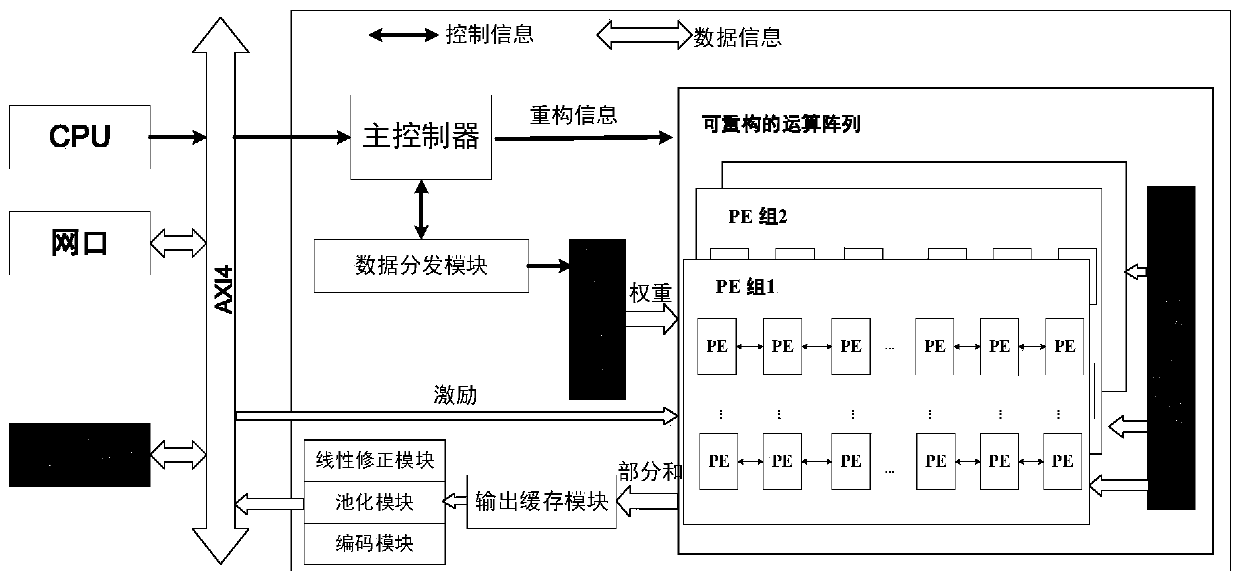

Load-balanced sparse convolutional neural network accelerator and acceleration method thereof

PendingCN109993297ABalanced operationMeet the needs of low power consumption and high energy efficiency ratioNeural architecturesPhysical realisationParallel schedulingActivation function

The invention discloses a load-balanced sparse convolutional neural network accelerator and an acceleration method thereof. The accelerator comprises a main controller, a data distribution module, a convolution operation calculation array, an output result caching module, a linear activation function unit, a pooling unit, an online coding unit and an off-chip dynamic memory. According to the scheme provided by the invention, the high-efficiency operation of the convolution operation calculation array can be realized under the condition of few storage resources, the high multiplexing rate of input excitation and weight data is ensured, and the load balance and high utilization rate of the calculation array are ensured; meanwhile, the calculation array supports convolution operations of different sizes and different scales and parallel scheduling of two layers between rows and columns and between different feature maps through a static configuration mode, and the method has very good applicability and expansibility.

Owner:南京吉相传感成像技术研究院有限公司

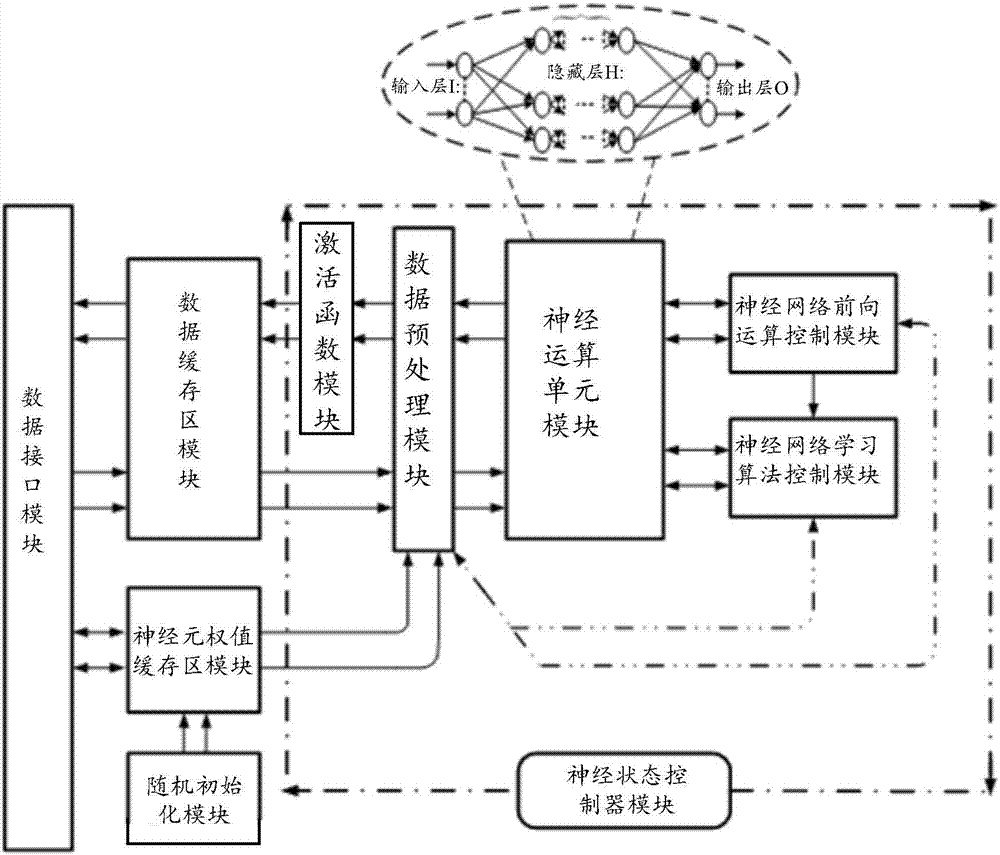

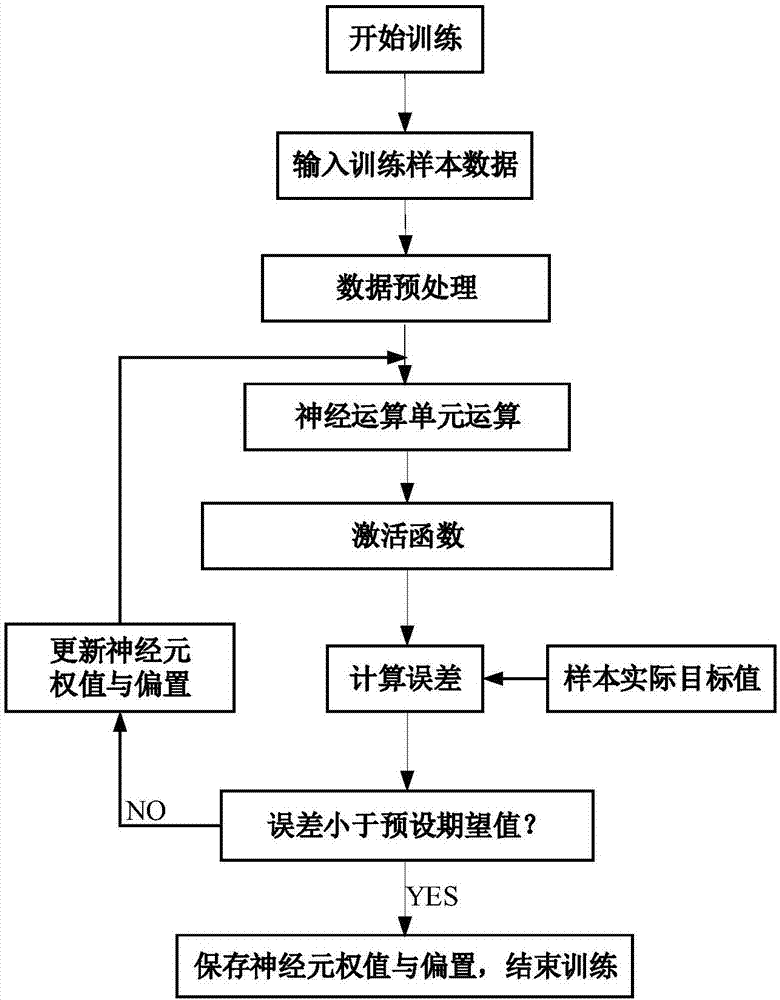

On-chip learning neural network processor

InactiveCN107480782AReduce areaImprove throughputPhysical realisationActivation functionNerve network

The invention discloses an on-chip learning neural network processor comprising a data interface module, a data preprocessing module, a data cache region module, a neuron weight cache region module, a random initialization module, a neural computing unit module, a neural network forward computing control module, an activation function module, a neural state controller module and a neural network learning algorithm control module. The neural state controller module controls all the unit modules to cooperatively work to perform neural network learning and reasoning. The neural computing unit module is designed by using general hardware acceleration computing for programmable control of the neural network computing type and computing scale. The assembly line technology is added in the design so that the data throughput and the computing speed can be greatly enhanced, the focus is put on optimization of the multiply-add units of the neural computing unit and the hardware area can be greatly reduced. Hardware mapping is performed on the neural network learning algorithm so that the neural network processor is enabled to perform on-chip learning and offline reasoning.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

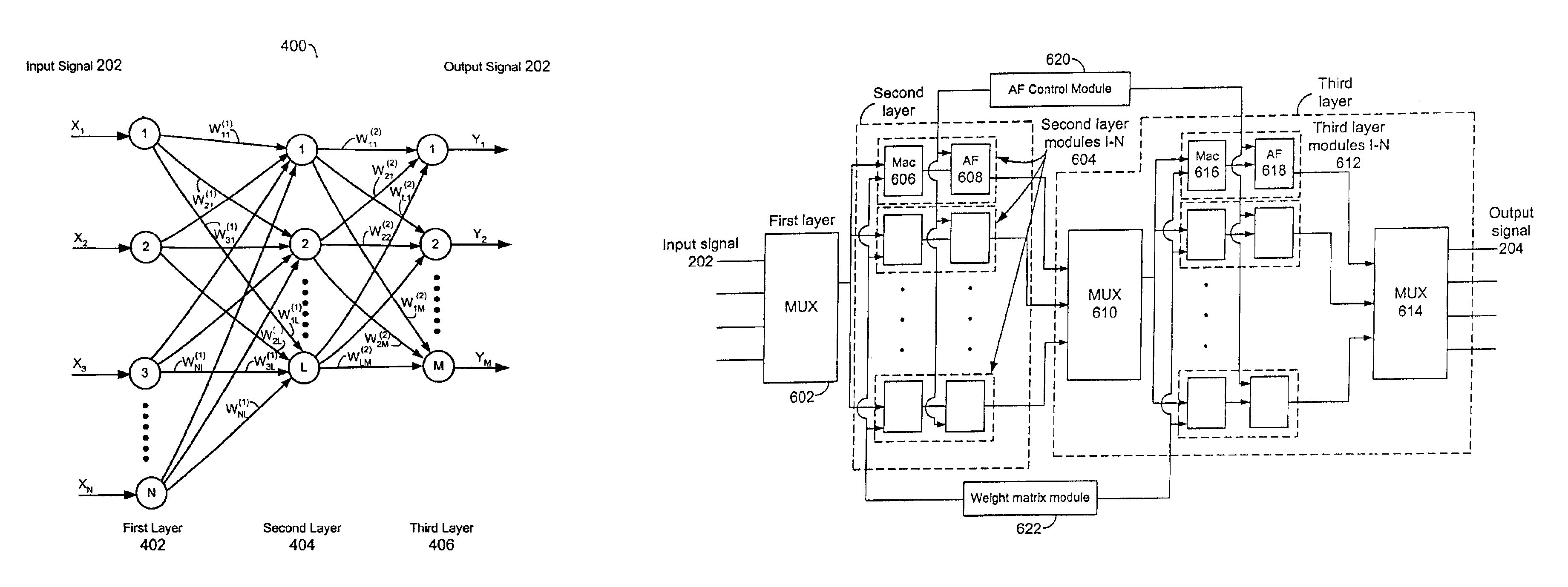

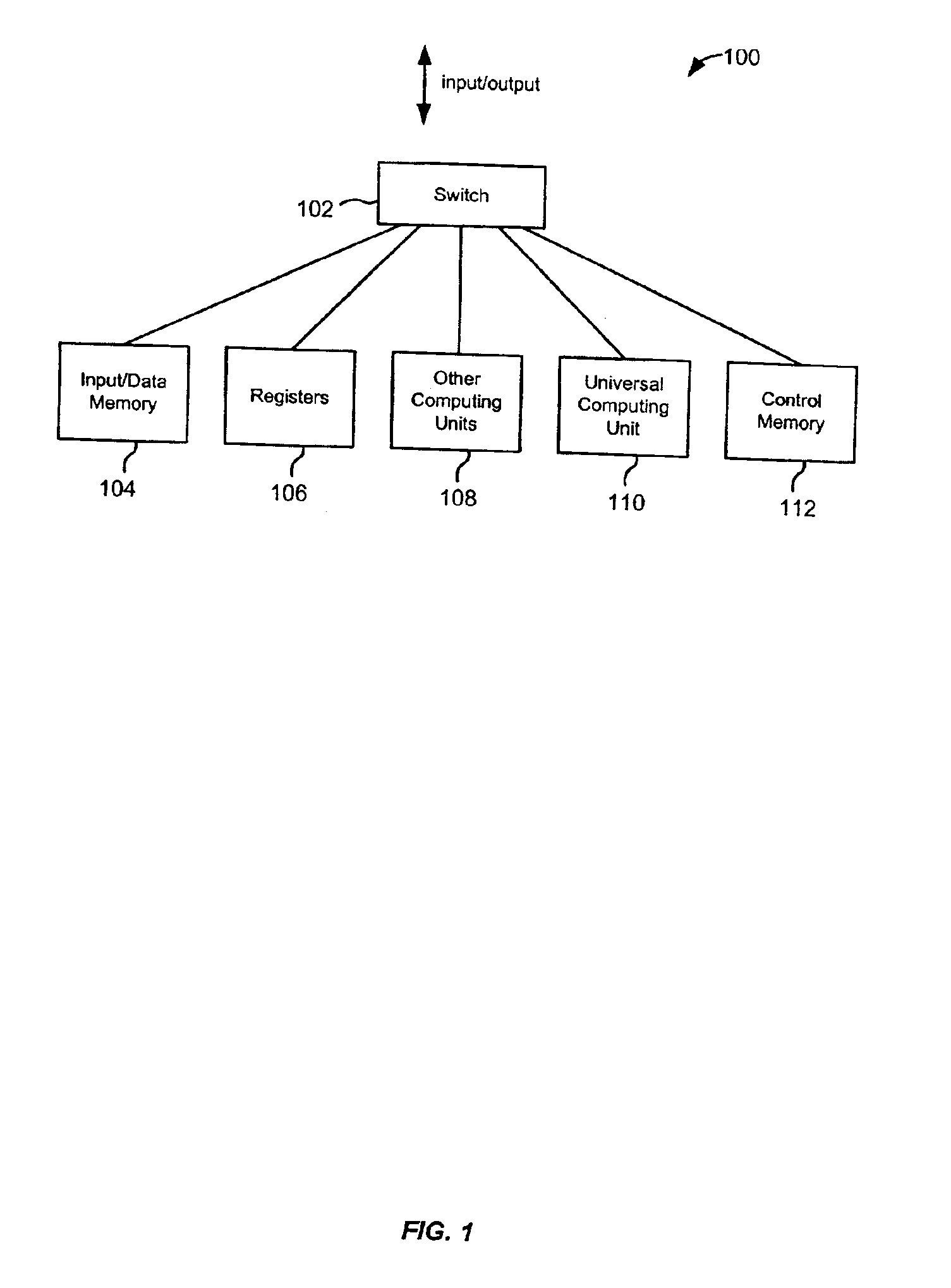

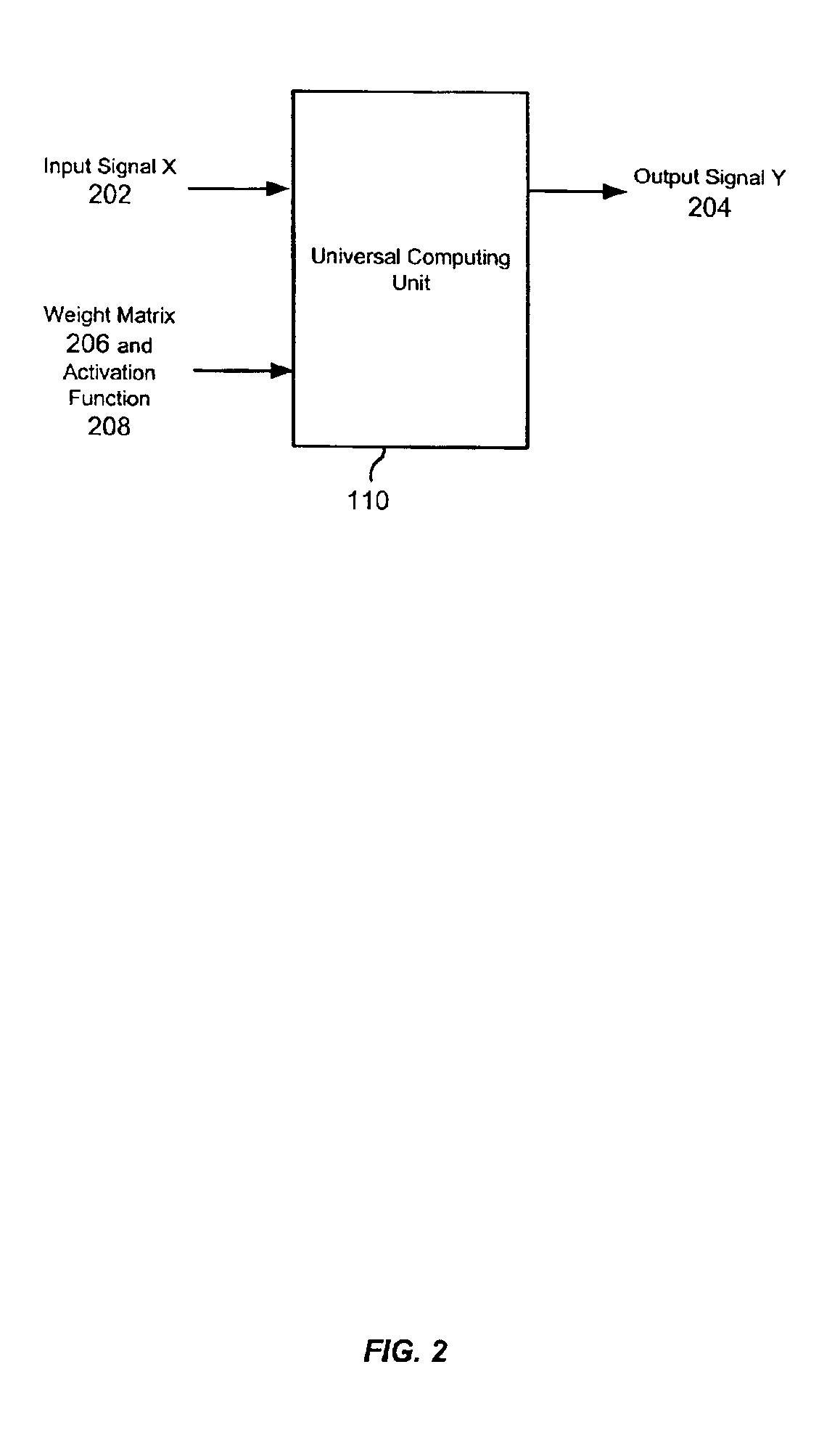

IC for universal computing with near zero programming complexity

A computing machine capable of performing multiple operations using a universal computing unit is provided. The universal computing unit maps an input signal to an output signal. The mapping is initiated using an instruction that includes the input signal, a weight matrix, and an activation function. Using the instruction, the universal computing unit may perform multiple operations using the same hardware configuration. The computation that is performed by the universal computing unit is determined by the weight matrix and activation function used. Accordingly, the universal computing unit does not require any programming to perform a type of computing operation because the type of operation is determined by the parameters of the instruction, specifically, the weight matrix and the activation function.

Owner:NVIDIA CORP

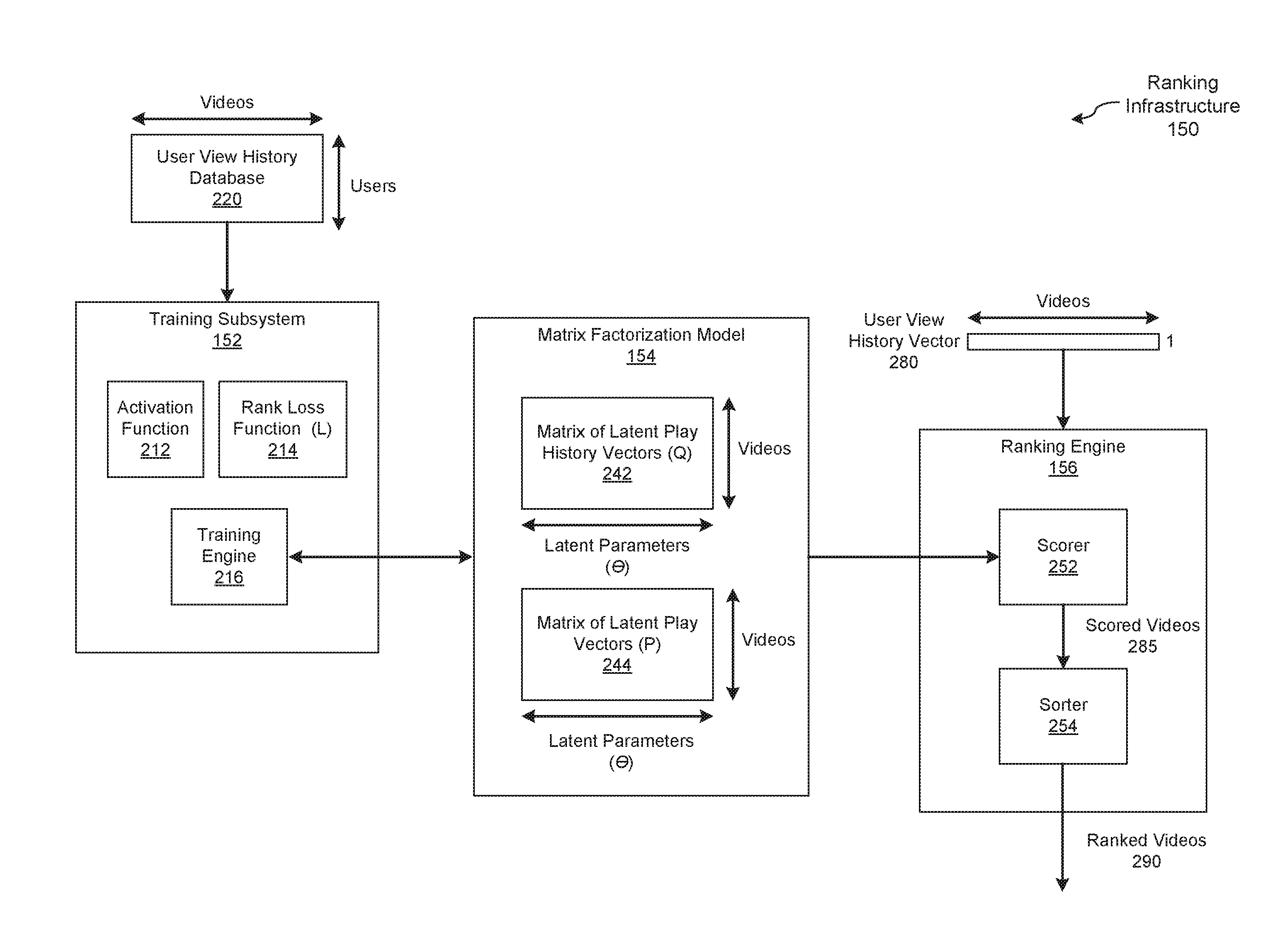

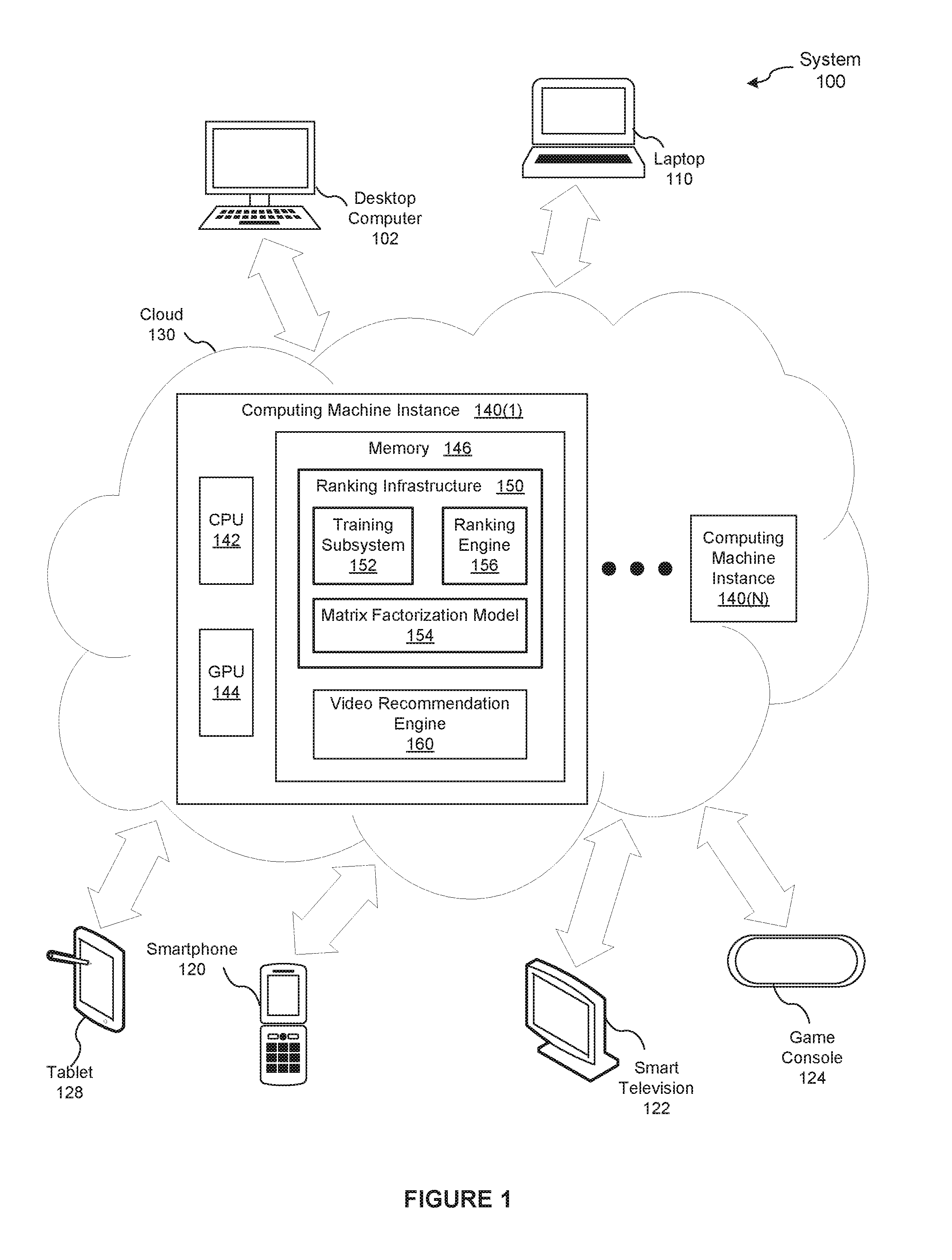

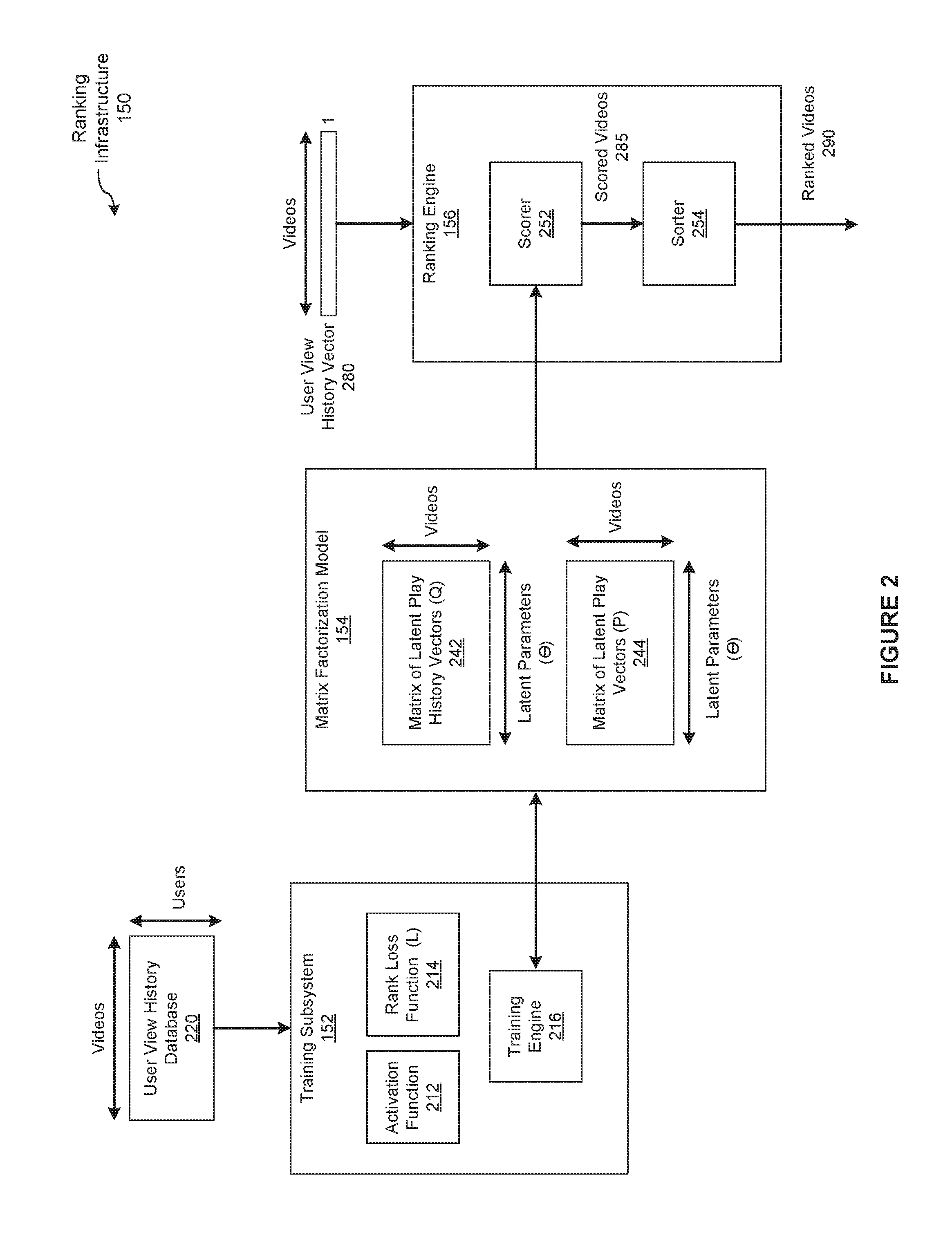

Gaussian ranking using matrix factorization

ActiveUS20170024391A1Efficiently and accurately trainMathematical modelsDigital data information retrievalMatrix decompositionActivation function

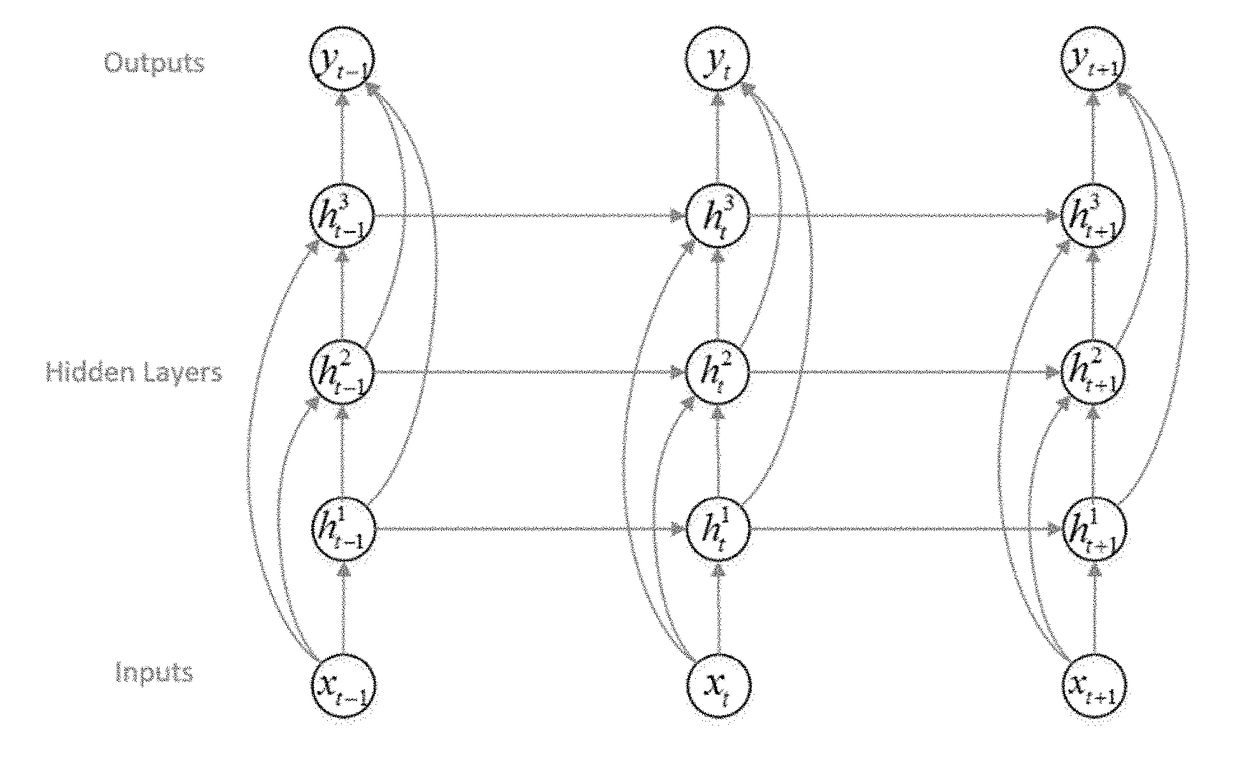

In one embodiment of the present invention, a training engine teaches a matrix factorization model to rank items for users based on implicit feedback data and a rank loss function. In operation, the training engine approximates a distribution of scores to corresponding ranks as an approximately Gaussian distribution. Based on this distribution, the training engine selects an activation function that smoothly maps between scores and ranks. To train the matrix factorization model, the training engine directly optimizes the rank loss function based on the activation function and implicit feedback data. By contrast, conventional training engines that optimize approximations of the rank loss function are typically less efficient and produce less accurate ranking models.

Owner:NETFLIX

Hardware accelerator for compressed rnn on FPGA

ActiveUS20180046897A1Digital data processing detailsNeural architecturesComputer hardwareActivation function

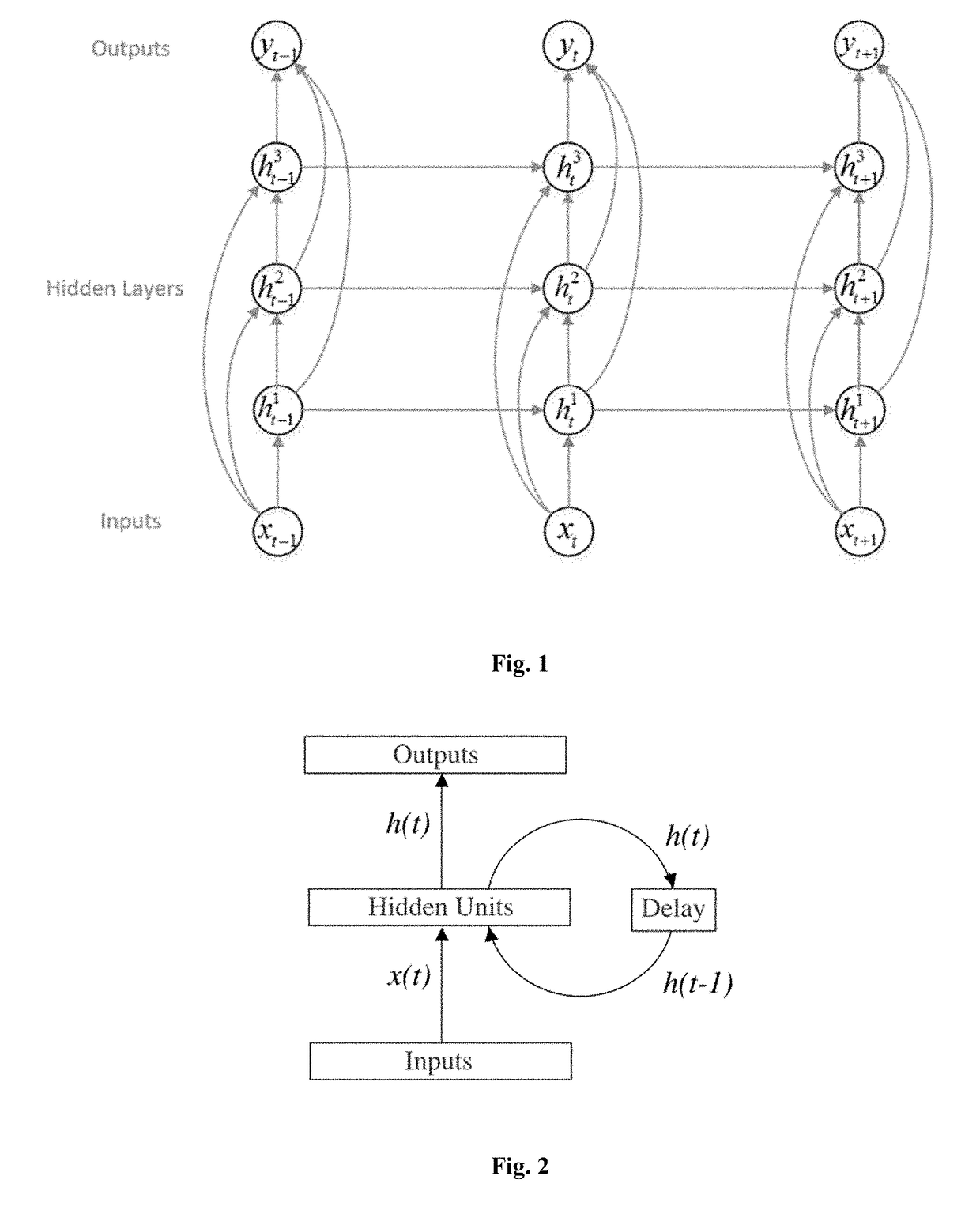

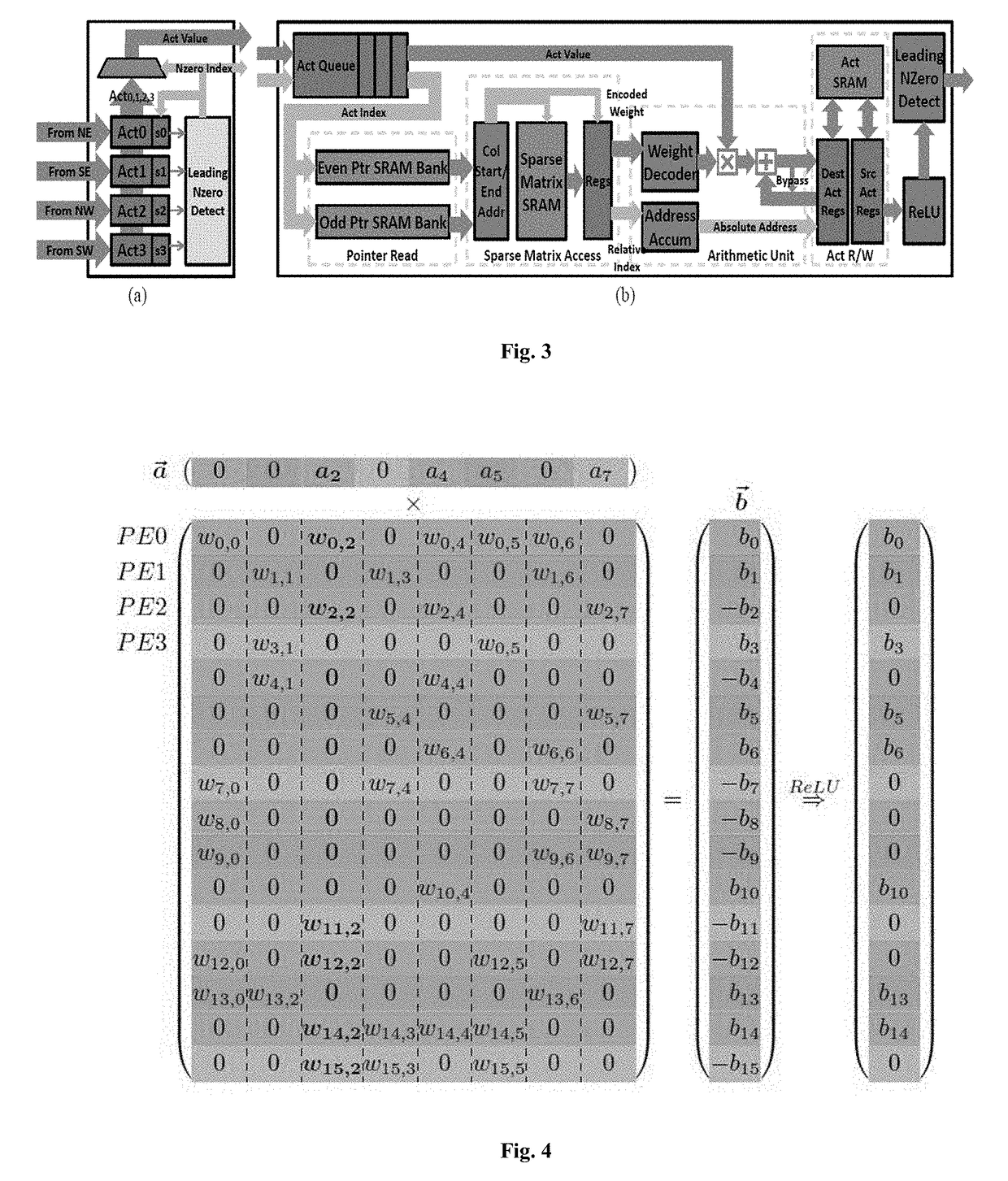

The present invention relates to recurrent neural network. In particular, the present invention relates to how to implement and accelerate a recurrent neural network based on an embedded FPGA. Specifically, it proposes an overall design processing method of matrix decoding, matrix-vector multiplication, vector accumulation and activation function. In another aspect, the present invention proposes an overall hardware design to implement and accelerate the above process.

Owner:XILINX INC

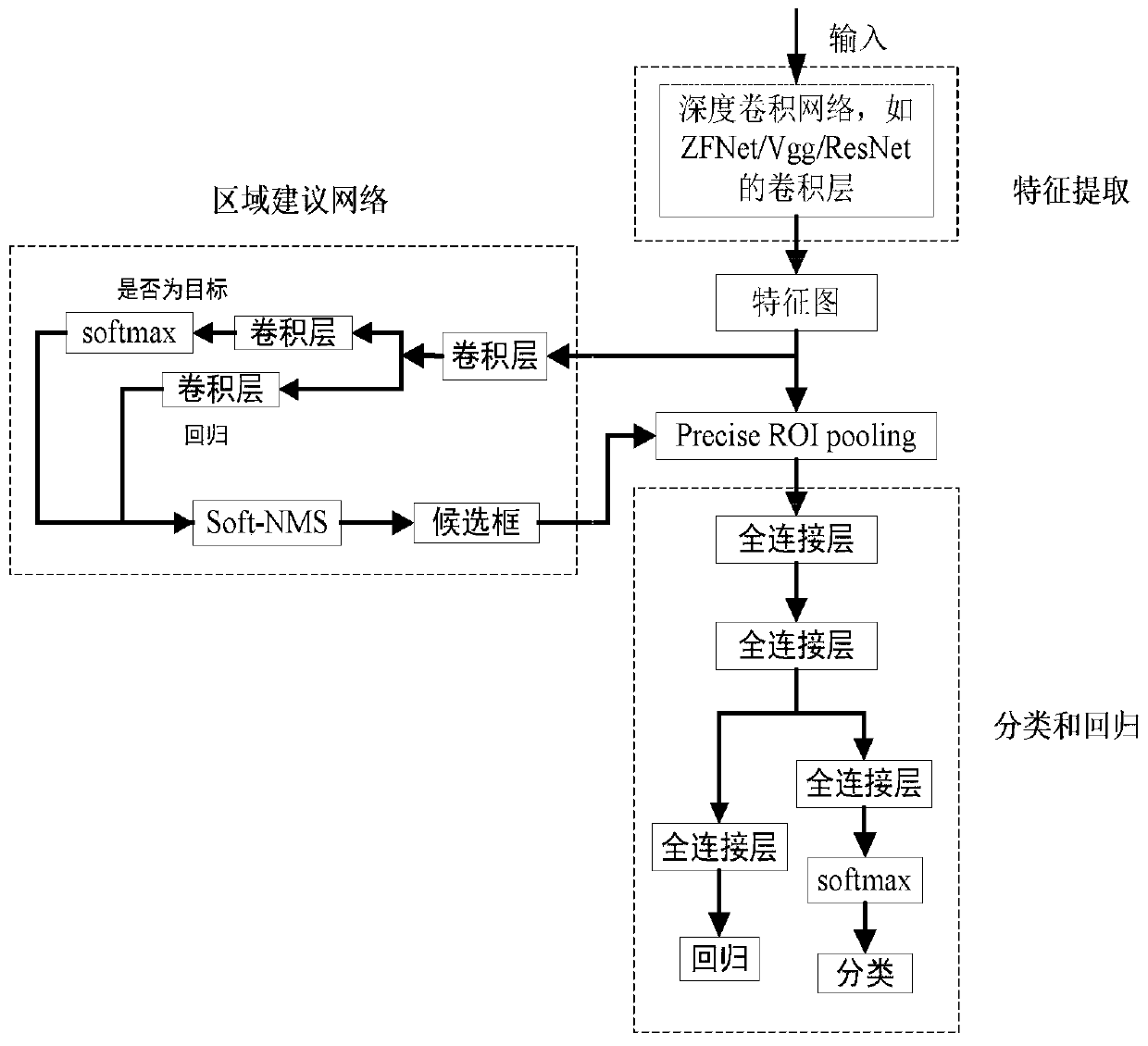

Radar target image detection method based on Precise ROI-Faster R-CNN

ActiveCN110210463AIntelligent processingImprove detection accuracyWave based measurement systemsInternal combustion piston enginesData setActivation function

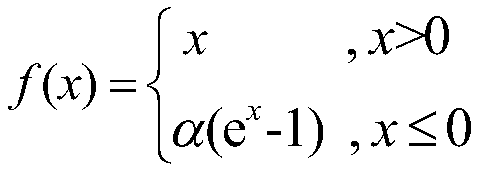

The present invention relates to a radar target image detection method based on Precise ROI-Faster R-CNN, and belongs to the technical field of radar signal processing. Firstly, the radar converts echo data information into an image and constructs a training data set; then, a Precise ROI-Faster R-CNN target detection model is established and comprises a shared convolutional neural network, a region suggestion network and a classification and regression network, and an ELU activation function, a Precise ROI Pooling method and a Soft-NMS method are adopted; inputting a training data set to carryout iterative optimization training on the model to obtain an optimal parameter of the model; and finally, inputting an image generated by real-time radar target echoes into the trained optimal target detection model for testing, and completing target detection and classification integrated processing. The method can intelligently learn and extract radar echo image features, is suitable for detection and classification of different types of targets in a complex environment, and reduces processing time and hardware cost.

Owner:NAVAL AVIATION UNIV

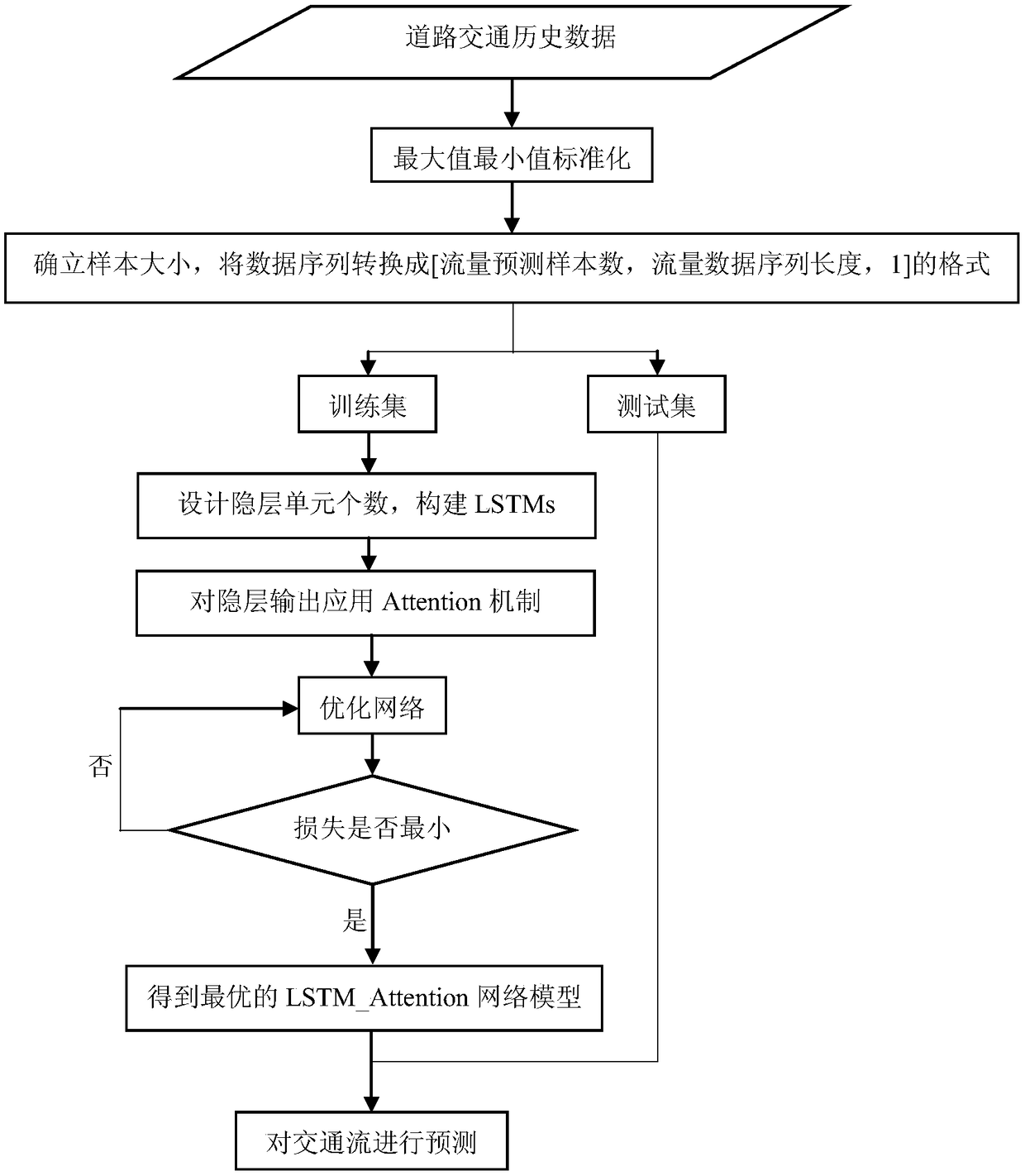

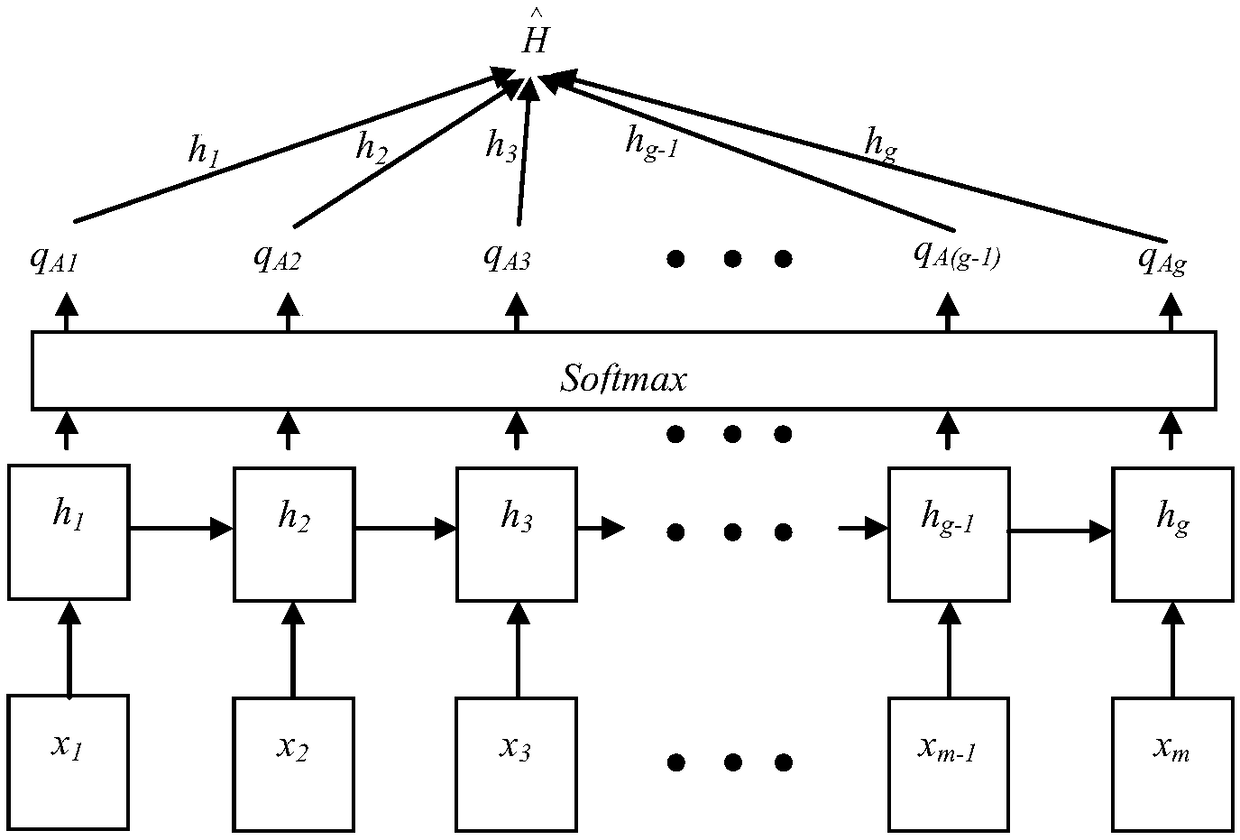

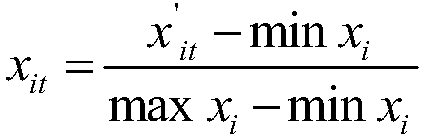

A traffic flow forecasting method based on LSTM_Attention network

InactiveCN109242140AIntegrity guaranteedForecastingNeural architecturesActivation functionNetwork model

A traffic flow prediction method based on LSTM_Attention network comprises the following steps: (1) acquiring road traffic history data, dividing the data into training set and test set, and preprocessing the data; (2) constructing a layer of LSTM network, the number of hidden layer units is set according to the sequence length of samples in the training set data, a full-link layer with Softmax activation function is added, and finally a logistic regression layer is added as a prediction layer, the training set data is input into the network to obtain the prediction value, the prediction valueand the real value are input into the loss function, and the network model and the internal parameters are optimized by back propagation. (3) Inputting the test set data into the trained LSTM_Attention network to obtain the prediction data. The present invention accurately predicts future road traffic flow data.

Owner:ZHEJIANG UNIV OF TECH

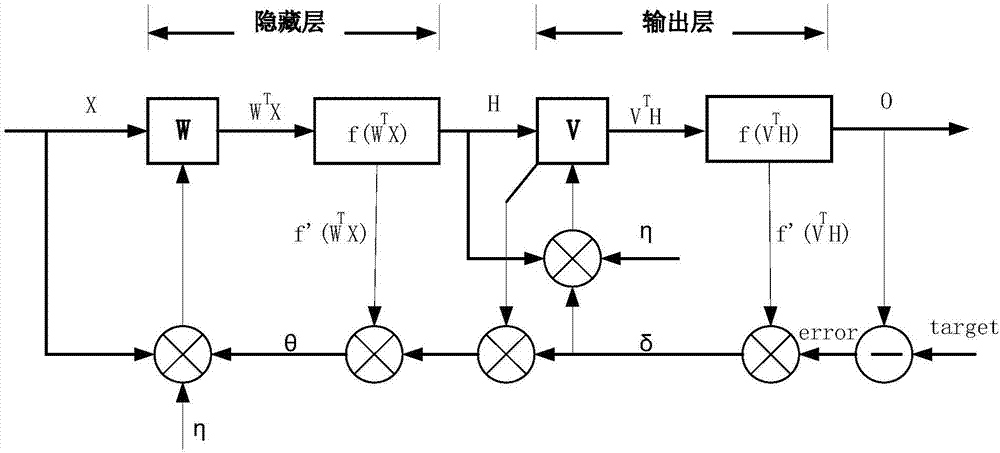

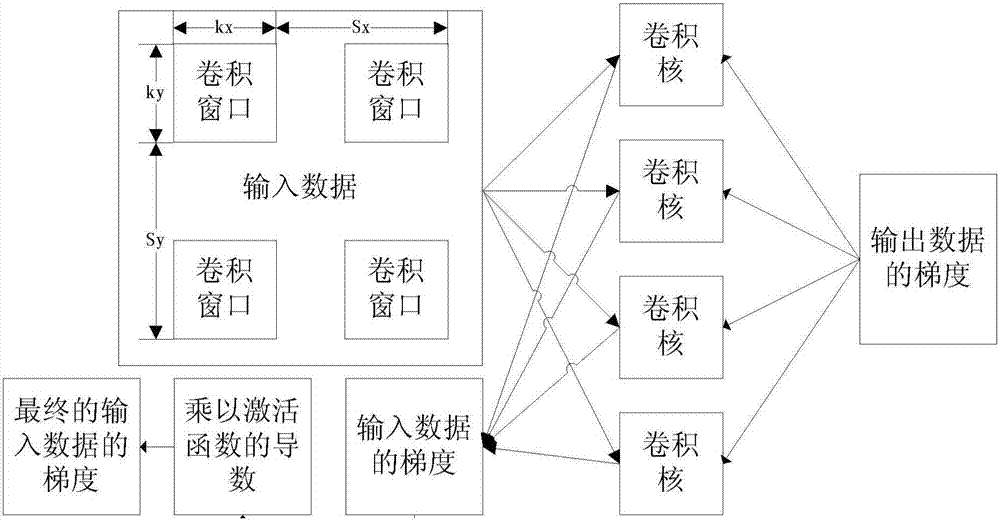

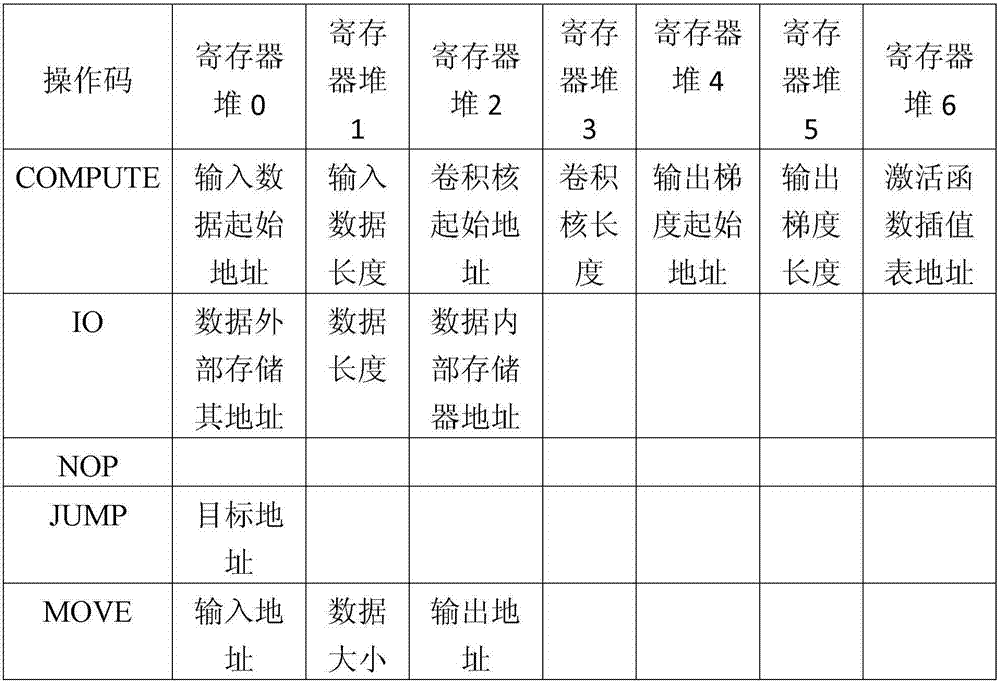

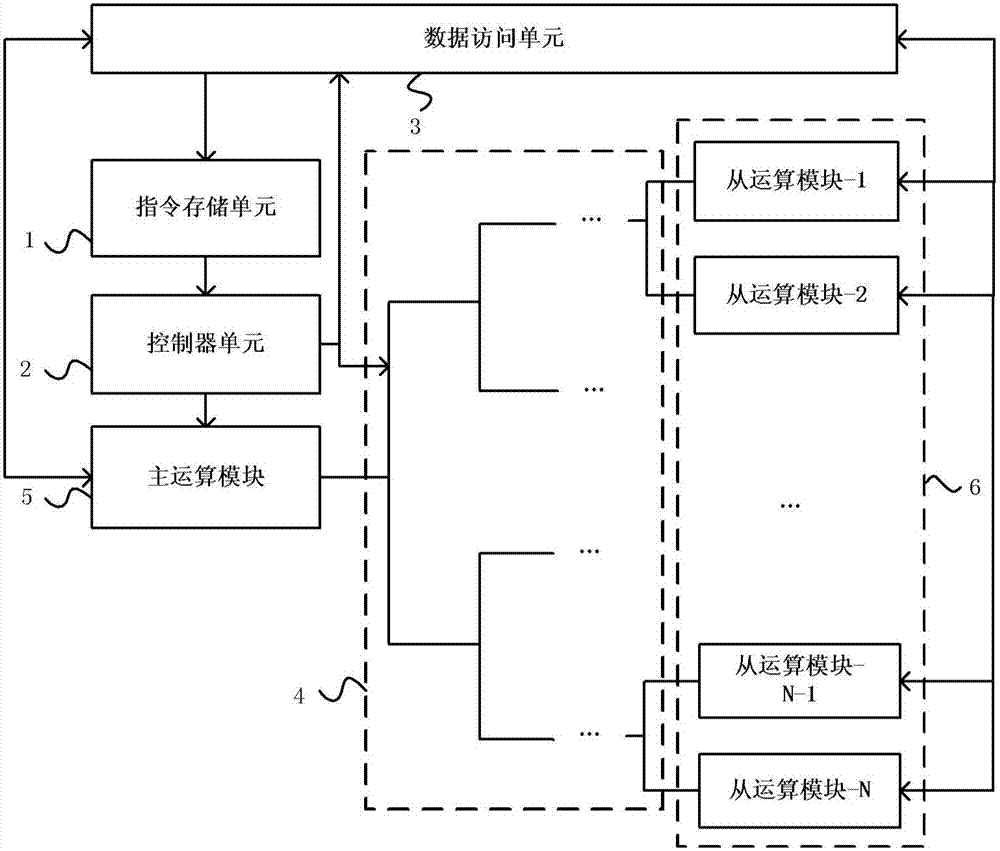

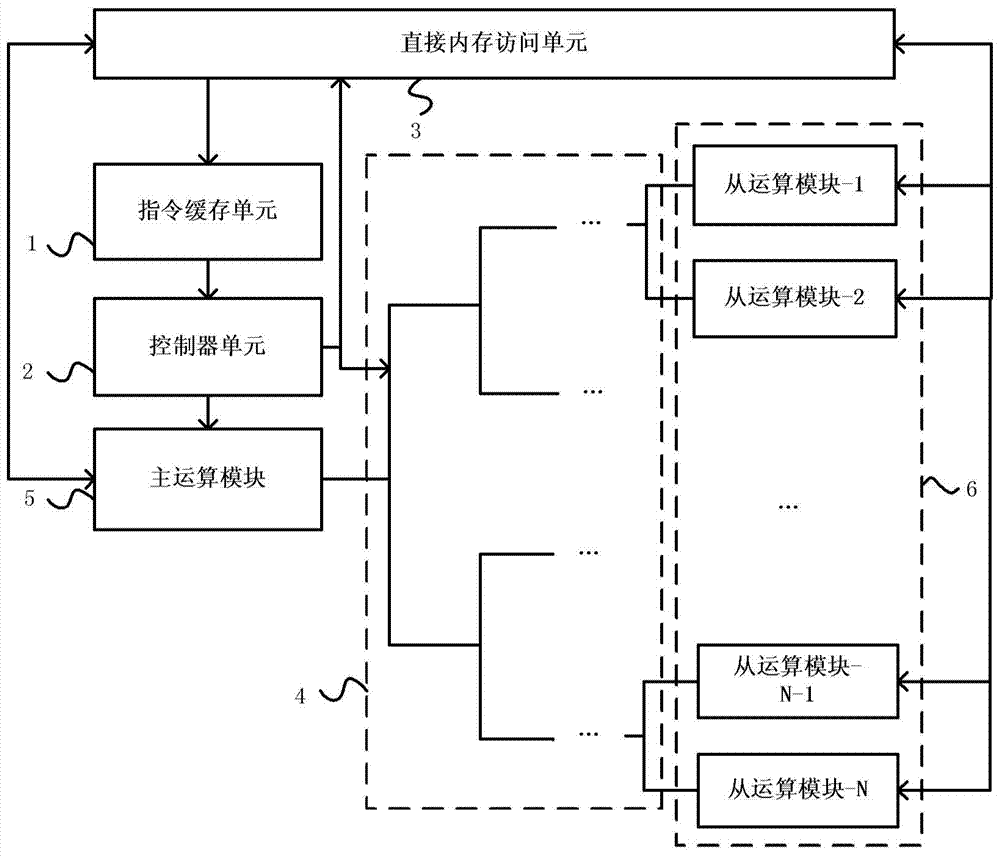

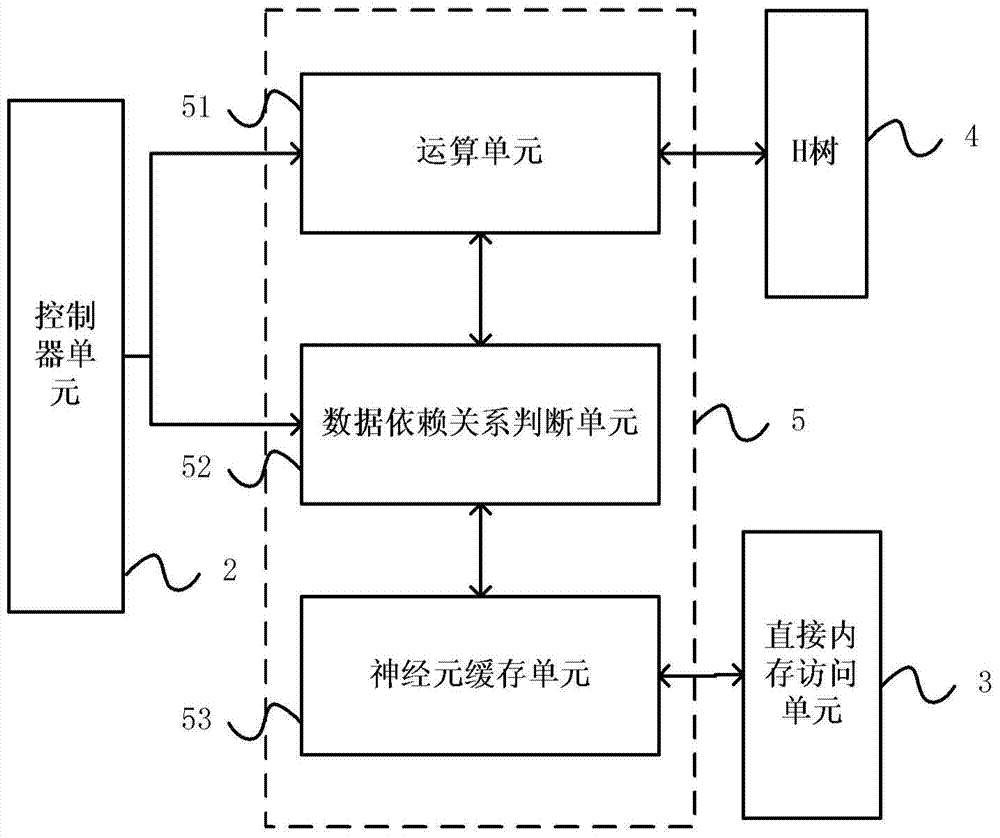

Apparatus and method for performing convolutional neural network training

ActiveCN107341547ASimple formatFlexible vector lengthDigital data processing detailsDigital computer detailsActivation functionAlgorithm

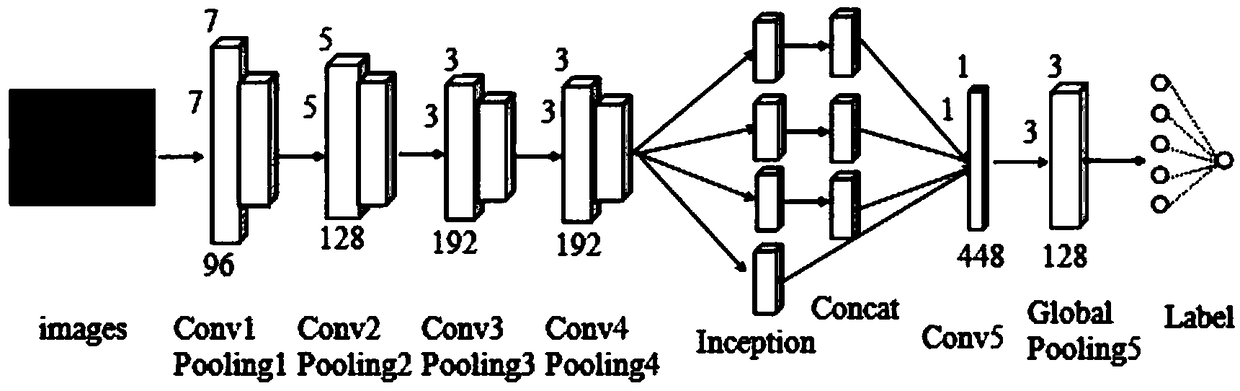

The present invention provides an apparatus and a method for performing convolution neural network inverse training. The apparatus comprises an instruction storage unit, a controller unit, a data access unit, an interconnection module, a main computing module, and a plurality of slave computing modules. The method comprises: for each layer, carrying out data selection on the input neuron vector according to the convolution window; and taking the data from the previous layer and the data gradient from the subsequent layer that are obtained according to selection as the inputs of the computing unit of the apparatus; calculating and updating the convolution kernel; and according to the convolution kernel, the data gradient, and the derivative function of the activation function, calculating the data gradient output by the apparatus, and storing the data gradient to a memory so as to output to the previous layer for inverse propagation calculation. According to the apparatus and method provided by the present invention, data and weight parameters involved in the calculation are temporarily stored in the high-speed cache memory, so that convolution neural network inverse training can be supported more flexibly and effectively, and the executing performance of the application containing a large number of memory access is improved.

Owner:CAMBRICON TECH CO LTD

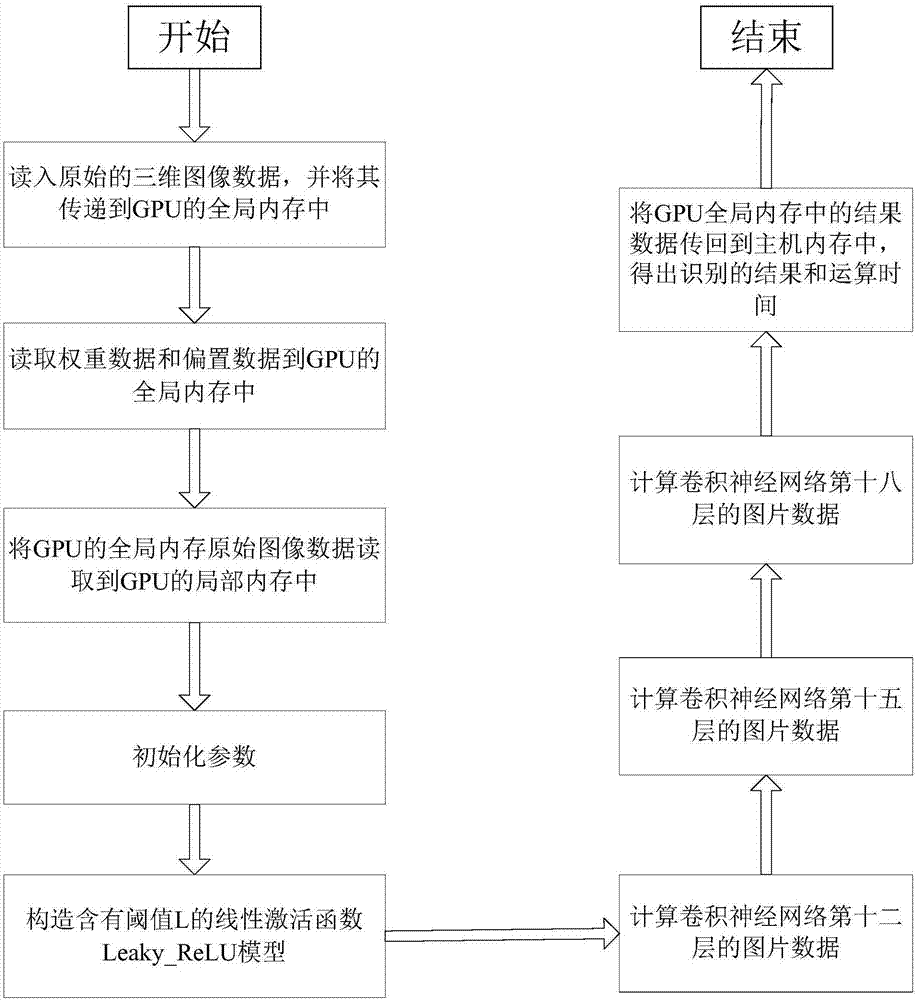

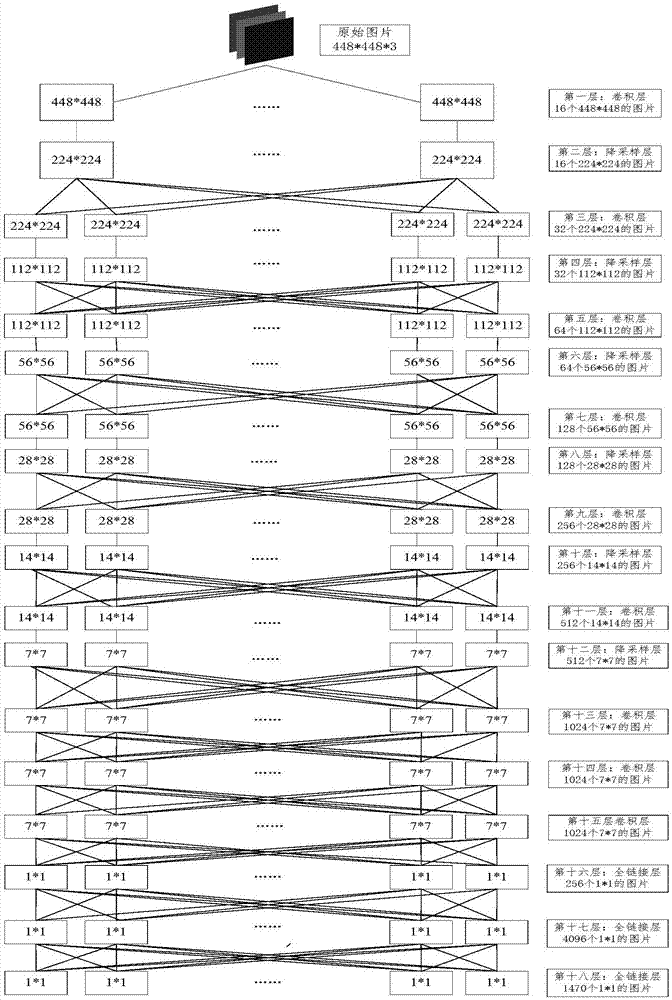

Convolutional neural network acceleration method based on OpenCL standard

ActiveCN107341127ANo change in accuracy capabilityCalculation speedMultiple digital computer combinationsNeural architecturesActivation functionHost memory

The invention proposes a convolutional neural network acceleration method based on the OpenCL standard for mainly solving the problem of low efficiency of the existing CPU to process a convolutional neural network. The method comprises the following steps: 1, reading original three-dimensional image data, and transmitting the original three-dimensional image data to a global memory of a GPU; 2, reading weights and offset data in the global memory of the GPU; 3, reading the original image data in the global memory of the GPU in a local memory of the GPU; 4, initializing the parameters, and constructing a linear activation function Leaky-ReLU; 5, calculating picture data of the twelfth layer of the convolutional neural network; 6, calculating the picture data of the fifteenth layer of the convolutional neural network; and 7, calculating the picture data of the eighteenth layer of the convolutional neural network, storing the picture data in the GPU, transmitting the picture data to a host memory, and providing an operation time. By adoption of the convolutional neural network acceleration method, the operation speed of the convolutional neural network is improved, and the convolutional neural network acceleration method can be applied to object detection in computer vision.

Owner:XIDIAN UNIV

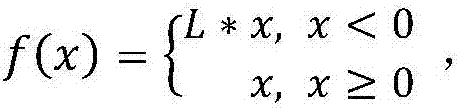

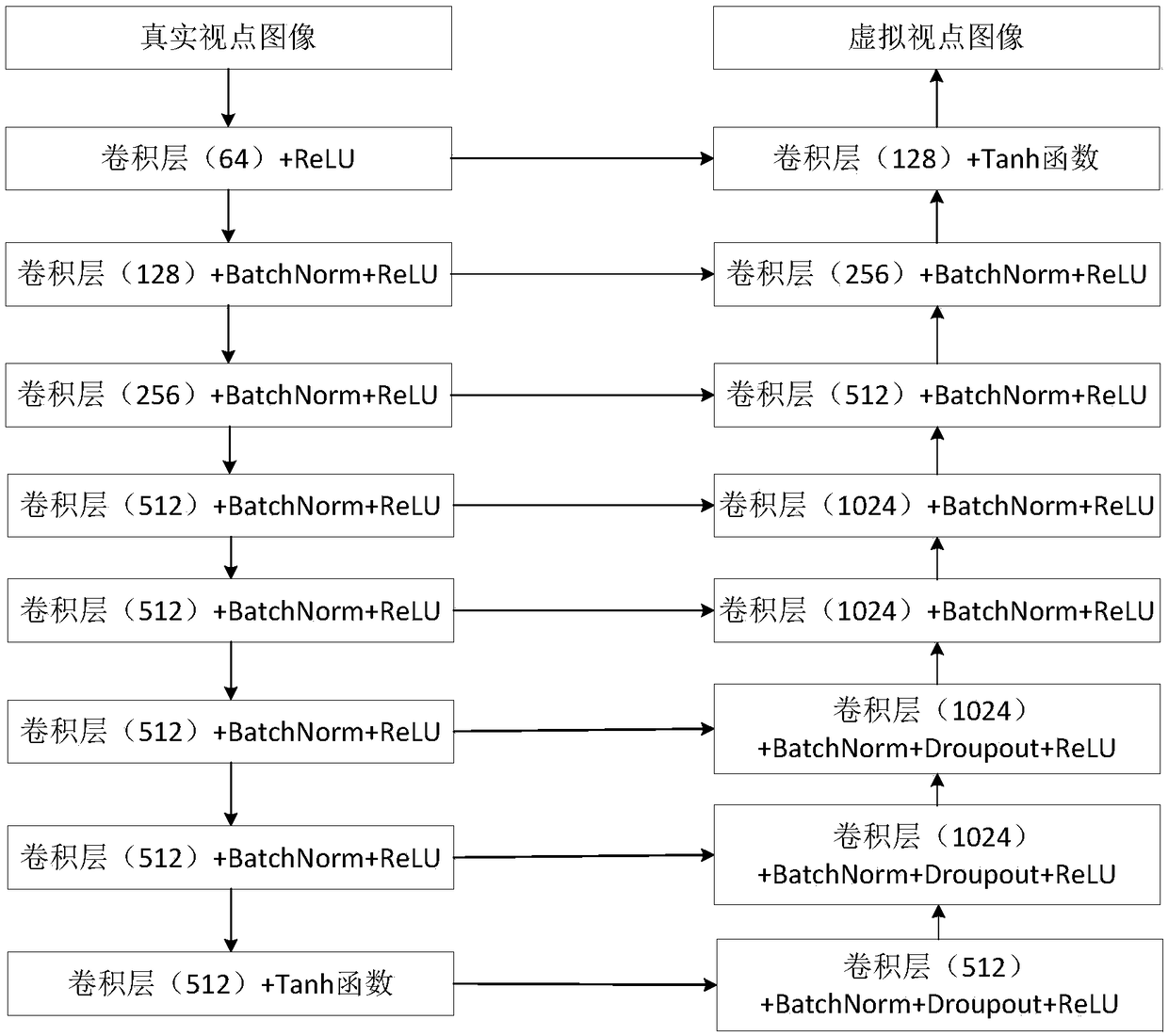

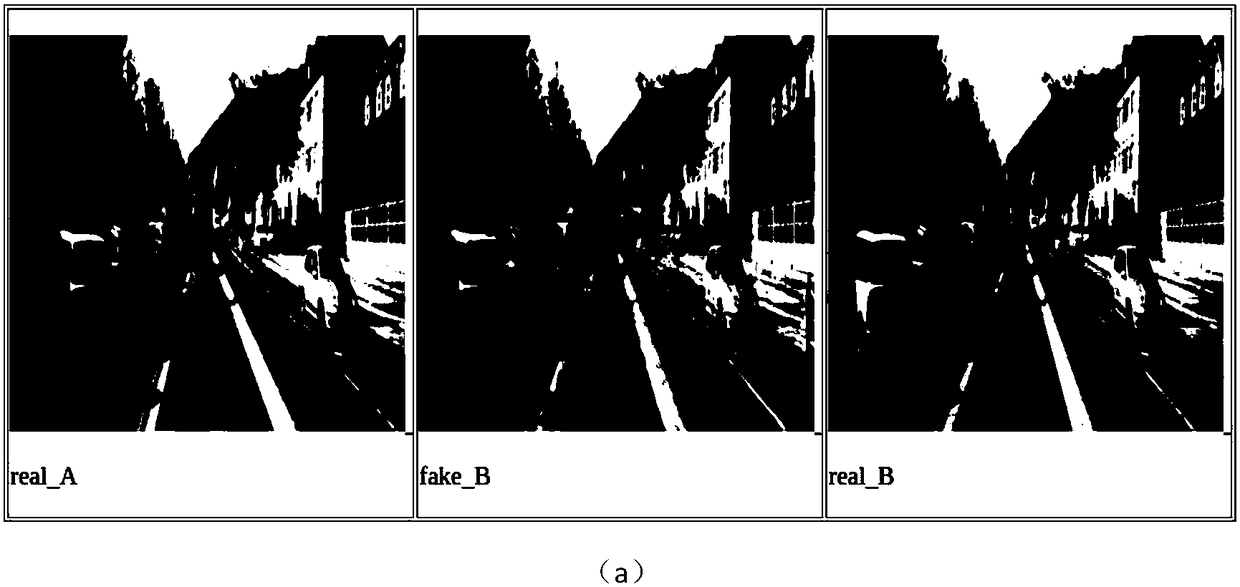

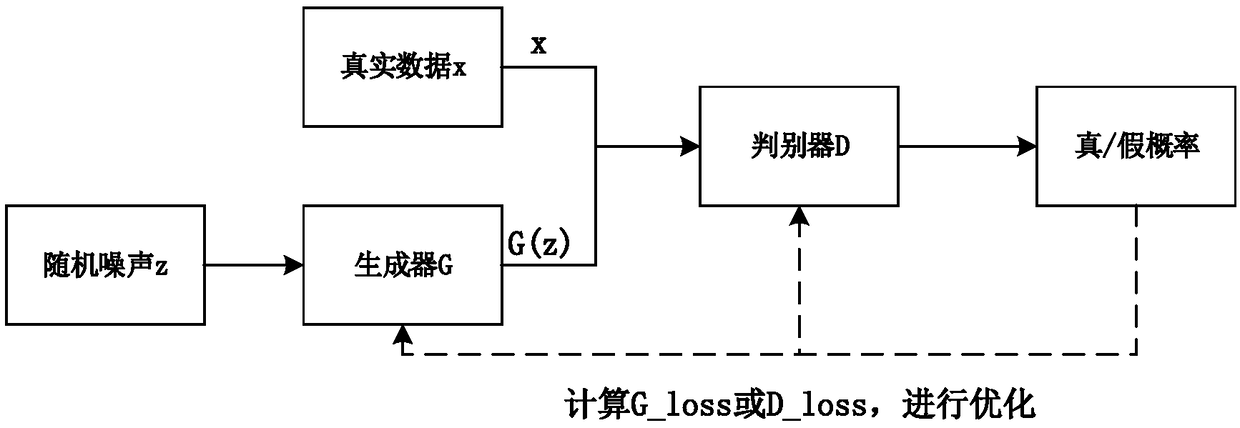

Virtual viewpoint image generation method based on generation type confrontation network

The invention relates to a virtual viewpoint image generation method based on a generation type confrontation network. The method comprises the following steps: first step, making a data set: obtaining an image pair necessary for training the generation type confrontation network; constructing a model: the structures used by a generator and a discriminator are that batch normalization layers BatchNorm and nonlinear operation unit ReLU activation functions are connected behind convolutional layers, all convolutional layers use a 4*4 convolution kernel size, the step size is set as 2, the lengthand width are both reduced to a half of the original length and width when downsampling is performed on a feature image, the length and width are both amplified to twice of the original length and width during upsampling, and a Dropout layer sets a Dropout rate as 50%; the RelU activation function is LeakyReLu; defining the loss; and training and testing the model.

Owner:TIANJIN UNIV

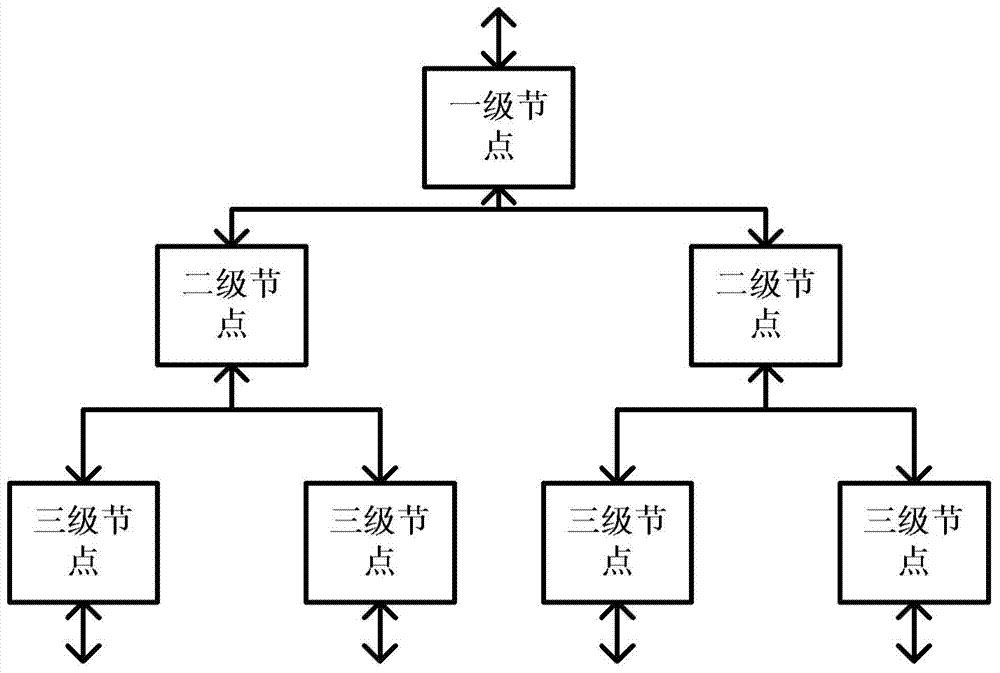

Device and method for executing reverse training of artificial neural network

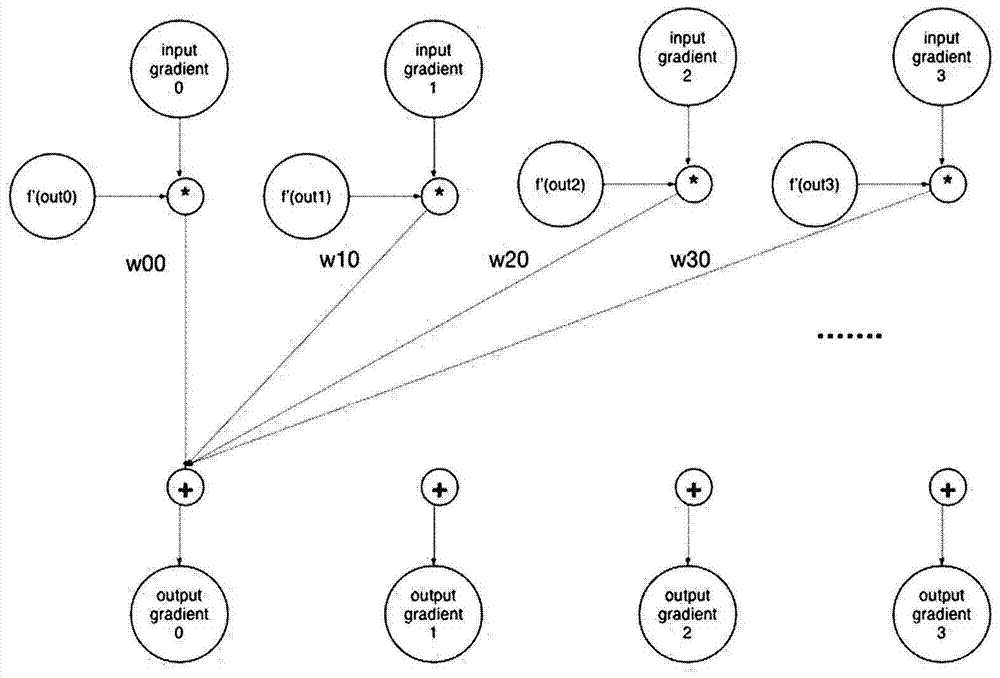

The invention provides a device for executing reverse training of an artificial neural network. The device comprises an instruction buffer memory unit, a controller unit, a direct memory accessing unit, an H-tree module, a main operation module and a plurality of auxiliary operation modules. The device can realize reverse training of a multilayer artificial neural network. For each layer, weighted summation is performed on an input gradient vector and an output gradient vector of the layer is calculated. An input gradient vector of a next layer can be obtained through multiplying the output gradient vector by a derivative value of an excited function in forward operation. The gradient of the weight at this layer is obtained through multiplying the input gradient vector by an input neuron in forward operation. Then the weight of this layer can be updated according to the gradient of the weight at this layer.

Owner:CAMBRICON TECH CO LTD

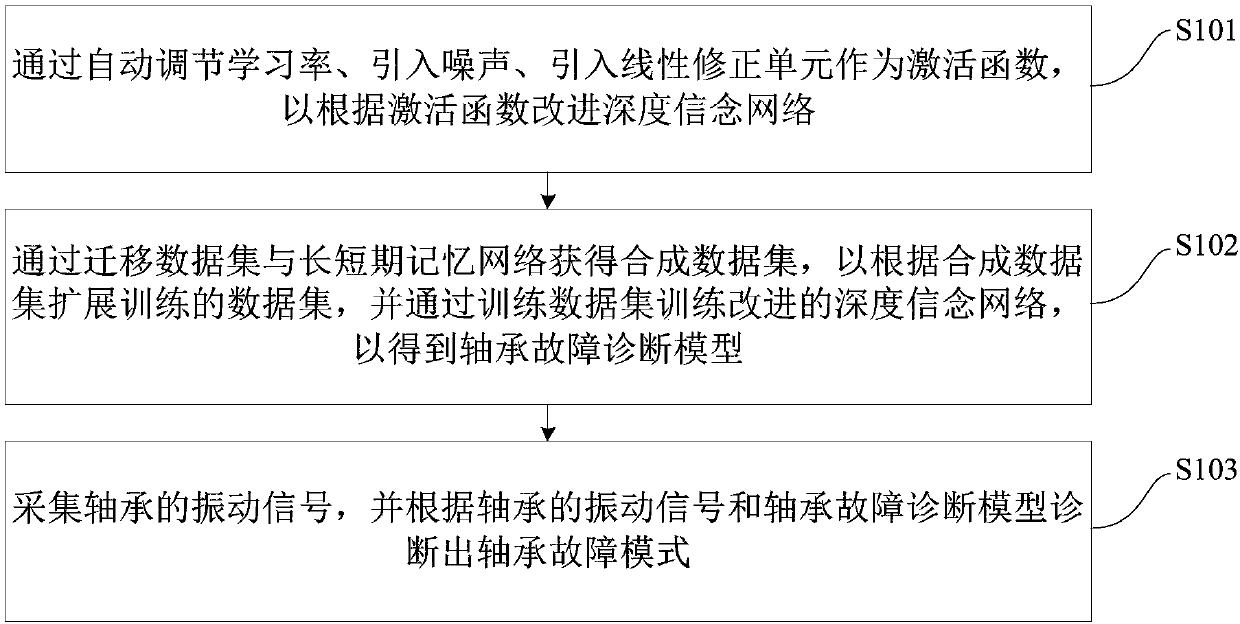

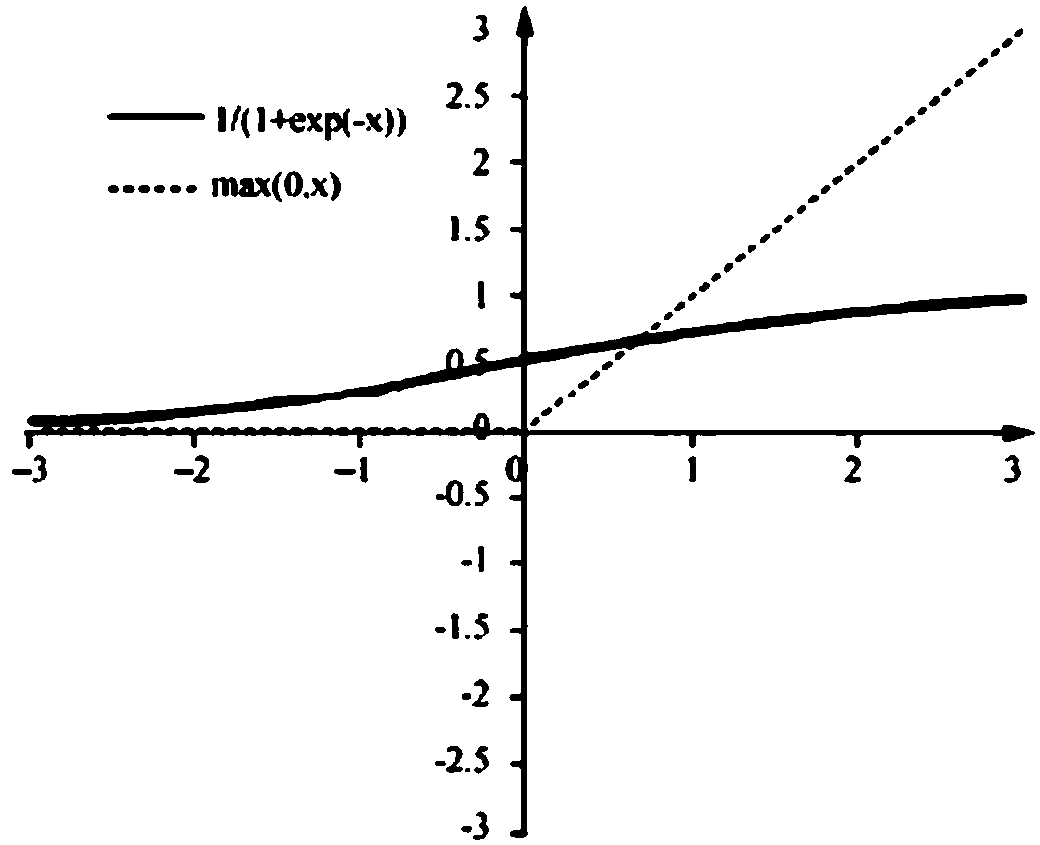

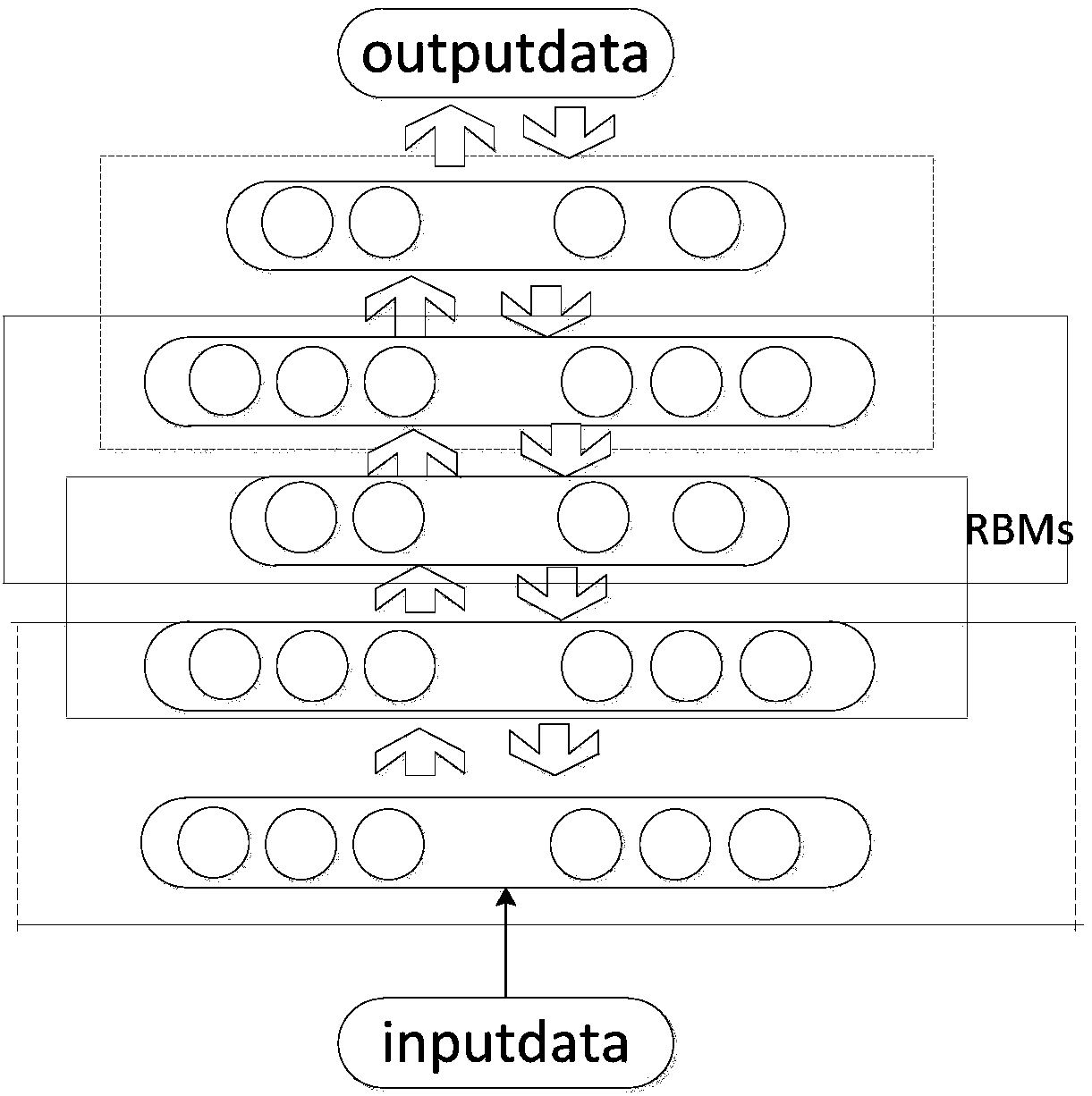

Bearing fault mode diagnosis method and system based on deep learning

InactiveCN108304927ASolve problemsAccurate Fault Diagnosis AccuracyMachine bearings testingNeural architecturesDeep belief networkActivation function

The invention discloses a bearing fault mode diagnosis method and system based on deep learning. The method comprises: a learning rate is adjusted automatically, noises are introduced, and a linear correction unit is introduced as an activation function, and thus a deep belief network is improved based on the activation function; a data set and long- and short-term memory networks are migrated toobtain a synthetic data set, a trained data set is extended based on the synthetic data set, and the improved deep belief network is trained based on training data set training to obtain a bearing fault diagnosis model; and a vibration signal of the bearing is collected and a bearing failure mode is diagnosed based on the bearing vibration signal and the bearing fault diagnosis model. On the basisof combination of a semi-supervised learning and a migration learning algorithm, the diagnostic accuracy is improved while no insufficient data are provided.

Owner:TSINGHUA UNIV

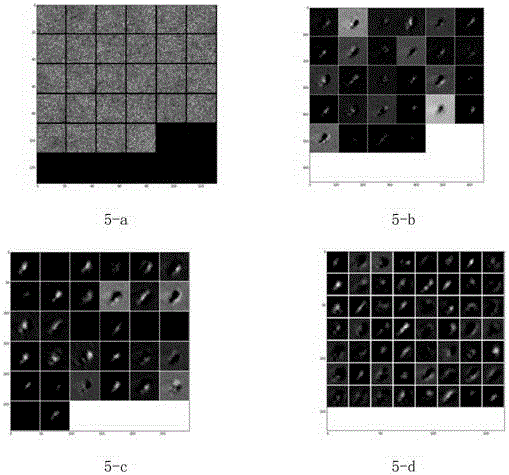

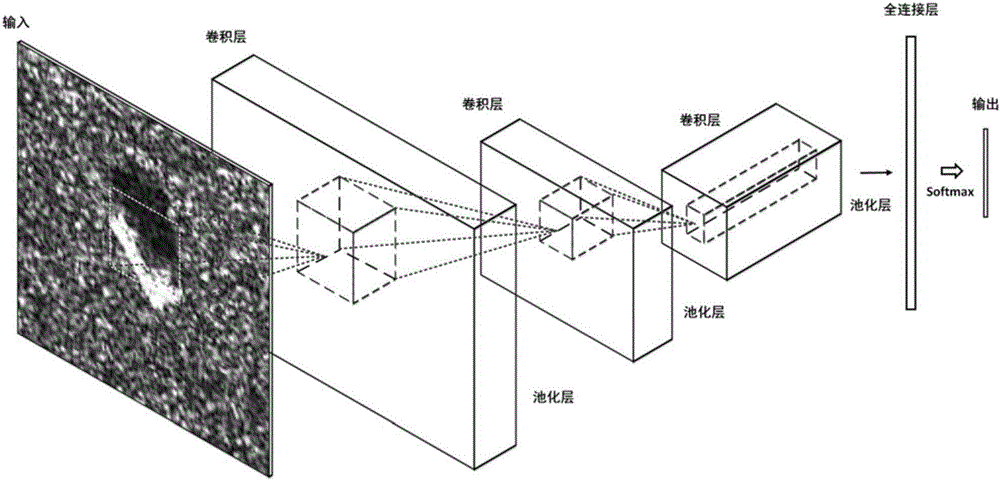

Synthetic aperture radar image target identification method based on depth model

ActiveCN106407986AReduce overheadTarget recognition facilitatesCharacter and pattern recognitionFeature extractionLevel structure

The invention discloses a synthetic aperture radar image target identification method based on a depth model. The method comprises steps of image cutting; depth model level structure design, characteristic extraction filter design, parameter quantity control and overfitting prevention, function activation and non-linear lifting, identification classification and autonomous parameter correction update; depth model training; target identification. The method is advantaged in that filter parameters can realize autonomous iteration update in a training process, characteristic selection and extraction cost is greatly reduced, moreover, target different-level characteristics can be extracted through the depth model, the characteristics can be acquired through high-degree matching and training, so high-degree target representation can be realized, and target identification accuracy of SAR images is improved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

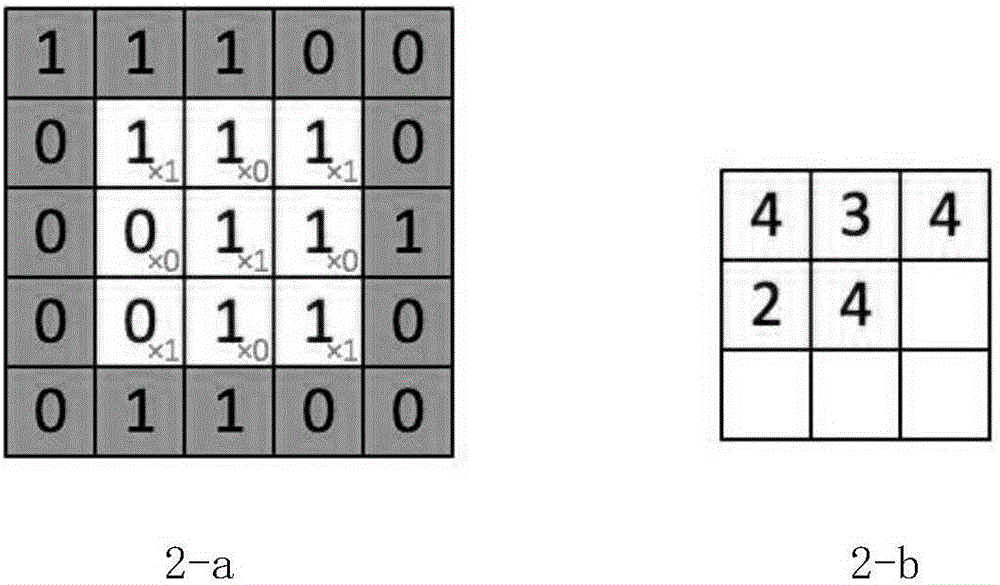

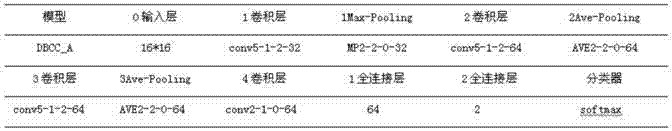

CNN-deep-learning-based DBCC classification model and constructing method thereof

InactiveCN106910185AAccurate calculationImprove recognition accuracyImage enhancementImage analysisLearning basedActivation function

The invention discloses a CNN-deep-learning-based DBCC classification model and the constructing method thereof. The DBCC classification model consists of four convolution layers, three pooling layers and two full-connection layers. The DBCC classification model uses the softmax loss function as the loss function; the activation function (RELU) is added behind the first convolution layer, the fourth convolution layer, the second pooling layer, the third pooling layer, the first full-connection layer respectively; and a local response normalized layer LRN is added behind the first convolution layer, and a dropout layer is added behind the first full-connection layer. The DBCC classification model of the present invention is constructed based on a convolution neural network CNN, which increases the depth of the network through the use of more convolution cores in each convolution layer, the addition of the LRN and the use of the dropout so that the DBCC classification model achieves higher identification accuracy in its identification of small pictures with 16 * 16pixel resolutions.

Owner:SHAANXI NORMAL UNIV

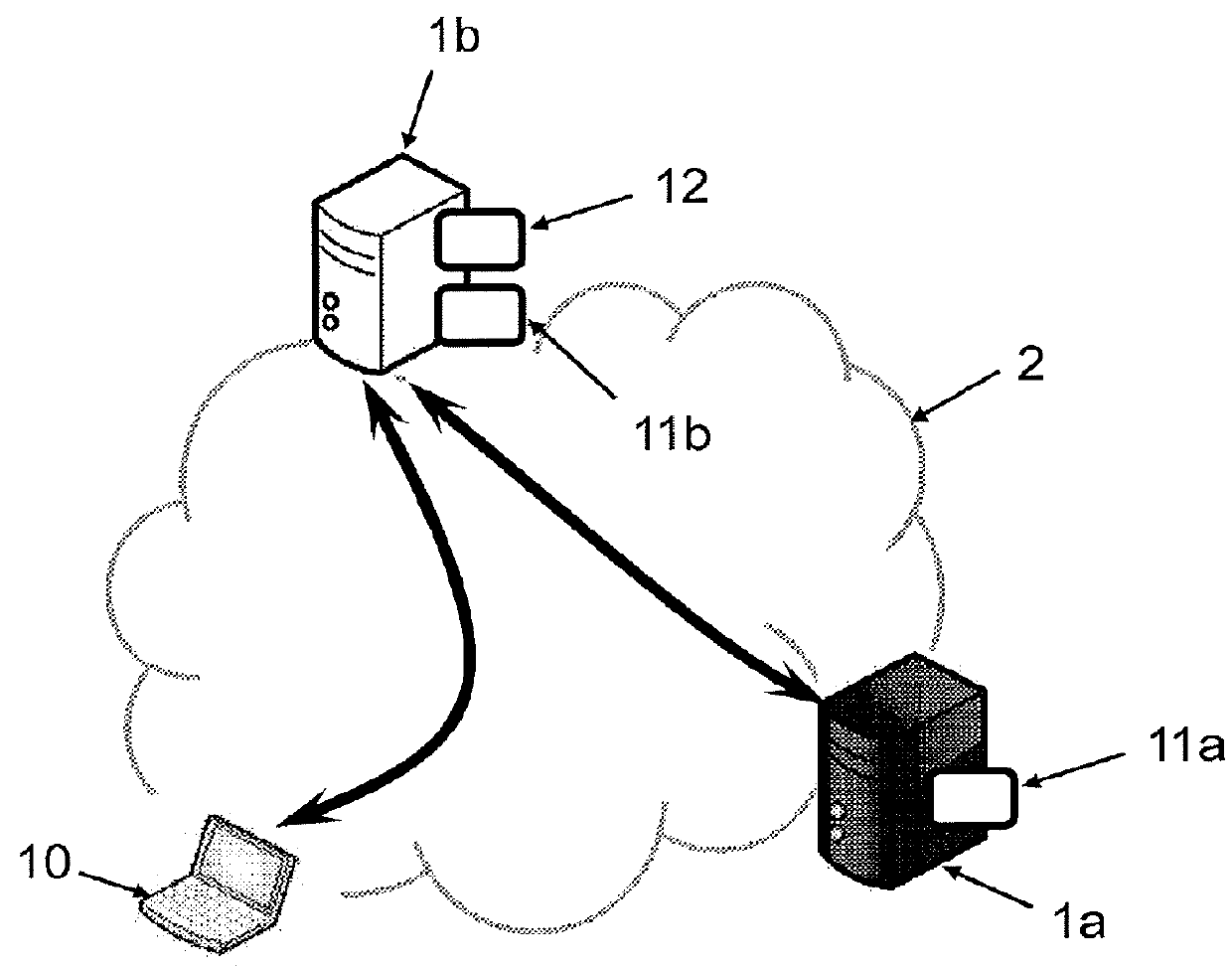

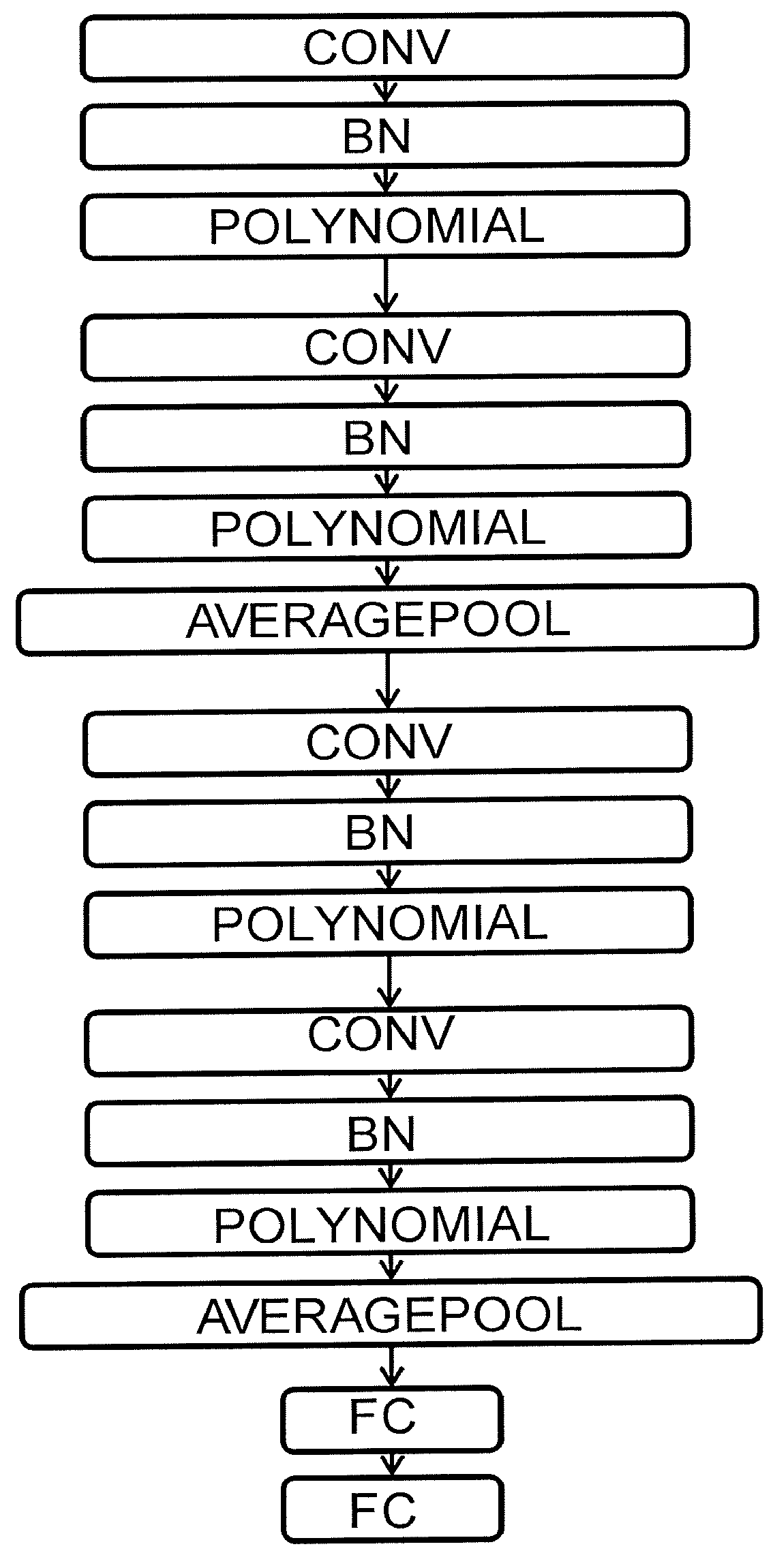

Methods for secure learning of parameters of a convolution neural network, and for secure input data classification

ActiveUS20180096248A1Extended processing timeBenefit is lostNeural architecturesCommunication with homomorphic encryptionActivation functionLearning data

A method for secure learning of parameters of a convolution neural network, CNN, for data classification includes the implementation, by data processing of a first server, including receiving from a second server a base of already classified learning data, the learning data being homomorphically encrypted; learning in the encrypted domain, from the learning database, the parameters of a reference CNN including a non-linear layer (POLYNOMIAL) operating an at least two-degree polynomial function approximating an activation function; a batch normalization layer before each non-linear layer (POLYNOMIAL); and transmitting the learnt parameters to the second server, for decryption and use for classification.

Owner:IDEMIA IDENTITY & SECURITY FRANCE

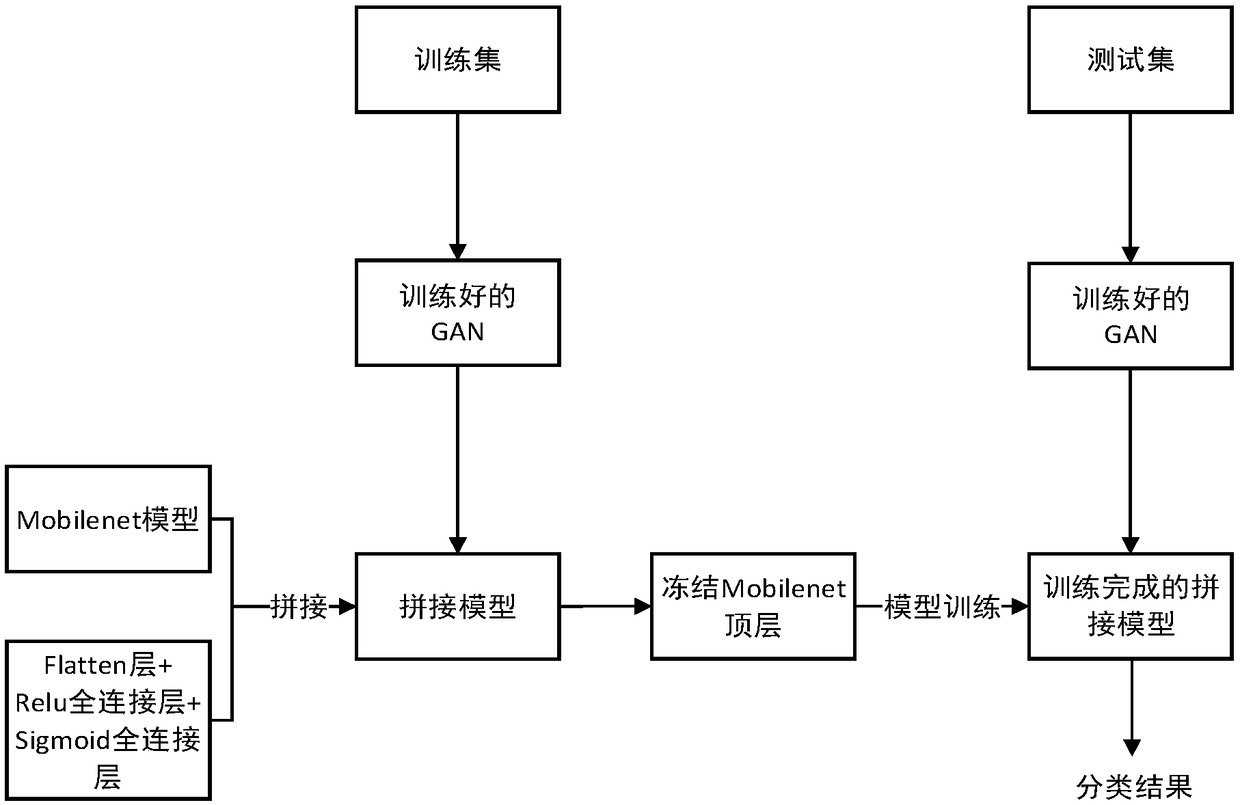

A dam image crack detection method based on transfer learning

ActiveCN109345507APrevent overfittingReduce redundant expressionImage enhancementImage analysisActivation functionImaging Feature

The invention discloses a dam image crack detection method based on transfer learning. The method comprises the following steps of collecting the dam crack image, preprocessing the image data set to fill the data set through a generated antagonism network GAN; using a pre-training model MobileNet that does not retain a top layer and a full-connection layer to extract image features, splicing a Flatten layer after MobileNet, splicing the full connection layer whose activation function is ReLU after the Flatten layer, and splicing the Full Connection Layer whose activation function is Sigmoid asthe output layer; frozening the first K depth decomposable convolution structures in MobileNet, and fixing the relative weights of these K depth decomposable convolution structures; training the model and only updating the weights of the unfrozen network layer in the process of model training; using the trained model to detect the dam cracks in the image. The method of the invention solves the over-fitting problem under the condition of small data set, and improves the prediction performance and the running speed through the migration learning idea.

Owner:HOHAI UNIV

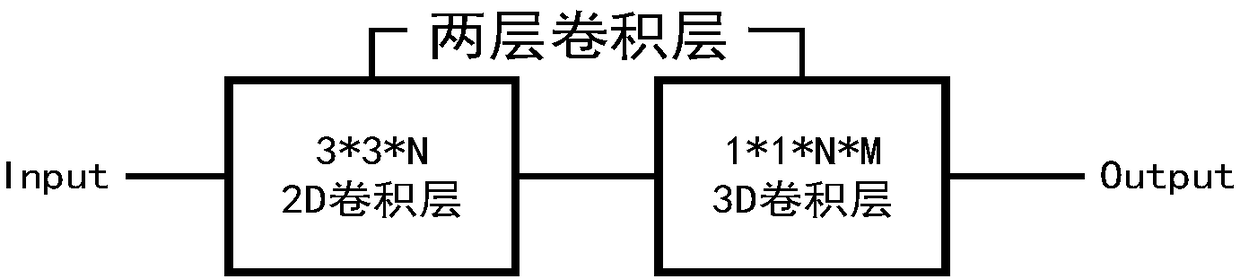

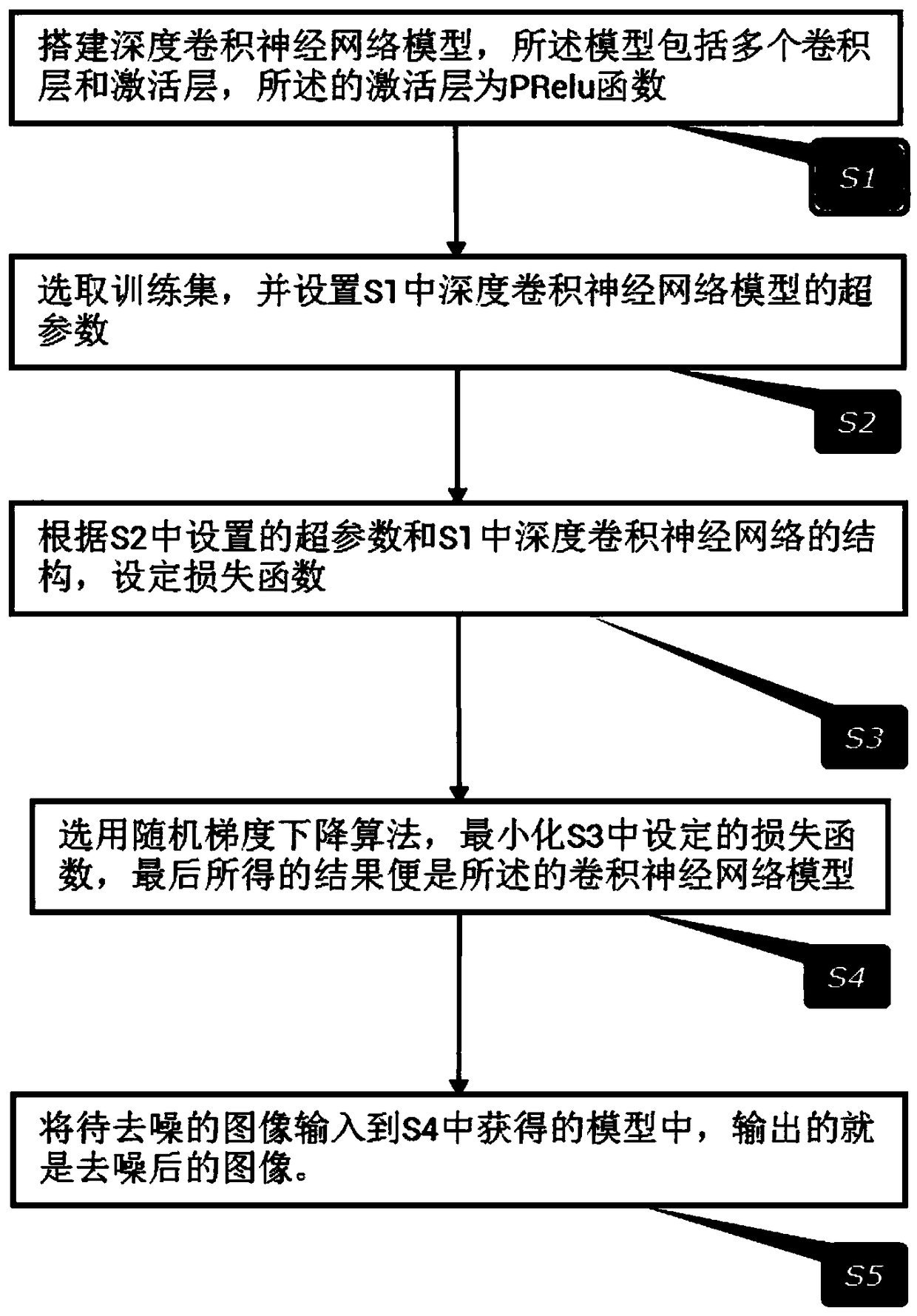

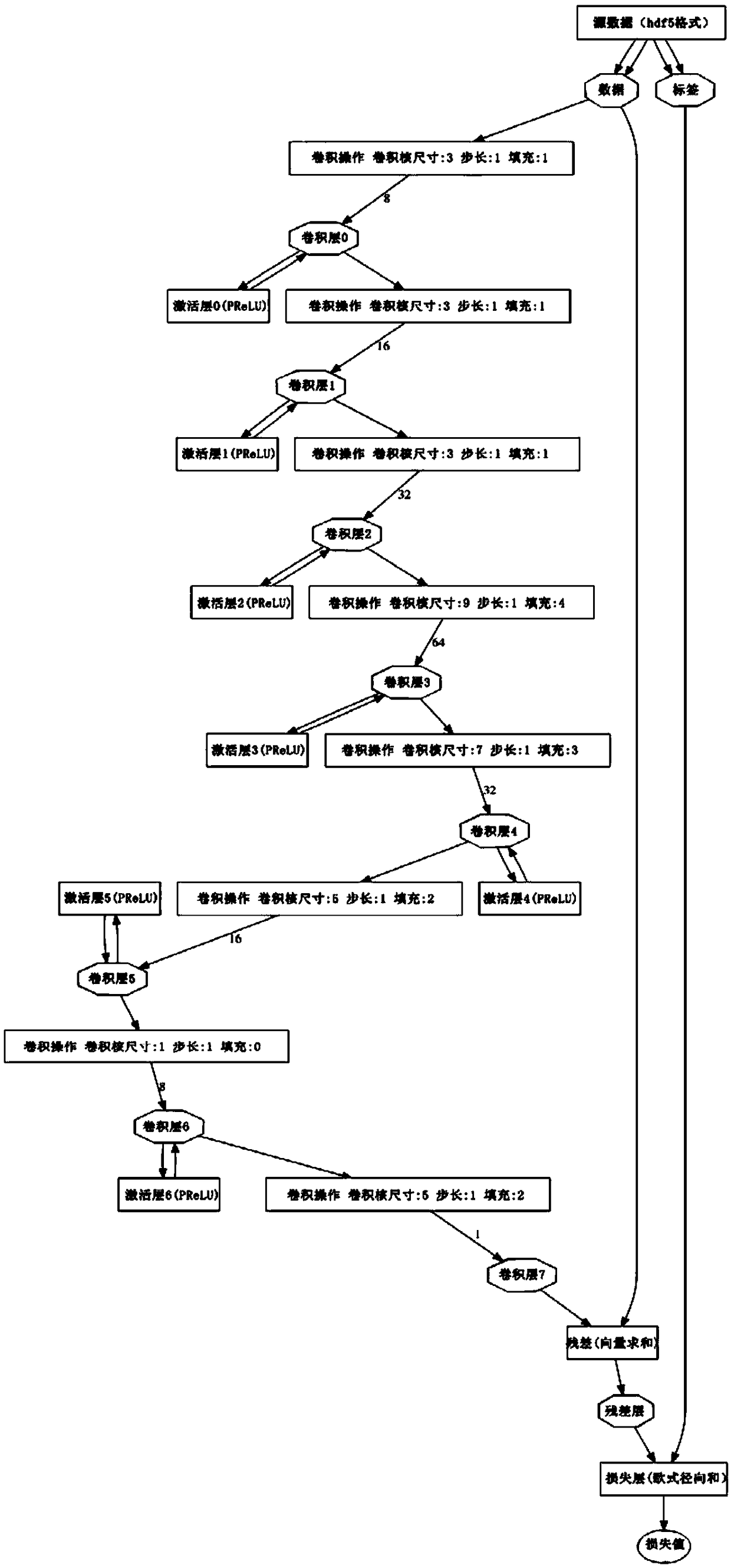

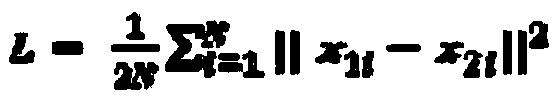

Depth residual convolution neural network image denoising method based on PReLU

InactiveCN109118435AOptimizationAvoid computational overheadImage enhancementImage analysisDenoising algorithmActivation function

The invention relates to a depth residual convolution neural network image denoising method based on PReLU, based on deep convolution neural network, combined with Gaussian noise simulating unknown real noise image denoising task, in this paper, a deep convolution neural network for image denoising is proposed, which uses PReLU activation function instead of Sigmoid and ReLU function, increases residual learning and reduces mapping complexity, and adopts optimized network training techniques and network parameter settings to improve the denoising ability of the network. Compared with other existing denoising algorithms, the present invention performs very well under various Gaussian noise environments in which the standard variance is mixed, and the detailed information in the image can bewell preserved while the noise is eliminated.

Owner:GUANGDONG UNIV OF TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com