Patents

Literature

1559 results about "Network processor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

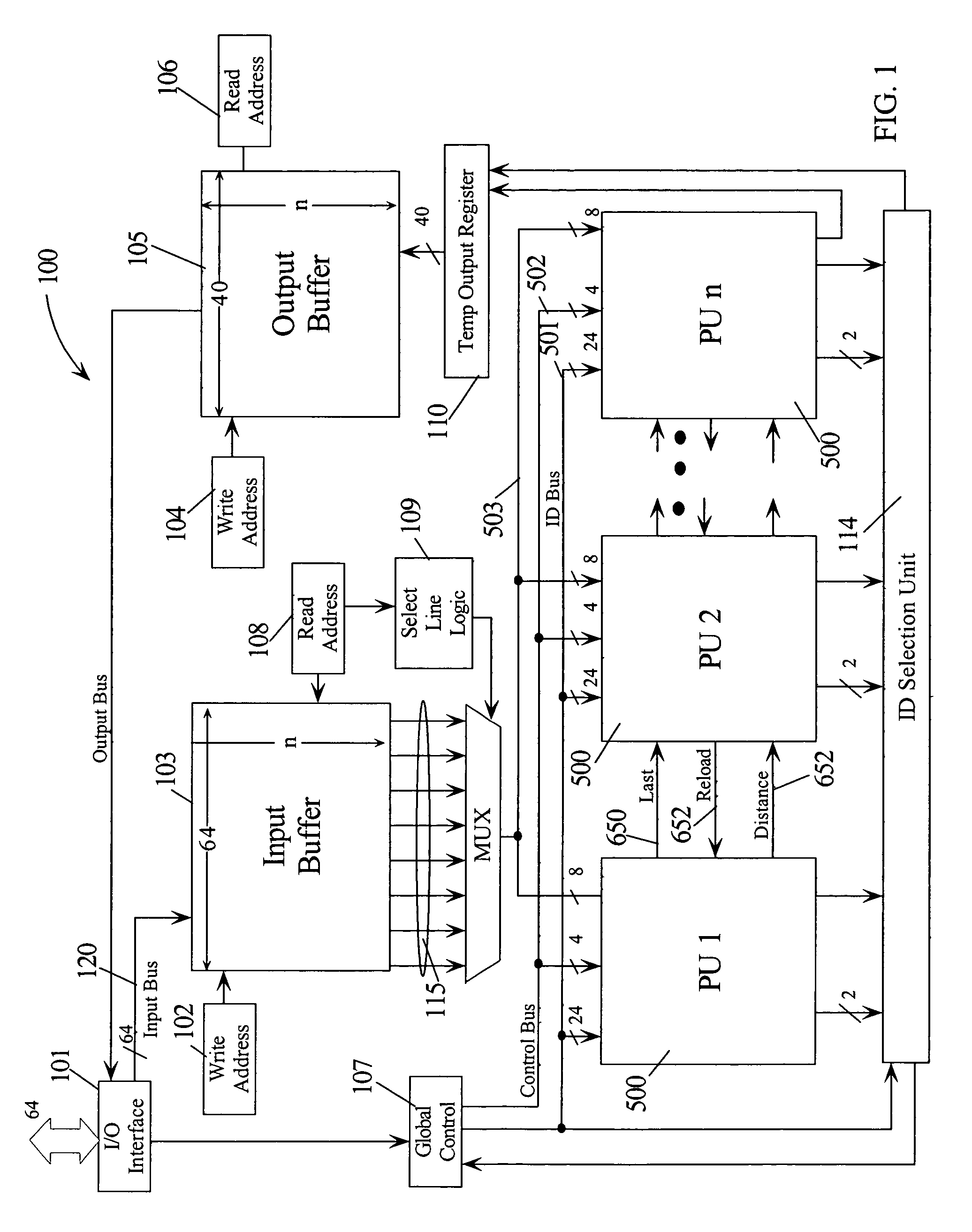

A network processor is an integrated circuit which has a feature set specifically targeted at the networking application domain. Network processors are typically software programmable devices and would have generic characteristics similar to general purpose central processing units that are commonly used in many different types of equipment and products.

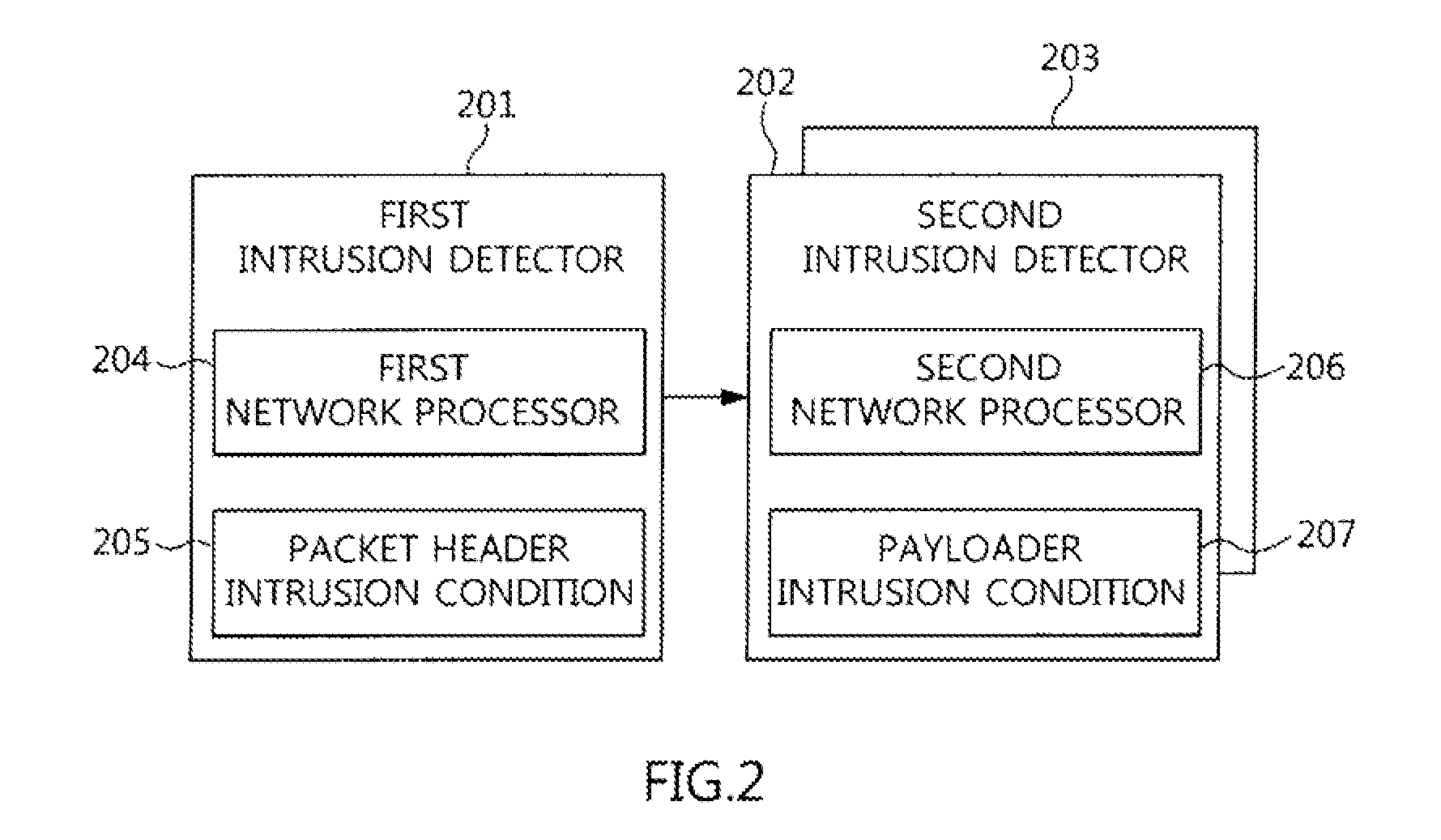

Processing data flows with a data flow processor

InactiveUS20110238855A1Increased complexityAvoid problemsMultiple digital computer combinationsPlatform integrity maintainanceData controlConfigfs

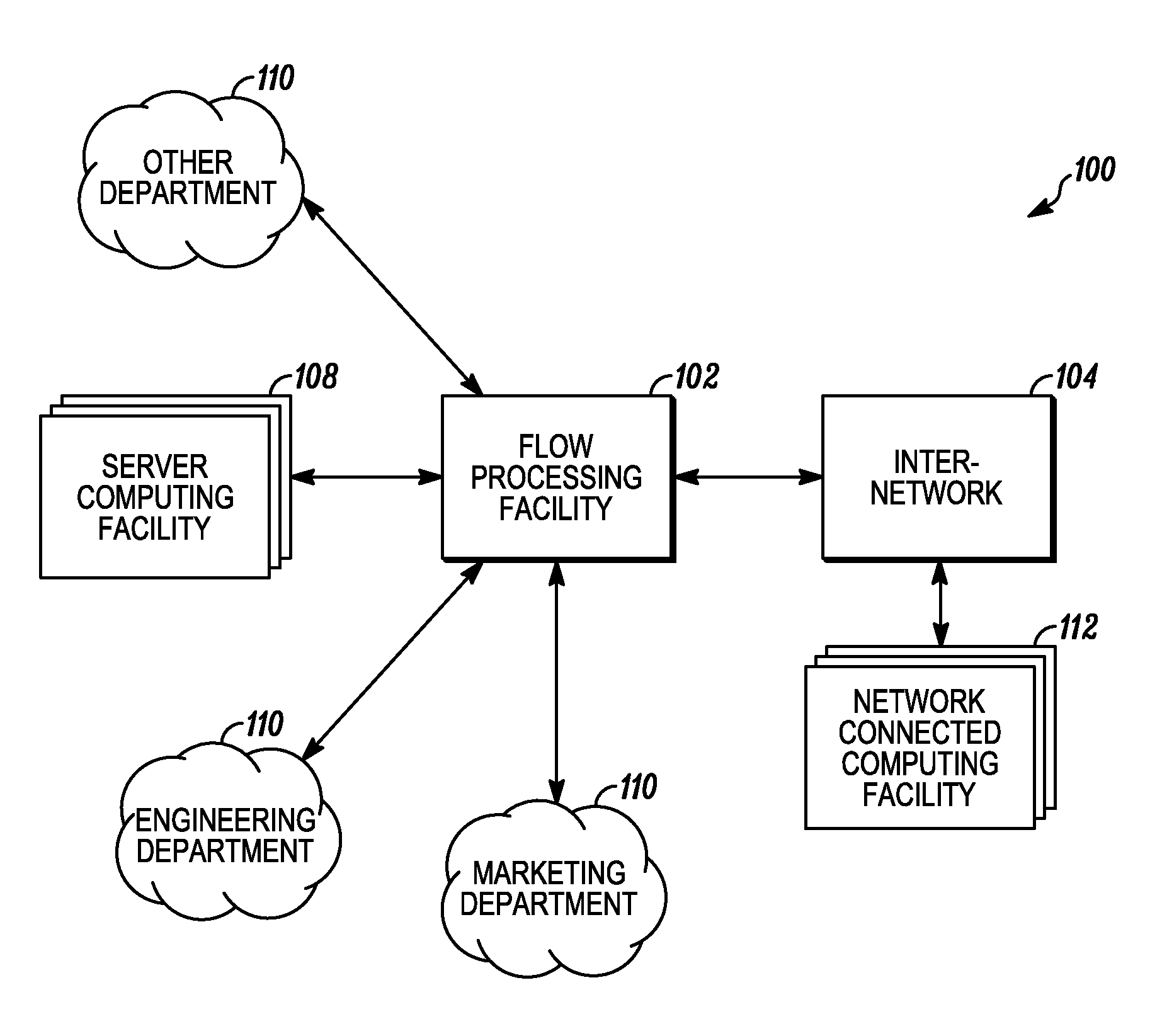

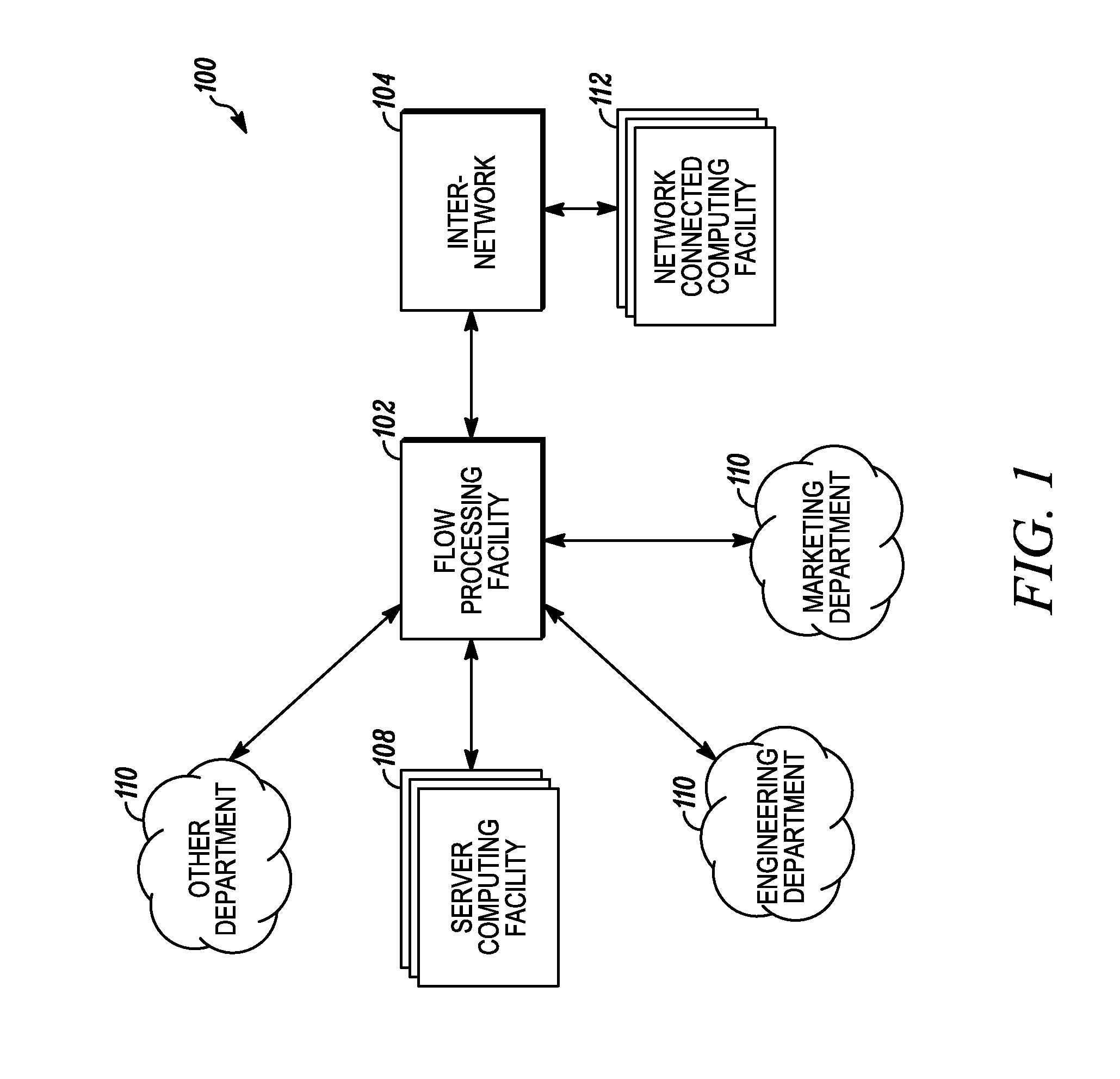

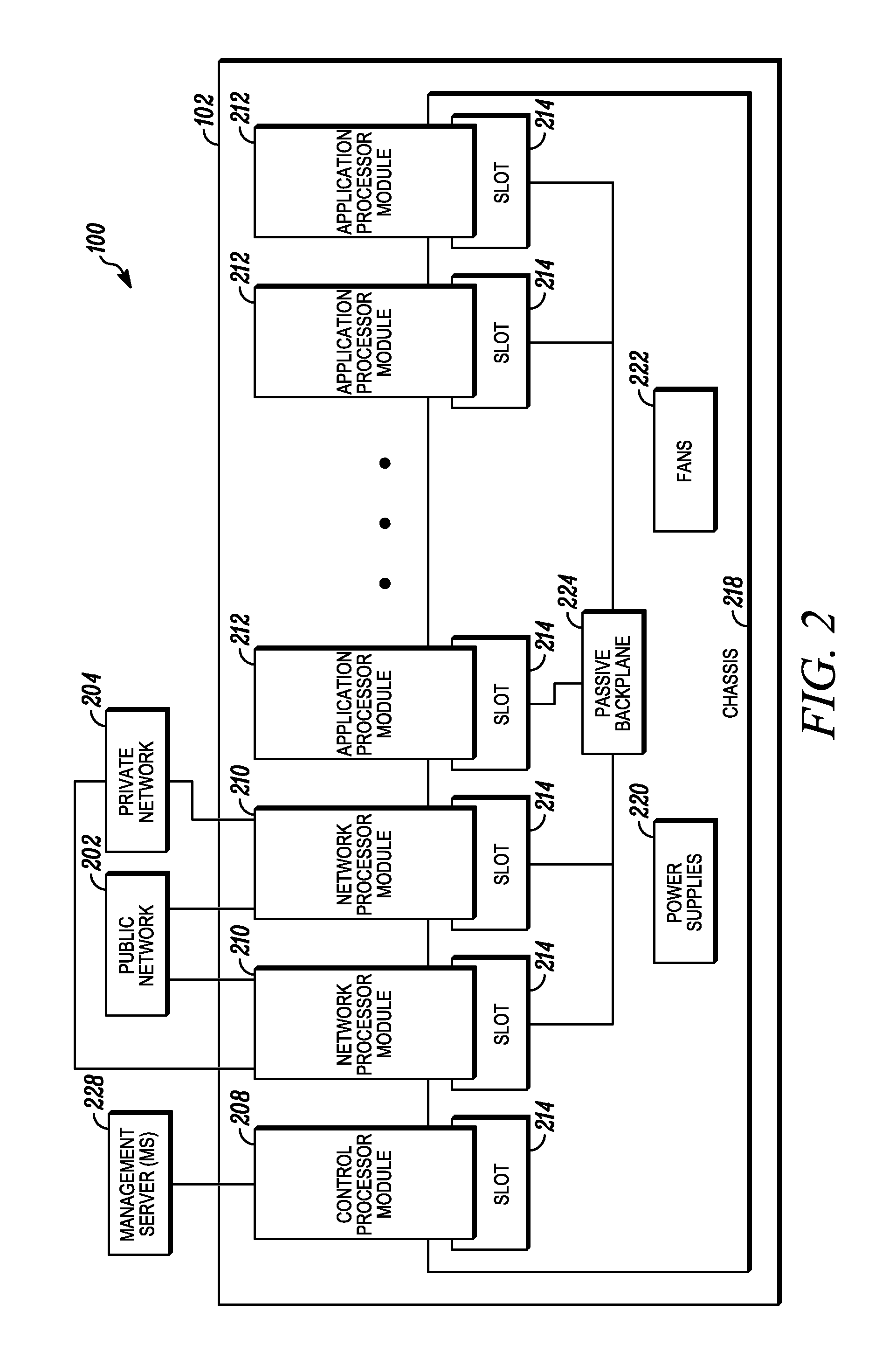

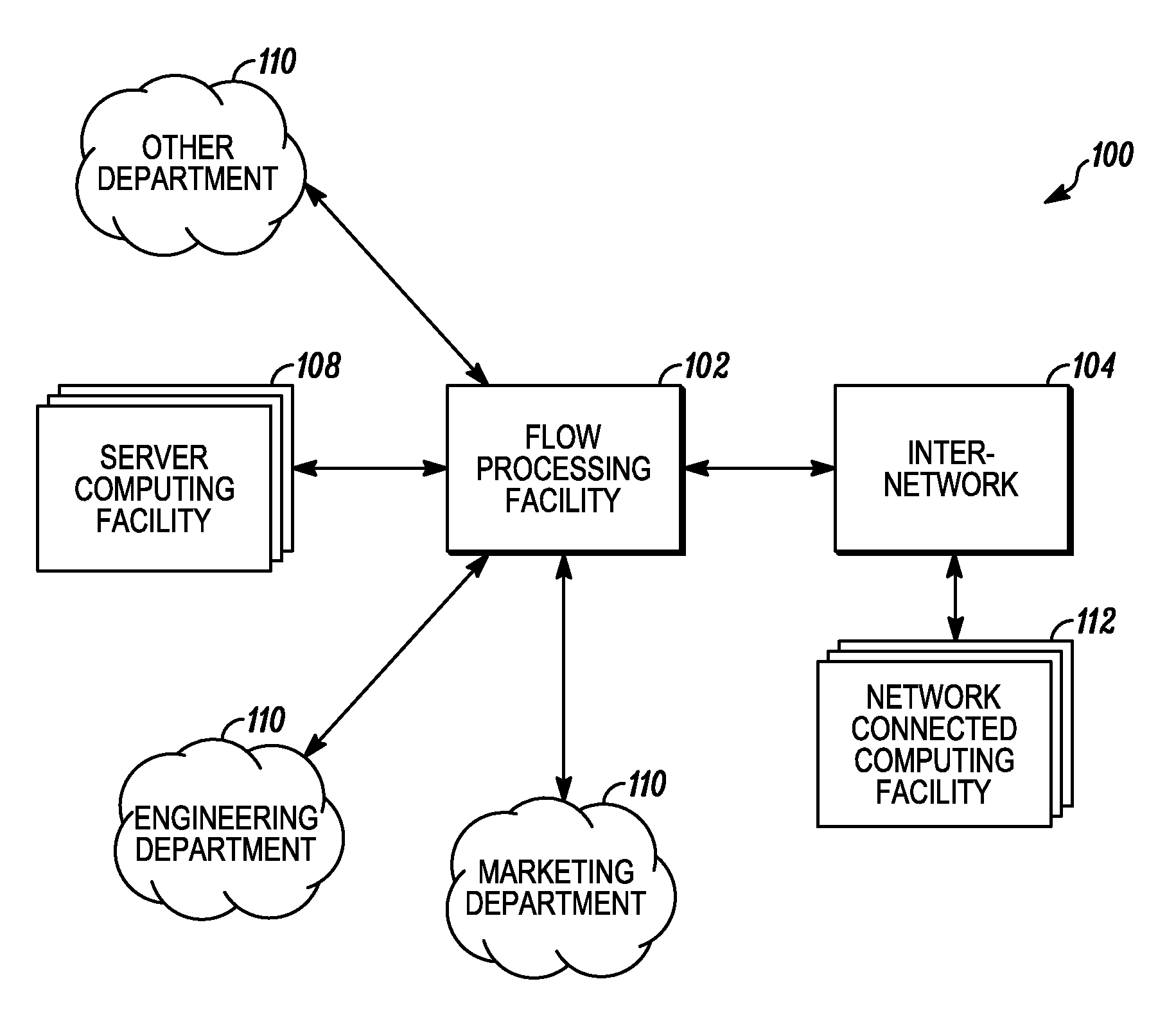

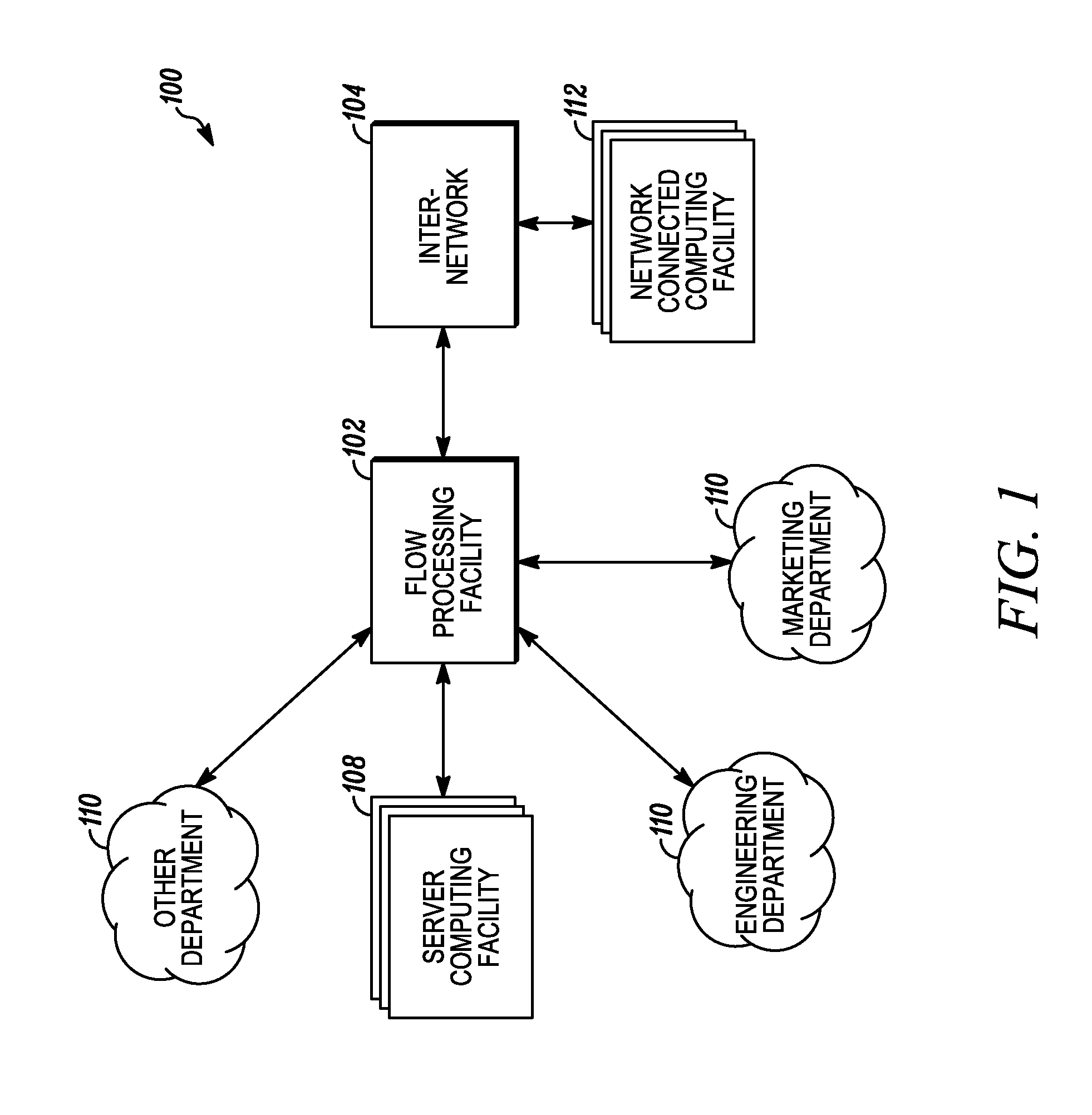

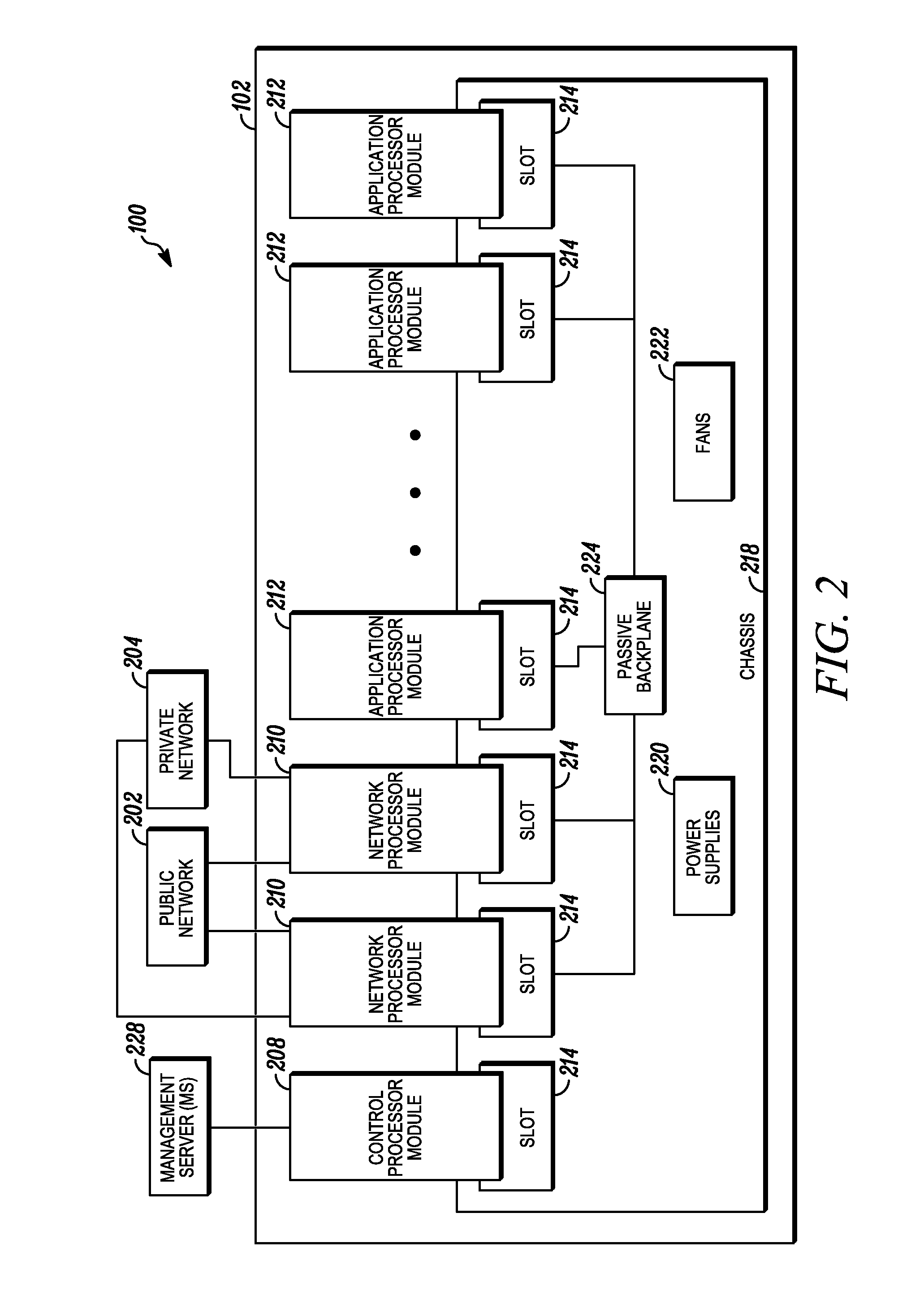

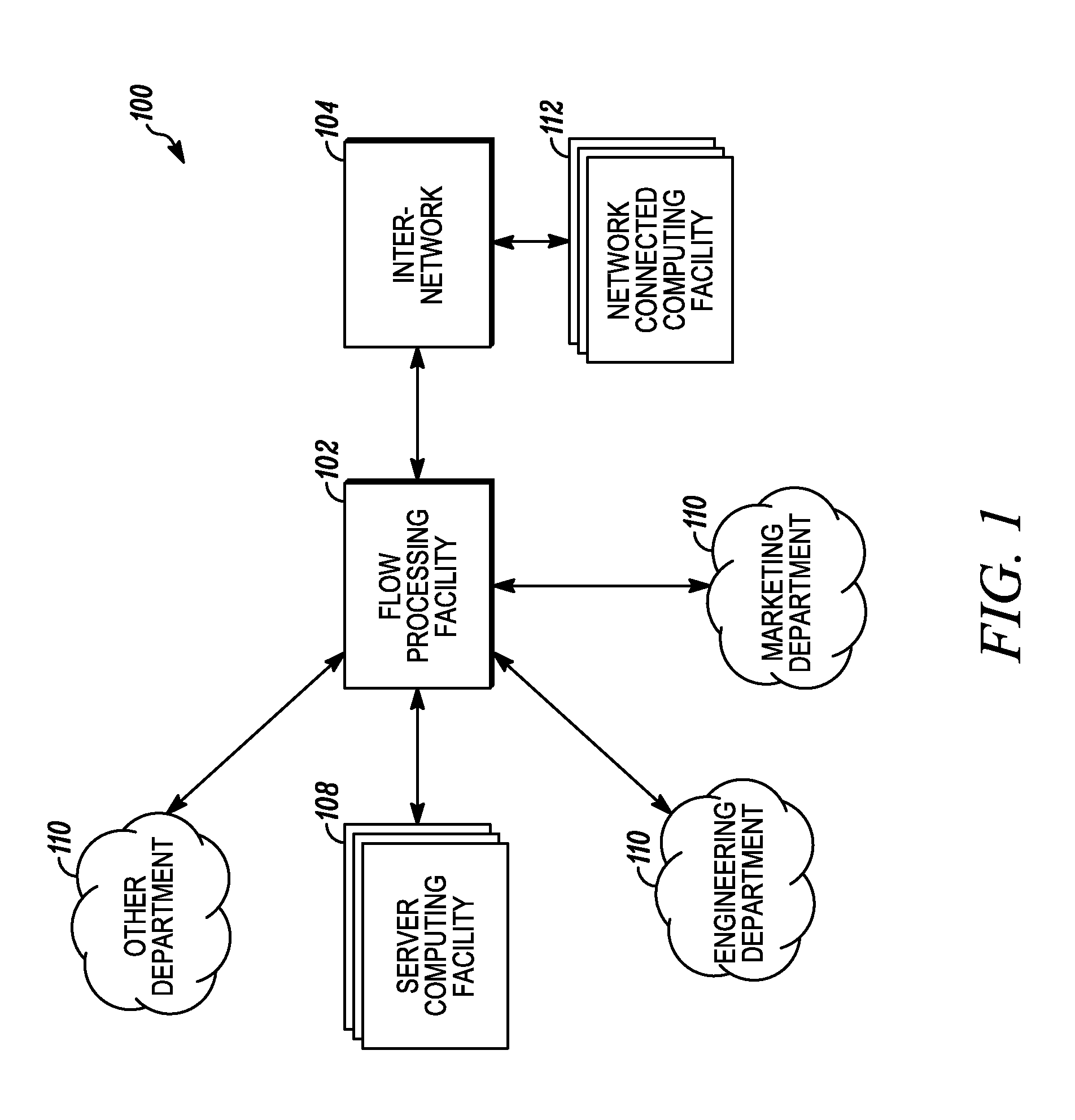

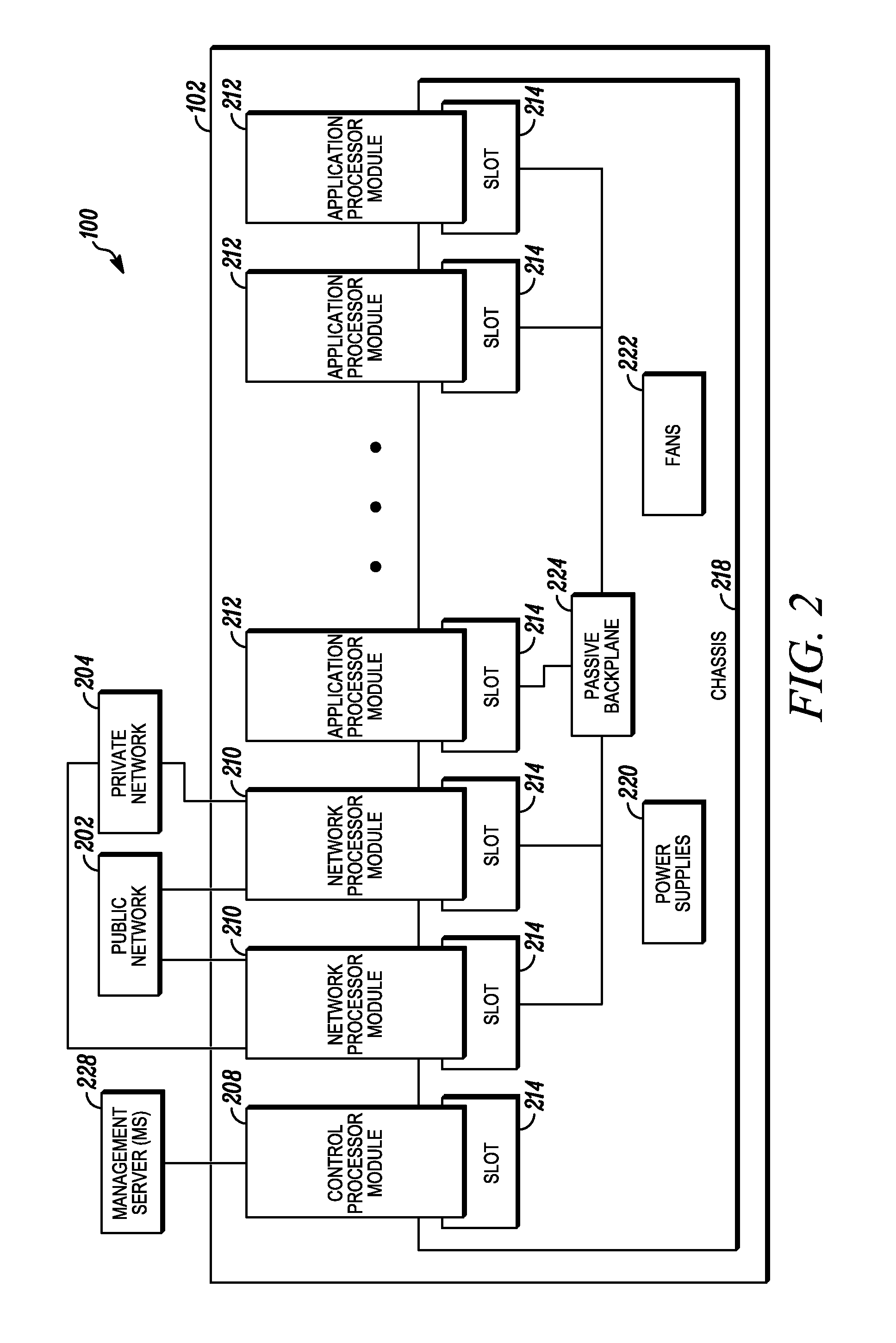

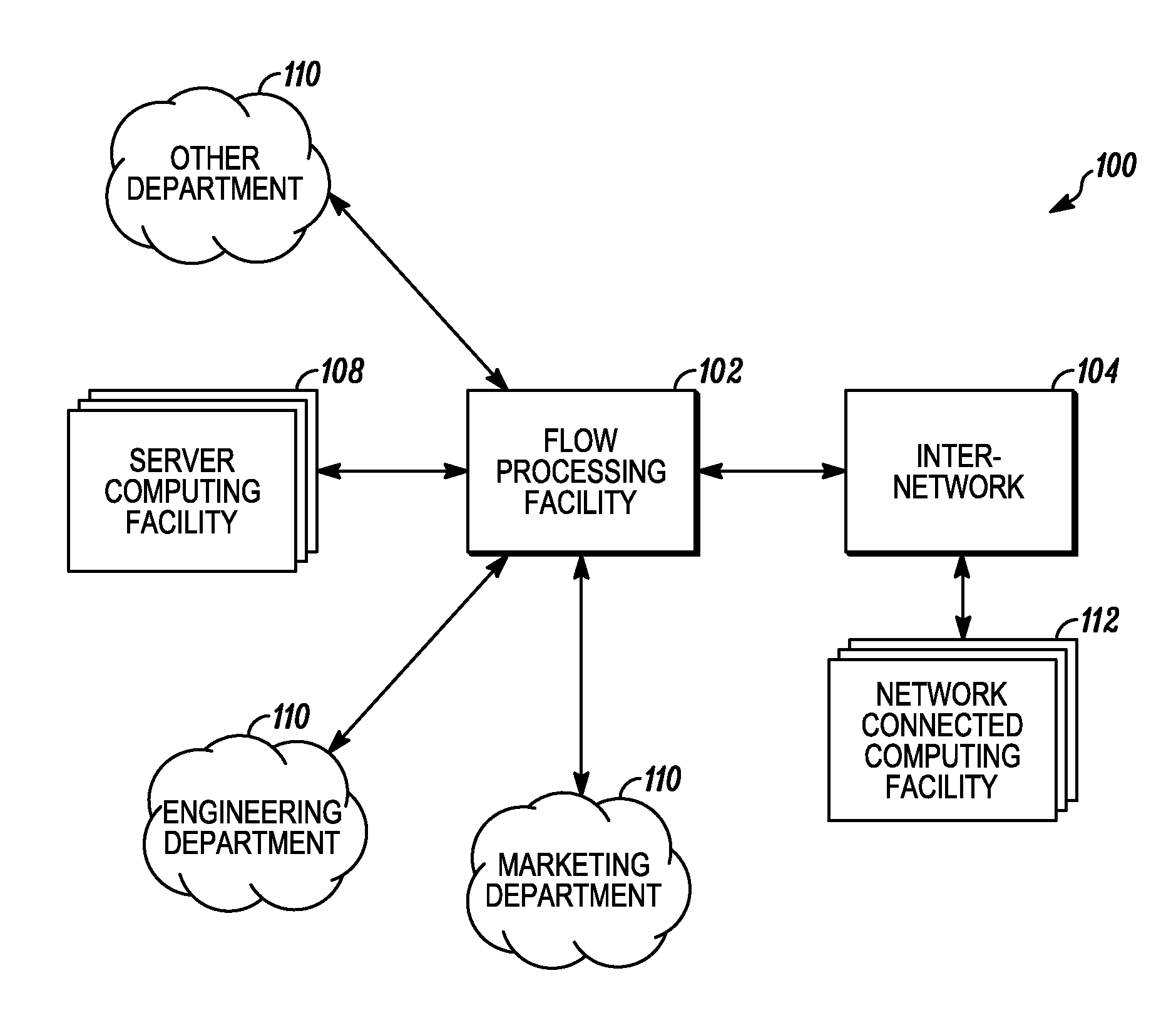

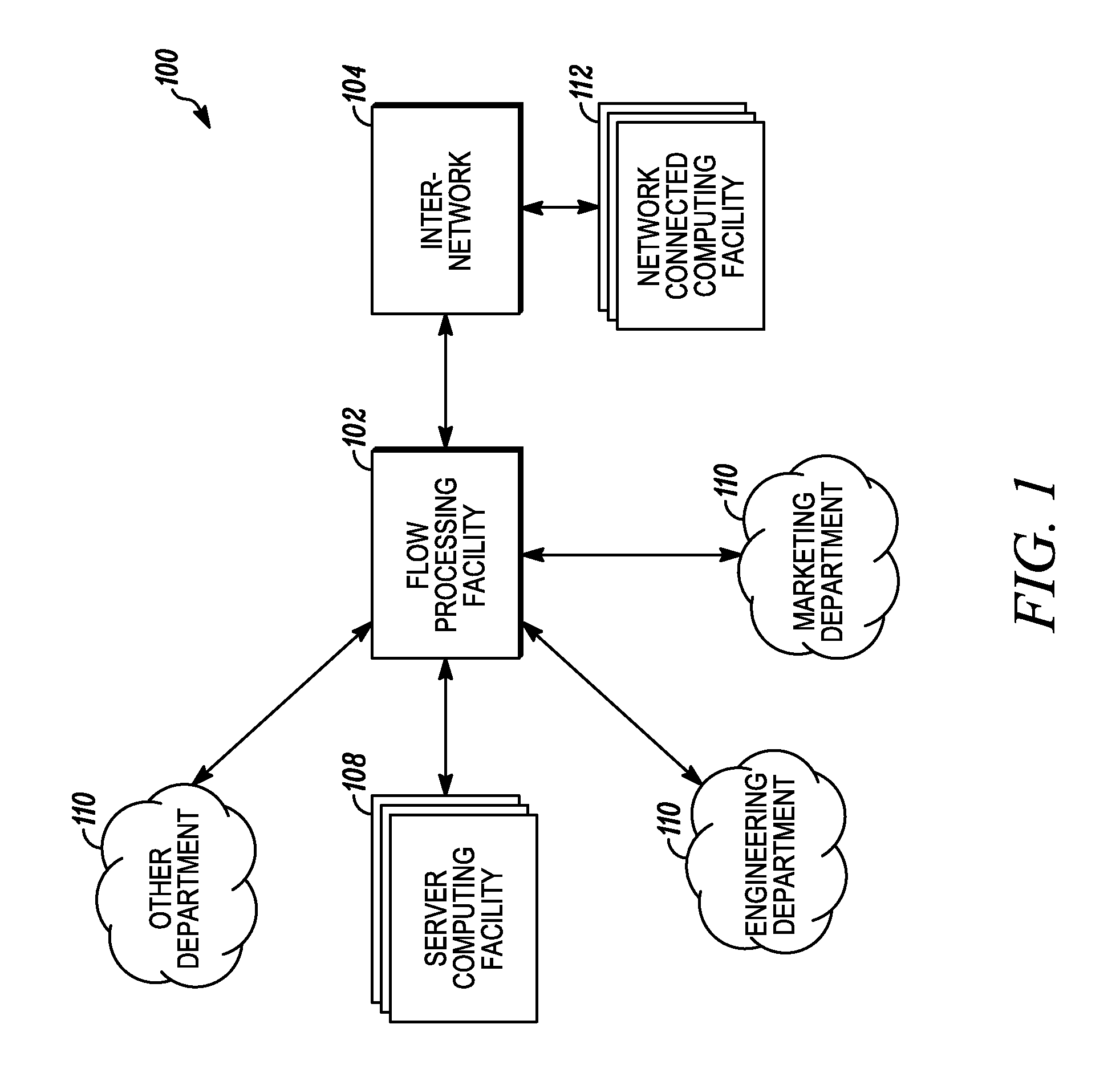

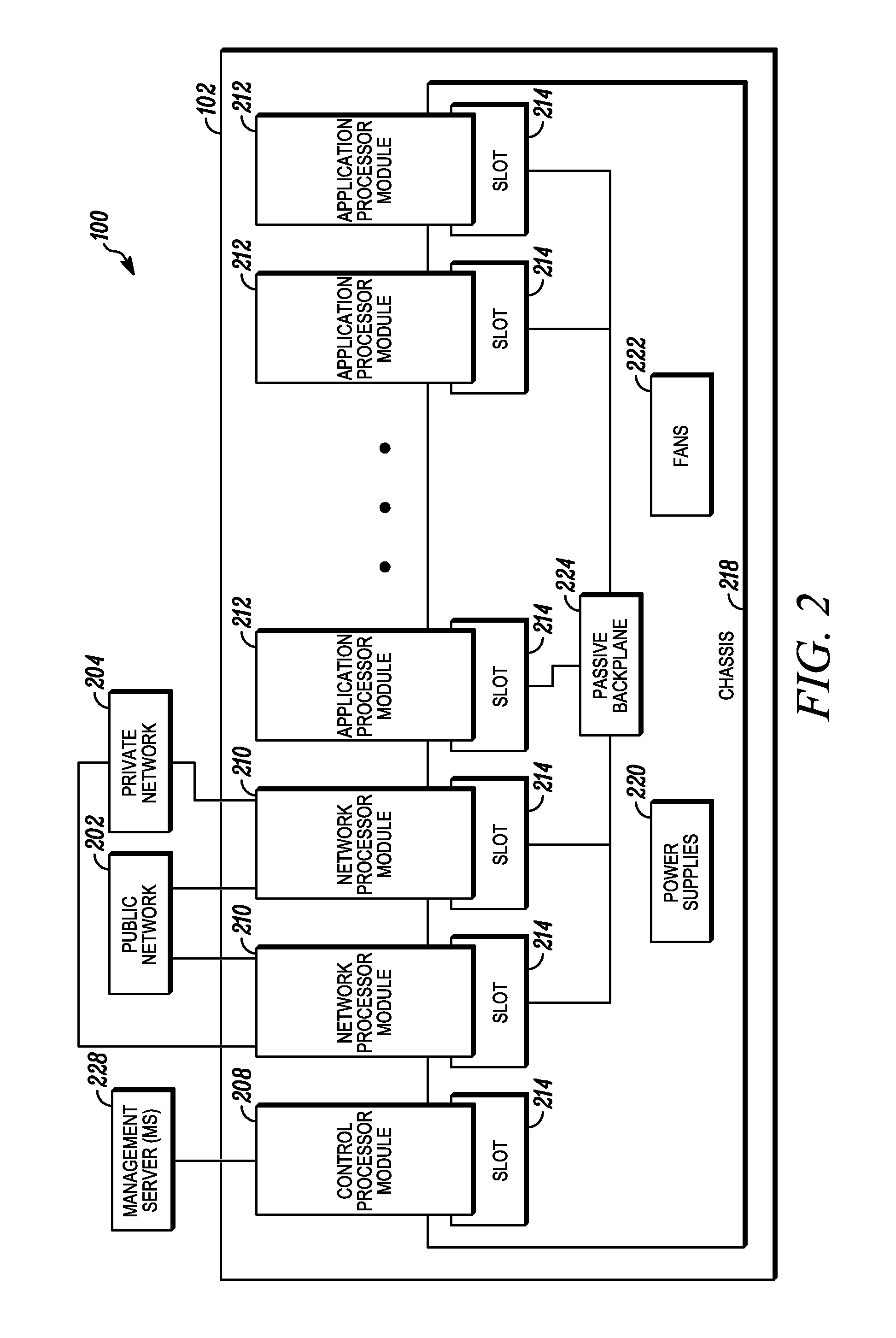

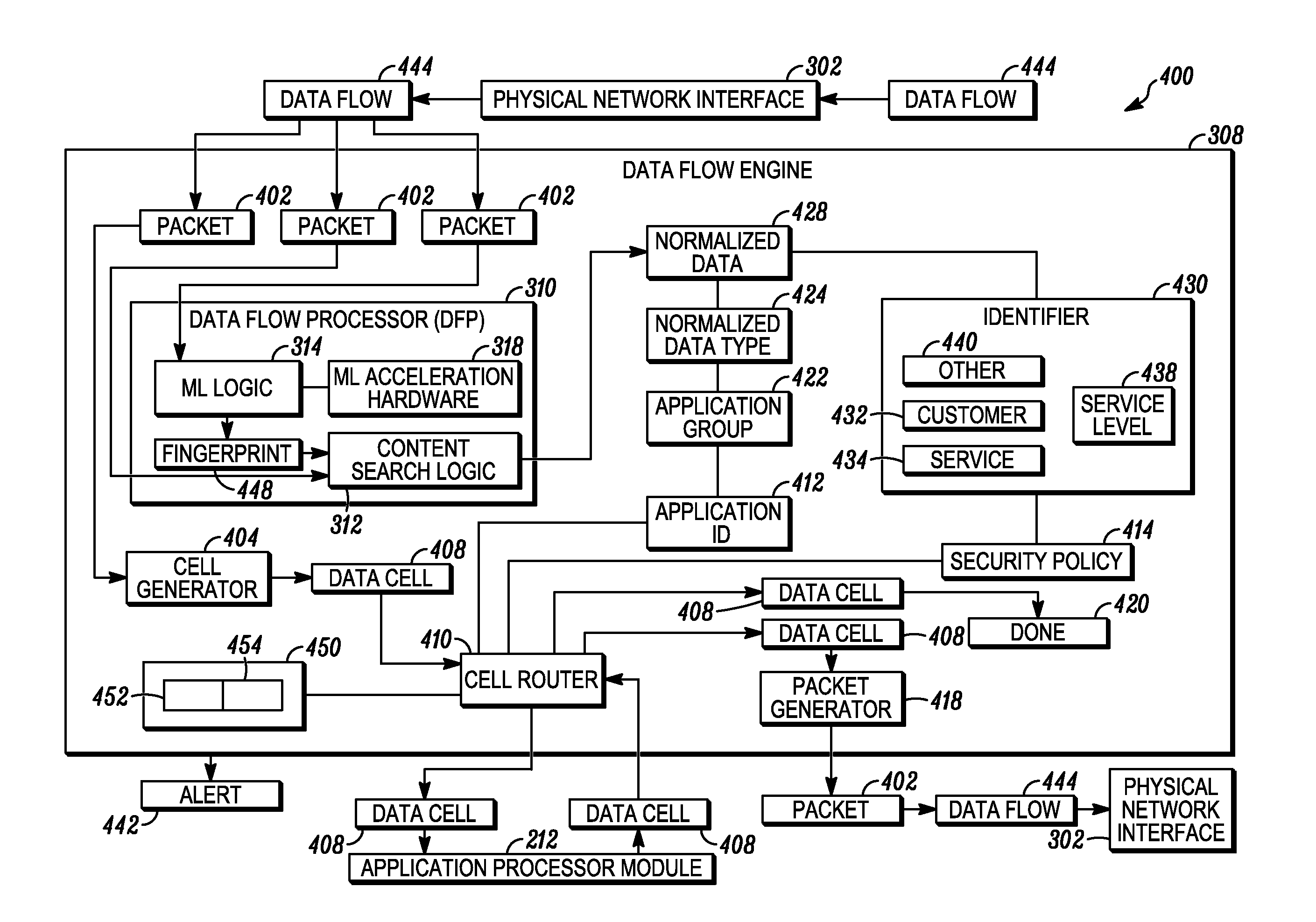

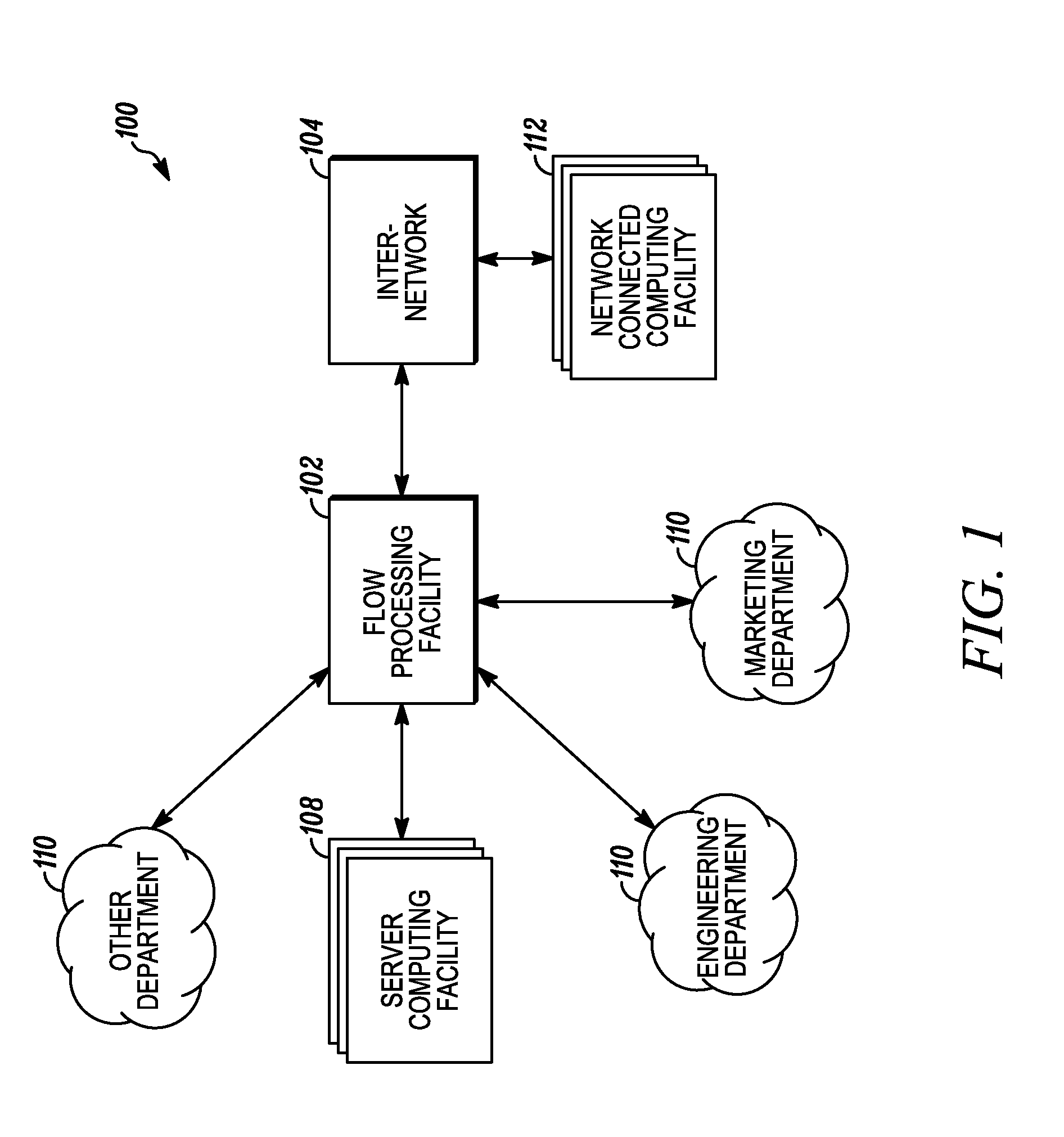

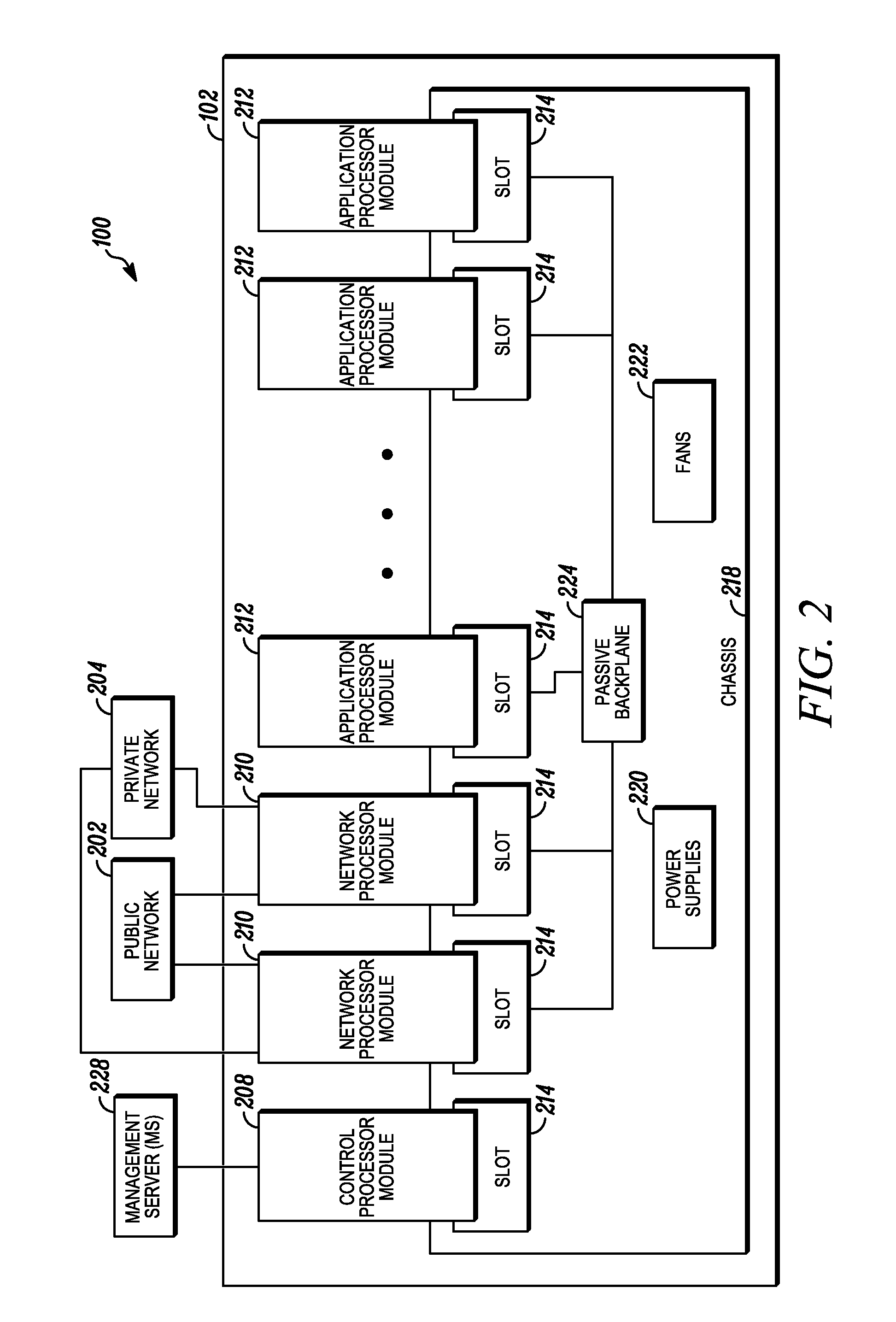

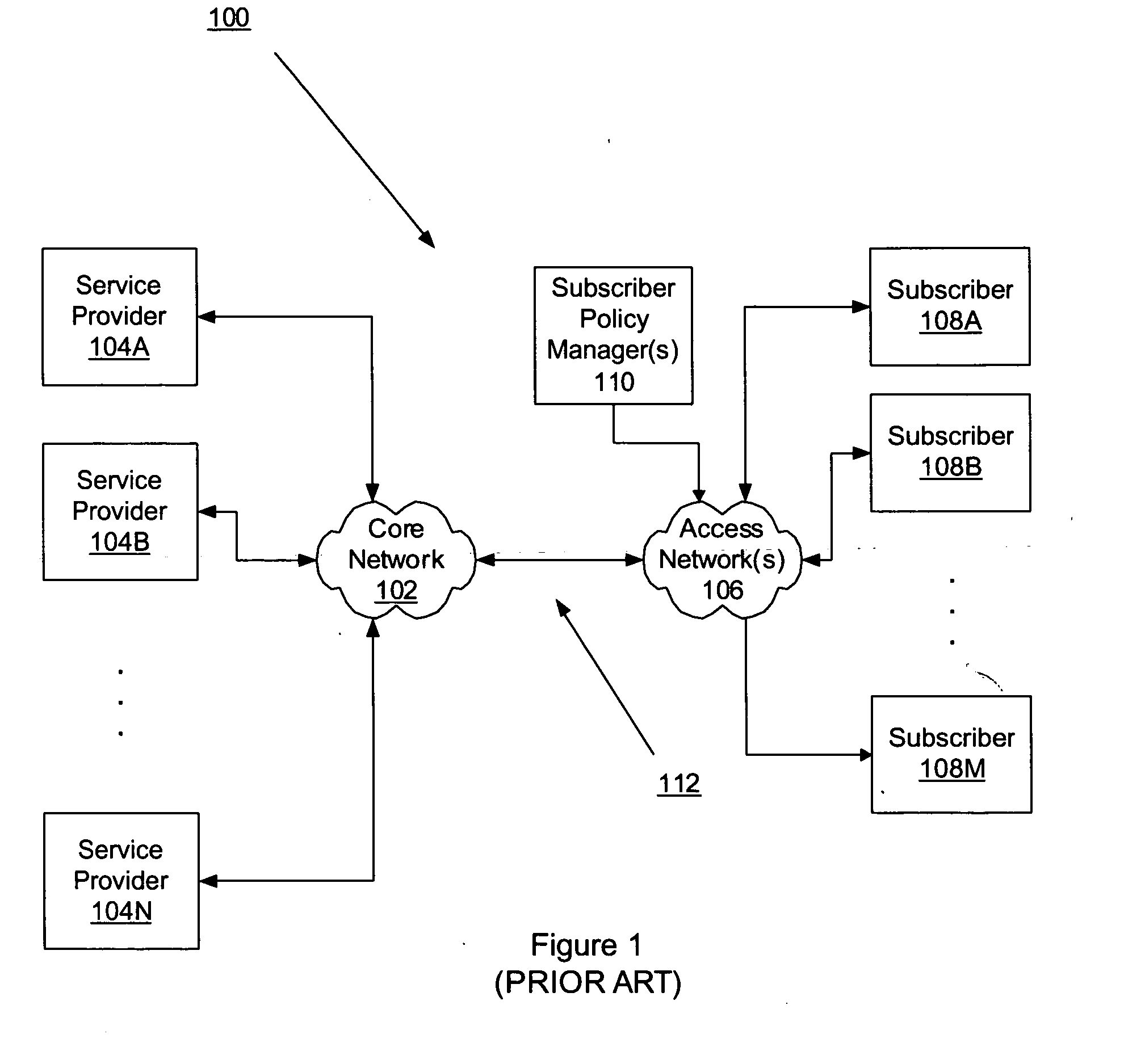

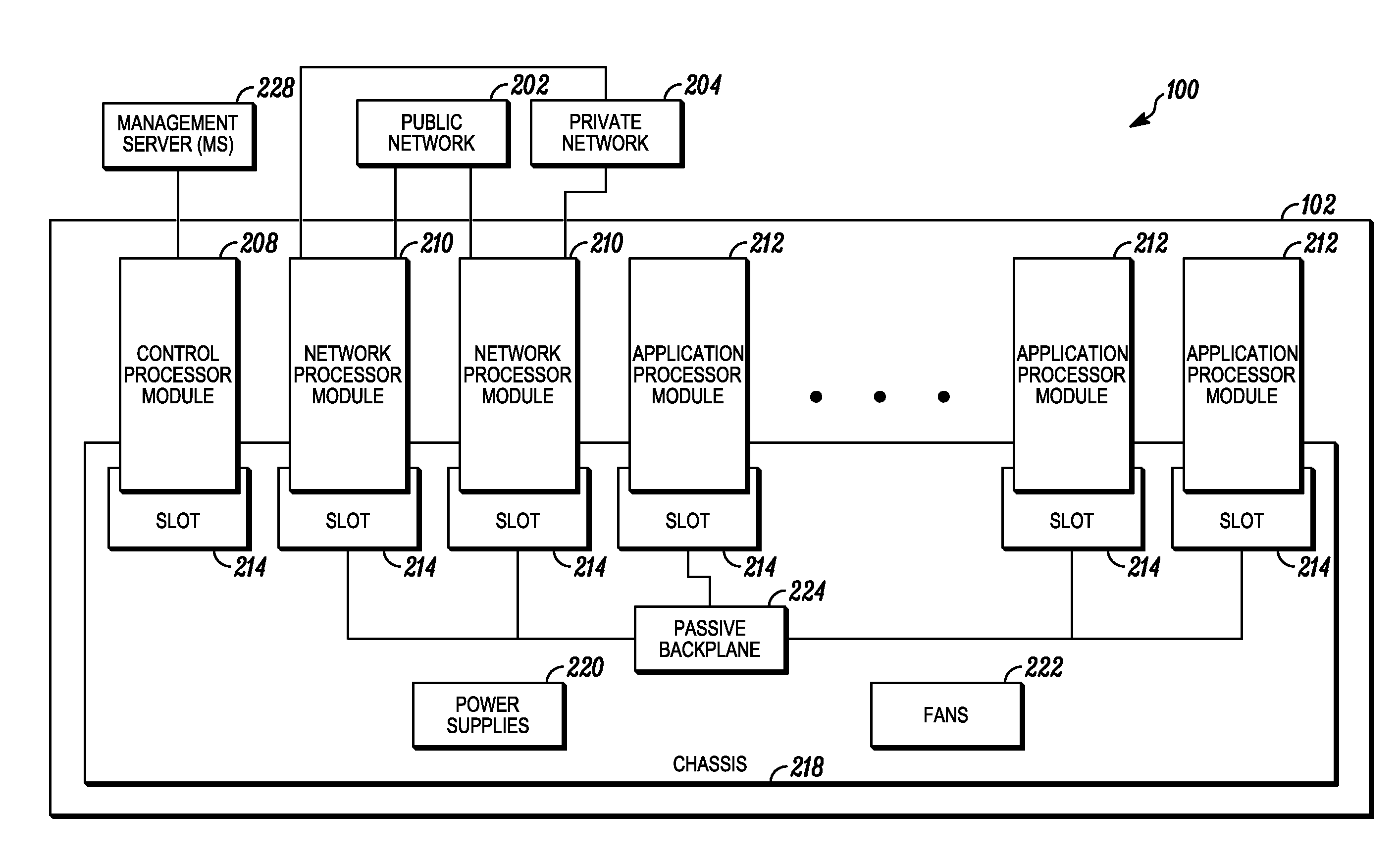

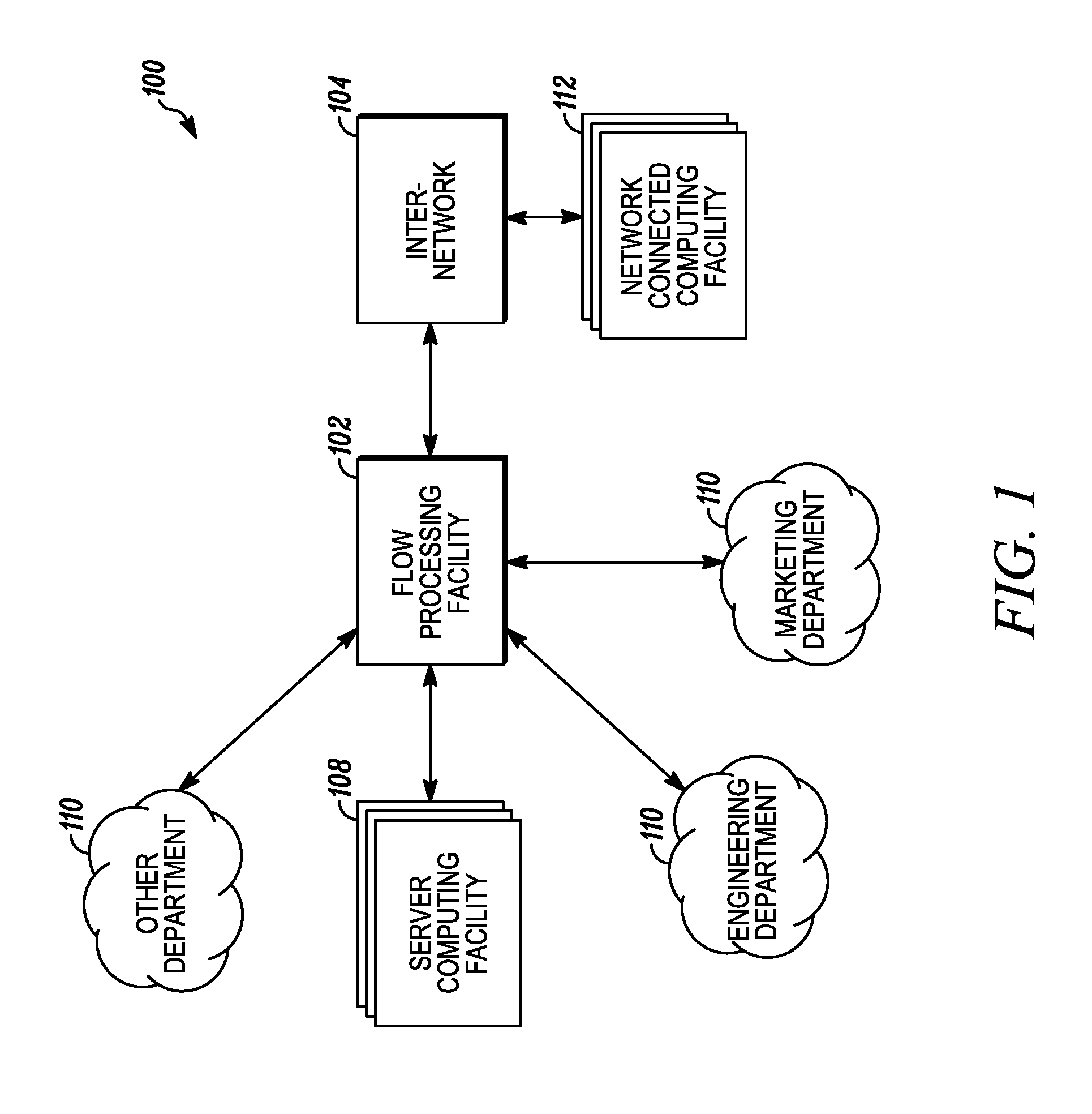

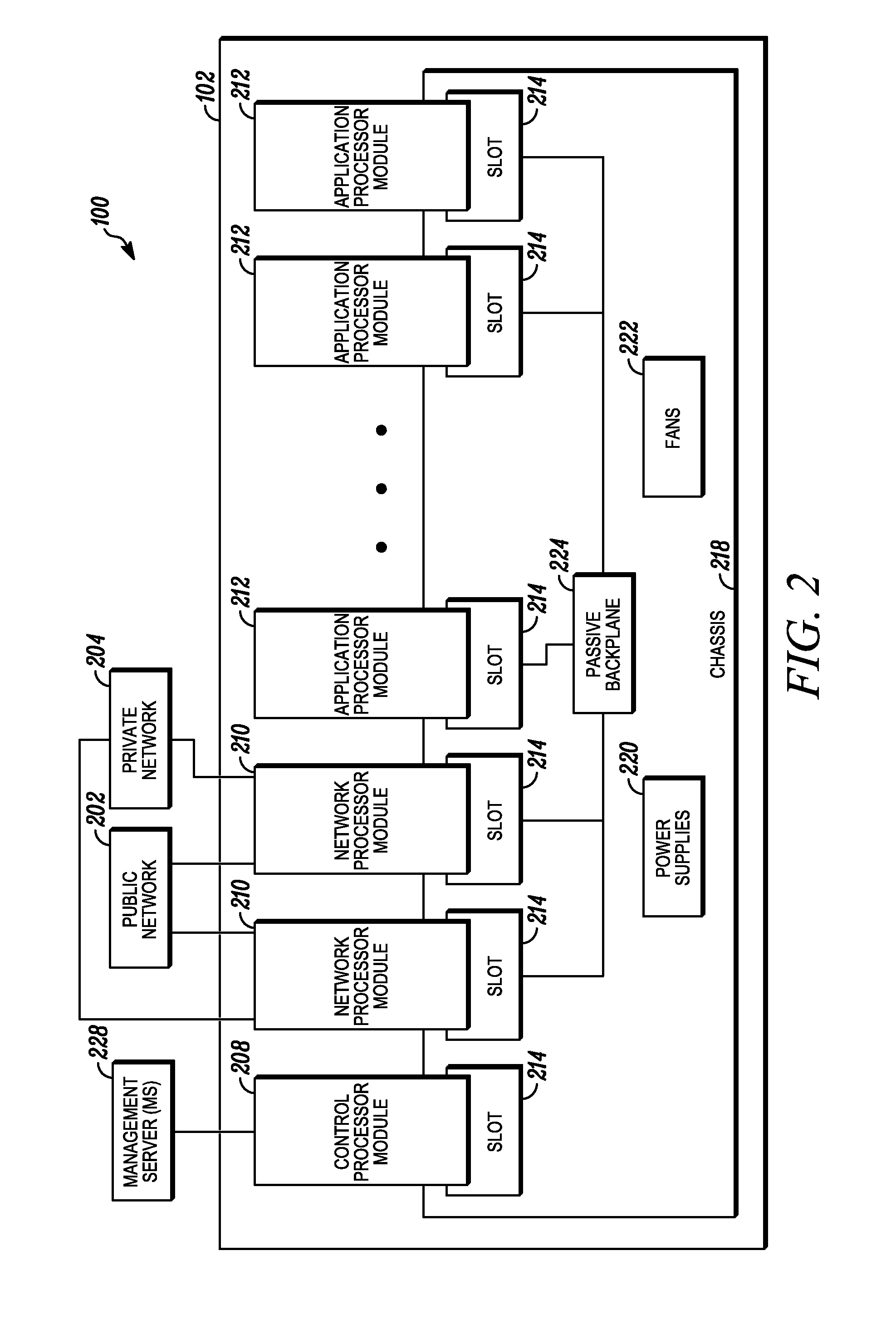

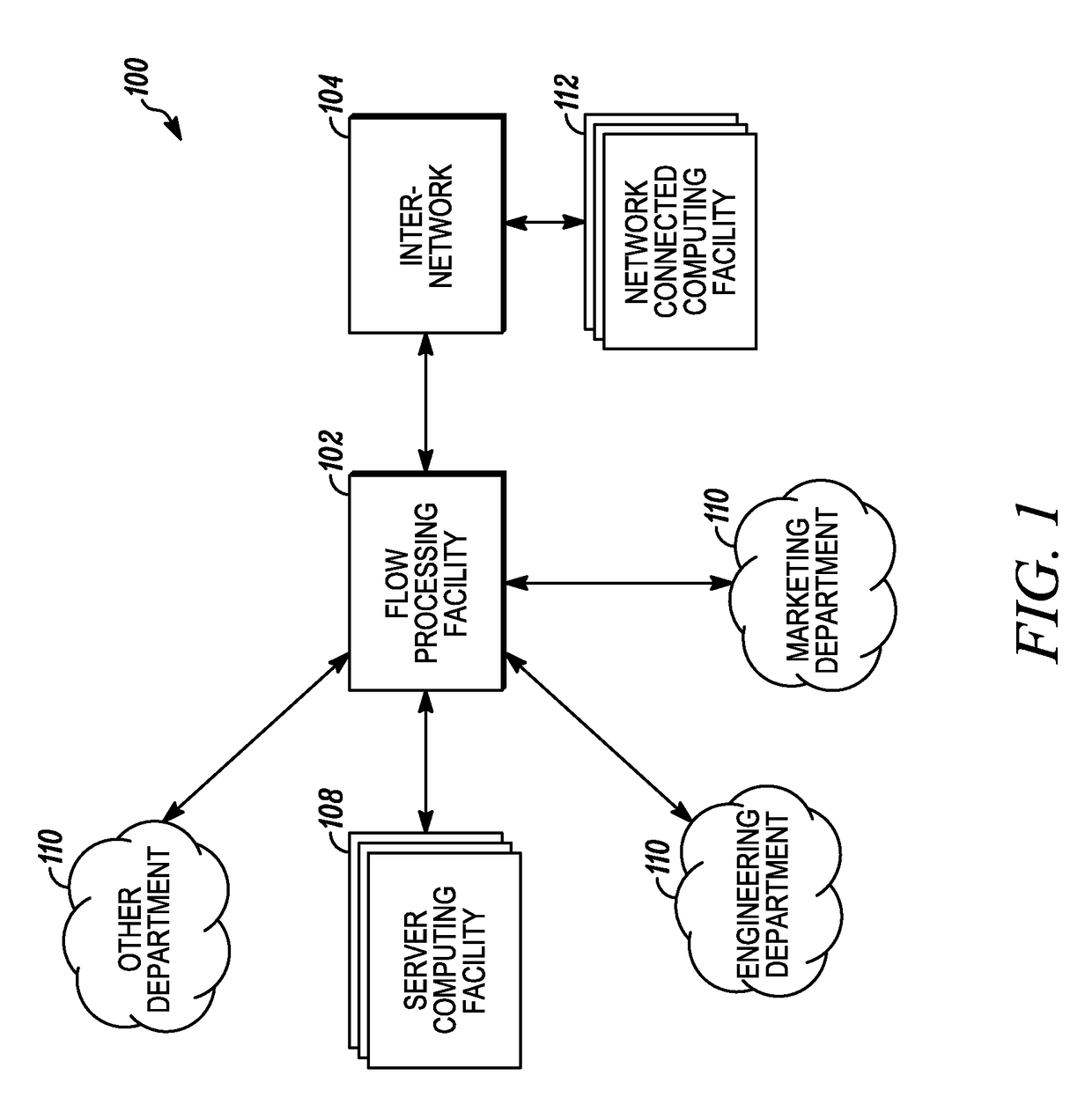

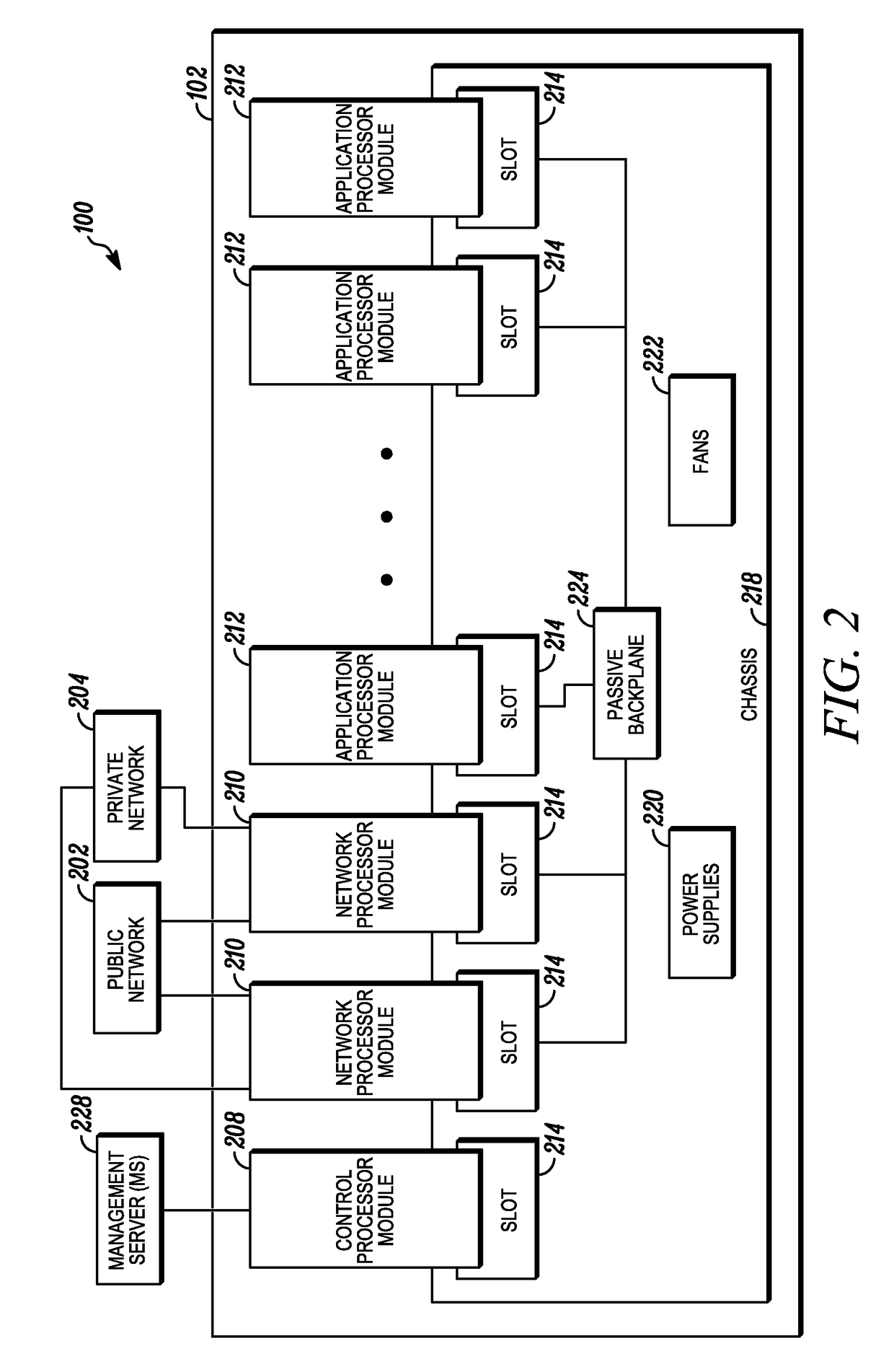

An apparatus and method to distribute applications and services in and throughout a network and to secure the network includes the functionality of a switch with the ability to apply applications and services to received data according to respective subscriber profiles. Front-end processors, or Network Processor Modules (NPMs), receive and recognize data flows from subscribers, extract profile information for the respective subscribers, utilize flow scheduling techniques to forward the data to applications processors, or Flow Processor Modules (FPMs). The FPMs utilize resident applications to process data received from the NPMs. A Control Processor Module (CPM) facilitates applications processing and maintains connections to the NPMs, FPMs, local and remote storage devices, and a Management Server (MS) module that can monitor the health and maintenance of the various modules.

Owner:BLUE COAT SYSTEMS

Radio frequency identification (RFID) based sensor networks

ActiveUS20050088299A1Low production costLow costElectric signal transmission systemsAlarmsCommunication interfaceNetwork architecture

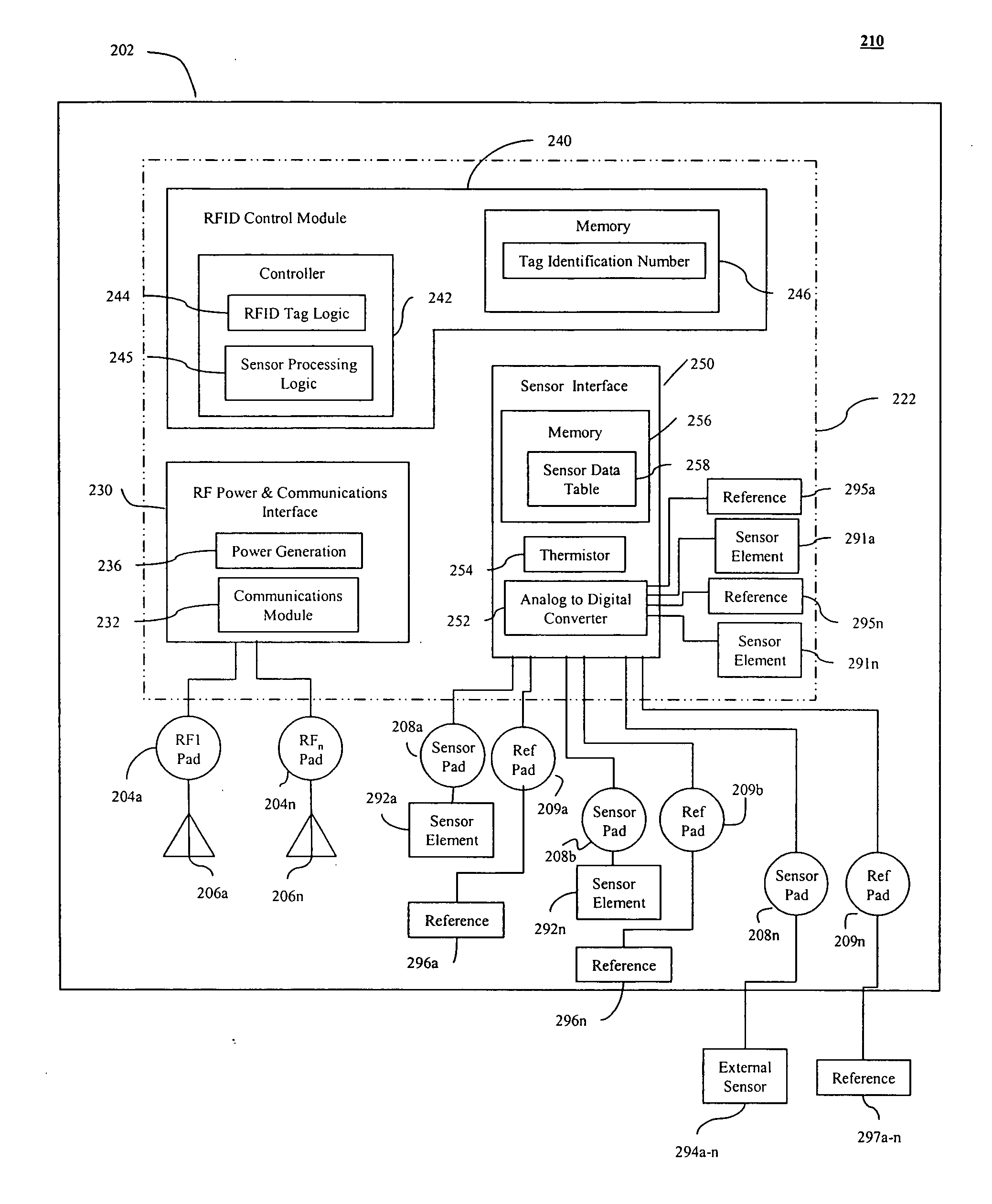

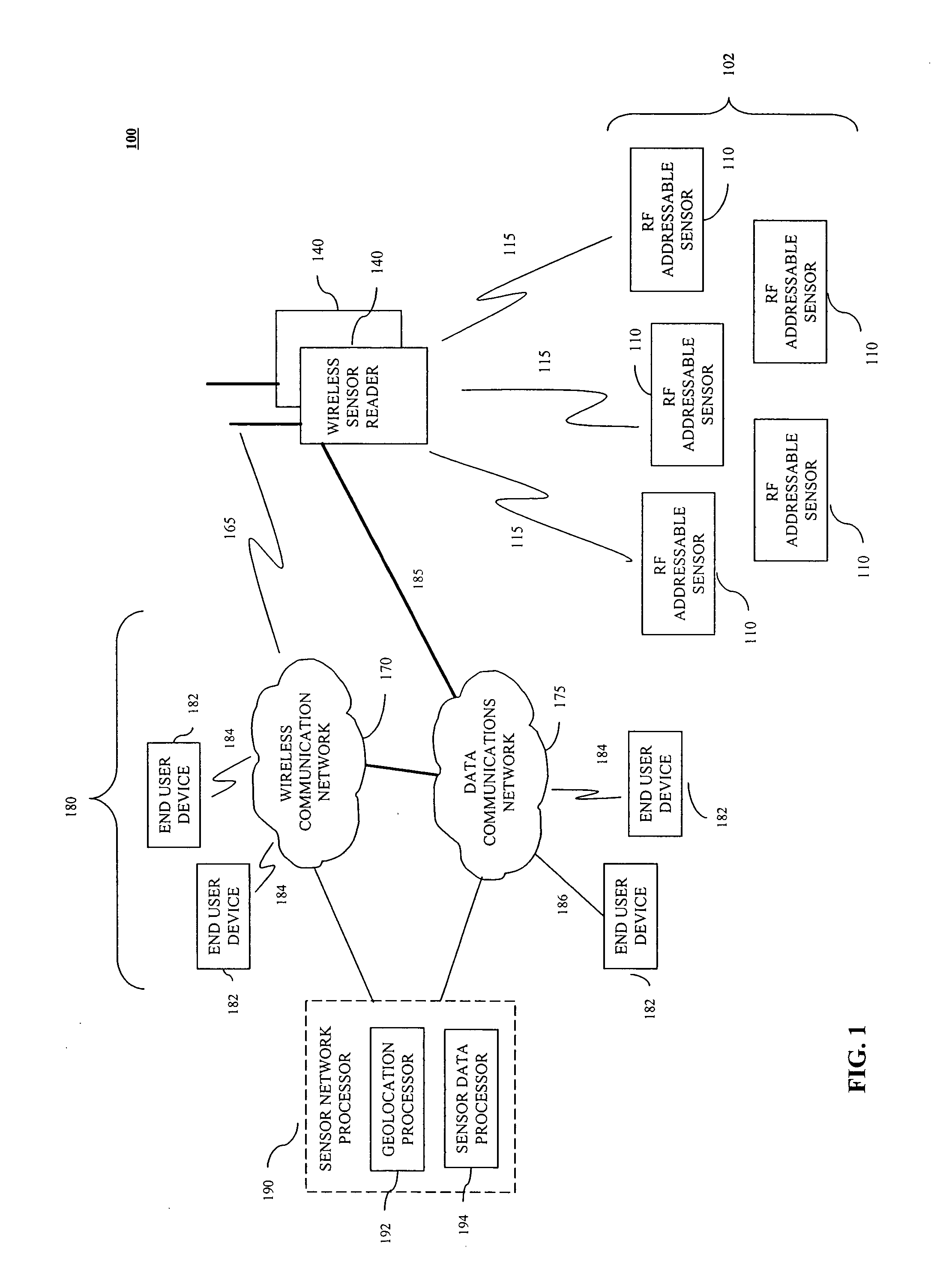

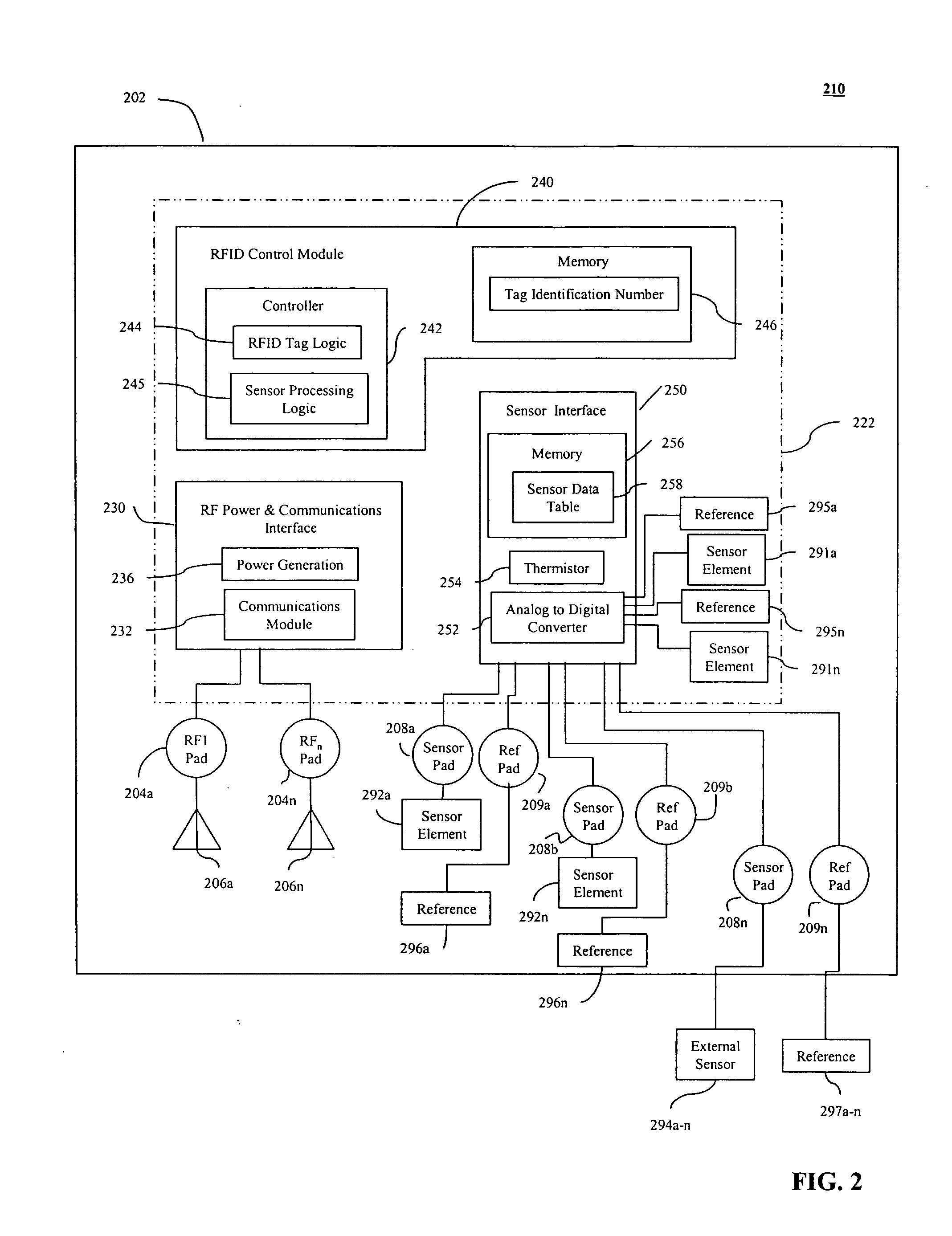

An RF addressable sensor network architecture is provided. The RF addressable sensor network includes one or more RF addressable sensors, one or more wireless sensor readers coupled to a communications network, and one or more end user devices coupled to the communications network. The RF addressable sensor network may also include a sensor network processor. An RF addressable sensor includes one or more sensor elements, one or more antennas for communicating with the wireless sensor reader, an RF power and communications interface, and RFID control module, and a sensor interface. The wireless sensor reader includes one or more antennas, a user interface, a controller, a network communications module, and an RF addressable sensor logic module.

Owner:ALTIVERA +1

Securing a network with data flow processing

InactiveUS20110214157A1Easy to detectPreventing data flowPlatform integrity maintainanceTransmissionData stream processingApplication software

An apparatus and method to distribute applications and services in and throughout a network and to secure the network includes the functionality of a switch with the ability to apply applications and services to received data according to respective subscriber profiles. Front-end processors, or Network Processor Modules (NPMs), receive and recognize data flows from subscribers, extract profile information for the respective subscribers, utilize flow scheduling techniques to forward the data to applications processors, or Flow Processor Modules (FPMs). The FPMs utilize resident applications to process data received from the NPMs. A Control Processor Module (CPM) facilitates applications processing and maintains connections to the NPMs, FPMs, local and remote storage devices, and a Management Server (MS) module that can monitor the health and maintenance of the various modules.

Owner:CA TECH INC

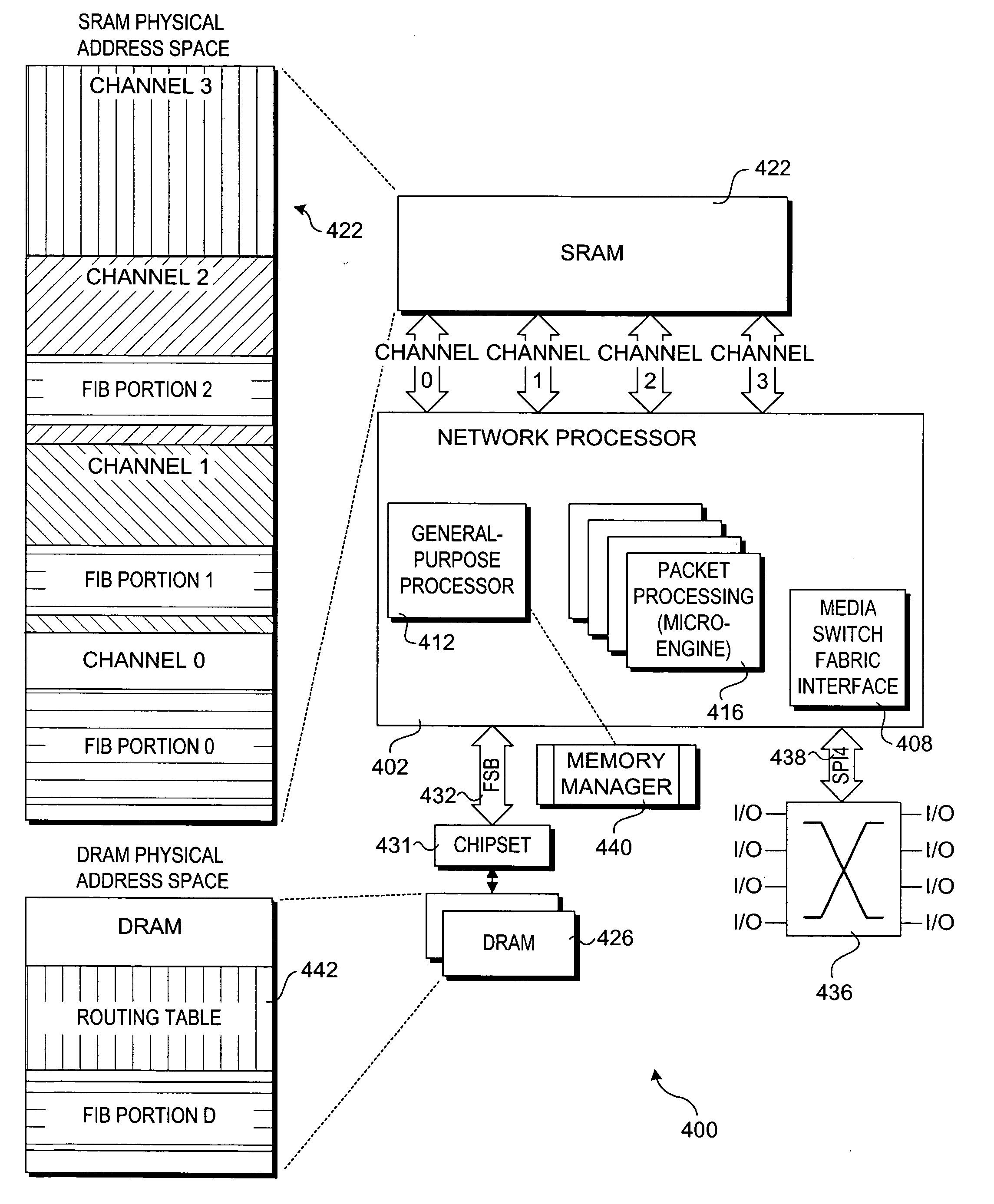

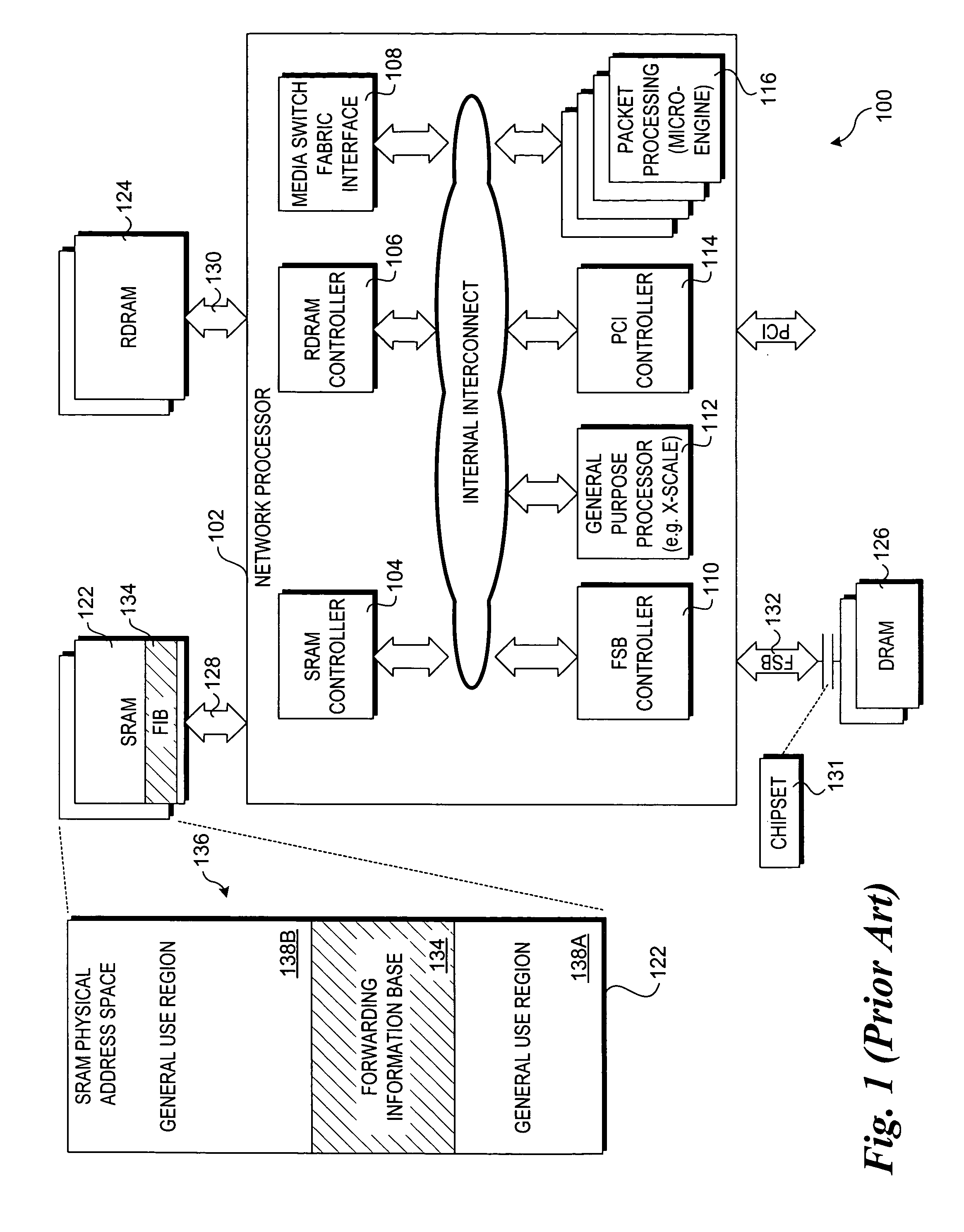

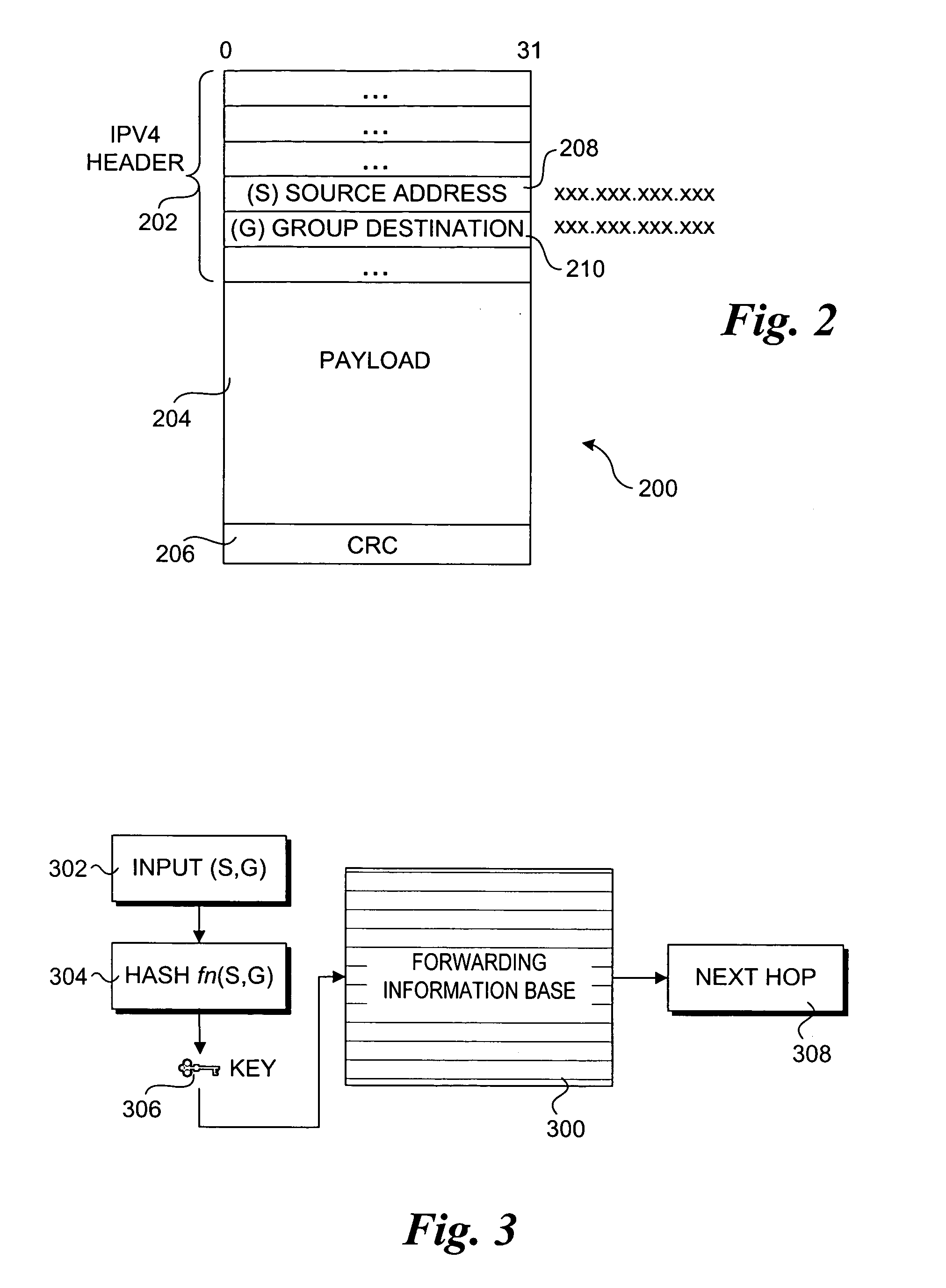

Method to improve forwarding information base lookup performance

InactiveUS20050259672A1Multiprogramming arrangementsData switching by path configurationInformation repositoryDepth level

A method and apparatus for improving forwarding information base (FIB) lookup performance. An FIB is partitioned into a multiple portions that are distributed across segments of a multi-channel SRAM store to form a distributed FIB that is accessible to a network processor. Primary entries corresponding to a linked list of FIB entries are stored in a designated FIB portion. Secondary FIB entries are stored in other FIB portions (a portion of the secondary FIB entries may also be stored in the designated primary entry portion), enabling multiple FIB entries to be concurrently accessed via respective channels. A portion of the secondary FIB entries may also be stored in a secondary (e.g., DRAM) store. A depth level threshold is set to limit the number of accesses to a linked list of FIB entries by a network processor micro-engine thread, wherein an access depth that would exceed the threshold generates an exception that is handled by a separate execution thread to maintain line-rate throughput.

Owner:INTEL CORP

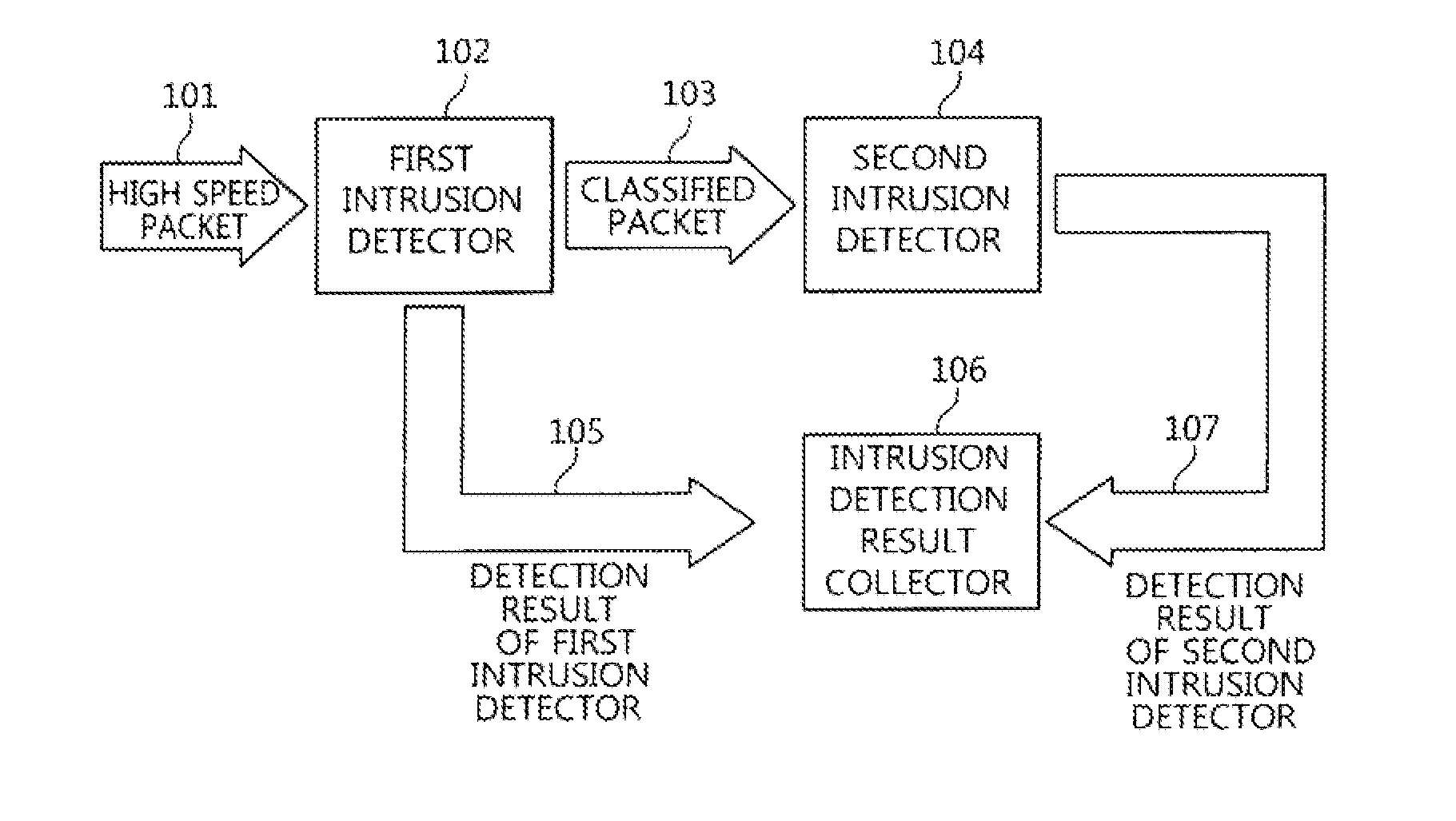

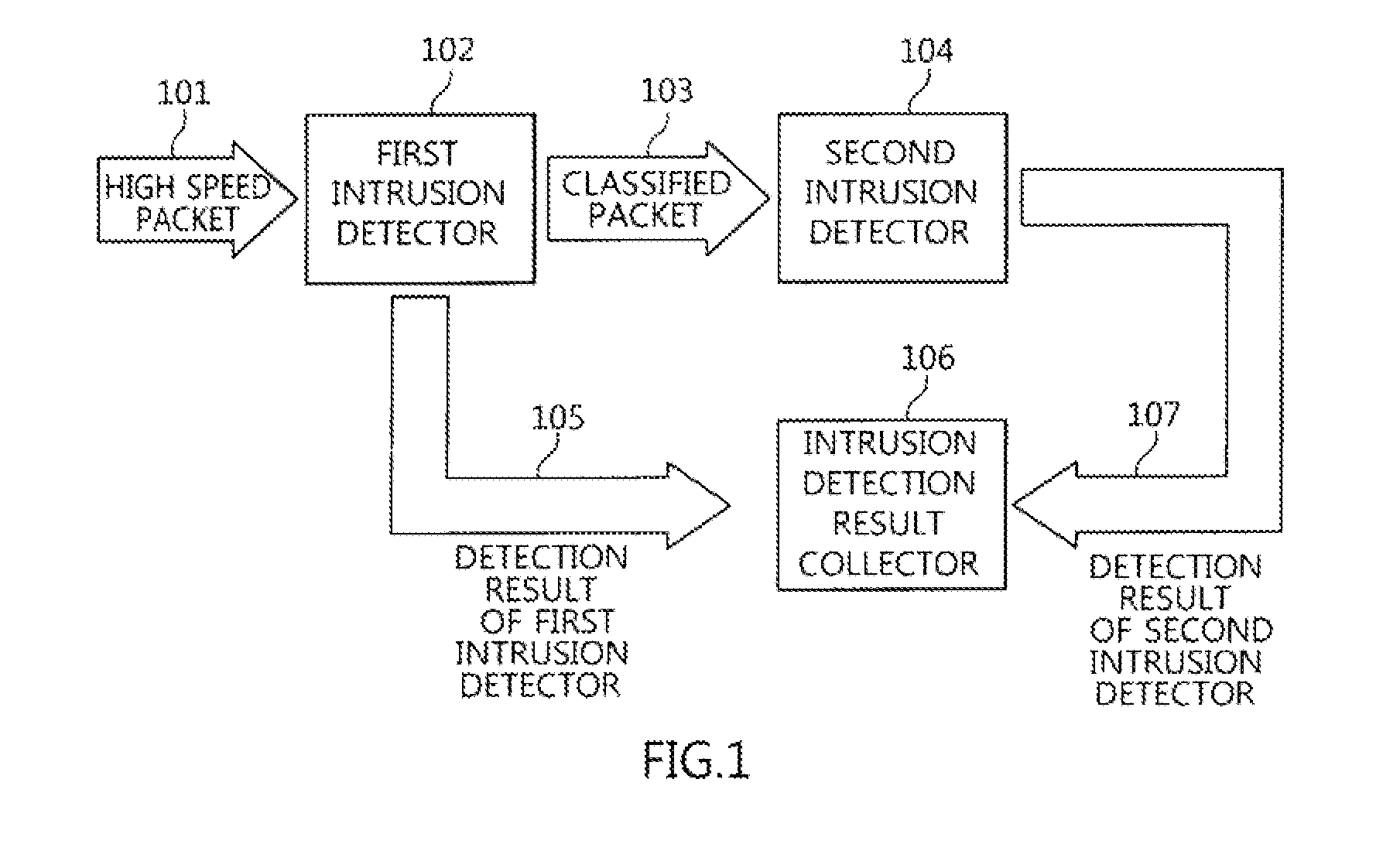

Two-stage intrusion detection system for high-speed packet processing using network processor and method thereof

ActiveUS20130160122A1Memory loss protectionData taking preventionNetwork processorDistributed computing

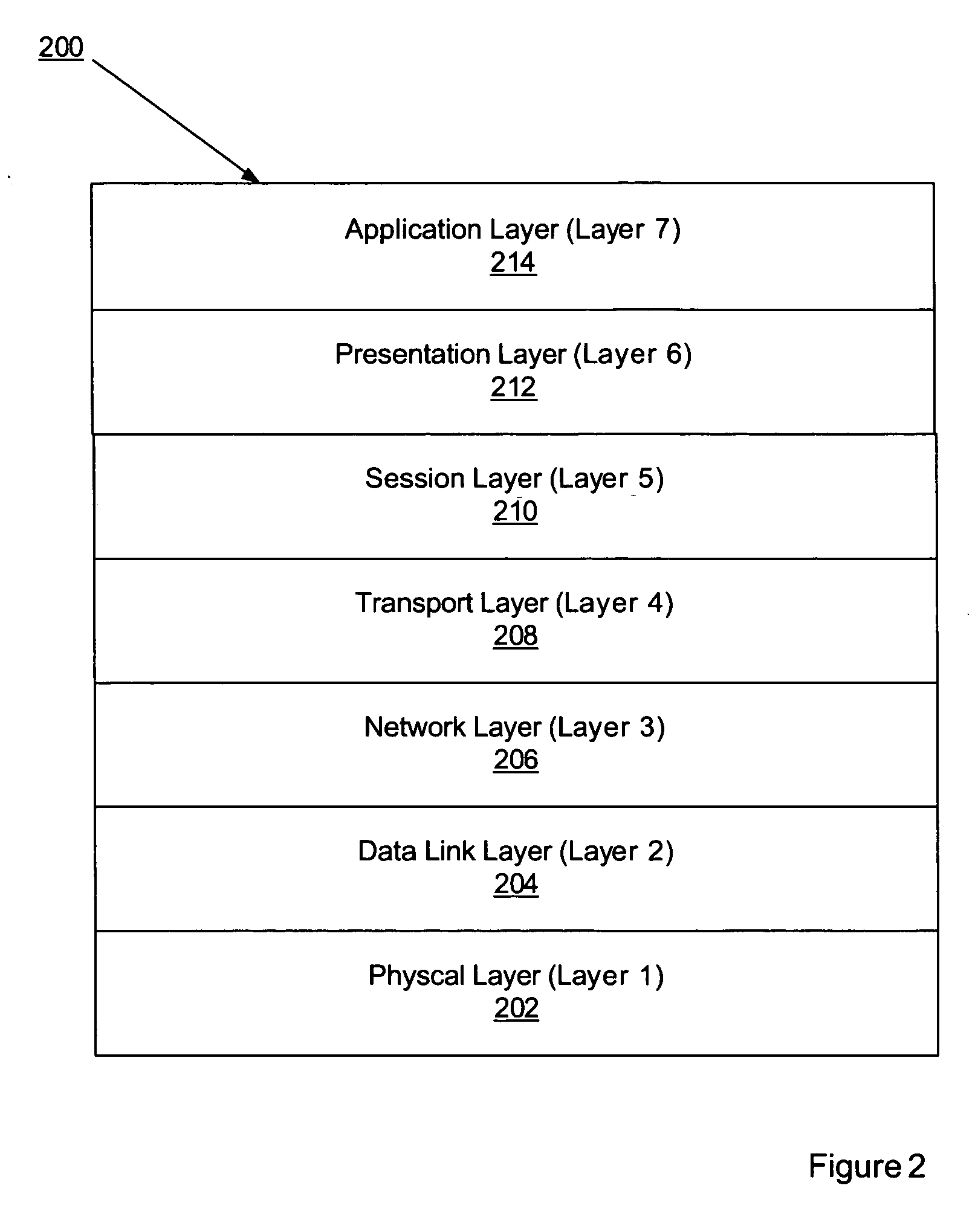

A system and method for detecting network intrusion by using a network processor are provided. The intrusion detection system includes: a first intrusion detector, configured to use a first network processor to perform intrusion detection on layer 3 and layer 4 of a protocol field among information included in a packet header of a packet transmitted to the intrusion detection system, and when no intrusion is detected, classify the packets according to stream and transmit the classified packets to a second intrusion detector; and a second intrusion detector, configured to use a second network processor to perform intrusion detection through deep packet inspection (DPI) for the packet payload of the packets transmitted from the first intrusion detector. Thereby, intrusion detection for high-speed packets can be performed in a network environment.

Owner:ELECTRONICS & TELECOMM RES INST

Processing data flows with a data flow processor

InactiveUS20110213869A1Easy to detectPreventing data flowDigital computer detailsPlatform integrity maintainanceData stream processingApplication software

An apparatus and method to distribute applications and services in and throughout a network and to secure the network includes the functionality of a switch with the ability to apply applications and services to received data according to respective subscriber profiles. Front-end processors, or Network Processor Modules (NPMs), receive and recognize data flows from subscribers, extract profile information for the respective subscribers, utilize flow scheduling techniques to forward the data to applications processors, or Flow Processor Modules (FPMs). The FPMs utilize resident applications to process data received from the NPMs. A Control Processor Module (CPM) facilitates applications processing and maintains connections to the NPMs, FPMs, local and remote storage devices, and a Management Server (MS) module that can monitor the health and maintenance of the various modules.

Owner:BLUE COAT SYSTEMS

Database security via data flow processing

InactiveUS20110219035A1Easy to detectPreventing data flowDigital data information retrievalDigital data processing detailsComputer moduleData stream processing

An apparatus and method to distribute applications and services in and throughout a network and to secure the network includes the functionality of a switch with the ability to apply applications and services to received data according to respective subscriber profiles. Front-end processors, or Network Processor Modules (NPMs), receive and recognize data flows from subscribers, extract profile information for the respective subscribers, utilize flow scheduling techniques to forward the data to applications processors, or Flow Processor Modules (FPMs). The FPMs utilize resident applications to process data received from the NPMs. A Control Processor Module (CPM) facilitates applications processing and maintains connections to the NPMs, FPMs, local and remote storage devices, and a Management Server (MS) module that can monitor the health and maintenance of the various modules.

Owner:BLUE COAT SYSTEMS

Processing data flows with a data flow processor

ActiveUS20110231510A1Easy to detectPreventing data flowDigital computer detailsPlatform integrity maintainanceData stream processingComputer module

An apparatus and method to distribute applications and services in and throughout a network and to secure the network includes the functionality of a switch with the ability to apply applications and services to received data according to respective subscriber profiles. Front-end processors, or Network Processor Modules (NPMs), receive and recognize data flows from subscribers, extract profile information for the respective subscribers, utilize flow scheduling techniques to forward the data to applications processors, or Flow Processor Modules (FPMs). The FPMs utilize resident applications to process data received from the NPMs. A Control Processor Module (CPM) facilitates applications processing and maintains connections to the NPMs, FPMs, local and remote storage devices, and a Management Server (MS) module that can monitor the health and maintenance of the various modules.

Owner:CA TECH INC

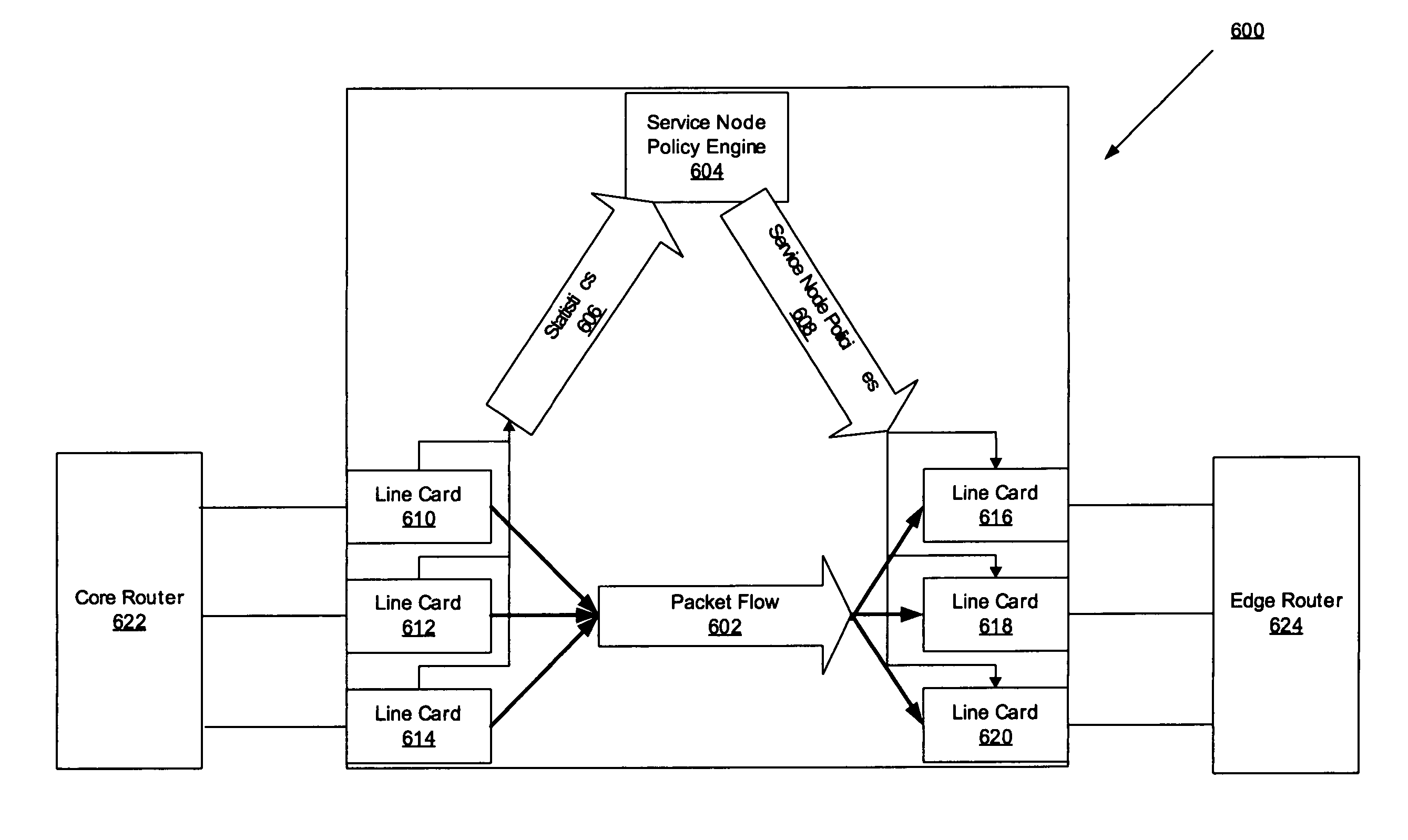

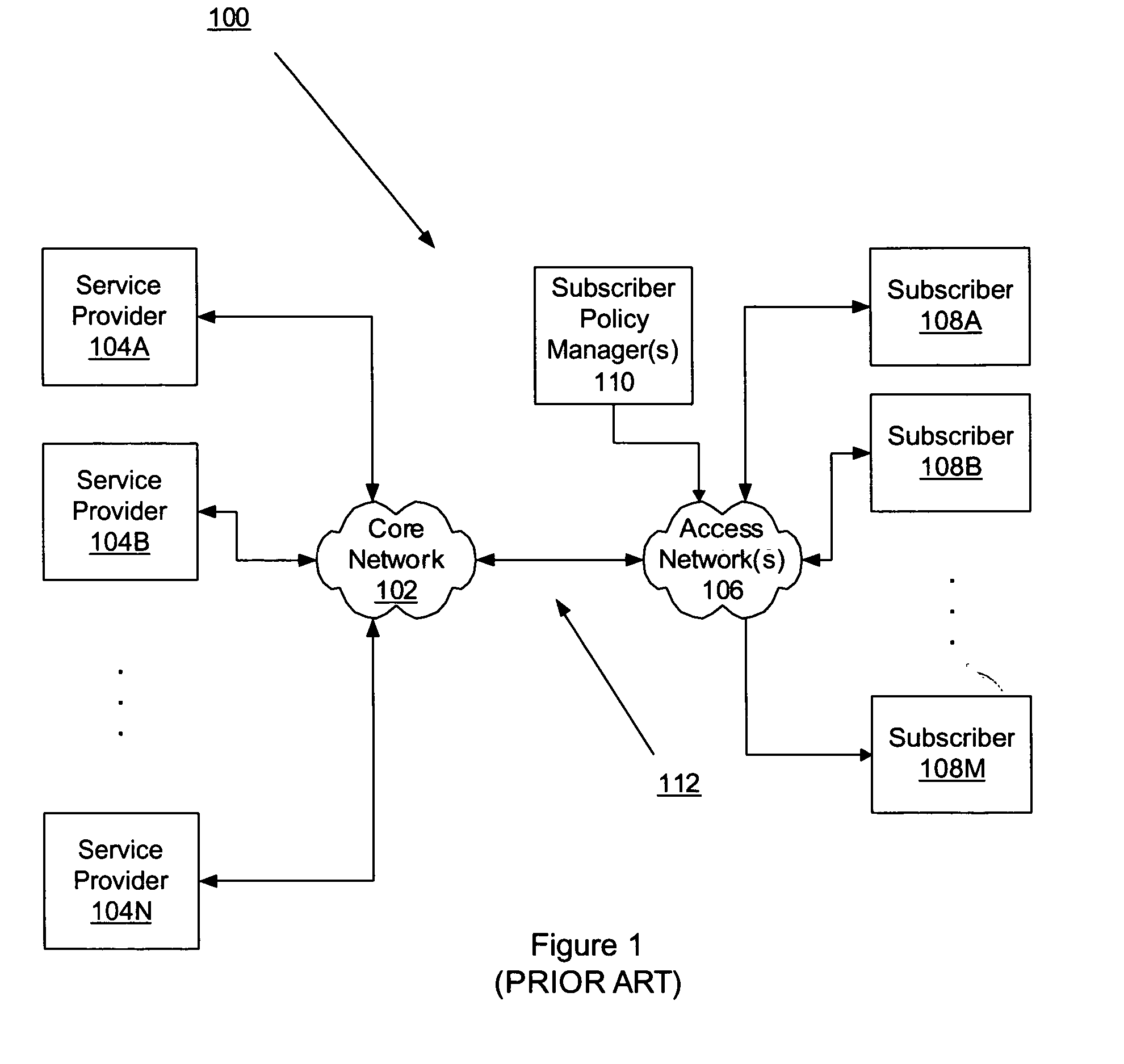

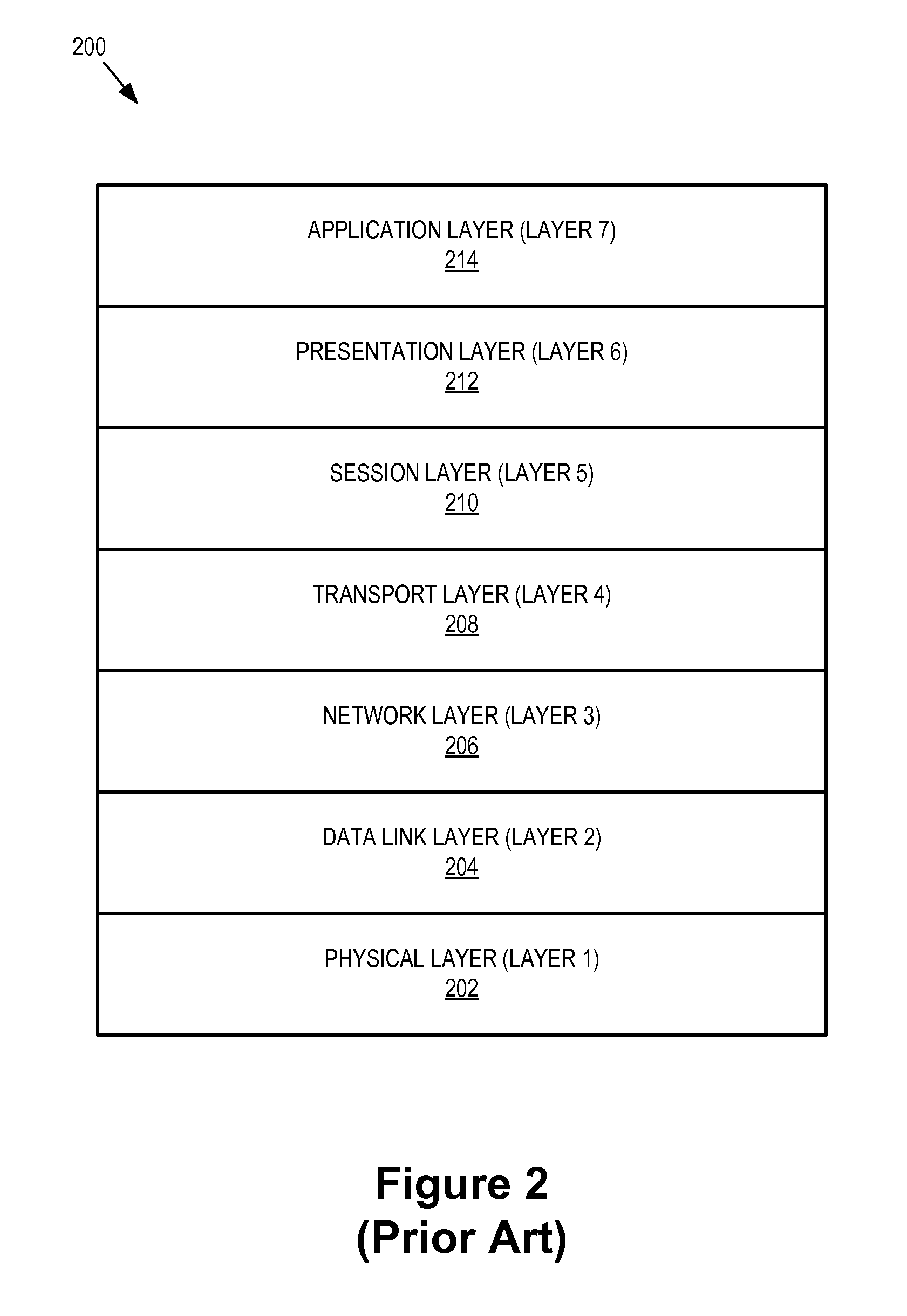

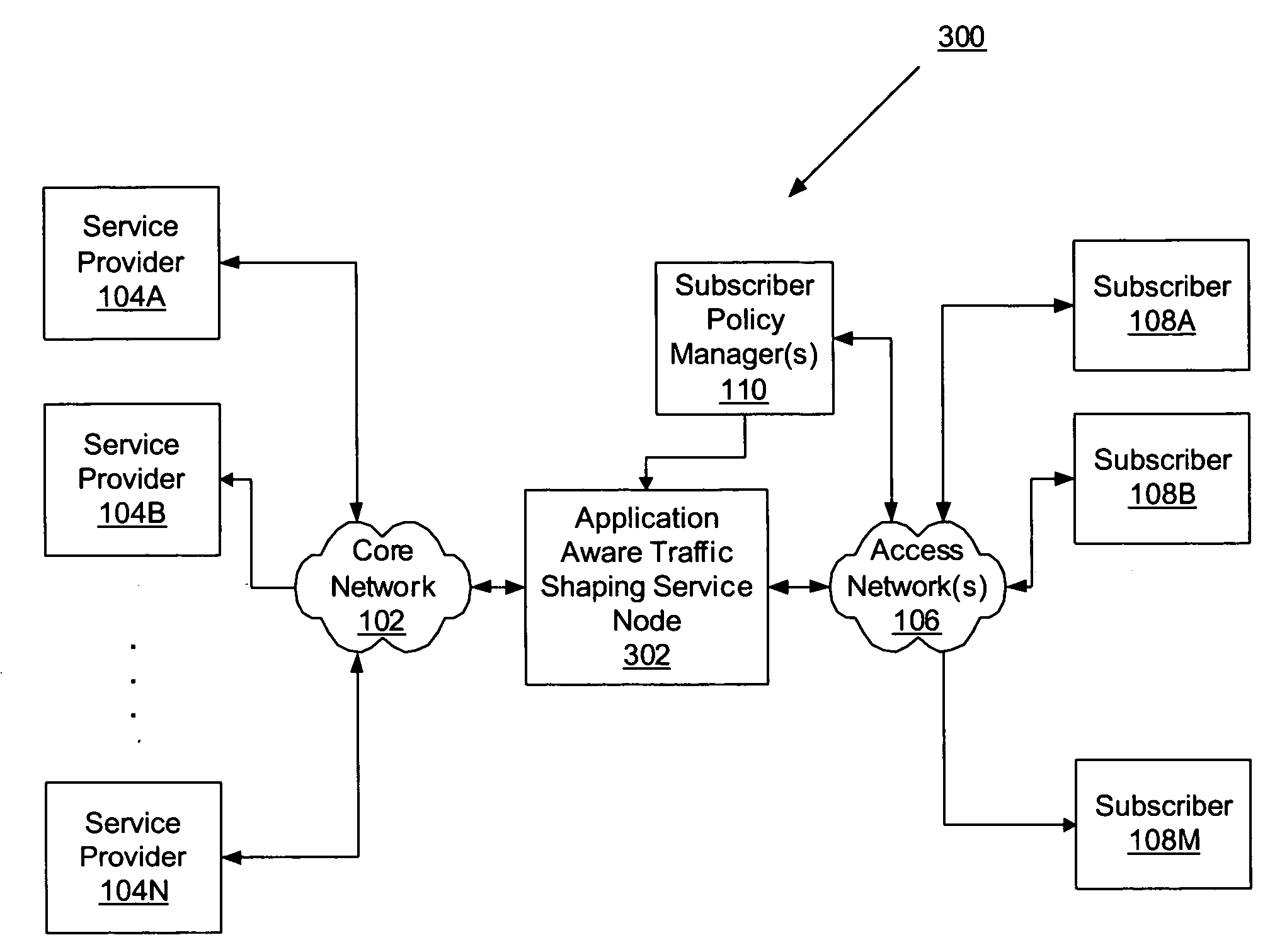

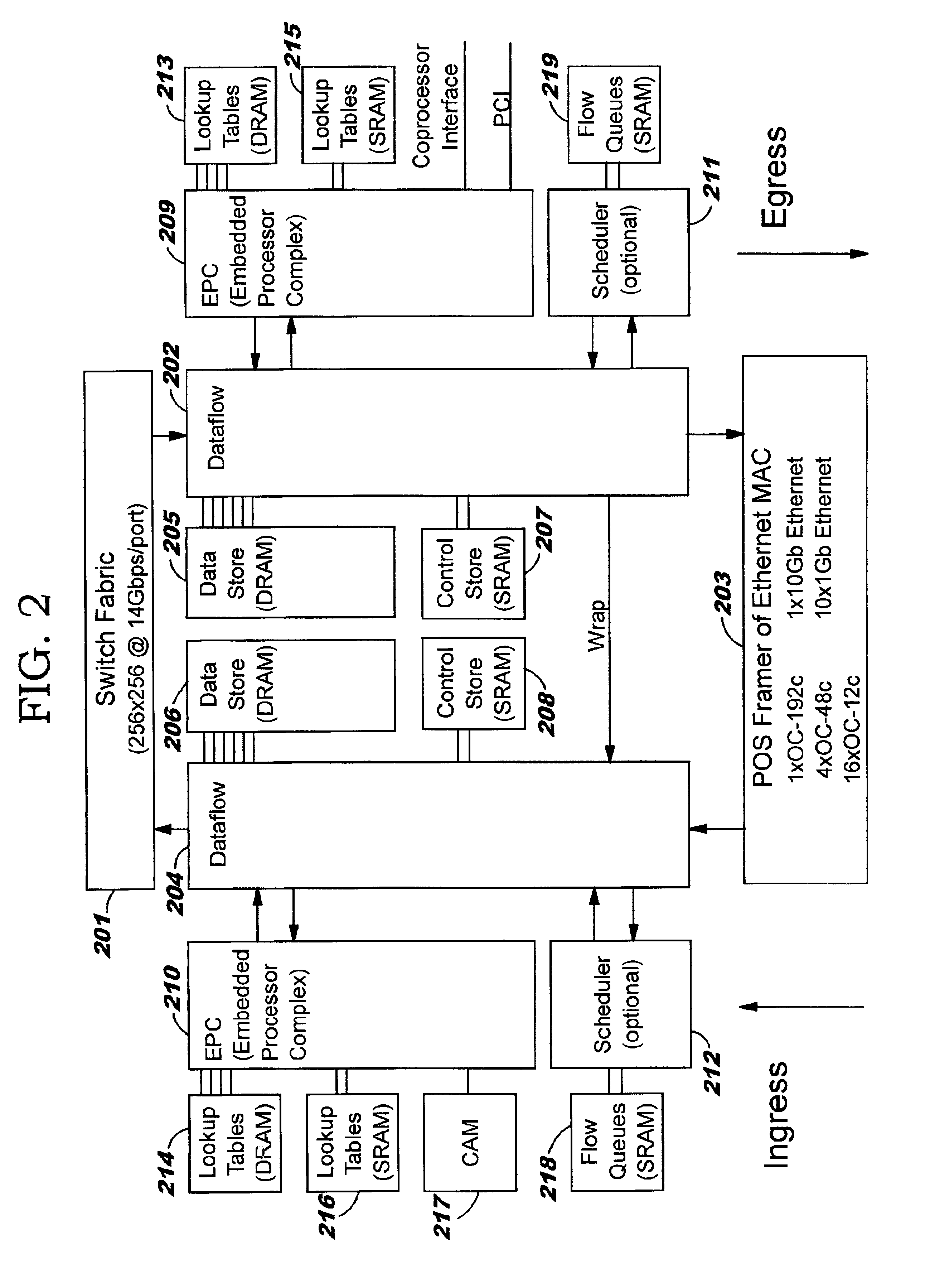

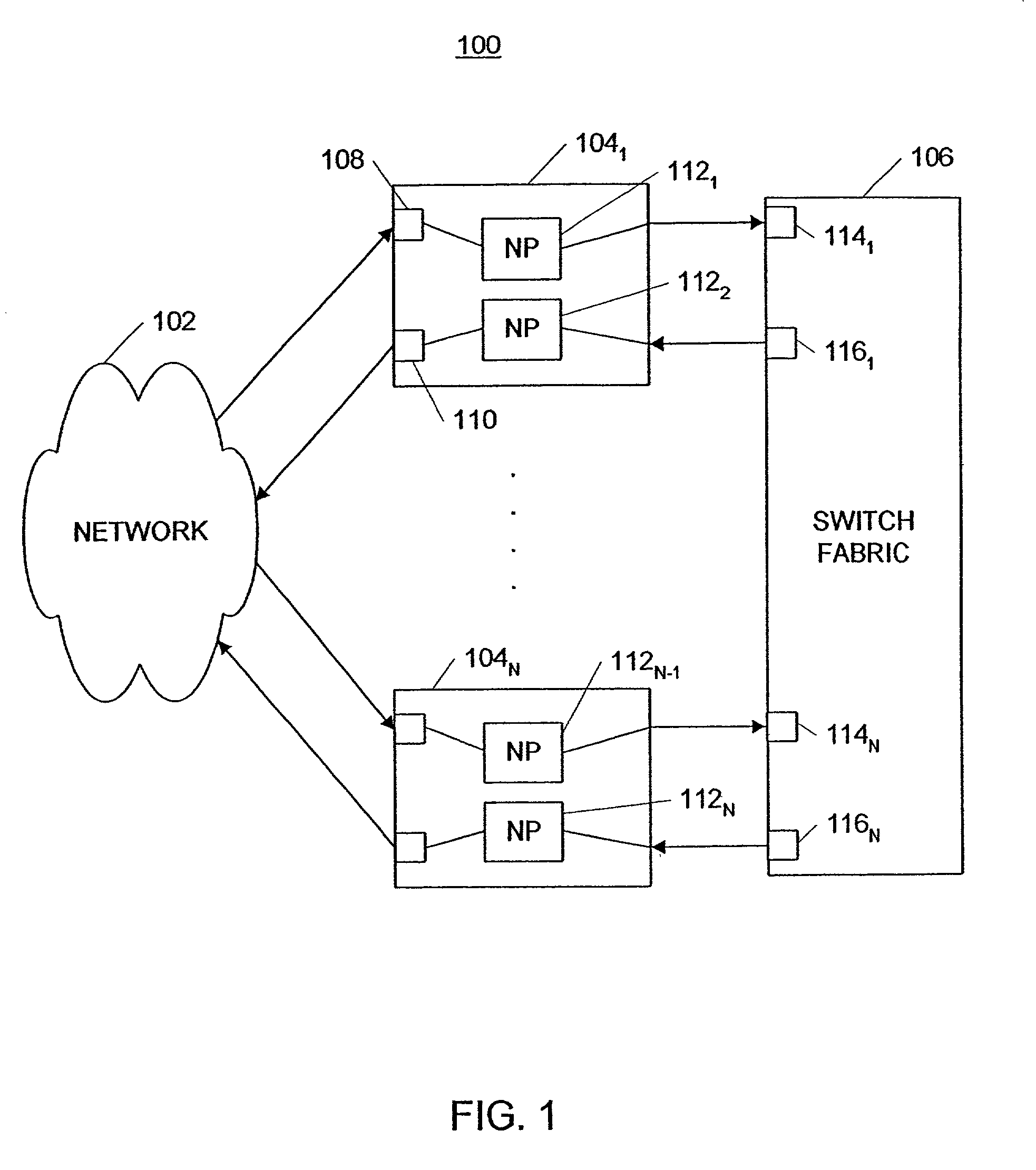

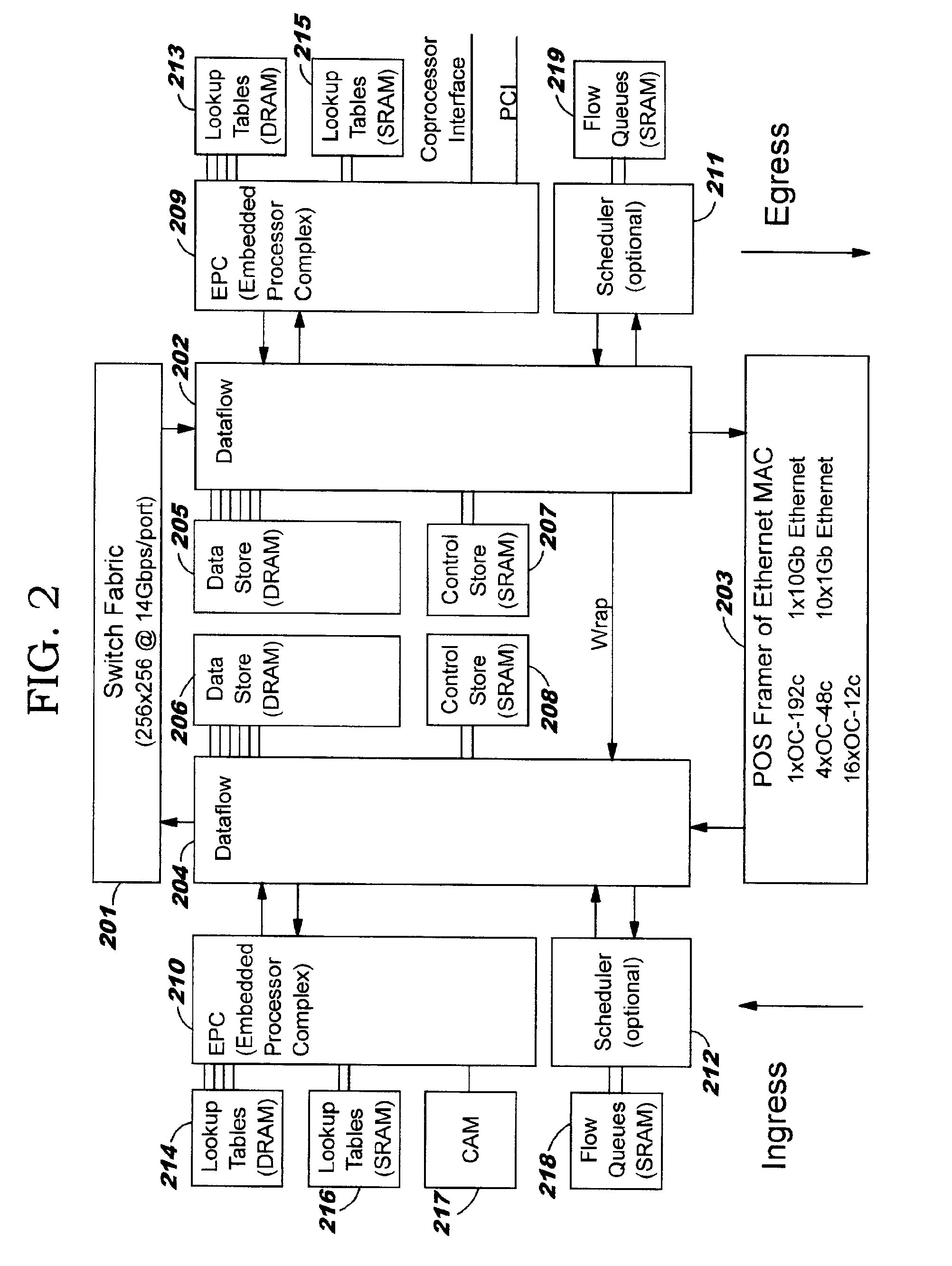

Network element architecture for deep packet inspection

A method and apparatus for an application aware traffic shaping service node architecture is described. One embodiment of the invention, the service node architecture includes a set of one or more line cards, a set of one or more processor cards and a full mesh communication infrastructure coupling the sets of line and processor cards. Each link coupling the sets of line and processor cards is of equal capacity. A line card includes a physical interface and a set of one or policy network processors, with the network processors performing deep packet inspection on incoming traffic and shaping outgoing traffic. Processors cards include a set of one or more policy generating processors. According to another embodiment of the invention, the service node generates a set of statistics based on the incoming traffic and continually updates, in real-time, traffic shaping policies based on the set of statistics.

Owner:TELLABS COMM CANADA

Network element architecture for deep packet inspection

ActiveUS20060233101A1Equal capacityError preventionFrequency-division multiplex detailsTraffic capacityNetwork architecture

A method and apparatus for an application aware traffic shaping service node architecture is described. One embodiment of the invention, the service node architecture includes a set of one or more line cards, a set of one or more processor cards and a full mesh communication infrastructure coupling the sets of line and processor cards. Each link coupling the sets of line and processor cards is of equal capacity. A line card includes a physical interface and a set of one or policy network processors, with the network processors performing deep packet inspection on incoming traffic and shaping outgoing traffic. Processors cards include a set of one or more policy generating processors. According to another embodiment of the invention, the service node generates a set of statistics based on the incoming traffic and continually updates, in real-time, traffic shaping policies based on the set of statistics.

Owner:TELLABS COMM CANADA

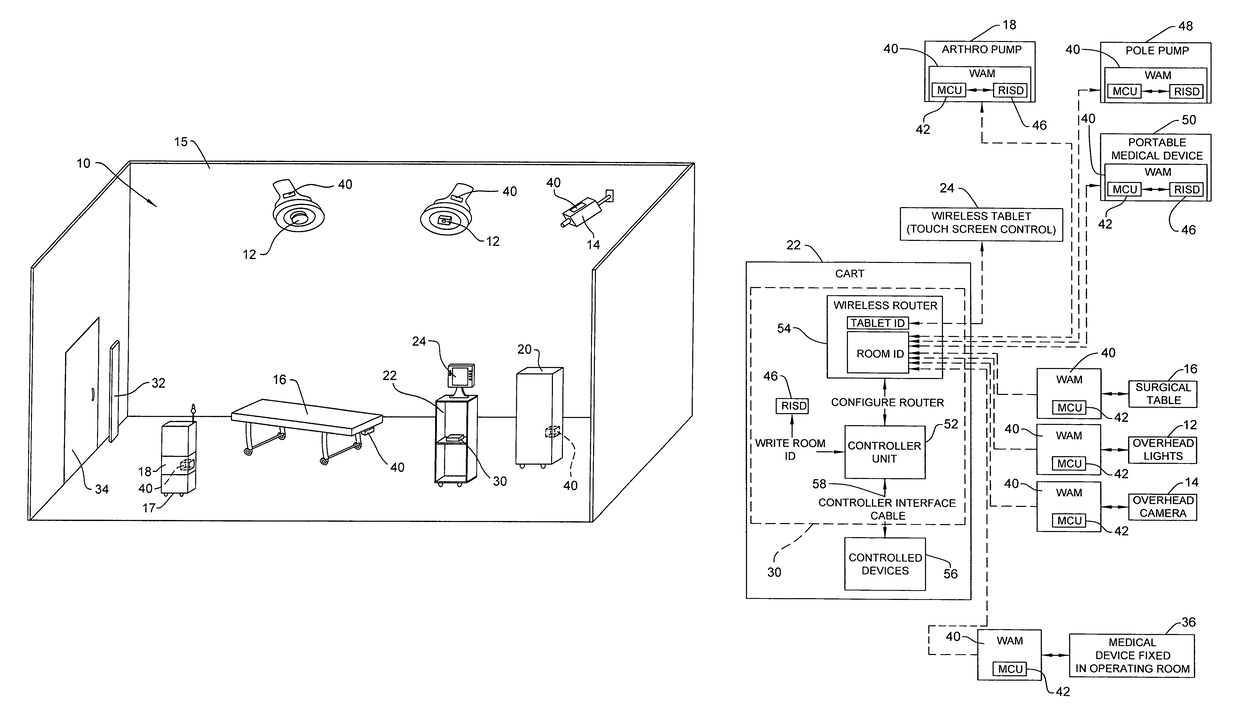

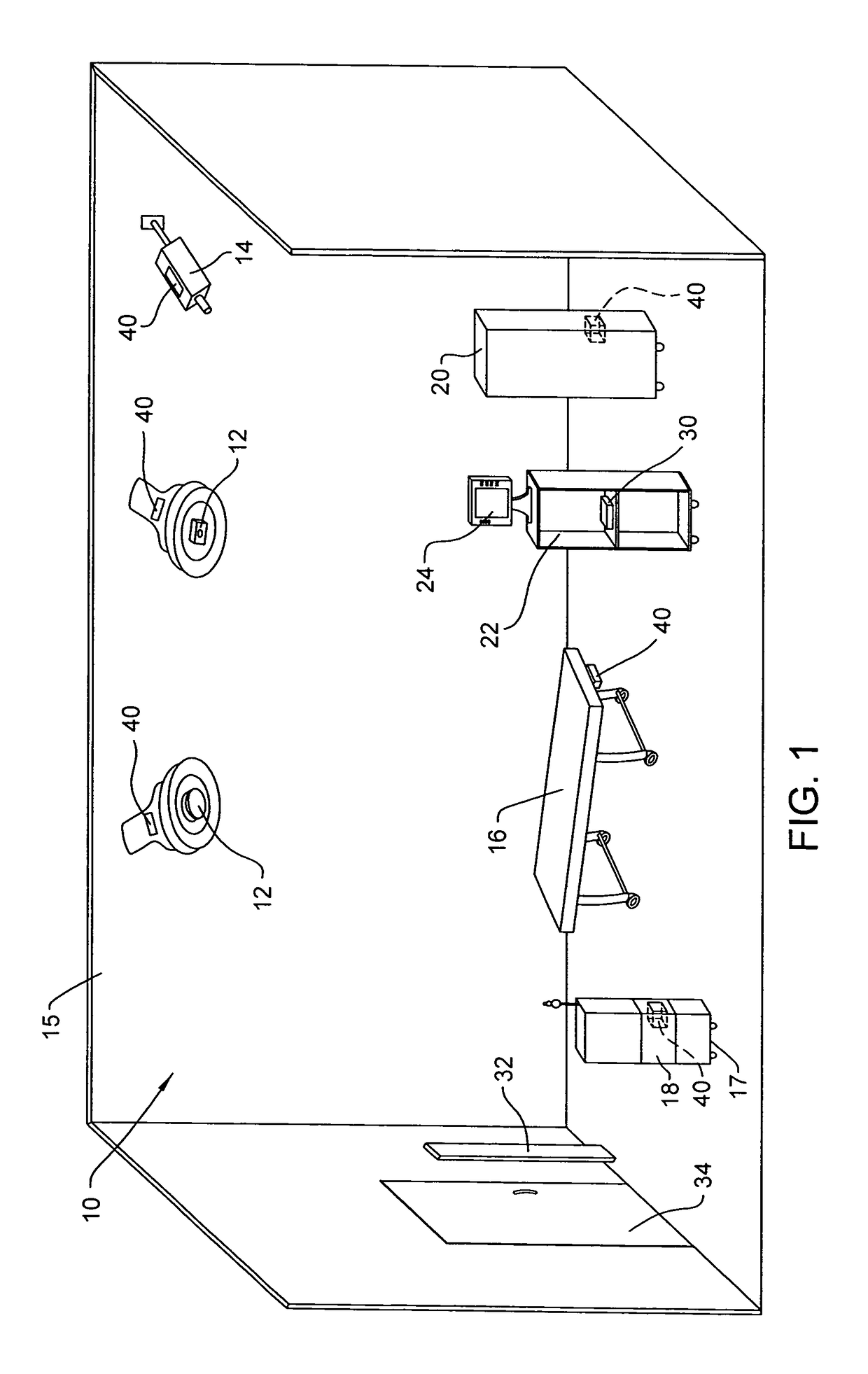

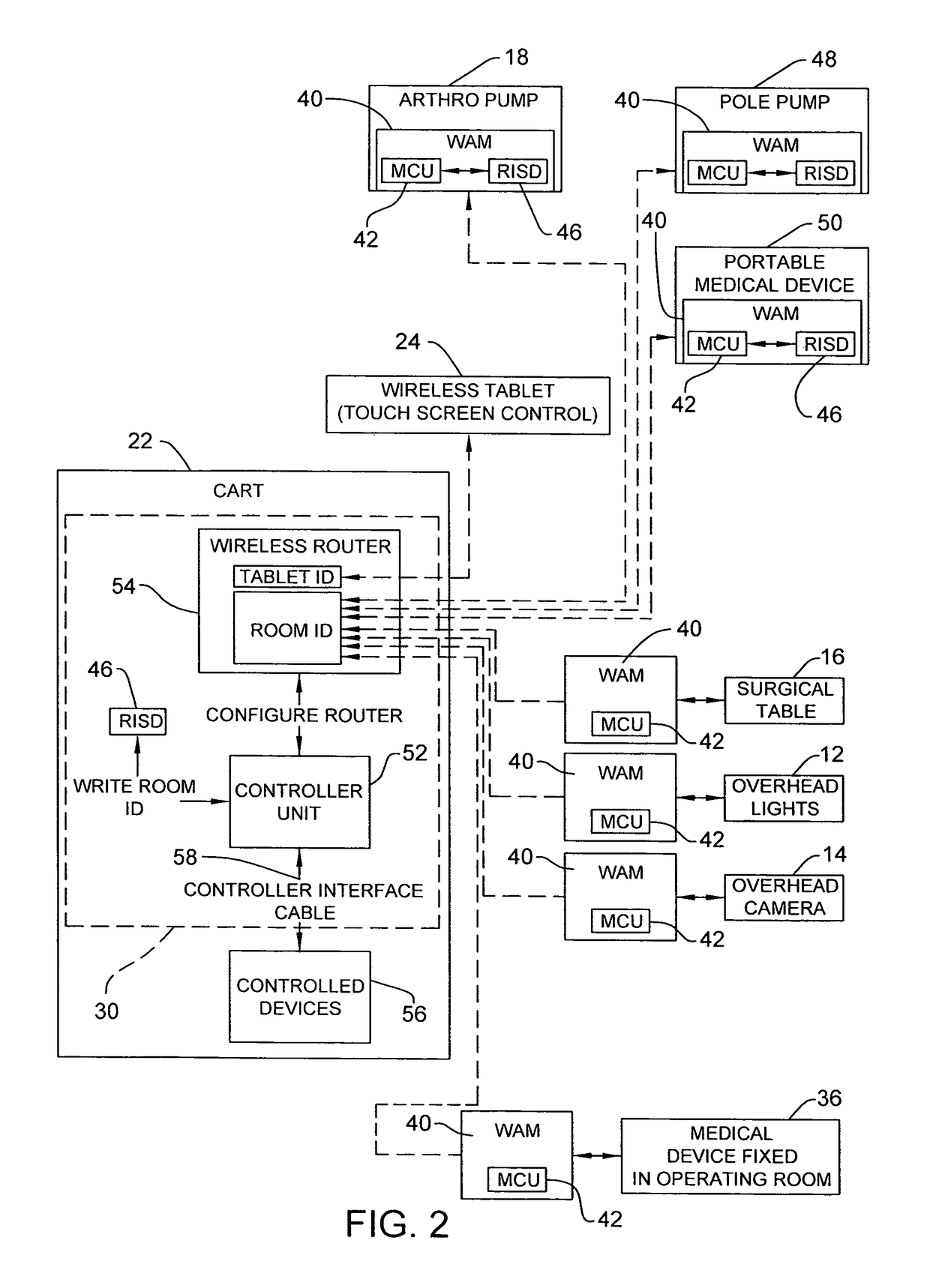

Wireless medical room control arrangement for control of a plurality of medical devices

A wireless medical room control arrangement includes a wireless controller having a wireless router. A room identifier and a device identifier are stored in the controller. A communication interface sends commands to and receives commands from the wireless controller. In response to commands from the interface, the wireless controller sends wireless control signals to operate medical devices in the room. A room monitor adjacent a doorway provides room identifiers to medical devices and wireless controllers entering the room and provides dummy identifiers to medical devices and controllers exiting the room. The room monitors may connect to a global network processor that determines the location of the medical devices in a medical facility.

Owner:STRYKER CORP

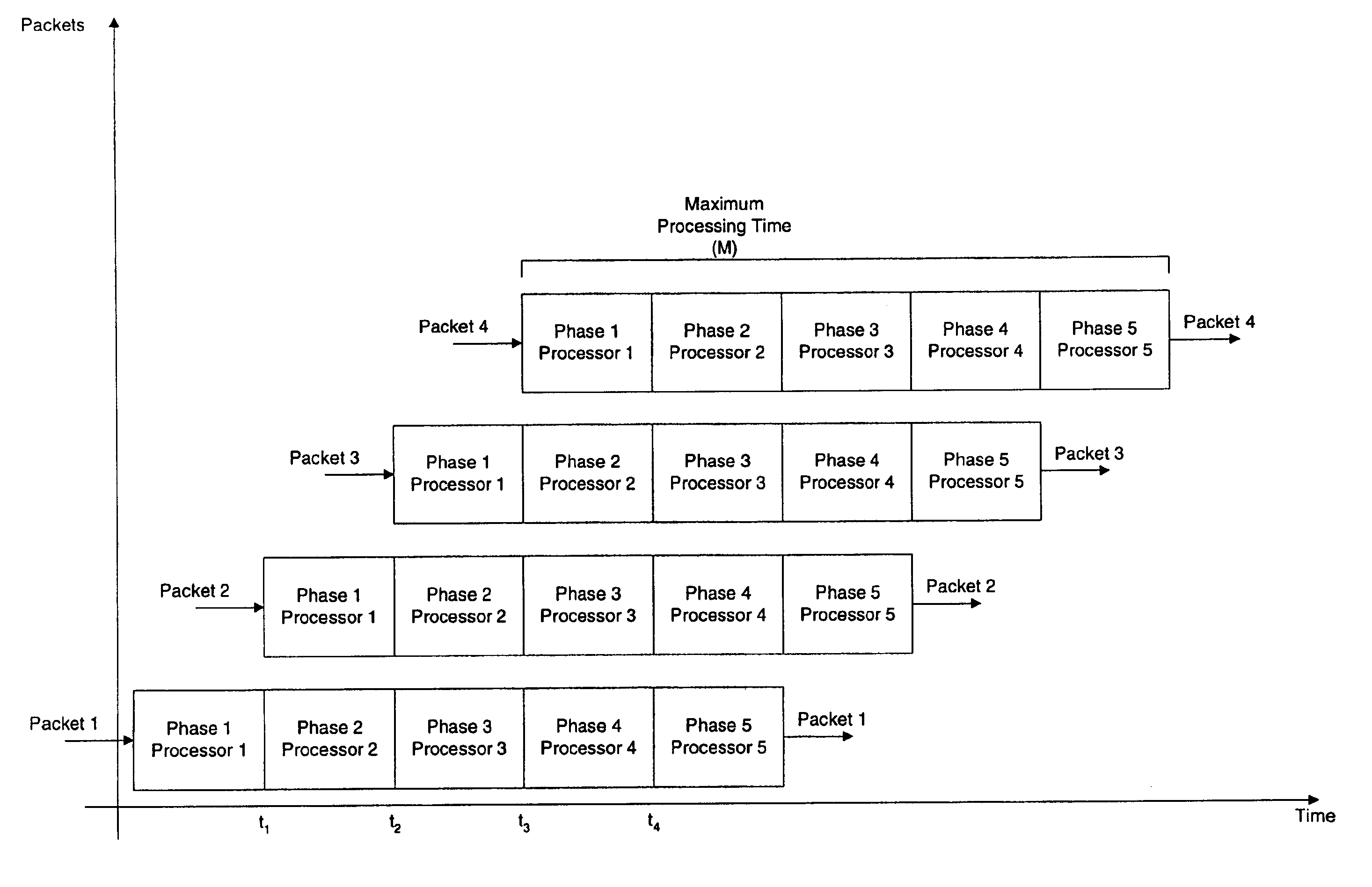

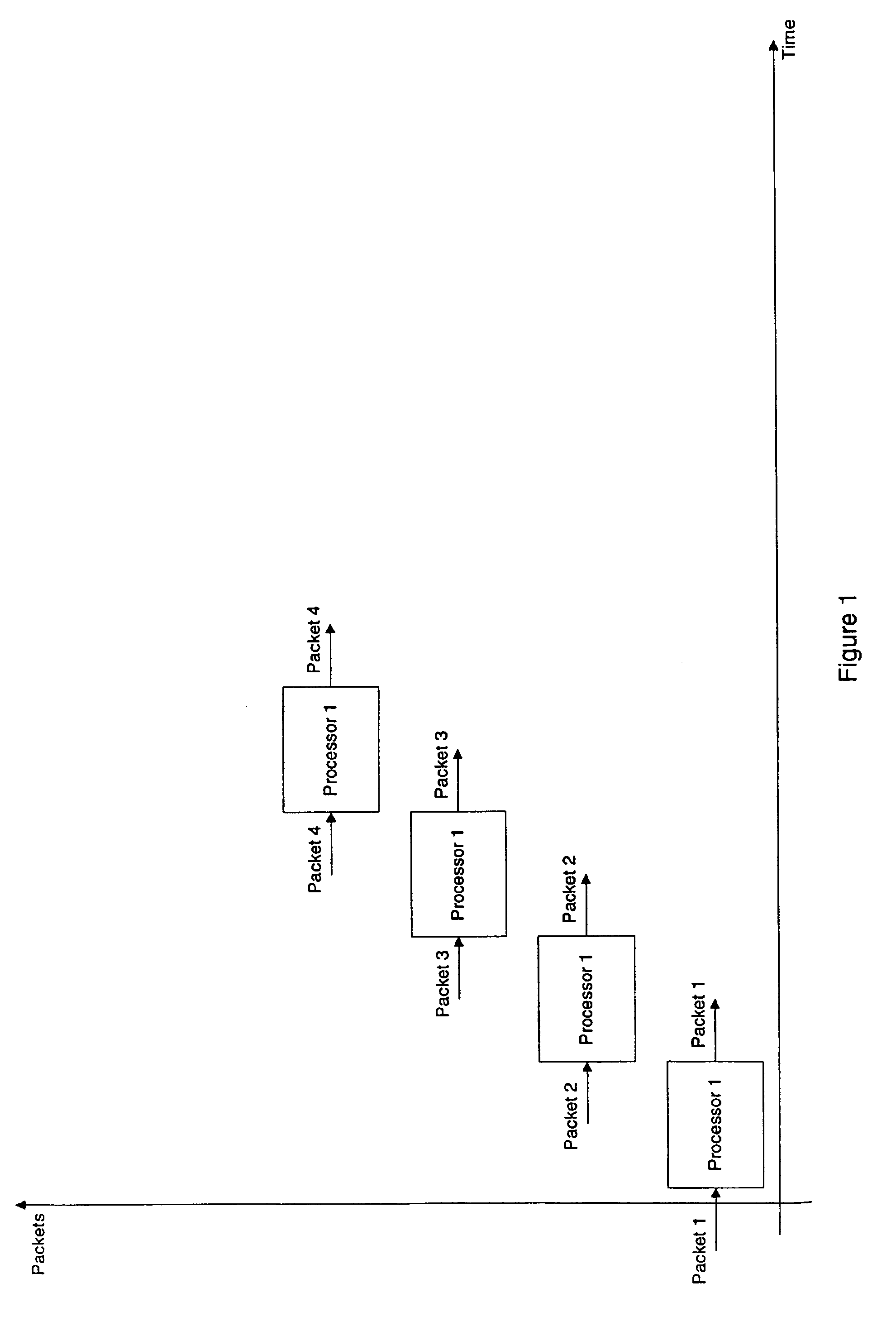

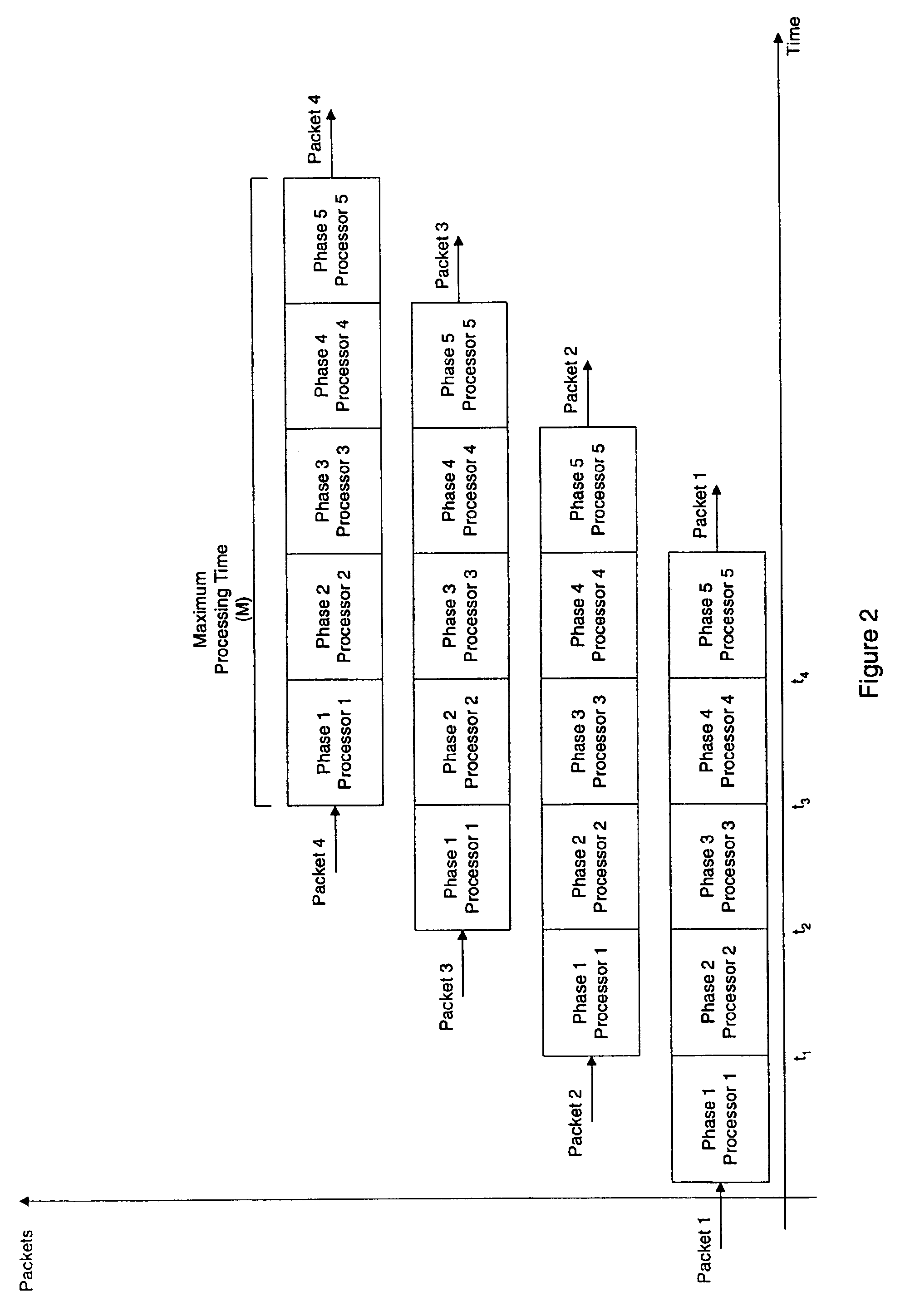

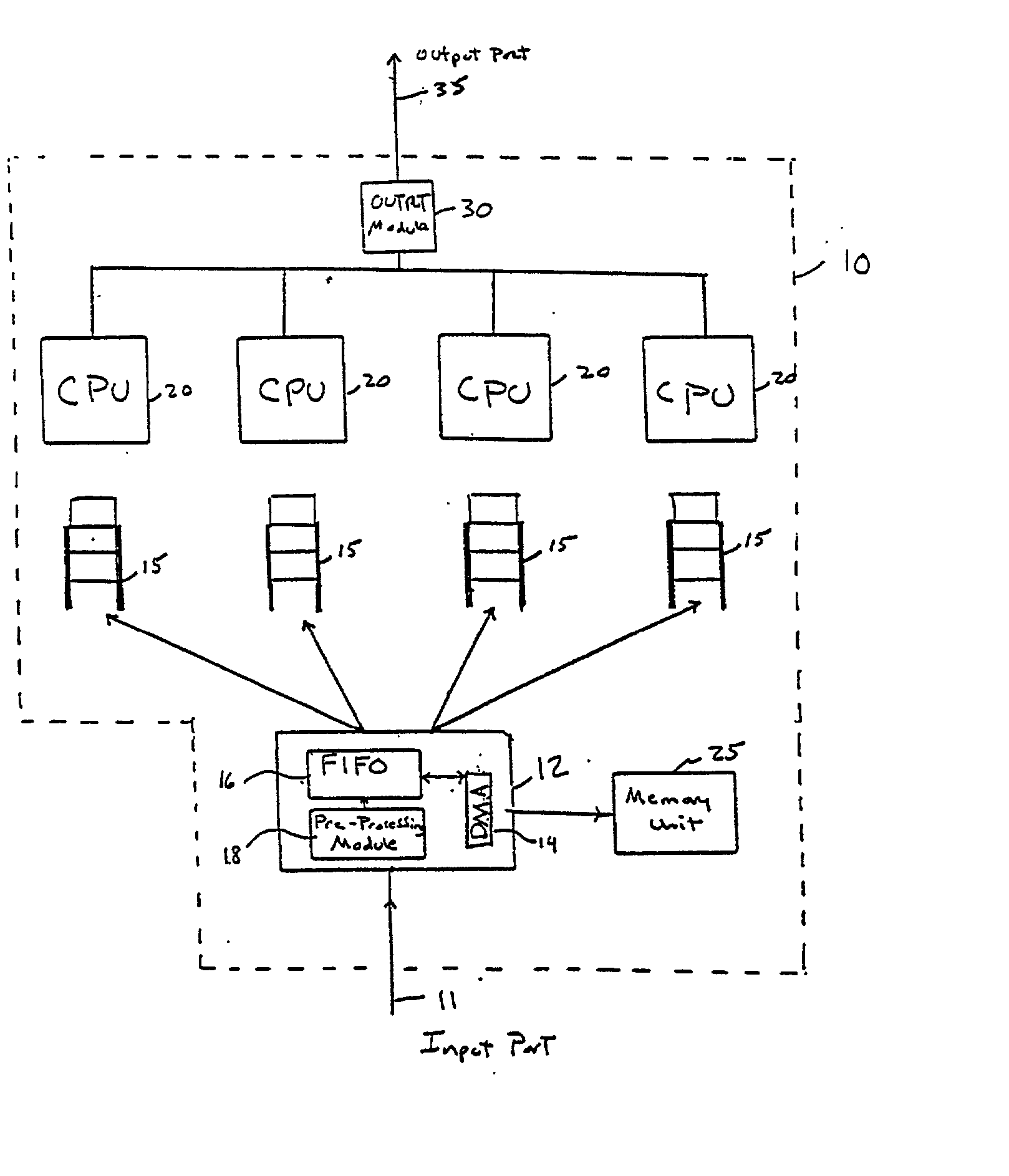

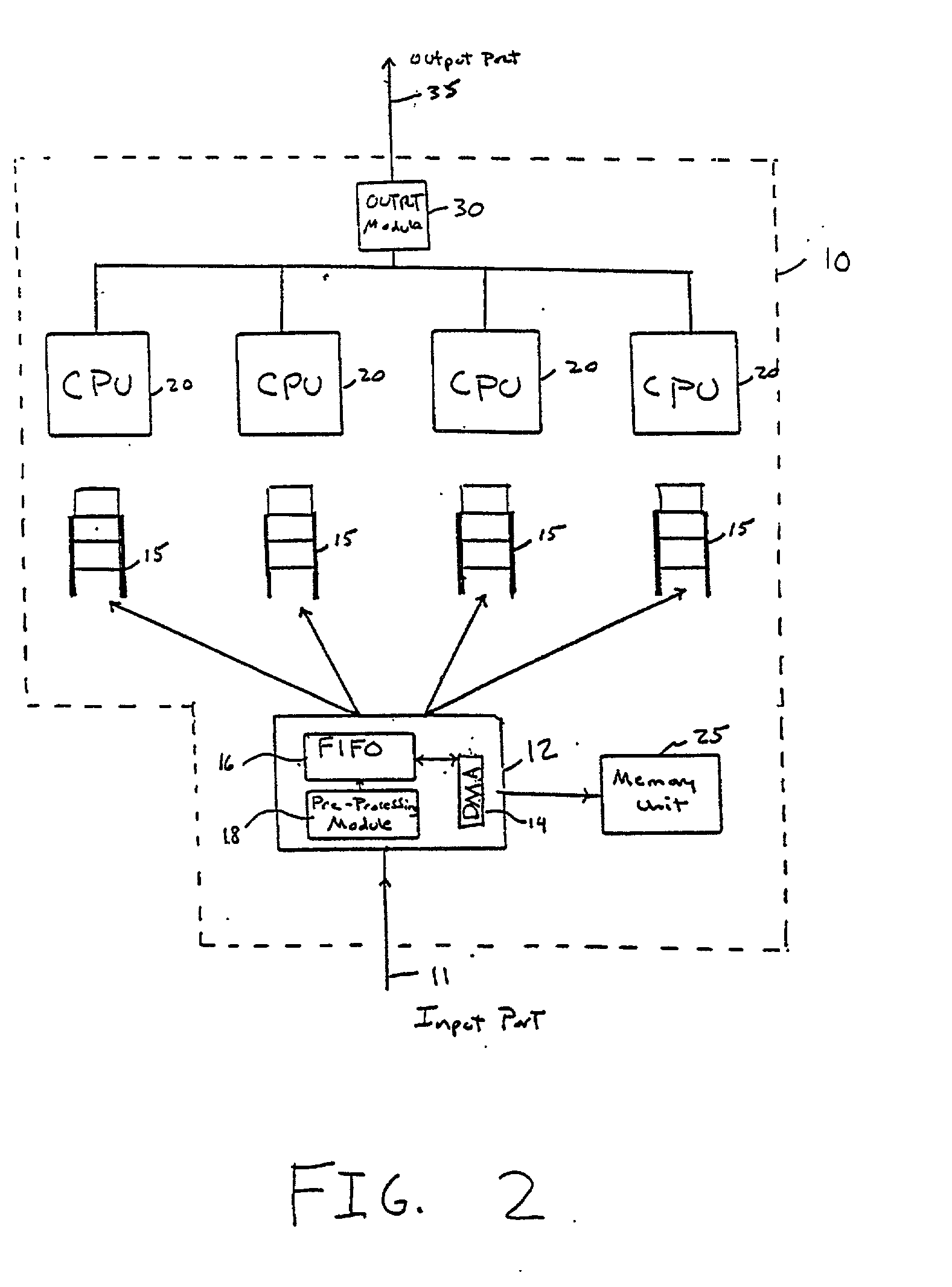

Parallel network processor array

InactiveUS6854117B1Account can be blockedDigital computer detailsMultiprogramming arrangementsMultiple contextParallel processing

A method and system performs parallel processing of asynchronous processes on ordered entities. A system focuses on the average time and variance of the variable time process. Each processor can run multiple contexts. The processing may be divided into a number of stages, each of which can be performed by each of the processors. A system also needs to ensure that the order of the entities is preserved as desired. This order may be maintained by performing some type of pre-processing on the entities to determine their order, and then not starting processing on an entity until the processing of any entity which must precede that entity has been completed. For processing of packets in a network, it may be needed to ensure that packets in the same flow maintain their order after processing. A system also may determine the number of processors that optimally are needed in order to process an incoming stream of entities at a desired speed. This computation may depend on how many different contexts each processor runs. In addition, this computation also may depend on whether there is an input buffer available to store the incoming entities, and the capacity of such an input buffer.

Owner:SABLE NETWORKS +1

Processing data flows with a data flow processor

InactiveUS20110231564A1Easy to detectPreventing data flowMultiple digital computer combinationsPlatform integrity maintainanceData stream processingComputer module

An apparatus and method to distribute applications and services in and throughout a network and to secure the network includes the functionality of a switch with the ability to apply applications and services to received data according to respective subscriber profiles. Front-end processors, or Network Processor Modules (NPMs), receive and recognize data flows from subscribers, extract profile information for the respective subscribers, utilize flow scheduling techniques to forward the data to applications processors, or Flow Processor Modules (FPMs). The FPMs utilize resident applications to process data received from the NPMs. A Control Processor Module (CPM) facilitates applications processing and maintains connections to the NPMs, FPMs, local and remote storage devices, and a Management Server (MS) module that can monitor the health and maintenance of the various modules.

Owner:BLUE COAT SYSTEMS

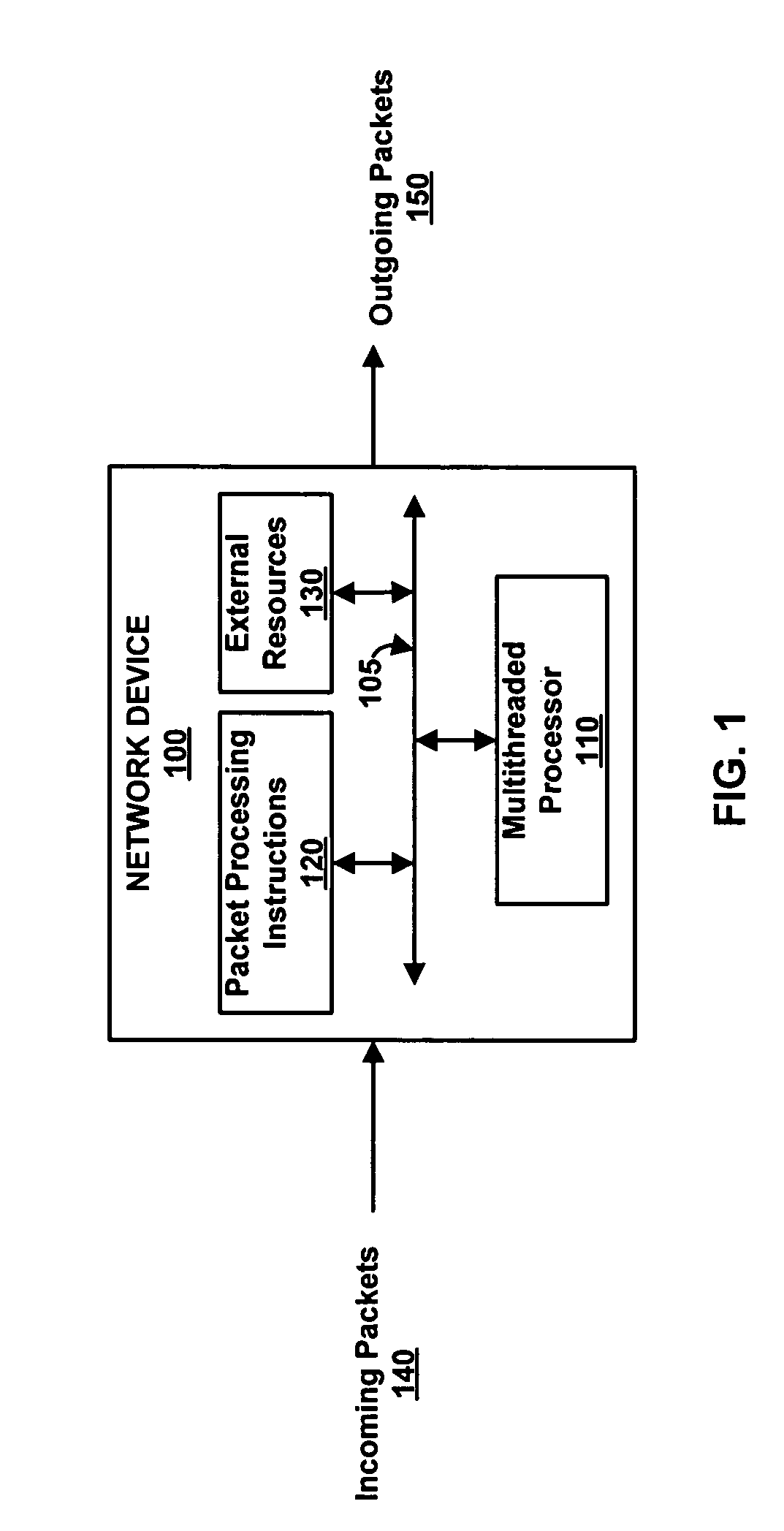

Thread interleaving in a multithreaded embedded processor

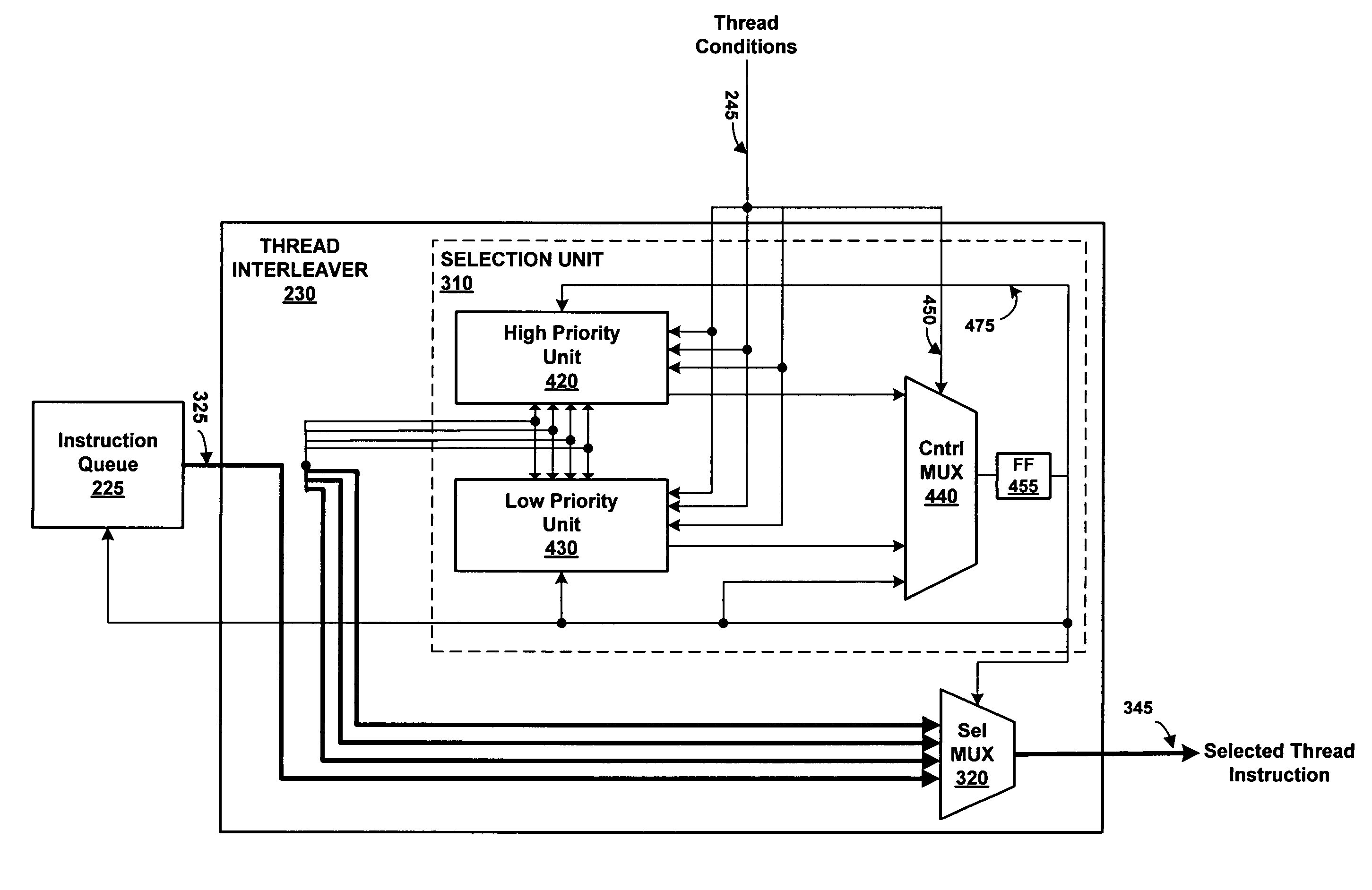

InactiveUS7360064B1Improves processor utilizationImprove performanceDigital computer detailsConcurrent instruction executionTime conditionInstruction unit

The present invention provides a network multithreaded processor, such as a network processor, including a thread interleaver that implements fine-grained thread decisions to avoid underutilization of instruction execution resources in spite of large communication latencies. In an upper pipeline, an instruction unit determines an instruction fetch sequence responsive to an instruction queue depth on a per thread basis. In a lower pipeline, a thread interleaver determines a thread interleave sequence responsive to thread conditions including thread latency conditions. The thread interleaver selects threads using a two-level round robin arbitration. Thread latency signals are active responsive to thread latencies such as thread stalls, cache misses, and interlocks. During the subsequent one or more clock cycles, the thread is ineligible for arbitration. In one embodiment, other thread conditions affect selection decisions such as local priority, global stalls, and late stalls.

Owner:CISCO TECH INC

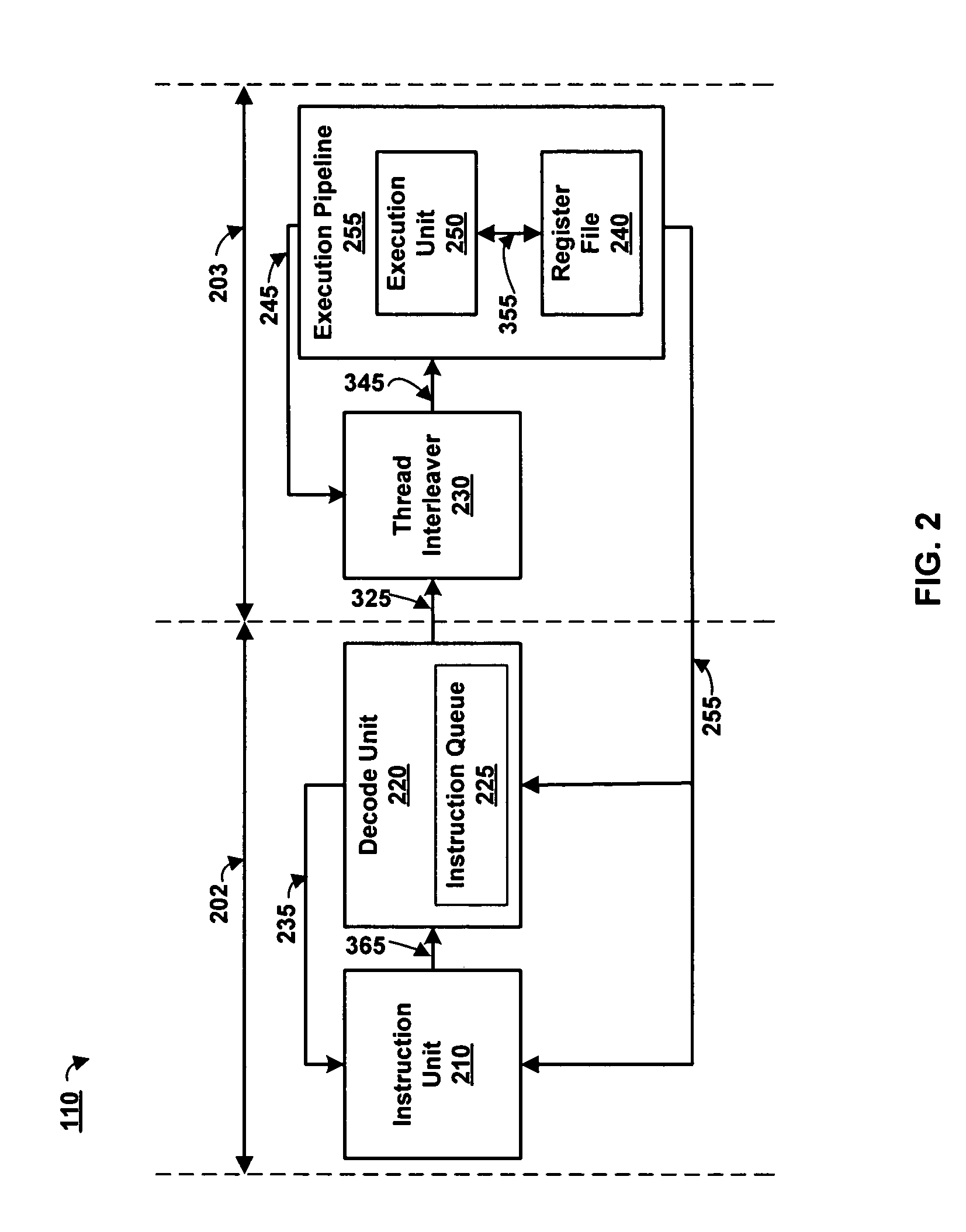

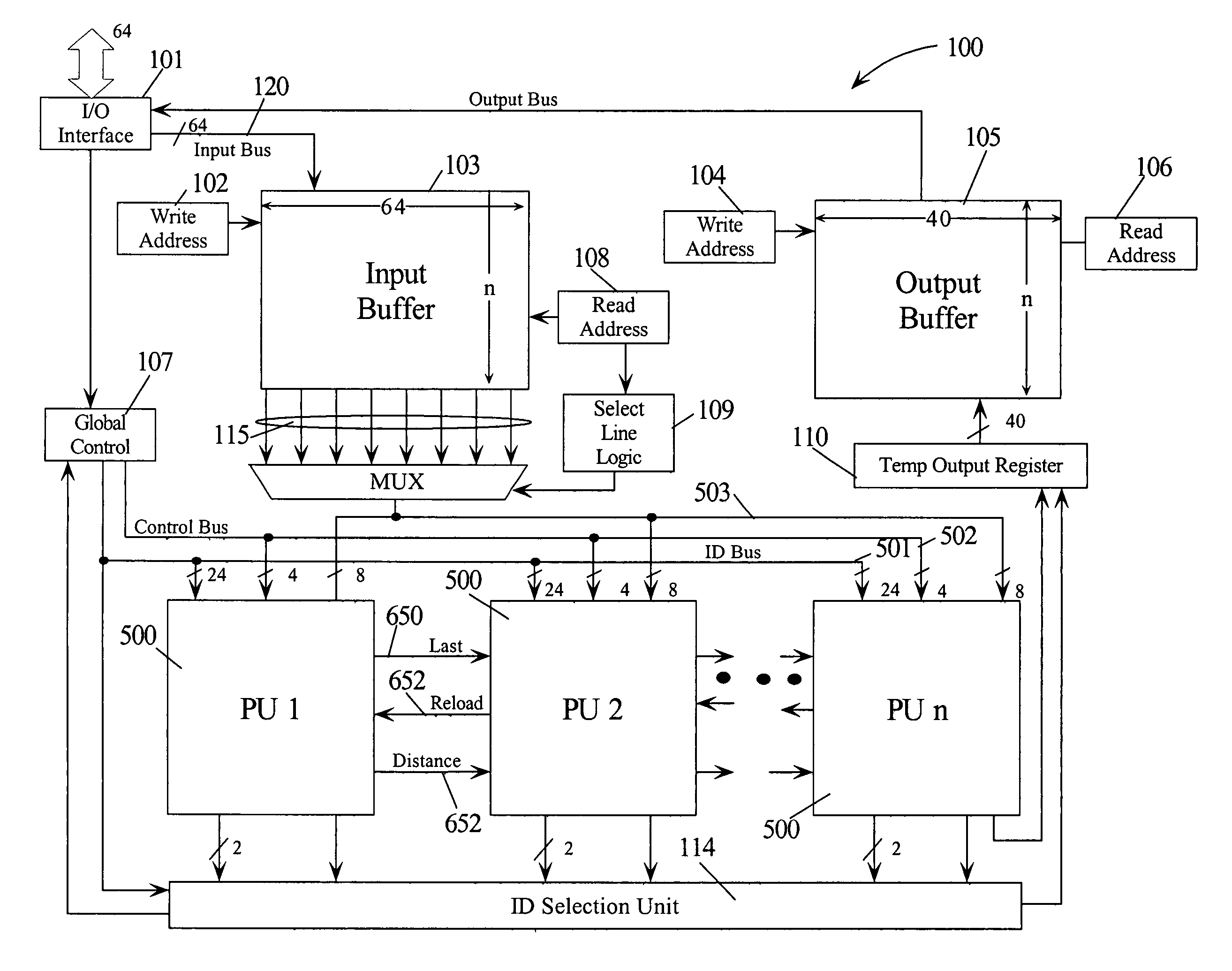

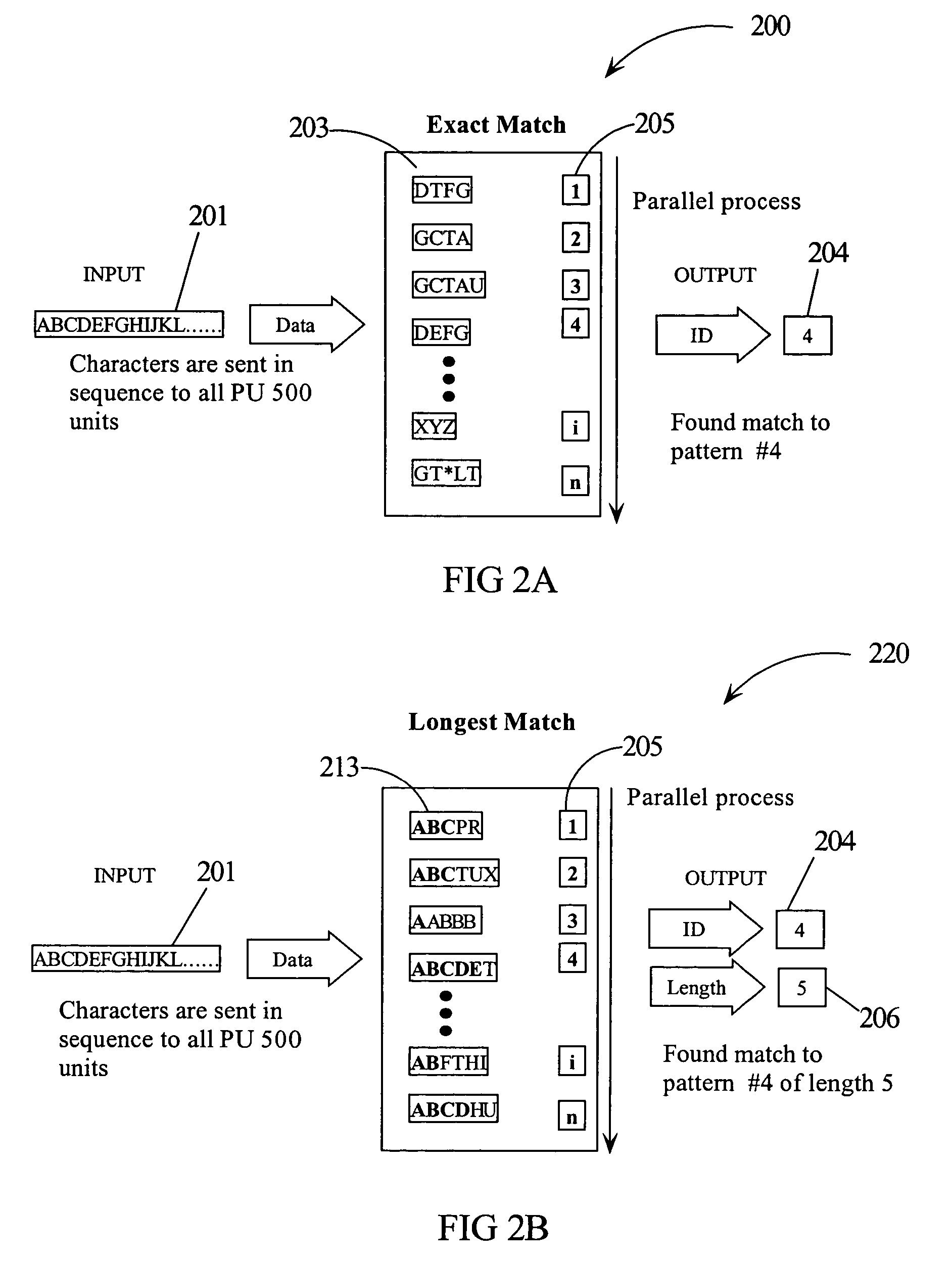

Intrusion detection using a network processor and a parallel pattern detection engine

InactiveUS7487542B2Improve scalabilityMemory loss protectionDigital data processing detailsData streamPattern matching

An intrusion detection system (IDS) comprises a network processor (NP) coupled to a memory unit for storing programs and data. The NP is also coupled to one or more parallel pattern detection engines (PPDE) which provide high speed parallel detection of patterns in an input data stream. Each PPDE comprises many processing units (PUs) each designed to store intrusion signatures as a sequence of data with selected operation codes. The PUs have configuration registers for selecting modes of pattern recognition. Each PU compares a byte at each clock cycle. If a sequence of bytes from the input pattern match a stored pattern, the identification of the PU detecting the pattern is outputted with any applicable comparison data. By storing intrusion signatures in many parallel PUs, the IDS can process network data at the NP processing speed. PUs may be cascaded to increase intrusion coverage or to detect long intrusion signatures.

Owner:TREND MICRO INC

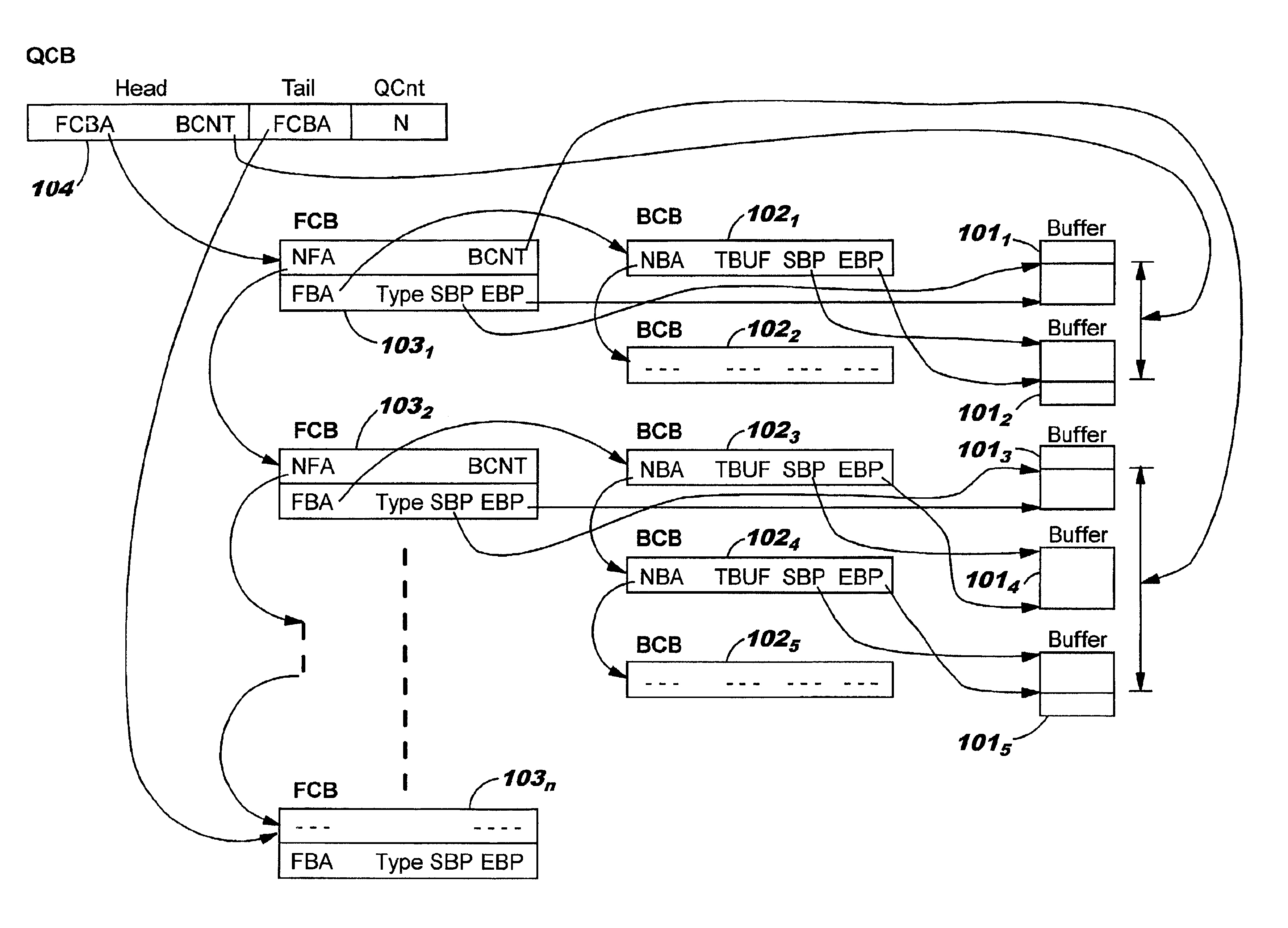

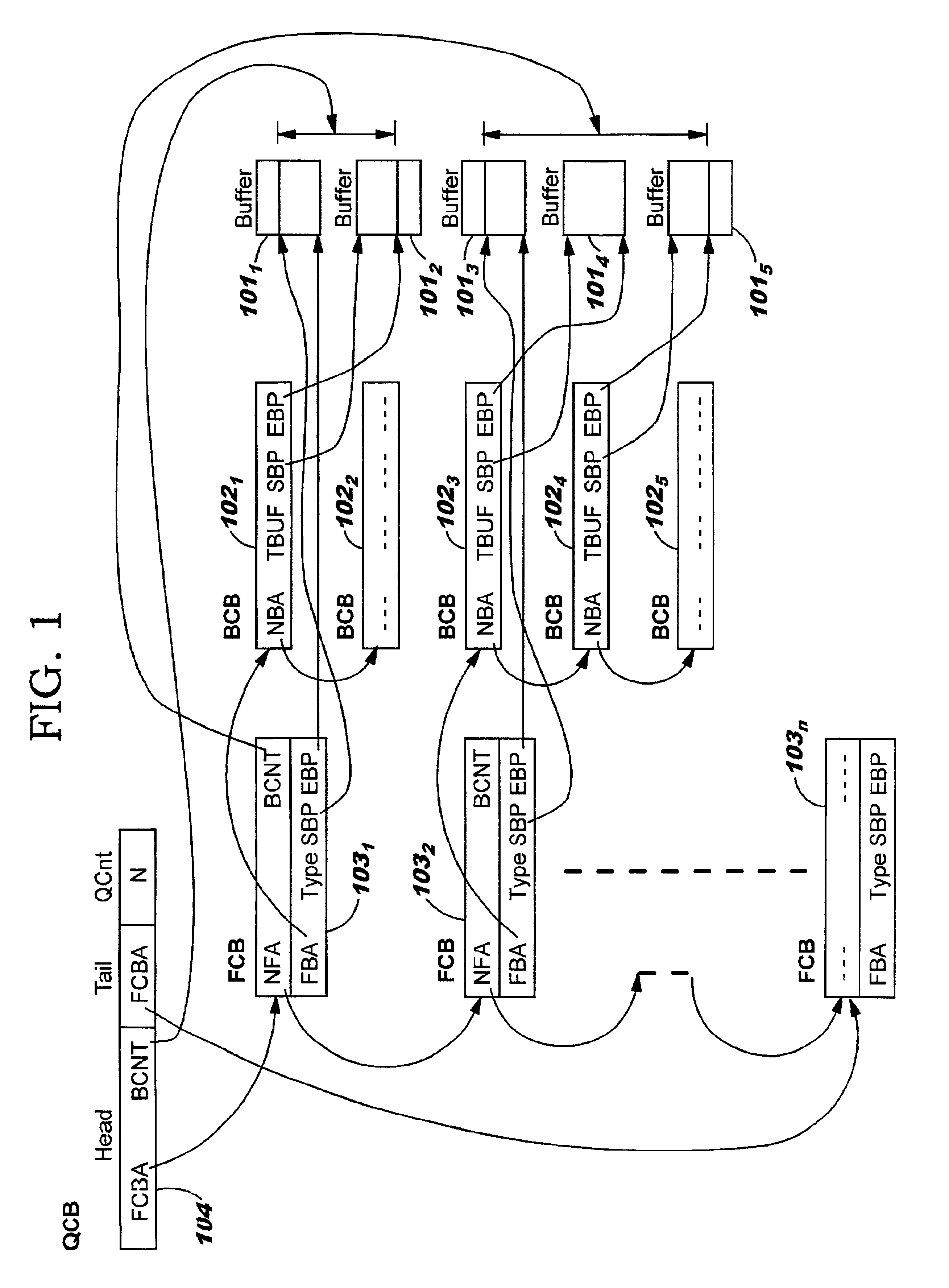

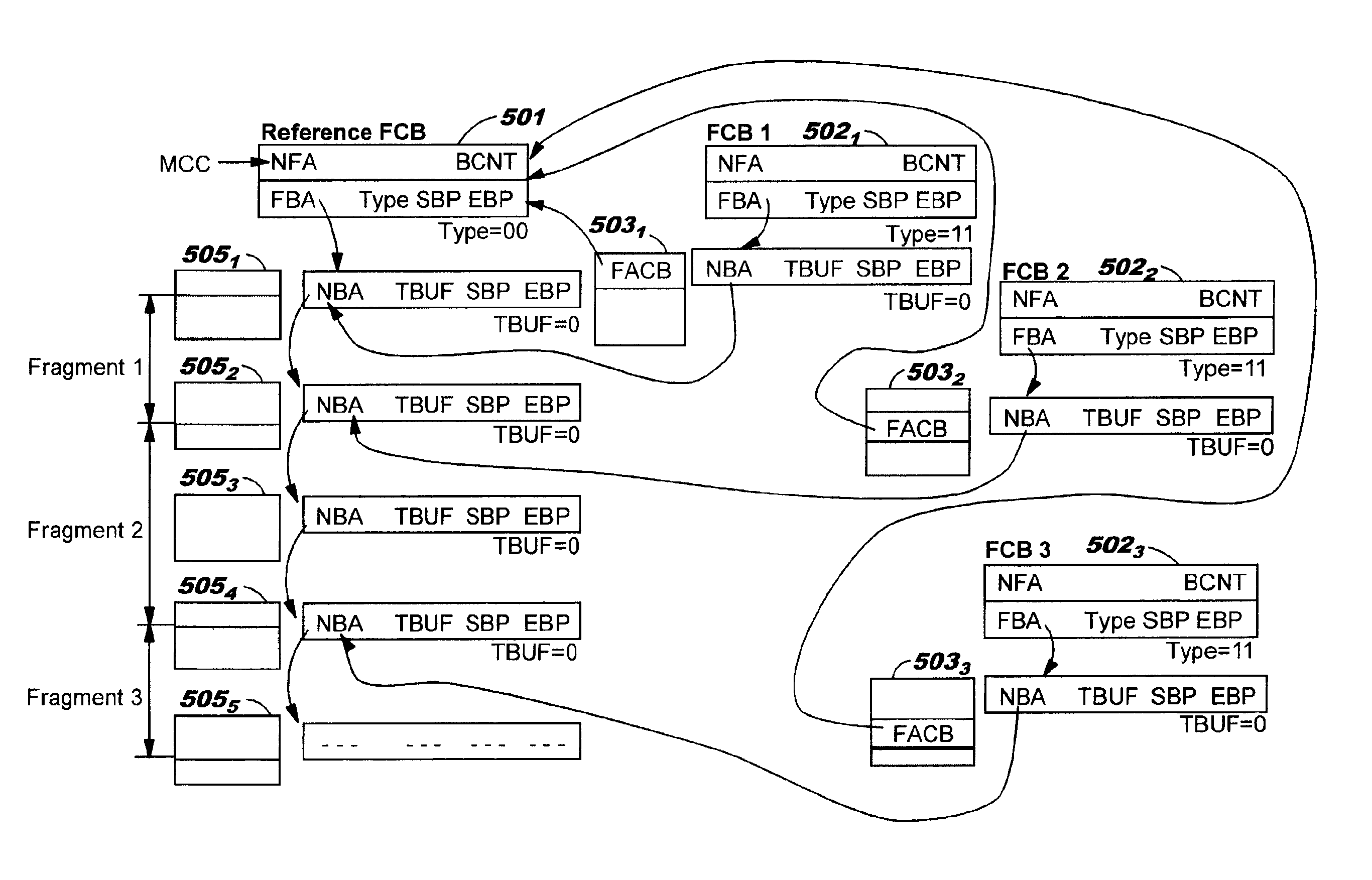

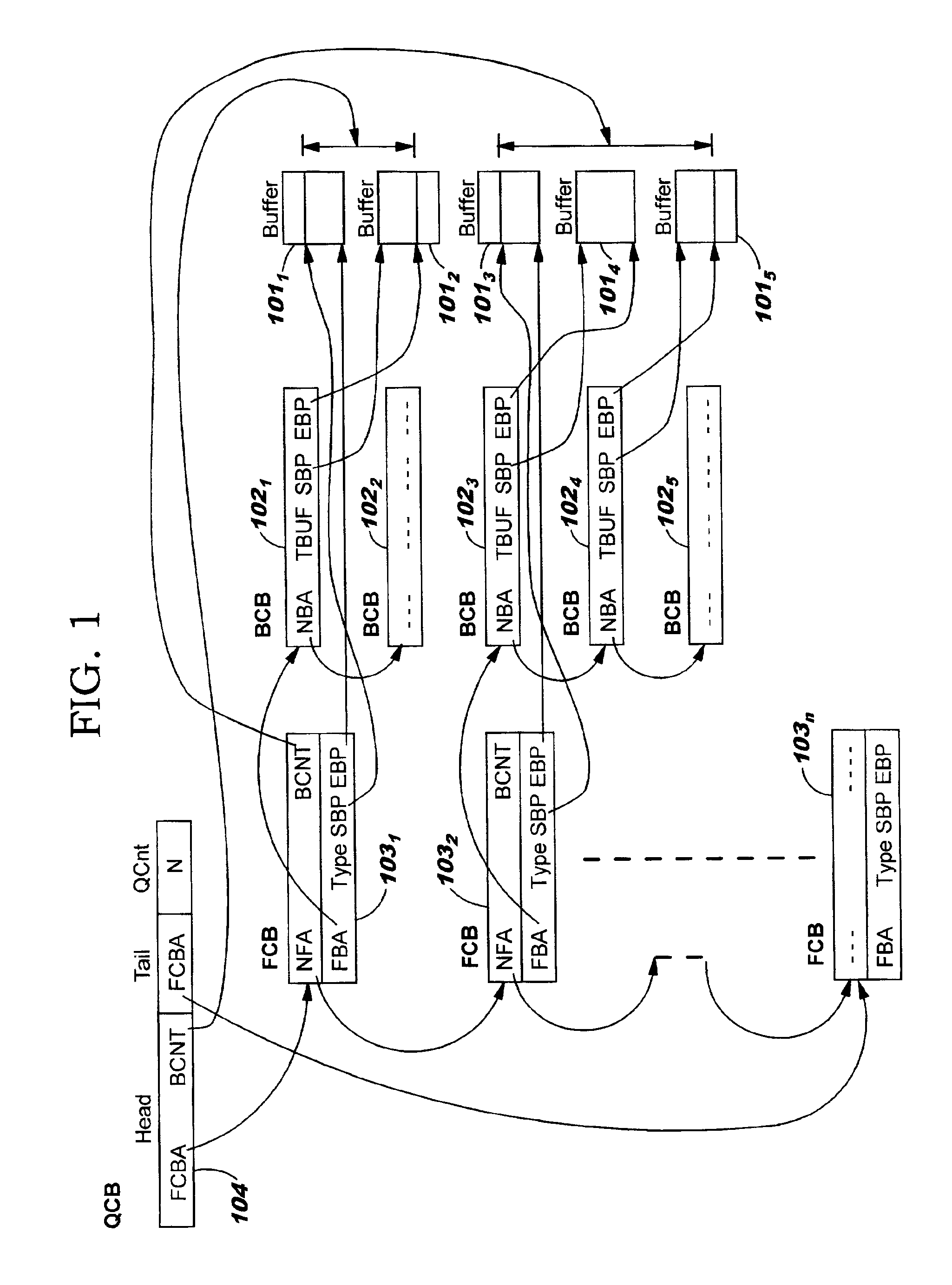

Data structures for efficient processing of multicast transmissions

InactiveUS6836480B2Reduce memory requirementsEliminate needSpecial service provision for substationTime-division multiplexArray data structureTransport system

Data structures, a method, and an associated transmission system for multicast transmission on network processors in order both to minimize multicast transmission memory requirements and to account for port performance discrepancies. Frame data for multicast transmission on a network processor is read into buffers to which are associated various control structures and a reference frame. The reference frame and the associated control structures permit multicast targets to be serviced without creating multiple copies of the frame. Furthermore this same reference frame and control structures allow buffers allocated for each multicast target to be returned to the free buffer queue without waiting until all multicast transmissions are complete.

Owner:ACTIVISION PUBLISHING

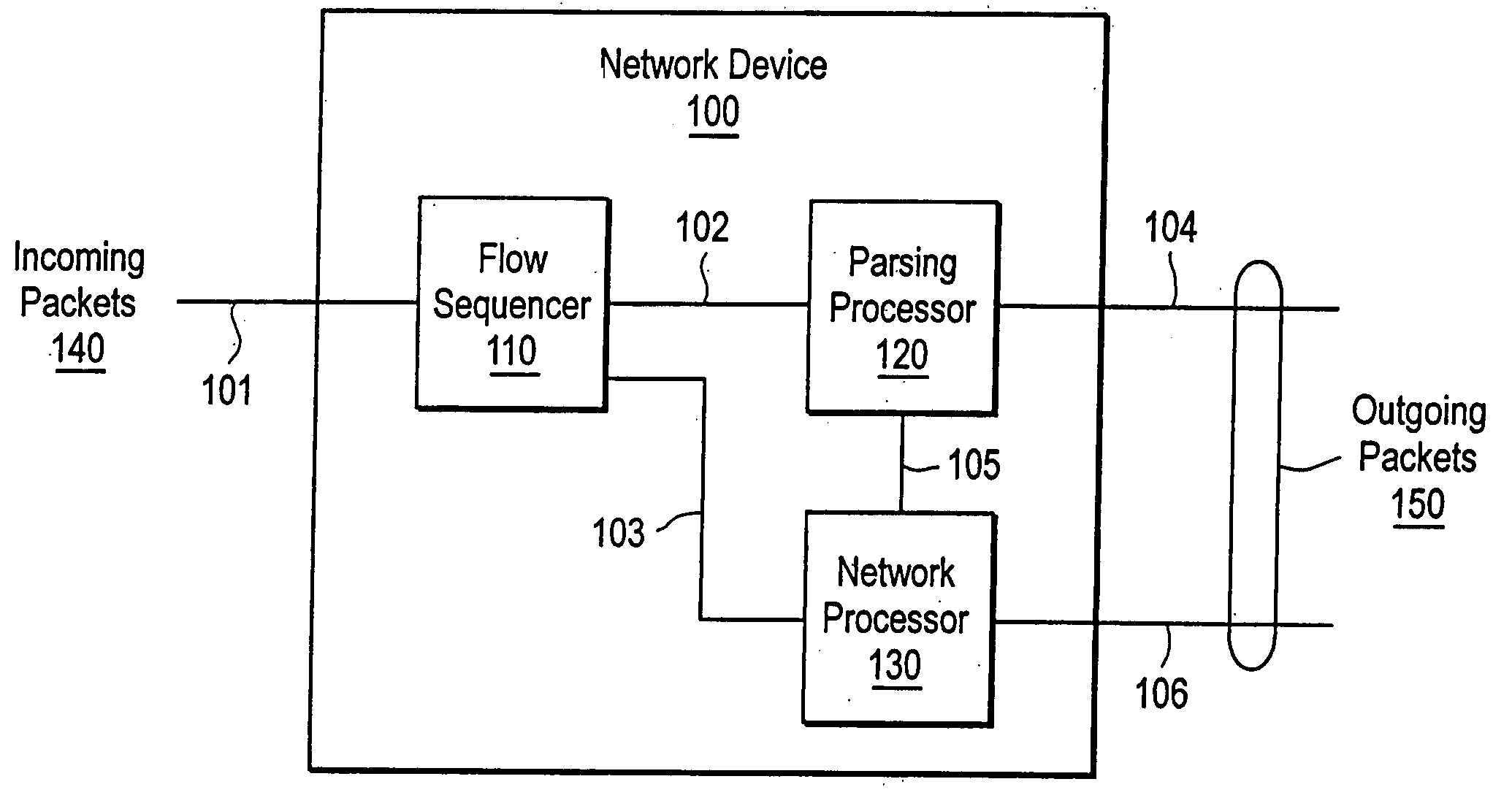

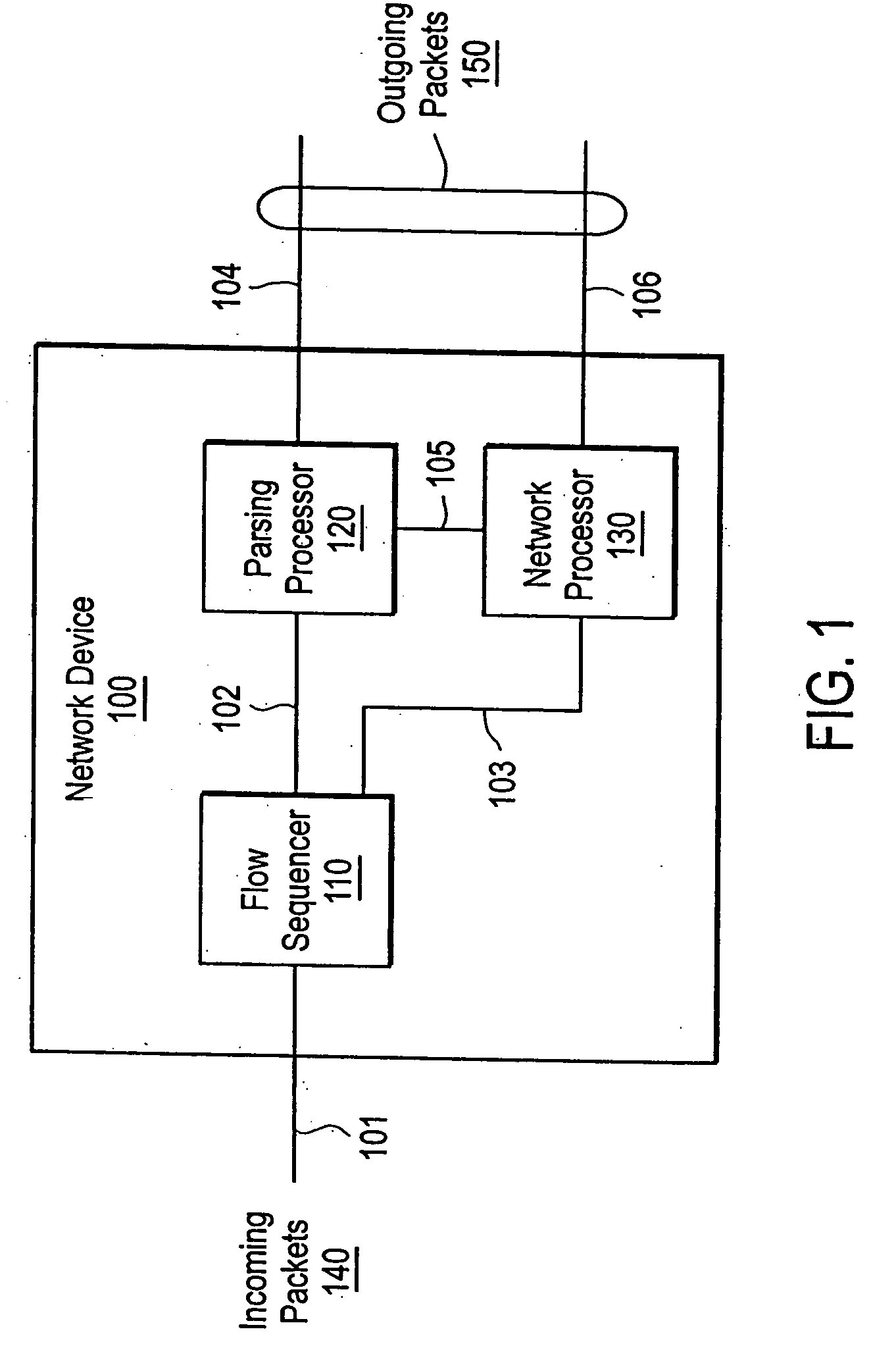

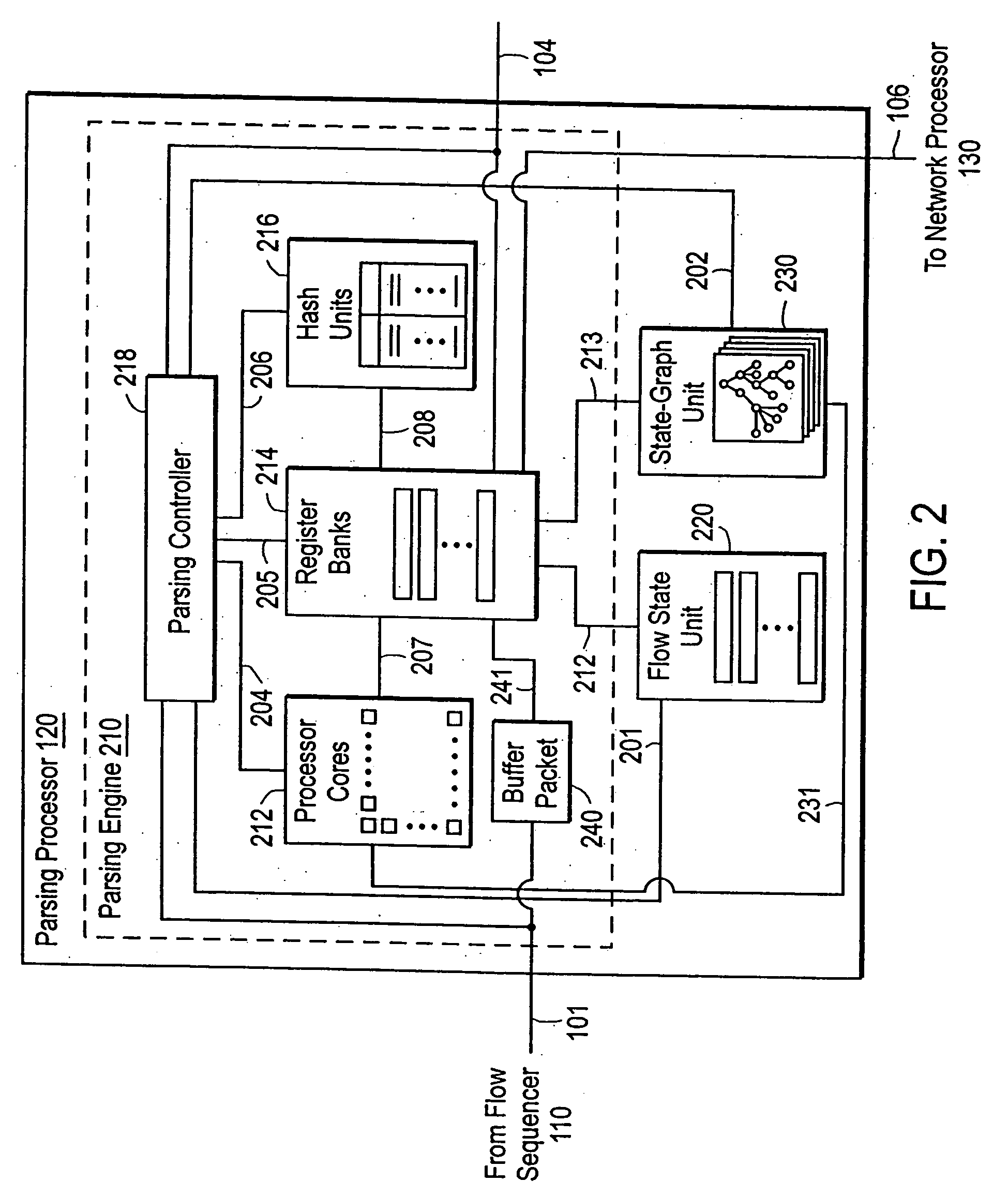

Stateful flow of network packets within a packet parsing processor

ActiveUS20050238022A1Maintain statefulnessDigital data processing detailsData switching by path configurationData packNetwork processor

The present invention provides a packet processing device and method. A parsing processor provides instruction-driven content inspection of network packets at 10-Gbps and above with a parsing engine that executes parsing instructions. A flow state unit maintains statefulness of packet flows to allow content inspection across several related network packets. A state-graph unit traces -state-graph nodes to keyword indications and / or parsing instructions. The parsing instructions can be derived from a high-level application to emulate user-friendly parsing logic. The parsing processor sends parsed packets to a network processor unit for further processing.

Owner:CISCO TECH INC

Processing data flows with a data flow processor

ActiveUS9800608B2Increased complexityAvoid problemsMultiple digital computer combinationsPlatform integrity maintainanceData stream processingComputer module

An apparatus and method to distribute applications and services in and throughout a network and to secure the network includes the functionality of a switch with the ability to apply applications and services to received data according to respective subscriber profiles. Front-end processors, or Network Processor Modules (NPMs), receive and recognize data flows from subscribers, extract profile information for the respective subscribers, utilize flow scheduling techniques to forward the data to applications processors, or Flow Processor Modules (FPMs). The FPMs utilize resident applications to process data received from the NPMs. A Control Processor Module (CPM) facilitates applications processing and maintains connections to the NPMs, FPMs, local and remote storage devices, and a Management Server (MS) module that can monitor the health and maintenance of the various modules.

Owner:CA TECH INC

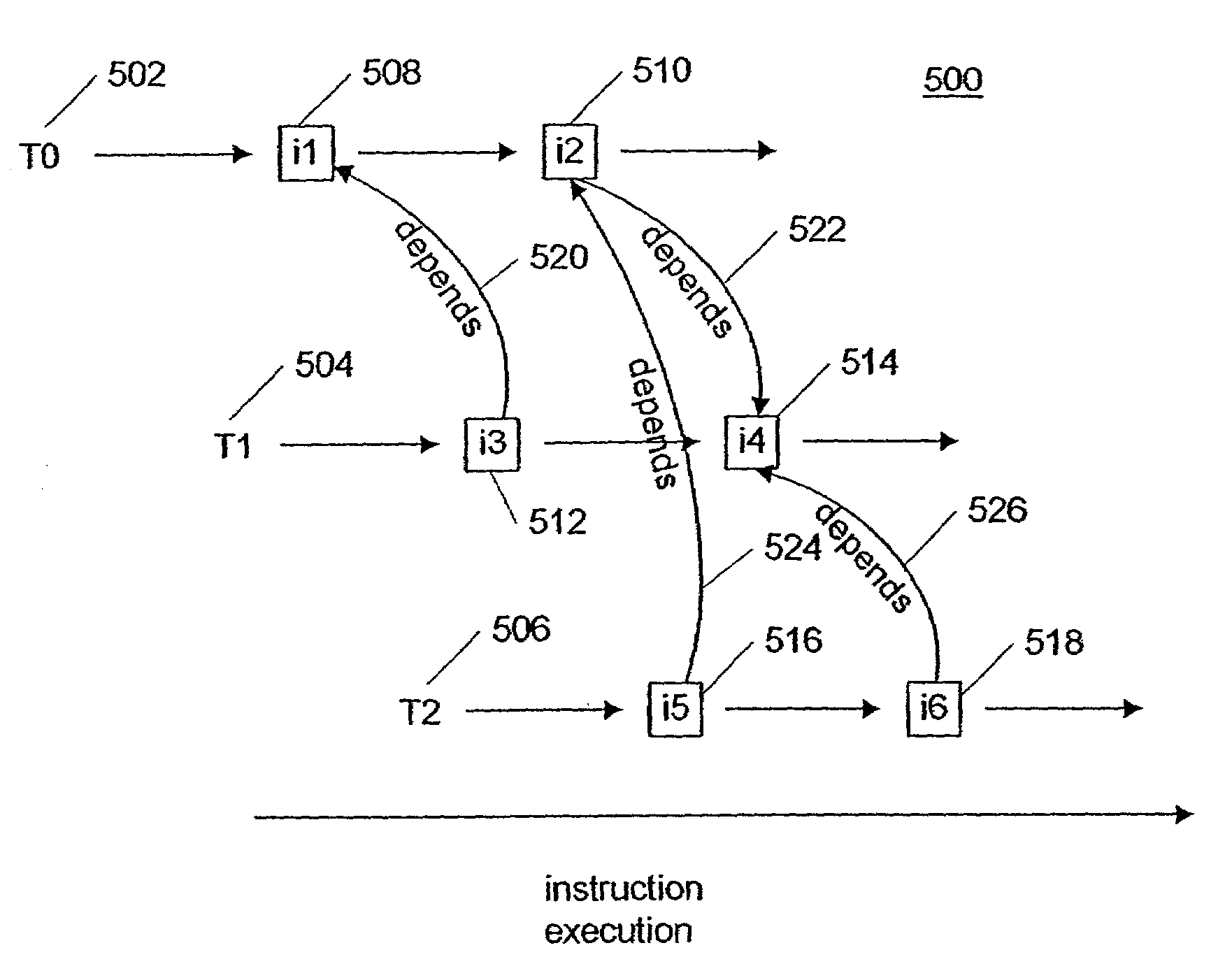

System and method for instruction-level parallelism in a programmable multiple network processor environment

InactiveUS6950927B1Digital computer detailsConcurrent instruction executionInstruction unitExecution unit

A system and method process data elements with instruction-level parallelism. An instruction buffer holds a first instruction and a second instruction, the first instruction being associated with a first thread, and the second instruction being associated with a second thread. A dependency counter counts satisfaction of dependencies of instructions of the second thread on instructions of the first thread. An instruction control unit is coupled to the instruction buffer and the dependency counter, the instruction control unit increments and decrements the dependency counter according to dependency information included in instructions. An execution switch is coupled to the instruction control unit and the instruction buffer, and the execution switch routes instructions to instruction execution units.

Owner:UNITED STATES OF AMERICA +1

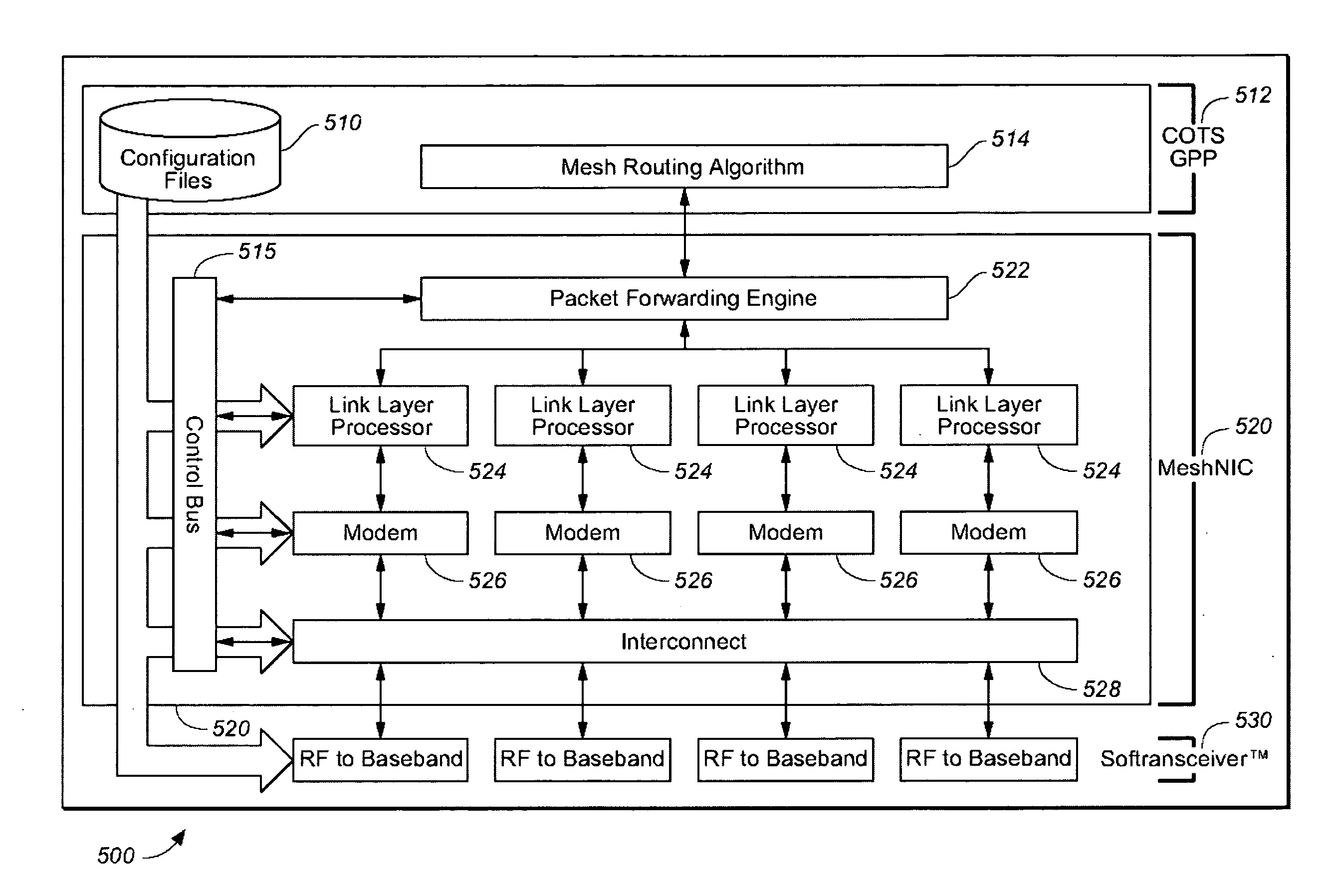

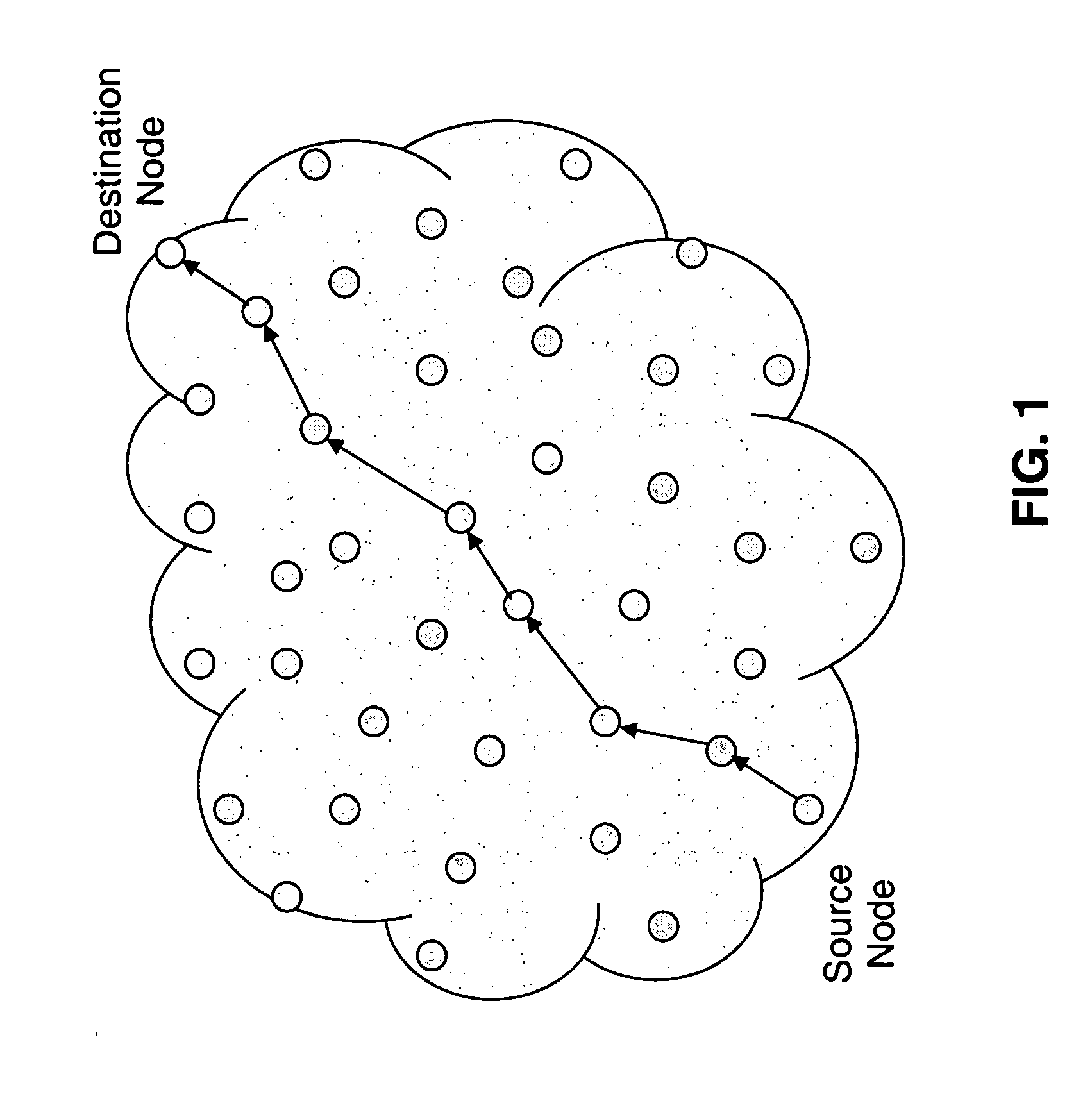

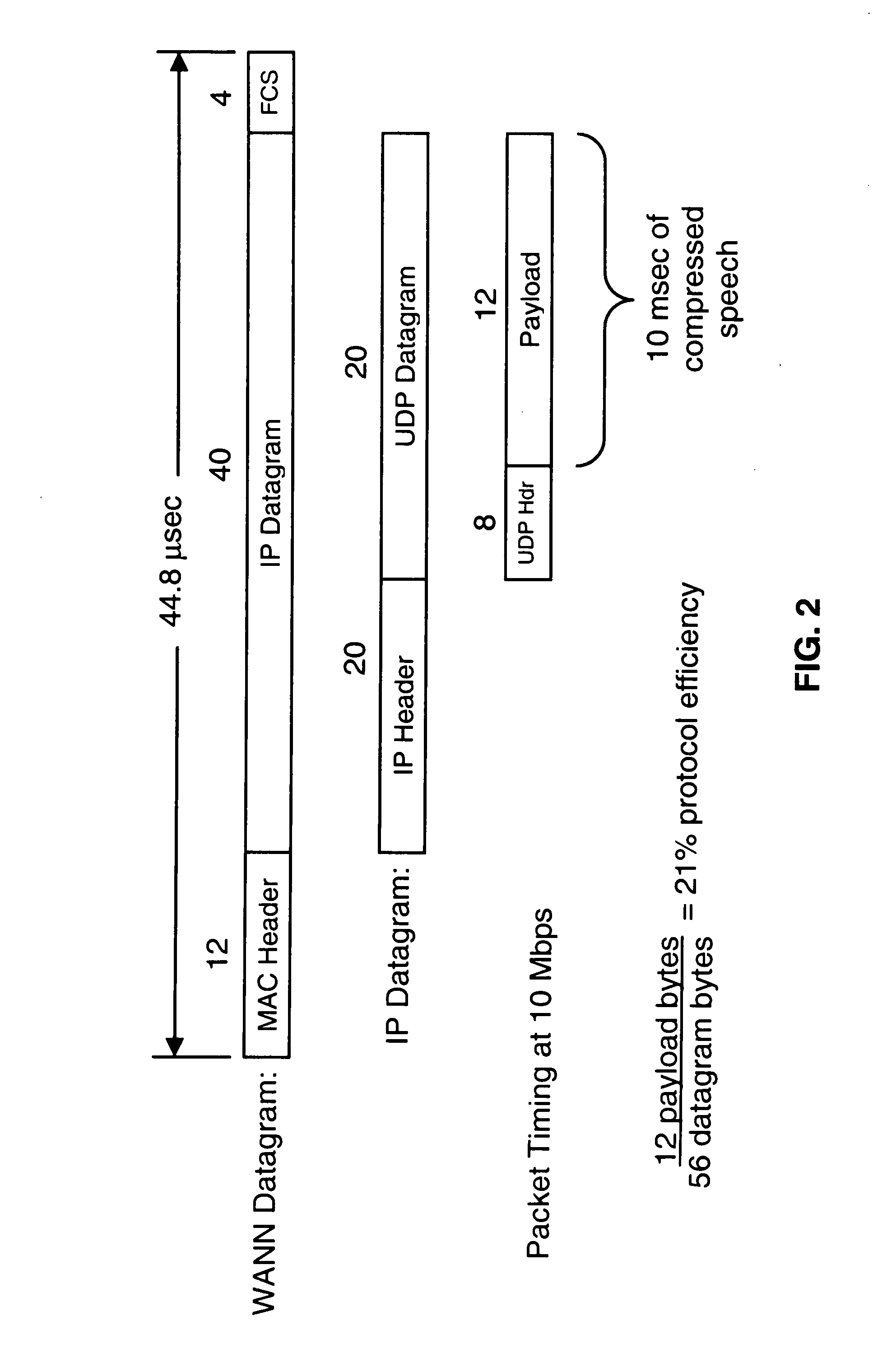

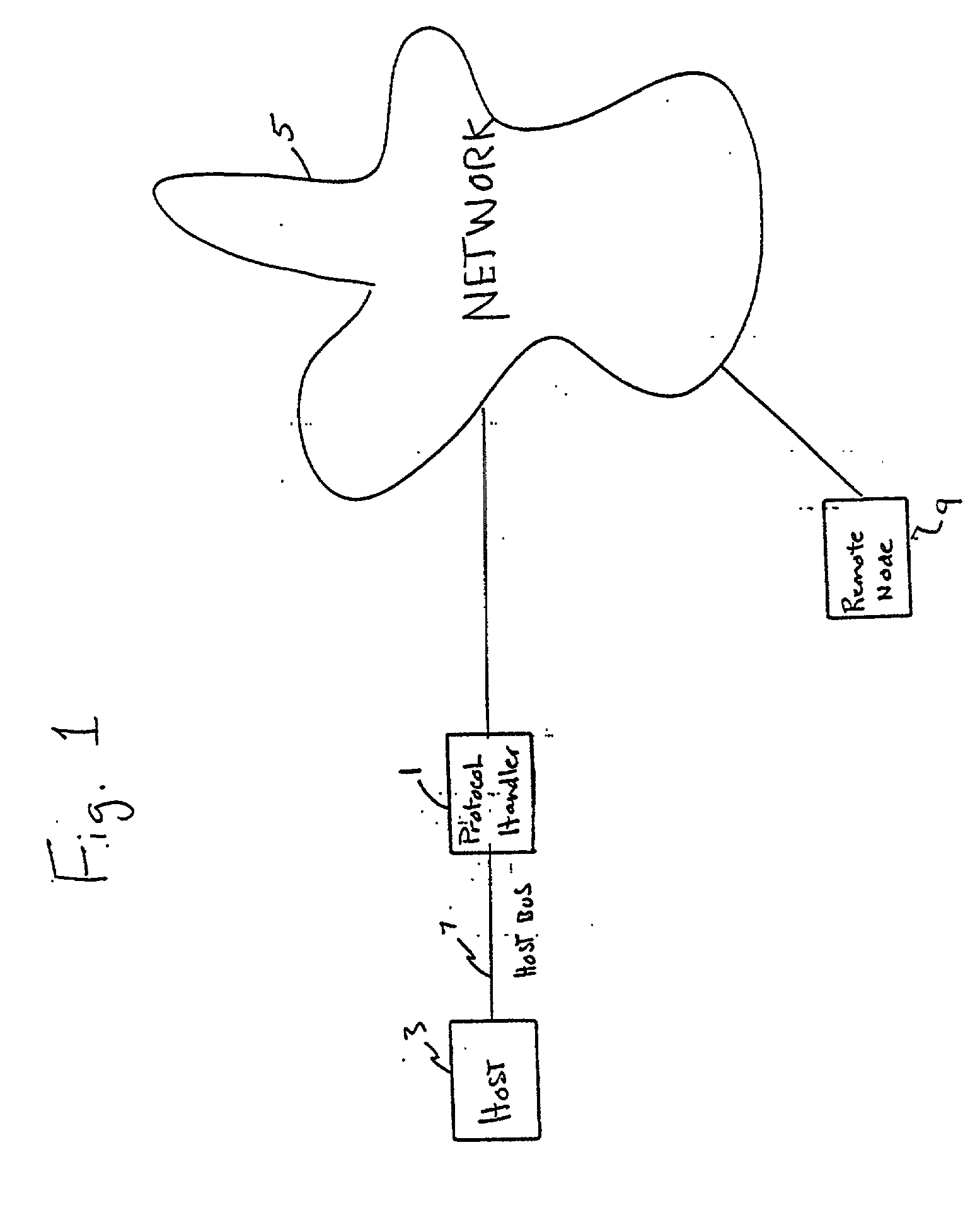

Mobile nodal based communication system, method and apparatus

ActiveUS20090225751A1Consumes powerSufficiently fast for effective routingError preventionTransmission systemsCommunications systemSoftware define radio

An improved micro architectural approach for a network microprocessor has low power consumption, and employs two specialized processing cores, a MAC processing core and a network processor core. Each of these processing cores has facilities designed for a specific set of functions, to handle ISO layer 2 and layer 3 functionality in a packet switched Software Defined Radio mobile network.

Owner:ROCKWELL COLLINS INC

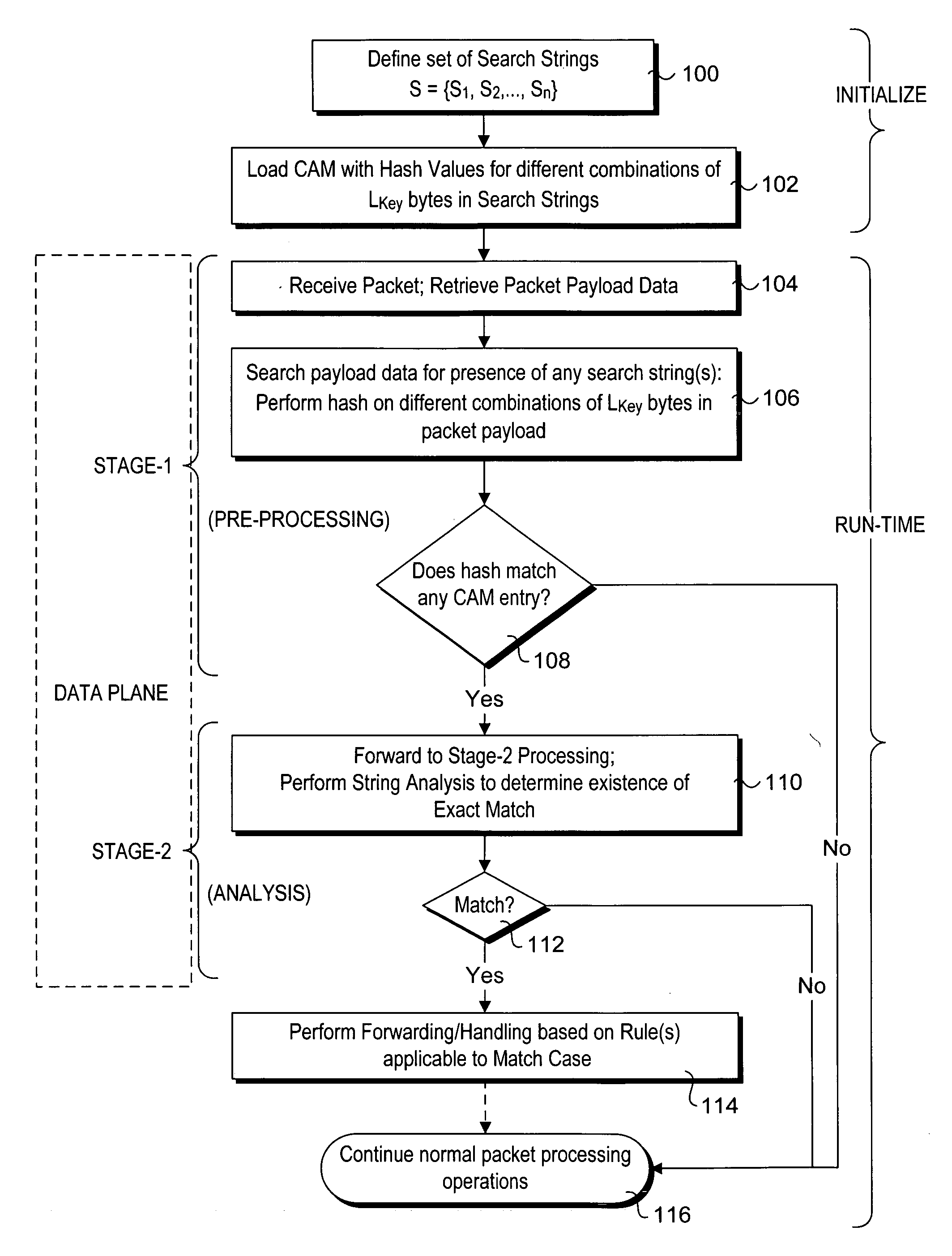

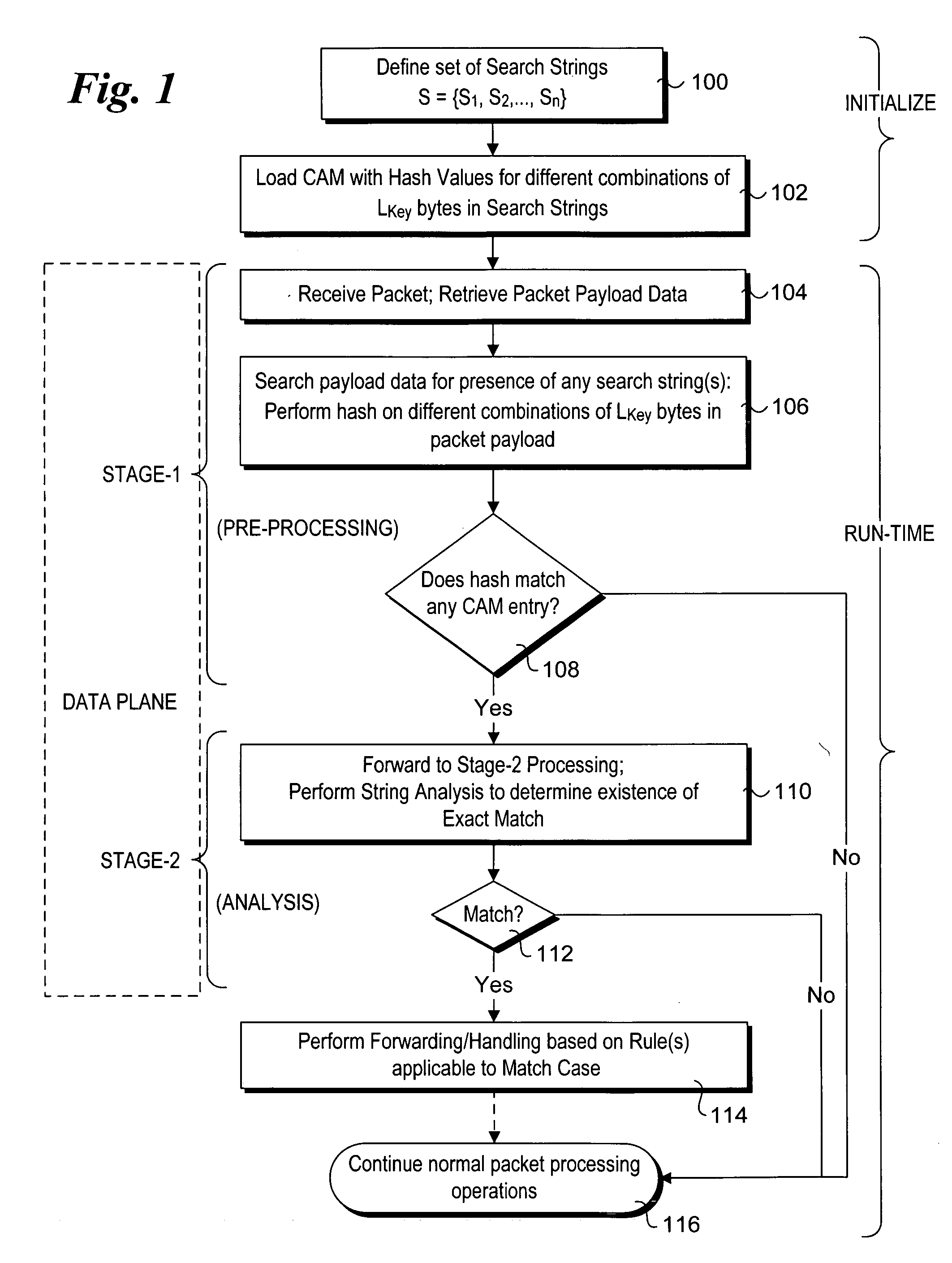

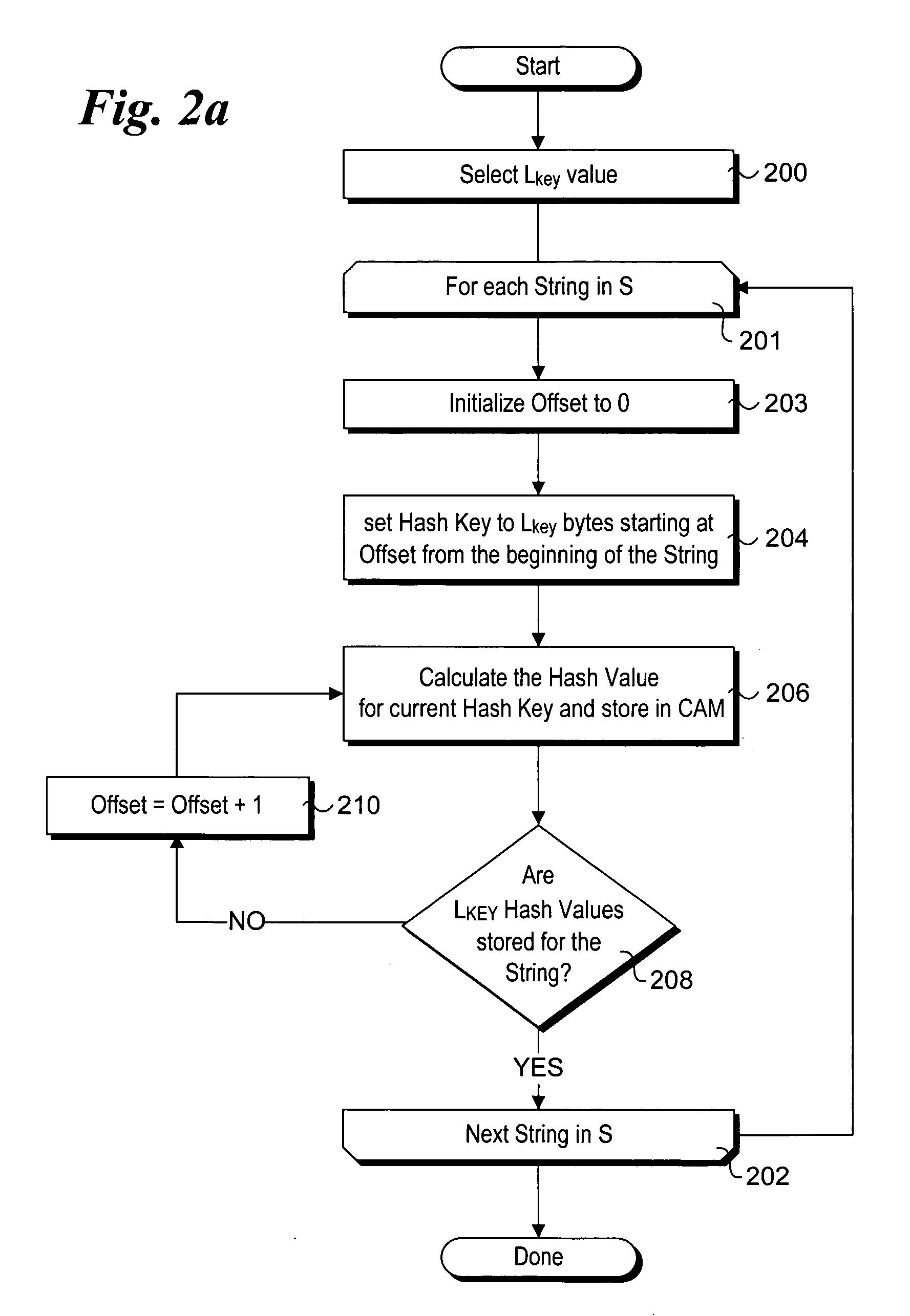

Method to perform exact string match in the data plane of a network processor

InactiveUS20070115986A1Digital computer detailsData switching by path configurationData packLine rate

Methods for performing exact search string matches in the data plane of a network processor. The methods employ a two-stage string search mechanism to identify the existence of a search string from a set S in a packet payload. A first pre-processing stage identifies a potential search string match and a second analysis stage determines whether the first stage match corresponds to an exact string match. The first stage is implemented using hash values derived from at least one of search strings in set S or sub-strings of those search strings. In one embodiment, a plurality of Bloom filters are used to perform the first pre-processing stage, while in other embodiments various CAM-based technique are used. Various TCAM-based schemes are disclosed for performing the second analysis stage. The methods enable packet payloads to be searched for search strings at line-rate speeds.

Owner:INTEL CORP

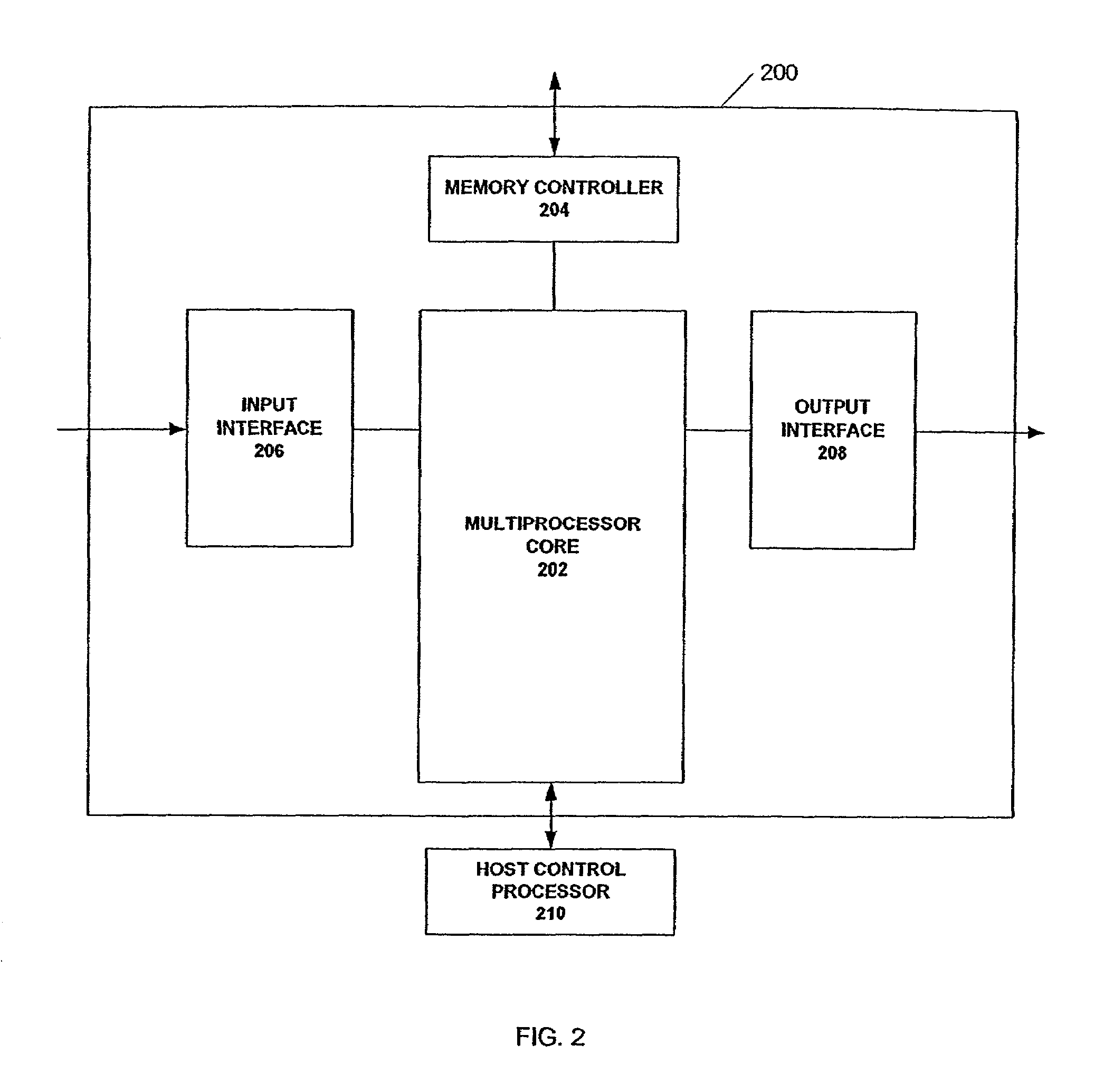

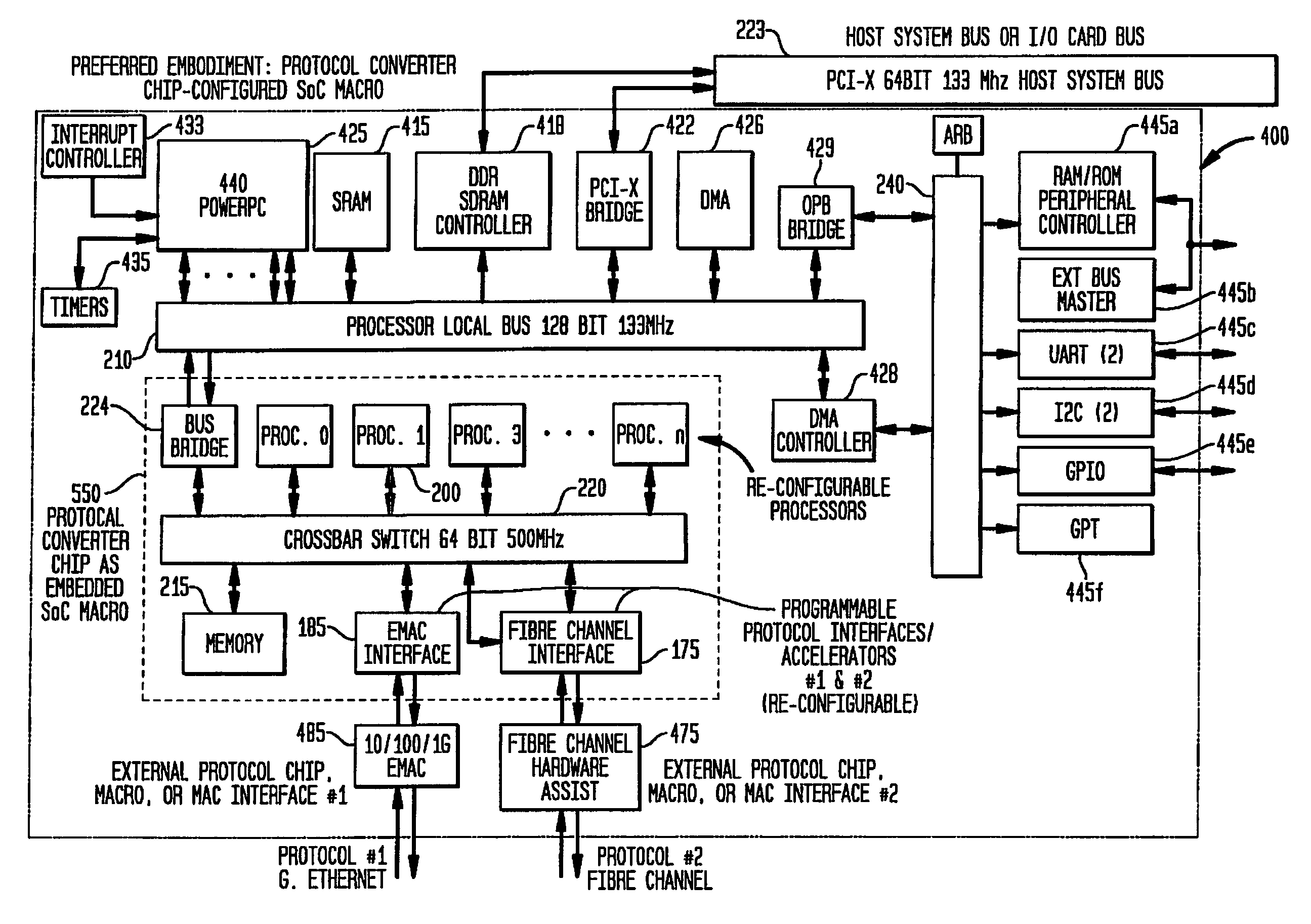

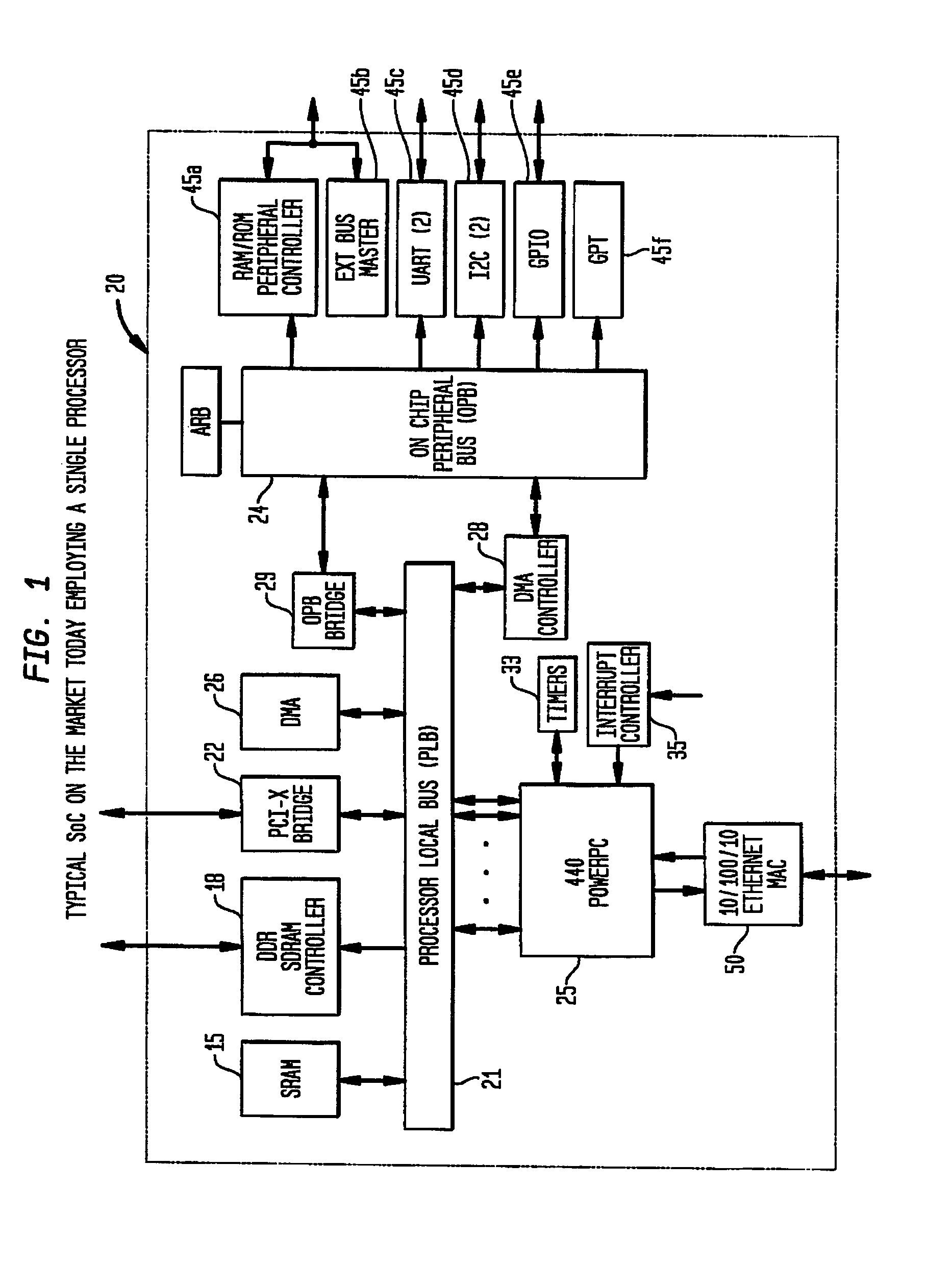

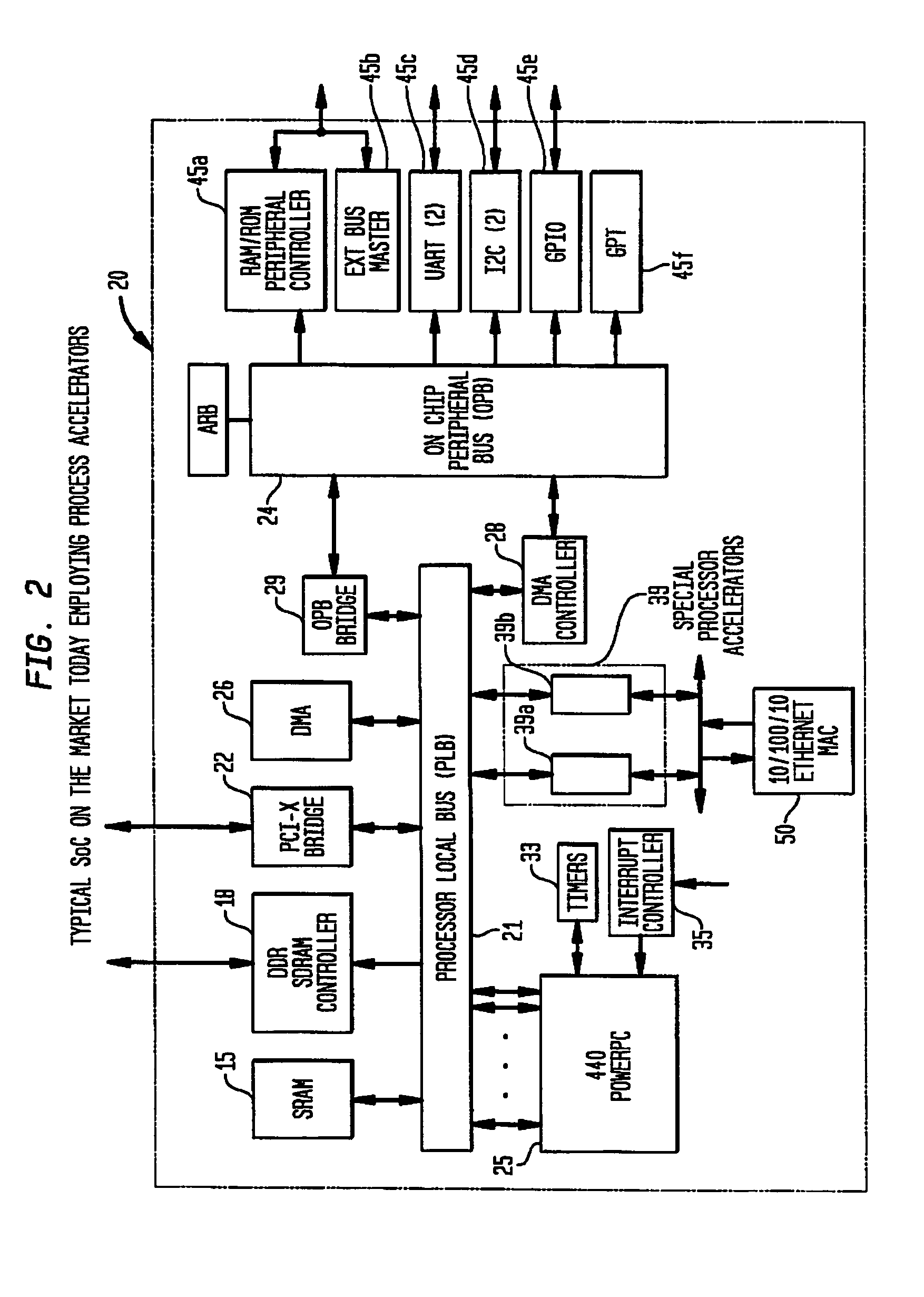

Network processor system on chip with bridge coupling protocol converting multiprocessor macro core local bus to peripheral interfaces coupled system bus

ActiveUS7412588B2Increase the number ofHigh bandwidthMultiple digital computer combinationsConcurrent instruction executionMulti processorCoupling system

A network processor includes a system-onchip (SoC) macro core and functions as a single chip protocol converter that receives packets generating according to a first protocol type and processes the packets to implement protocol conversion and generates converted packets of a second protocol type for output thereof, the process of protocol conversion being performed entirely within the SoC macro core. The process of protocol conversion contained within the SoC macro core does not require the processing resources of a host system. The system-on chip macro core includes a bridge device for coupling a local bus in the protocol converting multiprocessor SoC macro core local bus to peripheral interfaces coupled to a system bus.

Owner:MICROSOFT TECH LICENSING LLC

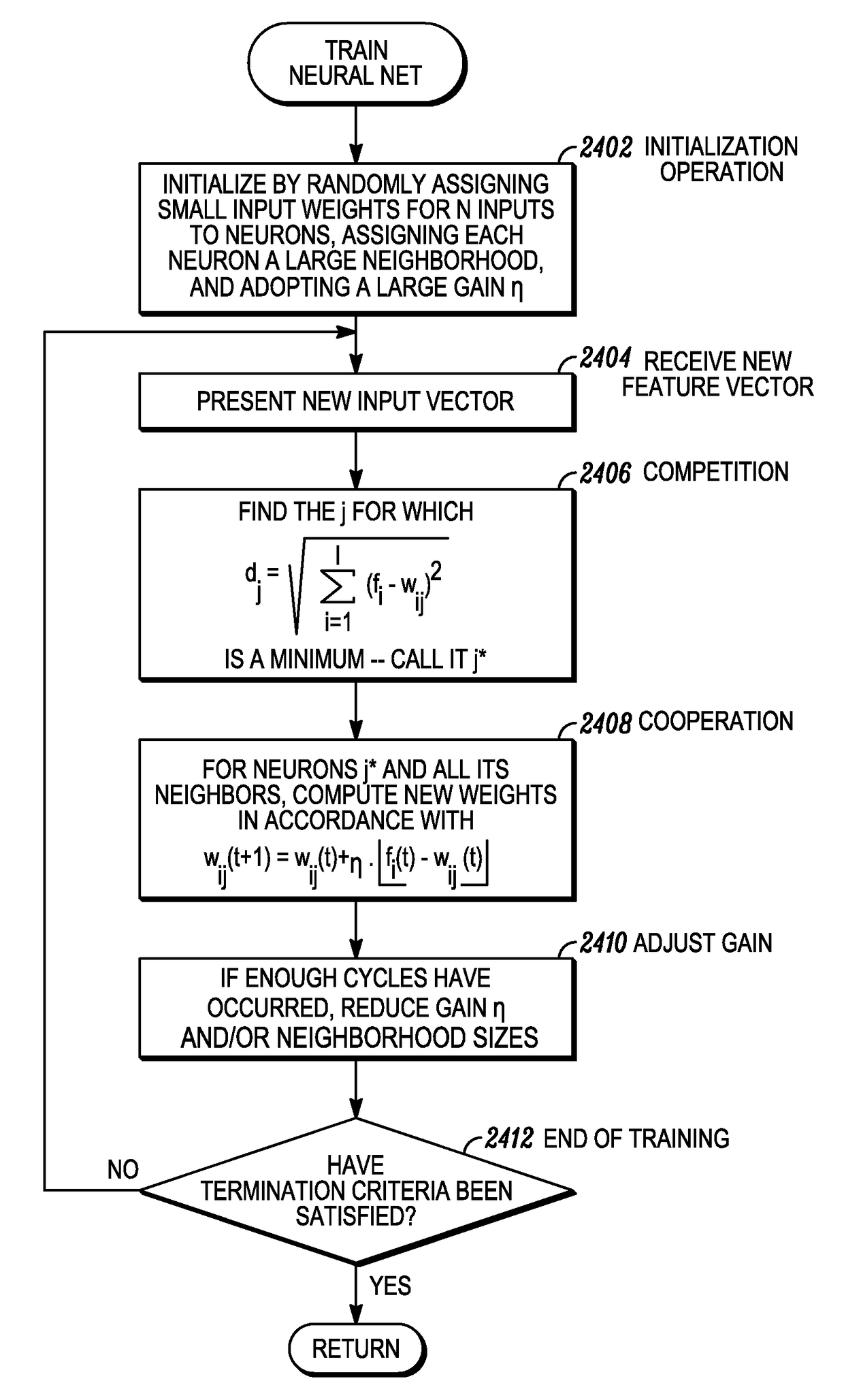

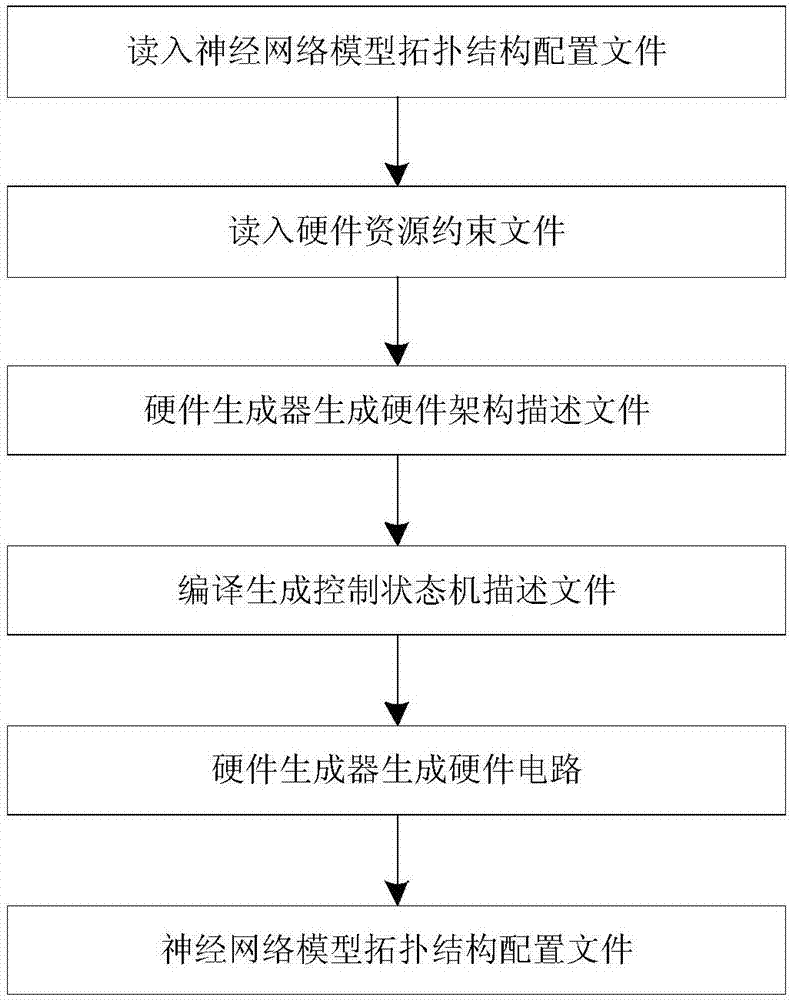

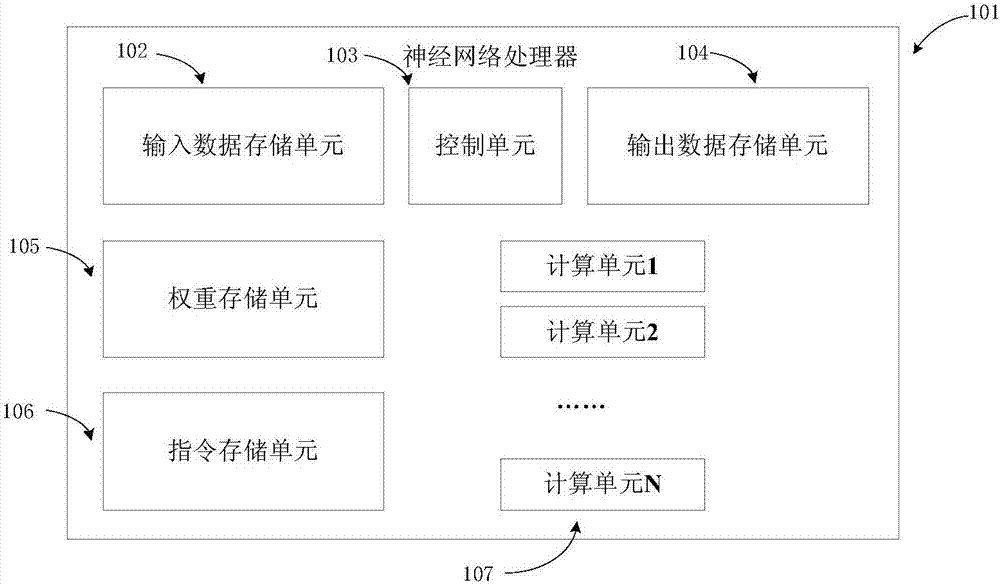

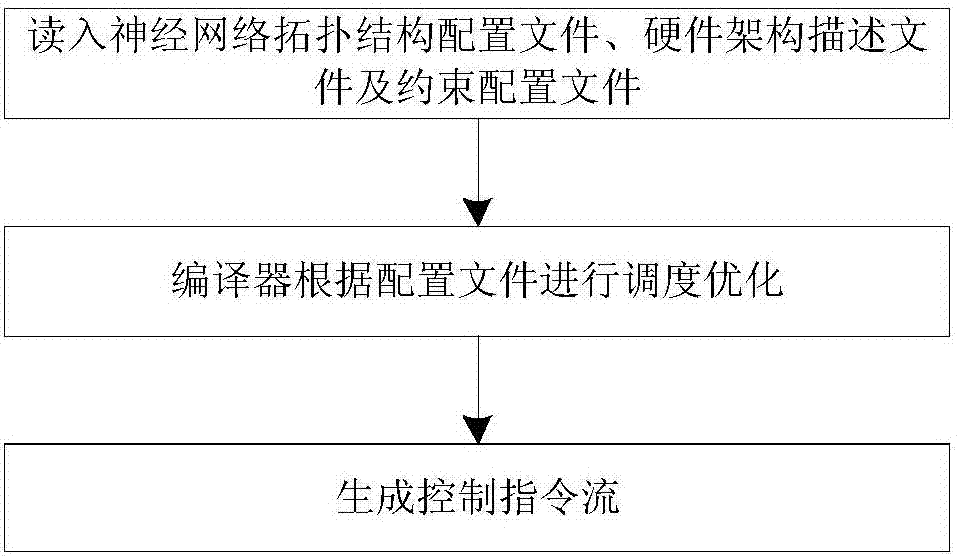

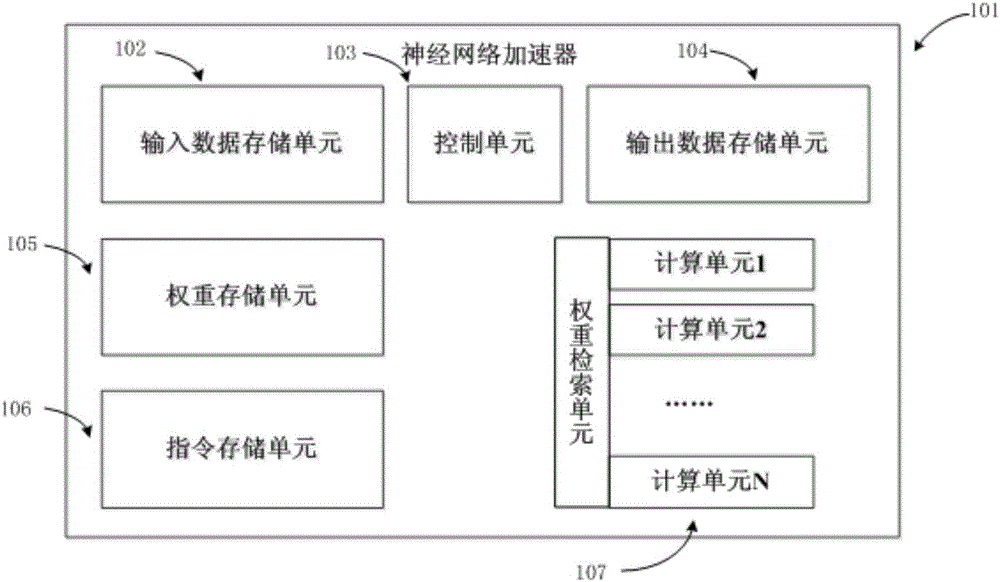

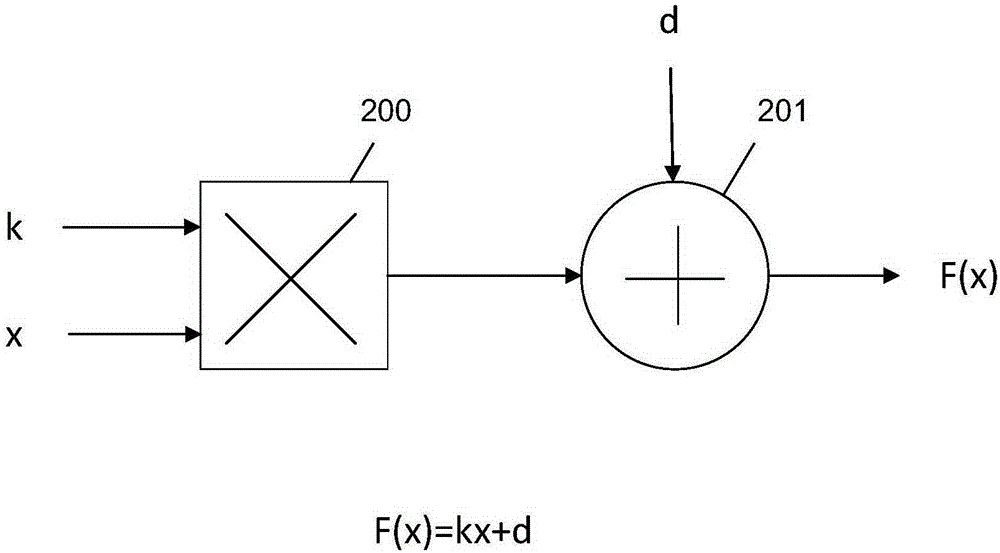

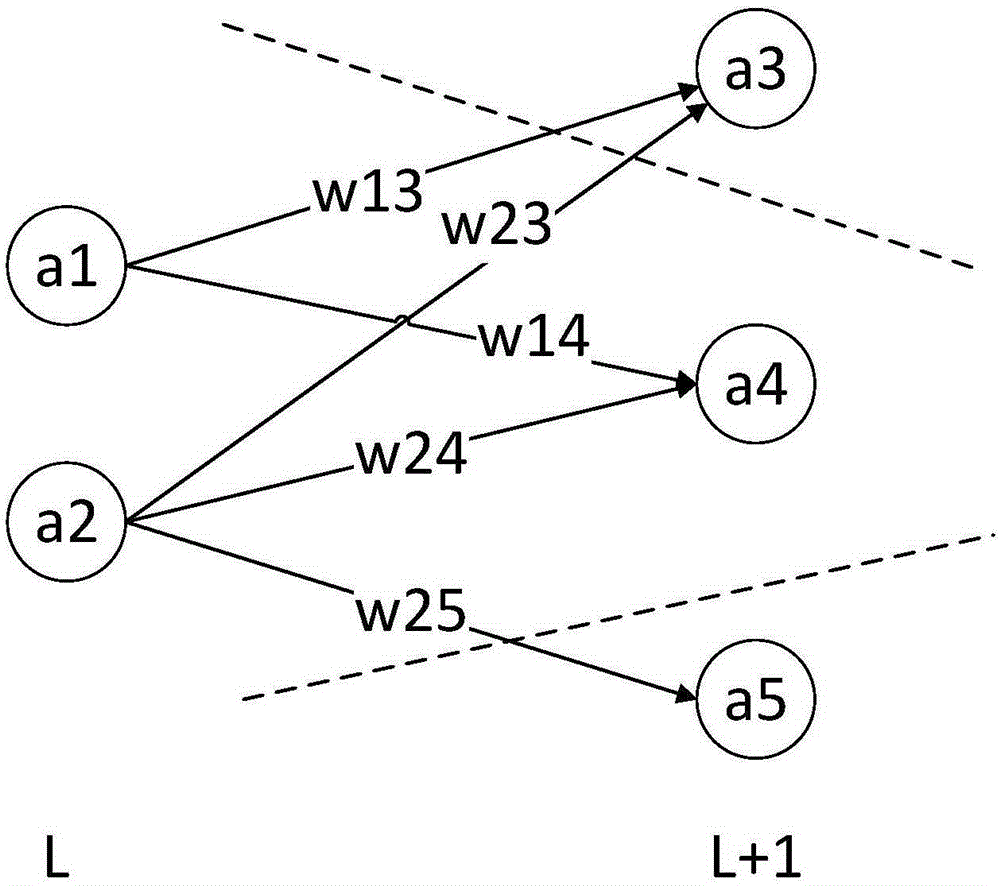

Automated design method, device and optimization method applied for neural network processor

ActiveCN107016175ABiological neural network modelsCAD circuit designComputer architectureHardware architecture

The invention discloses an automated design method, device and optimization method applied for a neural network processor. The method comprises the steps that neural network model topological structure configuration files and hardware resource constraint files are obtained, wherein the hardware resource constraint files comprise target circuit area consumption, target circuit power consumption and target circuit working frequency; a neural network processor hardware architecture is generated according to the neural network model topological structure configuration files and the hardware resource constraint files, and hardware architecture description files are generated; according to a neural network model topological structure, the hardware resource constraint files and the hardware architecture description files, modes of data scheduling, storage and calculation are optimized, and corresponding control description files are generated; according to the hardware architecture description files and the control description files, cell libraries meet the design requirements are found in constructed reusable neural network cell libraries, corresponding control logic and a corresponding hardware circuit description language are generated, and the hardware circuit description language is transformed into a hardware circuit.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

Packet preprocessing interface for multiprocessor network handler

InactiveUS20030067930A1Efficient developmentEvenly distributedData switching by path configurationStore-and-forward switching systemsTraffic capacityHash function

A network handler uses a DMA device to assign packets to network processors in accordance with a mapping function which classifies packets based on its content, e.g., bits in one or more header fields. Preferably, the mapping function is implemented as a hash function, which uses a predetermined number of bits from packet as inputs. The result of this function specifies the processor to which the packet is assigned. To make implementation manageable in a high-traffic environment, each processor may be equipped with a queue, which holds pointer information. Such a pointer provides an indication of the area in memory where incoming packet resides. The network handler is particularly useful in a Fibre Channel environment, where the hash function may be implemented to assign all packets from the same sequence to the same processor, thereby resulting in improved processing efficiency.

Owner:IBM CORP

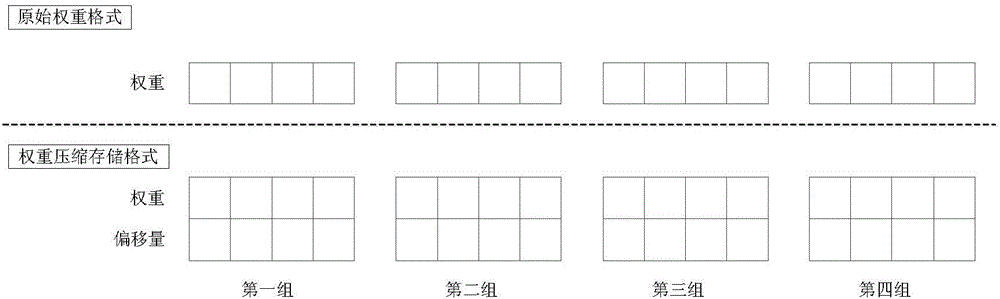

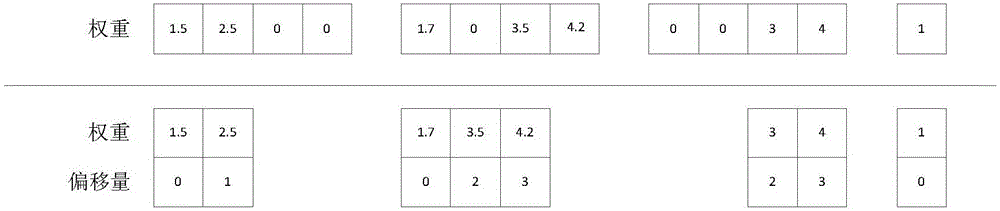

Neural network processor based on weight compression, design method, and chip

ActiveCN106529670AReduce occupancyFast operationPhysical realisationImage compressionNetwork processor

Owner:中科时代(深圳)计算机系统有限公司

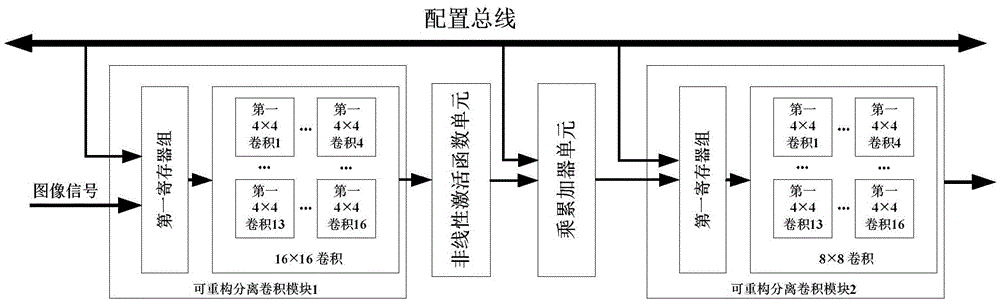

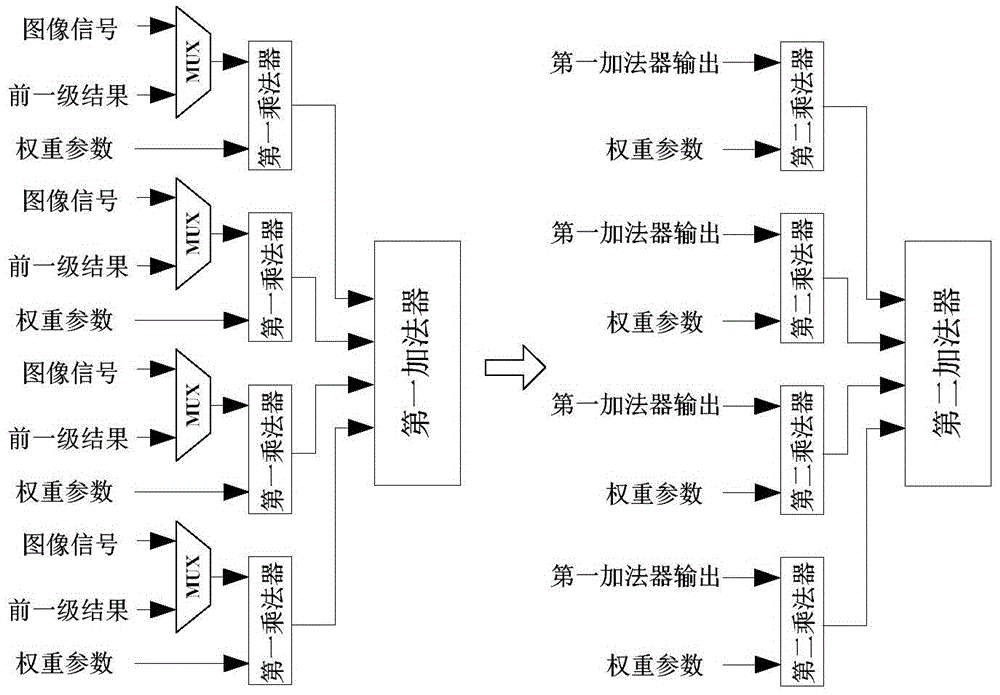

Convolution network arithmetic unit, reconfigurable convolution neural network processor and image de-noising method of reconfigurable convolution neural network processor

ActiveCN105681628AReduce consumptionReduce the number of convolutionsTelevision system detailsColor signal processing circuitsComputer architectureResource consumption

The invention discloses a convolution network arithmetic unit, a reconfigurable convolution neural network processor and an image de-noising method of the reconfigurable convolution neural network processor. The reconfigurable convolution neural network processor comprises a bus interface, a preprocessing unit, a reconfigurable hardware controller, an SRAM, an SRAM control module, an input caching module, an output caching module, a memory, a data memory controller and the convolution network arithmetic unit. The processor is featured by few resources and rapid speed and can be applicable to common convolution neural network architecture. According to the unit, the processor and the method provided by the invention, convolution neural networks can be realized; the processing speed is rapid; transplanting is liable to be carried out; the resource consumption is little; an image or a video polluted by raindrops and dusts can be recovered; and raindrop and dust removing operations can be taken as preprocessing operations for providing help in follow-up image identification or classification.

Owner:XI AN JIAOTONG UNIV

Data structures for efficient processing of IP fragmentation and reassembly

InactiveUS6937606B2Requires minimizationReduce memory requirementsTime-division multiplexData switching by path configurationArray data structureIP fragmentation

Data structures, a method, and an associated transmission system for IP fragmentation and IP reassembly on network processors in order to minimize memory allocation requirements. Frame data for IP fragmentation or reassembly on a network processor is read into buffers to which are associated various control structures. The control structures permit IP fragmentation or reassembly to be accomplished without creating multiple copies of the frame or fragments.

Owner:IBM CORP

Method and system for network processor scheduling based on service levels

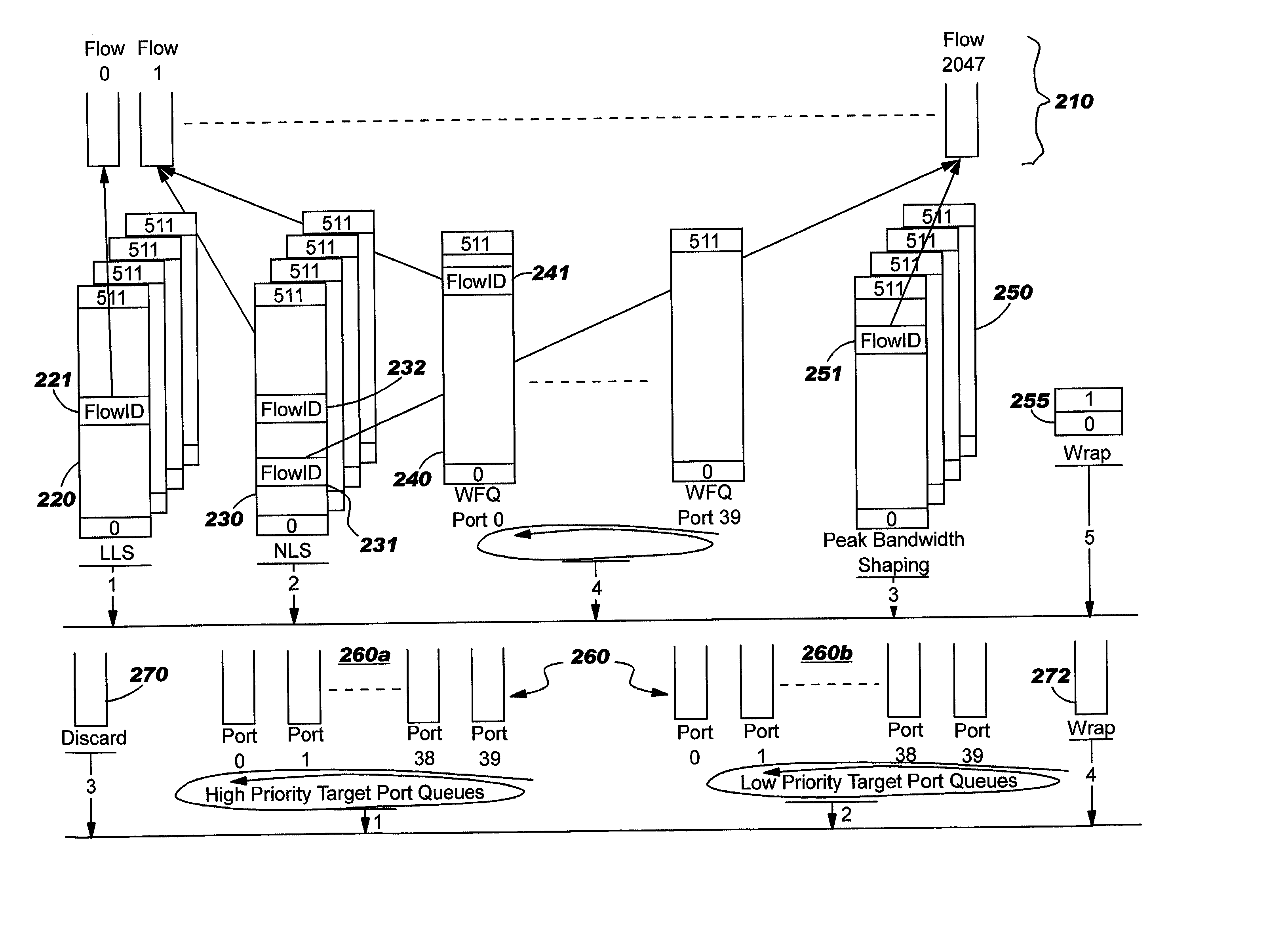

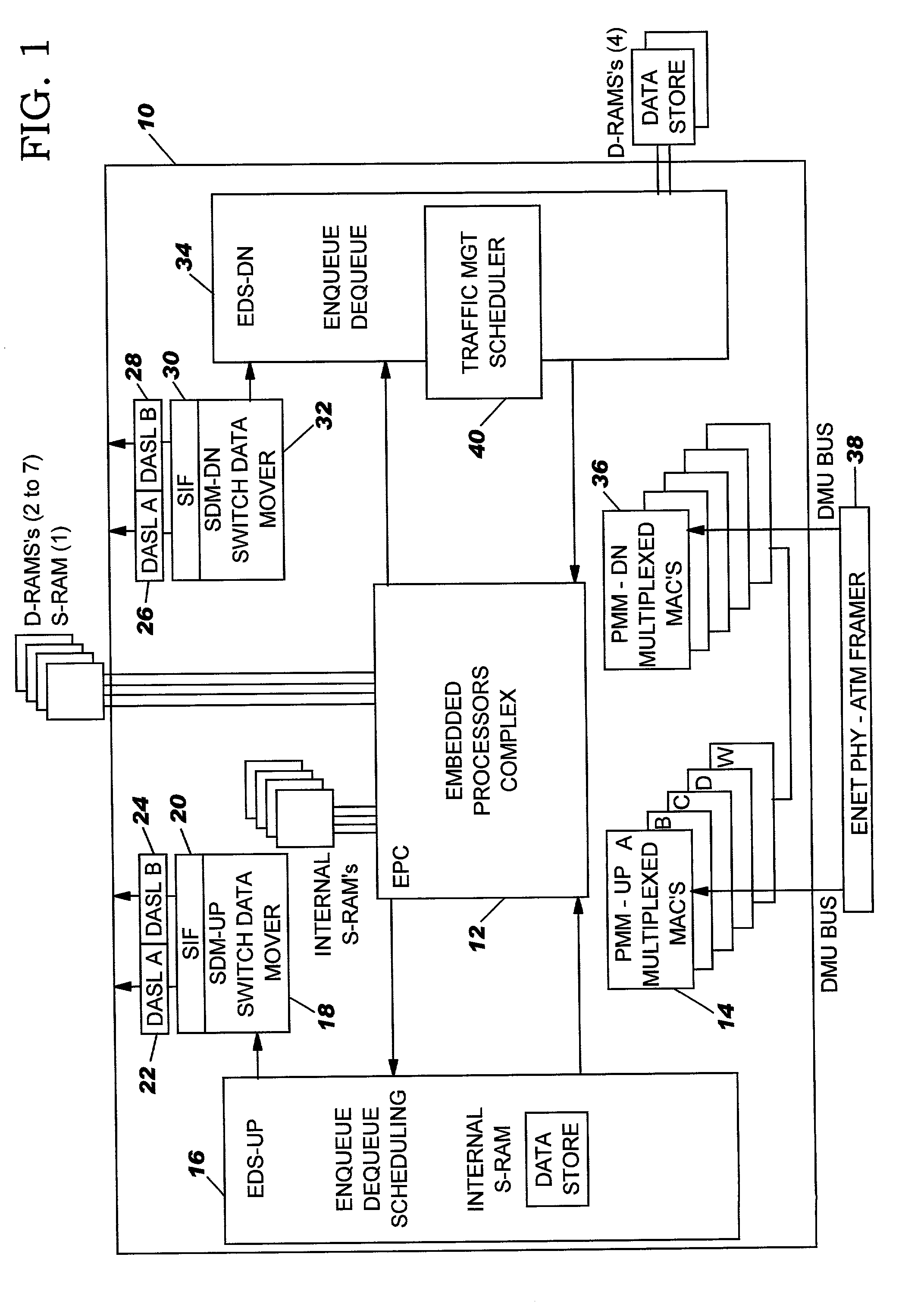

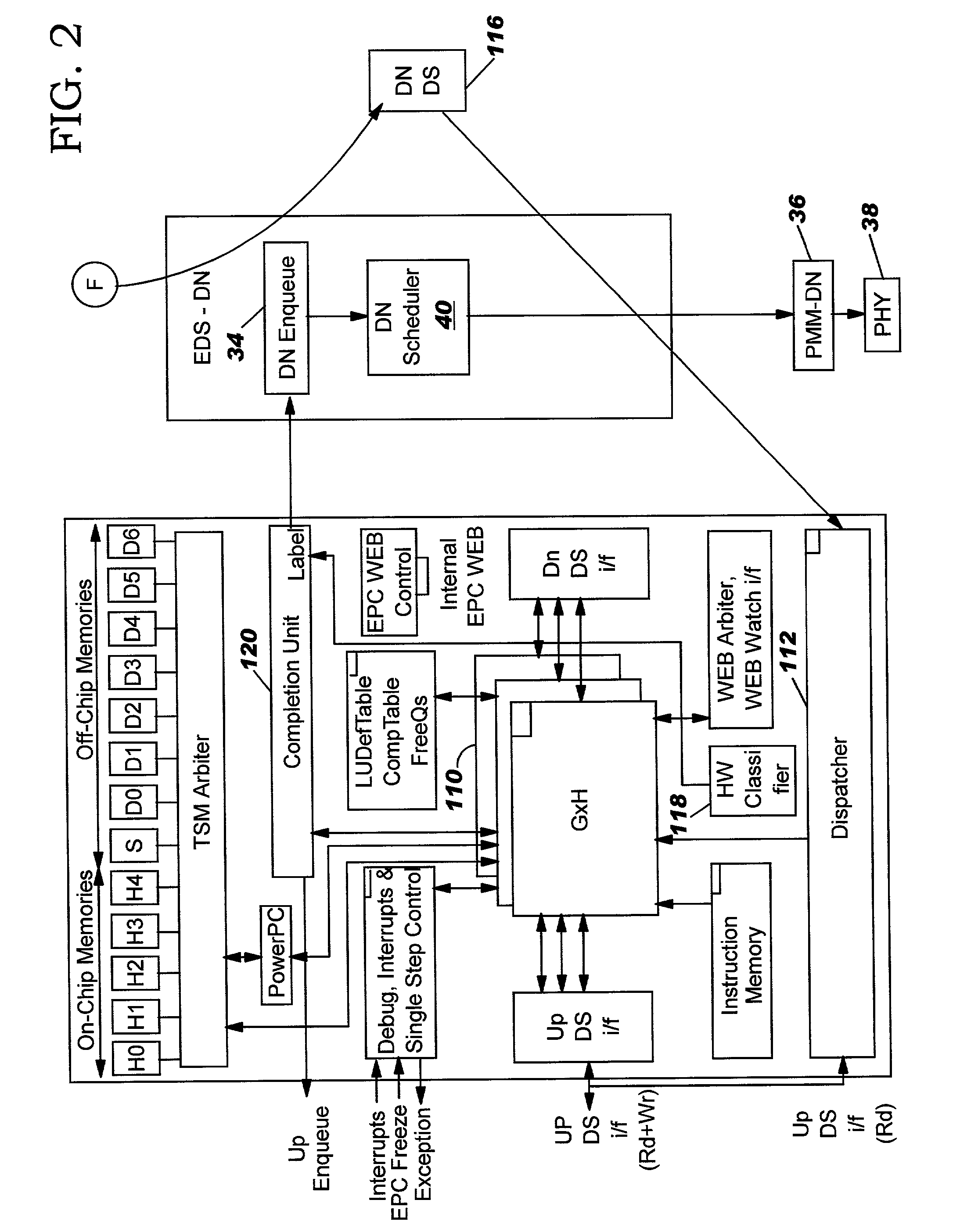

A system and method of moving information units from an output flow control toward a data transmission network in a prioritized sequence which accommodates several different levels of service. The present invention includes a method and system for scheduling the egress of processed information units (or frames) from a network processing unit according to service based on a weighted fair queue where position in the queue is adjusted after each service based on a weight factor and the length of frame, a process which provides a method for and system of interaction between different calendar types is used to provide minimum bandwidth, best effort bandwidth, weighted fair queuing service, best effort peak bandwidth, and maximum burst size specifications. The present invention permits different combinations of service that can be used to create different QoS specifications. The "base" services which are offered to a customer in the example described in this patent application are minimum bandwidth, best effort, peak and maximum burst size (or MBS), which may be combined as desired. For example, a user could specify minimum bandwidth plus best effort additional bandwidth and the system would provide this capability by putting the flow queue in both the NLS and WFQ calendar. The system includes tests when a flow queue is in multiple calendars to determine when it must come out.

Owner:IBM CORP

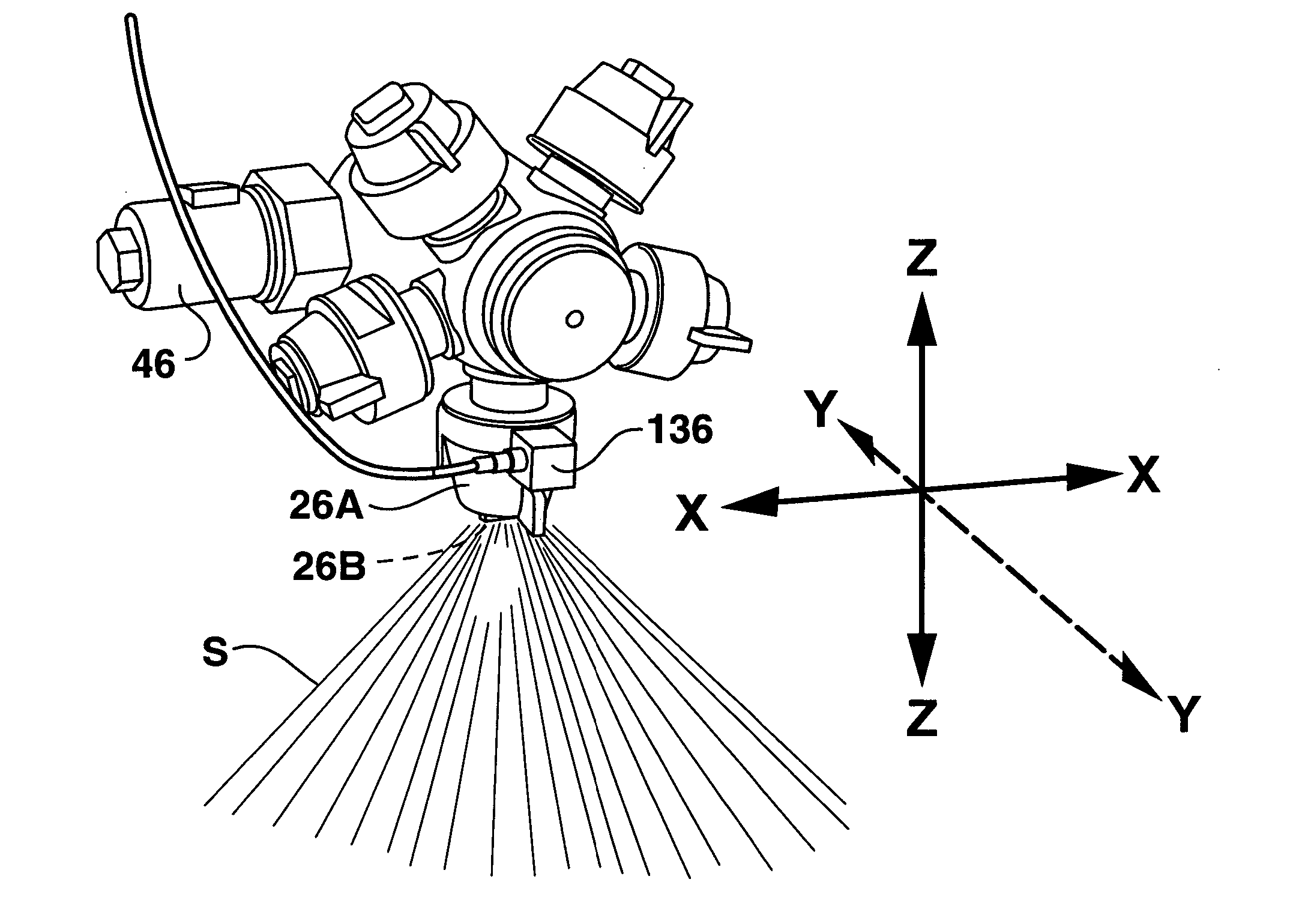

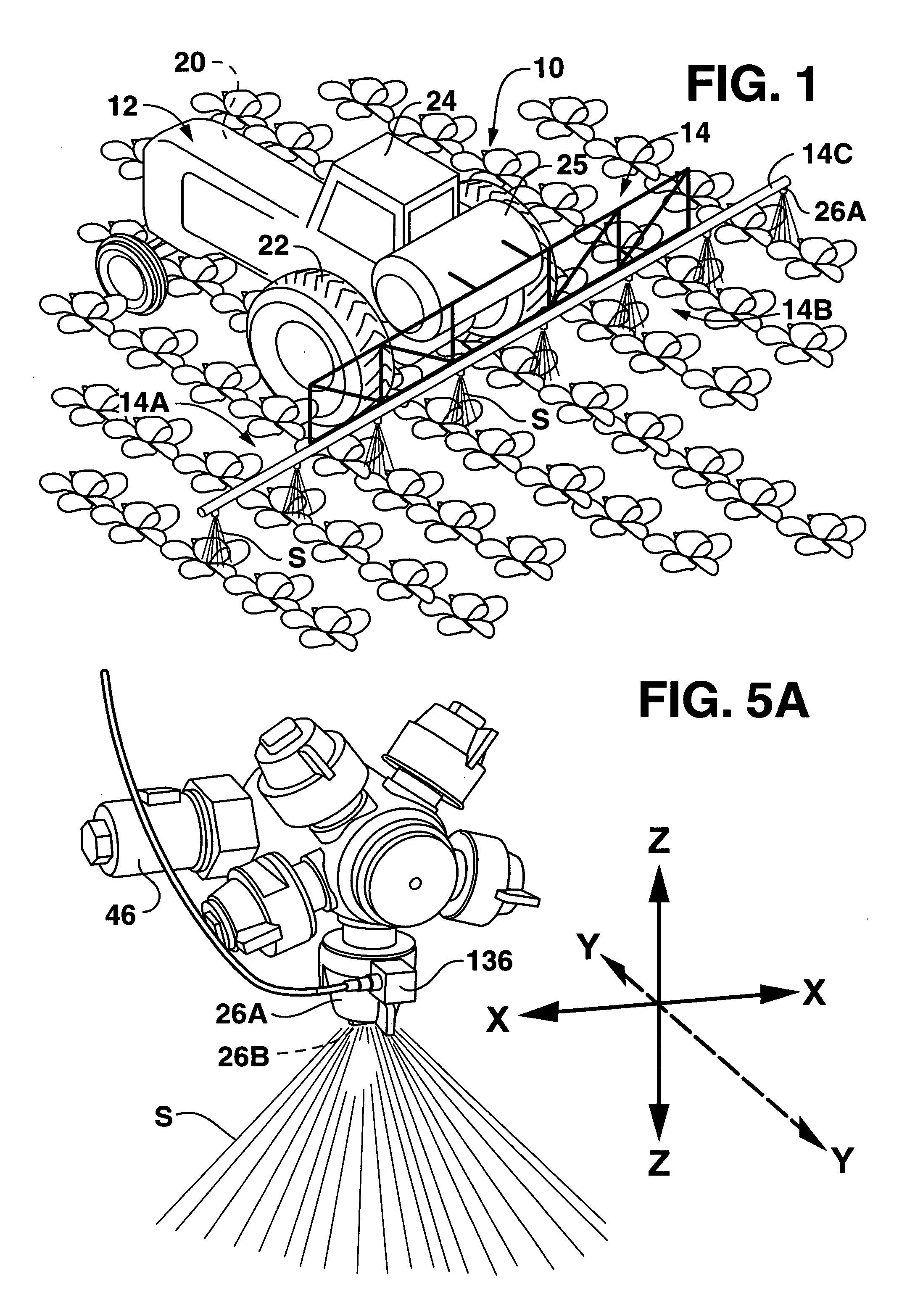

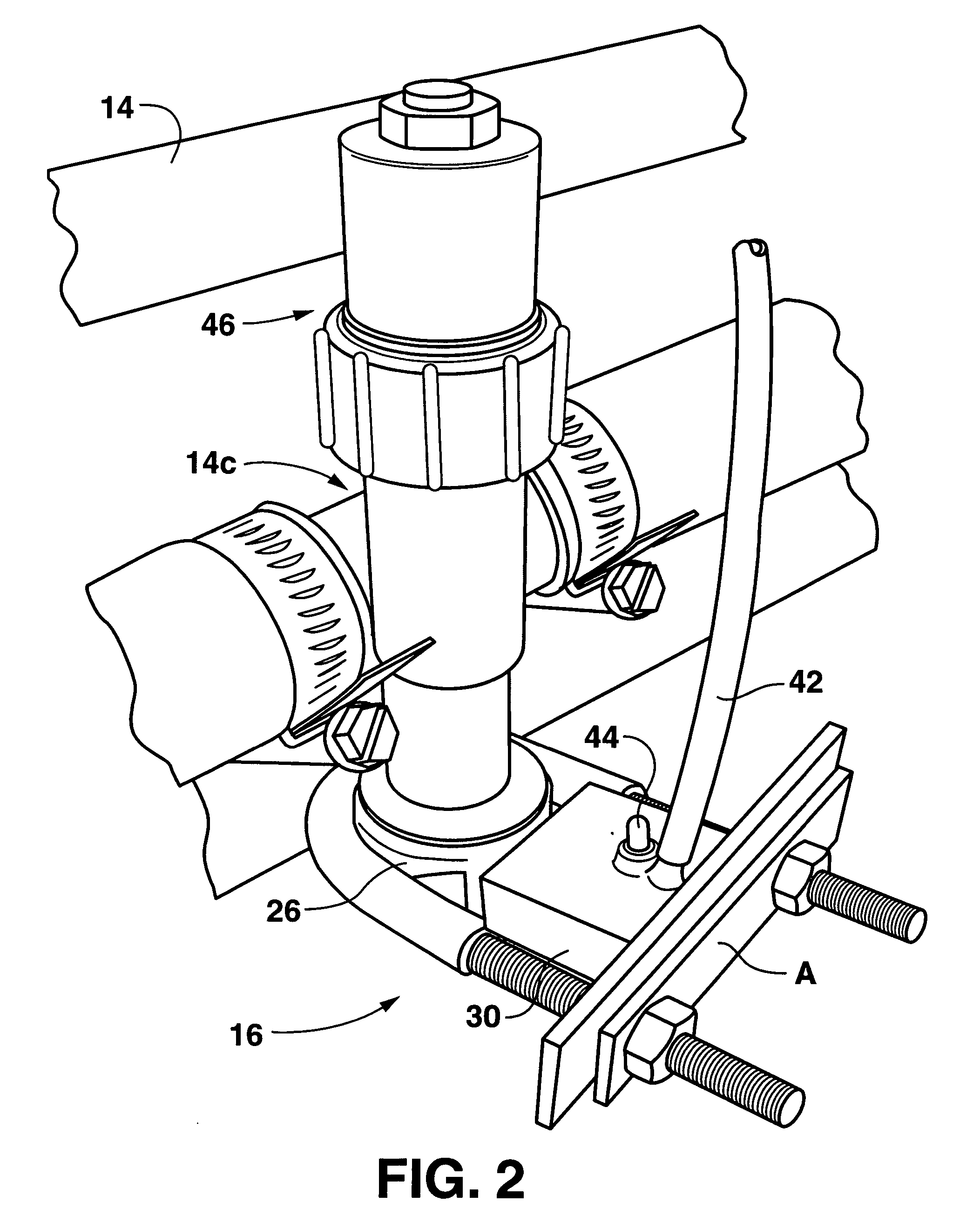

Networked diagnostic and control system for dispensing apparatus

ActiveUS20060265106A1Simple and economical to manufacture and assemble and useFunctional valve typesTemperatue controlControl systemAgricultural engineering

A networked delivery system and method for controlling operation of a spraying system includes nozzles for emitting an agrochemical according to a predetermined spray pattern and flow rate; vibration sensors located adjacent an agricultural spray system component to sense vibrations of the agricultural spray system component, such as spray nozzles. The networked delivery system also includes a control area network with a computer processor in communication with the vibration sensors. The processor conveys information to an operator regarding the agricultural spray system component based on the sensed vibrations. The processor also actuates each of the agricultural spray system components such as the spray nozzles to selectively control each of the nozzles or a designated group of the nozzles.

Owner:CAPSTAN

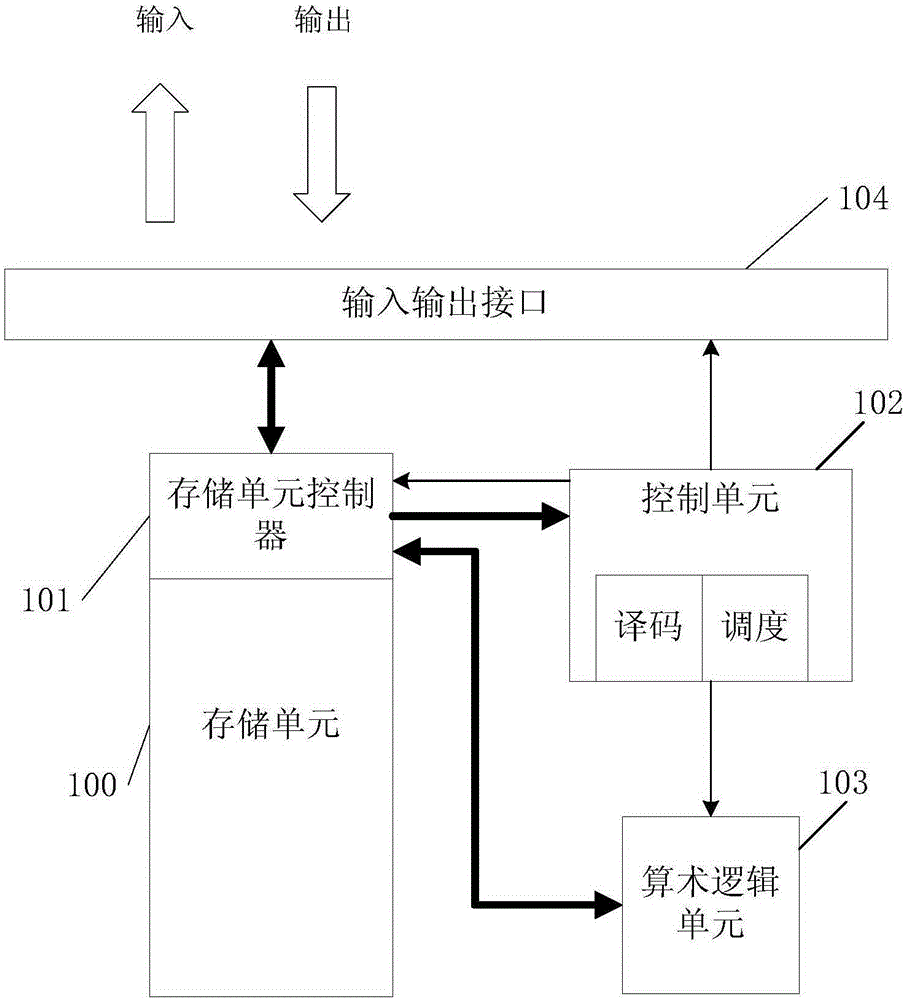

Time-division-multiplexing general neural network processor

ActiveCN105184366AImprove general performanceSolve overheadPhysical realisationArithmetic logic unitTime-division multiplexing

Provided in the invention is a time-division-multiplexing general neural network processor comprising at least one storage unit (100), at least one storage unit controller (101), at least one arithmetic logic unit (103), and a control unit (102). To be specific, the at least one storage unit (100) is used for storing an instruction and data. The at least one storage unit controller (101) corresponds to the at least one storage unit (100) and accesses the corresponding storage unit (100). The at least one arithmetic logic unit (103) is used for executing neural network computing. The control unit (102) connected with the at least one storage unit controller (101) and the at least one arithmetic logic unit (103) can obtain the instruction stored by the at least one storage unit (100) by the at least one storage unit controller (101) and parse the instruction to control the at least one arithmetic logic unit (103) to execute computation. The provided general neural network processor with high universality is suitable for computation of a large-scale neural network.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com