Patents

Literature

876 results about "Hardware architecture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In engineering, hardware architecture refers to the identification of a system's physical components and their interrelationships. This description, often called a hardware design model, allows hardware designers to understand how their components fit into a system architecture and provides to software component designers important information needed for software development and integration. Clear definition of a hardware architecture allows the various traditional engineering disciplines (e.g., electrical and mechanical engineering) to work more effectively together to develop and manufacture new machines, devices and components.

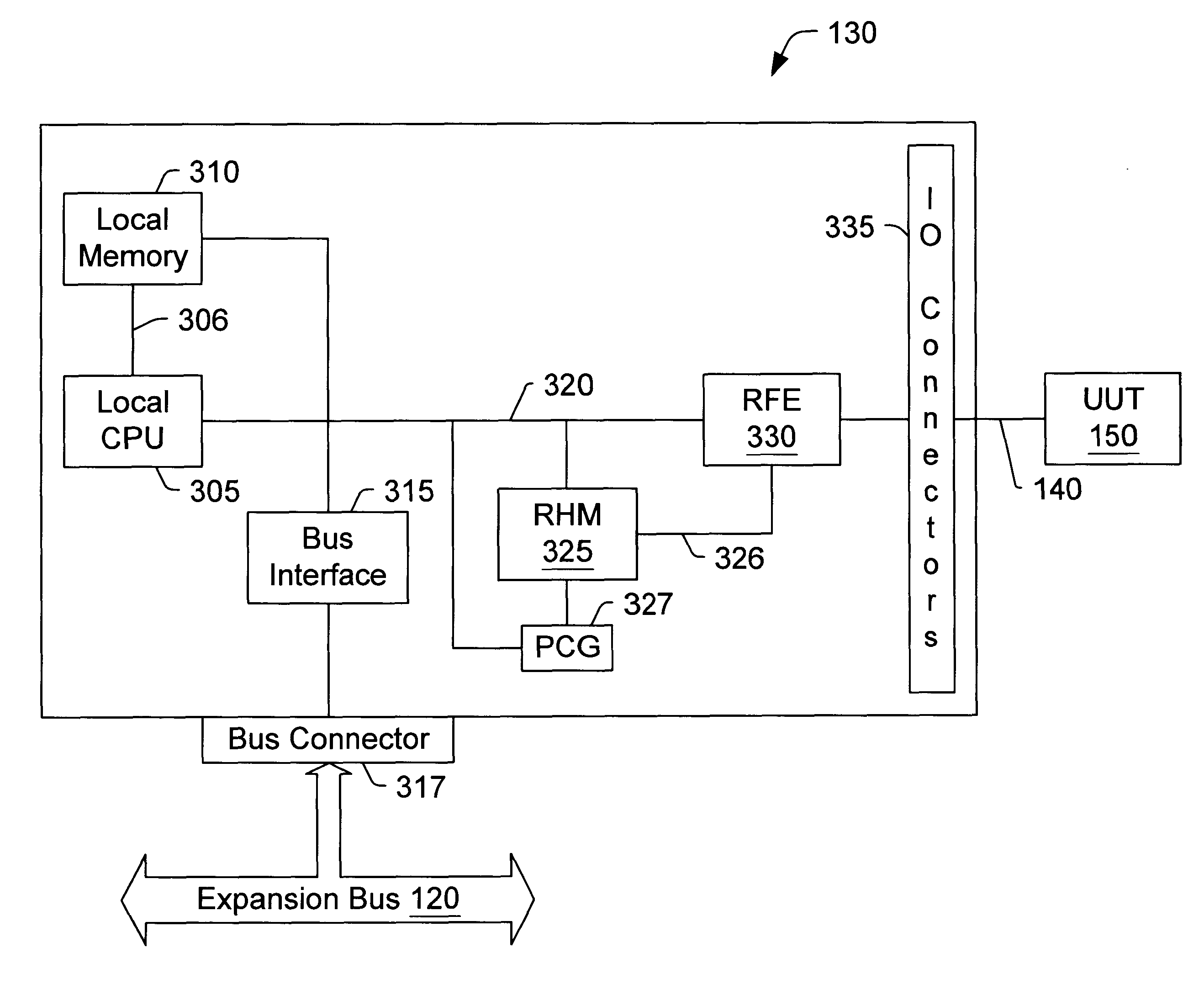

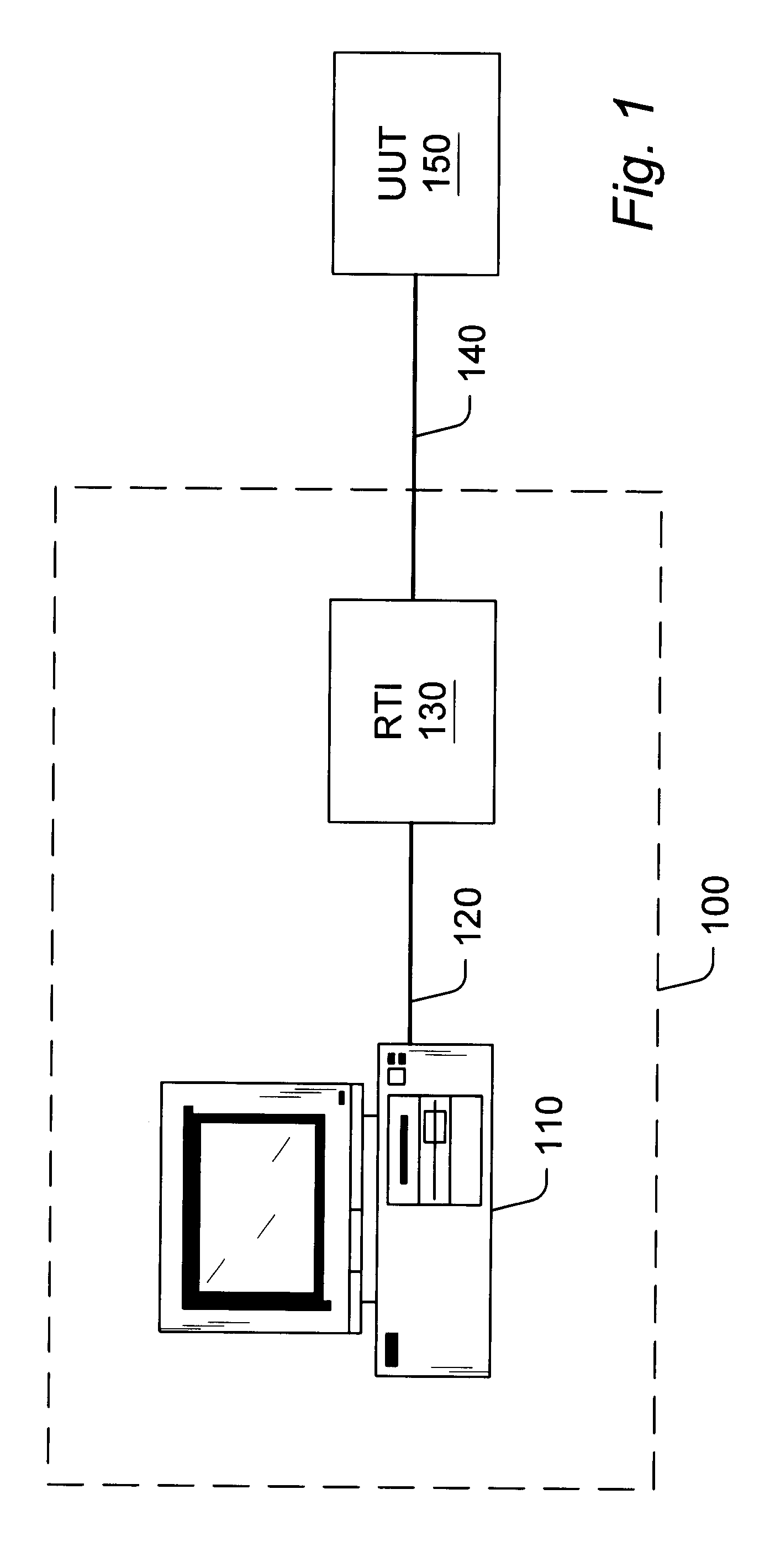

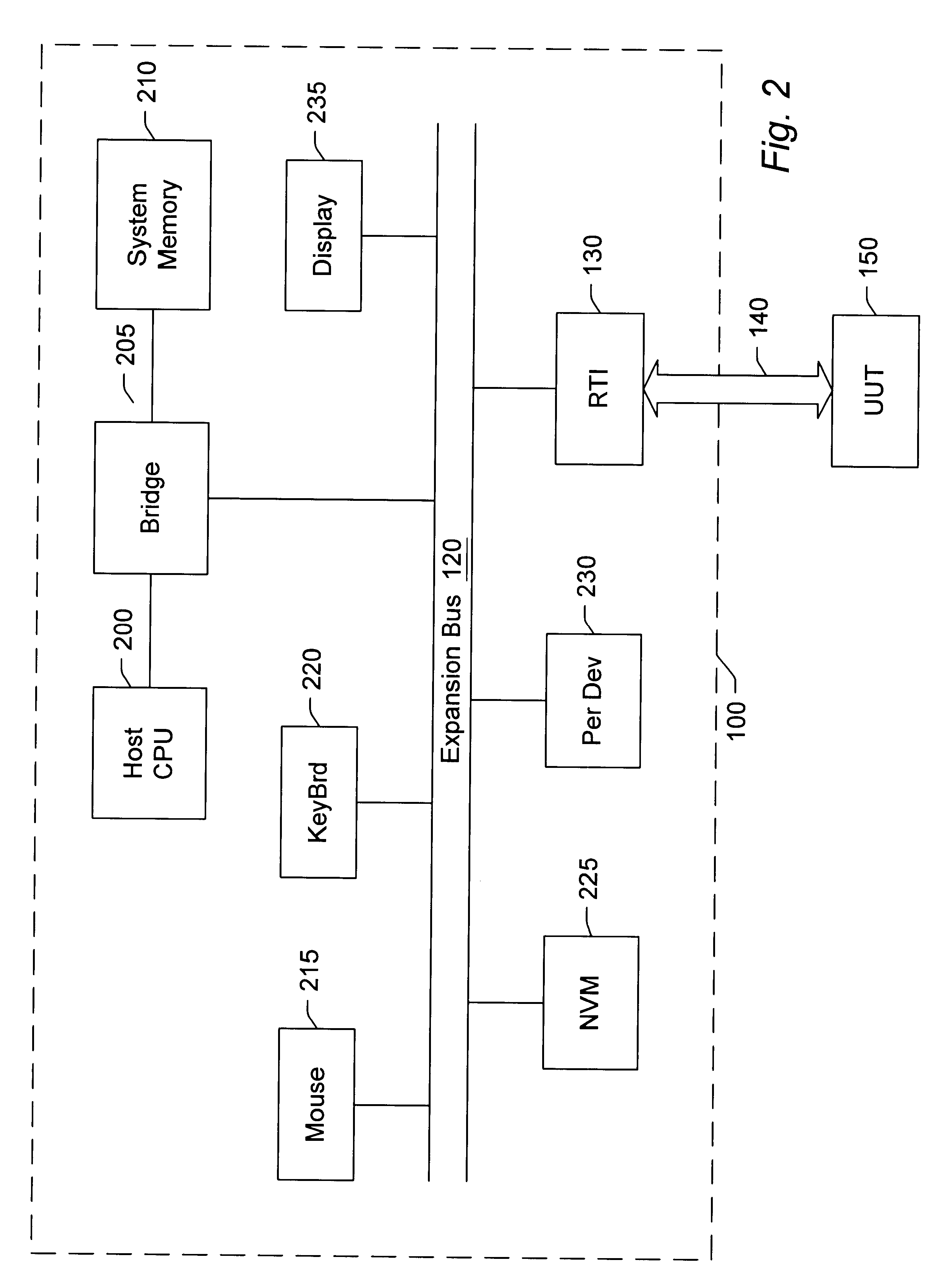

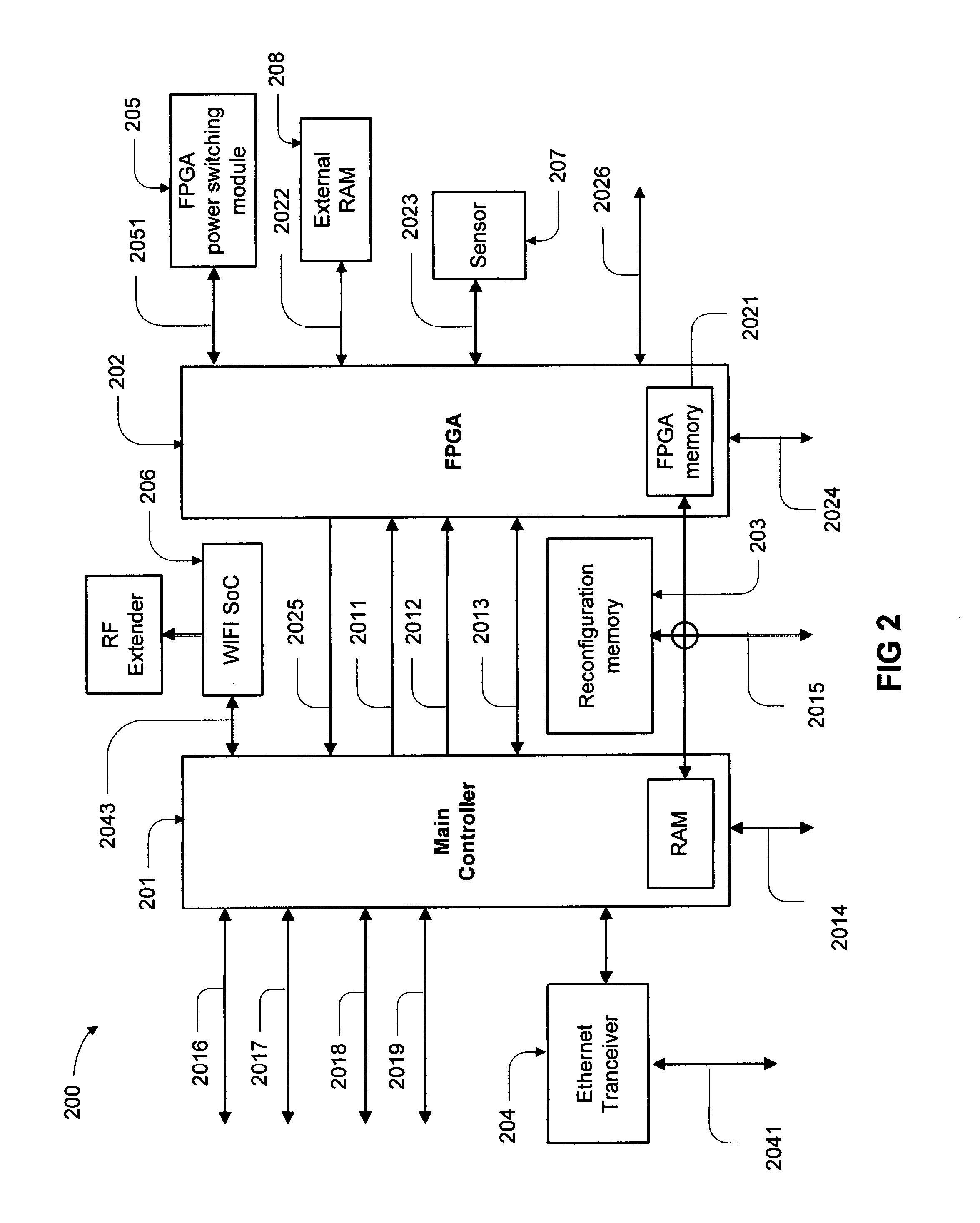

Reconfigurable test system

A reconfigurable test system including a host computer coupled to a reconfigurable test instrument. The reconfigurable test instrument includes reconfigurable hardware-i.e. a reconfigurable hardware module with one or more programmable elements such as Field Programmable Gate Arrays for realizing an arbitrary hardware architecture and a reconfigurable front end with programmable transceivers for interfacing with any desired physical medium-and optionally, an embedded processor. A user specifies system features with a software configuration utility which directs a component selector to select a set of software modules and hardware configuration files from a series of libraries. The modules are embedded in a host software driver or downloaded for execution on the embedded CPU. The configuration files are downloaded to the reconfigurable hardware. The entire selection process is performed in real-time and can be changed whenever the user deems necessary. Alternatively, the user may create a graphical program in a graphical programming environment and compile the program into various software modules and configuration files for host execution, embedded processor execution, or programming the reconfigurable hardware.

Owner:NATIONAL INSTRUMENTS

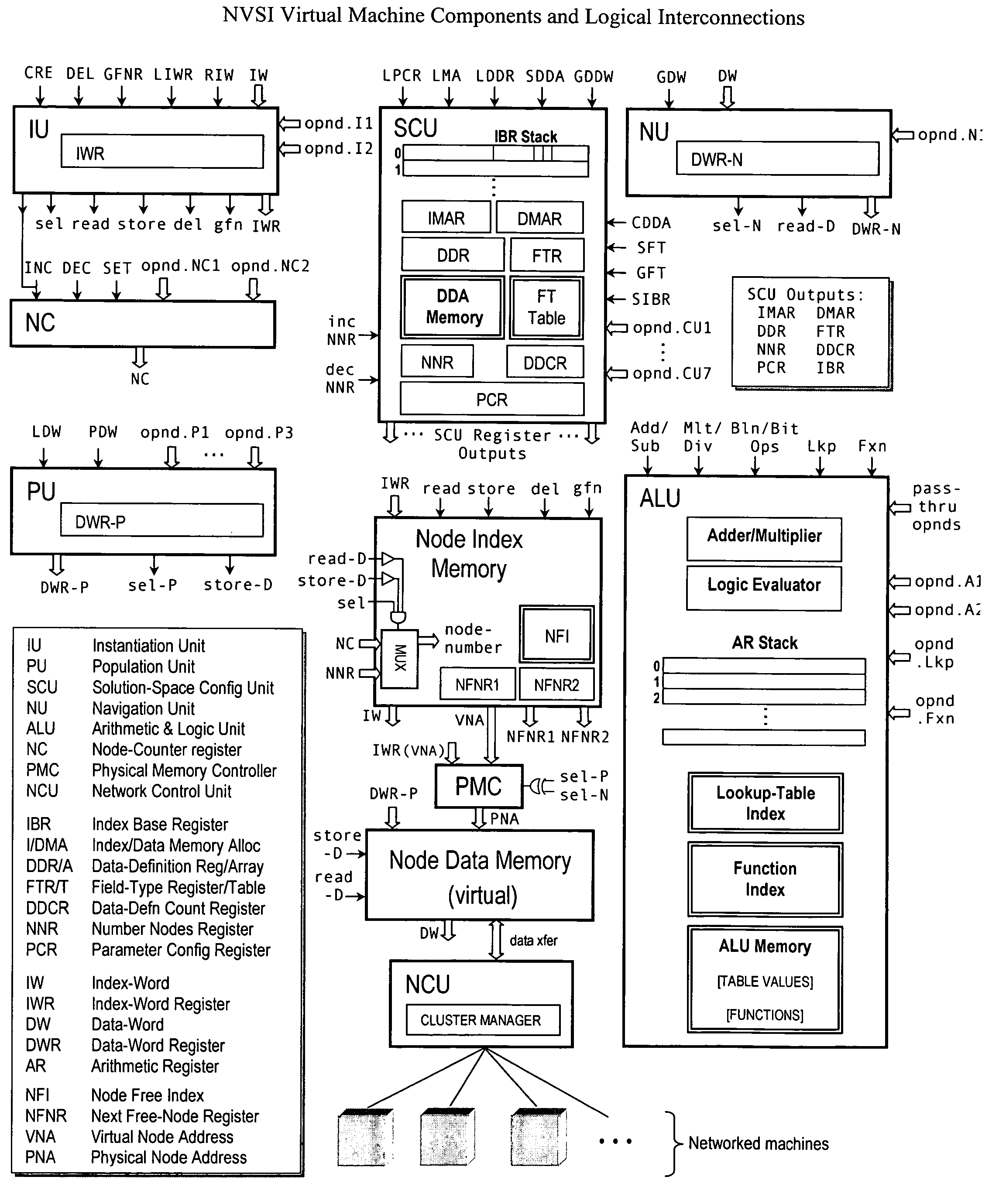

Virtual supercomputer

ActiveUS7774191B2Improve efficiencyFinanceResource allocationInformation processingOperational system

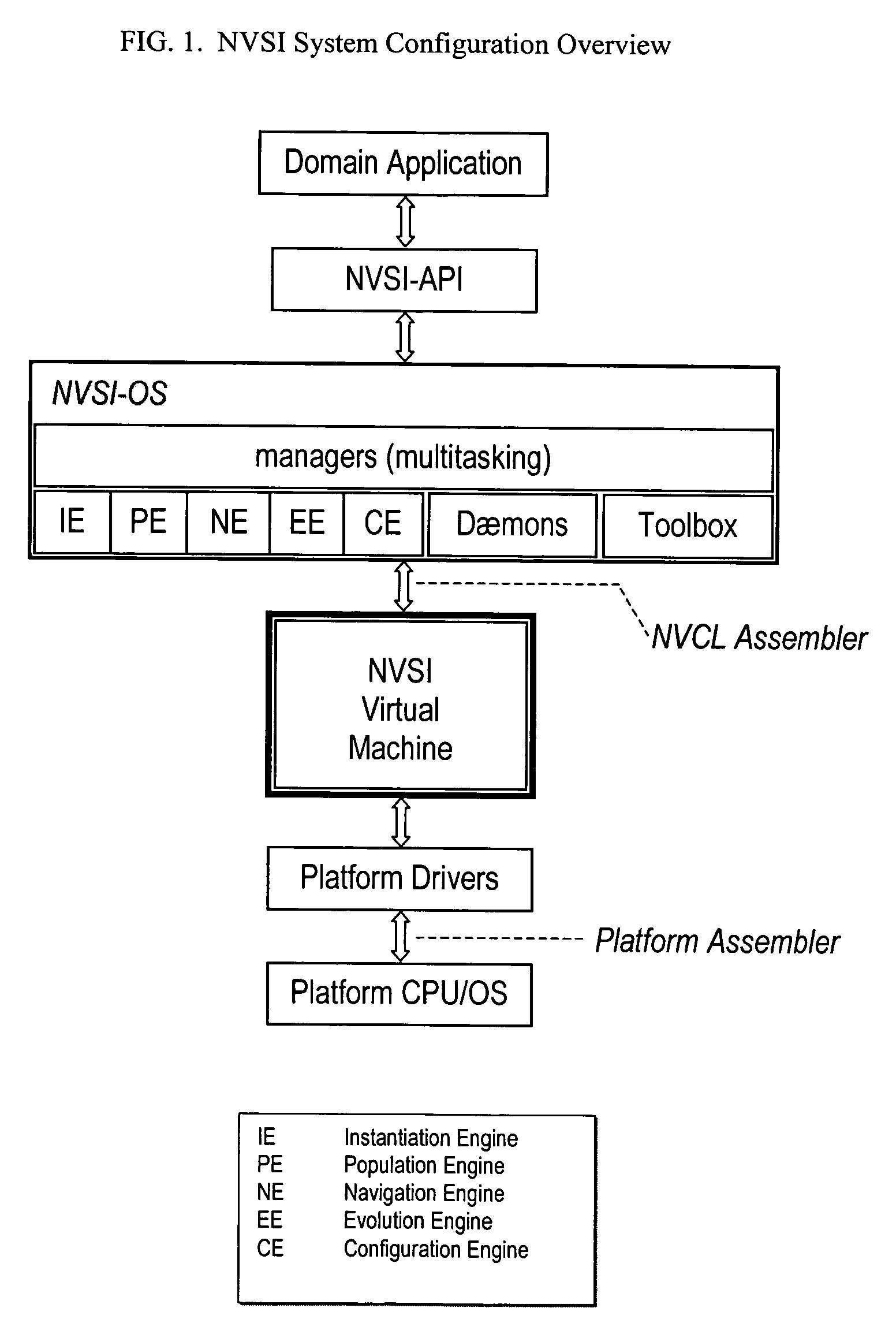

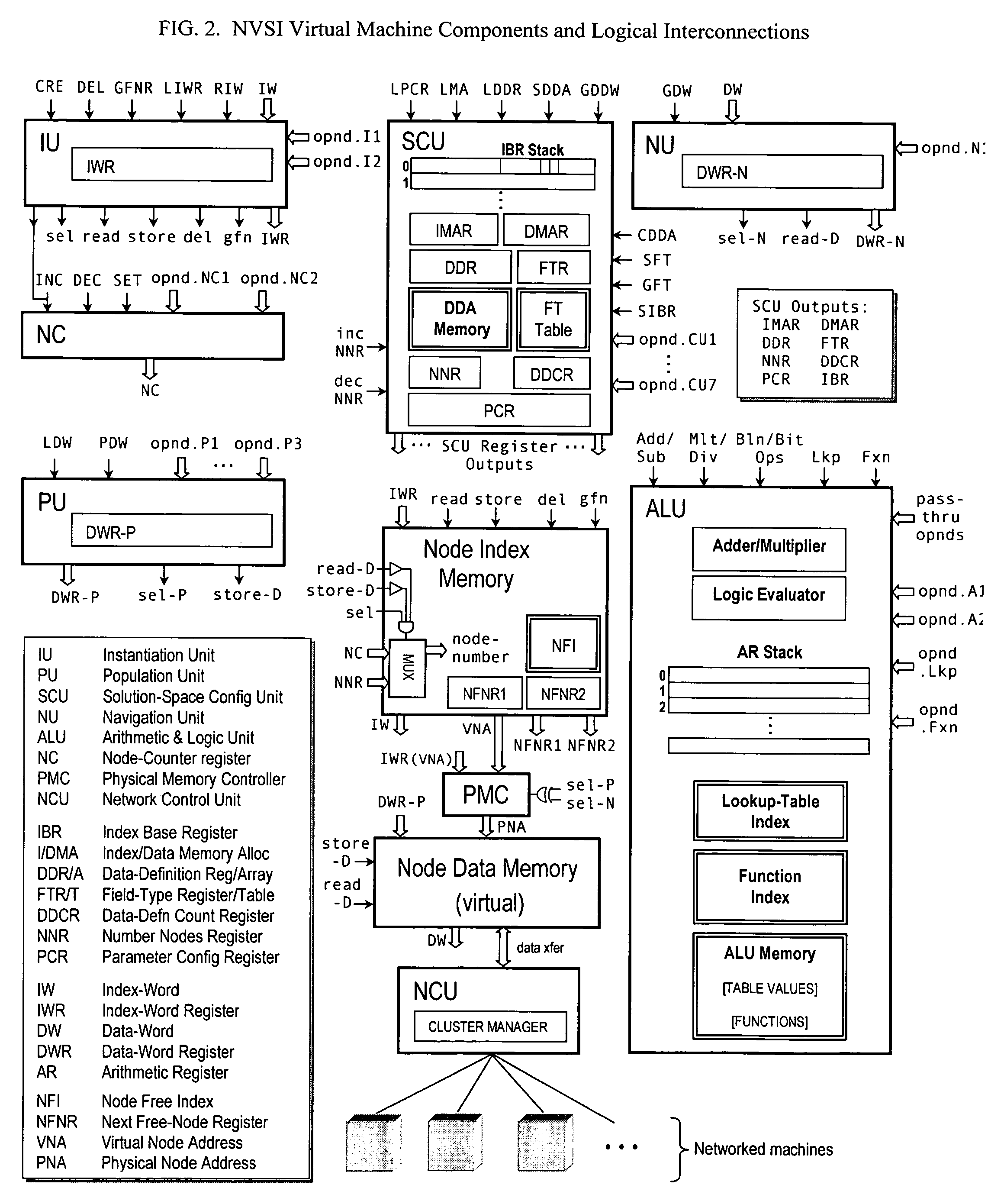

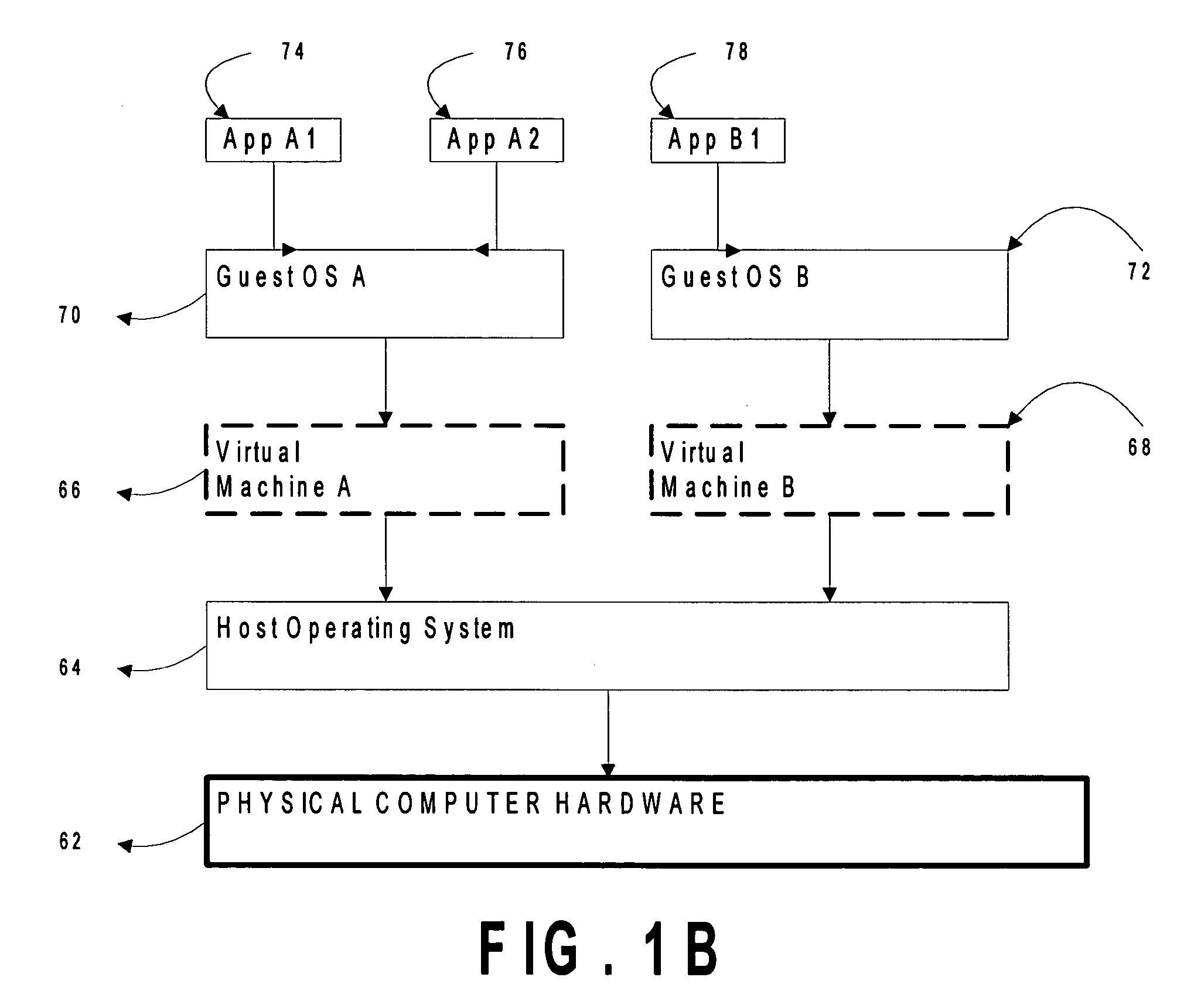

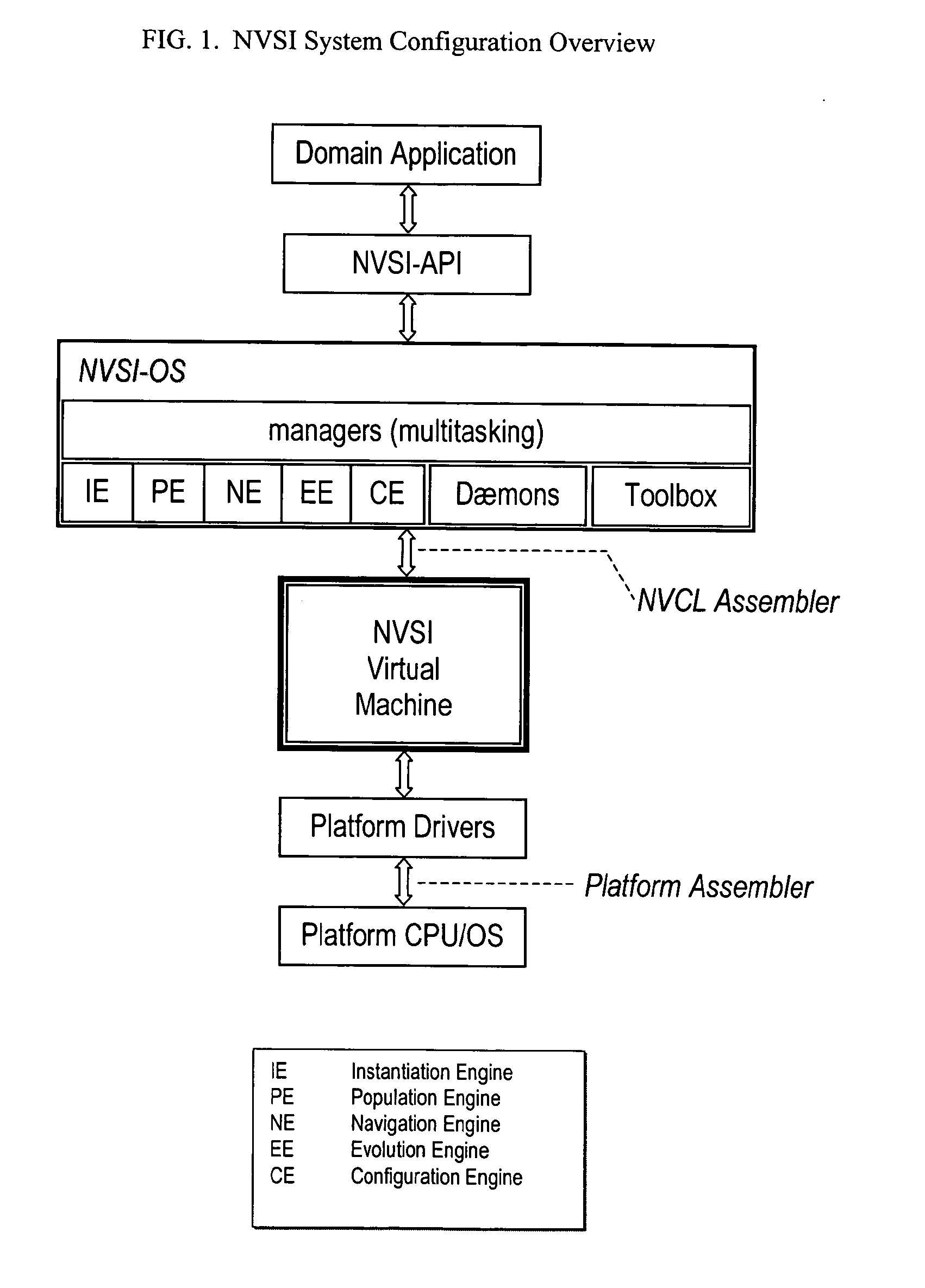

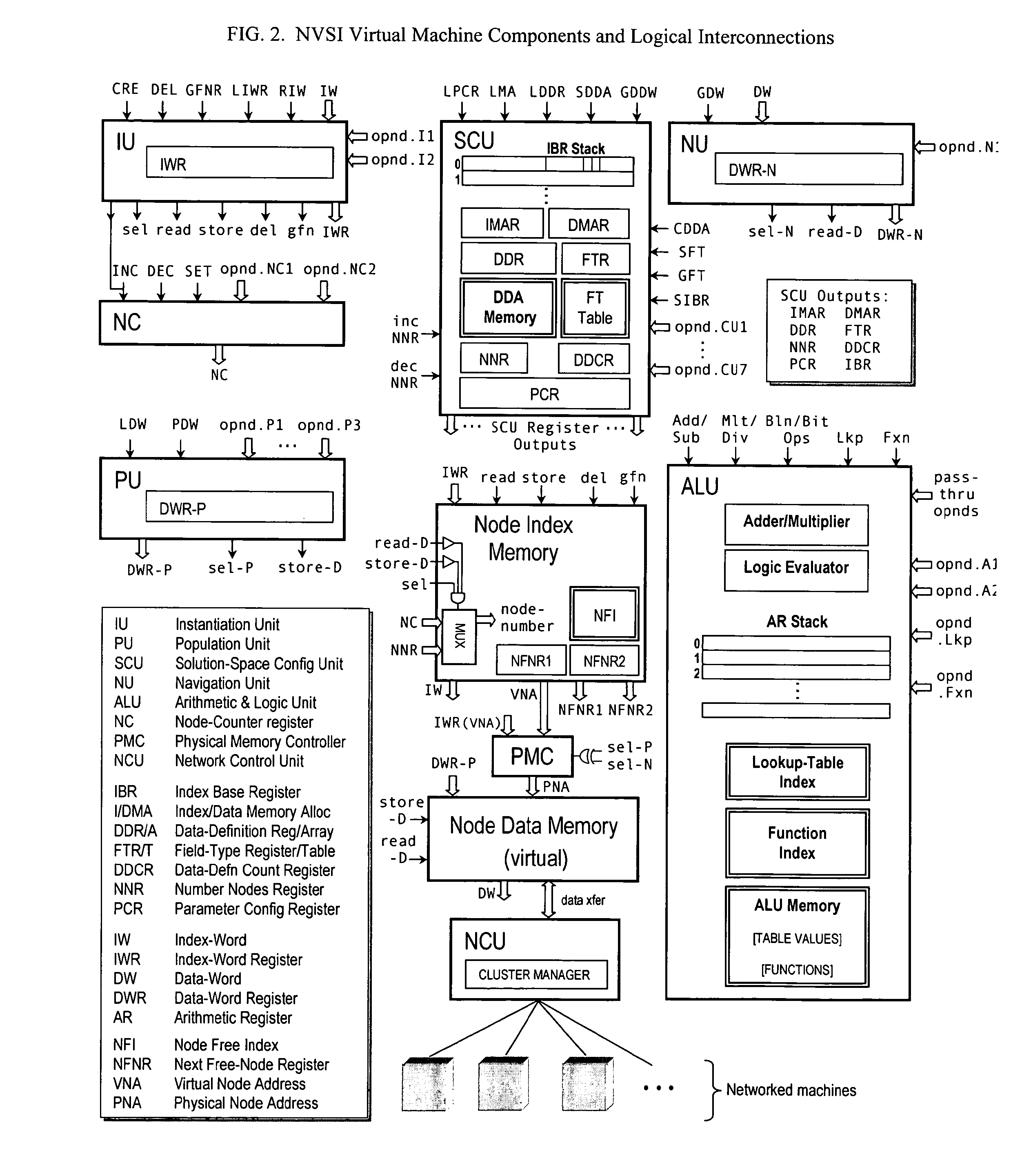

The virtual supercomputer is an apparatus, system and method for generating information processing solutions to complex and / or high-demand / high-performance computing problems, without the need for costly, dedicated hardware supercomputers, and in a manner far more efficient than simple grid or multiprocessor network approaches. The virtual supercomputer consists of a reconfigurable virtual hardware processor, an associated operating system, and a set of operations and procedures that allow the architecture of the system to be easily tailored and adapted to specific problems or classes of problems in a way that such tailored solutions will perform on a variety of hardware architectures, while retaining the benefits of a tailored solution that is designed to exploit the specific and often changing information processing features and demands of the problem at hand.

Owner:VERISCAPE

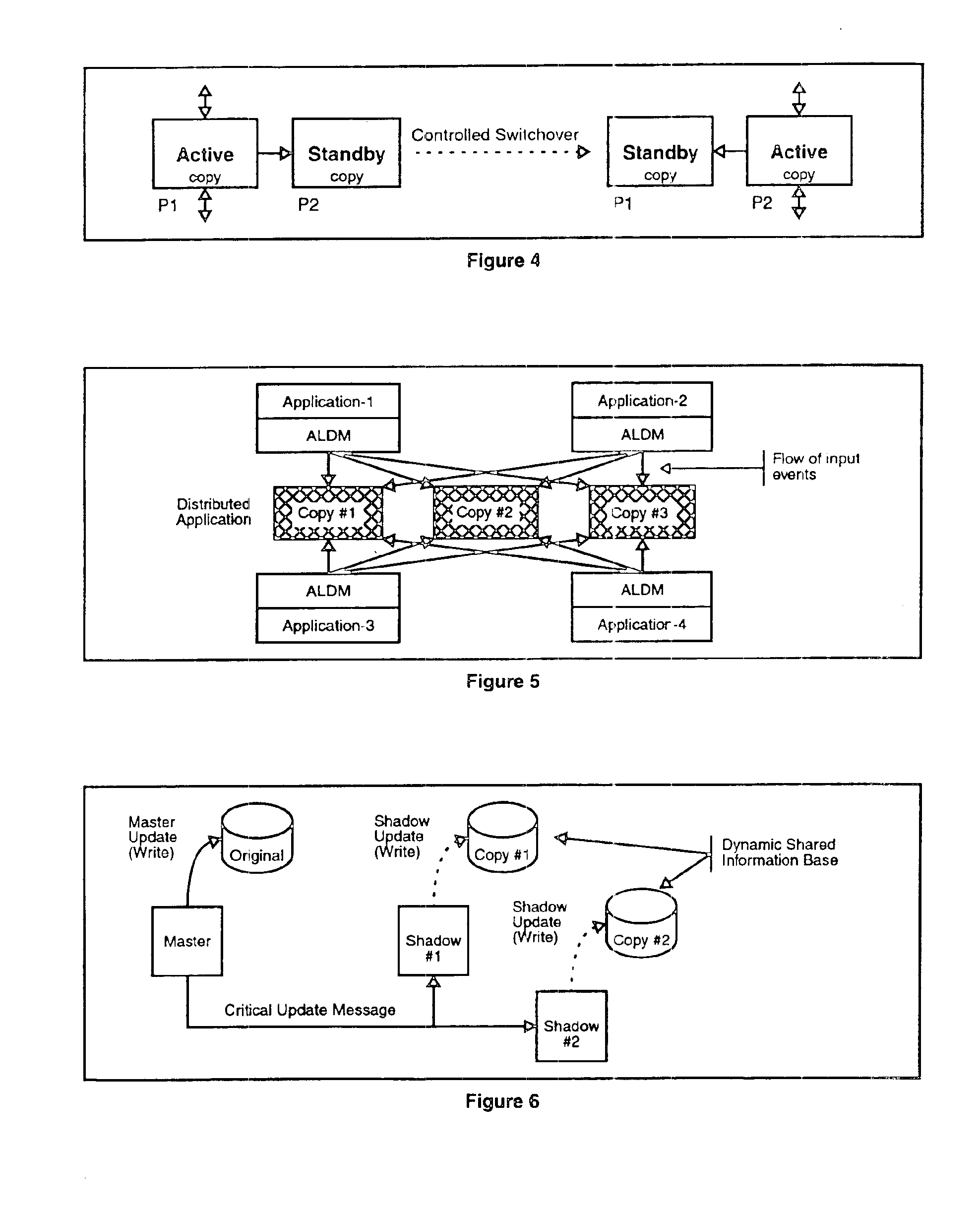

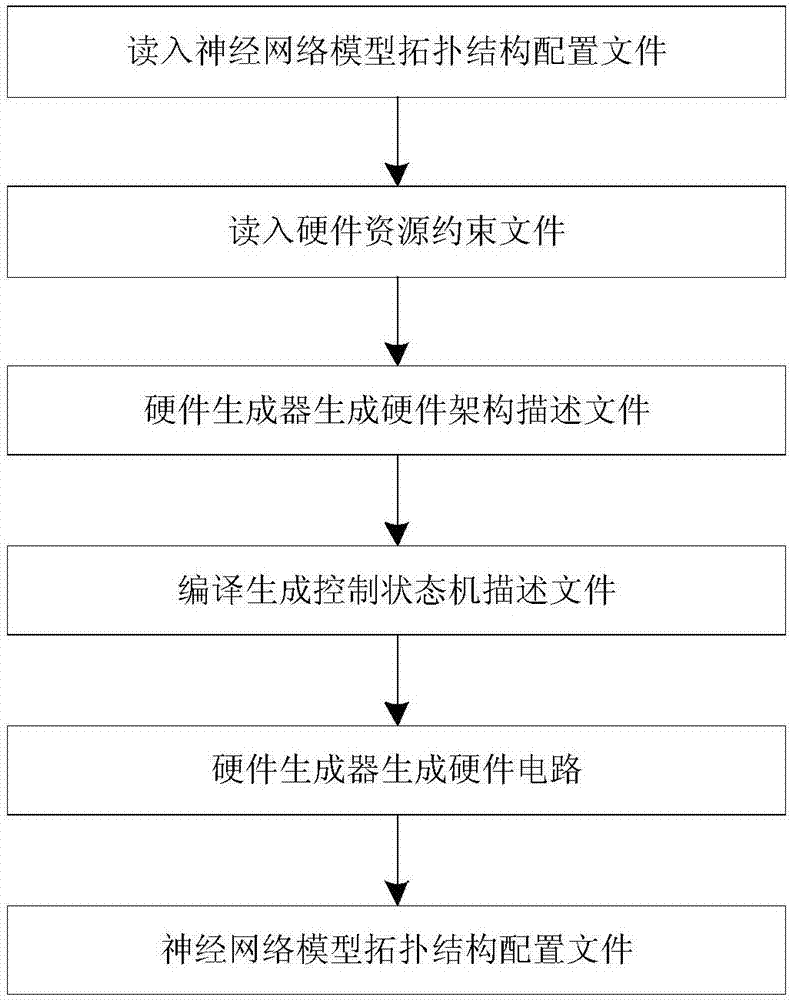

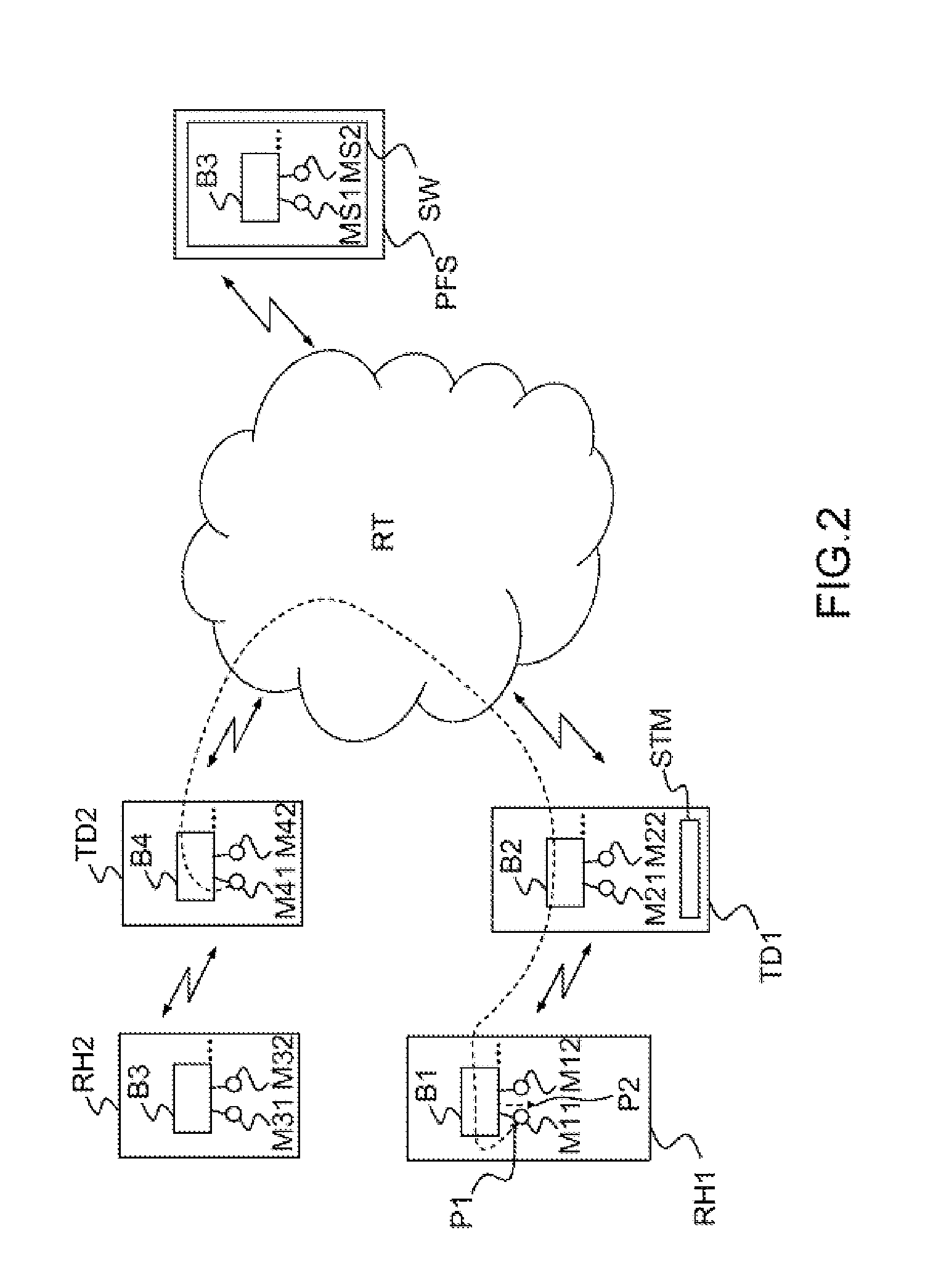

Apparatus and method for building distributed fault-tolerant/high-availability computed applications

InactiveUS6865591B1Multiple failureOptimal hardware utilizationMultiprogramming arrangementsTransmissionOperational systemSystem maintenance

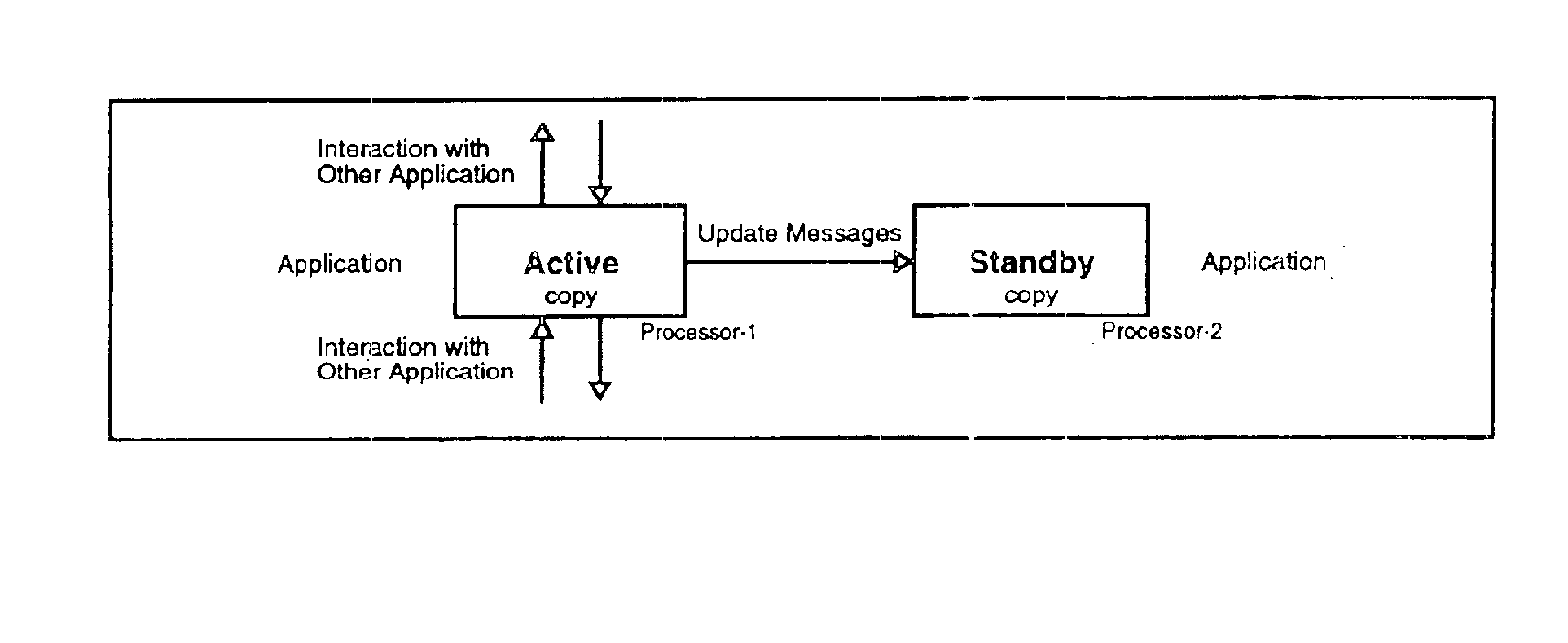

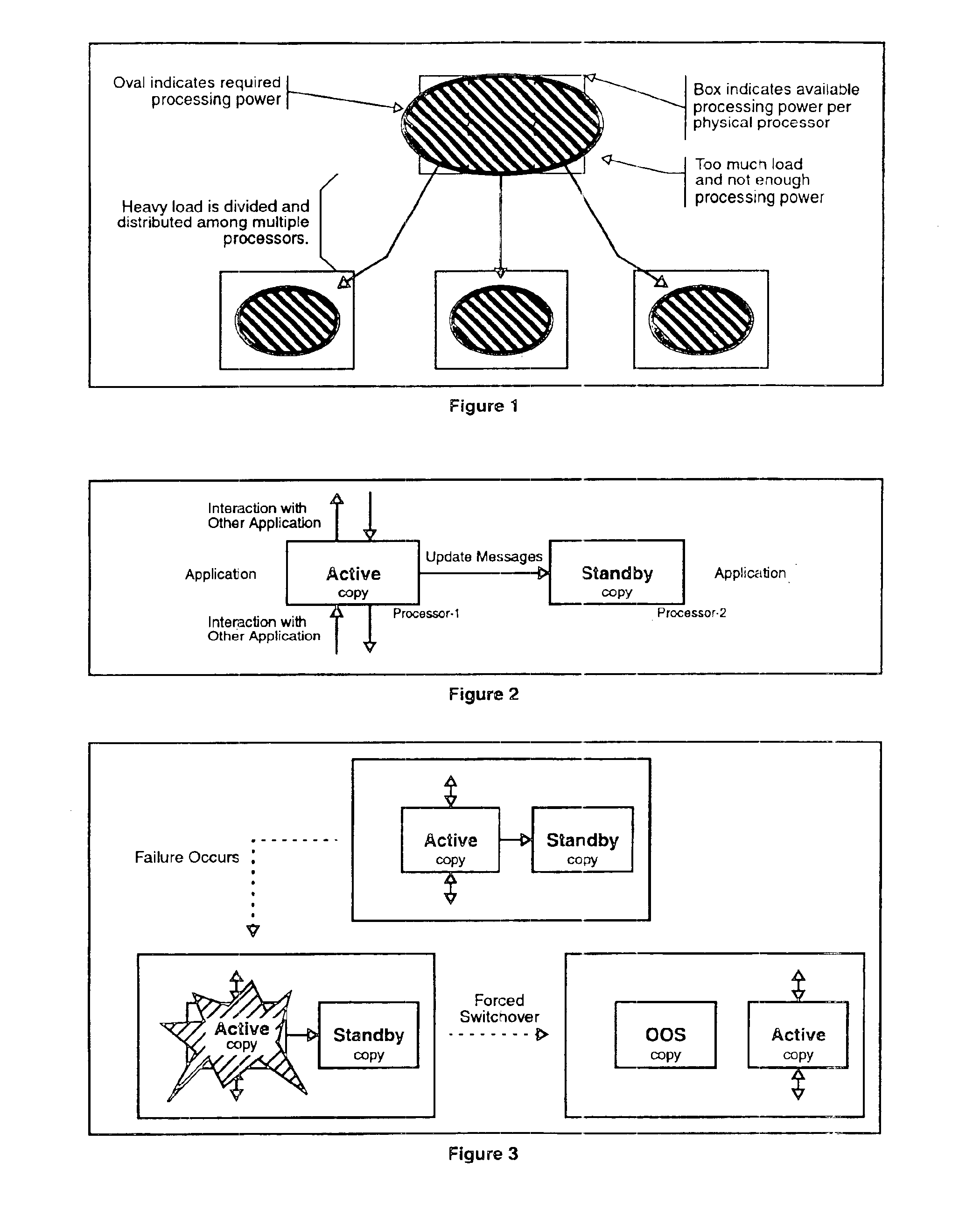

Software architecture for developing distributed fault-tolerant systems independent of the underlying hardware architecture and operating system. Systems built using architecture components are scalable and allow a set of computer applications to operate in fault-tolerant / high-availability mode, distributed processing mode, or many possible combinations of distributed and fault-tolerant modes in the same system without any modification to the architecture components. The software architecture defines system components that are modular and address problems in present systems. The architecture uses a System Controller, which controls system activation, initial load distribution, fault recovery, load redistribution, and system topology, and implements system maintenance procedures. An Application Distributed Fault-Tolerant / High-Availability Support Module (ADSM) enables an applications( ) to operate in various distributed fault-tolerant modes. The System Controller uses ADSM's well-defined API to control the state of the application in these modes. The Router architecture component provides transparent communication between applications during fault recovery and topology changes. An Application Load Distribution Module (ALDM) component distributes incoming external events towards the distributed application. The architecture allows for a Load Manager, which monitors load on various copies of the application and maximizes the hardware usage by providing dynamic load balancing. The architecture also allows for a Fault Manager, which performs fault detection, fault location, and fault isolation, and uses the System Controller's API to initiate fault recovery. These architecture components can be used to achieve a variety of distributed processing high-availability system configurations, which results in a reduction of cost and development time.

Owner:TRILLIUM DIGITAL SYST

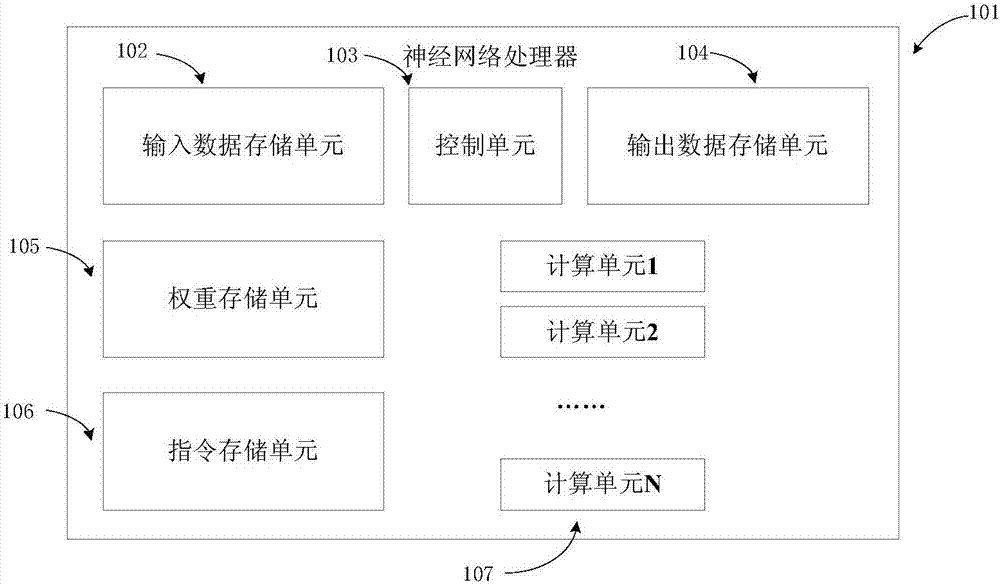

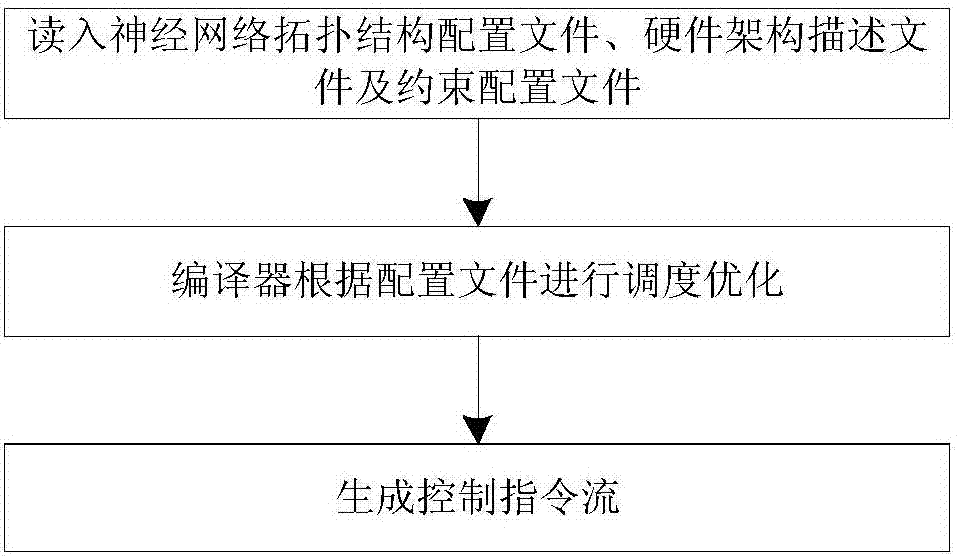

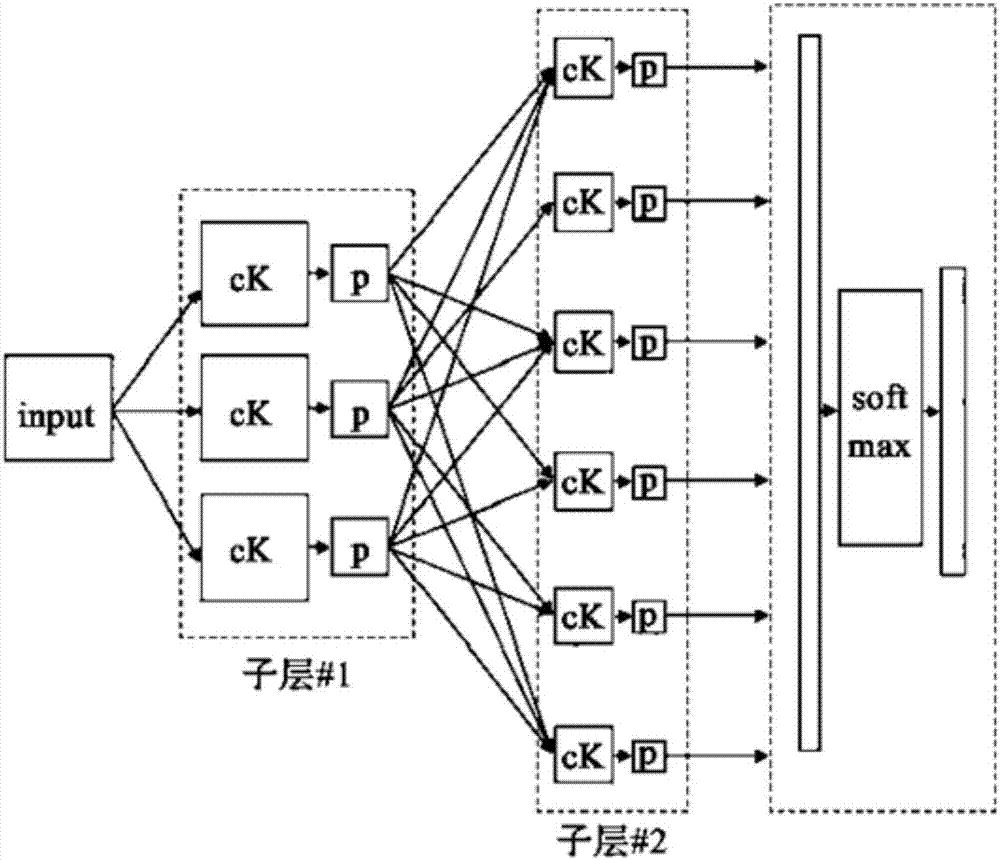

Automated design method, device and optimization method applied for neural network processor

ActiveCN107016175ABiological neural network modelsCAD circuit designComputer architectureHardware architecture

The invention discloses an automated design method, device and optimization method applied for a neural network processor. The method comprises the steps that neural network model topological structure configuration files and hardware resource constraint files are obtained, wherein the hardware resource constraint files comprise target circuit area consumption, target circuit power consumption and target circuit working frequency; a neural network processor hardware architecture is generated according to the neural network model topological structure configuration files and the hardware resource constraint files, and hardware architecture description files are generated; according to a neural network model topological structure, the hardware resource constraint files and the hardware architecture description files, modes of data scheduling, storage and calculation are optimized, and corresponding control description files are generated; according to the hardware architecture description files and the control description files, cell libraries meet the design requirements are found in constructed reusable neural network cell libraries, corresponding control logic and a corresponding hardware circuit description language are generated, and the hardware circuit description language is transformed into a hardware circuit.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

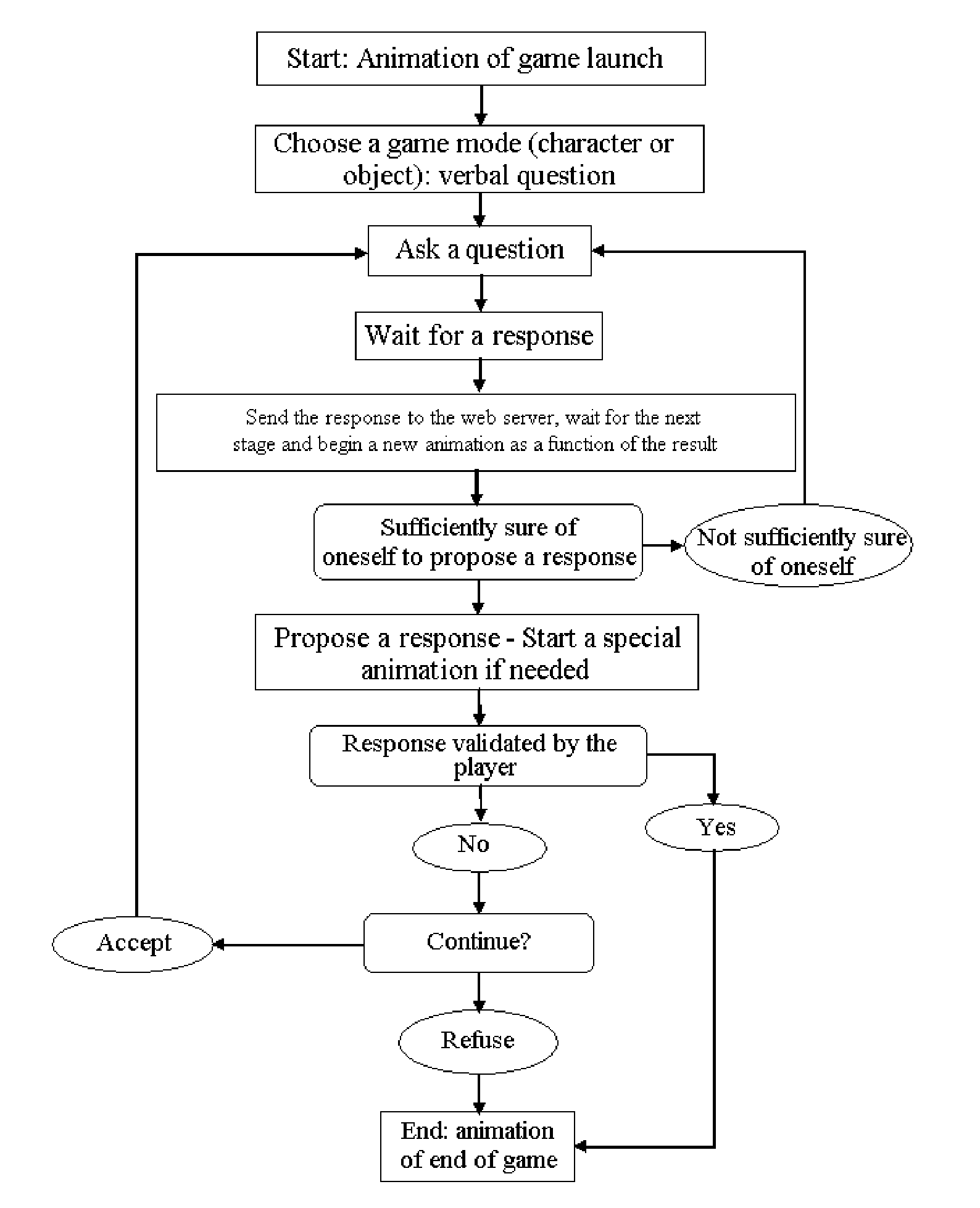

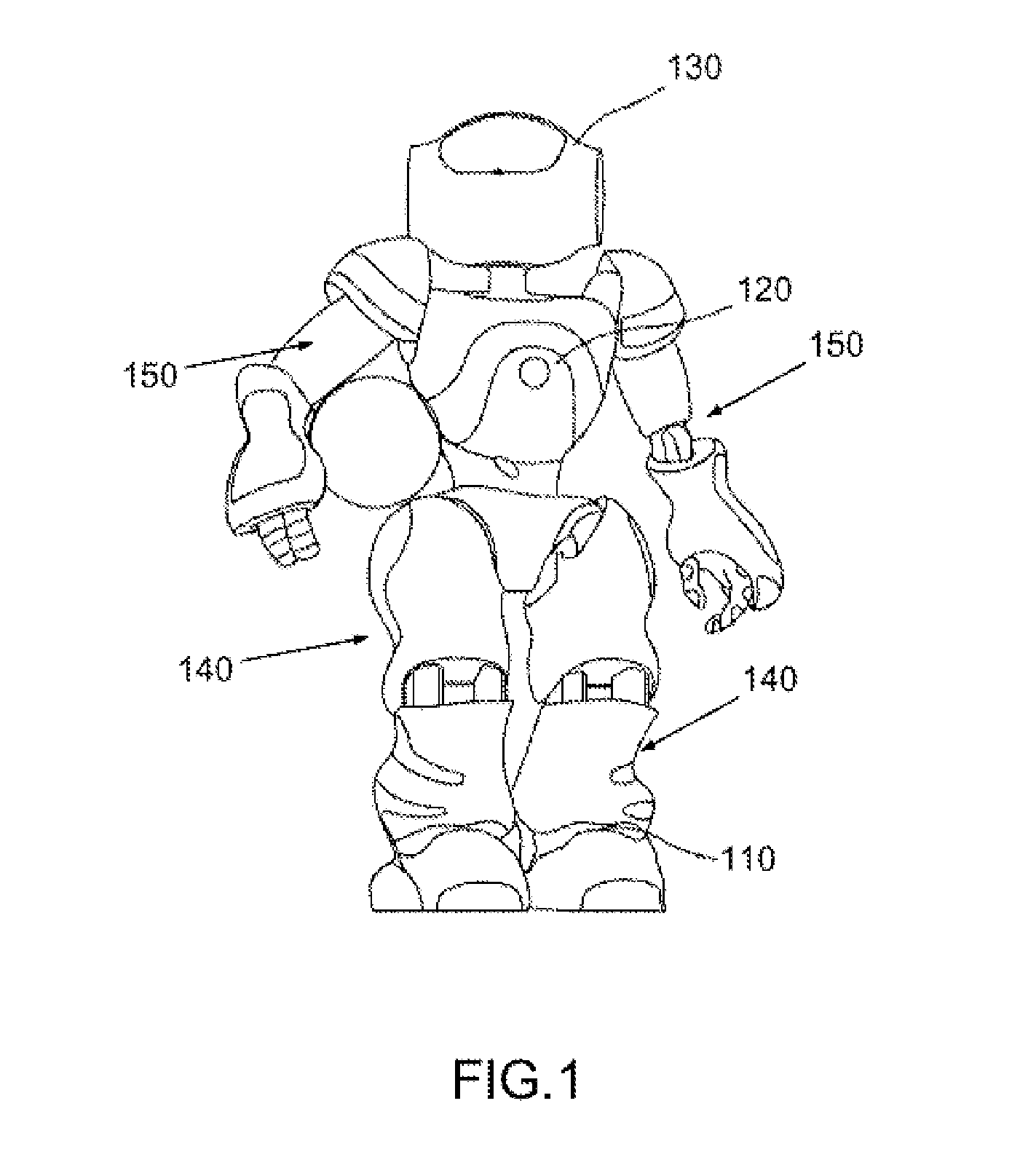

Humanoid game-playing robot, method and system for using said robot

InactiveUS20130103196A1Programme-controlled manipulatorIndoor gamesHumanoid robot naoHardware architecture

The invention relates to a player humanoid robot, a method and computer programs associated therewith. The prior art does not disclose any humanoid robot able to move on its lower limbs, to perform gestures, to communicate visual and / or audible signs, to receive same and interpret them so as to deduce therefrom appropriate behaviors for participating in a game in time as compere, questioner, questioned, investigator or mobile stake for the game. The hardware architectures, internal software and software for programming the robot of the invention make it possible to carry out these functions and to create new game experiences in which the boundaries between virtual world and real world are shifted once again.

Owner:SOFTBANK ROBOTICS EURO

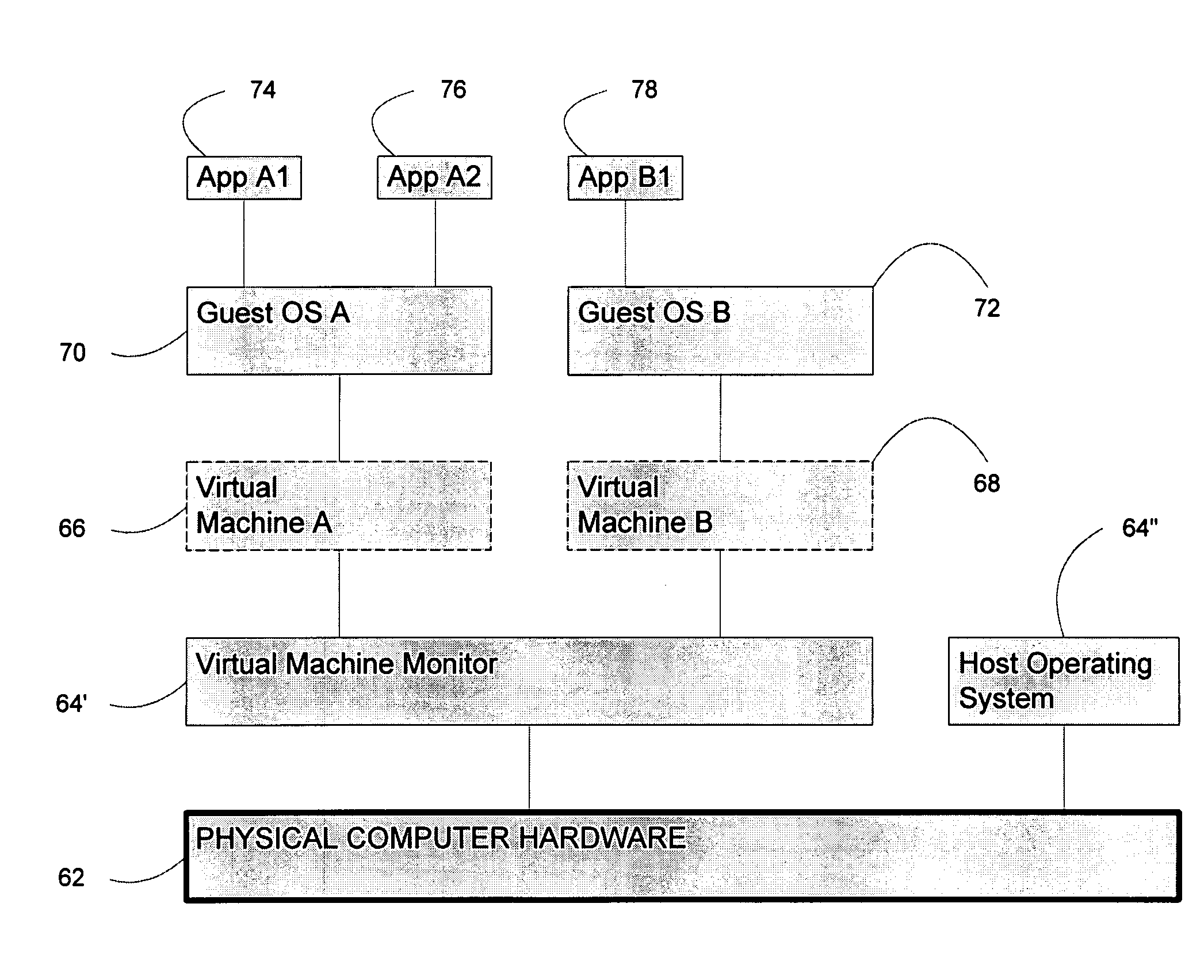

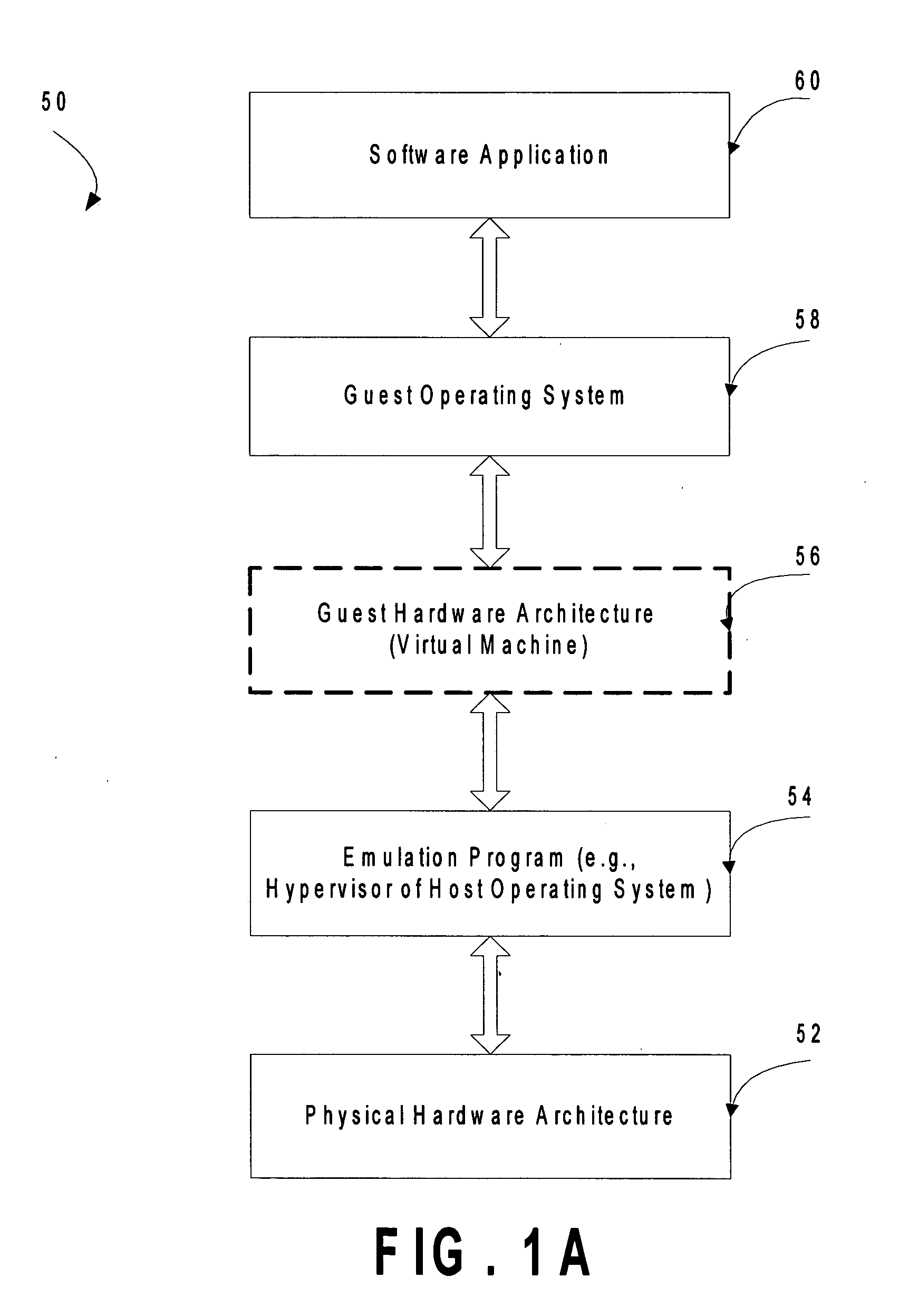

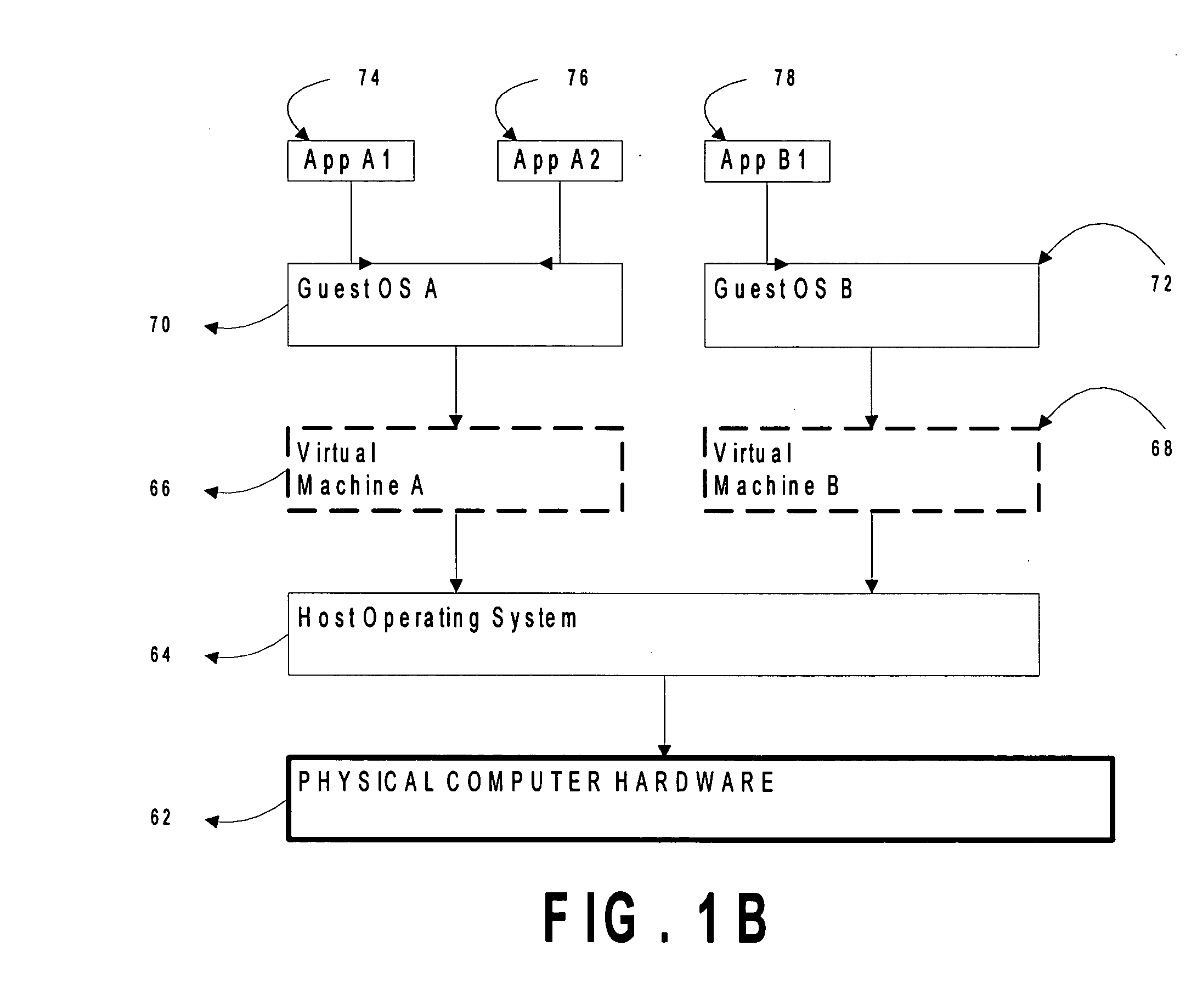

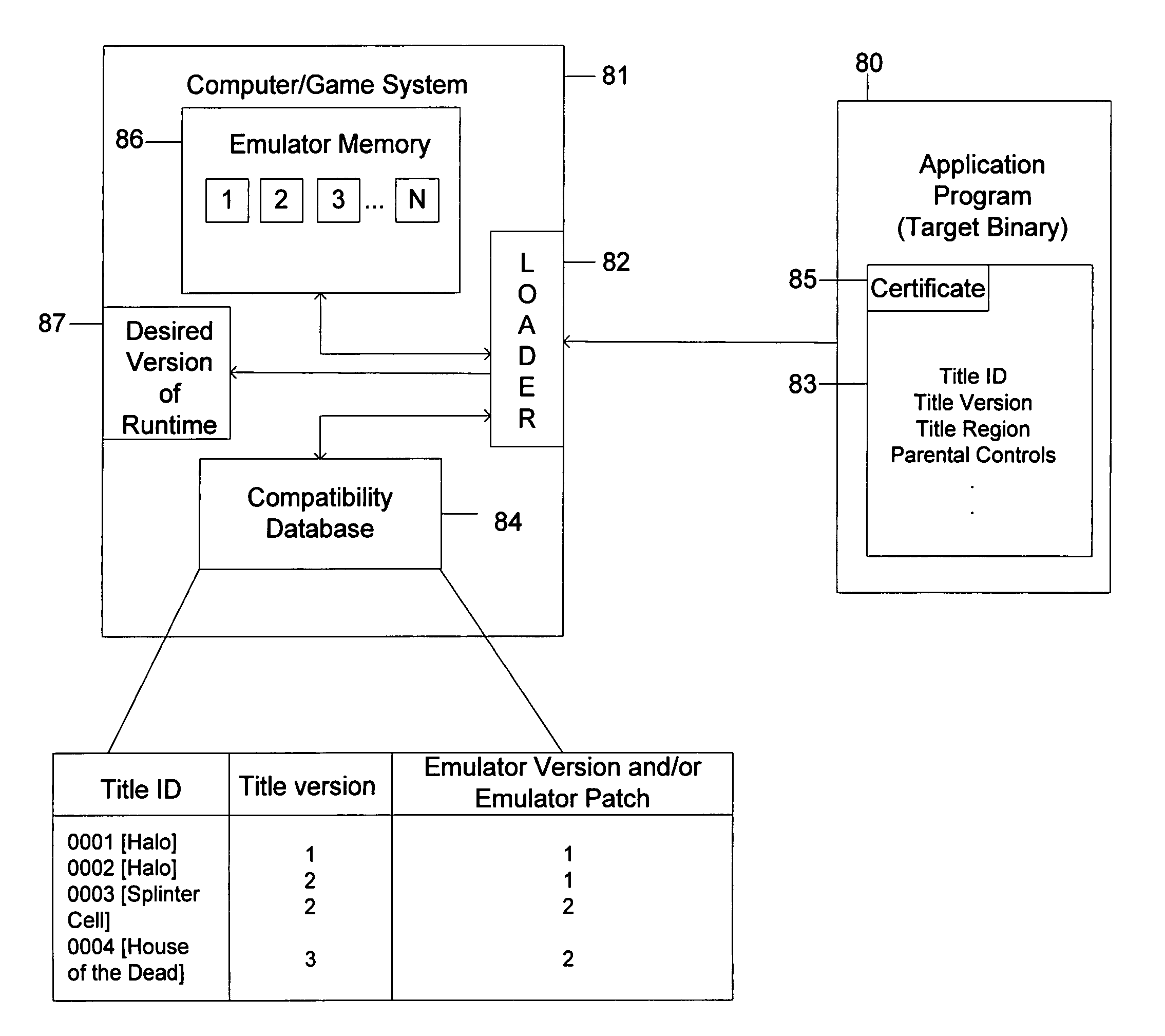

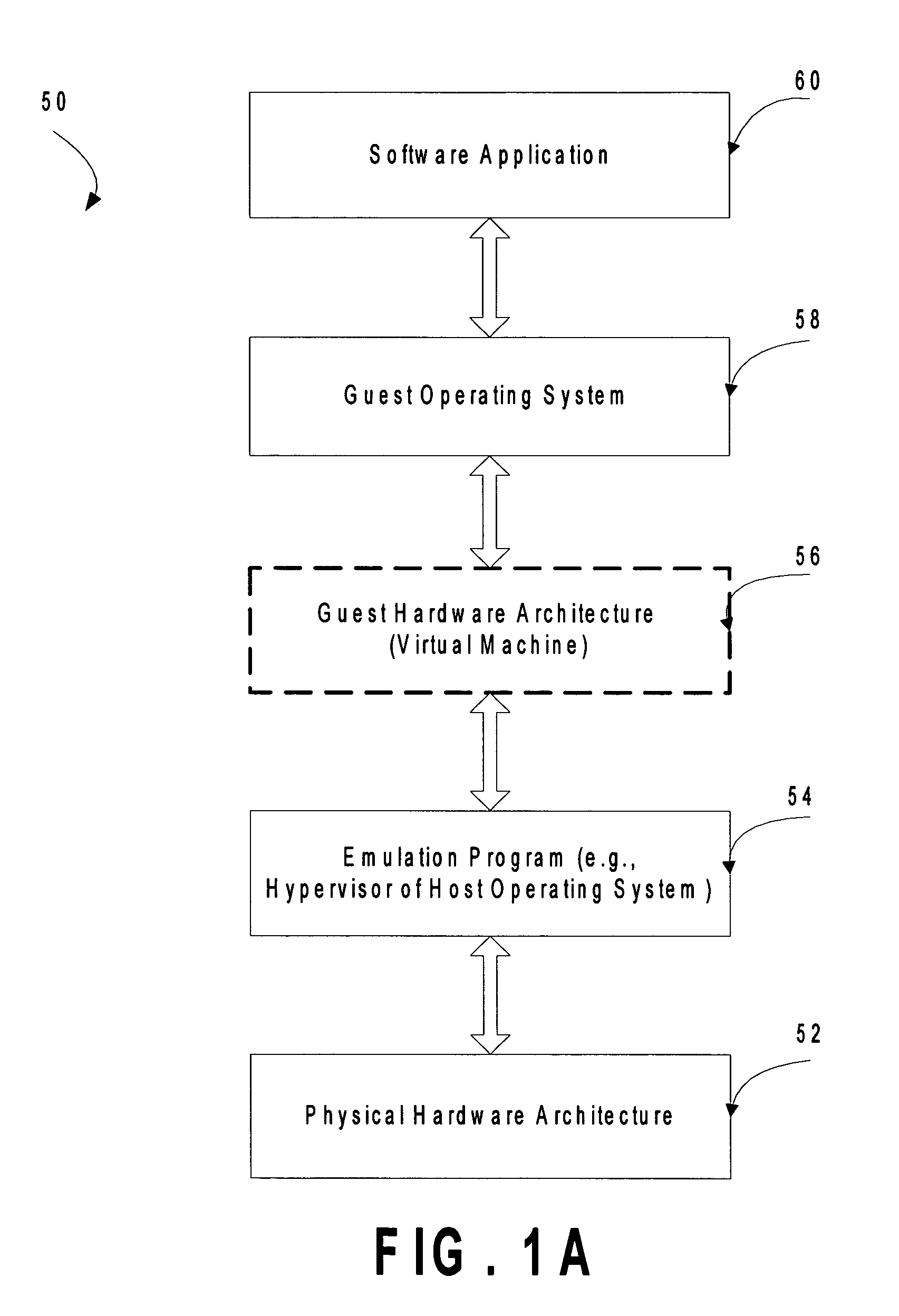

System and method for distributing updates to runtime systems without destabilizing compatibility

ActiveUS20060281556A1Reduce storage spaceVideo gamesSpecial data processing applicationsOperational systemSoftware bug

Multiple versions of a runtime system, such as a software emulation application that emulates a legacy hardware architecture, are allowed to co-exist in the memory of a new hardware architecture. The operating system software of the new hardware architecture reads configuration data from a database or table to decide which version of the runtime system is desirable for an application program or game that is being loaded or is currently running, and, if a match is found, only that runtime system is invoked. To reduce storage footprint, the different versions of the runtime system may be stored using “differential patching” techniques. In this configuration, the operating system will always launch the same basic runtime system binary, but it will select a different differential patch to apply at run-time based on the title as determined during the database lookup. In this fashion, future changes to the runtime system to correct software bugs, incompatibility issues, and the like only need to be tested for the relevant target application programs or games.

Owner:MICROSOFT TECH LICENSING LLC

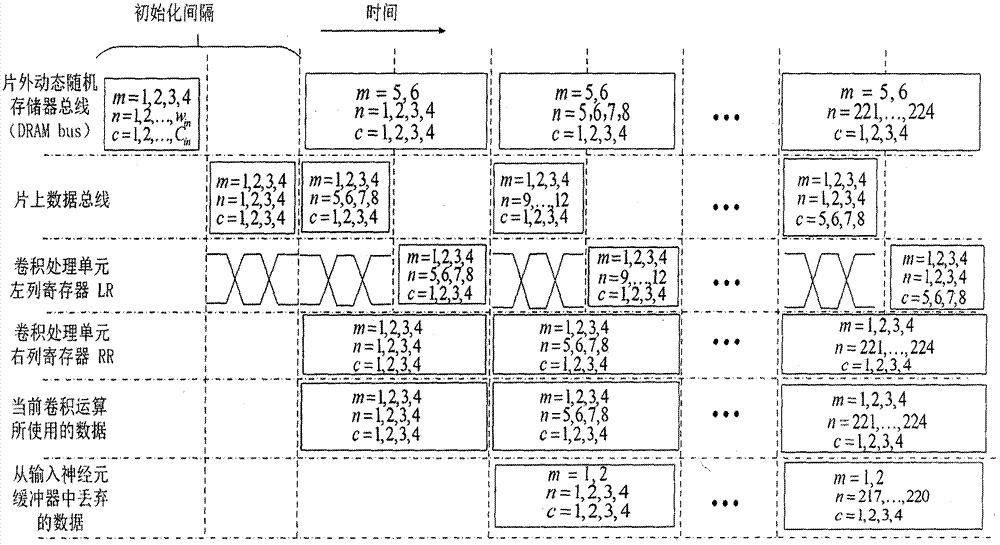

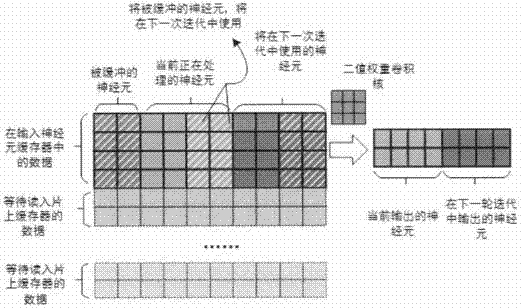

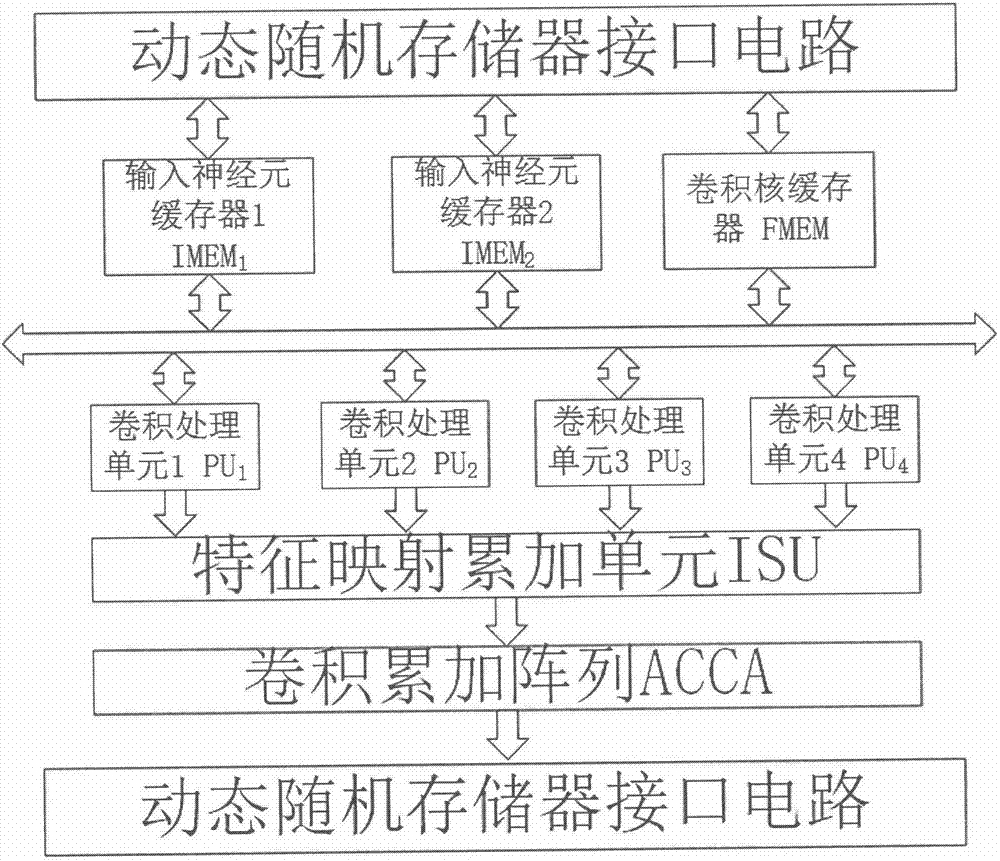

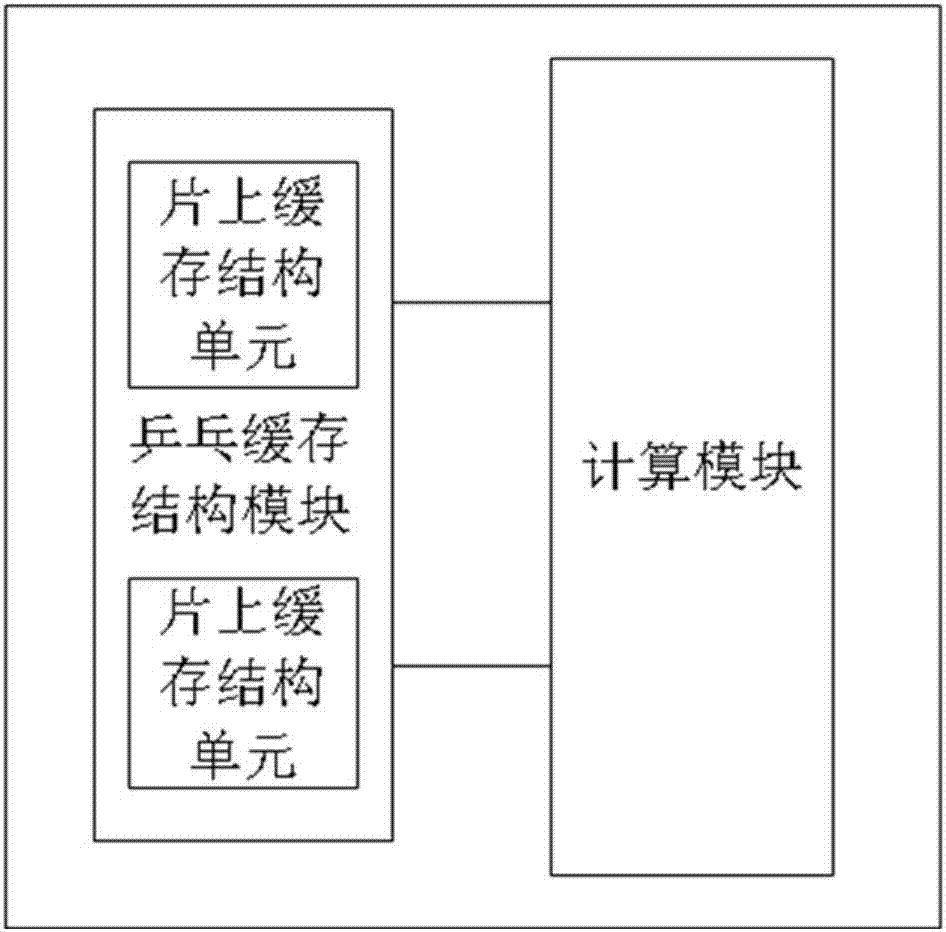

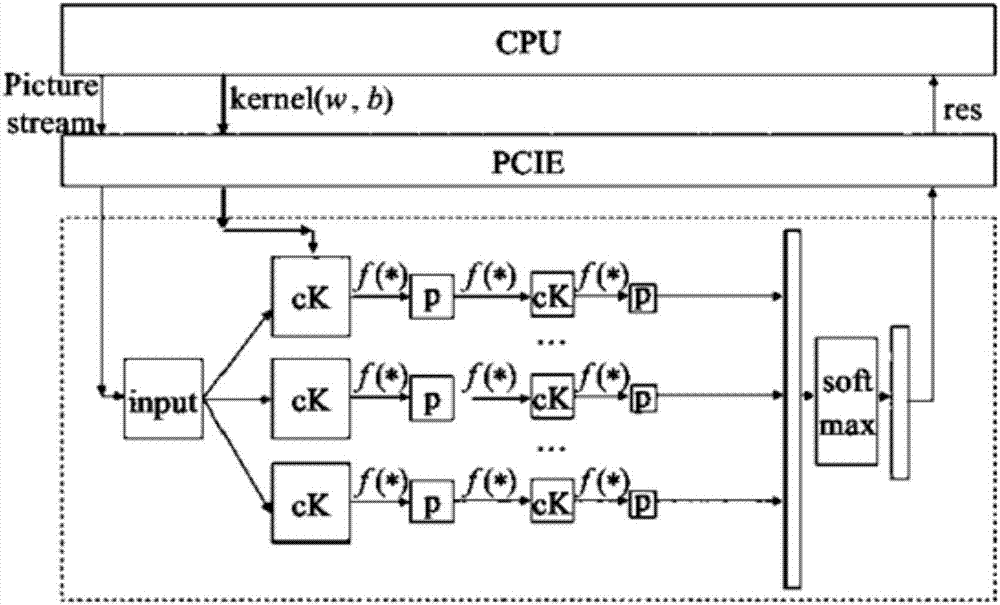

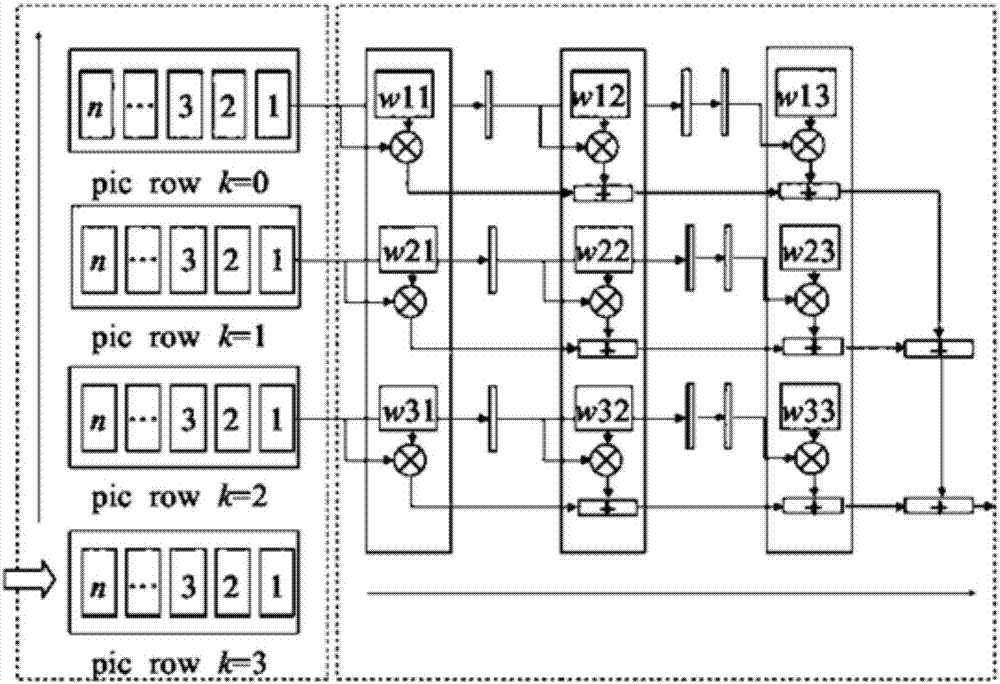

Hardware architecture of binary weight convolution neural network accelerator and calculation process thereof

ActiveCN106875011AReduce accessReduce power consumptionPhysical realisationStatic random-access memoryMemory interface

The invention discloses the hardware architecture of a binary weight convolution neural network accelerator and a calculation process thereof. The hardware architecture comprises three double-ended on-chip static random access memories which are used for buffering the binary weight of input neurons and a convolution layer, four convolution processing units capable of controlling calculation parts to complete major convolution calculation operation according to the calculation process, a feature map accumulation unit and a convolutional accumulation array. The feature map accumulation unit and the convolutional accumulation array are used for further processing the operation result of the convolution processing units to acquire a final correct output neuron value. The entire design exchanges data with an off-chip memory via a dynamic random access memory interface. In addition to the hardware architecture, the invention further provides the detailed calculation process which optimizes the hardware architecture and uses four lines of input feature map as a complete calculation unit. According to the invention, input data are reused to the greatest extent; the access of the off-chip memory is eliminated as much as possible; the power consumption of the deep binary convolution neural network calculation can be effectively reduced; a deep network is supported; and the scheme provided by the invention is a reasonable scheme which can be applied to an embedded system of visual application.

Owner:南京风兴科技有限公司

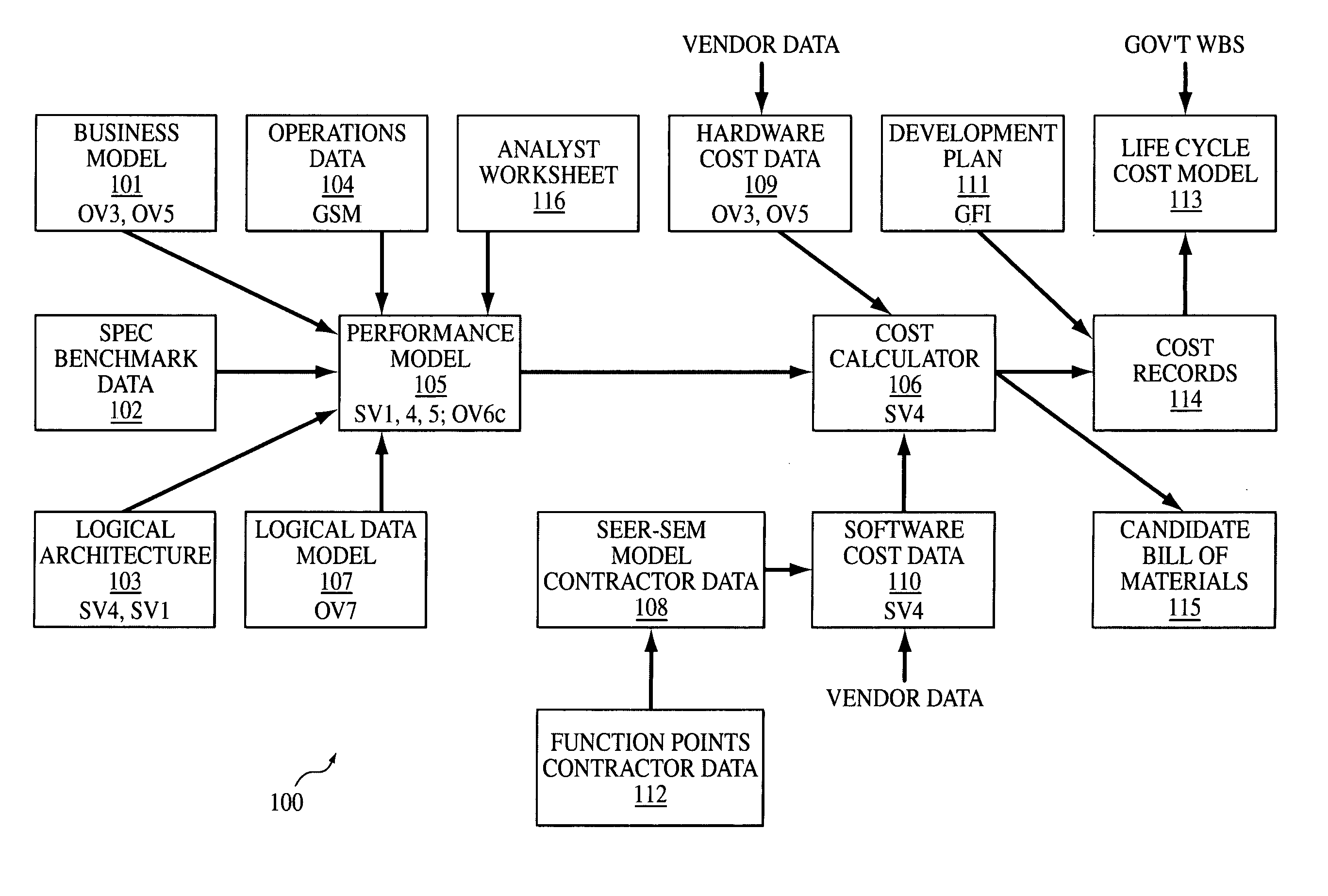

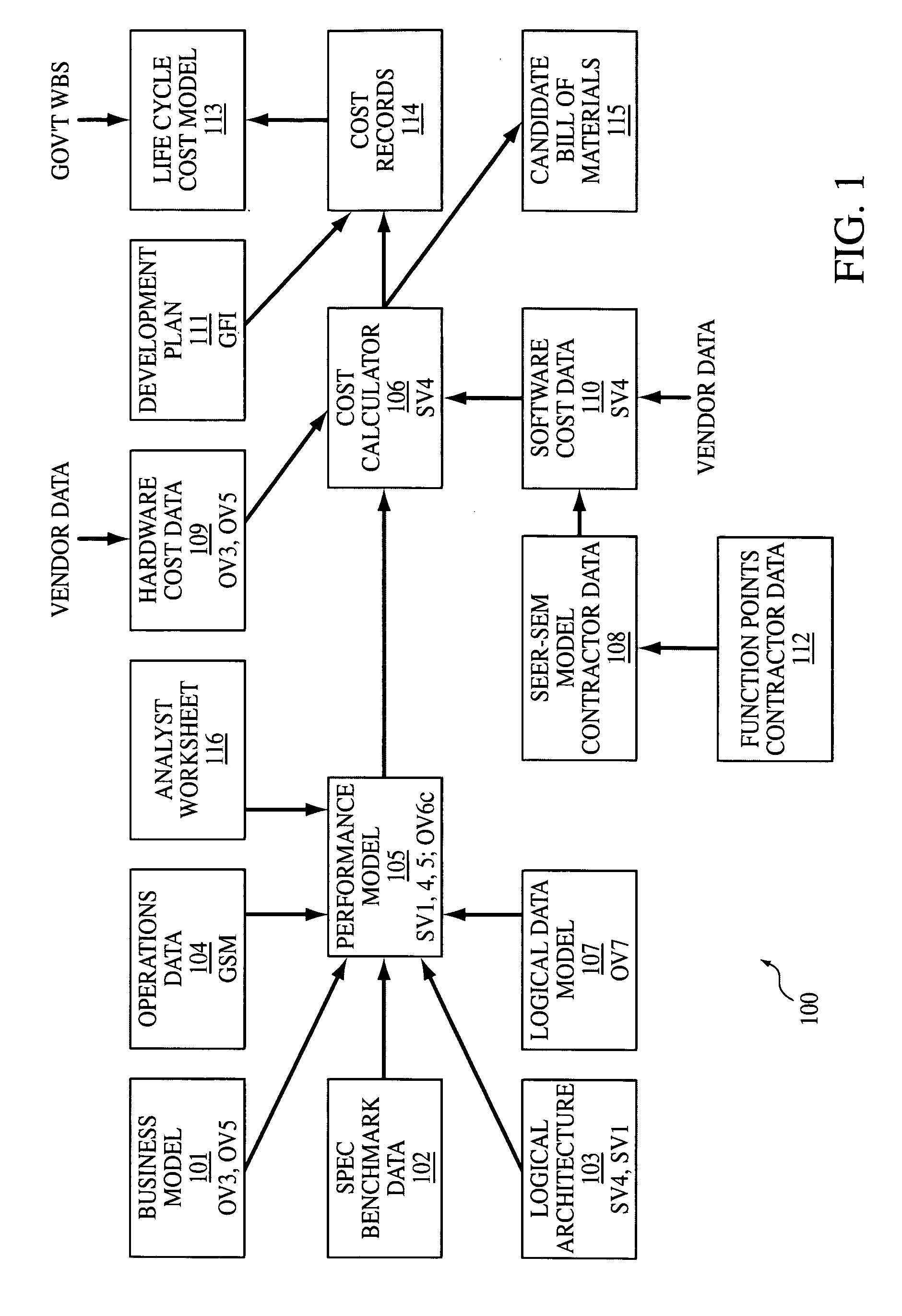

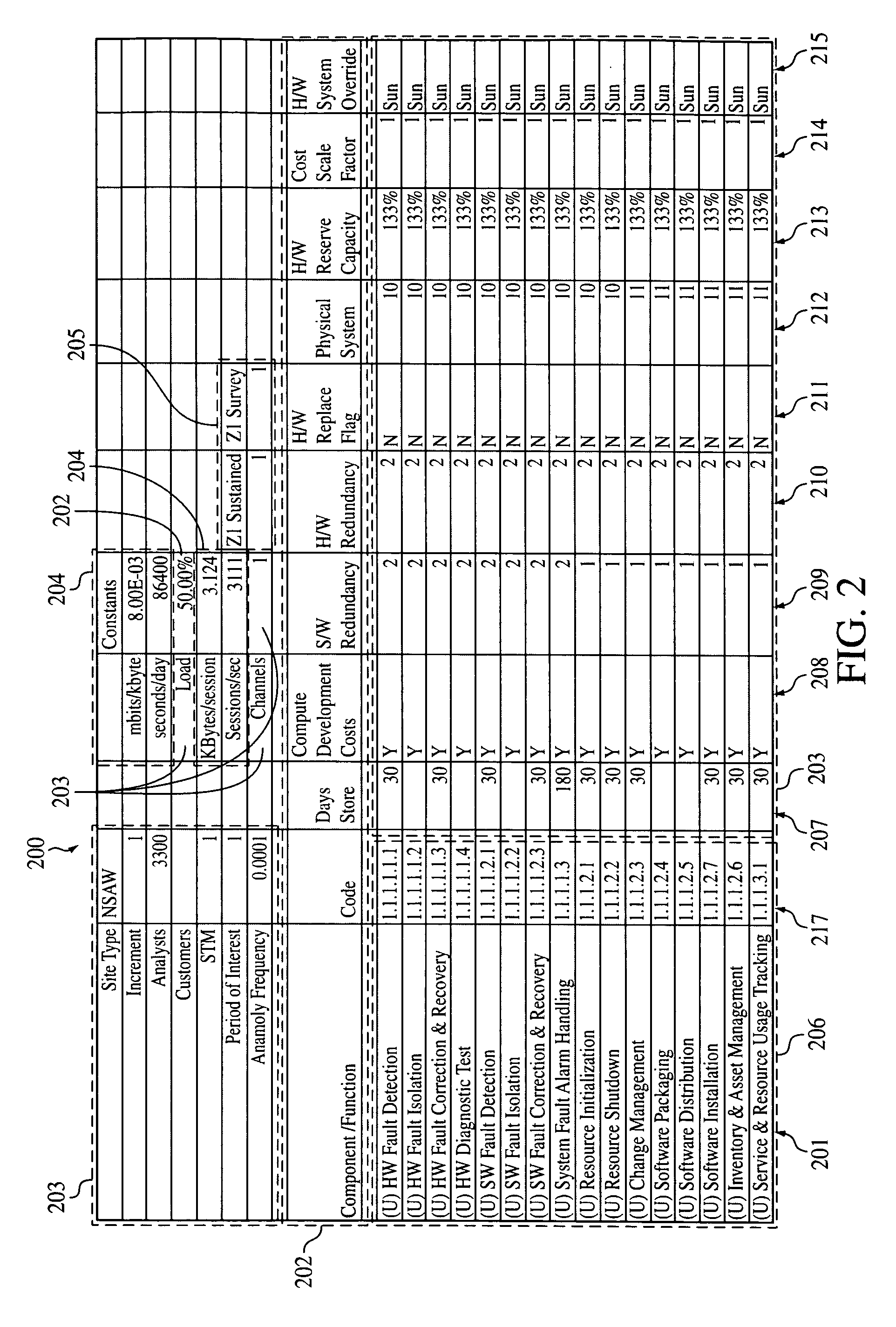

Performance and cost analysis system and method

A performance and cost analysis system and method comprises an output system; a calculation system operatively inputting data to the output system, wherein the calculation system is adapted to process system engineering and cost parameters of a system under analysis, make changes to the system engineering and cost parameters of the system under analysis, and calculate effects of factors influencing the system under analysis based on the changes made to the system engineering and cost parameters; an input model system operatively inputting data to the calculation system; and an input data system operatively inputting data to the calculation system and the output system, wherein the output system is adapted to identify changes to the system under analysis based on the calculated effects, and calculate a life cycle cost of the system under analysis based on the identified changes. Preferably, the system under analysis comprises hardware architecture components.

Owner:PIGGOTT BRYAN N +1

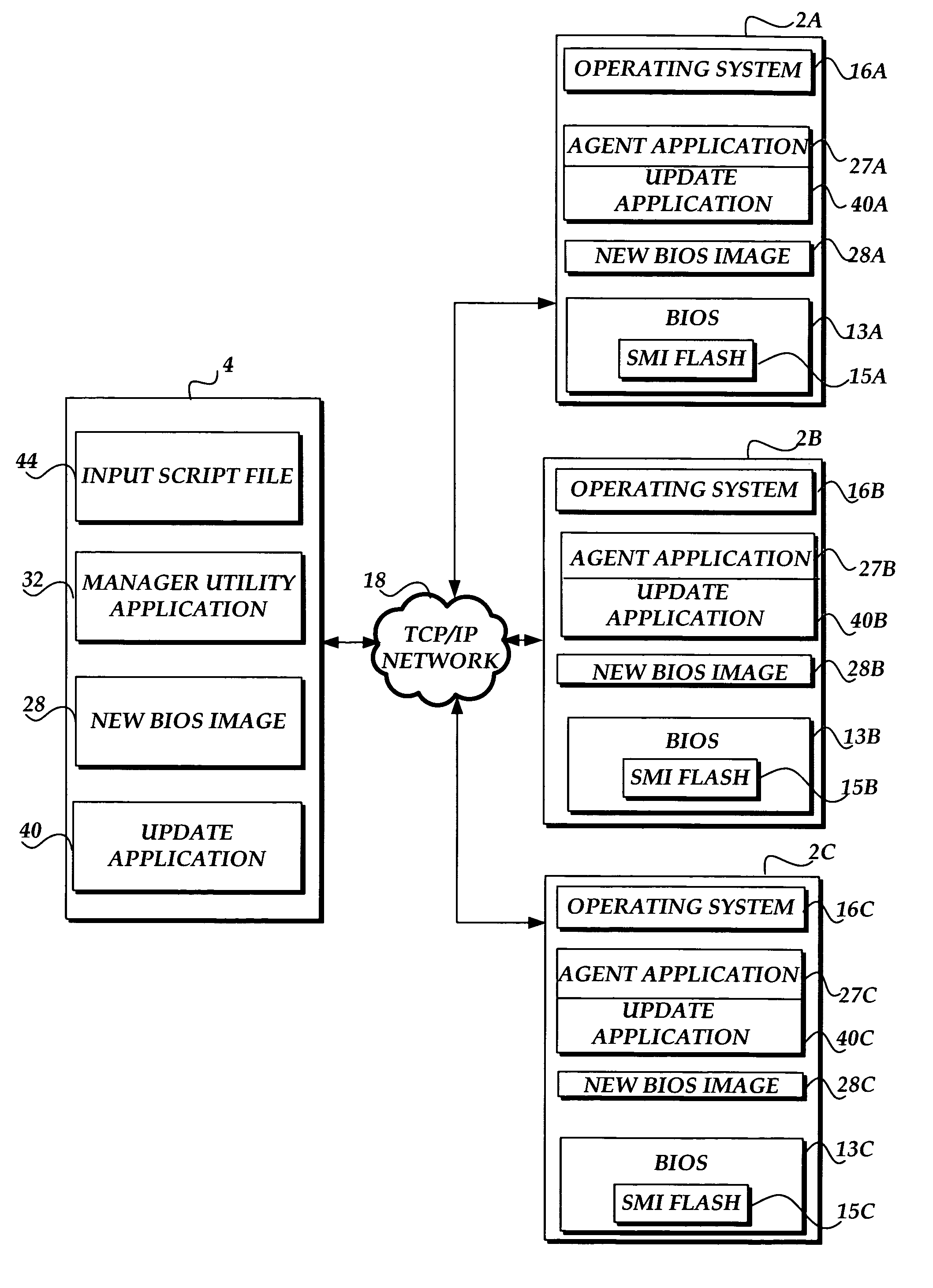

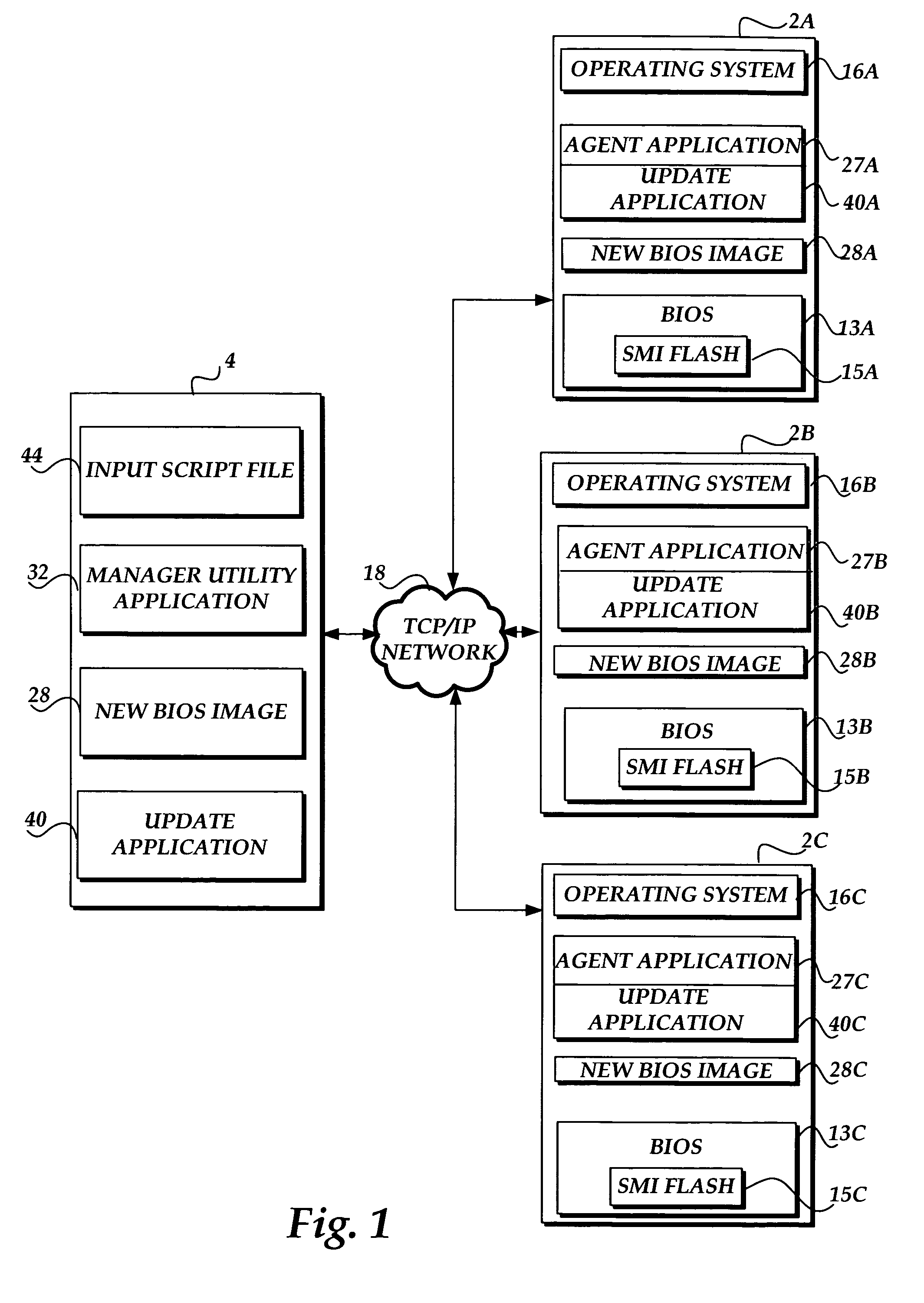

Methods and systems for remotely updating the firmware of multiple computers over a distributed network

These systems and methods make use of operating system dependent applications that allow remote updates to firmware stored on network attached remote computers. Updates to the remote computers may occur on any computer in the network despite diverse hardware architectures and various operating systems executing between the computers. A command to update the firmware on the remote computers is initiated from a manager utility application operating on a network attached manager computer. The command is then received at an agent application operating on each remote computer. Each agent application monitors command activity via a communication port. Upon determining that the command is valid, each agent application receives an update application and a new firmware file from a network attached computer or data storage and utilizes the update application and the new firmware file to update the firmware of each network attached computer.

Owner:AMERICAN MEGATRENDS

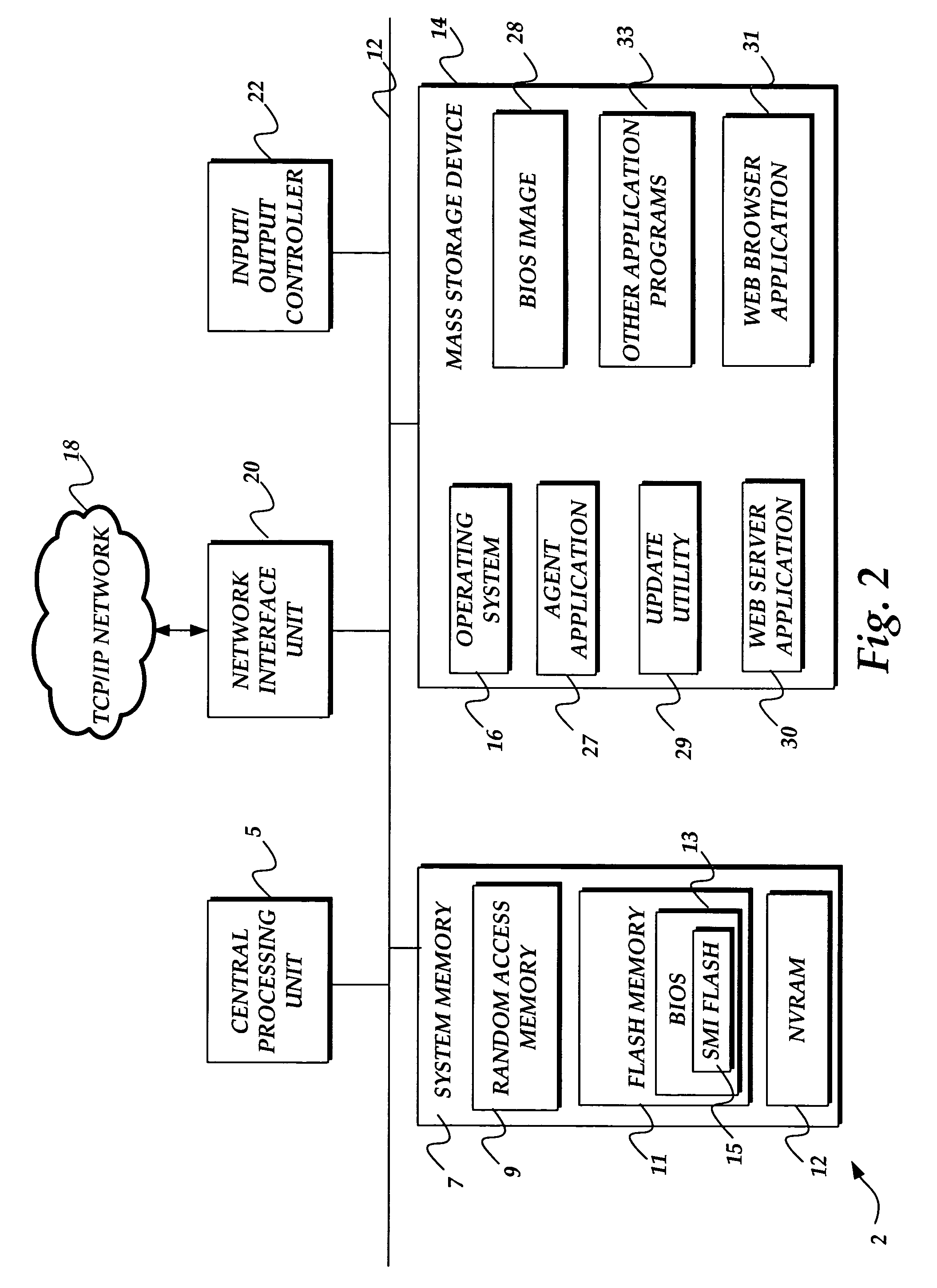

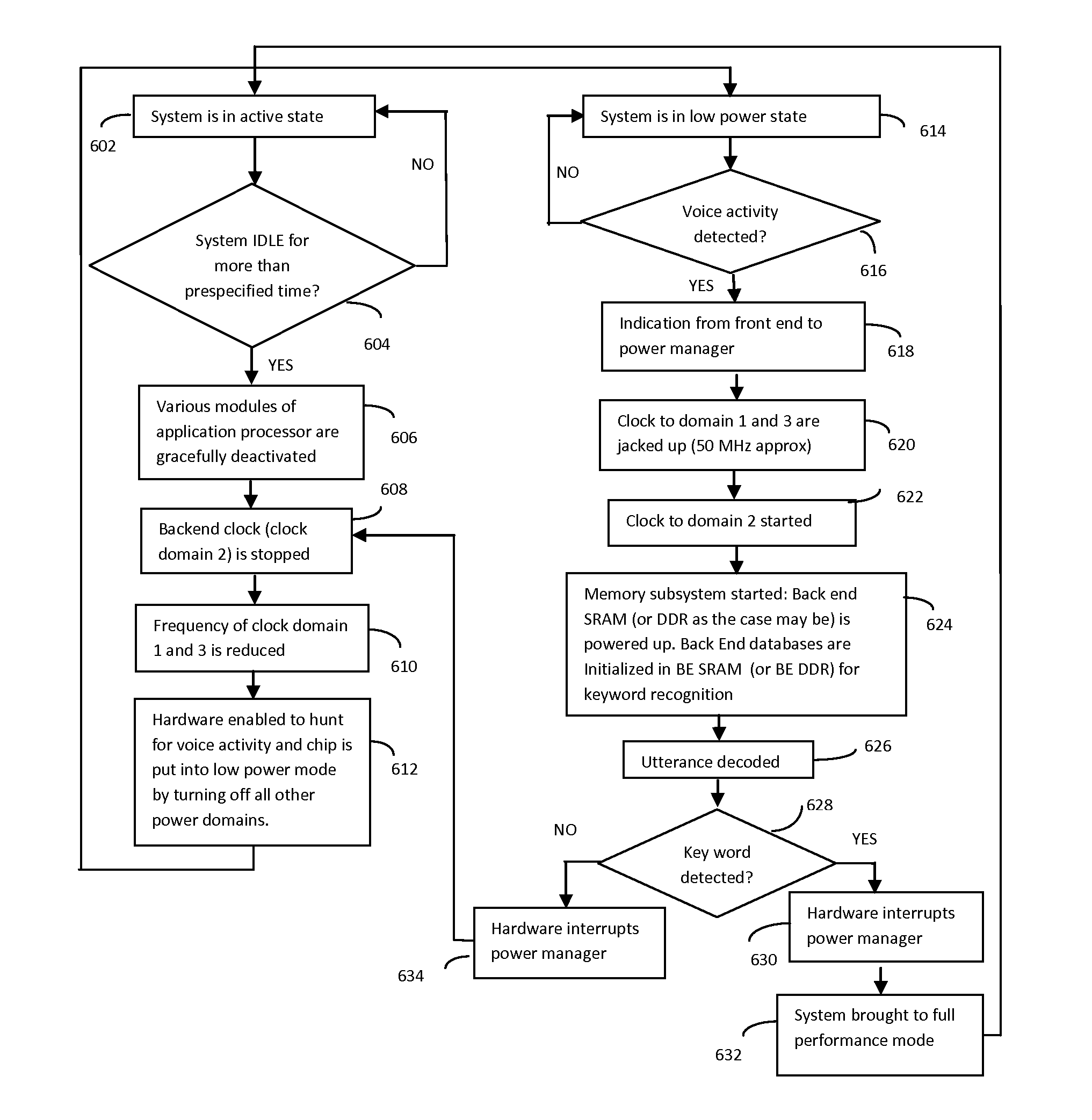

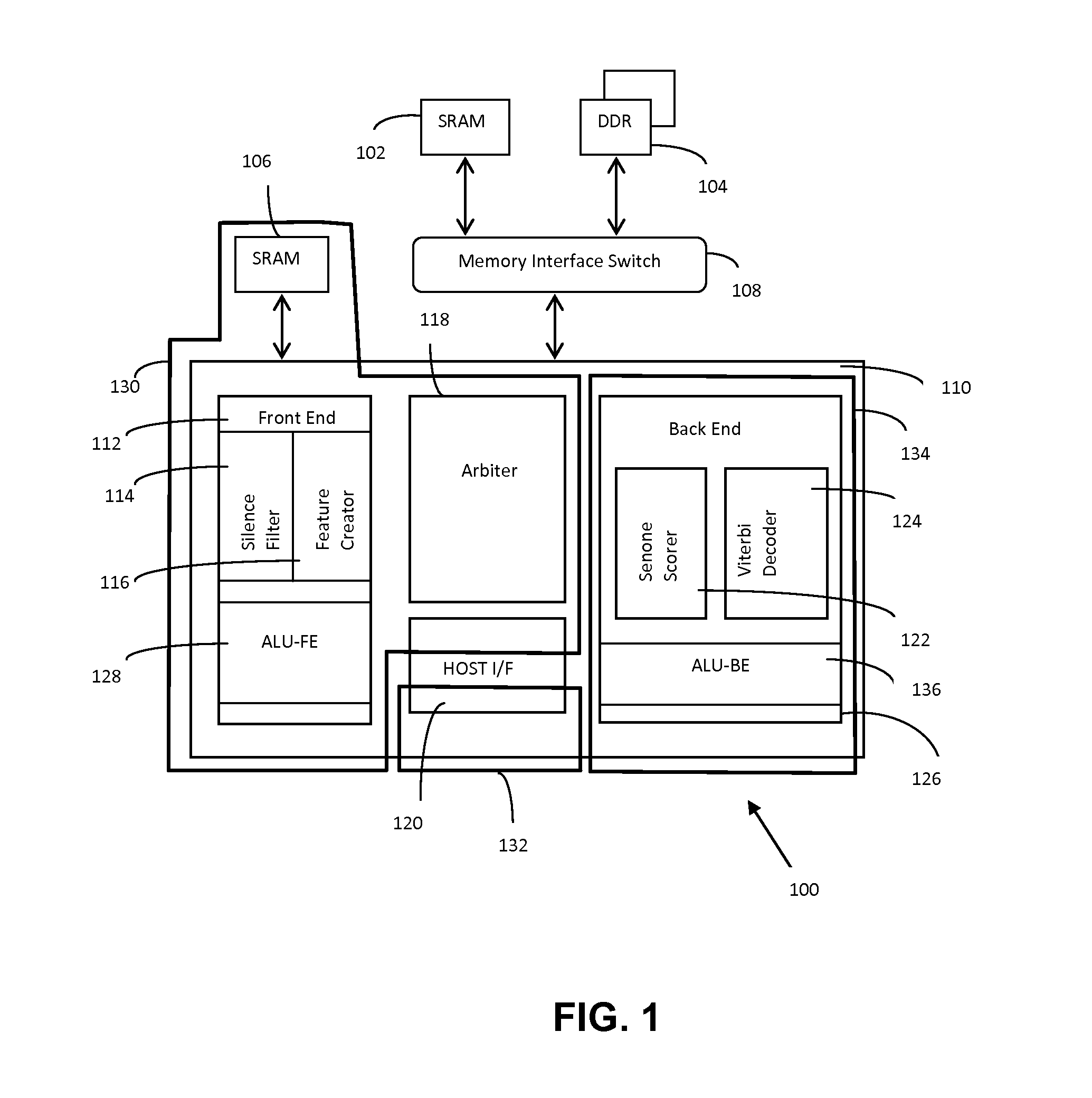

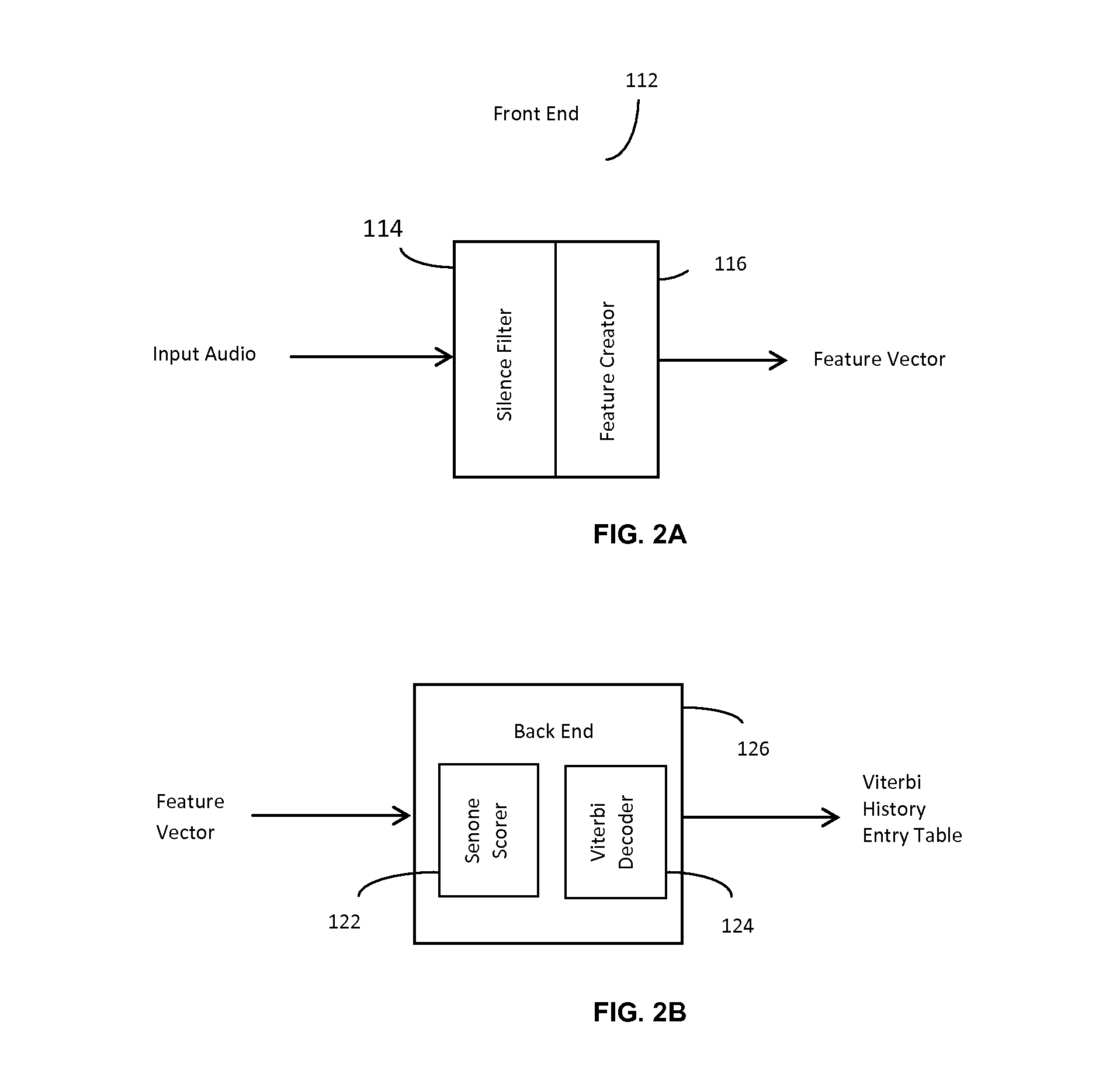

Low Power Mechanism for Keyword Based Hands-Free Wake Up in Always ON-Domain

InactiveUS20140122078A1Power supply for data processingSpeech recognitionHardware architectureHands free

A low power keyword based speech recognition hardware architecture for hands free wake up of devices is provided. This system can be used in always ON domain for detection of voice activity, due to its low power operational ability. The system goes into deep low power state by deactivating all the non-required processes, if no activity is detected for a pre-specified time. Upon detection of the valid voice activity the system searches for the detection of the spoken keyword, if the valid keyword is detected, all the application processes are activated and system goes into full functional mode and if the voice activity doesn't contain the valid keyword present in the database then the system goes back into the deep low power state.

Owner:3ILOGIC DESIGNS

Systems and methods for supporting multiple gaming console emulation environments

ActiveUS7685593B2Reduce storage spaceDigital computer detailsVideo gamesOperational systemSoftware emulation

Owner:MICROSOFT TECH LICENSING LLC

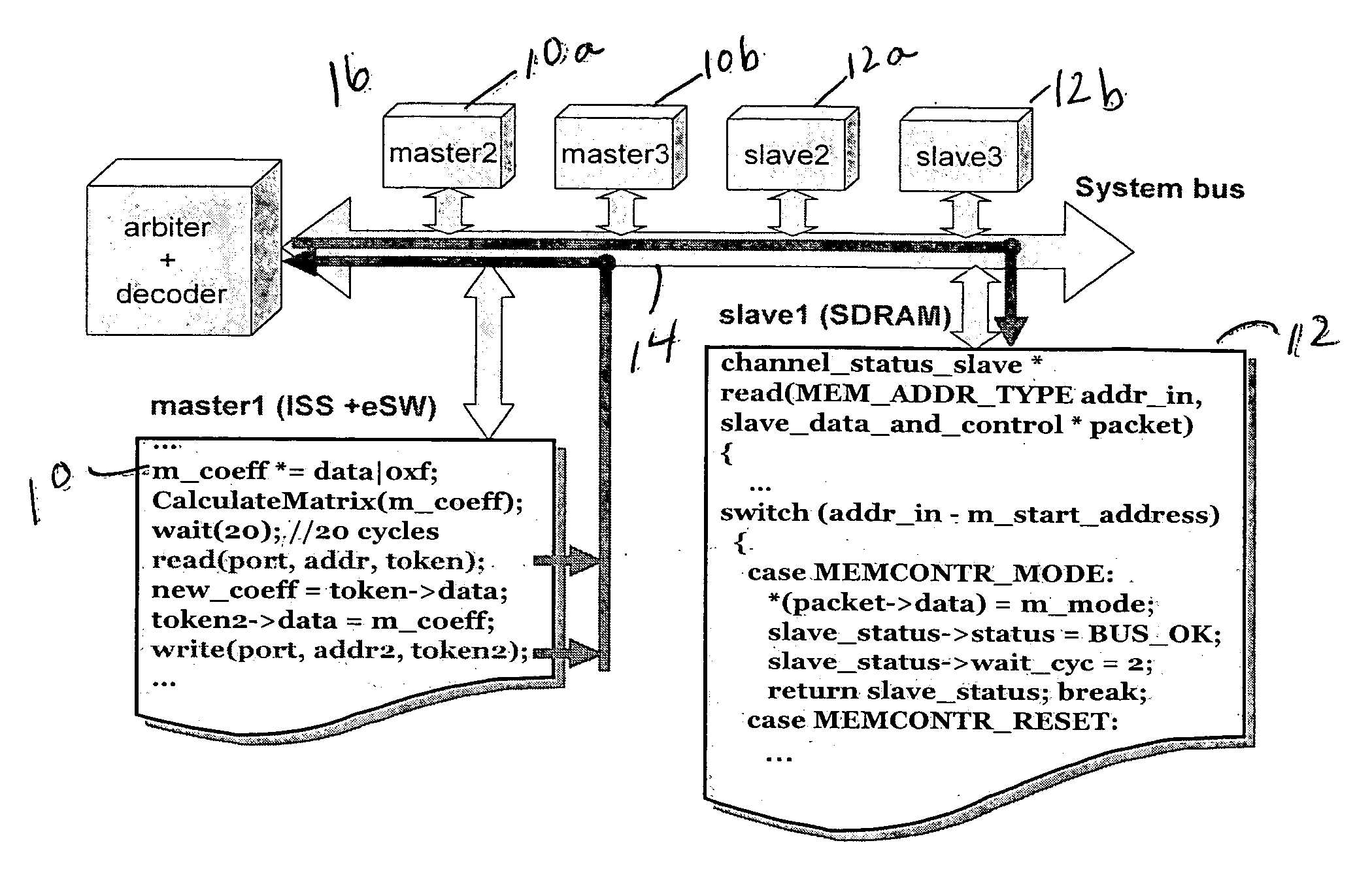

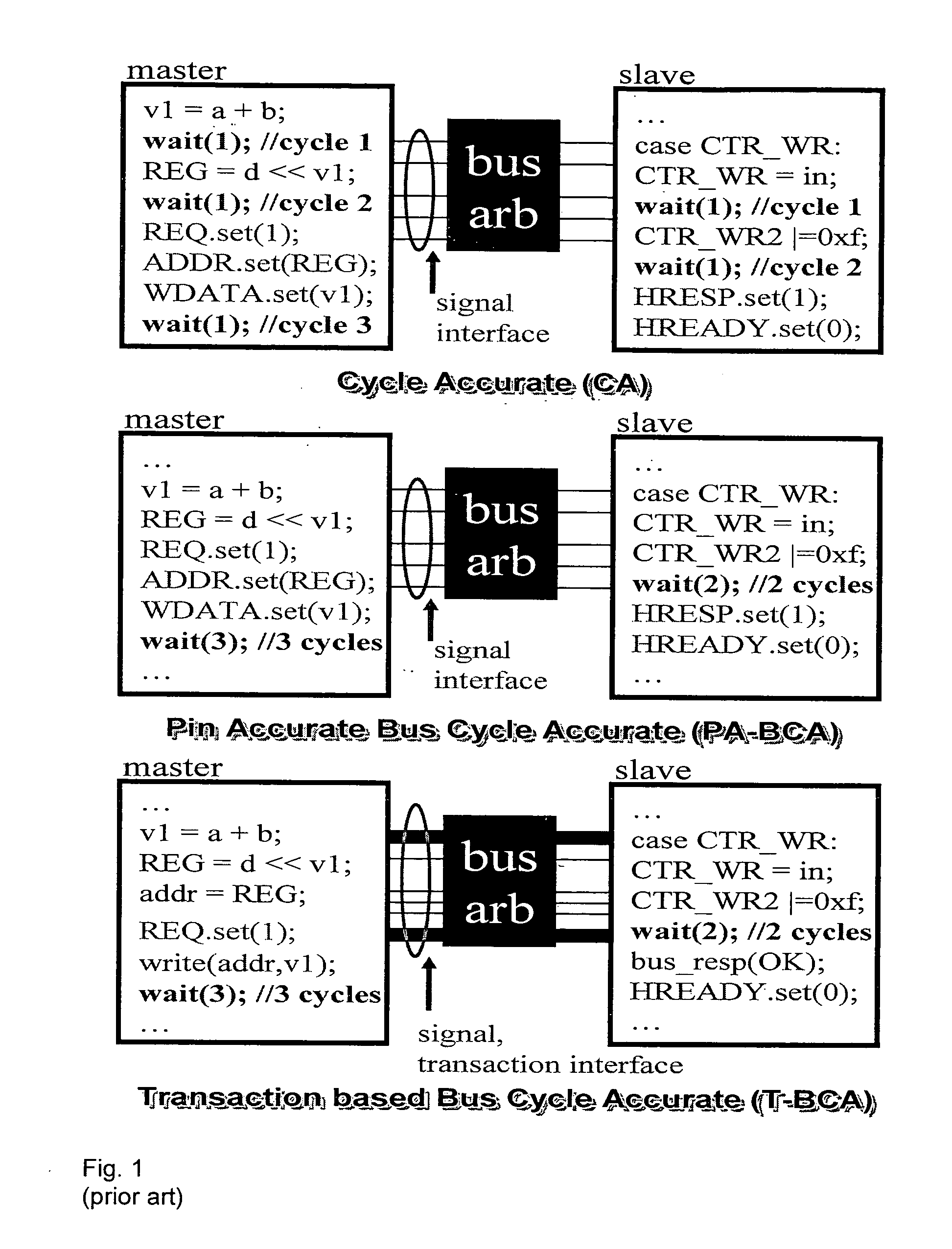

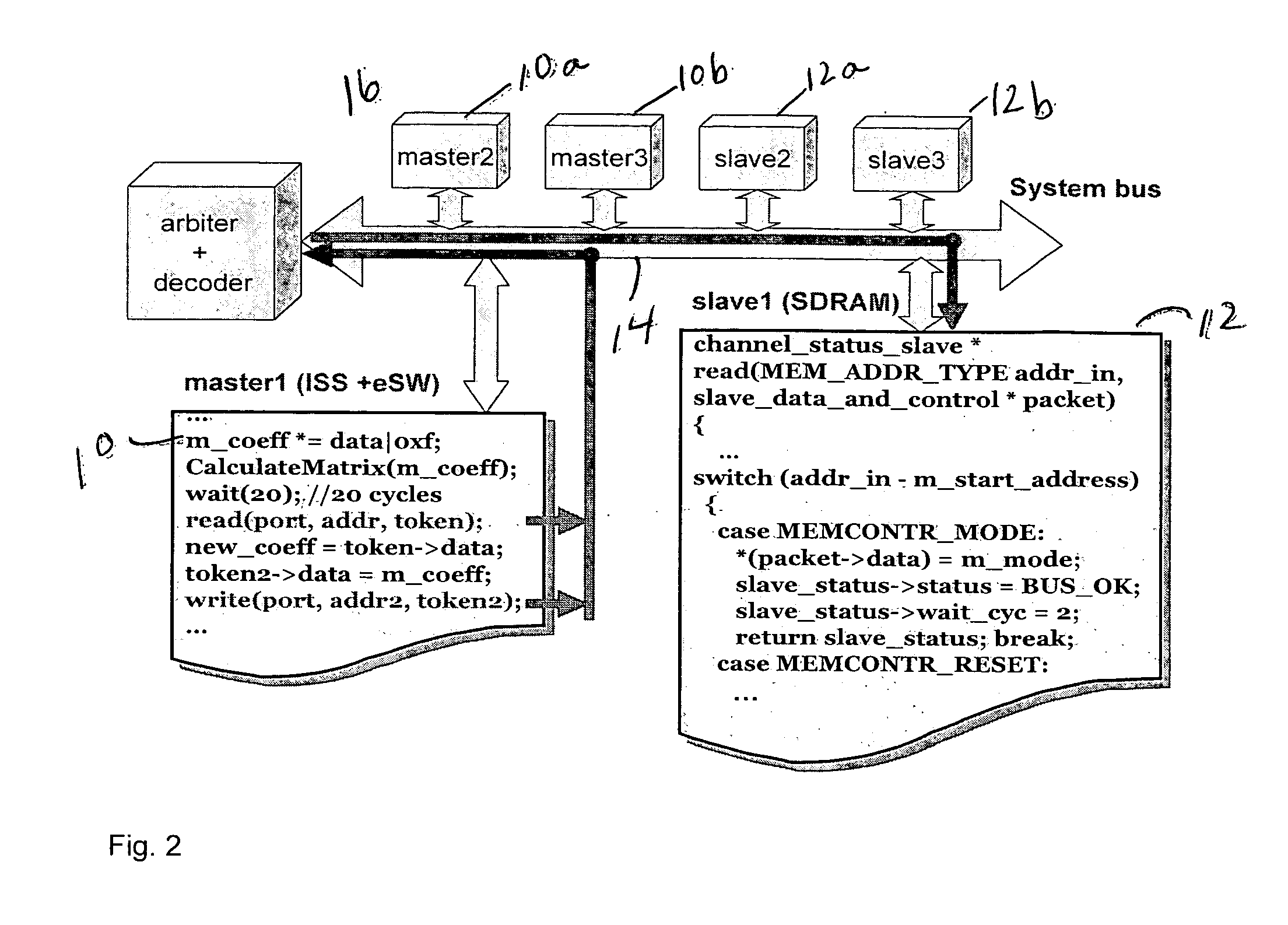

Method for the fast exploration of bus-based communication architectures at the cycle-count-accurate-at-transaction -boundaries (CCATB) abstraction

ActiveUS20060282233A1Speed up system prototypingMaintaining cycle count accuracyAnalogue computers for electric apparatusCAD circuit designComputer hardwareHardware architecture

A computer system simulation method starts with algorithmically implementing a specification model independently of hardware architecture. High level functional blocks representing hardware components are connected together using a bus architecture-independent generic channel. The bus architecture-independent generic channel is annotated with timing and protocol details to define an interface between the bus architecture-independent generic channel and functional blocks representing hardware components. The interface is refined to obtain a CCATB for communication space. The read( ) and write( ) interface calls are decomposed into several method calls which correspond to bus pins to obtain observable cycle accuracy for system debugging and validation and to obtain a cycle accurate model. The method calls are replaced by signals, and the functional blocks representing hardware components are further refined to obtain pin / cycle-accurate models which can be manually or automatically mapped to RTL, or be used to co-simulate with existing RTL components.

Owner:RGT UNIV OF CALIFORNIA

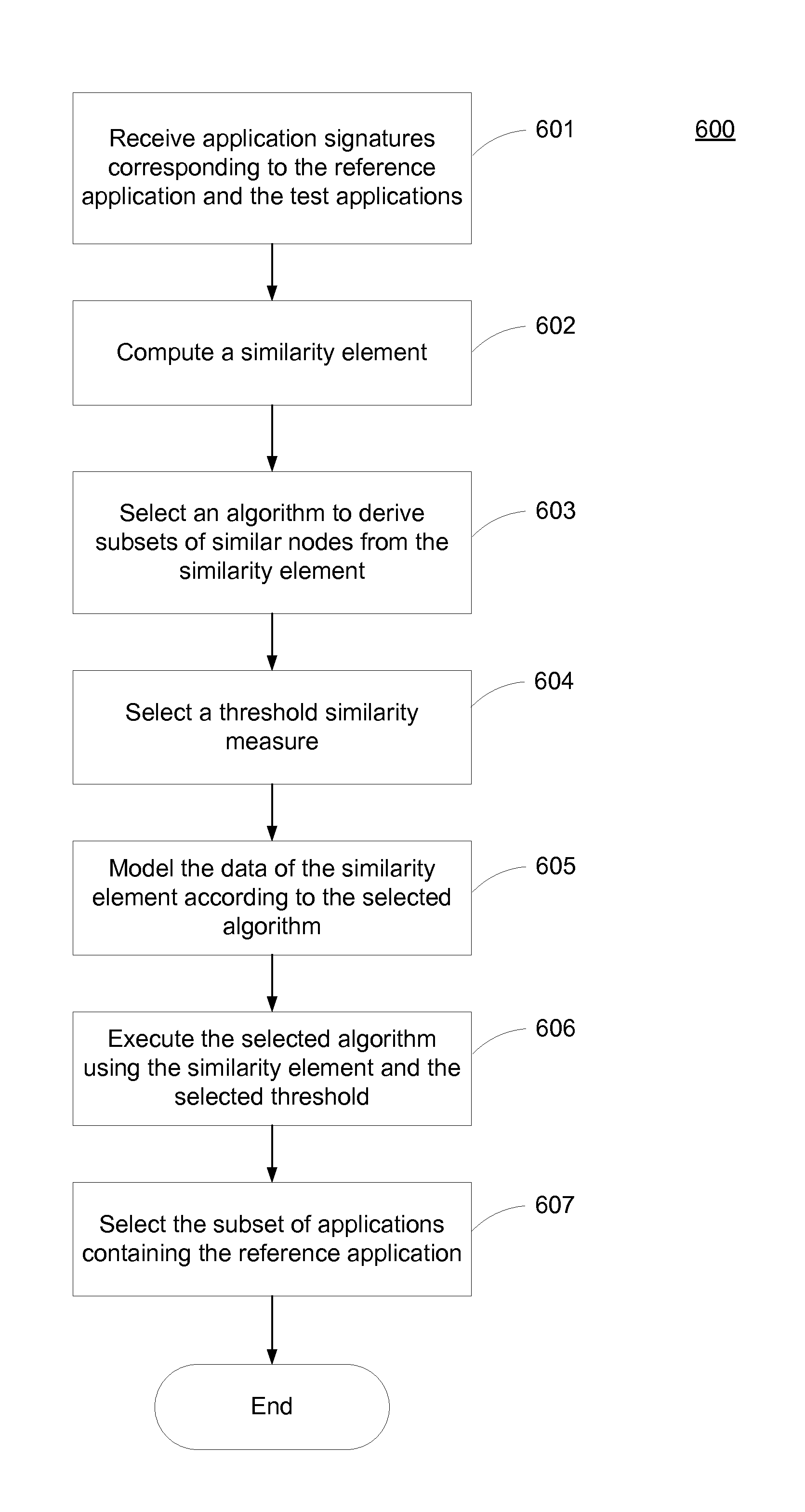

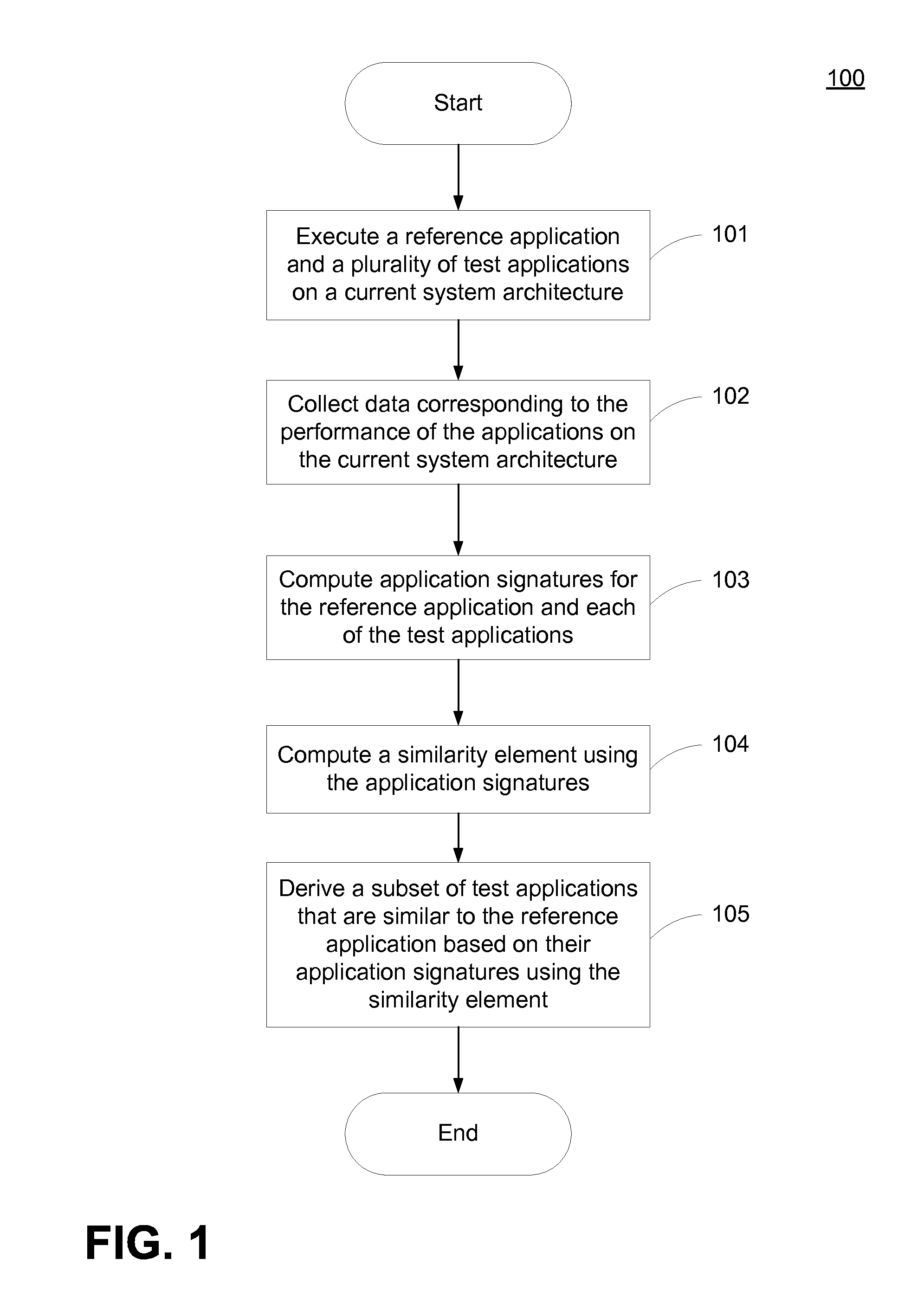

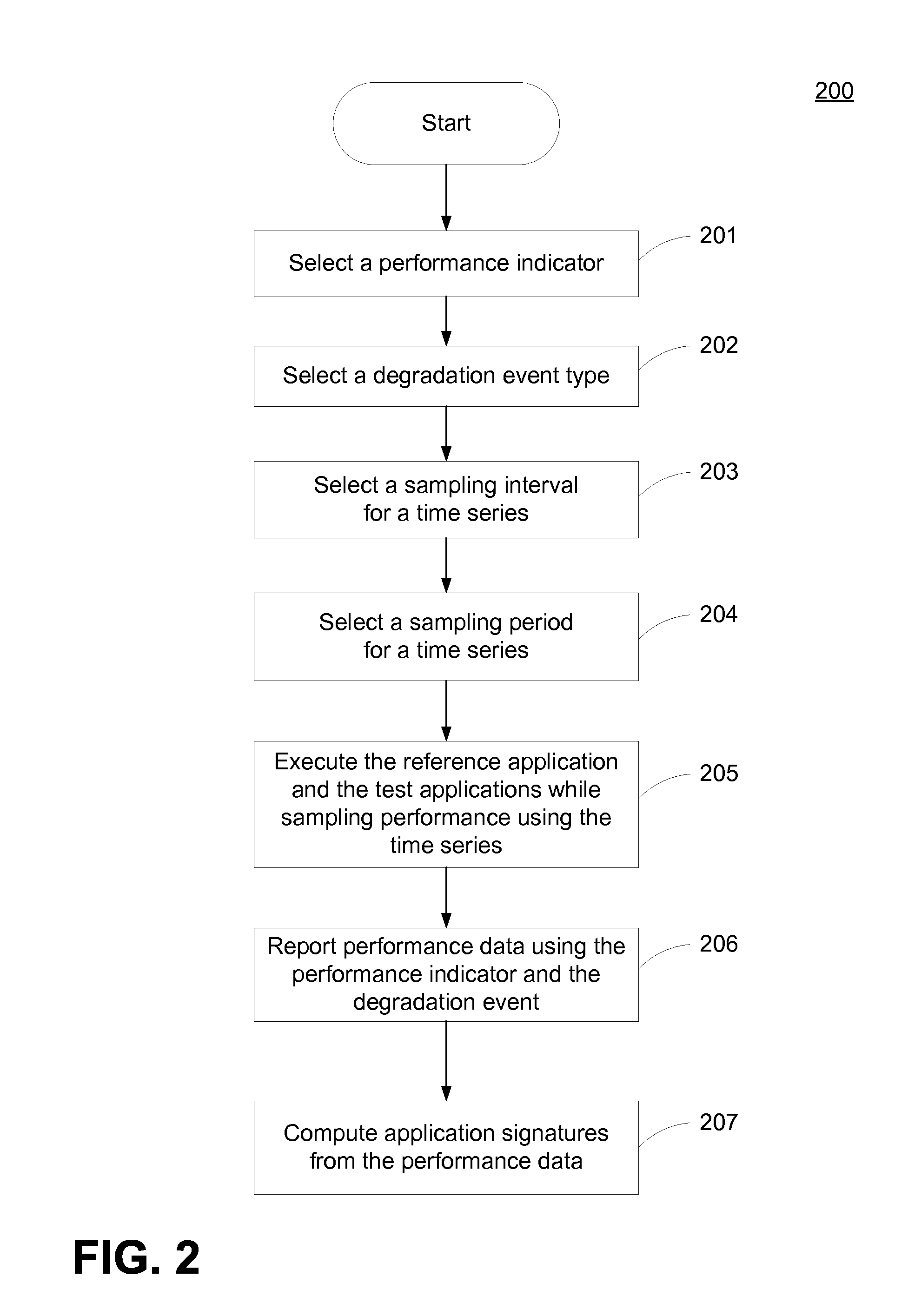

Method and System for Predicting Performance of Software Applications on Prospective Hardware Architecture

InactiveUS20120197626A1Error detection/correctionSpecific program execution arrangementsHardware architectureParallel computing

A system and method for identifying optimal system architectures for a reference application are provided. The system and method comprise executing a reference application and a plurality of test applications on a current system architecture and sampling performance data for each of the applications. The performance data is used to compute an application signature for each application. A similarity element is derived from the application signatures that illustrates the similarity between each application and every other application. Using a similarity threshold and an algorithm, a subset of test applications that are similar to the reference application are derived.

Owner:R2 SOLUTIONS

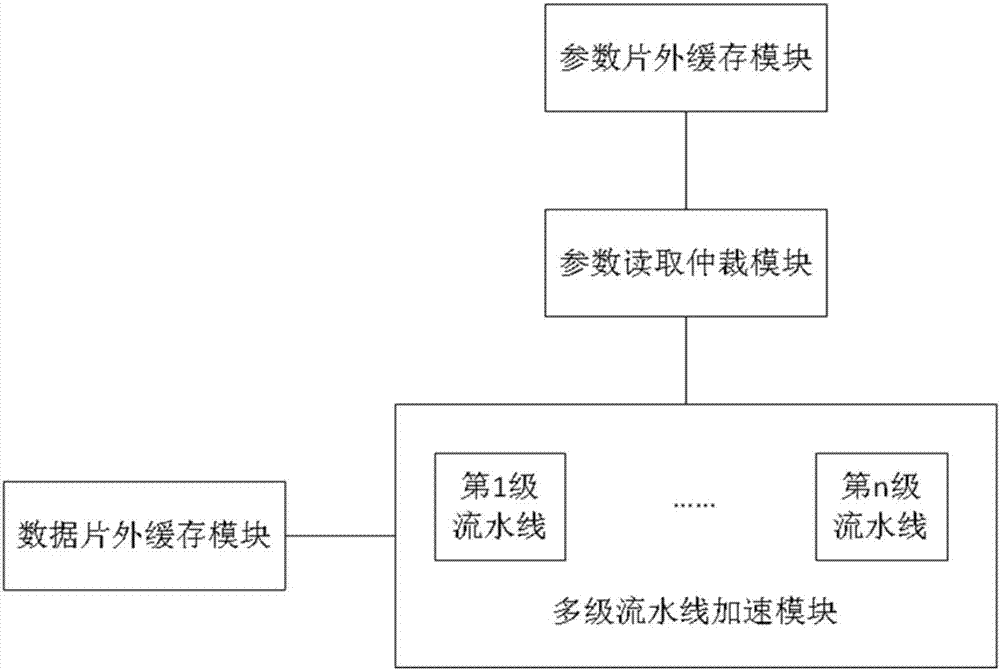

Hardware structure for realizing forward calculation of convolutional neural network

InactiveCN107066239AReduce cache requirementsImprove parallelismConcurrent instruction executionPhysical realisationHardware structureHardware architecture

The present application discloses a hardware structure for realizing forward calculation of a convolutional neural network. The hardware structure comprises: a data off-chip caching module, used for caching parameter data in each to-be-processed picture that is input externally into the module, wherein the parameter data waits for being read by a multi-level pipeline acceleration module; the multi-level pipeline acceleration module, connected to the data off-chip caching module and used for reading a parameter from the data off-chip caching module, so as to realize core calculation of a convolutional neural network; a parameter reading arbitration module, connected to the multi-level pipeline acceleration module and used for processing multiple parameter reading requests in the multi-level pipeline acceleration module, so as for the multi-level pipeline acceleration module to obtain a required parameter; and a parameter off-chip caching module, connected to the parameter reading arbitration module and used for storing a parameter required for forward calculation of the convolutional neural network. The present application realizes algorithms by adopting a hardware architecture in a parallel pipeline manner, so that higher resource utilization and higher performance are achieved.

Owner:智擎信息系统(上海)有限公司

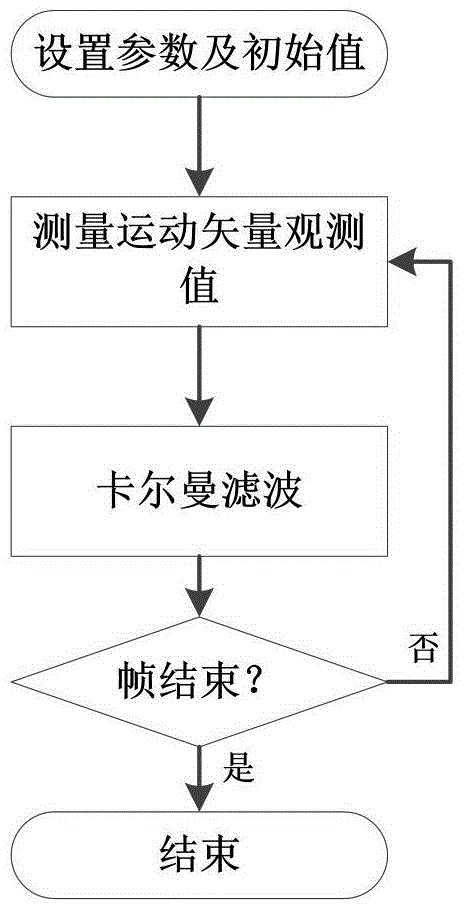

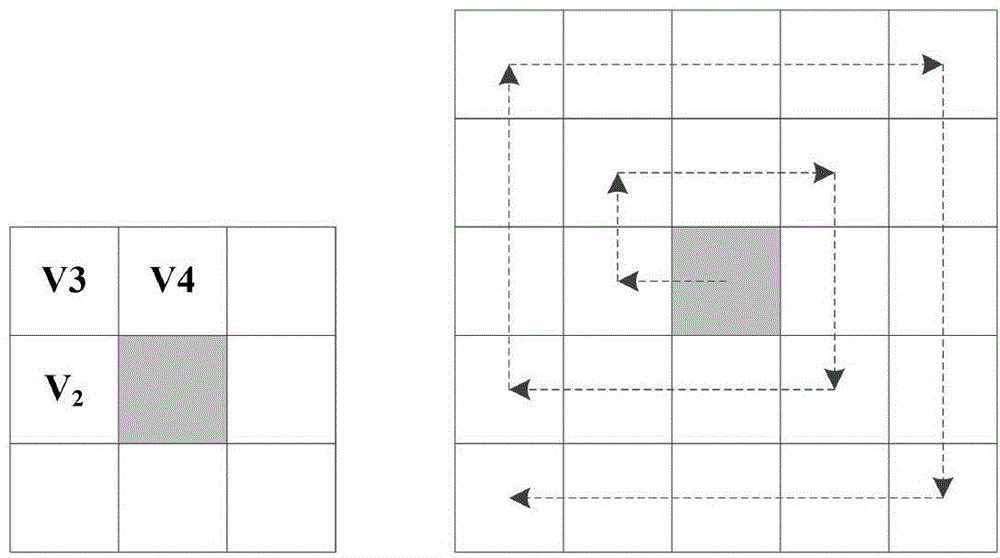

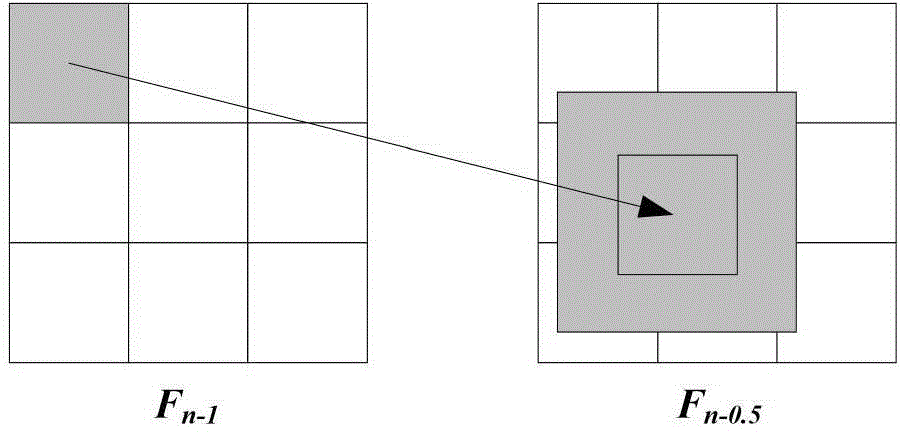

Frame rate up-conversion motion estimation method and frame rate up-conversion motion estimation system based on Kalman filtering

ActiveCN104915966AGuaranteed differenceSmall amount of calculationImage enhancementImage analysisHardware architectureMotion vector

The invention discloses a frame rate up-conversion motion estimation method and a frame rate up-conversion motion estimation system based on Kalman filtering. The method comprises the following steps: first, the parameters and the initial value of state of a Kalman filtering model are set to make the model accord with the actual system; then, the observed value of a motion vector is obtained through a strategy in which unidirectional motion estimation is carried out first and then a unidirectional motion vector is mapped to an interpolated frame; and finally, an observation vector is updated by a time-variant gain Kalman filtering method so as to obtain a more accurate motion vector. Based on the method, a frame rate up-conversion motion estimation hardware architecture based on Kalman filtering is put forward. High utilization rate and high throughput of the system are realized through an alternate block scanning sequence and two parallel data channels.

Owner:SHANGHAI JIAO TONG UNIV

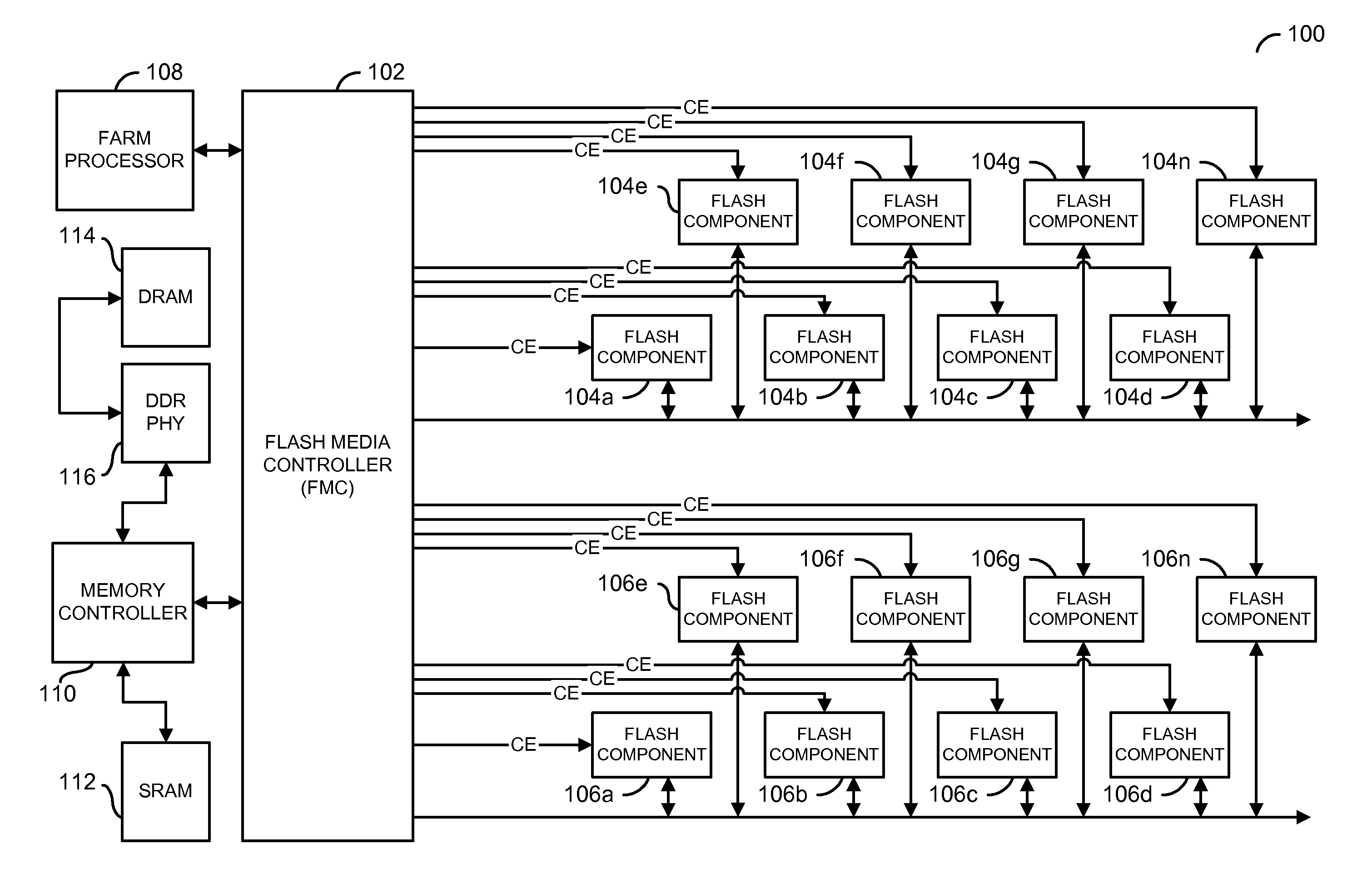

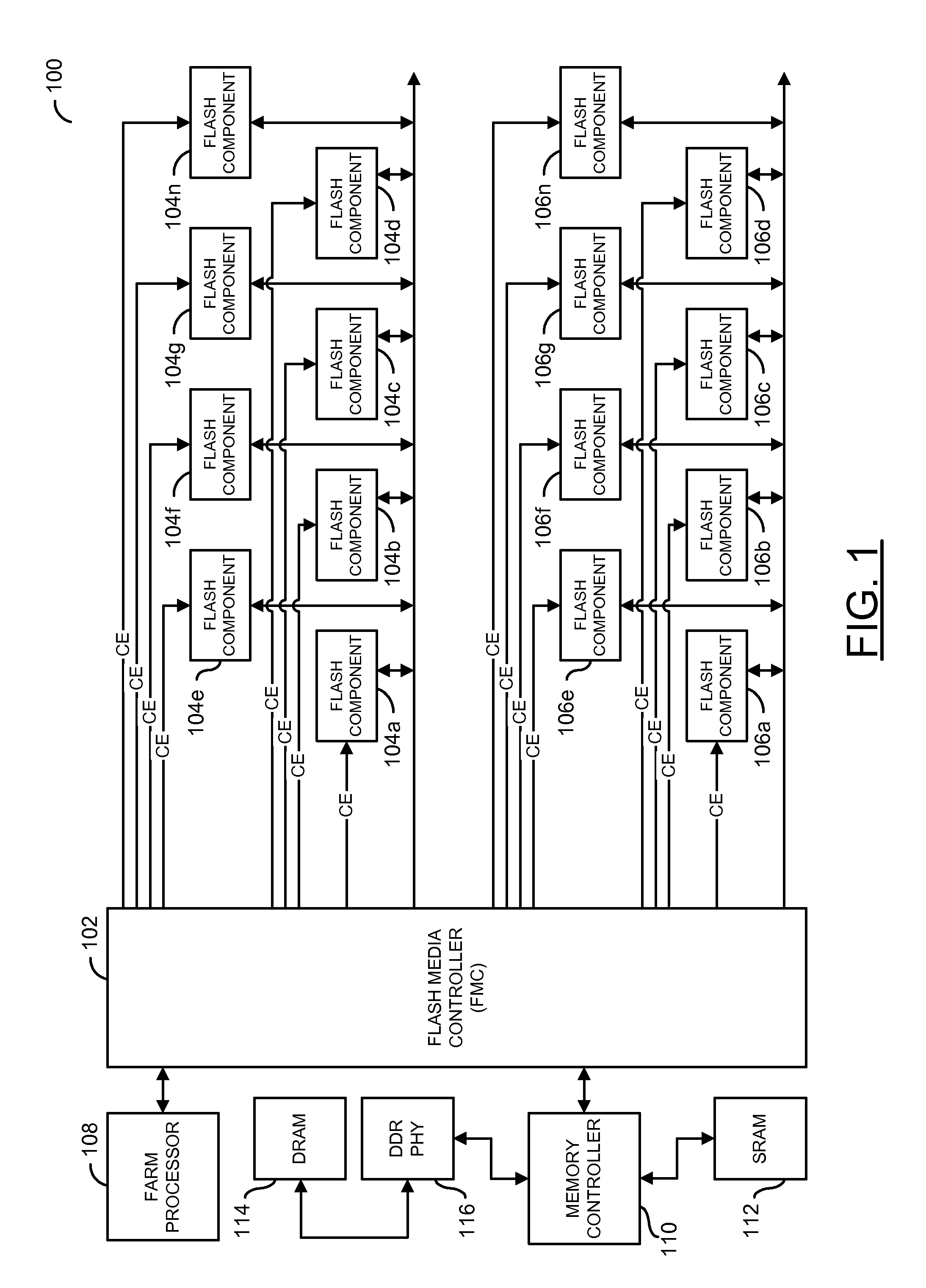

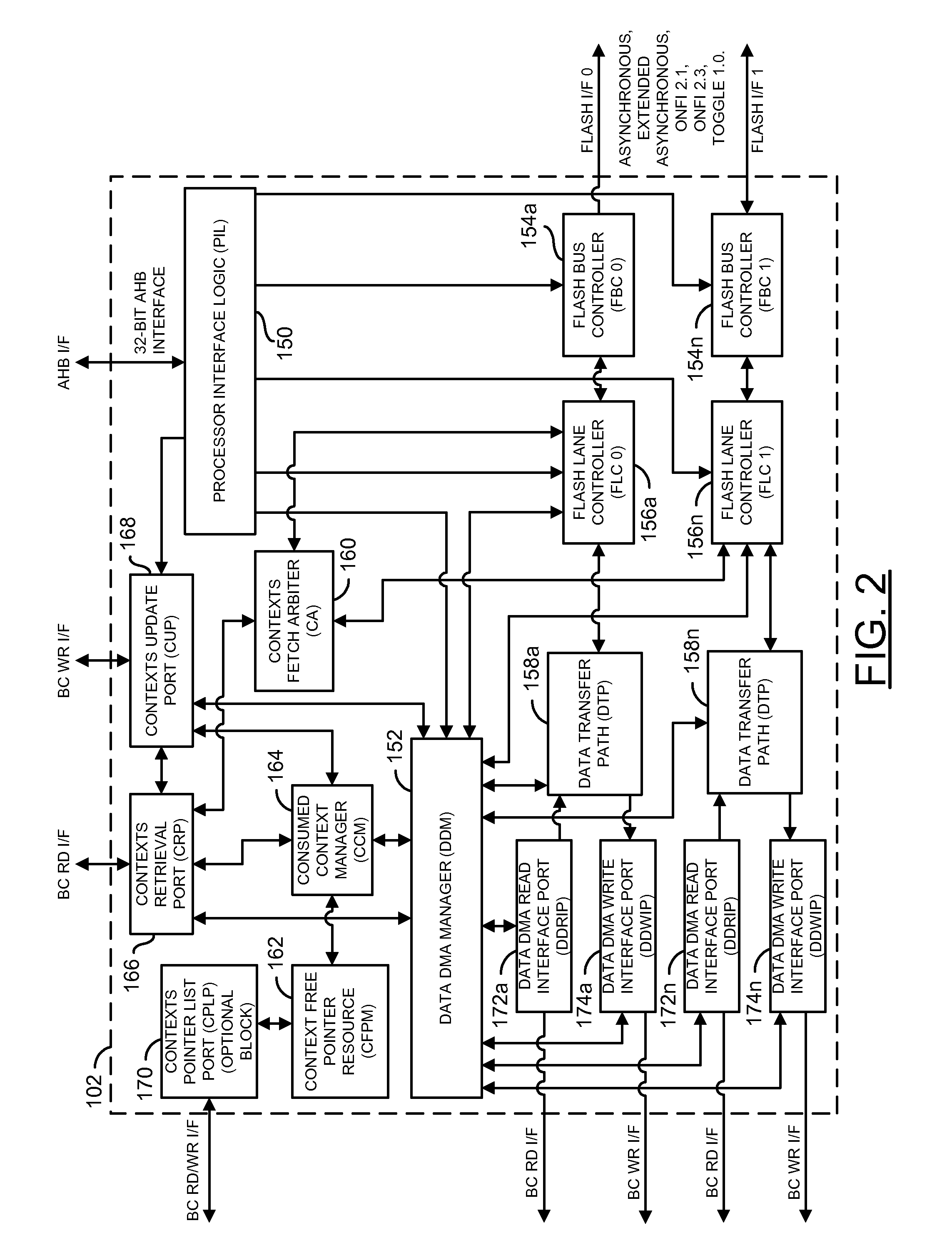

Flash controller hardware architecture for flash devices

A flash media controller including one or more dedicated data transfer paths, one or more flash lane controllers, and one or more flash bus controllers. The one or more flash lane controllers are generally coupled to the one or more dedicated data transfer paths. The one or more flash bus controllers are generally coupled to the one or more flash lane controllers.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

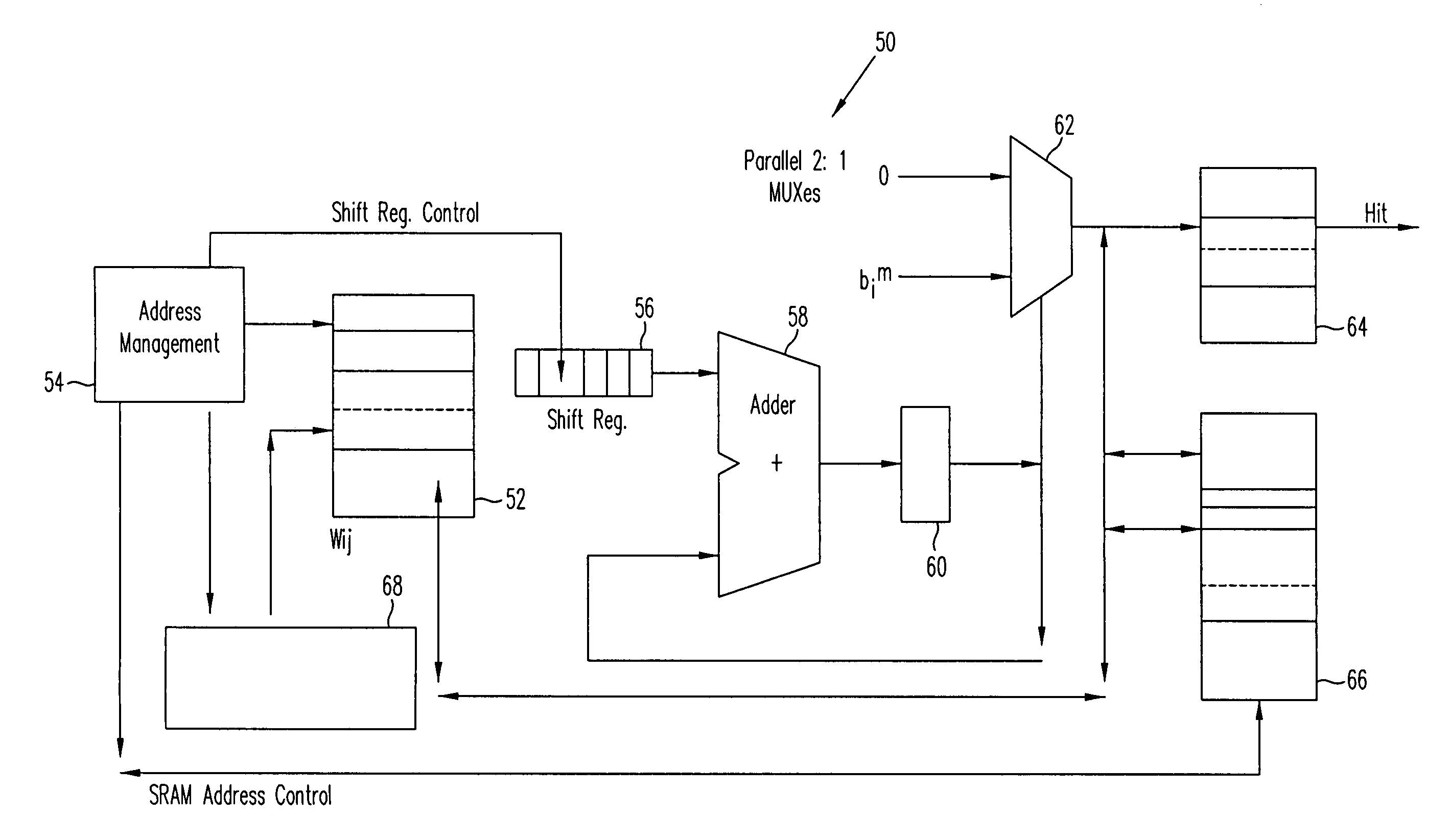

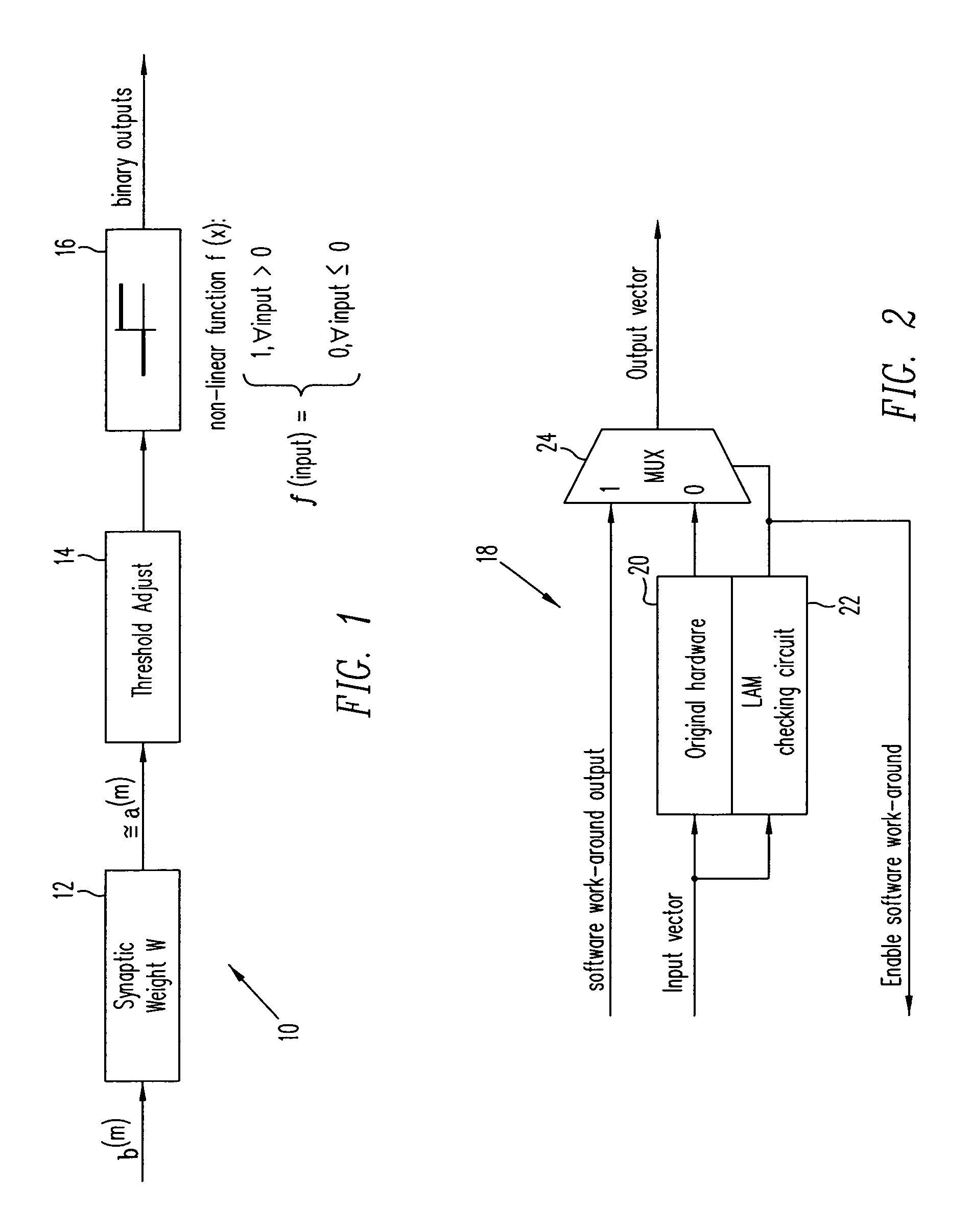

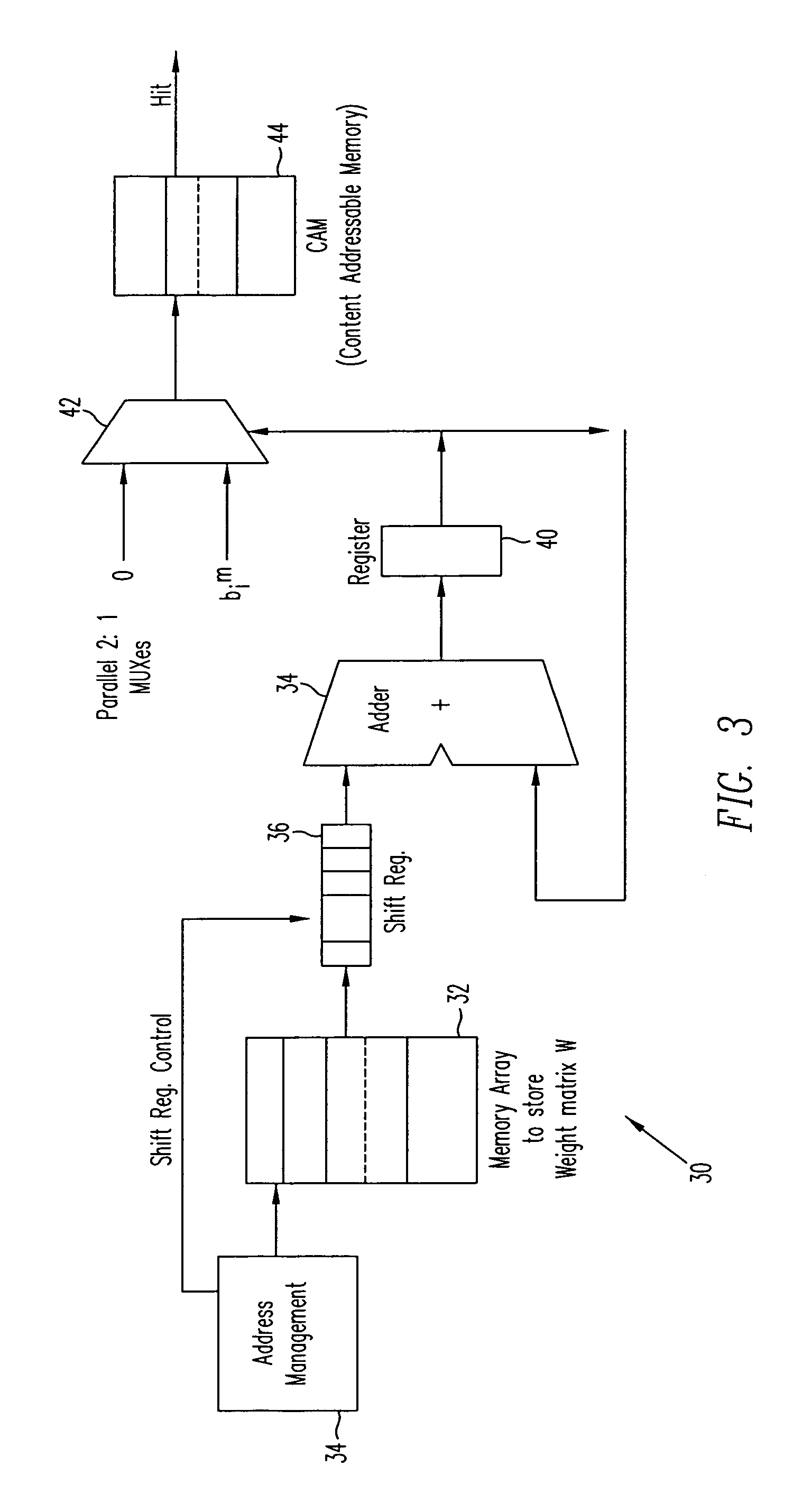

Linear associative memory-based hardware architecture for fault tolerant ASIC/FPGA work-around

A programmable logic unit (e.g., an ASIC or FPGA) having a feedforward linear associative memory (LAM) neural network checking circuit which classifies input vectors to a faulty hardware block as either good or not good and, when a new input vector is classified as not good, blocks a corresponding output vector of the faulty hardware block, enables a software work-around for the new input vector, and accepts the software work-around input as the output vector of the programmable logic circuit. The feedforward LAM neural network checking circuit has a weight matrix whose elements are based on a set of known bad input vectors for said faulty hardware block. The feedforward LAM neural network checking circuit may update the weight matrix online using one or more additional bad input vectors. A discrete Hopfield algorithm is used to calculate the weight matrix W. The feedforward LAM neural network checking circuit calculates an output vector a(m) by multiplying the weight matrix W by the new input vector b(m), that is, a(m)=wb(m), adjusts elements of the output vector a(m) by respective thresholds, and processes the elements using a plurality of non-linear units to provide an output of 1 when a given adjusted element is positive, and provide an output of 0 when a given adjusted element is not positive. If a vector constructed of the outputs of these non-linear units matches with an entry in a content-addressable memory (CAM) storing the set of known bad vectors (a CAM hit), then the new input vector is classified as not good.

Owner:CISCO TECH INC

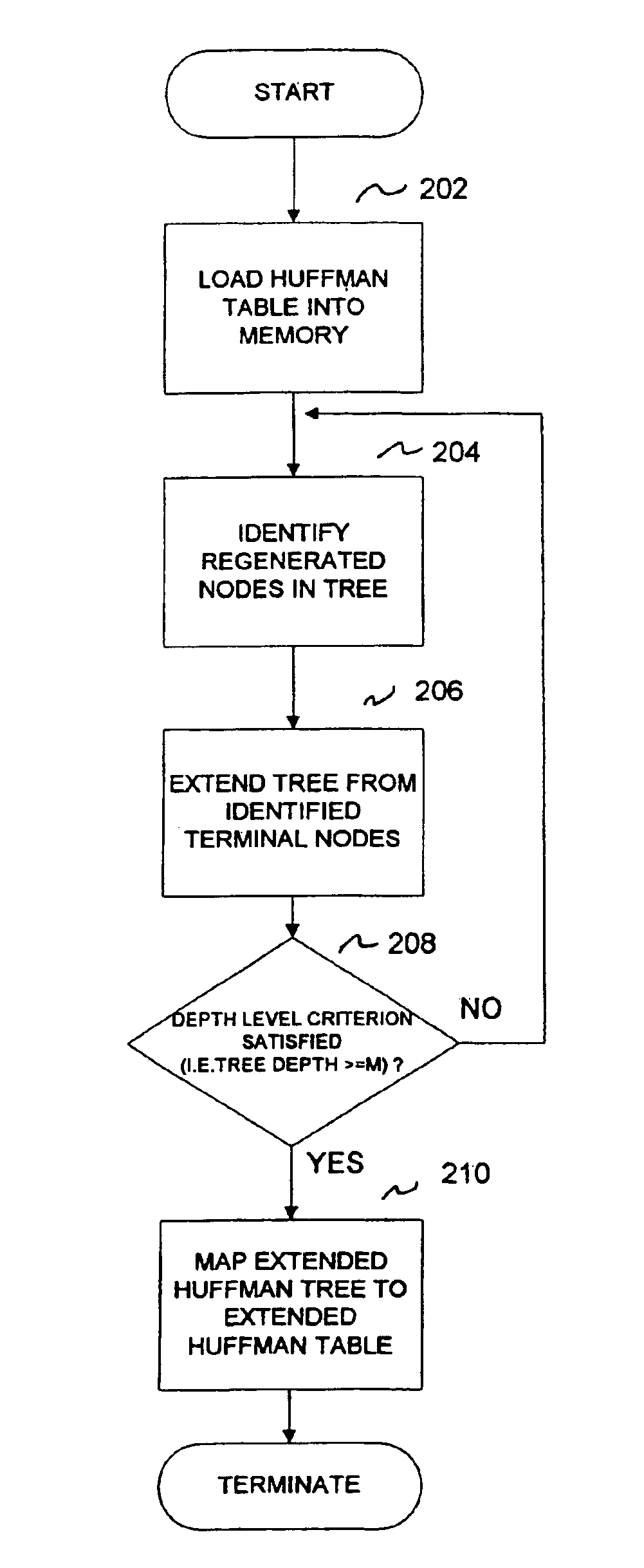

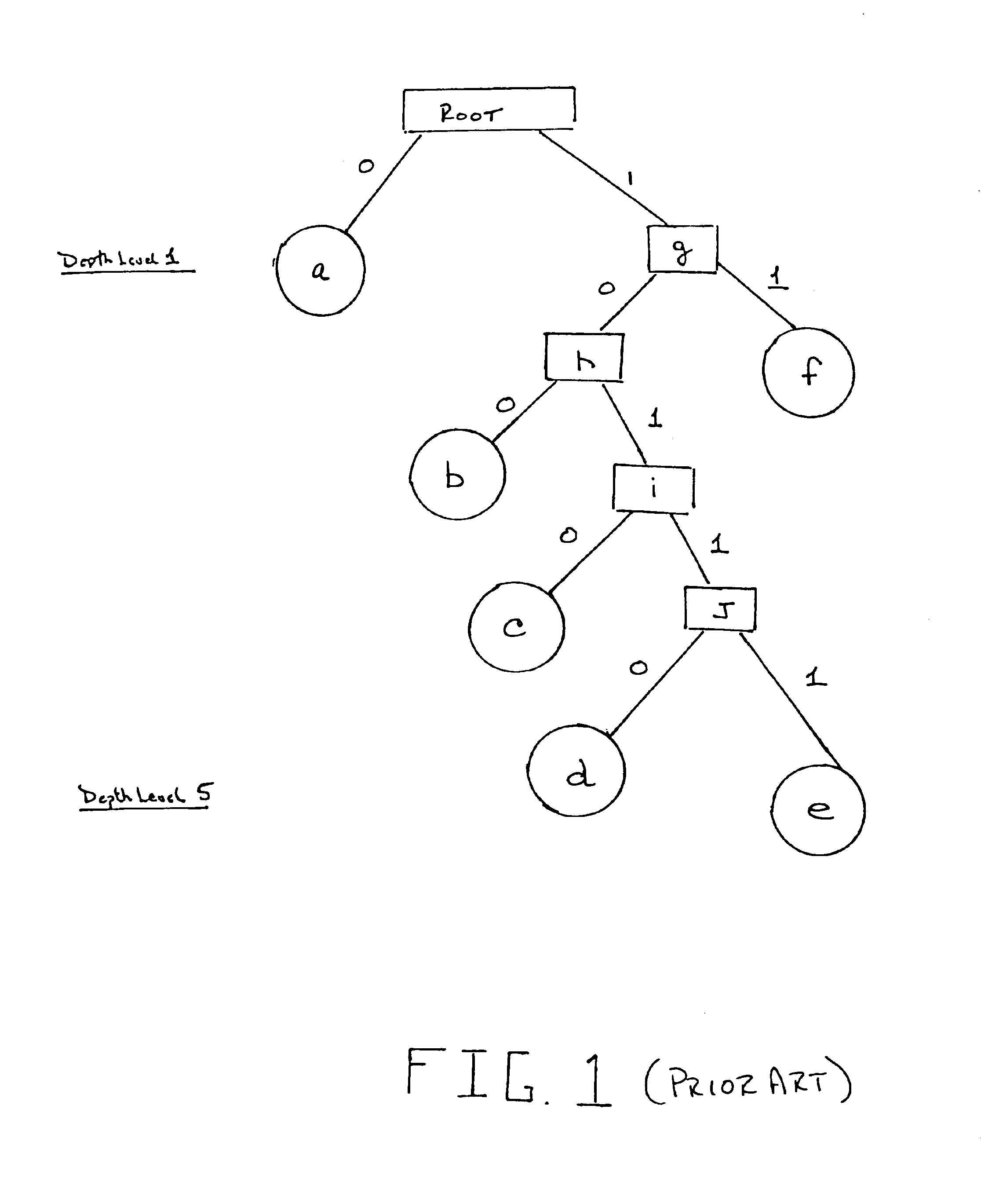

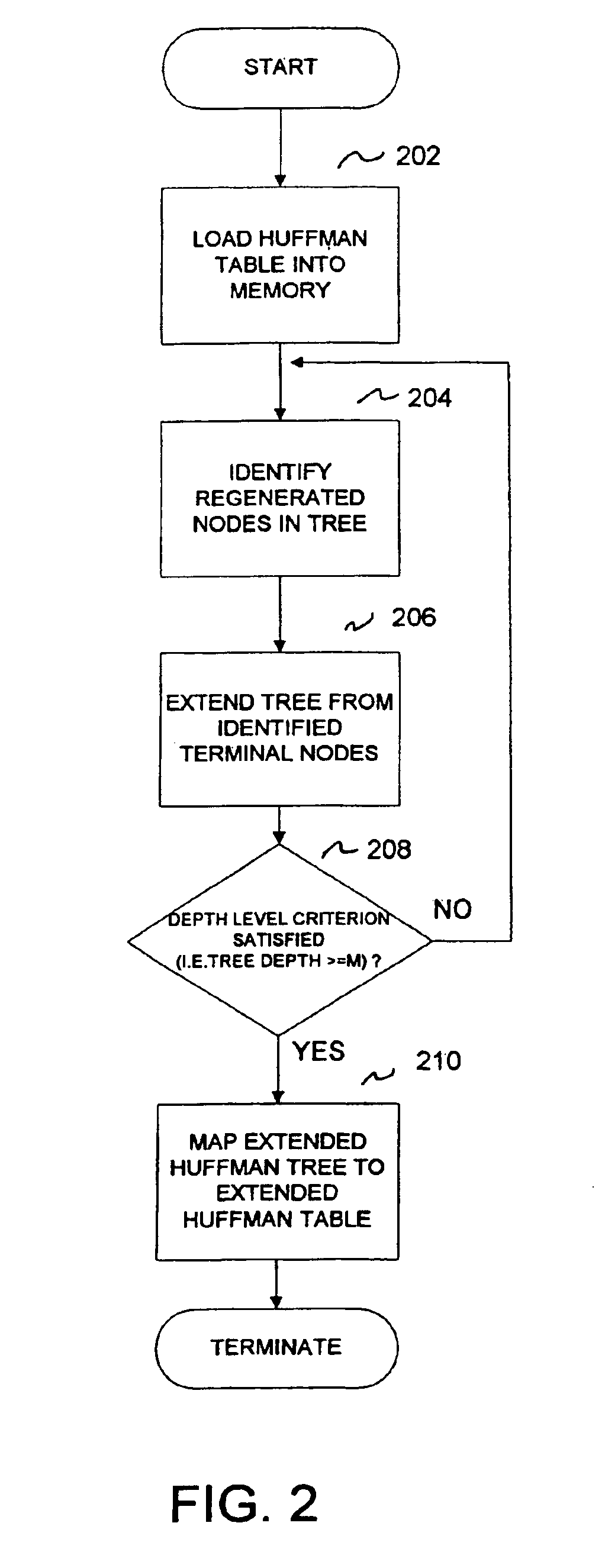

Adaptive variable length decoding method

InactiveUS7043088B2Guaranteed rate performanceCode conversionCharacter and pattern recognitionDecoding methodsHardware architecture

A method is disclosed for decoding multiple-coded symbols from a coded input symbol stream in a single clock cycle. The method constructs an original Huffman look-up table by extending the associated Huffman tree to decode multiple symbols in one clock cycle in a first embodiment and decodes multiple DCT coefficient symbols in an alternate embodiment. An advantage of the method is that the depth of the new Huffman tree is adjustable thereby making the method easily adaptable to various hardware architectures. A further advantage of the present invention is that the decoding process speed is significantly increased while the size of the lookup table is nominally increased.

Owner:WSOU INVESTMENTS LLC

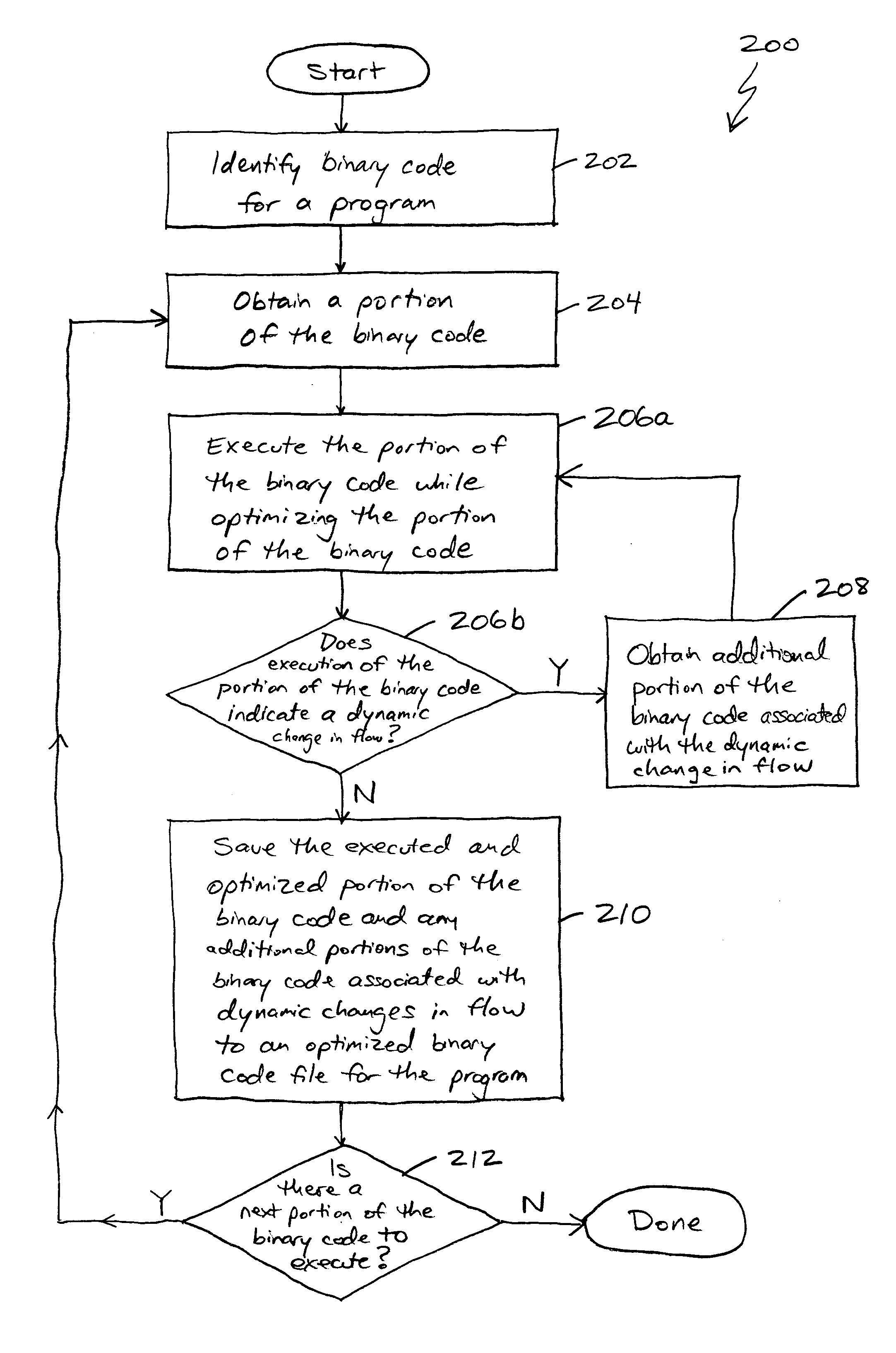

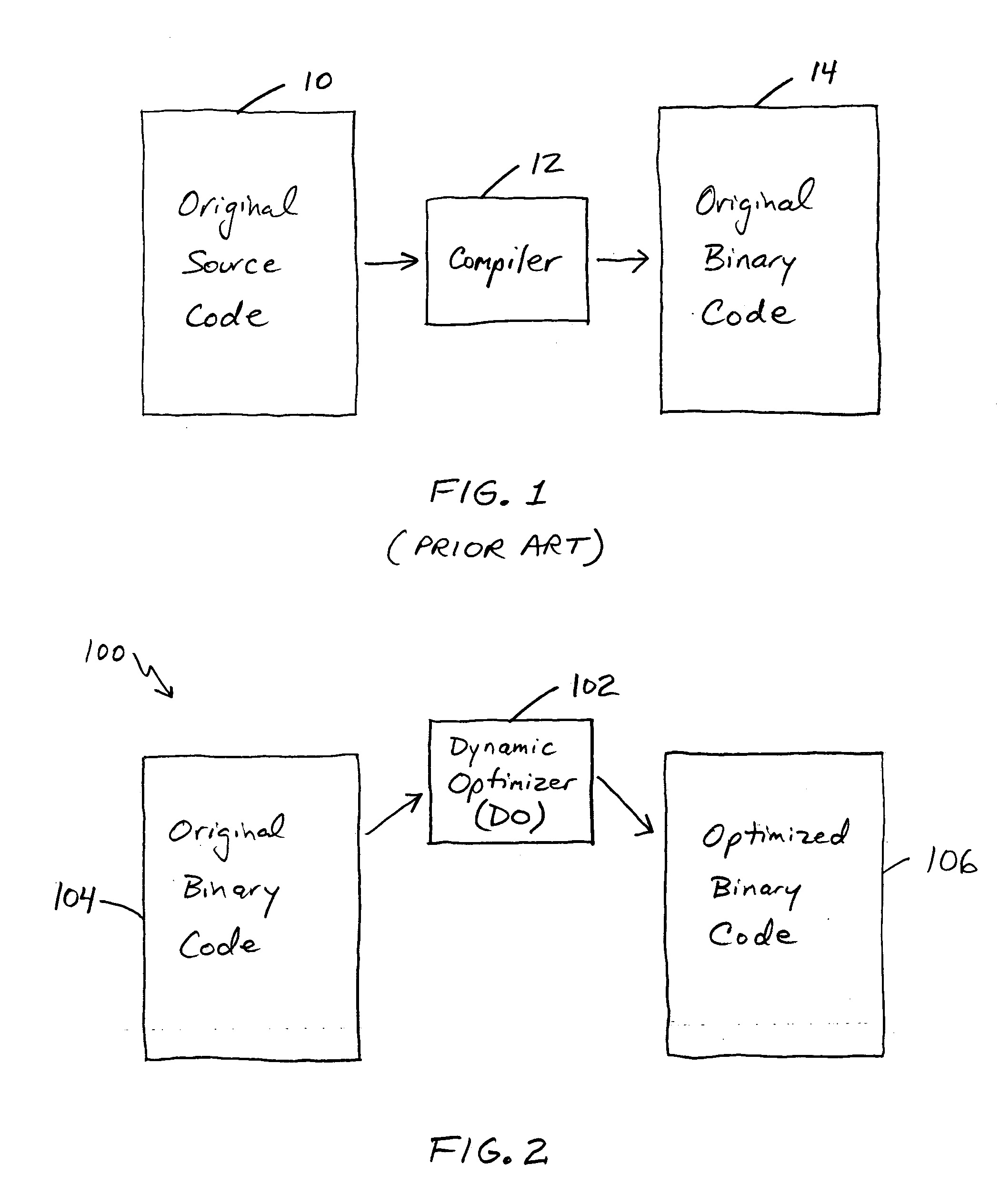

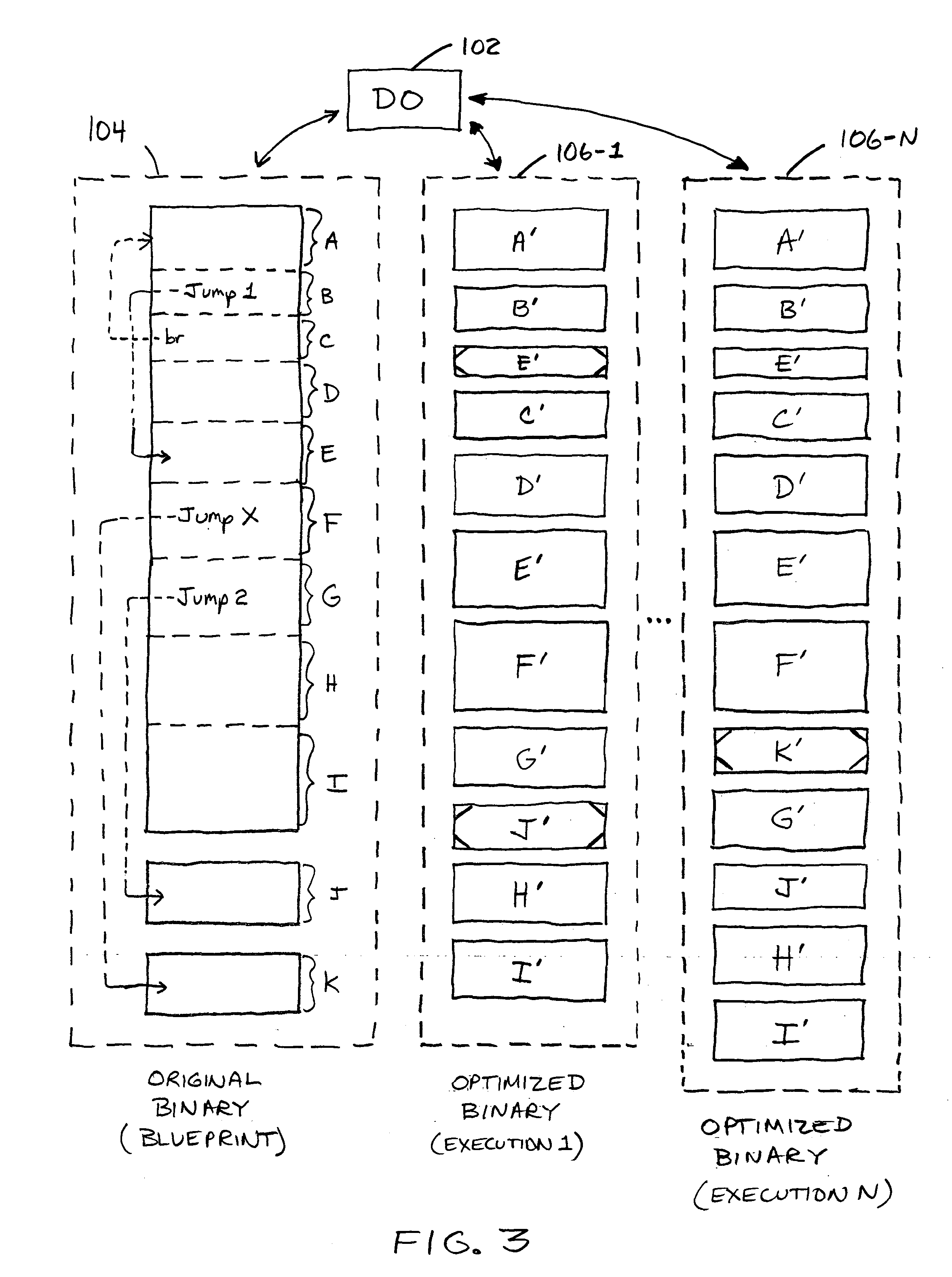

Method for dynamic recompilation of a program

In a method for dynamic recompilation of a program, binary code for a program is identified, a portion of the binary code is obtained, and the obtained portion of the binary code is executed while being optimized for, e.g., use with a new hardware architecture. During execution, dynamic changes in flow are identified to enable additional portions of the binary code to be obtained and executed. The executed and optimized portion of the binary code and any additional portions of the binary code are saved to an optimized binary code file for the program. The obtaining and executing of portions of the binary code is continued until all portions of the binary code have been saved to the optimized binary code file for the program. Thereafter, when the program is called, the optimized binary code file for the program can be executed.

Owner:SUN MICROSYSTEMS INC

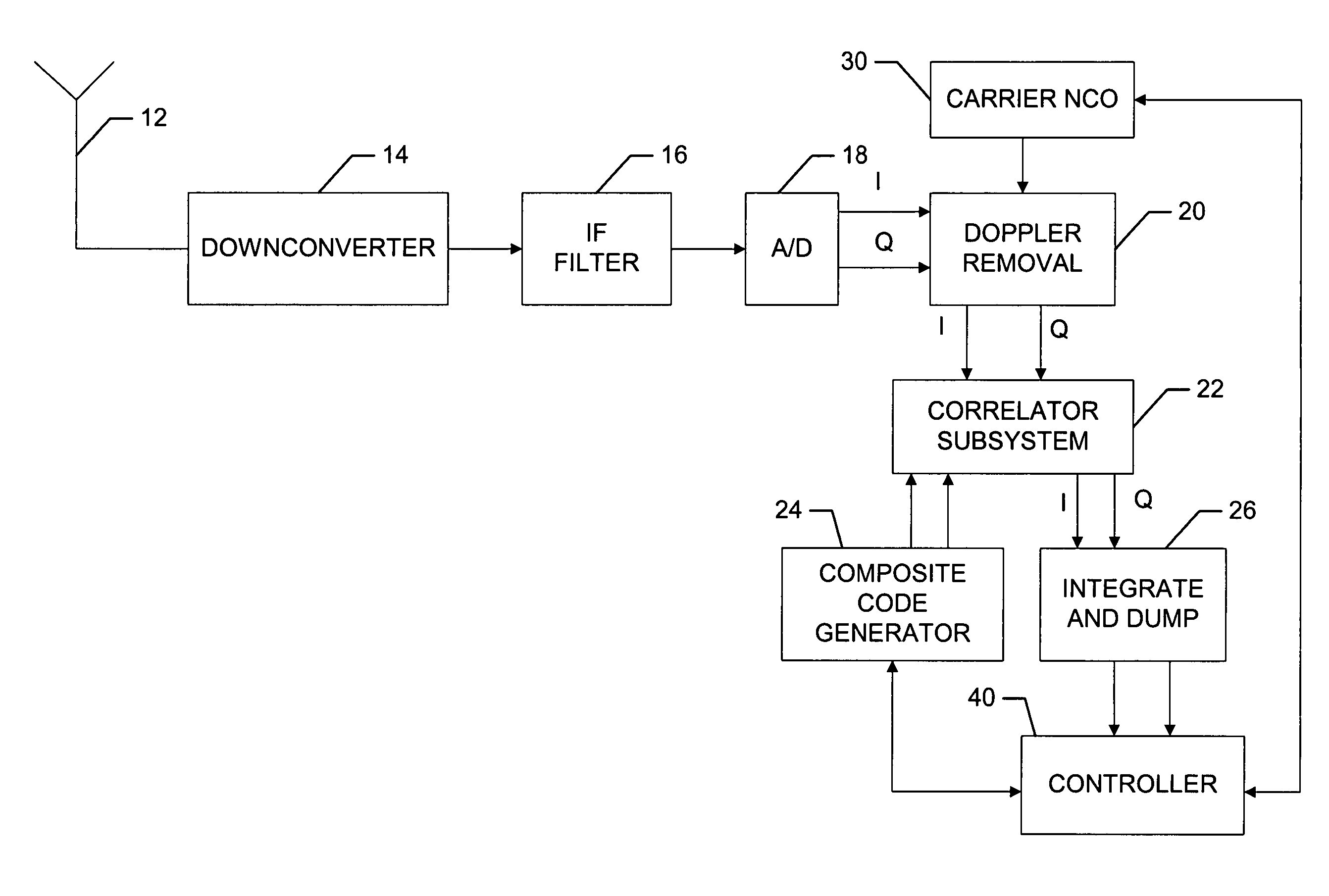

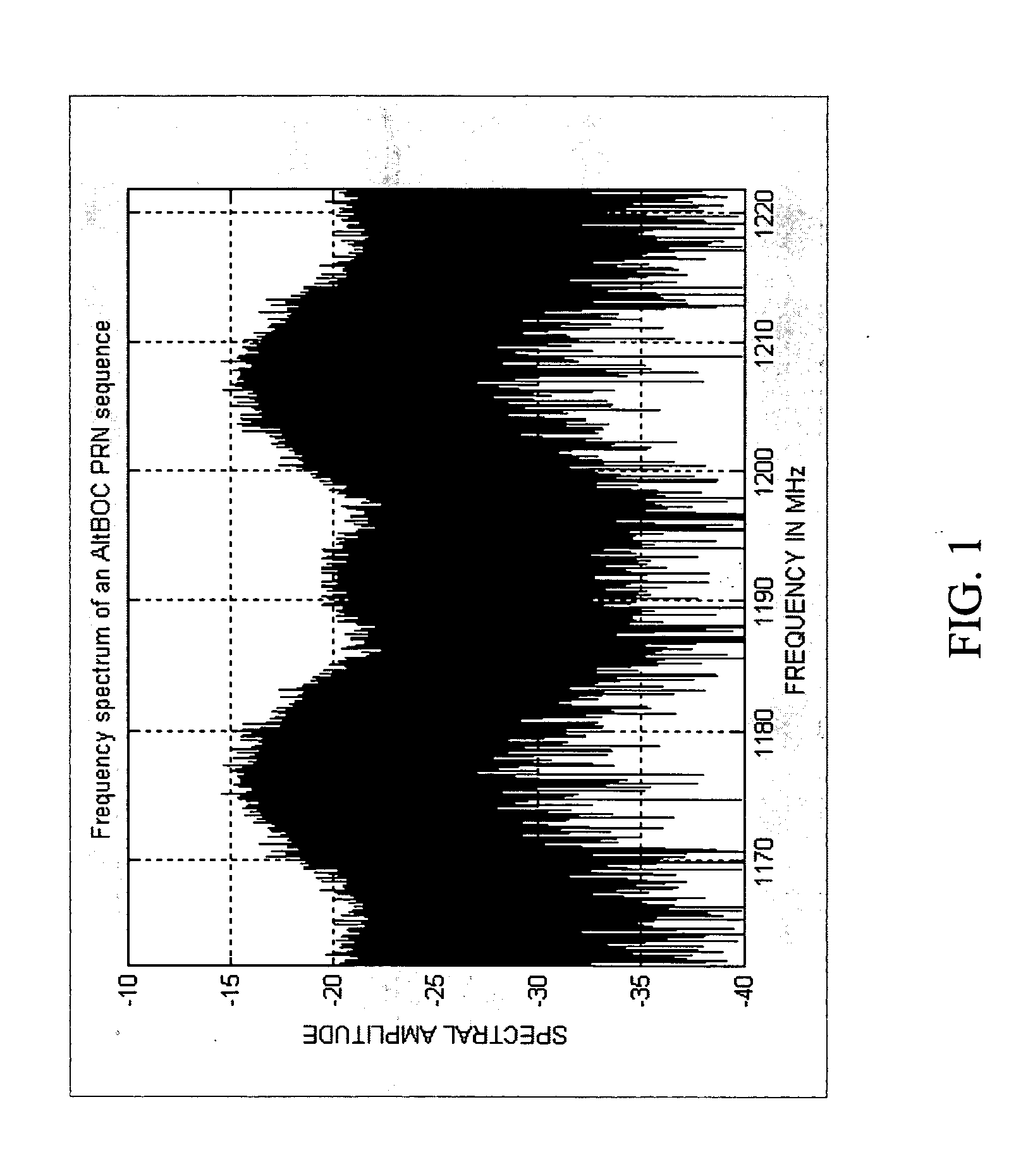

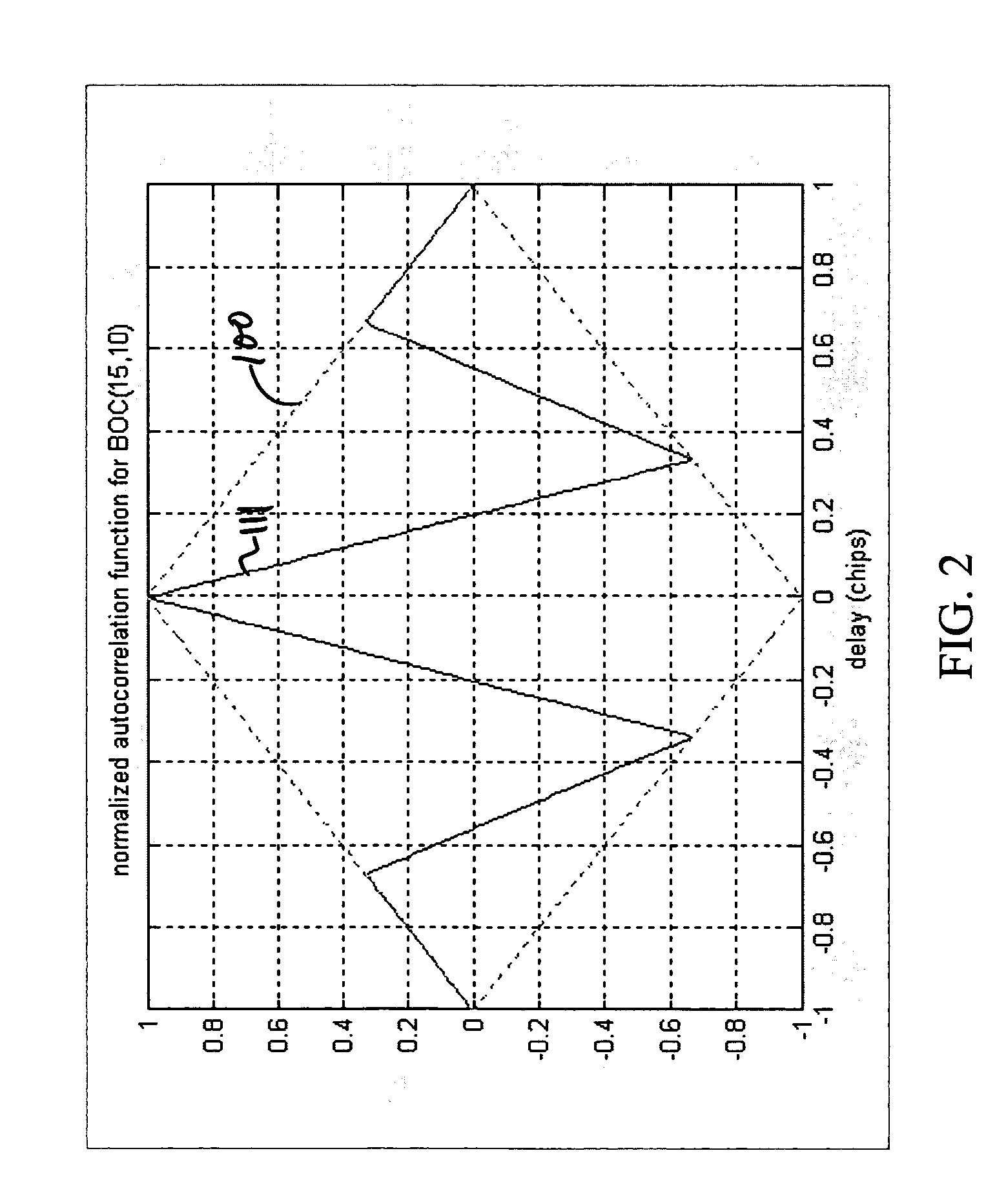

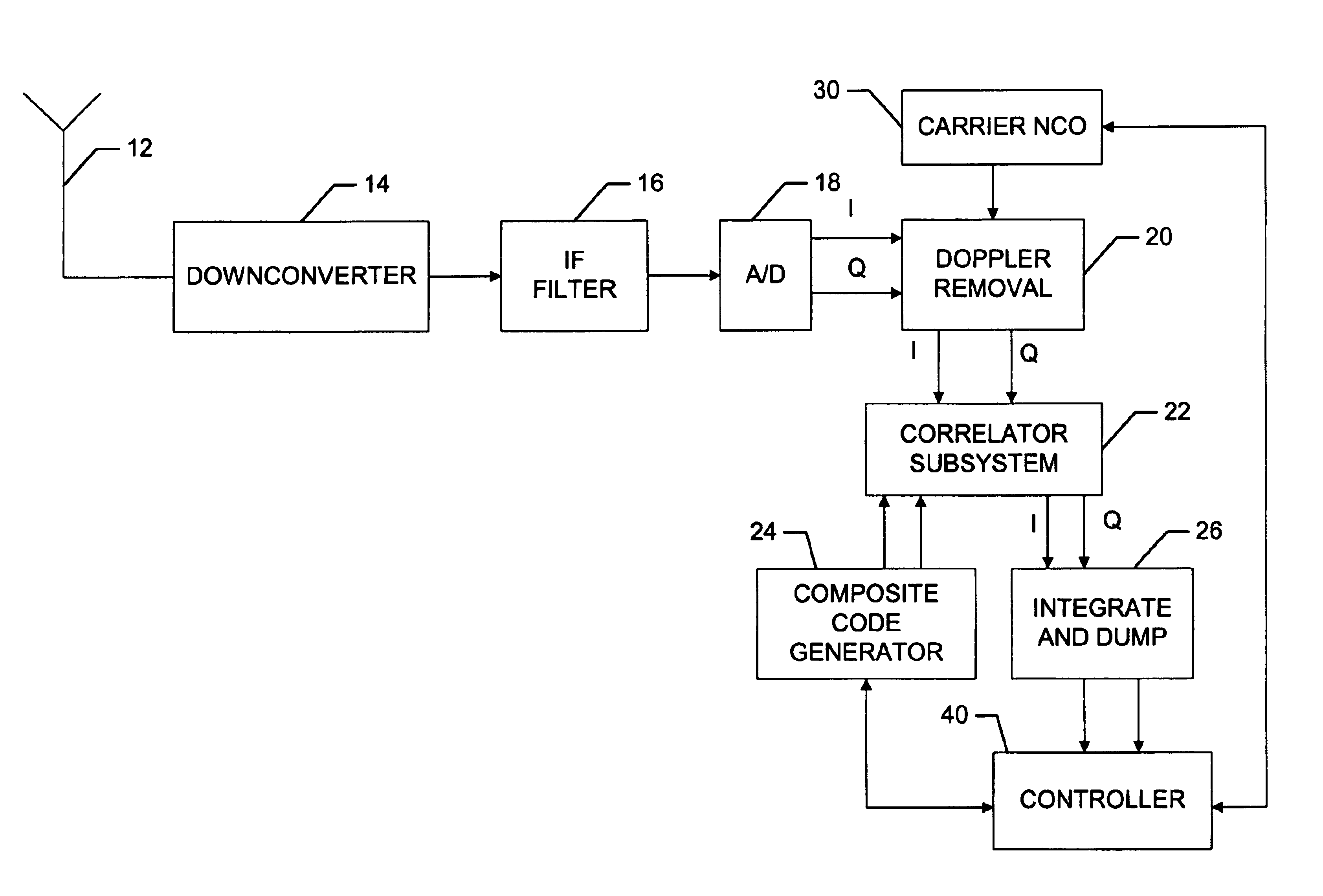

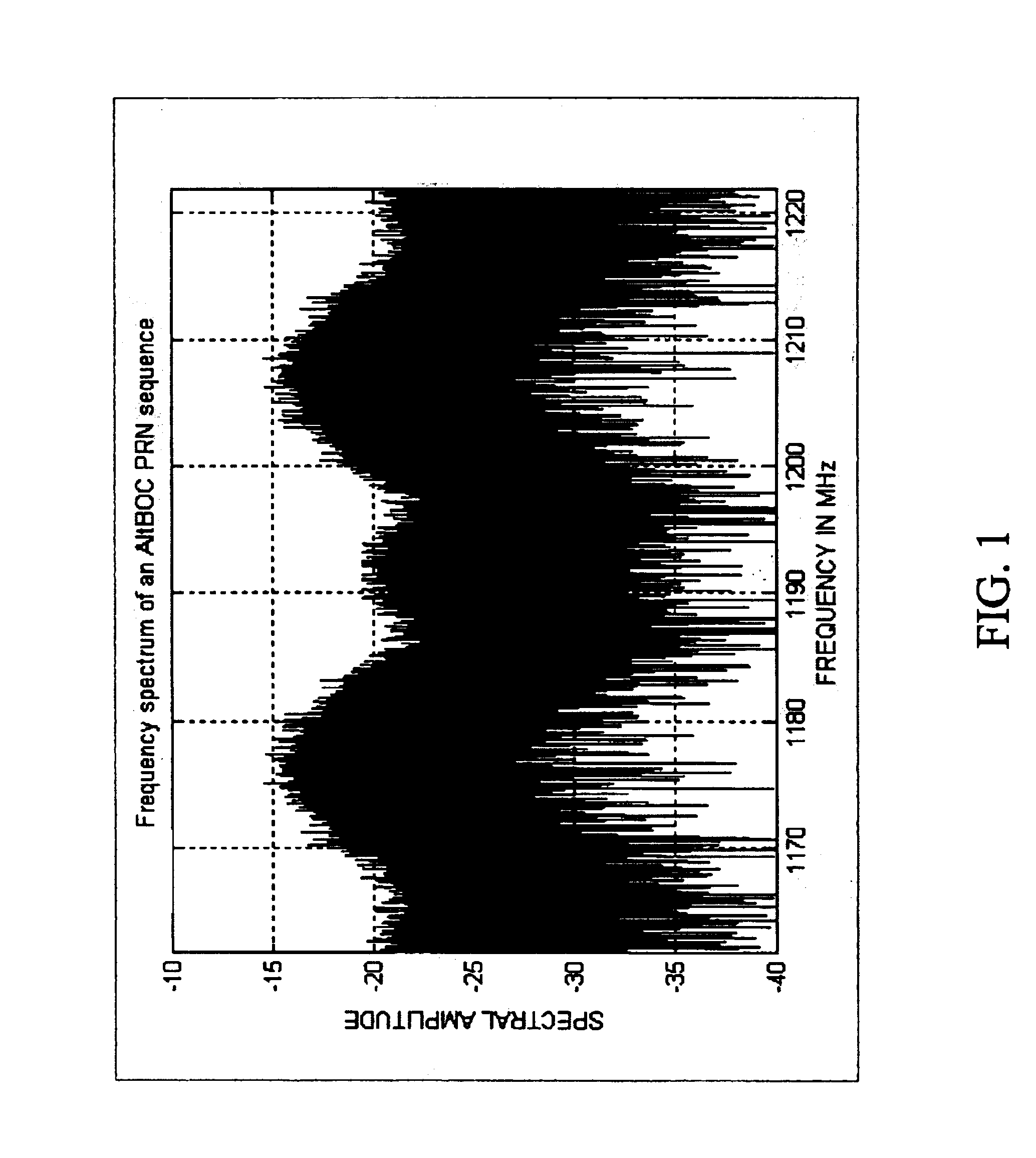

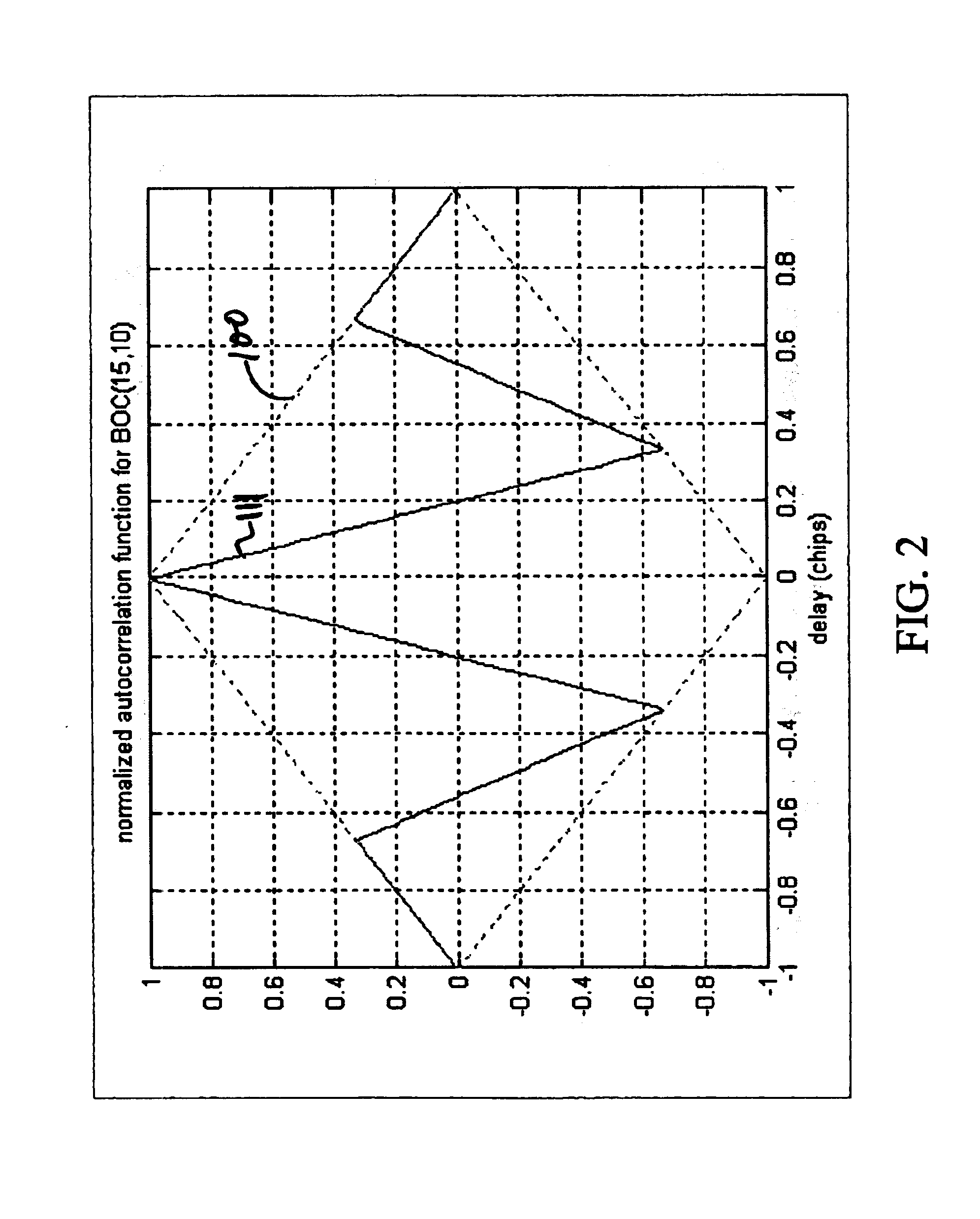

Hardware architecture for processing galileo alternate binary offset carrier (altboc) signals

InactiveUS20050012664A1Polarisation/directional diversityBeacon systems using radio wavesCarrier signalNumerically controlled oscillator

A GNSS receiver tracks the AltBOC (15,10), or composite E5a and E5b, codes using hardware that locally generates the complex composite signal by combining separately generated real and the imaginary components of the complex signal. To track the dataless composite pilot code signals that are on the quadrature channel of the AltBOC signal, the receiver operates PRN code generators that produce replica E5a and E5b PRN codes and square wave generators that generate the real and imaginary components of the upper and lower subcarriers, and combines the signals to produce a locally generated complex composite code. The receiver removes the complex composite code from the received signal by multiplying the received signal, which has been downconverted to baseband I and Q signal components, by the locally generated complex composite code. The receiver then uses the results, which are correlated I and Q prompt signal values, to estimate the center frequency carrier phase angle tracking error. The error signal is used to control a numerically controlled oscillator that operates in a conventional manner, to correct the phase angle of the locally generated center frequency carrier. The receiver also uses early and late versions of the locally generated complex composite pilot code in a DLL, and aligns the locally generated composite pilot code with the received composite pilot code by minimizing the corresponding DLL error signal. Once the receiver is tracking the composite pilot code, the receiver determines its pseudorange and global position in a conventional manner. The receiver also uses a separate set of correlators to align locally generated versions of the in-phase composite PRN codes with the in-phase channel codes in the received signal, and thereafter, recover the data that is modulated thereon.

Owner:EUROPEAN SPACE AGENCY

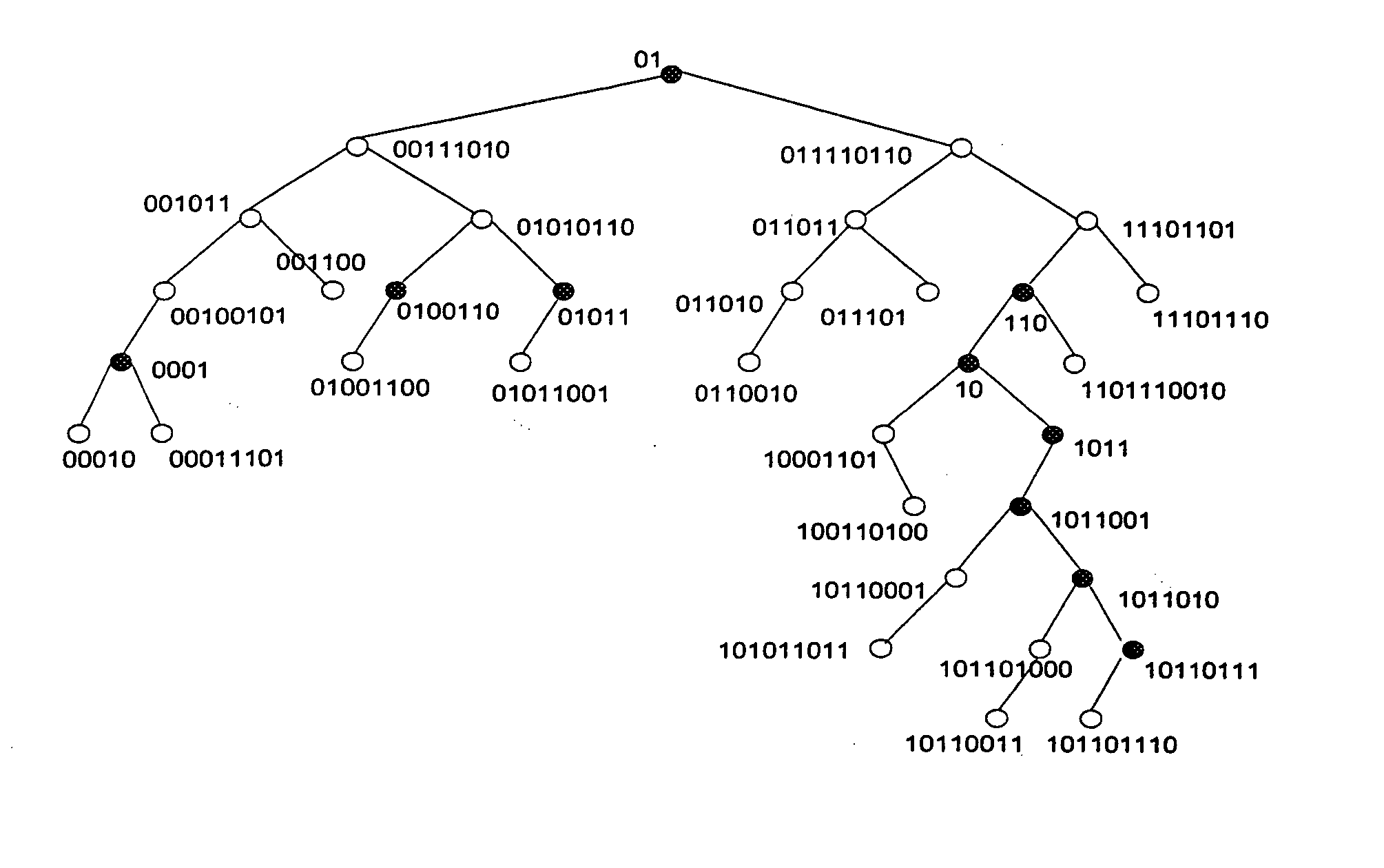

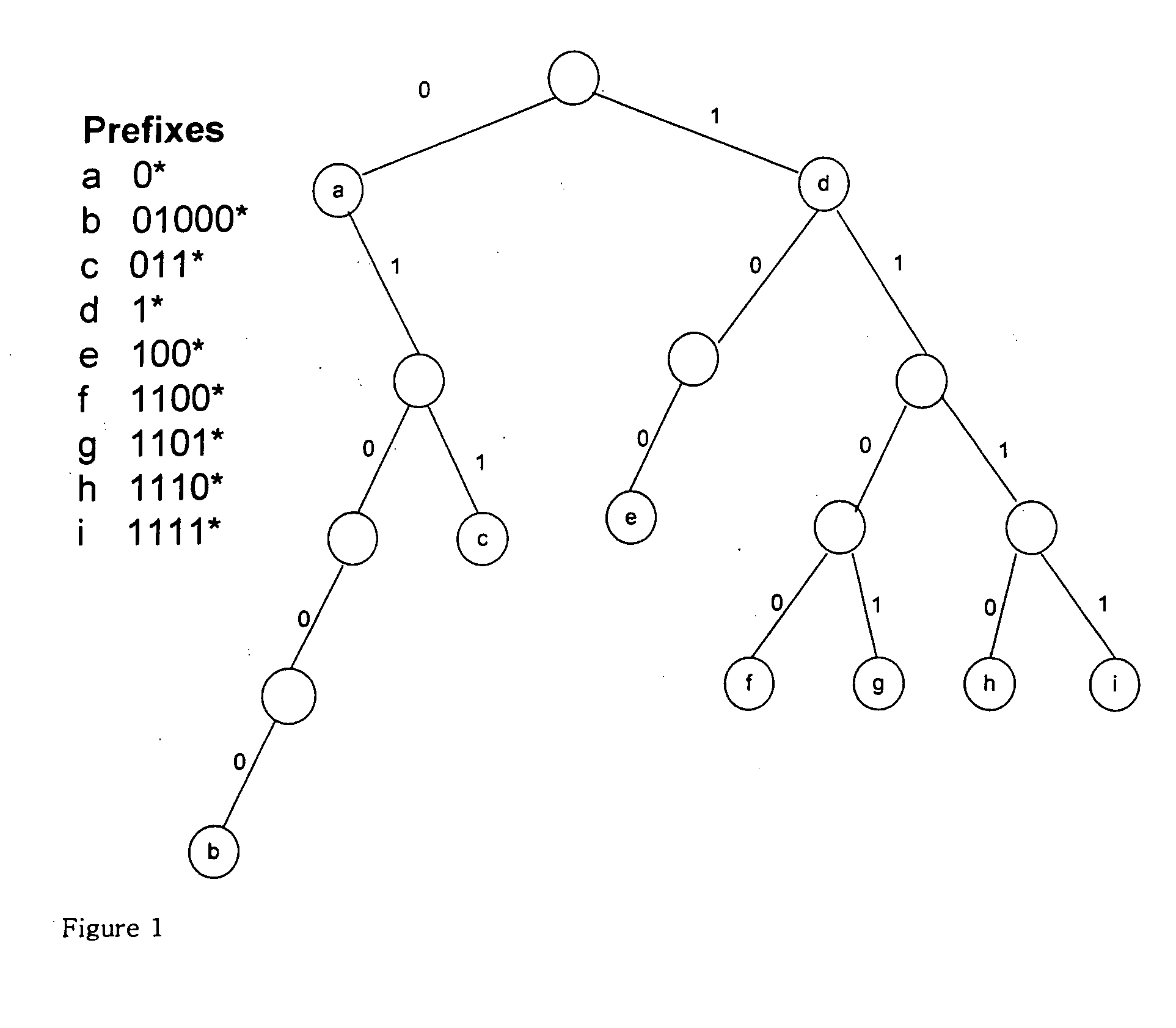

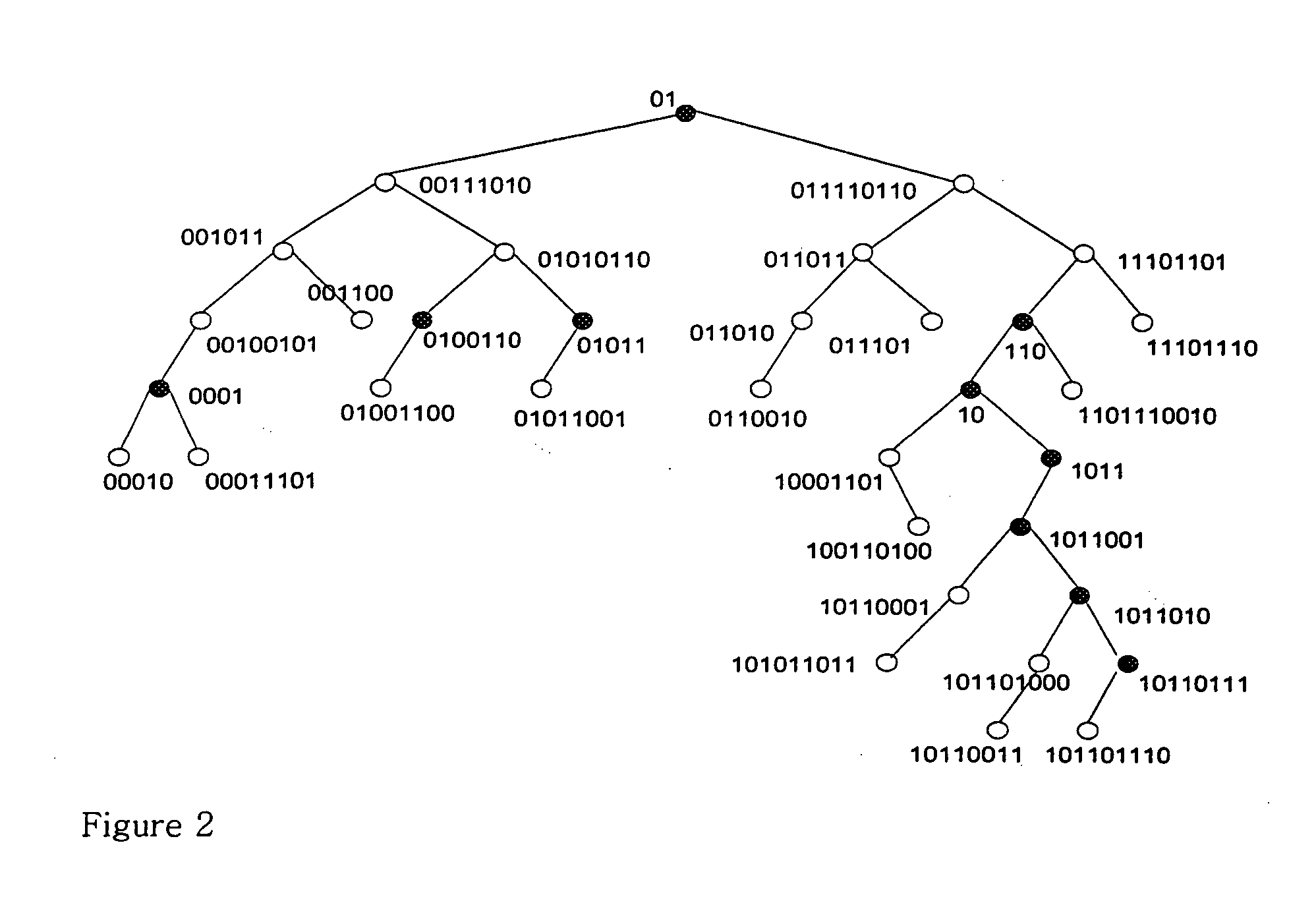

IP address lookup method using pipeline binary tree, hardware architecture, and recording medium

InactiveUS20050083937A1Data switching by path configurationMultiple digital computer combinationsHardware architectureBinary tree

The present invention relates to an IP address lookup method, a hardware architecture, and a recording medium. The present invention relates to an IP address lookup method searching the longest matched prefix by constructing a binary tree having enclosure prefixes as root nodes, extracting sub-trees of enclosures as individual trees from a maintree in the binary tree, and comparing an input address with prefixes included in nodes of the maintree and nodes of the sub-trees by using a pipelining scheme, a hardware architecture, and a recording medium. According to the present invention, it is possible to efficiently utilize a memory by using the best advantage of a binary prefix tree structure in that there is no empty node in the tree and the fact that a binary search process can be implemented by using pipelines. In addition, it is possible to provide a practical, excellent architecture capable of performing an address lookup procedure by using at most one content addressable memory access and one memory access.

Owner:LIM HYESOOK

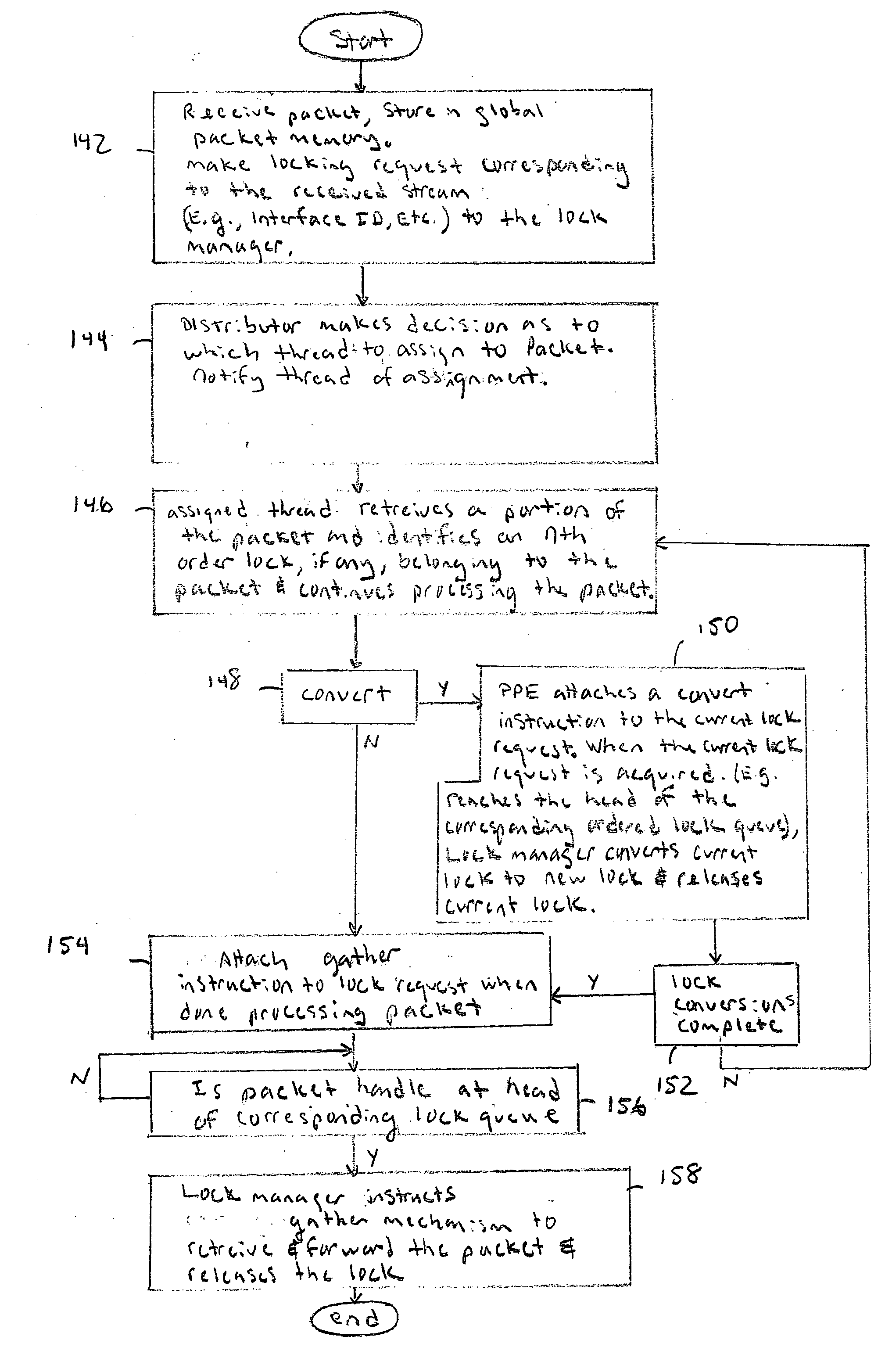

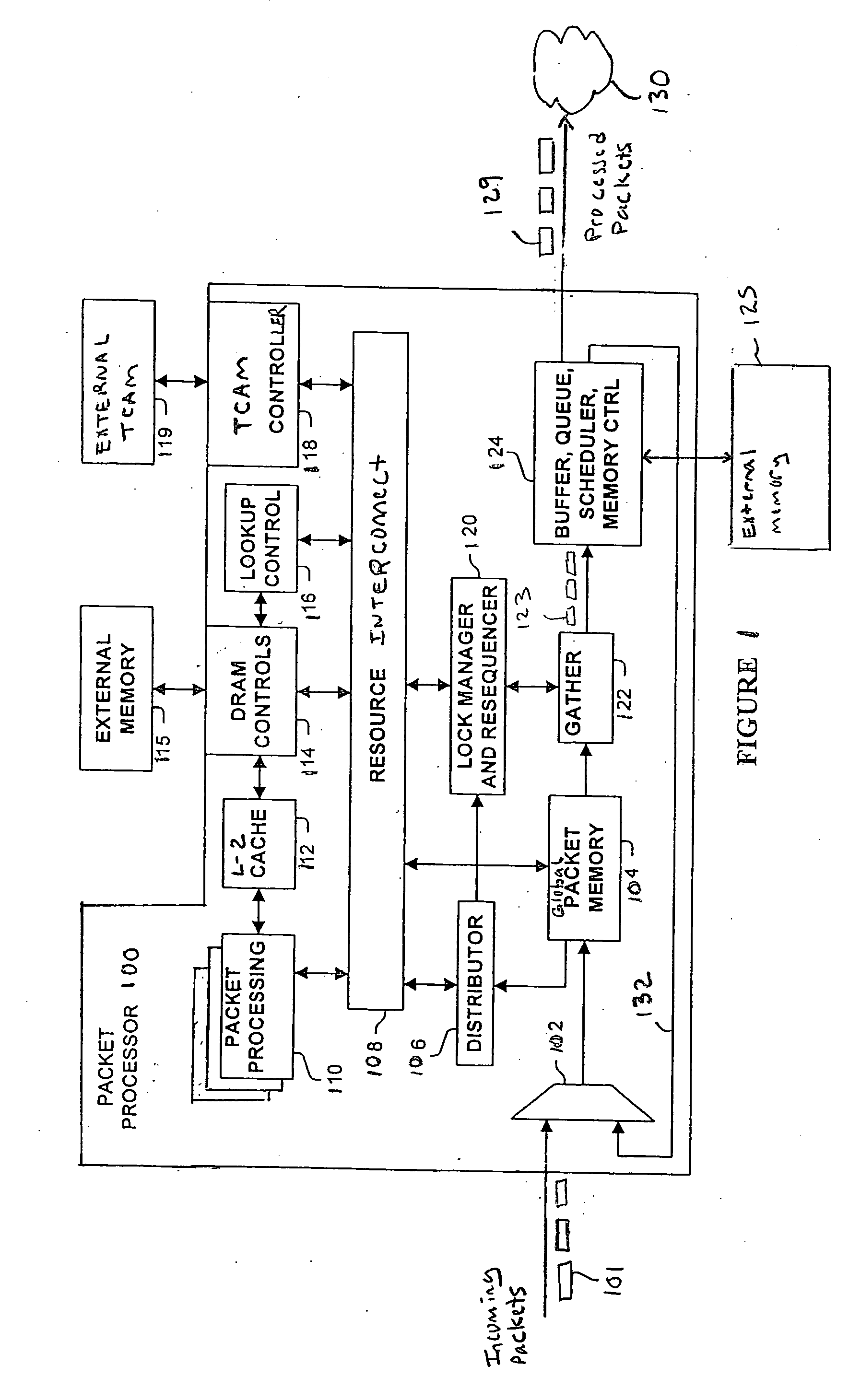

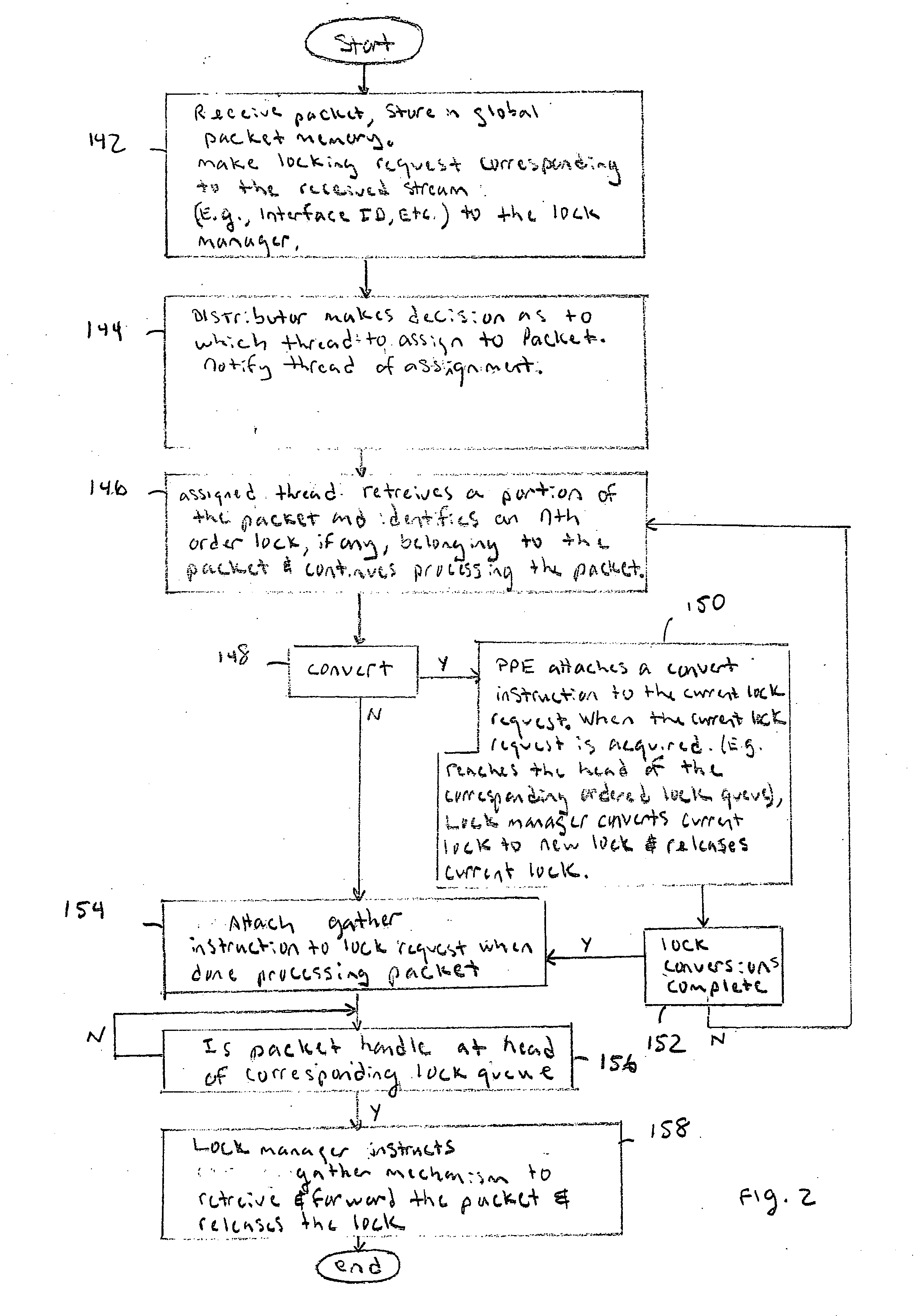

Multi-threaded packeting processing architecture

InactiveUS20060179156A1Quicker and high quality portingIncrease data rateDigital computer detailsData switching by path configurationData packHardware architecture

A network processor has numerous novel features including a multi-threaded processor array, a multi-pass processing model, and Global Packet Memory (GPM) with hardware managed packet storage. These unique features allow the network processor to perform high-touch packet processing at high data rates. The packet processor can also be coded using a stack-based high-level programming language, such as C or C++. This allows quicker and higher quality porting of software features into the network processor. Processor performance also does not severely drop off when additional processing features are added. For example, packets can be more intelligently processed by assigning processing elements to different bounded duration arrival processing tasks and variable duration main processing tasks. A recirculation path moves packets between the different arrival and main processing tasks. Other novel hardware features include a hardware architecture that efficiently intermixes co-processor operations with multi-threaded processing operations and improved cache affinity.

Owner:CISCO TECH INC

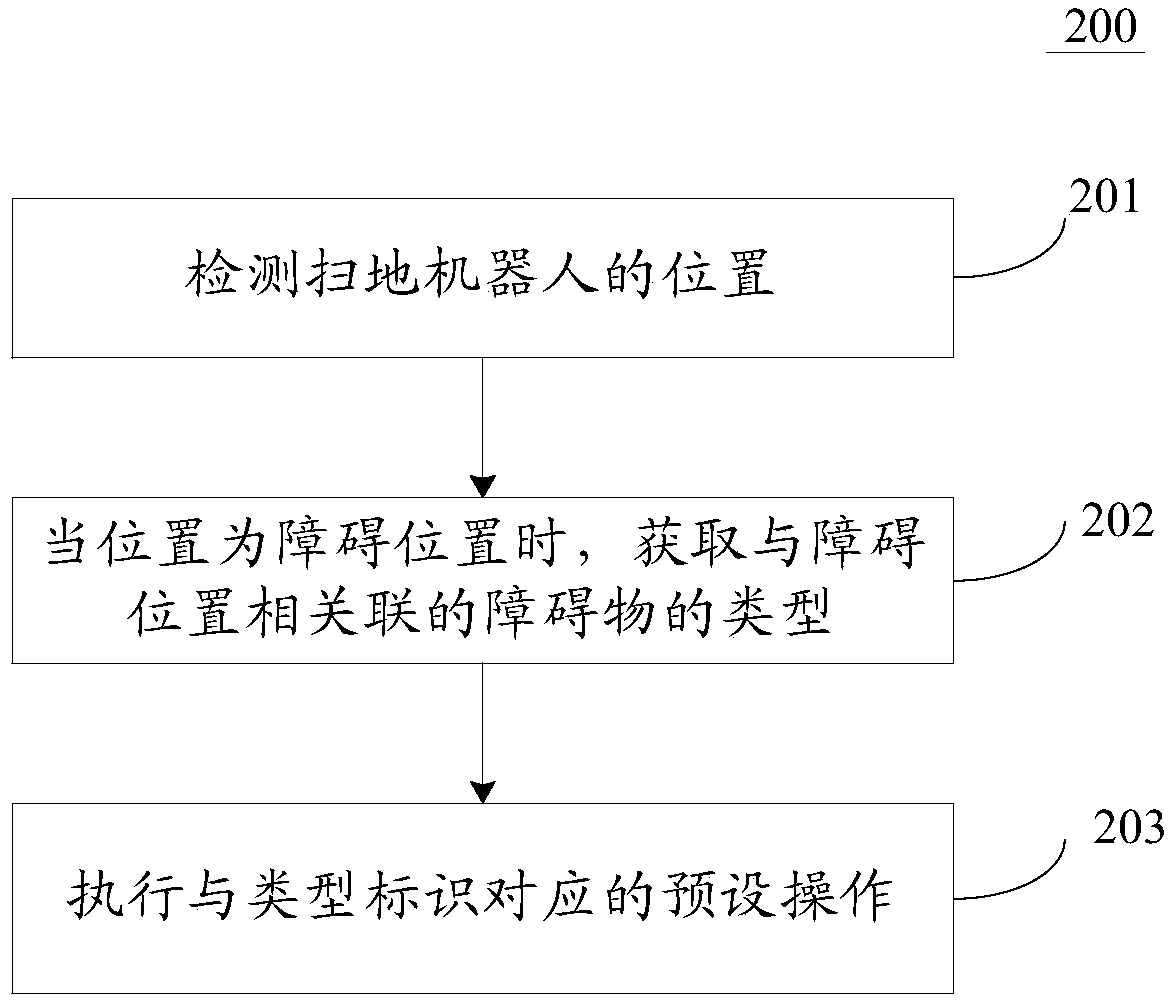

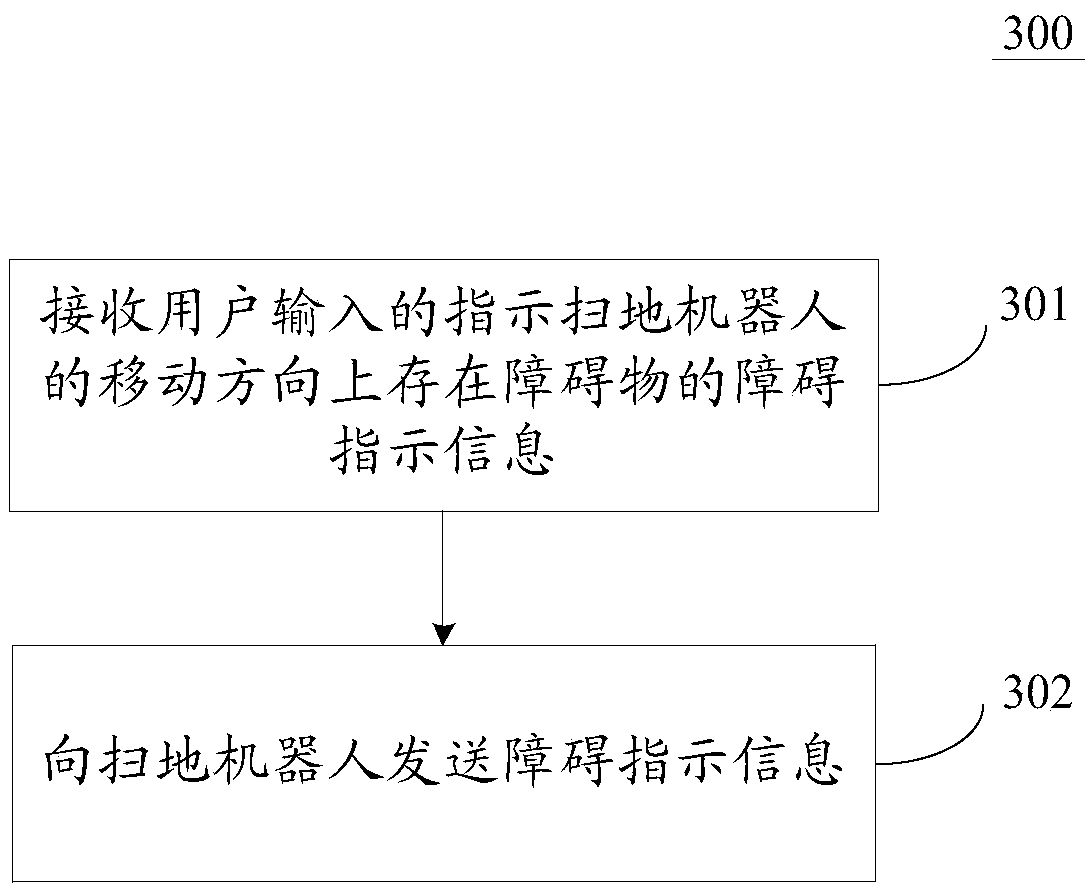

Robot cleaner, control method applied to same and terminal

ActiveCN105511478AImprove convenienceReduce stepsPosition/course control in two dimensionsVehiclesHardware architectureWorking environment

The invention discloses a robot cleaner, a control method applied to the same and a terminal. The method includes: detecting a position of the robot cleaner; when the position is an obstacle position, acquiring type identification of a corresponding obstacle type of the obstacle position; executing preset operations corresponding to the type identification. The robot cleaner, the control method applied to the robot cleaner and the terminal have the advantages that under the condition that a hardware architecture of the robot cleaner is not modified, obstacle types of the robot cleaner in a working environment can be determined according to received obstacle indication information sent by the terminal, so that the robot cleaner keeps away from obstacles automatically during operation, and convenience in application of the robot cleaner is improved while operations of a user are reduced during application of the robot cleaner.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

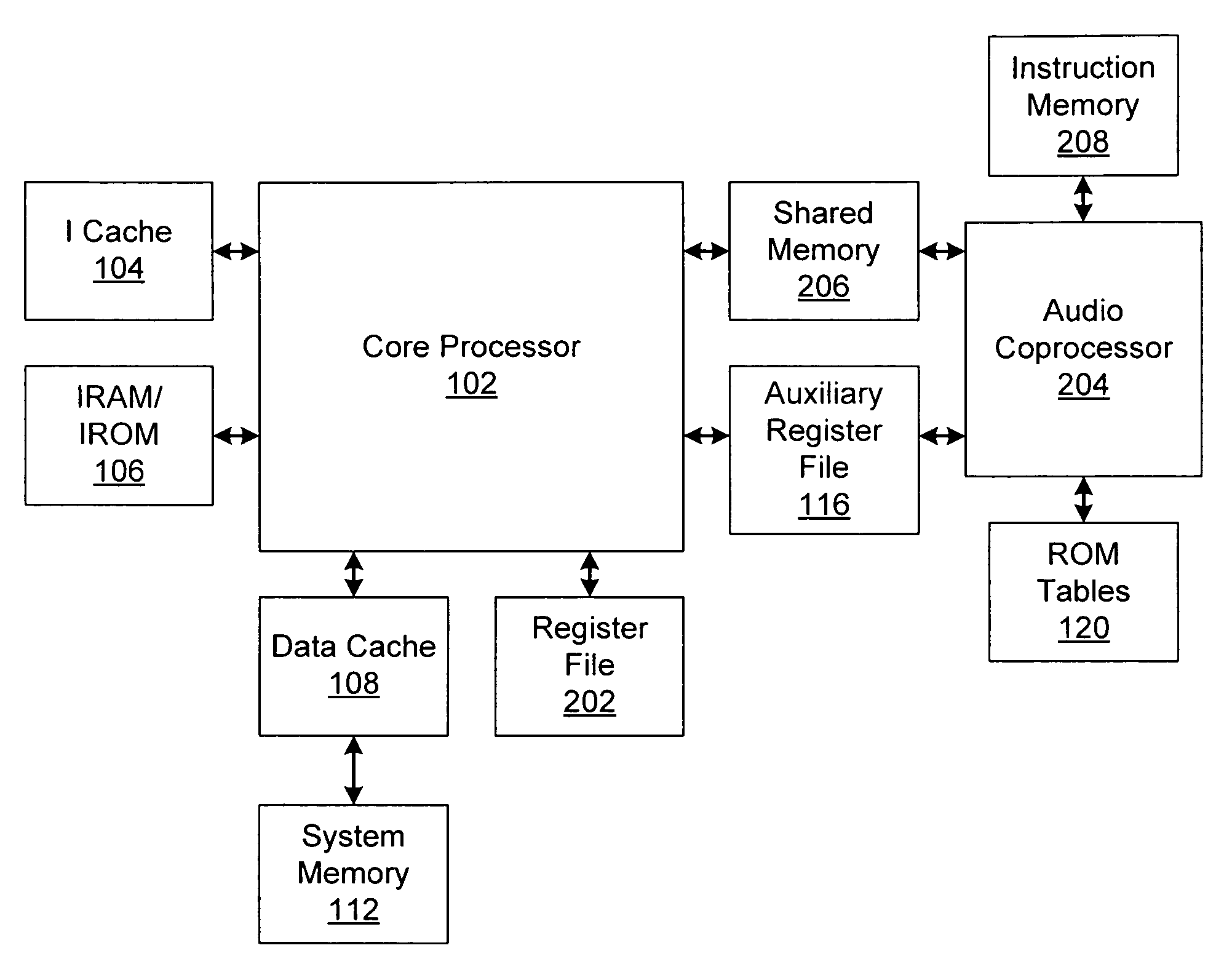

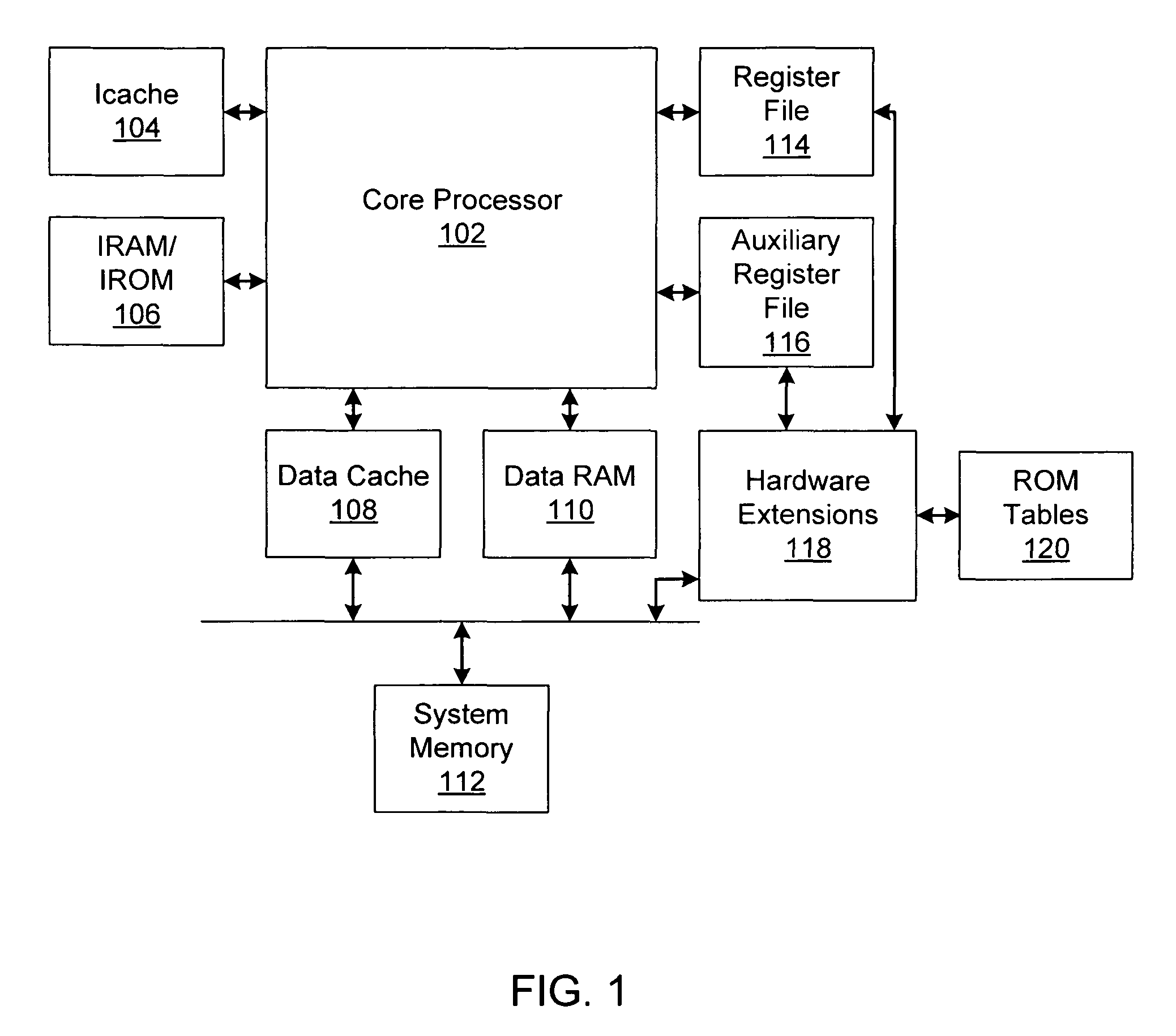

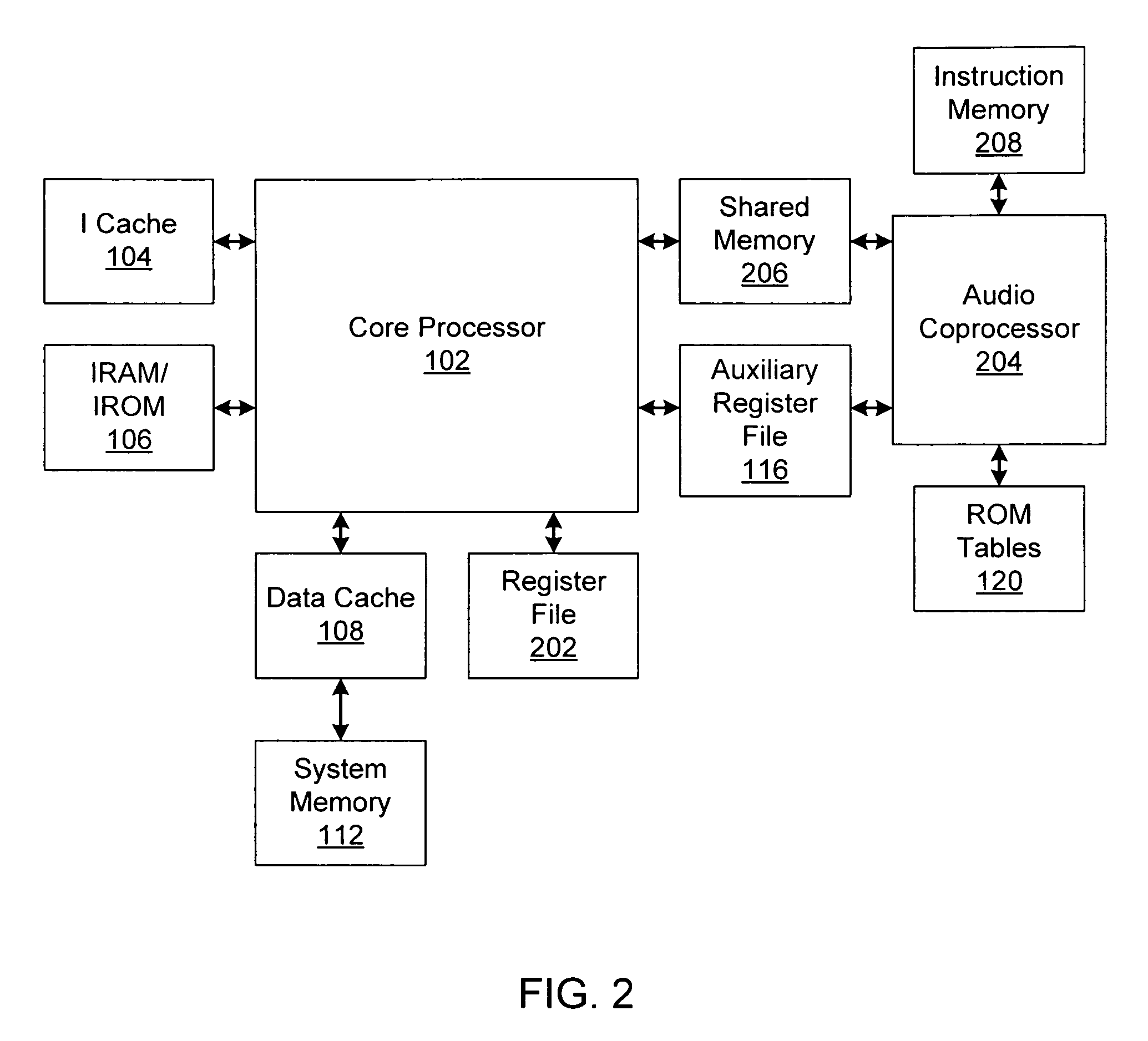

Processor extensions for accelerating spectral band replication

Owner:INTEL CORP

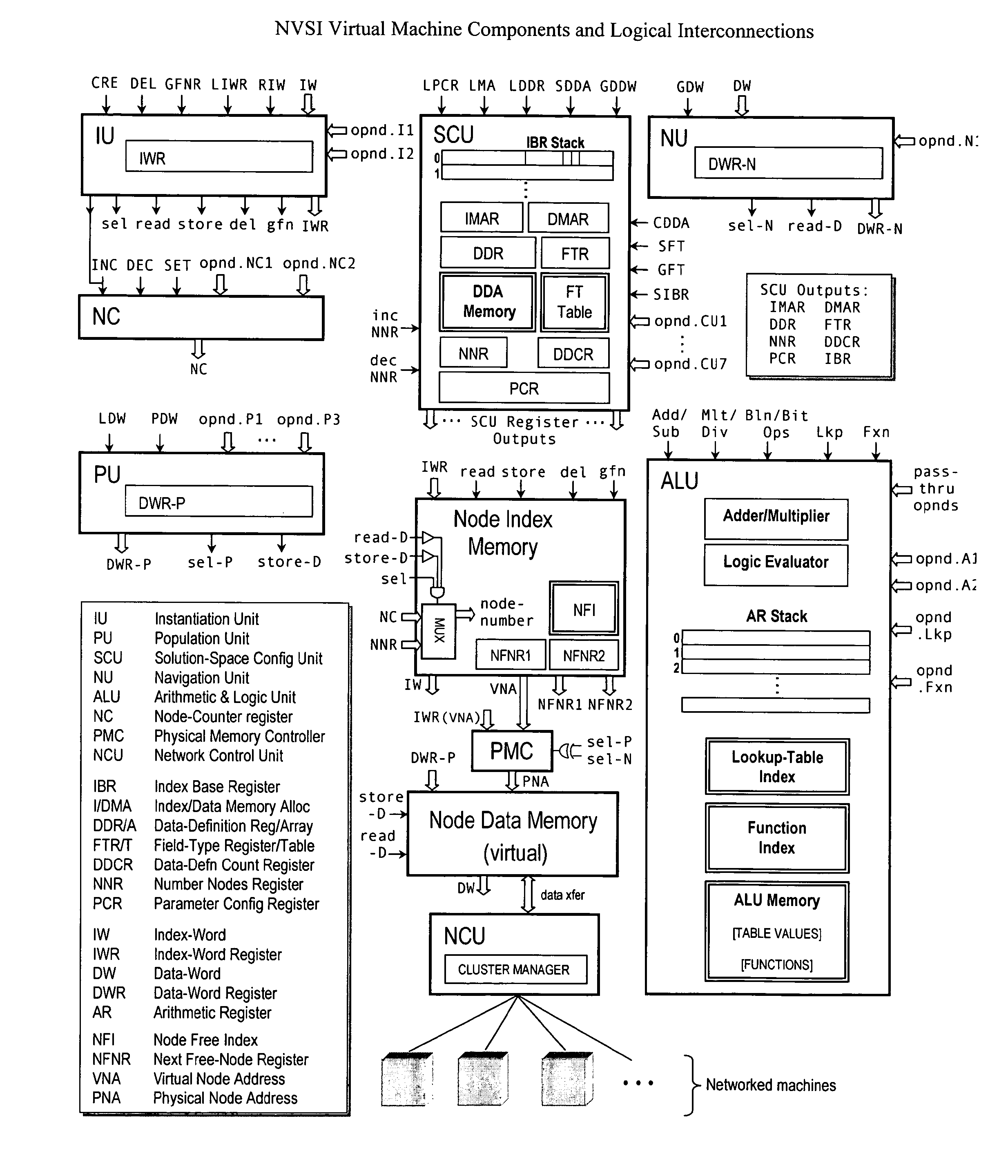

Virtual Supercomputer

ActiveUS20110004566A1Improve efficiencyFinanceResource allocationInformation processingOperational system

The virtual supercomputer is an apparatus, system and method for generating information processing solutions to complex and / or high-demand / high-performance computing problems, without the need for costly, dedicated hardware supercomputers, and in a manner far more efficient than simple grid or multiprocessor network approaches. The virtual supercomputer consists of a reconfigurable virtual hardware processor, an associated operating system, and a set of operations and procedures that allow the architecture of the system to be easily tailored and adapted to specific problems or classes of problems in a way that such tailored solutions will perform on a variety of hardware architectures, while retaining the benefits of a tailored solution that is designed to exploit the specific and often changing information processing features and demands of the problem at hand.

Owner:VERISCAPE

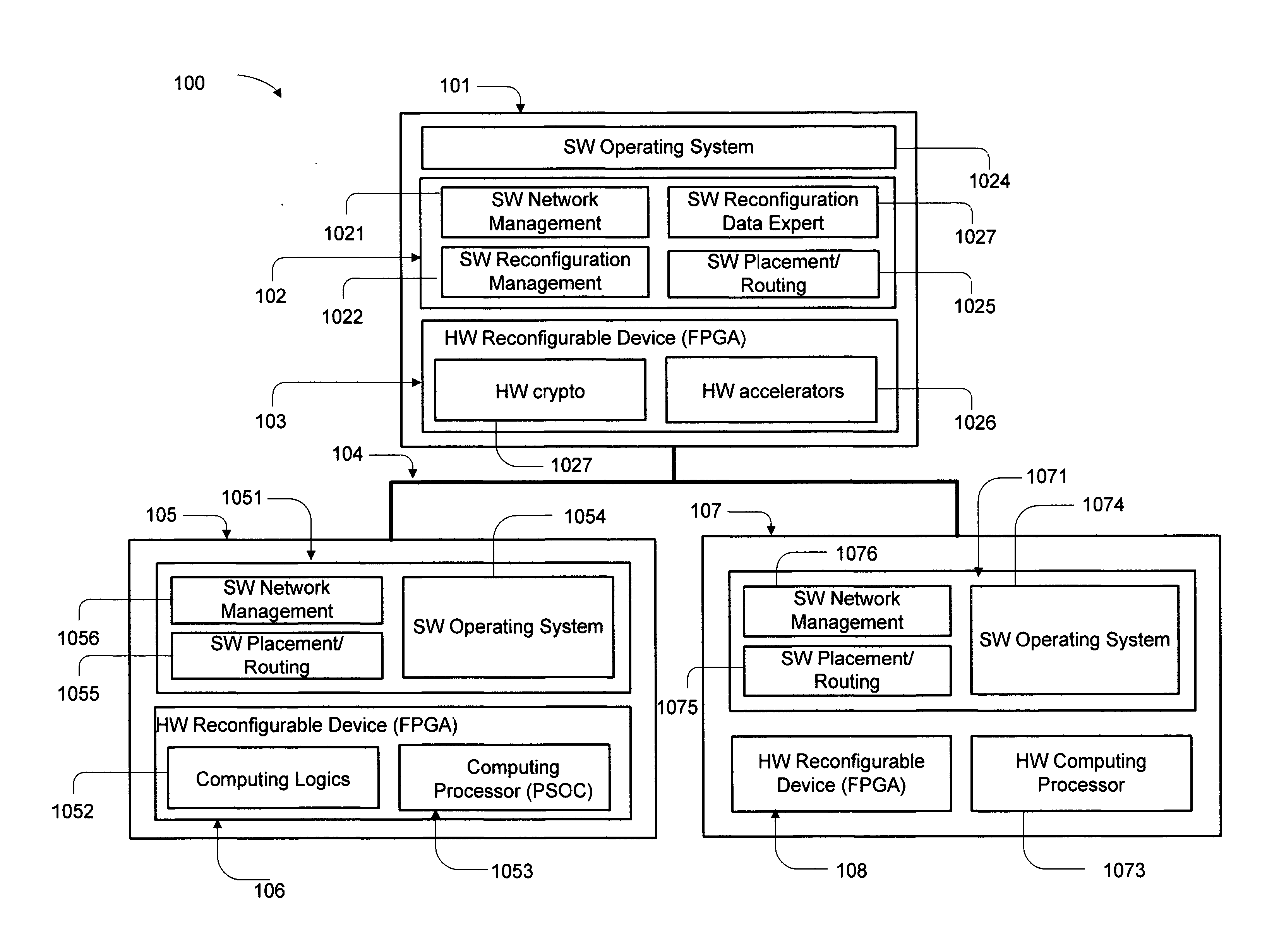

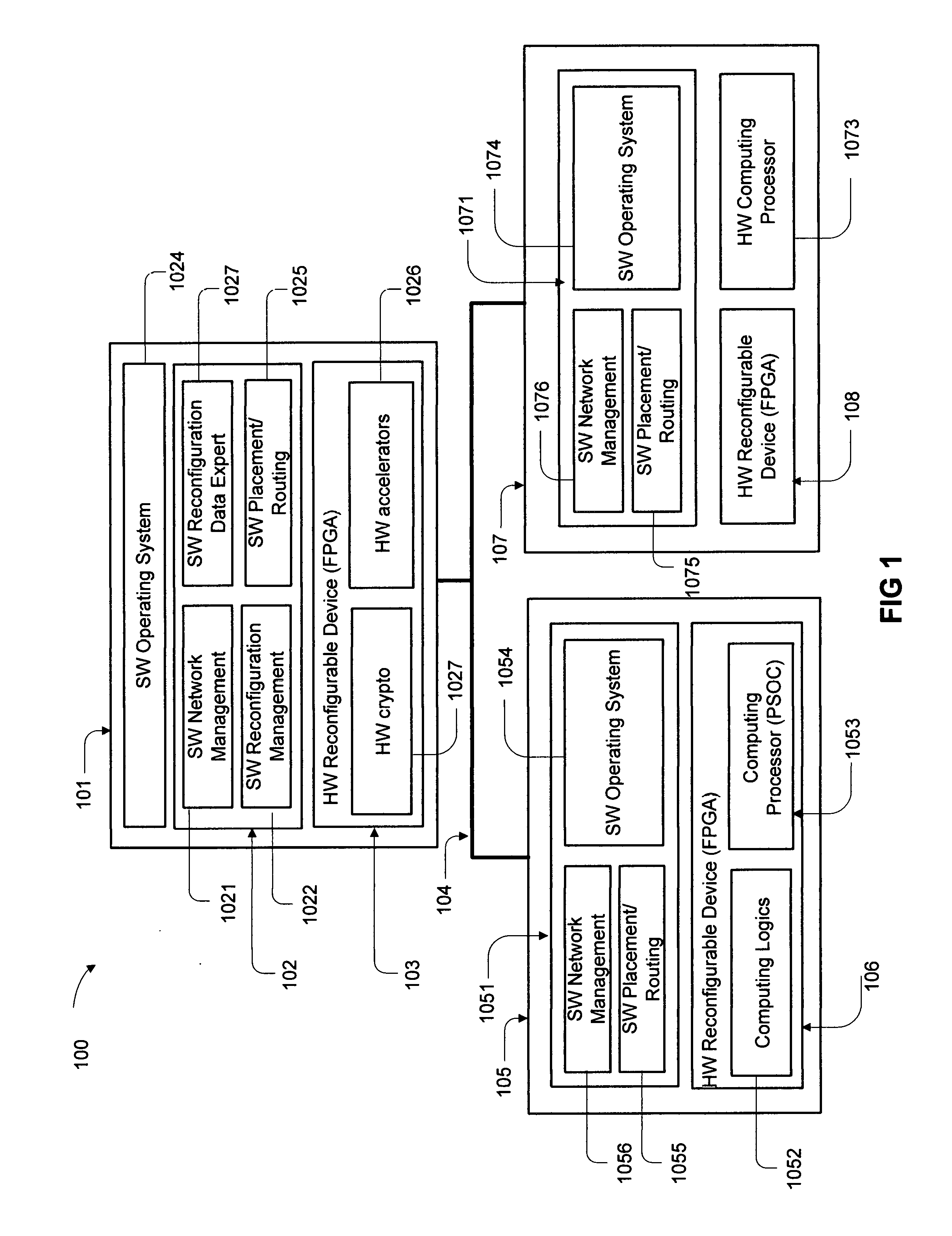

Embedded systems of internet-of-things incorporating a cloud computing service of FPGA reconfiguration

InactiveUS20160321081A1Improve performanceMemory architecture accessing/allocationData resettingHardware architectureSecure by design

IoT embedded systems as well as cloud computing infrastructure may merge processor soft cores with programmable logic (FPGAs) to yield a heterogeneous hardware-software processing ecosystem through which the embedded systems and infrastructure customize and adapt their computational power to the specific application in use. The embedded systems and cloud computing infrastructure with dynamic reconfiguration will bring neuroplasticity to the interconnected space of IoT embedded systems, enabling high-performance, customized, secure-by-design cloud computing services. A cloud server of IaaS is employed to evaluate the performances and efficiencies of embedded systems according to its hardware architectures, so that the computing service allow one reconfigurable system download its hardware architecture file or bitstream into other embedded systems via the cloud server.

Owner:KIM HYEUNG YUN +1

Hardware architecture for processing galileo alternate binary offset carrier (AltBOC) signals

InactiveUS6922167B2Beacon systems using radio wavesPolarisation/directional diversityNumerically controlled oscillatorCarrier signal

A GNSS receiver tracks the AltBOC (15,10), or composite E5a and E5b, codes using hardware that locally generates the complex composite signal by combining separately generated real and the imaginary components of the complex signal. To track the dataless composite pilot code signals that are on the quadrature channel of the AltBOC signal, the receiver operates PRN code generators that produce replica E5a and E5b PRN codes and square wave generators that generate the real and imaginary components of the upper and lower subcarriers, and combines the signals to produce a locally generated complex composite code. The receiver removes the complex composite code from the received signal by multiplying the received signal, which has been downconverted to baseband I and Q signal components, by the locally generated complex composite code. The receiver then uses the results, which are correlated I and Q prompt signal values, to estimate the center frequency carrier phase angle tracking error. The error signal is used to control a numerically controlled oscillator that operates in a conventional manner, to correct the phase angle of the locally generated center frequency carrier. The receiver also uses early and late versions of the locally generated complex composite pilot code in a DLL, and aligns the locally generated composite pilot code with the received composite pilot code by minimizing the corresponding DLL error signal. Once the receiver is tracking the composite pilot code, the receiver determines its pseudorange and global position in a conventional manner. The receiver also uses a separate set of correlators to align locally generated versions of the in-phase composite PRN codes with the in-phase channel codes in the received signal, and thereafter, recover the data that is modulated thereon.

Owner:EUROPEAN SPACE AGENCY

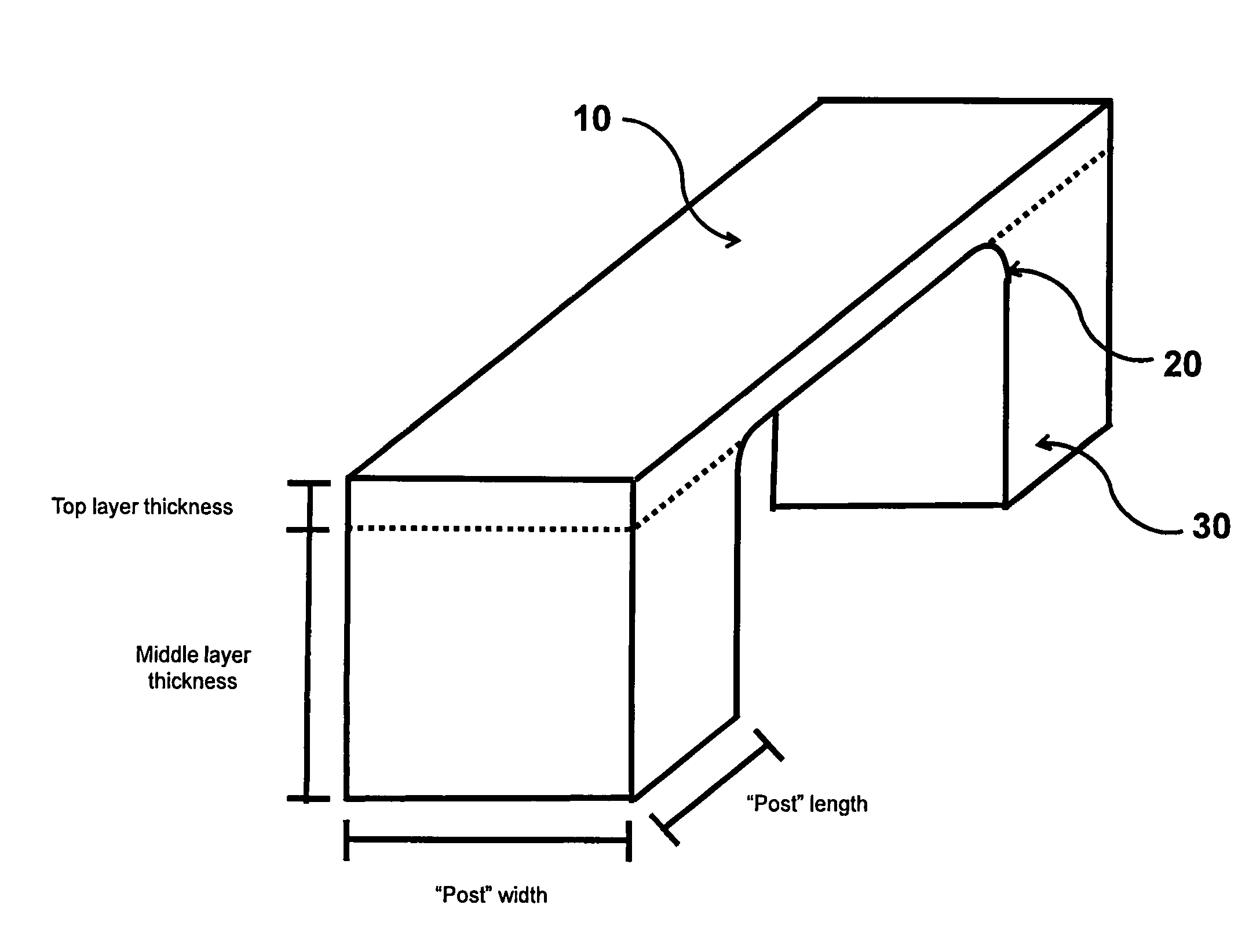

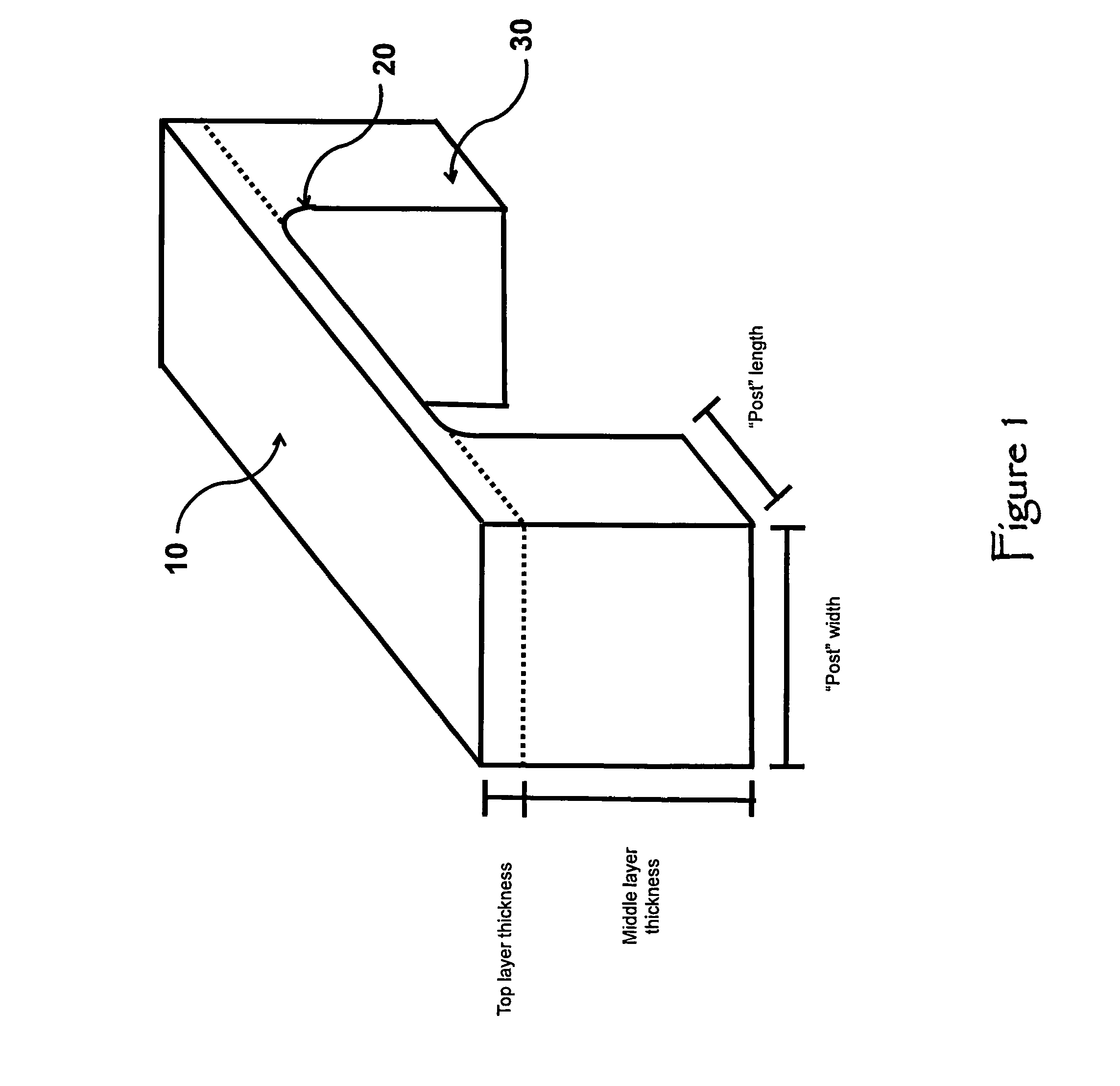

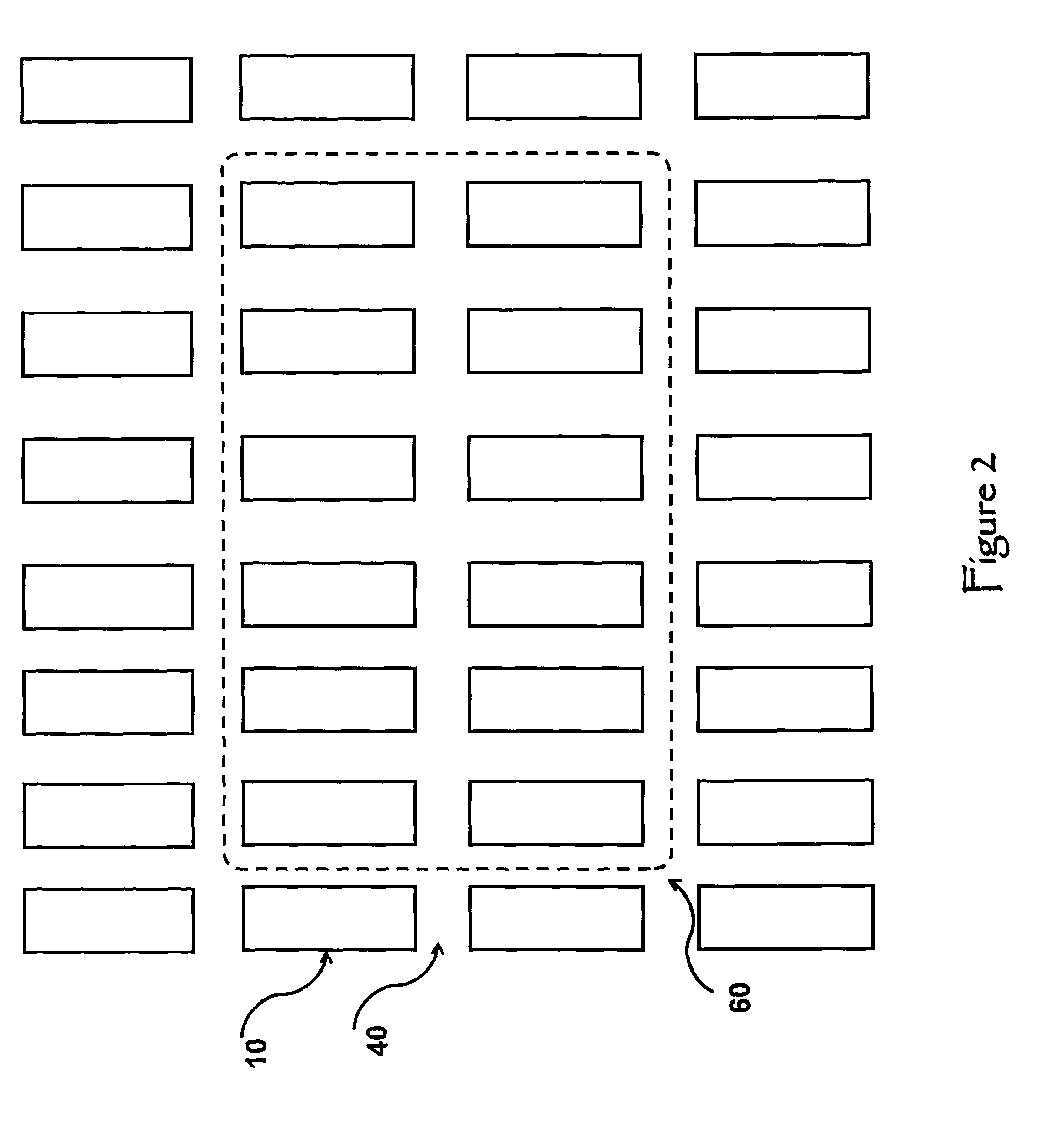

Number resolving superconducting nanowire photon detector via a multi-layer hardware architecture

ActiveUS8565844B2Highly efficient number resolving capabilityIncrease the number ofRadiation pyrometrySuperconductor detailsNanowireHardware architecture

A superconducting nanowire photon detector apparatus comprising detection, insulating, and substrate layers. The insulating layer provides electrical isolation of a plurality of individual detector elements from the interconnection network fabricated on the substrate layer except where electrical interconnection between the inputs and outputs of each detector element and the interconnection network is intended.

Owner:THE UNITED STATES OF AMERICA AS REPRESETNED BY THE SEC OF THE AIR FORCE

FPGA parallel acceleration method based on convolution neural network (CNN)

The invention discloses an FPGA parallel acceleration method based on a convolution neural network (CNN), and the method comprises the following steps: (1), building a CNN model; (2), configuring a hardware architecture; (3), configuring a convolution operation unit. The beneficial effects of the invention lies in that the method employs the FPGA for implementing the CNN, increases the speed of CNN calculation, compared with a GPU, relative to a handwriting image dataset MNIST by nearly five times through designing an optimization hardware acceleration scheme, and achieves the 10-time acceleration when compared with a 12-core CPU; the power consumption is one third of the power consumption of the CPU.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT

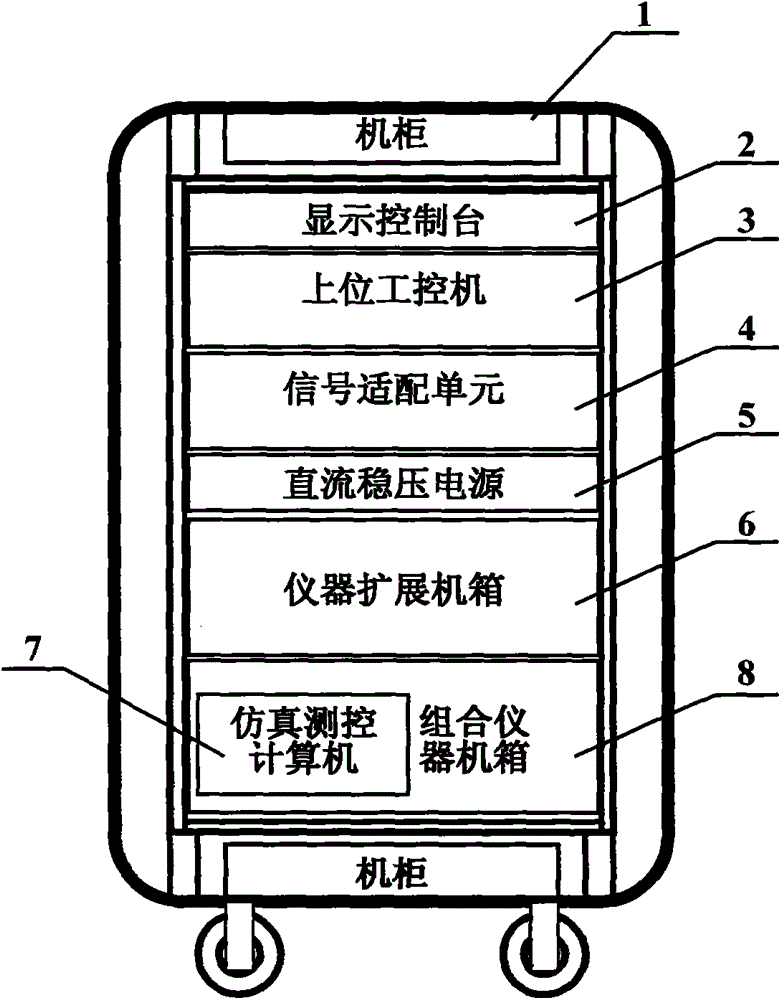

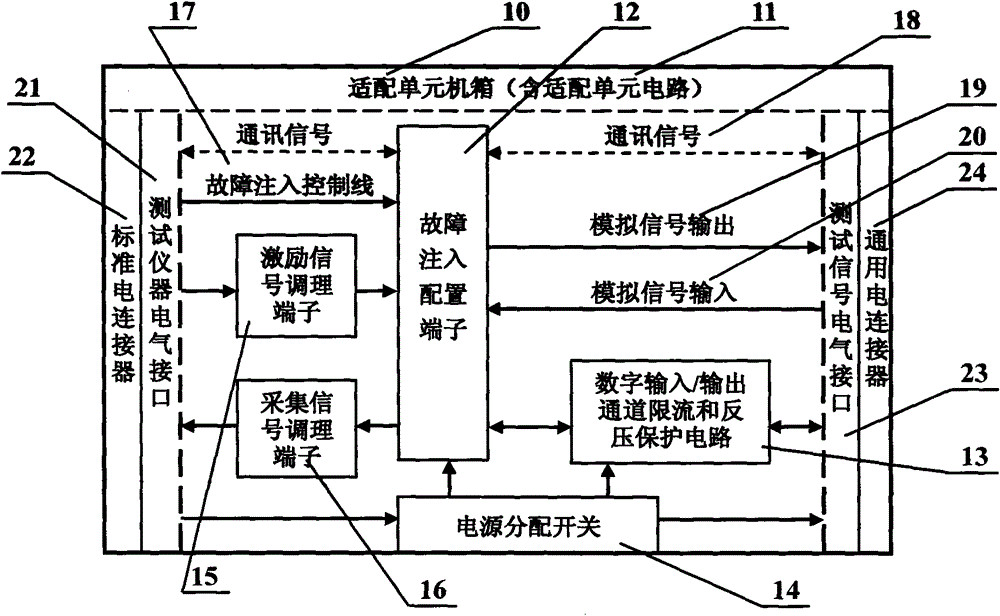

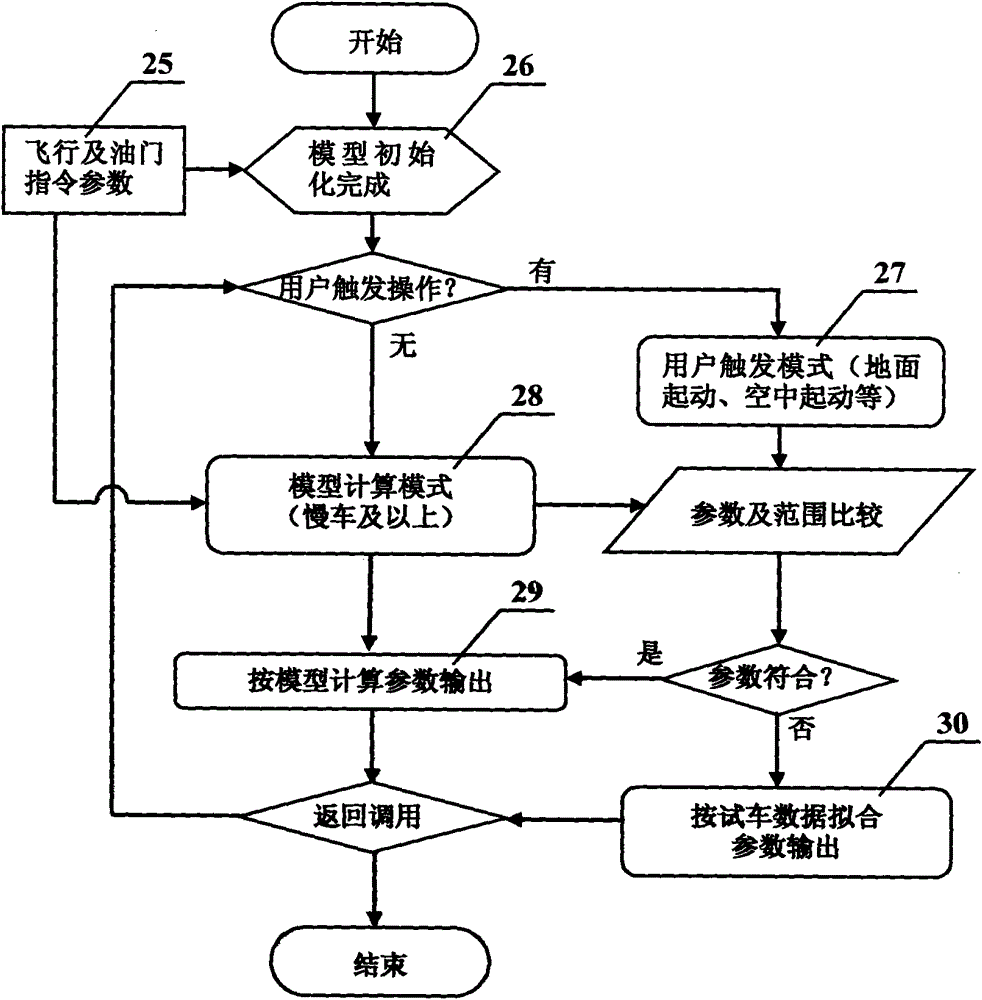

Universal simulator for aircraft engines

InactiveCN104699068AImprove compatibilityImprove scalabilityElectric testing/monitoringAviationMathematical model

The invention discloses a universal simulator for aircraft engines. The universal simulator comprises an upper industrial personal computer, a simulation measurement and control computer, a display control desk, a combination instrument case with a plurality of instrument hardware modules, an instrument extension case, a signal adaptation unit, a direct-current voltage-stabilized source and a special testing cable. The universal simulator has the advantages that mathematical models of the engines are operated by an embedded high-performance real-time operating system of the simulation measurement and control computer, so that the real-time performance and the effectiveness of simulation experiments can be guaranteed; hardware architectures for forming testing systems are standard PXI [PCI (peripheral component interconnect) extensions for instrumentation] bus open architectures, accordingly, the hardware modules of various mature PXI bus interfaces can be extended and configured according to particular testing application, and the universal simulator is high in automation degree, extensibility and compatibility and good in maintainability; the signal adaptation unit is configured with standard and universal electric connectors by means of integration, and only corresponding matched testing connecting cables need to be replaced, so that electric connection of the testing systems can be quickly reconstructed by the simulator for the engines, and requirements of platforms on the universality can be met.

Owner:GUIZHOU AERONAUTICAL ENGINE INST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com