Patents

Literature

610 results about "Performance computing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

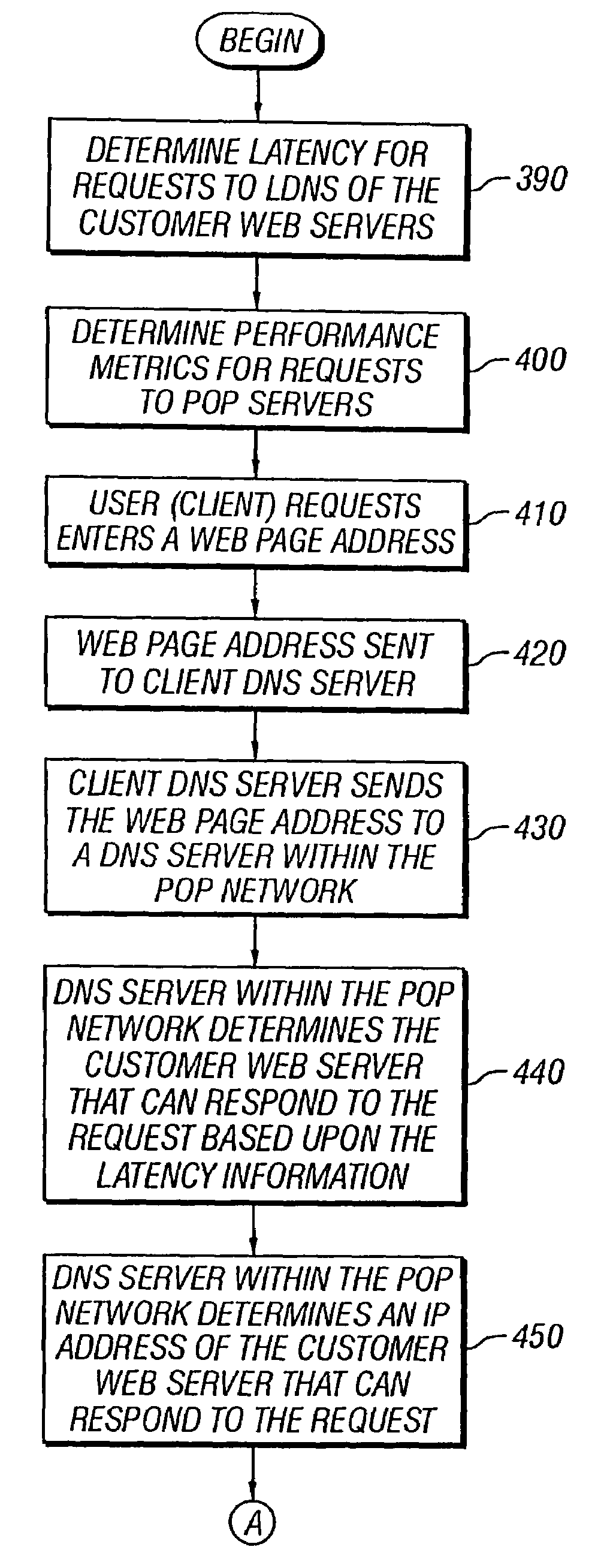

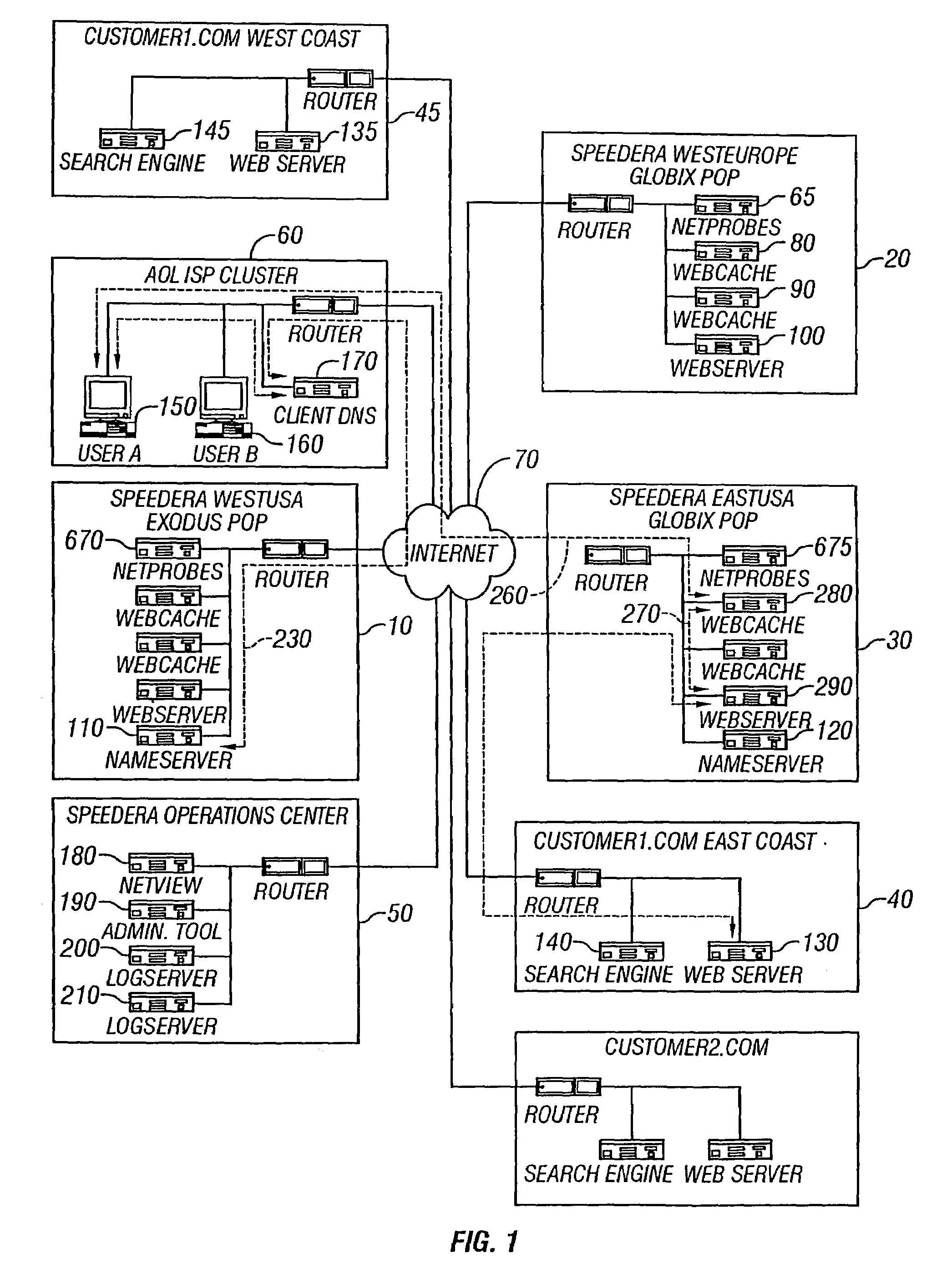

Performance computer network method

InactiveUS7225254B1Improve user experienceReduce download timeMetering/charging/biilling arrangementsDigital computer detailsTraffic capacityWeb service

A method for a computer network includes sending a first request from a web client for resolving a first web address of a web page to a client DNS server, sending the first request from the client DNS server to a POP DNS server within a POP server network, using a probe server in the POP server network to determine traffic loads of a plurality of customer web servers, each of the customer web servers storing the web page, using the POP DNS server to determine a customer web server from the plurality of customer web servers, the customer web server having a traffic load lower than traffic loads of other customer web servers in the plurality of customer web servers, requesting the web page from the customer web server, the web page including static content represented by an embedded URL, sending the web page from the customer web server to the web client, sending a second request from the web client for resolving the URL to the client DNS server. sending the second request from the client DNS server to the POP DNS server within a POP server network, using the probe server to determine service metrics of a plurality of web caches within the POP server network, using the POP DNS server to determine a web cache from the plurality of web caches, the web cache having service metrics more appropriate for the second request than service metrics of other web caches in the plurality of web caches, requesting the static content from the web cache, sending the static content to the web client, and outputting the static content with the web client.

Owner:AKAMAI TECH INC

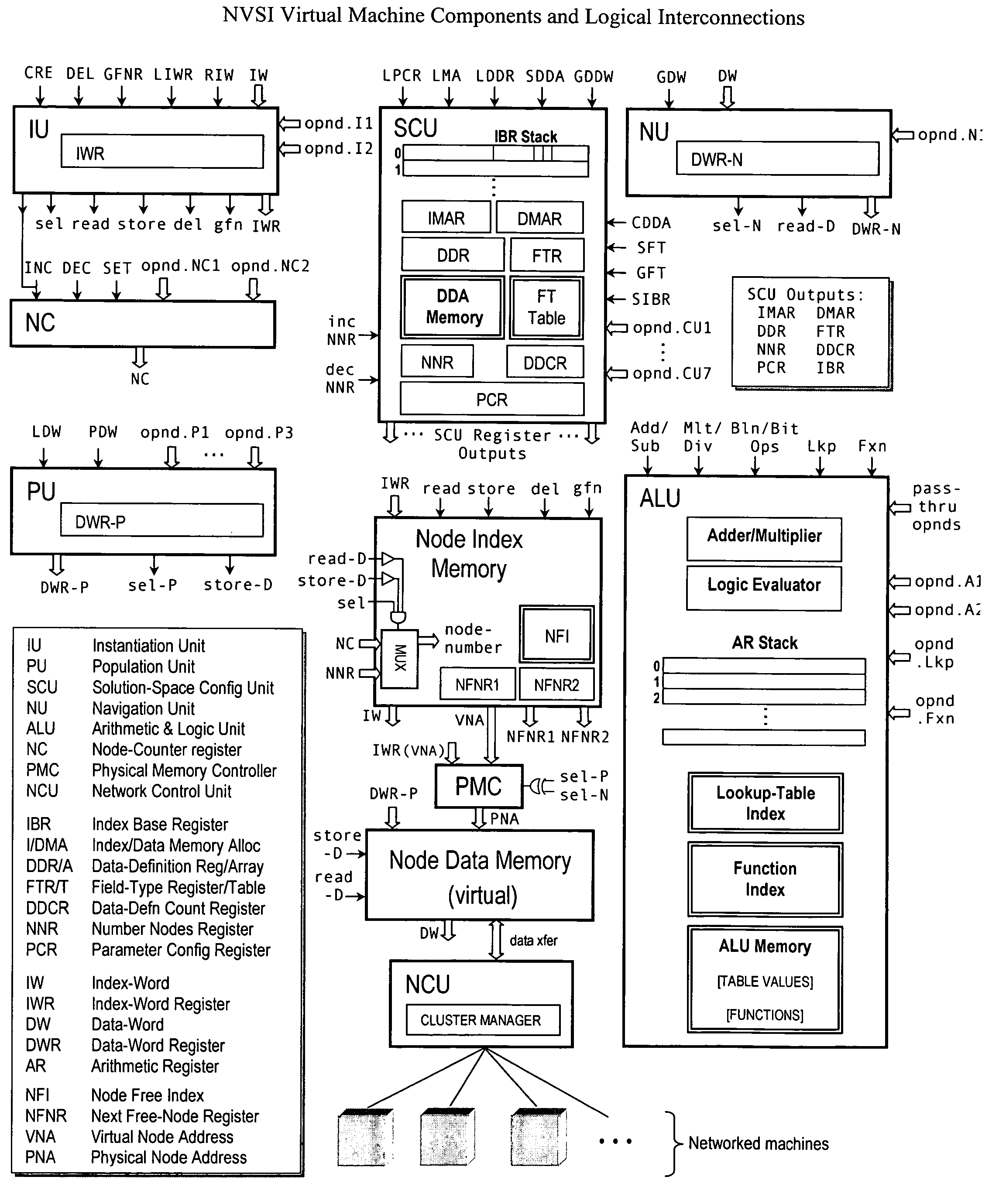

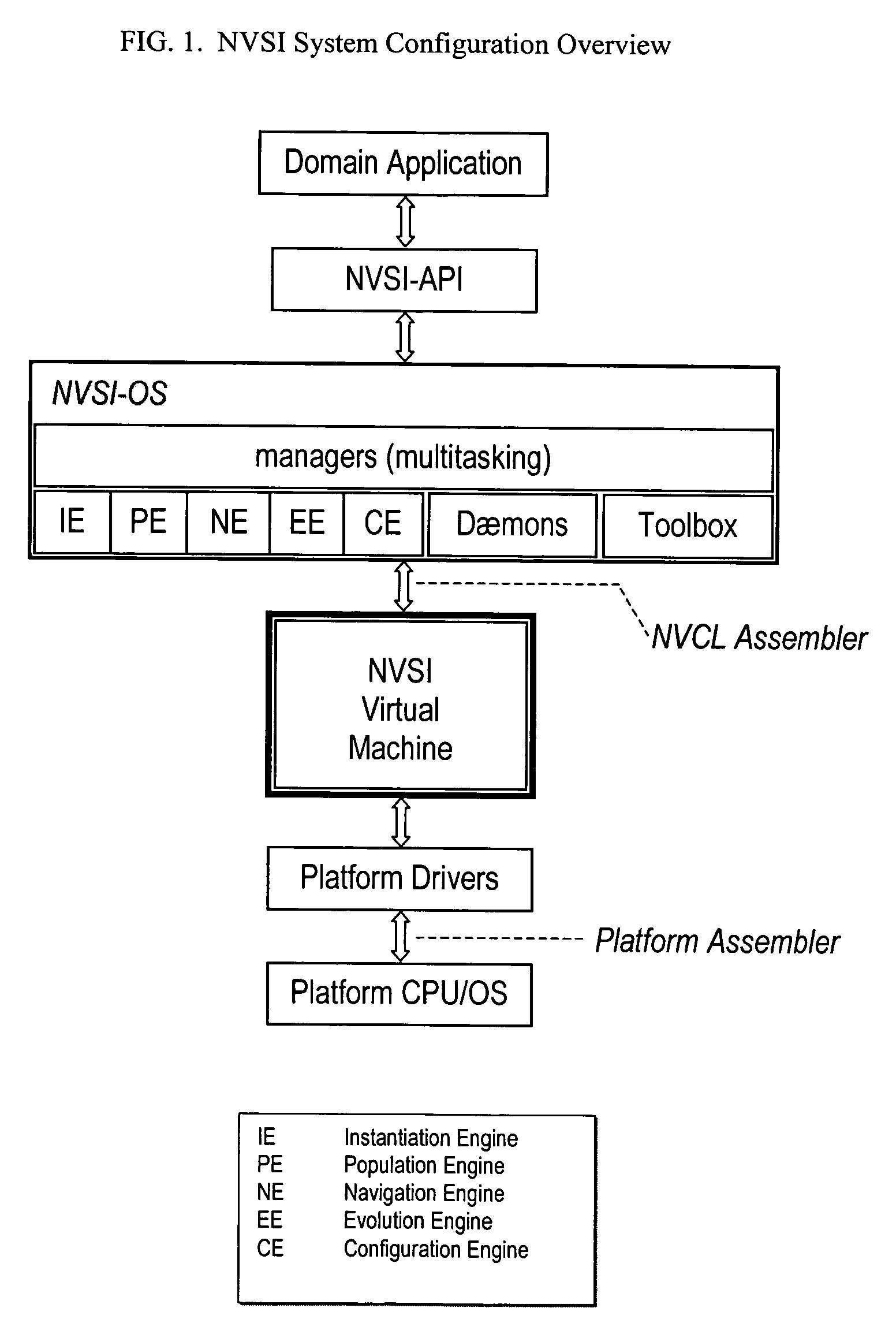

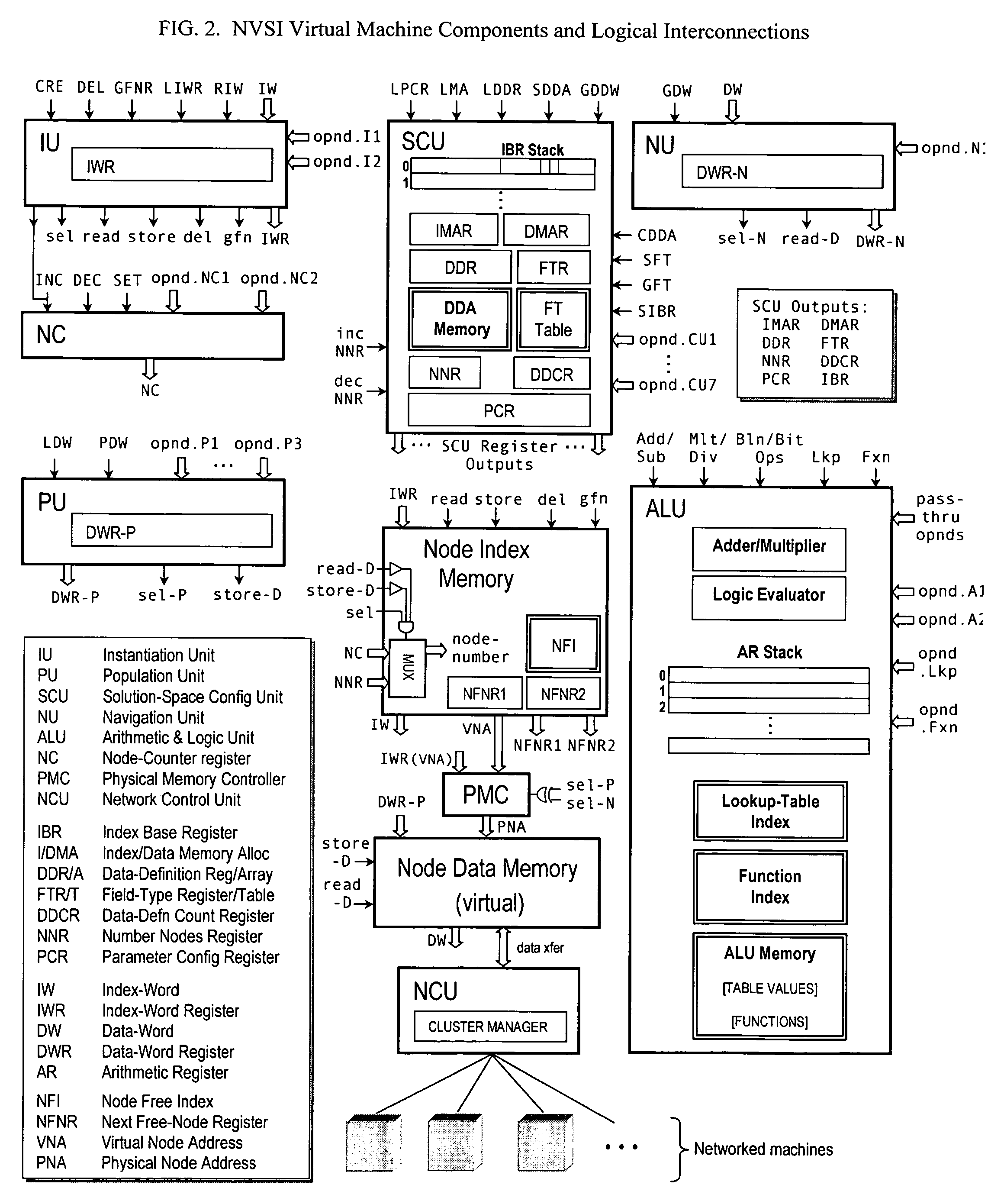

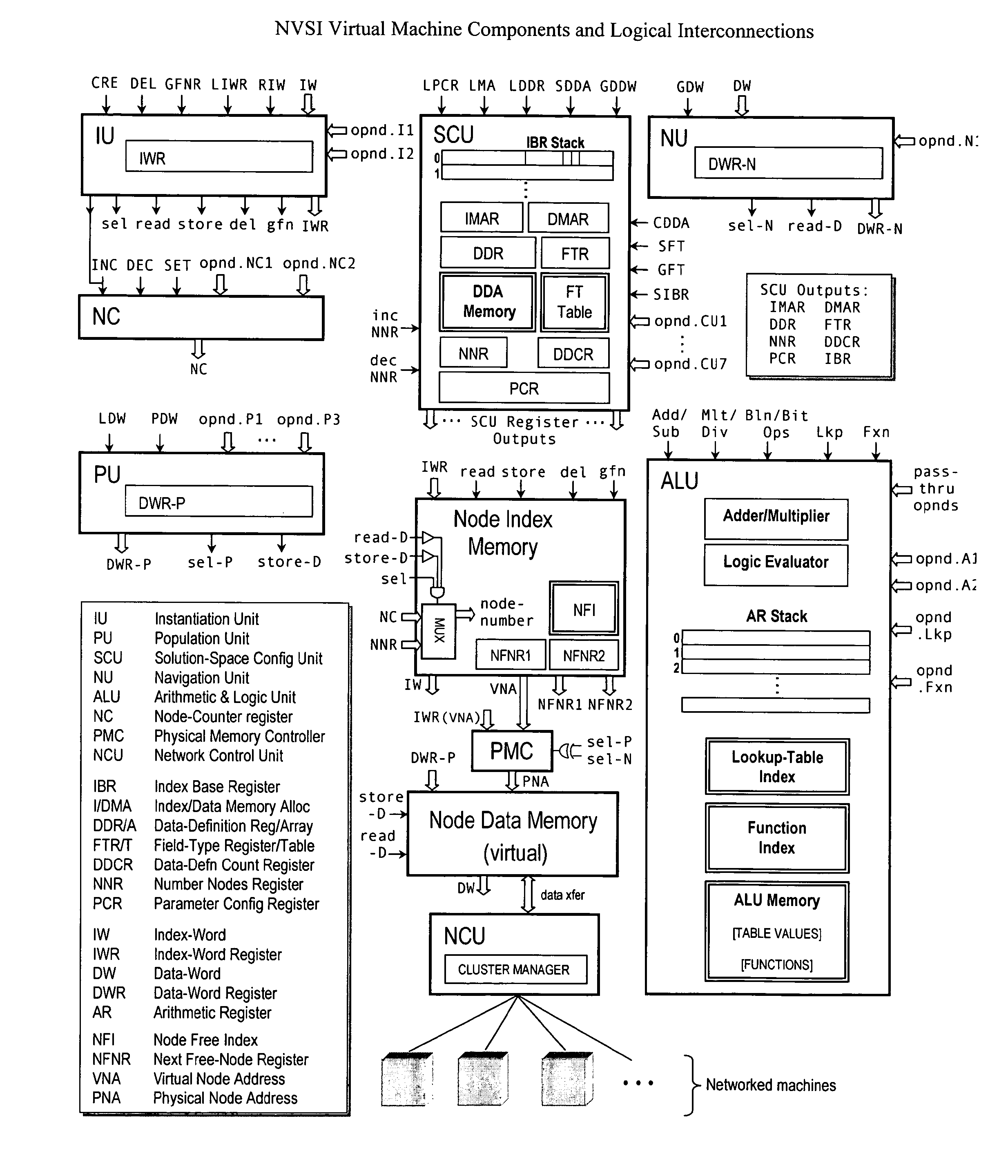

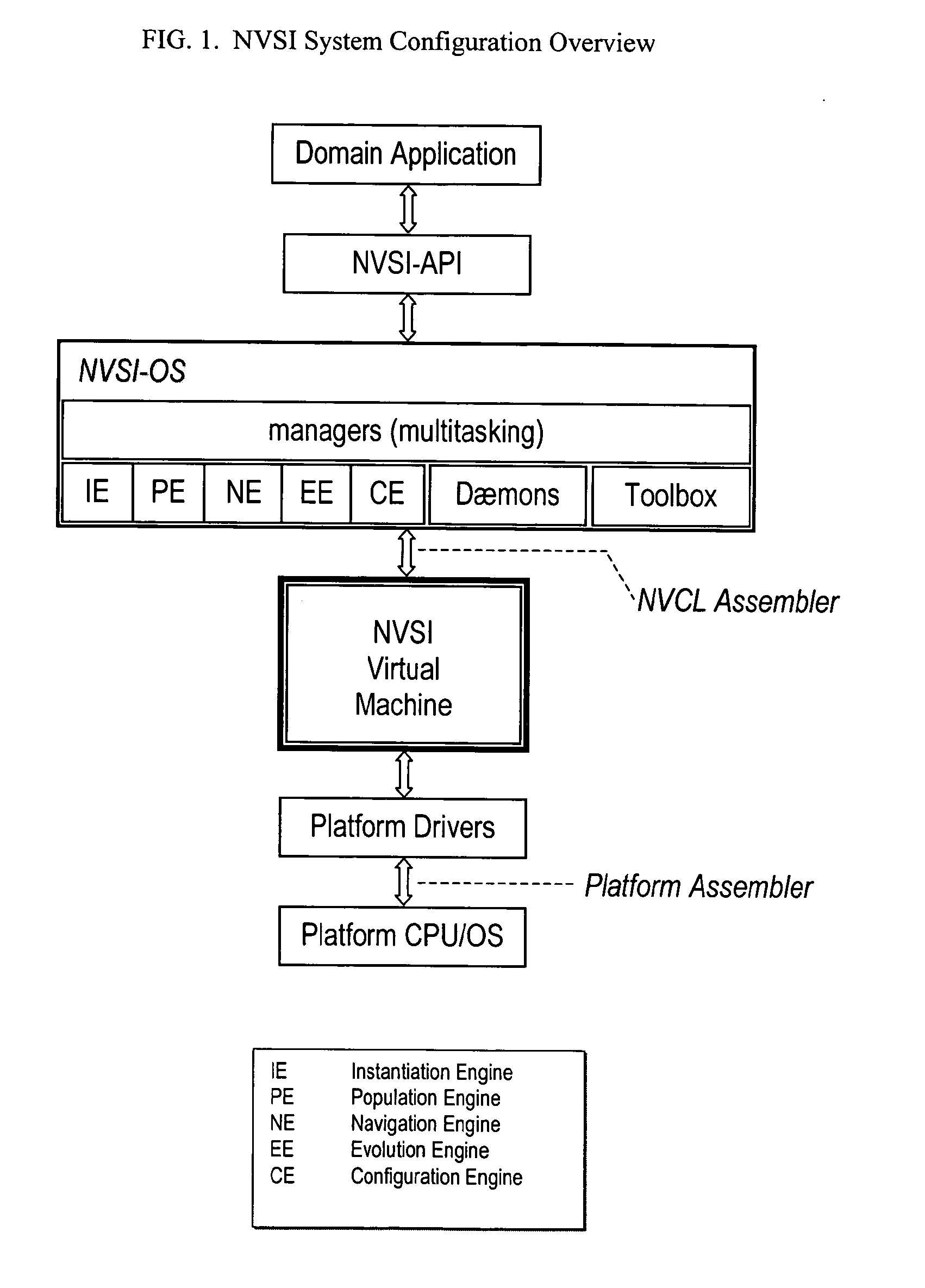

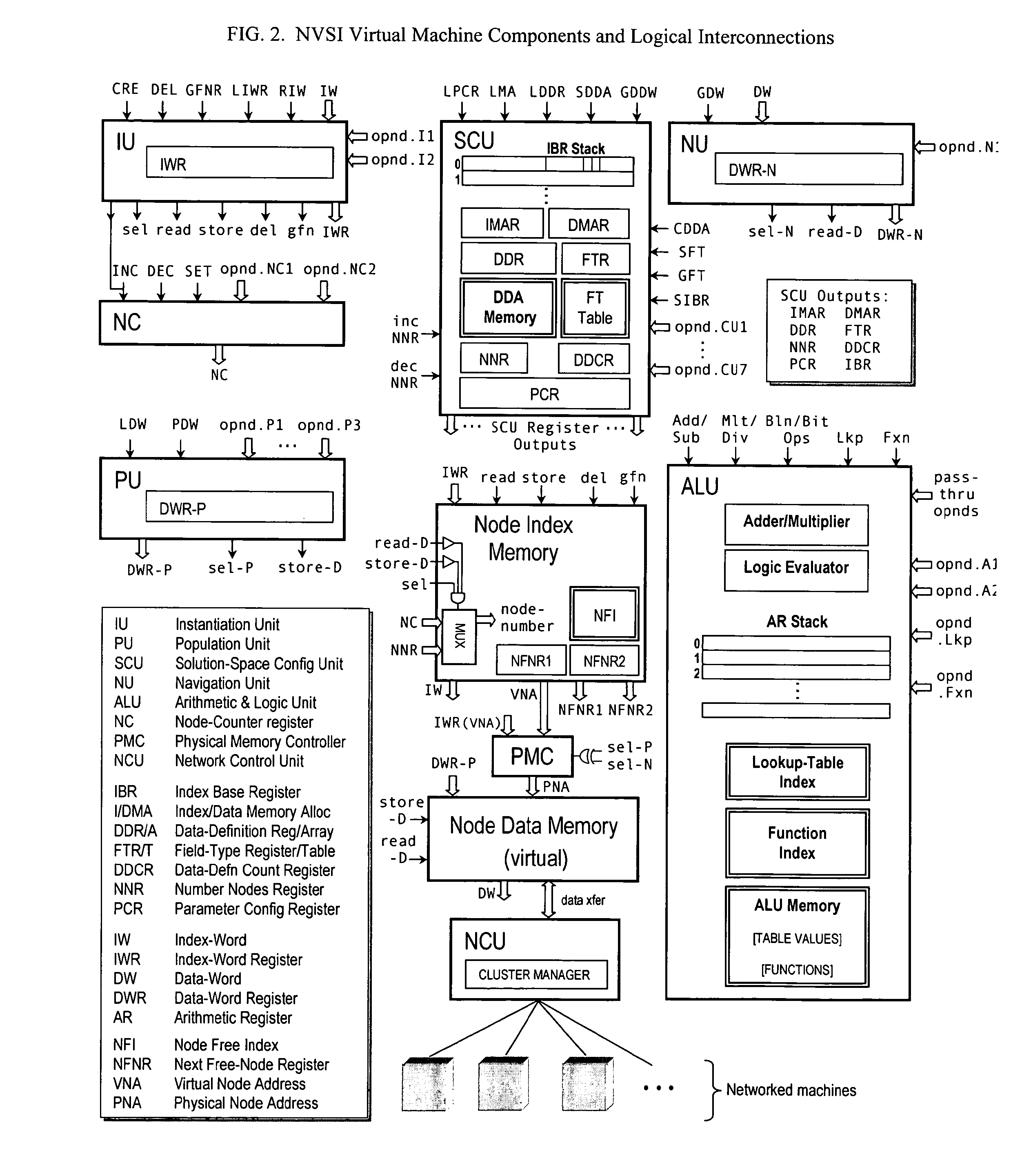

Virtual supercomputer

ActiveUS7774191B2Improve efficiencyFinanceResource allocationInformation processingOperational system

The virtual supercomputer is an apparatus, system and method for generating information processing solutions to complex and / or high-demand / high-performance computing problems, without the need for costly, dedicated hardware supercomputers, and in a manner far more efficient than simple grid or multiprocessor network approaches. The virtual supercomputer consists of a reconfigurable virtual hardware processor, an associated operating system, and a set of operations and procedures that allow the architecture of the system to be easily tailored and adapted to specific problems or classes of problems in a way that such tailored solutions will perform on a variety of hardware architectures, while retaining the benefits of a tailored solution that is designed to exploit the specific and often changing information processing features and demands of the problem at hand.

Owner:VERISCAPE

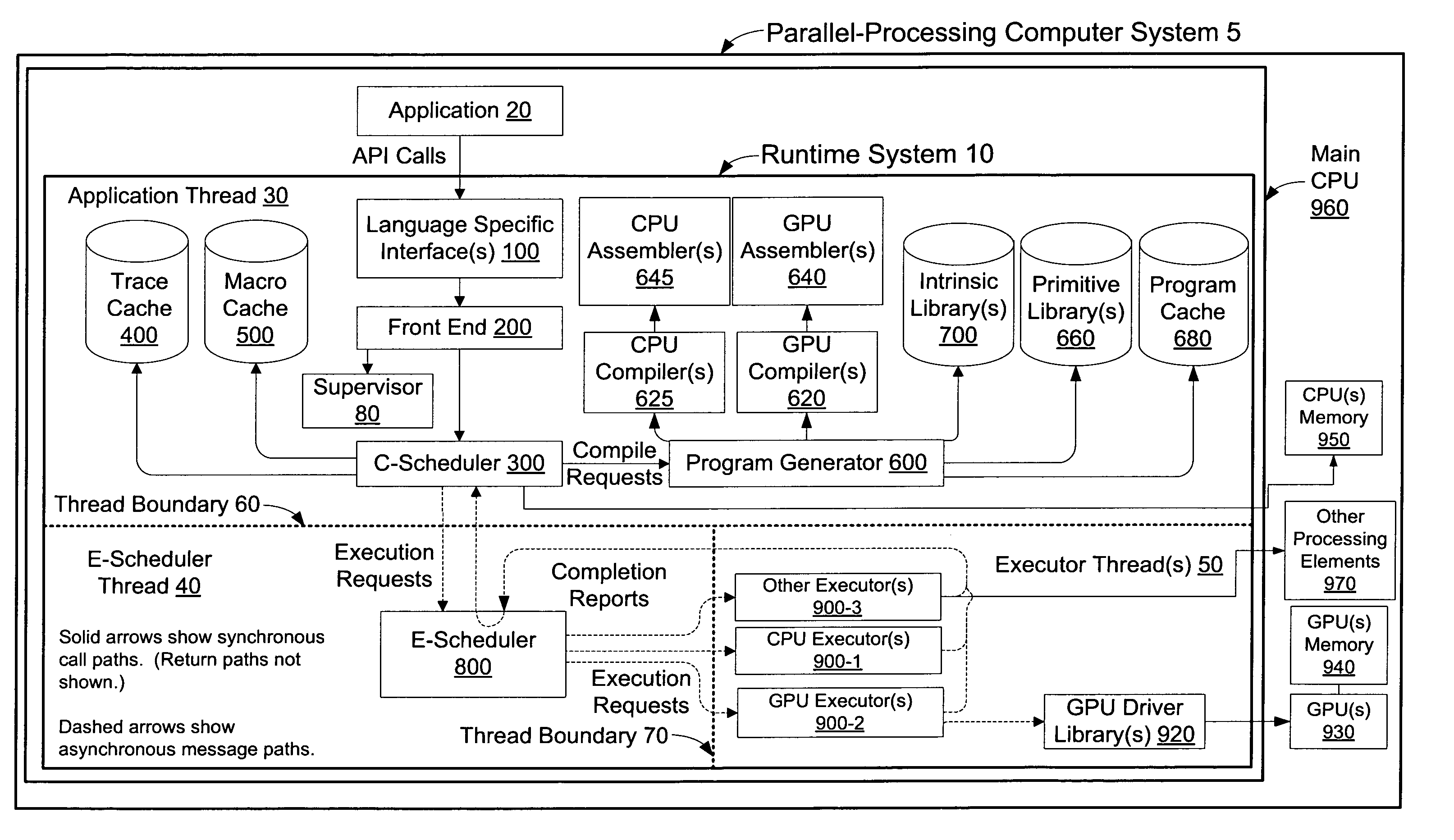

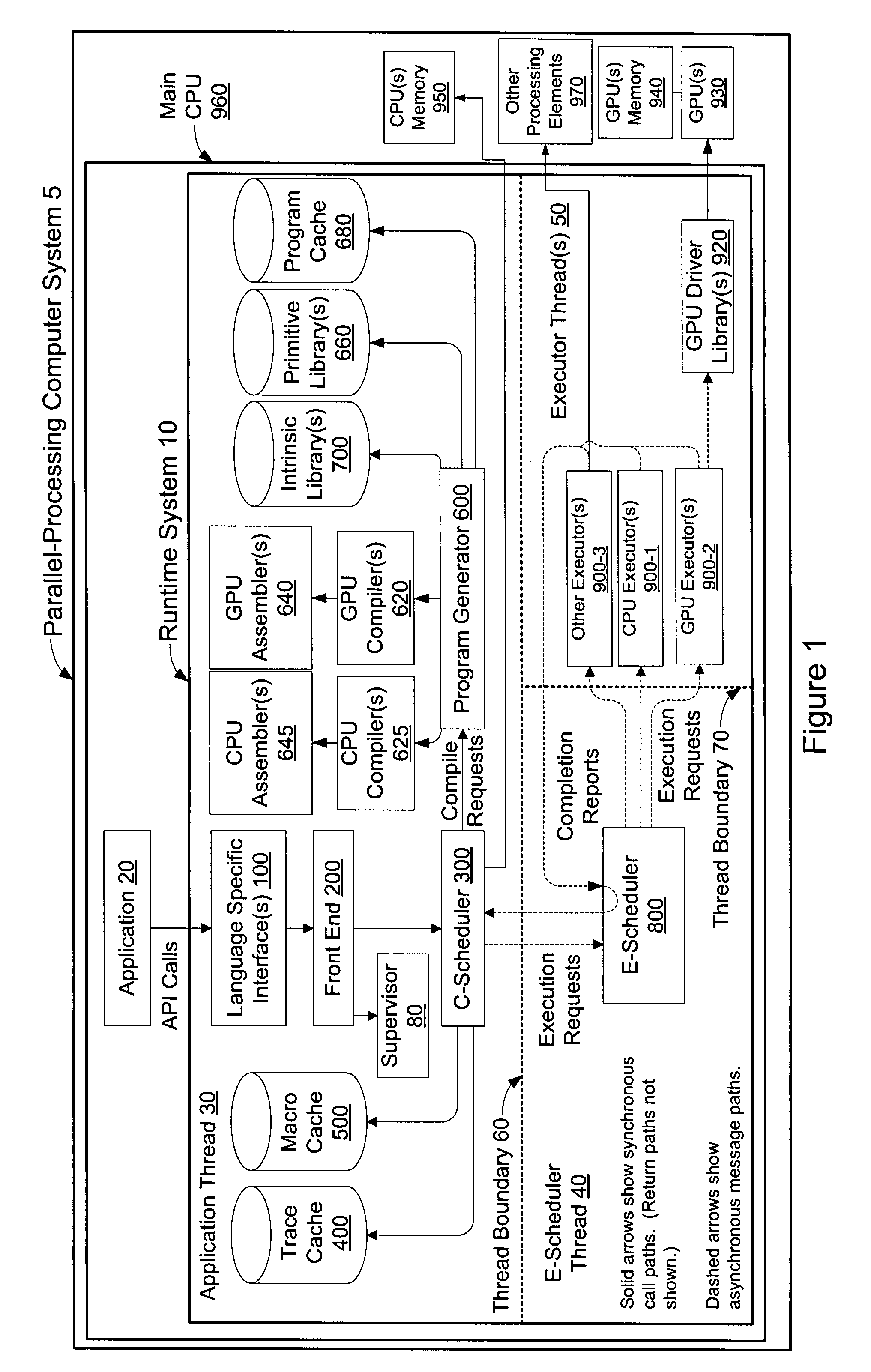

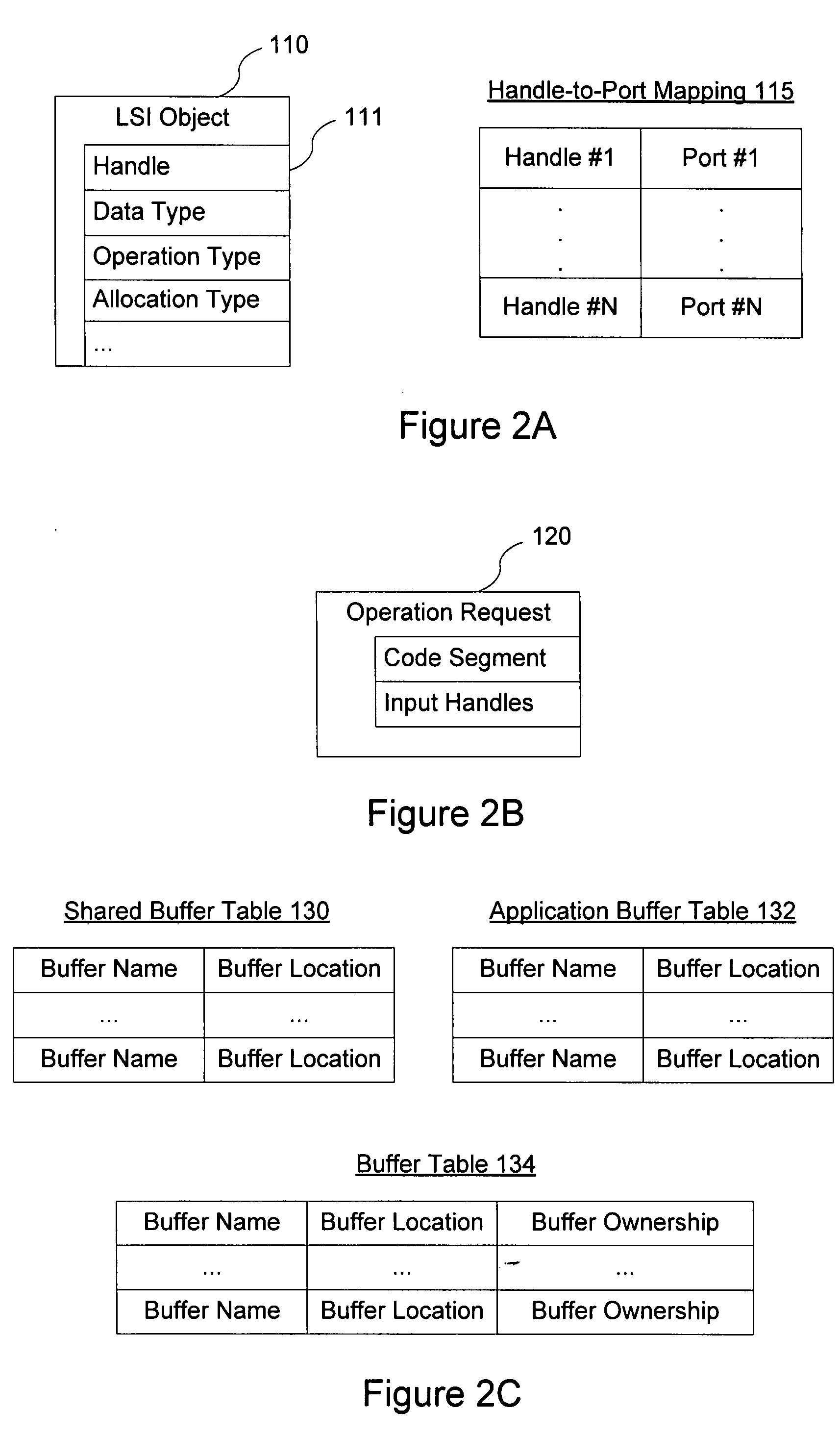

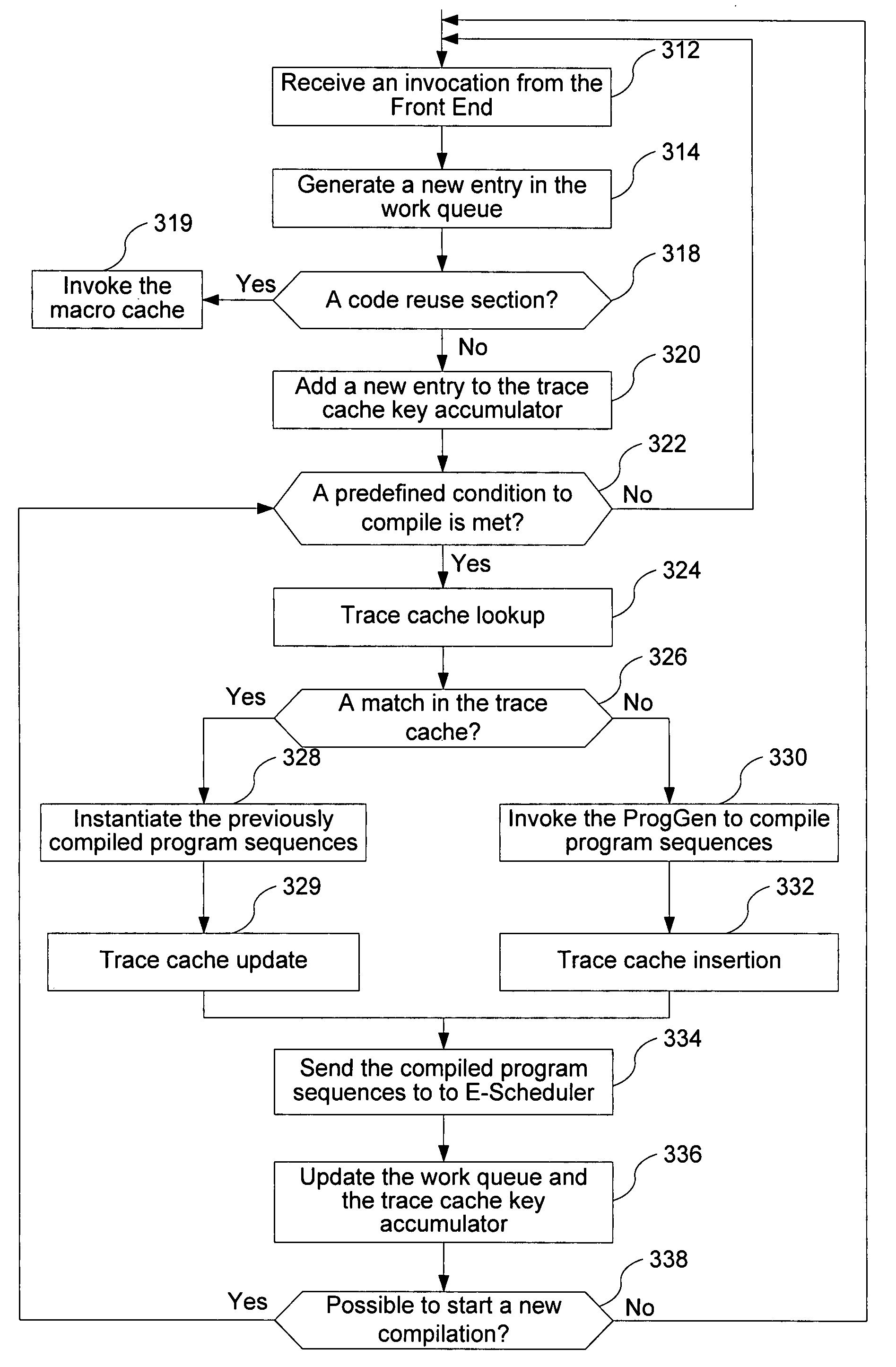

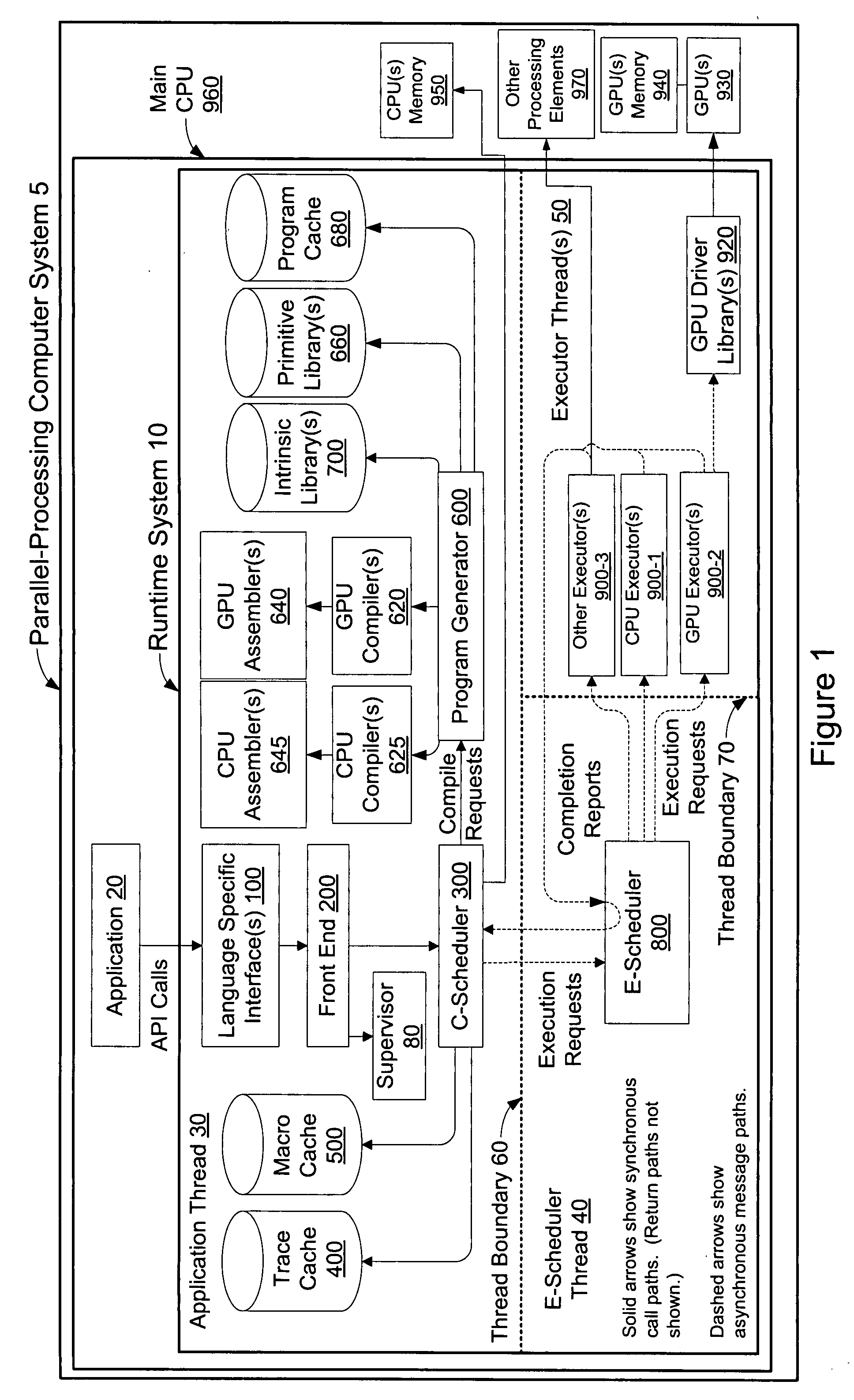

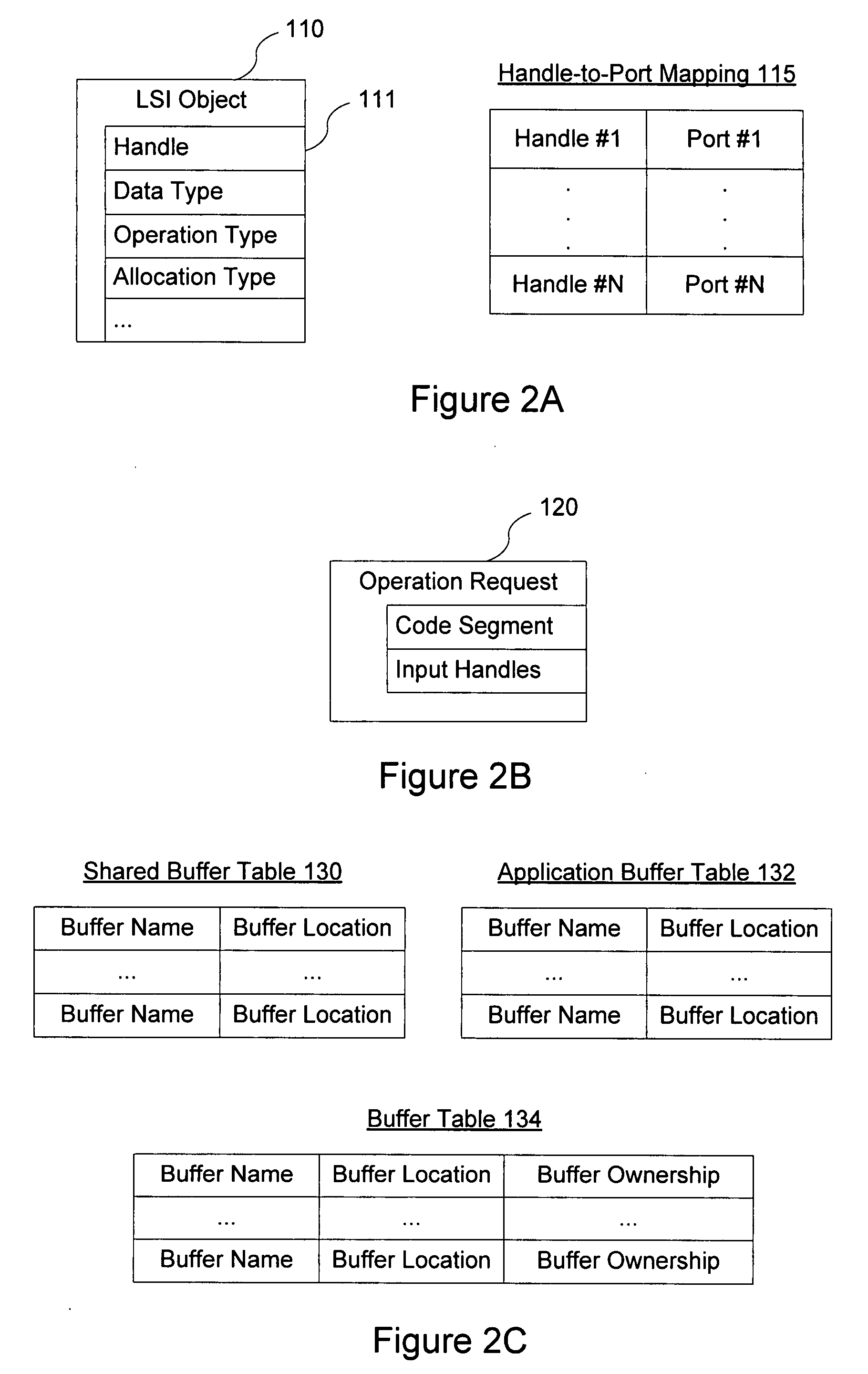

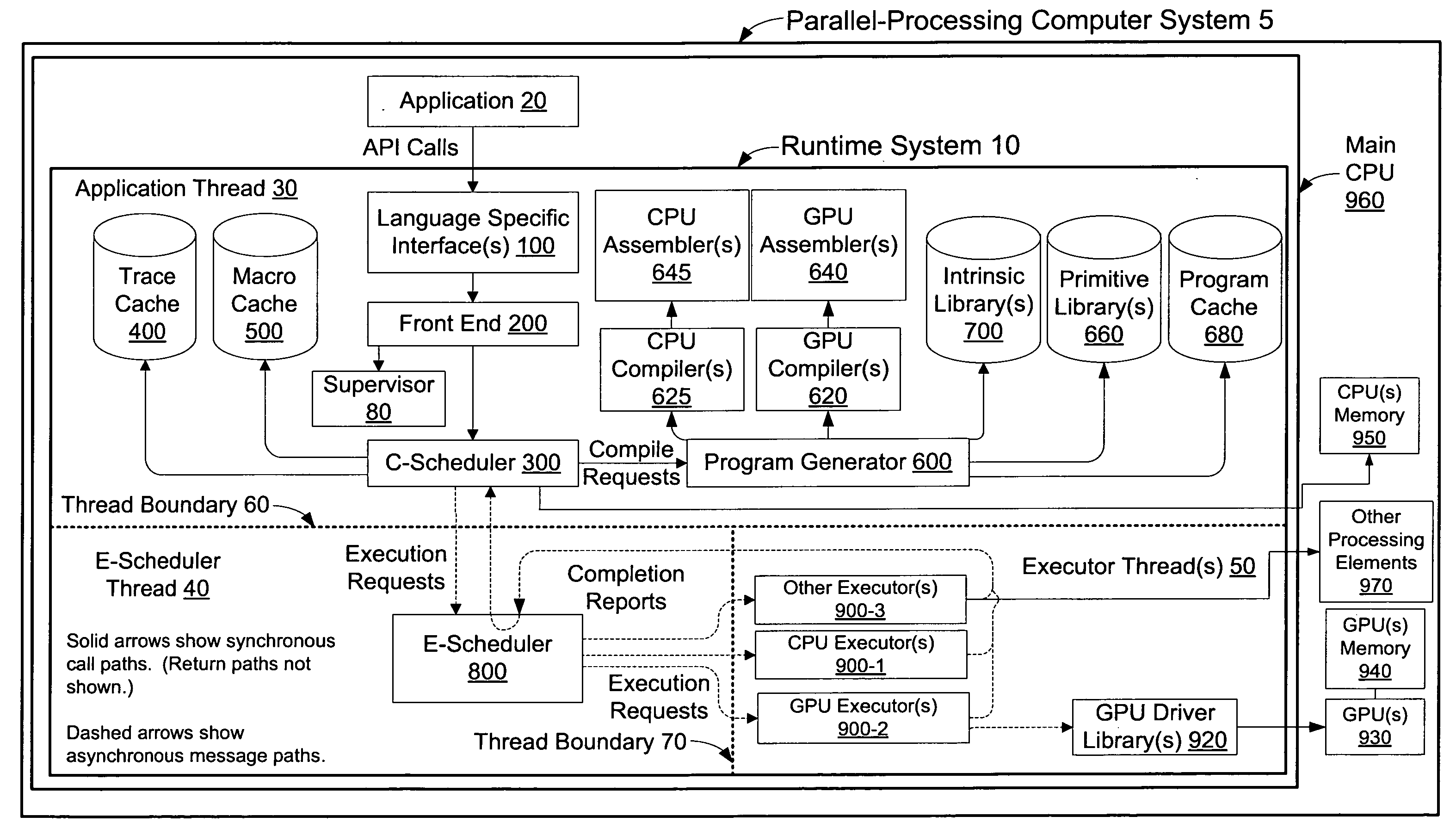

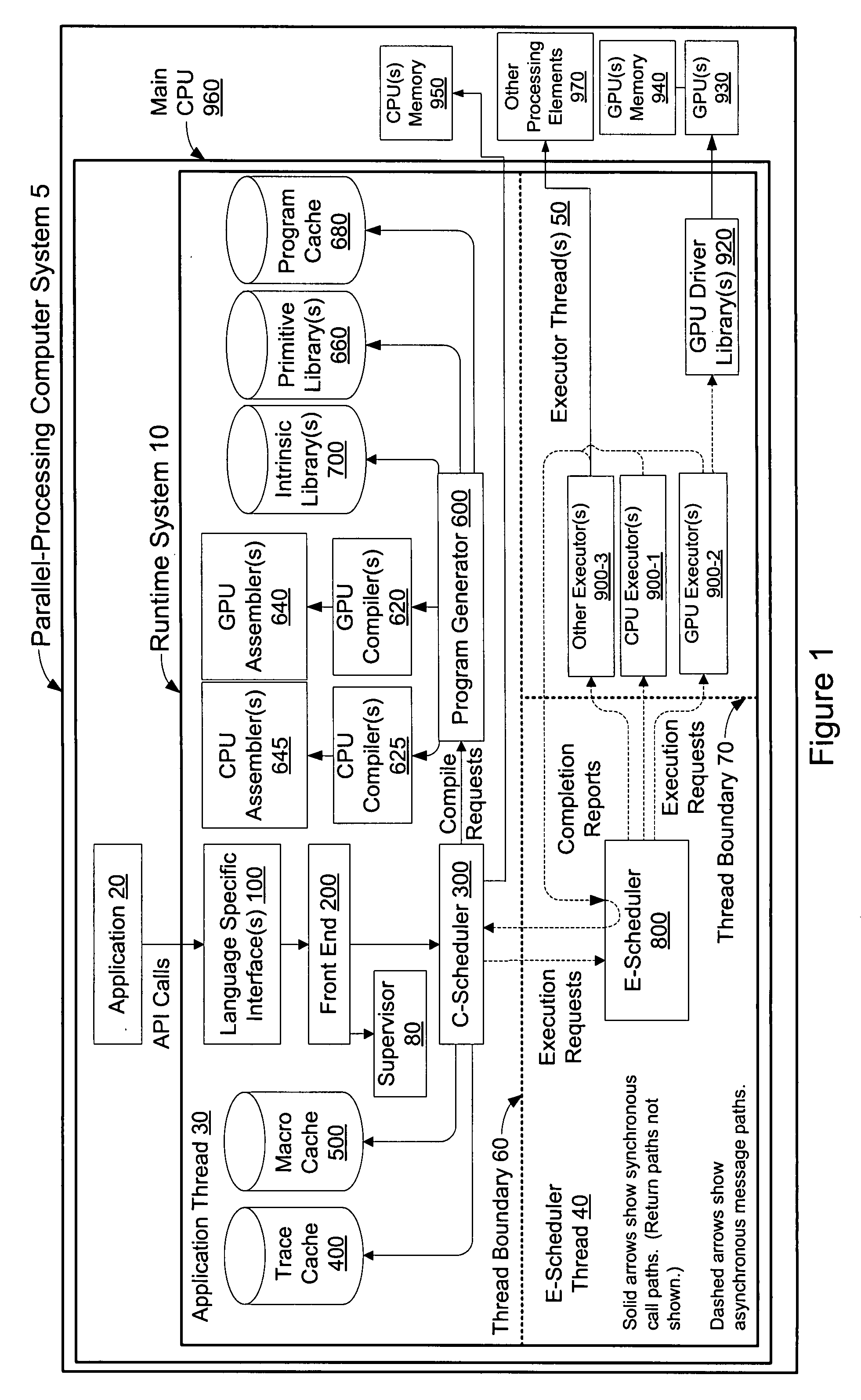

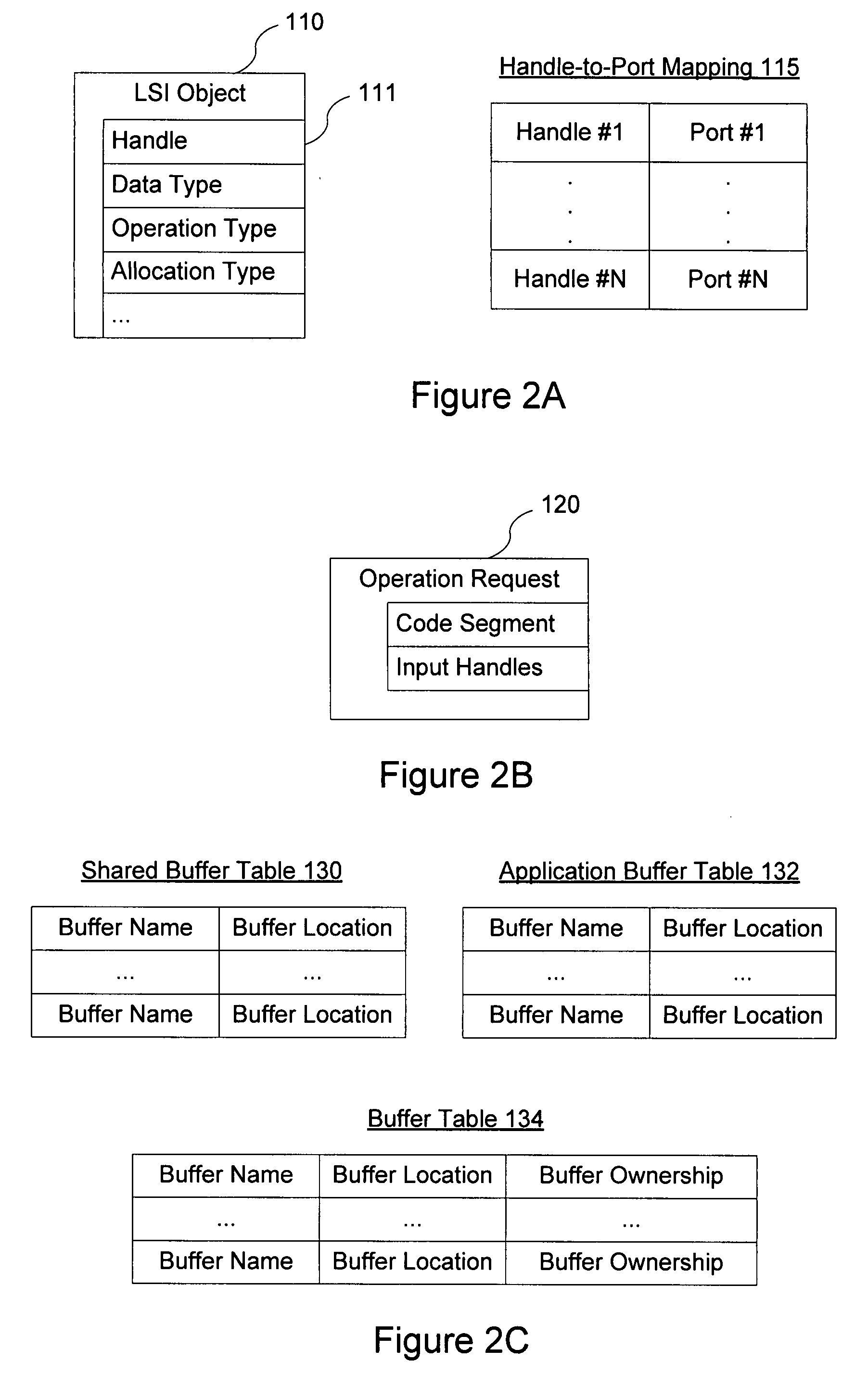

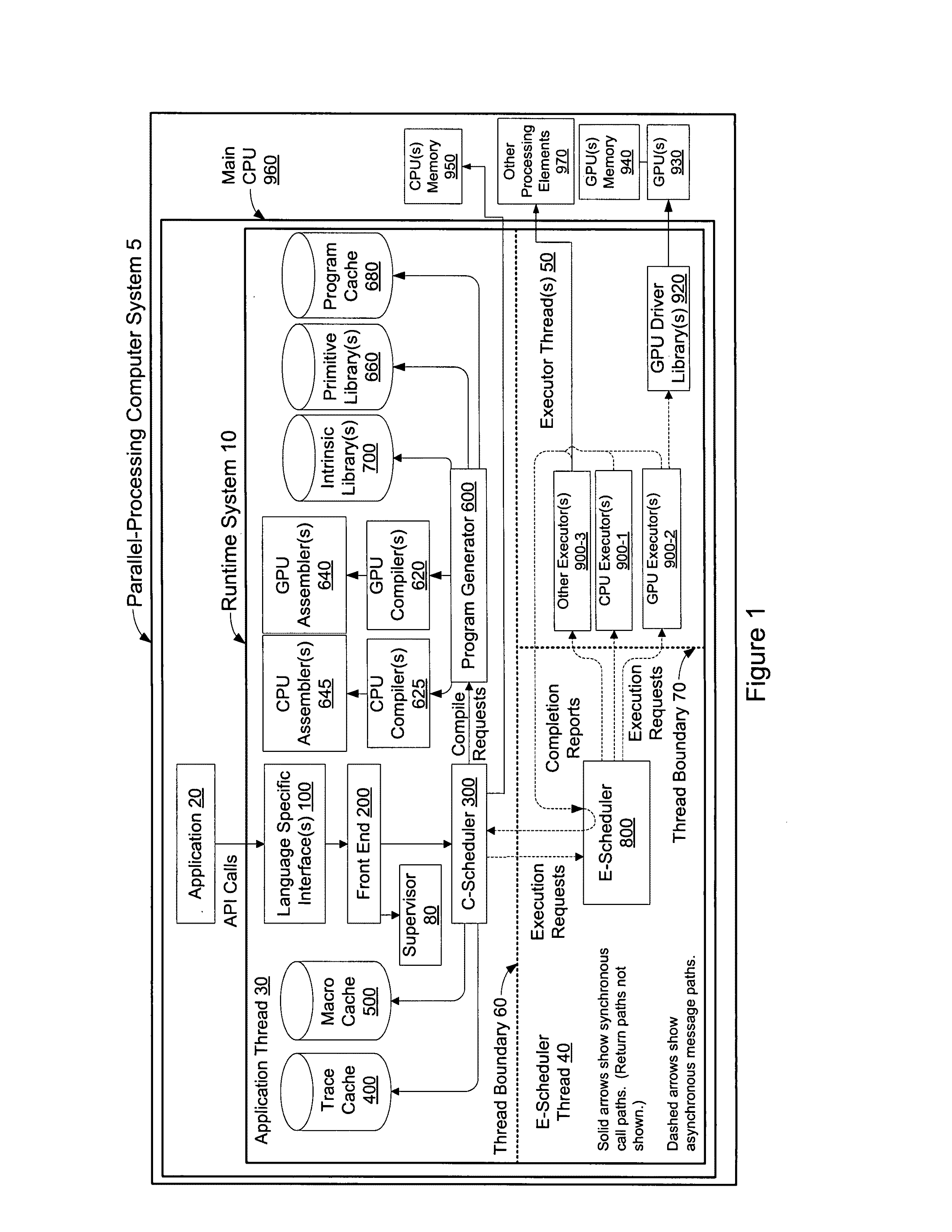

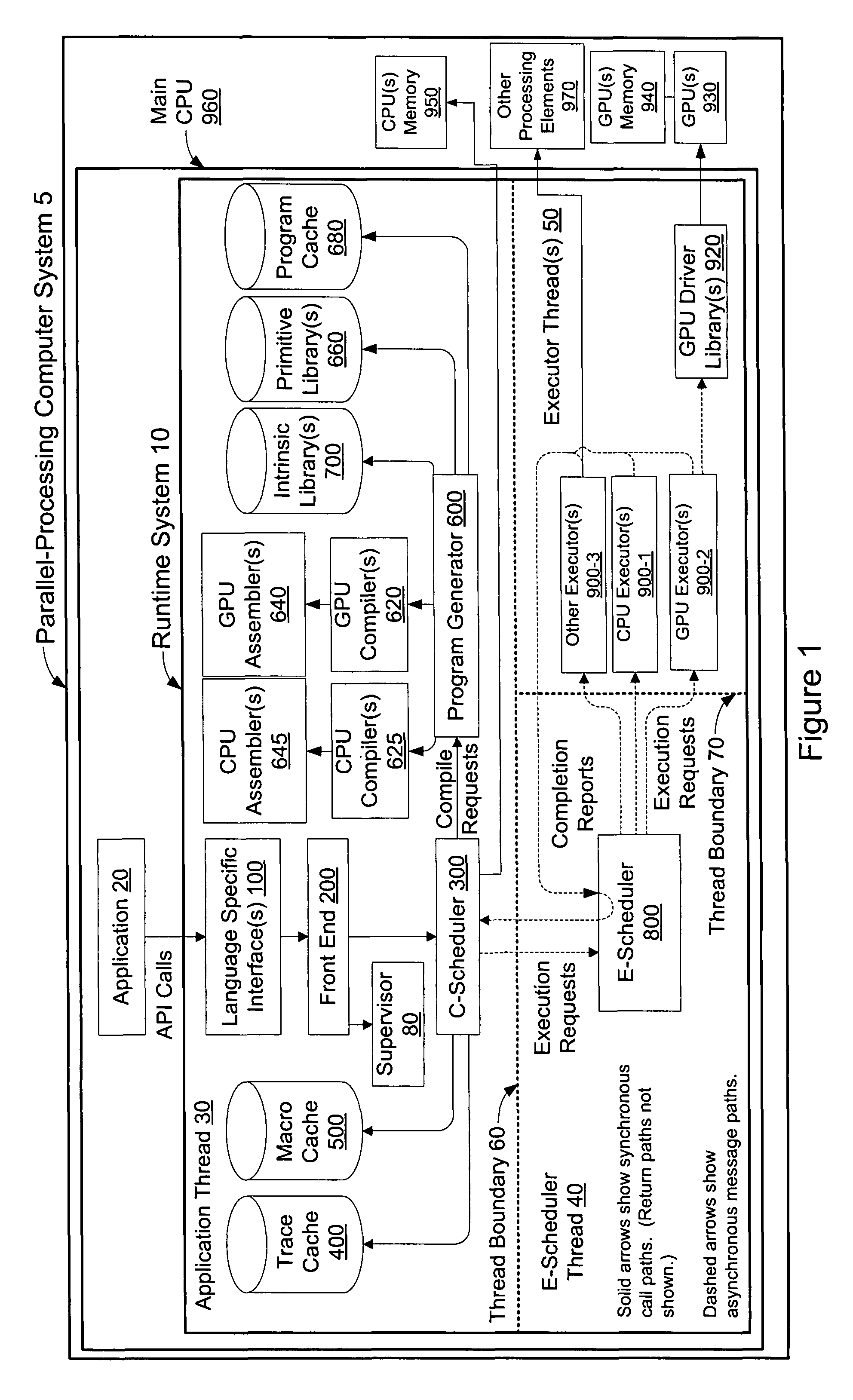

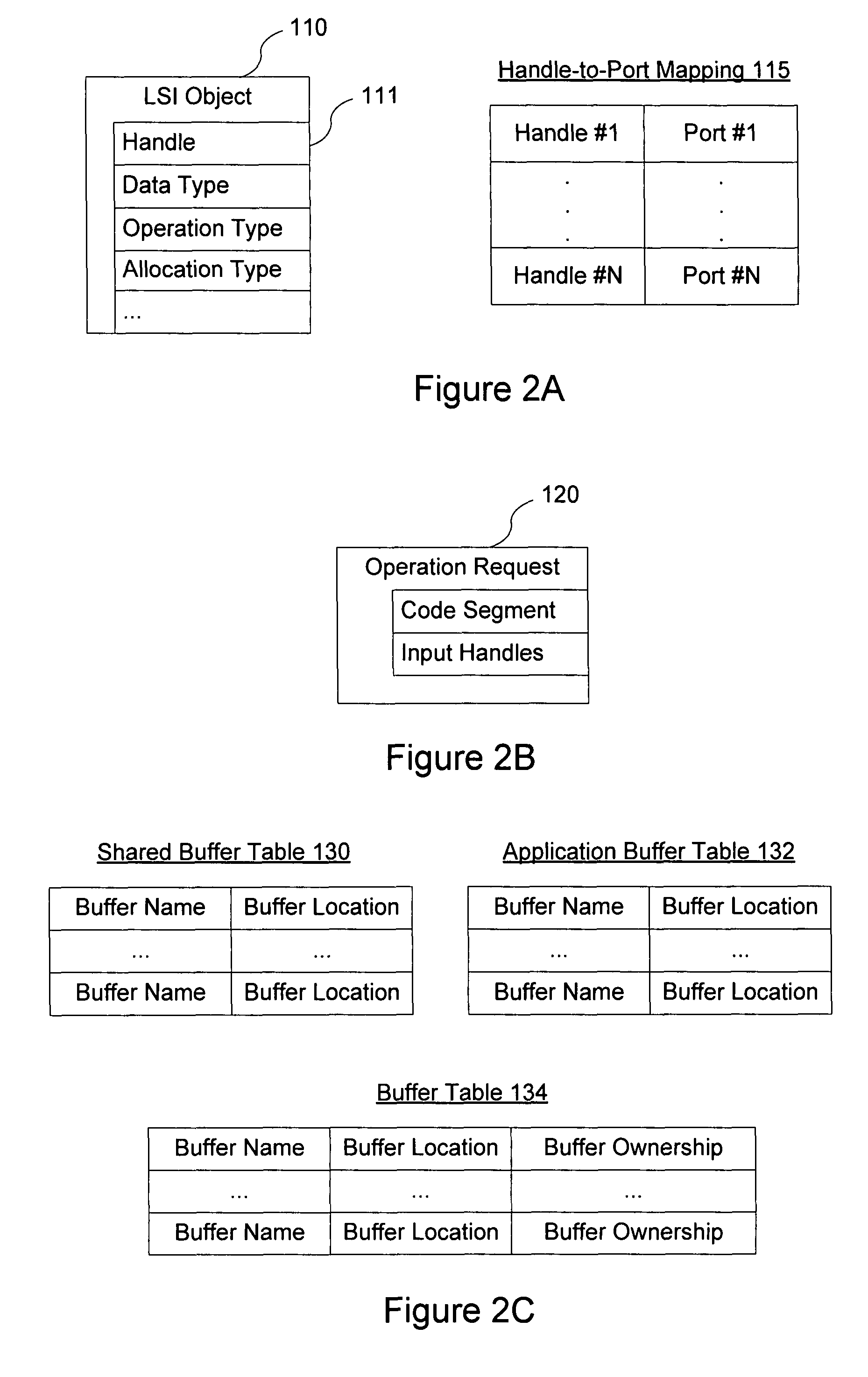

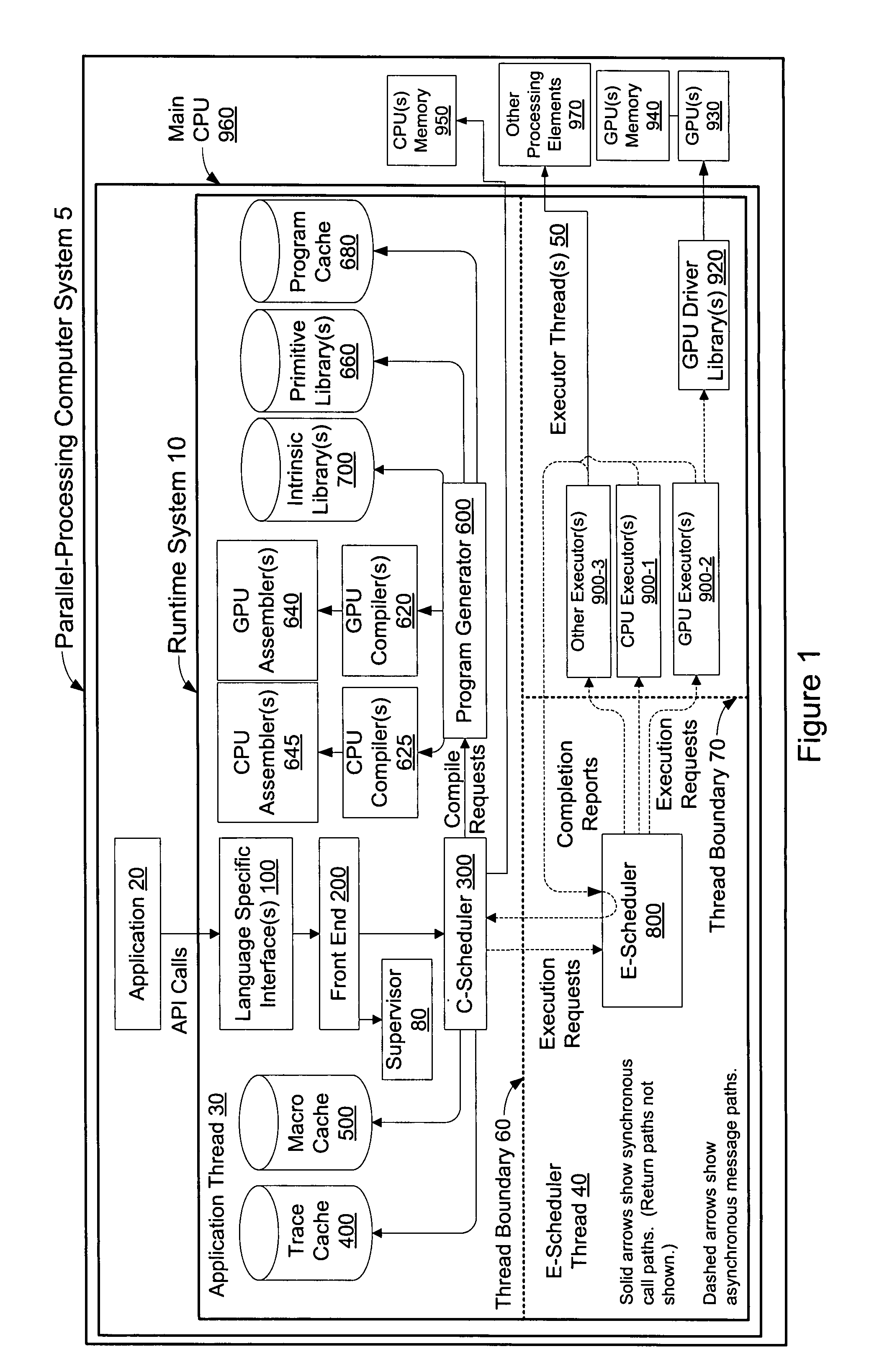

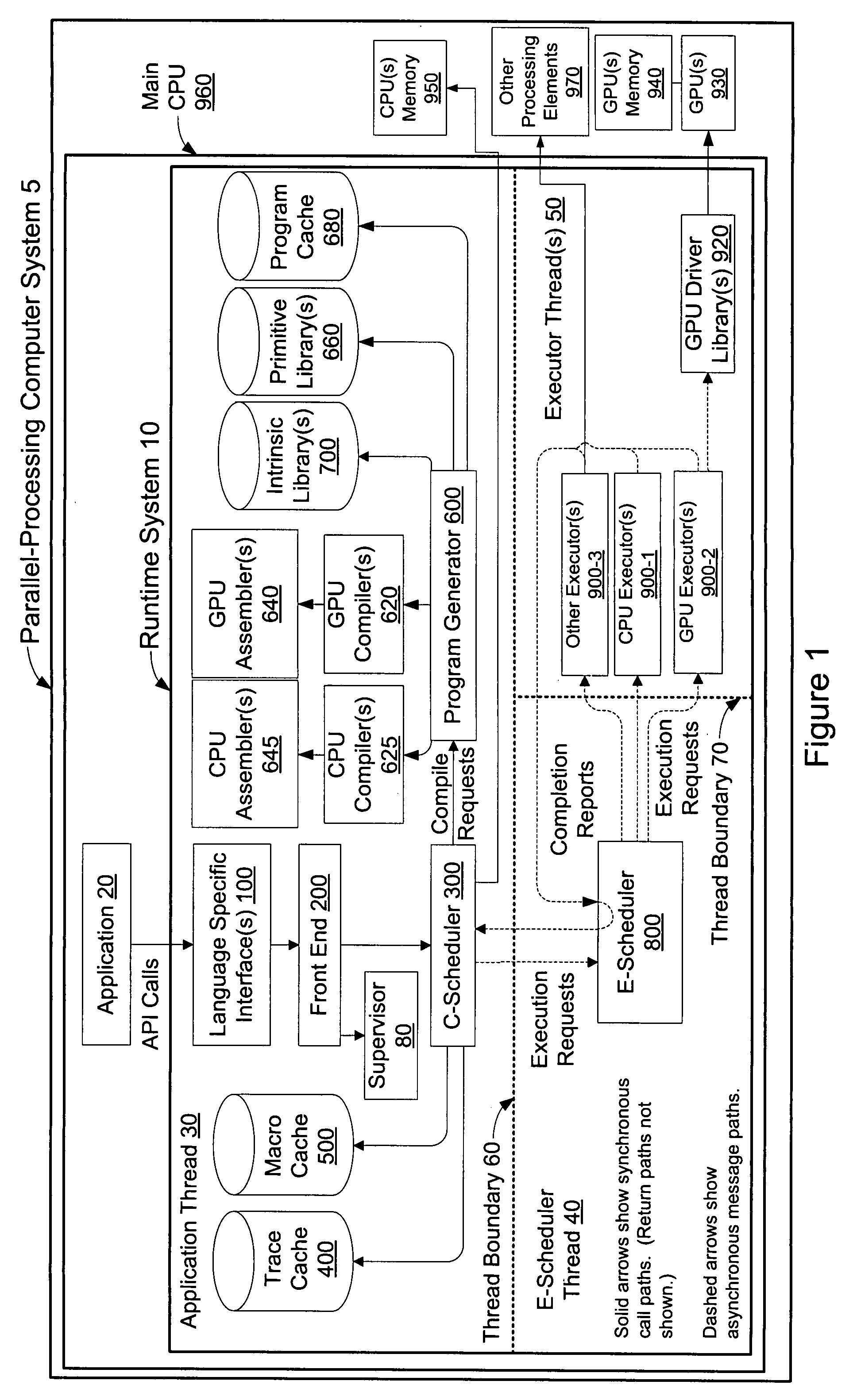

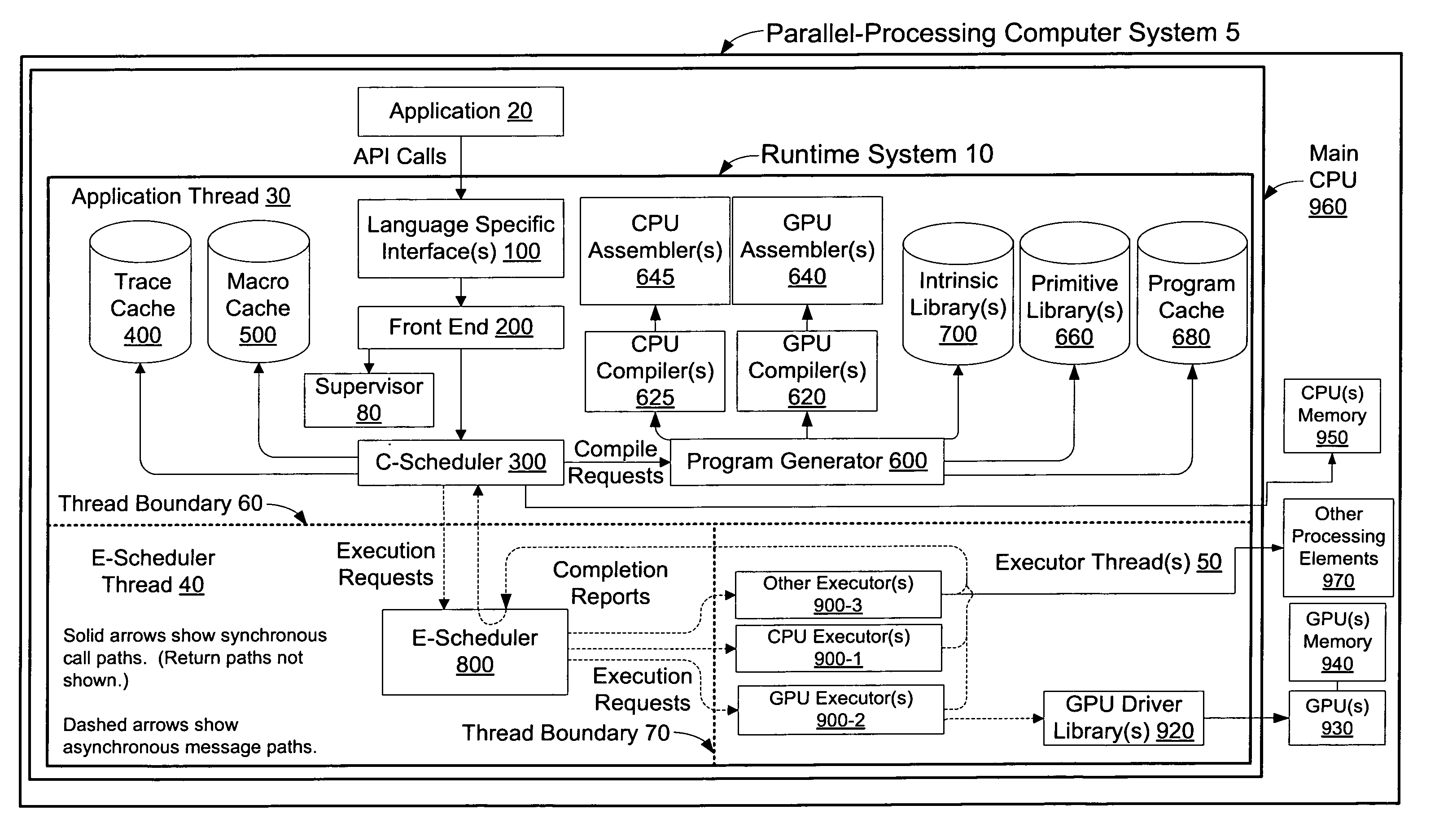

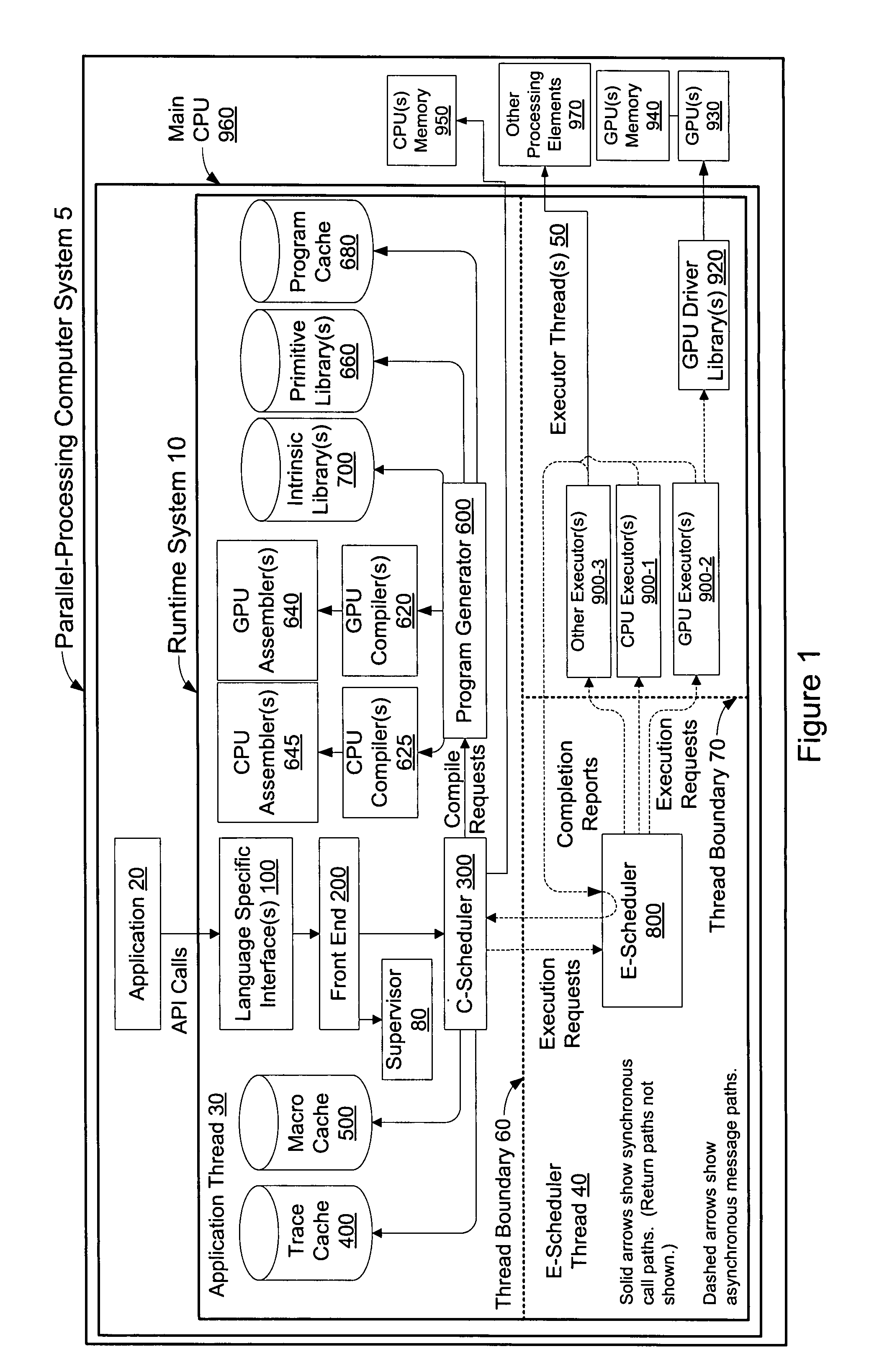

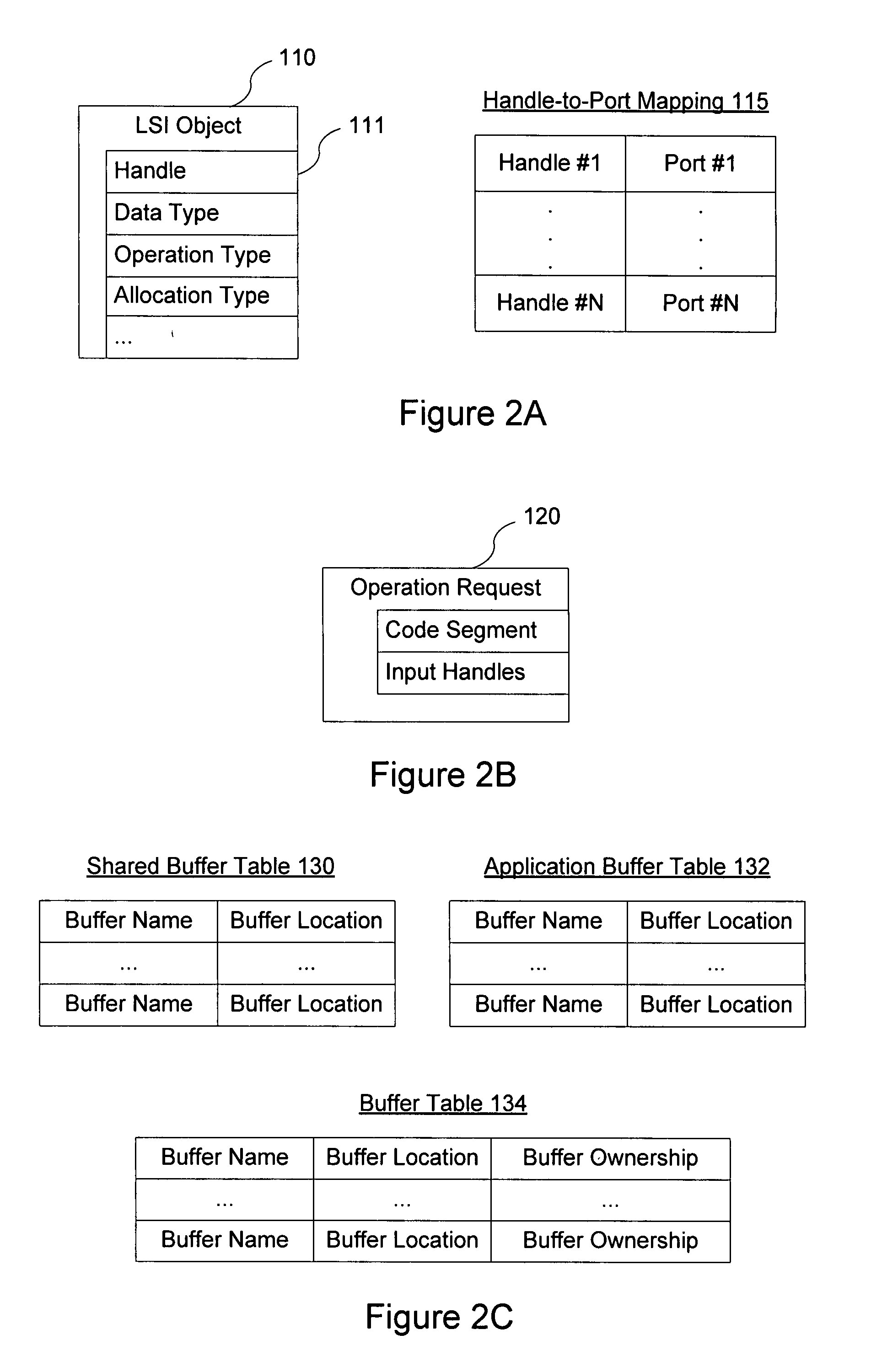

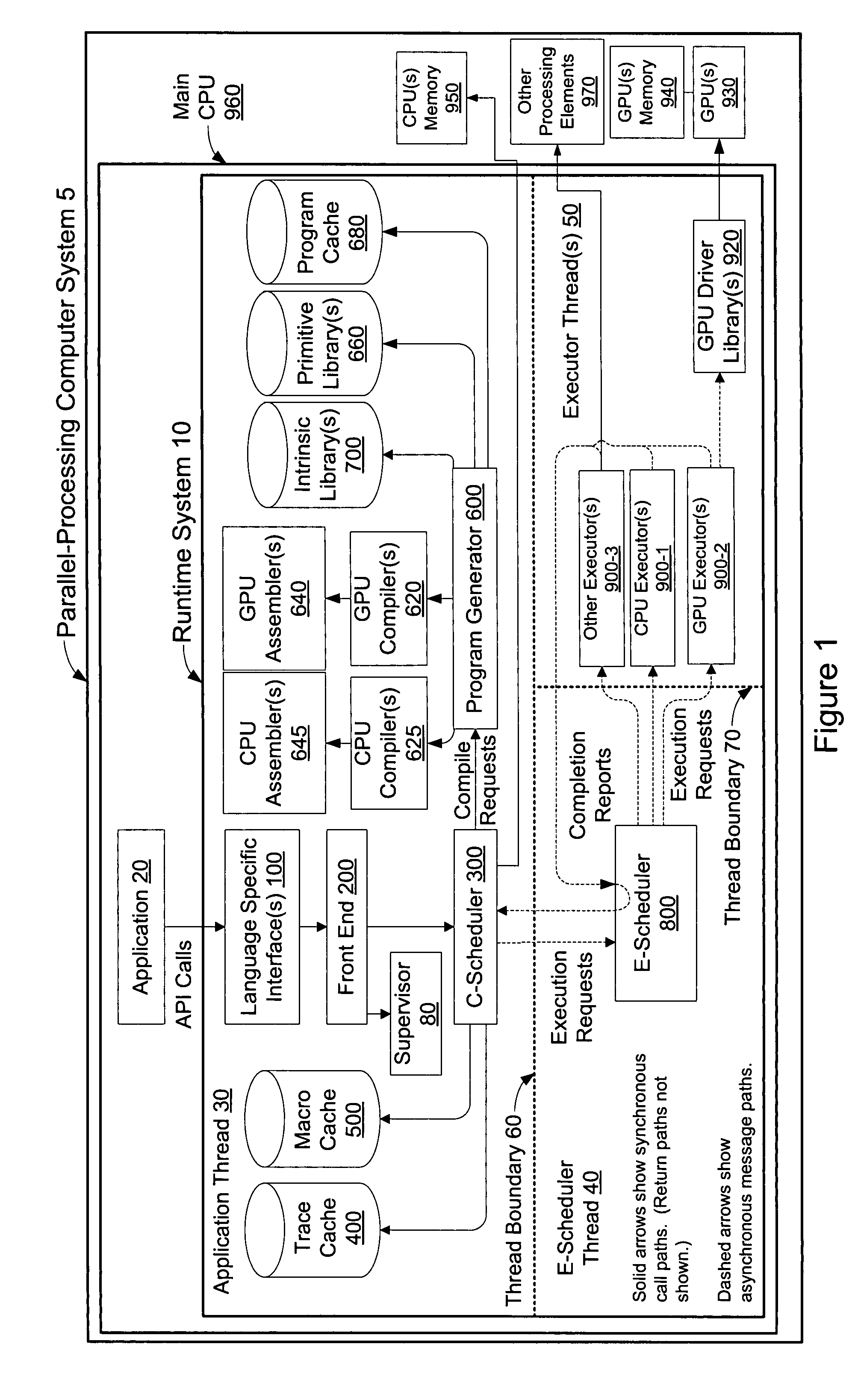

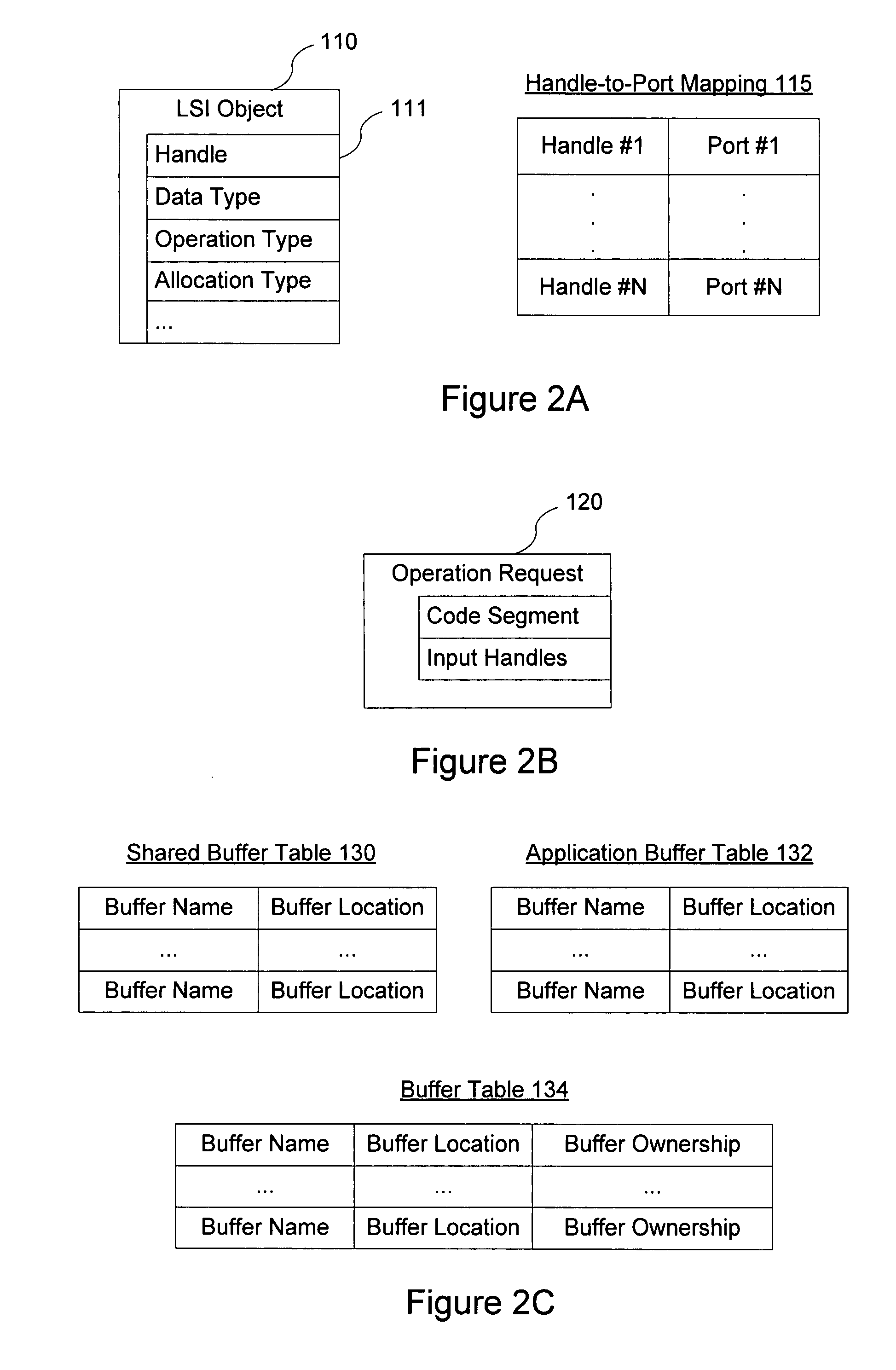

Systems and methods for dynamically choosing a processing element for a compute kernel

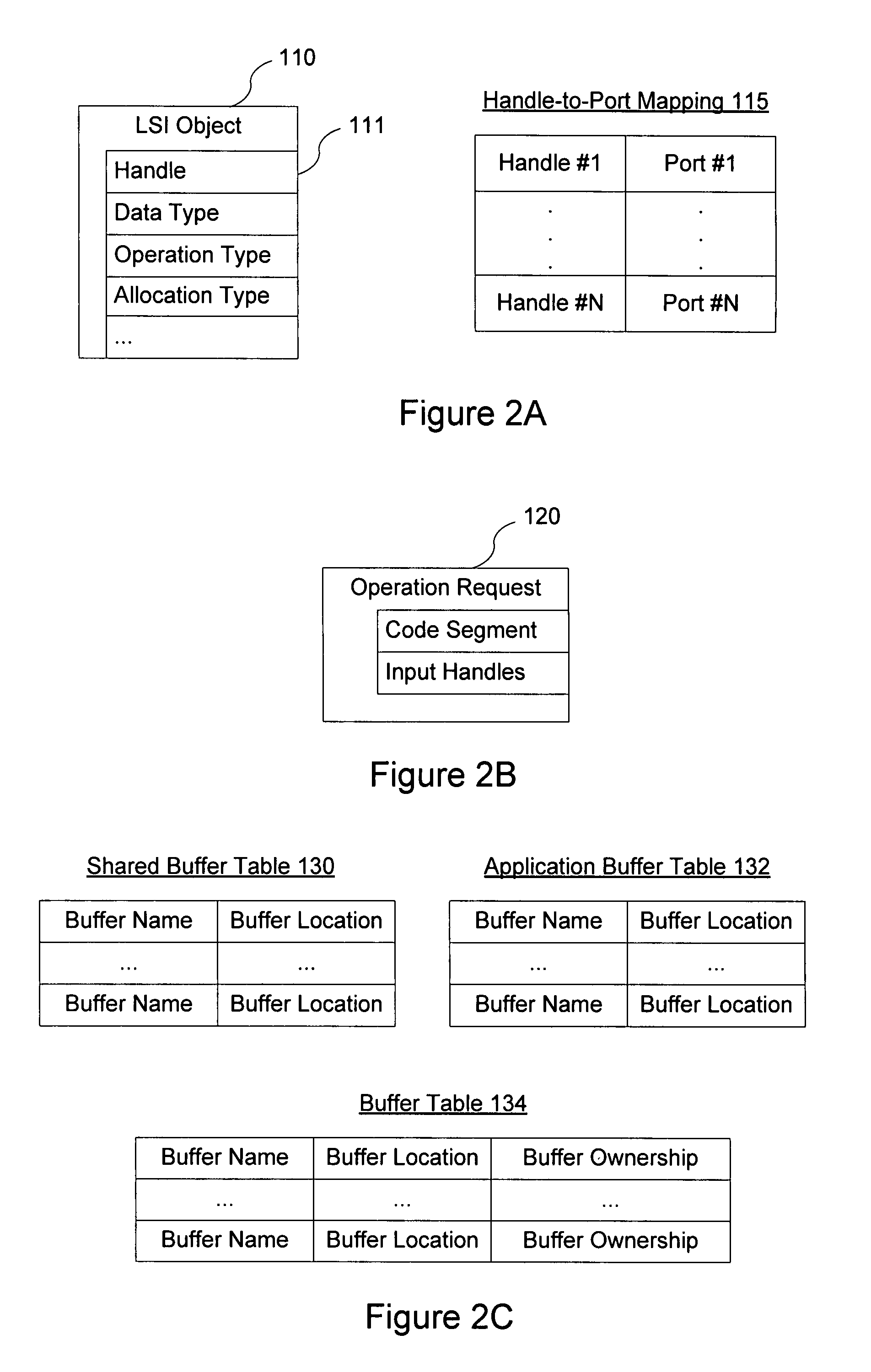

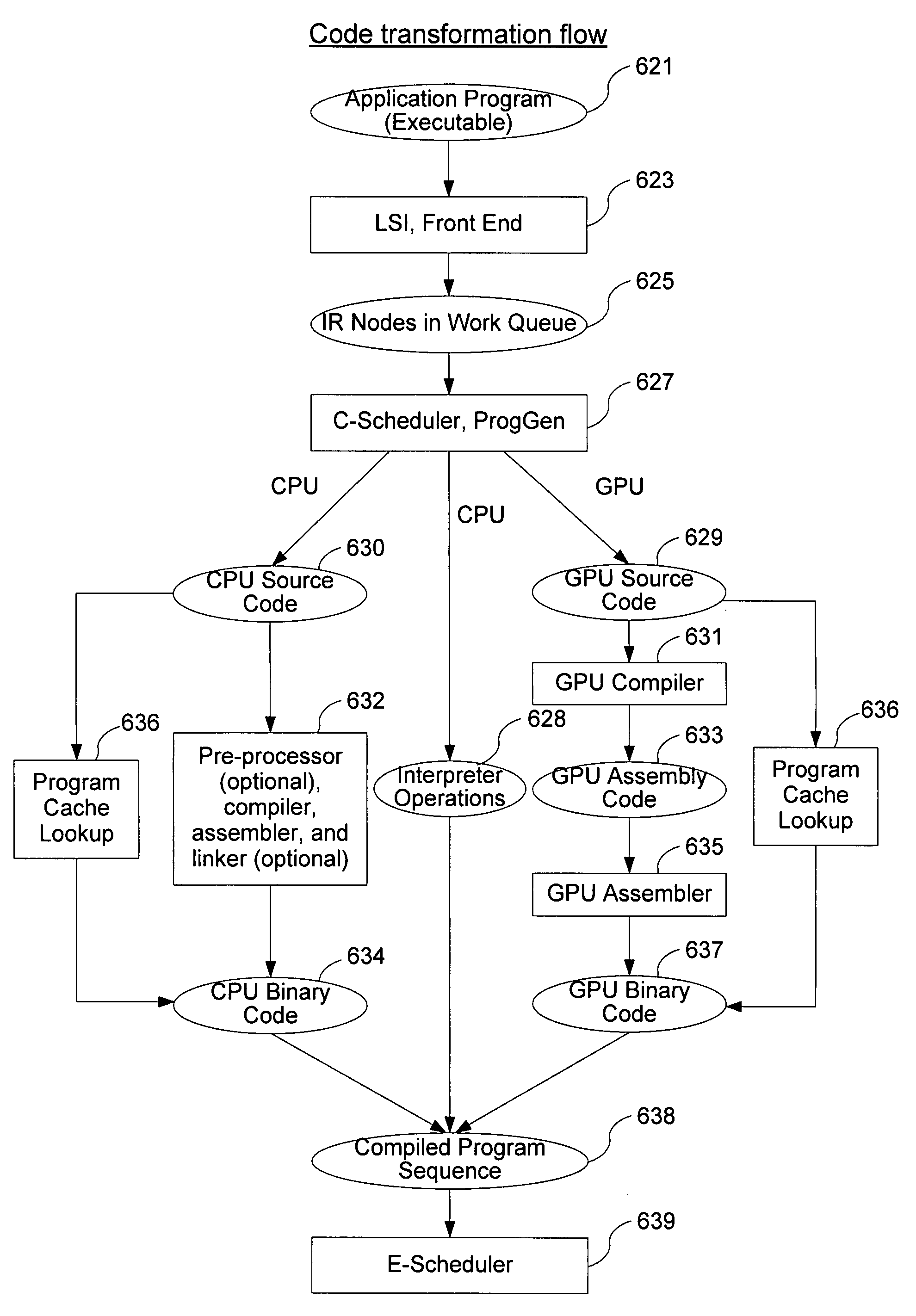

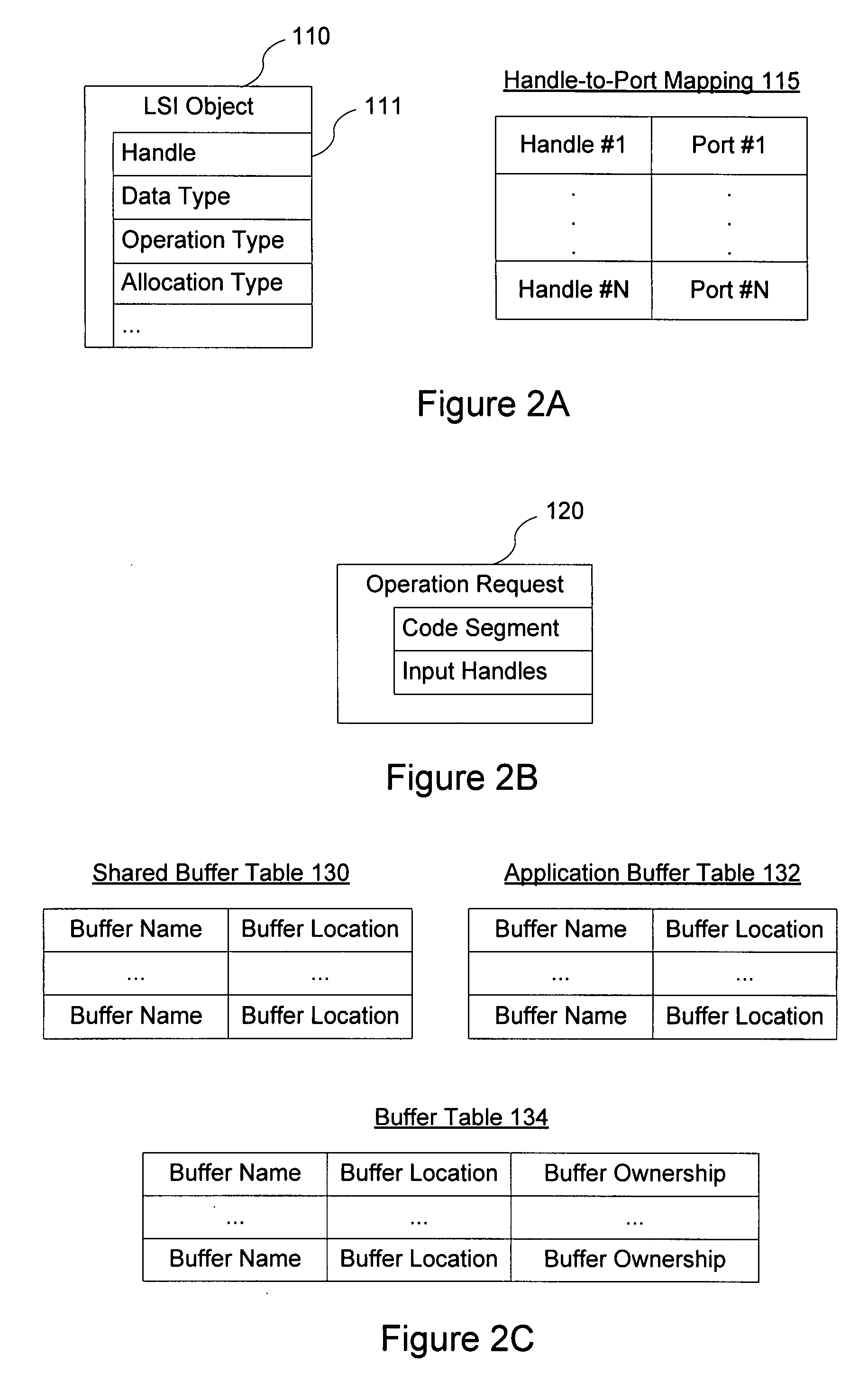

ActiveUS20070294512A1Error detection/correctionSoftware engineeringPerformance computingProcessing element

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

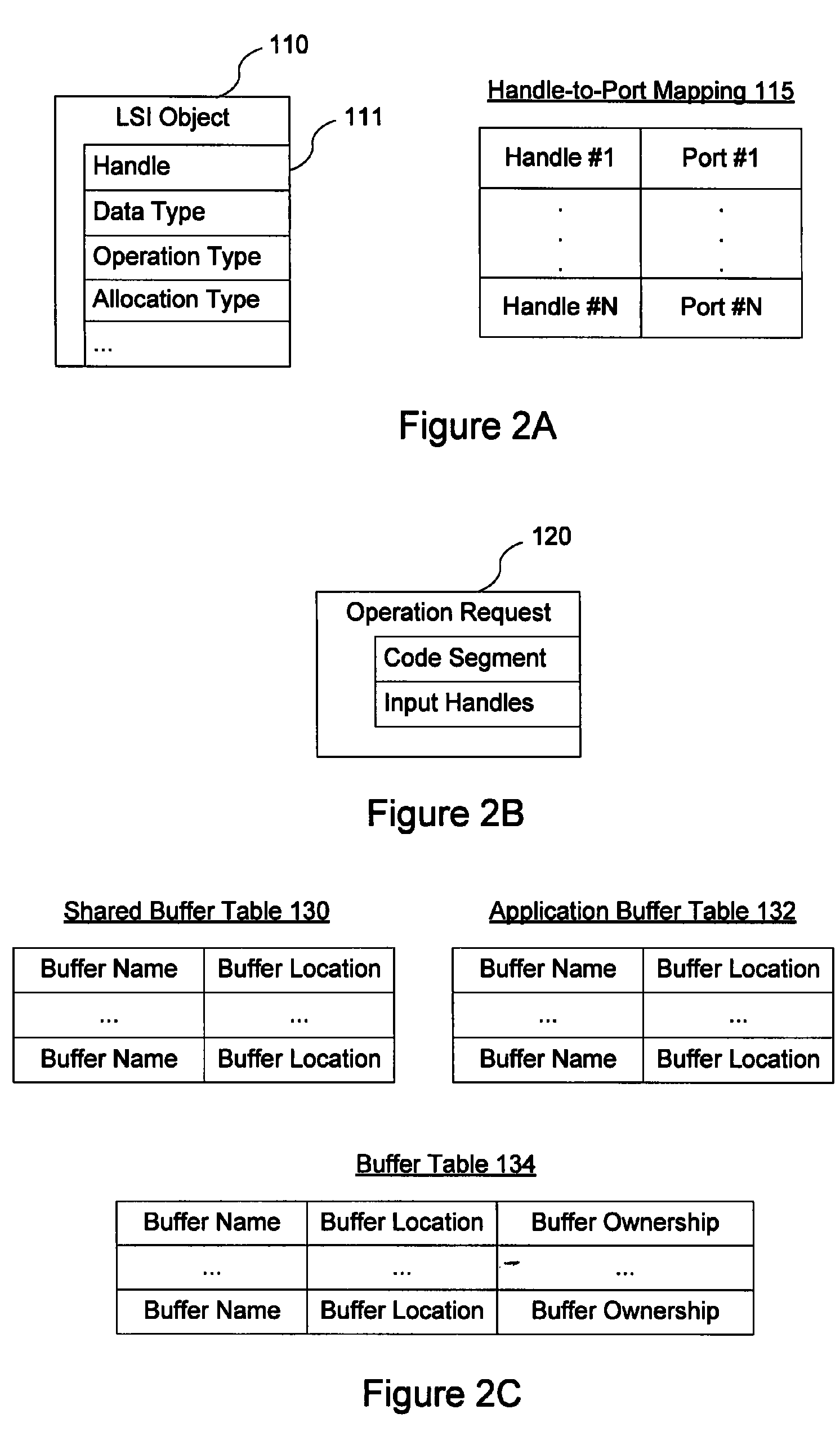

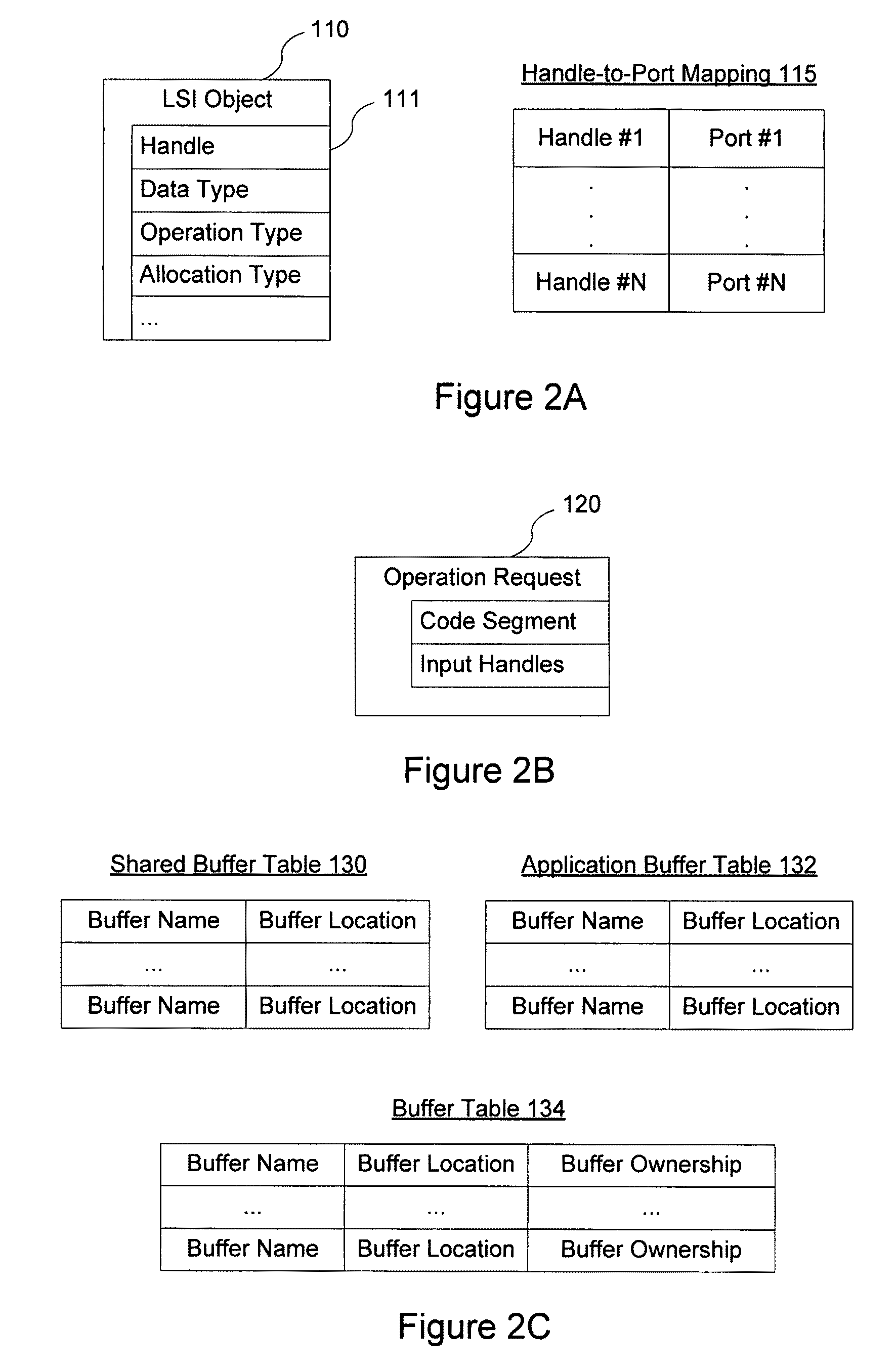

Application program interface of a parallel-processing computer system that supports multiple programming languages

ActiveUS20070294663A1Interprogram communicationKitchen cabinetsApplication programming interfacePerformance computing

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

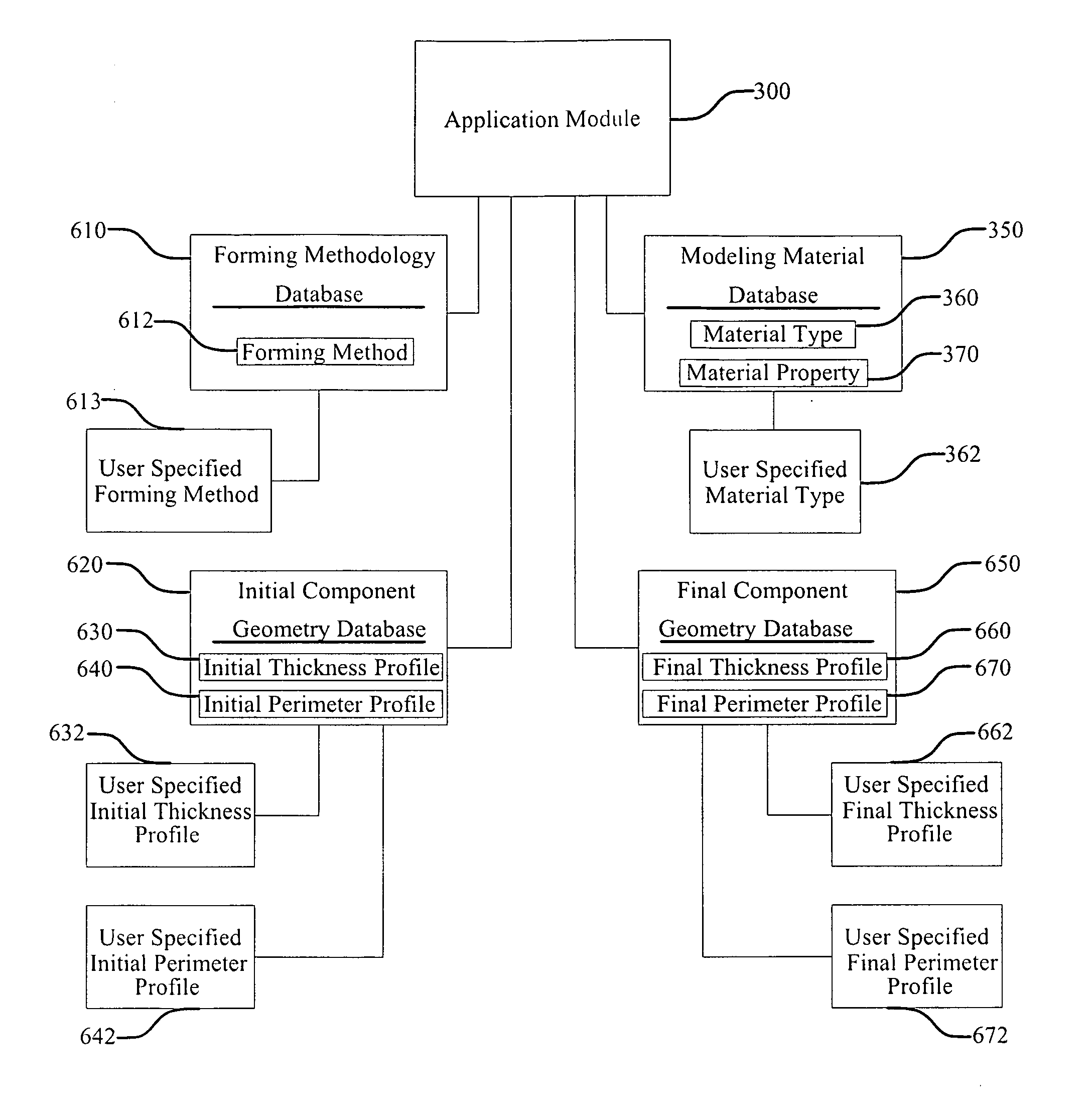

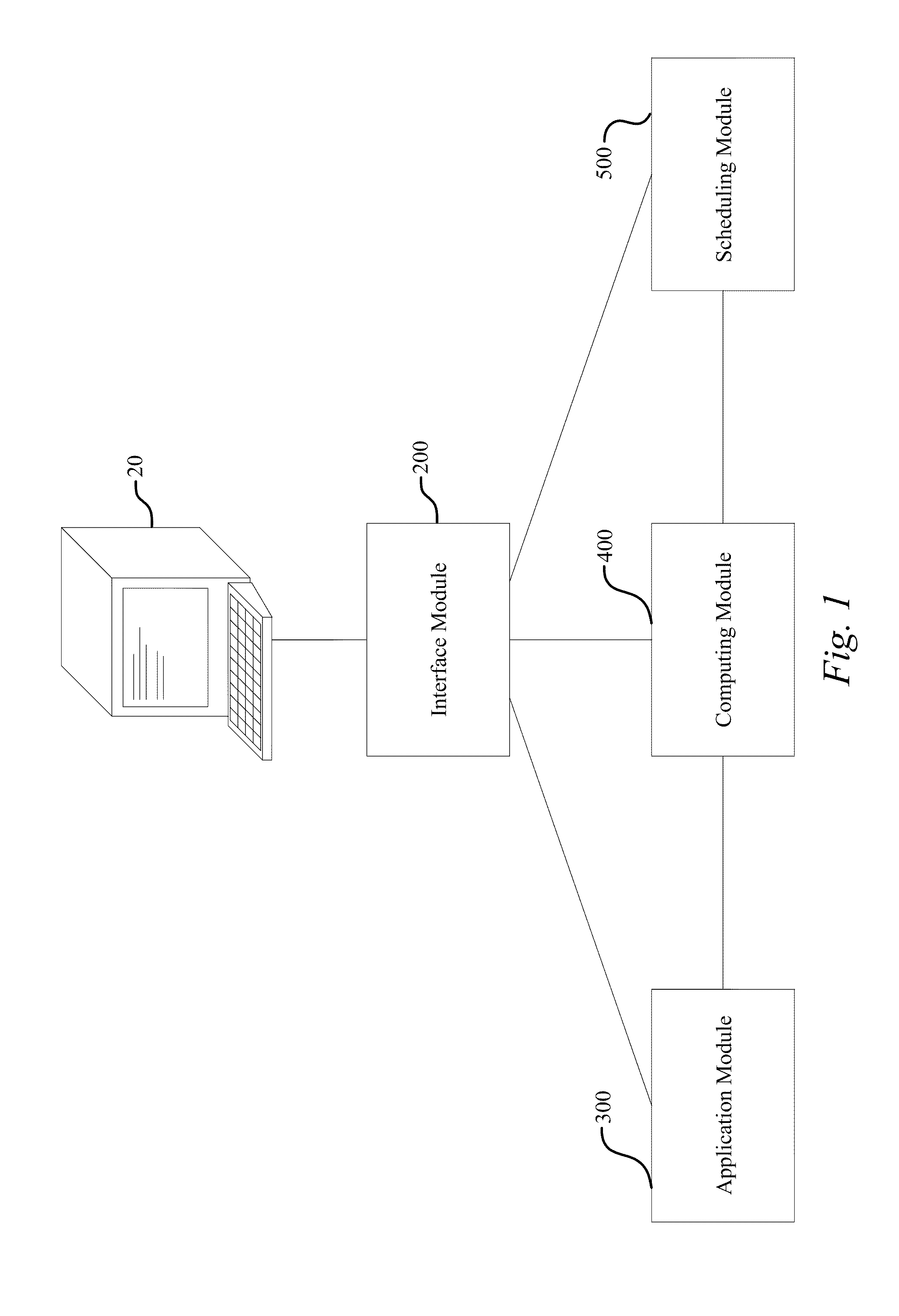

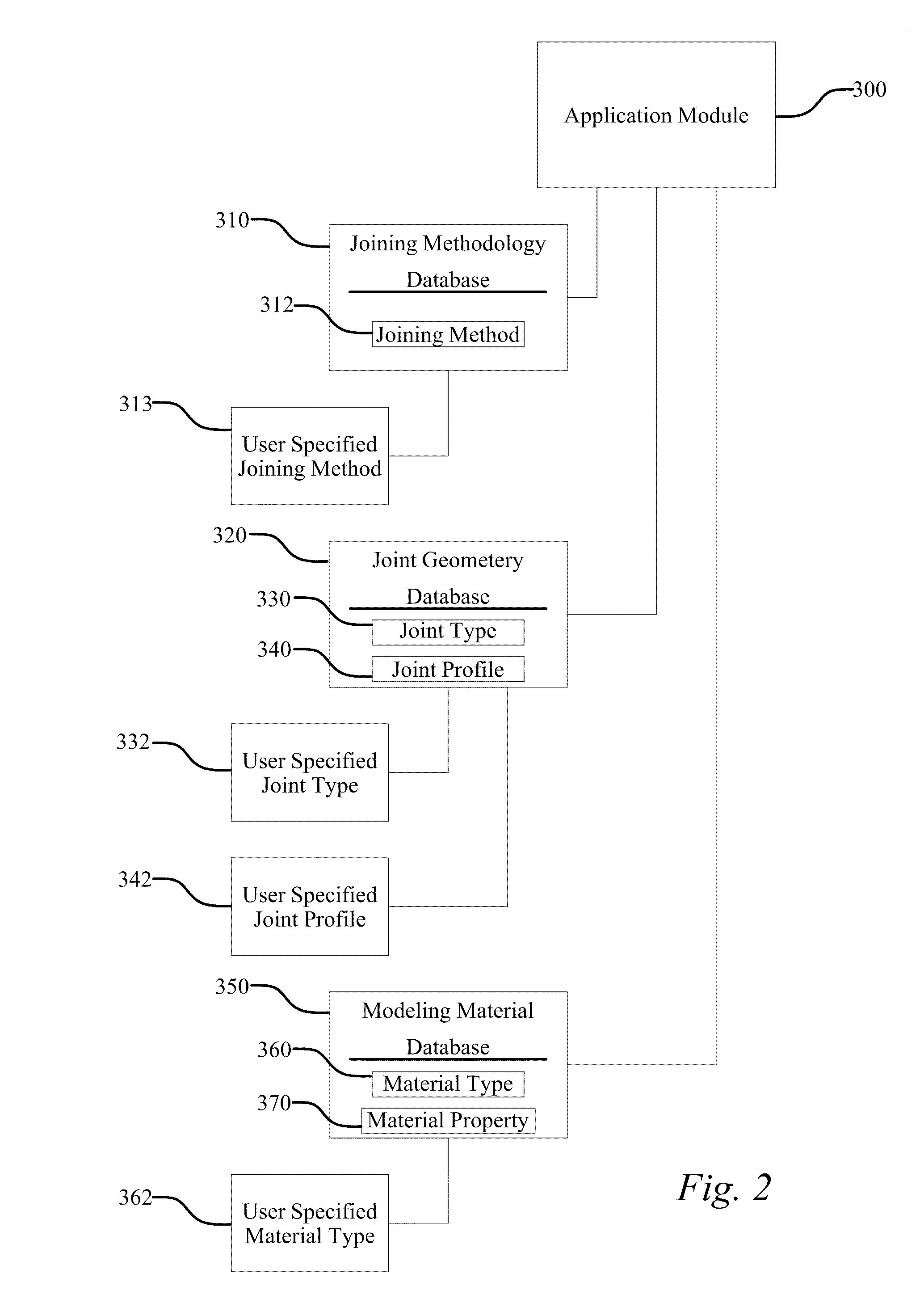

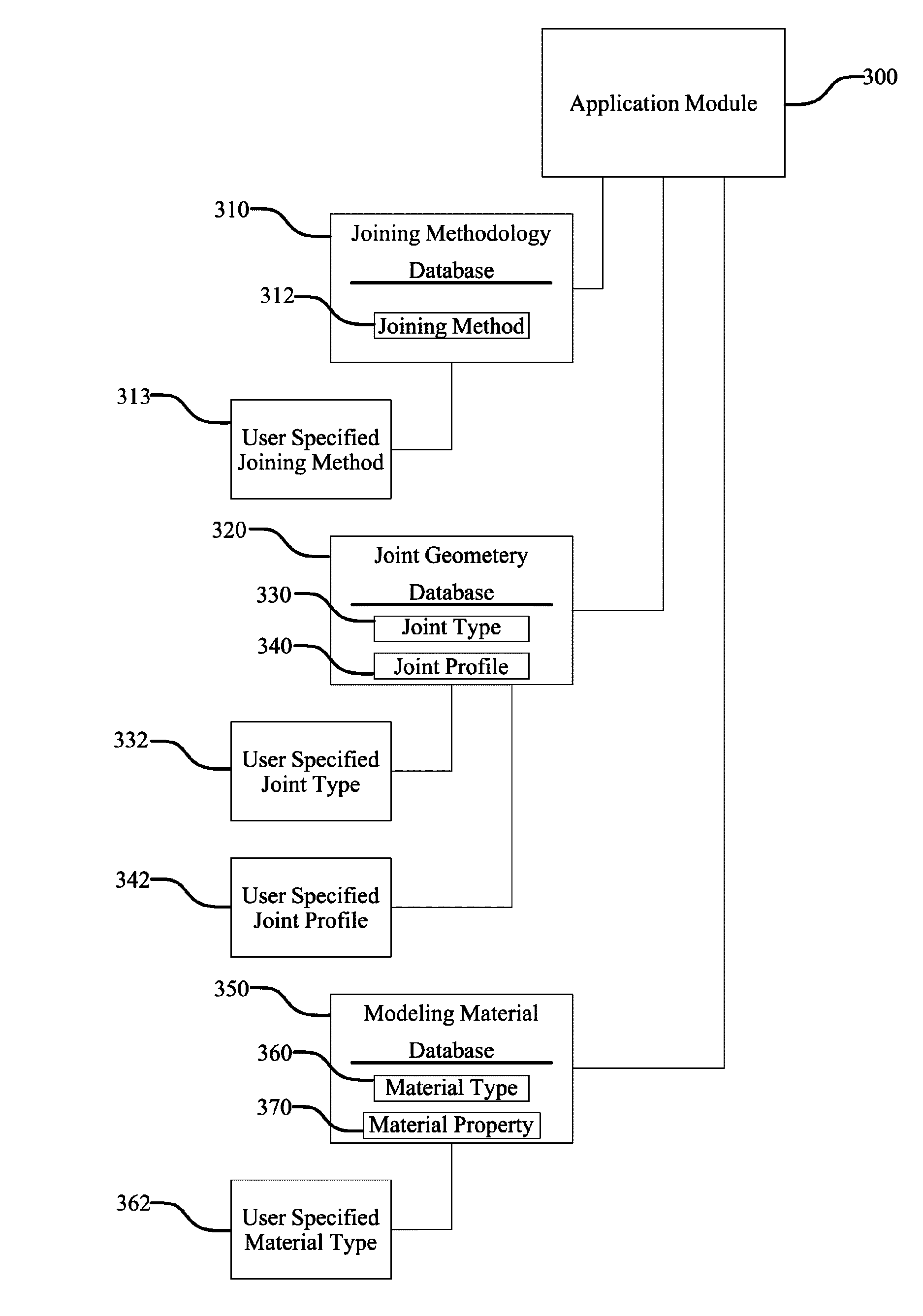

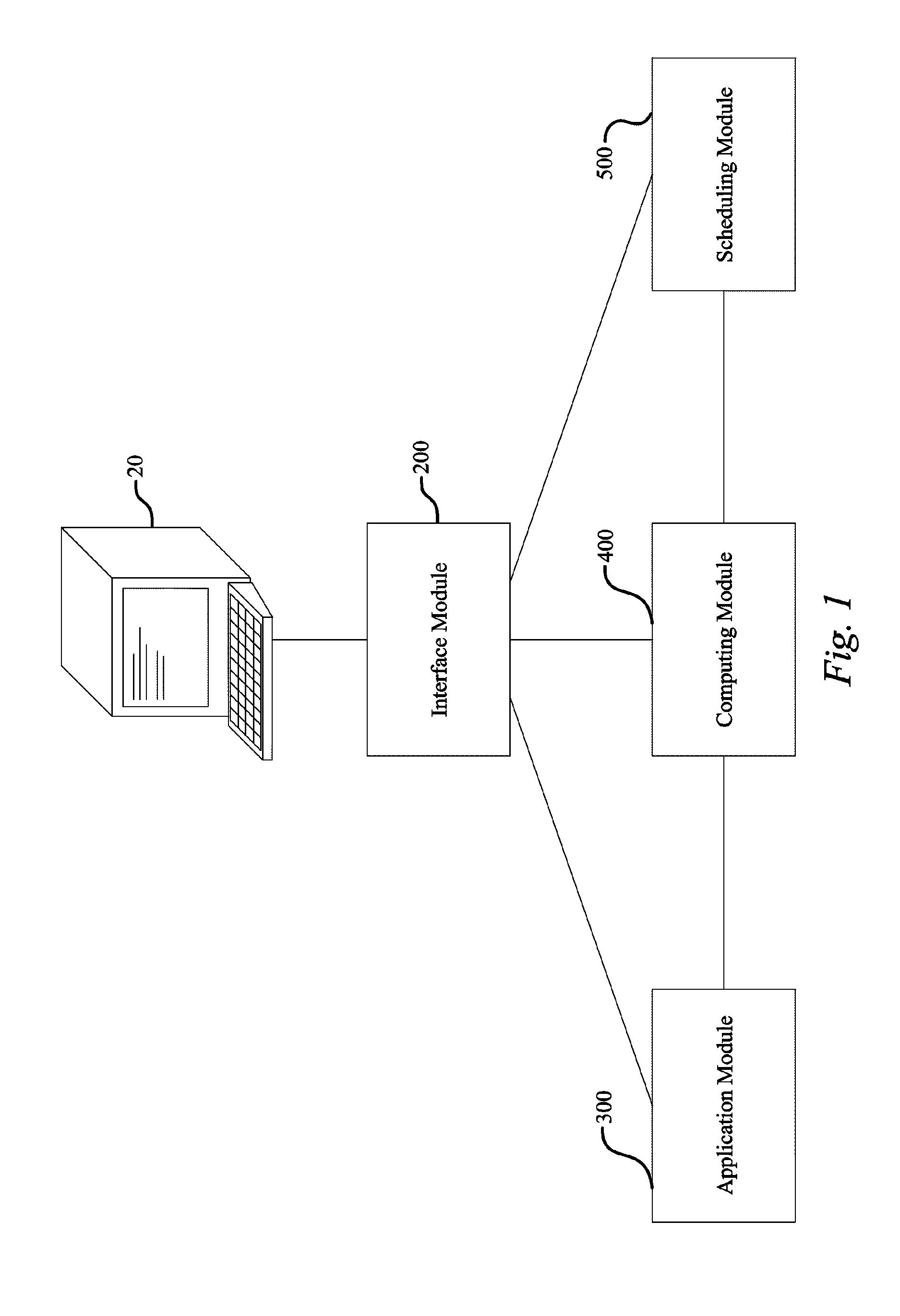

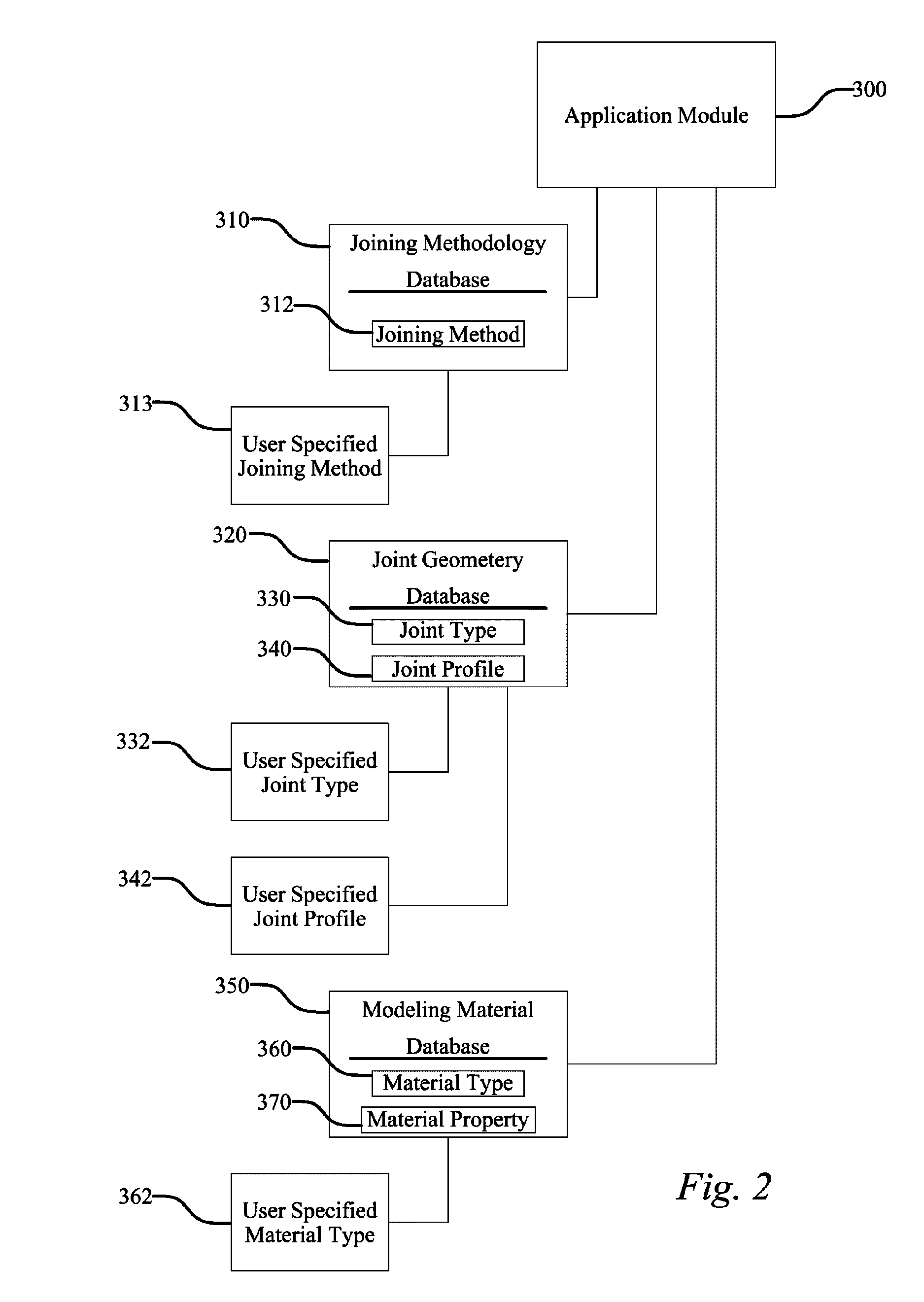

Remote High-Performance Computing Material Joining and Material Forming Modeling System and Method

InactiveUS20100121472A1Arc welding apparatusAnalogue computers for control systemsPerformance computingModelSim

A remote high-performance computing material joining and material forming modeling system (100) enables a remote user (10) using a user input device (20) to use high speed computing capabilities to model materials joining and material forming processes. The modeling system (100) includes an interface module (200), an application module (300), a computing module (400), and a scheduling module (500). The interface module (200) is in operative communication with the user input device (20), as well as the application module (300), the computing module (400), and the scheduling module (500). The application module (300) is in operative communication with the interface module (200) and the computing module (400). The scheduling module (500) is in operative communication with the interface module (200) and the computing module (400). Lastly, the computing module (400) is in operative communication with the interface module (200), the application module (300), and the scheduling module (500).

Owner:EDISON WELDING INSTITUTE INC

Multi-thread runtime system

ActiveUS20070294696A1Error detection/correctionSoftware engineeringPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Systems and methods for dynamically choosing a processing element for a compute kernel

ActiveUS8108844B2Error detection/correctionSoftware engineeringPerformance computingProcessing element

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Multi-thread runtime system

ActiveUS7814486B2Software engineeringError detection/correctionPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

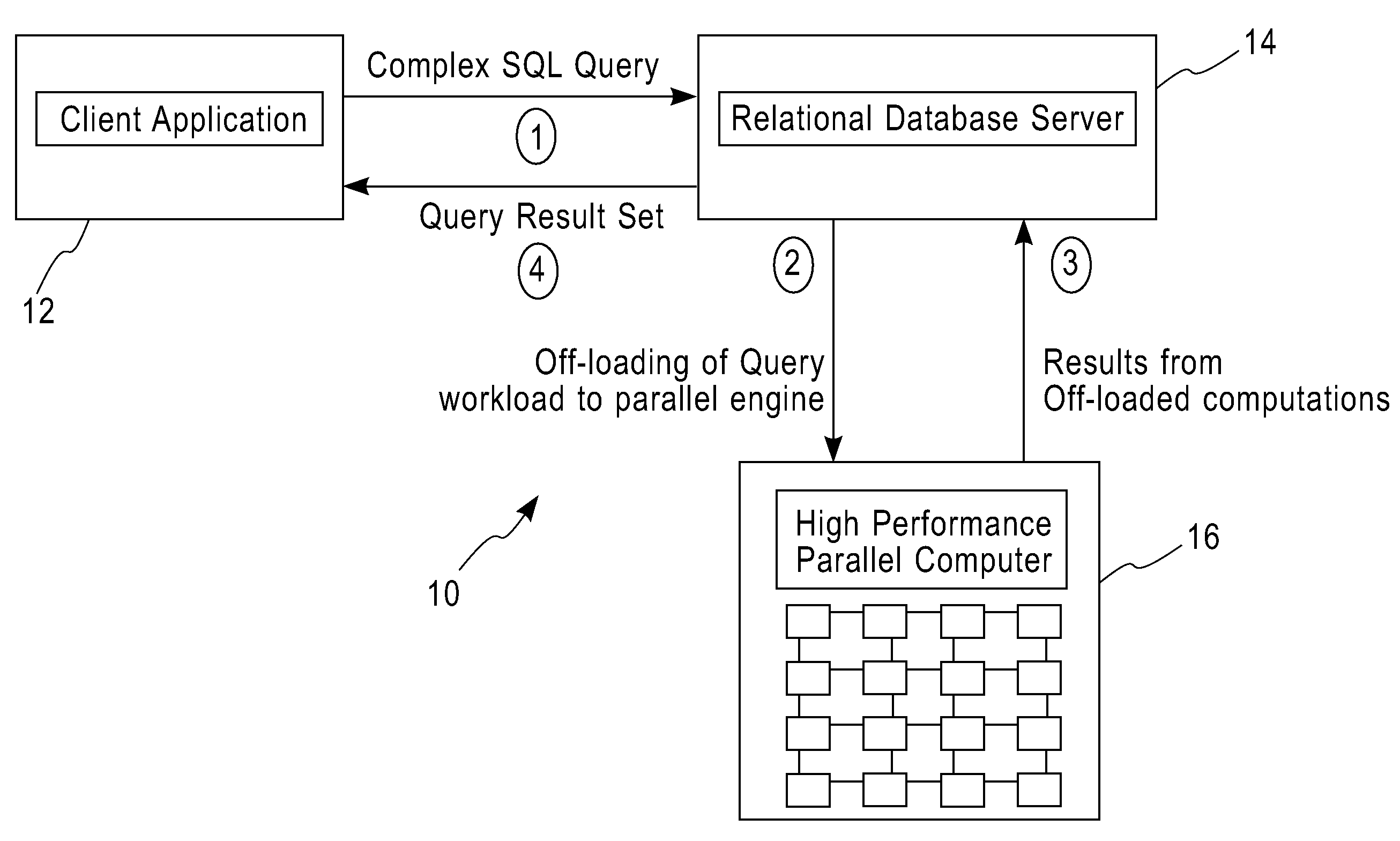

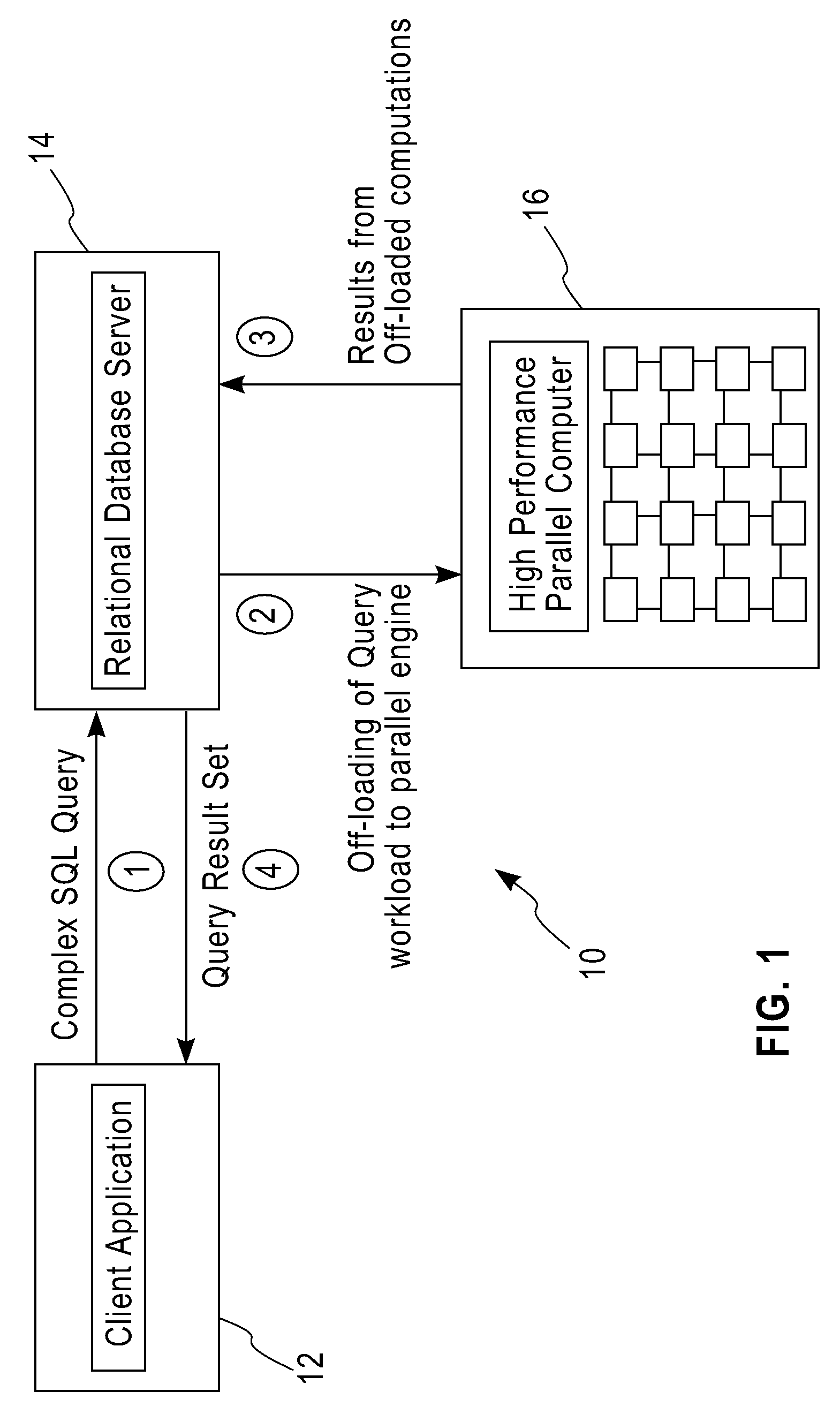

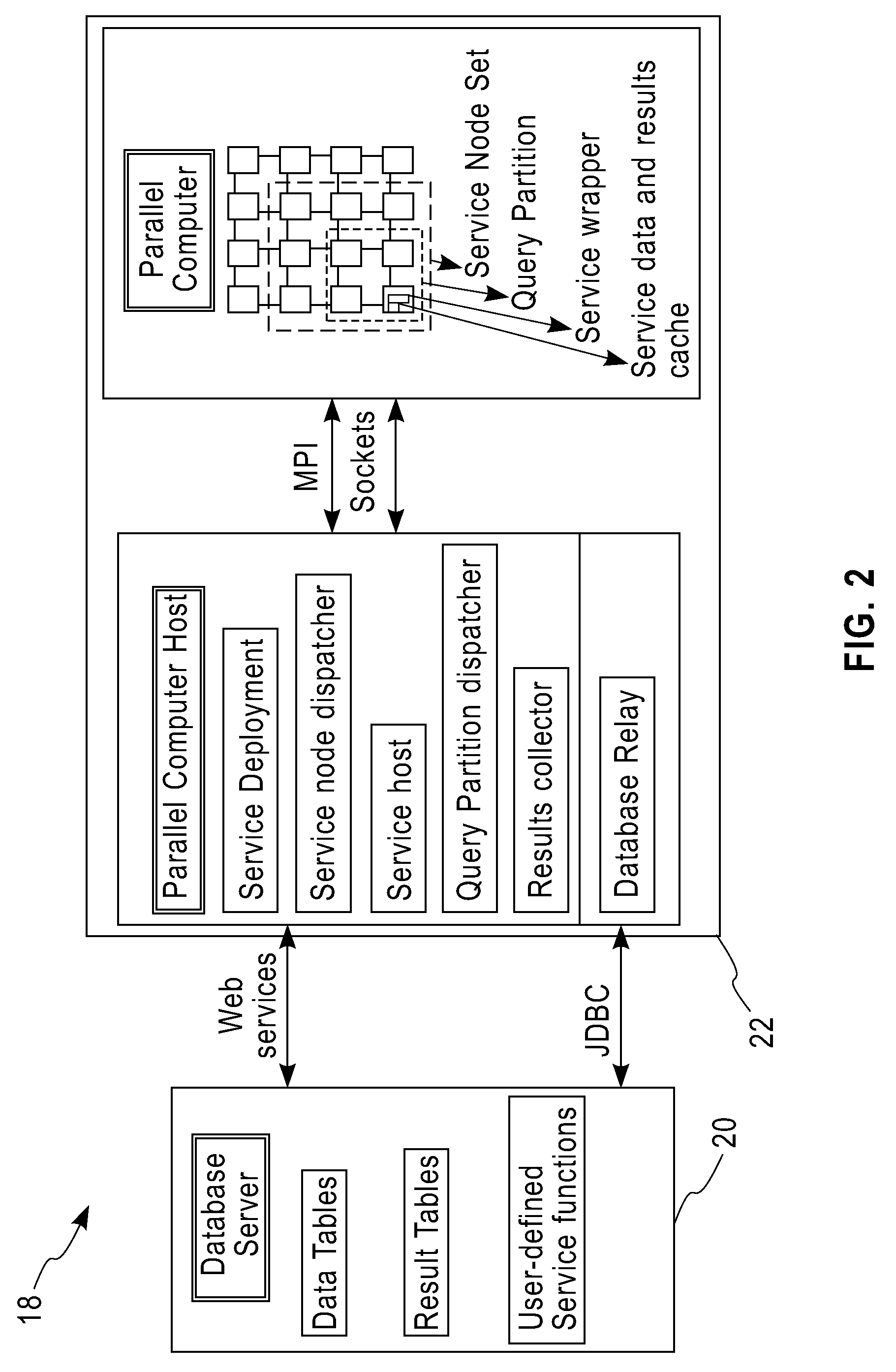

System and method for executing compute-intensive database user-defined programs on an attached high-performance parallel computer

InactiveUS20090077011A1Improve query performanceEasy to guaranteeDigital data information retrievalDigital data processing detailsDatabase queryData set

The invention pertains to a system and method for dispatching and executing the compute-intensive parts of the workflow for database queries on an attached high-performance, parallel computing platform. The performance overhead for moving the required data and results between the database platform and the high-performance computing platform where the workload is executed is amortized in several ways, for example,by exploiting the fine-grained parallelism and superior hardware performance on the parallel computing platform for speeding up compute-intensive calculations,by using in-memory data structures on the parallel computing platform to cache data sets between a sequence of time-lagged queries on the same data, so that these queries can be processed without further data transfer overheads,by replicating data within the parallel computing platform so that multiple independent queries on the same target data set can be simultaneously processed using independent parallel partitions of the high-performance computing platform.A specific embodiment of this invention was used for deploying a bio-informatics application involving gene and protein sequence matching using the Smith-Waterman algorithm on a database system connected via an Ethernet local area network to a parallel supercomputer.

Owner:IBM CORP

Systems and methods for determining compute kernels for an application in a parallel-processing computer system

ActiveUS8136104B2Error detection/correctionMultiprogramming arrangementsPerformance computingParallel processing

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

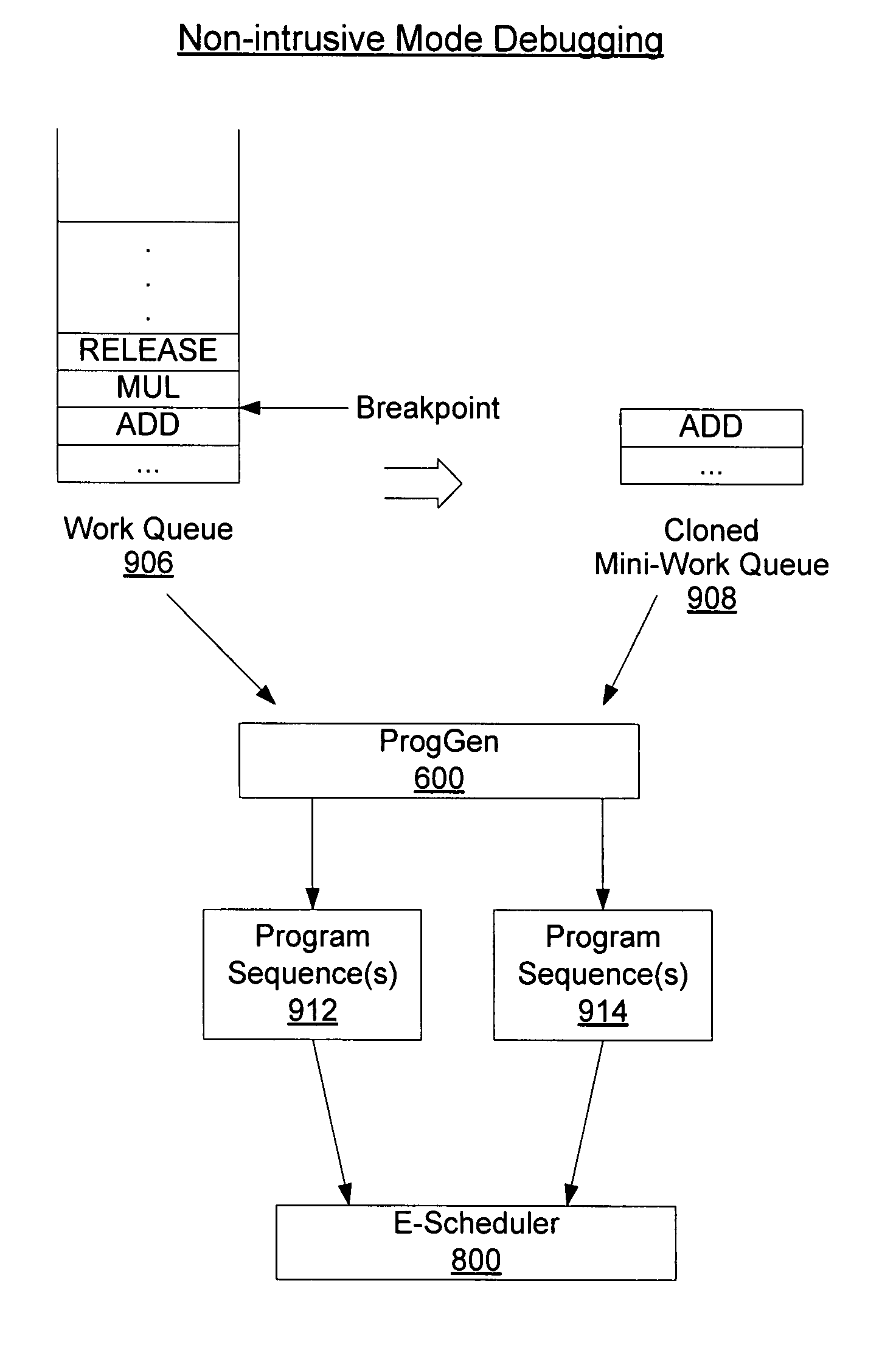

Systems and methods for debugging an application running on a parallel-processing computer system

ActiveUS8024708B2Error detection/correctionSpecific program execution arrangementsPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

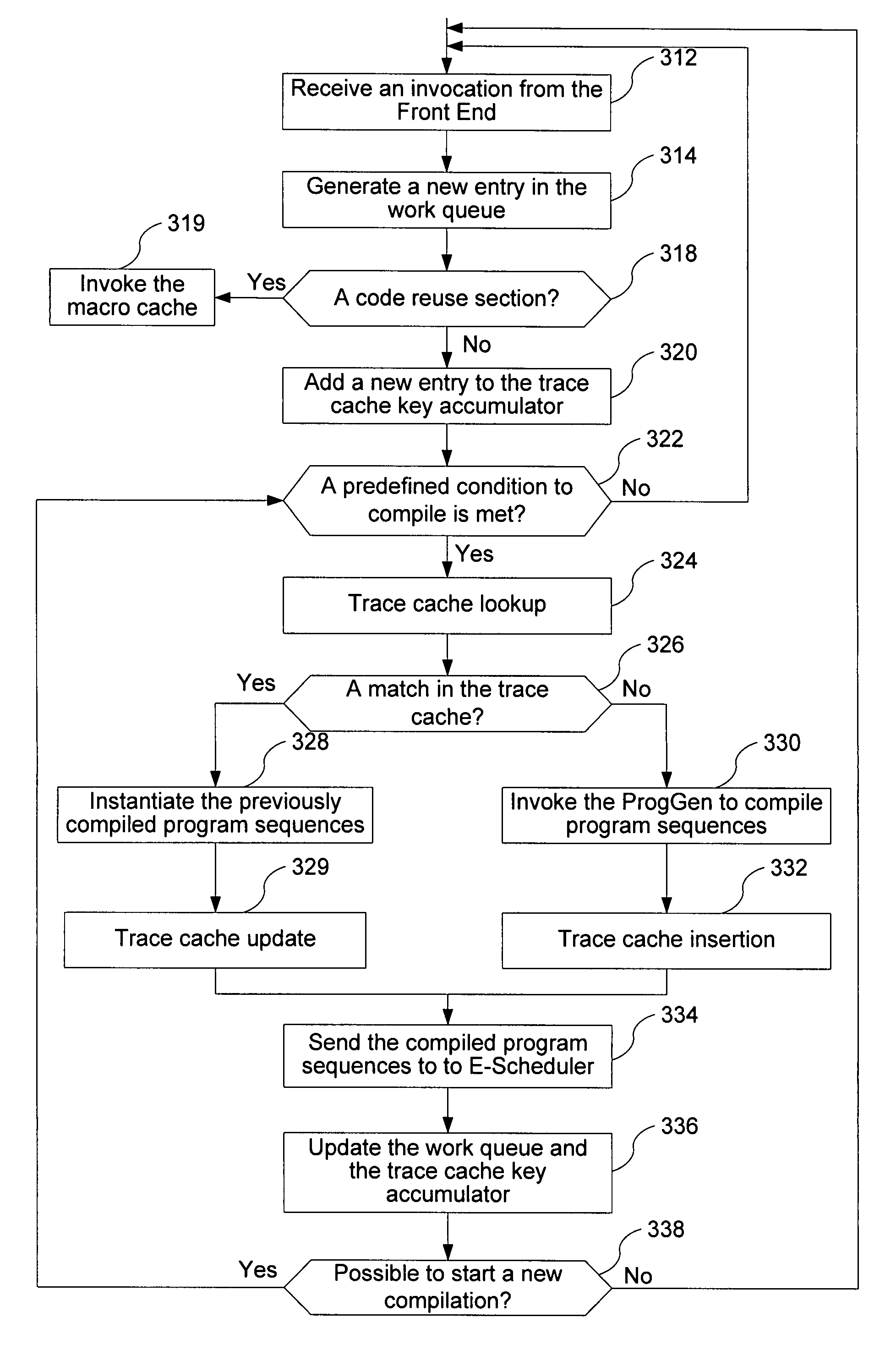

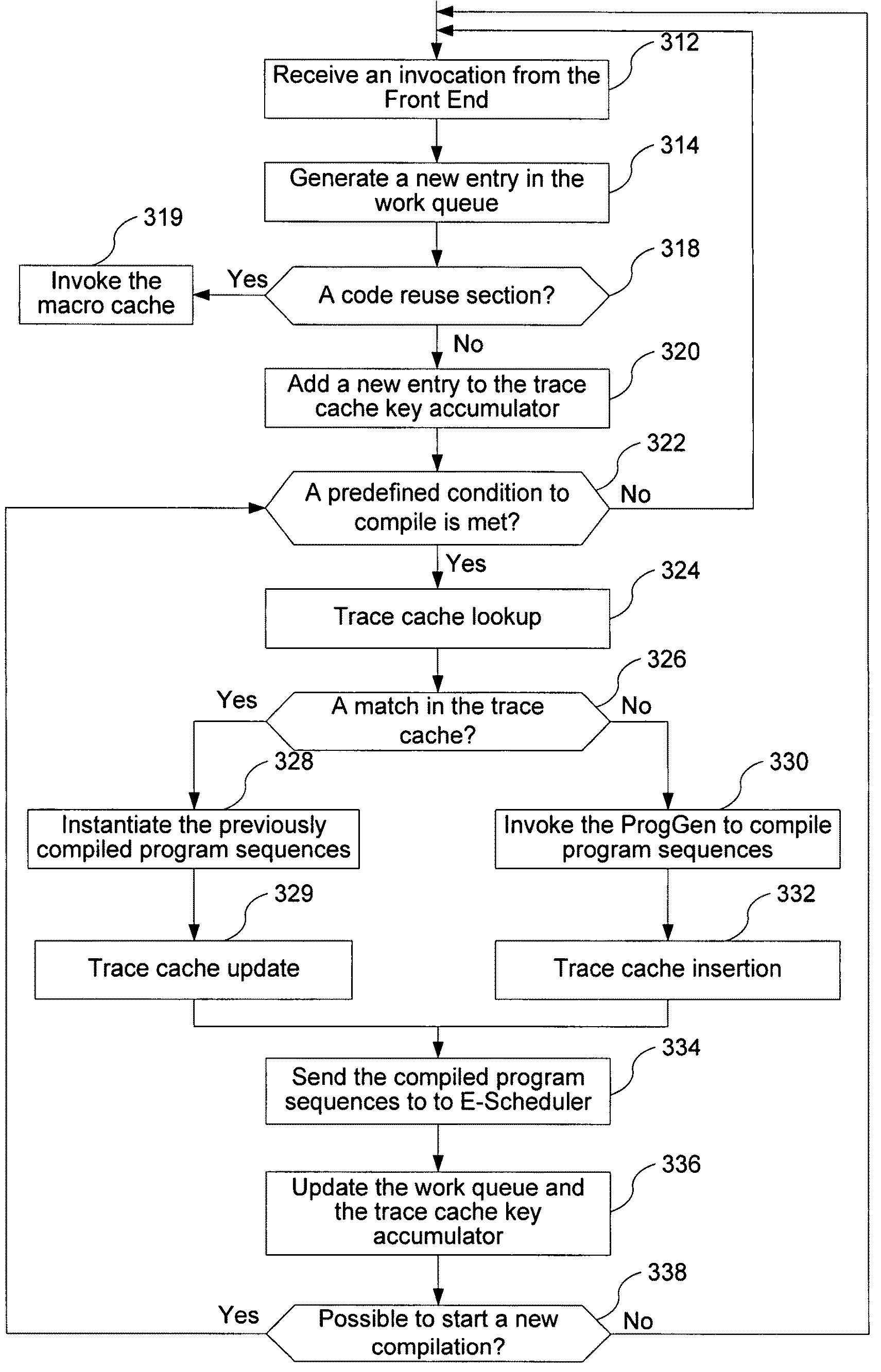

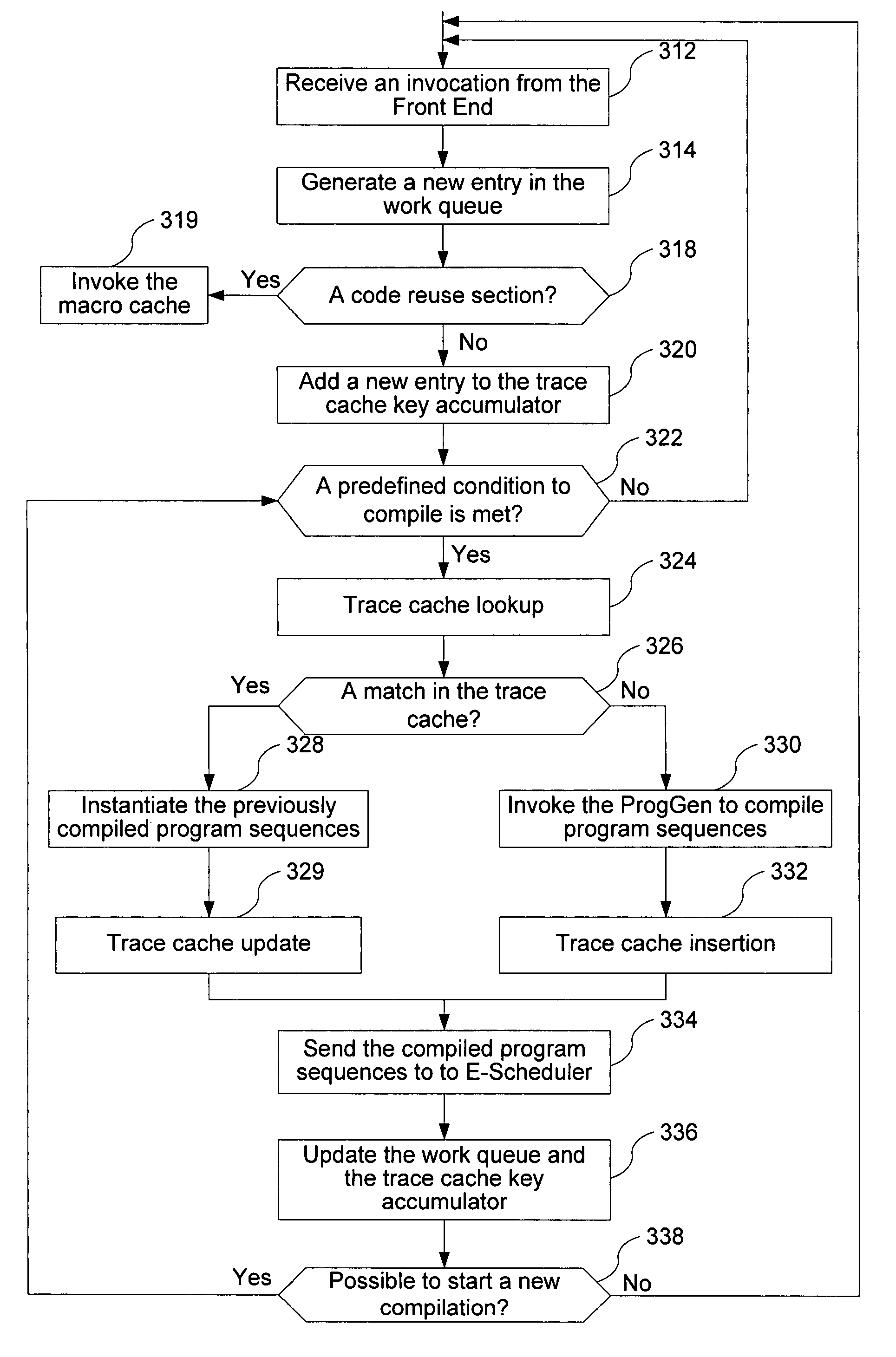

Systems and methods for caching compute kernels for an application running on a parallel-processing computer system

ActiveUS20070294682A1Multiprogramming arrangementsMemory systemsPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

Systems and methods for debugging an application running on a parallel-processing computer system

ActiveUS20070294671A1Error detection/correctionSpecific program execution arrangementsPerformance computingApplication software

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

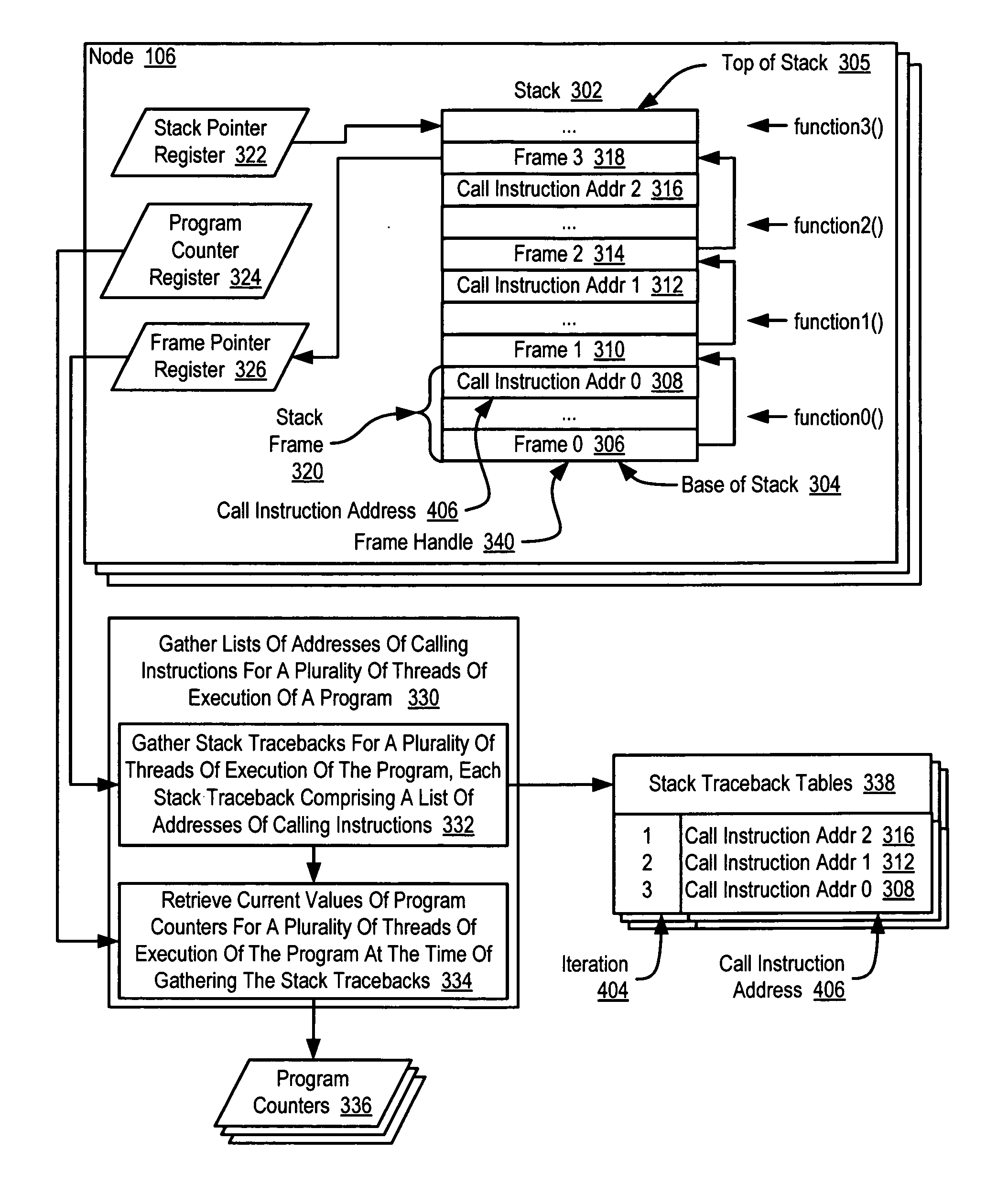

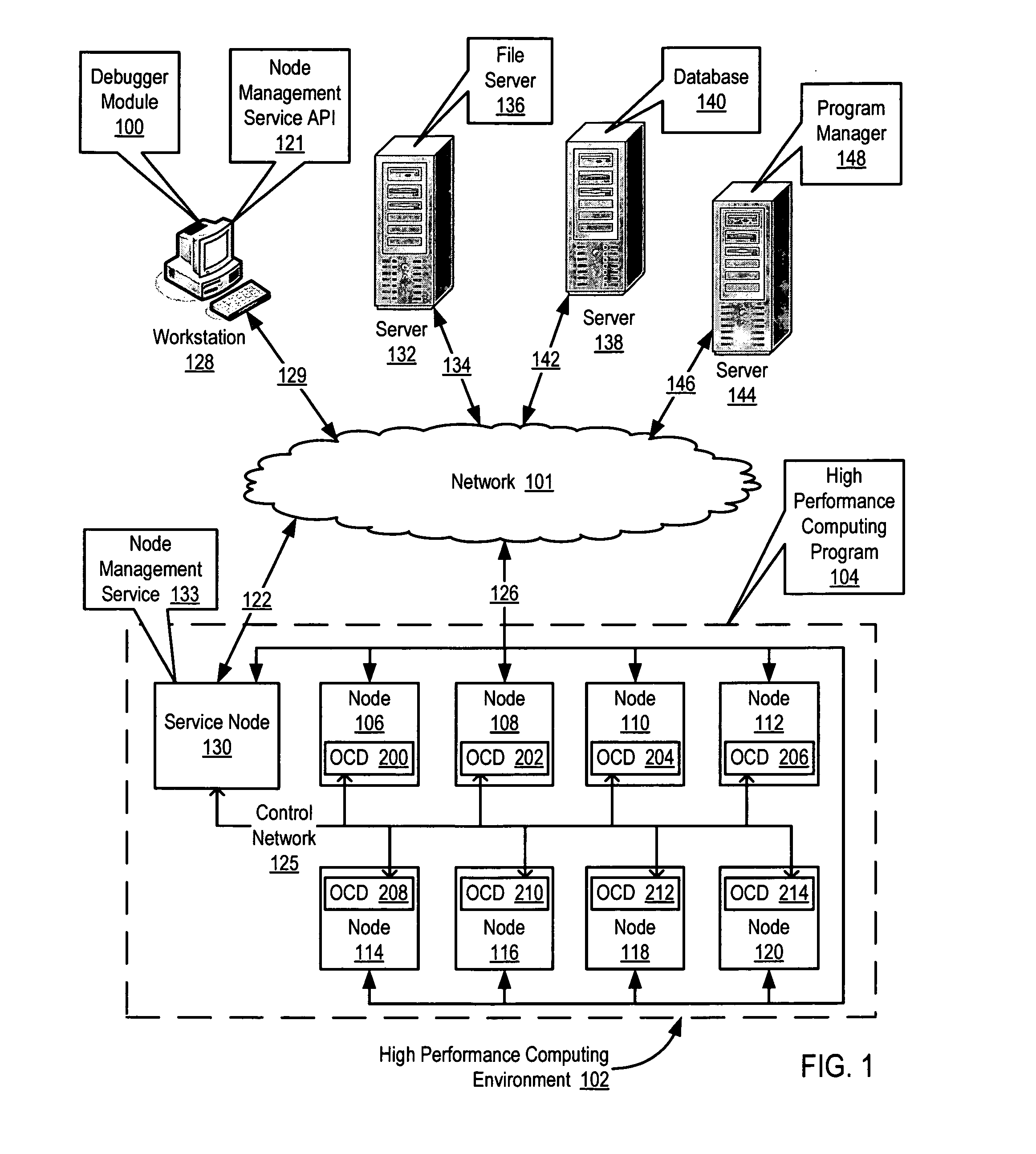

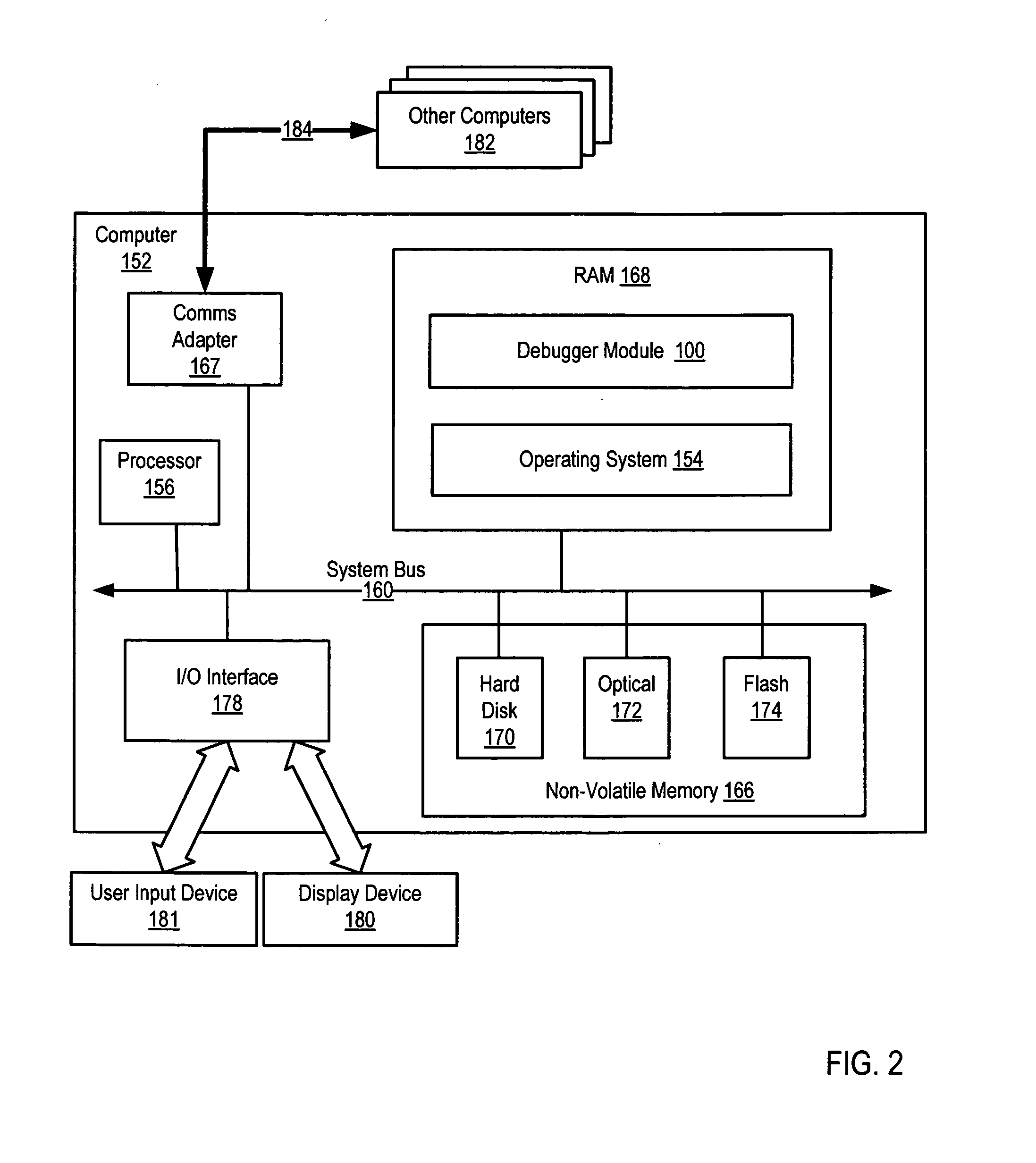

Debugging a high performance computing program

InactiveUS20070234294A1Improve performanceError detection/correctionSpecific program execution arrangementsPerformance computingParallel computing

Methods, apparatus, and computer program products are disclosed for debugging a high performance computing program by gathering lists of addresses of calling instructions for a plurality of threads of execution of the program, assigning the threads to groups in dependence upon the addresses, and displaying the groups to identify defective threads.

Owner:IBM CORP

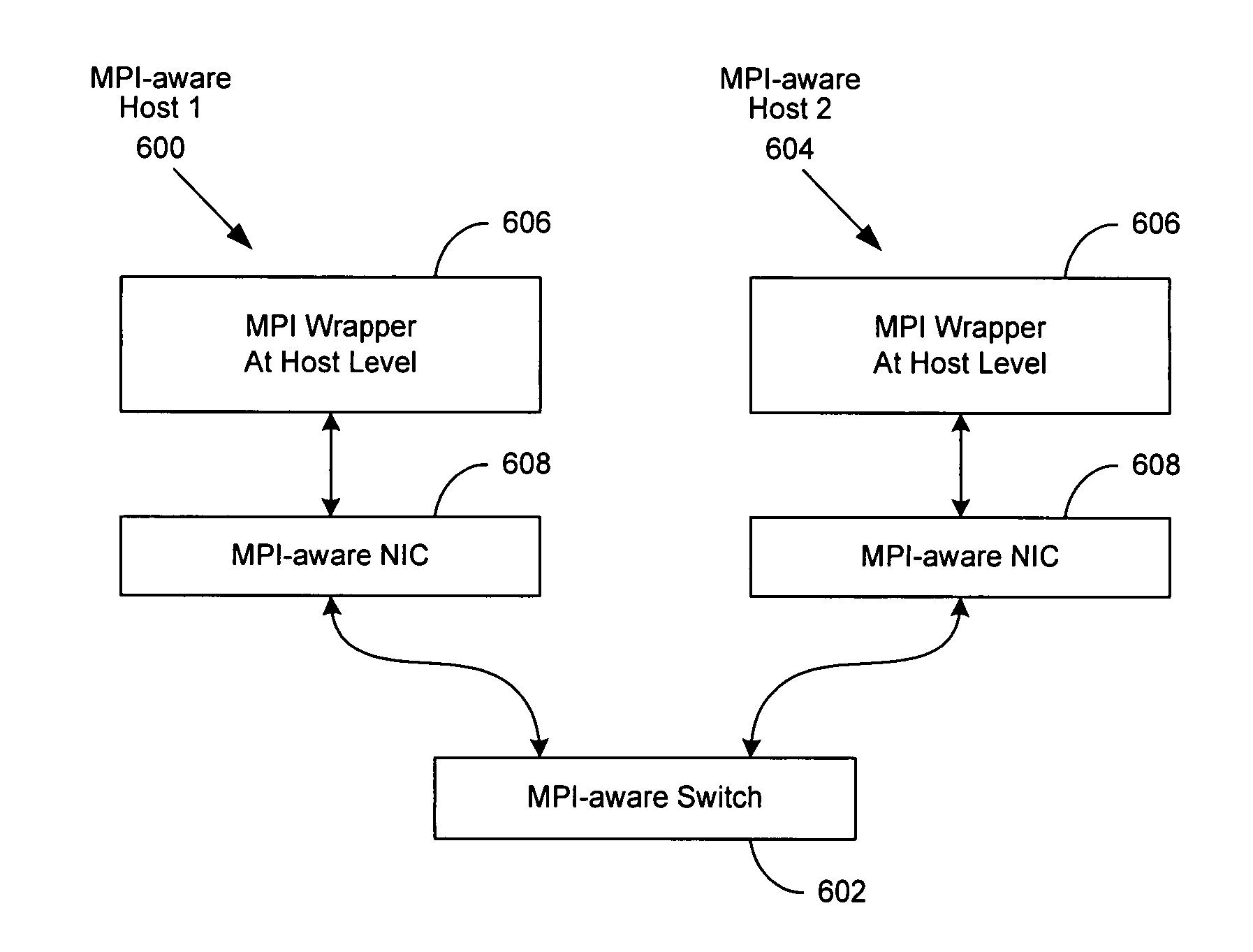

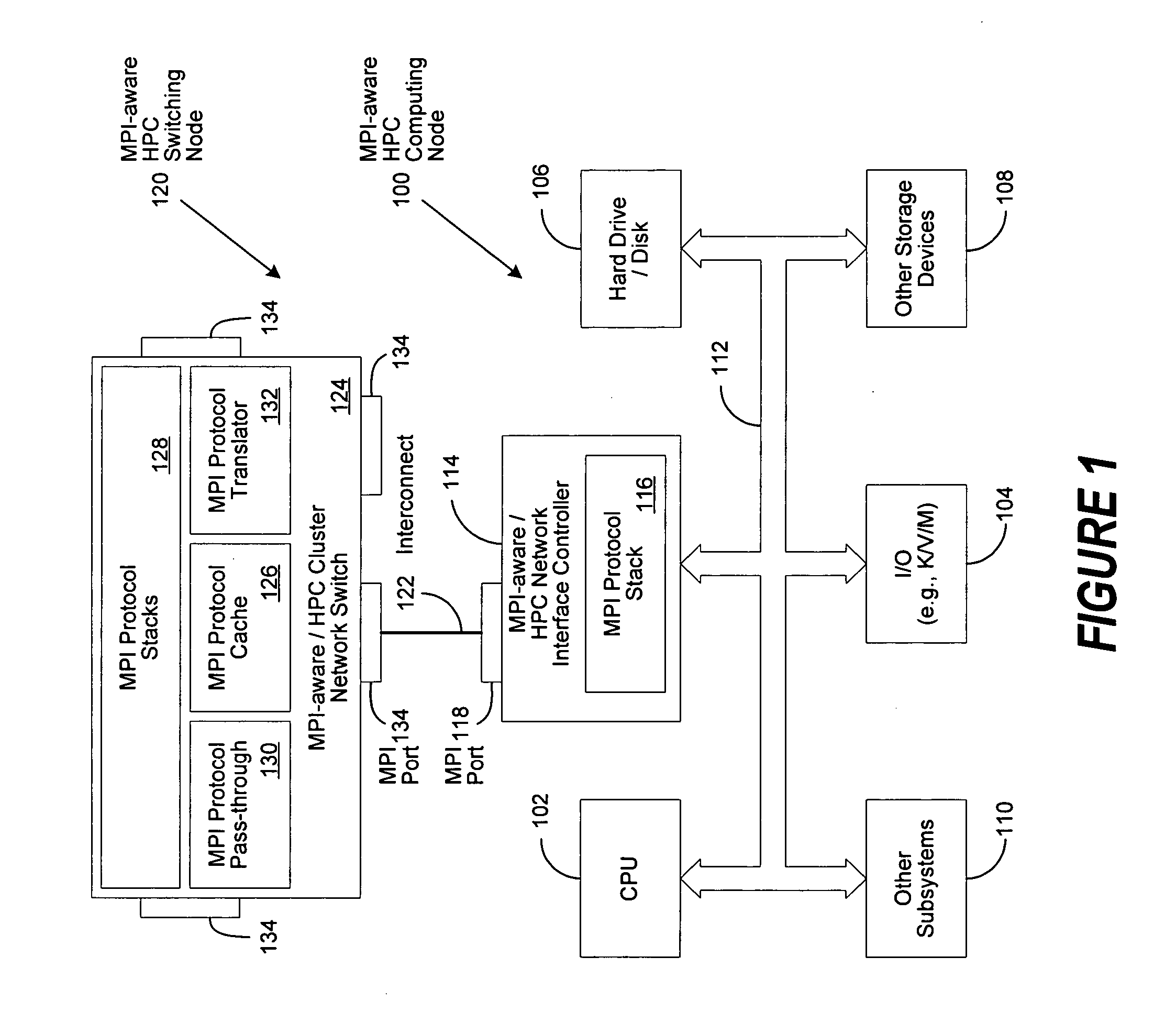

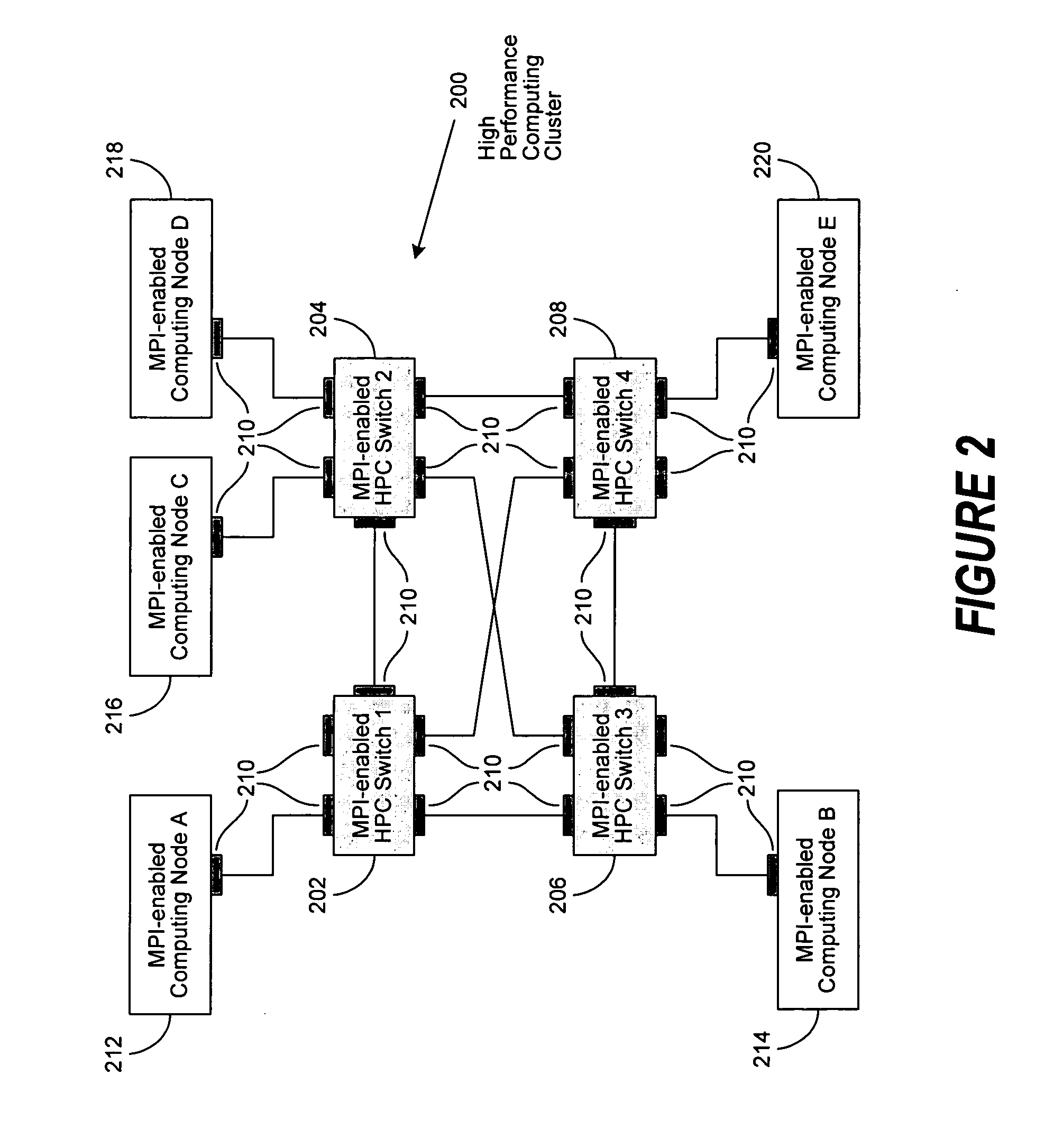

MPI-aware networking infrastructure

InactiveUS20060282838A1Reduced message-passing protocol communication overheadImprove performanceMultiprogramming arrangementsTransmissionExtensibilityInformation processing

The present invention provides for reduced message-passing protocol communication overhead between a plurality of high performance computing (HPC) cluster computing nodes. In particular, HPC cluster performance and MPI host-to-host functionality, performance, scalability, security, and reliability can be improved. A first information handling system host, and its associated network interface controller (NIC) is enabled with a lightweight implementation of a message-passing protocol, which does not require the use of intermediate protocols. A second information handling system host, and its associated NIC is enabled with the same lightweight message-passing protocol implementation. A high-speed network switch, likewise enabled with the same lightweight message-passing protocol implementation, interconnected to the first host and the second host, and potentially to a plurality of like hosts and switches, can create an HPC cluster network capable of higher performance and greater scalability.

Owner:DELL PROD LP

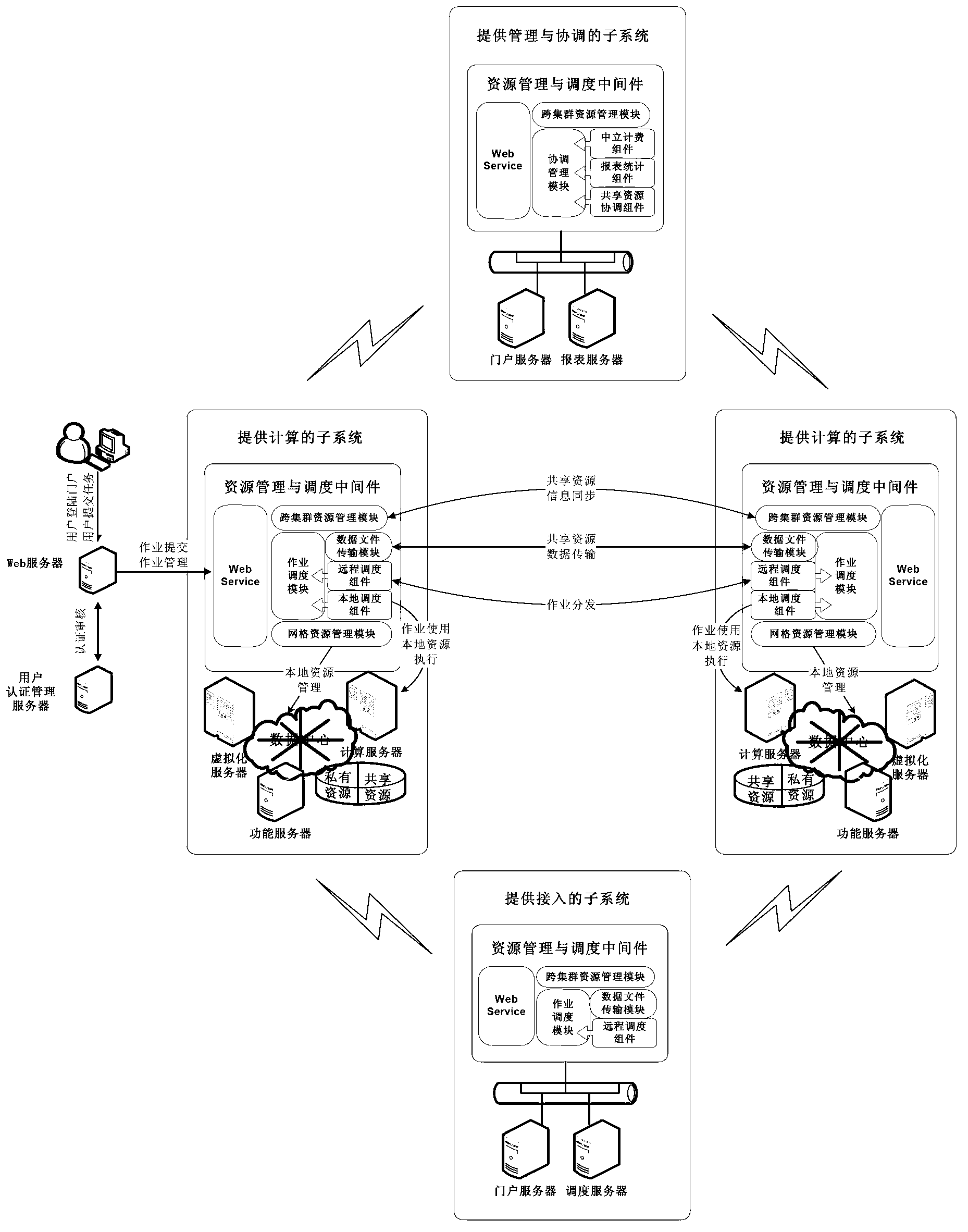

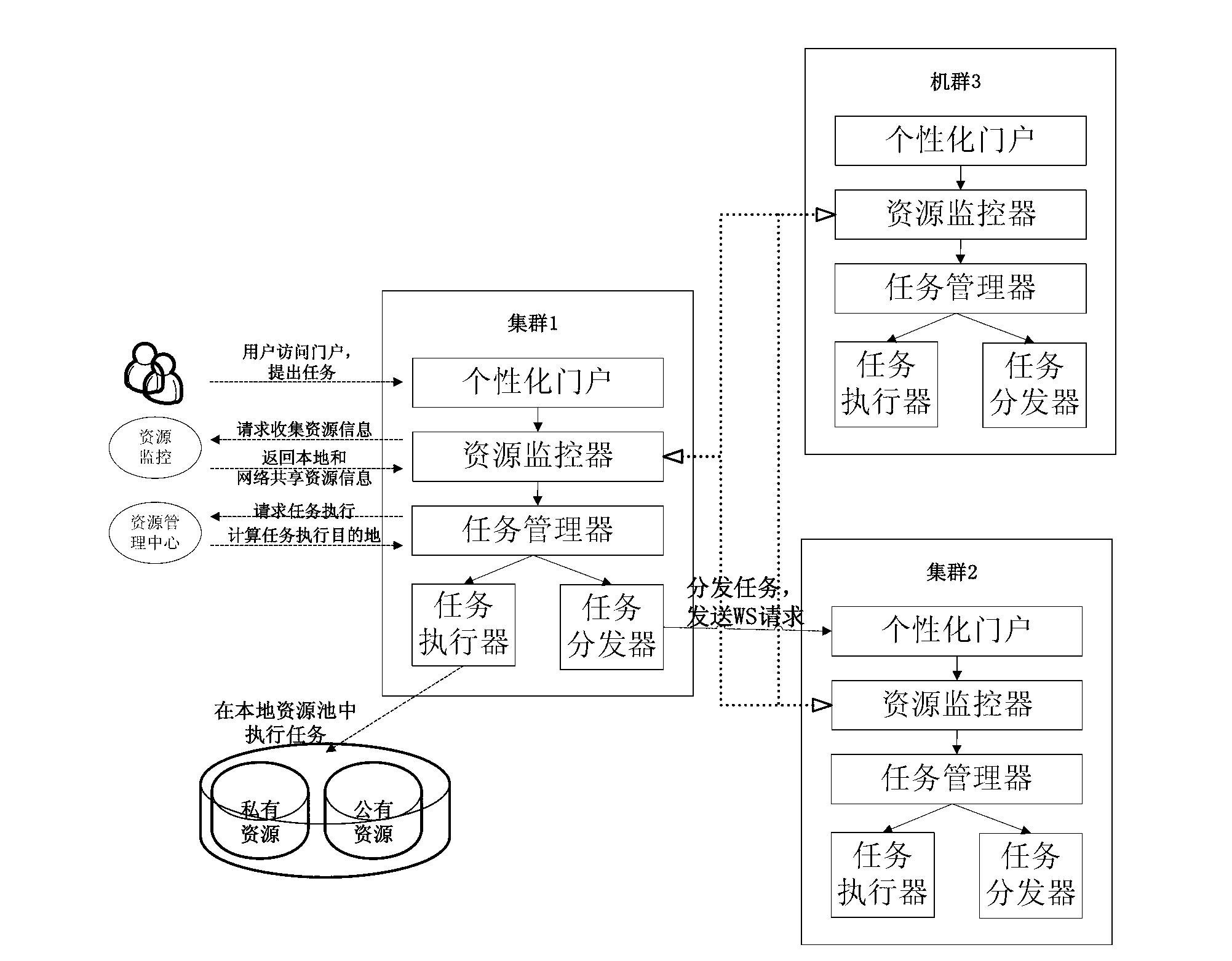

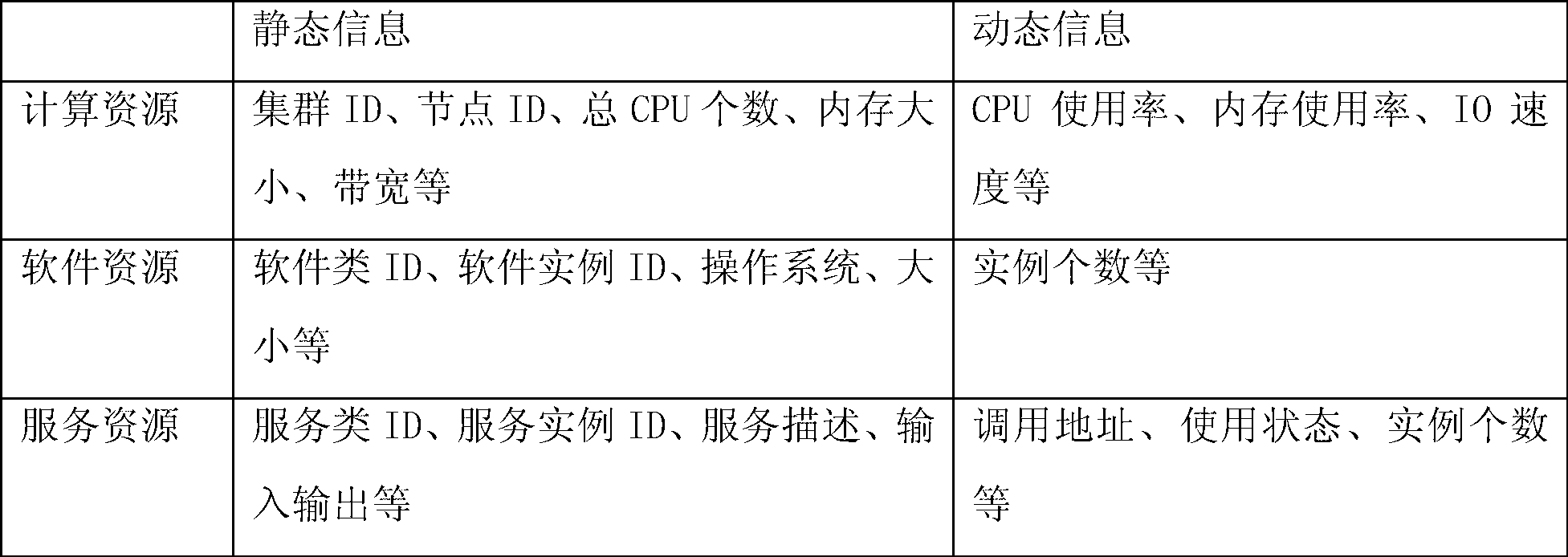

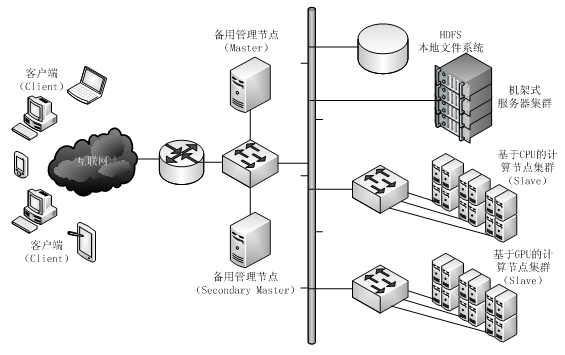

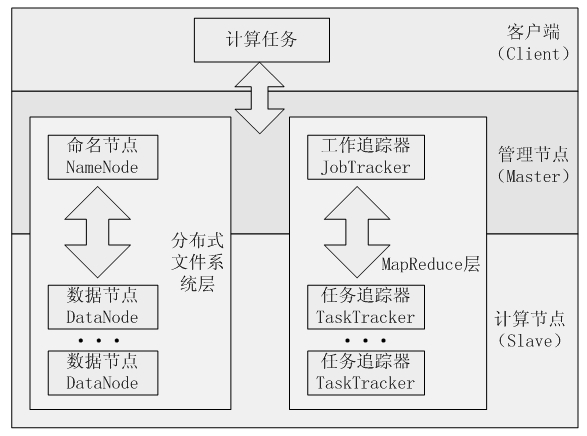

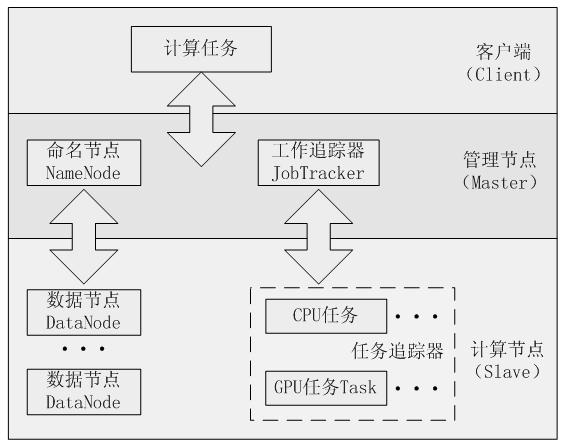

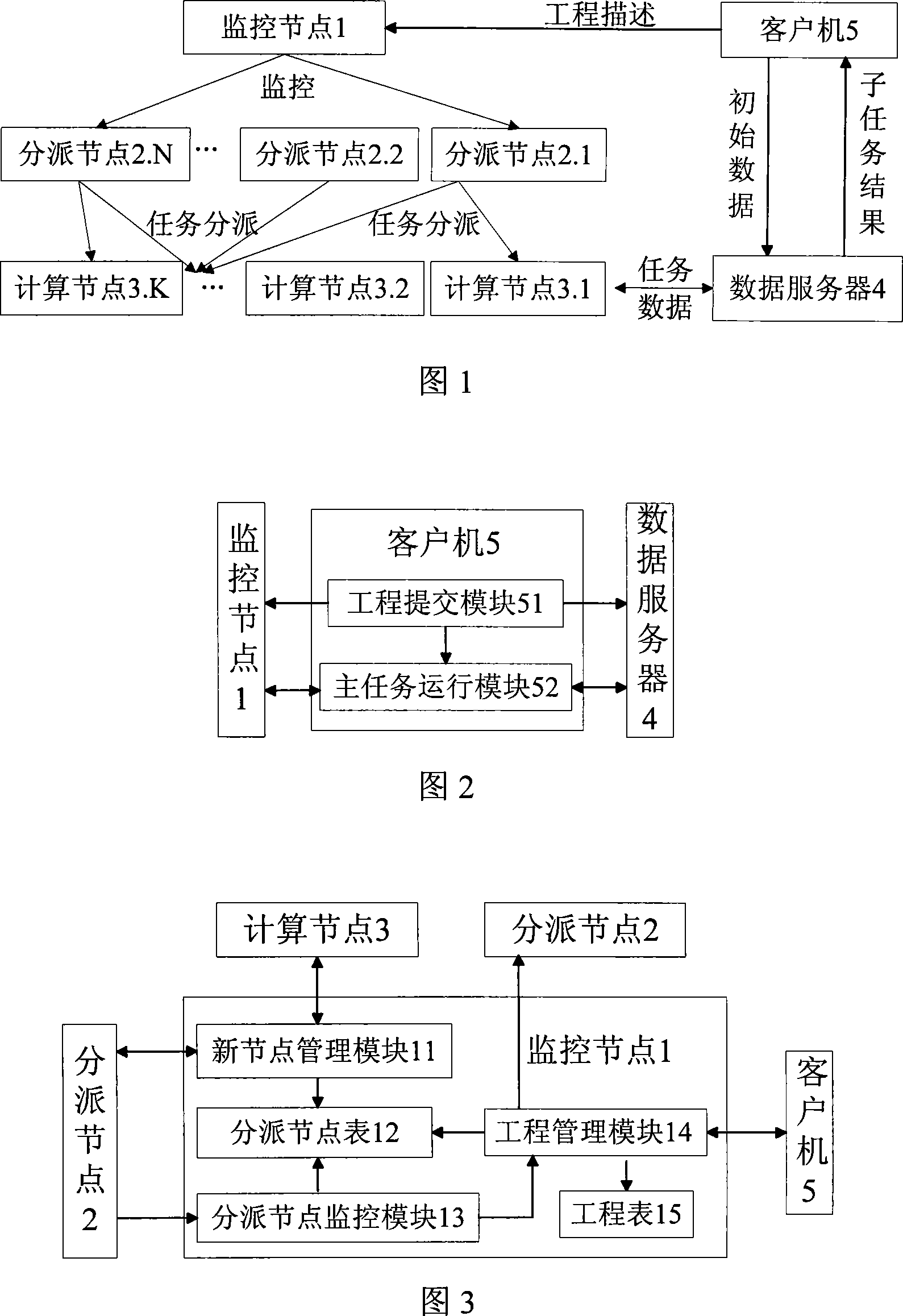

Decentralized cross cluster resource management and task scheduling system and scheduling method

ActiveCN103207814AImprove execution efficiencyImprove experienceResource allocationEnergy efficient computingData centerPerformance computing

The invention relates to a decentralized cross cluster resource management and task scheduling system and a scheduling method. The scheduling system comprises a subsystem for providing management and coordination service, a subsystem for providing computing service and a subsystem for providing access service, the subsystem for providing the management and coordination service acquires information of other subsystems, provides monitoring, reporting, billing, resource sharing coordination work, and provides decision reference for management and planning of a high-performance computing system. The subsystem for providing the computing service is provided with a data center of a high-performance computing node and collects local and remote resources, so that work scheduling is performed according to the local and remote resources. The subsystem for providing the access service provides localized work submission and access management service for a user. The decentralized cross cluster resource management and task scheduling method integrates single cluster resources, on one hand, work execution efficiency and user experience are improved, on the other hand, existing resources are effectively and maximally used, and the cost for purchasing hardware to expand computing power is saved.

Owner:BEIJING SIMULATION CENT

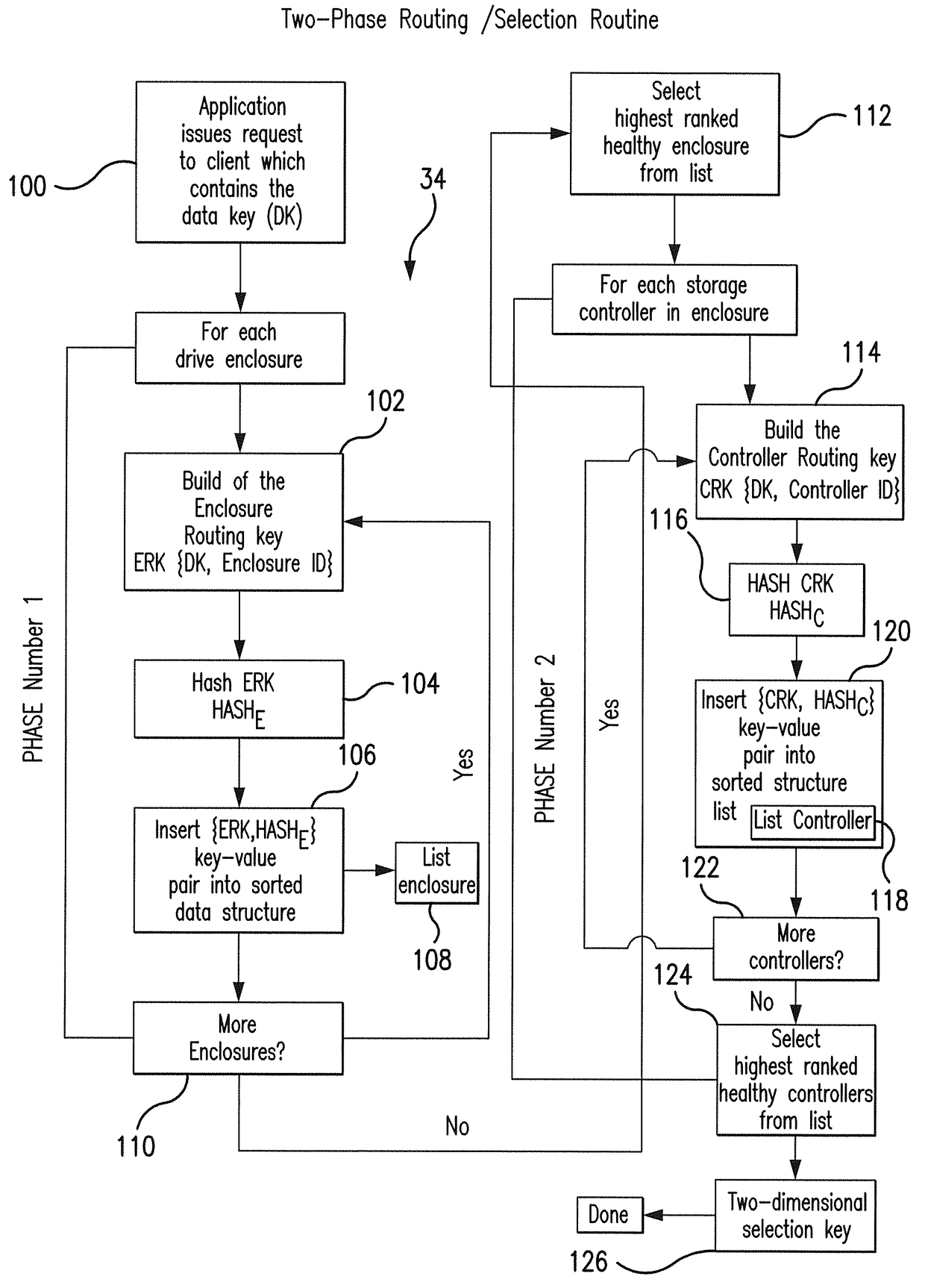

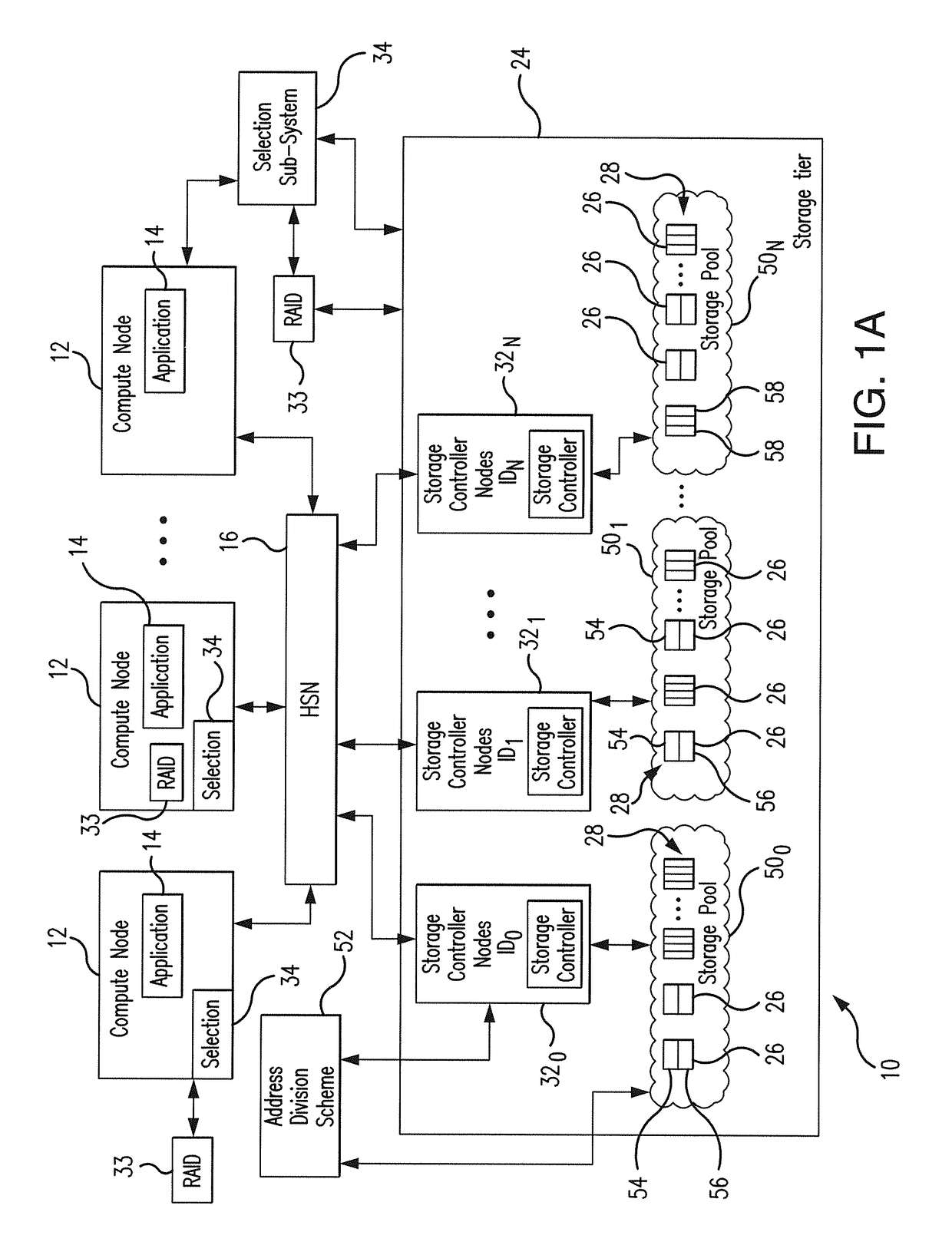

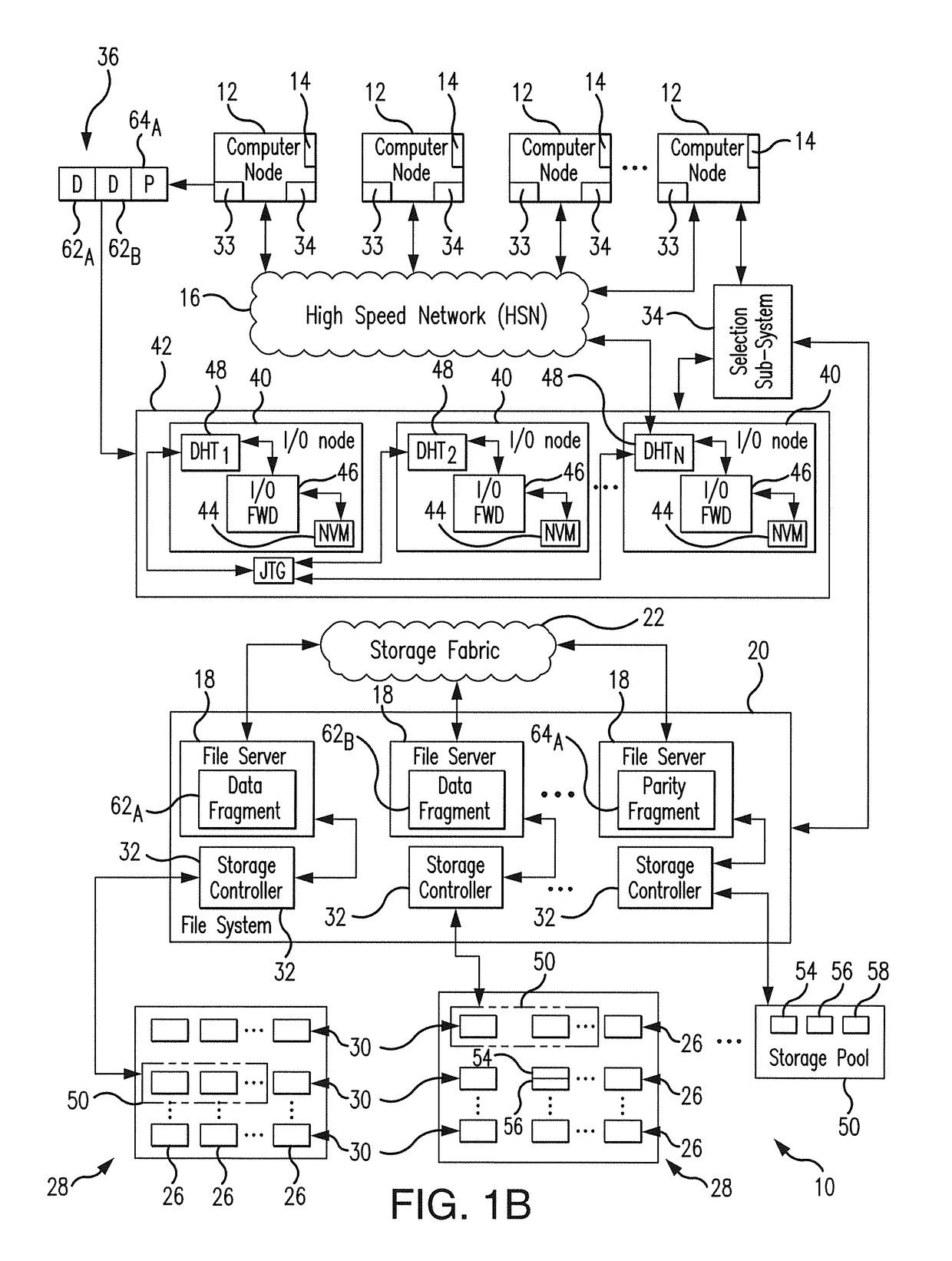

Low latency and reduced overhead data storage system and method for sharing multiple storage devices by high performance computing architectures

ActiveUS9959062B1Improve the level ofReliable rebuildInput/output to record carriersRedundant hardware error correctionData setPerformance computing

A data migration system supports a low-latency and reduced overhead data storage protocol for data storage sharing in a non-collision fashion which does not require inter-communication and permanent arbitration between data storage controllers to decide on the data placement / routing. The multiple data fragments of data sets are prevented from routing to the same storage devices by a multi-step selection protocol which selects (in a first phase of the selection routine) a healthy highest ranked drive enclosure, and further selects (in a second phase of the selection routine) a healthy highest-ranked data storage controller residing in the selected drive enclosure, for routing data fragments to different storage pools assigned to the selected data storage devices for exclusive “writing” and data modification. The selection protocol also contemplates various failure scenarios in a data placement collision free manner.

Owner:DATADIRECT NETWORKS

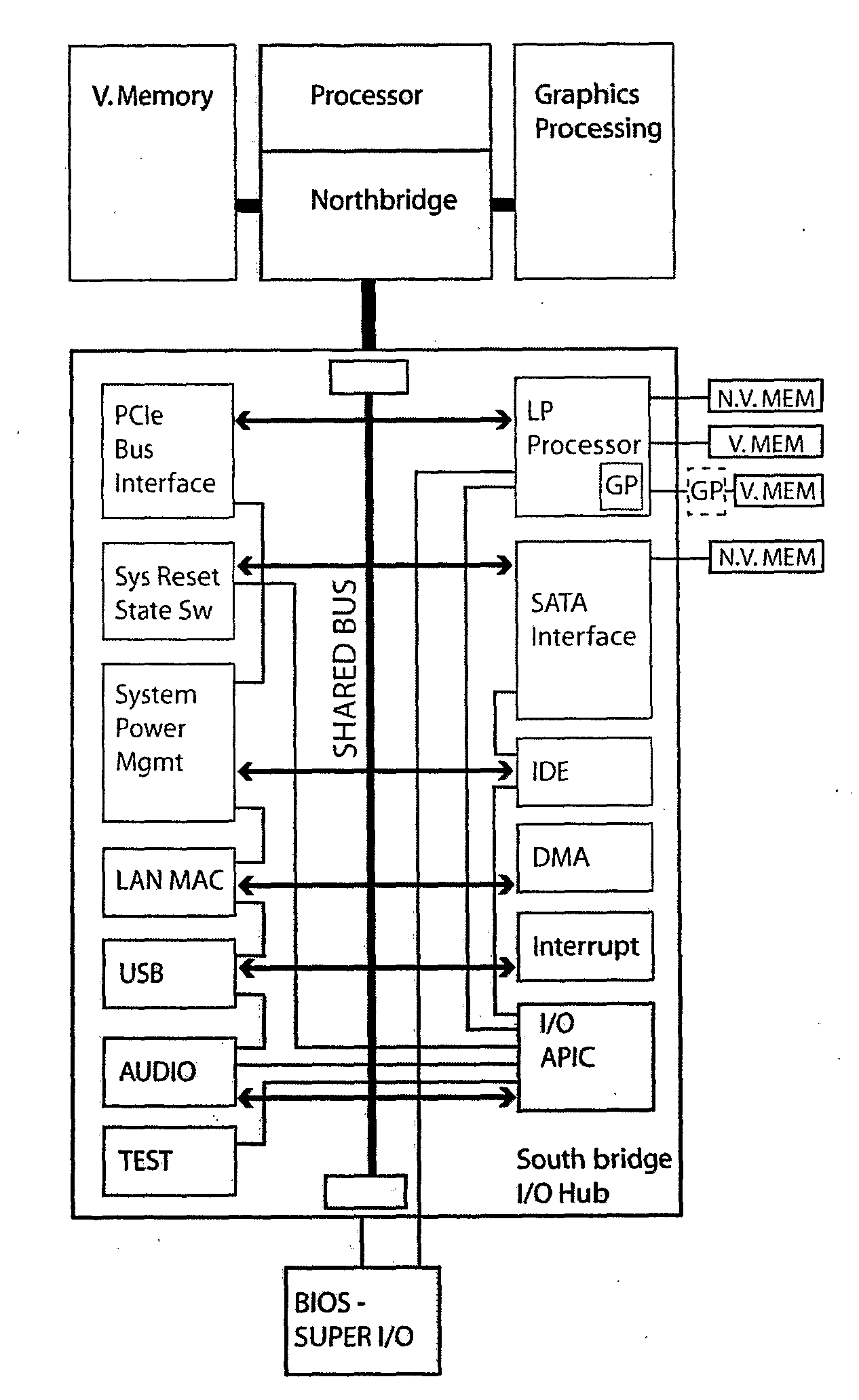

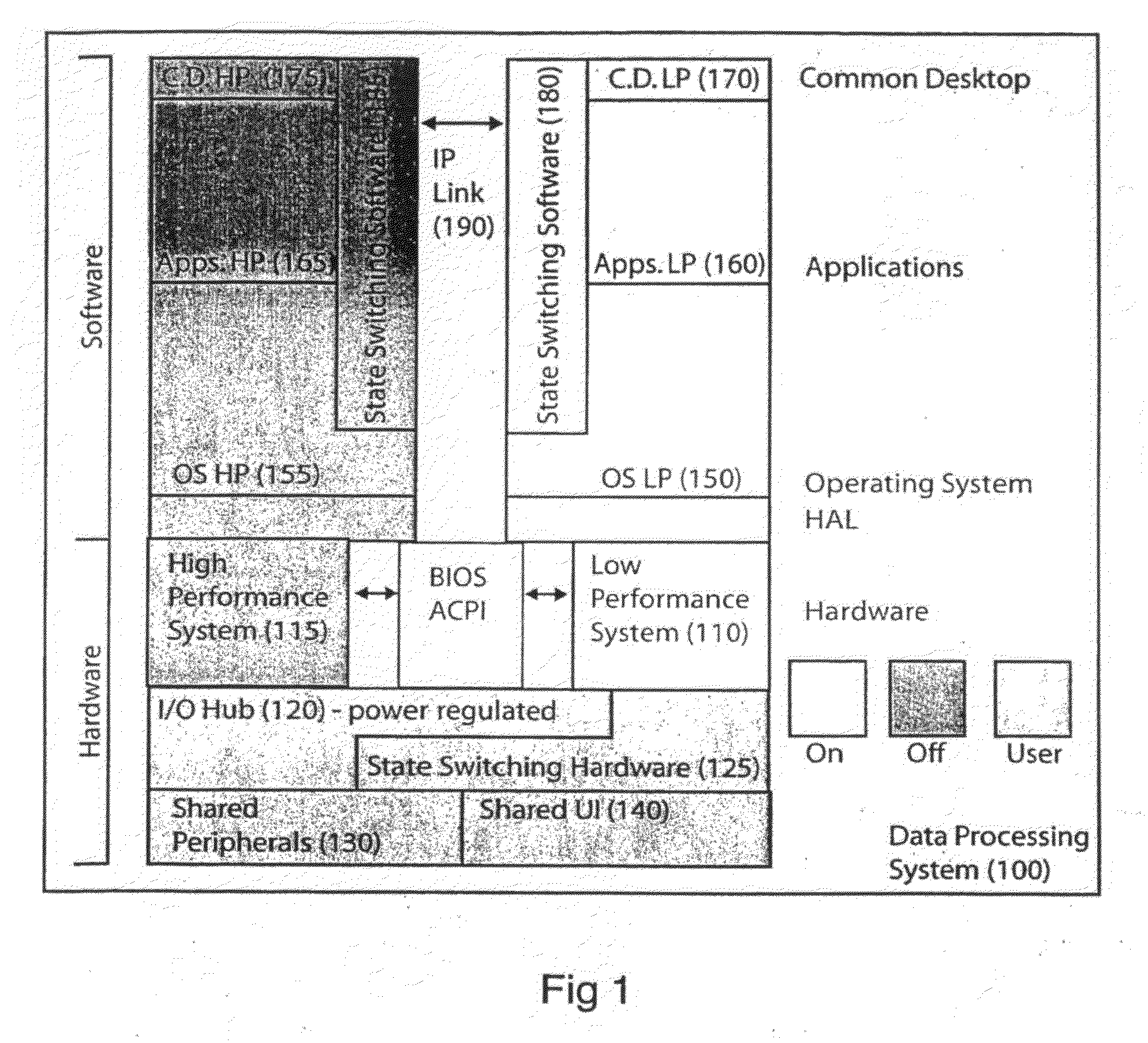

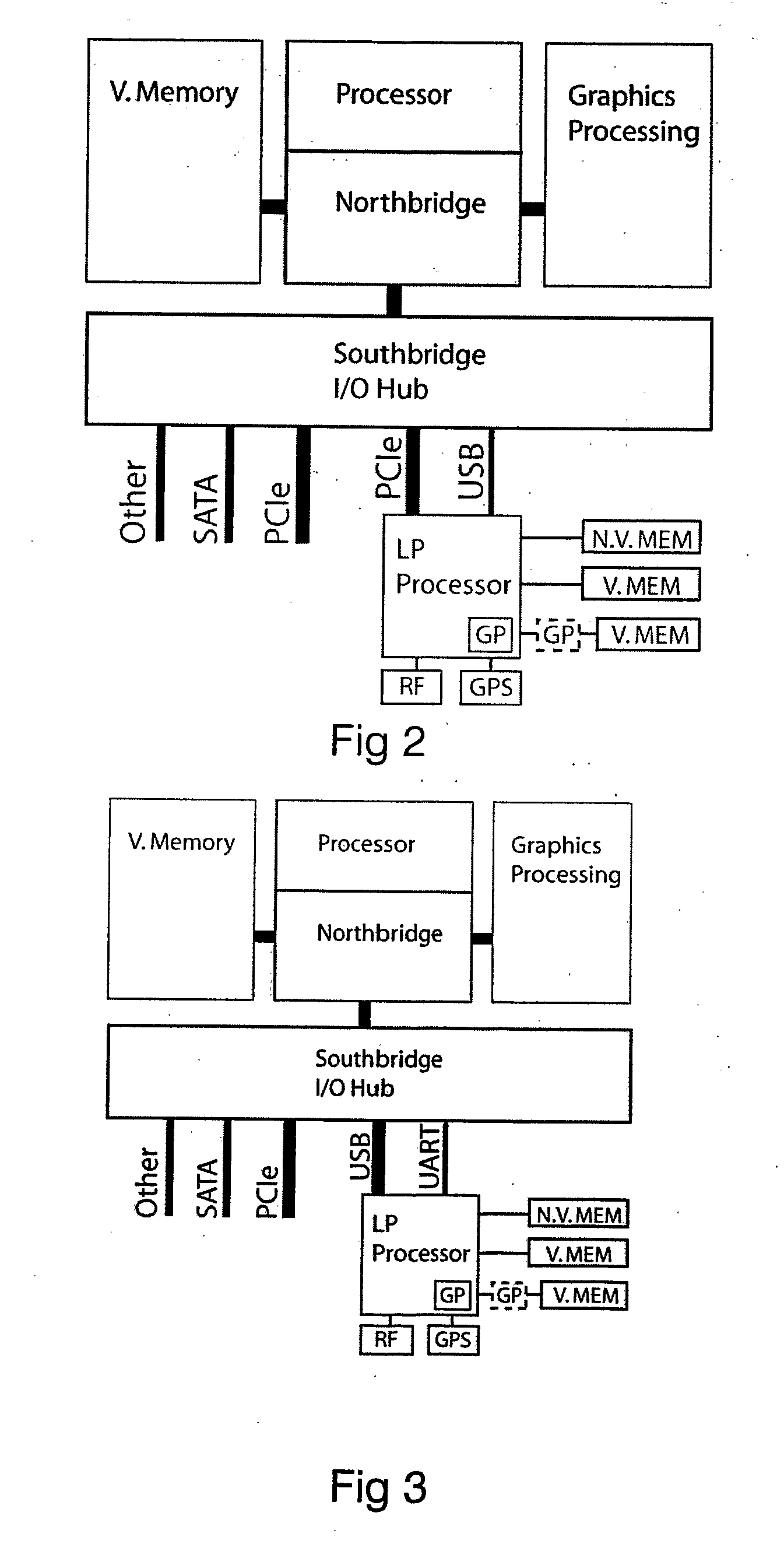

Dual Mode Power-Saving Computing System

ActiveUS20090193243A1Increase power consumptionReduce power consumptionEnergy efficient ICTVolume/mass flow measurementData processing systemPerformance computing

The present invention relates to a data processing system comprising both a high performance computing sub-system having typical high power consumption and a low performance subsystem requiring less power. The data processing system acts as a single computing device by moving the execution of software from the low performance subsystem to the high performance subsystem when high computing power is needed and vice versa when low computing performance is sufficient, allowing in the latter case to put the high performance subsystem into a power saving state. The invention relates also to related algorithms.

Owner:CUPP COMPUTING

Mapreduce-based multi-GPU (Graphic Processing Unit) cooperative computing method

InactiveCN102662639AReduce communicationImprove general performanceConcurrent instruction executionComputational scienceConcurrent computation

The invention discloses a mapreduce-based multi-GPU (Graphic Processing Unit) cooperative computing method, which belongs to the application field of computer software. Corresponding to single-layer parallel architecture of common high-performance GPU computing and MapReduce parallel computing, a programming model adopts a double-layer GPU and MapReduce parallel architecture to help a developer simplify the program model and multiplex the existing concurrent codes through a MapReduce program model with cloud computing concurrent computation by combining the structure characteristic of a GPU plus CPU (Central Processing Unit) heterogeneous system, thus reducing the programming complexity, having certain system disaster tolerance capacity and reducing the dependency of equipment. According to the computing method provided by the invention, the GPU plus MapReduce double concurrent mode can be used in a cloud computing platform or a common distributive computing system so as to realize concurrent processing of MapReduce tasks on a plurality of GPU cards.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

Systems and methods for compiling an application for a parallel-processing computer system

Owner:GOOGLE LLC

Techniques for dynamically assigning jobs to processors in a cluster based on processor workload

InactiveUS20100153541A1Digital computer detailsProgram controlPerformance computingParallel computing

A technique for operating a high performance computing (HPC) cluster includes monitoring workloads of multiple processors included in the HPC cluster. The HPC cluster includes multiple nodes that each include two or more of the multiple processors. One or more threads assigned to one or more of the multiple processors are moved to a different one of the multiple processors based on the workloads of the multiple processors.

Owner:IBM CORP

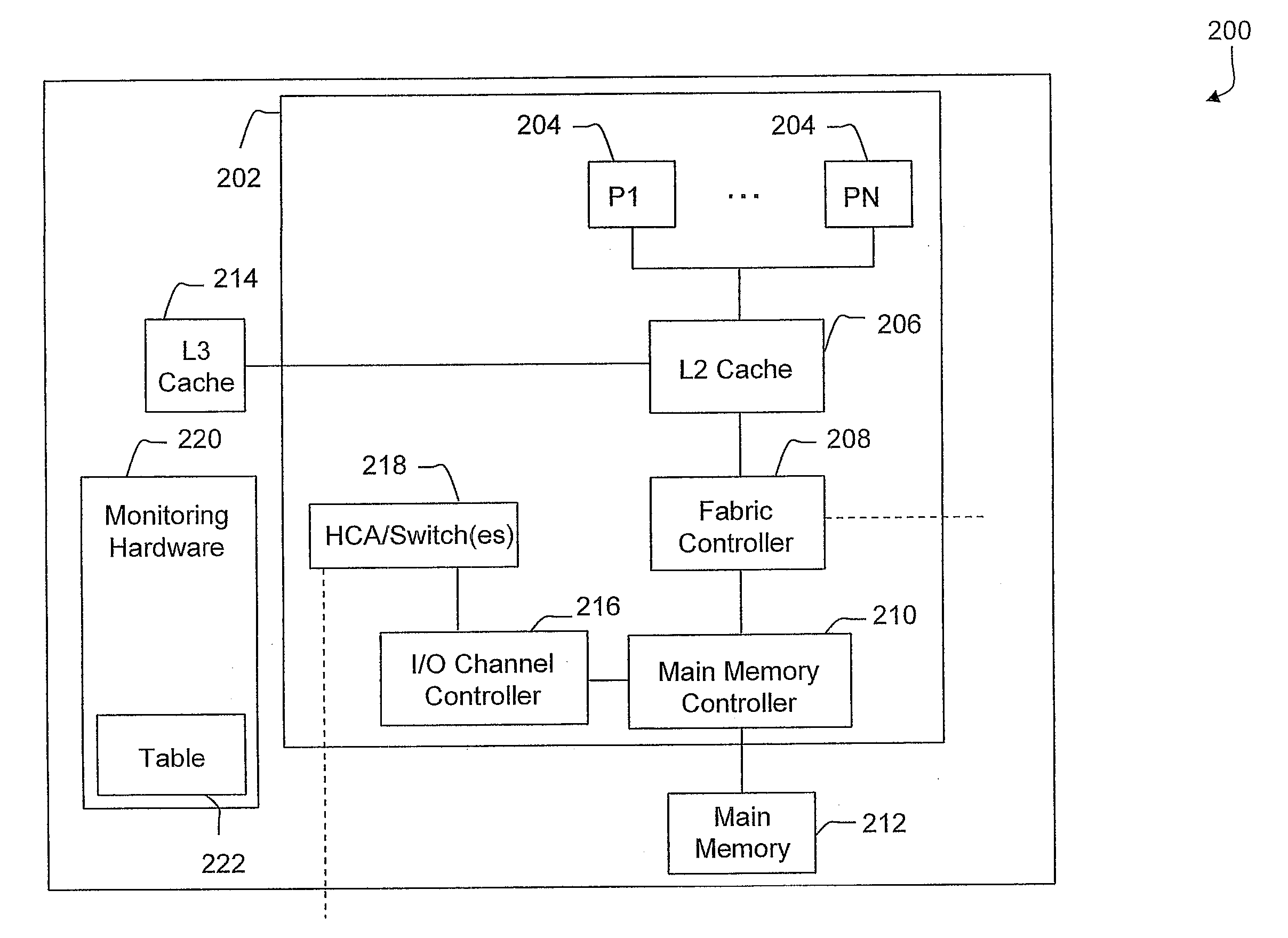

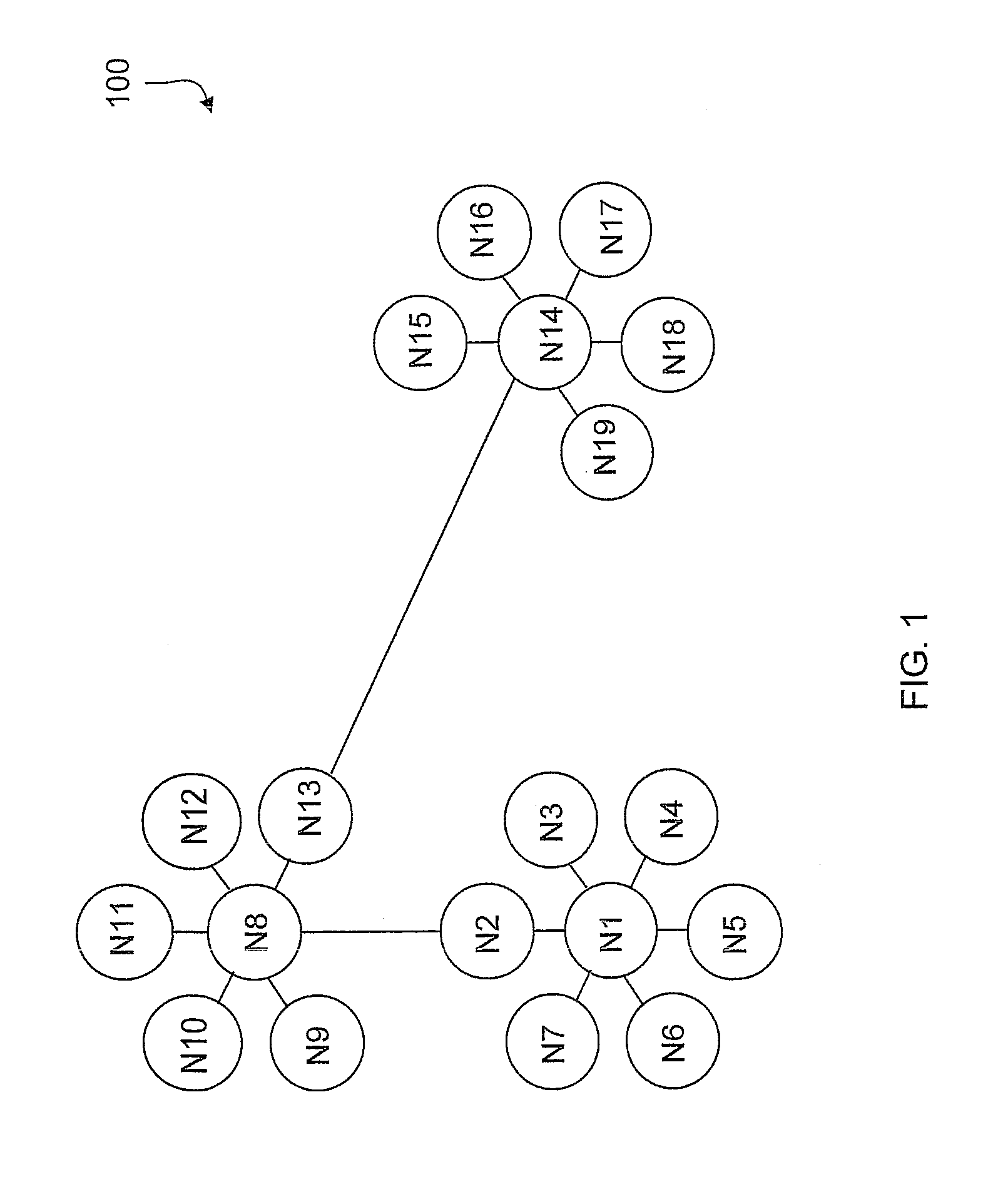

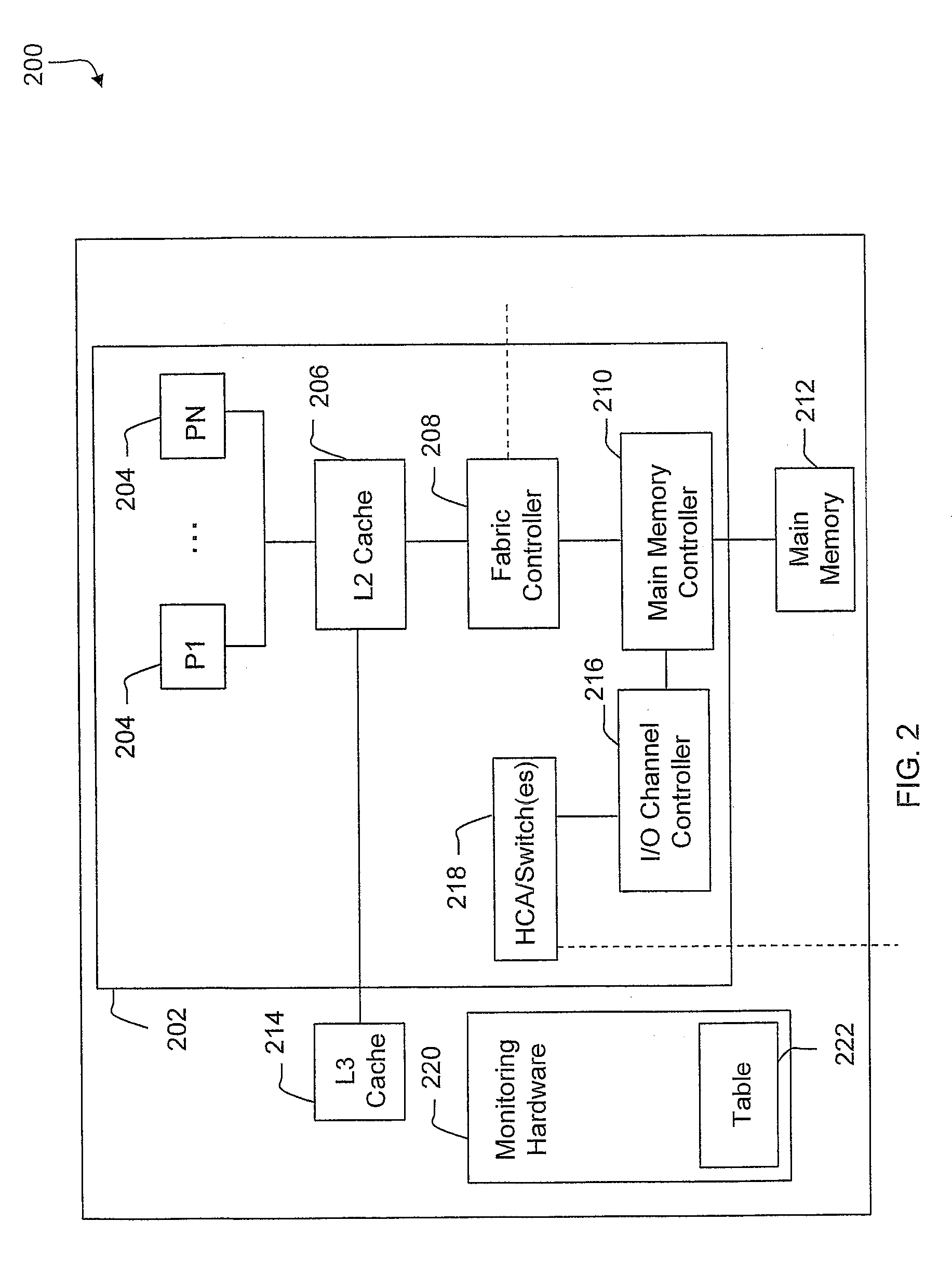

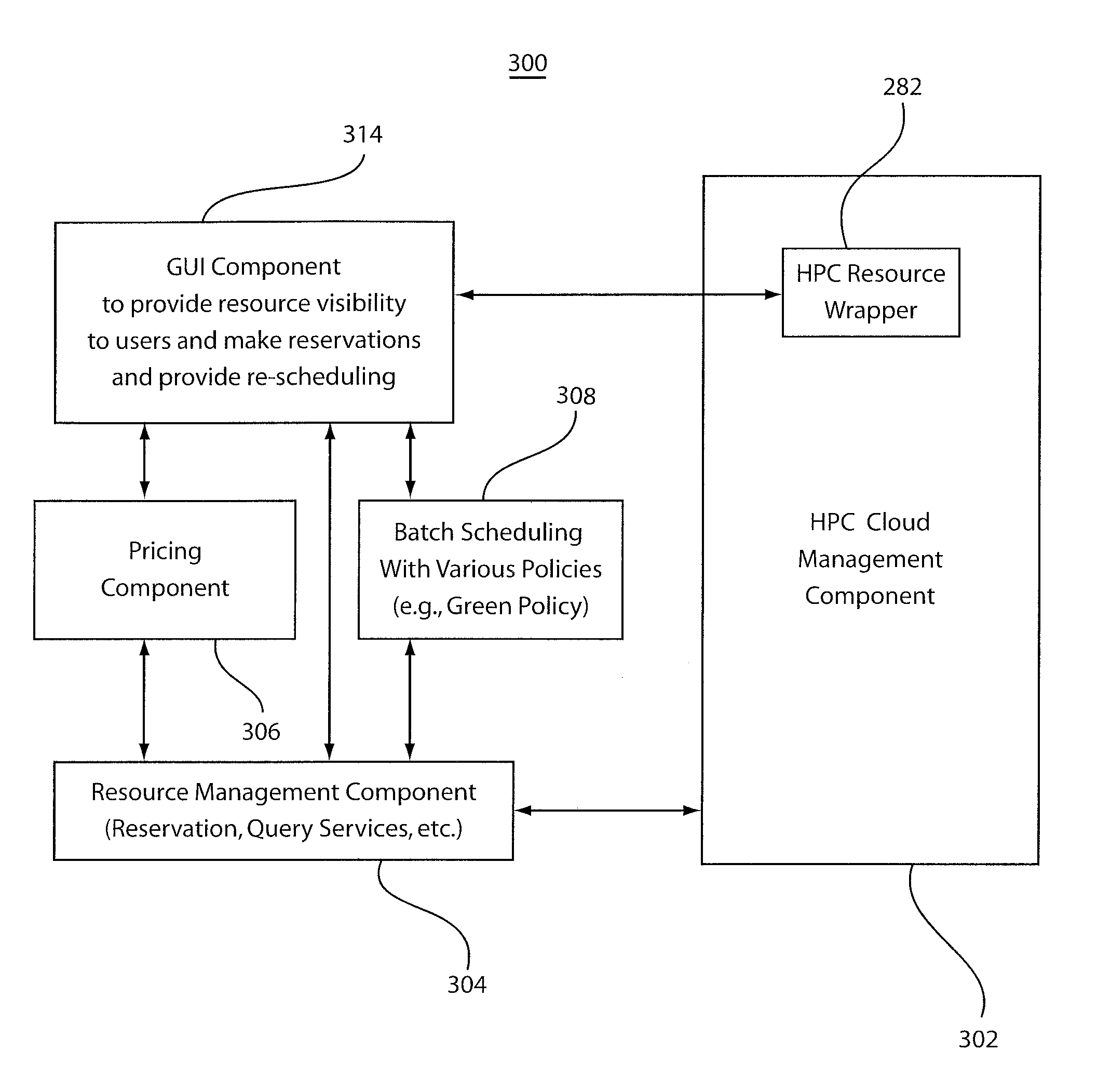

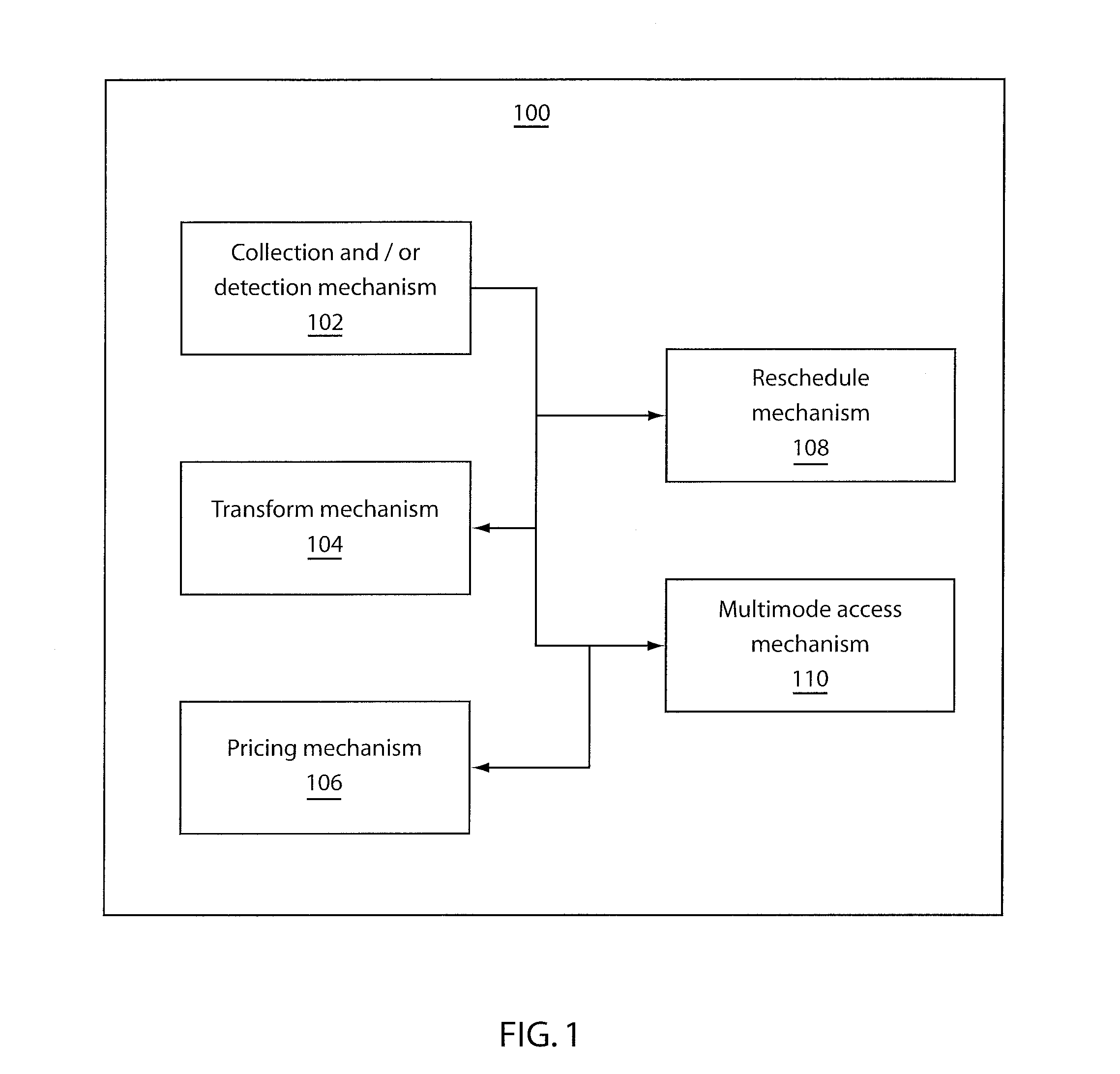

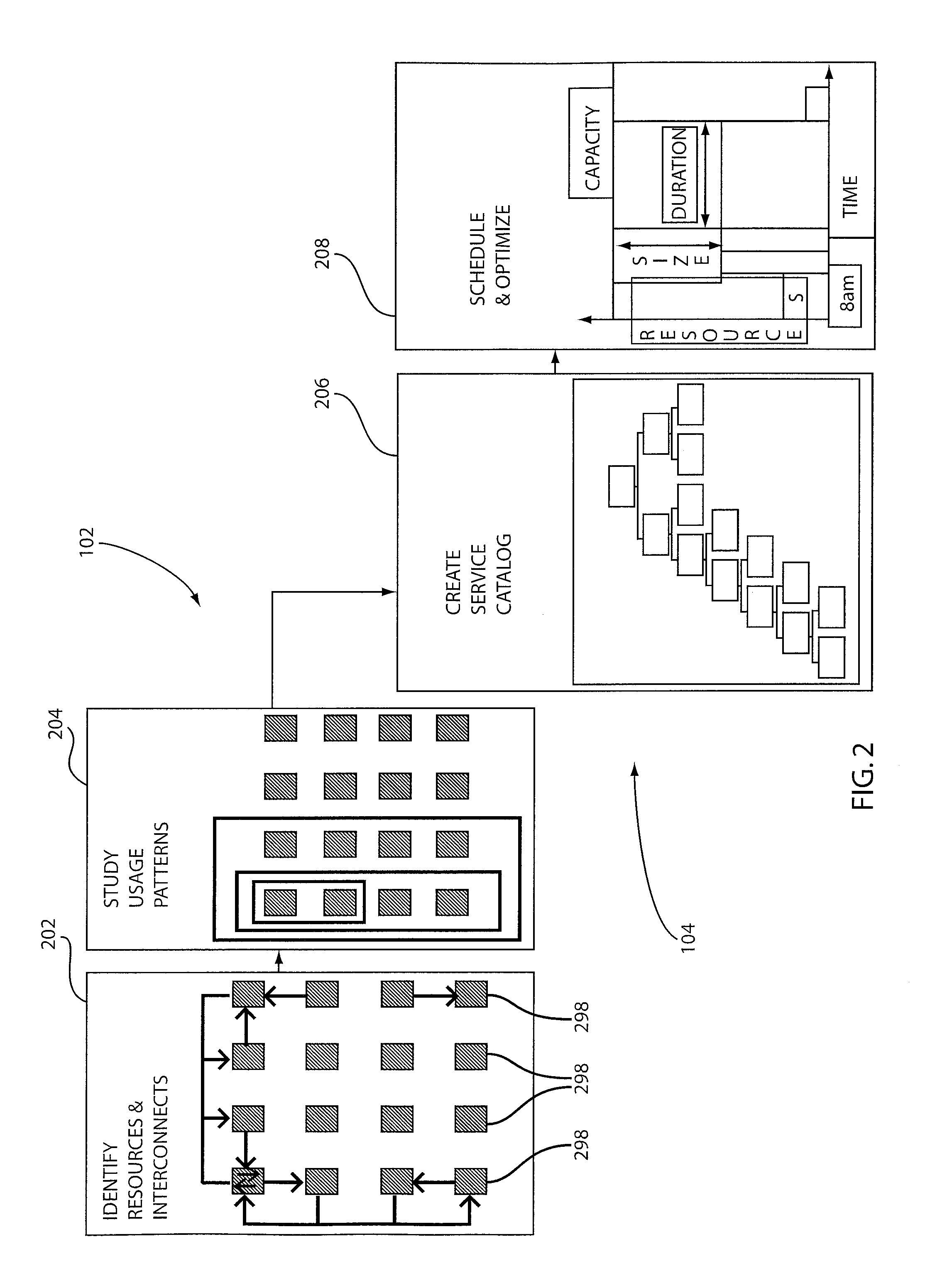

High performance computing as a service

A scheduling system and method for high-performance computing (HPC) applications includes a network management component stored in physical memory and executed by a processor. The management component is configured to transform HPC resources into a schedulable resource catalog by transforming multi-dimensional HPC resources into a one dimension versus time resource catalog with a dependent graph structure between resources such that HPC resources are enabled to be provisioned into a service environment with predictable provisioning using the resource catalog. A graphical user interface component is coupled to the network management component and configured to provide scheduling visibility to entities and to enable a plurality of different communication modes for scheduling and communication between entities.

Owner:IBM CORP

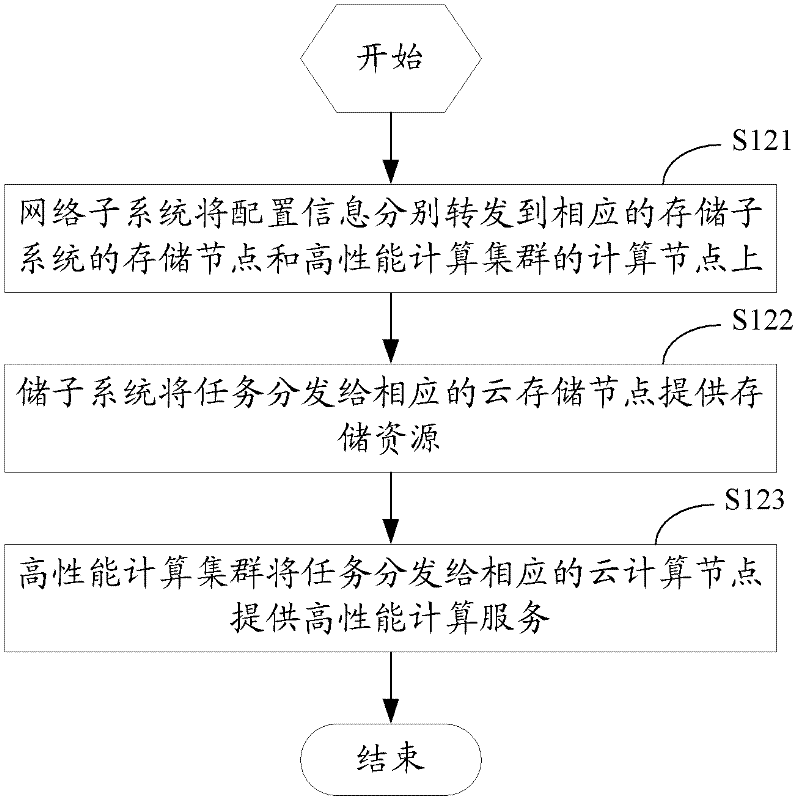

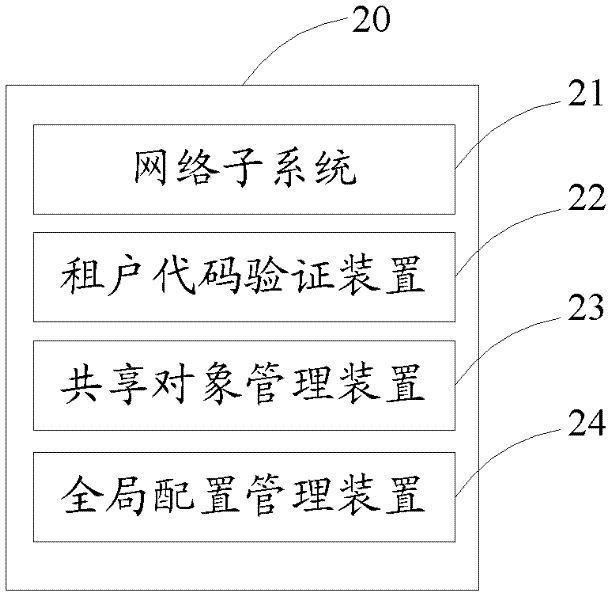

Method, equipment and system for managing shared objects of a plurality of lessees based on cloud computation

The invention discloses a method, equipment and a system for managing the shared objects of a plurality of lessees based on cloud computation. The system can comprise a lessee code authentication device, an overall configuration management device, a network subsystem, a storage subsystem, a high-performance computation cluster and a shared object management device; according to the method, the equipment and the system for managing the shared objects of the lessees based on the cloud computation, the problem of how to reasonably utilize resources and share information in lessees which are exclusively mapped can be solved by lessee codes under a structure of a plurality of lessees in cloud computation; by managing the shared objects of a plurality of lessees, a feasible solution for interconnecting and intercommunicating information resources is provided; the method, the equipment and the system for managing the shared objects of the lessees based on the cloud computation are beneficial to integrating existing information resources; and the resources of computation, storage, bandwidth and the like of the system are reasonably utilized.

Owner:国家超级计算深圳中心(深圳云计算中心)

Virtual Supercomputer

ActiveUS20110004566A1Improve efficiencyFinanceResource allocationInformation processingOperational system

The virtual supercomputer is an apparatus, system and method for generating information processing solutions to complex and / or high-demand / high-performance computing problems, without the need for costly, dedicated hardware supercomputers, and in a manner far more efficient than simple grid or multiprocessor network approaches. The virtual supercomputer consists of a reconfigurable virtual hardware processor, an associated operating system, and a set of operations and procedures that allow the architecture of the system to be easily tailored and adapted to specific problems or classes of problems in a way that such tailored solutions will perform on a variety of hardware architectures, while retaining the benefits of a tailored solution that is designed to exploit the specific and often changing information processing features and demands of the problem at hand.

Owner:VERISCAPE

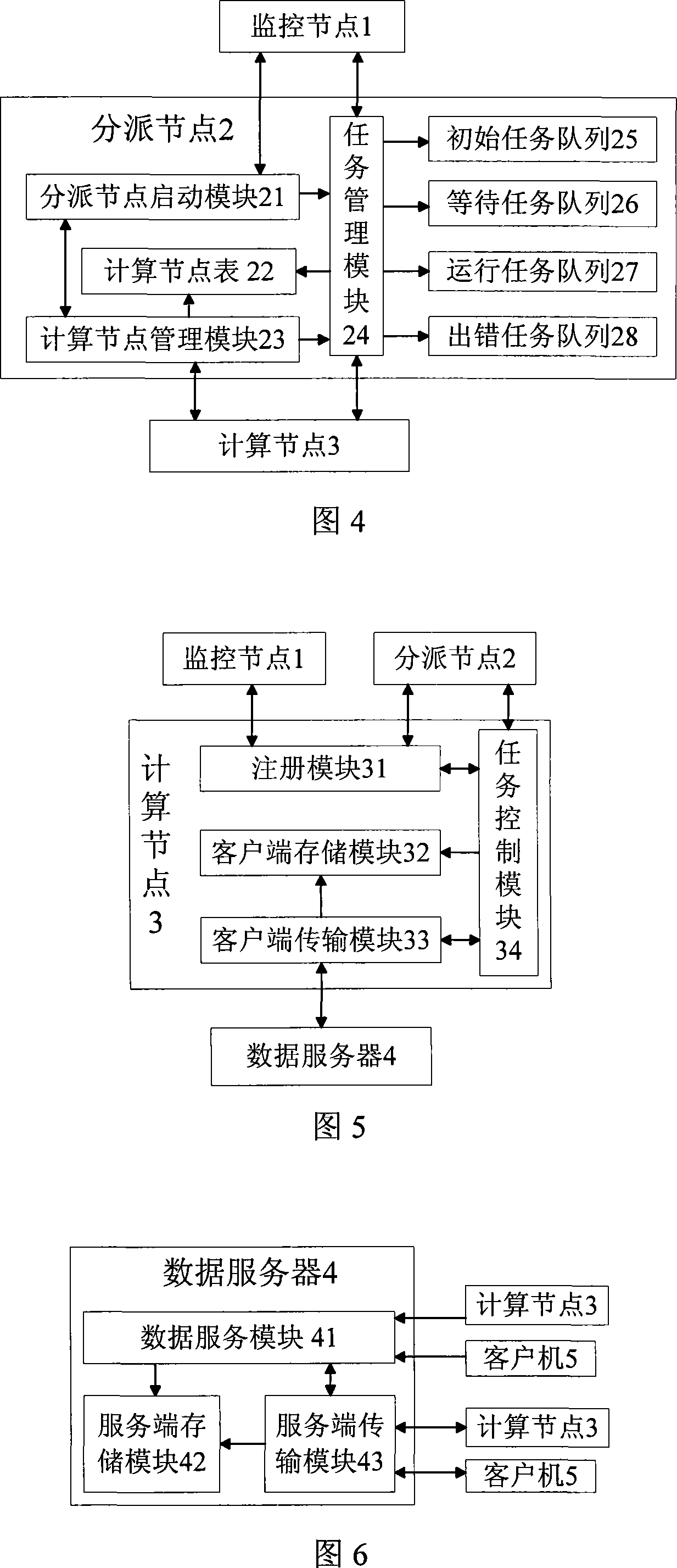

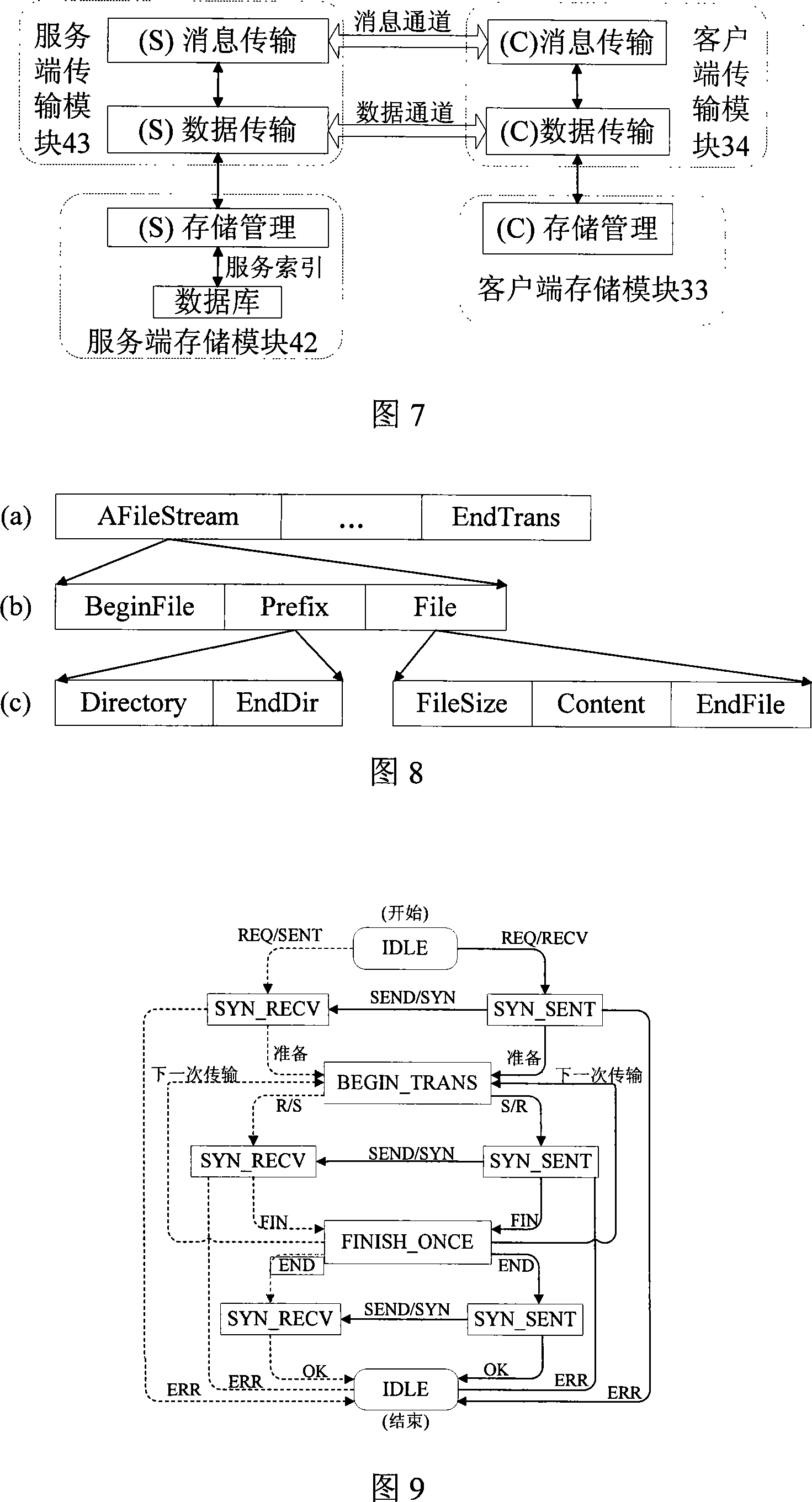

High-performance computing system based on peer-to-peer network

InactiveCN101072133AImprove acceleration performanceImprove fault toleranceMultiprogramming arrangementsData switching networksPerformance computingData interchange

The computer system includes monitoring node, dispatch node, computation node (CN), data server, and client. The monitoring node receives applied engineering (AE) description file submitted by client, manages and monitors state of the dispatch node and accomplished condition of each task cluster. The dispatch node dispatches tasks to dominative each CN, monitors state of each CN, and accomplished condition of task. CN receives and calculates task dispatched by the dispatch node, reports accomplished condition of task, and carries out data exchange with data server. The data server stores data of backed AE, processes data request from the client and CN. Client submits initial AE, manages start up running of main task, and obtains final result of application. Features are: good generality, crossing platform, convenient programming, good fault tolerant and expansibility, and overcoming disadvantages of former voluntary computation system.

Owner:HUAZHONG UNIV OF SCI & TECH

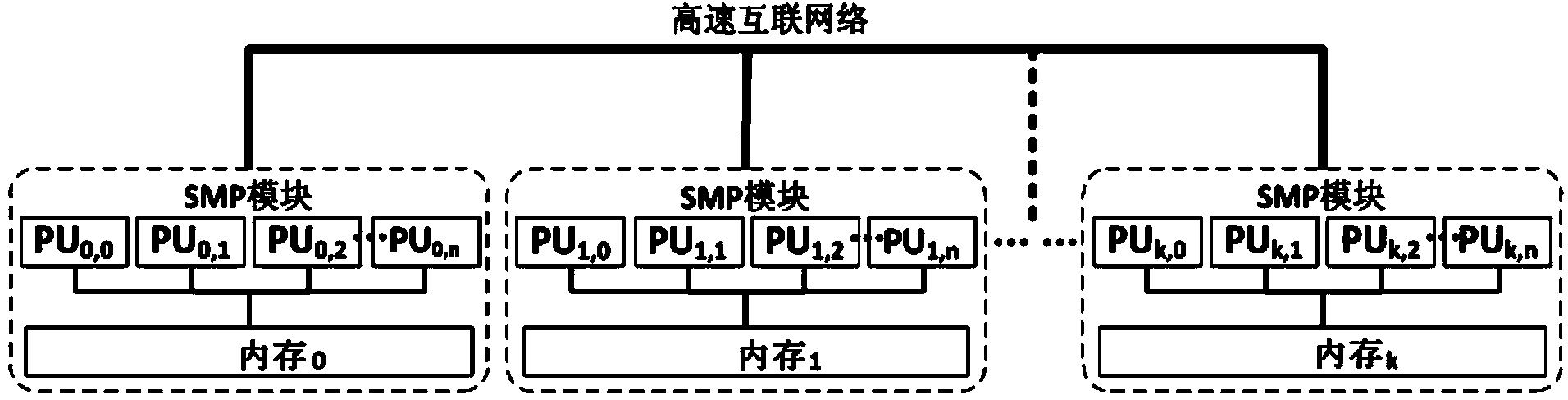

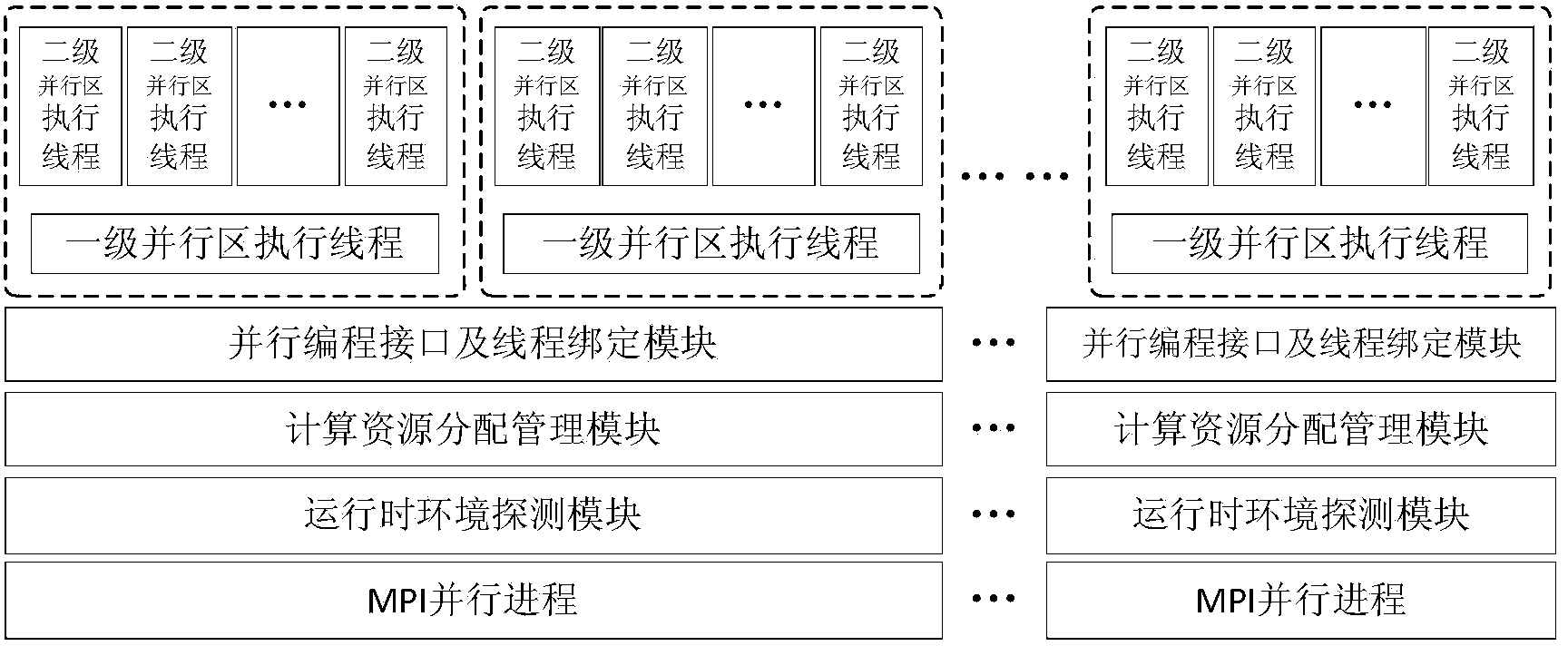

Thread for high-performance computer NUMA perception and memory resource optimizing method and system

ActiveCN104375899ASolve the problem of excessive granularity of memory managementSolve fine-grained memory access requirementsResource allocationComputer architecturePerformance computing

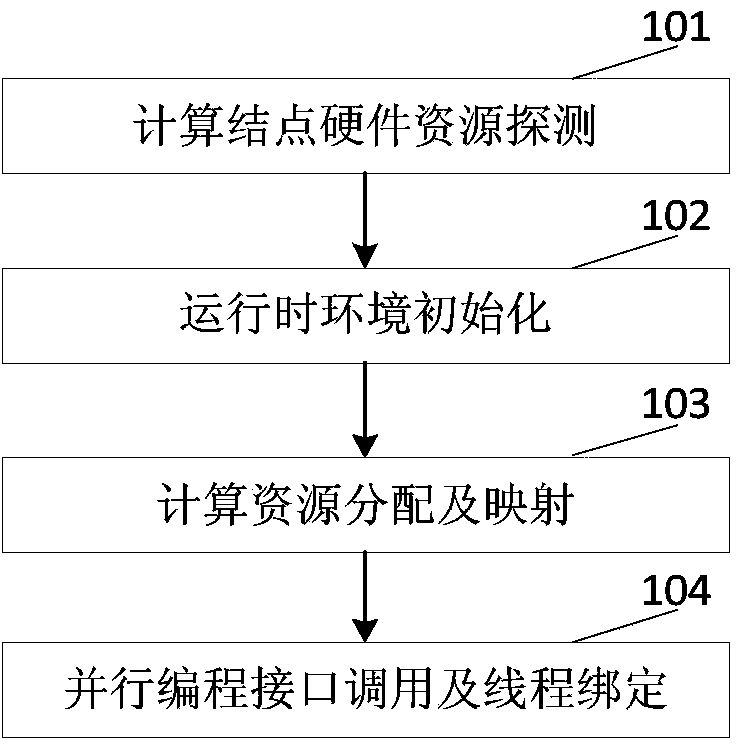

The invention discloses a thread for high-performance computer NUMA perception and a memory resource optimizing method and system. The system comprises a runtime environment detection module used for detecting hardware resources and the number of parallel processes of a calculation node, a calculation resource distribution and management module used for distributing calculation resources for parallel processes and building the mapping between the parallel processes and the thread and a processor core and physical memory, a parallel programming interface, and a thread binding module which is used for providing the parallel programming interface, obtaining a binding position mask of the thread according to mapping relations and binding the executing thread to a corresponding CPU core. The invention further discloses a multi-thread memory manager for NUMA perception and a multi-thread memory management method of the multi-thread memory manager. The manager comprises a DSM memory management module and an SMP module memory pool which manage SMP modules which the MPI processes belong to and memory distributing and releasing in the single SMP module respectively, the system calling frequency of the memory operation can be reduced, the memory management performance is improved, remote site memory access behaviors of application programs are reduced, and the performance of the application programs is improved.

Owner:INST OF APPLIED PHYSICS & COMPUTATIONAL MATHEMATICS

Smart card of supporting high performance computing, large capacity storage, high-speed transmission, and new type application

ActiveCN101004798AImprove securityQuick exchangeMemory record carrier reading problemsRecord carriers used with machinesMicrocontrollerMass storage

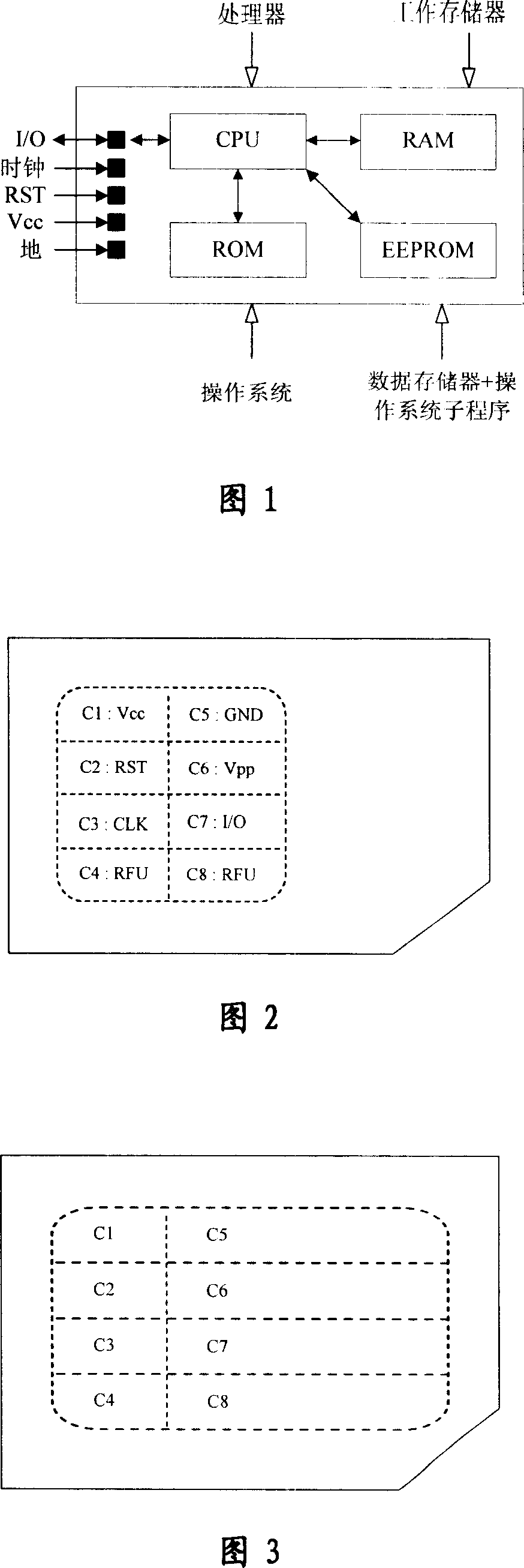

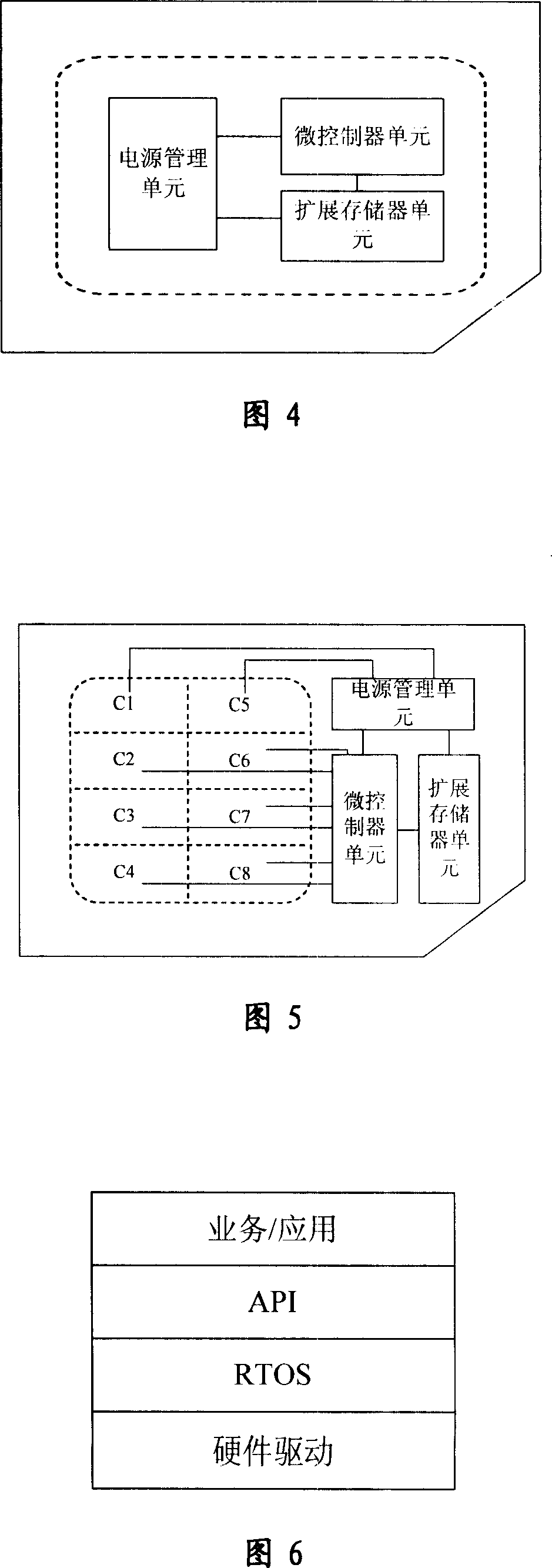

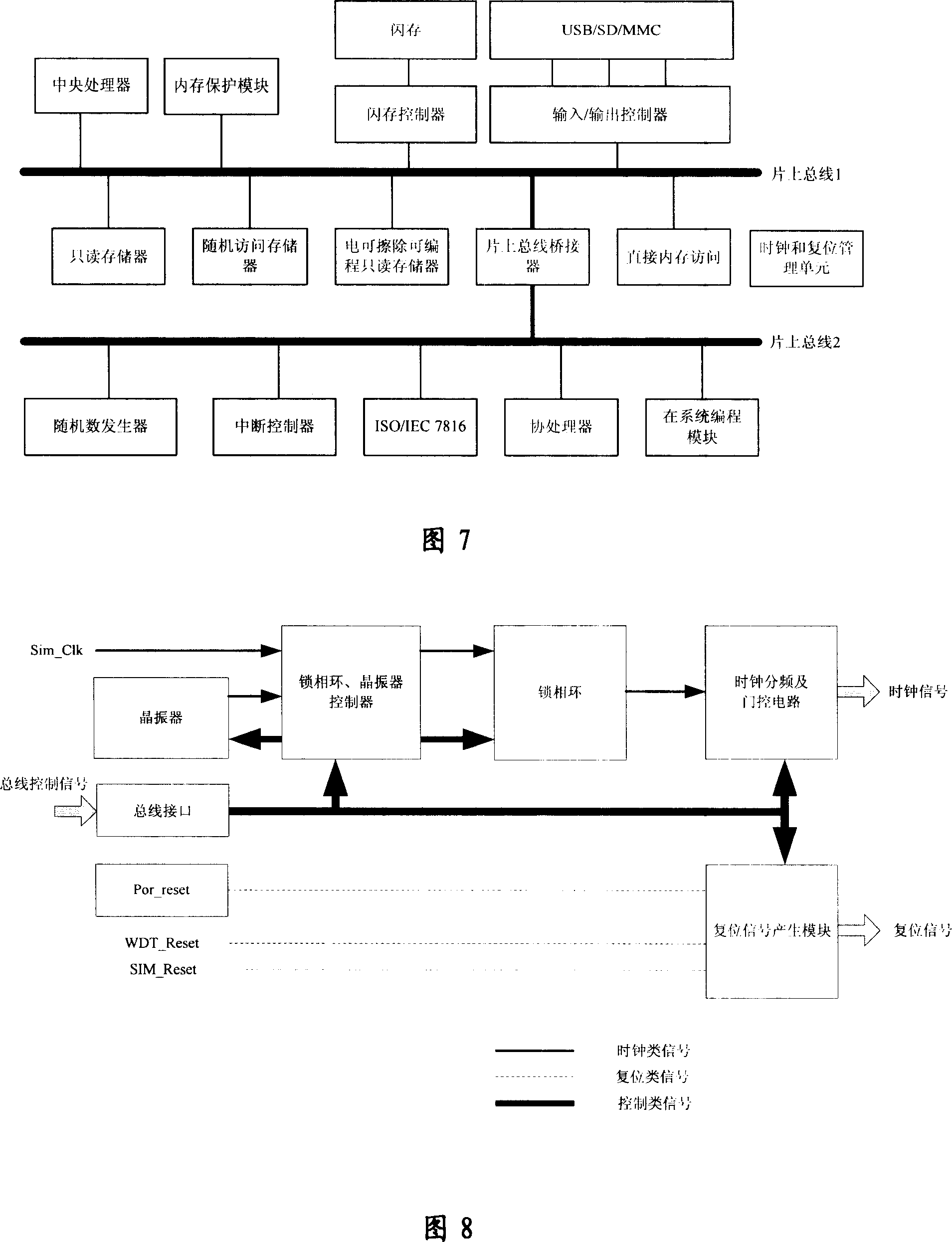

An intelligent card supporting high performance calculation and large-capacity storage as well as high speed transmission is prepared for connecting power supply management unit with two base pins and connecting microcontroller unit to the other six base pins according to base pin code defined by ISO / IEC 7816-3 protocol, connecting expansion storage unit separately to power supply management unit and microcontroller unit.

Owner:RDA MICROELECTRONICS SHANGHAICO LTD

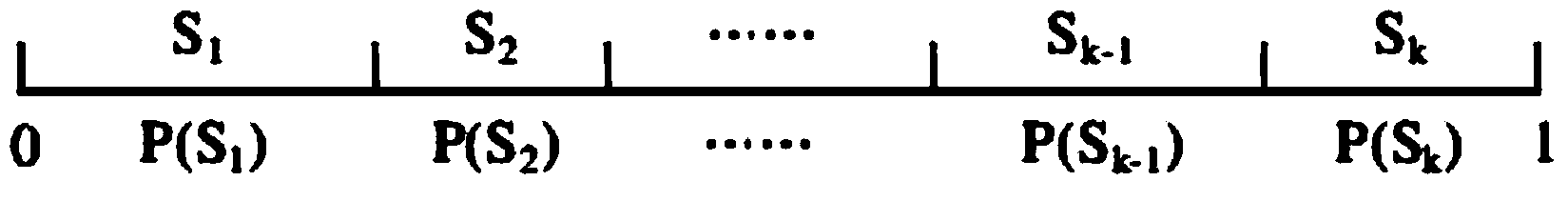

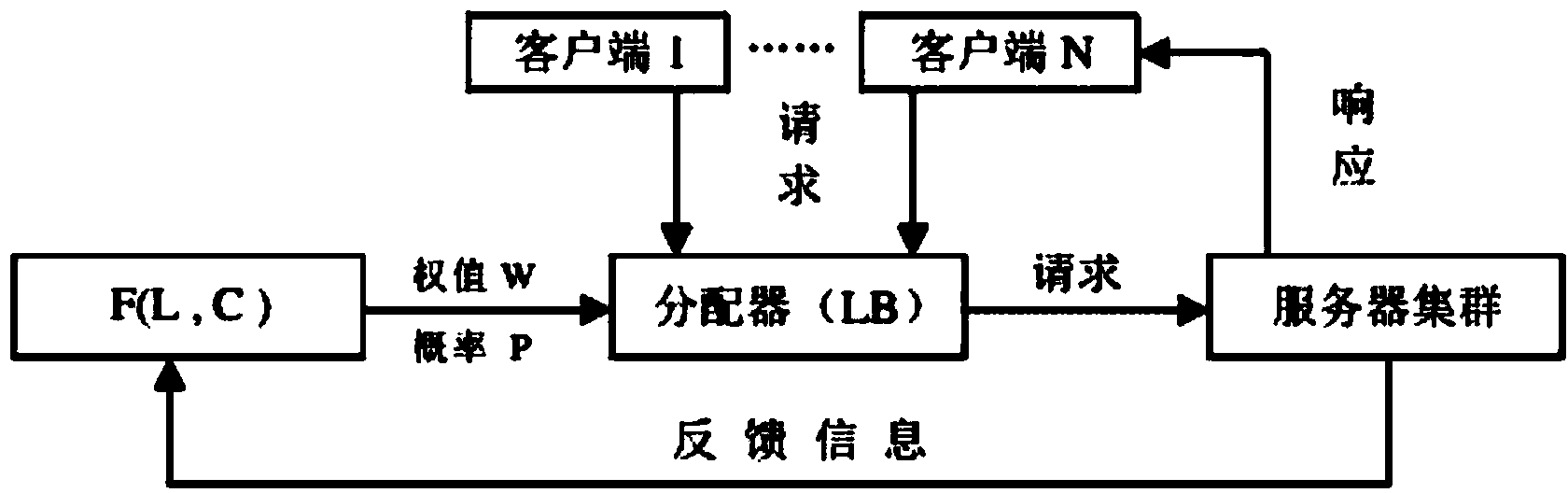

Load balance and node state monitoring method in high performance computing

InactiveCN104168332AImprove performanceGuaranteed stabilityData switching networksPerformance computingCluster systems

The invention relates to a load balance and node state monitoring method in high performance computing. The method is suitable for reducing resources which occupy a clustering system, increasing the utilization rate of the resources, effectively improving the performance of server clustering and providing high-quality service for a user. The method concretely comprises the steps that firstly, according to the operation state and load and performance parameters of server nodes, computing is performed to obtain load weights of all the nodes, and an alternative node set for next task distribution is selected; afterwards, according to load difference values and distribution probabilities, the probabilities of all node distribution tasks in the alternative node set are computed, and new requests are distributed to the selected nodes in a random probability distribution mode to solve the problem of uneven load distribution; finally, based on a load correction formula, node loads of the distribution tasks are corrected to improve the load balance effect and improve the reliability and stability of the cluster system.

Owner:GUANGDONG POWER GRID CO LTD INFORMATION CENT

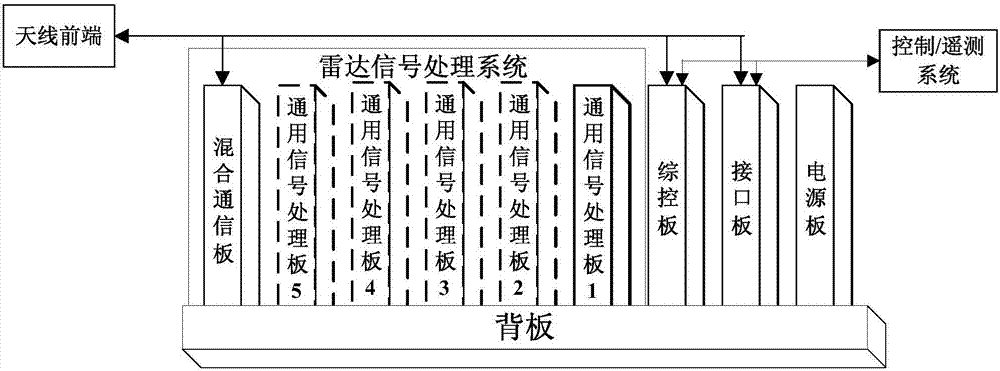

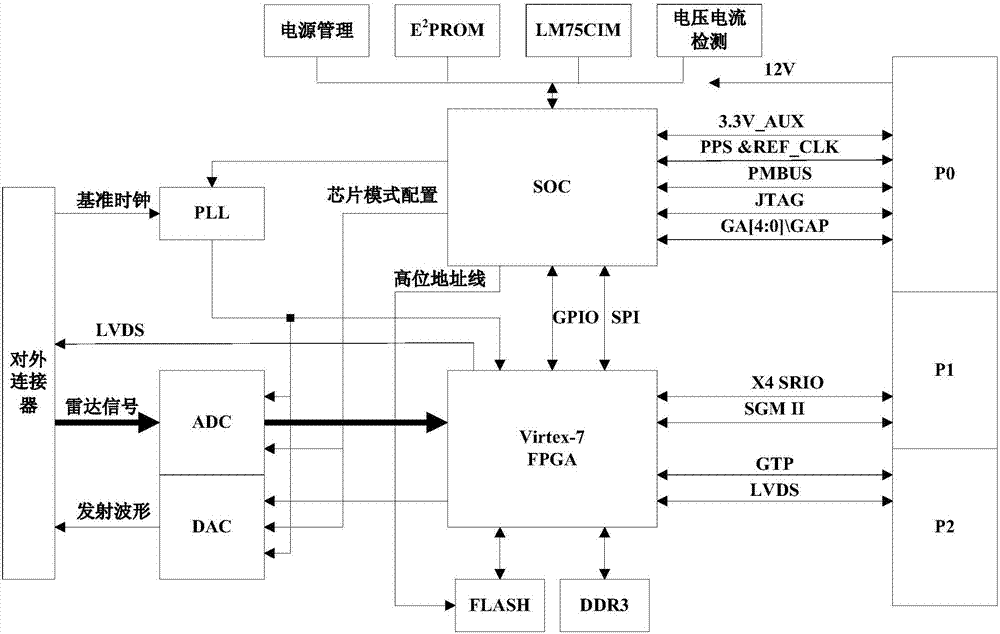

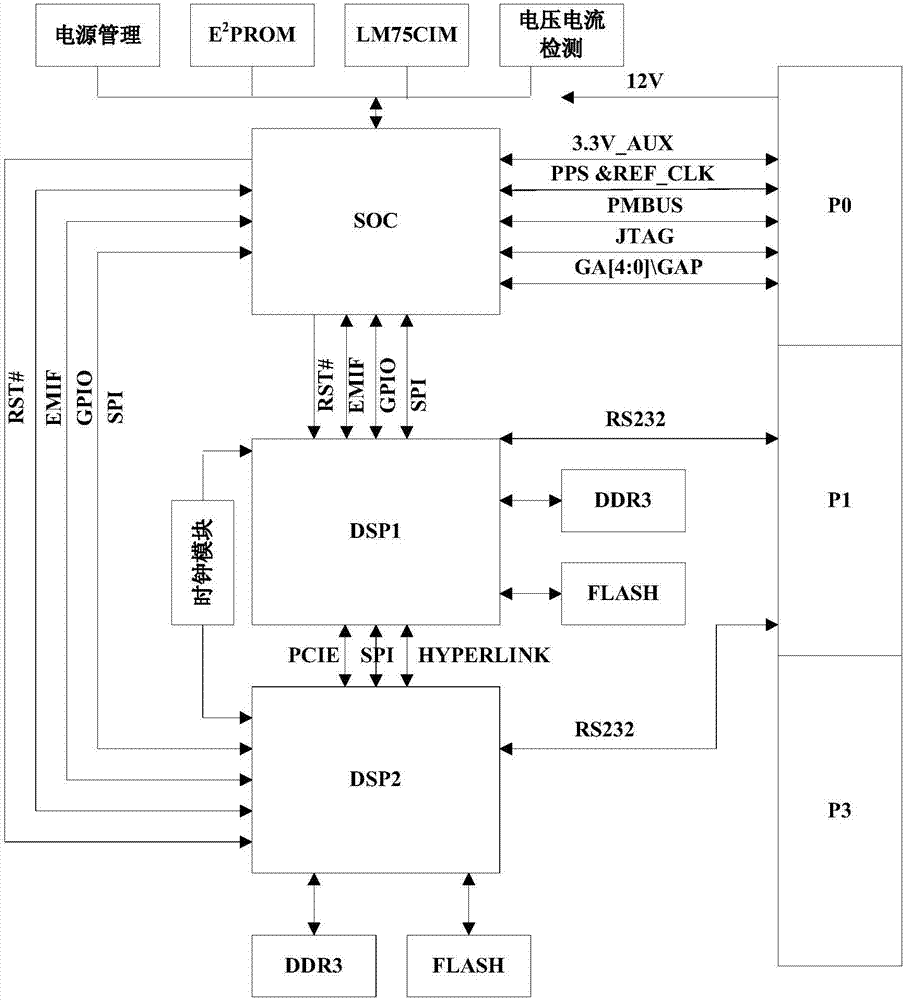

VPX-platform-based radar signal processing system and application software design method

ActiveCN107167773AAbnormal problem foundWave based measurement systemsExtensibilityPerformance computing

The invention discloses a VPX-platform-based radar signal processing system and an application software design method. The processing system operated in a VPX processor comprises a hybrid communication board and a universal signal processing panel. The hybrid communication board is integrated with a multi-channel AD / DA and a Virtex-7 FPGA processor and is used for frequency-modulation waveform control, signal acquisition and corresponding signal pre-stage pre-processing work, and carrying out radar work time-sequence controlling. The universal signal processing panel integrated with a multi-piece multi-core DSP6678 processing architecture is used for realizing high-performance computing, wherein the high processing capability is realized by a parallel system formed by a plurality of processors. According to the invention, the radar signal processing system with high universality and extensibility is constructed by using a VPX-architecture-based universal ruggedized computer and the high processing capability is realized by the parallel system formed by a plurality of processors. The radar signal processing system has characteristics of small size, firm structure, and good heat radiation performance and thus the radar signal processing is modularized, standardized, and universalized, so that function integration of the system under various radar mode application backgrounds.

Owner:THE GENERAL DESIGNING INST OF HUBEI SPACE TECH ACAD

Remote high-performance computing material joining and material forming modeling system and method

InactiveUS8301286B2Arc welding apparatusDesign optimisation/simulationUser inputPerformance computing

A remote high-performance computing material joining and material forming modeling system (100) enables a remote user (10) using a user input device (20) to use high speed computing capabilities to model materials joining and material forming processes. The modeling system (100) includes an interface module (200), an application module (300), a computing module (400), and a scheduling module (500). The interface module (200) is in operative communication with the user input device (20), as well as the application module (300), the computing module (400), and the scheduling module (500). The application module (300) is in operative communication with the interface module (200) and the computing module (400). The scheduling module (500) is in operative communication with the interface module (200) and the computing module (400). Lastly, the computing module (400) is in operative communication with the interface module (200), the application module (300), and the scheduling module (500).

Owner:EDISON WELDING INSTITUTE INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com