Patents

Literature

706 results about "Memory pool" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Memory pools, also called fixed-size blocks allocation, is the use of pools for memory management that allows dynamic memory allocation comparable to malloc or C++'s operator new. As those implementations suffer from fragmentation because of variable block sizes, it is not recommendable to use them in a real time system due to performance. A more efficient solution is preallocating a number of memory blocks with the same size called the memory pool. The application can allocate, access, and free blocks represented by handles at run time.

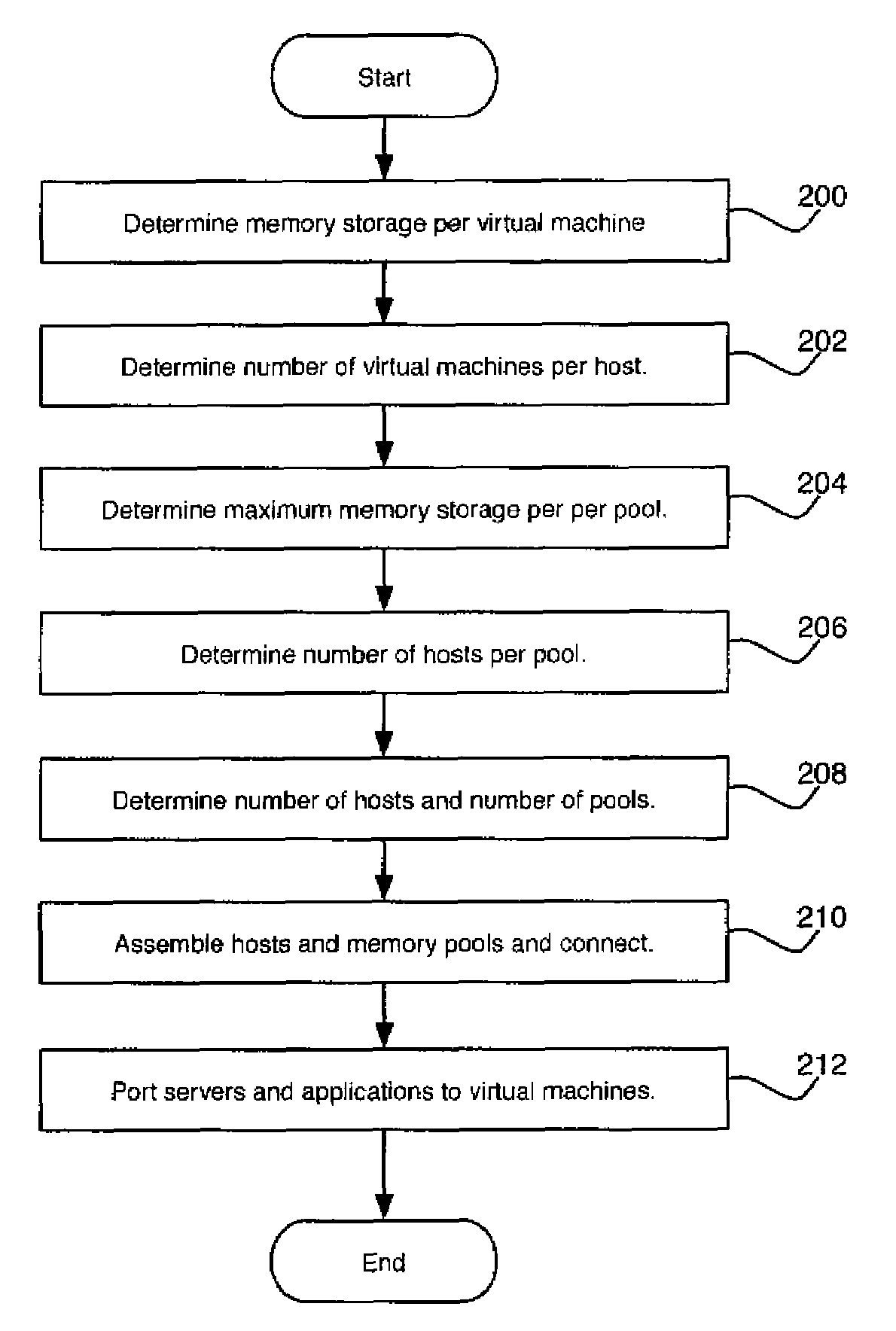

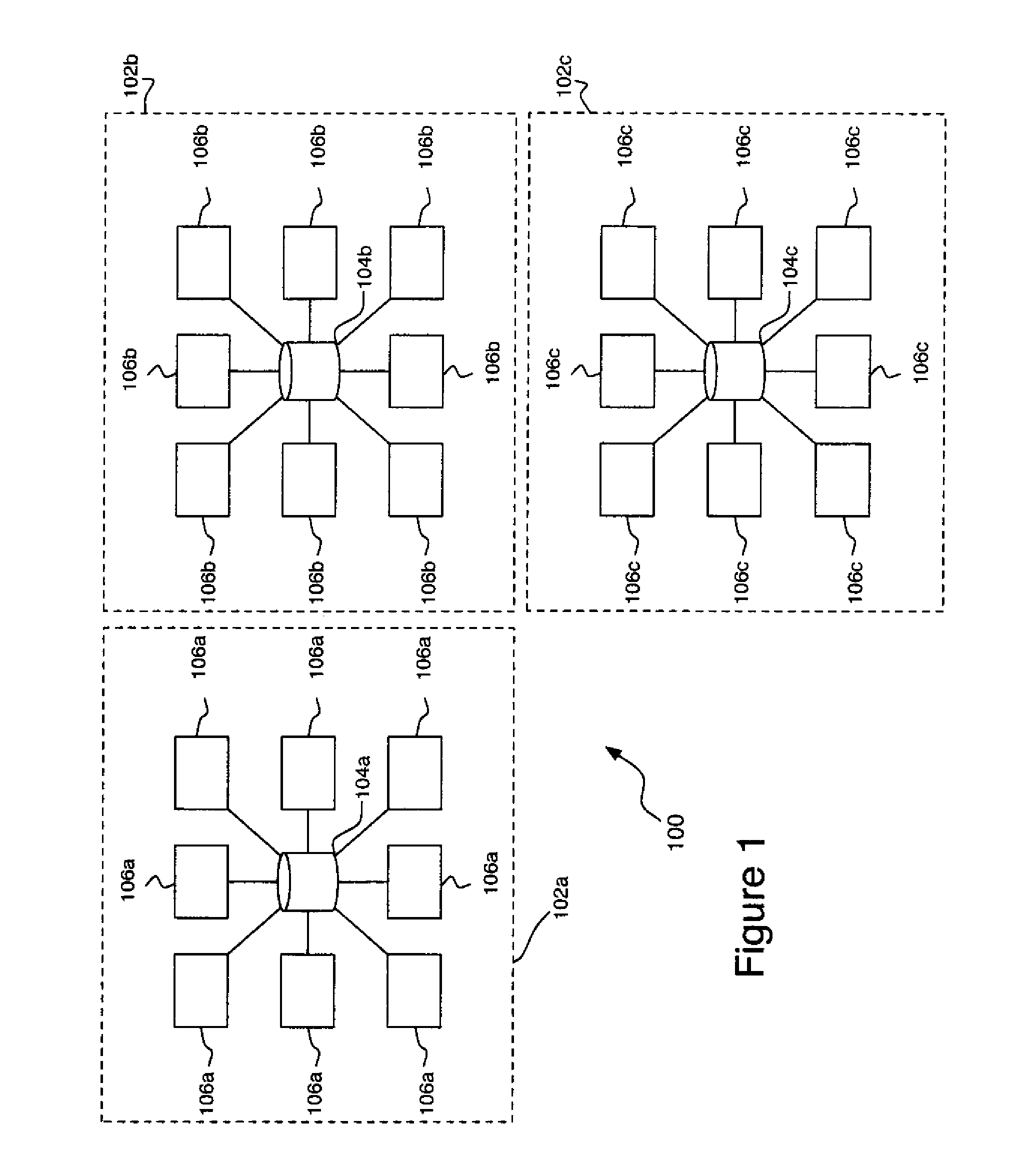

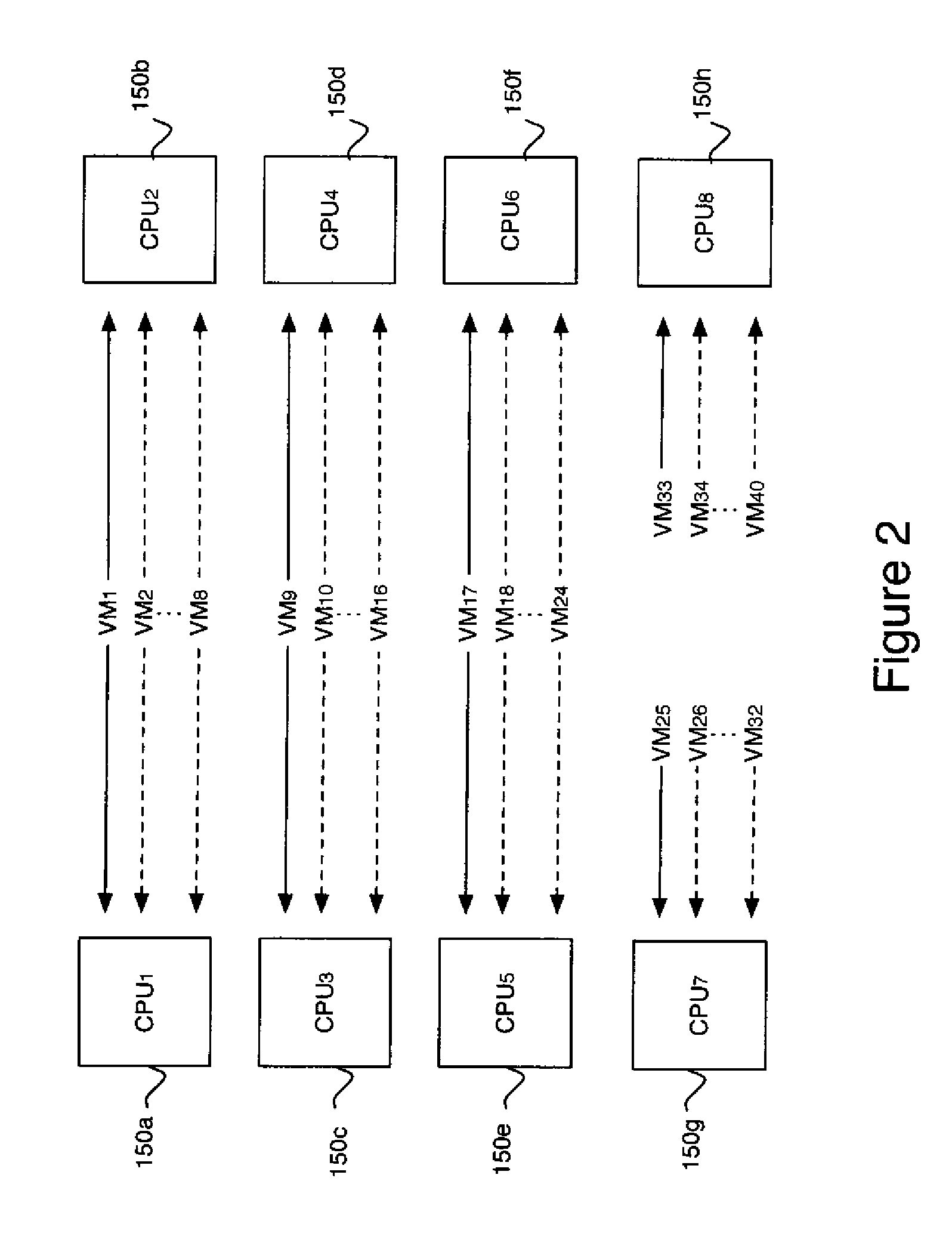

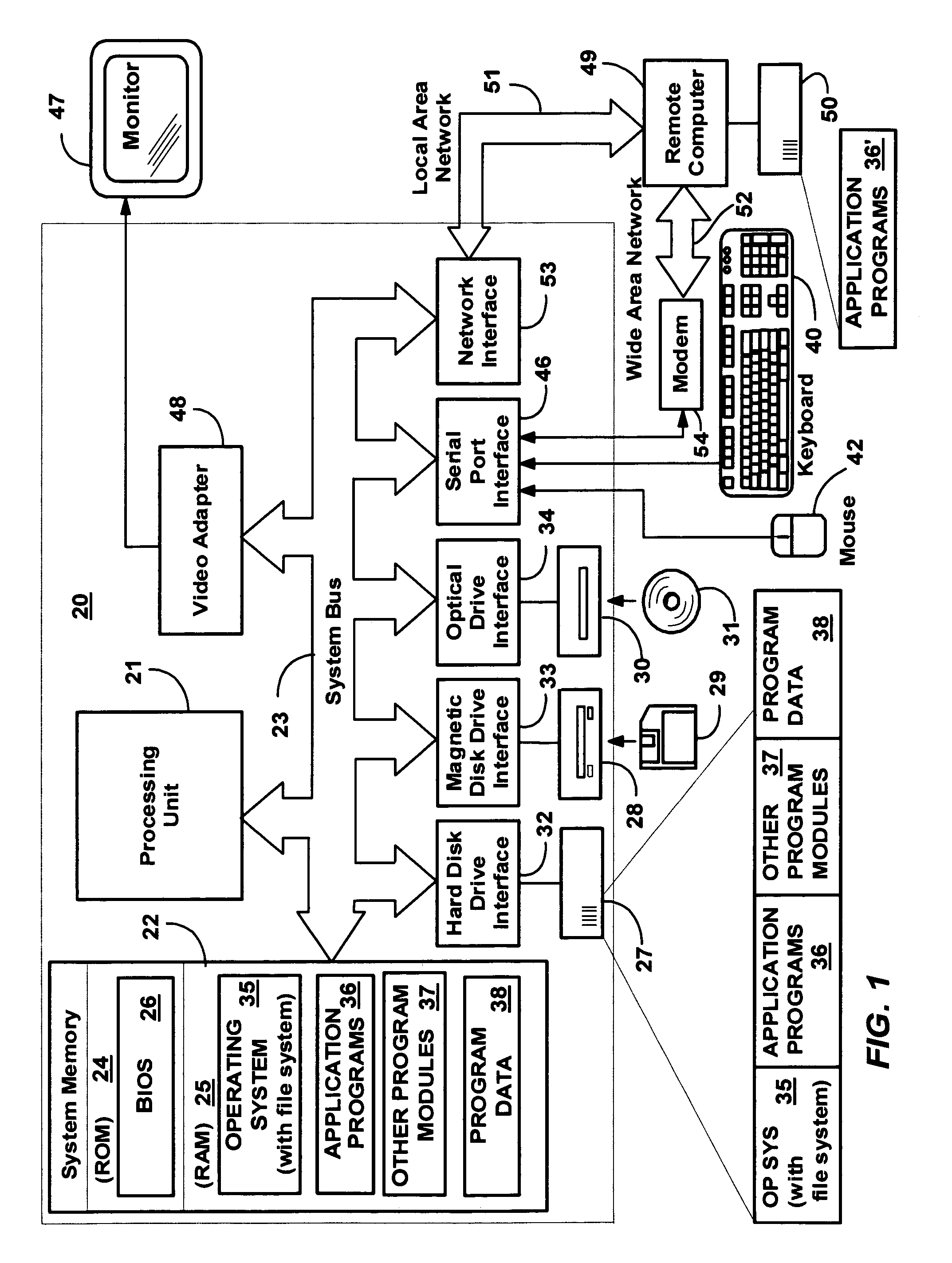

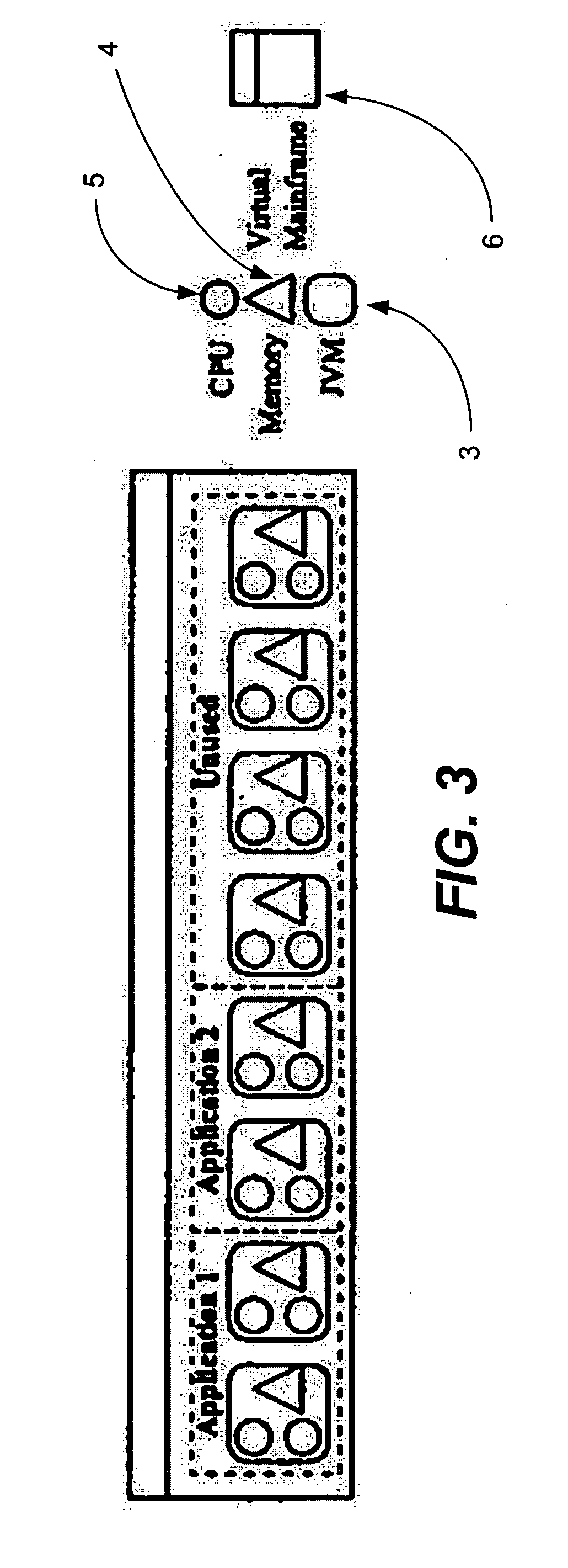

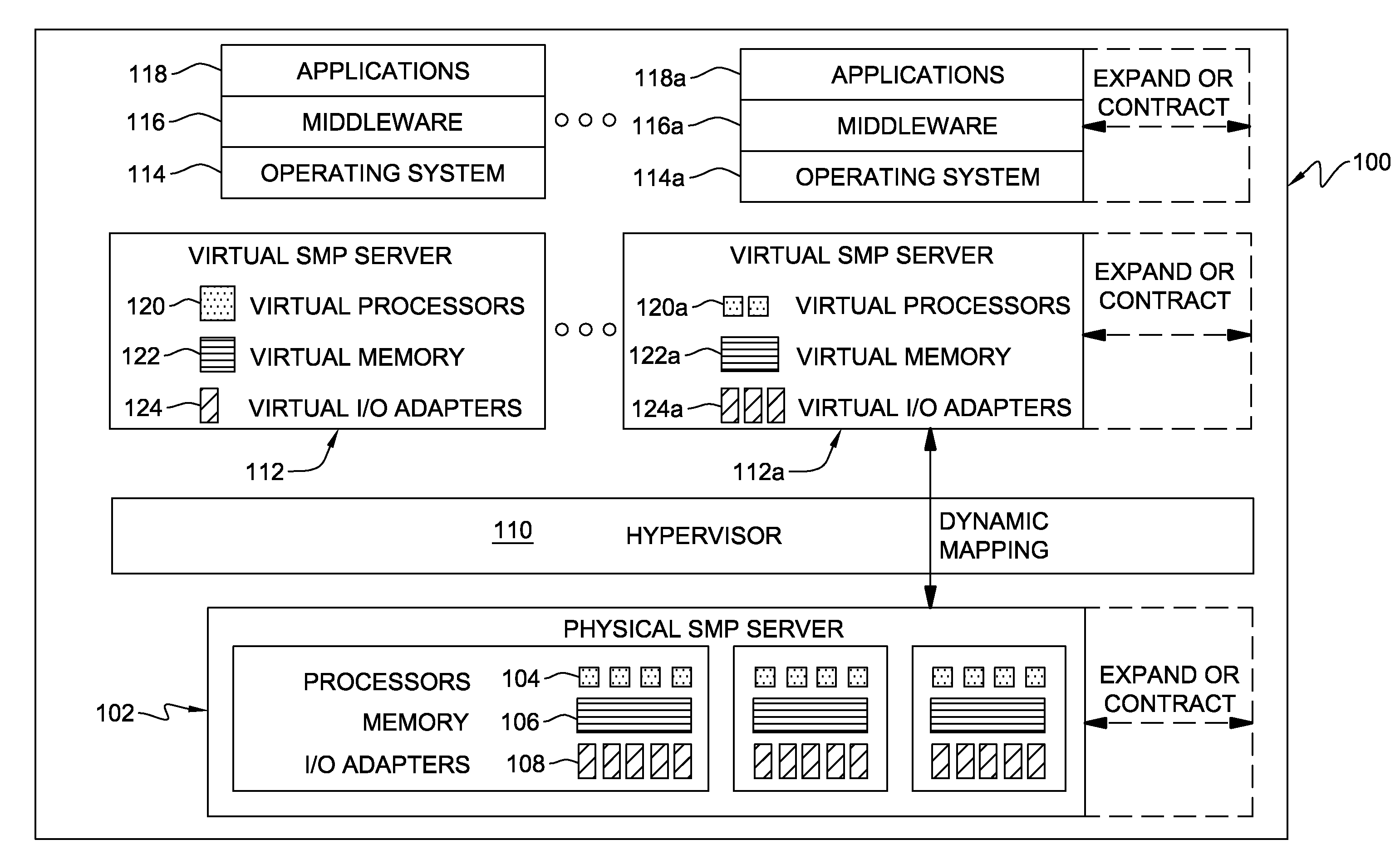

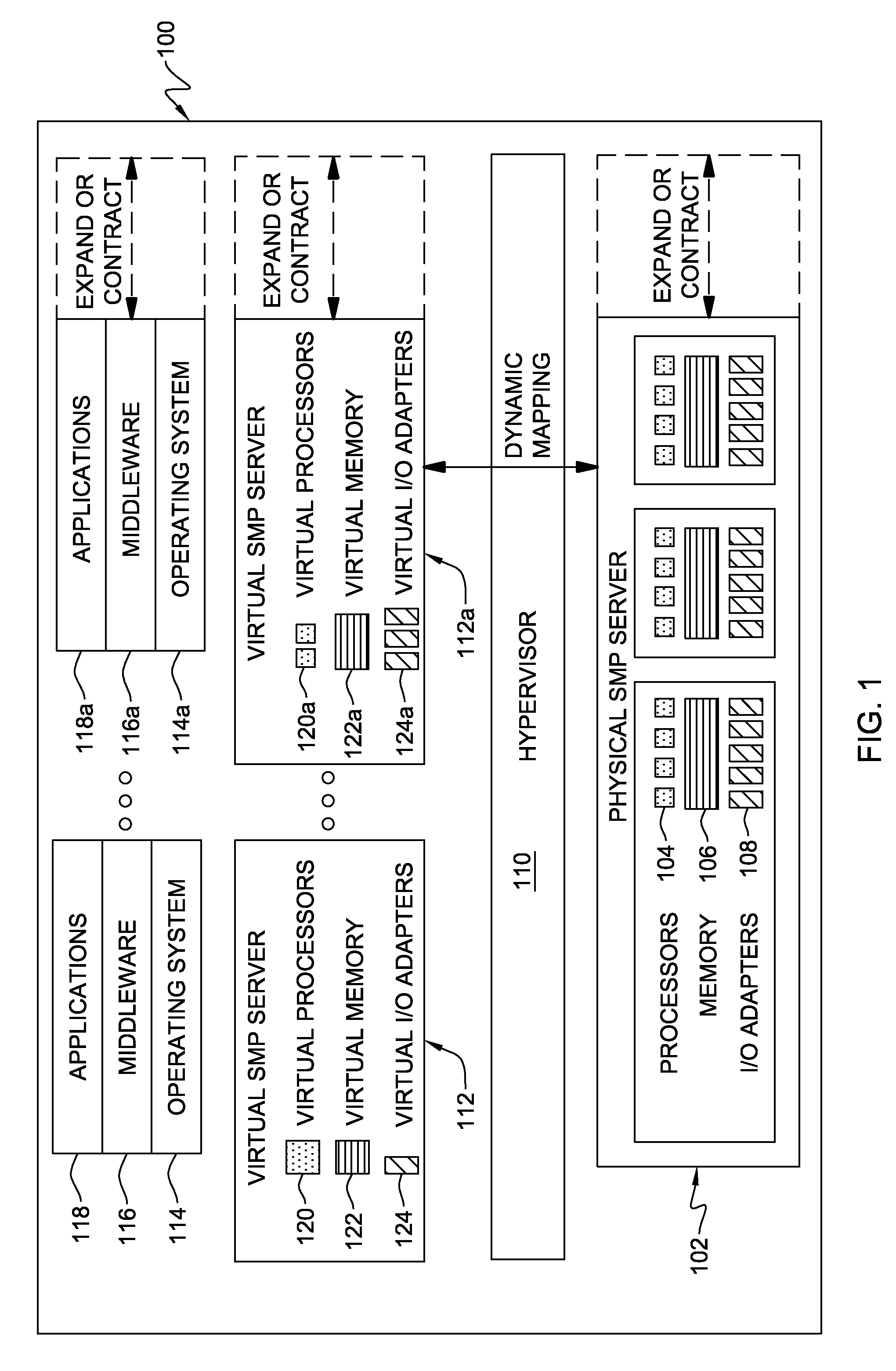

Virtual machine use and optimization of hardware configurations

ActiveUS7480773B1Energy efficient ICTMultiprogramming arrangementsConfiguration optimizationComputerized system

A method of building a computer system is provided. The method comprises determining an average memory storage per virtual machine, determining an average number of virtual machines per host computer, and determining an amount of memory storage per memory pool. The method also comprises determining a maximum number of host computers per memory pool based on the average memory storage per virtual machine, the average number of virtual machines per host computer, and the amount of memory storage per memory pool. The method also includes assembling the appropriate number of host computers and memory storage, organized around memory pools, to handle a specific number of virtual machines.

Owner:T MOBILE INNOVATIONS LLC

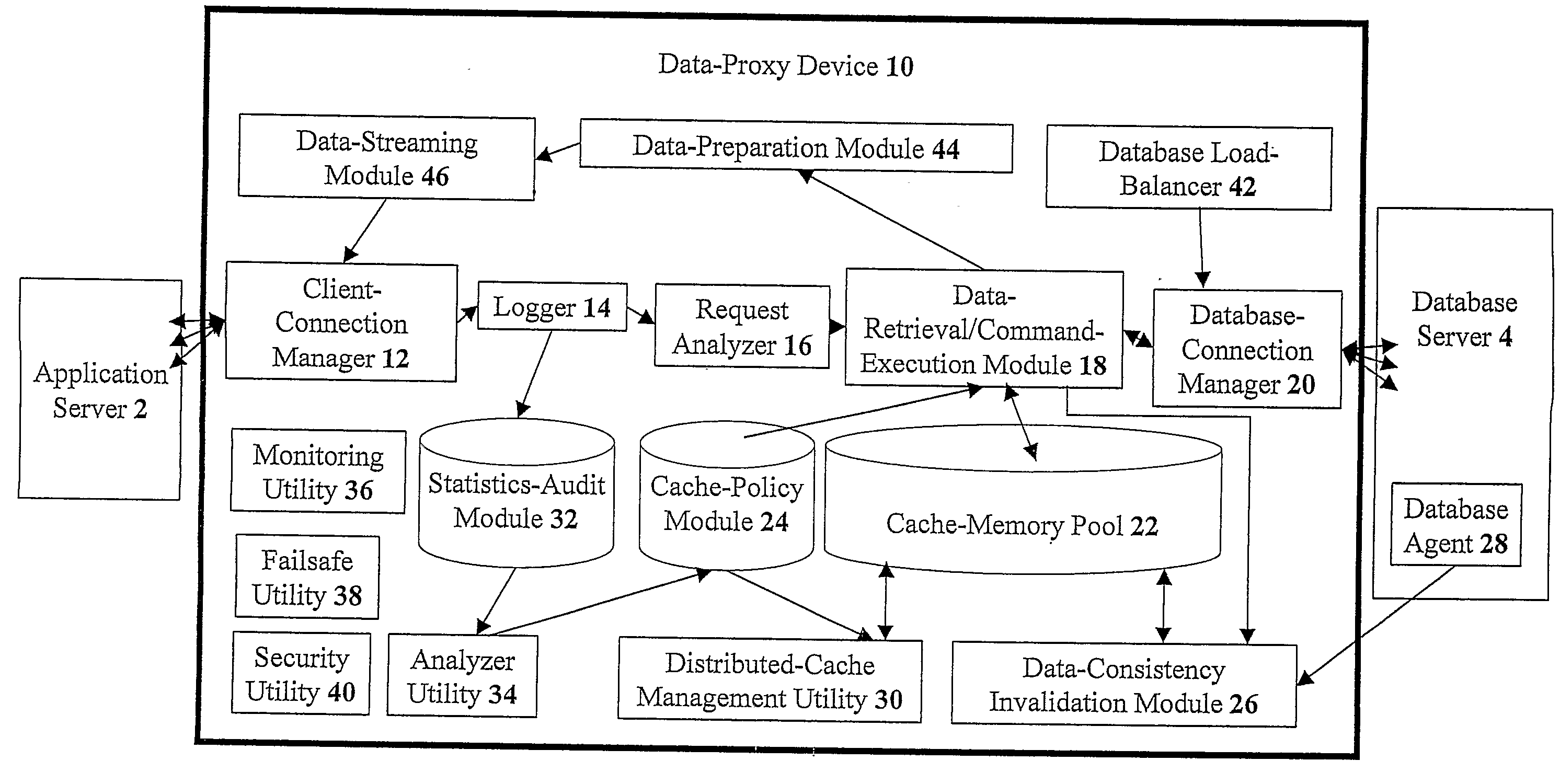

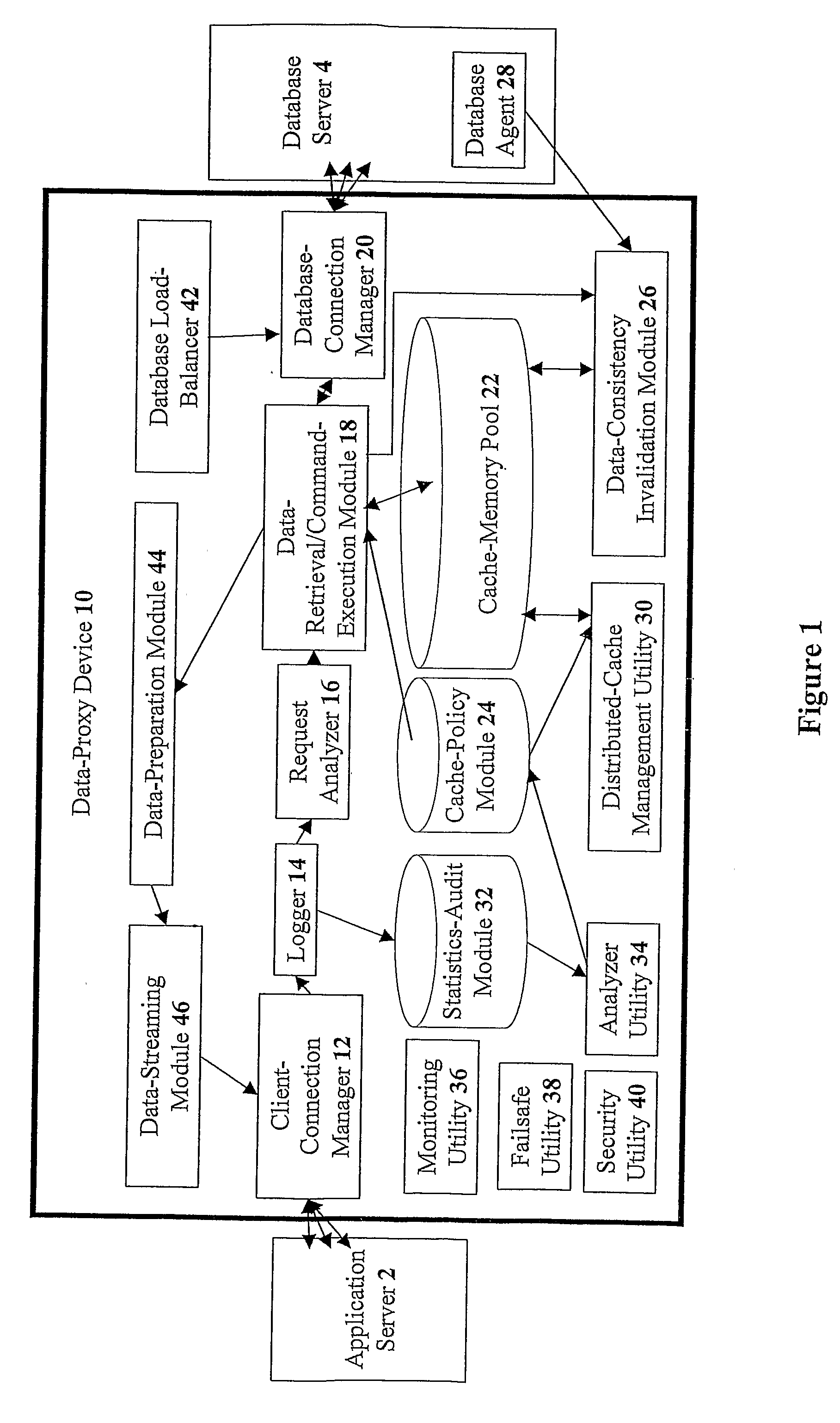

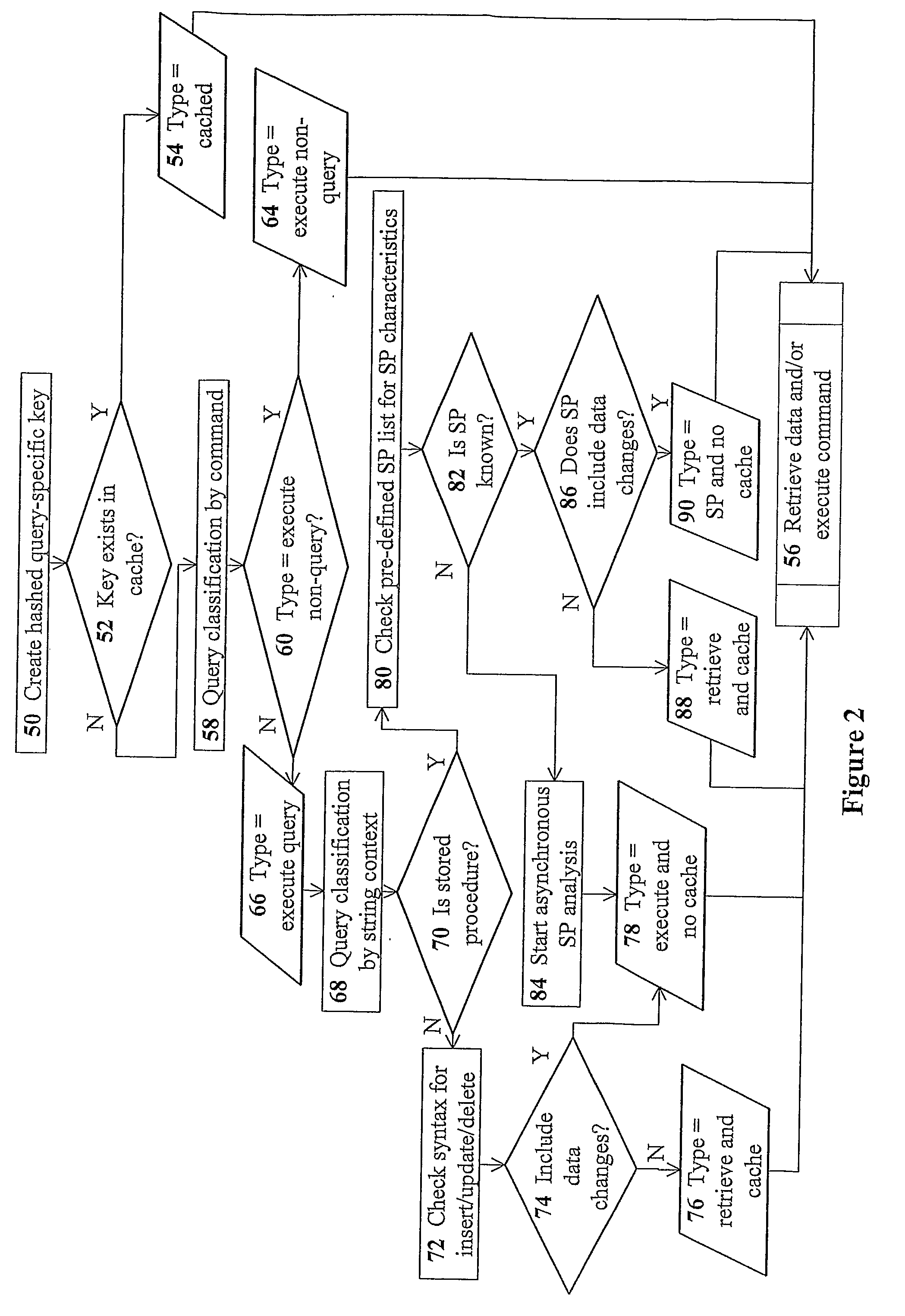

Devices for providing distributable middleware data proxy between application servers and database servers

InactiveUS20100174939A1Shorten the timeSave resourcesDigital data information retrievalResource allocationClient dataTerm memory

The present invention discloses devices Including a transparent client-connection manager for exchanging client data between an application server and the device: a request analyzer for analyzing query requests from at least one application server; a data-retrieval / command-execution module for executing query requests; a database connection manager for exchanging database data between at least one database server and the device: a cache-memory pool for storing data items from at least one database server: a cache-policy module for determining cache criteria for storing the data Items In the cache-memory pool; and a data-consistency invalidation module for determining invalidated data Items based on invalidation criteria for removing from the cache-memory pool. The cache-memory pool Is configured to utilize memory modules residing in data proxy devices and distributed cache management utility, enabling the memory capacity to be used as a cluster to balance workloads.

Owner:DCF TECH

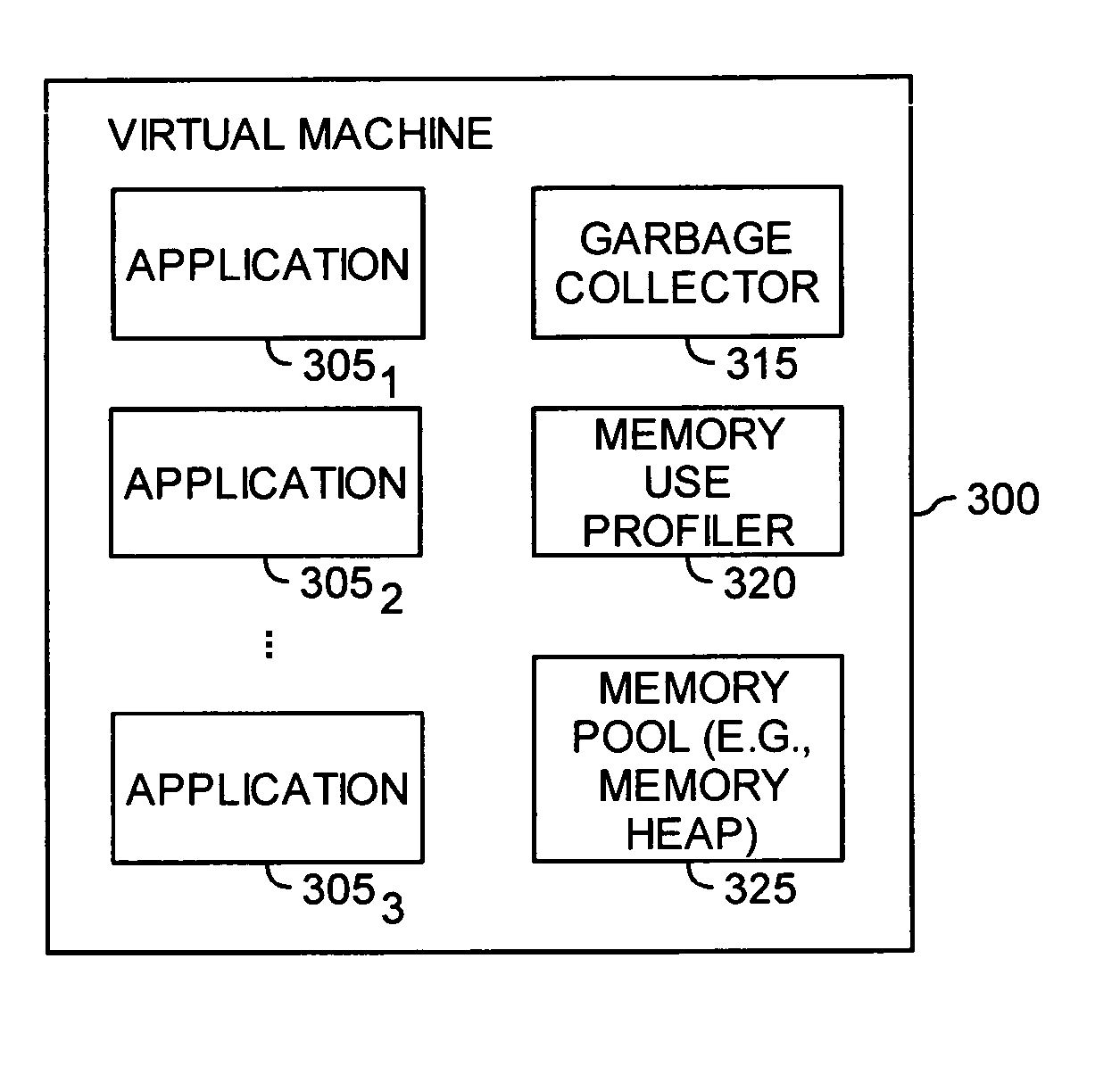

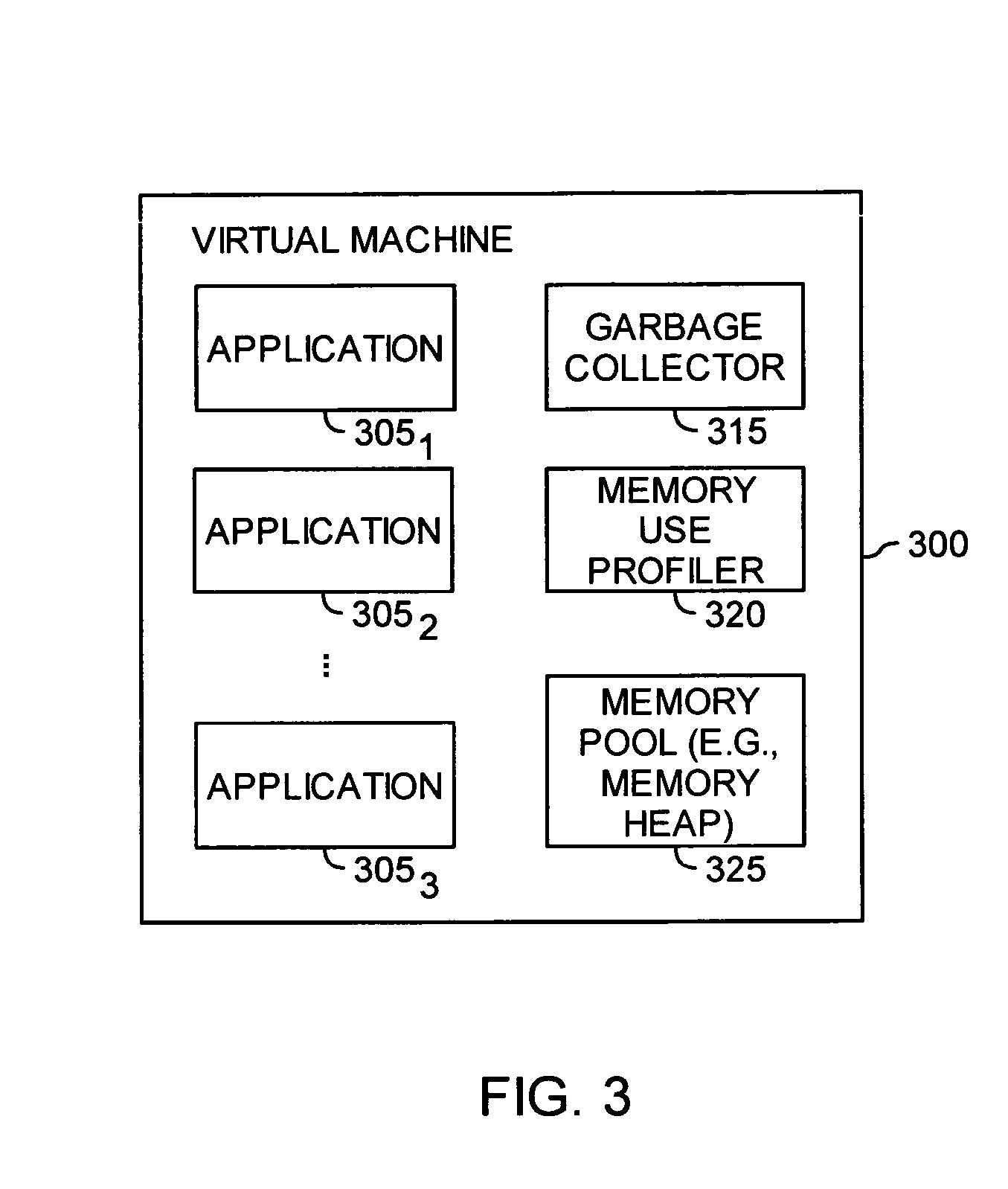

Automatic prediction of future out of memory exceptions in a garbage collected virtual machine

InactiveUS20070136402A1Special data processing applicationsMemory systemsOut of memoryParallel computing

A method, article of manufacture and apparatus for automatically predicting out of memory exceptions in garbage collected environments are disclosed. One embodiment provides a method of predicting out of memory events that includes monitoring an amount of memory available from a memory pool during a plurality of garbage collection cycles. A memory usage profile may be generated on the basis of the monitored amount of memory available, and then used to predict whether an out of memory exception is likely to occur.

Owner:IBM CORP

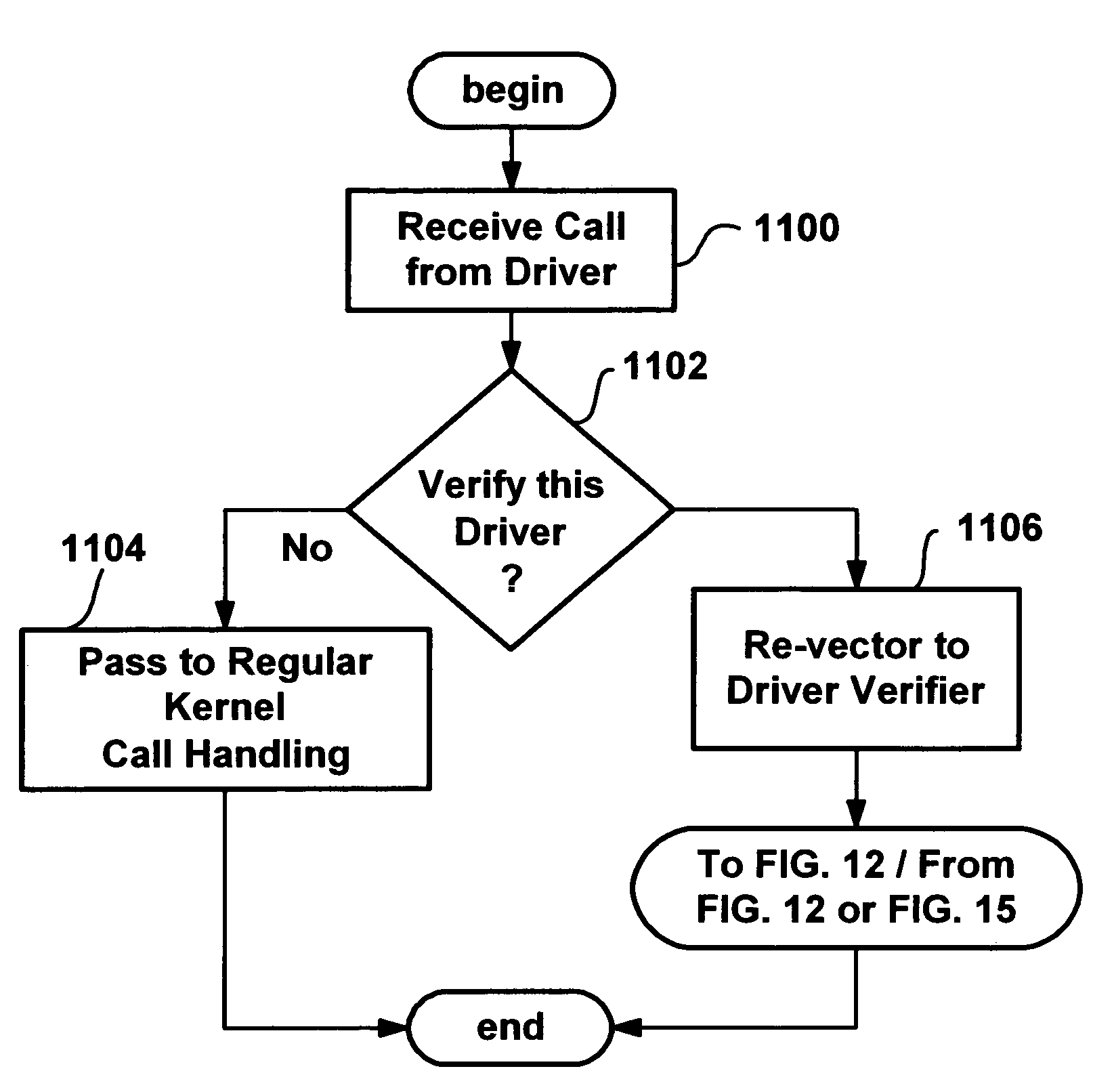

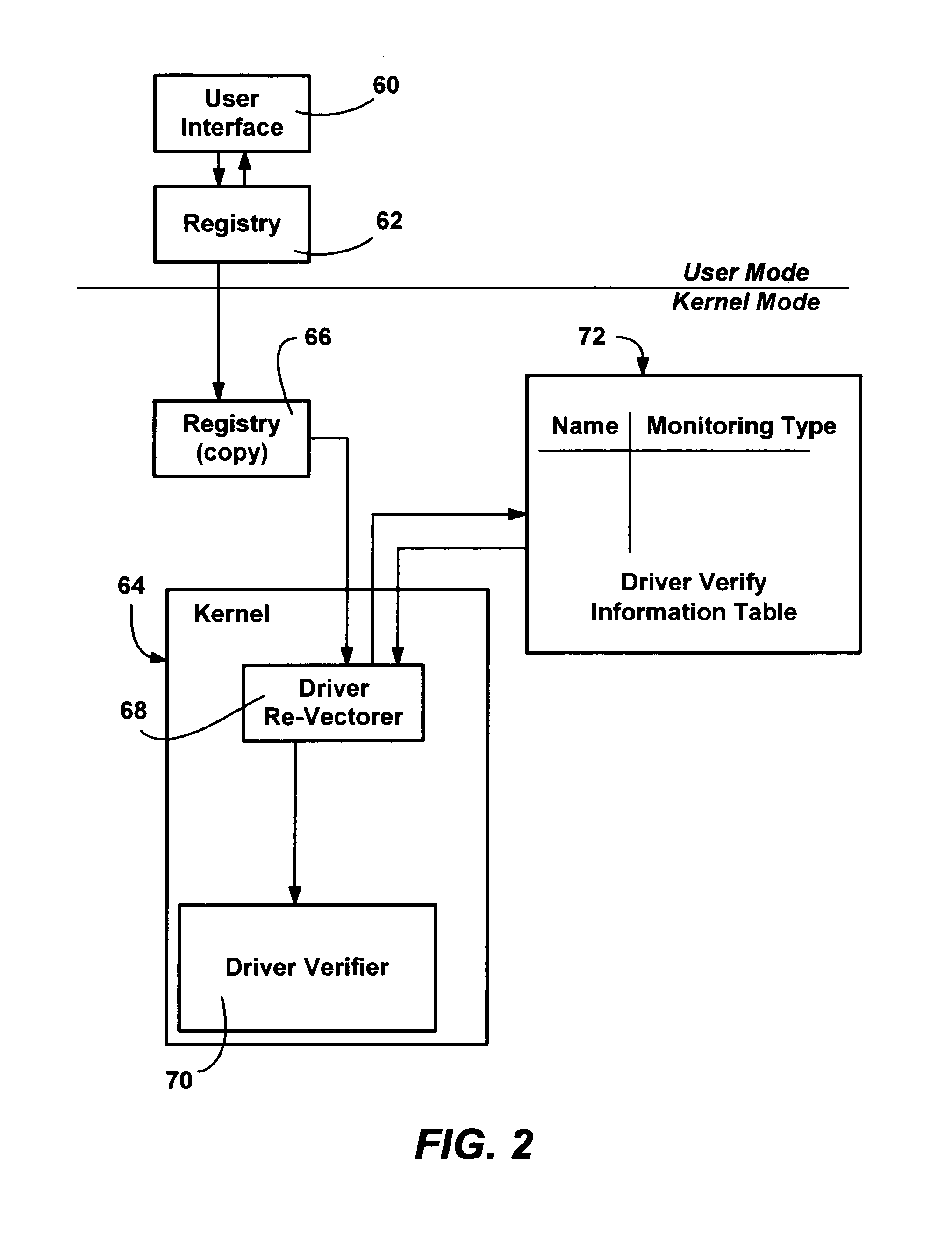

Method and system for monitoring and verifying software drivers using system resources including memory allocation and access

InactiveUS7111307B1Error detection/correctionMultiprogramming arrangementsComputer hardwareProgram validation

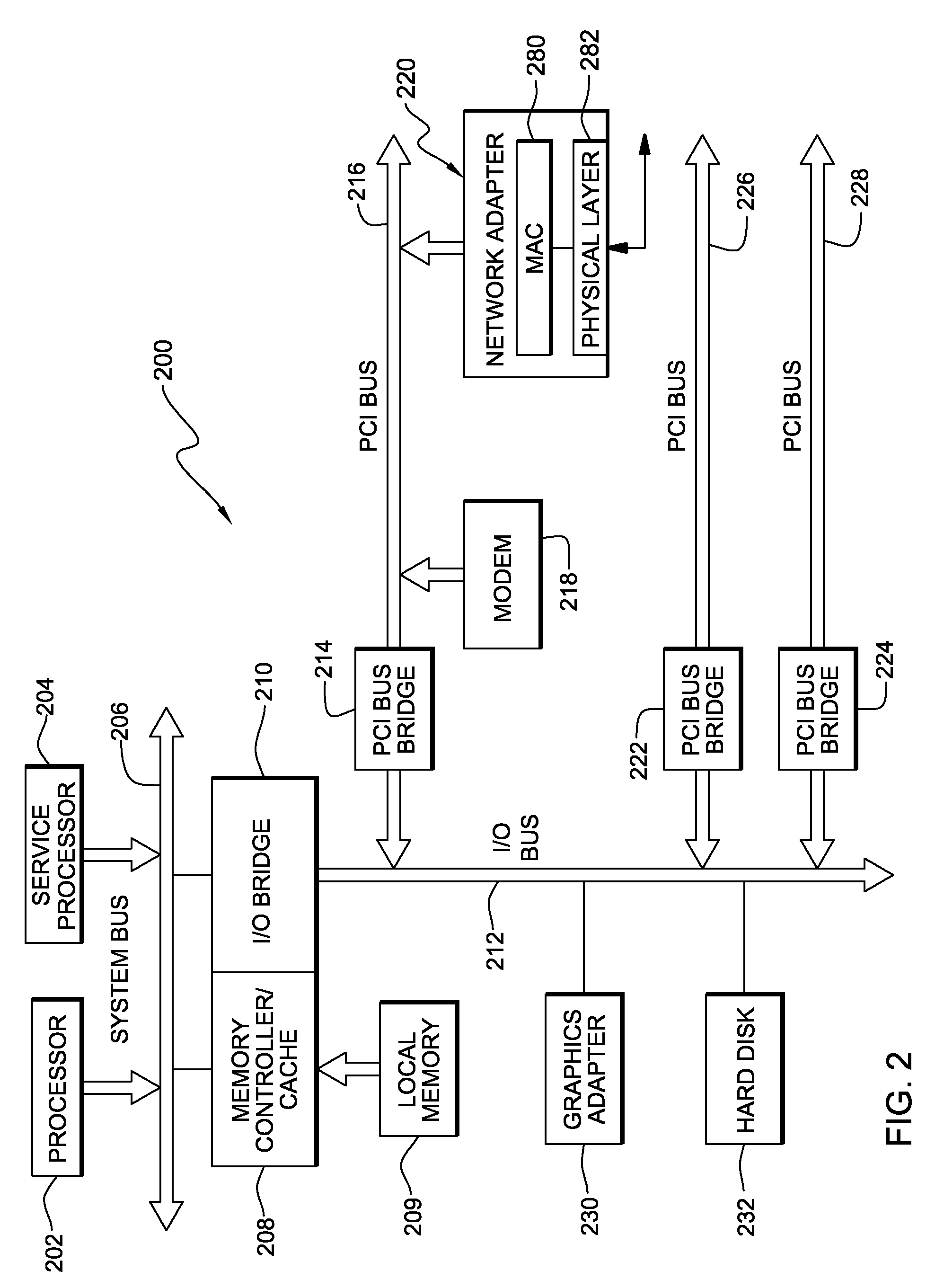

A method and system for verifying computer system drivers such as kernel mode drivers. A driver verifier sets up tests for specified drivers and monitors the driver's behavior for selected violations that cause system crashes. In one test, the driver verifier allocates a driver's memory pool allocations from a special pool bounded by inaccessible memory space for testing the driver's accessing memory outside of the allocation. The driver verifier also marks the space as inaccessible when it is deallocated, detecting a driver that accesses deallocated space. The driver verifier may also provide extreme memory pressure on a specific driver, or randomly fail requests for pool memory. The driver verifier also checks call parameters for violations, performs checks to ensure a driver cleans up timers when deallocating memory and cleans up memory and other resources when unloaded. An I / O verifier is also described for verifying drivers use of I / O request packets.

Owner:ZHIGU HLDG

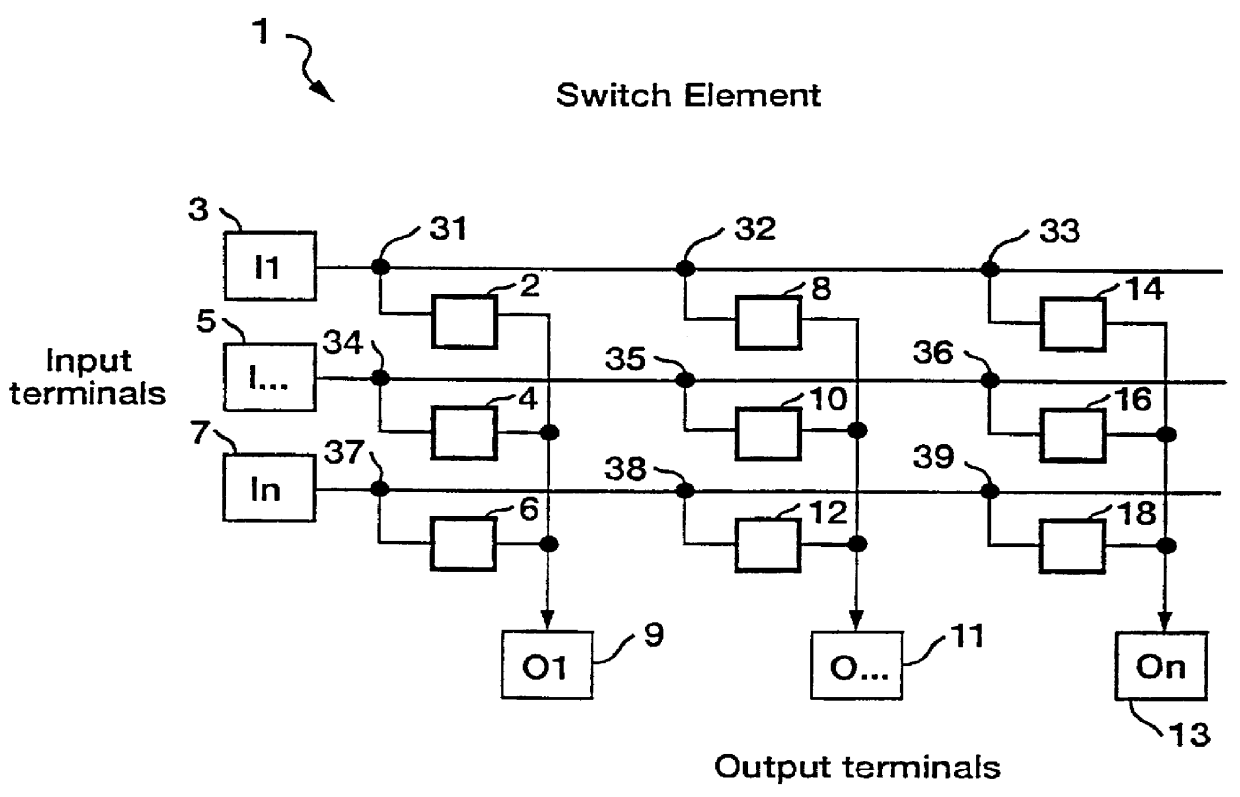

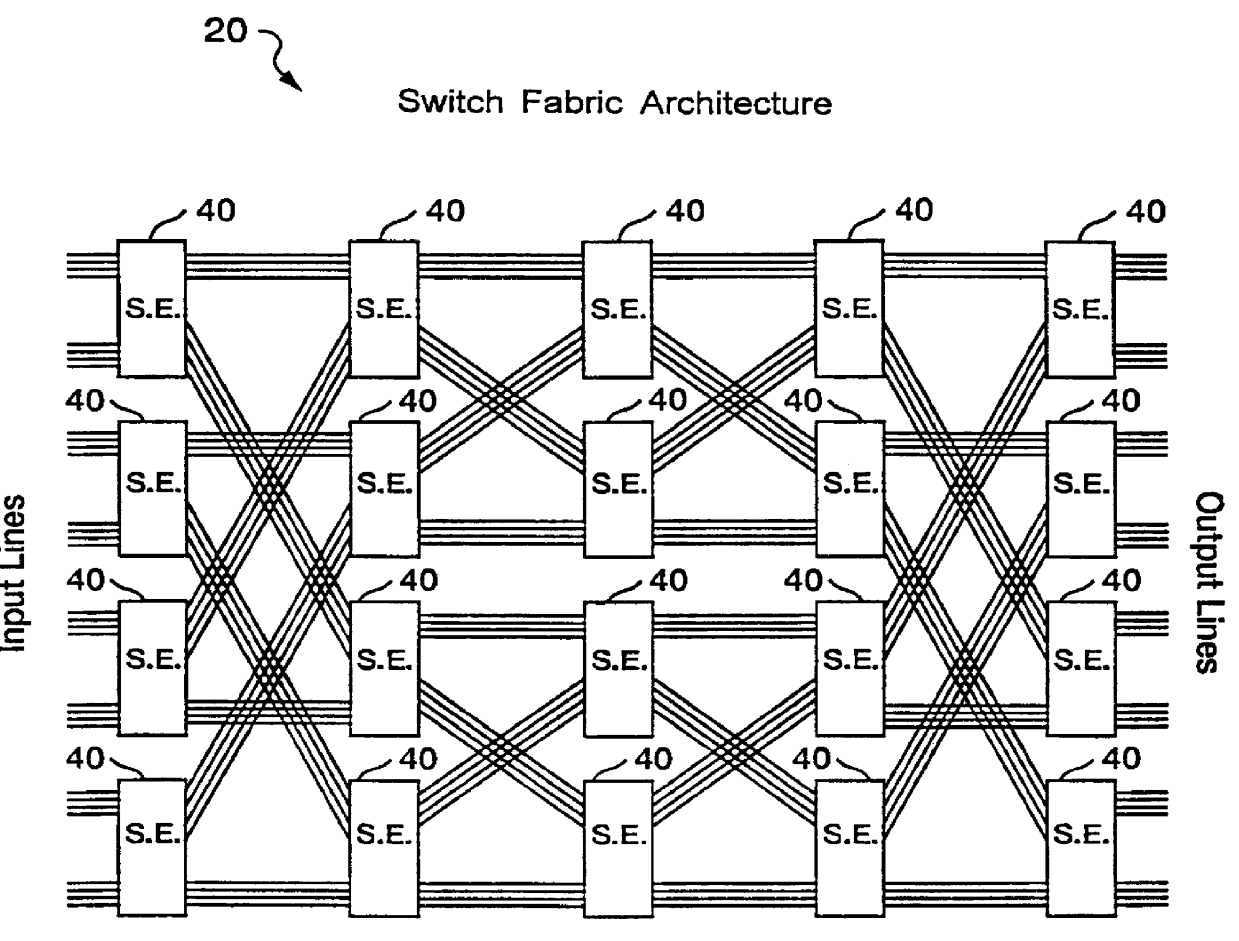

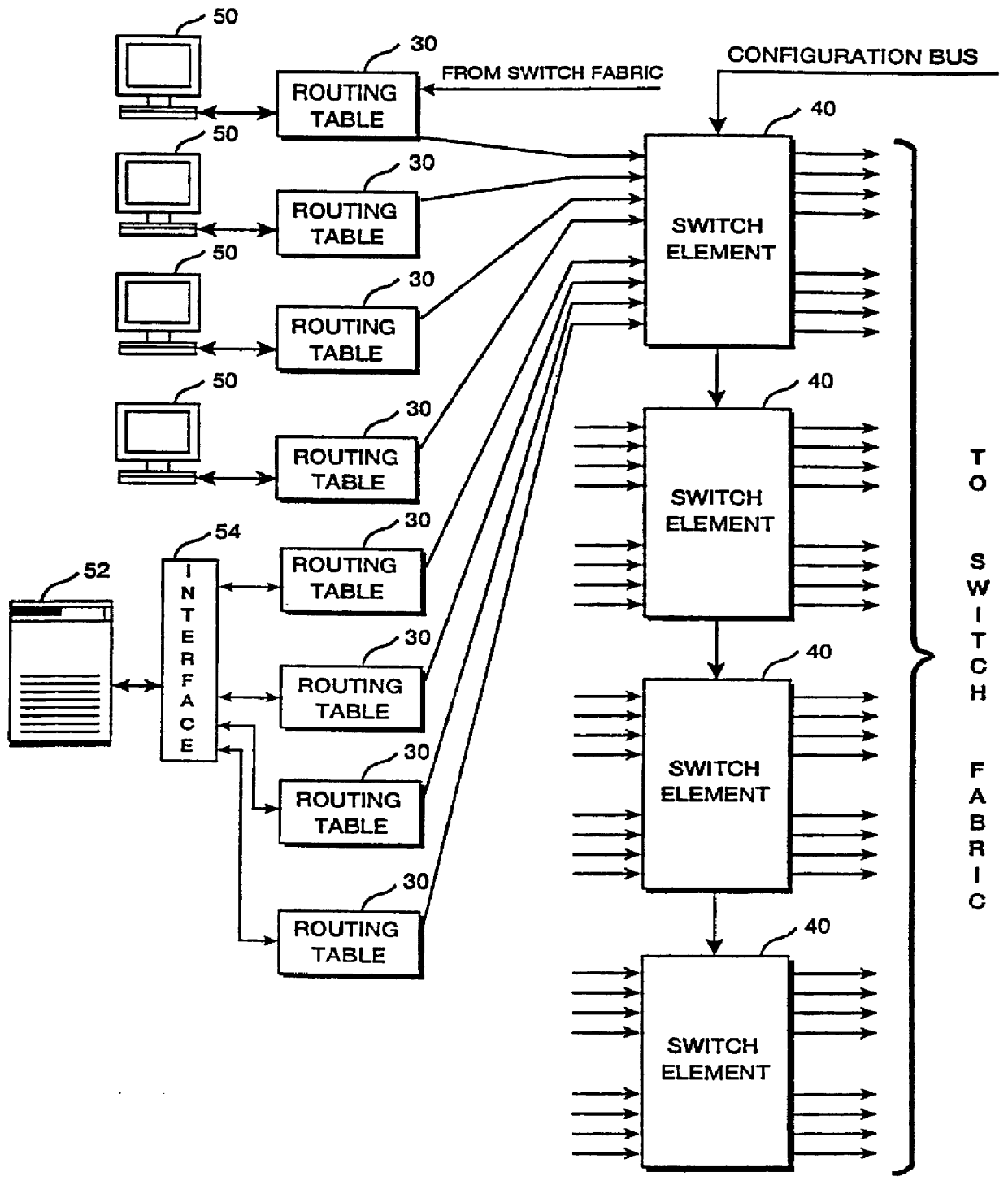

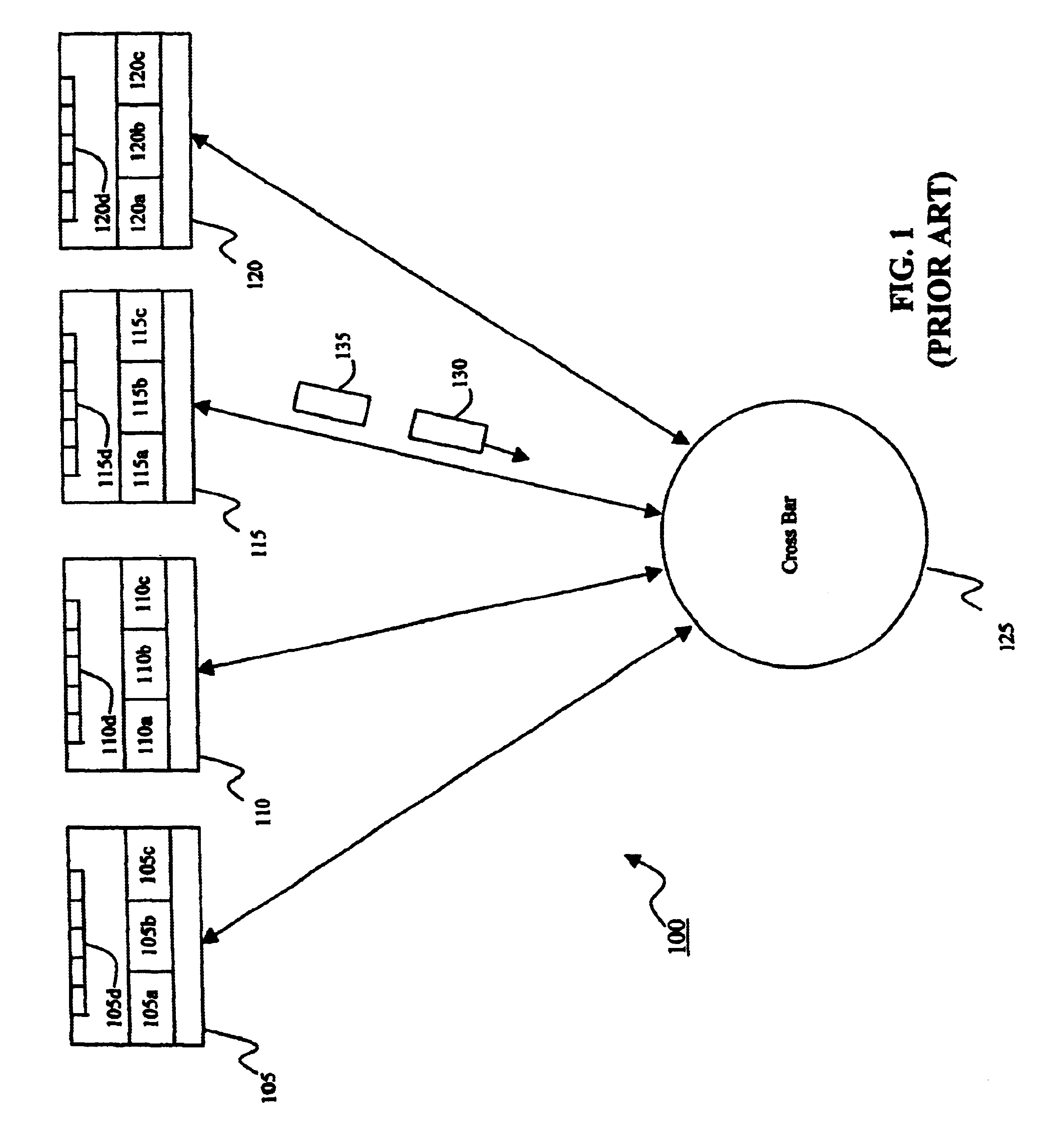

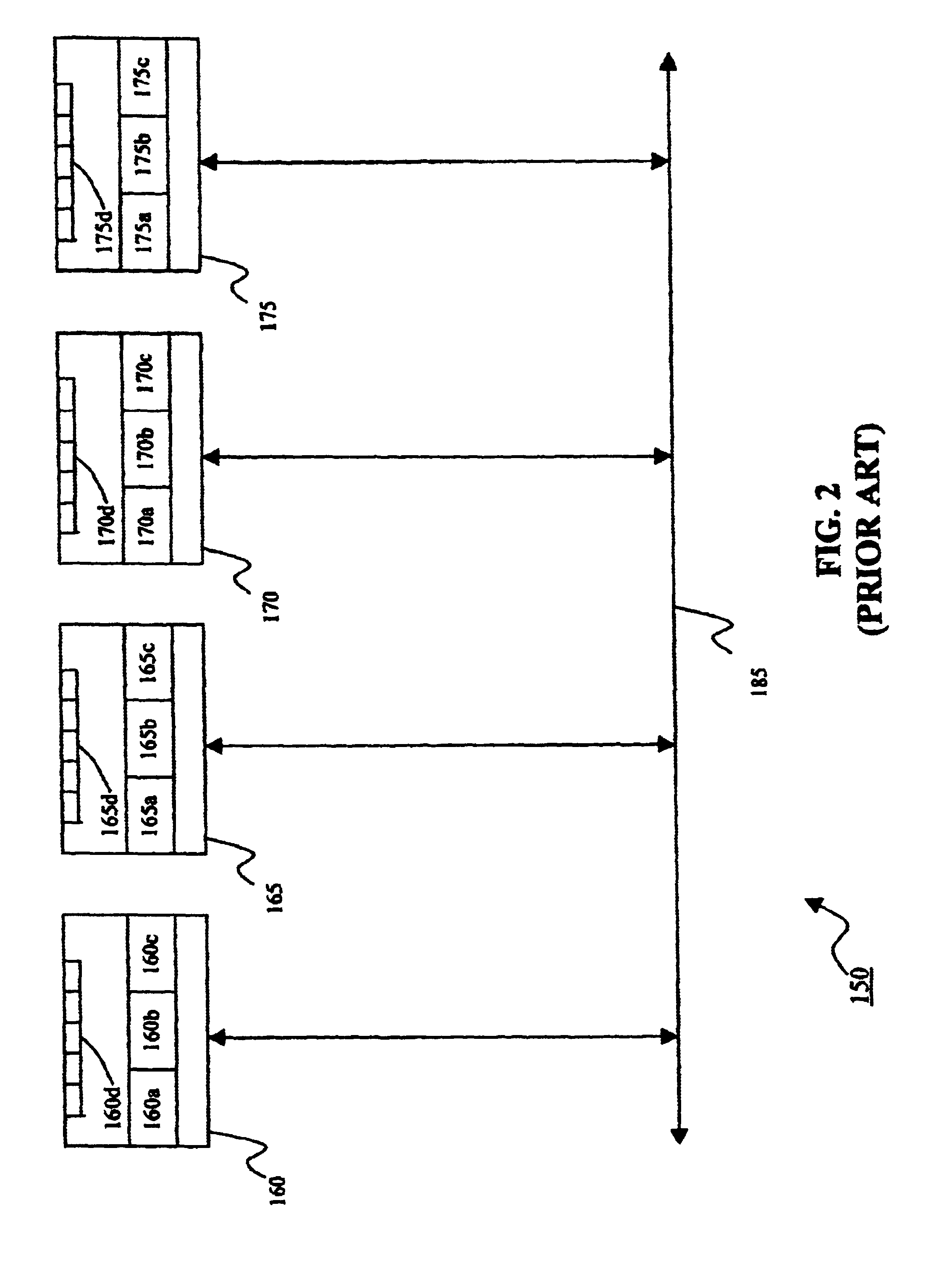

ATM architecture and switching element

An ATM switching system architecture of a switch fabric-type is built of, a plurality of ATM switch element circuits and routing table circuits for each physical connection to / from the switch fabric. A shared pool of memory is employed to eliminate the need to provide memory at every crosspoint. Each routing table maintains a marked interrupt linked list for storing information about which ones of its virtual channels are experiencing congestion. This linked list is available to a processor in the external workstation to alert the processor when a congestion condition exists in one of the virtual channels. The switch element circuit typically has up to eight 4-bit-wide nibble inputs and eight 4-bit-wide nibble outputs and is capable of connecting cells received at any of its inputs to any of its outputs, based on the information in a routing tag uniquely associated with each cell.

Owner:PMC SEIRRA

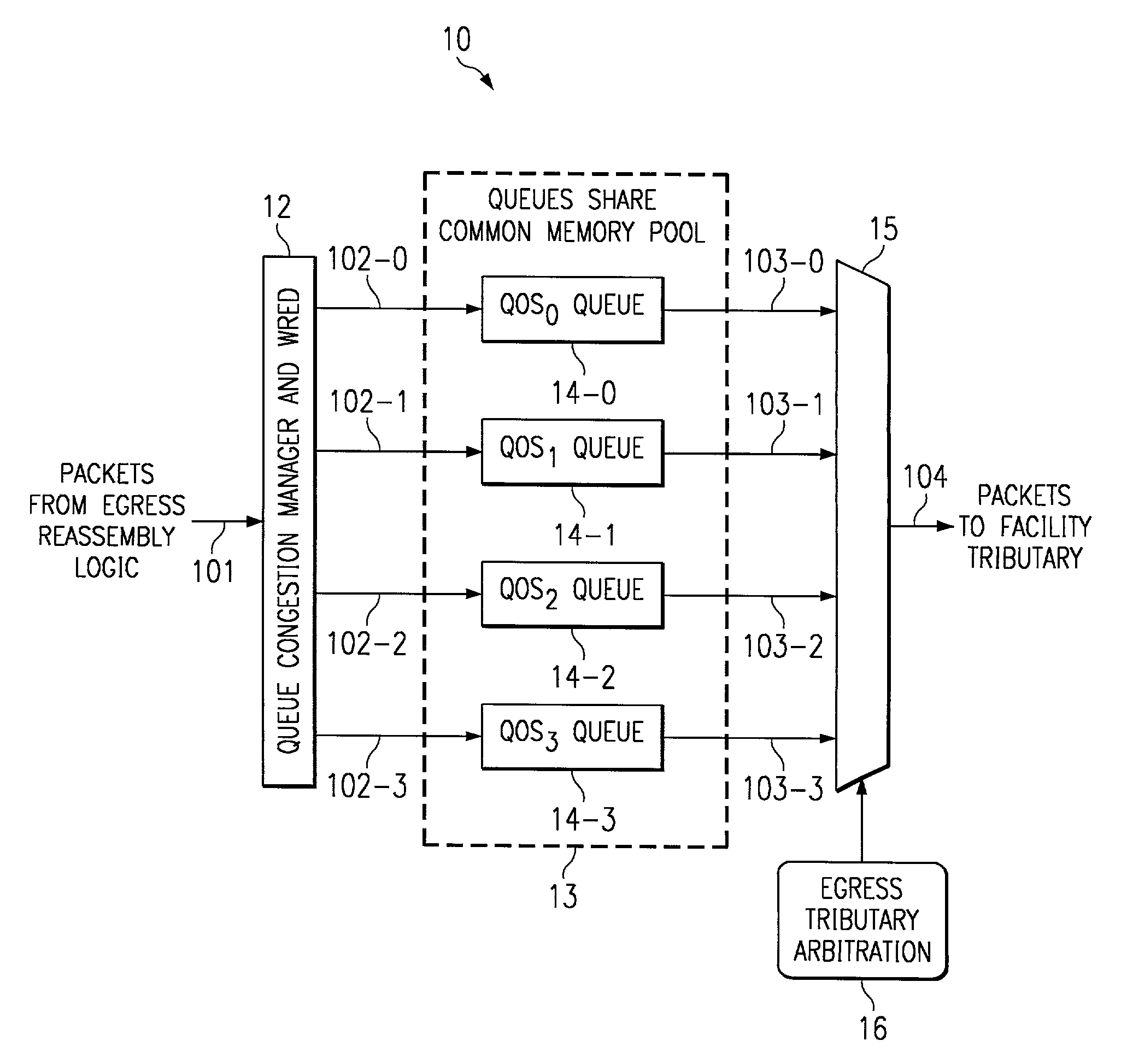

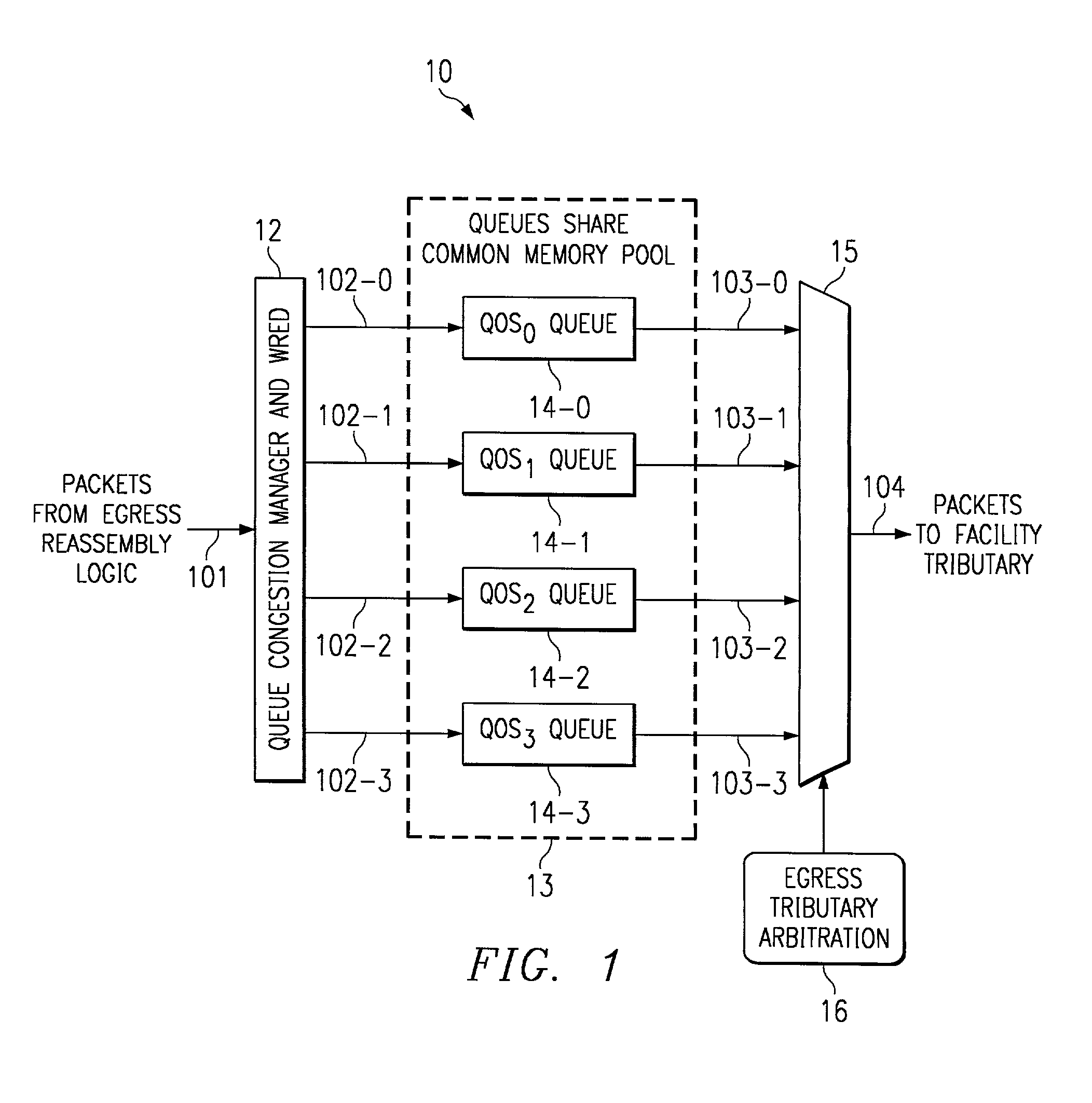

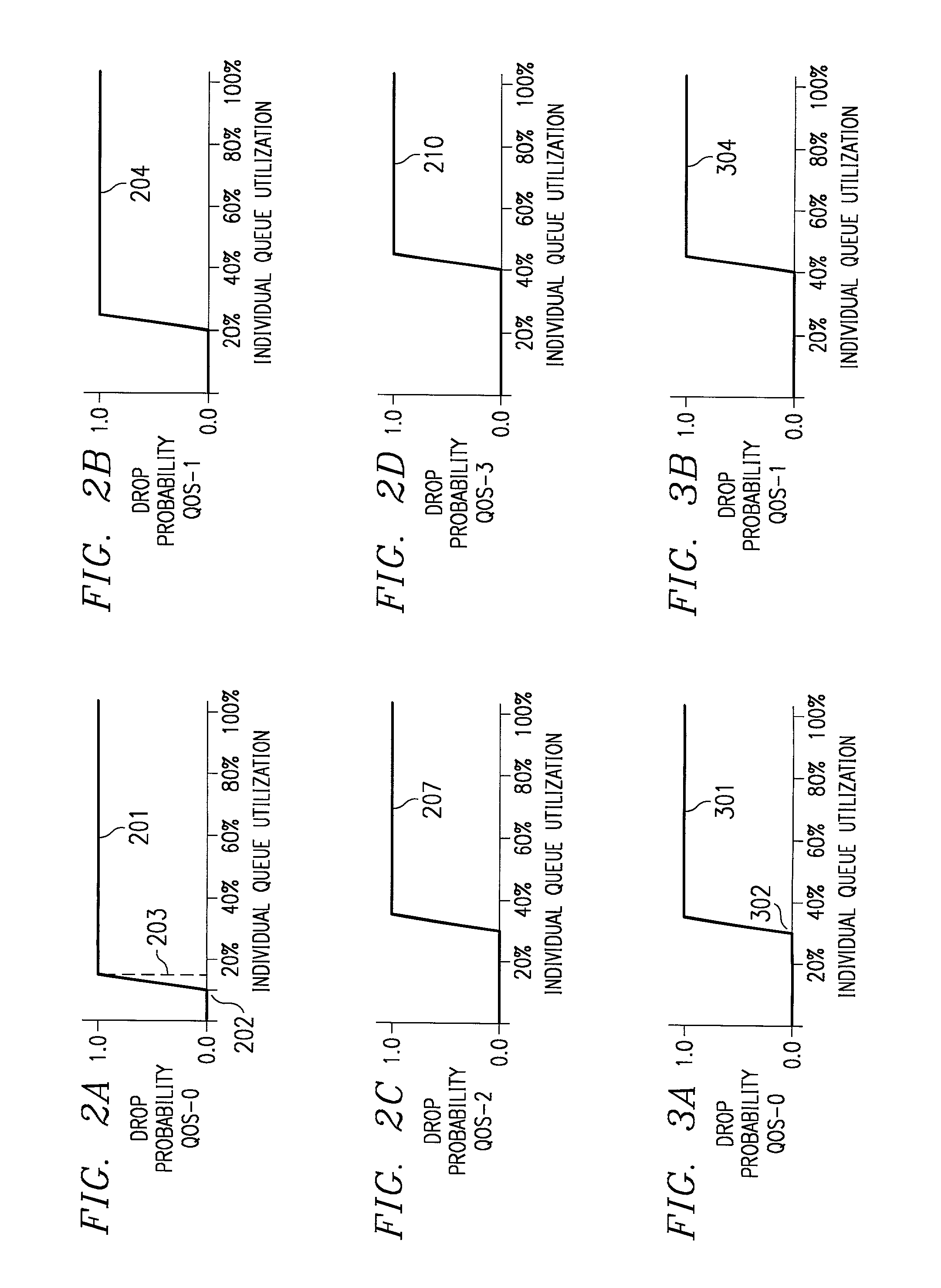

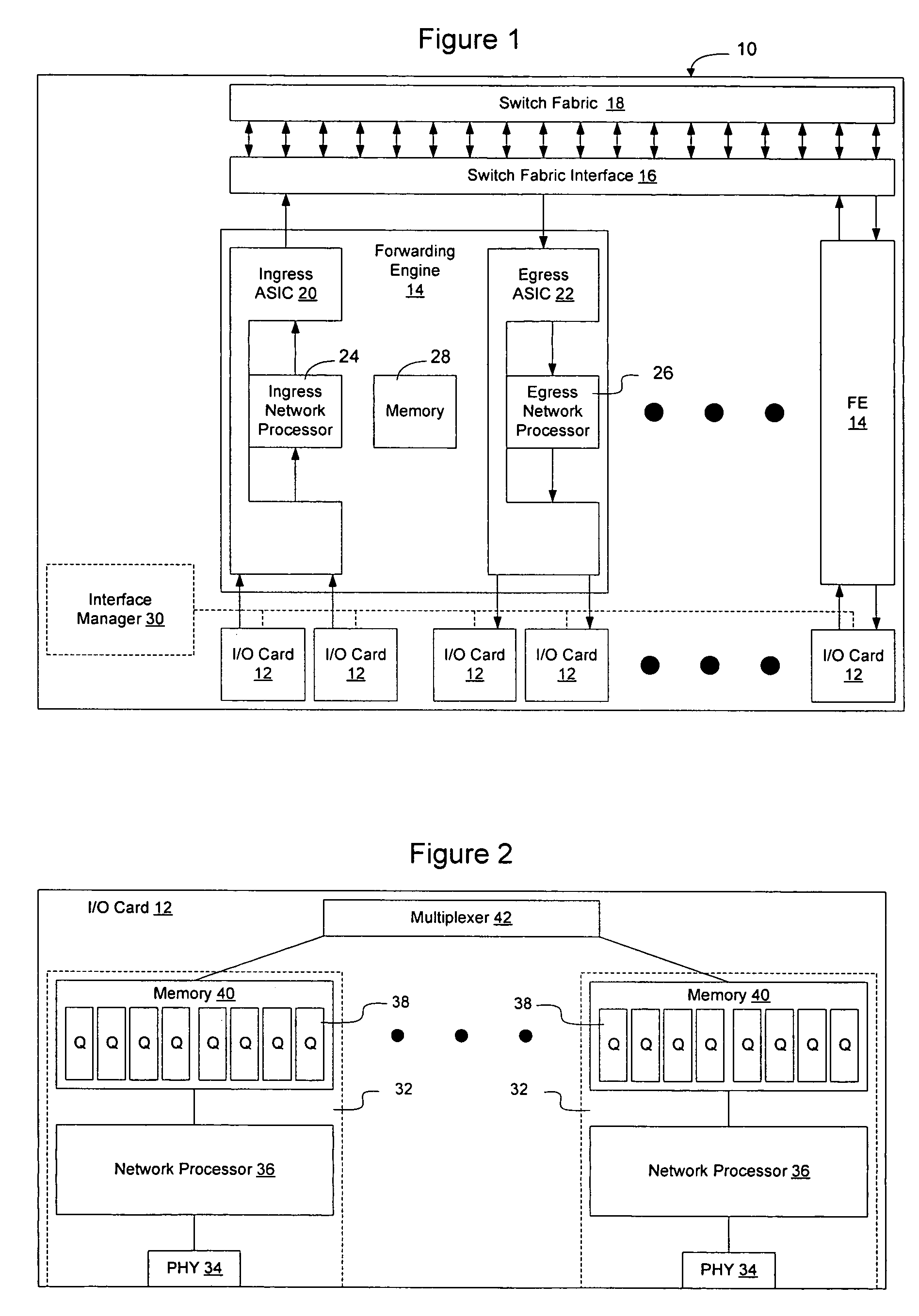

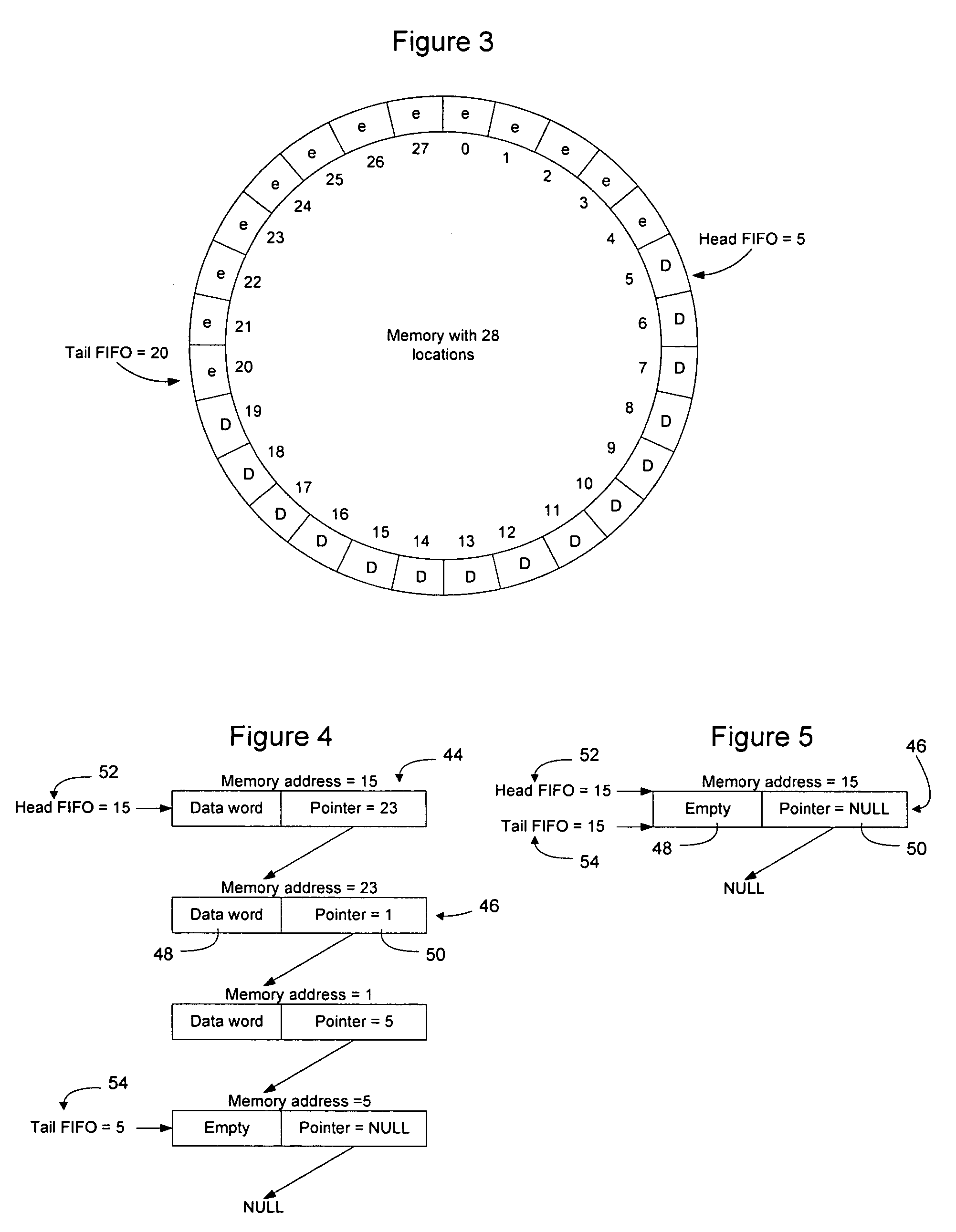

System and method for router queue and congestion management

In a multi-QOS level queuing structure, packet payload pointers are stored in multiple queues and packet payloads in a common memory pool. Algorithms control the drop probability of packets entering the queuing structure. Instantaneous drop probabilities are obtained by comparing measured instantaneous queue size with calculated minimum and maximum queue sizes. Non-utilized common memory space is allocated simultaneously to all queues. Time averaged drop probabilities follow a traditional Weighted Random Early Discard mechanism. Algorithms are adapted to a multi-level QOS structure, floating point format, and hardware implementation. Packet flow from a router egress queuing structure into a single egress port tributary is controlled by an arbitration algorithm using a rate metering mechanism. The queuing structure is replicated for each egress tributary in the router system.

Owner:AVAGO TECH INT SALES PTE LTD

Memory control method for embedded systems

ActiveCN102915276AImprove distribution efficiencyAdd status monitoringMemory adressing/allocation/relocationComputer architectureParallel computing

The invention provides a memory control method for embedded systems. The method includes: applying for memory in an operating system, and using part of the memory as a memory pool and the other part as a reserved memory area; establishing a cache for each thread by pool management of the memory pool and thread caching technology; subjecting the reserved memory area to management by TLSF (two-level segregated fit) algorithm; dividing the memory pool into memory blocks different in size, connecting the memory blocks in the same size to form a double linked list, adding a memory management unit for each memory block, and placing the memory management unit and the memory blocks in different memory areas respectively; and establishing a memory statistical linked list for each thread to connect the memory blocks applied by the thread so as to facilitate troubleshooting of memory leak. In addition, a memory coverage checking mechanism is added without increasing cost of the memory control method.

Owner:WUHAN POST & TELECOMM RES INST CO LTD

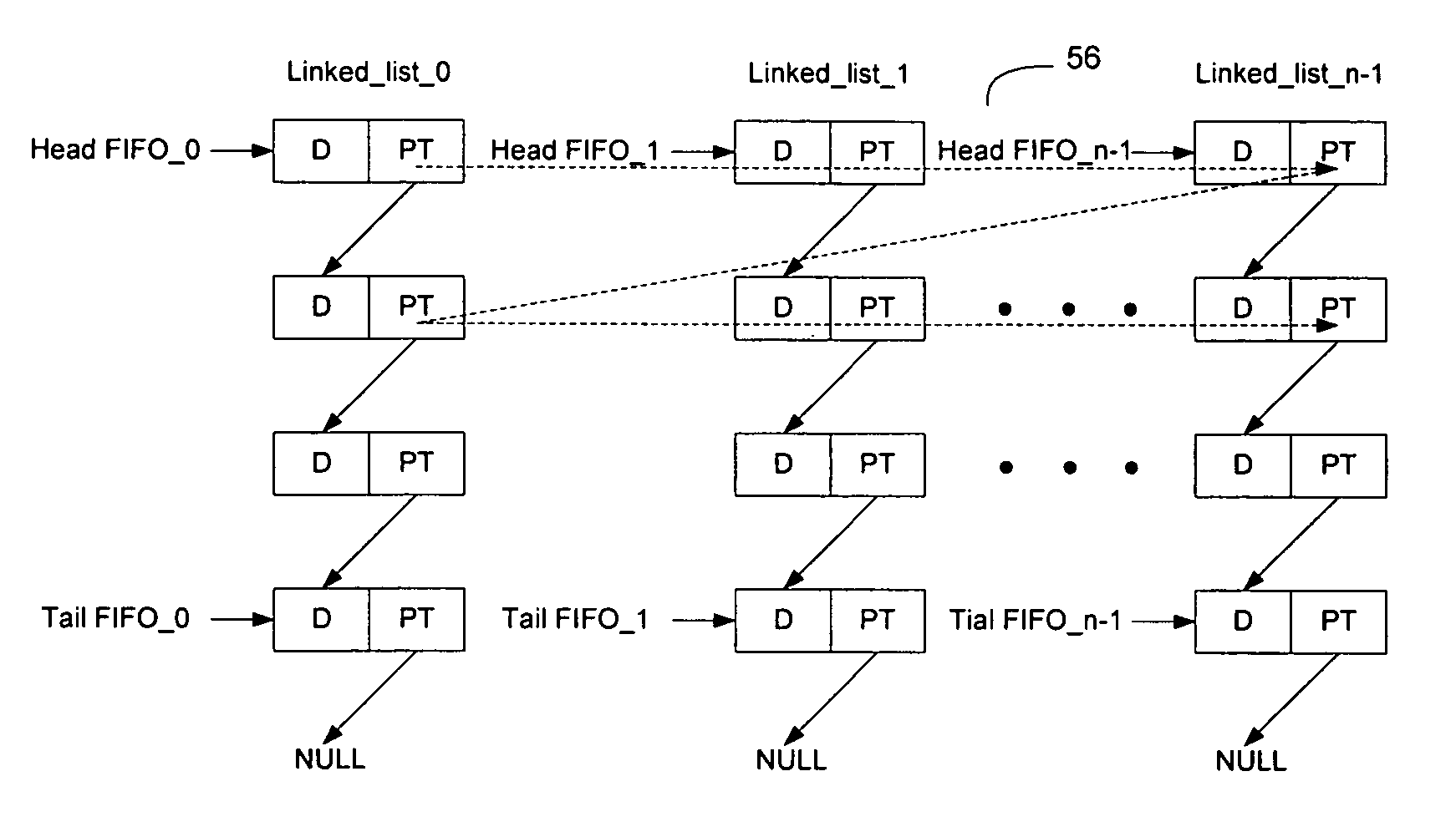

Implementing FIFOs in shared memory using linked lists and interleaved linked lists

InactiveUS20060031643A1Easy to readOvercomes drawbackMemory systemsInput/output processes for data processingData fieldTerm memory

Owner:AVAYA INC

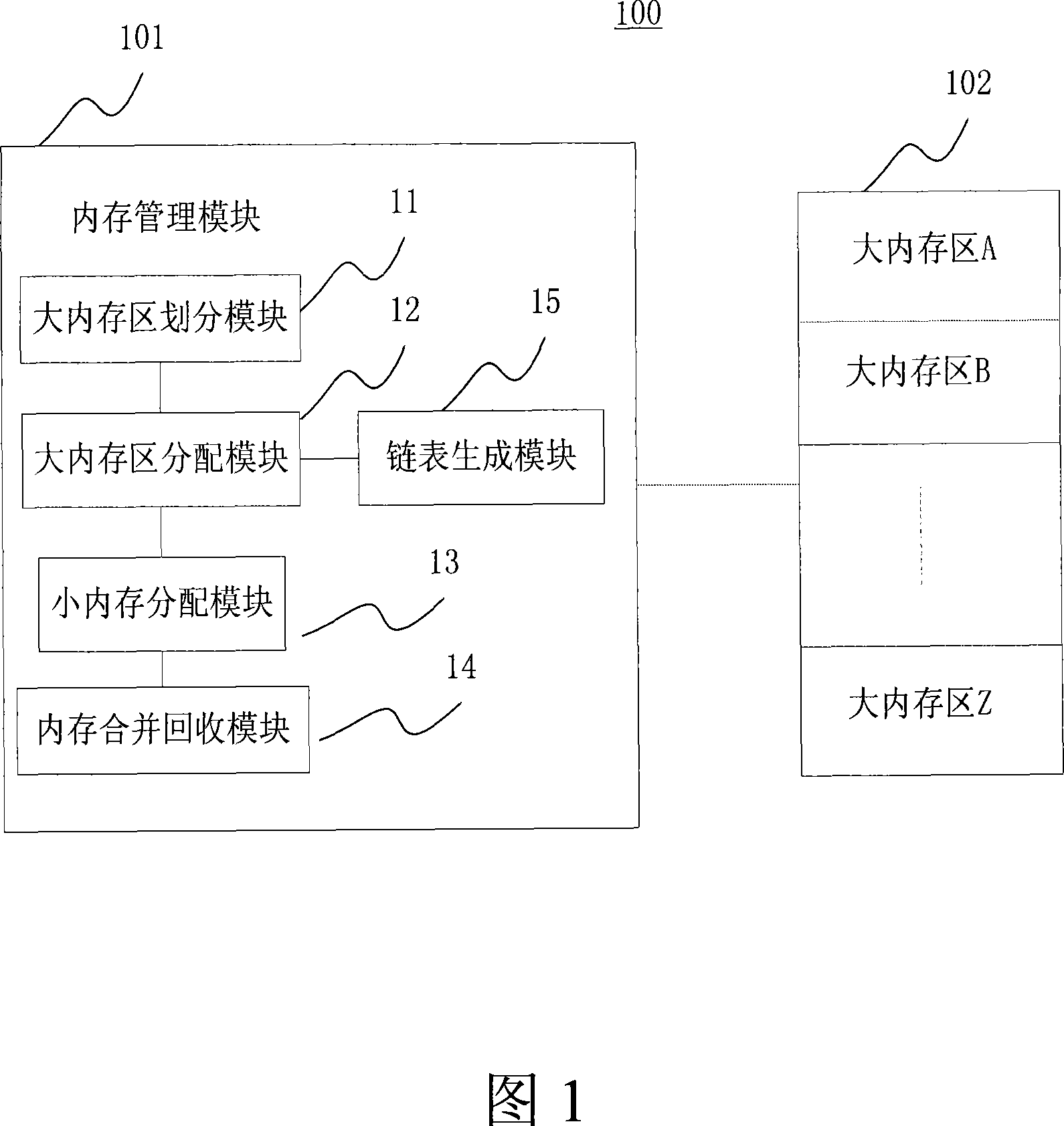

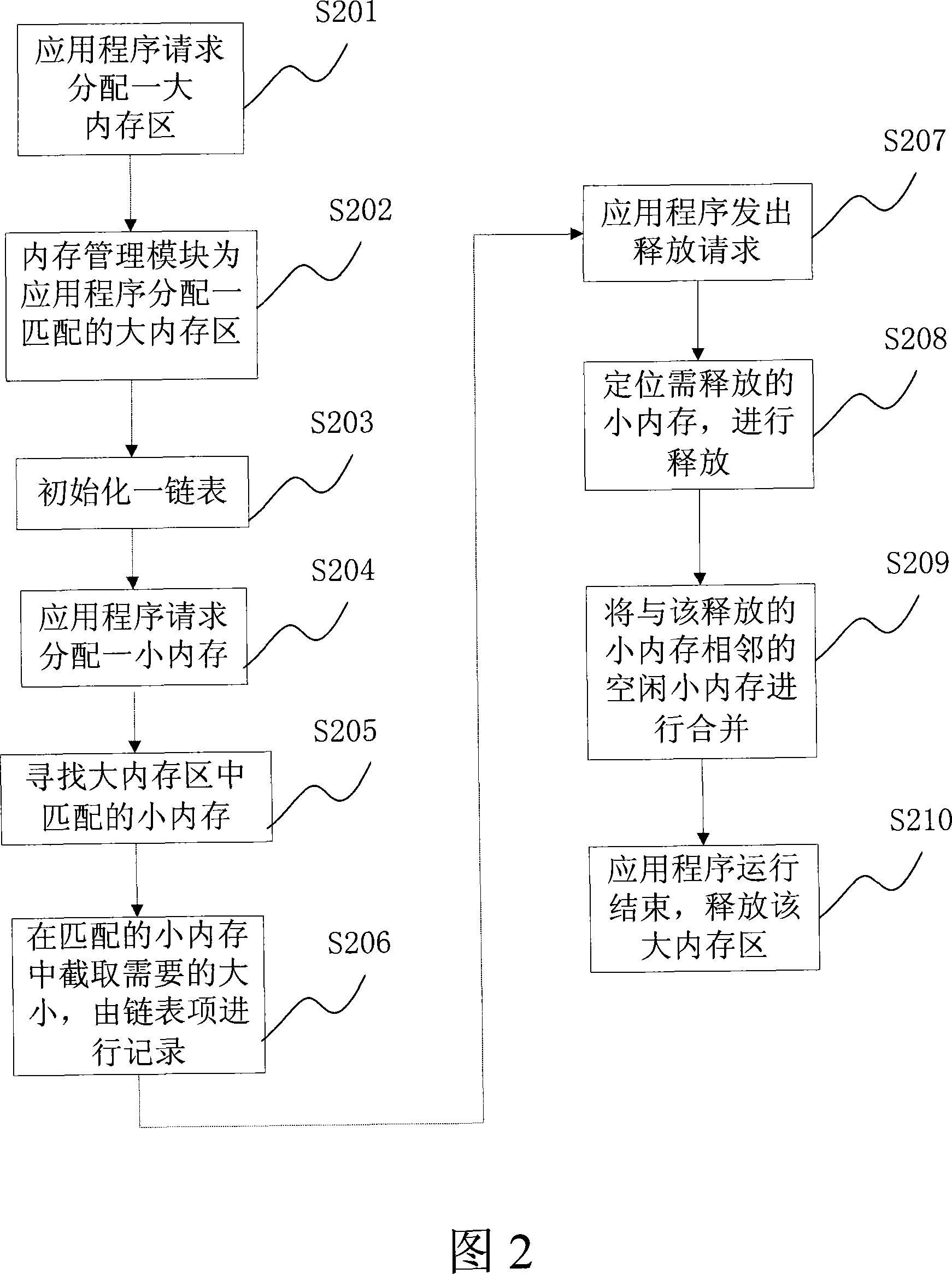

Internal memory managing method and device of embedded system

InactiveCN101221536ASpeed up tidying upNo effect on operationMemory adressing/allocation/relocationInternal memoryEmbedded system

The invention discloses a memory management method and a device for an embedded type system. The method of the invention comprises the following steps: firstly, a memory is divided into a plurality of large memory zones with unequal memory capacities; secondly, when an application program begins to run, a large memory zone which is matched with a maximum memory requirement value of the application program is allocated for the application program; thirdly, a small memory is allocated for the application program in the matched large memory zone by utilization of heap means; fourthly, when the small memory is released by the application program by utilization of heap means, the small memory is combined with adjacent and free small memories; when the application program stops running, the matched large memory zone is released. The invention avoids a large amount of memory fragmentations, quickens speed of memory fragmentation defrag and avoids the necessity in a memory pool means of calculation of the size and number of each memory which needs for dynamic allocation of each application program.

Owner:ZTE CORP

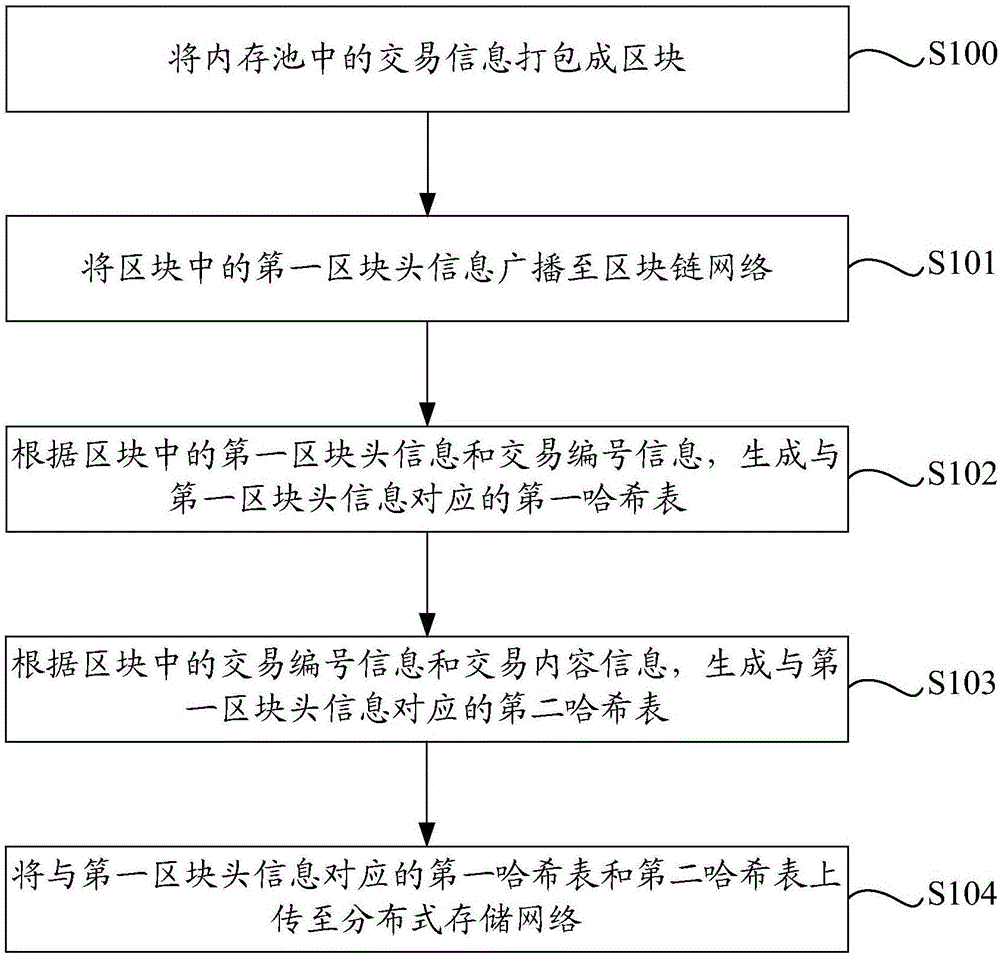

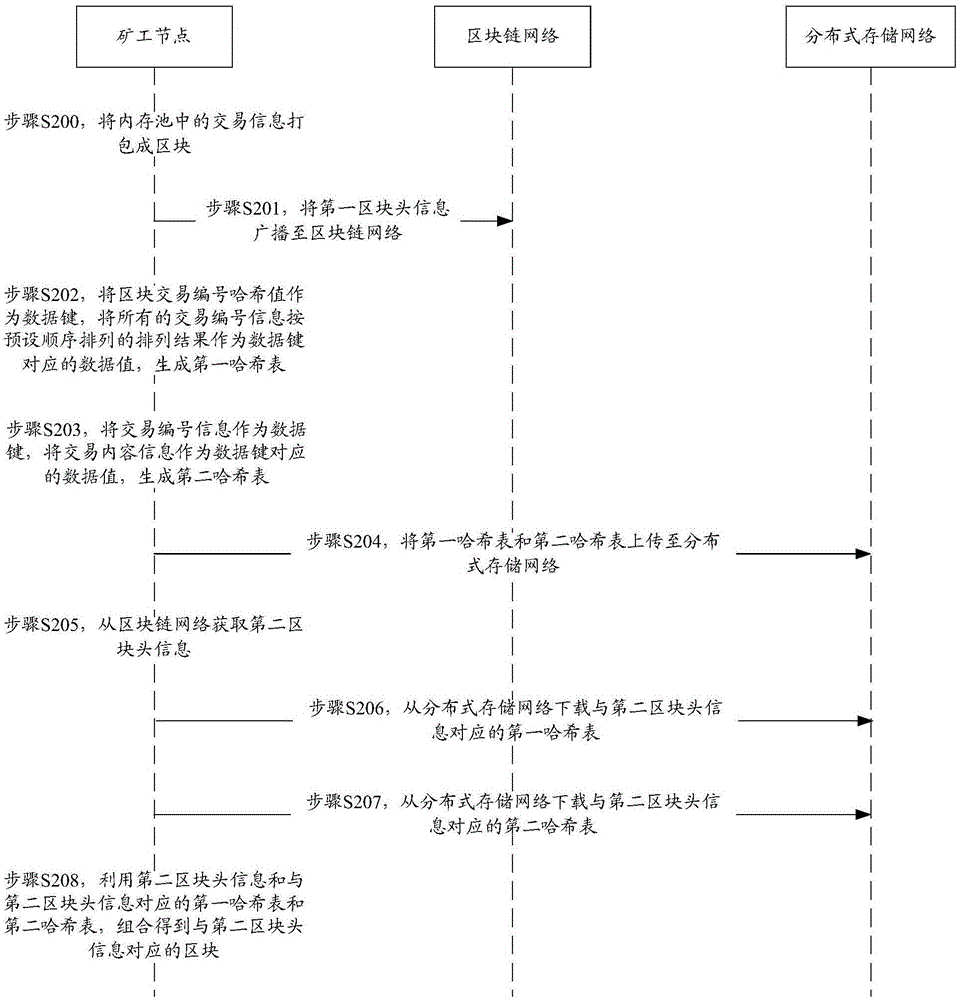

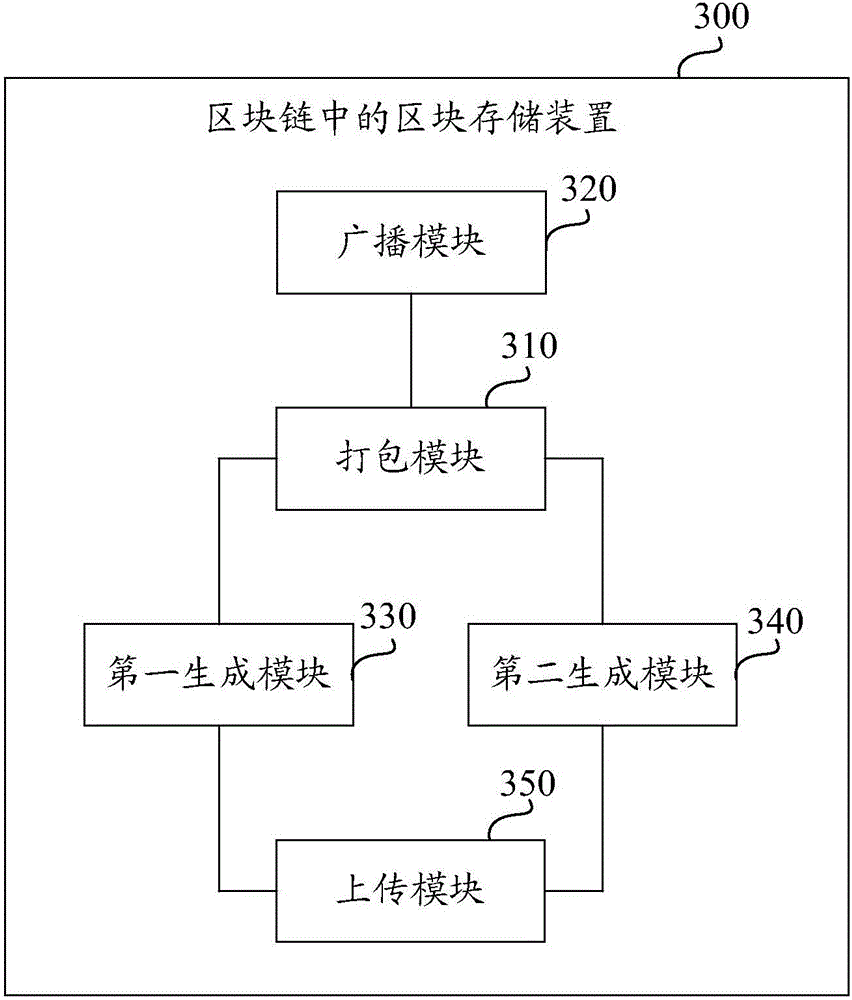

Block storage method and device in blockchain

The invention discloses a block storage method and a block storage device in a blockchain. The block storage method in the blockchain is implemented by a miner node, and comprises the steps of: packing transaction information in a memory pool into a block; broadcasting first block header information in the block to a blockchain network, wherein the first block header information comprises a block transaction serial number hash value; generating a first hash table corresponding to the first block header information according to the first block header information in the block and transaction serial number information; generating a second hash table corresponding to the first block header information according to the transaction serial number information in the block and transaction content information; and uploading the first hash table and the second hash table corresponding to the first block header information to a distributed storage network. By utilizing the block storage method and the block storage device provided by the invention, the pressure of network transmission and storage pressure of the single node are effectively alleviated.

Owner:JIANGSU PAYEGIS TECH CO LTD

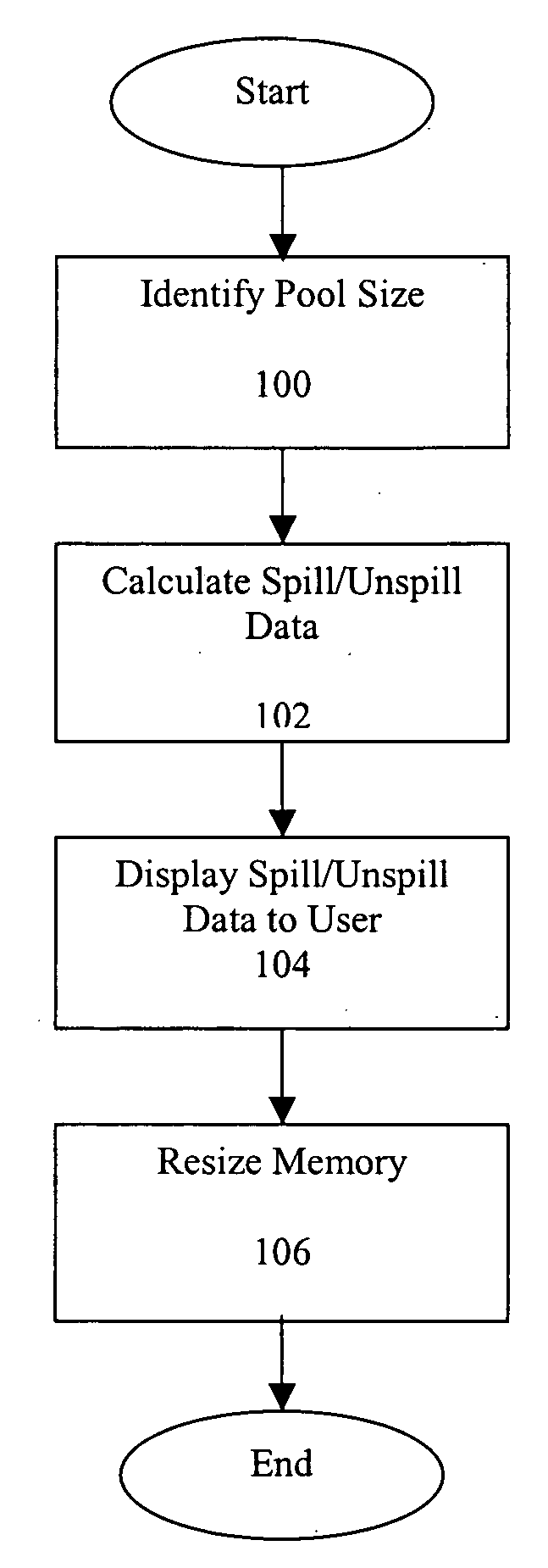

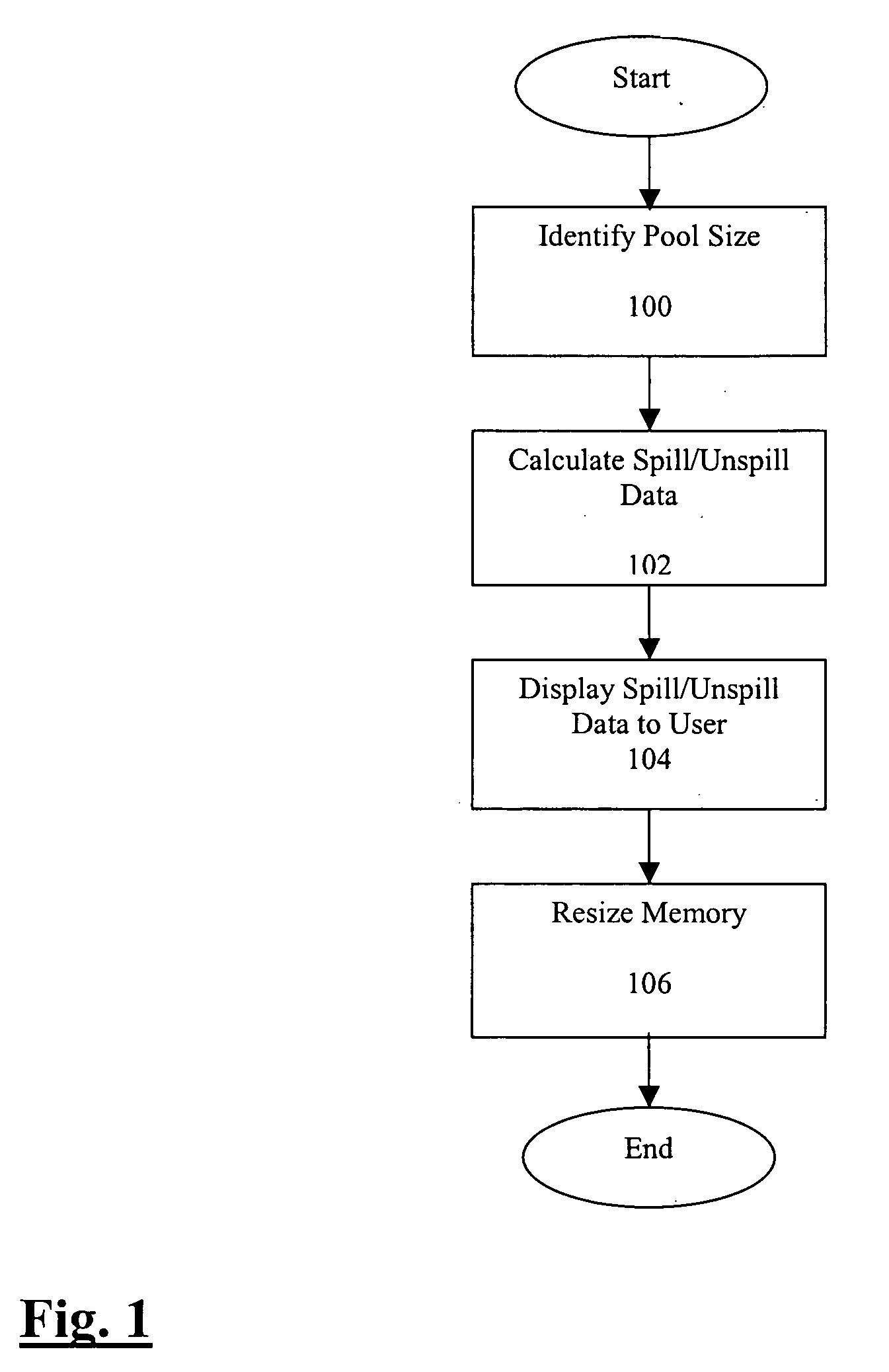

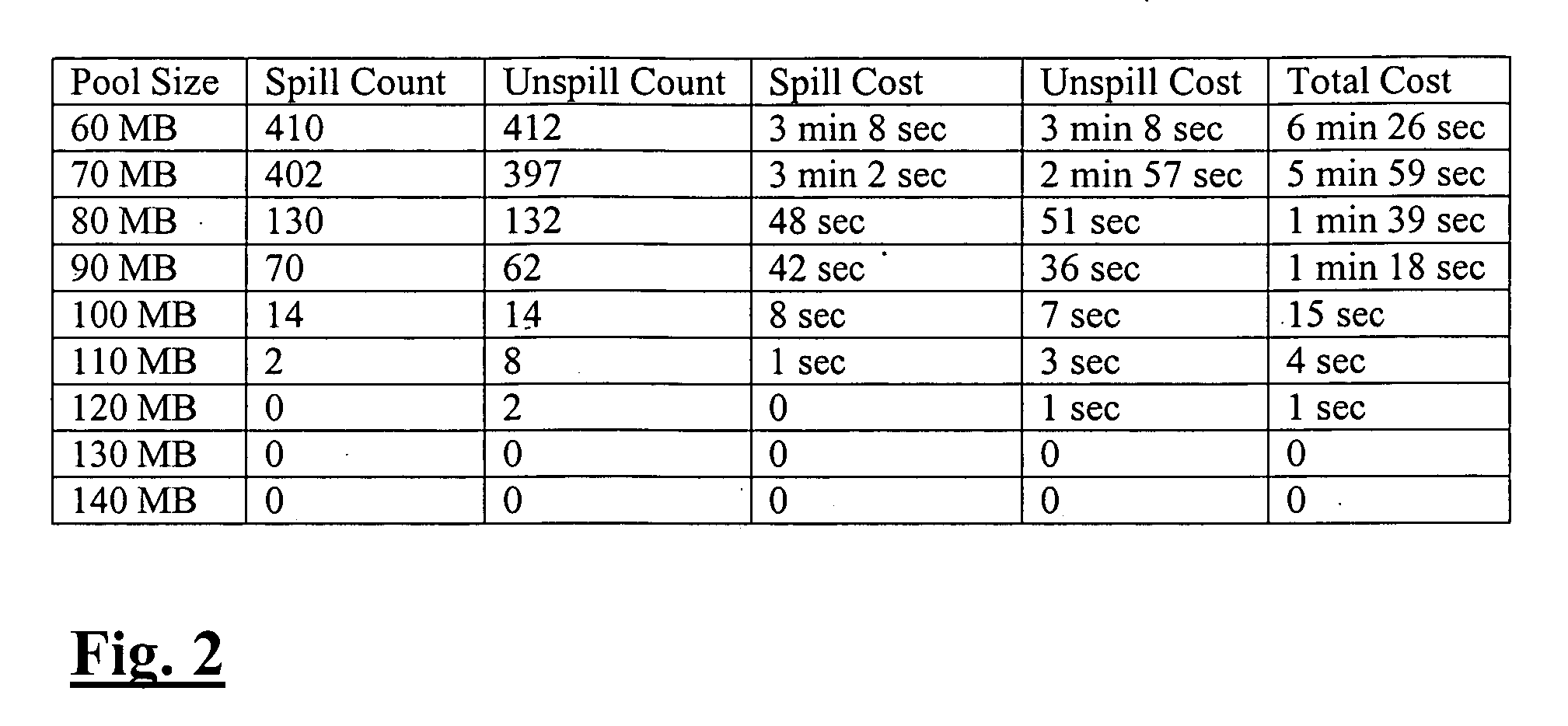

Automatic resizing of shared memory for messaging

ActiveUS20070118600A1Error detection/correctionMultiprogramming arrangementsTerm memoryMessage passing

Methods and systems for estimating the hypothetical performance of a messaging application are disclosed. A number of pool sizes may be identified, each pool size being a potential size for the memory allocated to the messaging application. An online simulation is running during the execution of the messaging application. The online simulation tracks the requests made by the messaging application and predicts the operation of the messaging application for each pool size. The data predicted includes the number of spill and unspill operations that read and write to disk. In addition, a method for calculating the age of the oldest message in a memory pool is disclosed. The age is used in determining the number of spill and unspill operations.

Owner:ORACLE INT CORP

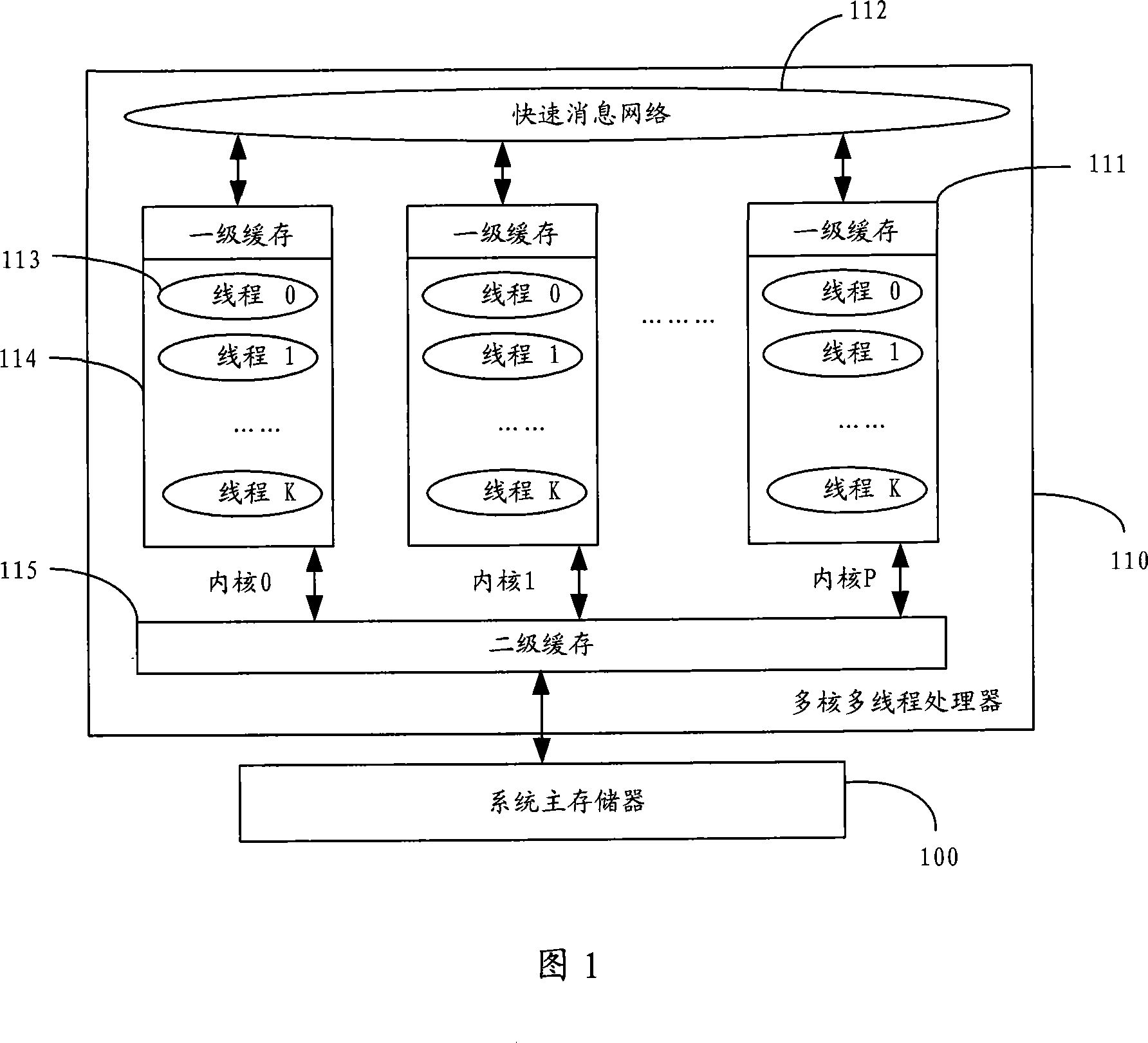

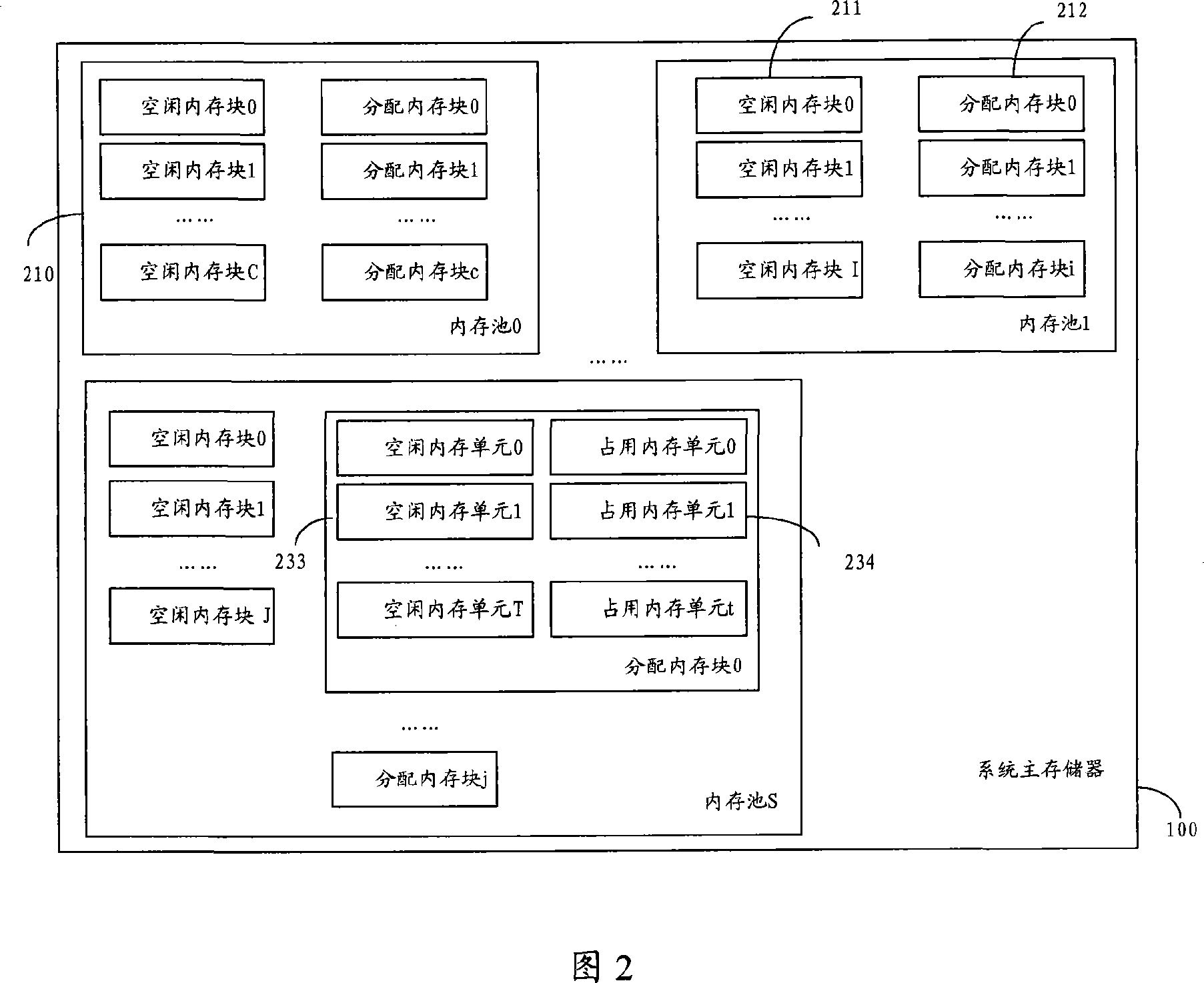

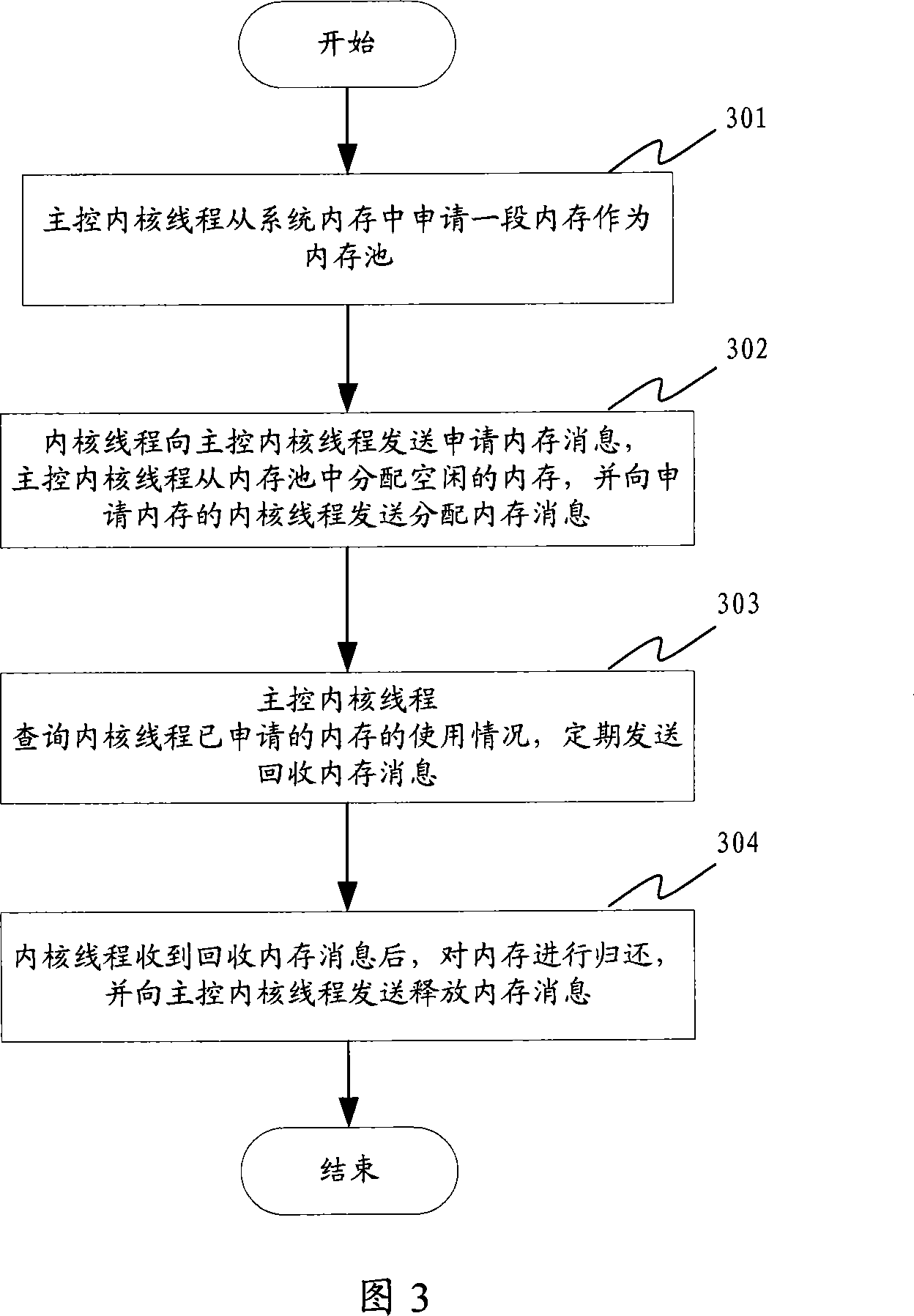

Multithreading processor dynamic EMS memory management system and method

ActiveCN101055533ASolve the problem of static memory allocationImprove efficiencyResource allocationControl memoryDynamic memory management

The invention disclosed a multi-thread processor dynamic memory management system and method. The system includes a message treament module, a main control memory management module and a memory monitoring module. The method includes the steps: a main kernel thread applies to one-stage memory as a memory pool from the memory of system; all kernel threads tranmit the message for applying to the memory for main kernal thread, and main kernel thread distributes free memory in the memory pool to the kernel thread for applying to the memory after receiving the message for applying to the memory, and transmits the message for distributing the memory for the kernel thread for applying to the memory; main control kernel thread inquires the use of memory which has been applied by the kernel thread, and transmits the message of callbacking memory; the kernel thread returns the memory after receiving the medssage of callbacking memory, and transmits the message of releasing the memory for main control thread. The use of memory in the system ia able to be improved using the inventive multi-thread processor dynamic memory management system and method.

Owner:ZTE CORP

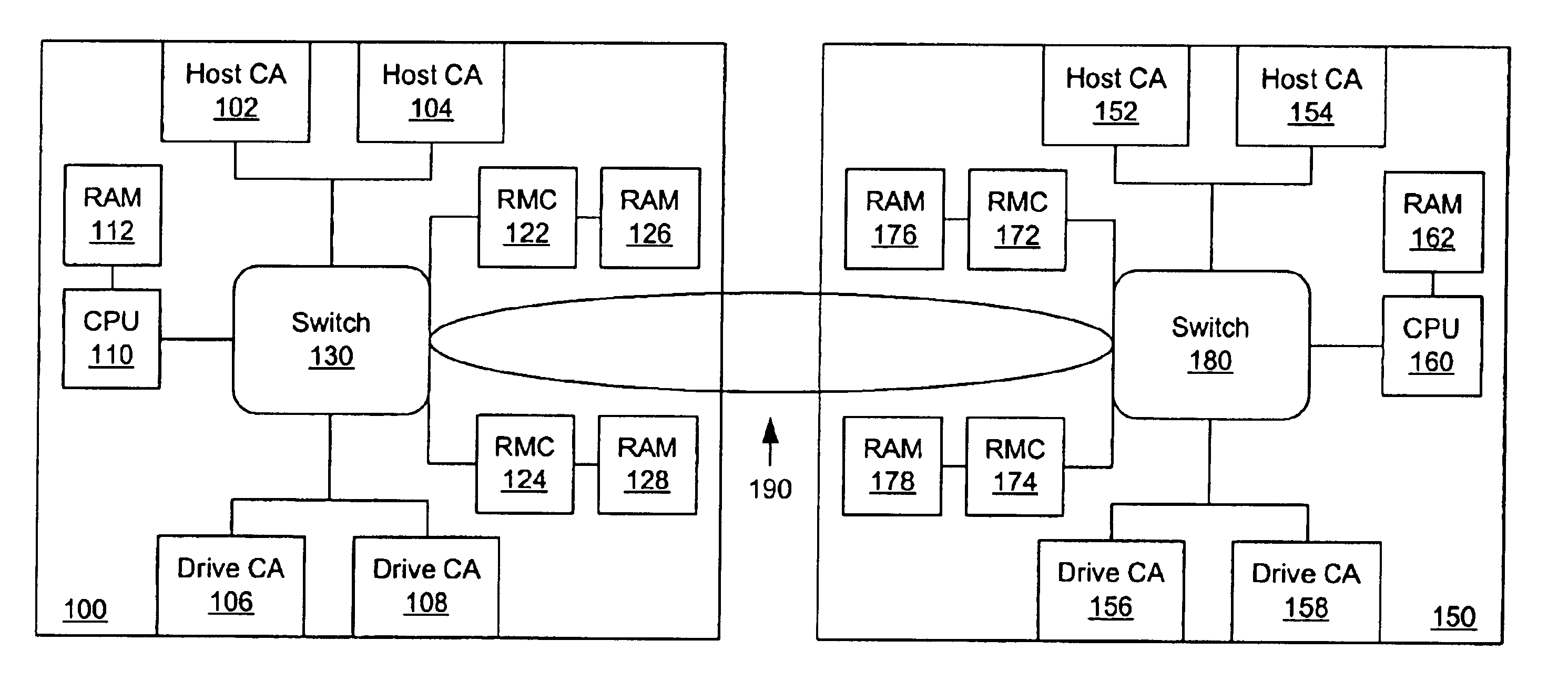

Method and apparatus to manage independent memory systems as a shared volume

InactiveUS6842829B1Memory architecture accessing/allocationMemory adressing/allocation/relocationLocking mechanismLogical block addressing

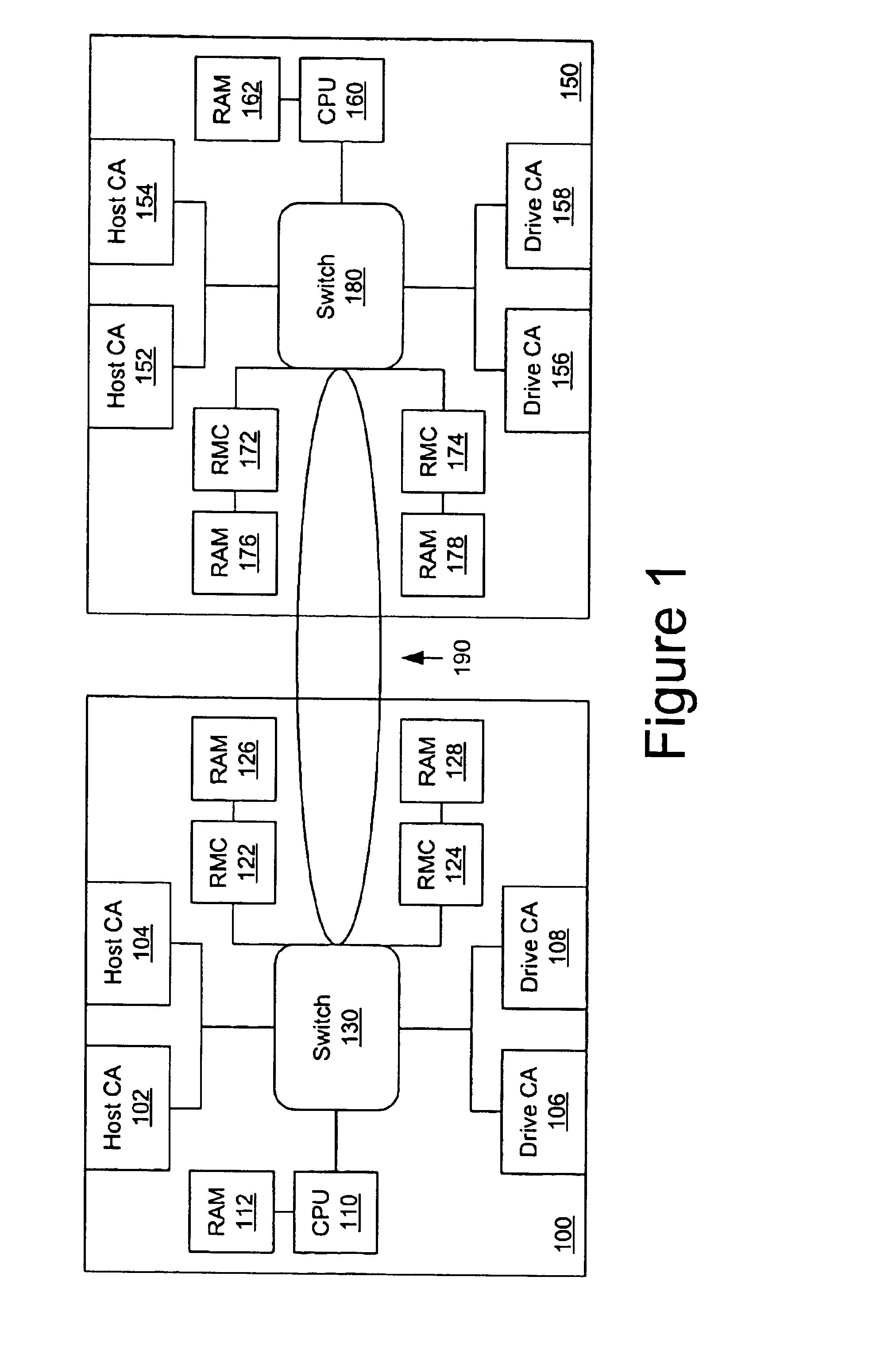

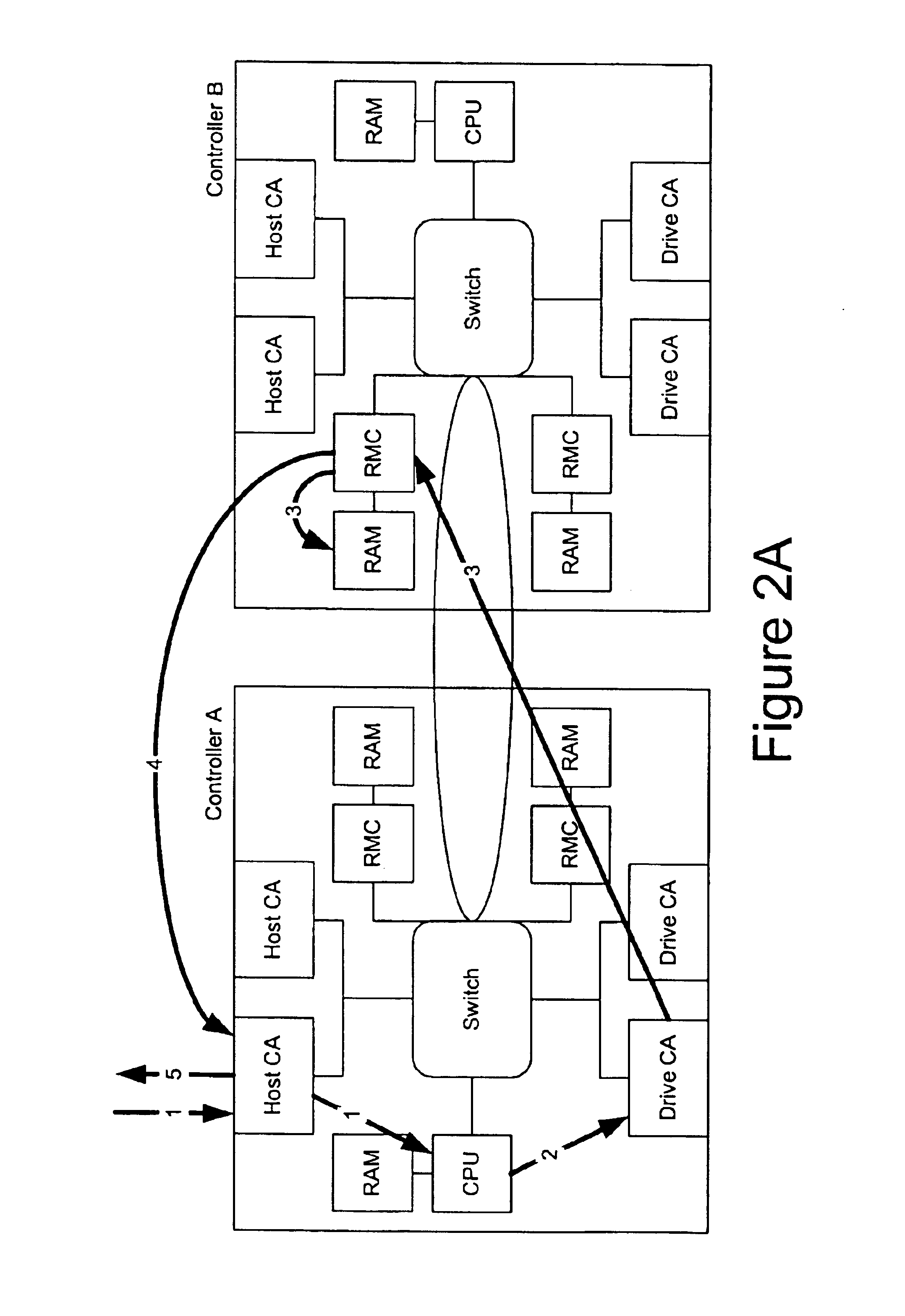

A switched architecture is provided to allow controllers to manage physically independent memory systems as a single, large memory system. The switched architecture includes a path between switches of controllers for inter-controller access to memory systems and input / output interfaces in a redundant controller environment. Controller memory systems are physically independent of each other; however, they are logically managed as a single, large memory pool. Cache coherency is concurrently maintained by both controllers through a shared locking mechanism. Volume Logical Block Address extents or individual cache blocks can be locked for either shared or exclusive access by either controller. There is no strict ownership model to determine data access. Access is managed by the controller in the pair that receives the access request. When a controller is removed or fails, a surviving controller may take appropriate action to invalidate all cache data that physically resides in the failed or missing controller's memory systems. Cached write data may be mirrored between redundant controllers to prevent a single point of failure with respect to unwritten cached write data.

Owner:AVAGO TECH INT SALES PTE LTD

Method and apparatus for performing application virtualization

InactiveUS20050005018A1Resource allocationMultiple digital computer combinationsVirtualizationResource utilization

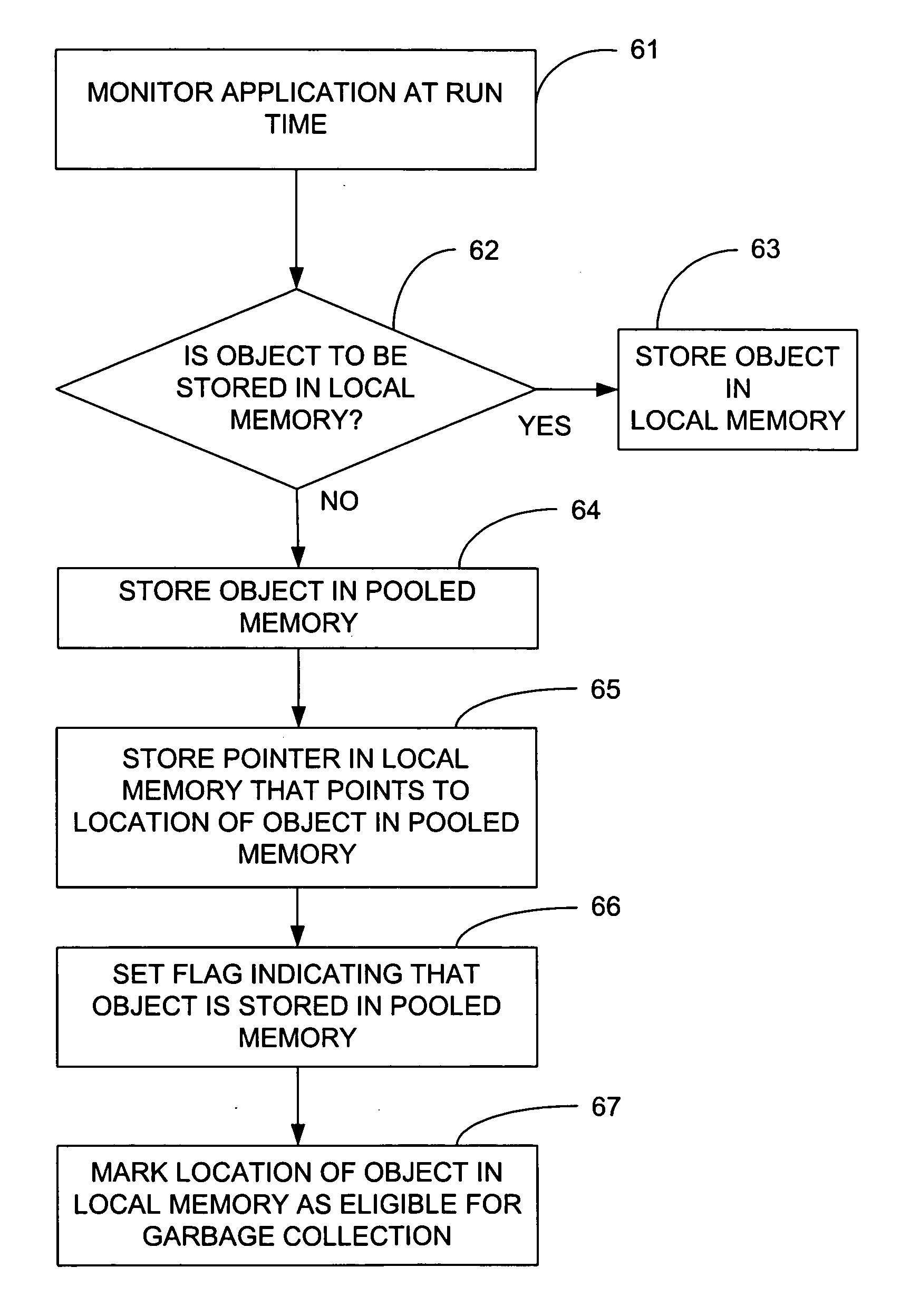

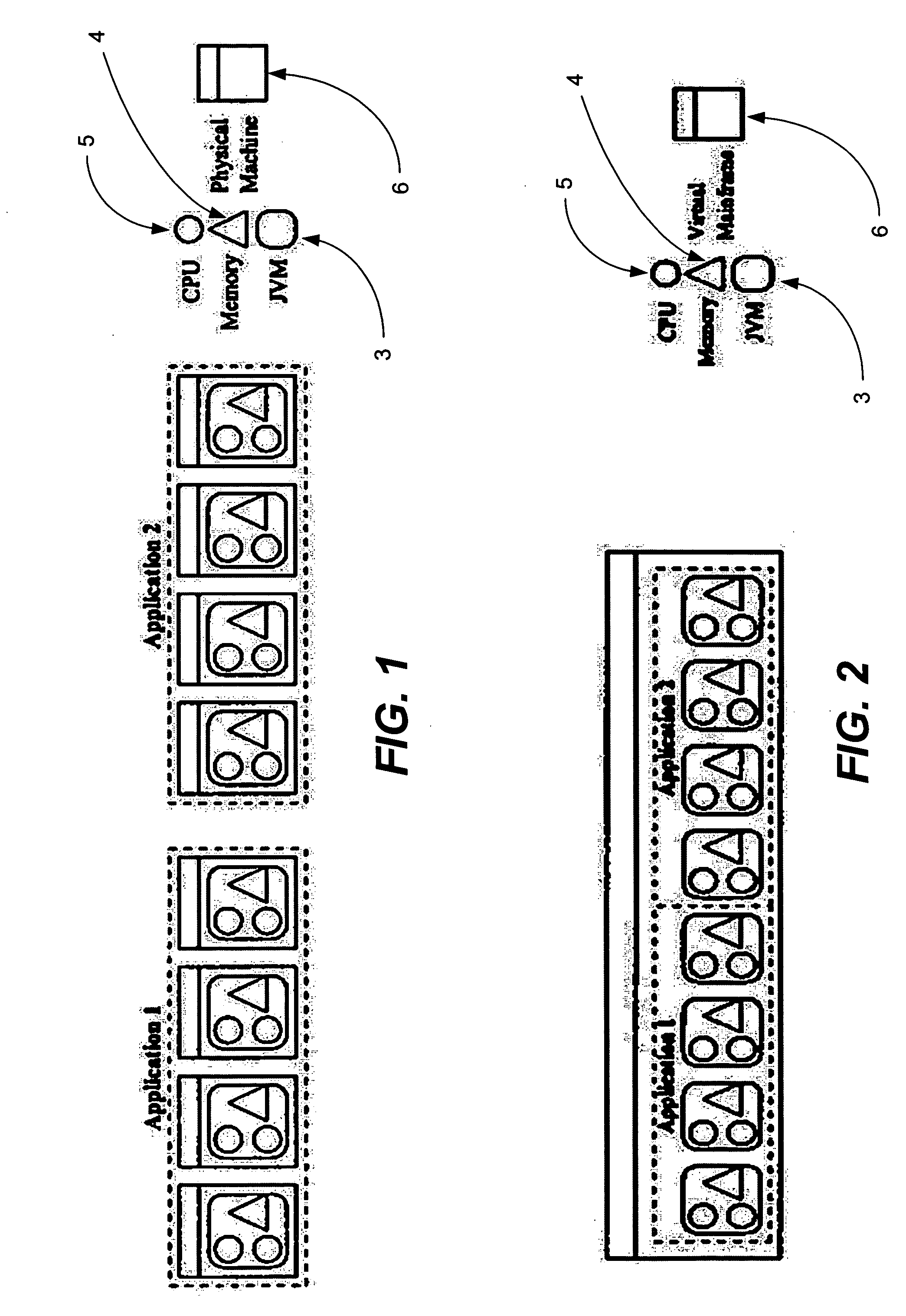

The present invention provides an application virtualization framework that allows dynamic allocation and de-allocation of compute resources, such as memory. Runtime applications are pooled across multiple application servers and compute resources are allocated and de-allocated in such a way that resource utilization is optimized. In addition, objects are either stored in local memory or in a non-local memory pool depending on certain criteria observed at runtime. Decision logic uses decision rules to determine whether an object is to be stored locally or in a memory pool. Resource management logic monitors the memory pool to determine which locations are available for storing objects and how much memory is available overall in the memory pool for storing objects.

Owner:CHUTNEY TECH

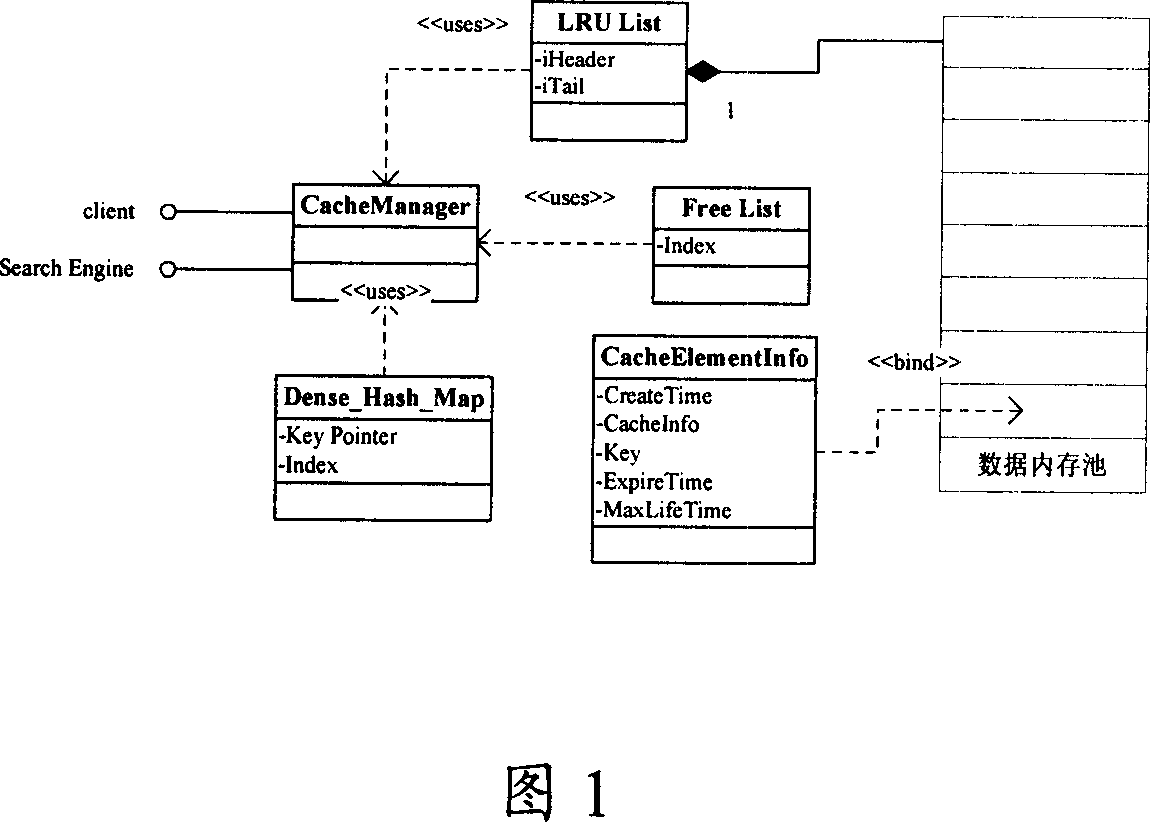

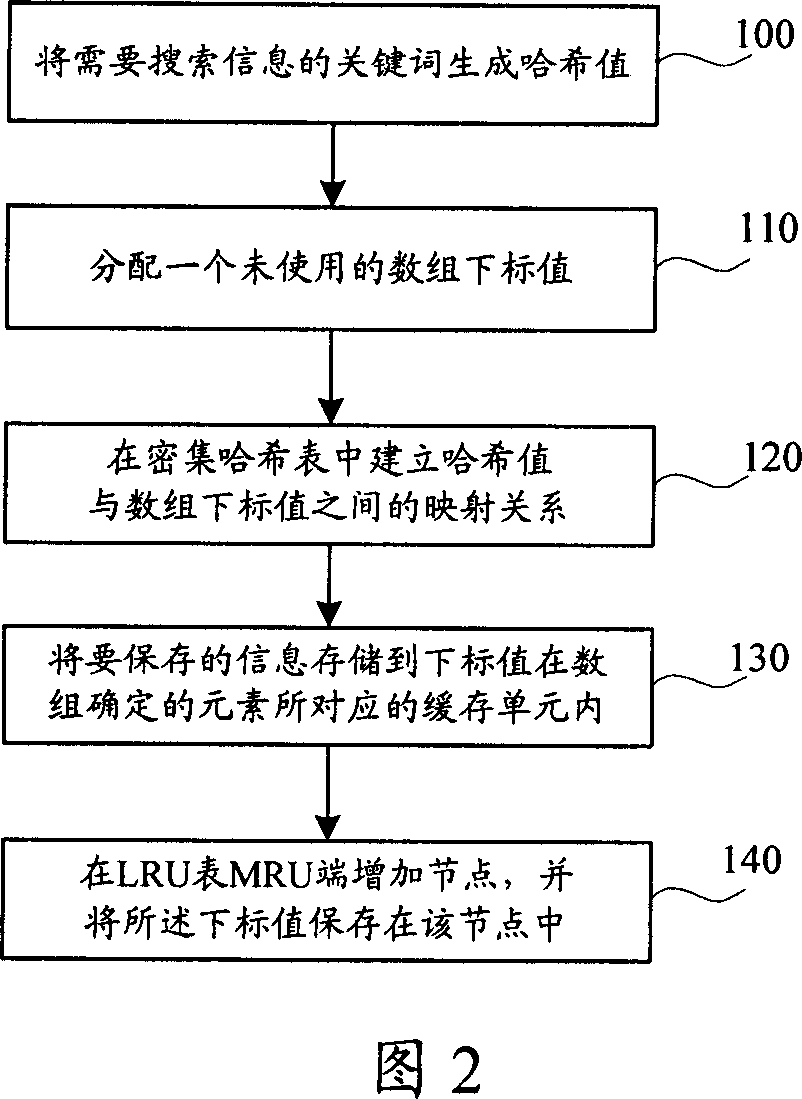

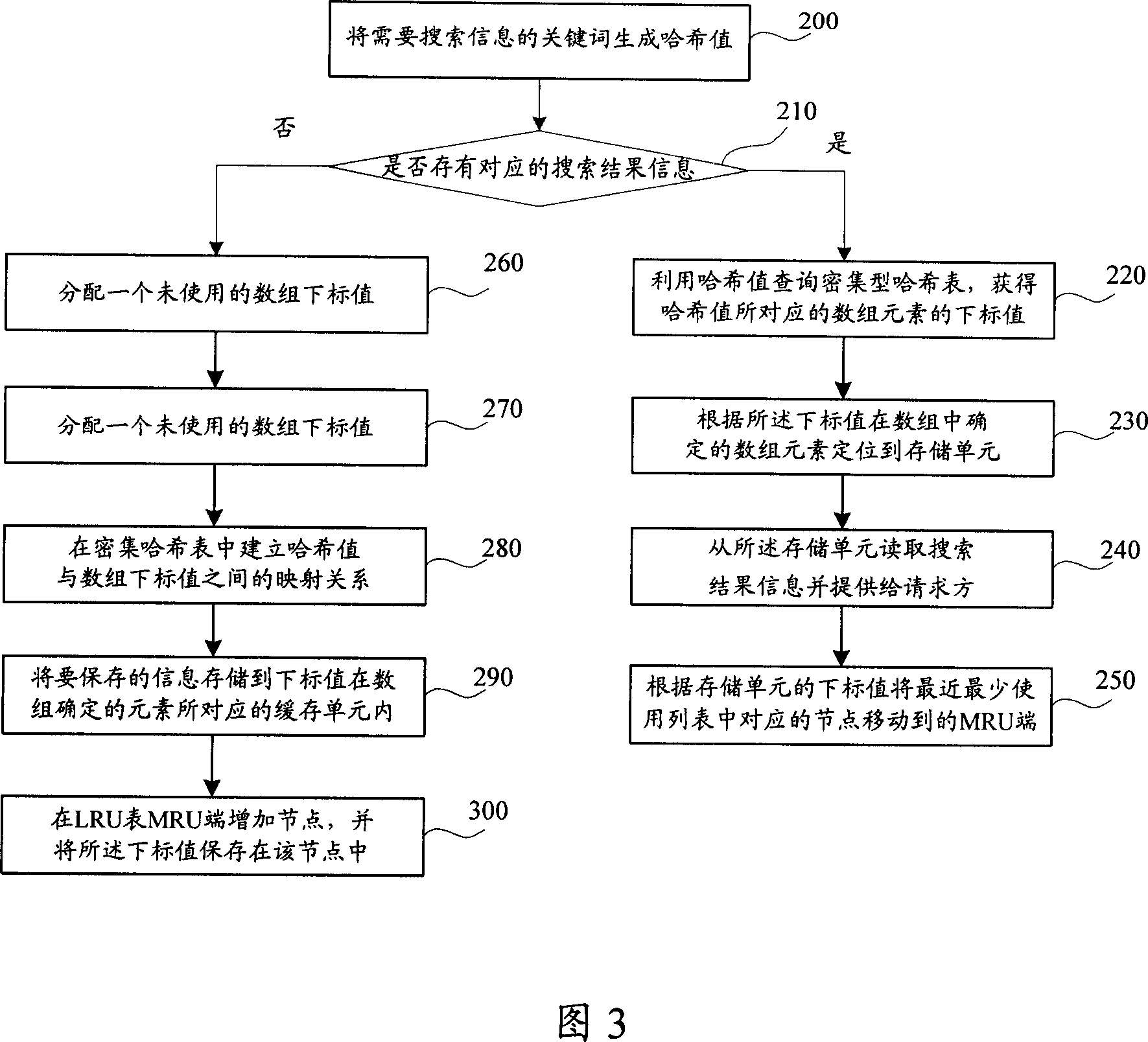

Method and system for improving information search speed

ActiveCN1940922AImprove concurrent processing performanceRapid positioningMemory adressing/allocation/relocationSpecial data processing applicationsInternal memoryArray data structure

A method for raising search speed of information includes generating Haxi value by key word in searching information, judging whether corresponding search result is buffer-stored or not by utilizing Haxi value to check Haxi table, obtaining array element index used for identifying storage unit in array internal memory cell from Haxi table and fetching search result information identified storage unit of said cell then feeding it back to request party if it is or otherwise using search engine to obtain search result through key word and setting up mapping relation of Haxi value to index value then storing said result information in identified storage unit of said cell and feeding it back to request party.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Memory management method and system

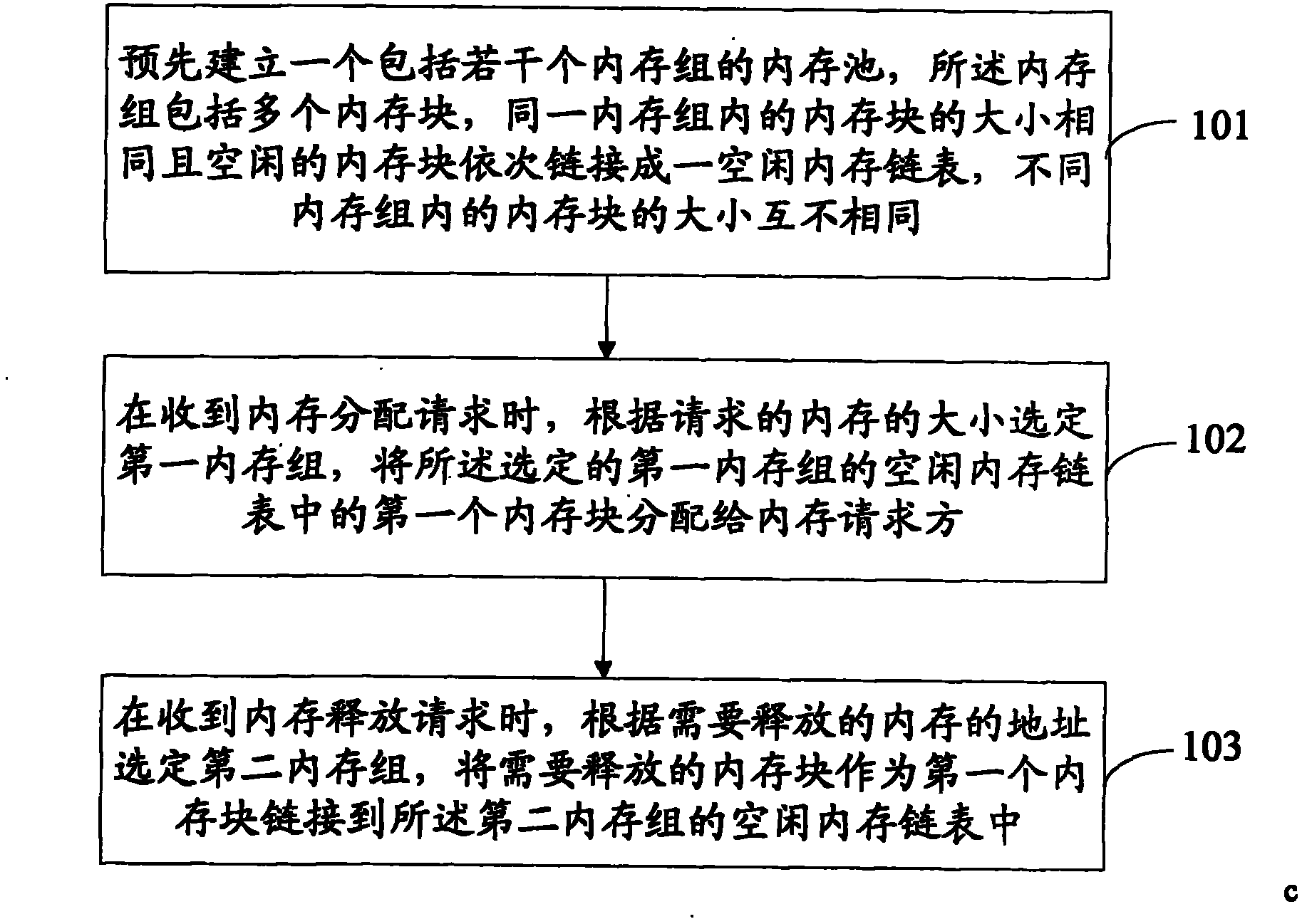

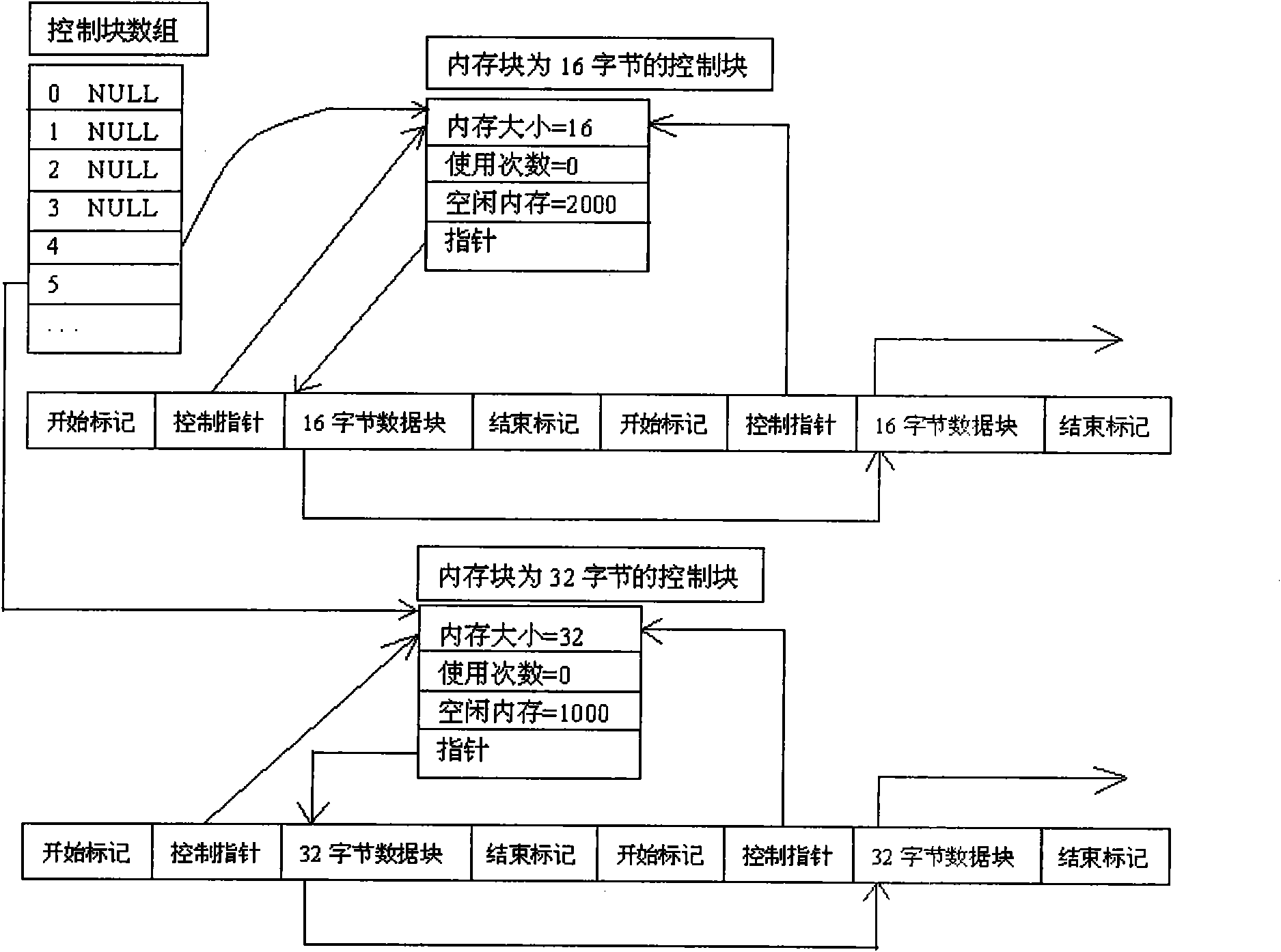

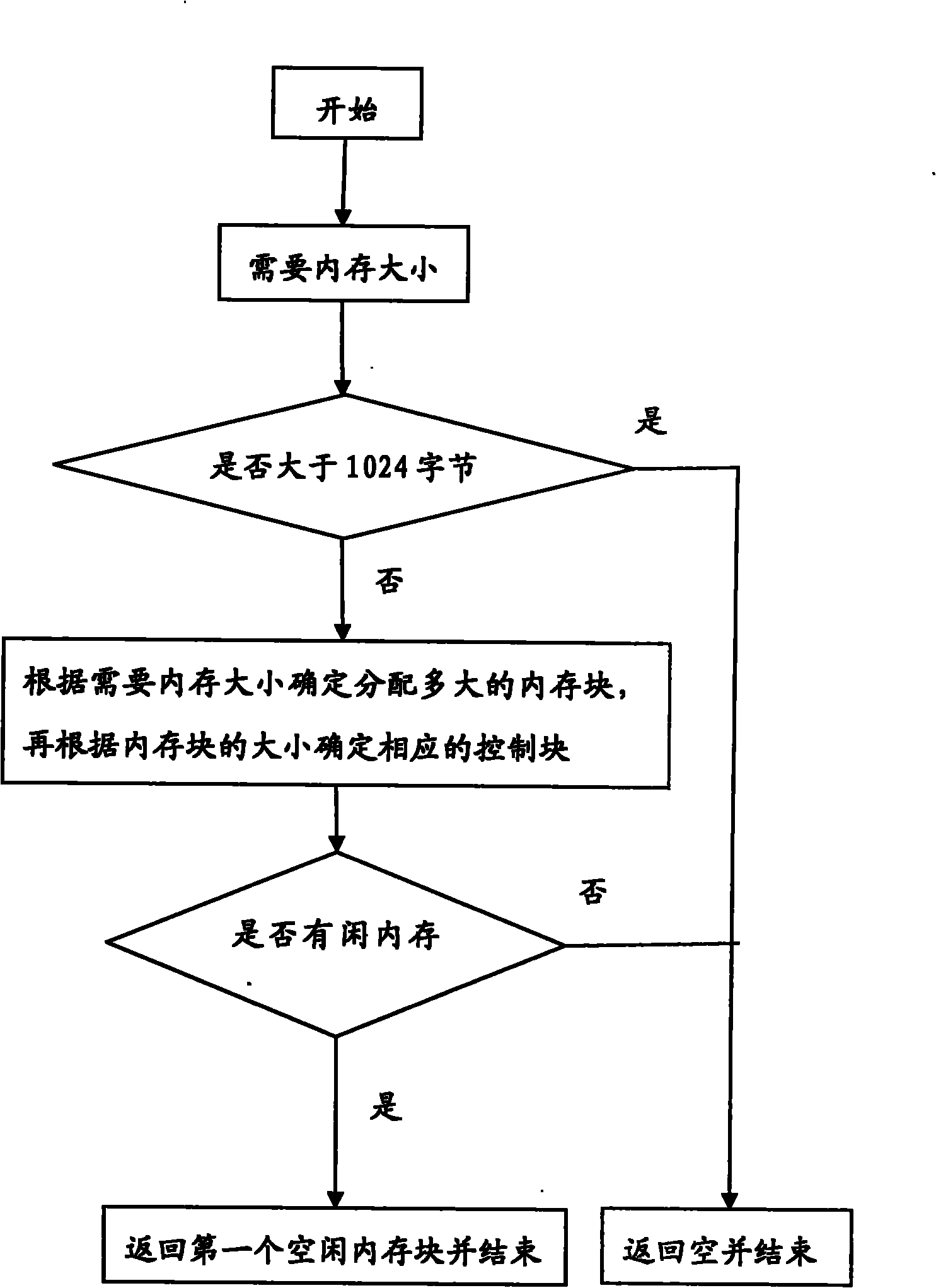

InactiveCN102063385AImprove distribution efficiencyAvoid it happening againMemory adressing/allocation/relocationParallel computingMemory management unit

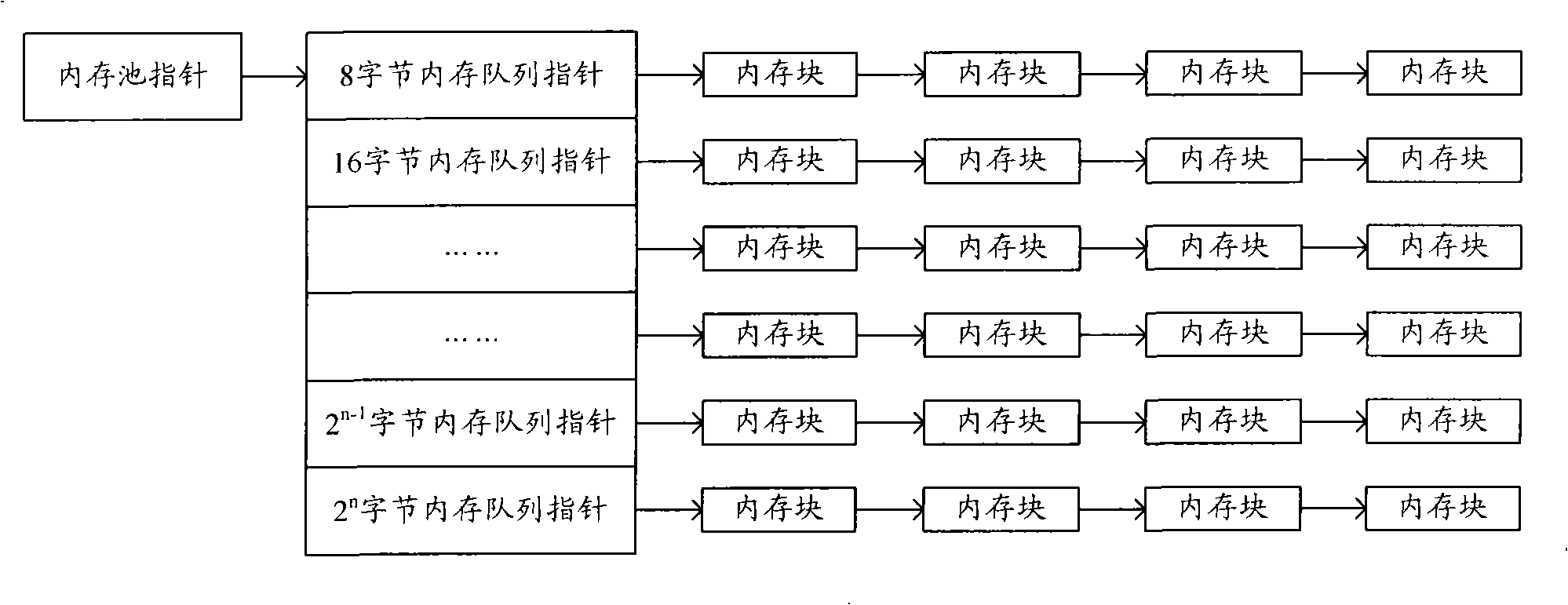

The invention discloses a memory management method. The method comprises the following steps of: creating a memory pool comprising a plurality of memory groups, wherein the memory blocks in the same memory group are the same in size and idle memory blocks are sequentially linked into an idle memory linked list, and memory blocks in different memory groups are different in size; selecting a first memory group according to the size of a requested memory when a memory allocation request is received, and allocating a first memory block in the idle memory linked list of the selected first memory group to a memory requester; and selecting a second memory group according to the address of a memory required to be released when a memory release request is received, and linking the memory block which is required to be released and is taken as the first memory block into the idle memory linked list of the second memory group. The embodiment of the invention also provides a corresponding memory management system. In the method disclosed by the invention, the memory is allocated or released in the corresponding memory group, thus no memory fragment is produced and the memory allocation efficiency can be improved.

Owner:SHENZHEN GOLDEN HIGHWAY TECH CO LTD

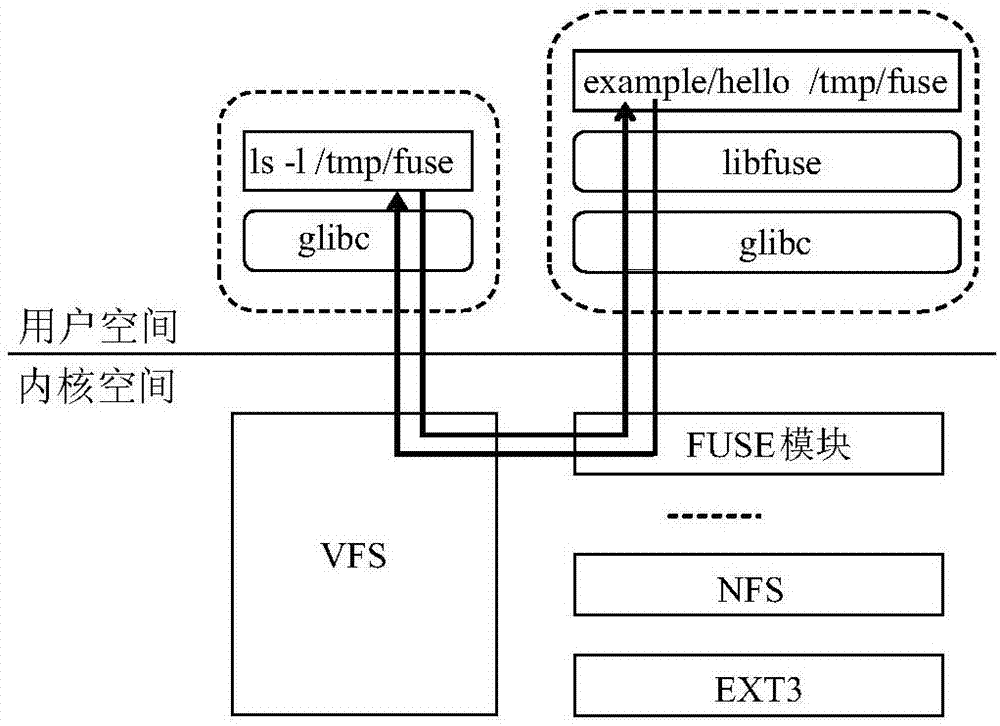

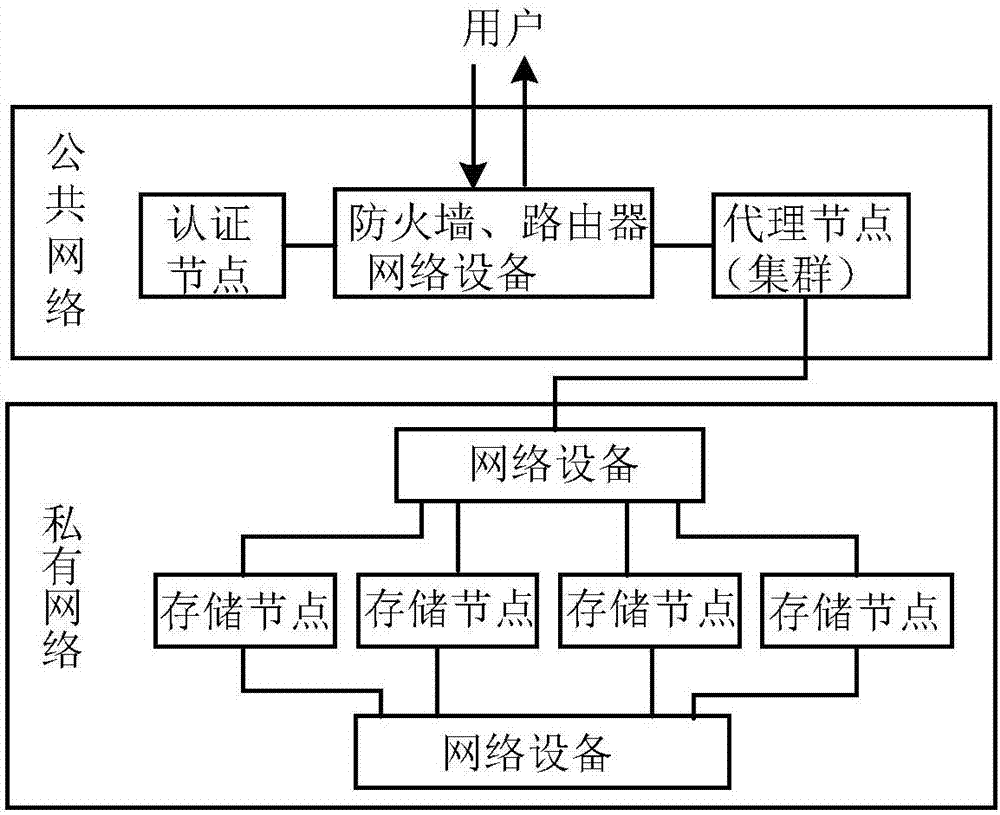

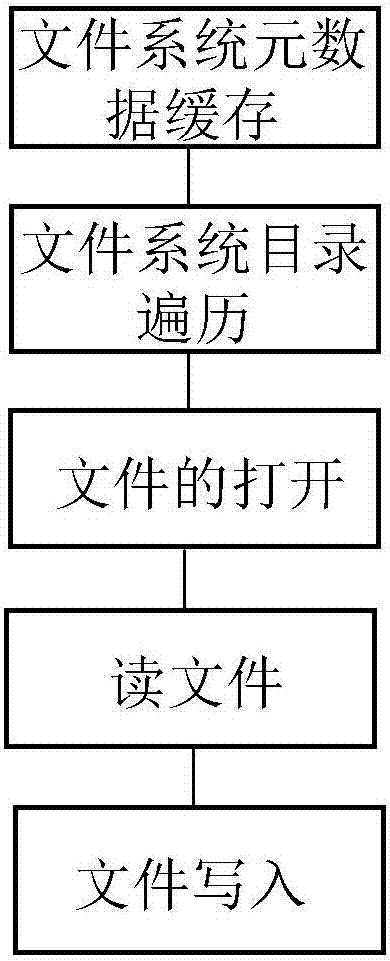

Method for realizing local file system through object storage system

InactiveCN107045530AReduce the number of interactionsImprove the performance of accessing swift storage systemSpecial data processing applicationsApplication serverFile system

The invention discloses a method for realizing a local file system through an object storage system. A metadata cache algorithm of the file system and a memory description structure are adopted, so that the interactive frequency of an application and a background of a swift storage system is reduced and the performance of accessing the swift storage system by the application is improved; a policy of pre-allocating a memory pool and recovering idle memory blocks in batches in a delayed manner is adopted, so that the efficiency of traversing a directory comprising a large amount of subdirectories and files is improved; a memory description structure of an open file handle is adopted, so that the application can efficiently perform file reading-writing operation; a pre-reading policy is adopted, so that the frequency of network interaction between an application server and a swift storage back end is effectively reduced and the reading performance of the file system is improved; and a zero copying and block writing policy is adopted, so that no any data copying and caching exist in a file writing process, system call during each write is a complete block writing operation, and the file writing efficiency is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

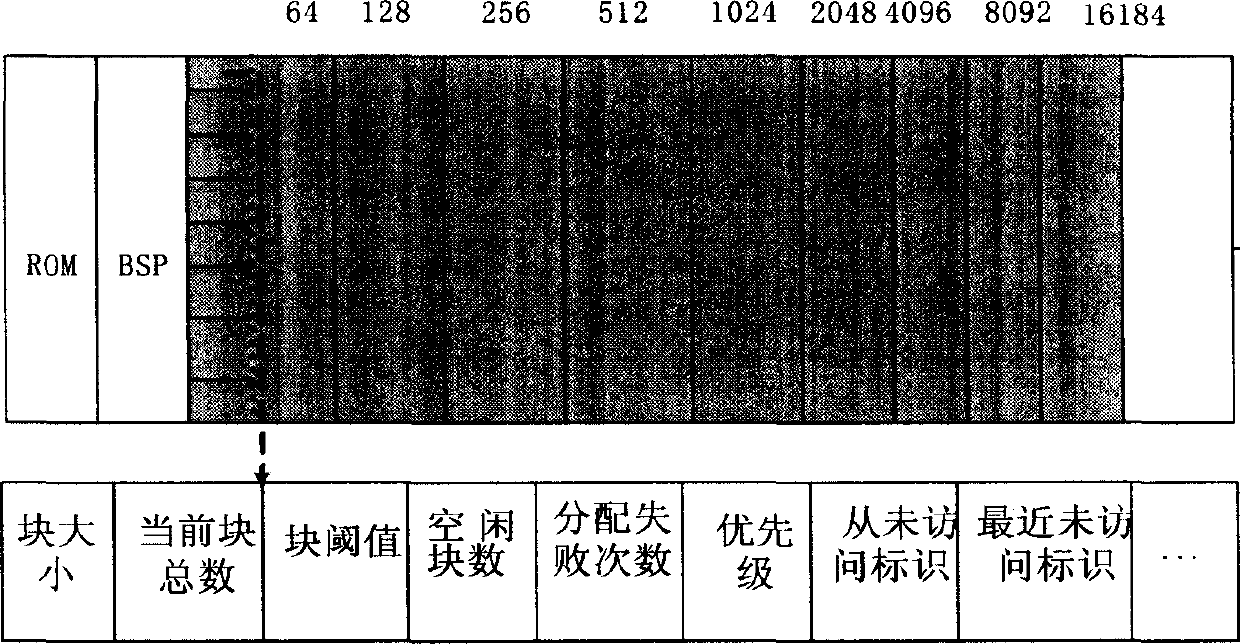

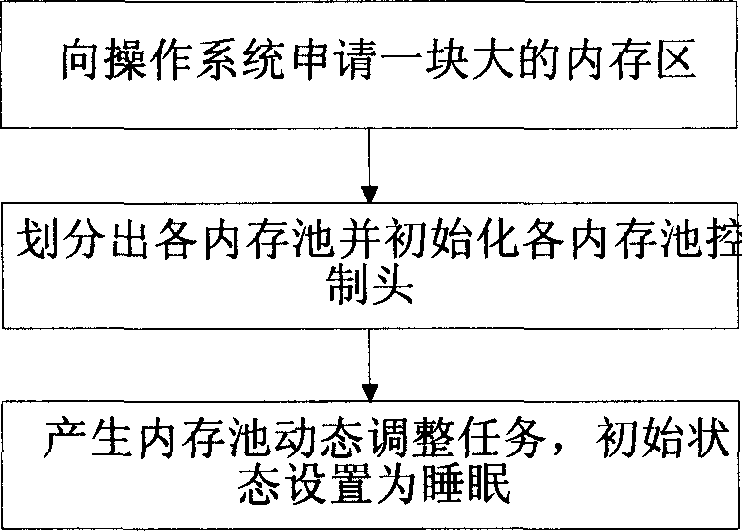

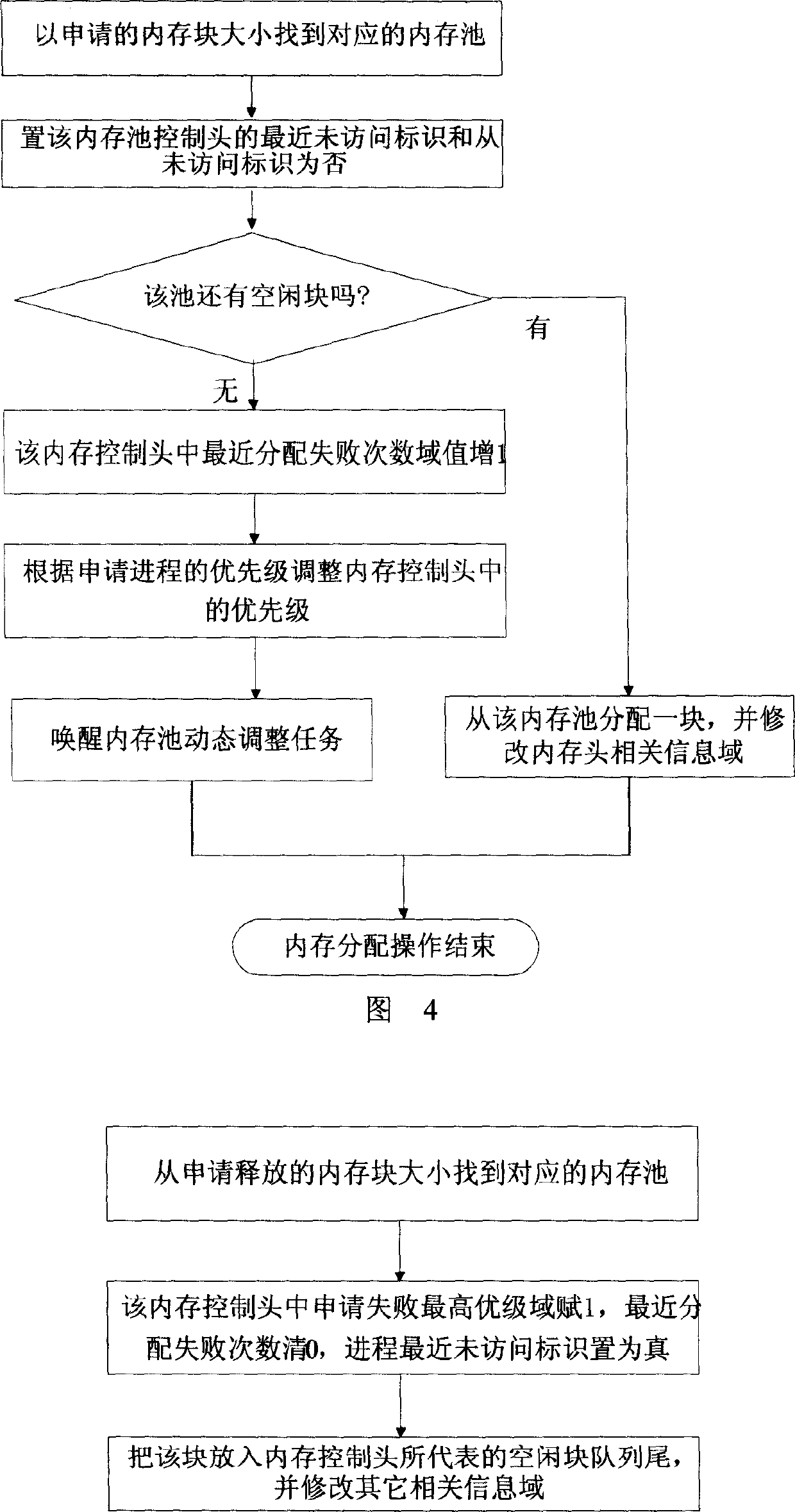

Method for internal memory allocation in the embedded real-time operation system

InactiveCN1722106AGuaranteed real-timeImprove reliabilityMultiprogramming arrangementsMemory systemsInternal memoryOperational system

The invention relates to a method for allocating internal memory of real time operating system, which comprises the following steps: applying a big internal memory area from operating system, dividing the big internal memory area into internal memory polls with different sizes and the same internal memory poll comprises internal memory block with the same size, initialing each internal memory poll controlling bar. When it needs internal memory block, it finds the corresponding internal memory poll according to the size of internal memory block, and quotes weather the internal memory poll has free block, if it has free block, it fetches a block from the head of the team and modifies the referent information of internal memory poll controlling bar, and then the allocation finishes; if not, it dose dynamic adjustment to internal memory; when it releases the internal memory, it finds the corresponding internal memory poll according to the size of internal memory and returns it into the tail of the team and rewrites the referent information of internal memory poll controlling bar.

Owner:GLOBAL INNOVATION AGGREGATORS LLC

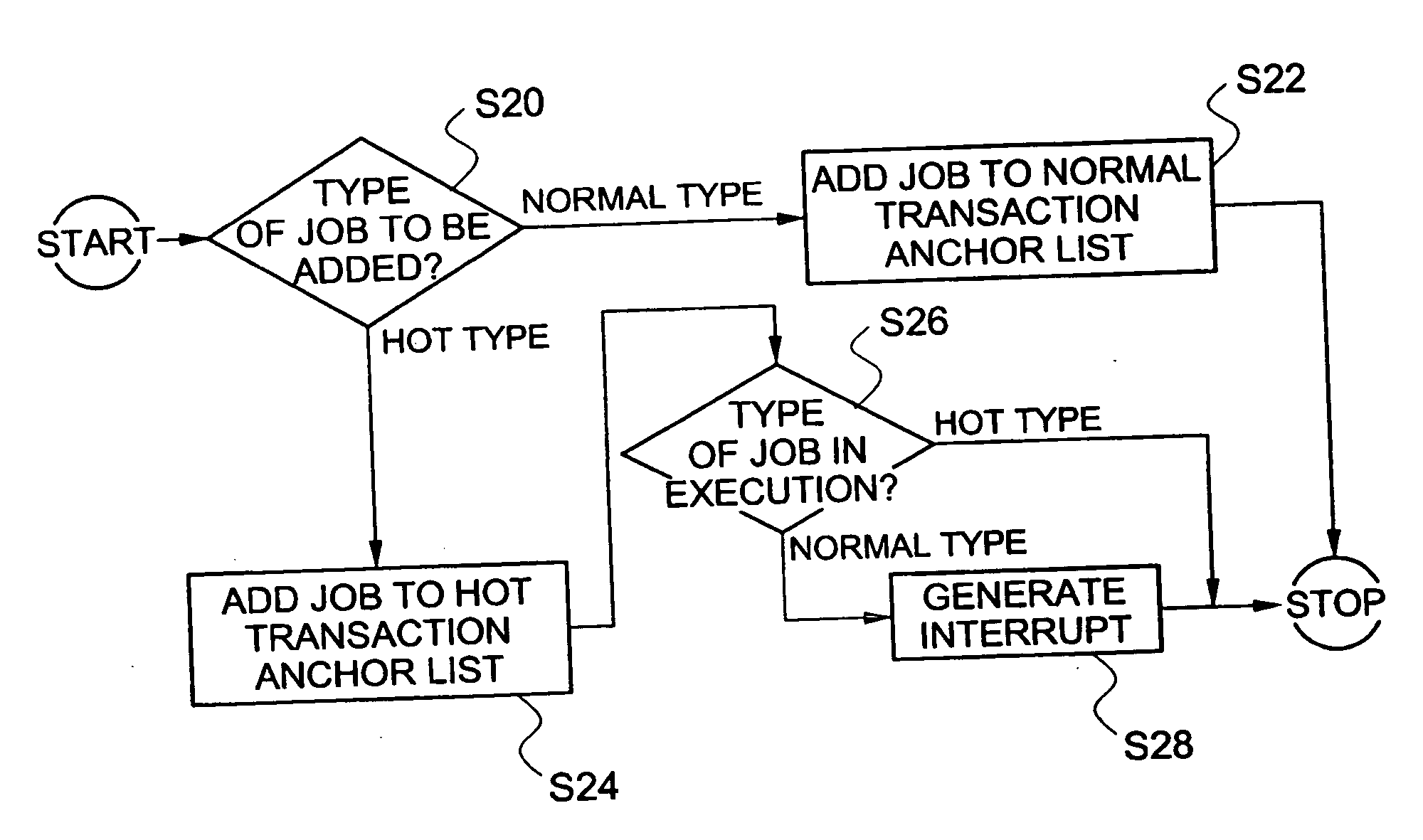

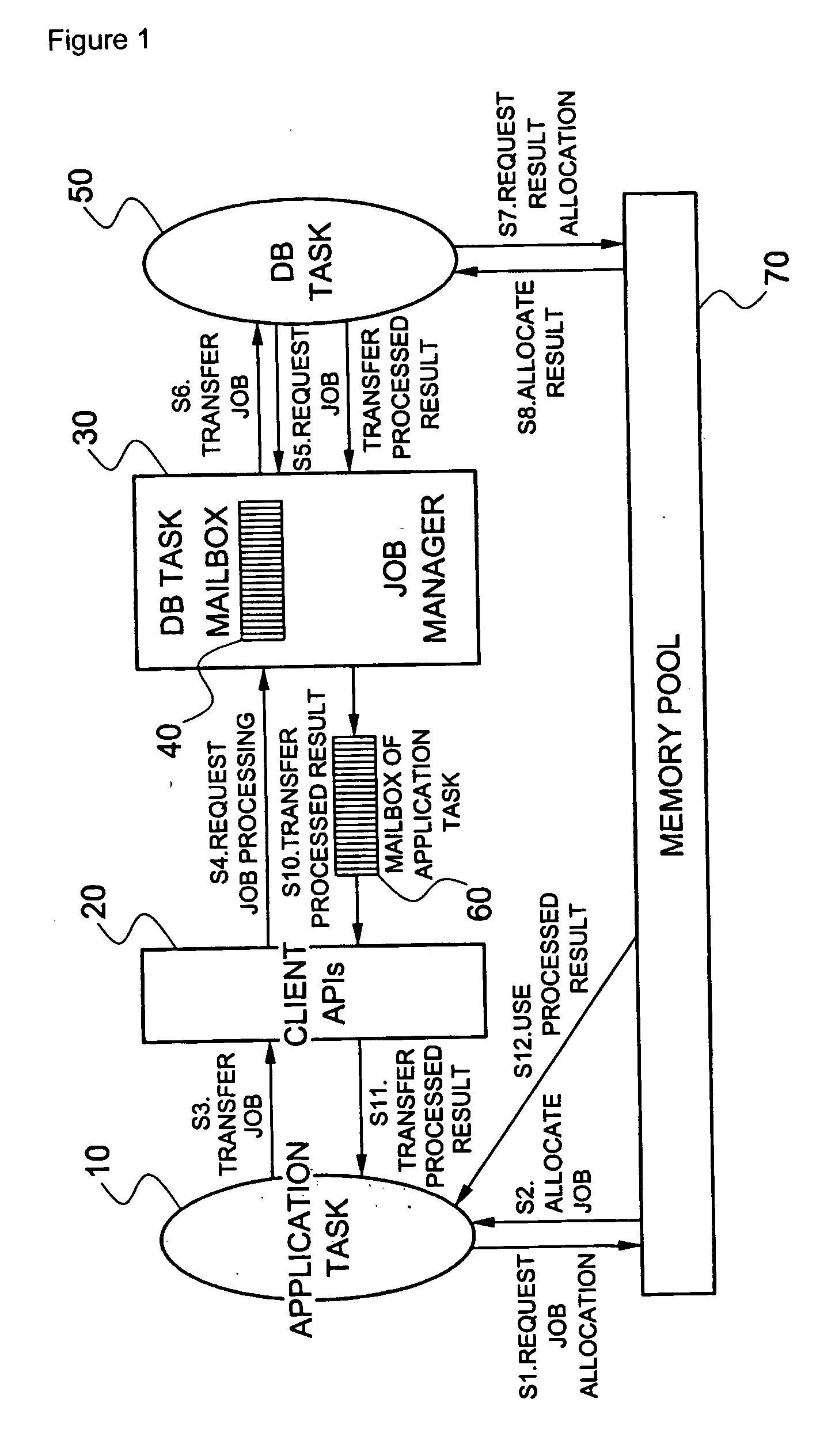

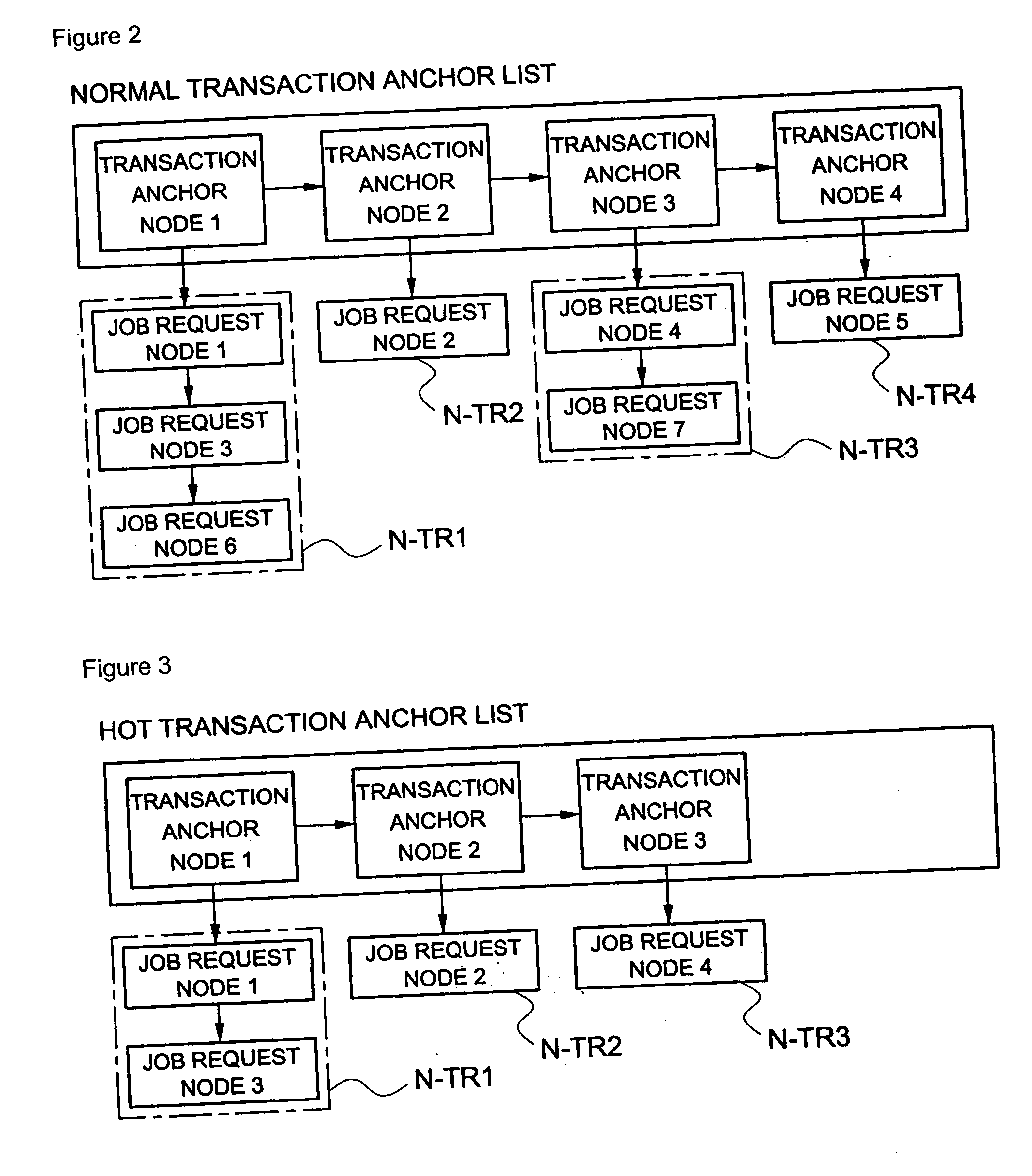

Method of scheduling jobs using database management system for real-time processing

InactiveUS20060206894A1Easy to handleSuitable for processingProgram control using stored programsMultiprogramming arrangementsOperation schedulingPublic resource

A method of scheduling jobs in real time using a database management system is provided. An application task classifies jobs as any one transaction type of a hot type and a normal type. A processing area in a memory pool that is a common resource is allocated to the application task, and the job is transferred to a database job manager through a client application program interface (API). The job manager loads a request node of the job in a list of a transaction type corresponding to the job, of a mailbox of the DB task, which classifies job request nodes as the hot type and the normal type with respect to the type of transaction and manages the nodes, so that the job request node can be scheduled in units of transactions. The job manager transfers the job request nodes loaded in the mailbox for the DB task, one by one to the DB task so that the job request nodes can be processed in units of transactions in a manner in which a job corresponding to a hot-type transaction is processed with a priority over a job corresponding to a normal-type transaction, and between jobs of an identical truncation type, jobs are processed in order of job requesting time. The DB task loads through the job manager, the processing result of the job in a mailbox of the application task which requested the processing of the job, so that the corresponding application task can use the processing result in the future.

Owner:FUSIONSOFT

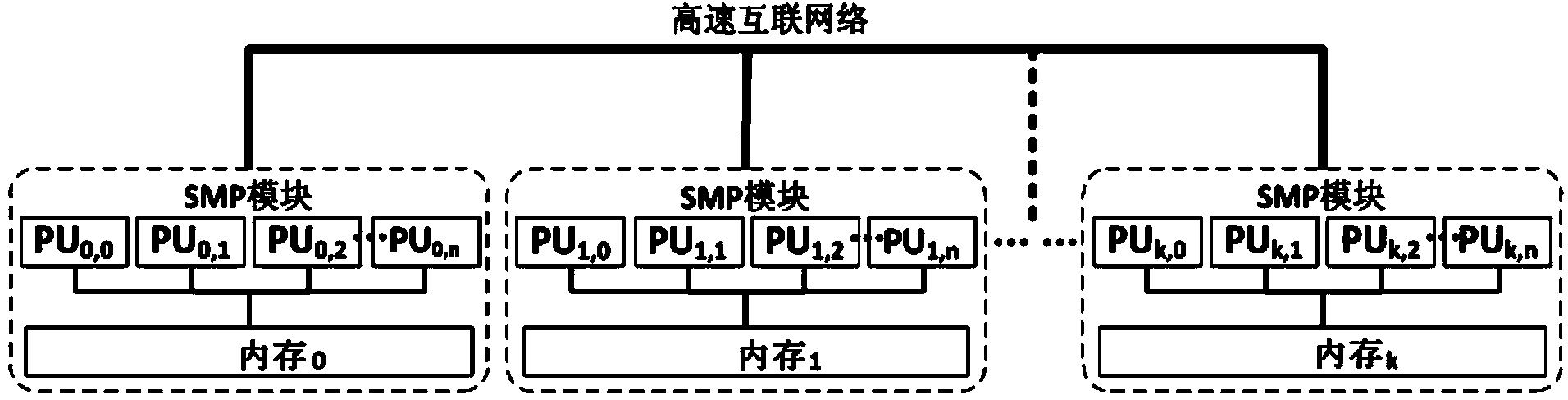

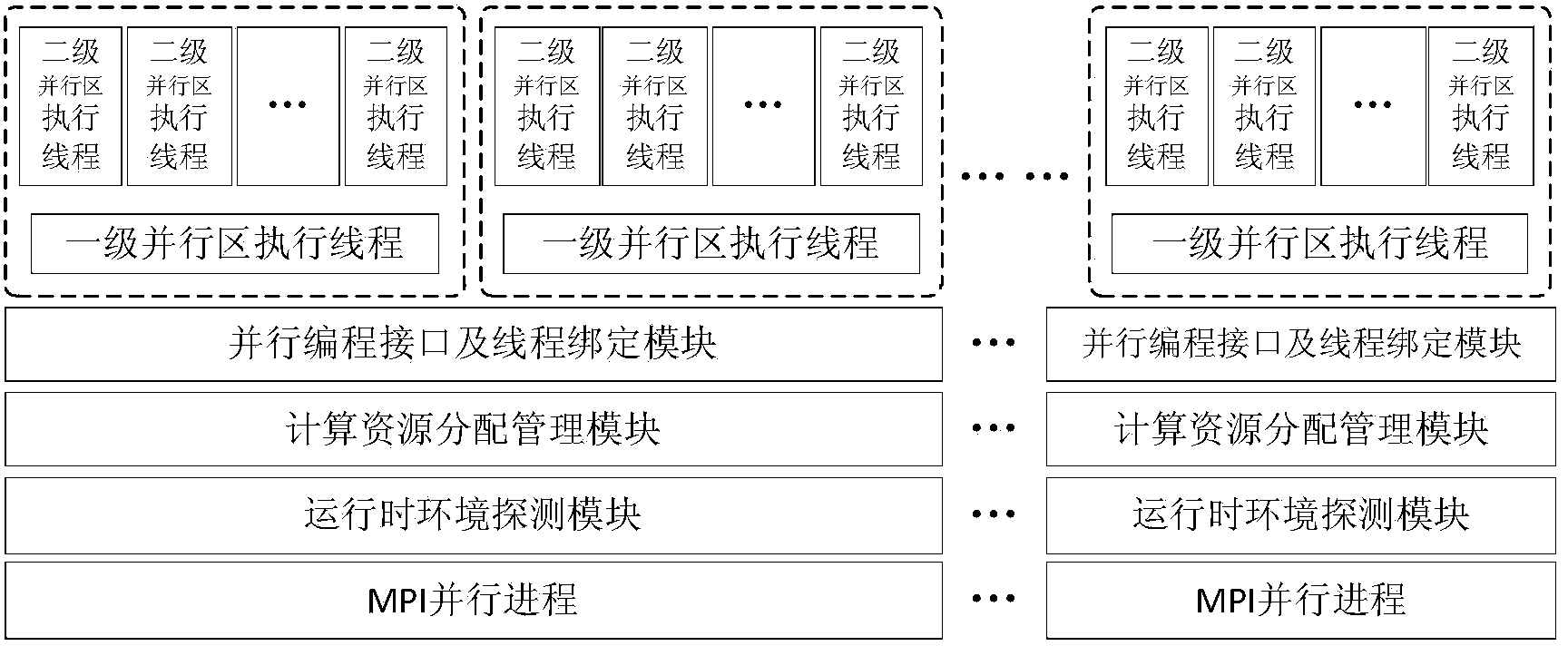

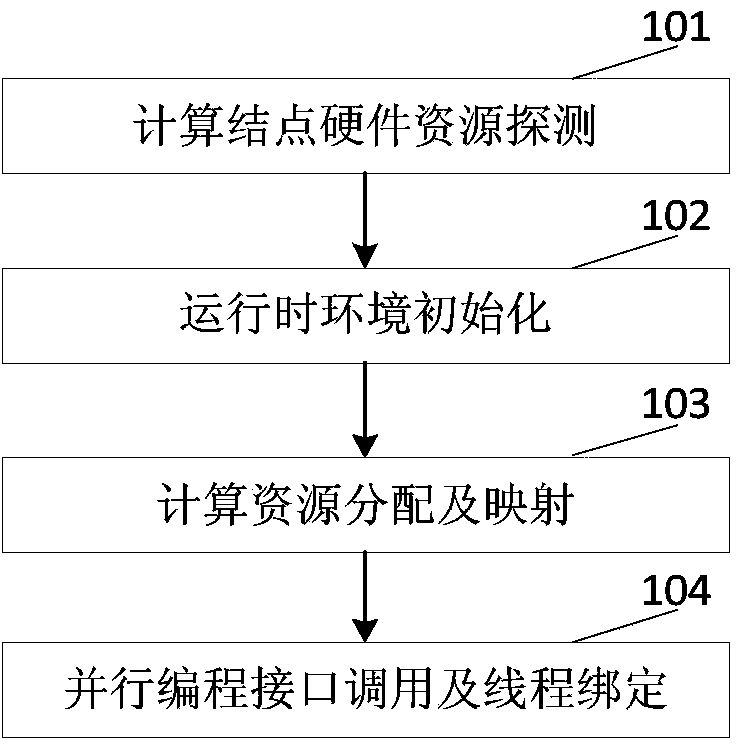

Thread for high-performance computer NUMA perception and memory resource optimizing method and system

ActiveCN104375899ASolve the problem of excessive granularity of memory managementSolve fine-grained memory access requirementsResource allocationComputer architecturePerformance computing

The invention discloses a thread for high-performance computer NUMA perception and a memory resource optimizing method and system. The system comprises a runtime environment detection module used for detecting hardware resources and the number of parallel processes of a calculation node, a calculation resource distribution and management module used for distributing calculation resources for parallel processes and building the mapping between the parallel processes and the thread and a processor core and physical memory, a parallel programming interface, and a thread binding module which is used for providing the parallel programming interface, obtaining a binding position mask of the thread according to mapping relations and binding the executing thread to a corresponding CPU core. The invention further discloses a multi-thread memory manager for NUMA perception and a multi-thread memory management method of the multi-thread memory manager. The manager comprises a DSM memory management module and an SMP module memory pool which manage SMP modules which the MPI processes belong to and memory distributing and releasing in the single SMP module respectively, the system calling frequency of the memory operation can be reduced, the memory management performance is improved, remote site memory access behaviors of application programs are reduced, and the performance of the application programs is improved.

Owner:INST OF APPLIED PHYSICS & COMPUTATIONAL MATHEMATICS

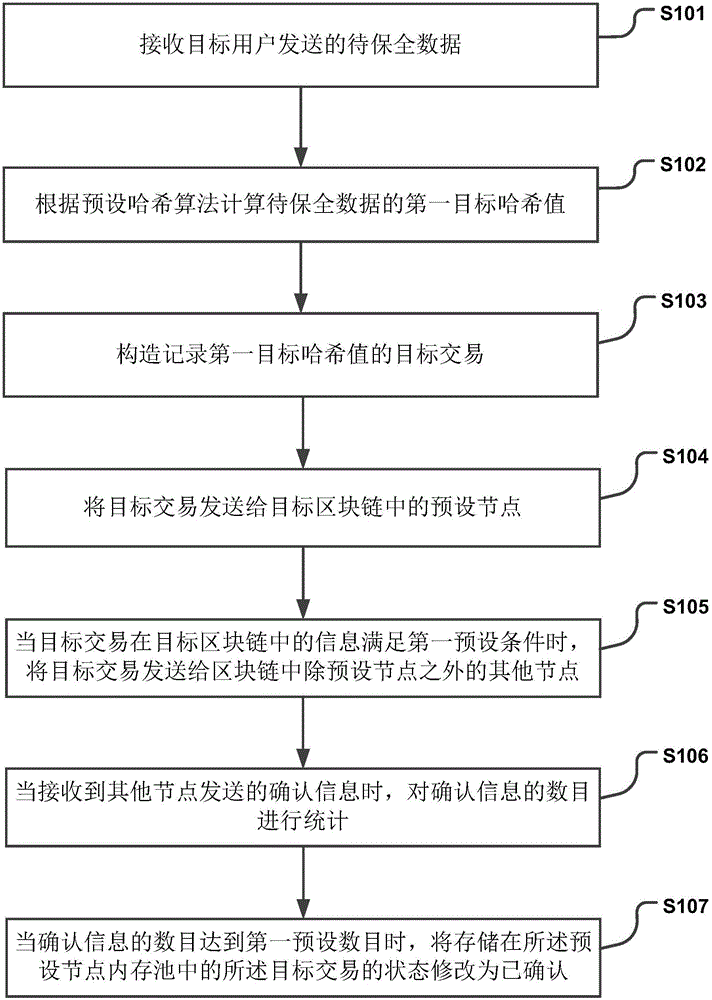

Data processing method and device

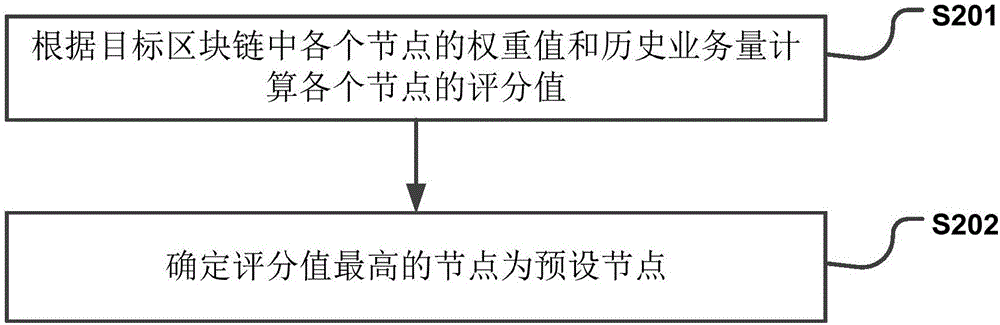

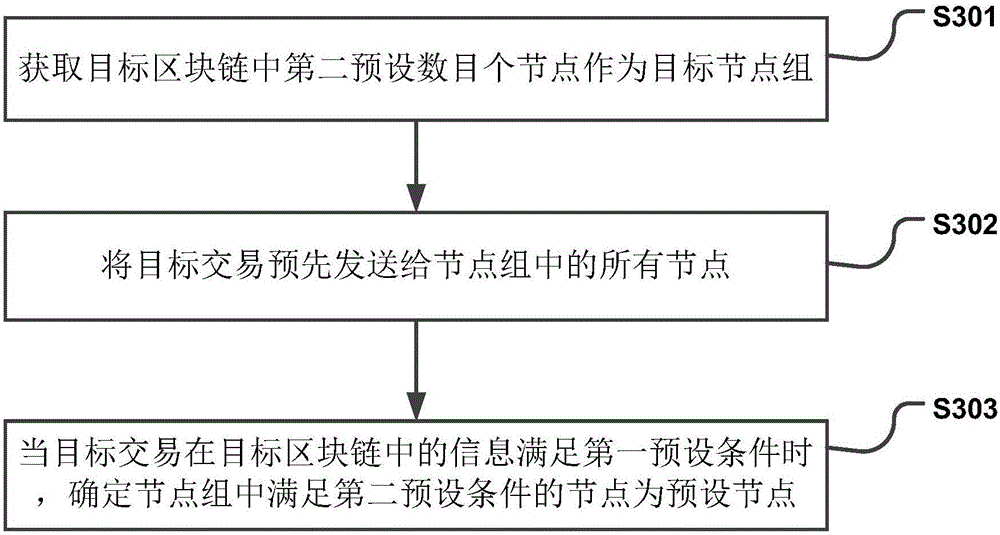

The invention discloses a data processing method and device used for improving the security of data. The method includes the steps that to-be-secured data sent by a target user is received; the first target Hash value of the to-be-secured data is calculated according to a preset Hash algorithm; a target transaction recording the first target Hash value is constructed; the target transaction is sent to a preset node in a target block chain; when information, in the target block chain, of the target transaction meets a first preset condition, the target transaction is sent to other nodes, except the preset node, in the block chain; when confirmation information sent by other nodes is received, the amount of the confirmation information is counted; when the amount of the confirmation information reaches a first preset amount, the state of the target transaction stored in a preset node memory pool is modified into a confirmed state. By the adoption of the scheme, multiple nodes can monitor the to-be-secured data jointly, and thus the security of the data is improved.

Owner:CANAAN CREATIVE (SH) CO LTD

Shared Memory Partition Data Processing System With Hypervisor Managed Paging

InactiveUS20090307445A1Resource allocationNon-redundant fault processingData processing systemPaging

Owner:IBM CORP

Memory management method used in Linux system

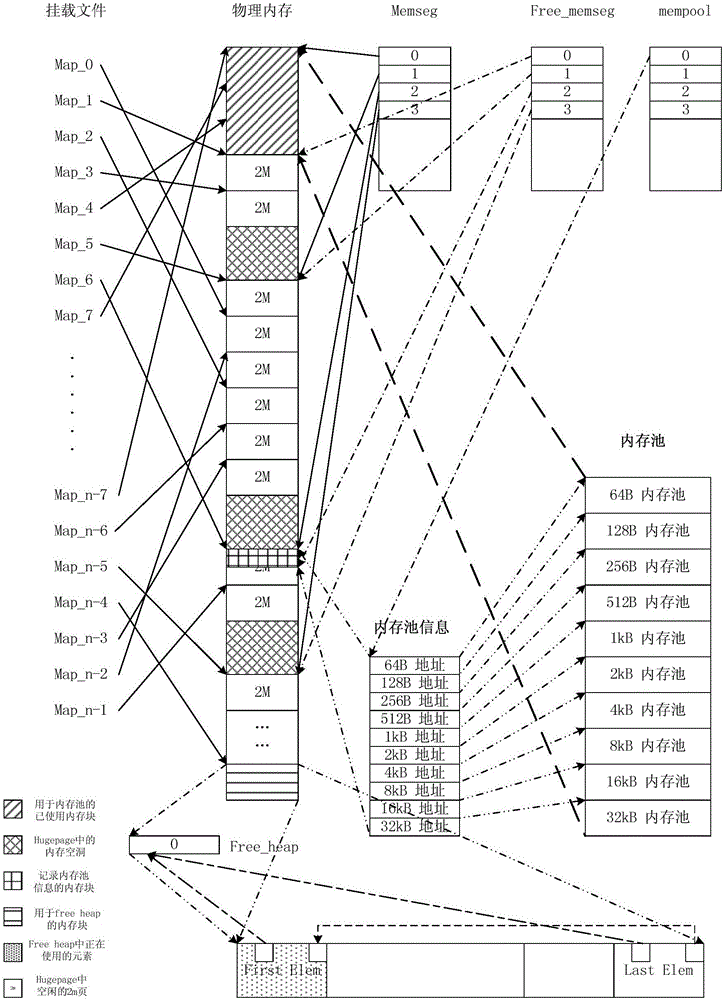

ActiveCN105893269AImprove efficiencyImprove access speedMemory architecture accessing/allocationMemory adressing/allocation/relocationStatic memory allocationGNU/Linux

The invention provides a memory management method used in a Linux system. A large page memory is used in a Linux environment, and the memory configuration working process, the memory application process and memory release process are executed based on the large page memory; the memory configuration working process comprises the steps of calculating the relation between virtual addresses and physical addresses to determine the numa node a mapped hugepage belongs to and performing sorting according to the physical addresses; the memory application process comprises the steps of memory pool configuration application and common memory application. The memory management method keeps the high efficiency advantage of static memory allocation and adopts the 2MB hugepage to replace a 4KB page, the page query time is saved, and the TLB miss probability is reduced. In addition, a hugepage memory is not exchanged to a disk, it is ensured that the memory is always used by an applied application program, priority is given to use a local memory during memory application, and the memory access speed is improved.

Owner:WUHAN HONGXIN TECH SERVICE CO LTD

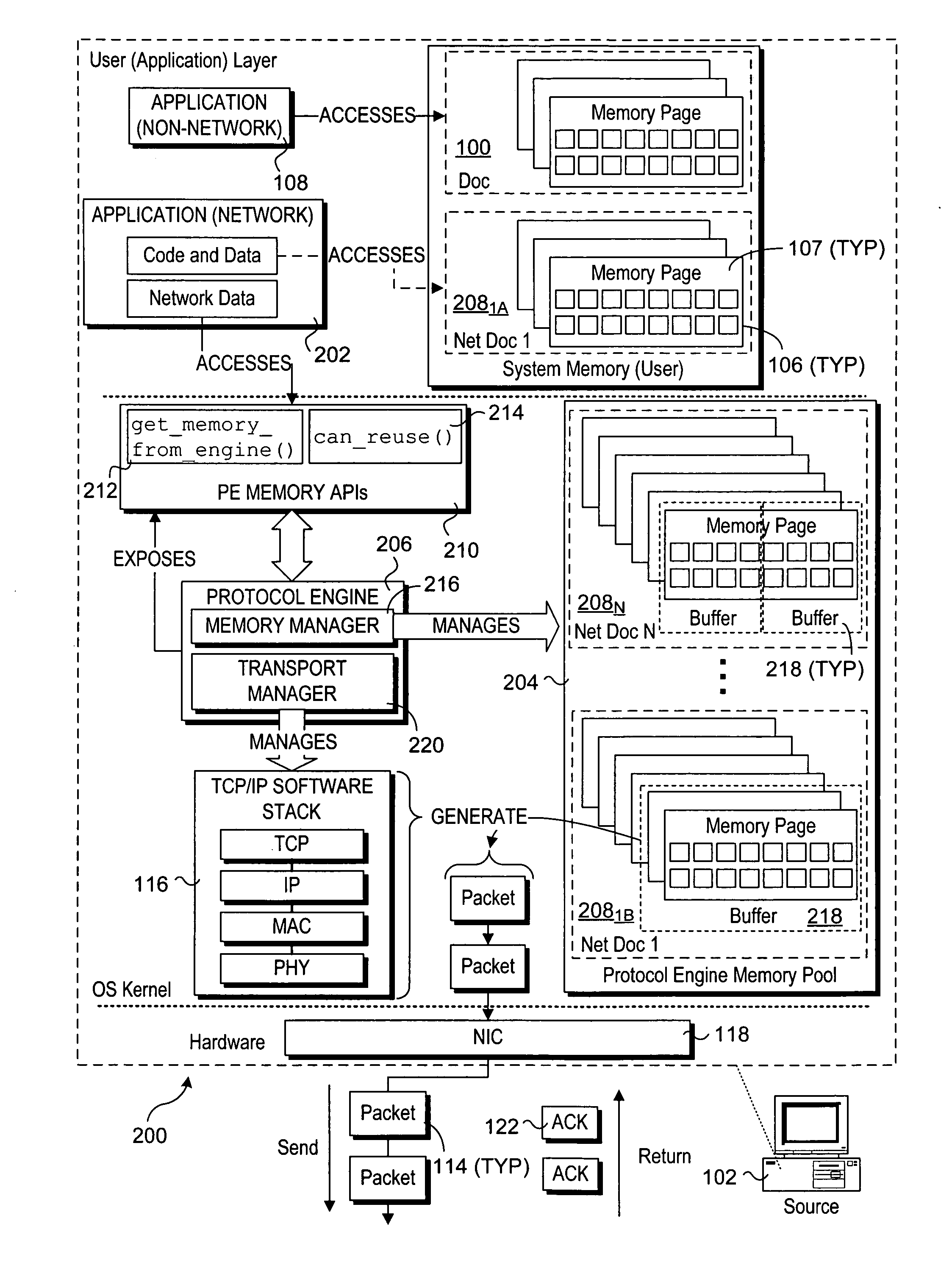

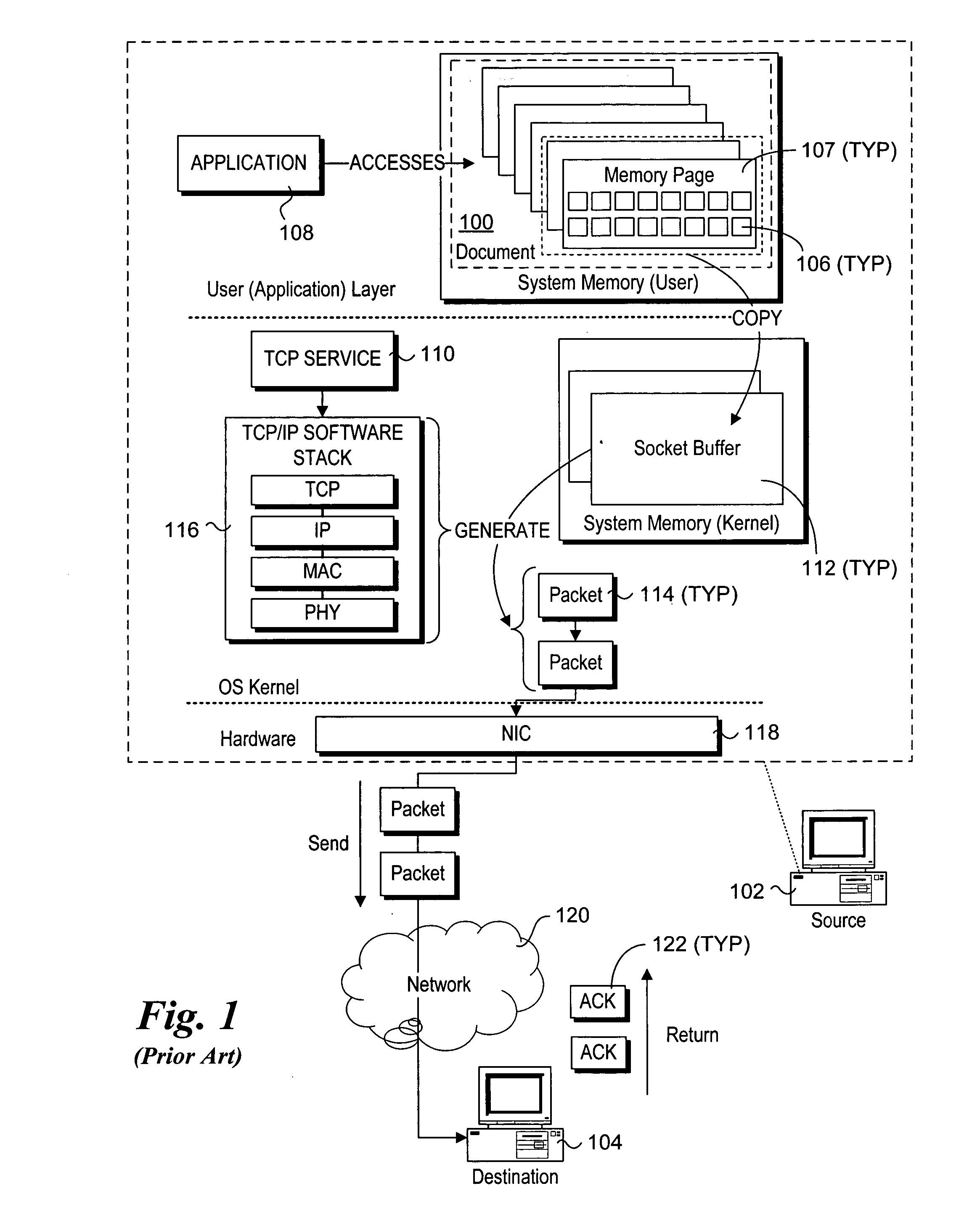

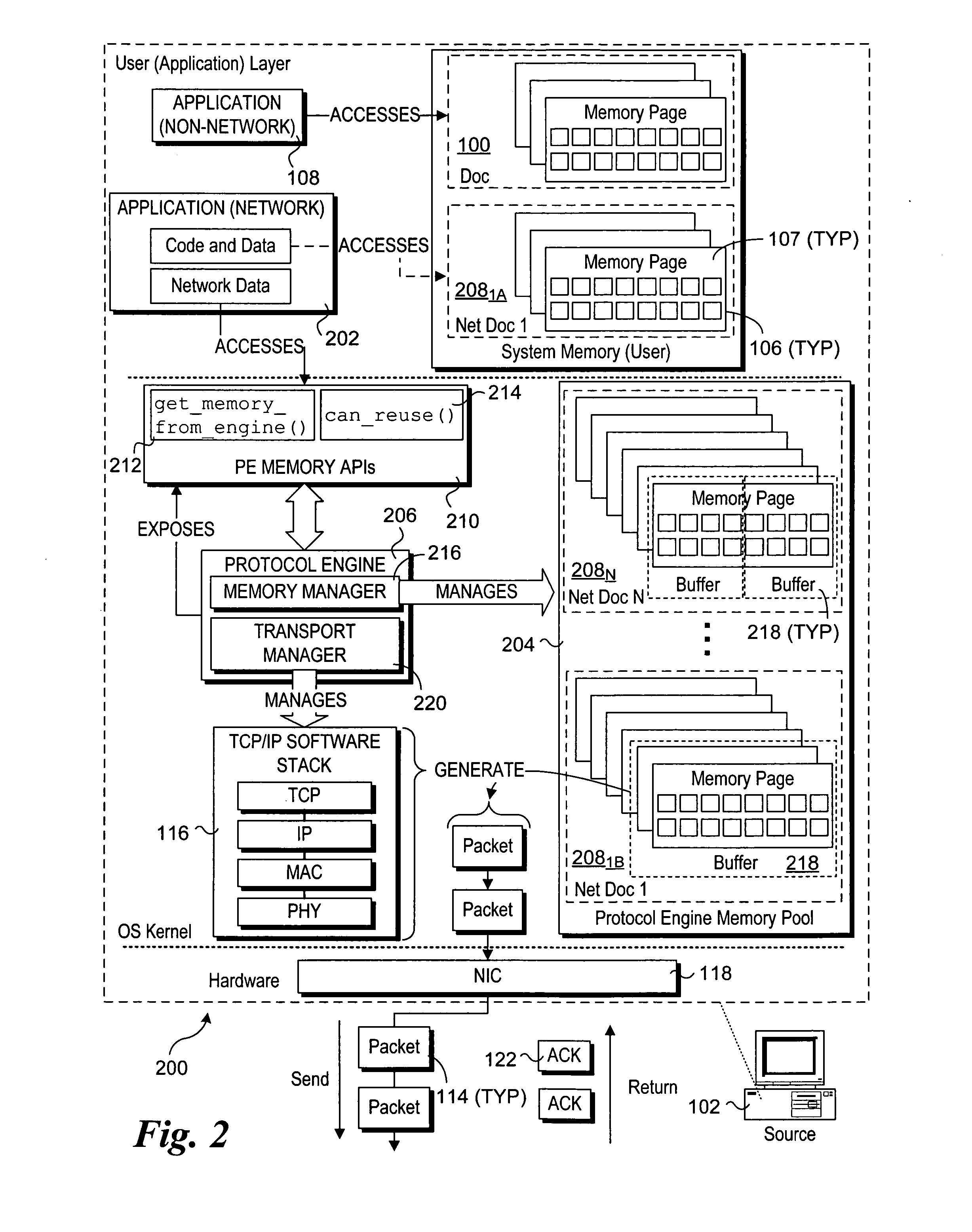

Mechanisms to implement memory management to enable protocol-aware asynchronous, zero-copy transmits

InactiveUS20070011358A1Multiple digital computer combinationsTransmissionZero-copyOperational system

Mechanisms to implement memory management to enable protocol-aware asynchronous, zero-copy transmits. A transport protocol engine exposes interfaces via which memory buffers from a memory pool in operating system (OS) kernel space may be allocated to applications running in an OS user layer. The memory buffers may be used to store data that is to be transferred to a network destination using a zero-copy transmit mechanism, wherein the data is directly transmitted from the memory buffers to the network via a network interface controller. The transport protocol engine also exposes a buffer reuse API to the user layer to enable applications to obtain buffer availability information maintained by the protocol engine. In view of the buffer availability information, the application may adjust its data transfer rate.

Owner:INTEL CORP

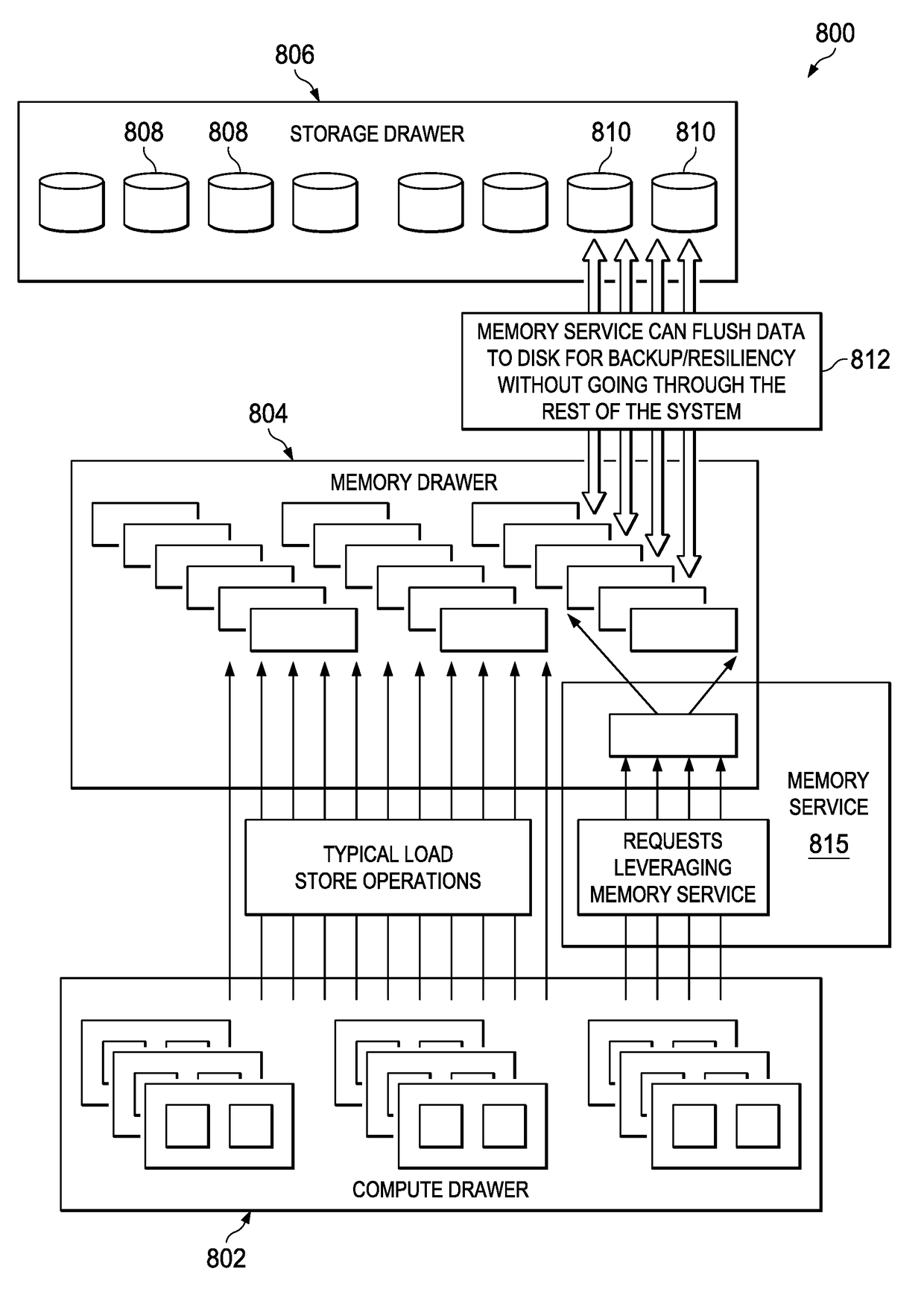

Multi-tenant memory service for memory pool architectures

ActiveUS20170293447A1High resource utilizationSignificant comprehensive benefitsMemory architecture accessing/allocationInput/output to record carriersDistribution patternMemory architecture

A memory management service occupies a configurable portion of an overall memory system in a disaggregate compute environment. The service provides optimized data organization capabilities over the pool of real memory accessible to the system. The service enables various types of data stores to be implemented in hardware, including at a data structure level. Storage capacity conservation is enabled through the creation and management of high-performance, re-usable data structure implementations across the memory pool, and then using analytics (e.g., multi-tenant similarity and duplicate detection) to determine when data organizations should be used. The service also may re-align memory to different data structures that may be more efficient given data usage and distribution patterns. The service also advantageously manages automated backups efficiently.

Owner:IBM CORP

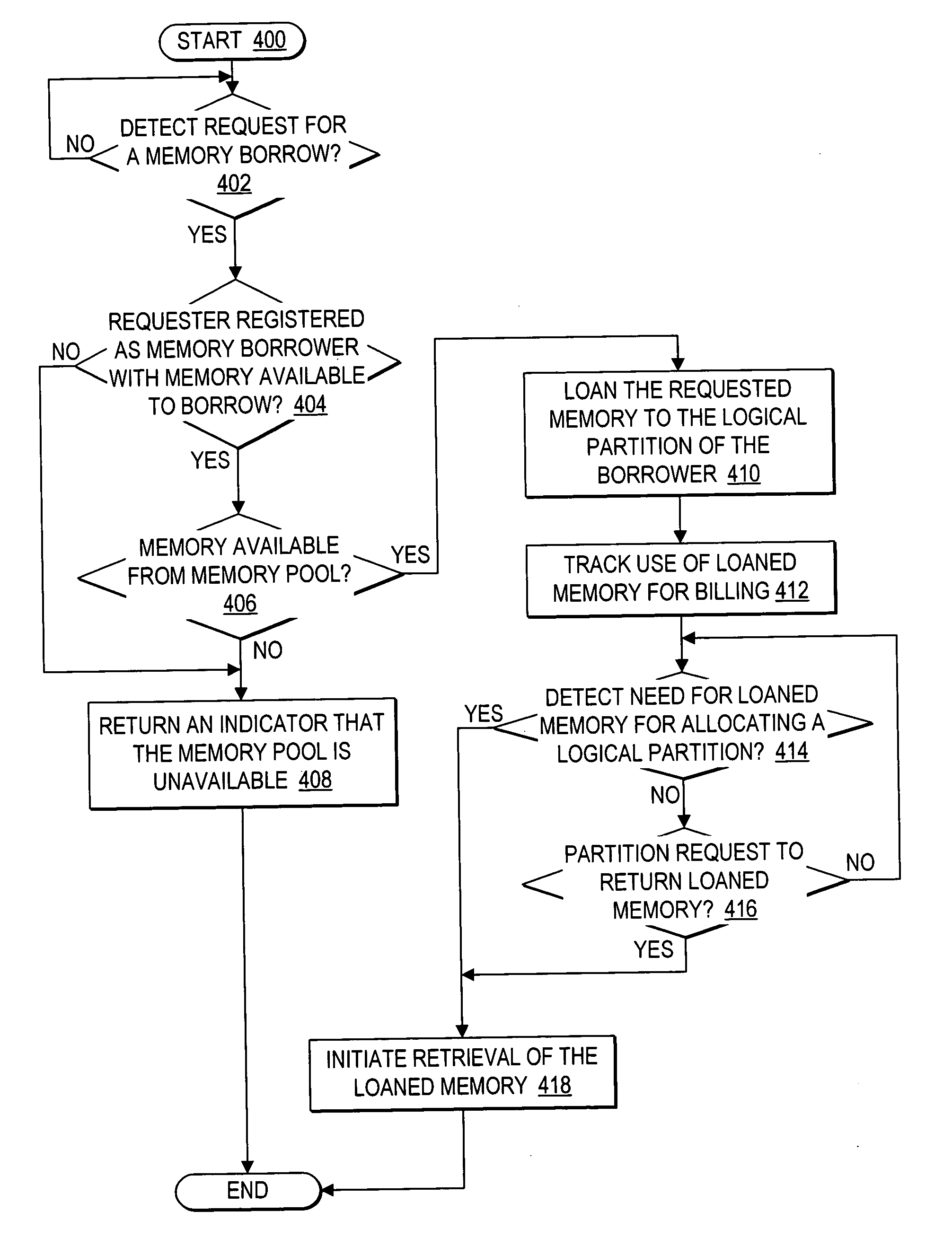

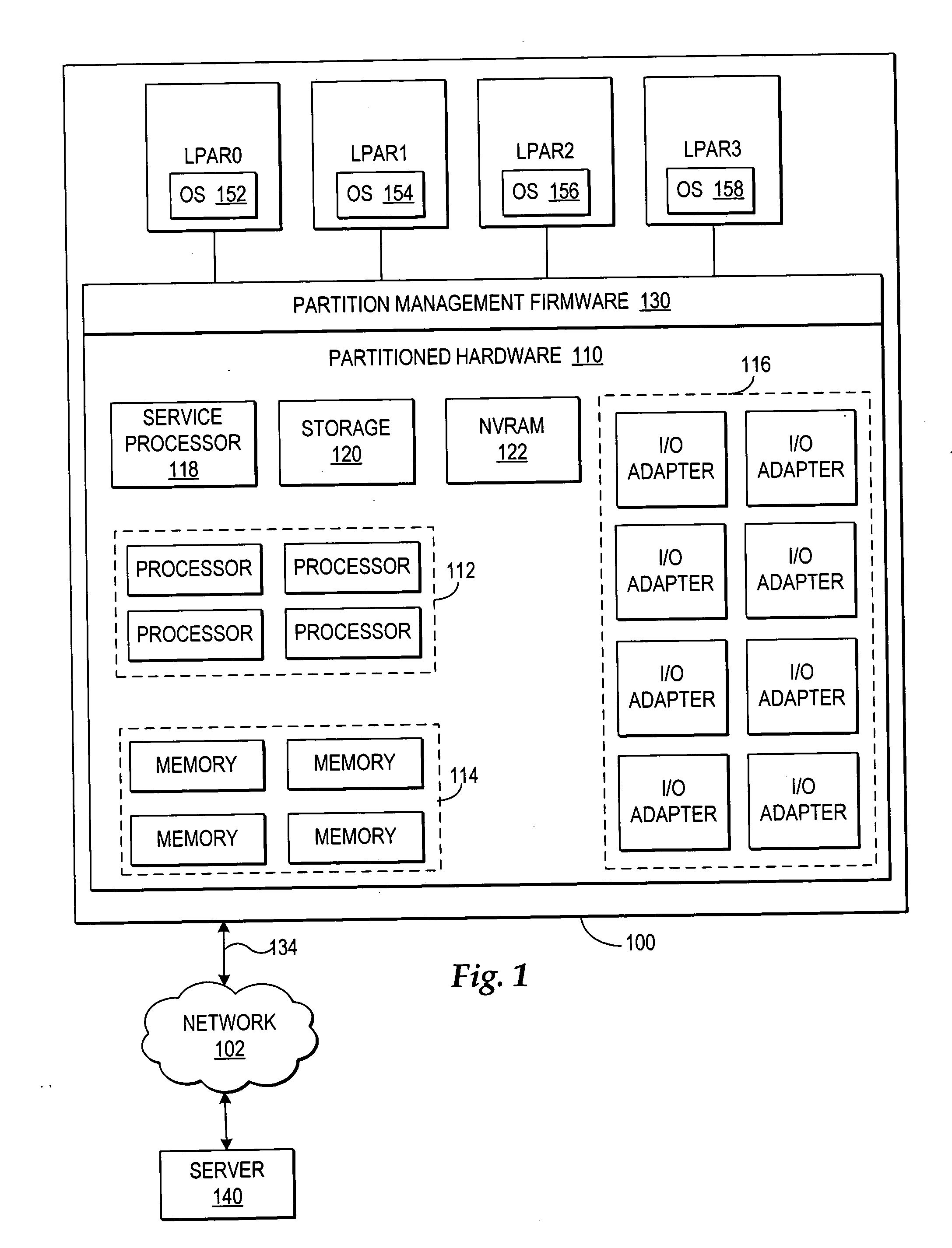

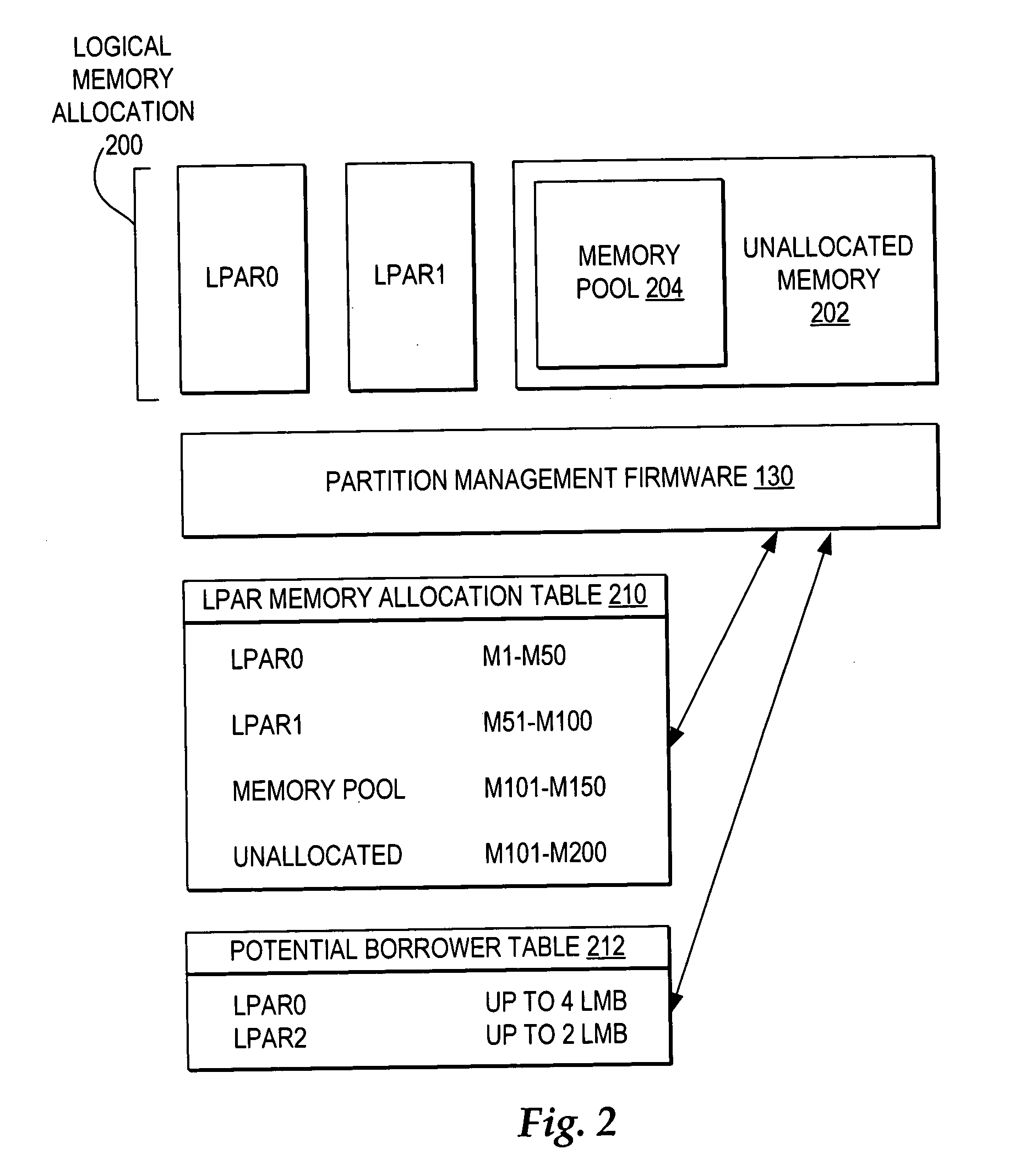

Dynamic memory management of unallocated memory in a logical partitioned data processing system

InactiveUS20050257020A1Maximizing usage of memoryMaximize useResource allocationMemory systemsData processing systemTerm memory

A method, system, and program for dynamic memory management of unallocated memory in a logical partitioned data processing system. A logical partitioned data processing system typically includes multiple memory units, processors, I / O adapters, and other resources enabled for allocation to multiple logical partitions. A partition manager operating within the data processing system manages allocation of the resources to each logical partition. In particular, the partition manager manages allocation of a first portion of the multiple memory units to at least one logical partition. In addition, the partition manager manages a memory pool of unallocated memory from among the multiple memory units. Responsive to receiving a request for a memory loan from one of the allocated logical partitions, a second selection of memory units from the memory pool is loaned to the requesting logical partition. The partition manager, however, is enabled to reclaim the loaned selection of memory units from the requesting logical partition at any time.

Owner:IBM CORP

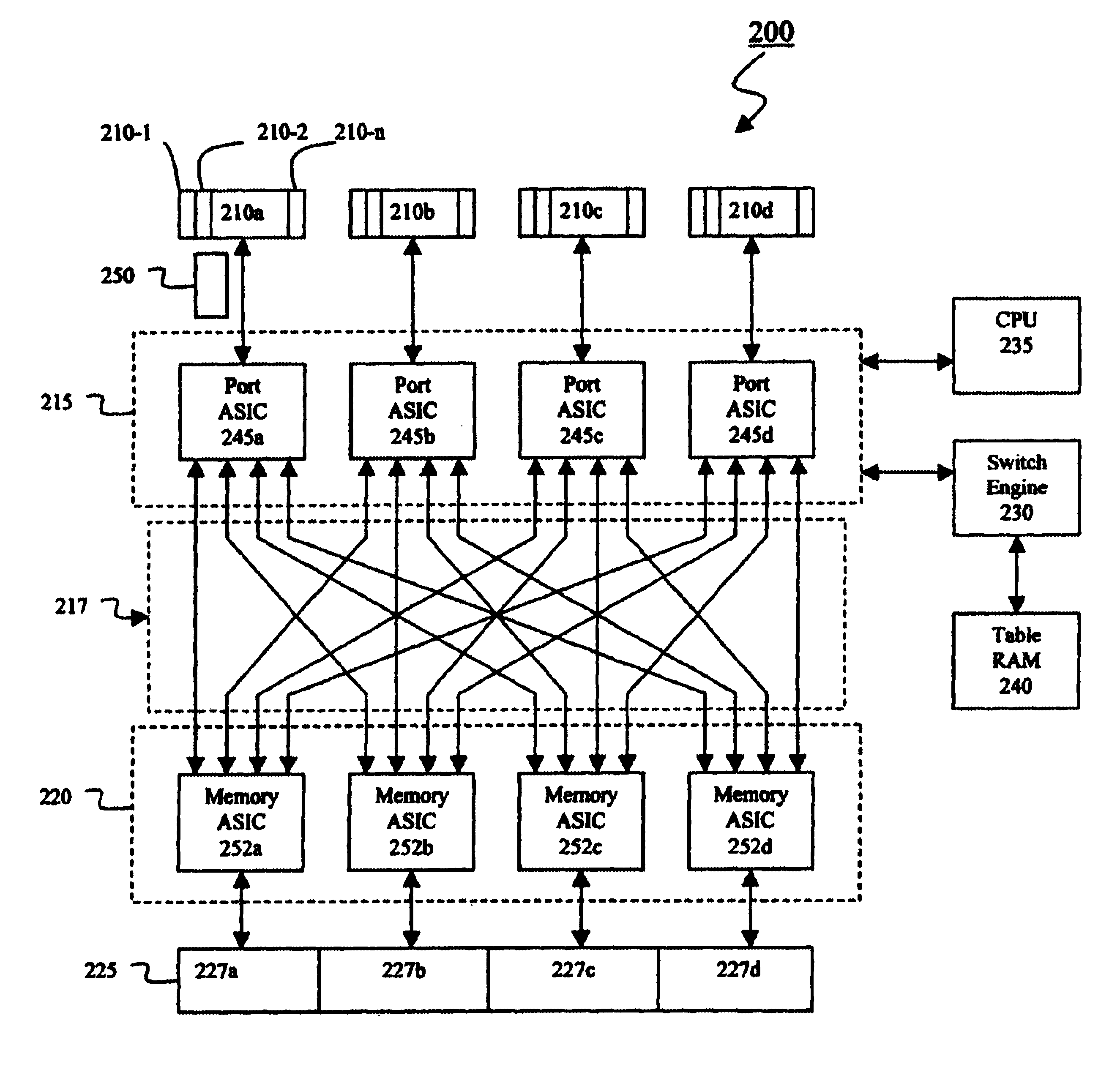

Distributed switch memory architecture

InactiveUS6697362B1Easy to mergeIncrease speedMultiplex system selection arrangementsFrequency-division multiplex detailsDistributed memoryInterconnection

A distributed memory switch system for transmitting packets from source ports to destination ports, comprising: a plurality of ports including a source port and a destination port wherein a packet is transmitted from the source port to the destination port; a memory pool; and an interconnection stage coupled between the plurality of ports and the memory pool such that the interconnection stage permits a packet to be transmitted from the source port to the destination port via the memory pool.

Owner:INTEL CORP

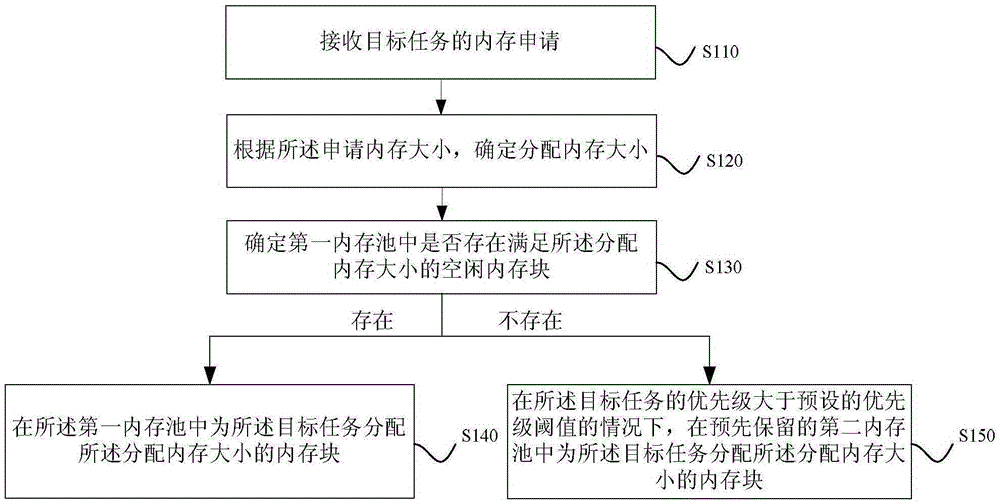

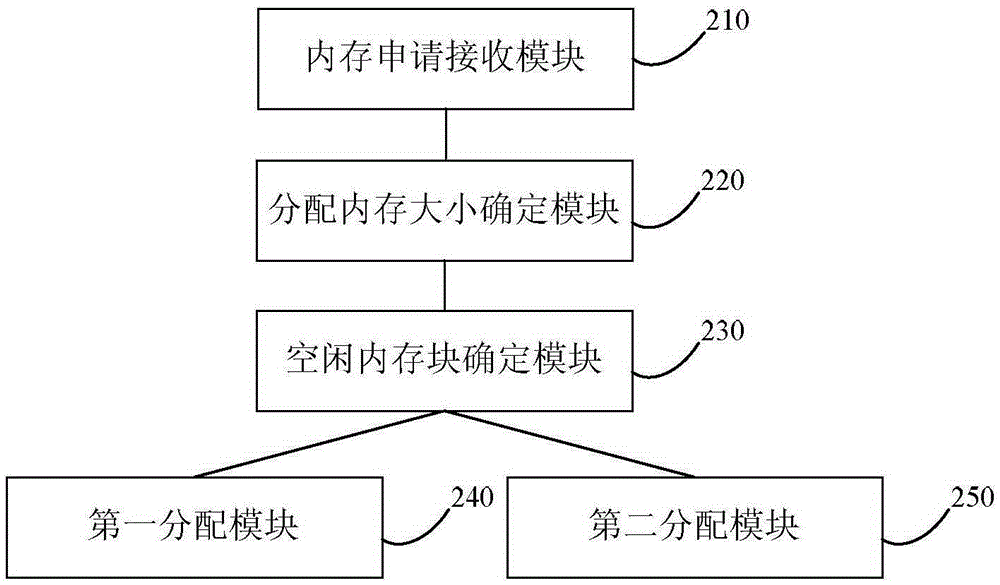

Method and device for distributing memory

ActiveCN105302738AGuaranteed diagnosisImprove securityMemory adressing/allocation/relocationDistributed memoryParallel computing

The embodiment of the invention discloses a method and device for distributing memory. The method for distributing the memory comprises the following steps that a memory request of a target task is received; the capacity of the distributed memory is determined according to the capacity of requested memory; whether a free memory block meeting the capacity of the distributed memory exists in a first memory pool or not is determined; if yes, the memory block with the capacity of the distributed memory is distributed to the target task in the first memory pool; otherwise, the memory block with the capacity of the distributed memory is distributed to the target task in a preserved second memory pool under the condition that the priority of the target task is higher than a preset priority threshold value. According to the technical scheme, when the memory in the first memory pool is used up, the system can distribute the preserved memory to a critical task with higher priority, the basic management capacity and diagnosis capacity of the system are guaranteed, and the safety and reliability of the system are improved.

Owner:KYLAND TECH CO LTD

Internal memory operation management method and system

InactiveCN101493787AImprove operational efficiencyReduce lockingResource allocationInternal memoryManagement unit

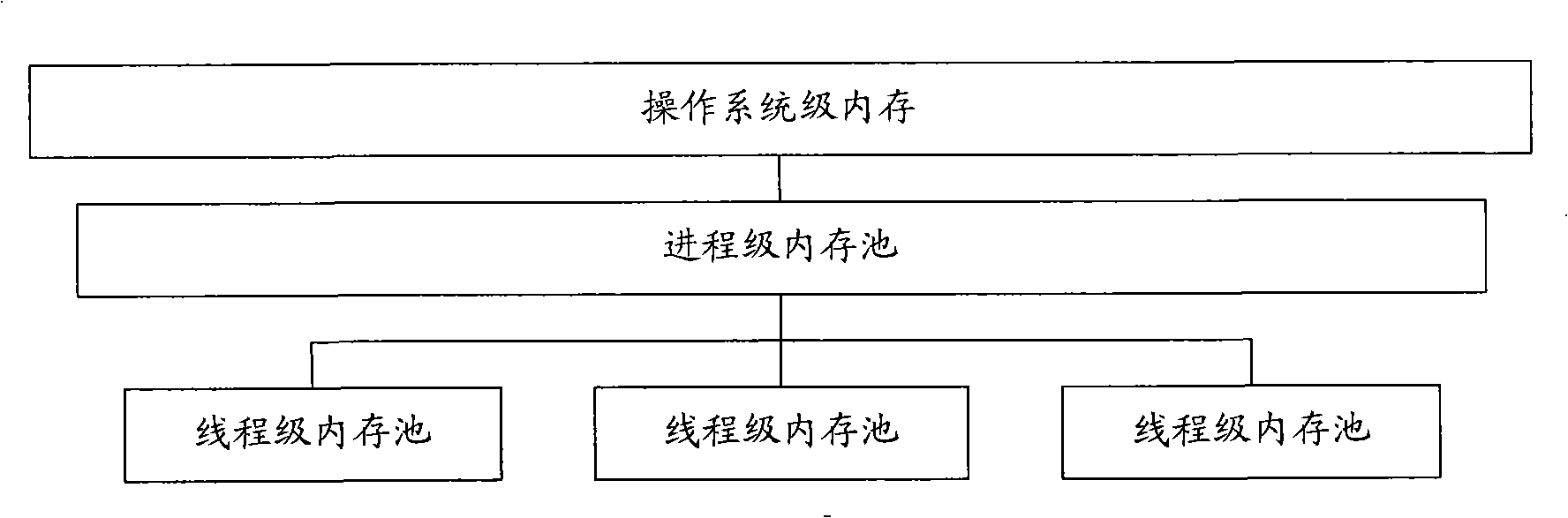

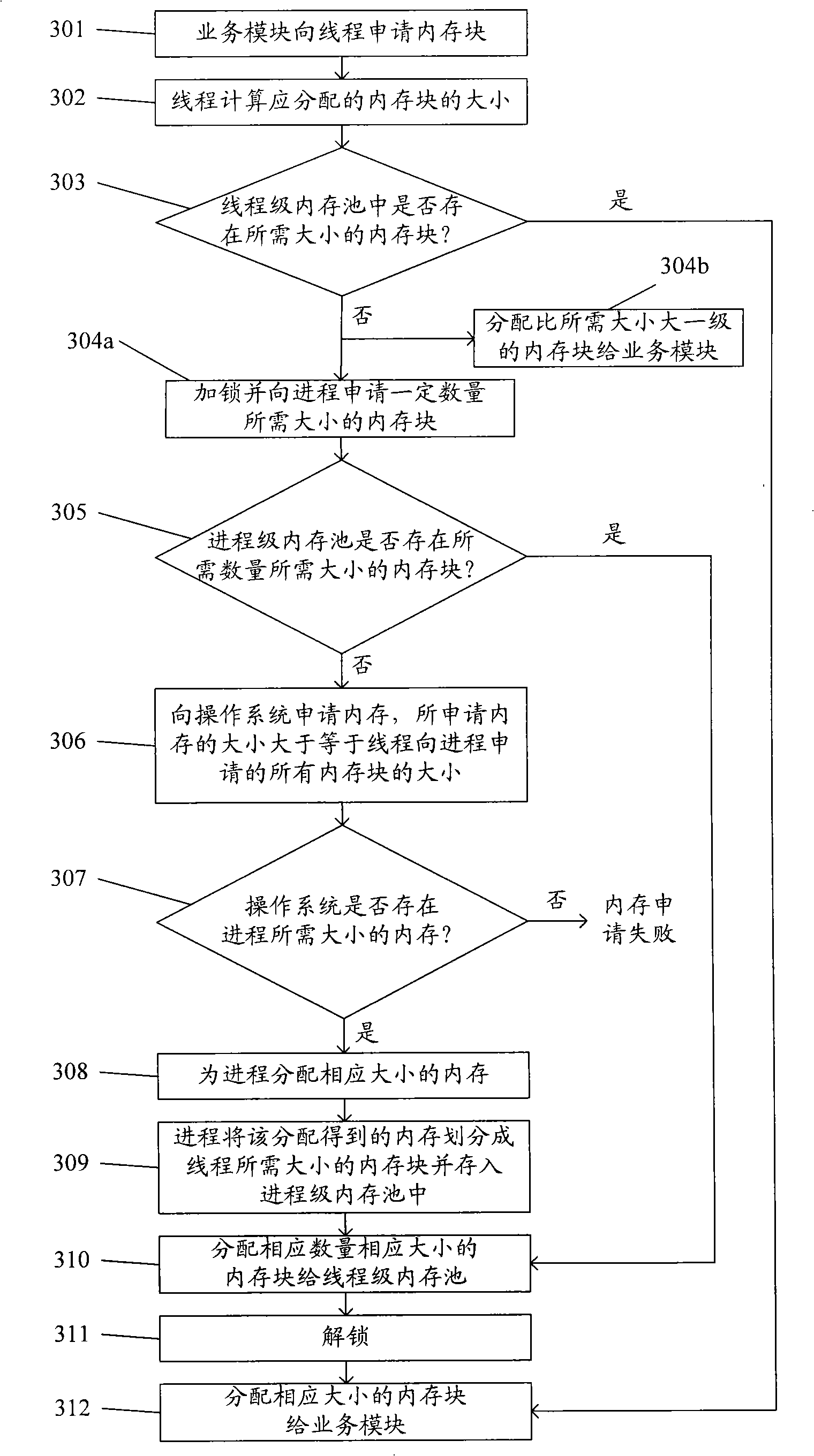

The invention discloses a management method of memory operation, and a thread level memory pool maintained by a thread is arranged. The method further comprises the following steps: a business module applies to the thread when needing to use a memory block; the thread calculates the size of the allocated memory block, and searches whether the memory block with needed size exists in the self thread level memory pool; if exists, the thread allocates the memory block with corresponding size from the thread level memory pool to the business module and completes the memory application flow; otherwise, the thread is locked and applies for a certain amount of memory blocks with the needed size to a process; or the memory block with the size of one level bigger than the needed size is allocated to the business module and the memory application flow is completed. The invention further discloses a memory operation management system comprising a first-level memory management unit, a second-level memory management unit and a third-level memory management unit. With the invention, the running efficiency of programs can be improved.

Owner:ZTE CORP

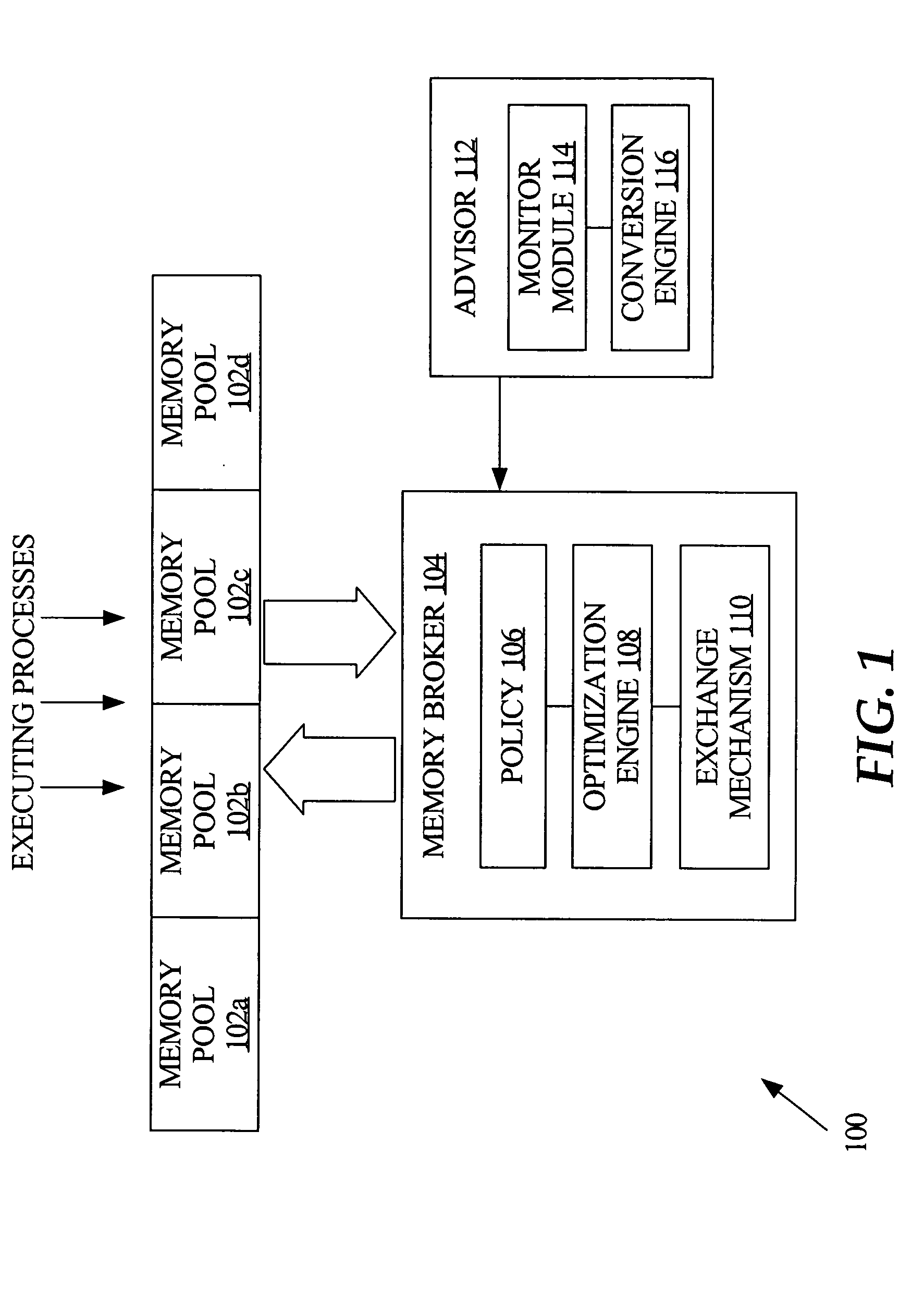

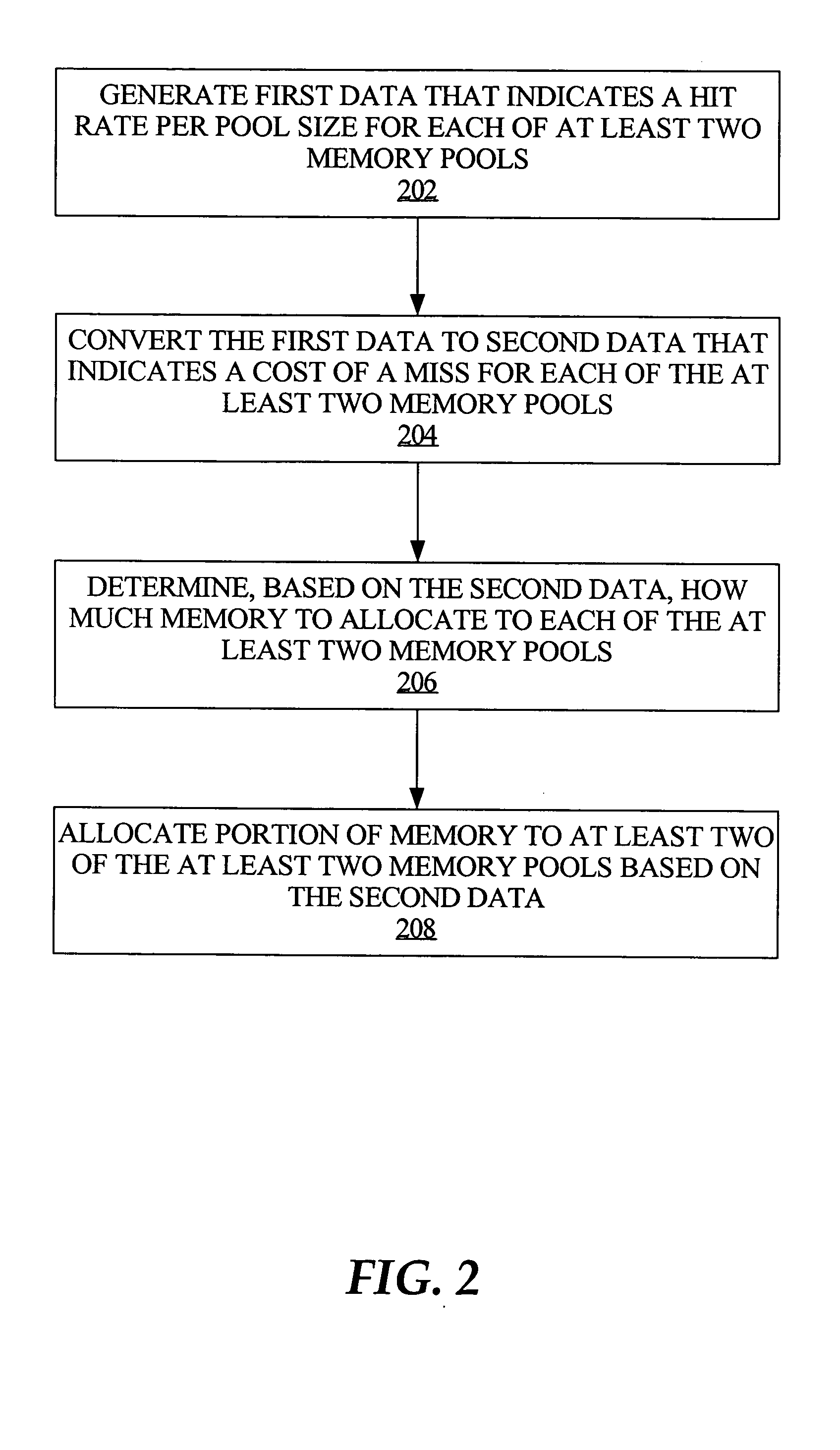

Techniques for automated allocation of memory among a plurality of pools

ActiveUS20050114621A1Resource allocationDigital data processing detailsParallel computingTerm memory

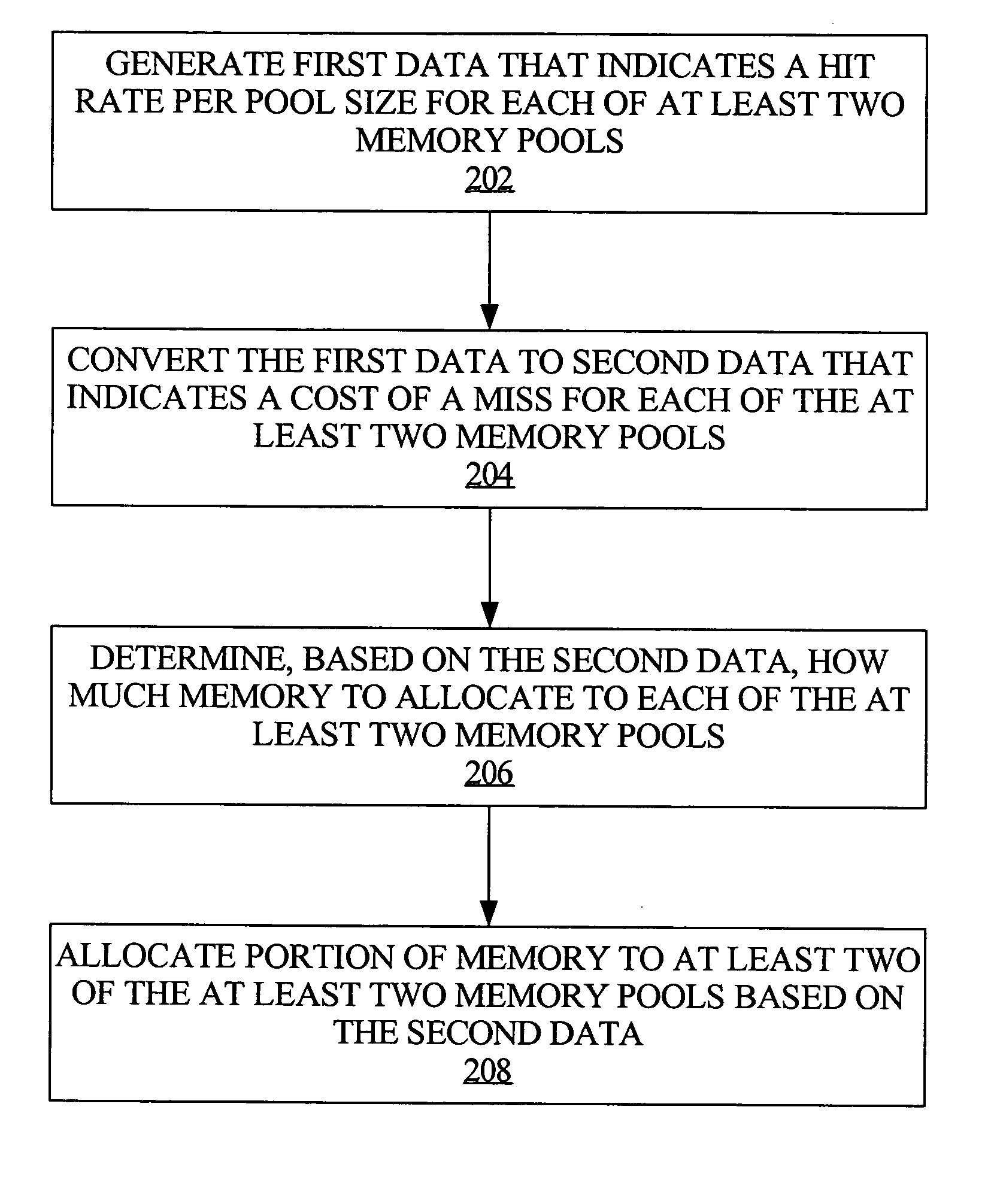

Allocation of memory is optimized across multiple pools of memory, based on minimizing the time it takes to successfully retrieve a given data item from each of the multiple pools. First data is generated that indicates a hit rate per pool size for each of multiple memory pools. In an embodiment, the generating step includes continuously monitoring attempts to access, or retrieve a data item from, each of the memory pools. The first data is converted to second data that accounts for a cost of a miss with respect to each of the memory pools. In an embodiment, the second data accounts for the cost of a miss in terms of time. How much of the memory to allocate to each of the memory pools is determined, based on the second data. In an embodiment, the steps of converting and determining are automatically performed, on a periodic basis.

Owner:ORACLE INT CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com