Patents

Literature

2685 results about "Hash table" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a hash table (hash map) is a data structure that implements an associative array abstract data type, a structure that can map keys to values. A hash table uses a hash function to compute an index, also called a hash code, into an array of buckets or slots, from which the desired value can be found.

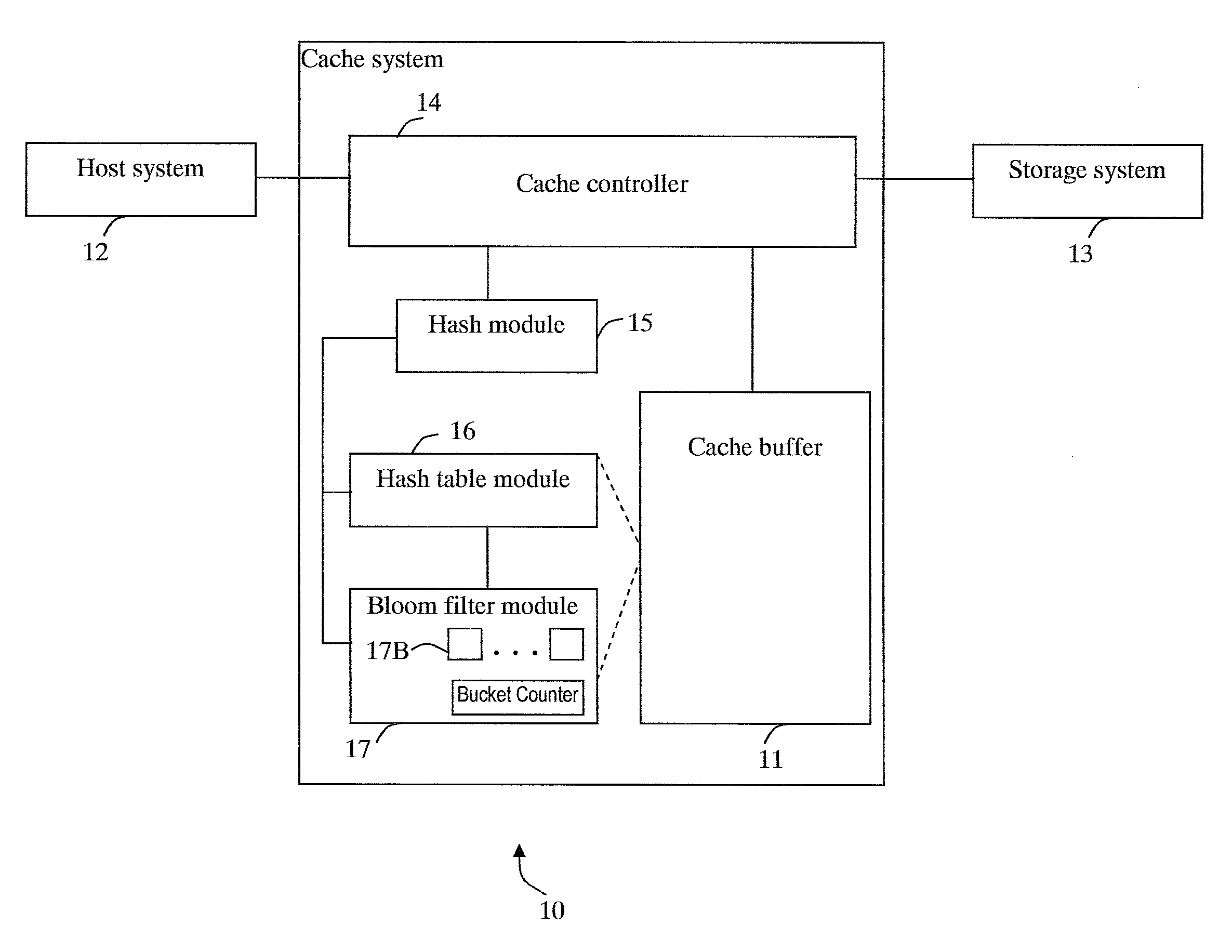

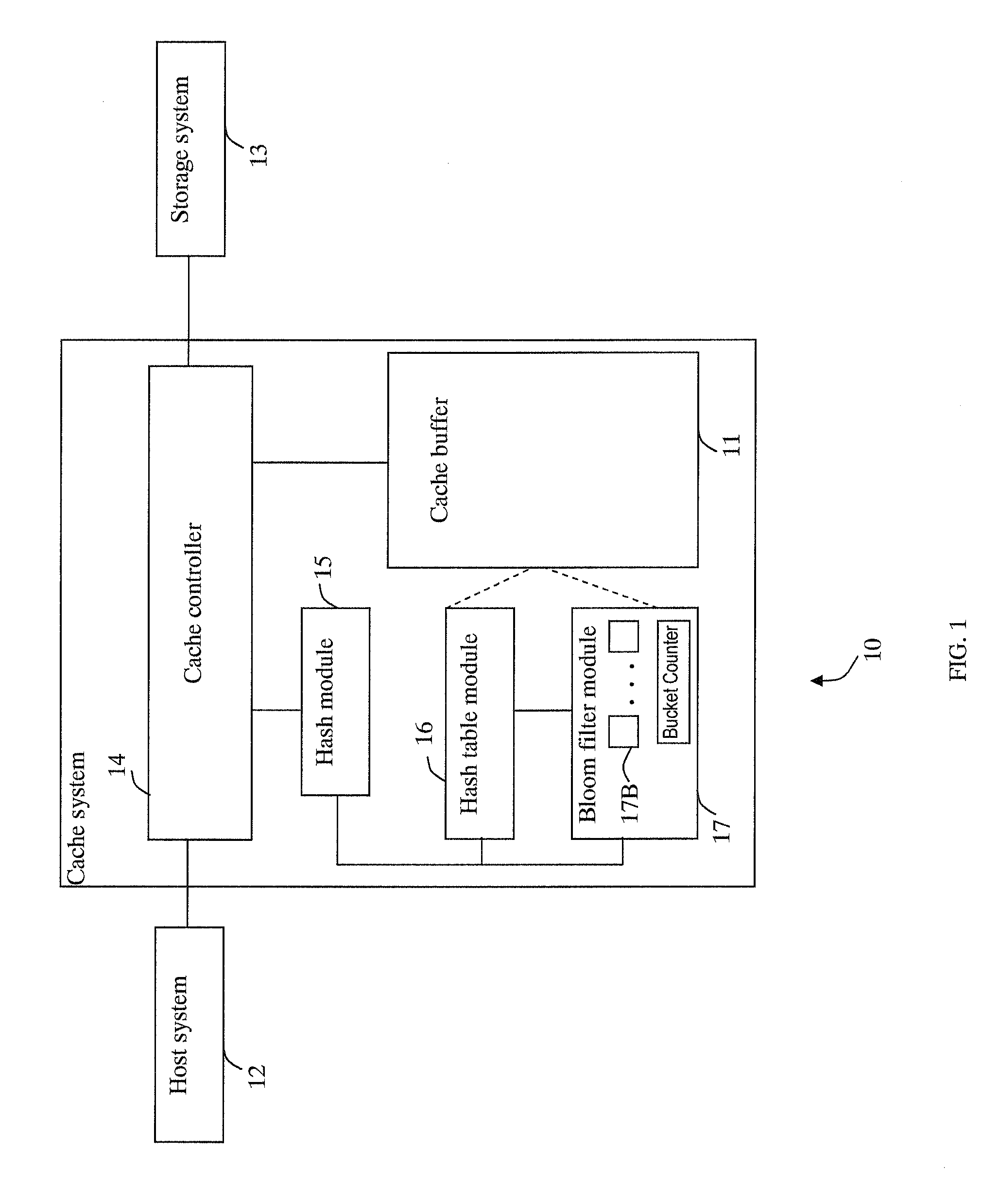

Cache system optimized for cache miss detection

ActiveUS20130326154A1Memory architecture accessing/allocationMemory adressing/allocation/relocationLogical block addressingBloom filter

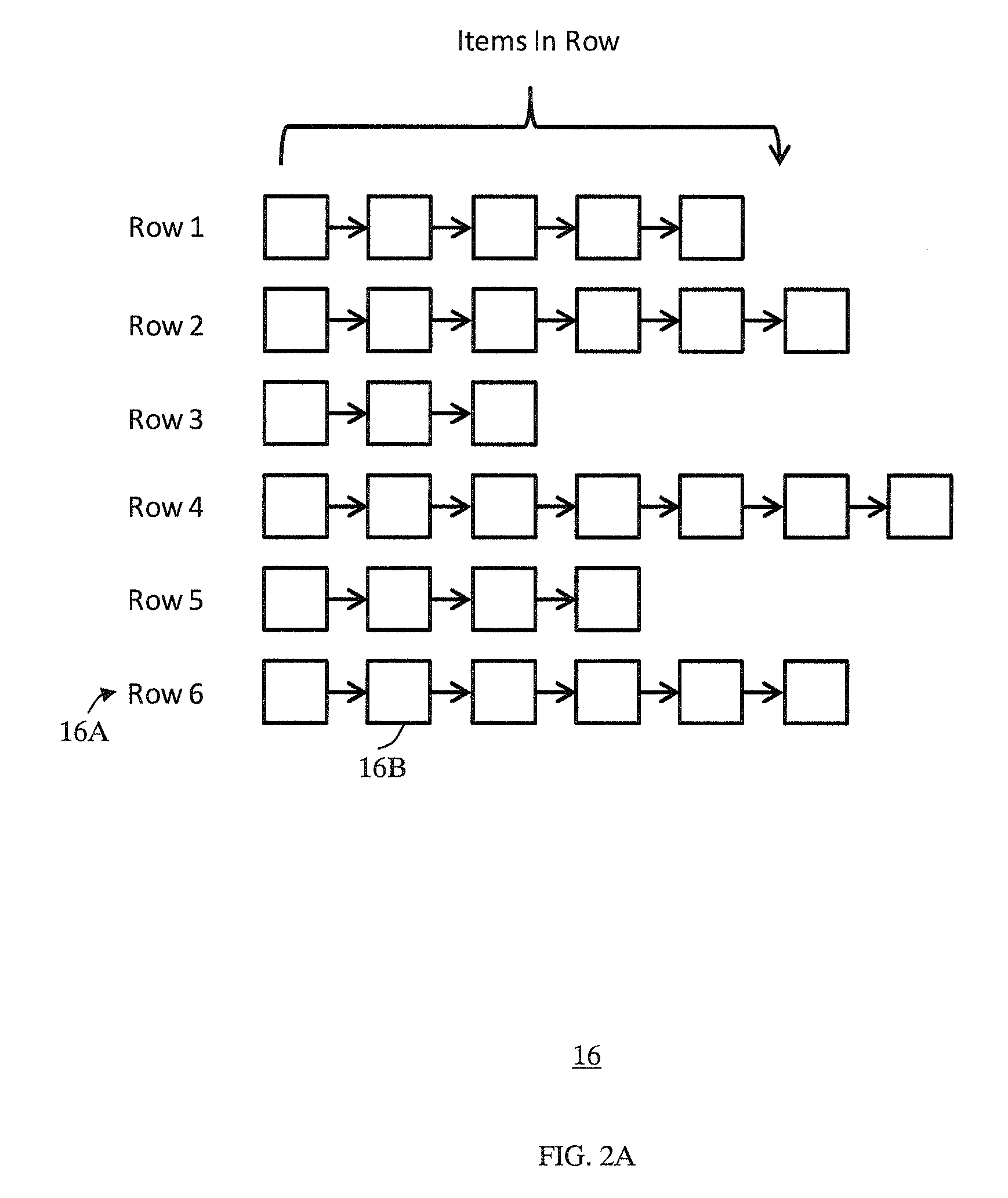

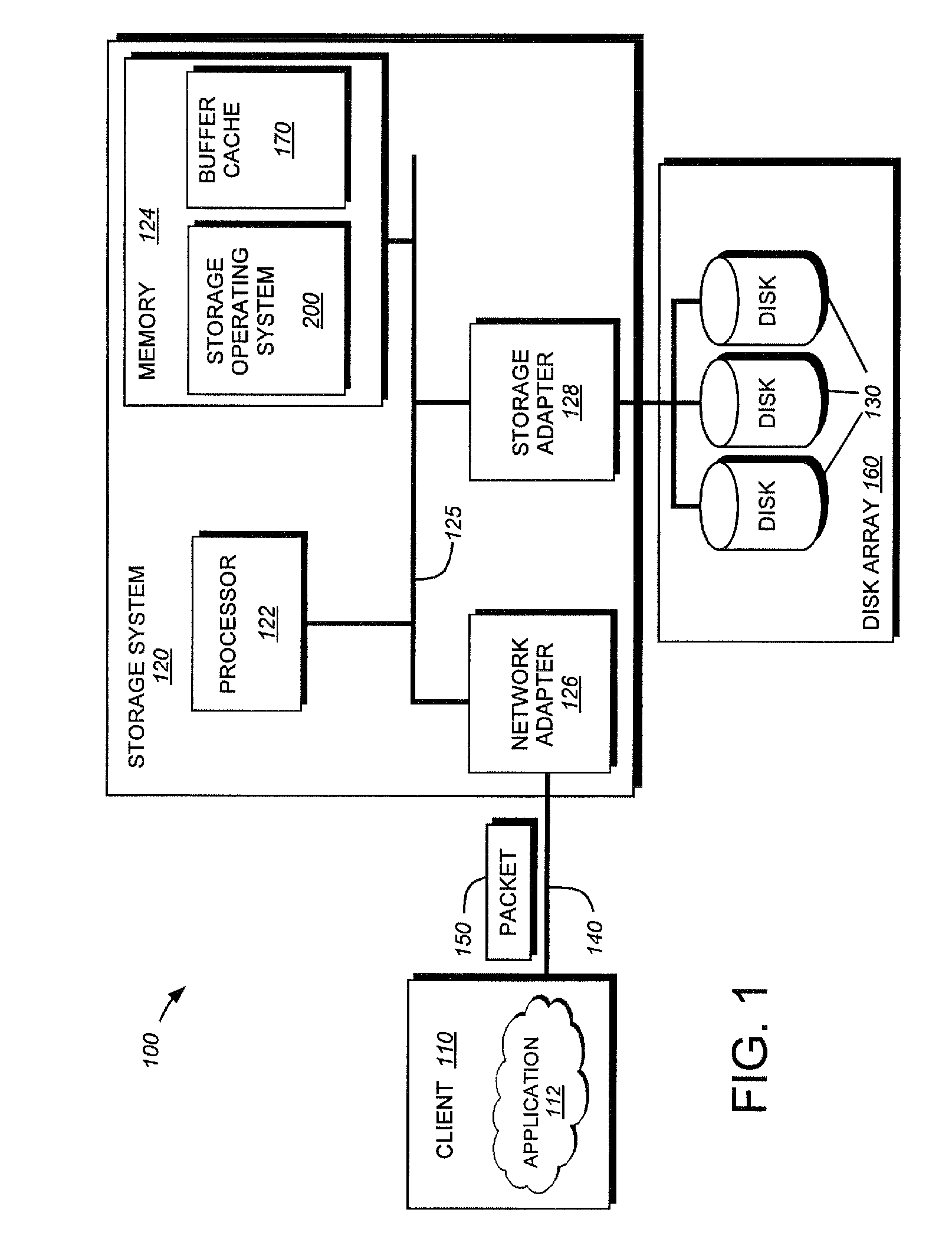

According to an embodiment of the invention, cache management comprises maintaining a cache comprising a hash table including rows of data items in the cache, wherein each row in the hash table is associated with a hash value representing a logical block address (LBA) of each data item in that row. Searching for a target data item in the cache includes calculating a hash value representing a LBA of the target data item, and using the hash value to index into a counting Bloom filter that indicates that the target data item is either not in the cache, indicating a cache miss, or that the target data item may be in the cache. If a cache miss is not indicated, using the hash value to select a row in the hash table, and indicating a cache miss if the target data item is not found in the selected row.

Owner:SAMSUNG ELECTRONICS CO LTD

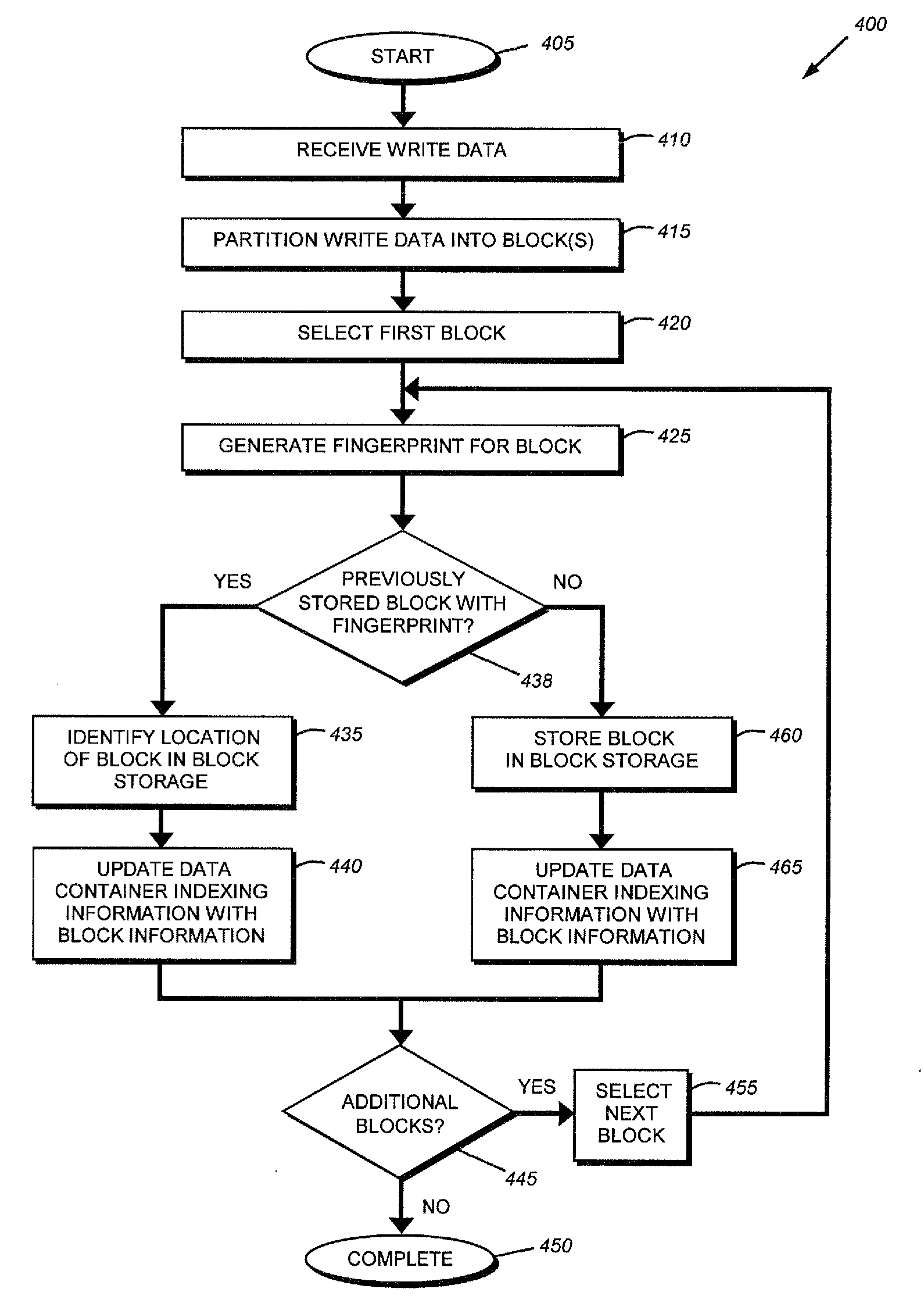

System and method for on-the-fly elimination of redundant data

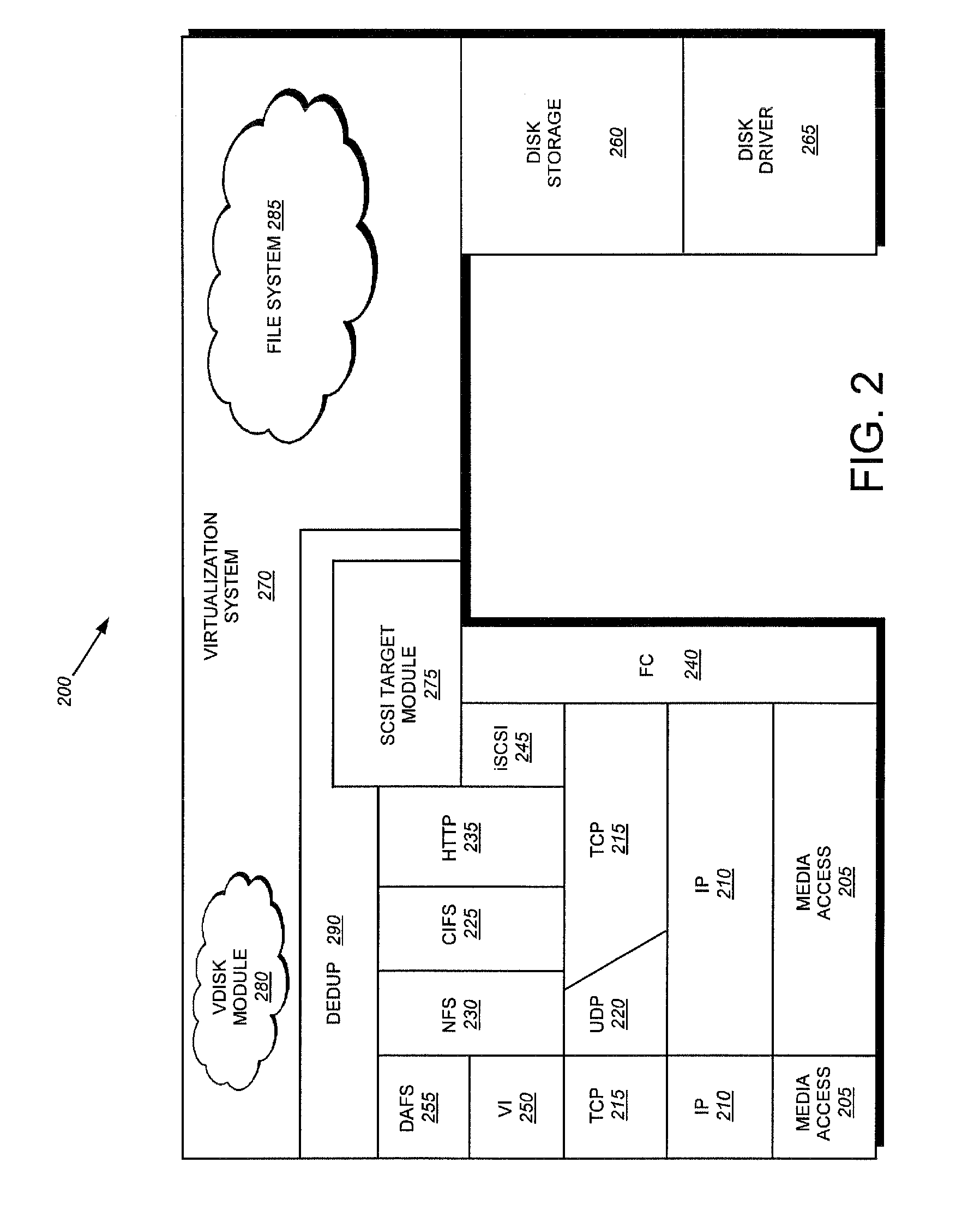

ActiveUS20080294696A1Digital data information retrievalDigital data processing detailsOperational systemFile system

Owner:NETWORK APPLIANCE INC

Block chain data comparison and consensus method

ActiveCN105719185AWill not limit performanceNo capacity constraintsFinanceFinancial transactionFile comparison

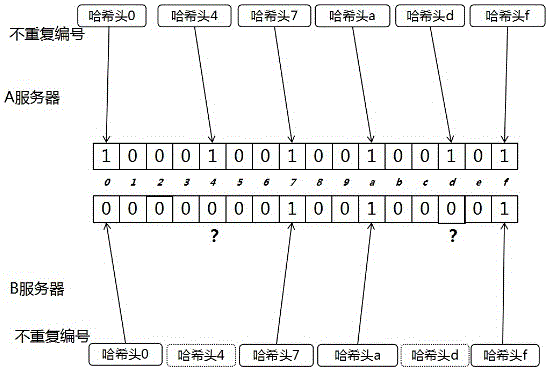

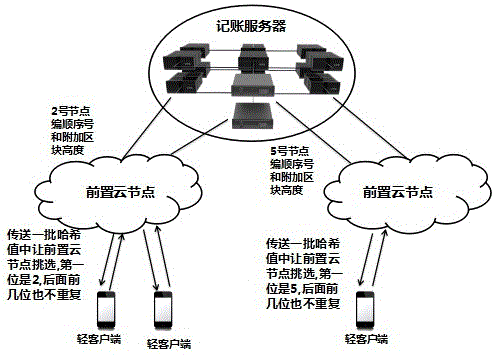

The invention discloses a block chain data comparison and consensus method used for hash value calculation of content of each transaction. By changing the random numbers, the numbers of some bits of the hash values are set not to be repeated in a time period, and can be called as unrepeated serial numbers. Accounting servers are used to compare the numbers of the preset bits of the hash values to determine whether the transactions are identical or not. The unrepeated serial number comparison can be carried out in a way of establishing the Merkle-like tree, and because the numbers of the preset bits of the hash values of the transactions are not repeated, and can be used as the serial numbers, and can be disposed in the Merkle-like tree according to the certain rule, and therefore for different accounting servers, the positions of the hash values of the same transaction on the Merkle trees are totally identical, and the differences can be found out quickly, the data transfer amount can be reduced, and the time required by the consensus can be greatly accelerated.

Owner:HANGZHOU FUZAMEI TECH CO LTD

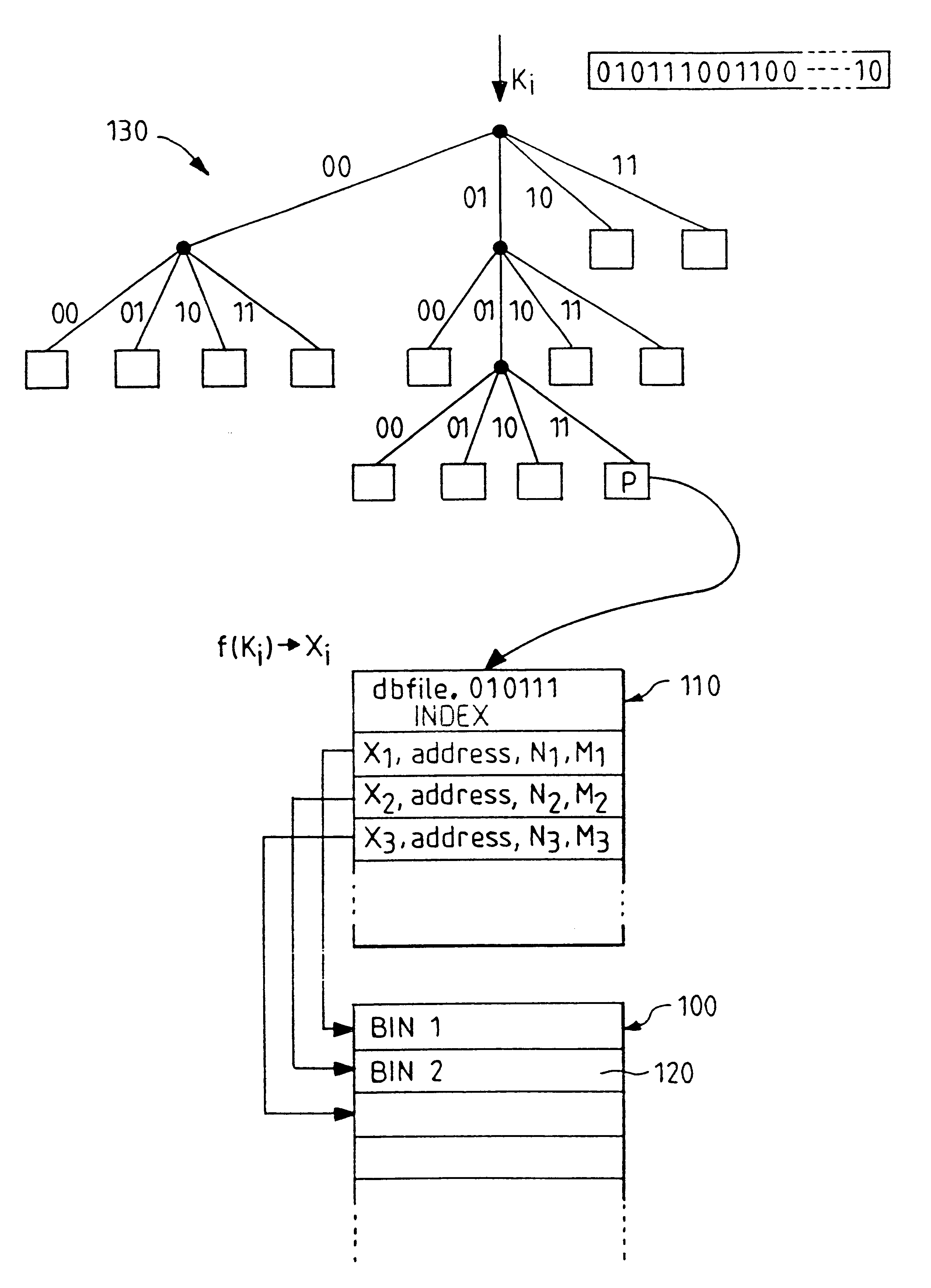

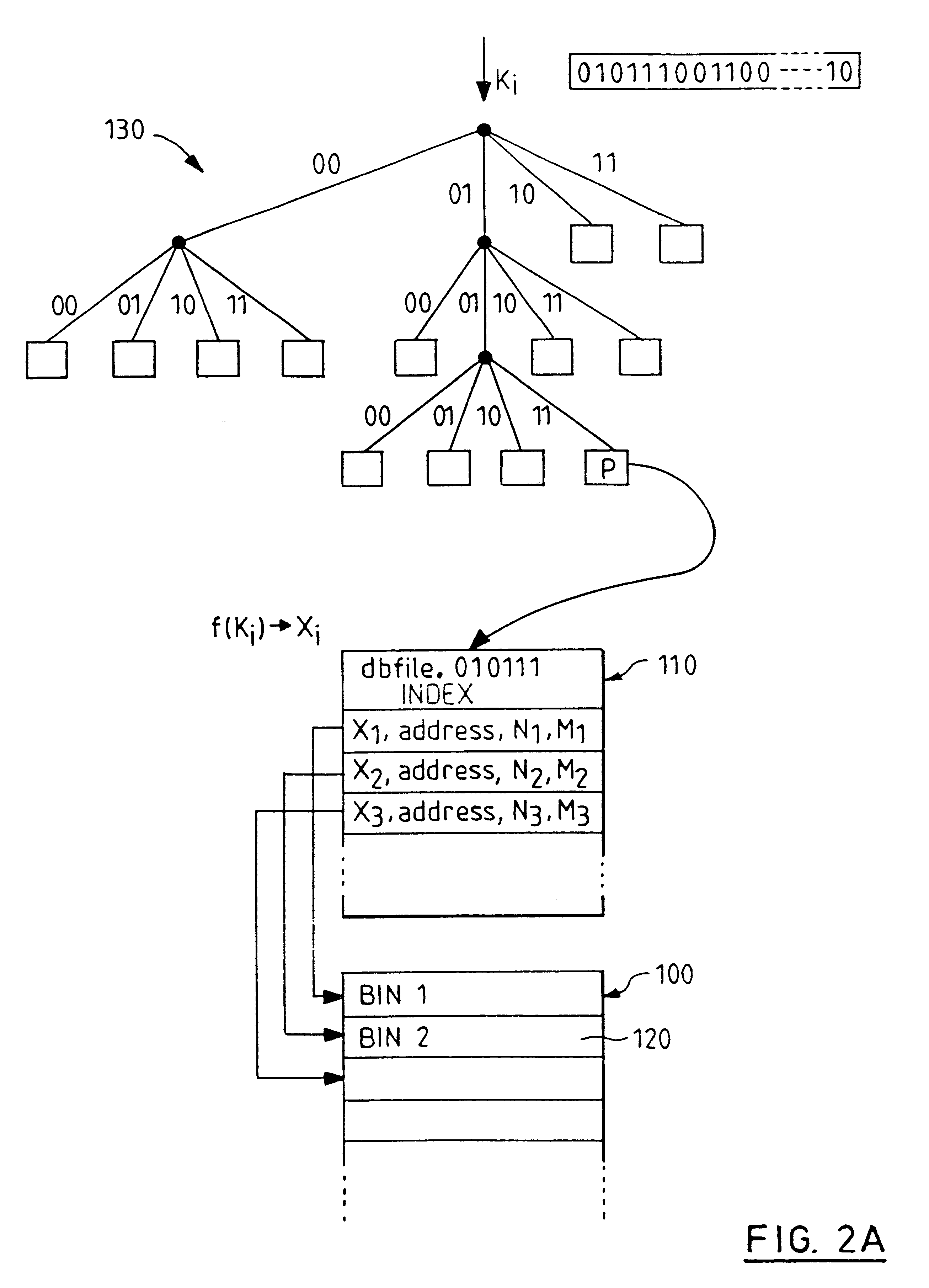

Indexed file system and a method and a mechanism for accessing data records from such a system

InactiveUS6292795B1Efficient access to dataWastefulData processing applicationsDigital data information retrievalExtensibilityCollision detection

A computer filing system includes a data access and allocation mechanism including a directory and a plurality of indexed data files or hash tables. The directory is preferably a radix tree including directory entries which contain pointers to respective ones of the hash tables. Using a plurality of hash tables avoids the whole database ever having to be re-hashed all at once. If a hash table exceeds a preset maximum size as data is added, it is replaced by two hash tables and the directory is updated to include two separate directory entries each containing a pointer to one of the new hash tables. The directory is locally extensible such that new levels are added to the directory only where necessary to distinguish between the hash tables. Local extensibility prevents unnecessary expansion of the size of the directory while also allowing the size of the hash tables to be controlled. This allows optimisation of the data access mechanism such that an optimal combination of directory-look-up and hashing processes is used. Additionally, if the number of keys mapped to an indexed data file is less than a threshold number (corresponding to the number of entries which can be held in a reasonable index), the index for the data file is built with a one-to-one relationship between keys and index entries such that each index entry identifies a data block holding data for only one key. This avoids the overhead of the collision detection of hashing when it ceases to be useful.

Owner:IBM CORP

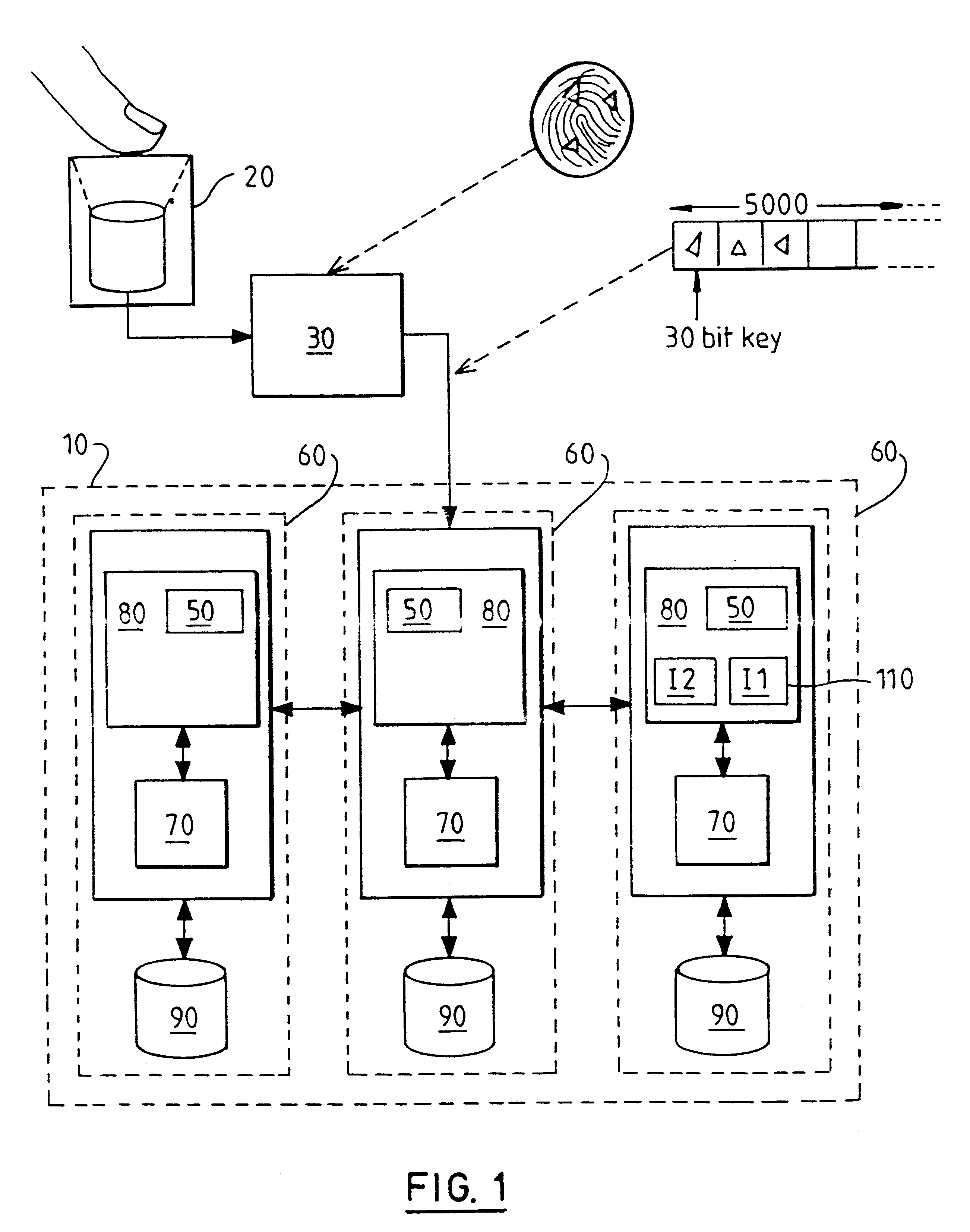

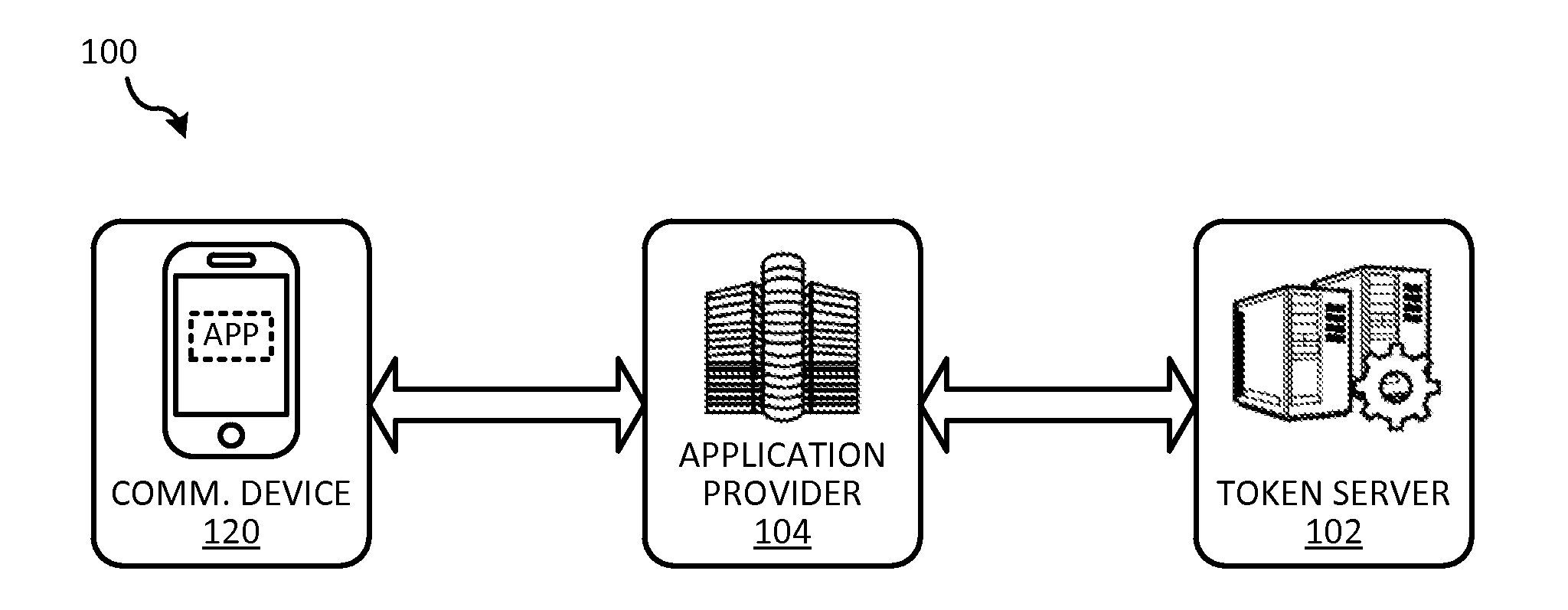

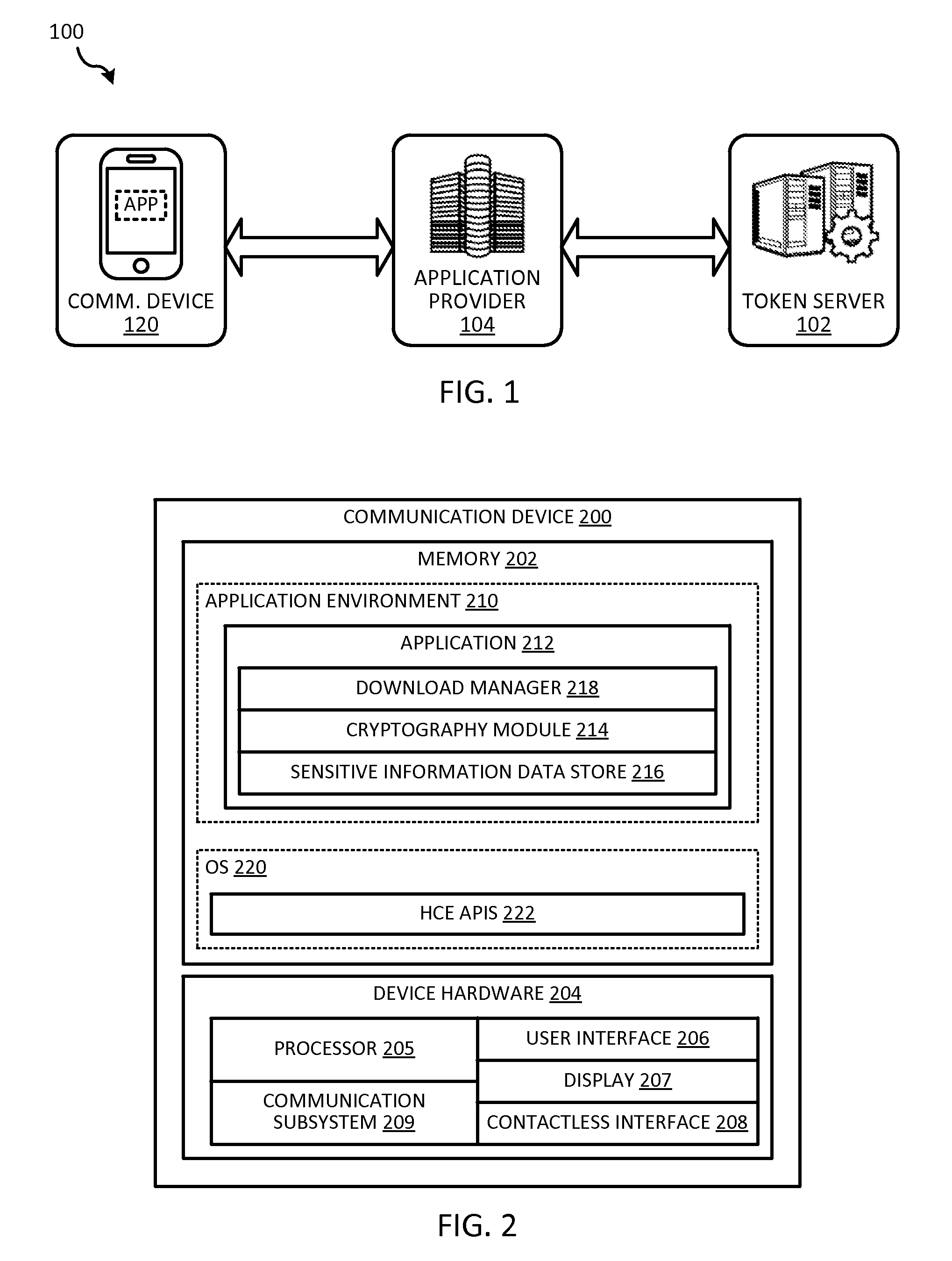

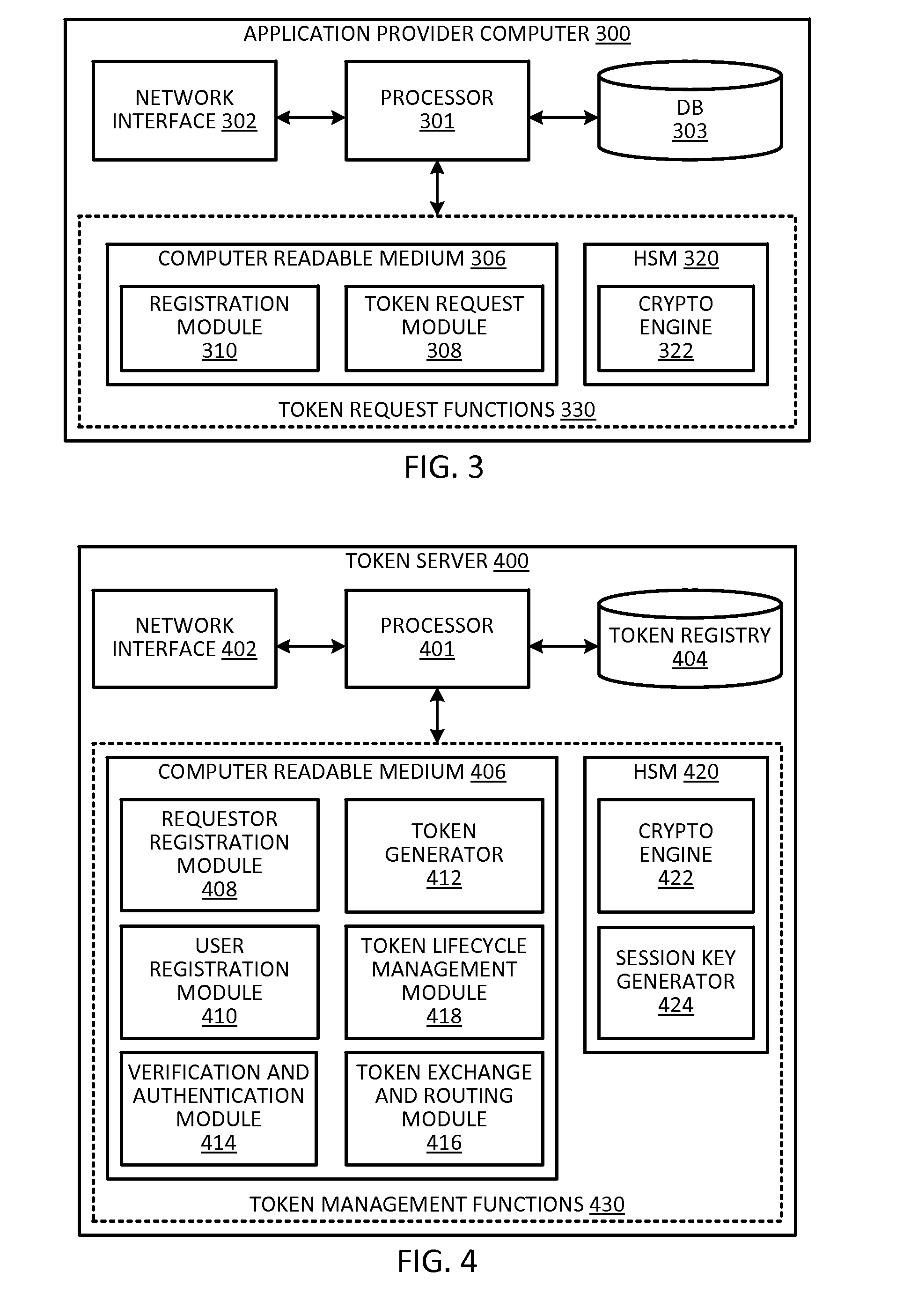

Token security on a communication device

ActiveUS20150312038A1Improve securityUser identity/authority verificationPayment architectureUser authenticationHash table

Techniques for enhancing the security of storing sensitive information or a token on a communication device may include sending a request for the sensitive information or token. The communication device may receive a session key encrypted with a hash value derived from user authentication data that authenticates the user of the communication device, and the sensitive information or token encrypted with the session key. The session key encrypted with the hash value, and the sensitive information or token encrypted with the session key can be stored in a memory of the communication device.

Owner:VISA INT SERVICE ASSOC

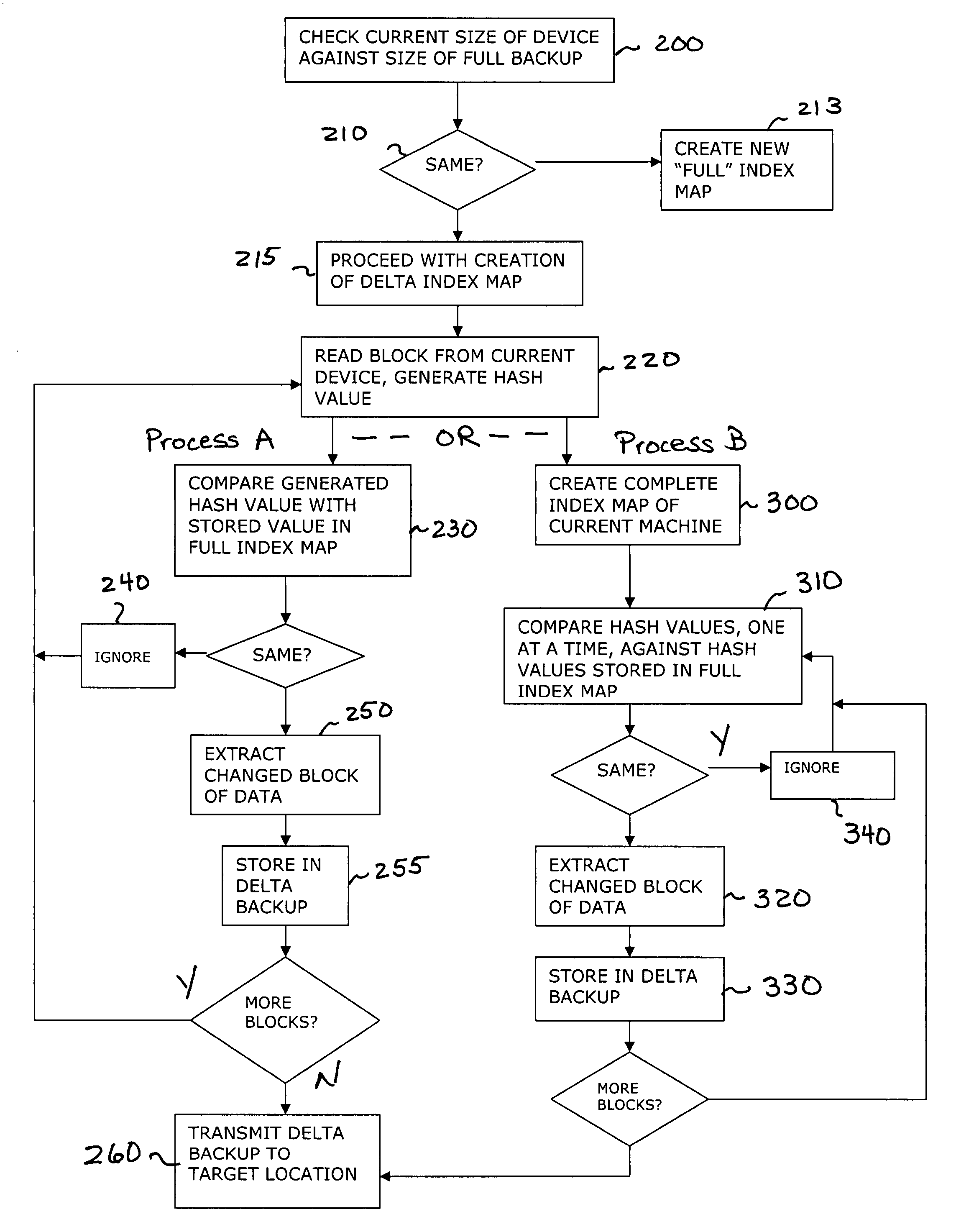

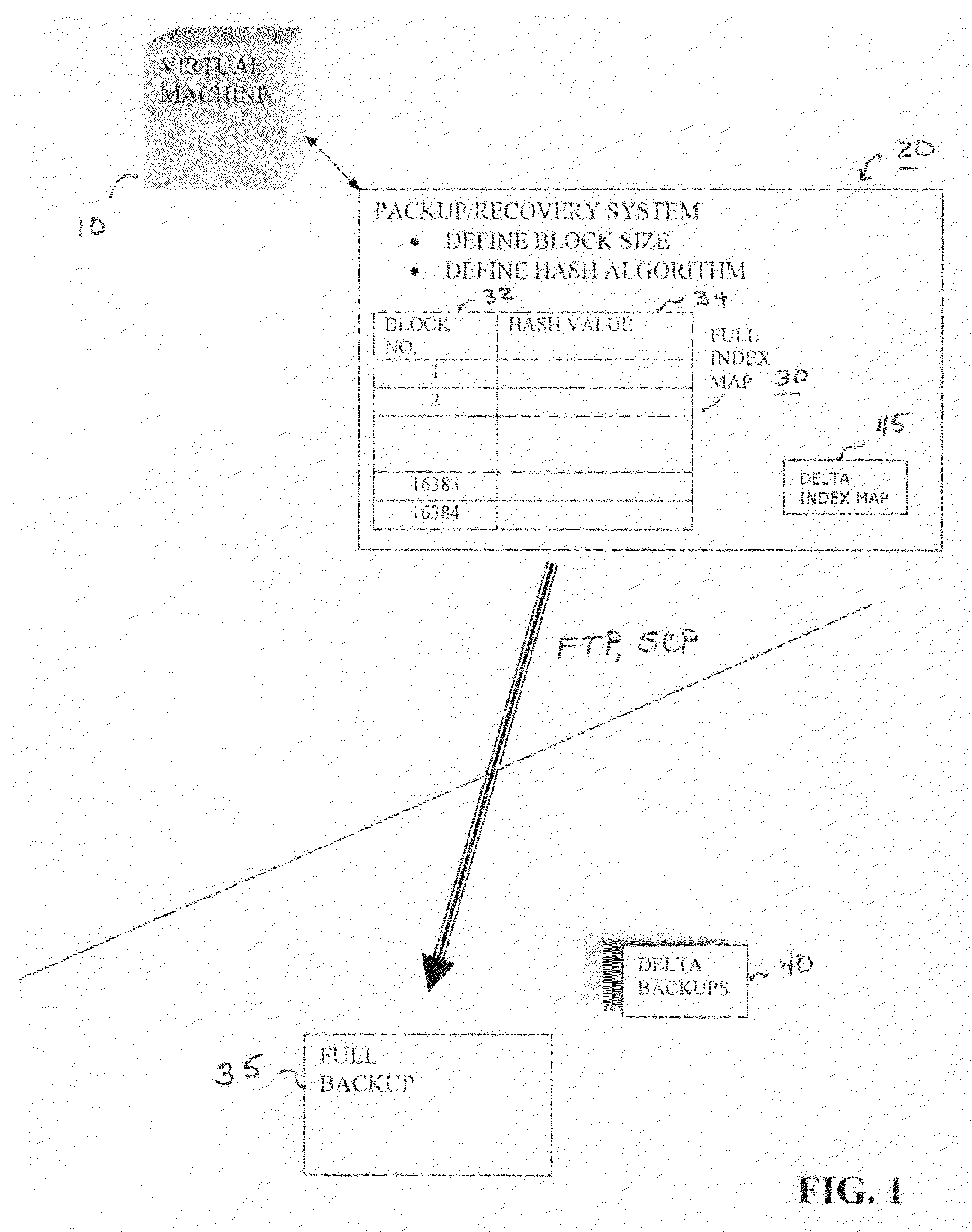

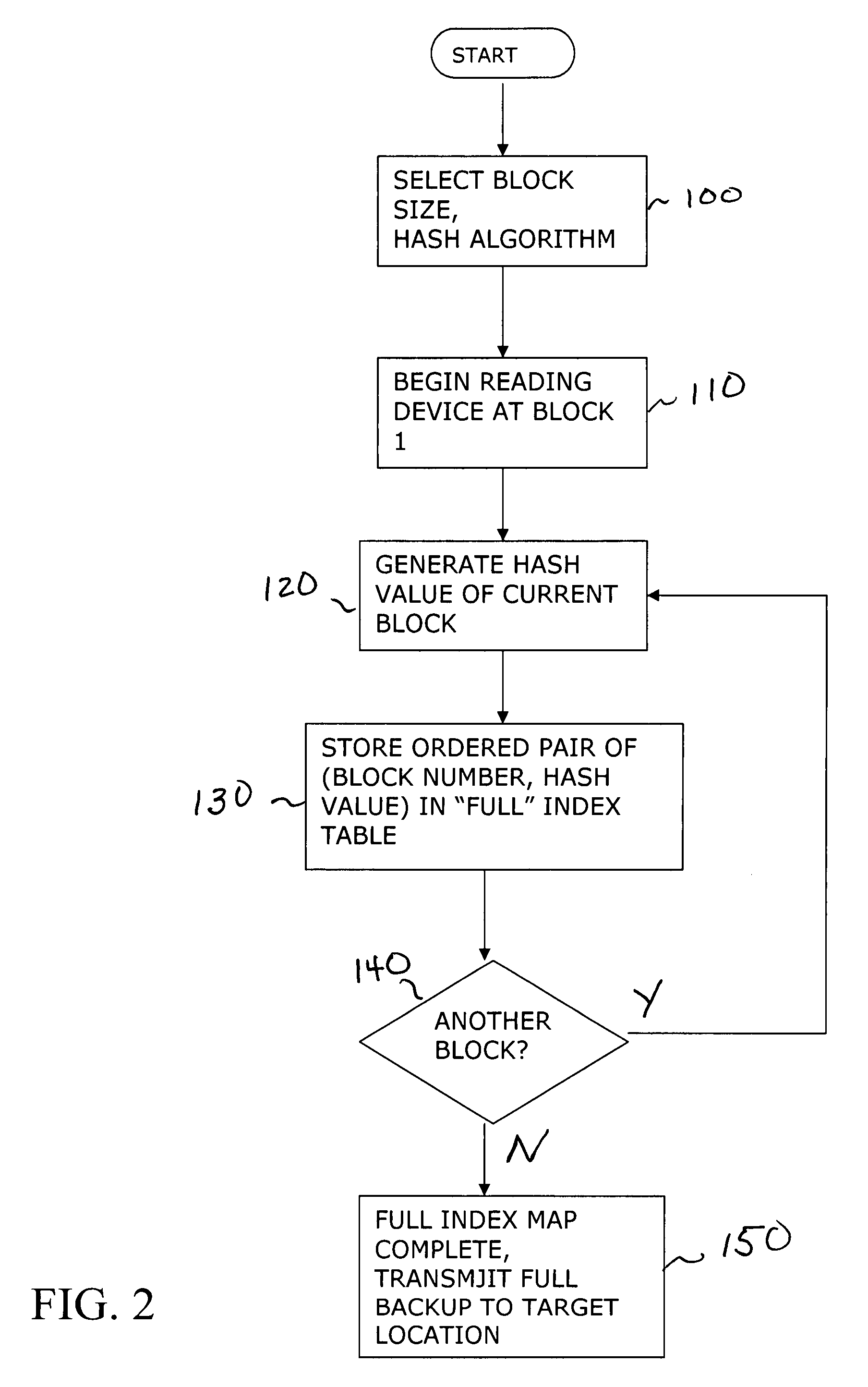

Method and apparatus for providing virtual machine backup

InactiveUS20070208918A1Memory loss protectionError detection/correctionComputerized systemComputer science

A system and method for creating computer system backups, particularly well-suited for performing backups of virtual machines. The method starts by reading the current state of the machine, in blocks of a constant size, and creates a “FULL” index of block numbers and a hash value associated with the data within that block, while at the same time creating a FULL backup of the machine (the FULL backup then stored at an off-site target location). Once the FULL index map is defined, subsequent DELTA backups are created by reading the current state of the device in the same block fashion and generating updated hash values for each data block. The newly-generated hash values are compared against the values stored in the FULL index map. If the hash numbers for a particular block do not match, this is an indication that the data within that block has changed since the last FULL backup was created. Once all of the “changed” data blocks have been identified to form a DELTA backup, a communication connection is opened in the network and the DELTA backup is sent to the off-site target location.

Owner:PHD TECH

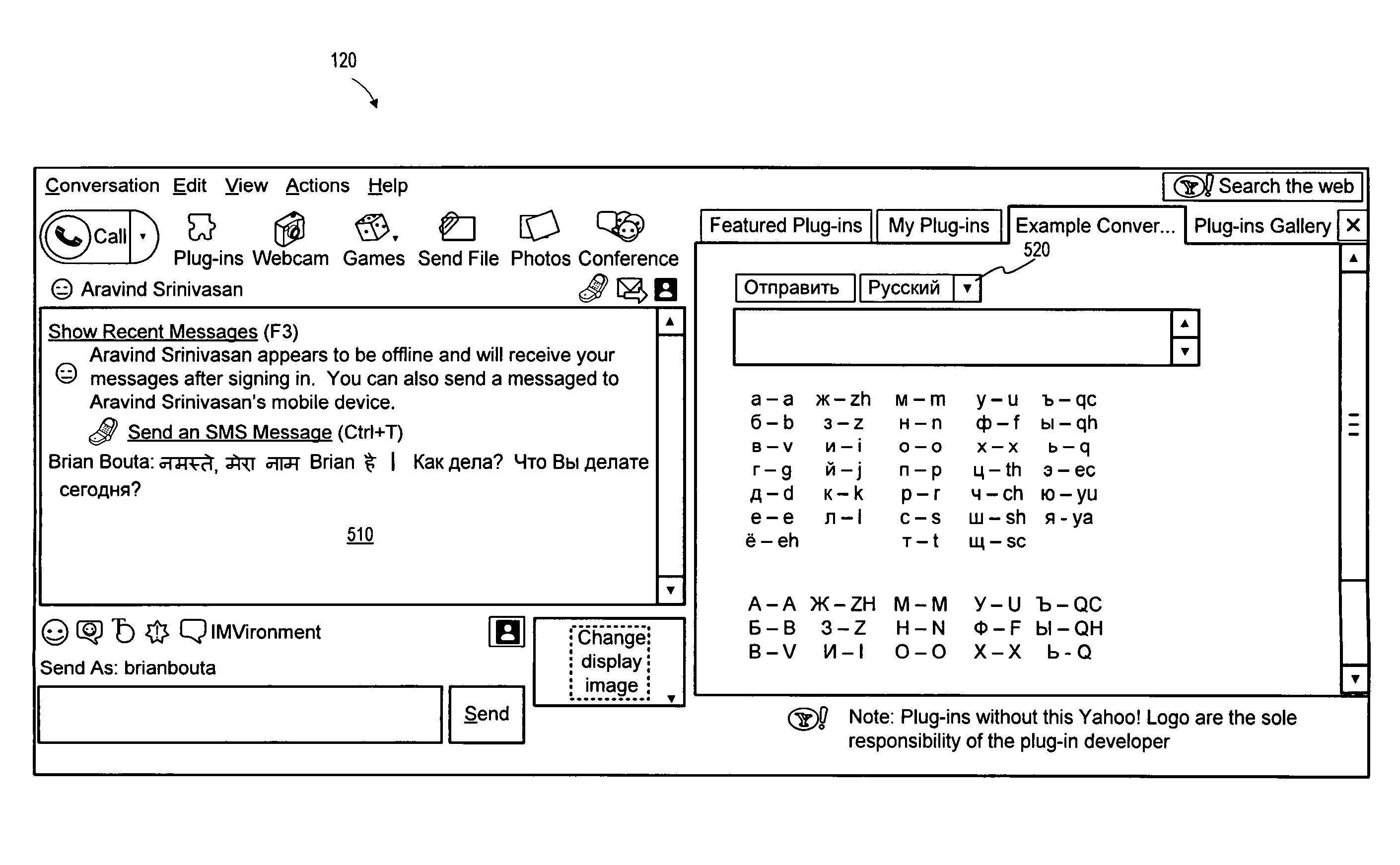

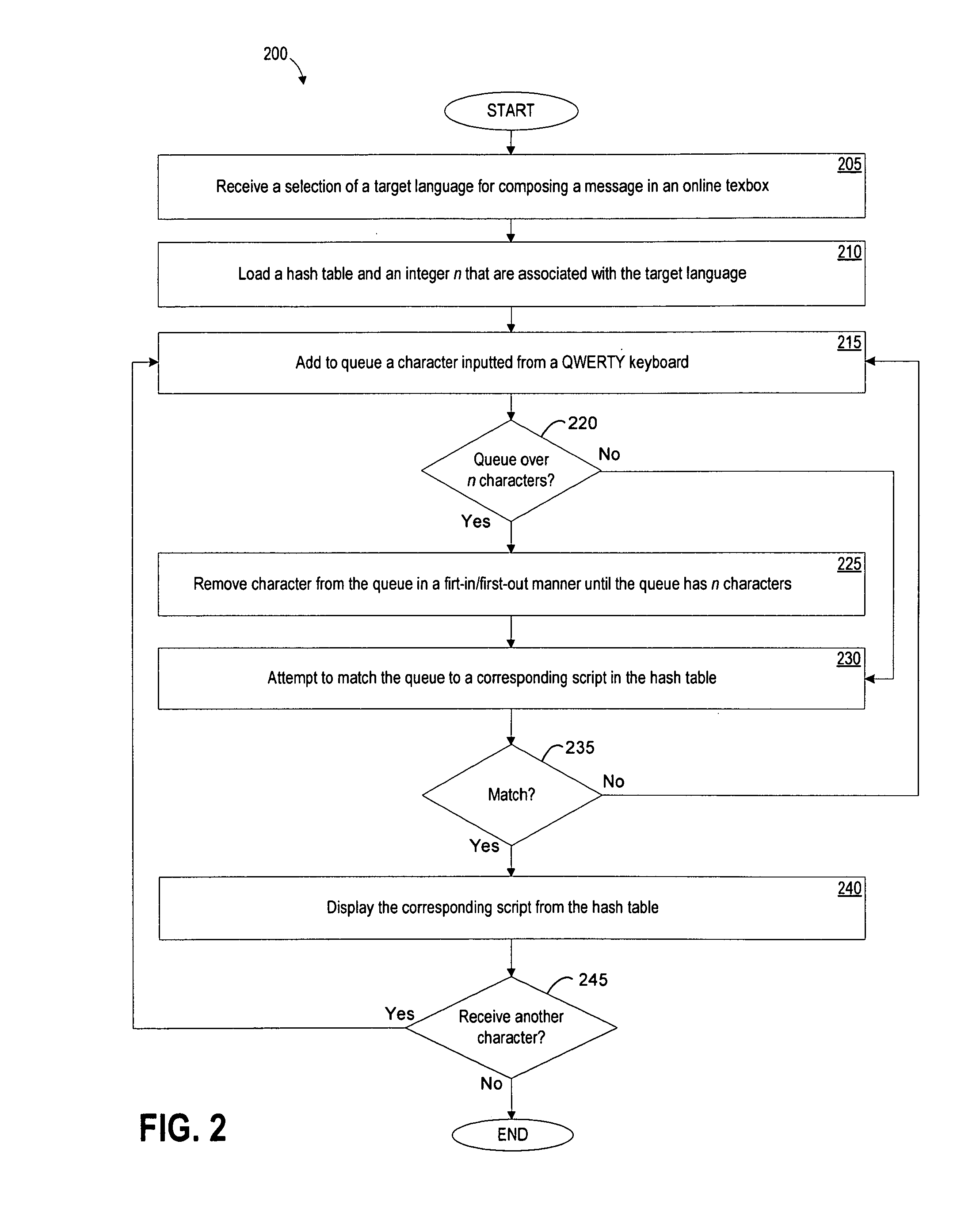

Composing a message in an online textbox using a non-latin script

ActiveUS8122353B2Input/output for user-computer interactionSpeech analysisLatin scriptMapping techniques

A method and an apparatus are provided for composing a message in an online textbox using a non-Latin script. In one example, the method includes receiving a selection of a target language for composing the message in the online textbox, loading a hash table and an integer n that are associated with the target language, adding to a queue a character inputted from a QWERTY keyboard, and applying appropriate parsing and mapping techniques to the queue using the hash table and the integer n to display an appropriate script of the target language.

Owner:YAHOO ASSETS LLC

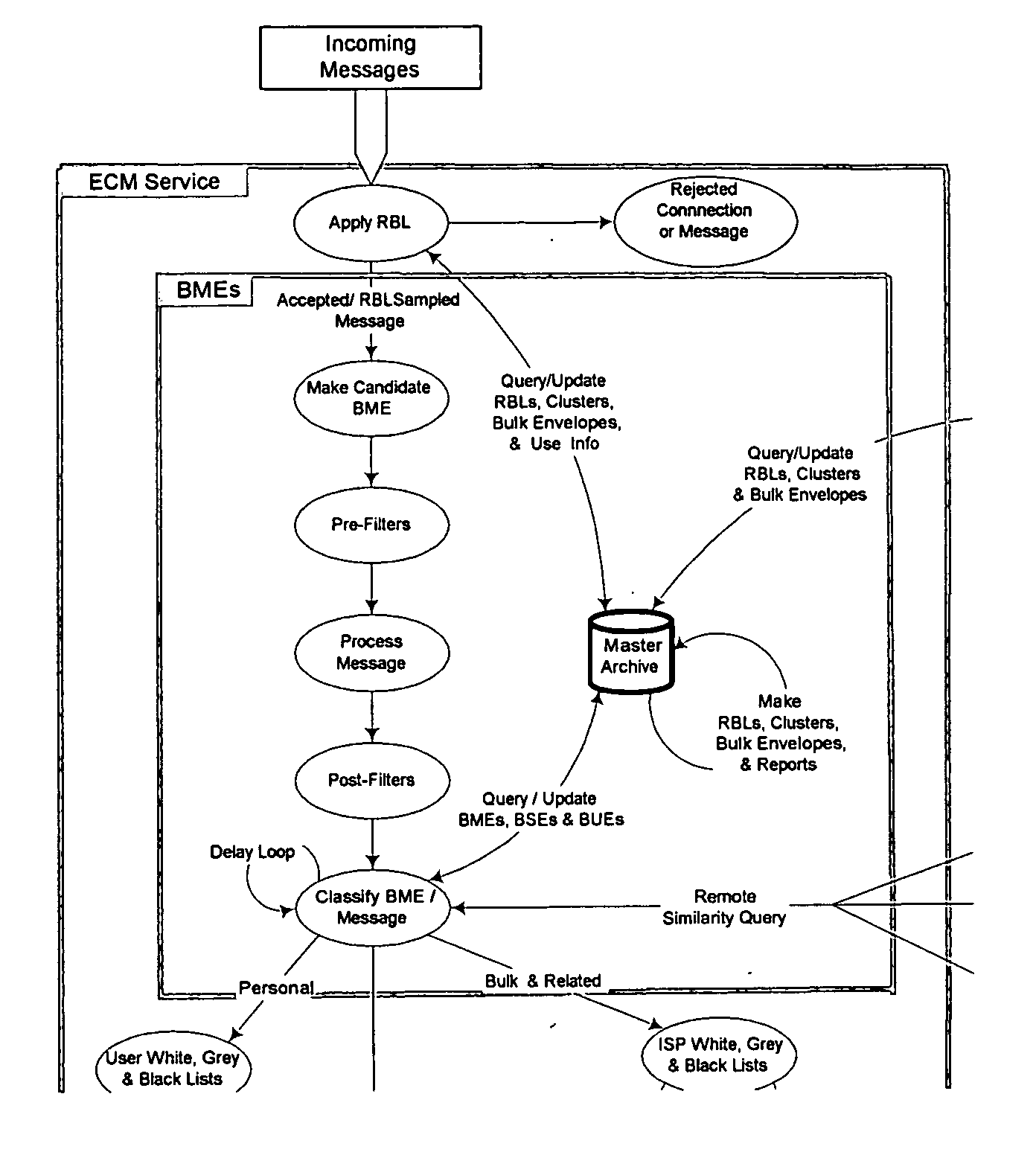

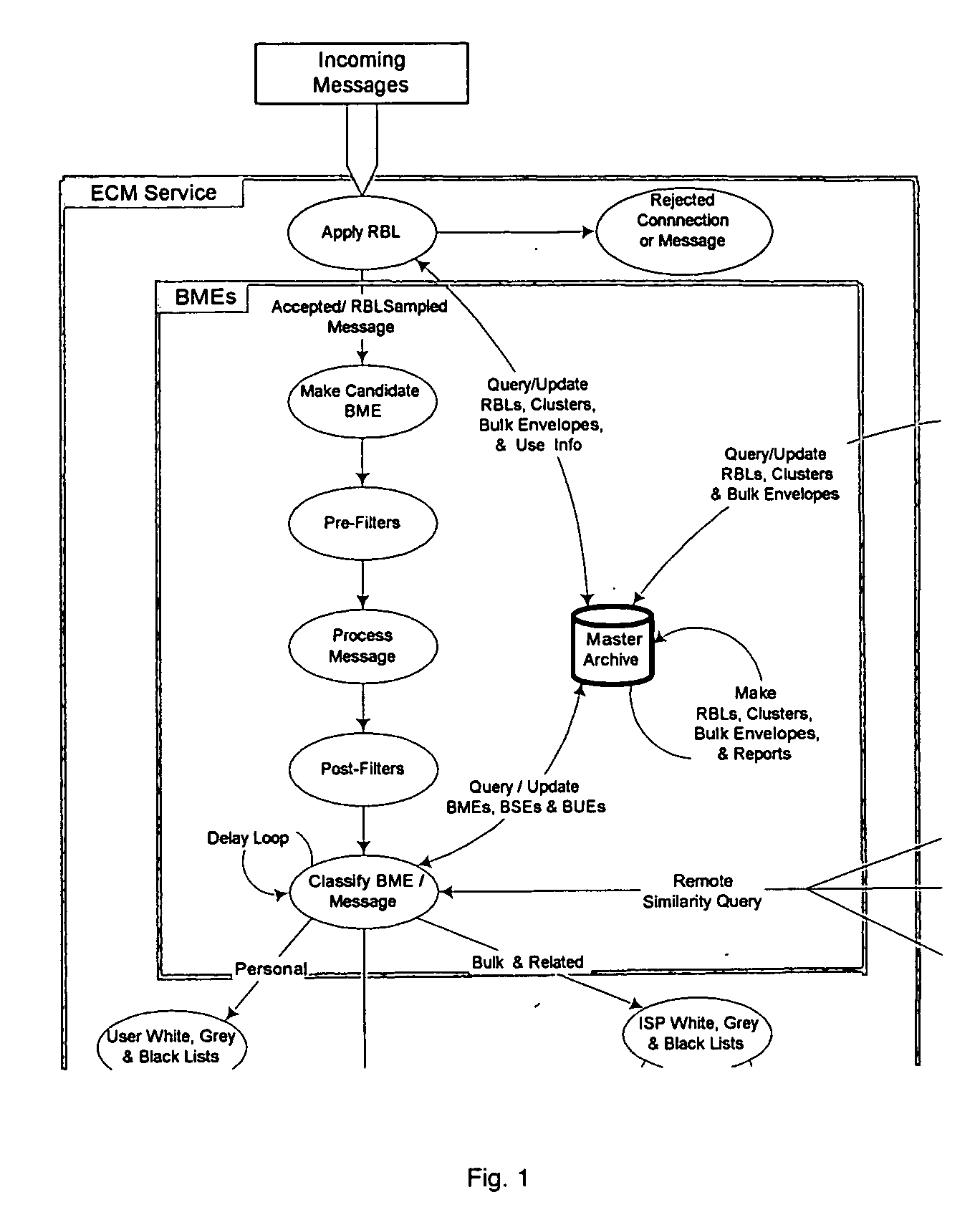

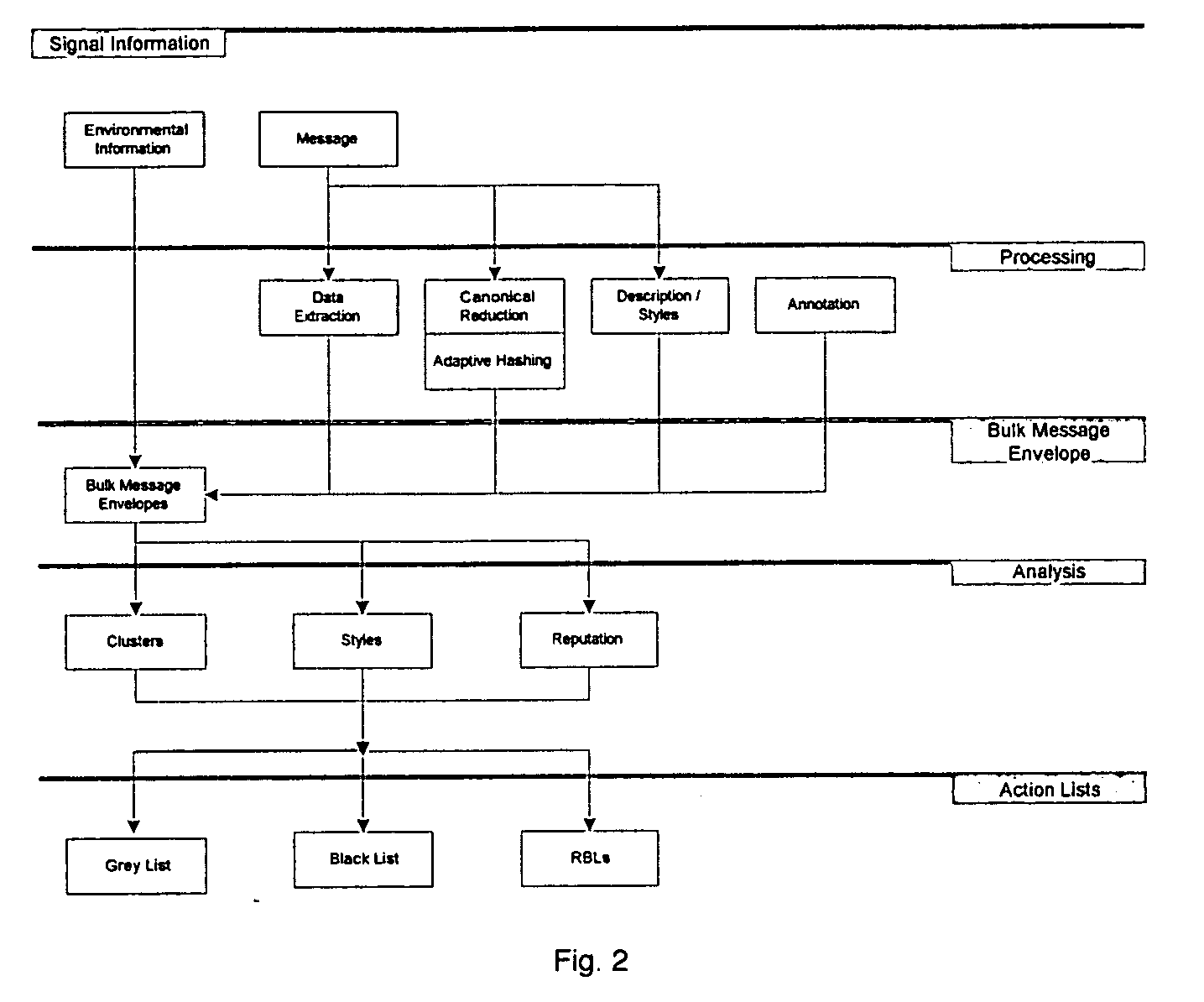

System and method for the classification of electronic communication

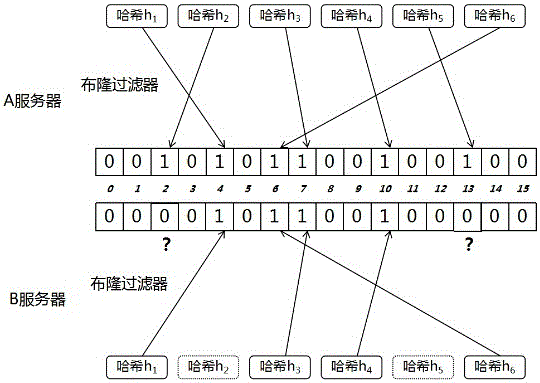

InactiveUS20060168006A1Multiple digital computer combinationsData switching networksElectronic communicationSpamming

From an electronic message, we extract any destinations in selectable links, and we reduce the message to a “canonical” (standard) form that we define. It minimizes the possible variability that a spammer can introduce, to produce unique copies of a message. We then make multiple hashes. These can be compared with those from messages received by different users to objectively find bulk messages. From these, we build hash tables of bulk messages and make a list of destinations from the most frequent messages. The destinations can be used in a Real time Blacklist (RBL) against links in bodies of messages. Similarly, the hash tables can be used to identify other messages as bulk or spam. Our method can be used by a message provider or group of users (where the group can do so in a p2p fashion) independently of whether any other provider or group does so. Each user can maintain a “gray list” of bulk mail senders that she subscribes to, to distinguish between wanted bulk mail and unwanted bulk mail (spam). The gray list can be used instead of a whitelist, and is far easier for the user to maintain.

Owner:METASWARM INC

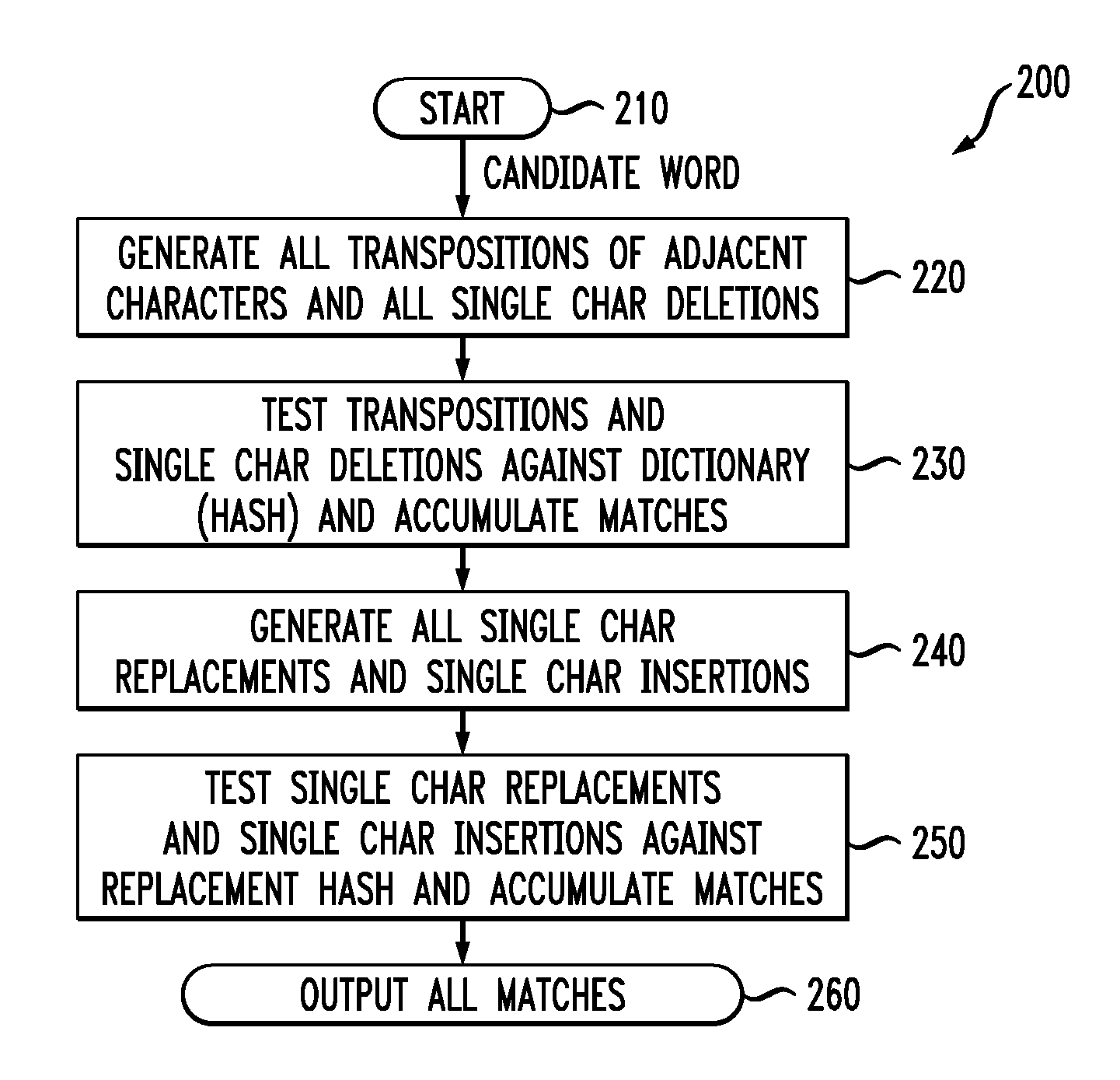

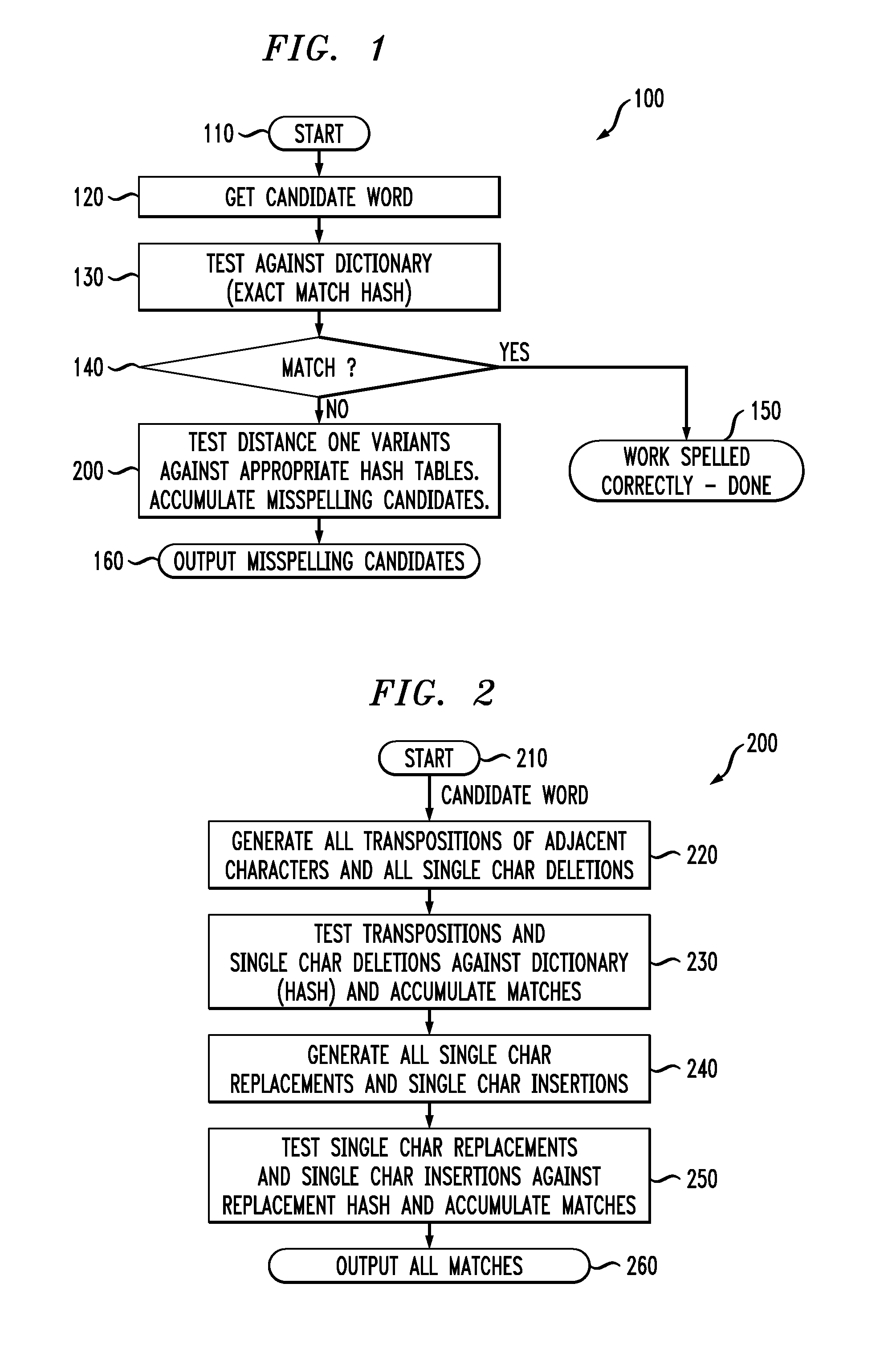

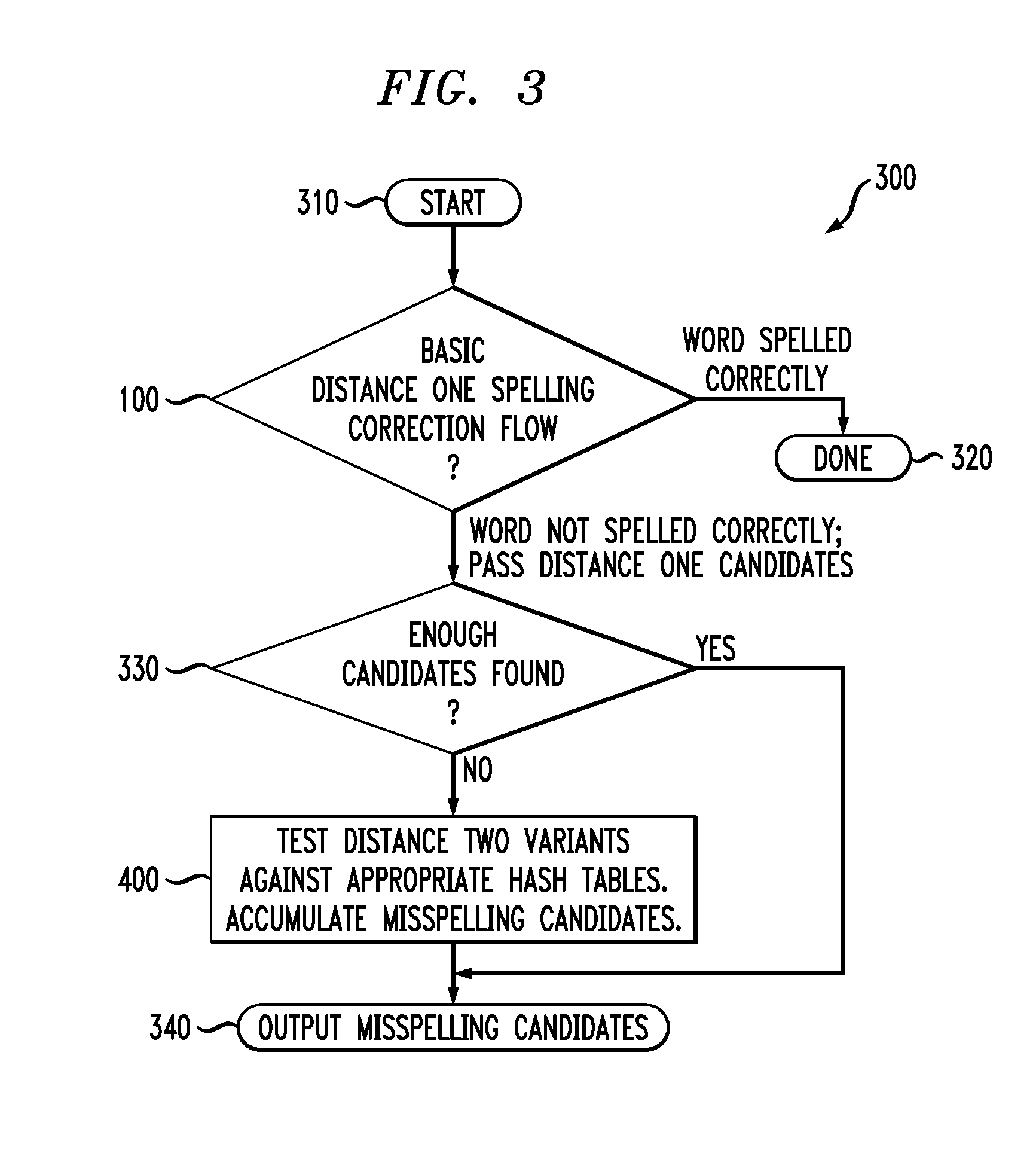

Methods and apparatus for performing spelling corrections using one or more variant hash tables

ActiveUS20080059876A1Natural language data processingSpecial data processing applicationsAlgorithmHash table

Methods and apparatus are provided for performing spelling corrections using one or more variant hash tables. The spelling of at least one candidate word is corrected by obtaining at least one variant dictionary hash table based on variants of a set of known correctly spelled words, wherein the variants are obtained by applying one or more of a deletion, insertion, replacement, and transposition operation on the correctly spelled words; obtaining from the candidate word one or more lookup variants using one or more of the deletion, insertion, replacement, and transposition operations; evaluating one or more of the candidate word and the lookup variants against the at least one variant dictionary hash table; and indicating a candidate correction if there is at least one match in the at least one variant dictionary hash table.

Owner:MAPLEBEAR INC

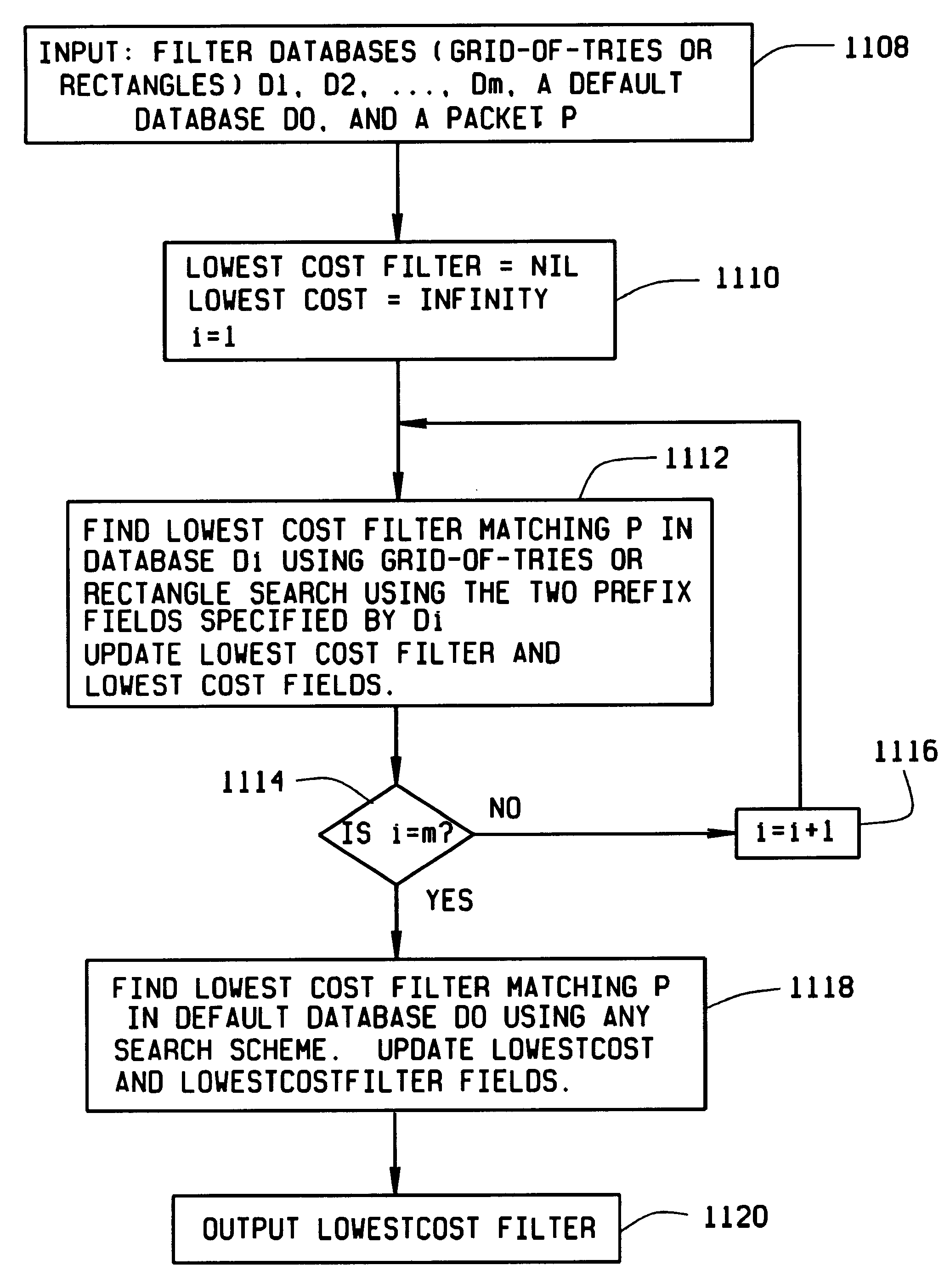

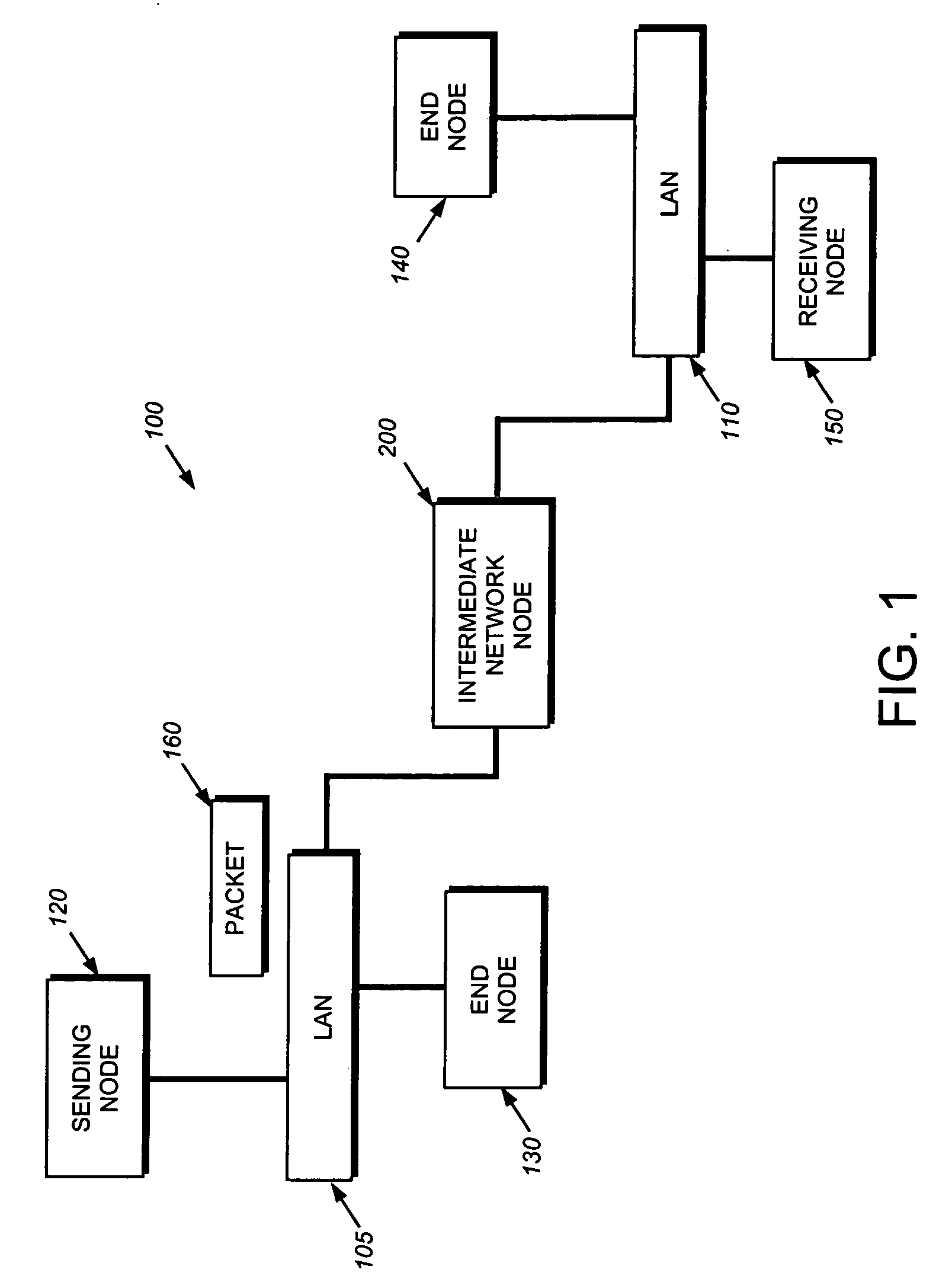

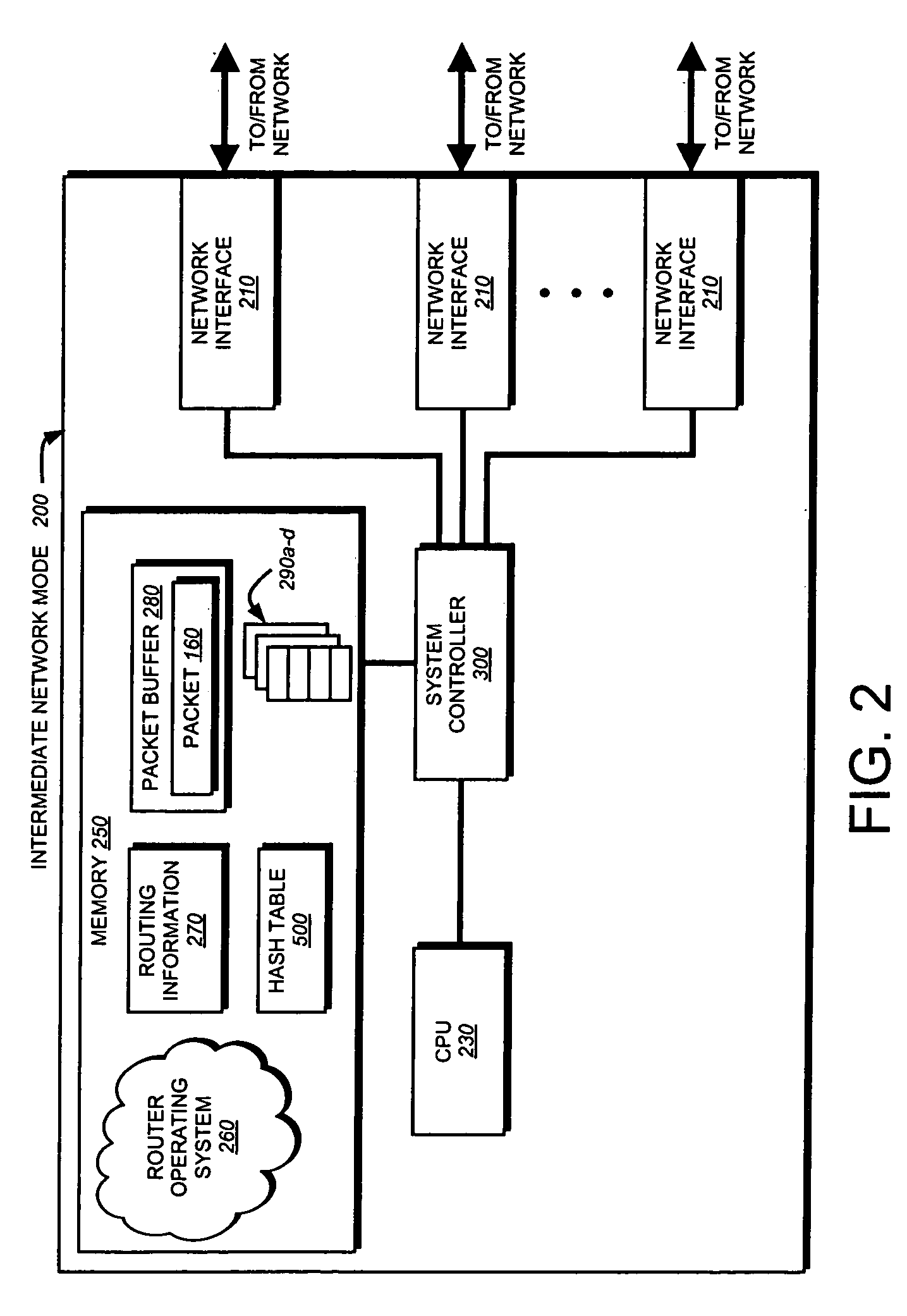

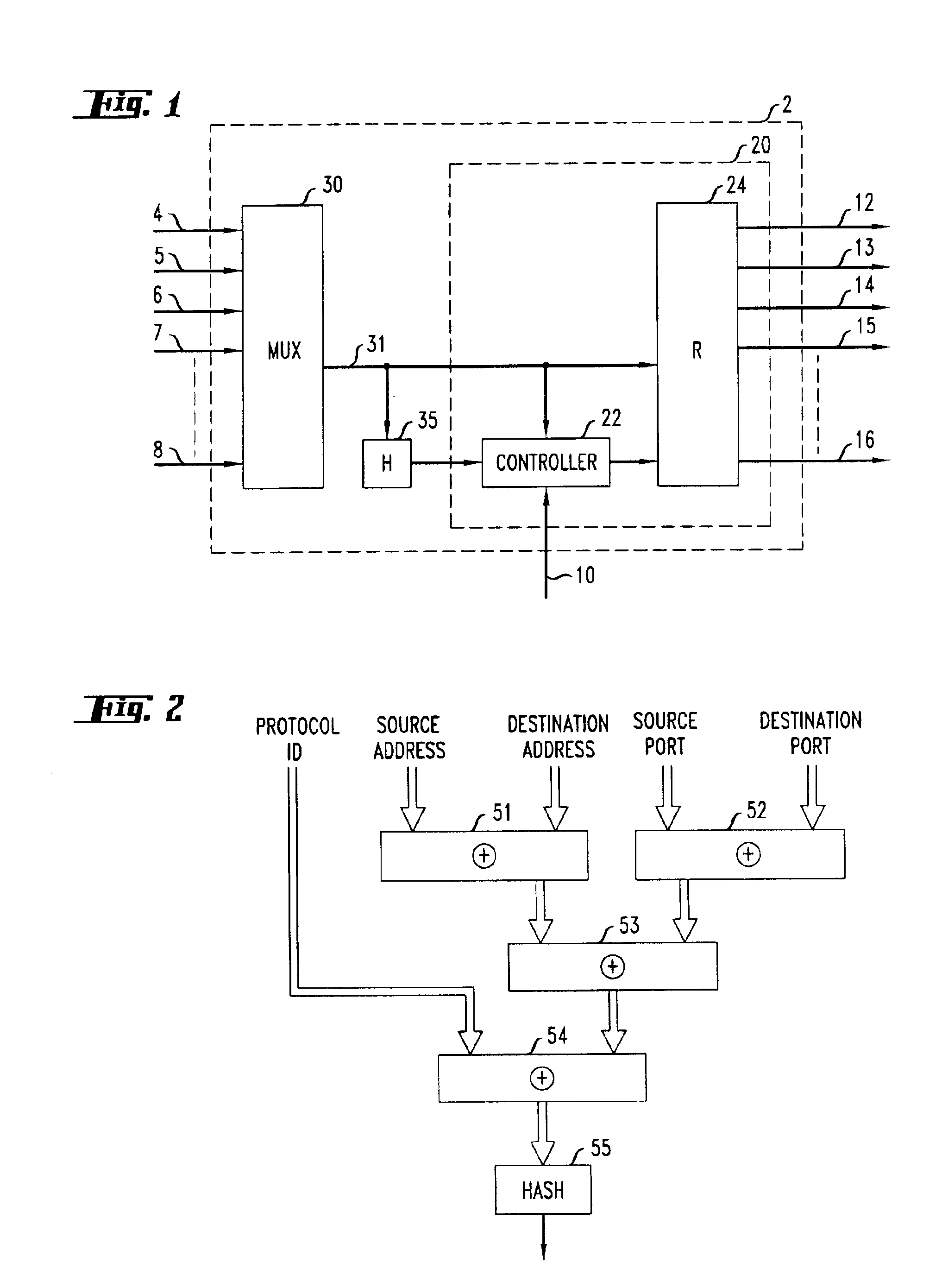

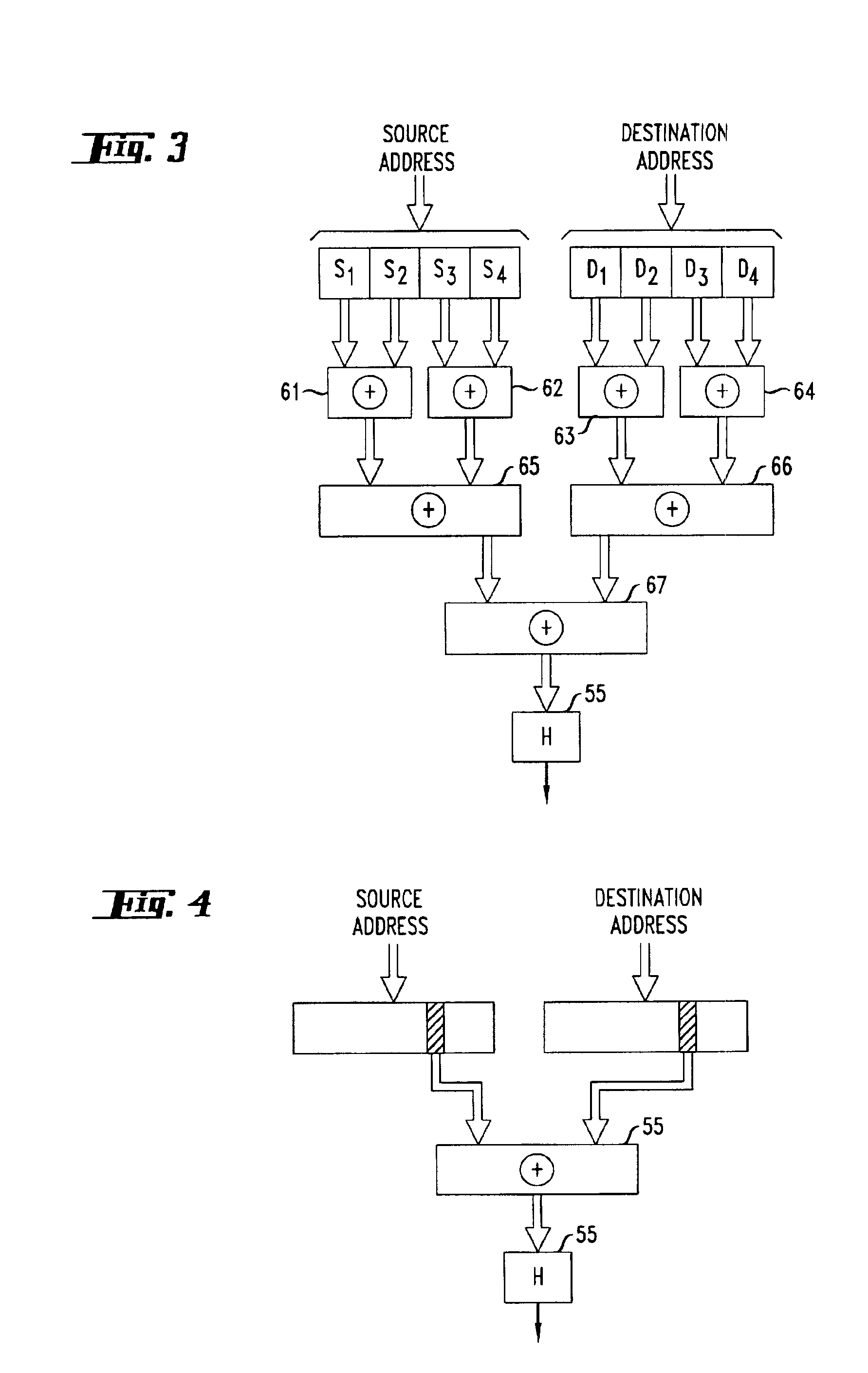

Fast scaleable methods and devices for layer four switching

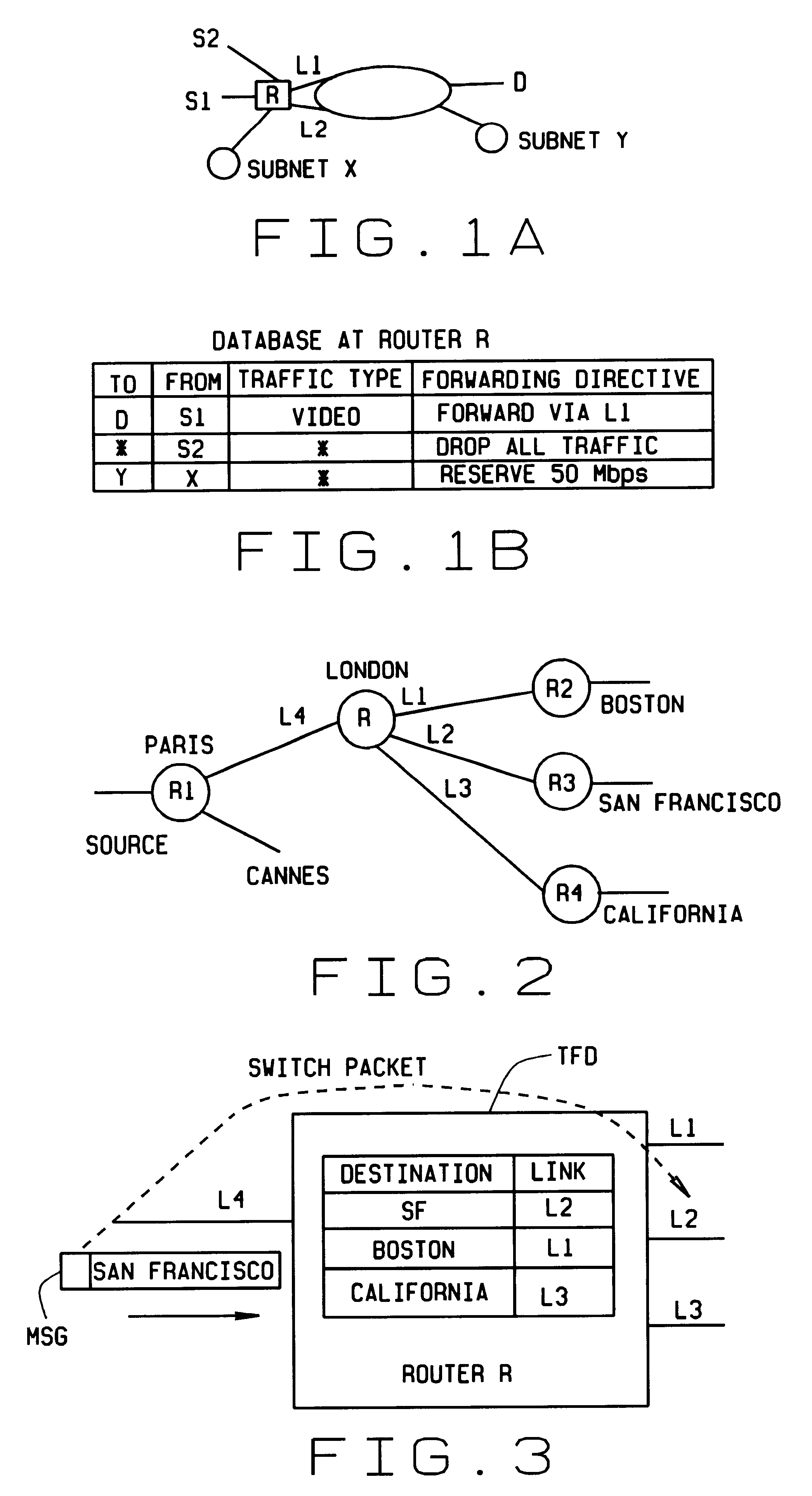

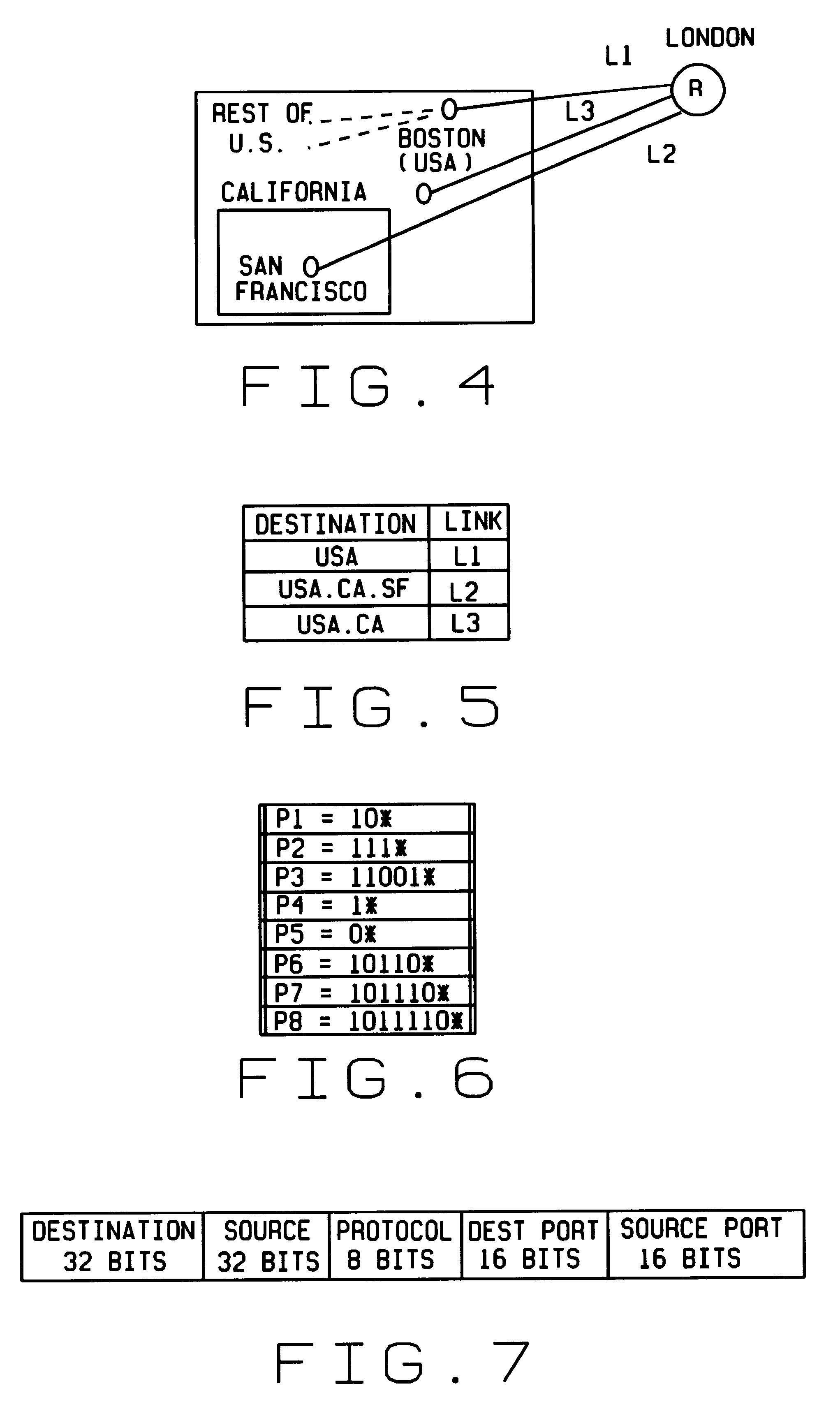

InactiveUS6212184B1Enormous saving in table sizeAvoid the needError preventionTransmission systemsPrecomputationRoute filtering

Fast, scalable methods and devices are provided for layer four switching in a router as might be found in the Internet. In a first method, a grid of tries, which are binary branching trees, is constructed from the set of routing filters. The grid includes a dest-trie and a number of source tries. To avoid memory blowup, each filter is stored in exactly one trie. The tries are traversed to find the lowest cost routing. Switch pointers are used to improve the search cost. In an extension of this method, hash tables may be constructed that point to grid-of-tries structures. The hash tables may be used to handle combinations of port fields and protocol fields. Another method is based on hashing, in which searches for lowest cost matching filters take place in bit length tuple space. Rectangle searching with precomputation and markers are used to eliminate a whole column of tuple space when a match occurs, and to eliminate the rest of a row when no match is found. Various optimizations of these methods are also provided. A router incorporating memory and processors implementing these methods is capable of rapid, selective switching of data packets on various types of networks, and is particularly suited to switching on Internet Protocol networks.

Owner:WASHINGTON UNIV IN SAINT LOUIS

Method and apparatus for prefetching recursive data structures

InactiveUS6848029B2Improve cache hit ratioPotential throughput of the computer systemMemory architecture accessing/allocationMemory adressing/allocation/relocationApplication softwareCache hit rate

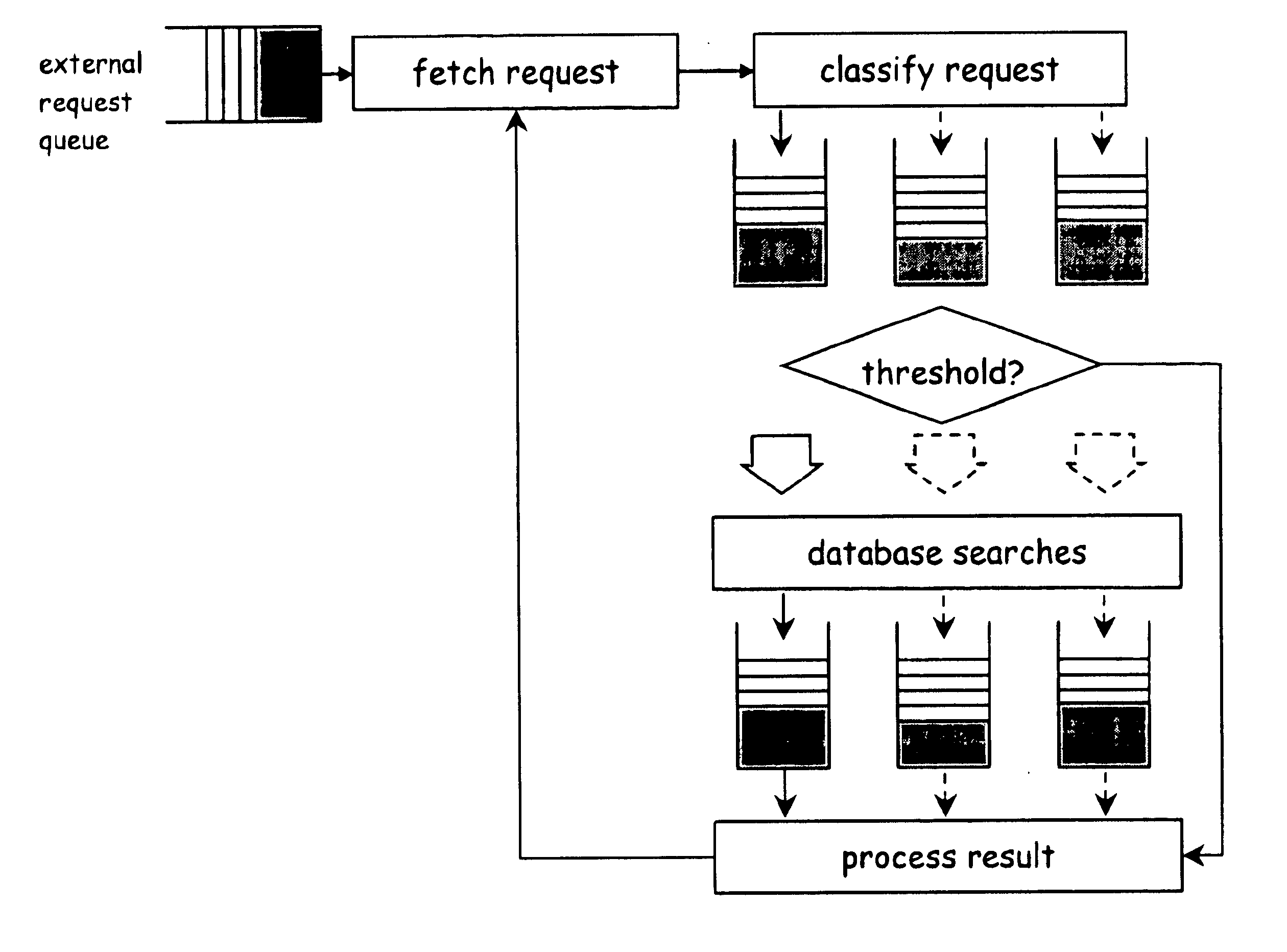

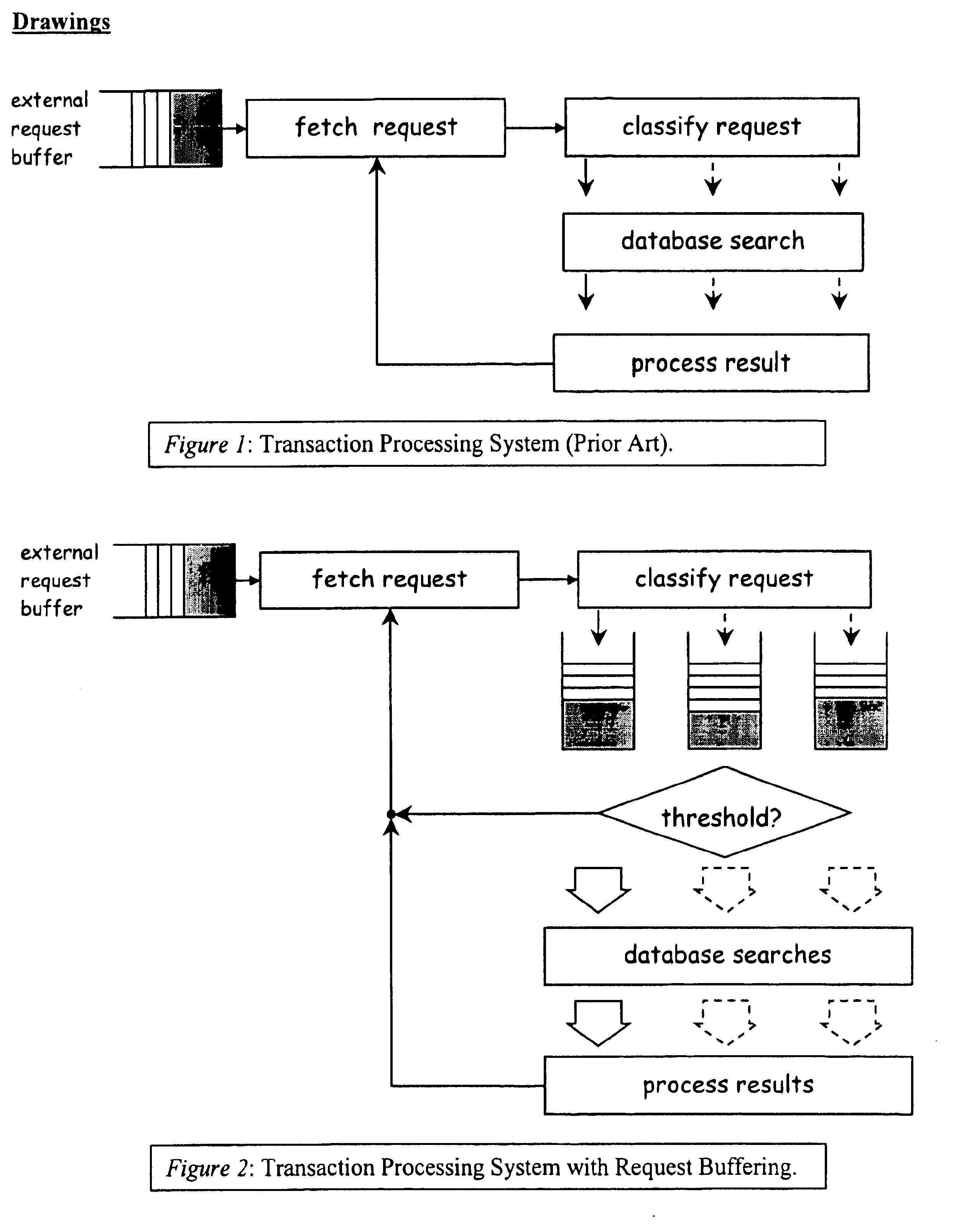

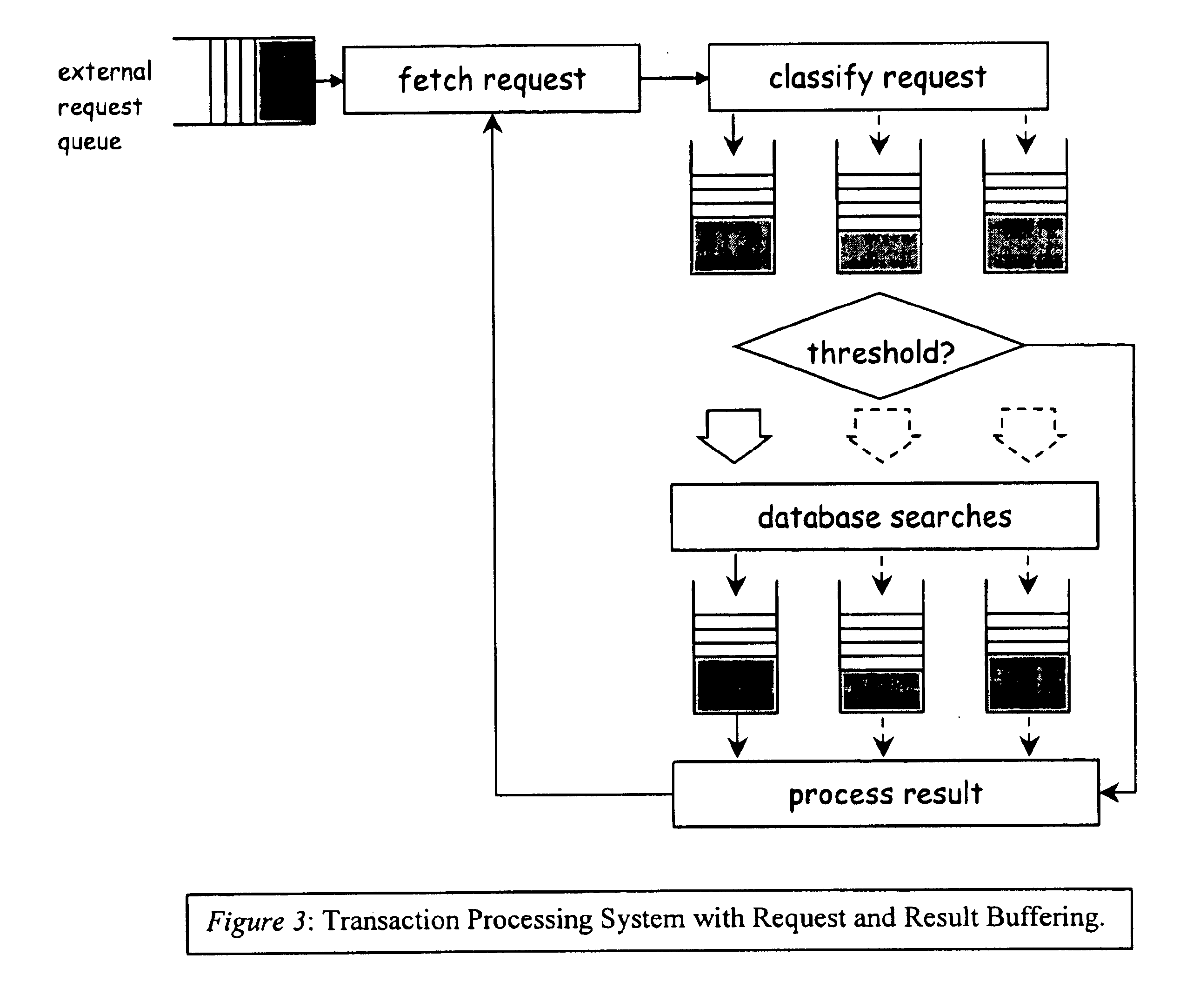

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. The invention is applicable to those data structure accesses in which the traversal path is dynamically determined. The invention does this by aggregating traversal requests and then pipelining the traversal of aggregated requests on the data structure. Once enough traversal requests have been accumulated so that most of the memory latency can be hidden by prefetching the accumulated requests, the data structure is traversed by performing software pipelining on some or all of the accumulated requests. As requests are completed and retired from the set of requests that are being traversed, additional accumulated requests are added to that set. This process is repeated until either an upper threshold of processed requests or a lower threshold of residual accumulated requests has been reached. At that point, the traversal results may be processed.

Owner:DIGITAL CACHE LLC +1

Detecting bootkits resident on compromised computers

ActiveUS9251343B1Unauthorized memory use protectionPlatform integrity maintainanceComputer hardwareHash table

Techniques detect bootkits resident on a computer by detecting a change or attempted change to contents of boot locations (e.g., the master boot record) of persistent storage, which may evidence a resident bootkit. Some embodiments may monitor computer operations seeking to change the content of boot locations of persistent storage, where the monitored operations may include API calls performing, for example, WRITE, READ or APPEND operations with respect to the contents of the boot locations. Other embodiments may generate a baseline hash of the contents of the boot locations at a first point of time and a hash snapshot of the boot locations at a second point of time, and compare the baseline hash and hash snapshot where any difference between the two hash values constitutes evidence of a resident bootkit.

Owner:FIREEYE SECURITY HLDG US LLC

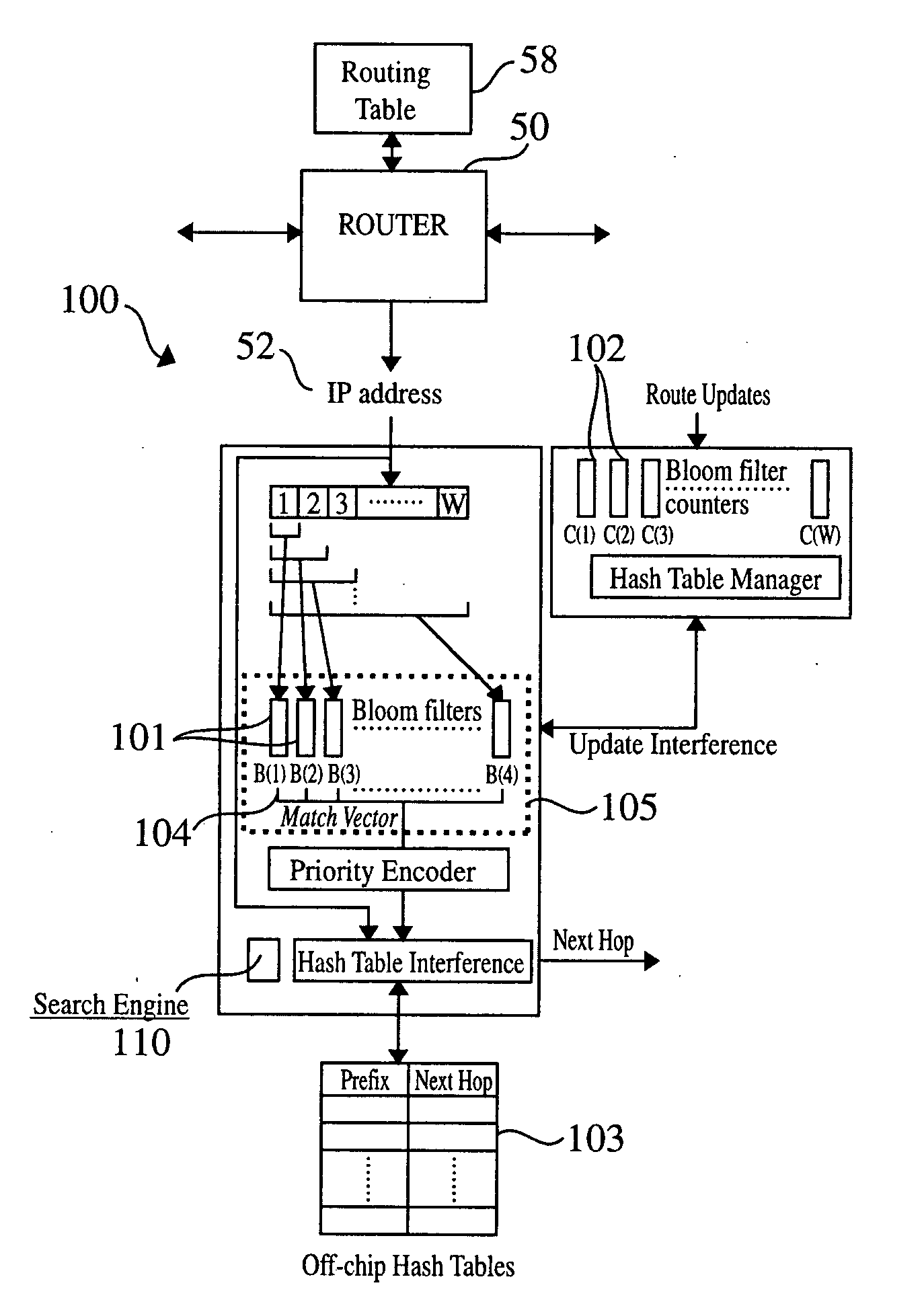

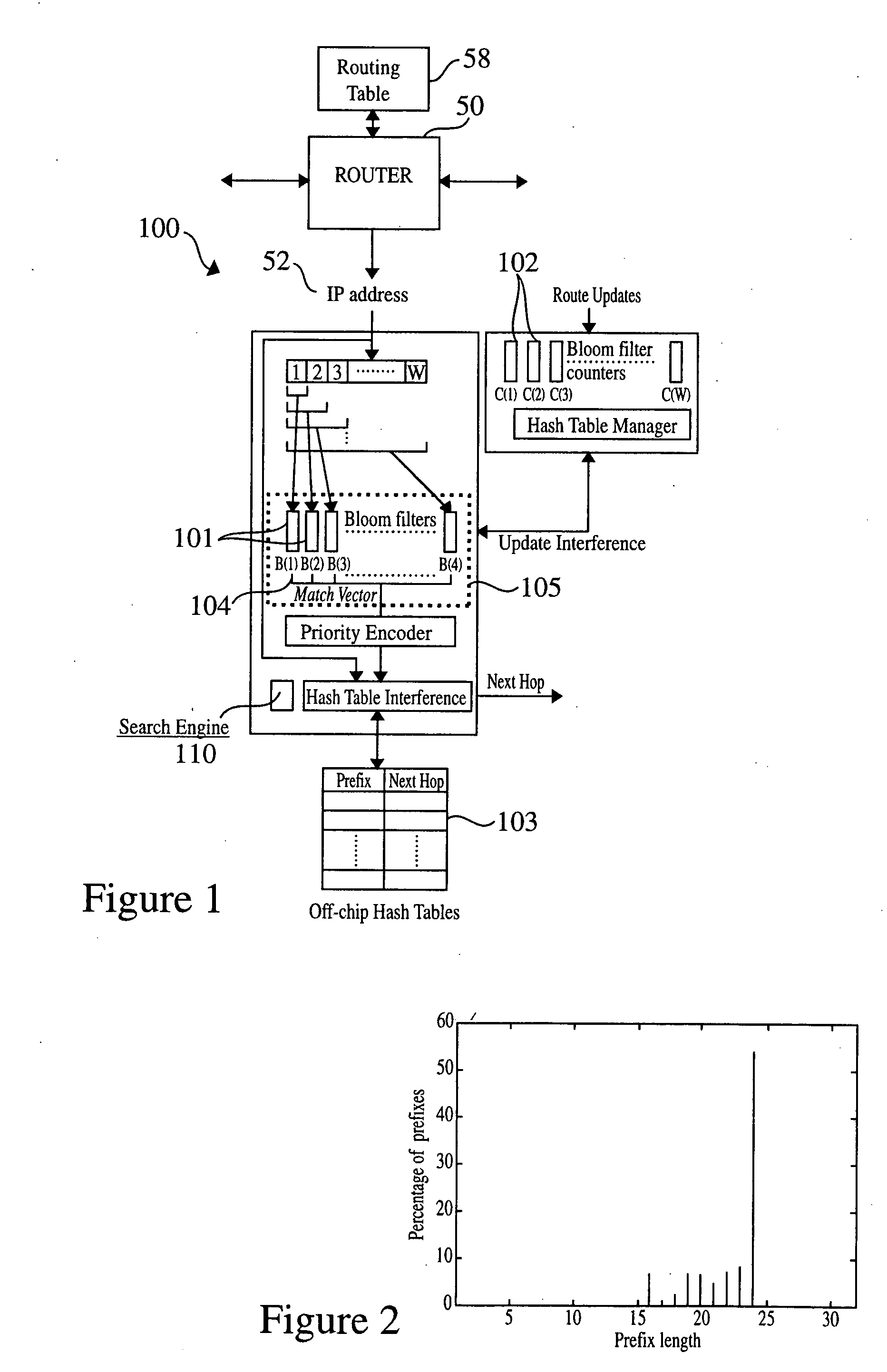

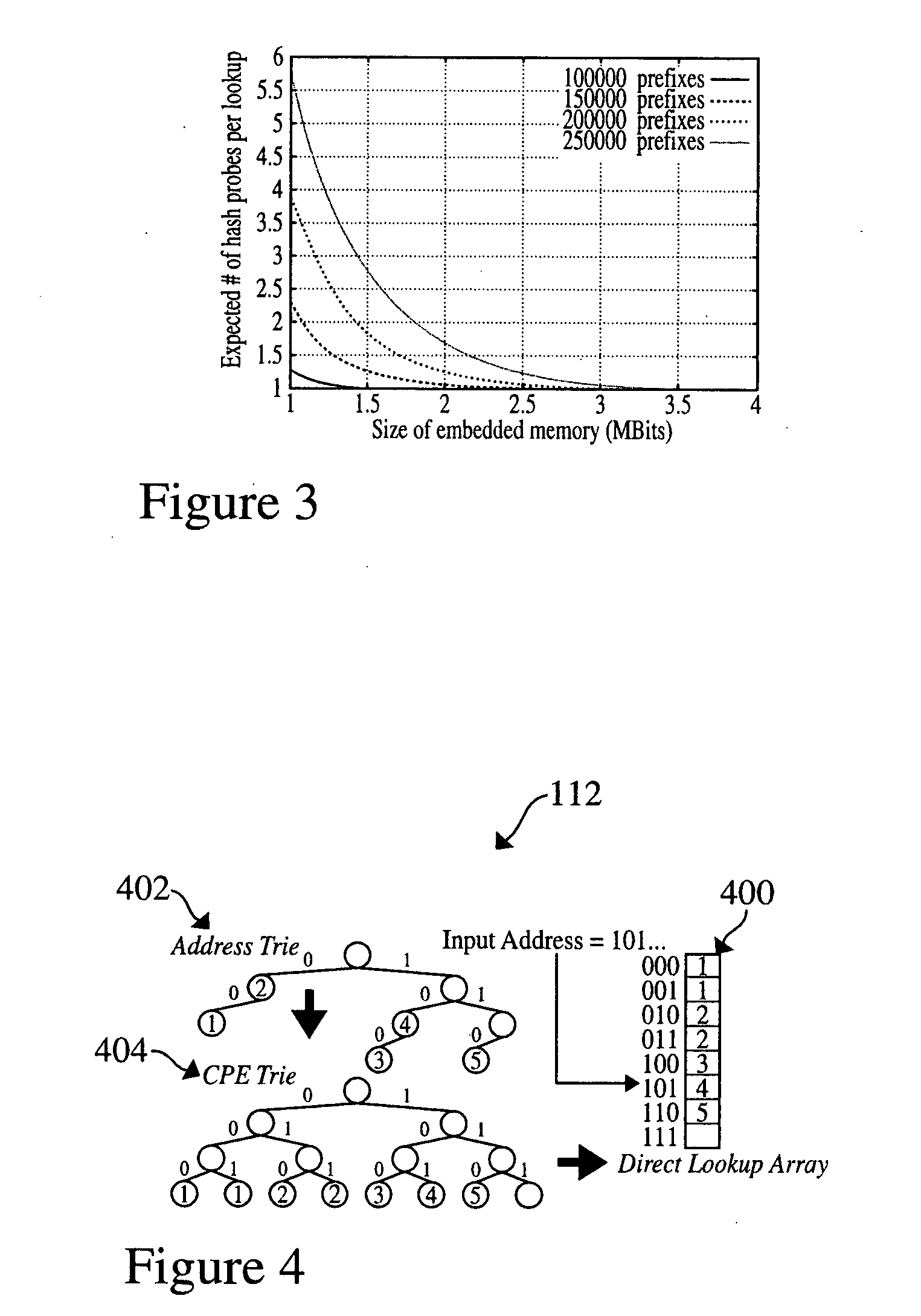

Method and system for performing longest prefix matching for network address lookup using bloom filters

ActiveUS20050195832A1Optimal average case performanceLower performance requirementsData switching by path configurationMultiple digital computer combinationsTheoretical computer scienceNetwork addressing

The present invention relates to a method and system of performing parallel membership queries to Bloom filters for Longest Prefix Matching, where address prefix memberships are determined in sets of prefixes sorted by prefix length. Hash tables corresponding to each prefix length are probed from the longest to the shortest match in the vector, terminating when a match is found or all of the lengths are searched. The performance, as determined by the number of dependent memory accesses per lookup, is held constant for longer address lengths or additional unique address prefix lengths in the forwarding table given that memory resources scale linearly with the number of prefixes in the forwarding table. For less than 2 Mb of embedded RAM and a commodity SRAM, the present technique achieves average performance of one hash probe per lookup and a worst case of two hash probes and one array access per lookup.

Owner:WASHINGTON UNIV IN SAINT LOUIS

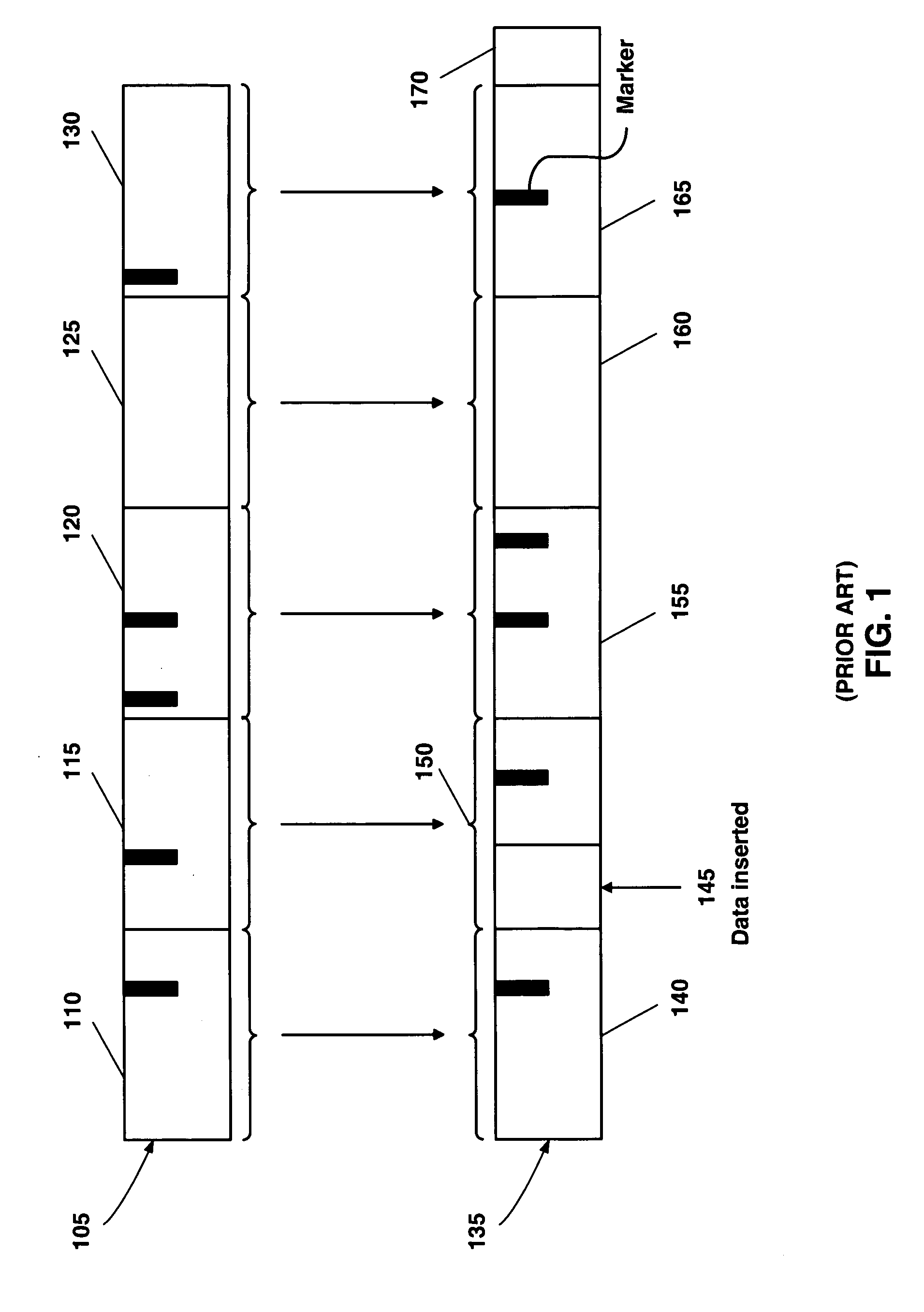

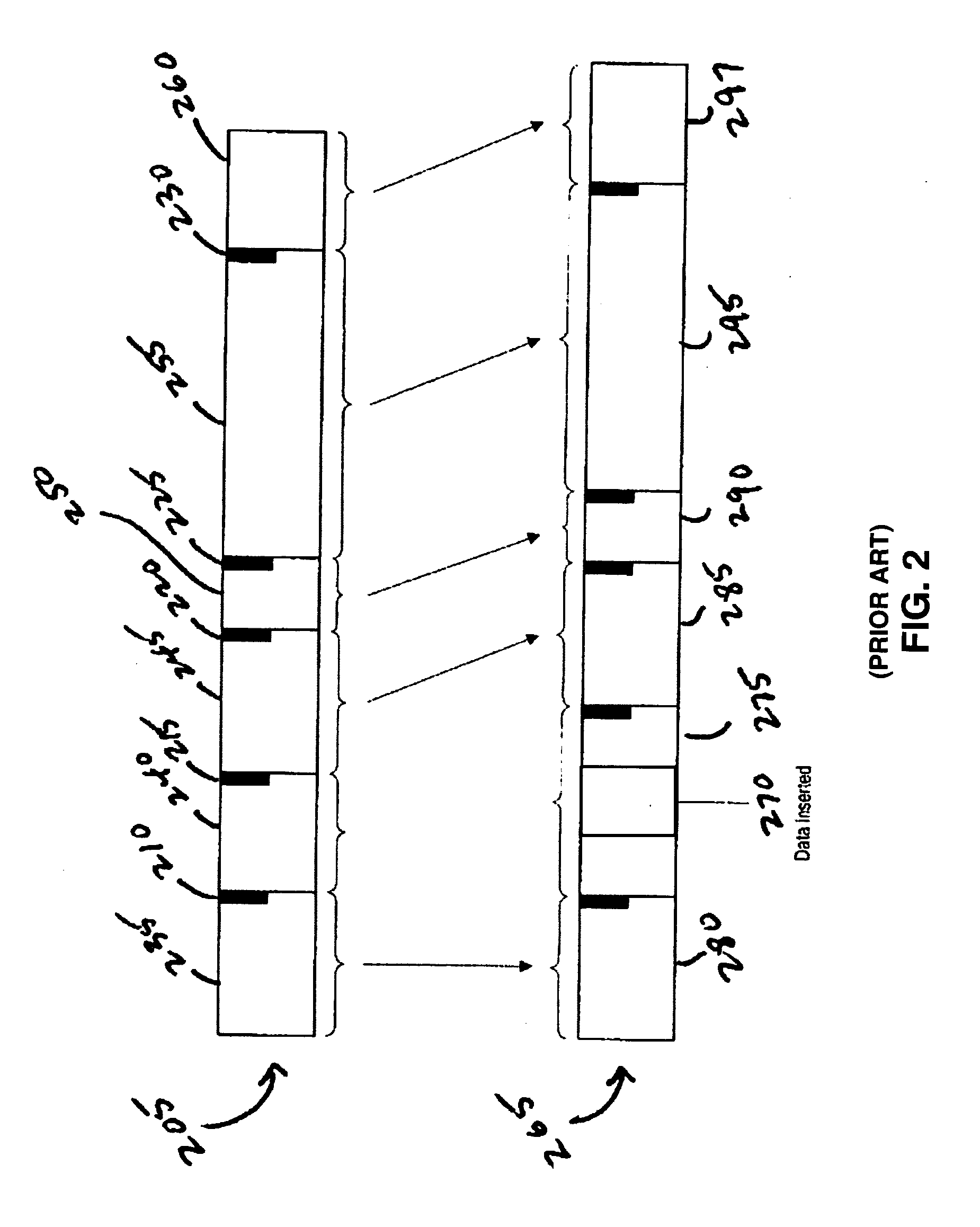

System and method for dividing data into predominantly fixed-sized chunks so that duplicate data chunks may be identified

InactiveUS20050091234A1Maximizes data storage efficiencyLow costData processing applicationsDigital data information retrievalData matchingTheoretical computer science

A data chunking system divides data into predominantly fixed-sized chunks such that duplicate data may be identified. The data chunking system may be used to reduce the data storage and save network bandwidth by allowing storage or transmission of primarily unique data chunks. The system may also be used to increase reliability in data storage and network transmission, by allowing an error affecting a data chunk to be repaired with an identified duplicate chunk. The data chunking system chunks data by selecting a chunk of fixed size, then moving a window along the data until a match to existing data is found. As the window moves across the data, unique chunks predominantly of fixed size are formed in the data passed over. Several embodiments provide alternate methods of determining whether a selected chunk matches existing data and methods by which the window is moved through the data. To locate duplicate data, the data chunking system remembers data by computing a mathematical function of a data chunk and inserting the computed value into a hash table.

Owner:IBM CORP

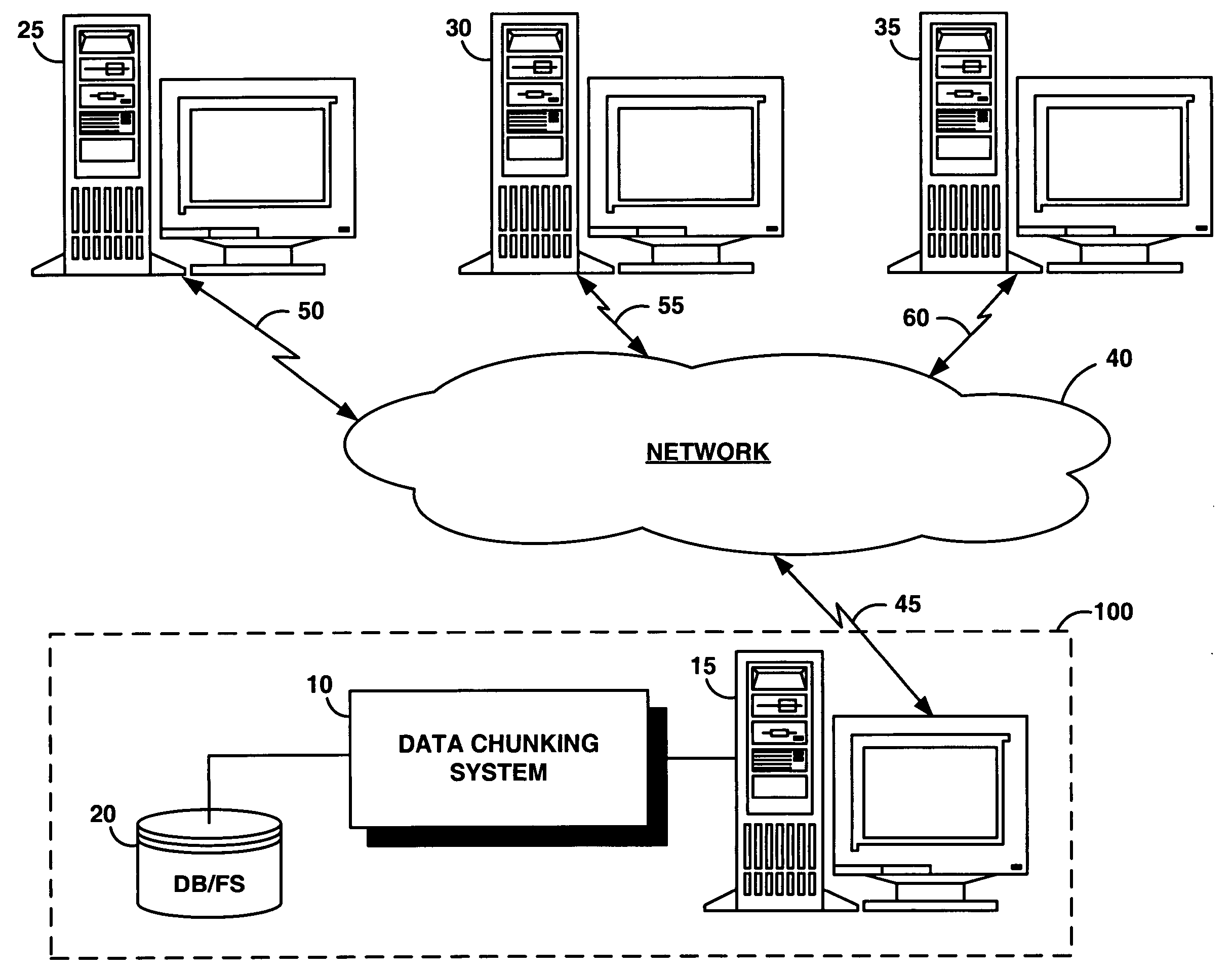

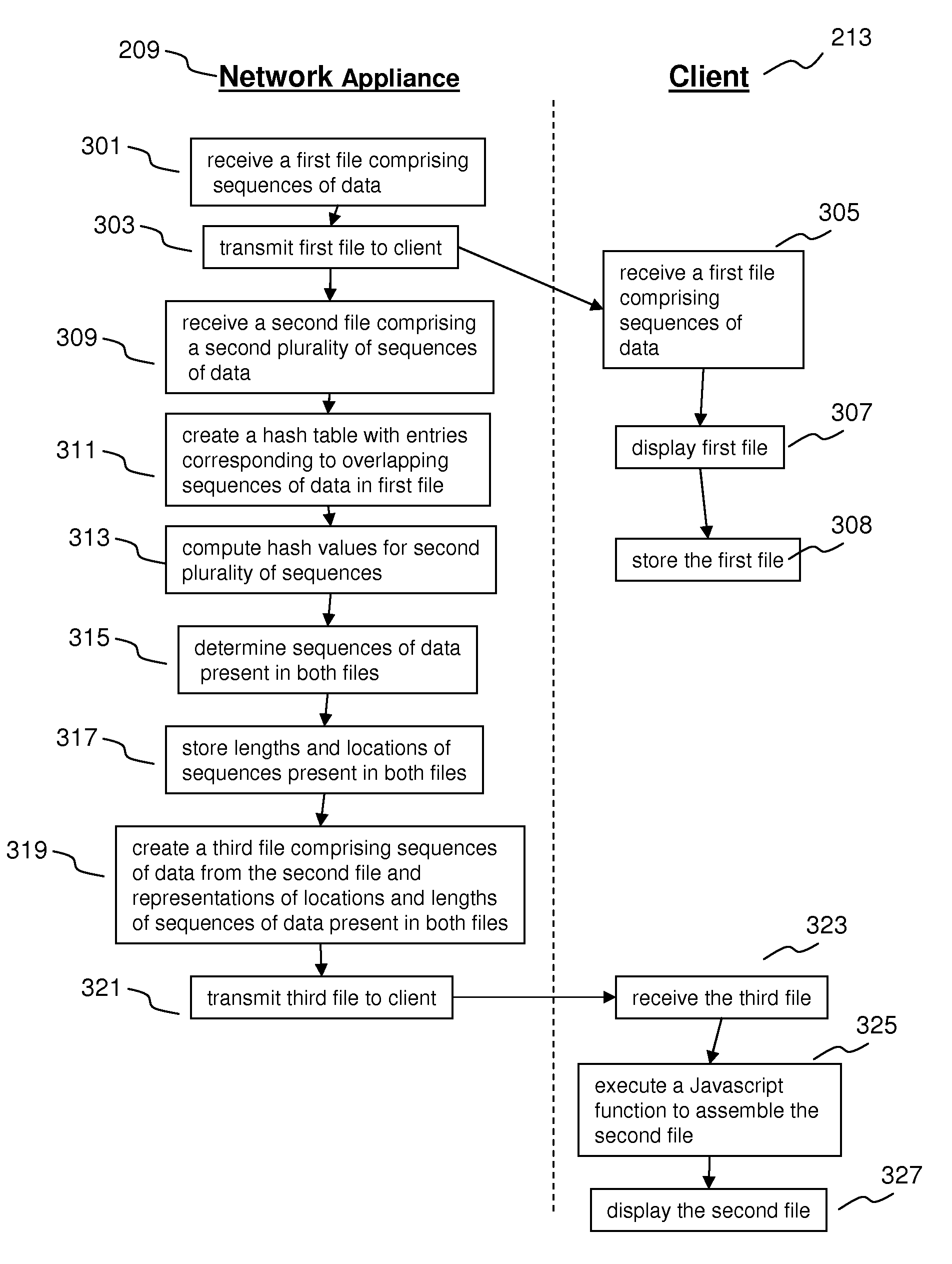

Method and systems for efficient delivery of previously stored content

ActiveUS7756826B2Reduce file sizeData processing applicationsDigital data processing detailsFile sizeHash table

Systems and methods for reducing file sizes for files delivered over a network are disclosed. A method comprises receiving a first file comprising sequences of data; creating a hash table having entries corresponding to overlapping sequences of data; receiving a second file comprising sequences of data; comparing each of the sequences of data in the second file to the sequences of data in the hash table to determine sequences of data present in both the first and second files; and creating a third file comprising sequences of data from the second file and representations of locations and lengths of said sequences of data present in both the first and second files.

Owner:CITRIX SYST INC

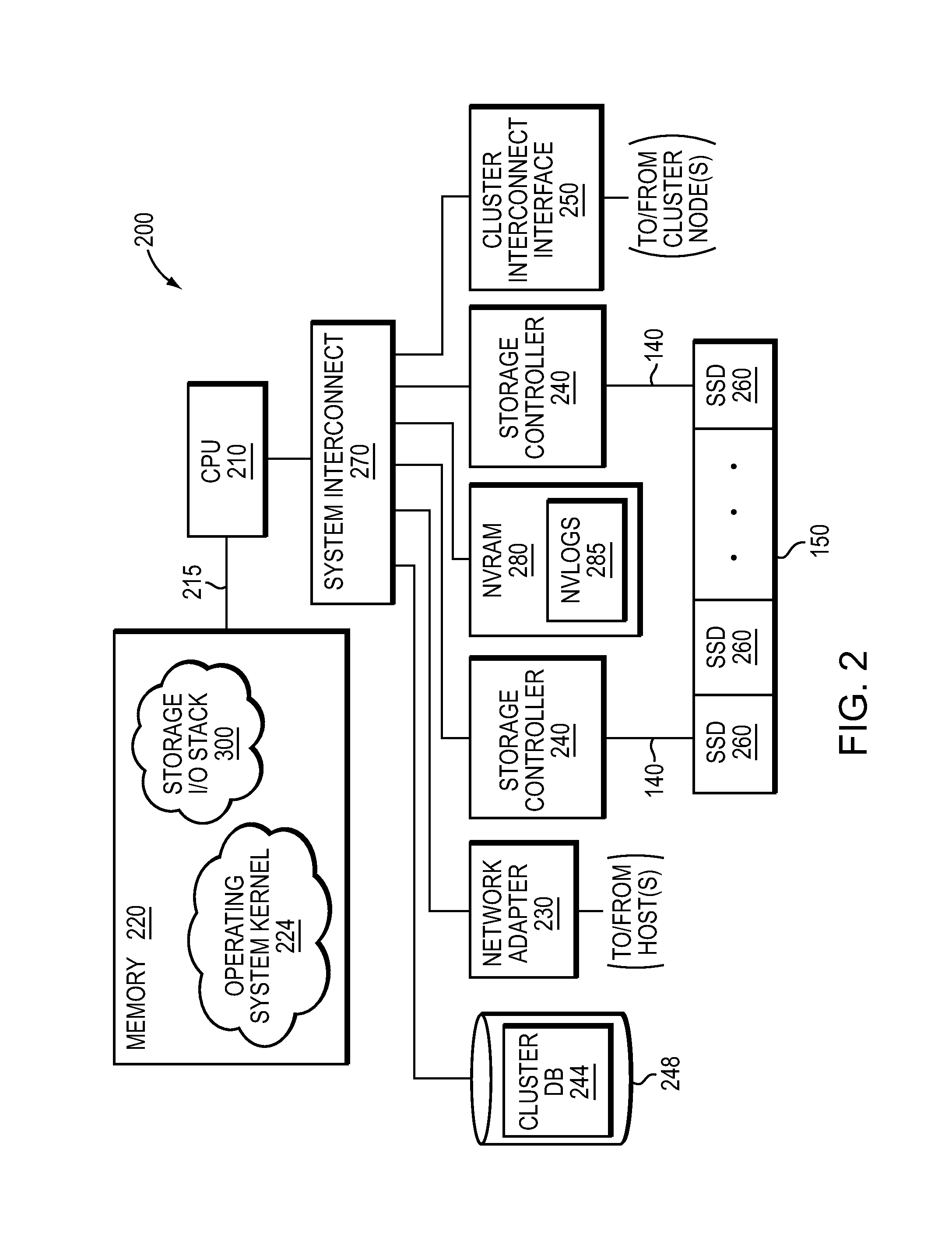

Set-associative hash table organization for efficient storage and retrieval of data in a storage system

ActiveUS8874842B1Memory architecture accessing/allocationInput/output to record carriersFile systemSolid-state drive

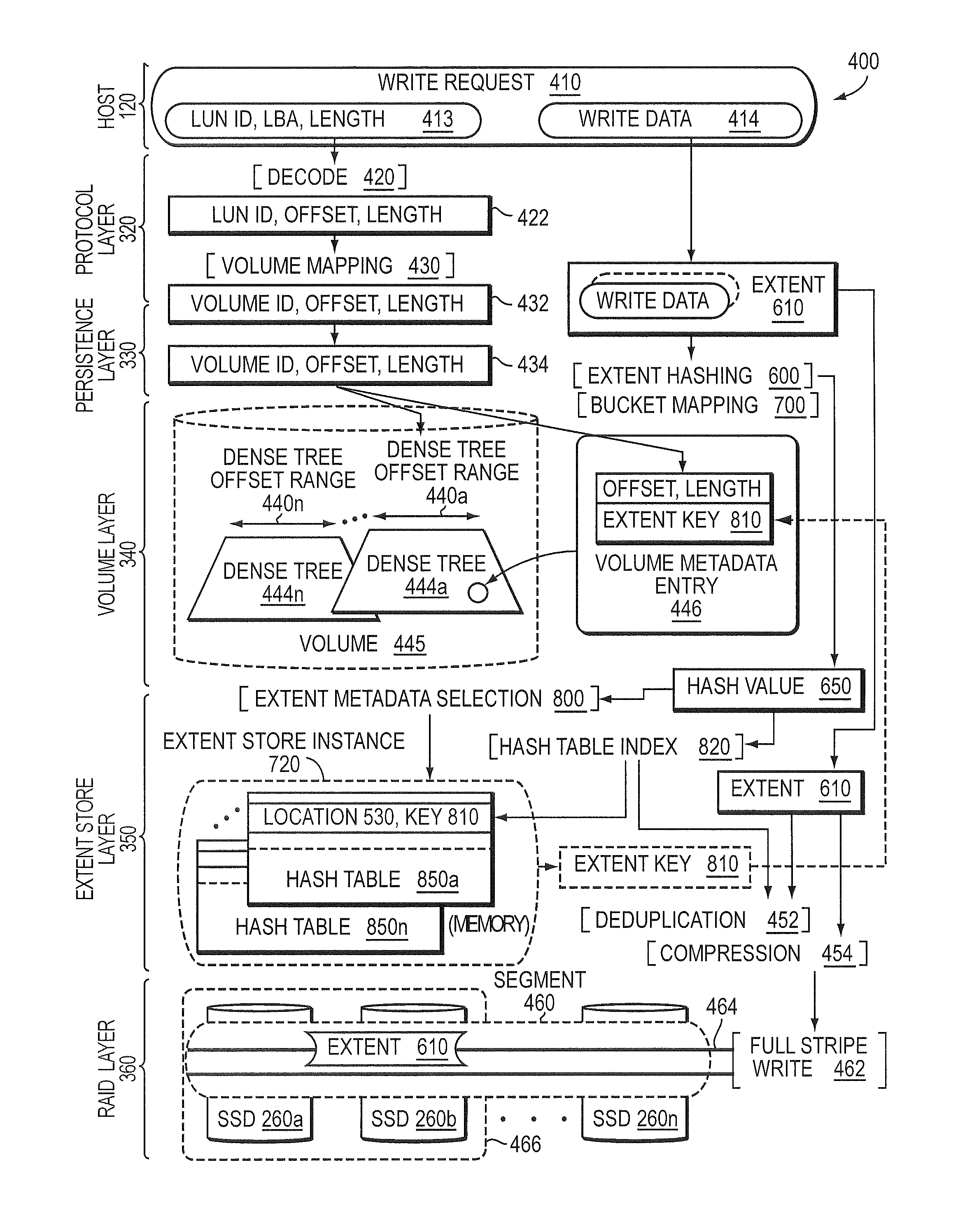

In one embodiment, use of hashing in a file system metadata arrangement reduces an amount of metadata stored in a memory of a node in a cluster and reduces the amount of metadata needed to process an input / output (I / O) request at the node. Illustratively, cuckoo hashing may be modified and applied to construct the file system metadata arrangement. The file system metadata arrangement may be illustratively configured as a key-value extent store embodied as a data structure, e.g., a cuckoo hash table, wherein a value, such as a hash table index, may be configured as an index and applied to the cuckoo hash table to obtain a key, such as an extent key, configured to reference a location of an extent on one or more storage devices, such as solid state drives.

Owner:NETWORK APPLIANCE INC

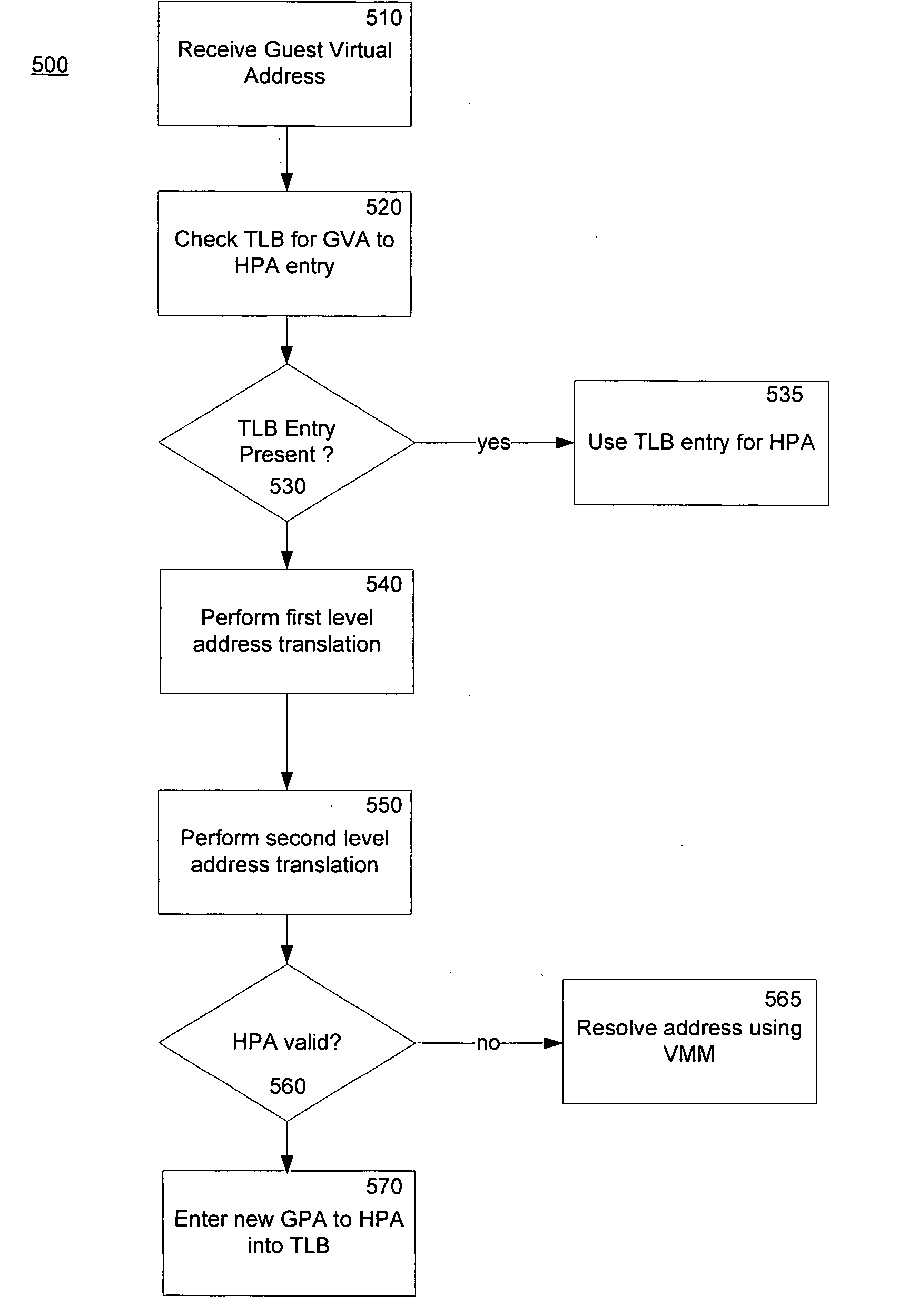

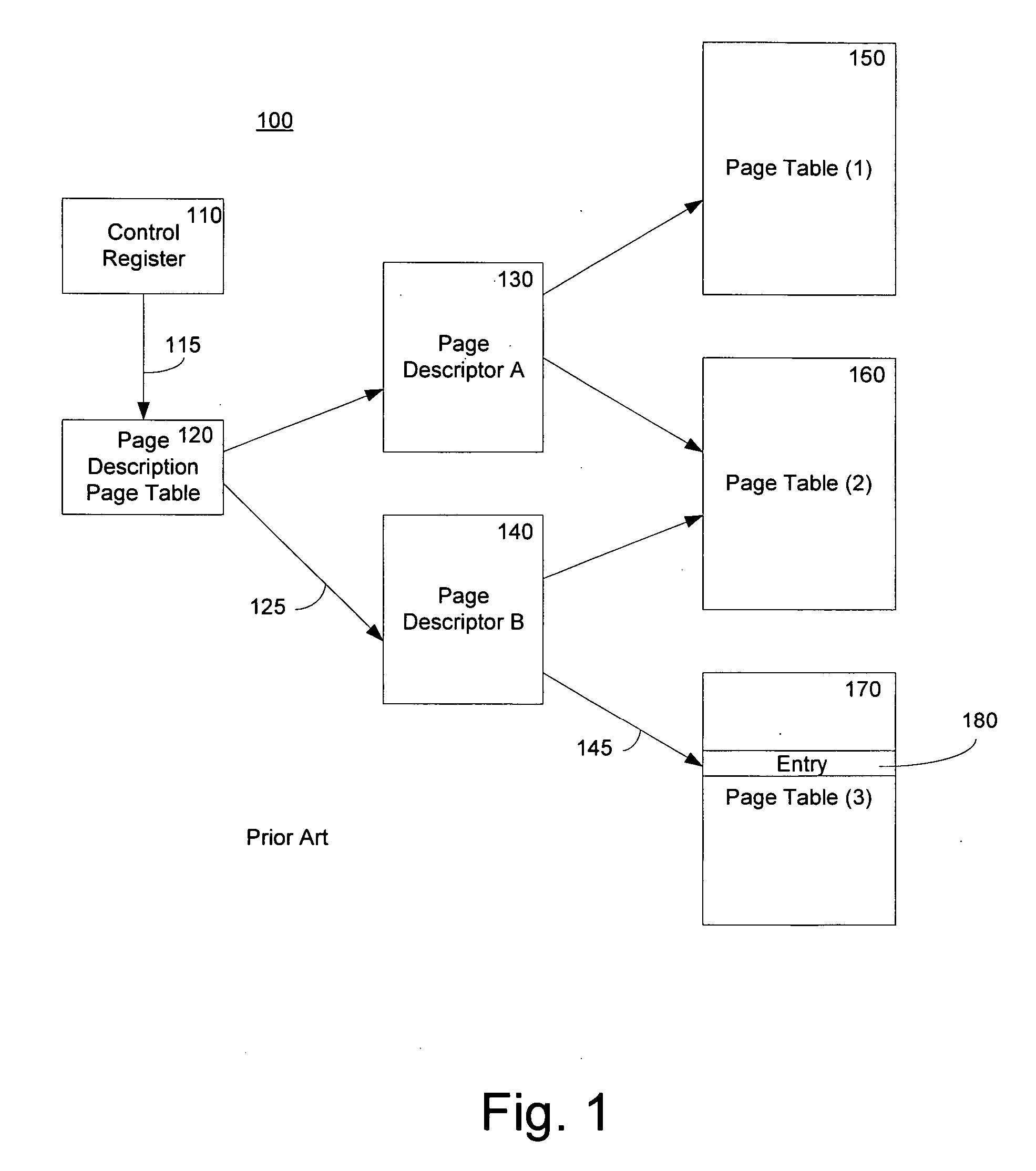

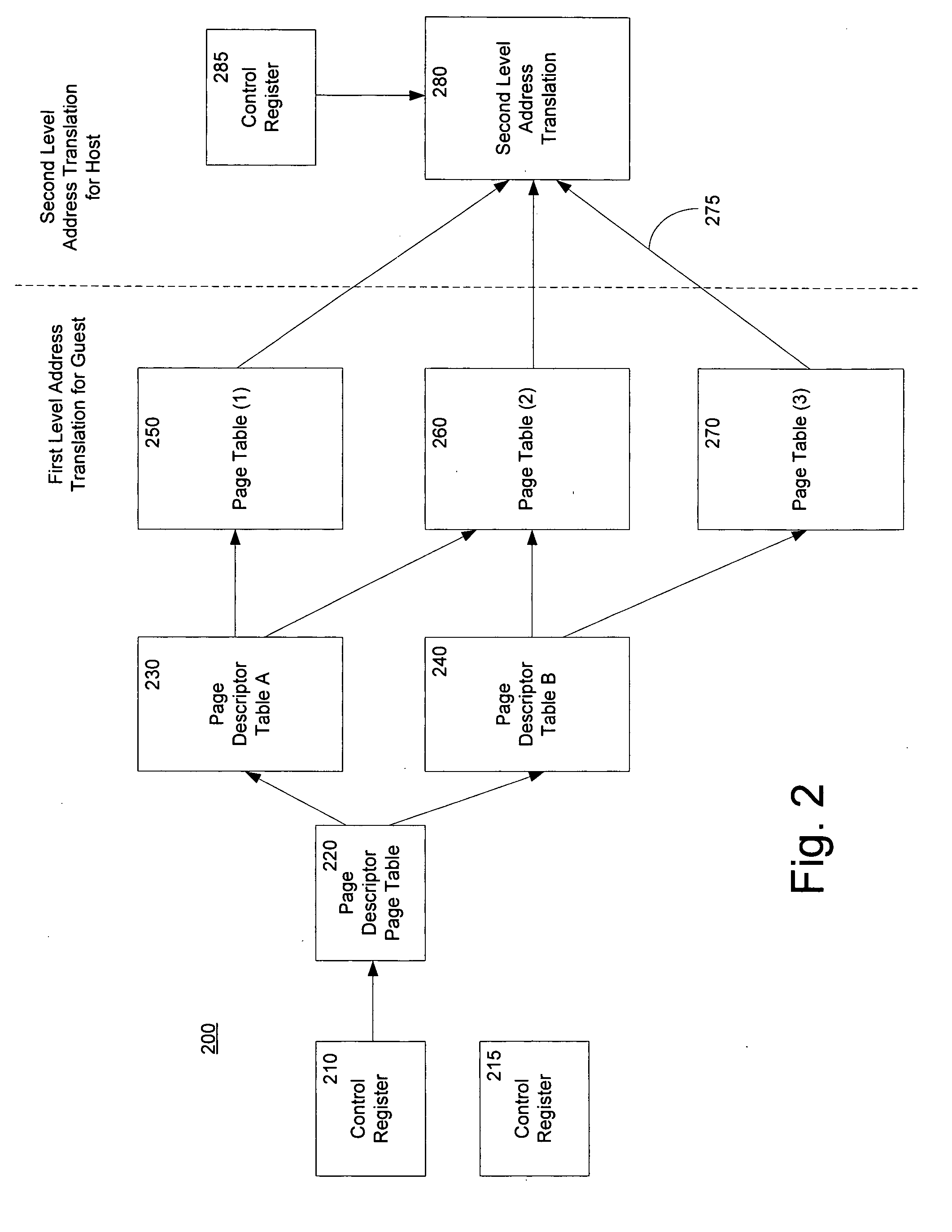

Method and system for a second level address translation in a virtual machine environment

ActiveUS20060206687A1Increase speedReduce usageMemory architecture accessing/allocationMemory adressing/allocation/relocationPhysical addressHost memory

A method of performing a translation from a guest virtual address to a host physical address in a virtual machine environment includes receiving a guest virtual address from a host computer executing a guest virtual machine program and using the hardware oriented method of the host CPU to determine the guest physical address. A second level address translation to a host physical address is then performed. In one embodiment, a multiple tier tree is traversed which translates the guest physical address into a host physical address. In another embodiment, the second level of address translation is performed by employing a hash function of the guest physical address and a reference to a hash table. One aspect of the invention is the incorporation of access overrides associated with the host physical address which can control the access permissions of the host memory.

Owner:MICROSOFT TECH LICENSING LLC

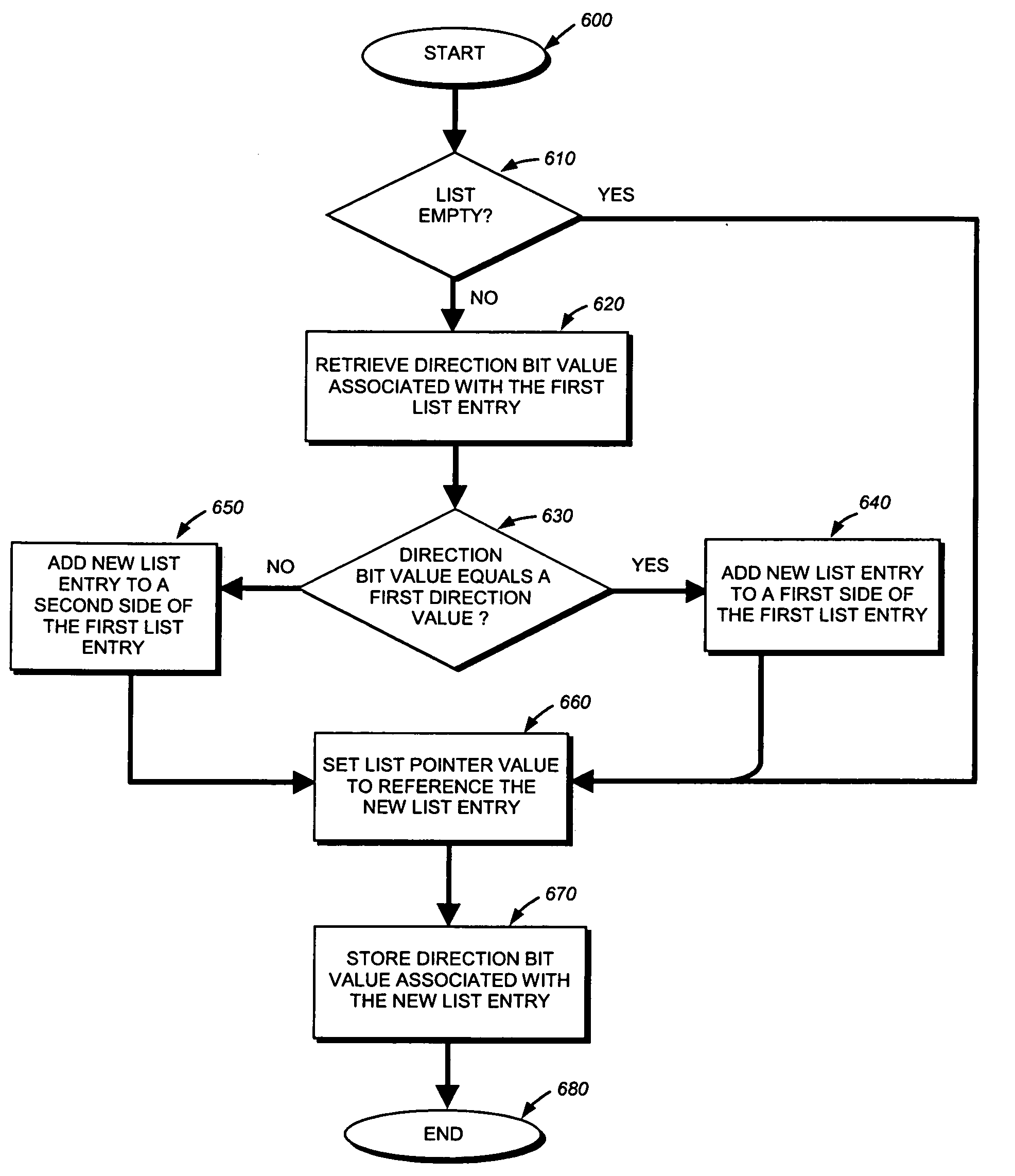

Memory efficient hashing algorithm

InactiveUS20050171937A1Efficient searchFew processing timeDigital data information retrievalSpecial data processing applicationsTerm memoryComputer science

A technique efficiently searches a hash table. Conventionally, a predetermined set of “signature” information is hashed to generate a hash-table index which, in turn, is associated with a corresponding linked list accessible through the hash table. The indexed list is sequentially searched, beginning with the first list entry, until a “matching” list entry is located containing the signature information. For long list lengths, this conventional approach may search a substantially large number of list entries. In contrast, the inventive technique reduces, on average, the number of list entries that are searched to locate the matching list entry. To that end, list entries are partitioned into different groups within each linked list. Thus, by searching only a selected group (e.g., subset) of entries in the indexed list, the technique consumes fewer resources, such as processor bandwidth and processing time, than previous implementations.

Owner:CISCO TECH INC

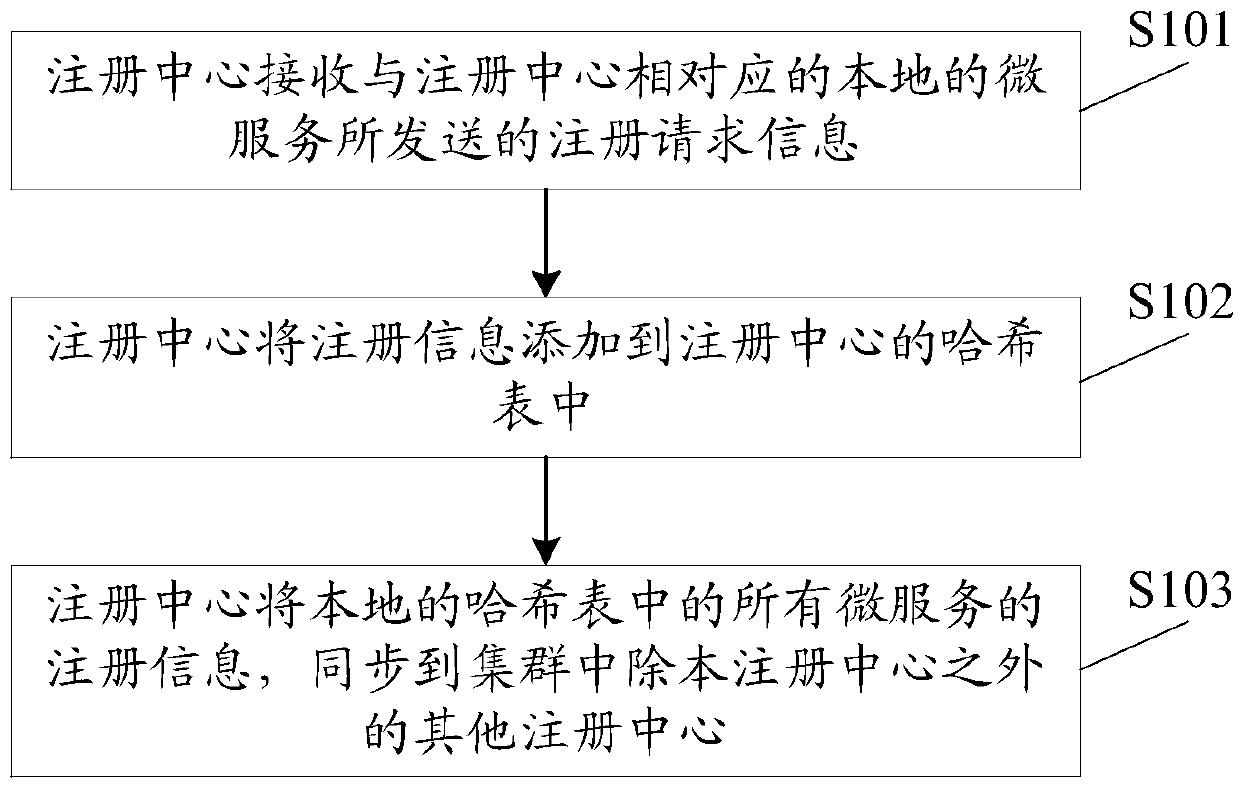

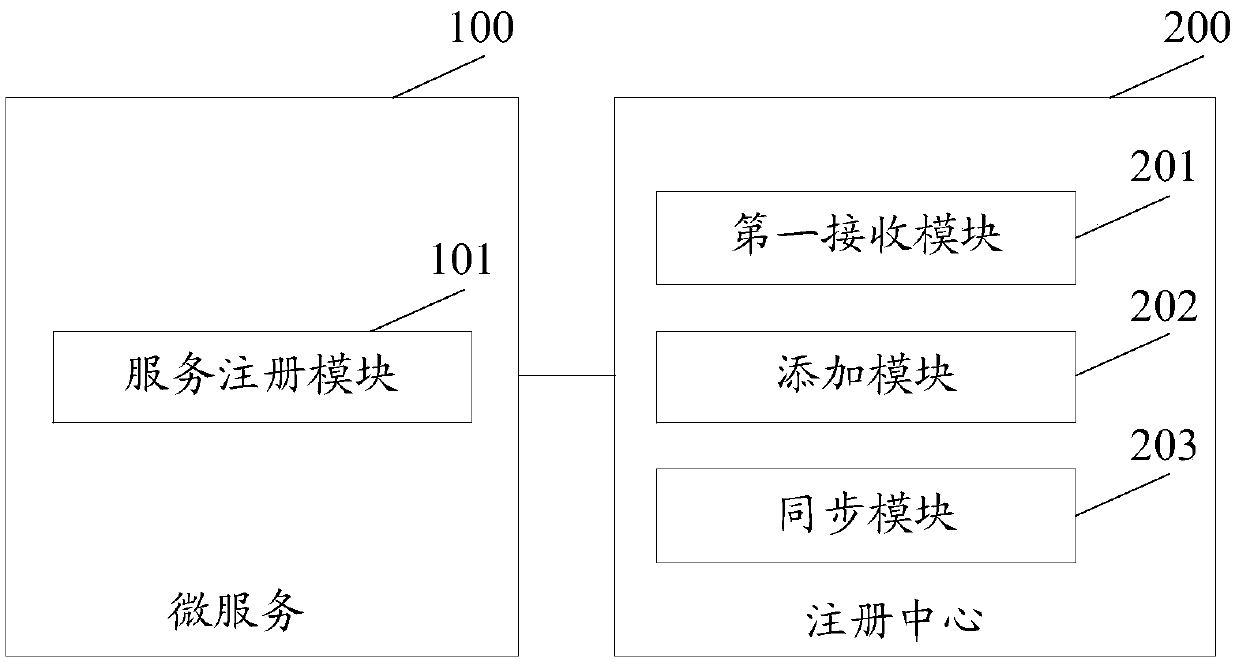

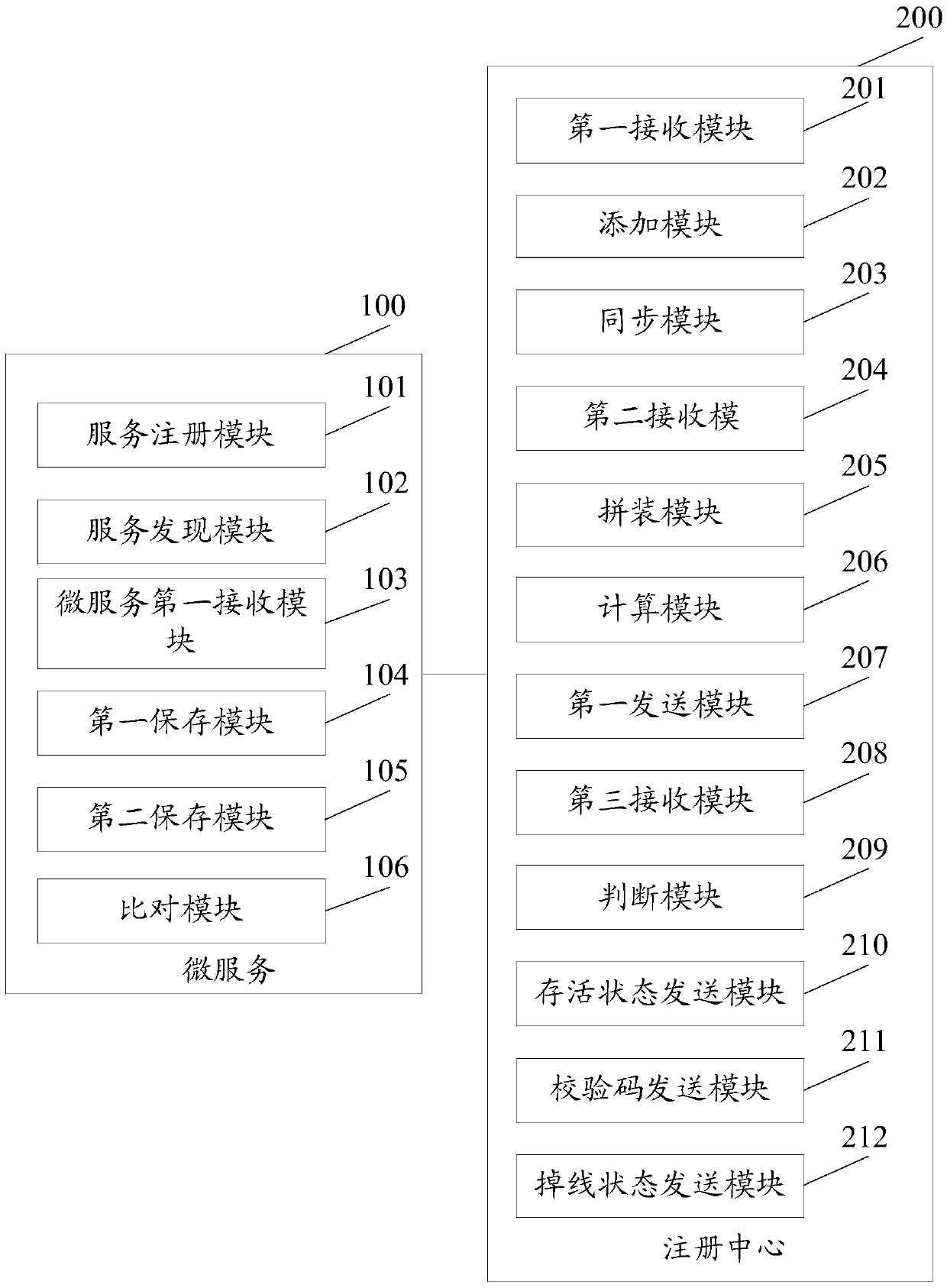

Micro service registration method and micro service registration system

ActiveCN105515759AReduce consumptionAvoid the risk of paralysisEncryption apparatus with shift registers/memoriesHash tableDatabase

The embodiment of the invention discloses a micro service registration method and a micro service registration system, wherein one registration center is started on each node in a cluster, and the method comprises the following steps that: the registration center receives registration request information transmitted by a local micro service corresponding to the registration center, wherein a registration information carried by the registration request information comprises micro service access address information, service providing information and service consumption information; the registration center adds the registration information to a hash table of the registration center; and the registration center synchronizes the registration information of all the micro services in the local hash table to other registration centers except the registration center itself in the cluster. With the mode of starting one registration center on each node in the cluster provided by the embodiment of the invention, maintaining one highly available registration center is not necessary, and if one of the registration centers is crashed, other registration centers in the cluster cannot be affected, and risk of breakdown of system caused by crash of only one registration center is avoided effectively.

Owner:STATE GRID INFORMATION & TELECOMM GRP +2

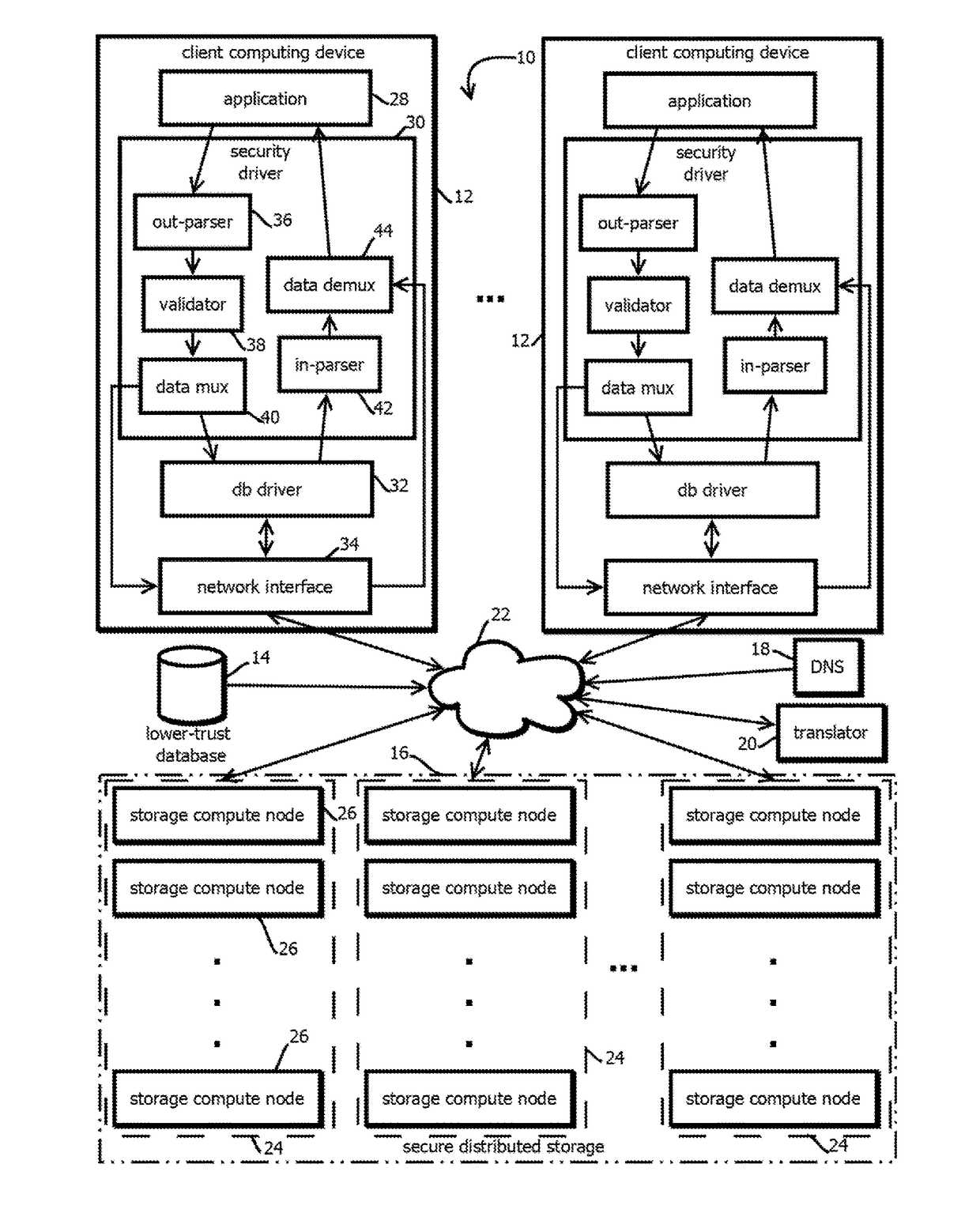

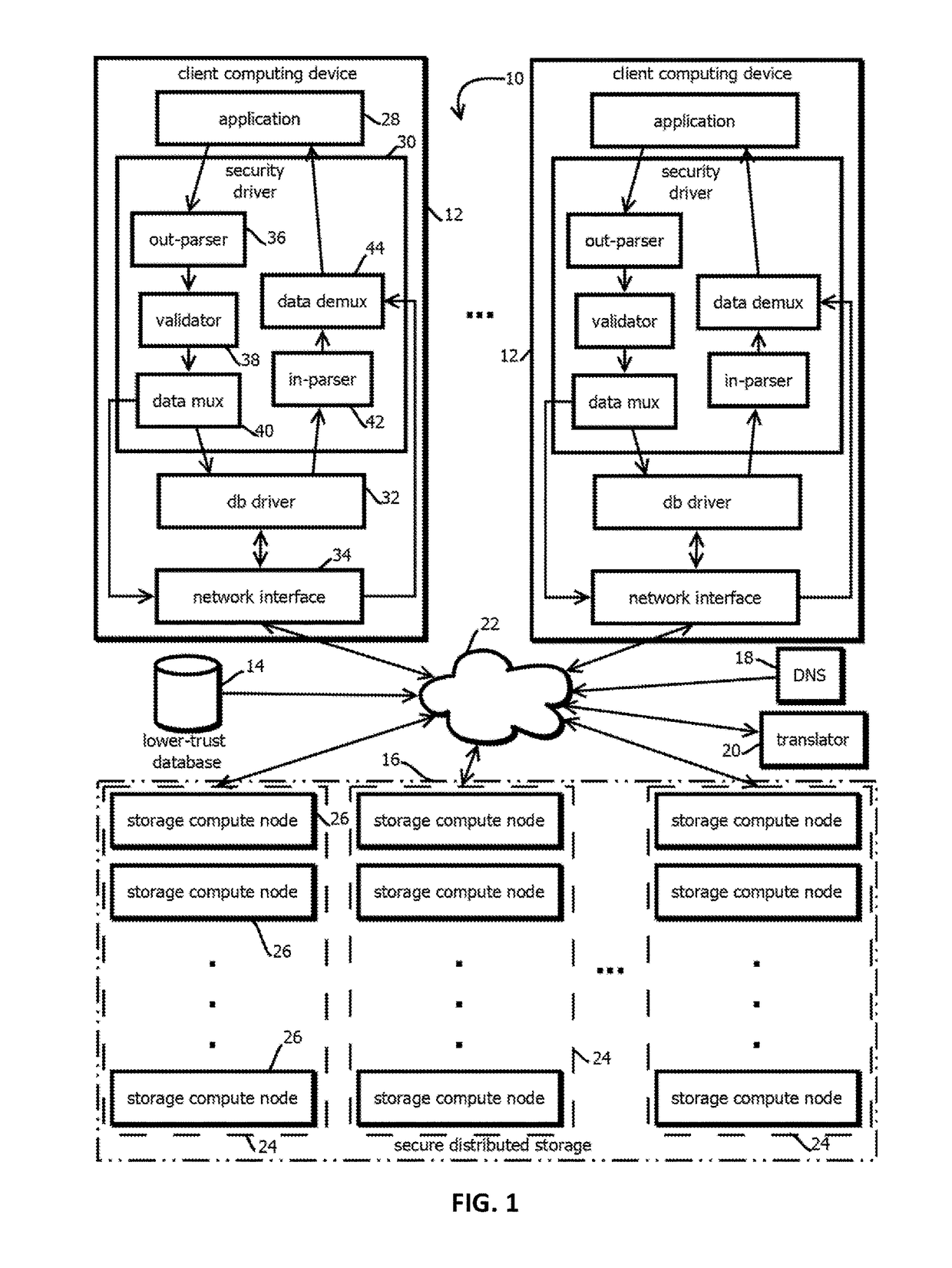

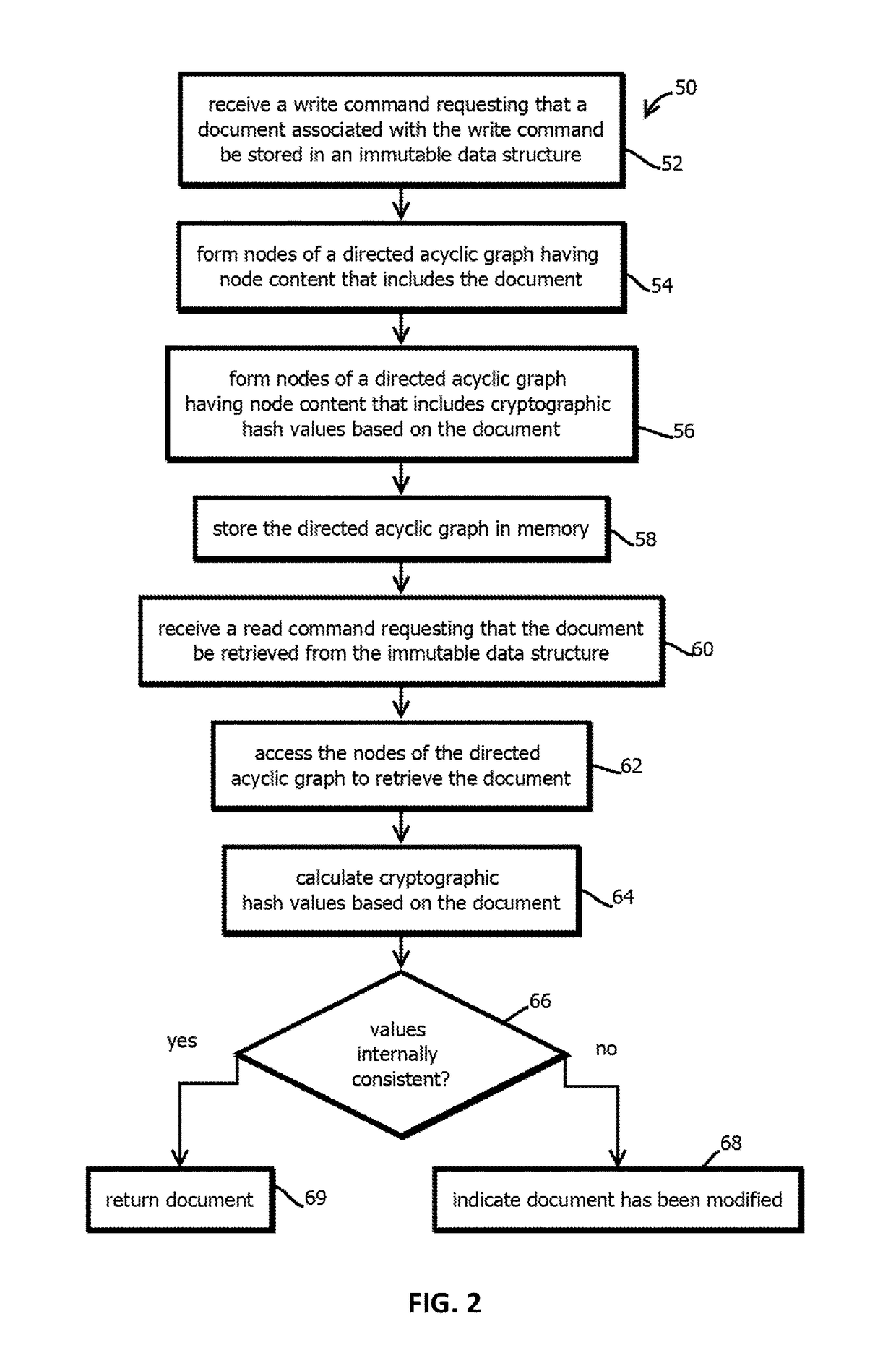

Generation of hash values within a blockchain

ActiveUS20170366353A1Encryption apparatus with shift registers/memoriesUser identity/authority verificationData miningHash table

Owner:ALTR SOLUTIONS INC

Use of dynamic multi-level hash table for managing hierarchically structured information

ActiveUS7058639B1Data processing applicationsDigital data information retrievalComputer scienceHash table

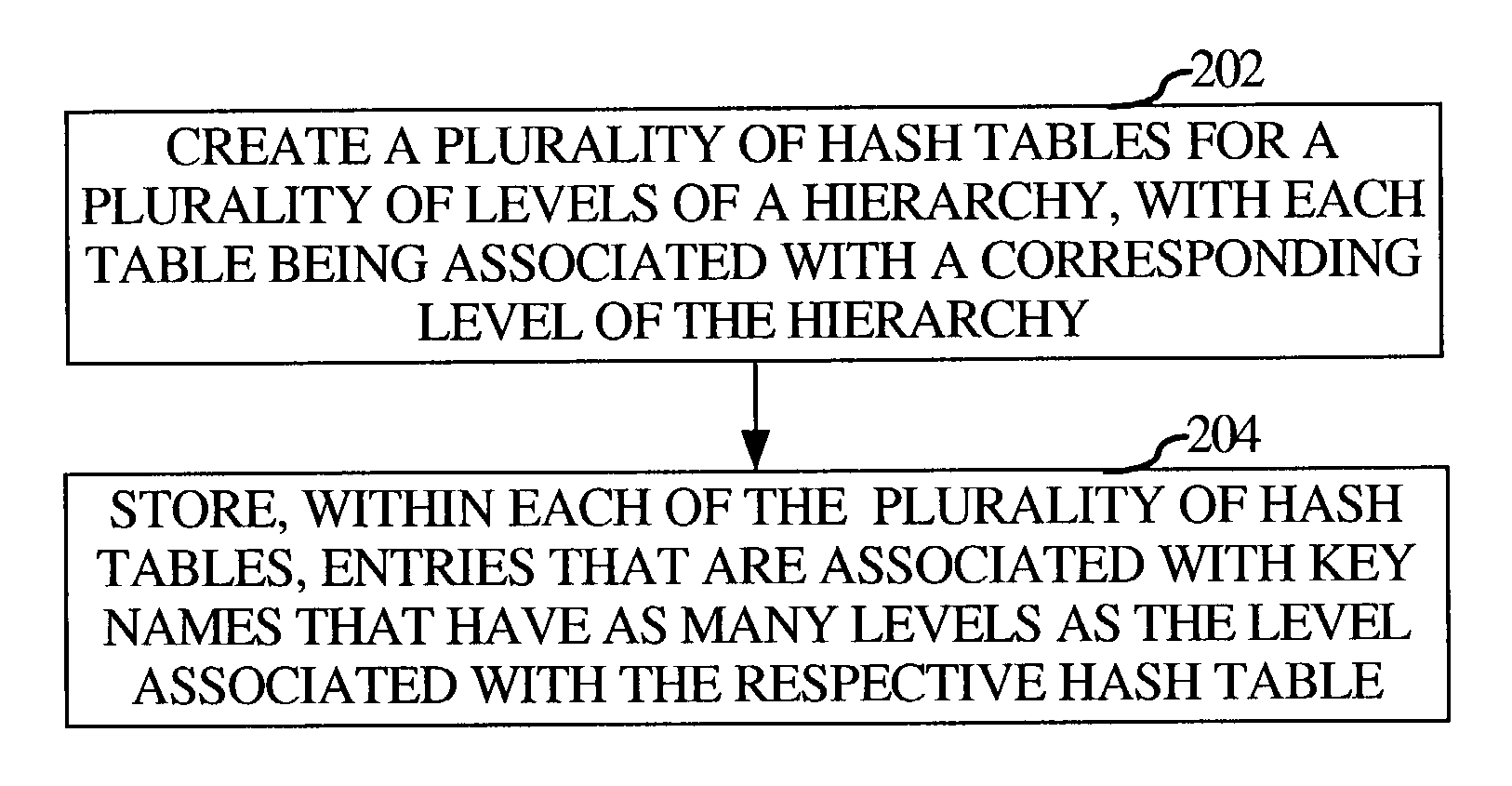

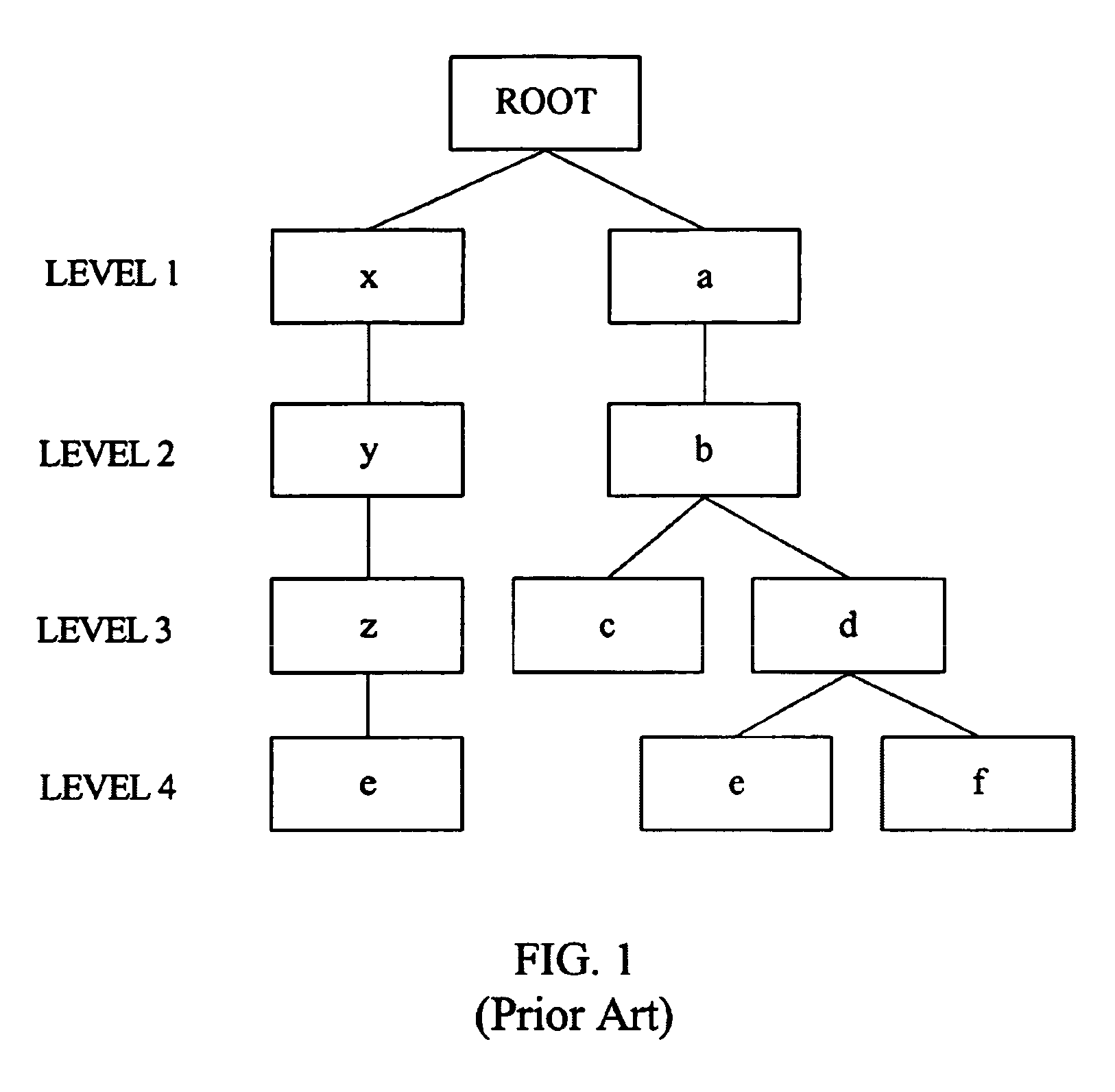

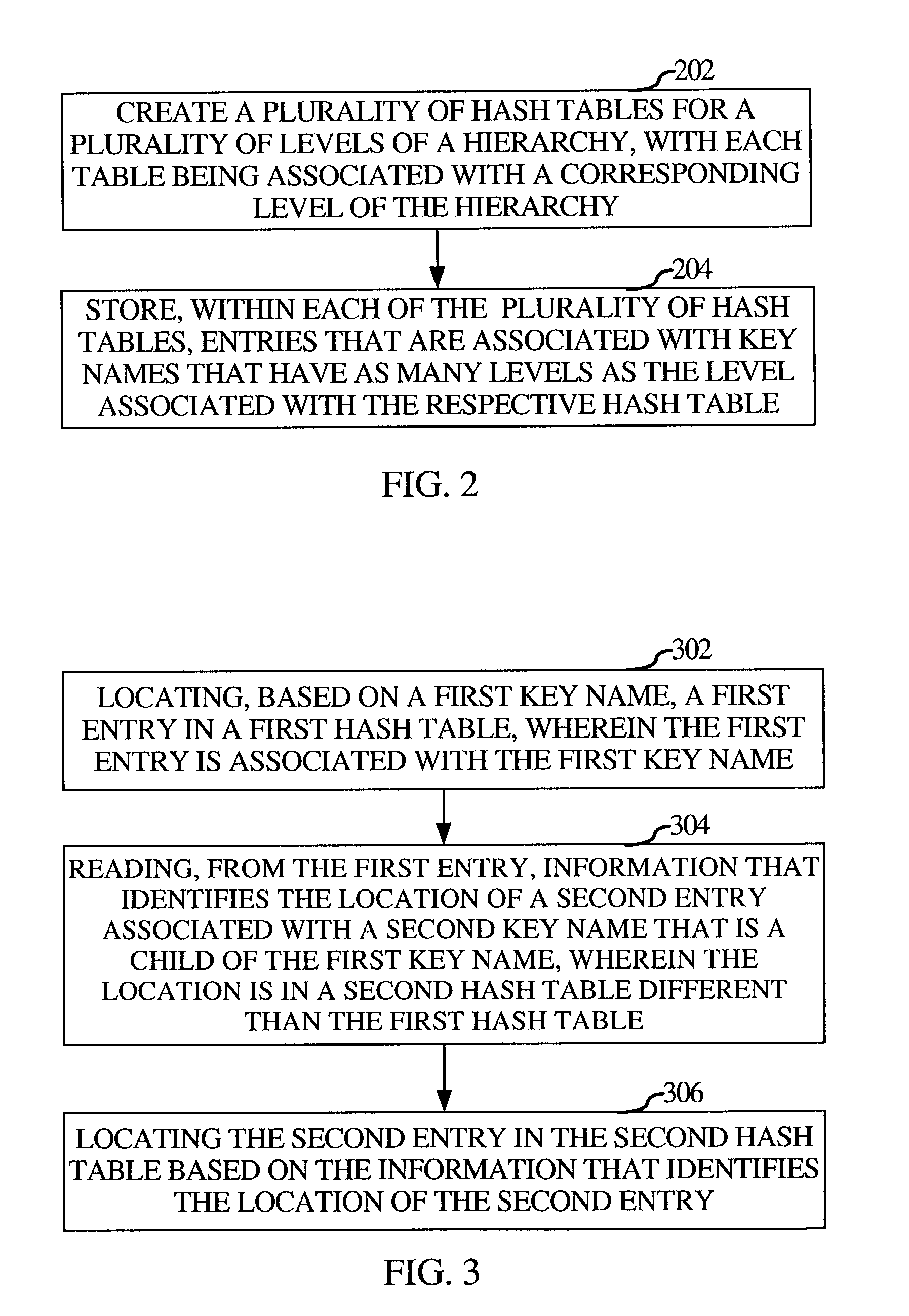

An aspect of the invention provides a method for managing information associated with a hierarchical key. A plurality of hash tables are created for a plurality of levels of a hierarchy associated with a hierarchical key, wherein each hash table is associated with a corresponding level of the hierarchy. Entries are stored within each of the plurality of hash tables, wherein the entries are associated with key names that have as many levels as the level associated with the respective hash table. Furthermore, a reference to a descendant entry that is in a respective hash table may be stored within each entry.

Owner:ORACLE INT CORP

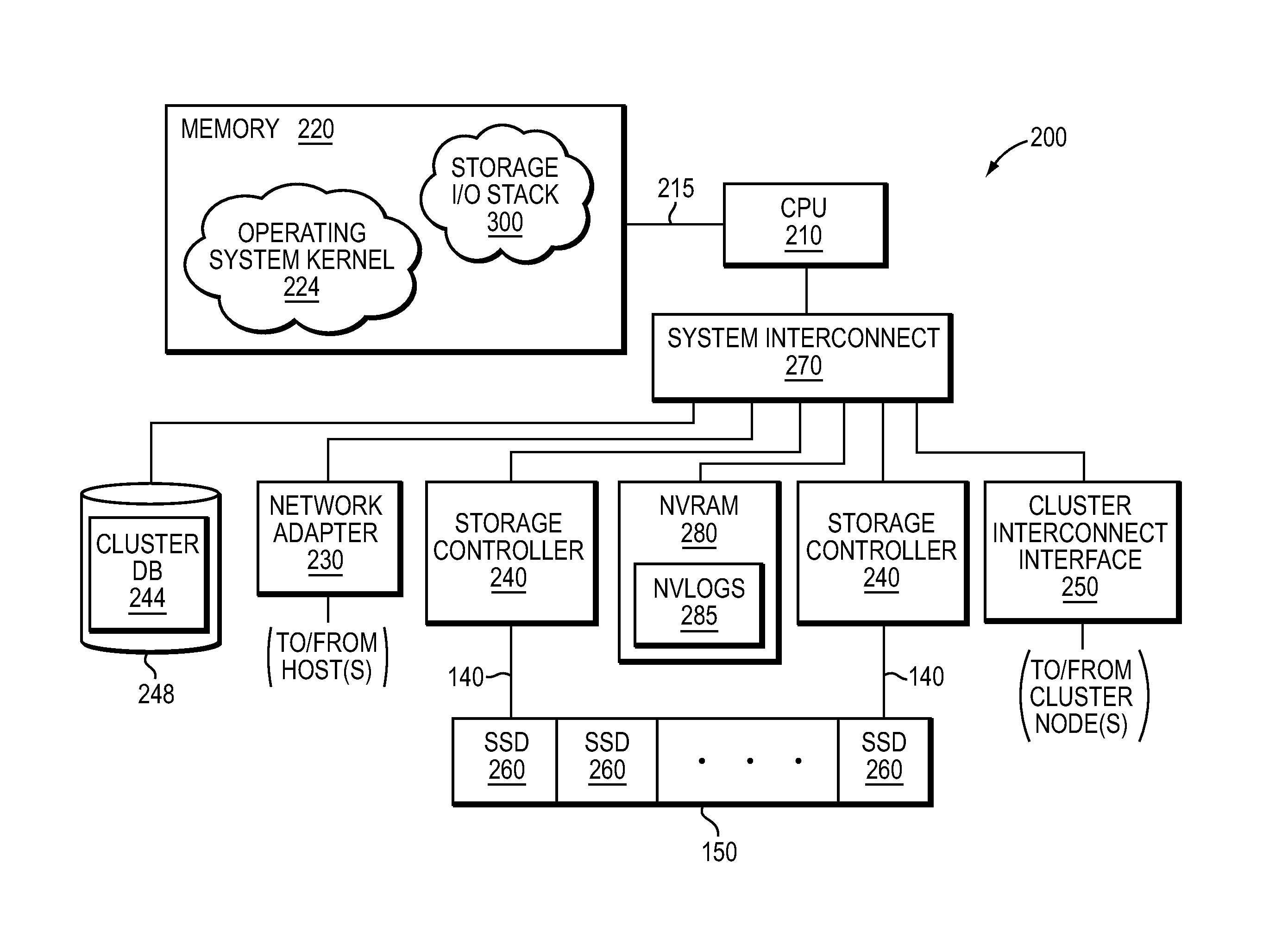

Extent metadata update logging and checkpointing

ActiveUS8880787B1Digital data information retrievalError detection/correctionArray data structureHash table

In one embodiment, an extent store layer of a storage input / output (I / O) stack executing on one or more nodes of a cluster manages efficient logging and checkpointing of metadata. The metadata managed by the extent store layer, i.e., the extent store metadata, resides in a memory (in-core) of each node and is illustratively organized as a key-value extent store embodied as one or more data structures, e.g., a set of hash tables. Changes to the set of hash tables are recorded as a continuous stream of changes to SSD embodied as an extent store layer log. A separate log stream structure (e.g., an in-core buffer) may be associated respectively with each hash table such that changed (i.e., dirtied) slots of the hash table are recorded as entries in the log stream structure. The hash tables are written to SSD using a fuzzy checkpointing technique.

Owner:NETWORK APPLIANCE INC

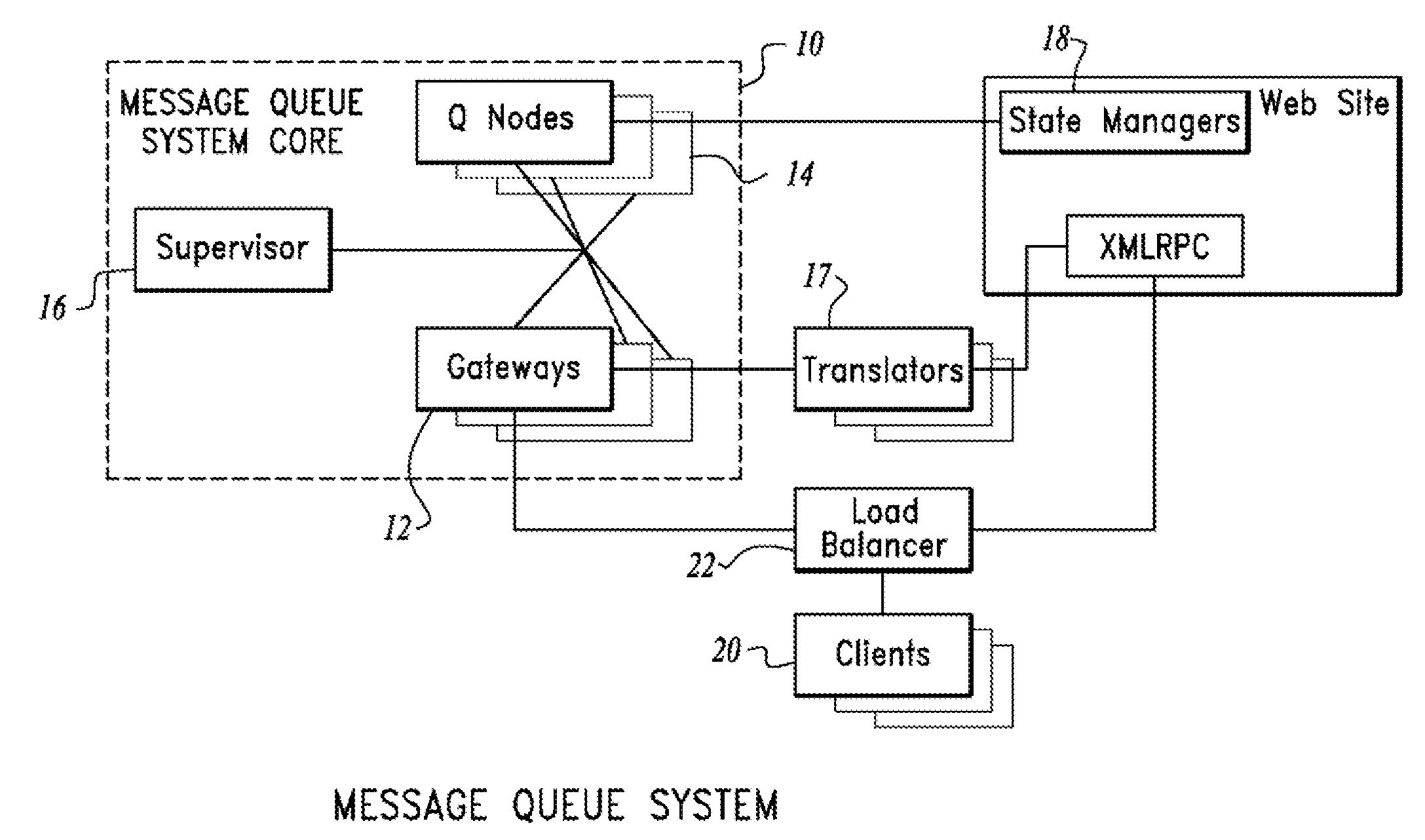

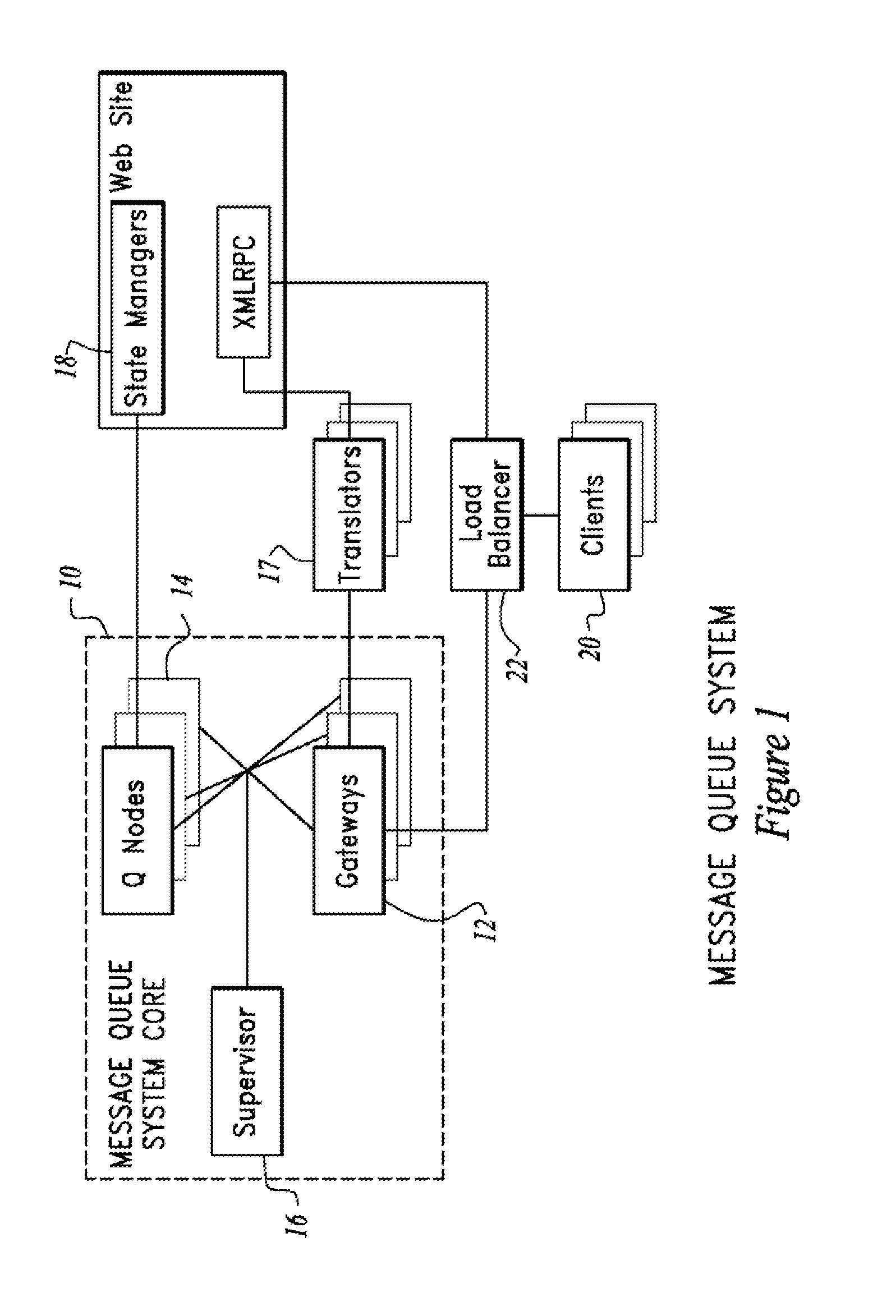

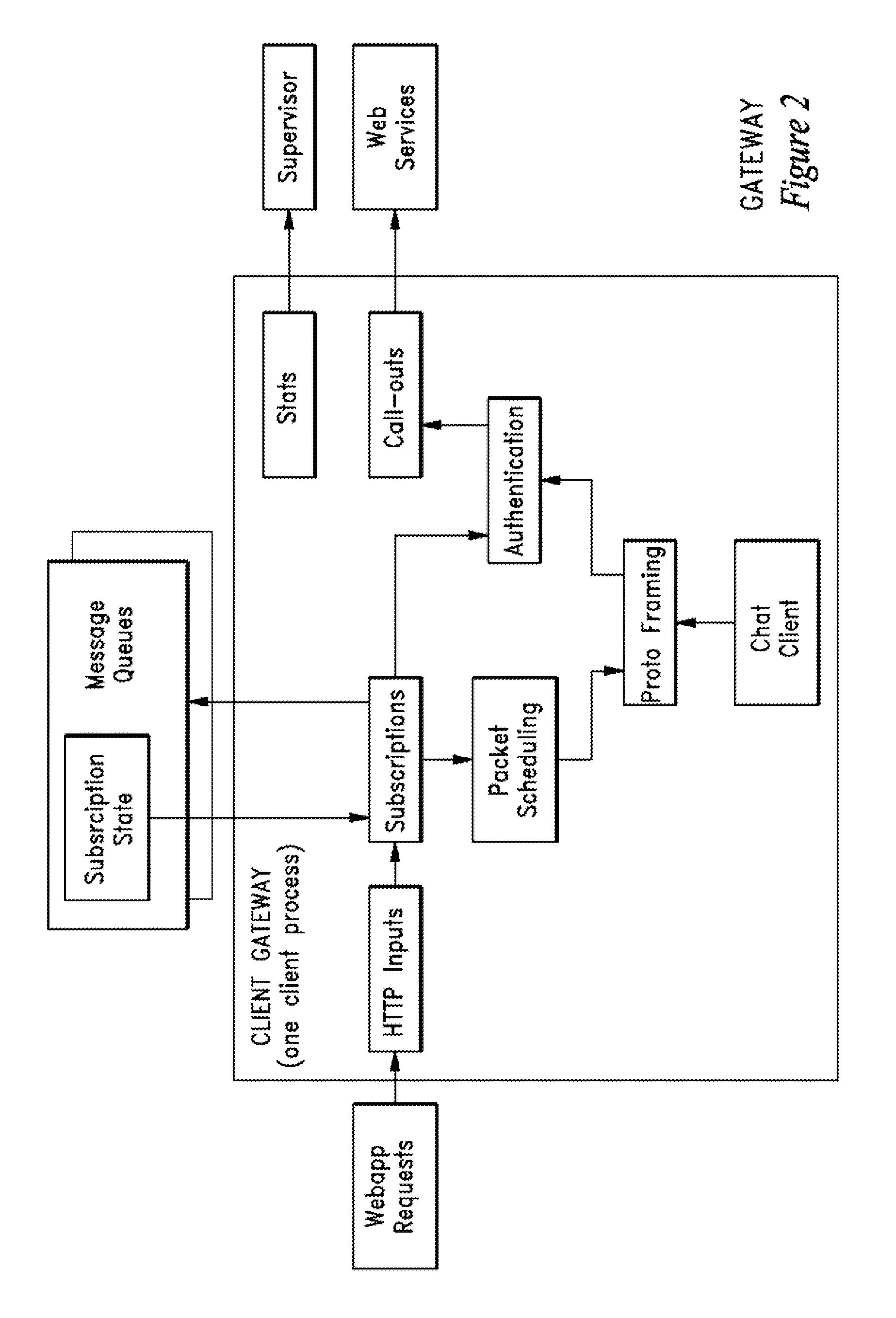

System and method for providing an actively invalidated client-side network resource cache

A system and method for providing an actively invalidated client-side network resource cache are disclosed. A particular embodiment includes: a client configured to request, for a client application, data associated with an identifier from a server; the server configured to provide the data associated with the identifier, the data being subject to subsequent change, the server being further configured to establish a queue associated with the identifier at a scalable message queuing system, the scalable message queuing system including a plurality of gateway nodes configured to receive connections from client systems over a network, a plurality of queue nodes containing subscription information about queue subscribers, and a consistent hash table mapping a queue identifier requested on a gateway node to a corresponding queue node for the requested queue identifier; the client being further configured to subscribe to the queue at the scalable message queuing system to receive invalidation information associated with the data; the server being further configured to signal the queue of an invalidation event associated with the data; the scalable message queuing system being configured to convey information indicative of the invalidation event to the client; and the client being further configured to re-request the data associated with the identifier from the server upon receipt of the information indicative of the invalidation event from the scalable message queuing system.

Owner:IMVU

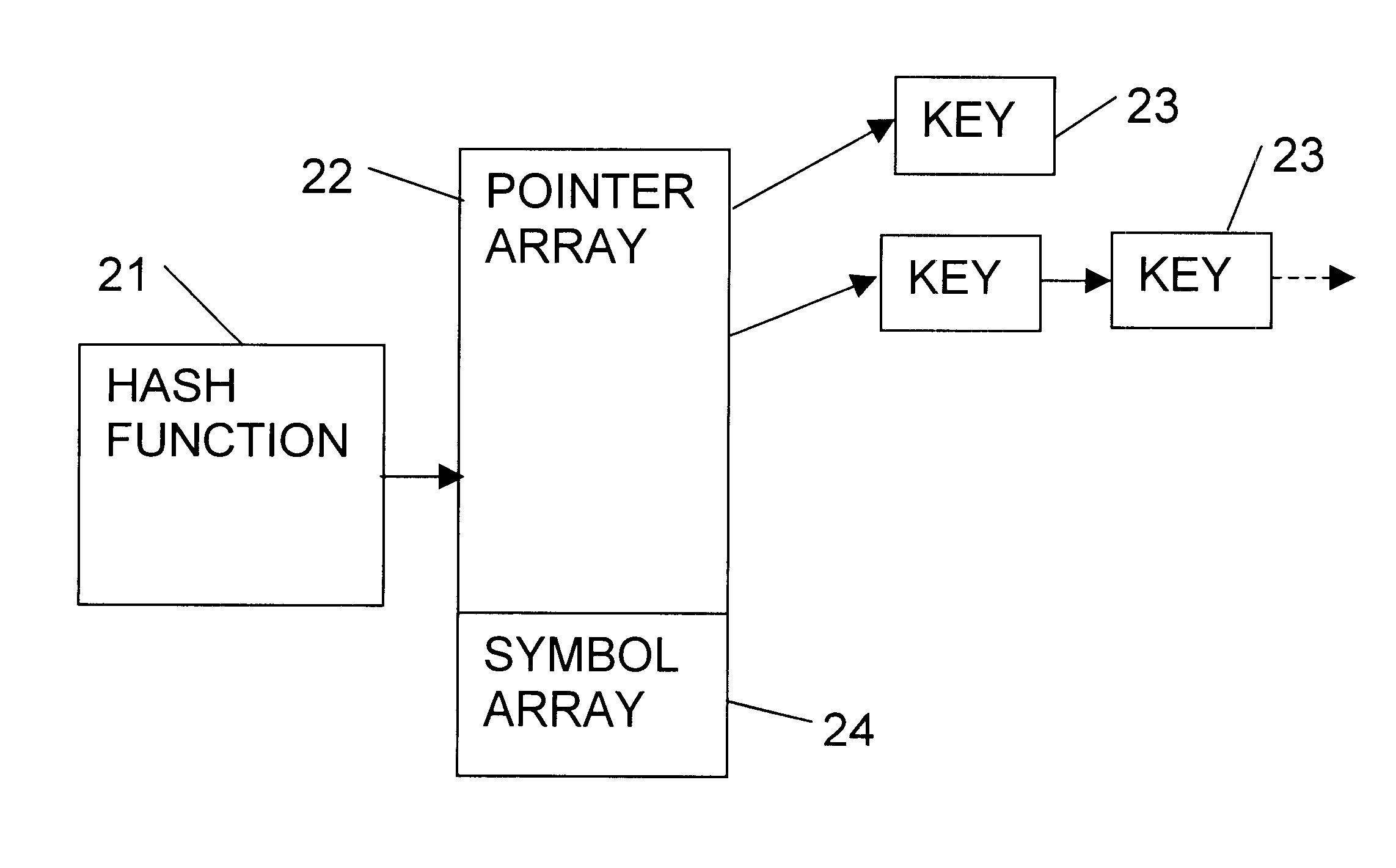

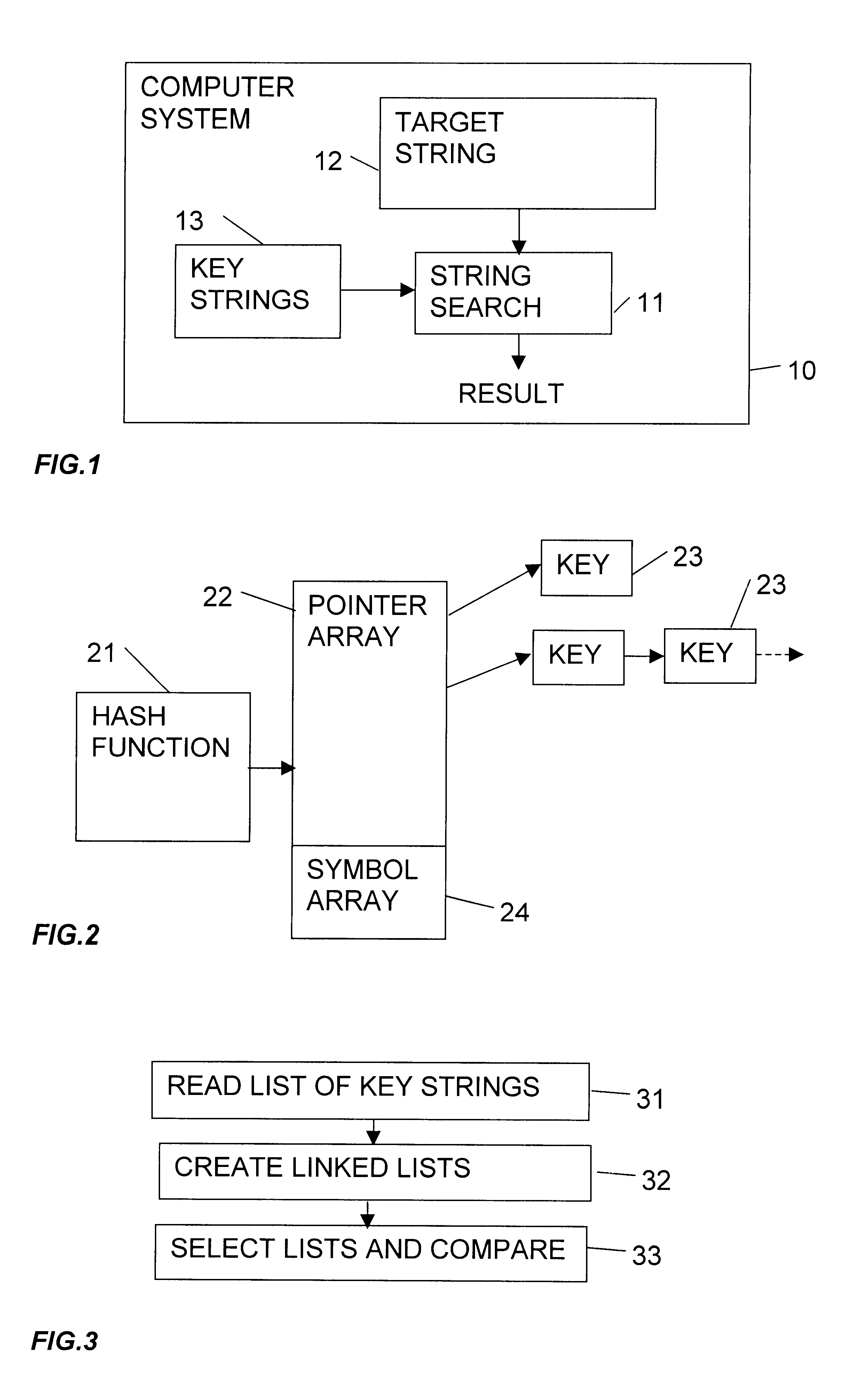

Multiple string search method

InactiveUS6377942B1Data processing applicationsDigital data information retrievalTheoretical computer scienceHash table

A data processing system has a searching mechanism for finding occurrences of a plurality of key strings within a target string. The searching mechanism forms a hash value from each of the key strings, and adds each key string to a collection of key strings having the same hash value. It then selects a plurality of symbol positions in the target string, and forms a hash value at each selected symbol position in the target string. This hash value is used to select one of the collections of key strings. Each key string in the selected collection of key strings is then compared with the target string.

Owner:INT COMP LTD

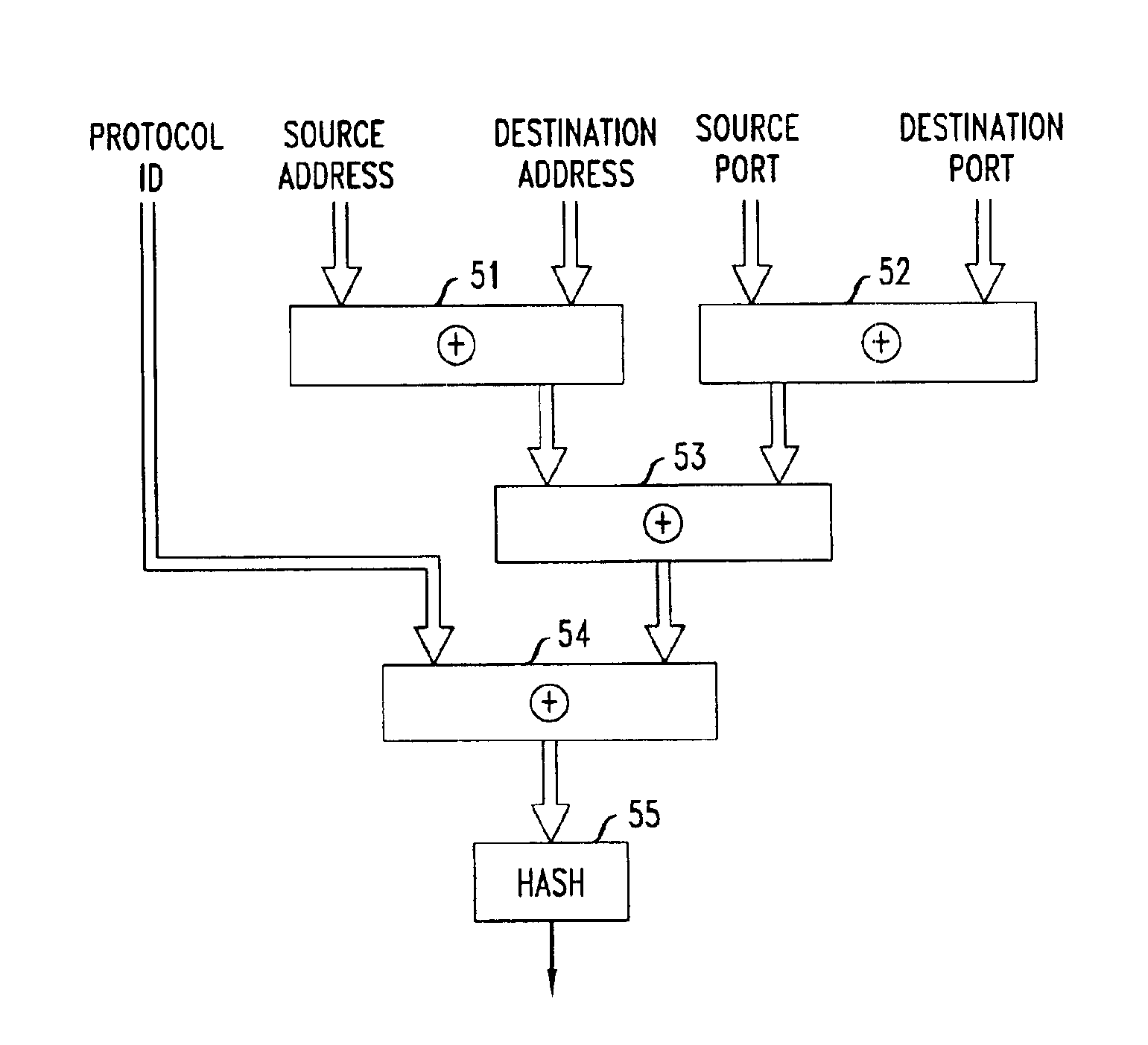

Hashing-based network load balancing

InactiveUS6888797B1Overcome deficienciesError preventionTransmission systemsComputer networkHash table

A hashing-based router and method for network load balancing includes calculating a hash value from header data of incoming data packets and routing incoming packets based on the calculated hash values to permissible output links in desired loading proportions.

Owner:LUCENT TECH INC

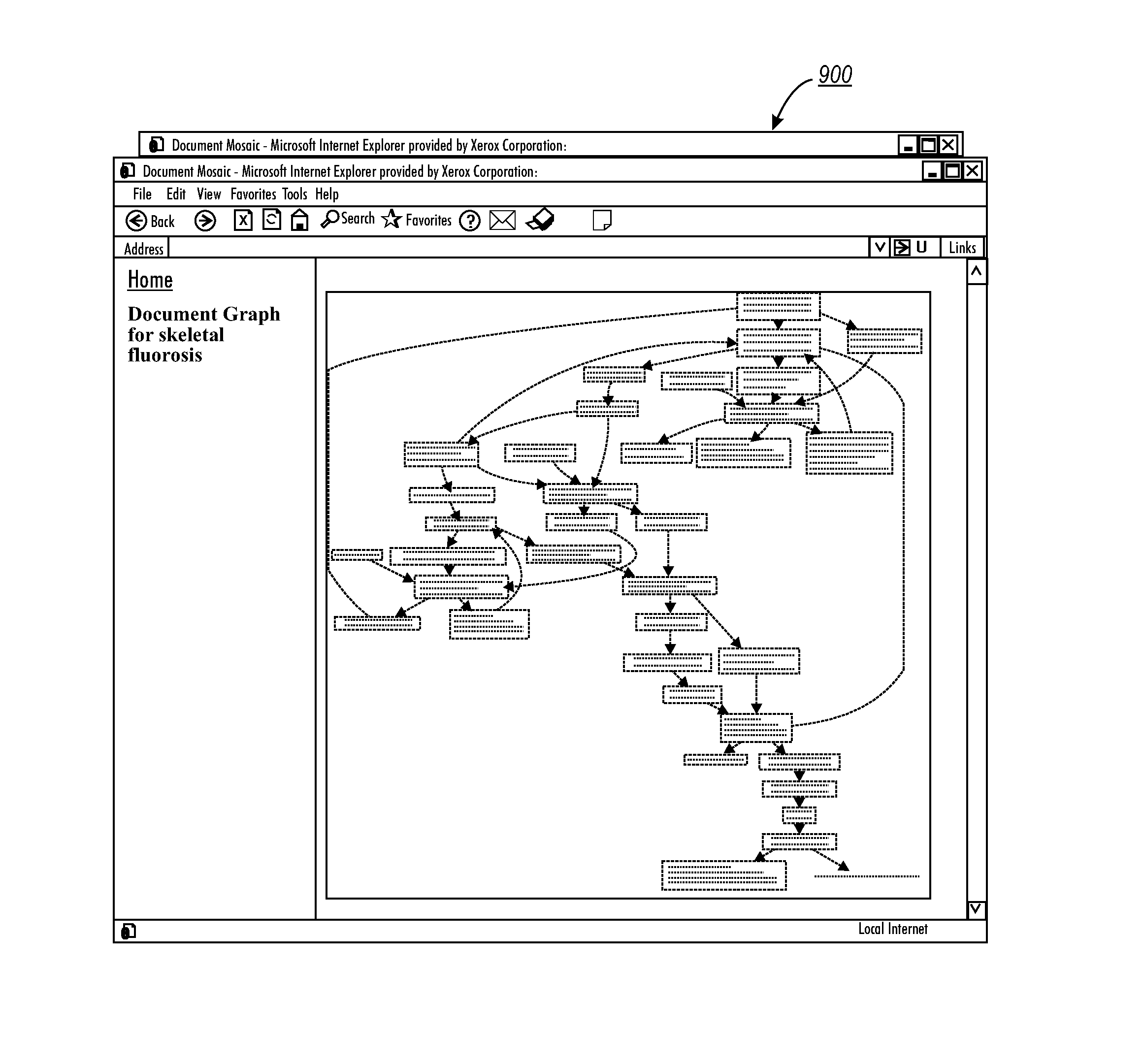

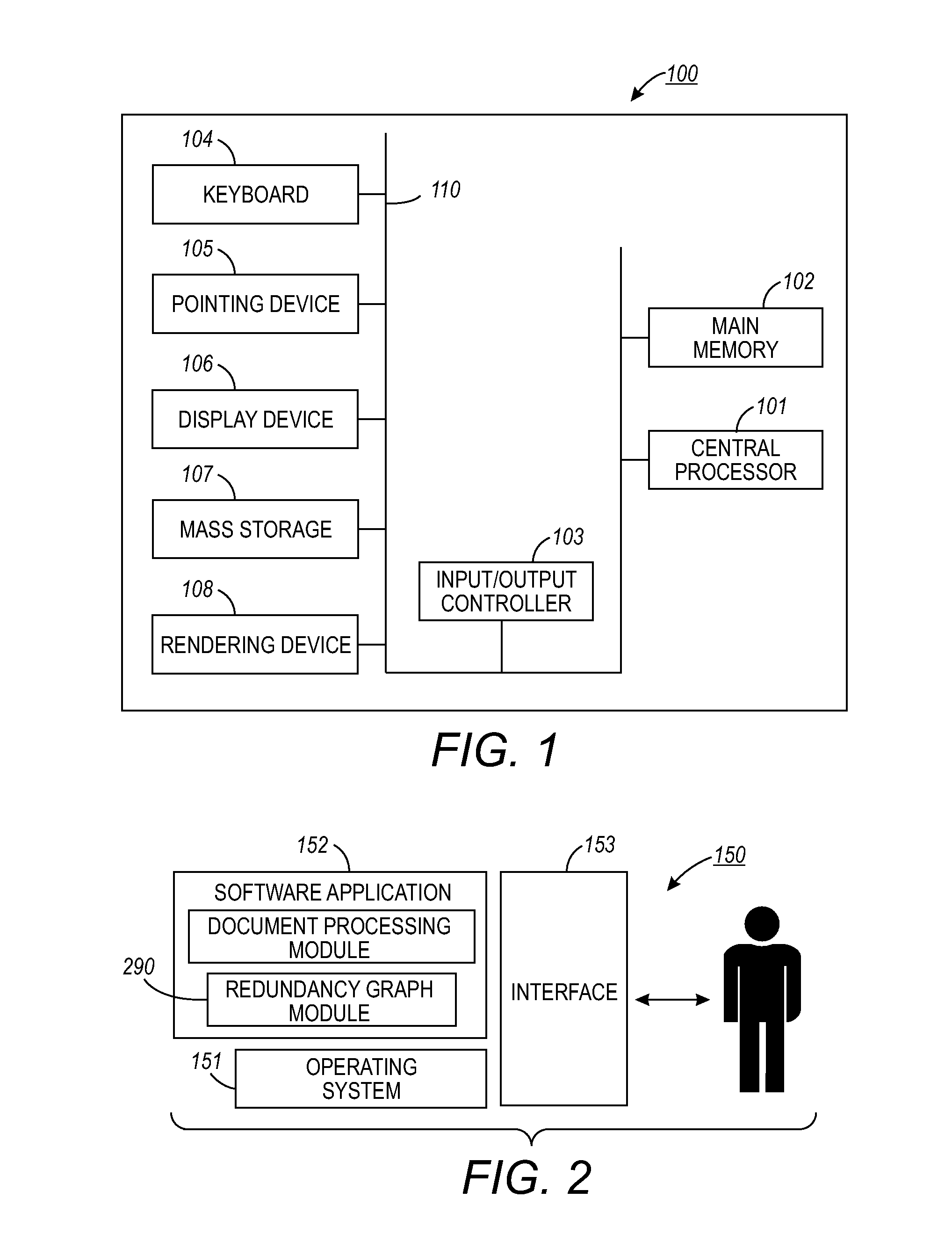

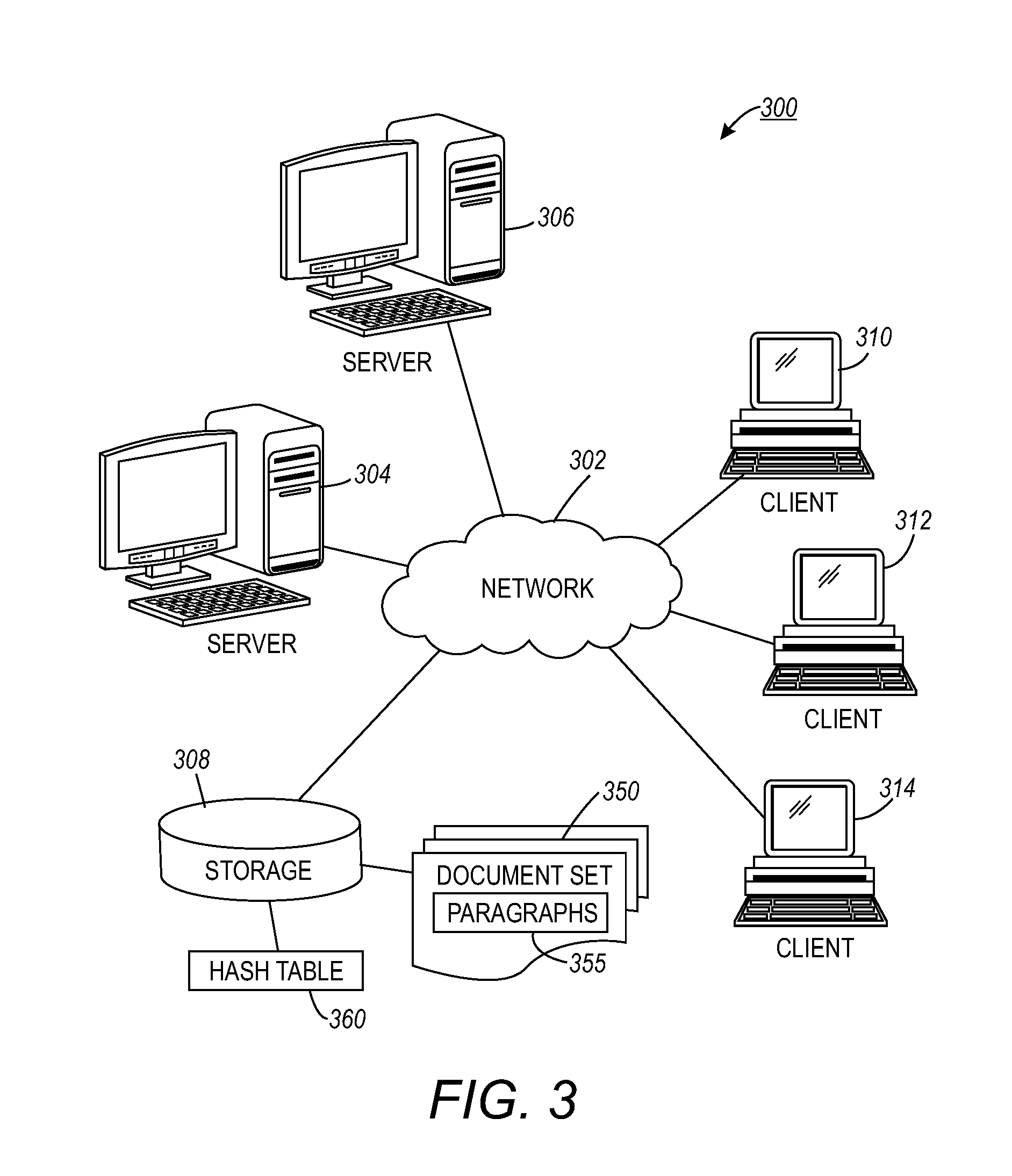

Method and system for constructing a document redundancy graph

ActiveUS20110029952A1Eliminate inconsistenciesMore informationDigital data information retrievalNatural language data processingHyperlinkGraphics

A system and method for constructing a document redundancy graph with respect to a document set. The redundancy graph can be constructed with a node for each paragraph associated with the document set such that each node in the redundancy graph represents a unique cluster of information. The nodes can be linked in an order with respect to the information provided in the document set and bundles of redundant information from the document set can be mapped to individual nodes. A data structure (e.g., a hash table) of a paragraph identifier associated with a probability value can be constructed for eliminating inconsistencies with respect to node redundancy. Additionally, a sequence of unique nodes can also be integrated into the graph construction process. The nodes can be connected to the paragraphs associated with the document set via a hyperlink and / or via a label with respect to each node.

Owner:XEROX CORP

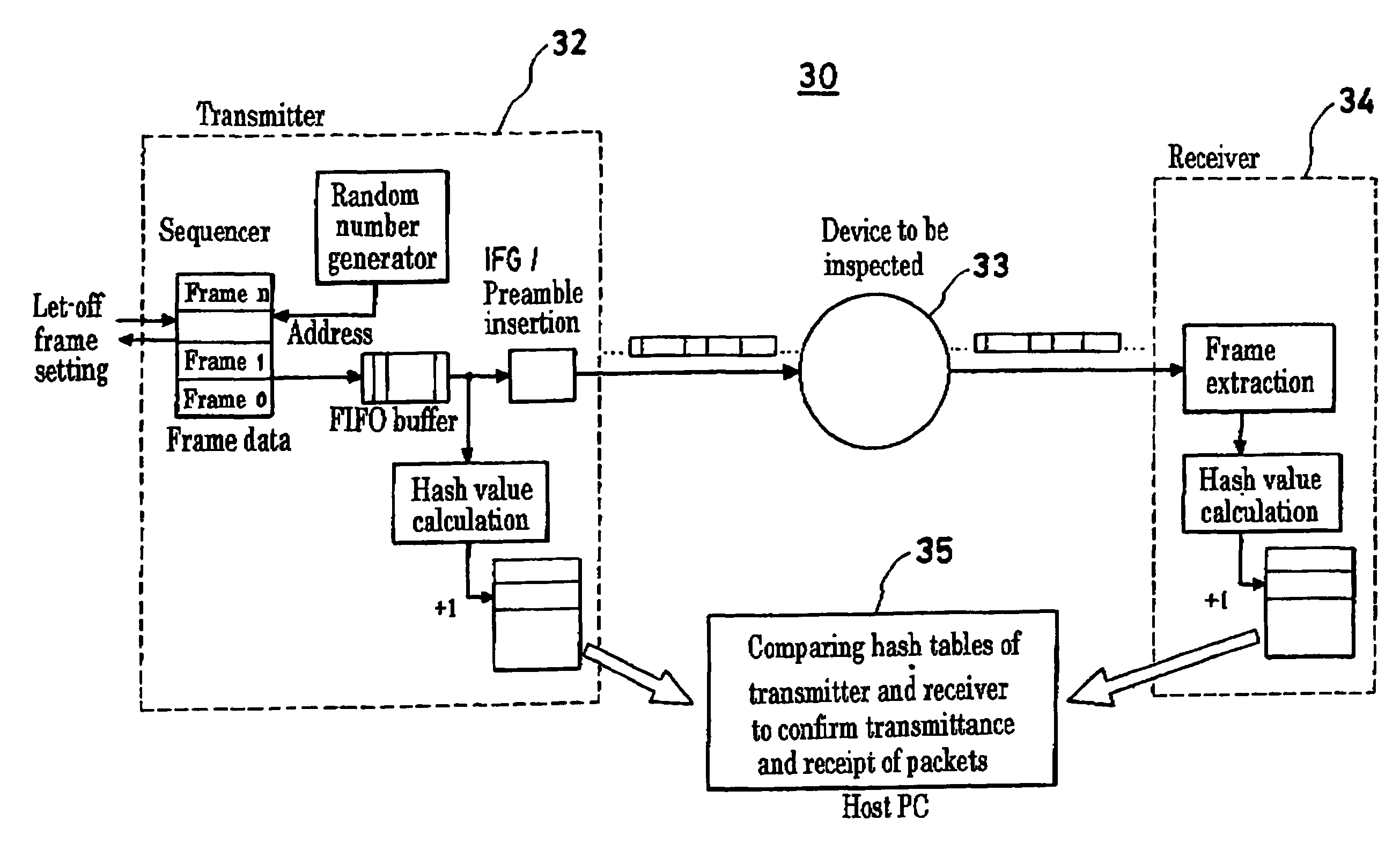

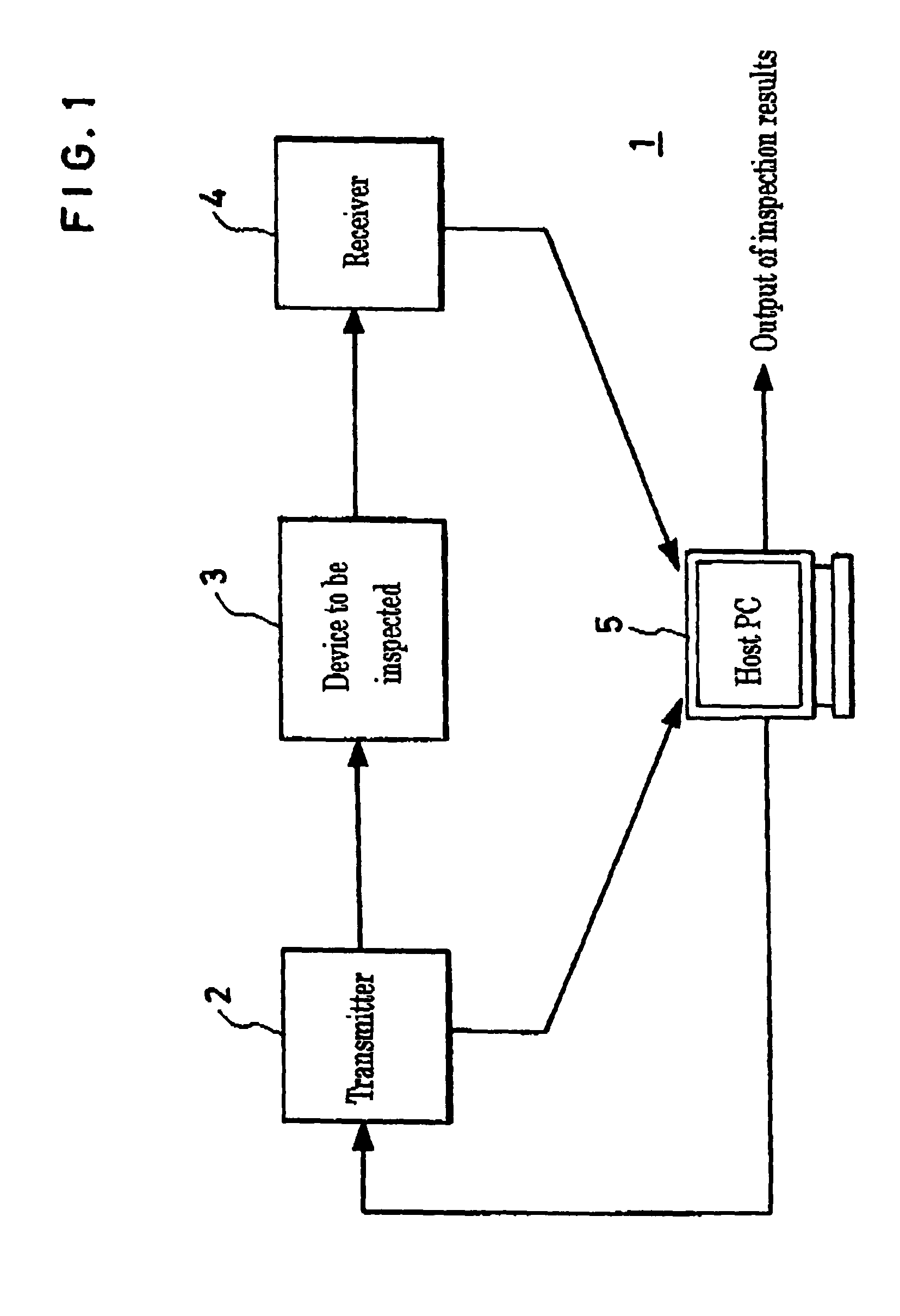

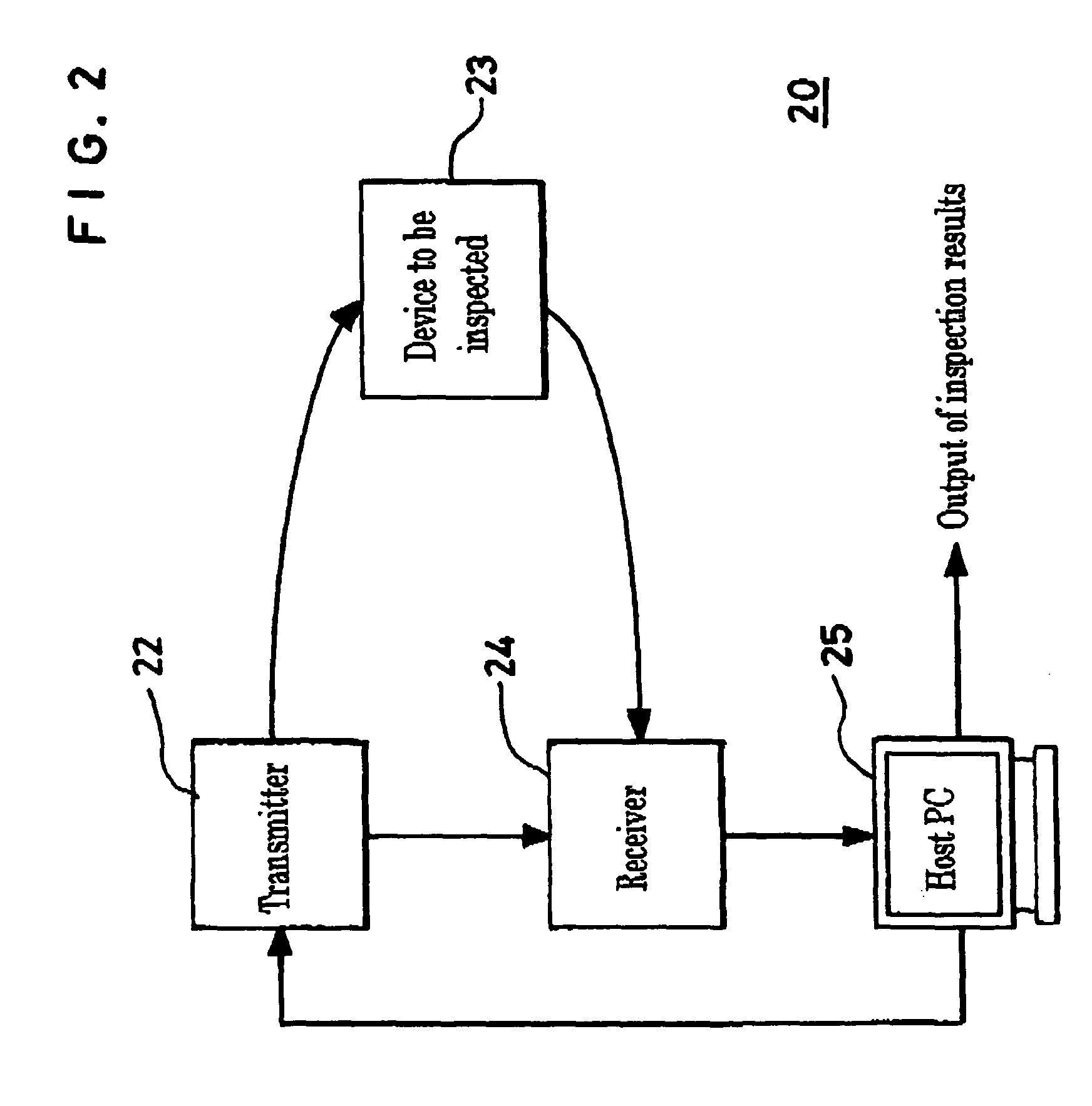

FPGA-based network device testing equipment for high load testing

InactiveUS7953014B2High load in testIncrease speedError preventionFrequency-division multiplex detailsPhysical layerPattern generation

Network device testing equipment capable of testing network devices using small size packets and for a transferring ability and a filtering ability at a media speed is described. A configuration is adopted in which a Field Programmable Gate Array (FPGA) included in a transmitter or receiver on one or both of transmitting and receiving sides is connected directly to a physical layer chip of a network and computers on both the transmitting and receiving sides are connected thereto. Each of the FPGAs of the transmitter and receiver has a circuit which has an integrated function of transmitting a packet pattern generation function and a packet-receiving function, thereby enabling a test and an inspection in real time. When inspecting the filtering function, a hash table storing therein a hash value and a list of occurrence frequencies for hash values is utilized. In order to avoid the hash values of different packets from having a same value, the hash function is configured so as to avoid that the same hash value is given to different packets or, when packet values have a common hash value, the packet is re-shaped into a packet having a different hash value.

Owner:NAT INST OF ADVANCED IND SCI & TECH +2

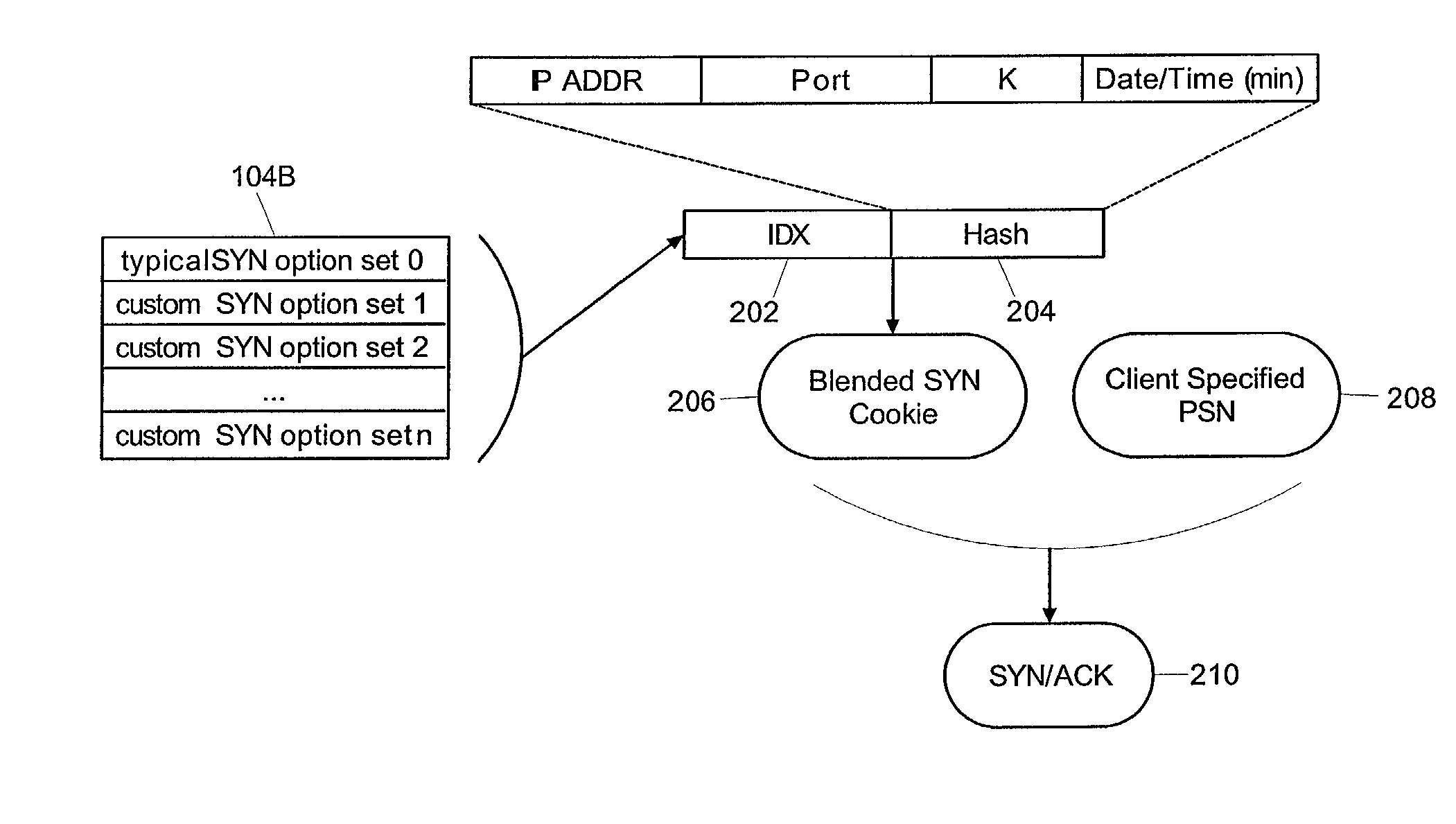

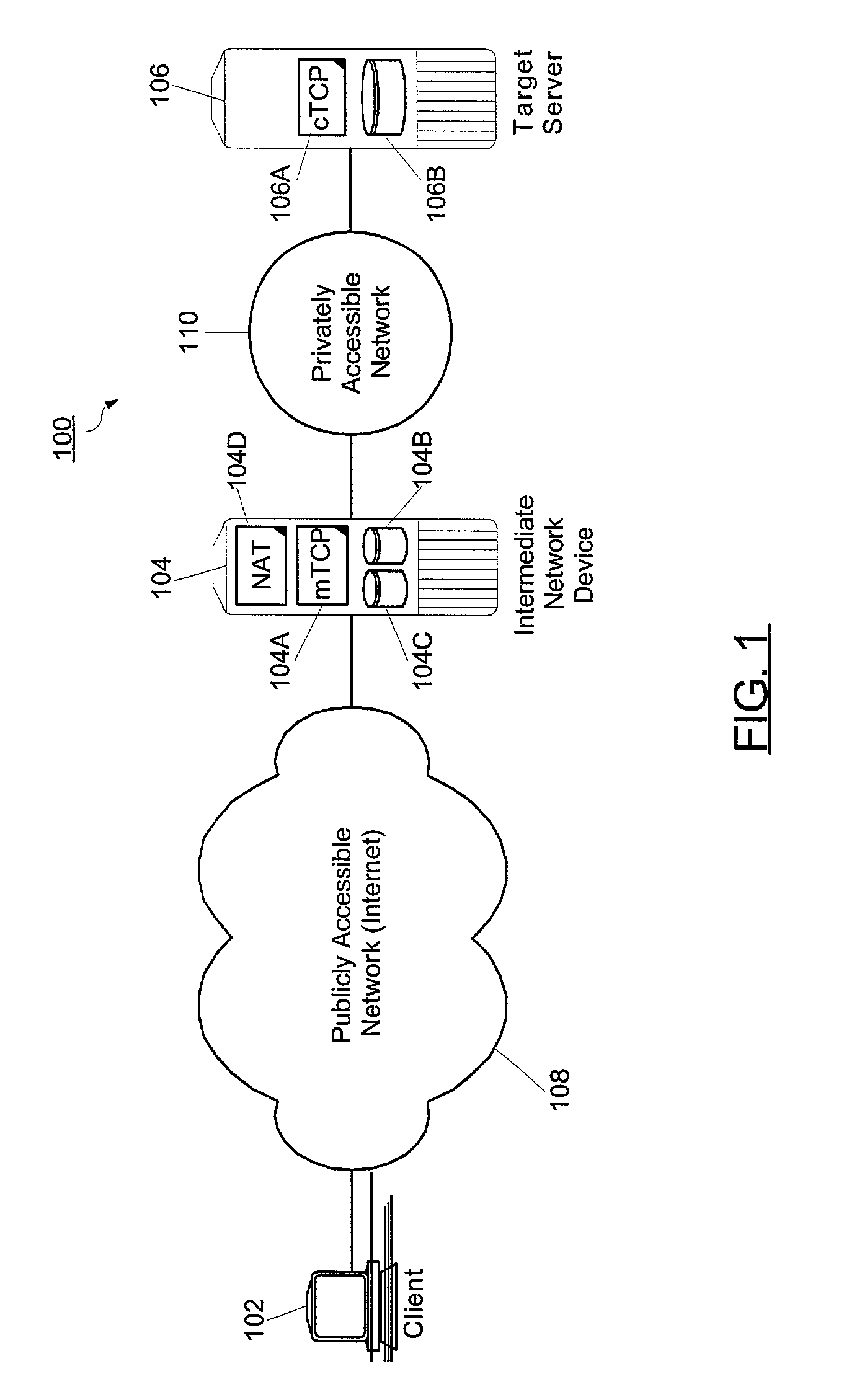

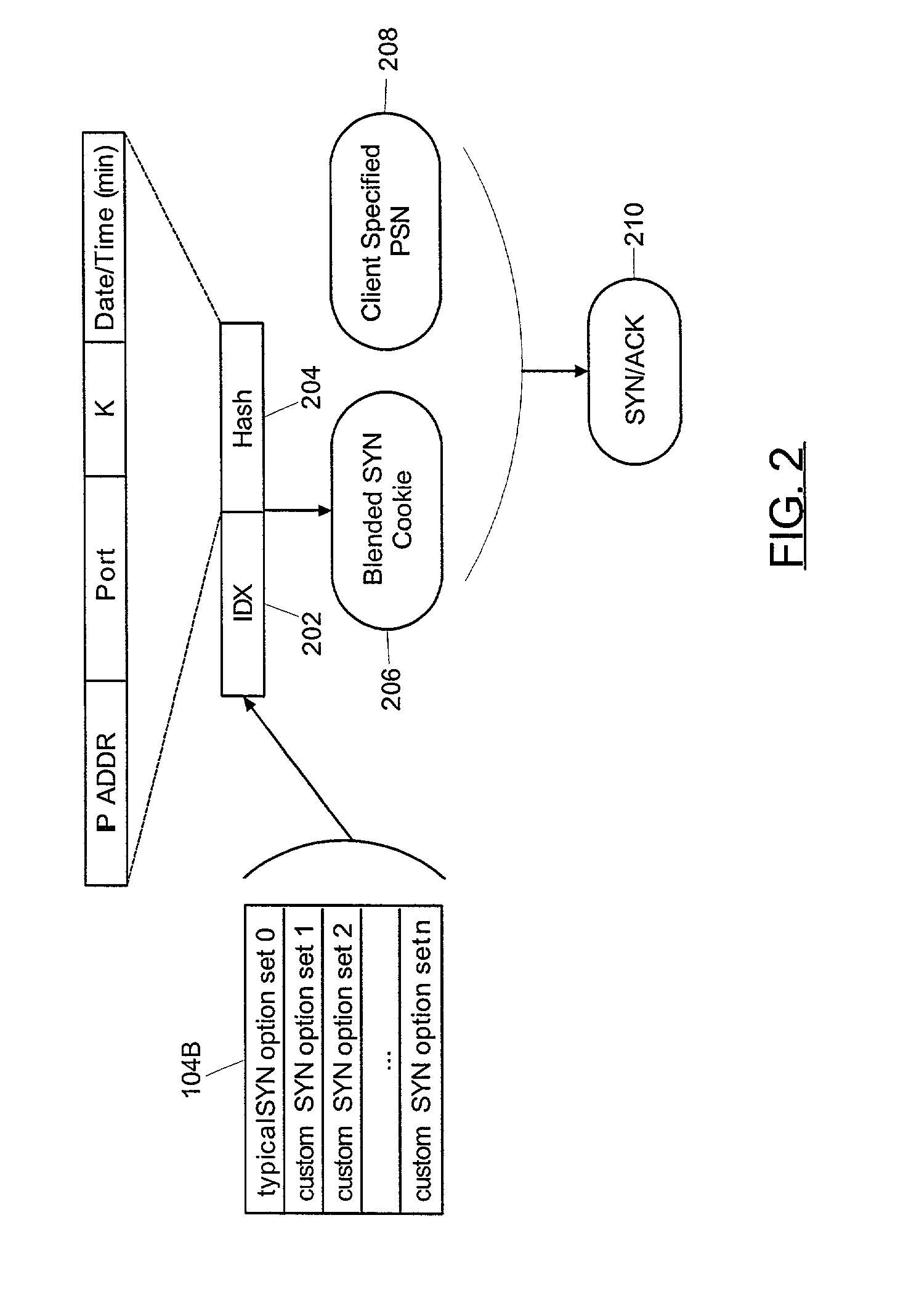

Blended SYN cookies

ActiveUS7058718B2Digital data processing detailsAnalogue secracy/subscription systemsNetwork addressingNetwork address

A method of producing a blended SYN cookie can include identifying within a SYN packet a source network address and desired communications session parameters. Subsequently, an index value into a table of pre-configured sets of communications session parameters can be retrieved. Notably, the index value can reference one of the sets which approximates the desired communications parameters. A hash value can be computed based upon the source network address, a constant seed and current date and time data. Finally, the computed hash value can be combined with the index value, the combination forming the blended SYN cookie.

Owner:TREND MICRO INC

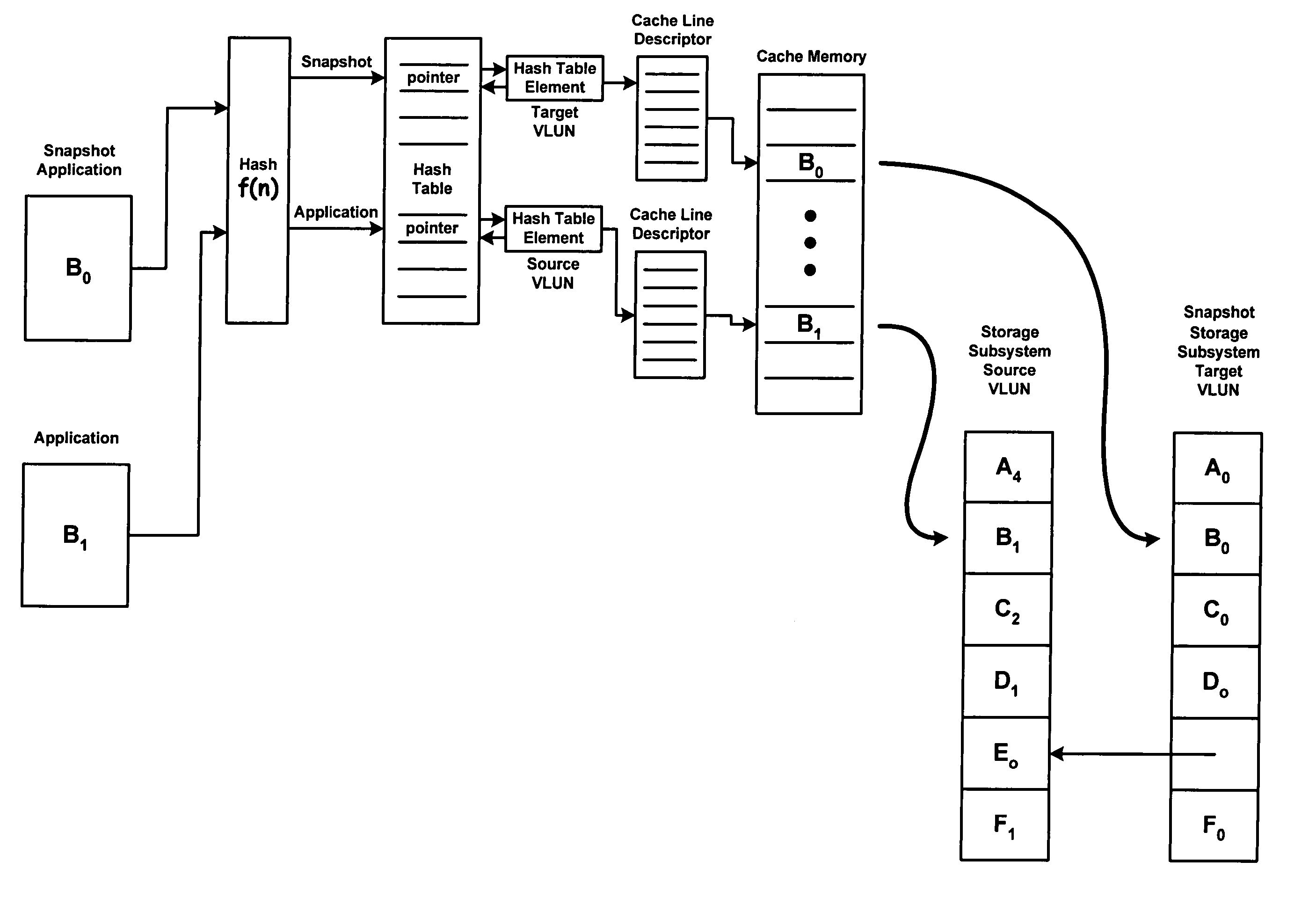

Methods and systems of cache memory management and snapshot operations

InactiveUS20060265568A1Memory architecture accessing/allocationMemory loss protectionParallel computingHash table

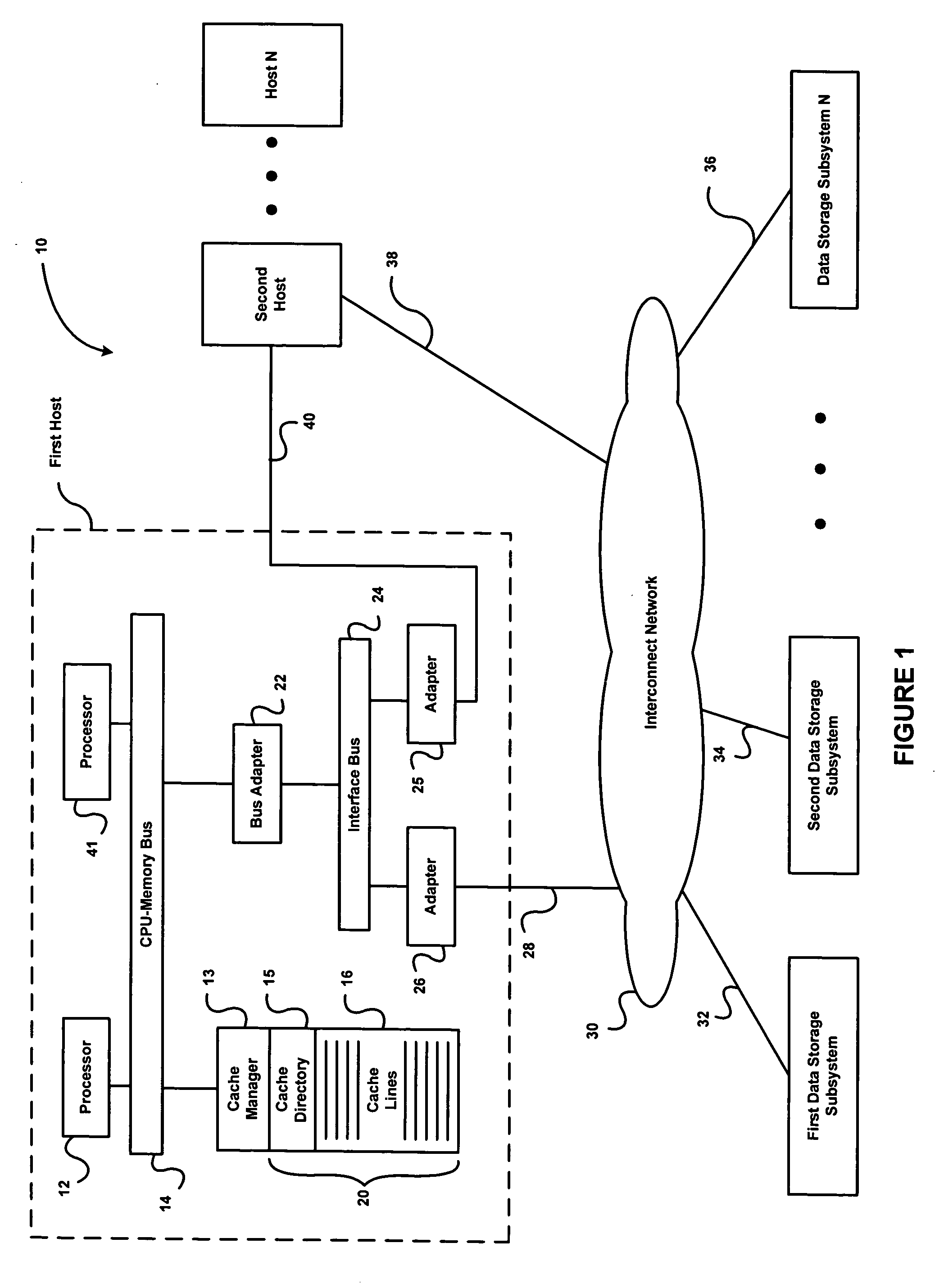

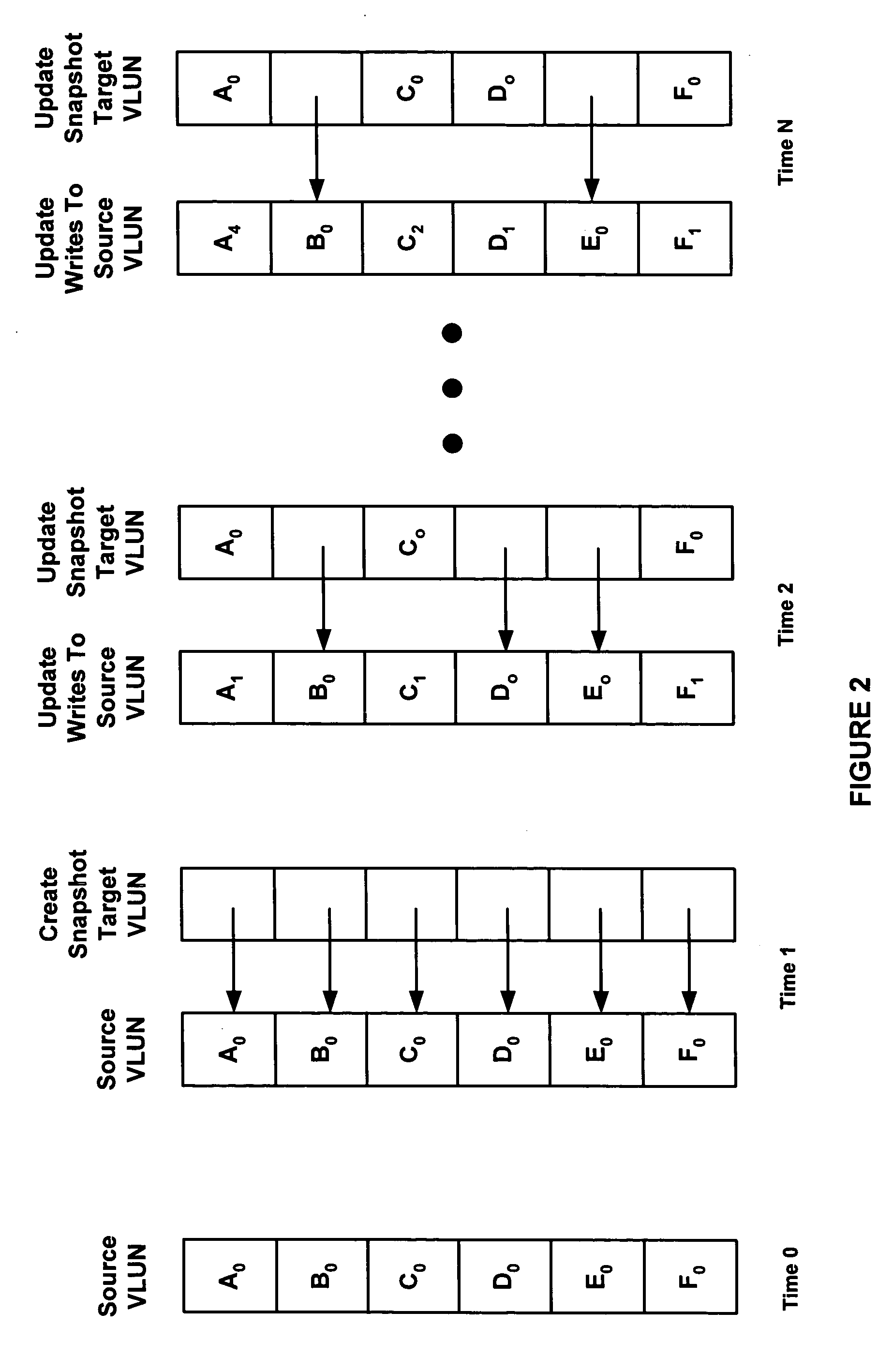

The present invention relates to a cache memory management system suitable for use with snapshot applications. The system includes a cache directory including a hash table, hash table elements, cache line descriptors, and cache line functional pointers, and a cache manager running a hashing function that converts a request for data from an application to an index to a first hash table pointer in the hash table. The first hash table pointer in turn points to a first hash table element in a linked list of hash table elements where one of the hash table elements of the linked list of hash table elements points to a first cache line descriptor in the cache directory and a cache memory including a plurality of cache lines, wherein the first cache line descriptor has a one-to-one association with a first cache line. The present invention also provides for a method converting a request for data to an input to a hashing function, addressing a hash table based on a first index output from the hashing function, searching the hash table elements pointed to by the first index for the requested data, determining the requested data is not in cache memory, and allocating a first hash table element and a first cache line descriptor that associates with a first cache line in the cache memory.

Owner:ORACLE INT CORP

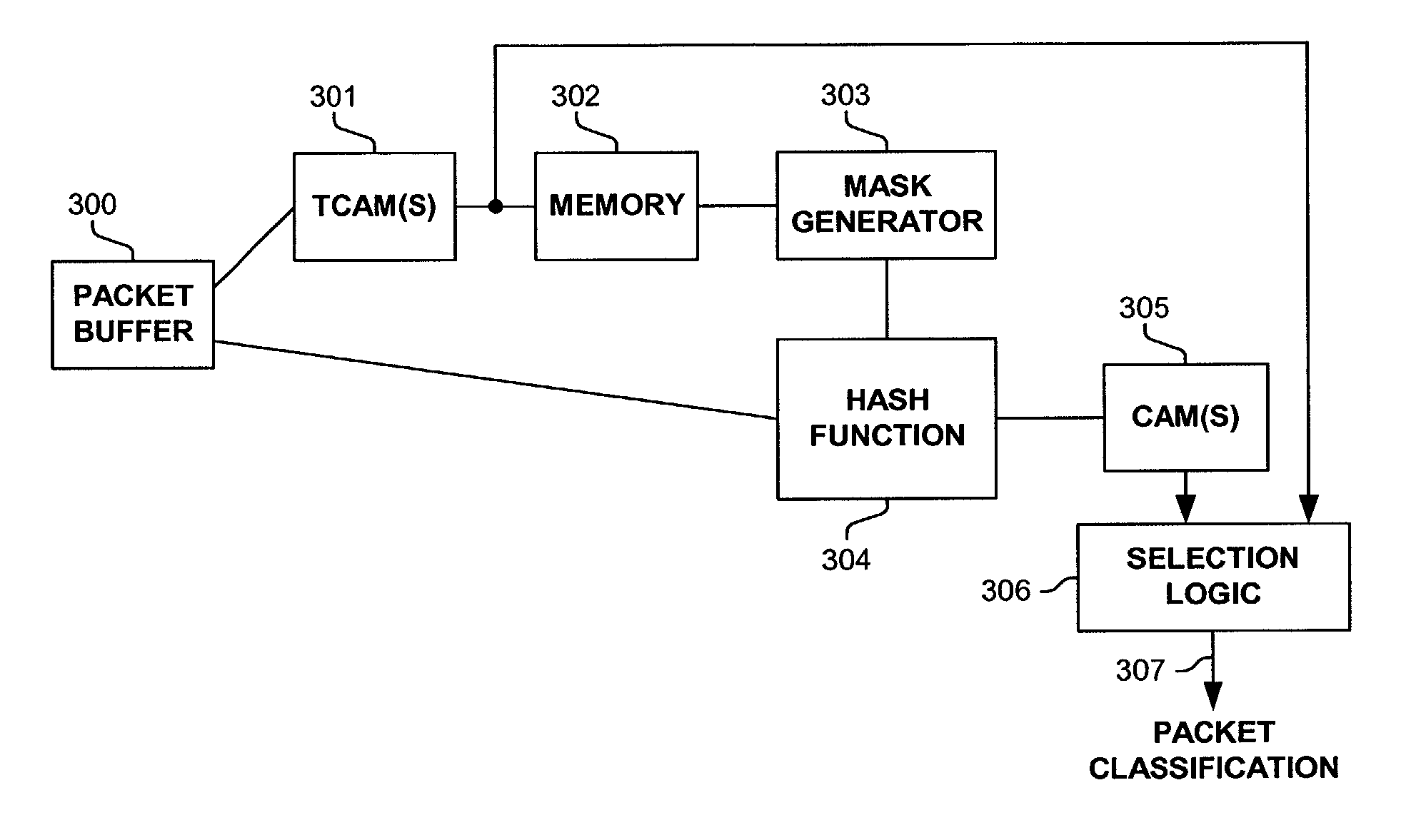

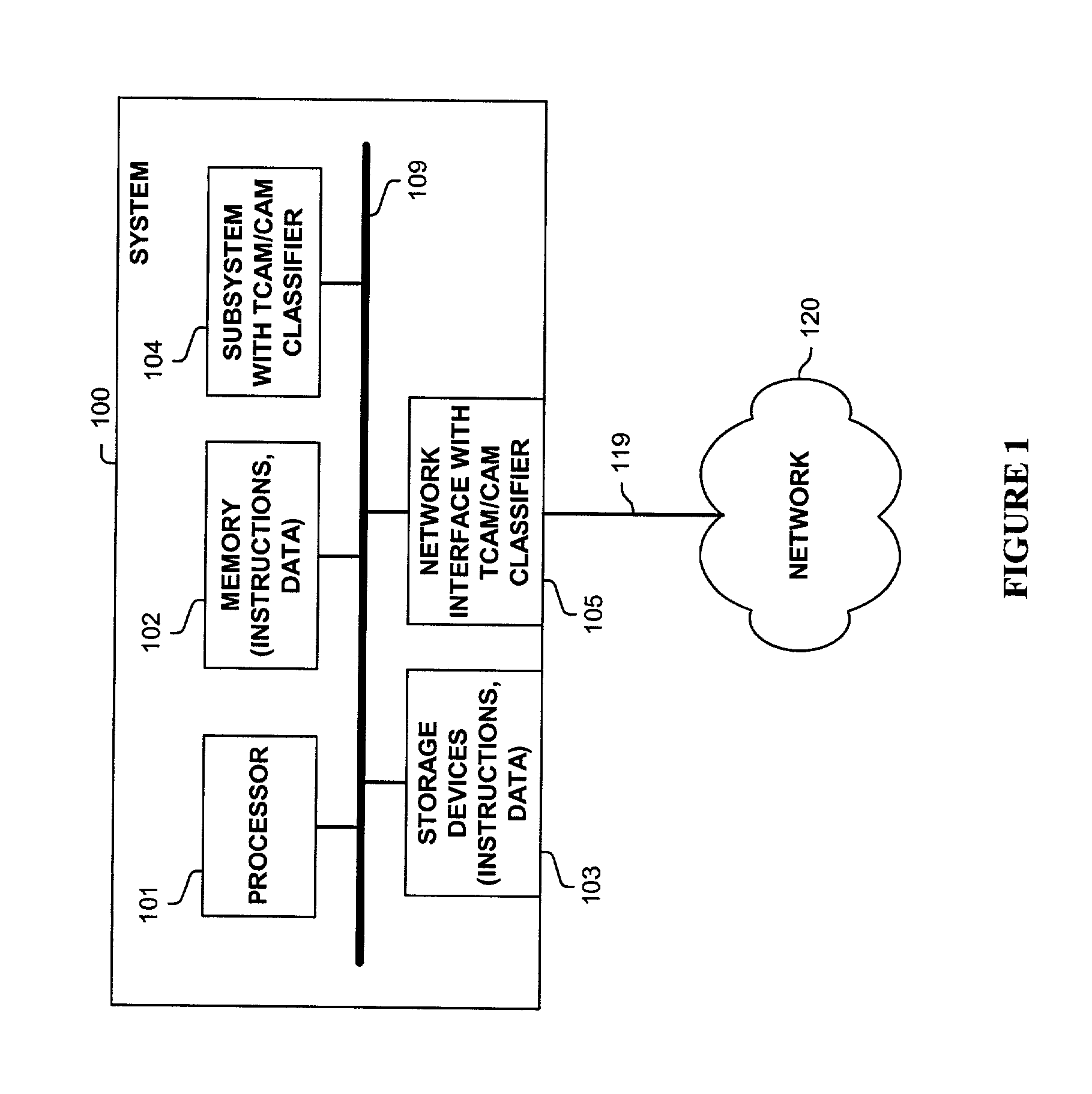

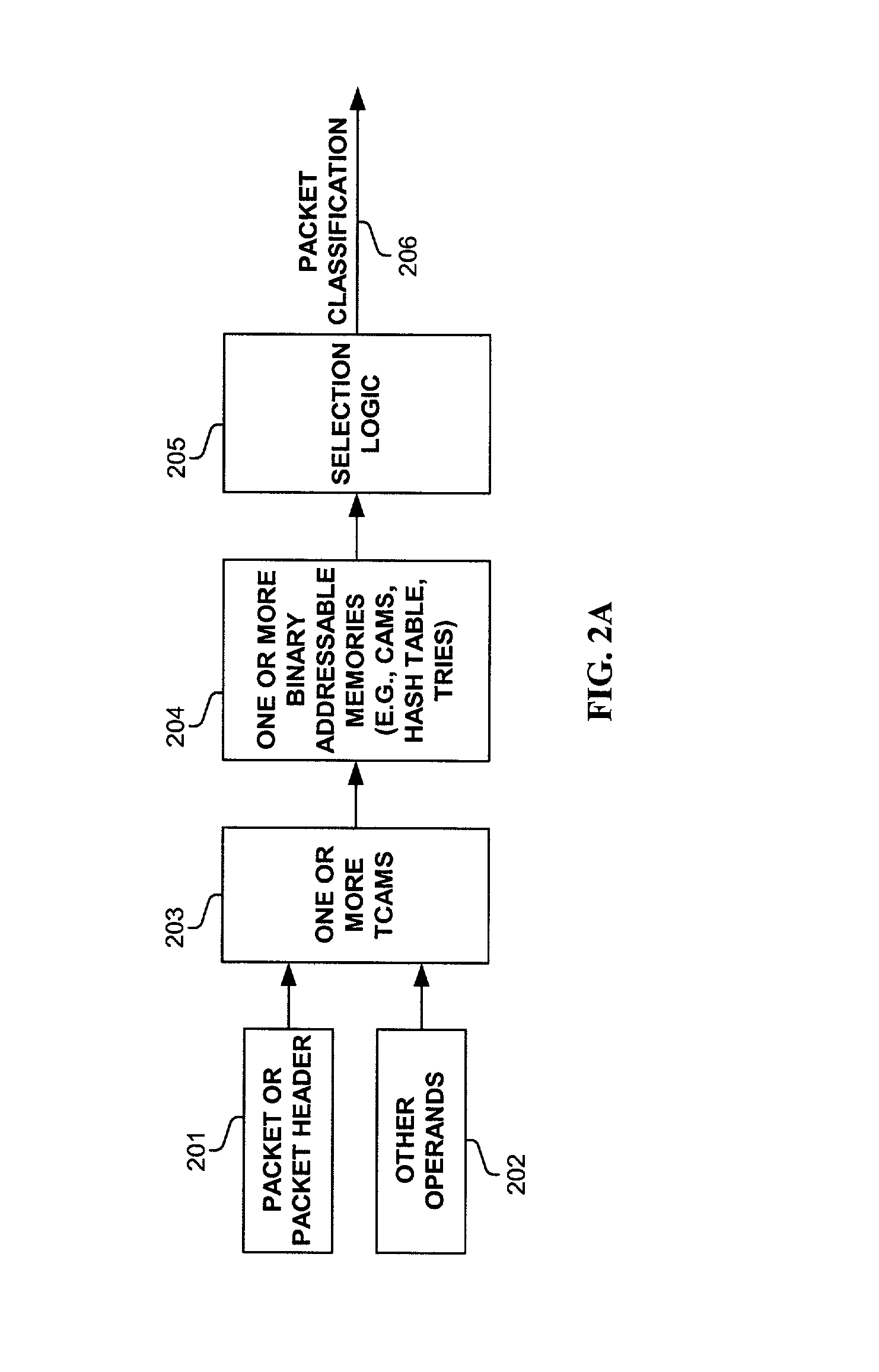

Method and apparatus for using ternary and binary content-addressable memory stages to classify packets

InactiveUS7002965B1Data processing applicationsDigital computer detailsQuality of serviceVirtual LAN

Methods and apparatus are disclosed herein for classifying packets using ternary and binary content-addressable memory stages to classify packets. One such system uses a stage of one or more TCAMS followed by a second stage one or more CAMS (or alternatively some other binary associative memories such as hash tables or TRIEs) to classify a packet. One exemplary system includes TCAMs for handling input and output classification and a forwarding CAM to classify packets for Internet Protocol (IP) forwarding decisions on a flow label. This input and output classification may include, but is not limited to routing, access control lists (ACLs), quality of service (QoS), network address translation (NAT), encryption, etc. These IP forwarding decisions may include, but are not limited to IP source and destination addresses, protocol type, flags and layer 4 source and destination ports, a virtual local area network (VLAN) id and / or other fields.

Owner:CISCO TECH INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com