Patents

Literature

1498 results about "Data deduplication" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

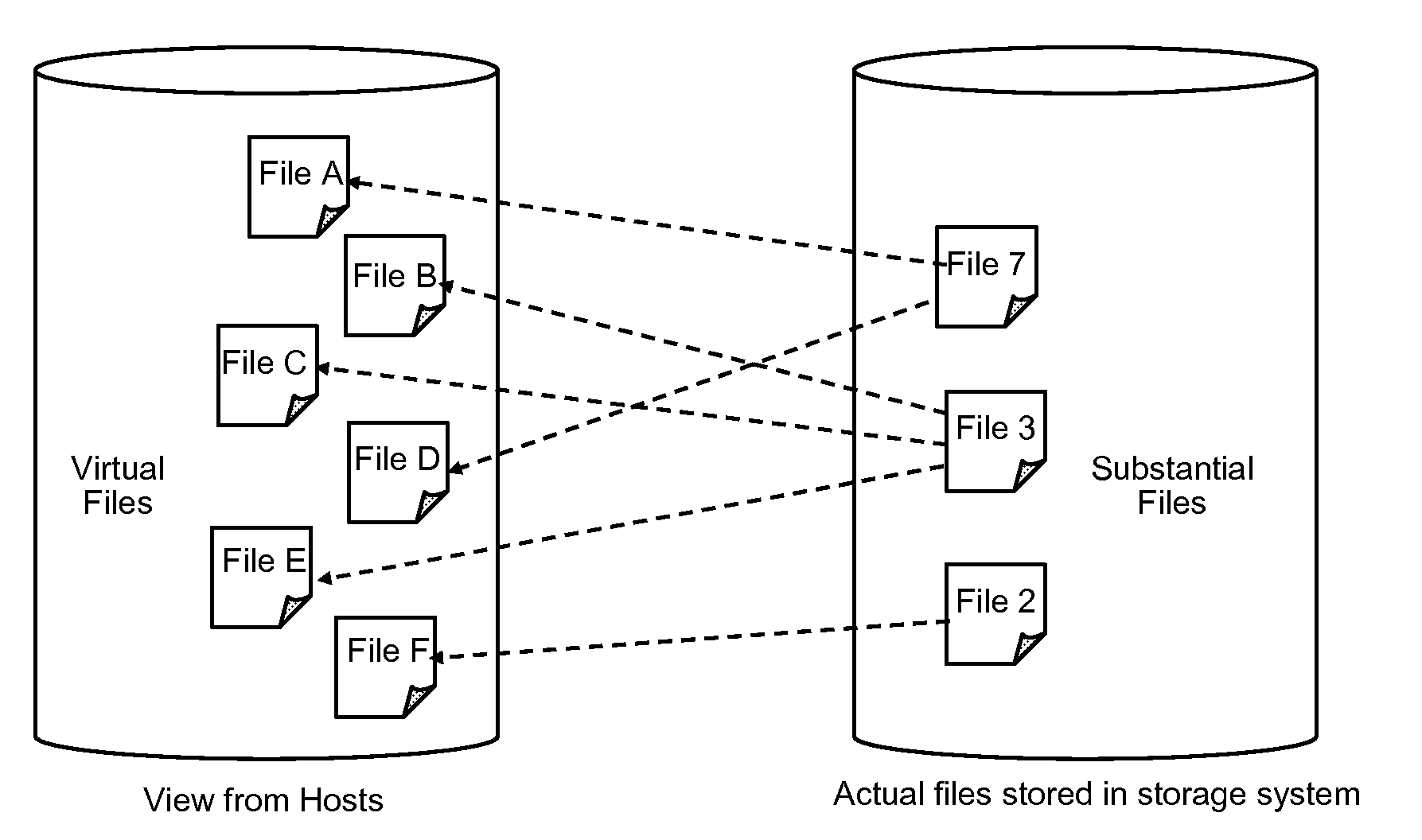

In computing, data deduplication is a technique for eliminating duplicate copies of repeating data. A related and somewhat synonymous term is single-instance (data) storage. This technique is used to improve storage utilization and can also be applied to network data transfers to reduce the number of bytes that must be sent. In the deduplication process, unique chunks of data, or byte patterns, are identified and stored during a process of analysis. As the analysis continues, other chunks are compared to the stored copy and whenever a match occurs, the redundant chunk is replaced with a small reference that points to the stored chunk. Given that the same byte pattern may occur dozens, hundreds, or even thousands of times (the match frequency is dependent on the chunk size), the amount of data that must be stored or transferred can be greatly reduced.

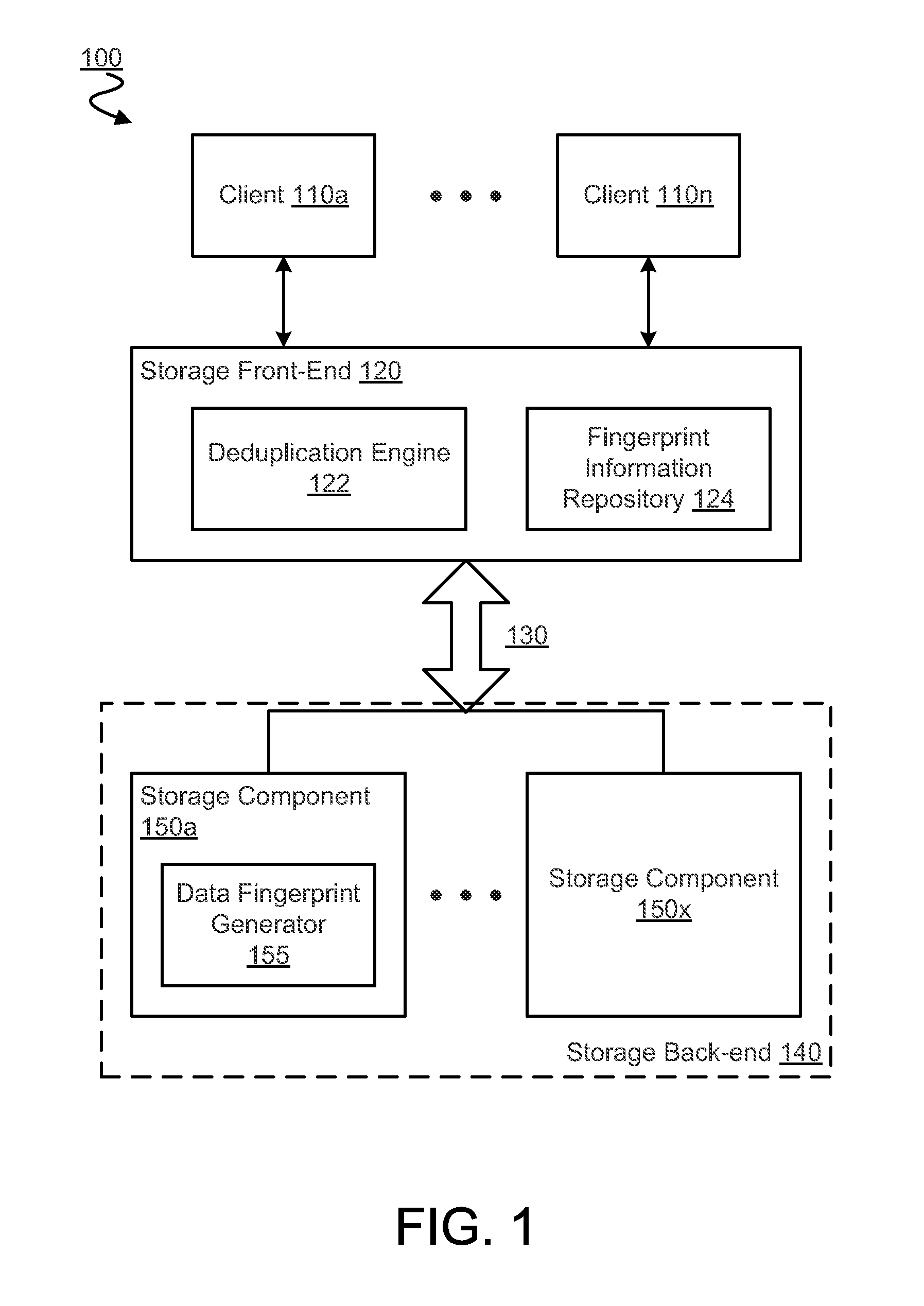

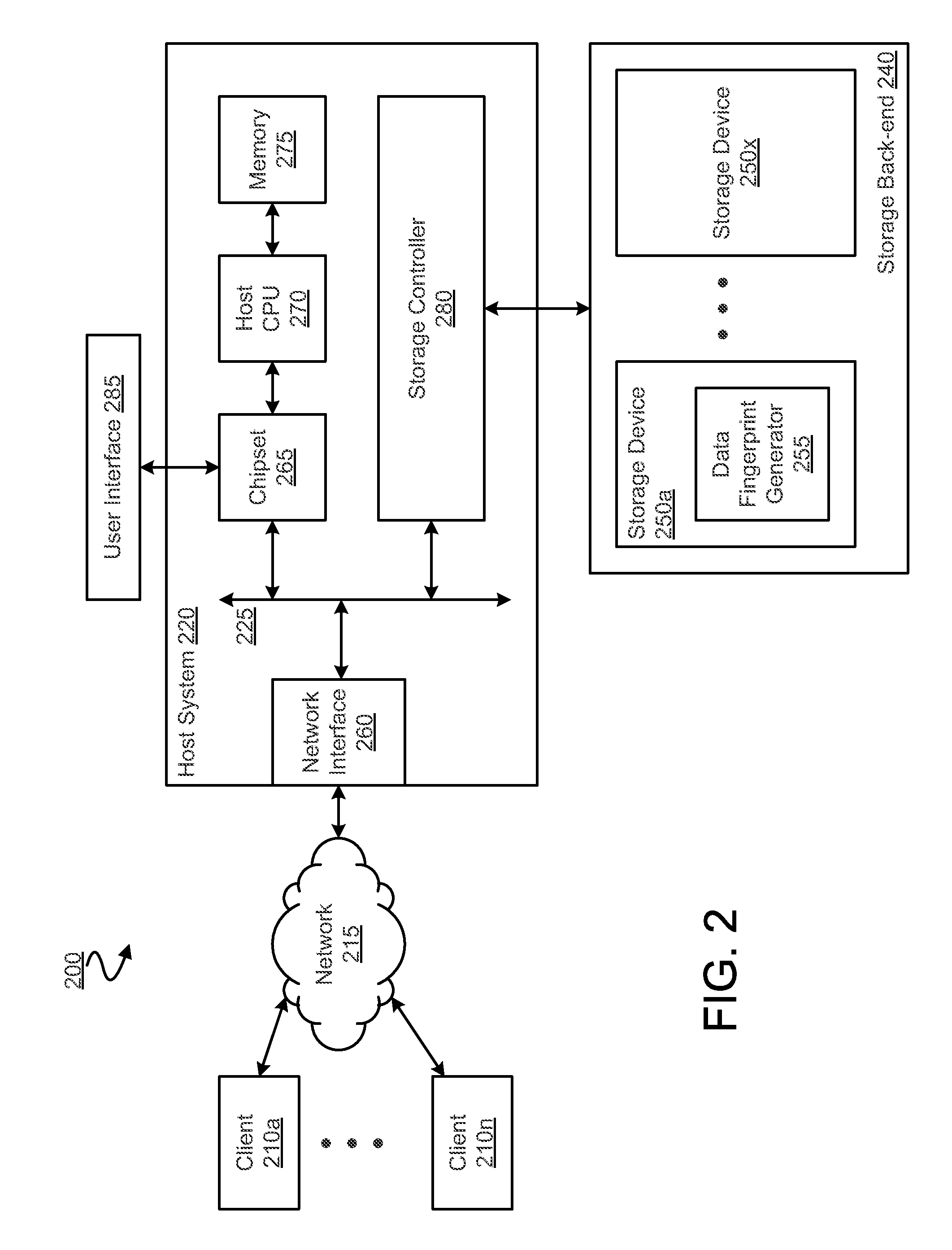

System and method for retrieving and using block fingerprints for data deduplication

ActiveUS20080005141A1Efficient identificationImprove performanceDigital data information retrievalDigital data processing detailsData deduplicationFingerprint database

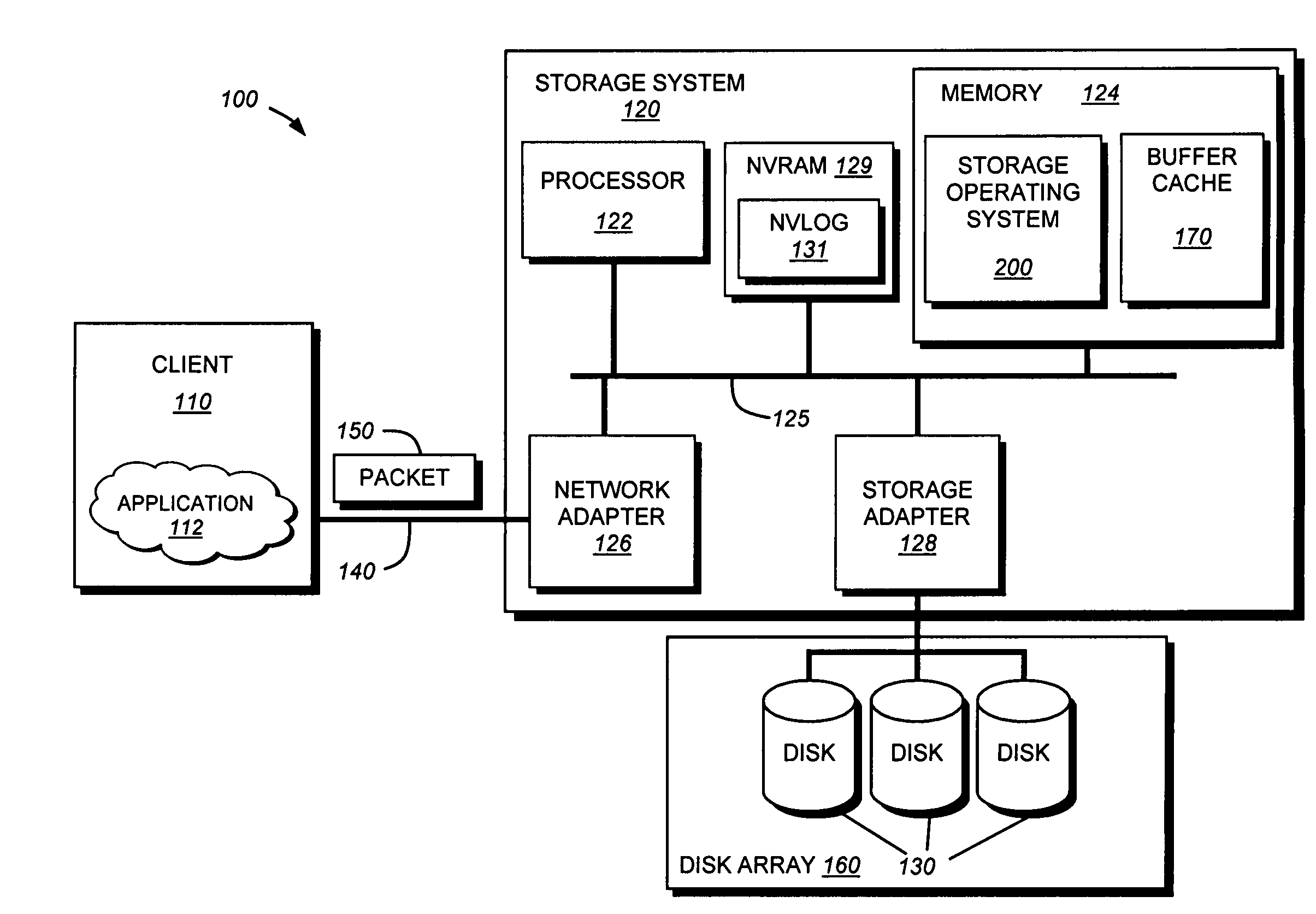

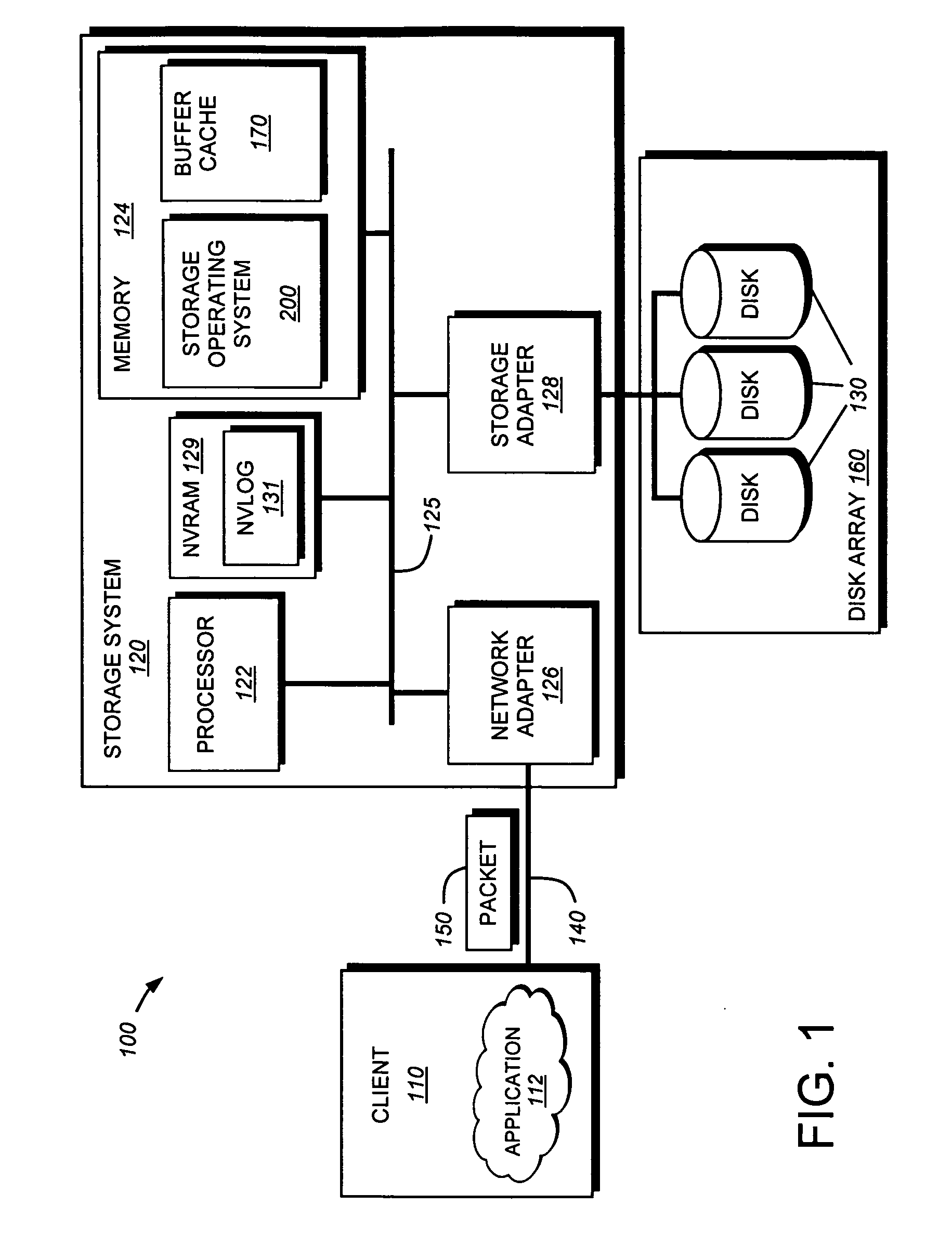

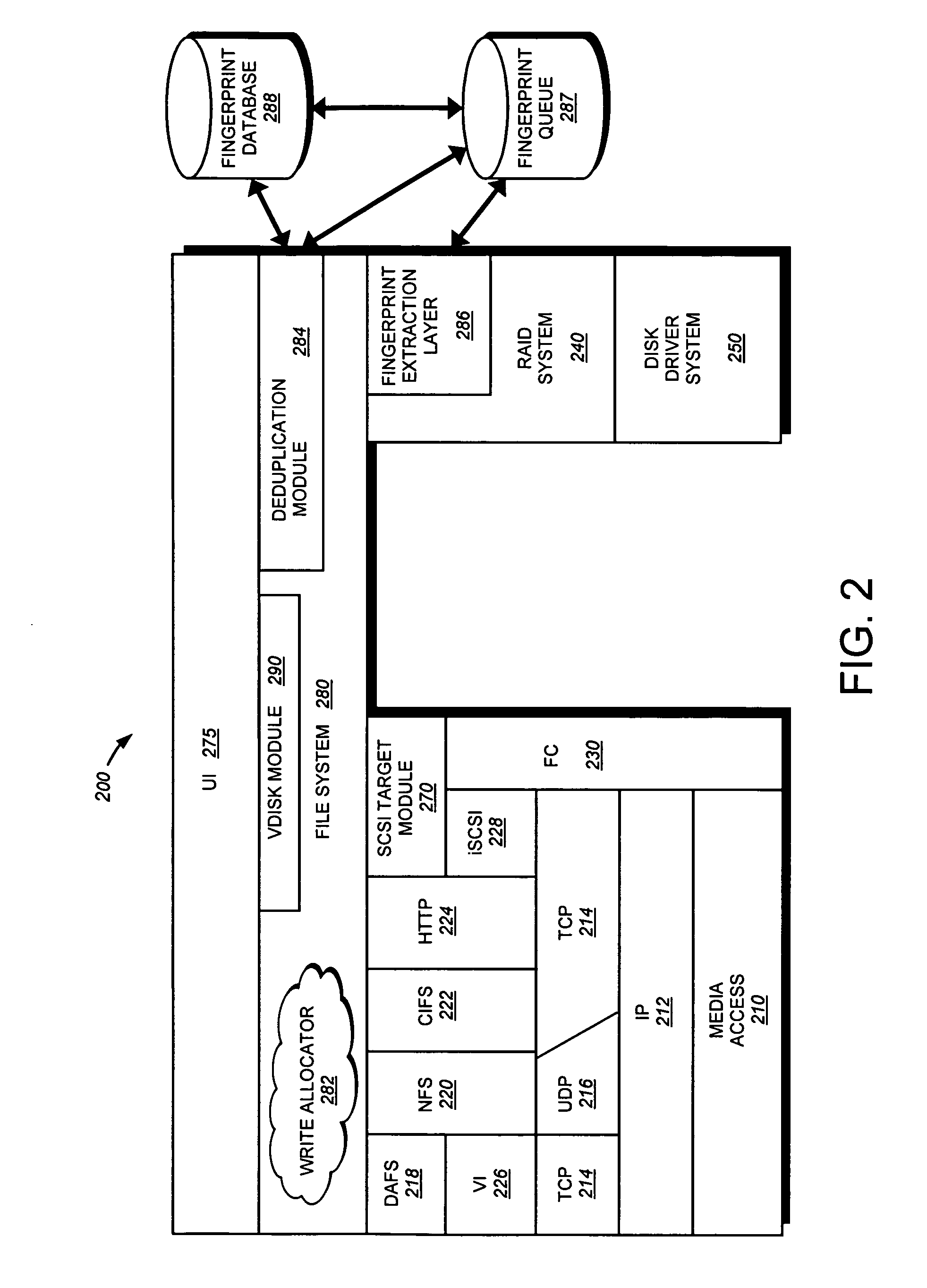

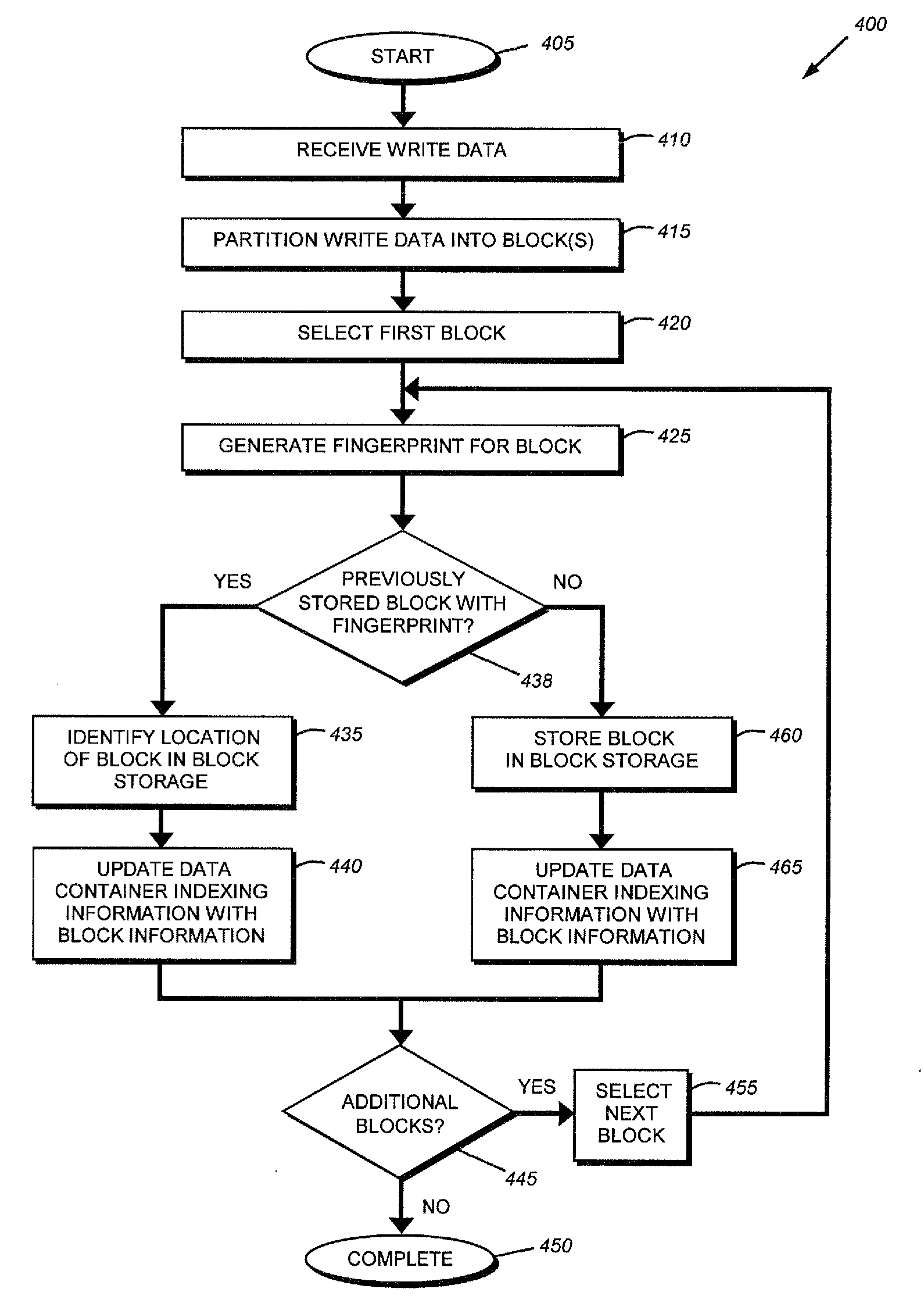

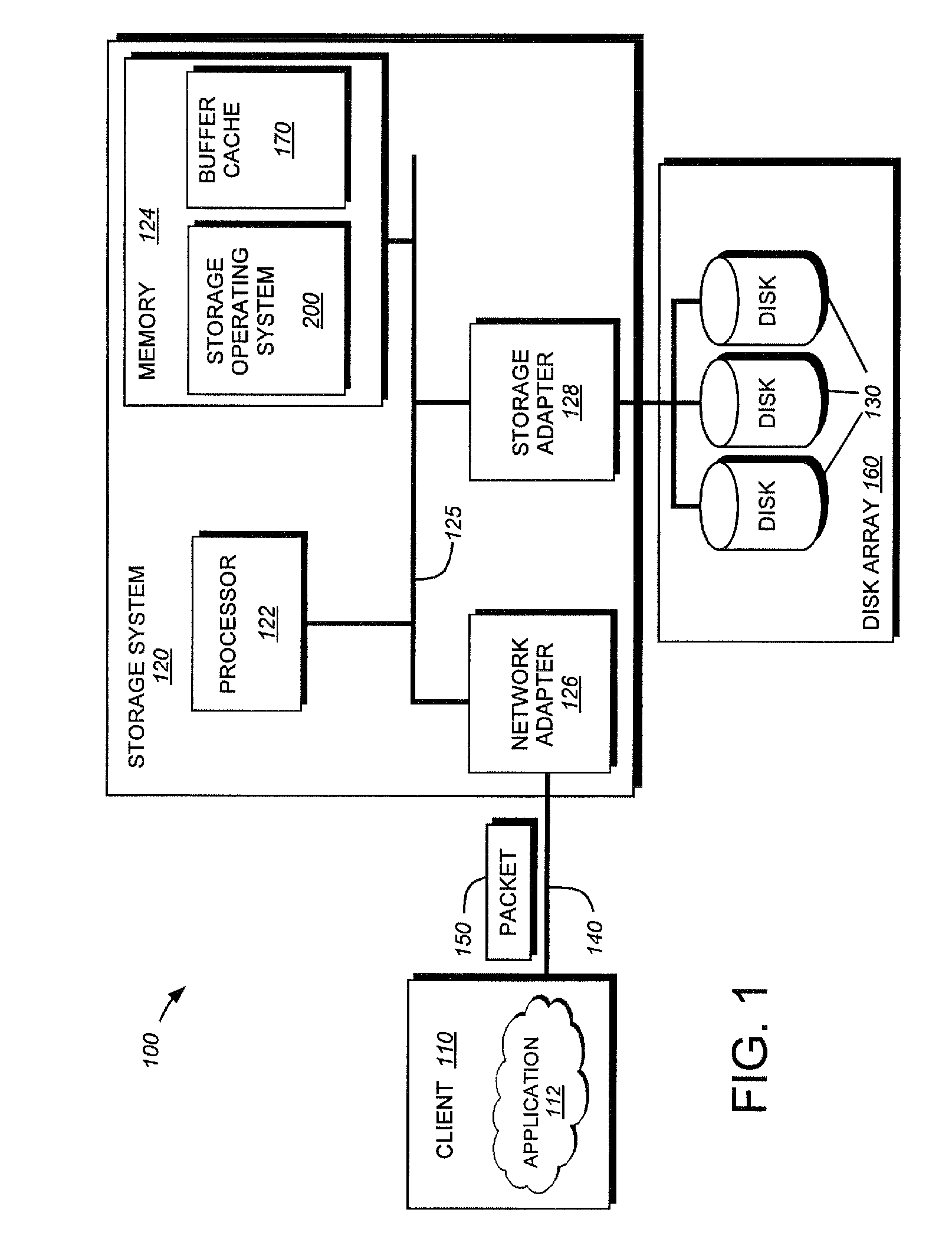

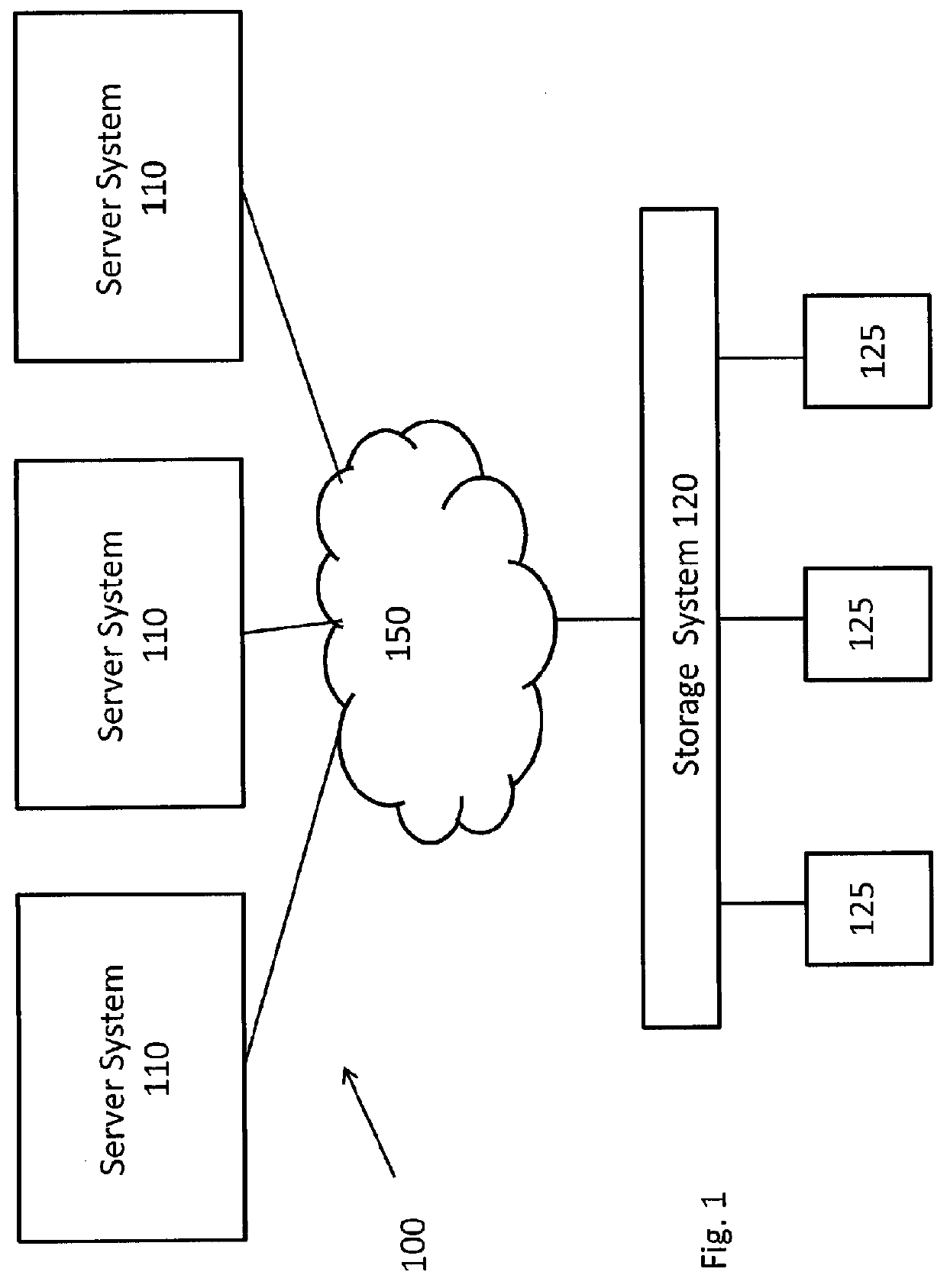

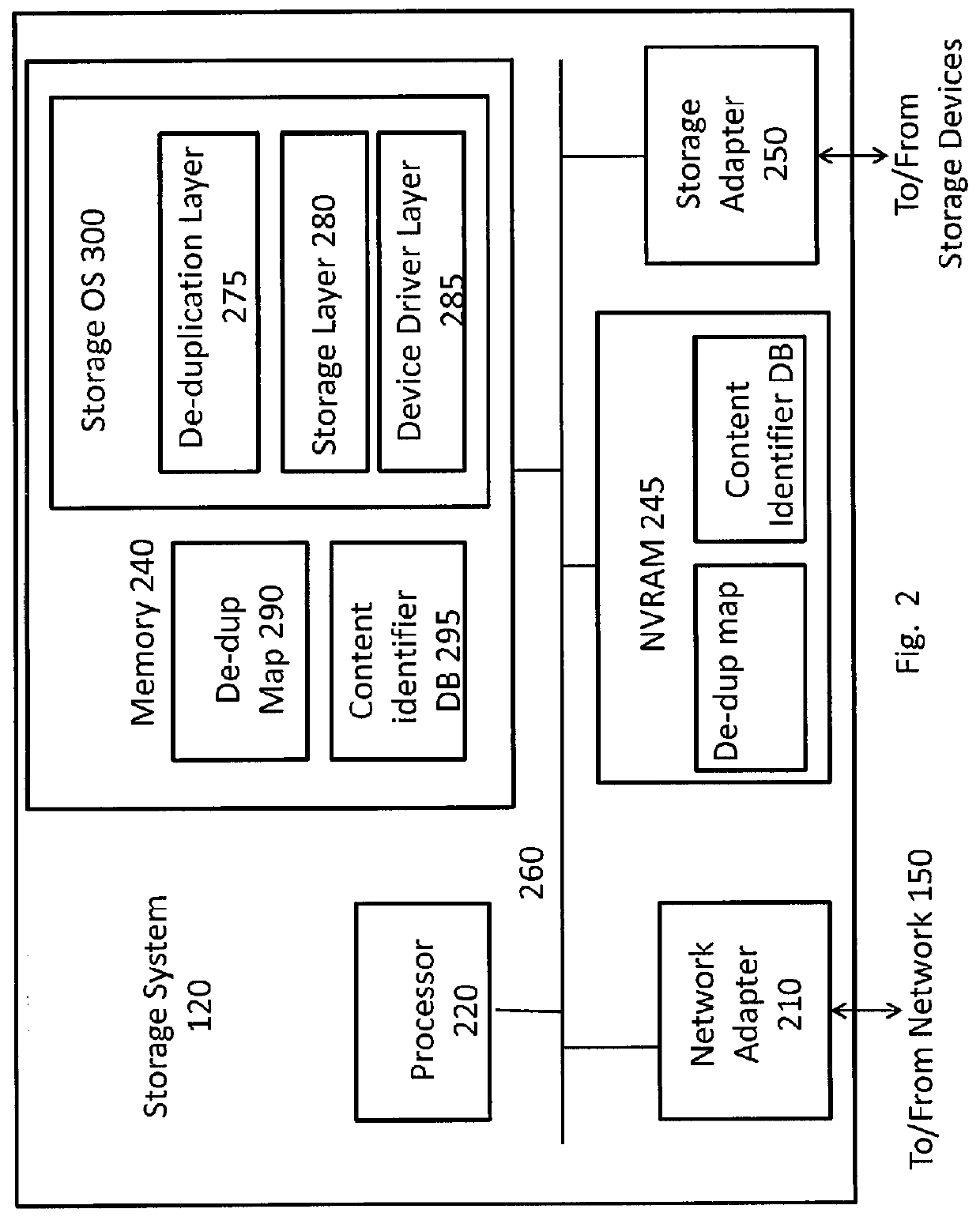

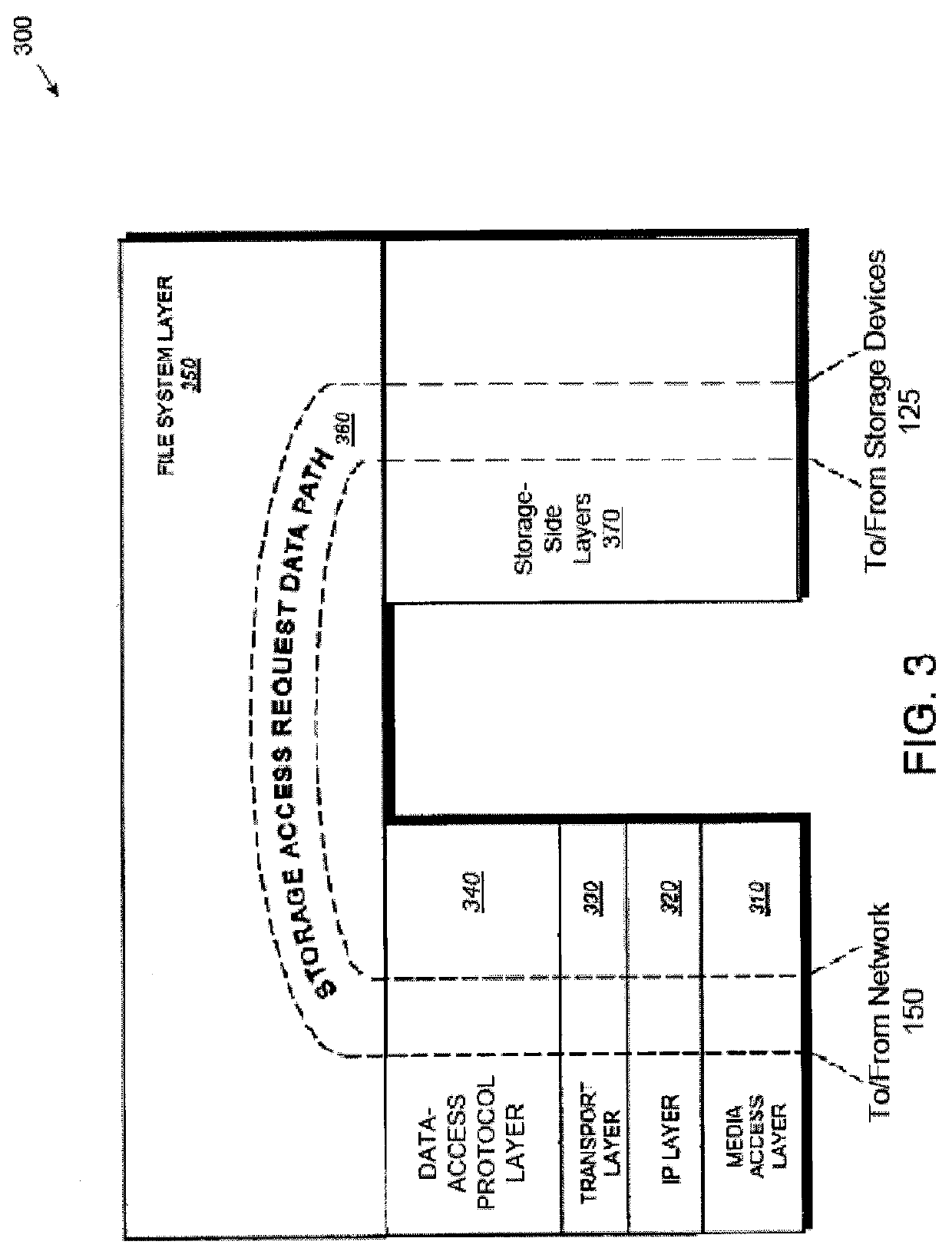

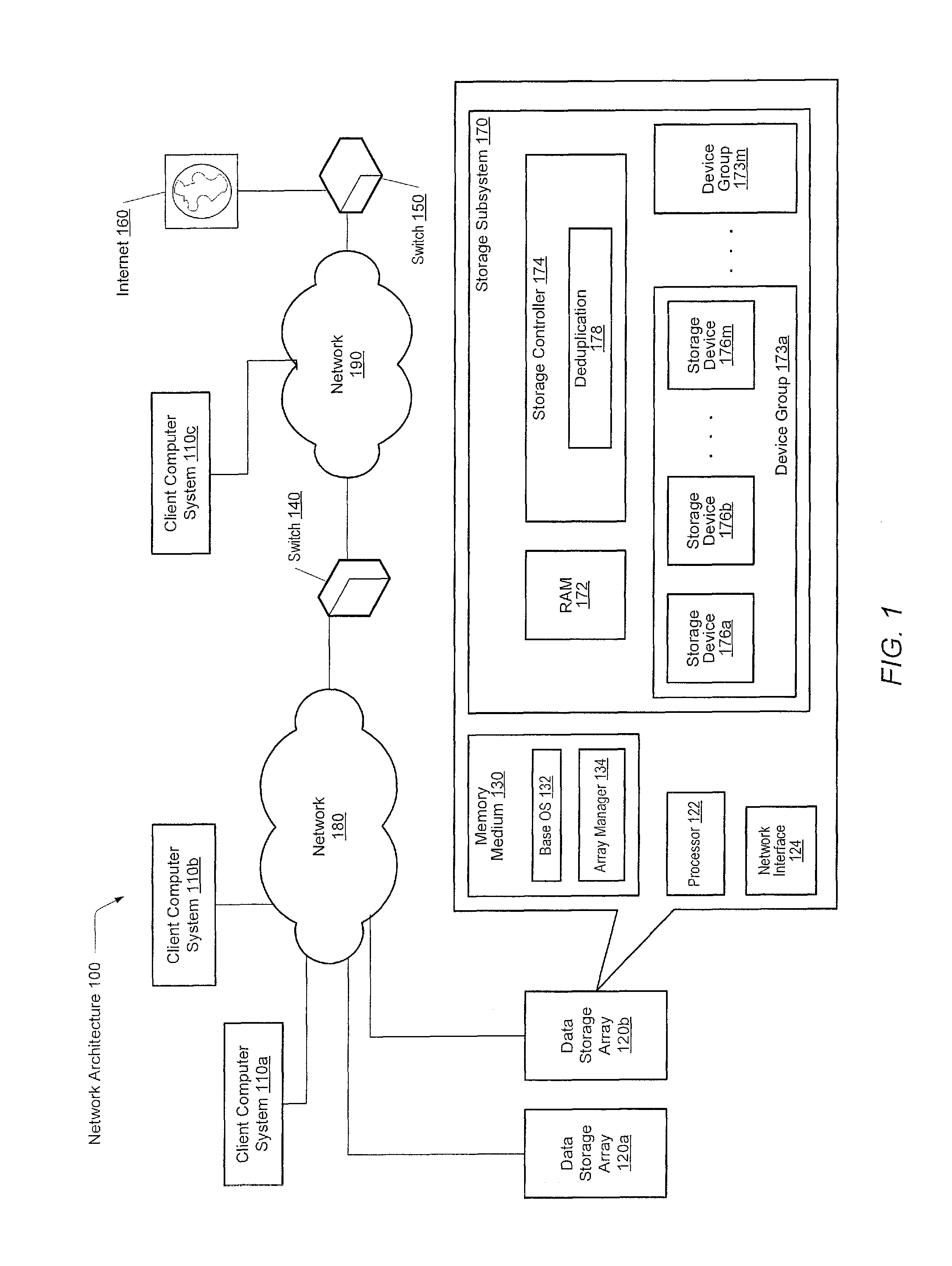

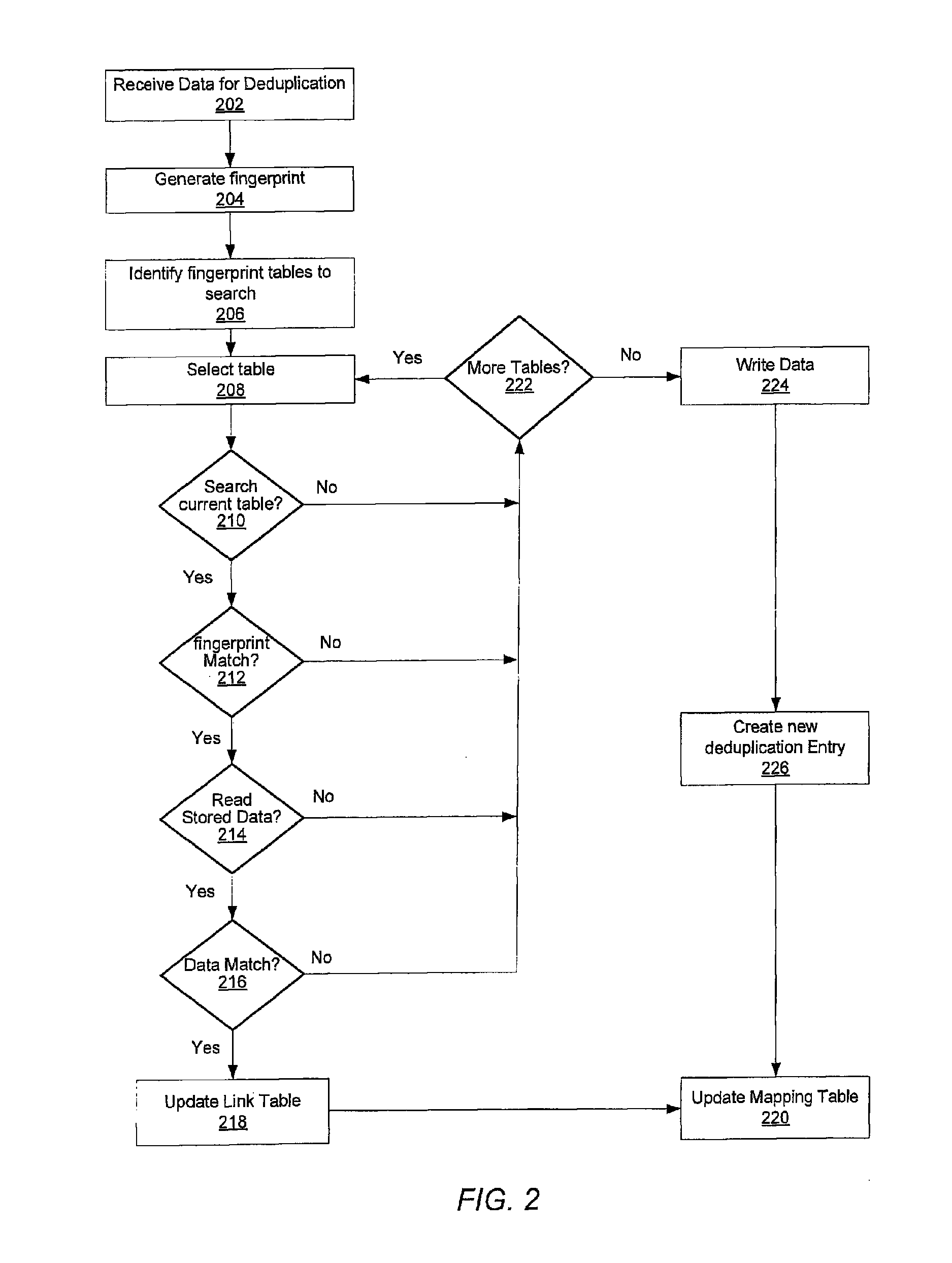

A system and method for calculating and storing block fingerprints for data deduplication. A fingerprint extraction layer generates a fingerprint of a predefined size, e.g., 64 bits, for each data block stored by a storage system. Each fingerprint is stored in a fingerprint record, and the fingerprint records are, in turn, stored in a fingerprint database for access by the data deduplication module. The data deduplication module may periodically compare the fingerprints to identify duplicate fingerprints, which, in turn, indicate duplicate data blocks.

Owner:NETWORK APPLIANCE INC

Capacity forecasting for a deduplicating storage system

ActiveUS8751463B1Digital data information retrievalDigital data processing detailsSystem informationData deduplication

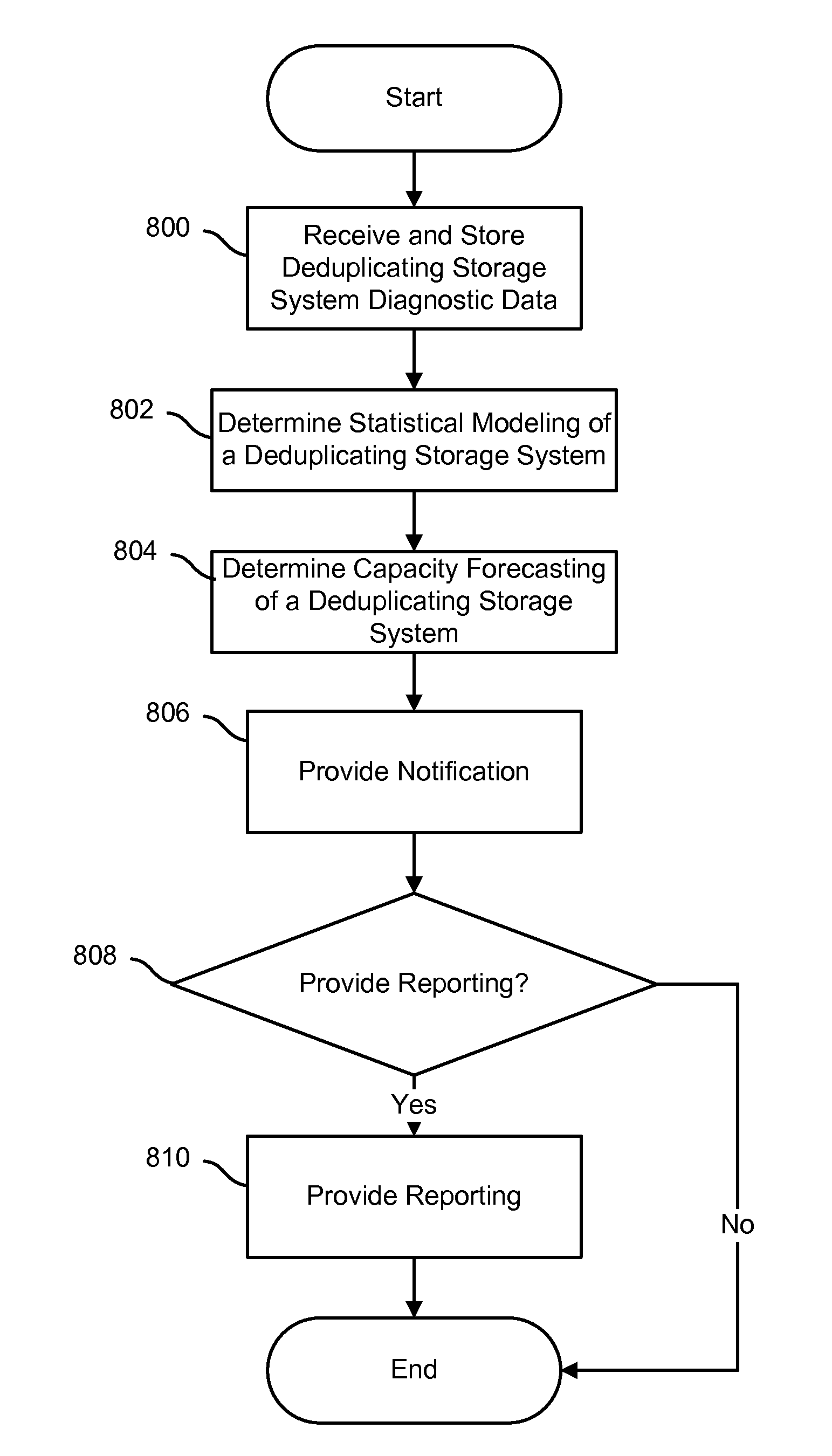

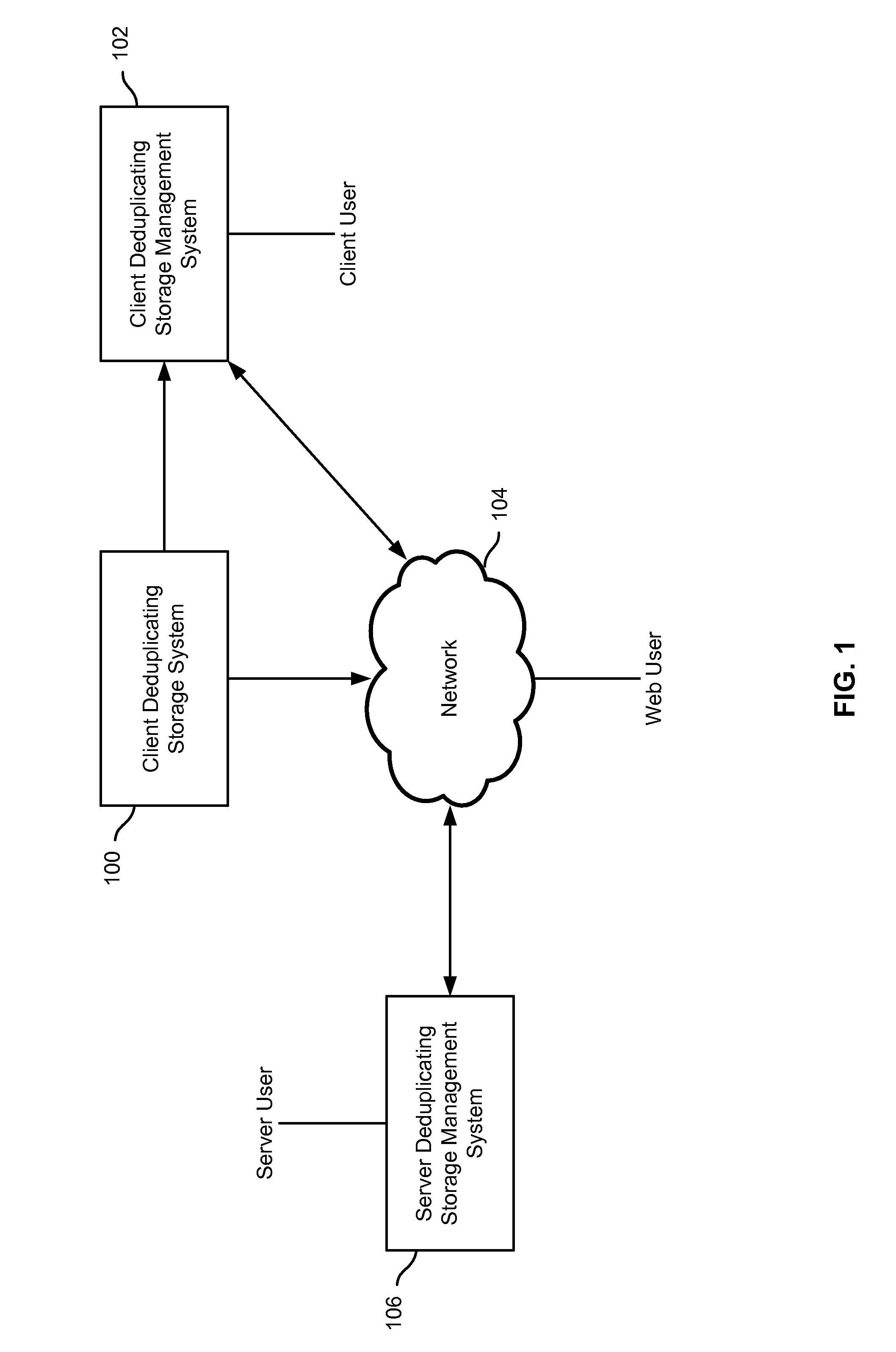

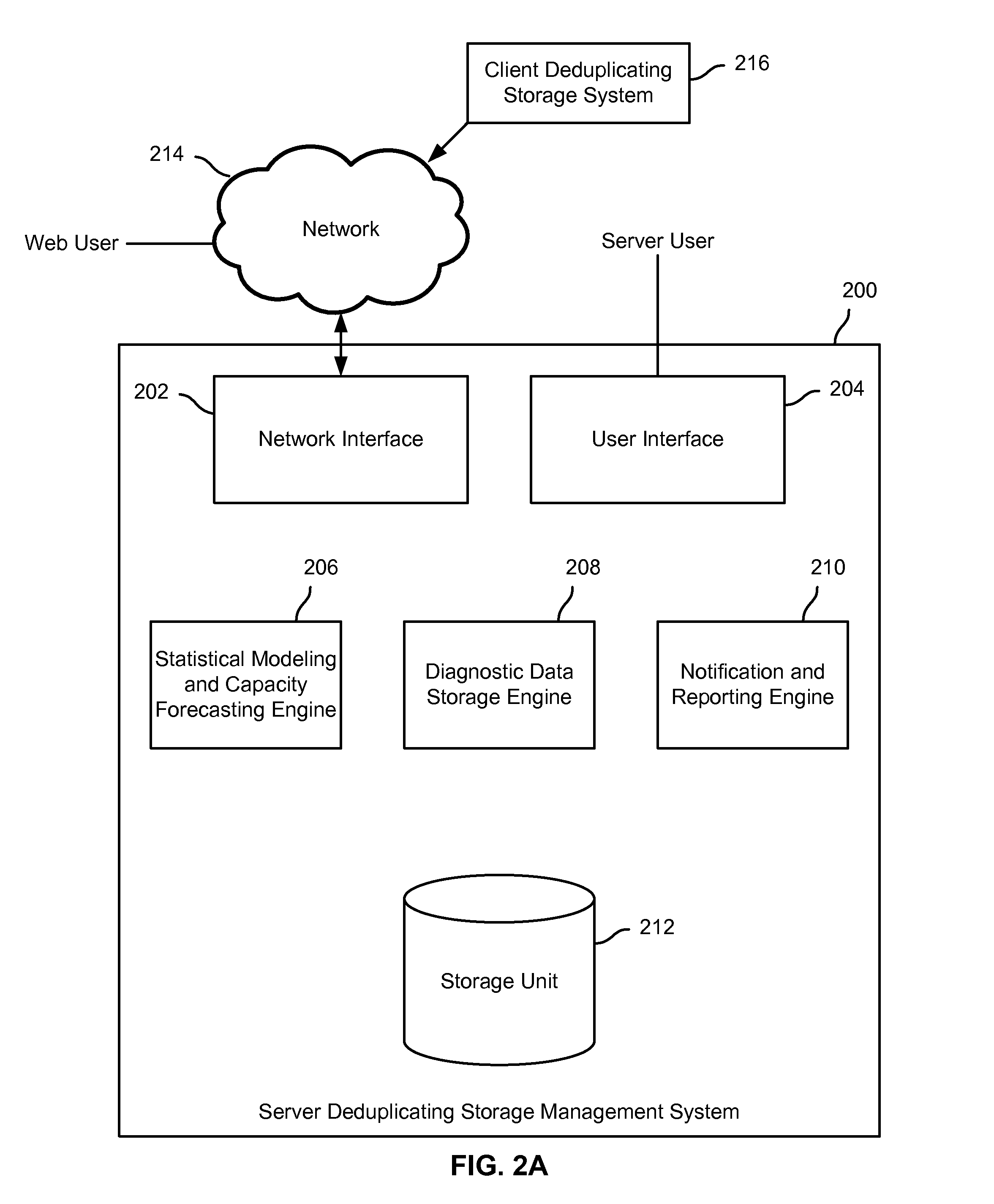

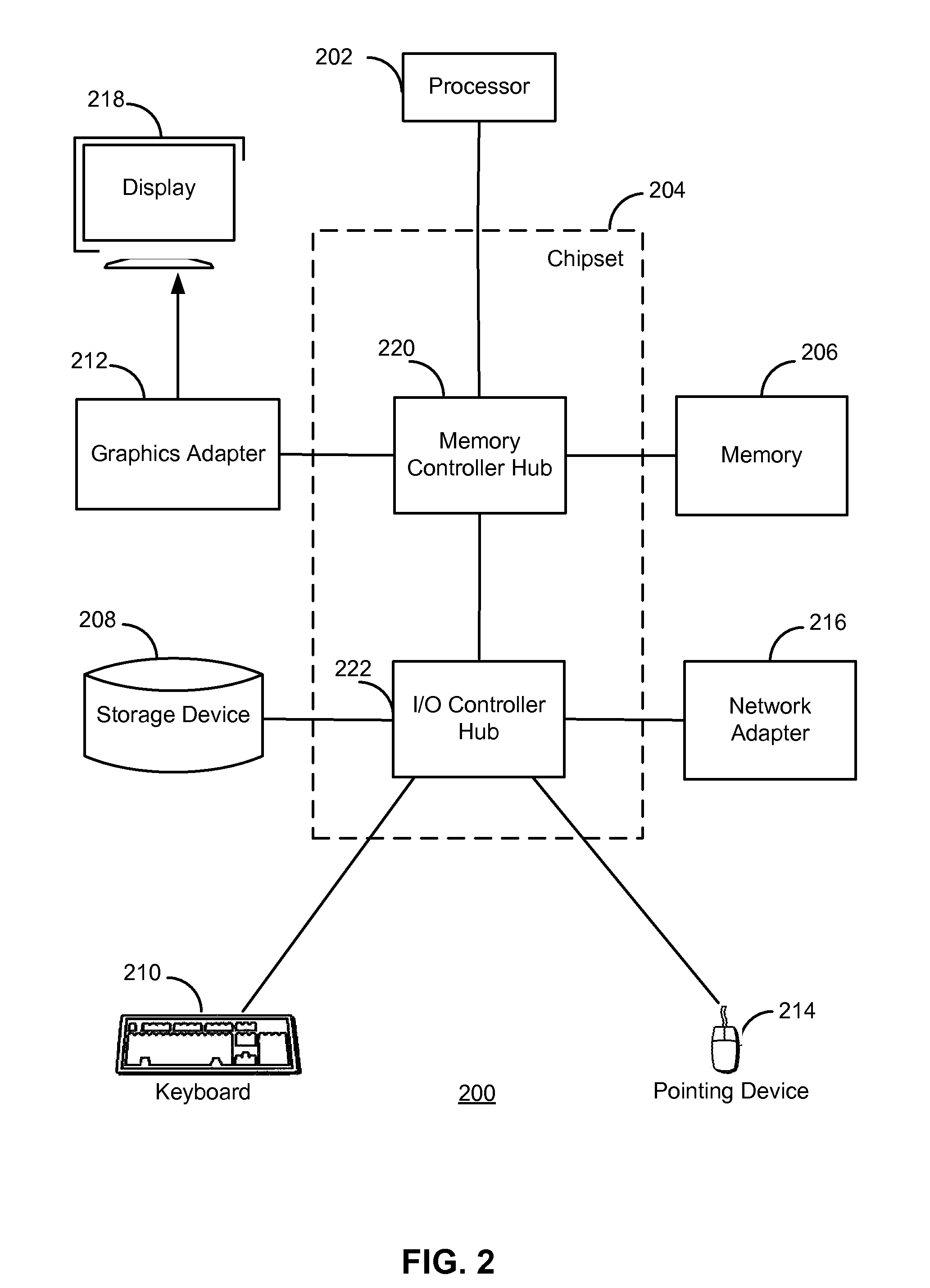

A system for managing a storage system comprises a processor and a memory. The processor is configured to receive storage system information from a deduplicating storage system. The processor is further configured to determine a capacity forecast based at least in part on the storage system information. The processor is further configured to provide a compression forecast. The memory is coupled to the processor and configured to provide the processor with instructions.

Owner:EMC IP HLDG CO LLC

System and method for on-the-fly elimination of redundant data

ActiveUS20080294696A1Digital data information retrievalDigital data processing detailsOperational systemFile system

Owner:NETWORK APPLIANCE INC

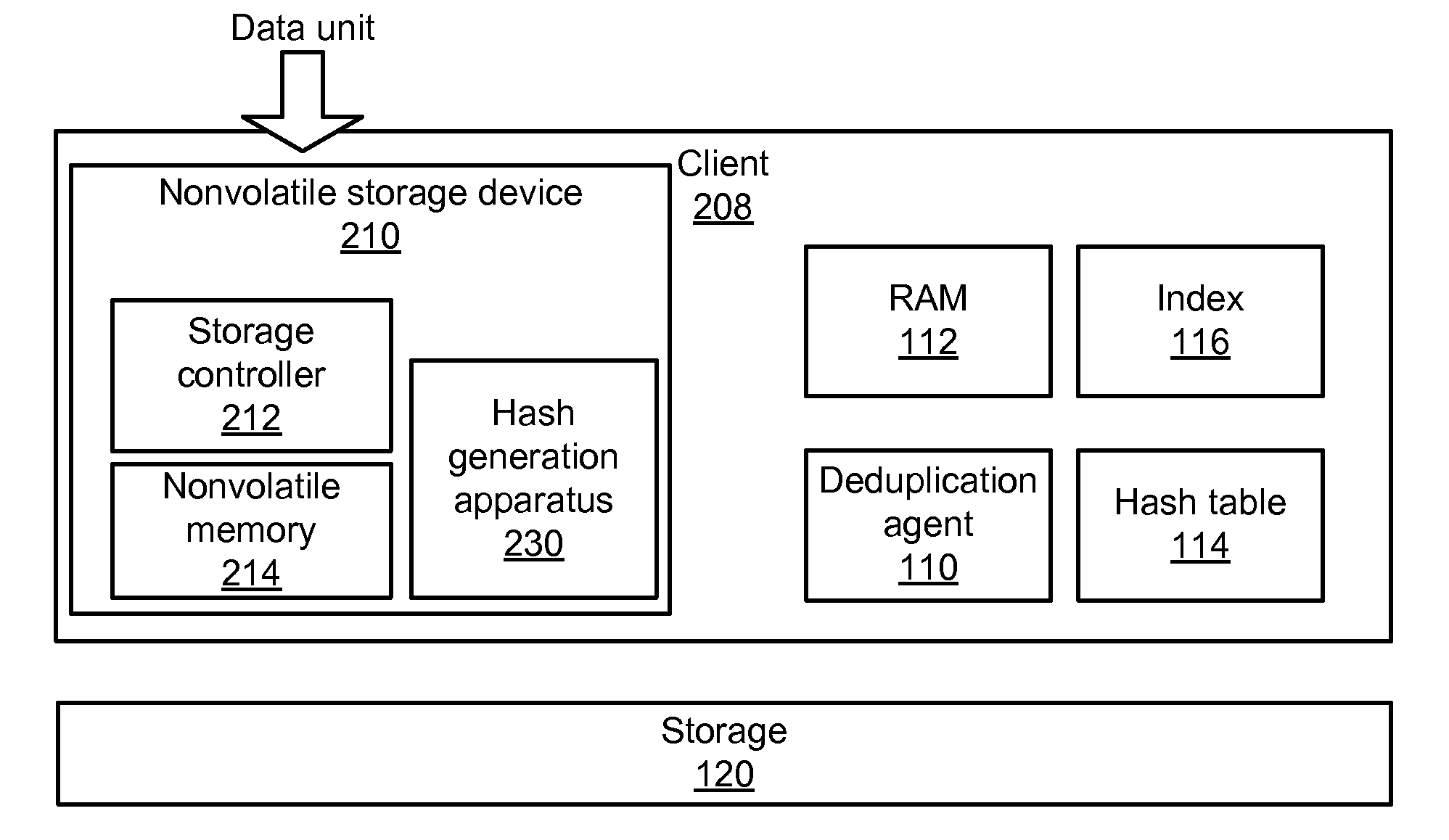

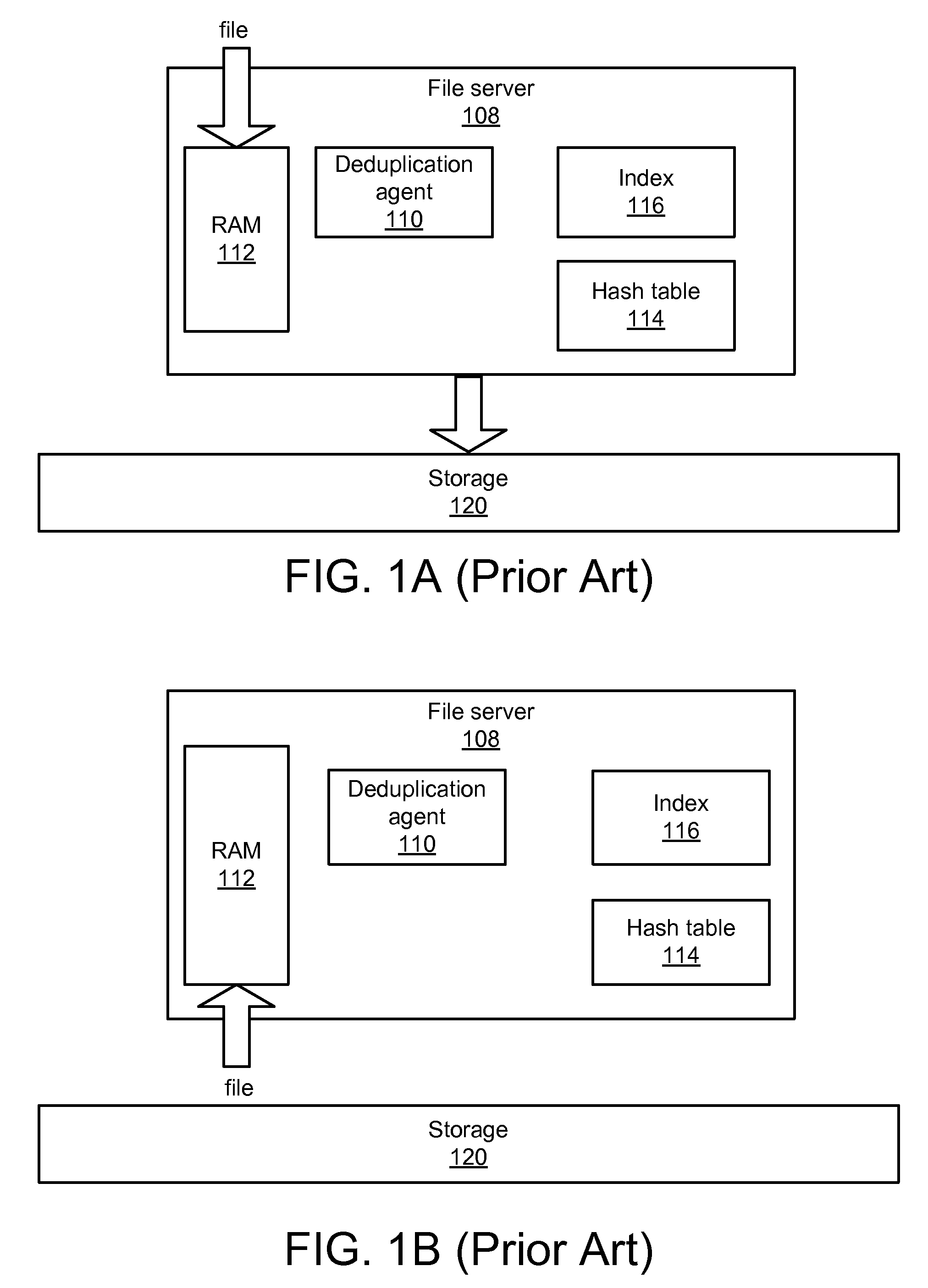

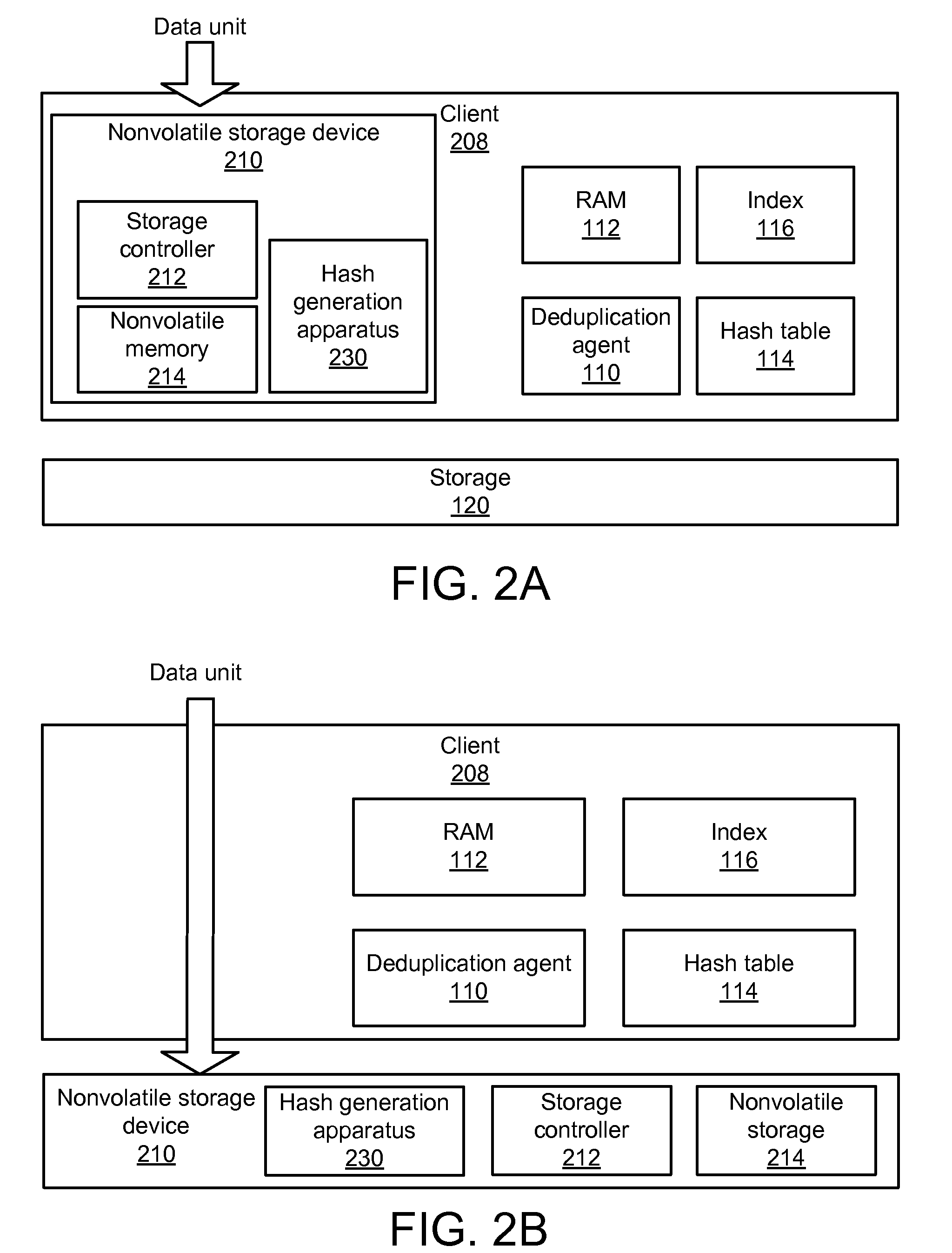

Apparatus, system, and method for improved data deduplication

InactiveUS20110055471A1Memory architecture accessing/allocationMemory systemsComputer hardwareHash function

An apparatus, system, and method are disclosed for improved deduplication. The apparatus includes an input module, a hash module, and a transmission module that are implemented in a nonvolatile storage device. The input module receives hash requests from requesting entities that may be internal or external to the nonvolatile storage device; the hash requests include a data unit identifier that identifies the data unit for which the hash is requested. The hash module generates a hash for the data unit using a hash function. The hash is generated using the computing resources of the nonvolatile storage device. The transmission module sends the hash to a receiving entity when the input module receives the hash request. A deduplication agent uses the hash to determine whether or not the data unit is a duplicate of a data unit already stored in the storage system that includes the nonvolatile storage device.

Owner:SANDISK TECH LLC

Method, apparatus and system for data deduplication

InactiveUS20130311434A1Digital data information retrievalDigital data processing detailsData deduplication

Owner:INTEL CORP

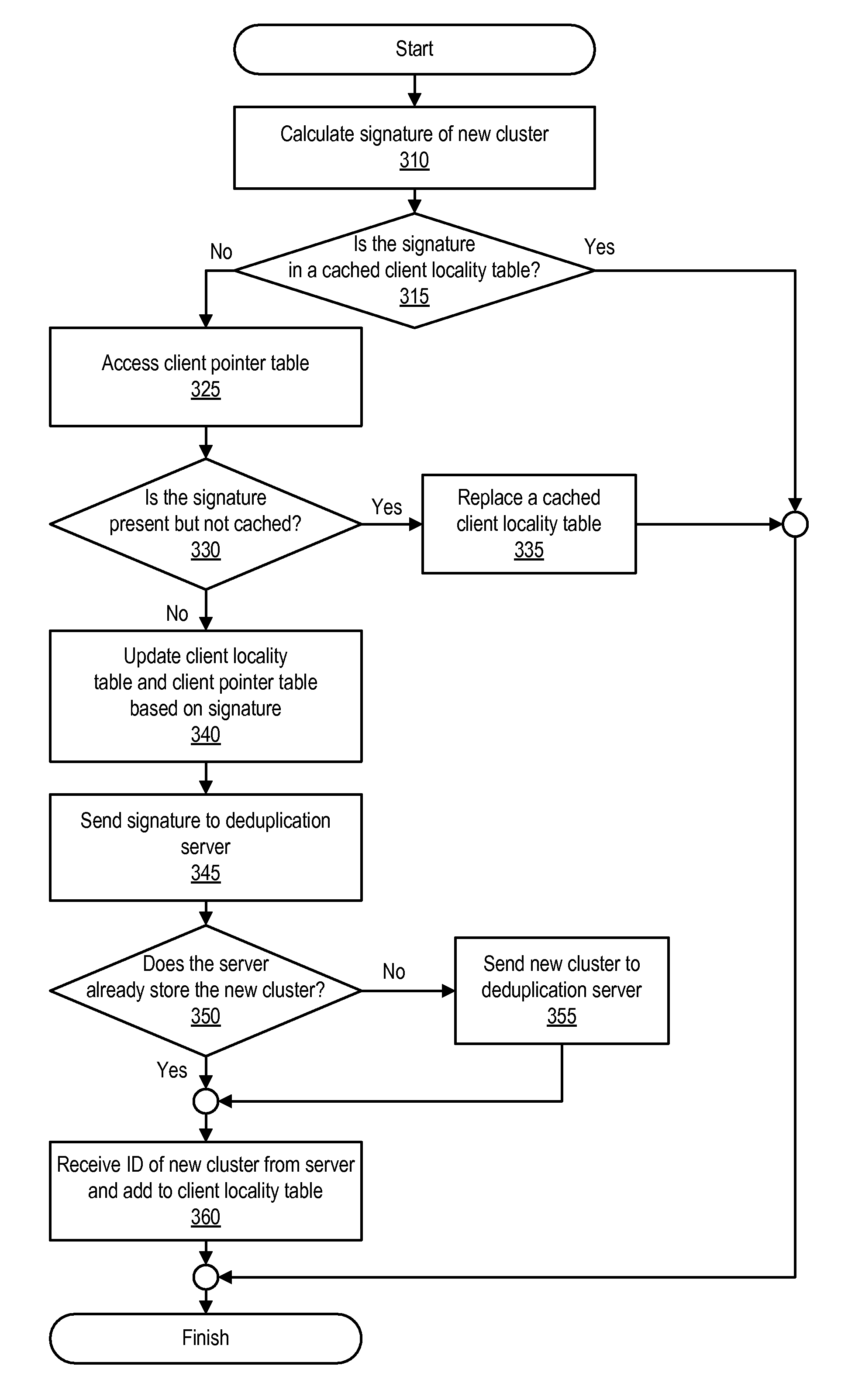

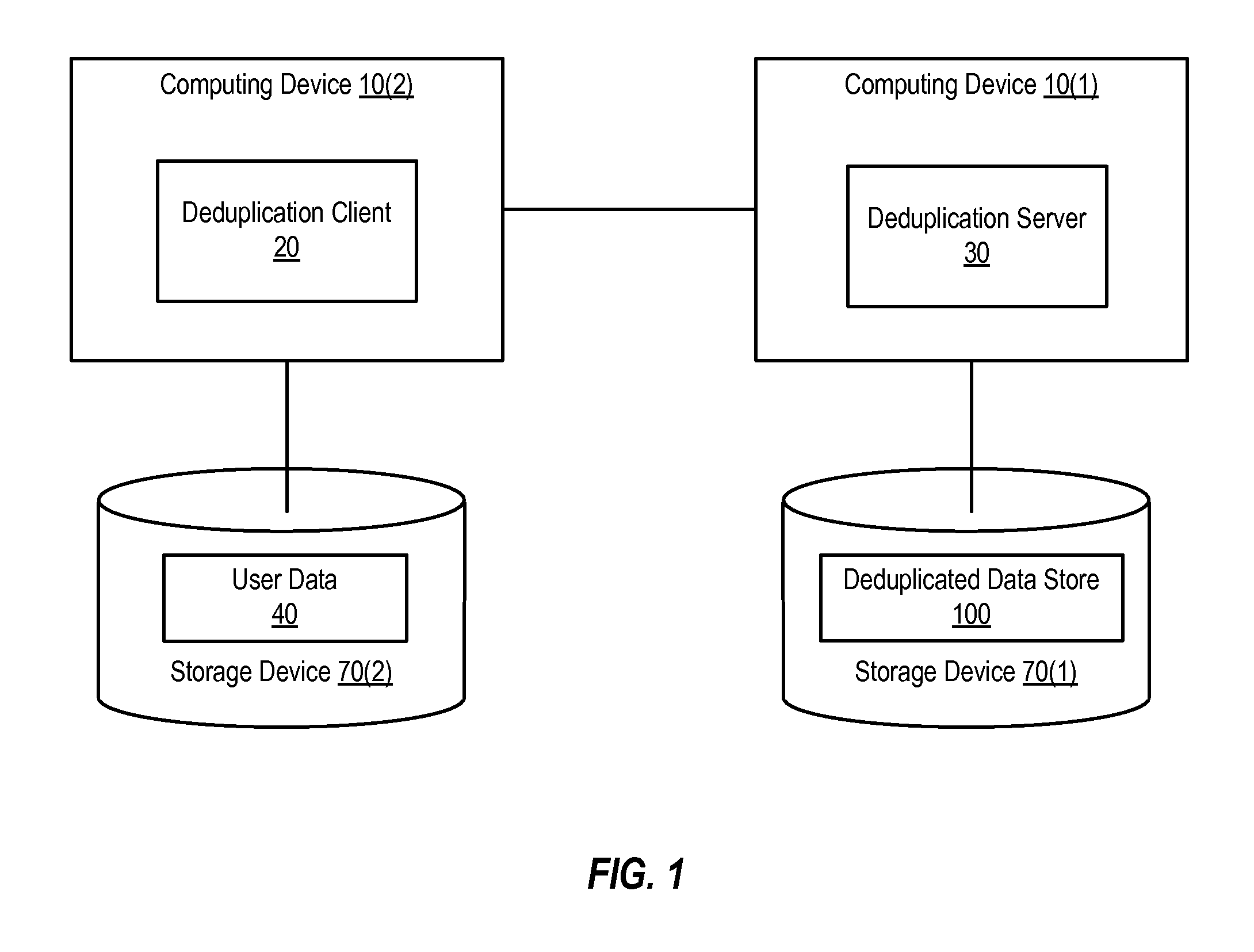

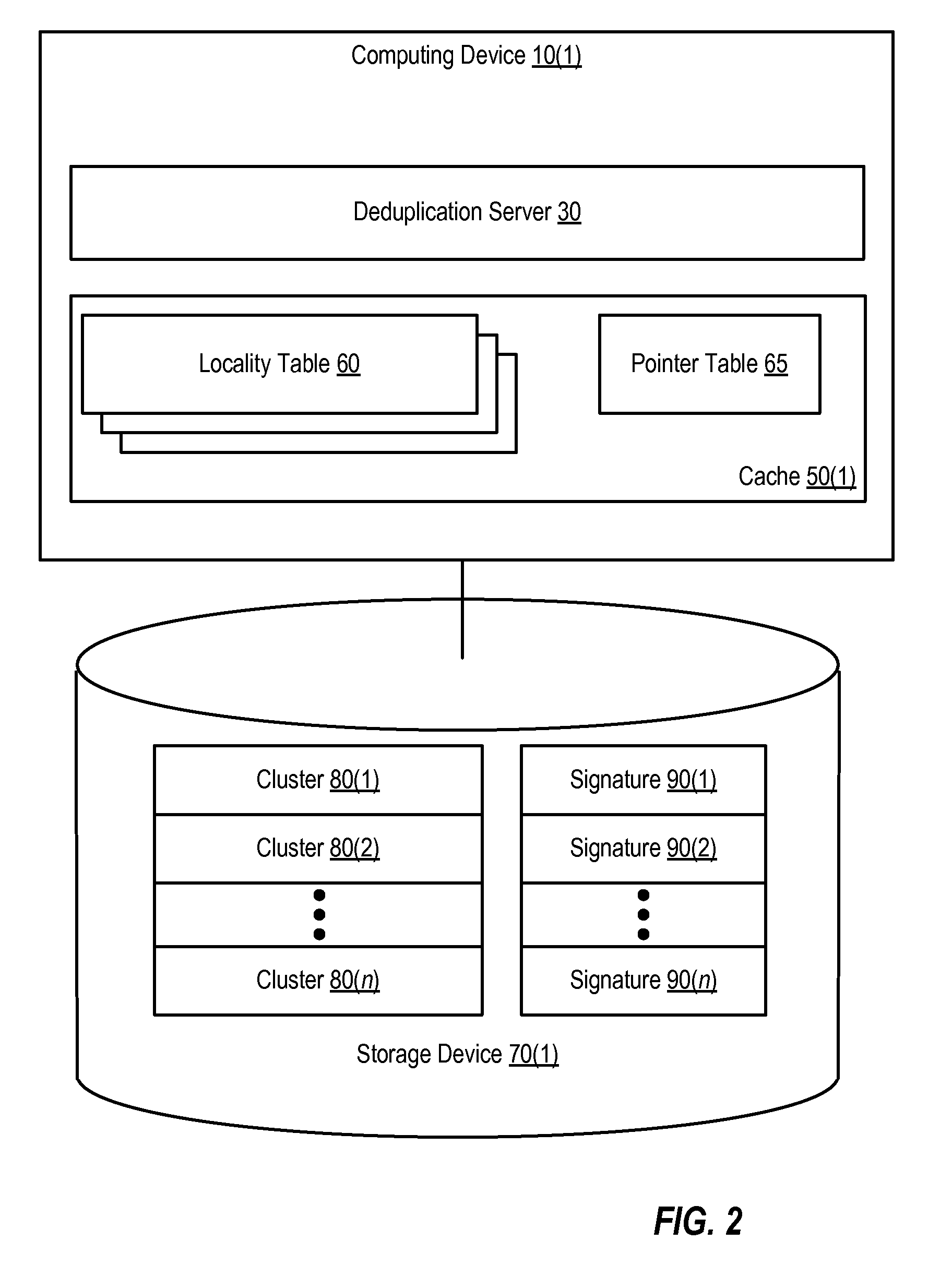

Client side data deduplication

ActiveUS7814149B1Digital data information retrievalData processing applicationsClient-sideData store

Methods and systems that use a client locality table when performing client-side data deduplication are disclosed. One method involves searching one or more client locality tables for the signature of a data unit (e.g., a portion of a volume or file). The client locality tables include signatures of data units stored in a deduplicated data store. If the signature is not found in the client locality tables, the signature is sent from a deduplication client to a deduplication server and added to one of the client locality tables. If instead the signature is found in the client locality tables, sending of the new signature to the deduplication server is inhibited.

Owner:SYMANTEC OPERATING CORP

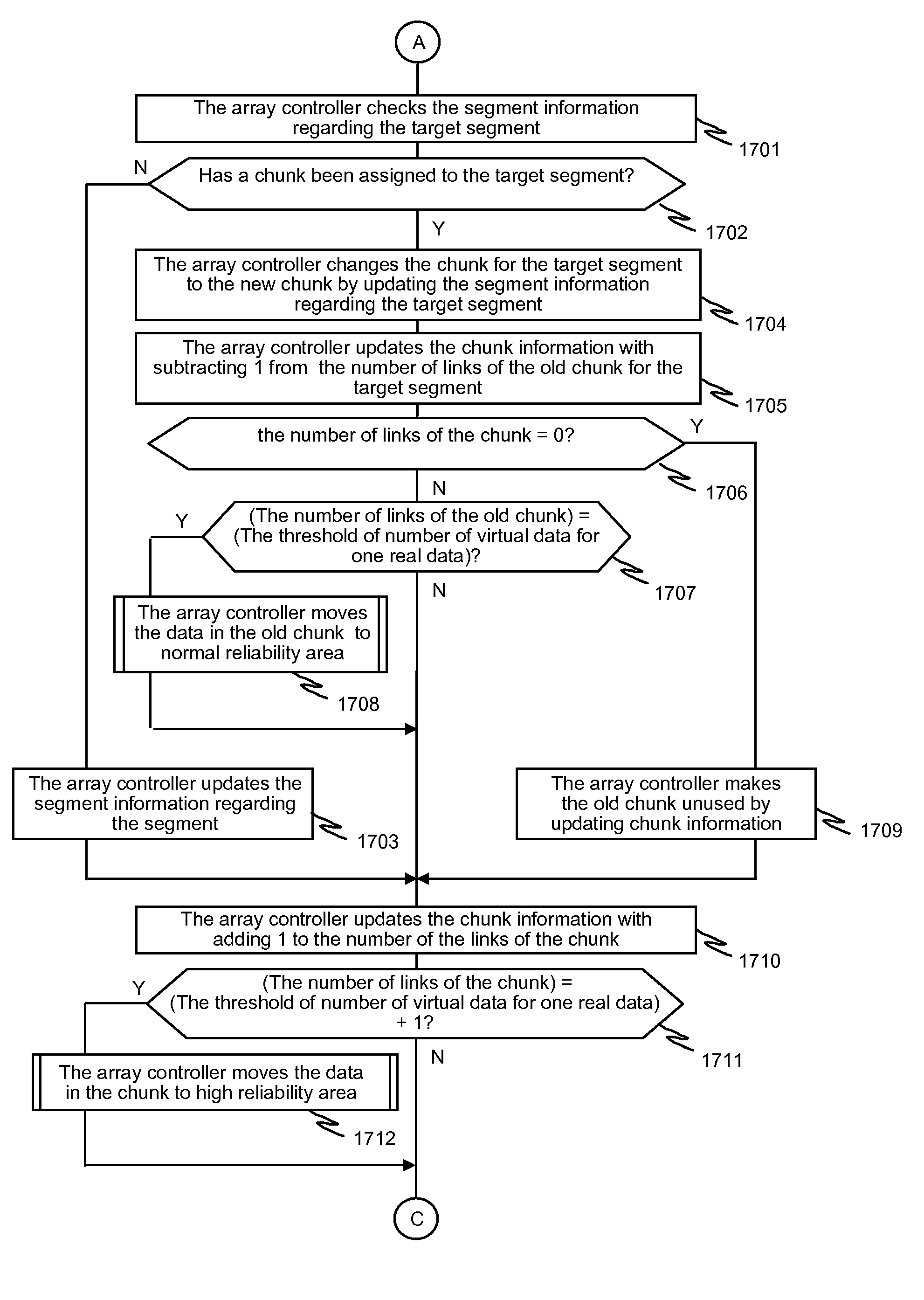

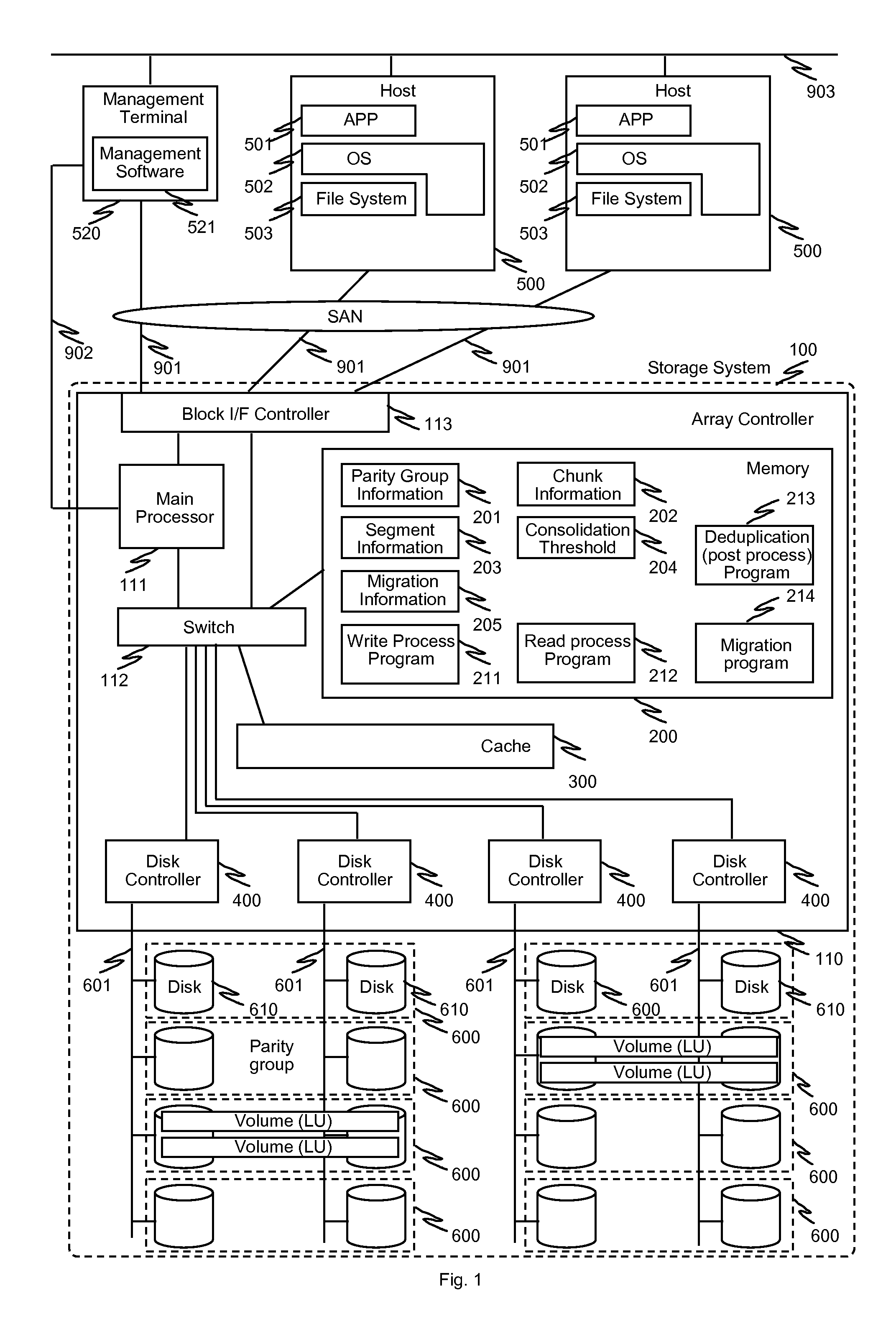

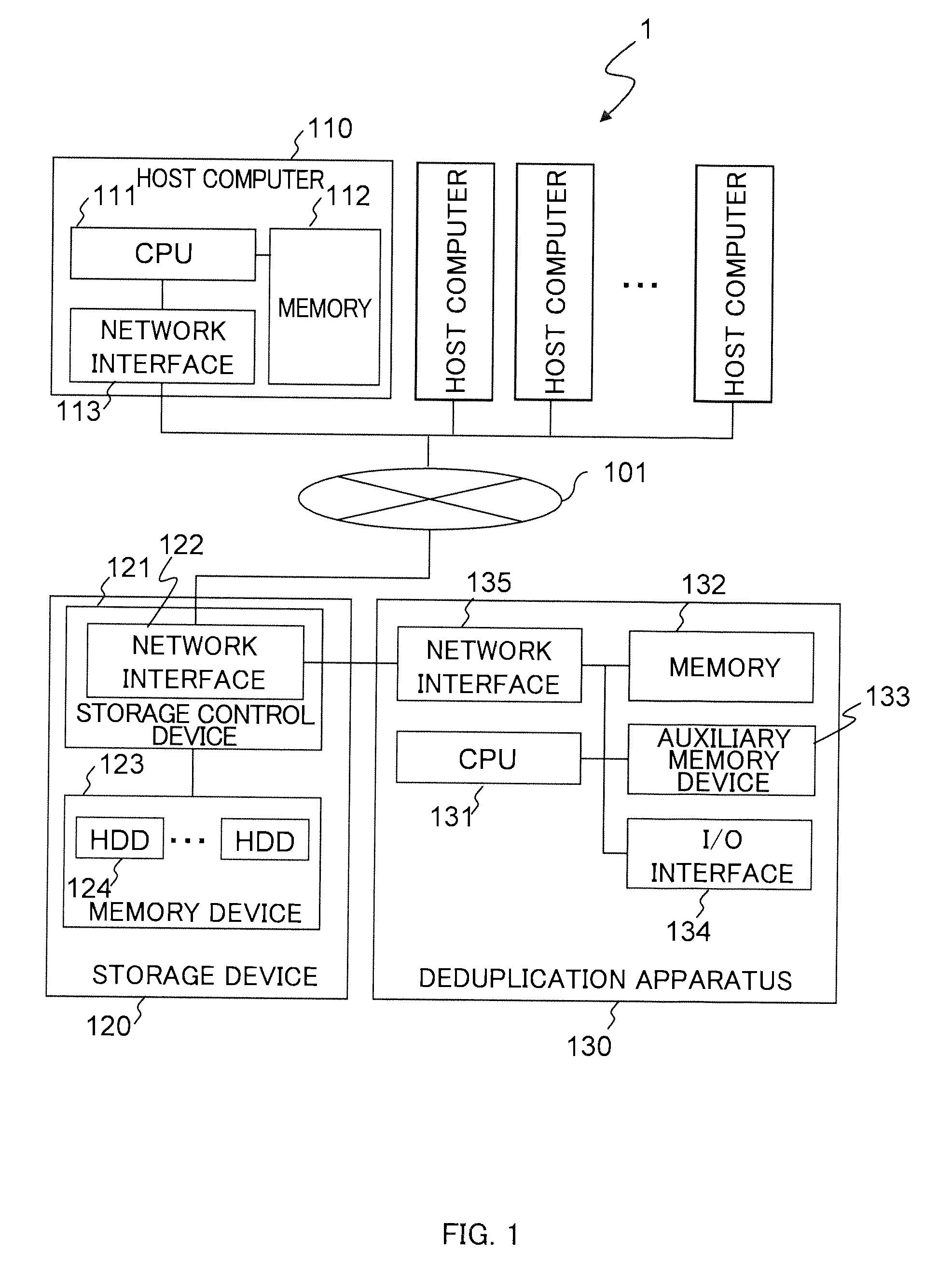

Methods and apparatus for deduplication in storage system

InactiveUS7870105B2Digital data processing detailsError detection/correctionComputer terminalData deduplication

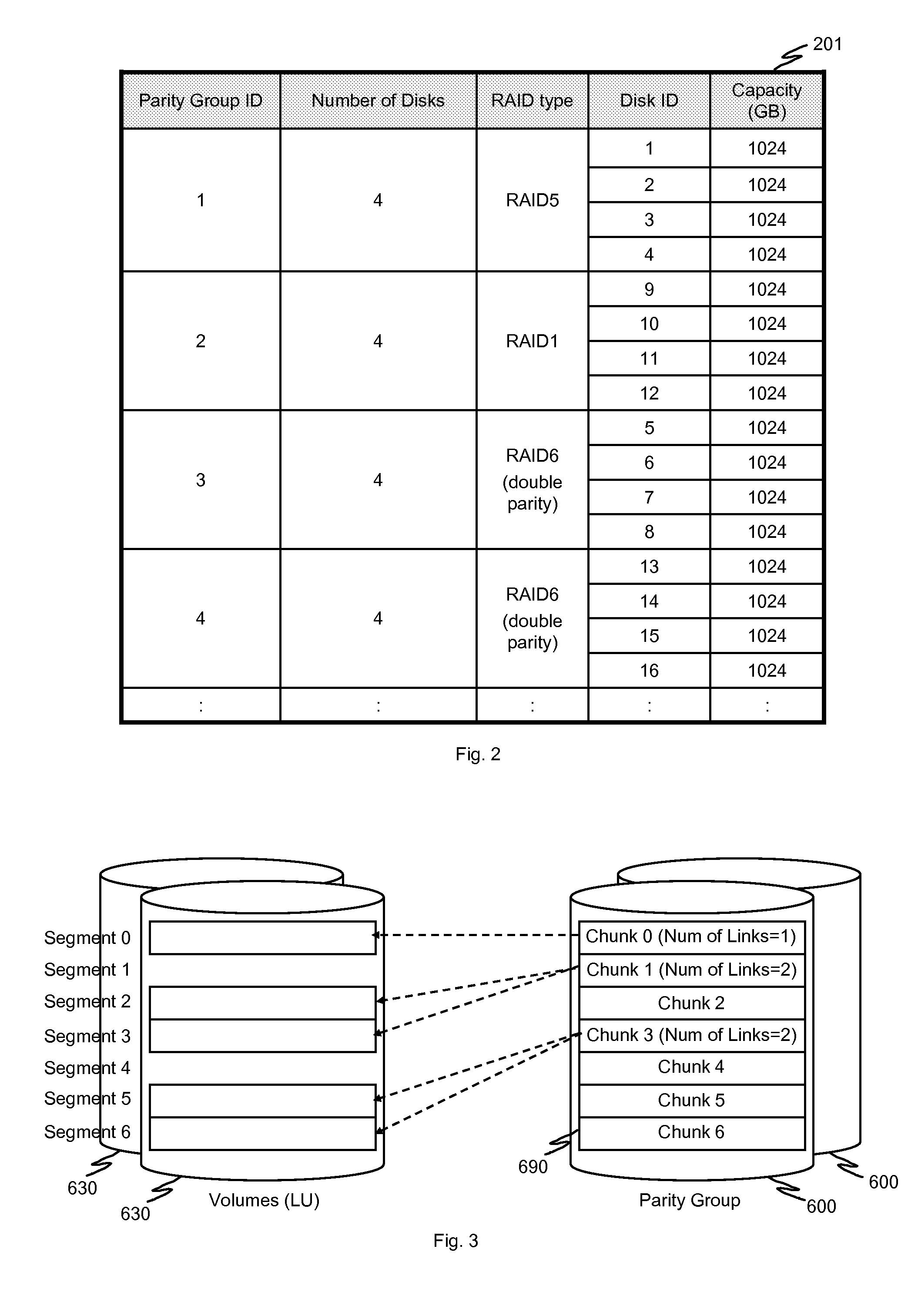

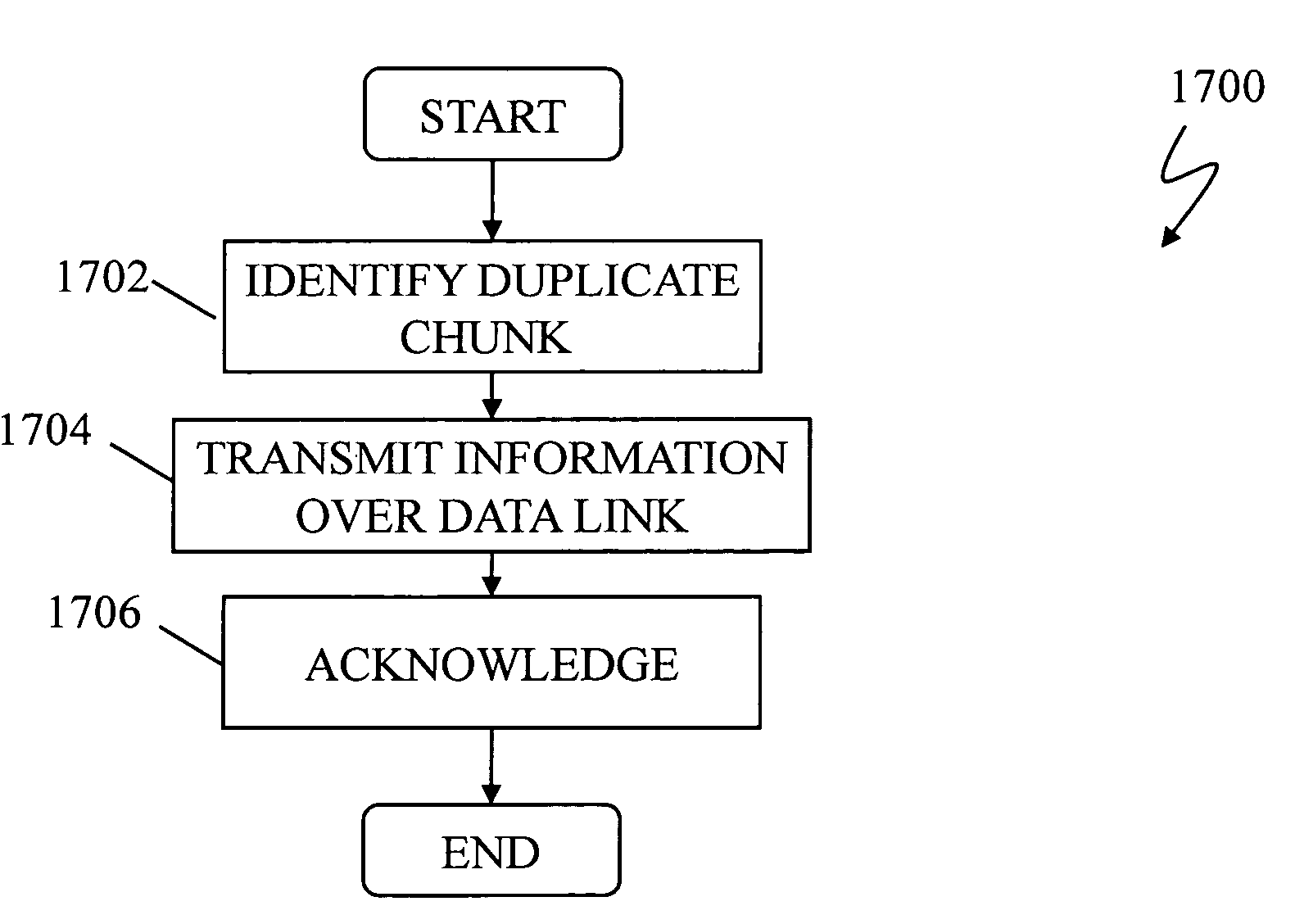

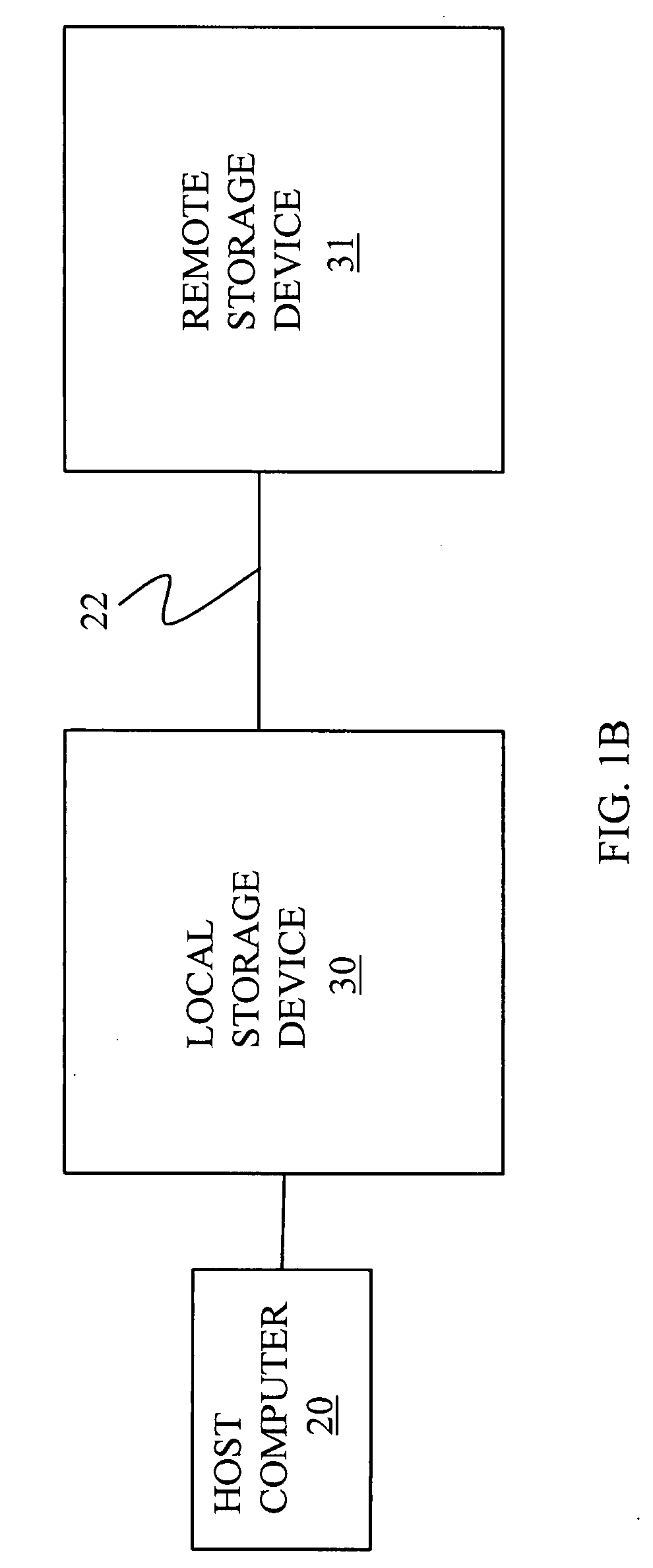

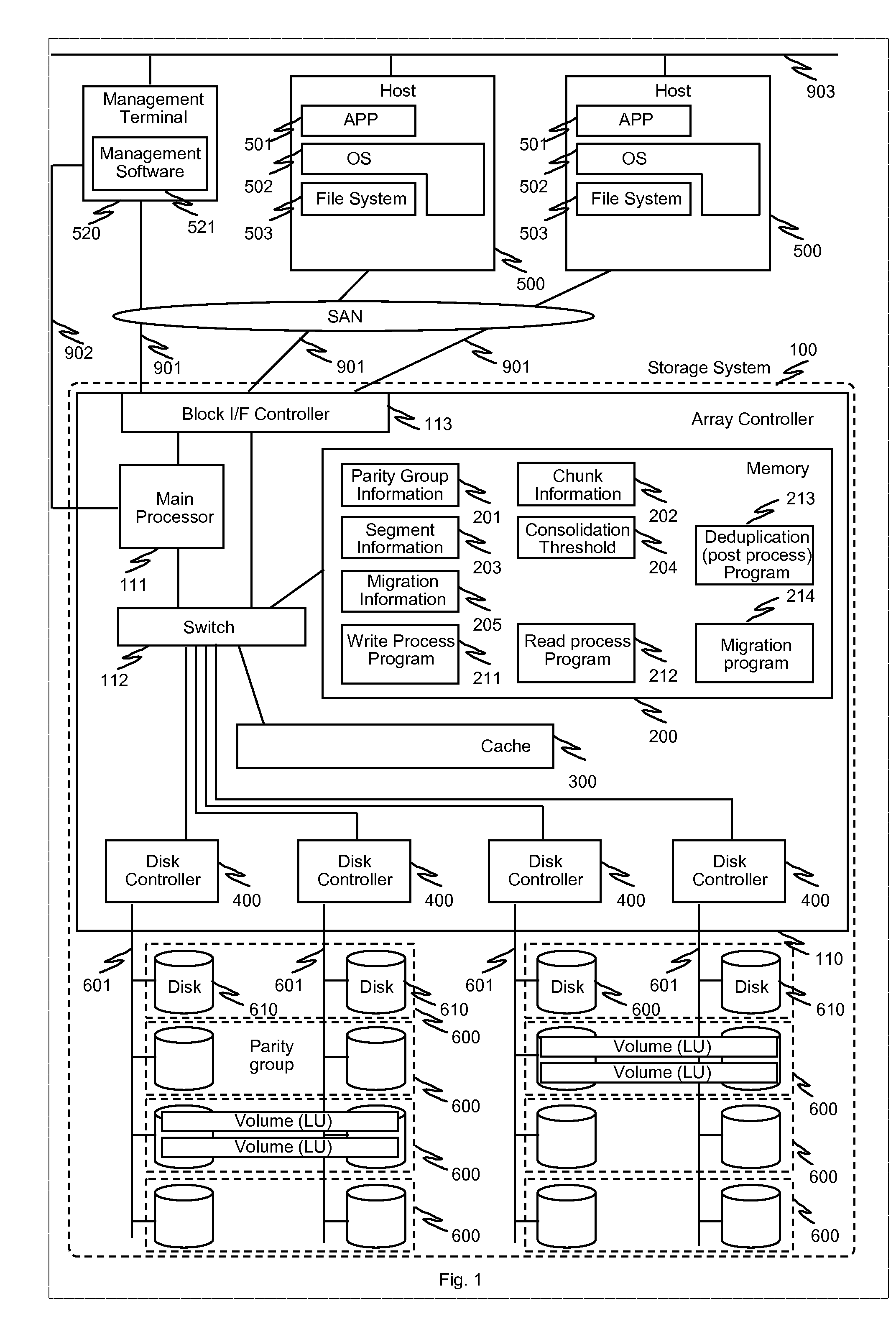

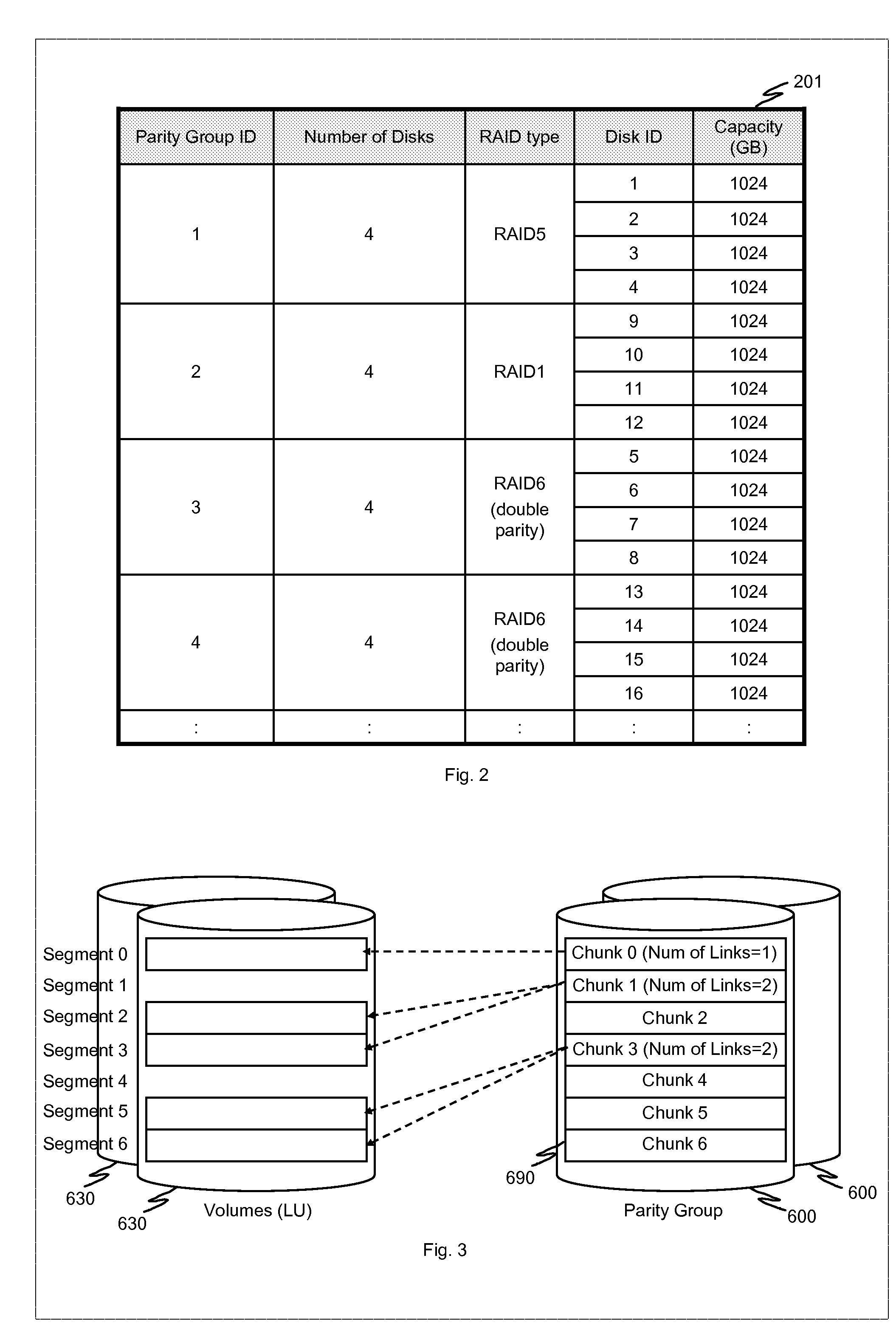

In one implementation, a storage system comprises host computers, a management terminal and a storage system having block interface to communicate with the host computers / clients. The storage system also incorporates a deduplication capability using chunks (divided storage area). The storage system maintains a threshold (upper limit) with respect to the degree of deduplication (i.e. number of virtual data for one real data) specified by users or the management software. The storage system counts the number of links for each chunk and does not perform deduplication when the number of reduced data for a chunk exceeds the threshold, even if duplication is detected. In another implementation, the storage system additionally incorporates a data migration capability and migrates physical data to high reliability area such as area protected with double parity (i.e. RAID6) when the deduplication level for a chunk exceeds the threshold.

Owner:HITACHI LTD

Data de-duplication using thin provisioning

ActiveUS7822939B1Digital data information retrievalSpecial data processing applicationsThin provisioningData storing

A system for de-duplicating data includes providing a first volume including at least one pointer to a second volume that corresponds to physical storage space, wherein the first volume is a logical volume. A first set of data is detected as a duplicate of a second set of data stored on the second volume at a first data chunk. A pointer of the first volume associated with the first set of data is modified to point to the first data chunk. After modifying the pointer, no additional physical storage space is allocated for the first set of data.

Owner:EMC IP HLDG CO LLC

Use of Similarity Hash to Route Data for Improved Deduplication in a Storage Server Cluster

ActiveUS20110099351A1Efficiently routedAvoid duplicationMemory adressing/allocation/relocationTransmissionData miningData storing

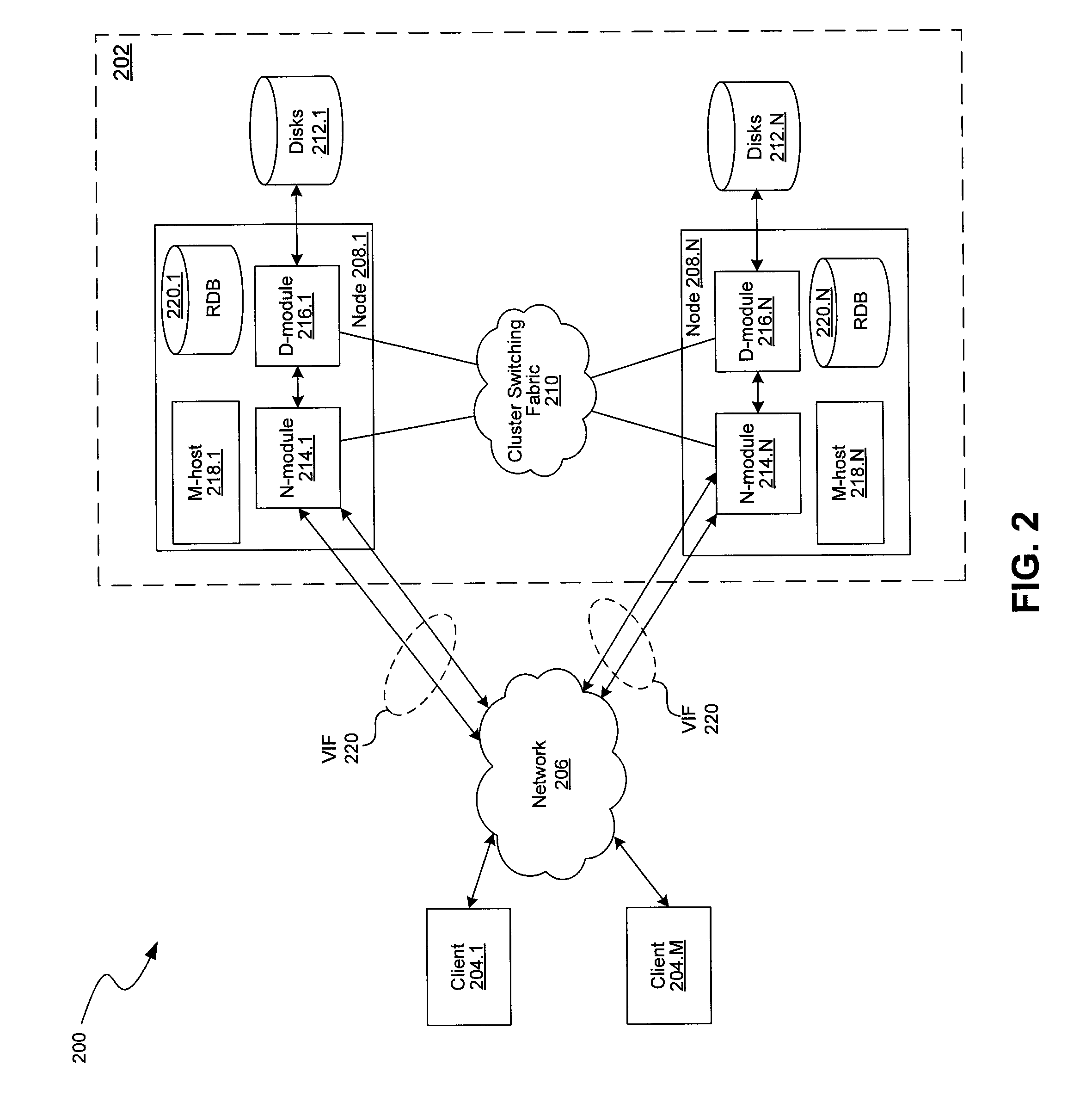

A technique for routing data for improved deduplication in a storage server cluster includes computing, for each node in the cluster, a value collectively representative of the data stored on the node, such as a “geometric center” of the node. New or modified data is routed to the node which has stored data identical or most similar to the new or modified data, as determined based on those values. Each node stores a plurality of chunks of data, where each chunk includes multiple deduplication segments. A content hash is computed for each deduplication segment in each node, and a similarity hash is computed for each chunk from the content hashes of all segments in the chunk. A geometric center of a node is computed from the similarity hashes of the chunks stored in the node.

Owner:NETWORK APPLIANCE INC

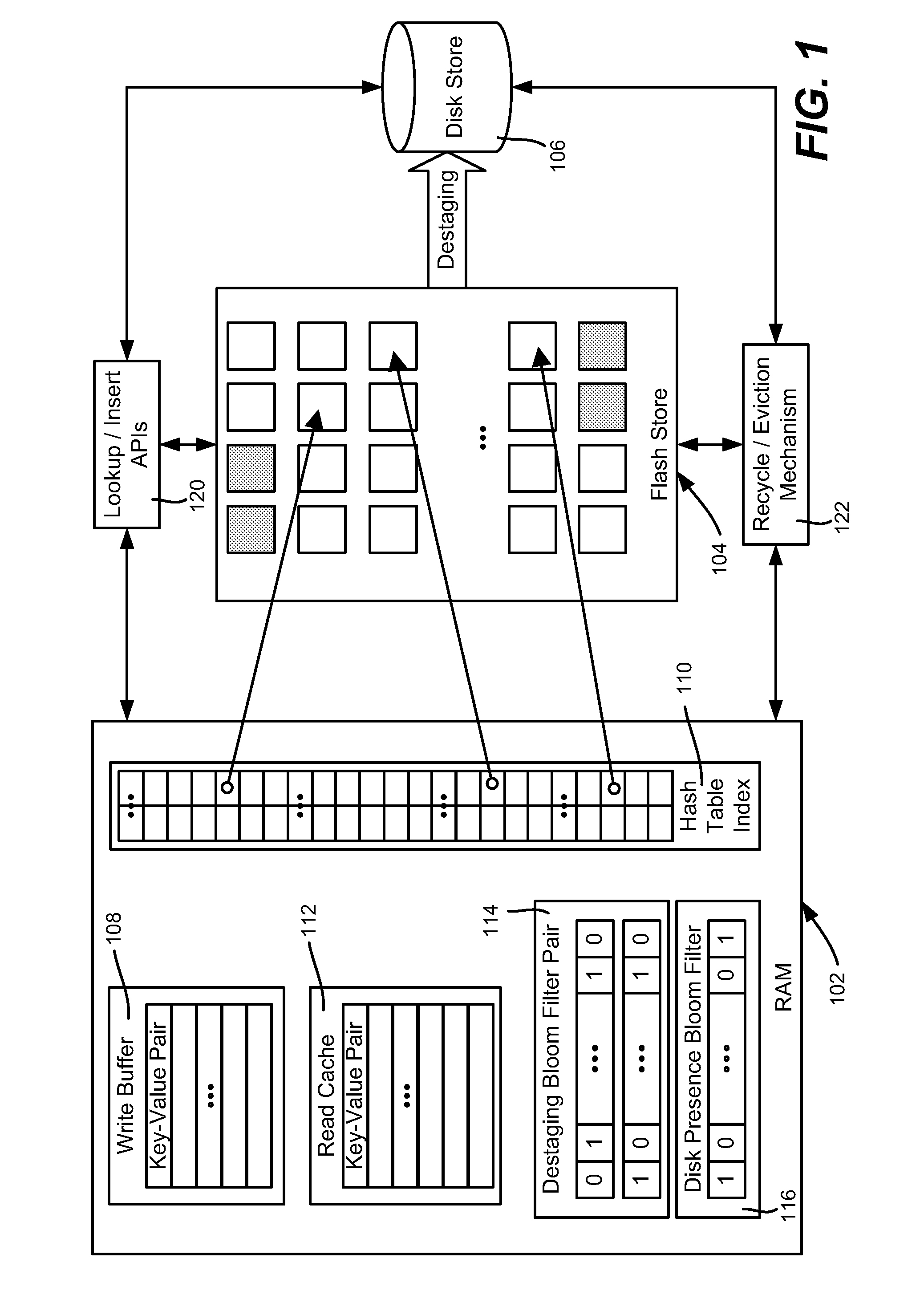

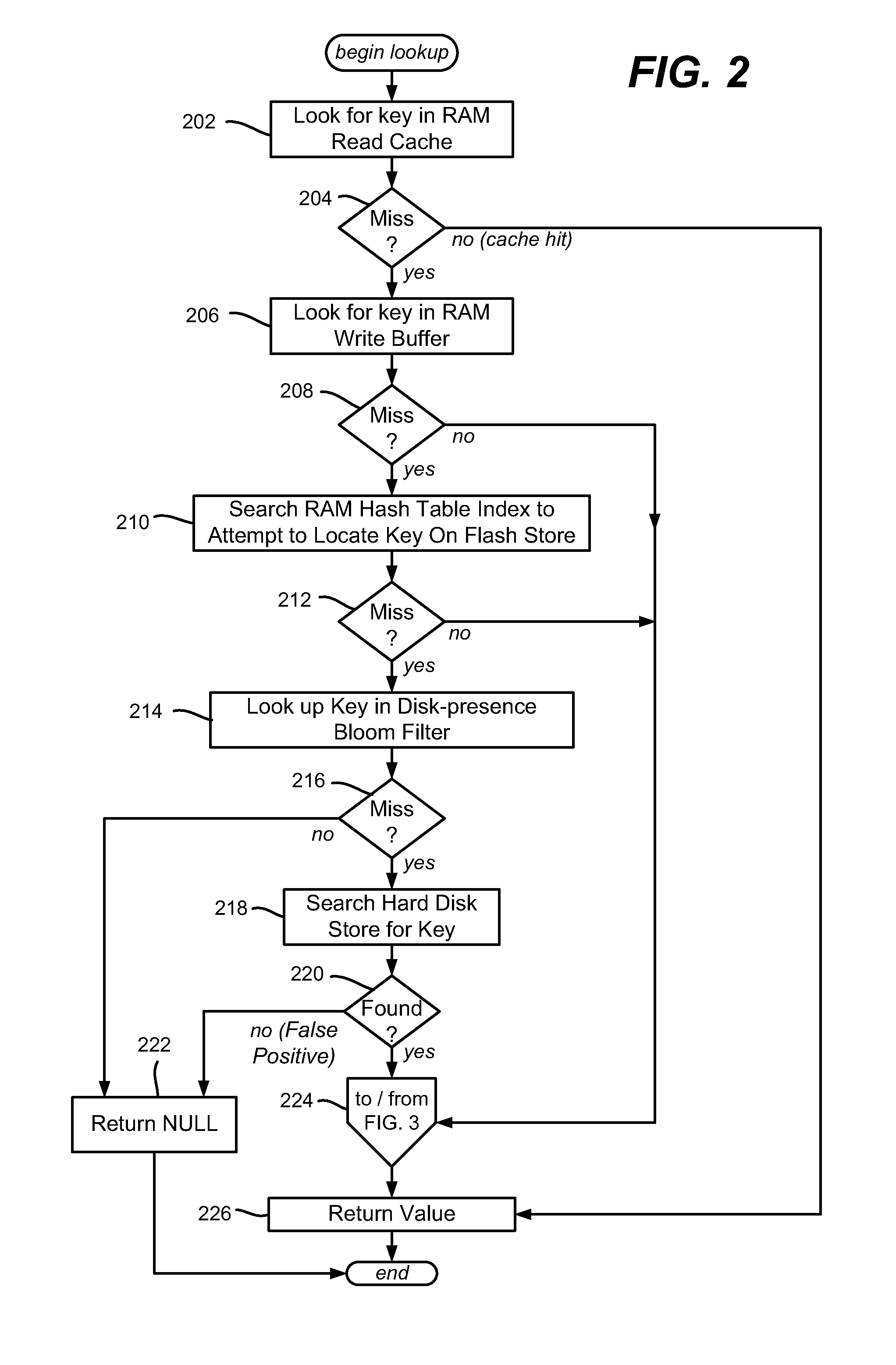

Flash memory cache including for use with persistent key-value store

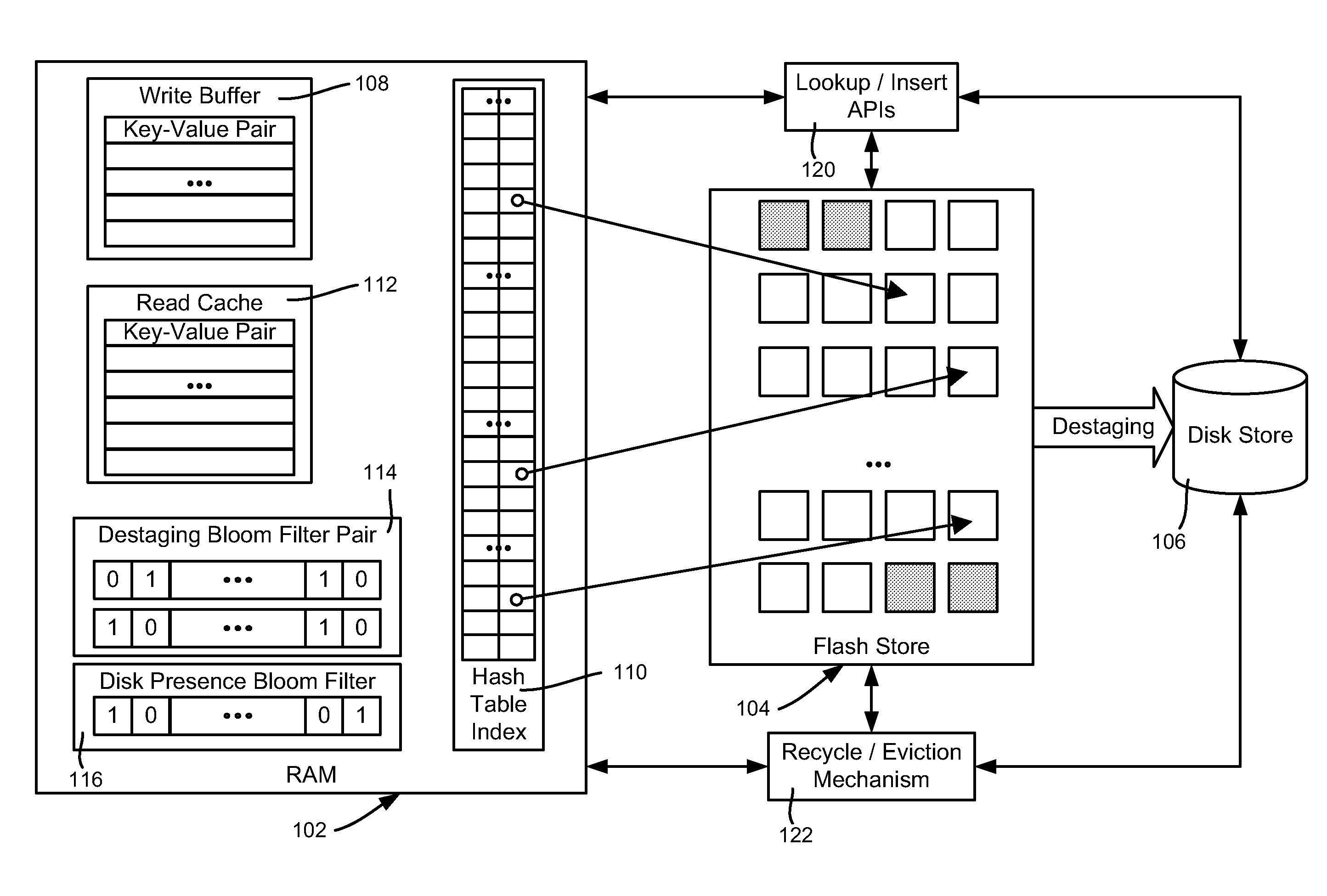

InactiveUS20110276744A1Memory architecture accessing/allocationMemory adressing/allocation/relocationWrite bufferEngineering

Described is using flash memory, RAM-based data structures and mechanisms to provide a flash store for caching data items (e.g., key-value pairs) in flash pages. A RAM-based index maps data items to flash pages, and a RAM-based write buffer maintains data items to be written to the flash store, e.g., when a full page can be written. A recycle mechanism makes used pages in the flash store available by destaging a data item to a hard disk or reinserting it into the write buffer, based on its access pattern. The flash store may be used in a data deduplication system, in which the data items comprise chunk-identifier, metadata pairs, in which each chunk-identifier corresponds to a hash of a chunk of data that indicates. The RAM and flash are accessed with the chunk-identifier (e.g., as a key) to determine whether a chunk is a new chunk or a duplicate.

Owner:MICROSOFT TECH LICENSING LLC

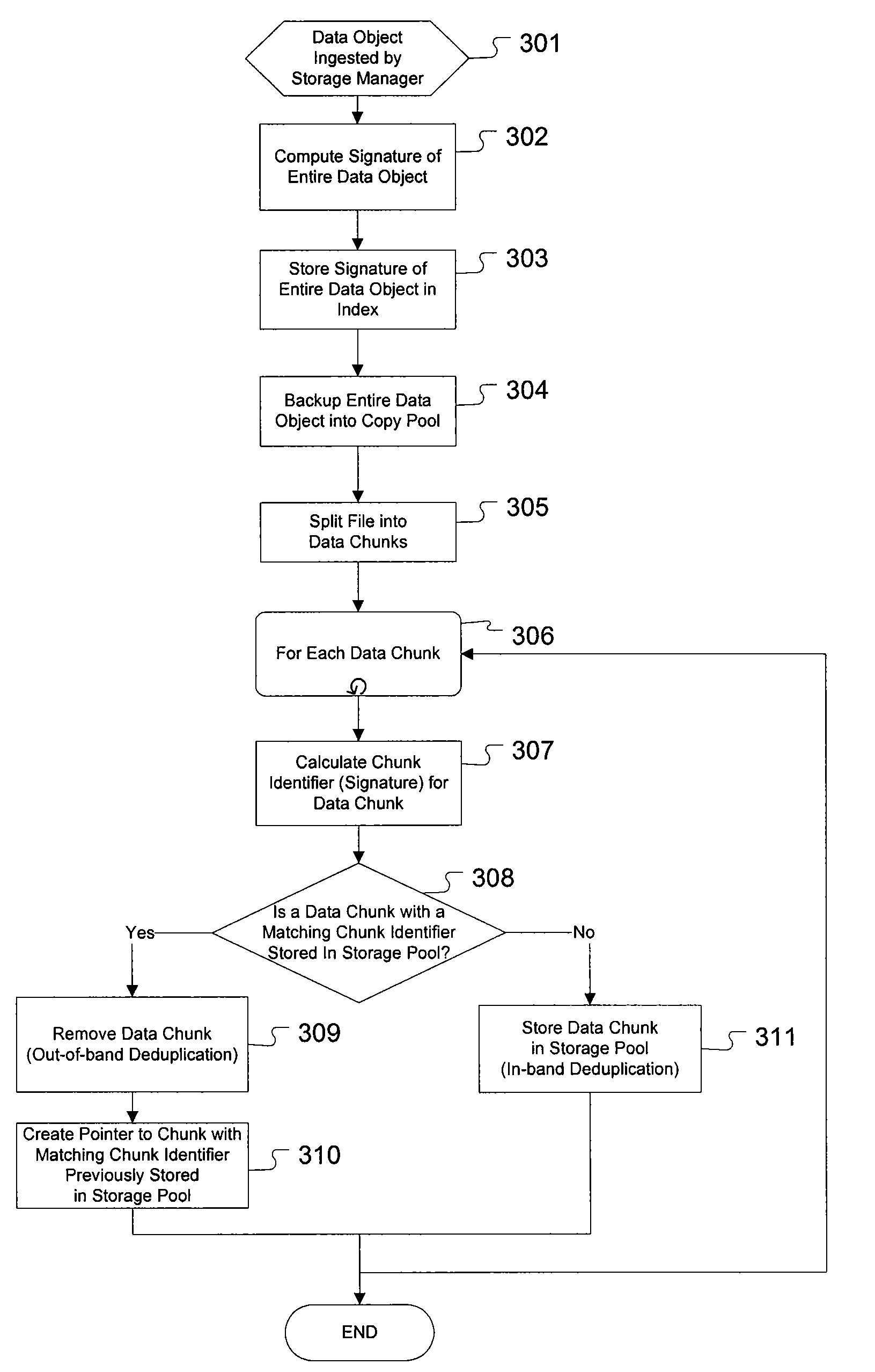

Enhanced method and system for assuring integrity of deduplicated data

ActiveUS20090271454A1Access latencyDigital data information retrievalDigital data processing detailsHash functionDigital signature

The present invention provides for an enhanced method and system for assuring integrity of deduplicated data objects stored within a storage system. A digital signature of the data object is generated to determine if the data object reassembled from a deduplicated state is identical to its pre-deduplication state. In one embodiment, generating the object signature of a data object before deduplication comprises generating an object signature from intermediate hash values computed from a hash function operating on each data chunk within the data object, the hash function also used to determine duplicate data chunks. In an alternative embodiment, generating the object signature of a data object before deduplication comprises generating an object signature on a portion of each data chunk of the data object.

Owner:DROPBOX

Policy based tiered data deduplication strategy

ActiveUS7567188B1Promote recoveryLeast riskDigital data information retrievalCode conversionData storeData deduplication

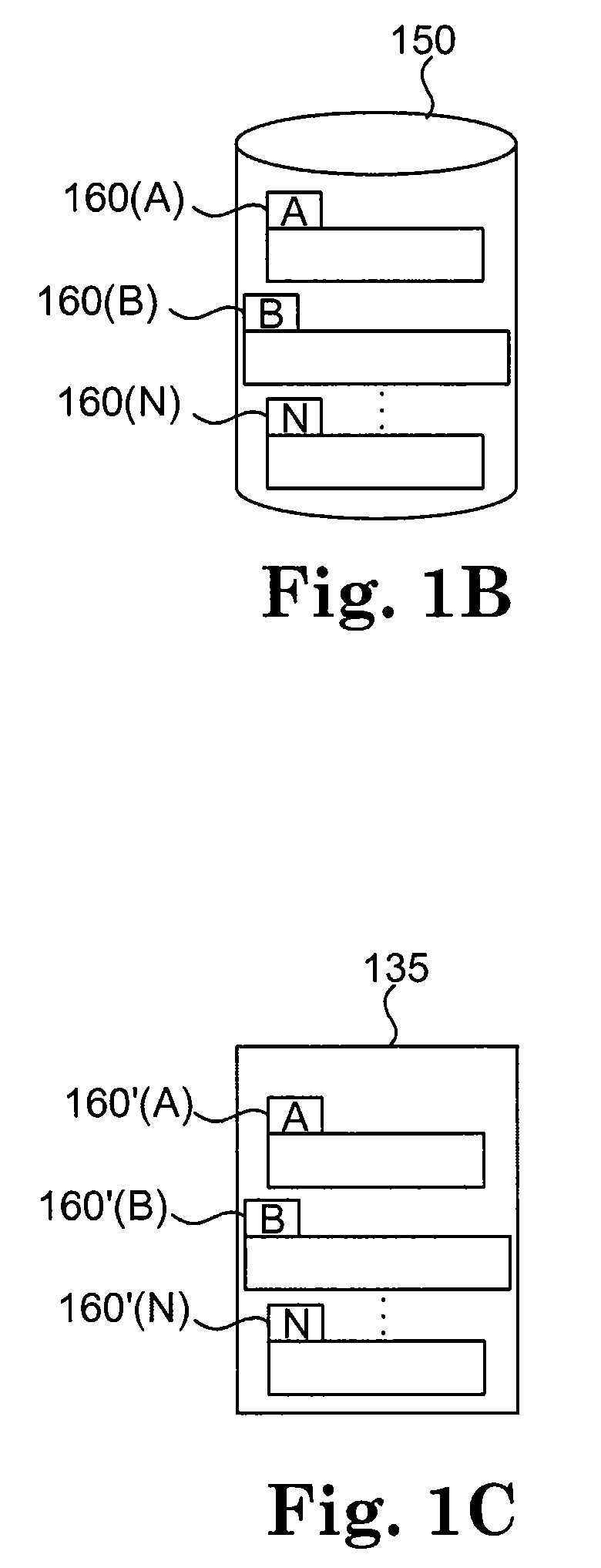

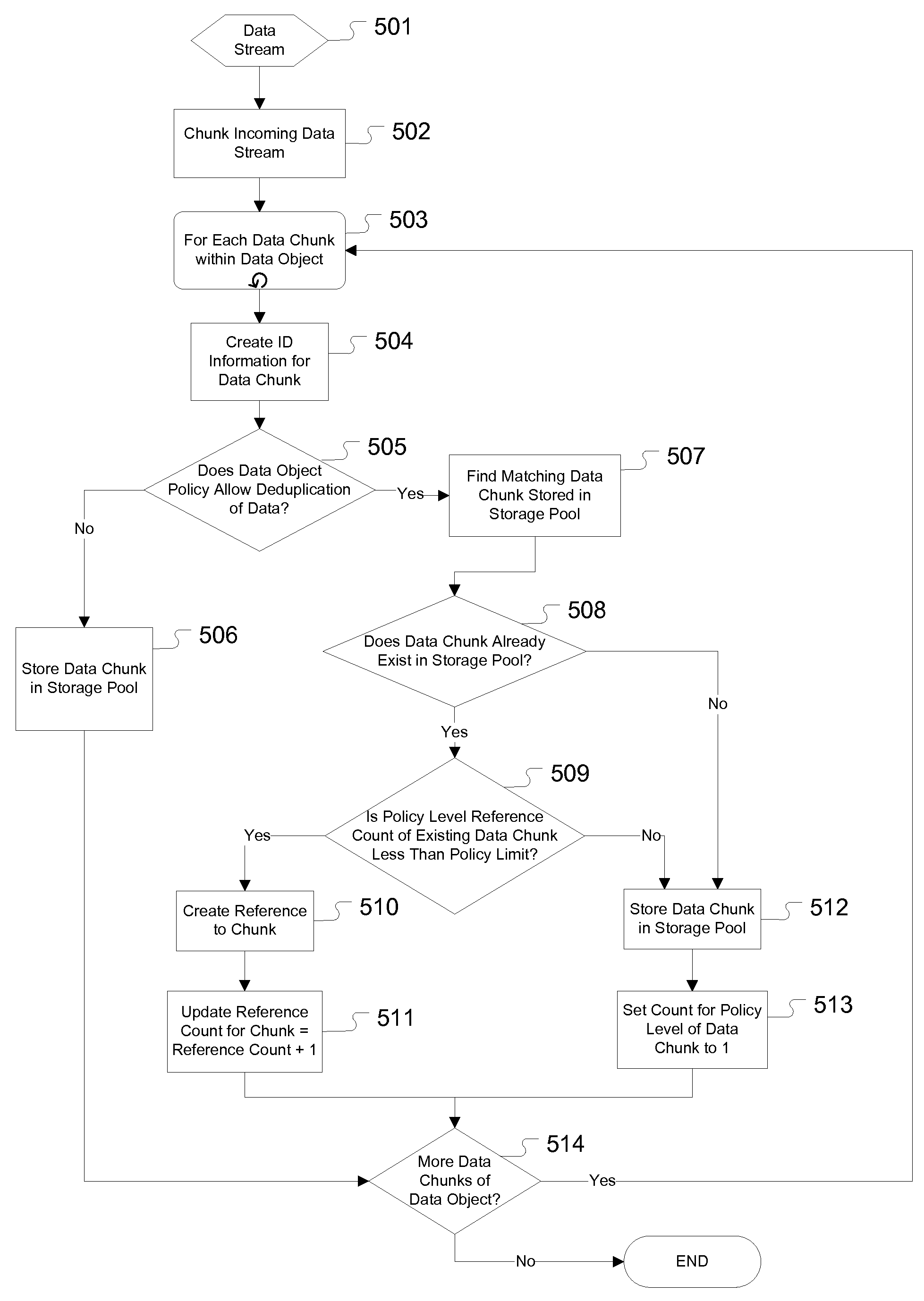

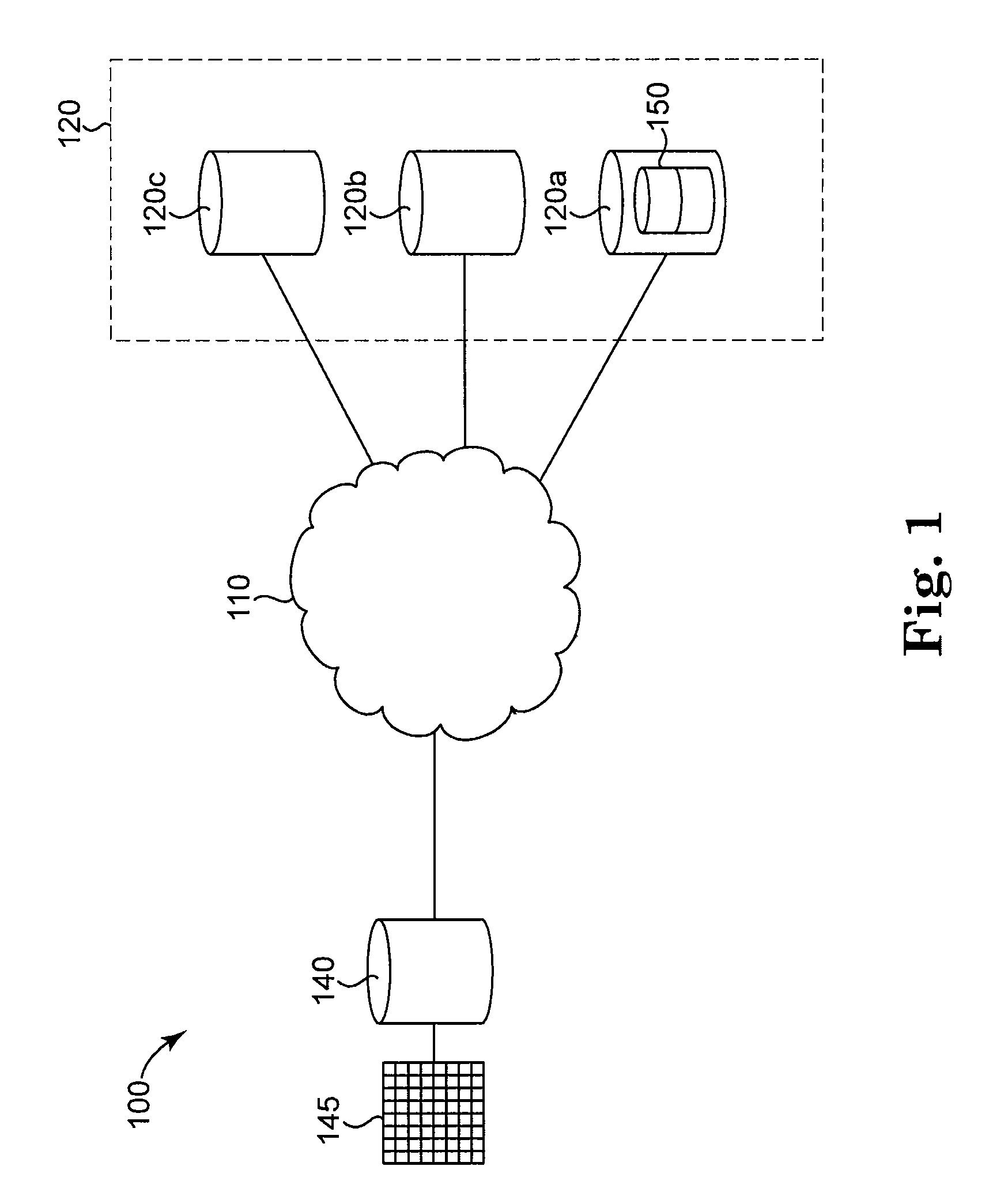

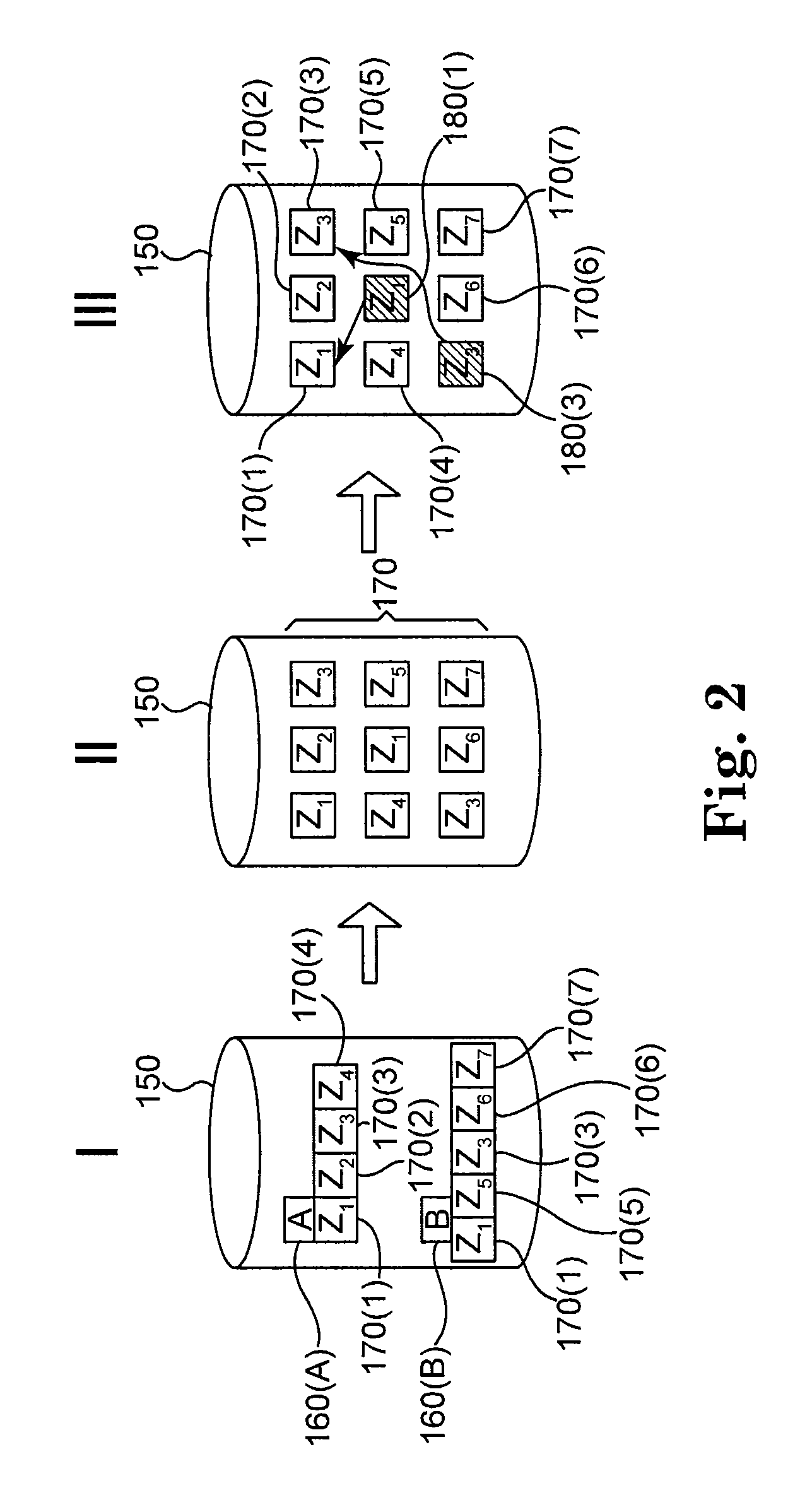

The present invention provides for a method, system, and computer program for the application of data deduplication according to a policy-based strategy of tiered data. The method operates by defining a plurality of data storage policies for data in a deduplication system, policies which may be arranged in tiers. Data objects are classified according to a selected data storage policy and are split into data chunks. If the selected data storage policy for the data object does not allow deduplication, the data chunks are stored in a deduplication pool. If the selected data storage policy for the data object allows deduplication, deduplication is performed. The data storage policy may specify a maximum number of references to data chunks, facilitating storage of new copies of the data chunks when the maximum number of references is met.

Owner:HUAWEI TECH CO LTD

Deduplication aware scheduling of requests to access data blocks

ActiveUS20130091102A1Reduce the possibilityReduce throughputDigital data processing detailsSpecial data processing applicationsComputer scienceThroughput

Systems and methods for scheduling requests to access data may adjust the priority of such requests based on the presence of de-duplicated data blocks within the requested set of data blocks. A data de-duplication process operating on a storage device may build a de-duplication data map that stores information about the presence and location of de-duplicated data blocks on the storage drive. An I / O scheduler that manages the access requests can employ the de-duplicated data map to identify and quantify any de-duplicated data blocks within an access request. The I / O scheduler can then adjust the priority of the access request, based at least in part, on whether de-duplicated data blocks provide a large enough sequence of data blocks to reduce the likelihood that servicing the request, even if causing a head seek operation, will not reduce the overall global throughput of the storage system.

Owner:NETWORK APPLIANCE INC

Method for removing duplicate data from a storage array

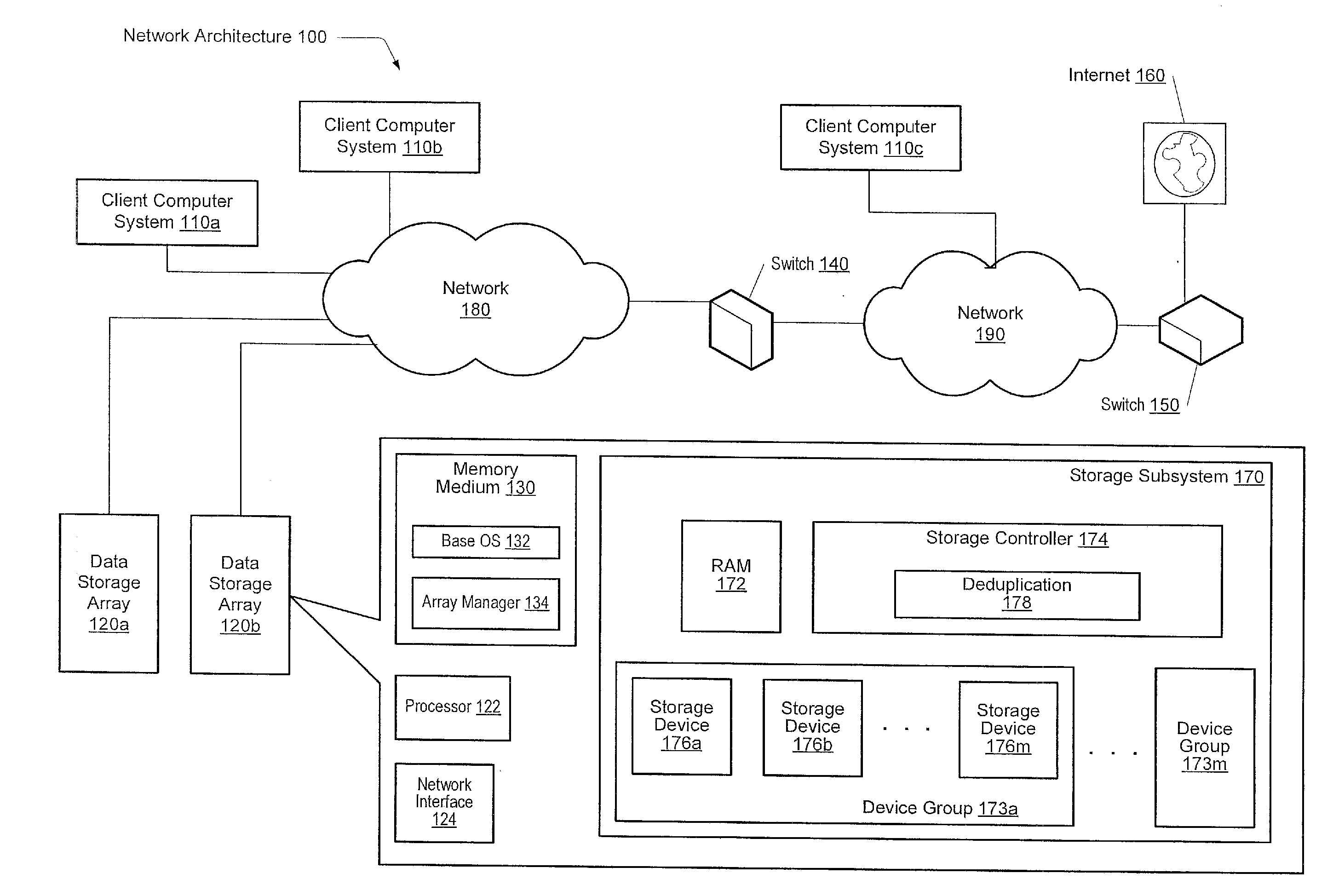

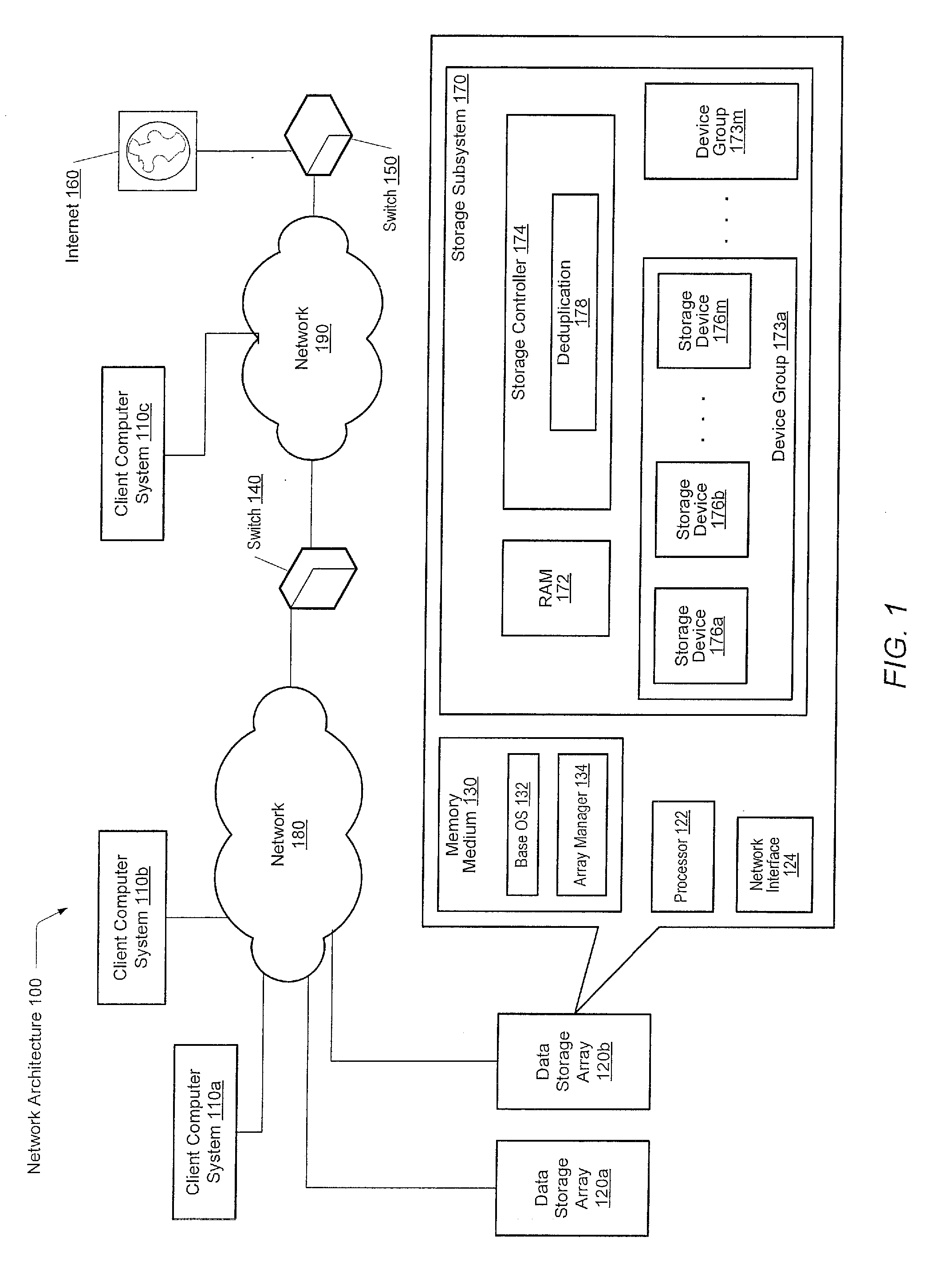

ActiveUS8930307B2Digital data information retrievalInput/output to record carriersGranularityData store

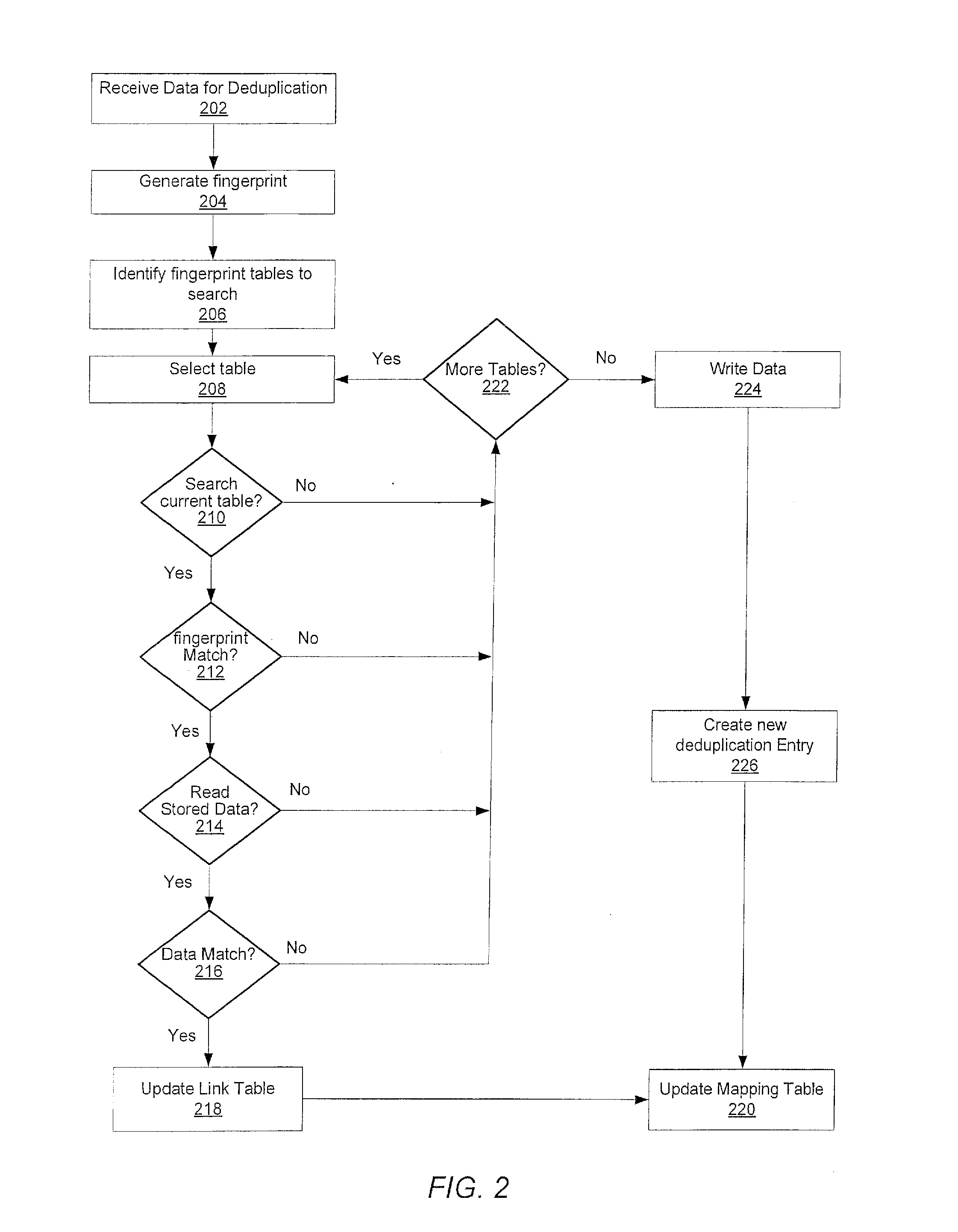

A system and method for efficiently removing duplicate data blocks at a fine-granularity from a storage array. A data storage subsystem supports multiple deduplication tables. Table entries in one deduplication table have the highest associated probability of being deduplicated. Table entries may move from one deduplication table to another as the probabilities change. Additionally, a table entry may be evicted from all deduplication tables if a corresponding estimated probability falls below a given threshold. The probabilities are based on attributes associated with a data component and attributes associated with a virtual address corresponding to a received storage access request. A strategy for searches of the multiple deduplication tables may also be determined by the attributes associated with a given storage access request.

Owner:PURE STORAGE

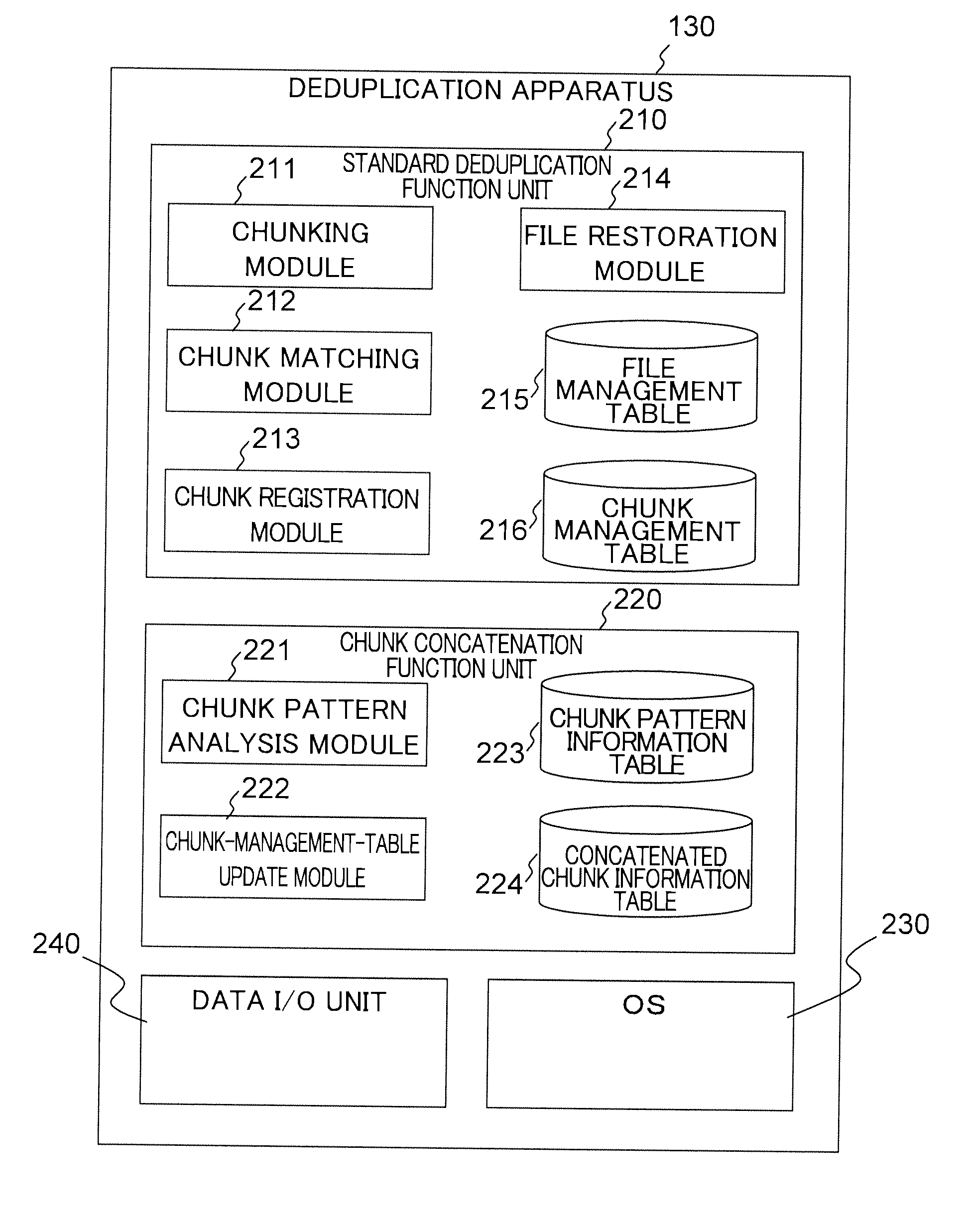

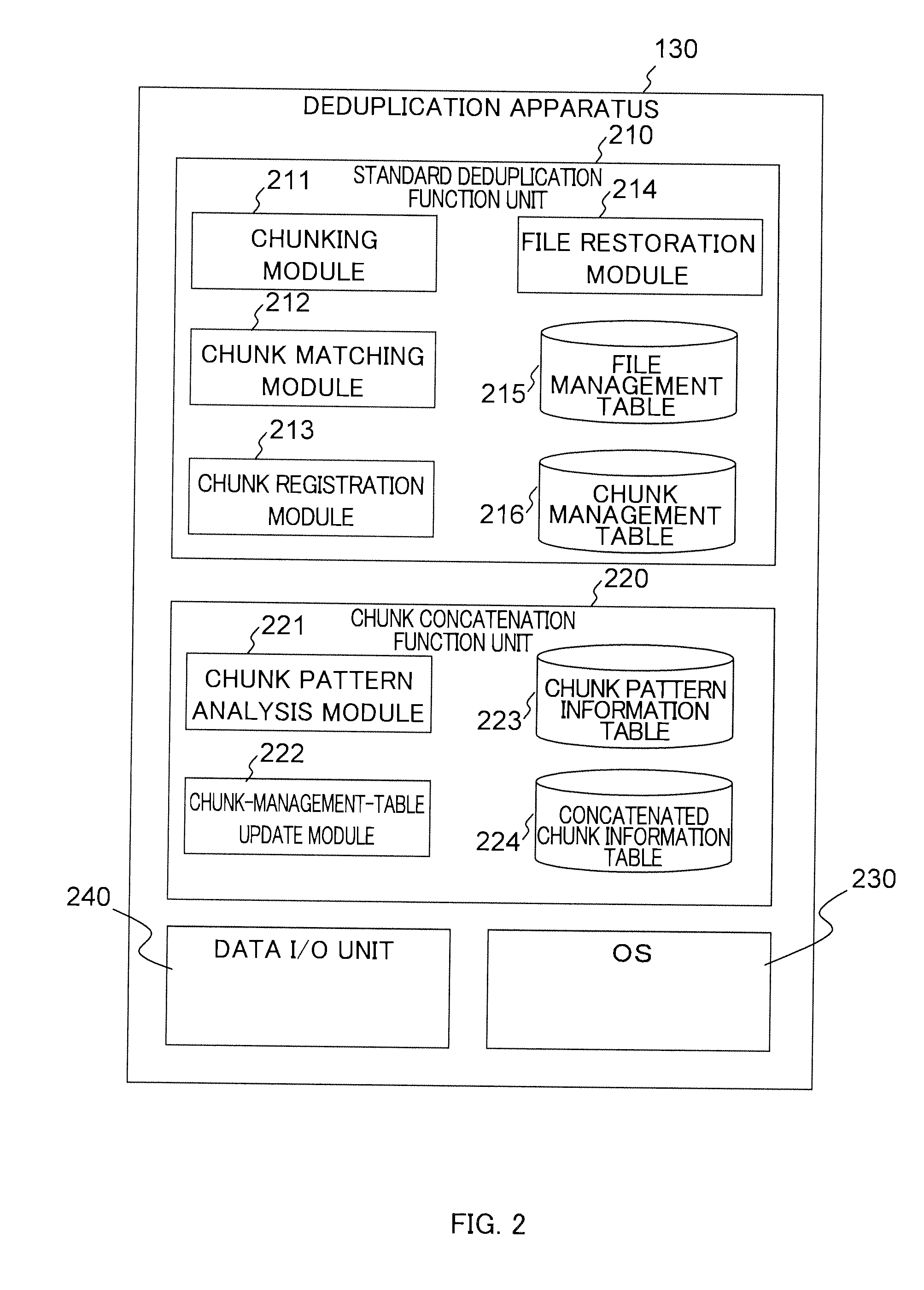

Stored data deduplication method, stored data deduplication apparatus, and deduplication program

InactiveUS20140229452A1Amount of timeDigital data information retrievalDigital data processing detailsData storingData store

Method of dividing data to be stored in storage device into data fragments; recording the data by using configurations of divided data fragments; judging whether identical data fragments exist in data fragments; when it is judged that identical data fragments exist, storing one of the identical data fragments in storage area of the storage device, and generating and recording data-fragment attribute information indicating an attribute unique to the data fragment stored; upon receipt of request to read data stored in the storage area of the storage device, acquiring the configurations of the data fragments forming the read-target data, reading the corresponding data fragments from the storage area of the storage device, and restoring the data; acquiring and coupling the recorded data fragments to generate concatenation target data targeted for judgment on whether chunk concatenation is possible or not, and detecting whether the concatenation target data has a repeated data pattern

Owner:HITACHI LTD

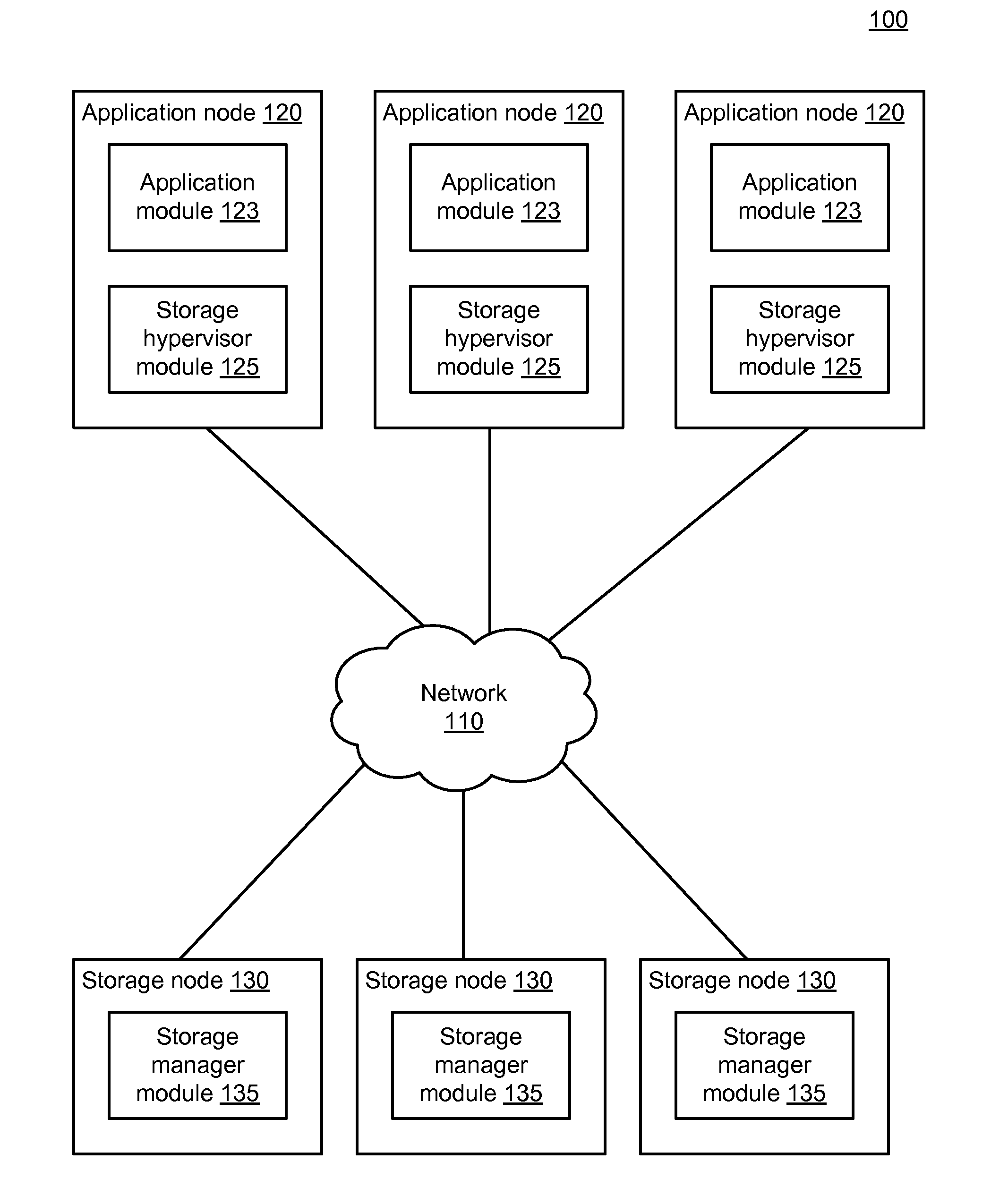

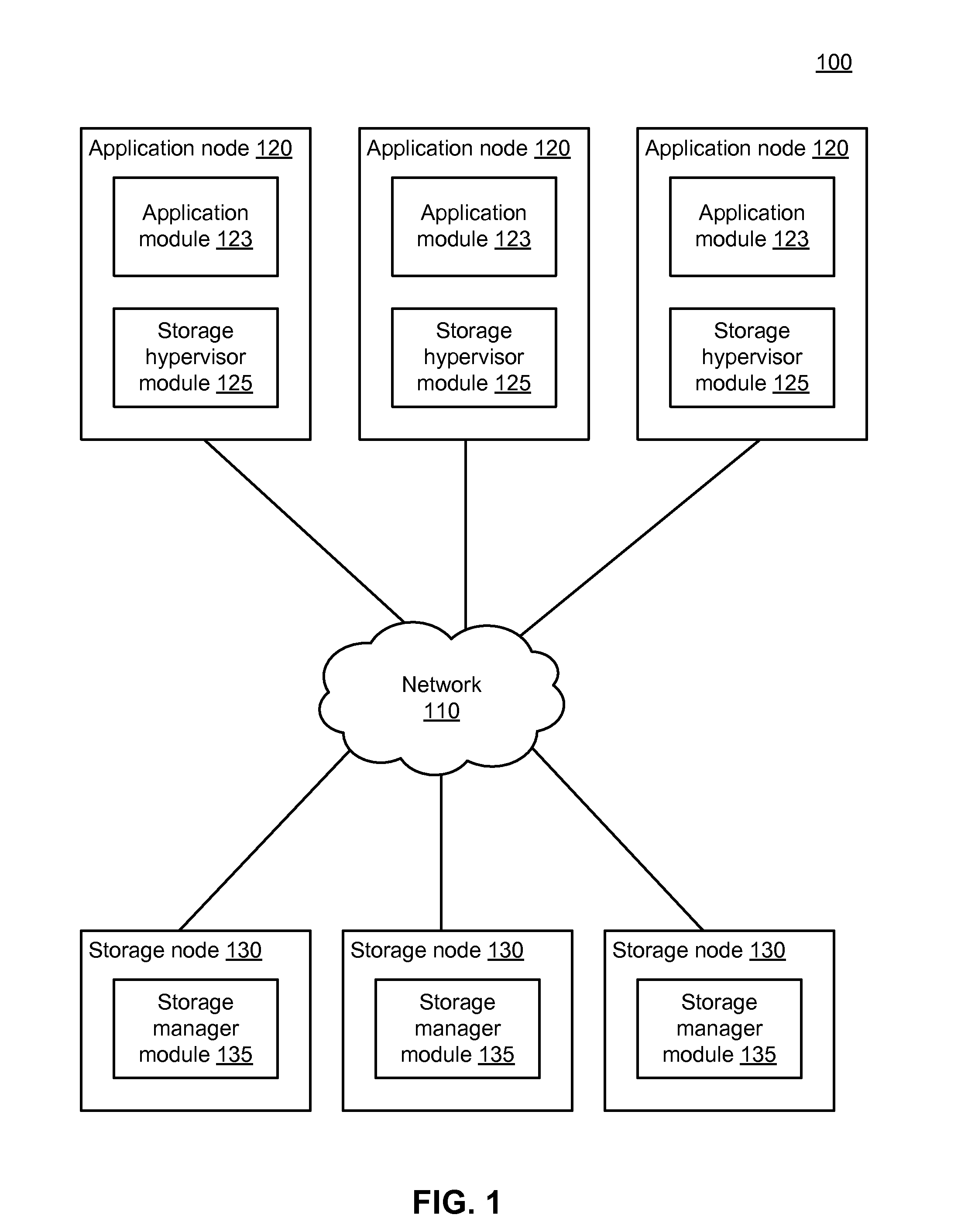

High-Performance Distributed Data Storage System with Implicit Content Routing and Data Deduplication

InactiveUS20150039645A1Digital data information retrievalDigital data processing detailsHash functionData location

A write request that includes a data object is processed. A hash function is executed on the data object, thereby generating a hash value that includes a first portion and a second portion. A data location table is queried with the first portion, thereby obtaining a storage node identifier. The data object is sent to a storage node associated with the storage node identifier. A write request that includes a data object and a pending data object identification (DOID) is processed, wherein the pending DOID comprises a hash value of the data object. The pending DOID is finalized, thereby generating a finalized data object identification (DOID). The data object is stored at a storage location. A storage manager catalog is updated by adding an entry mapping the finalized DOID to the storage location. The finalized DOID is output.

Owner:EBAY INC

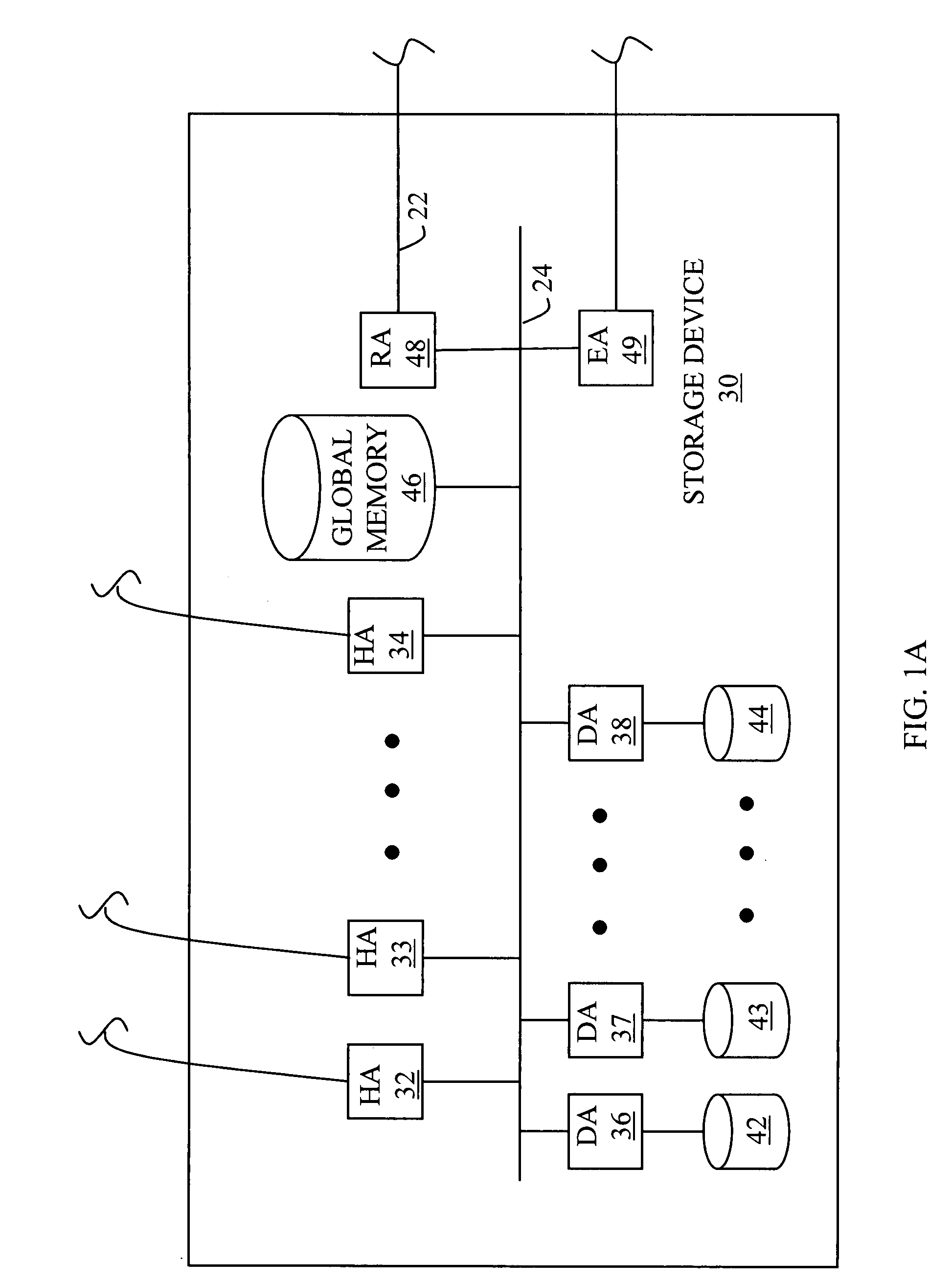

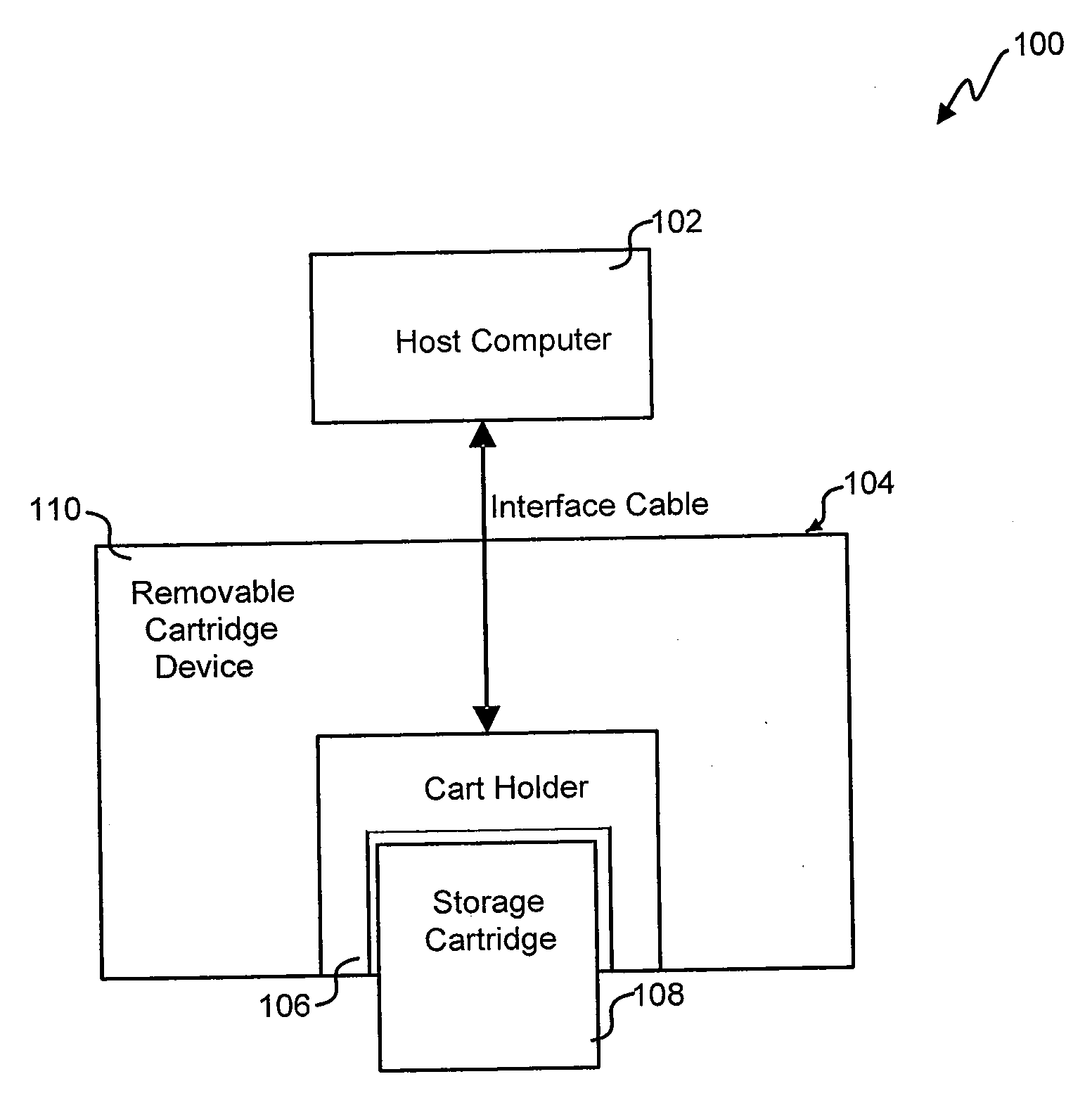

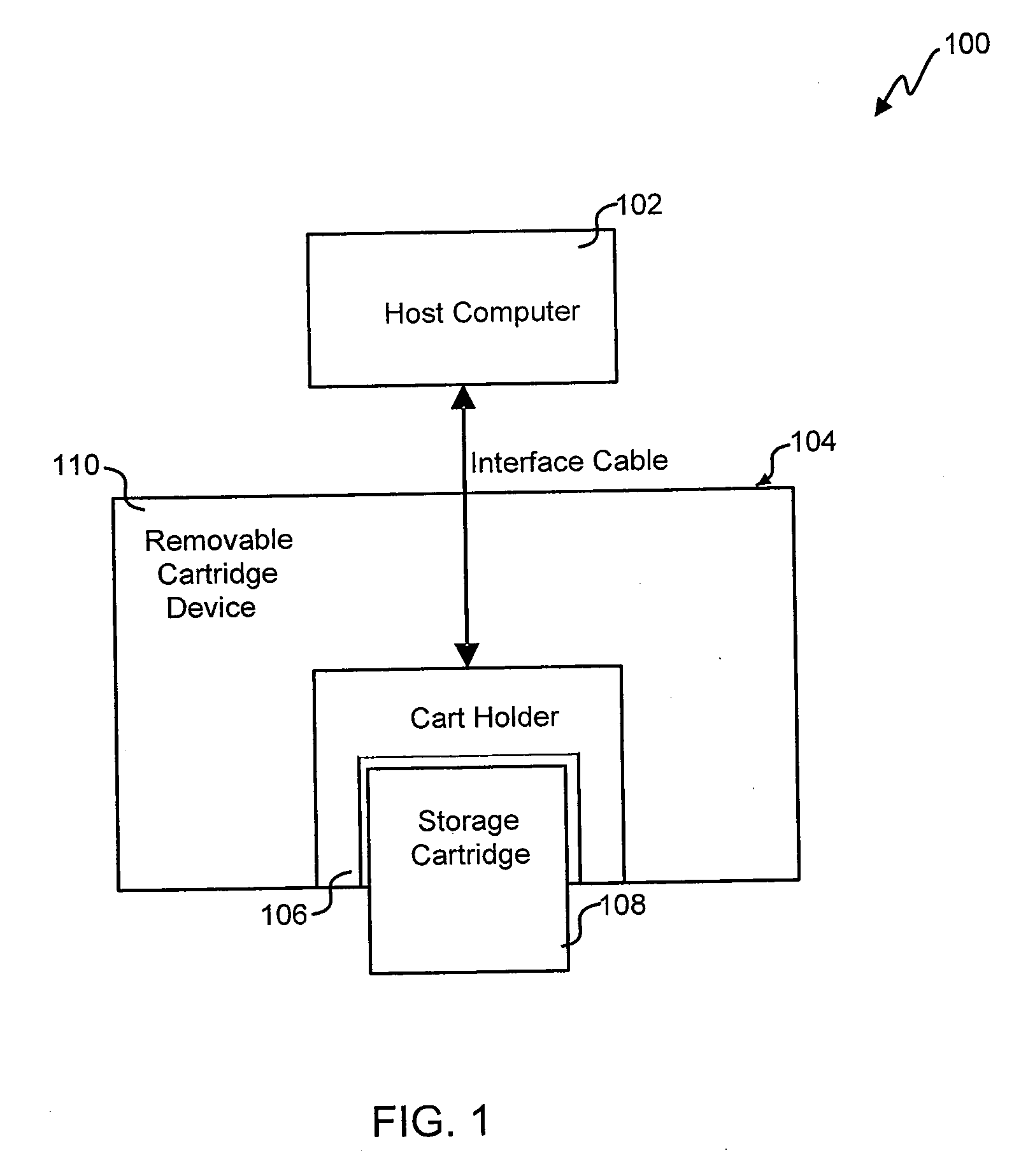

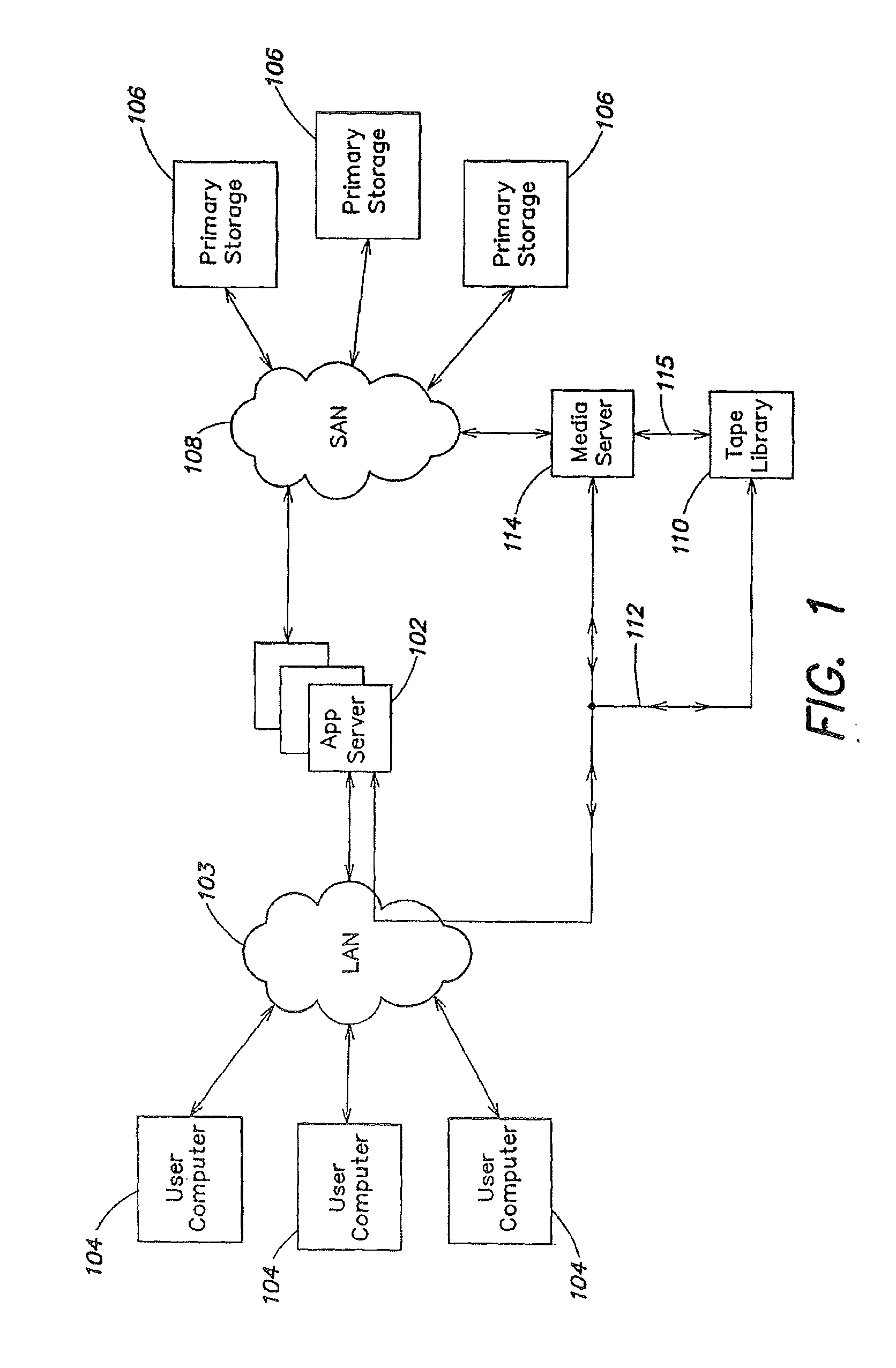

Commonality factoring for removable media

Systems and methods for commonality factoring for storing data on removable storage media are described. The systems and methods allow for highly compressed data, e.g., data compressed using archiving or backup methods including de-duplication, to be stored in an efficient manner on portable memory devices such as removable storage cartridges. The methods include breaking data, e.g., data files for backup, into unique chunks and calculating identifiers, e.g., hash identifiers, based on the unique chunks. Redundant chunks can be identified by calculating identifiers and comparing identifiers of other chunks to the identifiers of unique chunks previously calculated. When a redundant chunk is identified, a reference to the existing unique chunk is generated such that the chunk can be reconstituted in relation to other chunks in order to recreate the original data. The method further includes storing one or more of the unique chunks, the identifiers and / or the references on the removable storage medium.

Owner:IMATION

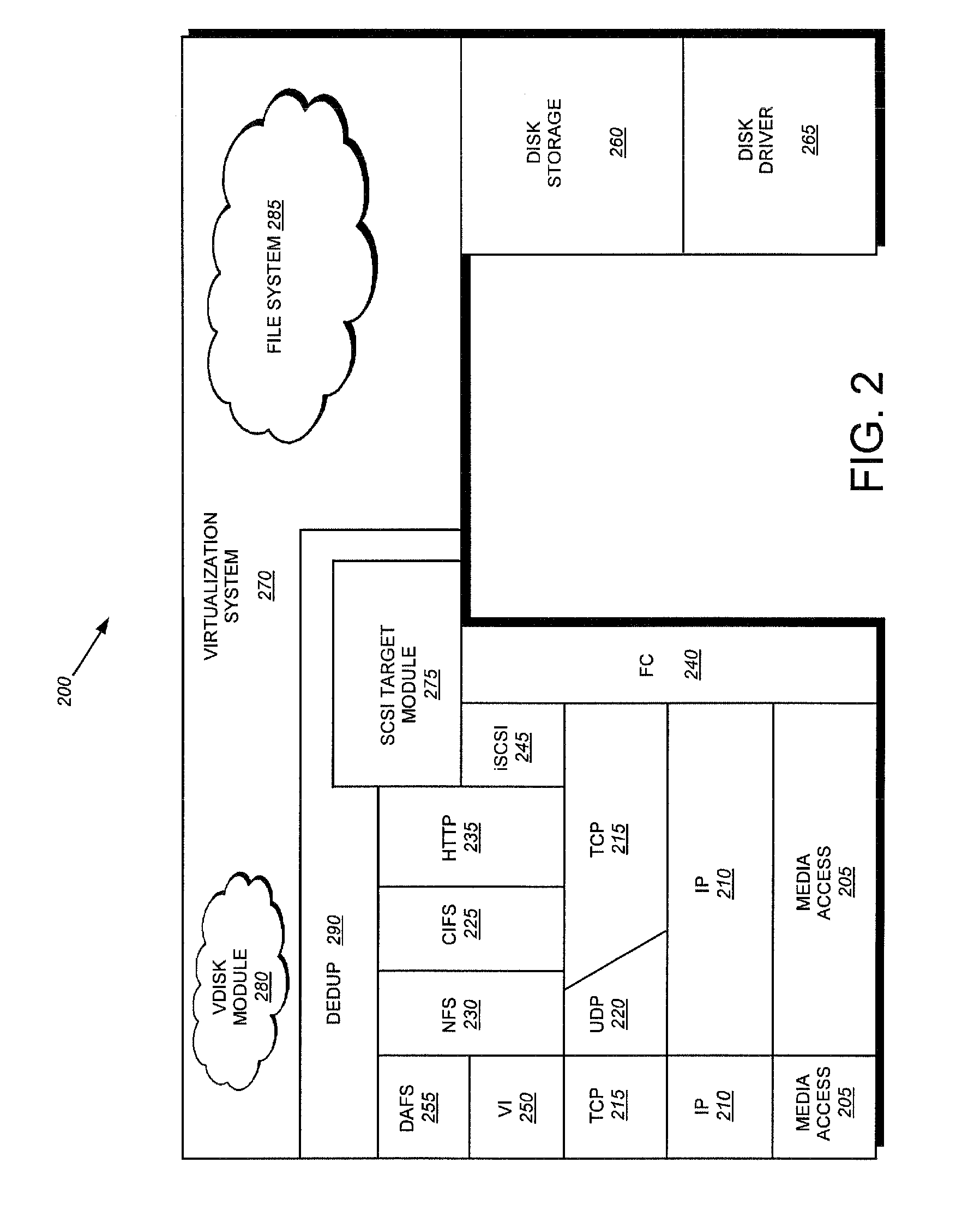

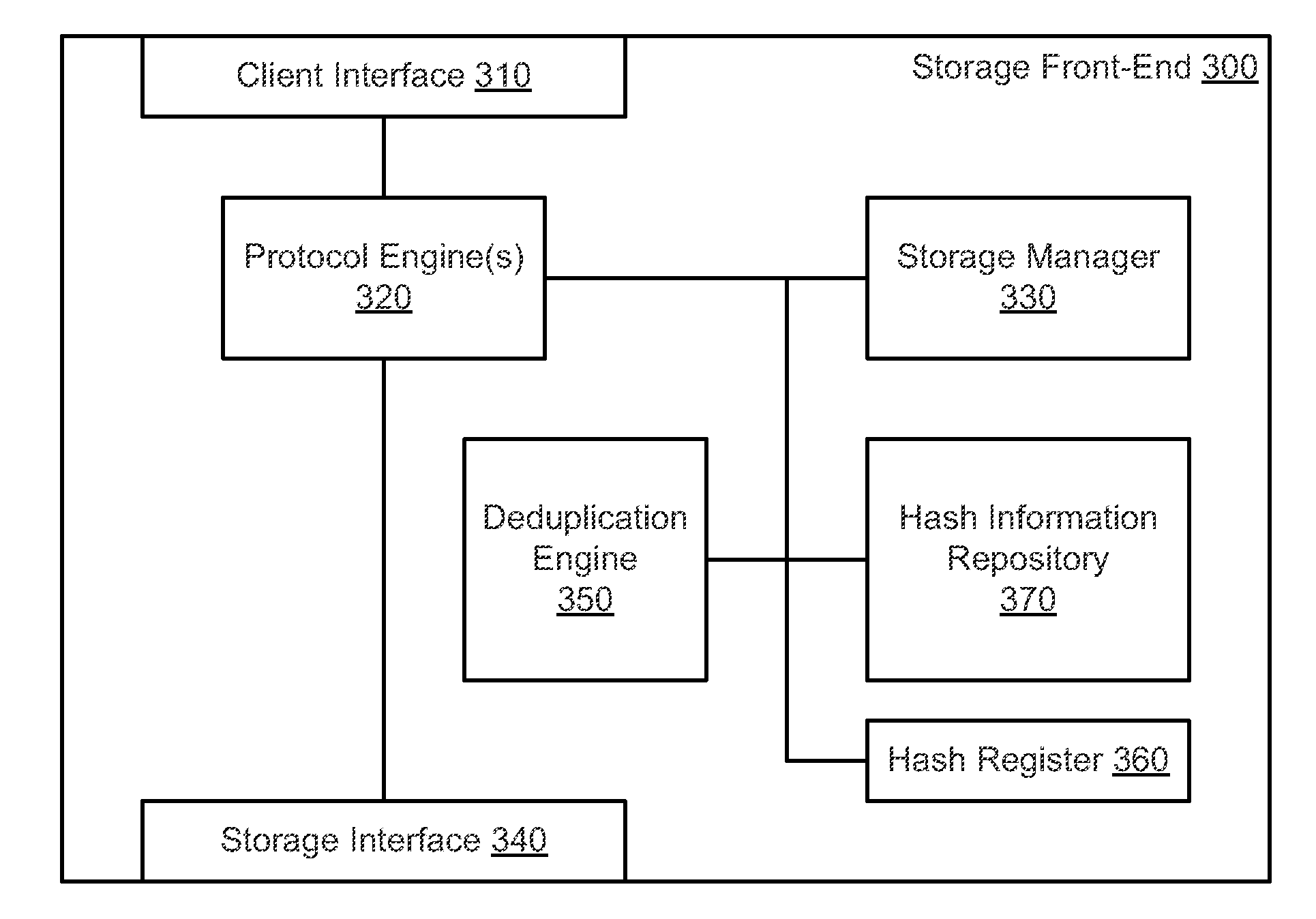

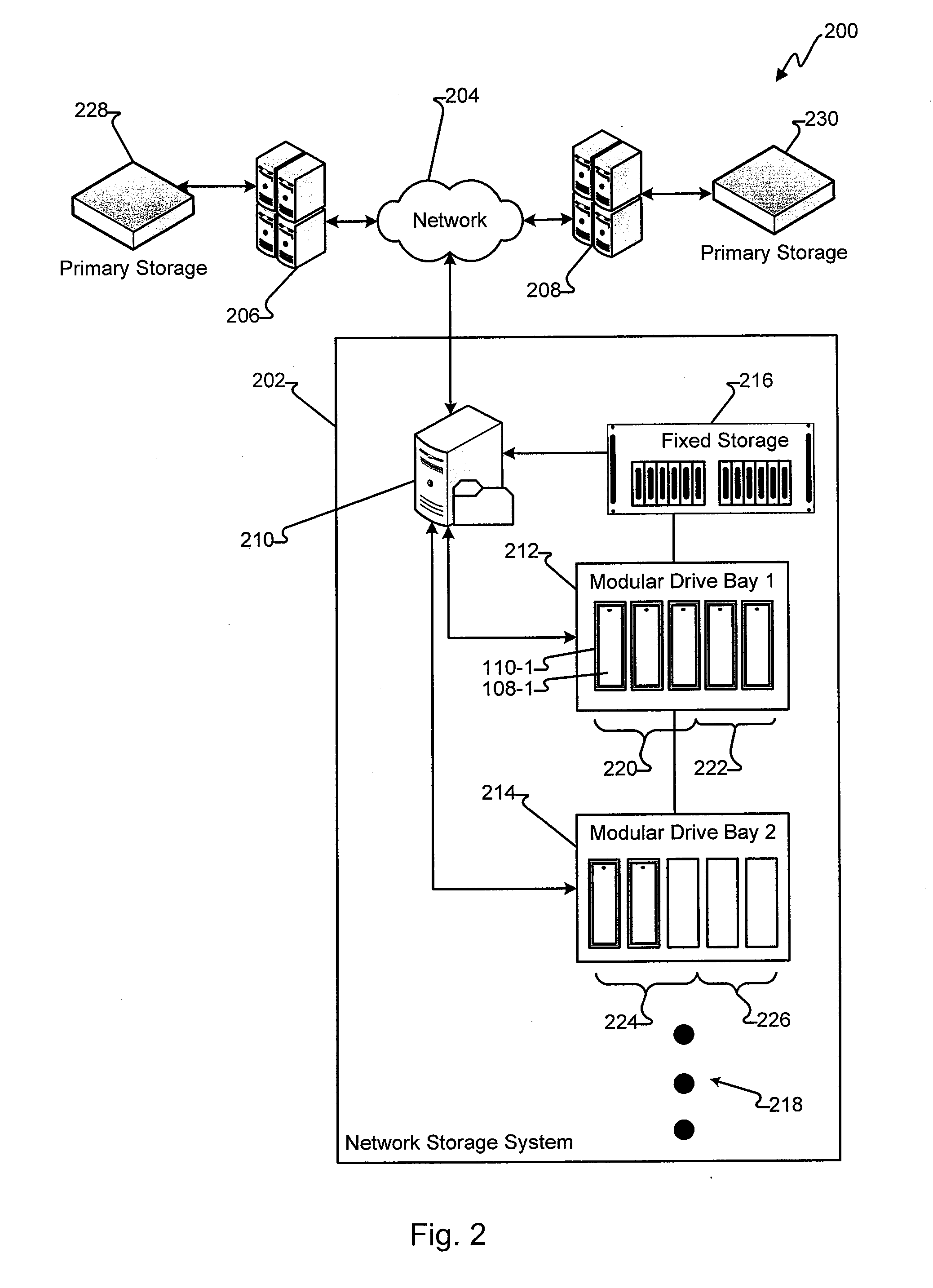

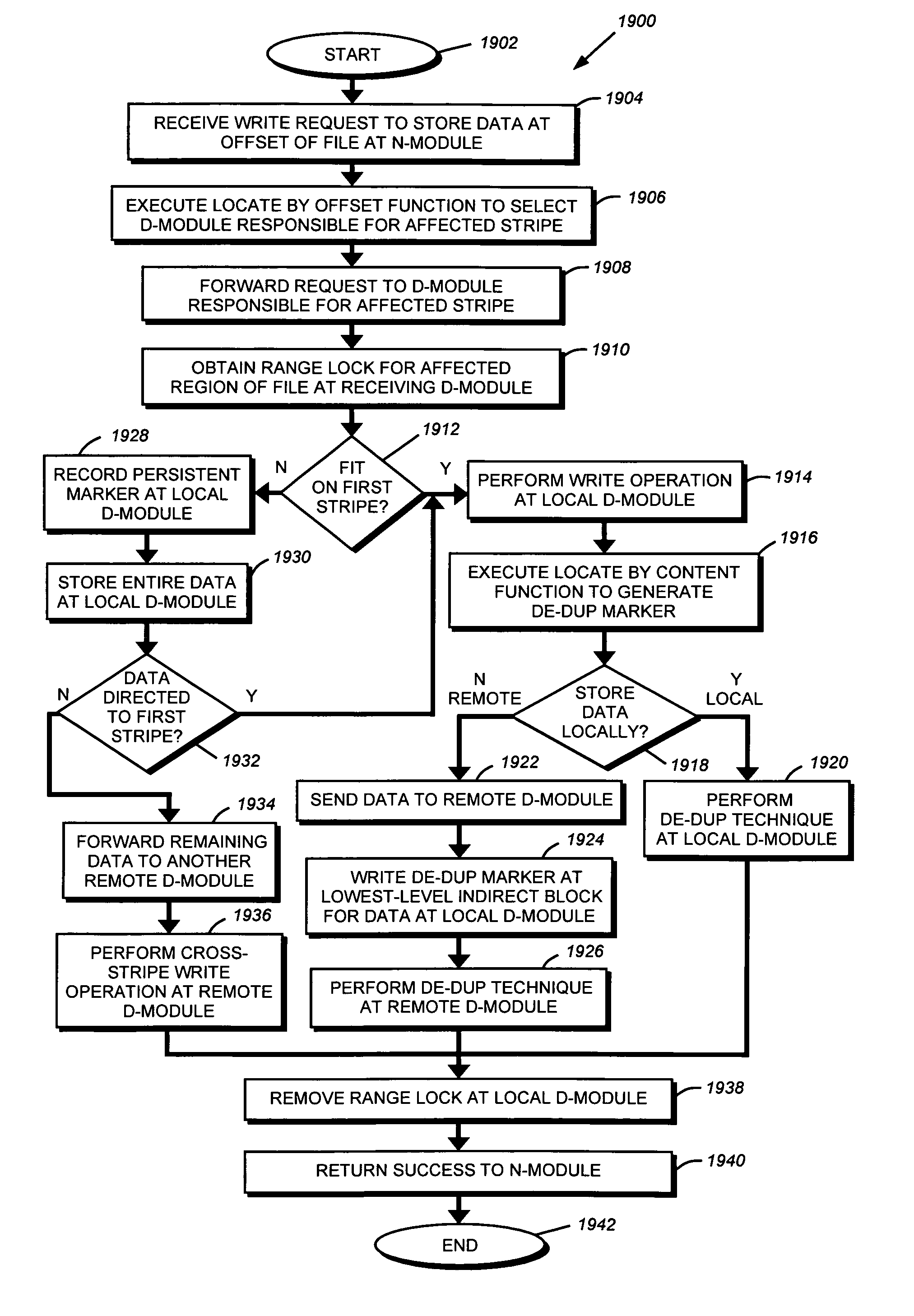

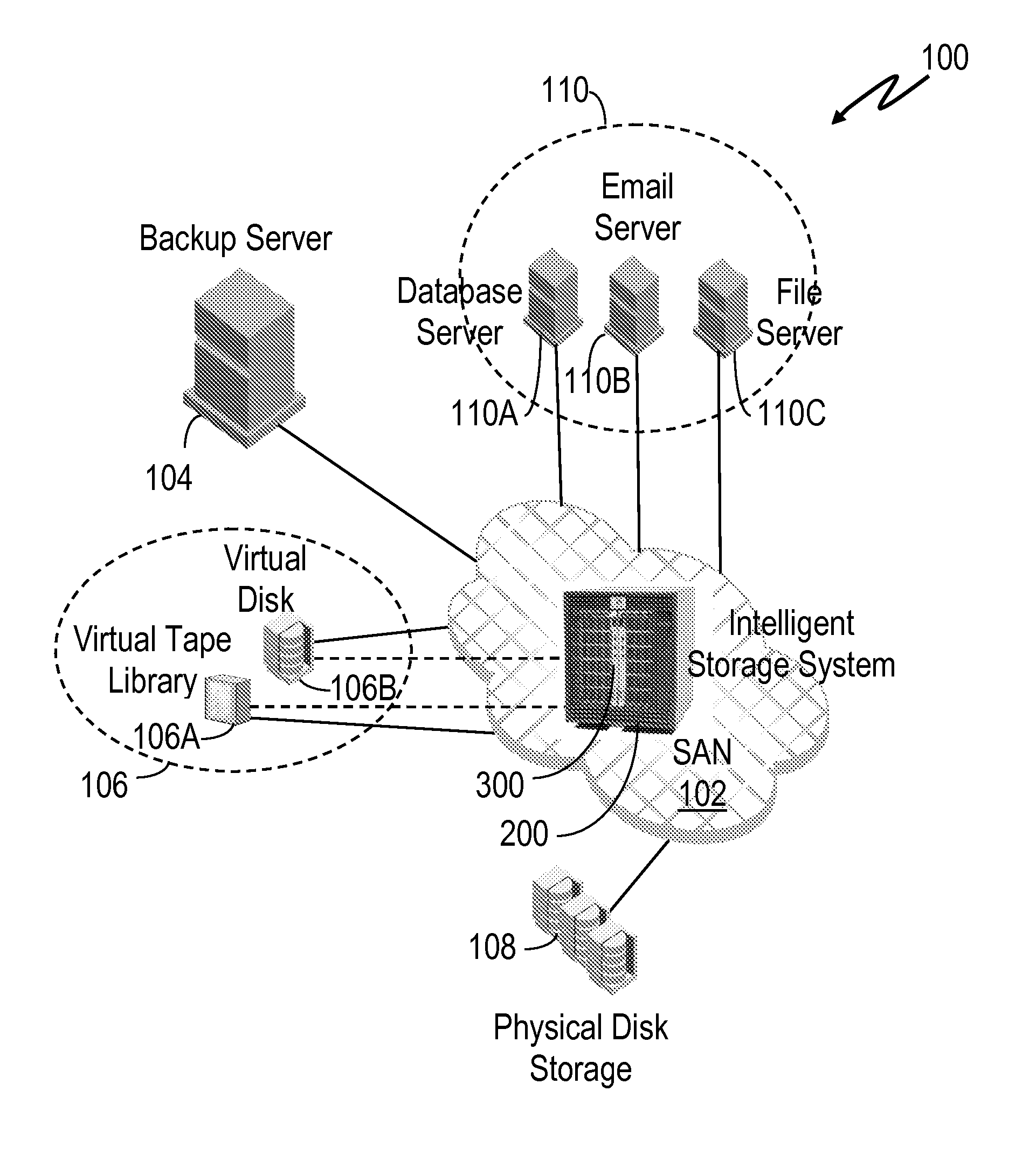

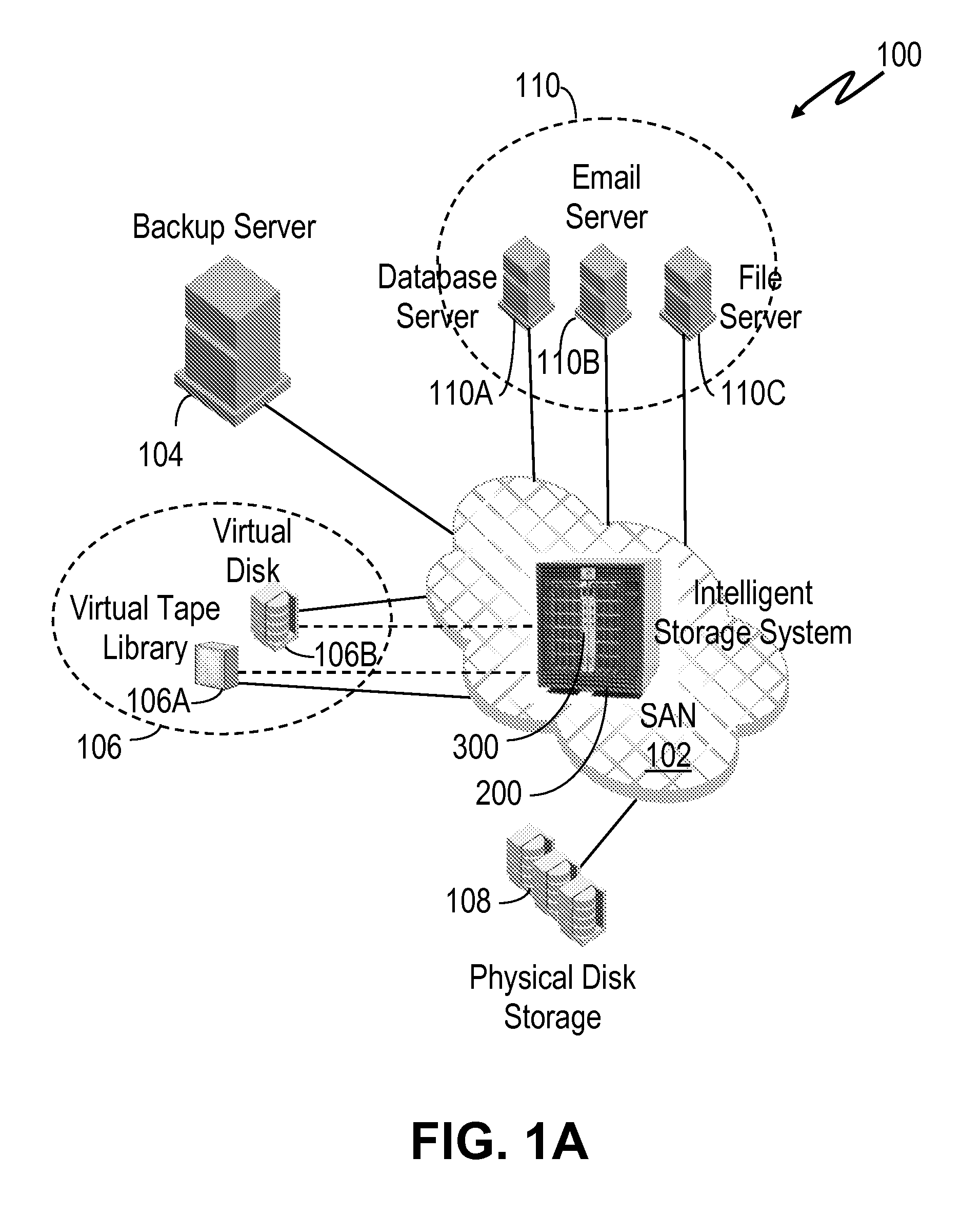

System and method for enabling de-duplication in a storage system architecture

ActiveUS7747584B1Effectively ensureImprove efficiencyDigital data information retrievalDigital data processing detailsData contentData store

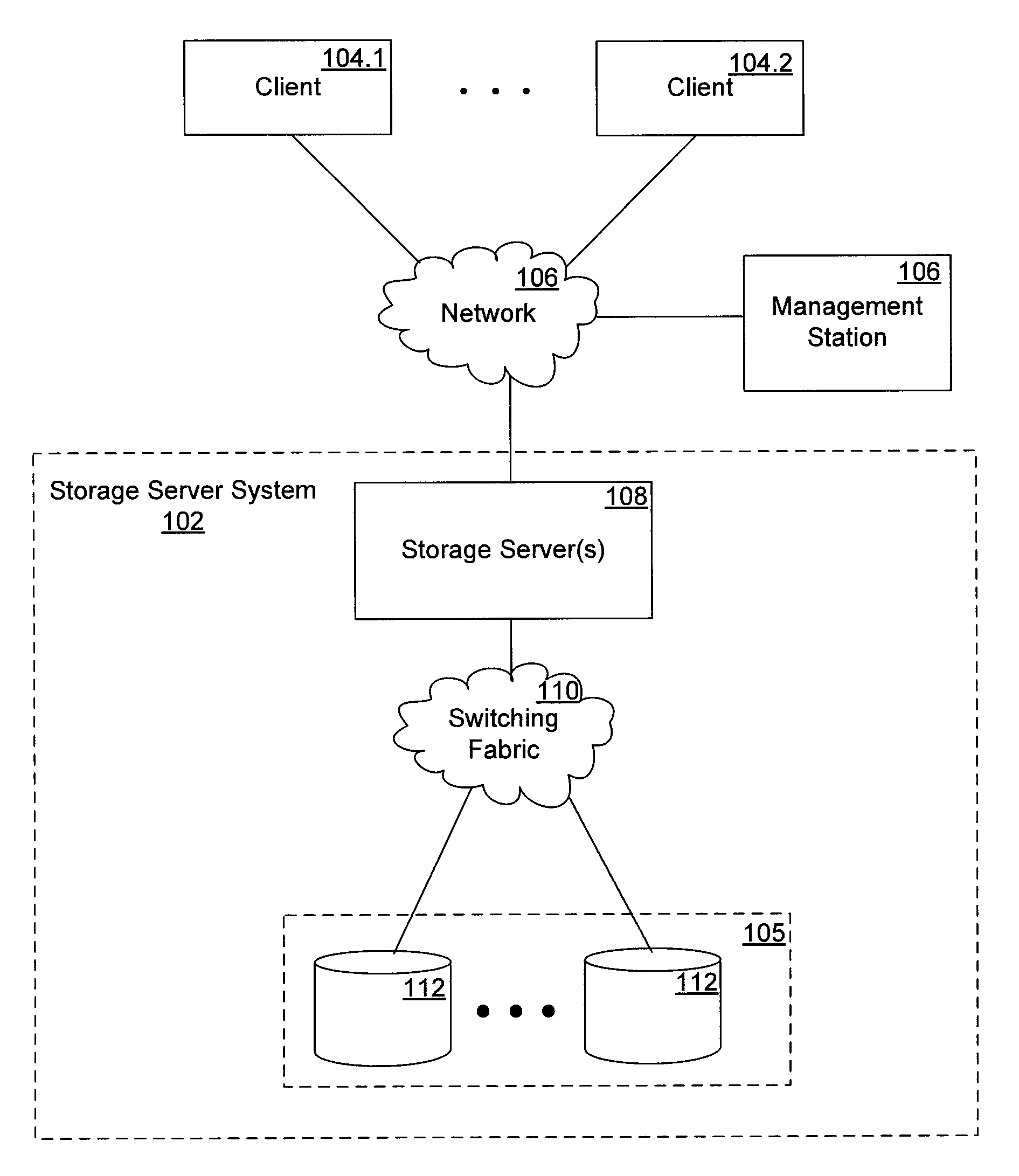

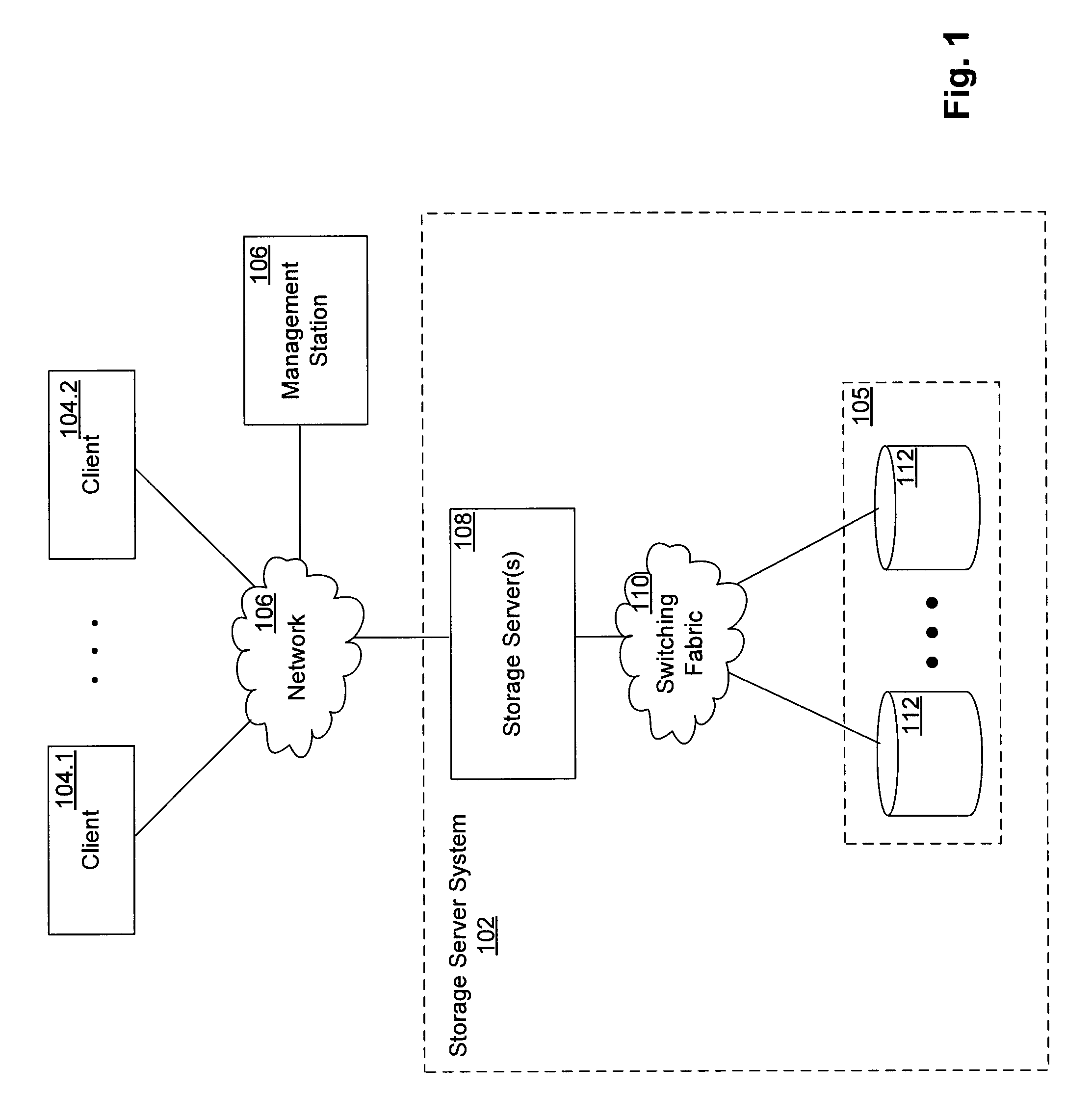

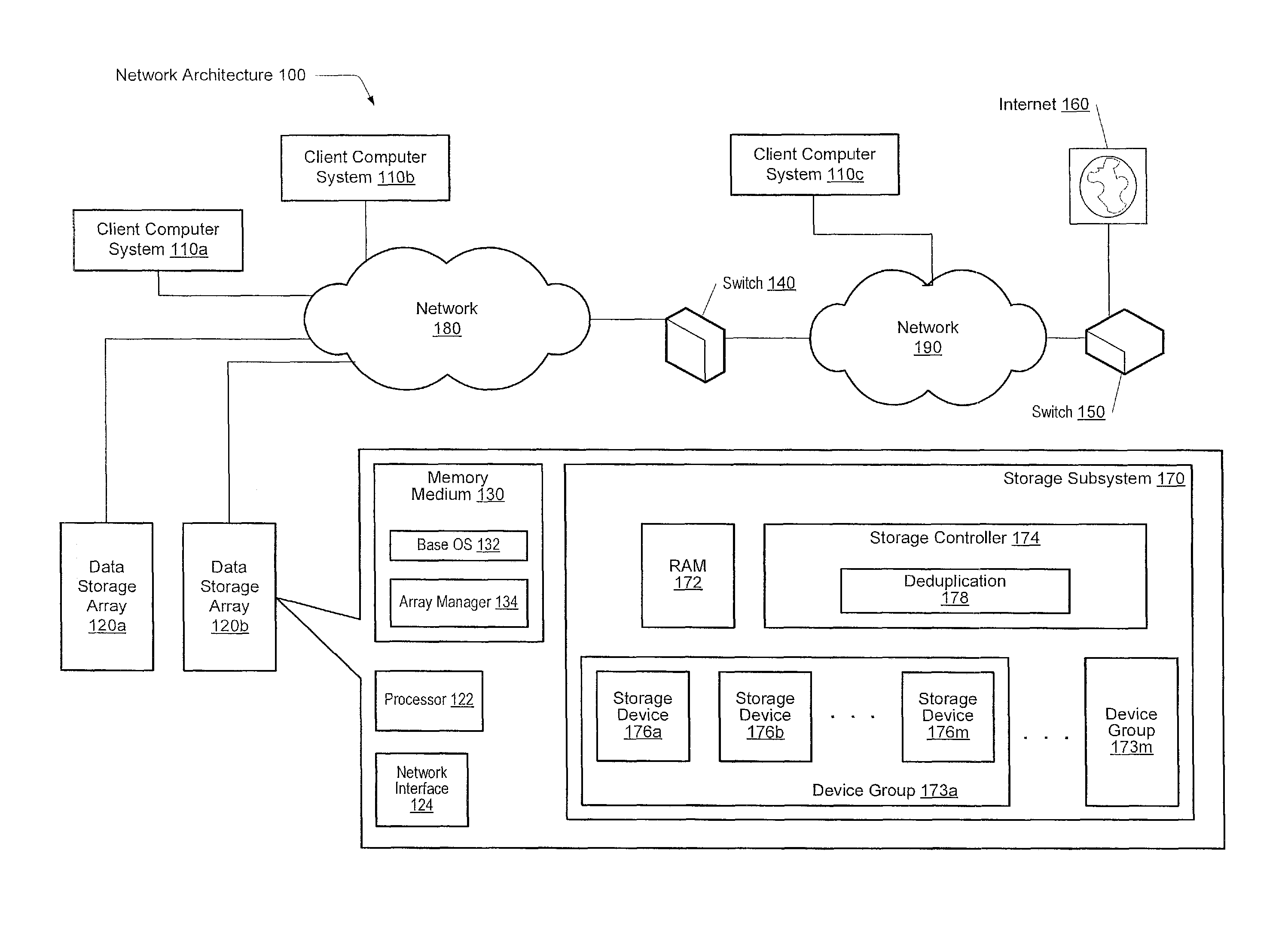

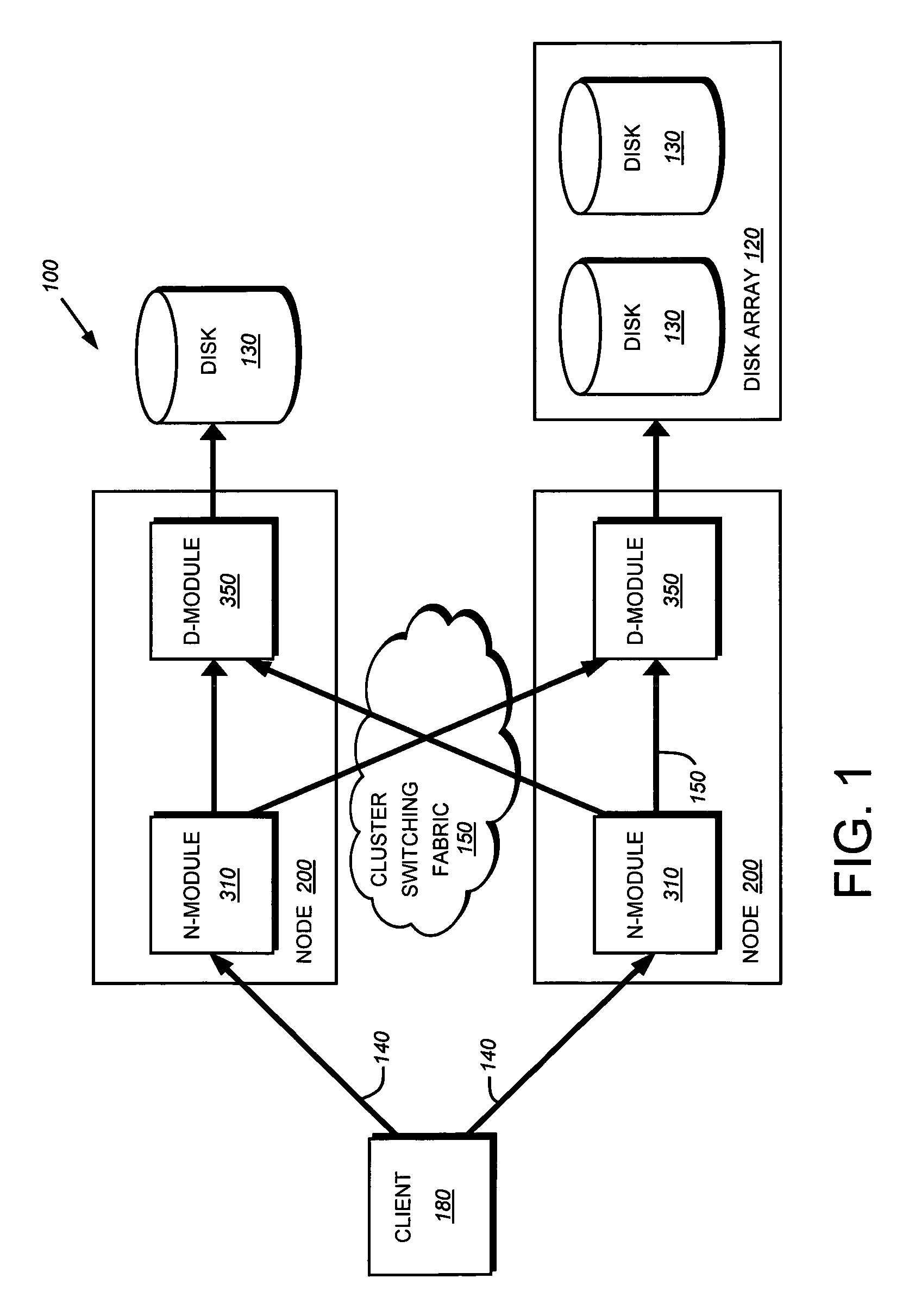

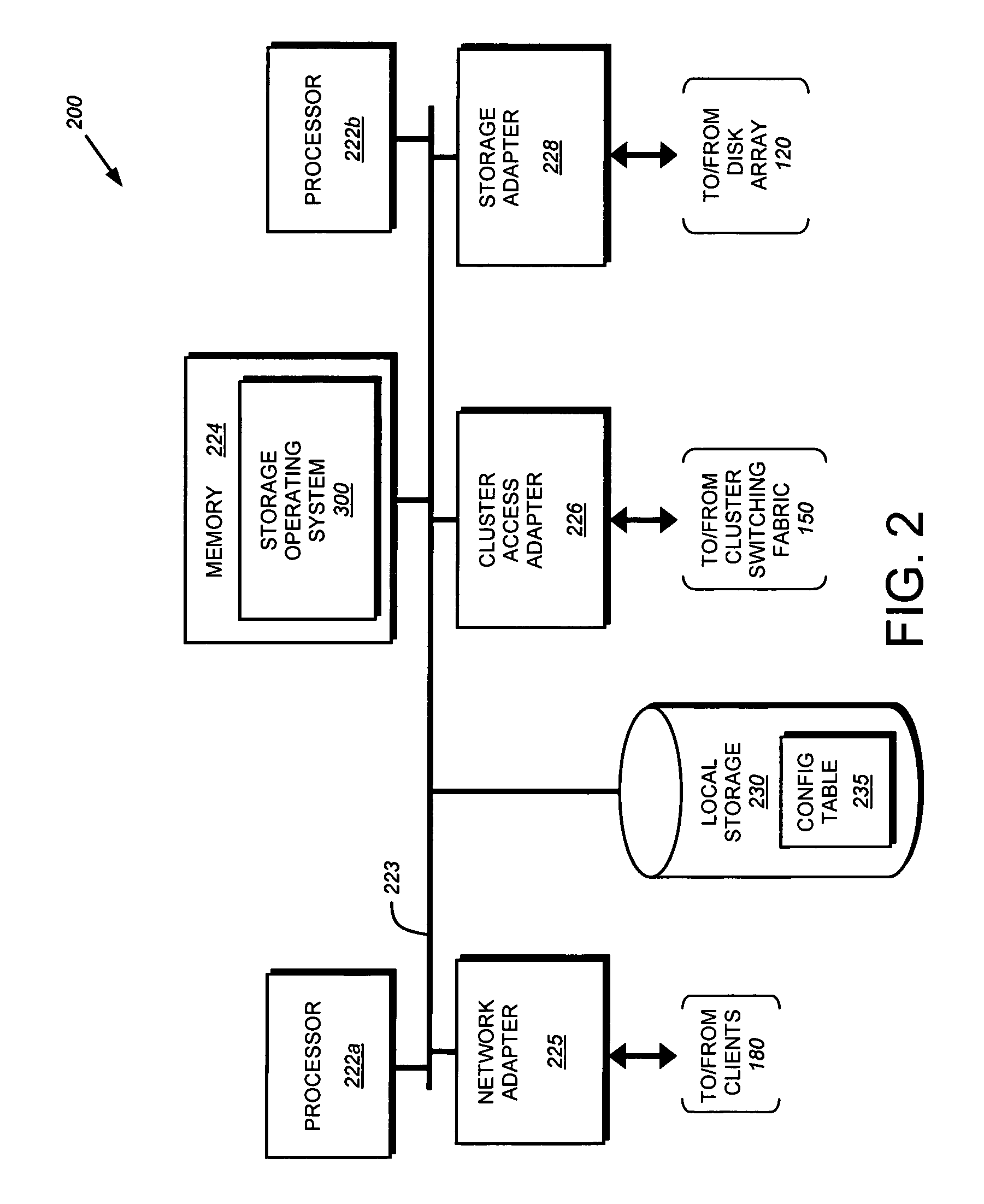

A system and method enables de-duplication in a storage system architecture comprising one or more volumes distributed across a plurality of nodes interconnected as a cluster. De-duplication is enabled through the use of file offset indexing in combination with data content redirection. File offset indexing is illustratively embodied as a Locate by offset function, while data content redirection is embodied as a novel Locate by content function. In response to input of, inter alia, a data container (file) offset, the Locate by offset function returns a data container (file) index that is used to determine a storage server that is responsible for a particular region of the file. The Locate by content function is then invoked to determine the storage server that actually stores the requested data on disk. Notably, the content function ensures that data is stored on a volume of a storage server based on the content of that data rather than based on its offset within a file. This aspect of the invention ensures that all blocks having identical data content are served by the same storage server so that it may implement de-duplication to conserve storage space on disk and increase cache efficiency of memory.

Owner:NETWORK APPLIANCE INC

Method for removing duplicate data from a storage array

ActiveUS20130086006A1Digital data processing detailsSpecial data processing applicationsGranularityComputer science

A system and method for efficiently removing duplicate data blocks at a fine-granularity from a storage array. A data storage subsystem supports multiple deduplication tables. Table entries in one deduplication table have the highest associated probability of being deduplicated. Table entries may move from one deduplication table to another as the probabilities change. Additionally, a table entry may be evicted from all deduplication tables if a corresponding estimated probability falls below a given threshold. The probabilities are based on attributes associated with a data component and attributes associated with a virtual address corresponding to a received storage access request. A strategy for searches of the multiple deduplication tables may also be determined by the attributes associated with a given storage access request.

Owner:PURE STORAGE

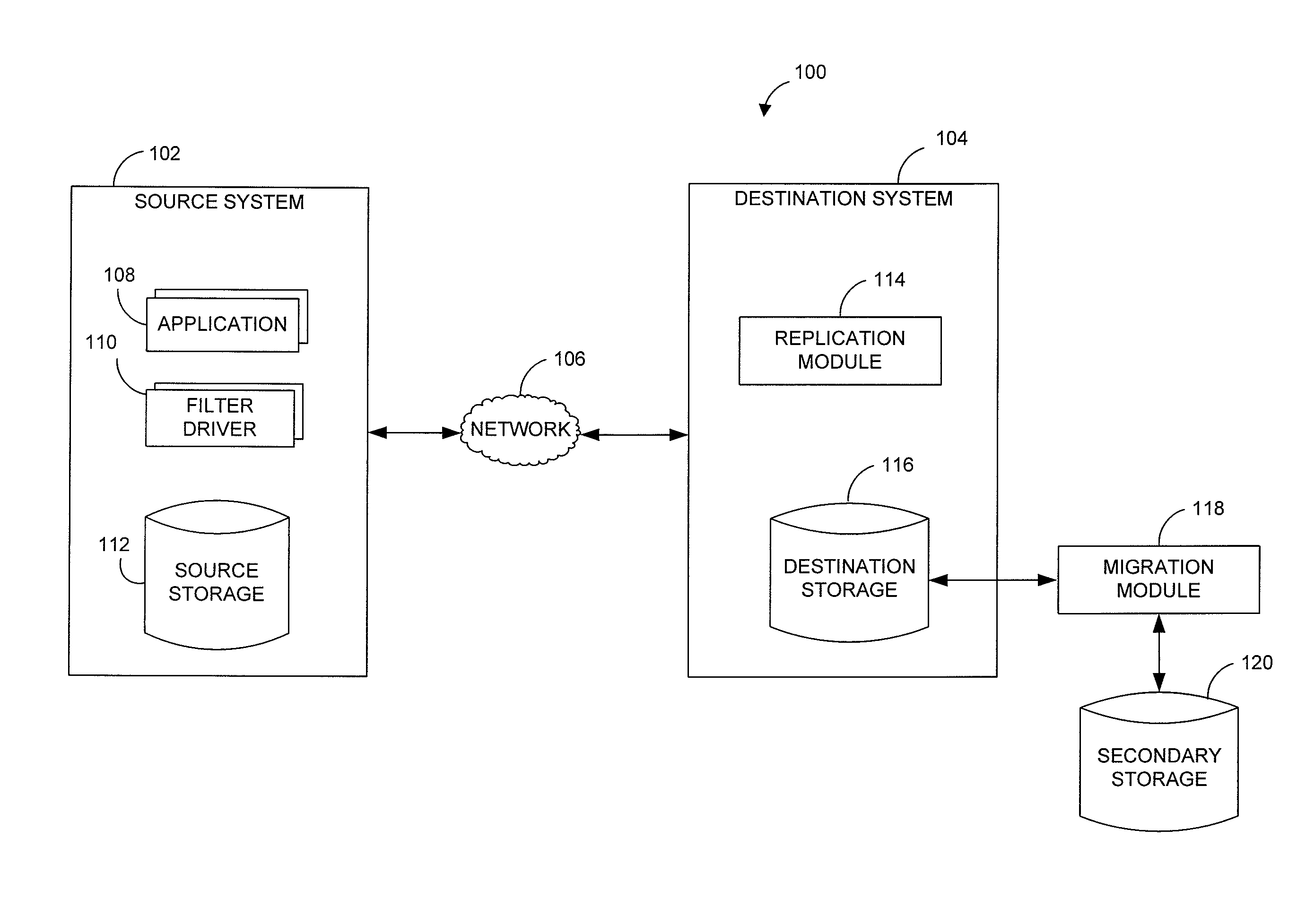

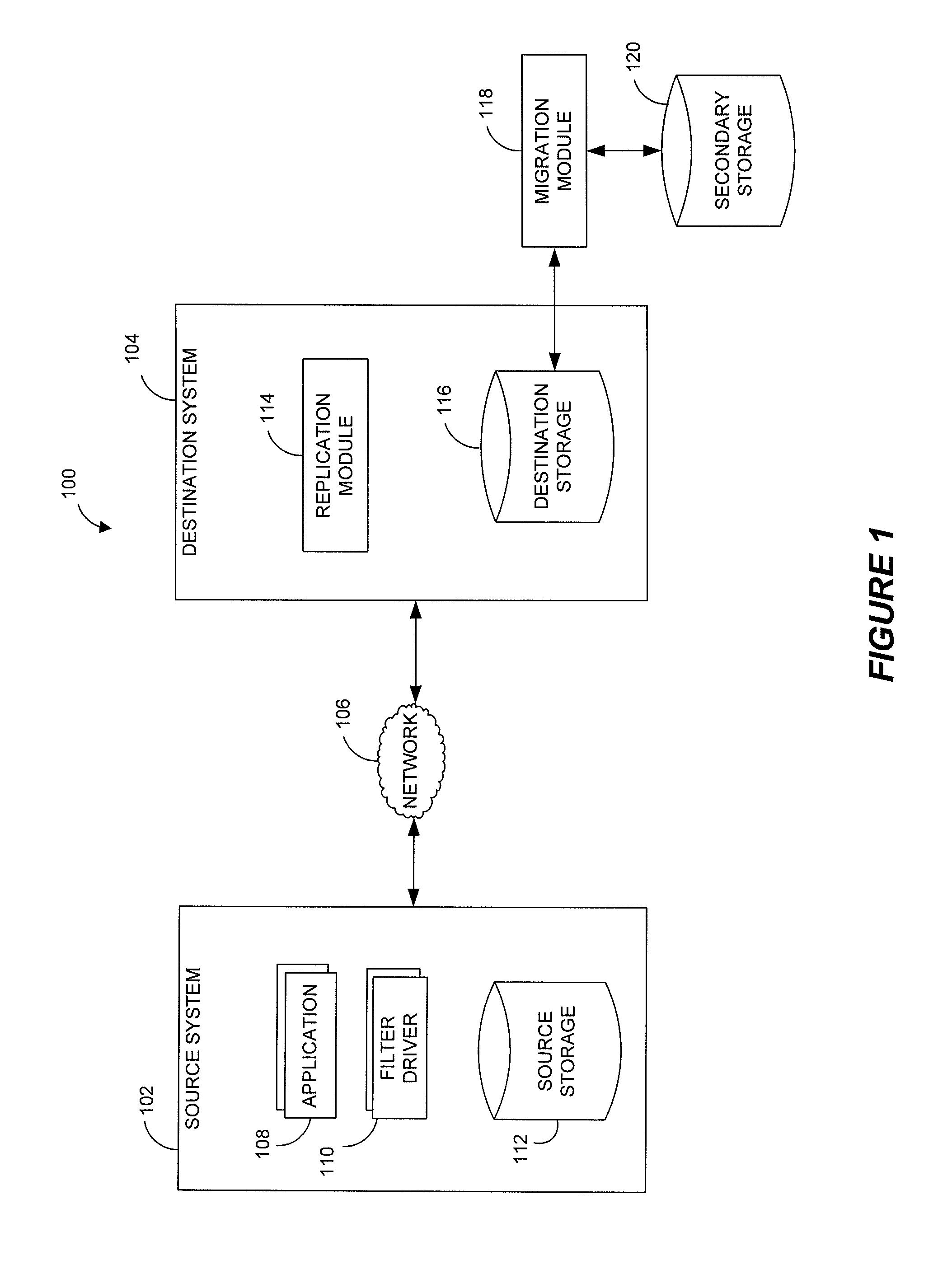

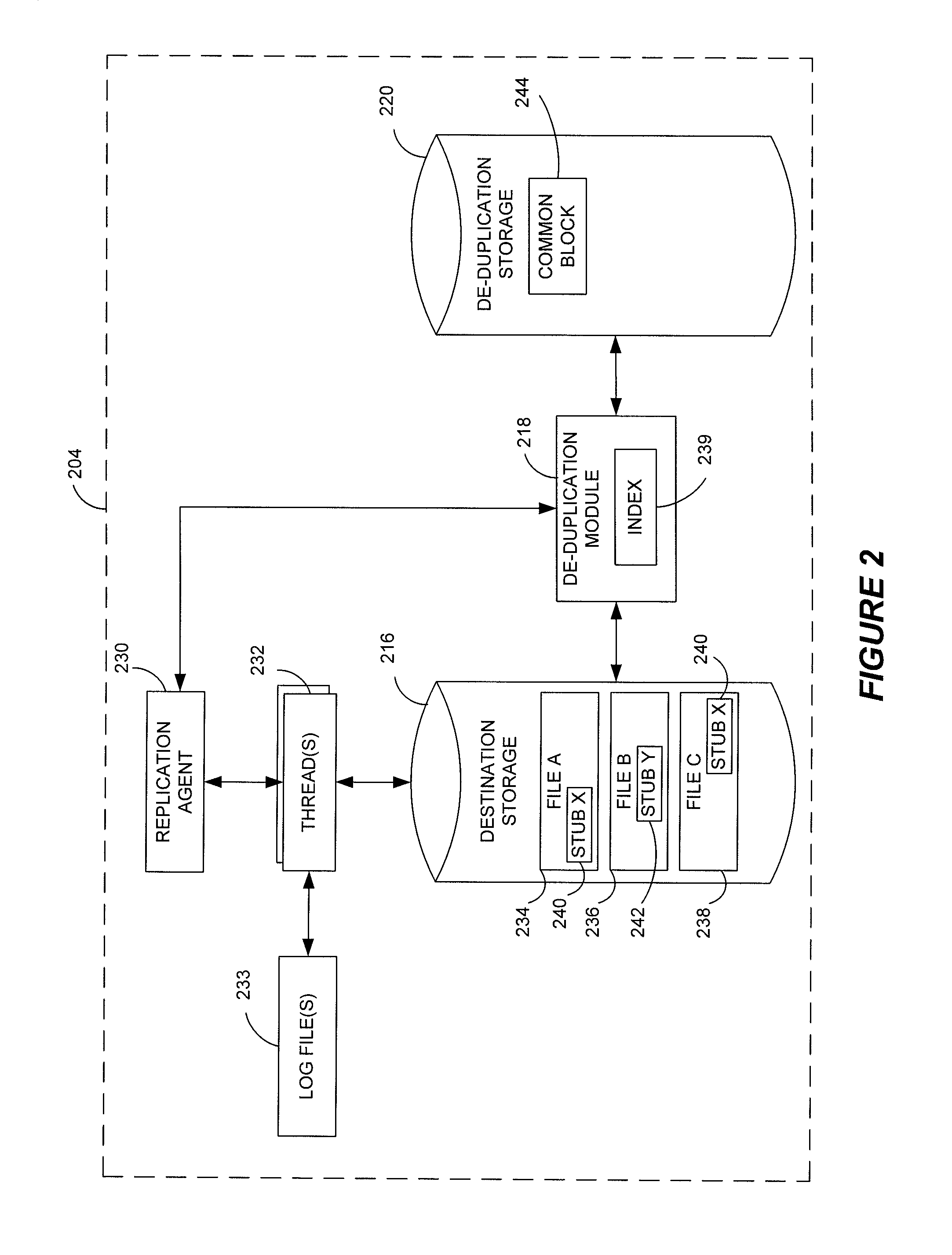

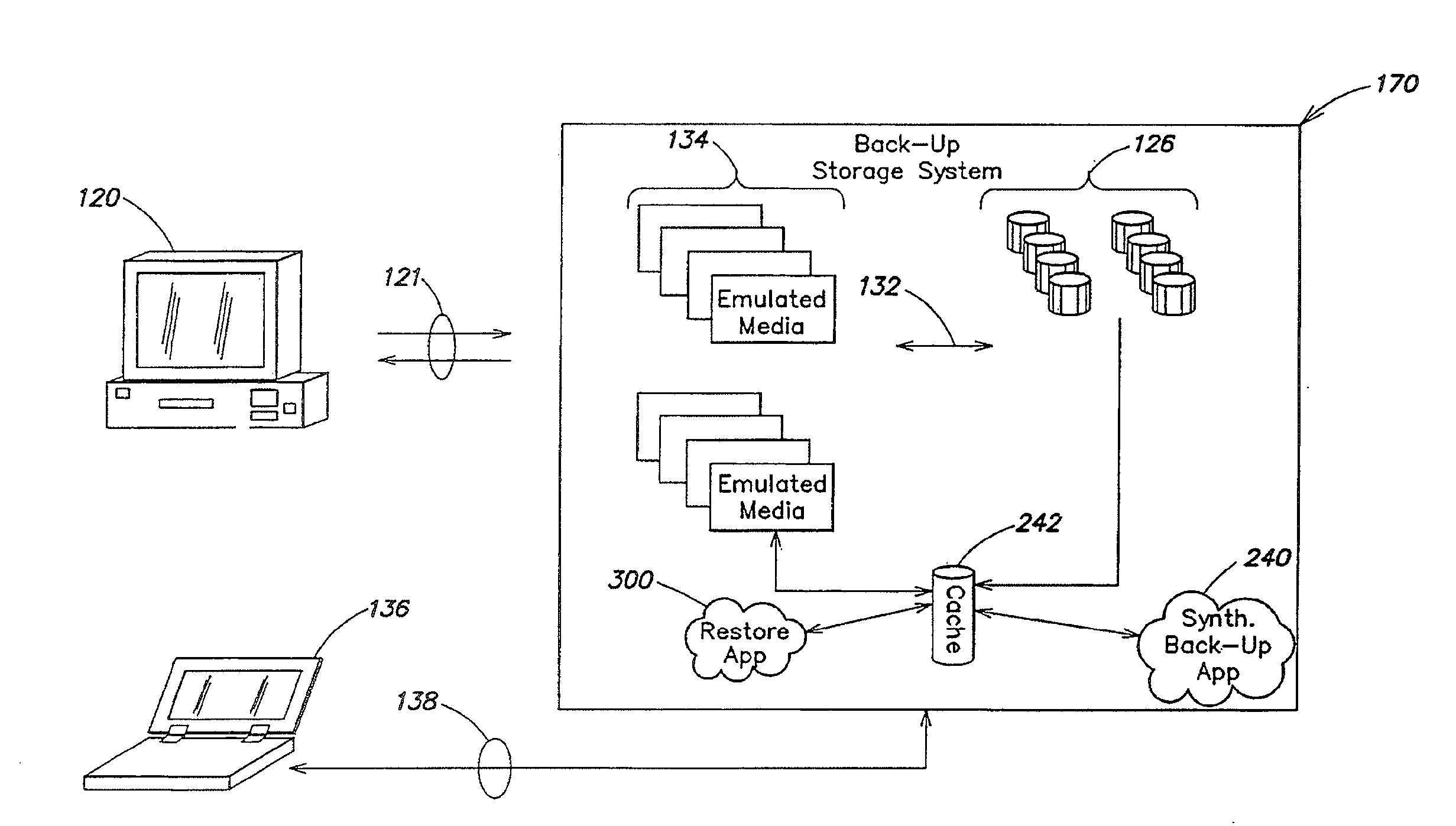

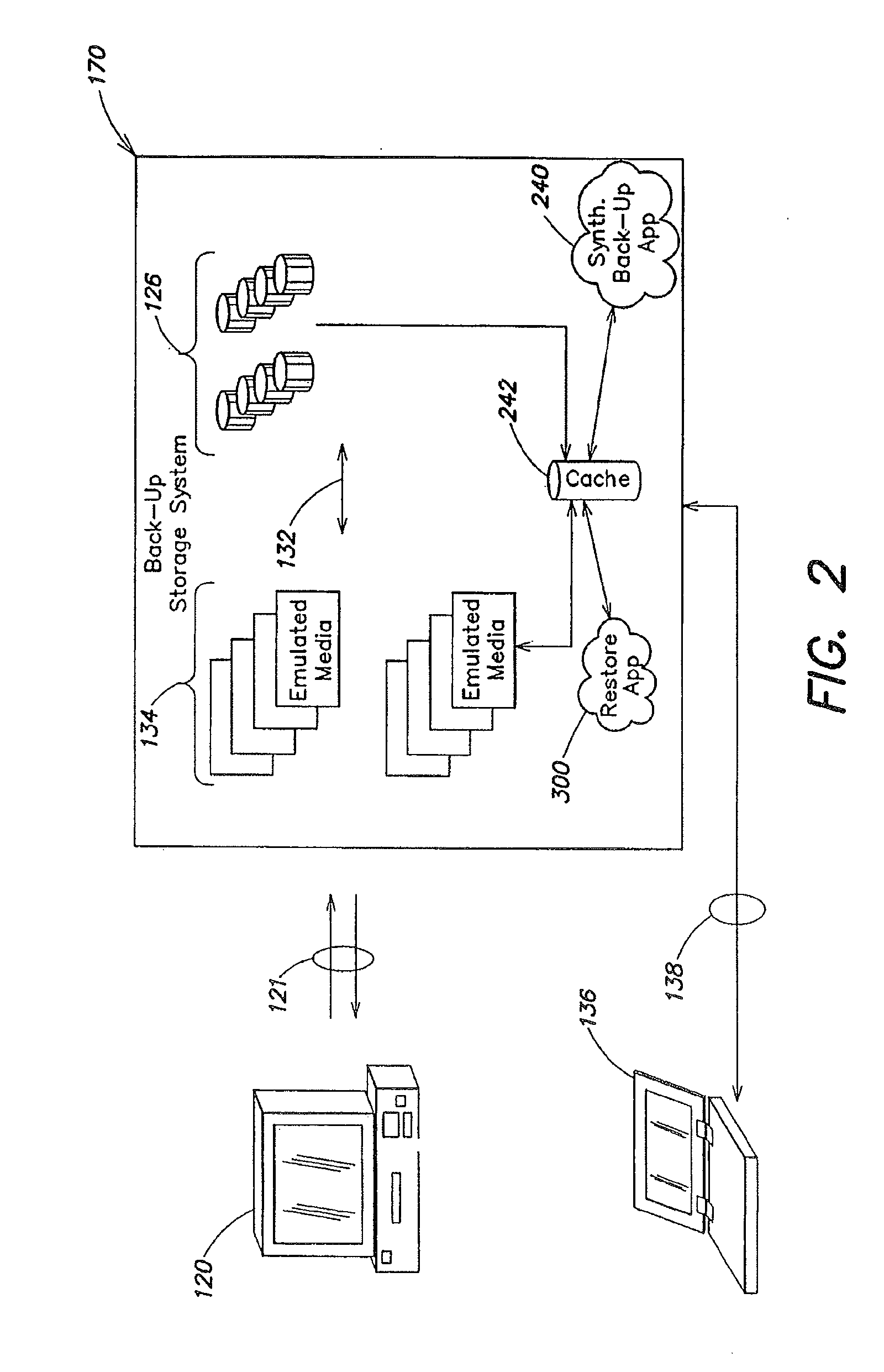

Data restore systems and methods in a replication environment

ActiveUS8352422B2Digital data information retrievalDigital data processing detailsData managementGoal system

Stubbing systems and methods are provided for intelligent data management in a replication environment, such as by reducing the space occupied by replication data on a destination system. In certain examples, stub files or like objects replace migrated, de-duplicated or otherwise copied data that has been moved from the destination system to secondary storage. Access is further provided to the replication data in a manner that is transparent to the user and / or without substantially impacting the base replication process. In order to distinguish stub files representing migrated replication data from replicated stub files, priority tags or like identifiers can be used. Thus, when accessing a stub file on the destination system, such as to modify replication data or perform a restore process, the tagged stub files can be used to recall archived data prior to performing the requested operation so that an accurate copy of the source data is generated.

Owner:COMMVAULT SYST INC

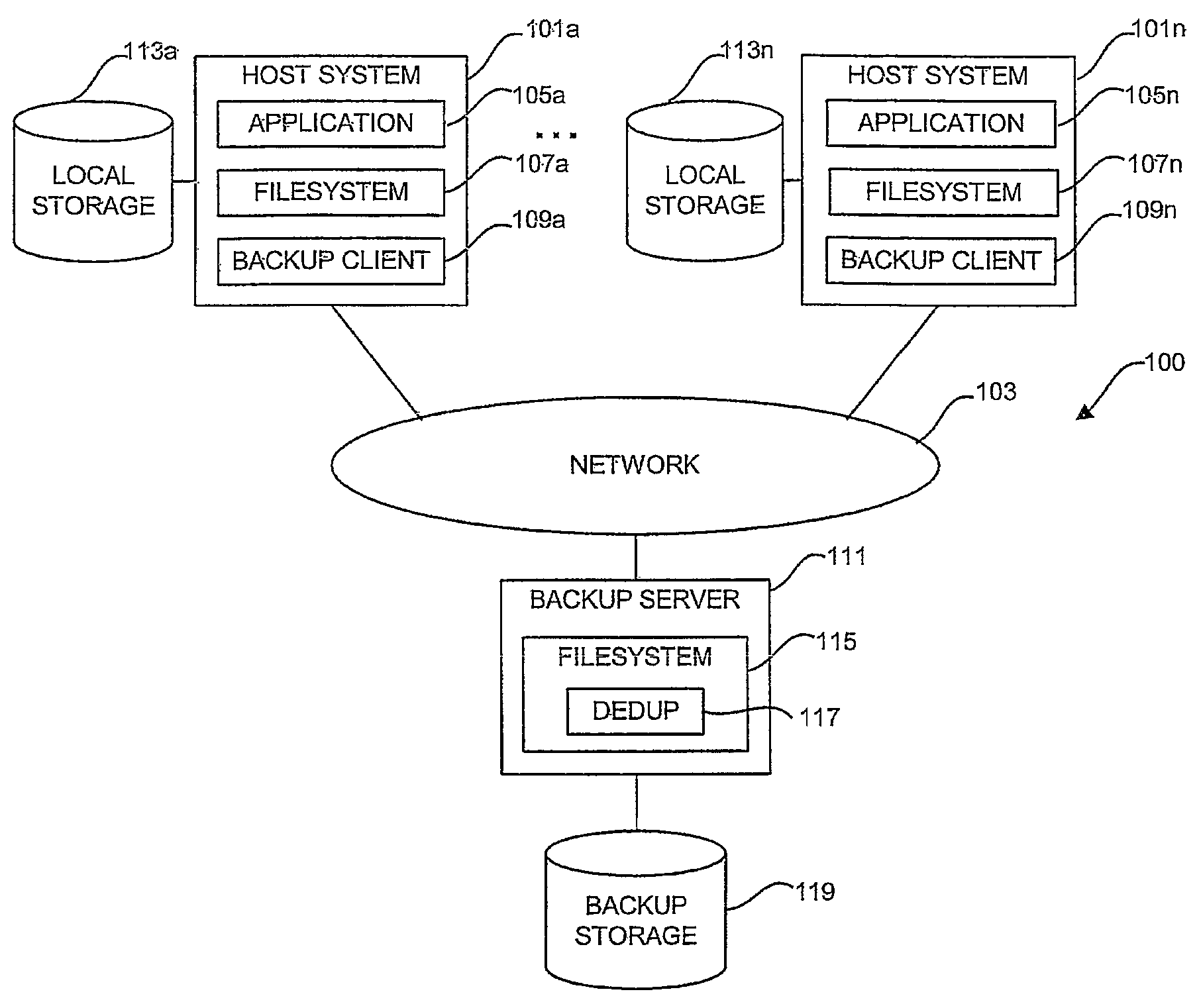

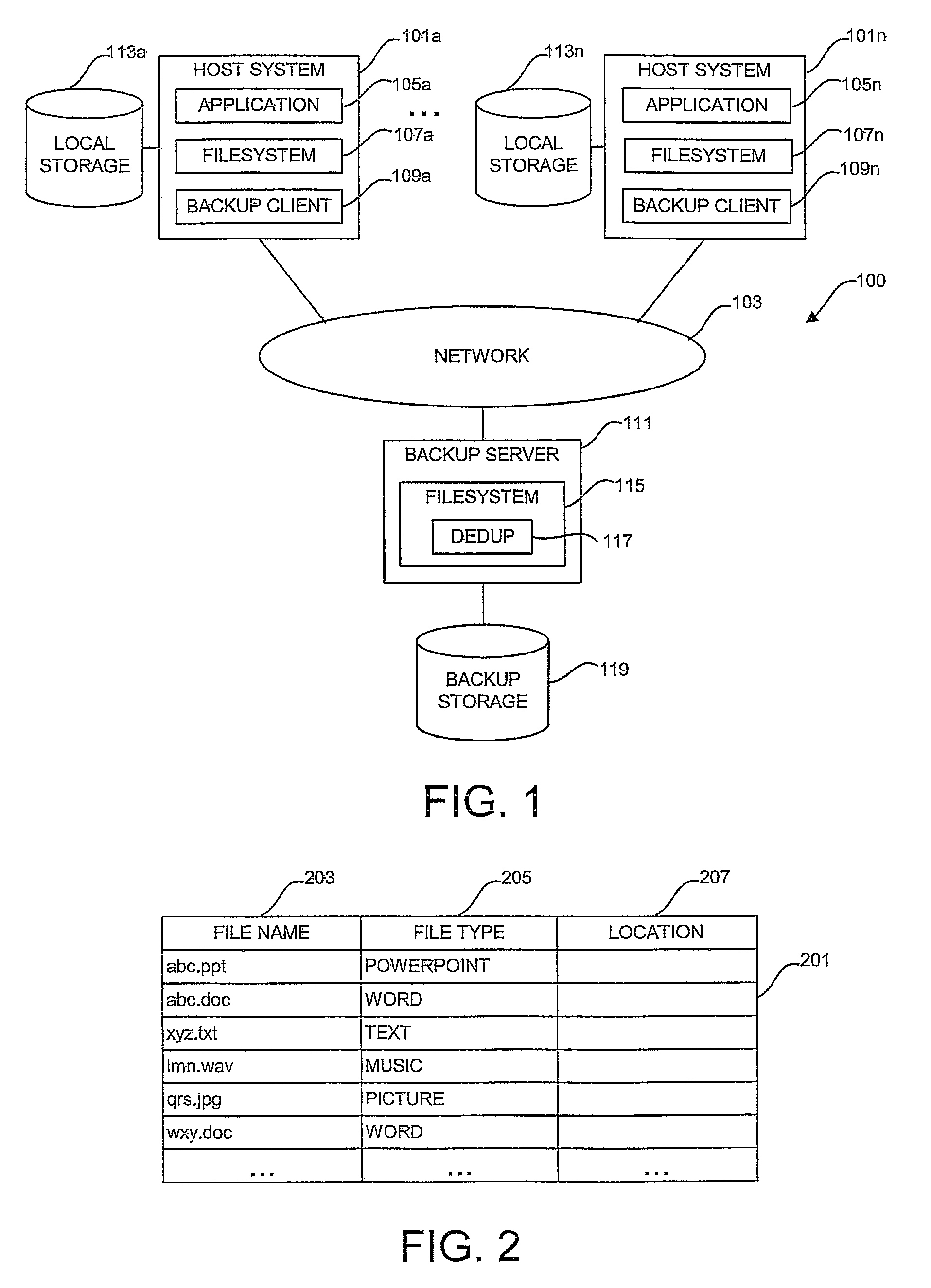

Method of and system for deduplicating backed up data in a client-server environment

InactiveUS7539710B1Data processing applicationsDigital data information retrievalClient-sideData deduplication

In a method of and a system for deduplicating backed-up data backup clients create respective backup tables comprising a list of files and respective file types to be backed up. A backup server receives backup tables from the backup clients. The backup server merges the received backup tables to form a merged backup table. The backup server sorts the merged backup table according to file type from a file type yielding a best deduplication ratio to a file type yielding a worst deduplication ratio, thereby forming a sorted backup table. The backup server requests the files listed in the sorted backup table, in order, from the backup clients. The backup server deduplicates files received from the backup clients, in order, using deduplication parameters optimized according to file type. The method calculates an updated deduplication ratio for each deduplicated file type. Examples of deduplication parameters include chunking techniques and hashing techniques.

Owner:IBM CORP

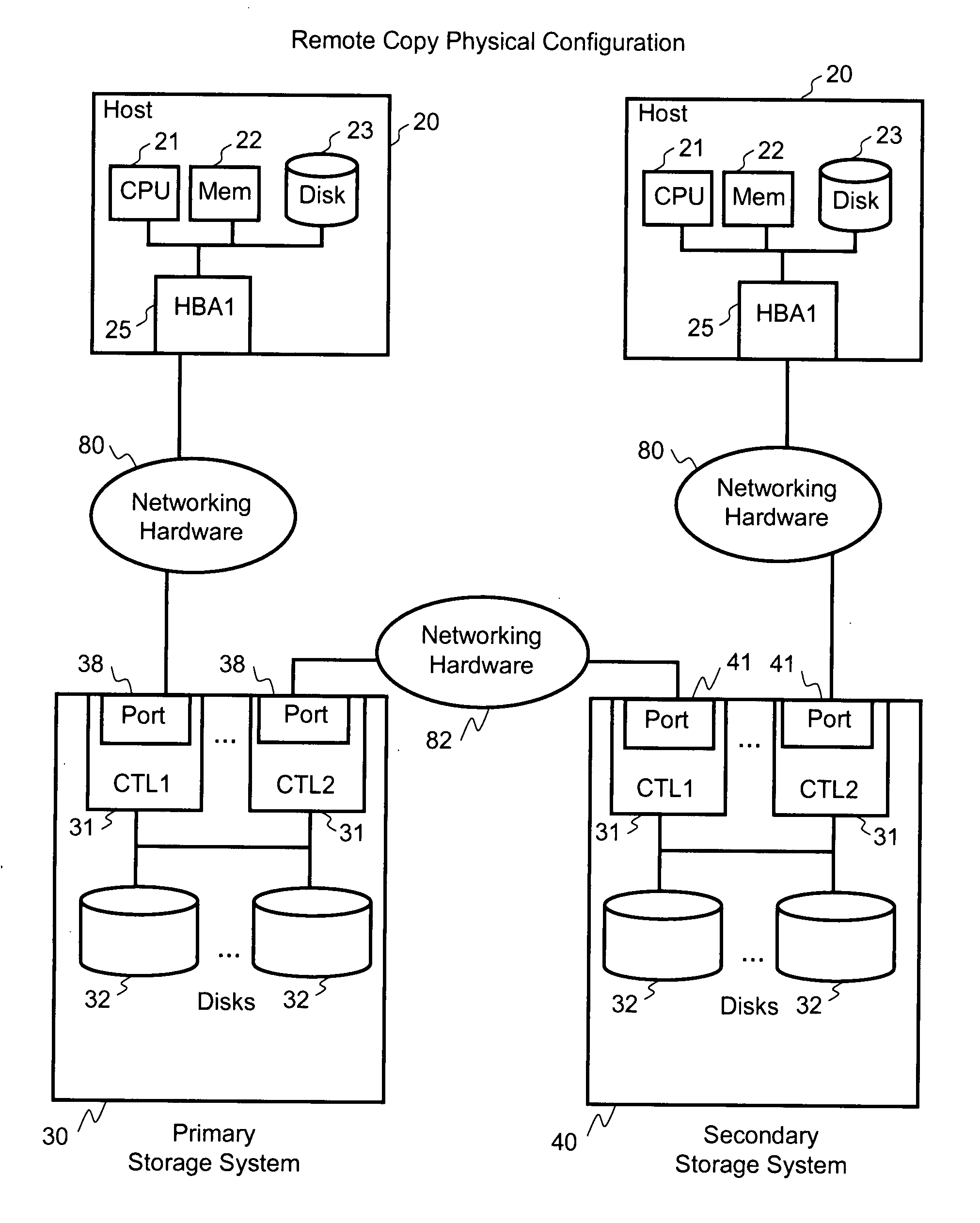

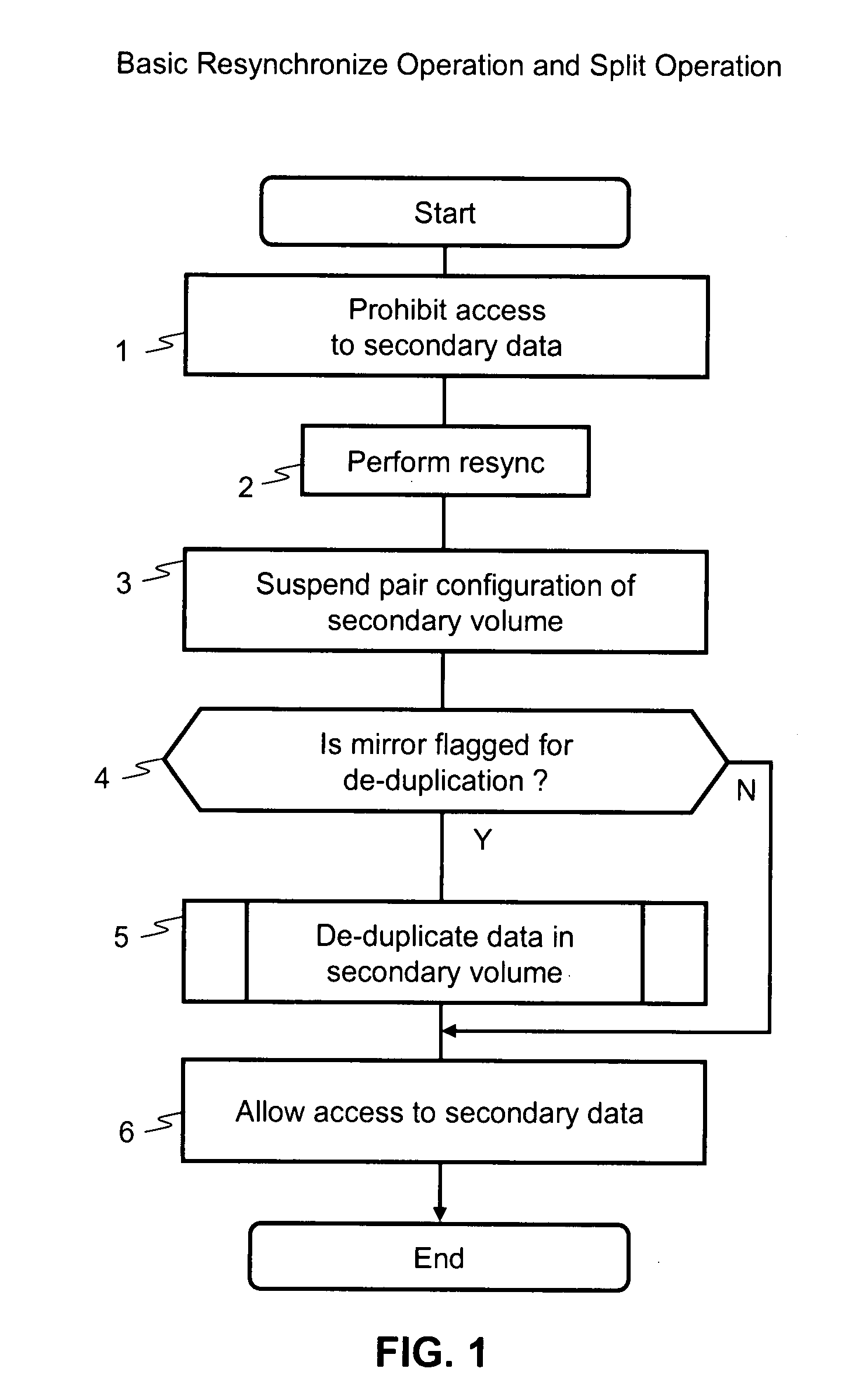

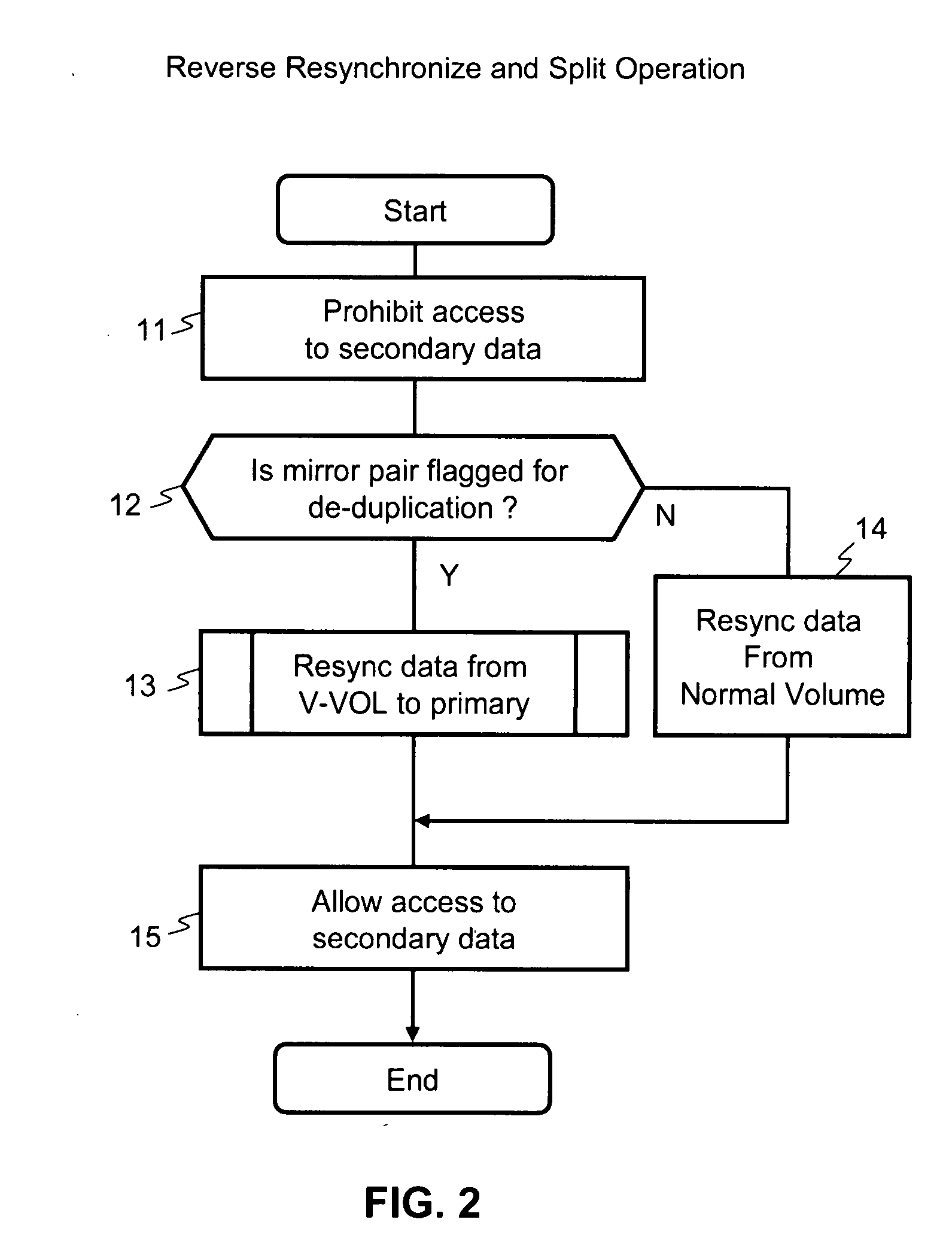

Method and apparatus for de-duplication after mirror operation

InactiveUS20080244172A1Digital data information retrievalError detection/correctionData storingData store

An amount of storage capacity used during mirroring operations is reduced by applying de-duplication operations to the mirror volumes. Data stored to a first volume is mirrored to a second volume. The second volume is a virtual volume having a plurality of logical addresses, such that segments of physical storage capacity are allocated for a specified logical address as needed when data is stored to the specified logical address. A de-duplication operation is carried out on the second volume following a split from the first volume. A particular segment of the second volume is identified as having data that is the same as another segment in the second volume or in the same consistency group. A link is created from the particular segment to the other segment and the particular segment is released from the second volume so that physical storage capacity required for the second volume is reduced.

Owner:HITACHI LTD

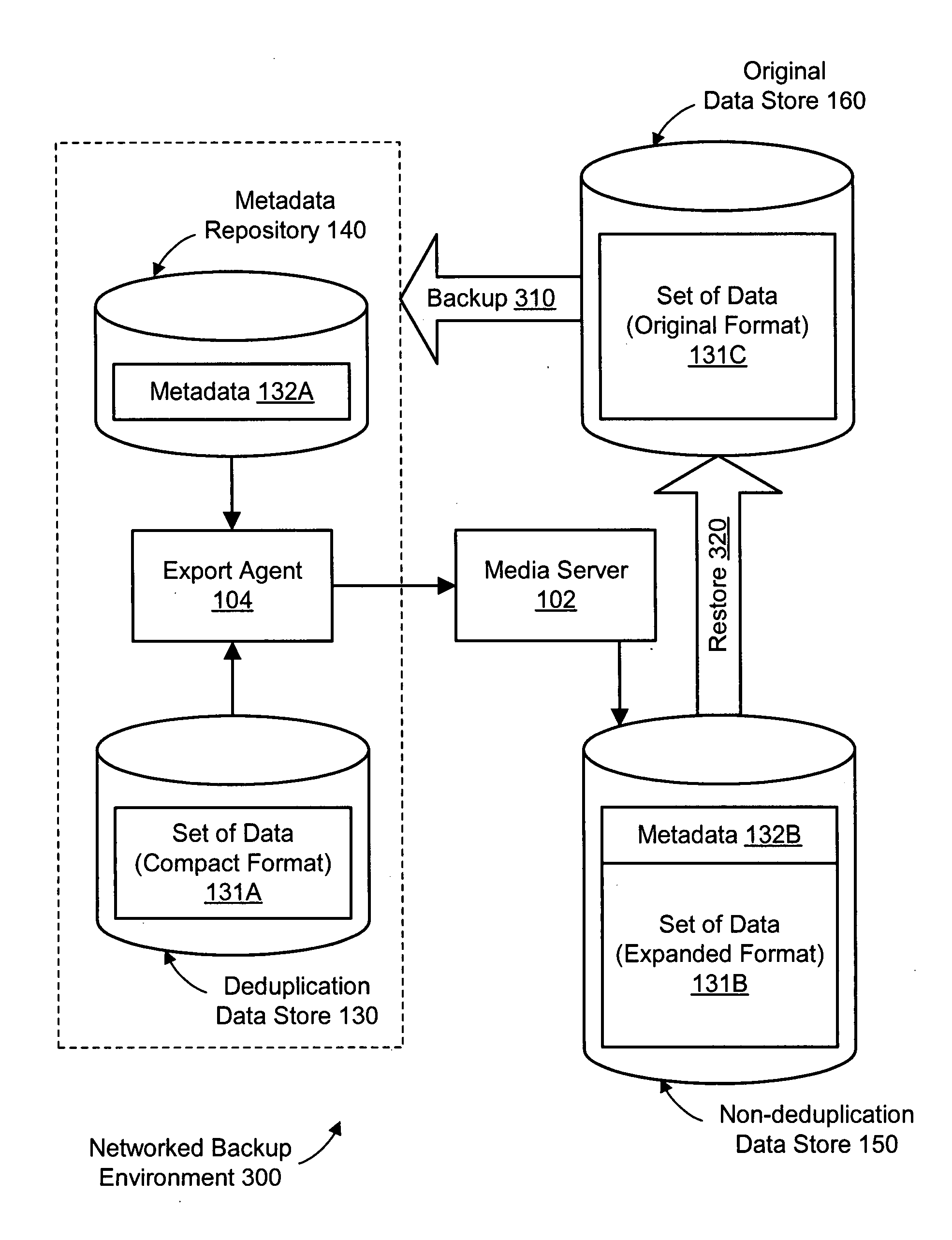

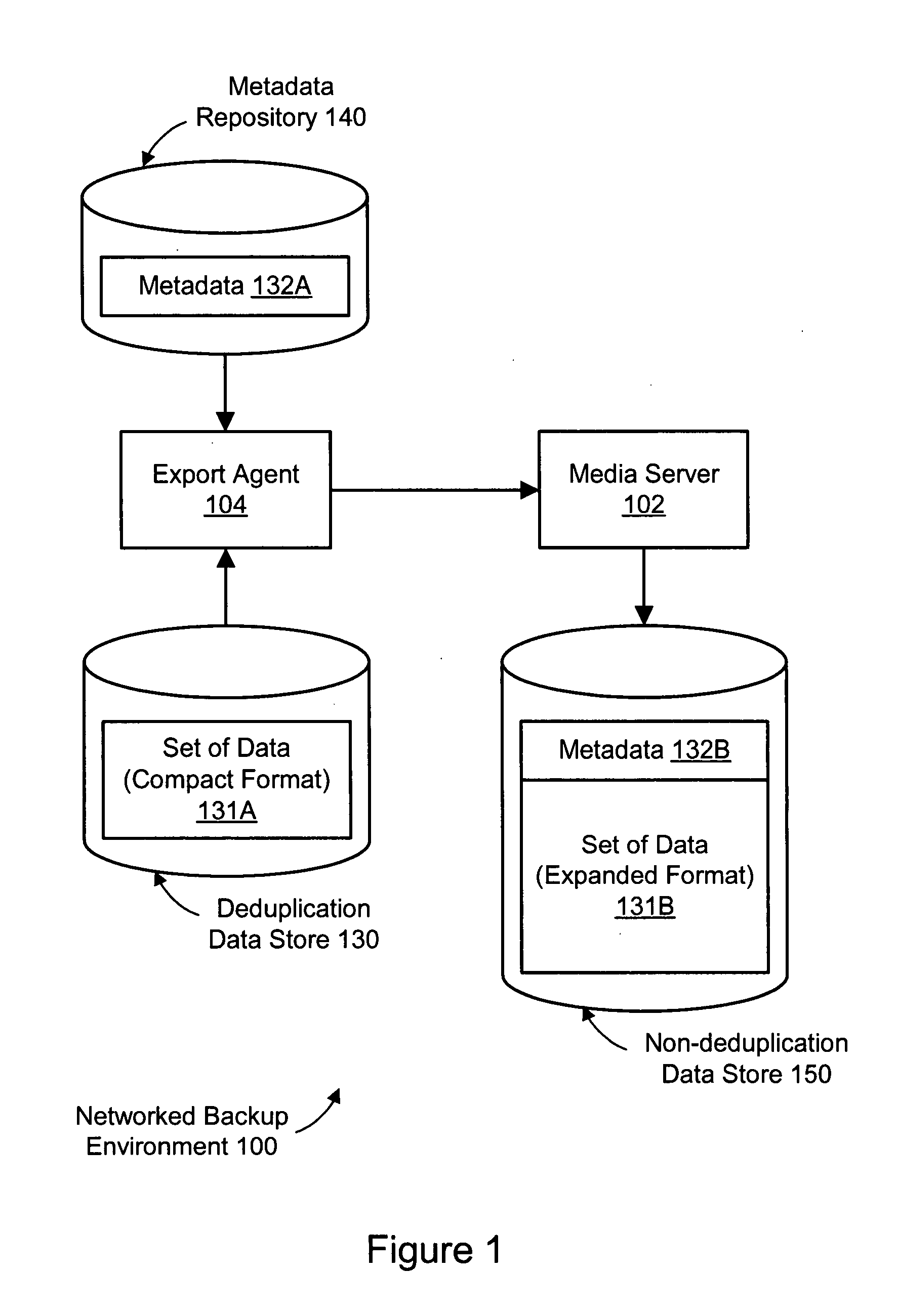

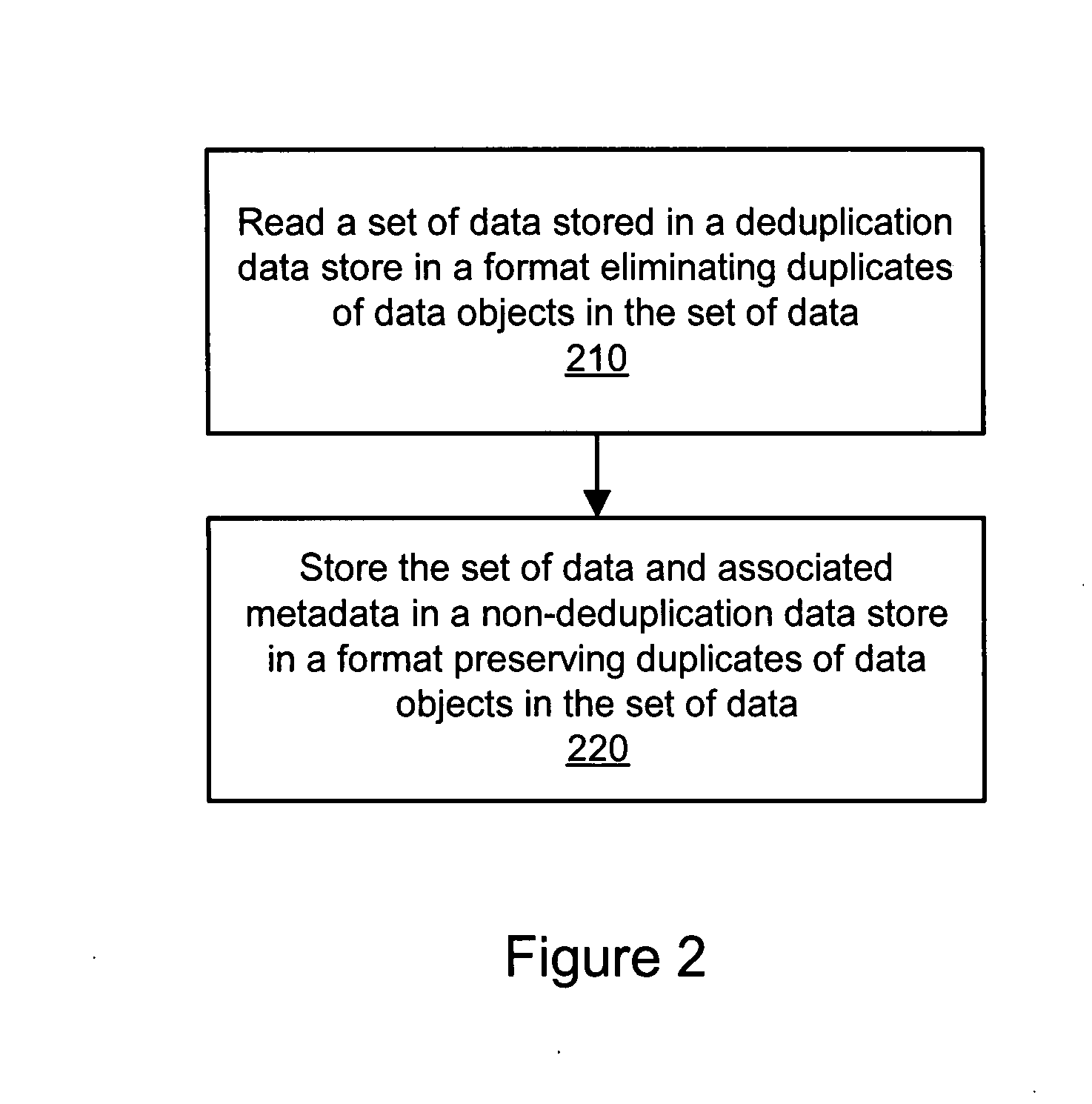

System and method for exporting data directly from deduplication storage to non-deduplication storage

InactiveUS20080243769A1Error detection/correctionSpecial data processing applicationsData setData storing

A method, system, and computer-readable storage medium are disclosed for exporting data from a deduplication data store to a non-deduplication data store. A set of data may be stored in the deduplication data store in a format eliminating one or more duplicates of data objects in the set of data. The set of data in the deduplication data store may be stored separately from metadata describing the set of data. The set of data stored in the deduplication data store may be read. The set of data read from the deduplication data store and the metadata may be stored in a non-deduplication data store. In the non-deduplication data store, the set of data is stored in a format preserving the one or more duplicates of data objects in the set of data.

Owner:SYMANTEC CORP

Methods and apparatus for deduplication in storage system

InactiveUS20090132619A1Eliminate the problemError detection/correctionDigital data processing detailsData deduplicationSoftware

In one implementation, a storage system comprises host computers, a management terminal and a storage system having block interface to communicate with the host computers / clients. The storage system also incorporates a deduplication capability using chunks (divided storage area). The storage system maintains a threshold (upper limit) with respect to the degree of deduplication (i.e. number of virtual data for one real data) specified by users or the management software. The storage system counts the number of links for each chunk and does not perform deduplication when the number of reduced data for a chunk exceeds the threshold, even if duplication is detected. In another implementation, the storage system additionally incorporates a data migration capability and migrates physical data to high reliability area such as area protected with double parity (i.e. RAID6) when the deduplication level for a chunk exceeds the threshold.

Owner:HITACHI LTD

Scalable de-duplication mechanism

ActiveUS20090182789A1Improve efficacyImprove scalabilityDigital data processing detailsError detection/correctionProtocol ApplicationData deduplication

A method for removing redundant data from a backup storage system is presented. In one example, the method may include receiving the application layer data object, selecting a de-duplication domain from a plurality of de-duplication domains based at least in part on a data object characteristic associated with the de-duplication domain, determining that the application layer data object has the characteristic and directing the application layer data object to the de-duplication domain.

Owner:HITACHI VANTARA LLC

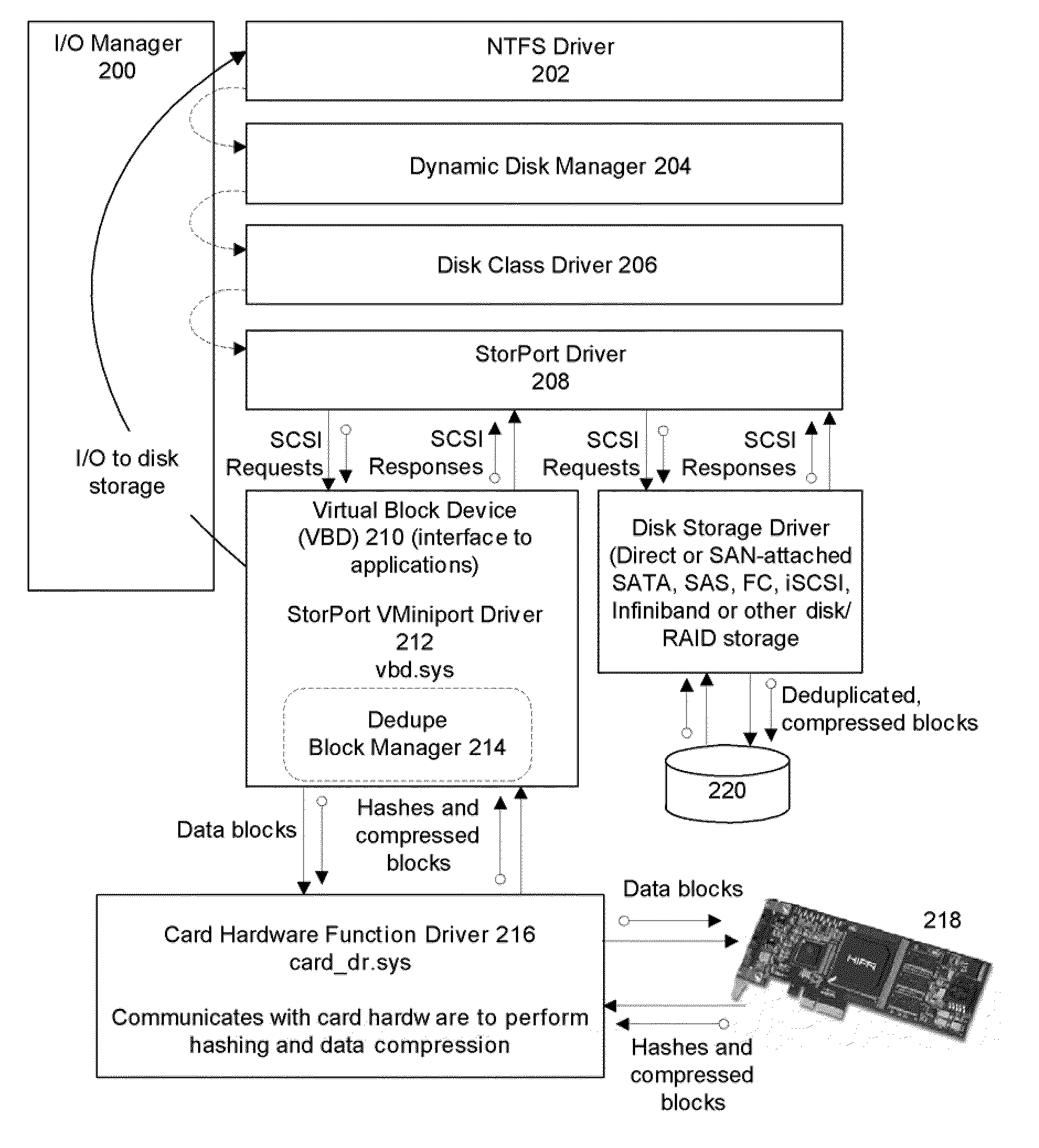

System and method for data deduplication

ActiveUS20100250896A1Digital data information retrievalError detection/correctionComputer hardwareData deduplication

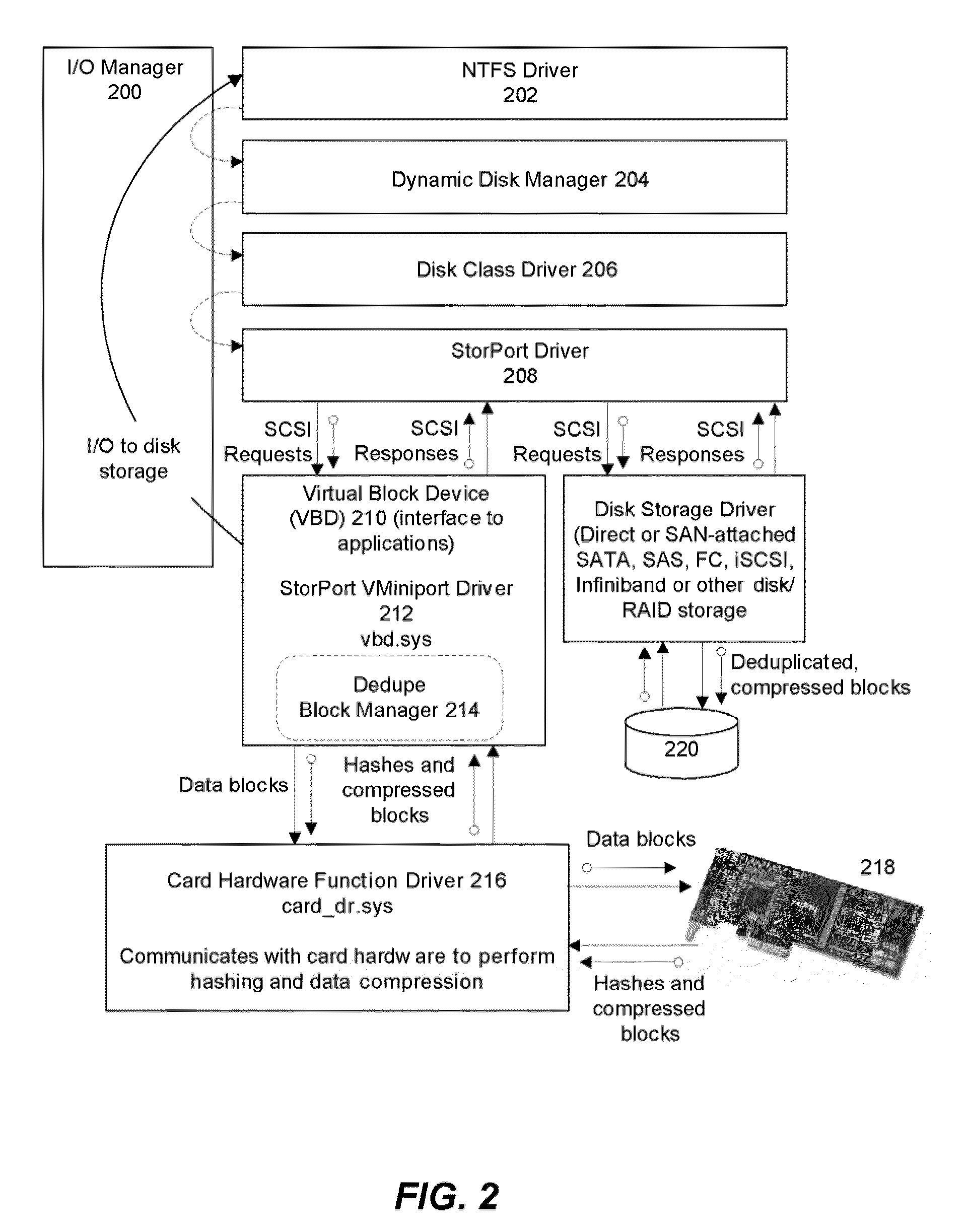

A system for deduplicating data comprises a card operable to receive at least one data block and a processor on the card that generates a hash for each data block. The system further comprises a first module that determines a processing status for the hash and a second module that discards duplicate hashes and their data blocks and writes unique hashes and their data blocks to a computer readable medium. In one embodiment, the processor also compresses each data block using a compression algorithm.

Owner:EXAR CORP

Storage management through adaptive deduplication

ActiveUS20100250501A1Digital data processing detailsMemory adressing/allocation/relocationStorage managementData deduplication

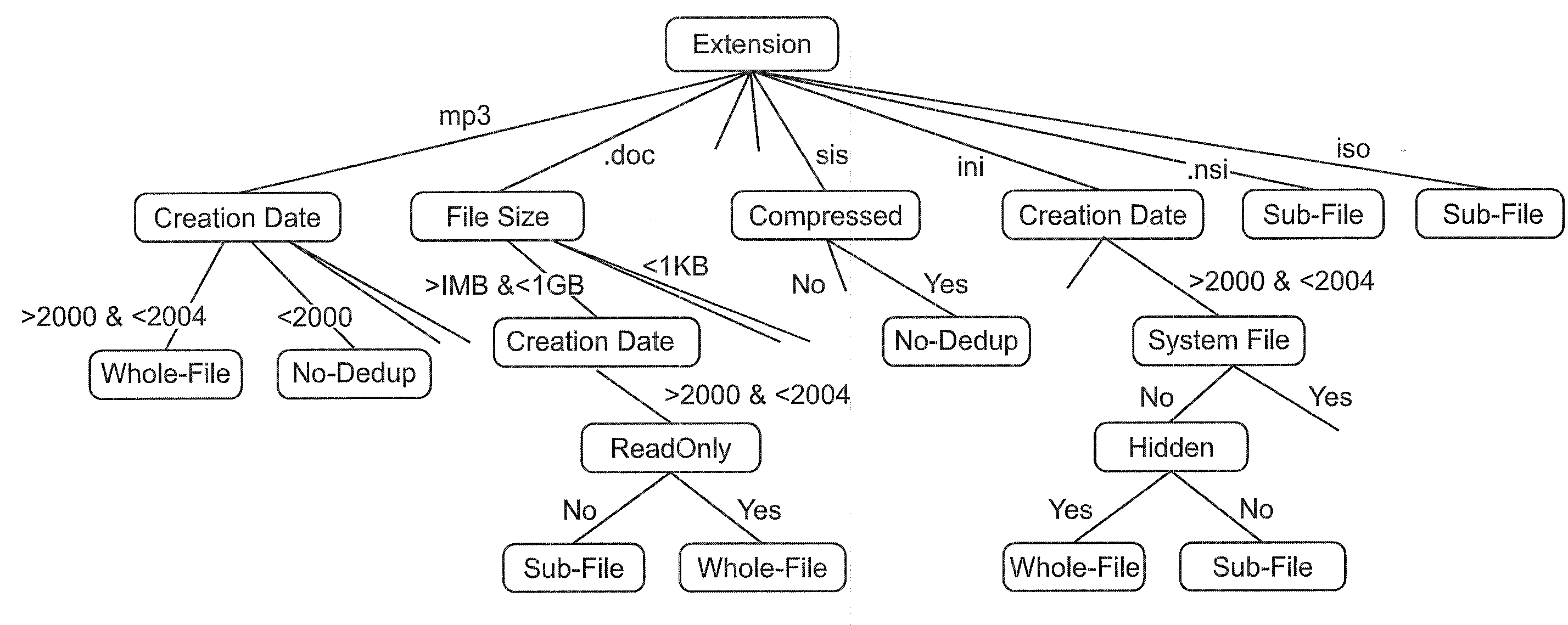

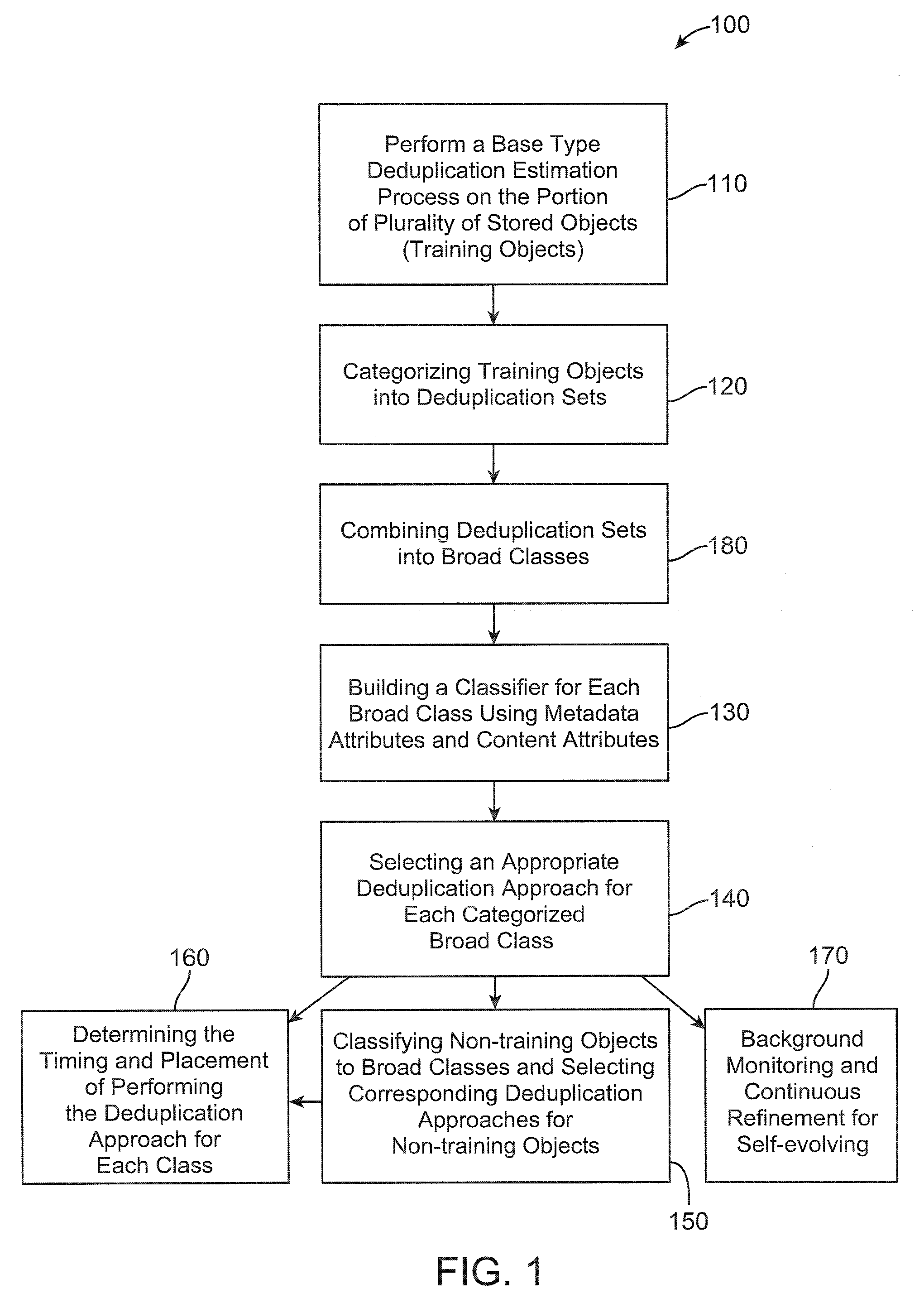

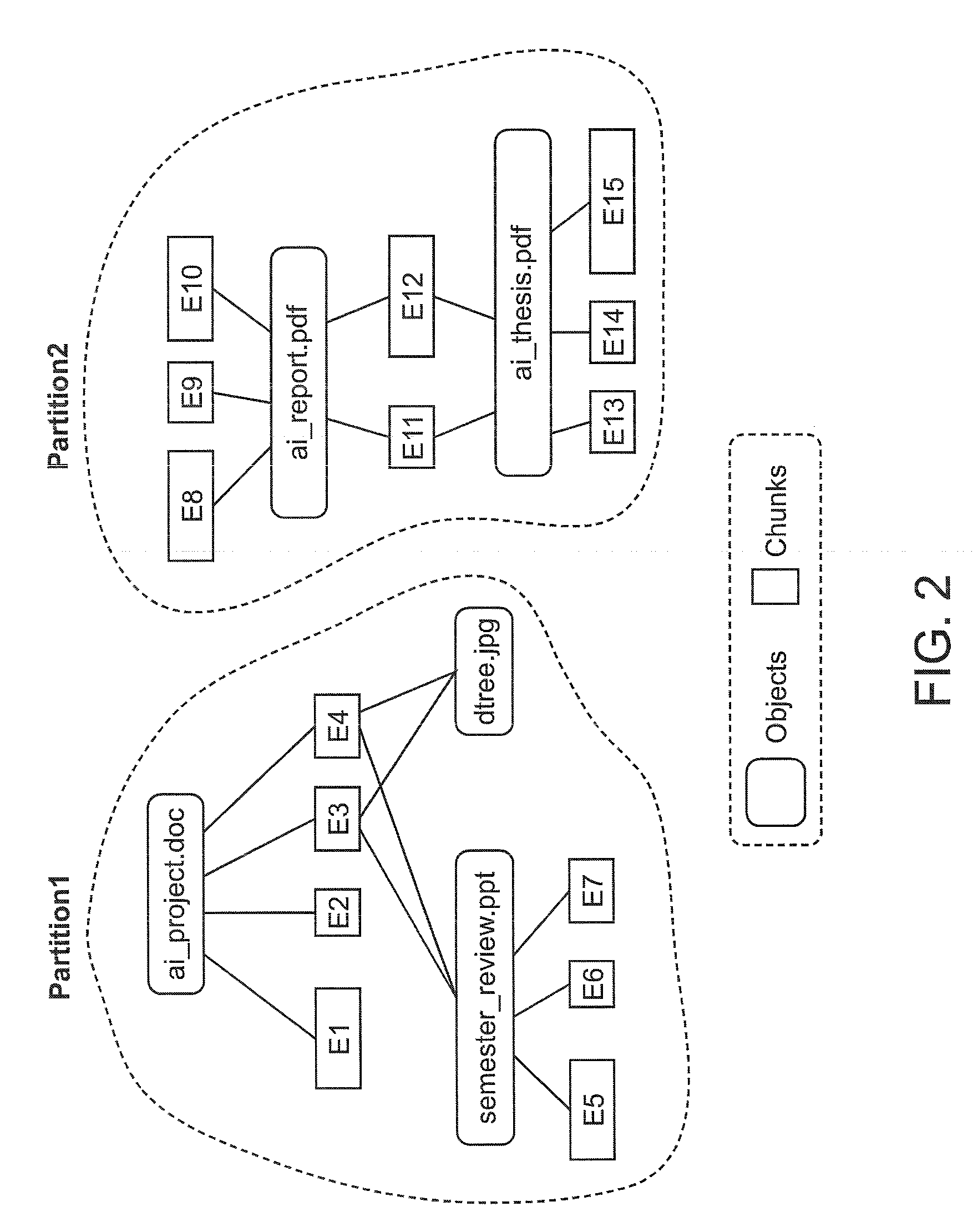

One embodiment retrieves a first portion of a plurality of stored objects from at least one storage device. The embodiment further performs a base type deduplication estimation process on the first portion of stored objects. The embodiment still further categorizes the first portion of the plurality of stored objects into deduplication sets based on a deduplication relationship of each object of the plurality of stored objects with each of the estimated first plurality of deduplication chunk portions. The embodiment further combines deduplication sets into broad classes based on deduplication characteristics of the objects in the deduplication sets. The embodiment still further classifies a second portion of the plurality of stored objects into broad classes using classifiers. The embodiment further selects an appropriate deduplication approach for each categorized class.

Owner:DAEDALUS BLUE LLC

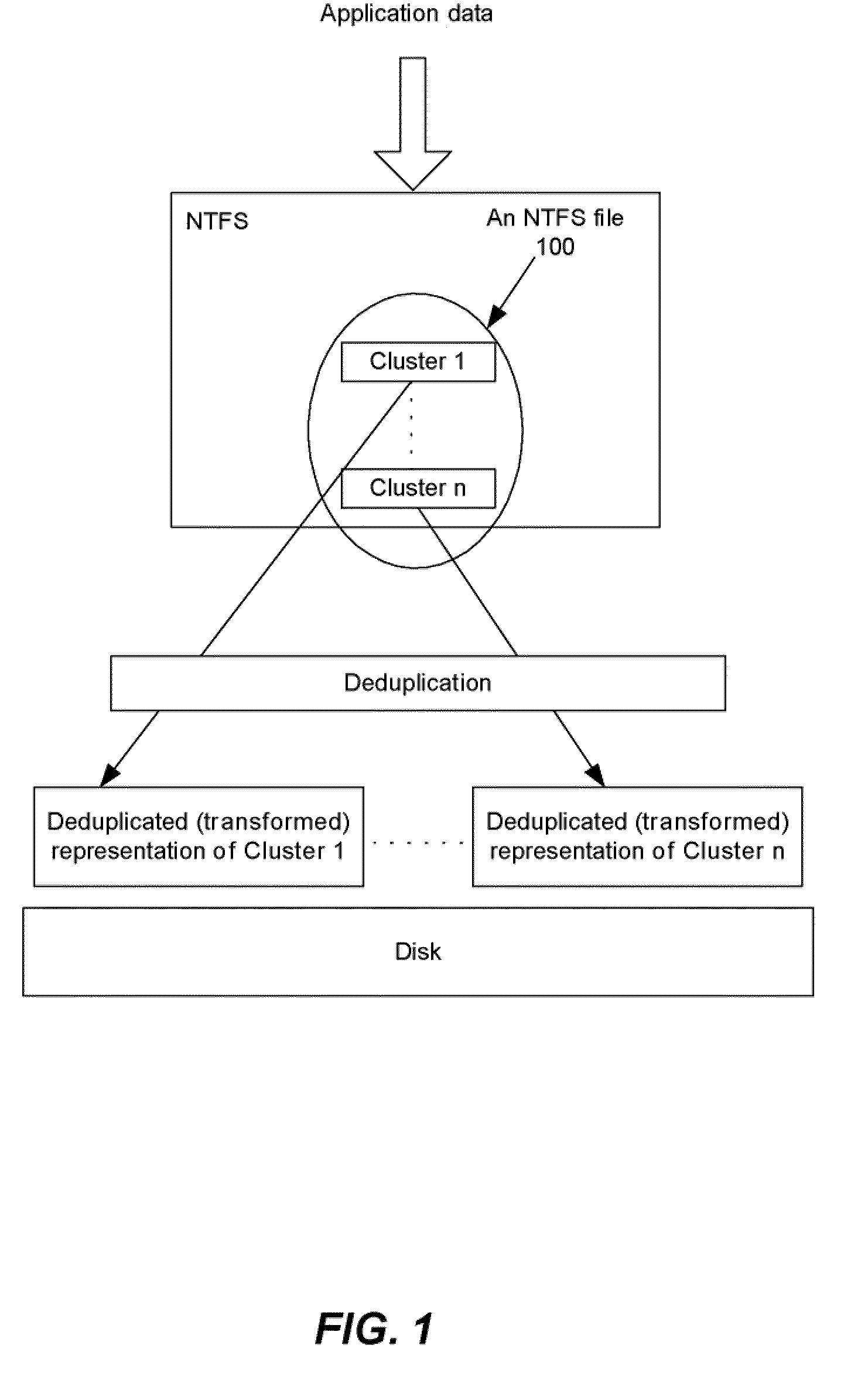

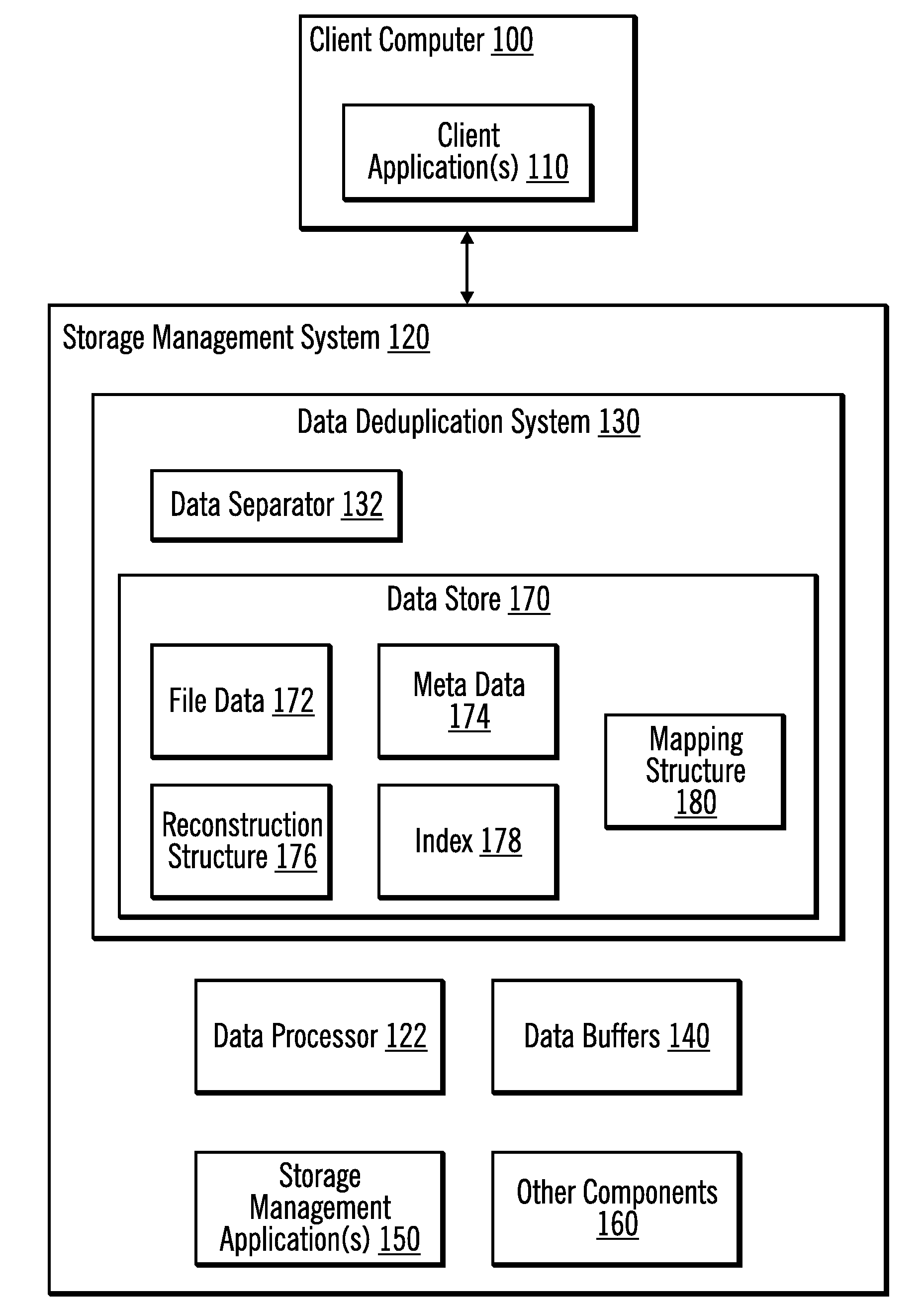

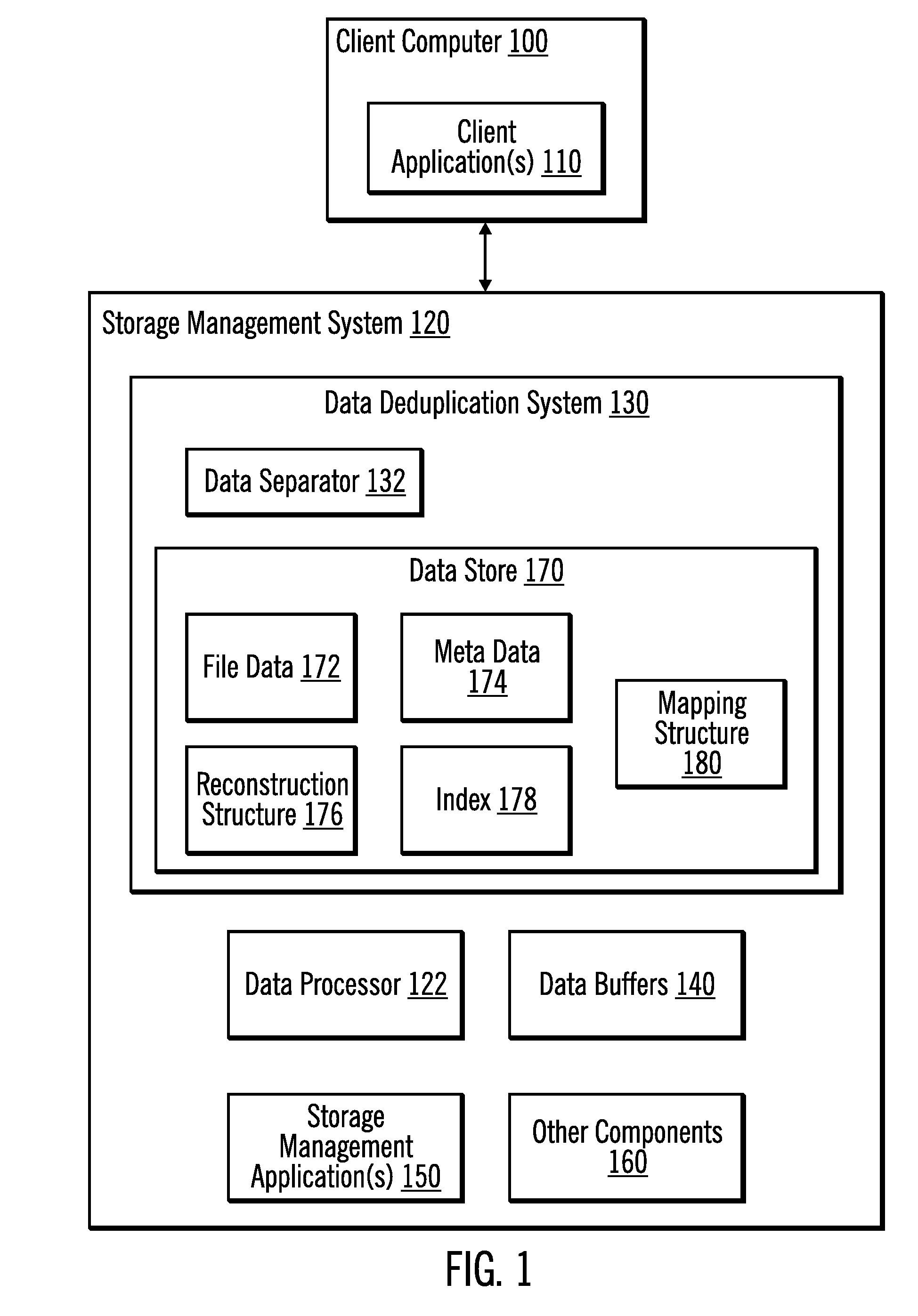

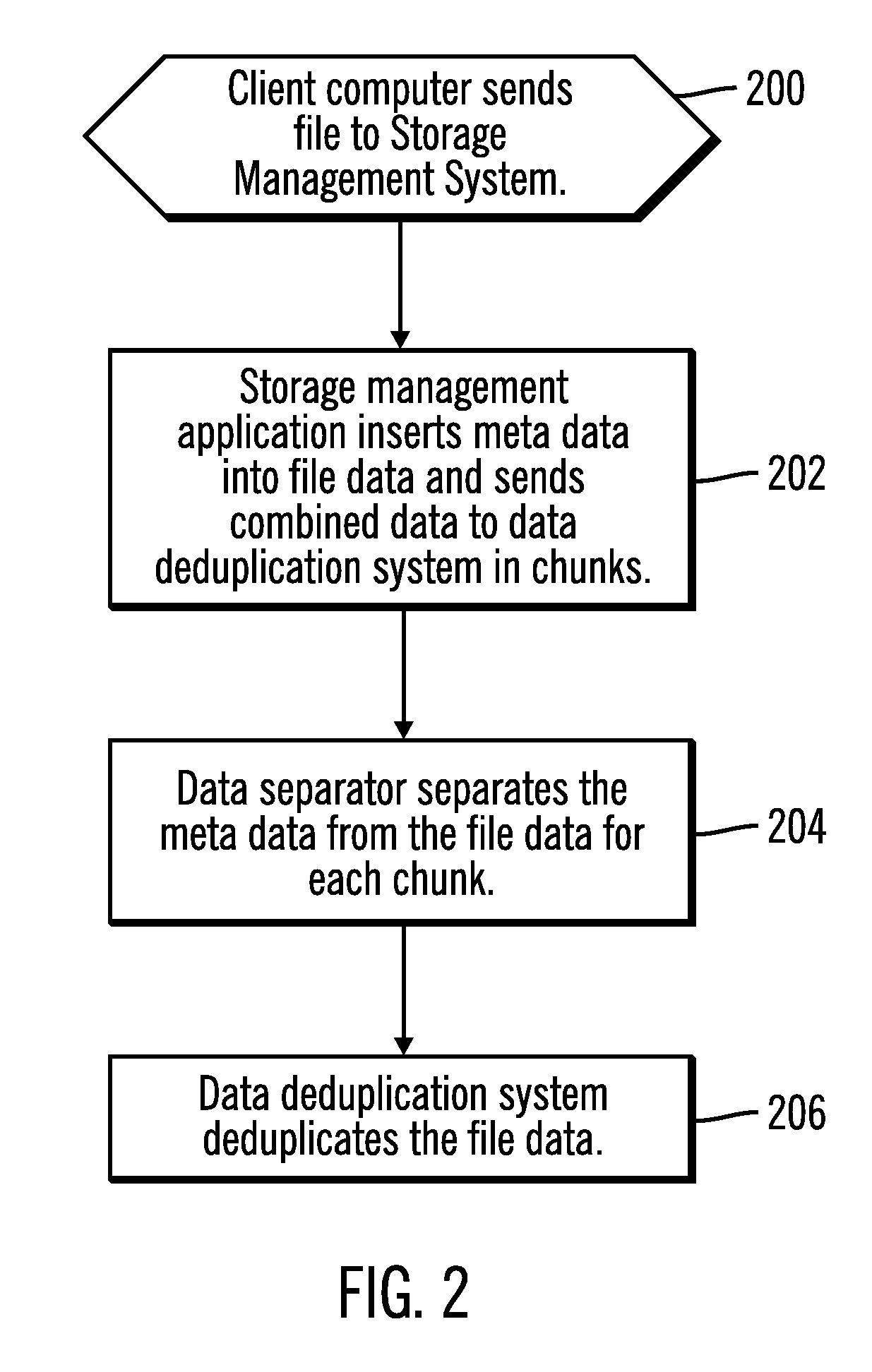

Data deduplication by separating data from meta data

InactiveUS20090171888A1Digital data information retrievalDigital data processing detailsData streamData store

Provided are techniques for data deduplication. A chunk of data and a mapping of boundaries between file data and meta data in the chunk of data are received. The mapping is used to split the chunk of data into a file data stream and a meta data stream and to store file data from the file data stream in a first file and to store meta data from the meta data stream in a second file, wherein the first file and the second file are separate files. The file data in the first file is deduplicated.

Owner:IBM CORP

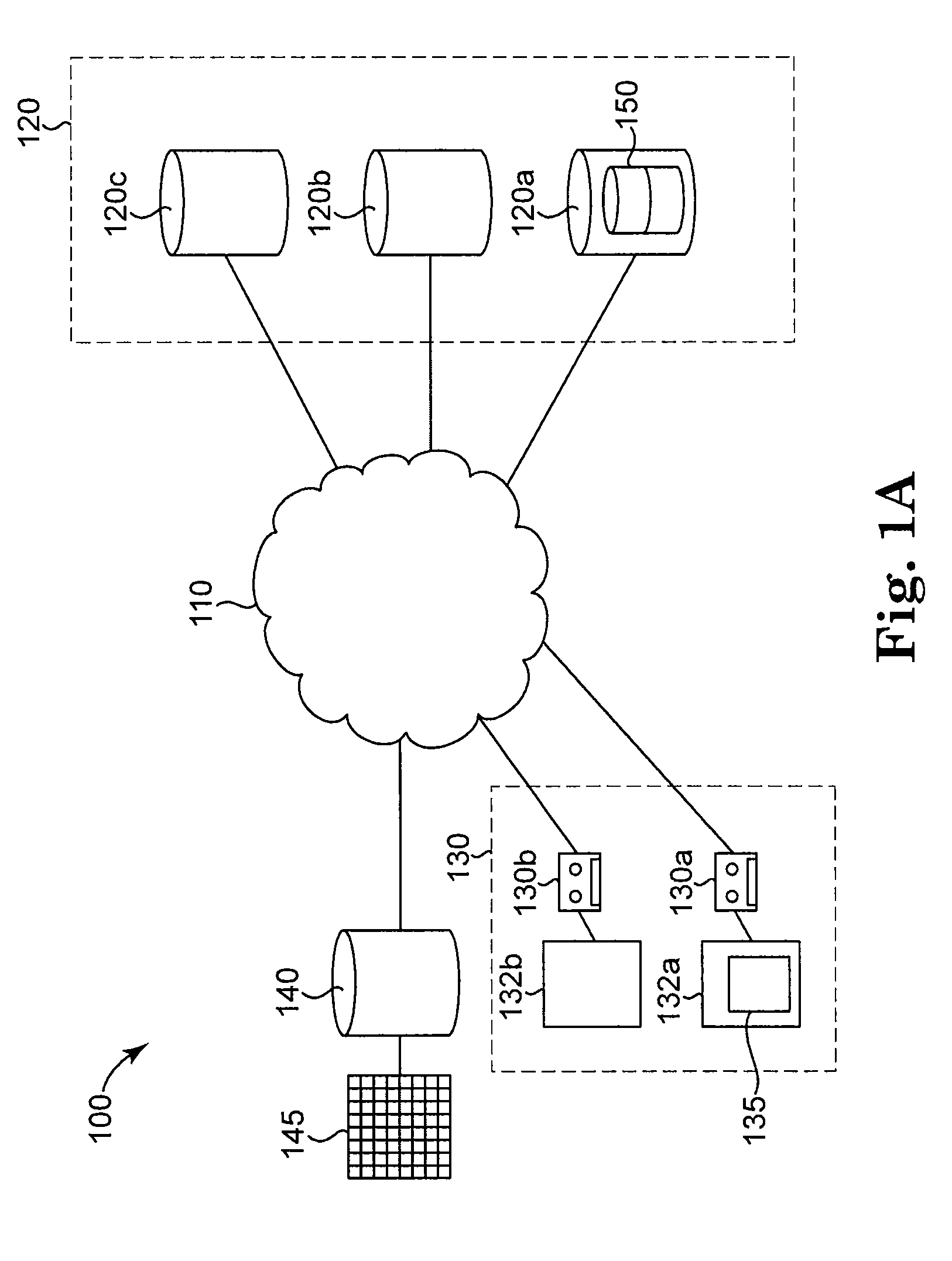

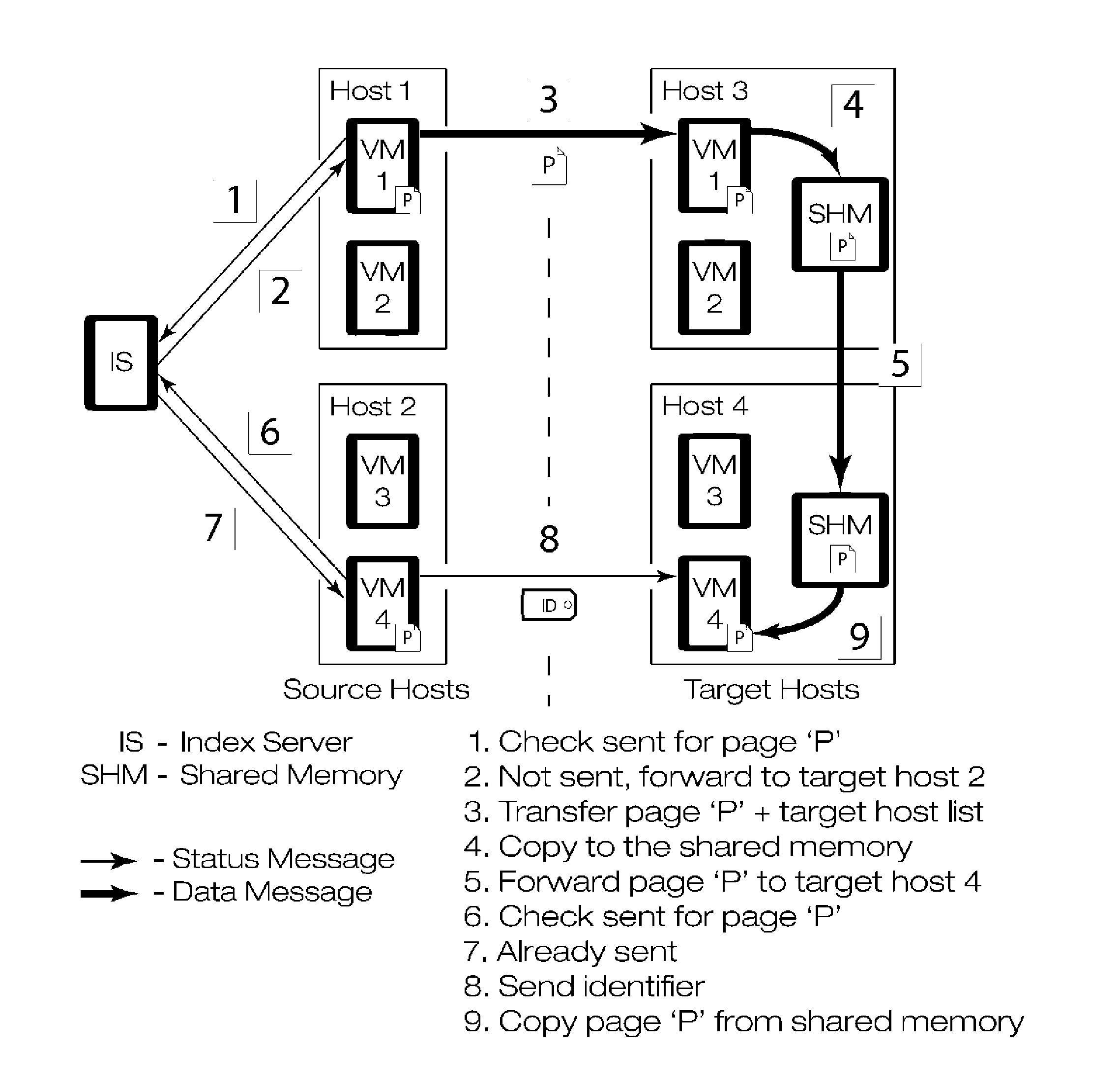

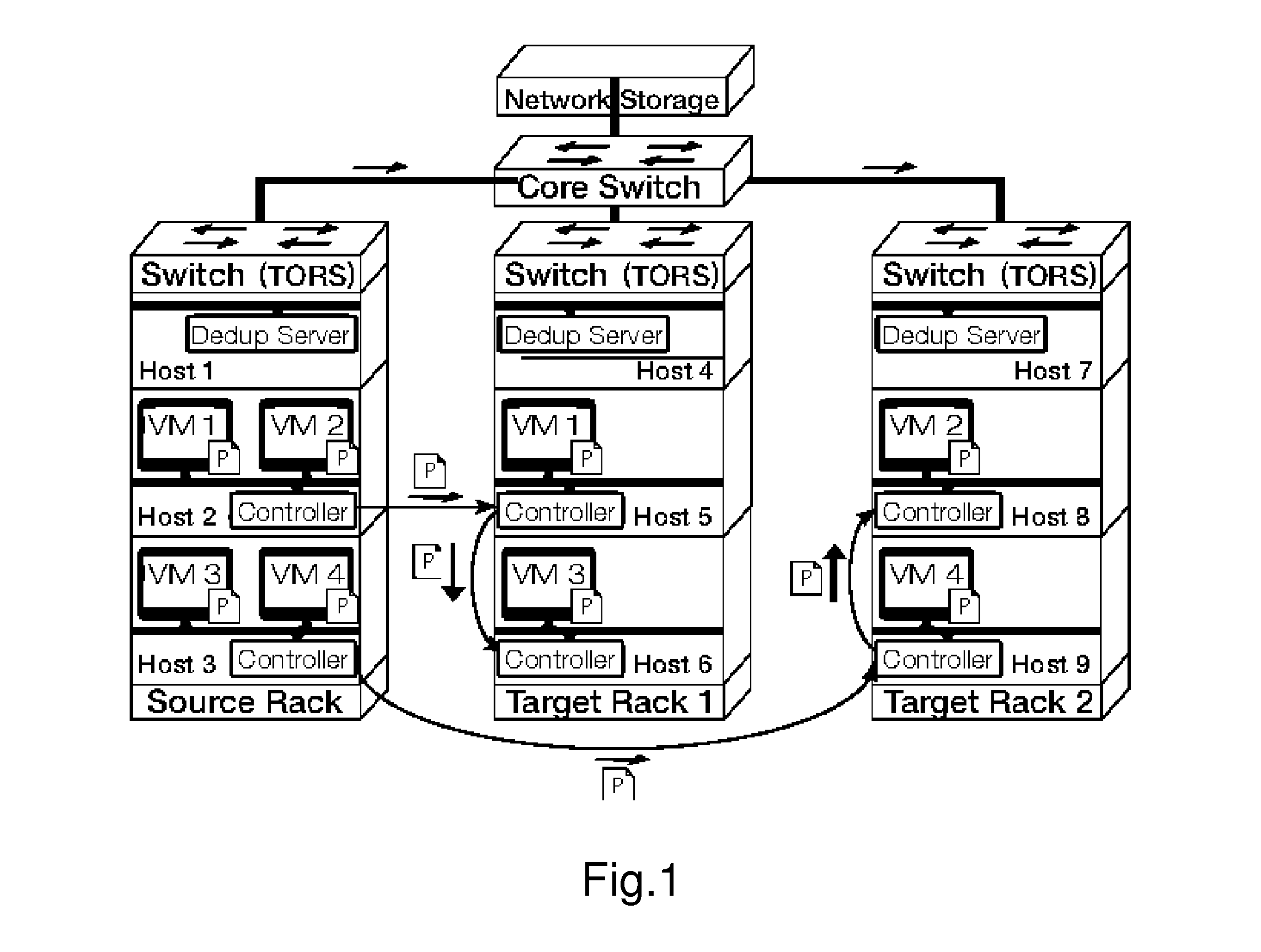

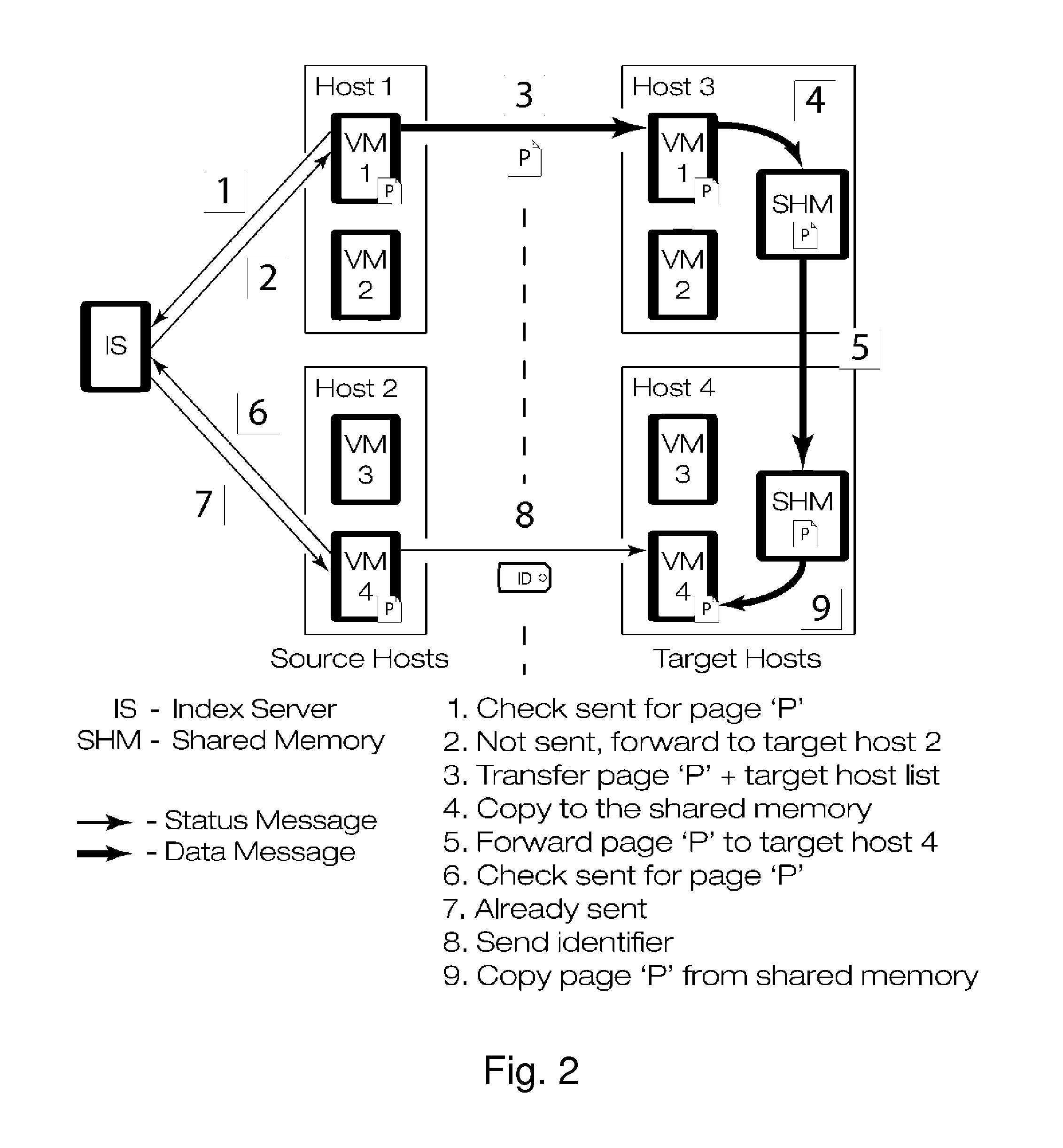

Gang migration of virtual machines using cluster-wide deduplication

ActiveUS20140196037A1Reduce throughputIncrease delayMemory architecture accessing/allocationProgram initiation/switchingTraffic capacityData center

Datacenter clusters often employ live virtual machine (VM) migration to efficiently utilize cluster-wide resources. Gang migration refers to the simultaneous live migration of multiple VMs from one set of physical machines to another in response to events such as load spikes and imminent failures. Gang migration generates a large volume of network traffic and can overload the core network links and switches in a data center. The present technology reduces the network overhead of gang migration using global deduplication (GMGD). GMGD identifies and eliminates the retransmission of duplicate memory pages among VMs running on multiple physical machines in the cluster. A prototype GMGD reduces the network traffic on core links by up to 51% and the total migration time of VMs by up to 39% when compared to the default migration technique in QEMU / KVM, with reduced adverse performance impact on network-bound applications.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

Shared dictionary between devices

ActiveUS20130318051A1Digital data information retrievalDigital data processing detailsComputer hardwareHash function

In one embodiment, a system and method for managing a network deduplication dictionary is disclosed. According to the method, the dictionary is divided between available deduplication engines (DDE) in deduplication devices that support shared dictionaries. The fingerprints are distributed to different DDEs based on a hash function. The hash function takes the fingerprint and hashes it and based on the hash result, it selects one of the DDEs. The hash function could select a few bits from the fingerprint and use those bits to select a DDE.

Owner:AVAGO TECH INT SALES PTE LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com