Patents

Literature

284 results about "Memory hierarchy" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

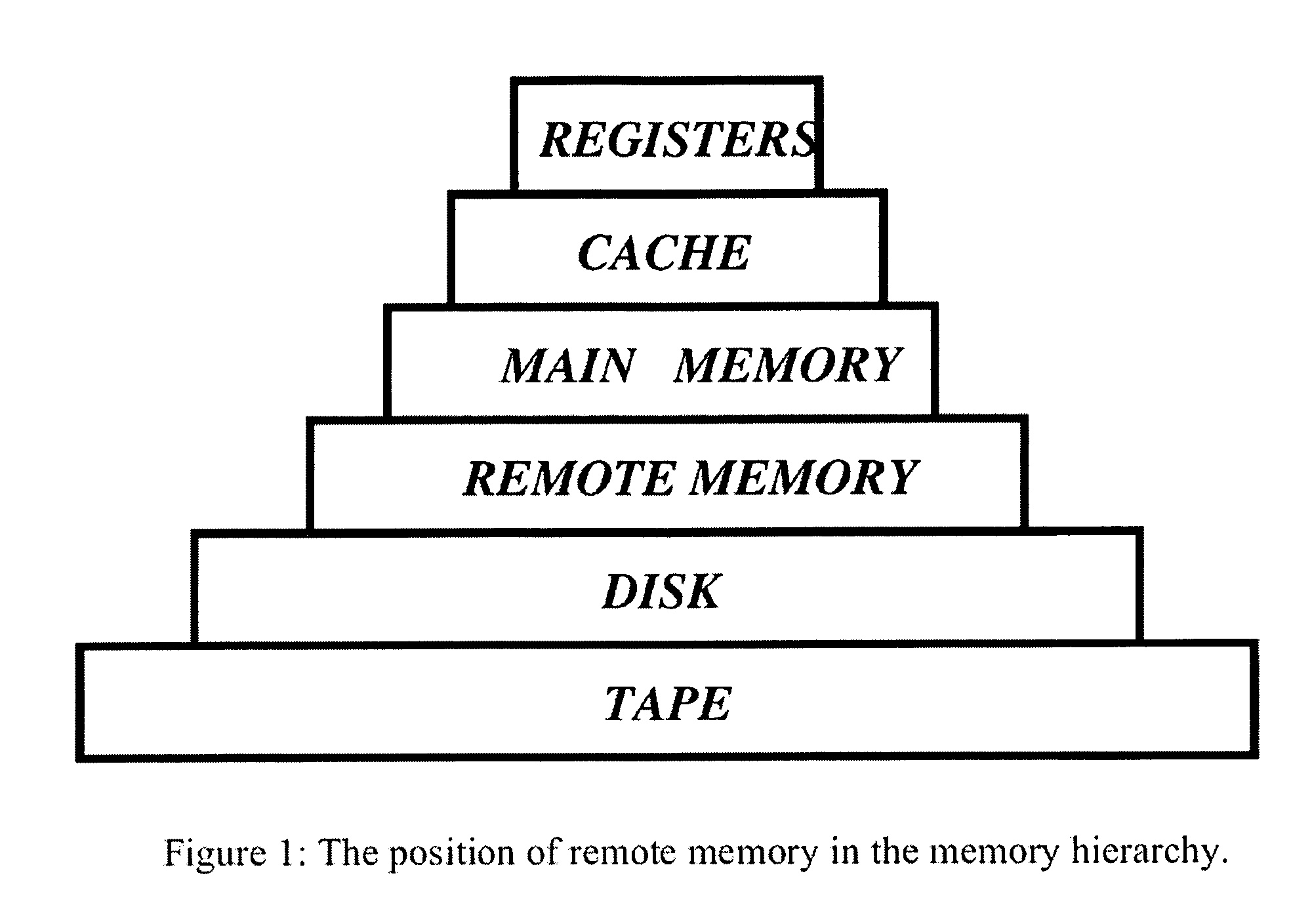

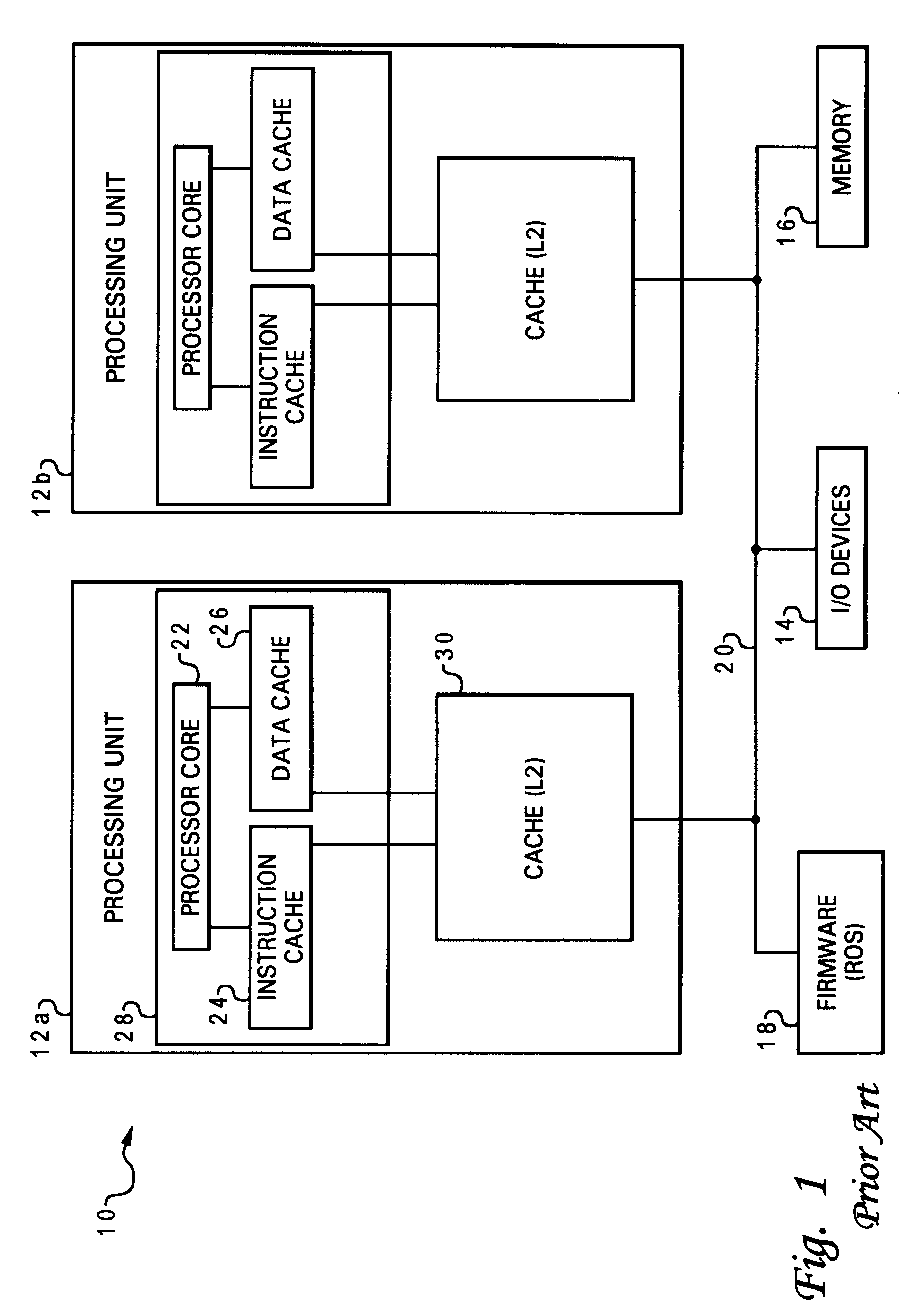

In computer architecture, the memory hierarchy separates computer storage into a hierarchy based on response time. Since response time, complexity, and capacity are related, the levels may also be distinguished by their performance and controlling technologies. Memory hierarchy affects performance in computer architectural design, algorithm predictions, and lower level programming constructs involving locality of reference.

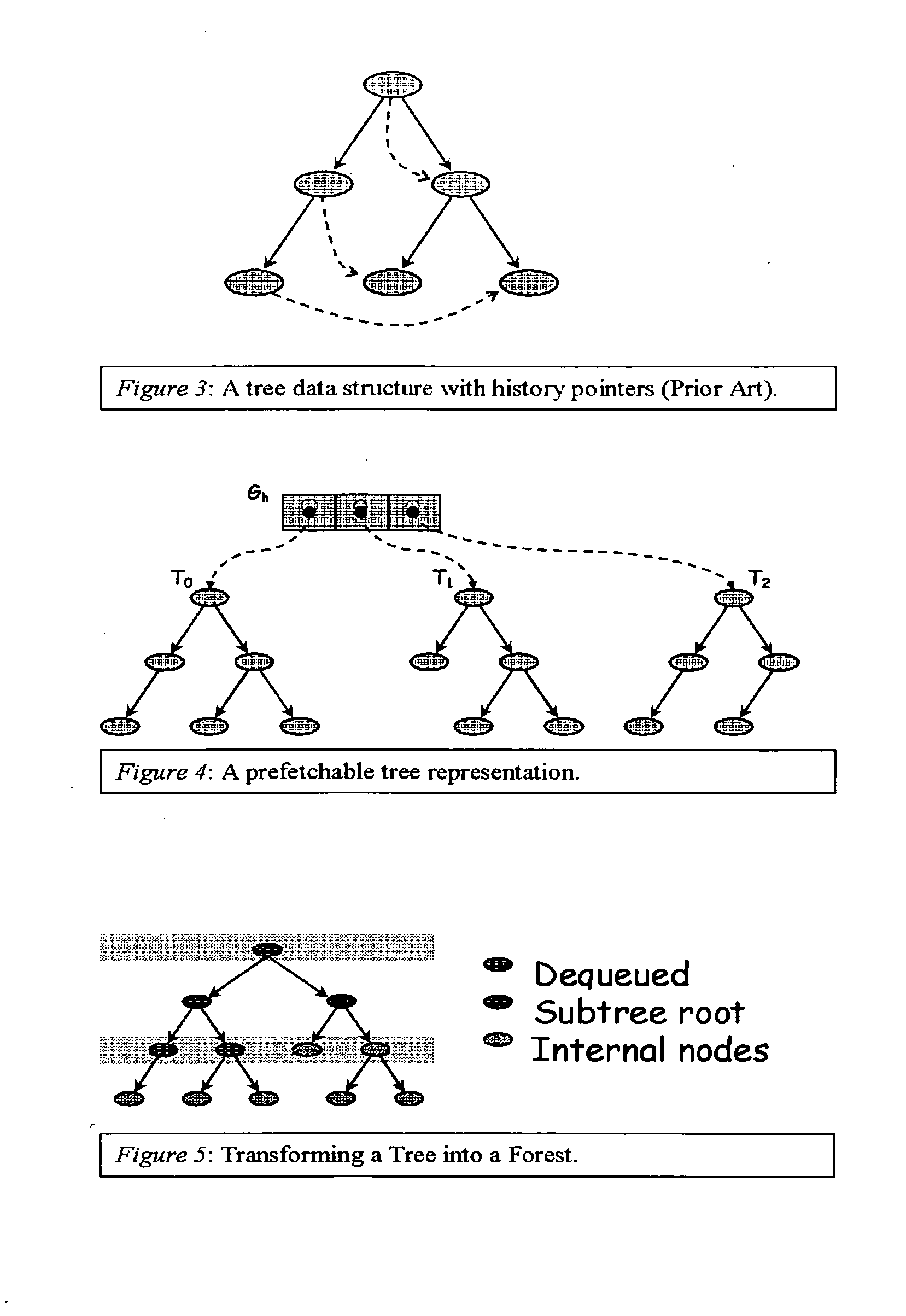

Method and apparatus for prefetching recursive data structures

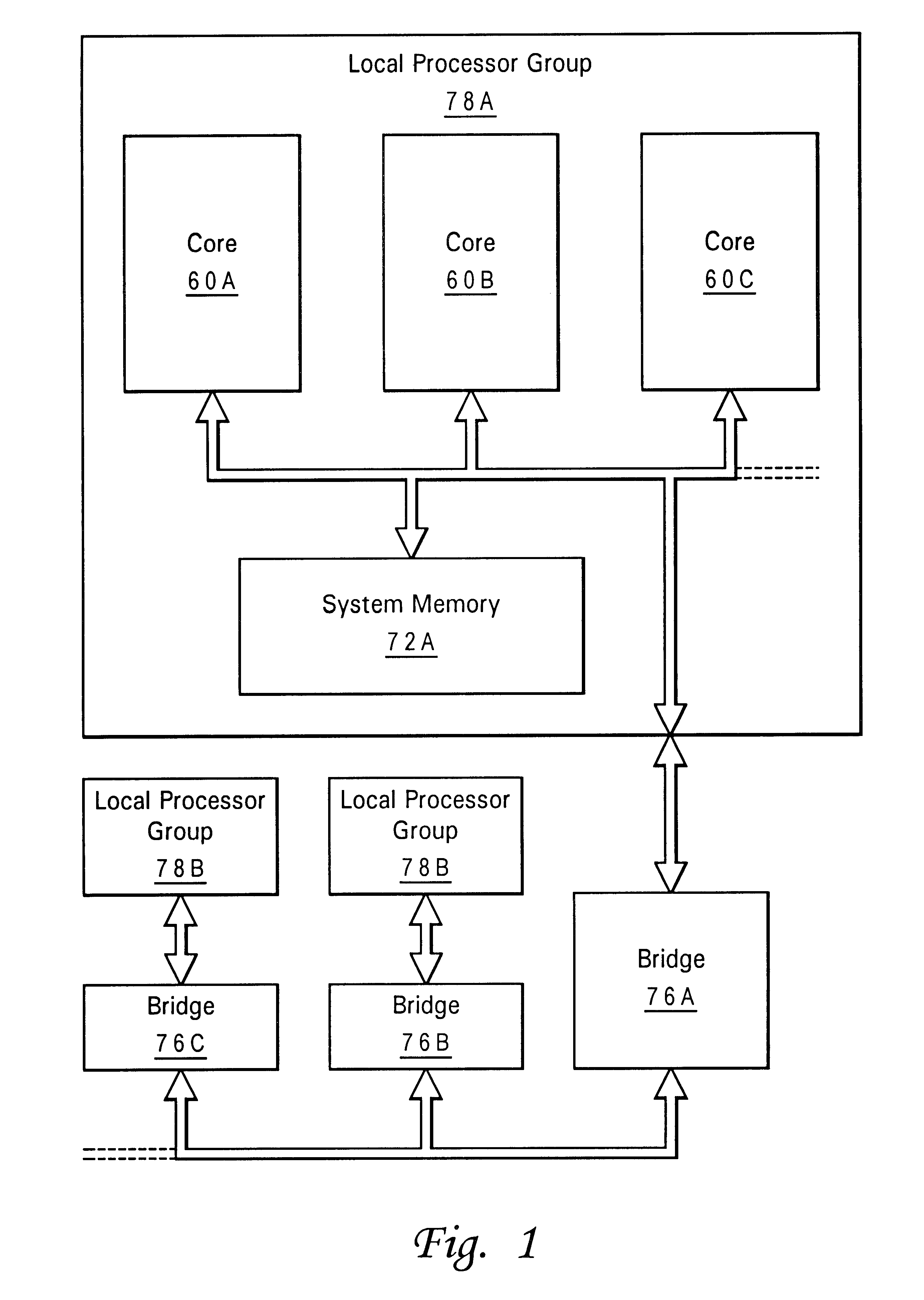

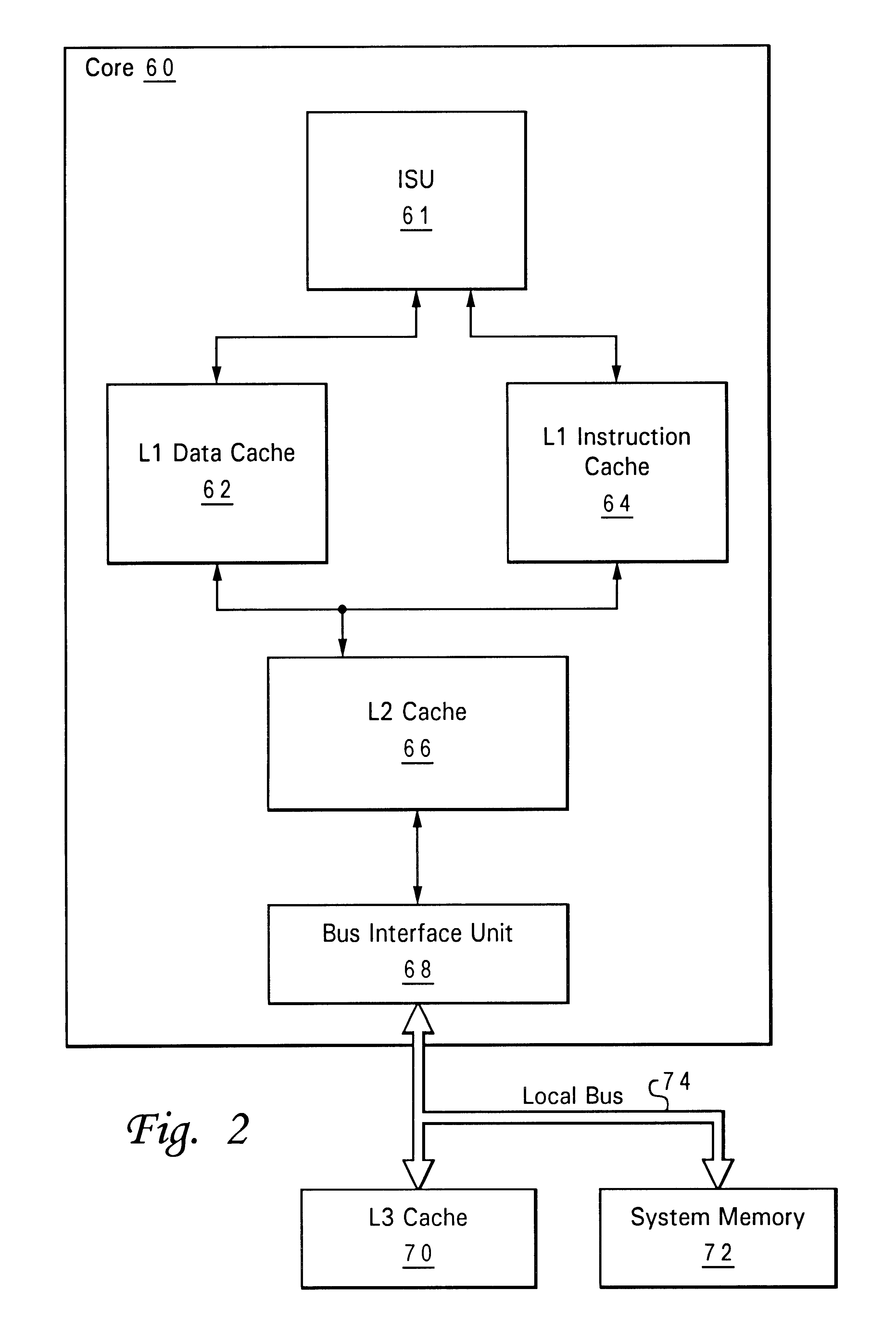

InactiveUS6848029B2Improve cache hit ratioPotential throughput of the computer systemMemory architecture accessing/allocationMemory adressing/allocation/relocationApplication softwareCache hit rate

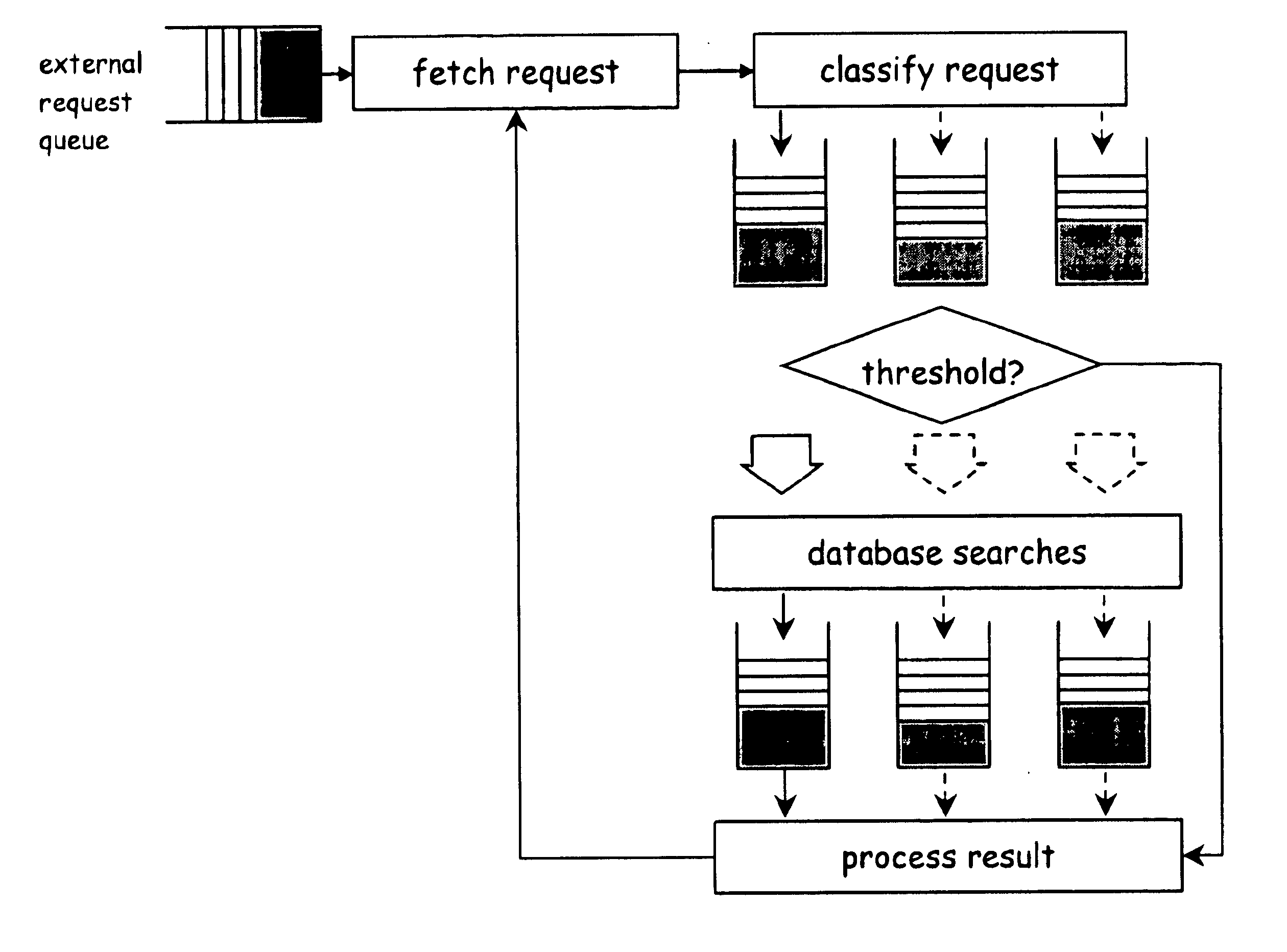

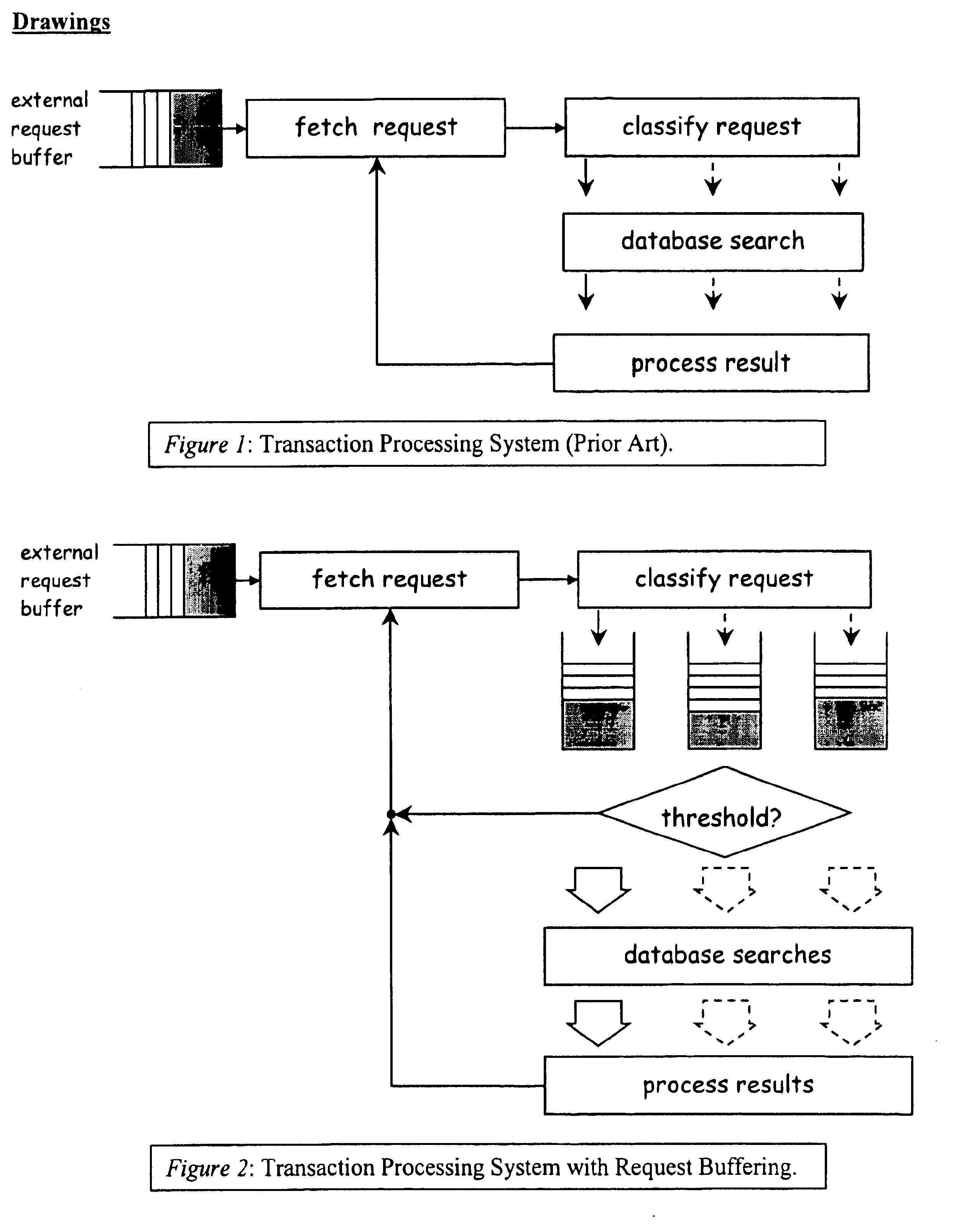

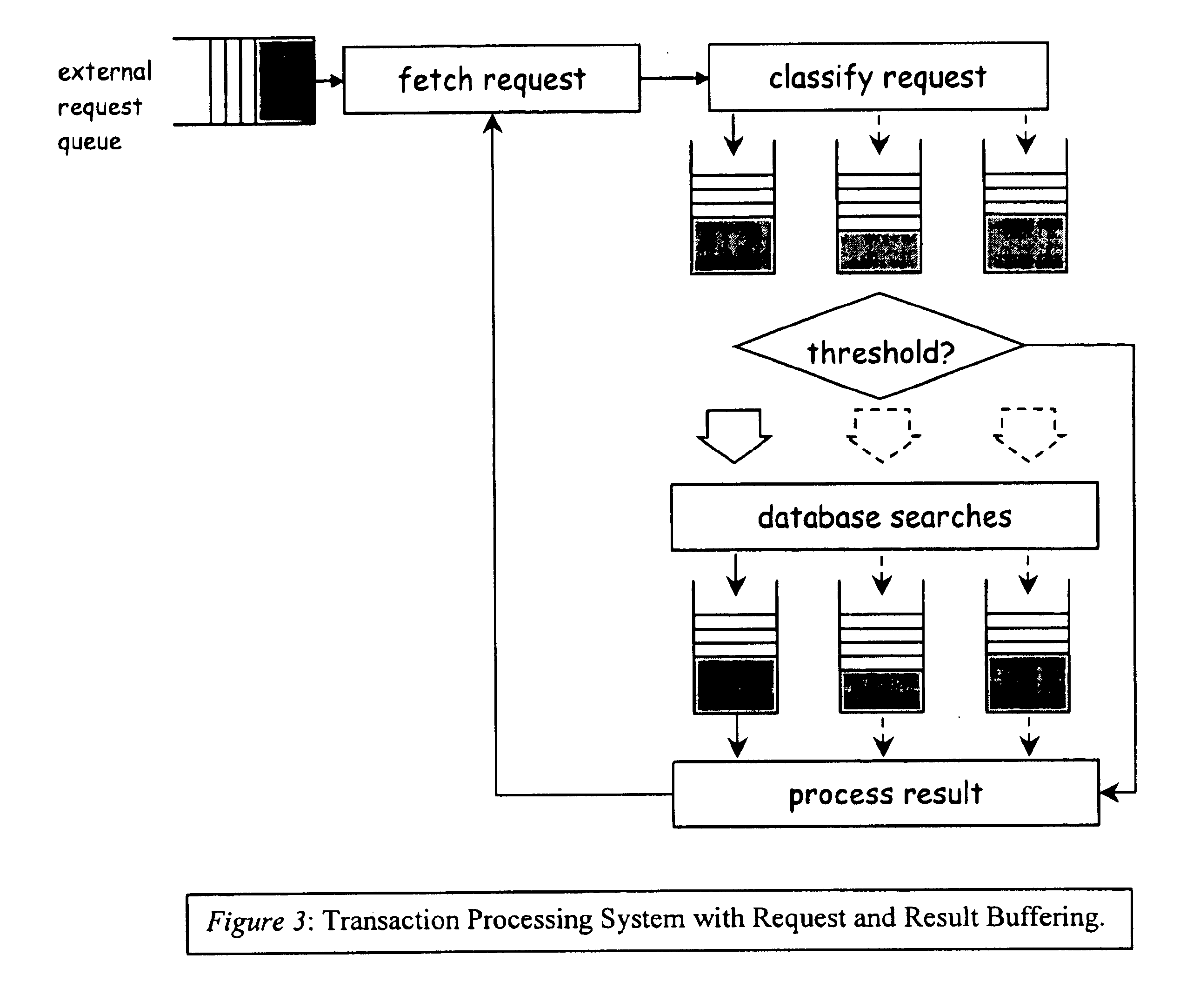

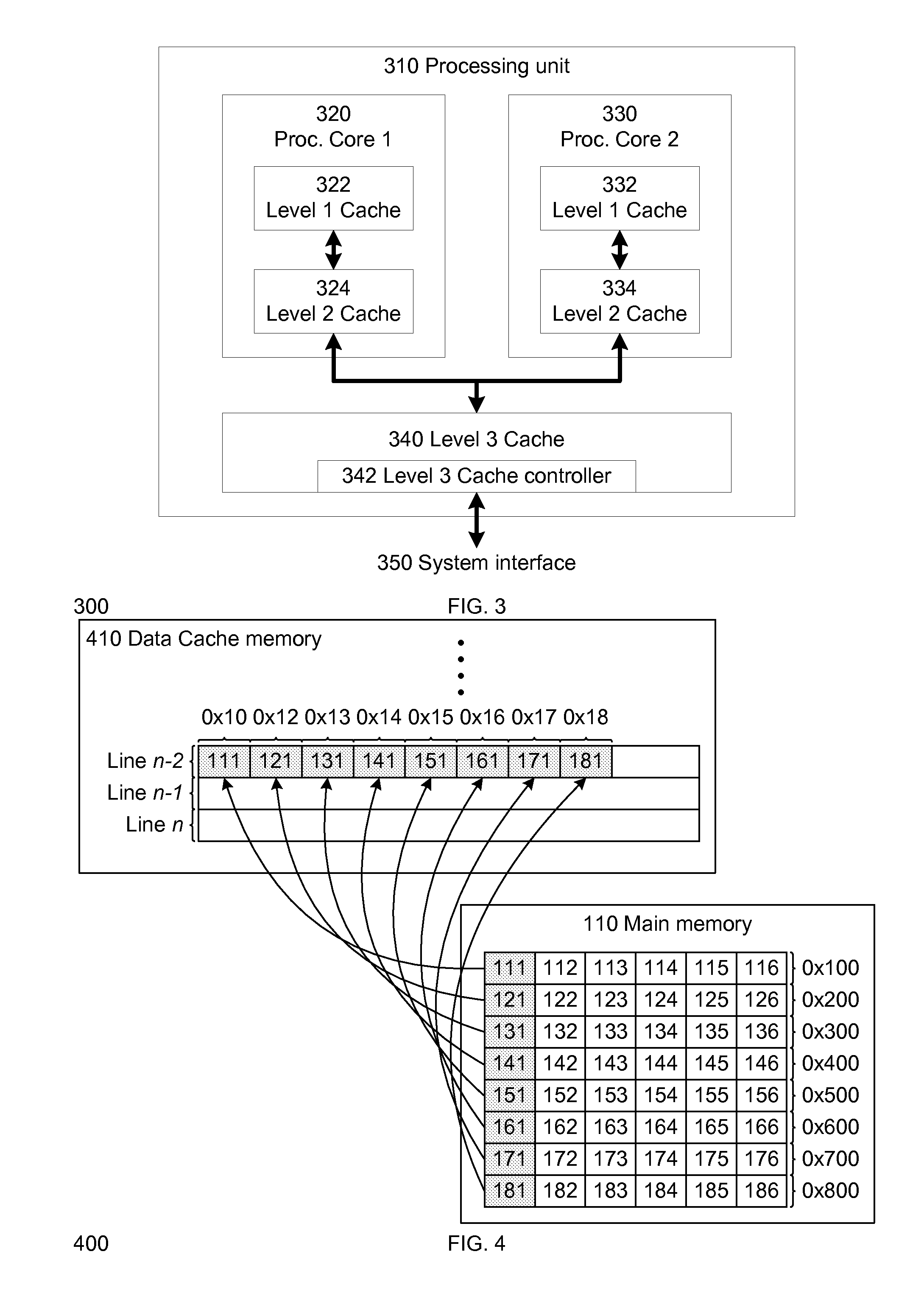

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. The invention is applicable to those data structure accesses in which the traversal path is dynamically determined. The invention does this by aggregating traversal requests and then pipelining the traversal of aggregated requests on the data structure. Once enough traversal requests have been accumulated so that most of the memory latency can be hidden by prefetching the accumulated requests, the data structure is traversed by performing software pipelining on some or all of the accumulated requests. As requests are completed and retired from the set of requests that are being traversed, additional accumulated requests are added to that set. This process is repeated until either an upper threshold of processed requests or a lower threshold of residual accumulated requests has been reached. At that point, the traversal results may be processed.

Owner:DIGITAL CACHE LLC +1

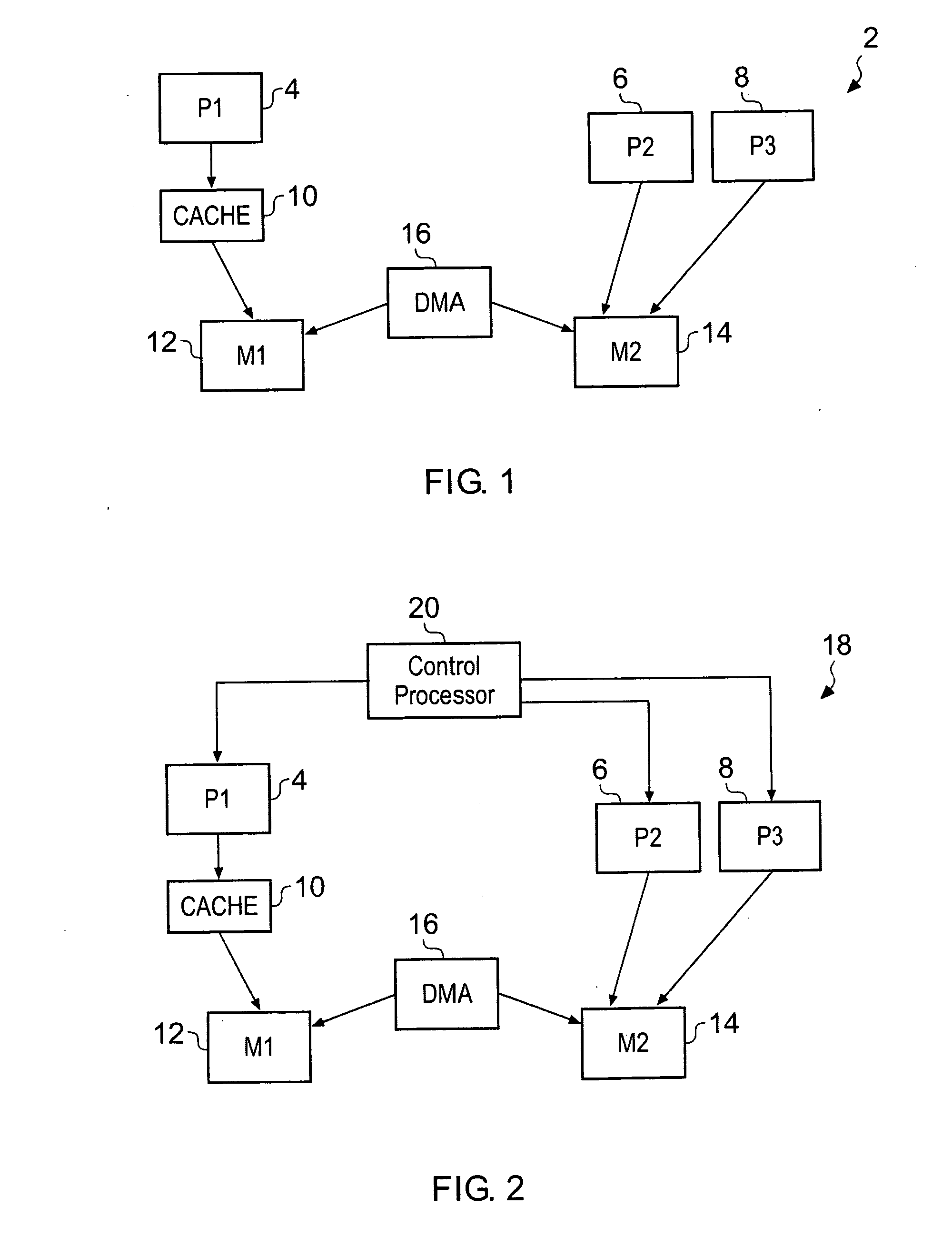

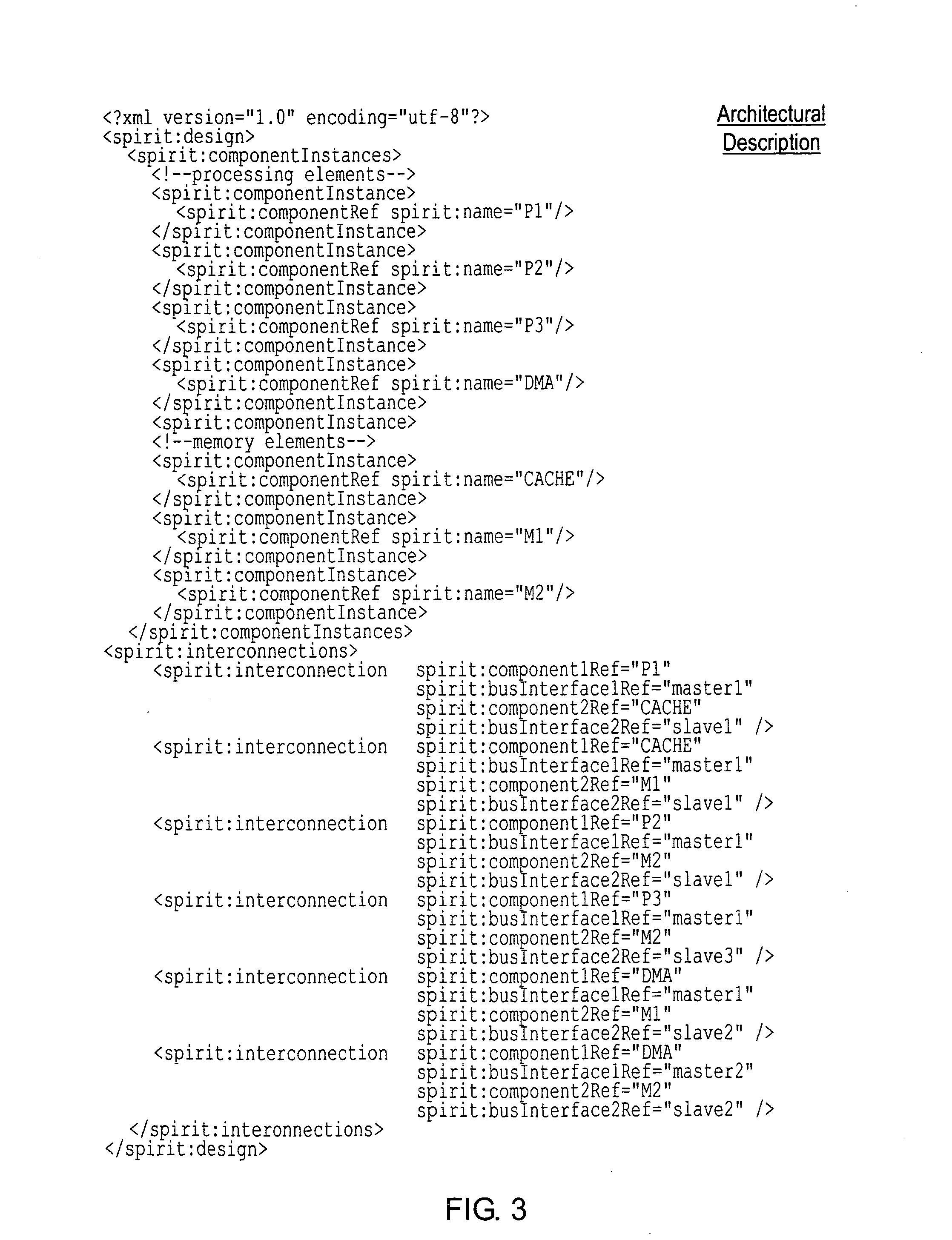

Mapping a computer program to an asymmetric multiprocessing apparatus

ActiveUS20080114937A1Quicker and less-expensiveNot easy to make mistakesMemory adressing/allocation/relocationDetecting faulty computer hardwareMemory hierarchyTheoretical computer science

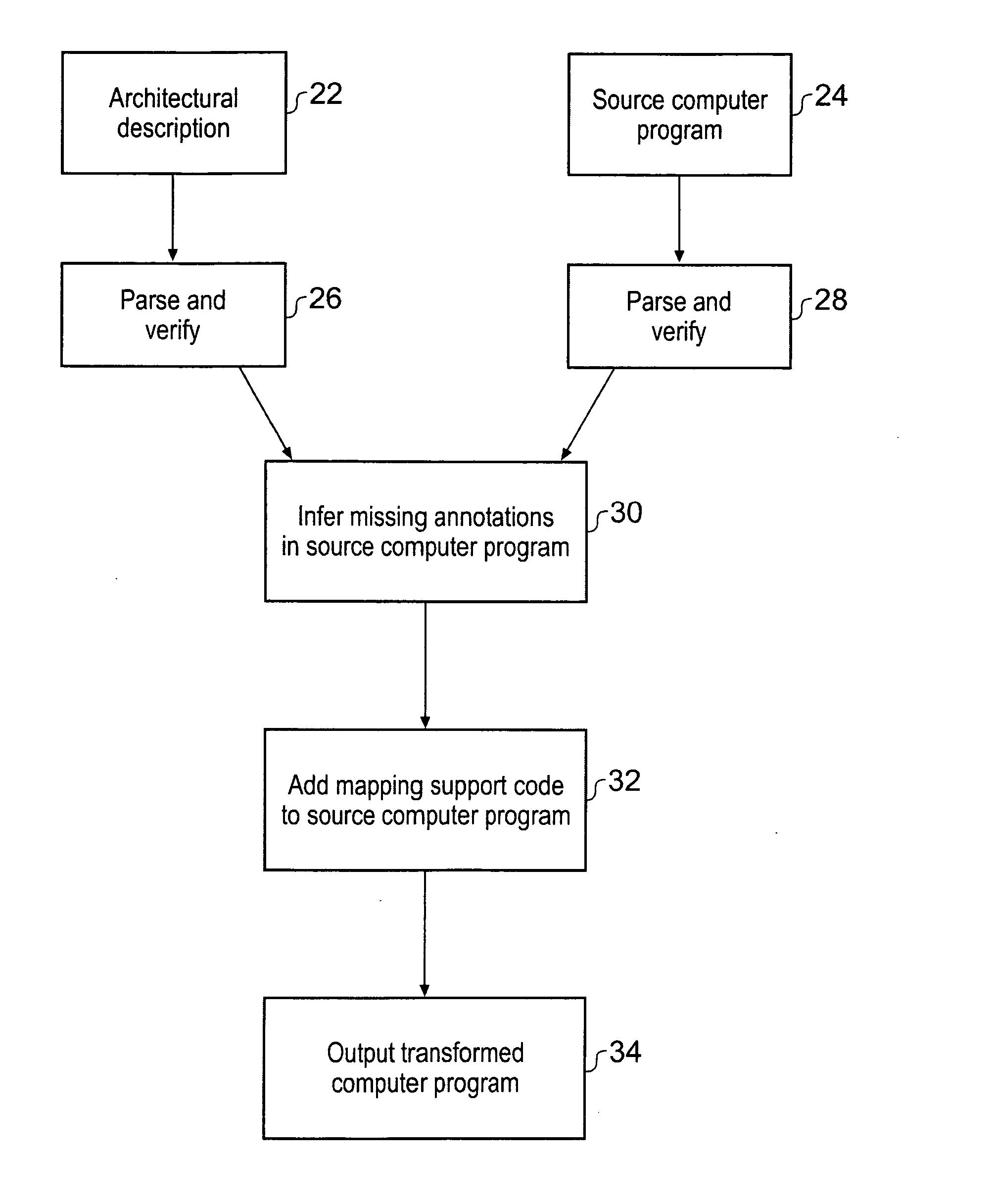

A computer implemented tool is provided for assisting in the mapping of a computer program to an asymmetric multiprocessing apparatus 2 incorporating an asymmetric memory hierarchy formed of a plurality of memories 12, 14. An at least partial architectural description 22, 40 is provided as an input variable to the tool and used to infer missing annotations within a source computer program 24, such as which functions are to be executed by which execution mechanisms 4, 6, 8 and which variables are to be stored within which memories 12, 14. The tool also adds mapping support commands, such as cache flush commands, cache invalidate commands, DMA move commands and the like as necessary to support the mapping of the computer program to the asymmetric multiprocessing apparatus 2.

Owner:ARM LTD

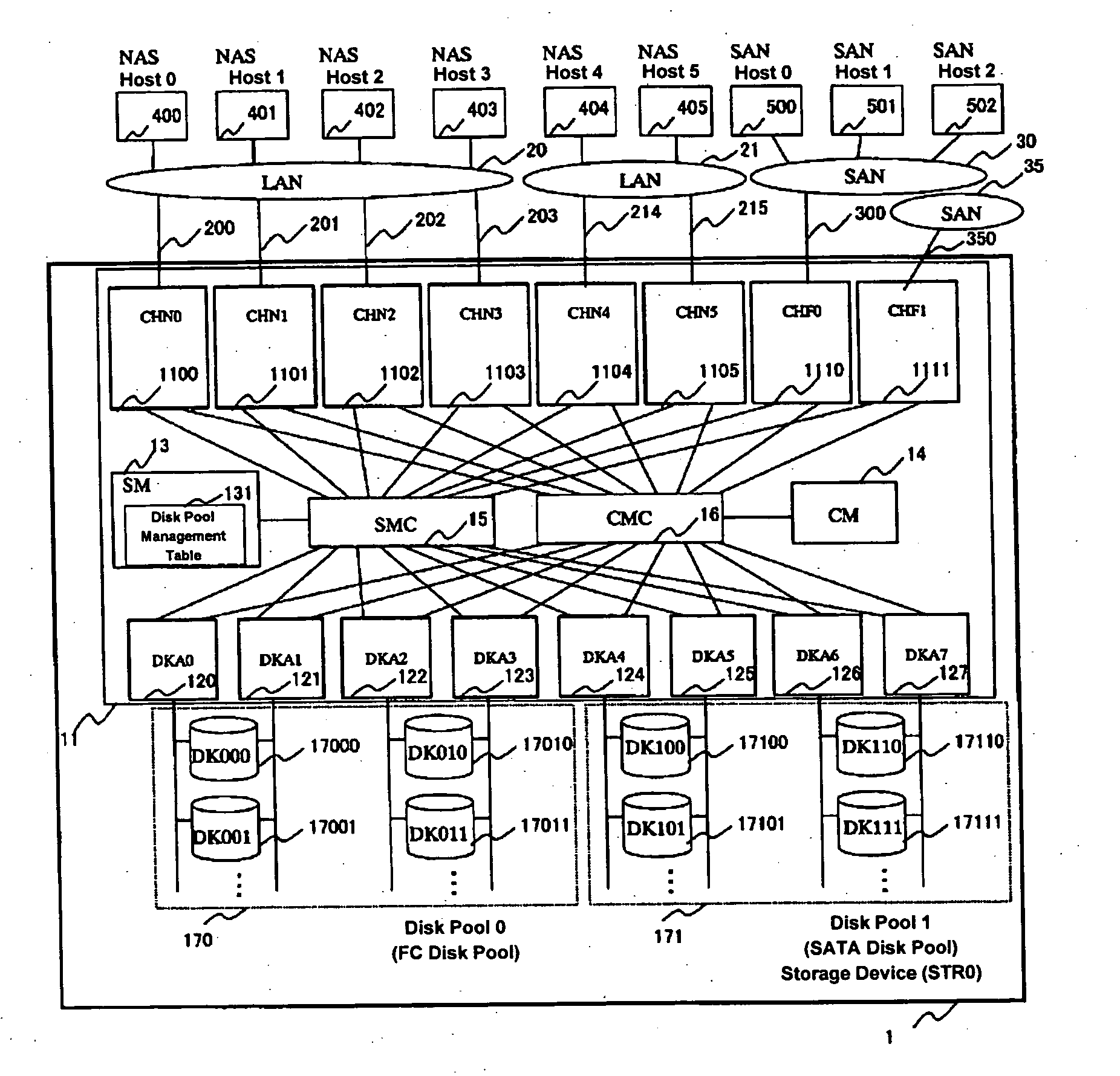

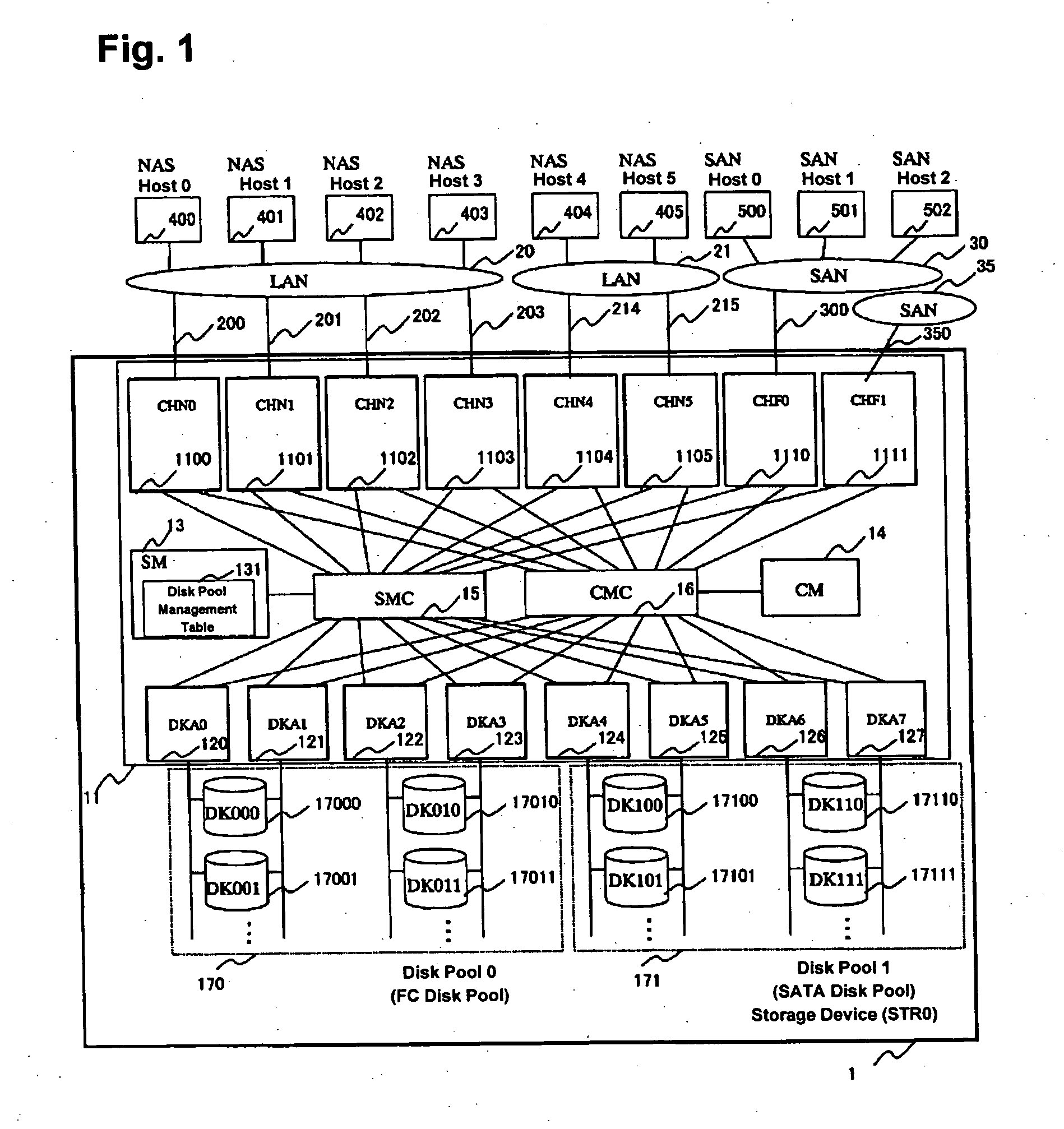

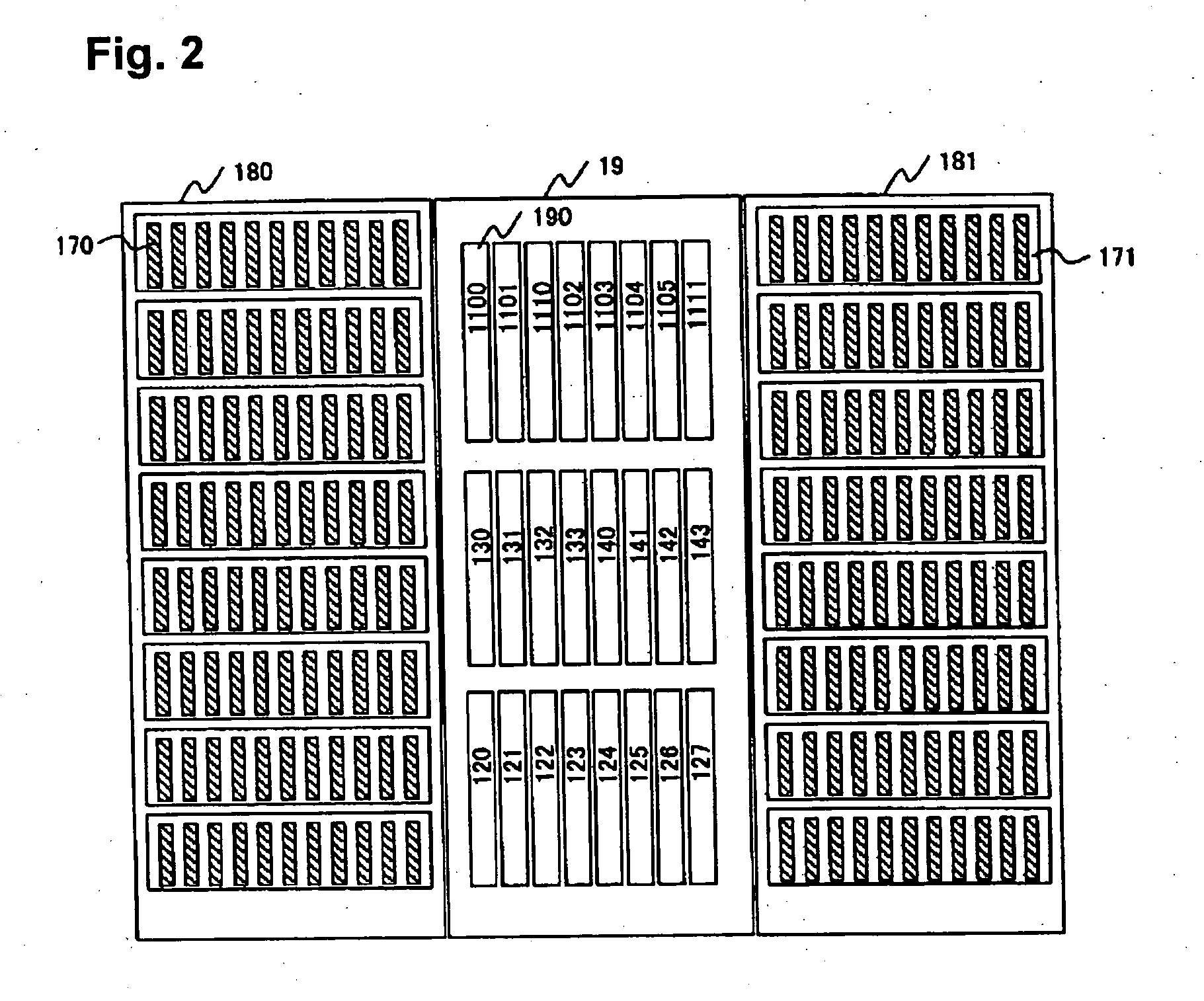

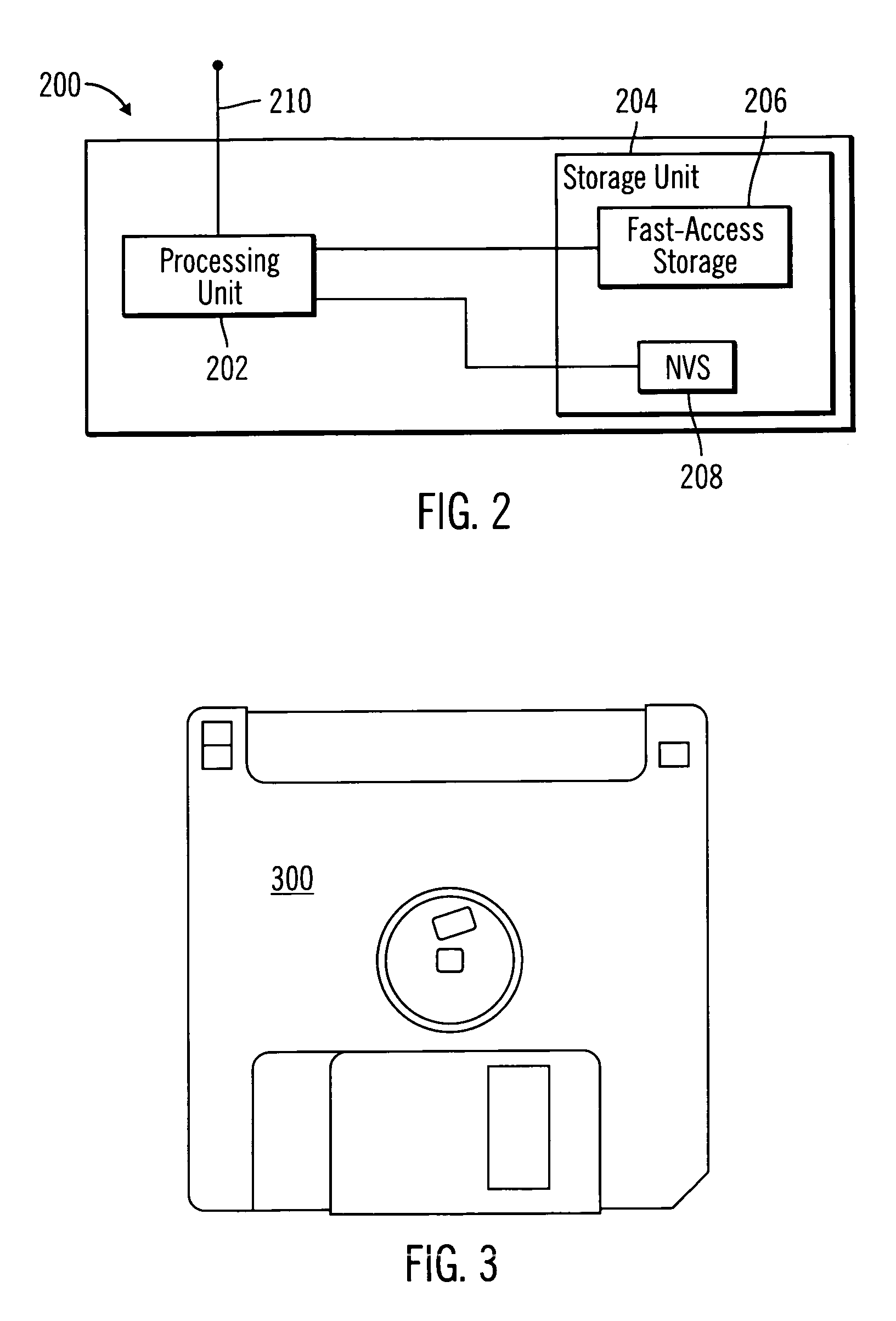

Storage device

InactiveUS20040193760A1Input/output to record carriersData processing applicationsMemory hierarchyFile system

A storage device is provided with a file I / O interface control device and a plurality of disk pools. The file I / O interface control device sets one of a plurality of storage hierarchies defining storage classes, respectively, for each of LUs within the disk pools, thereby forming a file system in each of the LUs. The file I / O interface control device migrates at least one of the files from one of the LUs to another one of the LUs of an optimal storage class, based on static properties and dynamic properties of each file.

Owner:HITACHI LTD

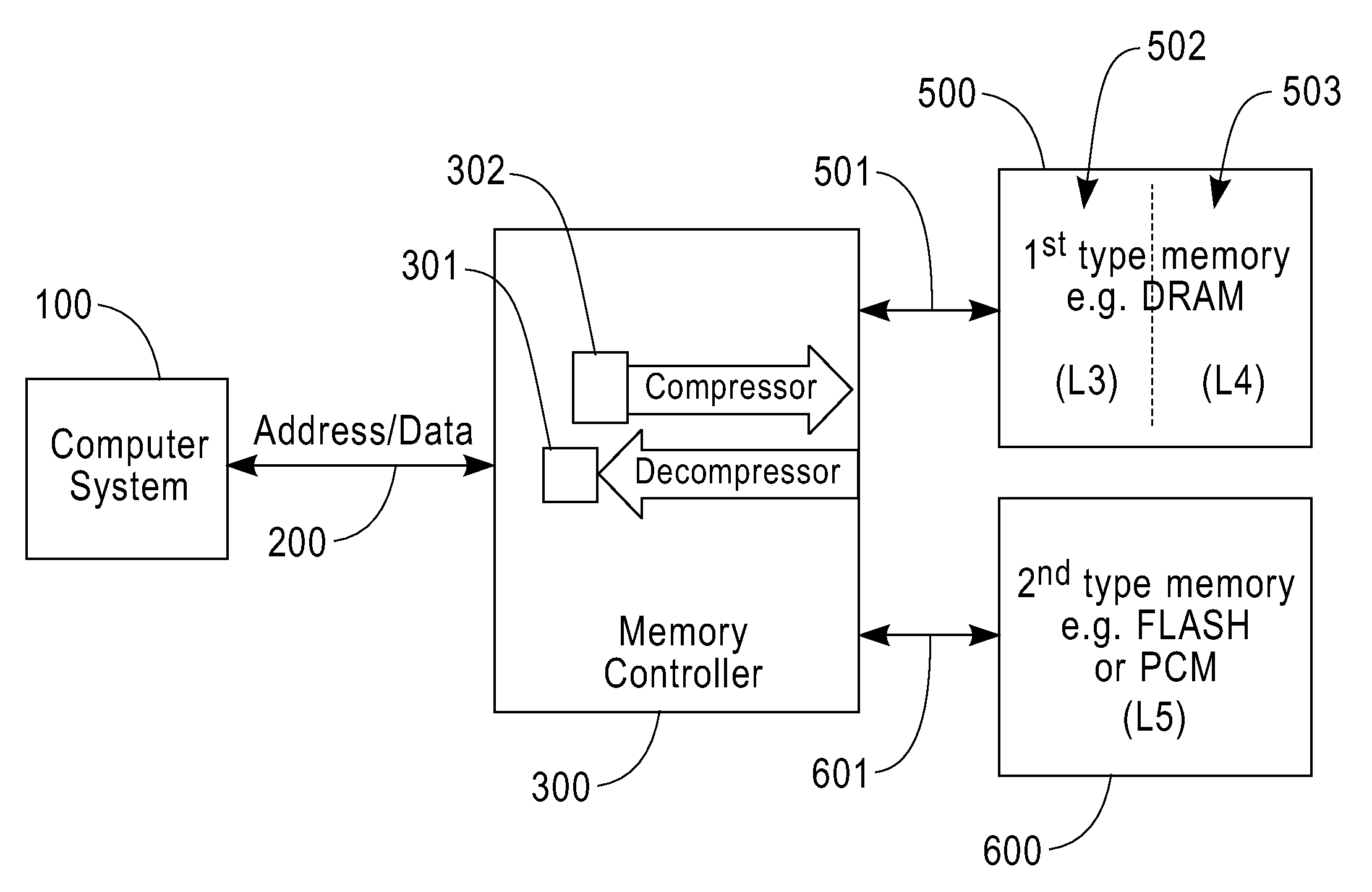

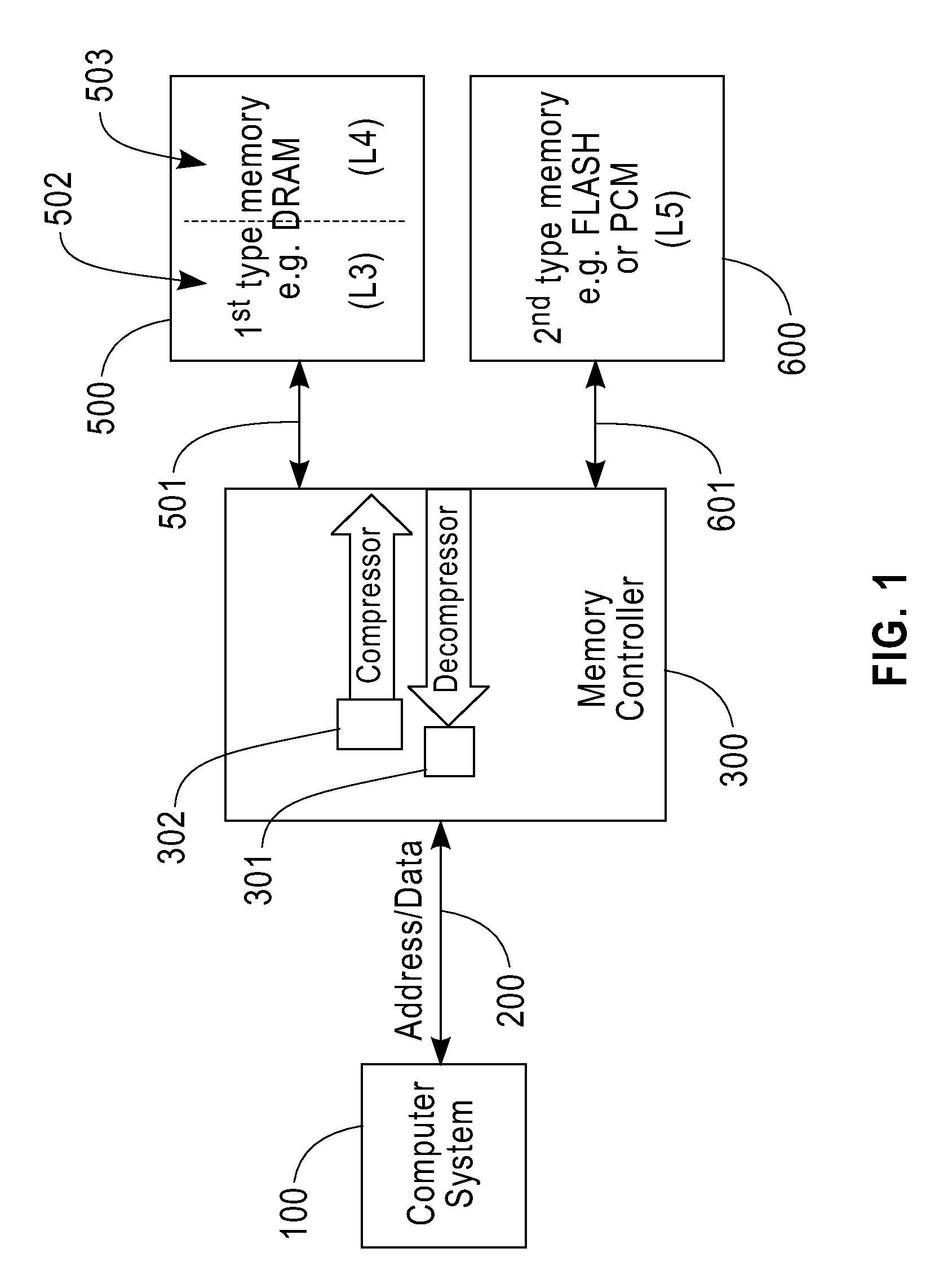

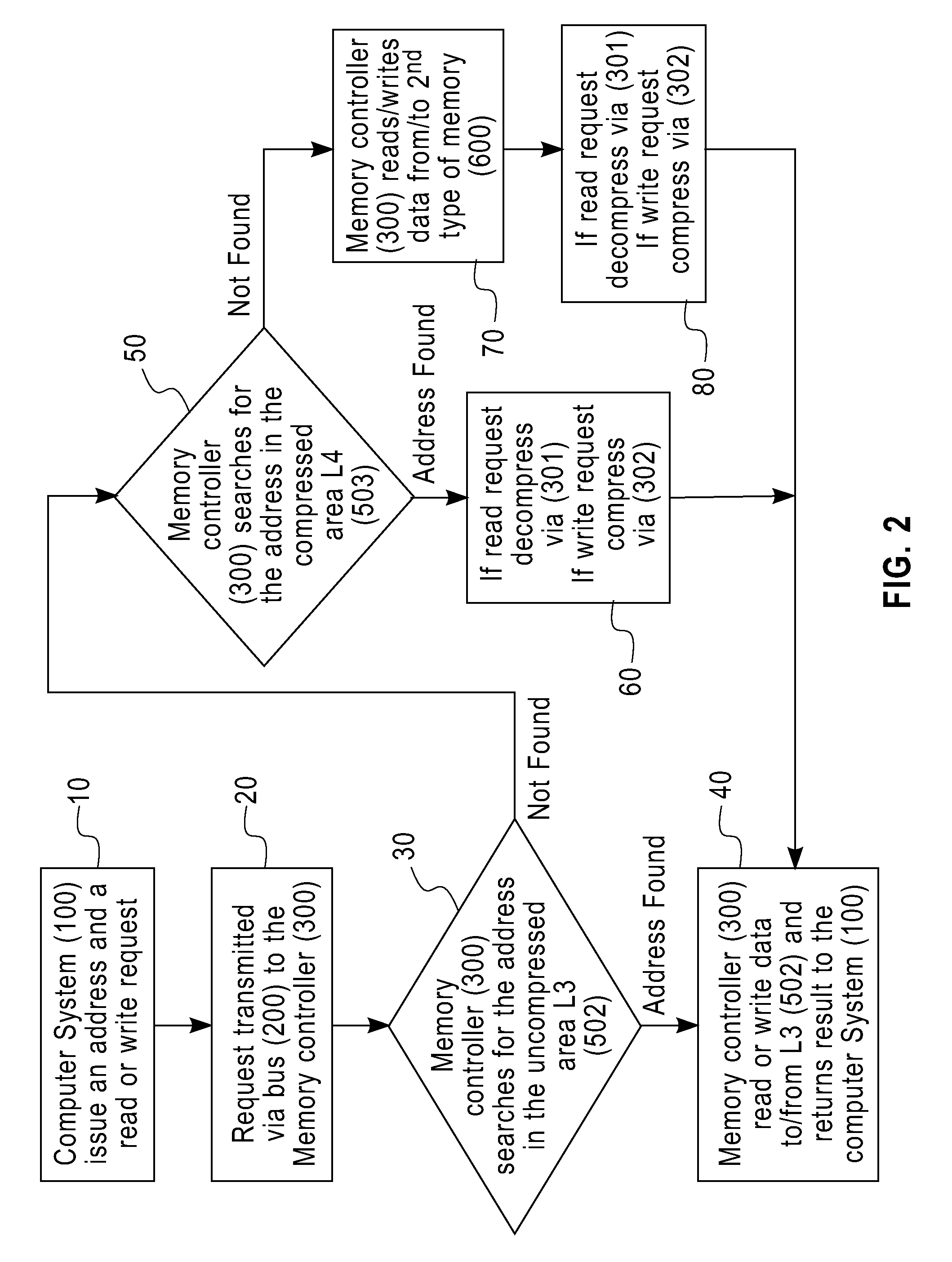

Bus attached compressed random access memory

InactiveUS20090254705A1Eliminate the problemLow costMemory architecture accessing/allocationMemory adressing/allocation/relocationThree levelMemory hierarchy

A computer memory system having a three-level memory hierarchy structure is disclosed. The system includes a memory controller, a volatile memory, and a non-volatile memory. The volatile memory is divided into an uncompressed data region and a compressed data region.

Owner:IBM CORP

Cache coherent support for flash in a memory hierarchy

ActiveUS20100293420A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory hierarchyRandom access memory

System and method for using flash memory in a memory hierarchy. A computer system includes a processor coupled to a memory hierarchy via a memory controller. The memory hierarchy includes a cache memory, a first memory region of random access memory coupled to the memory controller via a first buffer, and an auxiliary memory region of flash memory coupled to the memory controller via a flash controller. The first buffer and the flash controller are coupled to the memory controller via a single interface. The memory controller receives a request to access a particular page in the first memory region. The processor detects a page fault corresponding to the request and in response, invalidates cache lines in the cache memory that correspond to the particular page, flushes the invalid cache lines, and swaps a page from the auxiliary memory region to the first memory region.

Owner:ORACLE INT CORP

Dynamic partial power down of memory-side cache in a 2-level memory hierarchy

ActiveUS20140304475A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory hierarchyMultilevel memory

A system and method are described for flushing a specified region of a memory side cache (MSC) within a multi-level memory hierarchy. For example, a computer system according to one embodiment comprises: a memory subsystem comprised of a non-volatile system memory and a volatile memory side cache (MSC) for caching portions of the non-volatile system memory; and a flush engine for flushing a specified region of the MSC to the non-volatile system memory in response to a deactivation condition associated with the specified region of the MSC.

Owner:INTEL CORP

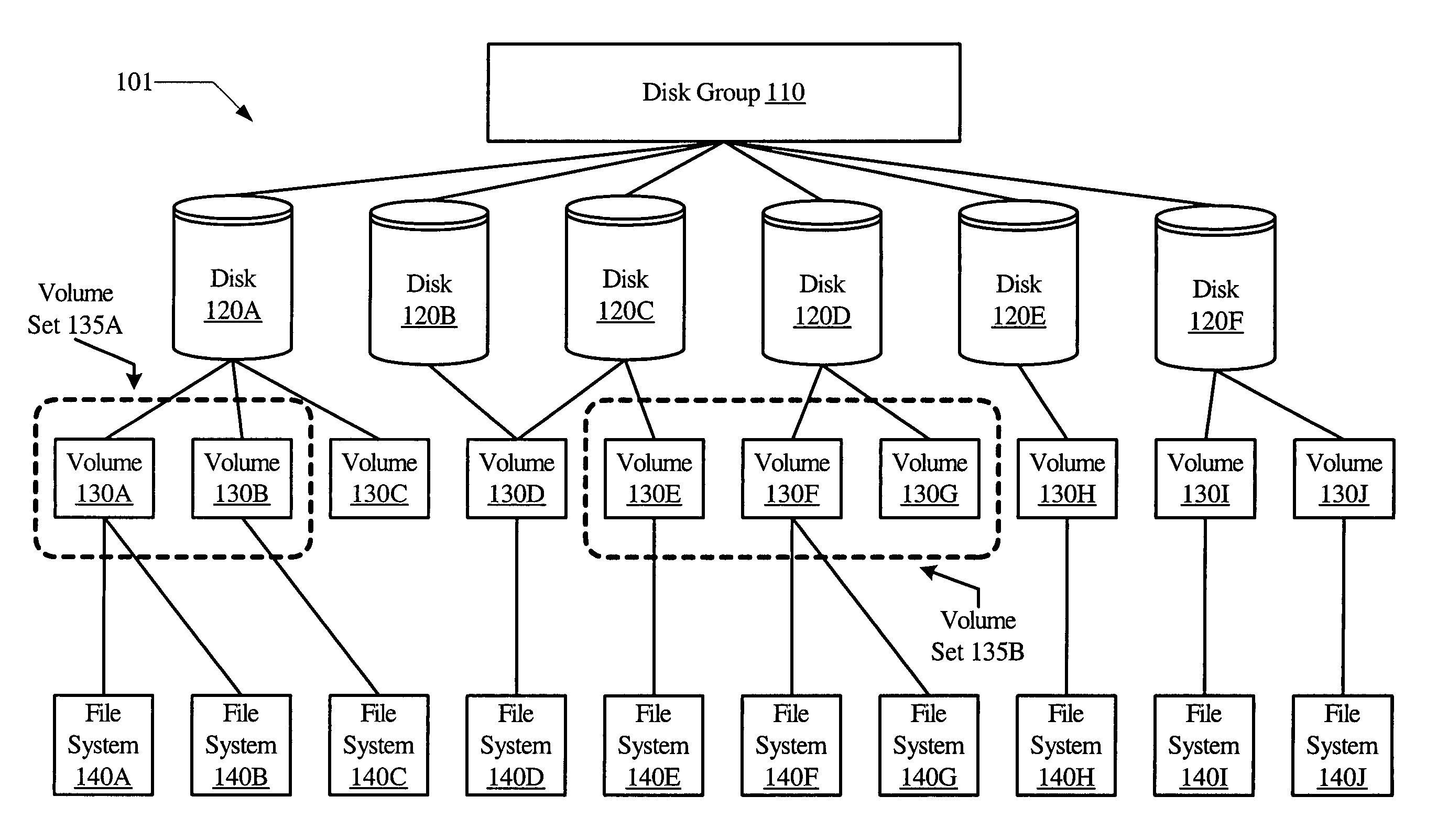

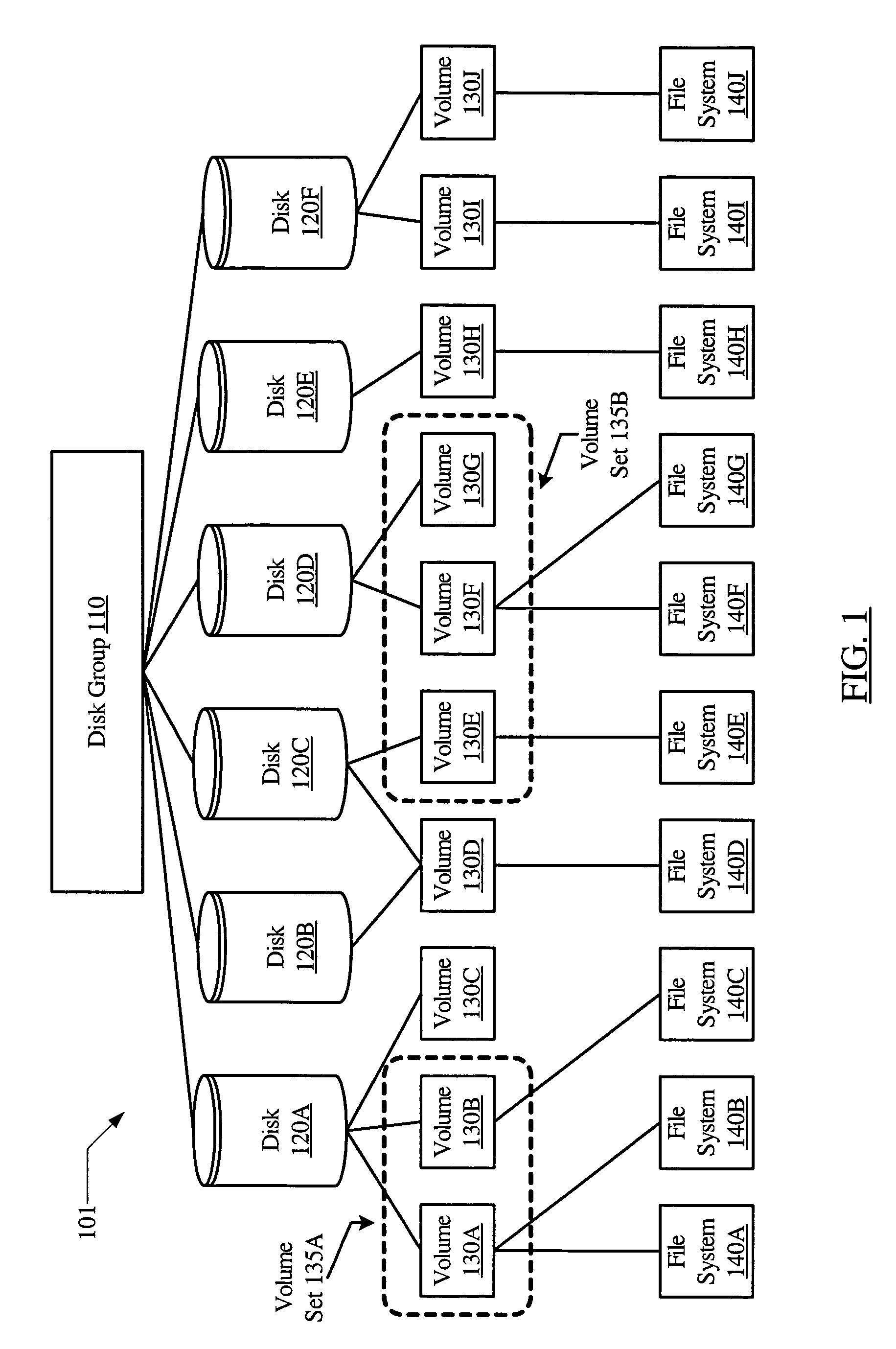

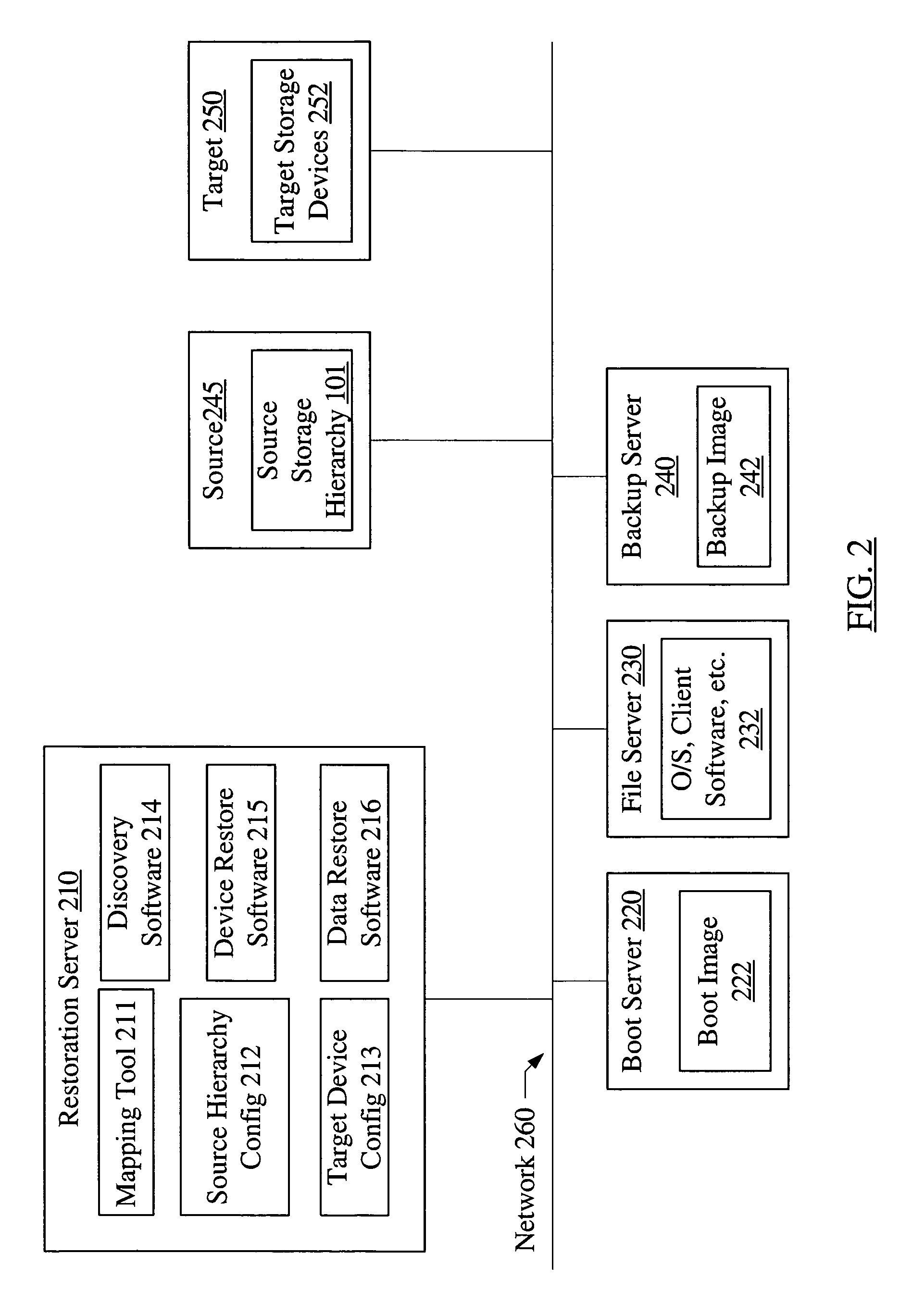

System and method for hierarchical storage mapping

A mapping tool for hierarchical storage mapping may include a storage hierarchy representation interface, a command interface and remapping software. The storage hierarchy representation interface may be configured to provide a user with representations of a source storage hierarchy and target storage devices, where the source storage hierarchy may include a source storage device with one or more contained storage devices. The command interface may allow the user to request a hierarchical mapping of the source storage device to one or more target storage devices. The remapping software may be configured to create a mapping of the source storage device and the contained storage devices to storage within the target storage devices.

Owner:SYMANTEC OPERATING CORP

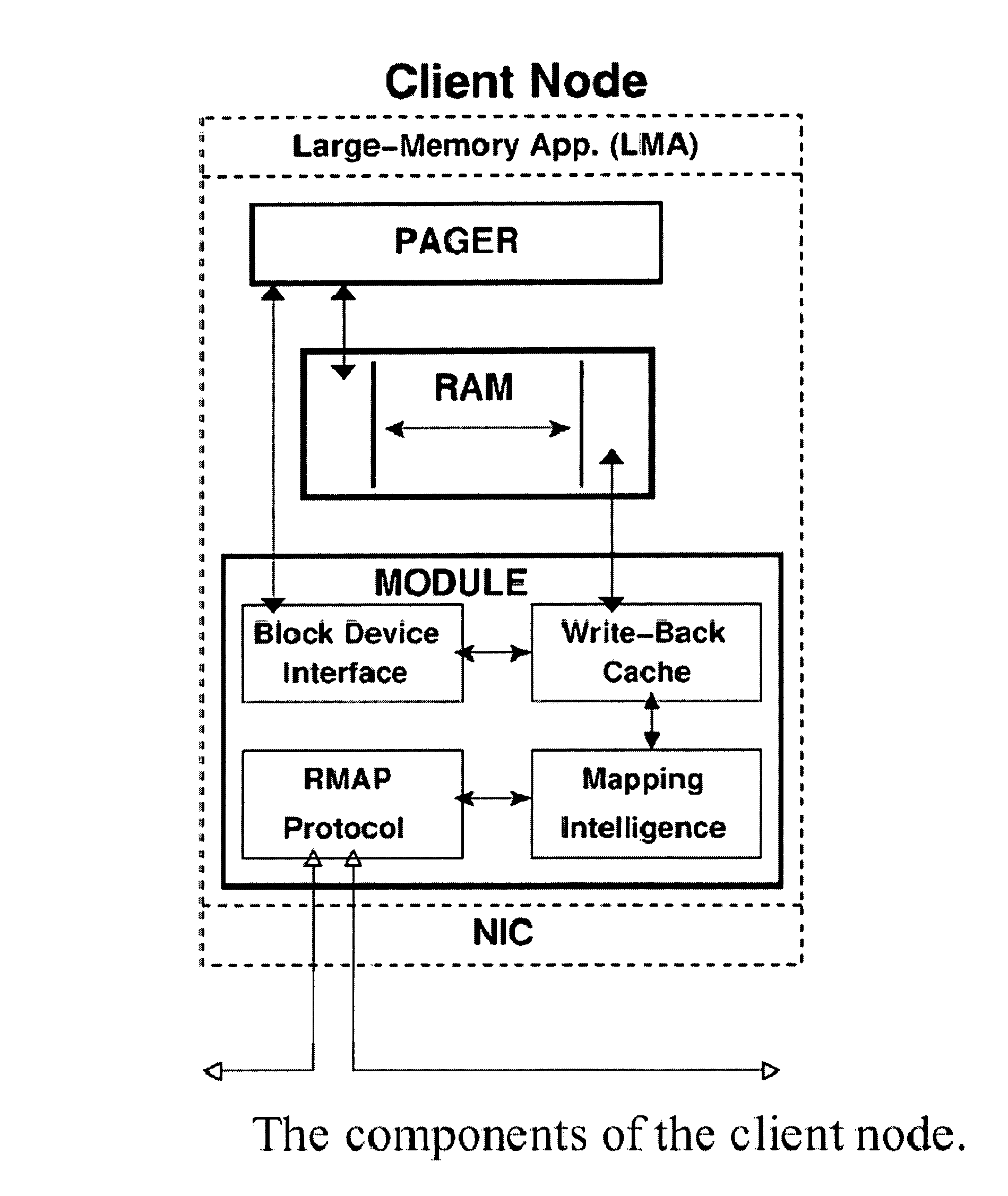

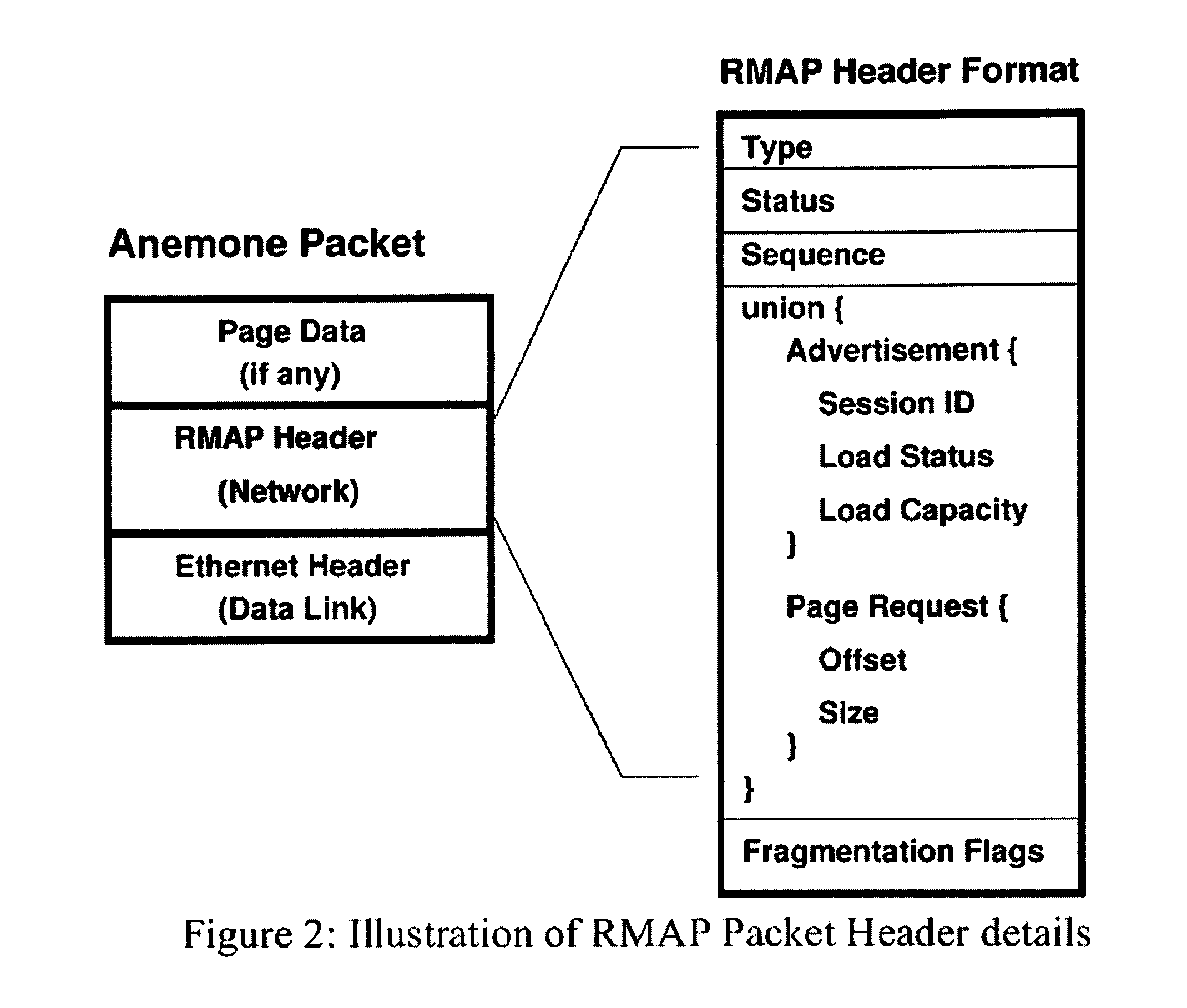

Distributed adaptive network memory engine

ActiveUS7917599B1Processing speedLower latencyMultiple digital computer combinationsTransmissionMass storageOperational system

Memory demands of large-memory applications continue to remain one step ahead of the improvements in DRAM capacities of commodity systems. Performance of such applications degrades rapidly once the system hits the physical memory limit and starts paging to the local disk. A distributed network-based virtual memory scheme is provided which treats remote memory as another level in the memory hierarchy between very fast local memory and very slow local disks. Performance over gigabit Ethernet shows significant performance gains over local disk. Large memory applications may access potentially unlimited network memory resources without requiring any application or operating system code modifications, relinkling or recompilation. A preferred embodiment employs kernel-level driver software.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

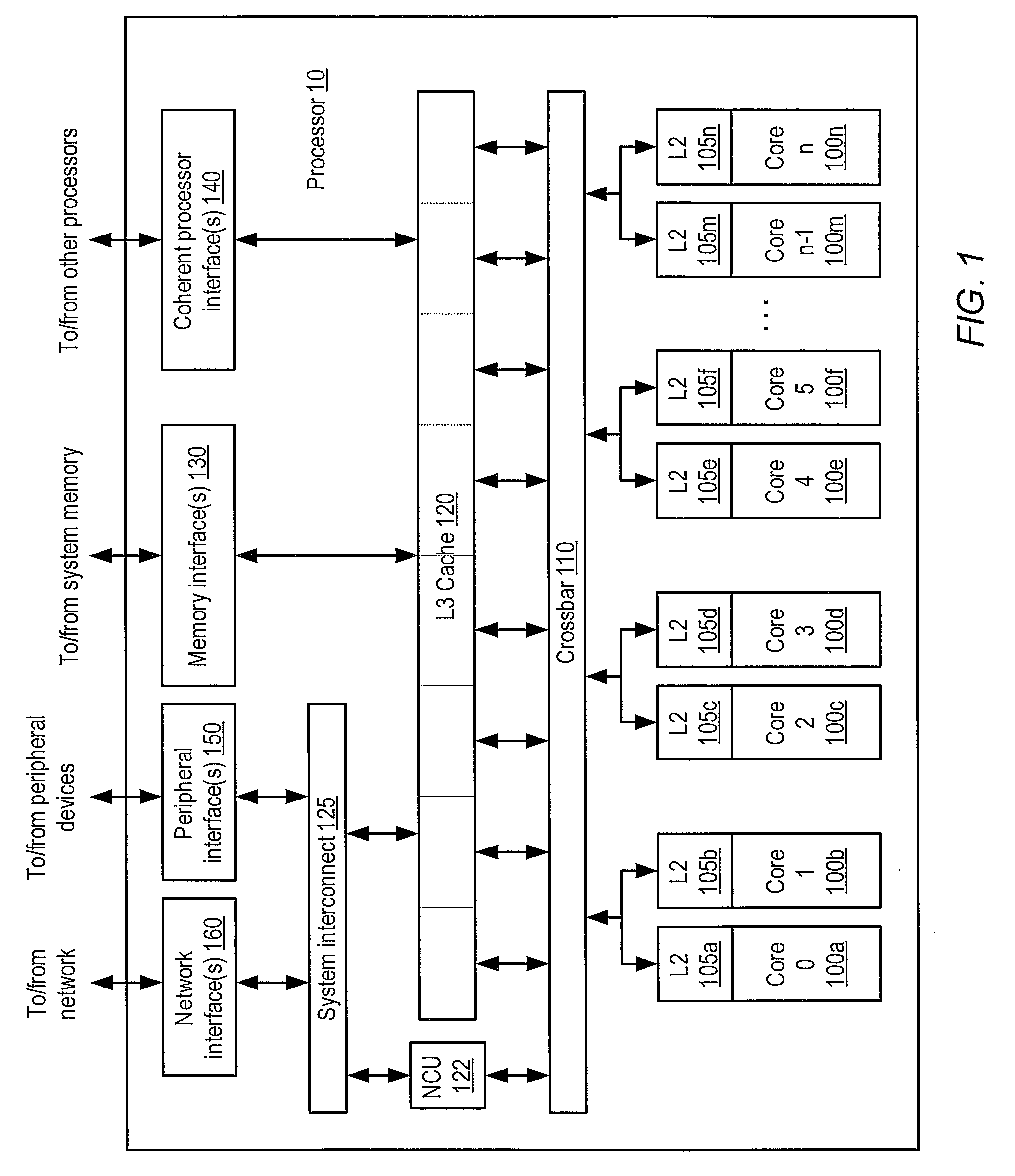

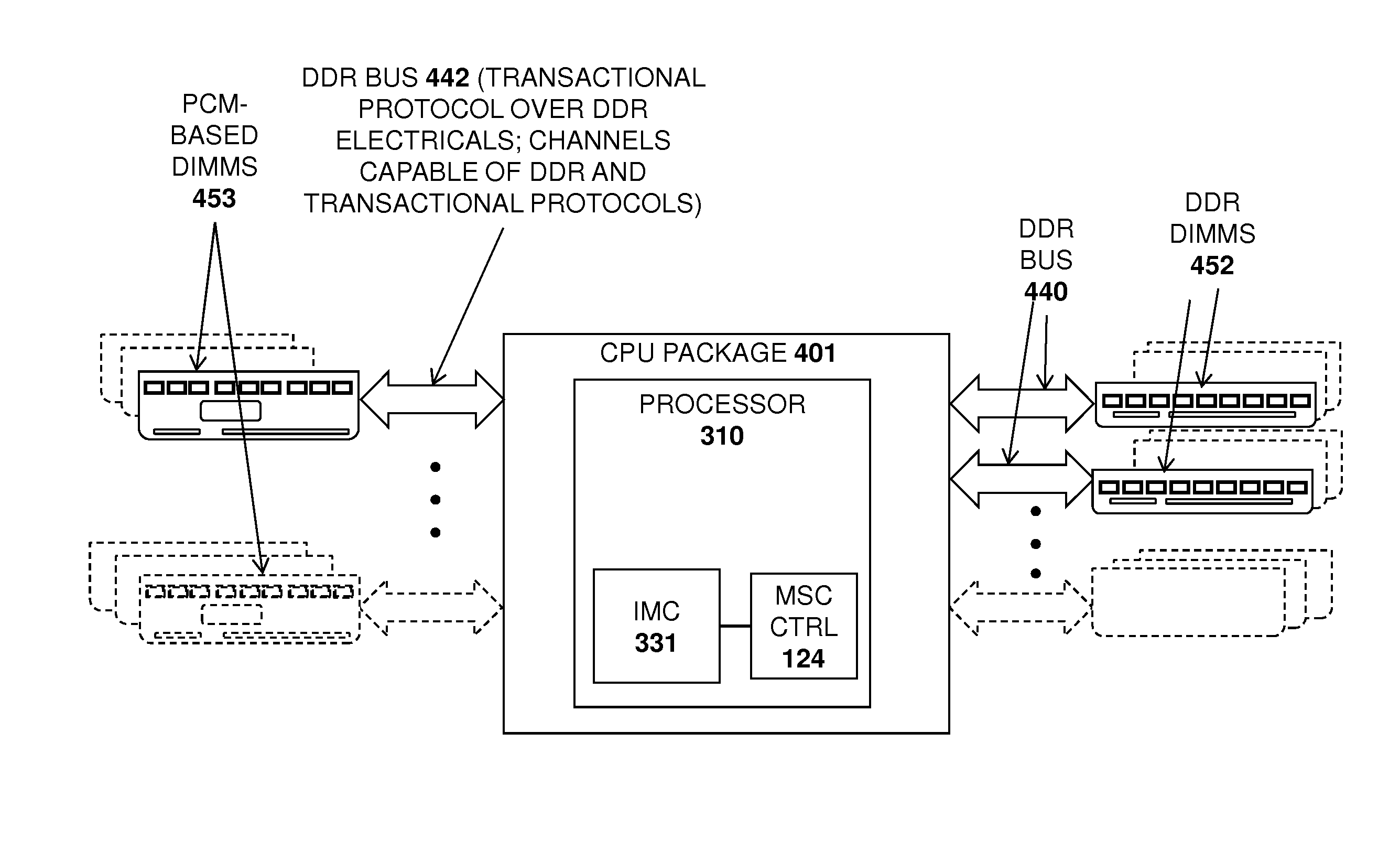

Apparatus and method for implementing a multi-level memory hierarchy over common memory channels

ActiveUS9317429B2Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory hierarchyParallel computing

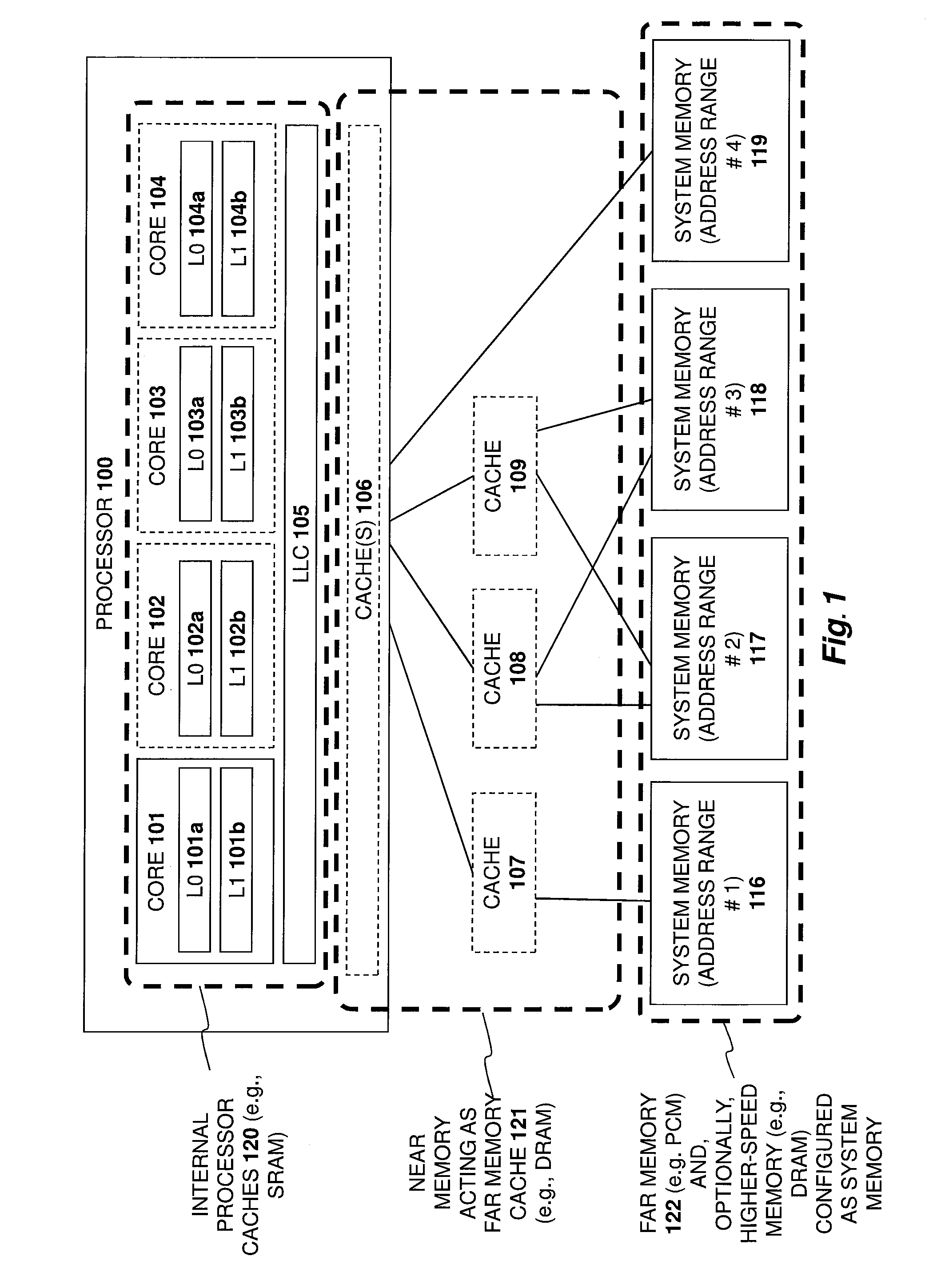

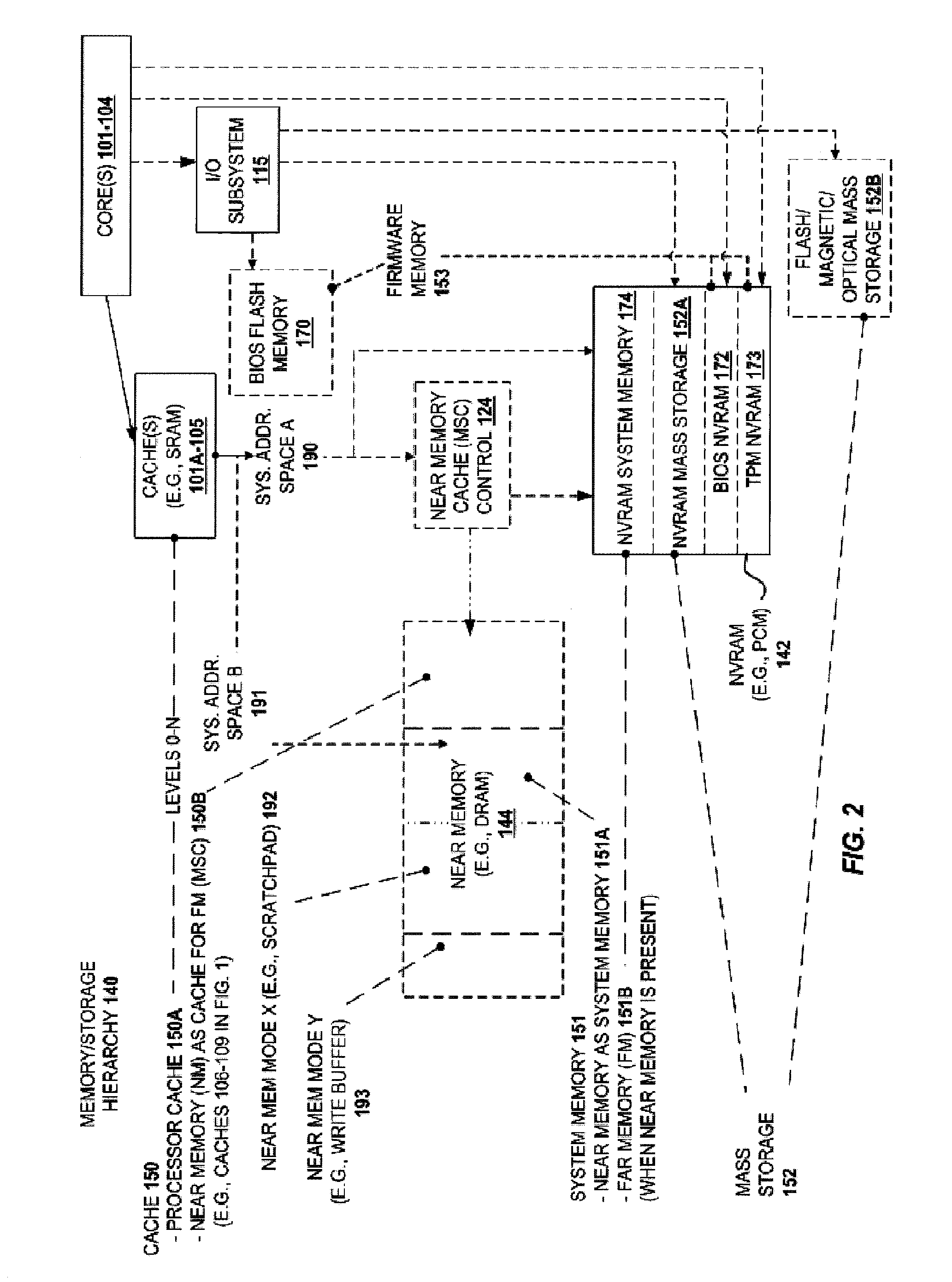

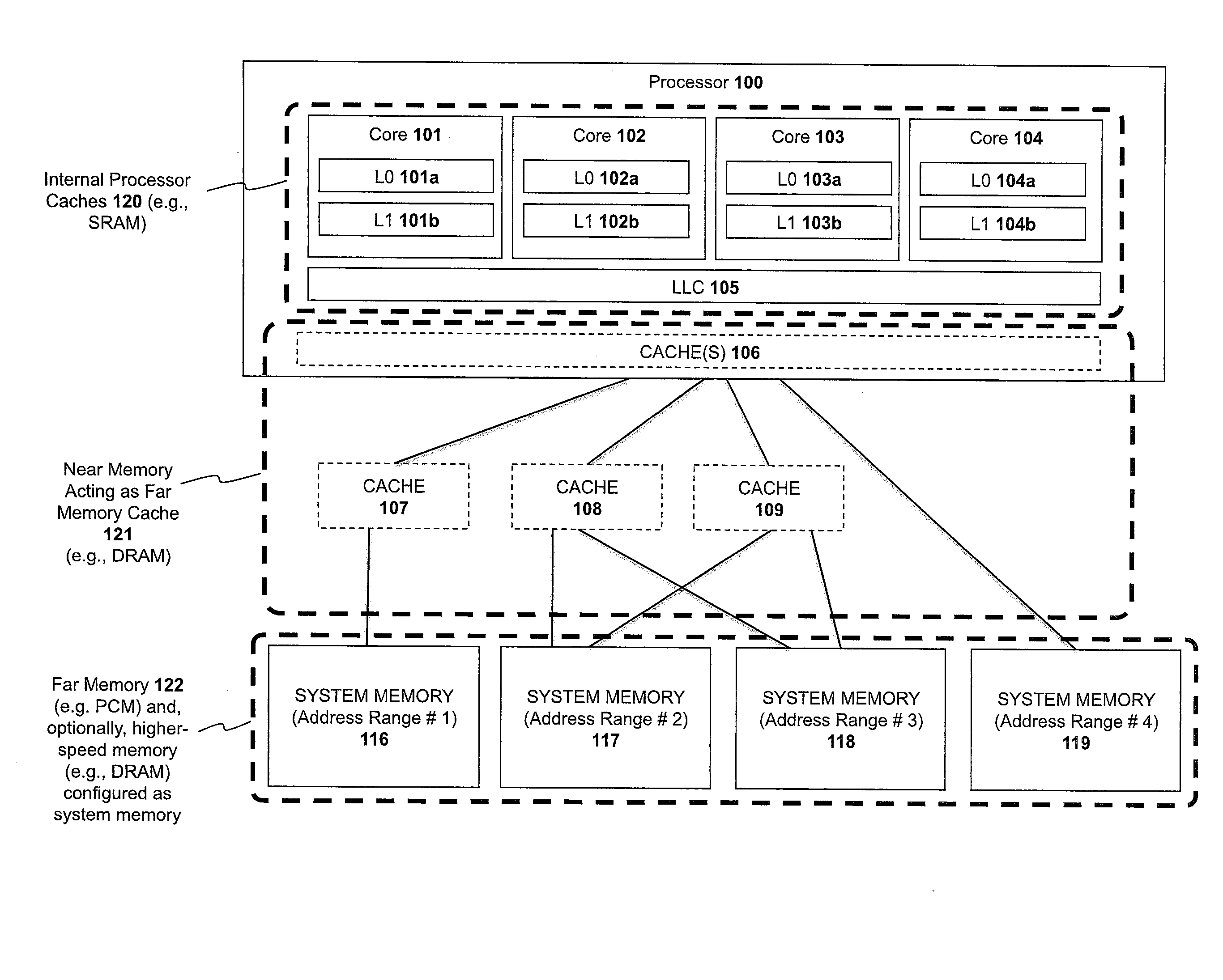

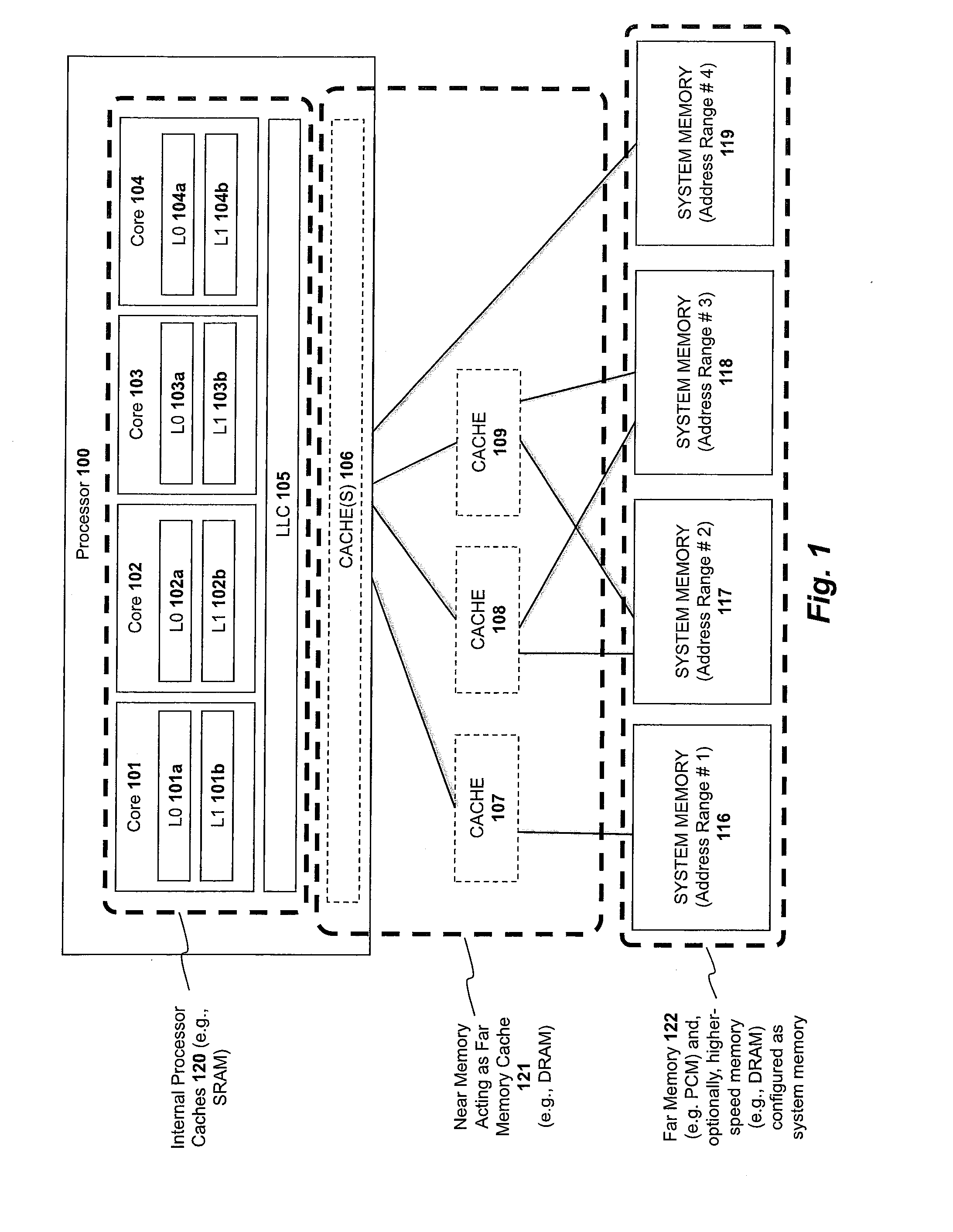

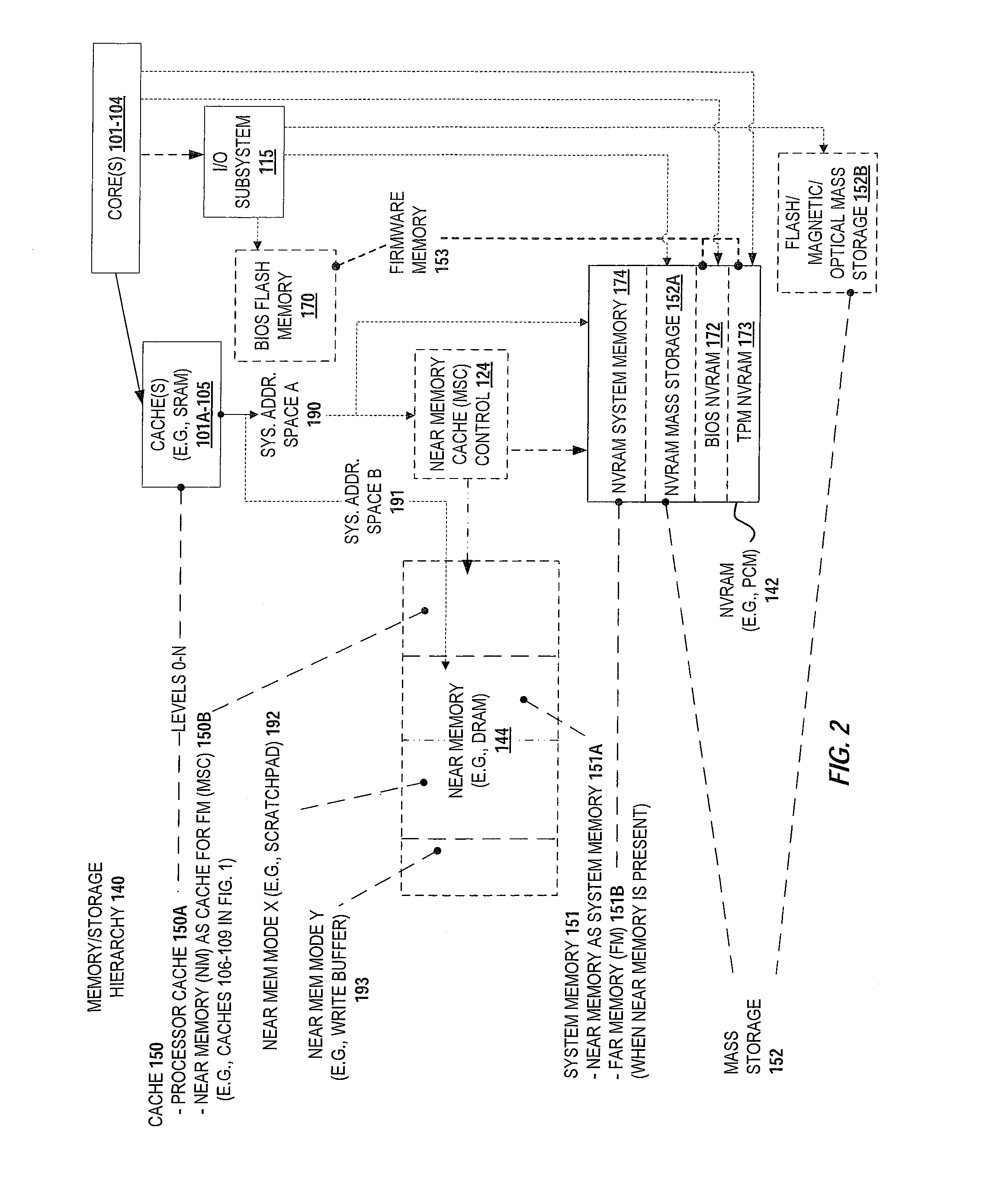

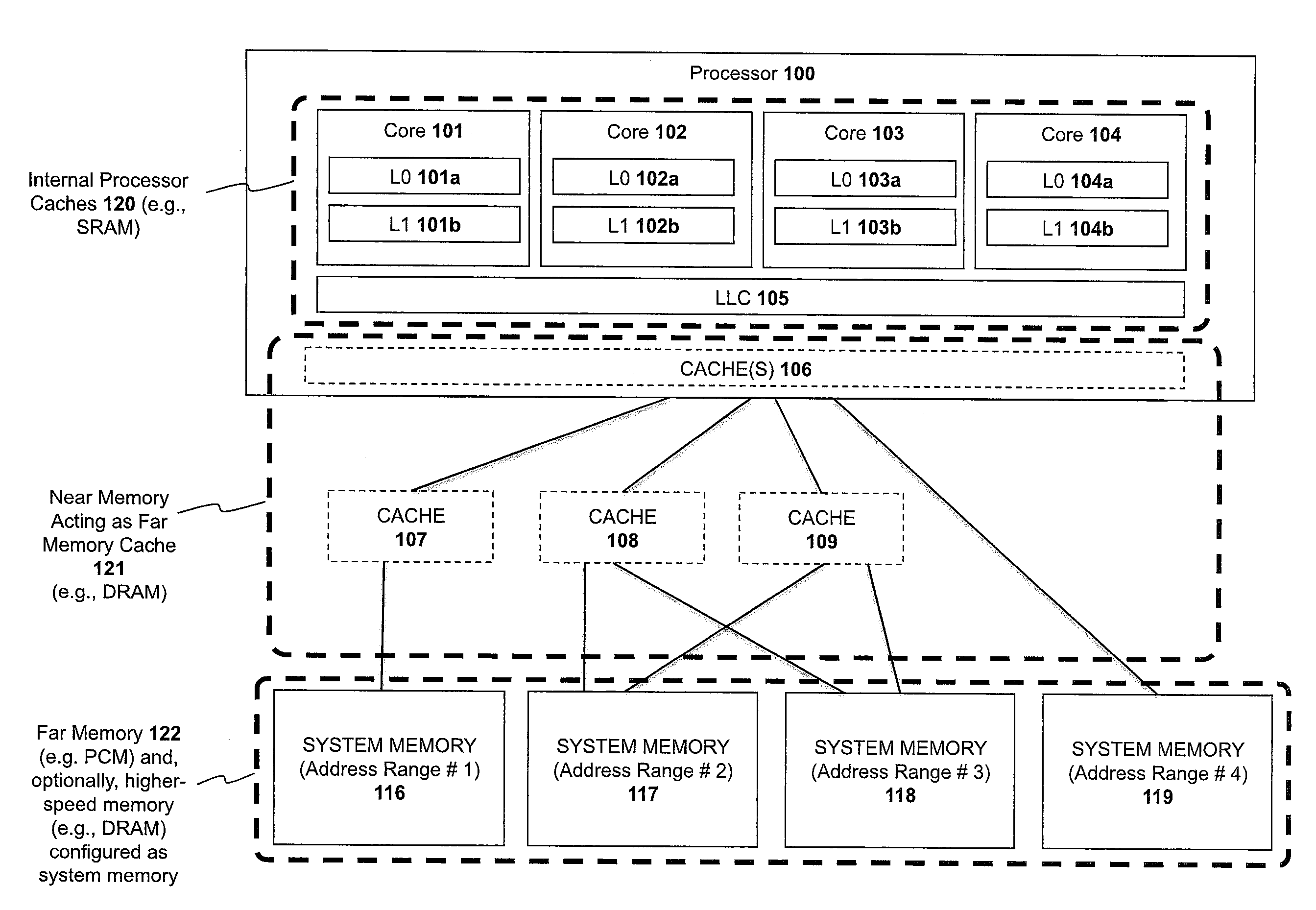

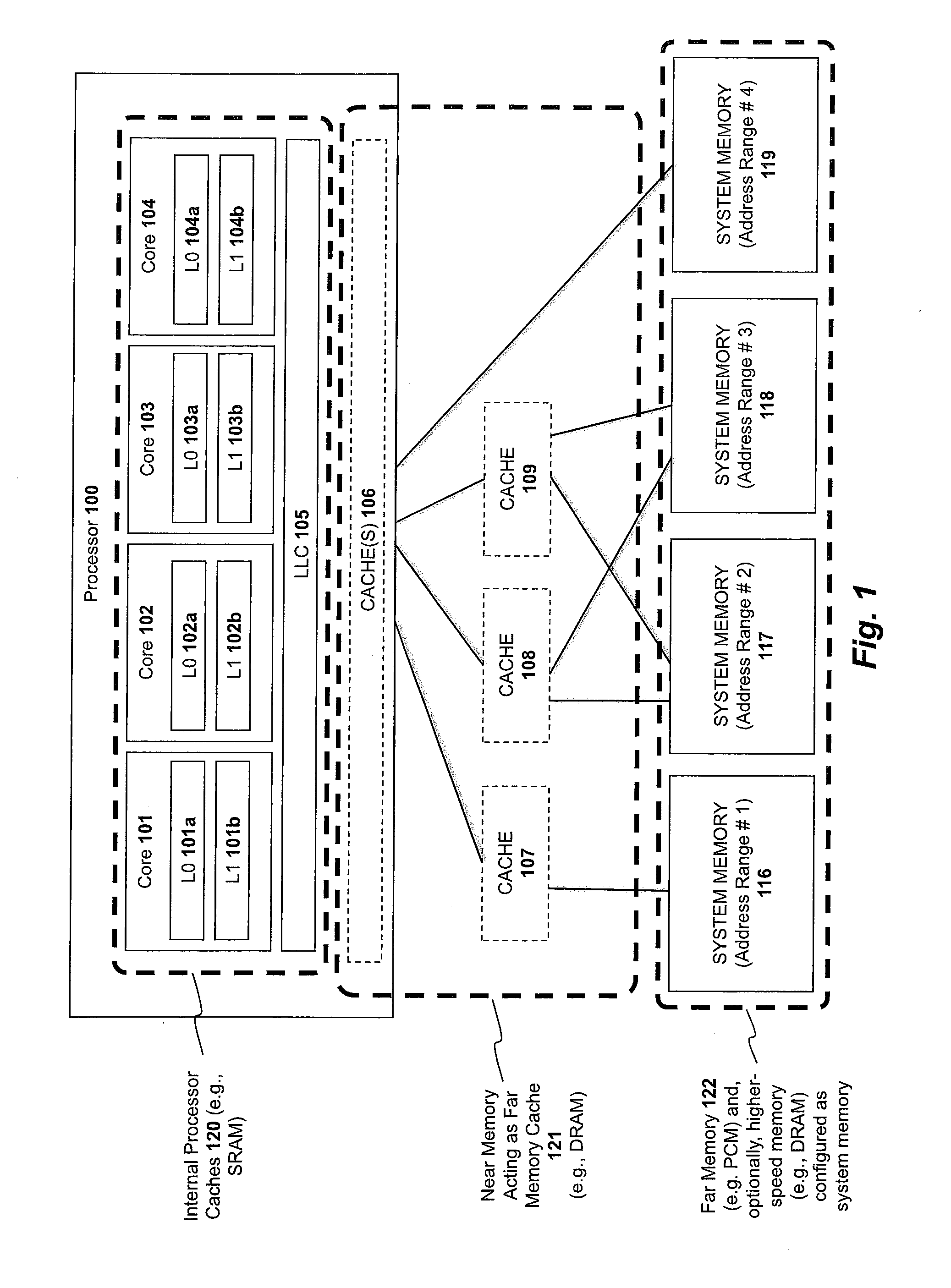

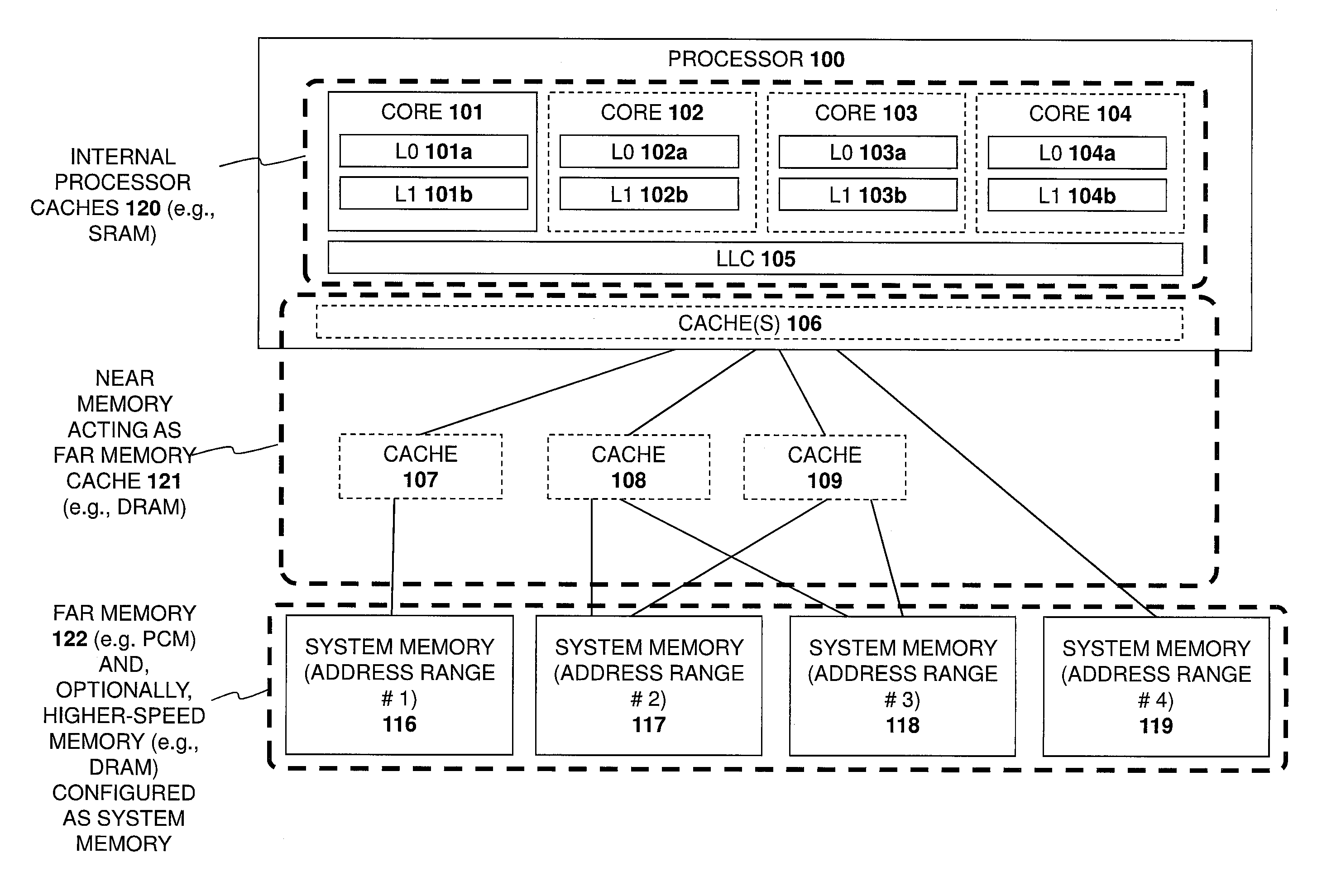

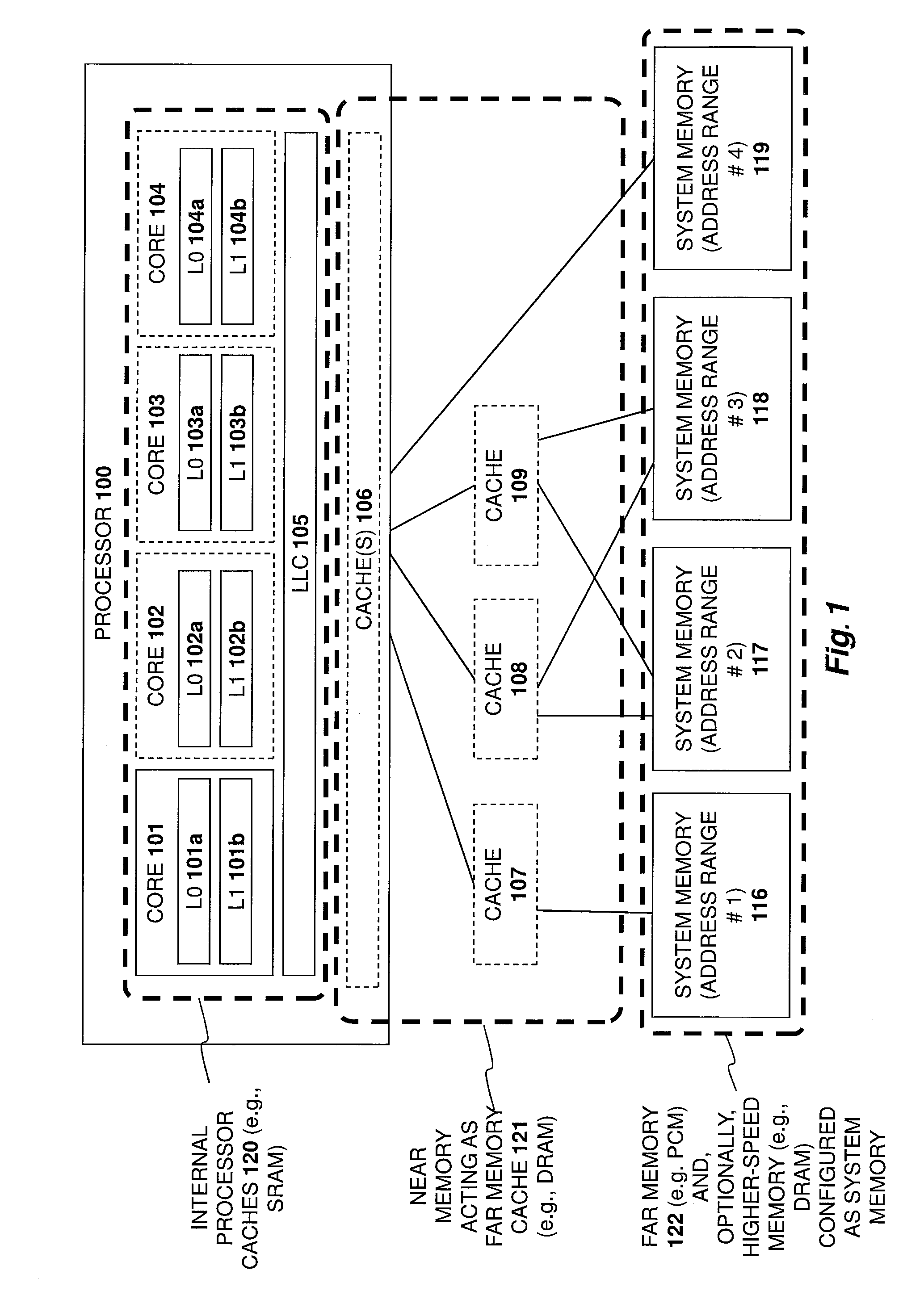

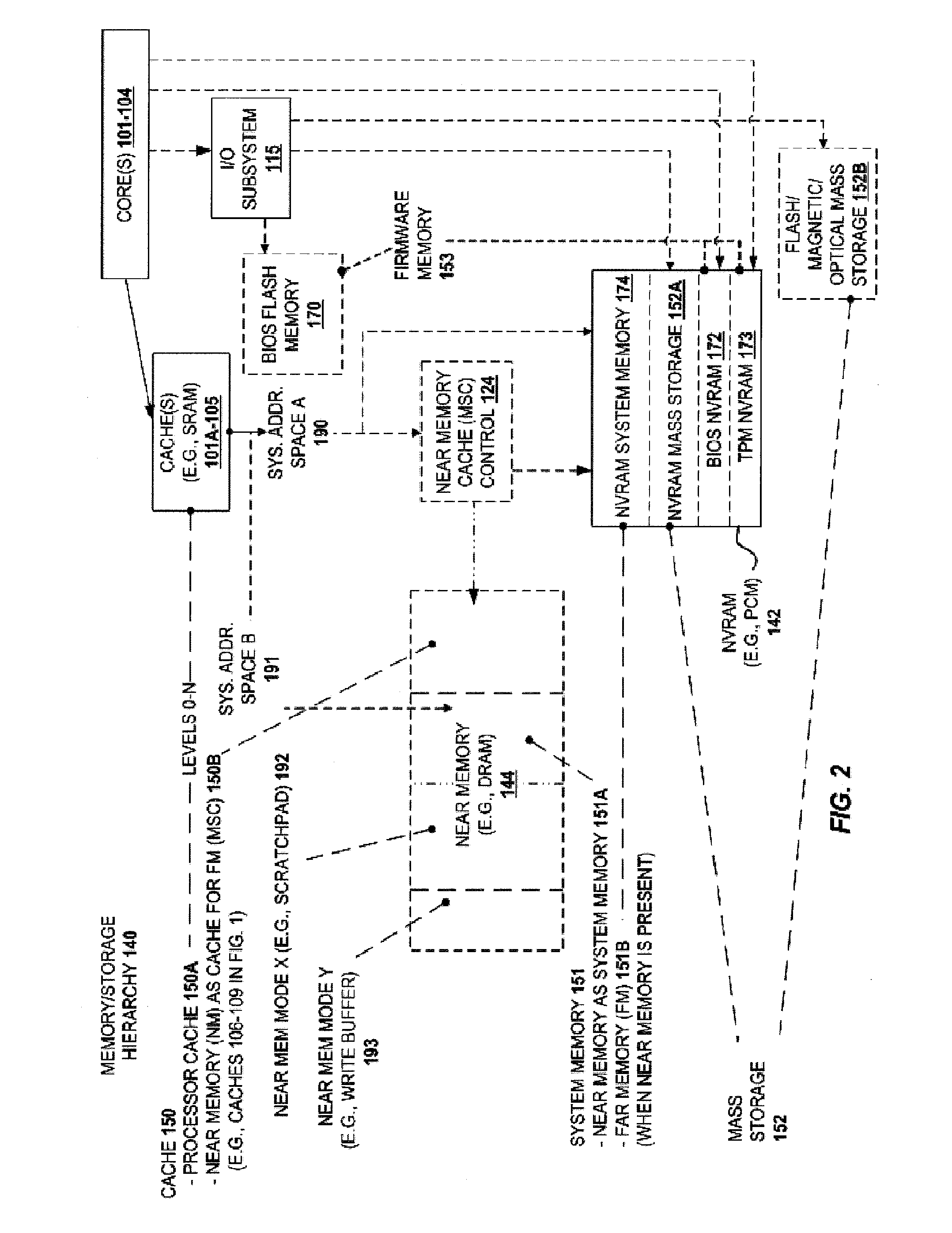

A system and method are described for integrating a memory and storage hierarchy including a non-volatile memory tier within a computer system. In one embodiment, PCMS memory devices are used as one tier in the hierarchy, sometimes referred to as “far memory.” Higher performance memory devices such as DRAM placed in front of the far memory and are used to mask some of the performance limitations of the far memory. These higher performance memory devices are referred to as “near memory.”

Owner:INTEL CORP

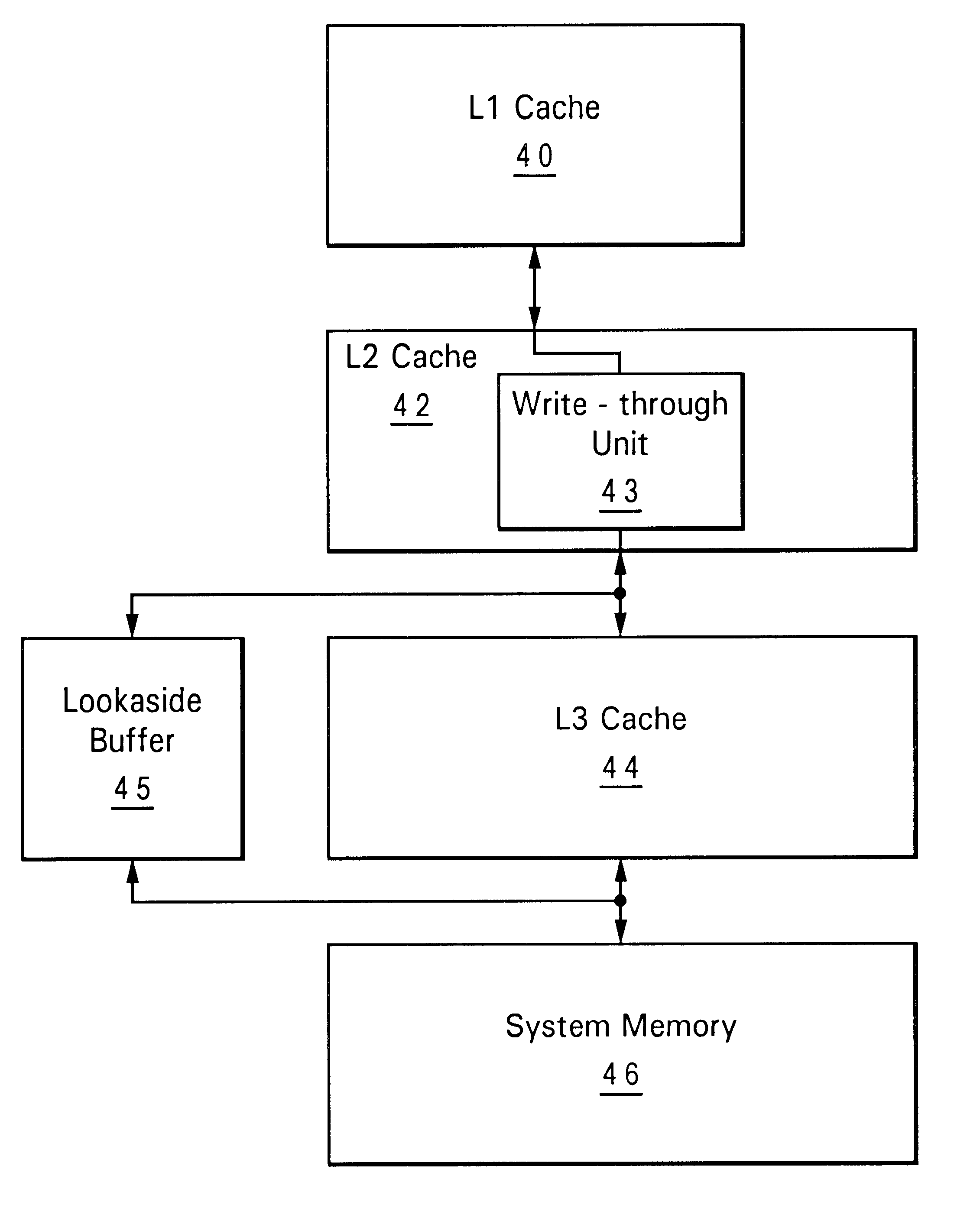

Method and system for bypassing cache levels when casting out from an upper level cache

InactiveUS6356980B1Easy to useMemory adressing/allocation/relocationMemory hierarchyParallel computing

A method and system for bypassing cache levels when storing data castout from an upper level cache provides a memory hierarchy that can selectively skip one more more intermediate levels when writing castout entries from a higher level cache based on a number of detected conditions. The intermediate levels may be bypassed when an intermediate cache level is busy, has an entry with an address conflict with the castout value, or may skip levels based on program control. The control providing the skipping selection may be driven by a detector that analyzes load / store operations of a processor in order to produce efficient operation under changing memory use conditions.

Owner:IBM CORP

Method for prefetching recursive data structure traversals

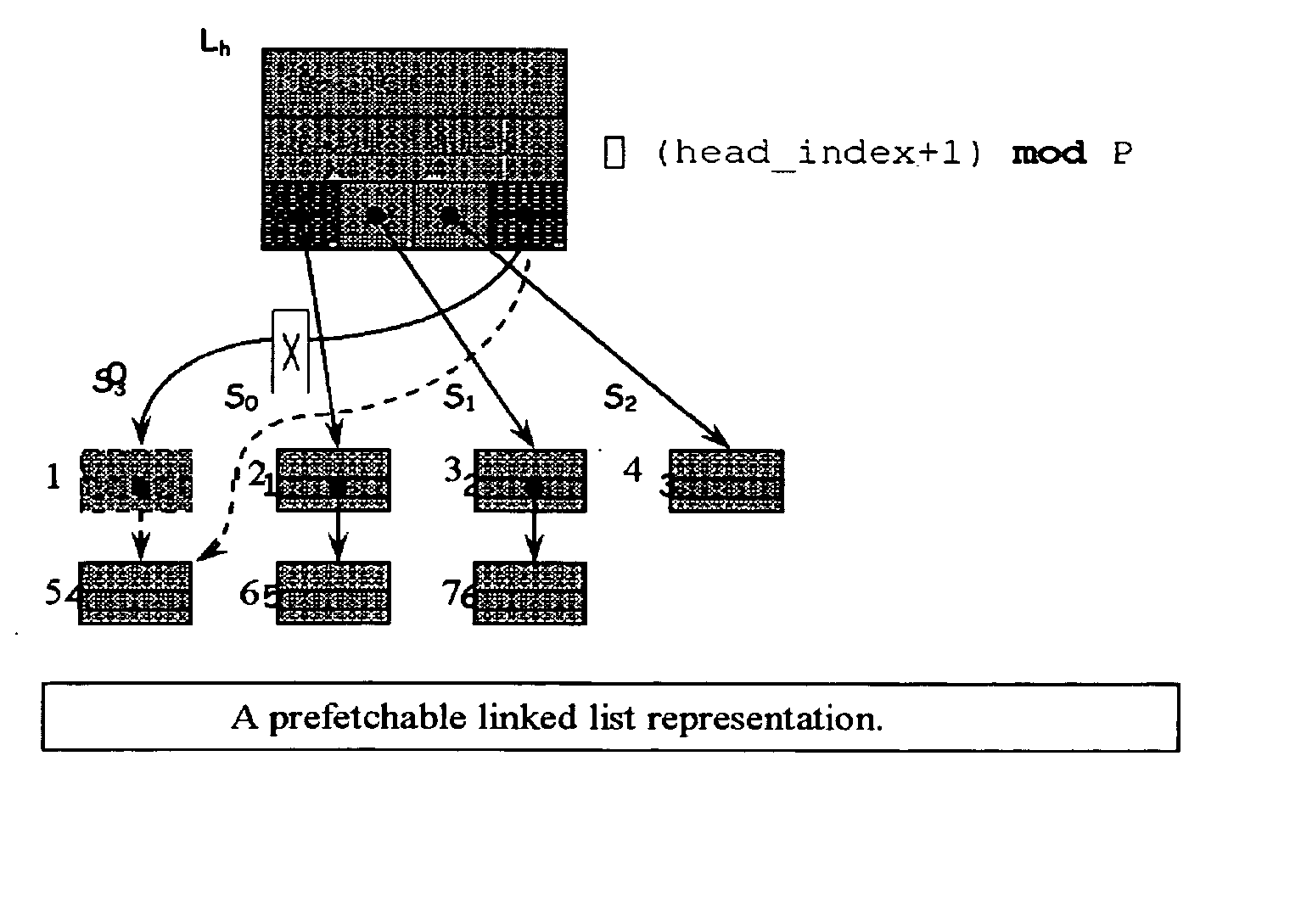

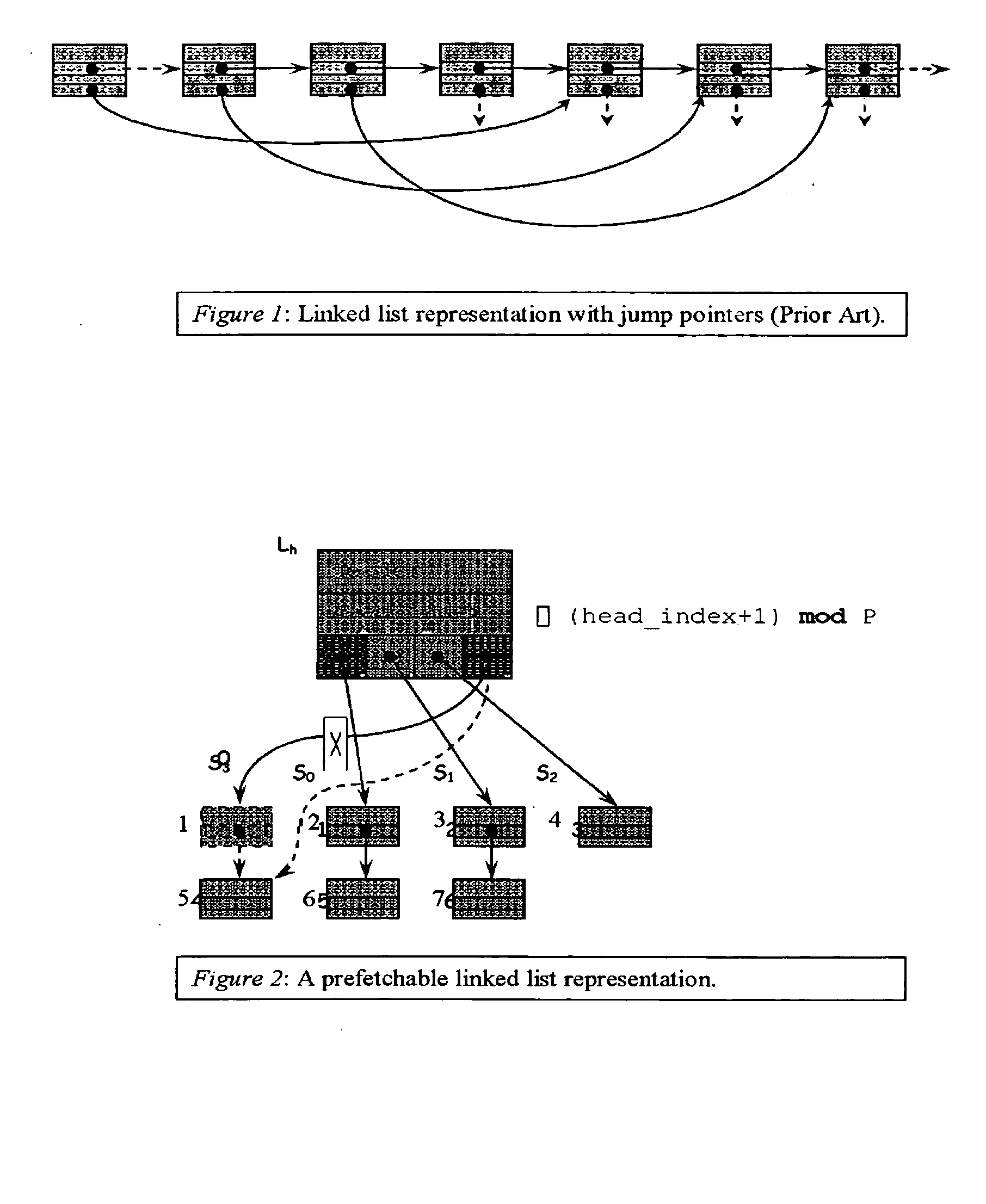

InactiveUS20050102294A1Improve cache hit ratioPotential throughput of the computer systemDigital data information retrievalData processing applicationsOperational systemTerm memory

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. In modern transaction processing systems, database servers, operating systems, and other commercial and engineering applications, information is frequently organized in trees, graphs, and linked lists. Lack of spatial locality results in a high probability that a miss will be incurred at each cache in the memory hierarchy. Each cache miss causes the processor to stall while the referenced value is fetched from lower levels of the memory hierarchy. Because this is likely to be the case for a significant fraction of the nodes traversed in the data structure, processor utilization suffers. The inability to compute the address of the next address to be referenced makes prefetching difficult in such applications. The invention allows compilers and / or programmers to restructure data structures and traversals so that pointers are dereferenced in a pipelined manner, thereby making it possible to schedule prefetch operations in a consistent fashion. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. For data structure traversals in which the traversal path may be predetermined, a transformation is performed on the data structure that permits references to nodes that will be traversed in the future be computed sufficiently far in advance to prefetch the data into cache.

Owner:DIGITAL CACHE LLC

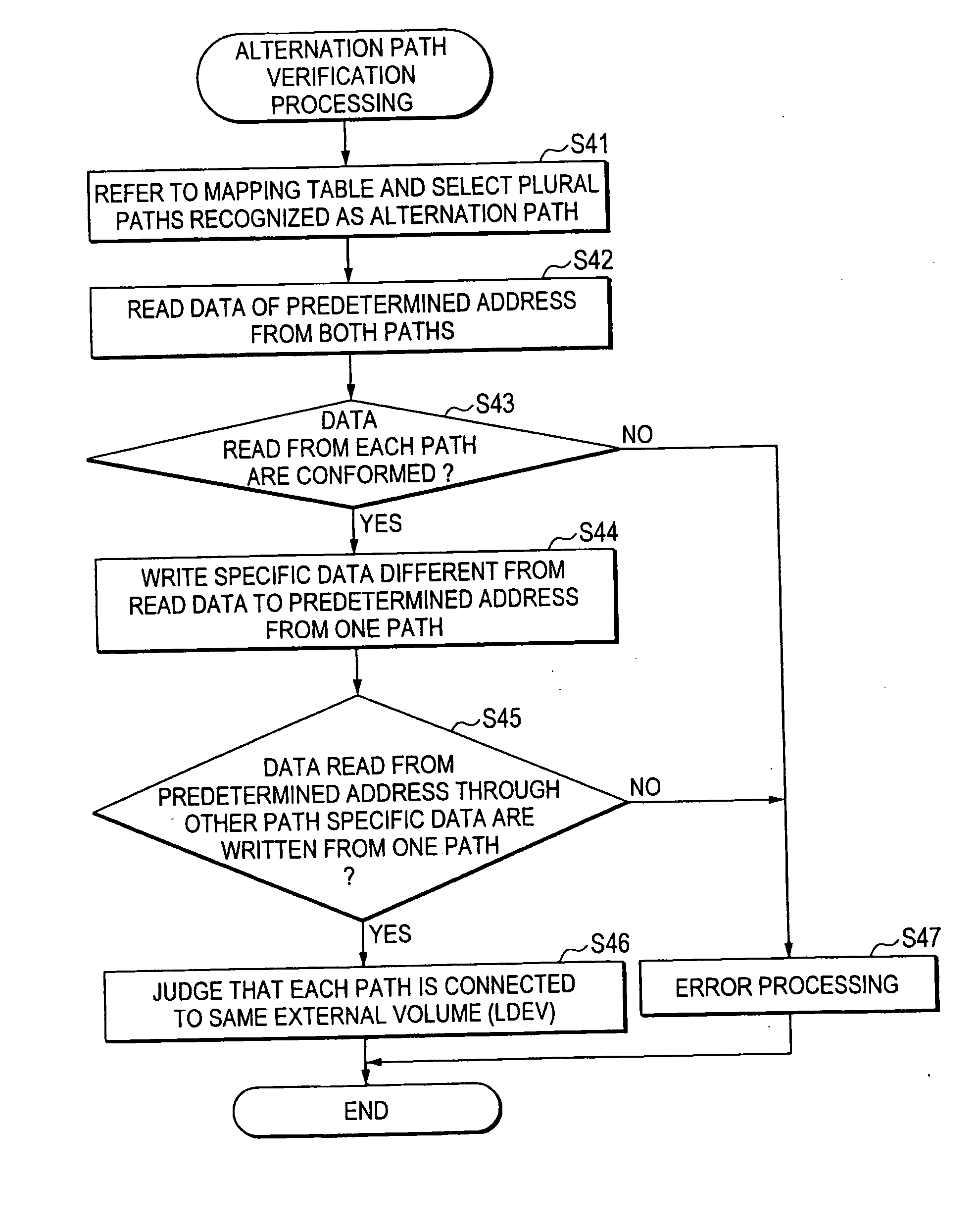

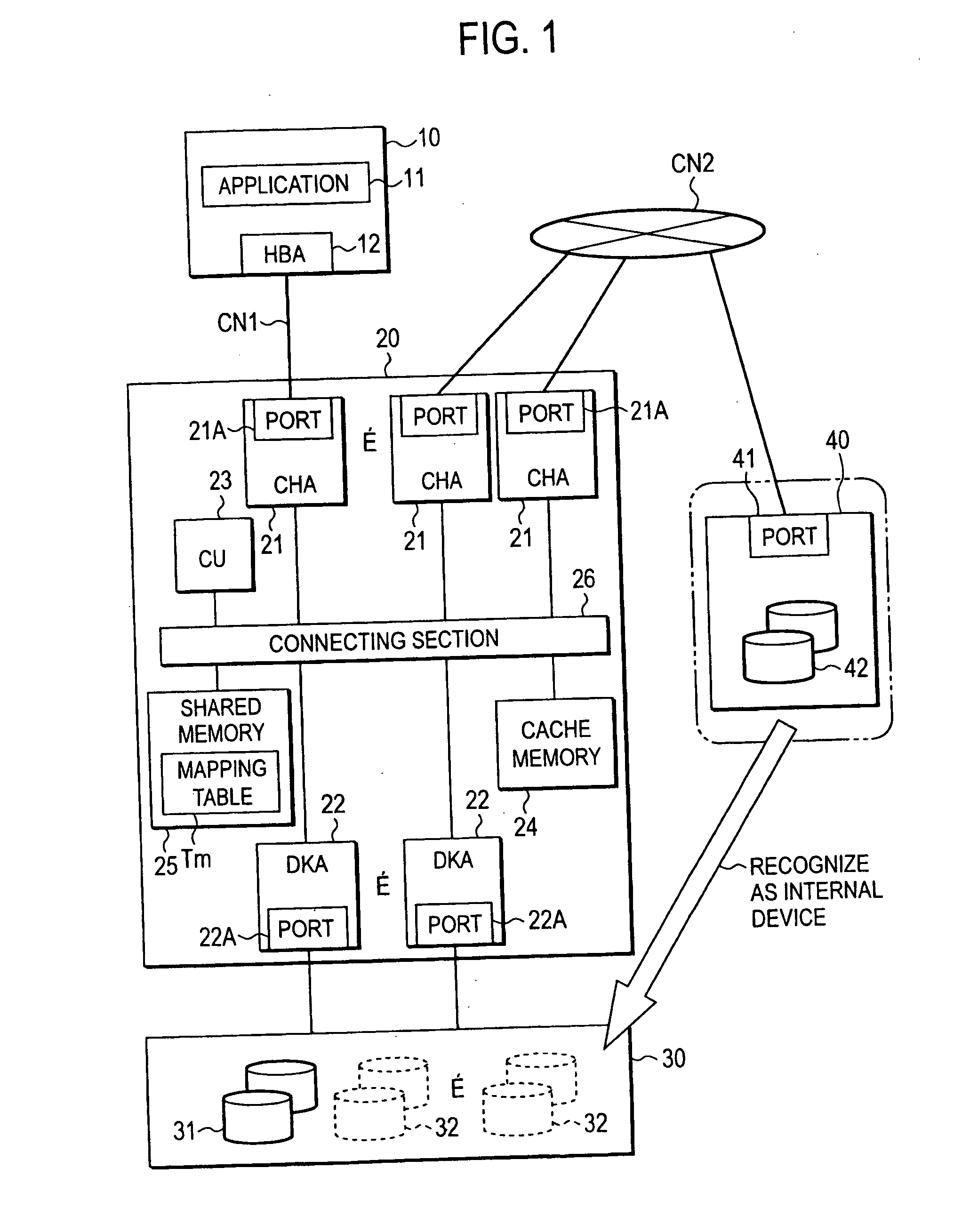

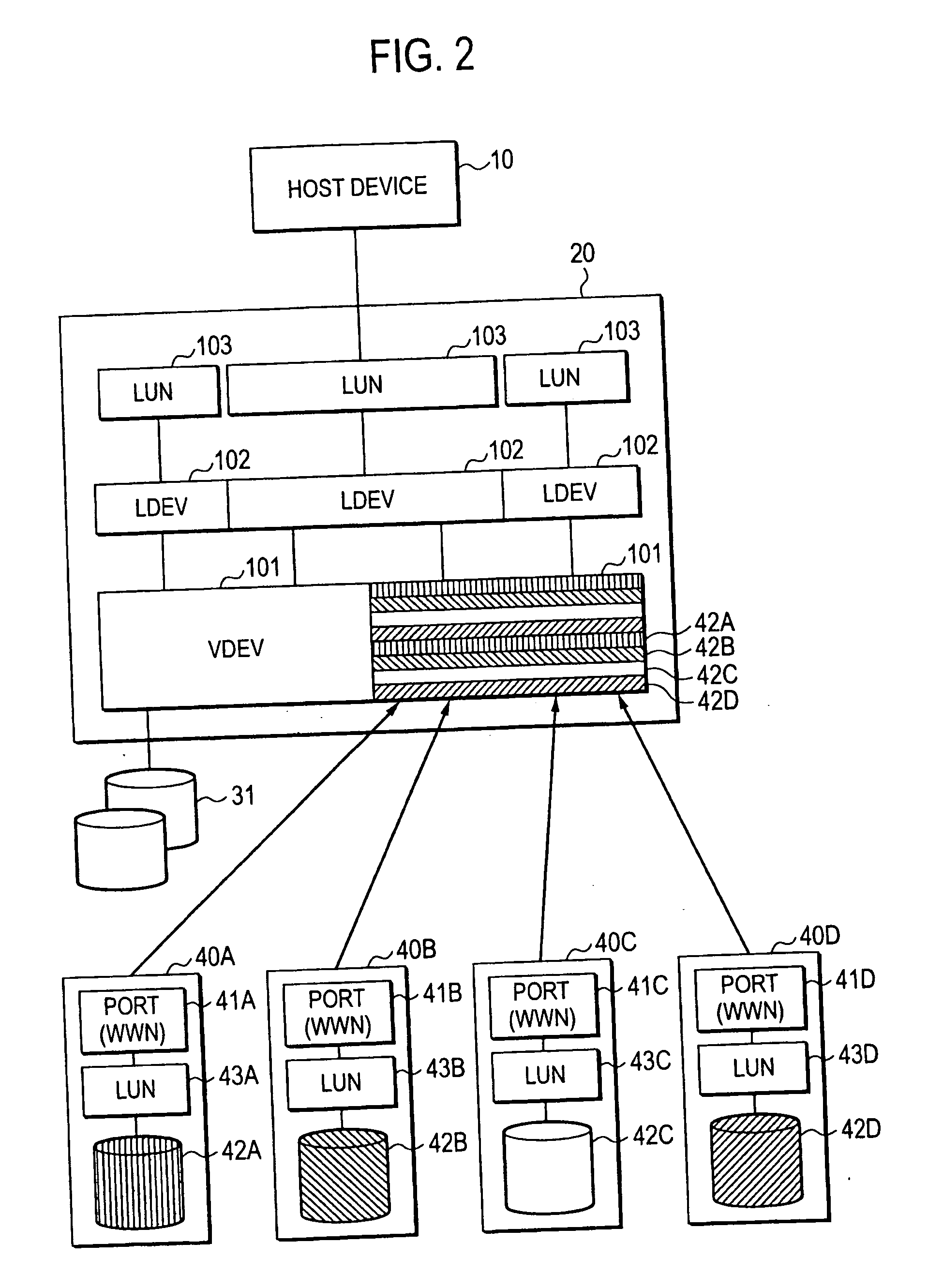

Storage system and storage controller

ActiveUS20050071559A1Efficient use ofInput/output to record carriersMemory loss protectionMemory hierarchyRAID

Memory resources are effectively utilized by visually setting an external memory resource as an internal memory resource. A first storage controller has a multilayer memory hierarchy constructed by LDEV (logical device) connected from LUN, and VDEV (virtual device) connected to the lower order of the LDEV. At least one of the VDEVs is constructed by mapping the memory resources arranged in external storage controllers. The functions of a stripe, RAID, etc. can be added in the mapping. Various kinds of functions (remote copy, variable volume function, etc.) applicable to the normal internal volume can be also used in a virtual internal volume by using the external memory resource as the virtual internal memory resource so that the degree of freedom of utilization is raised.

Owner:HITACHI LTD

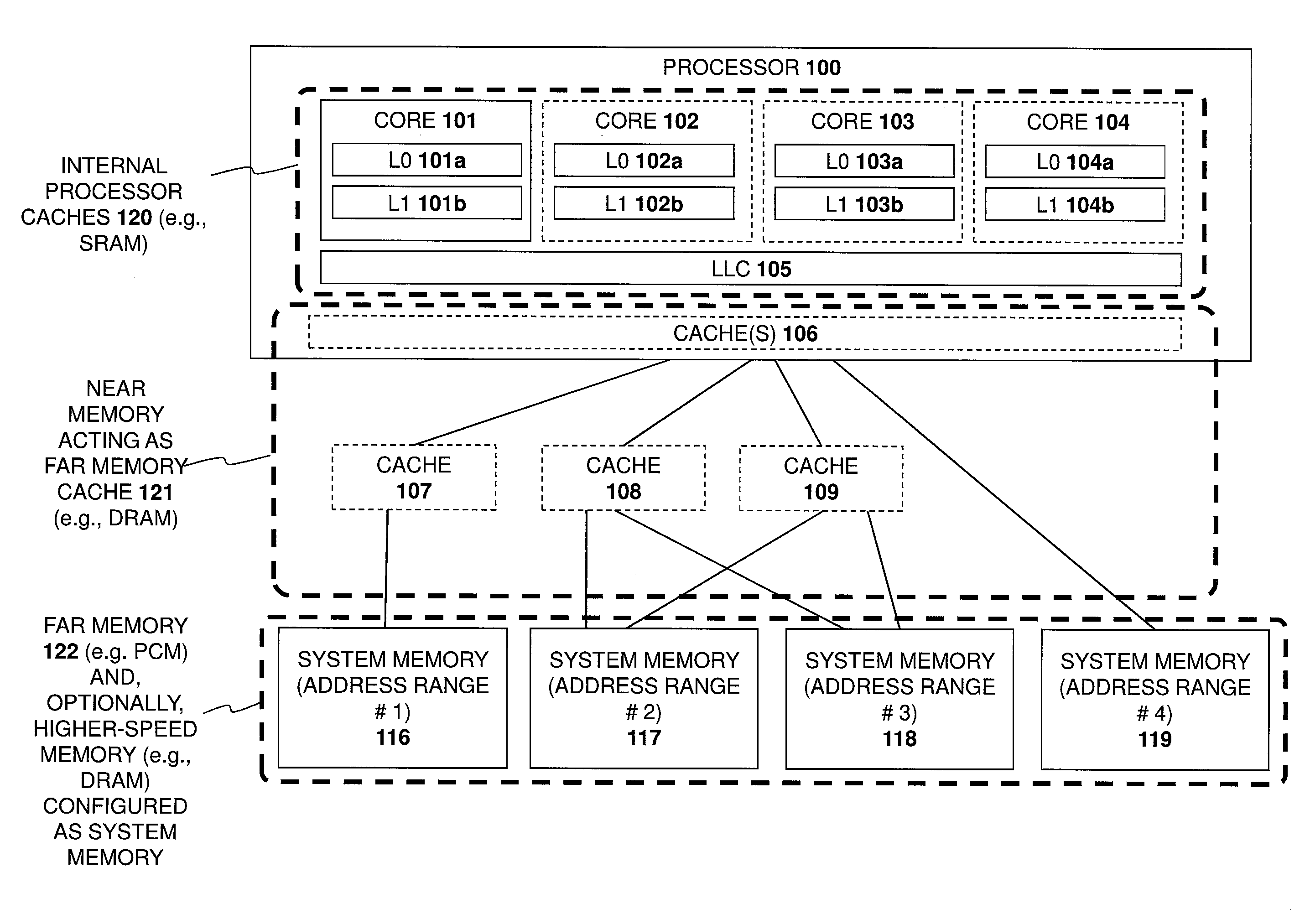

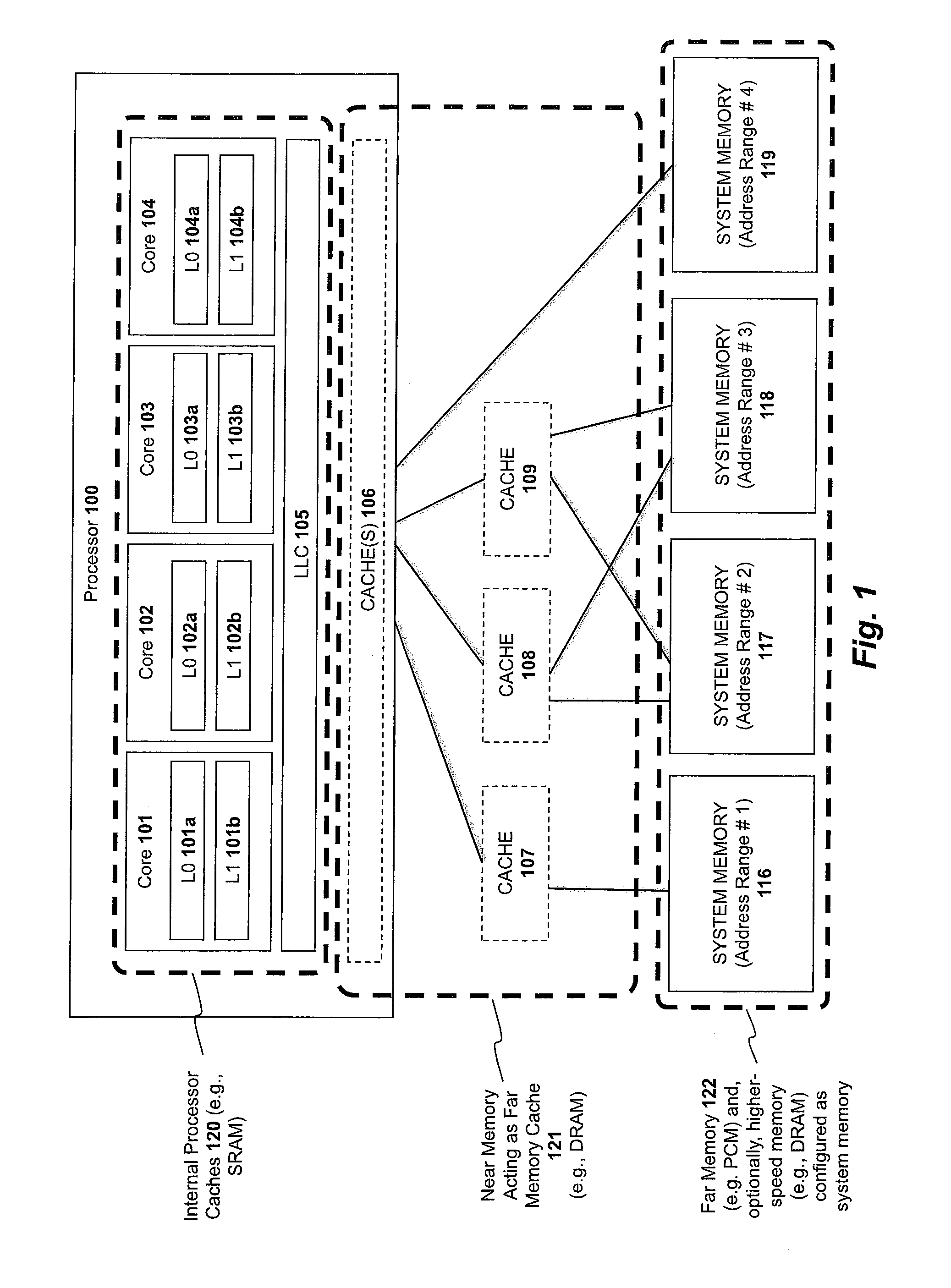

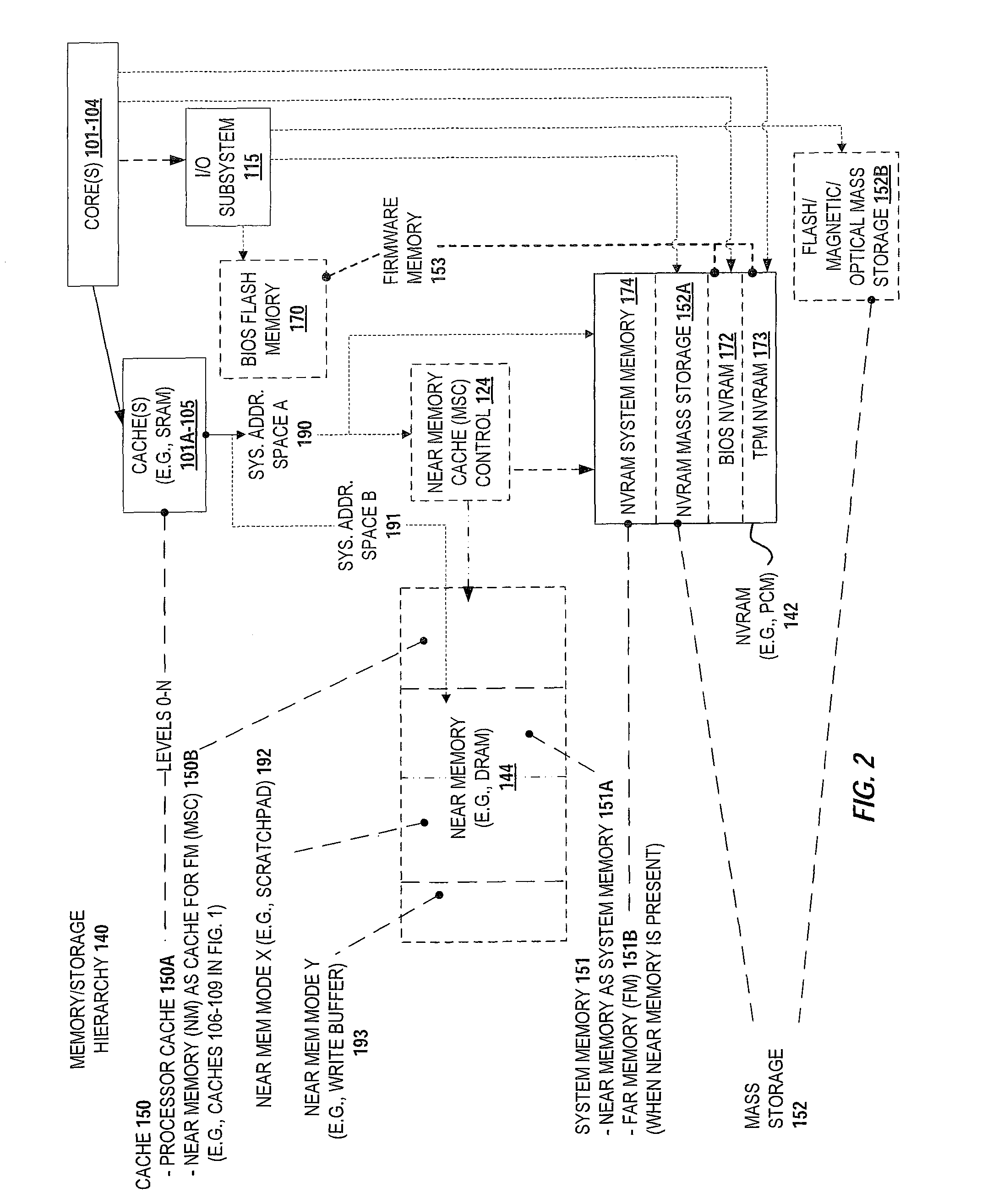

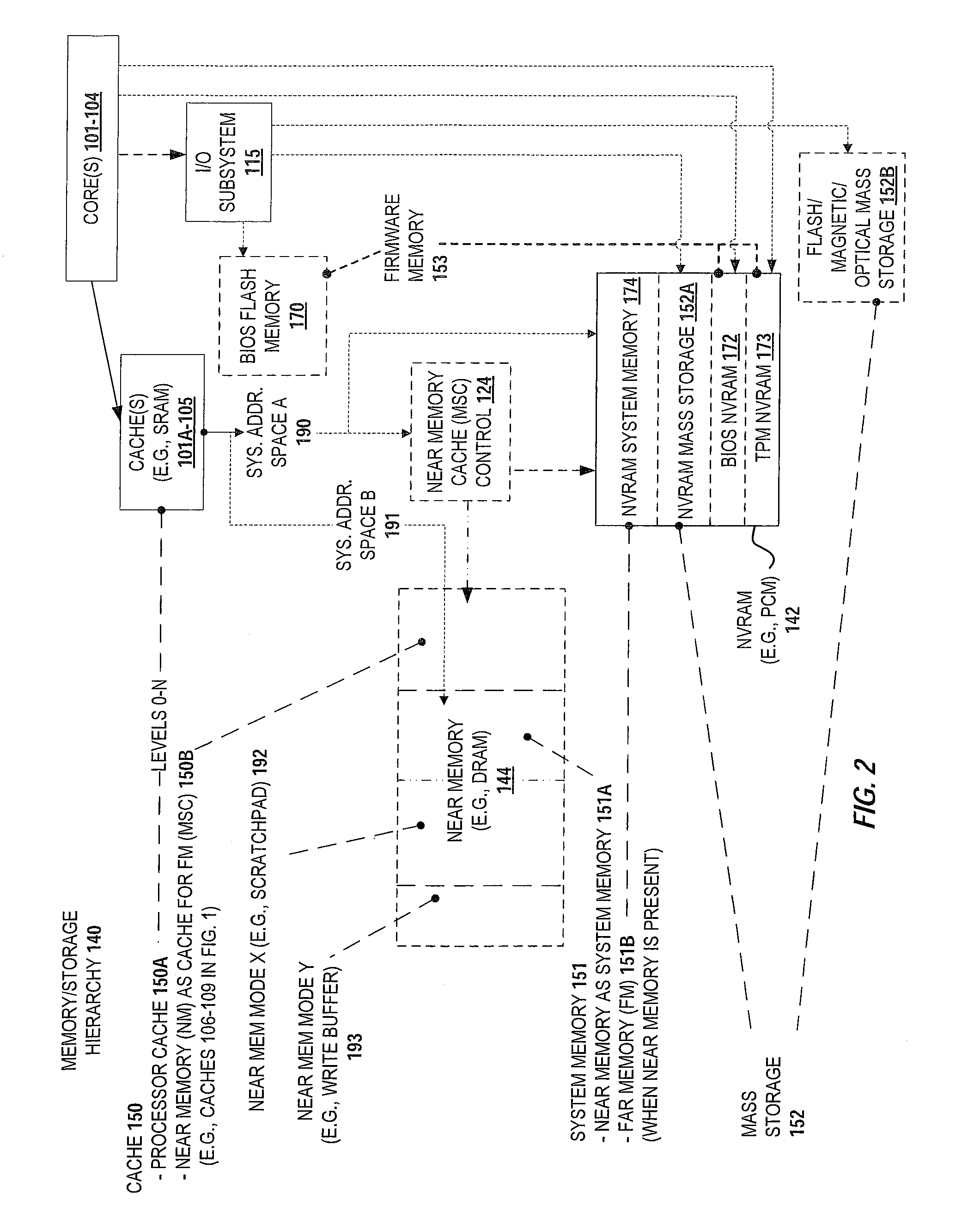

Apparatus and method for implementing a multi-level memory hierarchy having different operating modes

ActiveUS20130268728A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory hierarchyMultilevel memory

A system and method are described for integrating a memory and storage hierarchy including a non-volatile memory tier within a computer system. In one embodiment, PCMS memory devices are used as one tier in the hierarchy, sometimes referred to as “far memory.” Higher performance memory devices such as DRAM placed in front of the far memory and are used to mask some of the performance limitations of the far memory. These higher performance memory devices are referred to as “near memory.” In one embodiment, the “near memory” is configured to operate in a plurality of different modes of operation including (but not limited to) a first mode in which the near memory operates as a memory cache for the far memory and a second mode in which the near memory is allocated a first address range of a system address space with the far memory being allocated a second address range of the system address space, wherein the first range and second range represent the entire system address space.

Owner:SK HYNIX NAND PROD SOLUTIONS CORP

Integrated cache and directory structure for multi-level caches

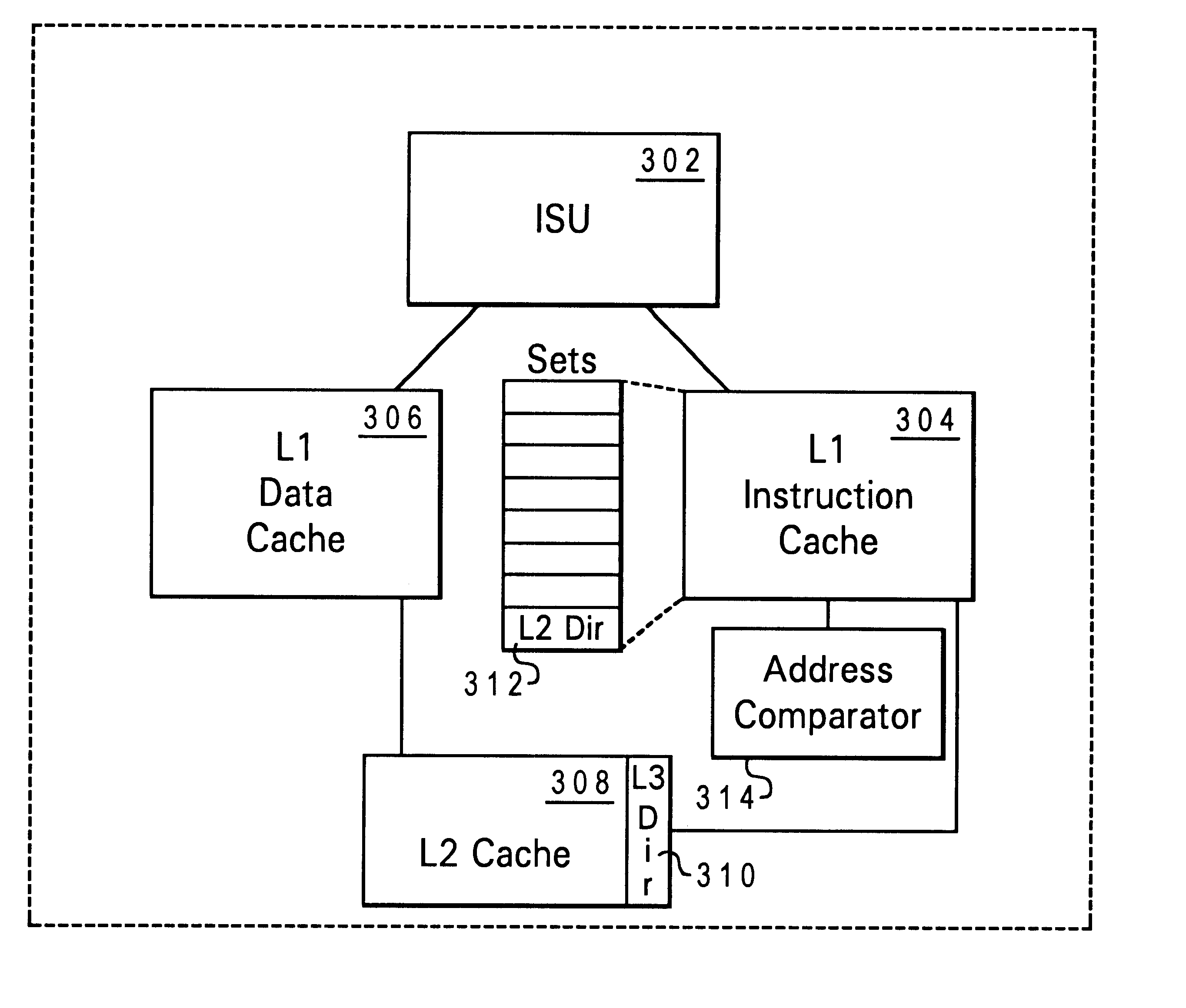

A method of operating a multi-level memory hierarchy of a computer system and an apparatus embodying the method, wherein multiple levels of storage subsystems are used to improve the performance of the computer system, each next higher level generally having a faster access time, but a smaller amount of storage. Values within a level are indexed by a directory that provides an indexing of information relating the values in that level to the next lower level. In a preferred embodiment of the invention, the directories for the various levels of storage are contained within the next higher level, providing a faster access to the directory information. Cache memories used as the highest levels of storage, and one or more sets are allocated out of that cache memory for containing a directory of the next lower level of storage. An address comparator which is used to compare entries in a directory to address values is directly coupled to the set or sets used for the directory, reducing the time needed to compare addresses in determining whether an address is present in the cache.

Owner:IBM CORP

Method and apparatus for stream buffer management instructions

ActiveUS20120137074A1Memory architecture accessing/allocationMemory loss protectionMemory hierarchyMemory address

A method and system to perform stream buffer management instructions in a processor. The stream buffer management instructions facilitate the creation and usage of a dedicated memory space or stream buffer of the processor in one embodiment of the invention. The dedicated memory space is a contiguous memory space and has a sequential or linear addressing scheme in one embodiment of the invention. The processor has logic to execute a stream buffer management instruction to copy data from a source memory address to a destination memory address that is specified with a desired level of memory hierarchy.

Owner:INTEL CORP

Computer system including plural caches and utilizing access history or patterns to determine data ownership for efficient handling of software locks

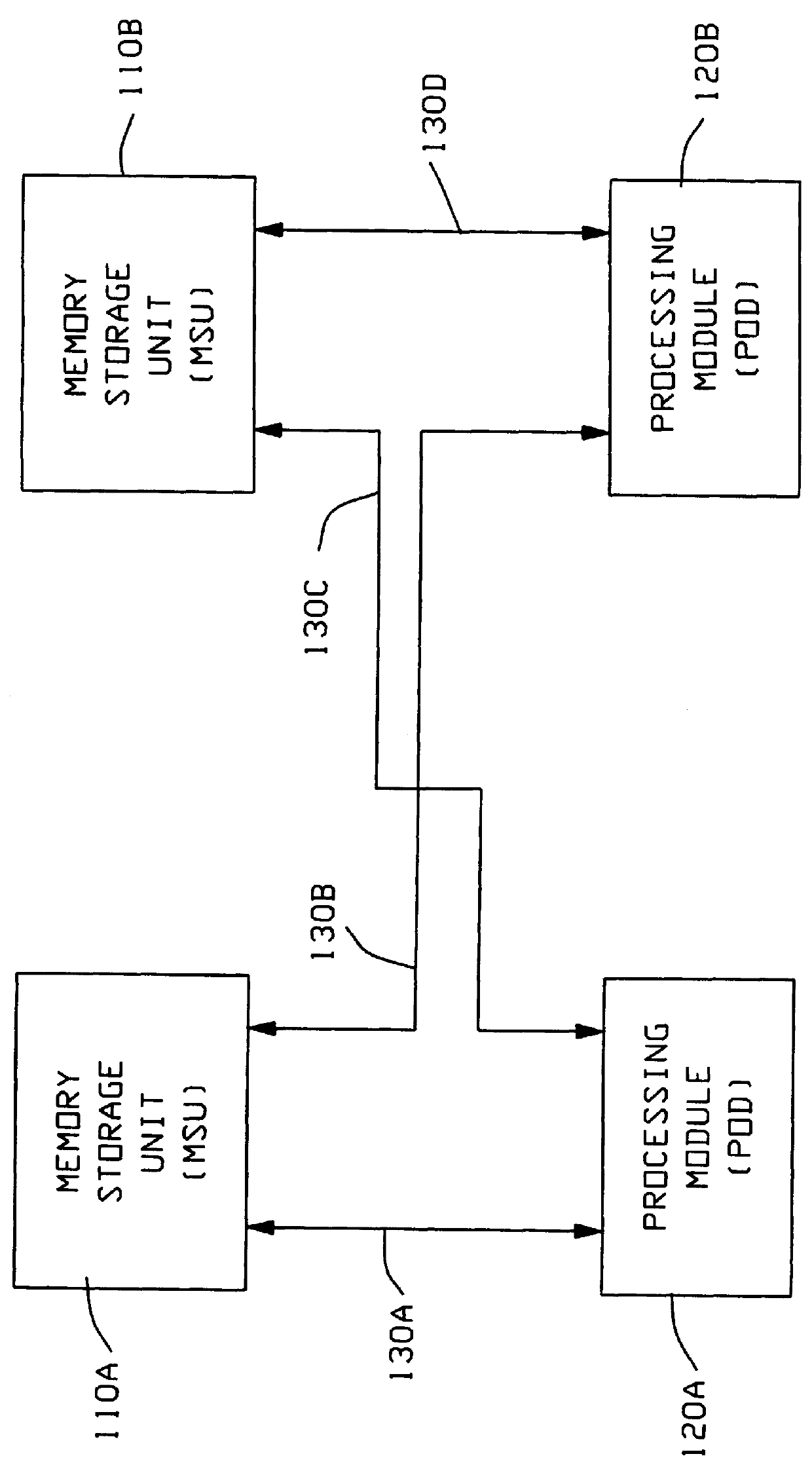

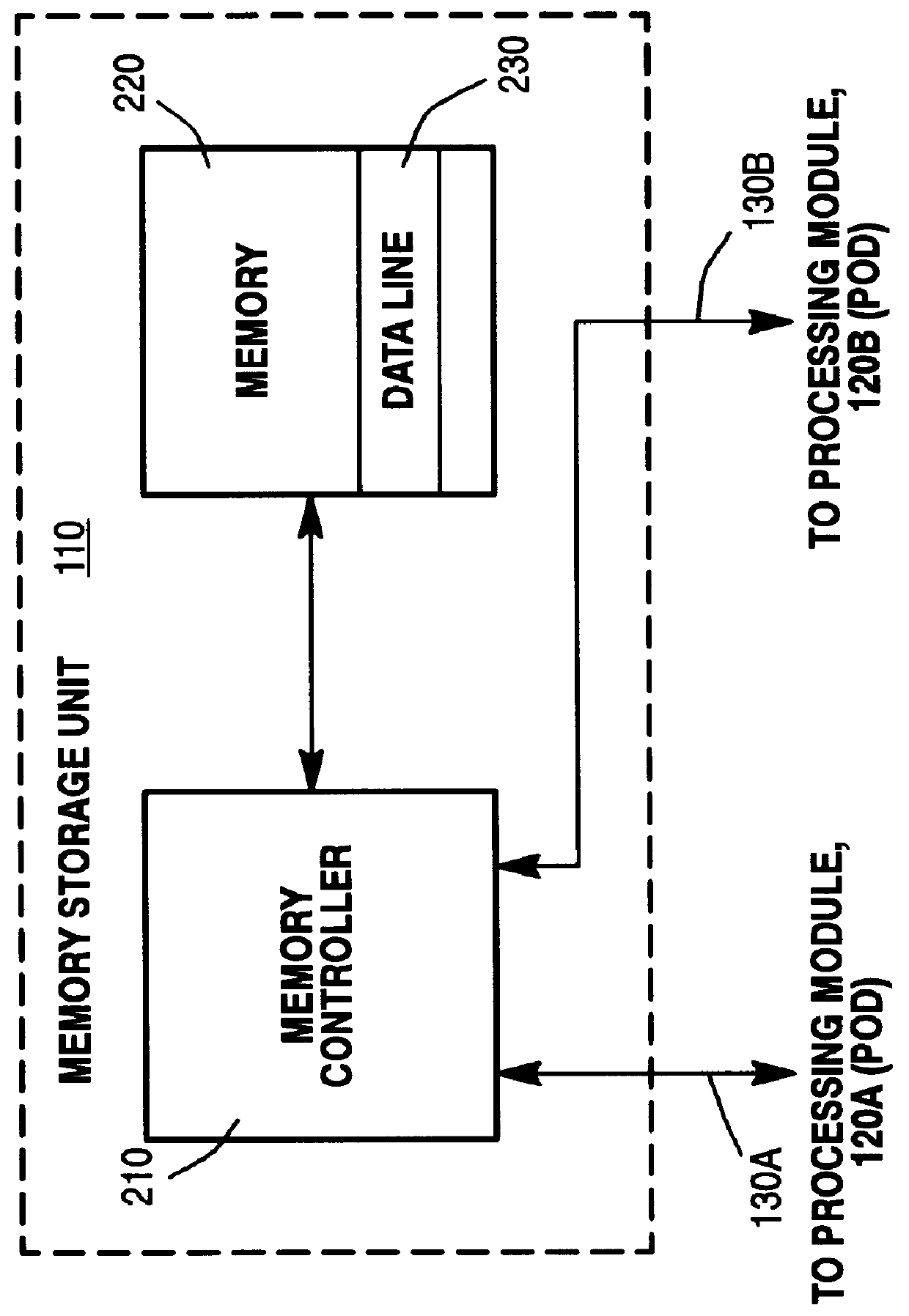

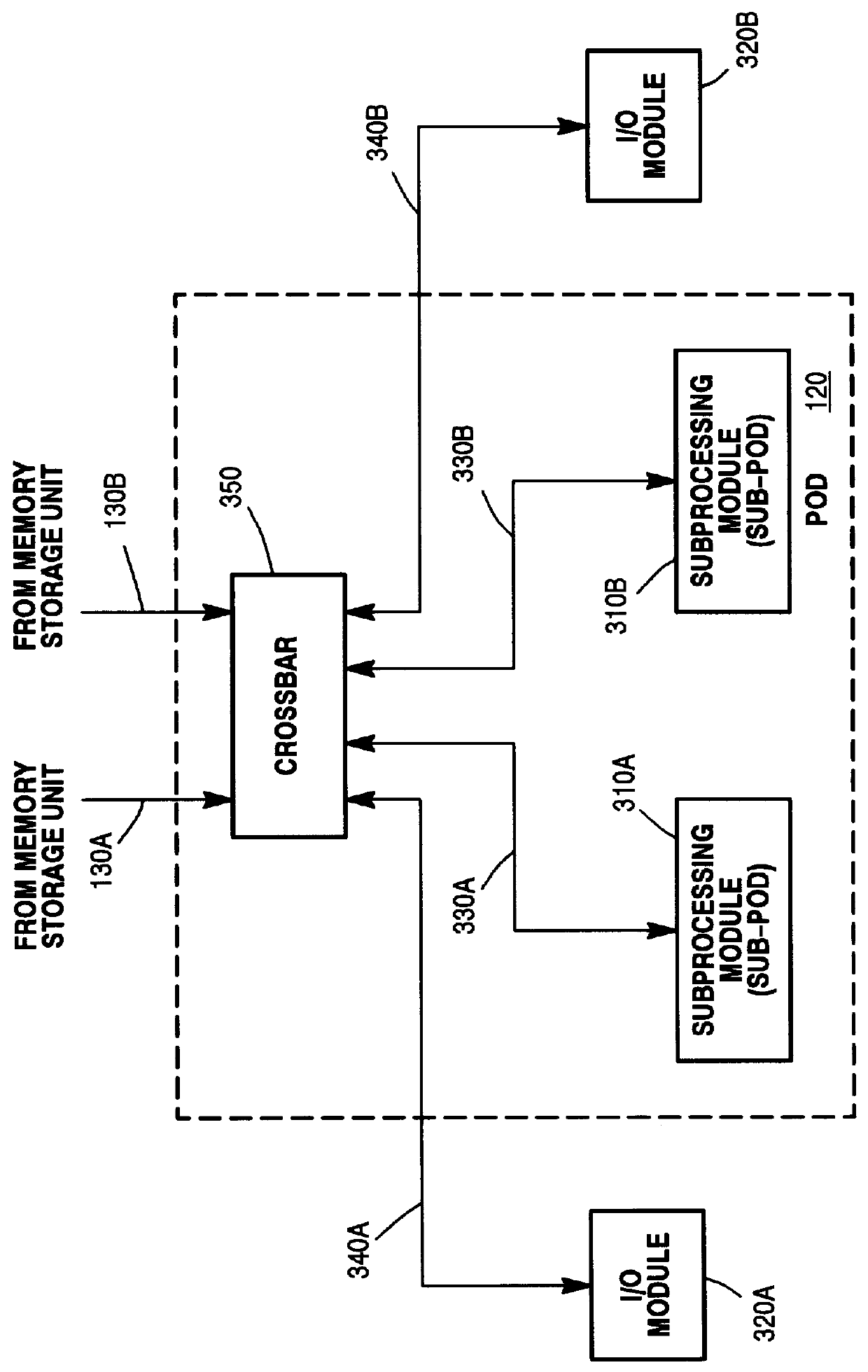

A system and method for enabling a multiprocessor system employing a memory hierarchy to identify data units or locations being used as software locks. The memory hierarchy comprises a main memory having a plurality of data units, a plurality of caches that operate independently of each other, and at least one coherent domain interfaced to each cache. Each coherent domain comprises at least two processors. The main memory maintains coherency of data among the plurality of caches using a directory that maintains information about each data line. The system of the present invention allows a requesting agent, such as a processor or cache, to request a data unit without specifying the type of ownership, where ownership may be exclusive or shared. The directory includes history information that defines the previous access pattern of the requested data unit. Prior to forwarding the requested data unit to the requesting agent, the main memory checks, using a conditional fetch command, the history information to determine what type of ownership to associate with the requested data unit. The requested data unit is then delivered to the requesting agent with ownership rights specified by the history information. The processors may utilize a directory-based protocol such as MESI (modified, exclusive, shared, invalid) to maintain coherence among the processors, with each processor snooping a shared bus to track the status of caches lines in the other processors.

Owner:UNISYS CORP

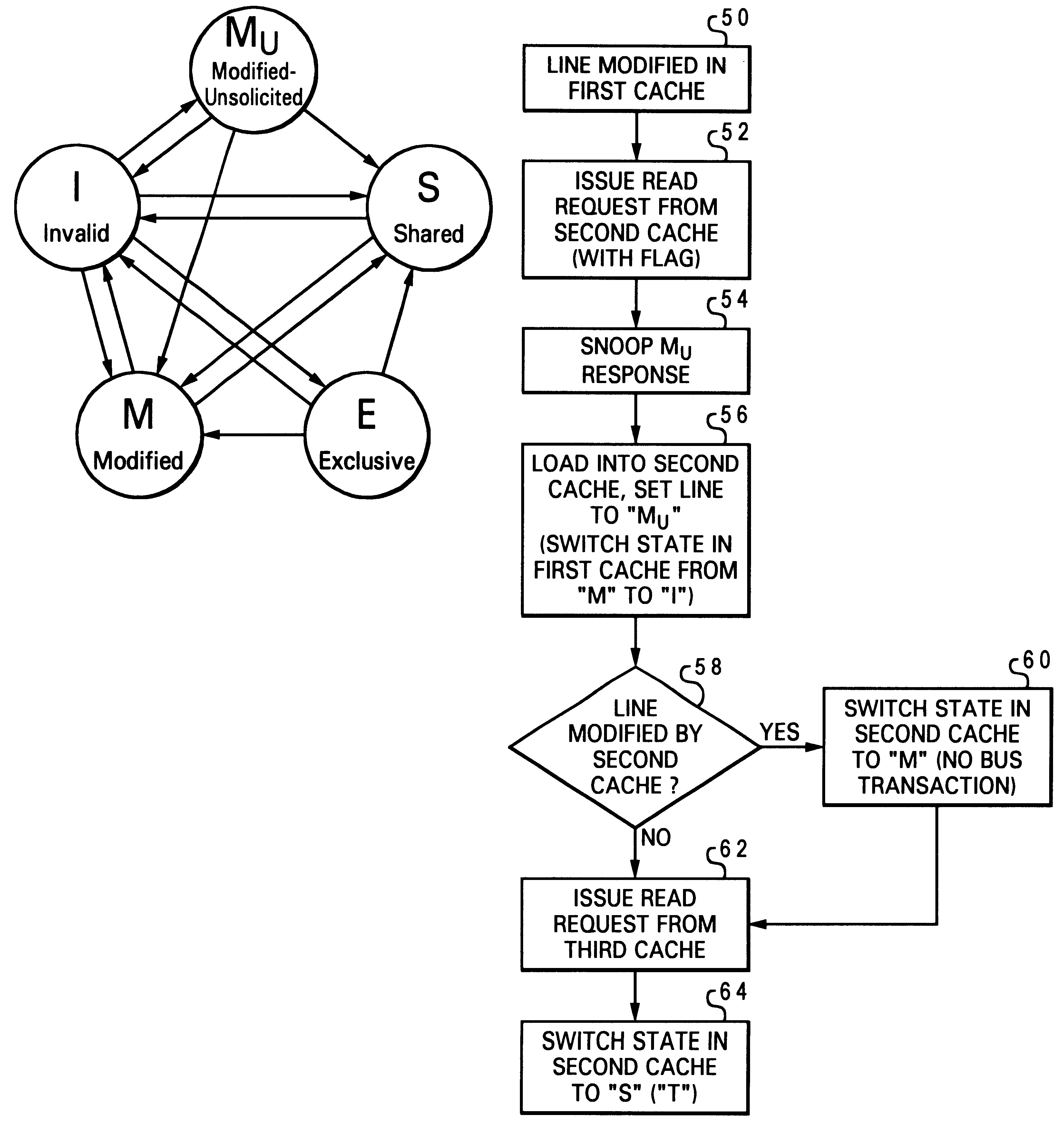

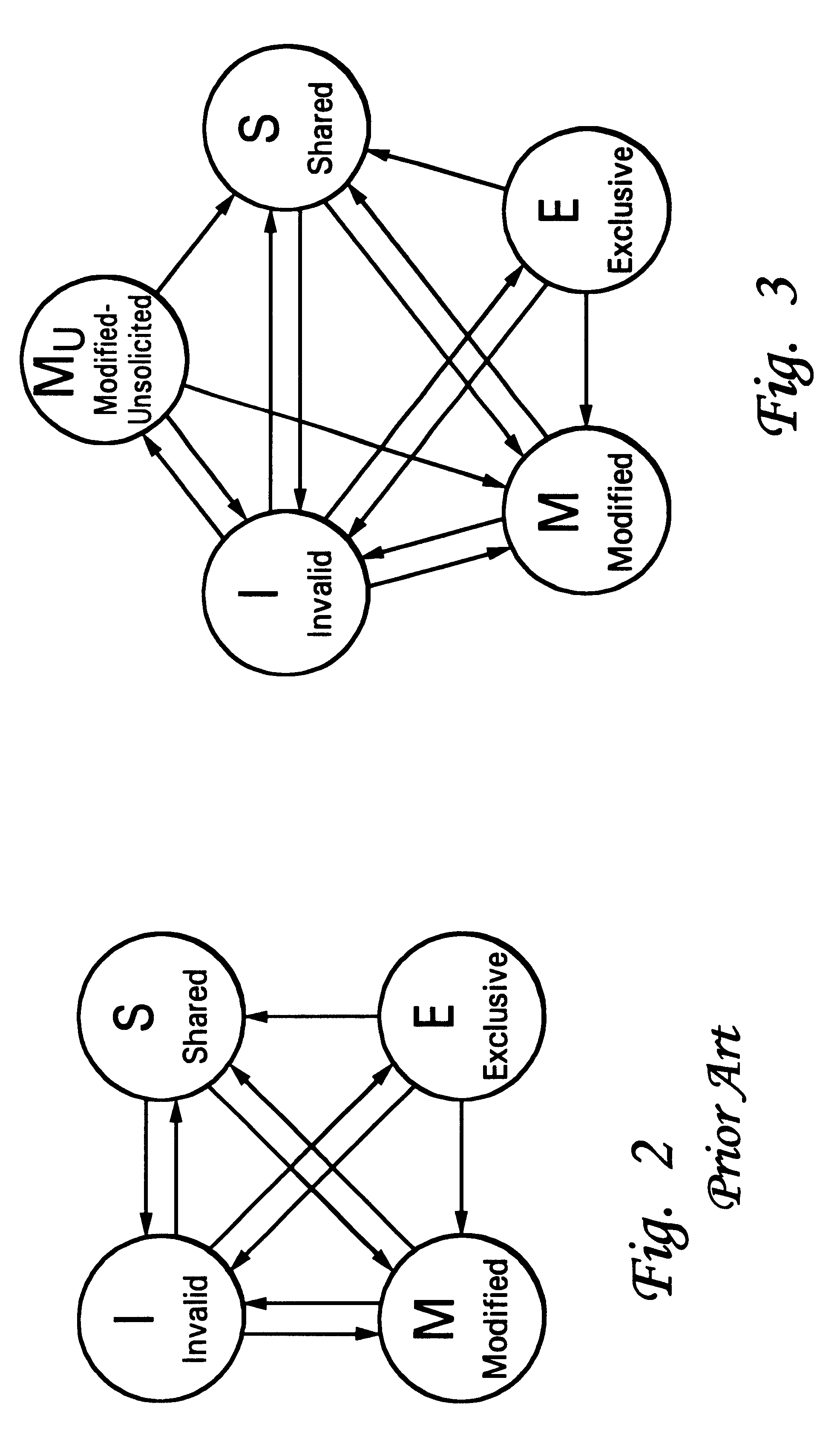

Cache coherency protocol employing a read operation including a programmable flag to indicate deallocation of an intervened cache line

A novel cache coherency protocol provides a modified-unsolicited (Mu) cache state to indicate that a value held in a cache line has been modified (i.e., is not currently consistent with system memory), but was modified by another processing unit, not by the processing unit associated with the cache that currently contains the value in the Mu state, and that the value is held exclusive of any other horizontally adjacent caches. Because the value is exclusively held, it may be modified in that cache without the necessity of issuing a bus transaction to other horizontal caches in the memory hierarchy. The Mu state may be applied as a result of a snoop response to a read request. The read request can include a flag to indicate that the requesting cache is capable of utilizing the Mu state. Alternatively, a flag may be provided with intervention data to indicate that the requesting cache should utilize the modified-unsolicited state.

Owner:INTEL CORP

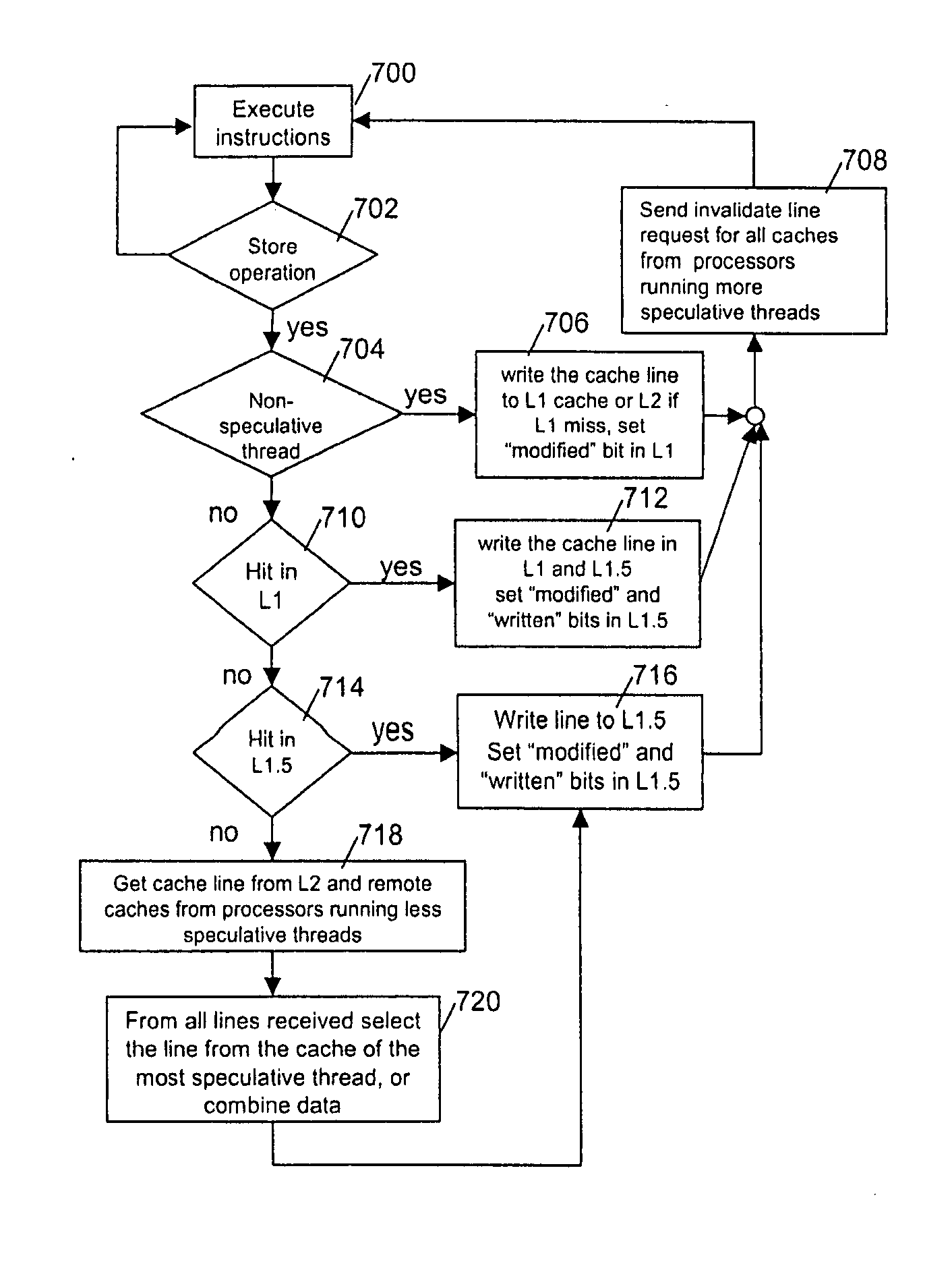

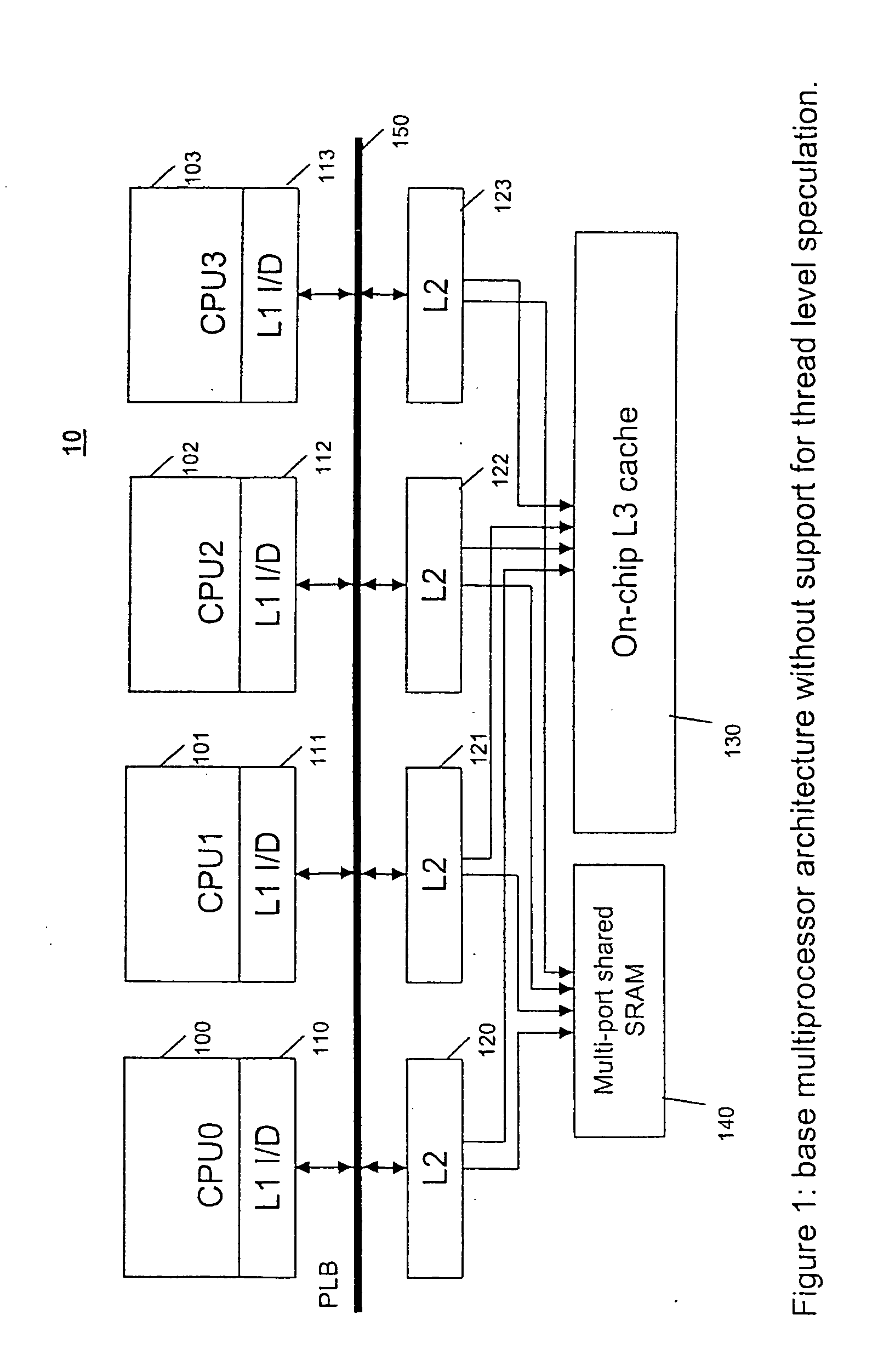

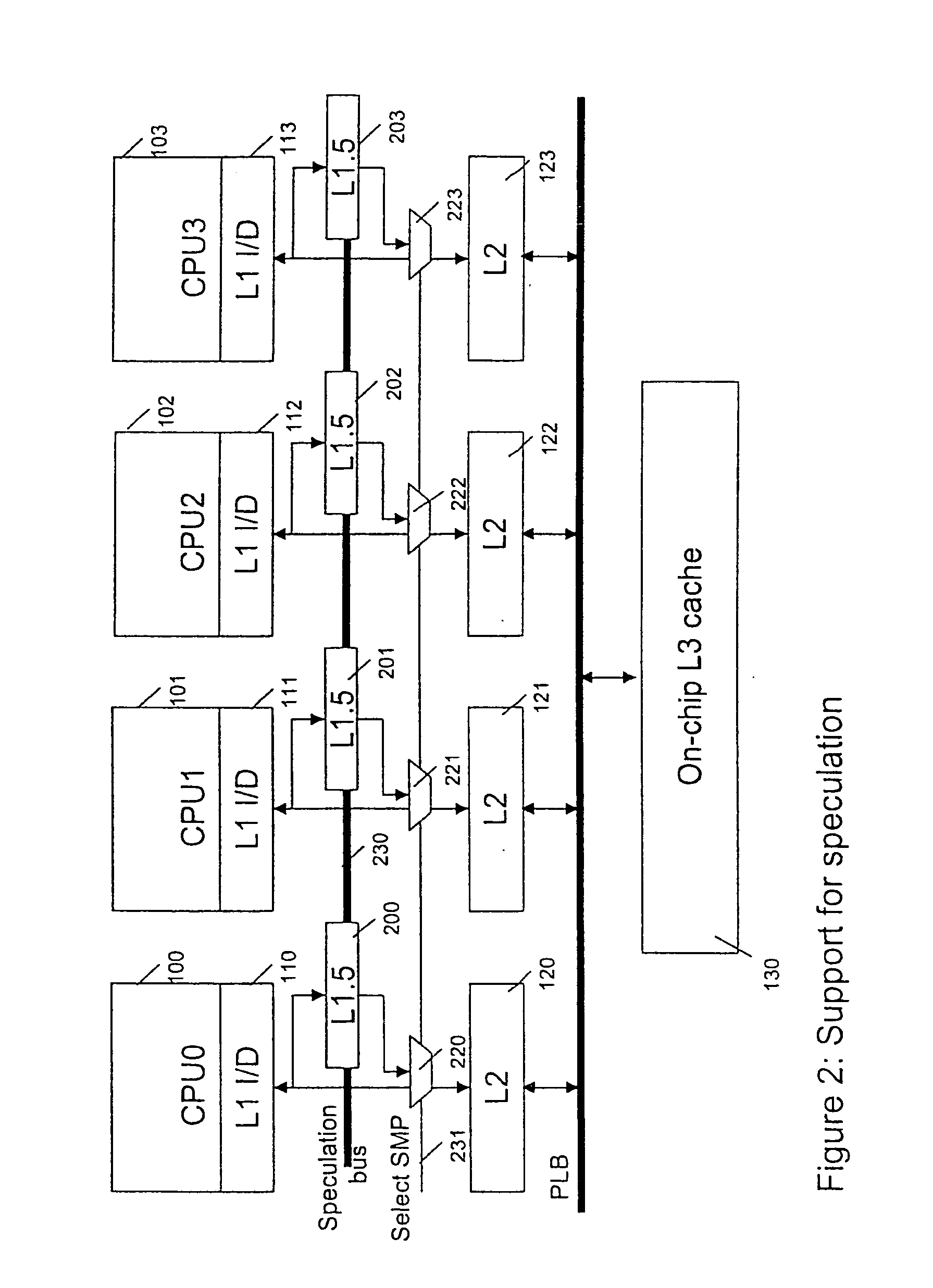

Low complexity speculative multithreading system based on unmodified microprocessor core

InactiveUS20070192545A1Efficient use ofMemory architecture accessing/allocationProgram controlMemory hierarchySpeculative execution

A system, method and computer program product for supporting thread level speculative execution in a computing environment having multiple processing units adapted for concurrent execution of threads in speculative and non-speculative modes. Each processing unit includes a cache memory hierarchy of caches operatively connected therewith. The apparatus includes an additional cache level local to each processing unit for use only in a thread level speculation mode, each additional cache for storing speculative results and status associated with its associated processor when handling speculative threads. The additional local cache level at each processing unit are interconnected so that speculative values and control data may be forwarded between parallel executing threads. A control implementation is provided that enables speculative coherence between speculative threads executing in the computing environment.

Owner:IBM CORP

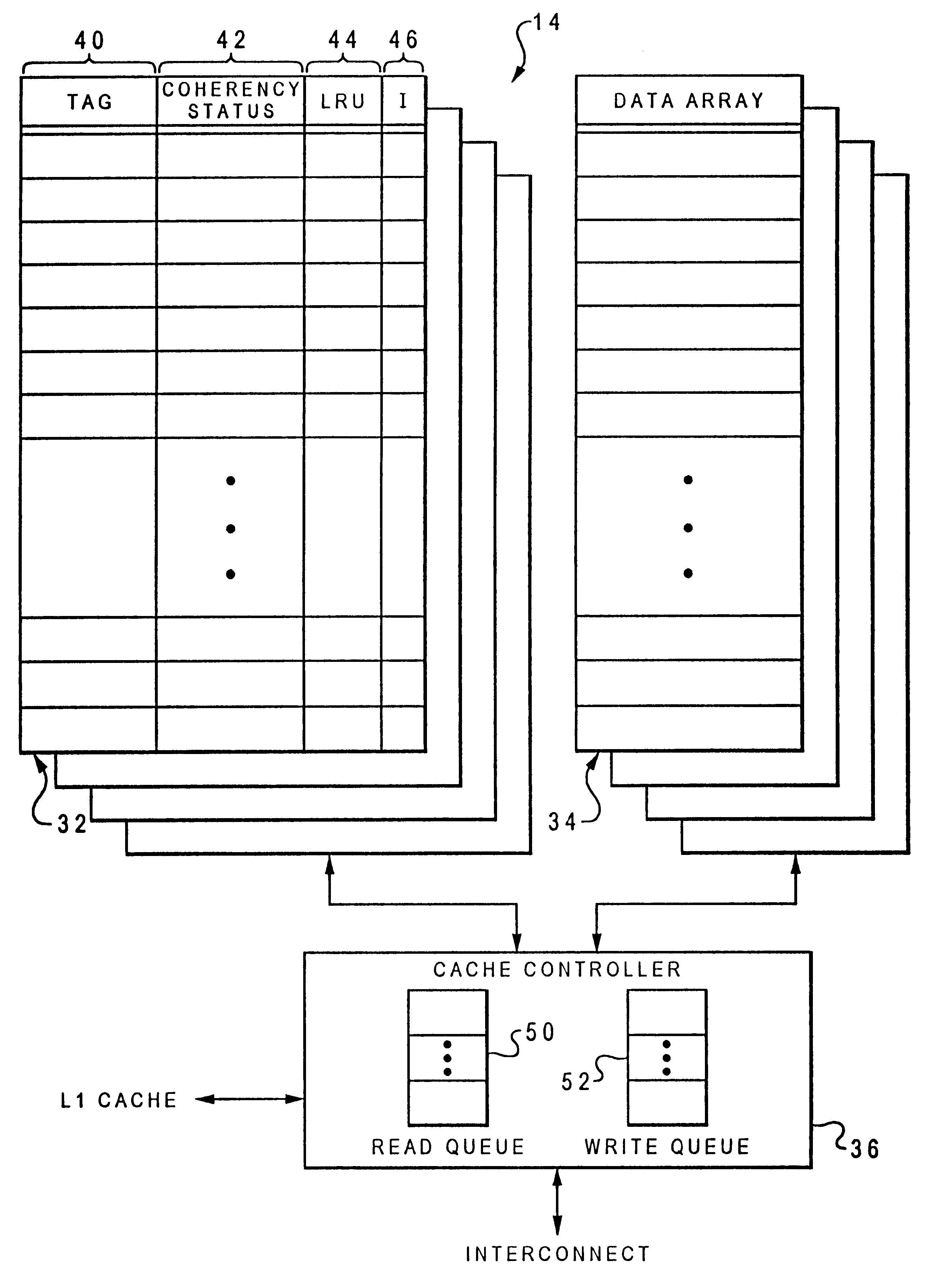

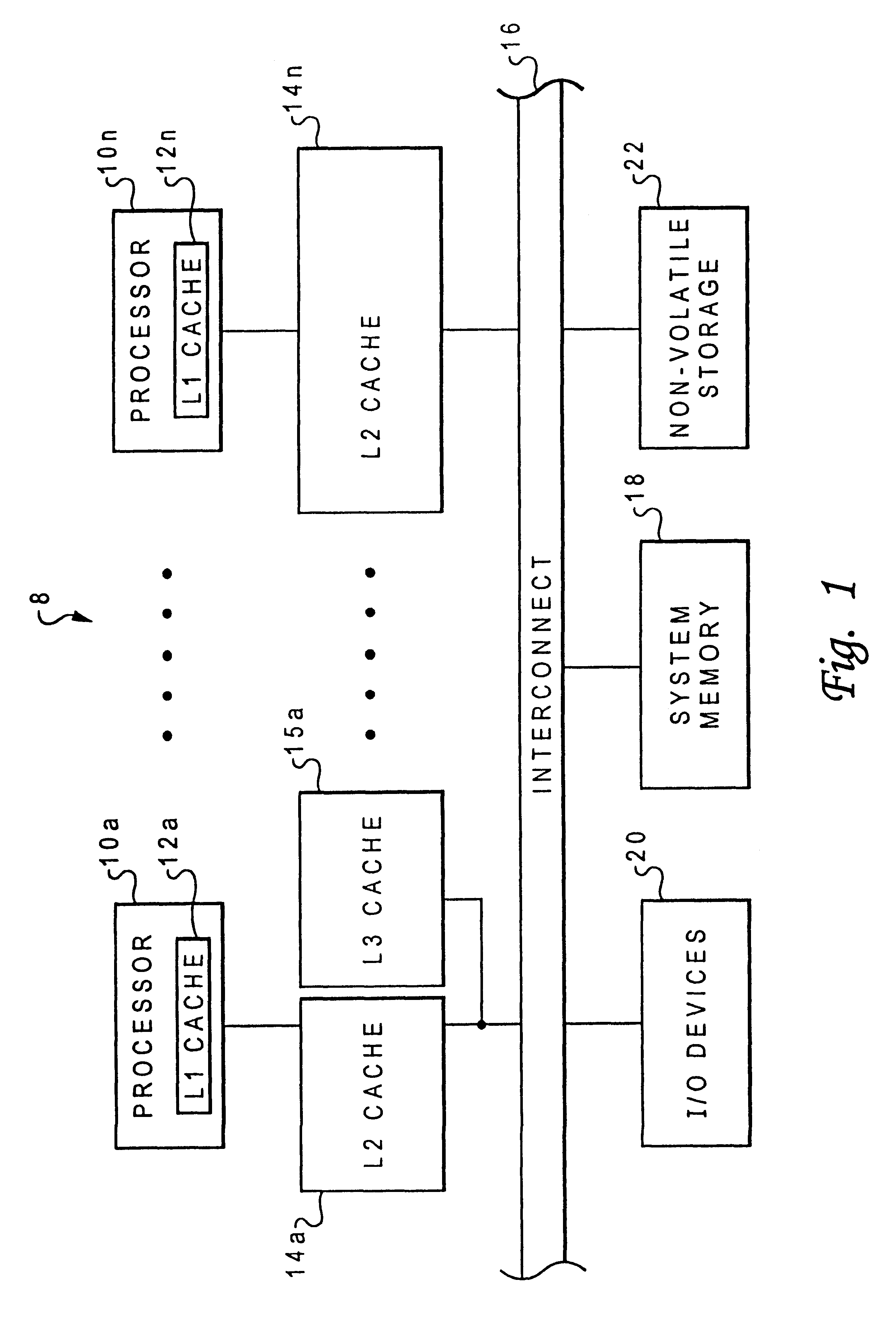

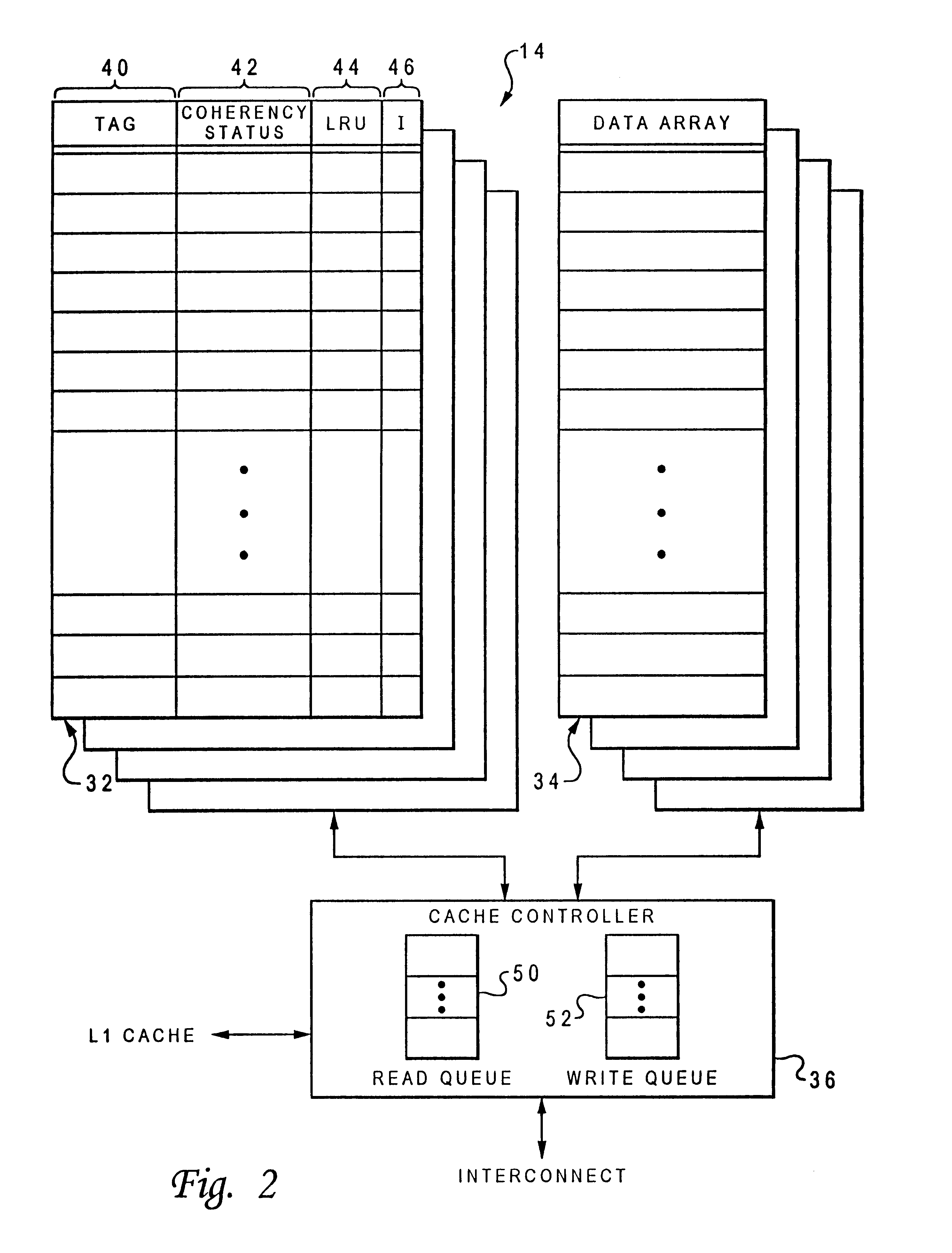

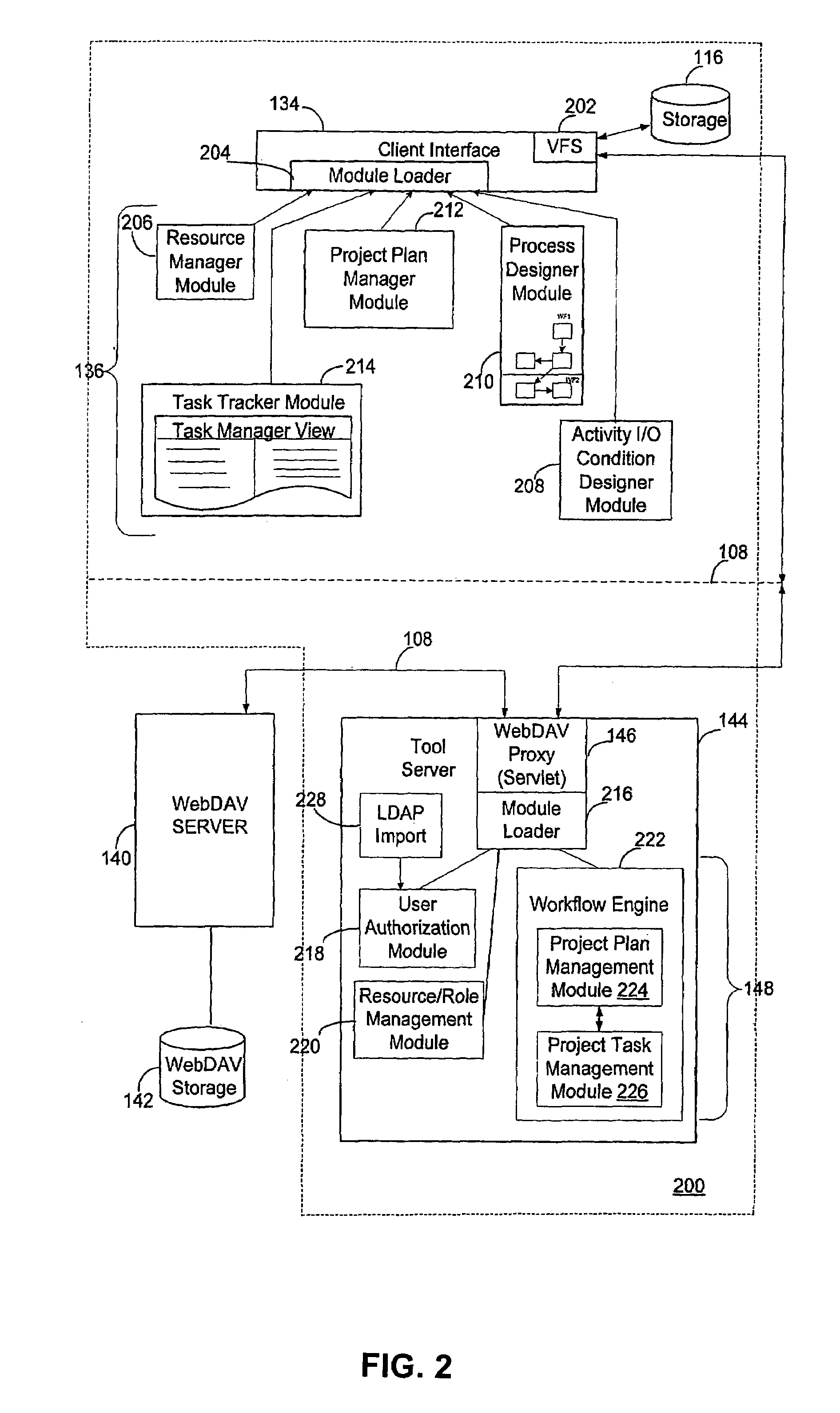

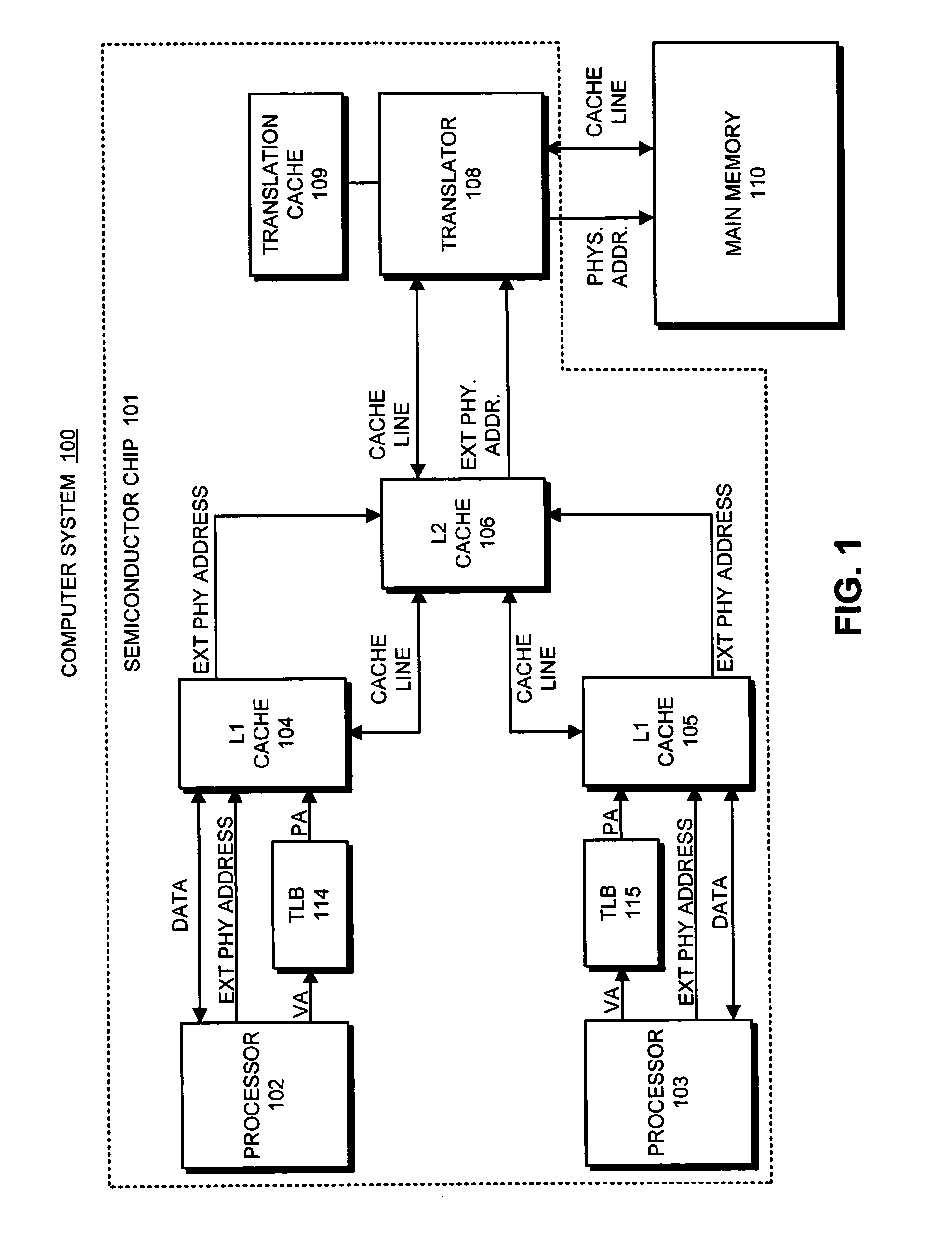

Cache coherency protocol for a data processing system including a multi-level memory hierarchy

InactiveUS6192451B1Memory adressing/allocation/relocationUnauthorized memory use protectionData processing systemMemory hierarchy

A data processing system and method of maintaining cache coherency in a data processing system are described. The data processing system includes a plurality of caches and a plurality of processors grouped into at least first and second clusters, where each of the first and second clusters has at least one upper level cache and at least one lower level cache. According to the method, a first data item in the upper level cache of the first cluster is stored in association with an address tag indicating a particular address. A coherency indicator in the upper level cache of the first cluster is set to a first state that indicates that the address tag is valid and that the first data item is invalid. Similarly, in the upper level cache of the second cluster, a second data item is stored in association with an address tag indicating the particular address. In addition, a coherency indicator in the upper level cache of the second cluster is set to the first state. Thus, the data processing system implements a coherency protocol that permits a coherency indicator in the upper level caches of both of the first and second clusters to be set to the first state.

Owner:IBM CORP

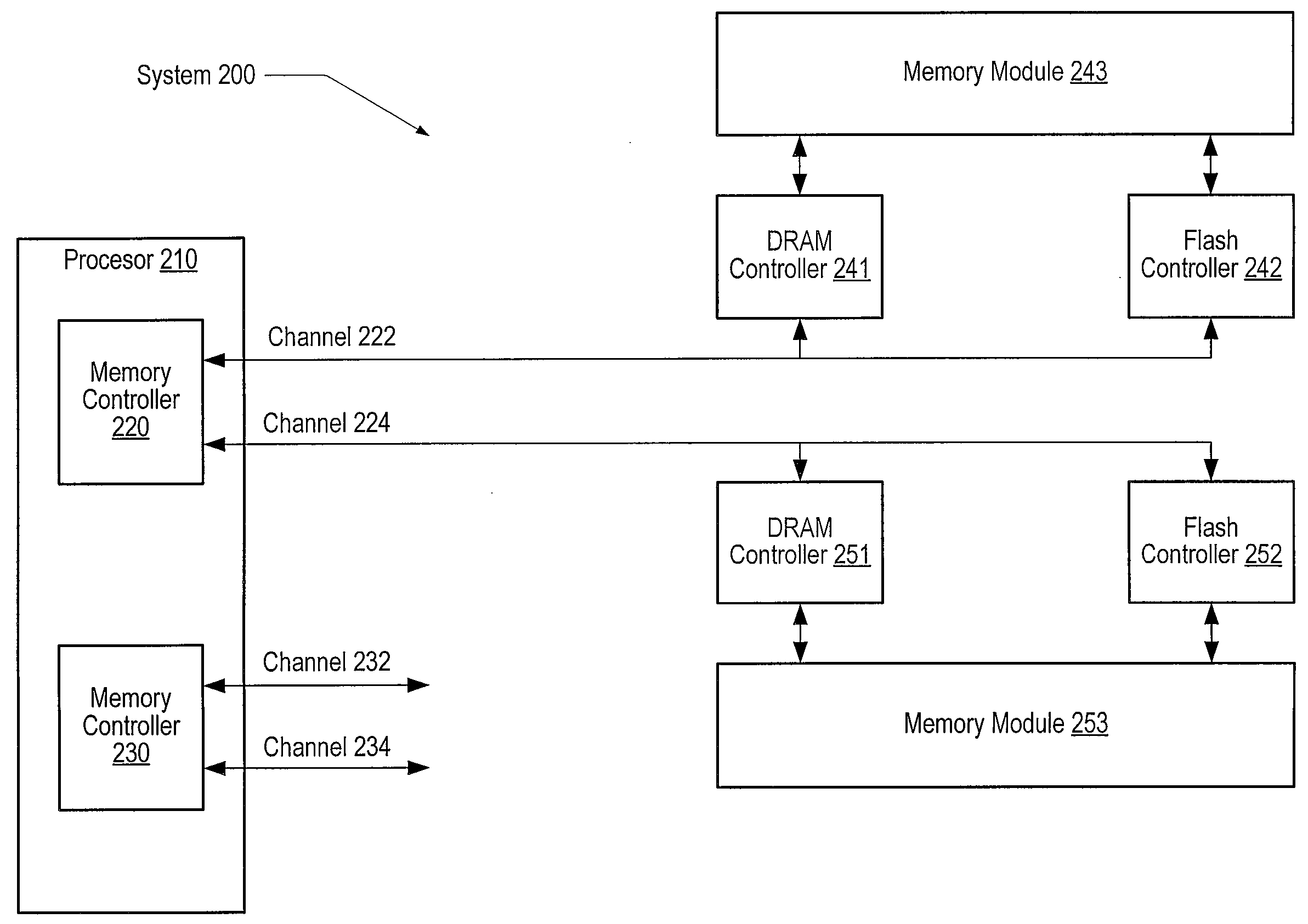

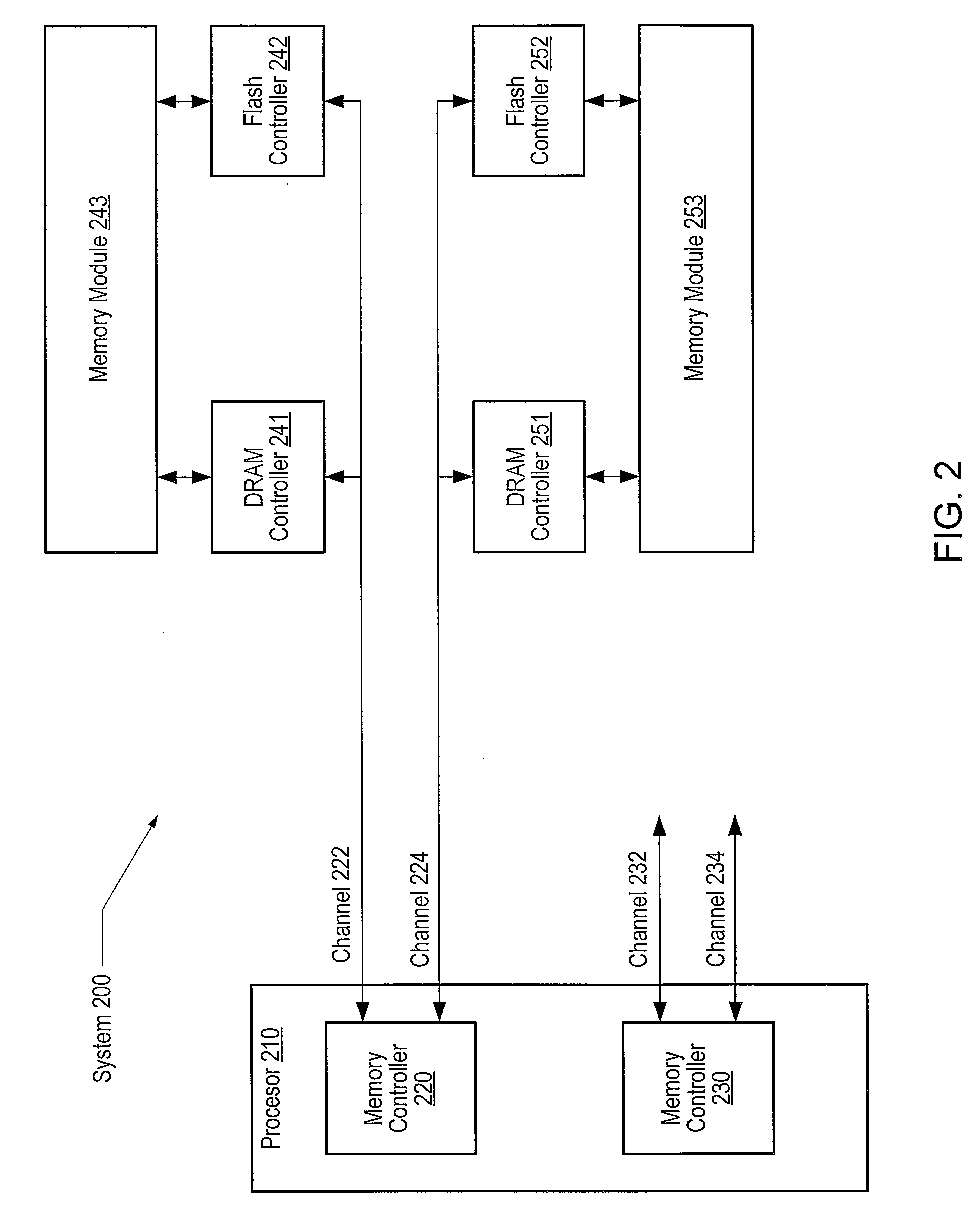

Apparatus and method for implementing a multi-level memory hierarchy over common memory channels

ActiveUS20130275682A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory hierarchyOperating system

A system and method are described for integrating a memory and storage hierarchy including a non-volatile memory tier within a computer system. In one embodiment, PCMS memory devices are used as one tier in the hierarchy, sometimes referred to as “far memory.” Higher performance memory devices such as DRAM placed in front of the far memory and are used to mask some of the performance limitations of the far memory. These higher performance memory devices are referred to as “near memory.”

Owner:INTEL CORP

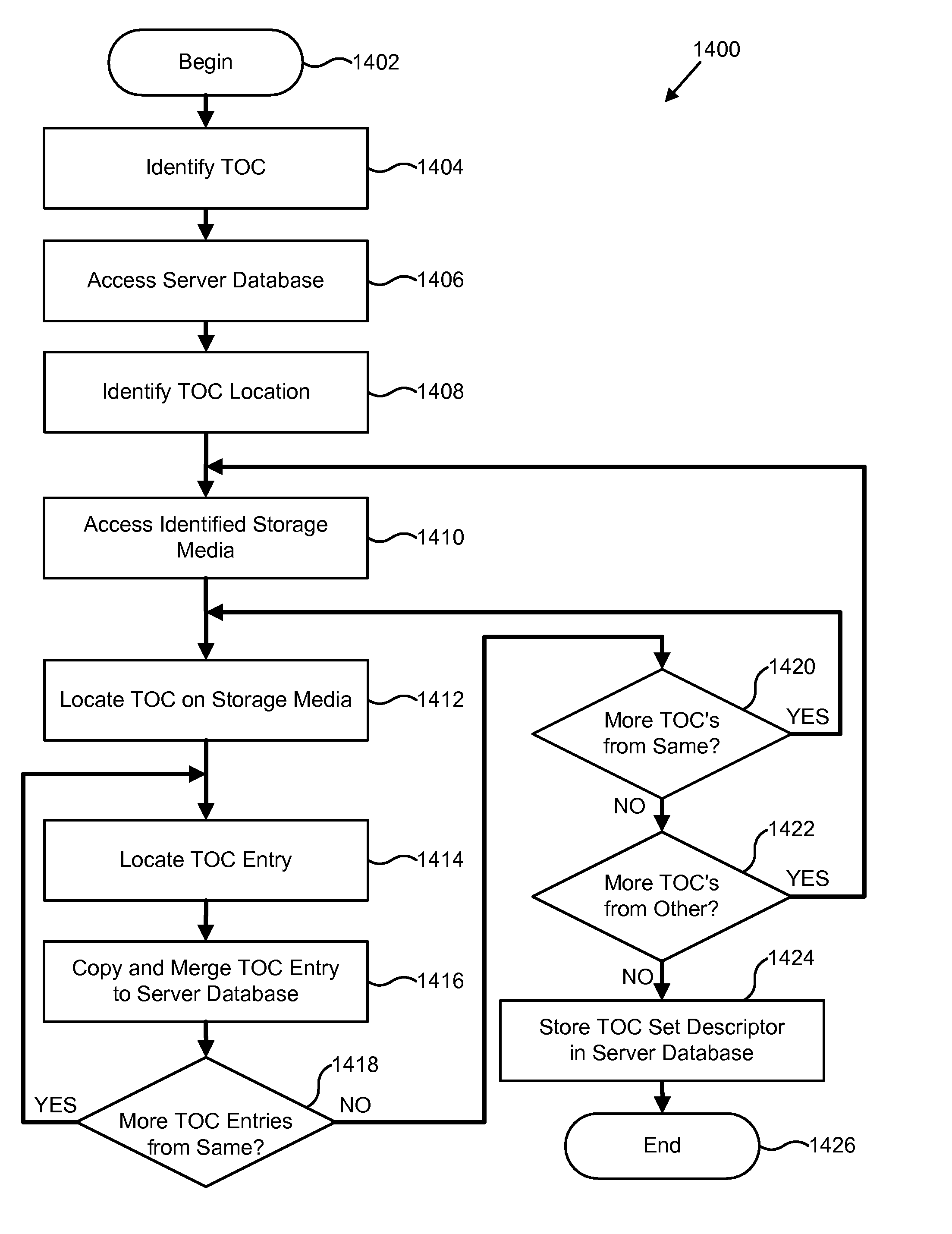

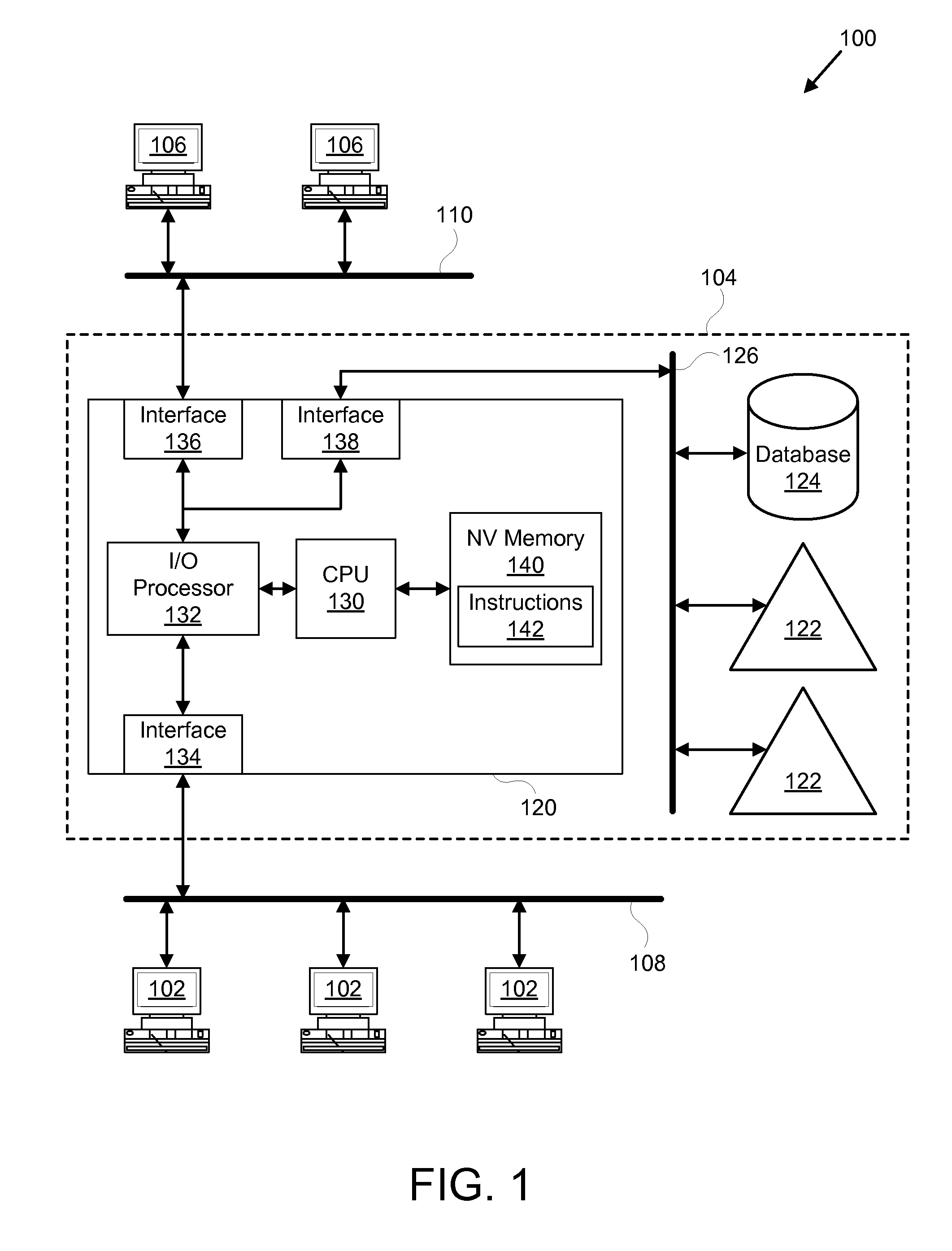

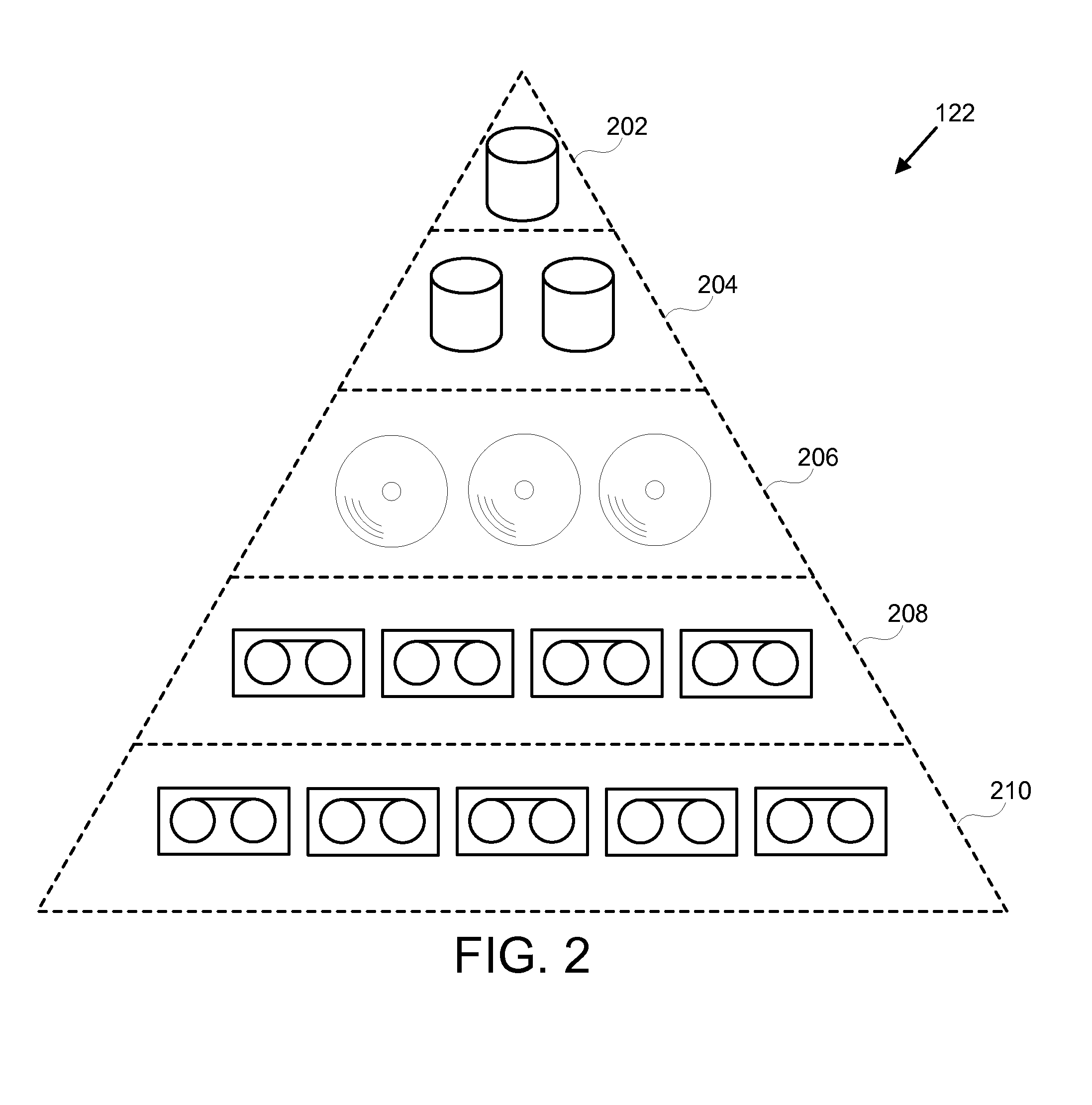

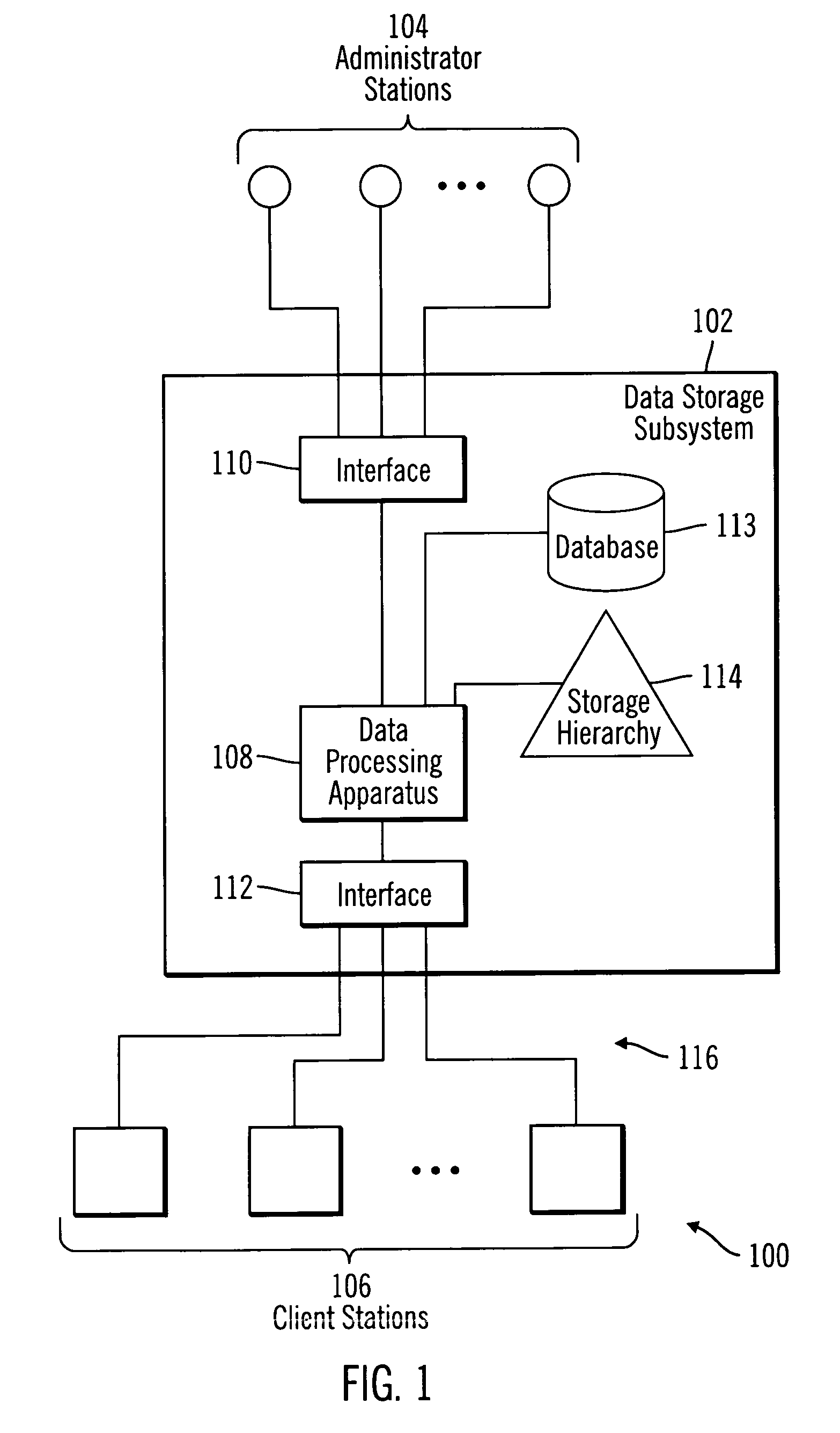

Hierarchical storage management using dynamic tables of contents and sets of tables of contents

InactiveUS20080294611A1Overcomes shortcomingData processing applicationsDigital data processing detailsMemory hierarchyStorage management

A system, apparatus, and process creates a table of contents (TOC), including one or more table of contents (TOC) entries, to manage data in a hierarchical storage management system. Each TOC entry contains metadata describing the contents and attributes of a data object within an image, which is an aggregation of multiple data objects into a single object for storage management purposes. The TOC is stored in a storage hierarchy, such as magnetic disk, for fast access of and efficient operation on the aggregated TOC entries. The system, apparatus, and process also provide for aggregating the TOC entries from one or more TOCs into a TOC set in the storage management server database. The TOC set may be manipulated and queried in order to find a particular data object or image referenced by a TOC entry. The TOC entries, TOCs, and TOC sets may be dynamically managed by the hierarchical data storage management system through implementation of a set of policy management constructs that define appropriate creation, retention, and movement of the objects within the database and storage hierarchy.

Owner:IBM CORP

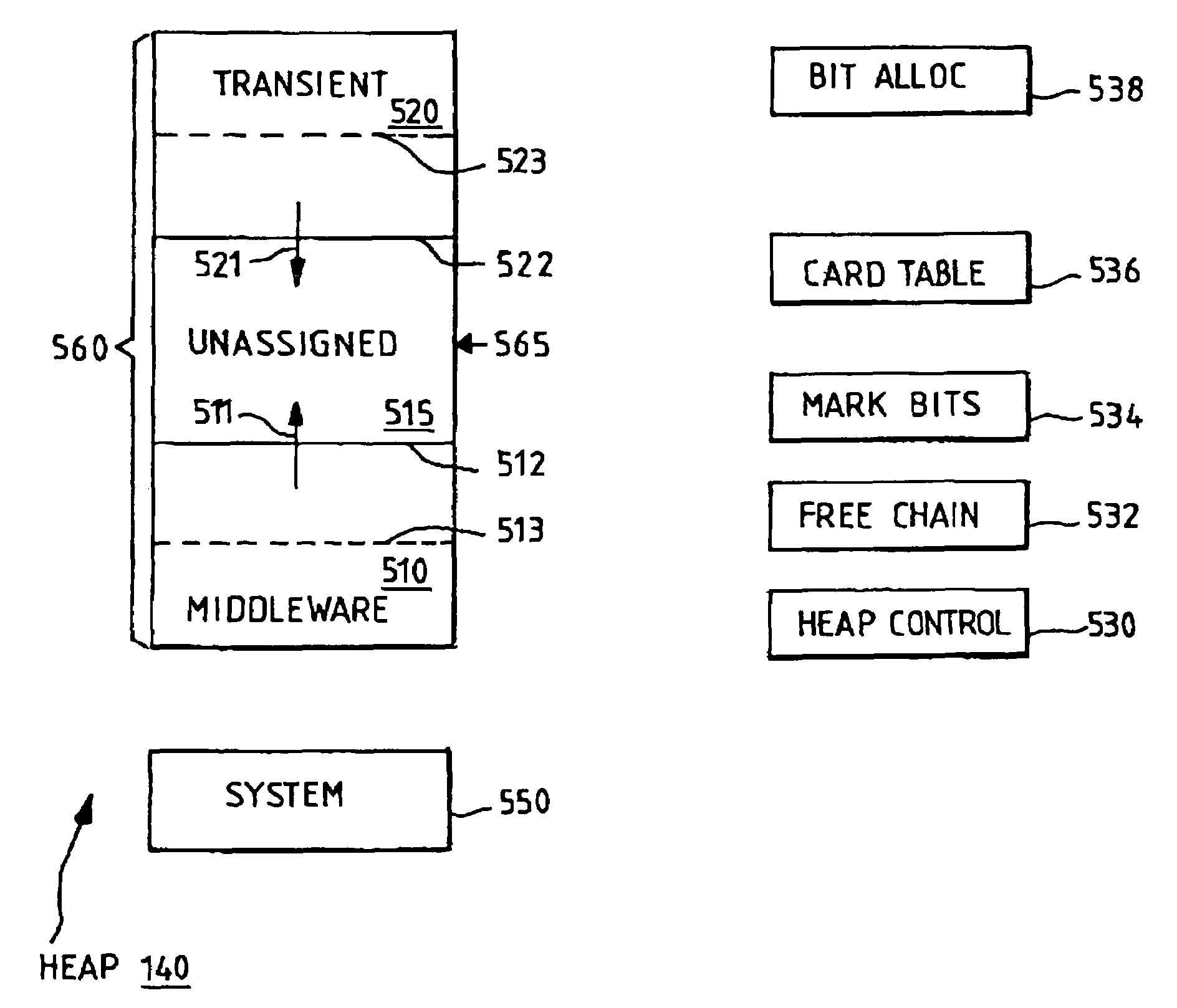

Serially, reusable virtual machine

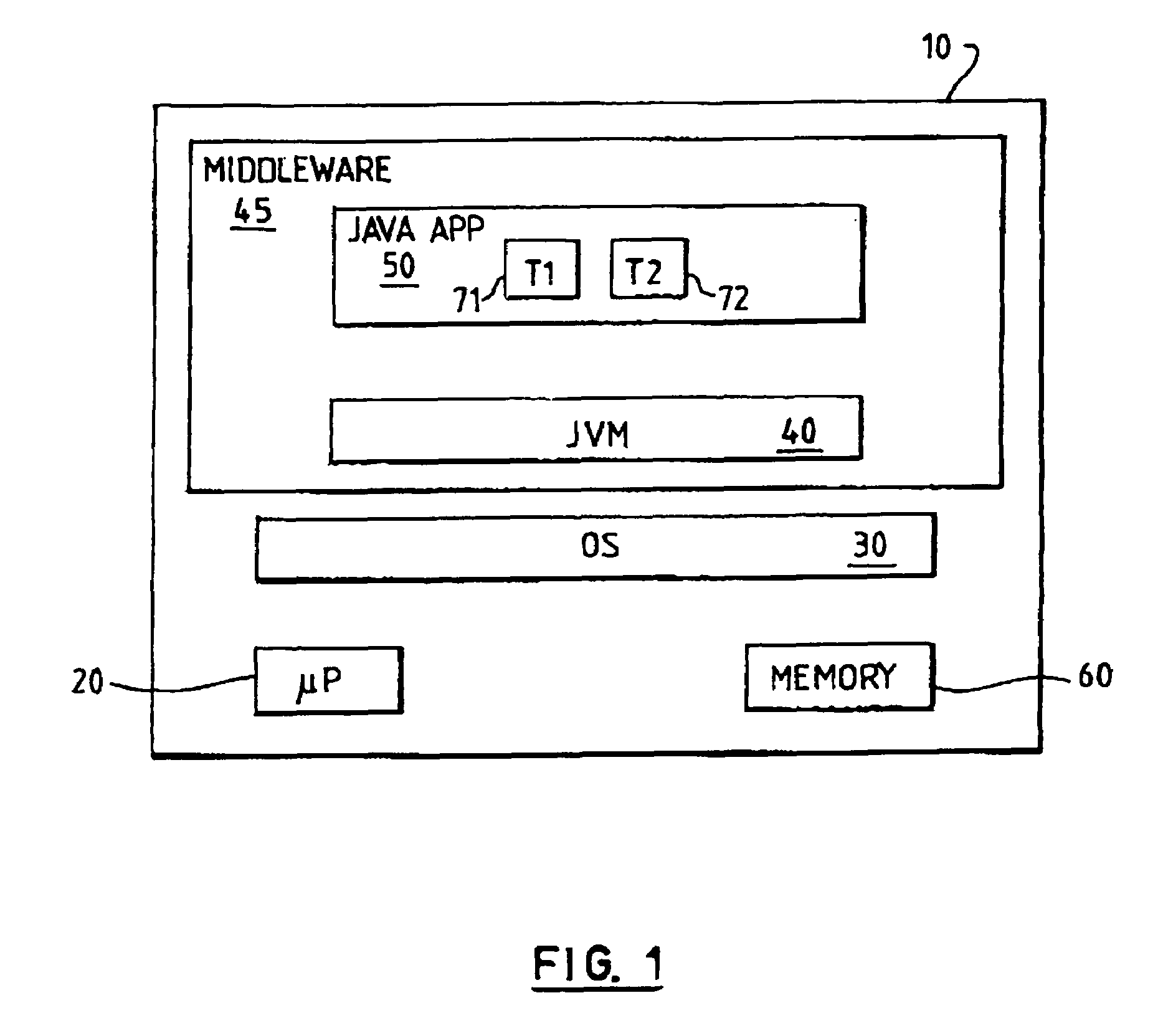

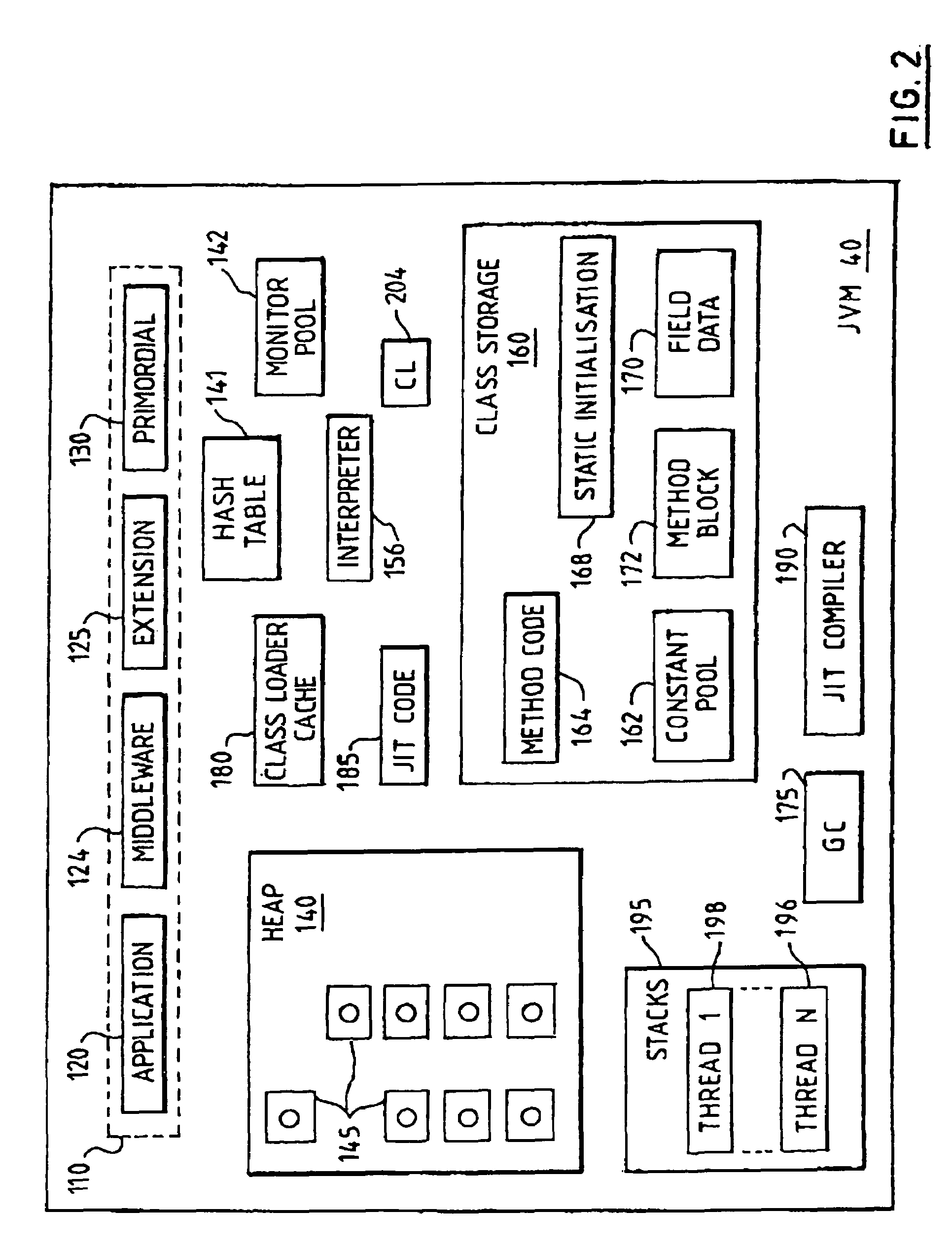

InactiveUS7263700B1Conserve costLow costData processing applicationsProgram loading/initiatingMemory hierarchyWaste collection

In a virtual machine environment, a method and apparatus for the use of multiple heaps to retain persistent data and transient data wherein the multiple heaps enables a single virtual machine to be easily resettable, thus avoiding the need to terminate and start a new Virtual Machine as well as enabling a single virtual machine to retain data and objects across multiple applications, thus avoiding the computing resource overhead of relinking, reloading, reverifying, and recompiling classes. The memory hierarchy includes a System Heap, a Middleware Heap and a Transient Heap. The use of three heaps enables garbage collection to be selectively targeted to one heap at a time in between applications, thus avoiding this overhead during the life of an application.

Owner:GOOGLE LLC

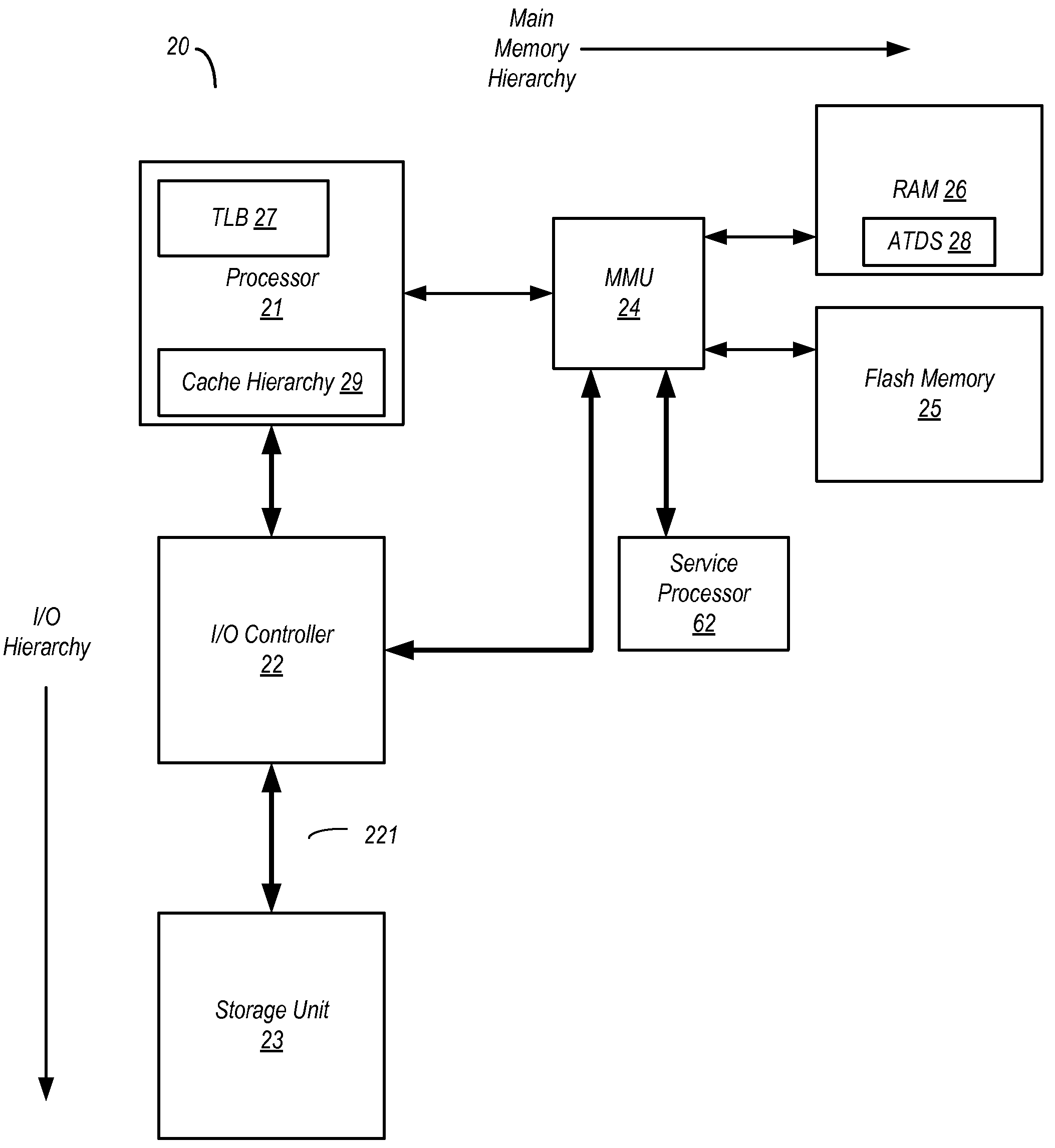

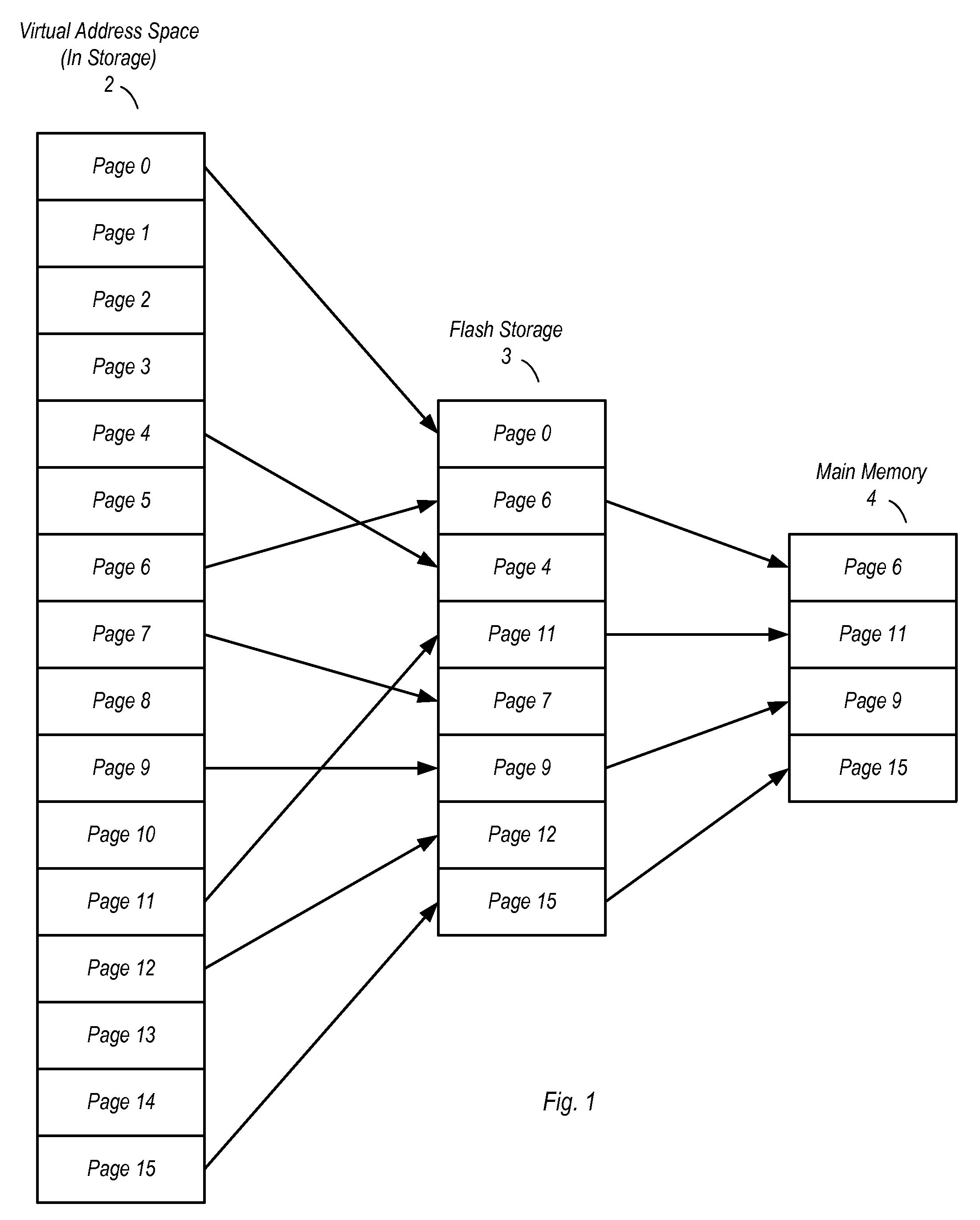

Extended main memory hierarchy having flash memory for page fault handling

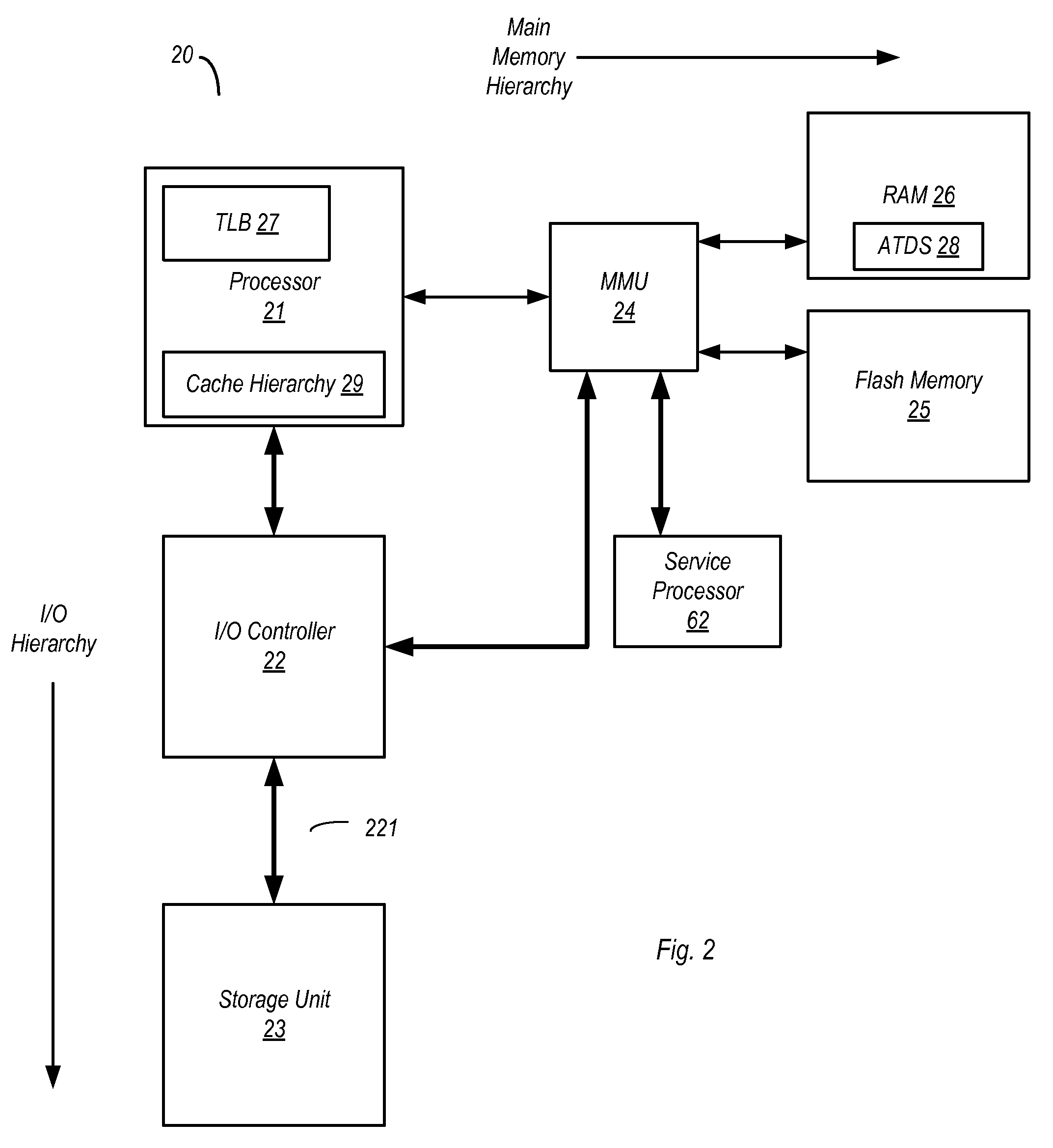

ActiveUS20100332727A1Lower performance requirementsPerformance penaltyMemory architecture accessing/allocationEnergy efficient ICTMemory hierarchyManagement unit

A computer system with flash memory in the main memory hierarchy is disclosed. In an embodiment, the computer system includes at least one processor, a memory management unit coupled to the at least one processor, and a random access memory (RAM) coupled to the memory management unit. The computer system may also include a flash memory coupled to the memory management unit, wherein the computer system is configured to store at least a subset of a plurality of pages in the flash memory during operation. Responsive to a page fault, the memory management unit may determine, without invoking an I / O driver, if a requested page associated with the page fault is stored in the flash memory and further configured to, if the page is stored in the flash memory, transfer the page into RAM.

Owner:SUN MICROSYSTEMS INC

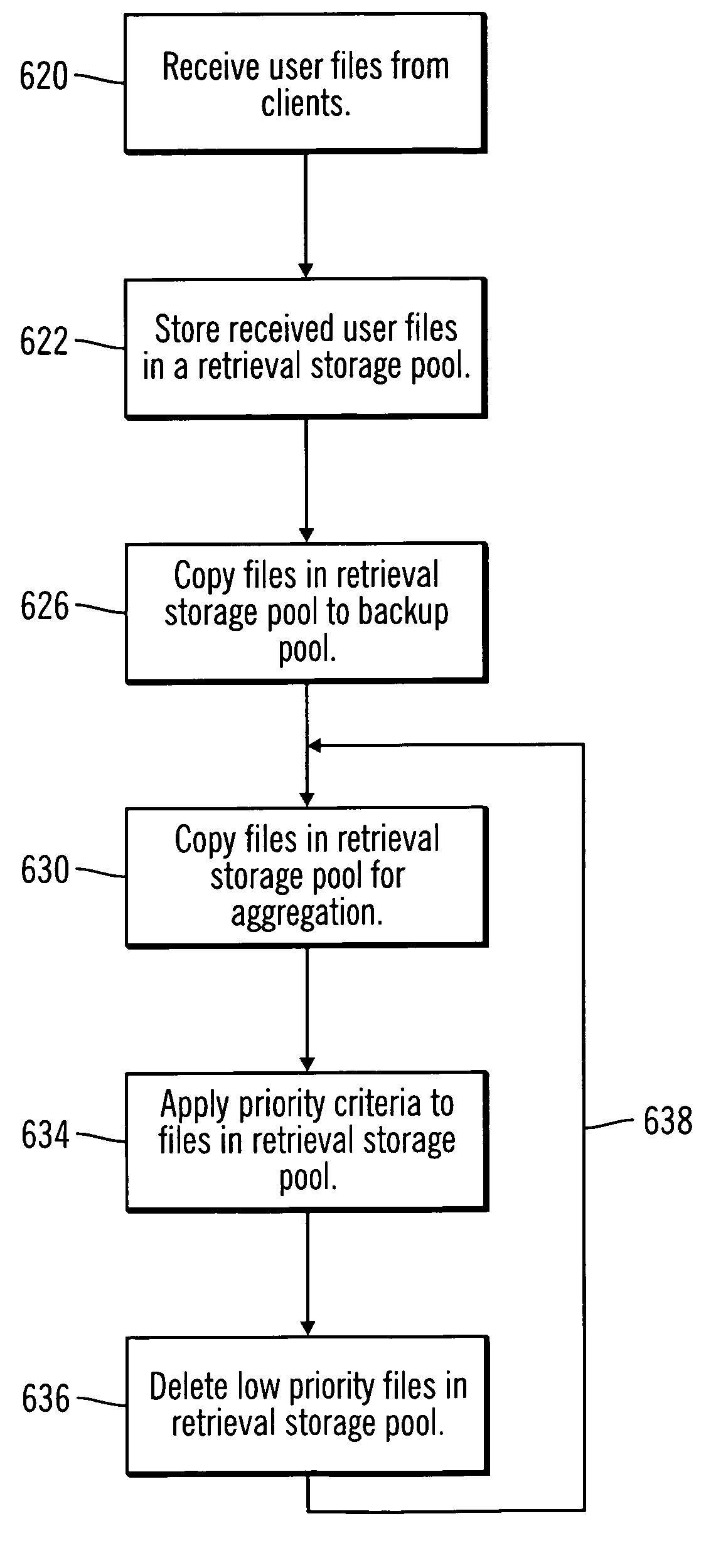

Method, system, and program for storing data for retrieval and transfer

ActiveUS7418464B2Data processing applicationsDigital data processing detailsMemory hierarchyData store

Owner:INT BUSINESS MASCH CORP

Apparatus and method for implementing a multi-level memory hierarchy

ActiveUS20140297919A1Memory architecture accessing/allocationMemory loss protectionMemory hierarchyMemory controller

A system and method are described for intelligently flushing data from a processor cache. For example, a system according to one embodiment of the invention comprises: a processor having a cache from which data is flushed, the data associated with a particular system address range; and a PCM memory controller for managing access to data stored in a PCM memory device corresponding to the particular system address range; the processor determining whether memory flush hints are enabled for the specified system address range, wherein if memory flush hints are enabled for the specified system address range then the processor sending a memory flush hint to a PCM memory controller of the PCM memory device and wherein the PCM memory controller uses the memory flush hint to determine whether to save the flushed data to the PCM memory device.

Owner:TAHOE RES LTD

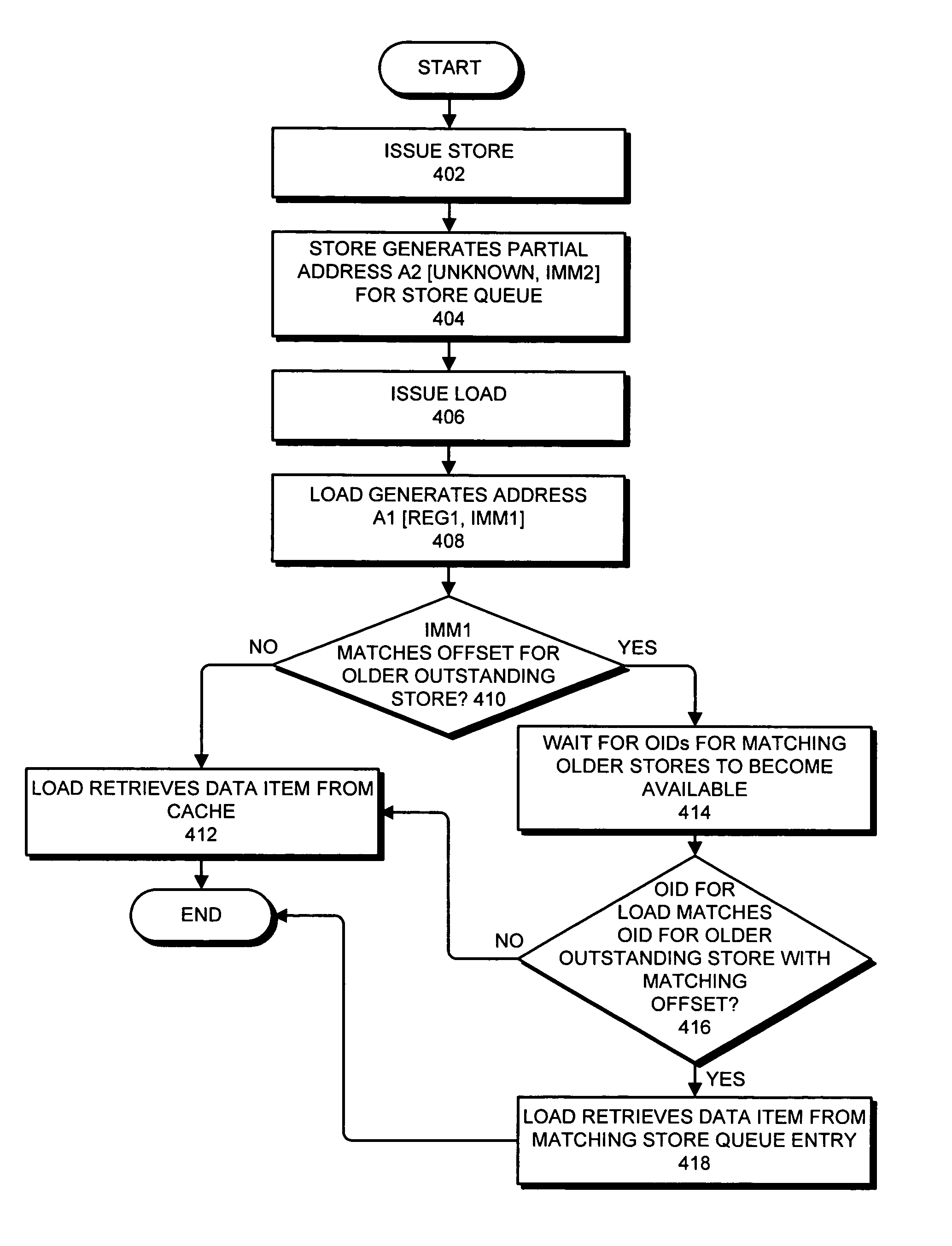

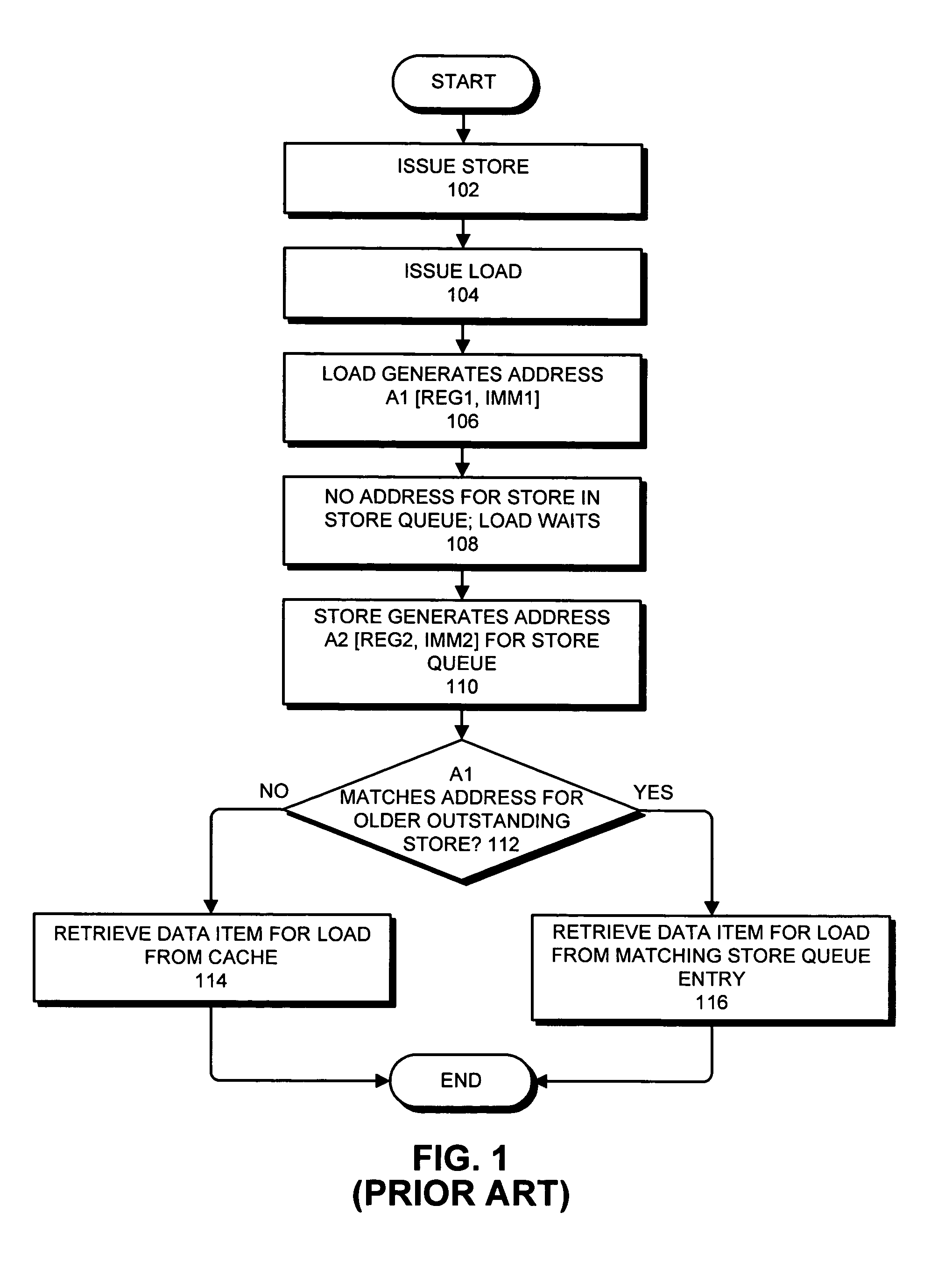

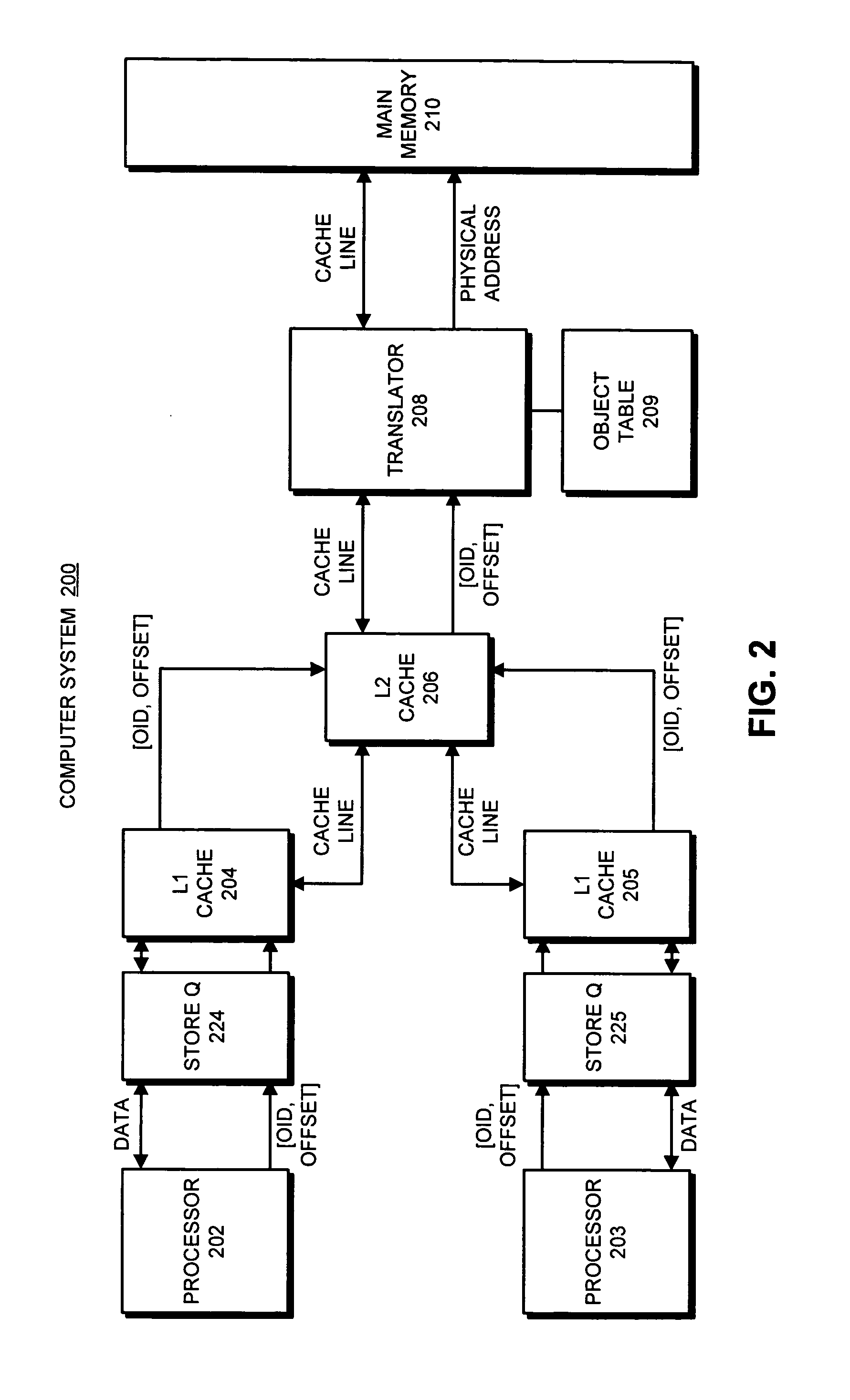

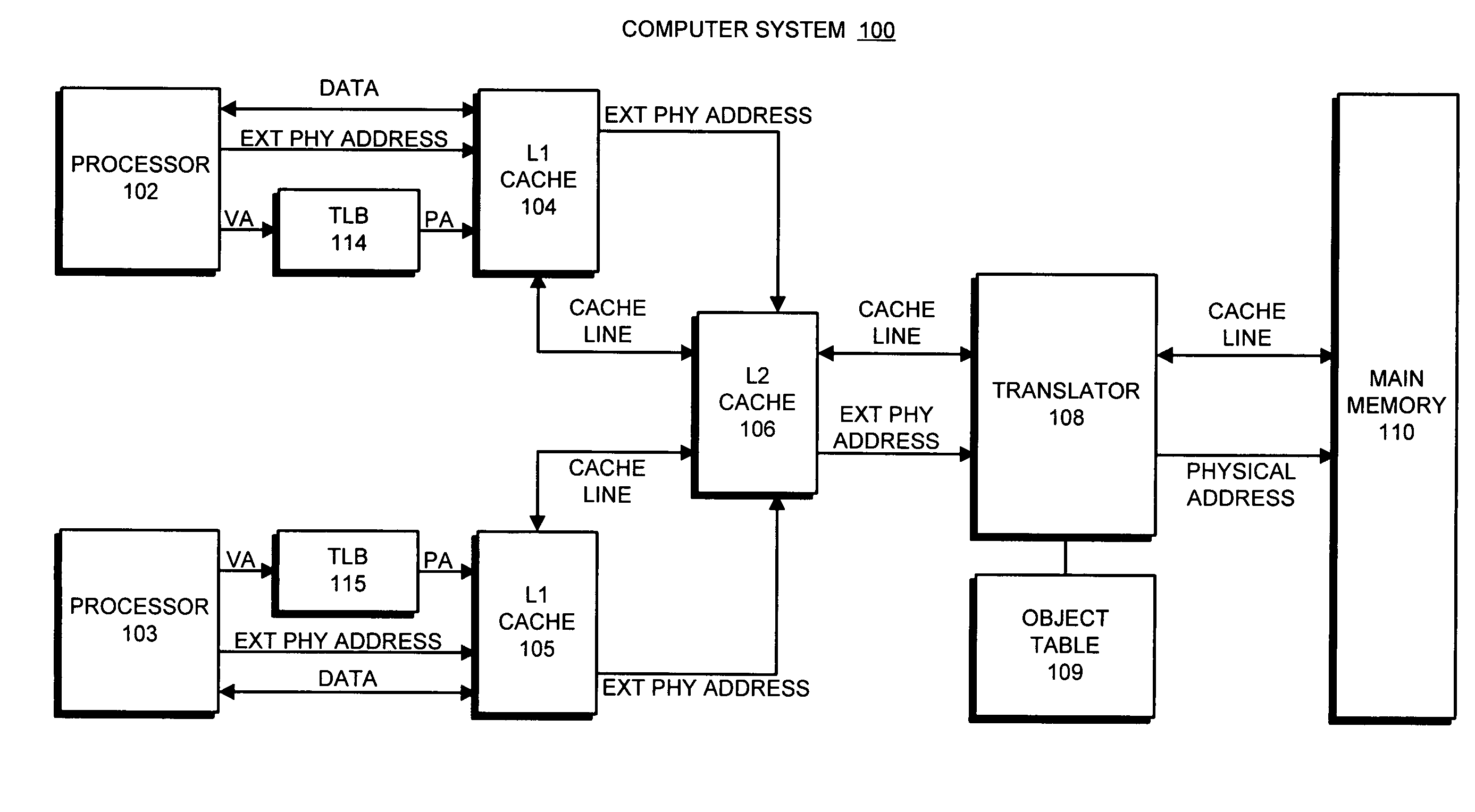

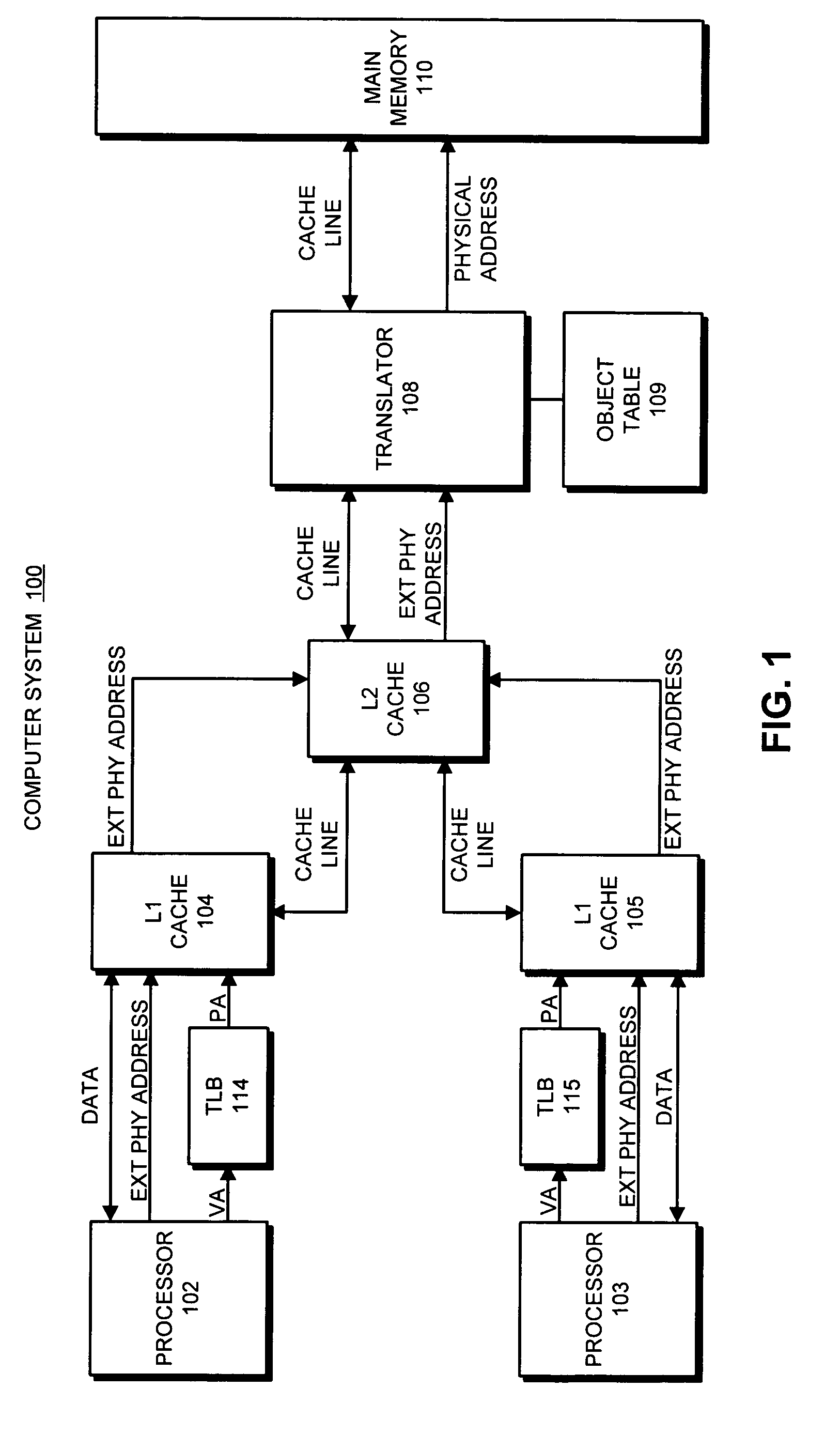

Detecting raw hazards in an object-addressed memory hierarchy by comparing an object identifier and offset for a load instruction to object identifiers and offsets in a store queue

One embodiment of the present invention provides a system that processes memory-access instructions in an object-addressed memory hierarchy. During operation, the system receives a load instruction to be executed, wherein the load instruction loads a data item from an object, and wherein the load instruction specifies an object identifier (OID) for the object and an offset for the data item within the object. Next, the system compares the OID and the offset for the data item against OIDs and offsets for outstanding store instructions in a store queue. If the offset for the data item does not match any of the offsets for the outstanding store instructions in the store queue, and hence no read-after-write (RAW) hazard exists, the system performs a cache access to retrieve the data item for the load instruction.

Owner:ORACLE INT CORP

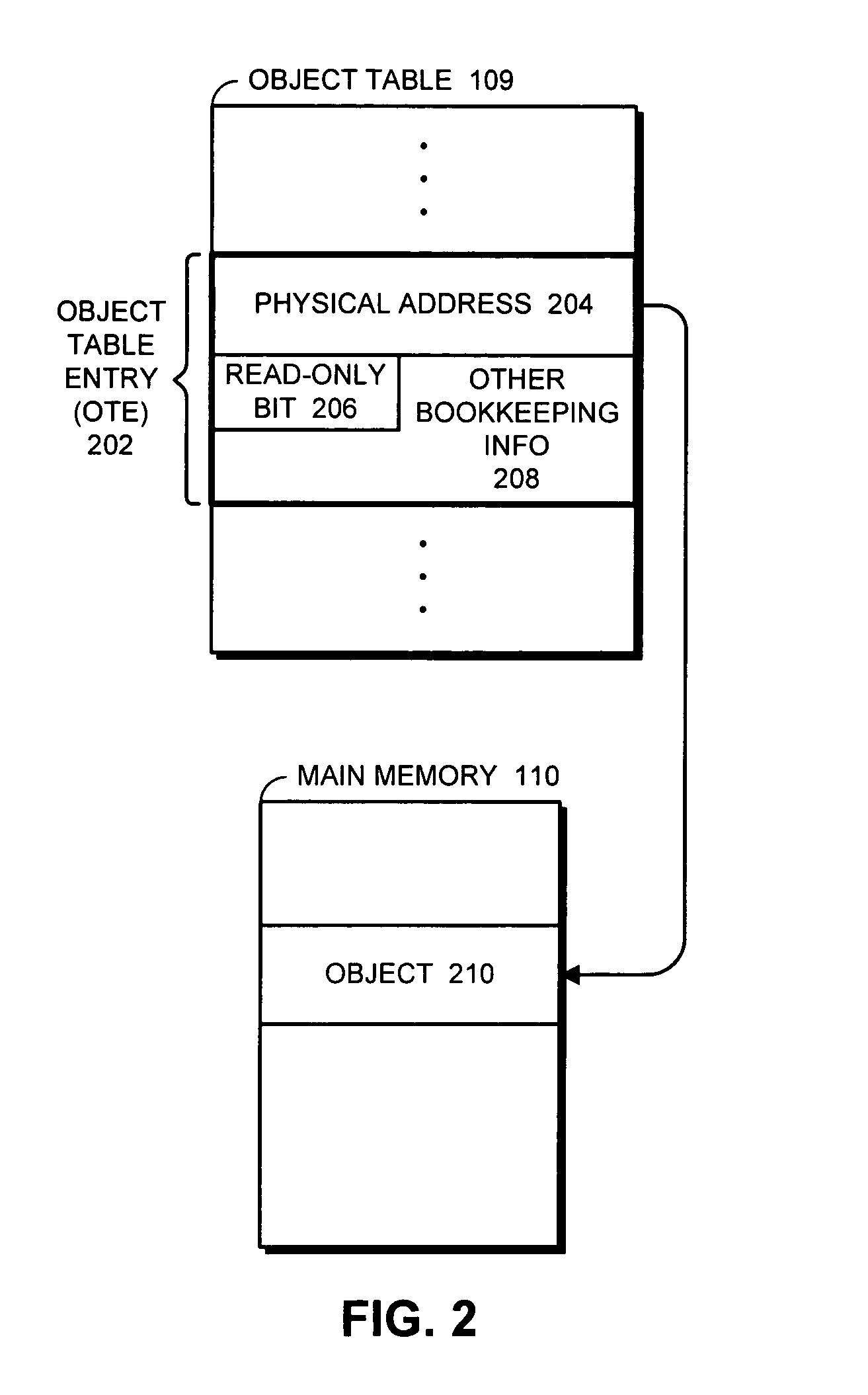

Method and apparatus for supporting read-only objects within an object-addressed memory hierarchy

ActiveUS7249225B1Data processing applicationsUnauthorized memory use protectionMemory hierarchySystem usage

One embodiment of the present invention provides a system that supports read-only objects within an object-addressed memory hierarchy. During operation, the system receives a request to access an object, wherein the request includes an object identifier for the object that is used to reference the object within the object-addressed memory hierarchy. In response to this request, the system uses the object identifier to retrieve an object table entry associated with the object. If the request is a write request, the system examines a read-only indicator within the object table entry. If this read-only indicator specifies that the object is a read-only object, the system performs a corrective action to deal with the fact that the write request is directed to a read-only object.

Owner:ORACLE INT CORP

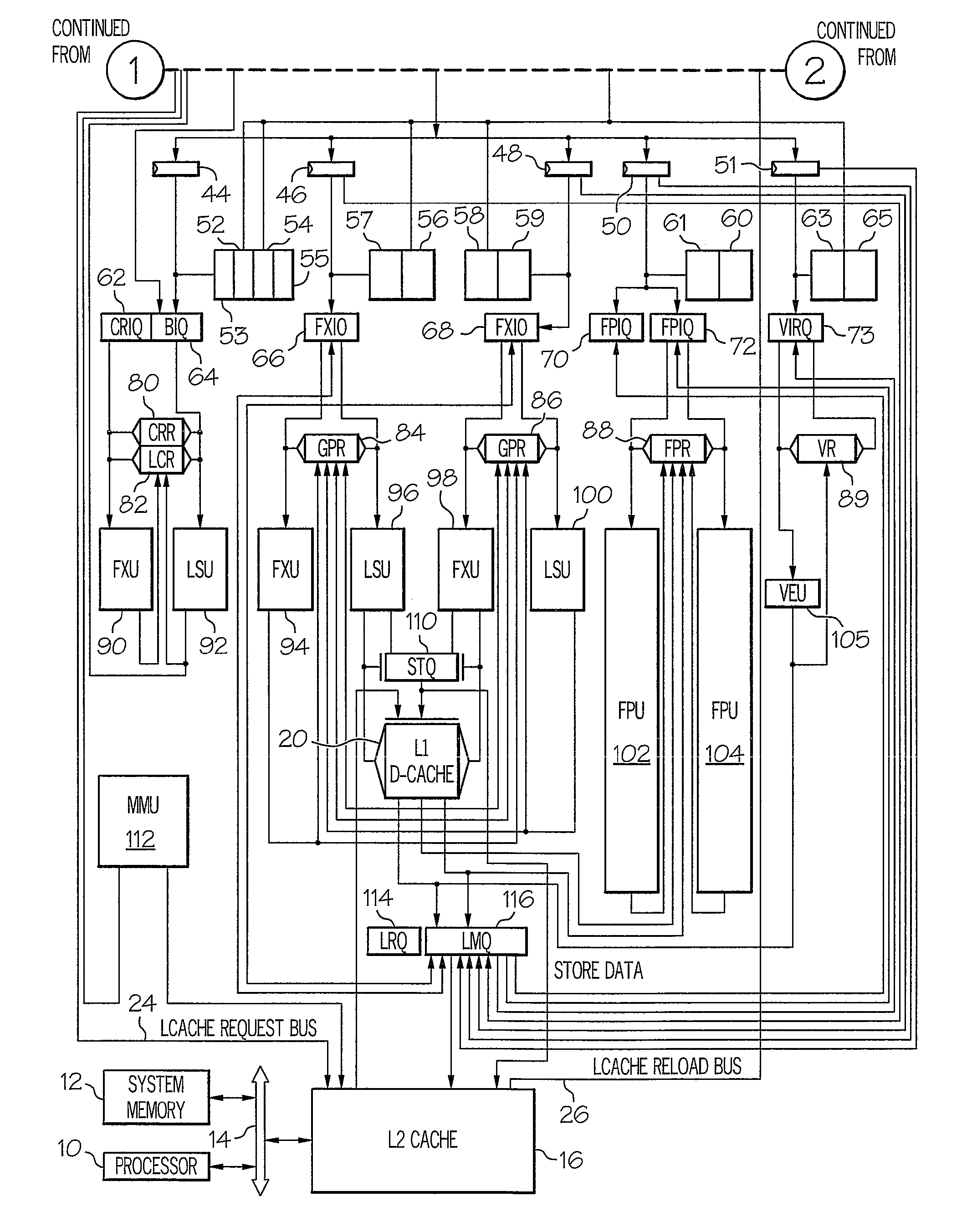

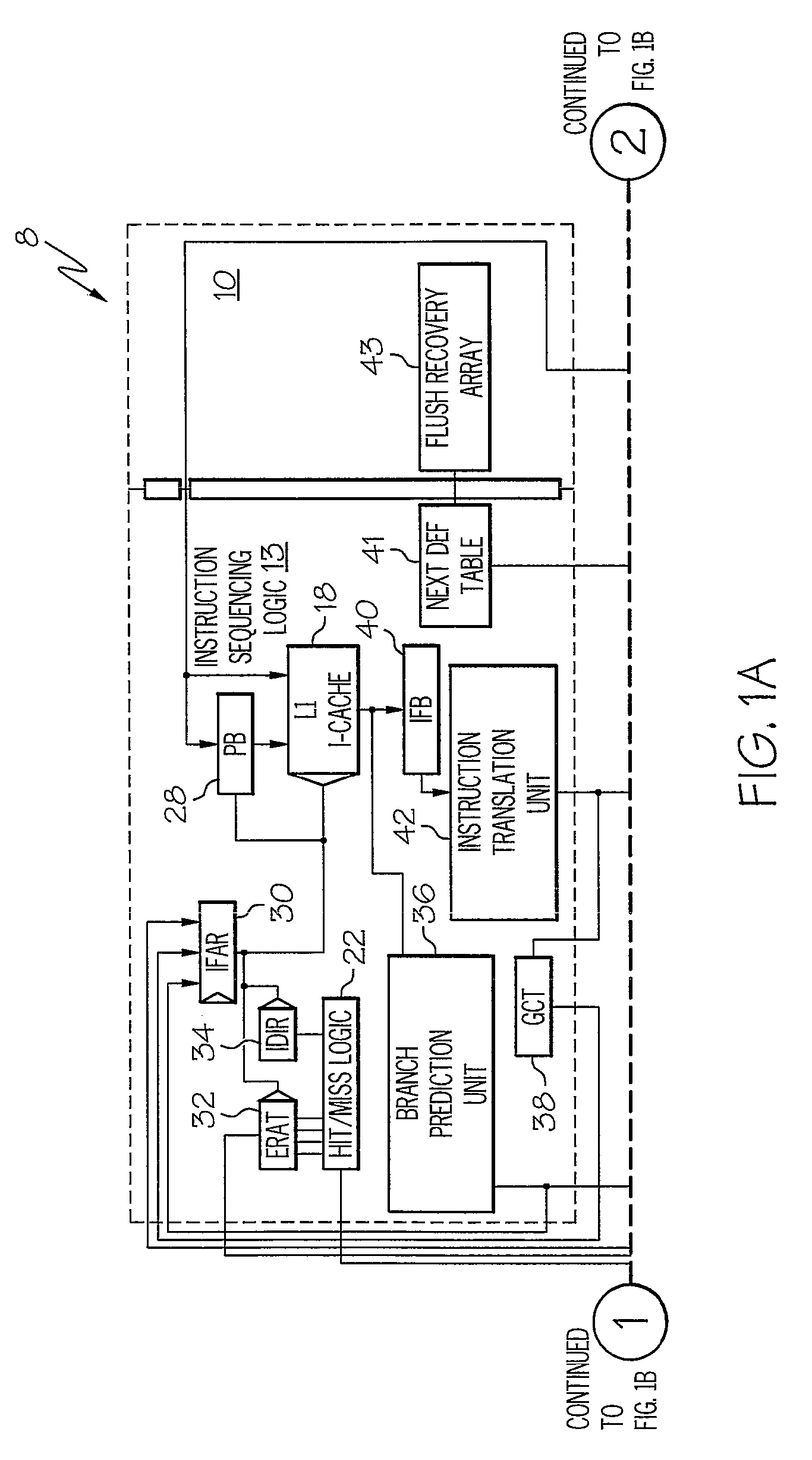

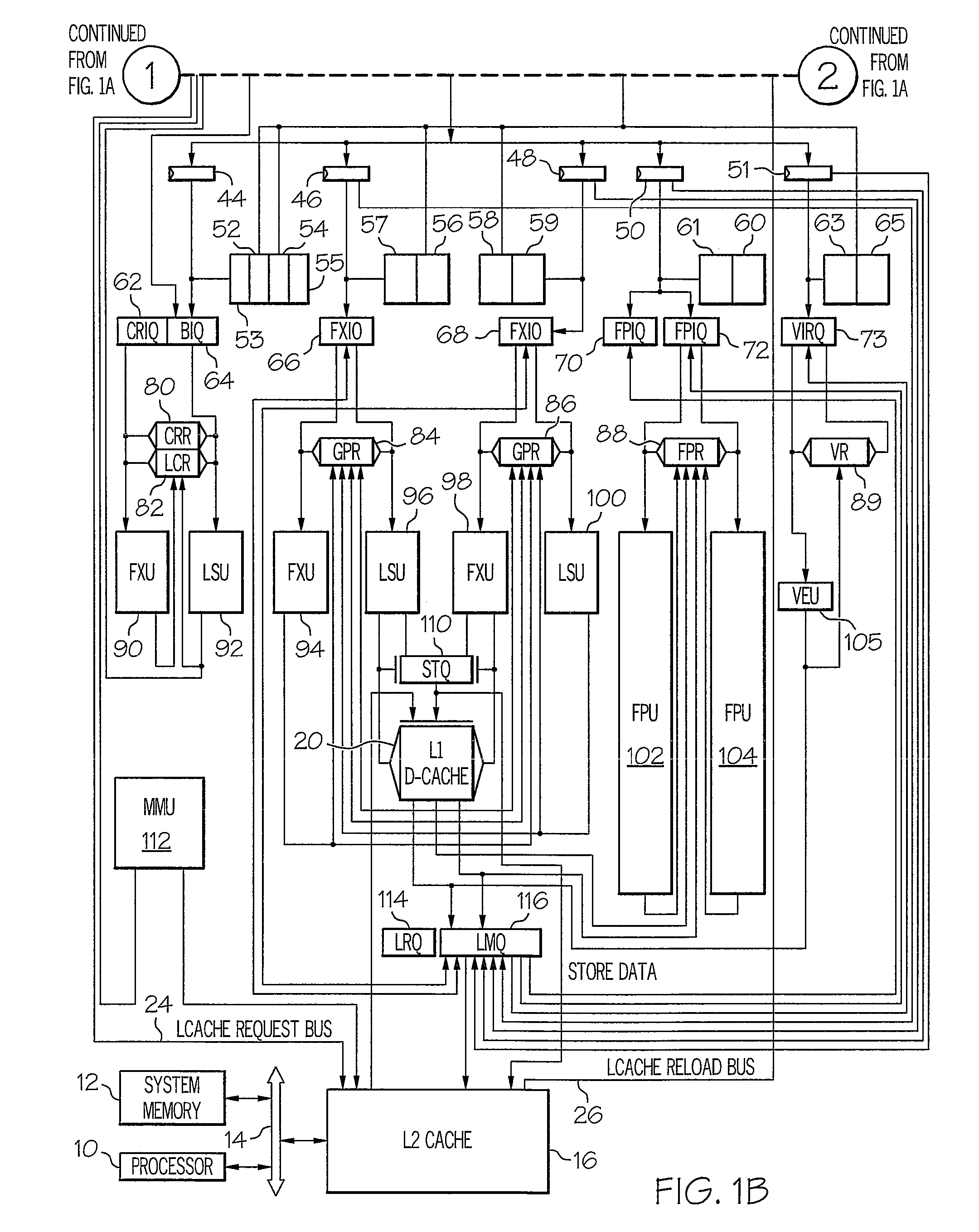

System and Method for Issuing Load-Dependent Instructions from an Issue Queue in a Processing Unit

InactiveUS20090113182A1Conditional code generationRegister arrangementsData processing systemMemory hierarchy

A system and method for issuing load-dependent instructions from an issue queue in a processing unit in a data processing system. In response to a LSU determining that a load request from a load instruction missed a first level in a memory hierarchy, a LMQ allocates a load-miss queue entry corresponding to the load instruction. The LMQ associates at least one instruction dependent on the load request with the load-miss queue entry. Once data associated with the load request is retrieved, the LMQ selects at least one instruction dependent on the load request for execution on the next cycle. At least one instruction dependent on the load request is executed and a result is outputted.

Owner:IBM CORP

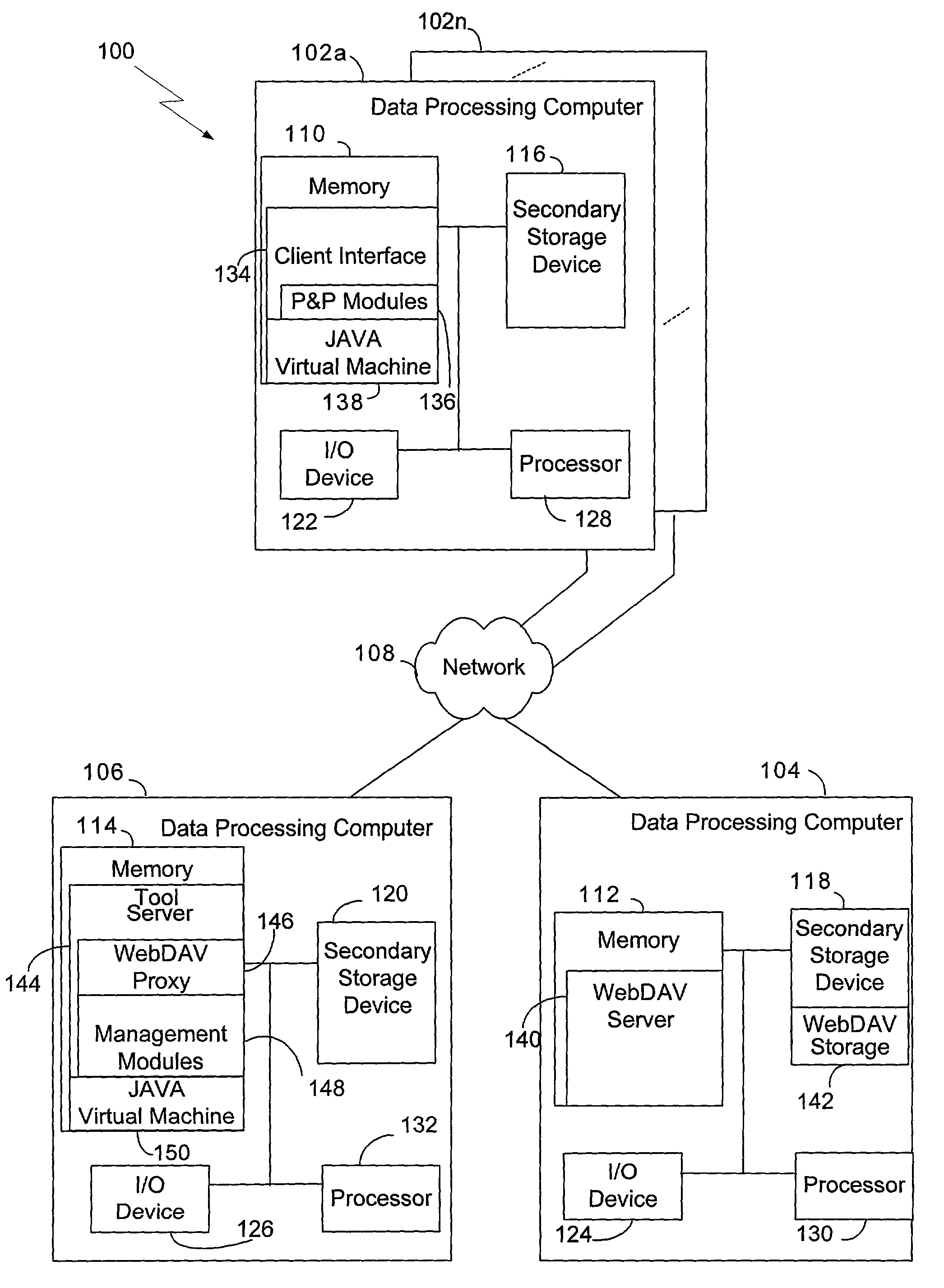

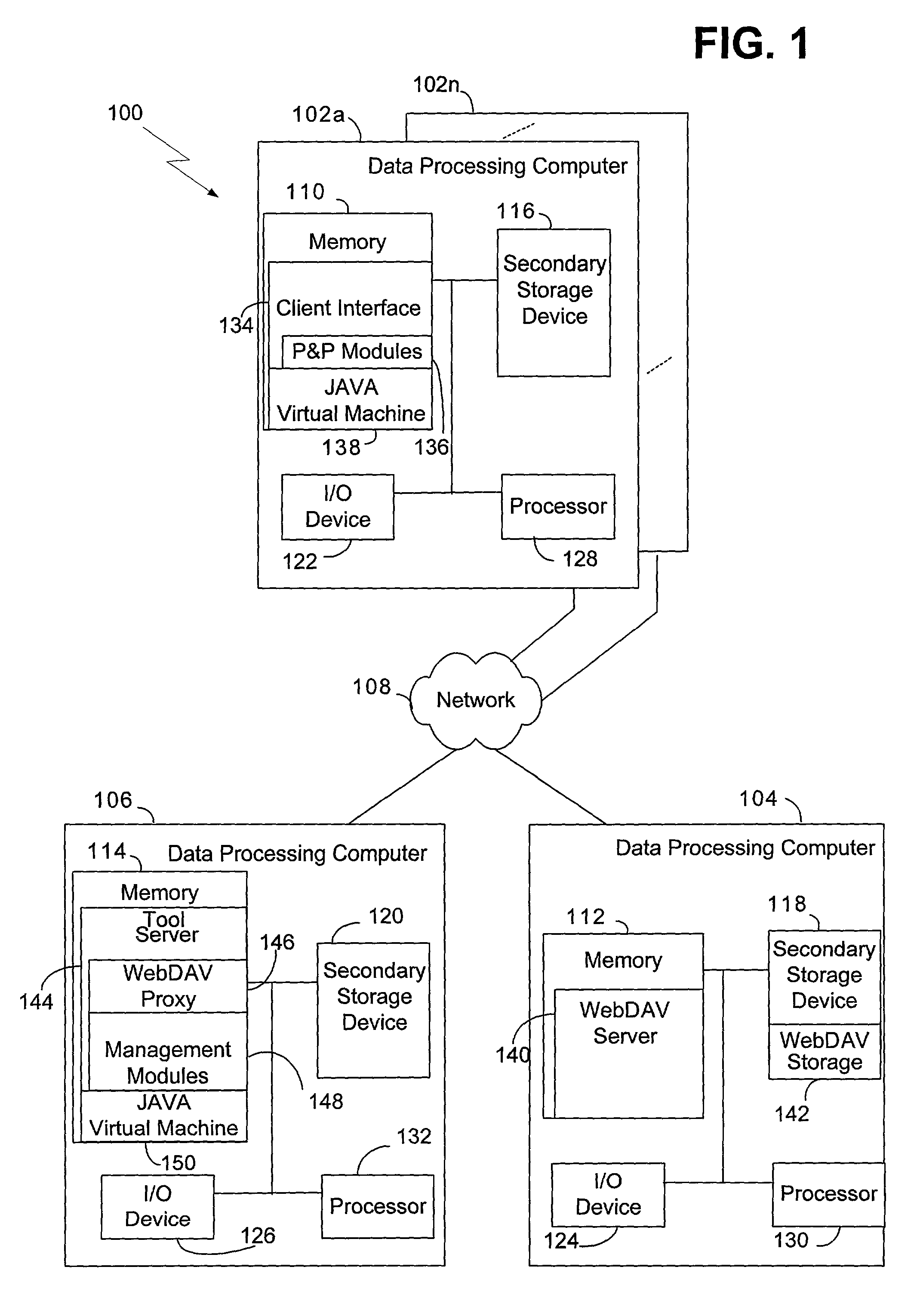

Methods and systems for auto-instantiation of storage hierarchy for project plan

InactiveUS7096222B2Improve efficiencyEasy to moveOffice automationBuying/selling/leasing transactionsMemory hierarchyProject planning

Methods and systems consistent with the present invention allow a user to create a storage hierarchy definition in association with a workflow that models a process, to generate a plan from the workflow that reflects an instance of the process, and to generate a container in accordance with the storage hierarchy definition when the plan is generated from the workflow. The container may then be used to store an artifact that is used or produced by the plan in accordance with methods and systems of the present invention.

Owner:JPMORGAN CHASE BANK N A AS SUCCESSOR AGENT

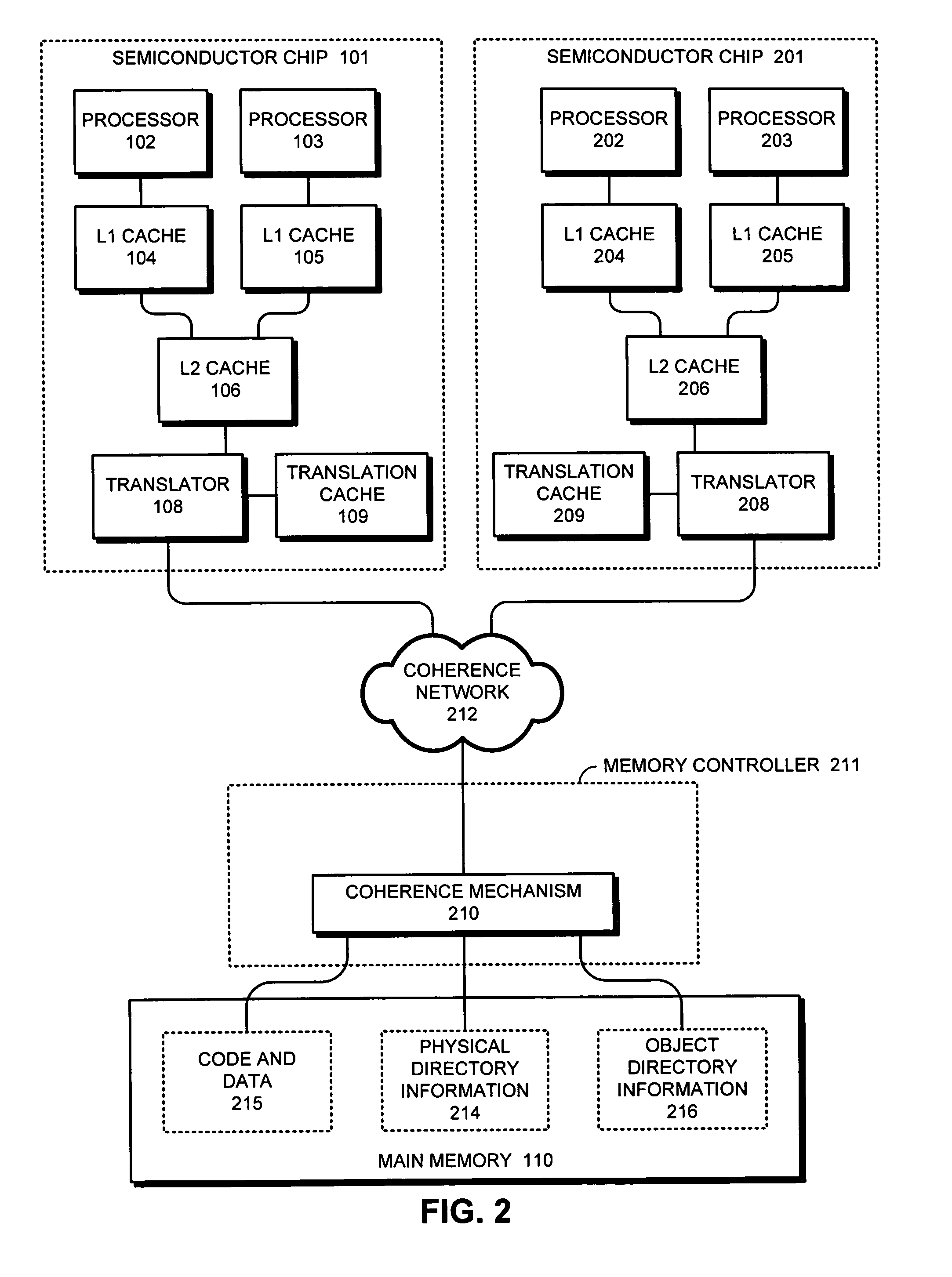

Avoiding inconsistencies between multiple translators in an object-addressed memory hierarchy

ActiveUS7167956B1Avoid inconsistenciesMemory adressing/allocation/relocationMicro-instruction address formationMemory hierarchyObject based

One embodiment of the present invention provides a system that avoids inconsistencies between multiple translators in an object-addressed memory hierarchy. This object-addressed memory hierarchy includes an object cache, which supports references to object cache lines based on object identifiers instead of physical addresses. During operation, the system receives a read-to-share (RTS) signal for an object cache line, wherein the RTS signal is received from a requesting processor as part of a cache-coherence operation. If no processor owns the object cache line, the system causes the requesting processor to become the owner of the object cache line instead of merely holding a copy the object cache line in the shared state. The system also generates a translation for the object cache line in a translator associated with the requesting processor, wherein the translation maps an object identifier and a corresponding offset to a physical address for the object cache line and reconstructs the contents of the object cache line by reading from memory at that physical address. In this way, if the requesting processor owns the object cache line, a subsequent processor that requests the same object cache line will receive the object cache line from the requesting processor, and will not generate an additional translation for the object cache line. This ensures that multiple translators will not generate inconsistent translations for the same object cache line.

Owner:ORACLE INT CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com