Patents

Literature

279 results about "Page fault" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

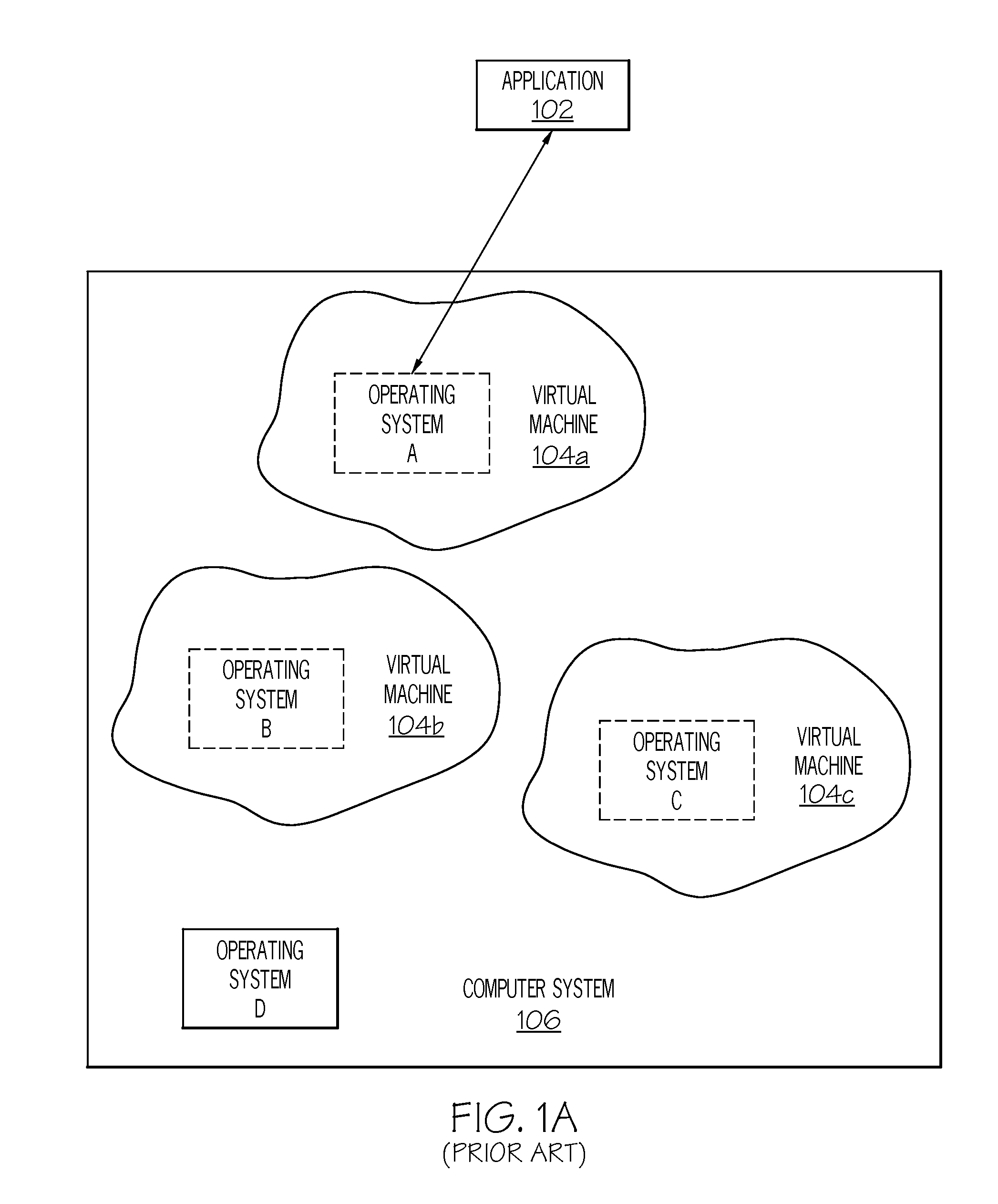

A page fault (sometimes called #PF, PF or hard fault) is a type of exception raised by computer hardware when a running program accesses a memory page that is not currently mapped by the memory management unit (MMU) into the virtual address space of a process. Logically, the page may be accessible to the process, but requires a mapping to be added to the process page tables, and may additionally require the actual page contents to be loaded from a backing store such as a disk. The processor's MMU detects the page fault, while the exception handling software that handles page faults is generally a part of the operating system kernel. When handling a page fault, the operating system generally tries to make the required page accessible at the location in physical memory, or terminates the program in case of an illegal memory access.

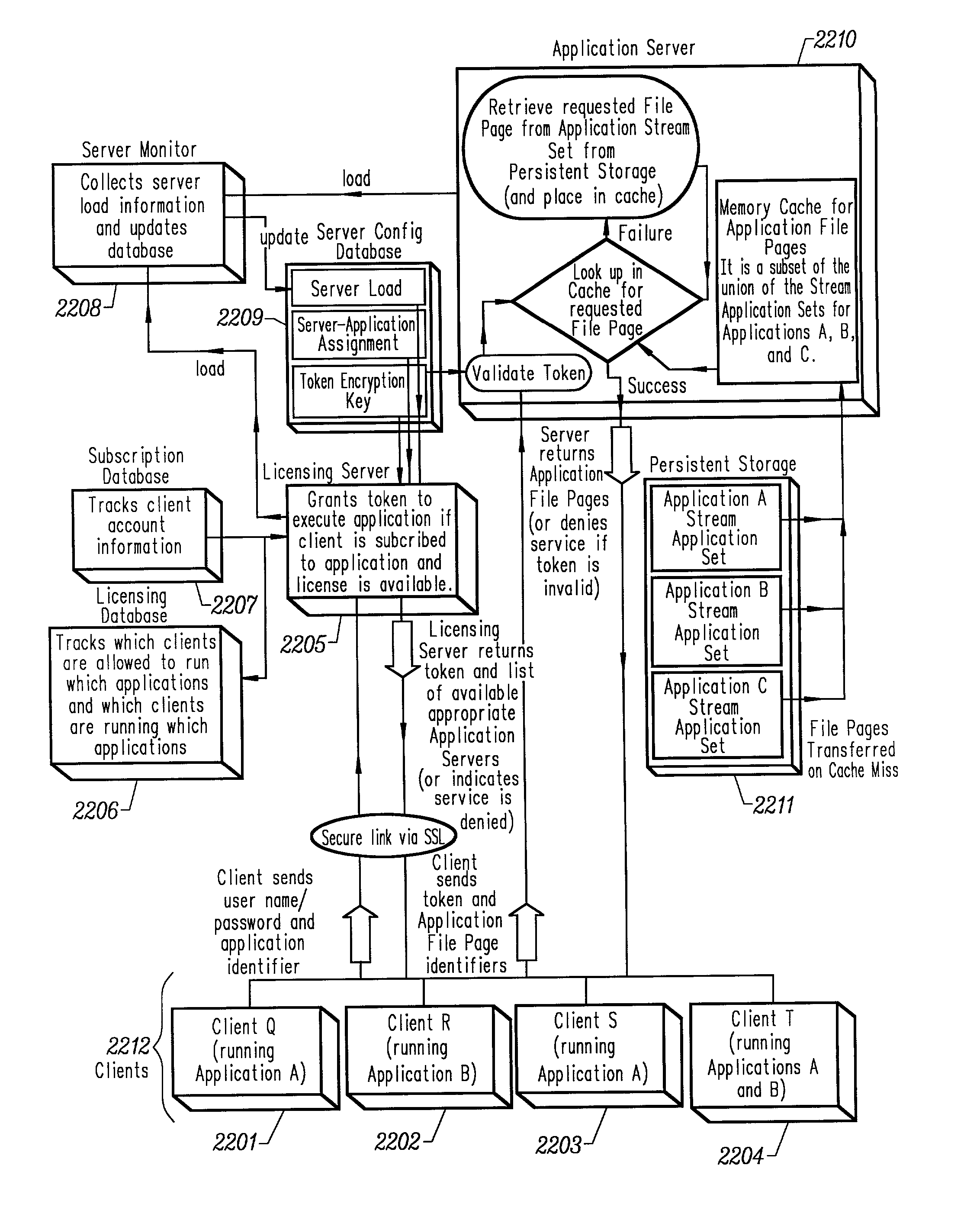

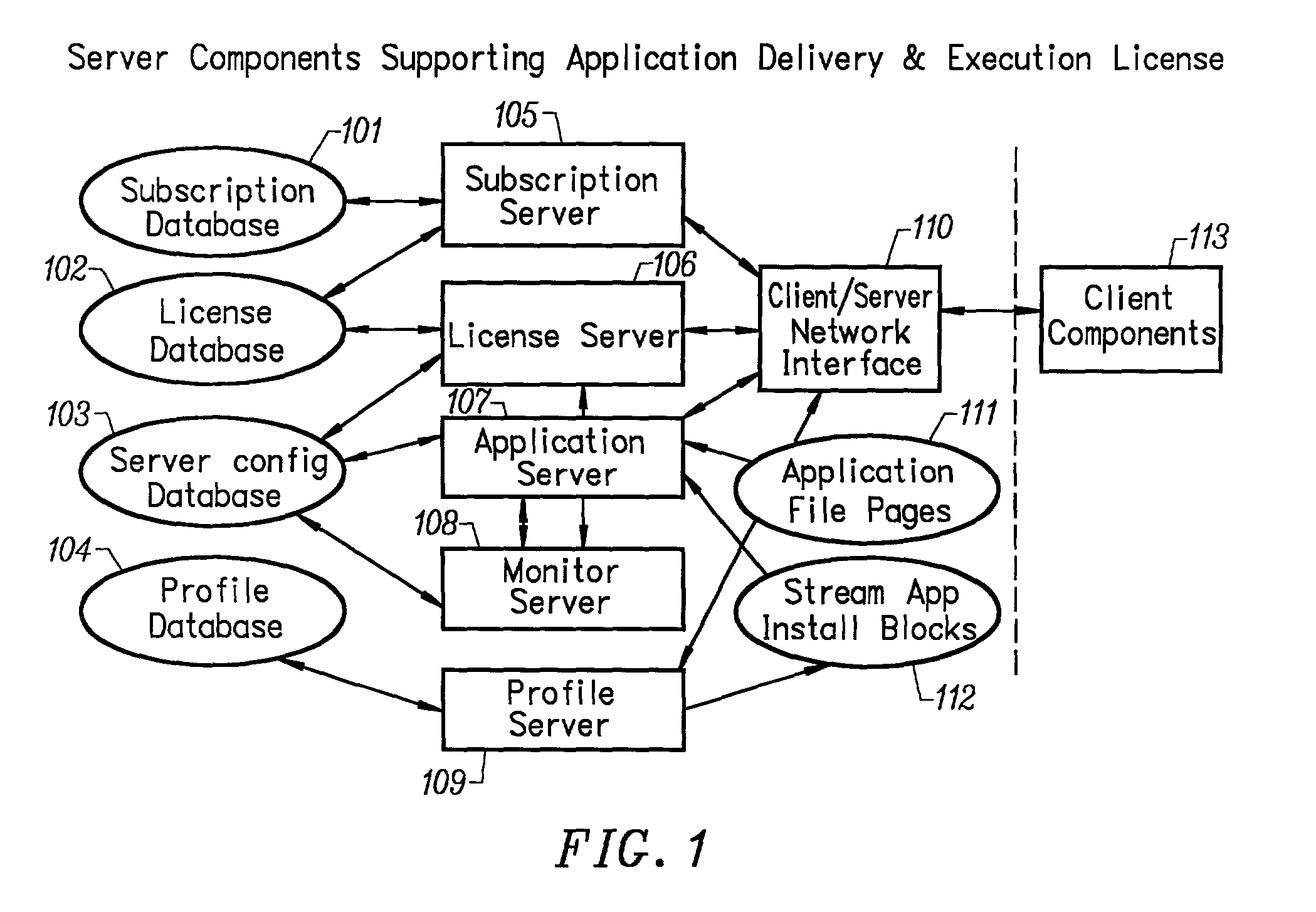

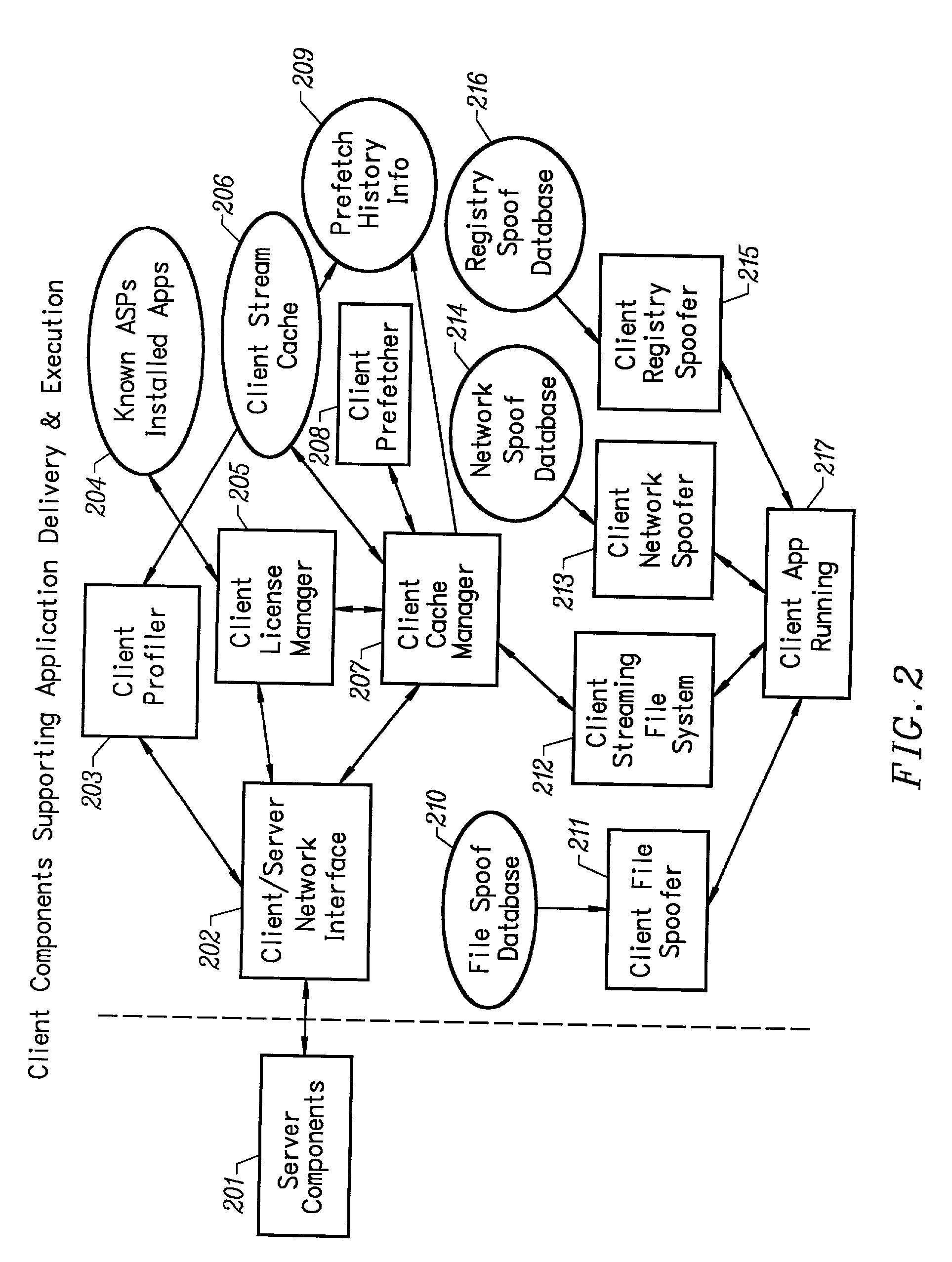

Intelligent network streaming and execution system for conventionally coded applications

InactiveUS7062567B2Multiple digital computer combinationsDigital data authenticationIntelligent NetworkApplication server

An intelligent network streaming and execution system for conventionally coded applications provides a system that partitions an application program into page segments by observing the manner in which the application program is conventionally installed. A minimal portion of the application program is installed on a client system and the user launches the application in the same ways that applications on other client file systems are started. An application program server streams the page segments to the client as the application program executes on the client and the client stores the page segments in a cache. Page segments are requested by the client from the application server whenever a page fault occurs from the cache for the application program. The client prefetches page segments from the application server or the application server pushes additional page segments to the client based on the pattern of page segment requests for that particular application. The user subscribes and unsubscribes to application programs, whenever the user accesses an application program a securely encrypted access token is obtained from a license server if the user has a valid subscription to the application program. The application server begins streaming the requested page segments to the client when it receives a valid access token from the client. The client performs server load balancing across a plurality of application servers. If the client observes a non-response or slow response condition from an application server or license server, it switches to another application or license server.

Owner:NUMECENT HLDG

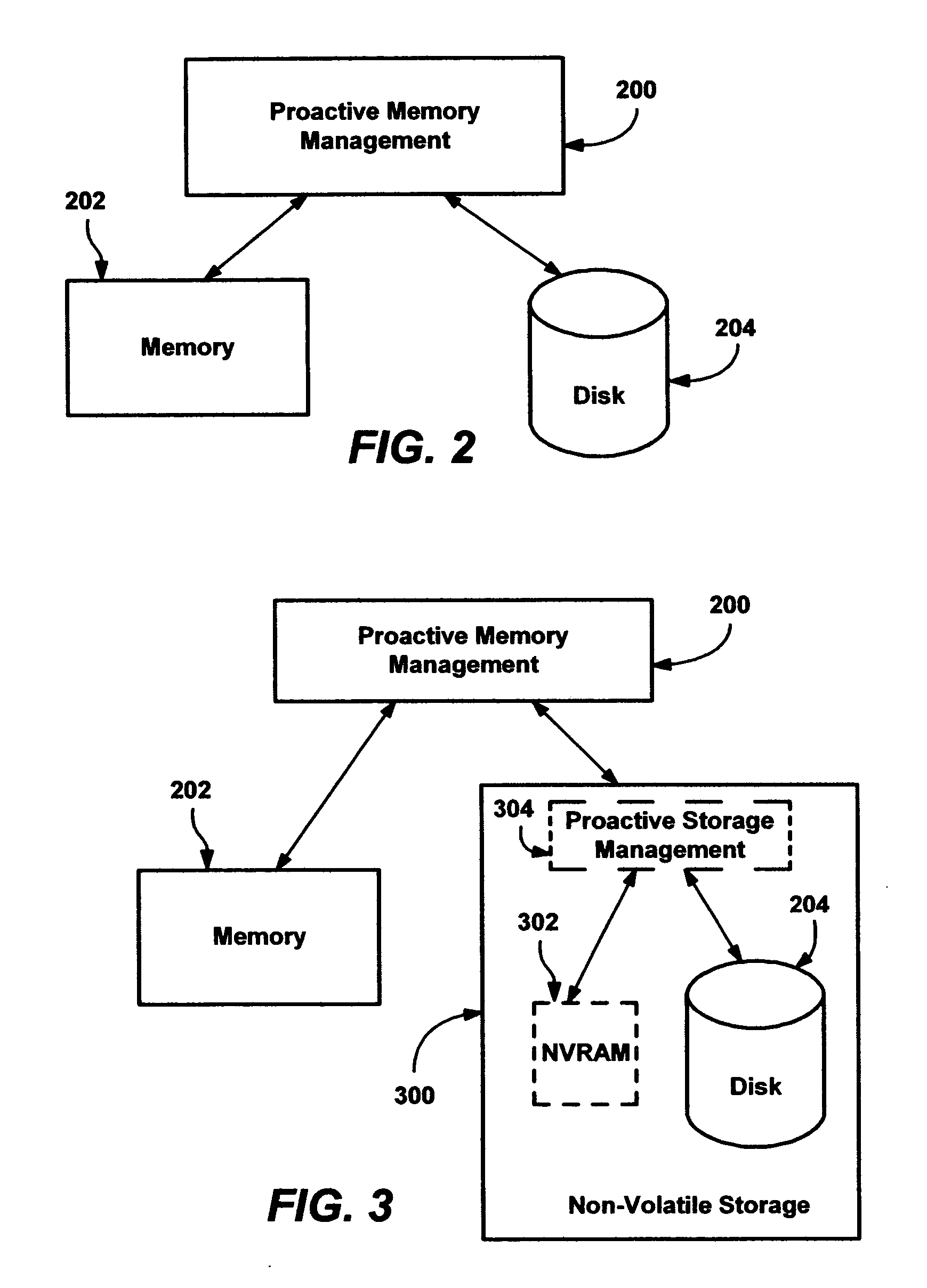

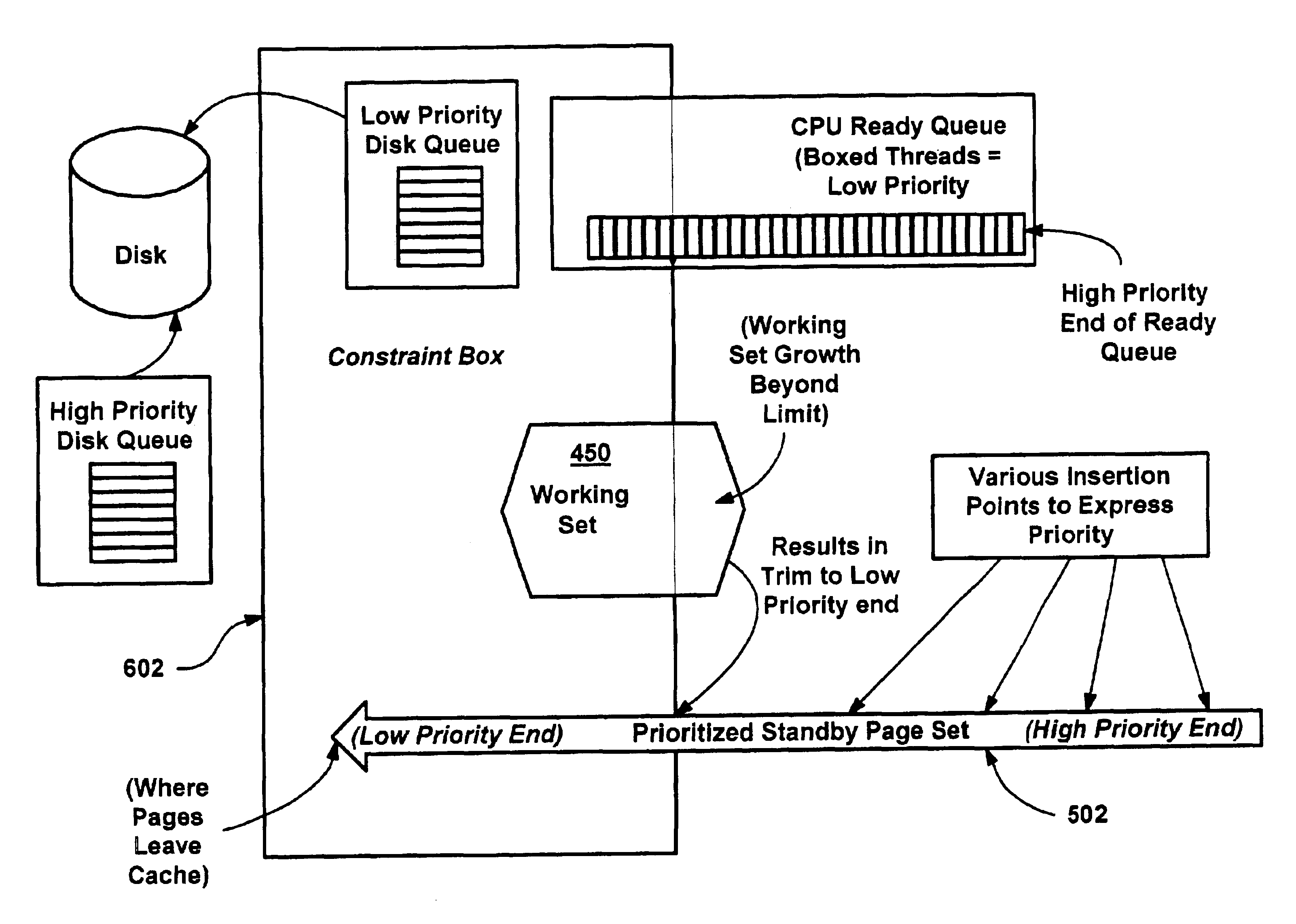

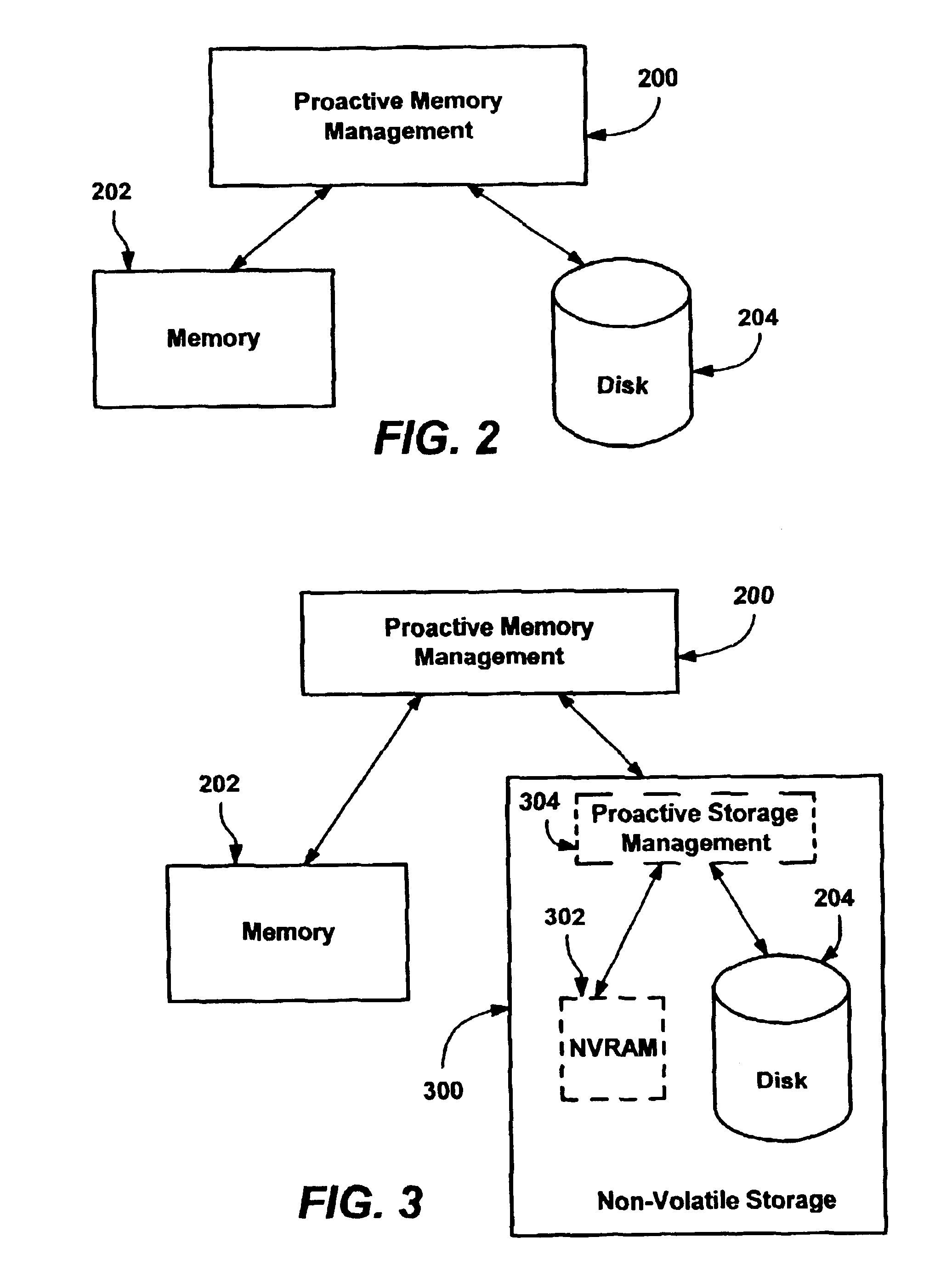

Methods and mechanisms for proactive memory management

InactiveUS20050228964A1Reduces or eliminates on-demand disk transfer operationsReduces or eliminates I/O bottlenecksInput/output to record carriersFault responseSelf-tuningTerm memory

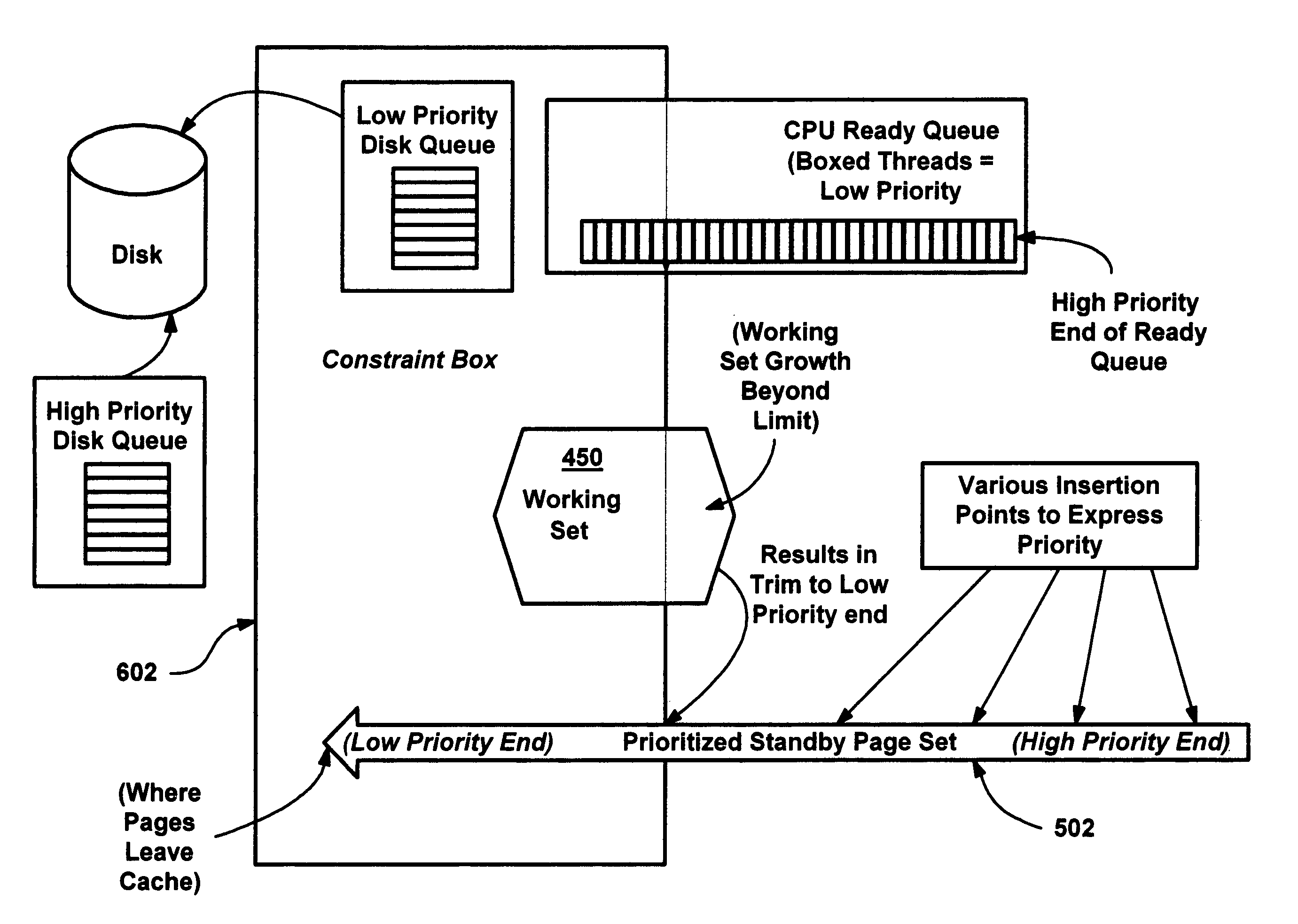

A proactive, resilient and self-tuning memory management system and method that result in actual and perceived performance improvements in memory management, by loading and maintaining data that is likely to be needed into memory, before the data is actually needed. The system includes mechanisms directed towards historical memory usage monitoring, memory usage analysis, refreshing memory with highly-valued (e.g., highly utilized) pages, I / O pre-fetching efficiency, and aggressive disk management. Based on the memory usage information, pages are prioritized with relative values, and mechanisms work to pre-fetch and / or maintain the more valuable pages in memory. Pages are pre-fetched and maintained in a prioritized standby page set that includes a number of subsets, by which more valuable pages remain in memory over less valuable pages. Valuable data that is paged out may be automatically brought back, in a resilient manner. Benefits include significantly reducing or even eliminating disk I / O due to memory page faults.

Owner:MICROSOFT TECH LICENSING LLC

Methods and mechanisms for proactive memory management

InactiveUS6910106B2Raise priorityIncrease profitInput/output to record carriersFault responseSelf-tuningPaging

A proactive, resilient and self-tuning memory management system and method that result in actual and perceived performance improvements in memory management, by loading and maintaining data that is likely to be needed into memory, before the data is actually needed. The system includes mechanisms directed towards historical memory usage monitoring, memory usage analysis, refreshing memory with highly-valued (e.g., highly utilized) pages, I / O pre-fetching efficiency, and aggressive disk management. Based on the memory usage information, pages are prioritized with relative values, and mechanisms work to pre-fetch and / or maintain the more valuable pages in memory. Pages are pre-fetched and maintained in a prioritized standby page set that includes a number of subsets, by which more valuable pages remain in memory over less valuable pages. Valuable data that is paged out may be automatically brought back, in a resilient manner. Benefits include significantly reducing or even eliminating disk I / O due to memory page faults.

Owner:MICROSOFT TECH LICENSING LLC

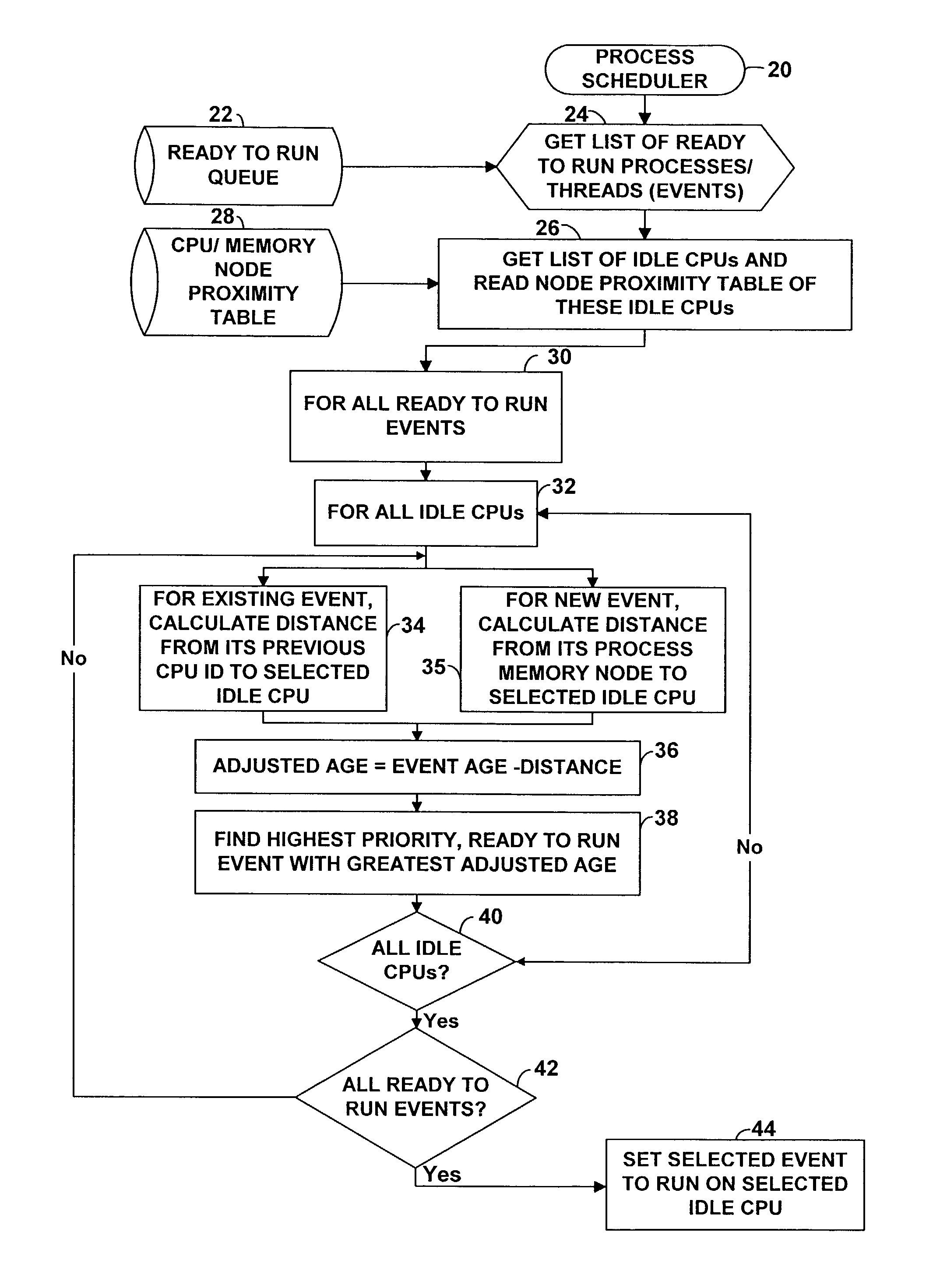

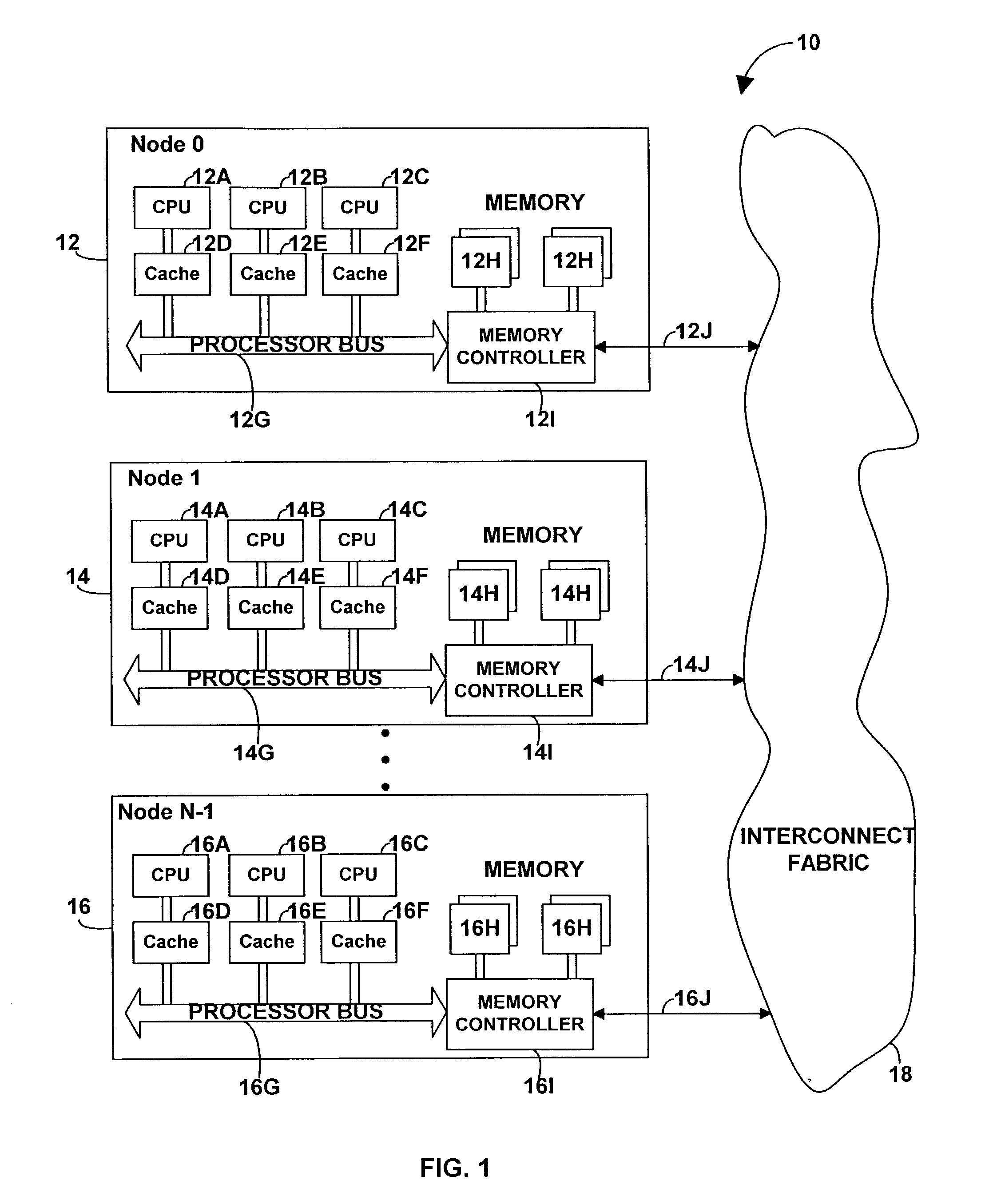

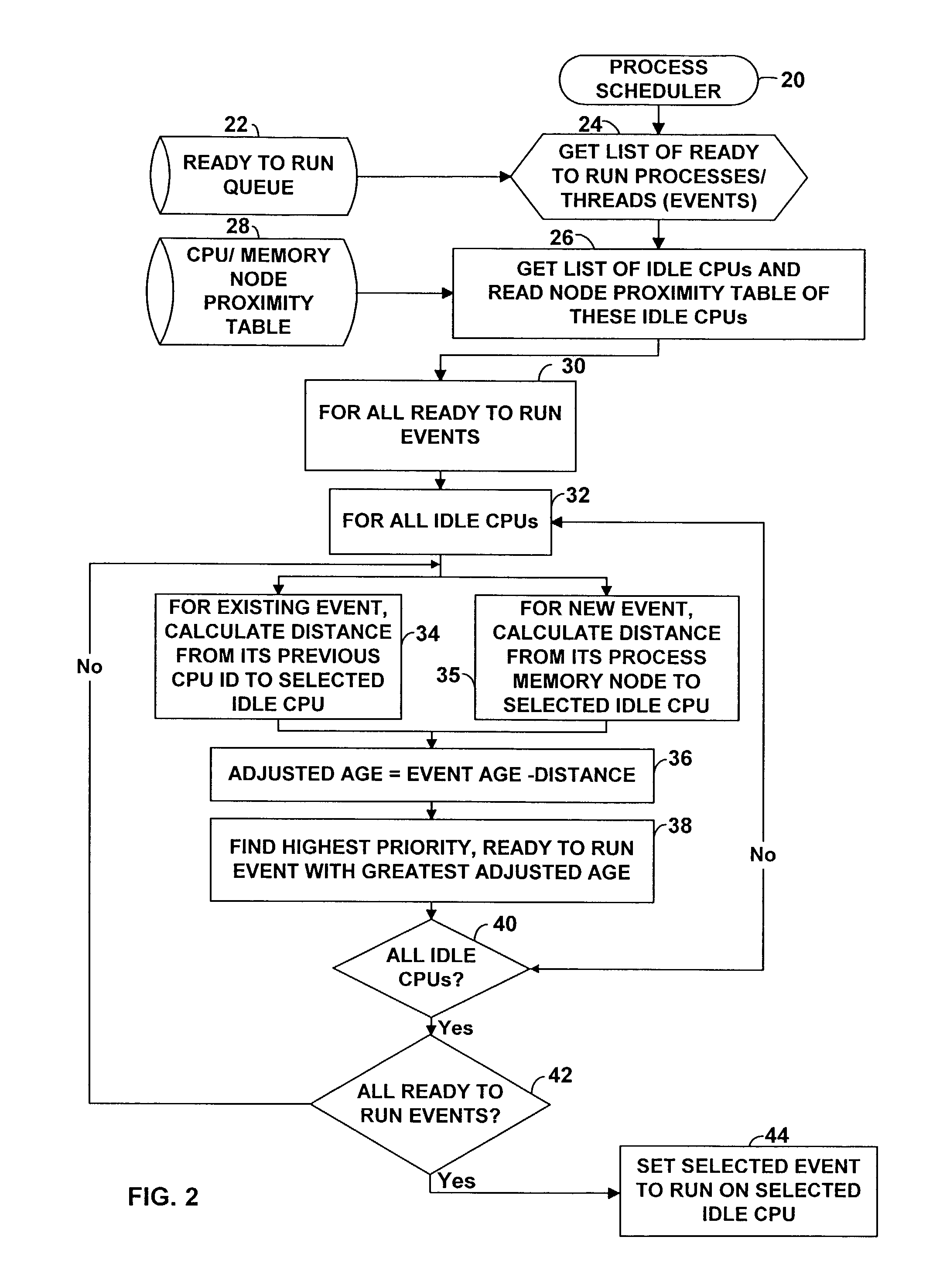

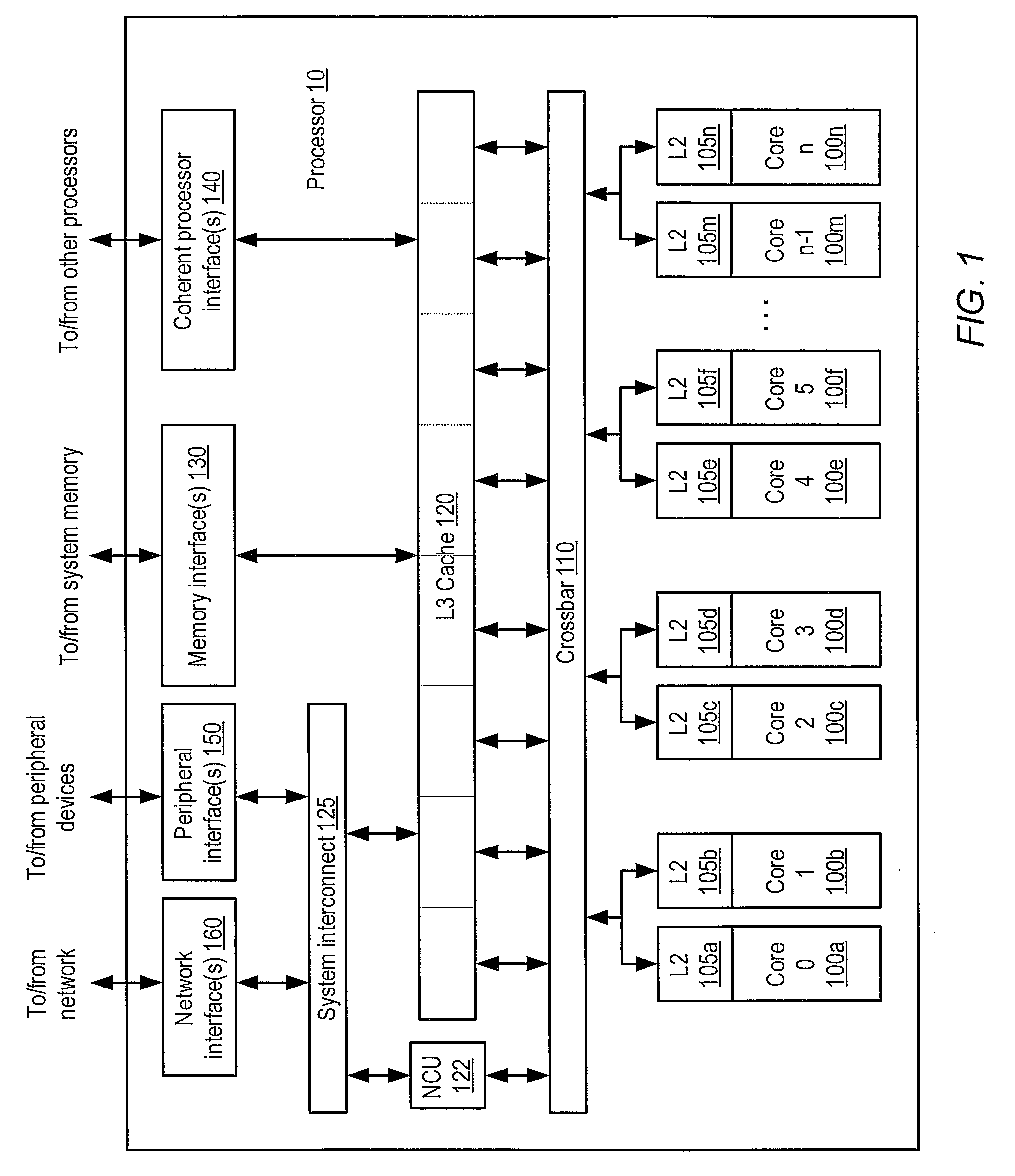

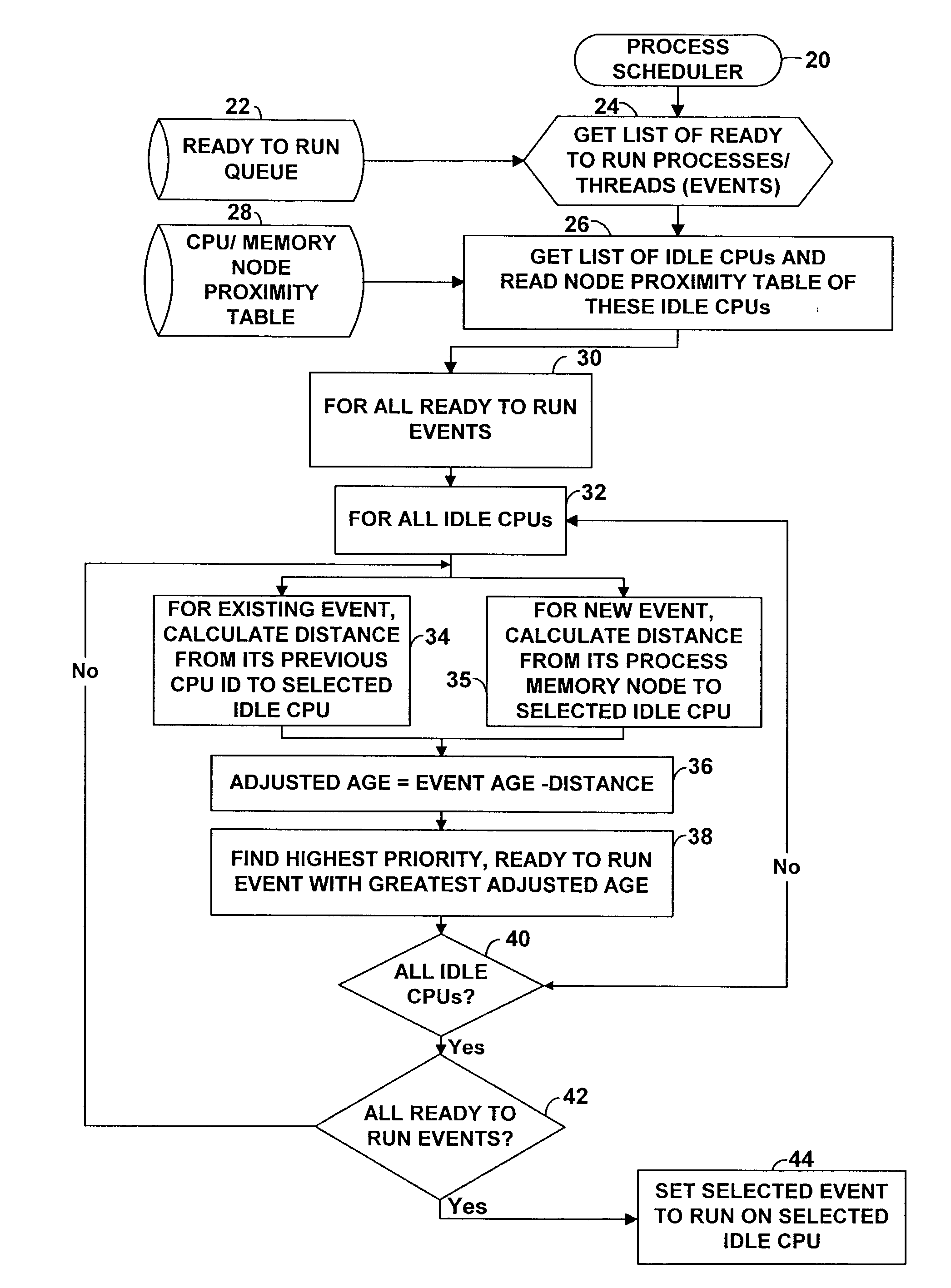

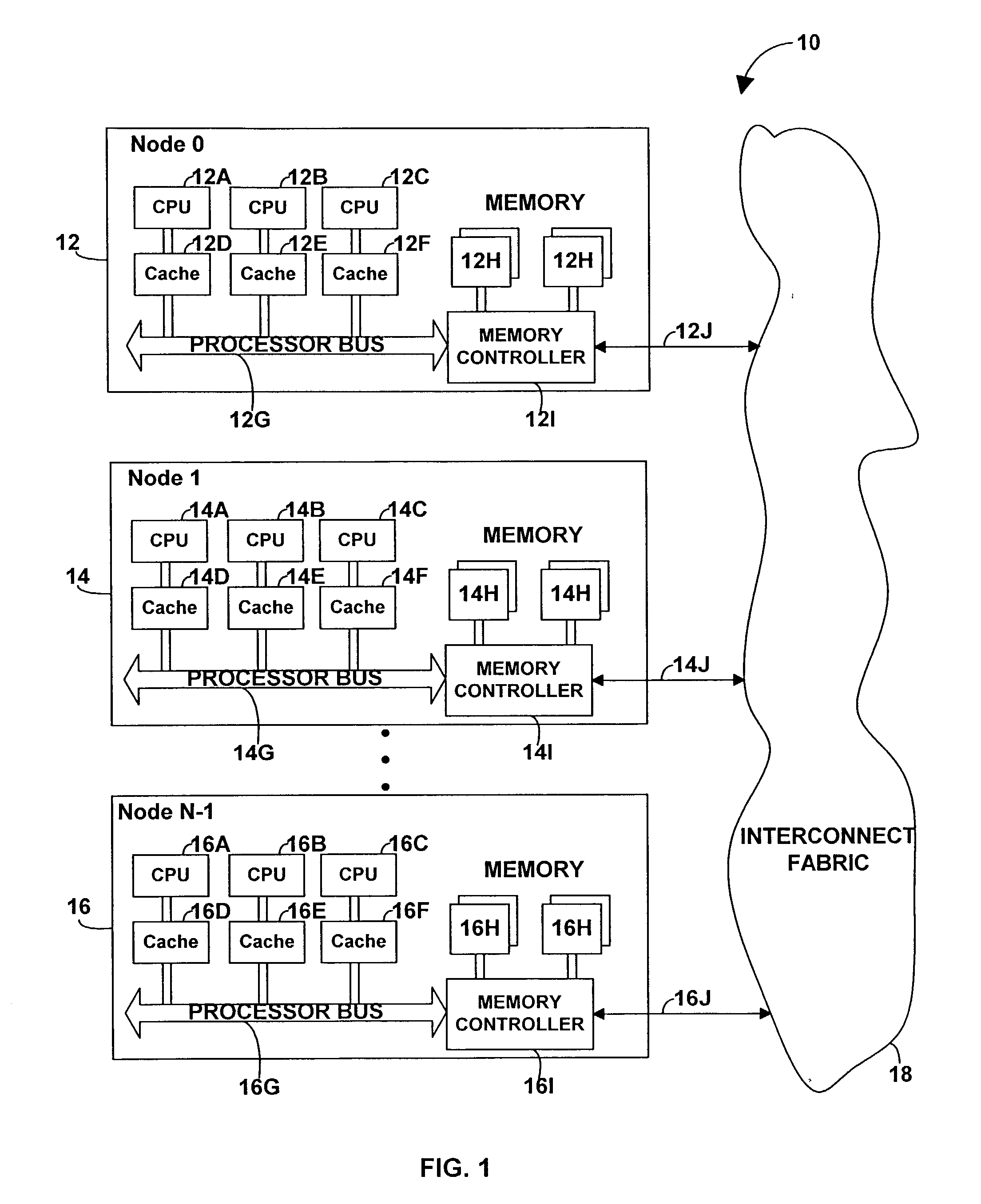

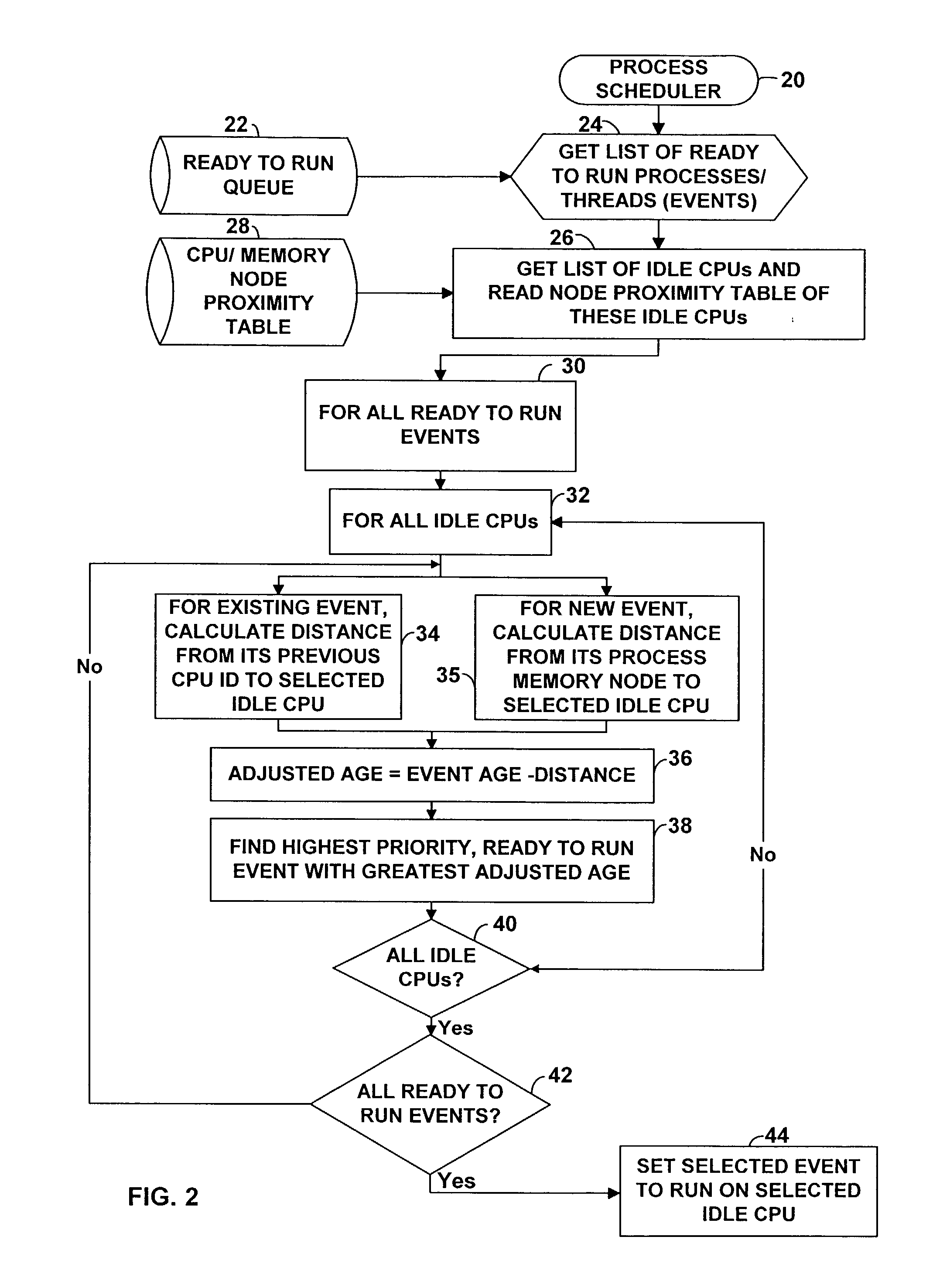

Method and apparatus for optimizing performance in a multi-processing system

InactiveUS7143412B2Interprogram communicationDigital computer detailsError processingThread scheduling

A technique for improving performance in a multi-processor system by reducing access latency by correlating processor, node and memory allocation. Specifically, a Process / Thread Scheduler is modified such that system mapping and node proximity tables may be referenced to help determine processor assignments for ready-to-run processes / threads. Processors are chosen to minimize access latency. Further, the Page Fault Handler is modified such that free memory pages are assigned to a process based partially on the proximity of the memory with respect to the processor requesting memory allocation.

Owner:HEWLETT PACKARD DEV CO LP

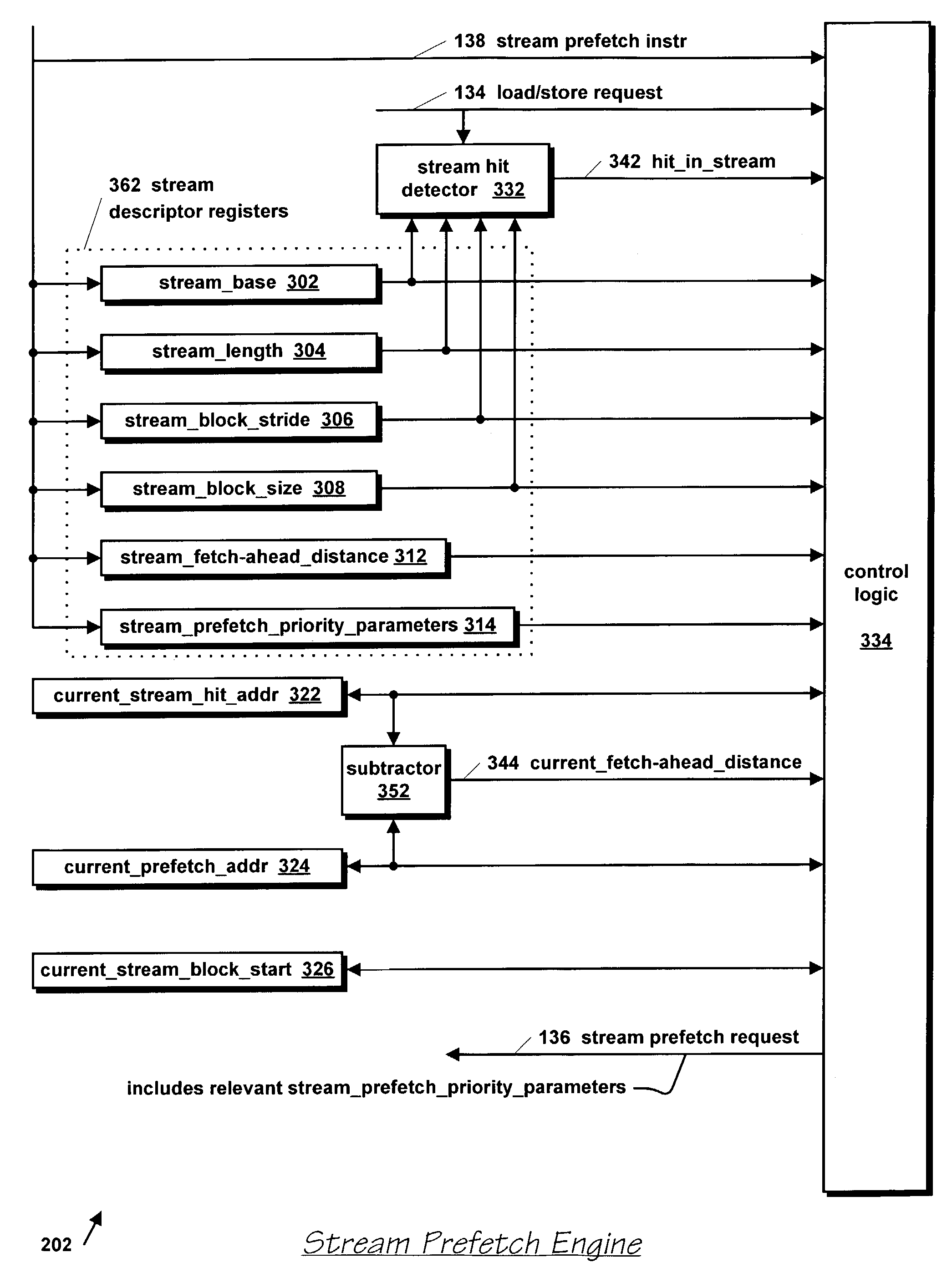

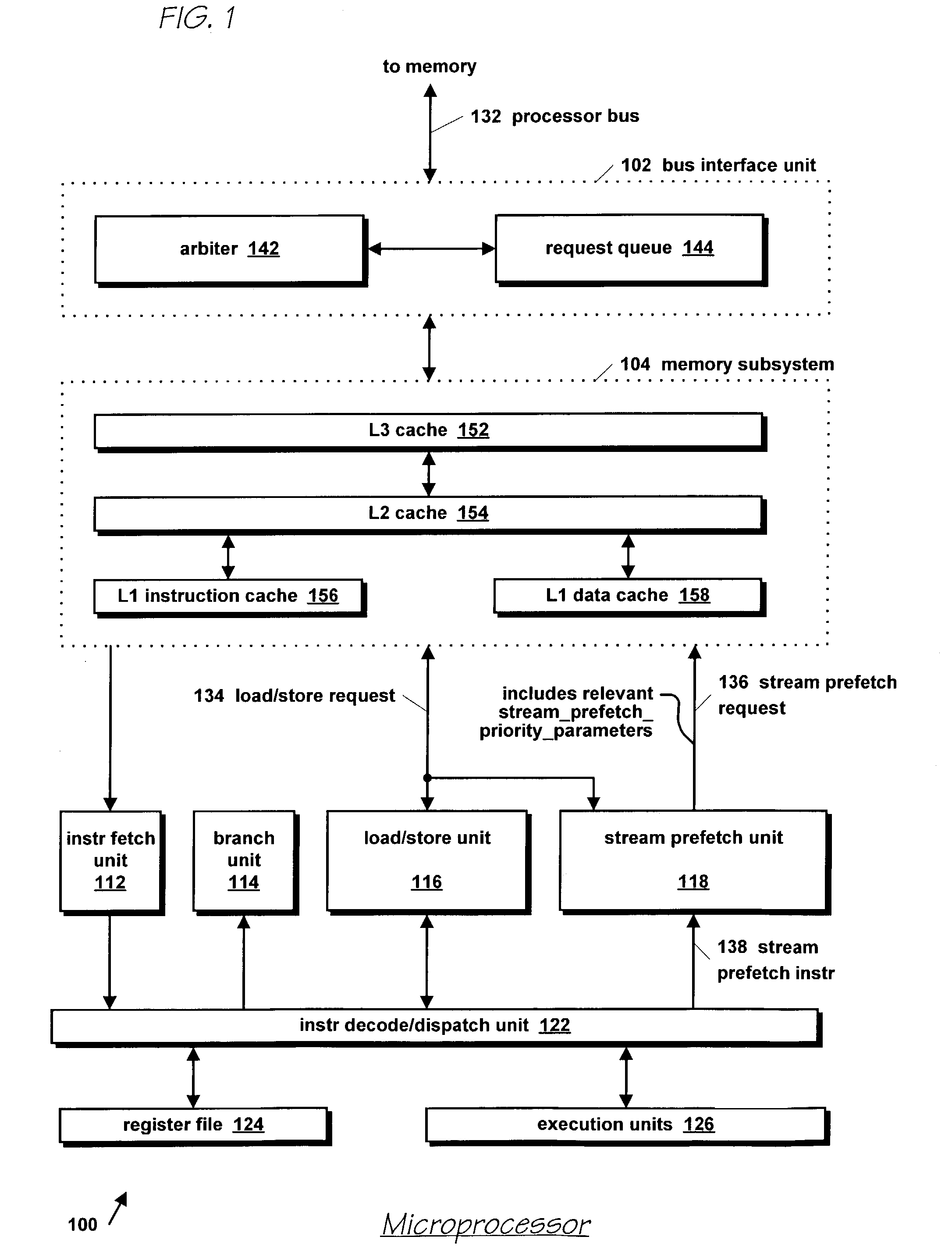

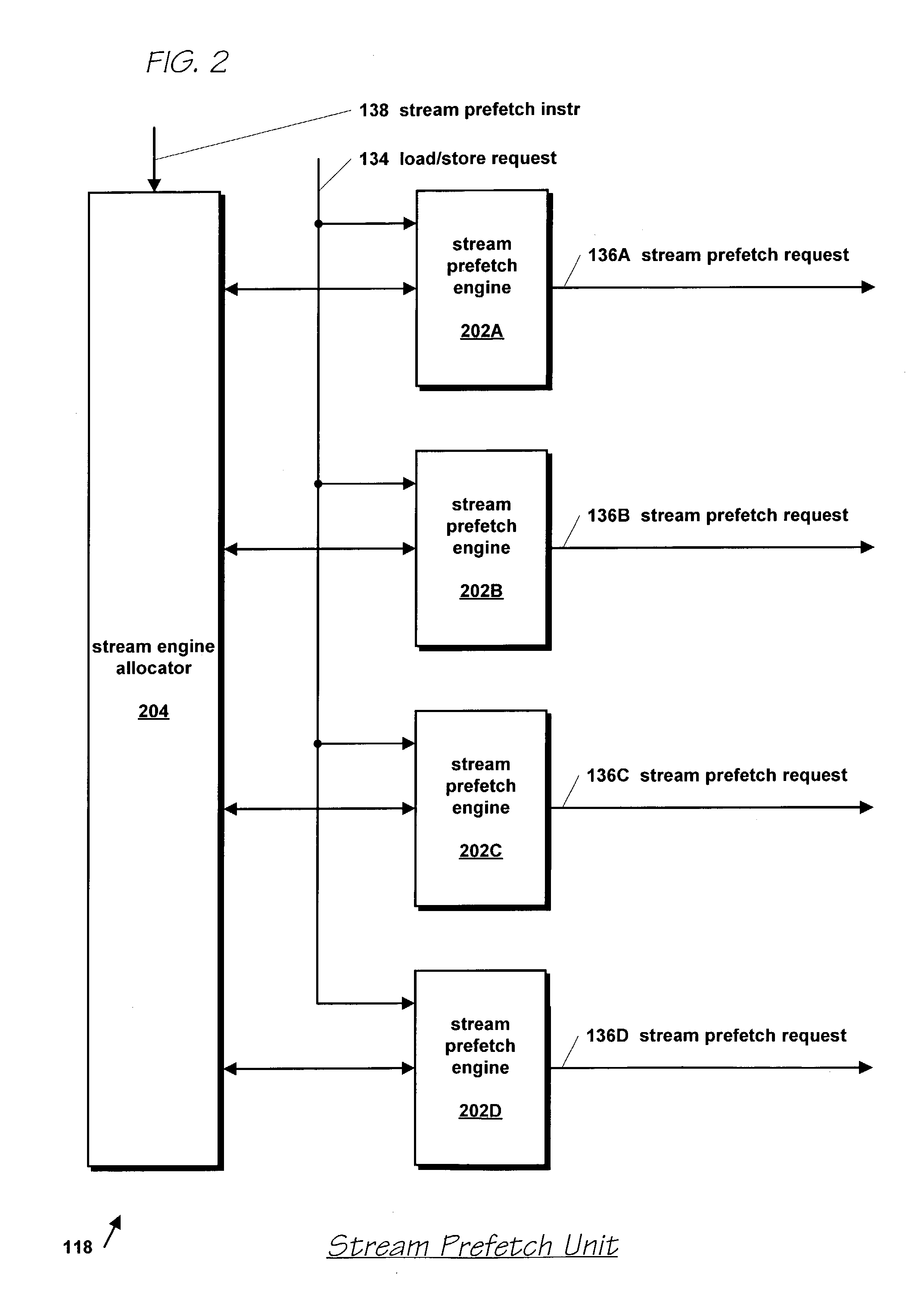

Microprocessor with improved data stream prefetching

ActiveUS7177985B1Improving data stream prefetchingMemory architecture accessing/allocationDigital computer detailsCache hierarchyPage fault

A microprocessor with multiple stream prefetch engines each executing a stream prefetch instruction to prefetch a complex data stream specified by the instruction in a manner synchronized with program execution of loads from the stream is provided. The stream prefetch engine stays at least a fetch-ahead distance (specified in the instruction) ahead of the program loads, which may randomly access the stream. The instruction specifies a level in the cache hierarchy to prefetch into, a locality indicator to specify the urgency and ephemerality of the stream, a stream prefetch priority, a TLB miss policy, a page fault miss policy, a protection violation policy, and a hysteresis value, specifying a minimum number of bytes to prefetch when the stream prefetch engine resumes prefetching. The memory subsystem includes a separate TLB for stream prefetches; or a joint TLB backing the stream prefetch TLB and load / store TLB; or a separate TLB for each prefetch engine.

Owner:ARM FINANCE OVERSEAS LTD

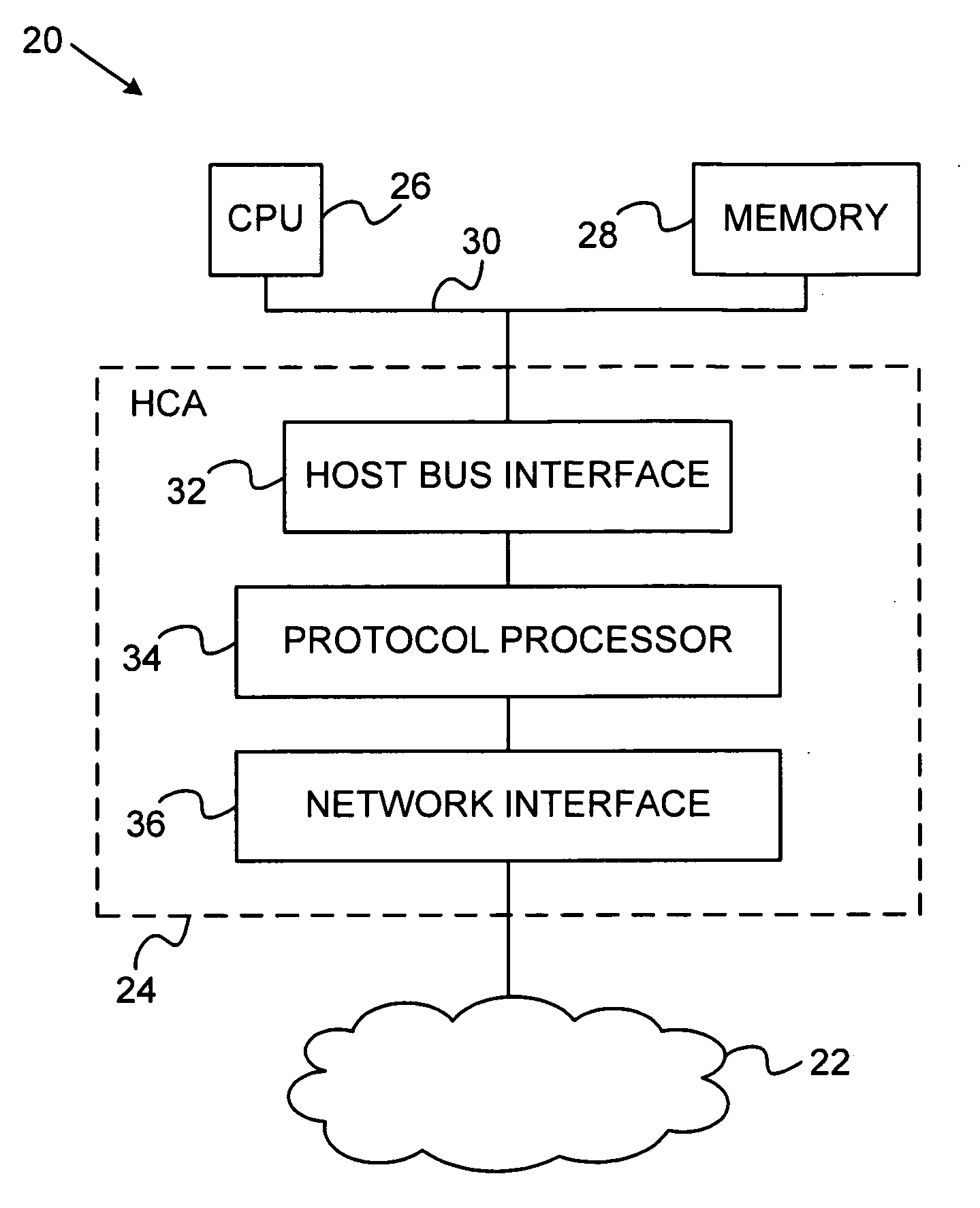

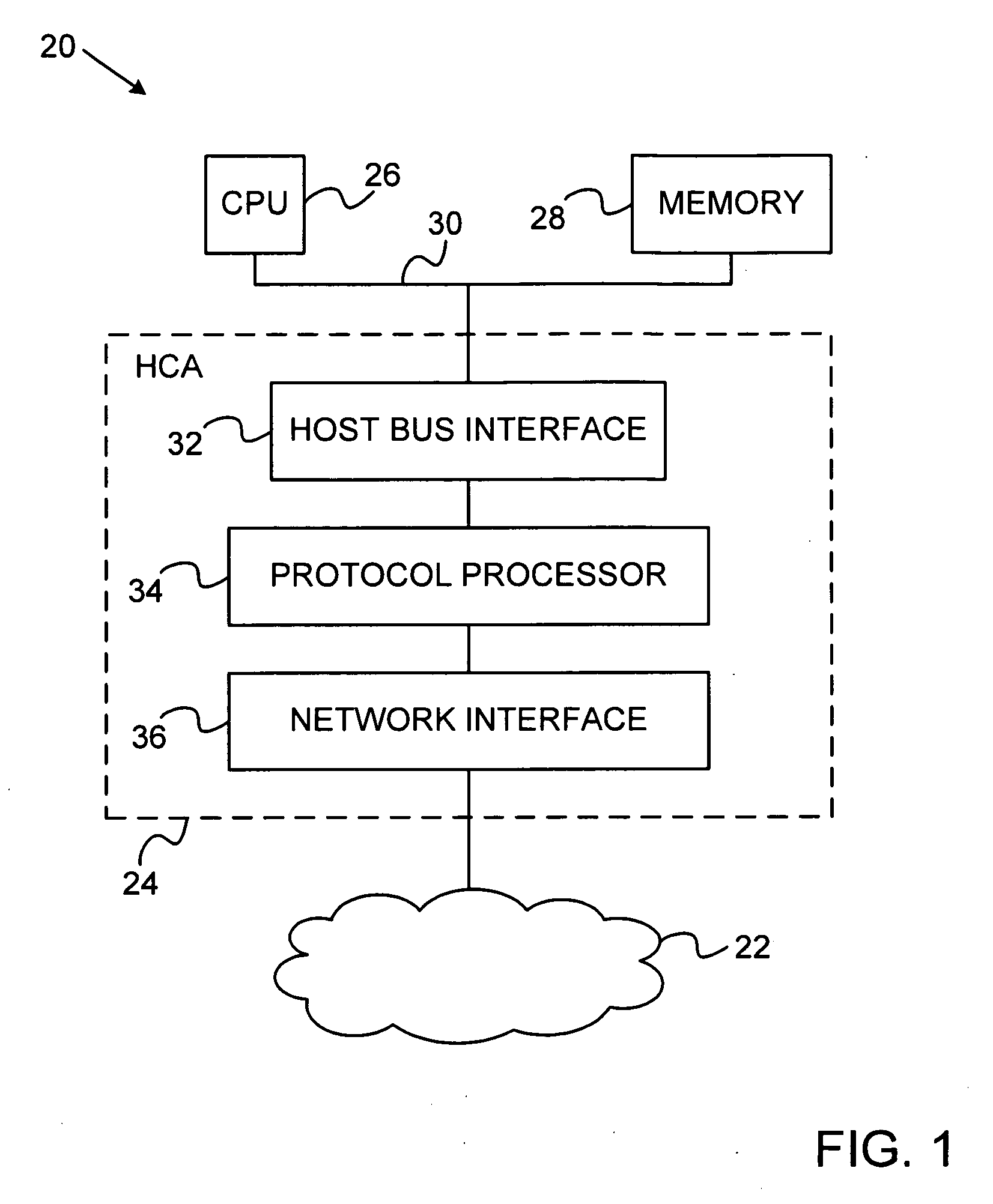

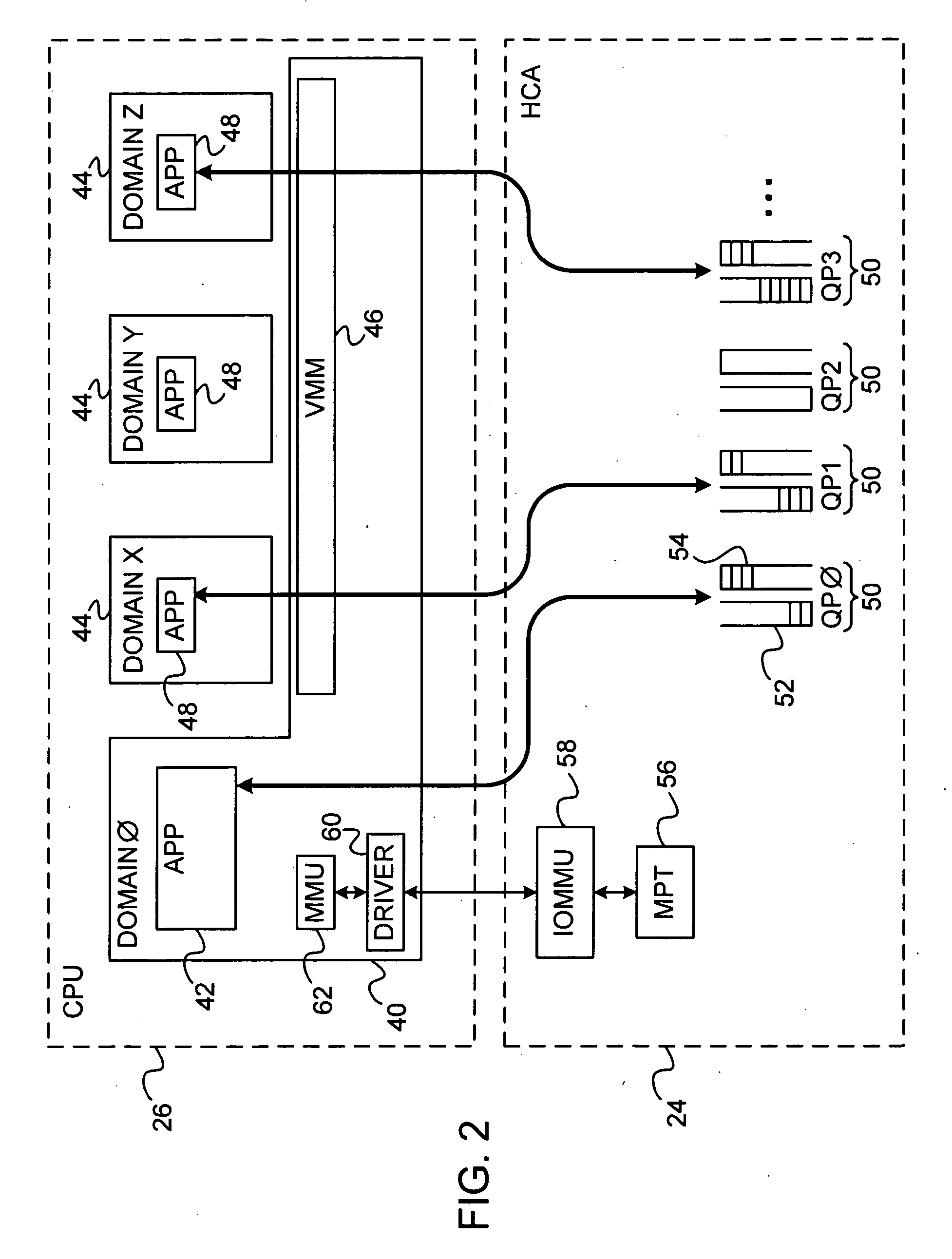

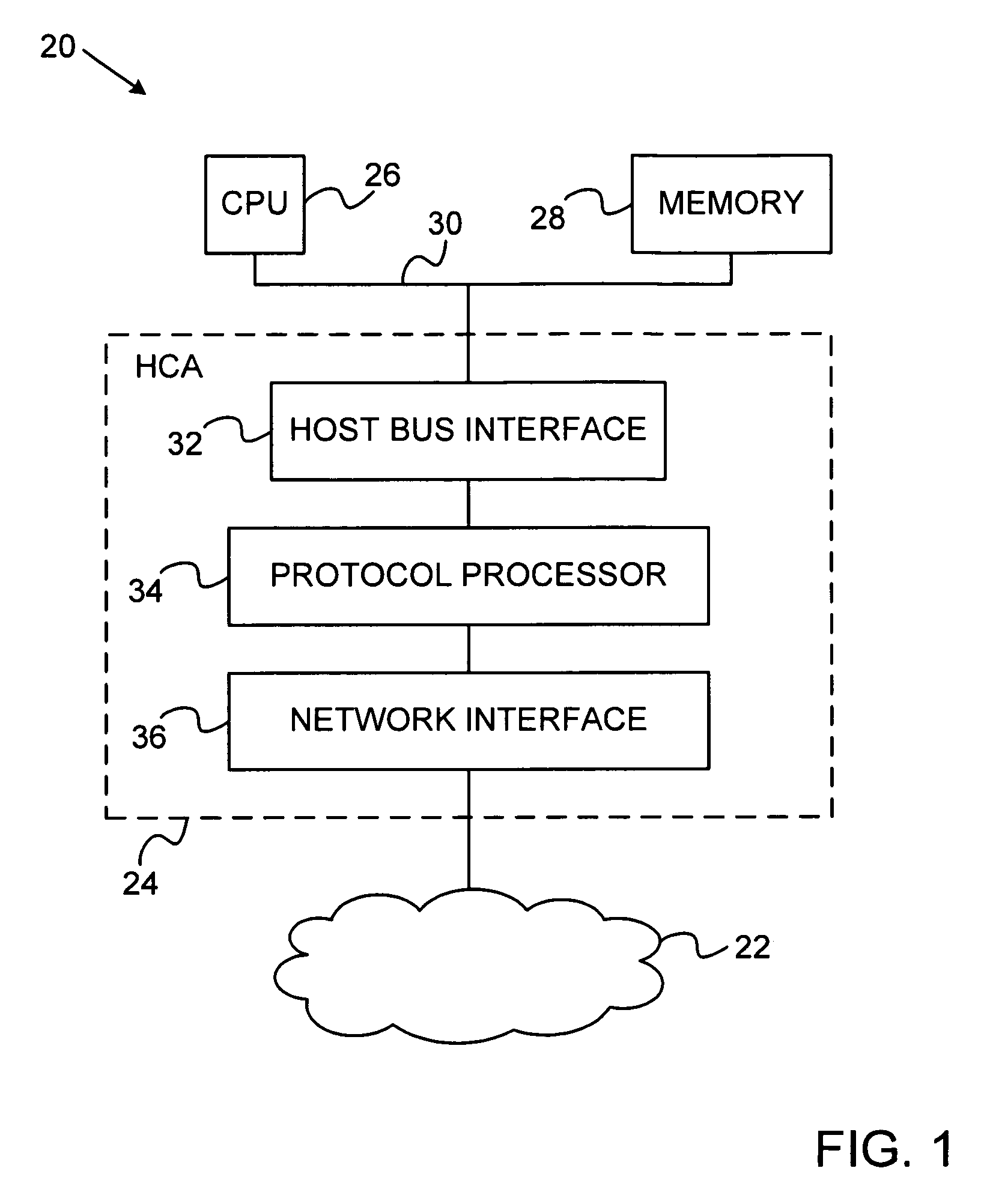

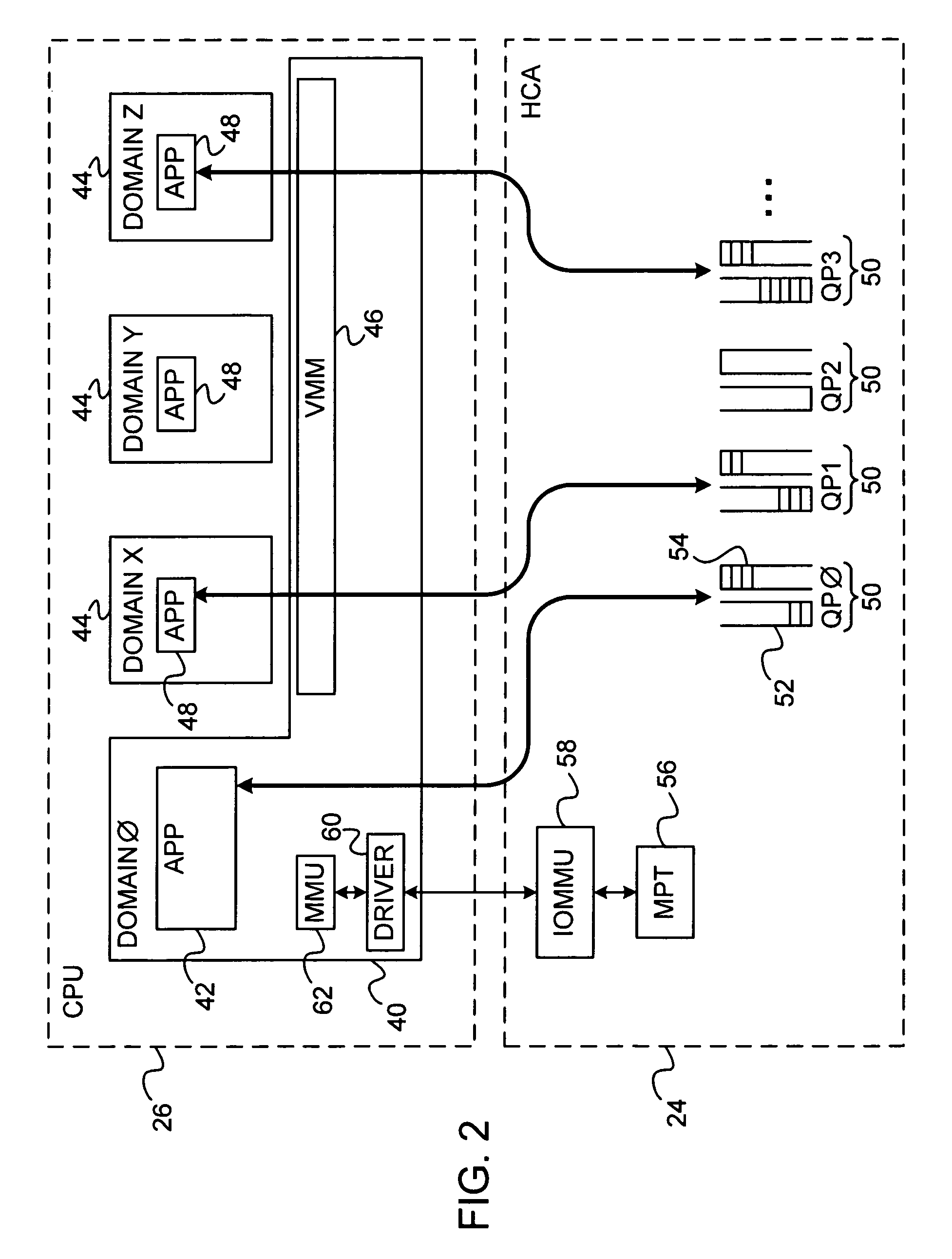

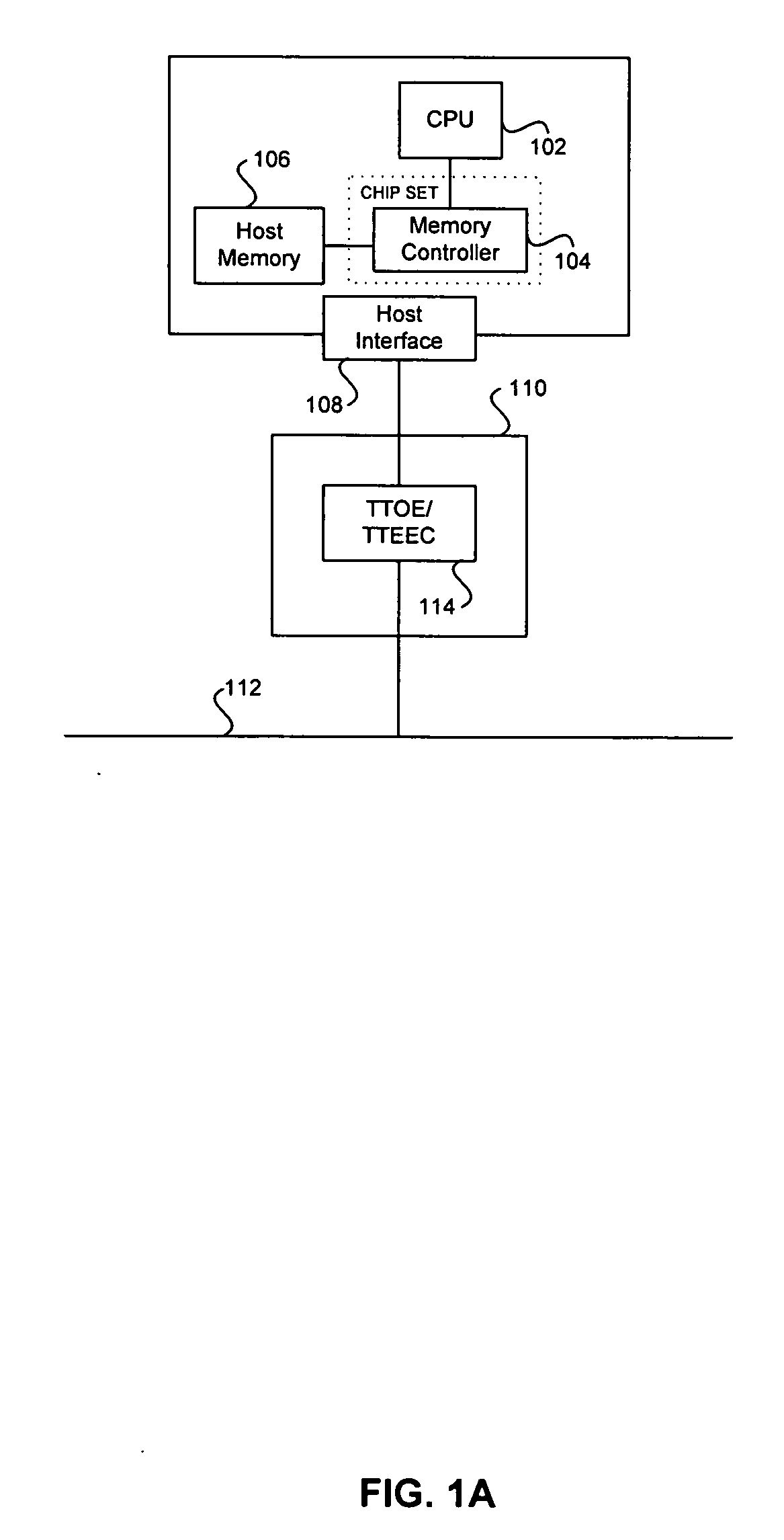

Network interface device with memory management capabilities

ActiveUS20100274876A1Multiple digital computer combinationsMemory systemsNetwork interface devicePhysical address

An input / output (I / O) device includes a host interface for connection to a host device having a memory and a network interface, which is configured to receive, over a network, data packets associated with I / O operations directed to specified virtual addresses in the memory. Packet processing hardware is configured to translate the virtual addresses into physical addresses and to perform the I / O operations using the physical addresses, and upon an occurrence of a page fault in translating one of the virtual addresses, to transmit a response packet over the network to a source of the data packets so as to cause the source to refrain from transmitting further data packets while the page fault is serviced.

Owner:MELLANOX TECHNOLOGIES LTD

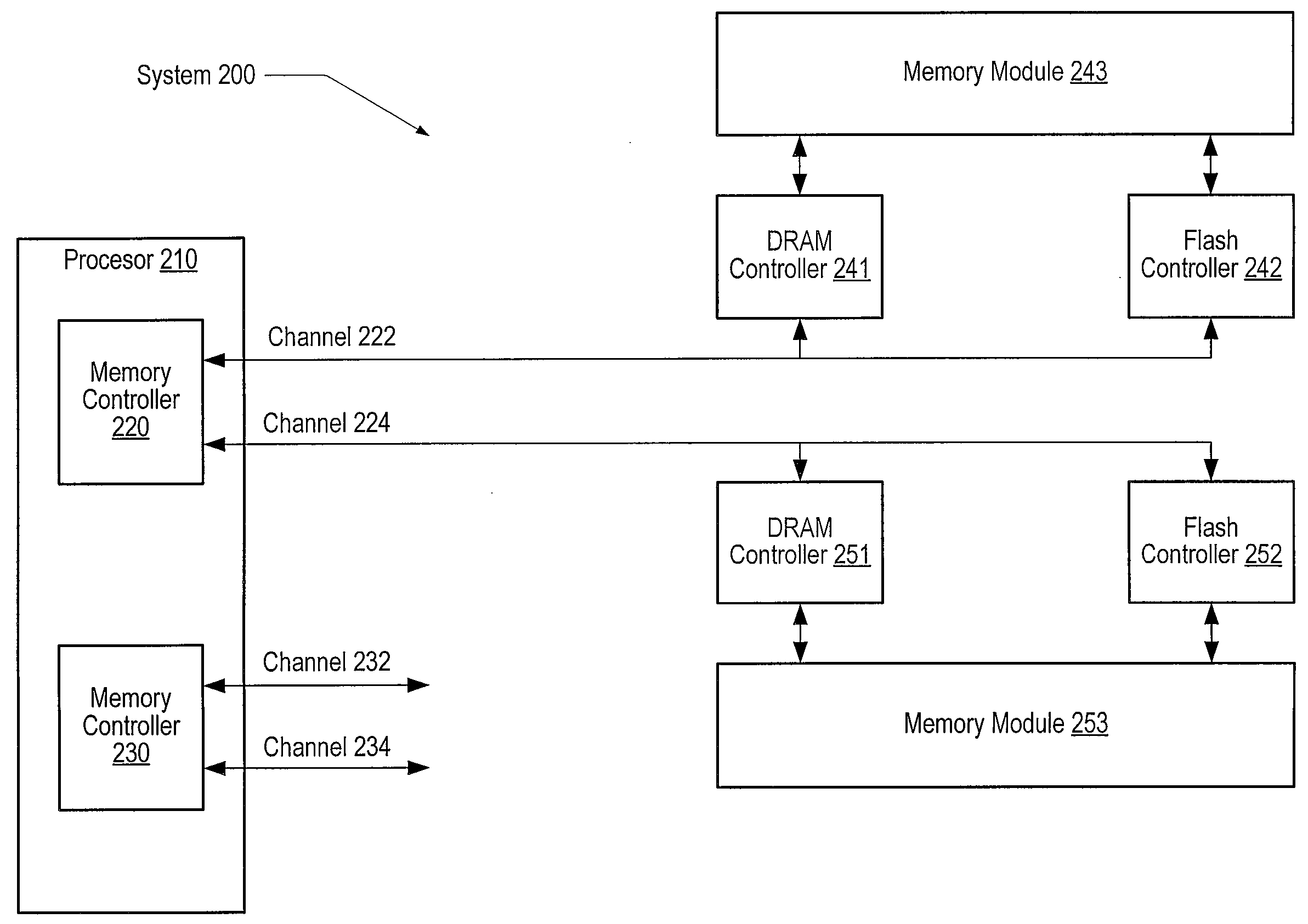

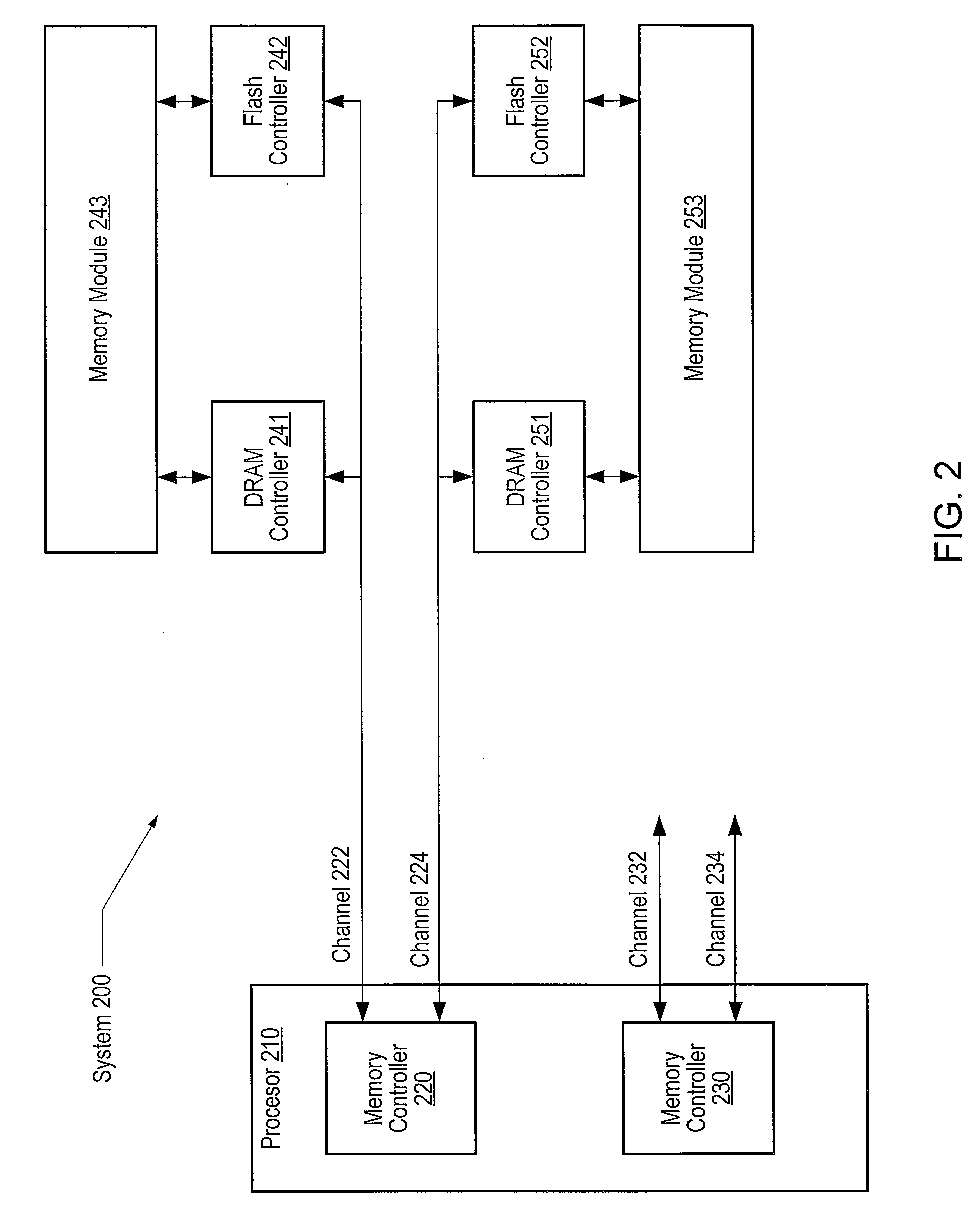

Cache coherent support for flash in a memory hierarchy

ActiveUS20100293420A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory hierarchyRandom access memory

System and method for using flash memory in a memory hierarchy. A computer system includes a processor coupled to a memory hierarchy via a memory controller. The memory hierarchy includes a cache memory, a first memory region of random access memory coupled to the memory controller via a first buffer, and an auxiliary memory region of flash memory coupled to the memory controller via a flash controller. The first buffer and the flash controller are coupled to the memory controller via a single interface. The memory controller receives a request to access a particular page in the first memory region. The processor detects a page fault corresponding to the request and in response, invalidates cache lines in the cache memory that correspond to the particular page, flushes the invalid cache lines, and swaps a page from the auxiliary memory region to the first memory region.

Owner:ORACLE INT CORP

Method and apparatus for optimizing performance in a multi-processing system

InactiveUS20040019891A1Interprogram communicationDigital computer detailsError processingThread scheduling

A technique for improving performance in a multi-processor system by reducing access latency by correlating processor, node and memory allocation. Specifically, a Process / Thread Scheduler is modified such that system mapping and node proximity tables may be referenced to help determine processor assignments for ready-to-run processes / threads. Processors are chosen to minimize access latency. Further, the Page Fault Handler is modified such that free memory pages are assigned to a process based partially on the proximity of the memory with respect to the processor requesting memory allocation.

Owner:HEWLETT PACKARD DEV CO LP

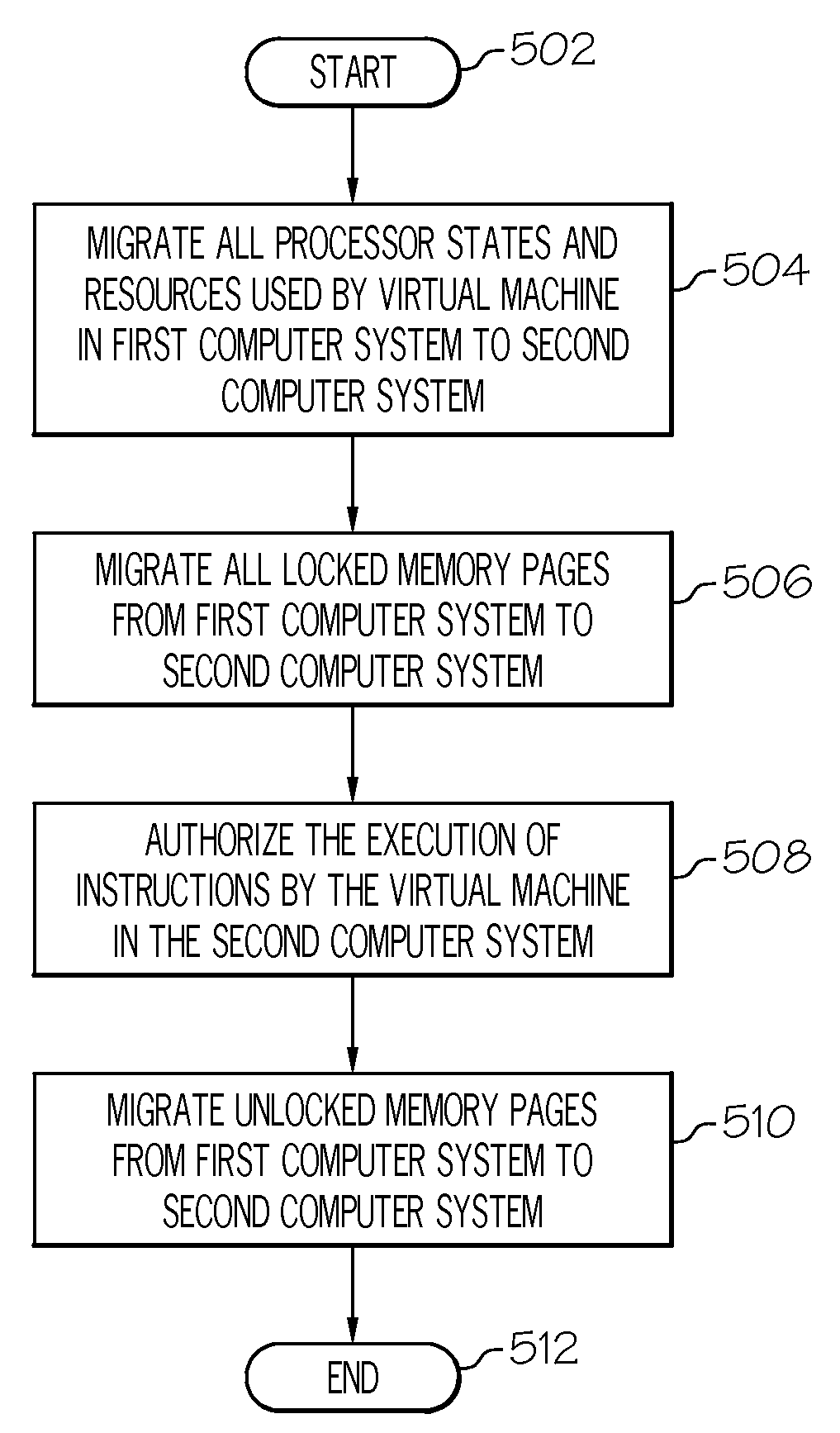

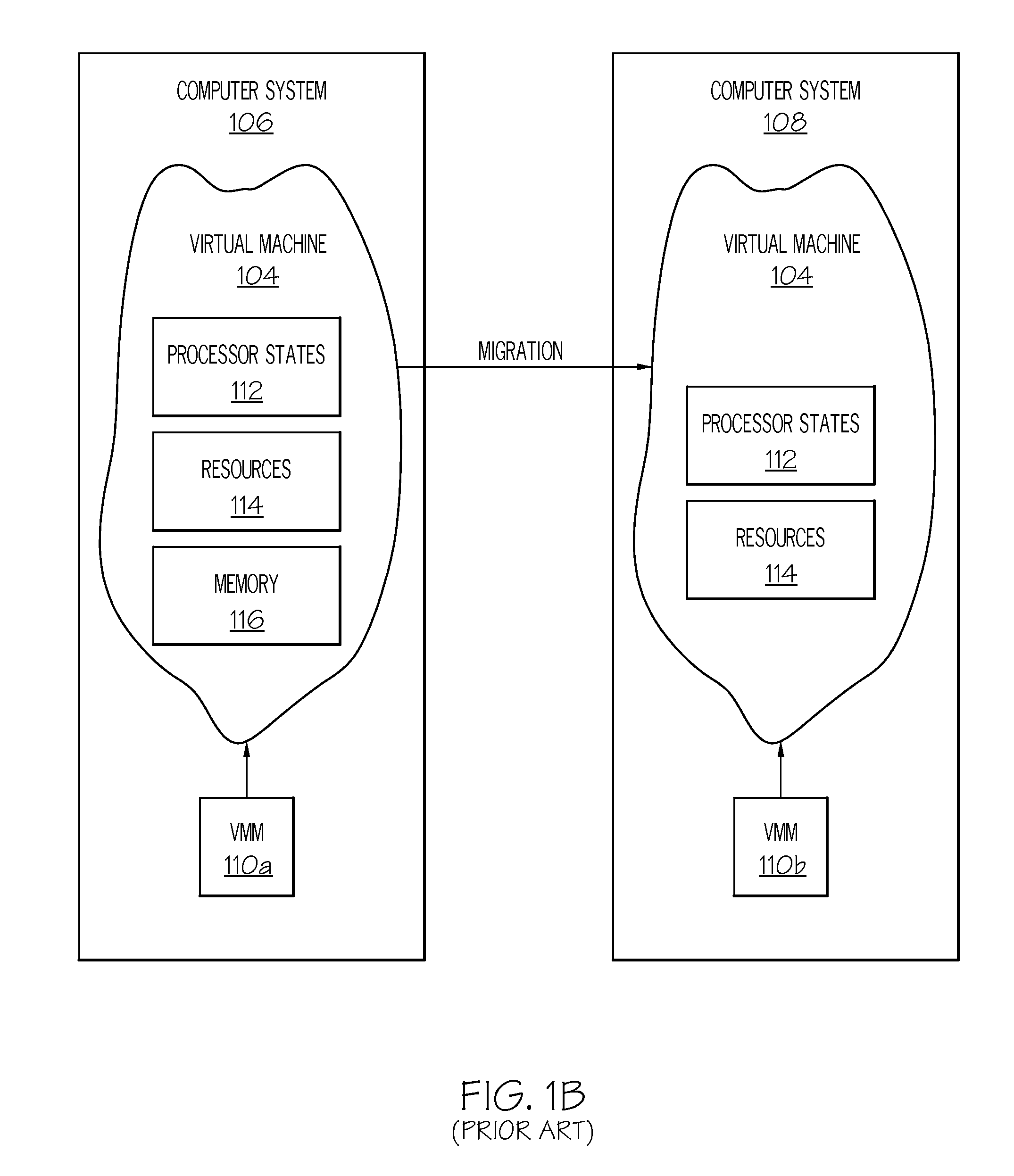

Managing Memory Pages During Virtual Machine Migration

InactiveUS20080127182A1Avoids fatal page faultMemory architecture accessing/allocationSoftware simulation/interpretation/emulationVirtual storageVirtual machine

A method, system and computer-readable medium is presented for migrating a virtual machine, from a first computer to a second computer, in a manner that avoids fatal page faults in the second computer. In a preferred embodiment, the method includes the steps of determining which memory pages of virtual memory are locked memory pages; migrating the virtual machine, from a first computer to a second computer, without migrating the locked memory pages; and prohibiting execution of a first instruction by the virtual machine in the second computer until the locked memory pages are migrated from the first computer to the second computer.

Owner:IBM CORP

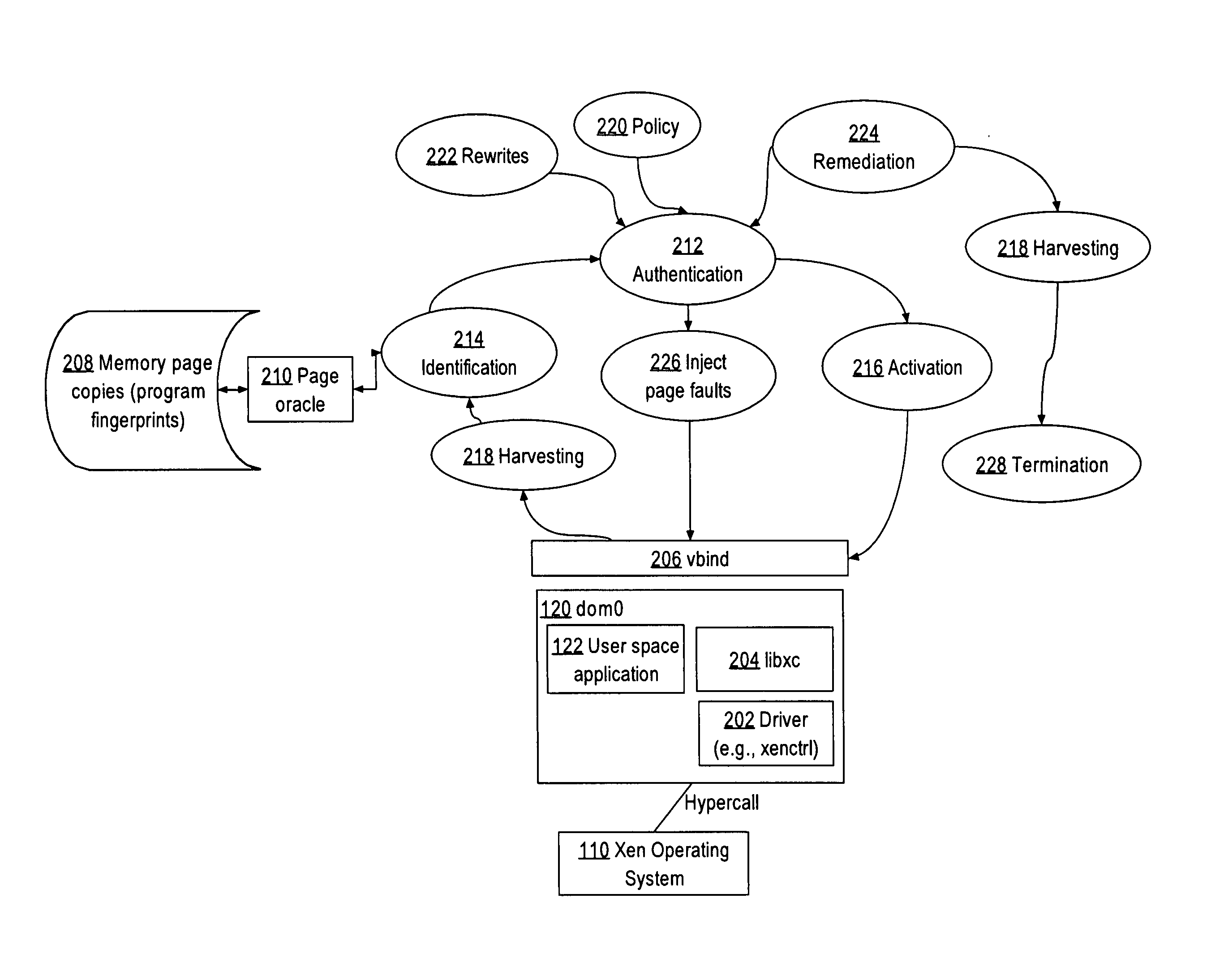

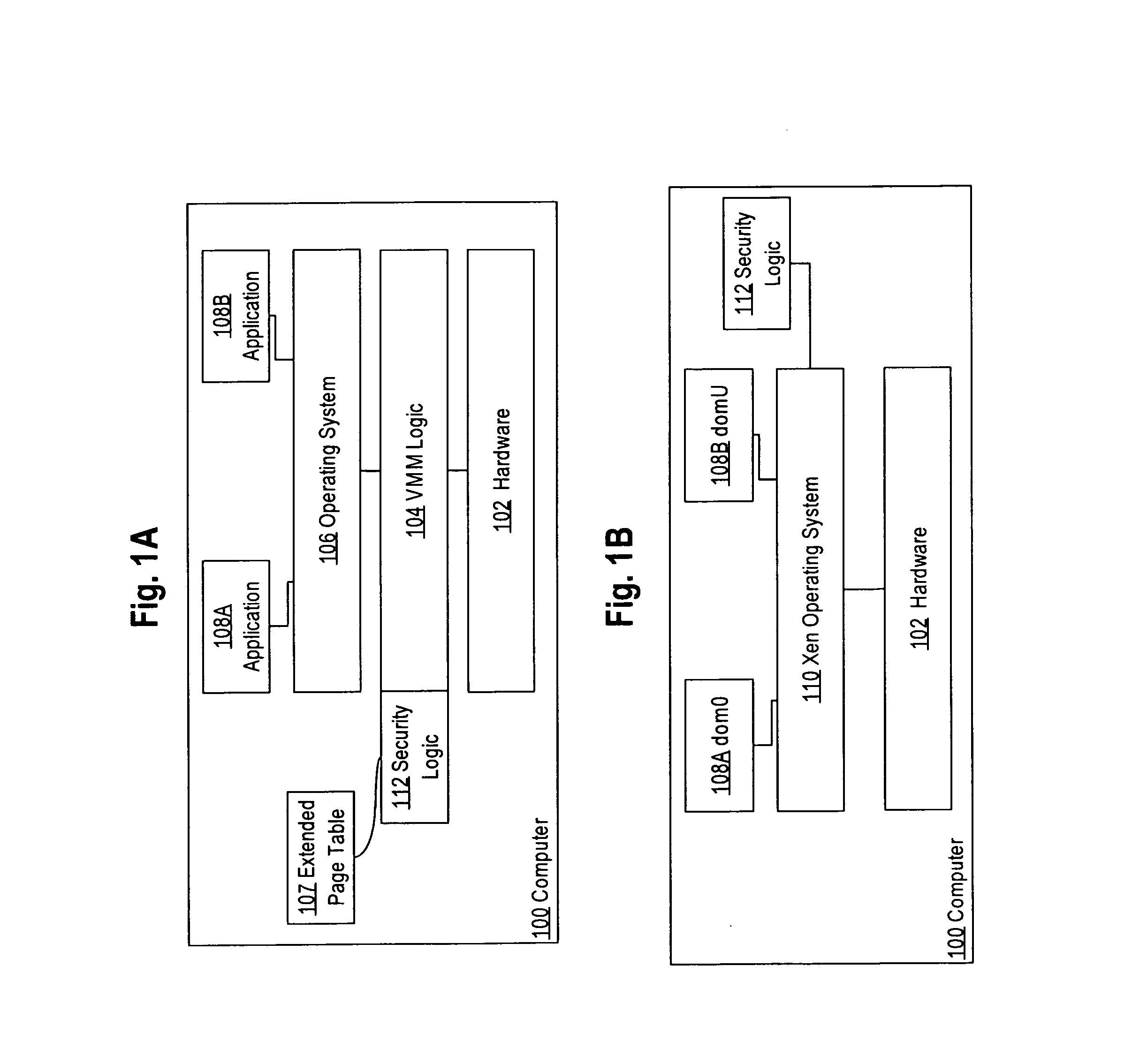

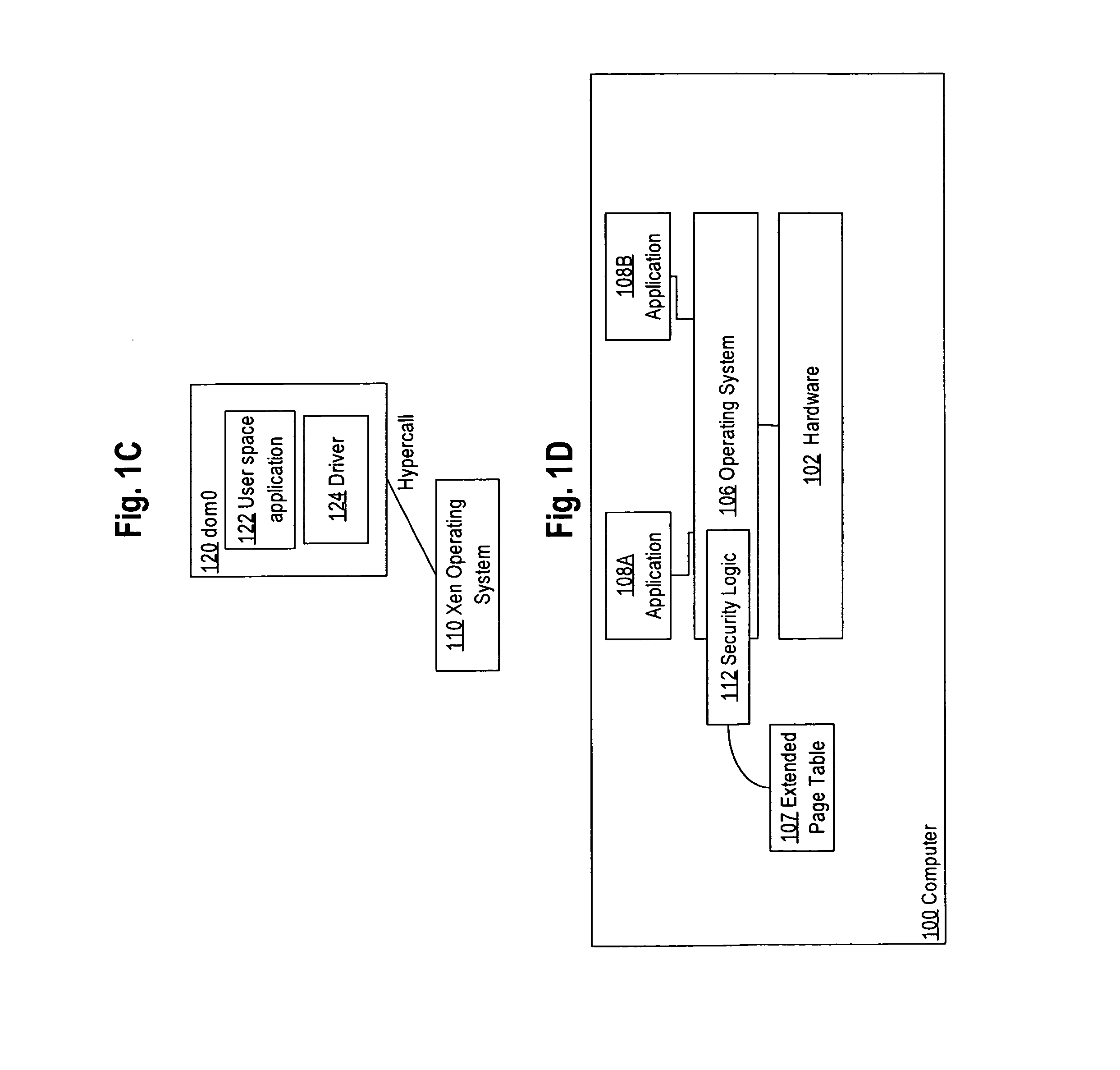

Security in virtualized computer programs

ActiveUS20130086299A1Binary to binaryMemory architecture accessing/allocationOperational systemPermission system

In an embodiment, a data processing method comprises implementing a memory event interface to a hypercall interface of a hypervisor or virtual machine operating system to intercept page faults associated with writing pages of memory that contain a computer program; receiving a page fault resulting from a guest domain attempting to write a memory page that is marked as not executable in a memory page permissions system; determining a first set of memory page permissions for the memory page that are maintained by the hypervisor or virtual machine operating system; determining a second set of memory page permissions for the memory page that are maintained independent of the hypervisor or virtual machine operating system; determining a particular memory page permission for the memory page based on the first set and the second set; processing the page fault based on the particular memory page permission, including performing at least one security function associated with regulating access of the guest domain to the memory page.

Owner:CISCO TECH INC

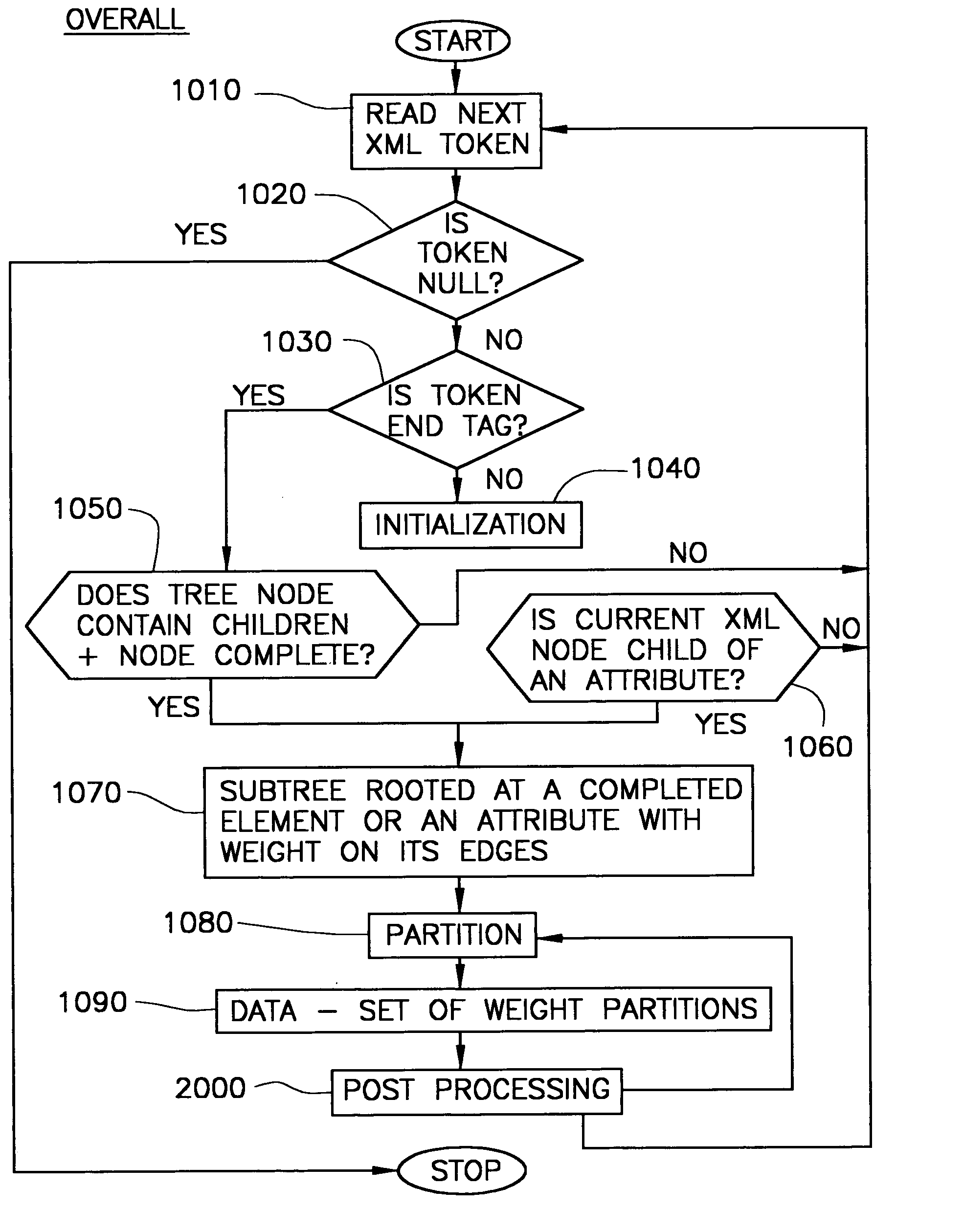

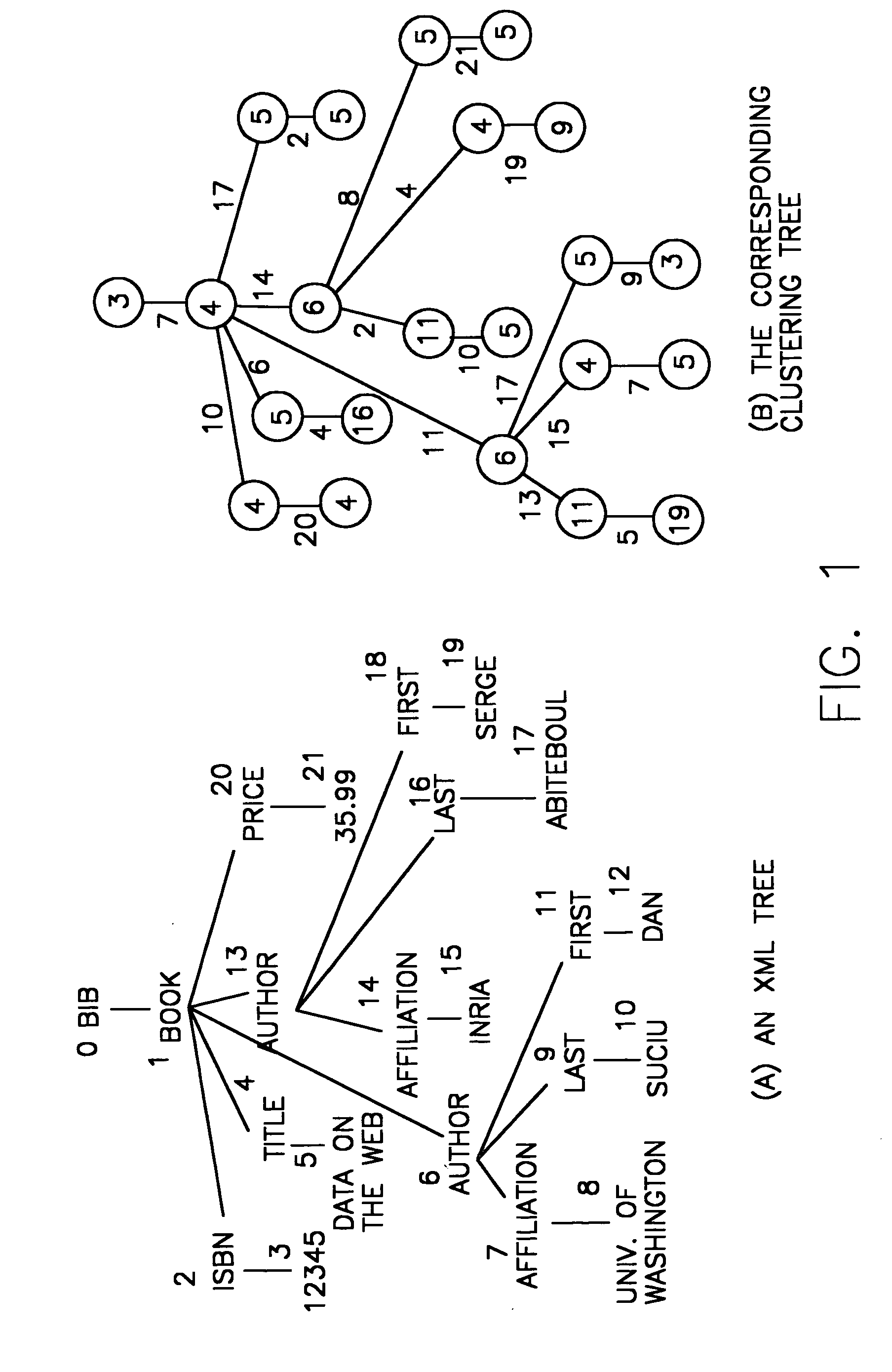

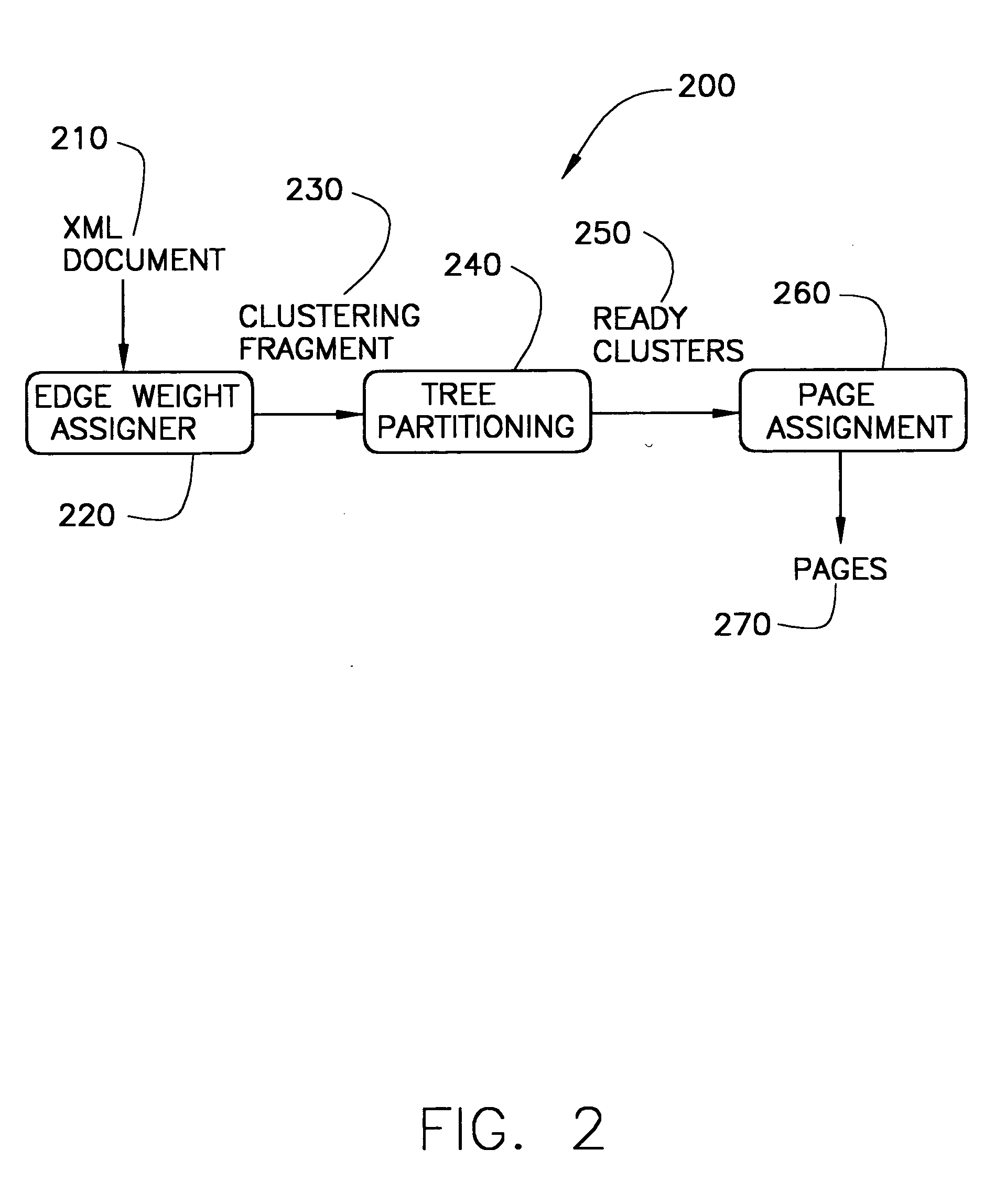

Single pass workload directed clustering of XML documents

InactiveUS20050102256A1Data processing applicationsDigital data processing detailsXPathPaper document

A method and system for clustering of XML documents is disclosed. The method operates under specified memory-use constraints. The system implements the method and scans an XML document, assigns edge-weights according to the application workload, and maps clusters of XML nodes to disk pages, all in a single parser-controlled pass over the XML data. Application workload information is used to generate XML clustering solutions that lead to substantial reduction in page faults for the workload under consideration. Several approaches for representing workload information are disclosed. For example, the workload may list the XPath operators invoked during the application along with their invocation frequencies. The application workload can be further refined by incorporating additional features such as query importance or query compilation costs. XML access patterns could be also modeled using stochastic approaches.

Owner:IBM CORP

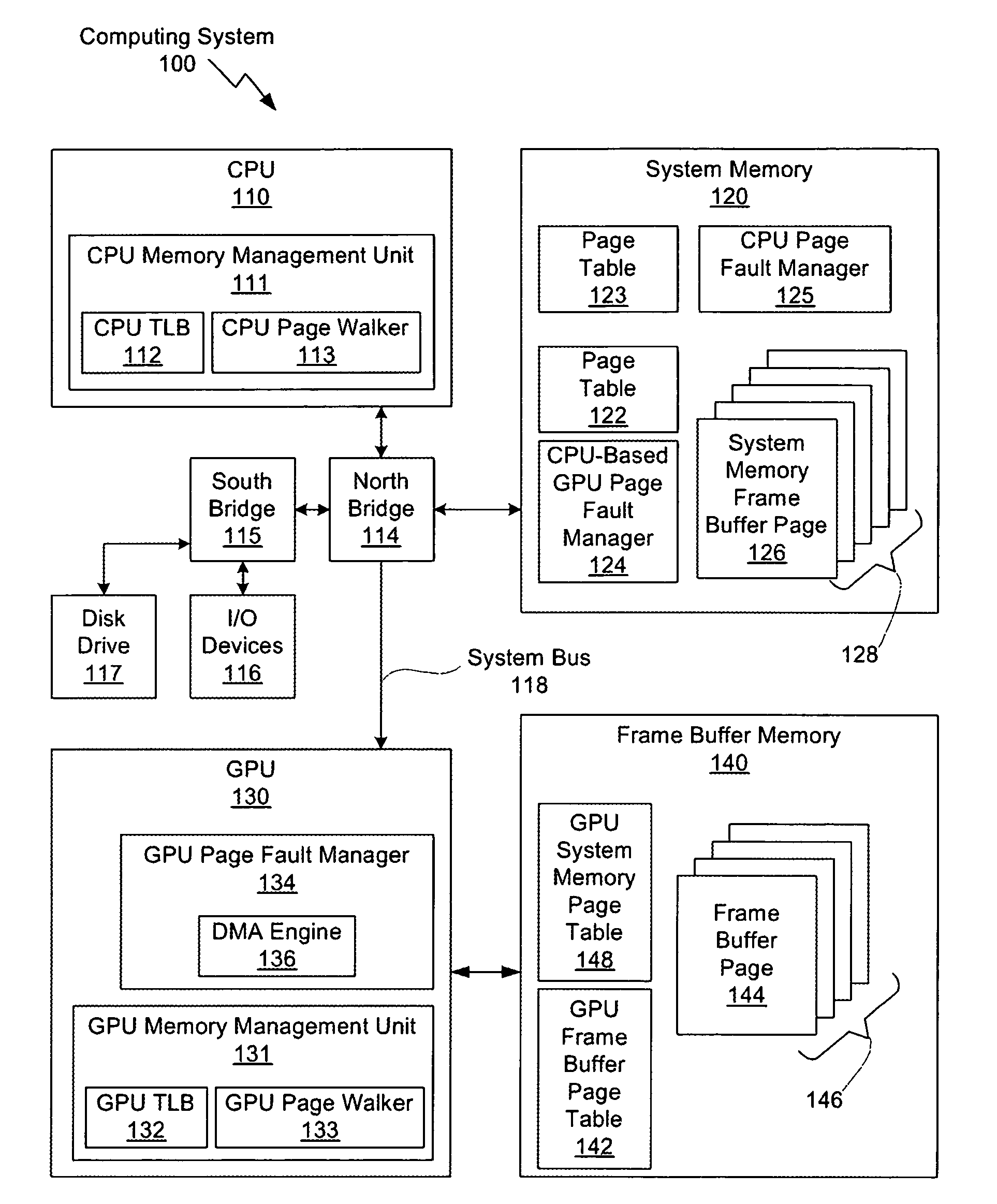

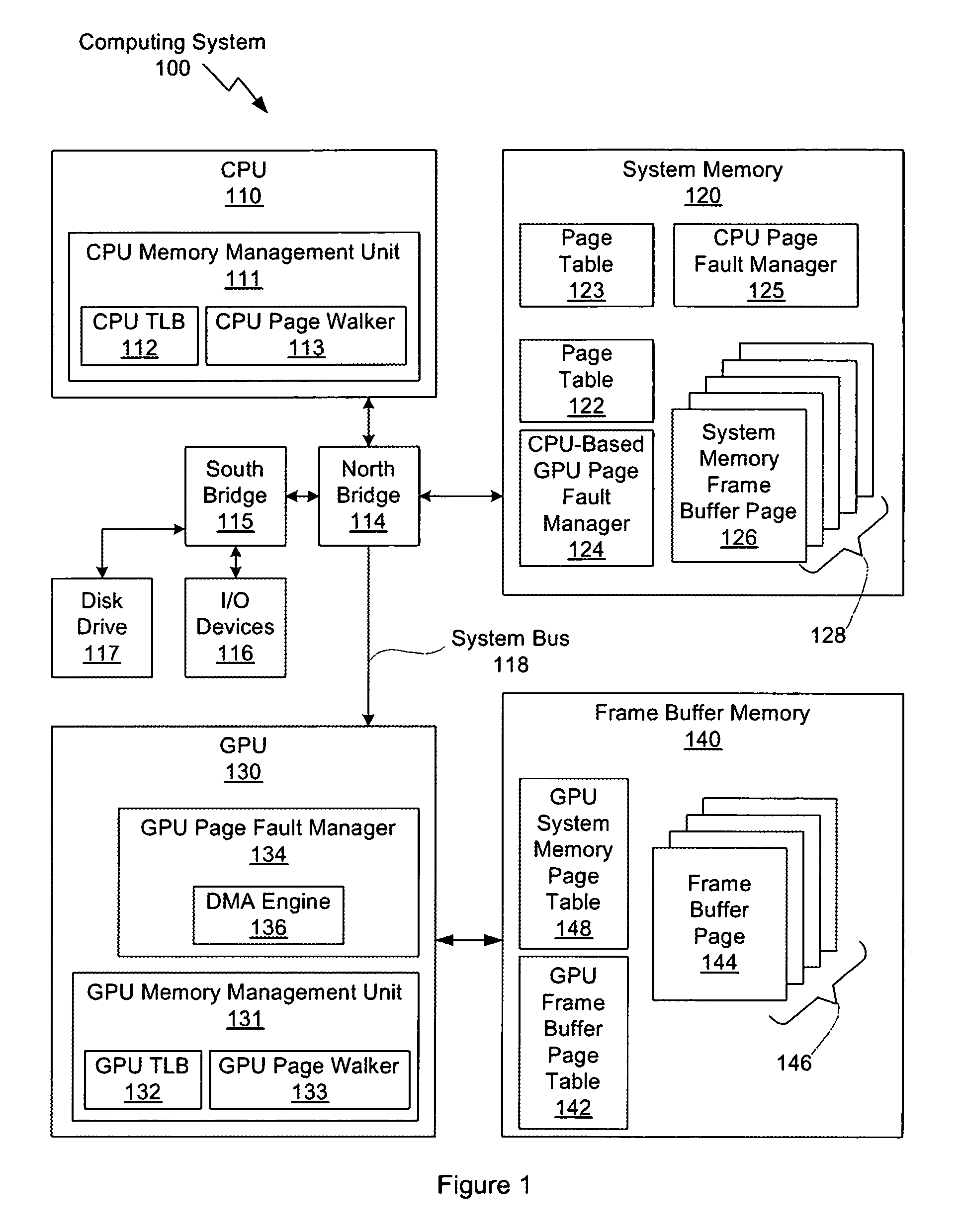

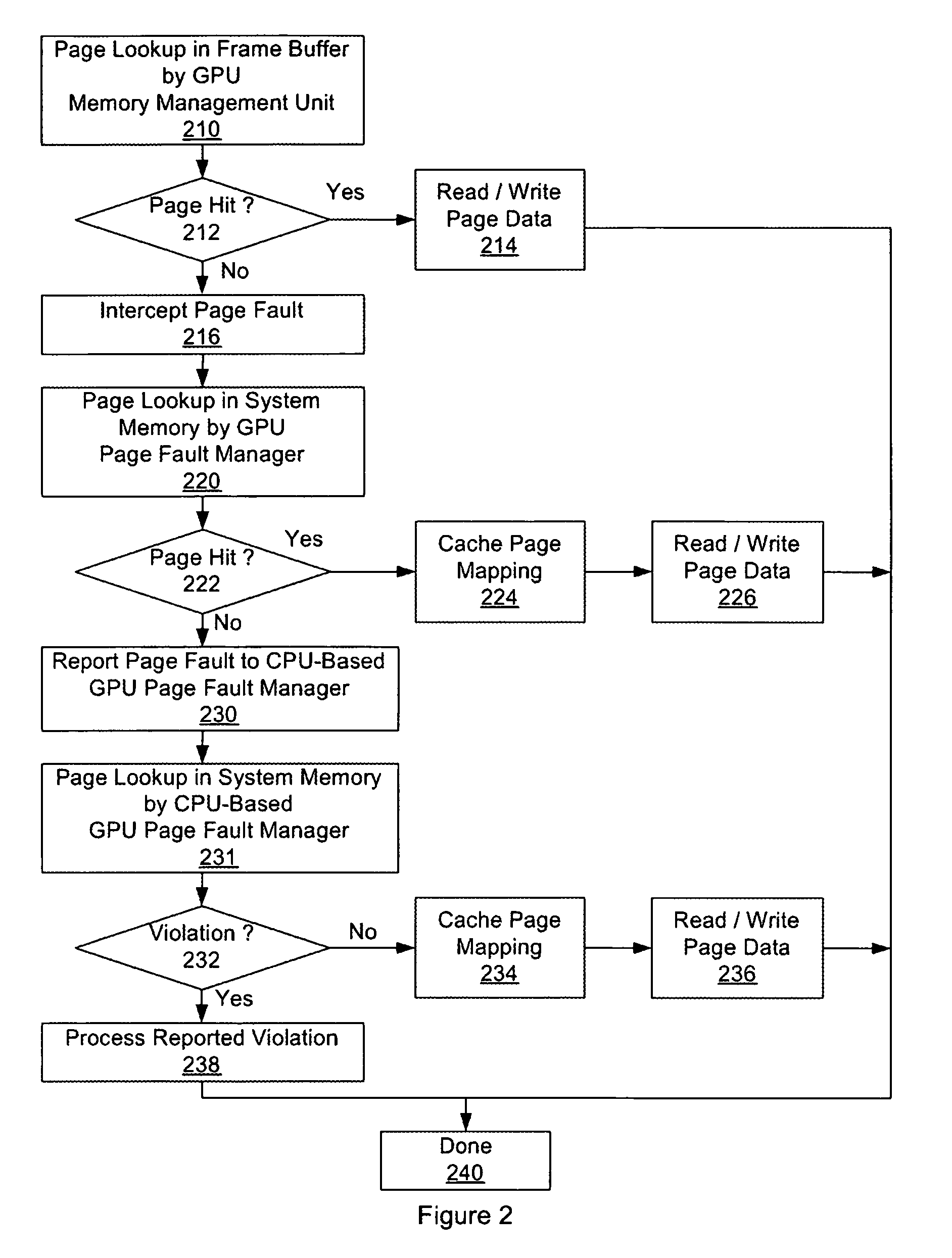

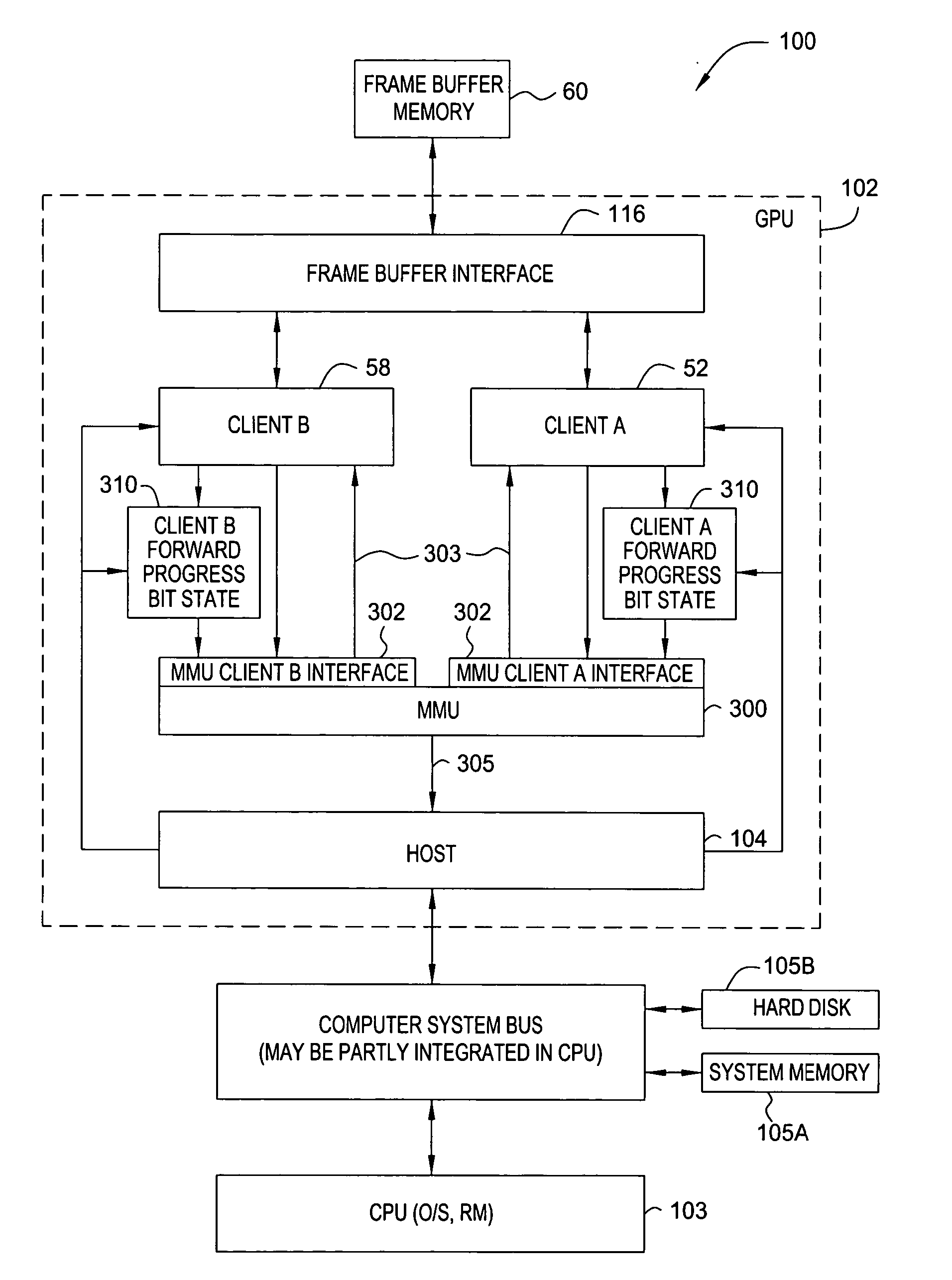

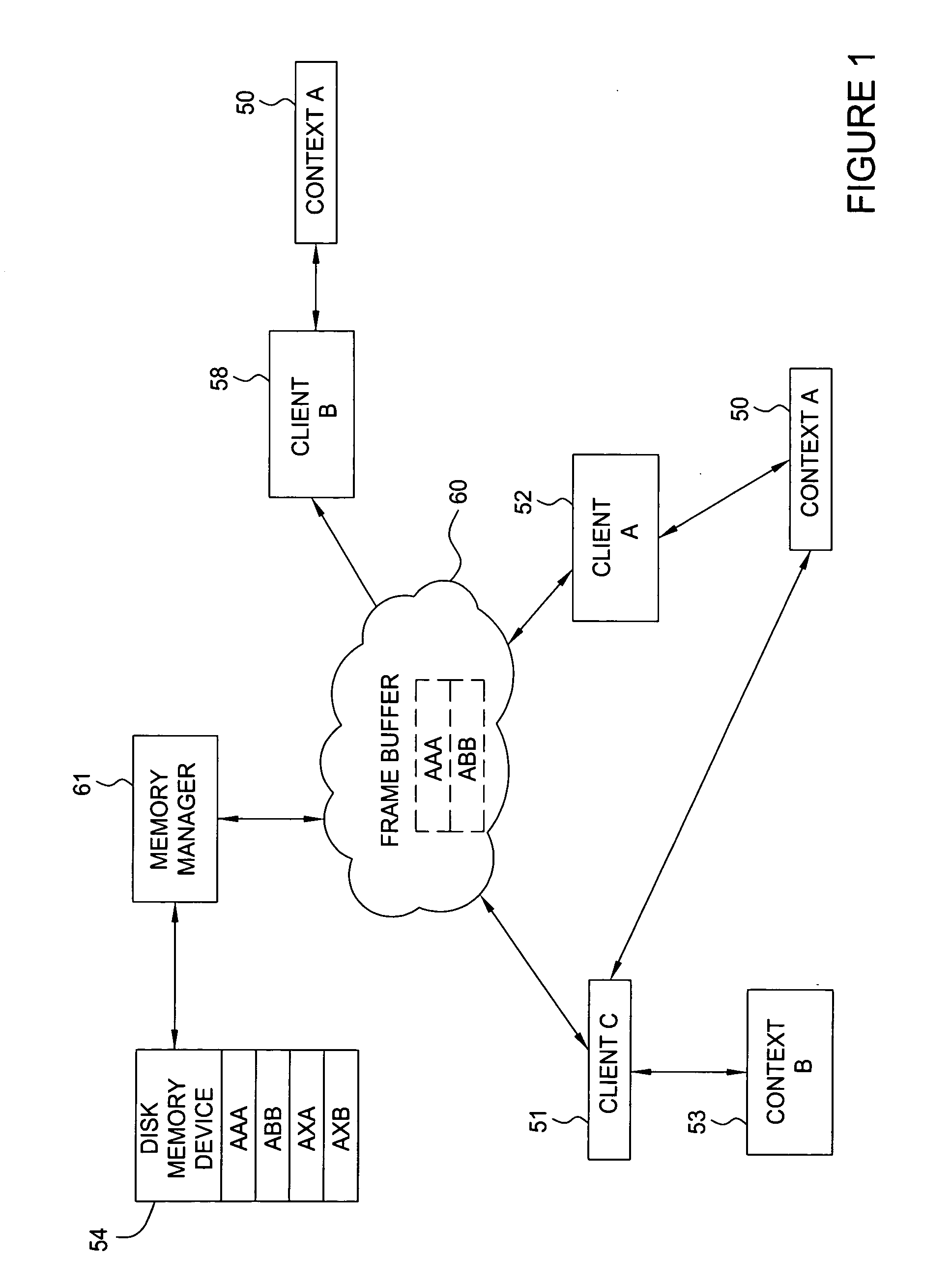

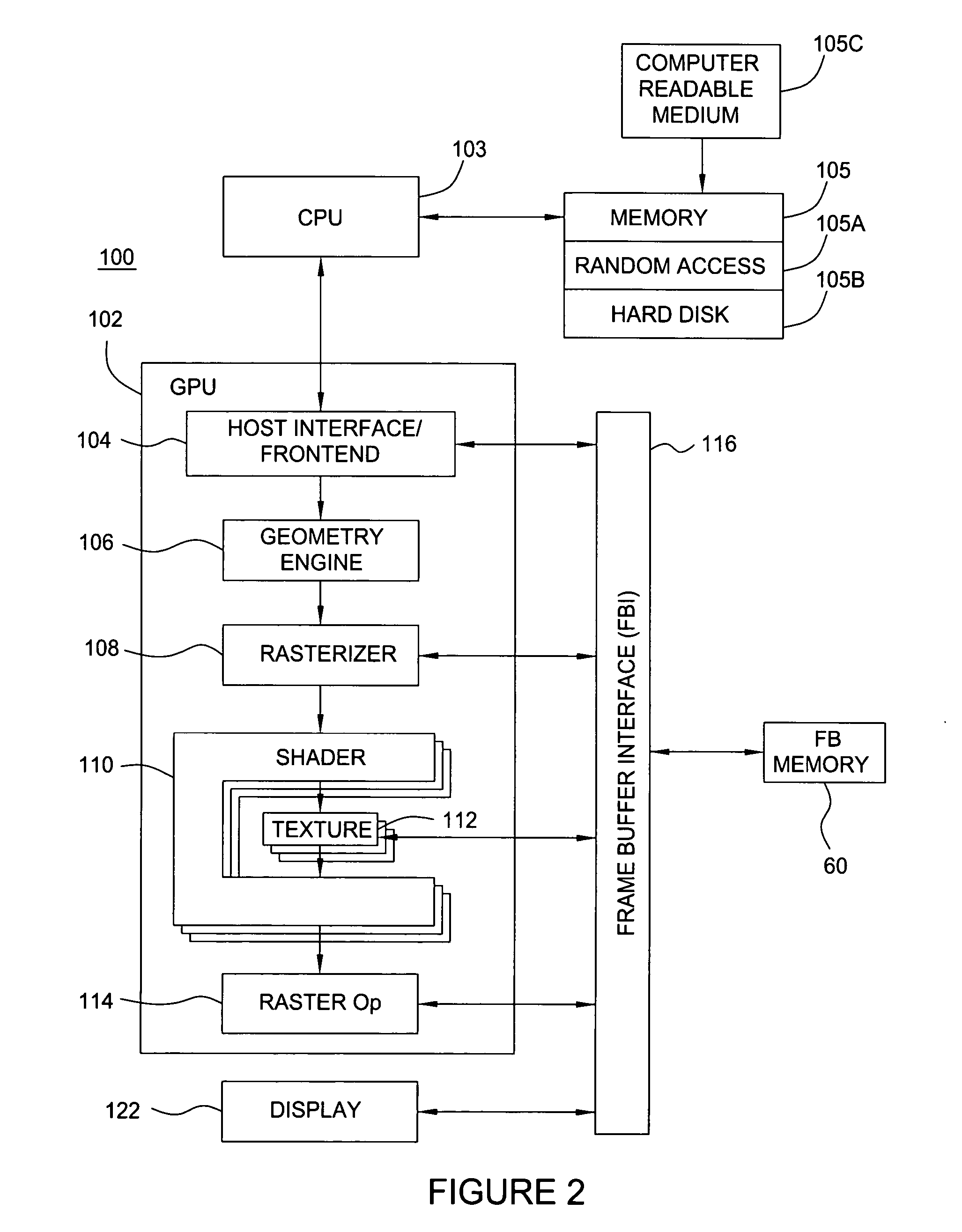

System and method for hardware-based GPU paging to system memory

ActiveUS7623134B1Improve system performanceReduce loadMemory architecture accessing/allocationMemory adressing/allocation/relocationOperational systemParallel computing

One embodiment of the present invention sets forth a technique for processing address page requests in a GPU system that is implementing a virtual memory model. A hardware-based page fault manager included in the GPU system intercepts page faults otherwise processed by a software-based page fault manager executing on a host CPU. The hardware-based page fault manager in the GPU includes a DMA engine capable of reading and writing pages between system memory and frame buffer memory without involving the CPU or operating system. A net improvement in system performance is achieved by processing a significant portion of page faults within the GPU, reducing the overall load on the host CPU.

Owner:NVIDIA CORP

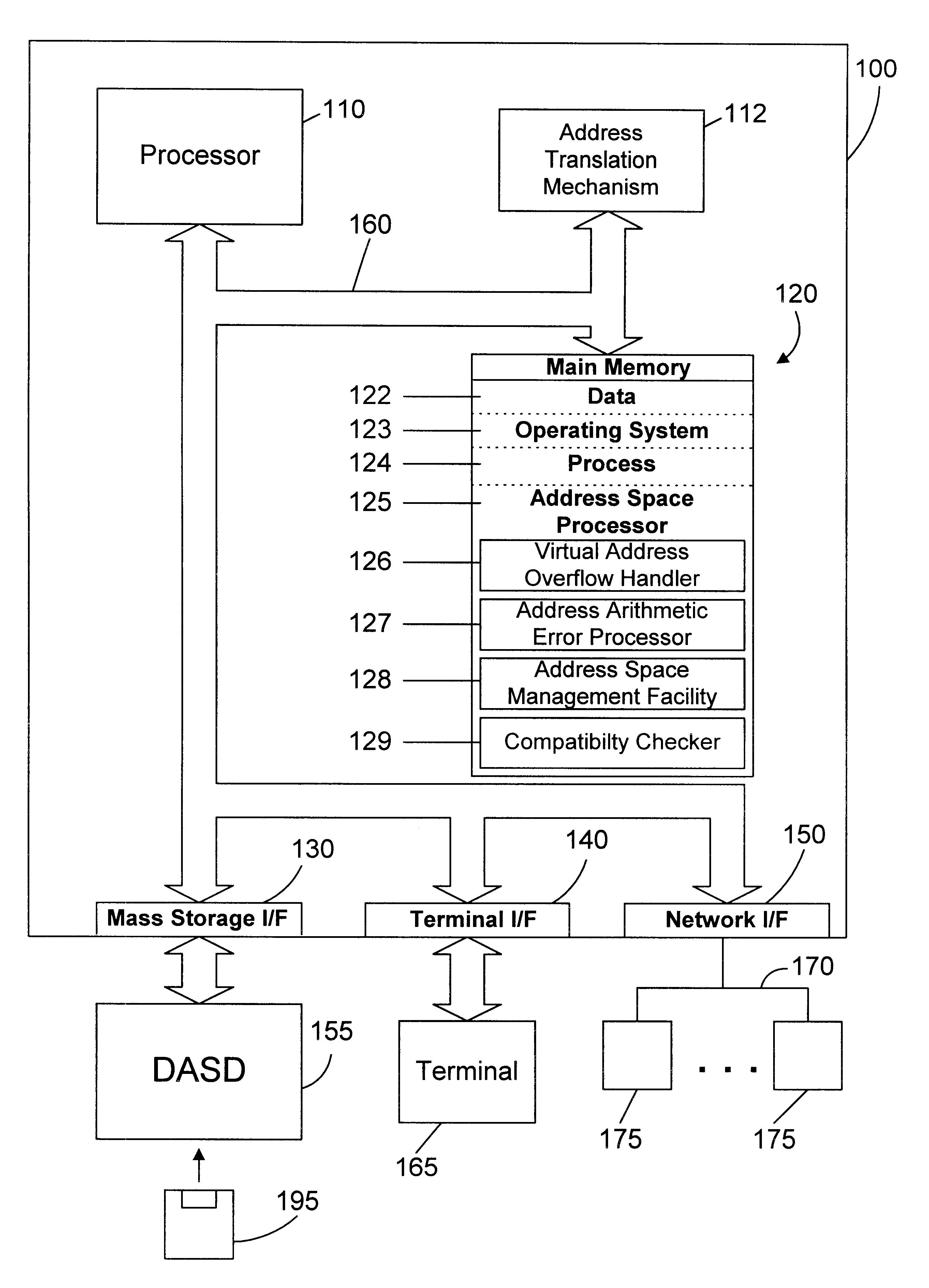

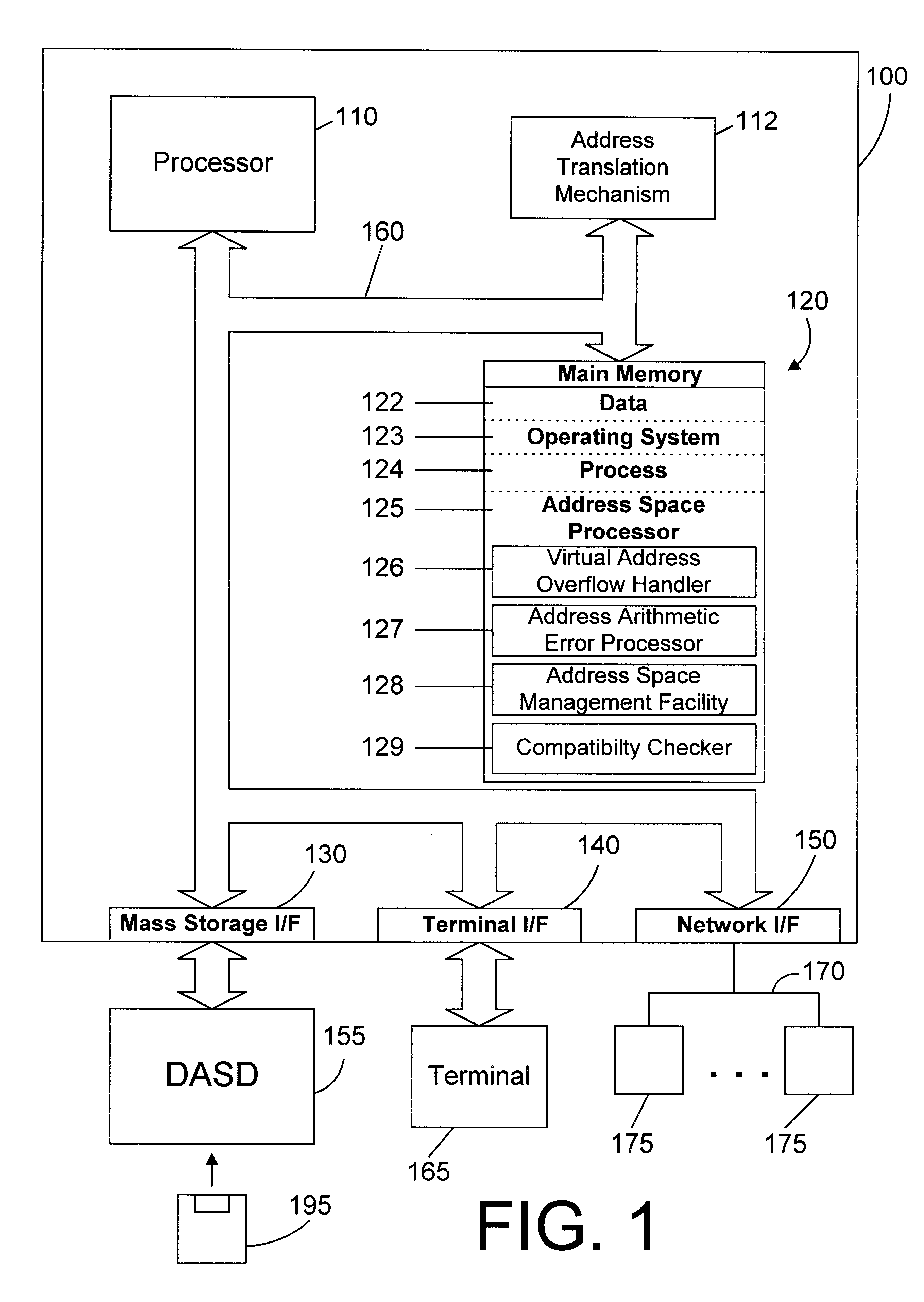

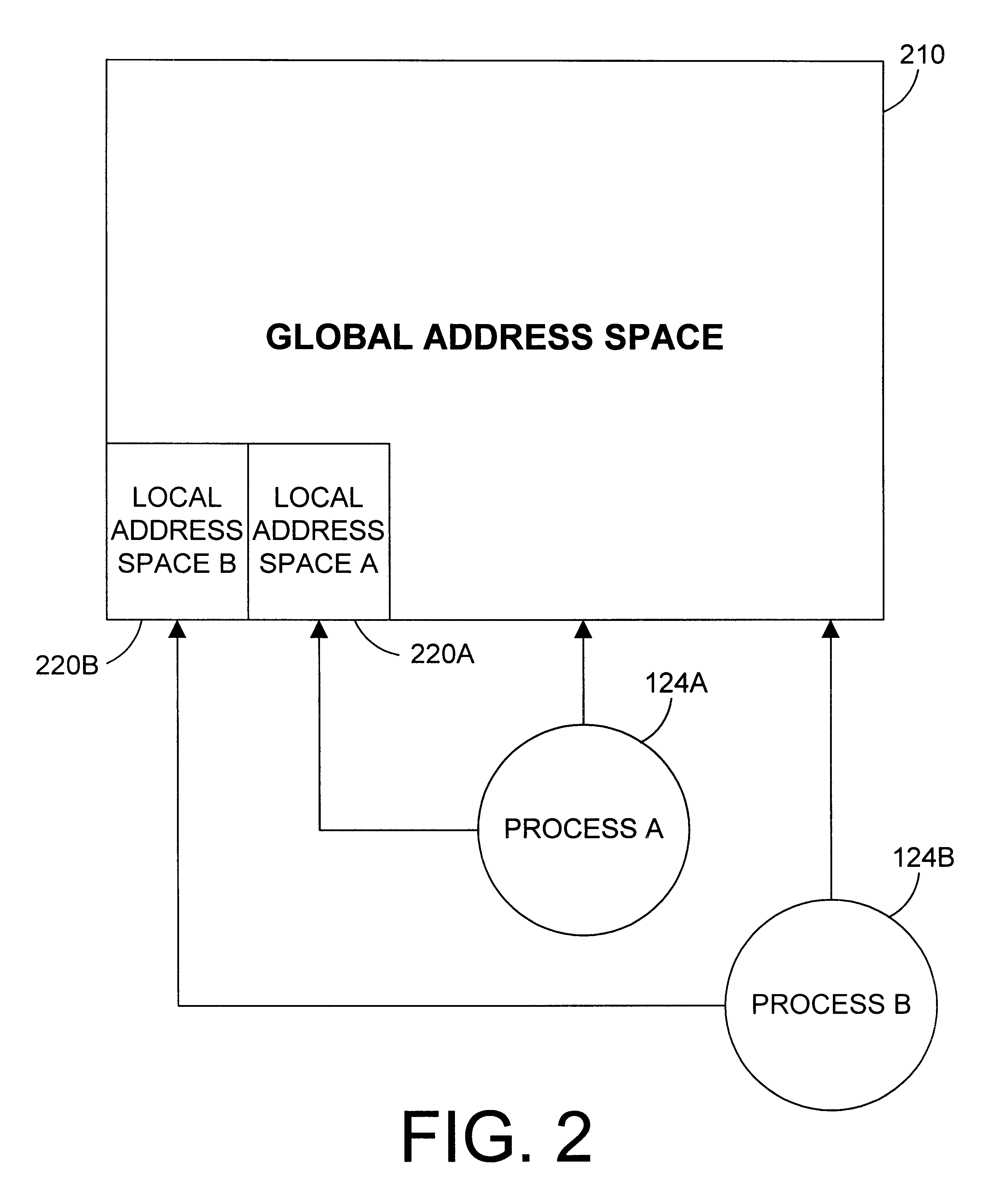

Apparatus and method for providing simultaneous local and global addressing using software to distinguish between local and global addresses

InactiveUS6574721B1Good flexibilityMemory adressing/allocation/relocationMicro-instruction address formationGlobal address spaceComputer compatibility

An apparatus and method provide simultaneous local and global addressing capabilities in a computer system. A global address space is defined that may be accessed by all processes. In addition, each process has a local address space that is local (and therefore available) only to that process. An address space processor is implemented in software to perform system functions that distinguish between local addresses and global addresses. In the preferred embodiments, the local address space has a size that is a multiple of the size of a segment of global address space. When the hardware indicates a page fault, the address space processor determines whether the address being translated is a local address or a global address. If the address is a local address, the address space processor uses a local directory to process the page fault. If the address is a global address, the address space processor uses a global directory to process the page fault. When the hardware indicates an addressing error because a computed address crosses a global segment boundary, the address space processor determines whether the address is a local address or a global address. If the address is a global address, the address space processor indicates an addressing error. If the address is a local address, the address space processor determines whether the address is within the process' local address space, and indicates an addressing error if the address is outside the process' local address space. Instructions are allowed to operate on both local and global addresses because the address space processor handles either type of address whenever software assistance is required, such as for servicing a page fault or checking a segment boundary crossing. In addition, the address space processor dynamically checks the addressing compatibility of called code before passing control to the called code.

Owner:IBM CORP

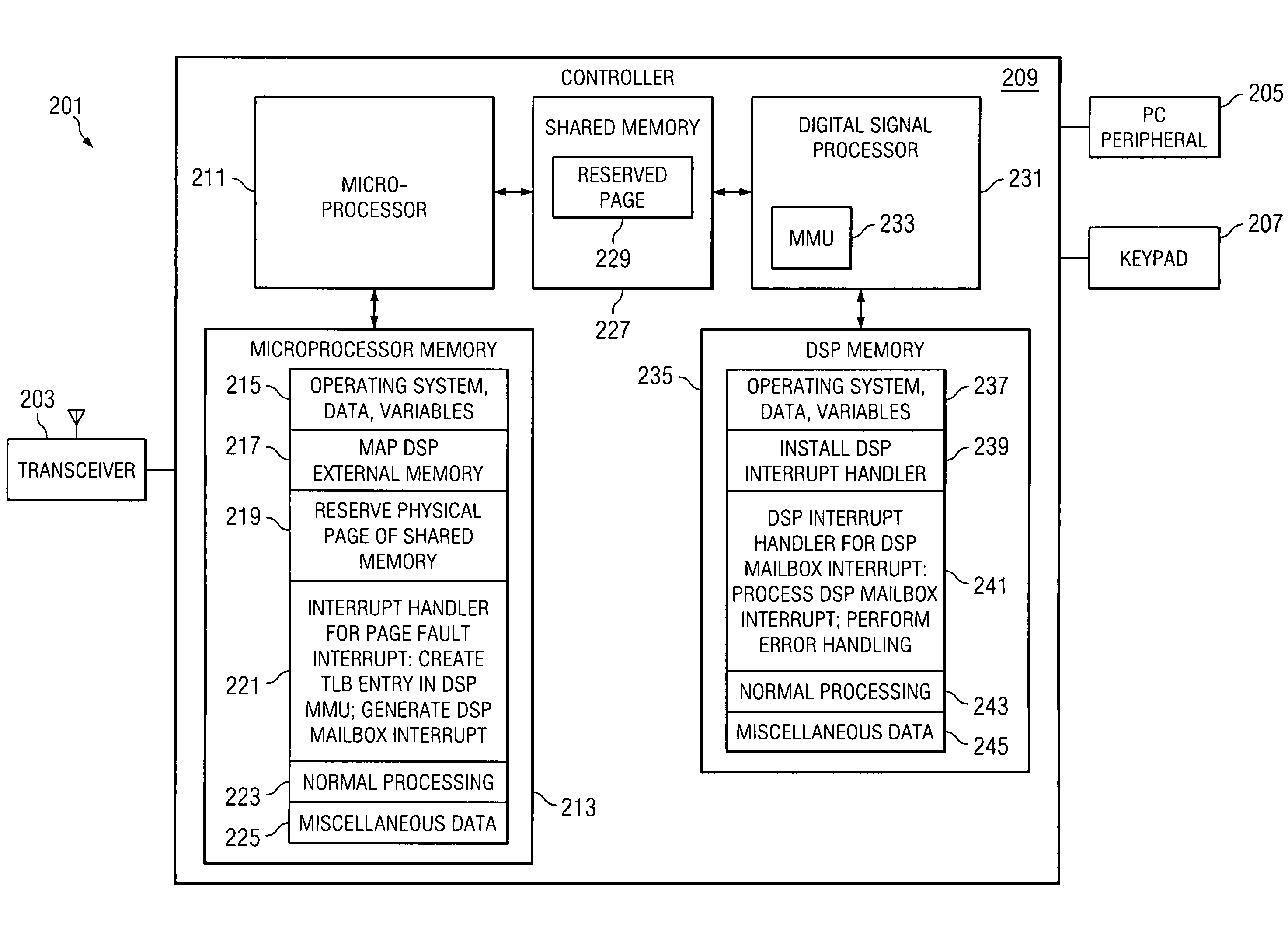

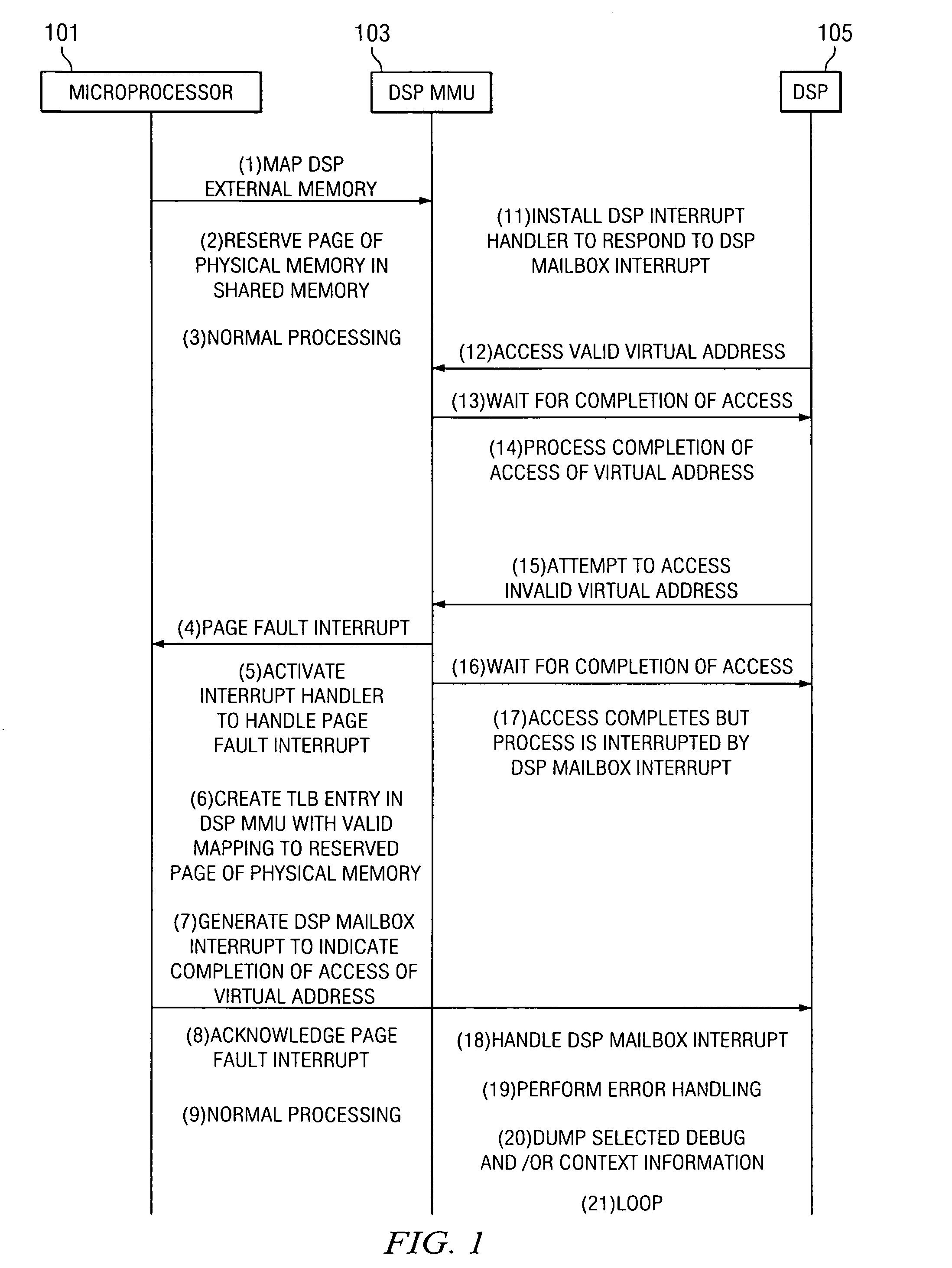

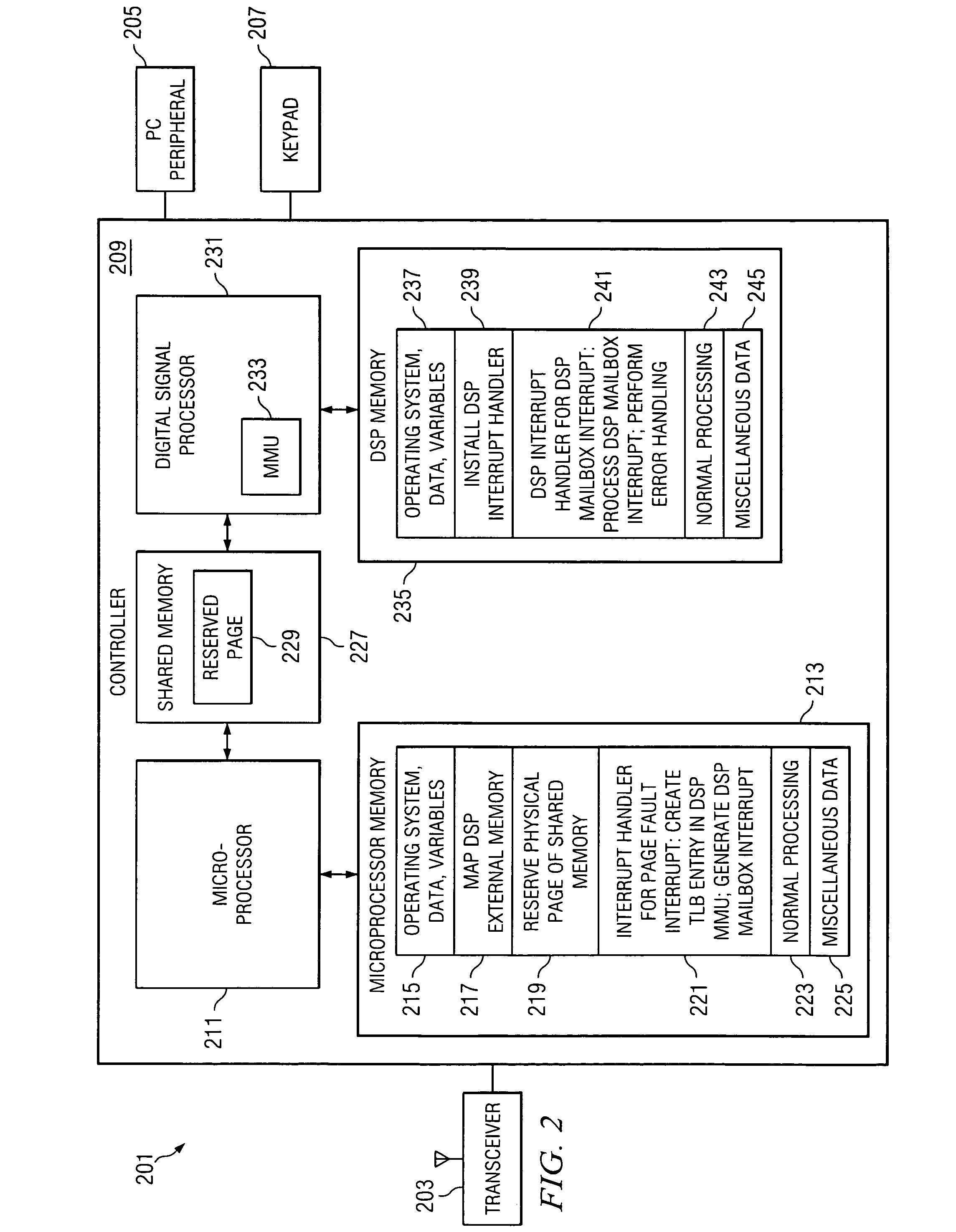

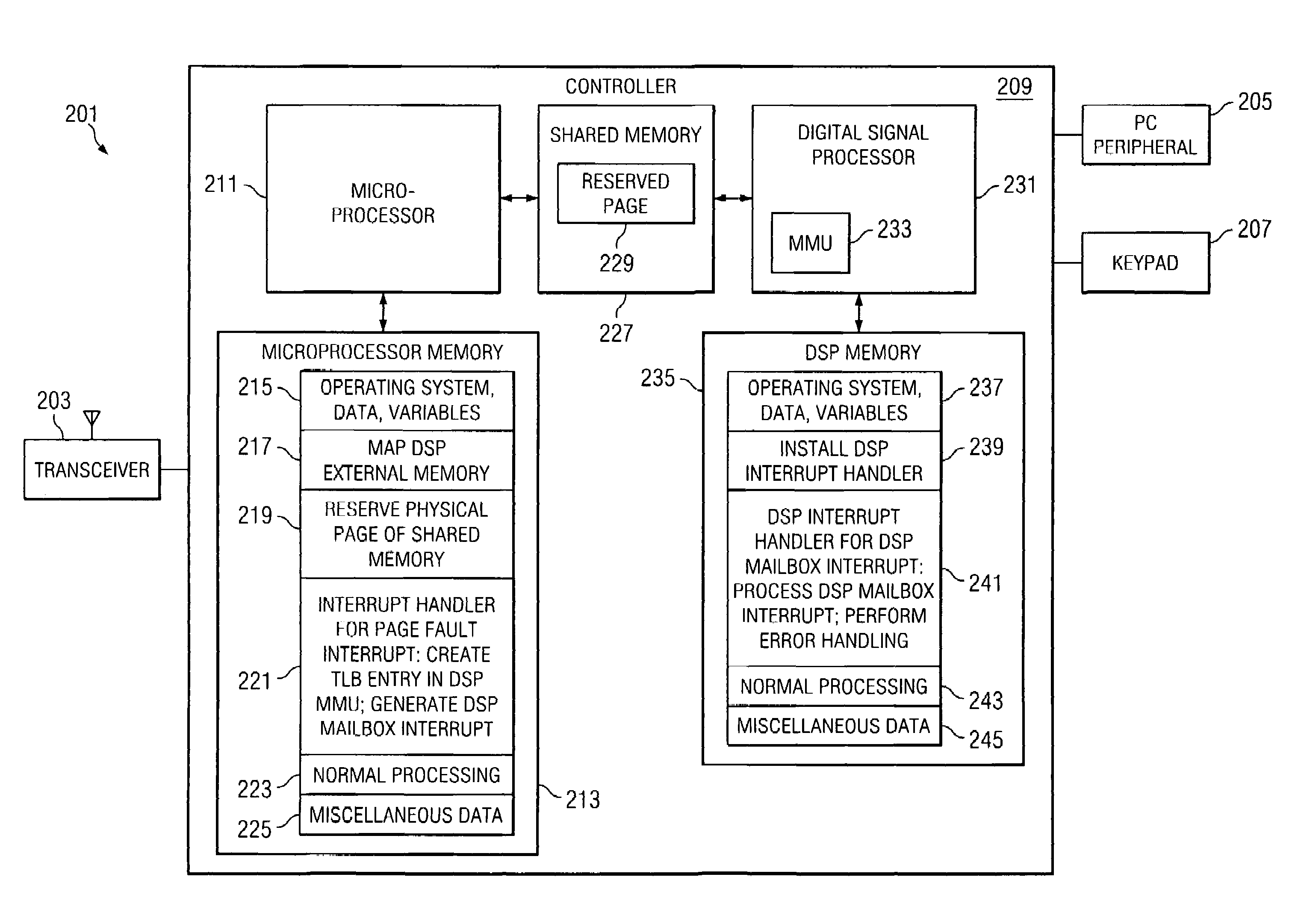

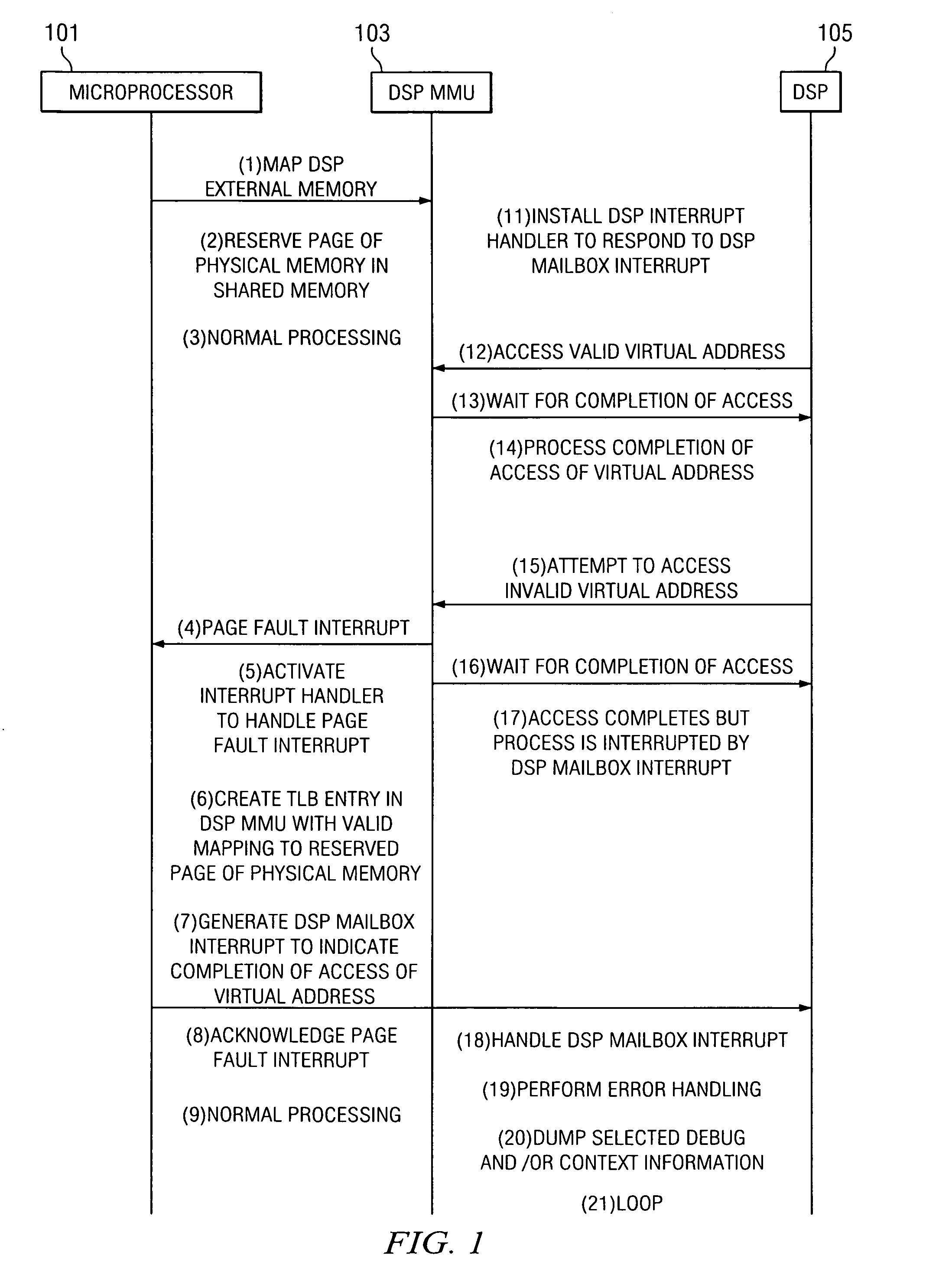

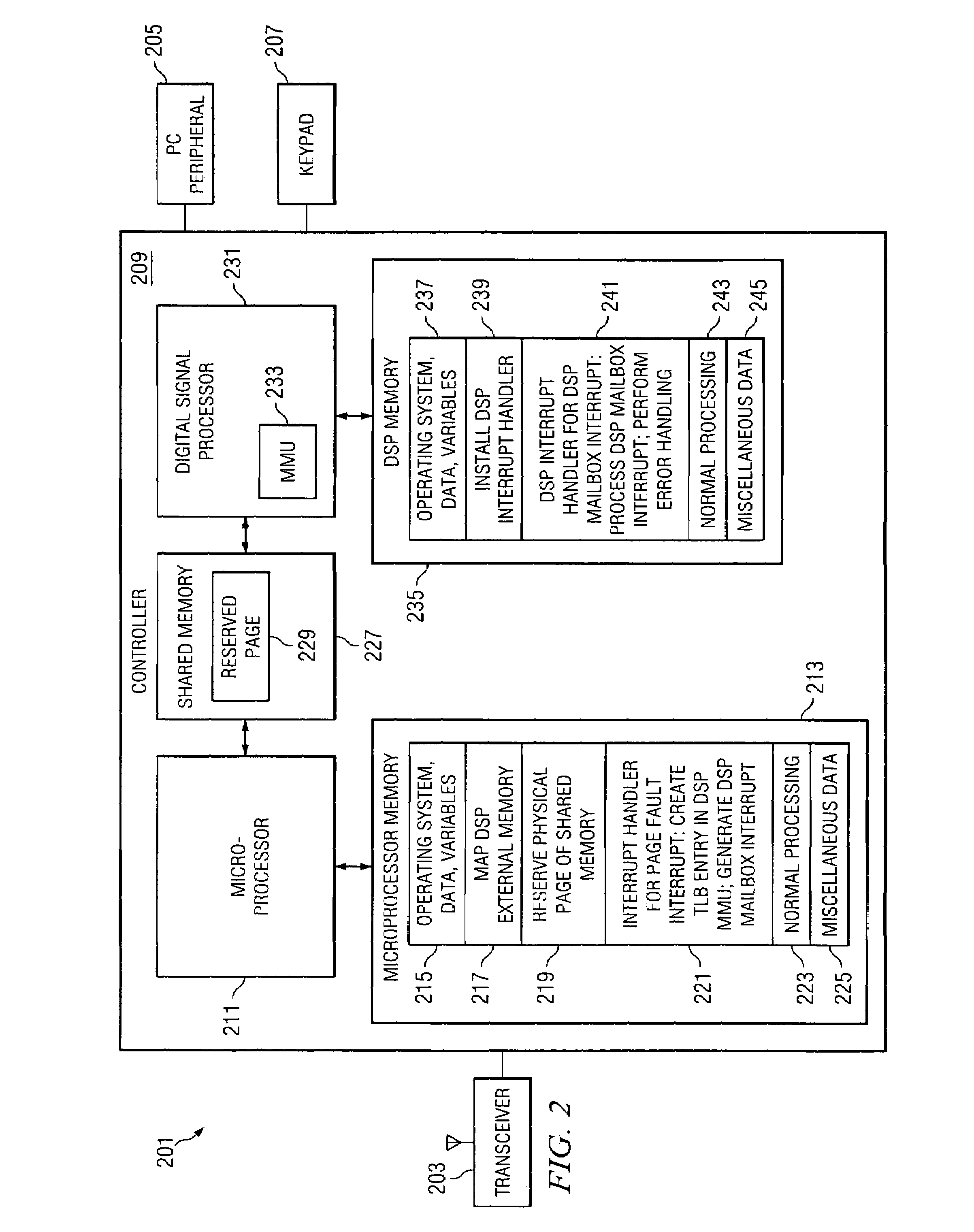

Method, system and device for handling a memory management fault in a multiple processor device

ActiveUS20080183931A1Memory architecture accessing/allocationFault responseComputer architectureMulti processor

A method or device handles memory management faults in a device having a digital signal processor (“DSP”) and a microprocessor. The DSP includes a memory management unit (“DSP MMU”) to manage memory access by the DSP, and the DSP and the microprocessor access shared physical memory. Upon the DSP executing an instruction attempting to access a virtual address wherein the virtual address is invalid, a page fault interrupt is generated by the DSP MMU. A microprocessor interrupt handler in the microprocessor is activated in direct response to the page fault interrupt. Thereafter in the microprocessor, a translation lookaside buffer (“TLB”) entry is created in the DSP MMU, which includes a valid mapping between the virtual address and a page of physical memory. After creating the TLB entry, the microprocessor indicates to the DSP that the access by the DSP of the virtual address is completed.

Owner:TEXAS INSTR INC

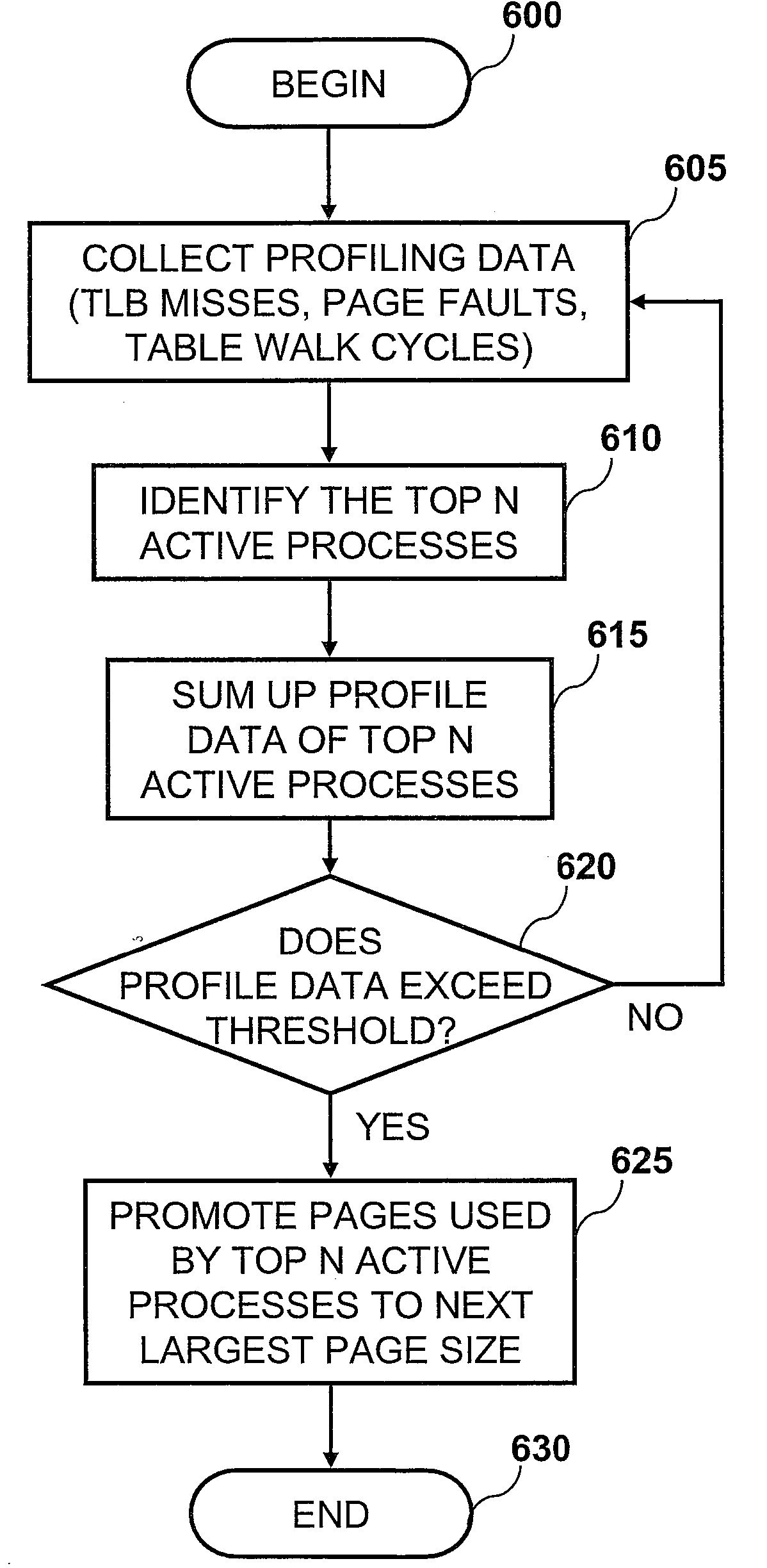

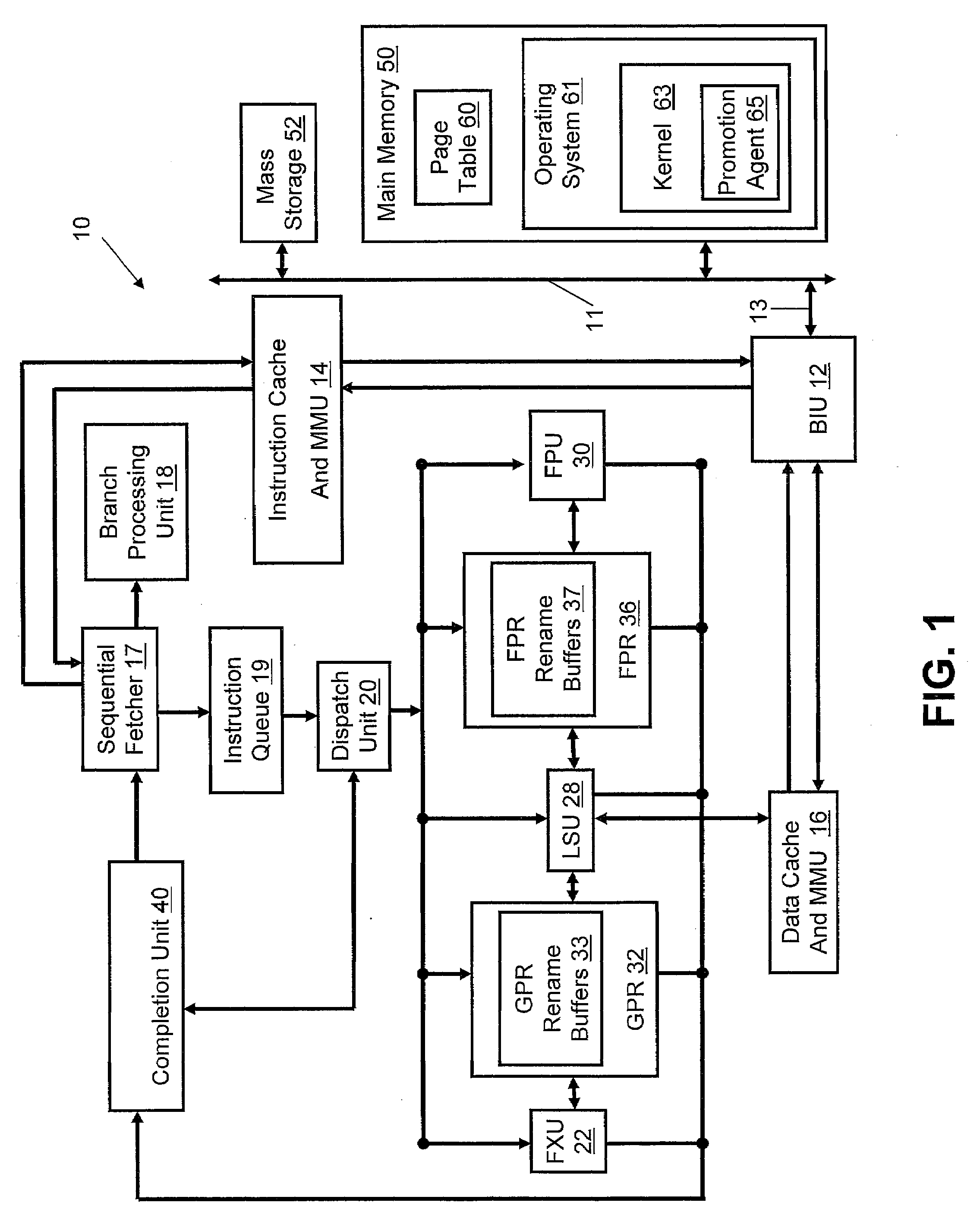

Method and System for Performance-Driven Memory Page Size Promotion

A method, system, and computer program product enable the selective adjustment in the size of memory pages allocated from system memory. In one embodiment, the method includes, but is not limited to, the steps of: collecting profile data (e.g., the number of Translation Lookaside Buffer (TLB) misses, the number of page faults, and the time spent by the Memory Management Unit (MMU) performing page table walks); identifying the top N active processes, where N is an integer that may be user-defined; evaluating the profile data of the top N active processes within a given time period; and in response to a determination that the profile data indicates that a threshold has been exceeded, promoting the pages used by the top N active processes to a larger page size and updating the Page Table Entries (PTEs) accordingly.

Owner:IBM CORP

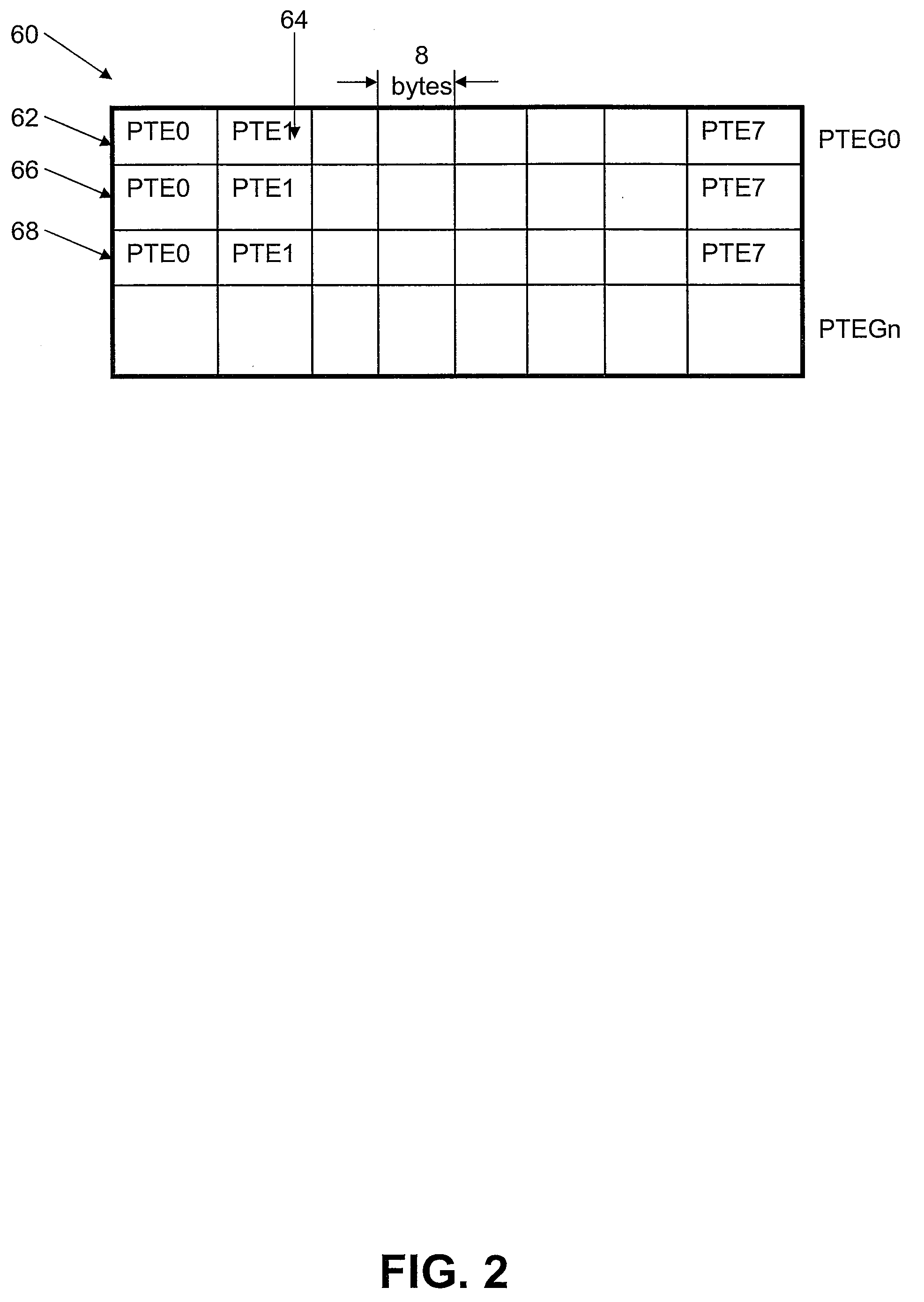

Memory management system having a forward progress bit

A virtual memory system that maintains a list of pages that are required to be resident in a frame buffer to guarantee the eventual forward progress of a graphics application context running on a graphics system composed of multiple clients. Pages that are required to be in the frame buffer memory are never swapped out of that memory. The required page list can be dynamically sized or fixed sized. A tag file is used to prevent page swapping of a page from the frame buffer that is required to make forward progress. A forward progress indicator signifies that a page faulting client has made forward progress on behalf of a context. The presence of a forward progress indicator is used to clear the tag file, thus enabling page swapping of the previously tagged pages from the frame buffer memory.

Owner:NVIDIA CORP

Extended main memory hierarchy having flash memory for page fault handling

ActiveUS20100332727A1Lower performance requirementsPerformance penaltyMemory architecture accessing/allocationEnergy efficient ICTMemory hierarchyManagement unit

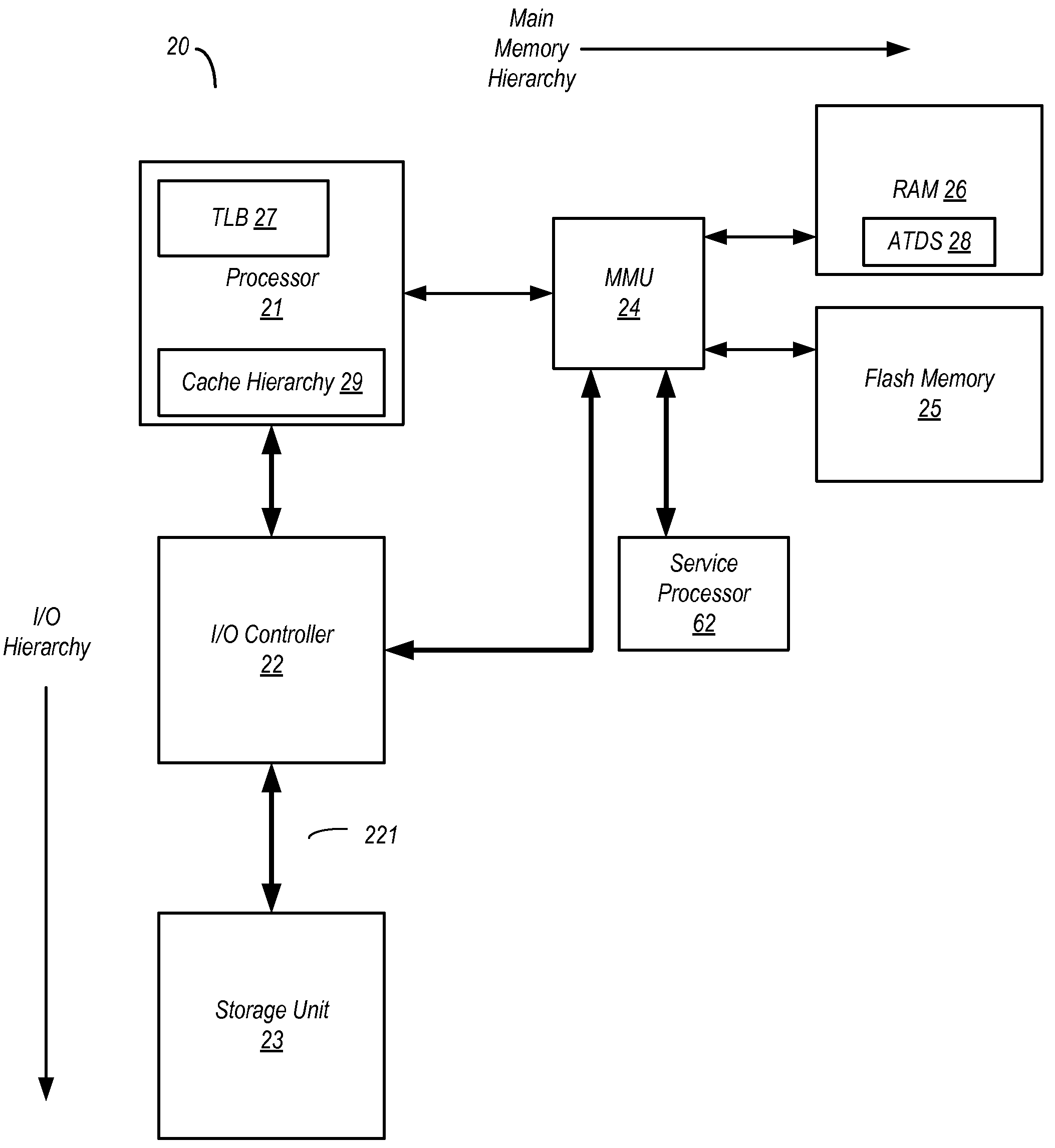

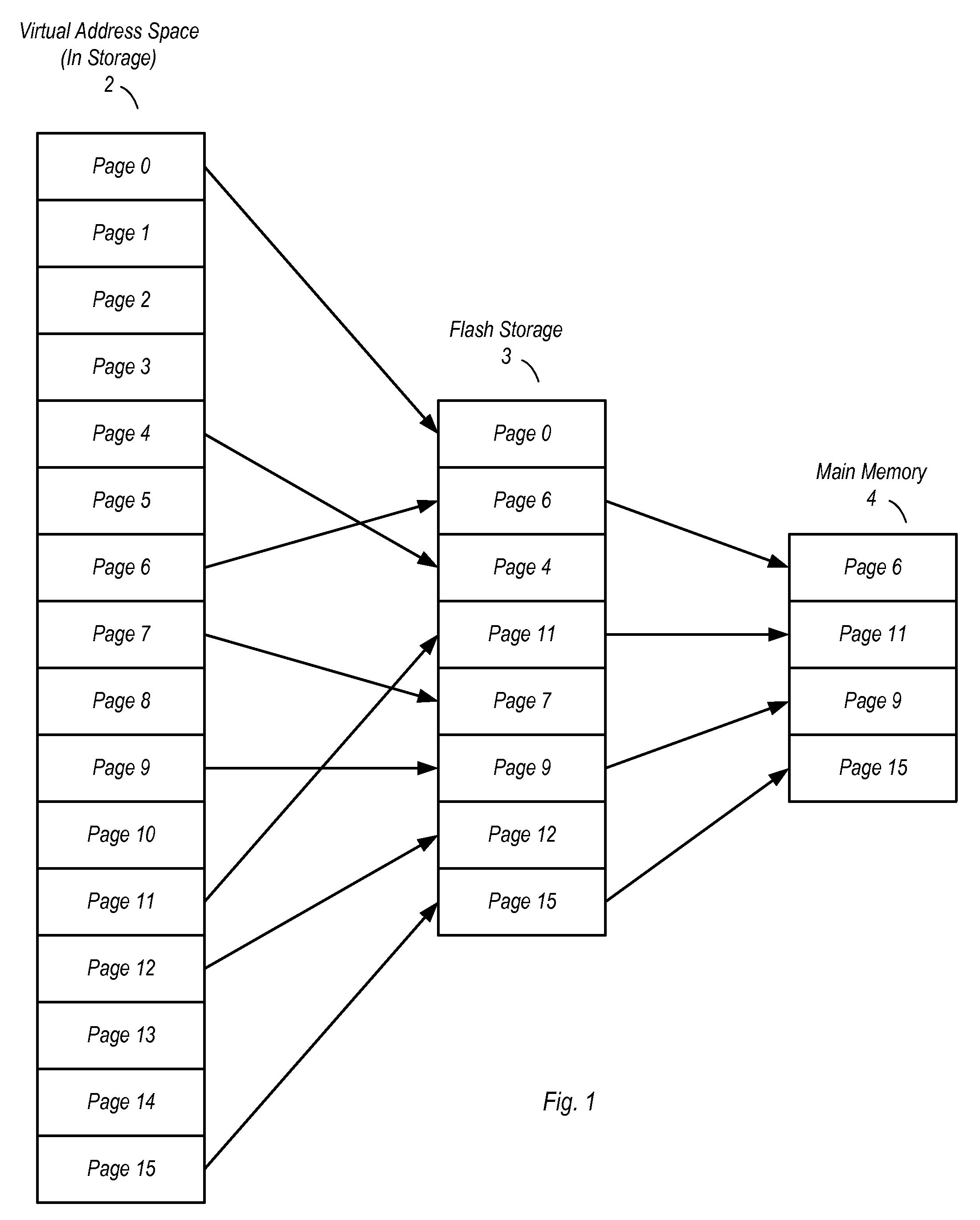

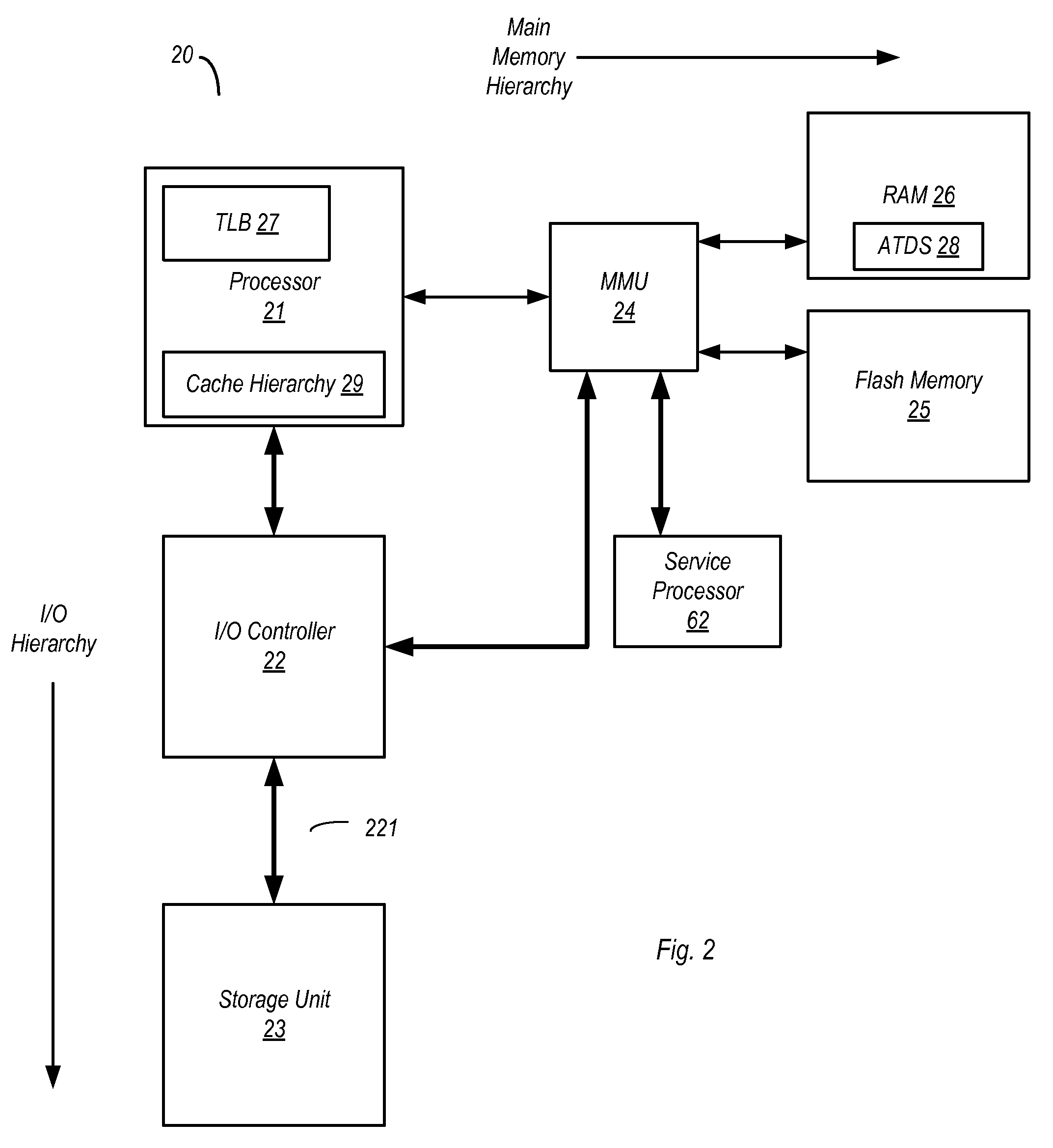

A computer system with flash memory in the main memory hierarchy is disclosed. In an embodiment, the computer system includes at least one processor, a memory management unit coupled to the at least one processor, and a random access memory (RAM) coupled to the memory management unit. The computer system may also include a flash memory coupled to the memory management unit, wherein the computer system is configured to store at least a subset of a plurality of pages in the flash memory during operation. Responsive to a page fault, the memory management unit may determine, without invoking an I / O driver, if a requested page associated with the page fault is stored in the flash memory and further configured to, if the page is stored in the flash memory, transfer the page into RAM.

Owner:SUN MICROSYSTEMS INC

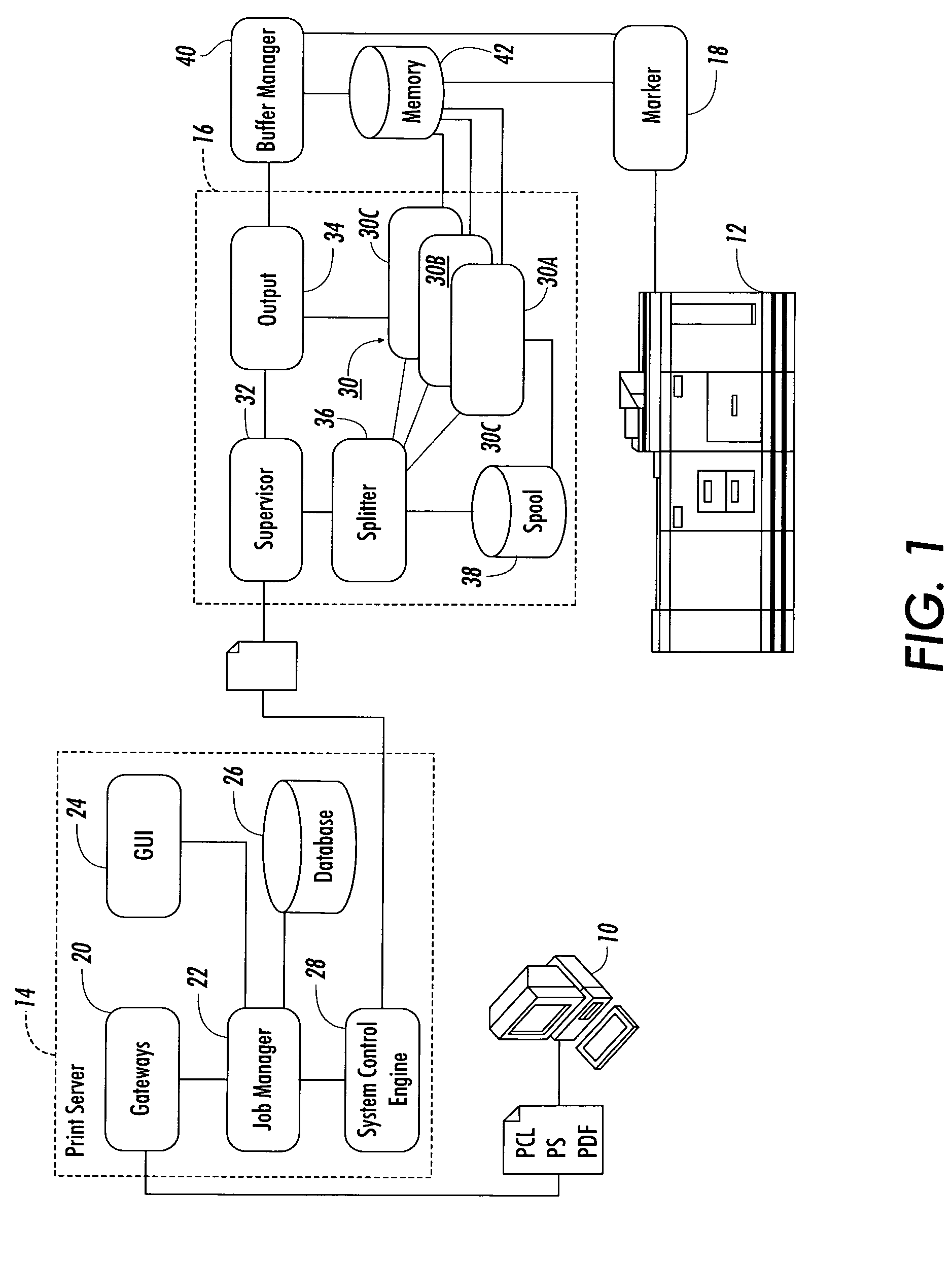

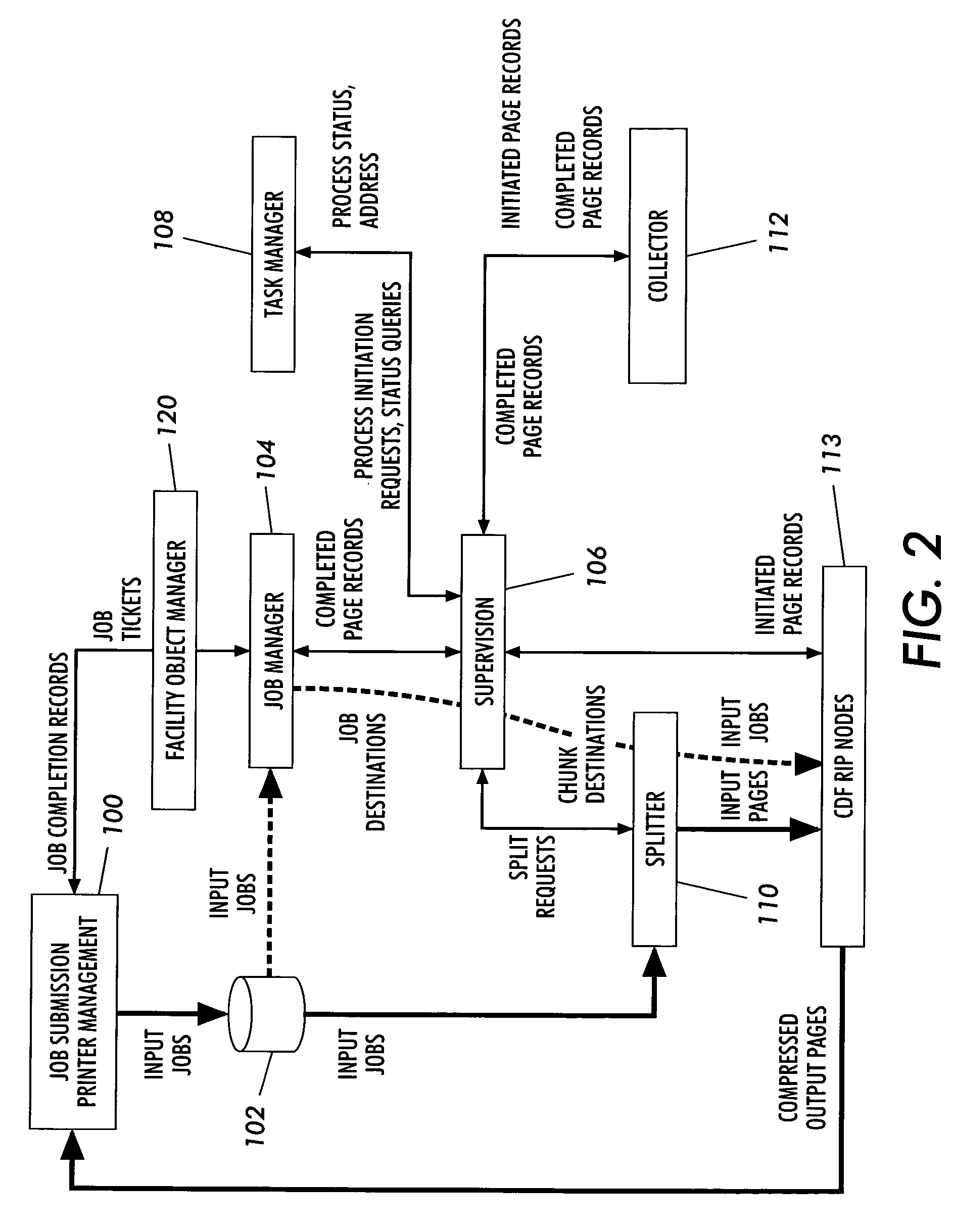

Parallel printing system having modes for auto-recovery, auto-discovery of resources, and parallel processing of unprotected postscript jobs

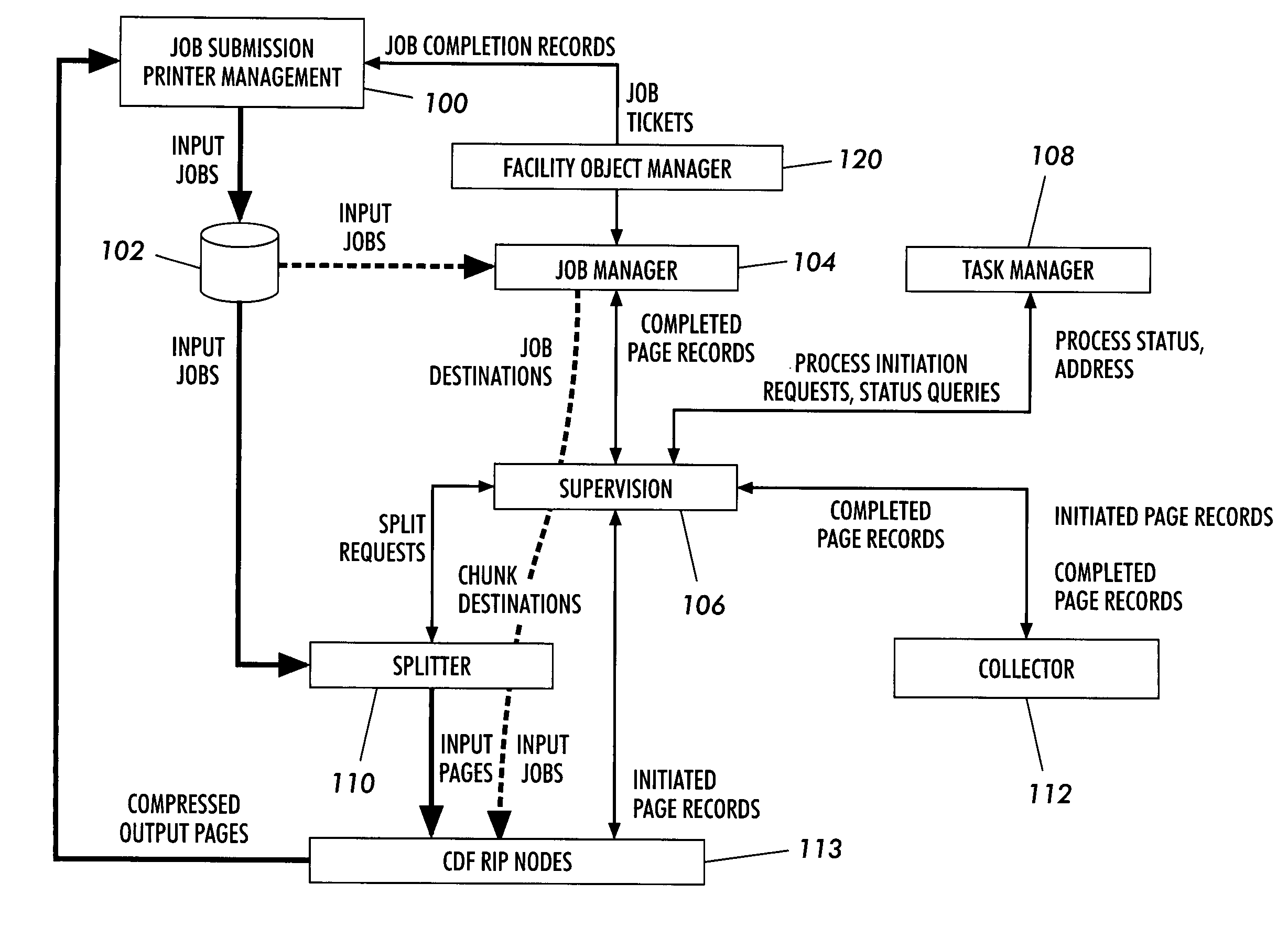

ActiveUS7161705B2Improve processing efficiencyImprove efficiencyVisual presentationPictoral communicationOperation modeParallel processing

A printing system comprised of a printer, a plurality of processing nodes, each processing node being disposed for processing a portion of a print job into a printer dependent format, and a processing manager for spooling the print job into selectively sized chunks and assigning the chunks to selected ones of the nodes for parallel processing of the chunks by the processing nodes into the printer dependent format. The chunks are selectively sized from at least one page to an entire size of the print job in accordance with predetermined splitting factors for enhancing printer printing efficiency. The operating of the printing system includes a method for parallel processing of a print job with a plurality of processing nodes into a printer-ready format for printing the print job, wherein operating modes are provided for: auto-recovery in response to a page fault by executing an auto recovery in serial mode of operation; auto-discovery of system hardware resources; and parallel processing of protected PostScript print jobs.

Owner:XEROX CORP

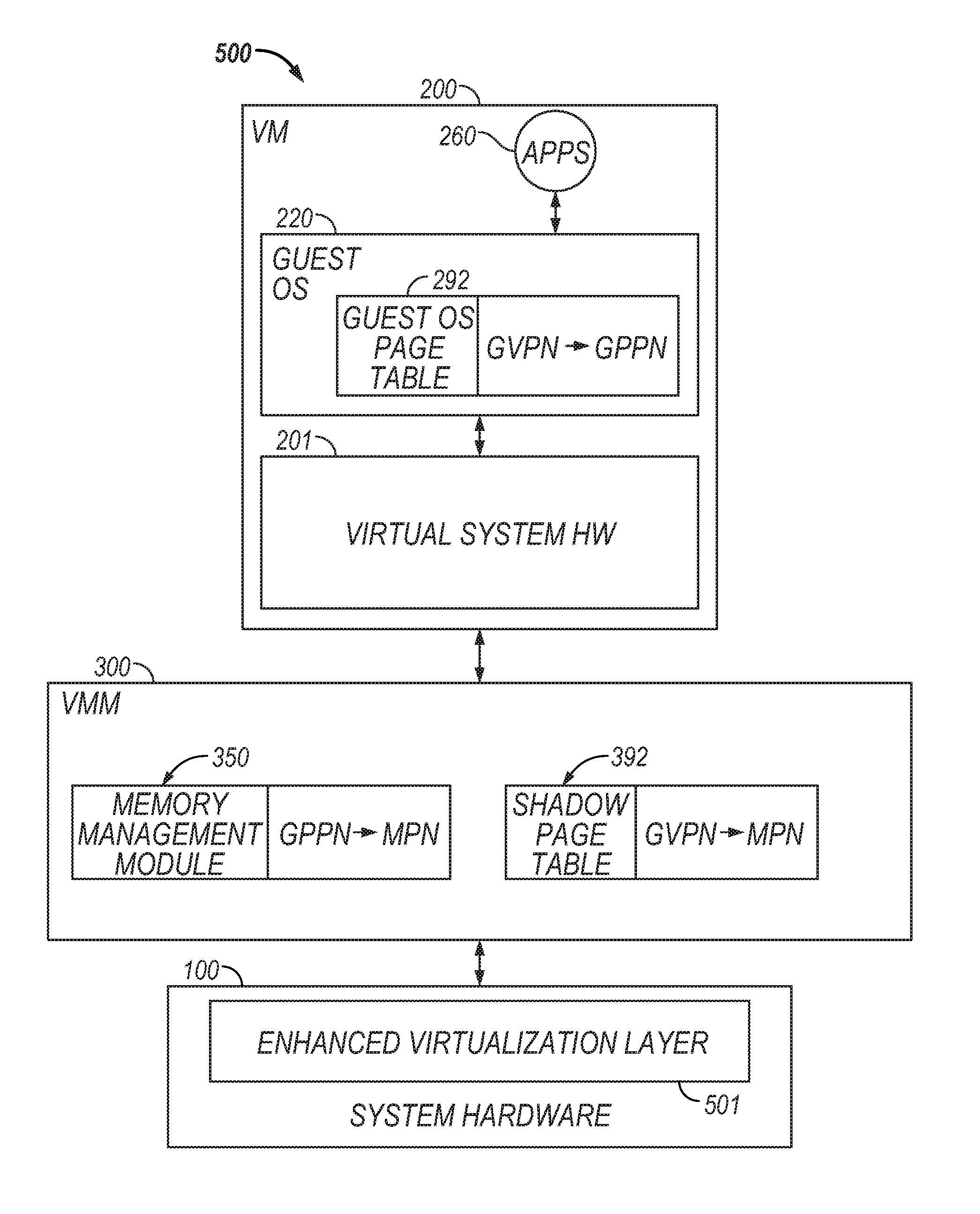

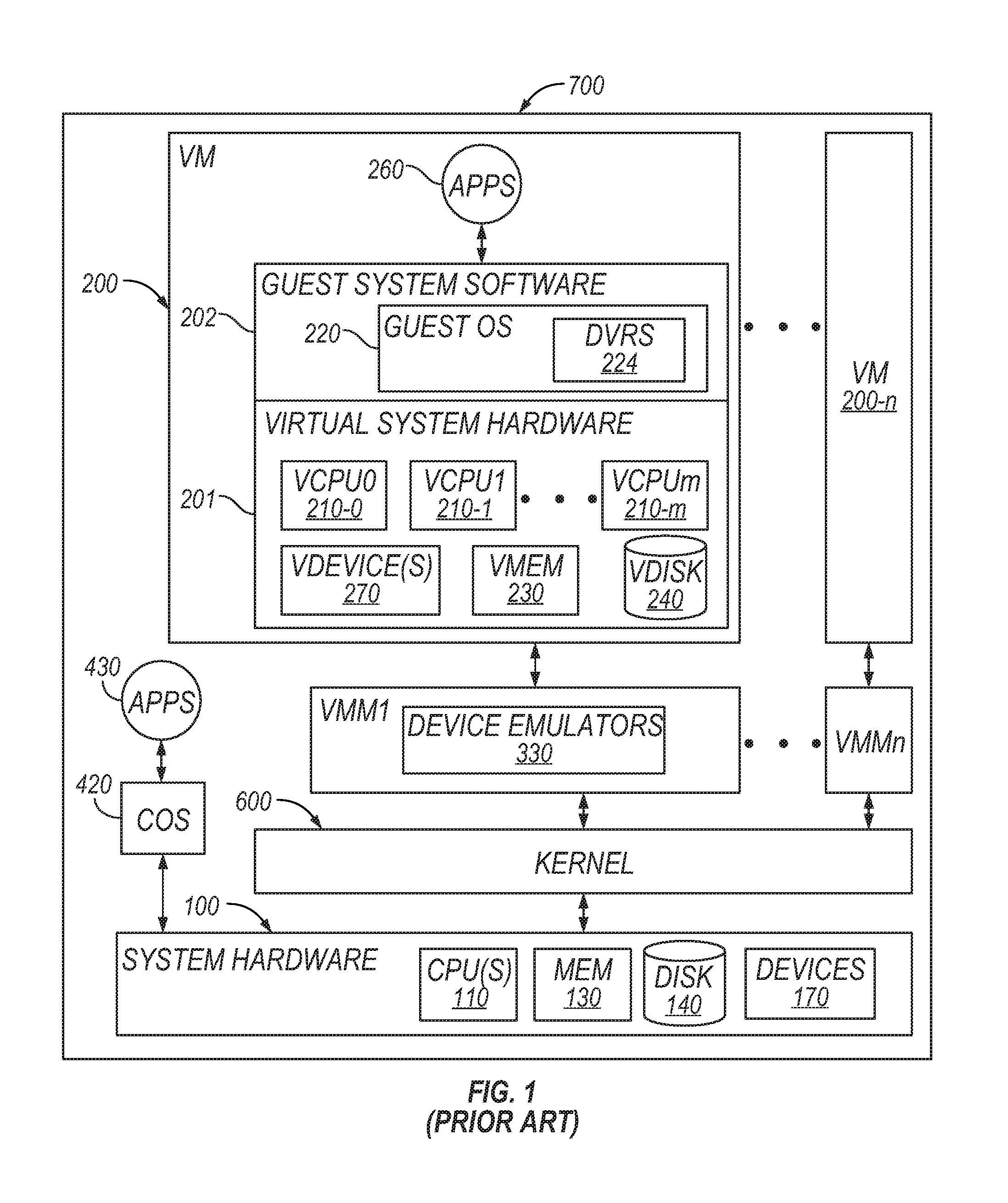

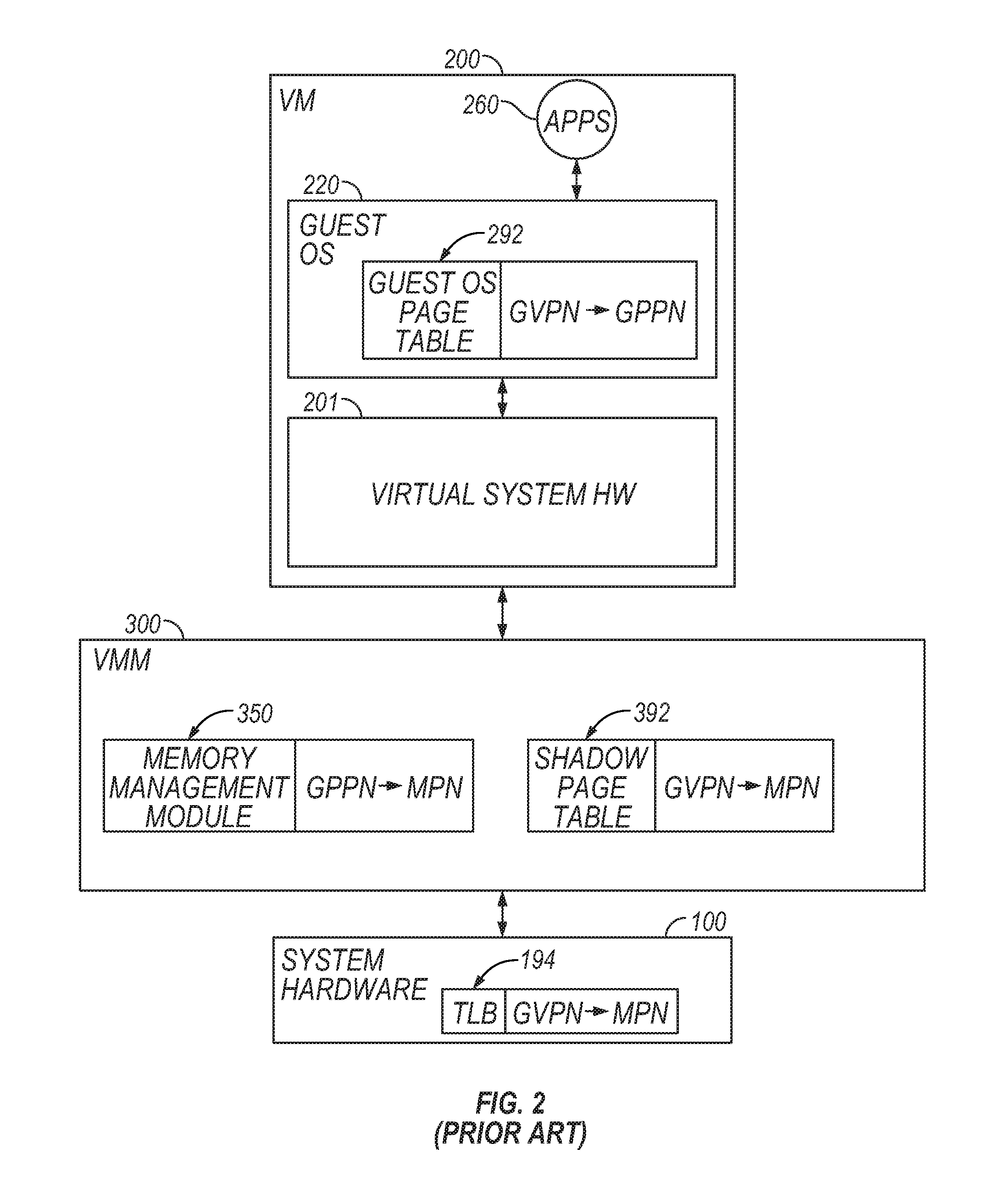

Page fault handling in a virtualized computer system

ActiveUS8307191B1Memory architecture accessing/allocationMemory systemsVirtualizationError processing

The invention relates to page fault handling in a virtualized computer system in which at least one guest page table maps virtual addresses to guest physical addresses, some of which are backed by machine addresses, and wherein at least one shadow page table and at least one translation look-aside buffer map the virtual addresses to the corresponding machine addresses. Indicators are maintained in entries of at least one shadow page table, wherein each indicator denotes a state of its associated entry from a group of states consisting of: a first state and a second state. An enhanced virtualization layer processes hardware page faults. States of shadow page table entries corresponding to hardware page faults are determined. Responsive to a shadow page table entry corresponding to a hardware page fault being in the first state, that page fault is delivered to a guest operating system for processing without activating a virtualization software component. On the other hand, responsive to a shadow page table entry corresponding to a hardware page fault being in the second state, that page fault is delivered to a virtualization software component for processing.

Owner:VMWARE INC

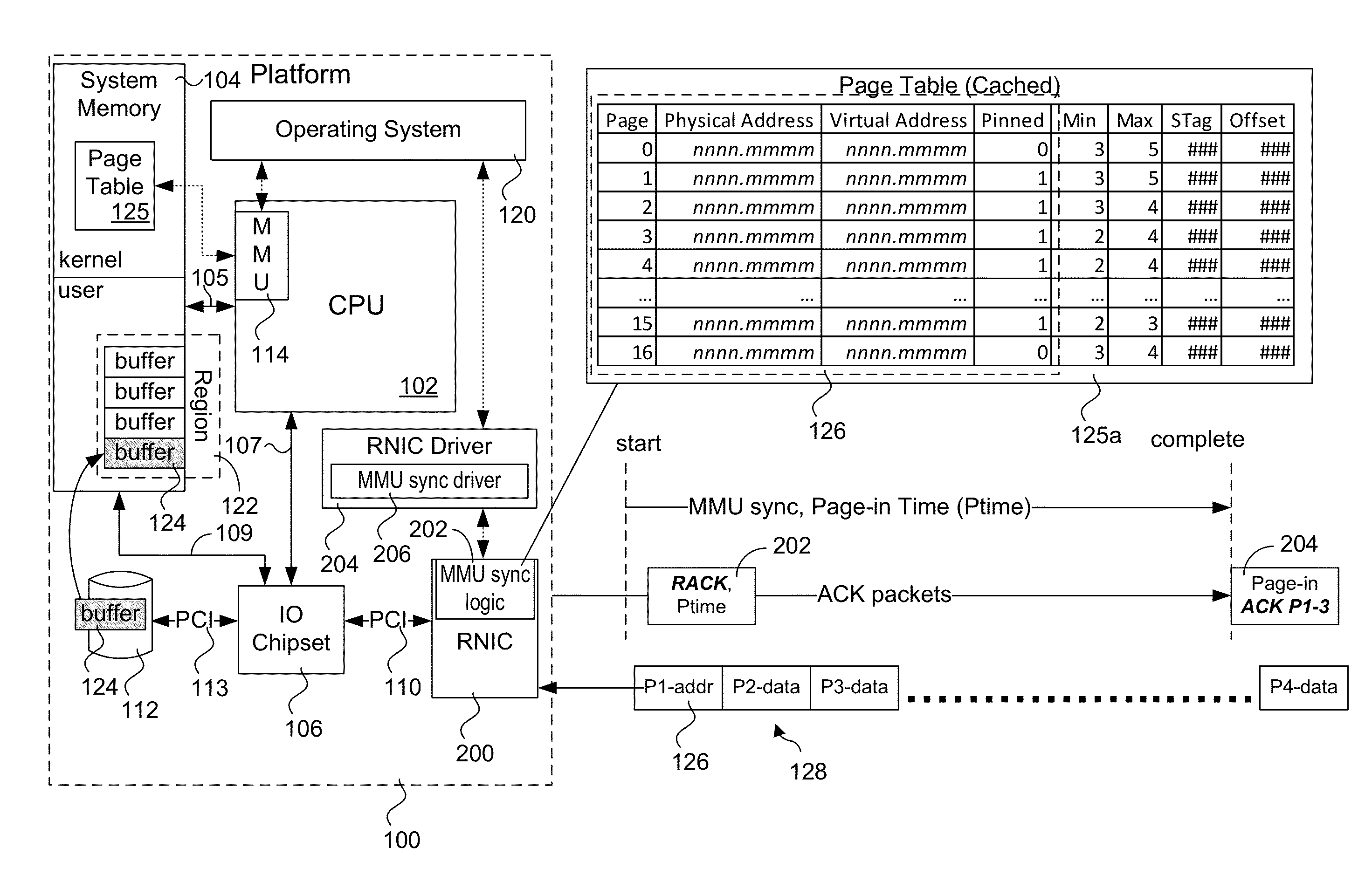

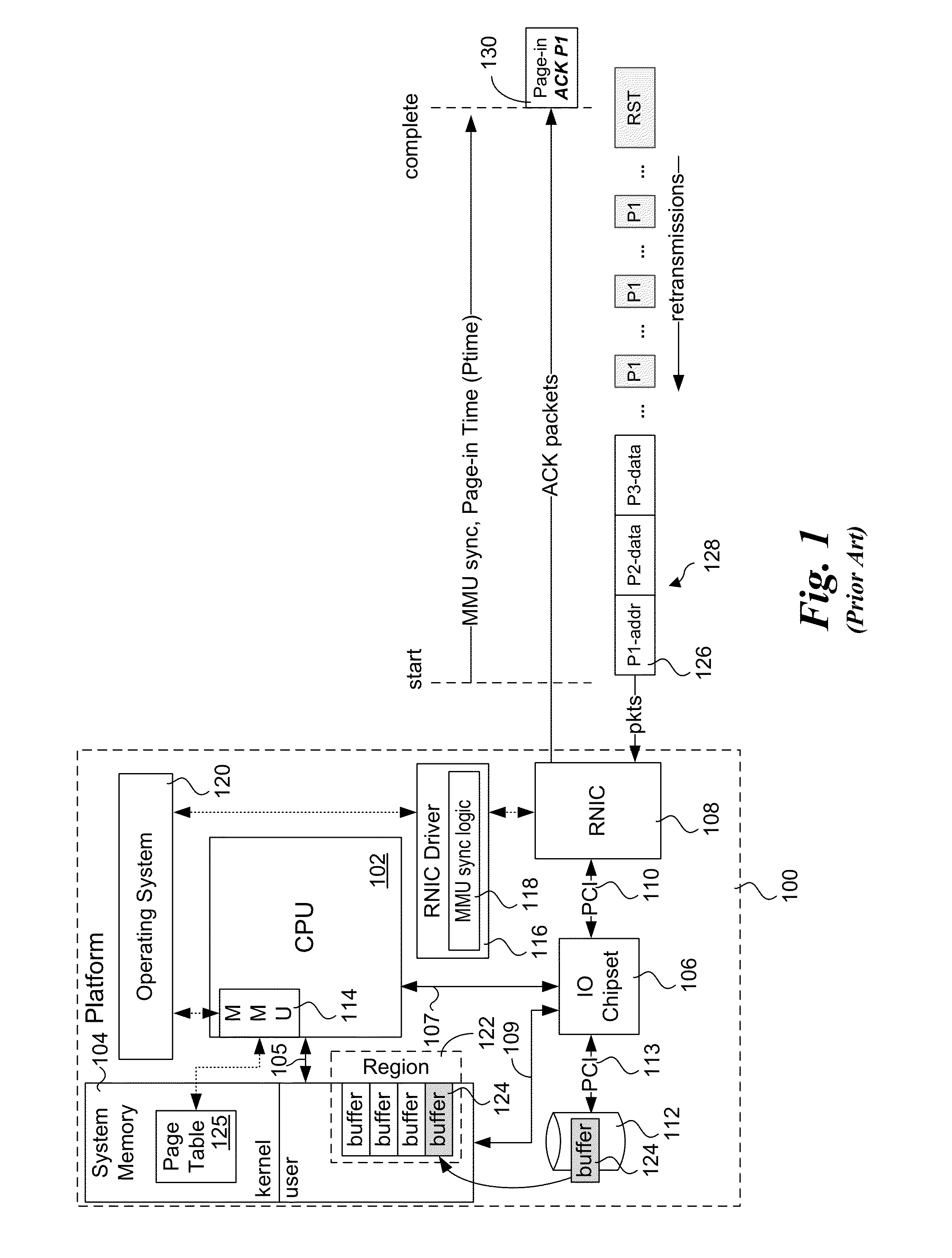

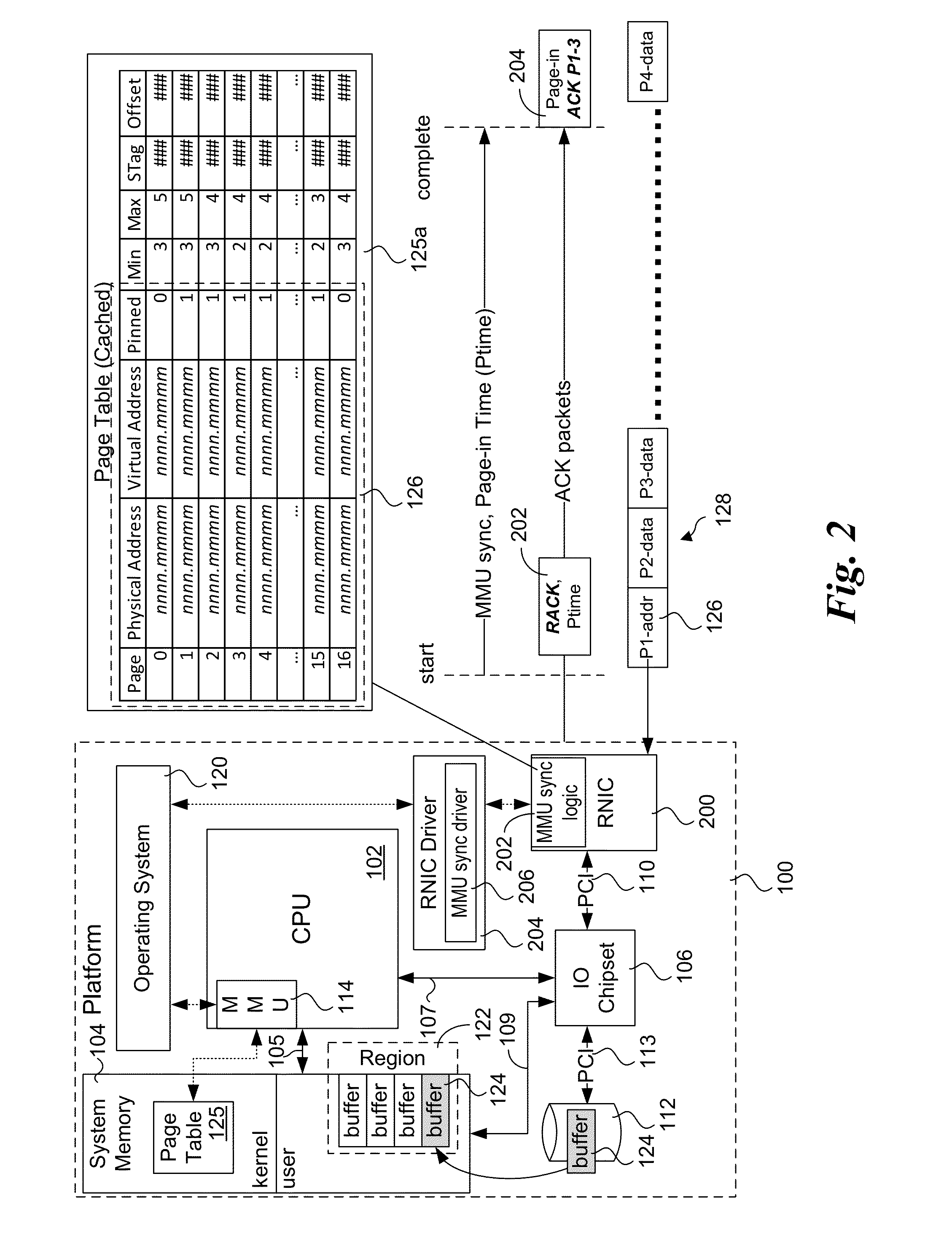

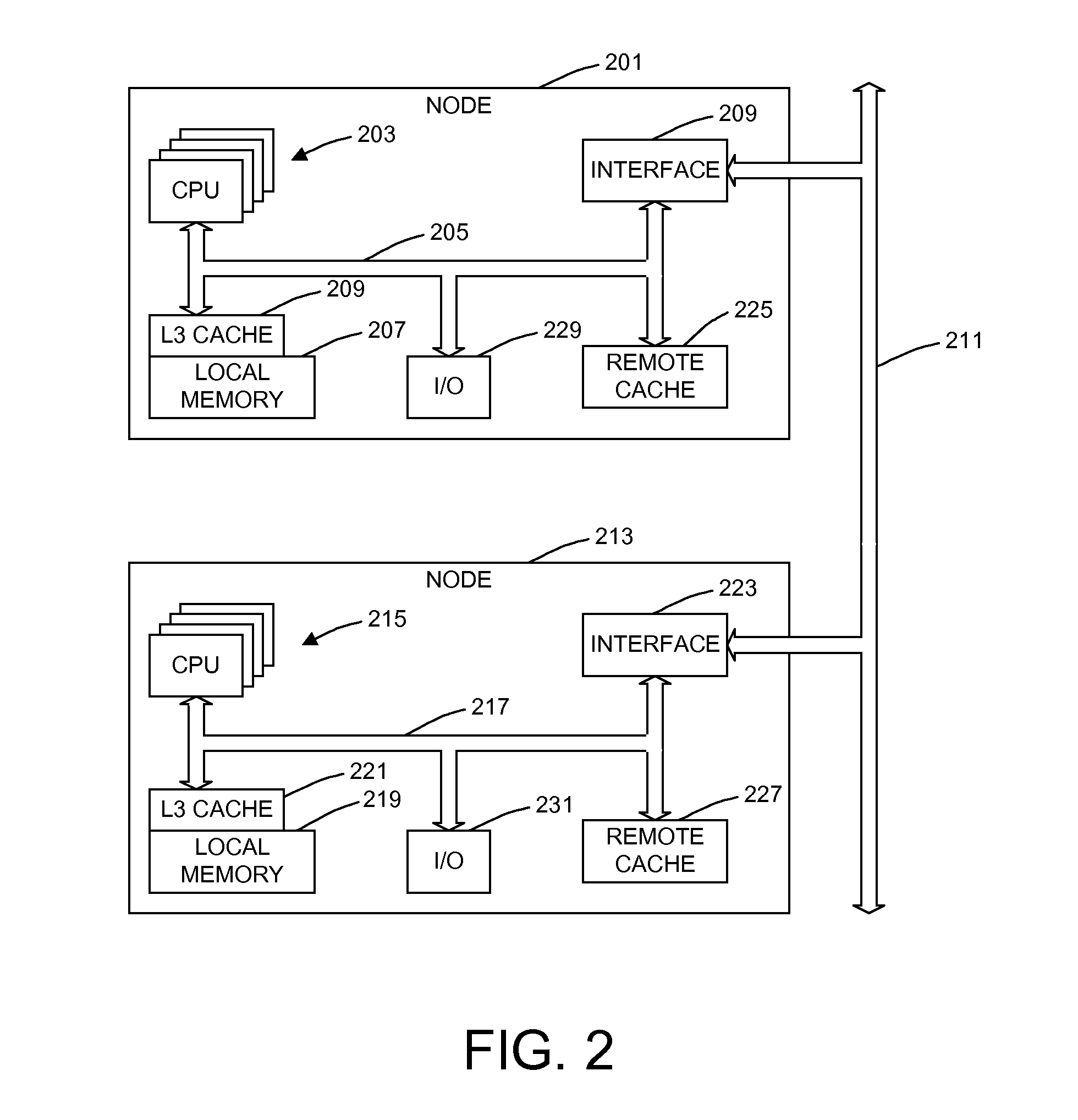

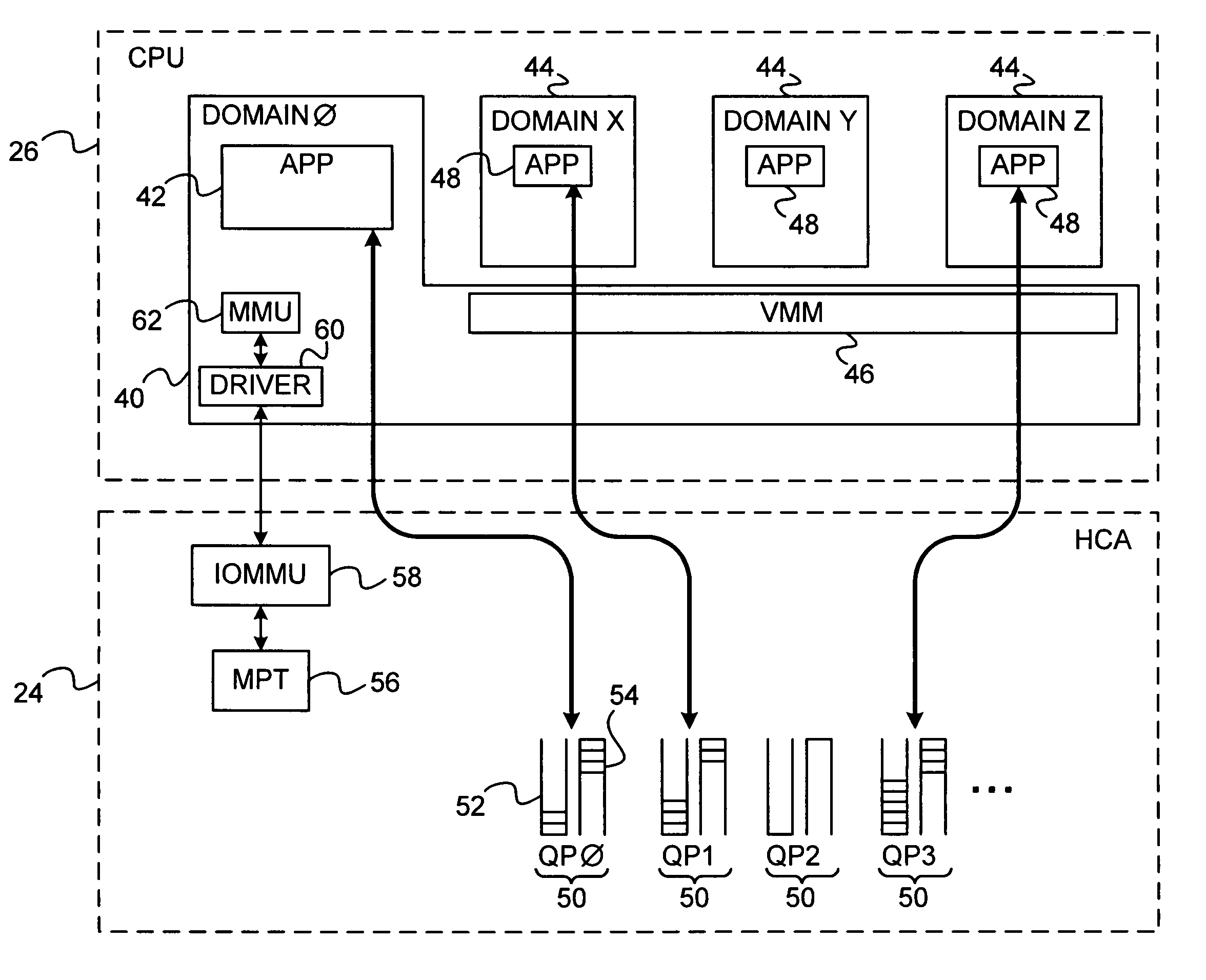

Explicit flow control for implicit memory registration

ActiveUS20140164545A1Digital computer detailsElectric digital data processingImplicit memoryRetransmission timeout

Methods, apparatus and systems for facilitating explicit flow control for RDMA transfers using implicit memory registration. To setup an RDMA data transfer, a source RNIC sends a request to allocate a destination buffer at a destination RNIC using implicit memory registration. Under implicit memory registration, the page or pages to be registered are not explicitly identified by the source RNIC, and may correspond to pages that are paged out to virtual memory. As a result, registration of such pages result in page faults, leading to a page fault delay before registration and pinning of the pages is completed. In response to detection of a page fault, the destination RNIC returns an acknowledgment indicating that a page fault delay is occurring. In response to receiving the acknowledgment, the source RNIC temporarily stops sending packets, and does not retransmit packets for which ACKs are not received prior to retransmission timeout expiration.

Owner:INTEL CORP

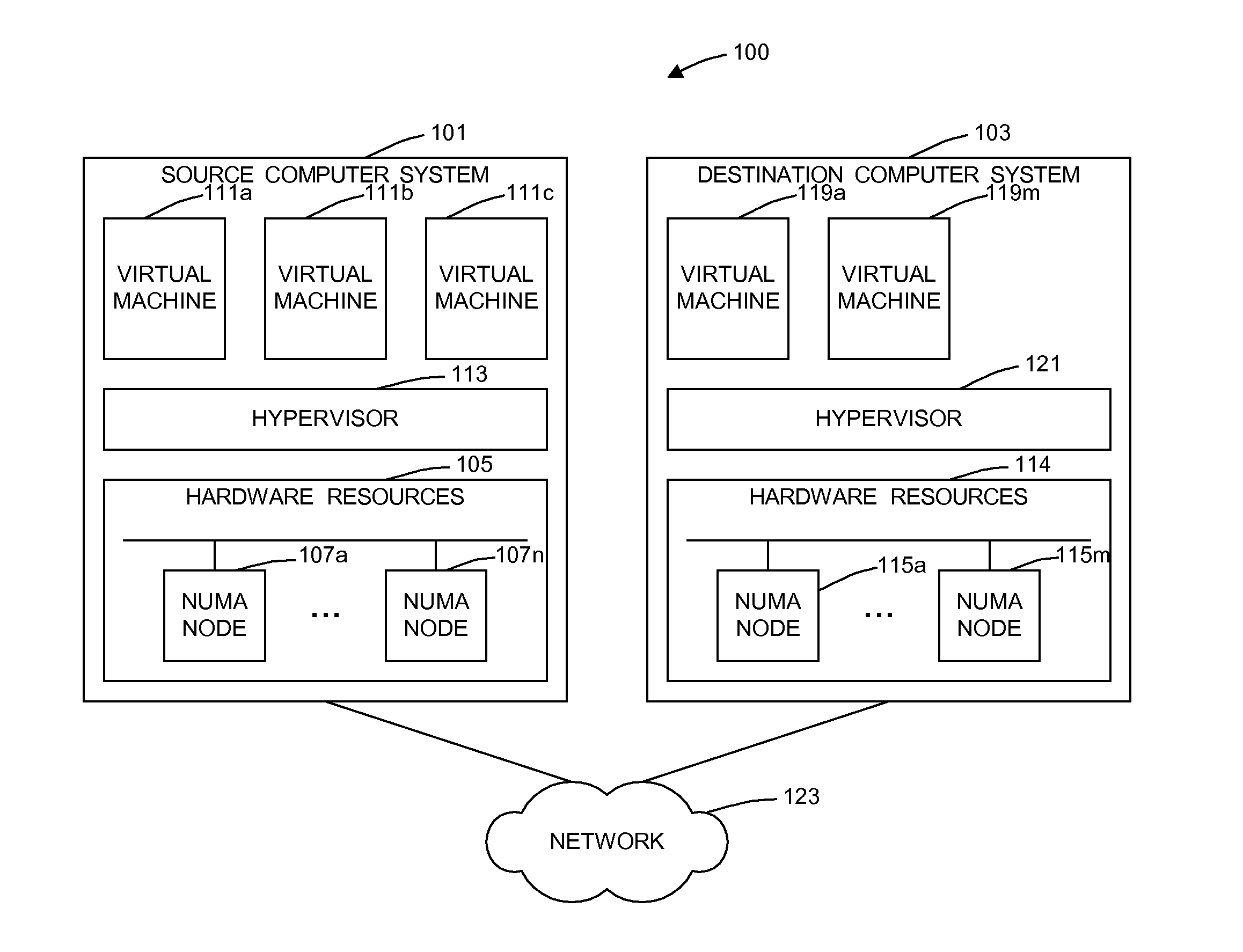

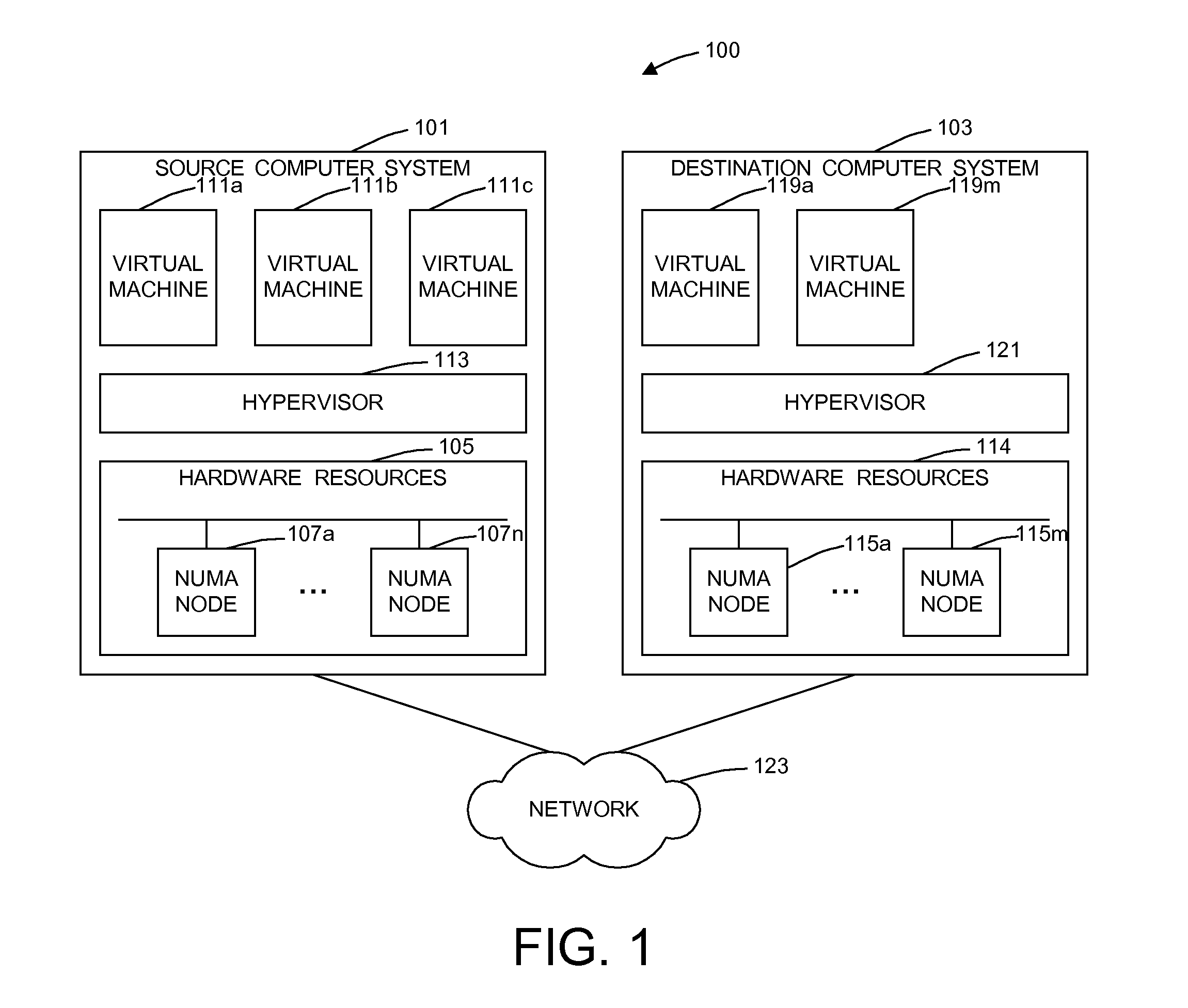

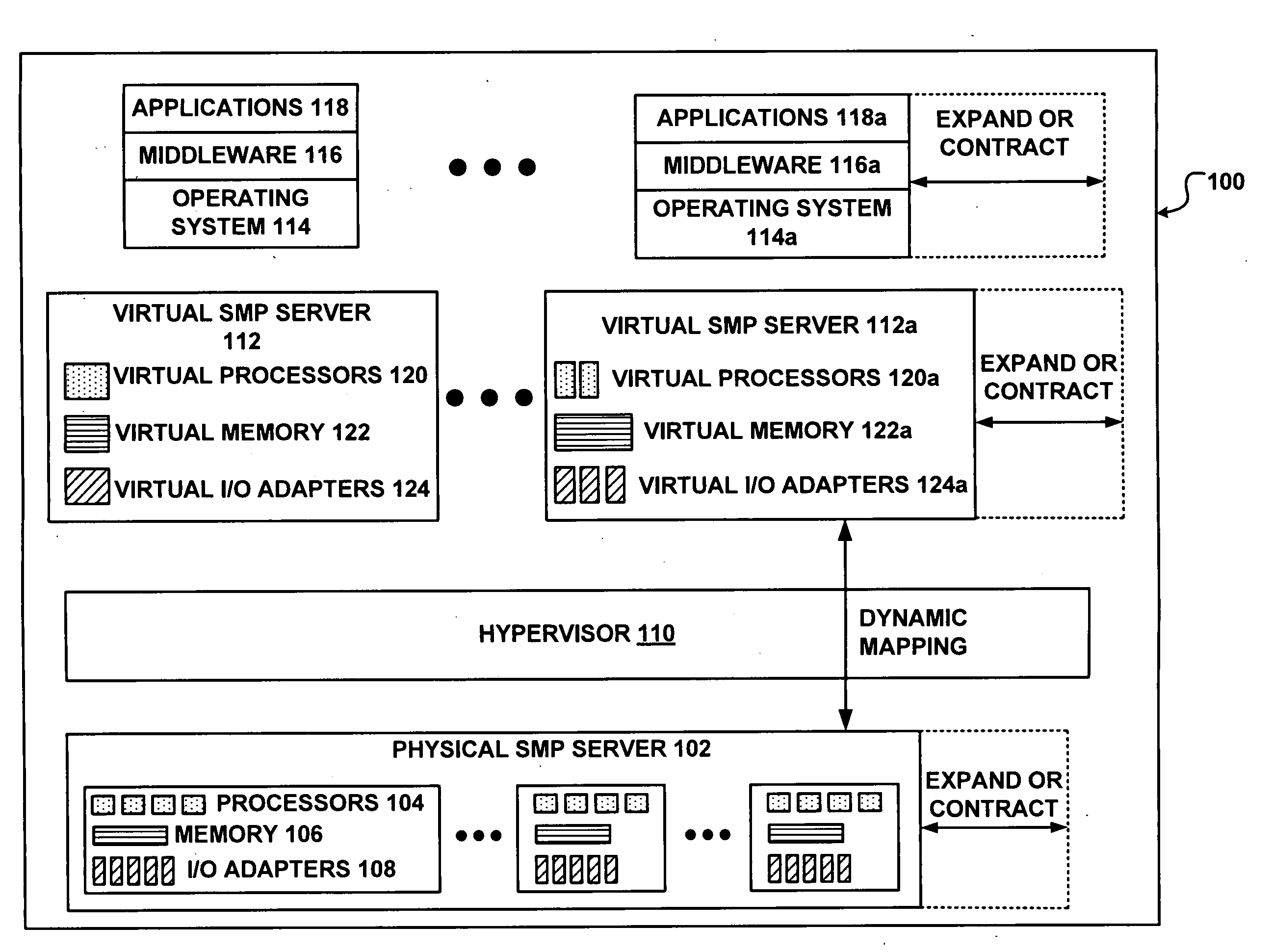

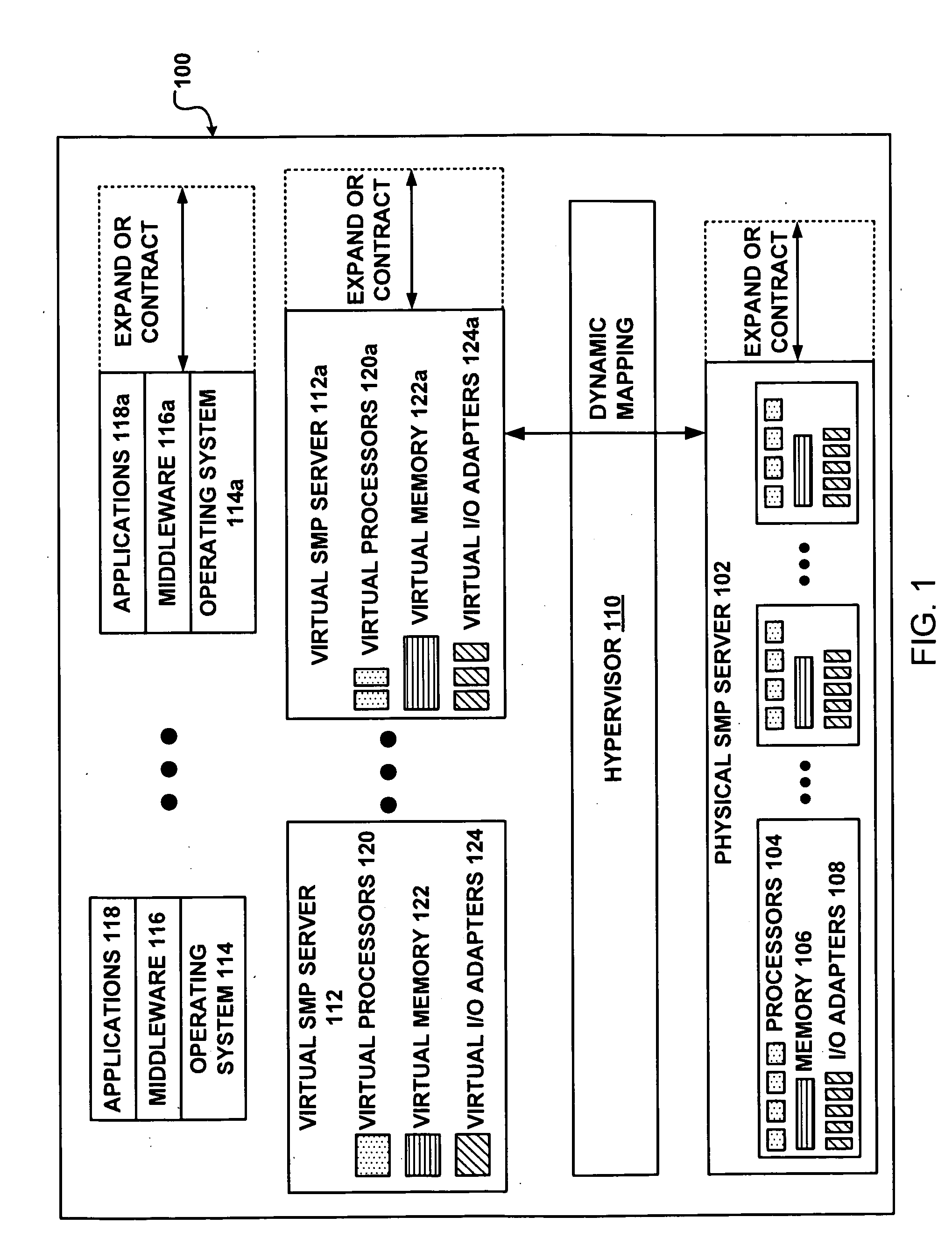

Dynamic memory affinity reallocation after partition migration

ActiveUS20120102258A1Memory adressing/allocation/relocationComputer security arrangementsProcessor nodeComputerized system

A method of dynamically reallocating memory affinity in a virtual machine after migrating the virtual machine from a source computer system to a destination computer system migrates processor states and resources used by the virtual machine from the source computer system to the destination computer system. The method maps memory of the virtual machine to processor nodes of the destination computer system. The method deletes memory mappings in processor hardware, such as translation lookaside buffers and effective-to-real address tables, for the virtual machine on the destination computer system. The method starts the virtual machine on the destination computer system in virtual real memory mode. A hypervisor running on the destination computer system receives a page fault and virtual address of a page for said virtual machine from a processor of the destination computer system and determines if the page is in local memory of the processor. If the hypervisor determines the page to be in the local memory of the processor, the hypervisor returning a physical address mapping for the page to the processor. If the hypervisor determines the page not to be in the local memory of the processor, the hypervisor moves the page to local memory of the processor and returns a physical address mapping for said page to the processor.

Owner:IBM CORP

Network interface device with memory management capabilities

An input / output (I / O) device includes a host interface for connection to a host device having a memory and a network interface, which is configured to receive, over a network, data packets associated with I / O operations directed to specified virtual addresses in the memory. Packet processing hardware is configured to translate the virtual addresses into physical addresses and to perform the I / O operations using the physical addresses, and upon an occurrence of a page fault in translating one of the virtual addresses, to transmit a response packet over the network to a source of the data packets so as to cause the source to refrain from transmitting further data packets while the page fault is serviced.

Owner:MELLANOX TECHNOLOGIES LTD

Method, system and device for handling a memory management fault in a multiple processor device

ActiveUS7681012B2Memory architecture accessing/allocationError detection/correctionManagement unitMulti processor

A method or device handles memory management faults in a device having a digital signal processor (“DSP”) and a microprocessor. The DSP includes a memory management unit (“DSP MMU”) to manage memory access by the DSP, and the DSP and the microprocessor access shared physical memory. Upon the DSP executing an instruction attempting to access a virtual address wherein the virtual address is invalid, a page fault interrupt is generated by the DSP MMU. A microprocessor interrupt handler in the microprocessor is activated in direct response to the page fault interrupt. Thereafter in the microprocessor, a translation lookaside buffer (“TLB”) entry is created in the DSP MMU, which includes a valid mapping between the virtual address and a page of physical memory. After creating the TLB entry, the microprocessor indicates to the DSP that the access by the DSP of the virtual address is completed.

Owner:TEXAS INSTR INC

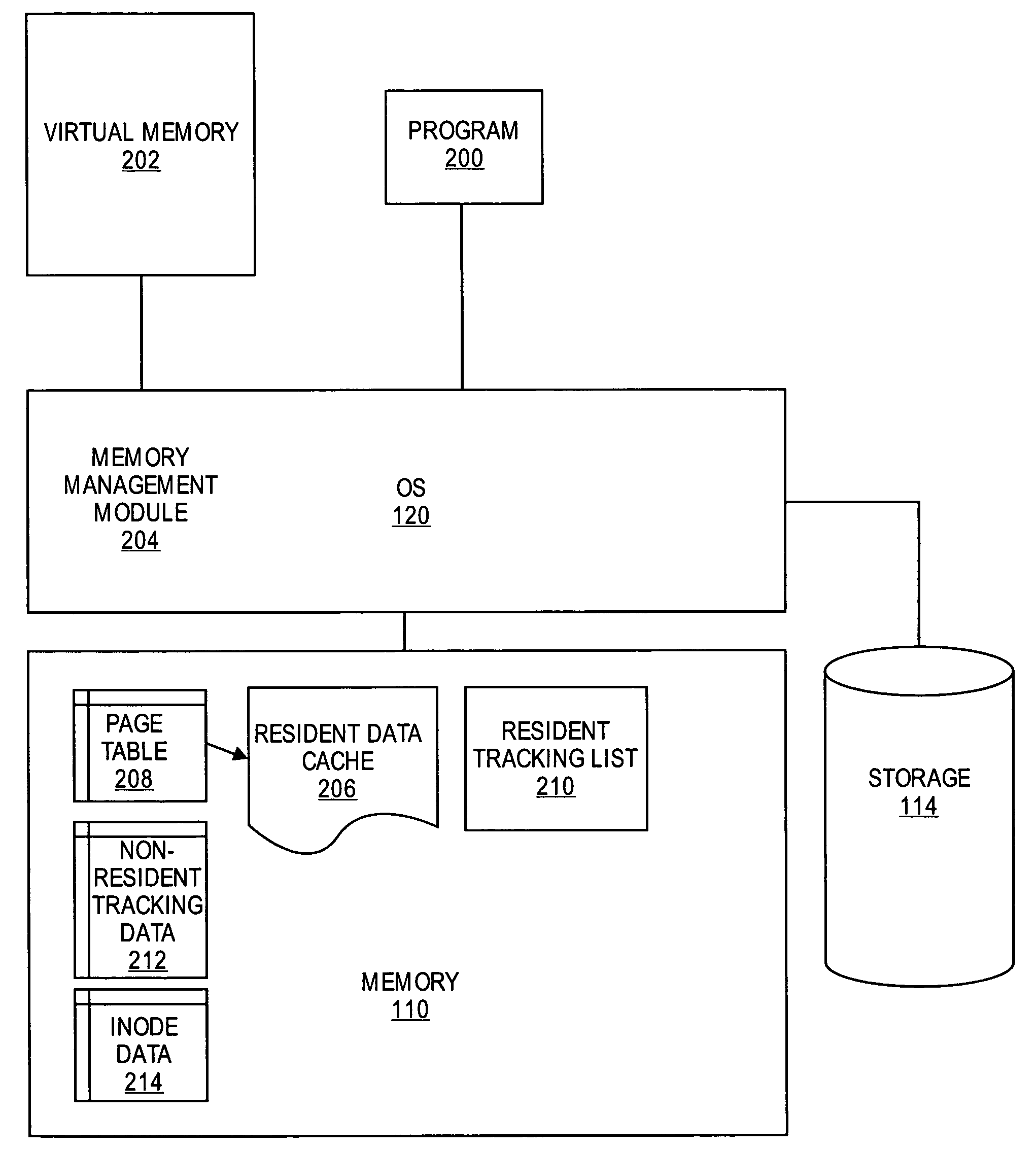

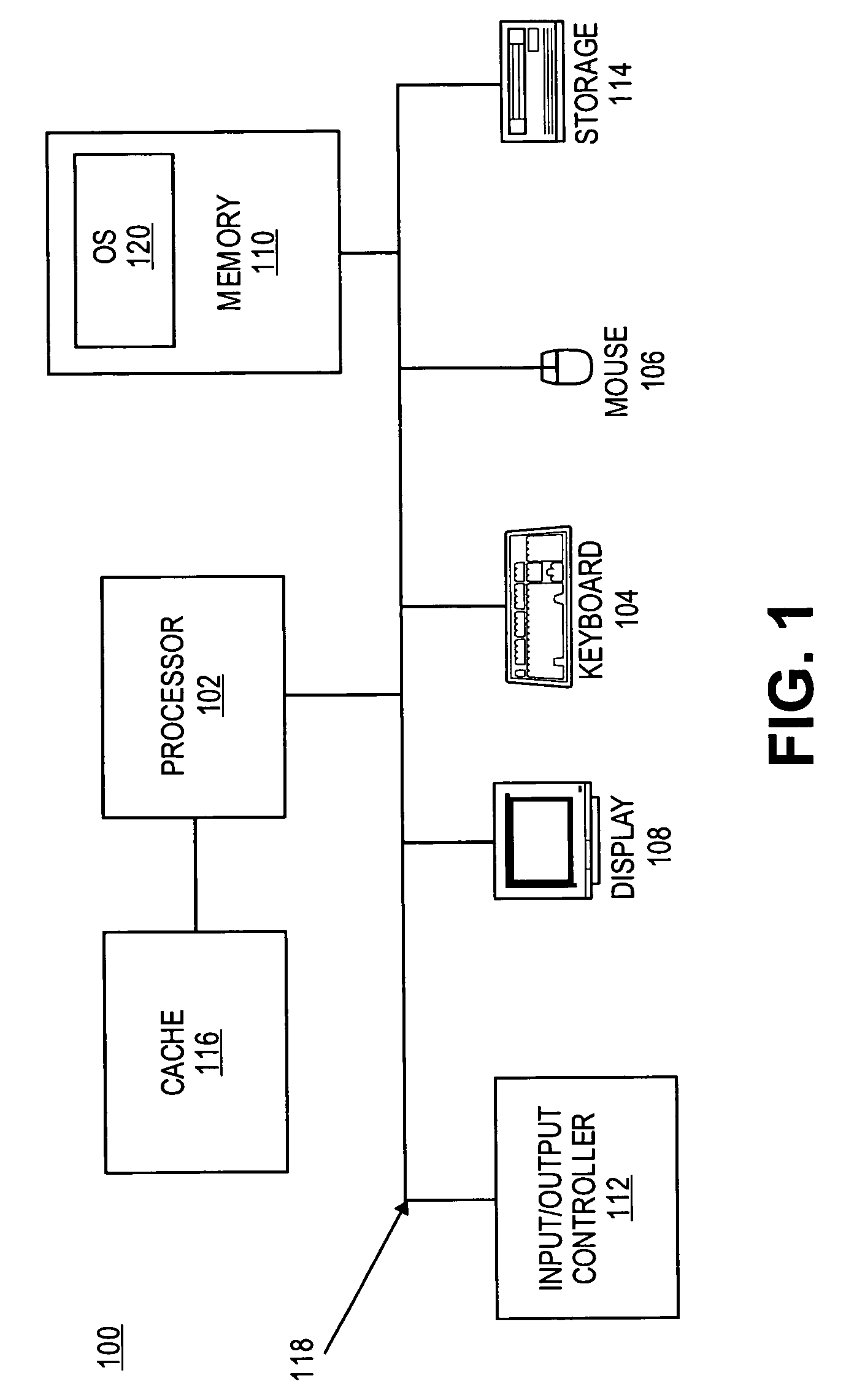

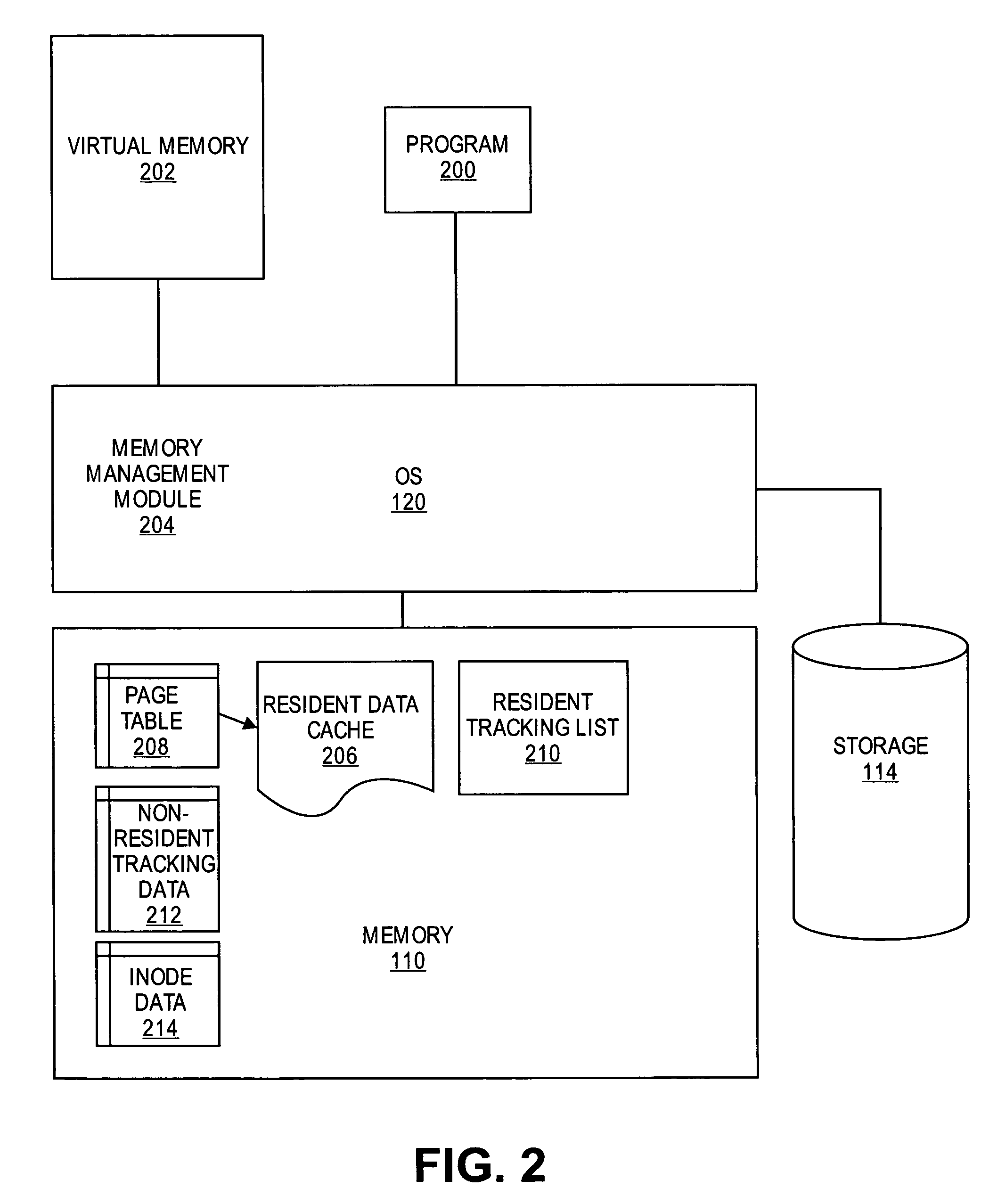

Method for tuning a cache

Embodiments of the present invention provide methods and systems for tuning the size of the cache. In particular, when a page fault occurs, non-resident page data is checked to determine if that page was previously accessed. If the page is found in the non-resident page data, an inter-reference distance for the faulted page is determined and the distance of the oldest resident page is determined. The size of the cache may then be tuned based on comparing the inter-reference distance of the newly faulted page relative to the distance of the oldest resident page.

Owner:RED HAT

Managing Paging I/O Errors During Hypervisor Page Fault Processing

ActiveUS20090307538A1Facilitates paging-inMemory adressing/allocation/relocationNon-redundant fault processingExternal storageError processing

In response to a hypervisor page fault for memory that is not resident in a shared memory pool, an I / O paging request is sent to an external storage paging space. In response to a paging service partition encountering an I / O paging error, a paging failure indication is sent to the hypervisor. A simulated machine check interrupt instruction is sent from the hypervisor to the shared memory partition and a machine check handler obtains control. The machine check handler performs data analysis utilizing an error log in an attempt to isolate the I / O paging error to a process or a set of processes in the shared memory partition. The process or set of processes associated with the I / O paging error, or the shared memory partition itself, may be terminated. Finally, the shared memory partition may clear or initialize the page associated with the I / O paging error.

Owner:IBM CORP

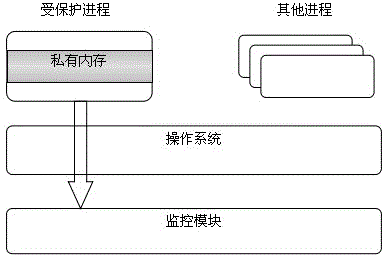

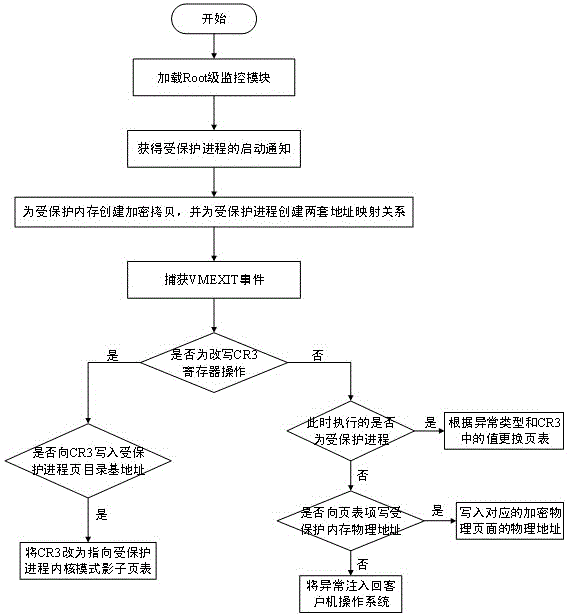

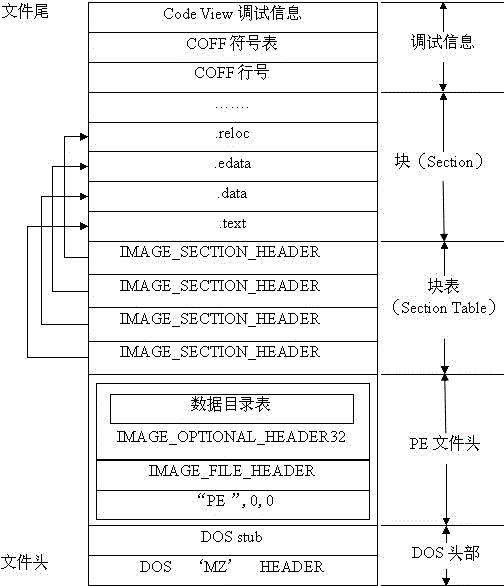

Process memory protecting method based on auxiliary virtualization technology for hardware

InactiveCN103955438AEffective protectionAvoid accessUnauthorized memory use protectionInternal memoryData Execution Prevention

The invention provides a process memory safety protecting method based on auxiliary virtualization for hardware. The method comprises the following steps: 1, loading a process memory monitoring module; step 2, informing the monitoring module during the starting of a protected process; step 3, creating an encrypted copy for a protected internal memory space of the protected process; step 4, realizing internal memory virtualization to a virtual machine system by using a shadow page table mechanism; step 5: acquiring rewritten operation and page fault abnormality of a CR3 register. The process memory safety protecting method provided by the invention has the advantages as follows: the monitoring module working at a Root stage is created to monitor page directories, page tables and modification of a page directory register in all processes so as to prevent any process except the protected process from visiting data in the memory space of the protected process, when the protected process is switched to a core state, a page in a user-mode space is replaced so as to prevent codes in a kernel mode from injection attacks, and a data execution prevention technology is used for setting the page of the data area of the protected process to be non-executable. Therefore, codes in the user mode are prevented from injection attacks.

Owner:NANJING UNIV

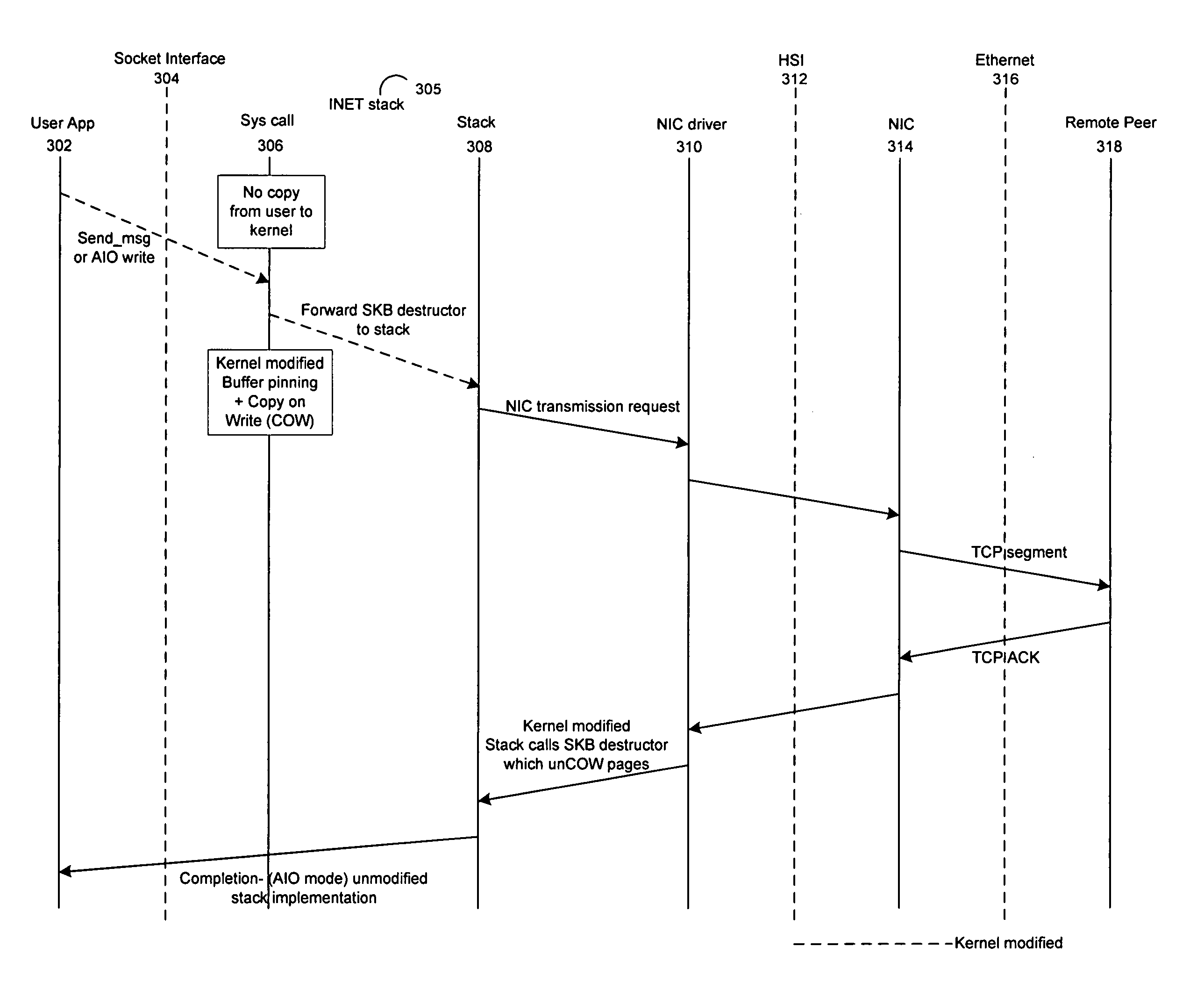

Method and system for transparent TCP offload with dynamic zero copy sending

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE +1

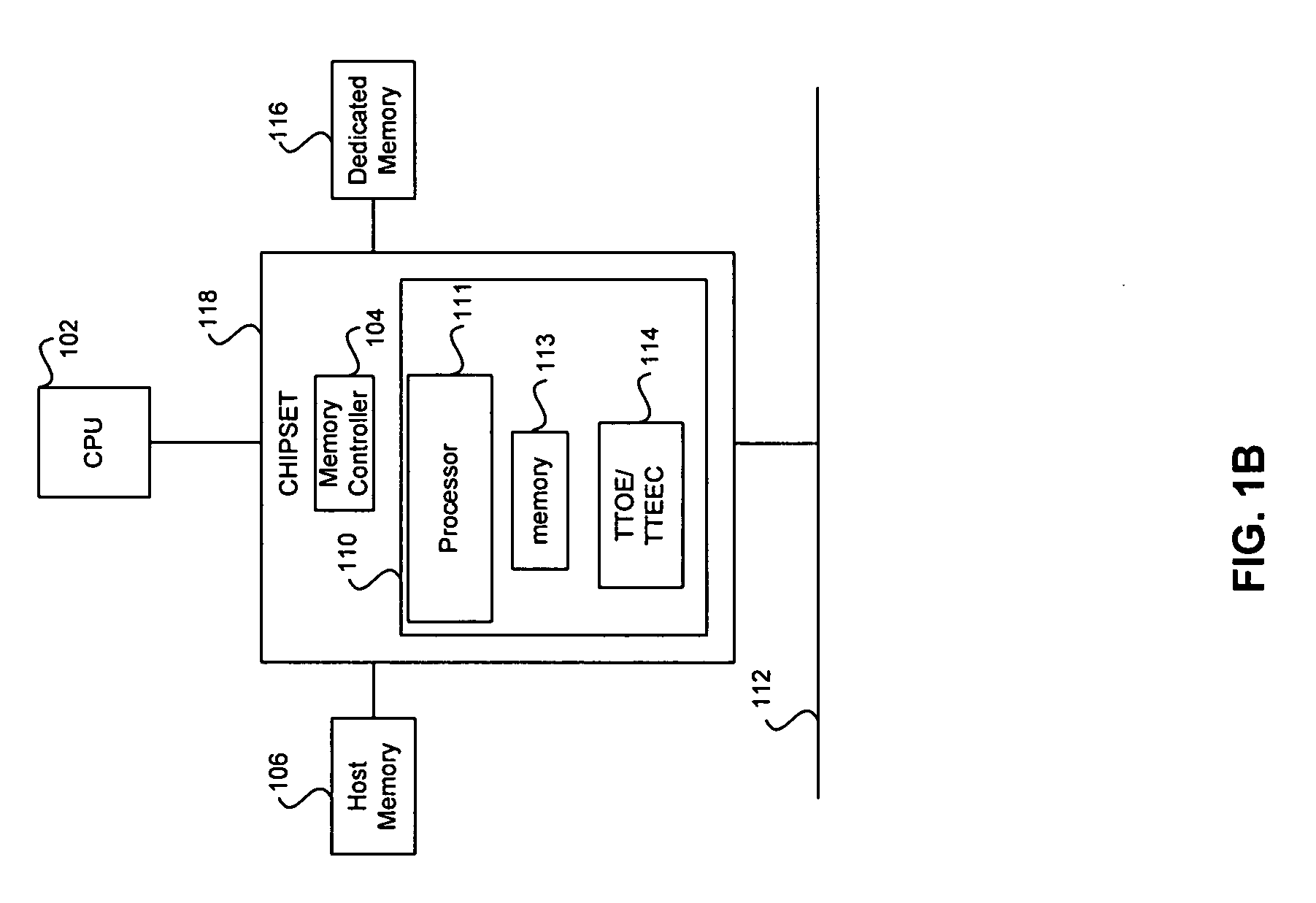

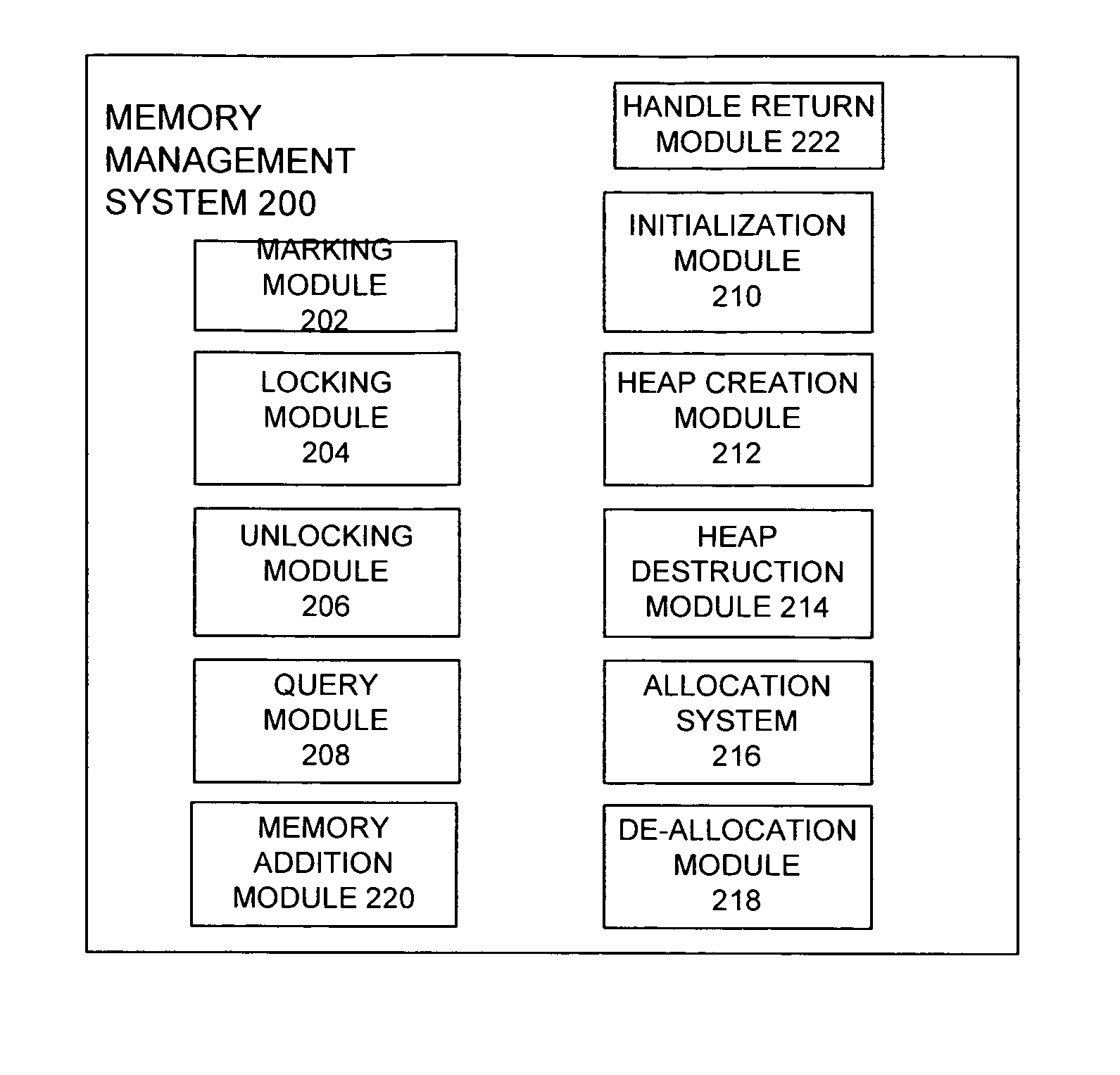

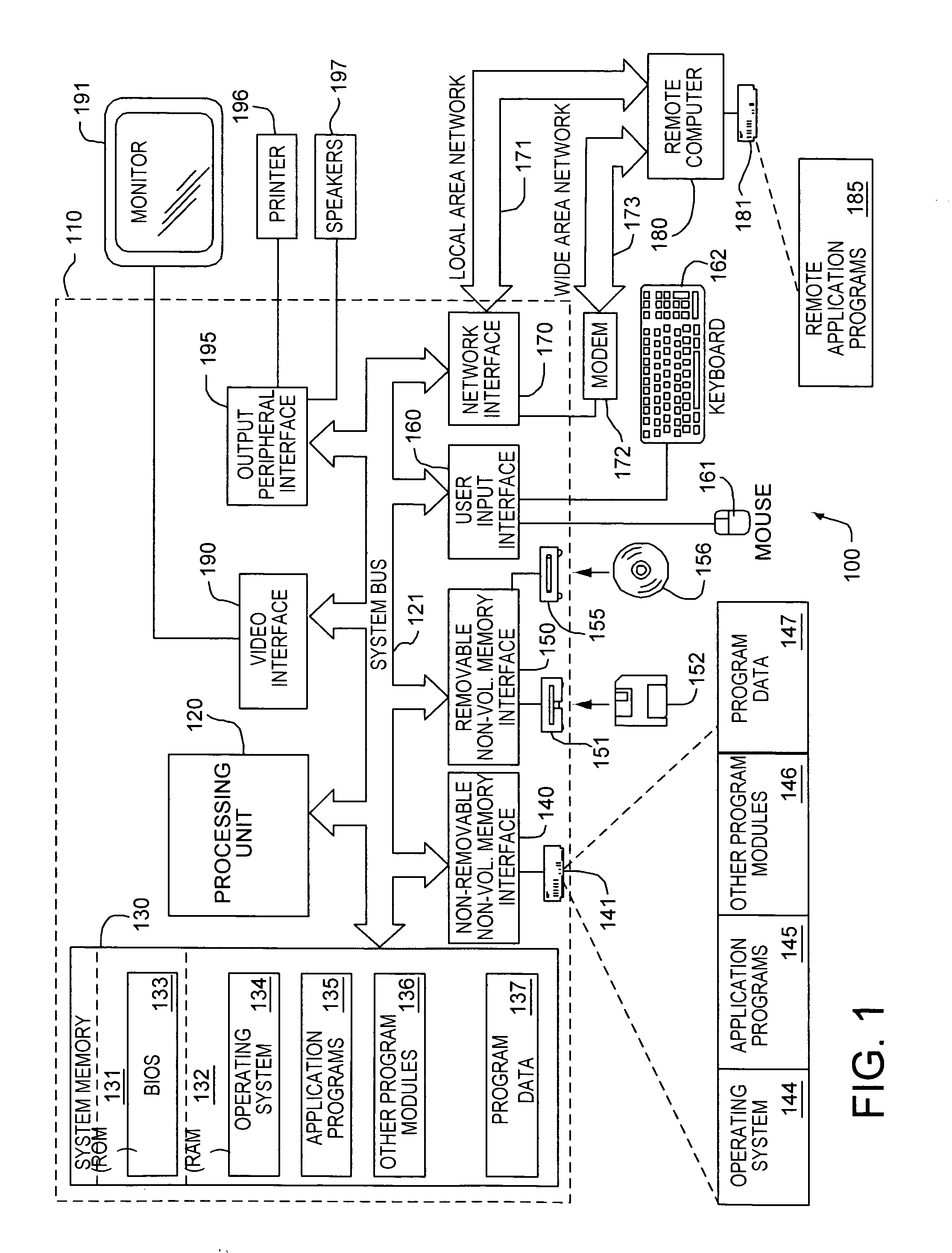

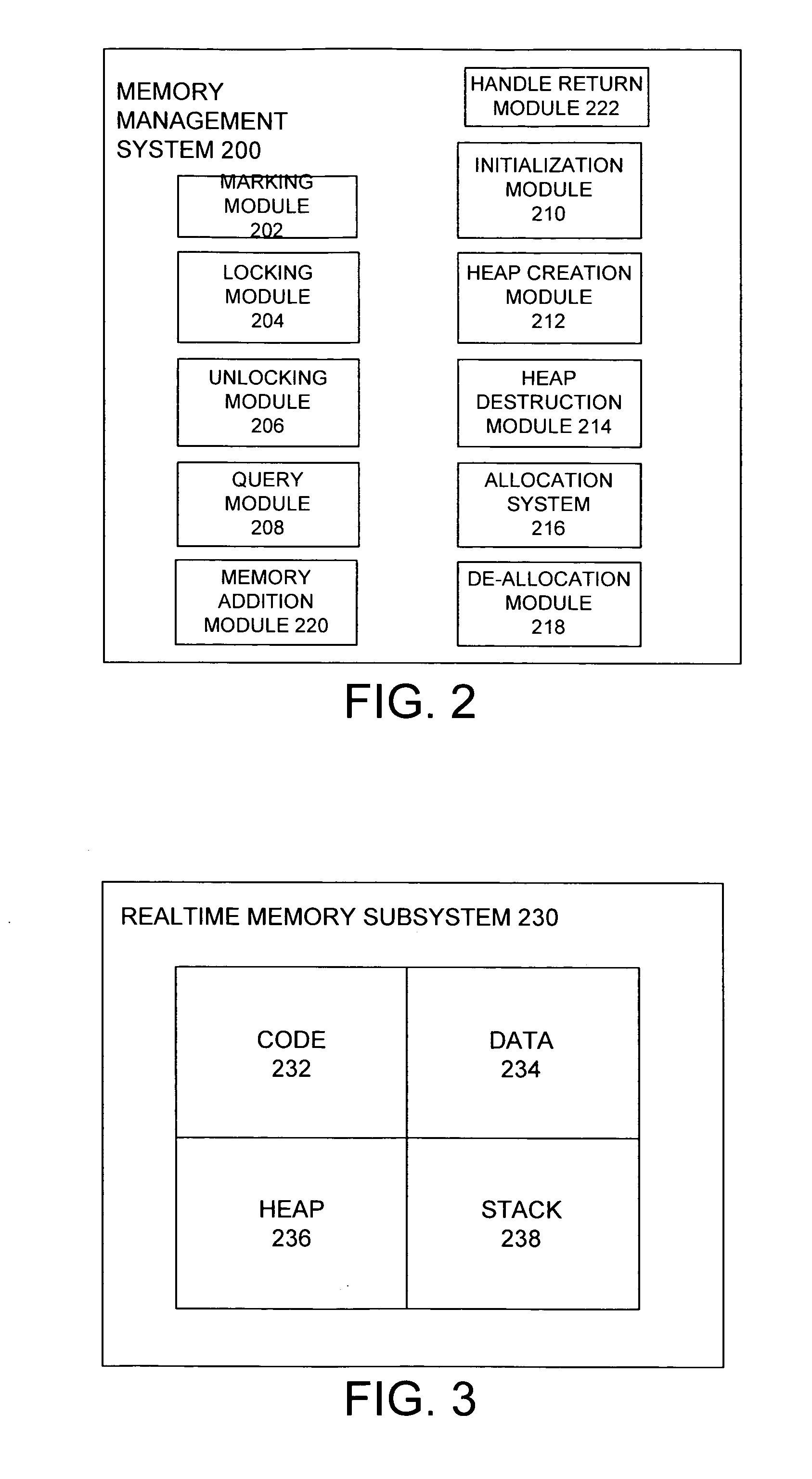

System and method for memory management

InactiveUS20050188164A1Minimizing memory access latencyMaximizing and memory accessResource allocationMemory adressing/allocation/relocationHandling CodeParallel computing

The present invention is directed to a method and system for minimizing memory access latency during realtime processing. The method includes a mechanism for marking information that will be accessed during realtime processing. The marked information may include code, data, heaps, stacks, as well as other information. The method includes support for locking down all of the marked information so that it is present in a computing machine's physical memory so that no page faults will be incurred during realtime processing. The method additionally enables realtime processing code to allocate and free memory in a non-blocking manner. It does so by enabling the creation of heaps for use during realtime processing, wherein each heap supports allocating and freeing memory in a non-blocking fashion. Each heap tracks freed memory blocks using individual non-blocking tracking lists for each memory block size supported by that heap. If a memory allocation request to a heap can be satisfied by using a memory block available on one of the lists of freed memory blocks, the method includes allocating the available memory block by popping the memory block from the tracking list. If no freed memory blocks of the desired size are available, then the method includes traversing a separate set of source memory blocks for that heap, and making the allocation in a non-blocking fashion from one of those blocks.

Owner:MICROSOFT TECH LICENSING LLC

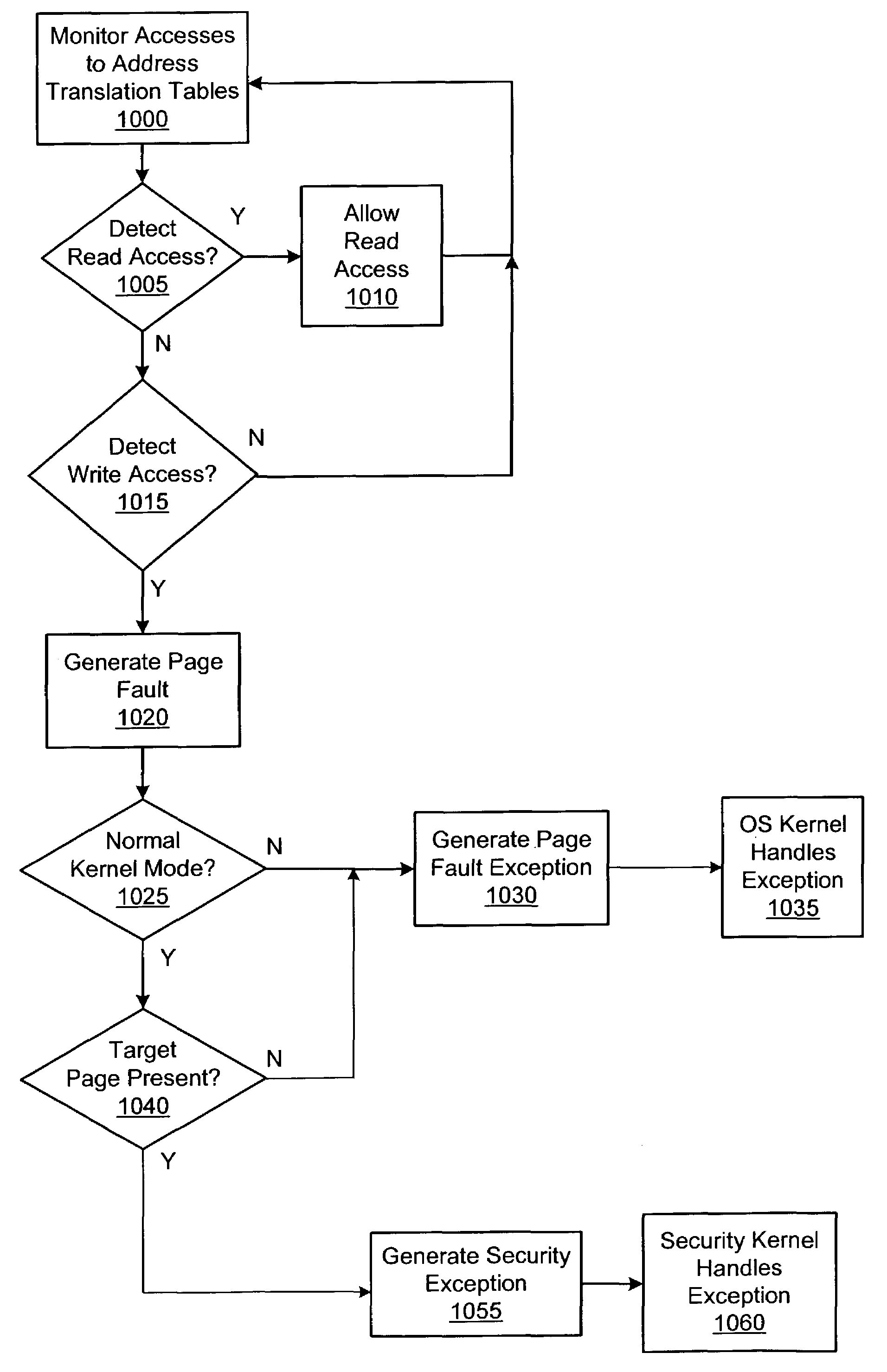

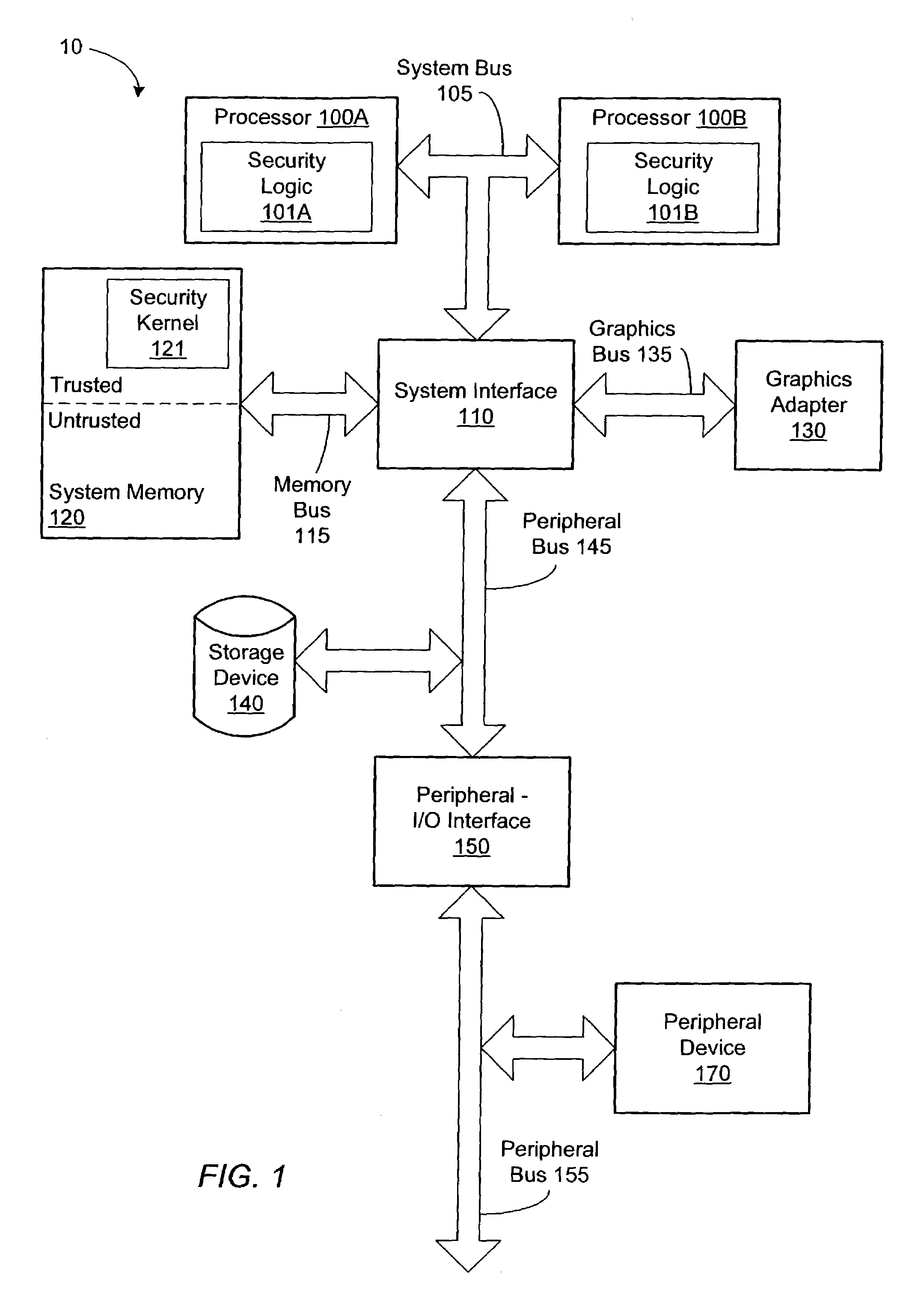

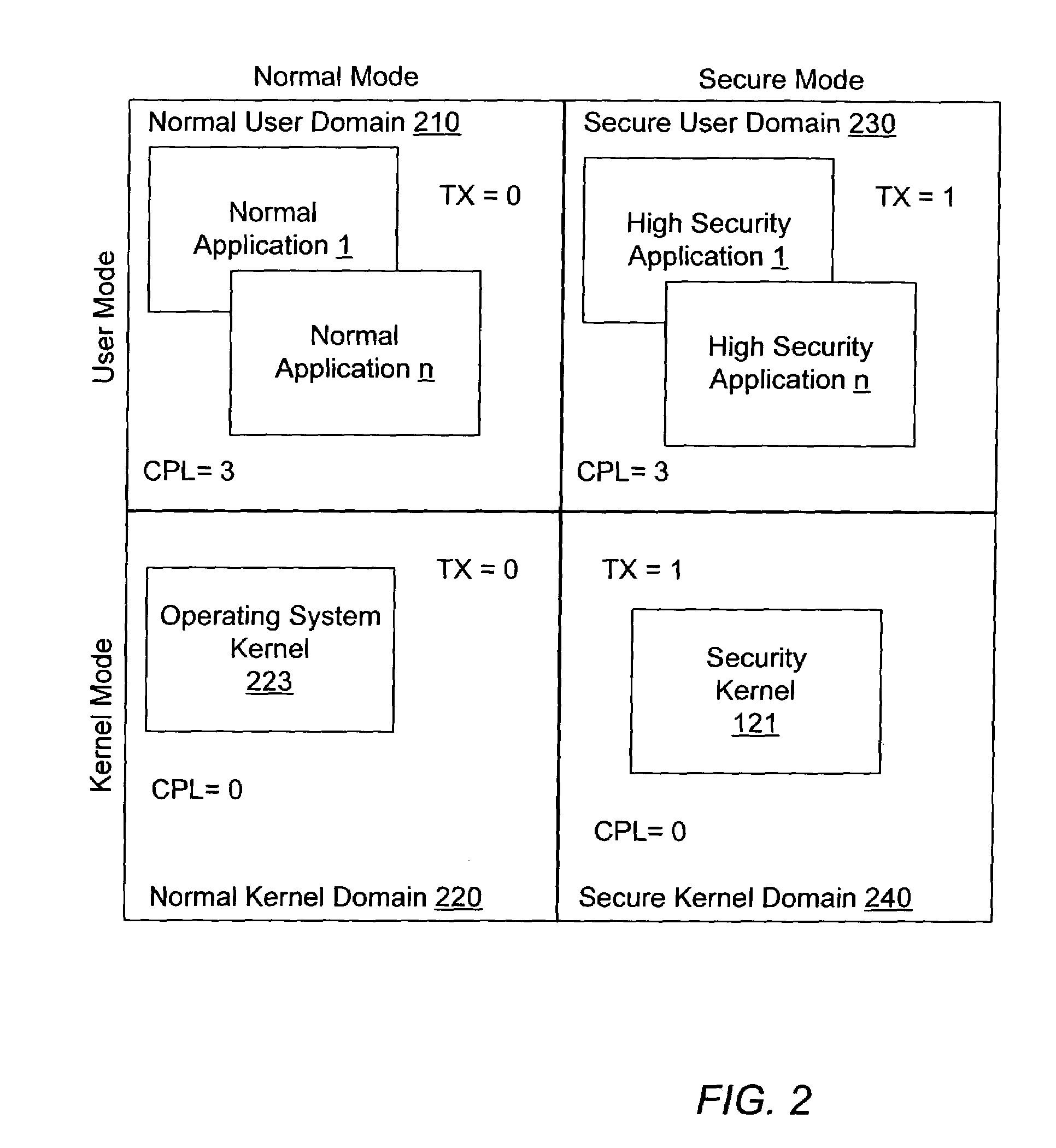

Method of controlling access to an address translation data structure of a computer system

ActiveUS7082507B1Memory adressing/allocation/relocationUnauthorized memory use protectionComputer hardwarePhysical address

A method of controlling access to an address translation data structure of a computer system. The computer system includes a processor having a normal execution mode and a secure execution mode. The method includes executing code and generating a linear address. During translation of the linear address into a physical address, the method also includes generating a read-only page fault exception during the normal execution mode in response to detecting a software invoked write access to an address translation data structure having a read / write attribute set to be read-only. The method further includes selectively generating either the read-only page fault exception or a security exception during the secure execution mode in response to detecting the software invoked write access.

Owner:ADVANCED SILICON TECH

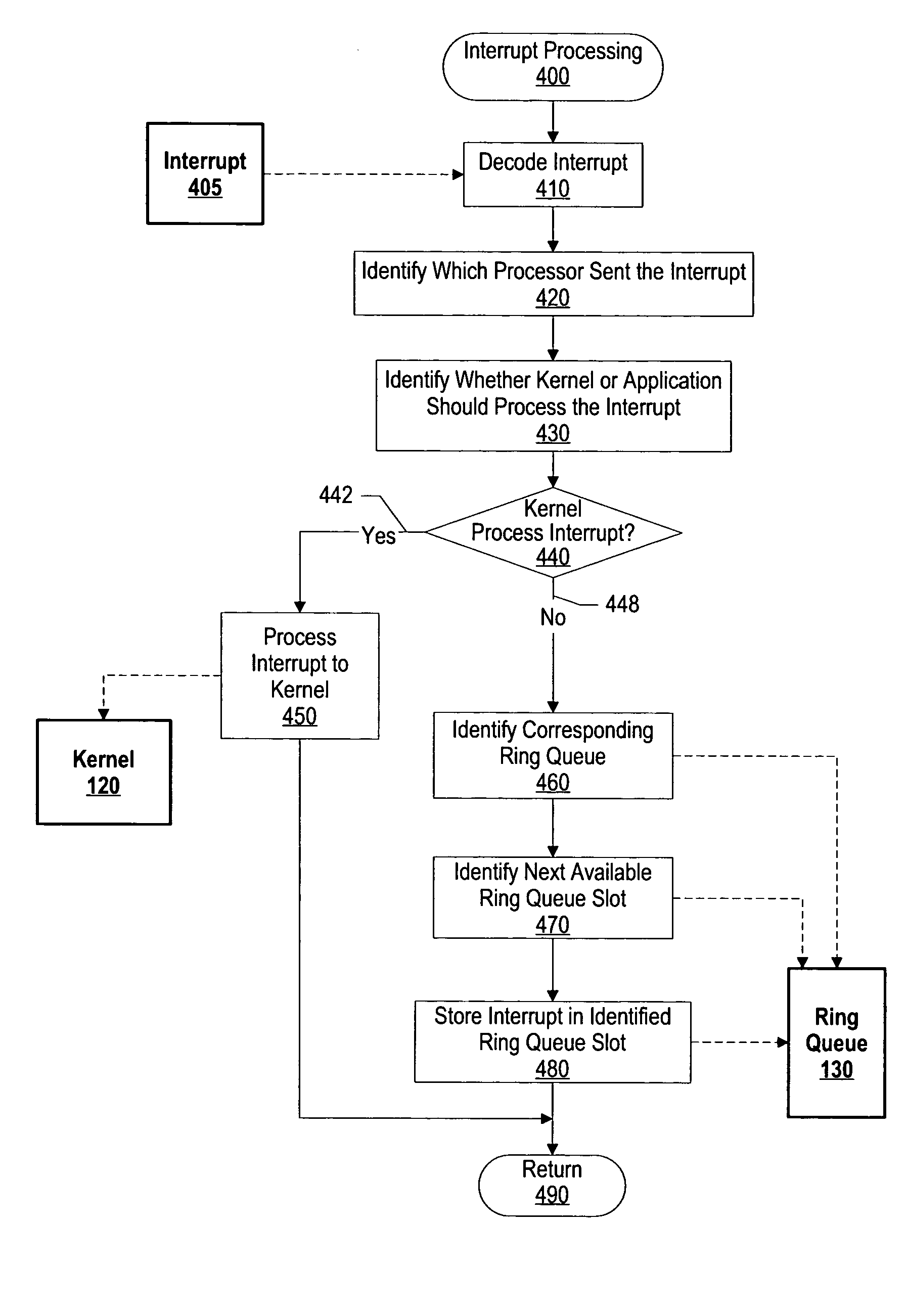

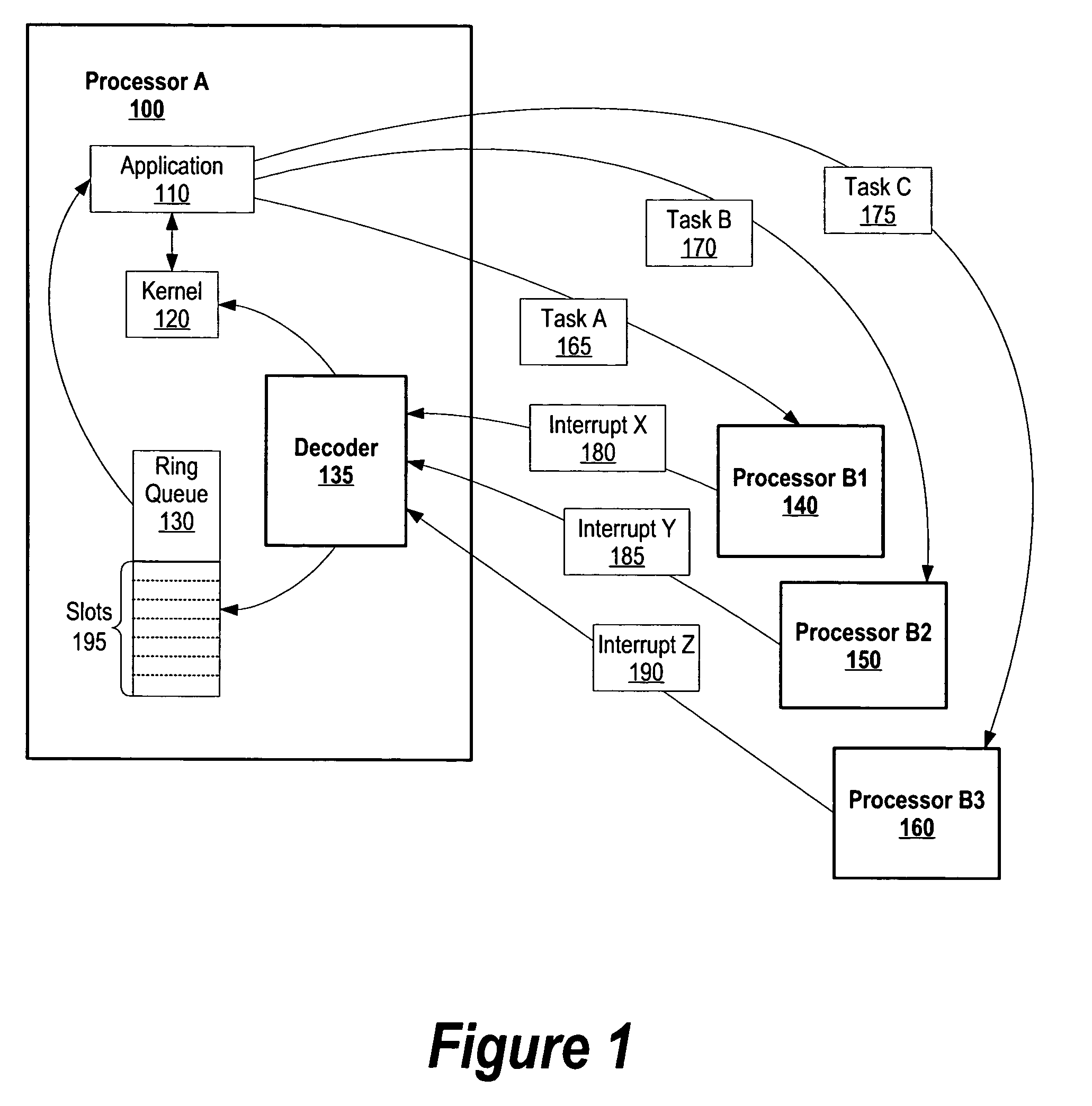

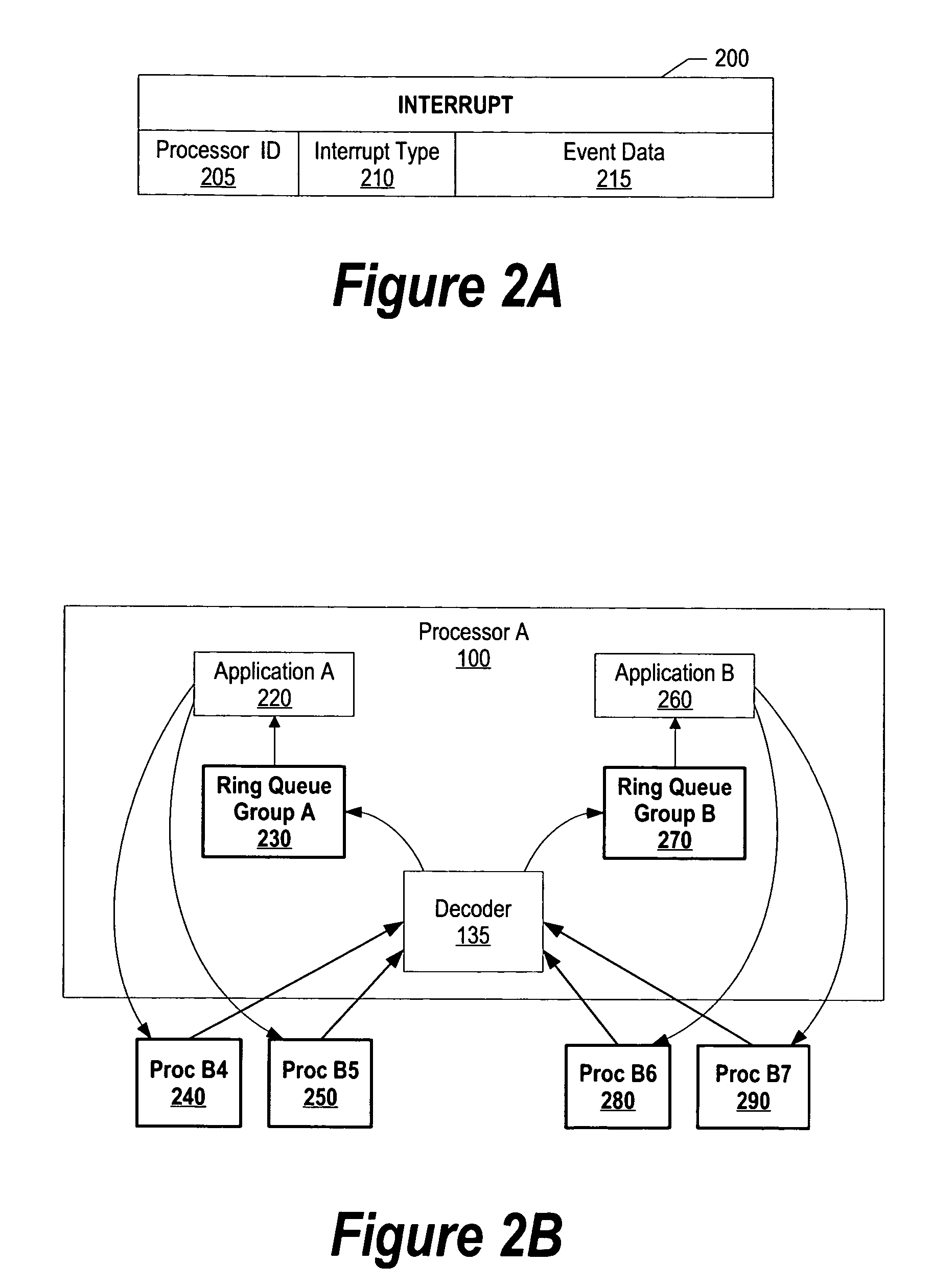

System and method for message delivery across a plurality of processors

A system and method is provided to deliver messages to processors operating in a multi-processing environment. In a multi-processor environment, interrupts are managed by storing events in a queue that correspond to a particular support processor. A main processor decodes an interrupt and determines which support processor generated the interrupt. The main processor then determines whether a kernel or an application should process the interrupt. Interrupts such as page faults, segment faults, and alignment errors are handled by the kernel, while “informational” signals, such as stop and signal requests, halt requests, mailbox requests, and DMC tag complete requests are handled by the application. In addition, multiple identical events are maintained, and event data may be included in the interrupt using the invention described herein.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com