Patents

Literature

5569results about "Micro-instruction address formation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

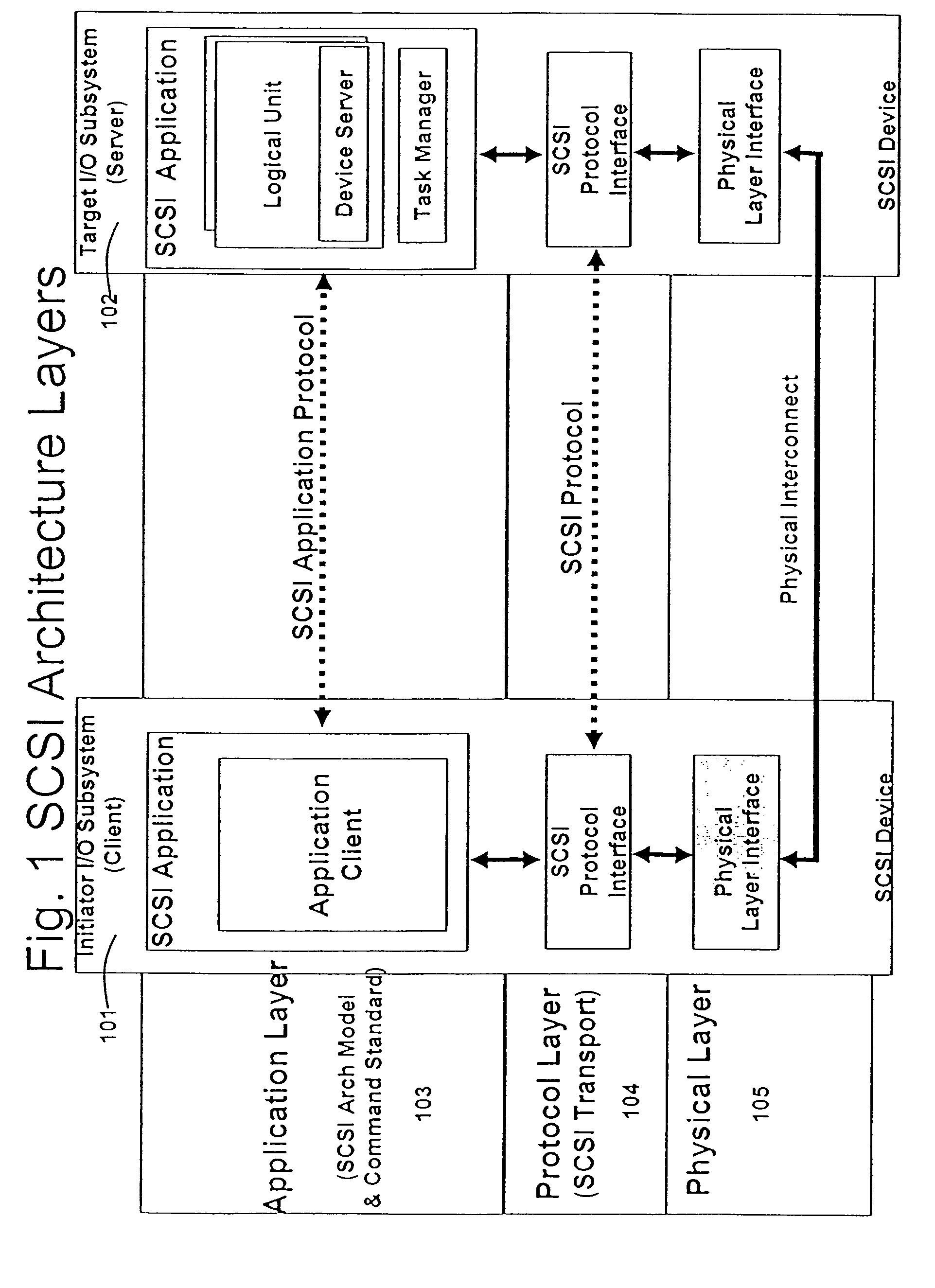

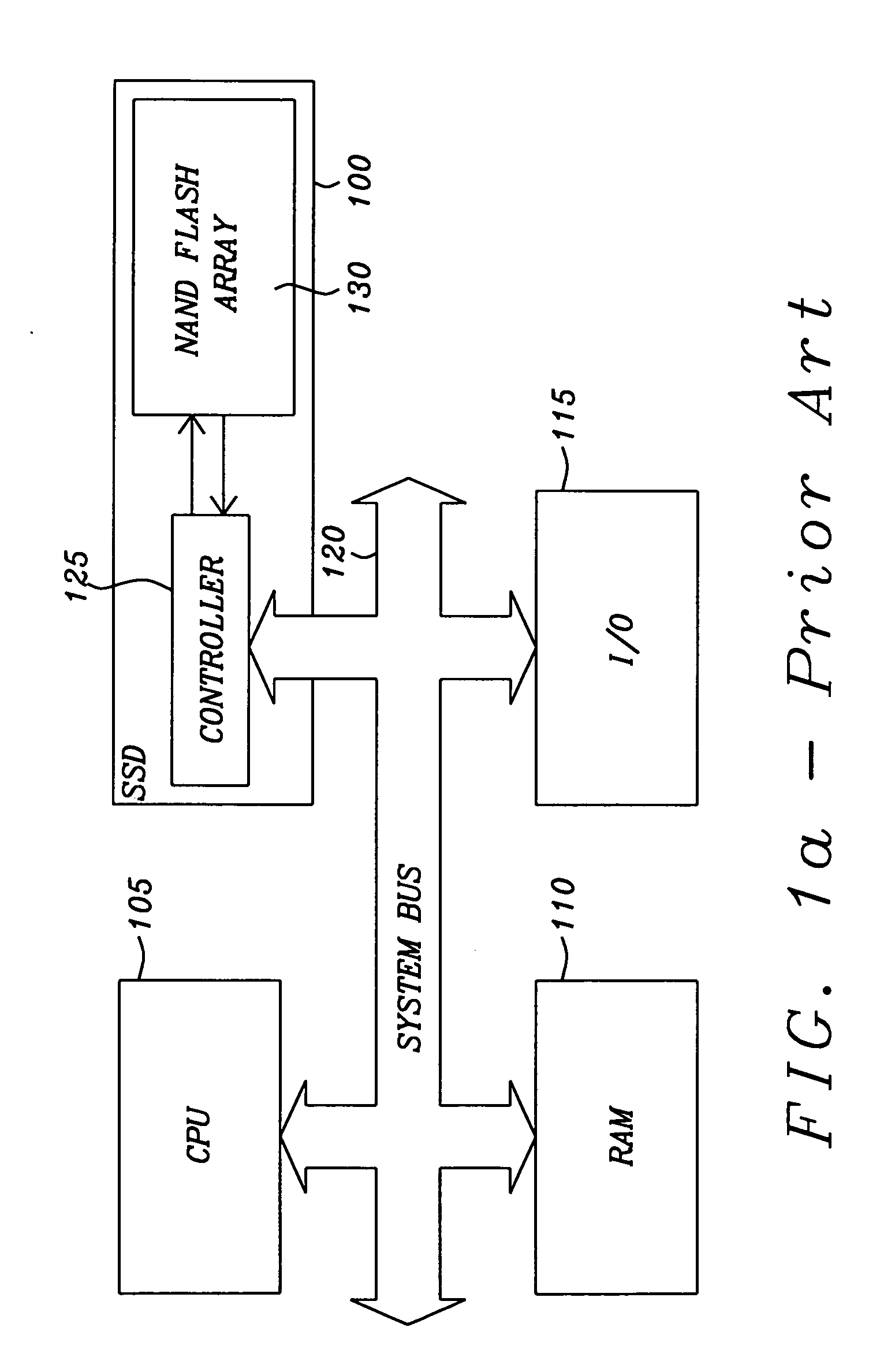

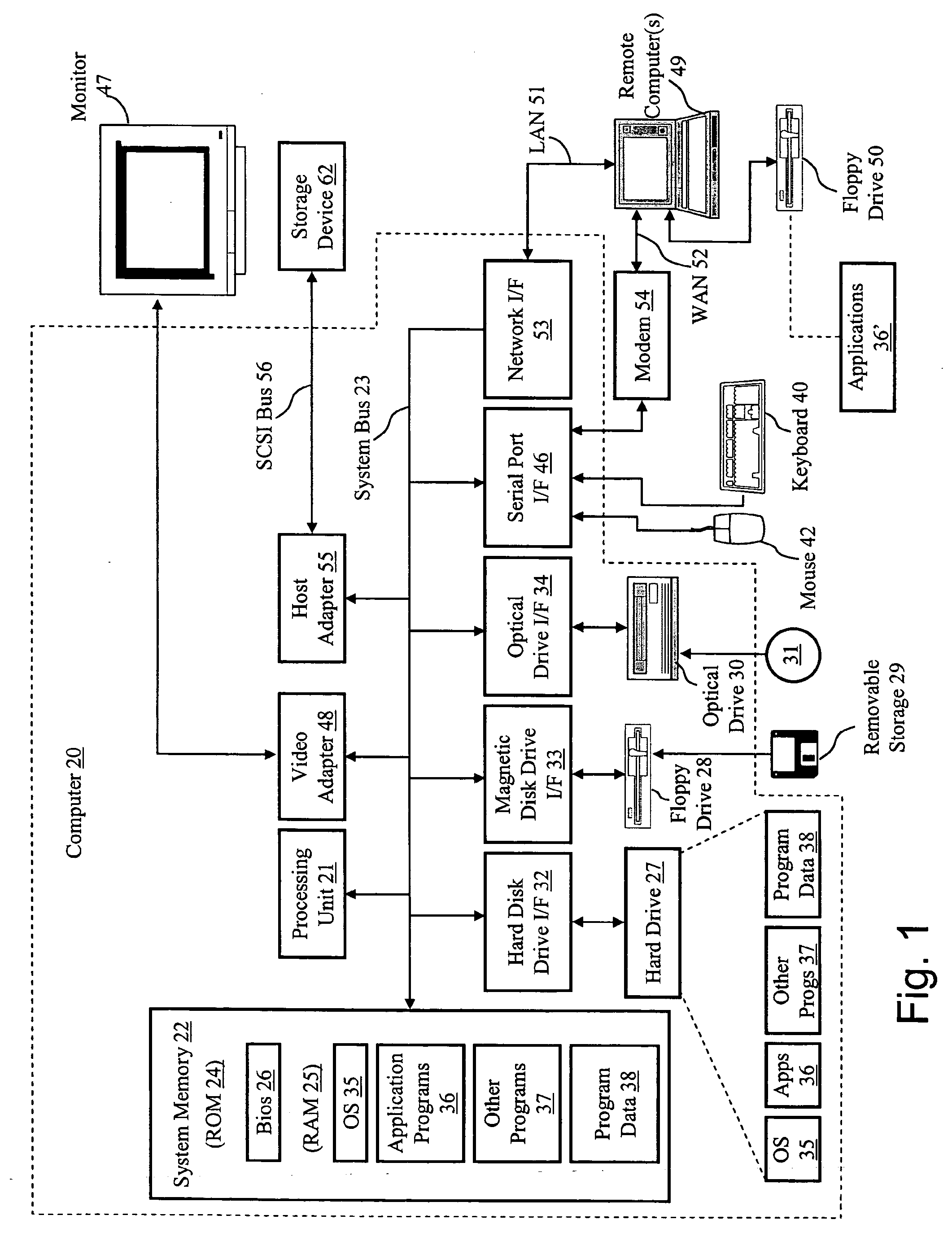

Storage virtualization system and methods

InactiveUS20050125593A1Reduce consumptionWithout impactInput/output to record carriersMemory adressing/allocation/relocationRAIDIslanding

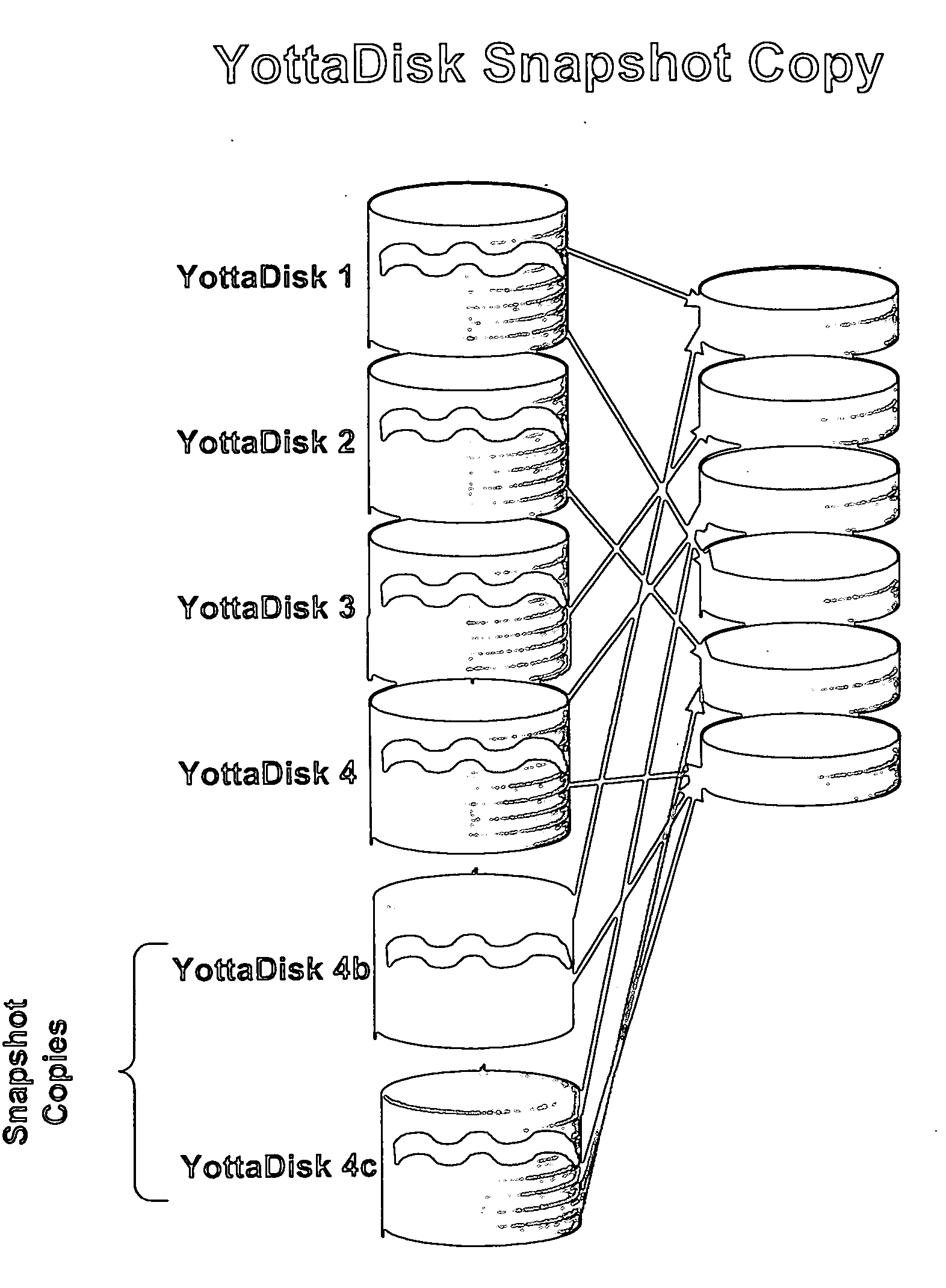

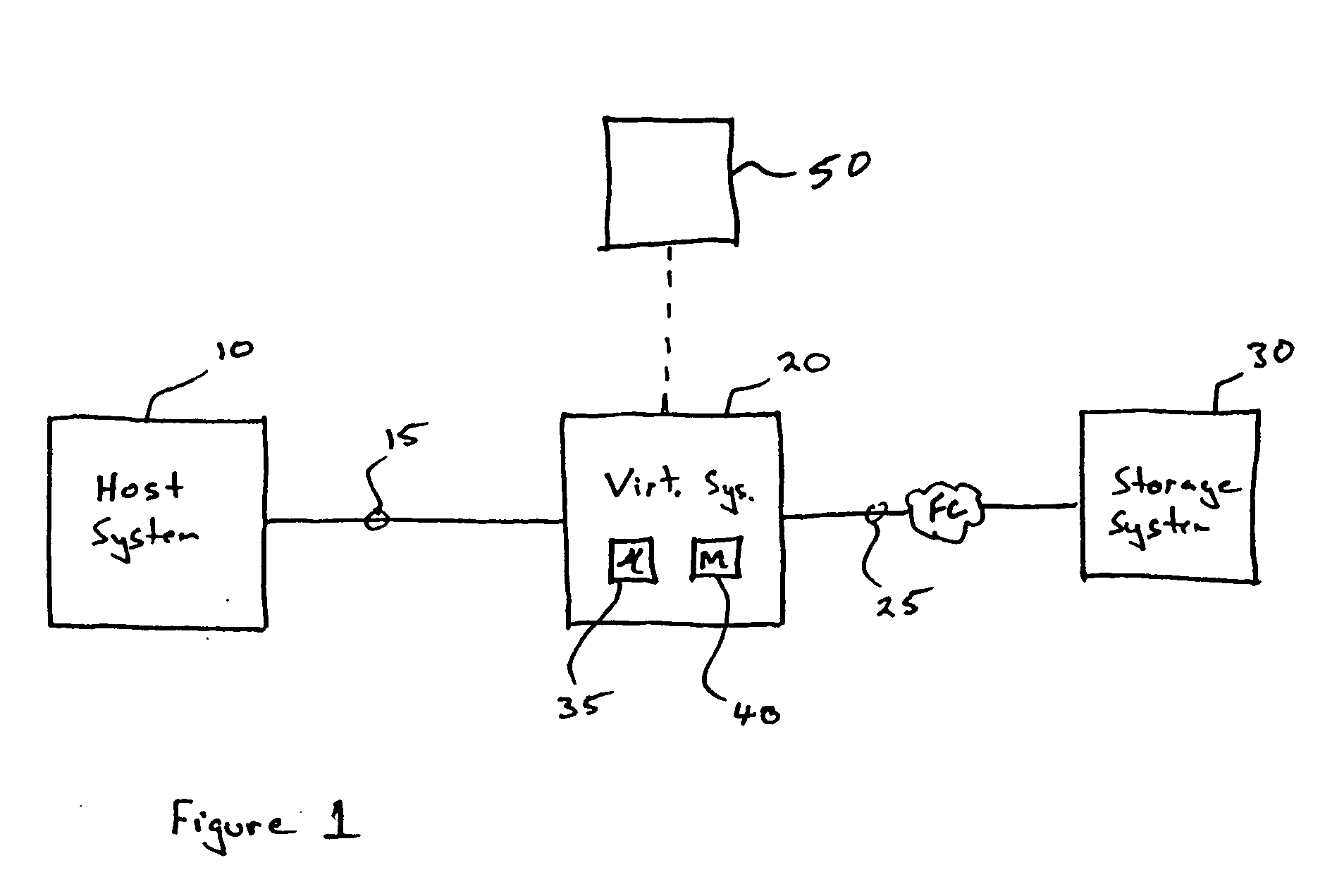

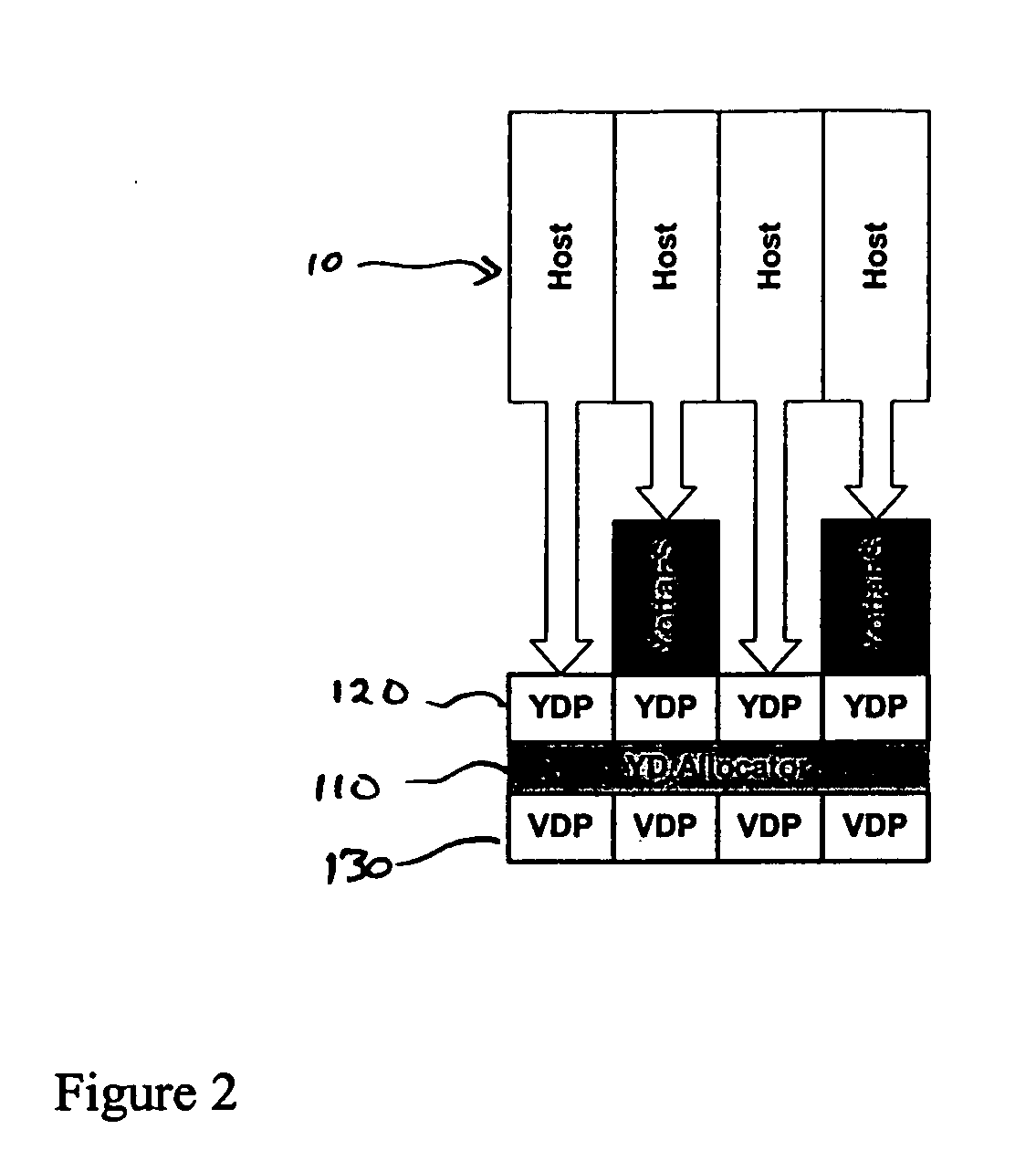

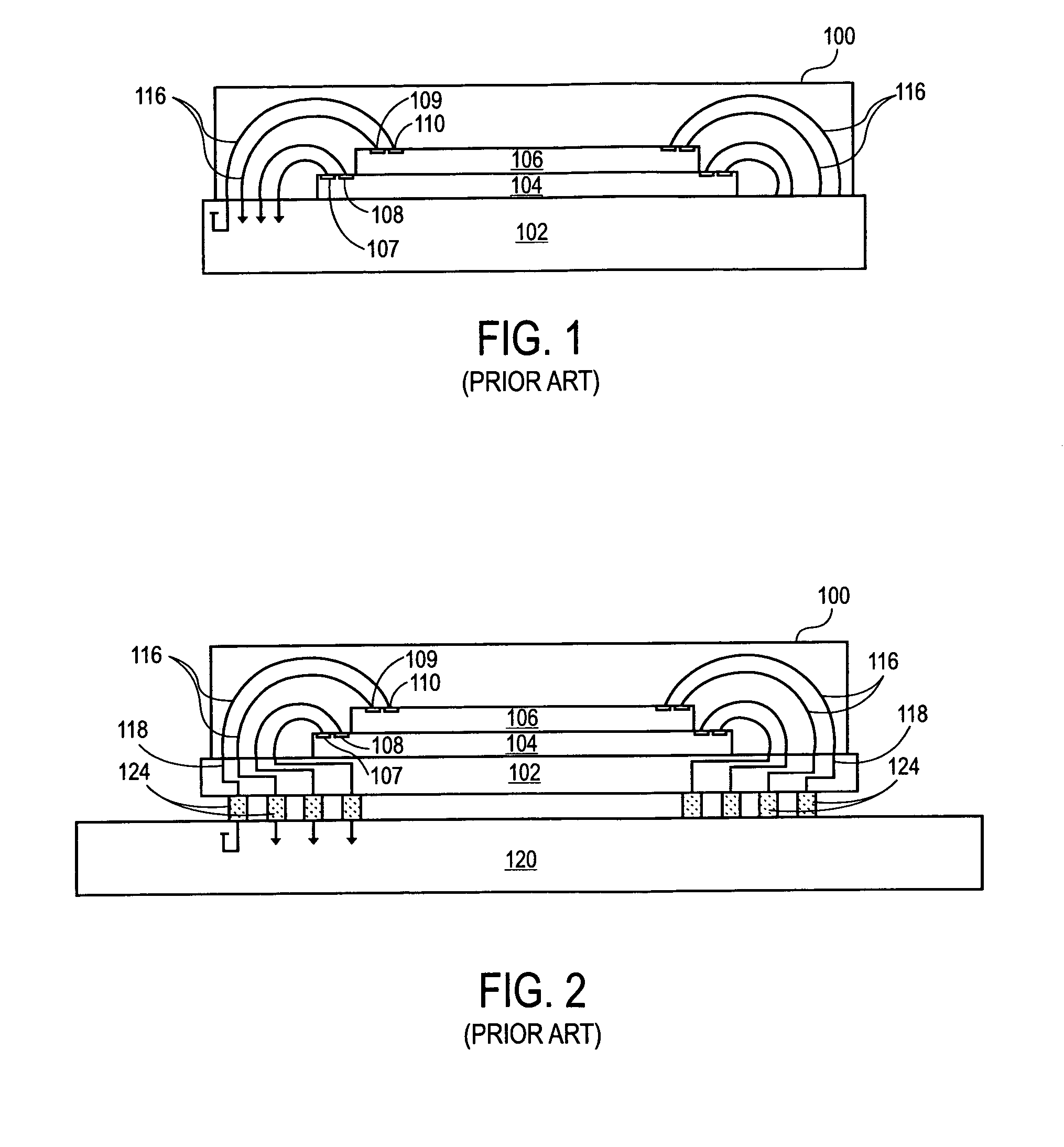

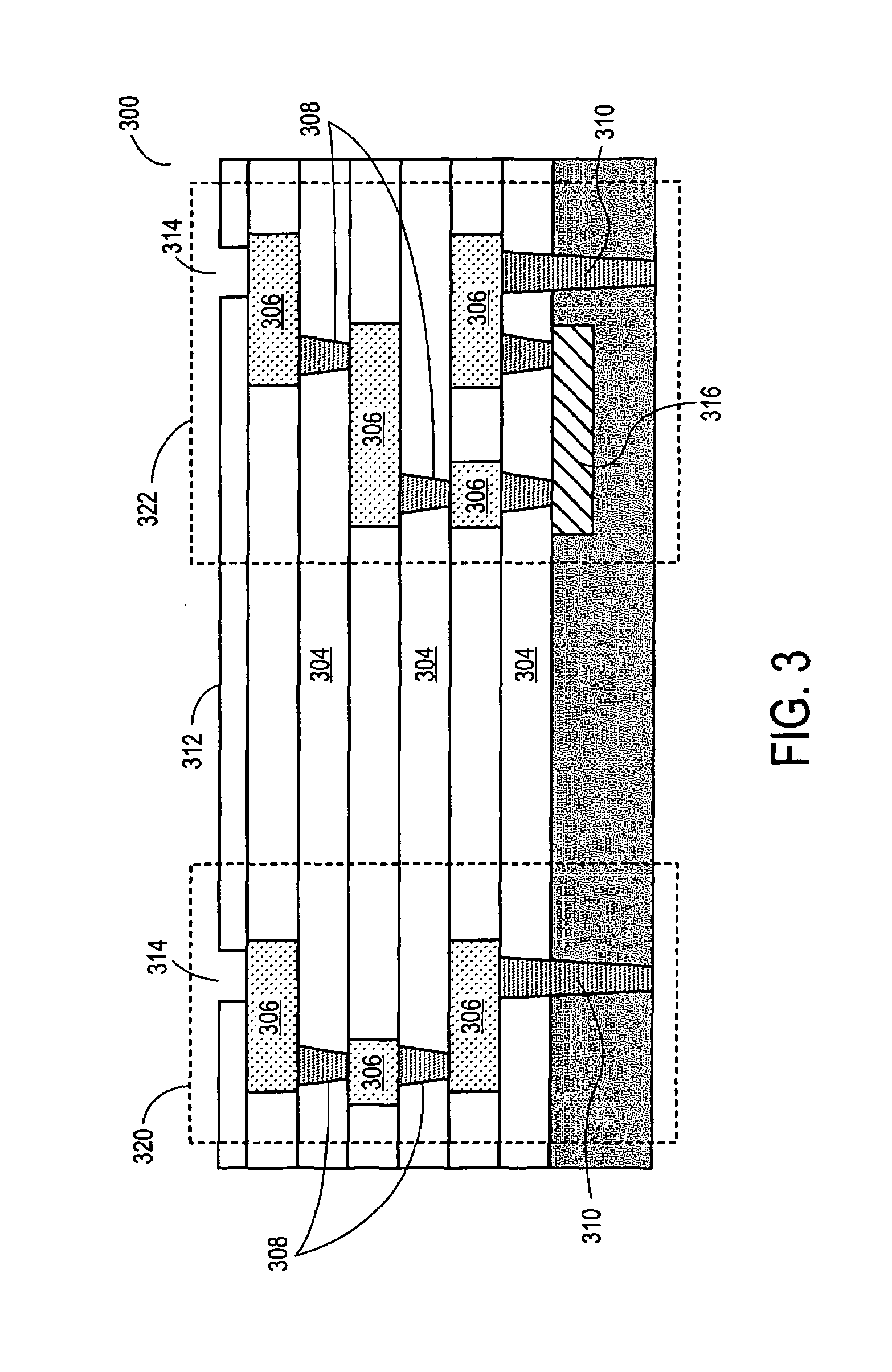

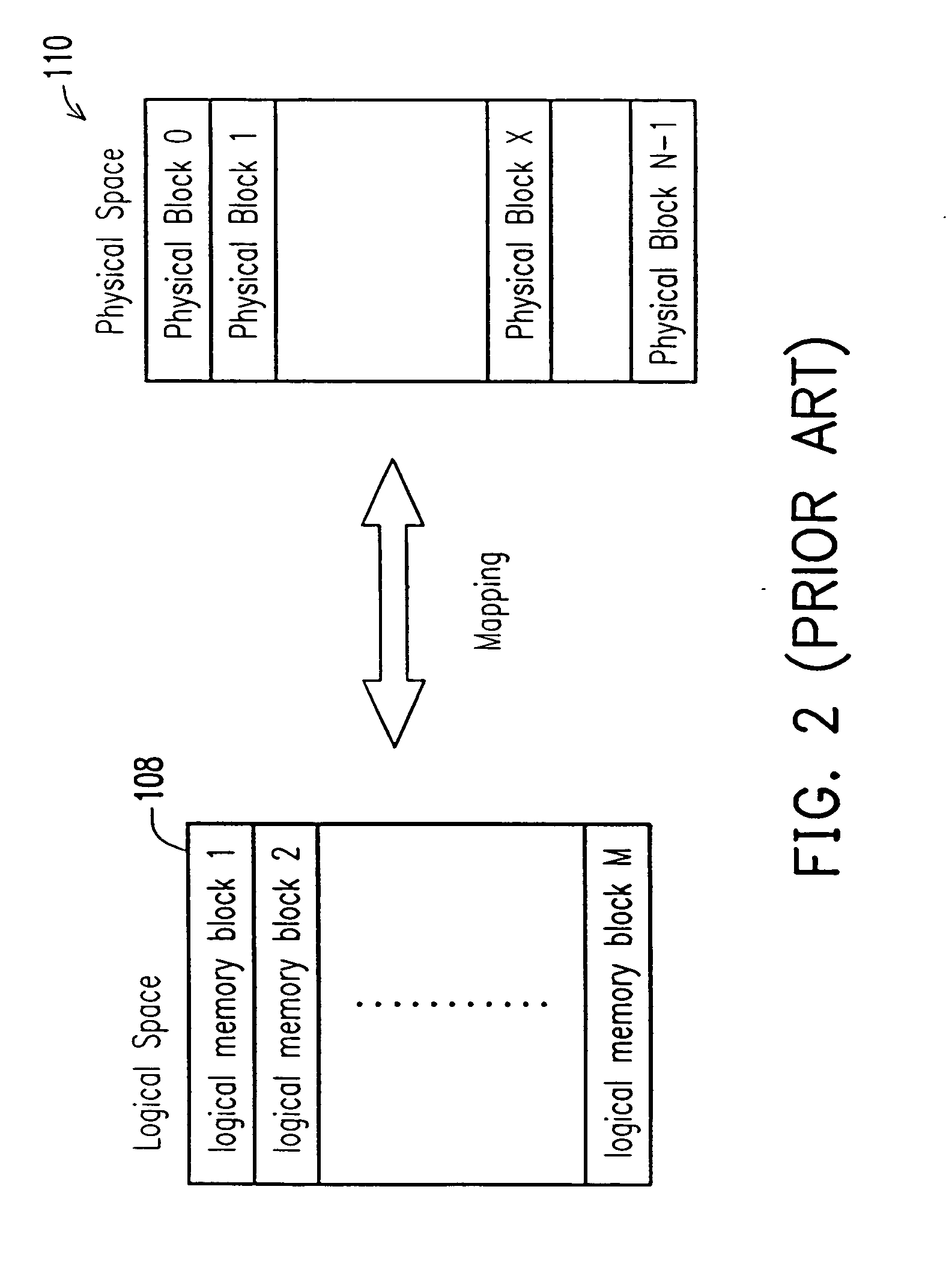

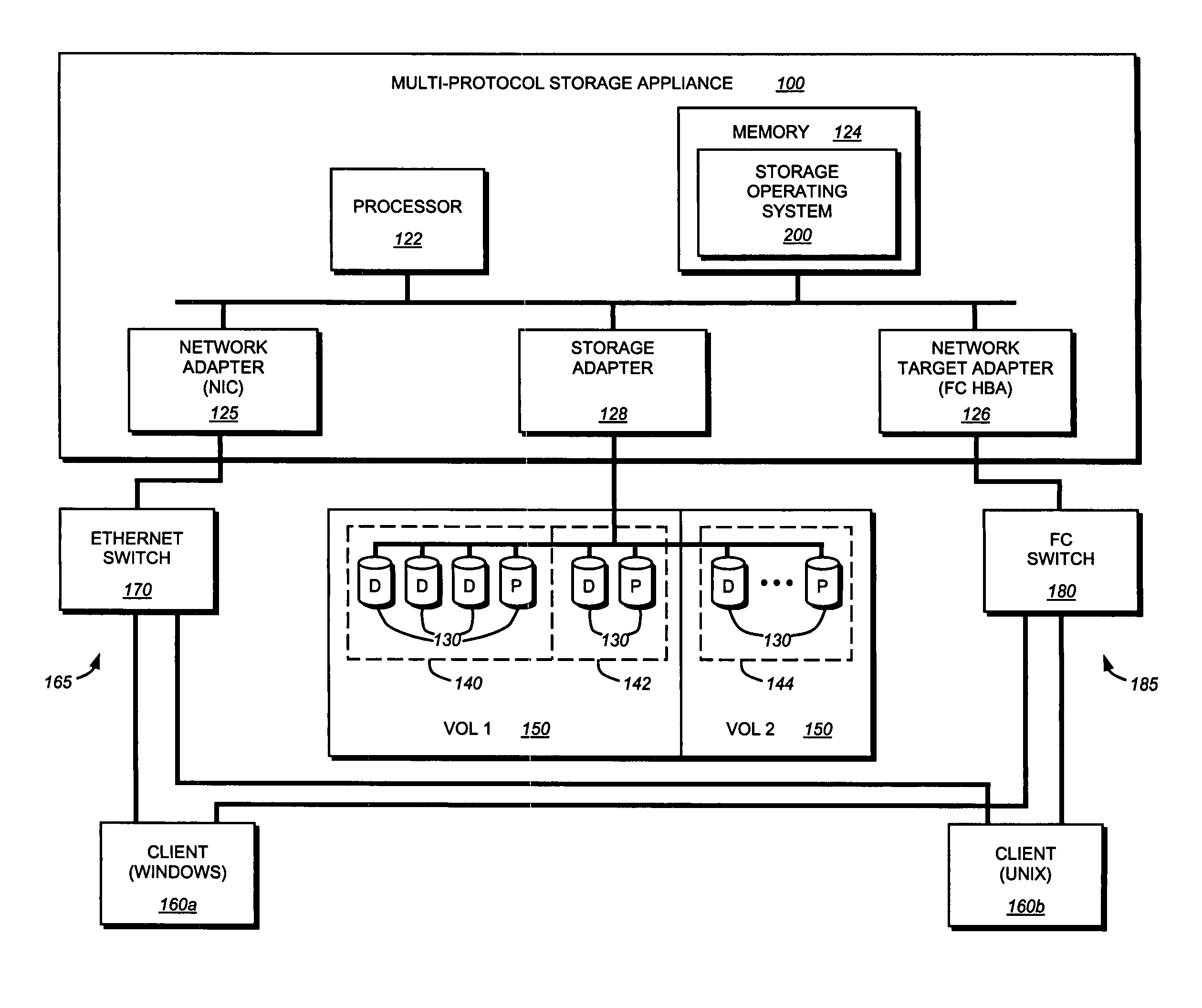

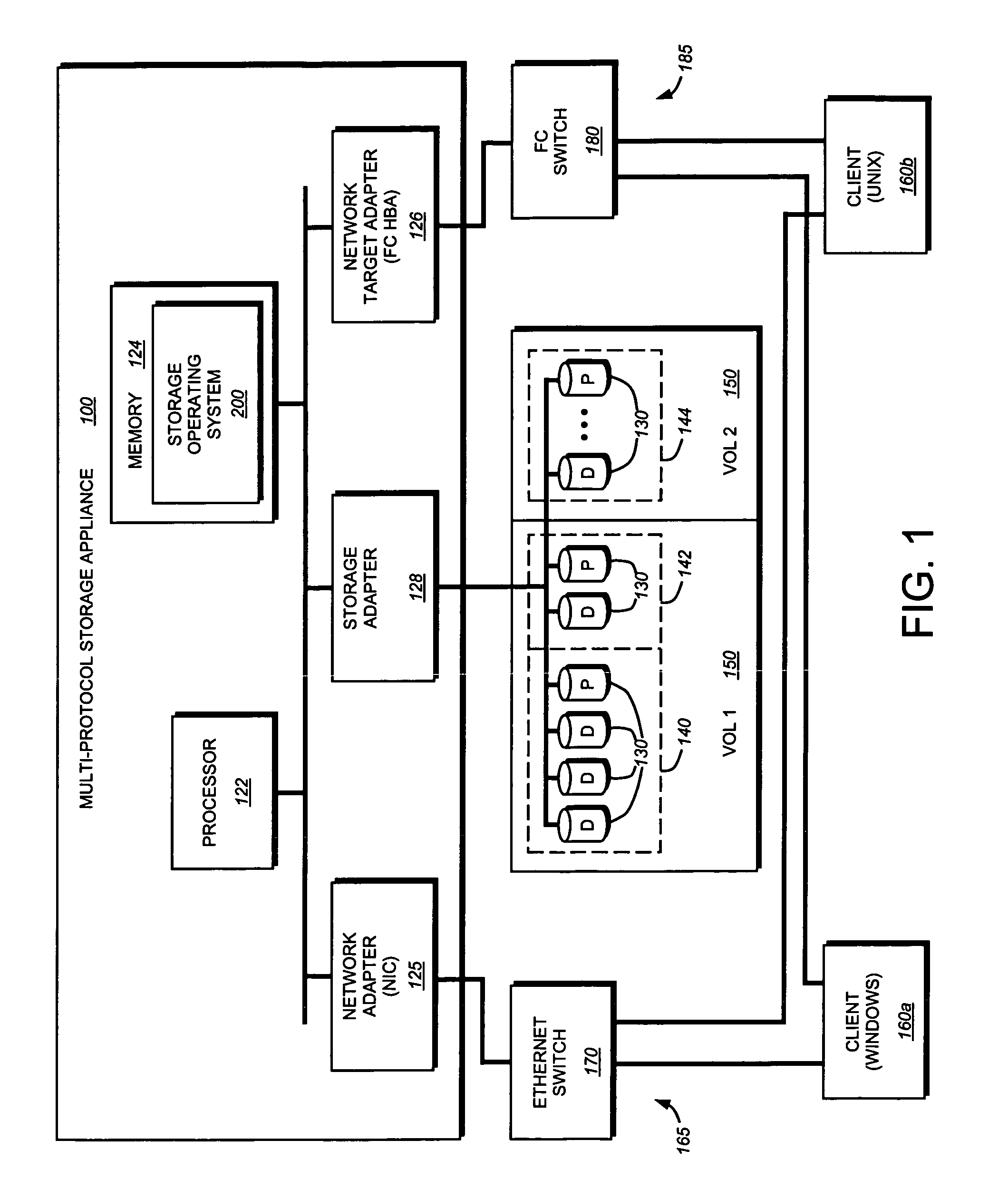

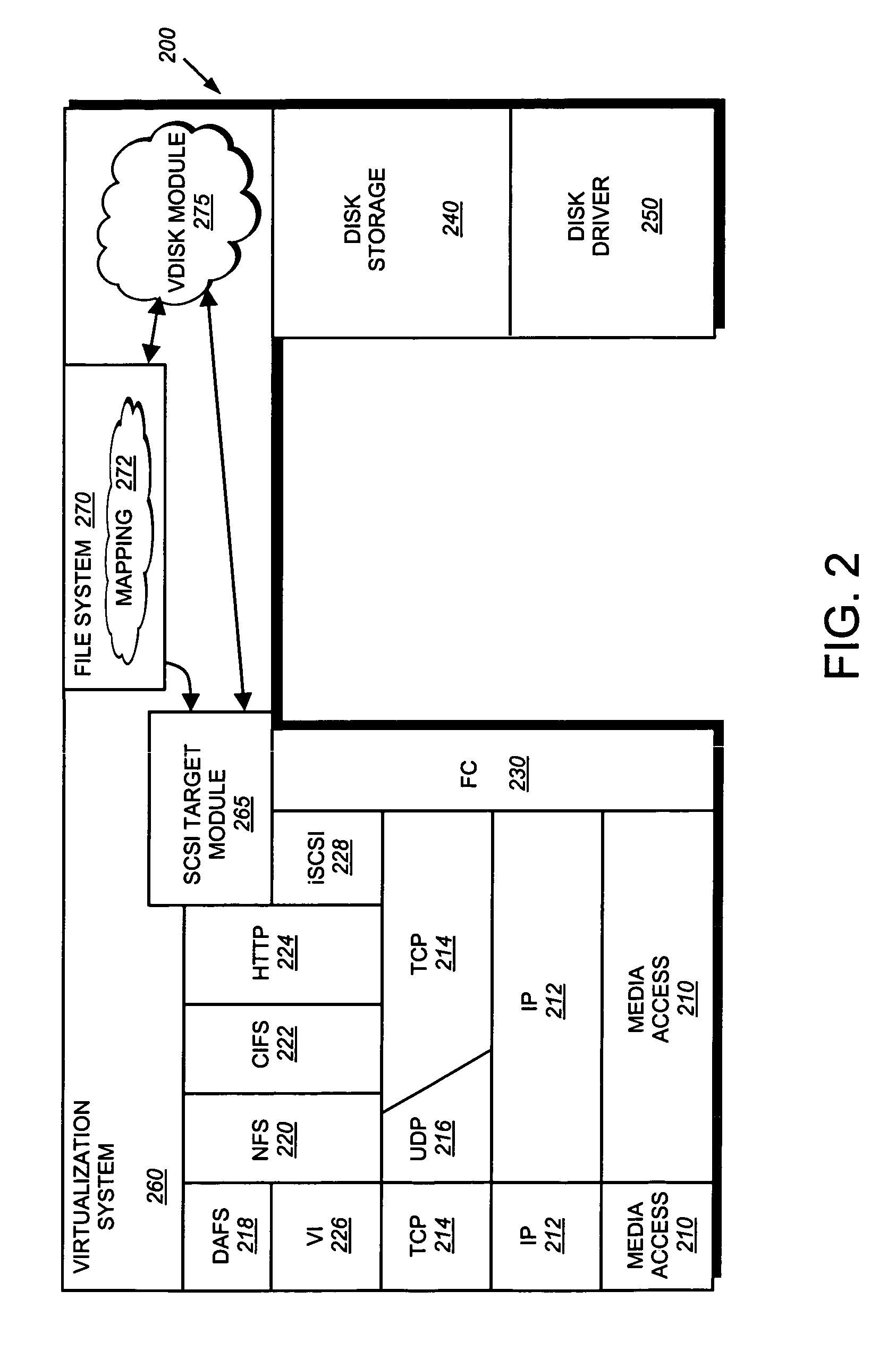

Storage virtualization systems and methods that allow customers to manage storage as a utility rather than as islands of storage which are independent of each other. A demand mapped virtual disk image of up to an arbitrarily large size is presented to a host system. The virtualization system allocates physical storage from a storage pool dynamically in response to host I / O requests, e.g., SCSI I / O requests, allowing for the amortization of storage resources-through a disk subsystem while maintaining coherency amongst I / O RAID traffic. In one embodiment, the virtualization functionality is implemented in a controller device, such as a controller card residing in a switch device or other network device, coupled to a storage system on a storage area network (SAN). The resulting virtual disk image that is observed by the host computer is larger than the amount of physical storage actually consumed.

Owner:EMC IP HLDG CO LLC

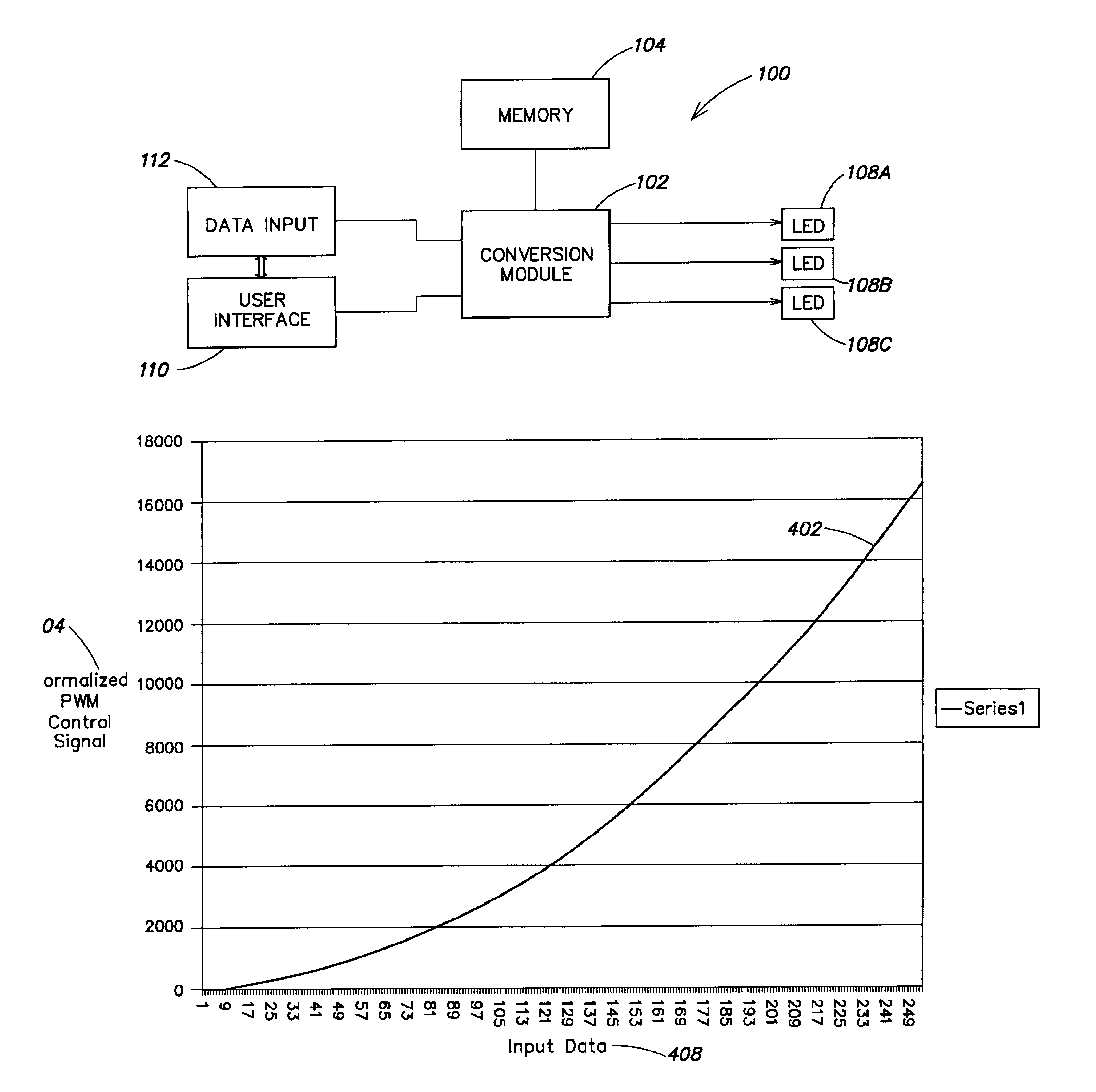

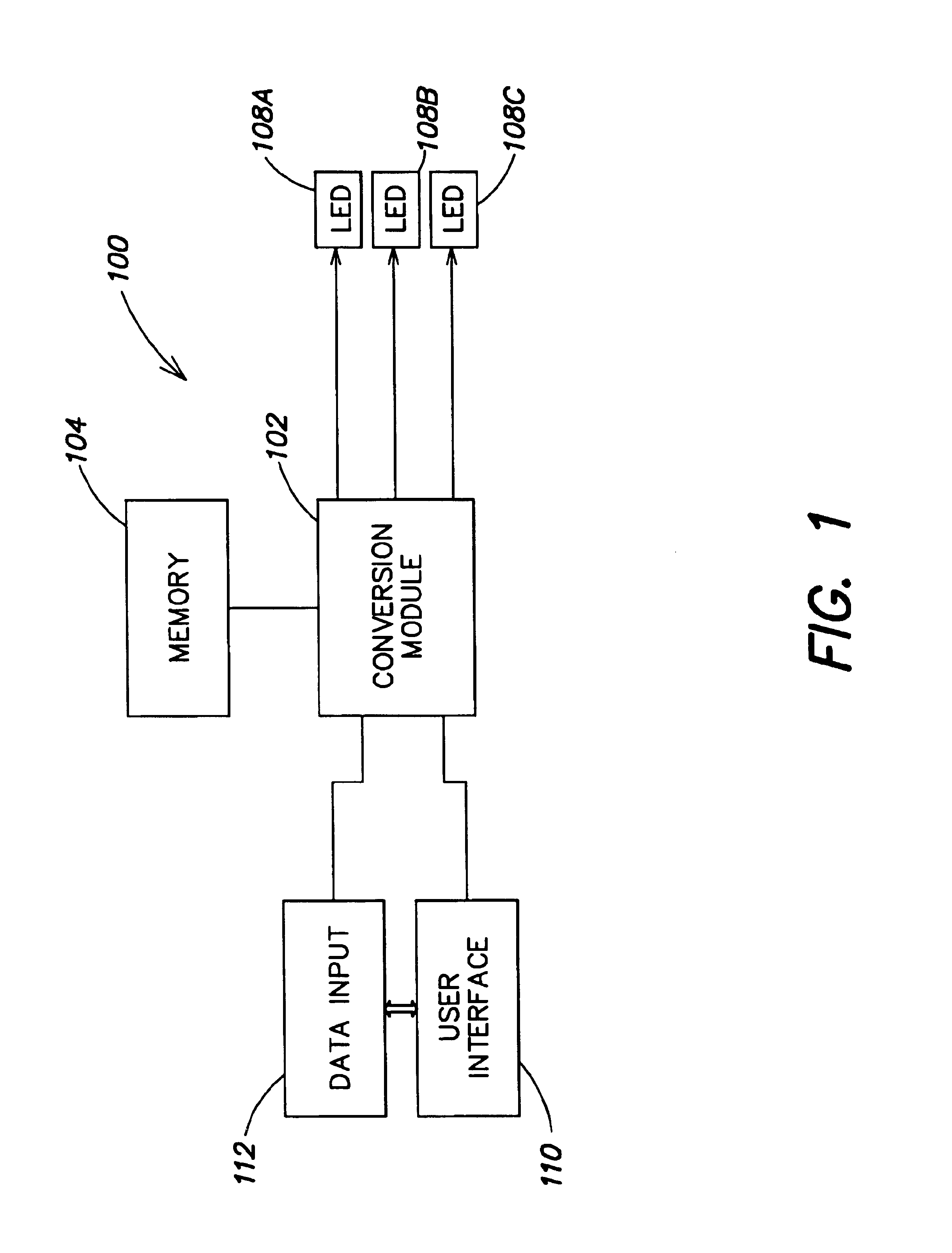

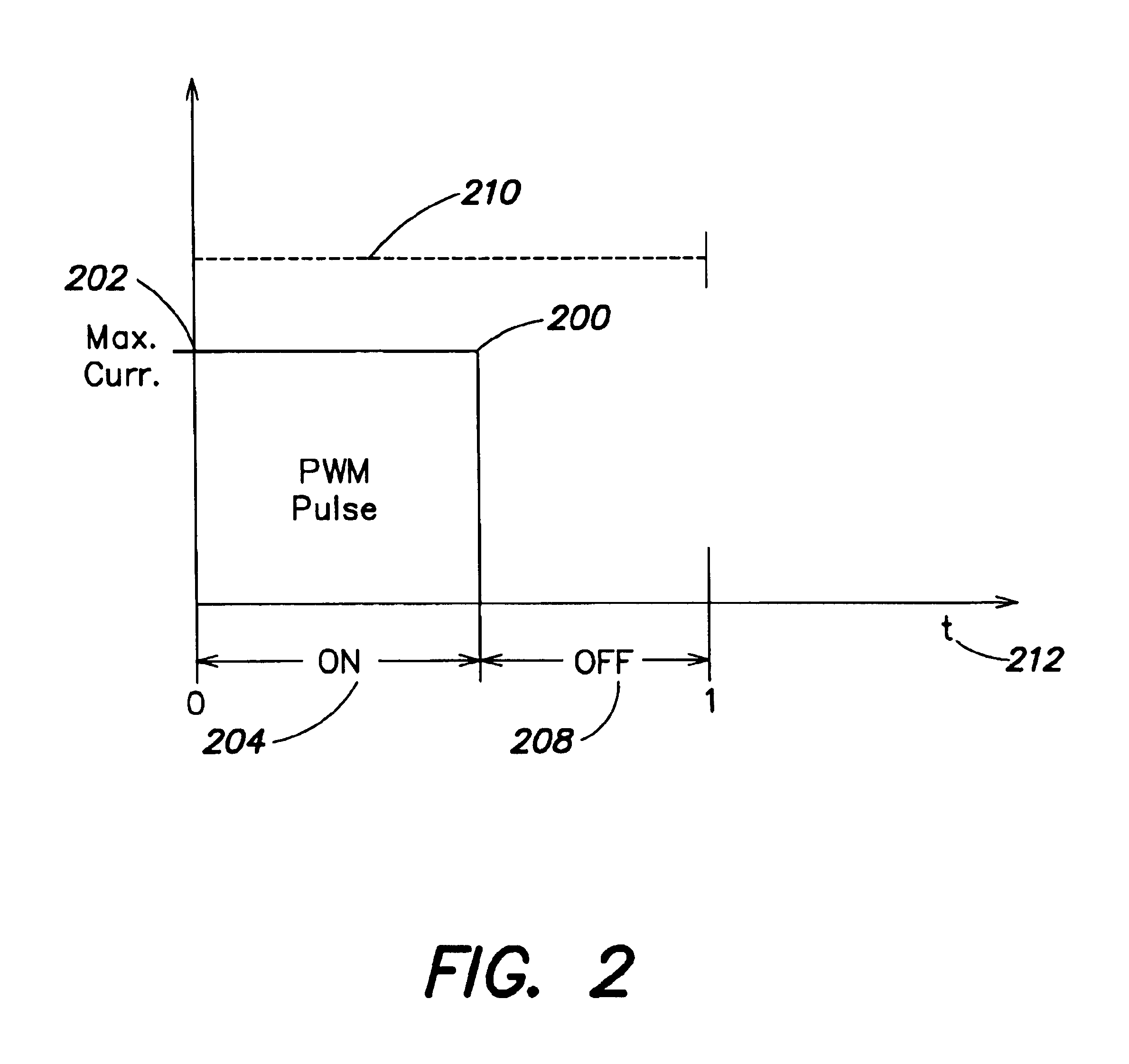

Systems and methods for controlling illumination sources

Provided are methods and systems for controlling the conversion of data inputs to a computer-based light system into lighting control signals. The methods and systems include facilities for controlling a nonlinear relationship between data inputs and lighting control signal ouputs. The nonlinear relationship may be programmed to account for varying responses of the viewer of a light source to different light source intensities.

Owner:PHILIPS LIGHTING NORTH AMERICA CORPORATION

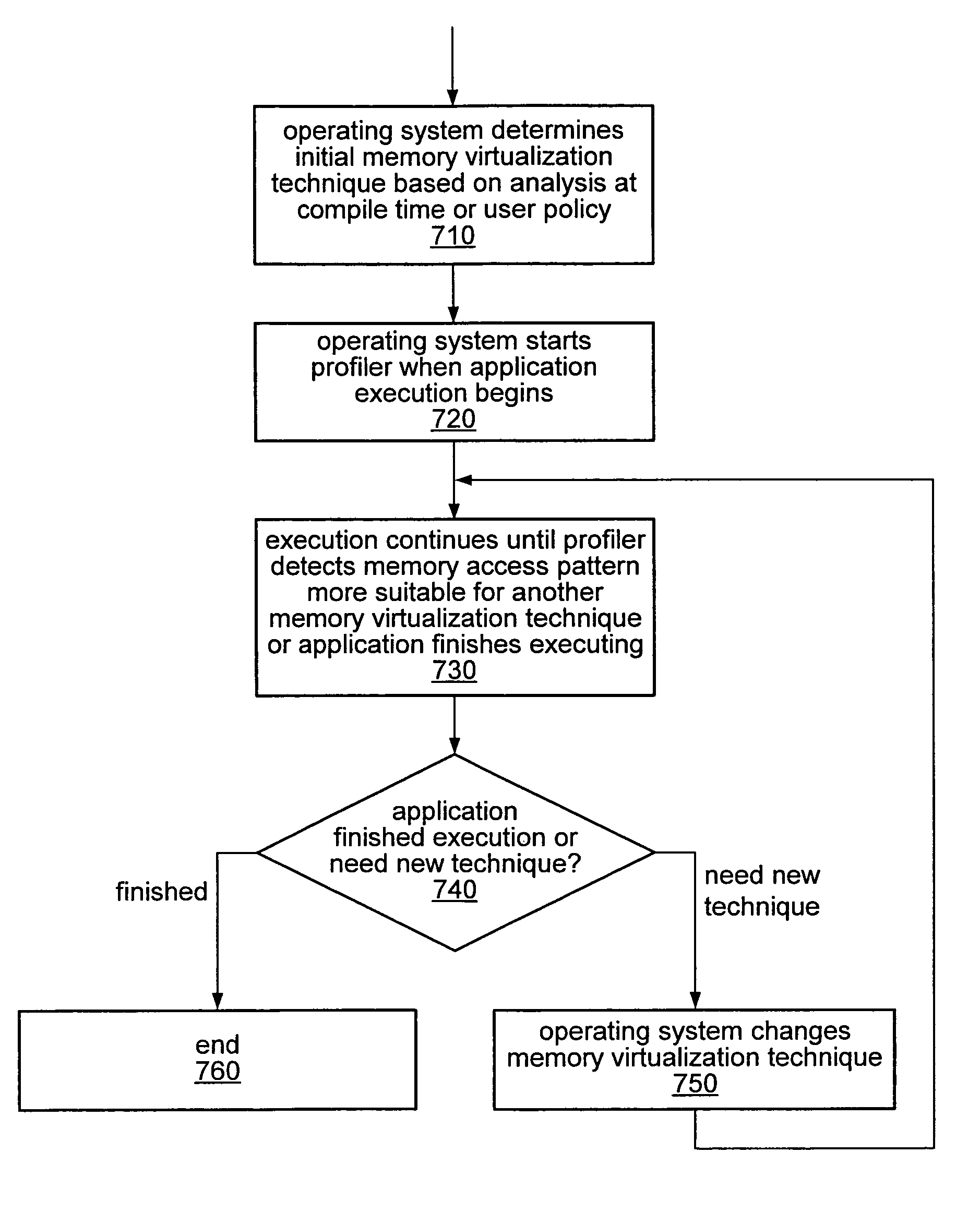

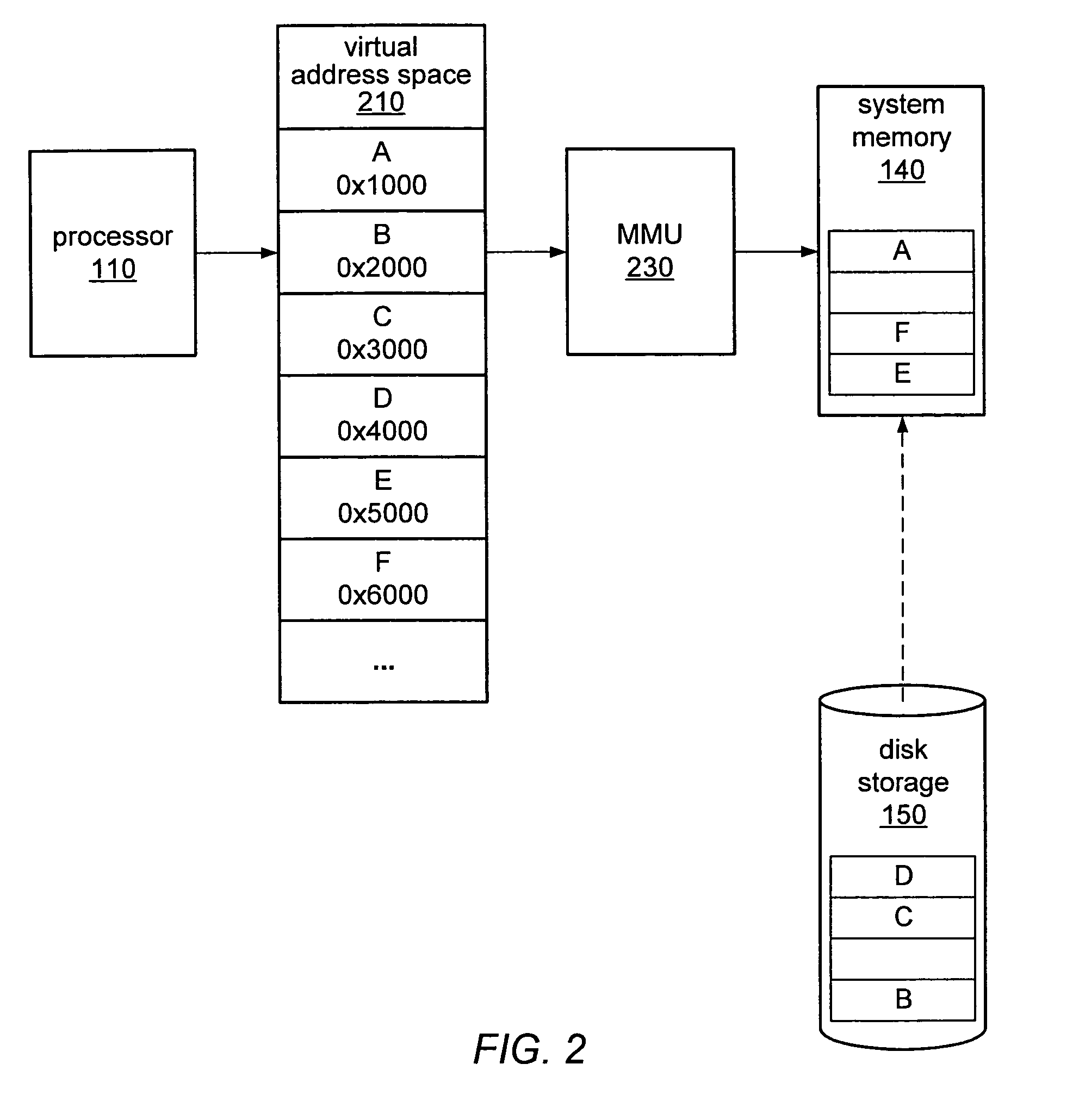

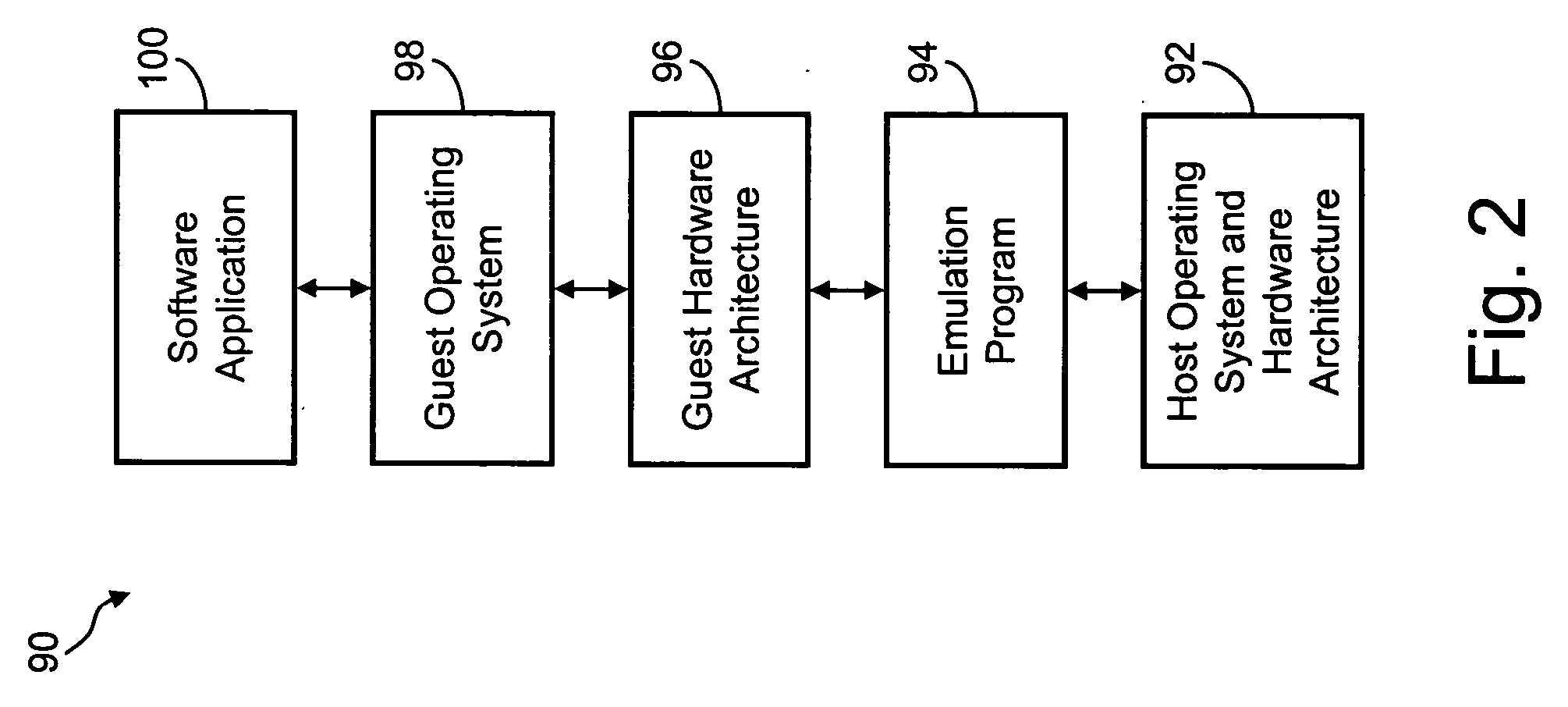

Dynamic selection of memory virtualization techniques

ActiveUS7752417B2Error detection/correctionMemory adressing/allocation/relocationVirtualizationComputerized system

A computer system may be configured to dynamically select a memory virtualization and corresponding virtual-to-physical address translation technique during execution of an application and to dynamically employ the selected technique in place of a current technique without re-initializing the application. The computer system may be configured to determine that a current address translation technique incurs a high overhead for the application's current workload and may be configured to select a different technique dependent on various performance criteria and / or a user policy. Dynamically employing the selected technique may include reorganizing a memory, reorganizing a translation table, allocating a different block of memory to the application, changing a page or segment size, or moving to or from a page-based, segment-based, or function-based address translation technique. A selected translation technique may be dynamically employed for the application independent of a translation technique employed for a different application.

Owner:ORACLE INT CORP

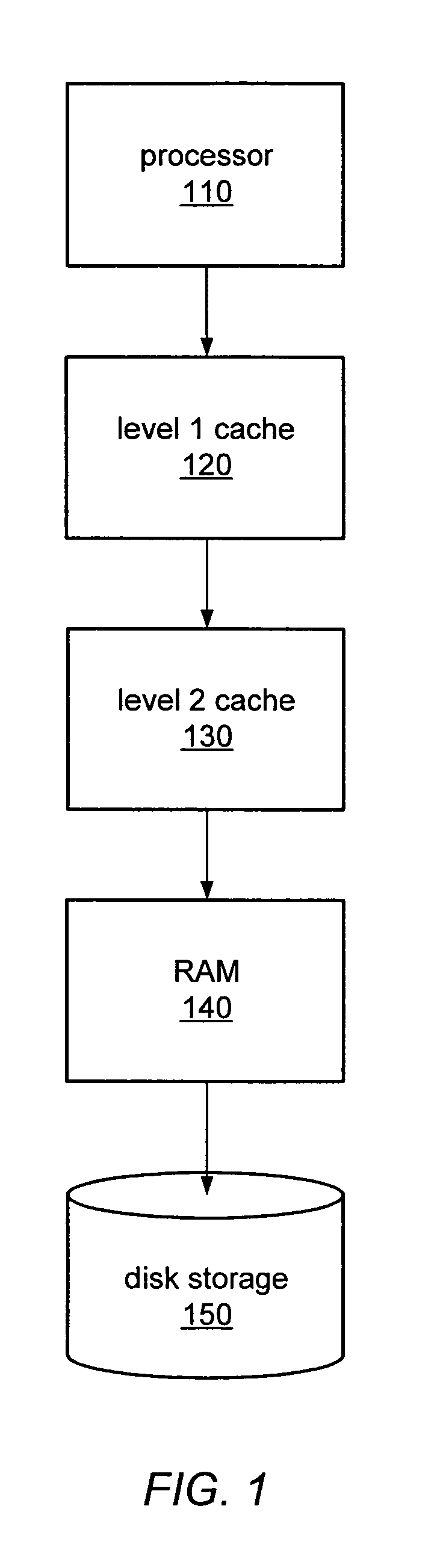

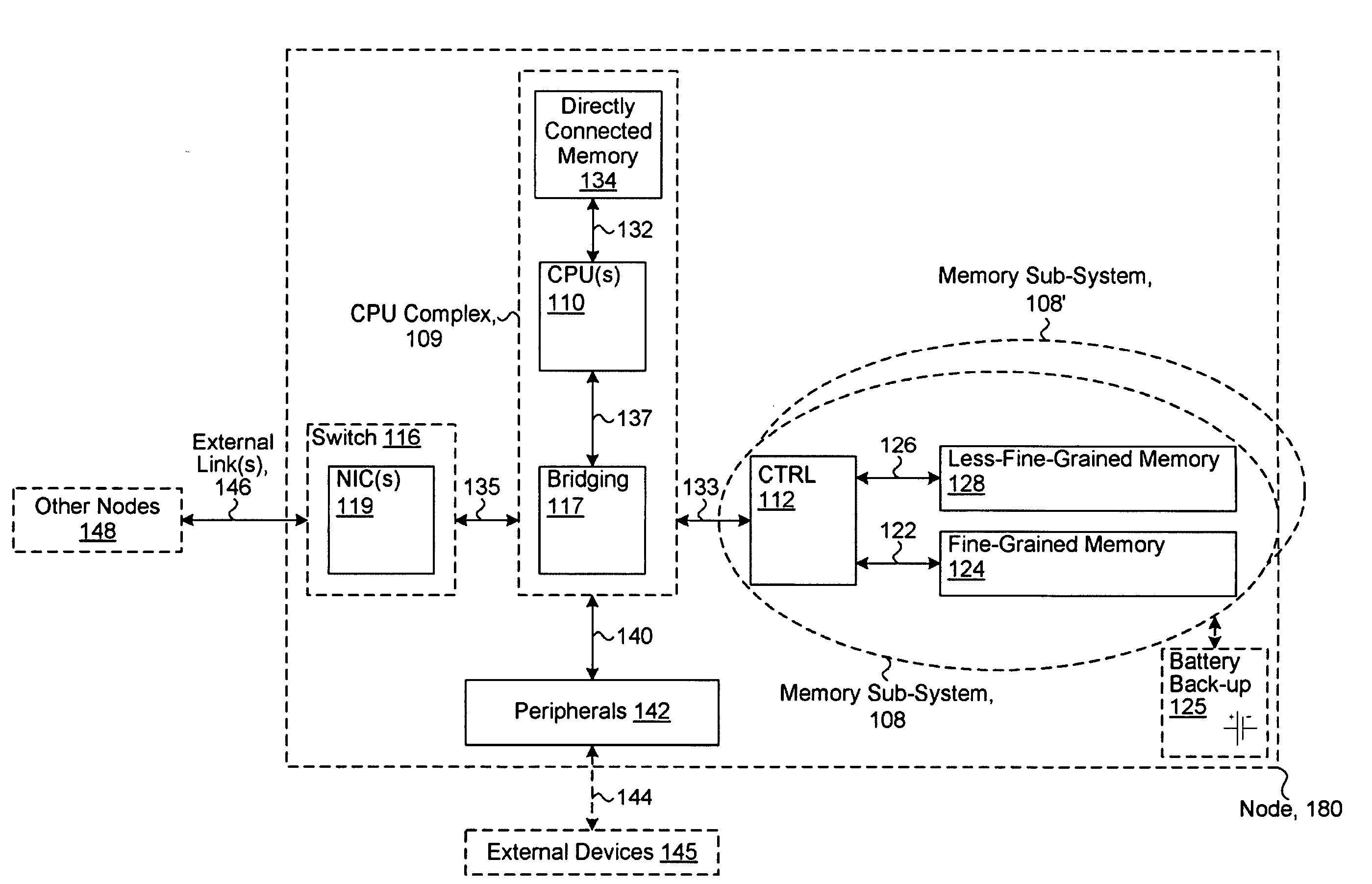

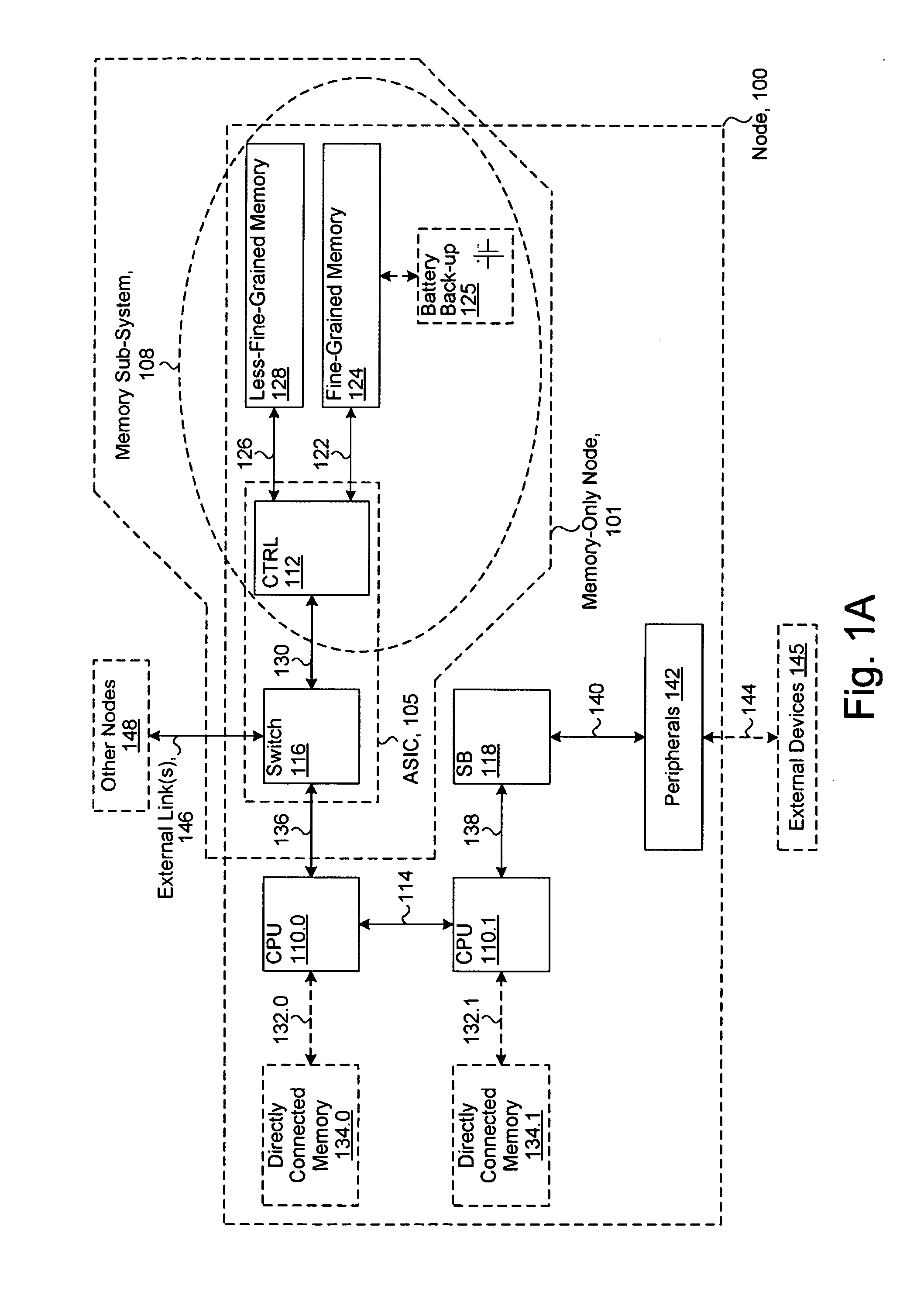

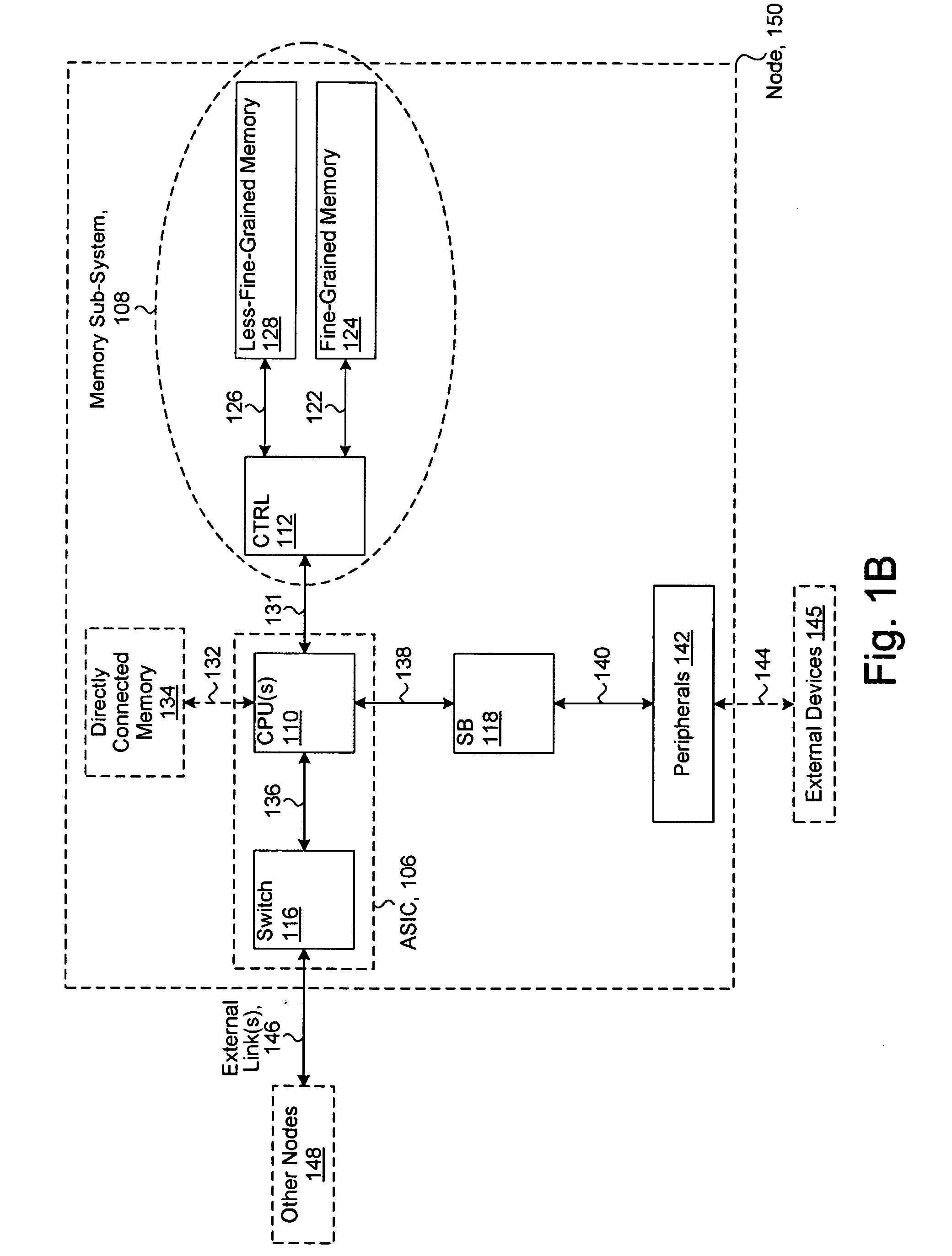

System including a fine-grained memory and a less-fine-grained memory

ActiveUS20080301256A1Memory architecture accessing/allocationEnergy efficient ICTData processing systemWrite buffer

A data processing system includes one or more nodes, each node including a memory sub-system. The sub-system includes a fine-grained, memory, and a less-fine-grained (e.g., page-based) memory. The fine-grained memory optionally serves as a cache and / or as a write buffer for the page-based memory. Software executing on the system uses n node address space which enables access to the page-based memories of all nodes. Each node optionally provides ACID memory properties for at least a portion of the space. In at least a portion of the space, memory elements are mapped to locations in the page-based memory. In various embodiments, some of the elements are compressed, the compressed elements are packed into pages, the pages are written into available locations in the page-based memory, and a map maintains an association between the some of the elements and the locations.

Owner:SANDISK TECH LLC

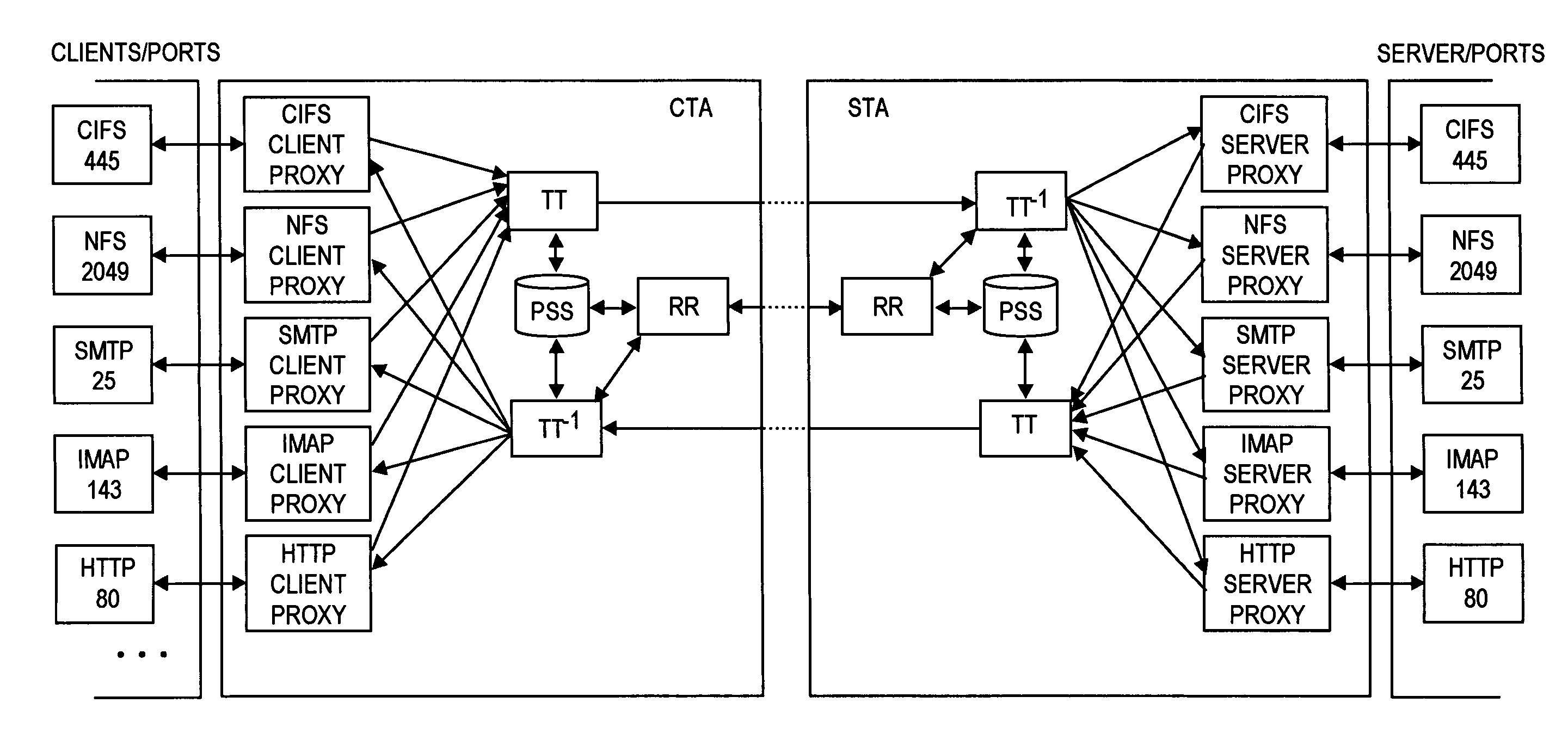

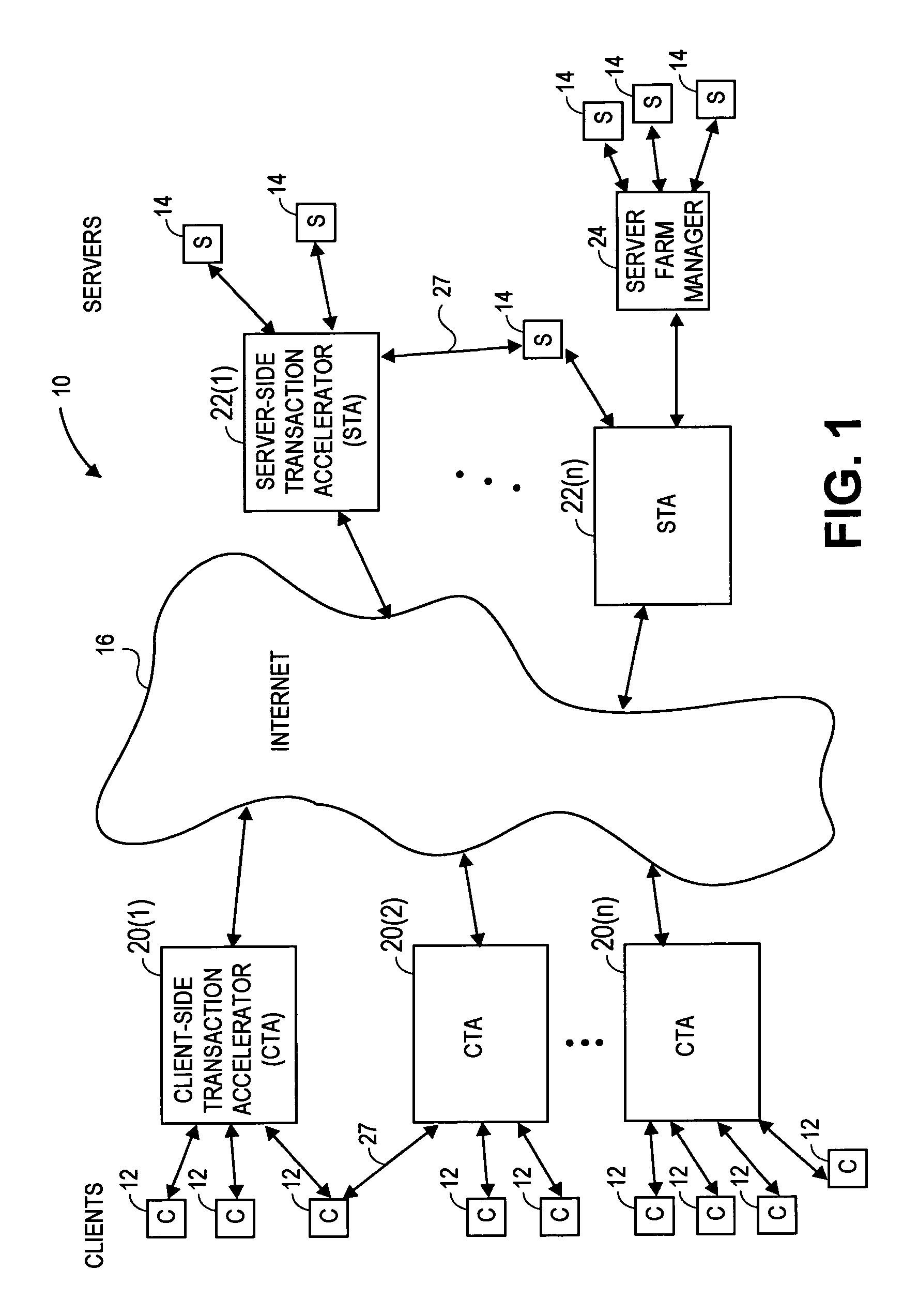

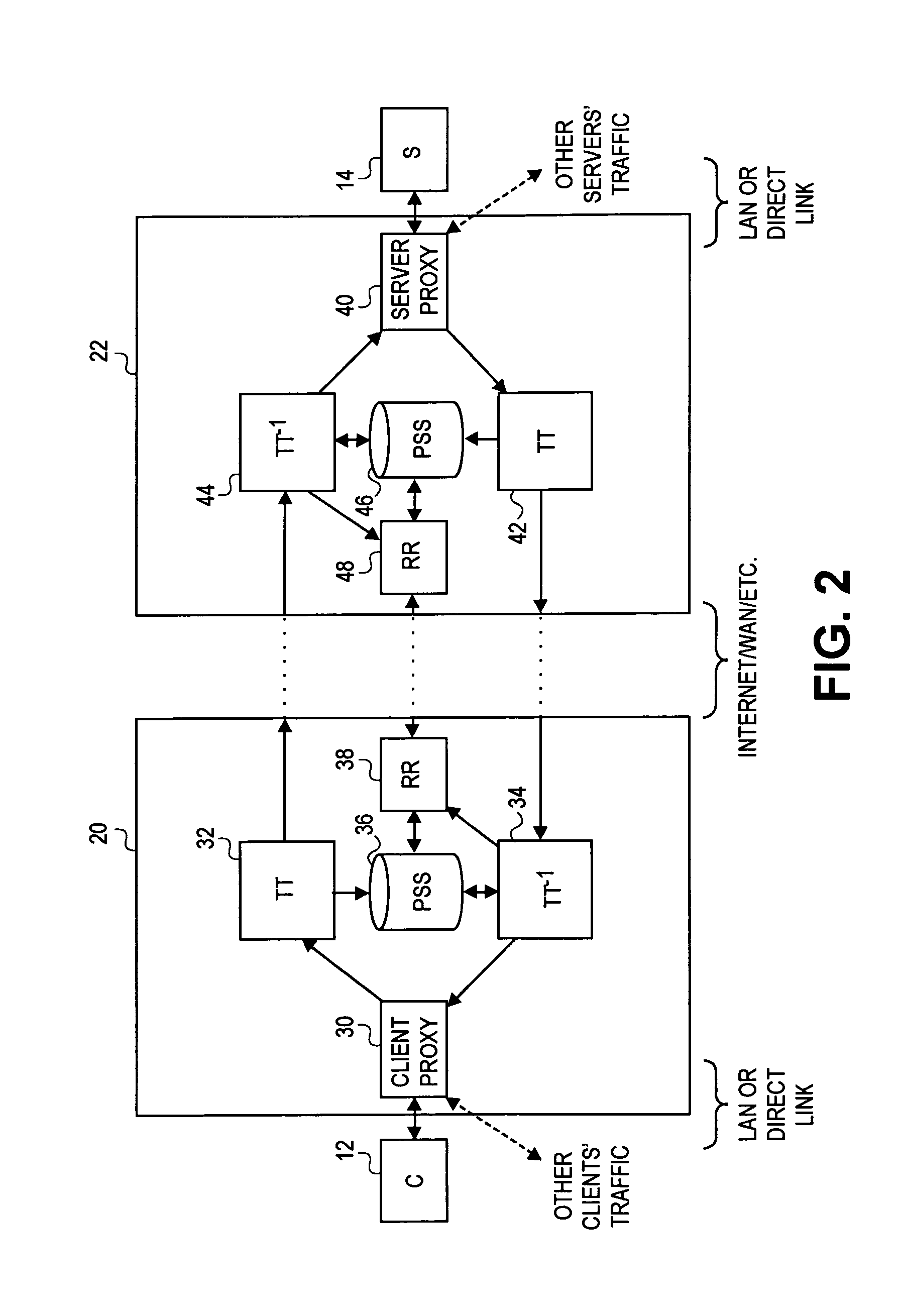

Transaction accelerator for client-server communication systems

InactiveUS7120666B2Reduce network bandwidth usageAccelerating transactionIndividual digits conversionMultiple digital computer combinationsCommunications systemClient-side

In a network having transaction acceleration, for an accelerated transaction, a client directs a request to a client-side transaction handler that forwards the request to a server-side transaction handler, which in turn provides the request, or a representation thereof, to a server for responding to the request. The server sends the response to the server-side transaction handler, which forwards the response to the client-side transaction handler, which in turn provides the response to the client. Transactions are accelerated by the transaction handlers by storing segments of data used in the transactions in persistent segment storage accessible to the server-side transaction handler and in persistent segment storage accessible to the client-side transaction handler. When data is to be sent between the transaction handlers, the sending transaction handler compares the segments of the data to be sent with segments stored in its persistent segment storage and replaces segments of data with references to entries in its persistent segment storage that match or closely match the segments of data to be replaced. The receiving transaction store reconstructs the data sent by replacing segment references with corresponding segment data from its persistent segment storage, requesting missing segments from the sender as needed. The transaction accelerators could handle multiple clients and / or multiple servers and the segments stored in the persistent segment stores can relate to different transactions, different clients and / or different servers. Persistent segment stores can be prepopulated with segment data from other transaction accelerators.

Owner:RIVERBED TECH LLC

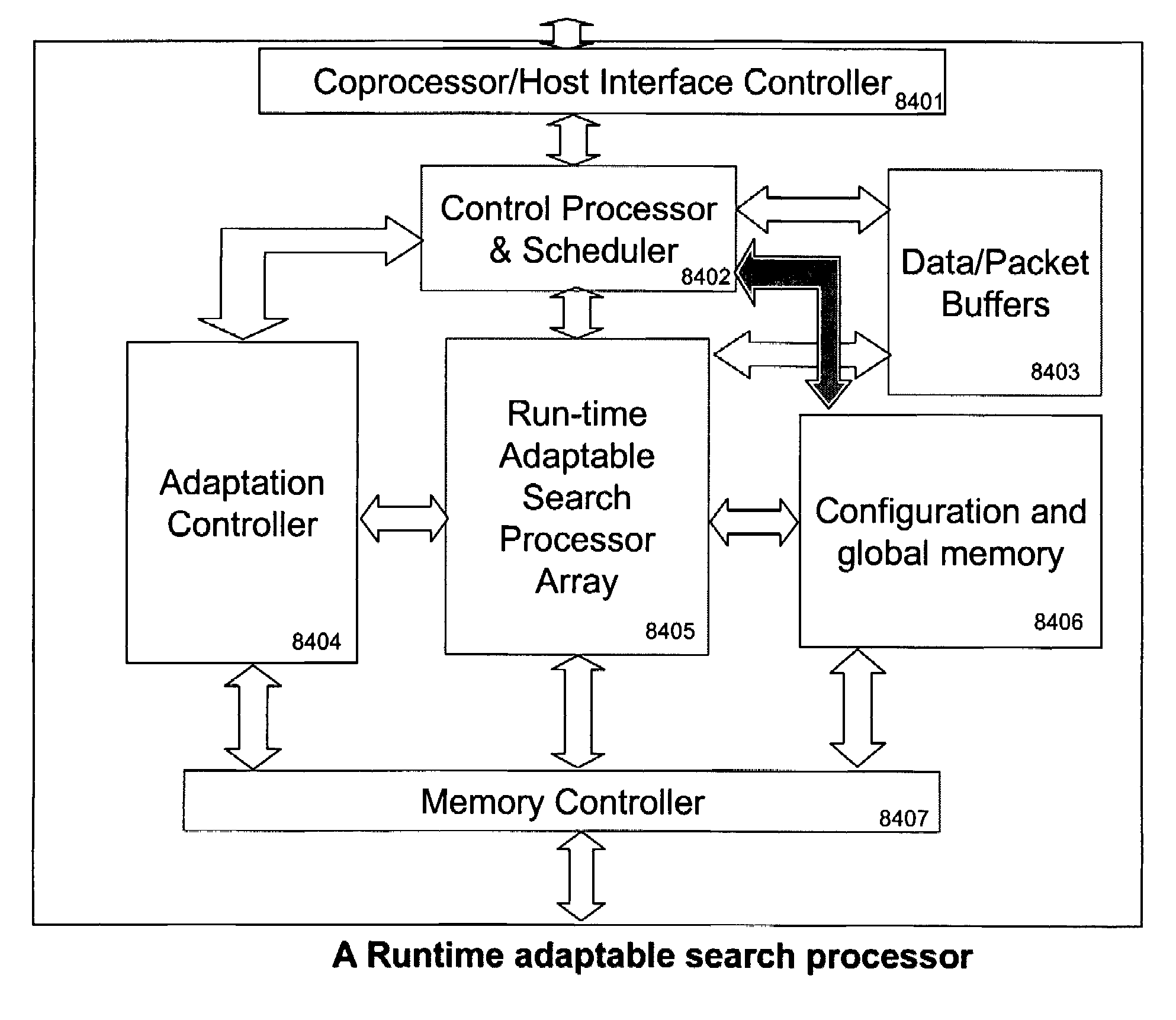

Runtime adaptable search processor

ActiveUS7685254B2Improve application performanceLarge capacityWeb data indexingMemory adressing/allocation/relocationPacket schedulingSchema for Object-Oriented XML

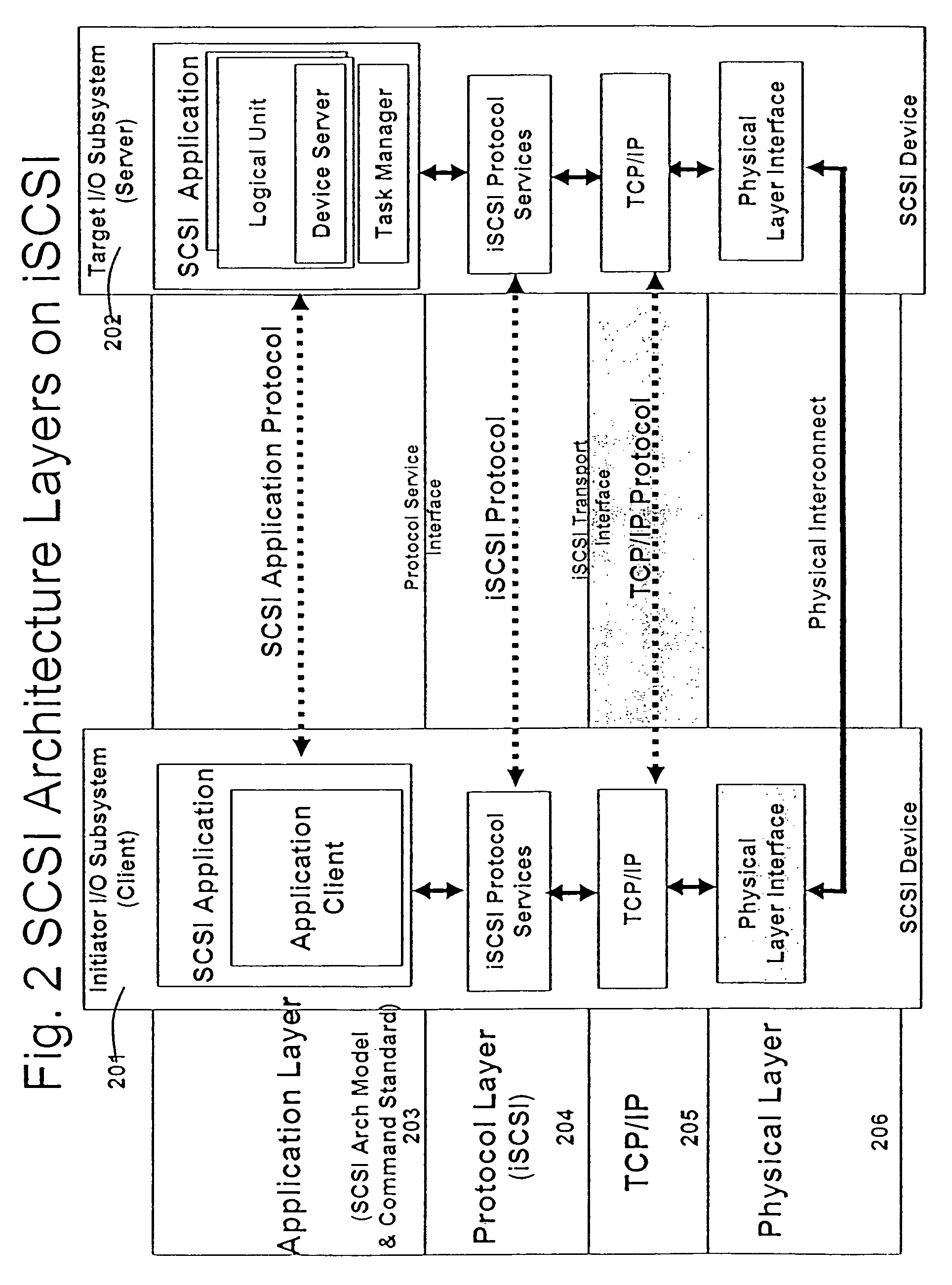

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. The search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. Scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

Content-based, transparent sharing of memory units

InactiveUS6789156B1Memory architecture accessing/allocationMemory adressing/allocation/relocationComputer hardwareMultiple context

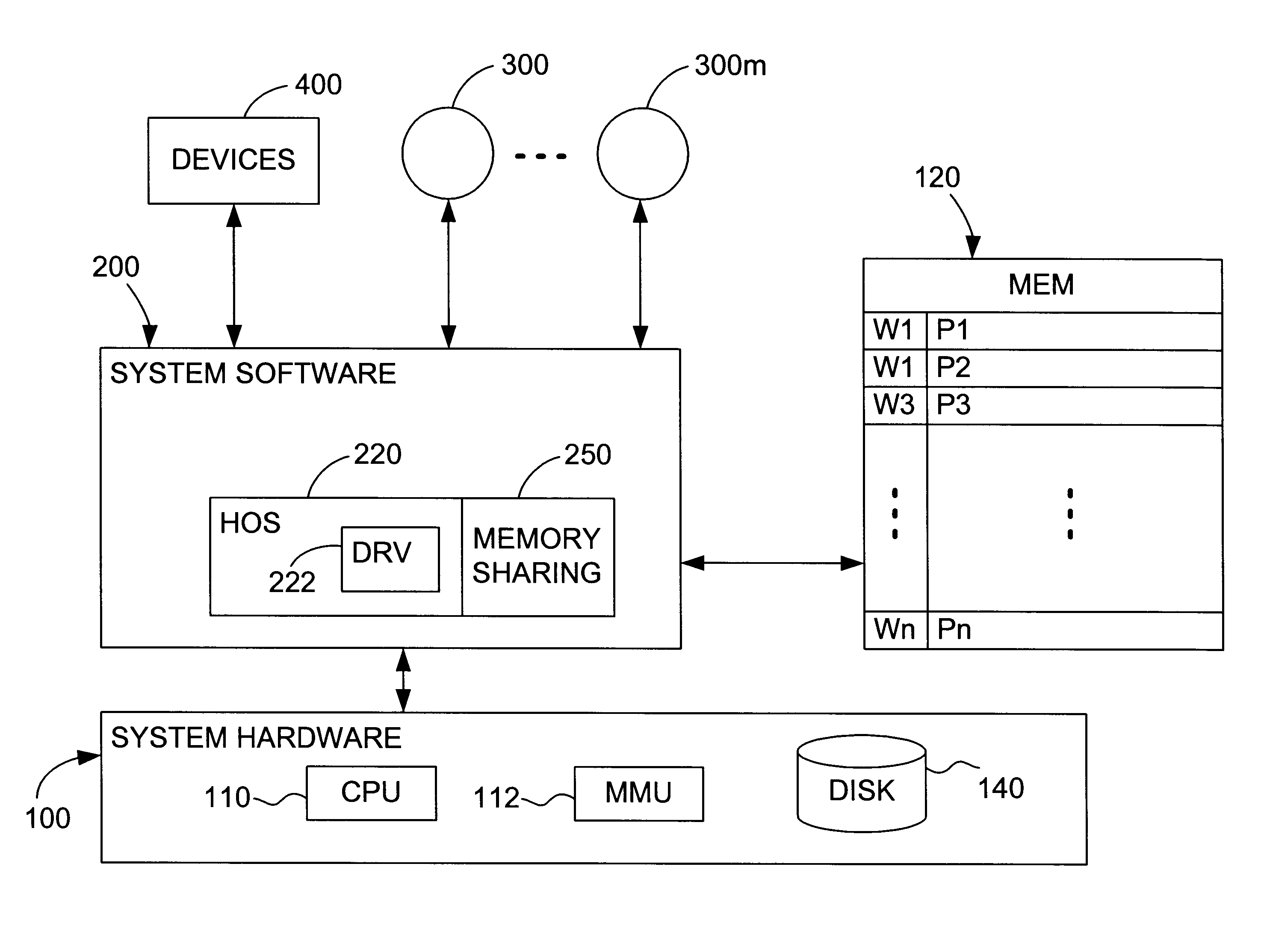

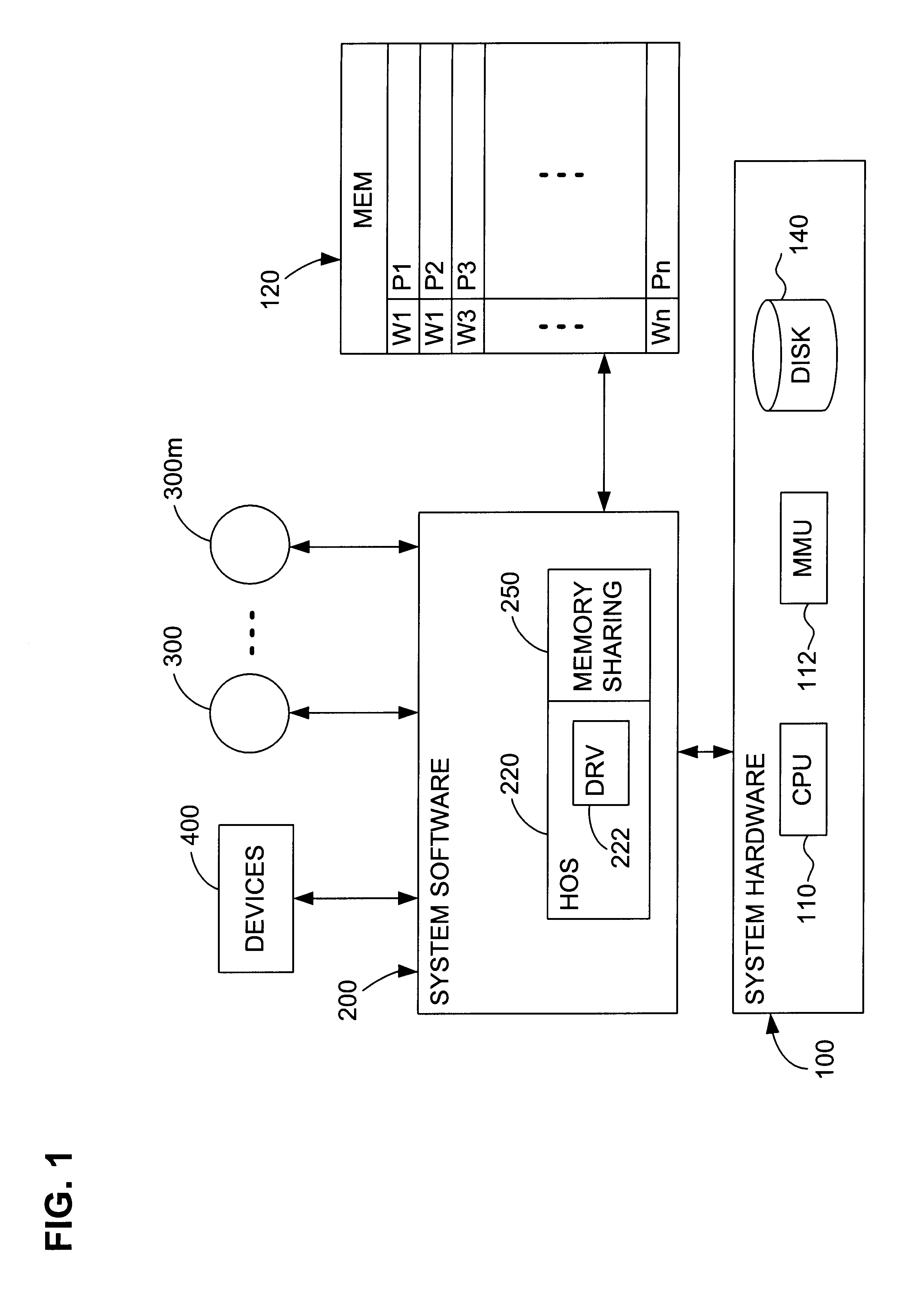

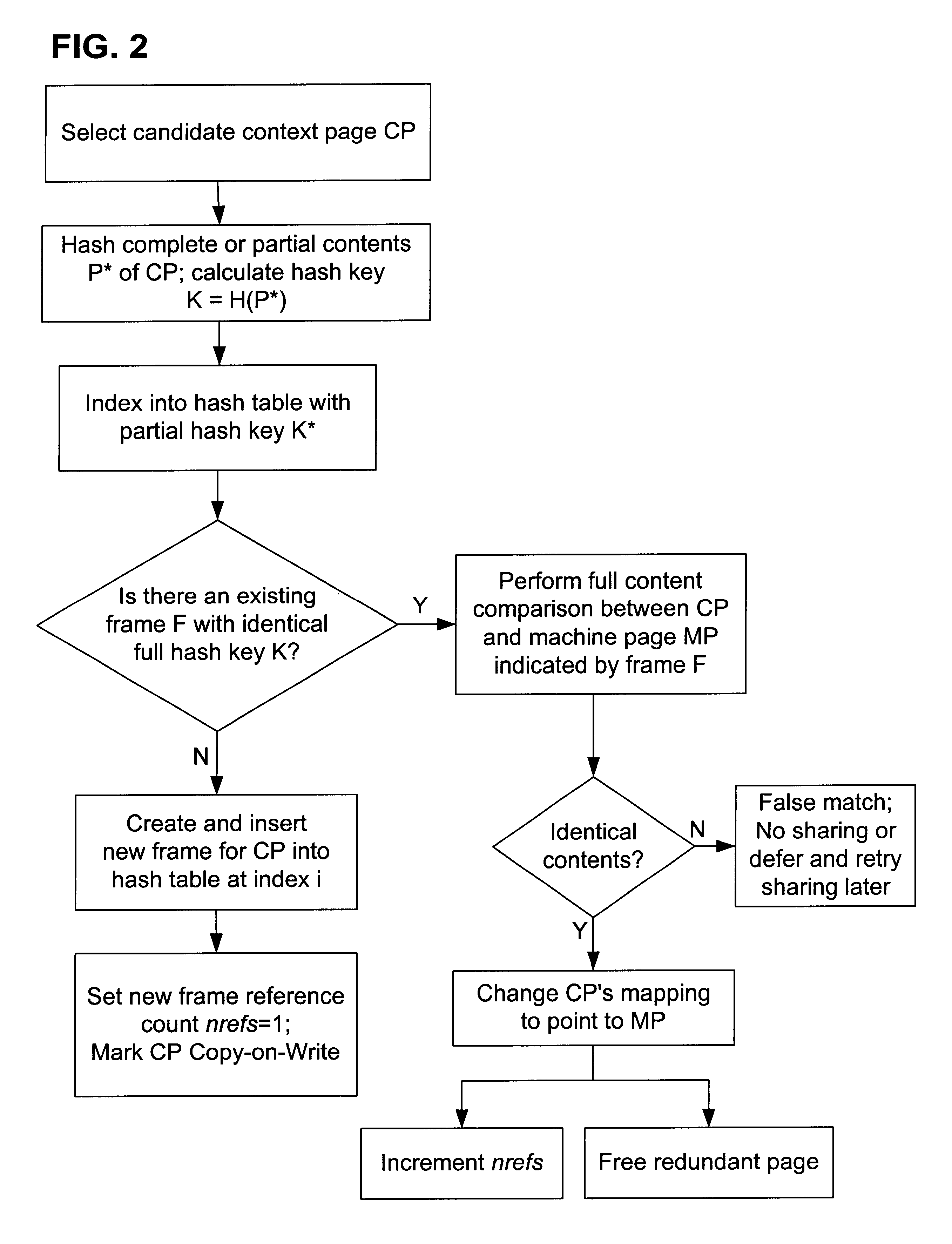

A computer system has one or more software context that share use of a memory that is divided into units such as pages. In the preferred embodiment of the invention, the context are, or include, virtual machines running on a common hardware platform. The context, as opposed to merely the addresses or page numbers, of virtual memory pages that accessible to one or more contexts are examined. If two or more context pages are identical, then their memory mappings are changed to point to a single, shared copy of the page in the hardware memory, thereby freeing the memory space taken up by the redundant copies. The shared copy is ten preferable marked copy-on-write. Sharing is preferably dynamic, whereby the presence of redundant copies of pages is preferably determined by hashing page contents and performing full content comparisons only when two or more pages hash to the same key.

Owner:VMWARE INC

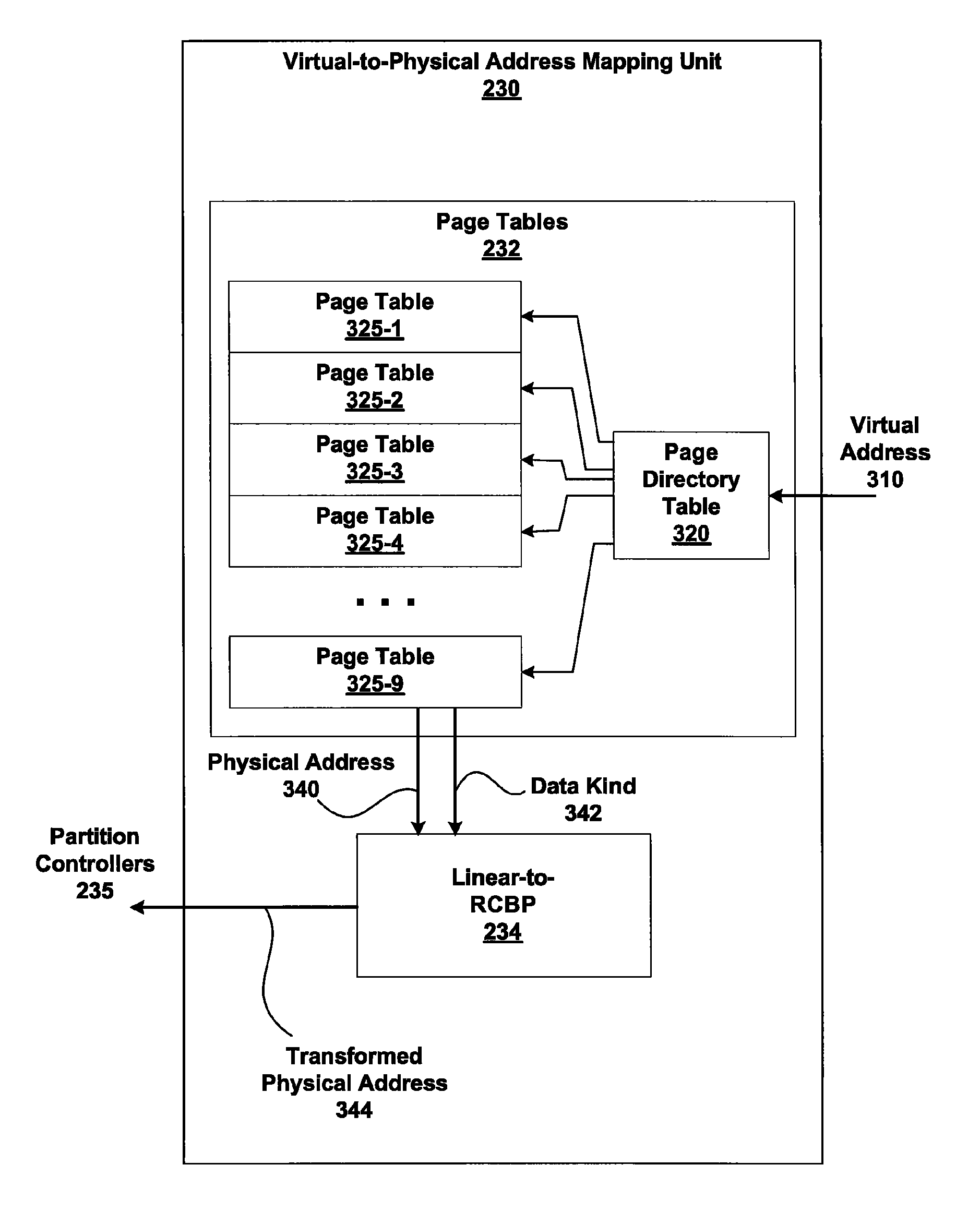

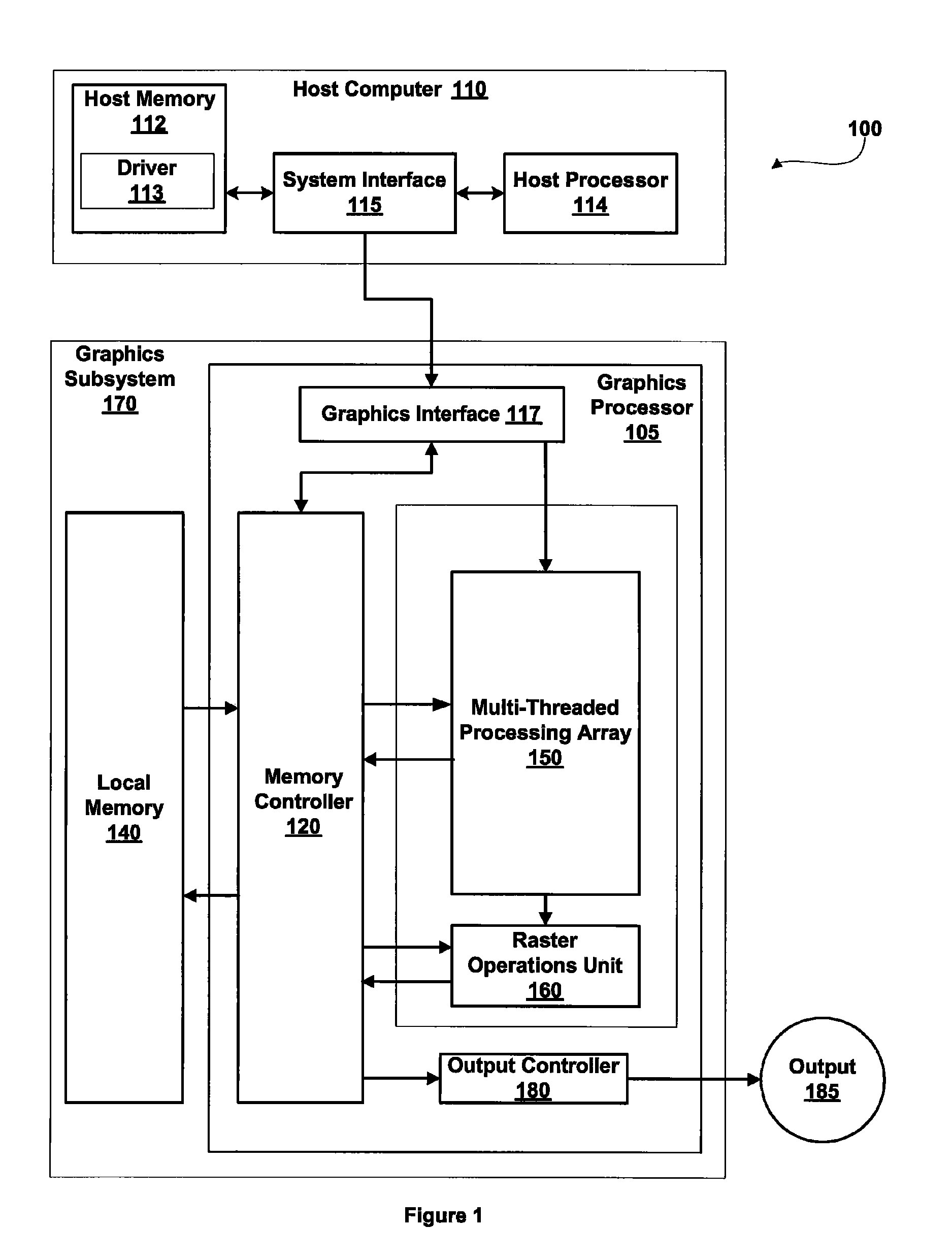

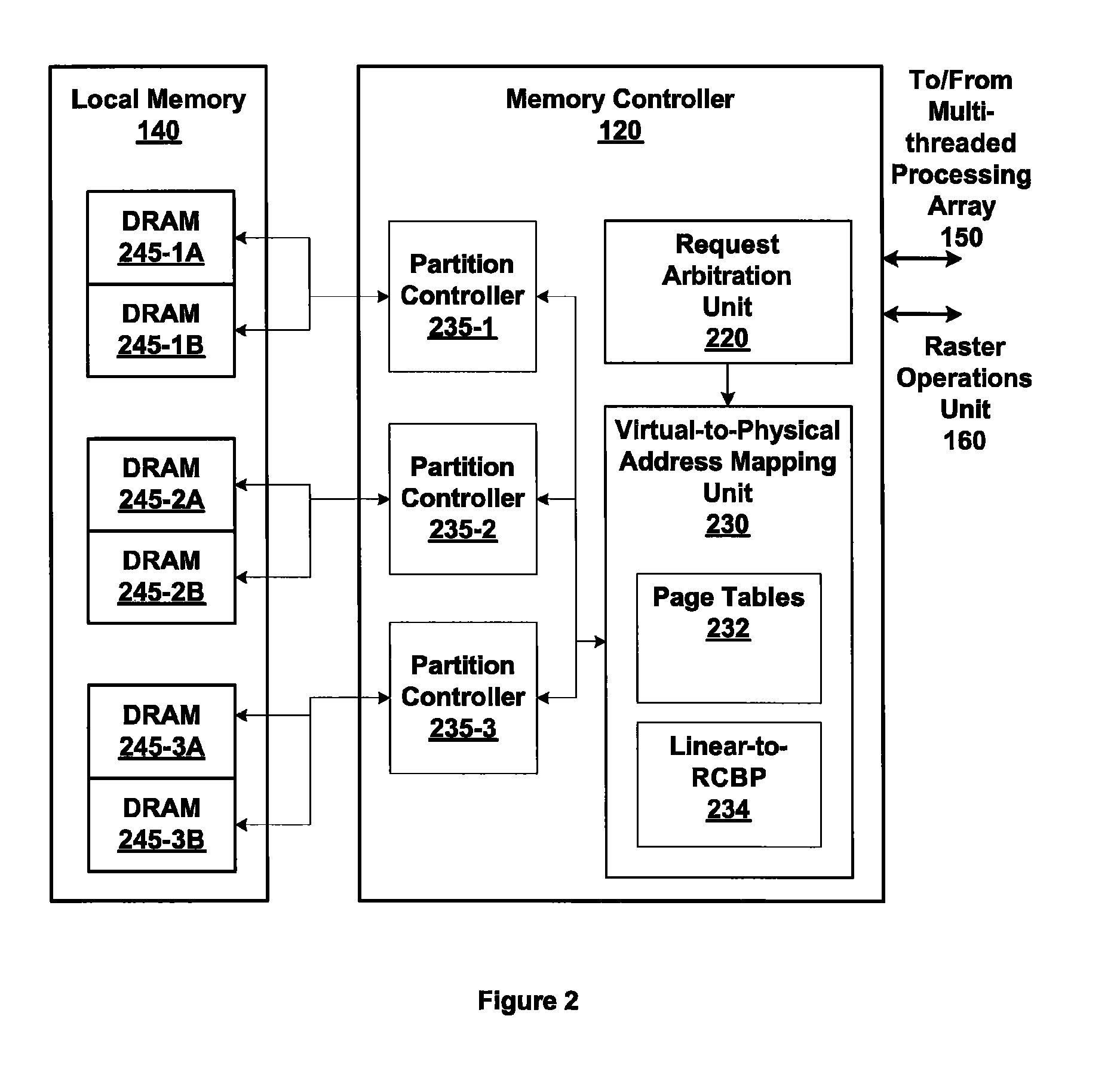

Memory addressing controlled by PTE fields

ActiveUS7805587B1Reduce accessMemory adressing/allocation/relocationComputer security arrangementsMemory addressDram memory

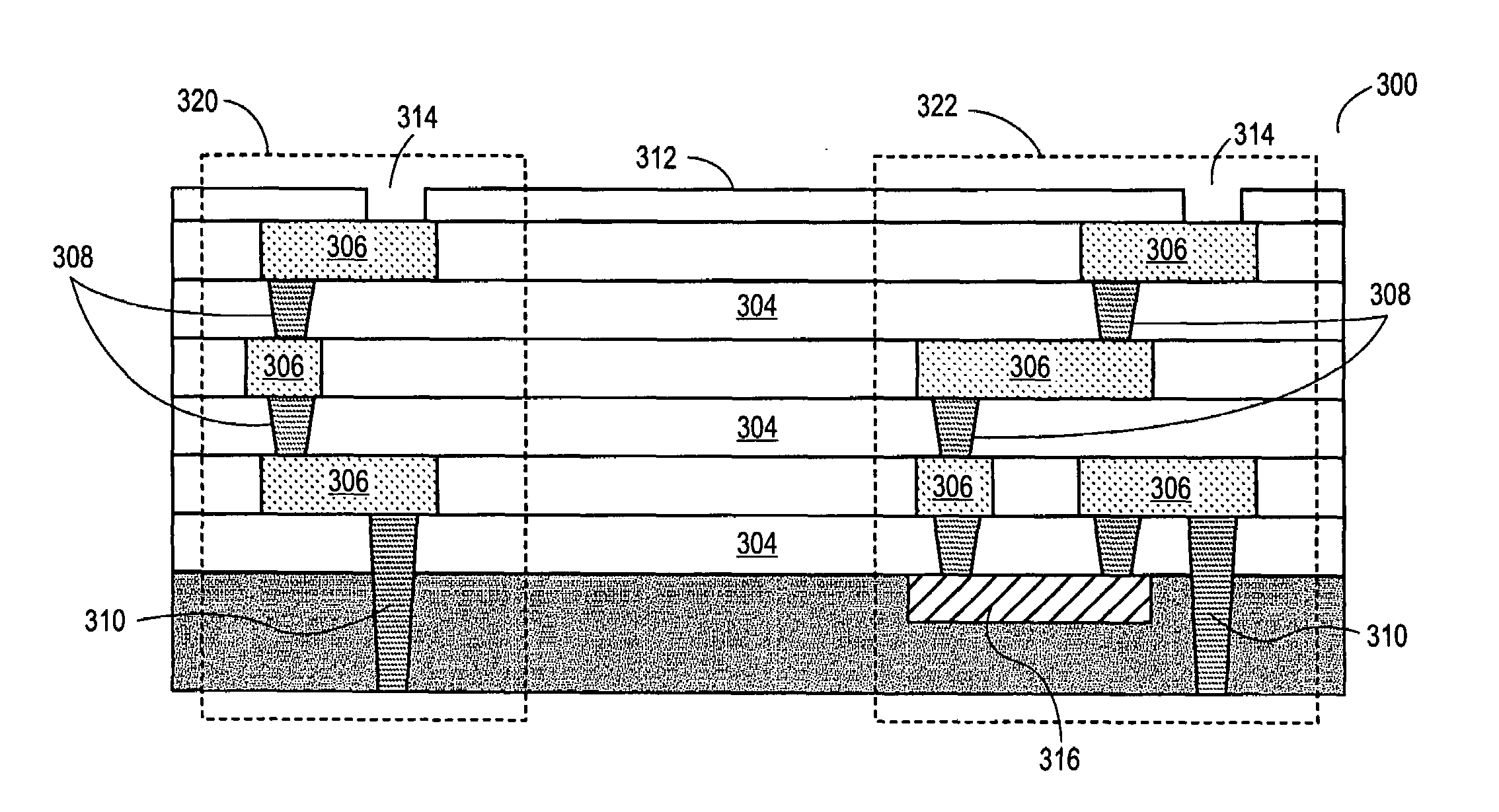

Embodiments of the present invention enable virtual-to-physical memory address translation using optimized bank and partition interleave patterns to improve memory bandwidth by distributing data accesses over multiple banks and multiple partitions. Each virtual page has a corresponding page table entry that specifies the physical address of the virtual page in linear physical address space. The page table entry also includes a data kind field that is used to guide and optimize the mapping process from the linear physical address space to the DRAM physical address space, which is used to directly access one or more DRAM. The DRAM physical address space includes a row, bank and column address. The data kind field is also used to optimize the starting partition number and partition interleave pattern that defines the organization of the selected physical page of memory within the DRAM memory system.

Owner:NVIDIA CORP

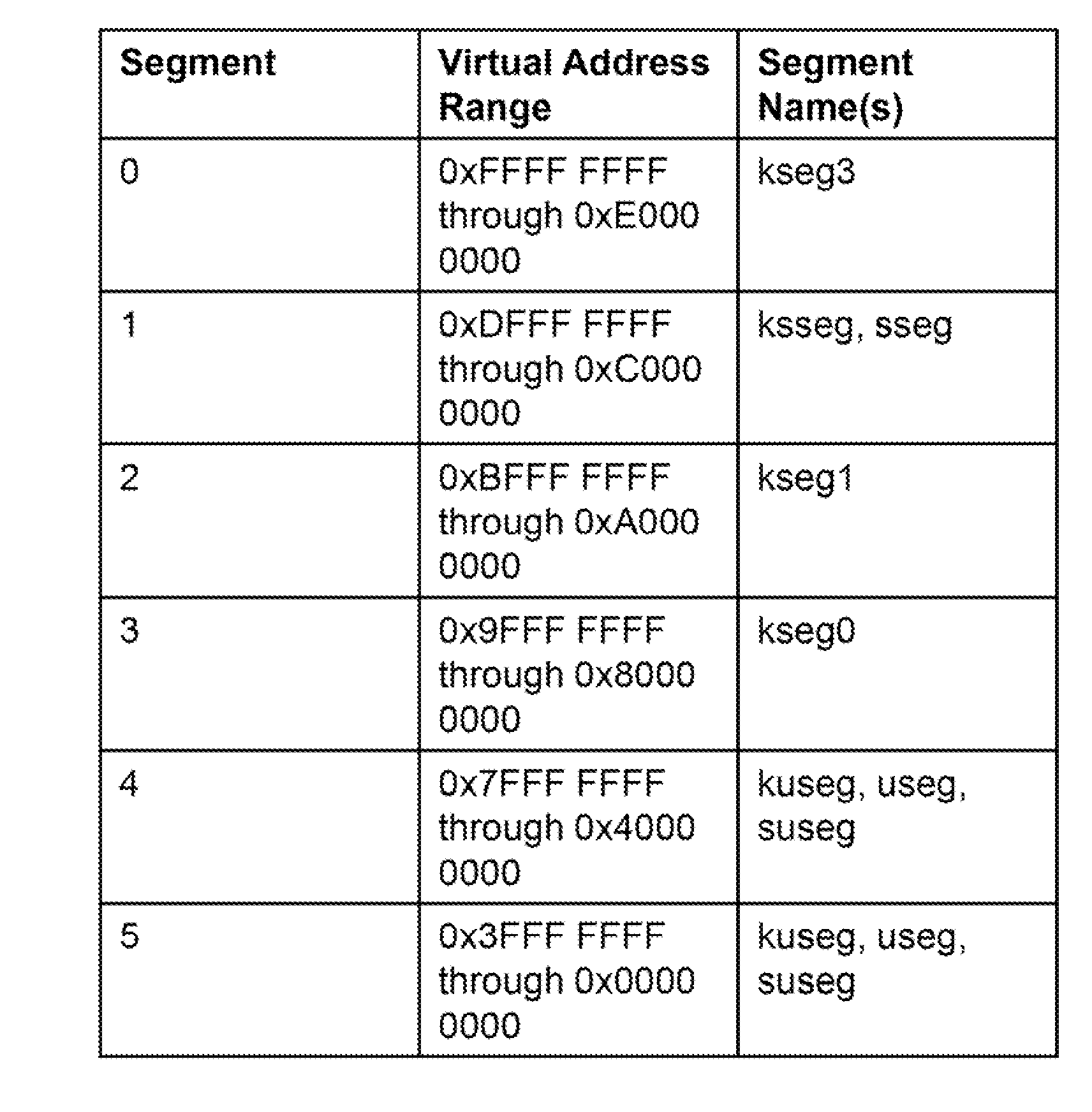

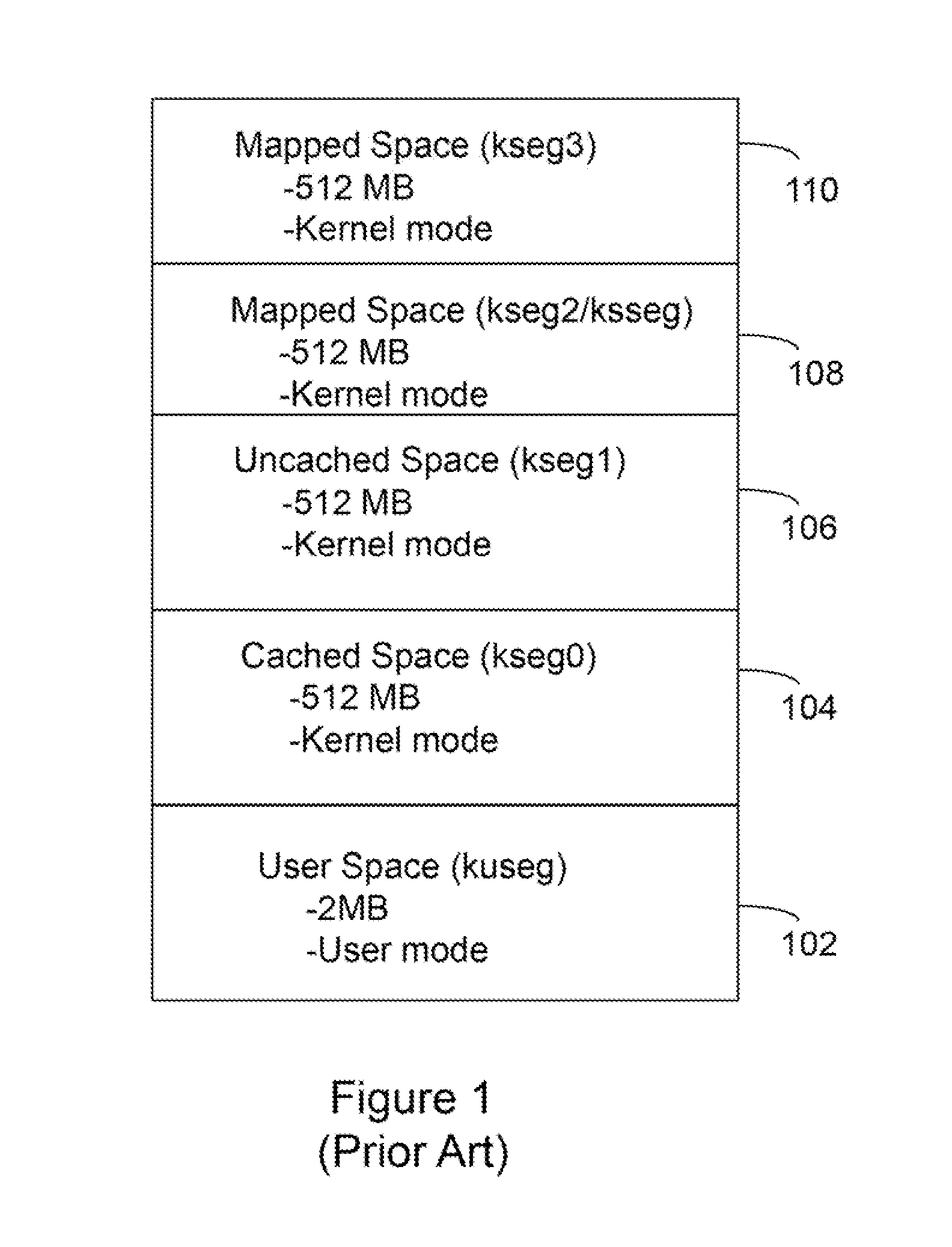

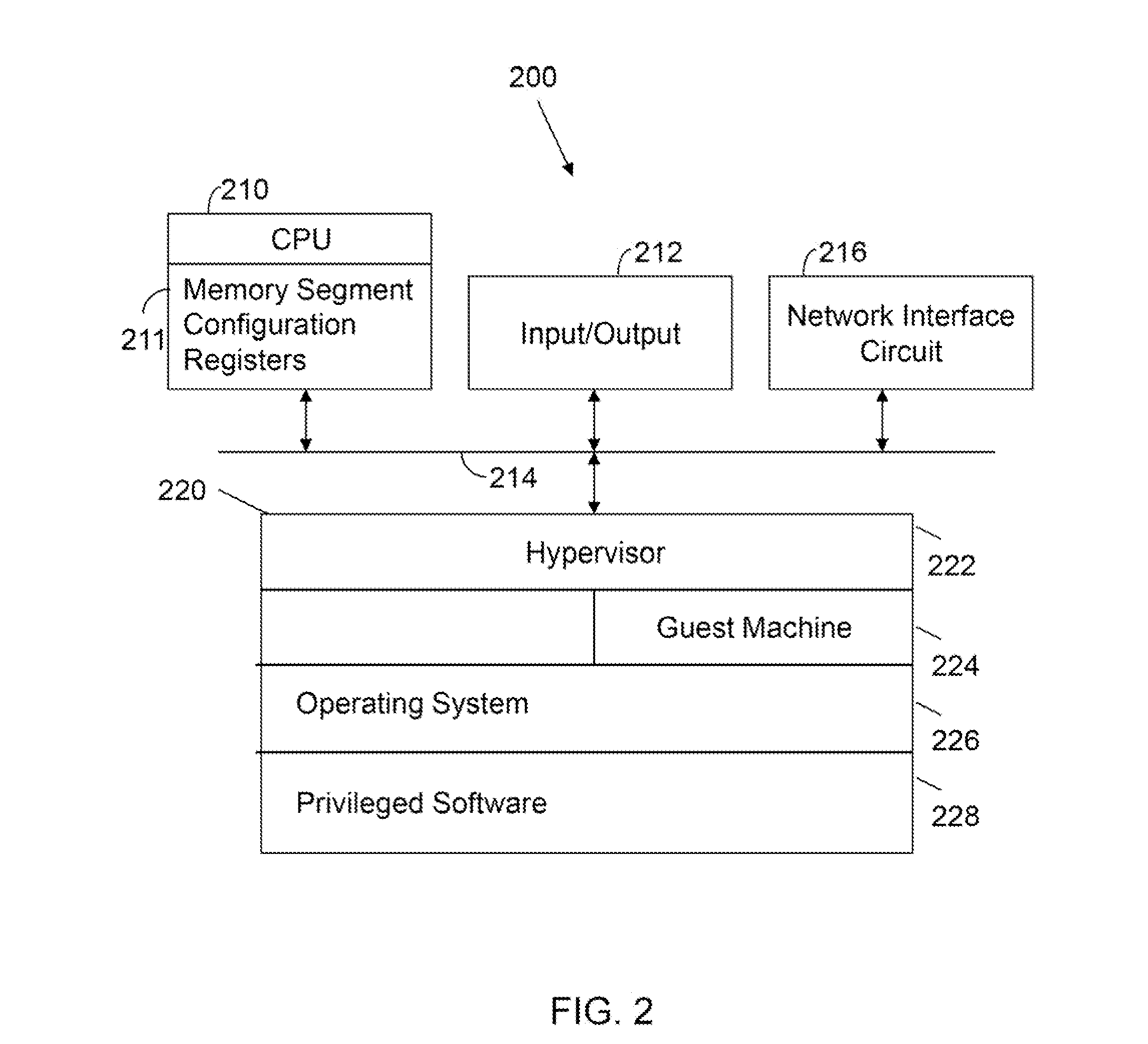

Processor with Kernel Mode Access to User Space Virtual Addresses

ActiveUS20130132702A1Enhanced kernel mode memory capacityIncrease memory capacityMemory adressing/allocation/relocationUnauthorized memory use protectionMemory addressParallel computing

A computer includes a memory and a processor connected to the memory. The processor includes memory segment configuration registers to store defined memory address segments and defined memory address segment attributes such that the processor operates in accordance with the defined memory address segments and defined memory address segment attributes to allow kernel mode access to user space virtual addresses for enhanced kernel mode memory capacity.

Owner:MIPS TECH INC

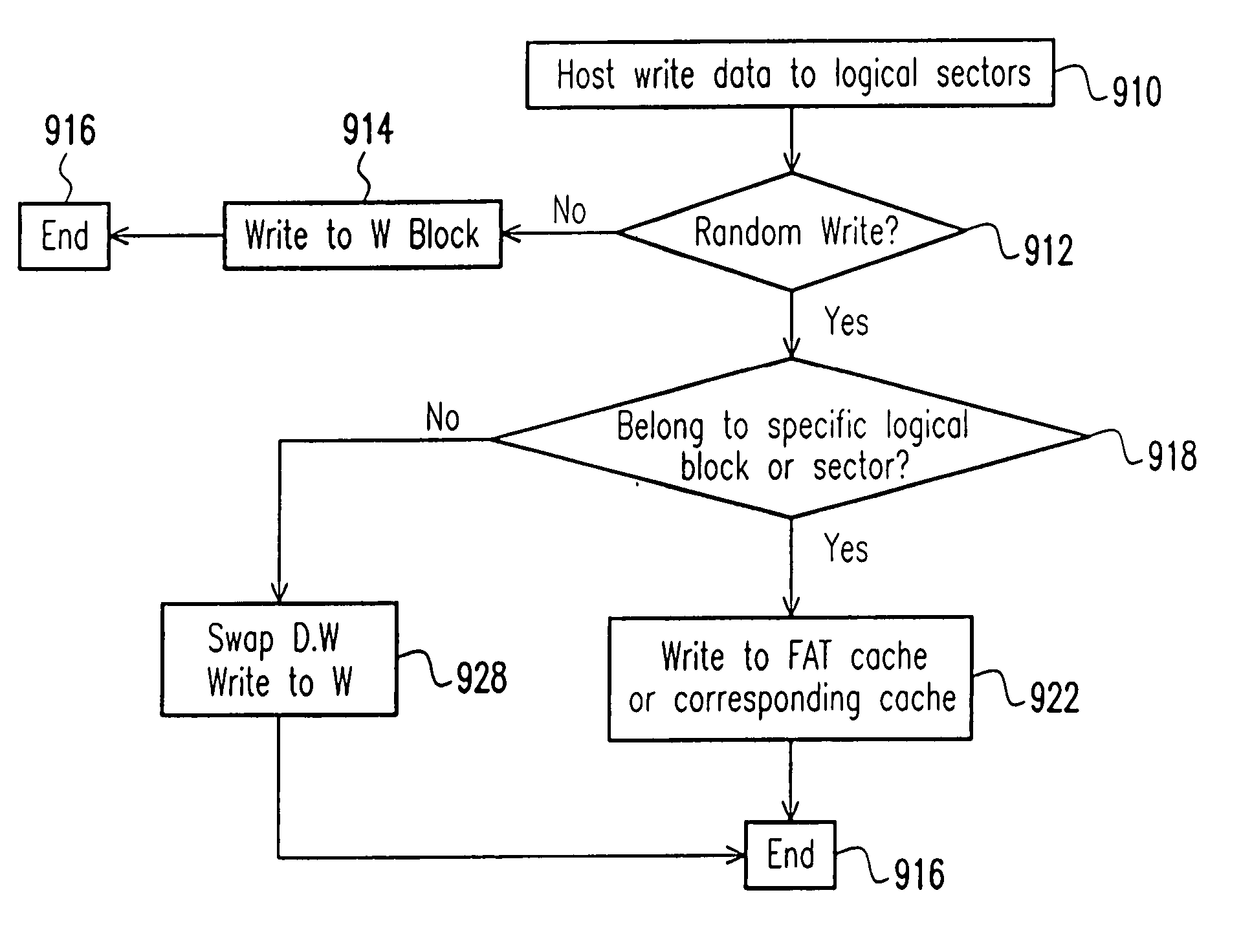

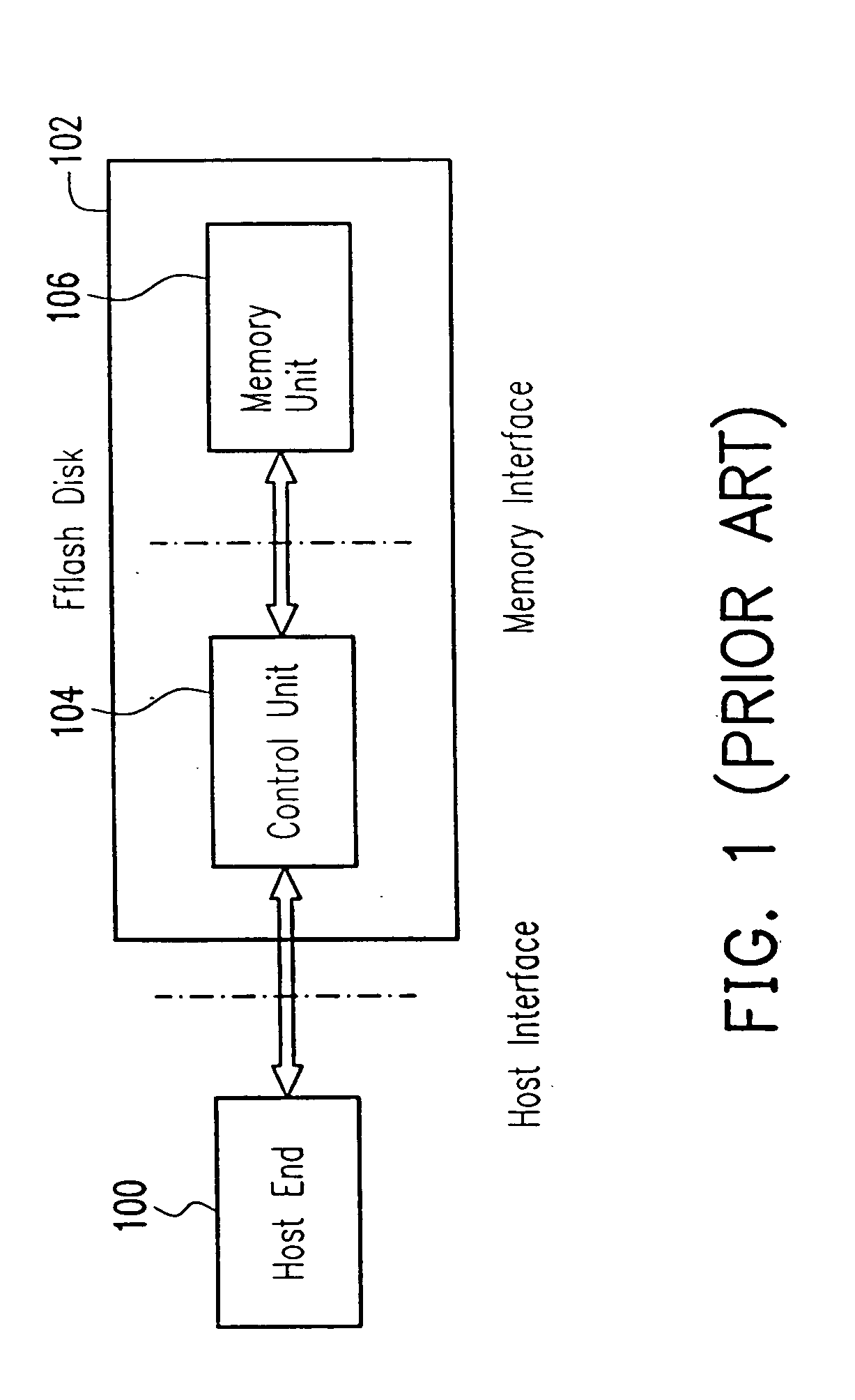

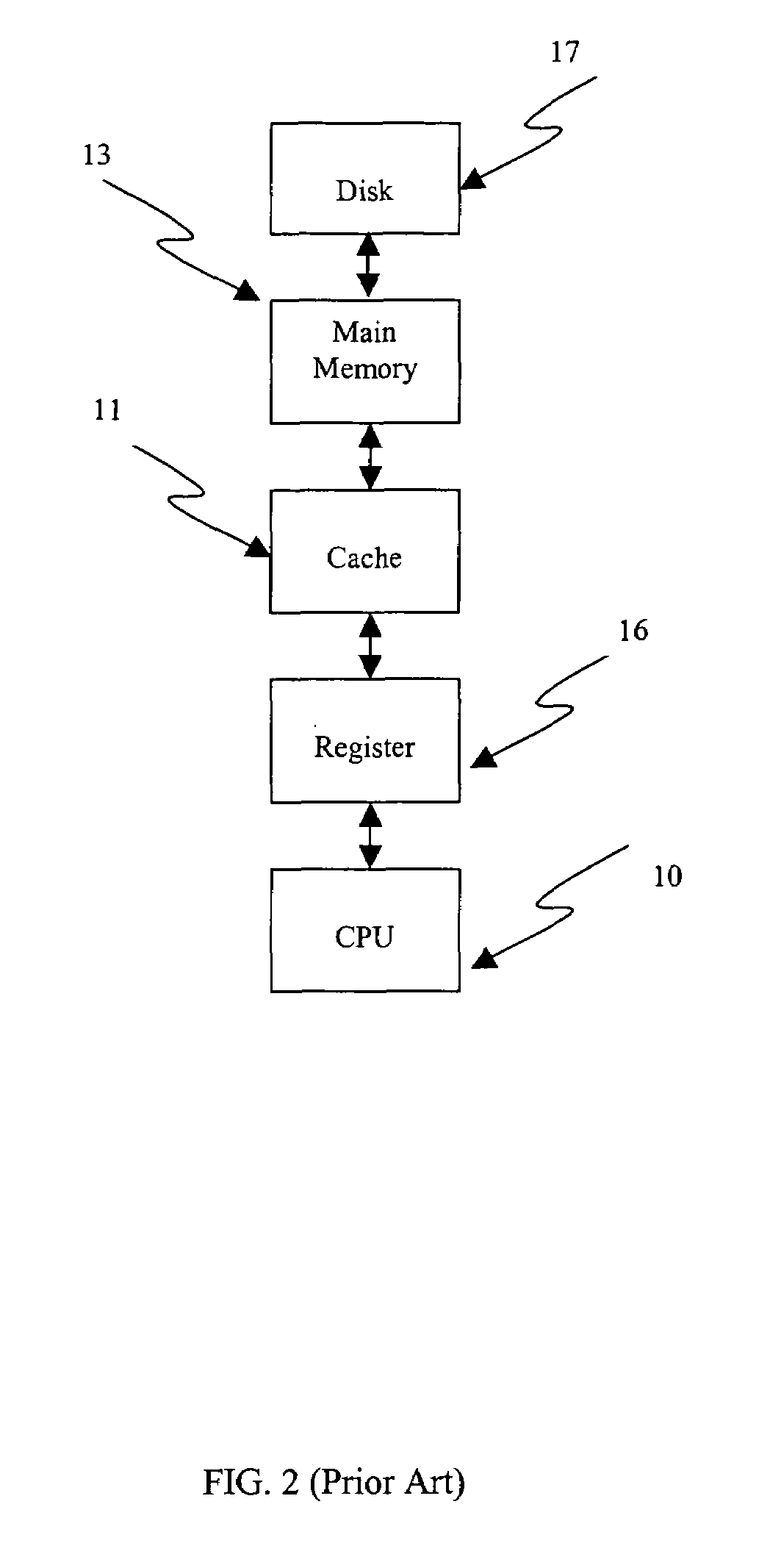

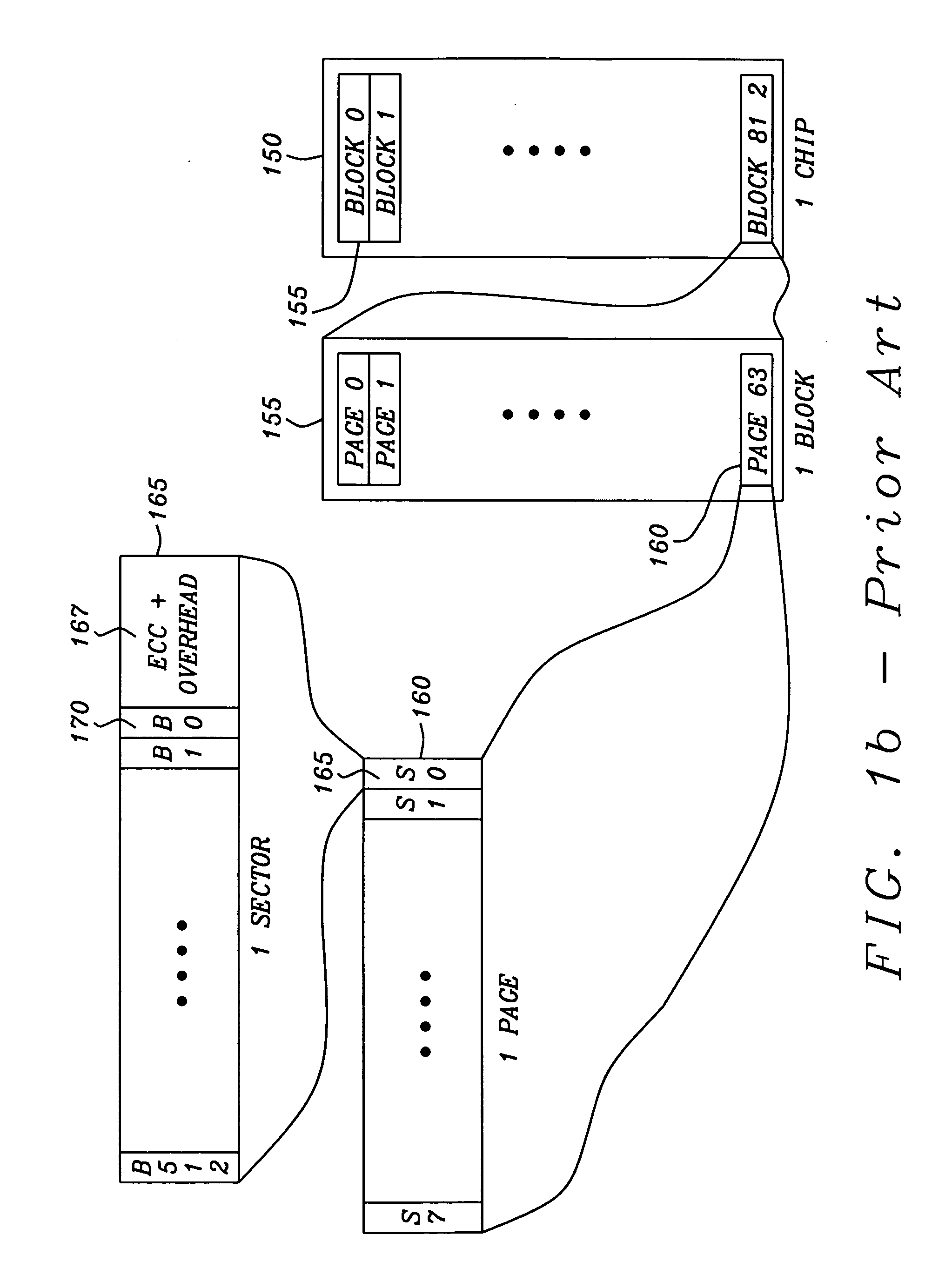

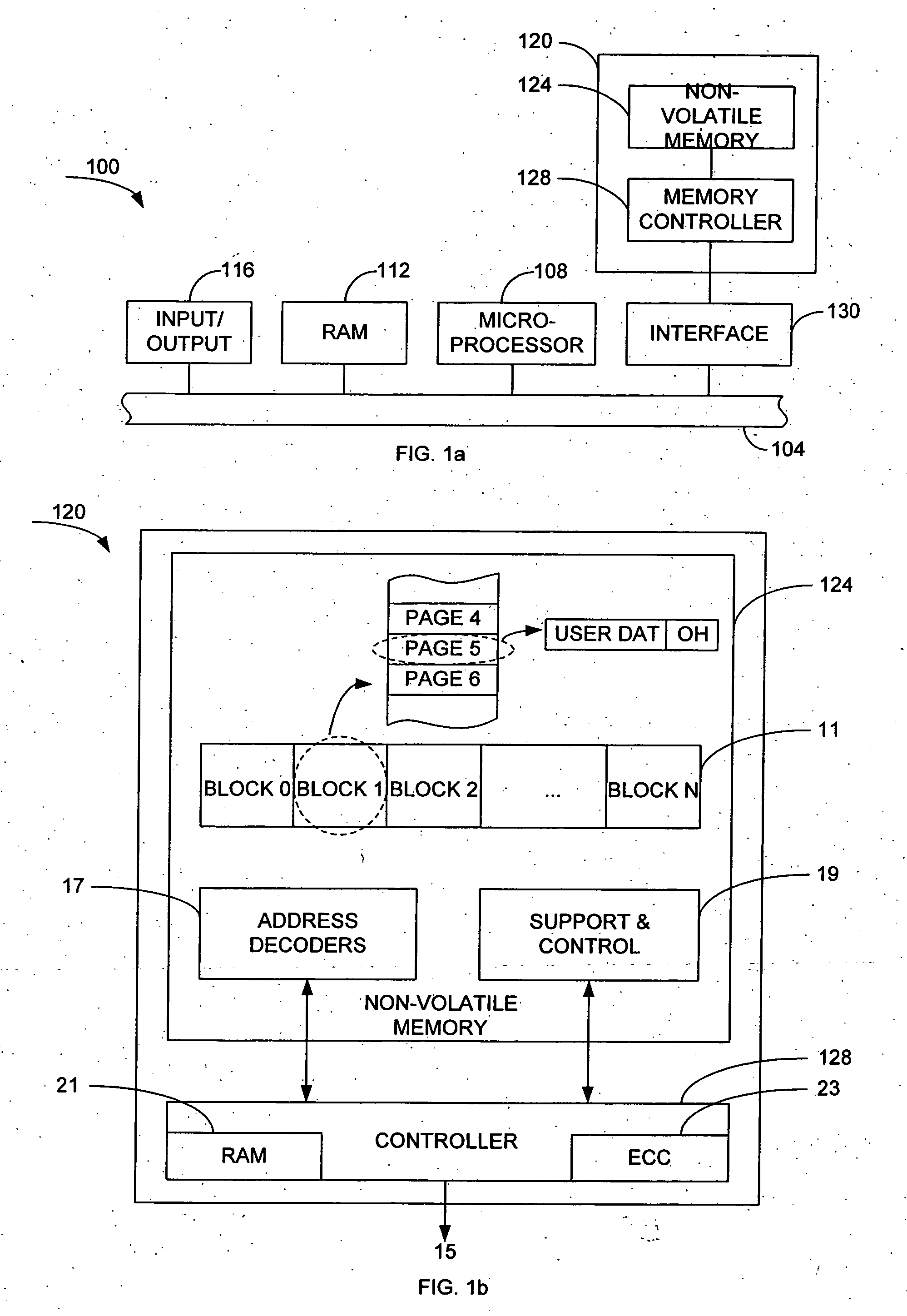

Nonvolatile memory unit with specific cache

InactiveUS20050015557A1Reduce frequencyAvoid actionMemory architecture accessing/allocationInput/output to record carriersOperating systemVolatile memory

The invention provides a method for organizing a writing operation to a nonvolatile memory. The method comprises setting a specific cache area, into which a specific data belonging to a specific group of logical blocks is to be written. It is determined whether or not the writing operation is a random write. If the writing operation is the random write, then the following steps are performed: determining whether or not the writing operation is to write a data that is belonging to the specific group of logical blocks; and writing the data into the specific cache area if the data is belonging to the specific group of logical blocks. As a result, a swap action between a data block and a writing block can be avoided during a random write operation. A storage structure in a nonvolatile memory device are organized to perform the forgoing writing operation.

Owner:SOLID STATE SYST

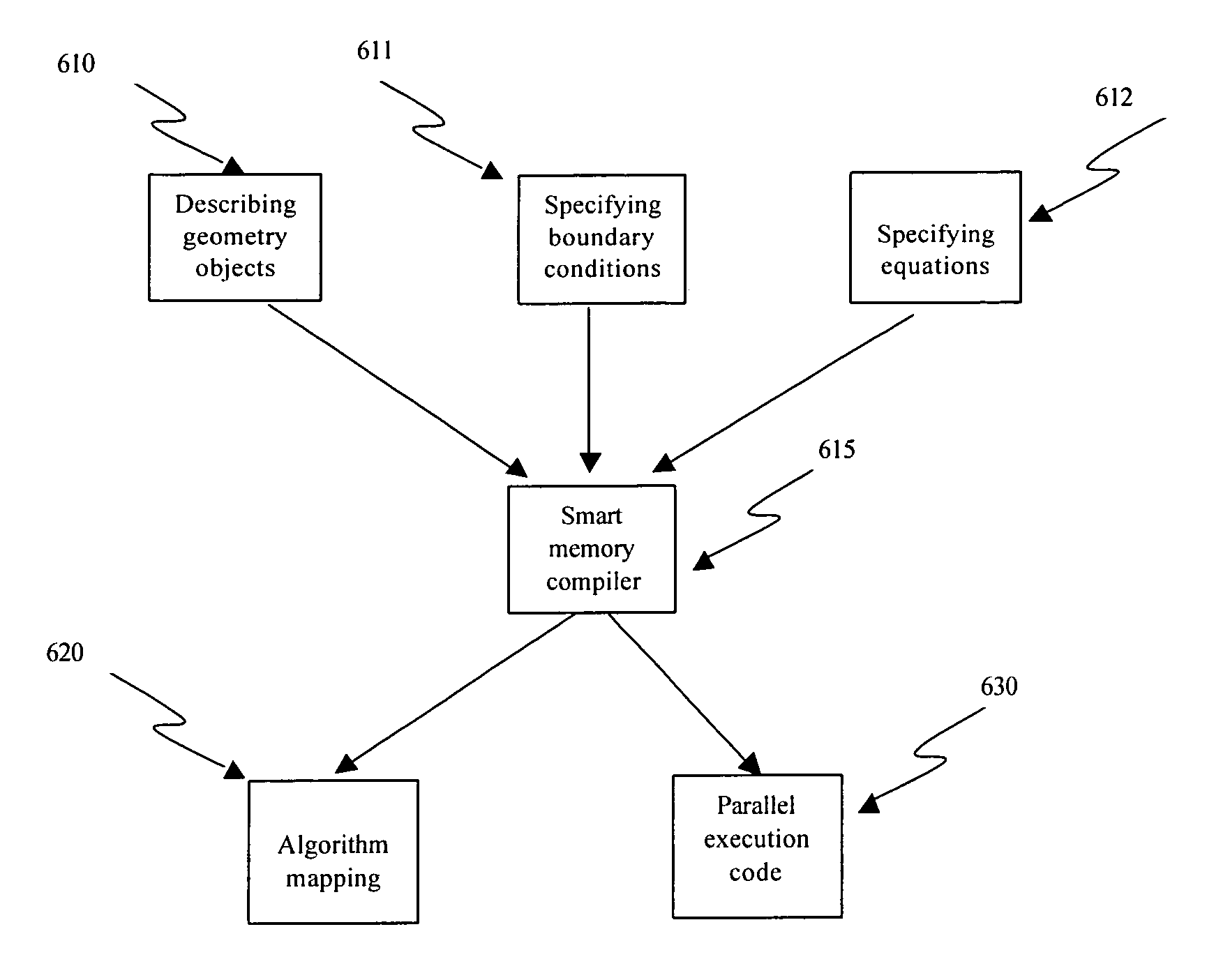

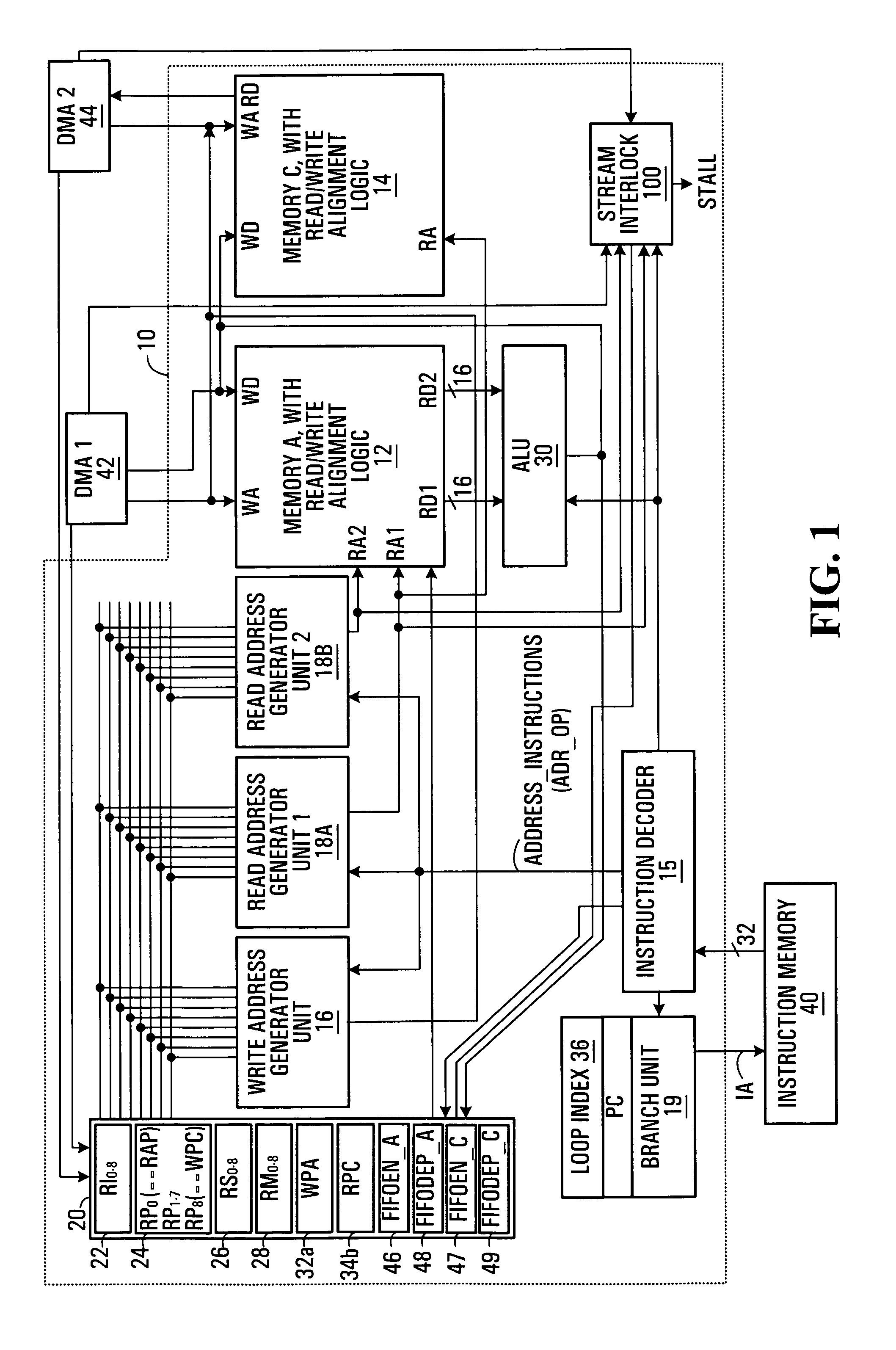

Algorithm mapping, specialized instructions and architecture features for smart memory computing

InactiveUS7546438B2Improve performanceLow costMultiplex system selection arrangementsDigital computer detailsSmart memoryExecution unit

A smart memory computing system that uses smart memory for massive data storage as well as for massive parallel execution is disclosed. The data stored in the smart memory can be accessed just like the conventional main memory, but the smart memory also has many execution units to process data in situ. The smart memory computing system offers improved performance and reduced costs for those programs having massive data-level parallelism. This smart memory computing system is able to take advantage of data-level parallelism to improve execution speed by, for example, use of inventive aspects such as algorithm mapping, compiler techniques, architecture features, and specialized instruction sets.

Owner:STRIPE INC

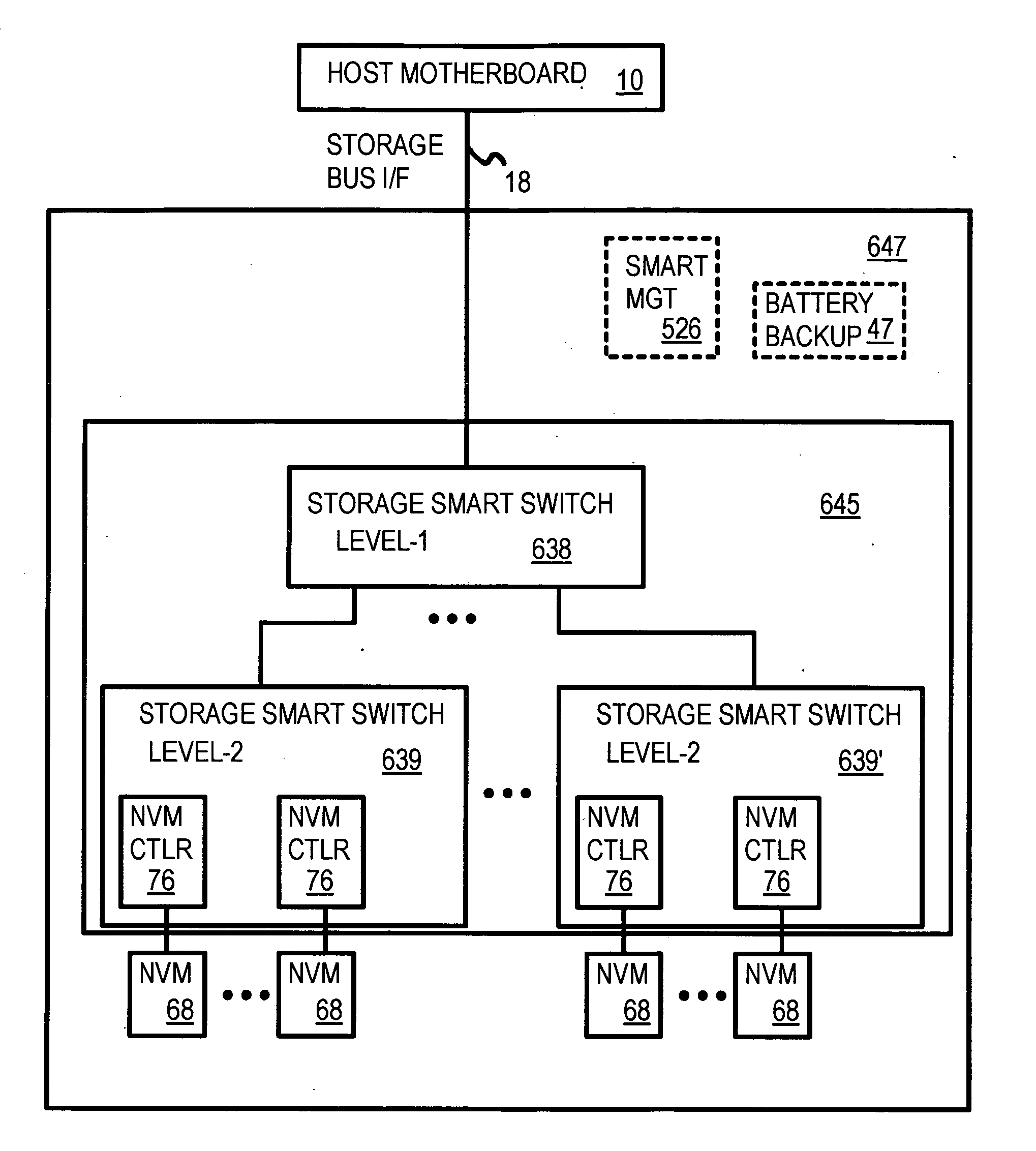

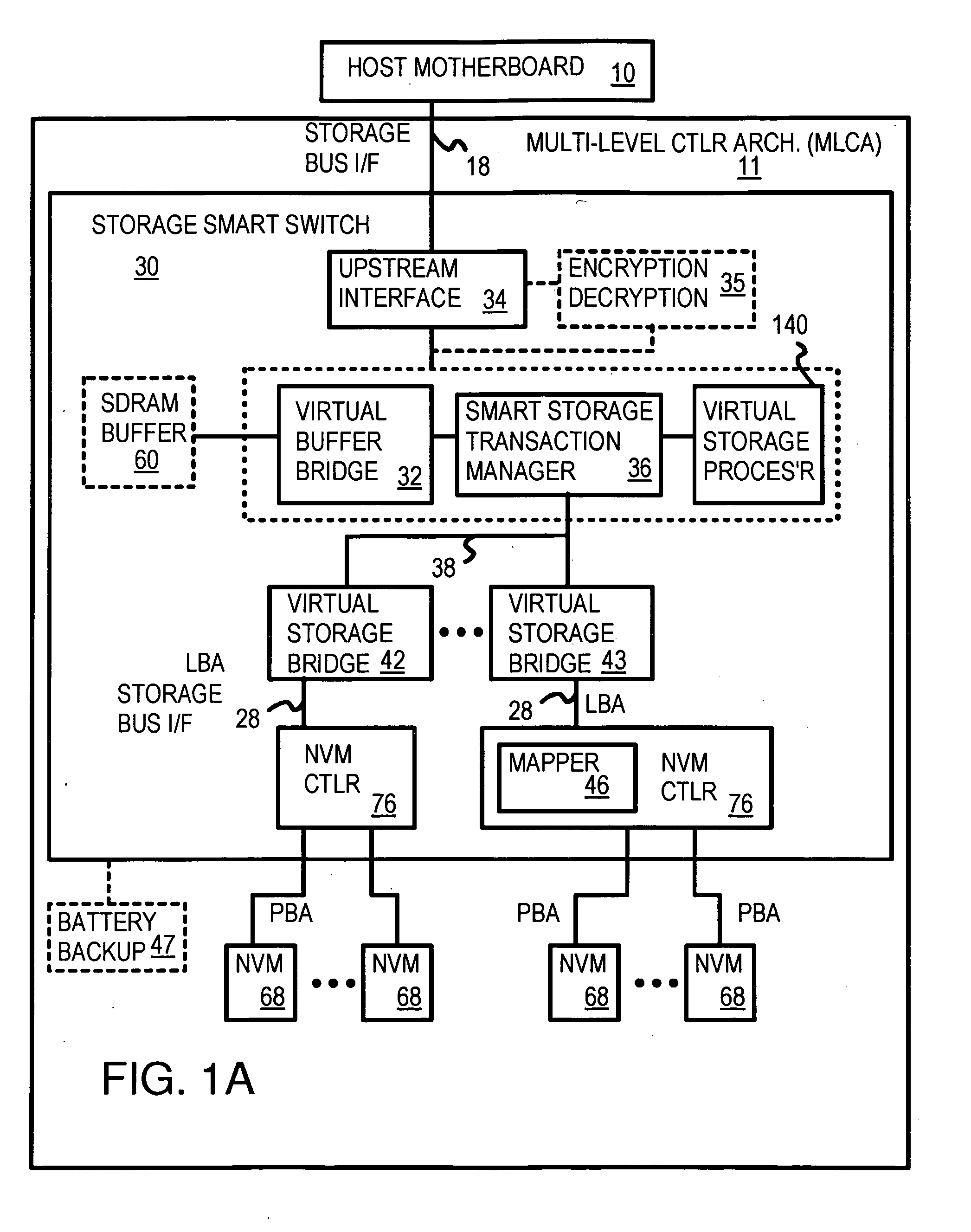

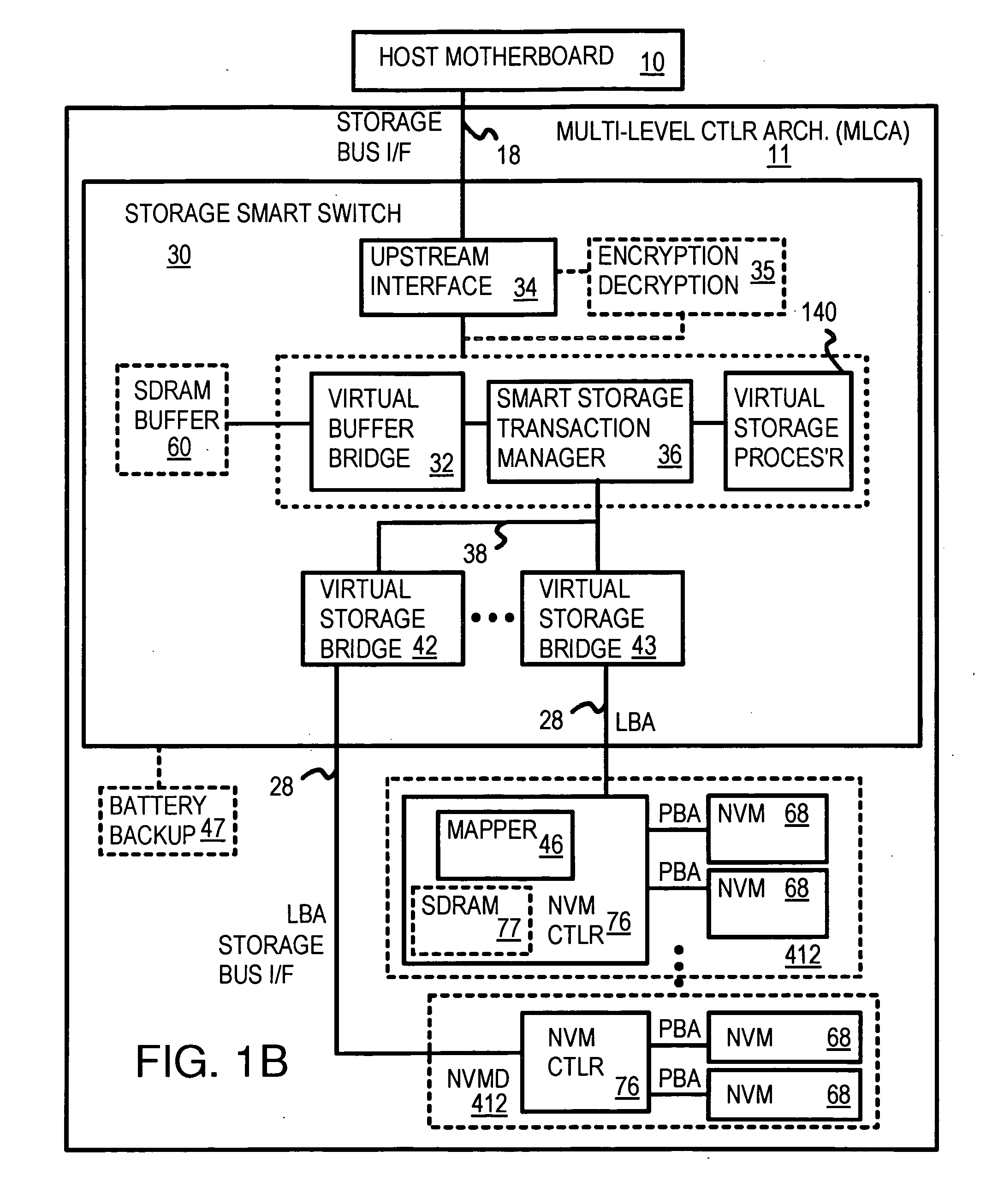

Multi-Level Striping and Truncation Channel-Equalization for Flash-Memory System

InactiveUS20090240873A1Memory architecture accessing/allocationError preventionLogical block addressingData access

Truncation reduces the available striped data capacity of all flash channels to the capacity of the smallest flash channel. A solid-state disk (SSD) has a smart storage switch salvages flash storage removed from the striped data capacity by truncation. Extra storage beyond the striped data capacity is accessed as scattered data that is not striped. The size of the striped data capacity is reduced over time as more bad blocks appear. A first-level striping map stores striped and scattered capacities of all flash channels and maps scattered and striped data. Each flash channel has a Non-Volatile Memory Device (NVMD) with a lower-level controller that converts logical block addresses (LBA) to physical block addresses (PBA) that access flash memory in the NVMD. Wear-leveling and bad block remapping are preformed by each NVMD. Source and shadow flash blocks are recycled by the NVMD. Two levels of smart storage switches enable three-level controllers.

Owner:SUPER TALENT TECH CORP

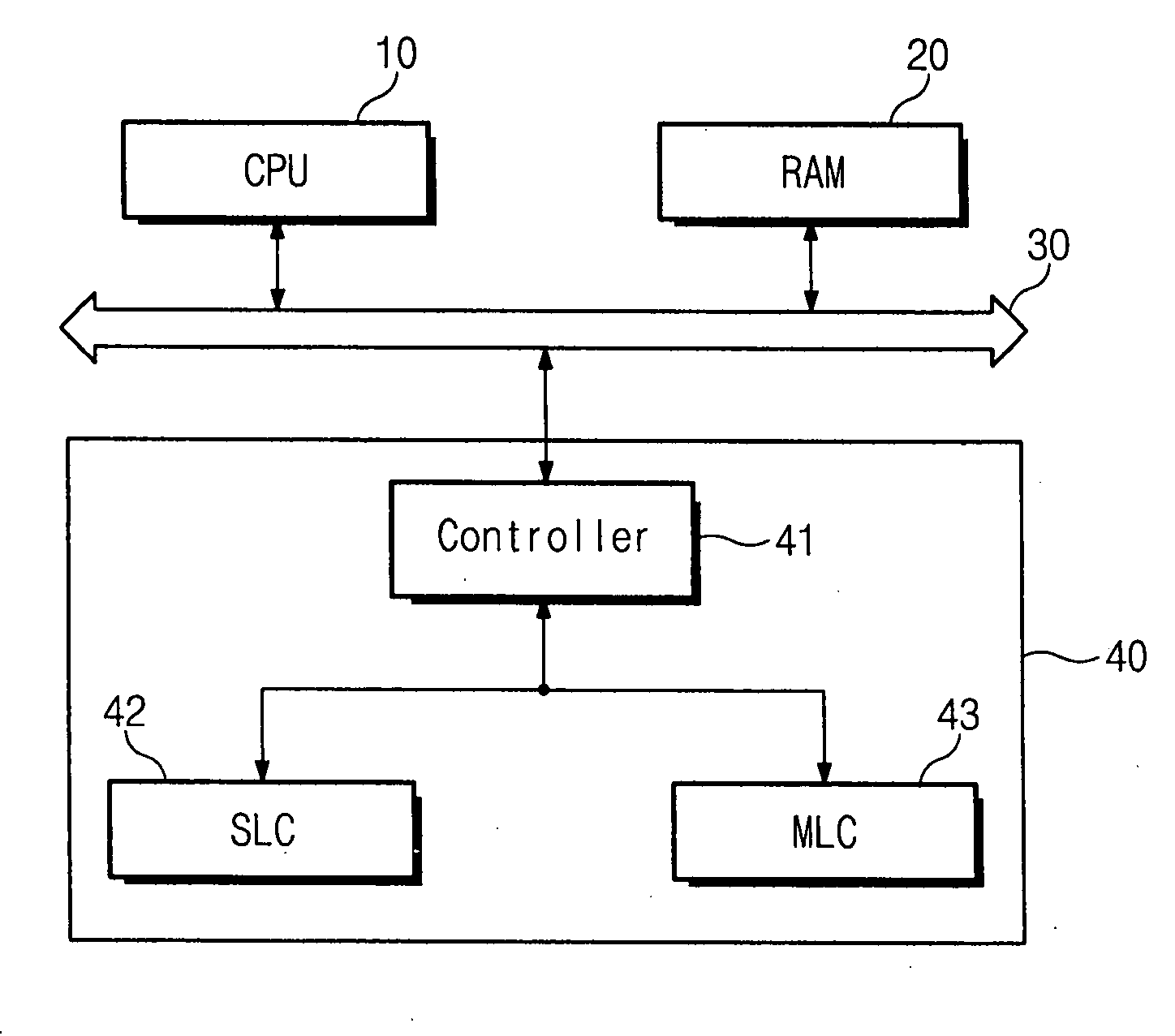

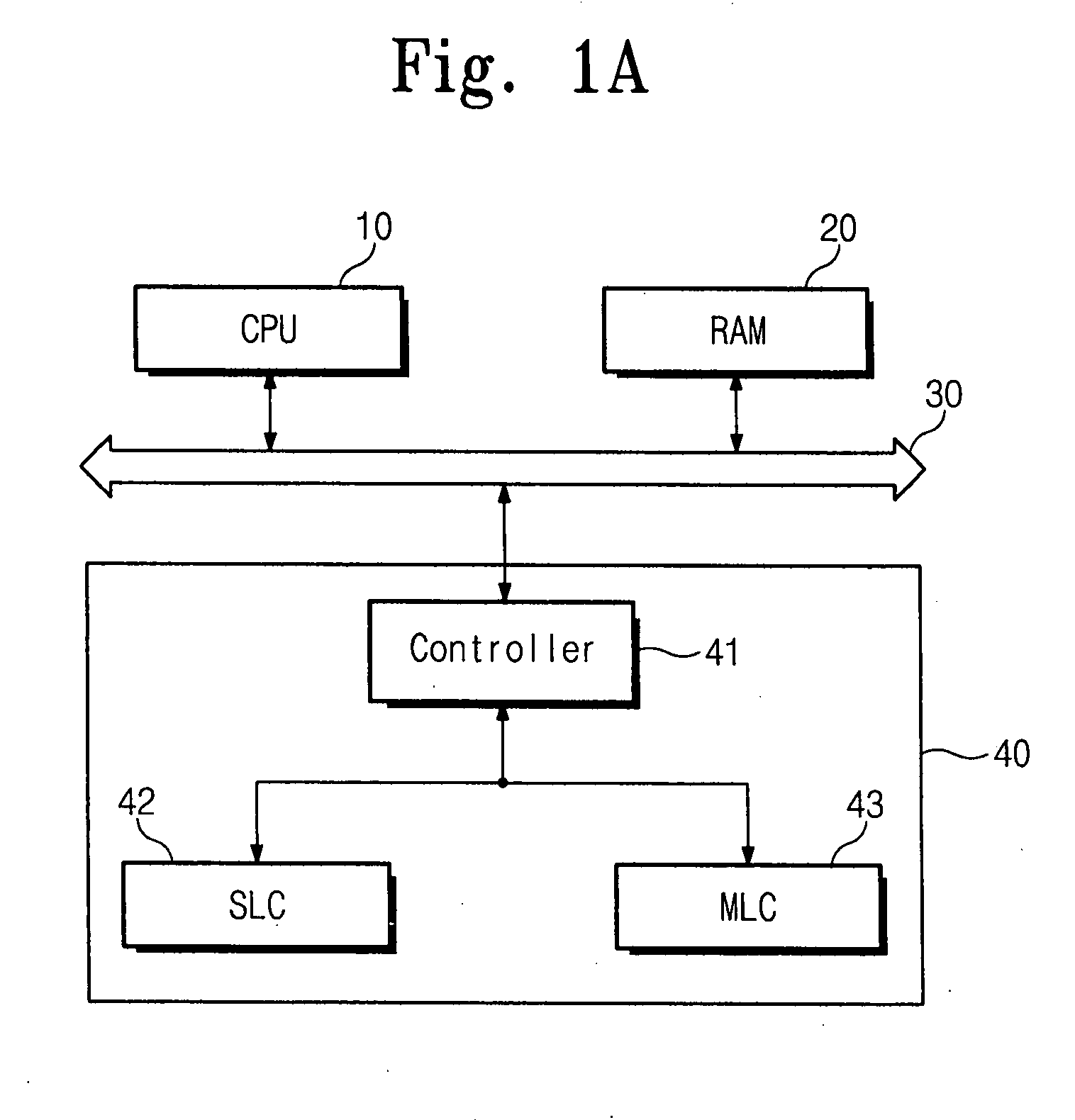

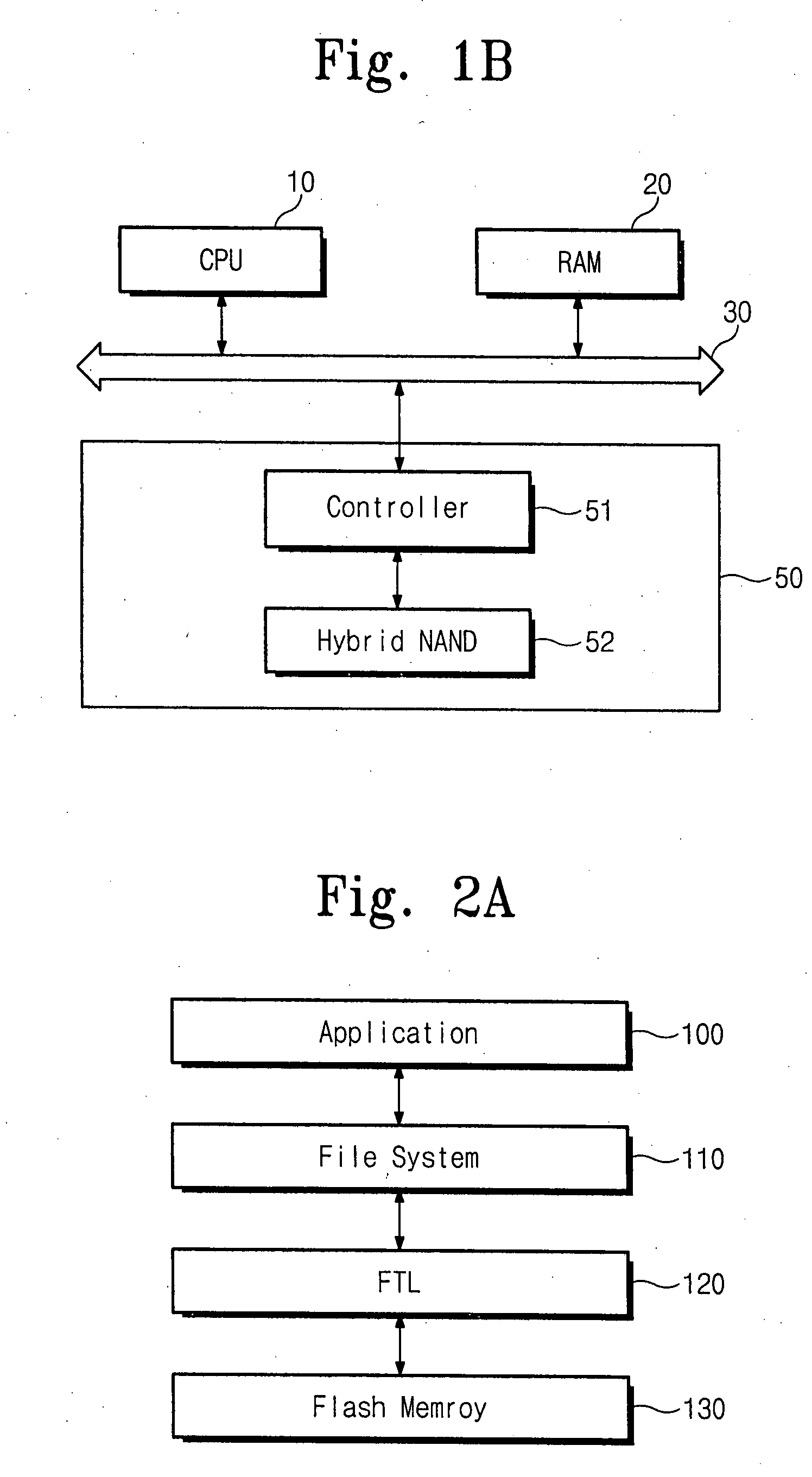

Flash memory device with multi-level cells and method of writing data therein

ActiveUS20080104309A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMulti-level cellAddress mapping

In one aspect, a method of writing data in a flash memory system is provided. The flash memory system forms an address mapping pattern according to a log block mapping scheme. The method includes determining a writing pattern of data to be written in a log block, and allocating one of SLC and MLC blocks to the log block in accordance with the writing pattern of the data.

Owner:SAMSUNG ELECTRONICS CO LTD

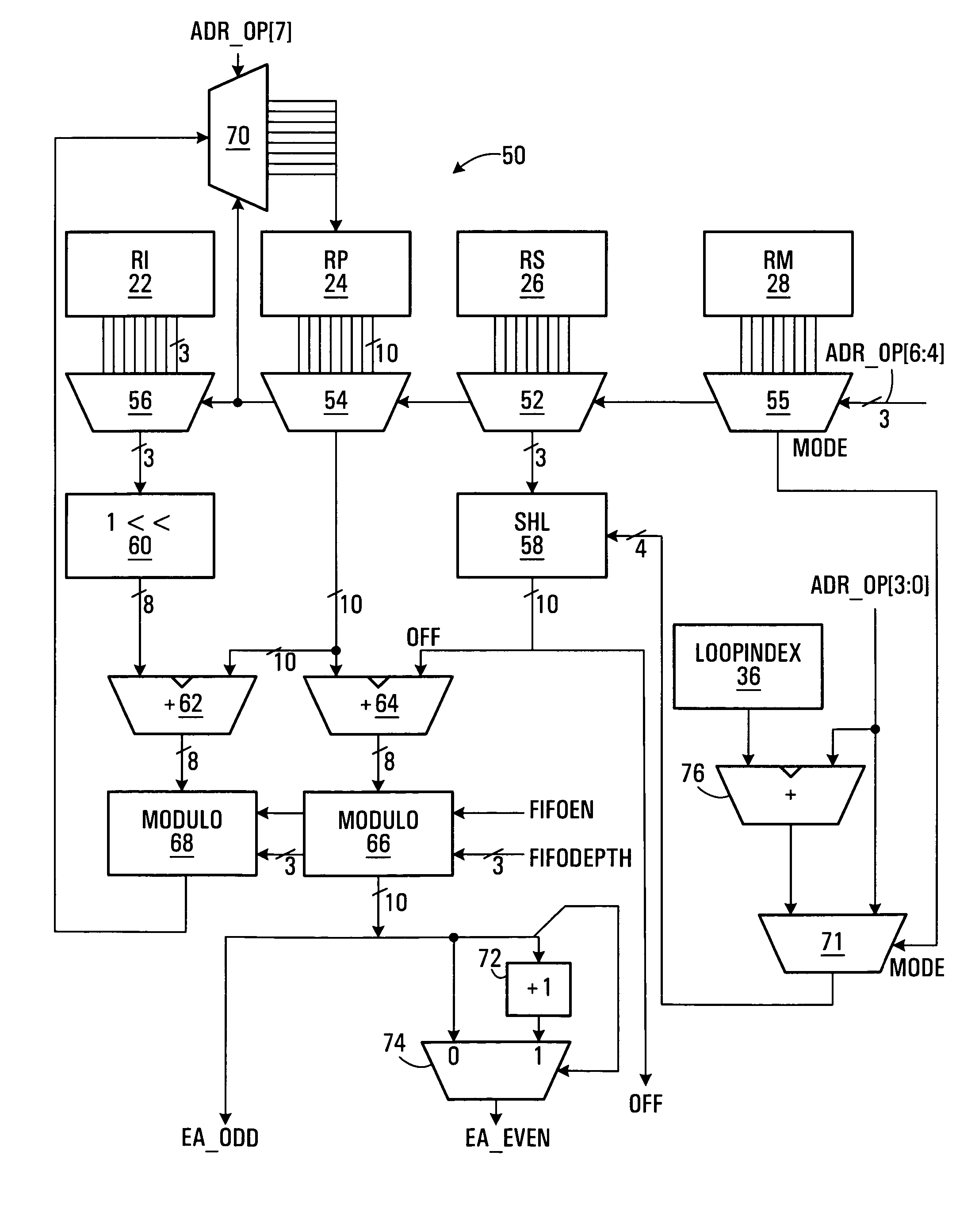

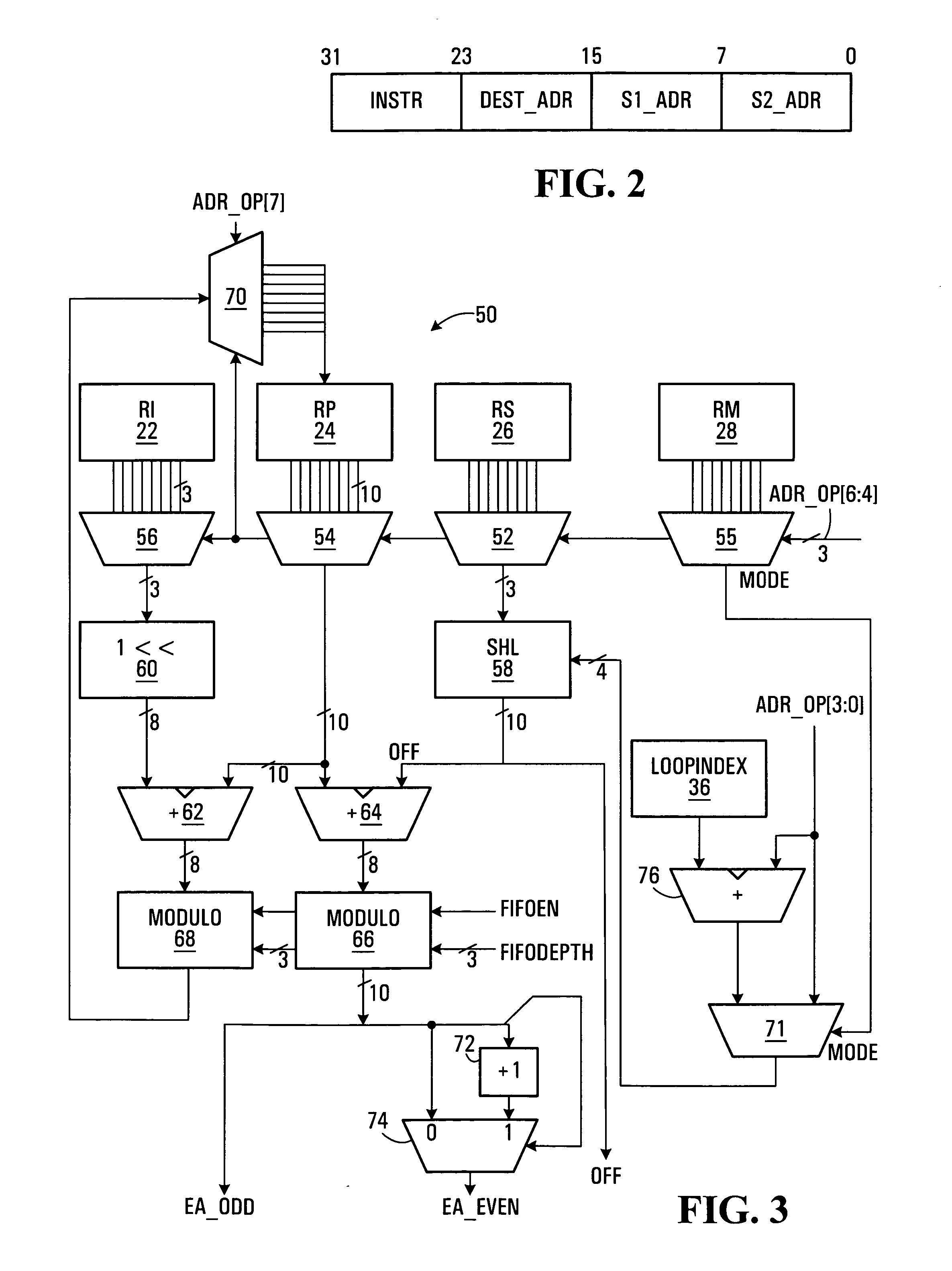

SIMD processor and addressing method

InactiveUS20060047937A1Without unduly consuming processor resourcesMemory adressing/allocation/relocationMicro-instruction address formationMemory addressProcessor register

A single instruction, multiple data (SIMD) processor including a plurality of addressing register sets, used to flexibly calculate effective operand source and destination memory addresses is disclosed. Two or more address generators calculate effective addresses using the register sets. Each register set includes a pointer register, and a scale register. An address generator forms effective addresses from a selected register set's pointer register and scale register; and an offset. For example, the effective memory address may be formed by multiplying the scale value by an offset value and summing the pointer and the scale value multiplied by the offset value.

Owner:AVAGO TECH INT SALES PTE LTD

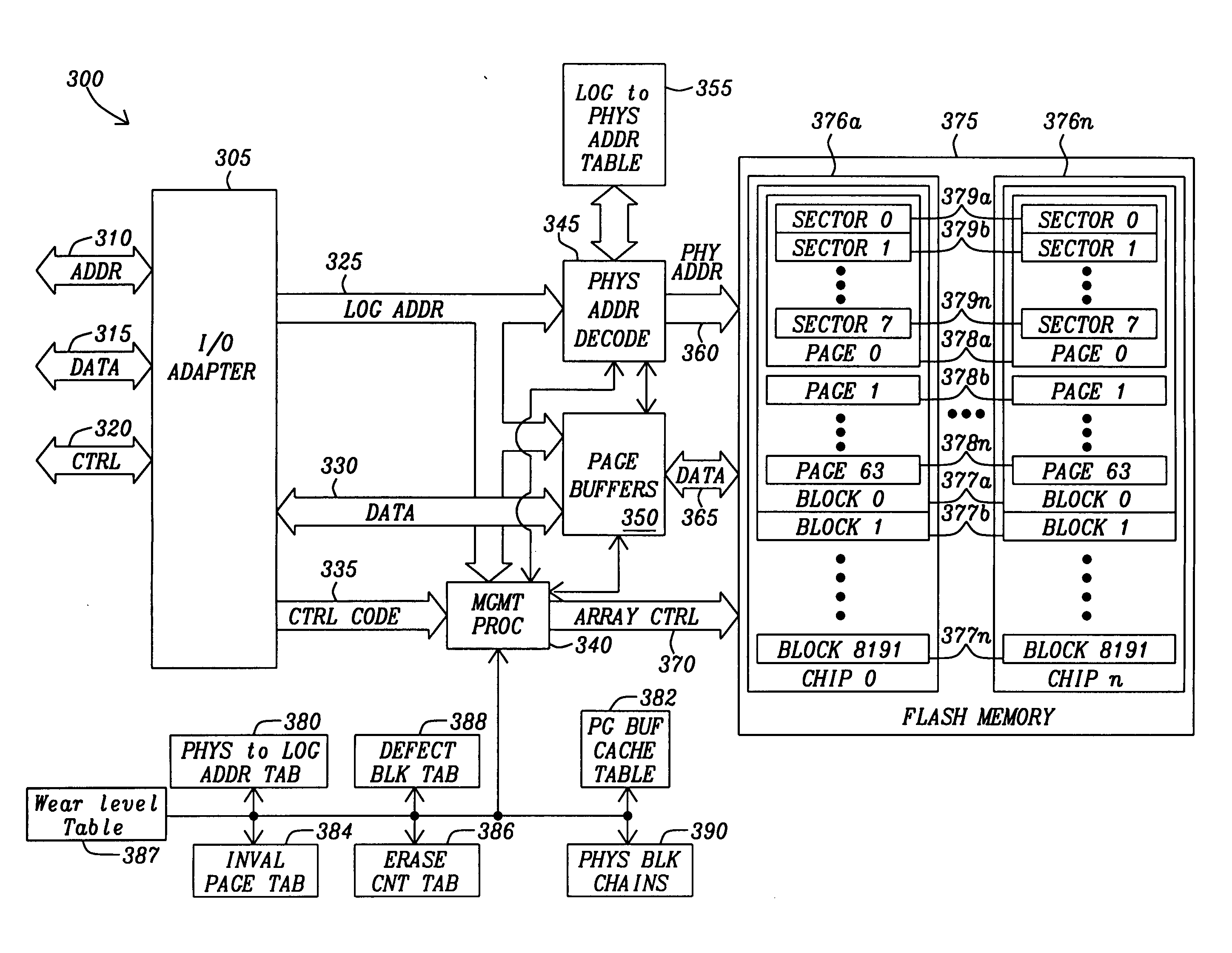

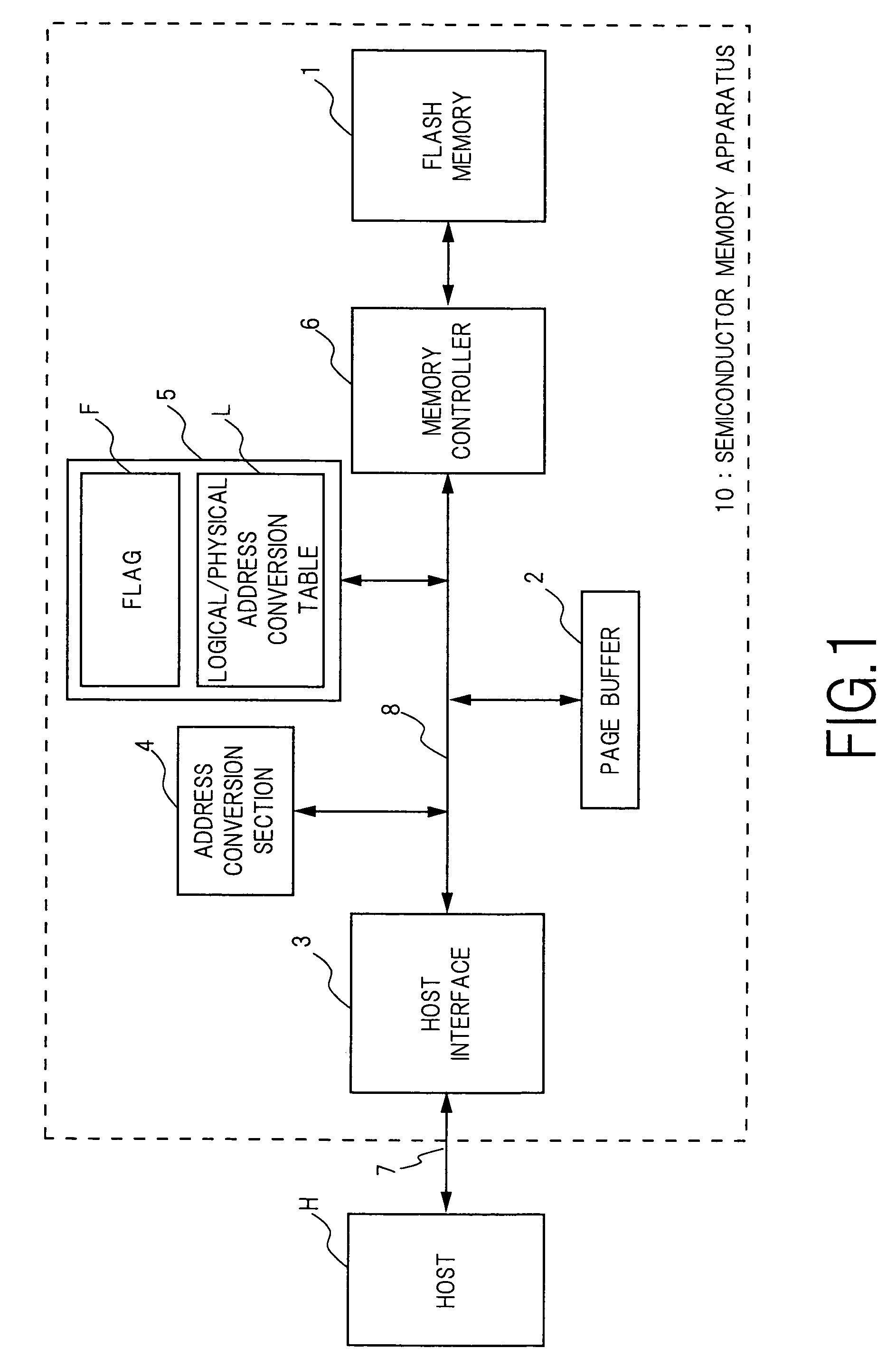

Page based management of flash storage

InactiveUS20110055458A1Memory architecture accessing/allocationMemory adressing/allocation/relocationControl signalPaging

Methods and circuits for page based management of an array of Flash RAM nonvolatile memory devices provide paged base reading and writing and block erasure of a flash storage system. The memory management system includes a management processor, a page buffer, and a logical-to-physical translation table. The management processor is in communication with an array of nonvolatile memory devices within the flash storage system to provide control signals for the programming of selected pages, erasing selected blocks, and reading selected pages of the array of nonvolatile memory devices.

Owner:PIONEER CHIP TECH

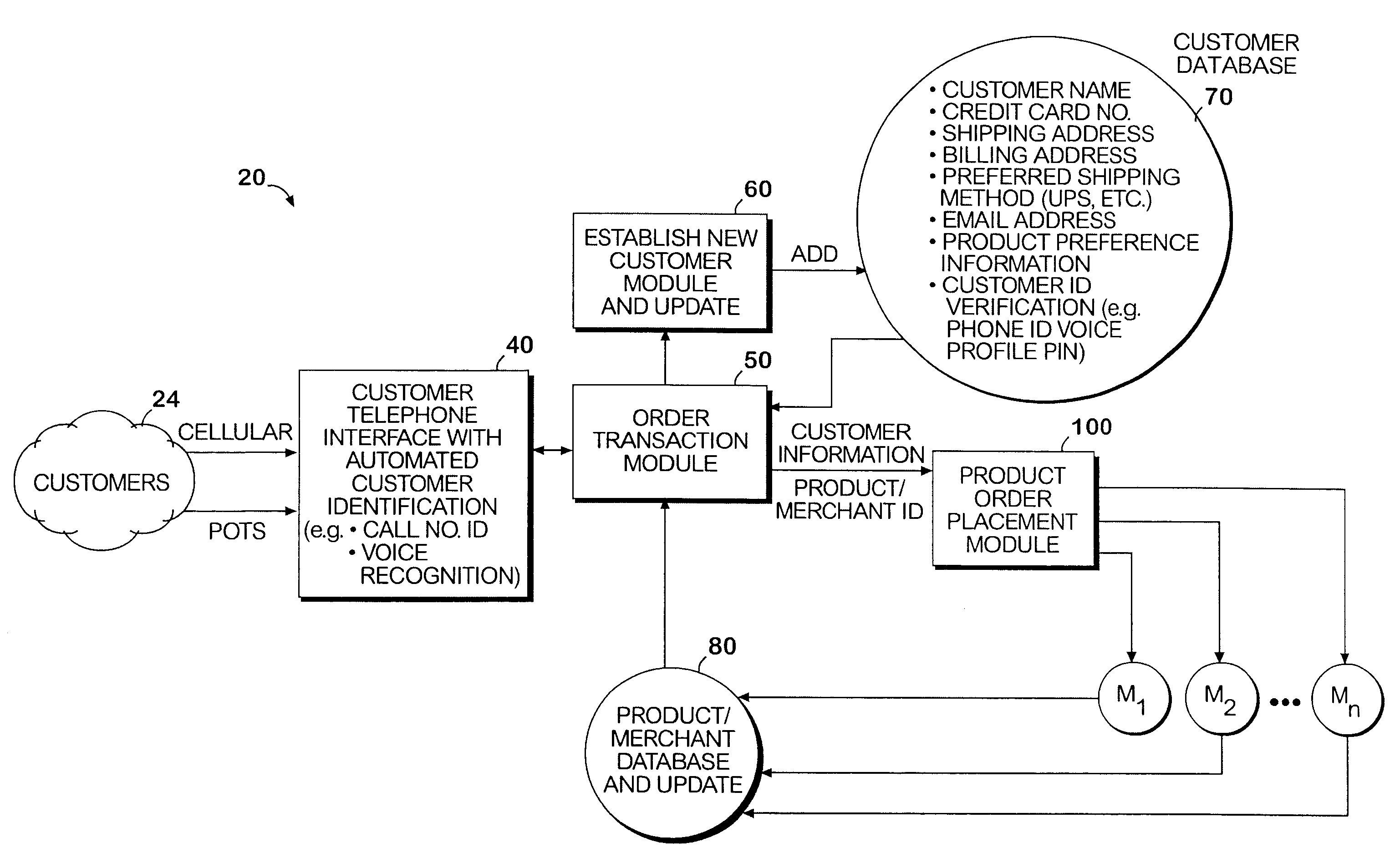

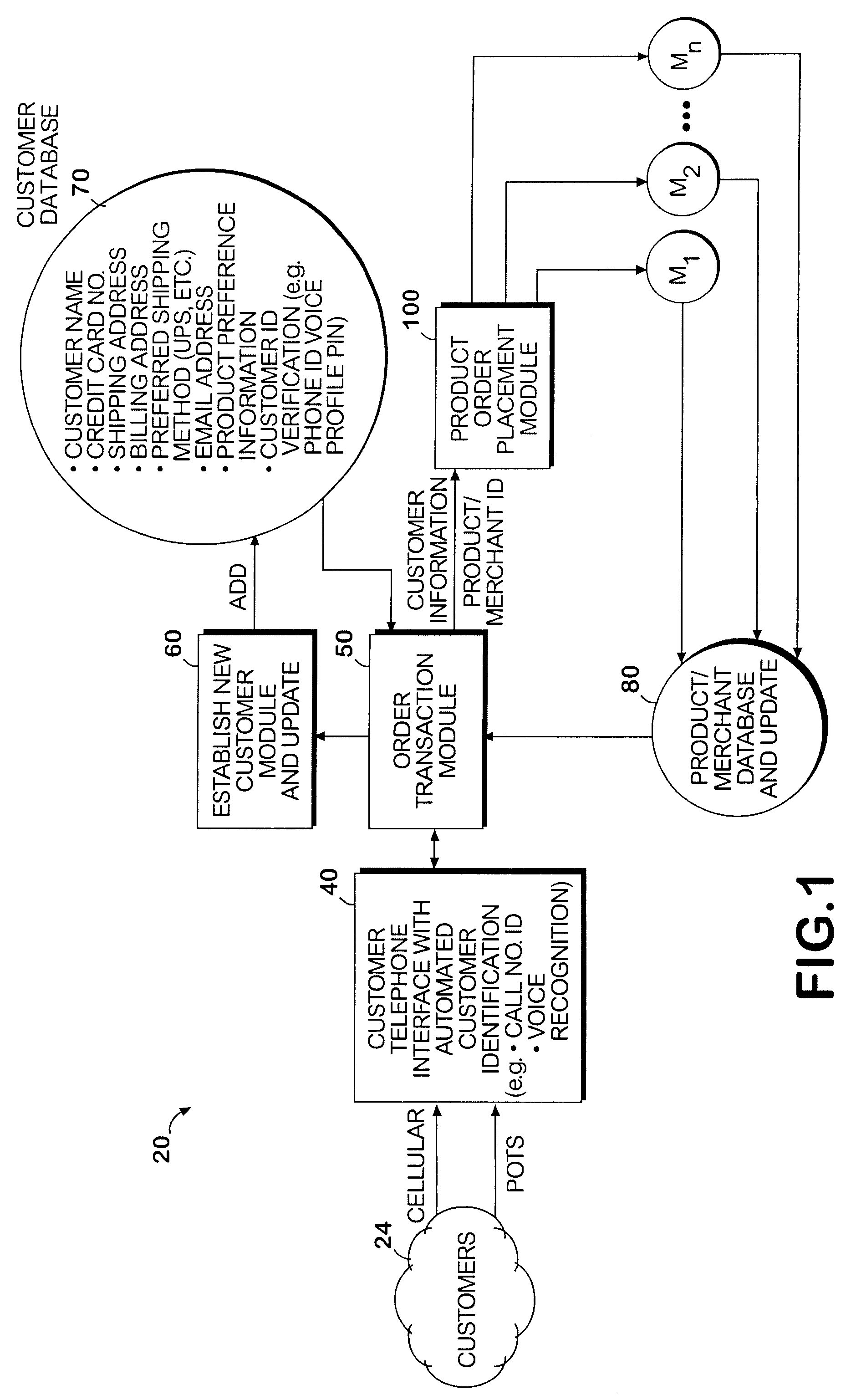

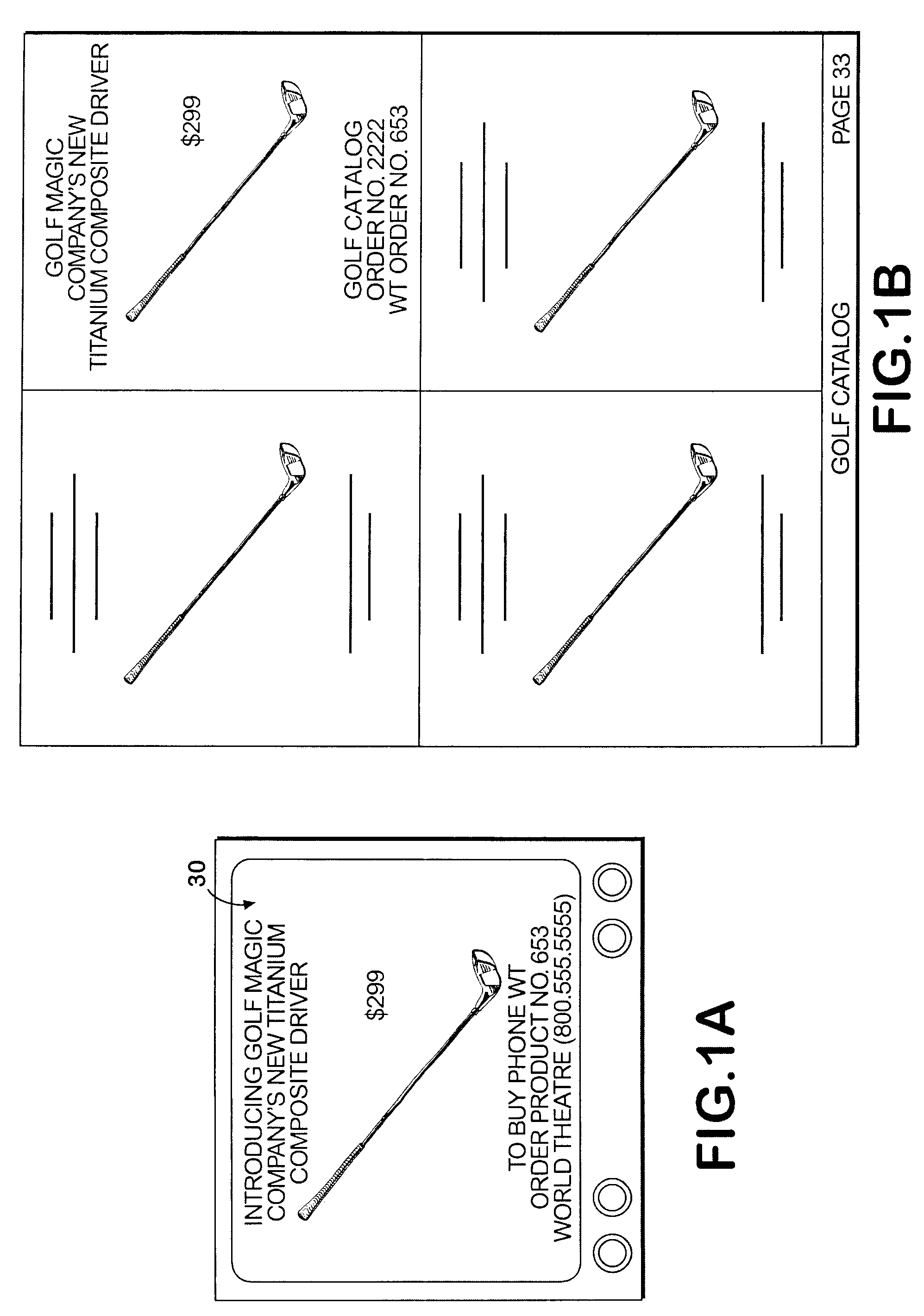

System and method permitting customers to order products from multiple participating merchants

A universal automated order processing system represents multiple (e.g., hundreds or thousands) participating merchants who offer their products through the system. Customers become qualified for using the system by supplying a set of information (e.g., name, credit card number, shipping address) that is stored in a customer database. When a customer wishes to order a product, the customer calls the system, customer identity is automatically confirmed, the customer enters a product order number and the complete order is routed to the appropriate merchant with the information necessary for the merchant to fulfill the order. Available credit verification and other aspects of credit card transactions may be handled by either the system operator or the merchant. The system operator may offer revolving credit. The system may also be used to provide potential customers of the merchants with free product information.

Owner:WORLD THEATRE INC +1

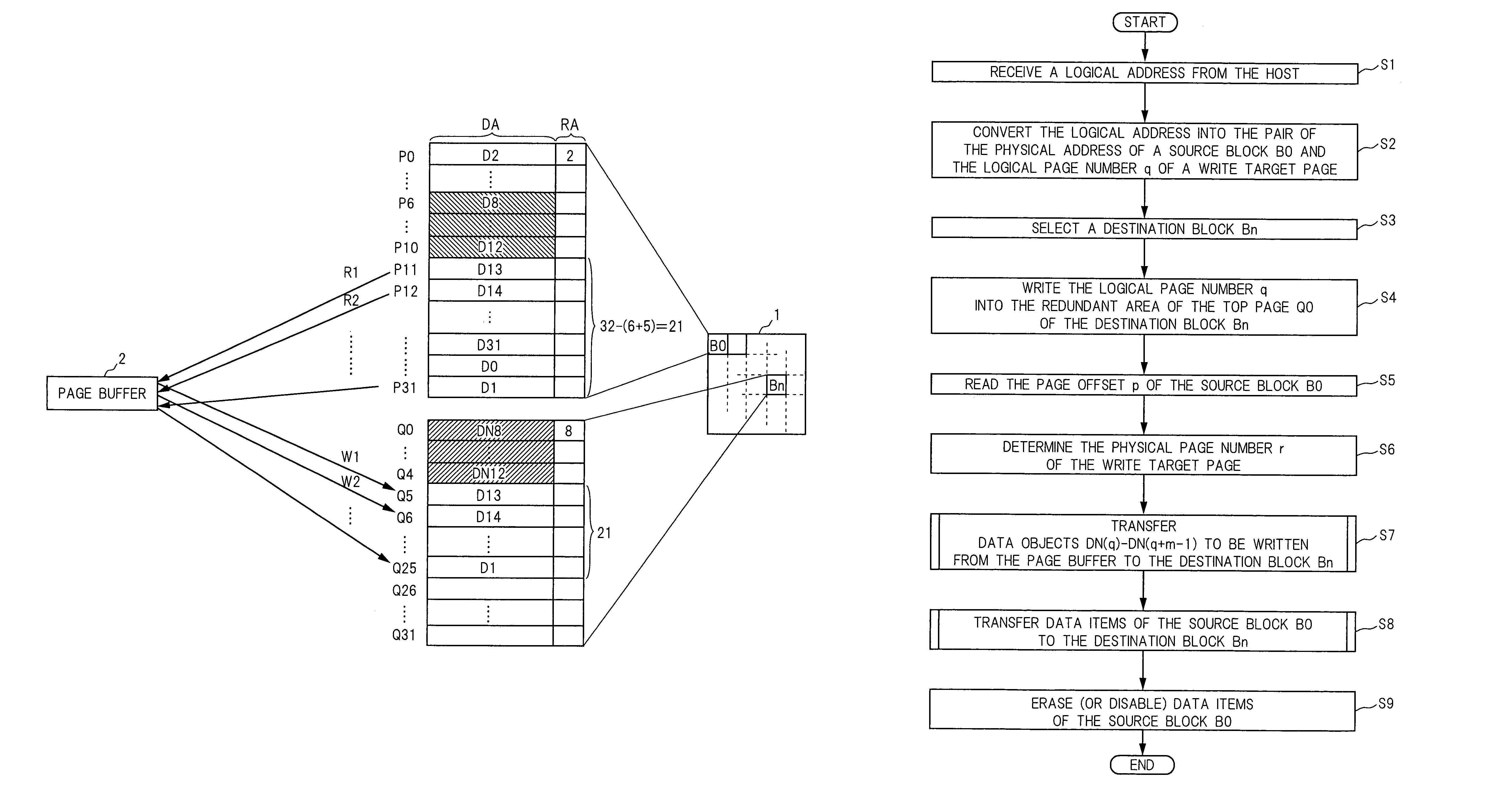

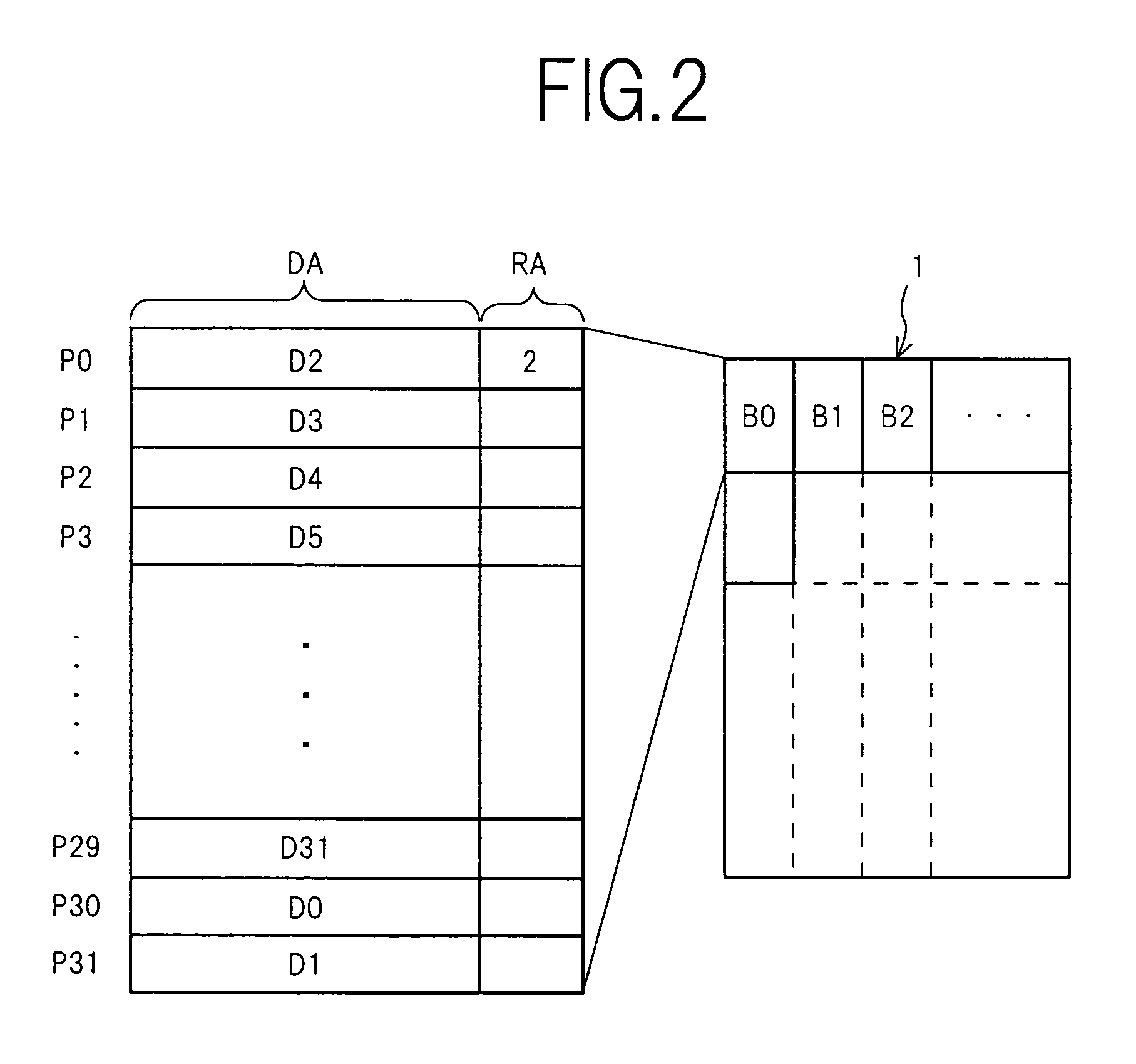

Semiconductor memory device and method for writing data into flash memory

ActiveUS7107389B2Small sizeReduce waiting timeMemory architecture accessing/allocationMemory adressing/allocation/relocationHome pageDatabase

A source block (B0) and the logical page number (“8”) of a write target page are identified from the logical address of the write target page. Data objects (DN8, DN9, . . . , DN12) to be written, which a host stores in a page buffer (2), are written into the data areas (DA) of the pages (Q0, Q1, . . . , Q4) of a destination block (Bn), starting from the top page (Q0) in sequence. The logical page number (“8”) of the write target page is written into the redundant area (RA) of the top page (Q0). The physical page number (“6=8−2”) of the write target page is identified, based on the logical page number (“8”) of the write target page and the page offset (“2”) of the source block (B0). When notified by the host of the end of the sending of the data objects (DN8, . . . , DN12), the data items (D13, . . . , D31, D0, D1, . . . , D7) in the source block (B0) are transferred to the pages (Q5, Q6, . . . , Q31) in the destination block (Bn) via the page buffer (2) sequentially and cyclically, starting from the page (P11) situated cyclically behind the write target page (P6) by the number (“5”) of pages of the data objects (DN8, . . . , DN12).

Owner:PANASONIC CORP

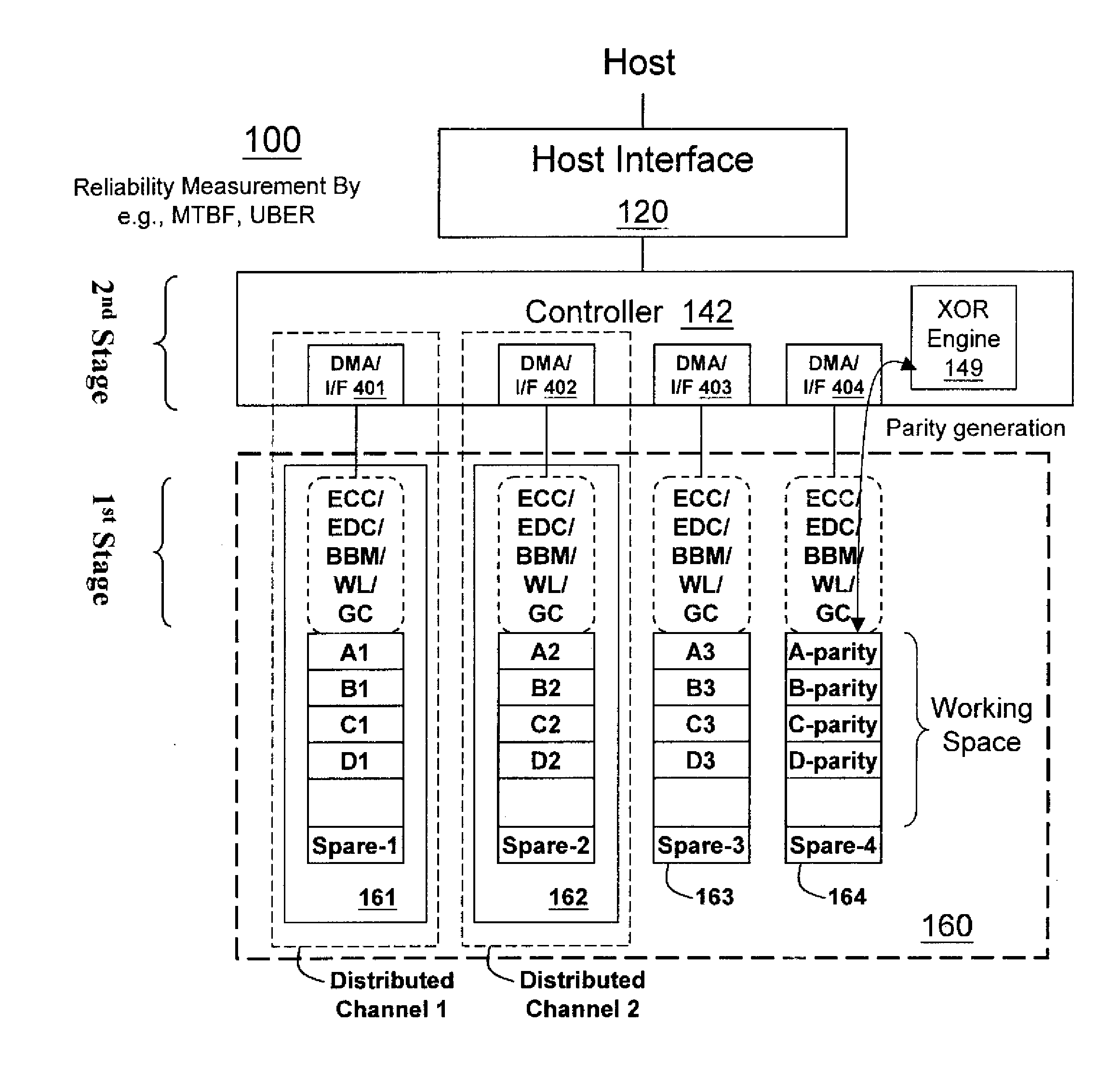

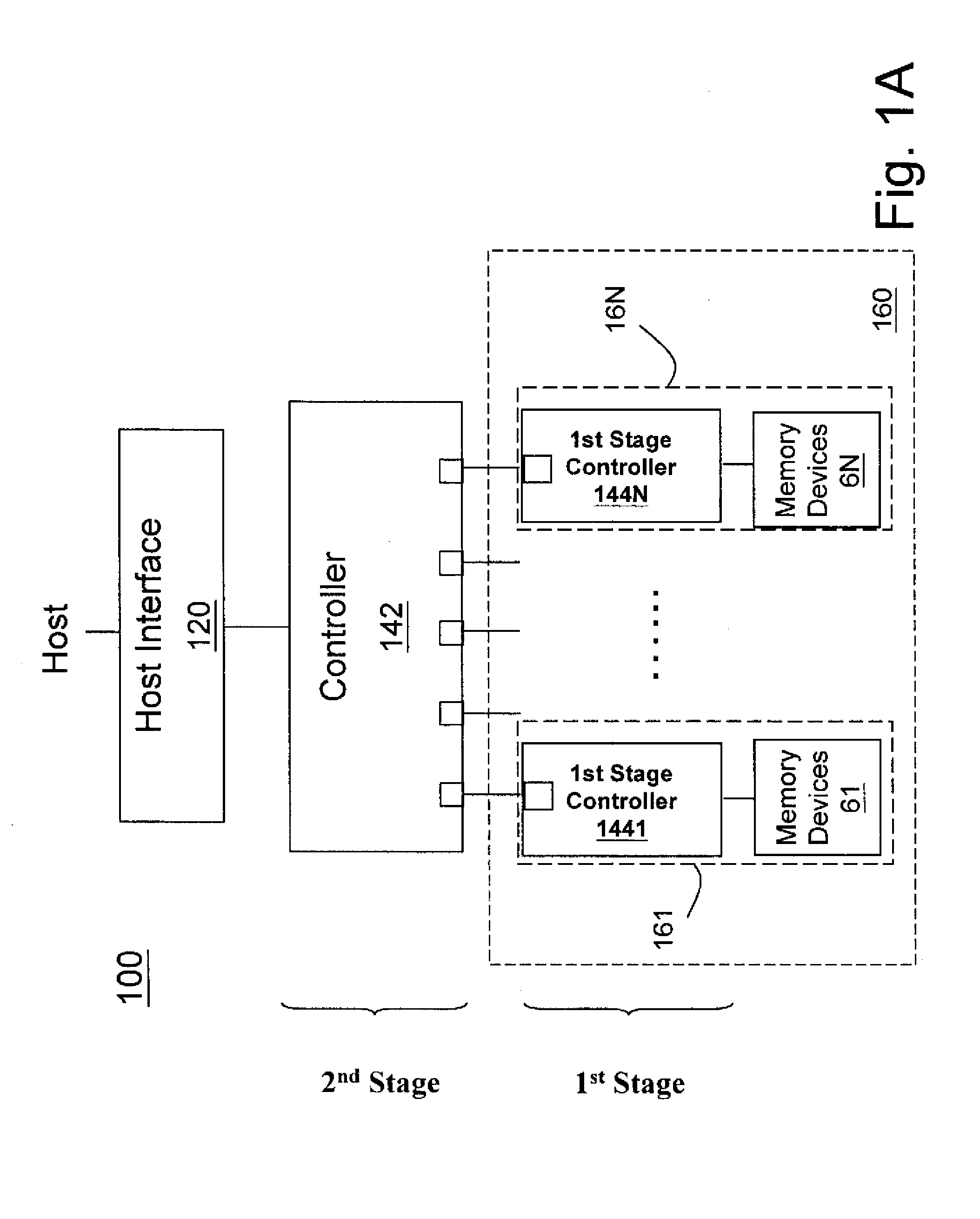

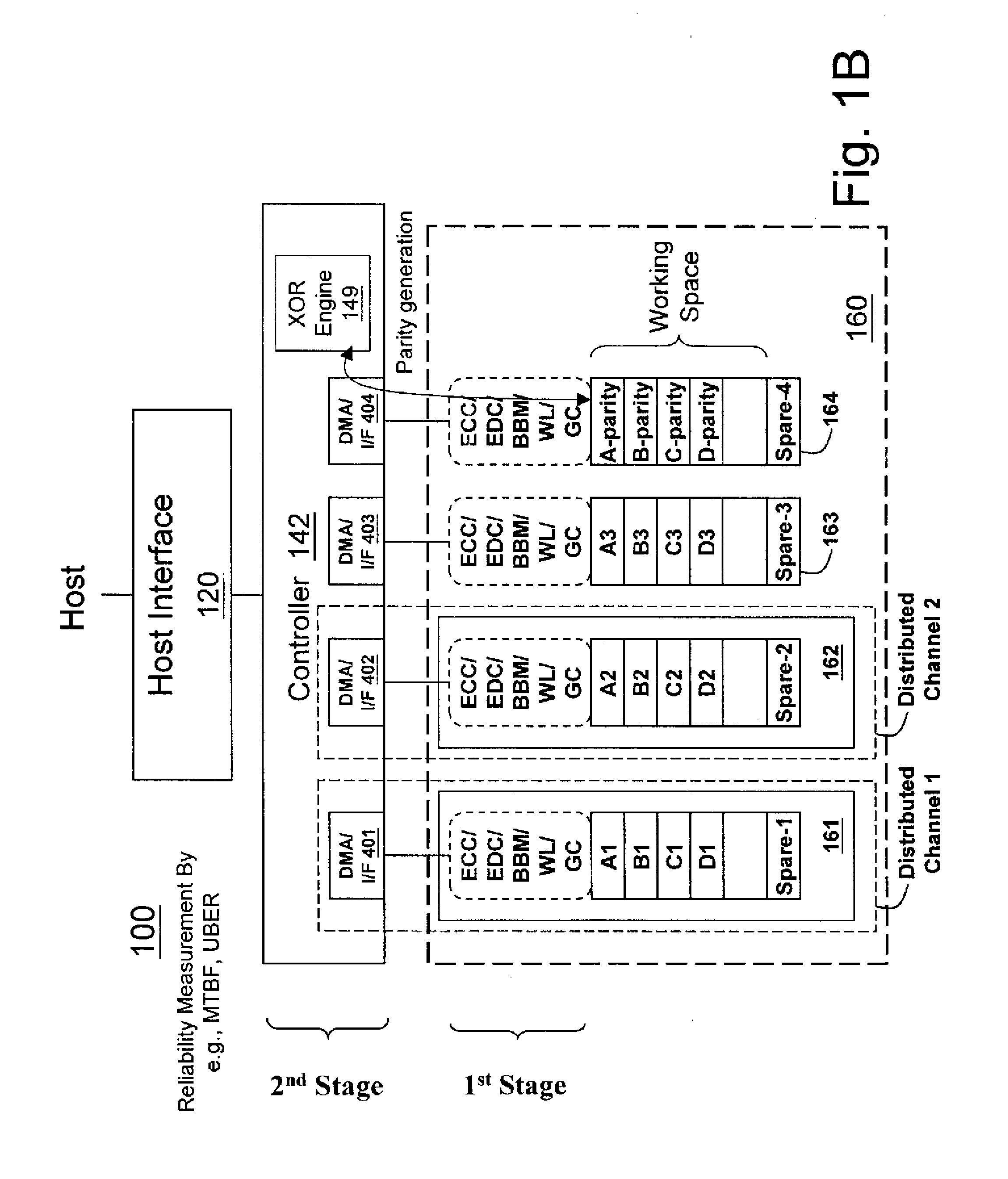

Non-volatile memory data storage system with reliability management

InactiveUS20100017650A1Improve reliabilityReliable managementMemory loss protectionMemory adressing/allocation/relocationRAIDData recovery

A non-volatile memory data storage system, comprising: a host interface for communicating with an external host; a main storage including a first plurality of flash memory devices, wherein each memory device includes a second plurality of memory blocks, and a third plurality of first stage controllers coupled to the first plurality of flash memory devices; and a second stage controller coupled to the host interface and the third plurality of first stage controller through an internal interface, the second stage controller being configured to perform RAID operation for data recovery according to at least one parity.

Owner:NANOSTAR CORP

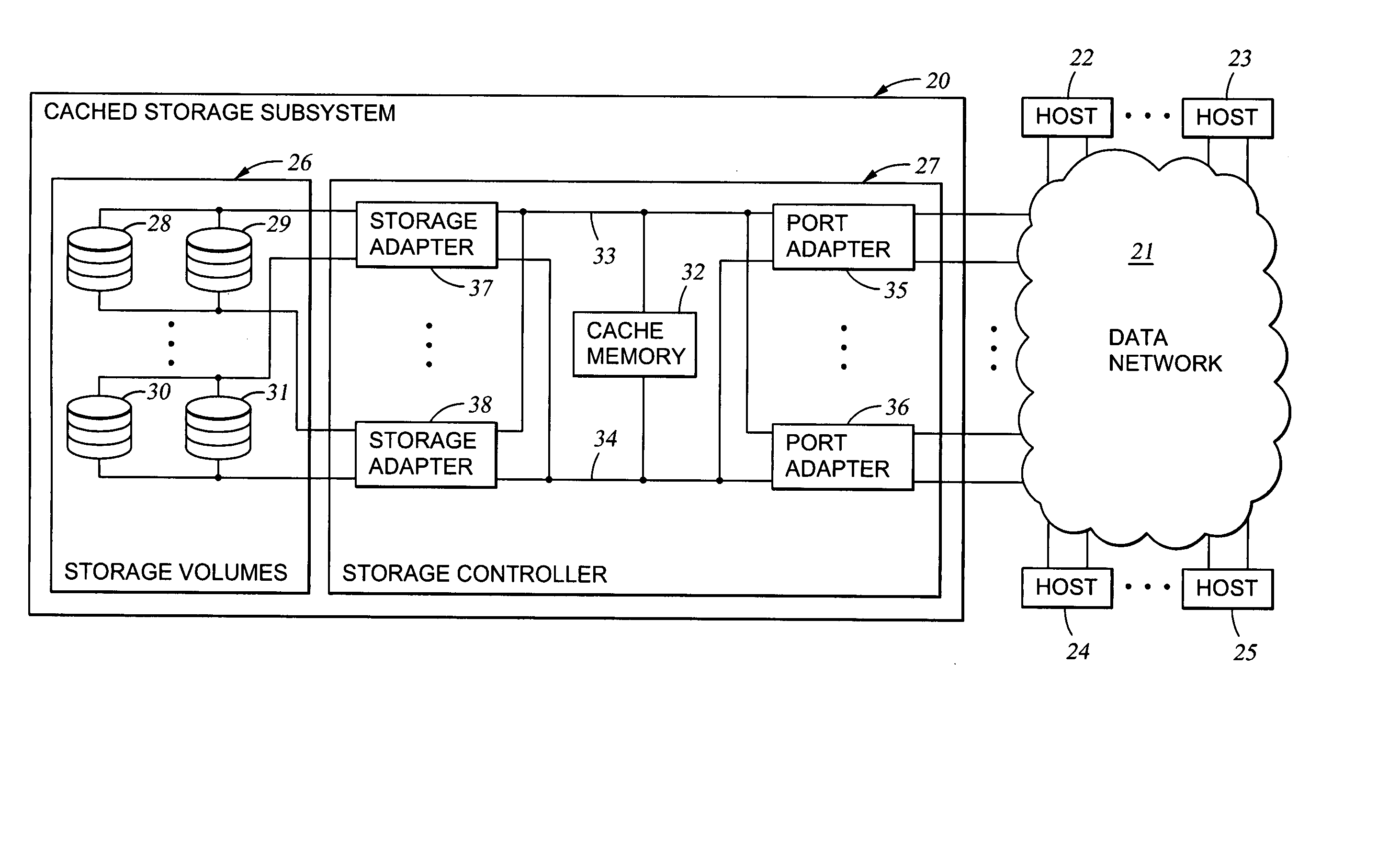

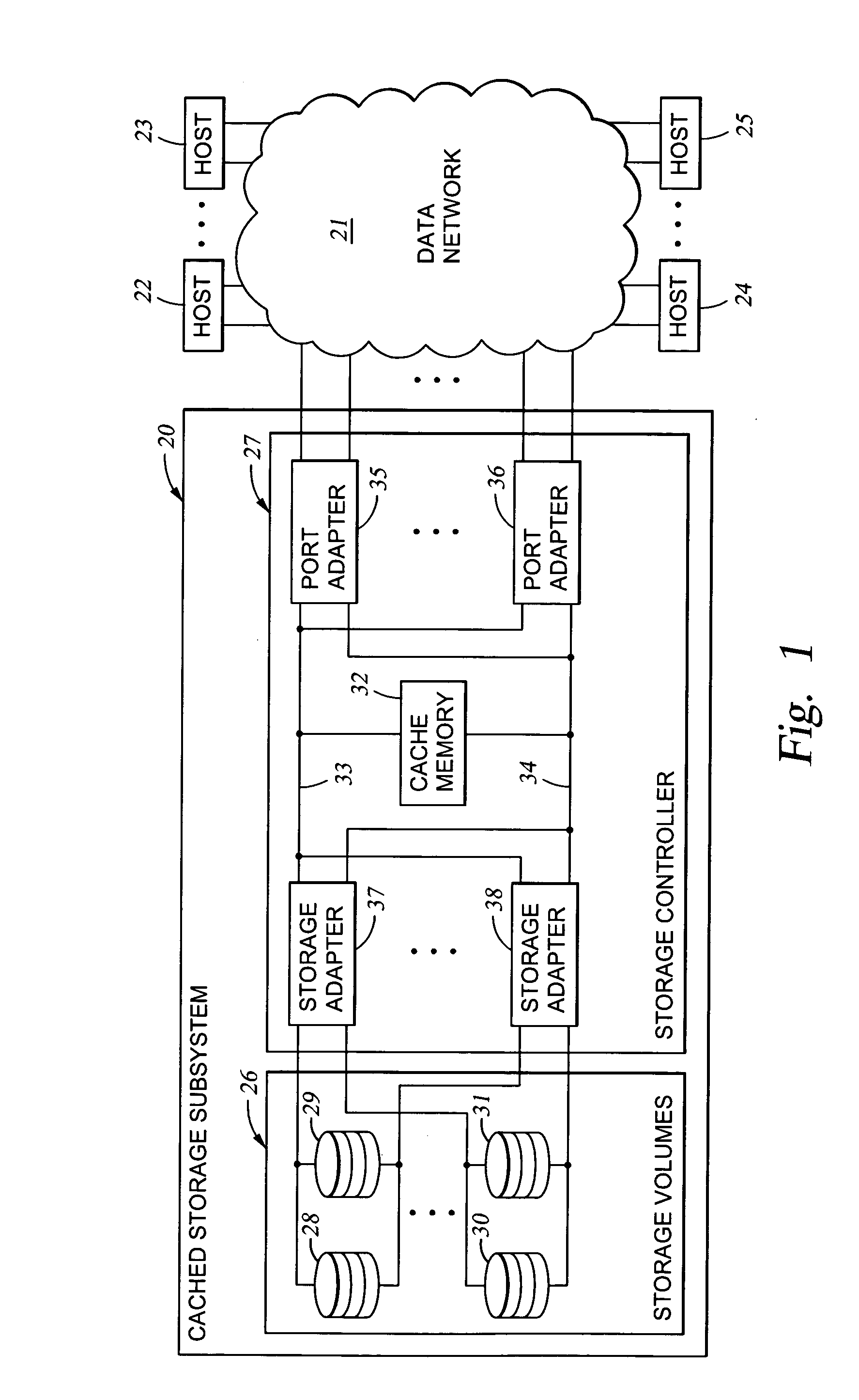

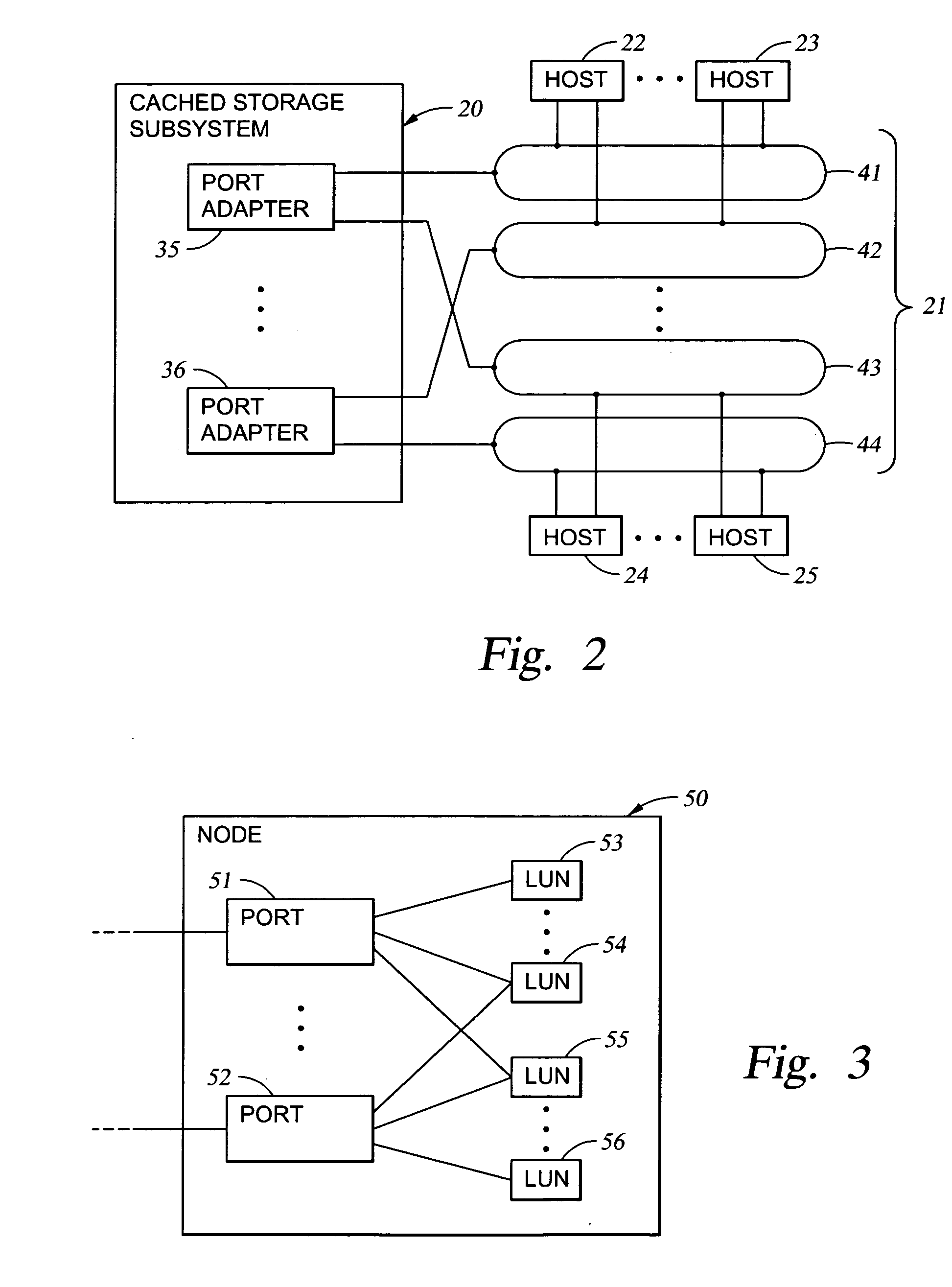

Mapping of hosts to logical storage units and data storage ports in a data processing system

InactiveUS20040054866A1Input/output to record carriersMultiple digital computer combinationsData processing systemData access

An apparatus has host ports for coupling hosts to data storage devices. The data storage devices are configured into logical storage units, and the apparatus is programmed with a mapping of the hosts to respective logical storage units. The apparatus decodes a host identifier and a logical storage unit specification from each data access request received at each host port, and determines whether or not the decoded host identifier and logical storage unit specification are in conformance with the mapping in order to permit or deny data access of the logical storage unit through the host port. For example, the apparatus includes a switch for routing the data storage access requests from the host ports to ports that provide access to the data storage, and a set of logical volumes of storage are accessible from each of the ports that provide access to the data storage.

Owner:EMC IP HLDG CO LLC

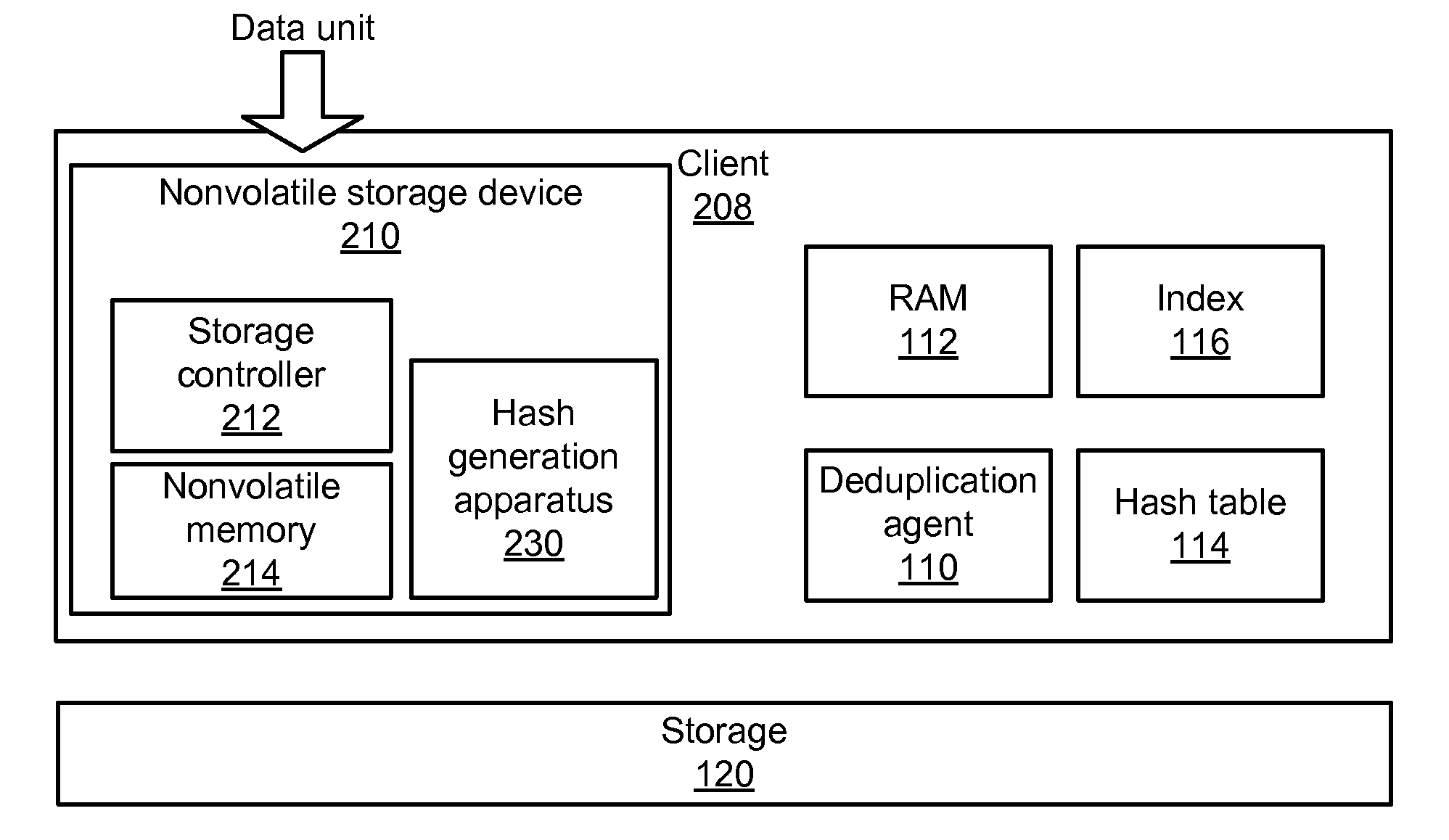

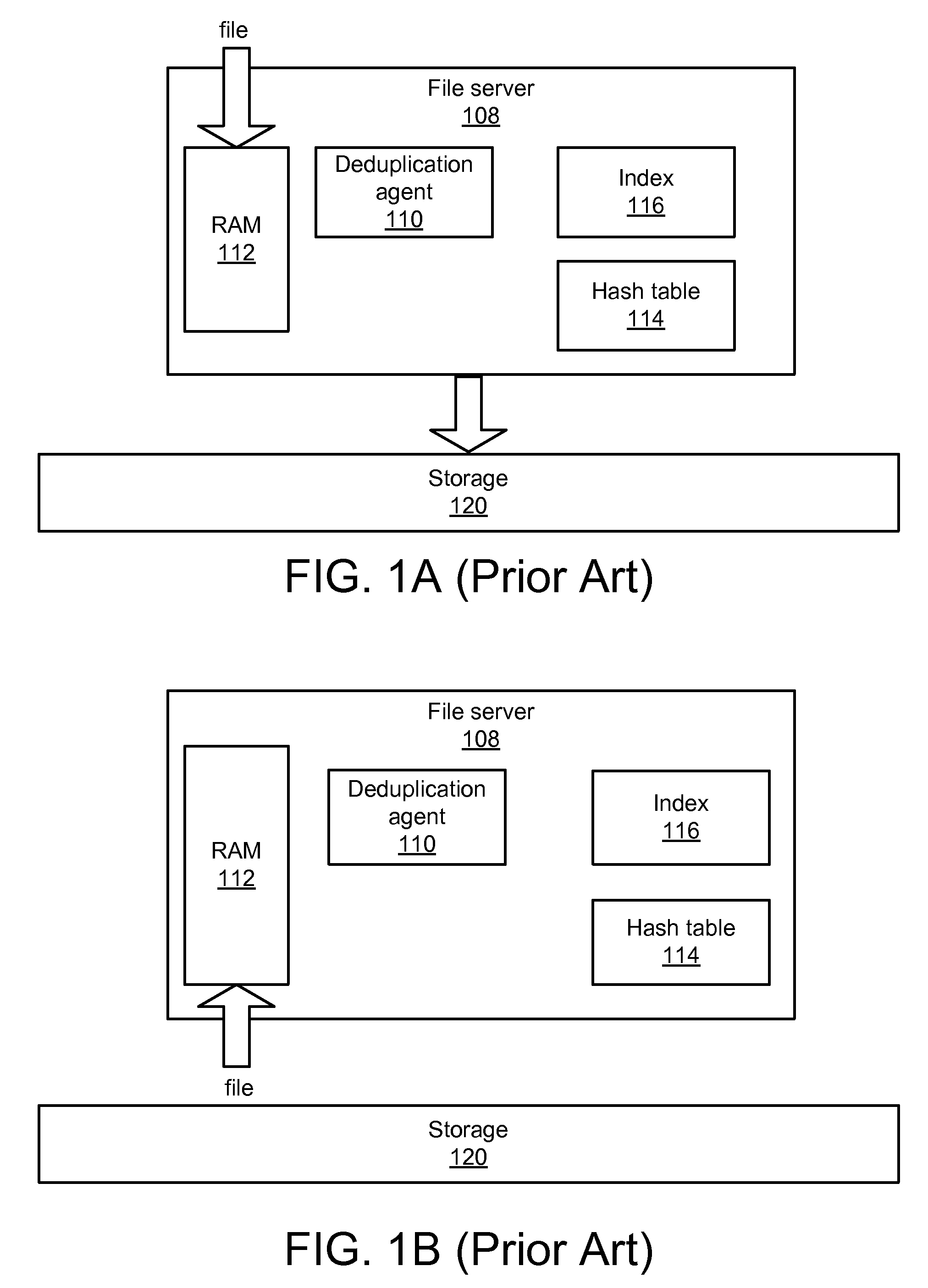

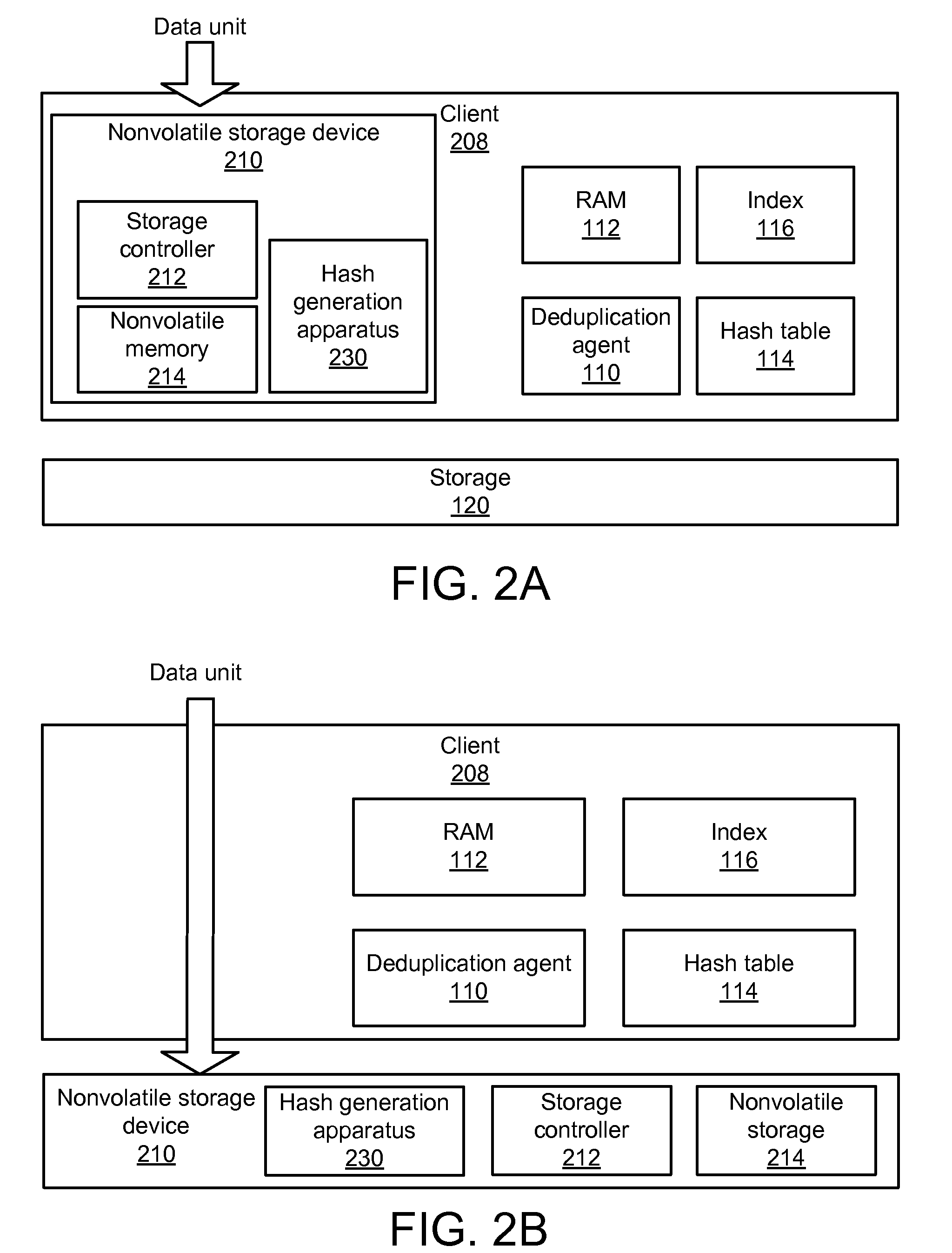

Apparatus, system, and method for improved data deduplication

InactiveUS20110055471A1Memory architecture accessing/allocationMemory systemsComputer hardwareHash function

An apparatus, system, and method are disclosed for improved deduplication. The apparatus includes an input module, a hash module, and a transmission module that are implemented in a nonvolatile storage device. The input module receives hash requests from requesting entities that may be internal or external to the nonvolatile storage device; the hash requests include a data unit identifier that identifies the data unit for which the hash is requested. The hash module generates a hash for the data unit using a hash function. The hash is generated using the computing resources of the nonvolatile storage device. The transmission module sends the hash to a receiving entity when the input module receives the hash request. A deduplication agent uses the hash to determine whether or not the data unit is a duplicate of a data unit already stored in the storage system that includes the nonvolatile storage device.

Owner:SANDISK TECH LLC

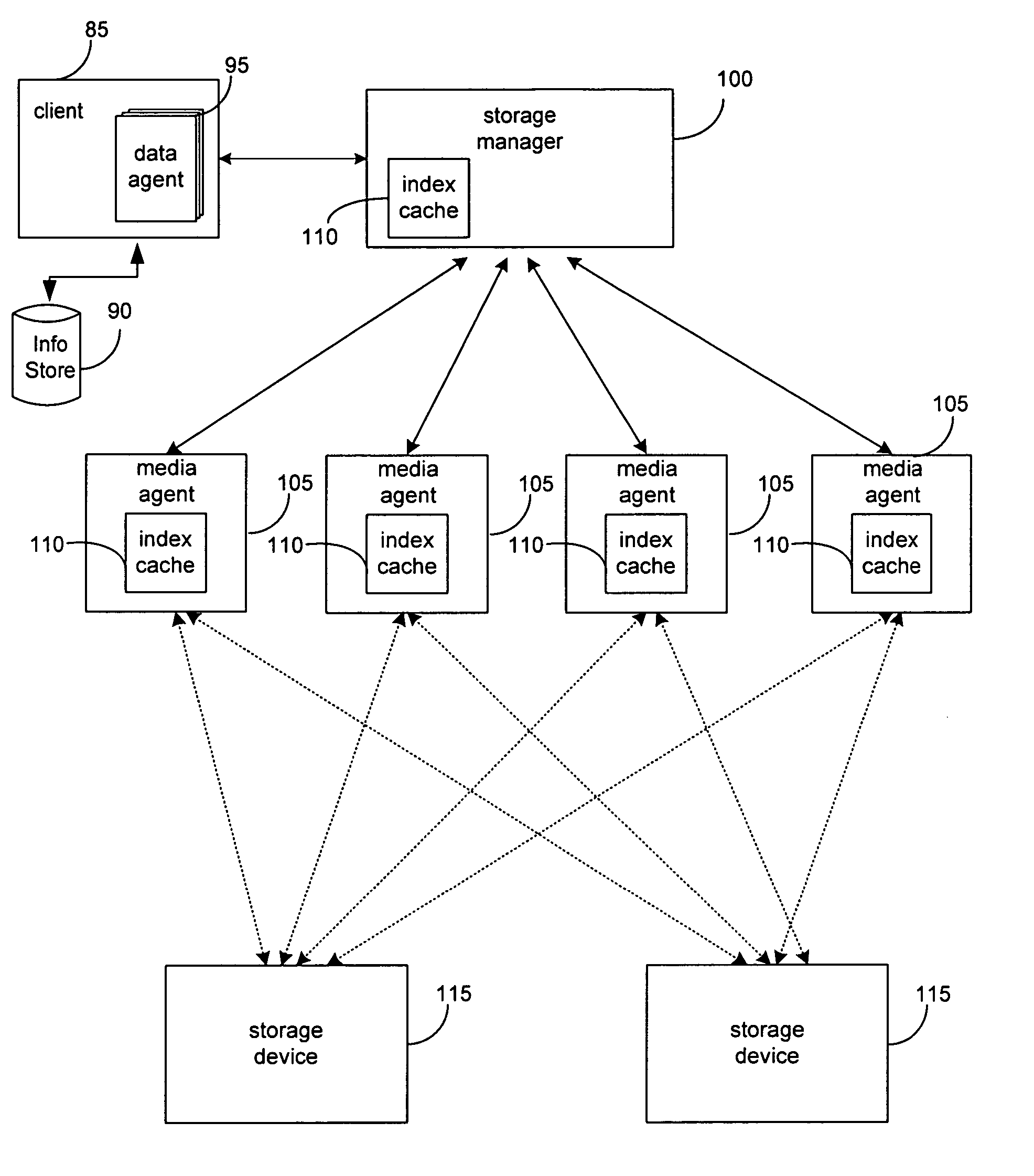

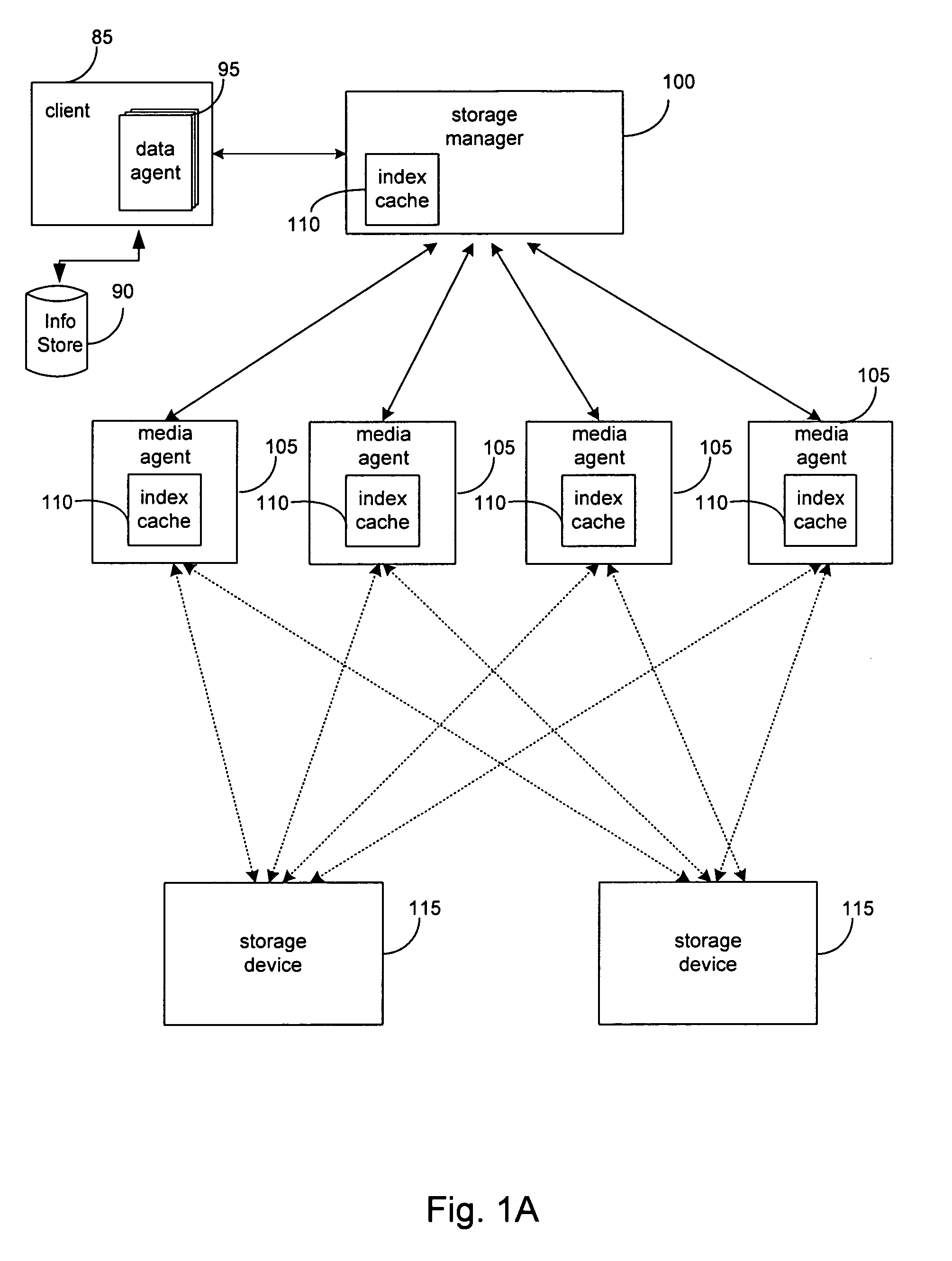

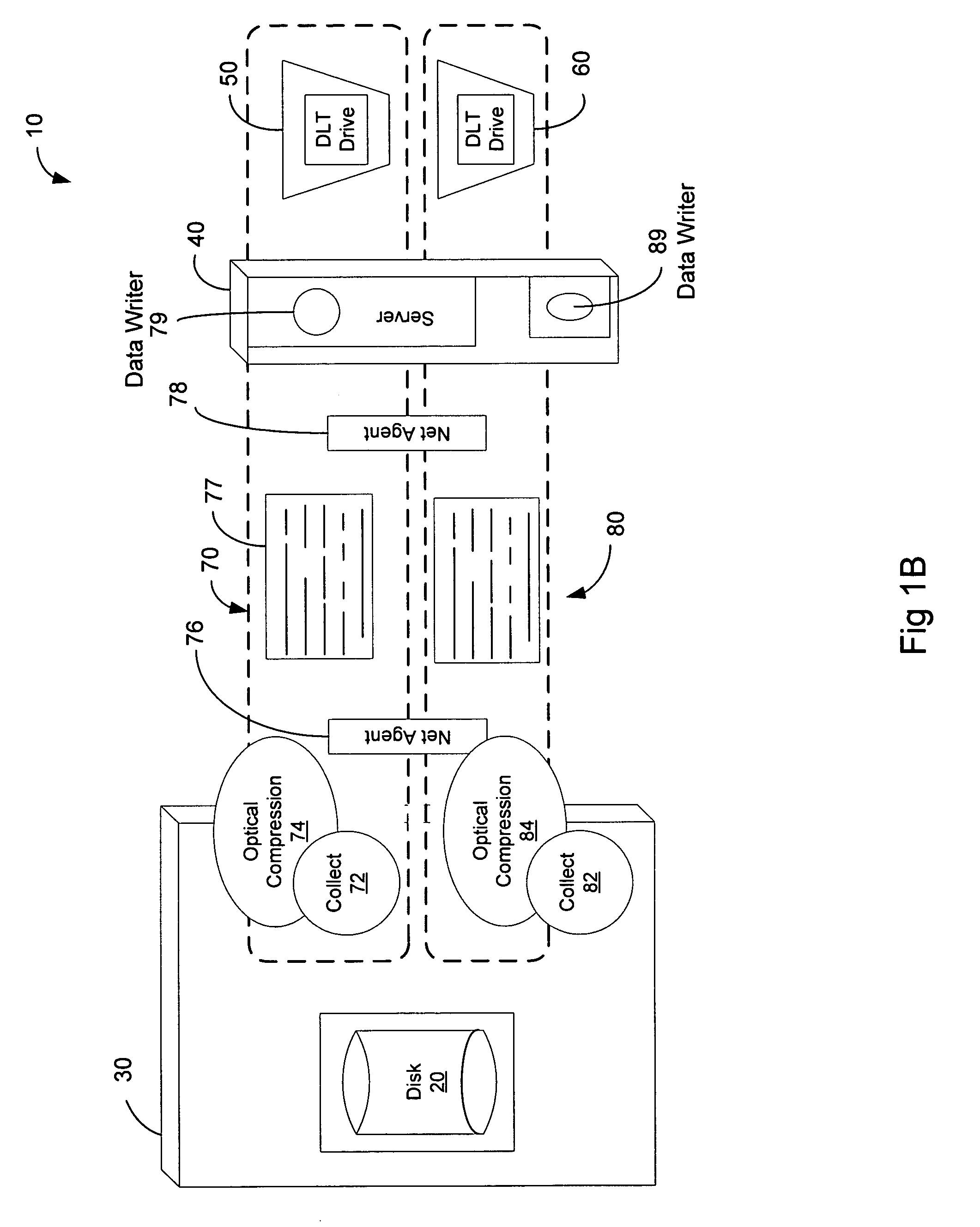

Method and system for transferring data in a storage operation

InactiveUS7581077B2Input/output to record carriersRedundant operation error correctionData storingData store

The invention provides a system and method for storing a copy of data stored in an information store. In one embodiment, a data agent reads one or more blocks containing the data from the information store. The data agent maps the one or more blocks to provide a mapping of the blocks, and transmits the one or more blocks and mapping to a media agent for a storage device. The media agent stores the one or more blocks in the storage device according to the mapping.

Owner:COMMVAULT SYST INC

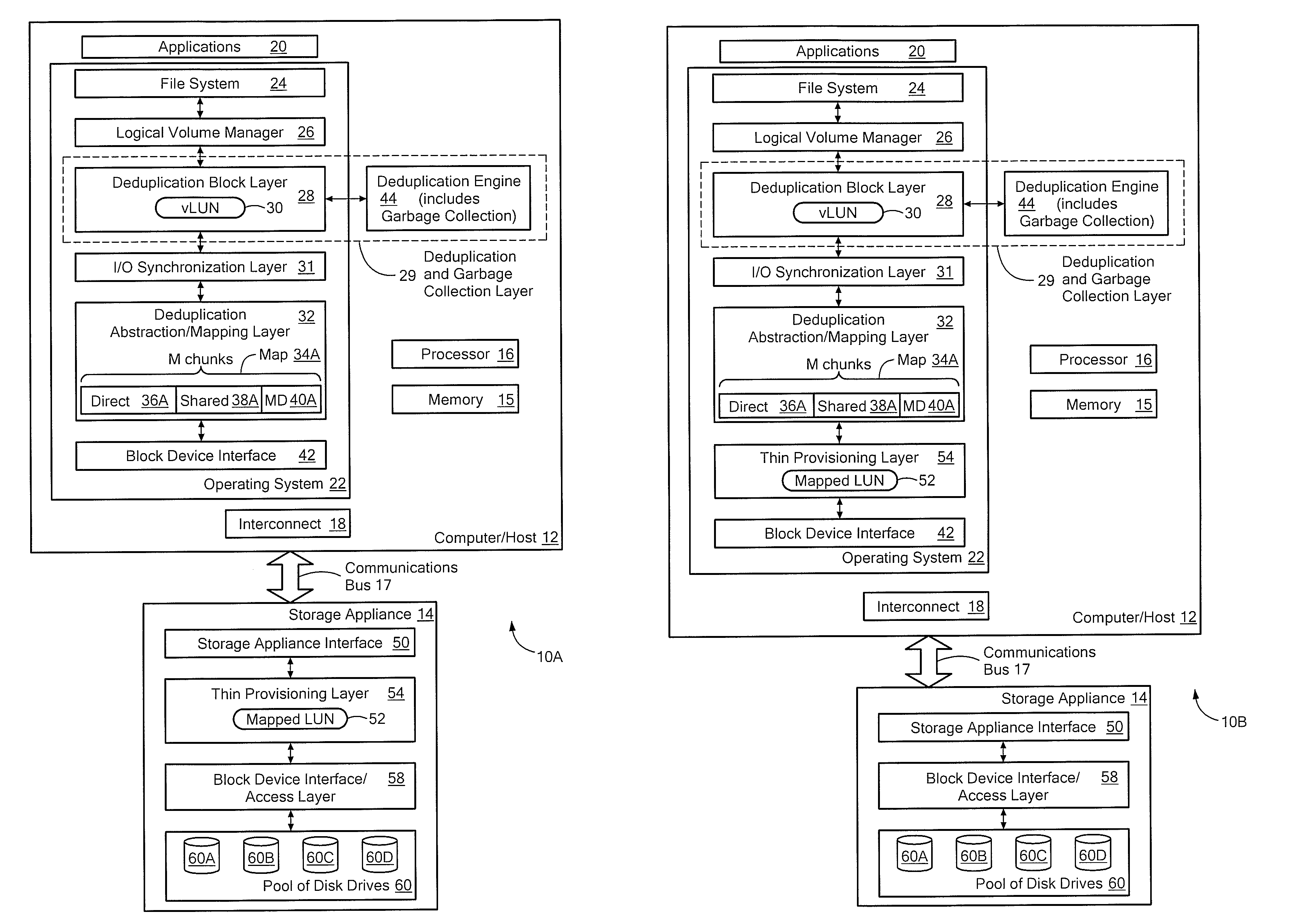

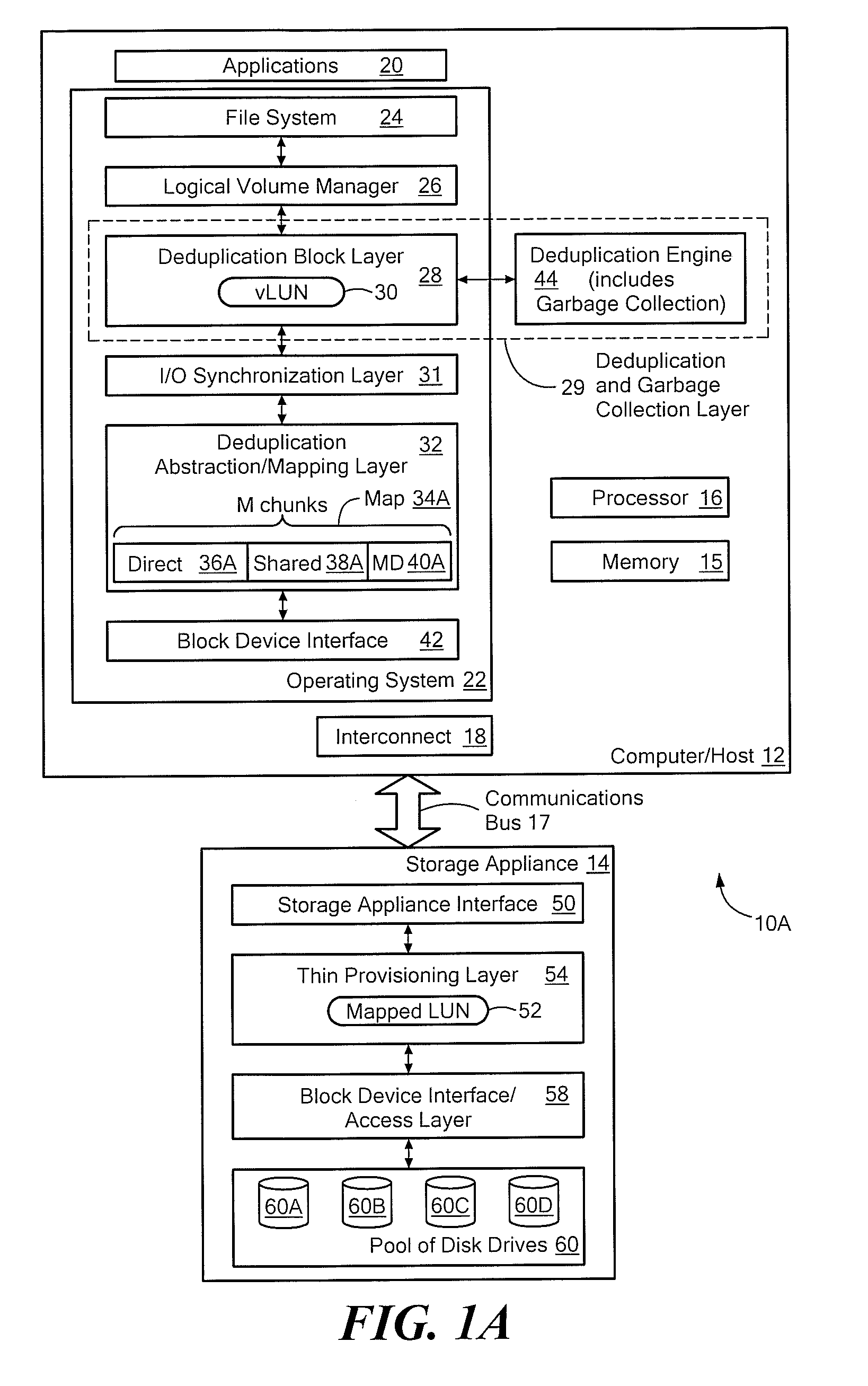

Efficient read/write algorithms and associated mapping for block-level data reduction processes

ActiveUS8140821B1Well formedFacilitates efficient read and write accessError detection/correctionDigital data processing detailsTheoretical computer scienceBlock level

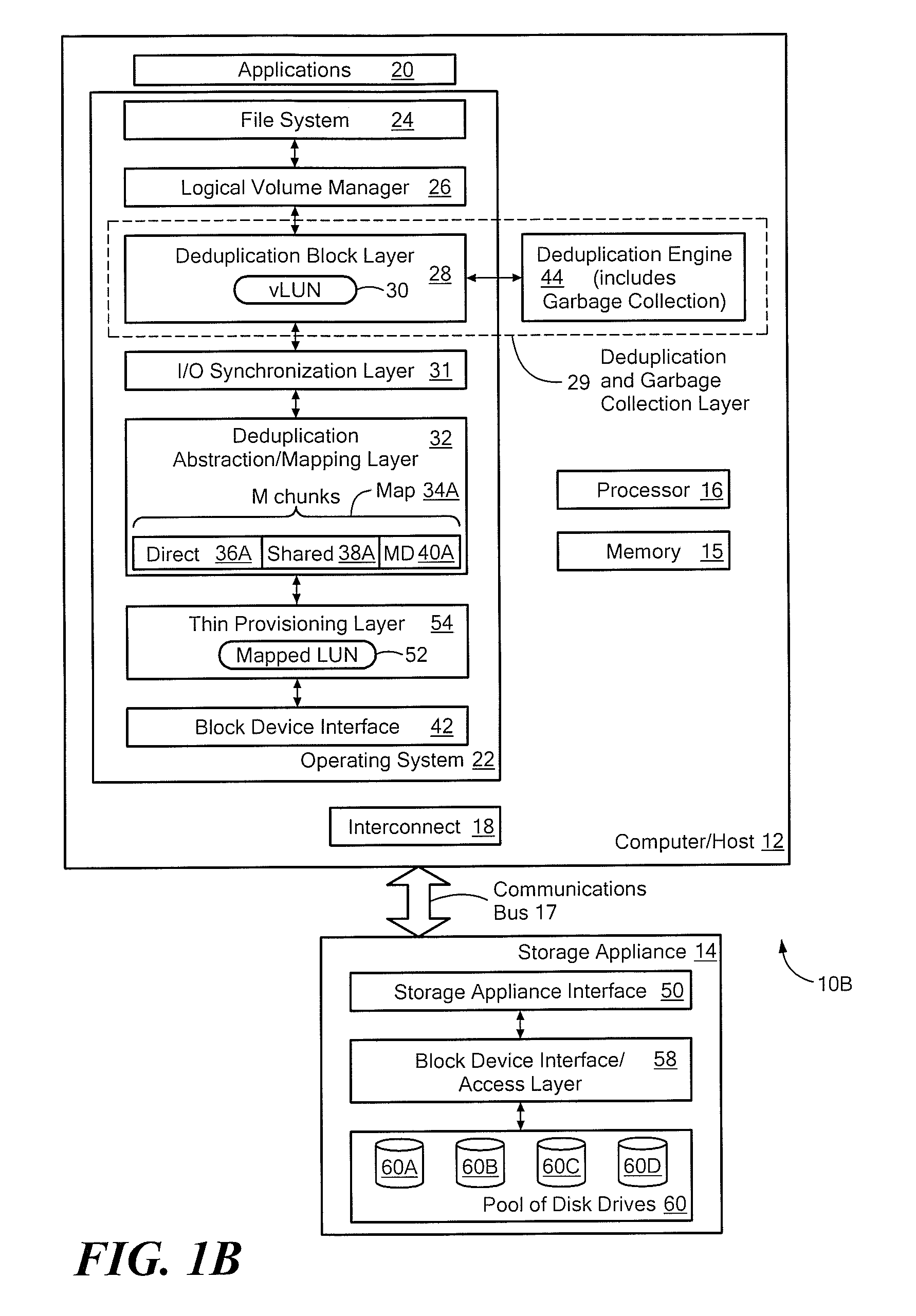

A system configured to optimize access to stored chunks of data is provided. The system comprises a vLUN layer, a mapped LUN layer, and a mapping layer disposed between the vLUN and the mapped LUN. The vLUN provides a plurality of logical chunk addresses (LCAs) and the mapped LUN provides a plurality of physical chunk addresses (PCAs), where each LCA or PCA stores a respective chunk of data. The mapping layer defines a layout of the mapped LUN that facilitates efficient read and write access to the mapped LUN.

Owner:EMC IP HLDG CO LLC

System and method for mapping file block numbers to logical block addresses

ActiveUS7437530B1Improve performanceRaise transfer toMultiple digital computer combinationsTransmissionOperational systemLogical block addressing

A system and method for mapping file block numbers (FBNs) to logical block addresses (LBAs) is provided. The system and method performs the mapping of FBNs to LBAs in a file system layer of a storage operating system, thereby enabling the use of clients in a storage environment that have not been modified to incorporate mapping tables. As a result, a client may send data access requests to the storage system utilizing FBNs and have the storage system perform the appropriate mapping to LBAs.

Owner:NETWORK APPLIANCE INC

Method and system for providing consistent data modification information to clients in a storage system

InactiveUS6952758B2Minimize overheadRapid data replicationData processing applicationsInput/output to record carriersClient-sideData store

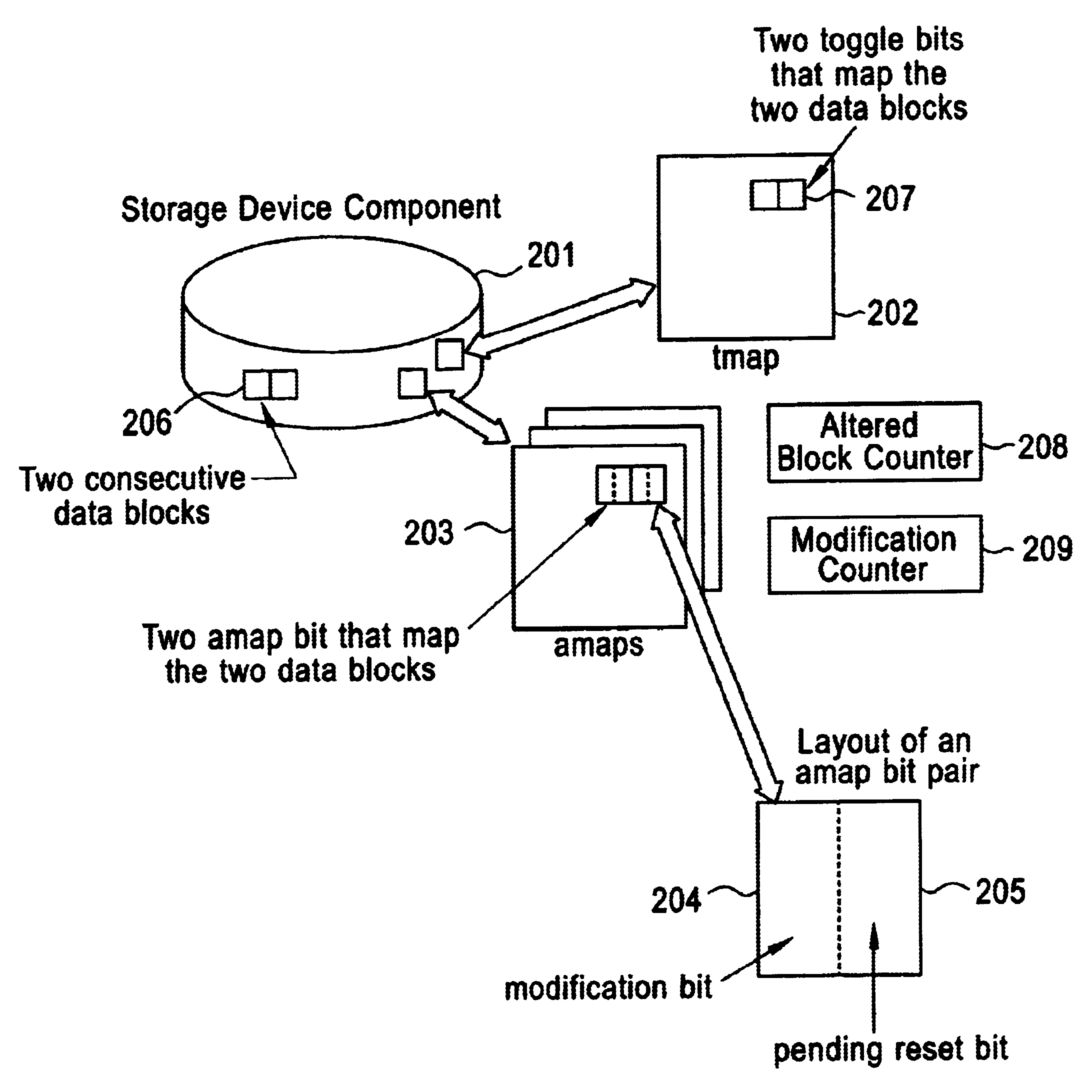

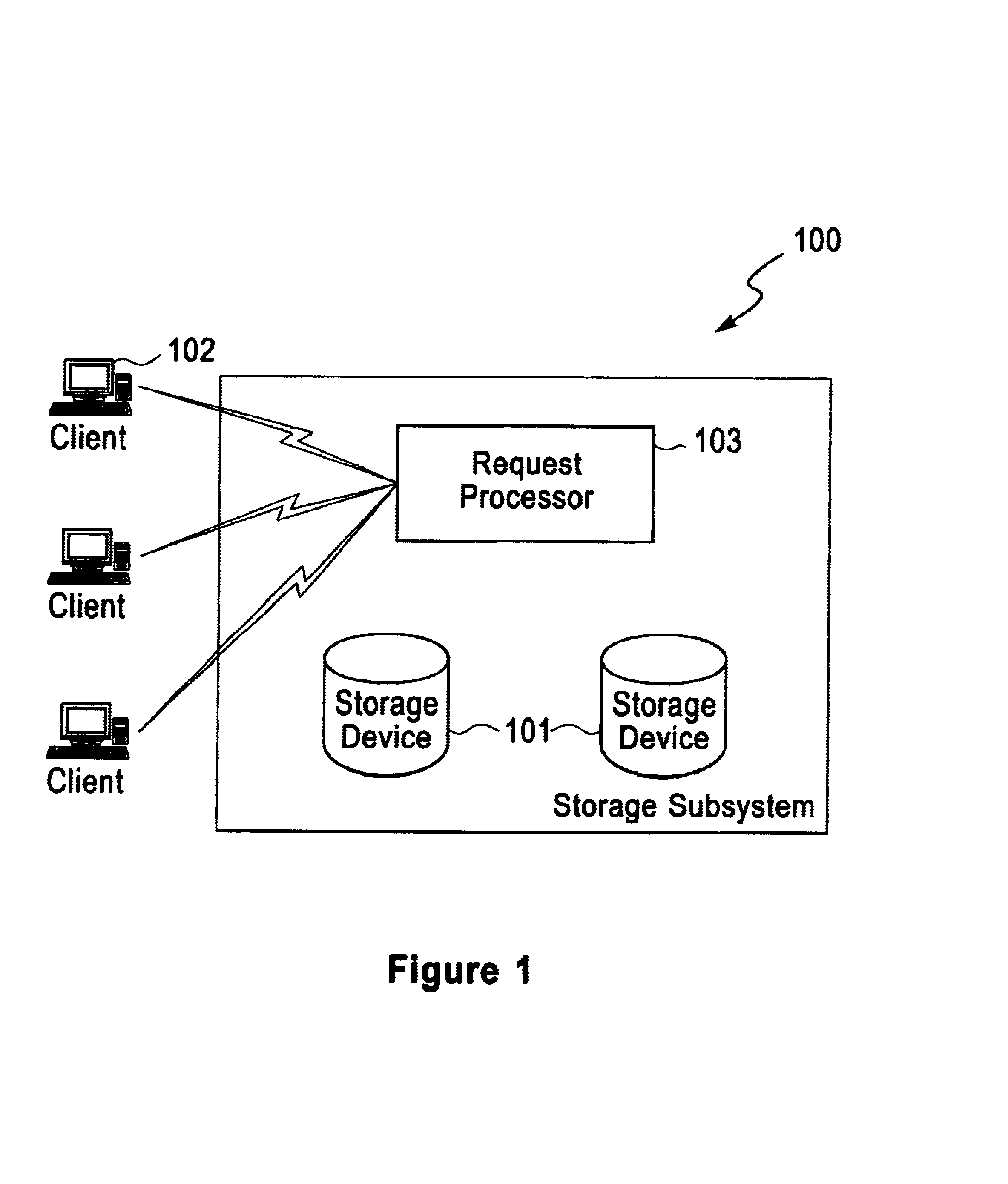

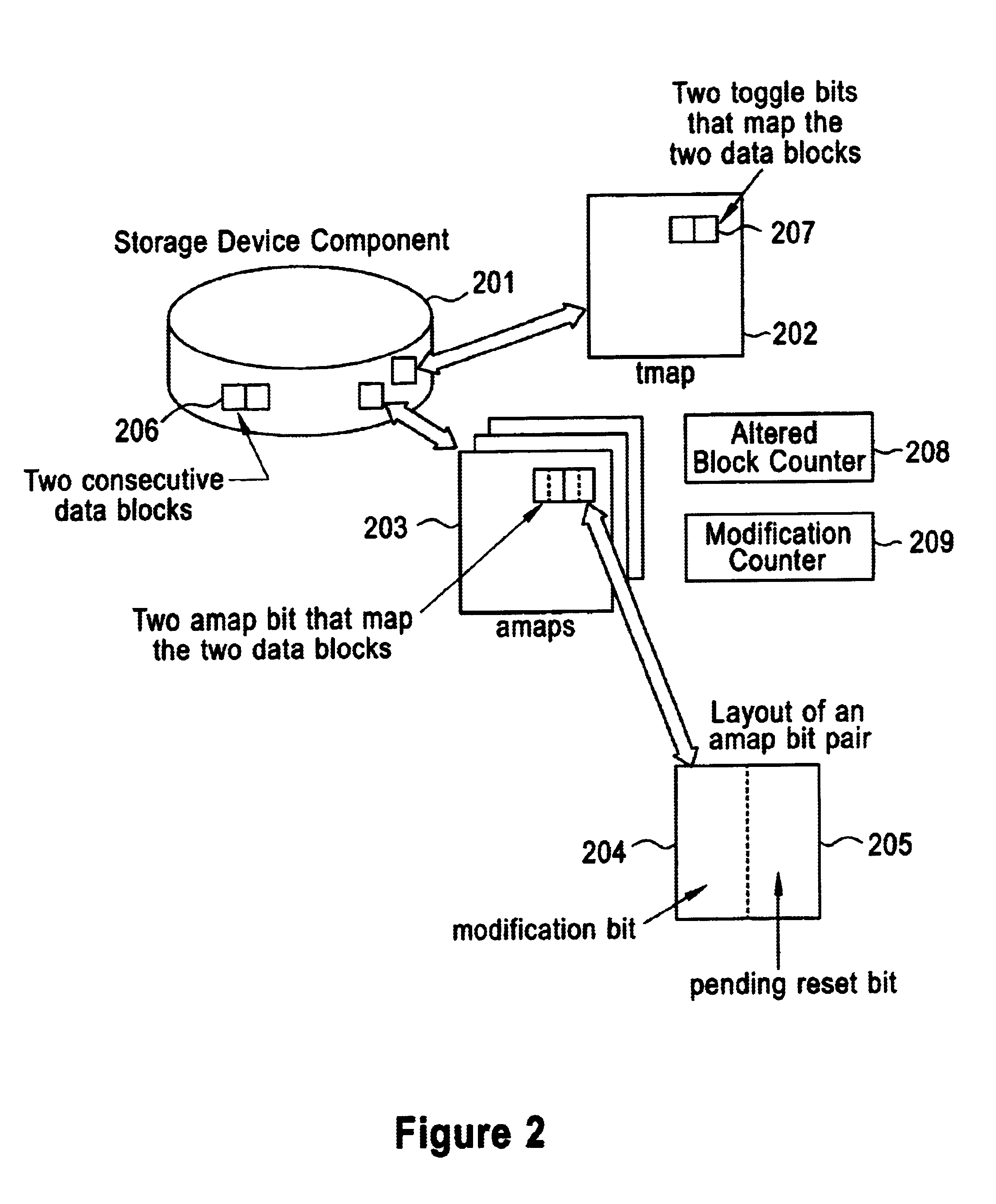

A data storage system and method for providing consistent data to multiple clients based on data modification information as existing data is updated and new data is written to the system. The information indicates the modification status of each data block and identifies which data blocks have been modified during a certain time interval. The clients may query and update the modification information by submitting requests through a request processor. The data modification information includes an Altered Block Map that indicates block modification status and a Toggle Block Map that identifies which blocks have been modified. The system further includes a Modification Counter a Pending Reset Counter for improved recognition and handling of the modified data.

Owner:IBM CORP

Management of non-volatile memory systems having large erase blocks

ActiveUS20050144358A1Reduce amountImprove system performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationOriginal dataTerm memory

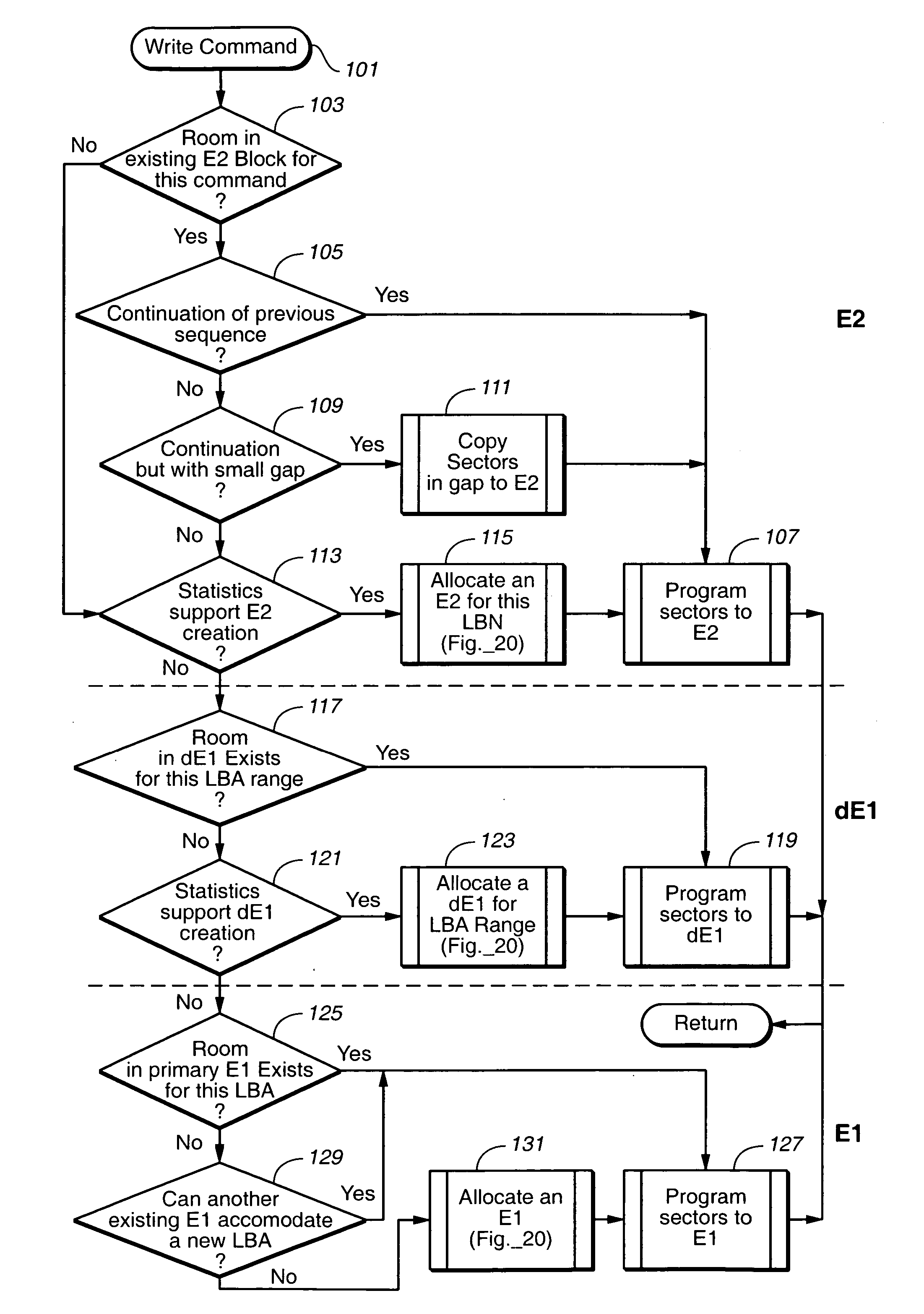

A non-volatile memory system of a type having blocks of memory cells erased together and which are programmable from an erased state in units of a large number of pages per block. If the data of only a few pages of a block are to be updated, the updated pages are written into another block provided for this purpose. Updated pages from multiple blocks are programmed into this other block in an order that does not necessarily correspond with their original address offsets. The valid original and updated data are then combined at a later time, when doing so does not impact on the performance of the memory. If the data of a large number of pages of a block are to be updated, however, the updated pages are written into an unused erased block and the unchanged pages are also written to the same unused block. By handling the updating of a few pages differently, memory performance is improved when small updates are being made. The memory controller can dynamically create and operate these other blocks in response to usage by the host of the memory system.

Owner:SANDISK TECH LLC

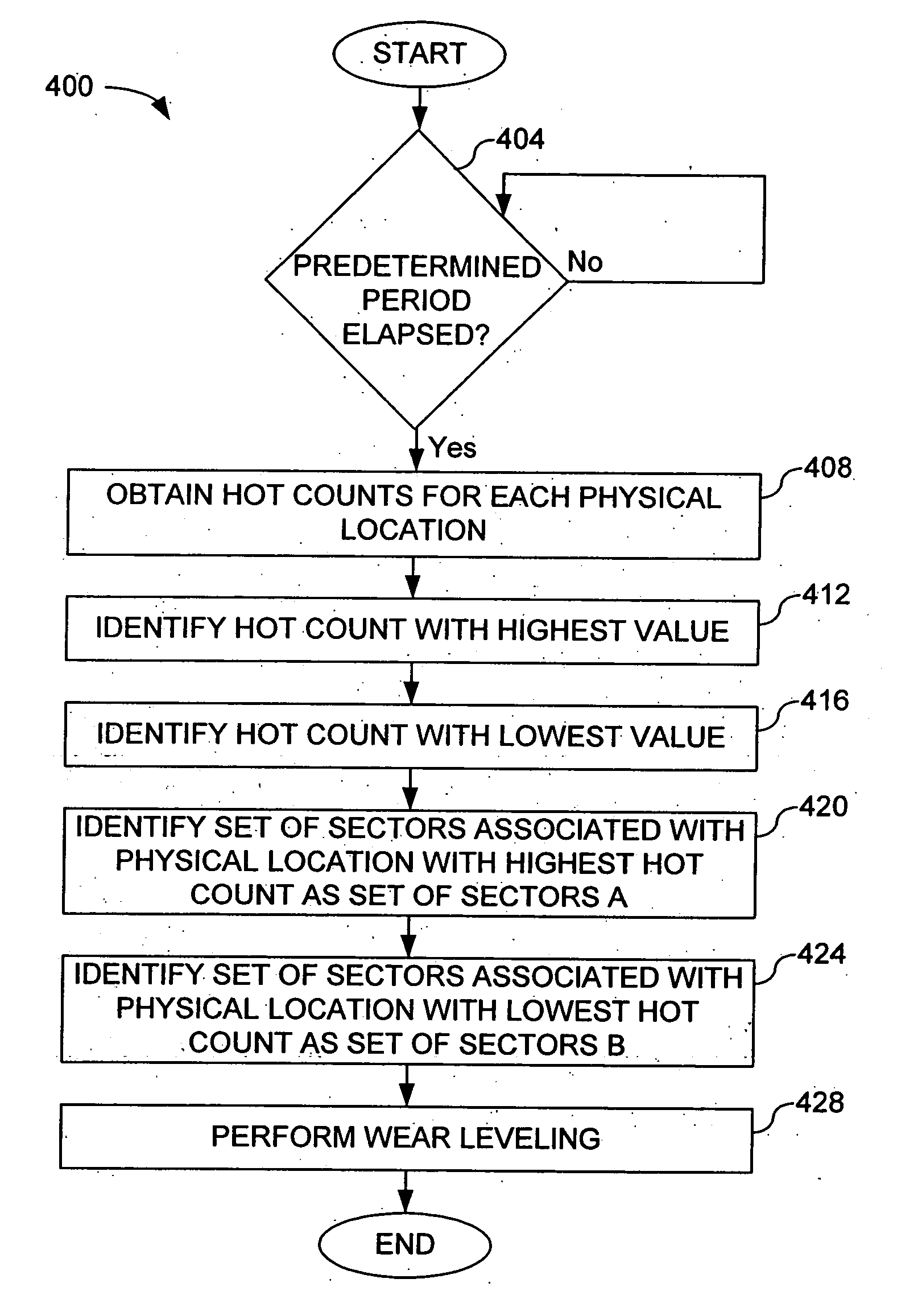

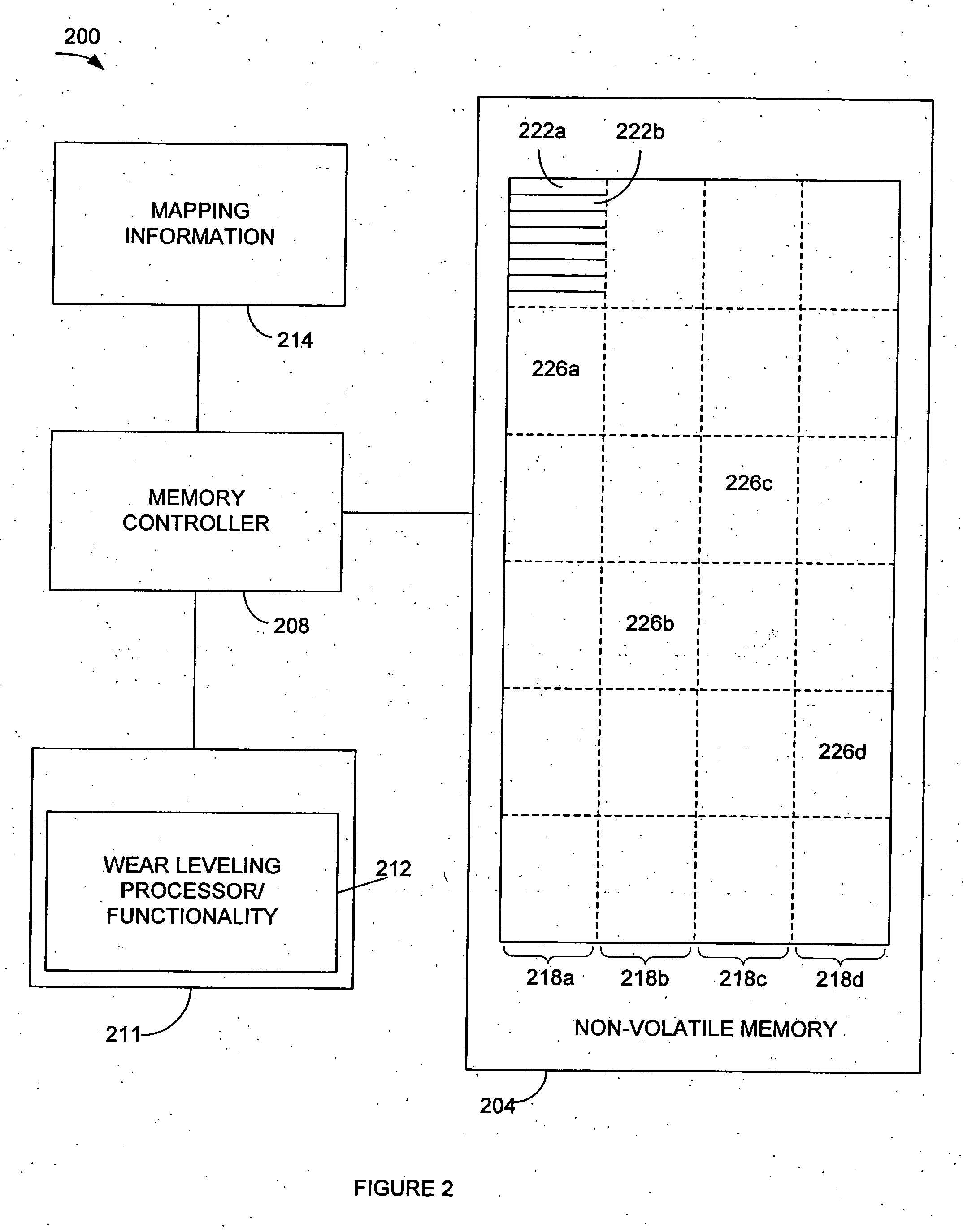

Automated wear leveling in non-volatile storage systems

ActiveUS20040083335A1Precise positioningMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingNon-volatile memory

Methods and apparatus for performing wear leveling in a non-volatile memory system are disclosed. Included is a method for performing wear leveling in a memory system that includes a first zone, which has a first memory element that includes contents, and a second zone includes identifying the first memory element and associating the contents of the first memory element with the second zone while disassociating the contents of the first memory element from the first zone. In one embodiment, associating the contents of the first memory element with the second involves moving contents of a second memory element into a third memory element, then copying the contents of the first memory element into the second memory element.

Owner:SANDISK TECH LLC

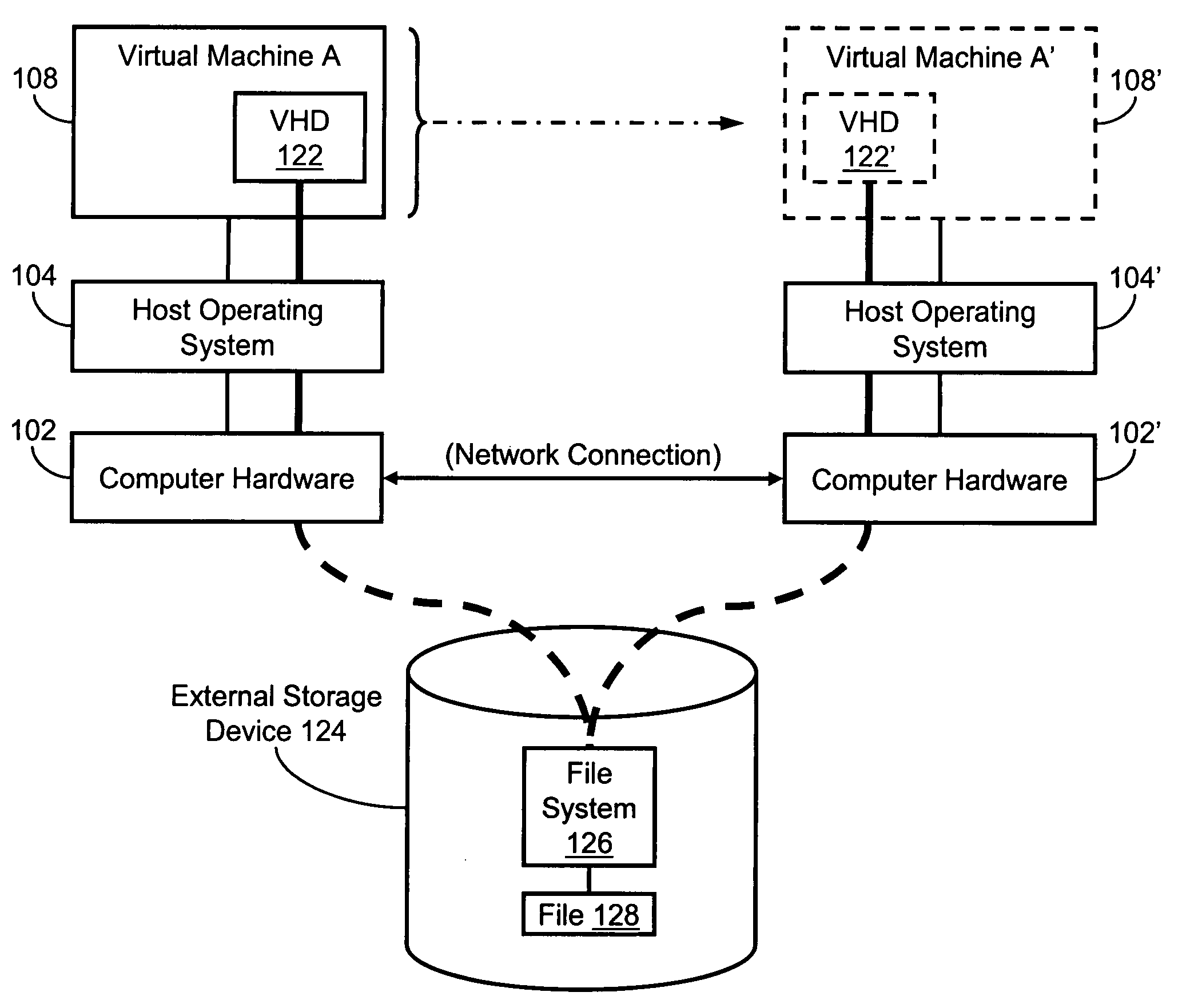

Systems and methods for voluntary migration of a virtual machine between hosts with common storage connectivity

InactiveUS20060005189A1Improving flexibility and efficiencyThe process is convenient and fastMultiprogramming arrangementsSoftware simulation/interpretation/emulationLoad SheddingSoftware upgrade

The present invention is a system for and method of performing disk migration in a virtual machine environment. The present invention provides a means for quickly and easily migrating a virtual machine from one host to another and, thus, improving flexibility and efficiency in a virtual machine environment for “load balancing” systems, performing hardware or software upgrades, handling disaster recovery, and so on. Certain of these embodiments are specifically directed to providing a mechanism for migrating the disk state along with the device and memory states, wherein the disk data resides in a remotely located storage device that is common to multiple host computer systems in a virtual machine environment. The virtual machine migration process of the present invention, which includes disk data migration, occurs without the user's awareness and, therefore, without the user's experiencing any noticeable interruption.

Owner:MICROSOFT TECH LICENSING LLC

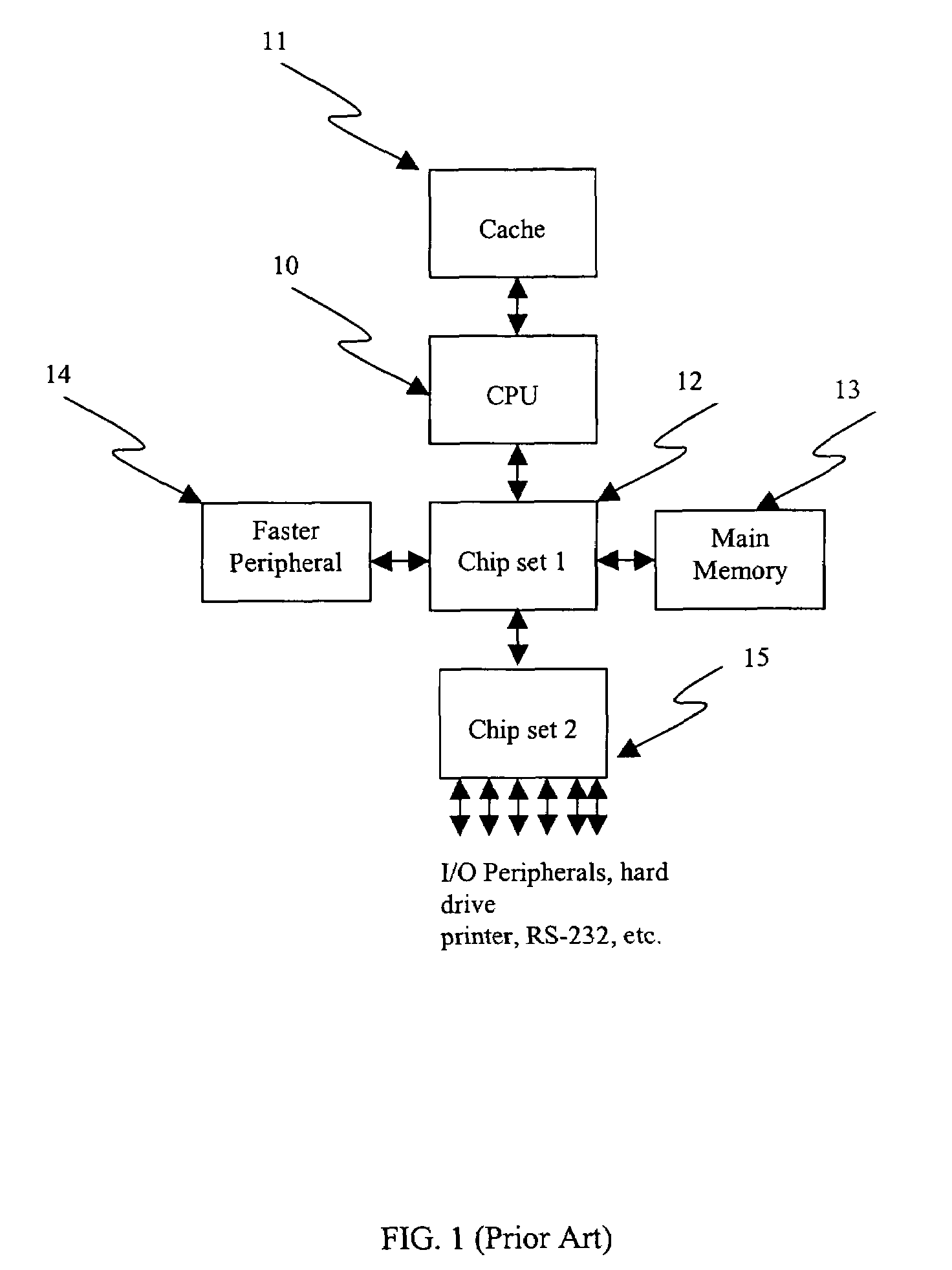

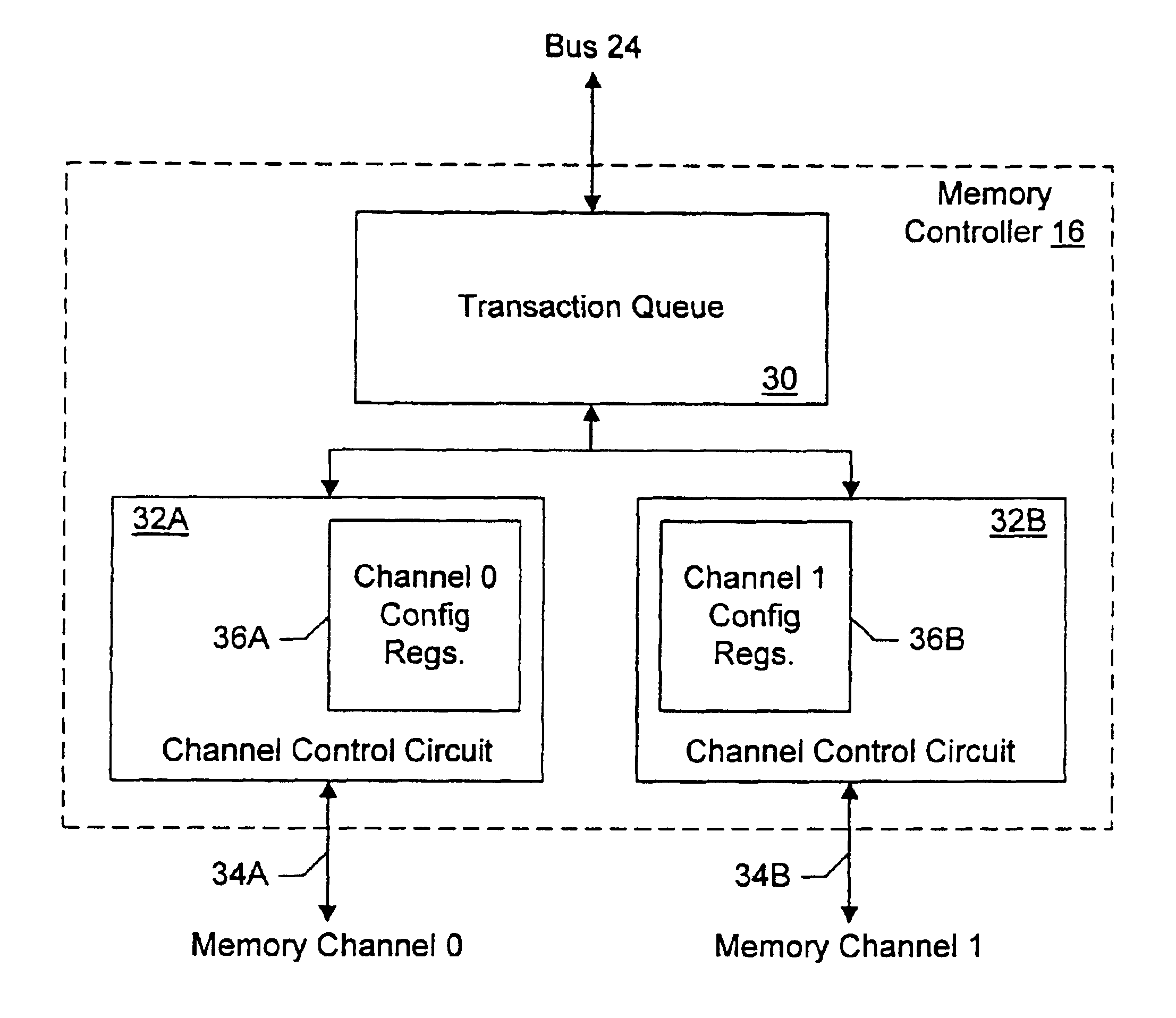

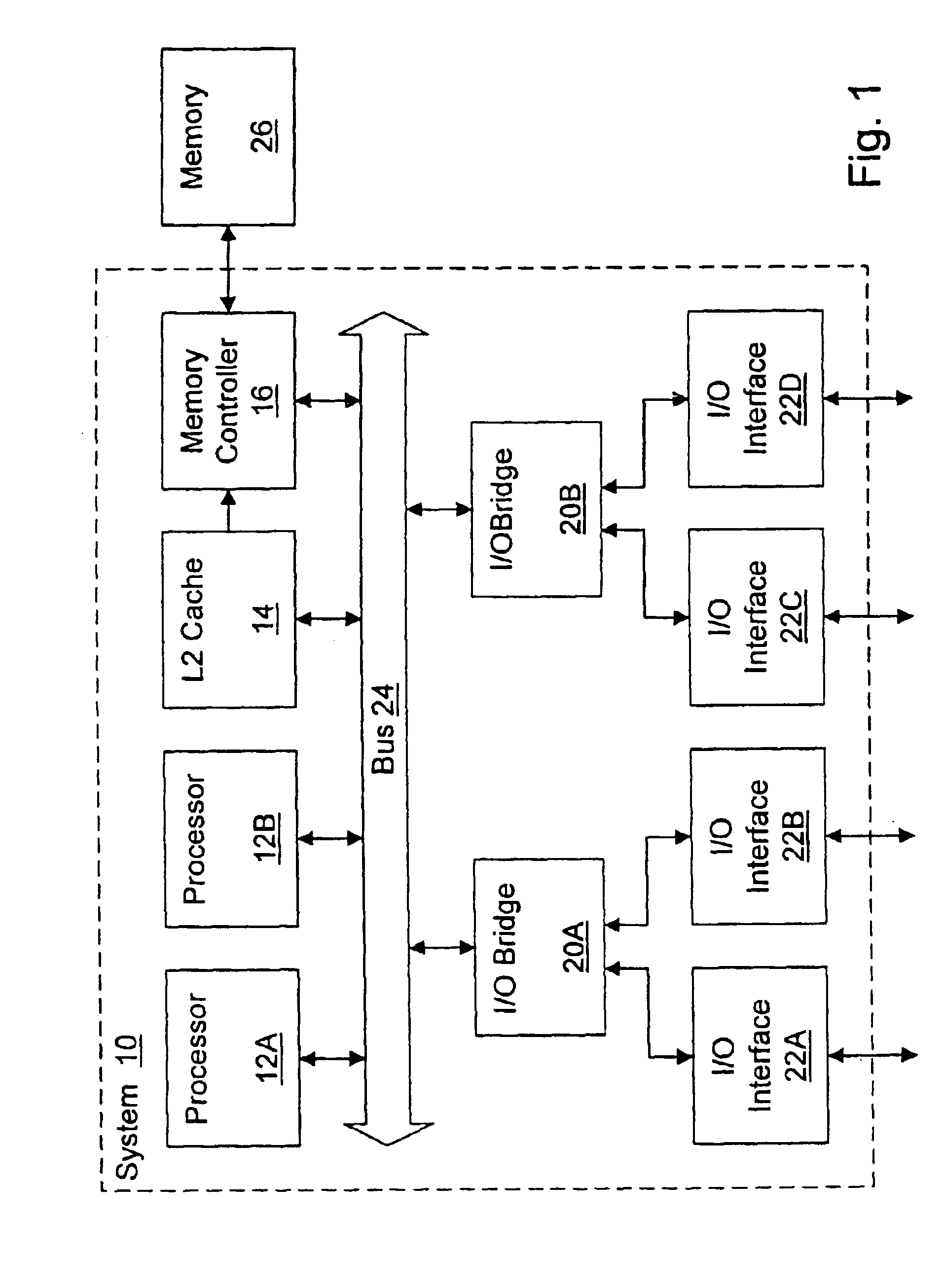

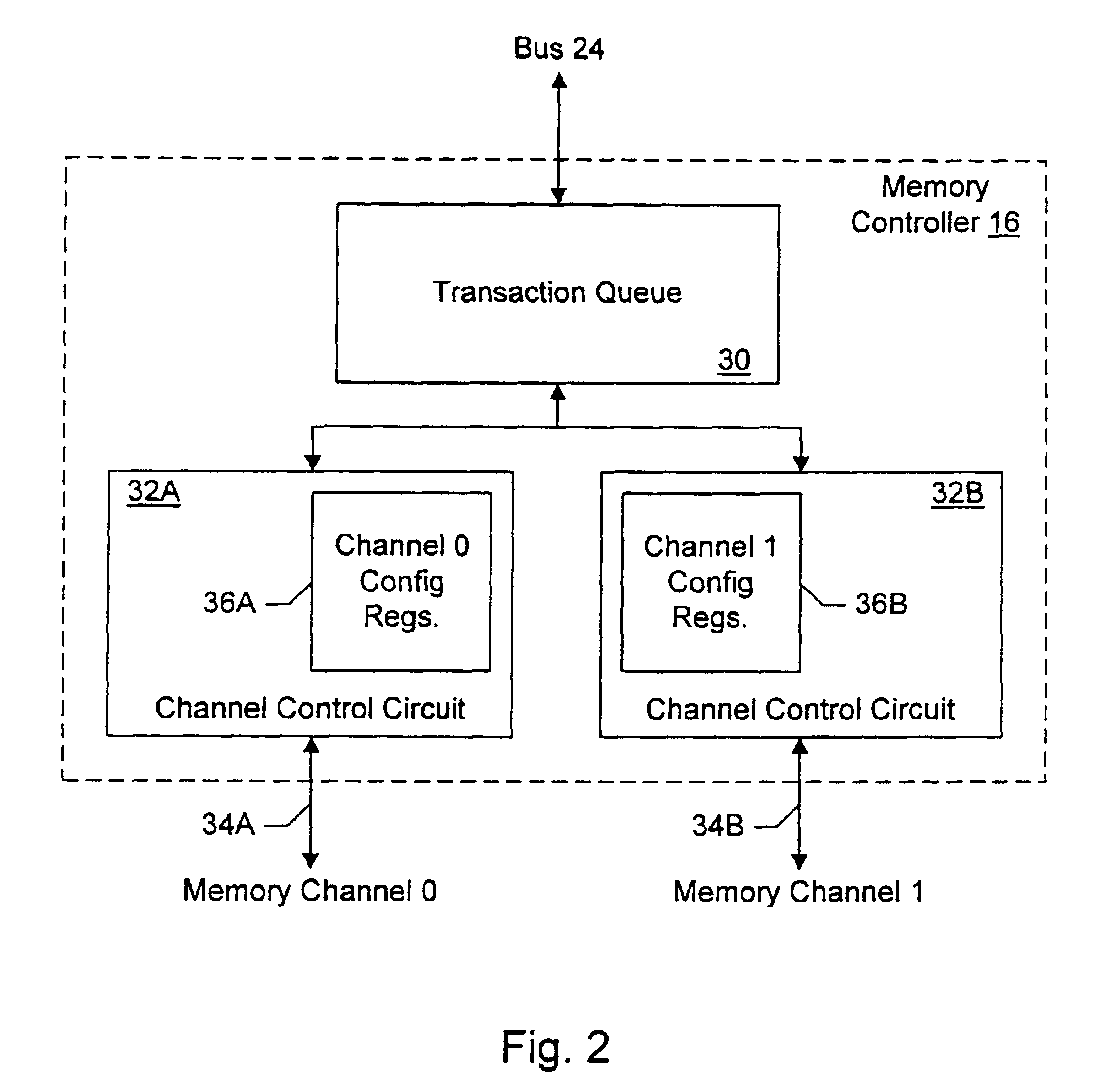

Memory controller with programmable configuration

InactiveUS6877076B1Flexible configurationOpen in timeMemory adressing/allocation/relocationMicro-instruction address formationProcessor registerParallel computing

Owner:AVAGO TECH INT SALES PTE LTD

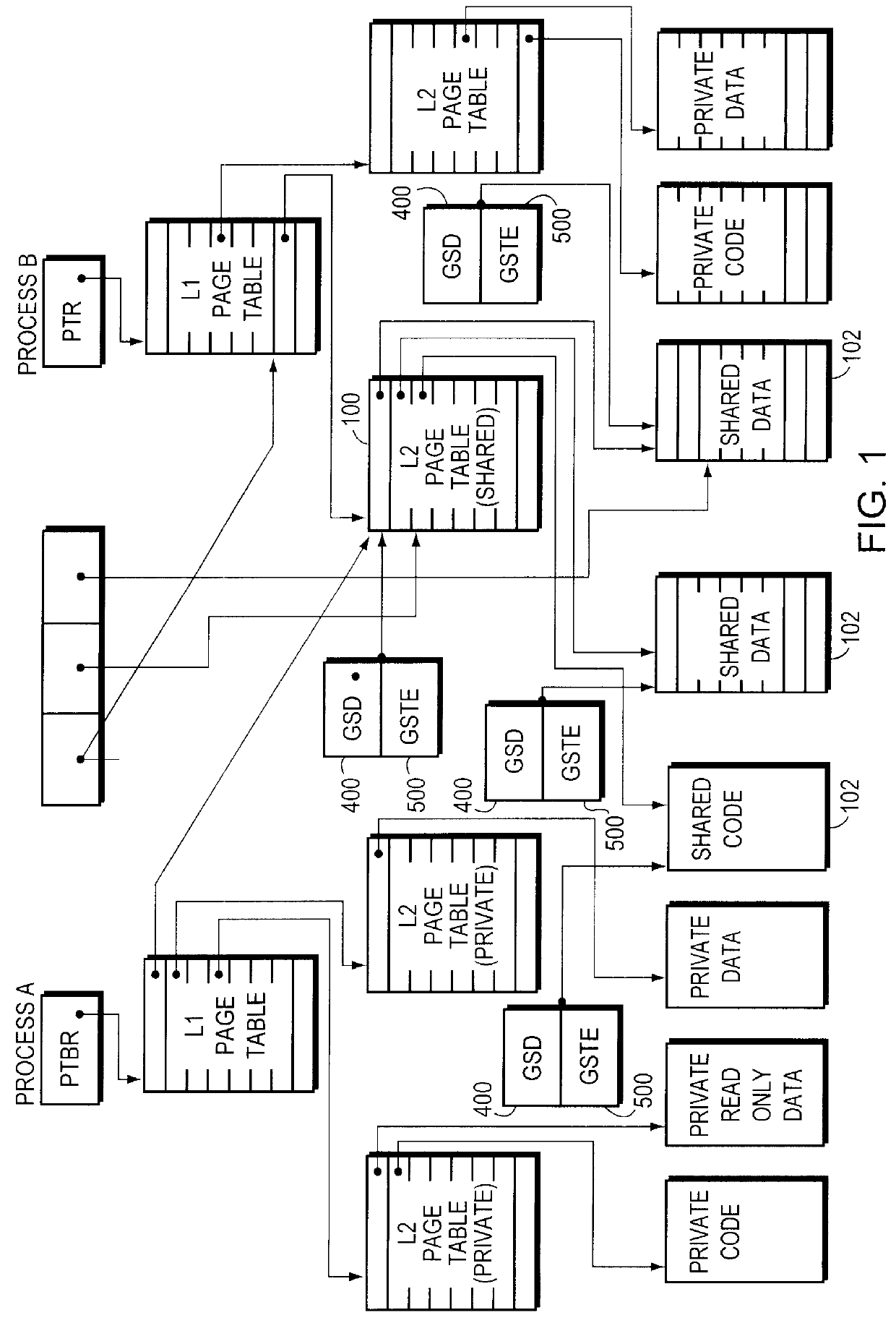

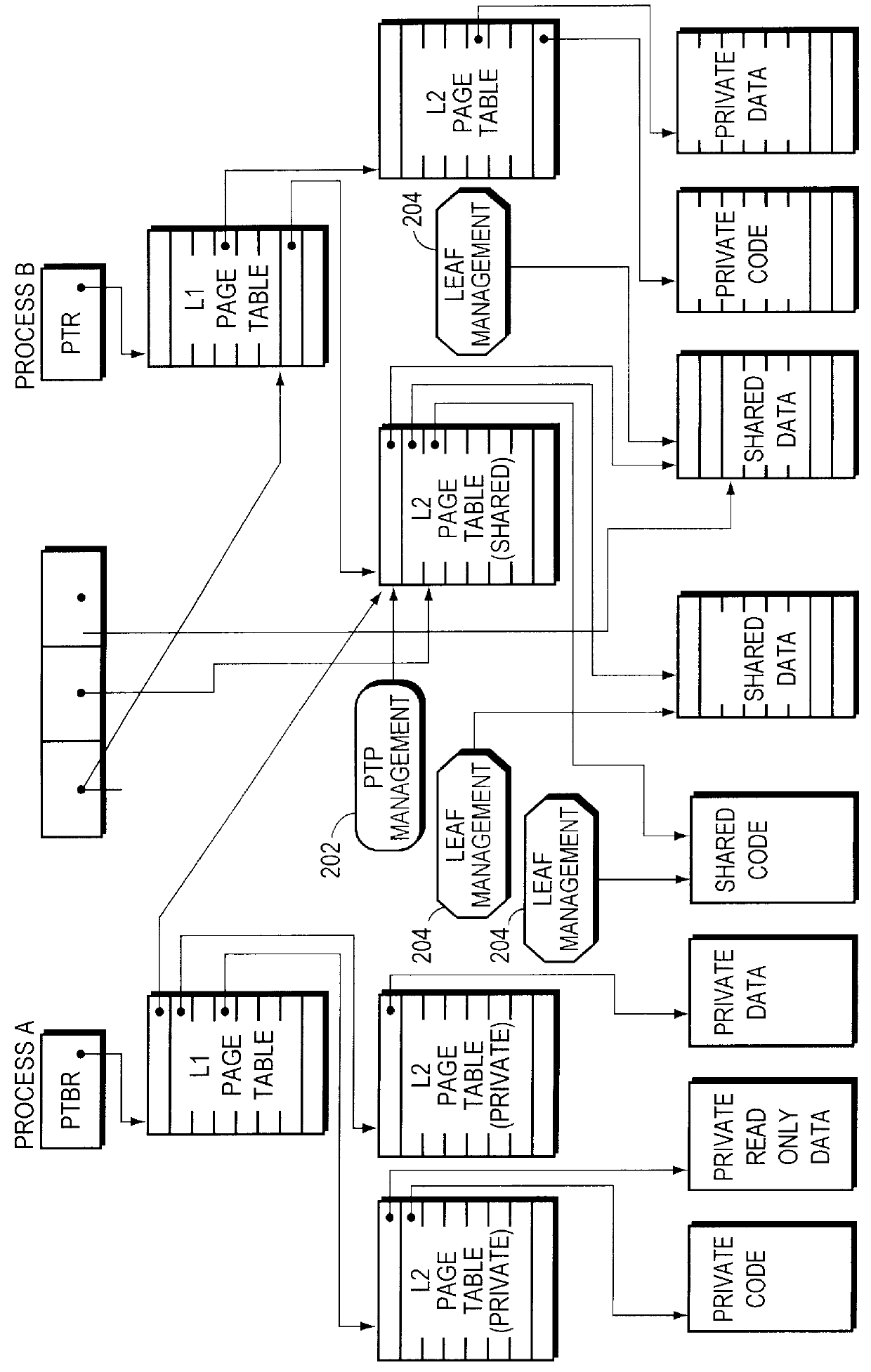

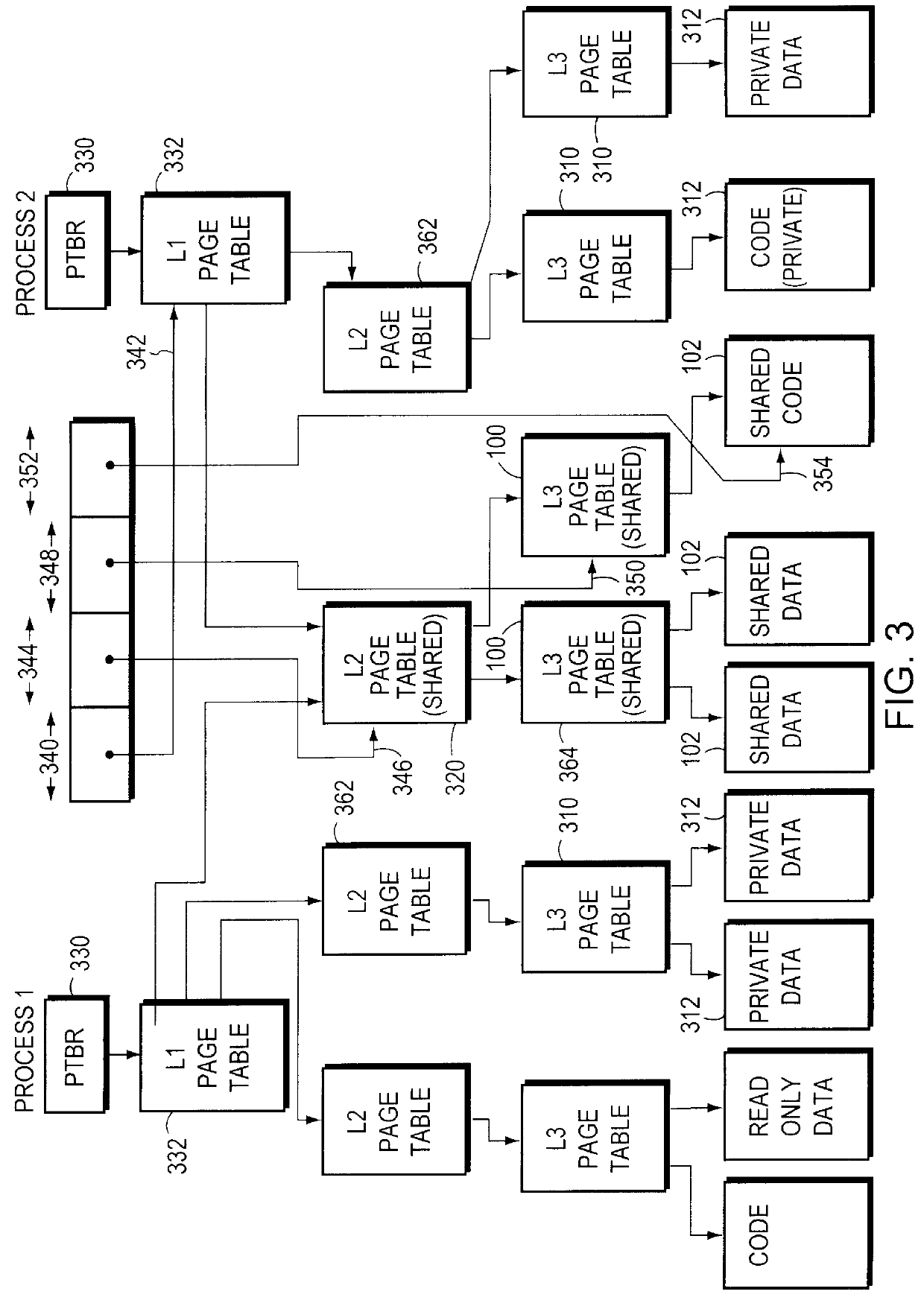

Sharing memory pages and page tables among computer processes

InactiveUS6085296AOptimizationReduce fragmentationMemory adressing/allocation/relocationMicro-instruction address formationPage tableComputer memory

A method of managing computer memory pages. The sharing of a program-accessible page between two processes is managed by a predefined mechanism of a memory manager. The sharing of a page table page between the processes is managed by the same predefined mechanism. The data structures used by the mechanism are equally applicable to sharing program-accessible pages or page table pages.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com