Patents

Literature

11521results about "Redundant data error correction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

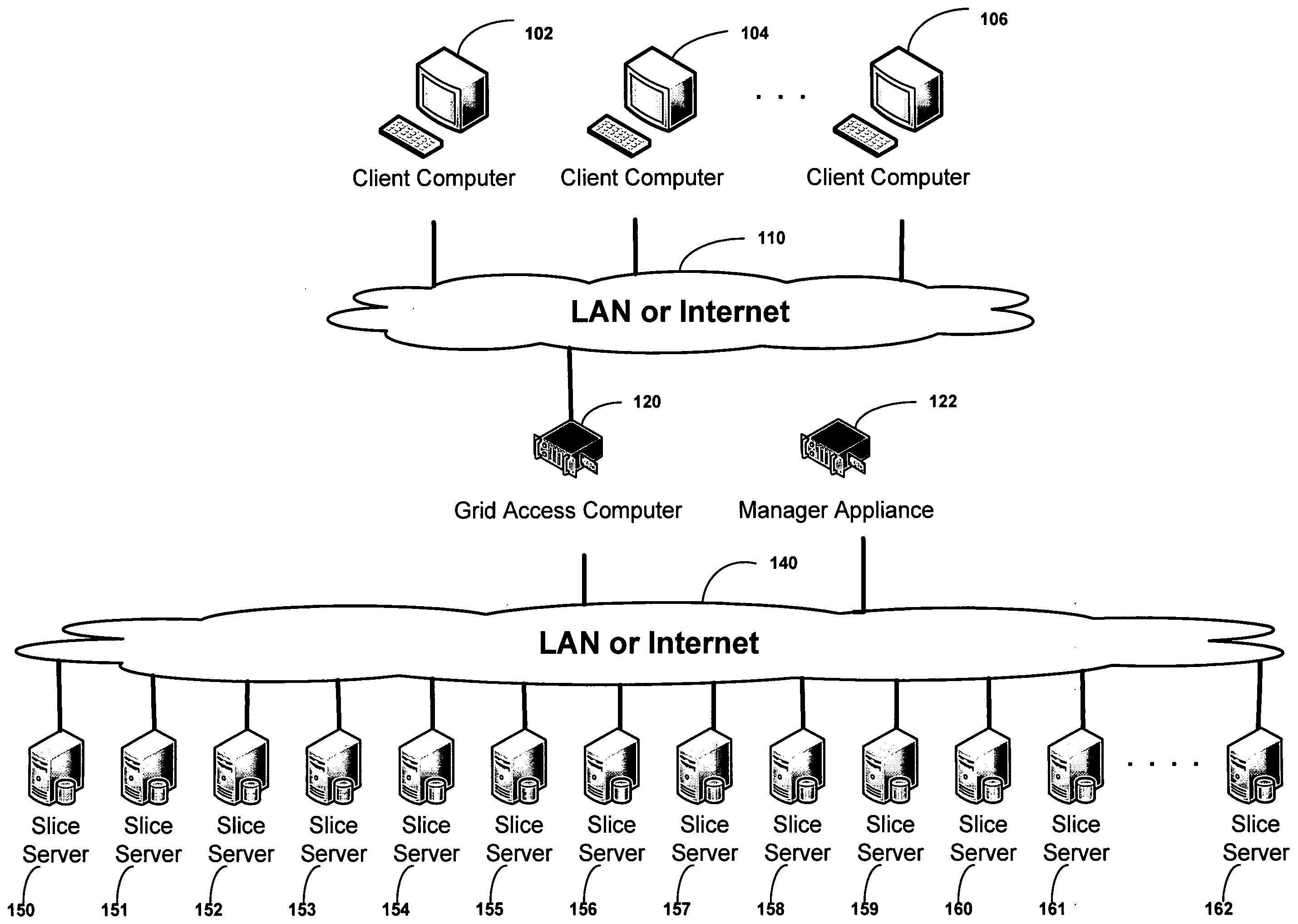

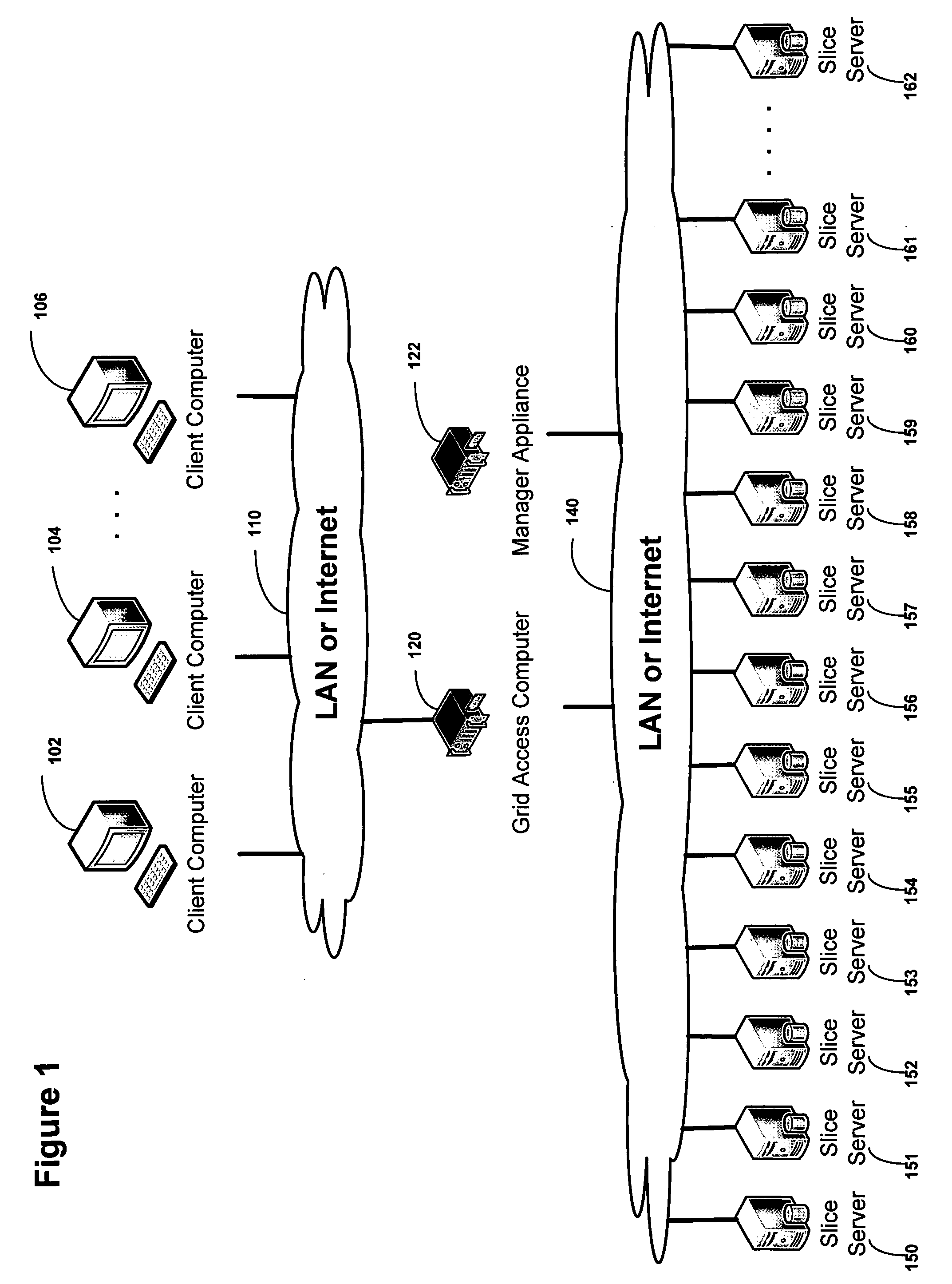

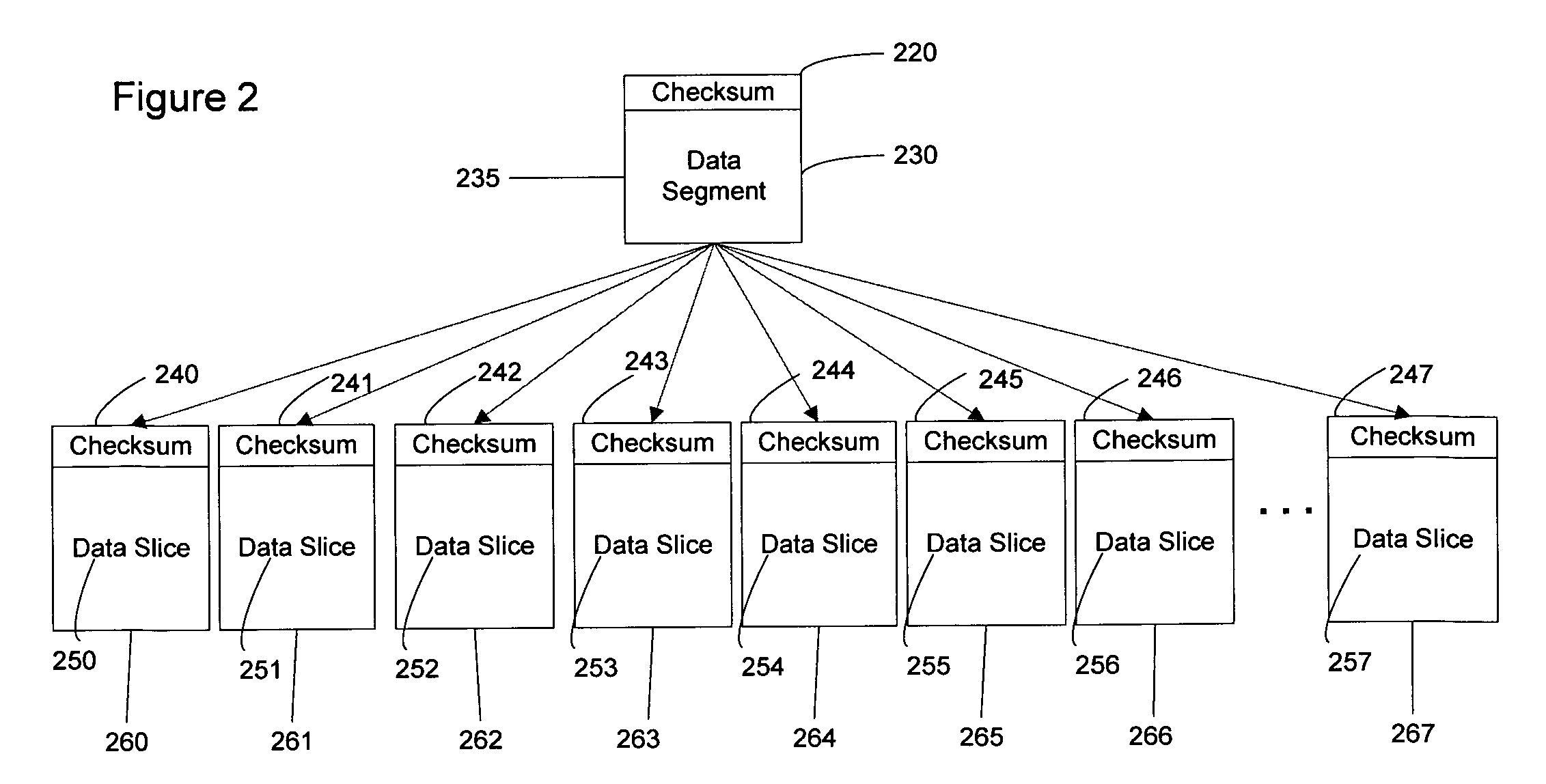

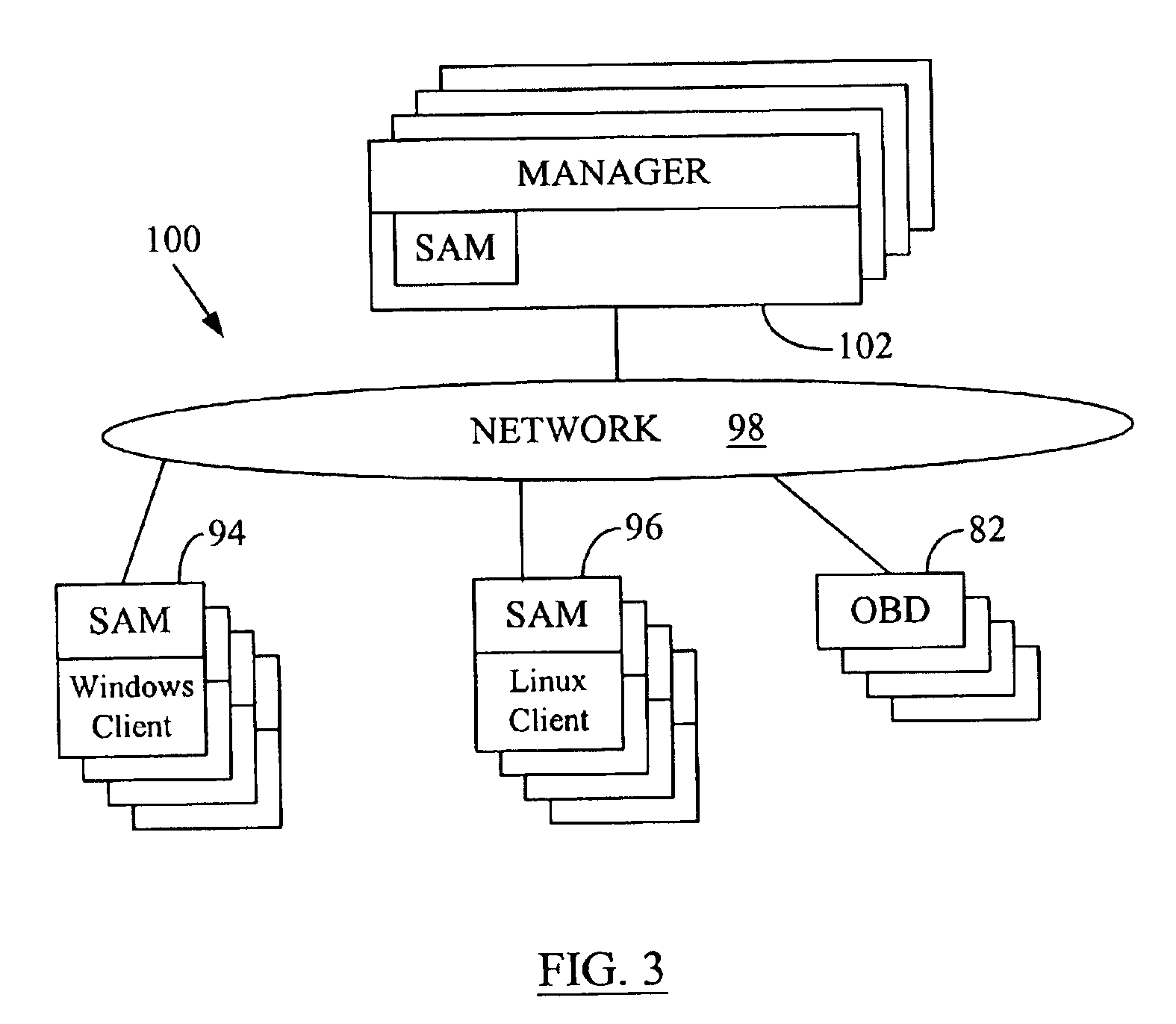

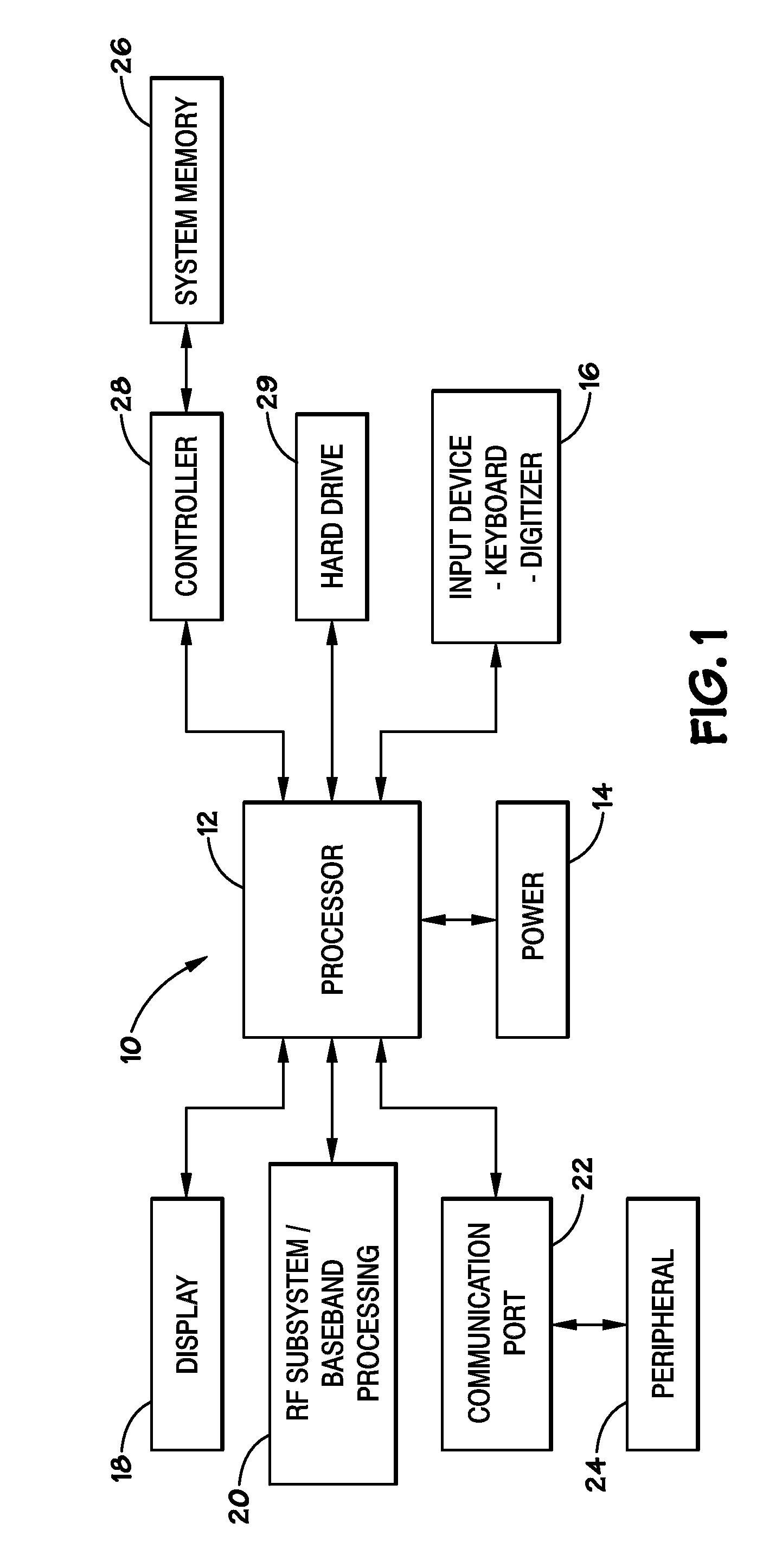

Computer system and process for transferring multiple high bandwidth streams of data between multiple storage units and multiple applications in a scalable and reliable manner

InactiveUS7111115B2Improve reliabilityImprove scalabilityInput/output to record carriersData processing applicationsHigh bandwidthData segment

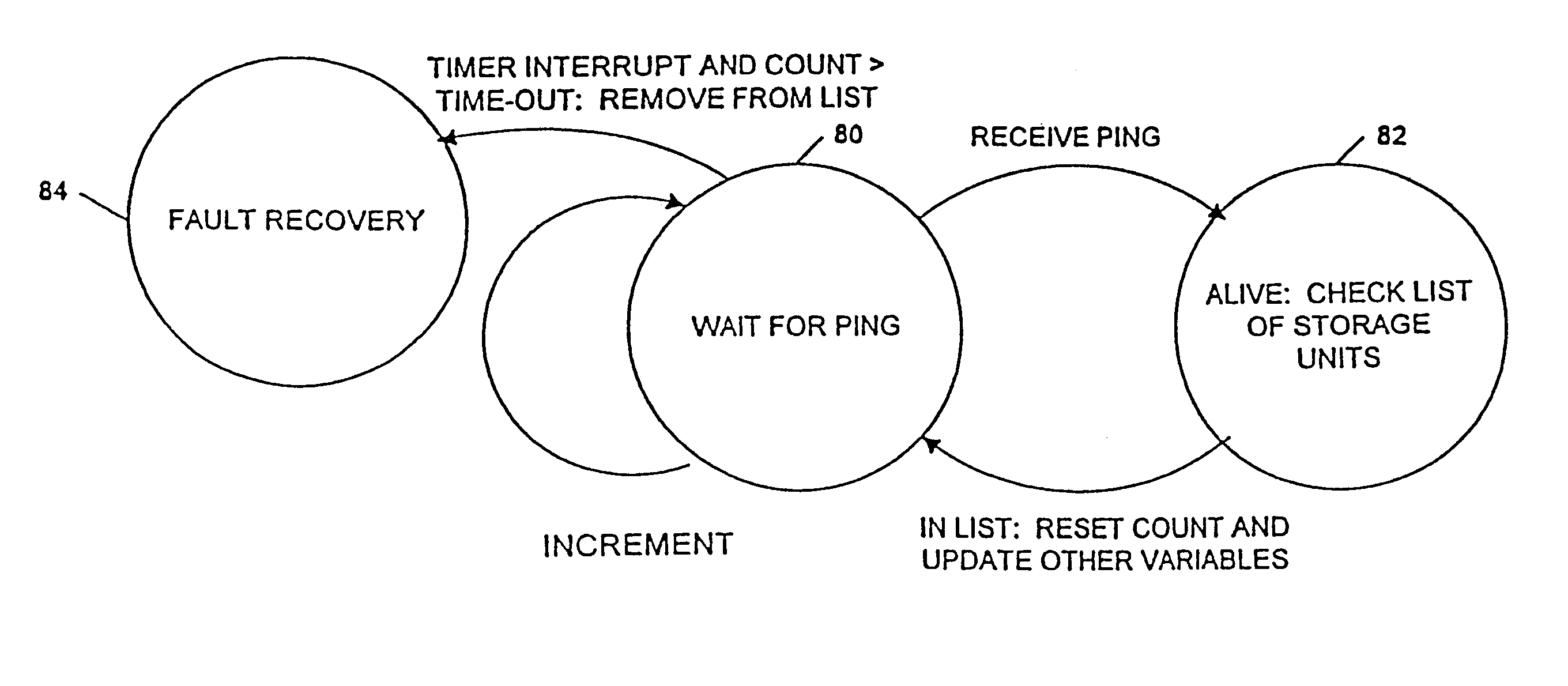

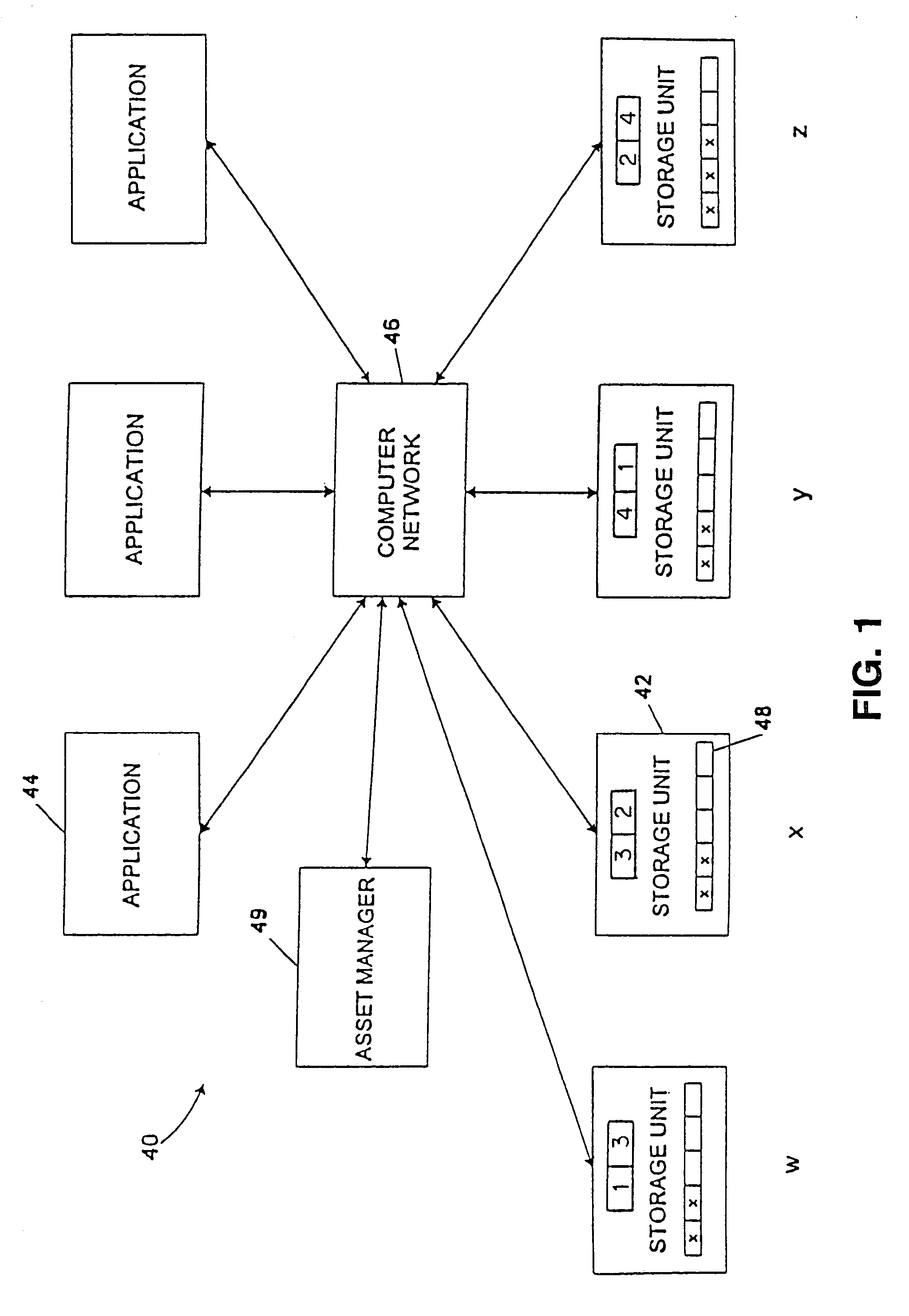

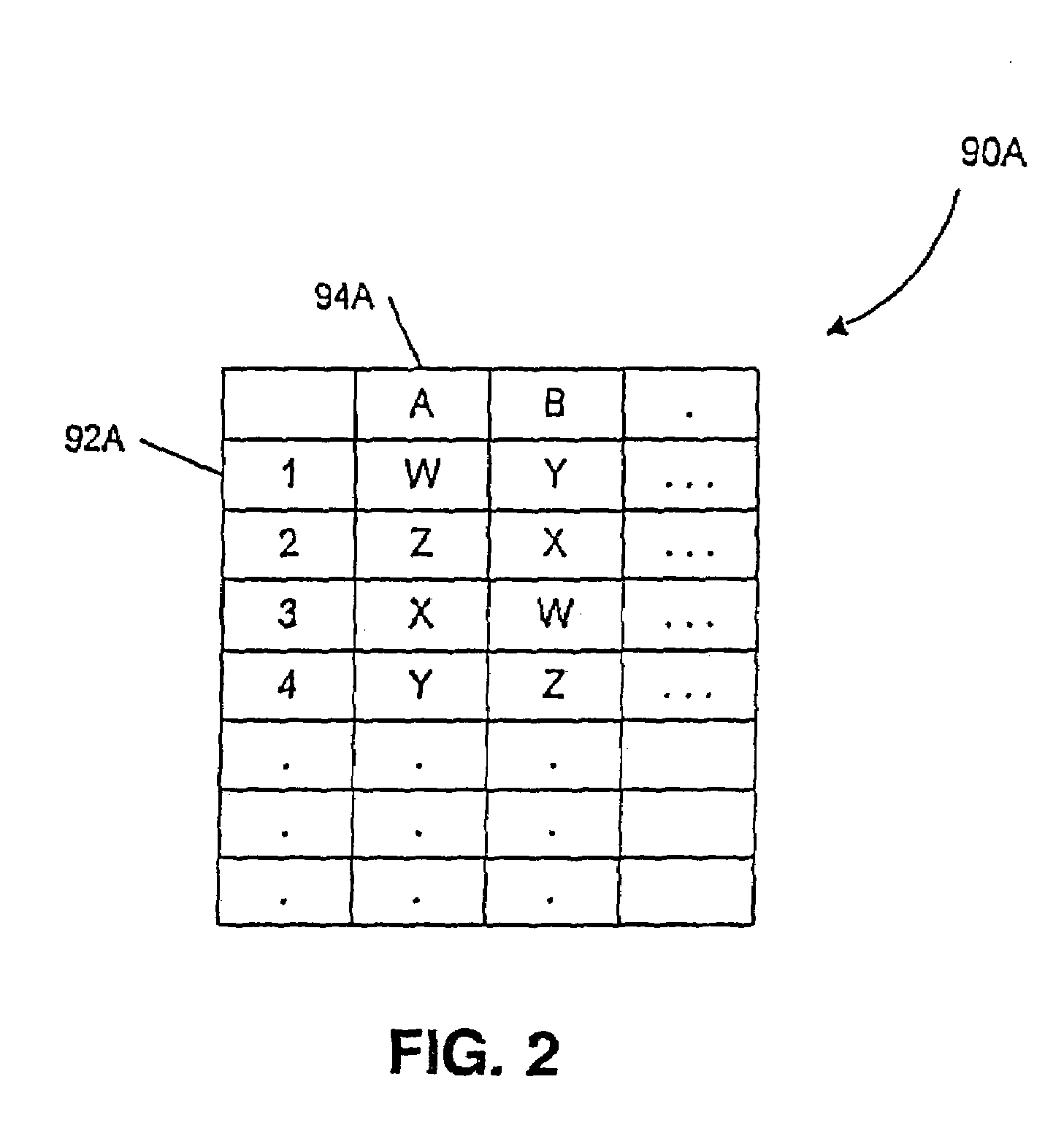

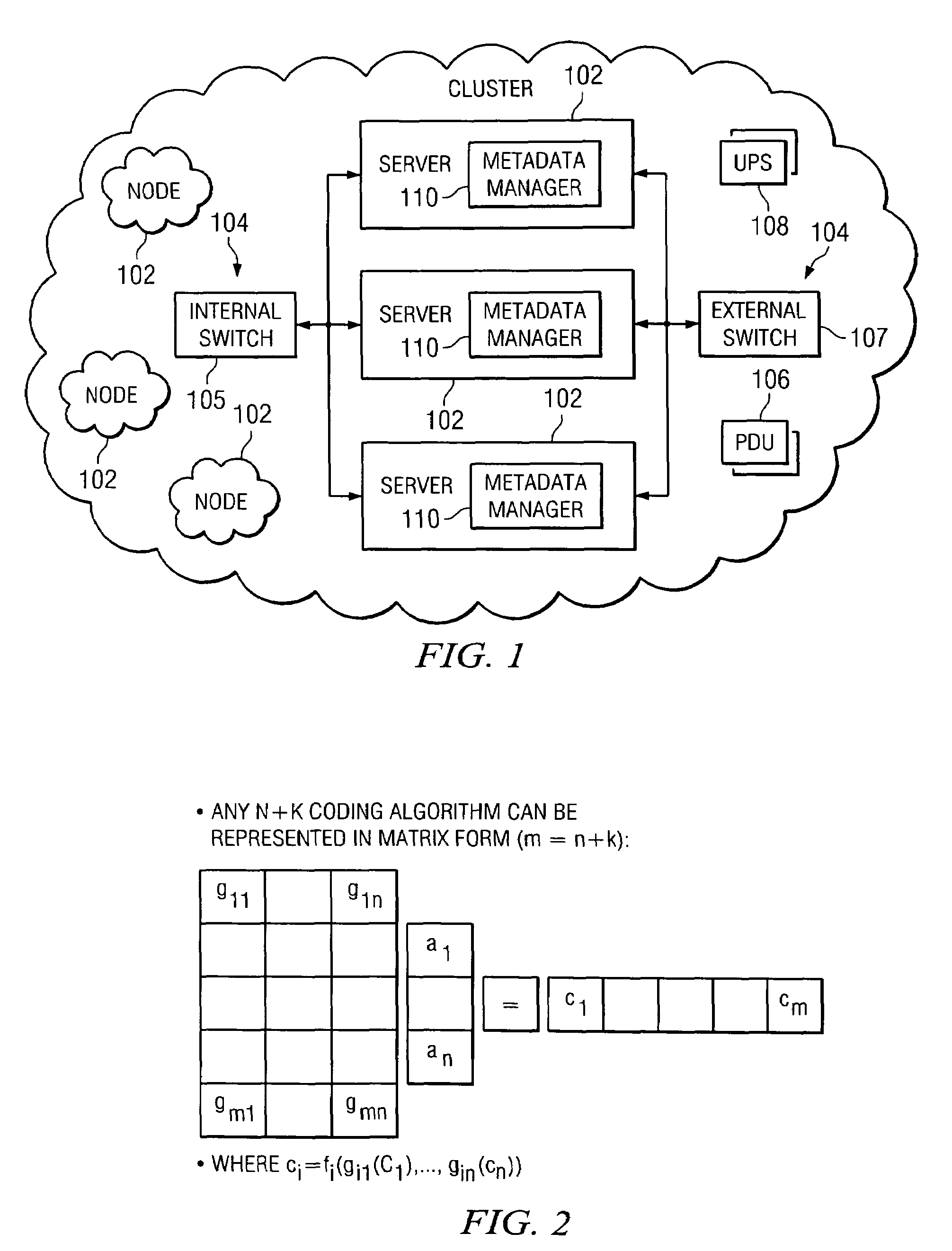

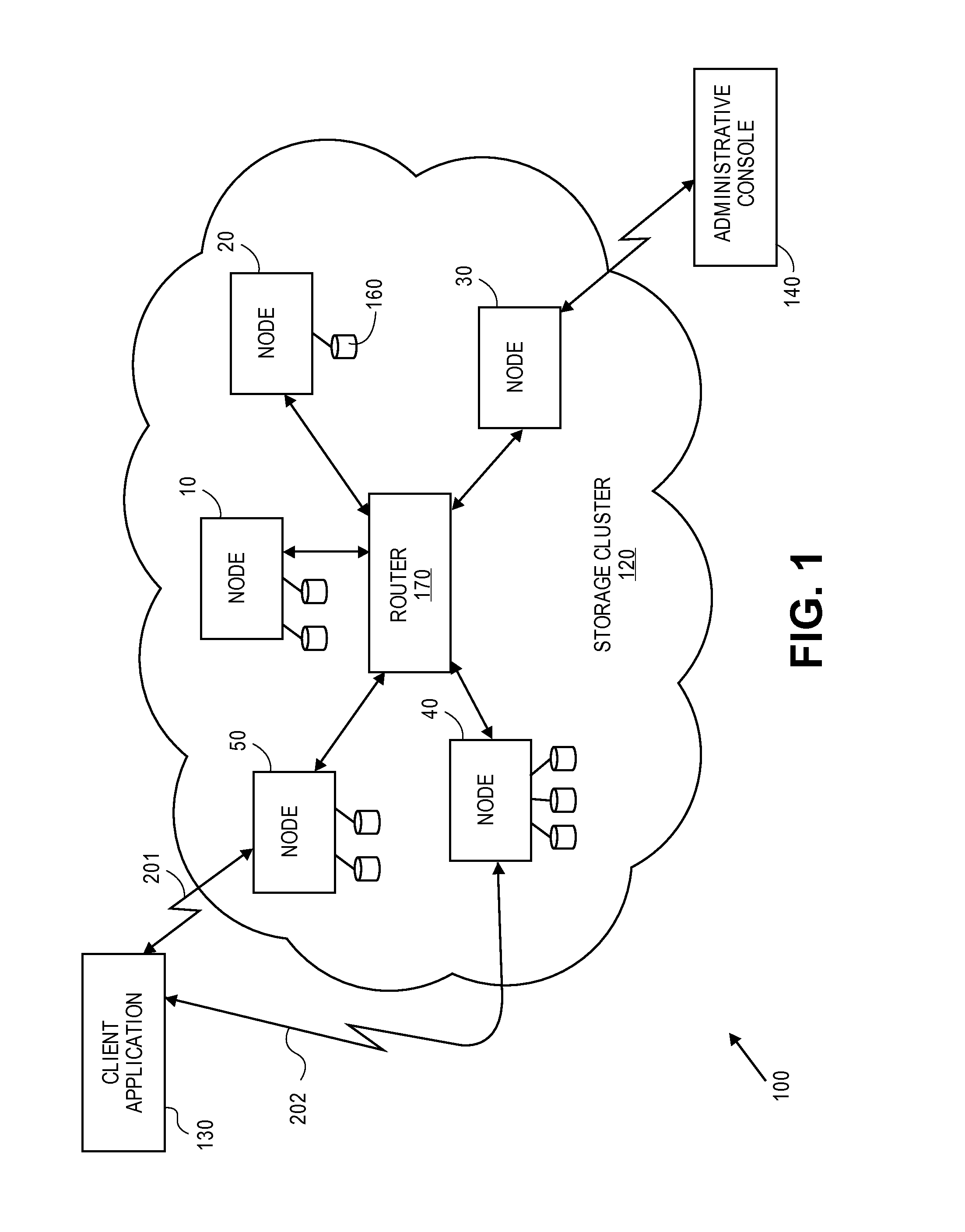

Multiple applications request data from multiple storage units over a computer network. The data is divided into segments and each segment is distributed randomly on one of several storage units, independent of the storage units on which other segments of the media data are stored. At least one additional copy of each segment also is distributed randomly over the storage units, such that each segment is stored on at least two storage units. This random distribution of multiple copies of segments of data improves both scalability and reliability. When an application requests a selected segment of data, the request is processed by the storage unit with the shortest queue of requests. Random fluctuations in the load applied by multiple applications on multiple storage units are balanced nearly equally over all of the storage units. This combination of techniques results in a system which can transfer multiple, independent high-bandwidth streams of data in a scalable manner in both directions between multiple applications and multiple storage units.

Owner:AVID TECHNOLOGY

Multi-dimensional data protection and mirroring method for micro level data

ActiveUS7103824B2Detection errorLow common data sizeCode conversionCyclic codesData validationData integrity

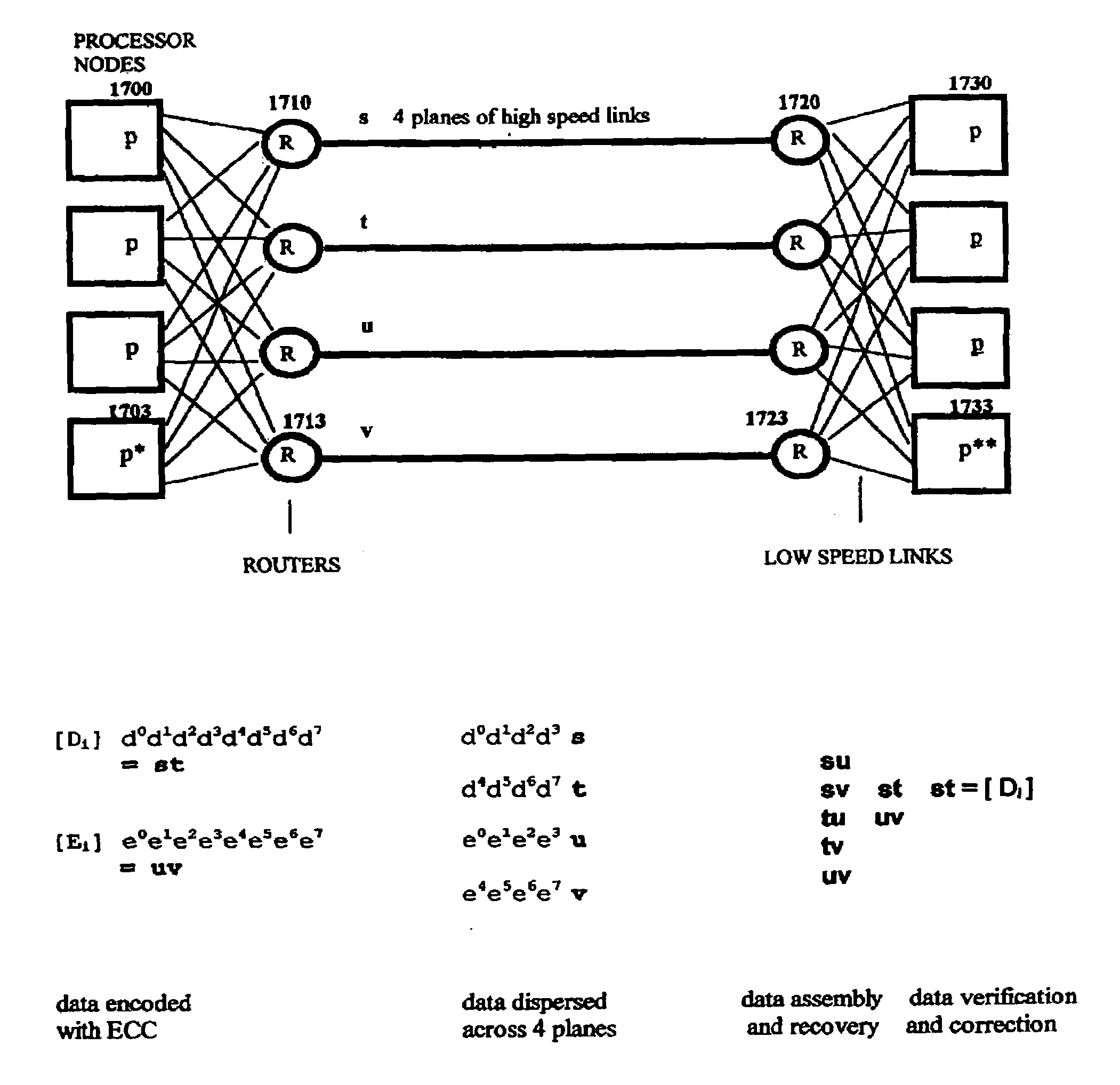

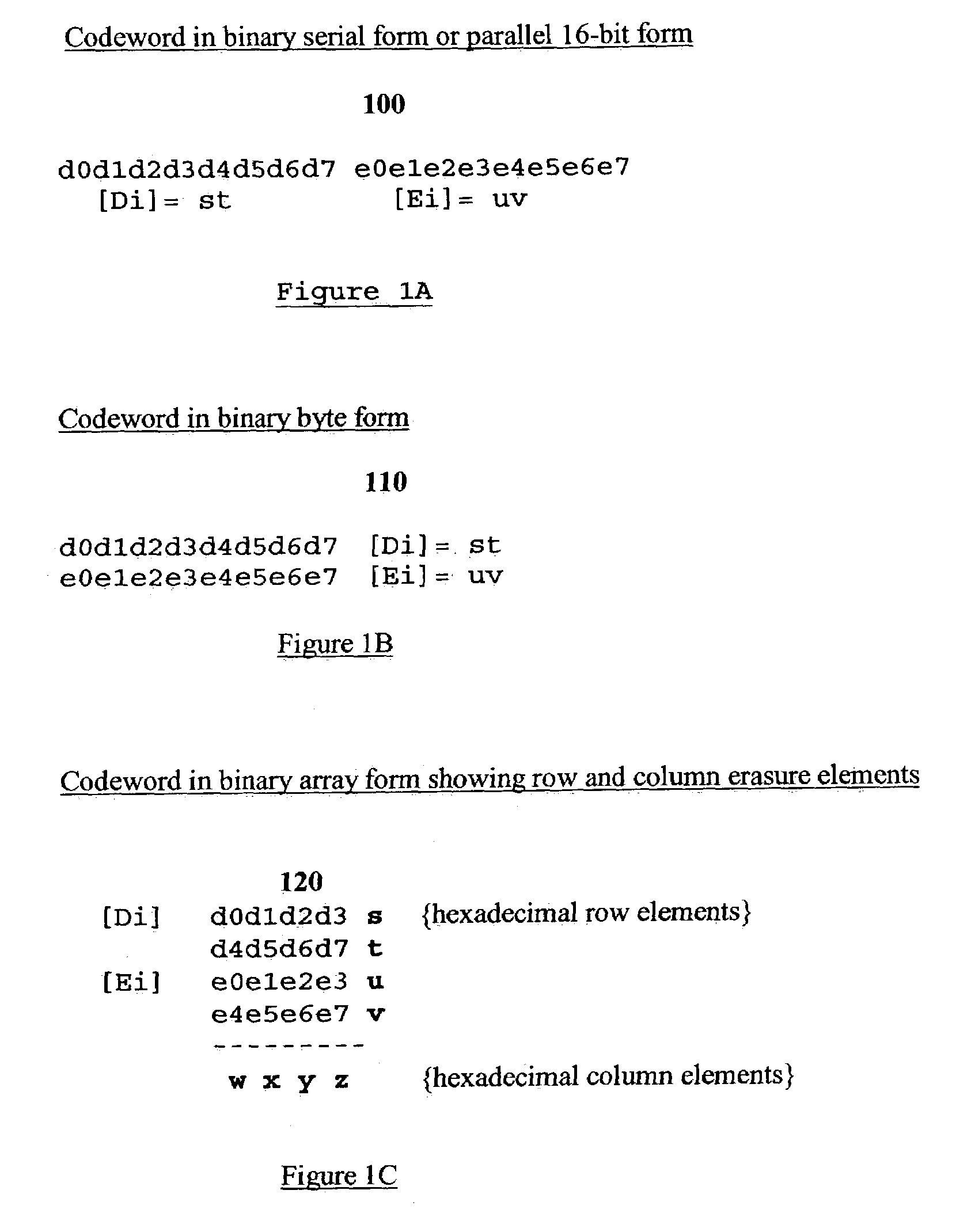

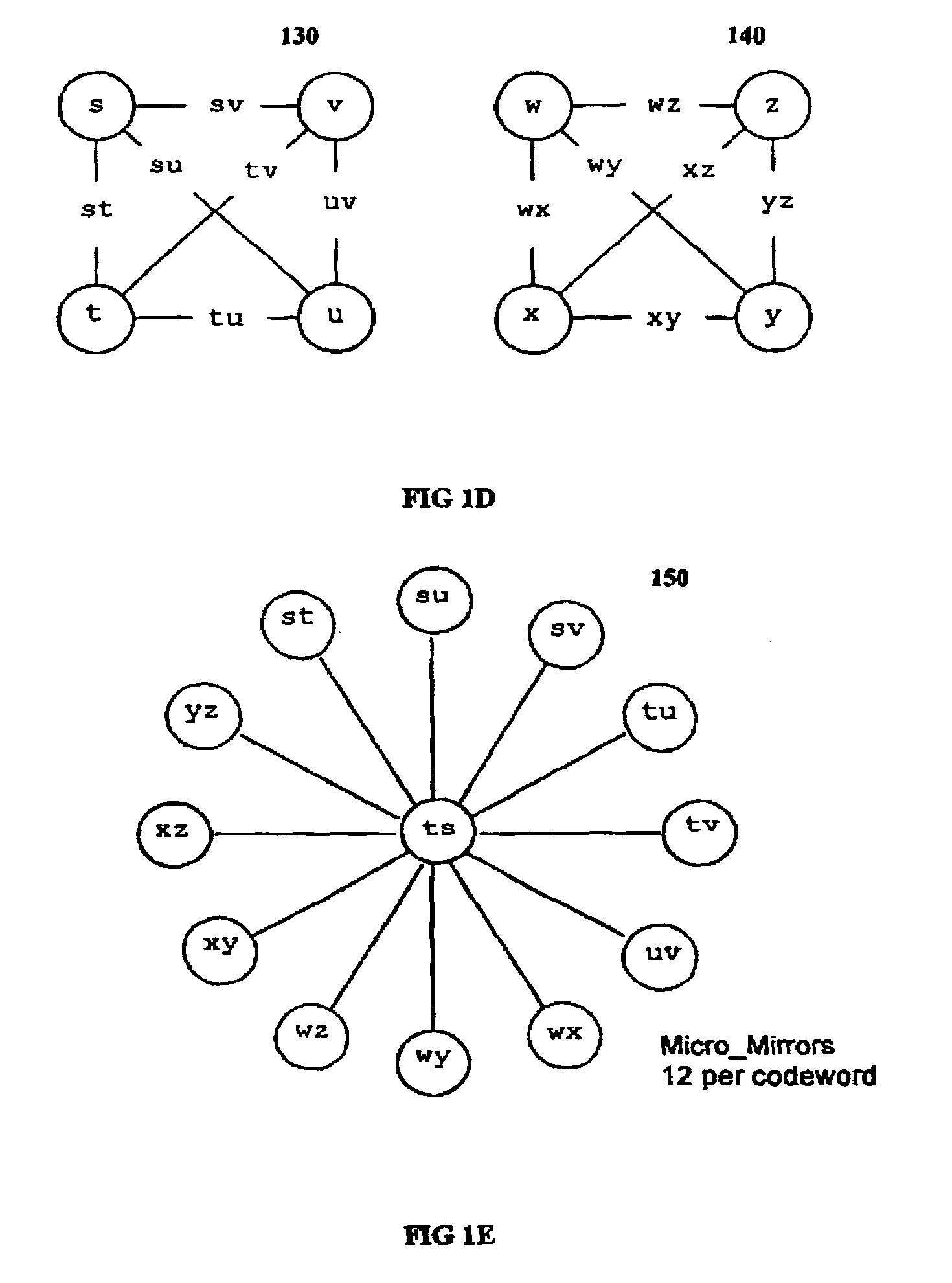

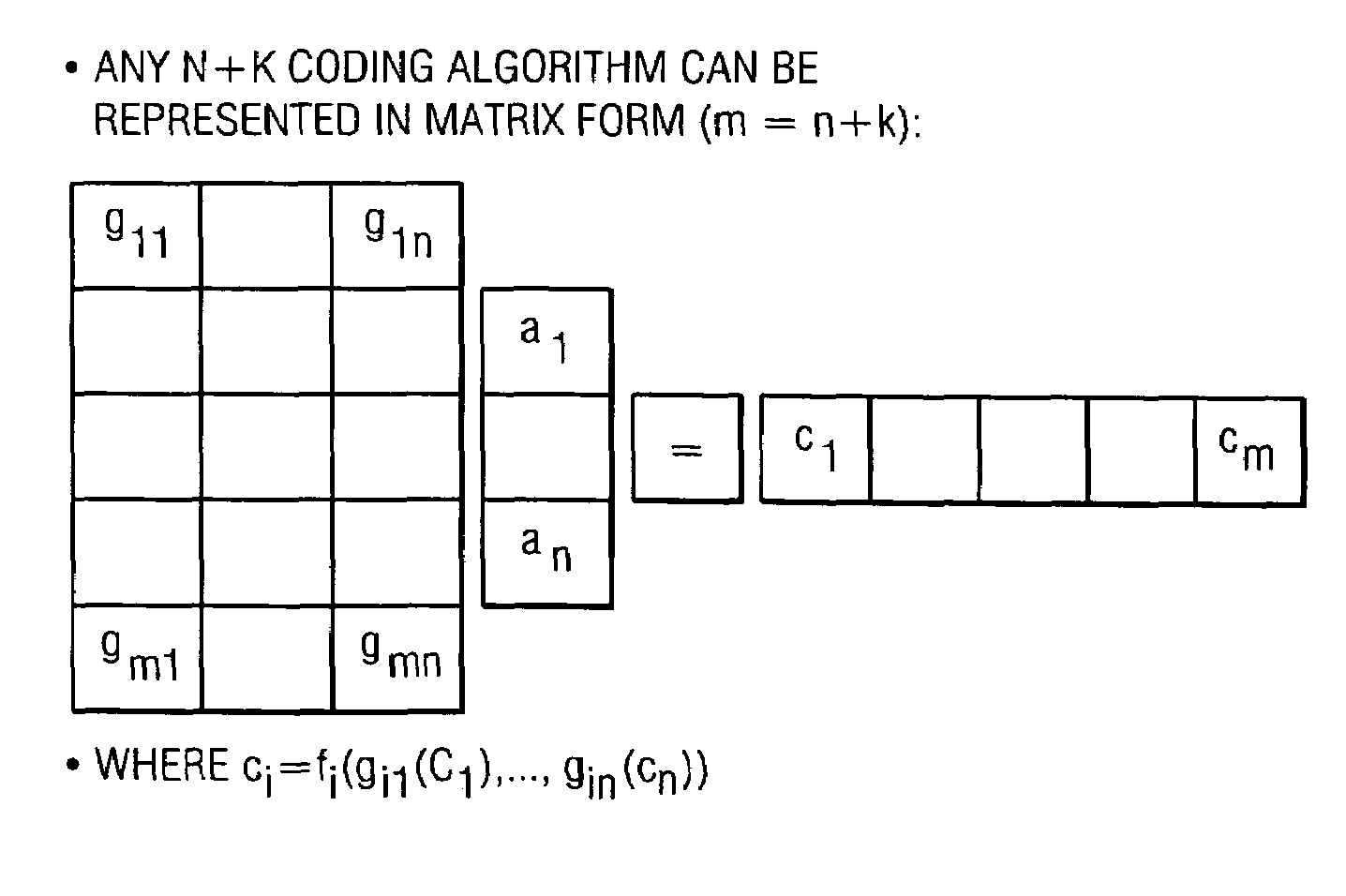

The invention discloses a data validation, mirroring and error / erasure correction method for the dispersal and protection of one and two-dimensional data at the micro level for computer, communication and storage systems. Each of 256 possible 8-bit data bytes are mirrored with a unique 8-bit ECC byte. The ECC enables 8-bit burst and 4-bit random error detection plus 2-bit random error correction for each encoded data byte. With the data byte and ECC byte configured into a 4 bit×4 bit codeword array and dispersed in either row, column or both dimensions the method can perform dual 4-bit row and column erasure recovery. It is shown that for each codeword there are 12 possible combinations of row and column elements called couplets capable of mirroring the data byte. These byte level micro-mirrors outperform conventional mirroring in that each byte and its ECC mirror can self-detect and self-correct random errors and can recover all dual erasure combinations over four elements. Encoding at the byte quanta level maximizes application flexibility. Also disclosed are fast encode, decode and reconstruction methods via boolean logic, processor instructions and software table look-up with the intent to run at line and application speeds. The new error control method can augment ARQ algorithms and bring resiliency to system fabrics including routers and links previously limited to the recovery of transient errors. Image storage and storage over arrays of static devices can benefit from the two-dimensional capabilities. Applications with critical data integrity requirements can utilize the method for end-to-end protection and validation. An extra ECC byte per codeword extends both the resiliency and dimensionality.

Owner:HALFORD ROBERT

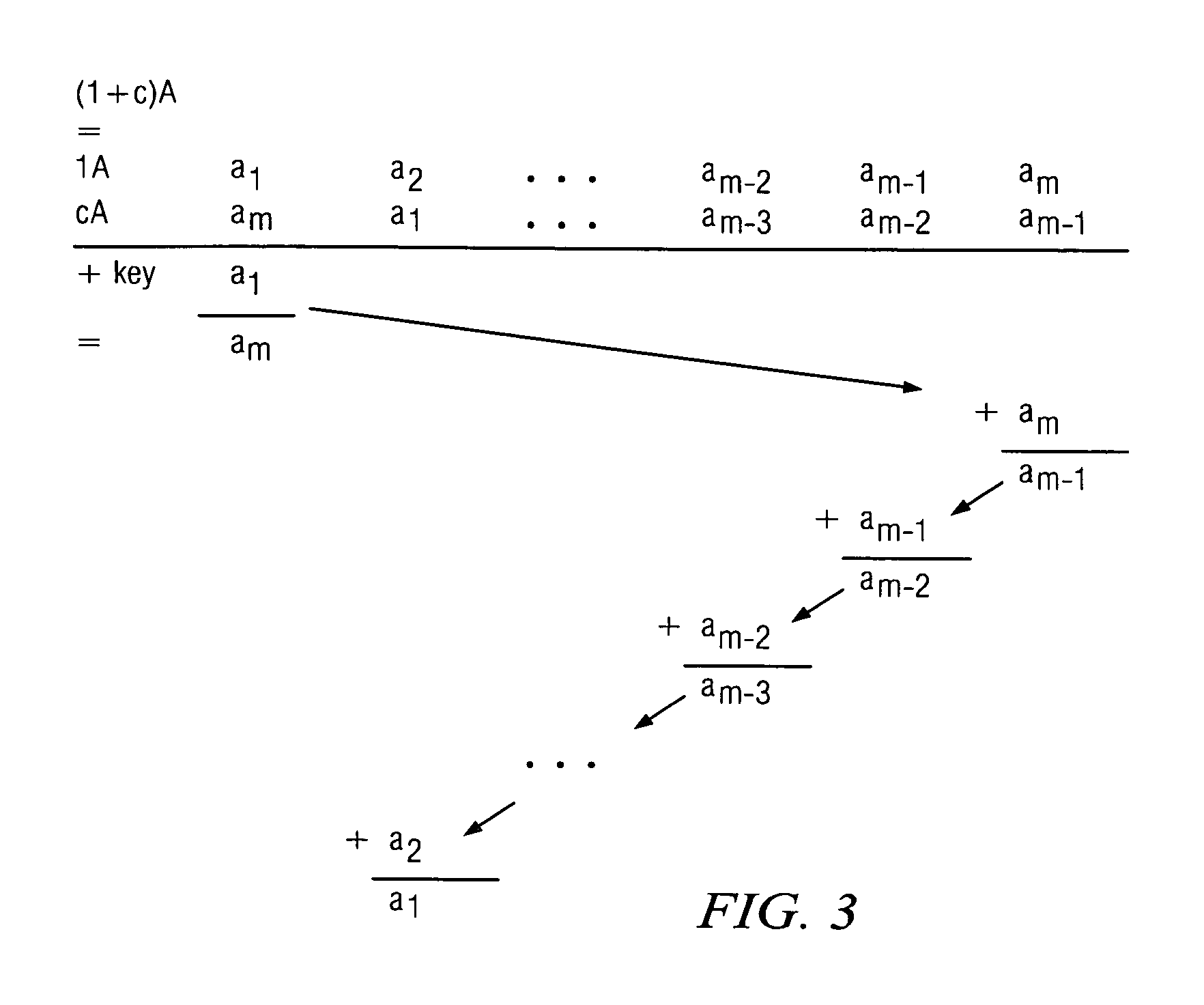

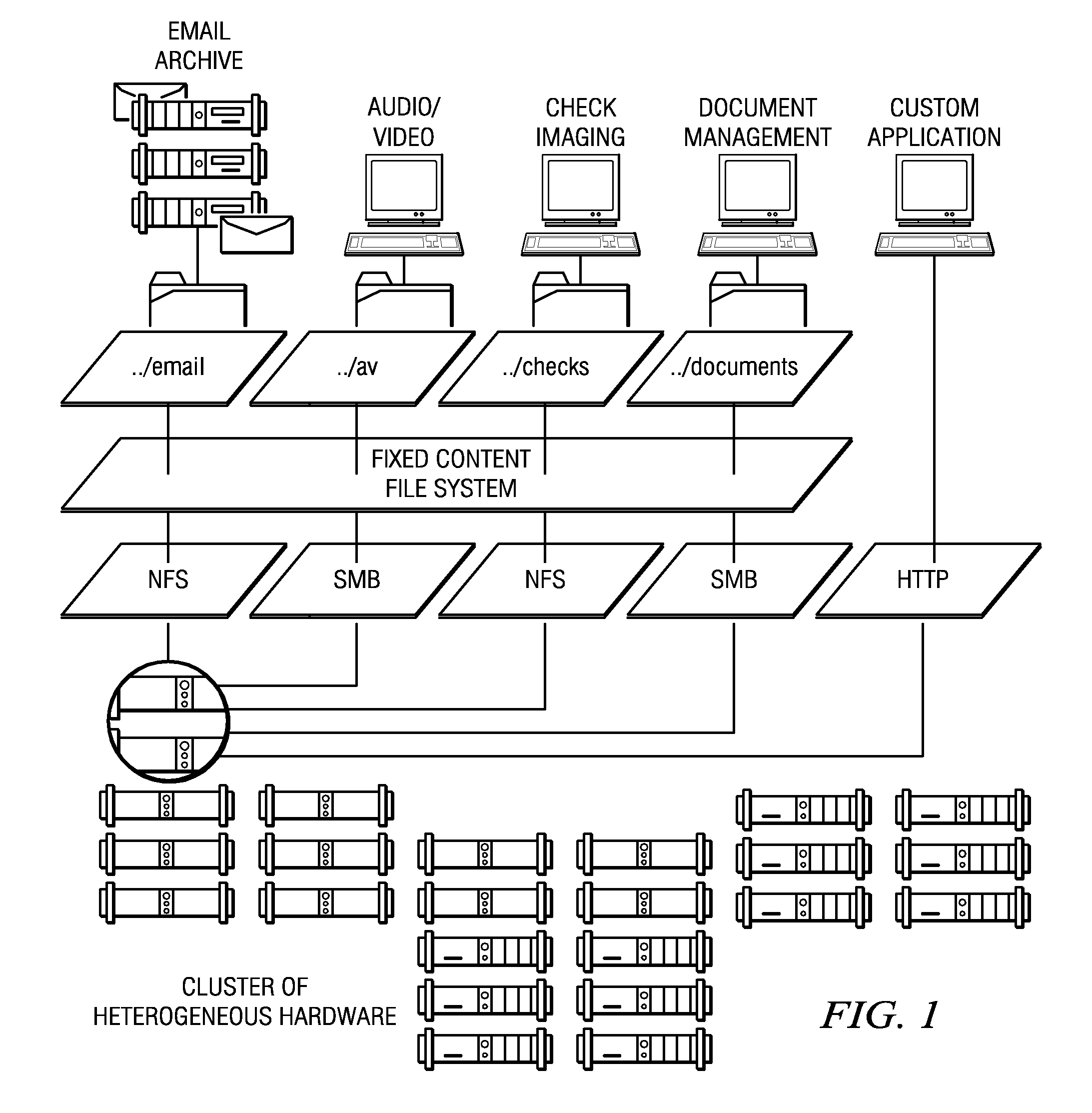

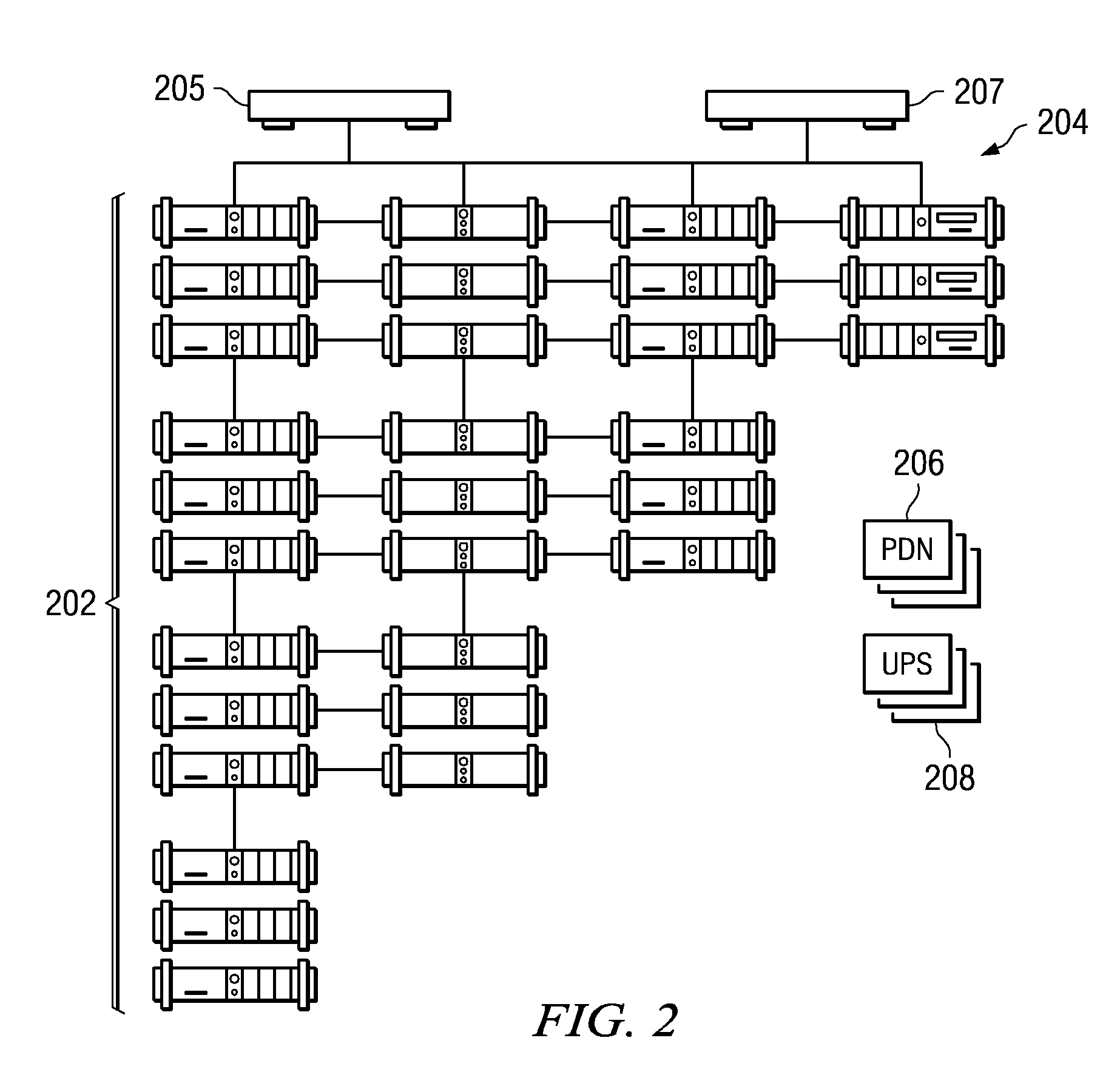

Fixed content distributed data storage using permutation ring encoding

InactiveUS7240236B2Highly available and reliable and persistent storageError correction/detection using multiple parity bitsCode conversionCoding blockDatabase

A file protection scheme for fixed content in a distributed data archive uses computations that leverage permutation operators of a cyclic code. In an illustrative embodiment, an N+K coding technique is described for use to protect data that is being distributed in a redundant array of independent nodes (RAIN). The data itself may be of any type, and it may also include system metadata. According to the invention, the data to be distributed is encoded by a dispersal operation that uses a group of permutation ring operators. In a preferred embodiment, the dispersal operation is carried out using a matrix of the form [IN<sub2>—< / sub2>C] where IN is an n×n identity sub-matrix and C is a k×n sub-matrix of code blocks. The identity sub-matrix is used to preserve the data blocks intact. The sub-matrix C preferably comprises a set of permutation ring operators that are used to generate the code blocks. The operators are preferably superpositions that are selected from a group ring of a permutation group with base ring Z2.

Owner:HITACHI VANTARA CORP

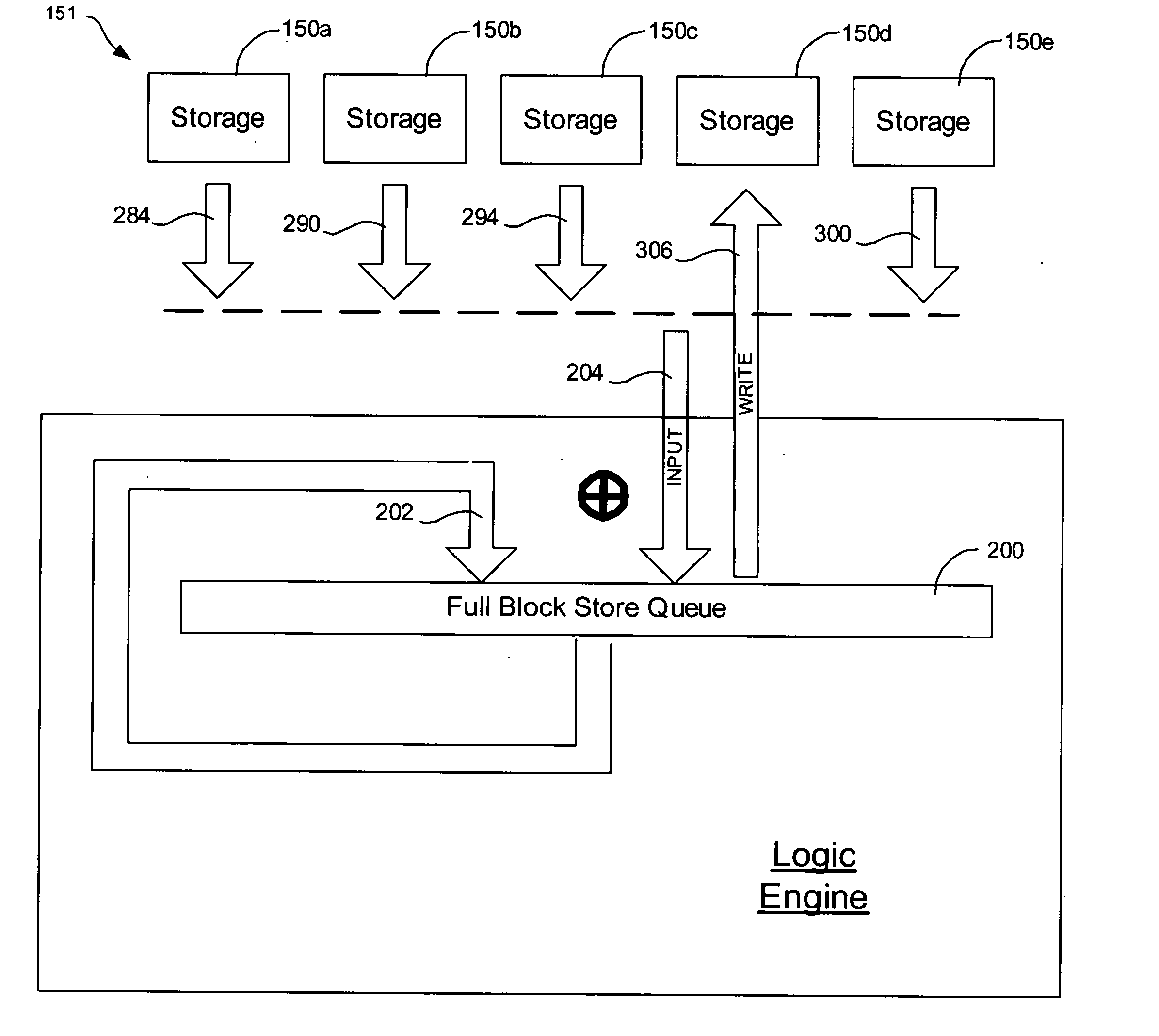

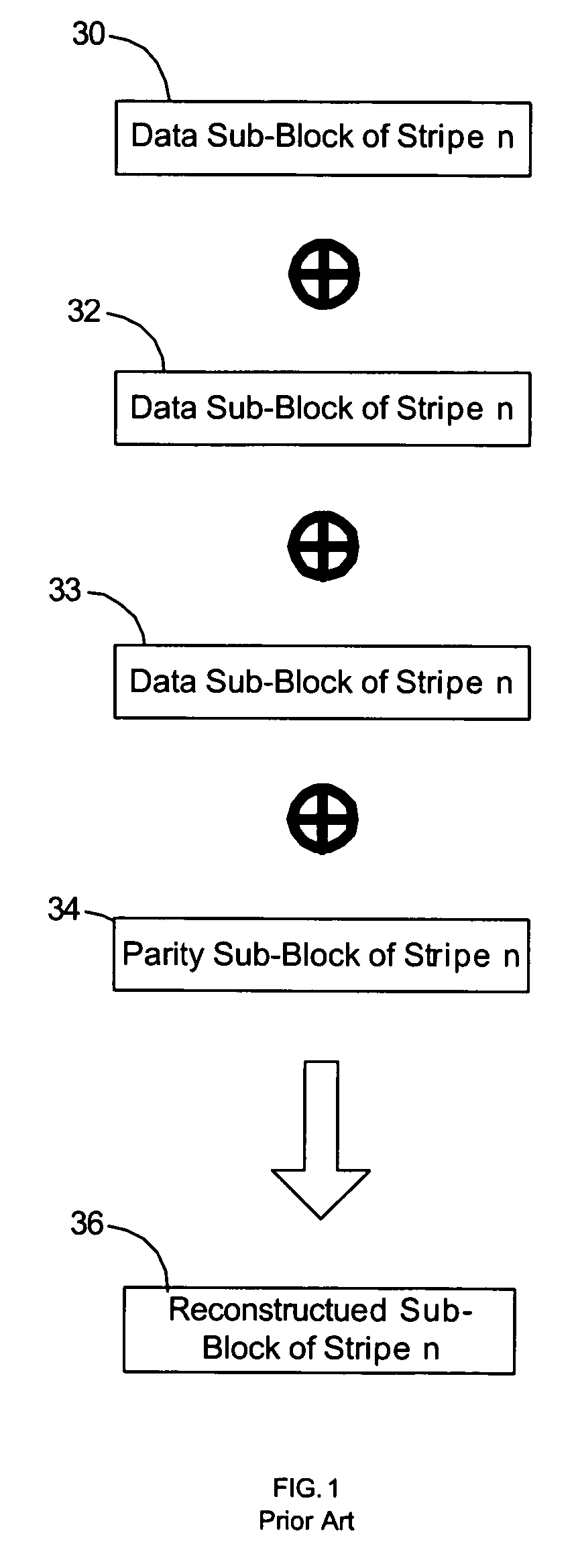

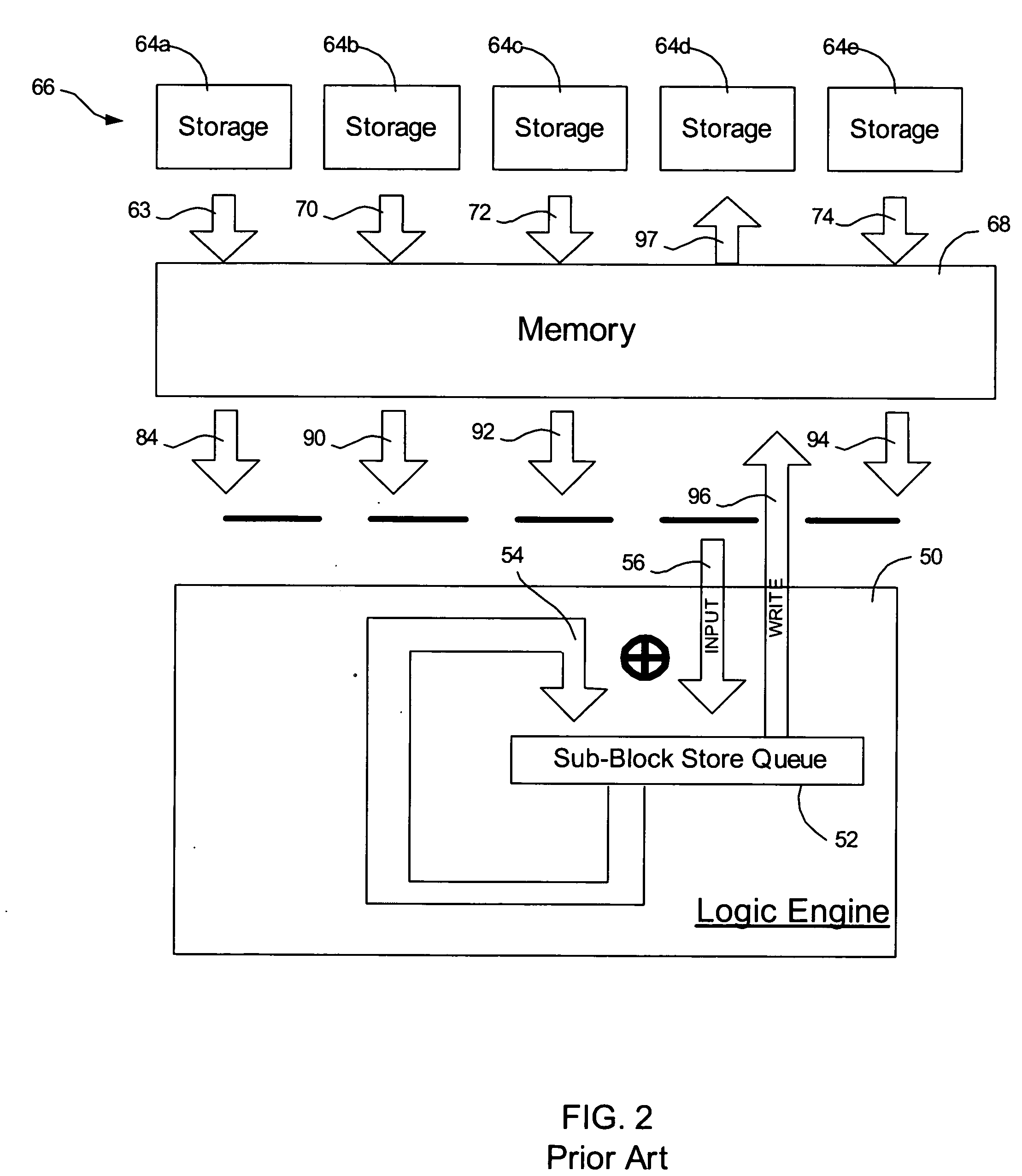

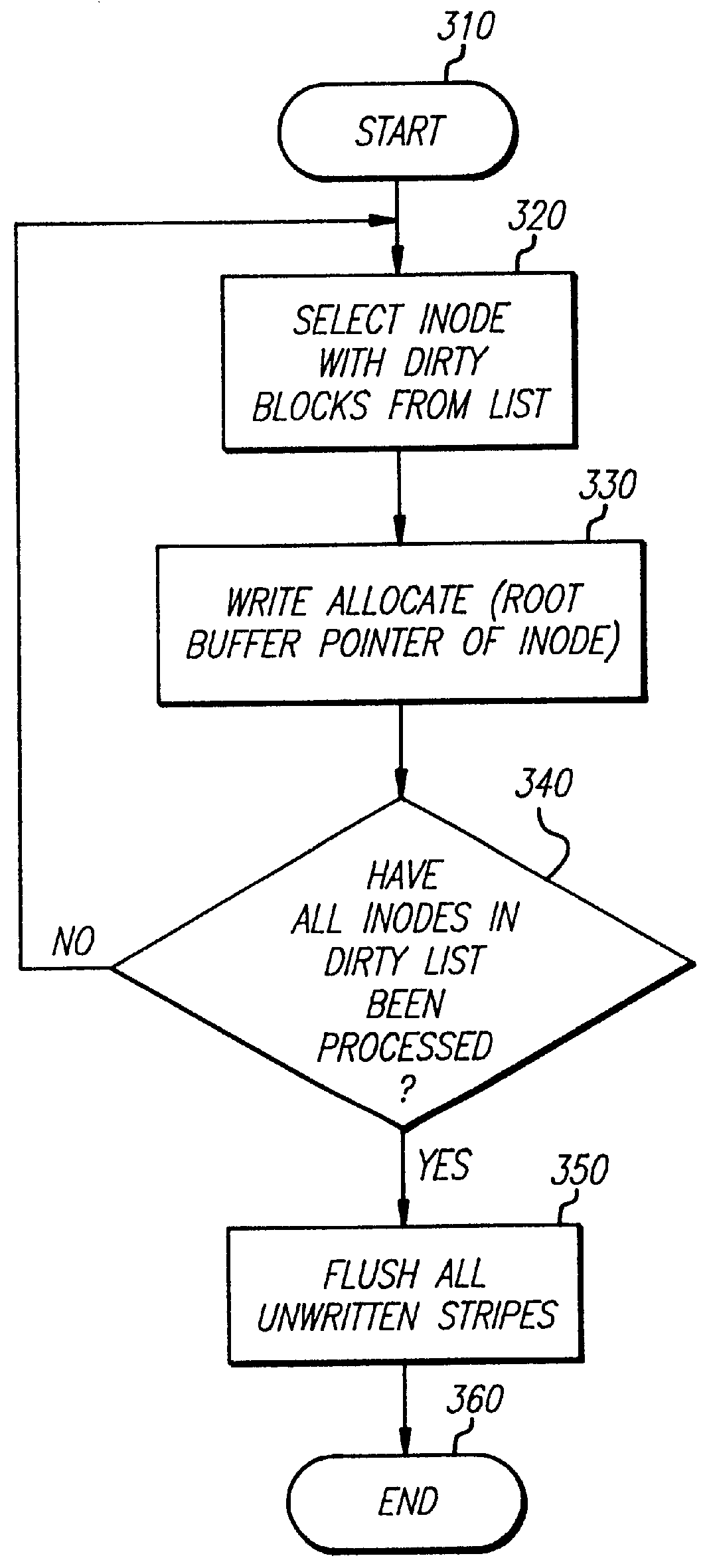

Method, system, and program for managing data organization

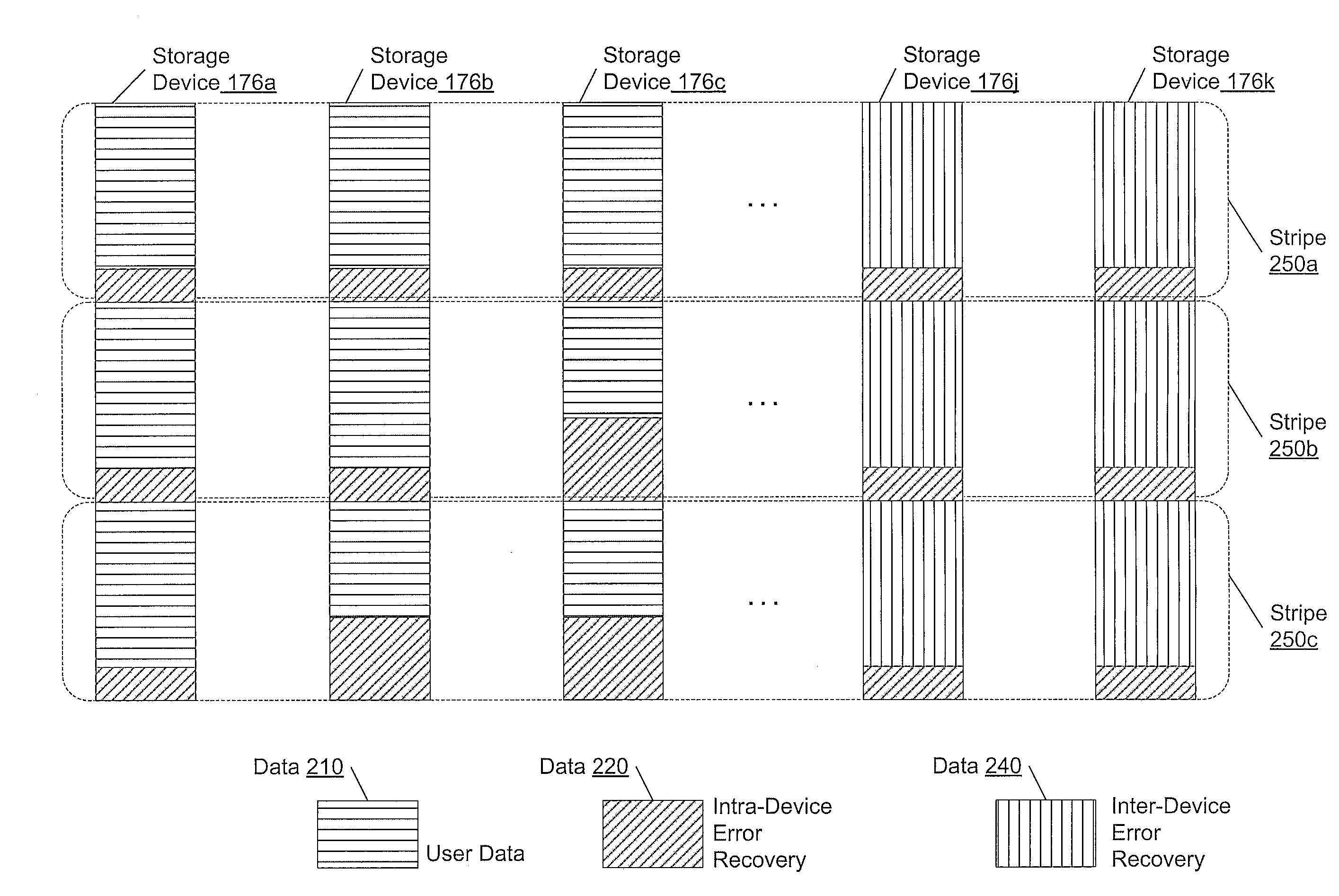

Provided are a method, system, and program for constructing data including reconstructing data organized in a data organization type, such as a Redundant Array of Independent Disks (RAID) organization, for example, which permits data reconstruction In one embodiment, blocks of data are transferred from a stripe of data stored across storage units, such as disk drives in a RAID array, to a logic engine of a storage processor, bypassing the cache memory of the storage processor. A store queue performs a logic function, such as Exclusive-OR, on each block of data as it is transferred from the disk drives, to reconstruct a block of data from the stripe. The constructed block of data may be subsequently transferred to a disk drive of the RAID array to replace a lost block of data in the stripe of data across the RAID array or to replace an old block of parity data.

Owner:INTEL CORP

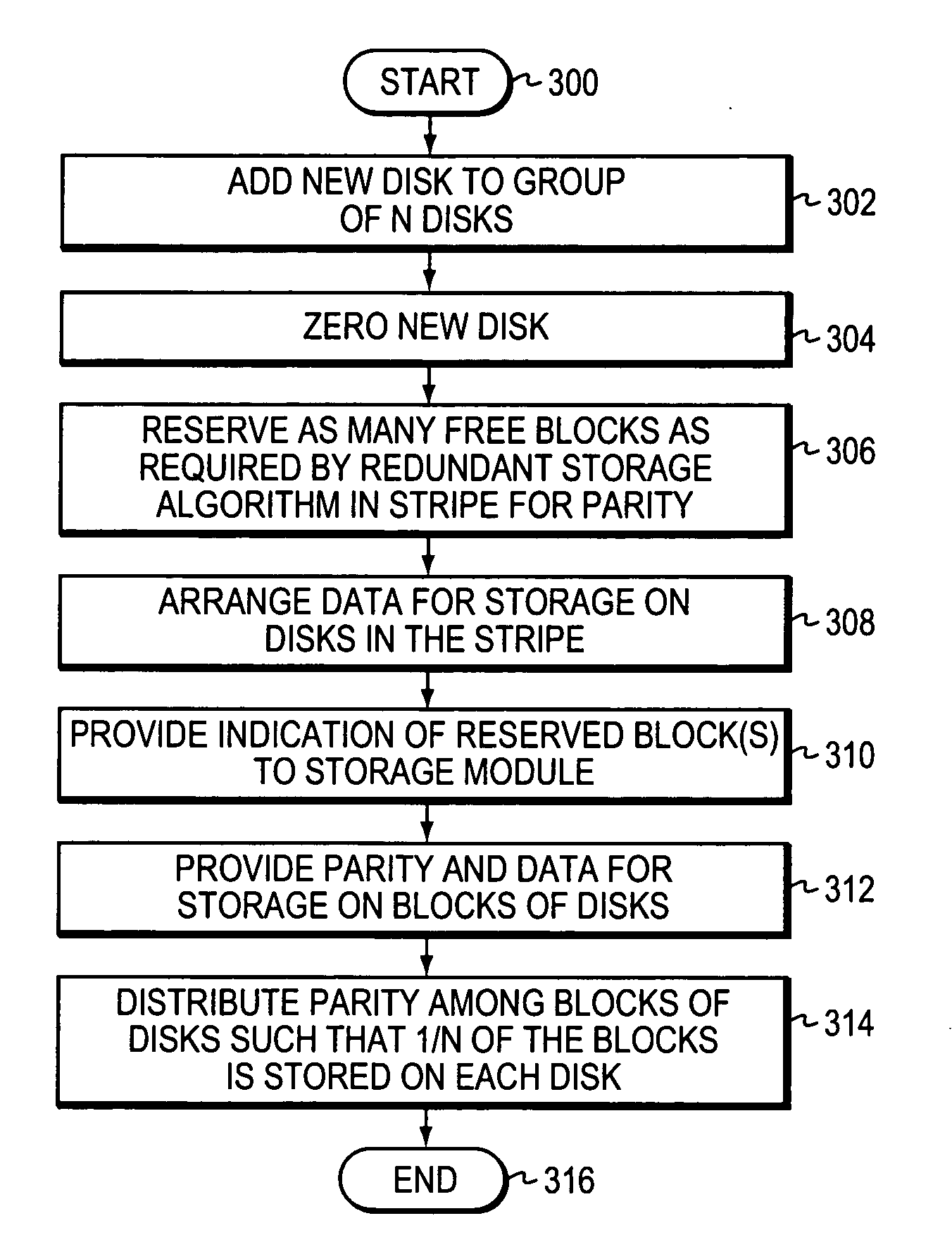

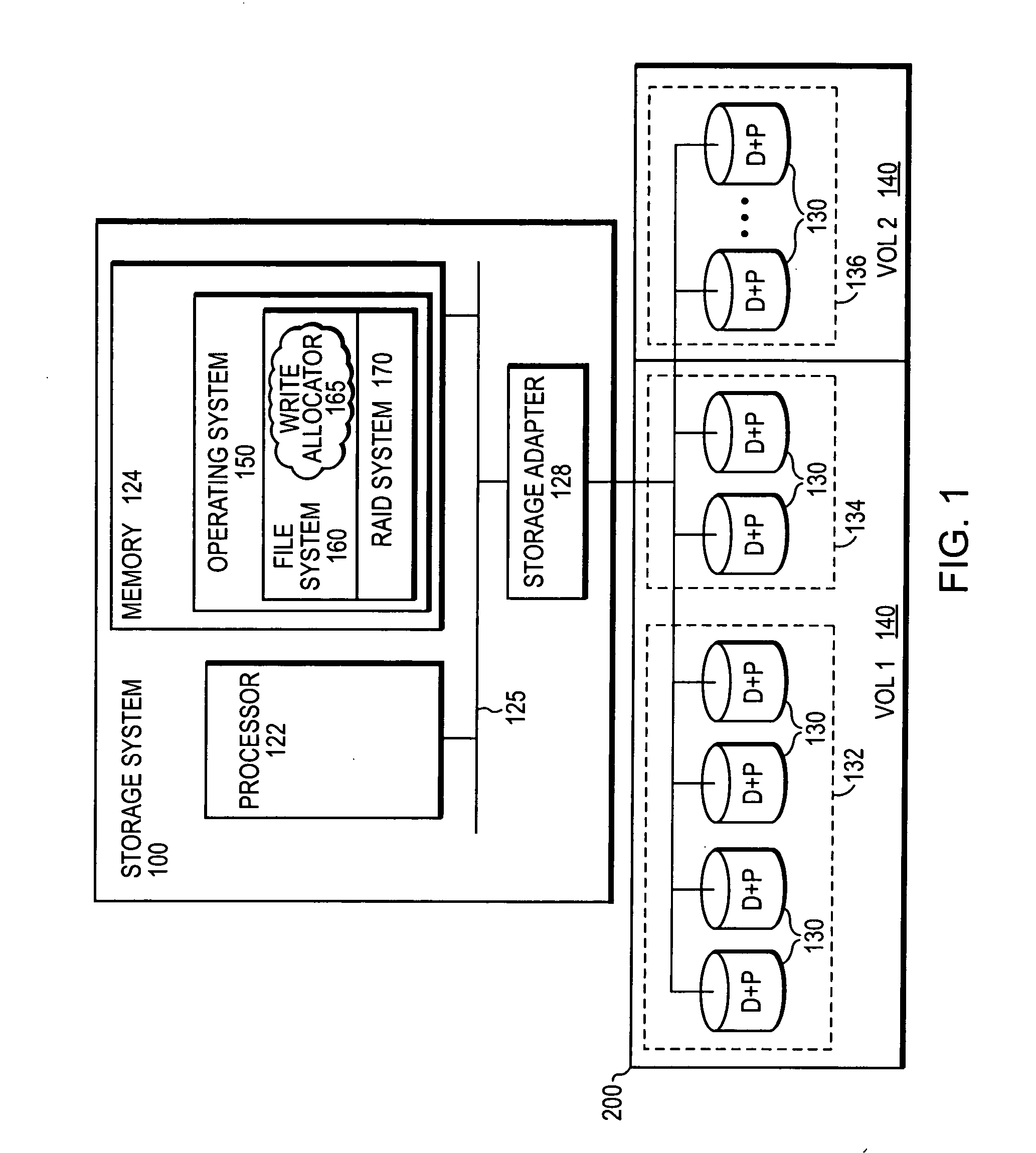

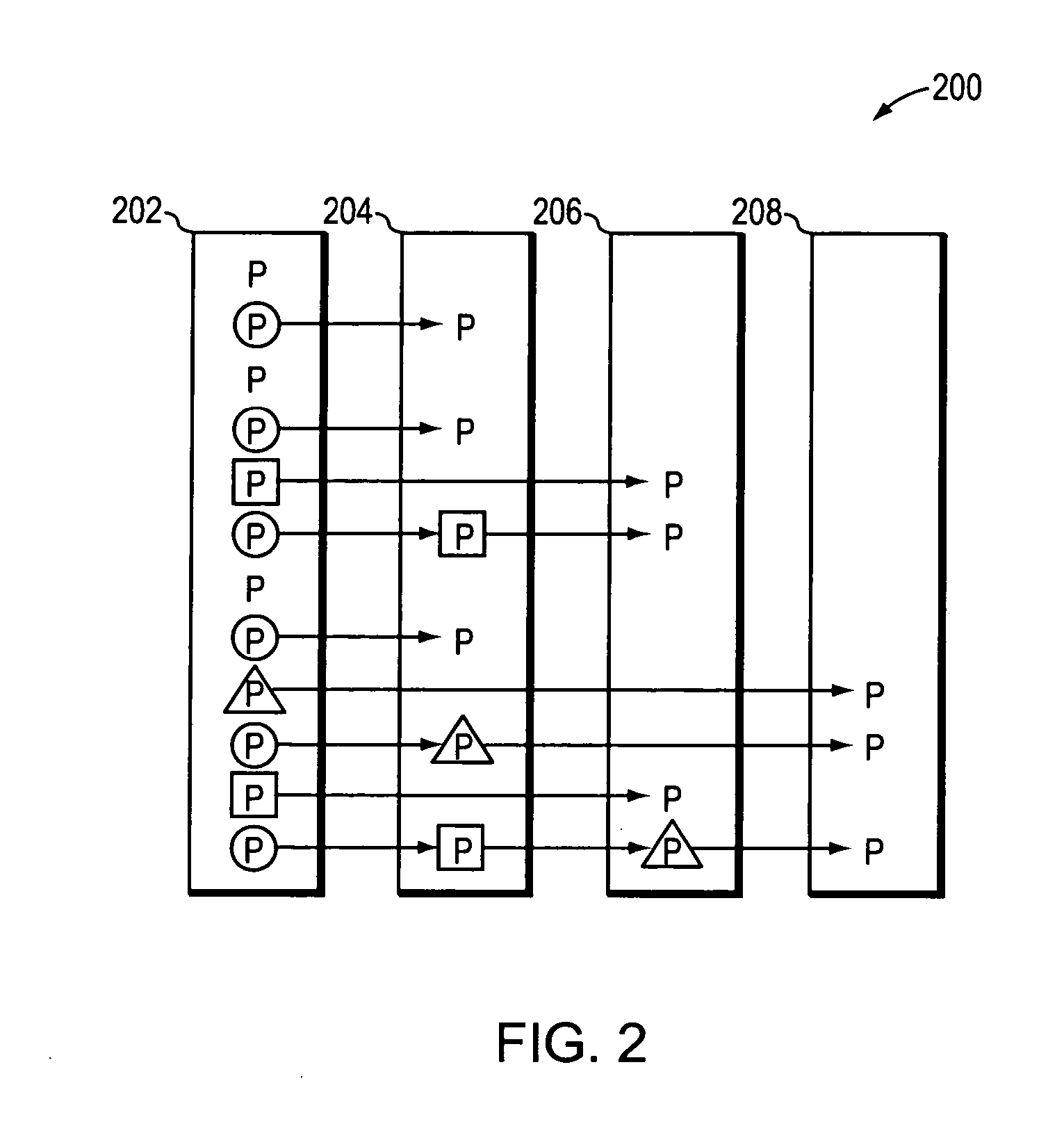

Semi-static distribution technique

ActiveUS20050114594A1Improve performanceCase blockedMemory loss protectionRedundant data error correctionComputer sciencePre-existing

Owner:NETWORK APPLIANCE INC

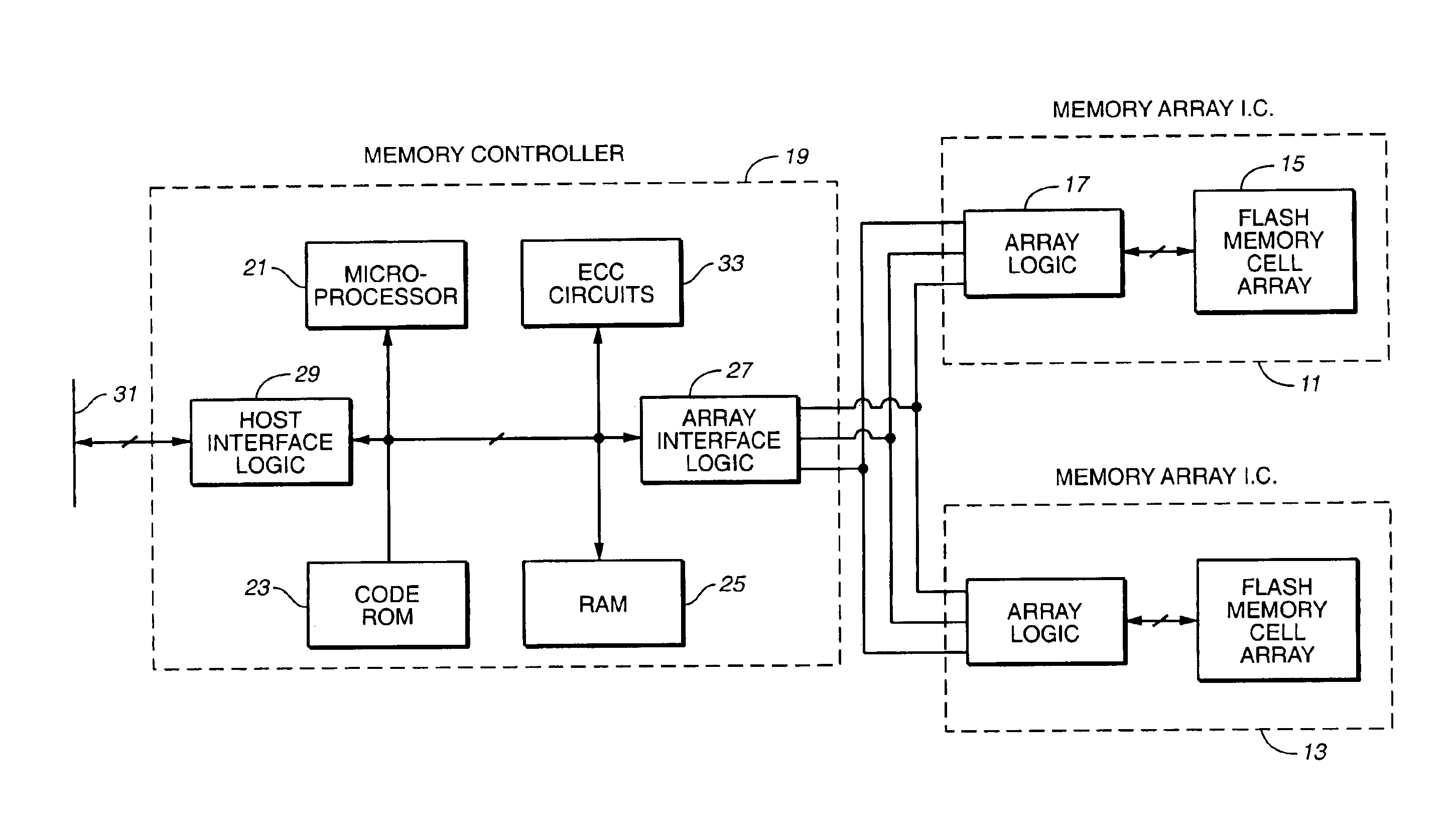

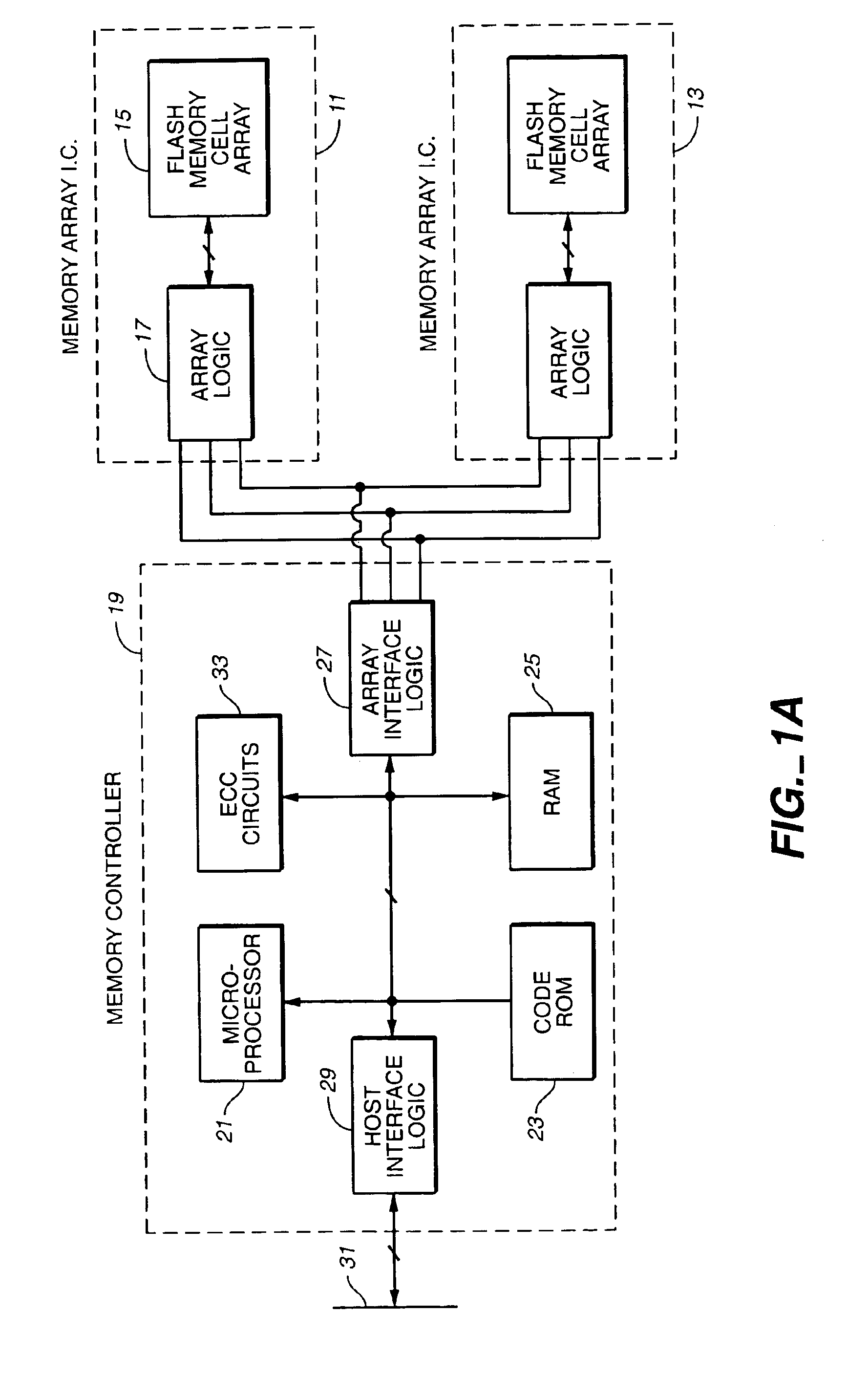

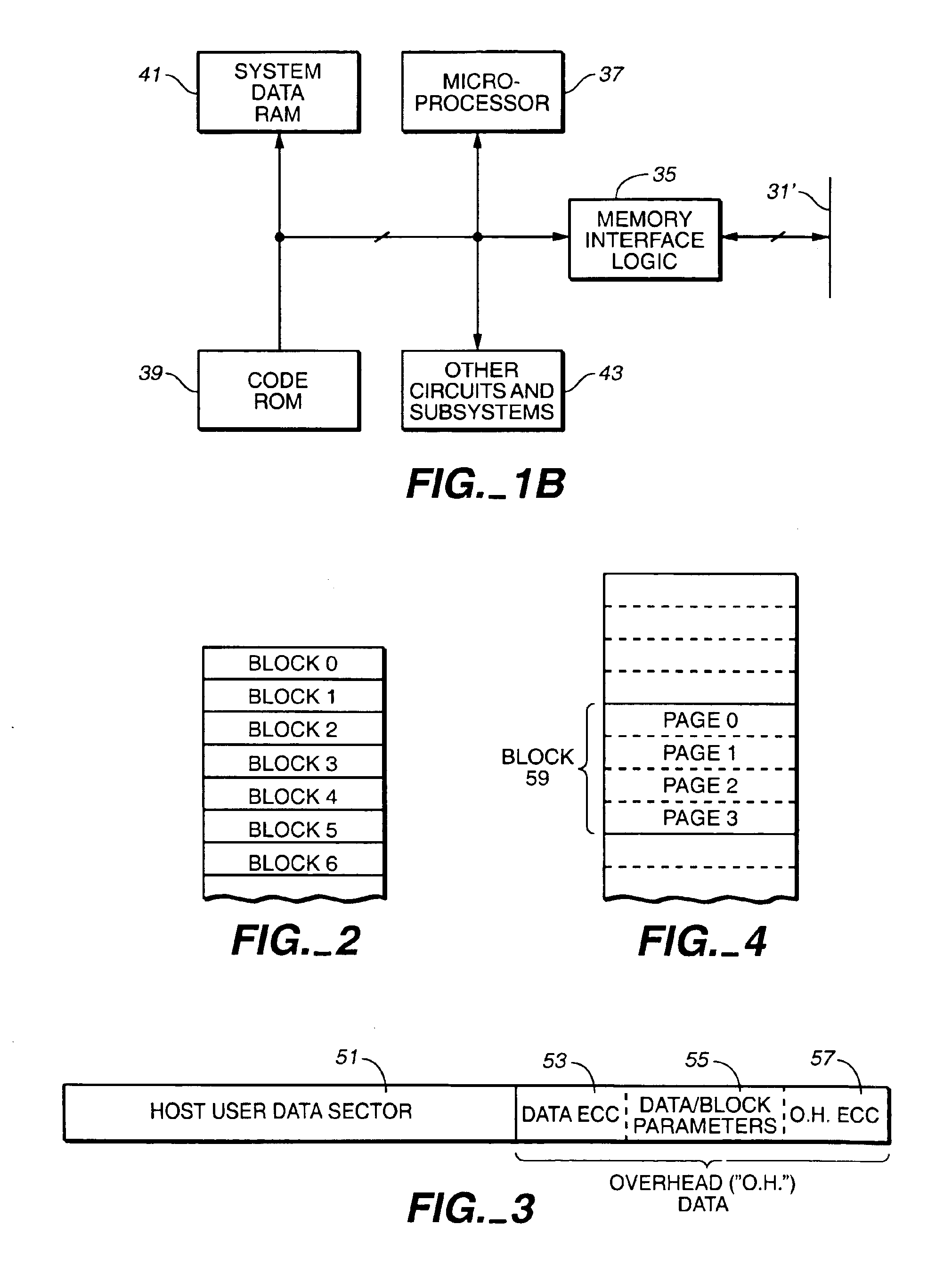

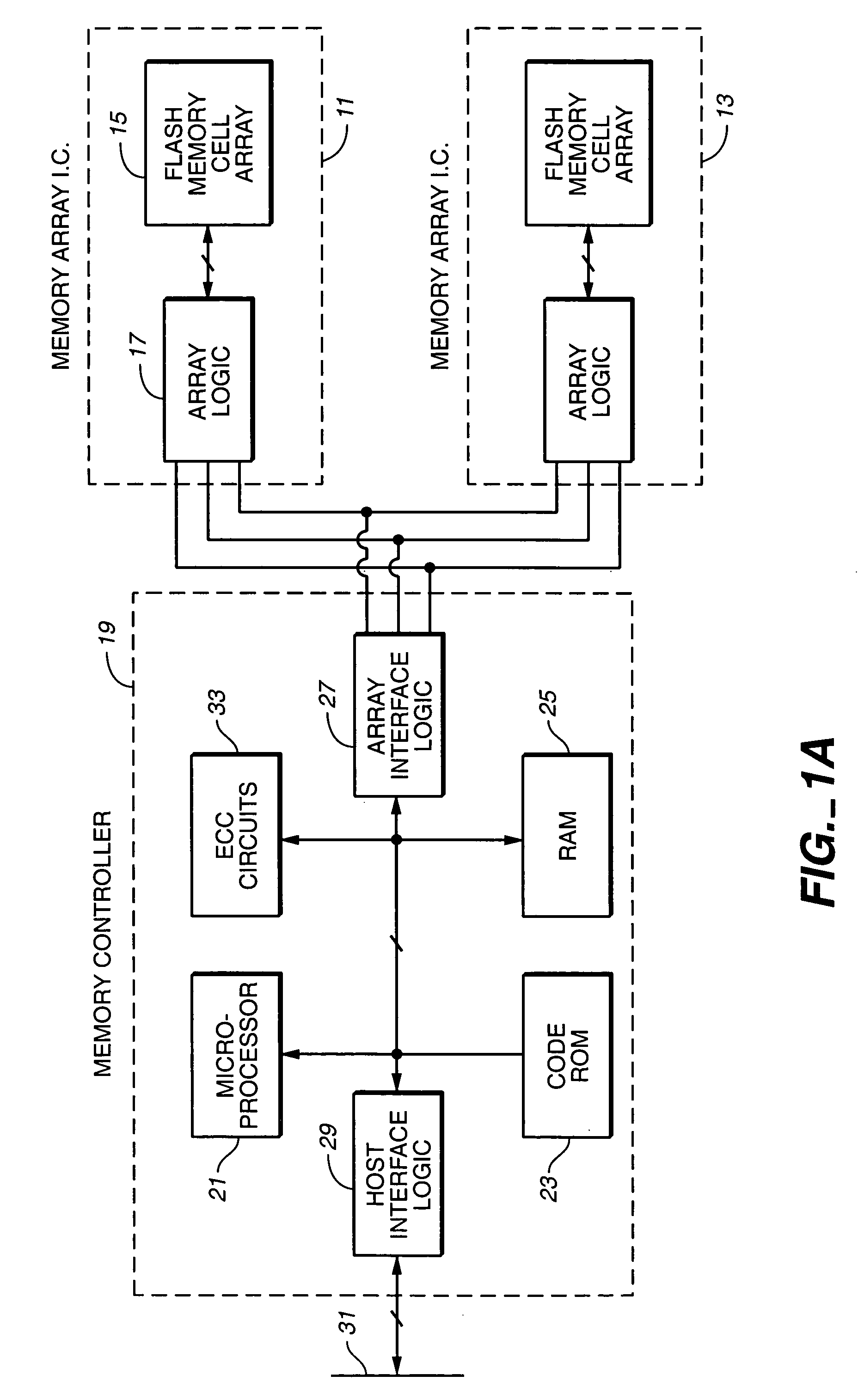

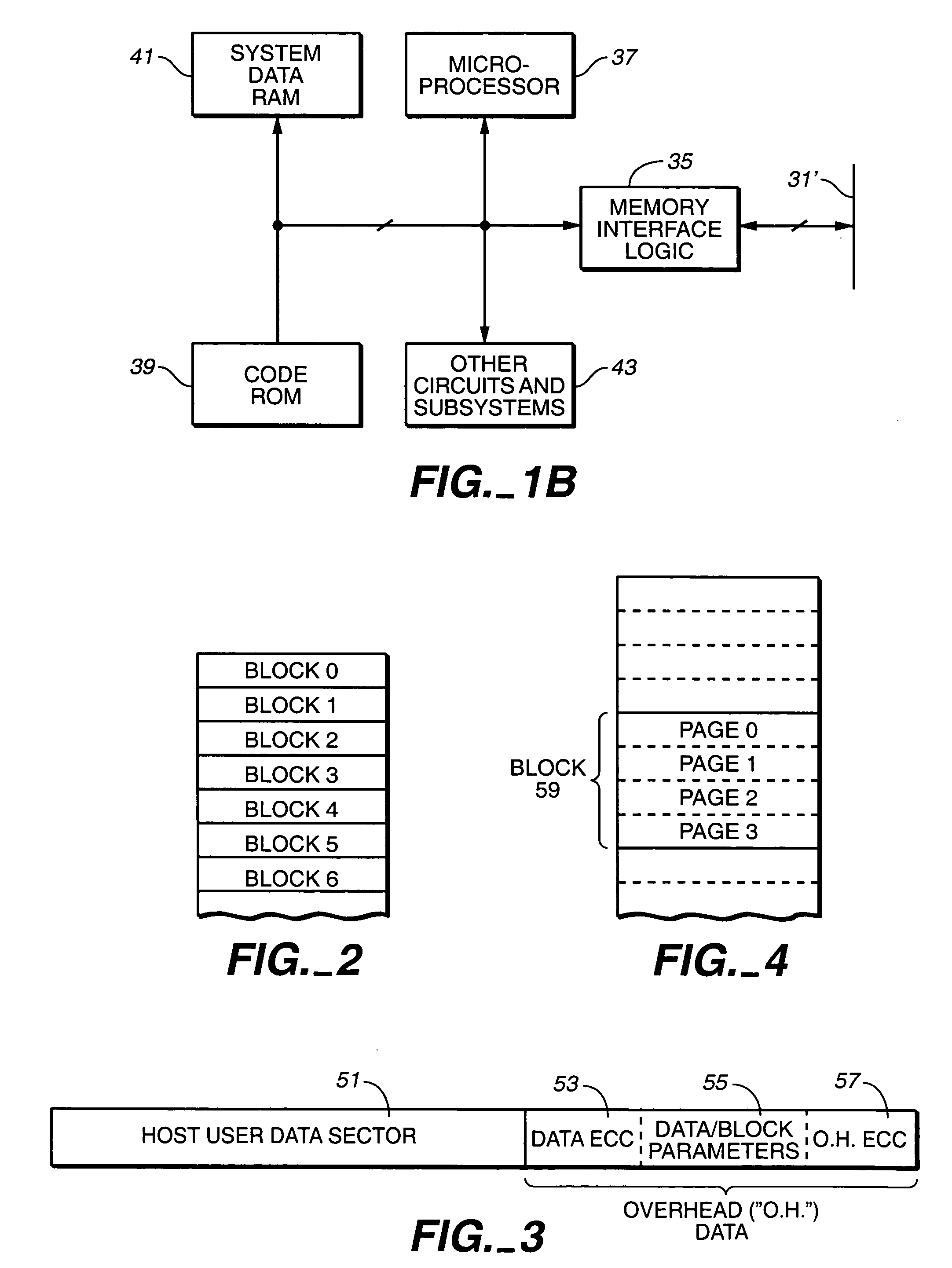

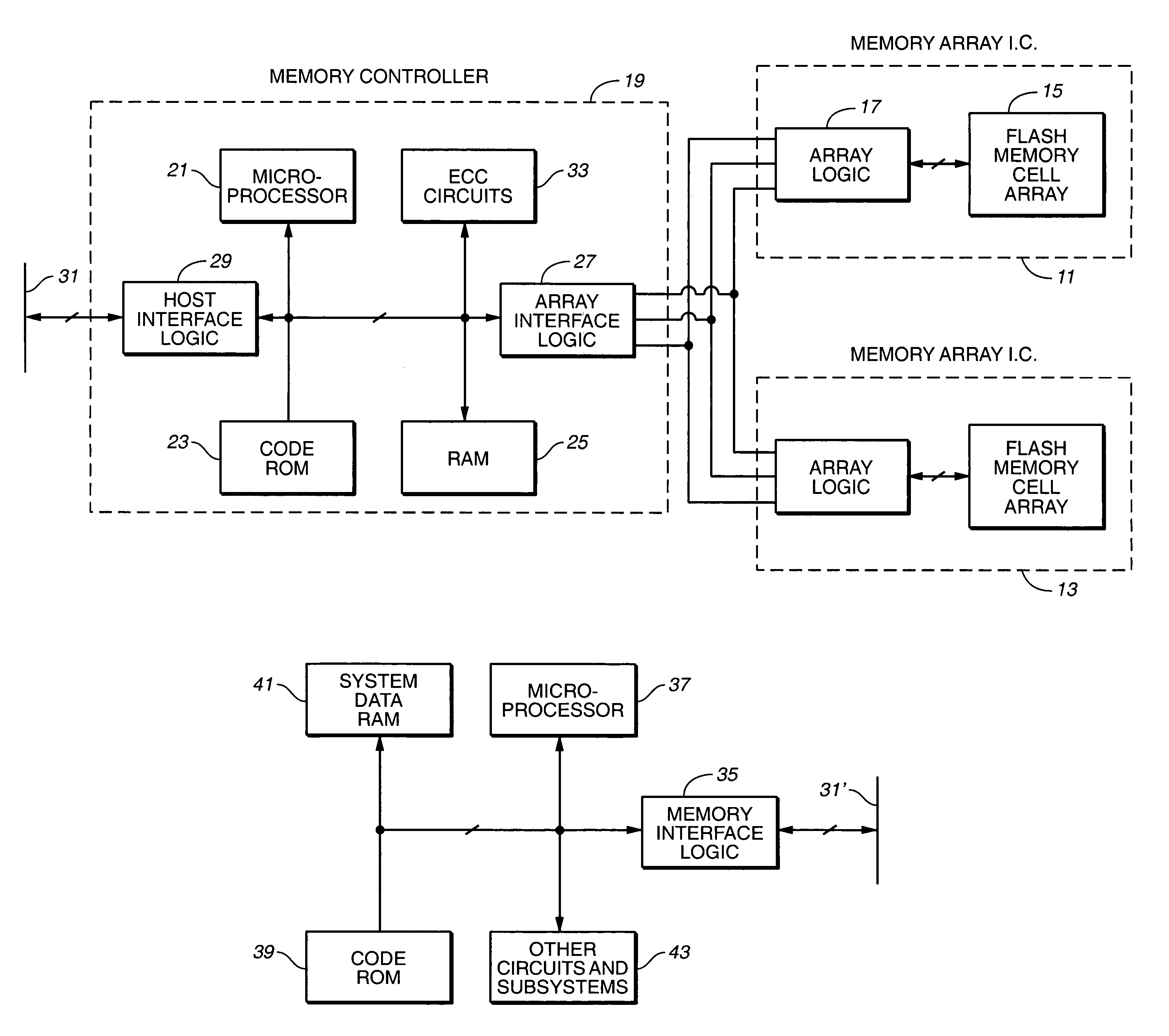

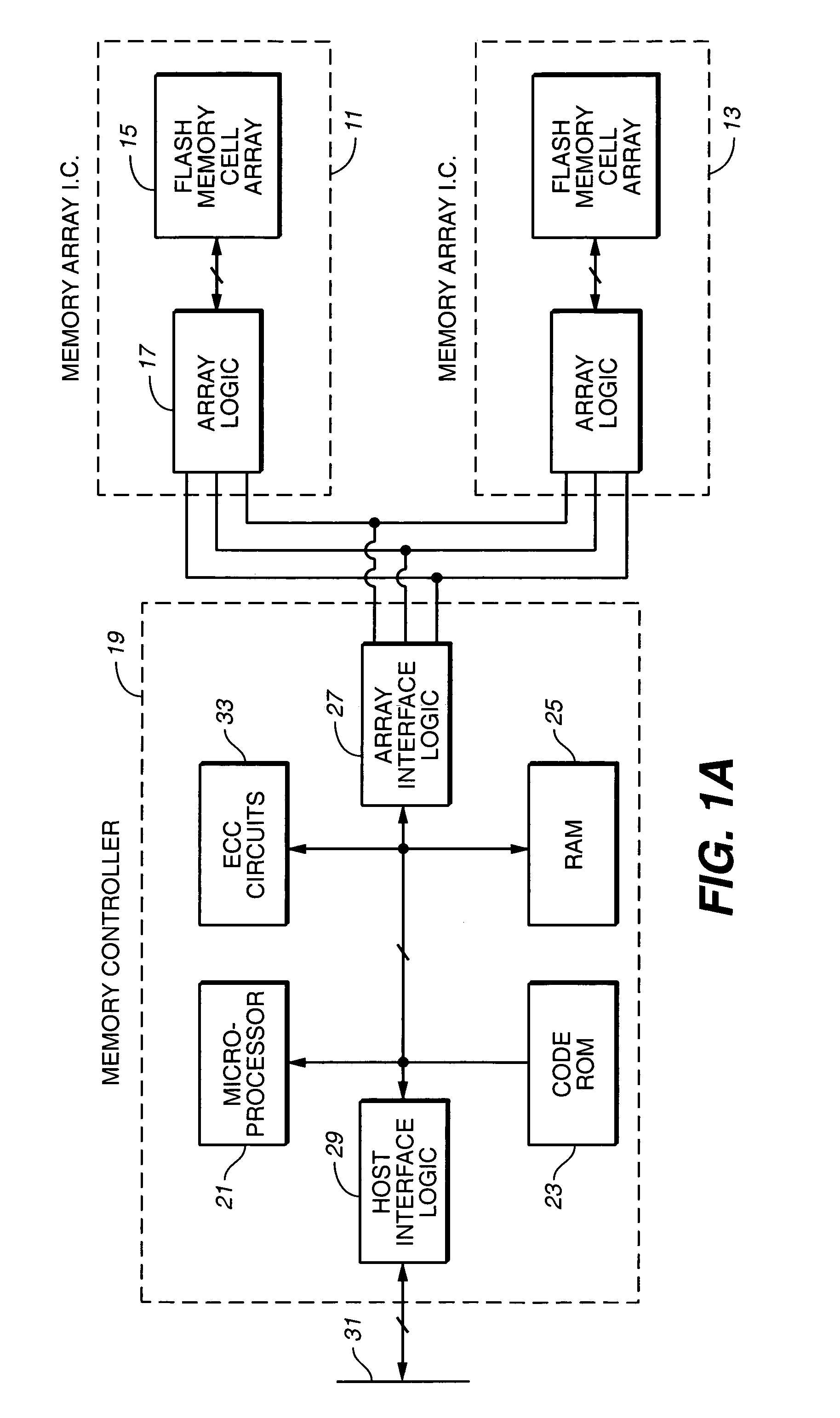

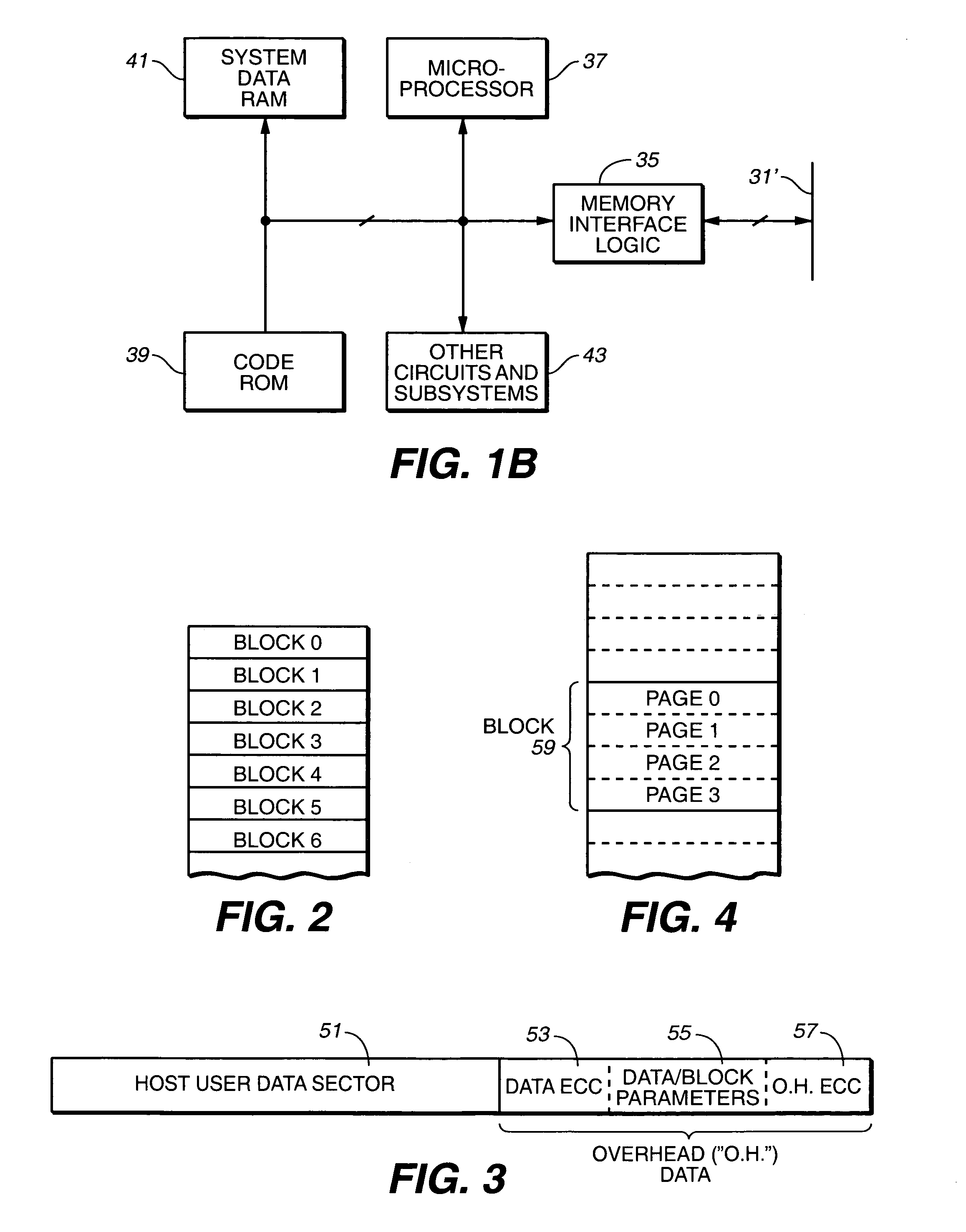

Flash memory data correction and scrub techniques

ActiveUS7012835B2Data disturbanceReduce storage dataMemory loss protectionRead-only memoriesData integrityData storing

In order to maintain the integrity of data stored in a flash memory that are susceptible to being disturbed by operations in adjacent regions of the memory, disturb events cause the data to be read, corrected and re-written before becoming so corrupted that valid data cannot be recovered. The sometimes conflicting needs to maintain data integrity and system performance are balanced by deferring execution of some of the corrective action when the memory system has other high priority operations to perform. In a memory system utilizing very large units of erase, the corrective process is executed in a manner that is consistent with efficiently rewriting an amount of data much less than the capacity of a unit of erase.

Owner:SANDISK TECH LLC

Method for allocating files in a file system integrated with a RAID disk sub-system

The present invention is a method for integrating a file system with a RAID array that exports precise information about the arrangement of data blocks in the RAID subsystem. The file system examines this information and uses it to optimize the location of blocks as they are written to the RAID system. Thus, the system uses explicit knowledge of the underlying RAID disk layout to schedule disk allocation. The present invention uses separate current-write location (CWL) pointers for each disk in the disk array where the pointers simply advance through the disks as writes occur. The algorithm used has two primary goals. The first goal is to keep the CWL pointers as close together as possible, thereby improving RAID efficiency by writing to multiple blocks in the stripe simultaneously. The second goal is to allocate adjacent blocks in a file on the same disk, thereby improving read back performance. The present invention satisfies the first goal by always writing on the disk with the lowest CWL pointer. For the second goal, a new disk is chosen only when the algorithm starts allocating space for a new file, or when it has allocated N blocks on the same disk for a single file. A sufficient number of blocks is defined as all the buffers in a chunk of N sequential buffers in a file. The result is that CWL pointers are never more than N blocks apart on different disks, and large files have N consecutive blocks on the same disk.

Owner:NETWORK APPLIANCE INC

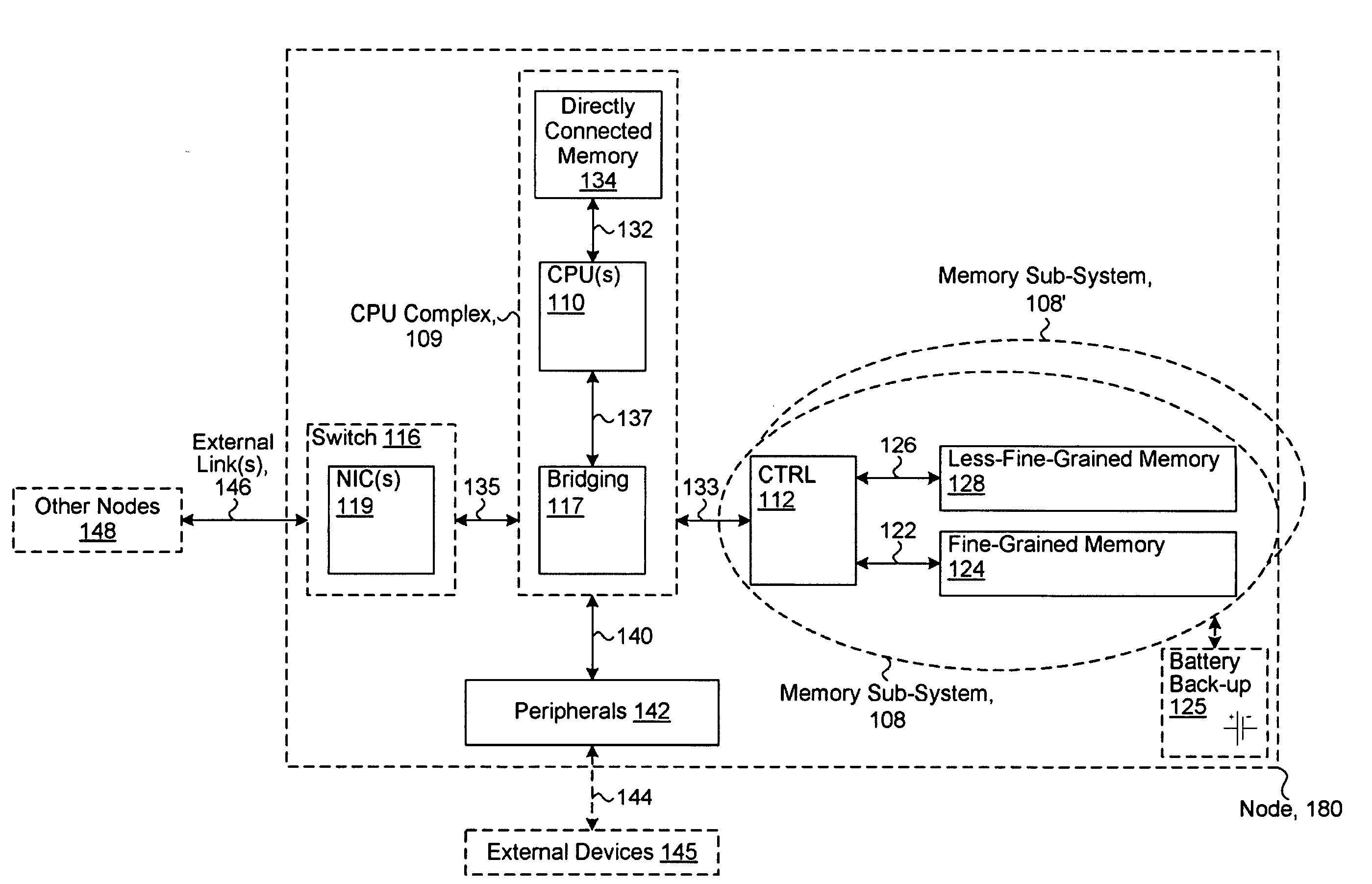

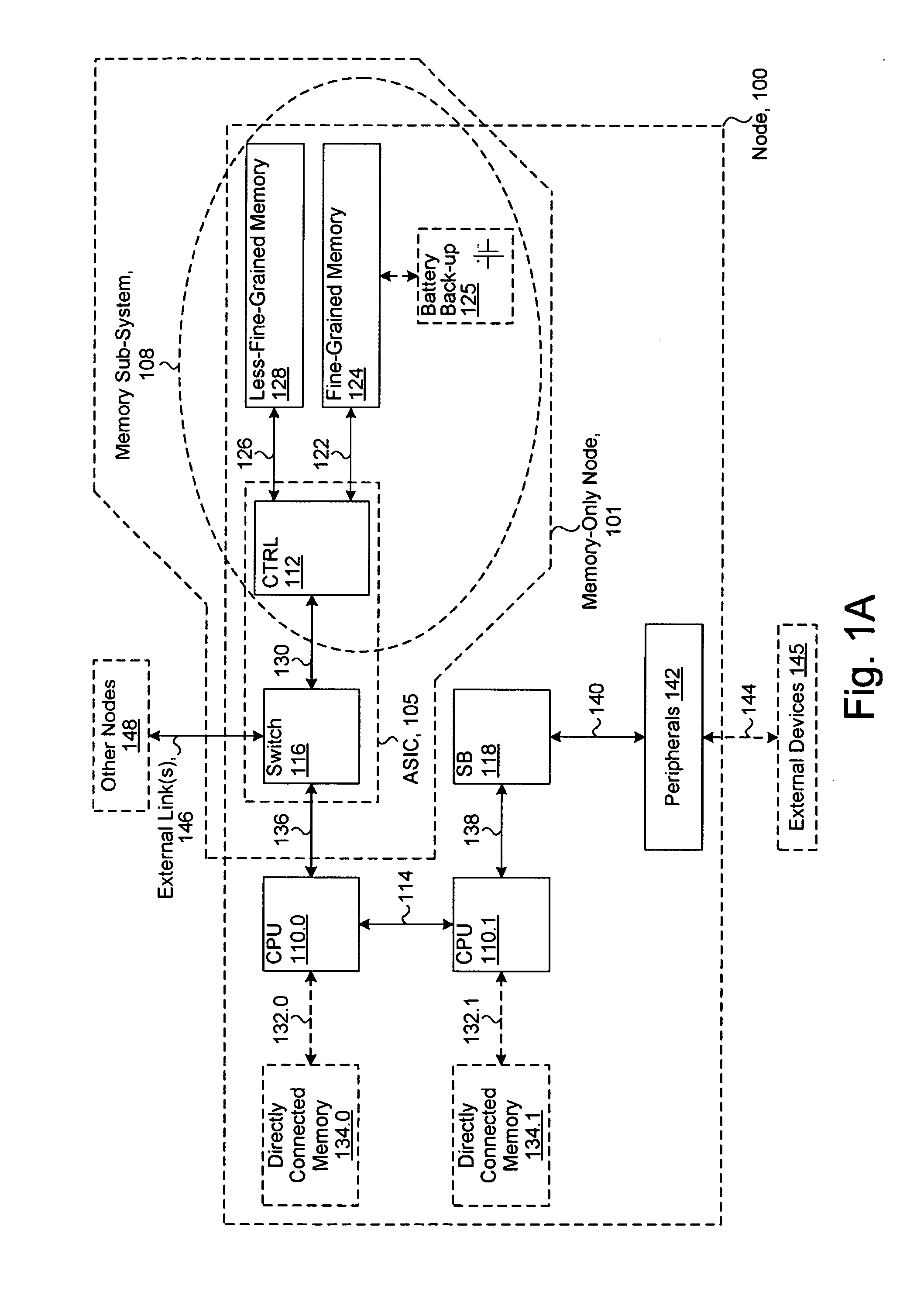

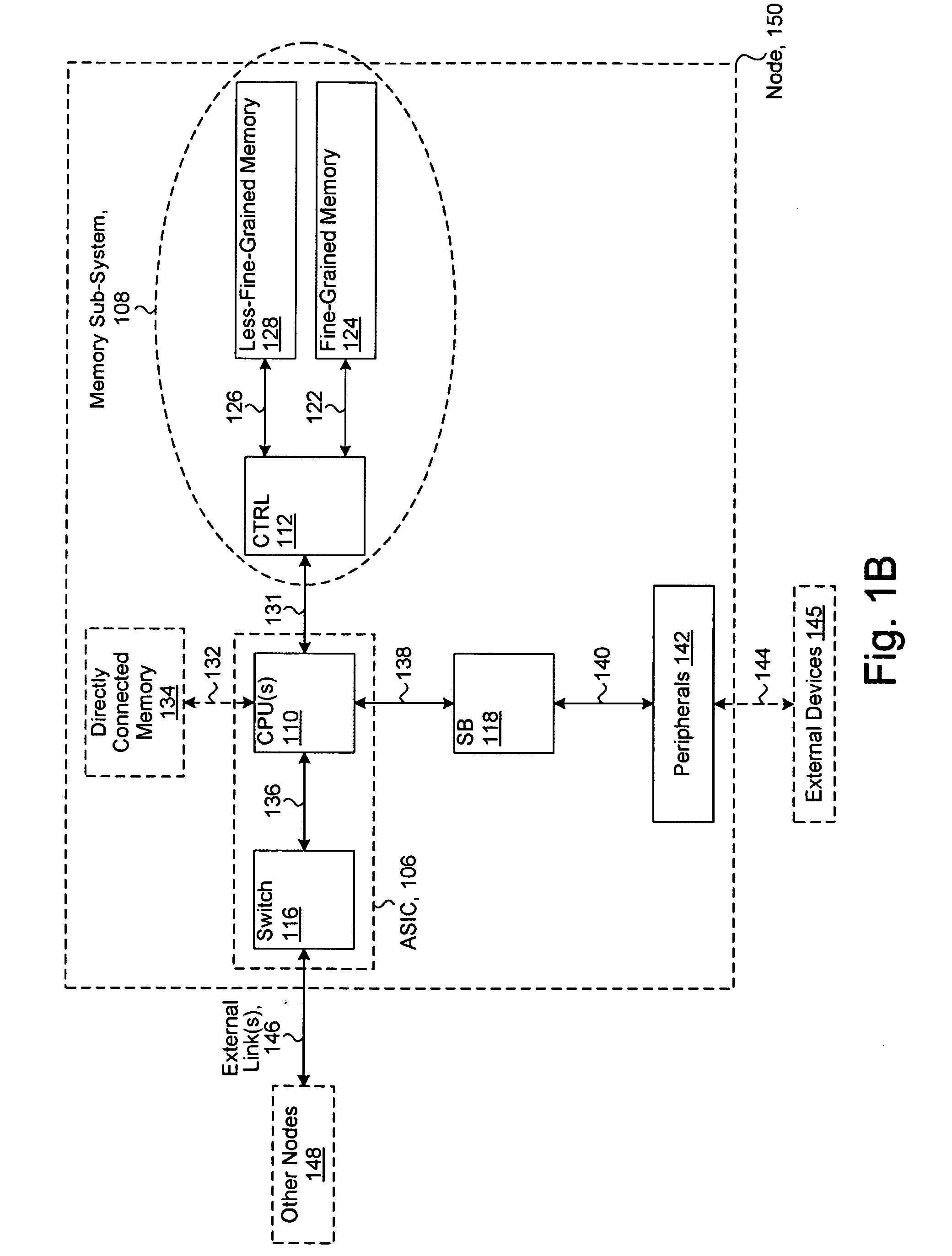

System including a fine-grained memory and a less-fine-grained memory

ActiveUS20080301256A1Memory architecture accessing/allocationEnergy efficient ICTData processing systemWrite buffer

A data processing system includes one or more nodes, each node including a memory sub-system. The sub-system includes a fine-grained, memory, and a less-fine-grained (e.g., page-based) memory. The fine-grained memory optionally serves as a cache and / or as a write buffer for the page-based memory. Software executing on the system uses n node address space which enables access to the page-based memories of all nodes. Each node optionally provides ACID memory properties for at least a portion of the space. In at least a portion of the space, memory elements are mapped to locations in the page-based memory. In various embodiments, some of the elements are compressed, the compressed elements are packed into pages, the pages are written into available locations in the page-based memory, and a map maintains an association between the some of the elements and the locations.

Owner:SANDISK TECH LLC

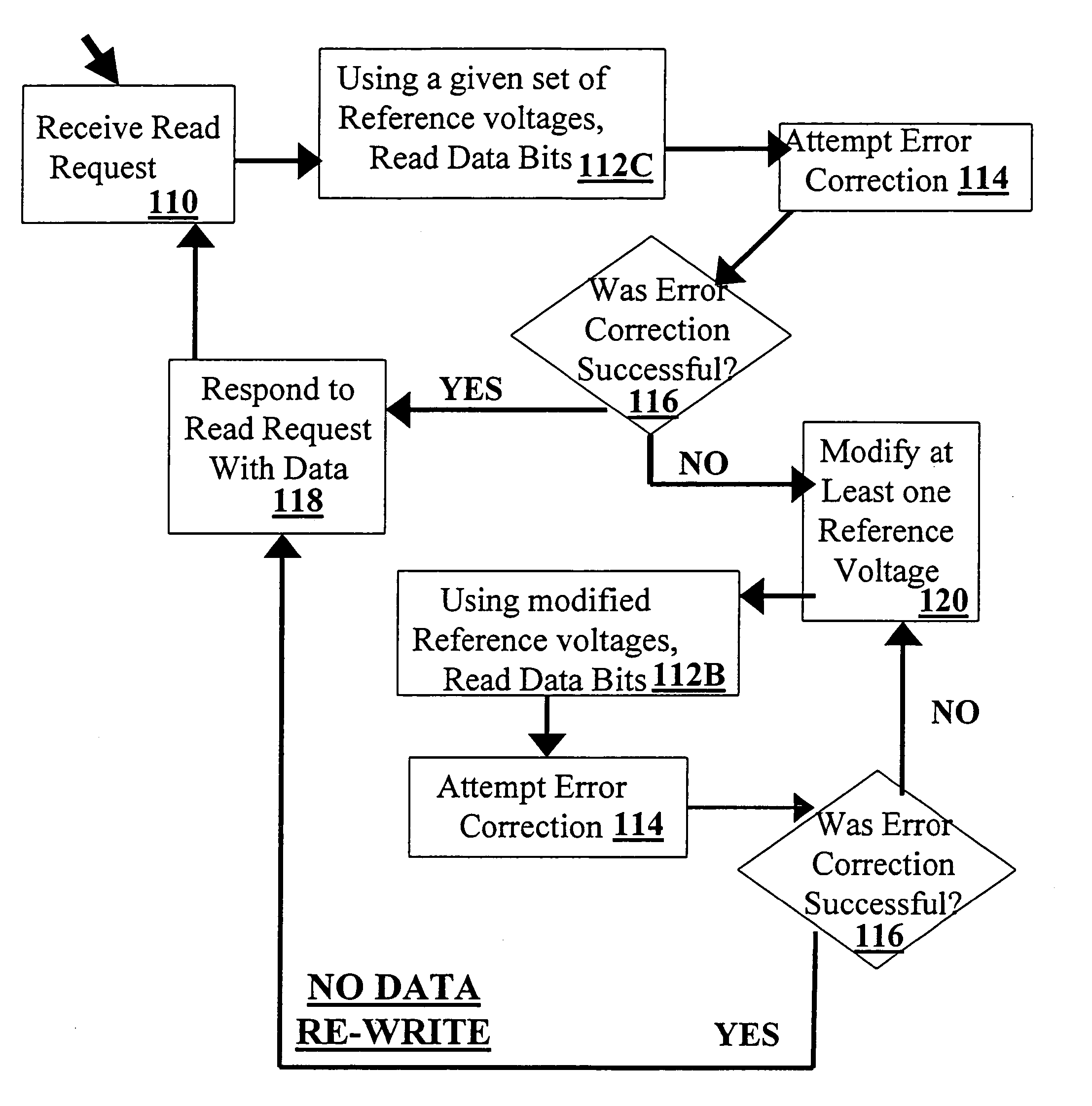

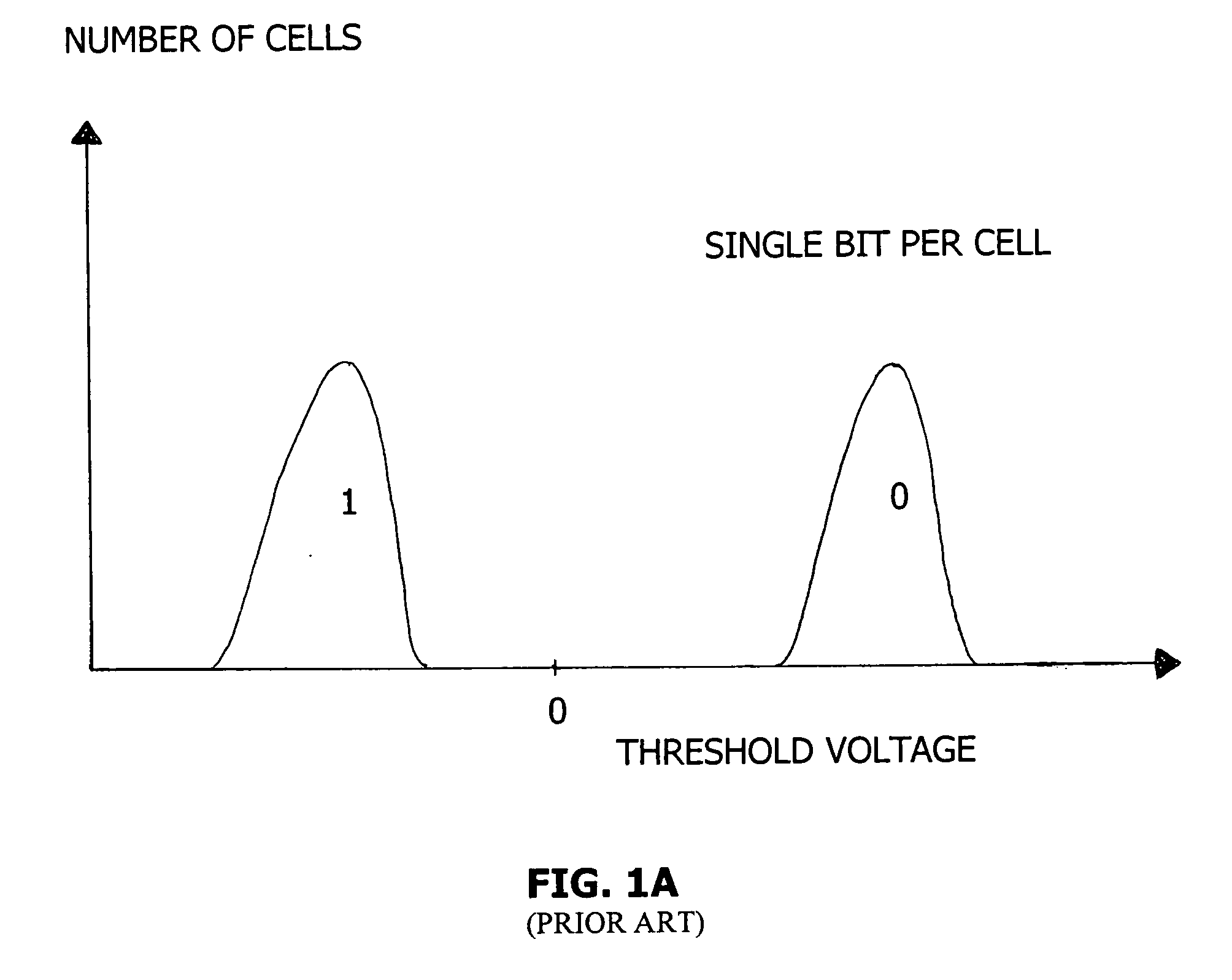

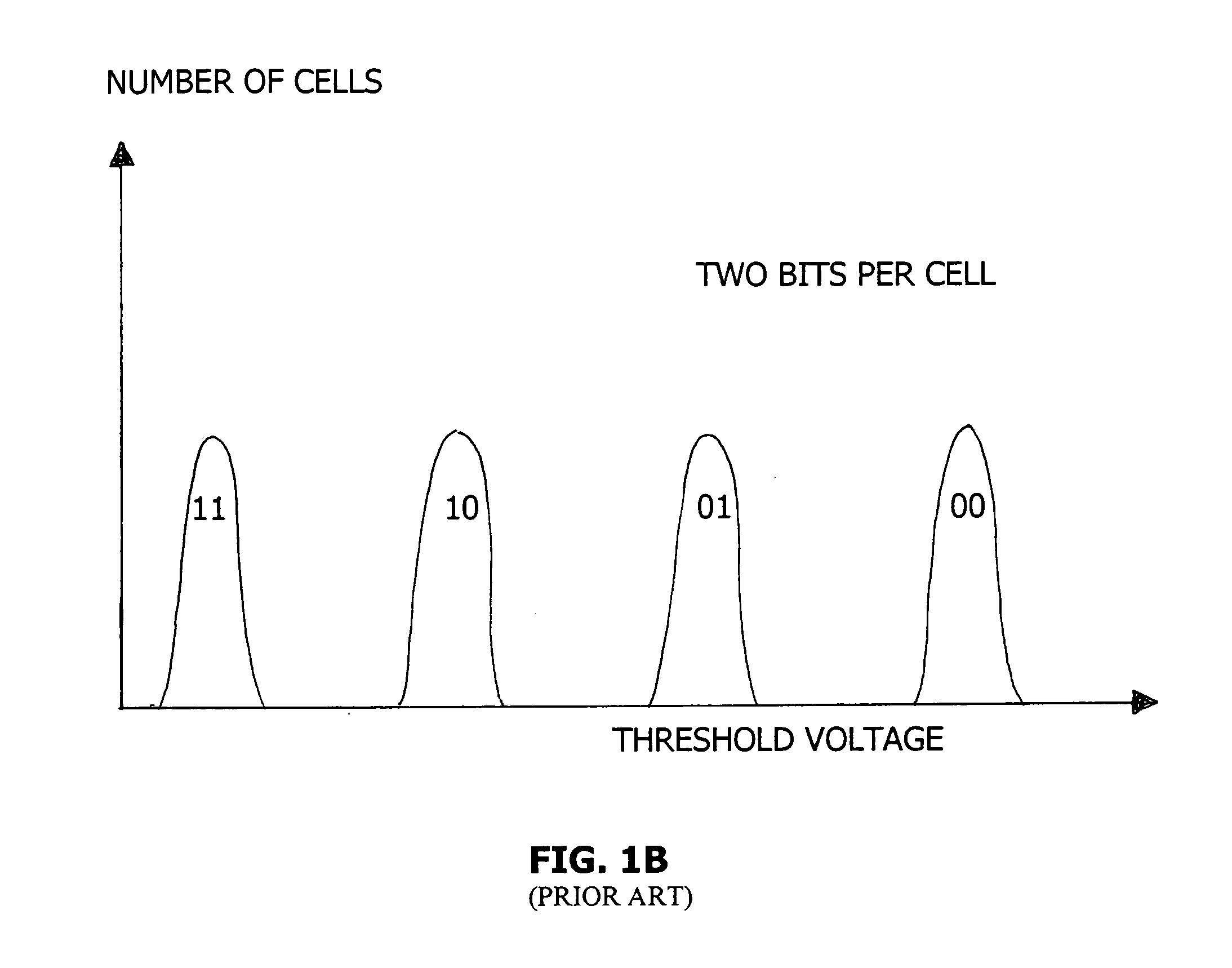

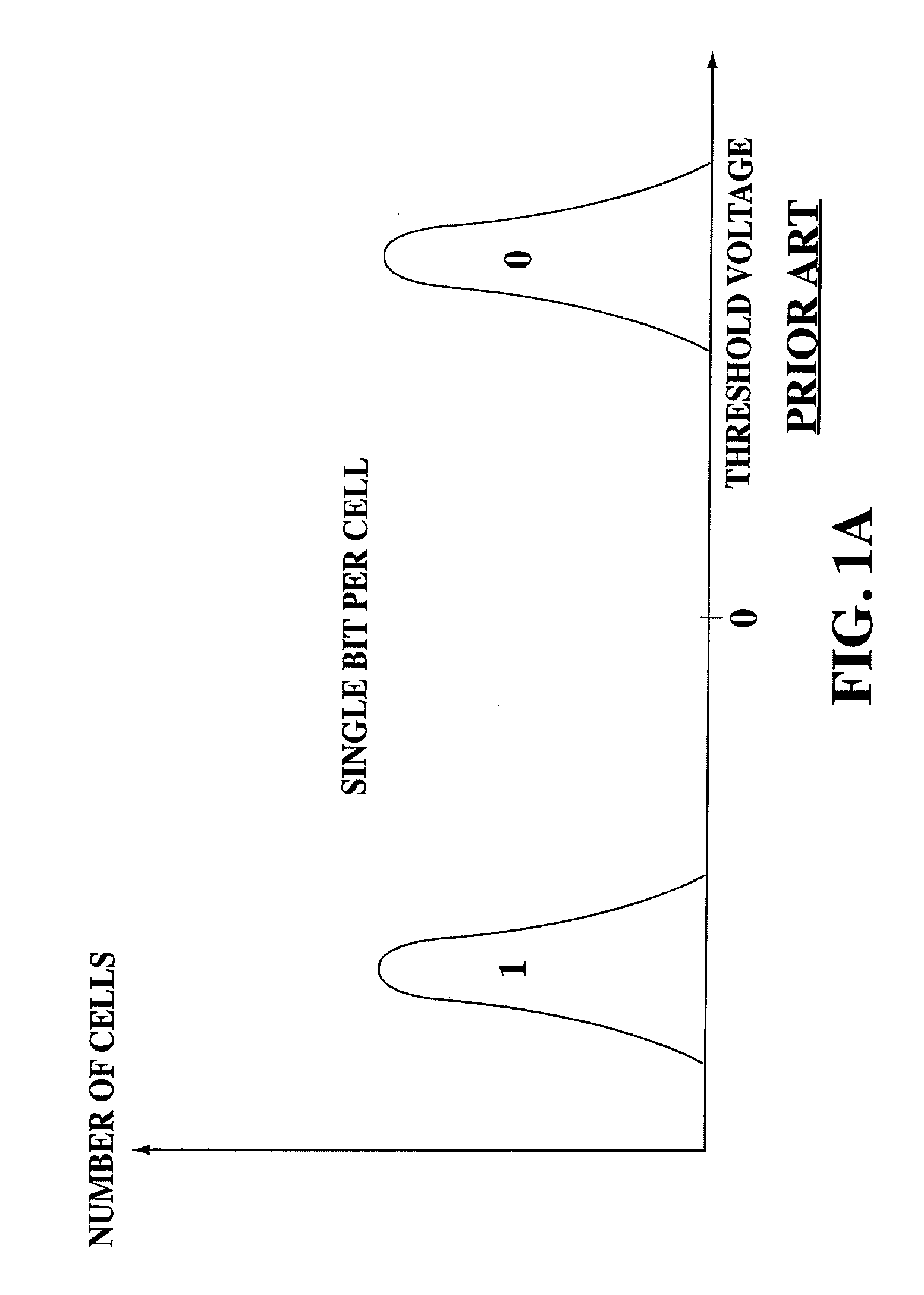

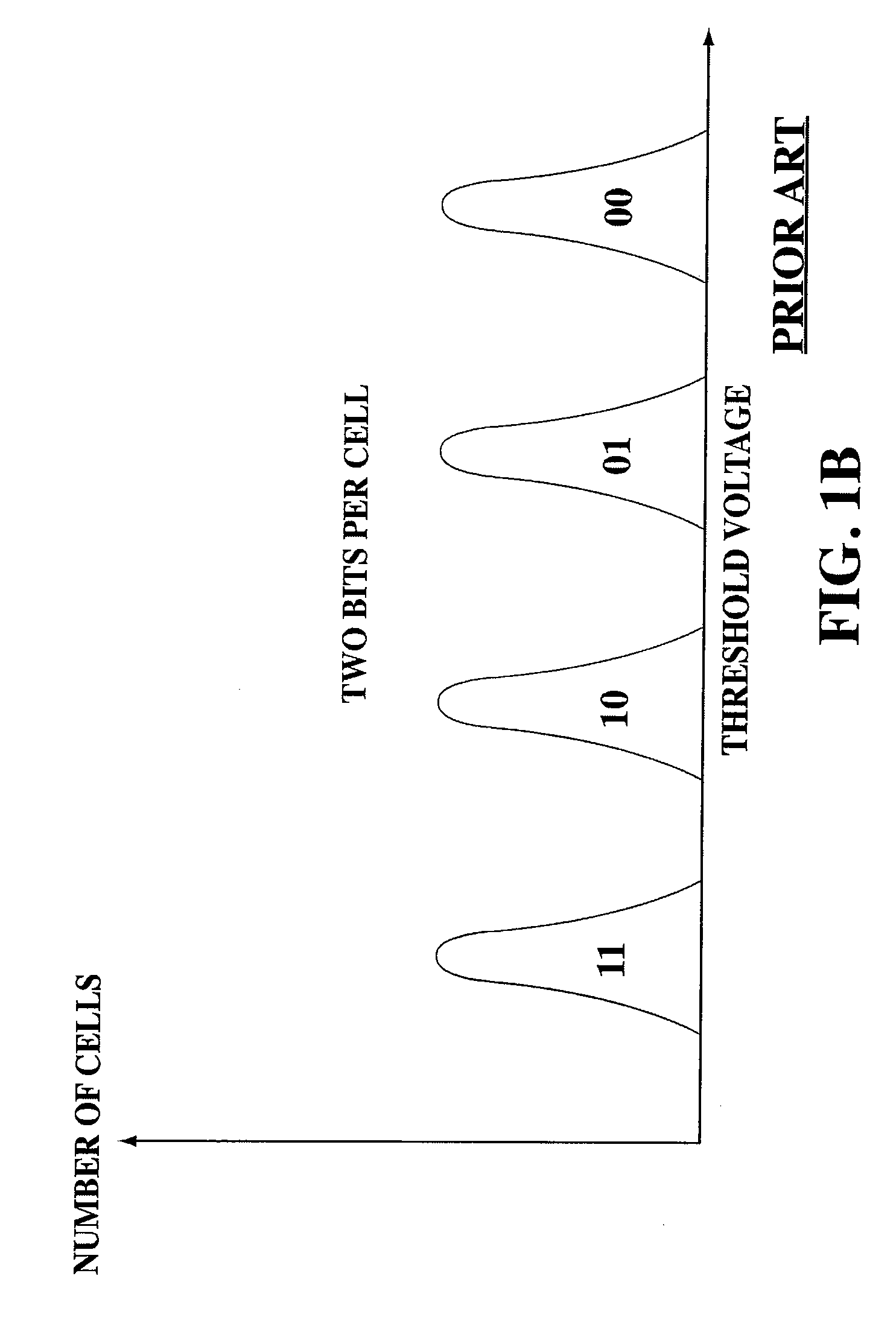

Method for recovering from errors in flash memory

ActiveUS20070091677A1Satisfies needRead-only memoriesDigital storageVoltage referenceCorrection code

Methods, devices and computer readable code for reading data from one or more flash memory cells, and for recovering from read errors are disclosed. In some embodiments, in the event of an error correction failure by an error detection and correction module, the flash memory cells are re-read at least once using one or more modified reference voltages, for example, until a successful error correction may be carried out. In some embodiments, after successful error correction a subsequent read request is handled without re-writing data (for example, reliable values of the read data) to the flash memory cells in the interim. In some embodiments, reference voltages associated with a reading where errors are corrected may be stored in memory, and retrieved when responding to a subsequent read request. In some embodiments, the modified reference voltages are predetermined reference voltages. Alternatively or additionally, these modified reference voltages may be determined as needed, for example, using randomly generated values or in accordance with information provided by the error detection and correction module. Methods, devices and computer readable code for reading data for situations where there is no error correction failure are also provided.

Owner:WESTERN DIGITAL ISRAEL LTD

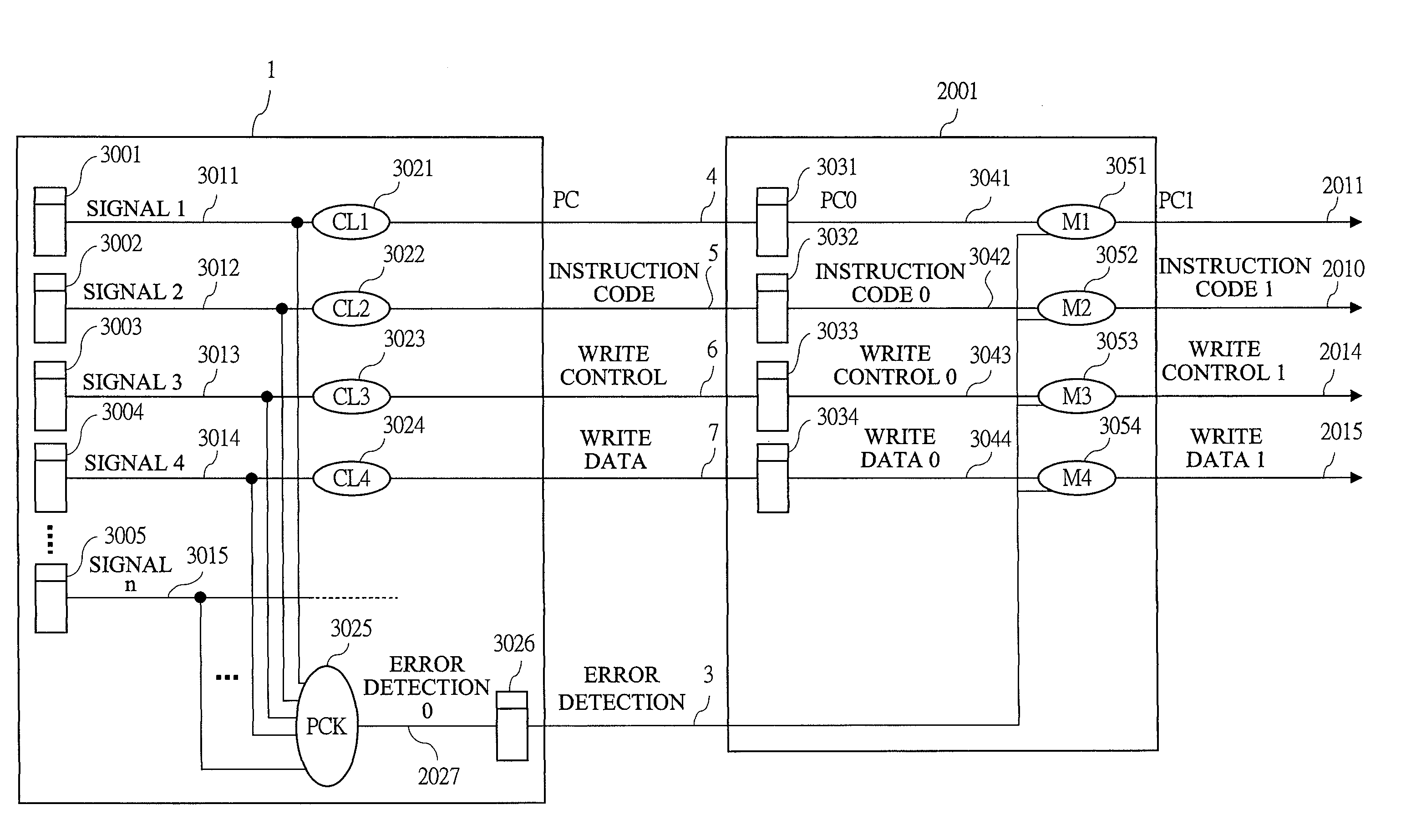

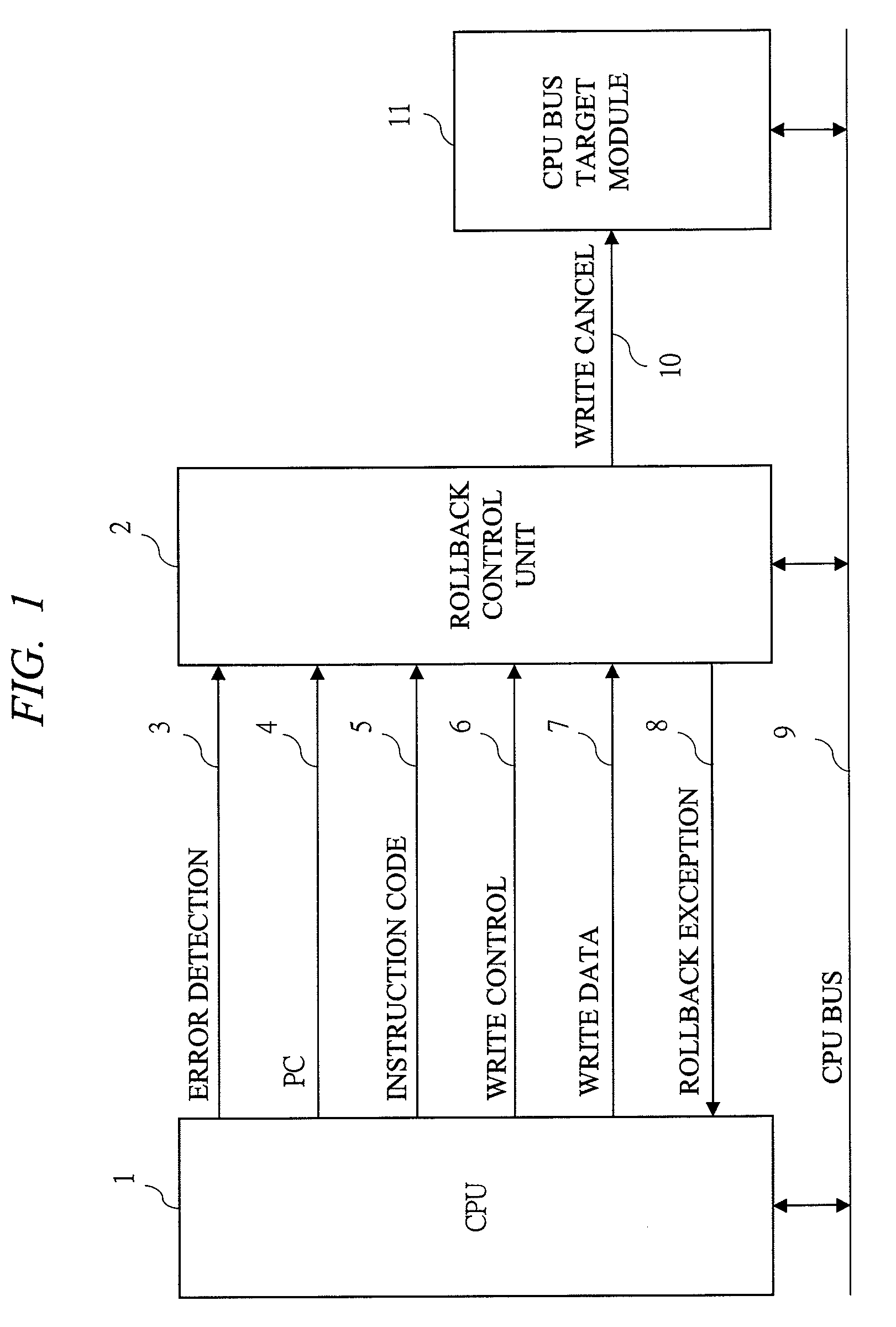

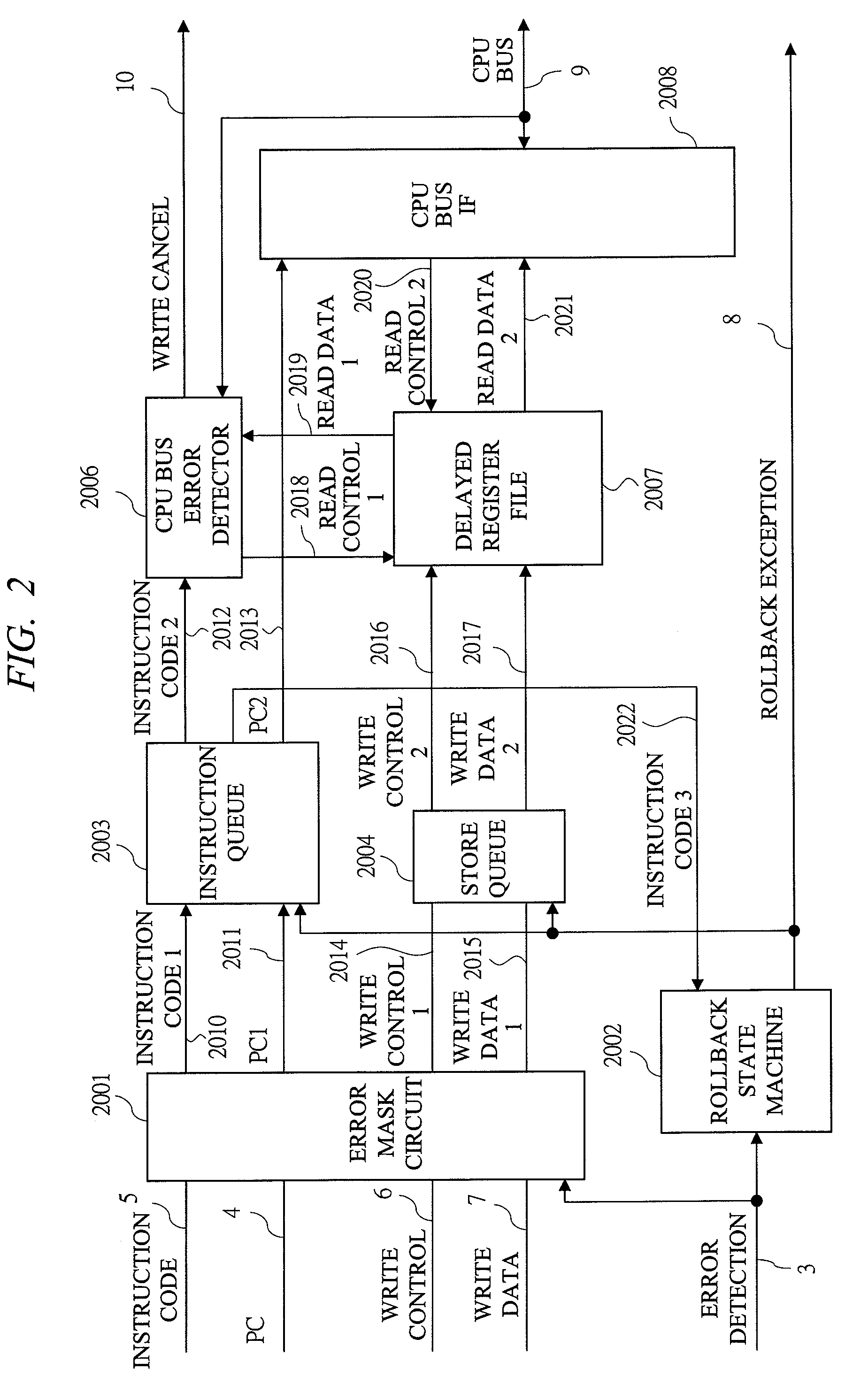

Error correction method with instruction level rollback

This method is an error correction method such that, when an error is detected in a CPU with pipeline structure, a content of a register file is restored by a delayed register file which holds an execute completion state of an [Instruction N] correctly executed before this error, and a rollback control that re-executes an instruction from the [Instruction N+1] which is the next instruction of the [Instruction N] is performed. The method collects a parity check result of arbitrary Flip-Flops existing inside the CPU, and detects an error. As a result, the content of the register file is restored into the instruction execute completion state preceding to the instruction range likely to malfunction by the error, and the instruction can be roll backed from the beginning of the instruction range likely having malfunctioned by the error.

Owner:RENESAS ELECTRONICS CORP

Rebuilding data on a dispersed storage network

A rebuilder application operates on a dispersed data storage grid and rebuilds stored data segments that have been compromised in some manner. The rebuilder application actively scans for compromised data segments, and is also notified during partially failed writes to the dispersed data storage network, and during reads from the dispersed data storage network when a data slice is detected that is compromised. Records are created for compromised data segments, and put into a rebuild list, which the rebuilder application processes.

Owner:PURE STORAGE

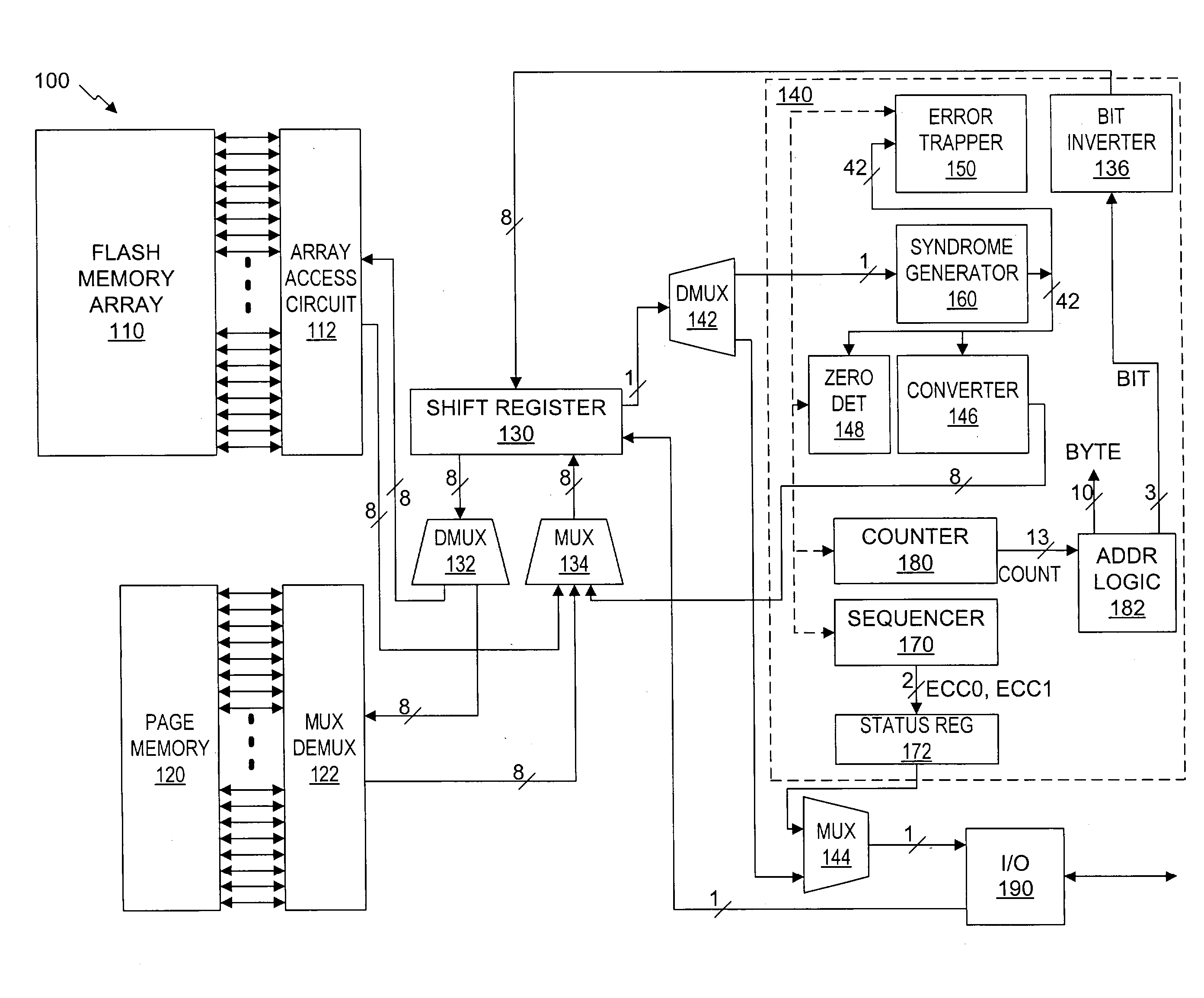

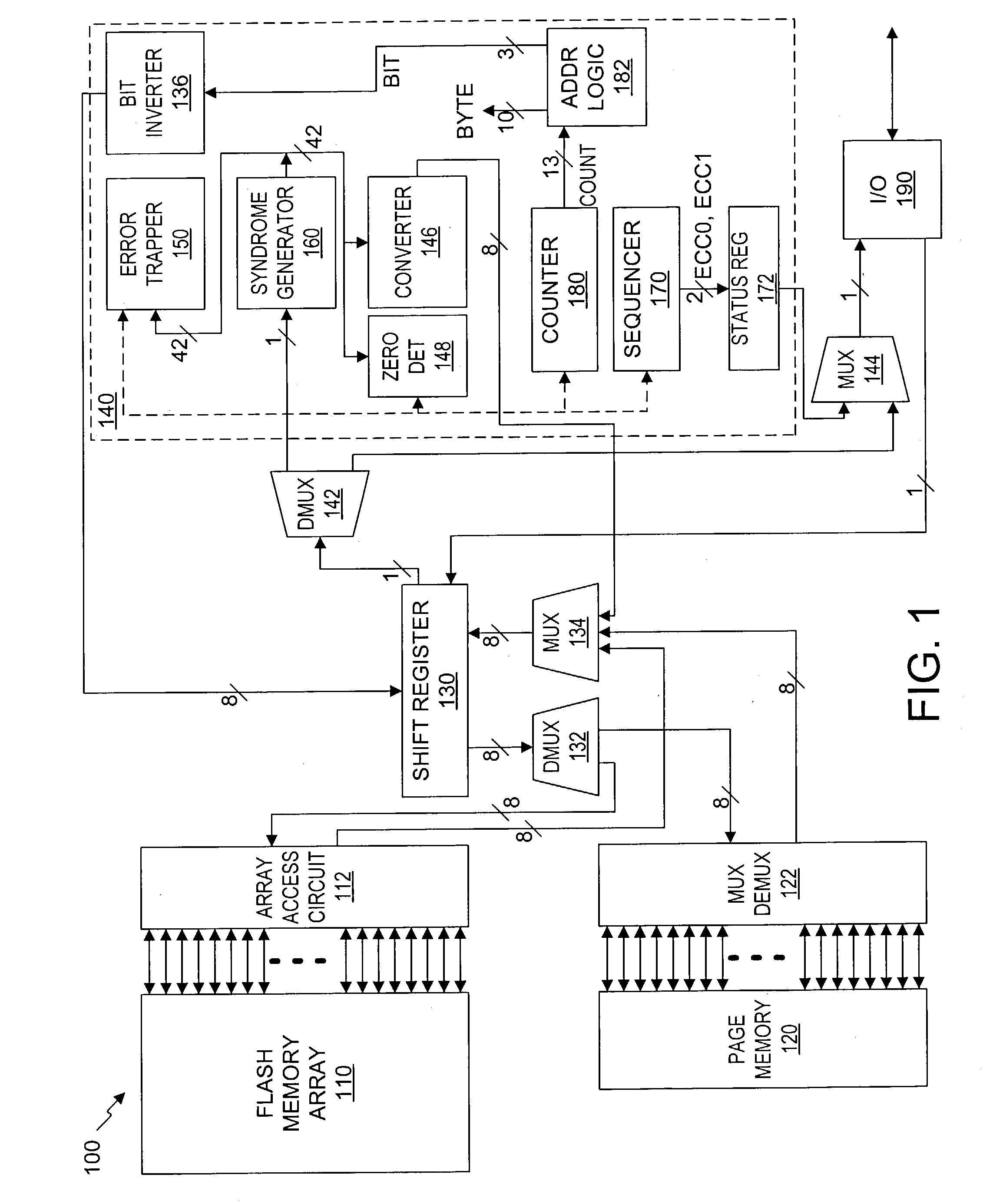

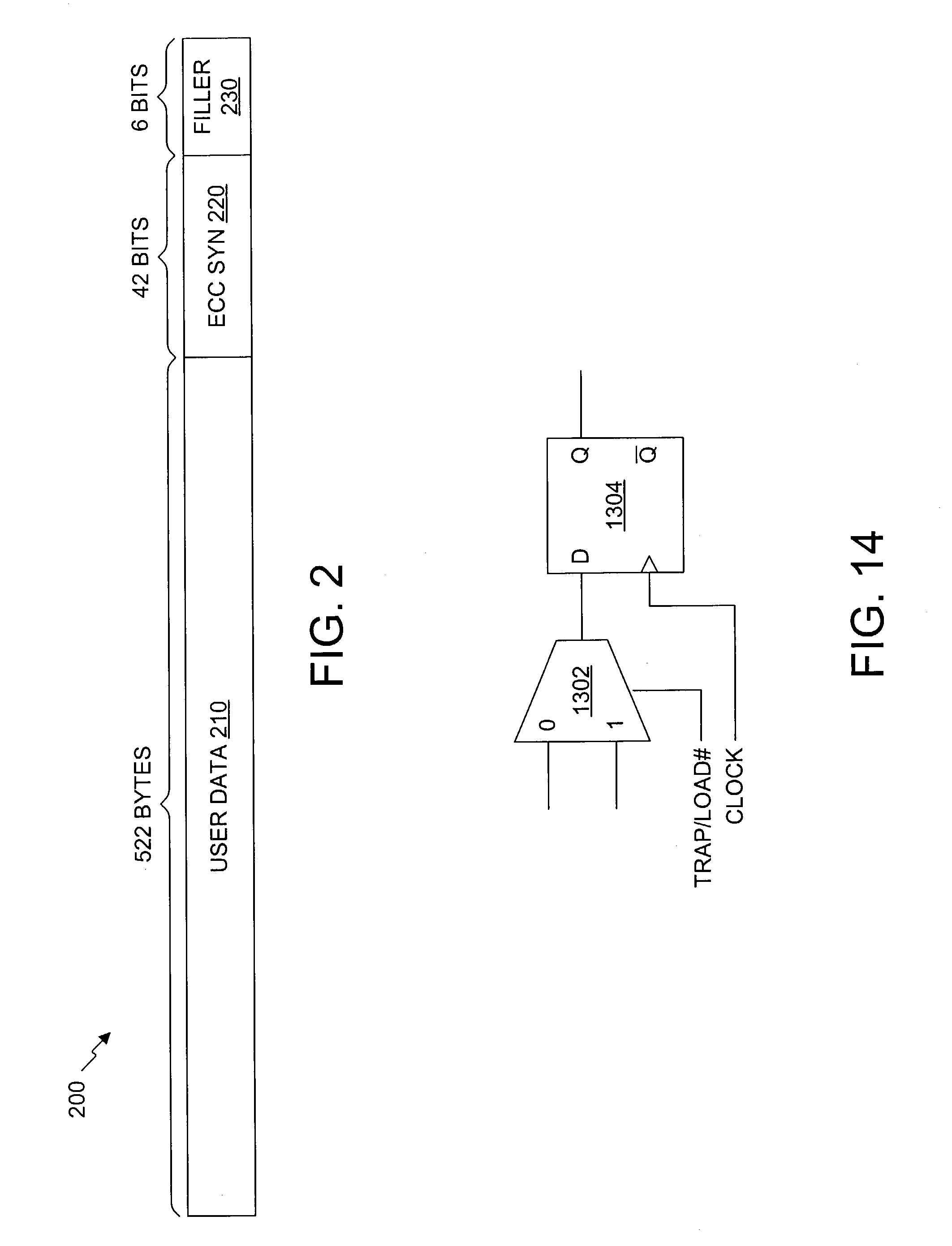

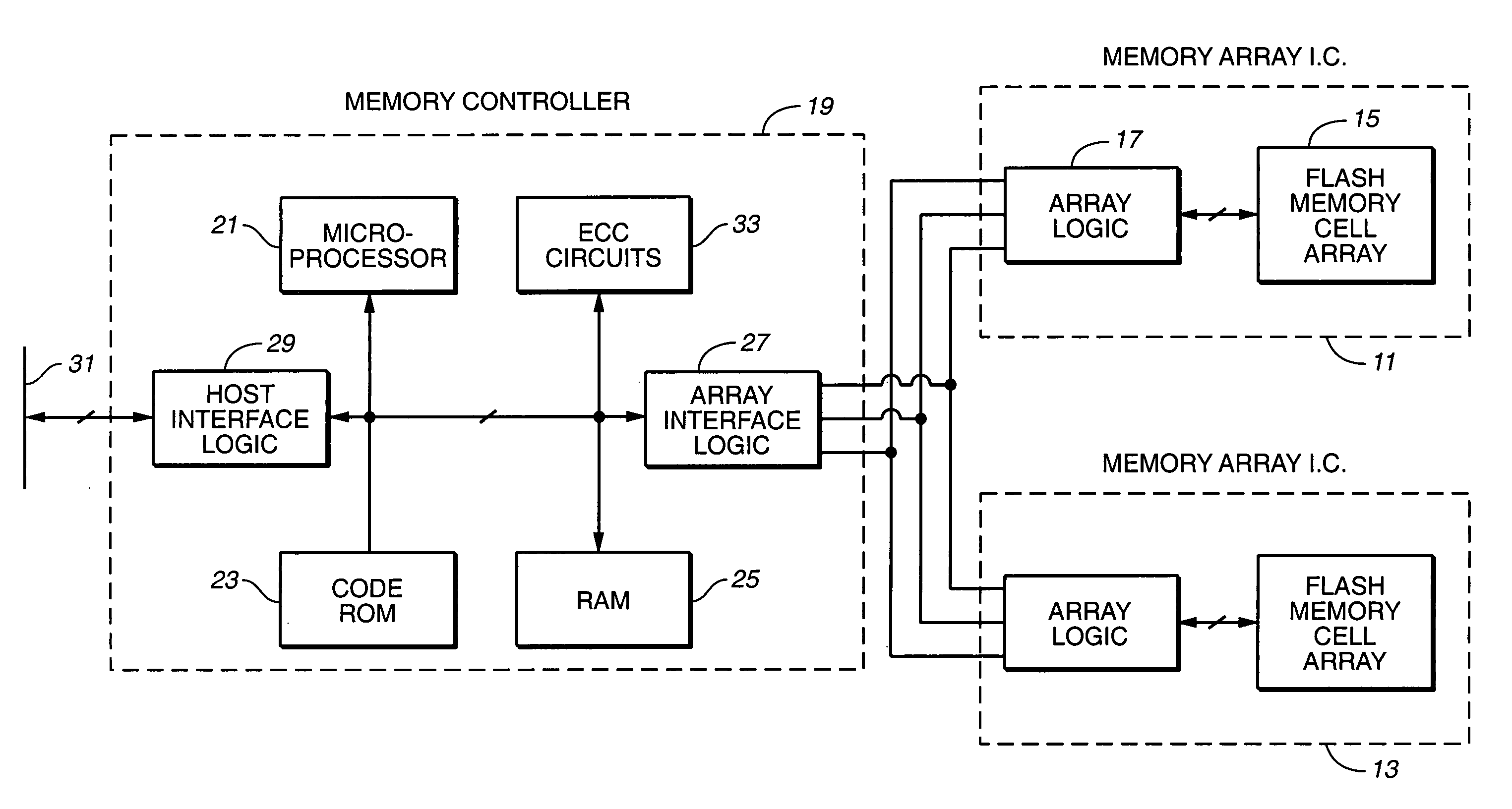

Serial flash integrated circuit having error detection and correction

InactiveUS20040153902A1Static storageRedundant data error correctionCorrection codeIntegrated circuit

A serial flash integrated circuit is provided with an integrated error correction coding ("ECC") system that is used with an integrated volatile page memory for fast automatic data correction. The ECC code has the capability of correcting any one or two bit errors that might occur on a page of the flash memory array. One bit corrections are done automatically in hardware during reads or transfer to the page memory, while two-bit corrections are handled in external software, firmware or hardware. The ECC system uses a syndrome generator for generating both write and read syndromes, and an error trapper to identify the location of single bit errors using very little additional chip space. The flash memory array may be refreshed from the page memory to correct any detected errors. Data status is made available to the application prior to the data. The use of the ECC is optional.

Owner:WINBOND ELECTRONICS CORP

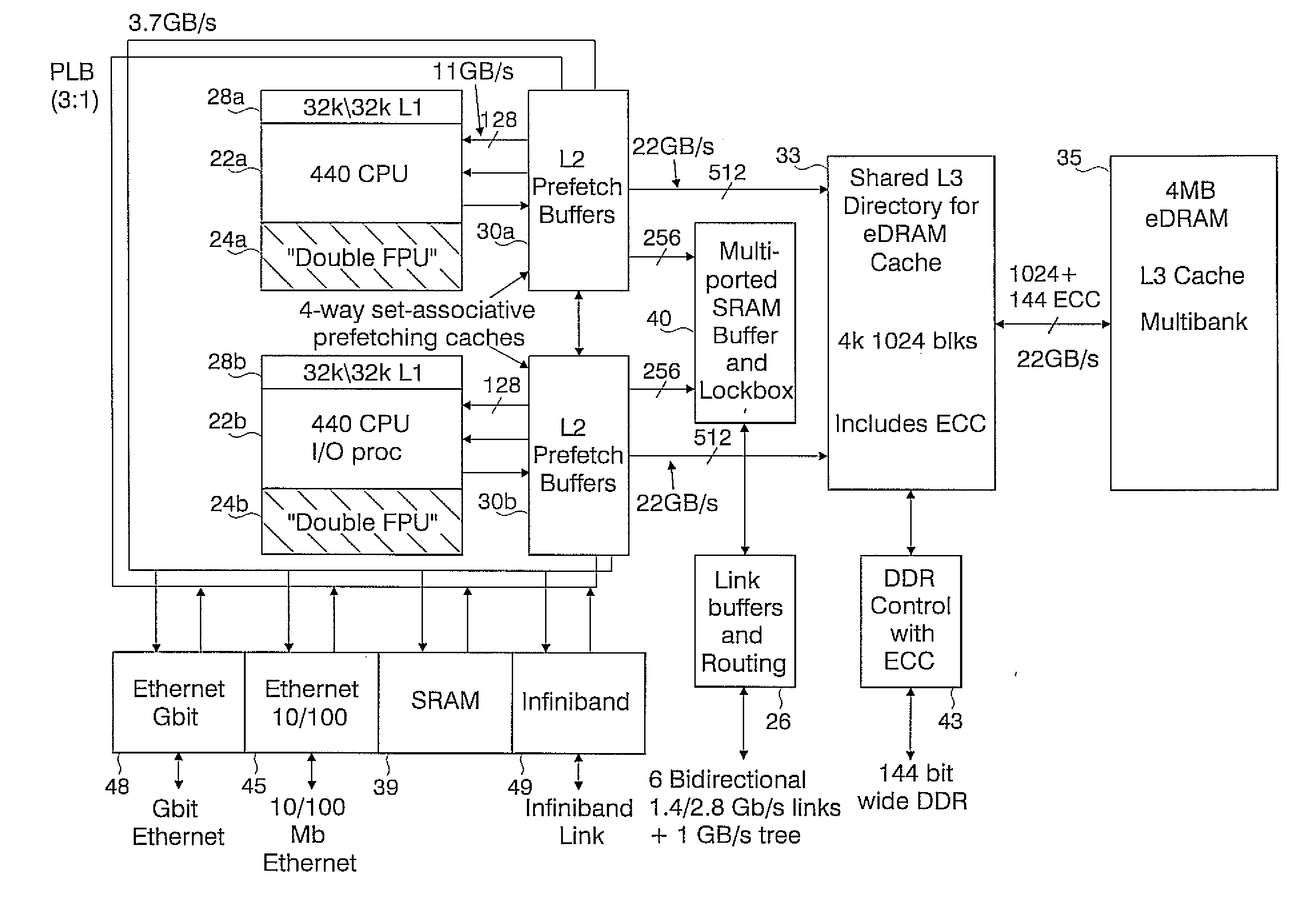

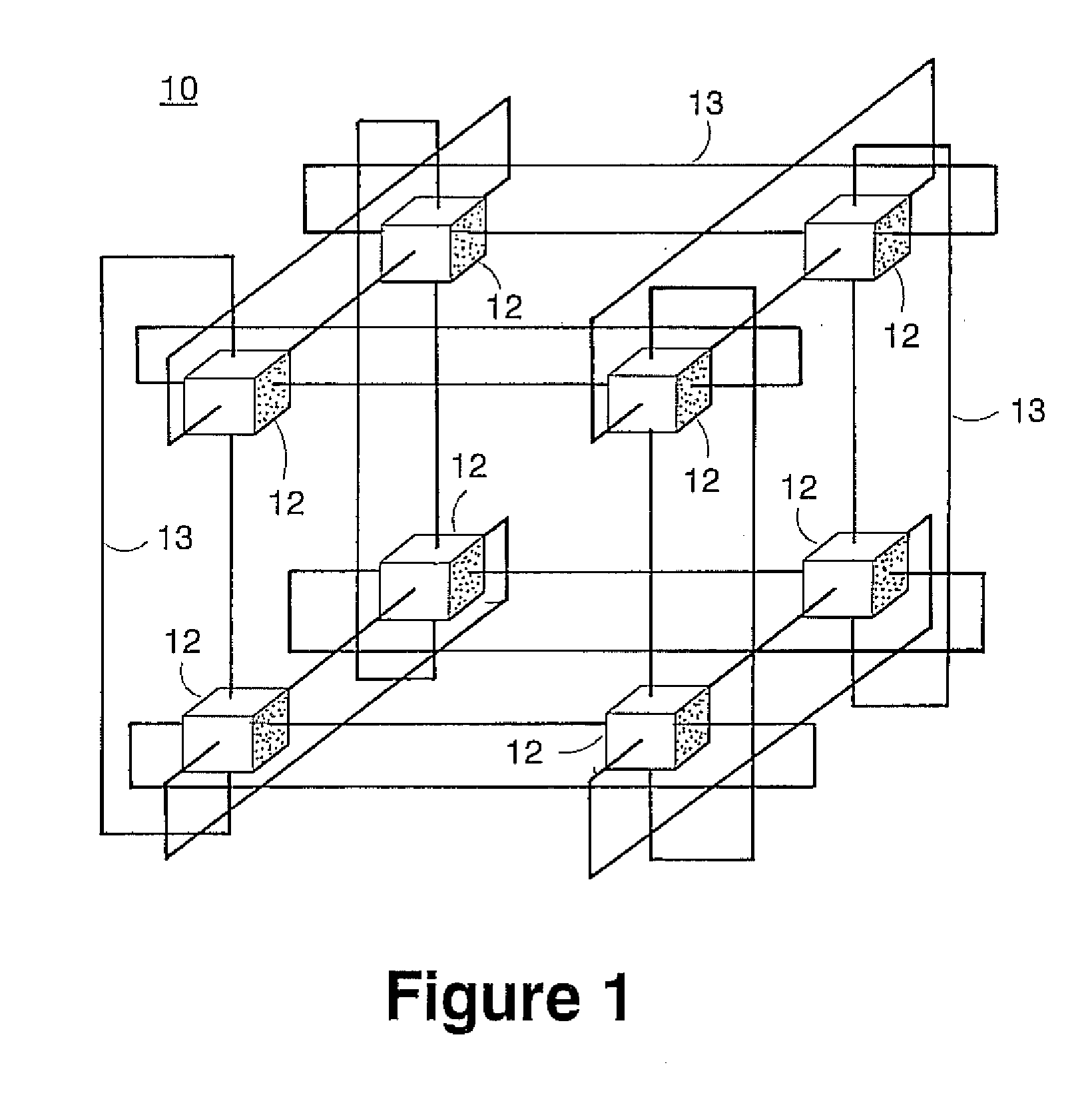

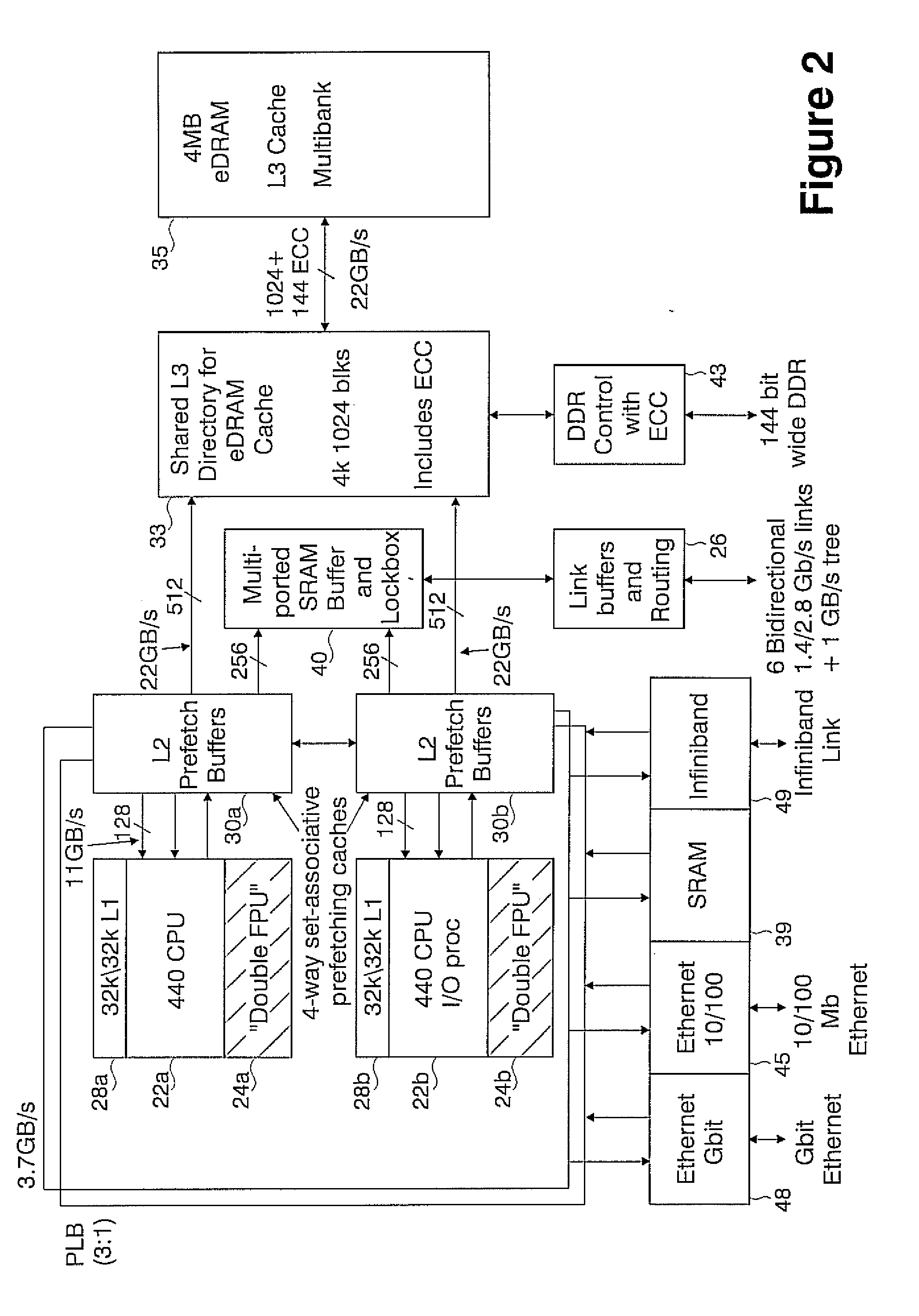

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

Flash memory data correction and scrub techniques

ActiveUS20050073884A1Data disturbanceReduce storage dataMemory loss protectionRead-only memoriesData integrityData storing

In order to maintain the integrity of data stored in a flash memory that are susceptible to being disturbed by operations in adjacent regions of the memory, disturb events cause the data to be read, corrected and re-written before becoming so corrupted that valid data cannot be recovered. The sometimes conflicting needs to maintain data integrity and system performance are balanced by deferring execution of some of the corrective action when the memory system has other high priority operations to perform. In a memory system utilizing very large units of erase, the corrective process is executed in a manner that is consistent with efficiently rewriting an amount of data much less than the capacity of a unit of erase.

Owner:SANDISK TECH LLC

Corrected data storage and handling methods

InactiveUS7173852B2Data disturbanceReduce storage dataRead-only memoriesDigital storageData integrityTime limit

In order to maintain the integrity of data stored in a flash memory that are susceptible to being disturbed by operations in adjacent regions of the memory, disturb events cause the data to be read, corrected and re-written before becoming so corrupted that valid data cannot be recovered. The sometimes conflicting needs to maintain data integrity and system performance are balanced by deferring execution of some of the corrective action when the memory system has other high priority operations to perform. In a memory system utilizing very large units of erase, the corrective process is executed in a manner that is consistent with efficiently rewriting an amount of data much less than the capacity of a unit of erase. Data is rewritten when severe errors are found during read operations. Portions of data are corrected and copied within the time limit for read operation. Corrected portions are written to dedicated blocks.

Owner:SANDISK TECH LLC

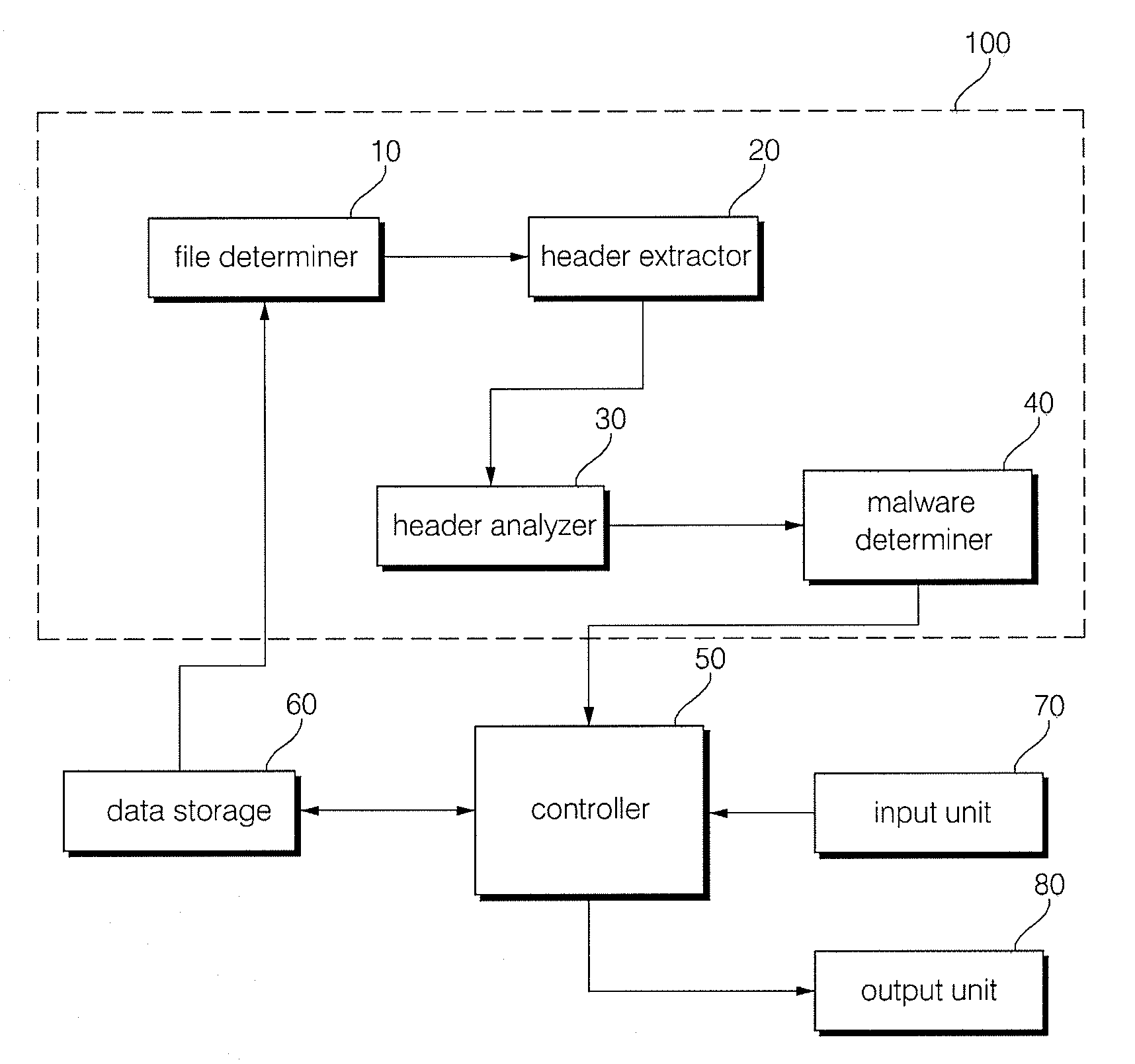

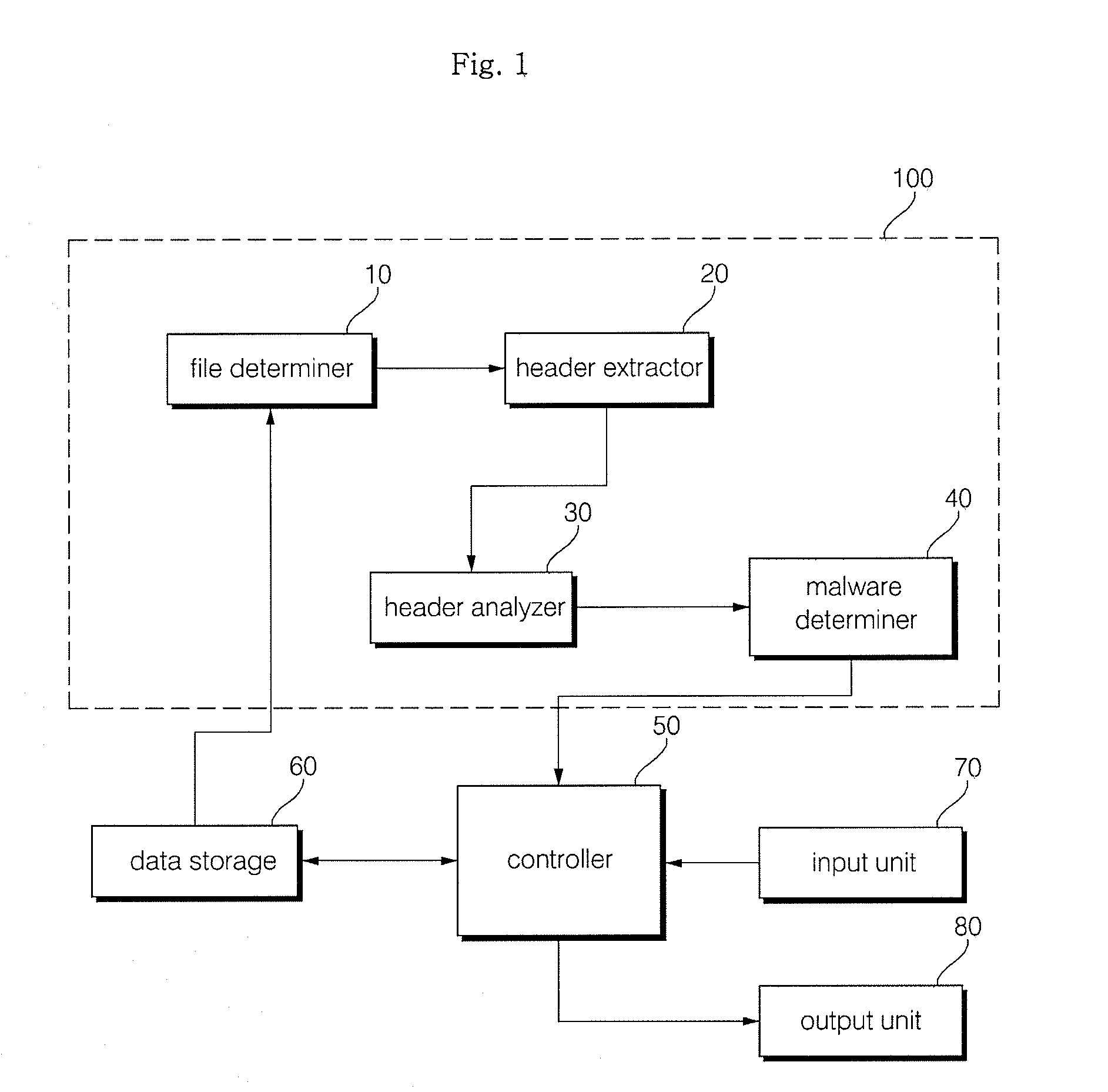

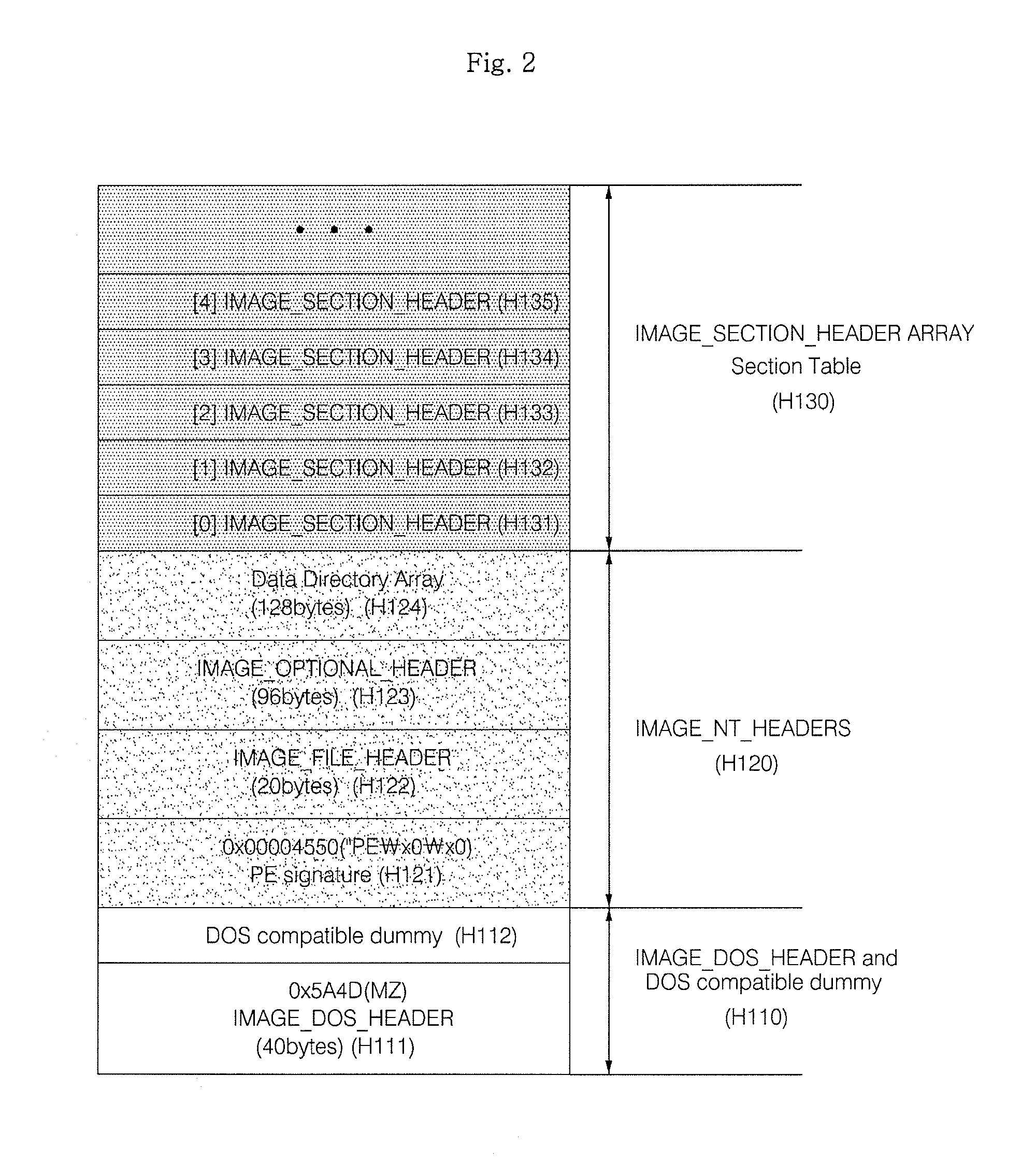

Method and apparatus for malware detection

InactiveUS20090133125A1Shorten detection timeImprove security levelMemory loss protectionUnauthorized memory use protectionMalwareProtection system

The present invention relates to an apparatus and method for detecting malware. The malware detection apparatus and method of the present invention determines whether a file is malware or not by analyzing the header of an executable file. Since the malware detection apparatus and method can quickly detect presence of malware, it can shorten detection time considerably. The malware detection apparatus and method can also detect even unknown malware as well as known malware to thereby estimate and determine presence of malware. Therefore, it is possible to cope with malware in advance, protect a system with a program, and increase security level remarkably.

Owner:ELECTRONICS & TELECOMM RES INST

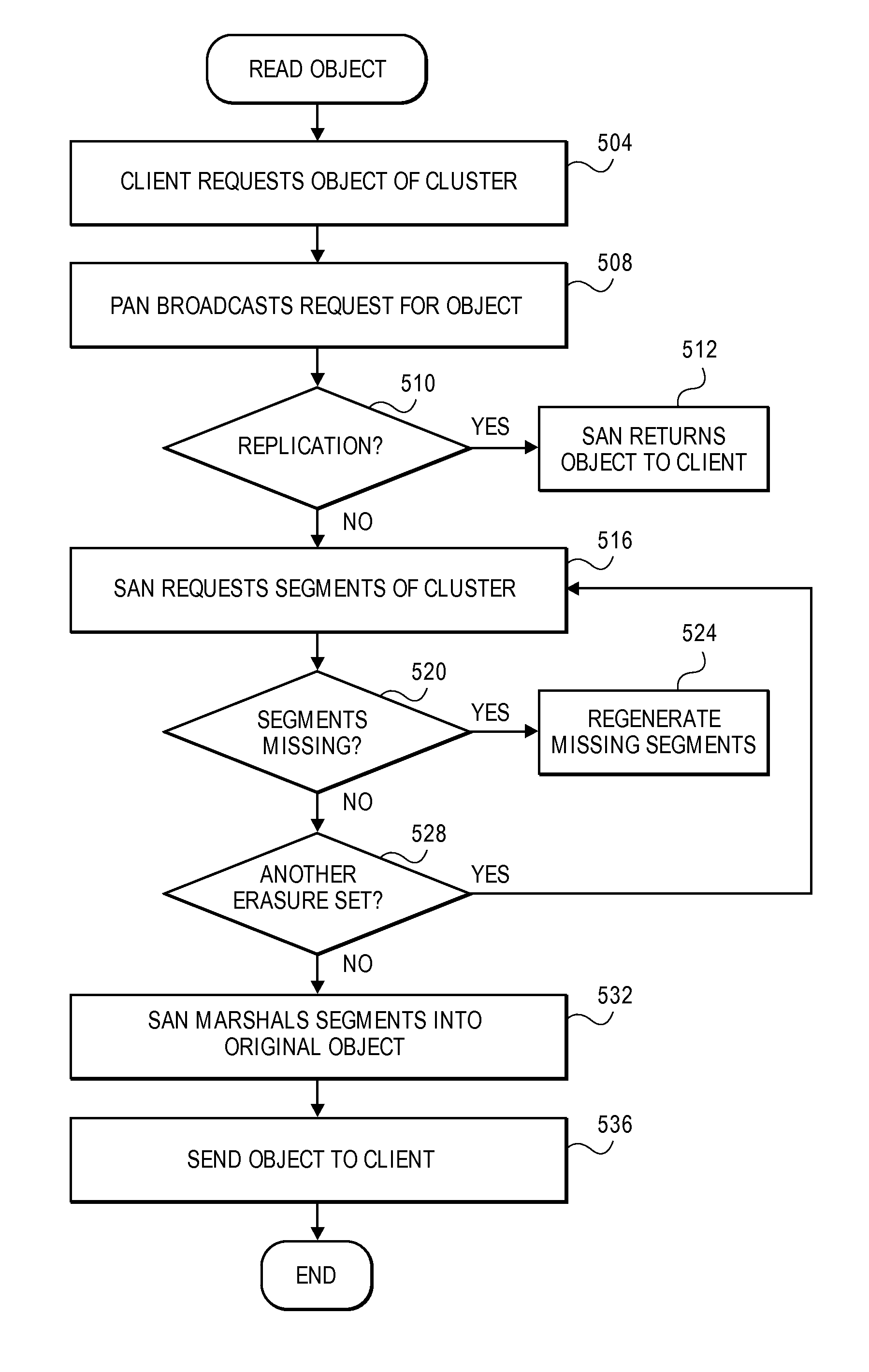

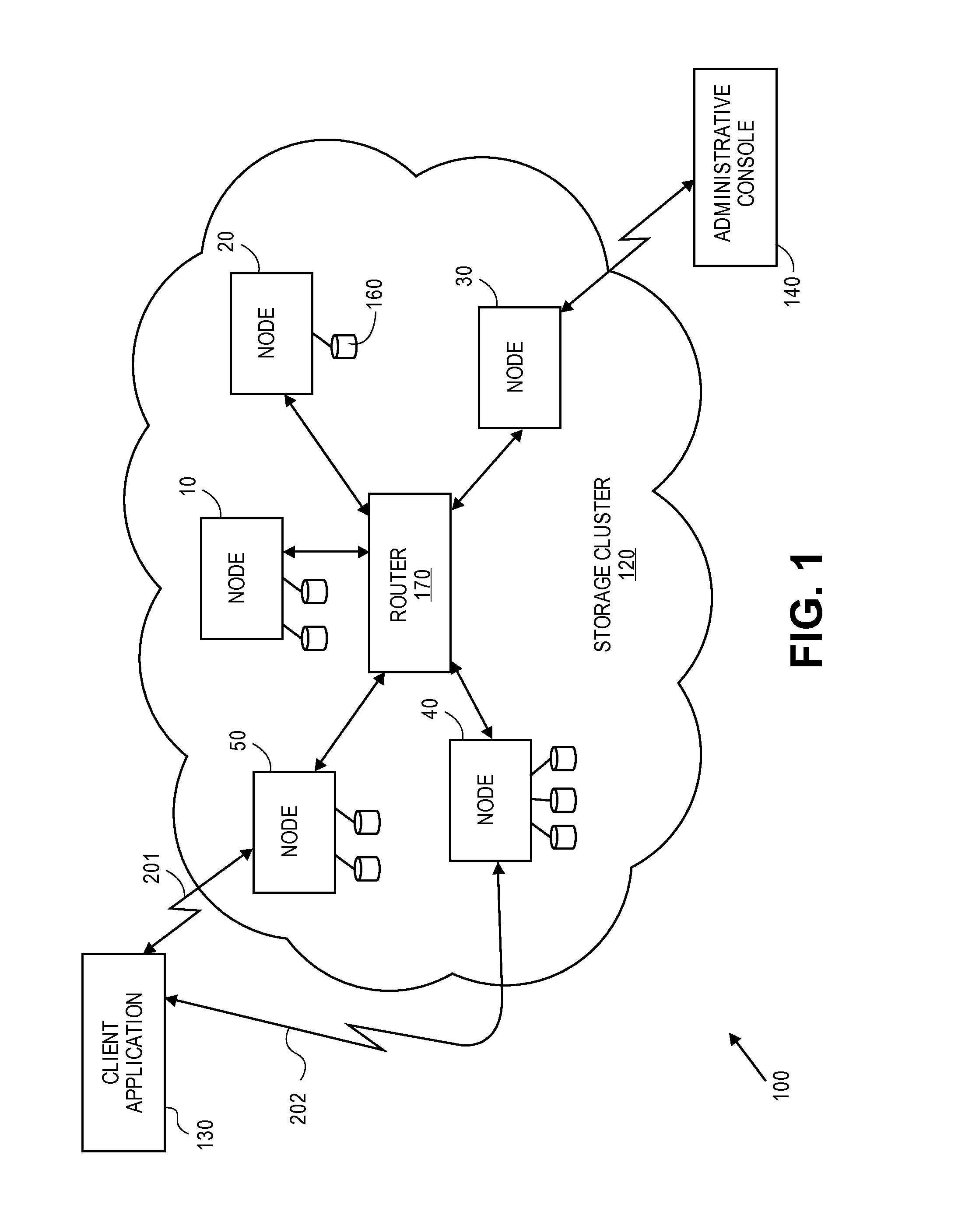

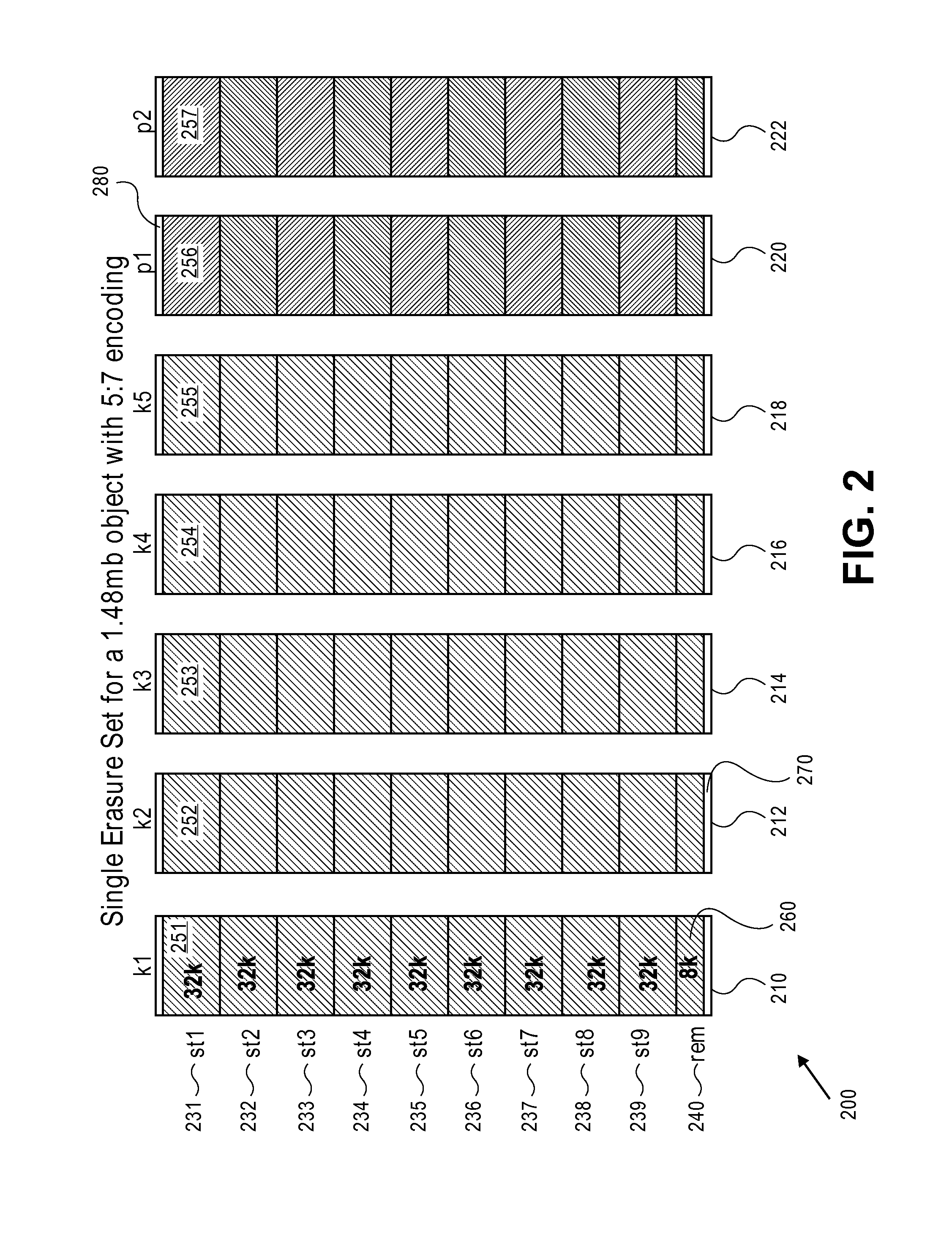

Erasure coding and replication in storage clusters

ActiveUS20130339818A1Error correction/detection using block single space codingCode conversionUnique identifierOperating system

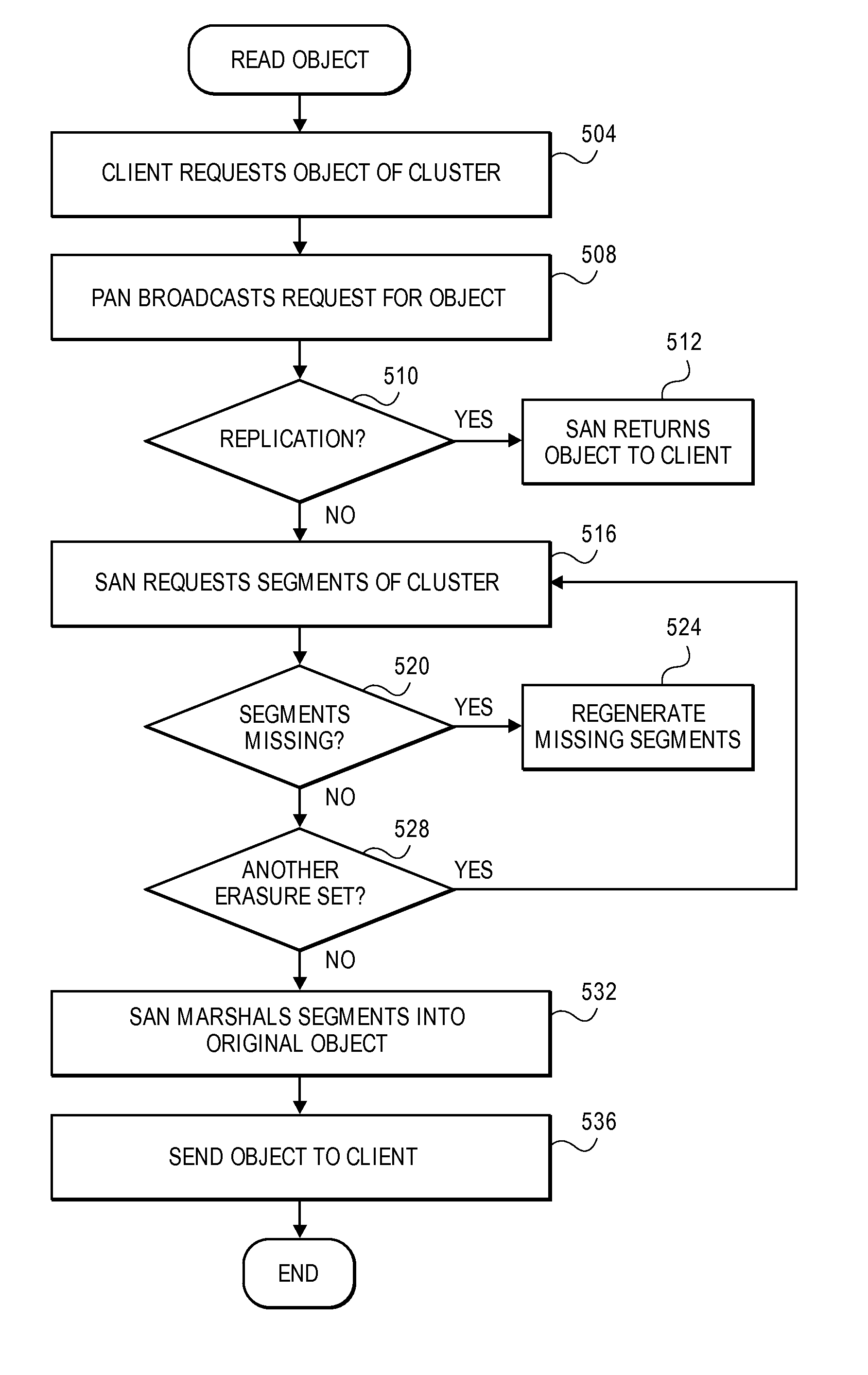

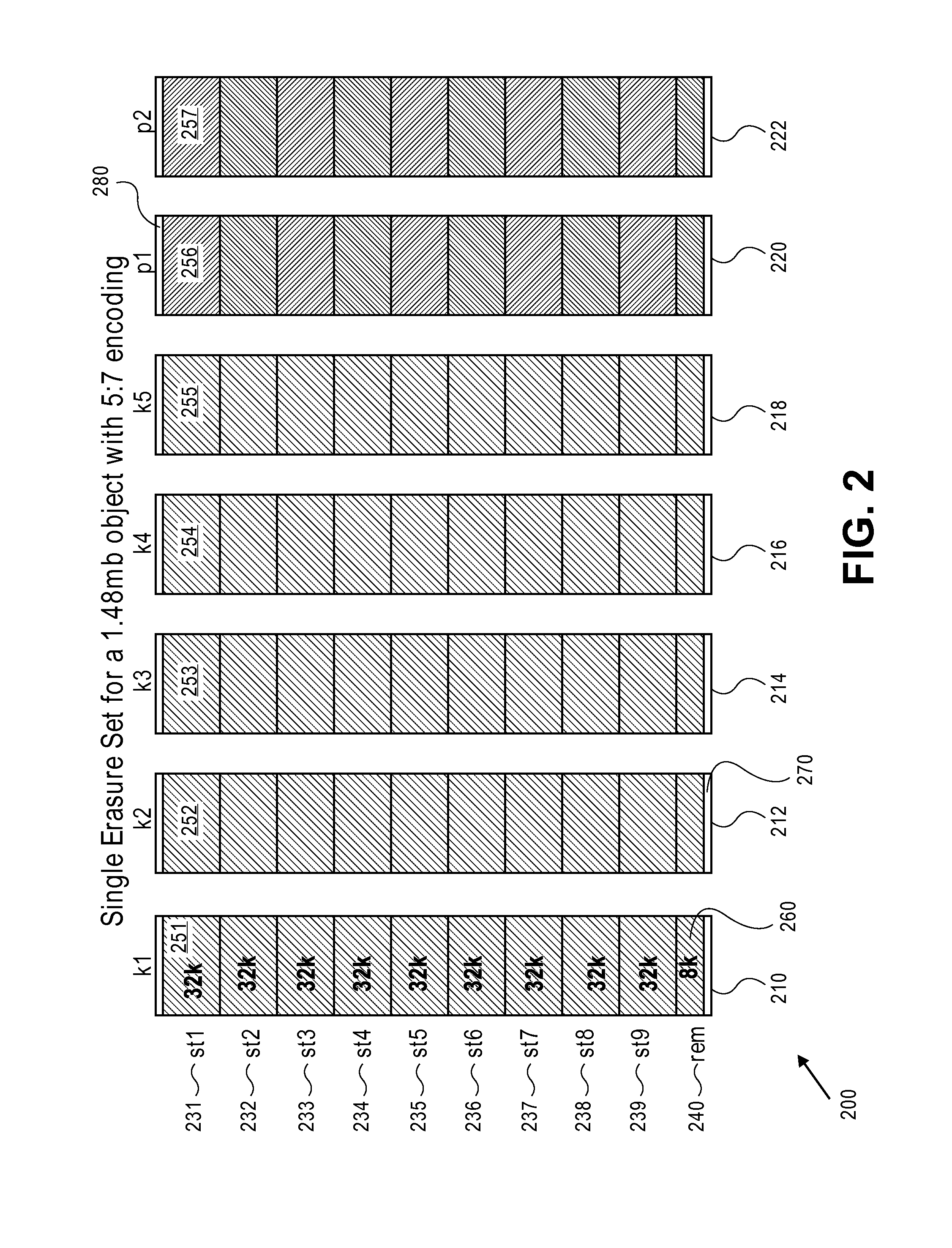

A cluster receives a request to store an object using replication or erasure coding. The cluster writes the object using erasure coding. A manifest is written that includes an indication of erasure coding and a unique identifier for each segment. The cluster returns a unique identifier of the manifest. The cluster receives a request from a client that includes a unique identifier. The cluster determines whether the object has been stored using replication or erasure coding. If using erasure coding, the method reads a manifest. The method identifies segments within the cluster using unique segment identifiers of the manifest. Using these unique segment identifiers, the method reconstructs the object. A persistent storage area of another disk is scanned to find a unique identifier of a failed disk. If using erasure coding, a missing segment previously stored on the disk is identified. The method locates other segments. Missing segments are regenerated.

Owner:DATACORE SOFTWARE

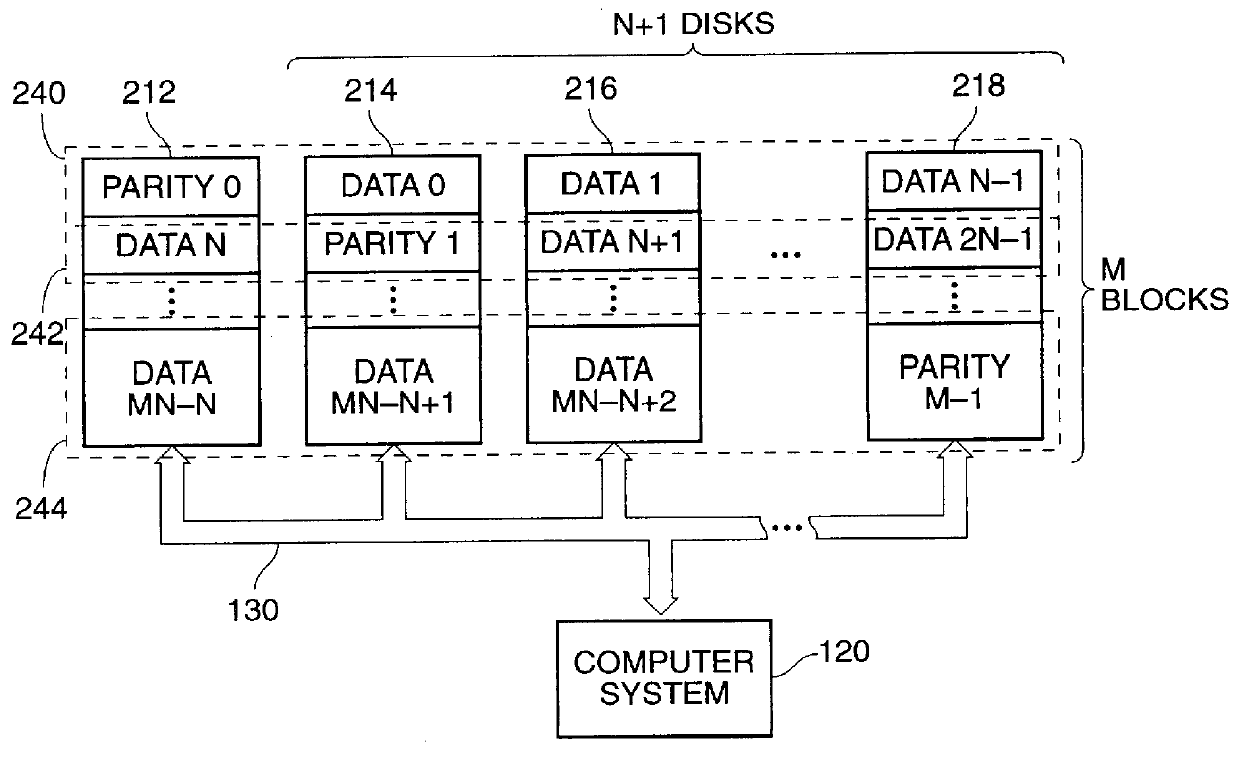

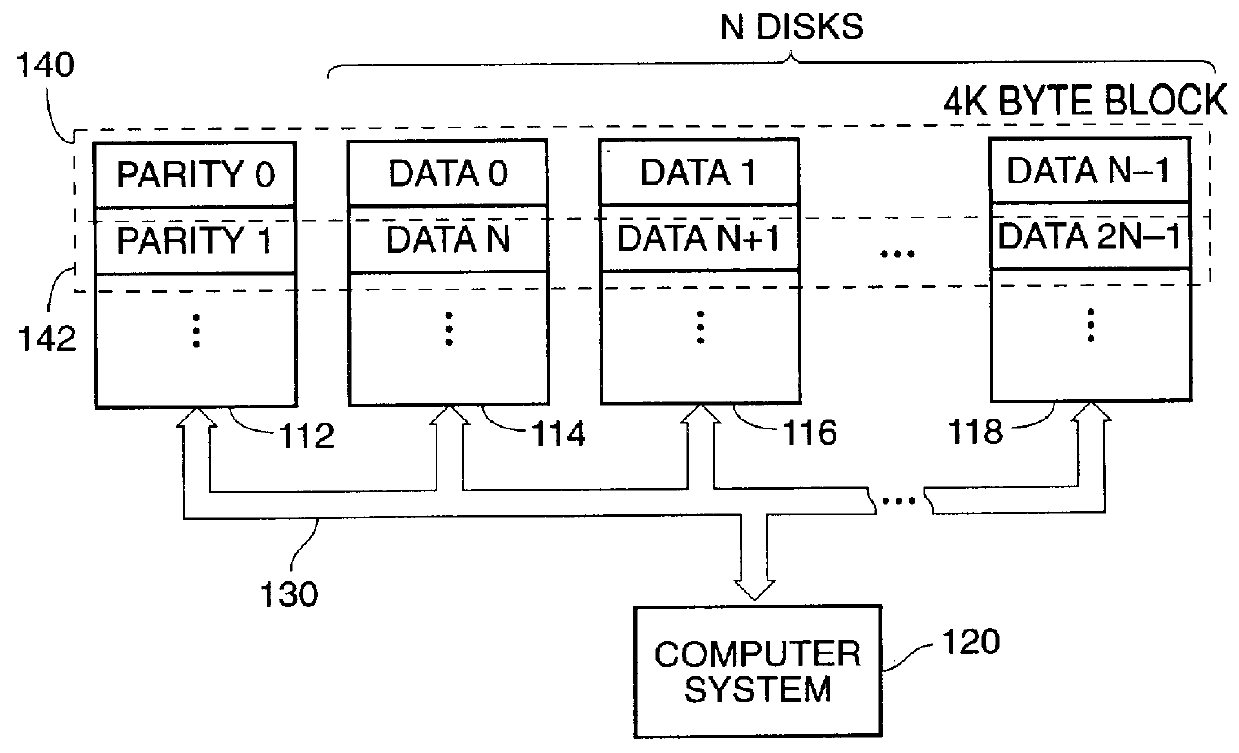

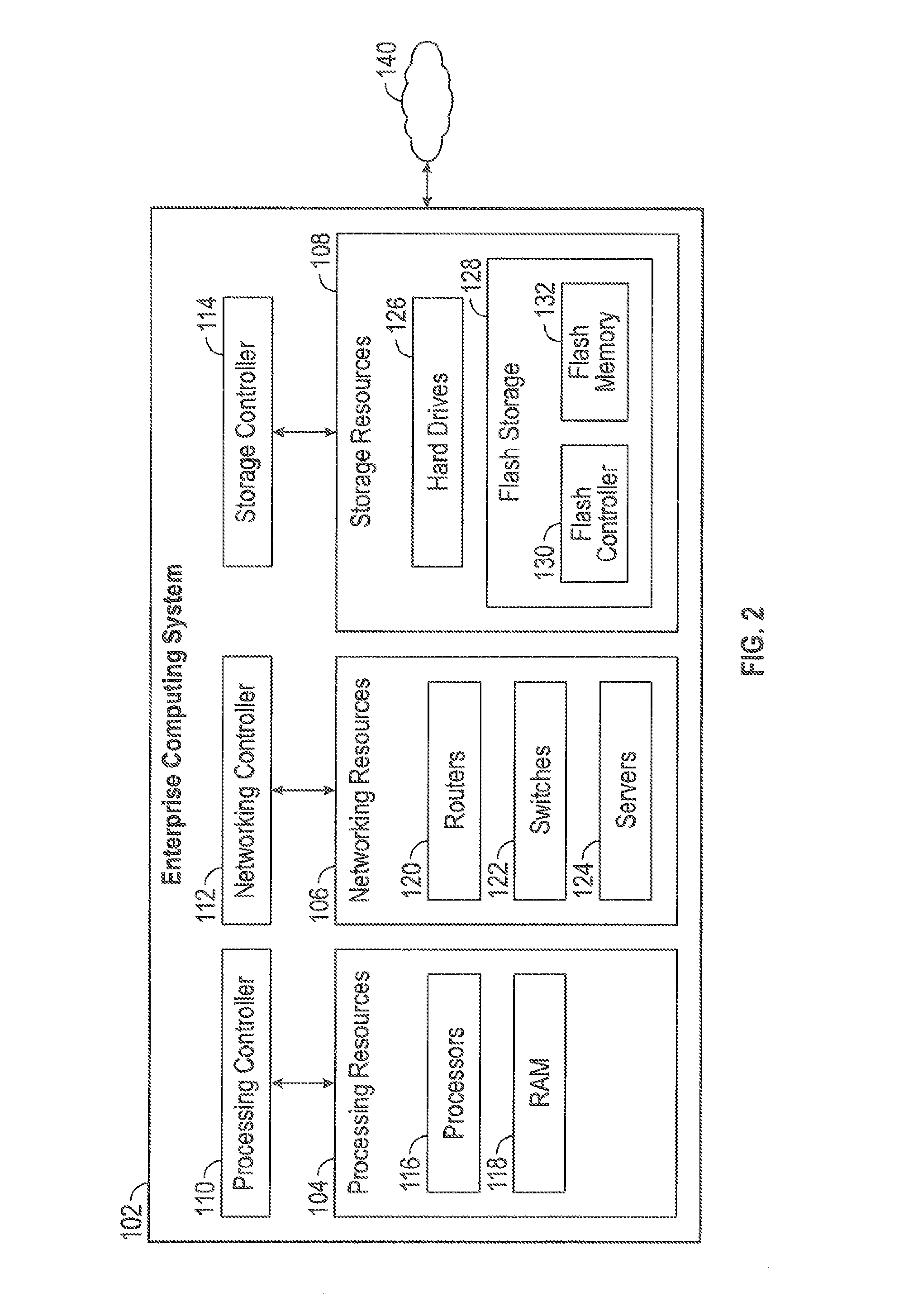

Adaptive RAID for an SSD environment

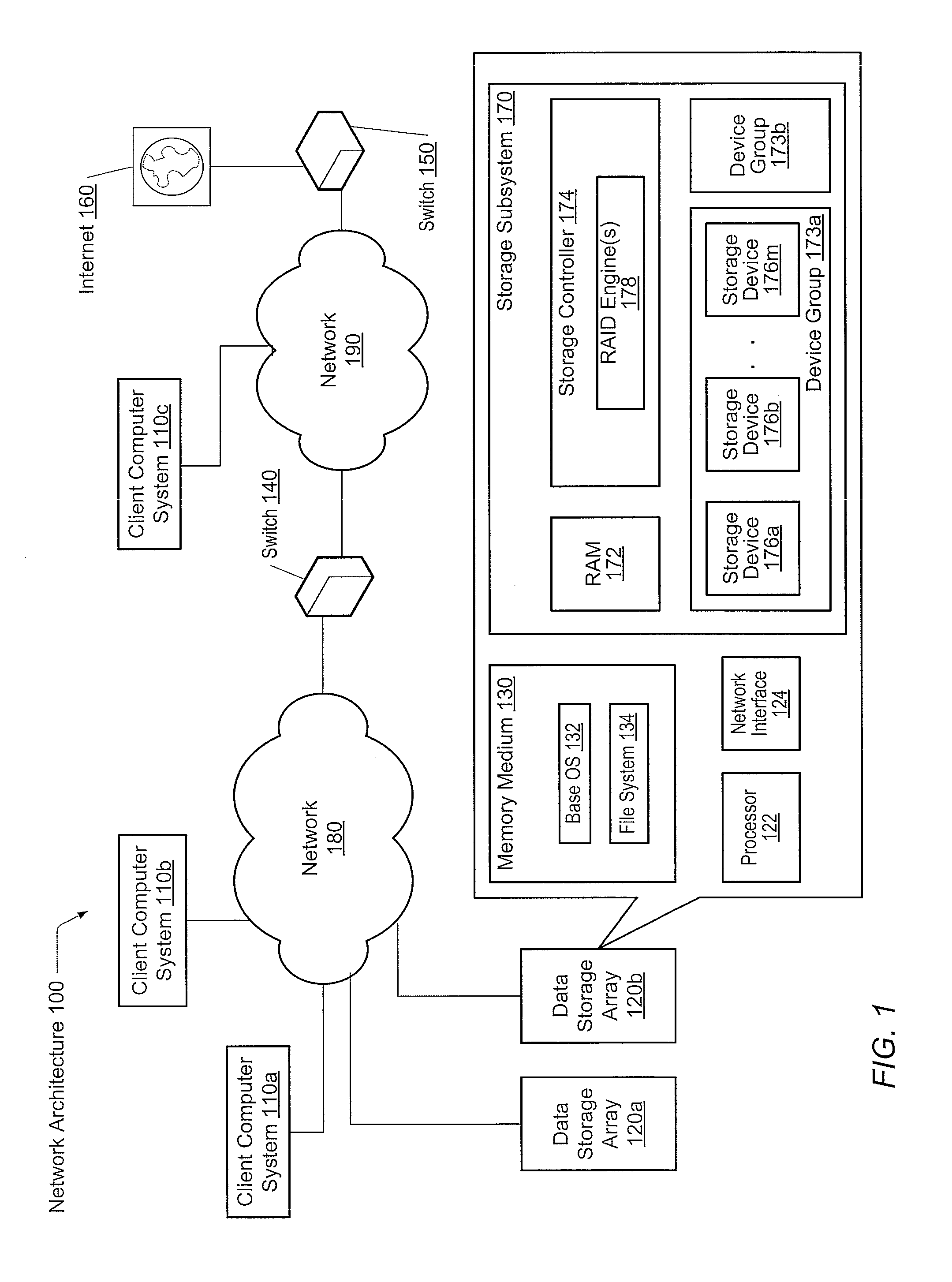

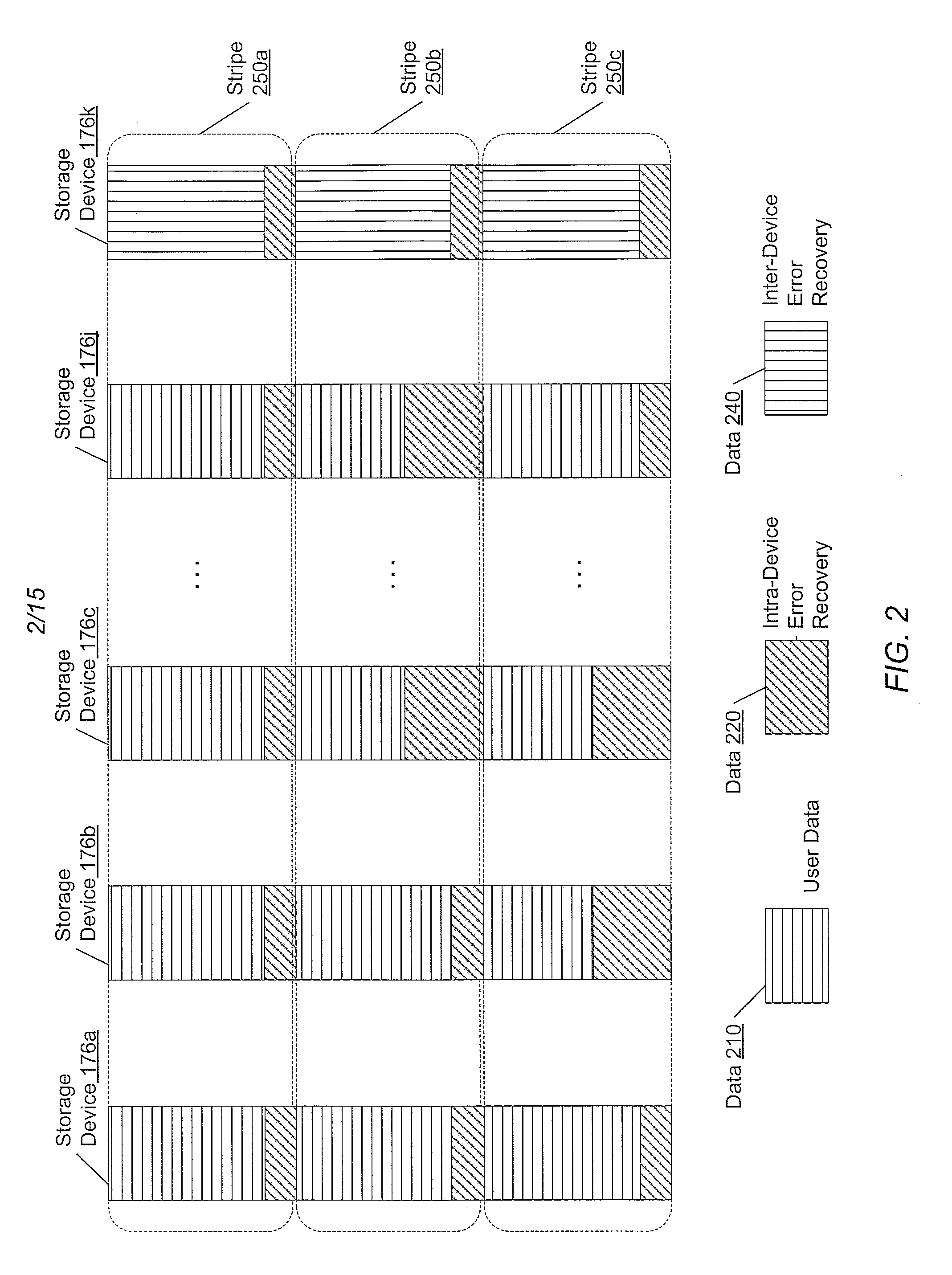

A system and method for adaptive RAID geometries. A computer system comprises client computers and data storage arrays coupled to one another via a network. A data storage array utilizes solid-state drives and Flash memory cells for data storage. A storage controller within a data storage array is configured to determine a first RAID layout for use in storing data, and write a first RAID stripe to the device group according to the first RAID layout. In response to detecting a first condition, the controller is configured to determine a second RAID layout which is different from the first RAID layout, and write a second RAID stripe to the device group according to the second layout, whereby the device group concurrently stores data according to both the first RAID layout and the second RAID layout.

Owner:PURE STORAGE

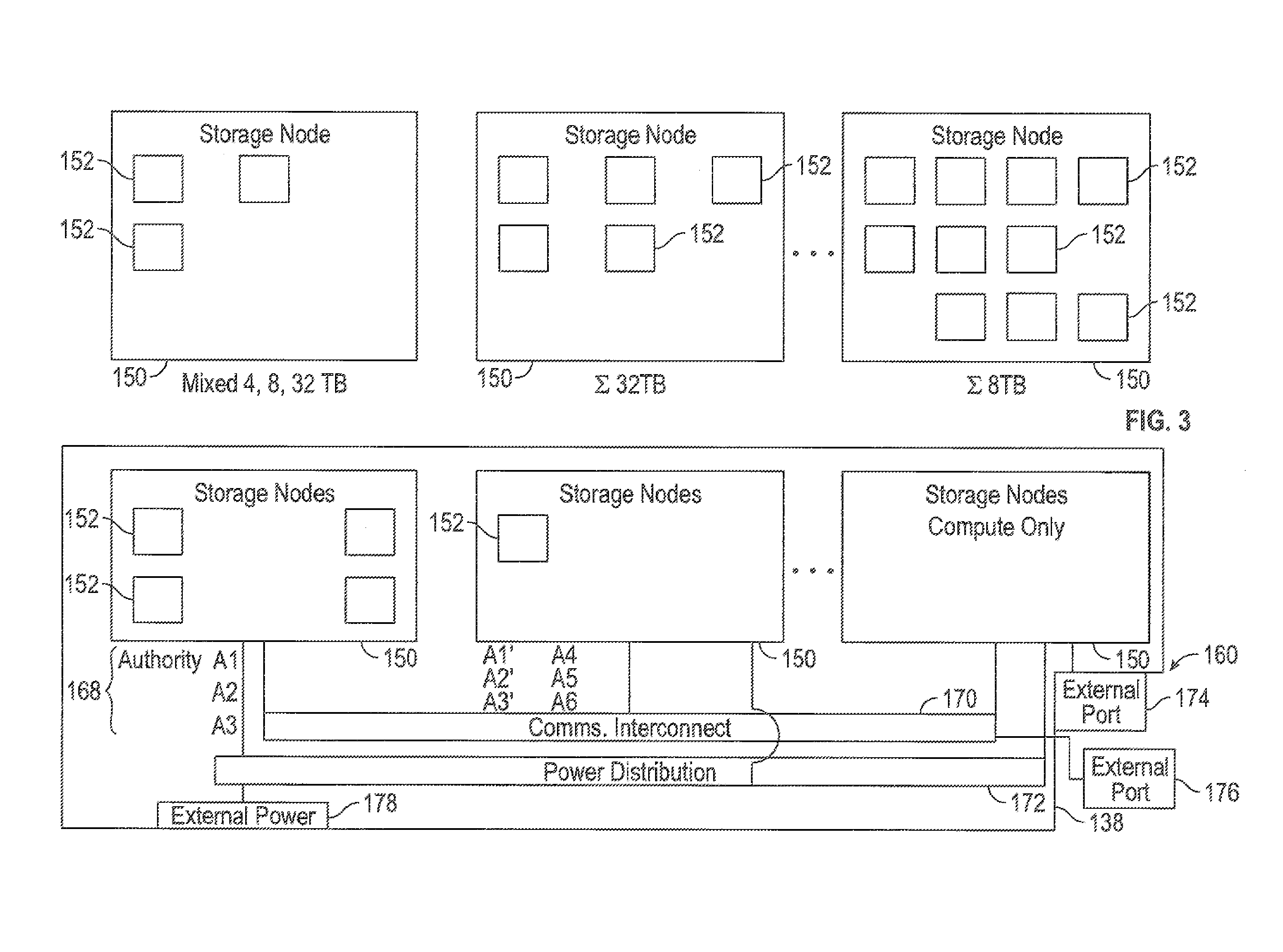

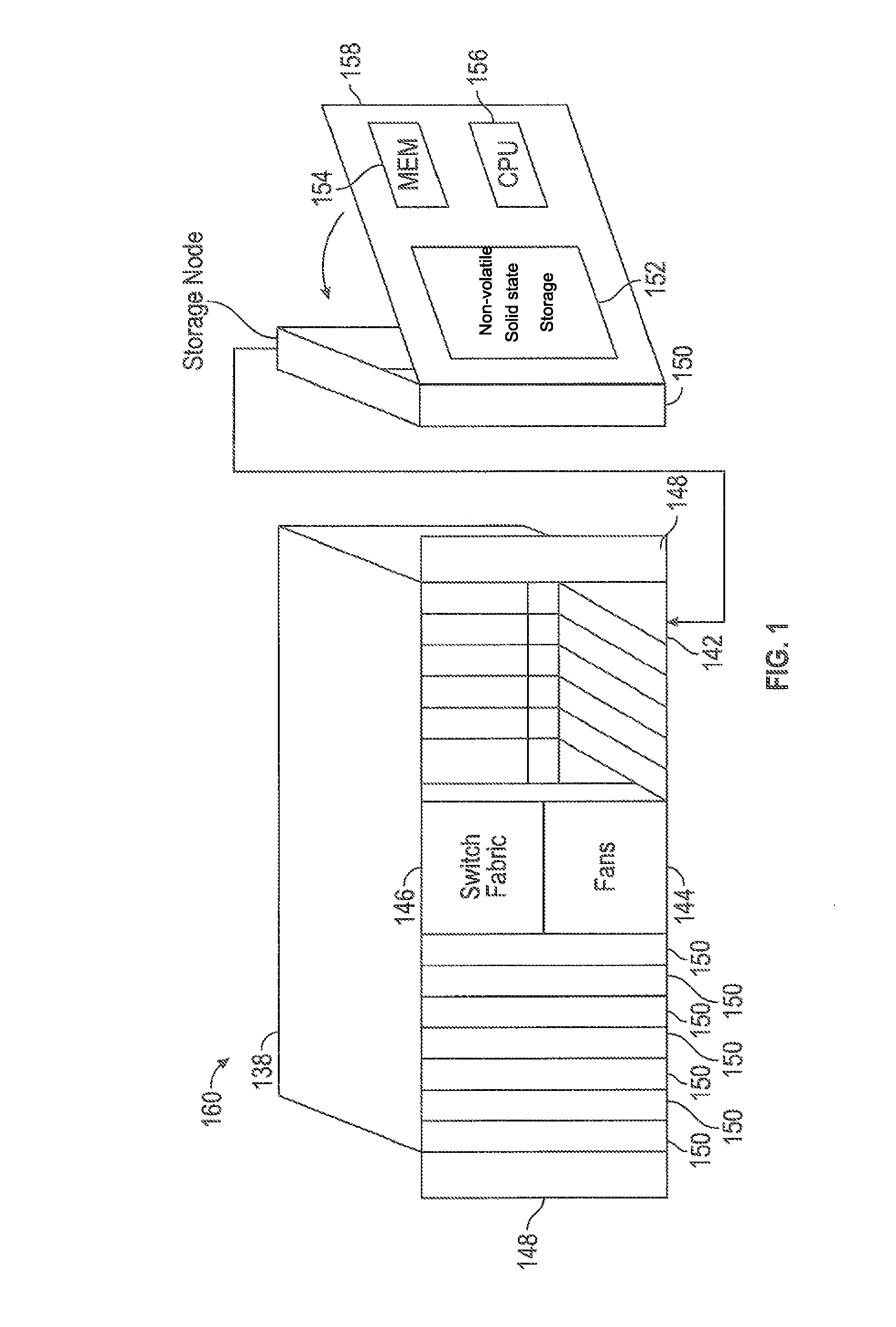

Storage cluster

ActiveUS8850108B1Maintain abilityMemory architecture accessing/allocationInput/output to record carriersData storeSolid state memory

A plurality of storage nodes in a single chassis is provided. The plurality of storage nodes in the single chassis is configured to communicate together as a storage cluster. Each of the plurality of storage nodes includes nonvolatile solid-state memory for user data storage. The plurality of storage nodes is configured to distribute the user data and metadata associated with the user data throughout the plurality of storage nodes such that the plurality of storage nodes maintain the ability to read the user data, using erasure coding, despite a loss of two of the plurality of storage nodes. The chassis includes power distribution, a high speed communication bus and the ability to install one or more storage nodes which may use the power distribution and communication bus. A method for accessing user data in a plurality of storage nodes having nonvolatile solid-state memory is also provided.

Owner:PURE STORAGE

Erasure coding and replication in storage clusters

ActiveUS8799746B2Error correction/detection using block single space codingCode conversionUnique identifierOperating system

Owner:DATACORE SOFTWARE

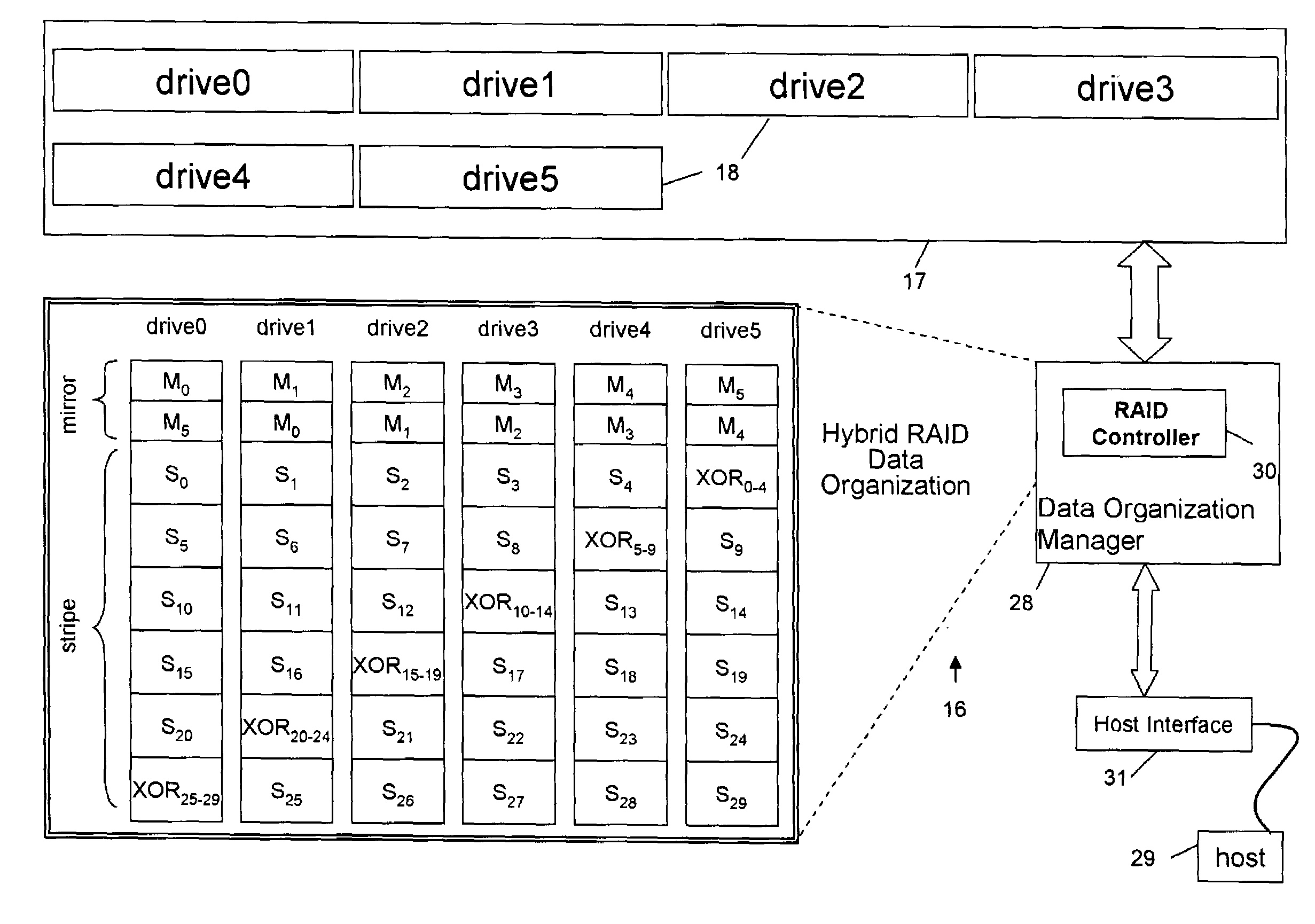

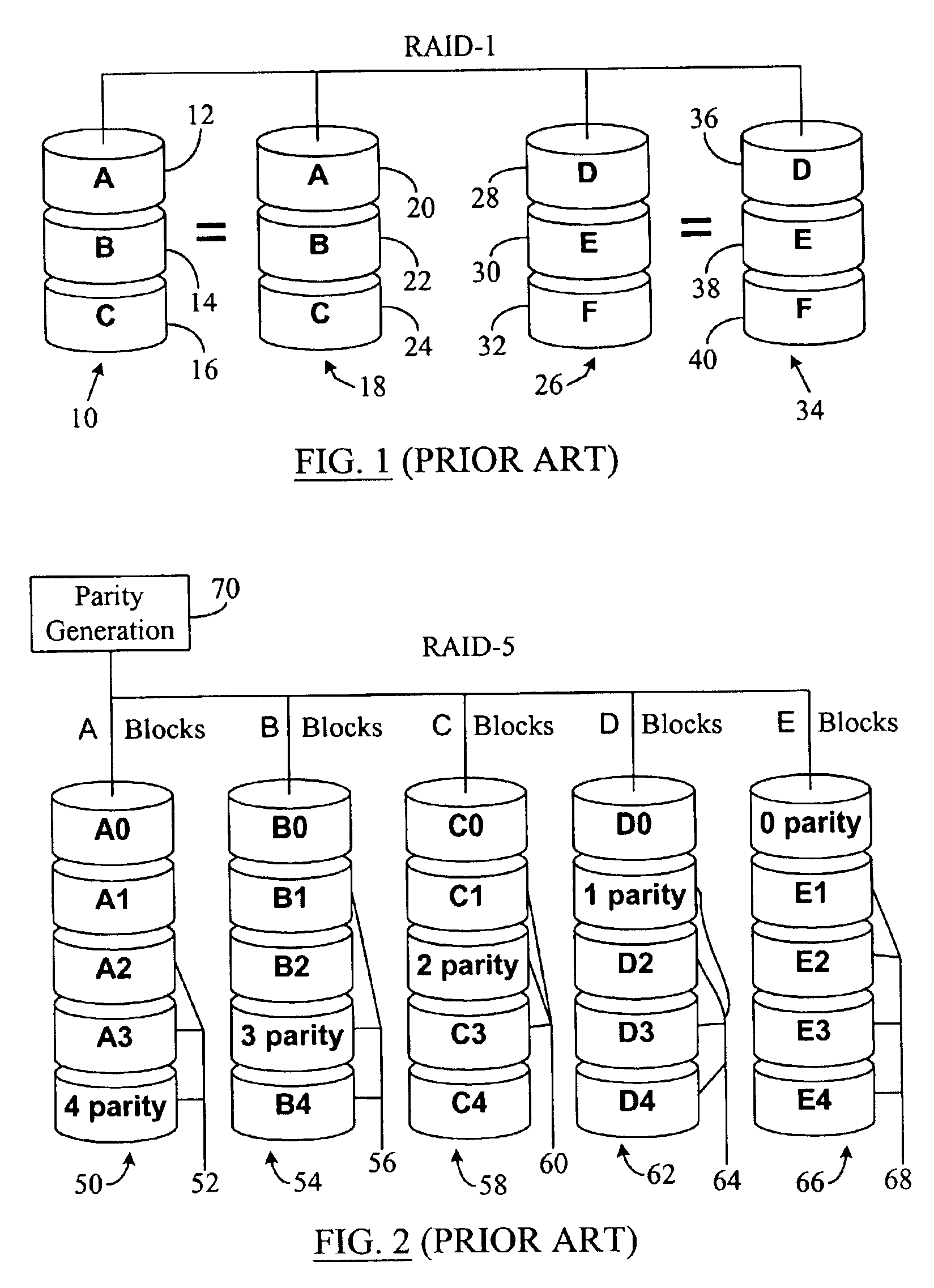

Accelerated RAID with rewind capability

InactiveUS7076606B2Increase exposureImprove read performanceInput/output to record carriersData processing applicationsRAIDData store

A method for storing data in a fault-tolerant storage subsystem having an array of failure independent data storage units, by dividing the storage area on the storage units into a logical mirror area and a logical stripe area, such that when storing data in the mirror area, duplicating the data by keeping a duplicate copy of the data on a pair of storage units, and when storing data in the stripe area, storing data as stripes of blocks, including data blocks and associated error-correction blocks.

Owner:QUANTUM CORP

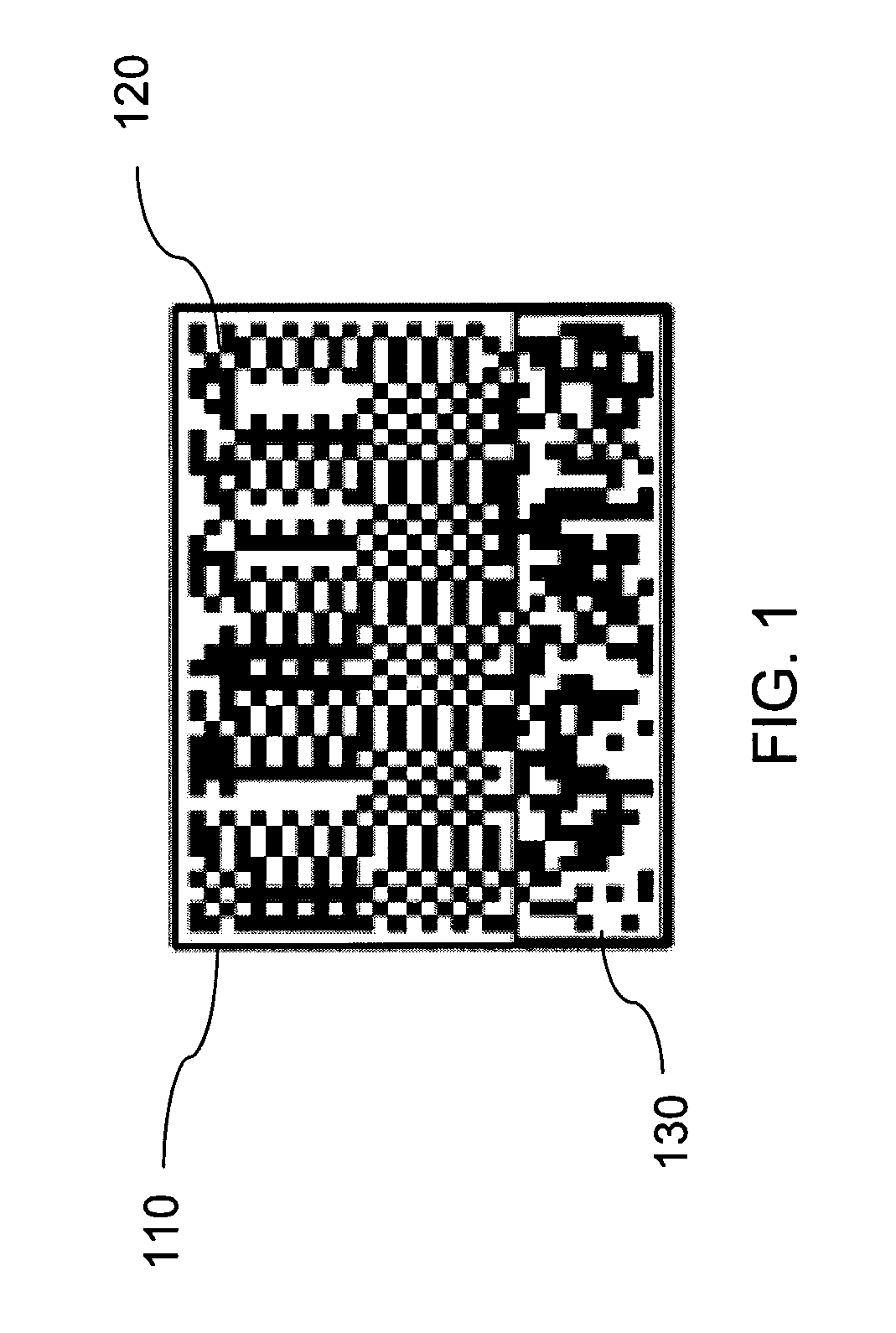

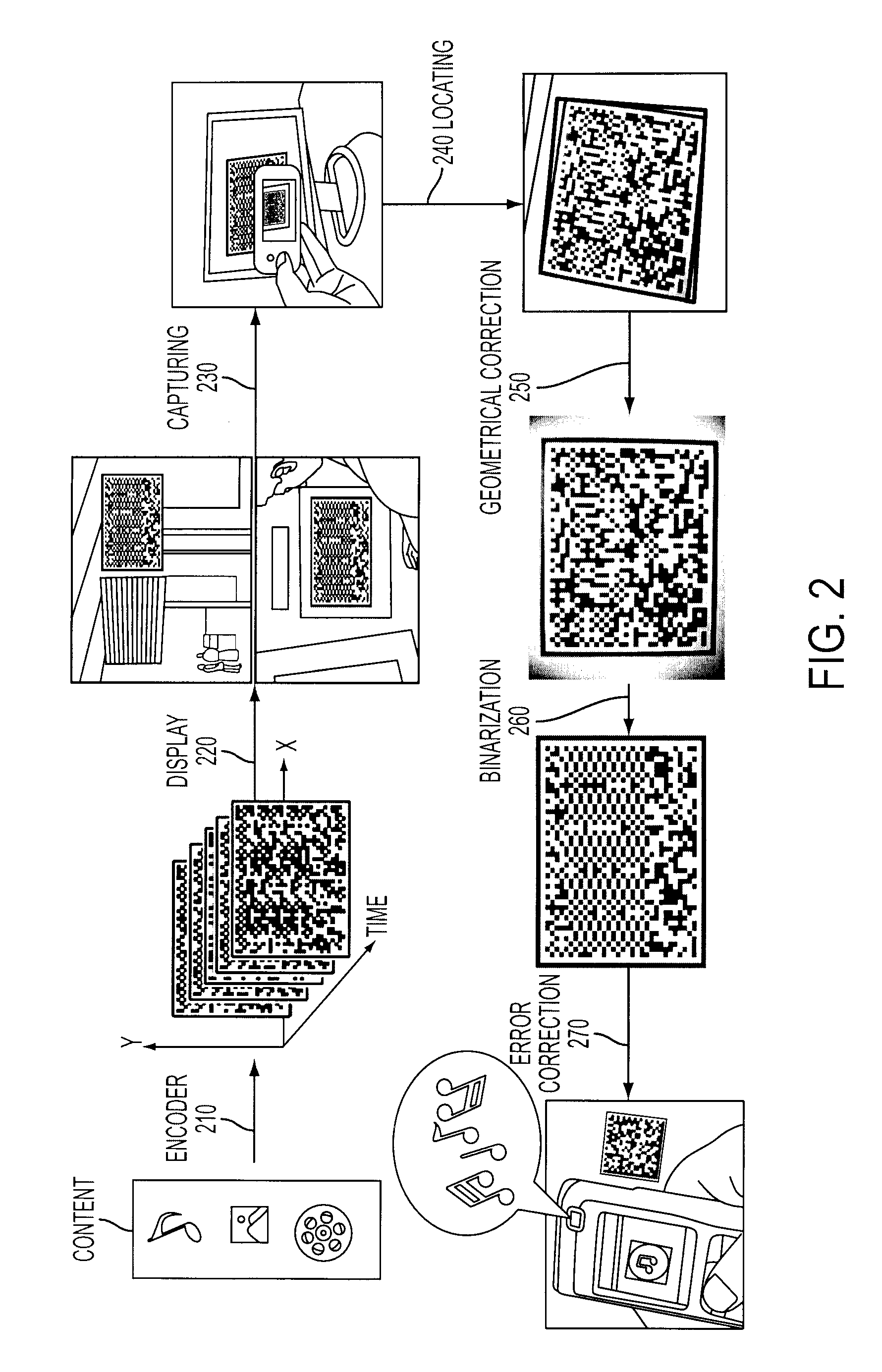

System And Method For Camera Imaging Data Channel

InactiveUS20100020970A1Low data costLow costCode conversionSecret communicationBarcodeDisplay device

A system and method for using cameras to download data to cell phones or other devices as an alternative to CDMA / GPRS, BlueTooth, Infrared or cable connections. The data is encoded as a sequence of images such as 2D bar codes, which can be displayed in any flat panel display, acquired by a camera, and decoded by software embedded in the device. The decoded data is written to a file. The system and method meet the following challenges: (1) To encode arbitrary data as a sequence of images. (2) To process captured images under various lighting variations and perspective distortions while maintaining real time performance. (3) To decode the processed images robustly even when partial data is lost.

Owner:LIU XU +2

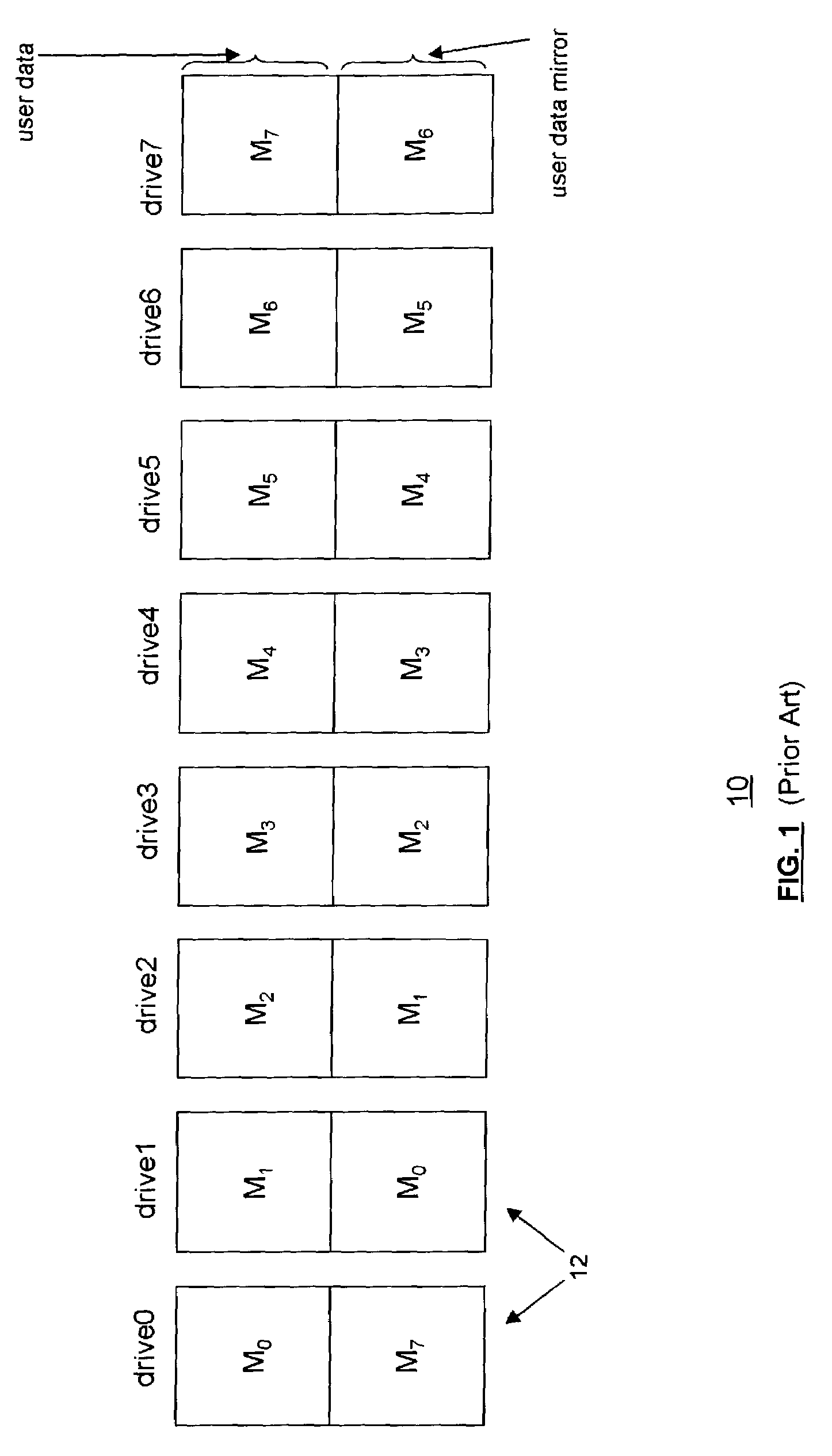

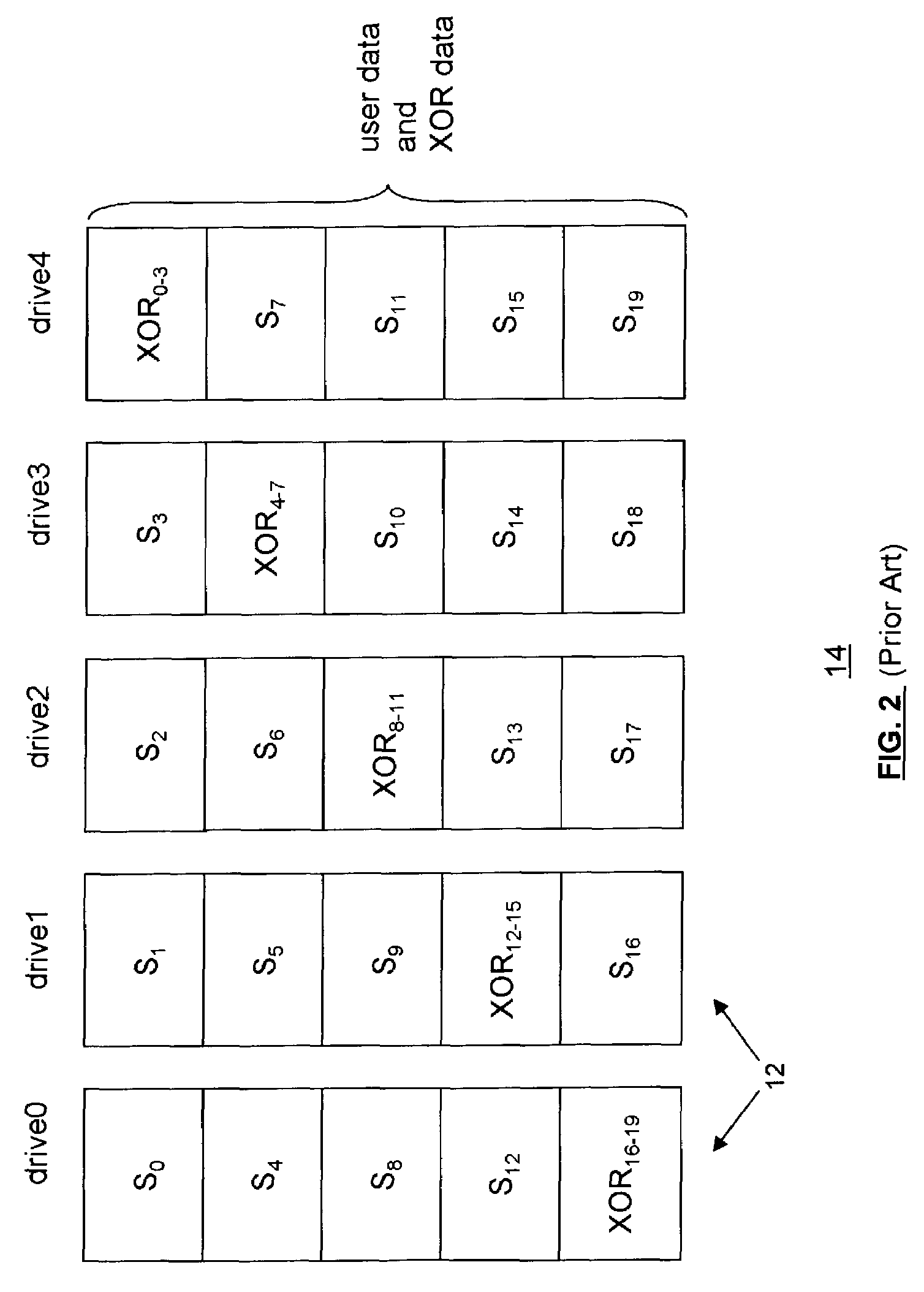

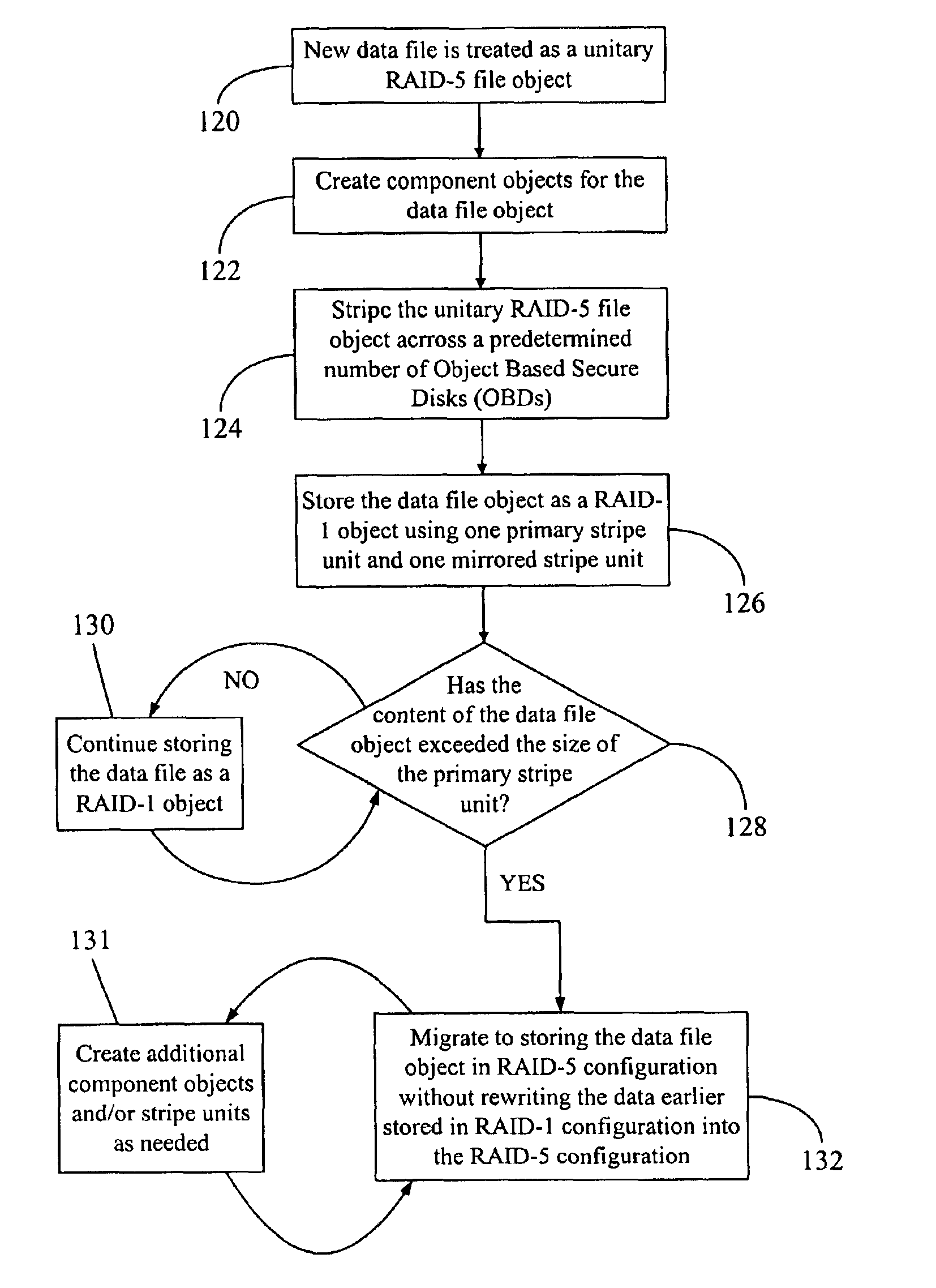

Data file migration from a mirrored RAID to a non-mirrored XOR-based RAID without rewriting the data

InactiveUS6985995B2Improve performanceImprove reliabilityData processing applicationsMemory loss protectionRAIDObject based

A data storage methodology wherein a data file is initially stored in a format consistent with RAID-1 and RAID-X and then migrated to a format consistent with RAID-X and inconsistent with RAID-1 when the data file grows in size beyond a certain threshold. Here, RAID-X refers to any non-mirrored storage scheme employing XOR-based error correction coding (e.g., a RAID-5 configuration). Each component object (including the data objects and the parity object) for the data file is configured to be stored in a different stripe unit per object-based secure disk. Each stripe unit may store, for example, 64 KB of data. So long as the data file does not grow beyond the size threshold of a stripe unit (e.g., 64 KB), the parity stripe unit contains a mirrored copy of the data stored in one of the data stripe units because of the exclusive-ORing of the input data with “all zeros” assumed to be contained in empty or partially-filled stripe units. When the file grows beyond the size threshold, the parity stripe unit starts storing parity information instead of a mirrored copy of the file data. Thus, the data file can be automatically migrated from a format consistent with RAID-1 and RAID-X to a format consistent with RAID-X and inconsistent with RAID-1 without the necessity to duplicate or rewrite the stored data.

Owner:PANASAS INC

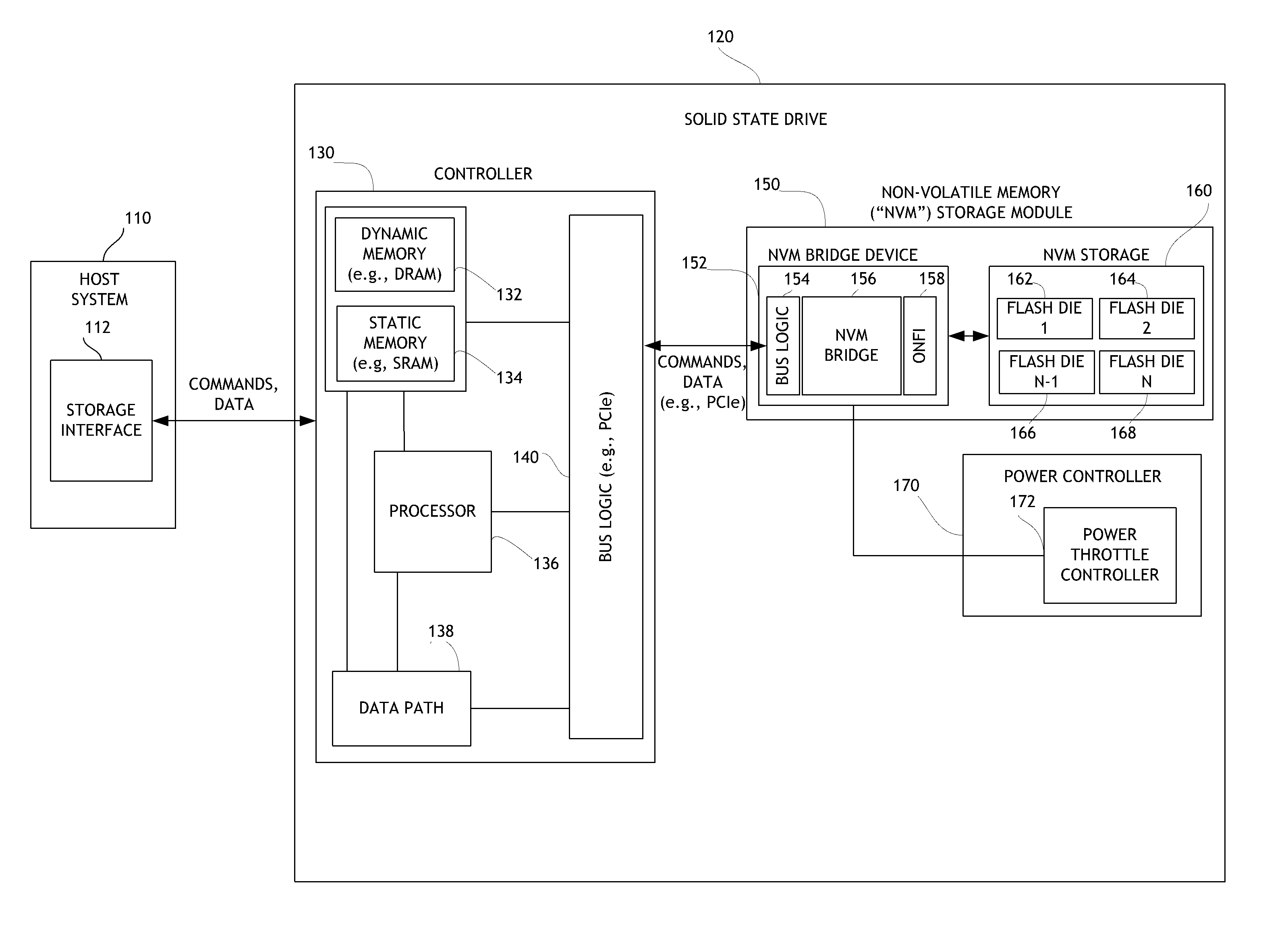

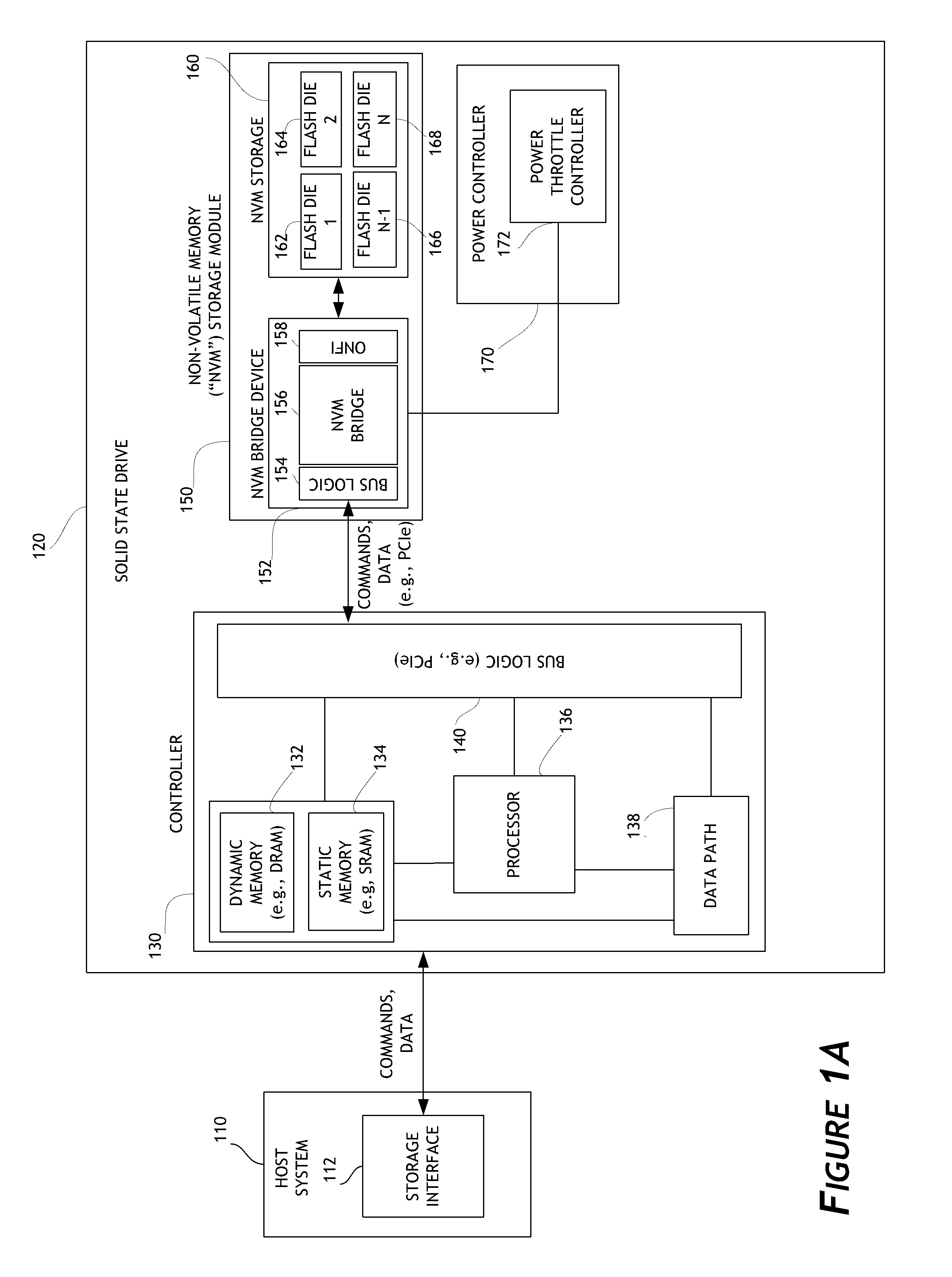

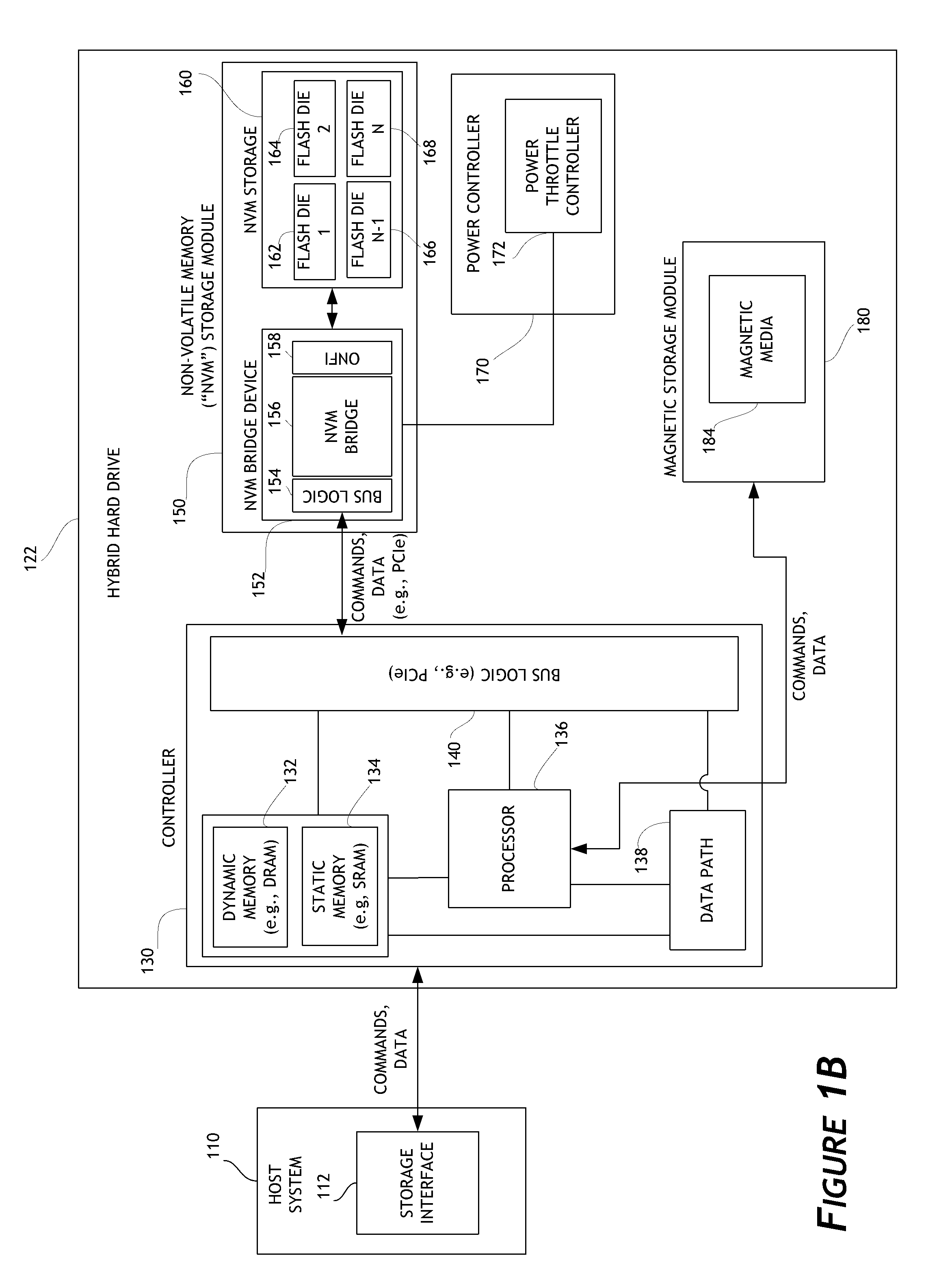

Systems and methods for error injection in data storage systems

ActiveUS8707104B1Solving precise measurementsTested and reliableDetecting faulty computer hardwareRead-only memoriesSolid-state storageHybrid storage system

Embodiments of the solid-state storage system provided herein are configured to perform improved mechanisms for testing of error recovery of solid state storage devices. In some embodiments, the system is configured to introduce or inject errors into data storage commands or operations performed in the non-volatile memory. Injected errors include corruption of data stored in the non-volatile memory, deliberate failure to execute storage operations, and errors injected into communication protocols used between various elements of the device. In some embodiments, injected errors can include direct errors that trigger an immediate execution of error recovery mechanisms and delayed errors that trigger execution of error recovery mechanisms at a later time. Error recovery mechanisms can be tested in an efficient, reliable, and deterministic manner to help ensure effective operation of storage devices. The integrity of non-volatile memory can also be tested.

Owner:WESTERN DIGITAL TECH INC

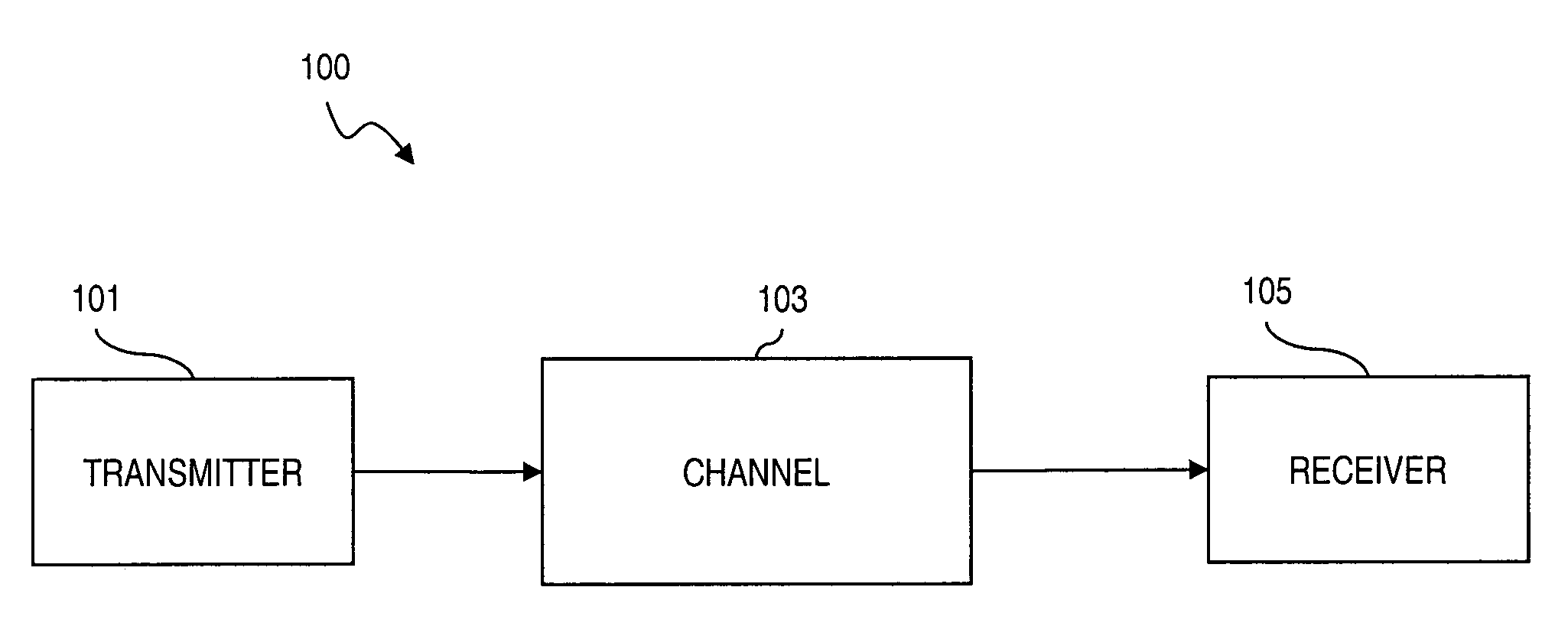

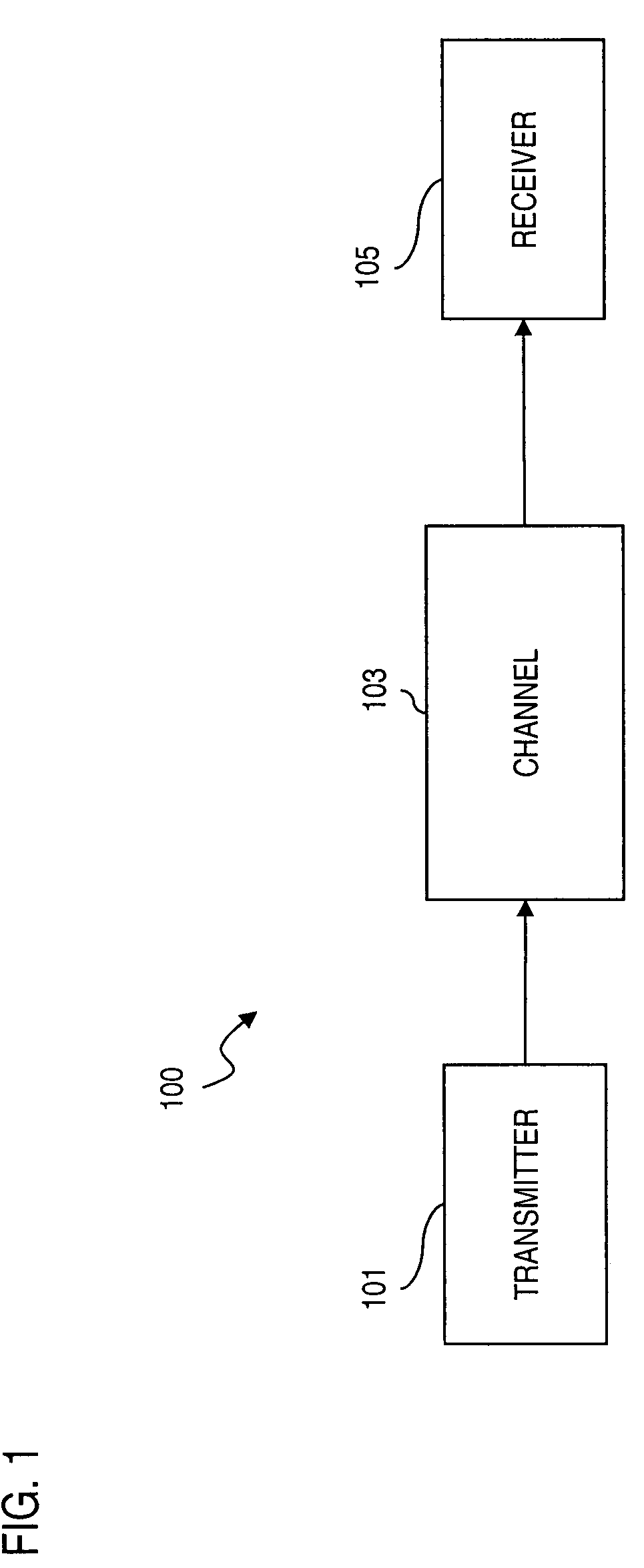

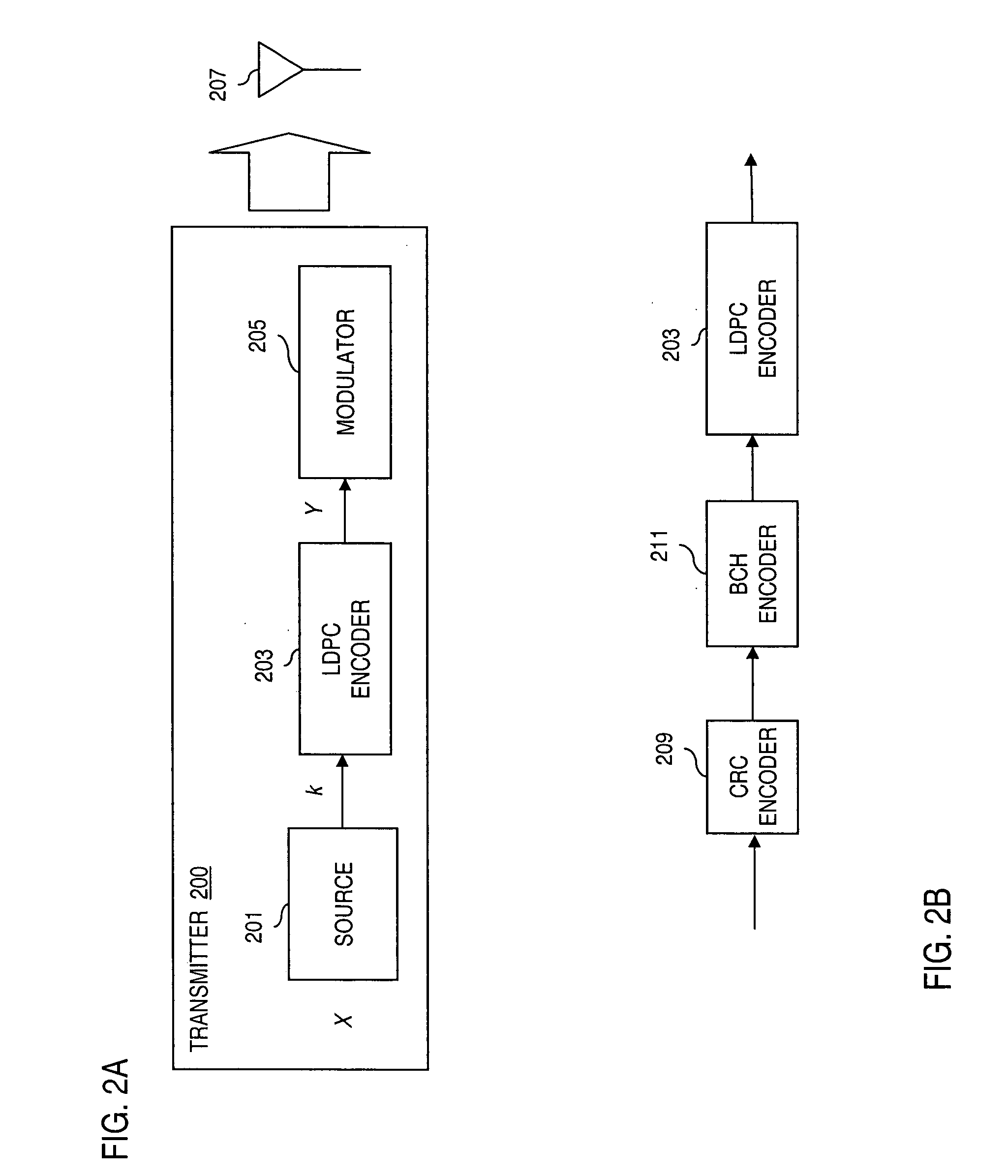

Method and system for providing low density parity check (LDPC) encoding

ActiveUS7191378B2Readily apparentInterconnection arrangementsError correction/detection using LDPC codesAlgorithmParity-check matrix

An approach is provided for a method of encoding structure Low Density Parity Check (LDPC) codes. Memory storing information representing a structured parity check matrix of Low Density Parity Check (LDPC) codes is accessed during the encoding process. The information is organized in tabular form, wherein each row represents occurrences of one values within a first column of a group of columns of the parity check matrix. The rows correspond to groups of columns of the parity check matrix, wherein subsequent columns within each of the groups are derived according to a predetermined operation. An LDPC coded signal is output based on the stored information representing the parity check matrix.

Owner:DTVG LICENSING INC

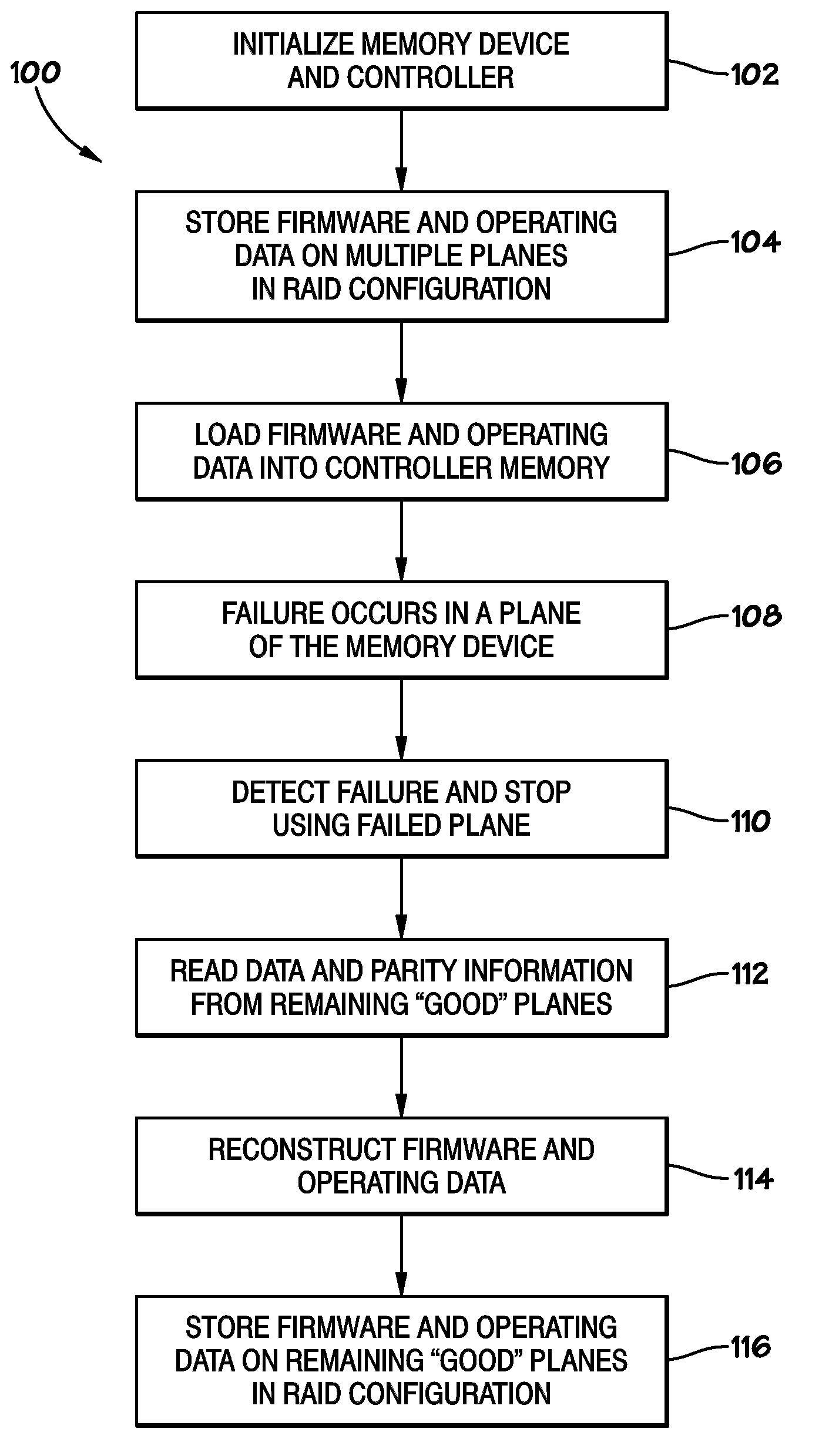

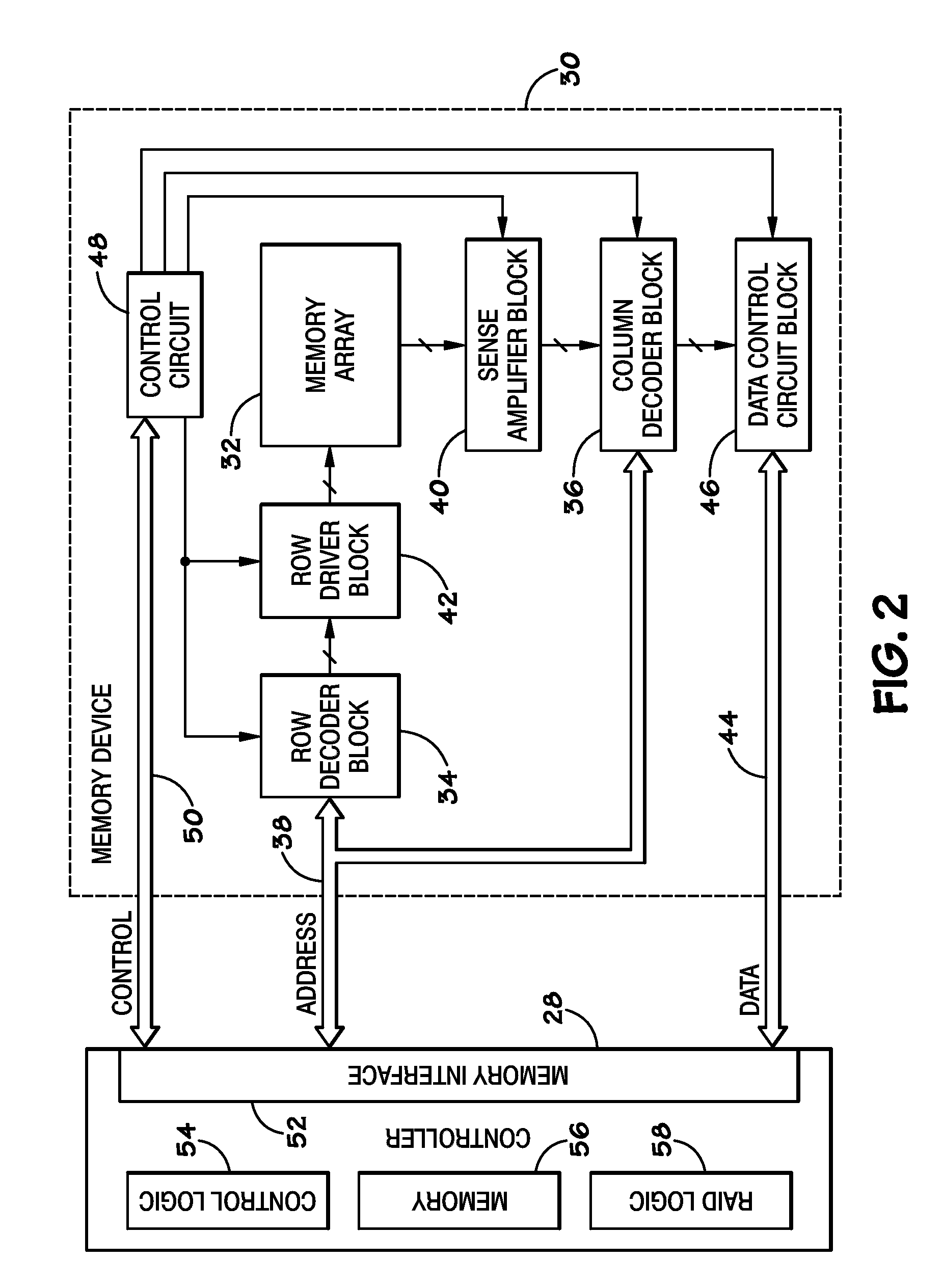

Systems and Methods for Storing and Recovering Controller Data in Non-Volatile Memory Devices

Systems and methods are disclosed for storing the firmware and other data of a flash memory controller, such as using a RAID configuration across multiple flash memory devices or portions of a single memory device. In various embodiments, the firmware and other data used by a controller, and error correction information, such as parity information for RAID configuration, may be stored across multiple flash memory devices, multiple planes of a multi-plane flash memory device, or across multiple blocks or pages of a single flash memory device. The controller may detect the failure of a memory device or a portion thereof, and reconstruct the firmware and / or other data from the other memory devices or portions thereof.

Owner:MICRON TECH INC

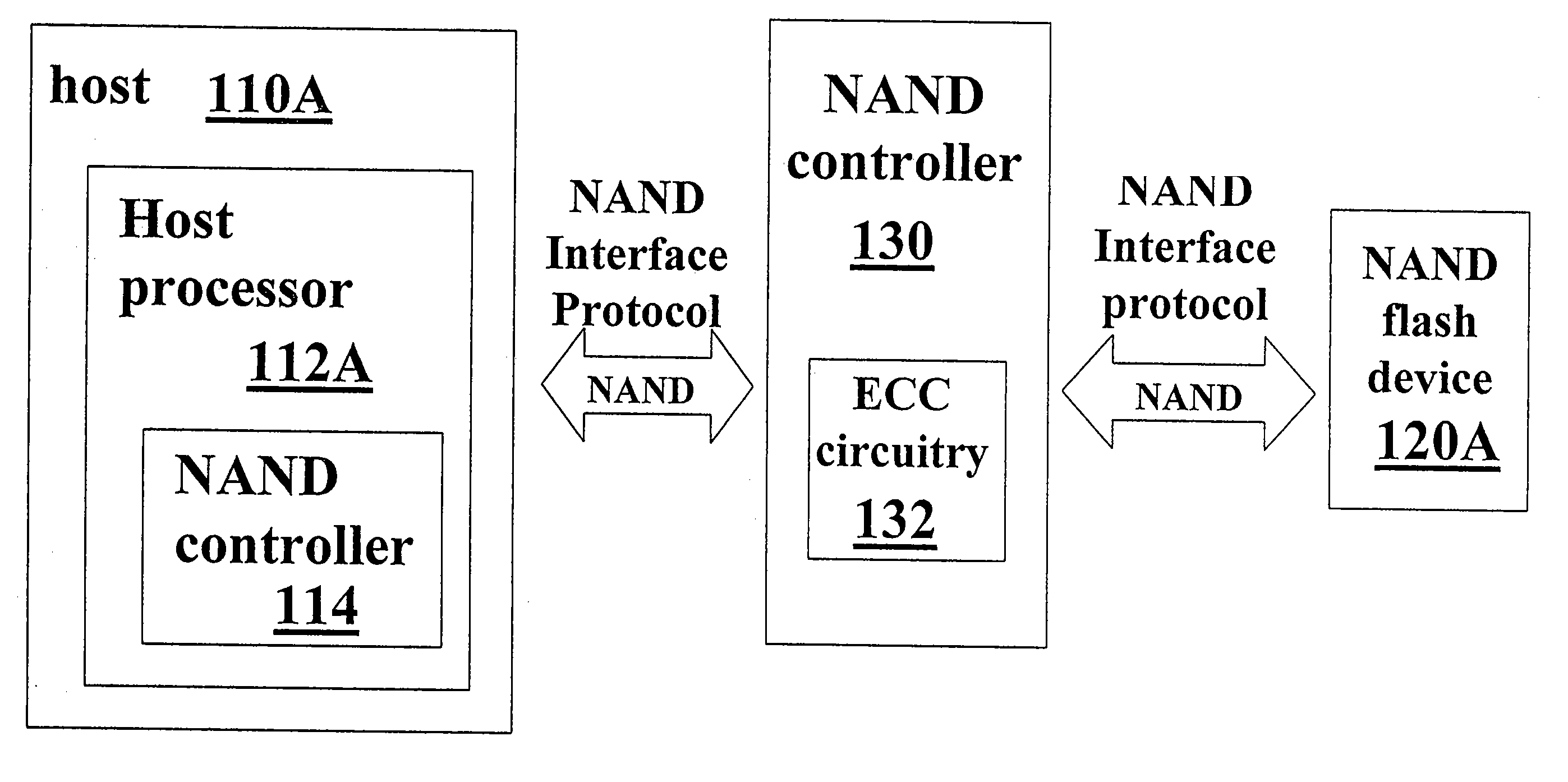

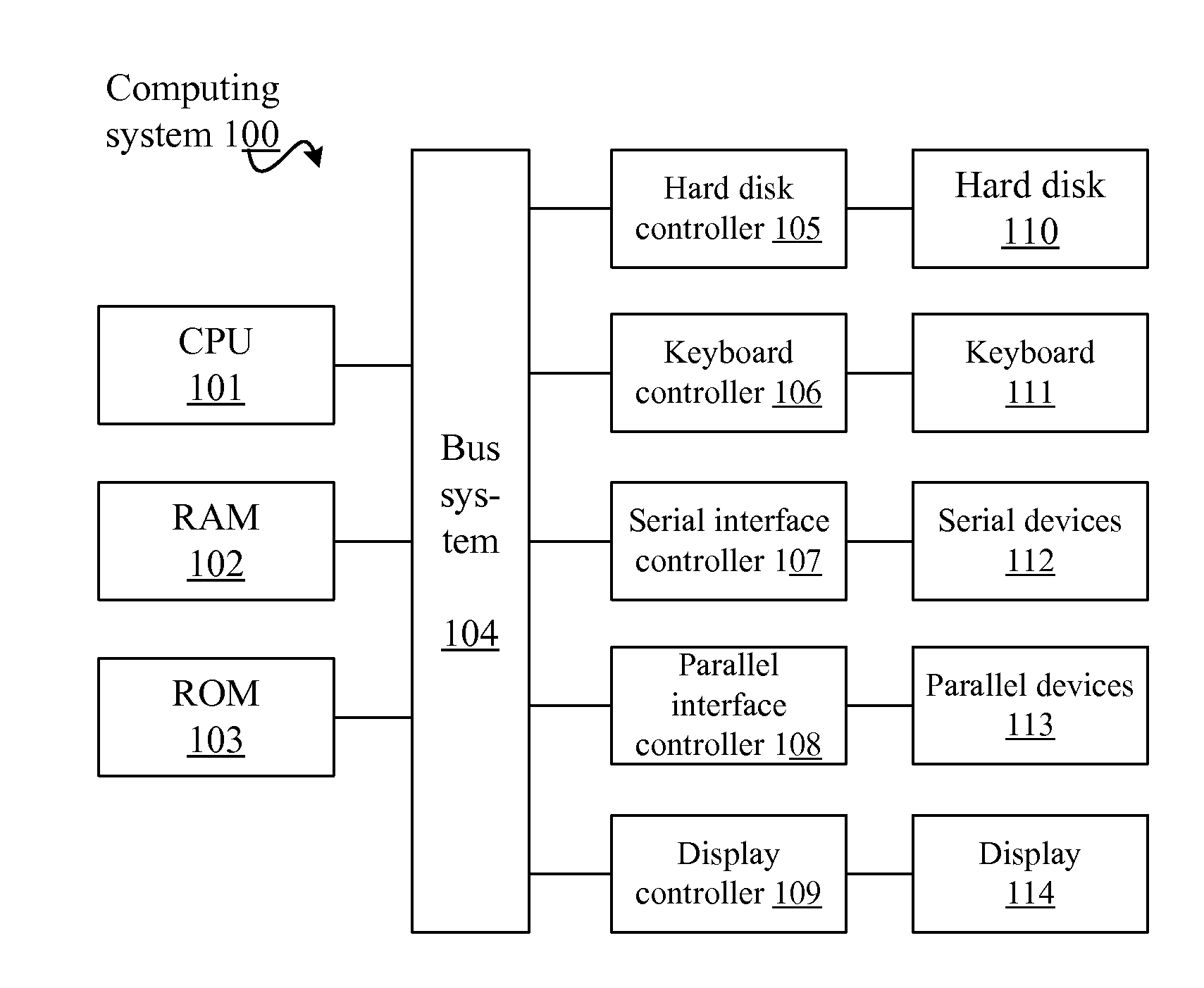

NAND Flash Memory Controller Exporting a NAND Interface

ActiveUS20100023800A1Satisfies needMemory architecture accessing/allocationData representation error detection/correctionHost typeHost machine

A NAND controller for interfacing between a host device and a flash memory device (e.g., a NAND flash memory device) fabricated on a flash die is disclosed. In some embodiments, the presently disclosed NAND controller includes electronic circuitry fabricated on a controller die, the controller die being distinct from the flash die, a first interface (e.g. a host-type interface, for example, a NAND interface) for interfacing between the electronic circuitry and the flash memory device, and a second interface (e.g. a flash-type interface) for interfacing between the controller and the host device, wherein the second interface is a NAND interface. According to some embodiments, the first interface is an inter-die interface. According to some embodiments, the first interface is a NAND interface. Systems including the presently disclosed NAND controller are also disclosed. Methods for assembling the aforementioned systems, and for reading and writing data using NAND controllers are also disclosed.

Owner:INNOVATIVE MEMORY SYST INC

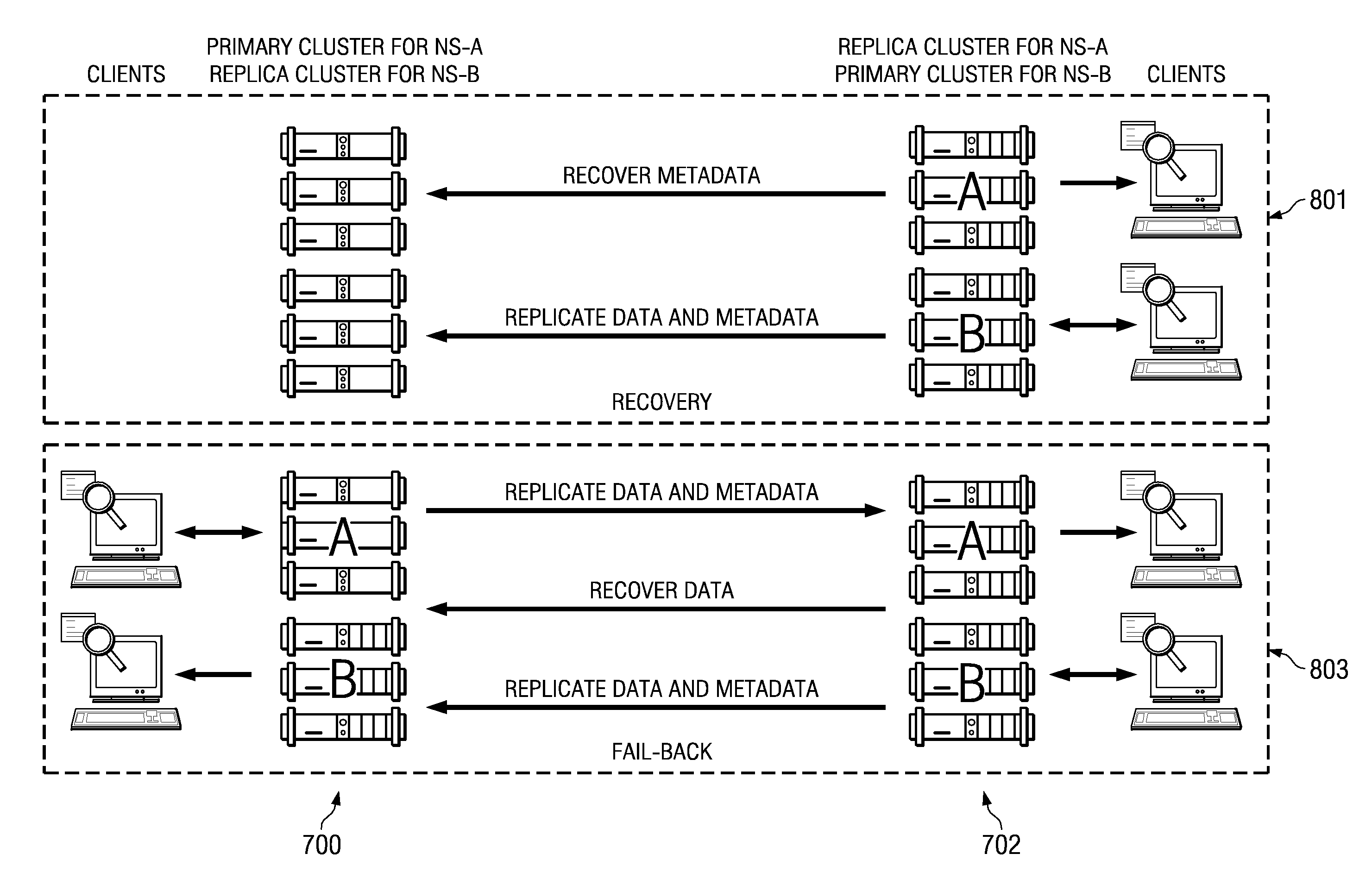

Fast primary cluster recovery

ActiveUS20090006888A1Easy to copyDigital data processing detailsRedundant data error correctionFailoverObject based

A cluster recovery process is implemented across a set of distributed archives, where each individual archive is a storage cluster of preferably symmetric nodes. Each node of a cluster typically executes an instance of an application that provides object-based storage of fixed content data and associated metadata. According to the storage method, an association or “link” between a first cluster and a second cluster is first established to facilitate replication. The first cluster is sometimes referred to as a “primary” whereas the “second” cluster is sometimes referred to as a “replica.” Once the link is made, the first cluster's fixed content data and metadata are then replicated from the first cluster to the second cluster, preferably in a continuous manner. Upon a failure of the first cluster, however, a failover operation occurs, and clients of the first cluster are redirected to the second cluster. Upon repair or replacement of the first cluster (a “restore”), the repaired or replaced first cluster resumes authority for servicing the clients of the first cluster. This restore operation preferably occurs in two stages: a “fast recovery” stage that involves preferably “bulk” transfer of the first cluster metadata, following by a “fail back” stage that involves the transfer of the fixed content data. Upon receipt of the metadata from the second cluster, the repaired or replaced first cluster resumes authority for the clients irrespective of whether the fail back stage has completed or even begun.

Owner:HITACHI VANTARA LLC

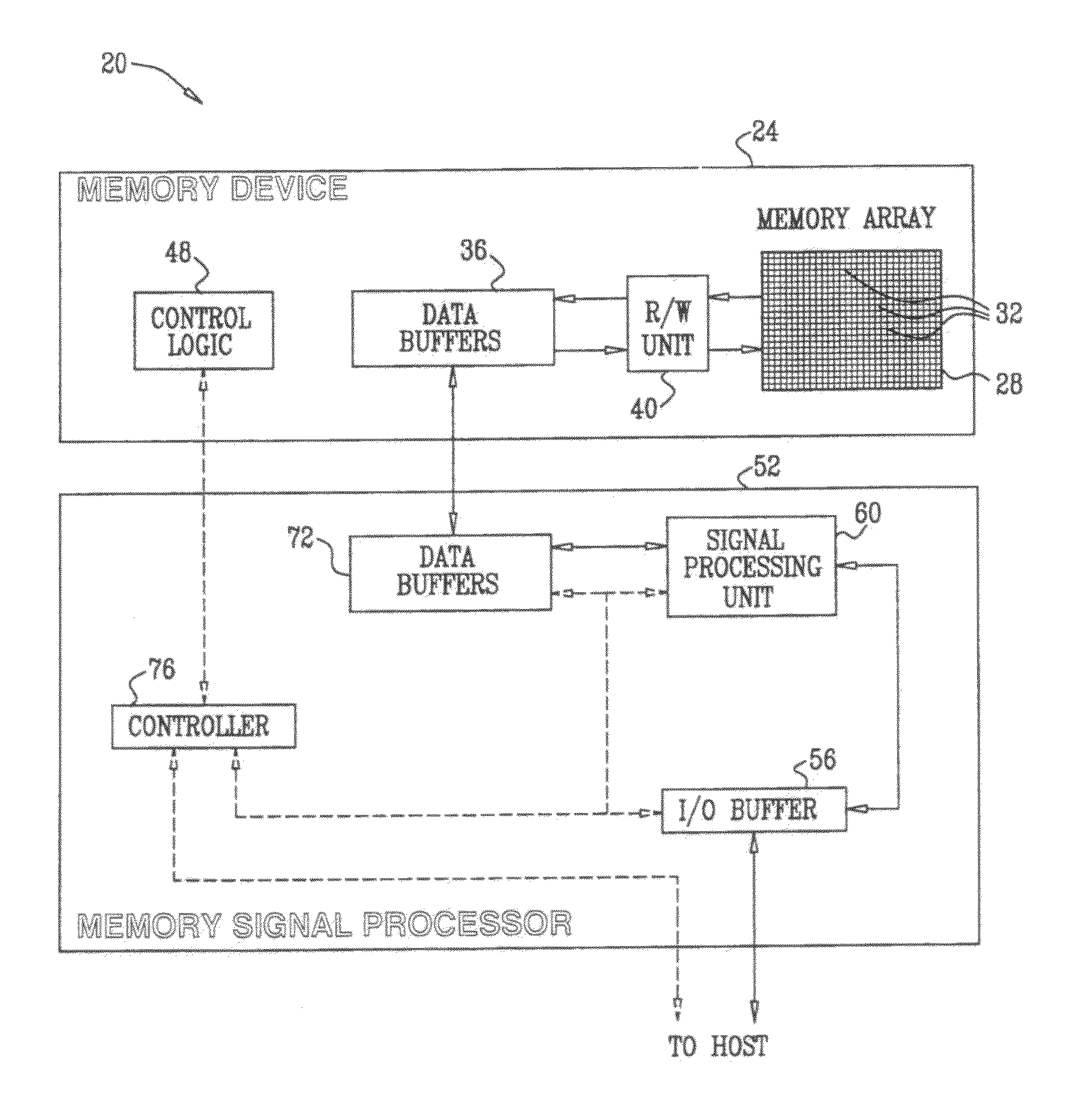

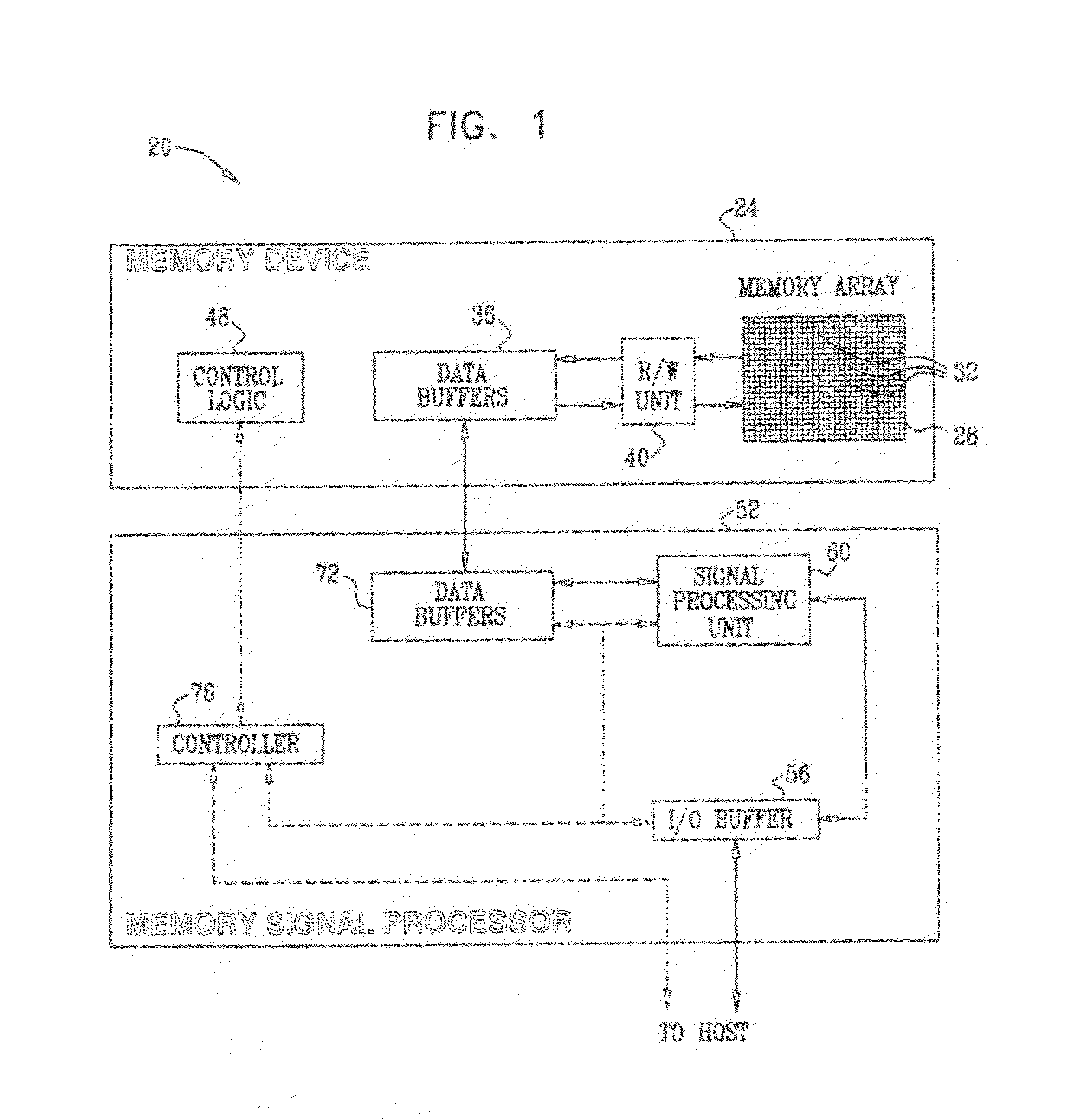

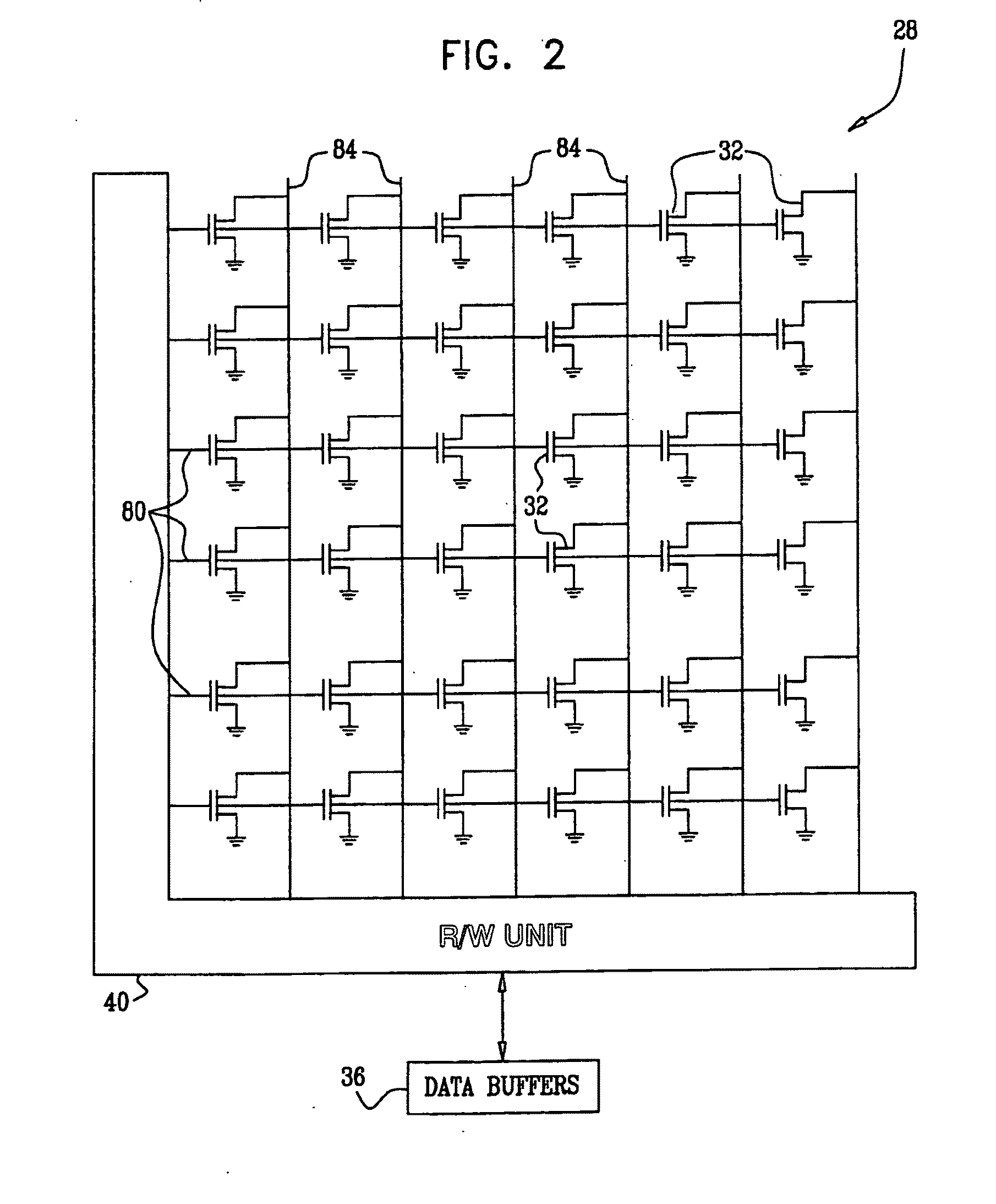

Automatic defect management in memory devices

ActiveUS20100115376A1Code conversionError correction/detection using block codesAlternative methodsComputer science

A method for storing data in a memory (28) that includes analog memory cells (32) includes identifying one or more defective memory cells in a group of the analog memory cells. An Error Correction Code (ECC) is selected responsively to a characteristic of the identified defective memory cells. The data is encoded using the selected ECC and the encoded data is stored in the group of the analog memory cells. In an alternative method, an identification of one or more defective memory cells among the analog memory cells is generated. Analog values are read from the analog memory cells in which the encoded data were stored, including at least one of the defective memory cells. The analog values are processed using an ECC decoding process responsively to the identification of the at least one of the defective memory cells, so as to reconstruct the data.

Owner:APPLE INC

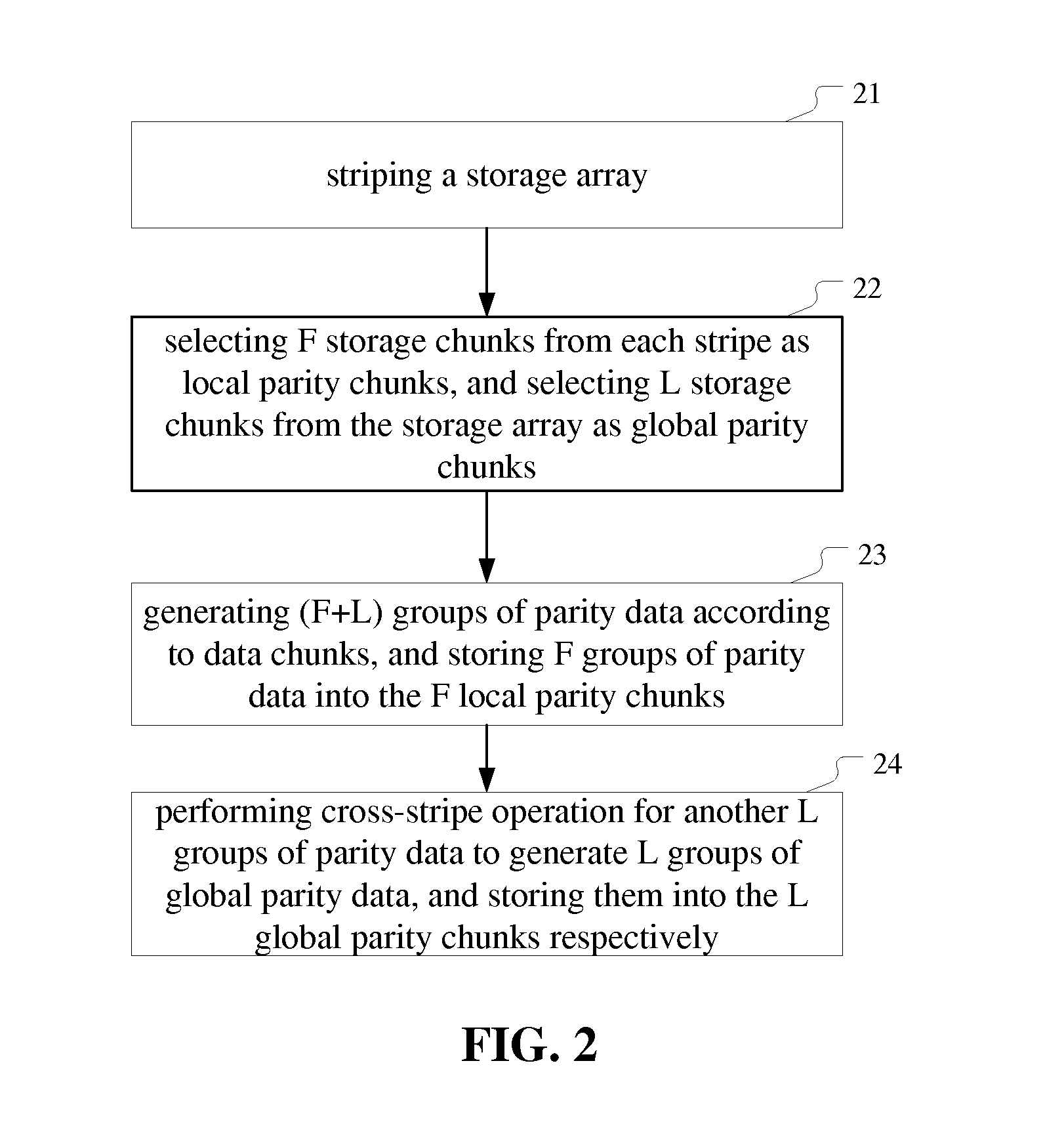

Managing a storage array

ActiveUS20140040702A1Easy to manageError capabilityCode conversionError correction/detection using interleaving techniquesFault toleranceErasure code

The present invention provides a method and apparatus of managing a storage array. The method comprises: striping the storage array to form a plurality of stripes; selecting F storage chunks from each stripe as local parity chunks, and selecting another L storage chunks from the storage array as global parity chunks; performing (F+L) fault tolerant erasure coding on all data chunks in a stripe to generate (F+L) groups of parity data, and storing F groups of parity data therein into the F local parity chunks; performing cross-stripe operation on another L groups of parity data to generate L groups of global parity data, and storing them into the L global parity chunks, respectively. The apparatus corresponds to the method. With the invention, a plurality of errors in the storage array can be detected and / or recovered to improve fault tolerance and space utilization of the storage array.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com