Patents

Literature

2636 results about "Data integrity" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Data integrity is the maintenance of, and the assurance of the accuracy and consistency of, data over its entire life-cycle, and is a critical aspect to the design, implementation and usage of any system which stores, processes, or retrieves data. The term is broad in scope and may have widely different meanings depending on the specific context – even under the same general umbrella of computing. It is at times used as a proxy term for data quality, while data validation is a pre-requisite for data integrity. Data integrity is the opposite of data corruption. The overall intent of any data integrity technique is the same: ensure data is recorded exactly as intended (such as a database correctly rejecting mutually exclusive possibilities,) and upon later retrieval, ensure the data is the same as it was when it was originally recorded. In short, data integrity aims to prevent unintentional changes to information. Data integrity is not to be confused with data security, the discipline of protecting data from unauthorized parties.

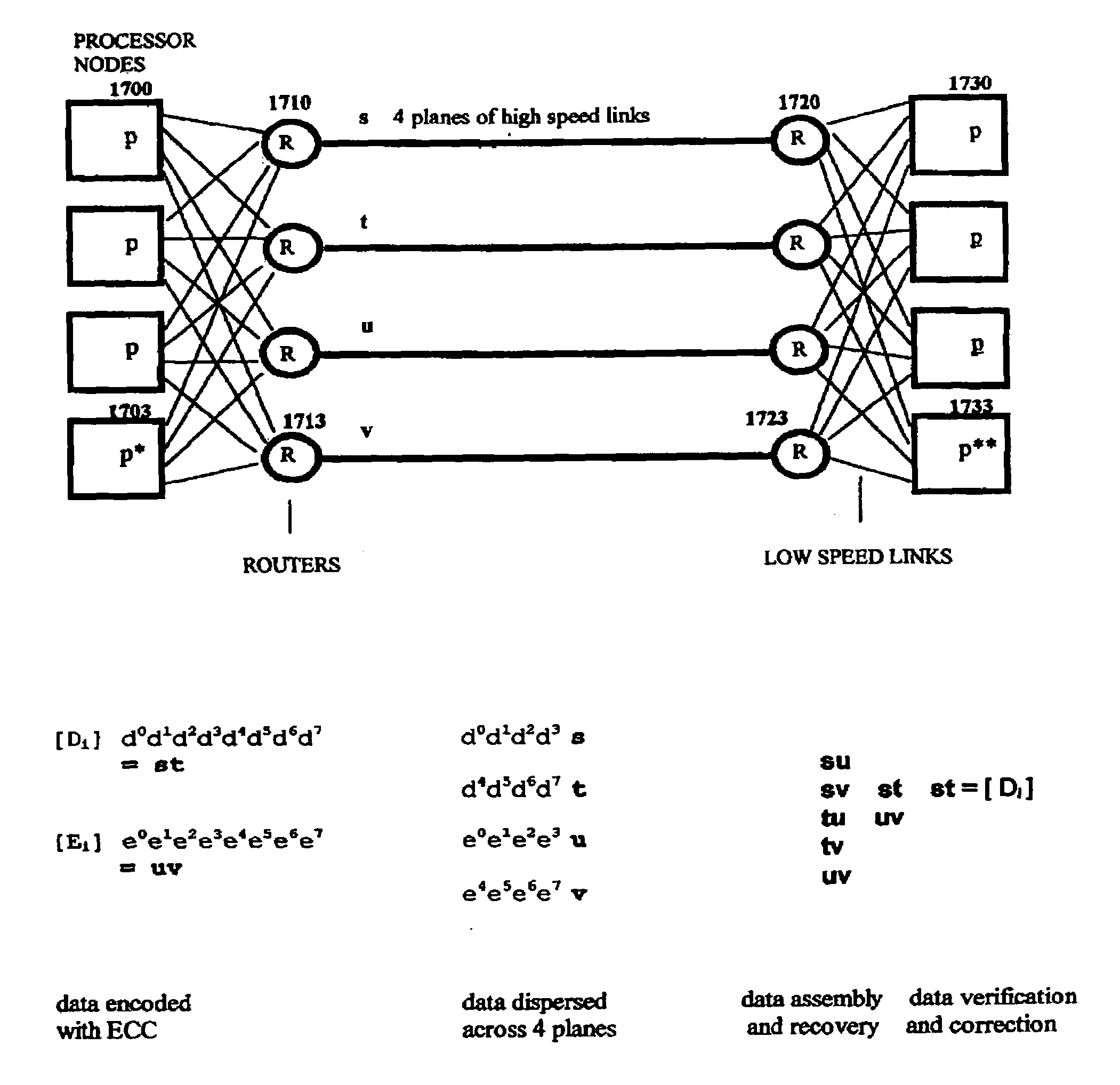

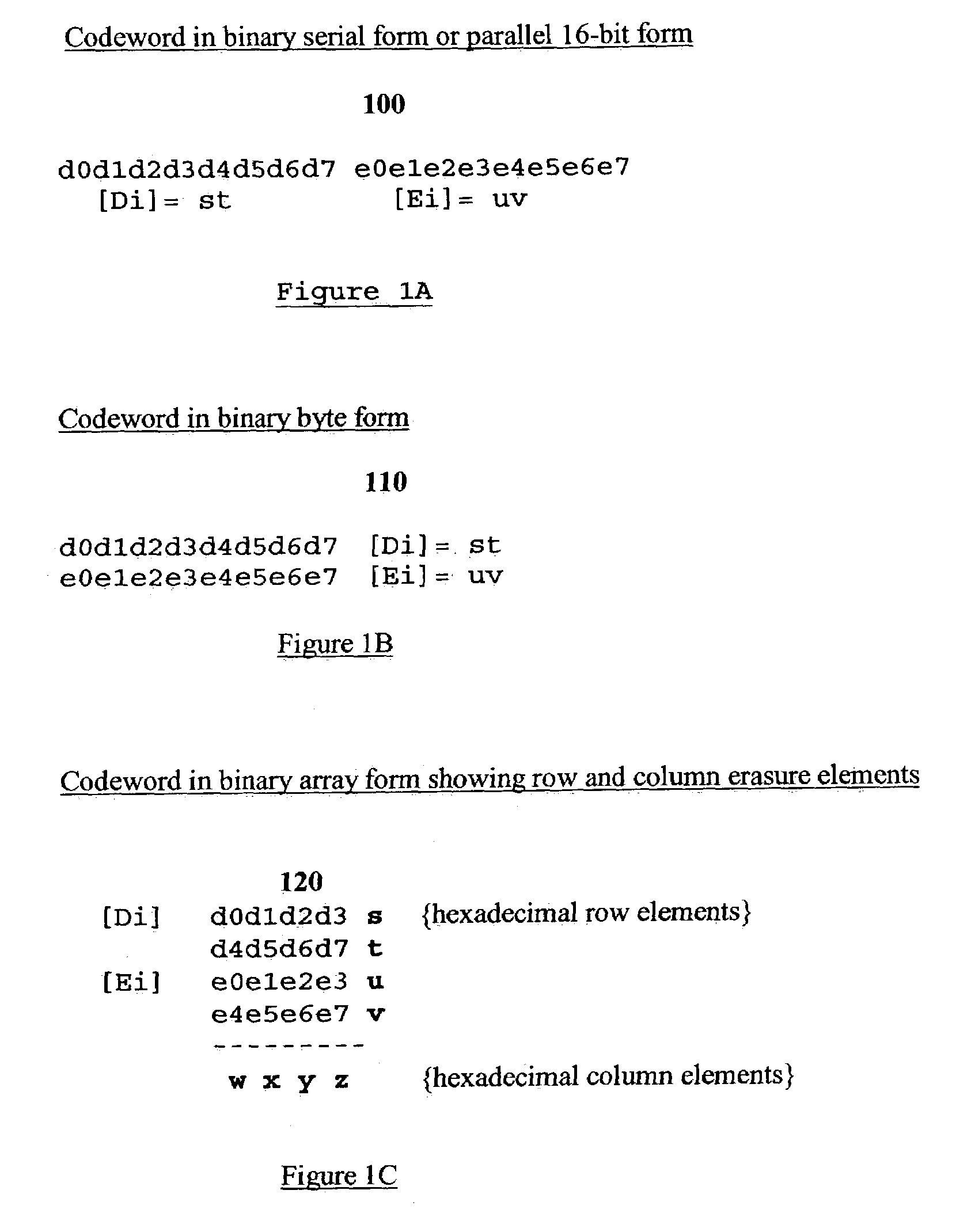

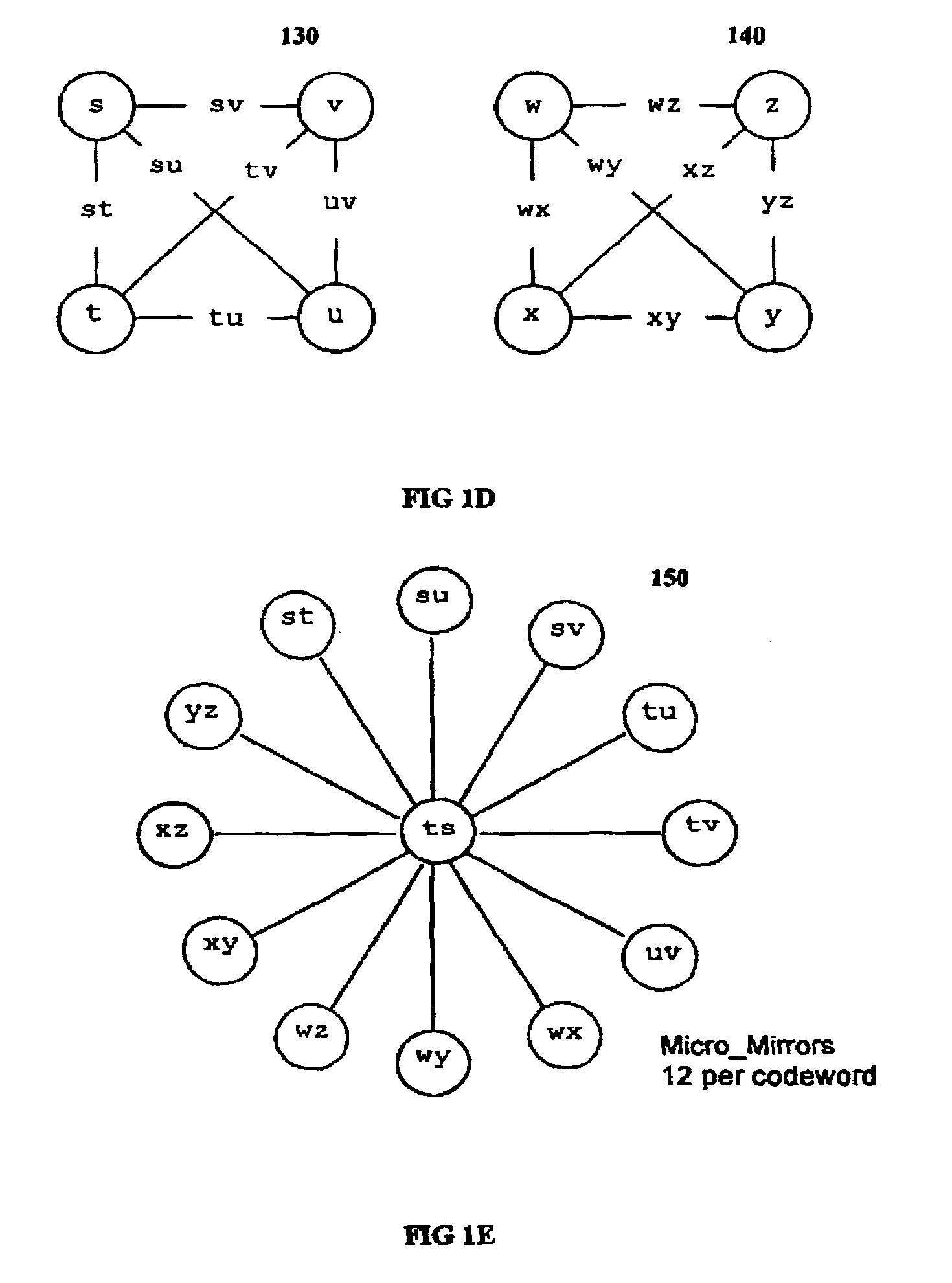

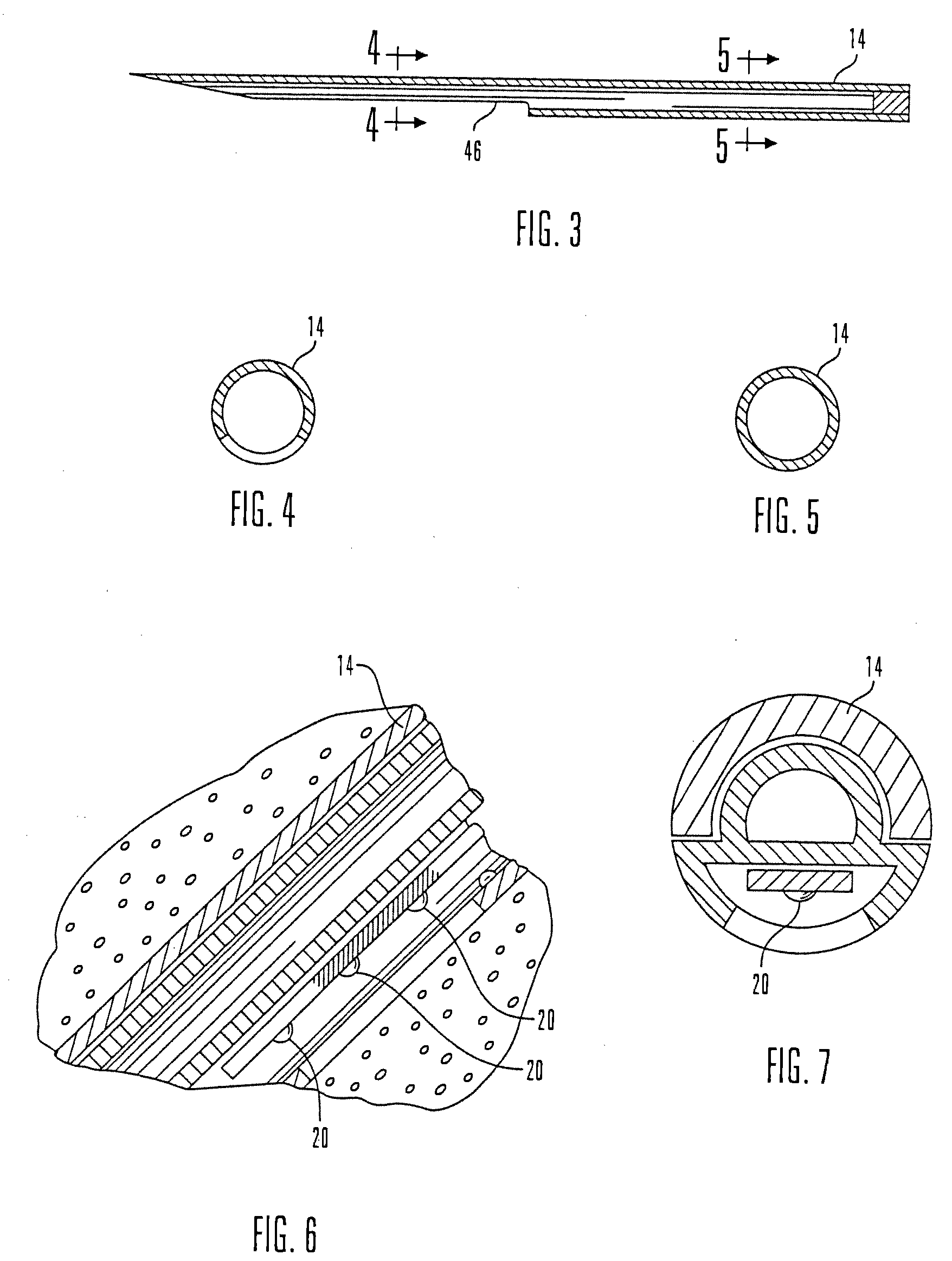

Multi-dimensional data protection and mirroring method for micro level data

ActiveUS7103824B2Detection errorLow common data sizeCode conversionCyclic codesData validationData integrity

The invention discloses a data validation, mirroring and error / erasure correction method for the dispersal and protection of one and two-dimensional data at the micro level for computer, communication and storage systems. Each of 256 possible 8-bit data bytes are mirrored with a unique 8-bit ECC byte. The ECC enables 8-bit burst and 4-bit random error detection plus 2-bit random error correction for each encoded data byte. With the data byte and ECC byte configured into a 4 bit×4 bit codeword array and dispersed in either row, column or both dimensions the method can perform dual 4-bit row and column erasure recovery. It is shown that for each codeword there are 12 possible combinations of row and column elements called couplets capable of mirroring the data byte. These byte level micro-mirrors outperform conventional mirroring in that each byte and its ECC mirror can self-detect and self-correct random errors and can recover all dual erasure combinations over four elements. Encoding at the byte quanta level maximizes application flexibility. Also disclosed are fast encode, decode and reconstruction methods via boolean logic, processor instructions and software table look-up with the intent to run at line and application speeds. The new error control method can augment ARQ algorithms and bring resiliency to system fabrics including routers and links previously limited to the recovery of transient errors. Image storage and storage over arrays of static devices can benefit from the two-dimensional capabilities. Applications with critical data integrity requirements can utilize the method for end-to-end protection and validation. An extra ECC byte per codeword extends both the resiliency and dimensionality.

Owner:HALFORD ROBERT

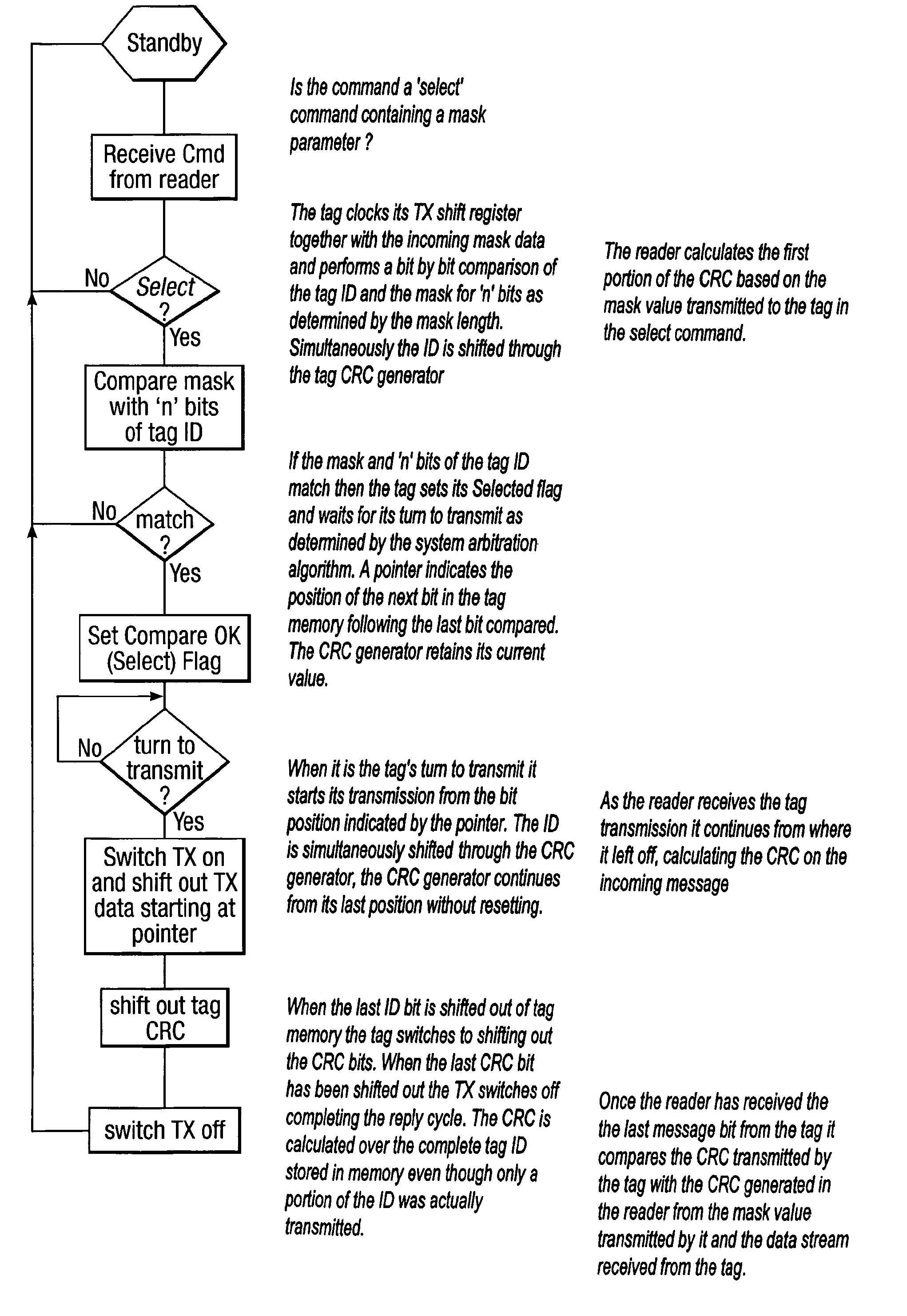

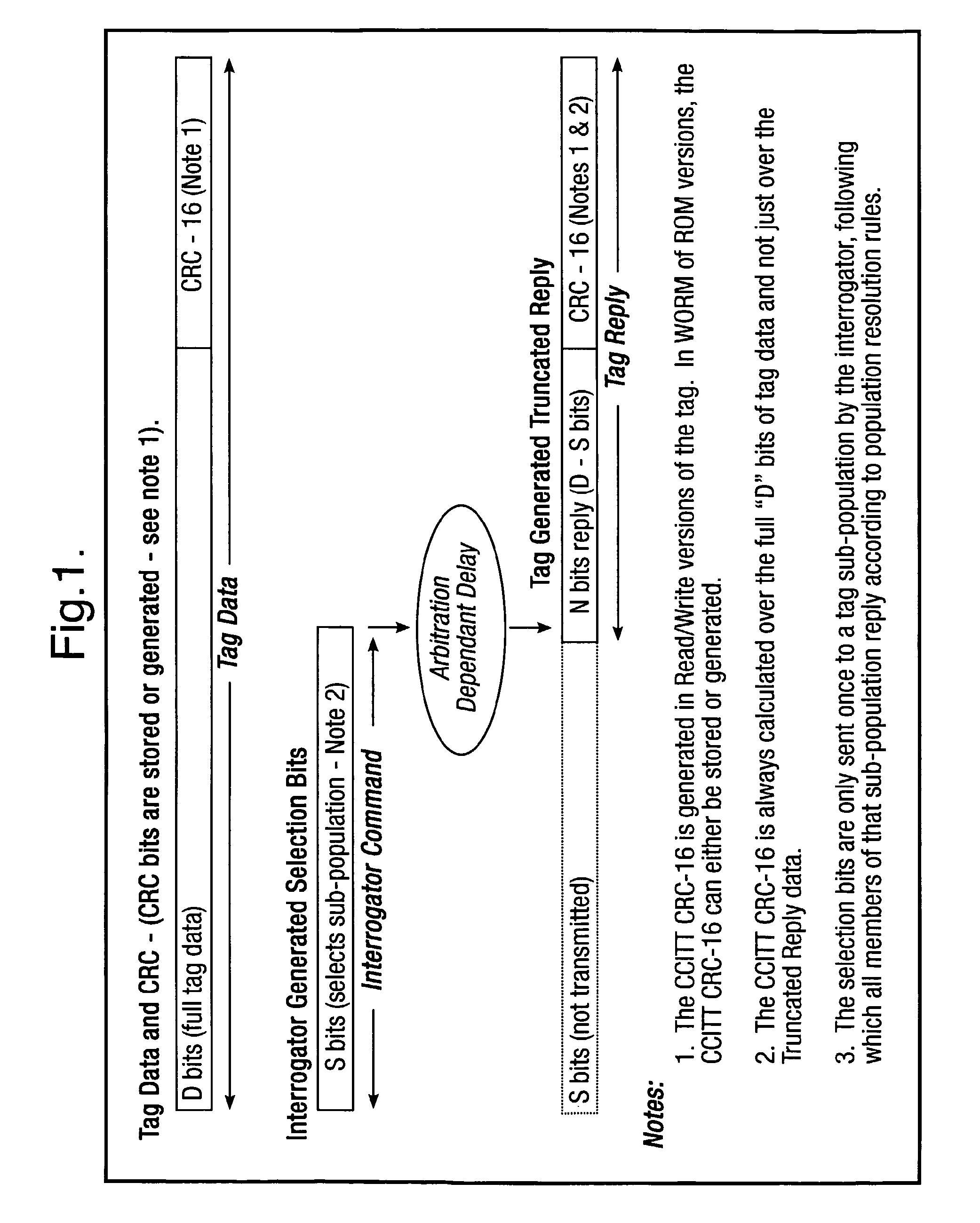

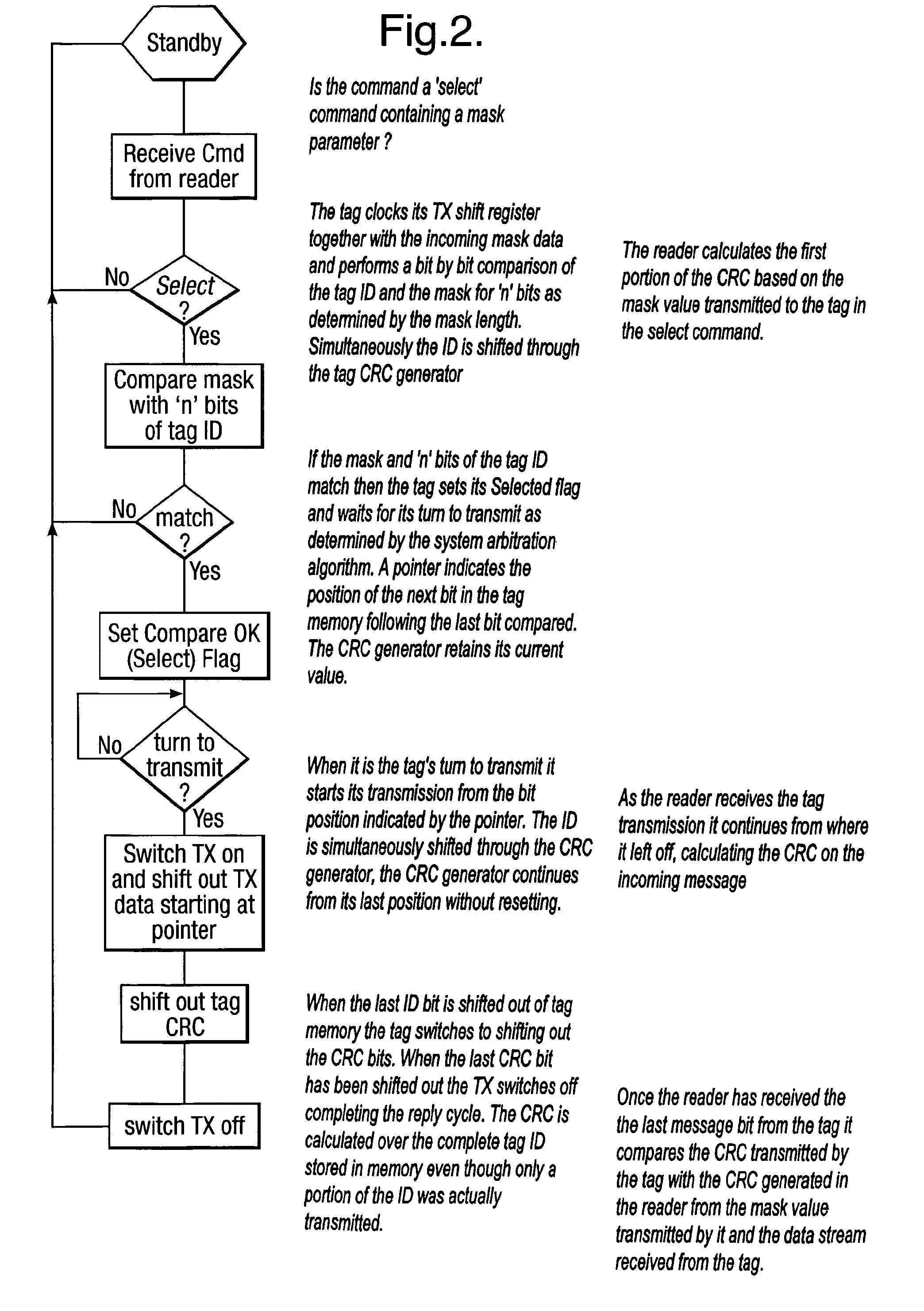

Method and system for calculating and verifying the integrity of data in a data transmission system

ActiveUS7987405B2Not having capability to accuratelyStay flexibleError preventionMemory record carrier reading problemsComputer hardwareData integrity

A method is described of calculating and verifying the integrity of data in a data communication system. The system comprises a base station and one or more remote stations, such as in an RFID system. The method includes transmitting a select instruction from the base station to the one or more remote stations, the select instruction containing a data field which matches a portion of an identity or other data field in one or more of the remote stations; transmitting from a selected remote station or stations a truncated reply containing identity data or other data of the remote station but omitting the portion transmitted by the base station; calculating in the base station a check sum or CRC from the data field originally sent and the truncated reply data received and comparing the calculated check sum or CRC with the check sum or CRC sent by the remote station.

Owner:ZEBRA TECH CORP

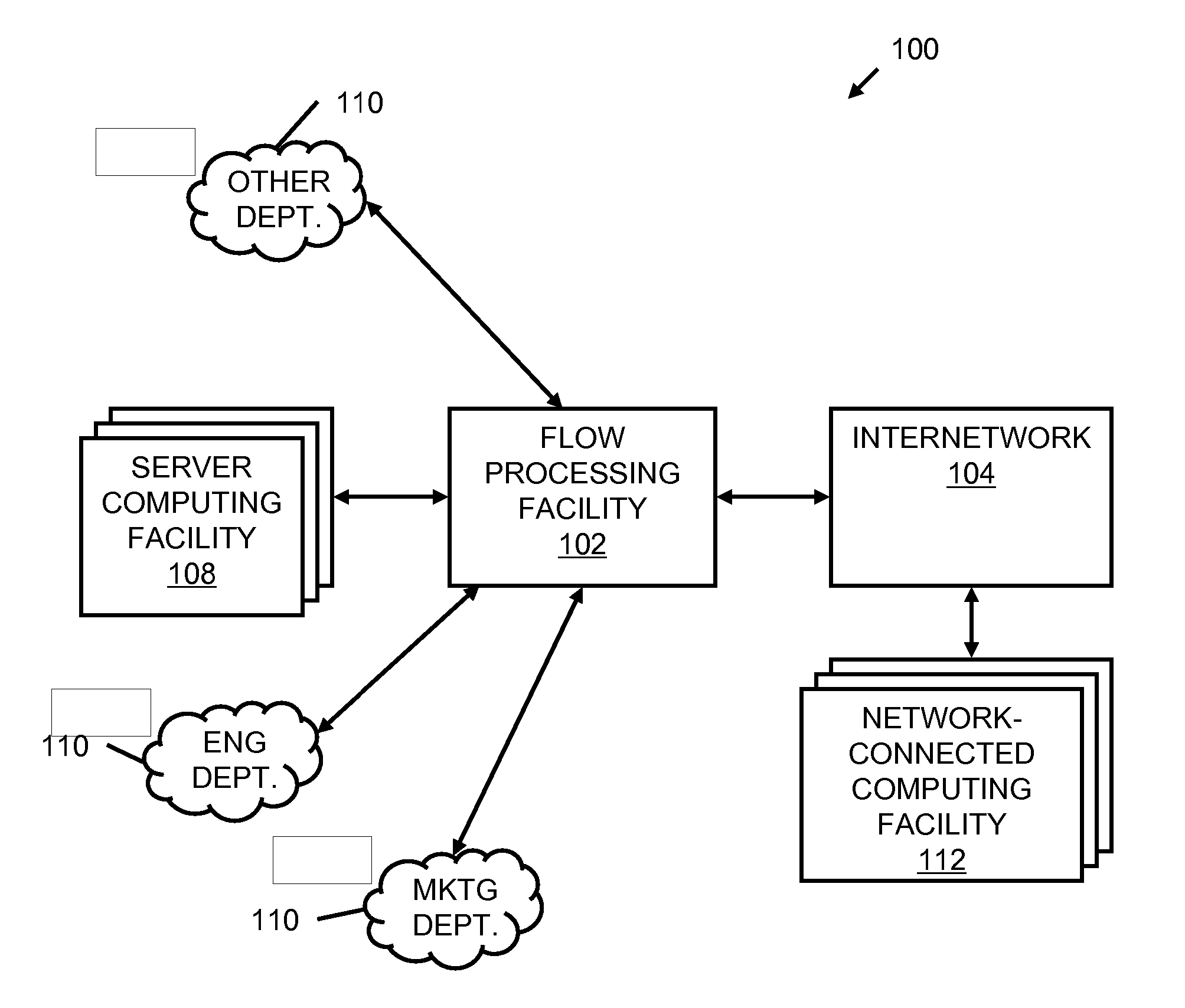

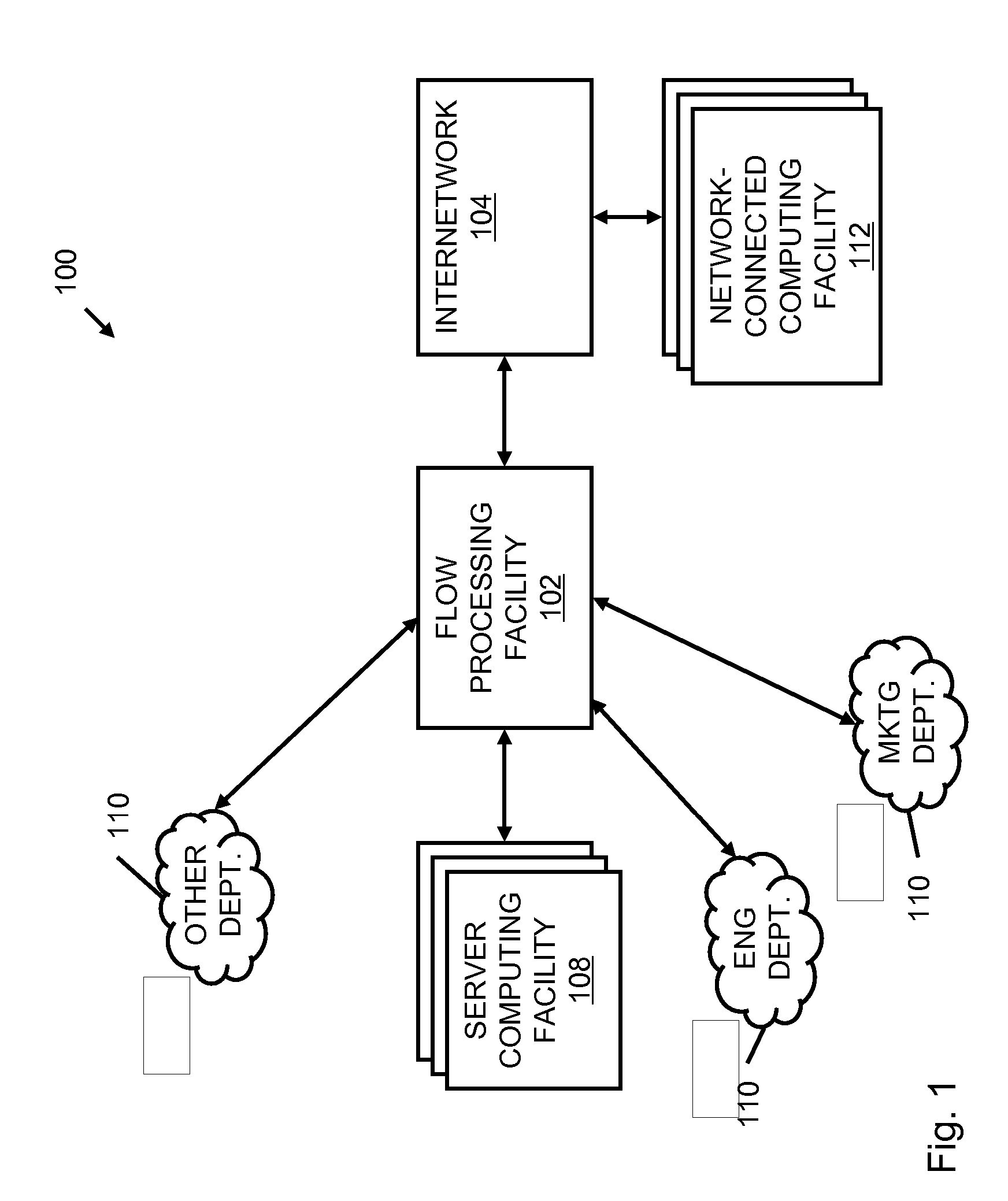

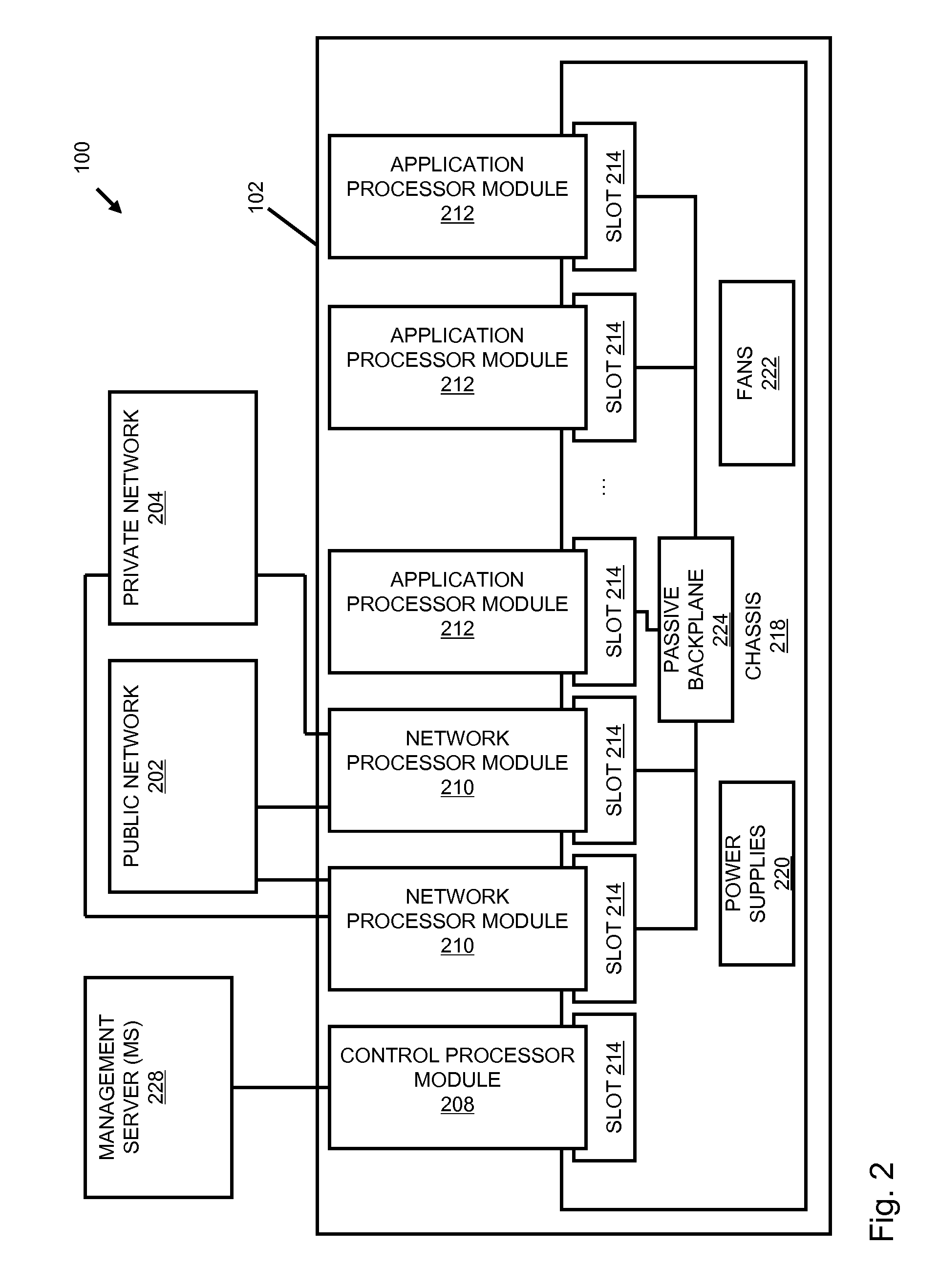

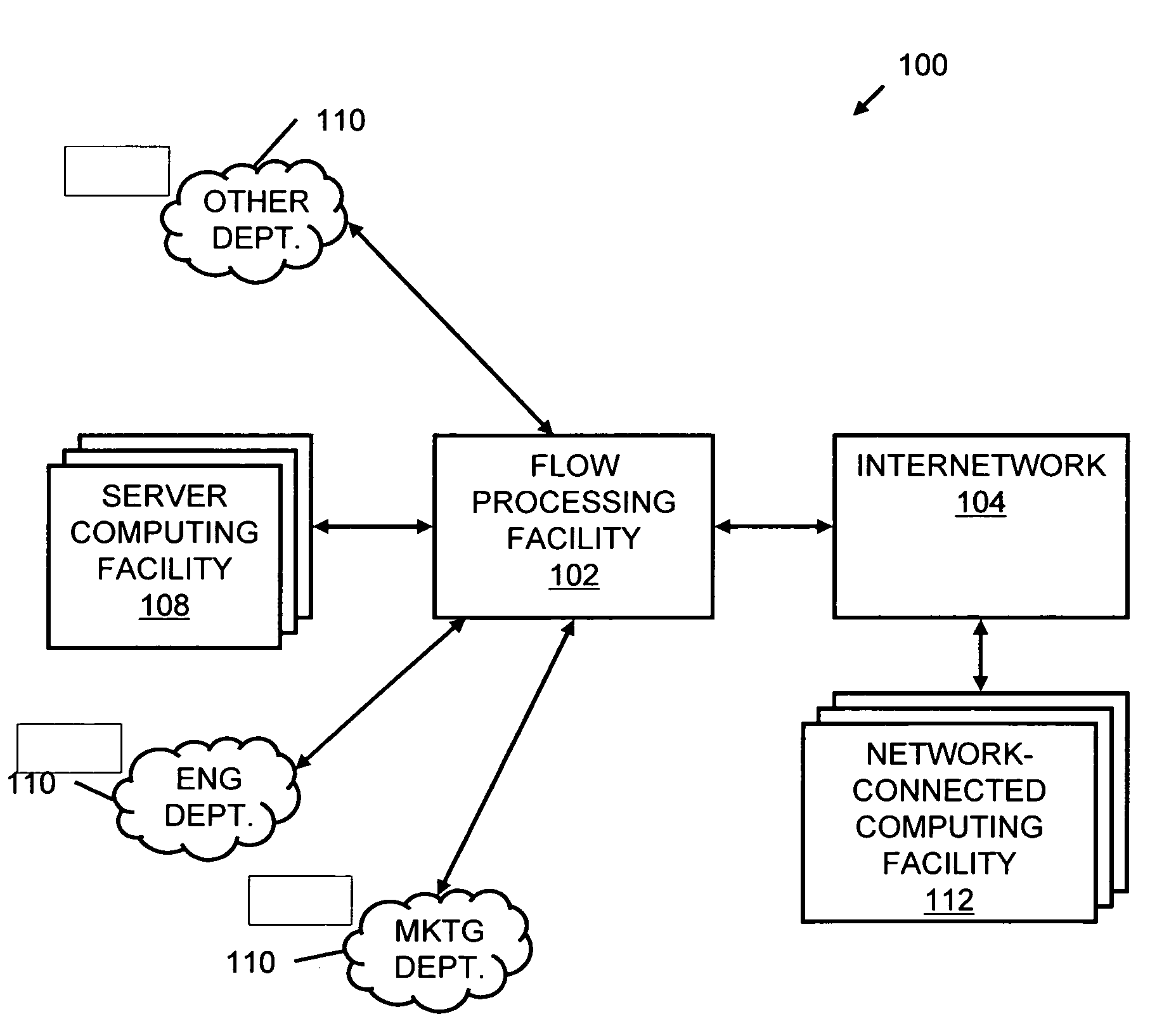

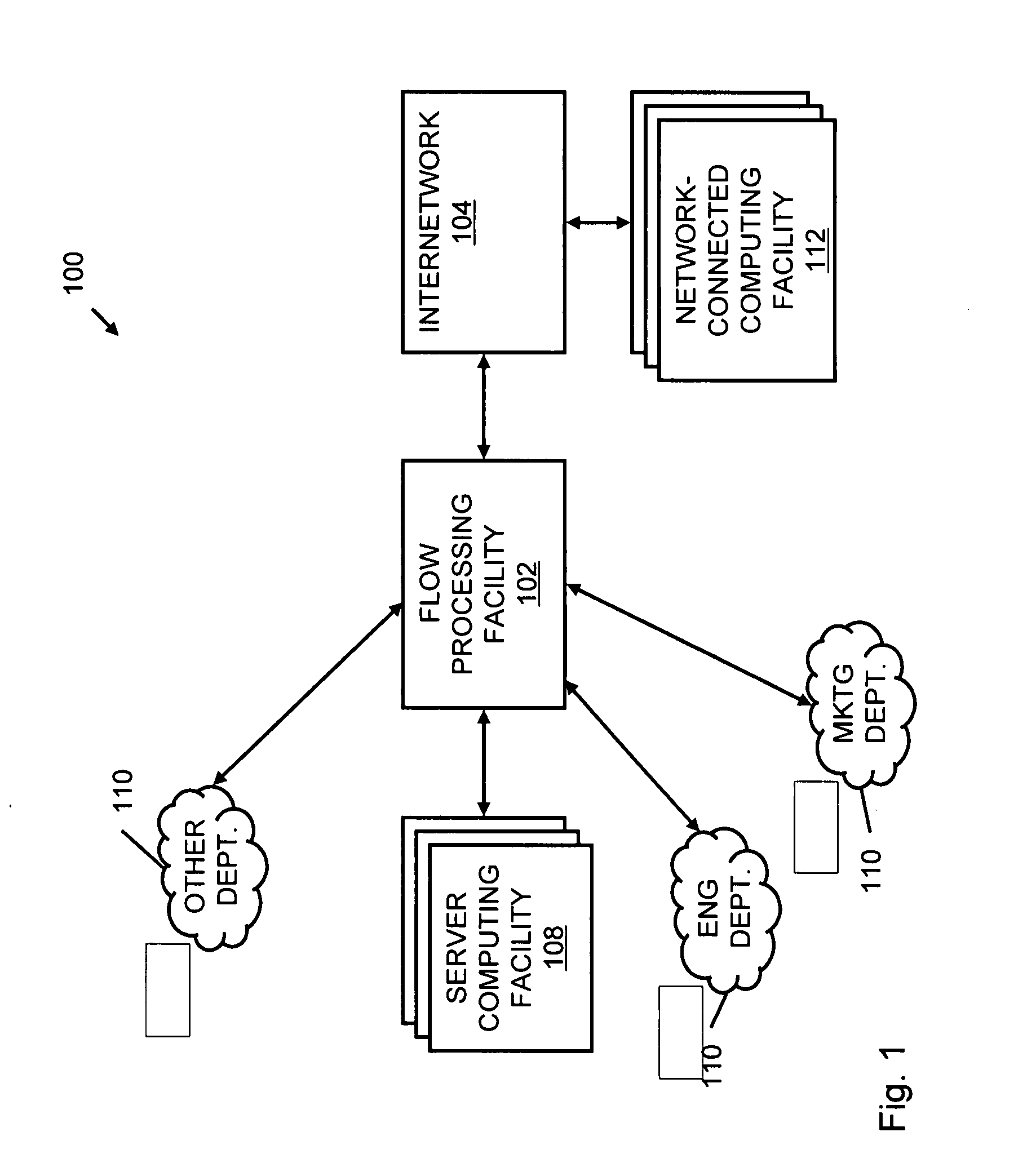

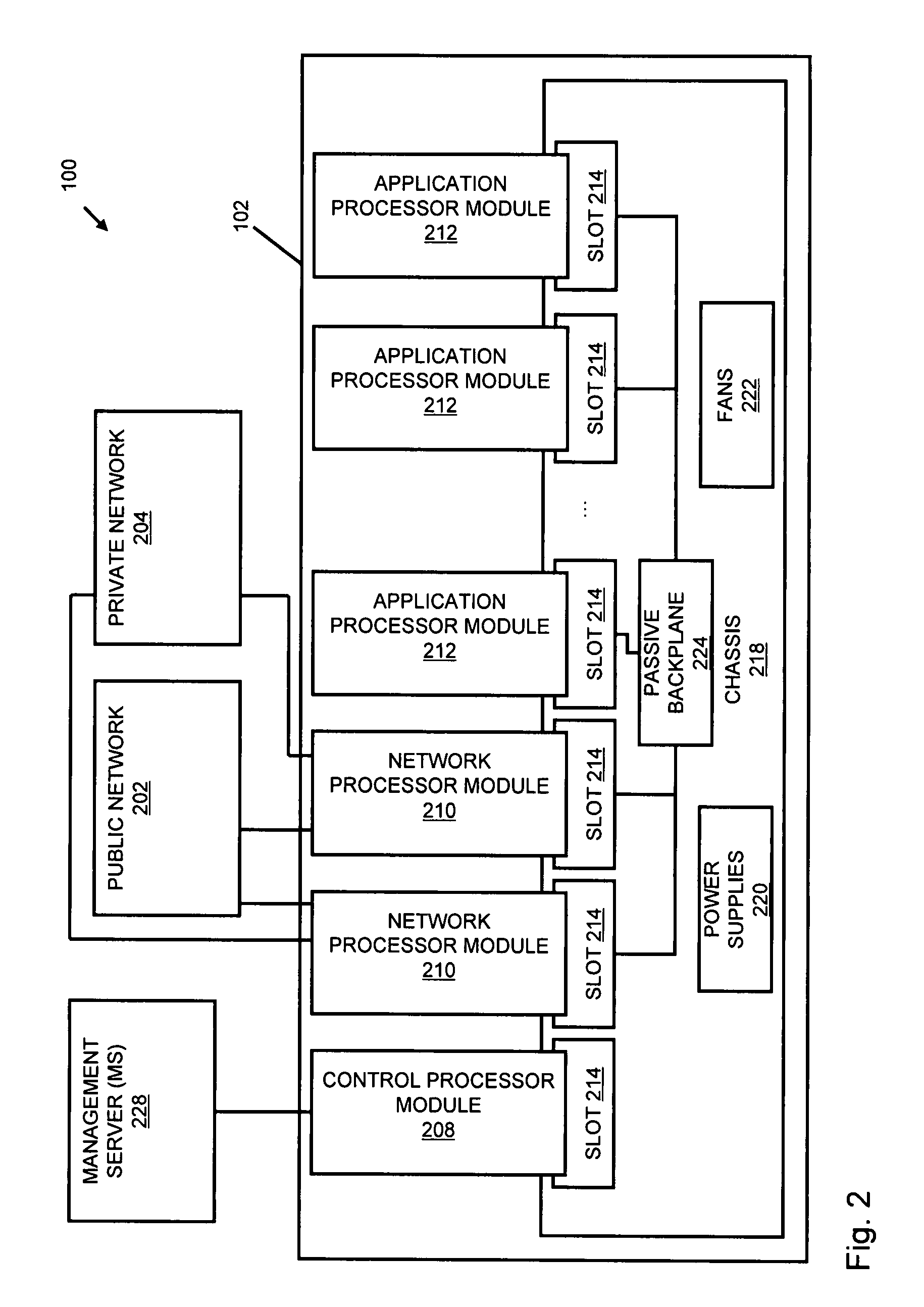

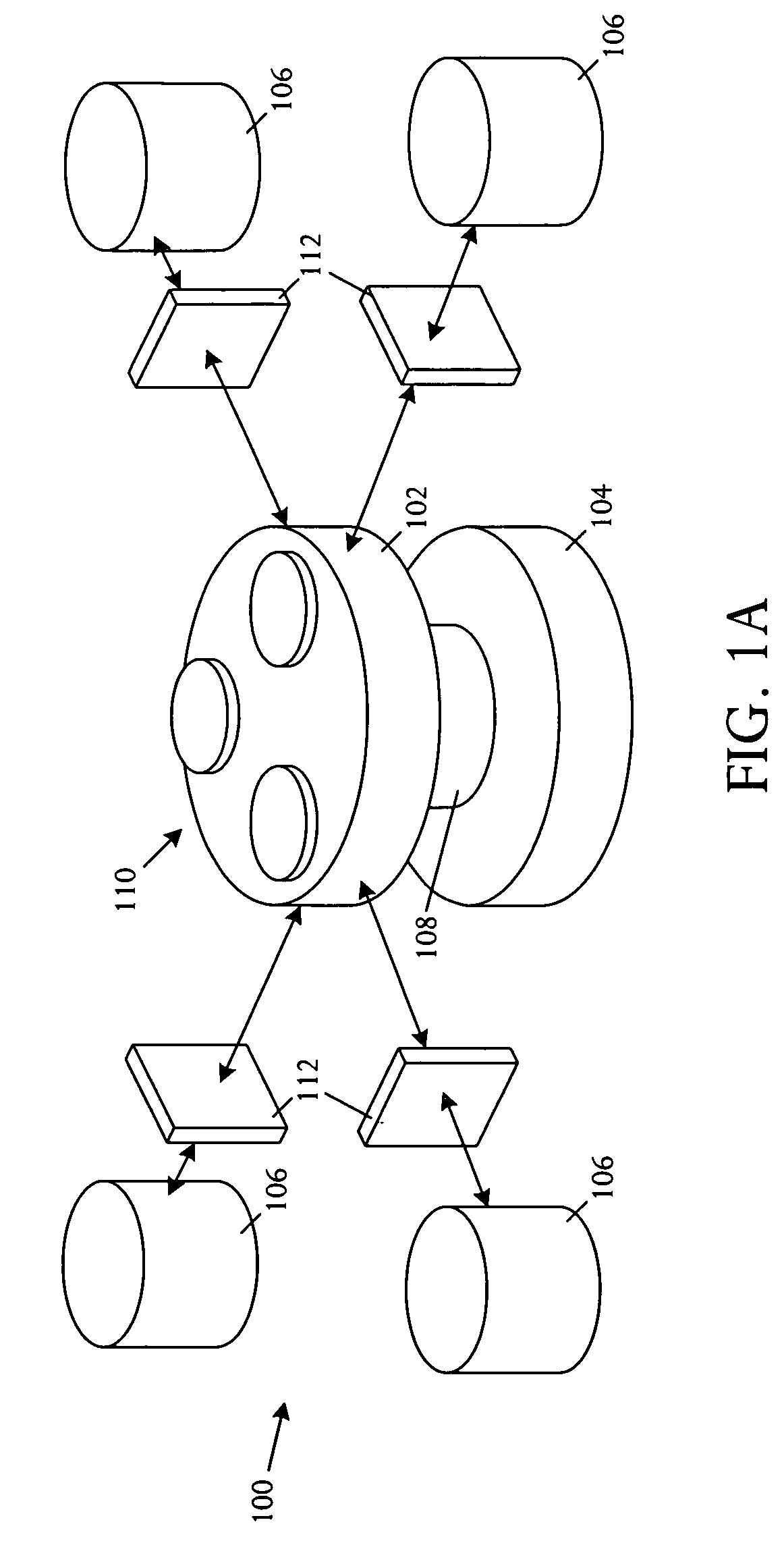

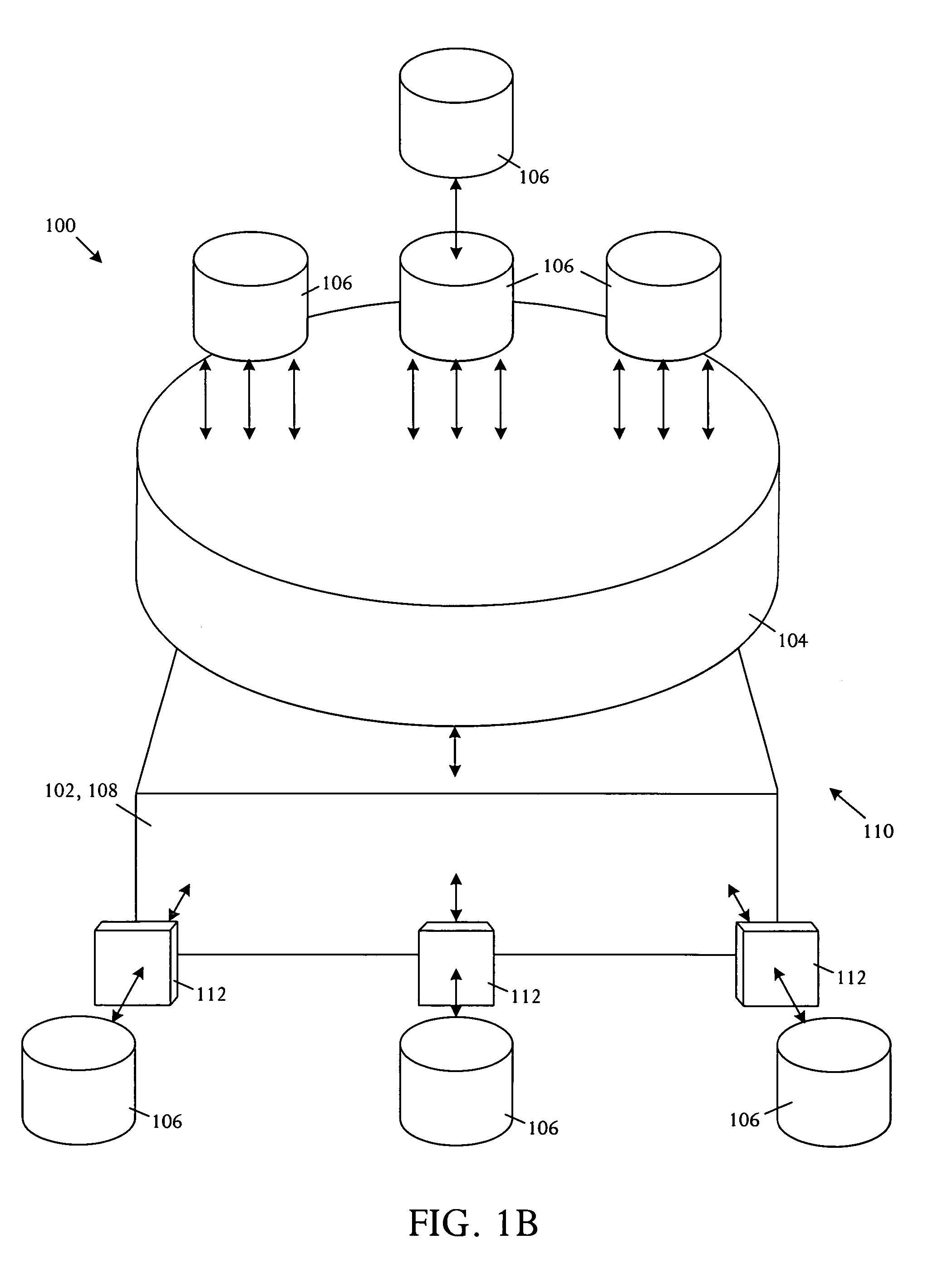

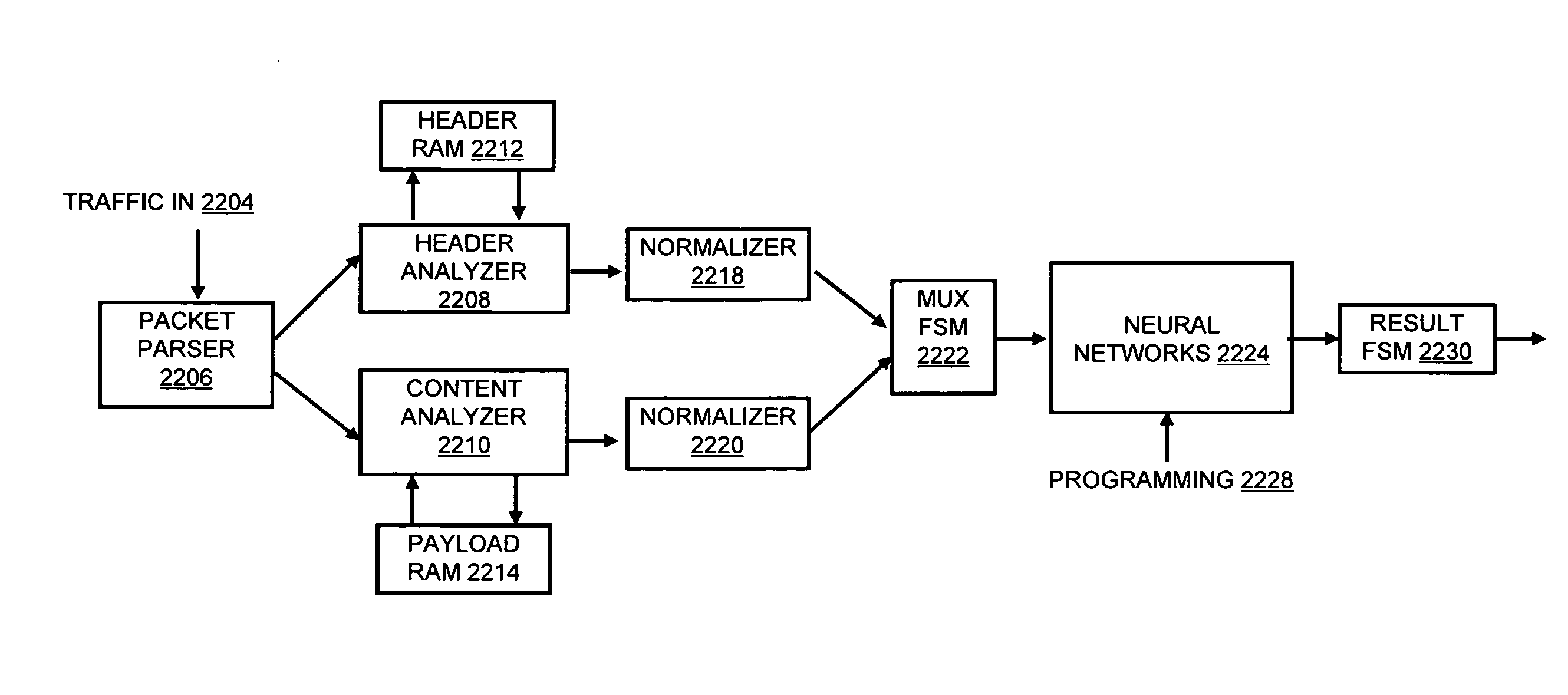

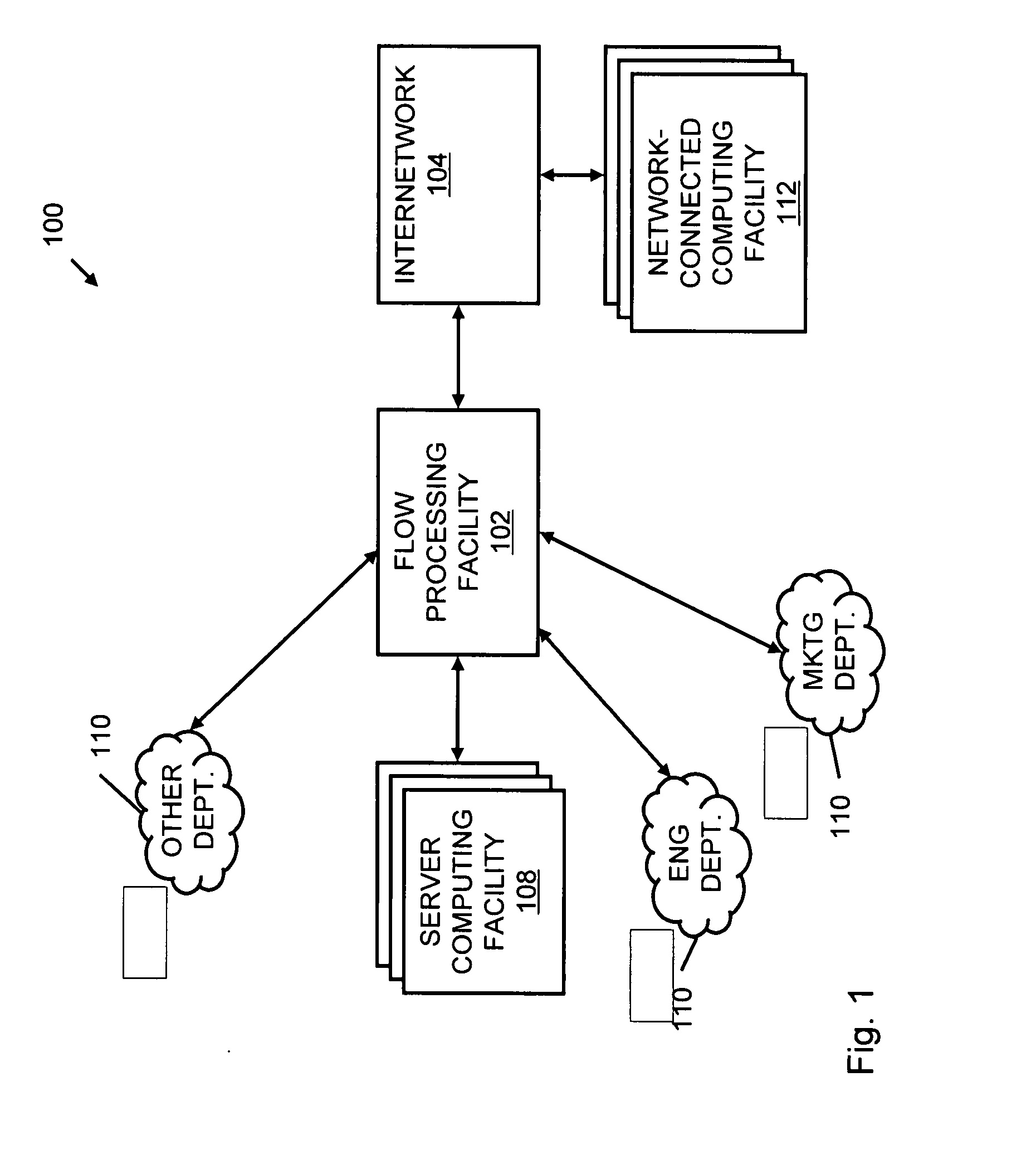

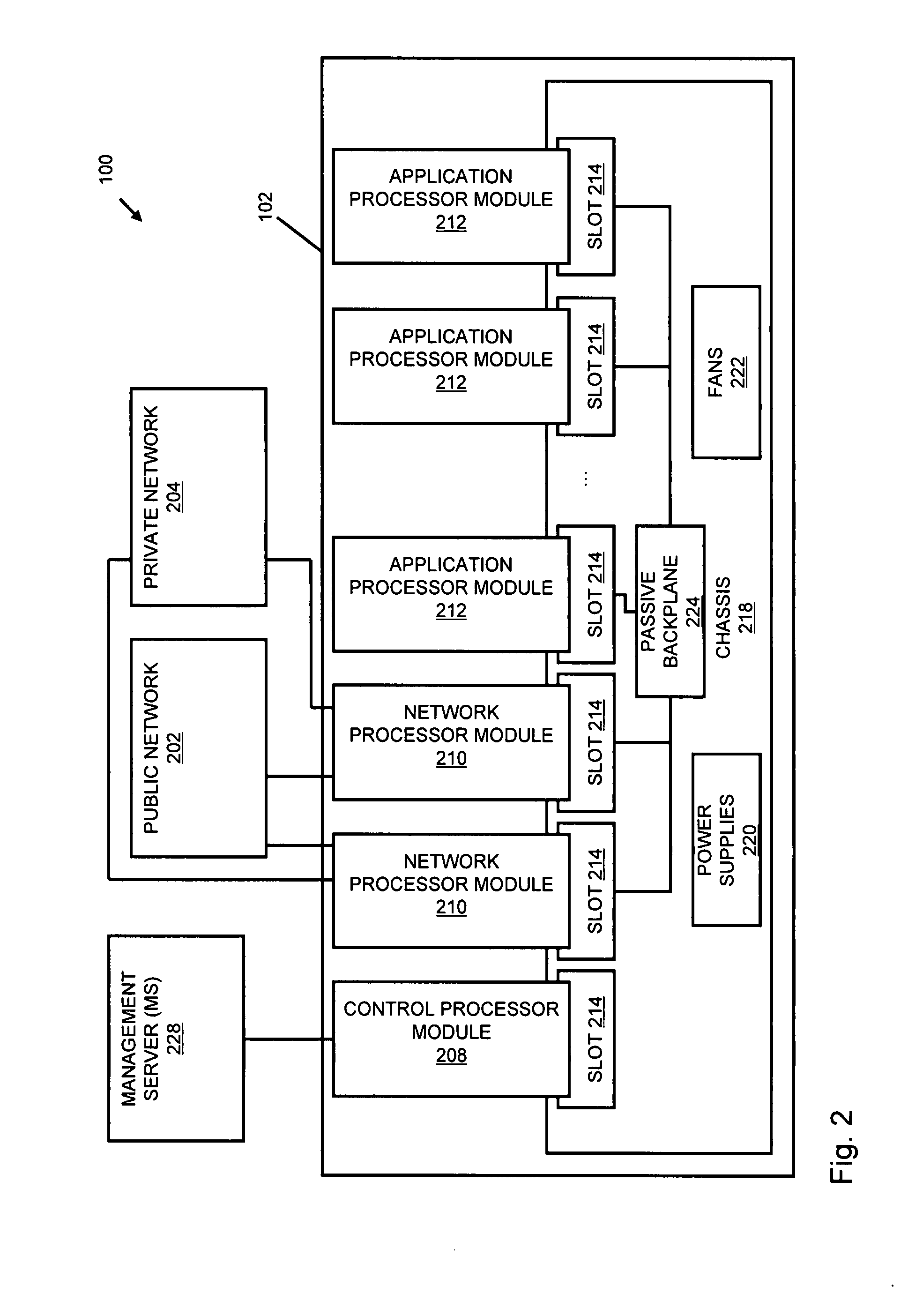

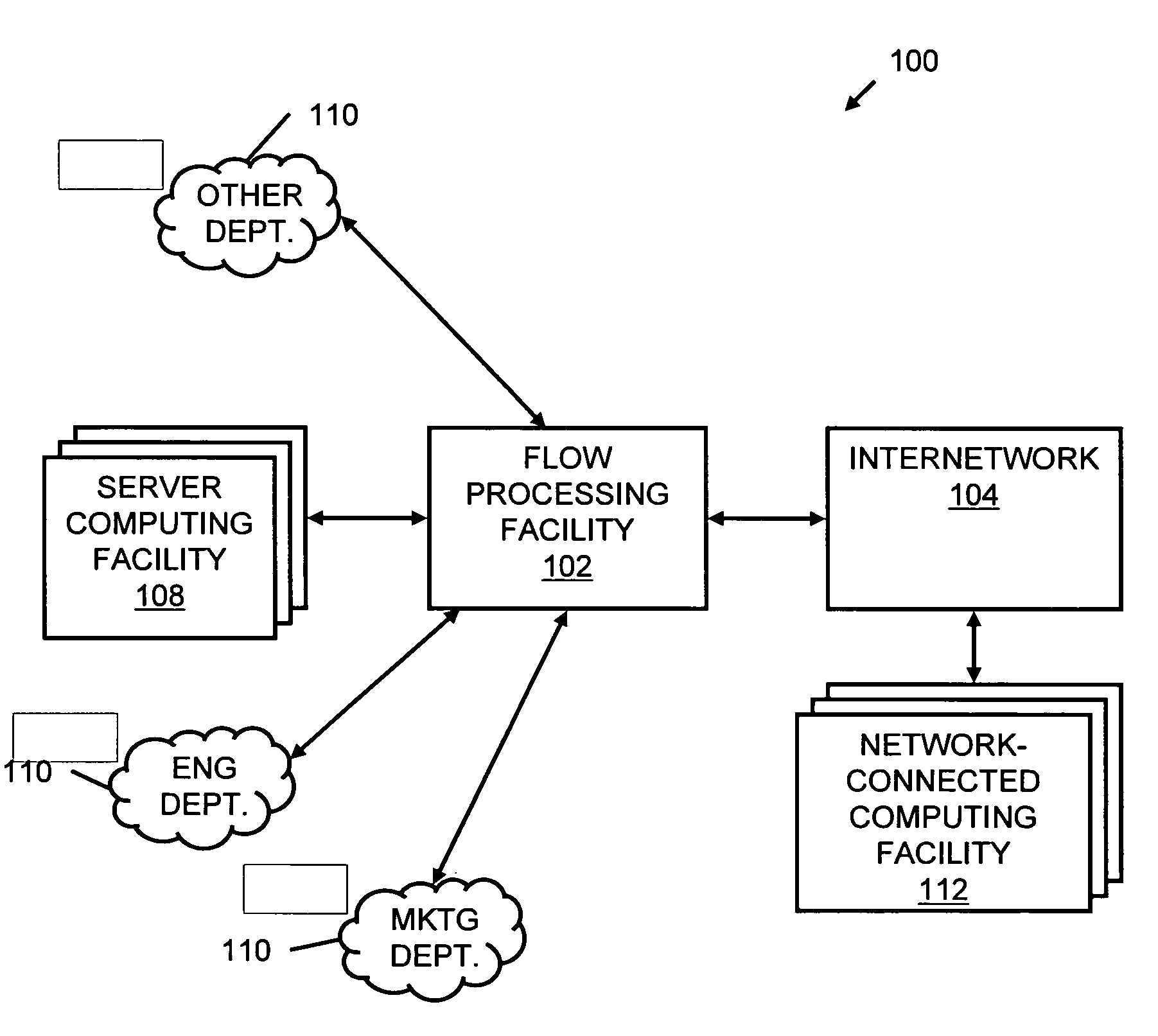

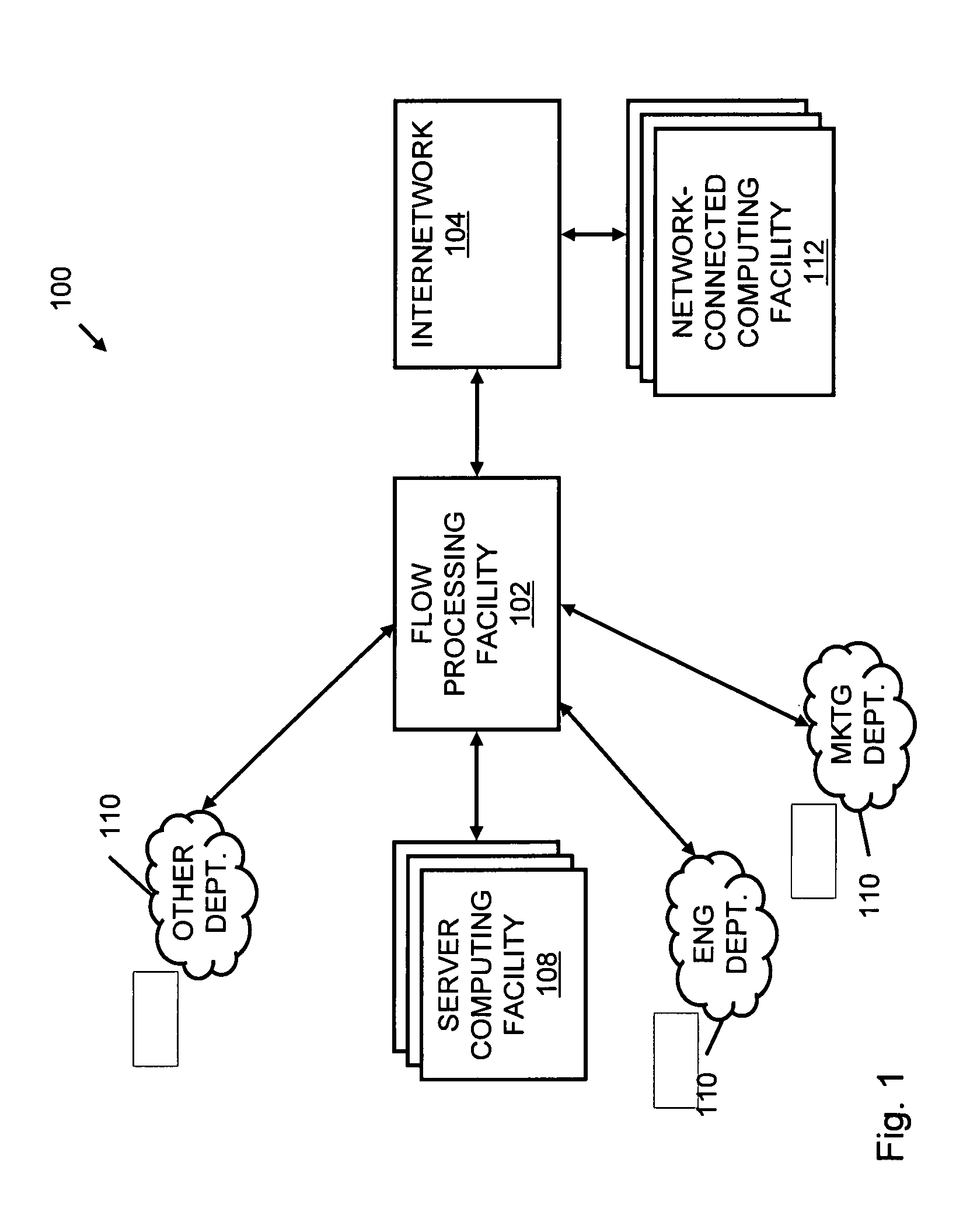

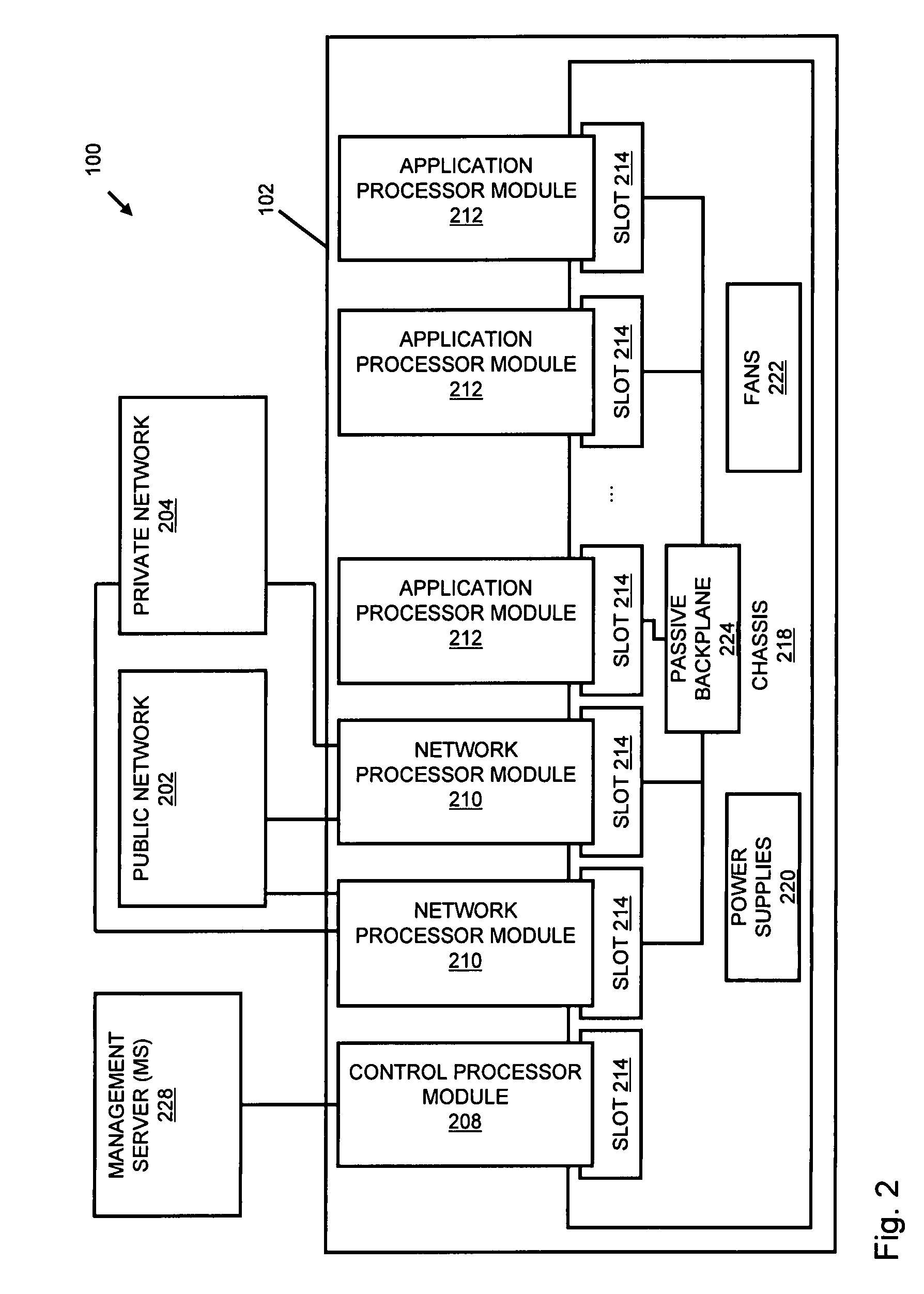

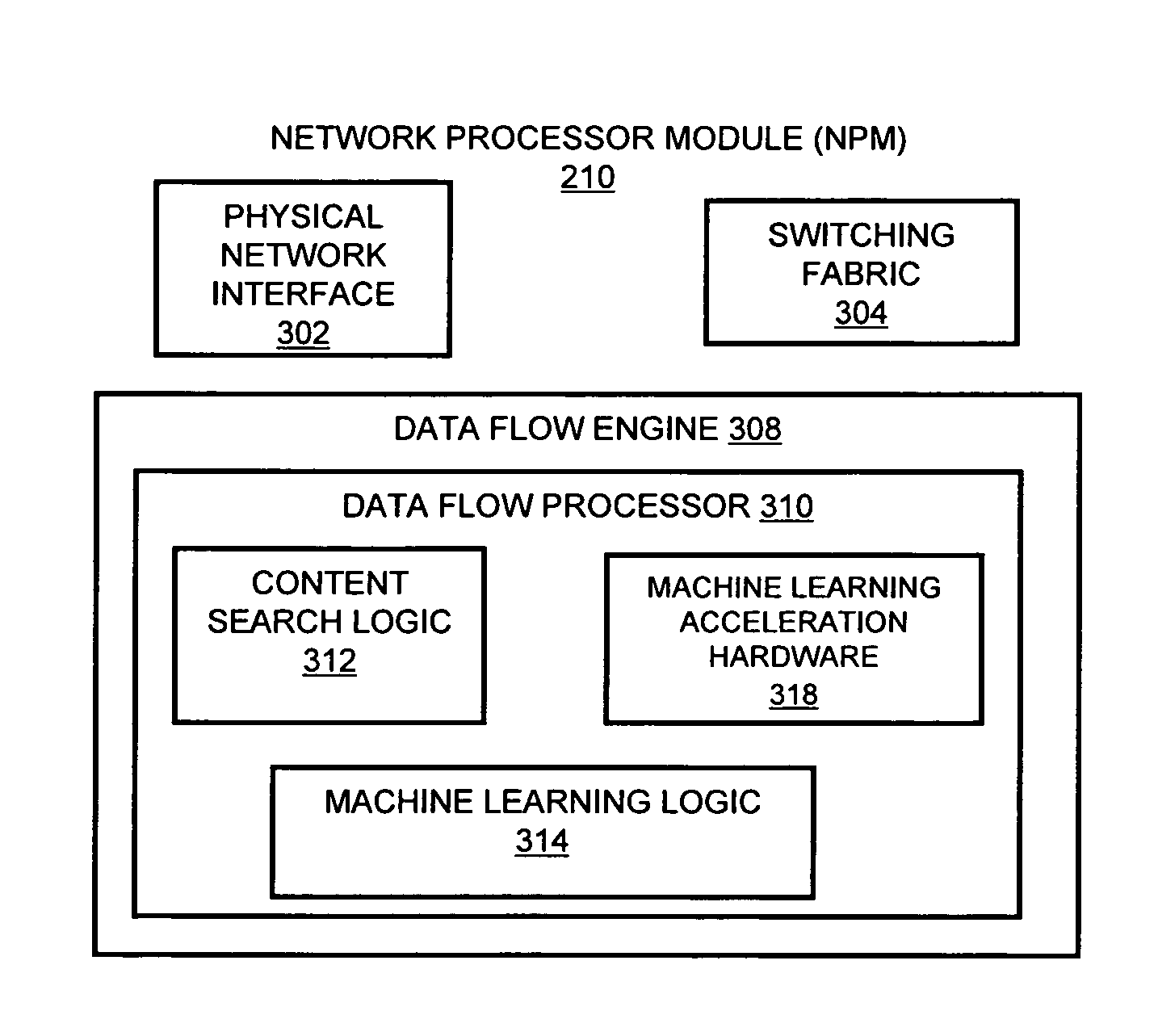

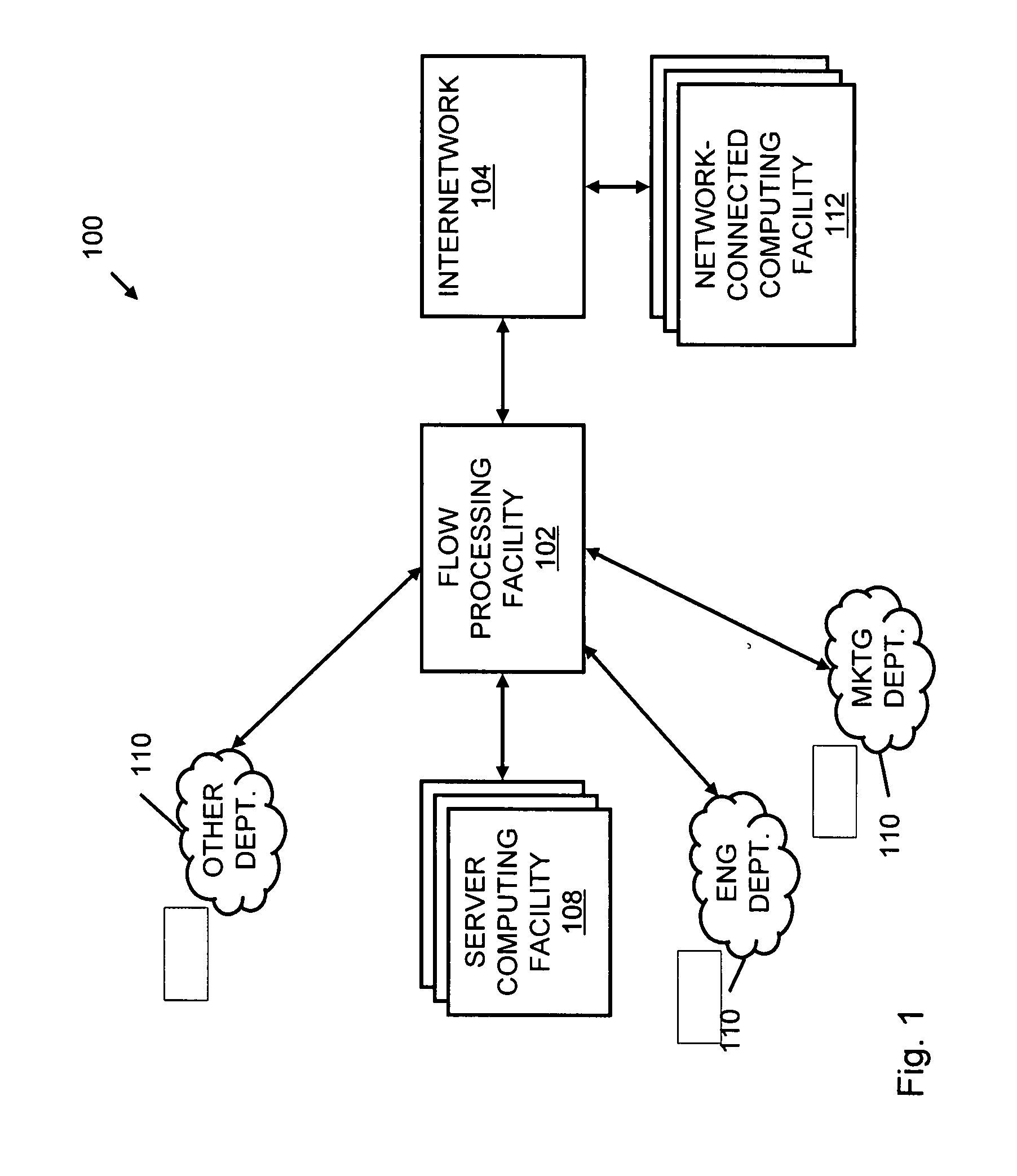

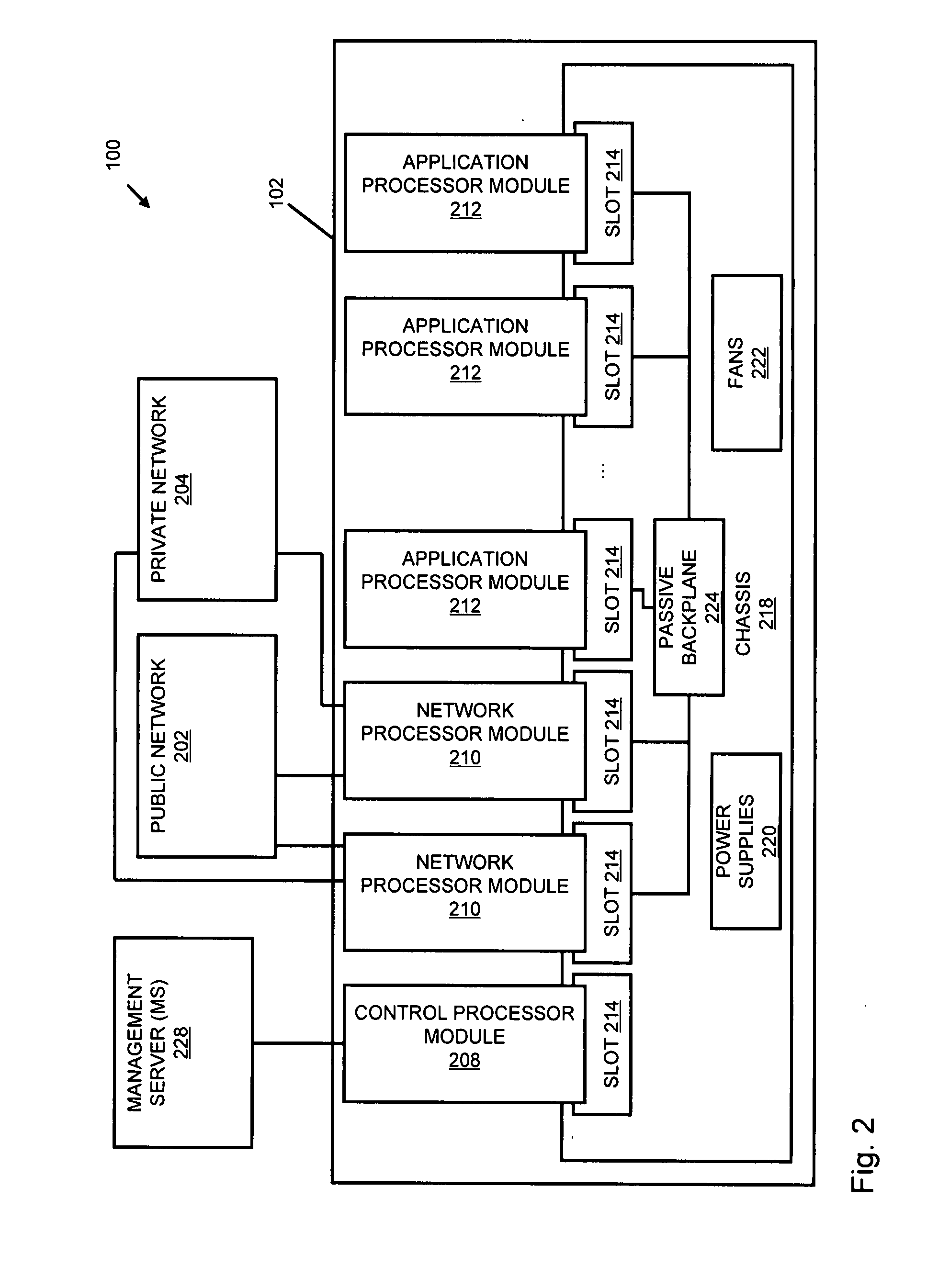

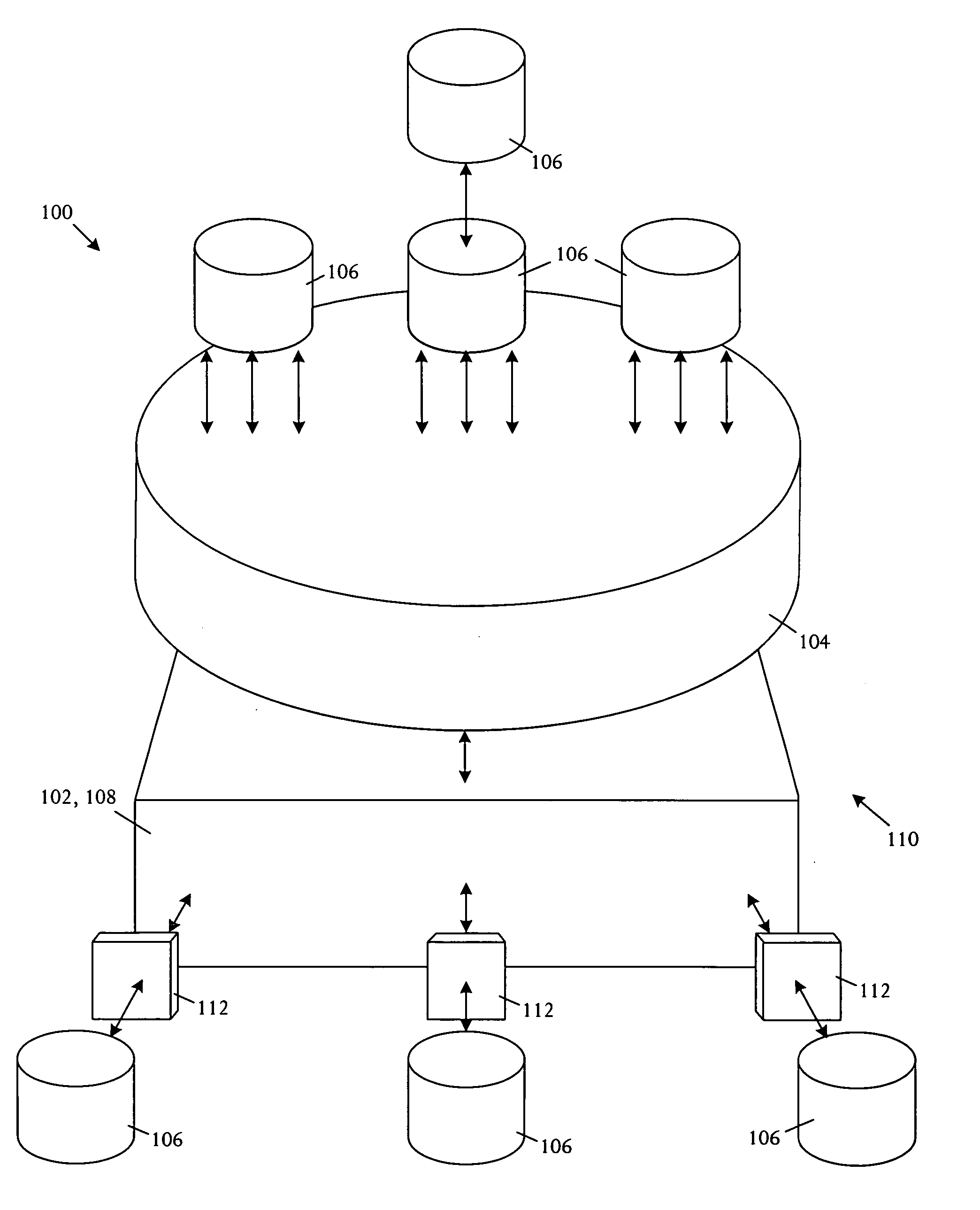

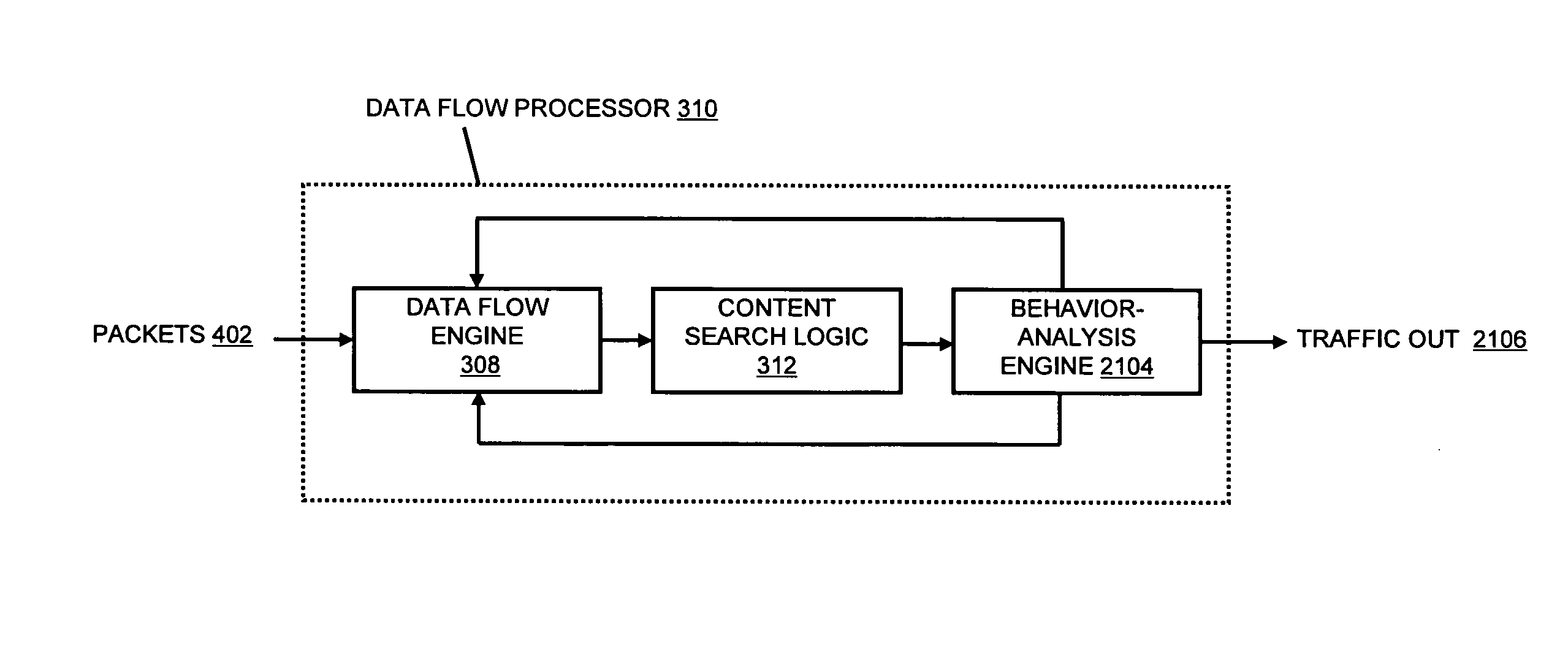

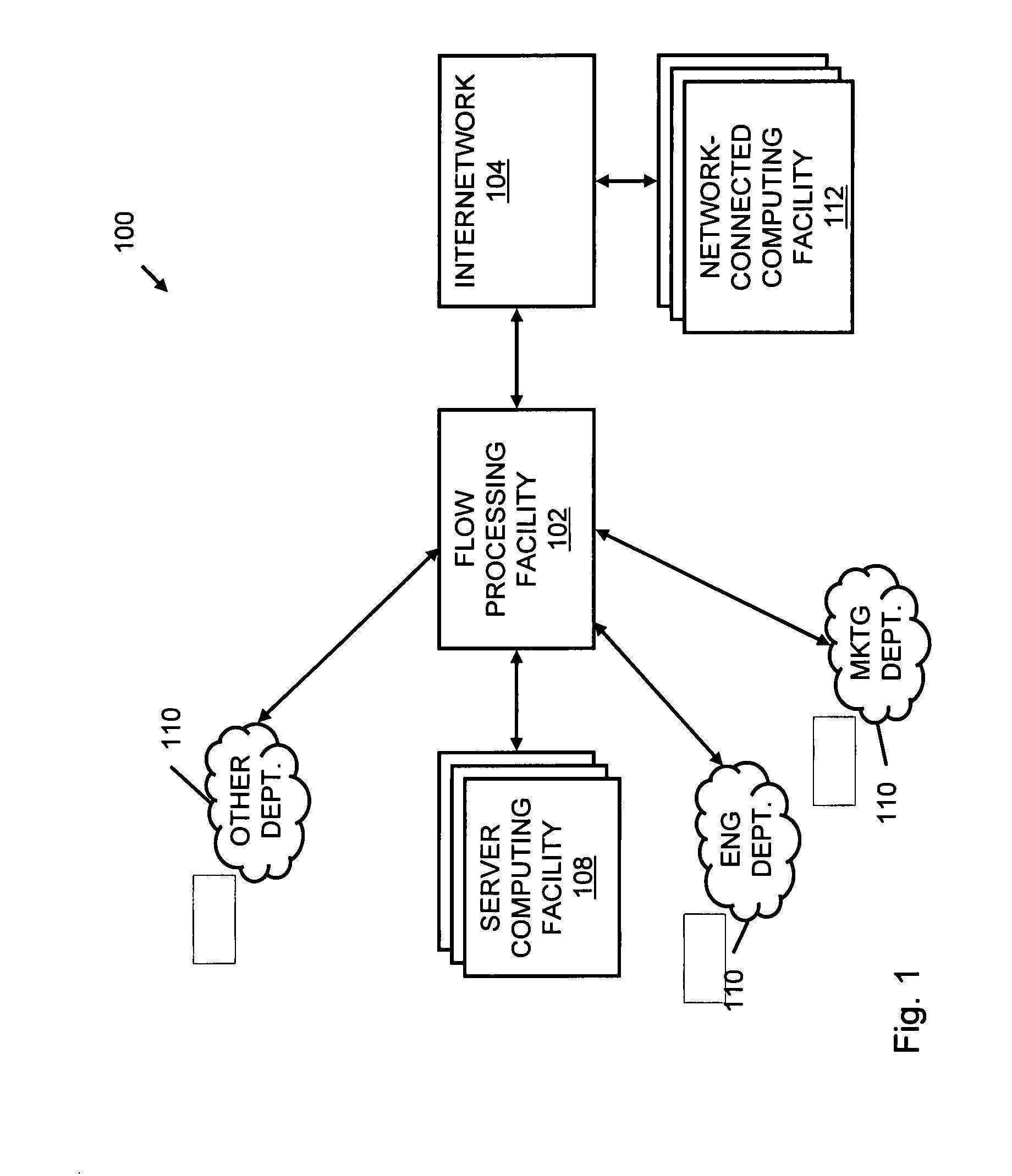

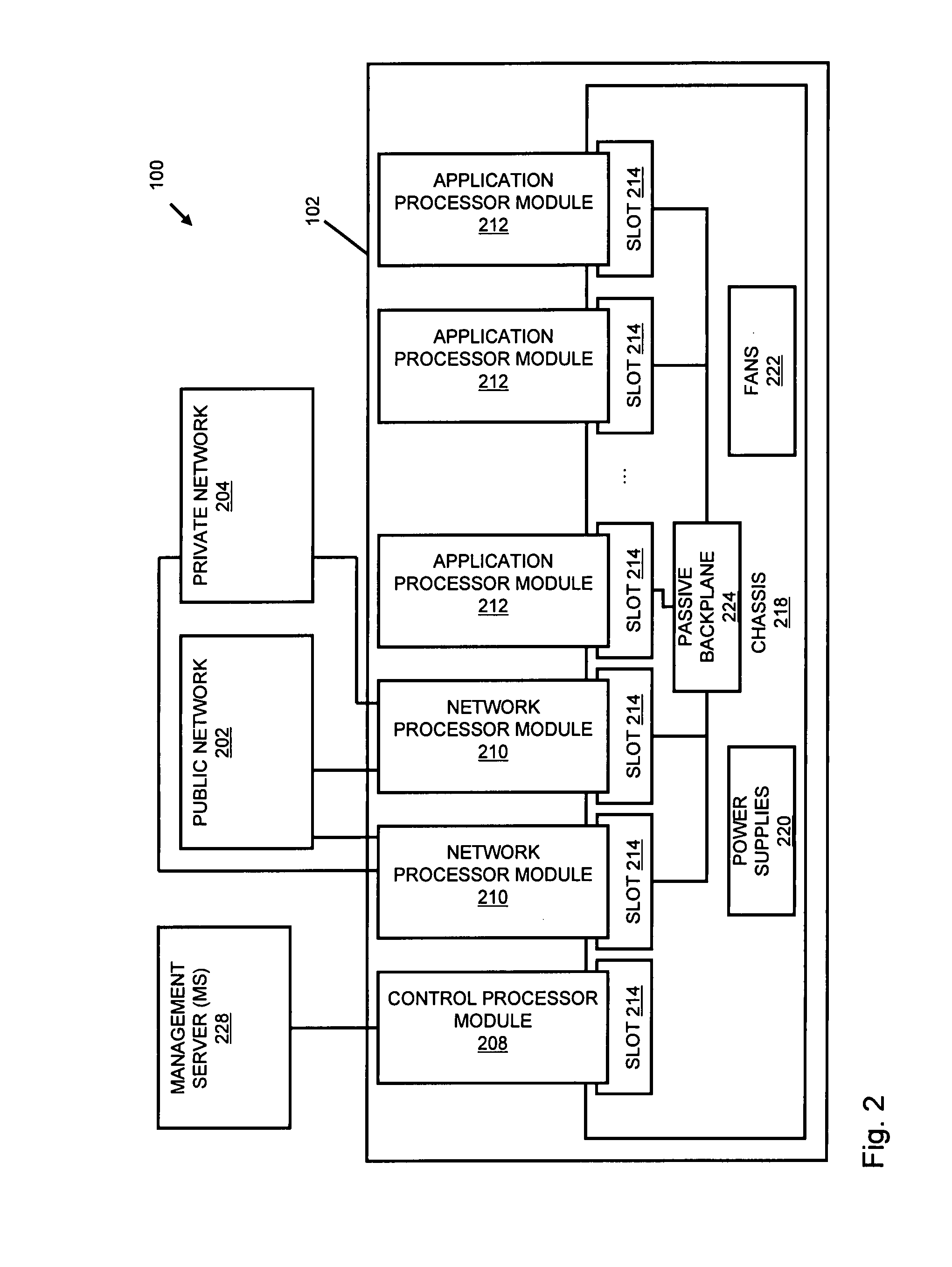

Systems and methods for processing data flows

InactiveUS20070192863A1Increased complexitySignificant expenseMemory loss protectionError detection/correctionData packData stream

A flow processing facility, which uses a set of artificial neurons for pattern recognition, such as a self-organizing map, in order to provide security and protection to a computer or computer system supports unified threat management based at least in part on patterns relevant to a variety of types of threats that relate to computer systems, including computer networks. Flow processing for switching, security, and other network applications, including a facility that processes a data flow to address patterns relevant to a variety of conditions are directed at internal network security, virtualization, and web connection security. A flow processing facility for inspecting payloads of network traffic packets detects security threats and intrusions across accessible layers of the IP-stack by applying content matching and behavioral anomaly detection techniques based on regular expression matching and self-organizing maps. Exposing threats and intrusions within packet payload at or near real-time rates enhances network security from both external and internal sources while ensuring security policy is rigorously applied to data and system resources. Intrusion Detection and Protection (IDP) is provided by a flow processing facility that processes a data flow to address patterns relevant to a variety of types of network and data integrity threats that relate to computer systems, including computer networks.

Owner:BLUE COAT SYSTEMS

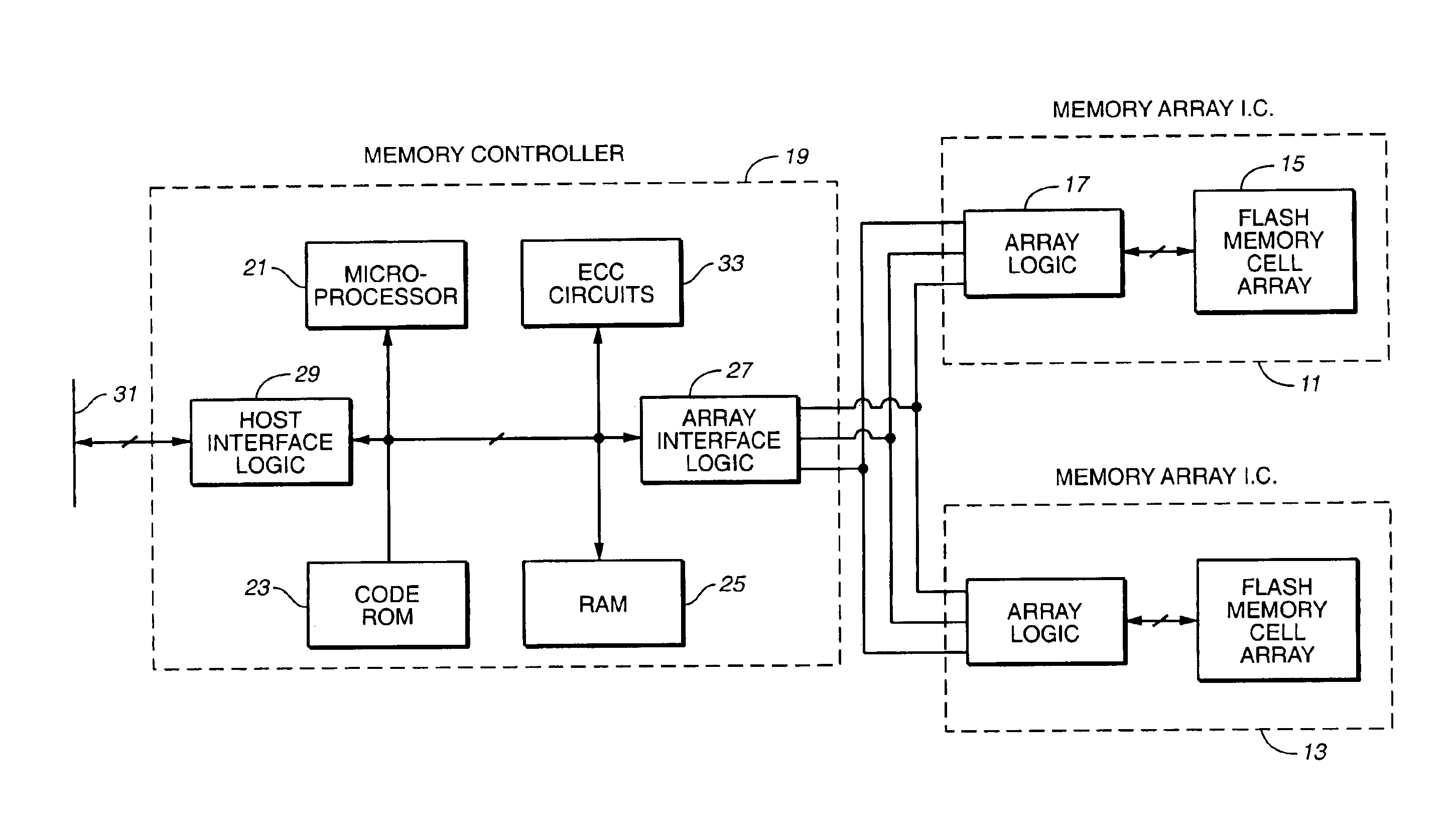

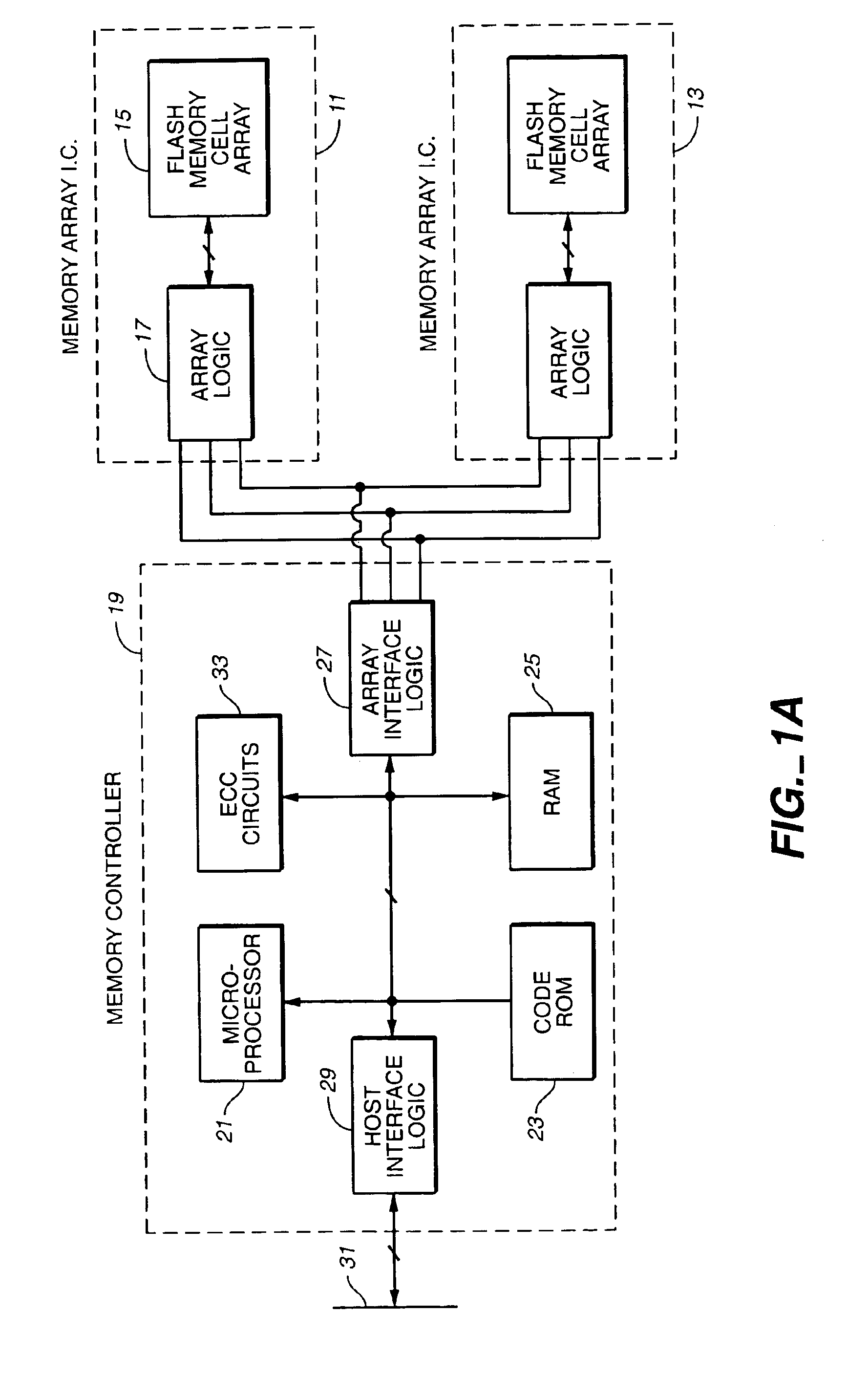

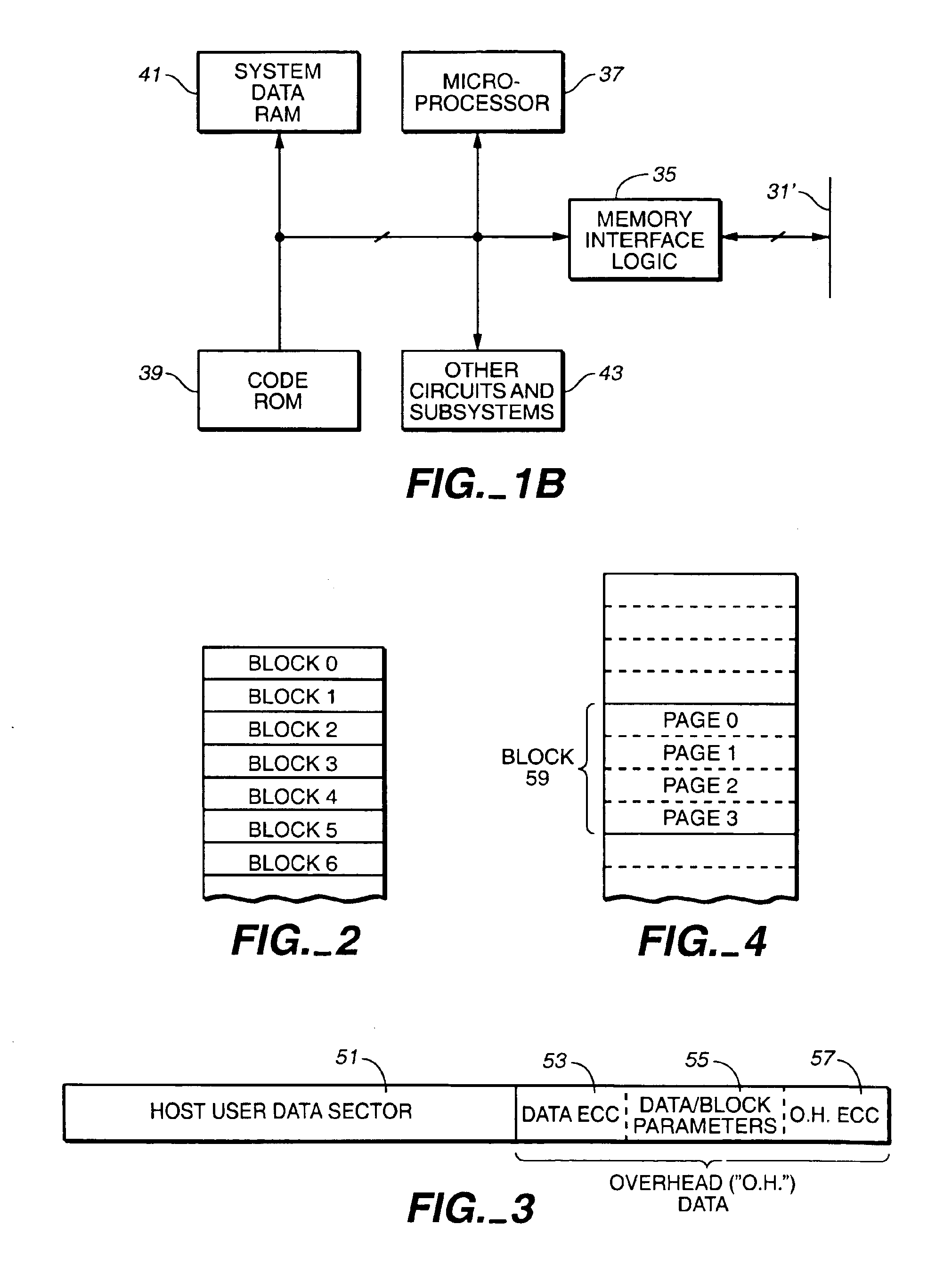

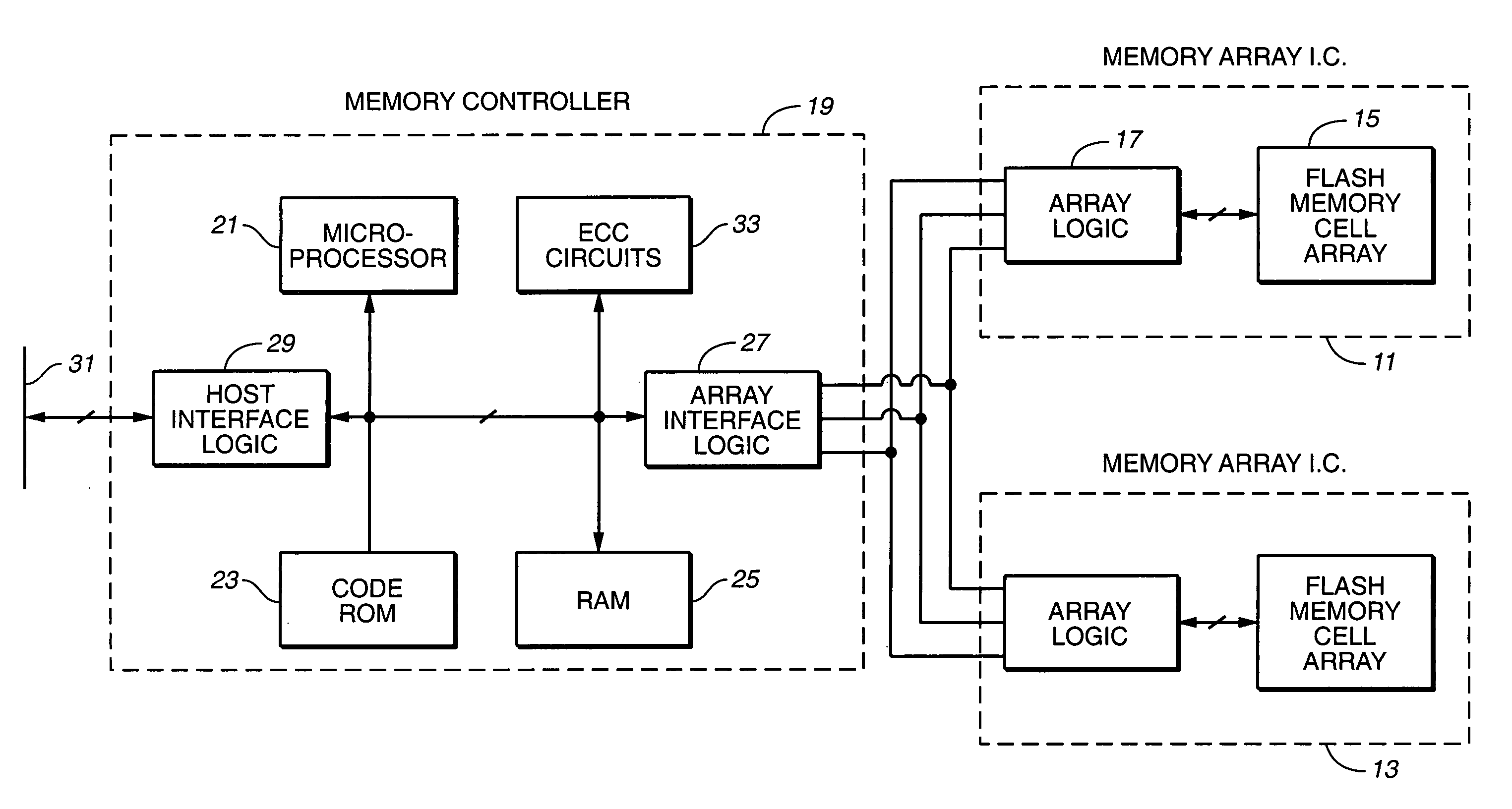

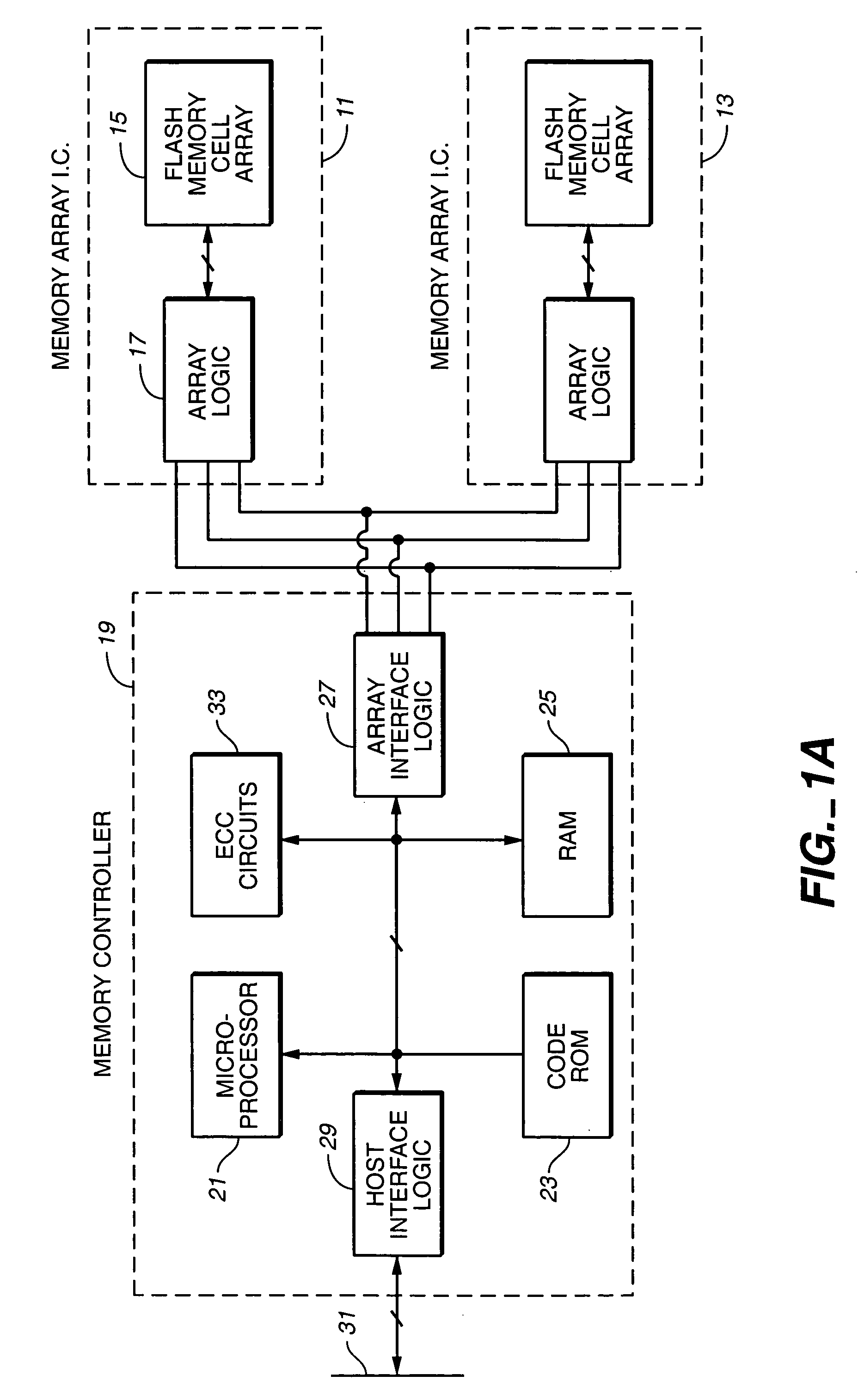

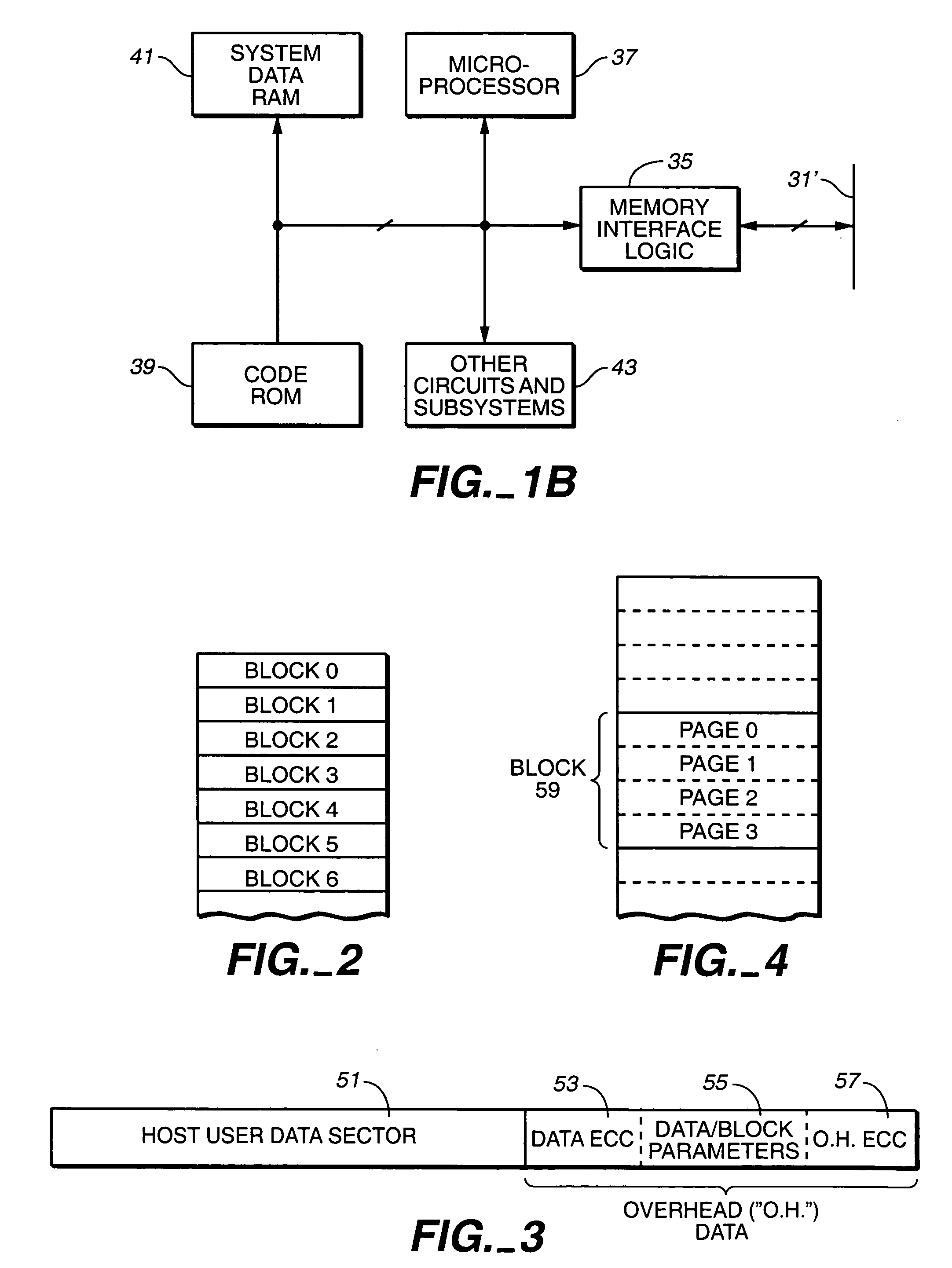

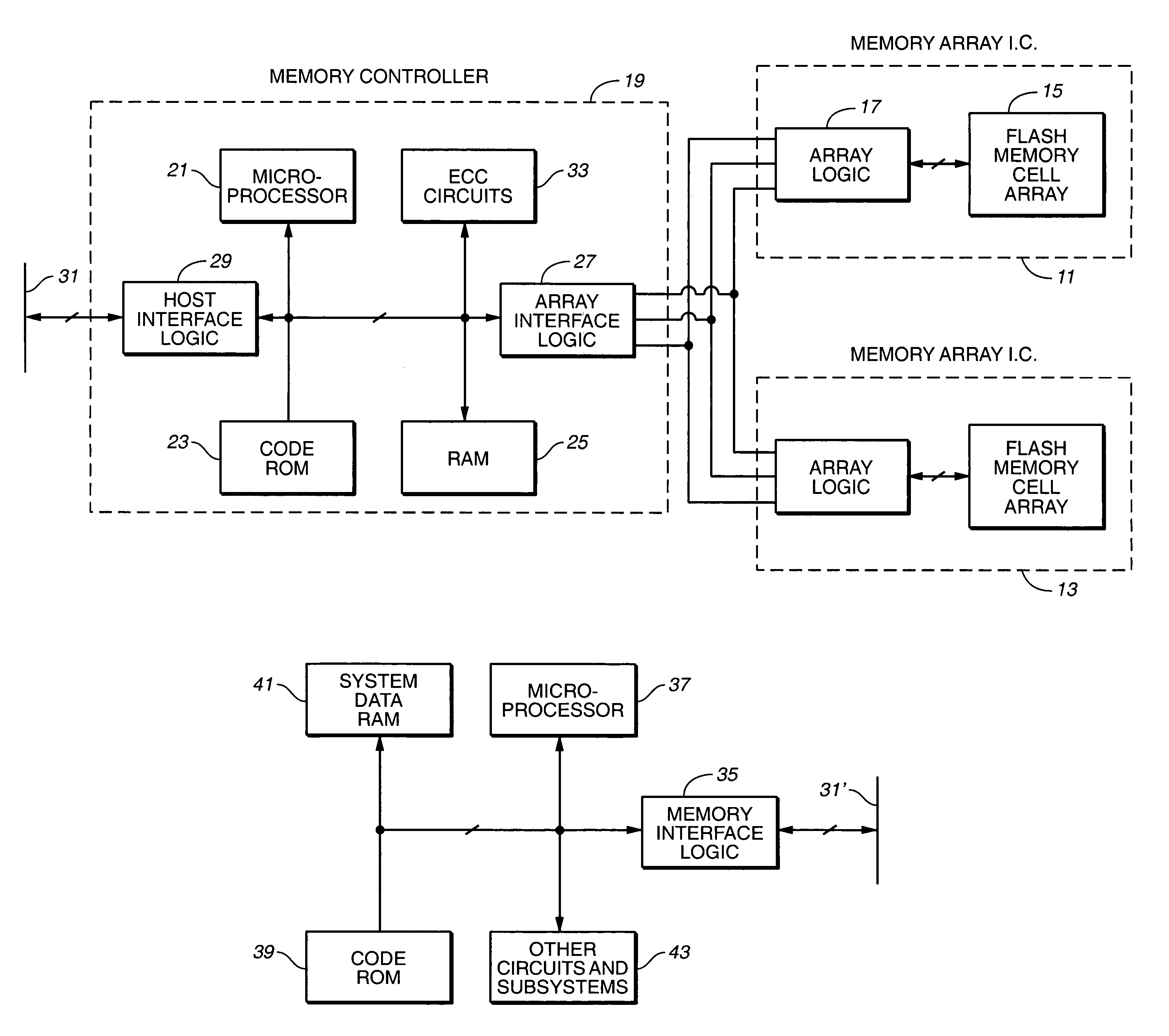

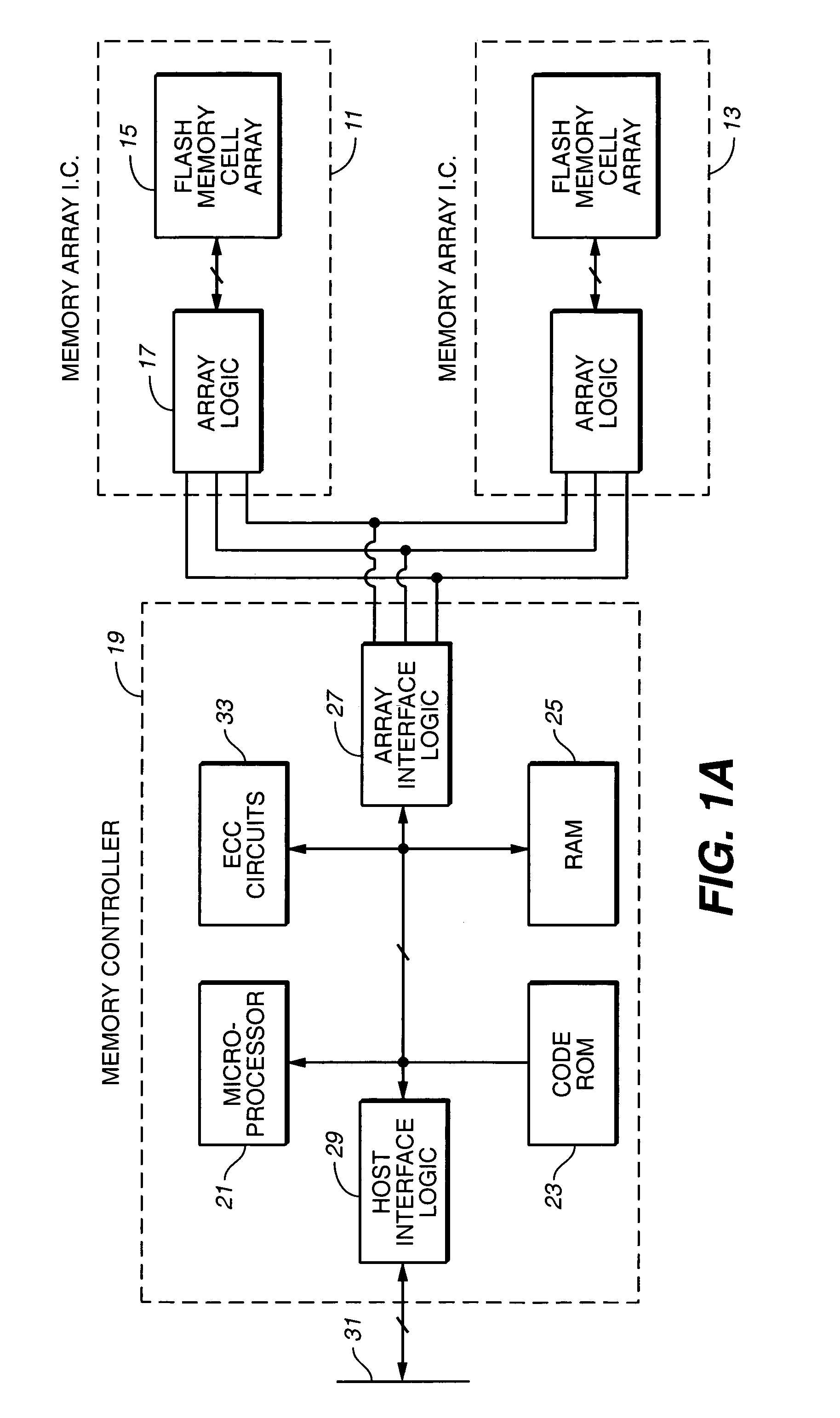

Flash memory data correction and scrub techniques

ActiveUS7012835B2Data disturbanceReduce storage dataMemory loss protectionRead-only memoriesData integrityData storing

In order to maintain the integrity of data stored in a flash memory that are susceptible to being disturbed by operations in adjacent regions of the memory, disturb events cause the data to be read, corrected and re-written before becoming so corrupted that valid data cannot be recovered. The sometimes conflicting needs to maintain data integrity and system performance are balanced by deferring execution of some of the corrective action when the memory system has other high priority operations to perform. In a memory system utilizing very large units of erase, the corrective process is executed in a manner that is consistent with efficiently rewriting an amount of data much less than the capacity of a unit of erase.

Owner:SANDISK TECH LLC

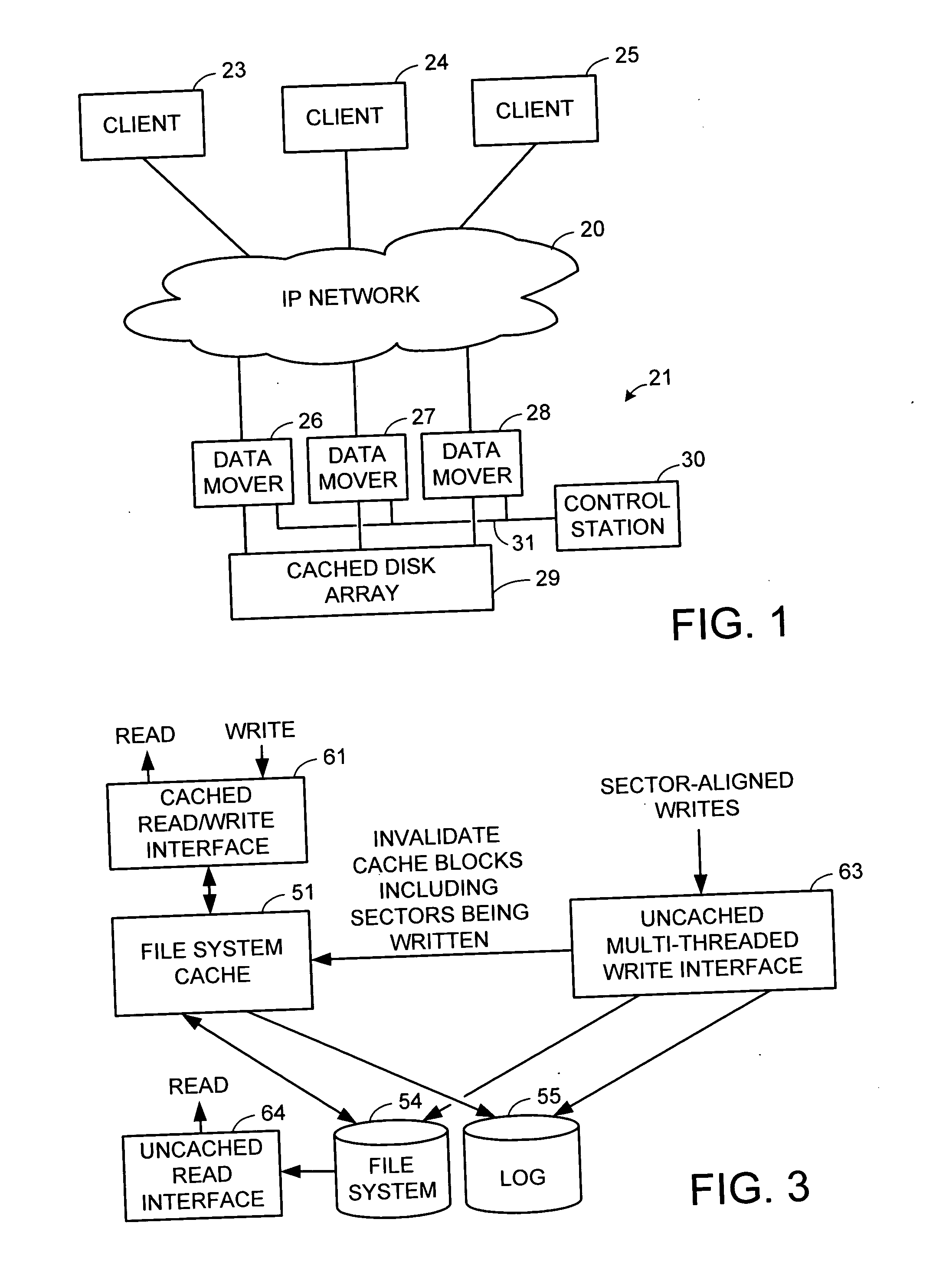

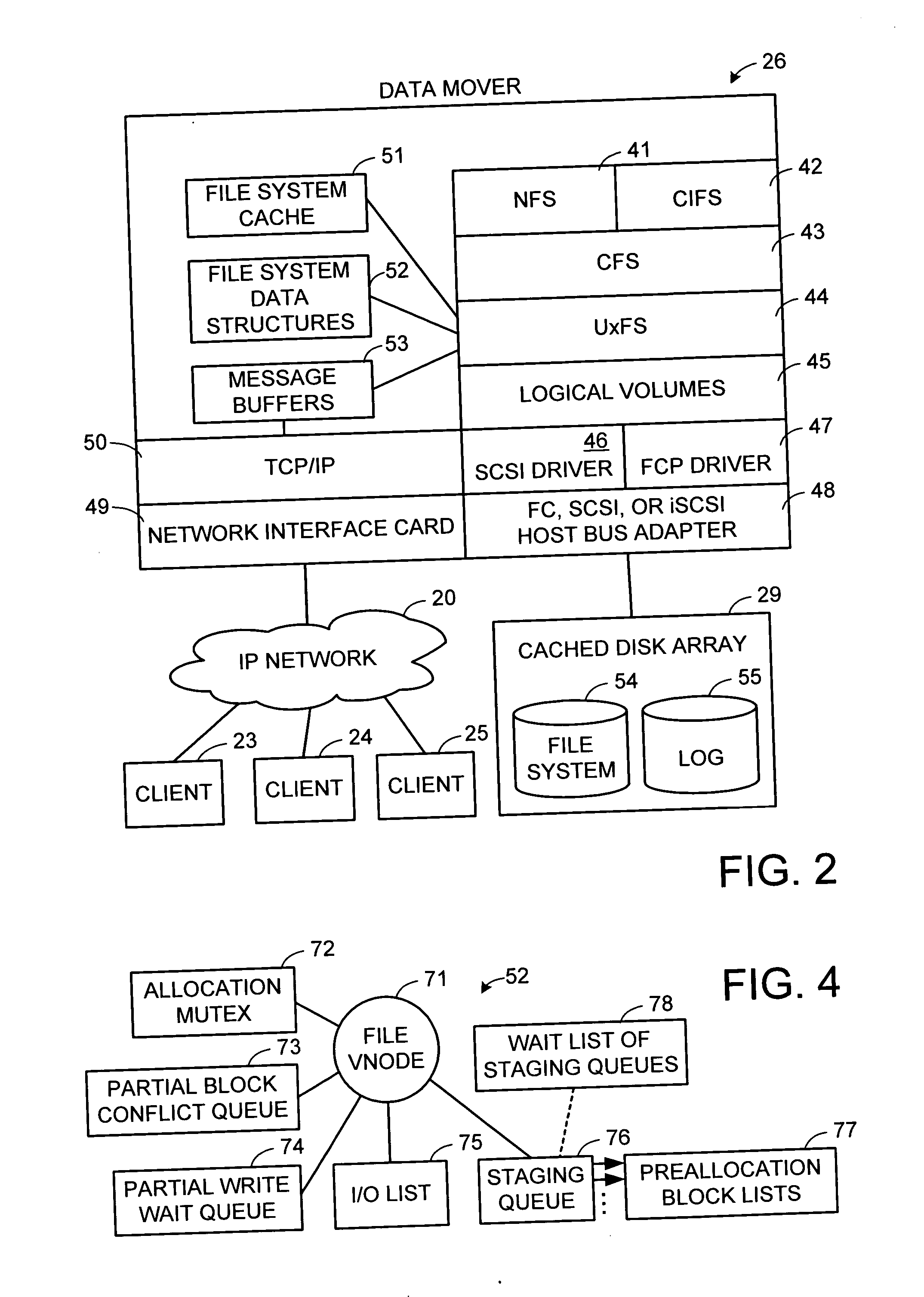

Multi-threaded write interface and methods for increasing the single file read and write throughput of a file server

ActiveUS20050066095A1Digital data information retrievalDigital data processing detailsData integrityFile allocation

A write interface in a file server provides permission management for concurrent access to data blocks of a file, ensures correct use and update of indirect blocks in a tree of the file, preallocates file blocks when the file is extended, solves access conflicts for concurrent reads and writes to the same block, and permits the use of pipelined processors. For example, a write operation includes obtaining a per file allocation mutex (mutually exclusive lock), preallocating a metadata block, releasing the allocation mutex, issuing an asynchronous write request for writing to the file, waiting for the asynchronous write request to complete, obtaining the allocation mutex, committing the preallocated metadata block, and releasing the allocation mutex. Since no locks are held during the writing of data to the on-disk storage and this data write takes the majority of the time, the method enhances concurrency while maintaining data integrity.

Owner:EMC IP HLDG CO LLC

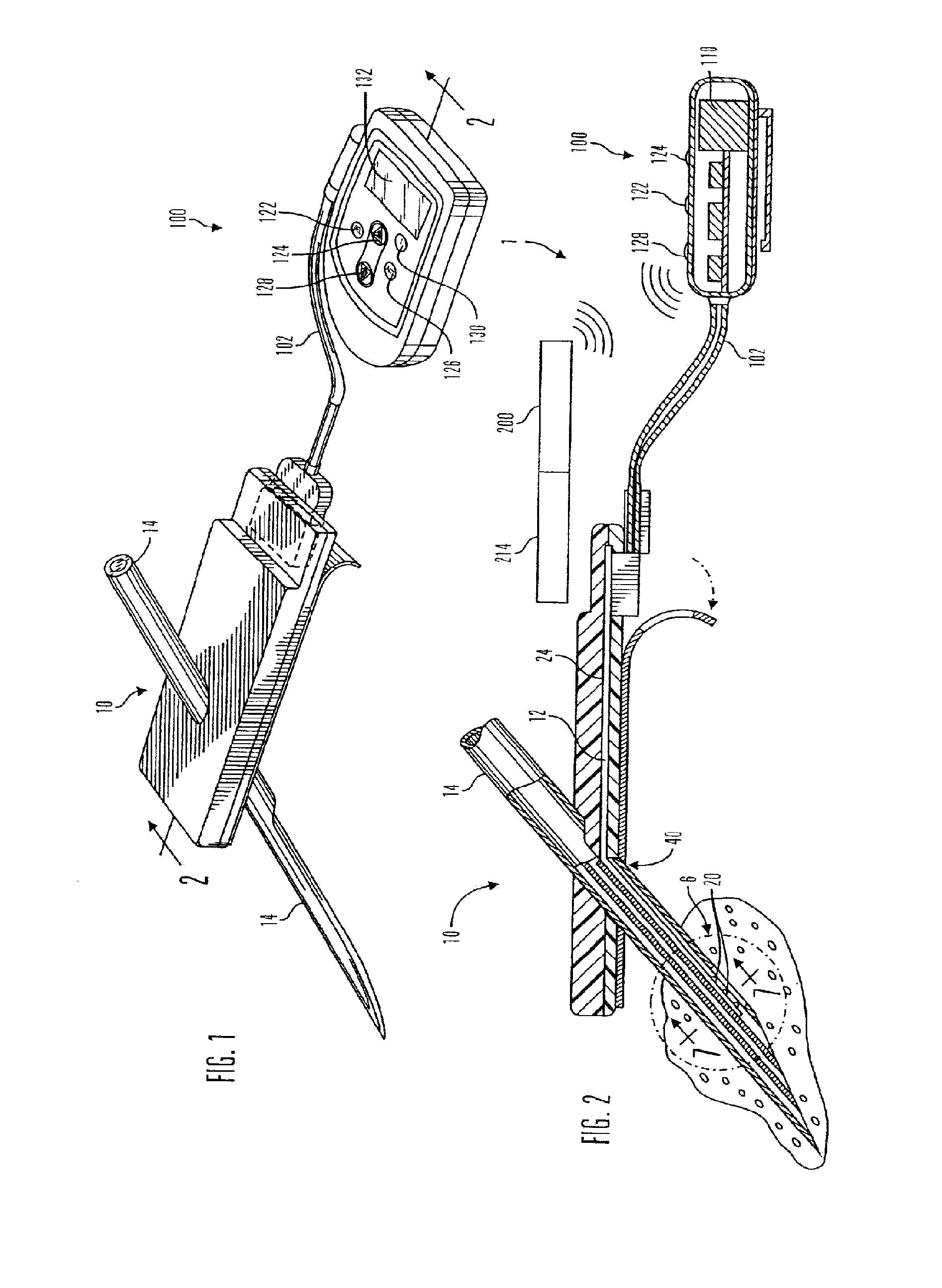

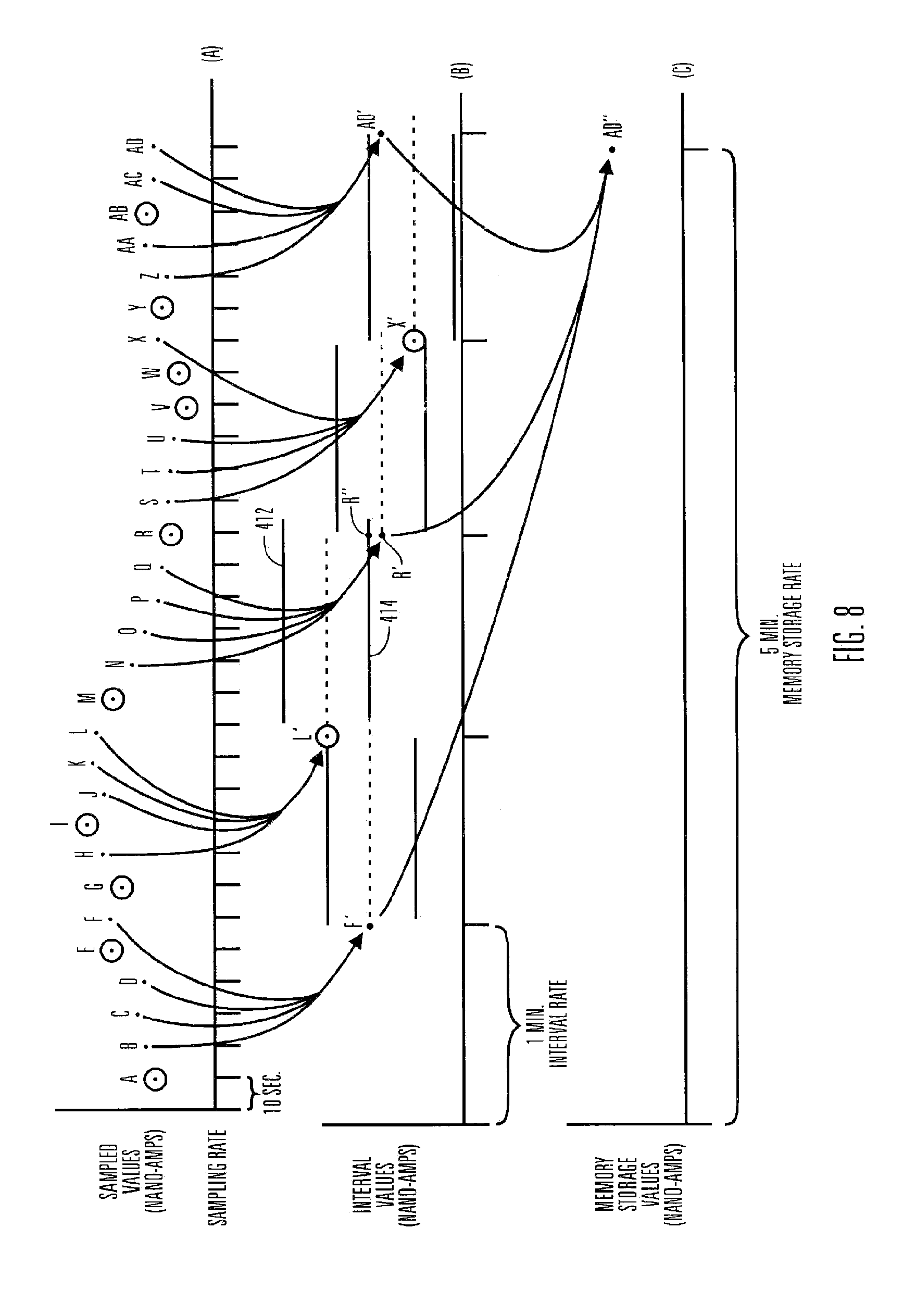

Real time self-adjusting calibration algorithm

A method of calibrating glucose monitor data includes collecting the glucose monitor data over a period of time at predetermined intervals. It also includes obtaining at least two reference glucose values from a reference source that temporally correspond with the glucose monitor data obtained at the predetermined intervals. Also included is calculating the calibration characteristics using the reference glucose values and corresponding glucose monitor data to regress the obtained glucose monitor data. And, calibrating the obtained glucose monitor data using the calibration characteristics is included. In preferred embodiments, the reference source is a blood glucose meter, and the at least two reference glucose values are obtained from blood tests. In additional embodiments, calculation of the calibration characteristics includes linear regression and, in particular embodiments, least squares linear regression. Alternatively, calculation of the calibration characteristics includes non-linear regression. Data integrity may be verified and the data may be filtered.

Owner:MEDTRONIC MIMIMED INC

Systems and methods for processing data flows

InactiveUS20080229415A1Easy to detectPreventing data flowMemory loss protectionError detection/correctionData integrityData stream

A flow processing facility, which uses a set of artificial neurons for pattern recognition, such as a self-organizing map, in order to provide security and protection to a computer or computer system supports unified threat management based at least in part on patterns relevant to a variety of types of threats that relate to computer systems, including computer networks. Flow processing for switching, security, and other network applications, including a facility that processes a data flow to address patterns relevant to a variety of conditions are directed at internal network security, virtualization, and web connection security. A flow processing facility for inspecting payloads of network traffic packets detects security threats and intrusions across accessible layers of the IP-stack by applying content matching and behavioral anomaly detection techniques based on regular expression matching and self-organizing maps. Exposing threats and intrusions within packet payload at or near real-time rates enhances network security from both external and internal sources while ensuring security policy is rigorously applied to data and system resources. Intrusion Detection and Protection (IDP) is provided by a flow processing facility that processes a data flow to address patterns relevant to a variety of types of network and data integrity threats that relate to computer systems, including computer networks.

Owner:BLUE COAT SYSTEMS

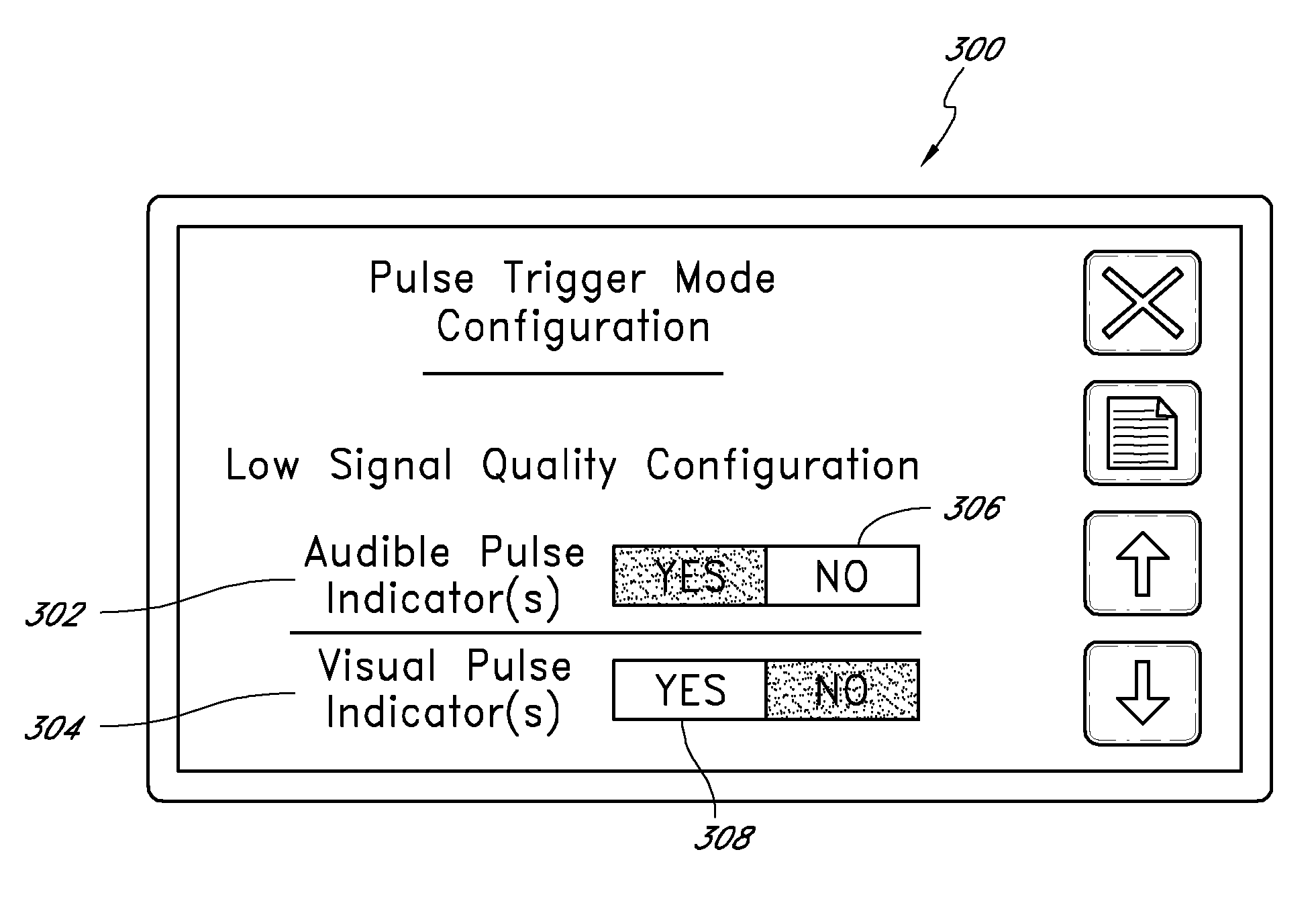

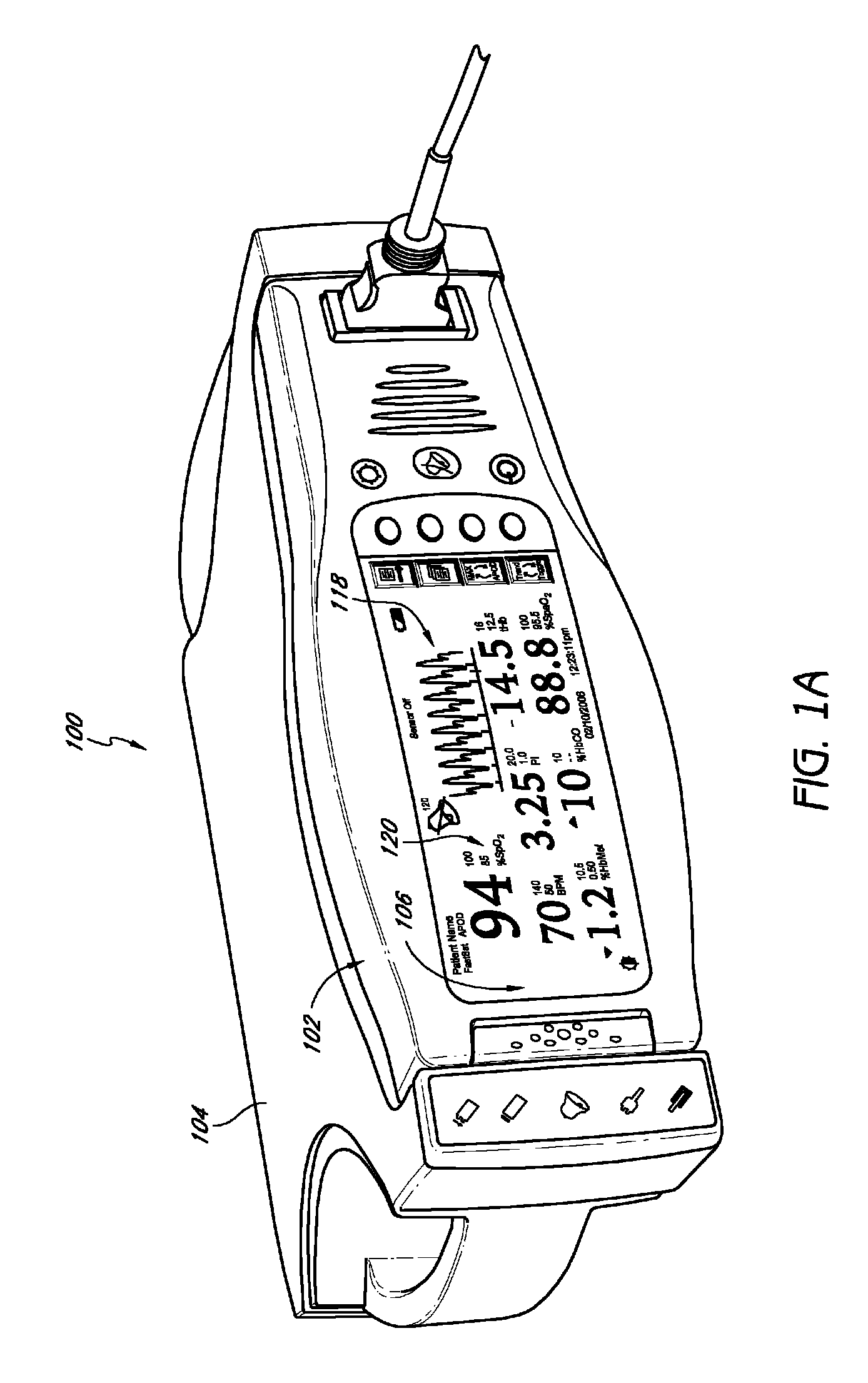

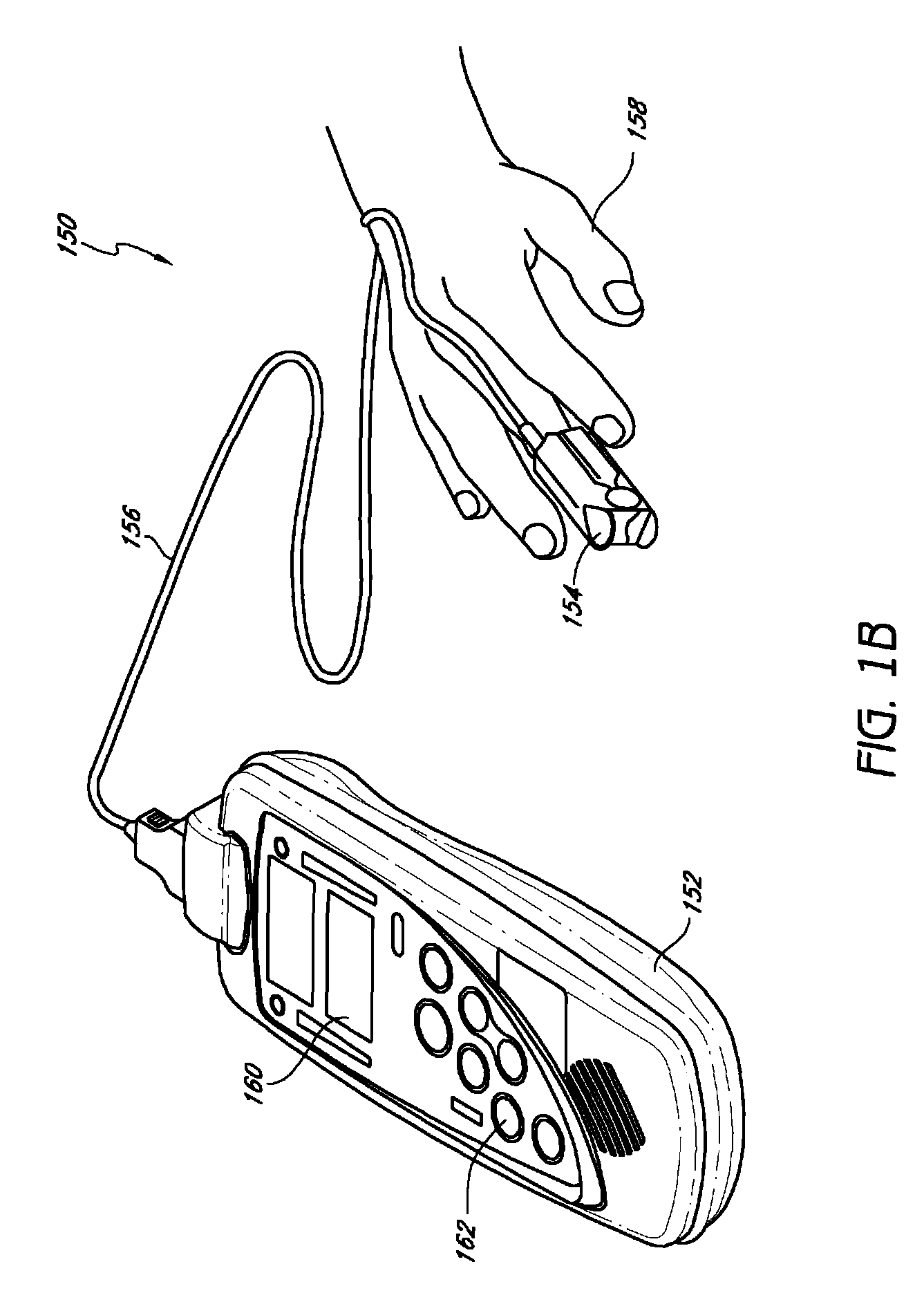

Variable mode pulse indicator

A user configurable variable mode pulse indicator provides a user the ability to influence outputs indicative of a pulse occurrence at least during distortion, or high-noise events. For example, when configured to provide or trigger pulse indication outputs, a pulse indicator designates the occurrence of each pulse in a pulse oximeter-derived photo-plethysmograph waveform, through waveform analysis or some statistical measure of the pulse rate, such as an averaged pulse rate. When the configured to block outputs or not trigger pulse indication outputs, a pulse indicator disables the output for one or more of an audio or visual pulse occurrence indication. The outputs can be used to initiate an audible tone “beep” or a visual pulse indication on a display, such as a vertical spike on a horizontal trace or a corresponding indication on a bar display. The amplitude output is used to indicate data integrity and corresponding confidence in the computed values of saturation and pulse rate. The amplitude output can vary a characteristic of the pulse indicator, such as beep volume or frequency or the height of the visual display spike.

Owner:JPMORGAN CHASE BANK NA

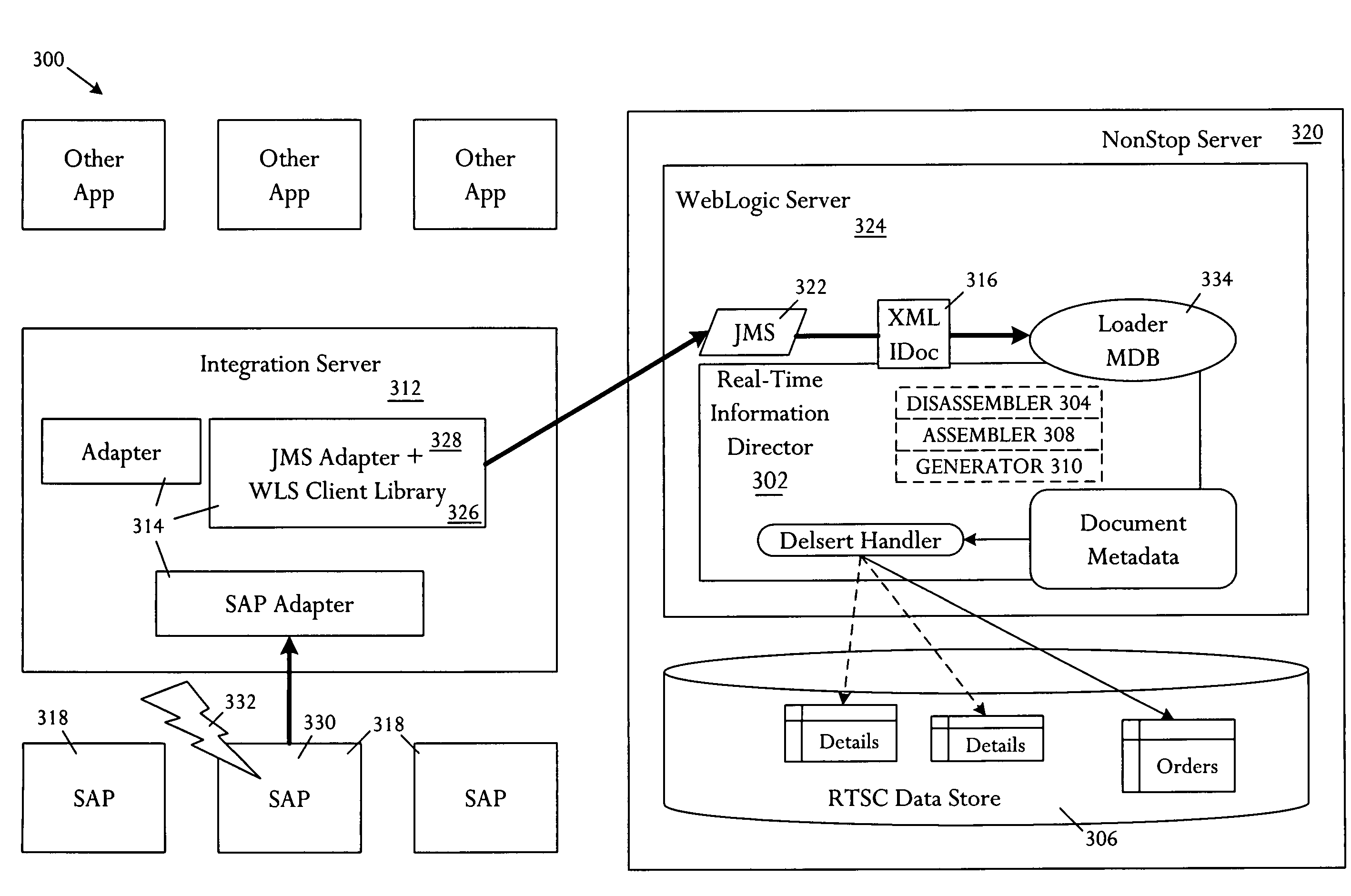

Data services handler

ActiveUS7313575B2Digital data processing detailsMultiple digital computer combinationsTime informationData integrity

A data services handler comprises an interface between a data store and applications that supply and consume data, and a real time information director (RTID) that transforms data under direction of polymorphic metadata that defines a security model and data integrity rules for application to the data.

Owner:VALTRUS INNOVATIONS LTD

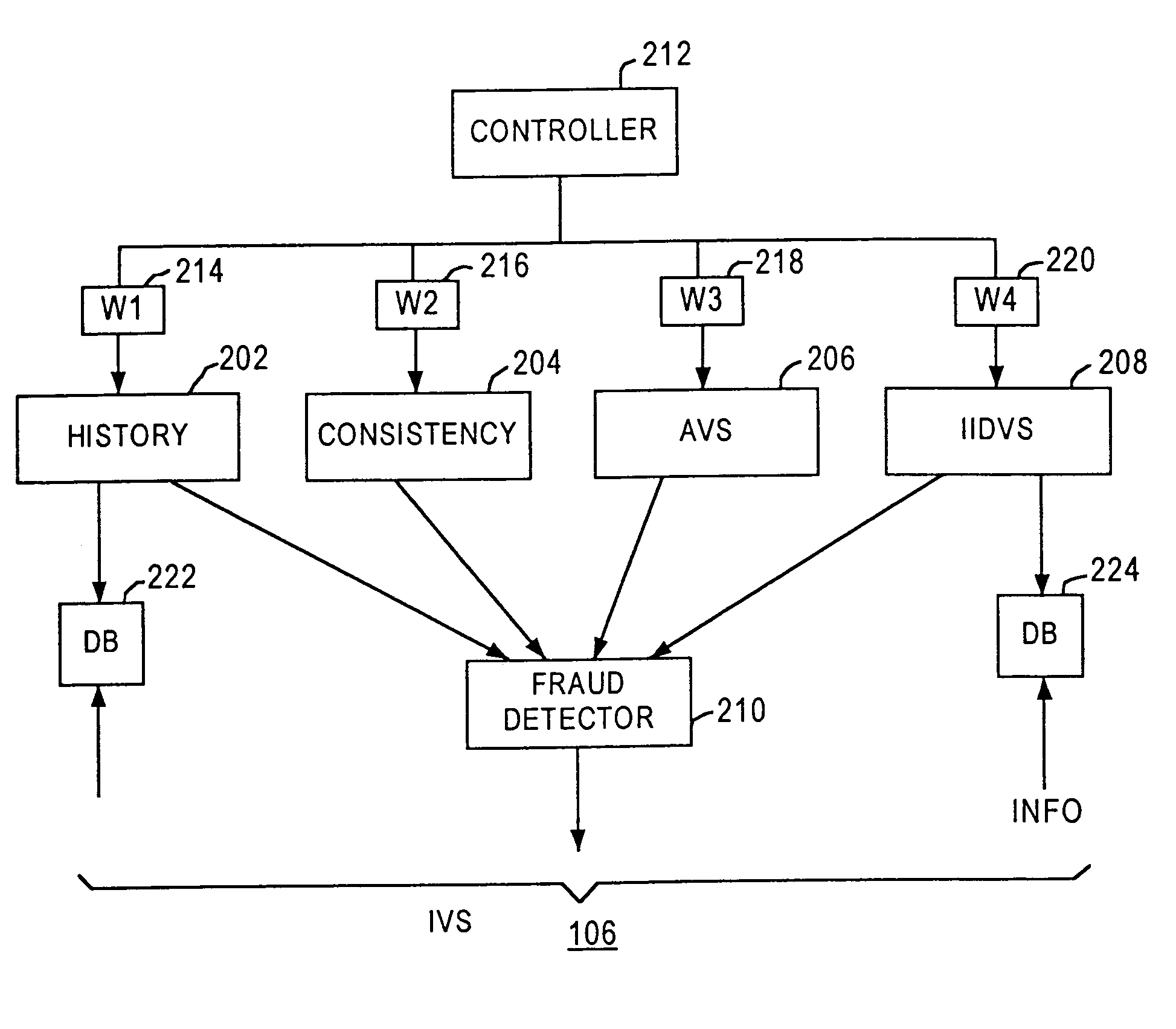

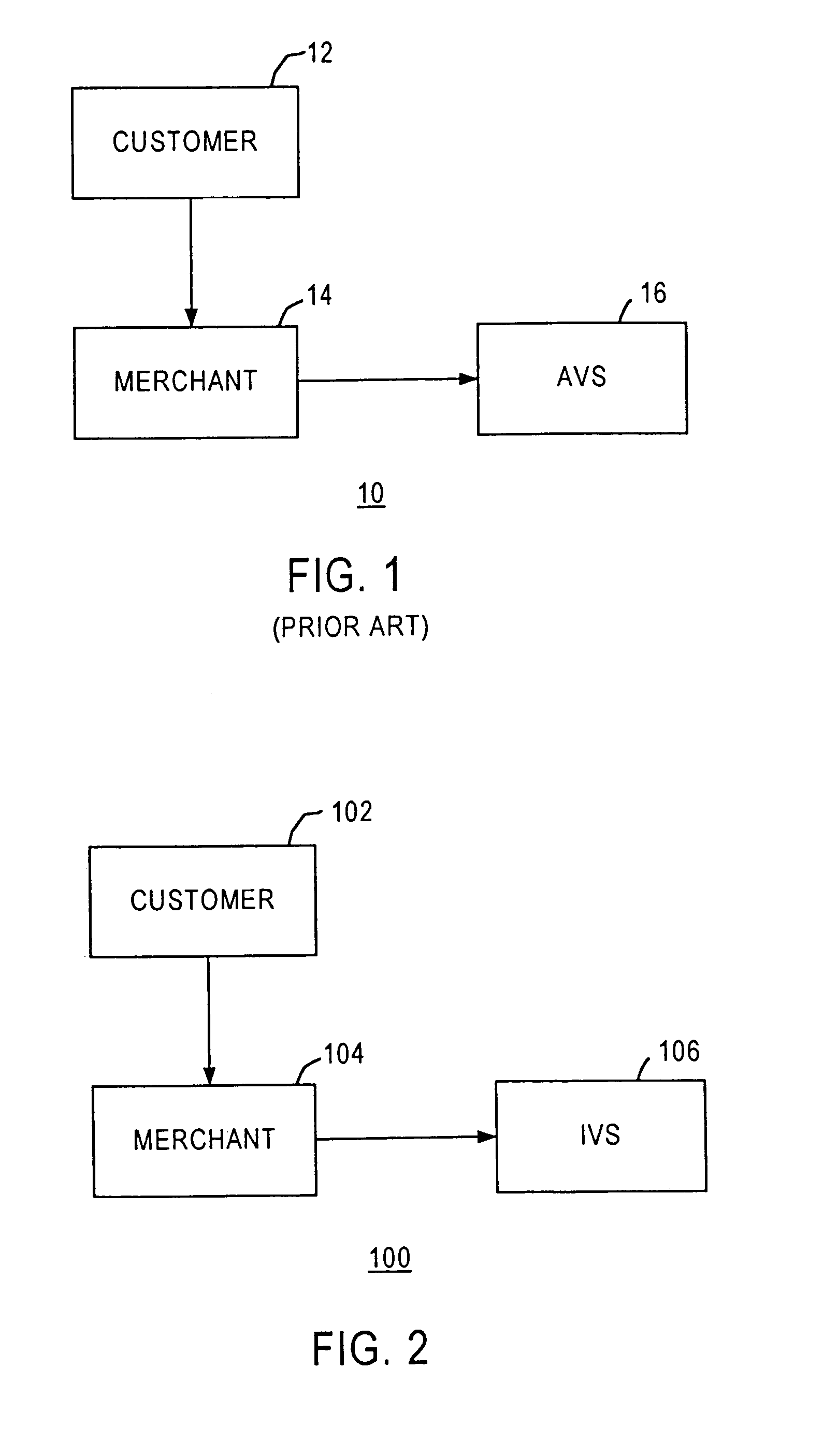

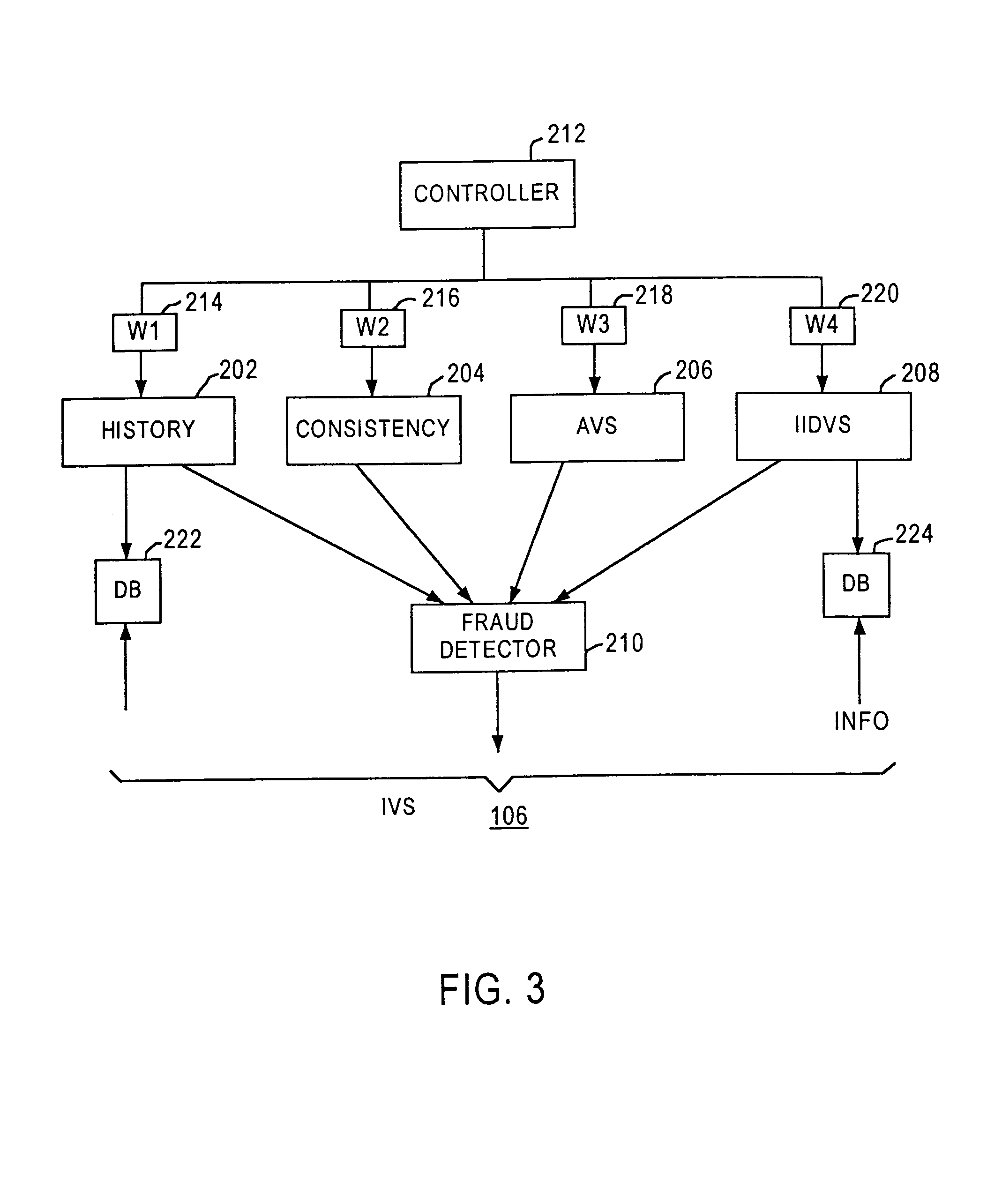

Method and apparatus for evaluating fraud risk in an electronic commerce transaction

A technique for evaluating fraud risk in e-commerce transactions between consumer and a merchant is disclosed. The merchant requests service from the system using a secure, open messaging protocol. An e-commerce transaction or electronic purchase order is received from the merchant, the level of risk associated with each order is measured, and a risk score is returned. In one embodiment, data validation, highly predictive artificial intelligence pattern matching, network data aggregation and negative file checks are used. The system performs analysis including data integrity checks and correlation analyses based on characteristics of the transaction. Other analysis includes comparison of the current transaction against known fraudulent transactions, and a search of a transaction history database to identify abnormal patterns, name and address changes, and defrauders. In one alternative, scoring algorithms are refined through use of a closed-loop risk modeling process enabling the service to adapt to new or changing fraud patterns.

Owner:CYBERSOURCE CORP

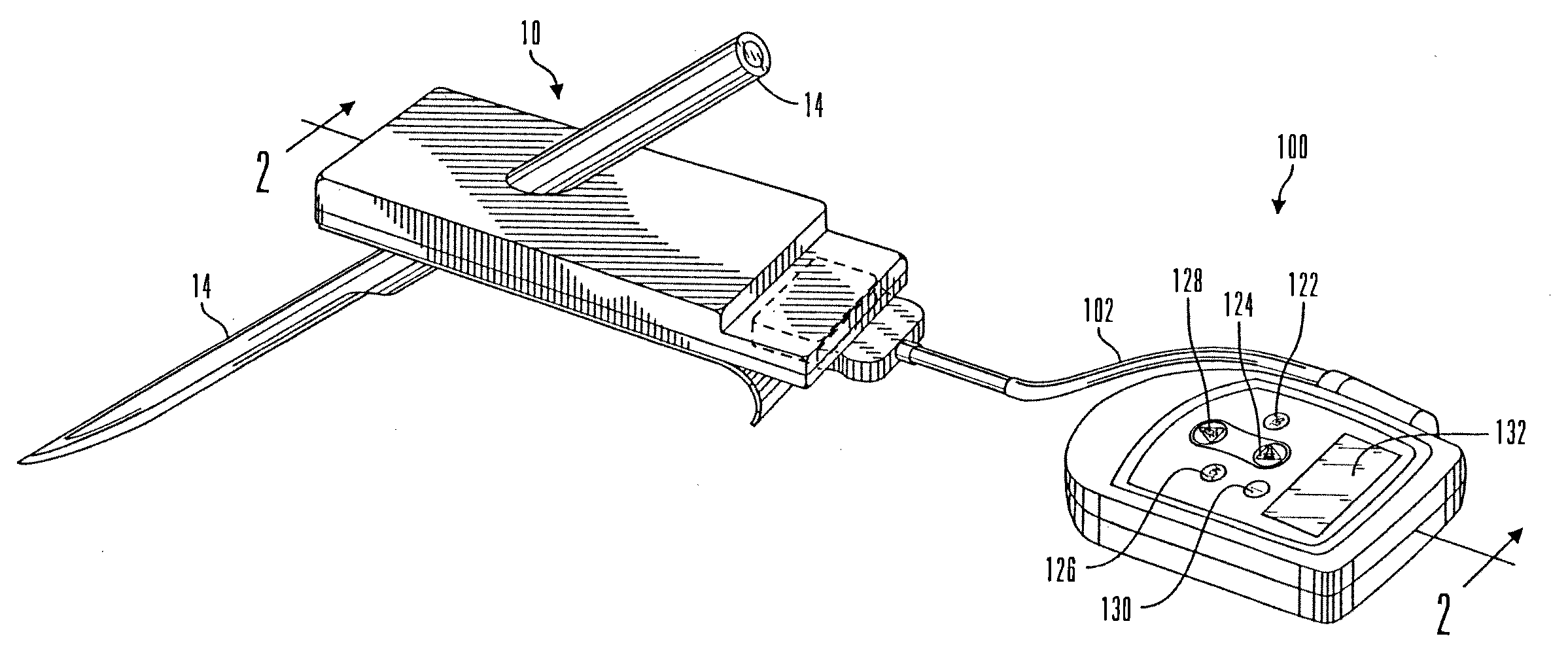

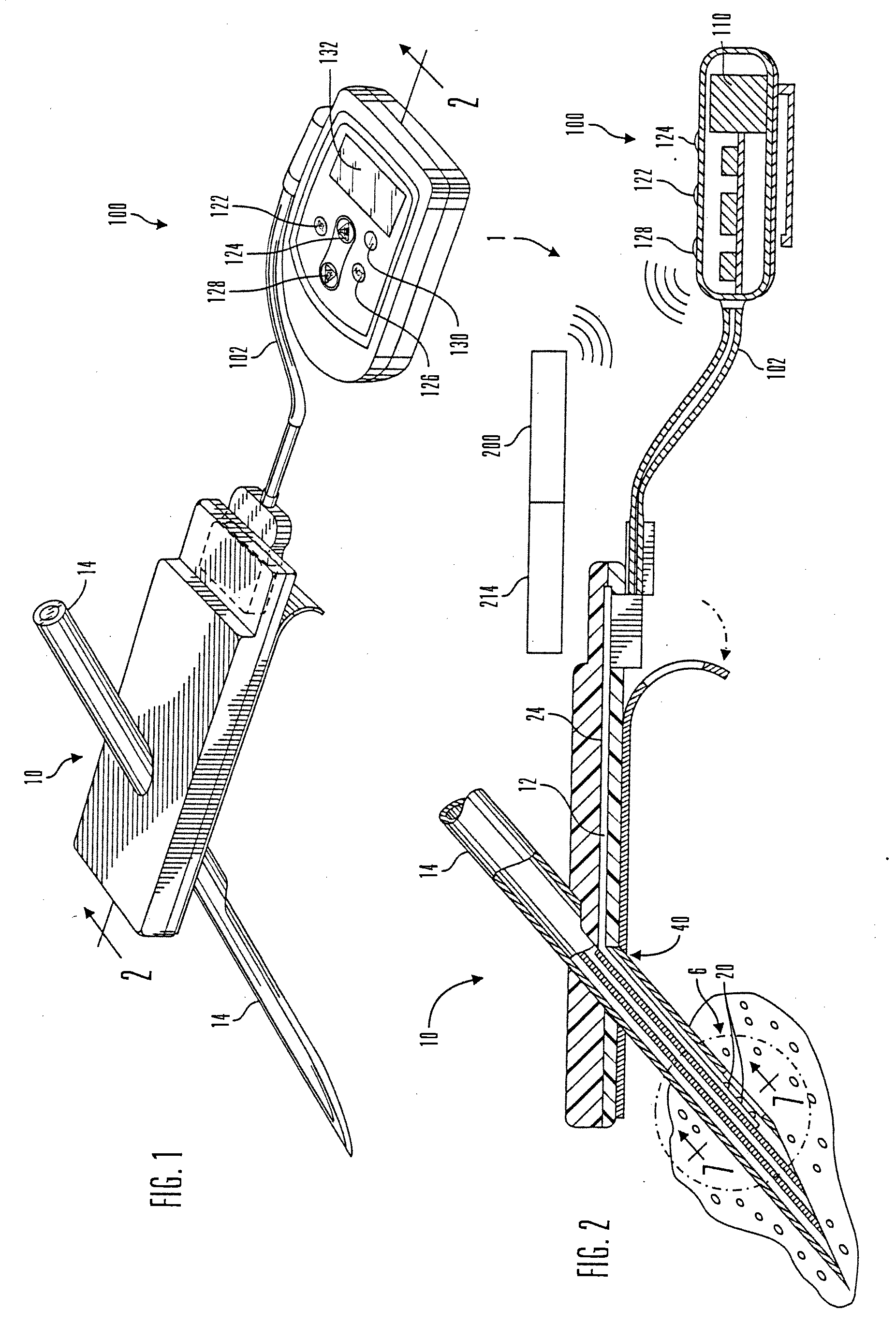

Modified Sensor Calibration Algorithm

ActiveUS20090112478A1Reduce weightQuick changeTesting/calibration apparatusSpeed measurement using gyroscopic effectsData integrityGlucose polymers

A method of calibrating glucose monitor data includes collecting the glucose monitor data over a period of time at predetermined intervals, obtaining reference glucose values from a reference source that temporally correspond with the glucose monitor data obtained at the predetermined intervals, calculating the calibration characteristics using the reference glucose values and corresponding glucose monitor data to regress the obtained glucose monitor data, and calibrating the obtained glucose monitor data using the calibration characteristics. In additional embodiments, calculation of the calibration characteristics includes linear regression and, in particular embodiments, least squares linear regression. Alternatively, calculation of the calibration characteristics includes non-linear regression. Data integrity may be verified and the data may be filtered. Further, calibration techniques may be modified during a fast rate of change in the patient's blood glucose level to increase sensor accuracy.

Owner:MEDTRONIC MIMIMED INC

Systems and methods for processing data flows

InactiveUS20080262991A1Easy to detectPreventing data flowDigital computer detailsBiological neural network modelsData integrityData stream

A flow processing facility, which uses a set of artificial neurons for pattern recognition, such as a self-organizing map, in order to provide security and protection to a computer or computer system supports unified threat management based at least in part on patterns relevant to a variety of types of threats that relate to computer systems, including computer networks. Flow processing for switching, security, and other network applications, including a facility that processes a data flow to address patterns relevant to a variety of conditions are directed at internal network security, virtualization, and web connection security. A flow processing facility for inspecting payloads of network traffic packets detects security threats and intrusions across accessible layers of the IP-stack by applying content matching and behavioral anomaly detection techniques based on regular expression matching and self-organizing maps. Exposing threats and intrusions within packet payload at or near real-time rates enhances network security from both external and internal sources while ensuring security policy is rigorously applied to data and system resources. Intrusion Detection and Protection (IDP) is provided by a flow processing facility that processes a data flow to address patterns relevant to a variety of types of network and data integrity threats that relate to computer systems, including computer networks.

Owner:CA TECH INC

Systems and methods for processing data flows

InactiveUS20080134330A1Increased complexityAvoid problemsMemory loss protectionError detection/correctionData integrityData stream

A flow processing facility, which uses a set of artificial neurons for pattern recognition, such as a self-organizing map, in order to provide security and protection to a computer or computer system supports unified threat management based at least in part on patterns relevant to a variety of types of threats that relate to computer systems, including computer networks. Flow processing for switching, security, and other network applications, including a facility that processes a data flow to address patterns relevant to a variety of conditions are directed at internal network security, virtualization, and web connection security. A flow processing facility for inspecting payloads of network traffic packets detects security threats and intrusions across accessible layers of the IP-stack by applying content matching and behavioral anomaly detection techniques based on regular expression matching and self-organizing maps. Exposing threats and intrusions within packet payload at or near real-time rates enhances network security from both external and internal sources while ensuring security policy is rigorously applied to data and system resources. Intrusion Detection and Protection (IDP) is provided by a flow processing facility that processes a data flow to address patterns relevant to a variety of types of network and data integrity threats that relate to computer systems, including computer networks.

Owner:BLUE COAT SYSTEMS

Flash memory data correction and scrub techniques

ActiveUS20050073884A1Data disturbanceReduce storage dataMemory loss protectionRead-only memoriesData integrityData storing

In order to maintain the integrity of data stored in a flash memory that are susceptible to being disturbed by operations in adjacent regions of the memory, disturb events cause the data to be read, corrected and re-written before becoming so corrupted that valid data cannot be recovered. The sometimes conflicting needs to maintain data integrity and system performance are balanced by deferring execution of some of the corrective action when the memory system has other high priority operations to perform. In a memory system utilizing very large units of erase, the corrective process is executed in a manner that is consistent with efficiently rewriting an amount of data much less than the capacity of a unit of erase.

Owner:SANDISK TECH LLC

Systems and methods for processing data flows

InactiveUS20080262990A1Easy to detectPreventing data flowMemory loss protectionError detection/correctionData streamData integrity

A flow processing facility, which uses a set of artificial neurons for pattern recognition, such as a self-organizing map, in order to provide security and protection to a computer or computer system supports unified threat management based at least in part on patterns relevant to a variety of types of threats that relate to computer systems, including computer networks. Flow processing for switching, security, and other network applications, including a facility that processes a data flow to address patterns relevant to a variety of conditions are directed at internal network security, virtualization, and web connection security. A flow processing facility for inspecting payloads of network traffic packets detects security threats and intrusions across accessible layers of the IP-stack by applying content matching and behavioral anomaly detection techniques based on regular expression matching and self-organizing maps. Exposing threats and intrusions within packet payload at or near real-time rates enhances network security from both external and internal sources while ensuring security policy is rigorously applied to data and system resources. Intrusion Detection and Protection (IDP) is provided by a flow processing facility that processes a data flow to address patterns relevant to a variety of types of network and data integrity threats that relate to computer systems, including computer networks.

Owner:CA TECH INC

Corrected data storage and handling methods

InactiveUS7173852B2Data disturbanceReduce storage dataRead-only memoriesDigital storageData integrityTime limit

In order to maintain the integrity of data stored in a flash memory that are susceptible to being disturbed by operations in adjacent regions of the memory, disturb events cause the data to be read, corrected and re-written before becoming so corrupted that valid data cannot be recovered. The sometimes conflicting needs to maintain data integrity and system performance are balanced by deferring execution of some of the corrective action when the memory system has other high priority operations to perform. In a memory system utilizing very large units of erase, the corrective process is executed in a manner that is consistent with efficiently rewriting an amount of data much less than the capacity of a unit of erase. Data is rewritten when severe errors are found during read operations. Portions of data are corrected and copied within the time limit for read operation. Corrected portions are written to dedicated blocks.

Owner:SANDISK TECH LLC

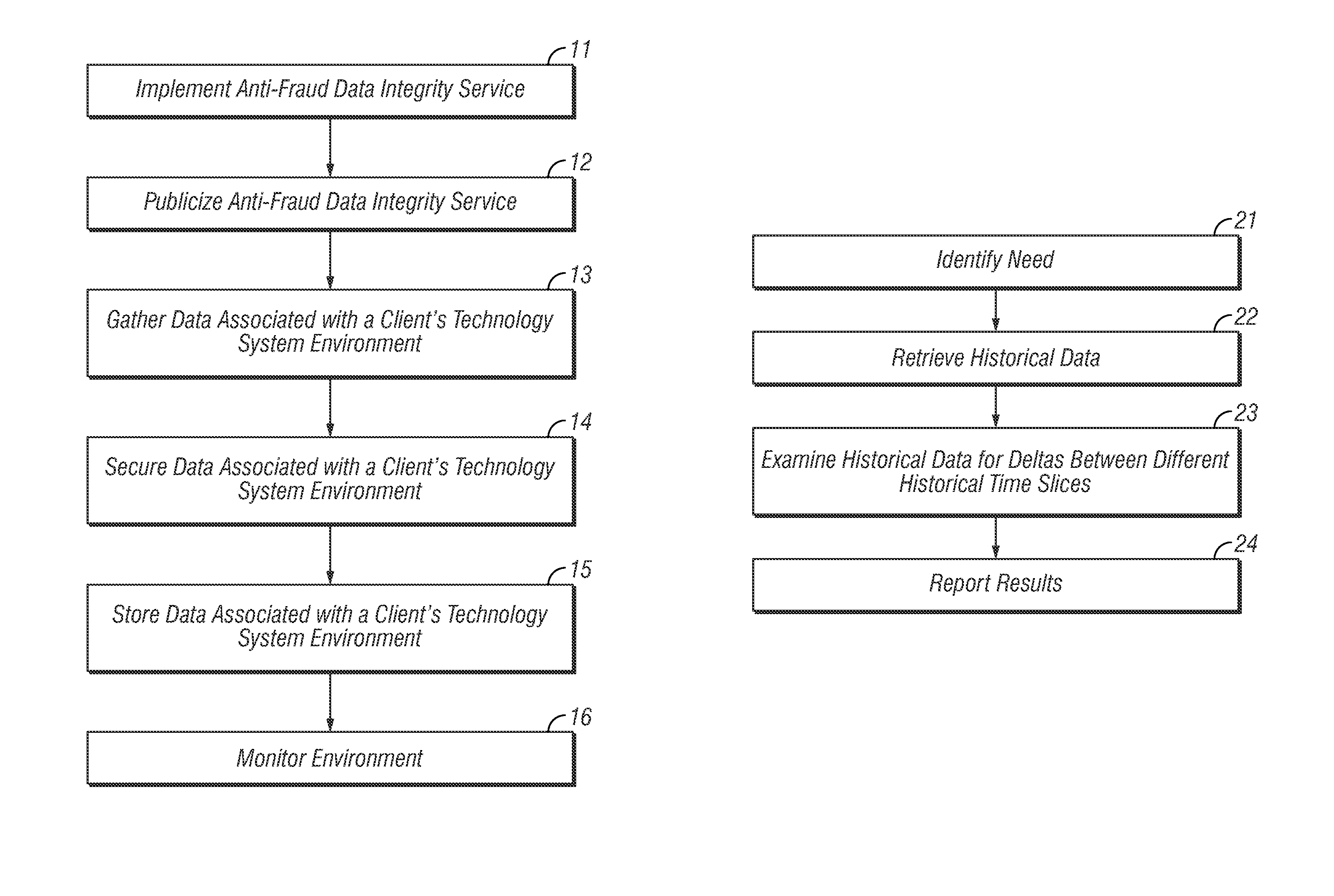

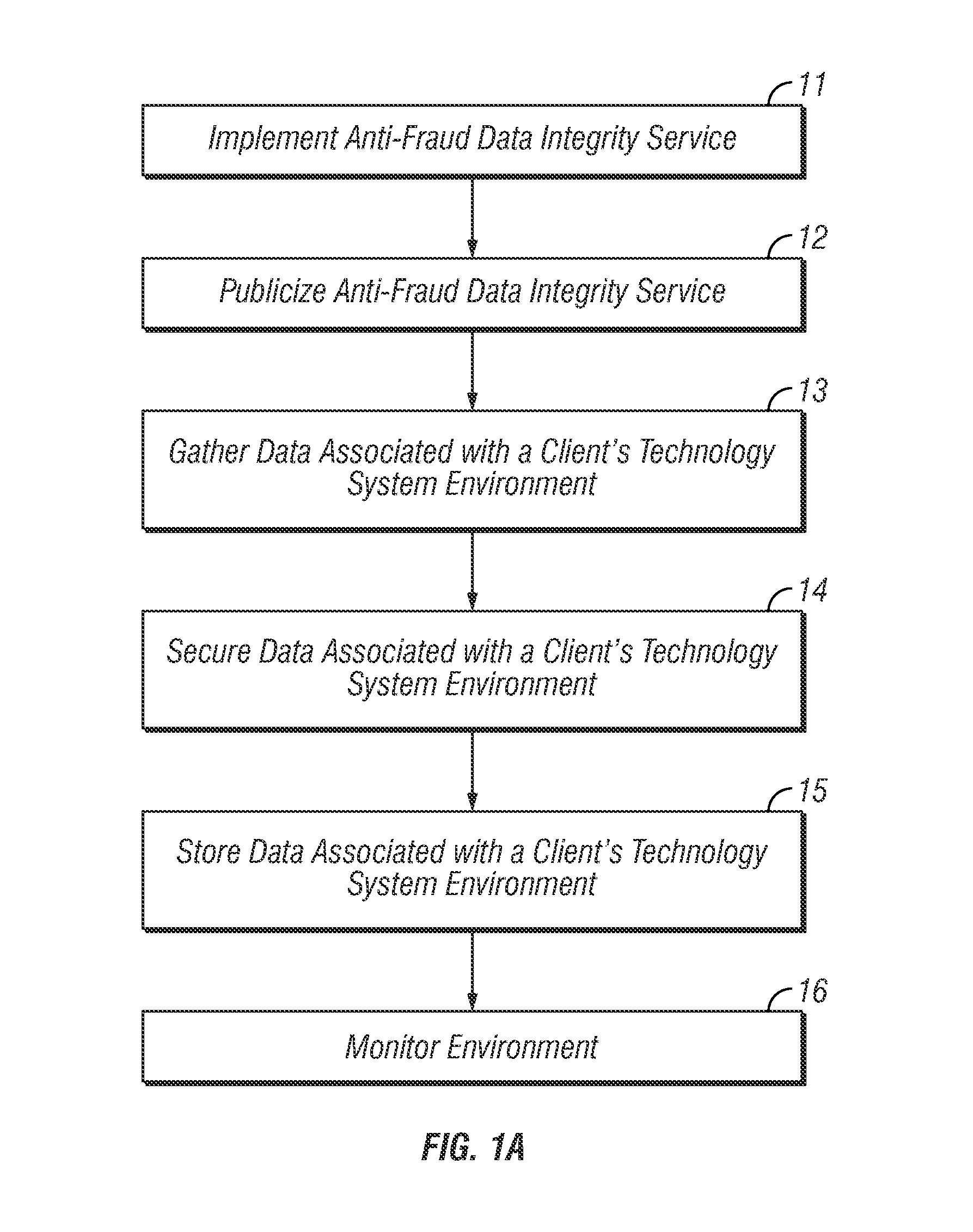

Method and apparatus for maintaining high data integrity and for providing a secure audit for fraud prevention and detection

ActiveUS8805925B2Easy to findReduce the burden onComplete banking machinesFinanceData integrityInvoice

Owner:NBRELLA

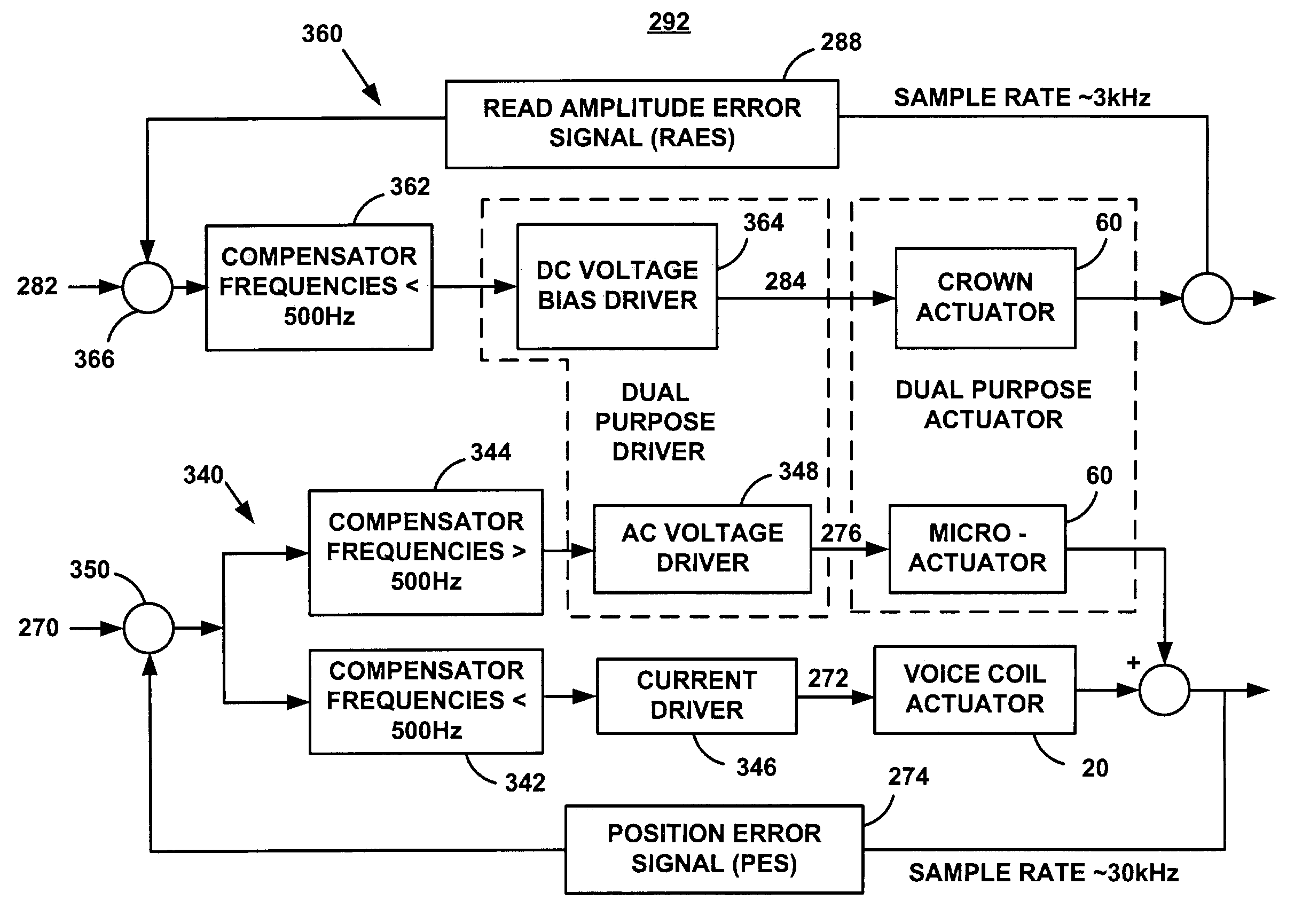

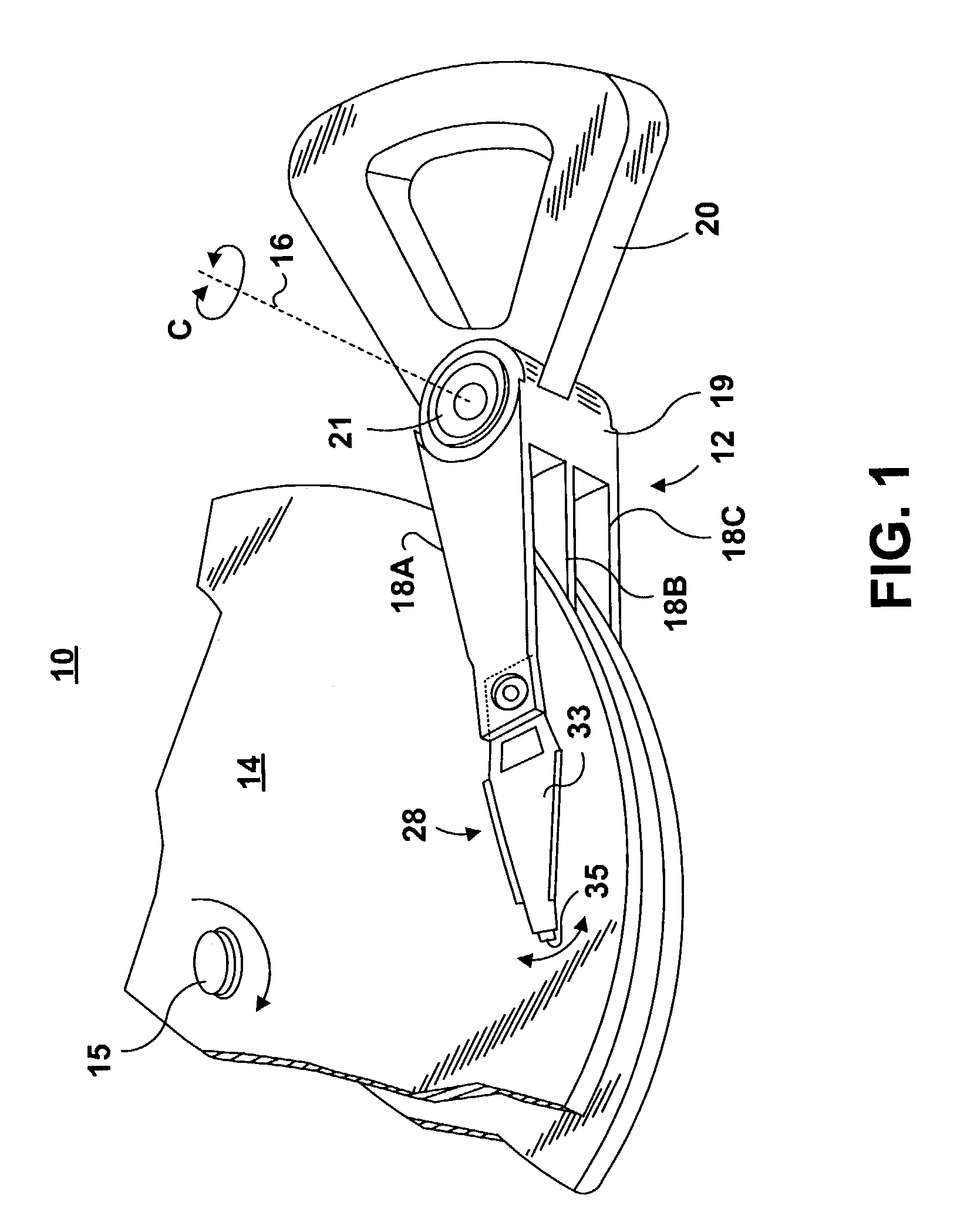

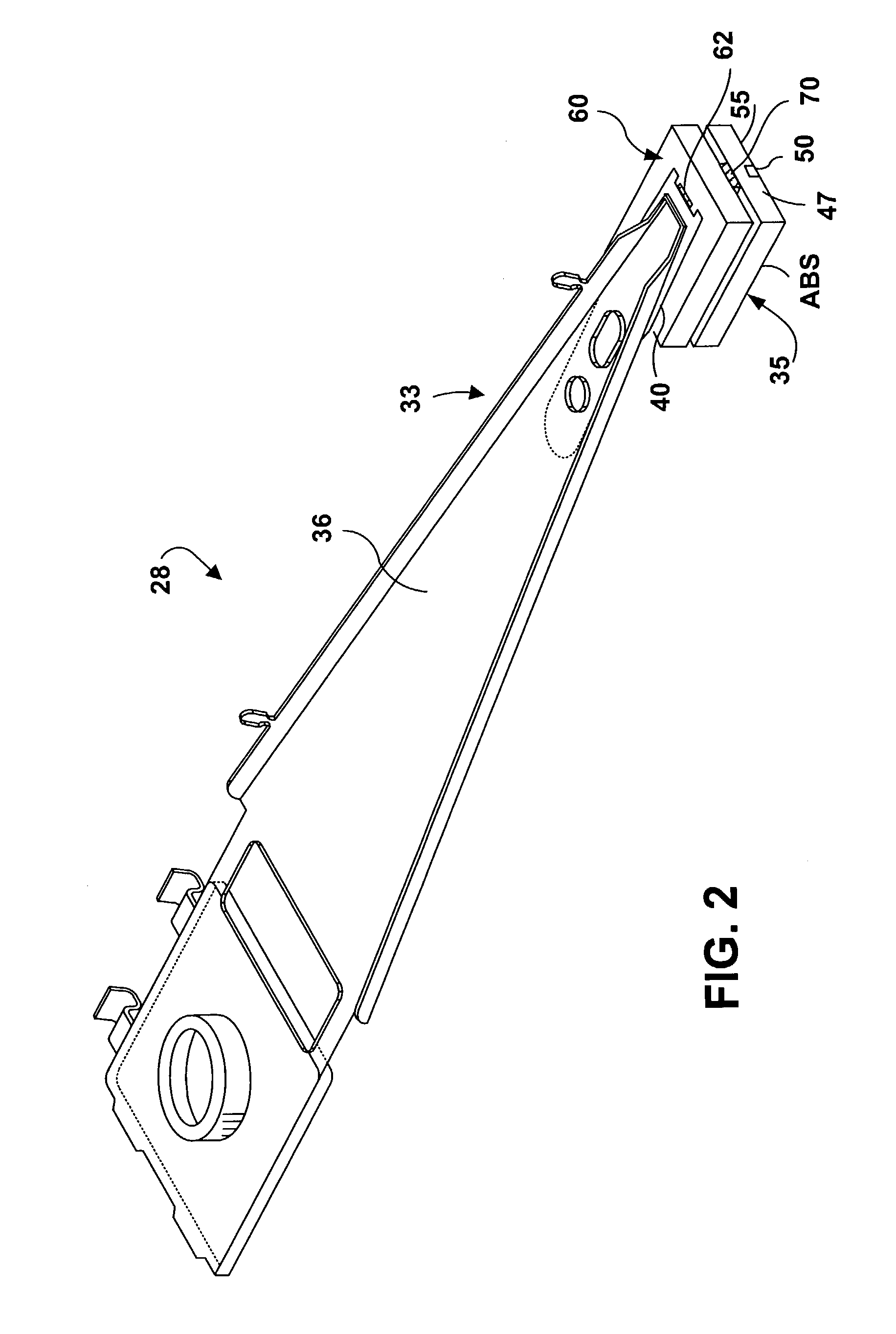

Active fly height control crown actuator

InactiveUS6950266B1Improve production efficiencyReduced altitude sensitivityAnalogue recording/reproducingDriving/moving recording headsData integrityMicro actuator

A micro-actuator is comprised of a piezoelectric motor mounted on a flexure tongue with offsetting hinges, to perform a fine positioning of the magnetic read / write head. The substantial gain in the frequency response greatly improves the performance and accuracy of the track-follow control for fine positioning. The simplicity of the enhanced micro-actuator design results in a manufacturing efficiency that enables a high-volume, low-cost production. The micro-actuator is interposed between a flexure tongue and a slider to perform an active control of the fly height of the magnetic read / write head. The induced slider crown and camber are used to compensate for thermal expansion of the magnetic read / write head, which causes the slider to be displaced at an unintended fly height position relative to the surface of the magnetic recording disk. The enhanced micro-actuator design results in reduced altitude sensitivity, ABS tolerances, and reduced stiction. The controlled fly height of the magnetic read / write head prevents a possibility of a head crash, while improving the performance and data integrity.

Owner:WESTERN DIGITAL TECH INC

Data services handler

A data services handler comprises an interface between a data store and applications that supply and consume data, and a real time information director (RTID) that transforms data under direction of polymorphic metadata that defines a security model and data integrity rules for application to the data.

Owner:VALTRUS INNOVATIONS LTD

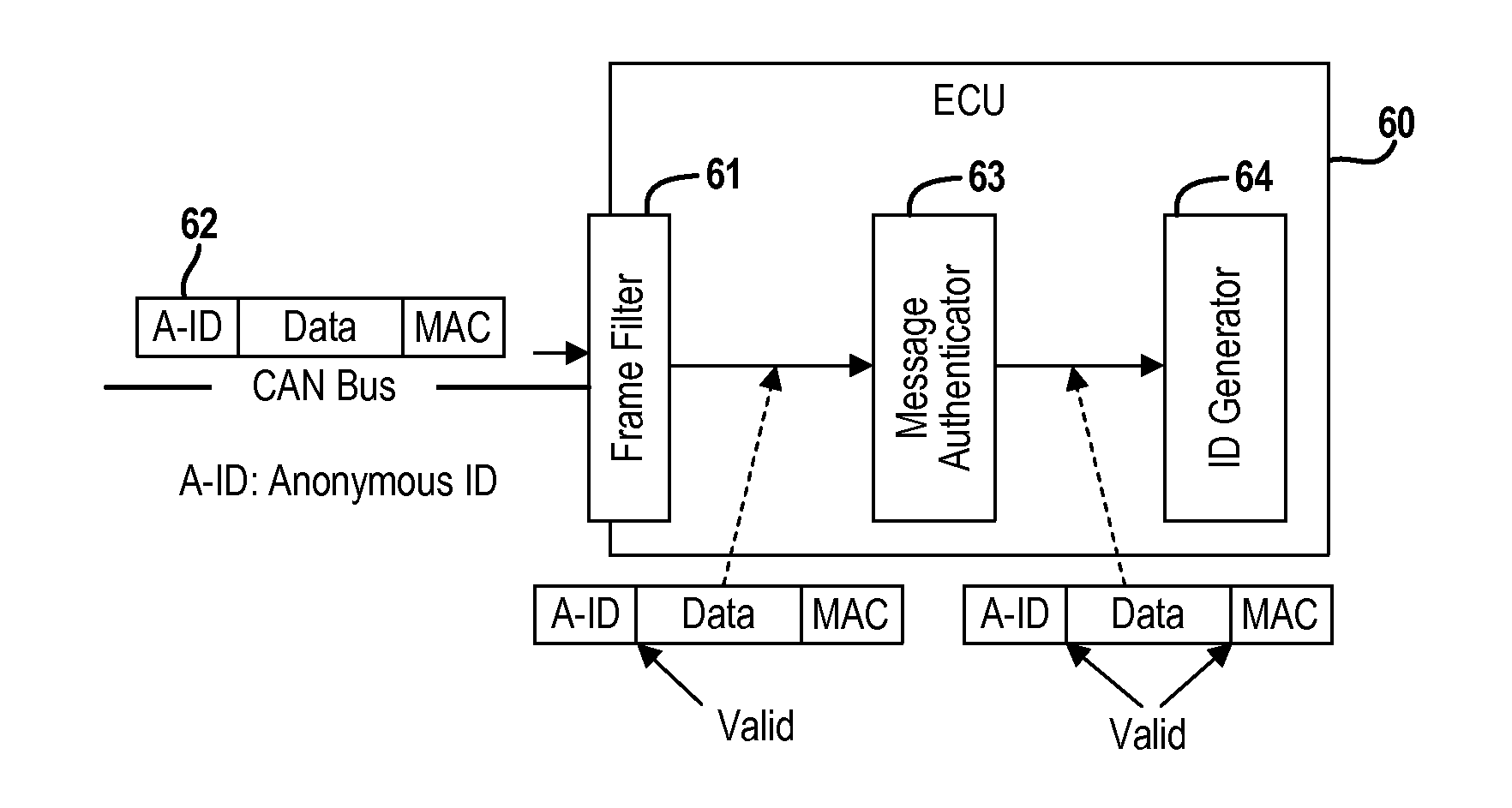

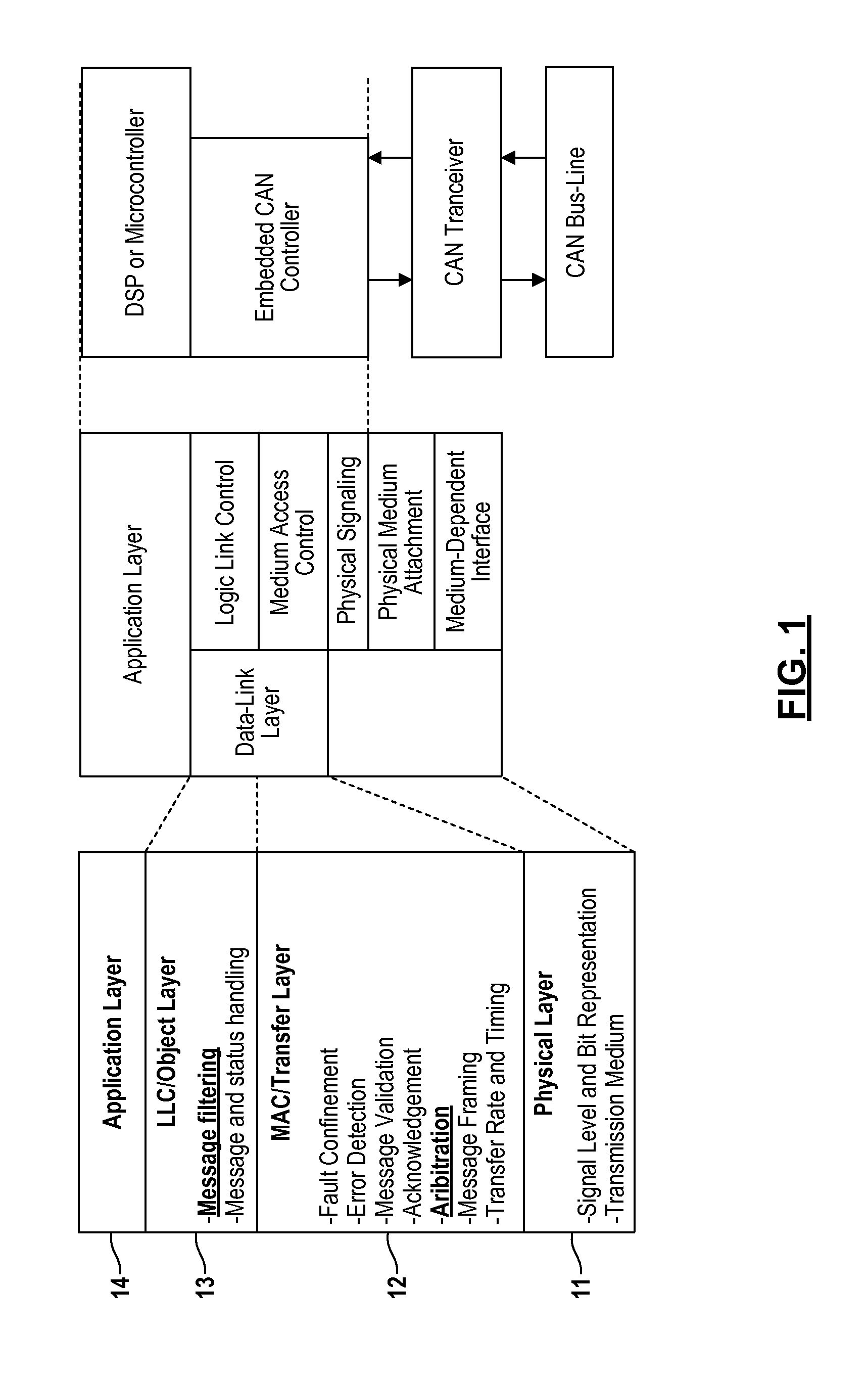

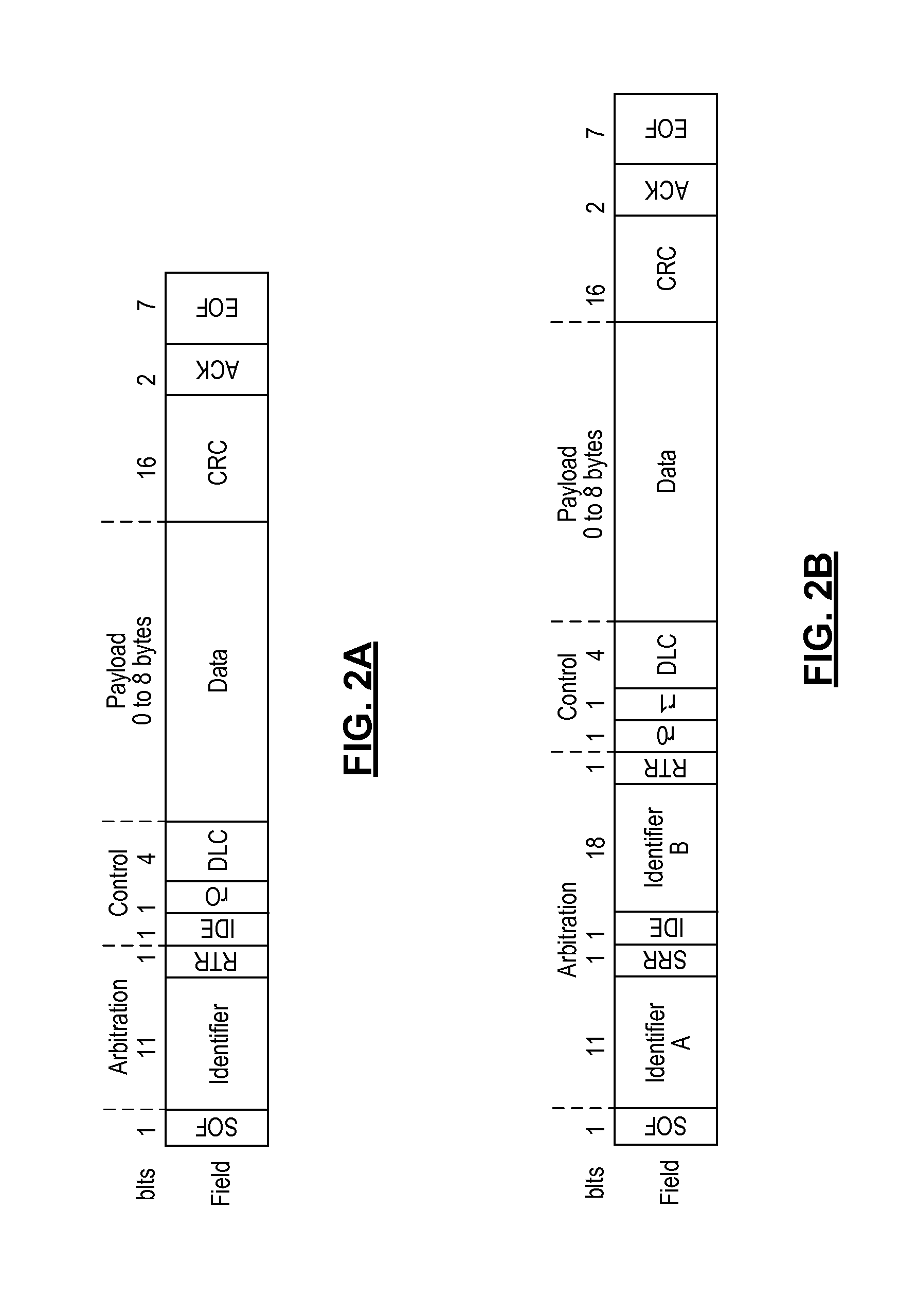

Real-Time Frame Authentication Using ID Anonymization In Automotive Networks

ActiveUS20150089236A1Reduce message authentication delay tmReduce delaysKey distribution for secure communicationSynchronising transmission/receiving encryption devicesComputer networkData integrity

A real-time frame authentication protocol is presented for in-vehicle networks. A frame identifier is made anonymous to unauthorized entities but identifiable by the authorized entities. Anonymous identifiers are generated on a per-frame basis and embedded into each data frame transmitted by a sending ECU. Receiving ECUs use the anonymous identifiers to filter incoming data frames before verifying data integrity. Invalid data frame are filtered without requiring any additional run-time computations.

Owner:RGT UNIV OF MICHIGAN

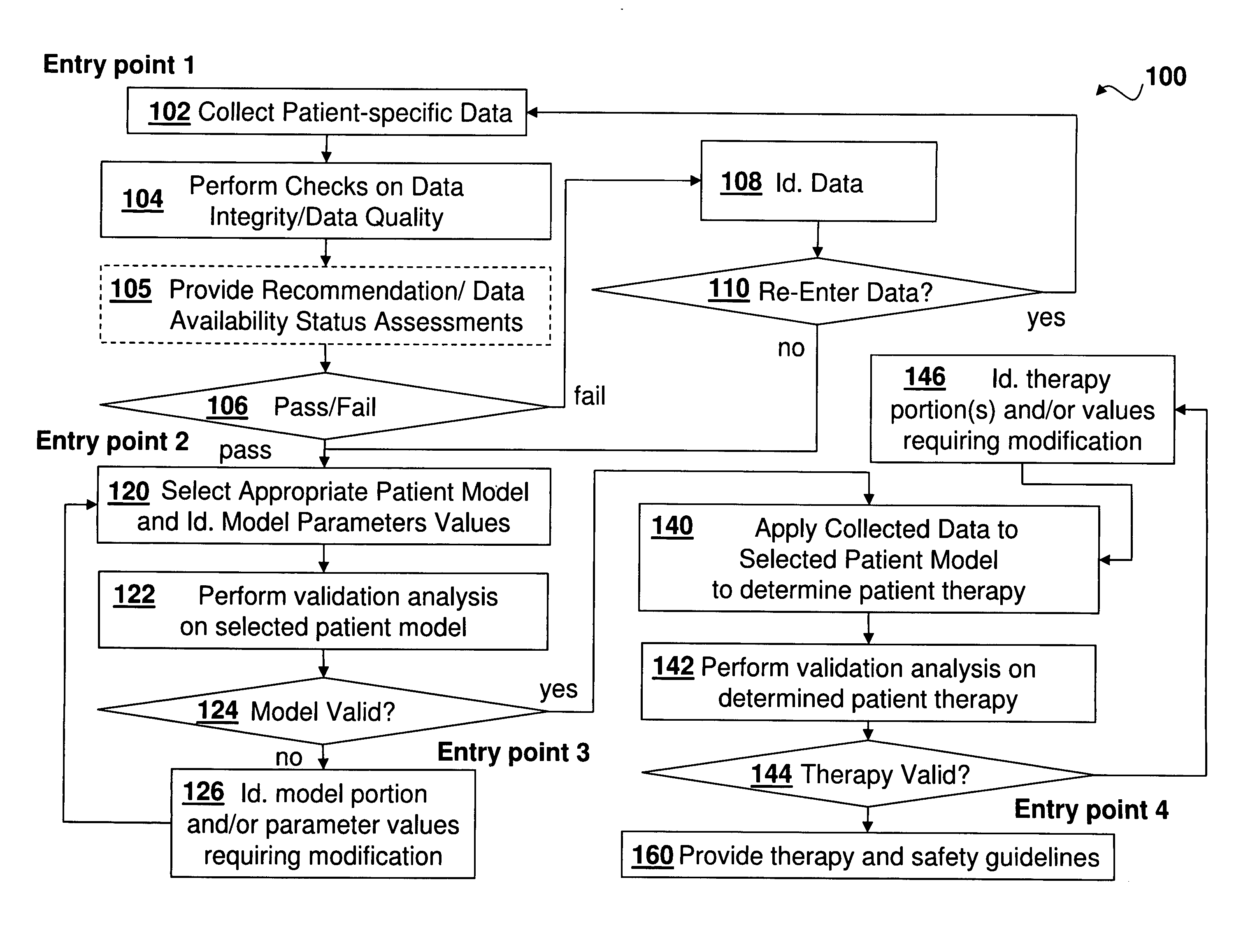

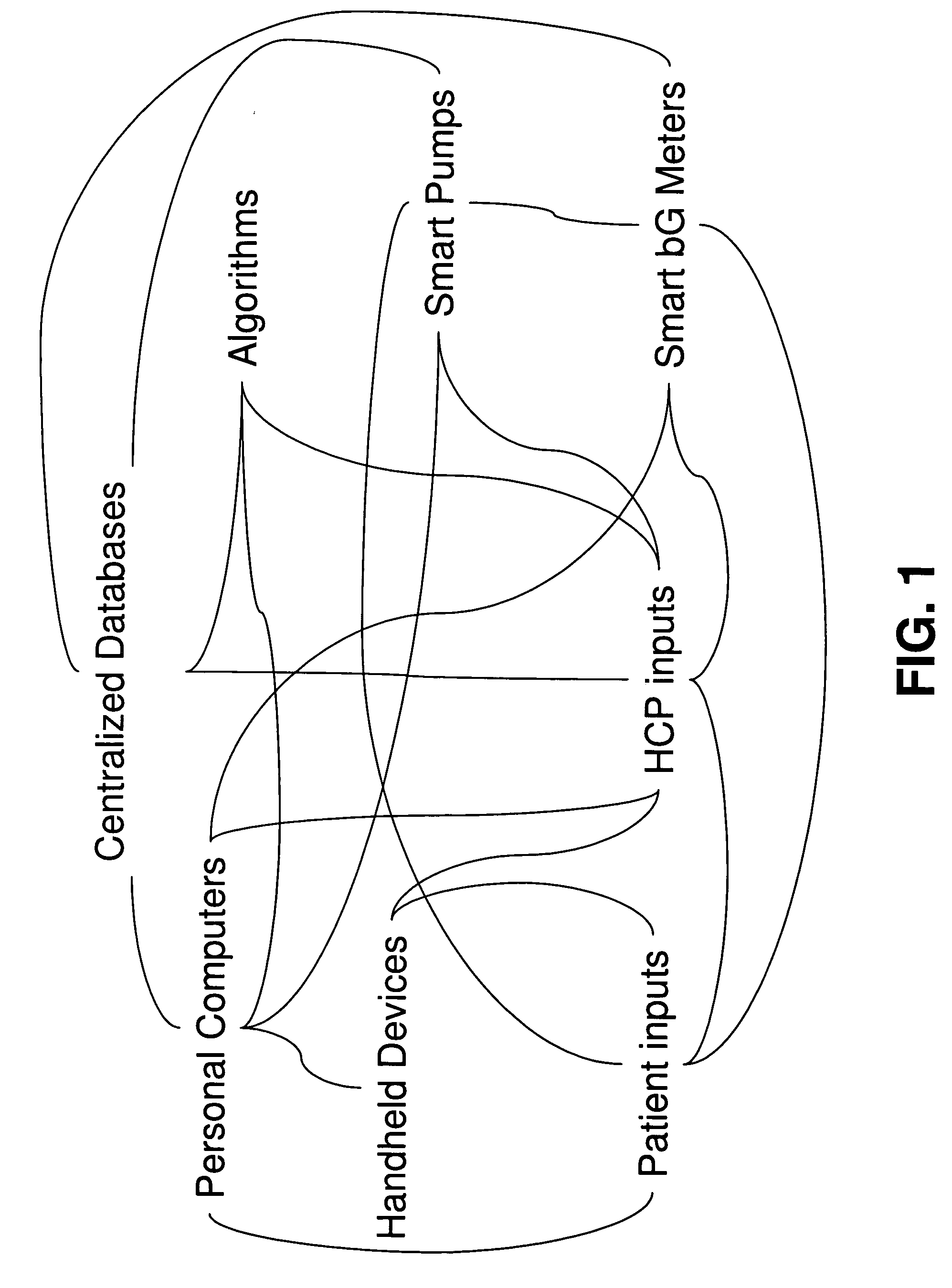

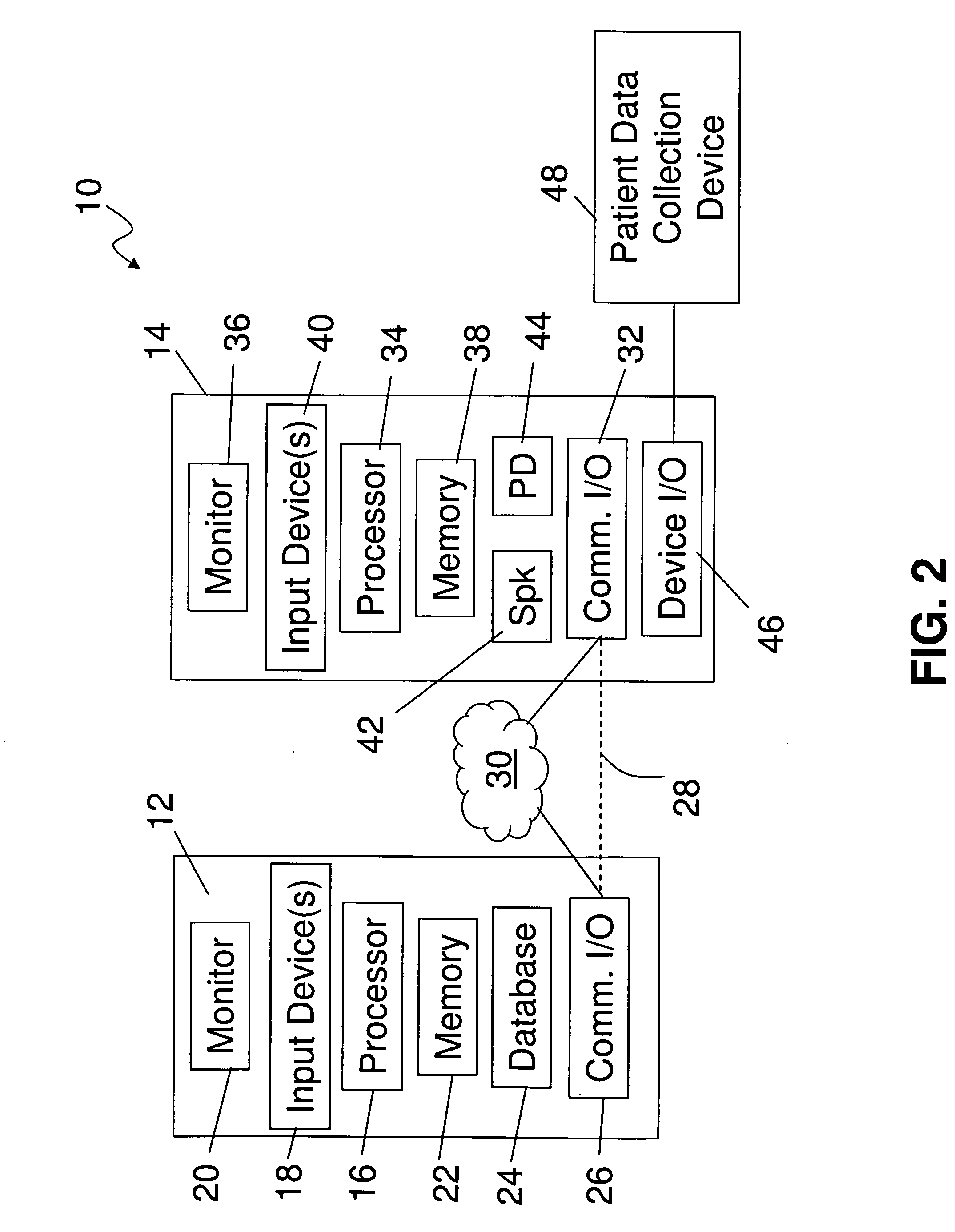

Medical Diagnosis, Therapy, And Prognosis System For Invoked Events And Methods Thereof

A diagnosis, therapy and prognosis system (DTPS) and method thereof to help either the healthcare provider or the patient in diagnosing, treating and interpreting data are disclosed. The apparatus provides data collection based on protocols, and mechanism for testing data integrity and accuracy. The data is then driven through an analysis engine to characterize in a quantitative sense the metabolic state of the patient's body. The characterization is then used in diagnosing the patient, determining therapy, evaluating algorithm strategies and offering prognosis of potential use case scenarios.

Owner:ROCHE DIABETES CARE INC

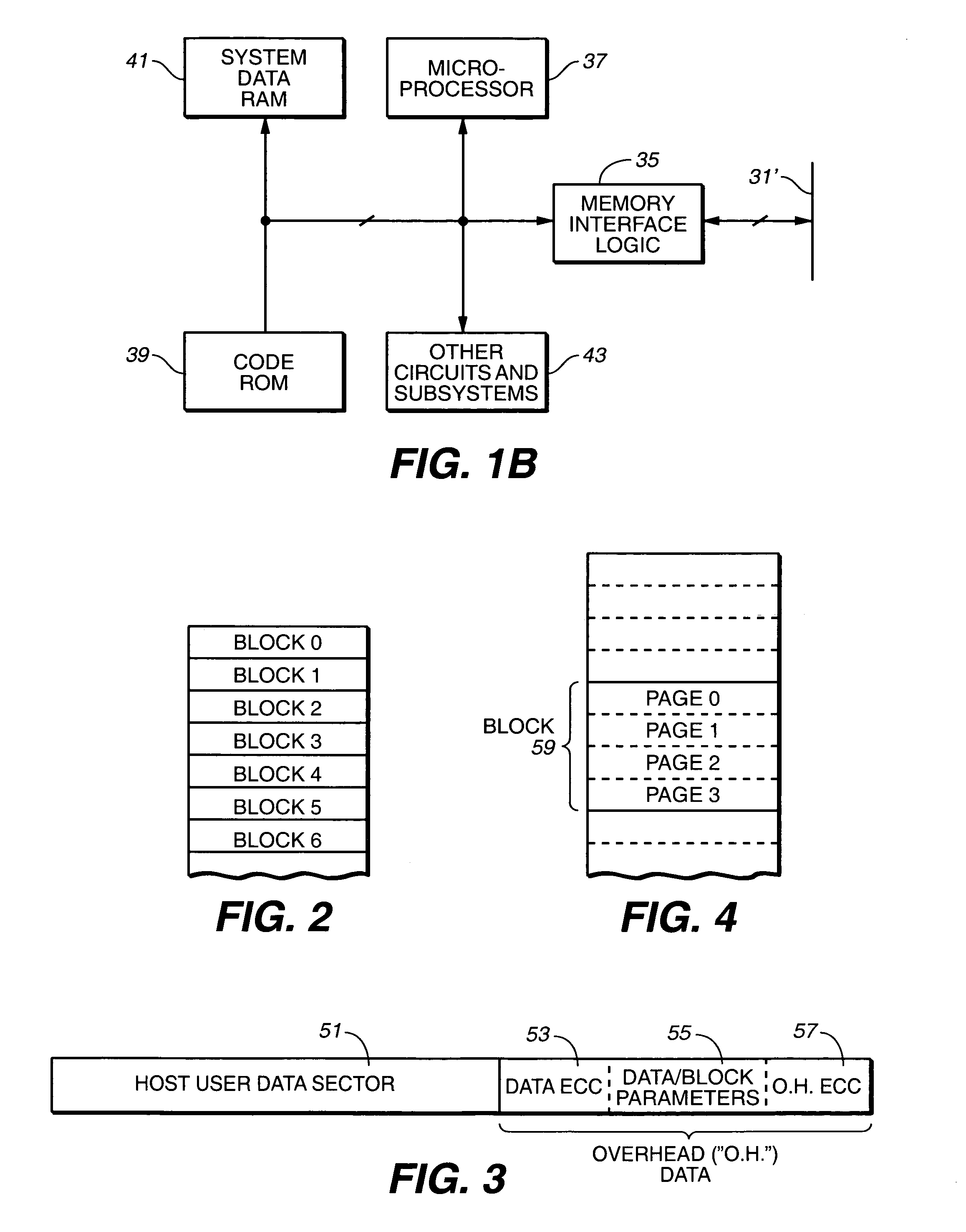

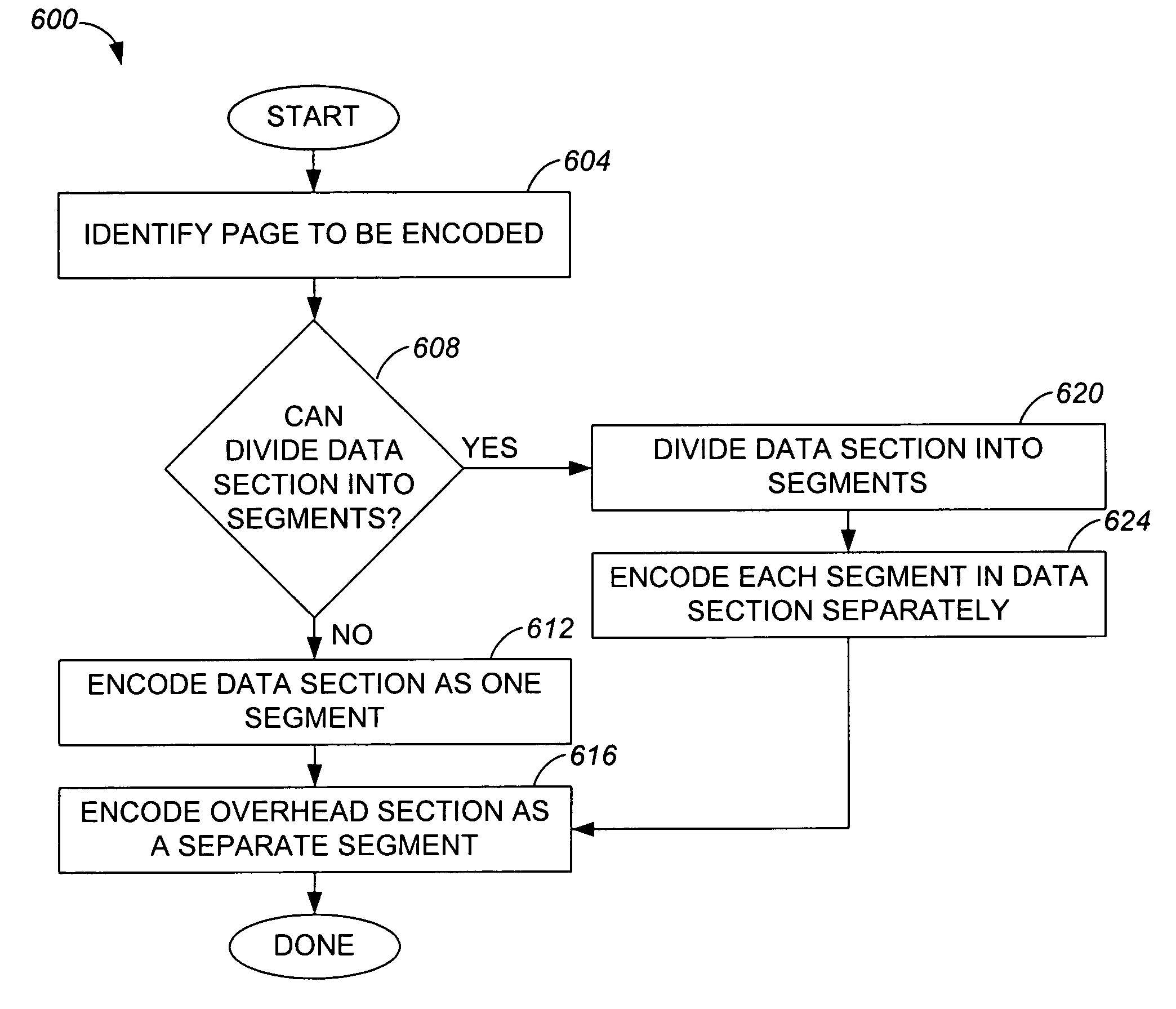

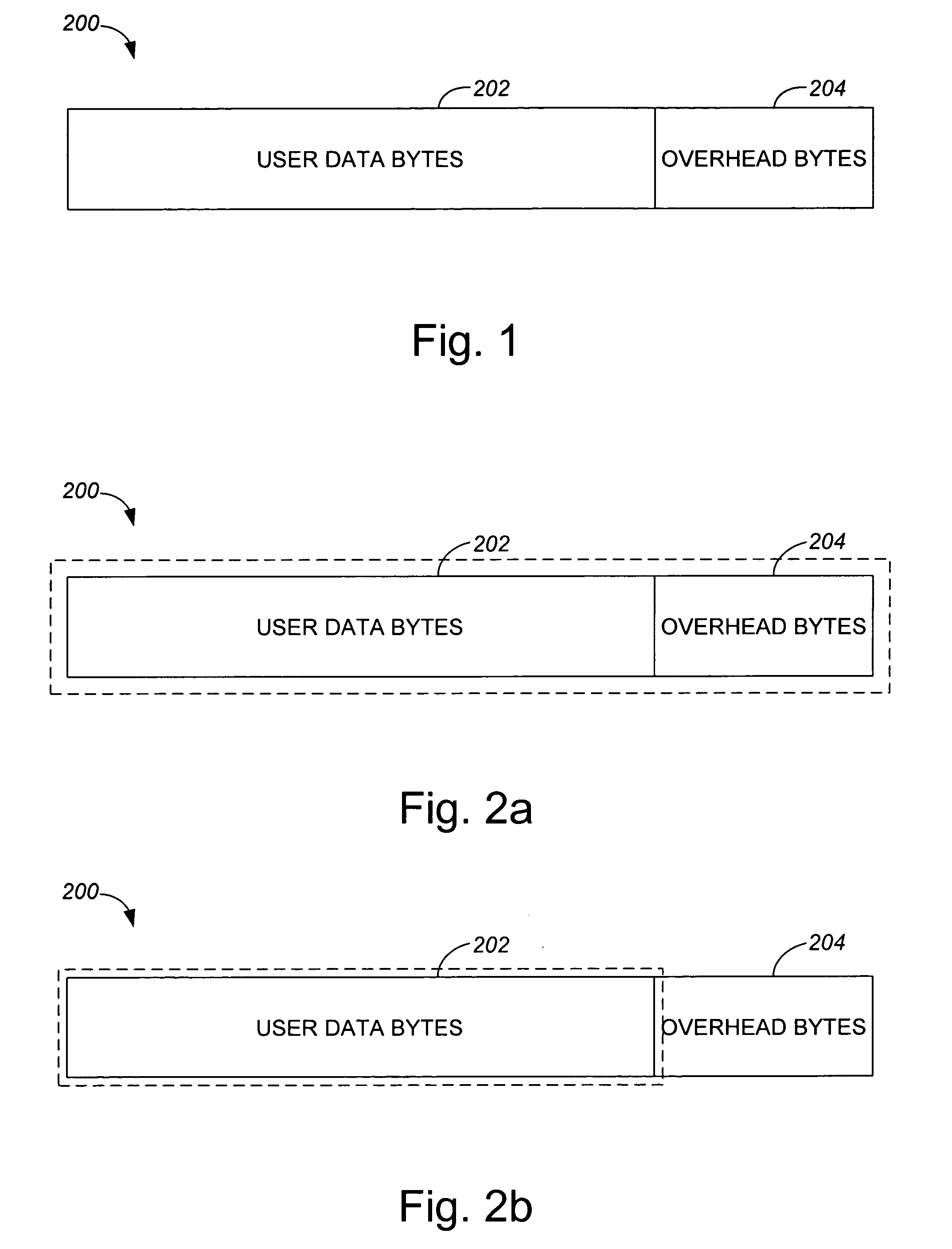

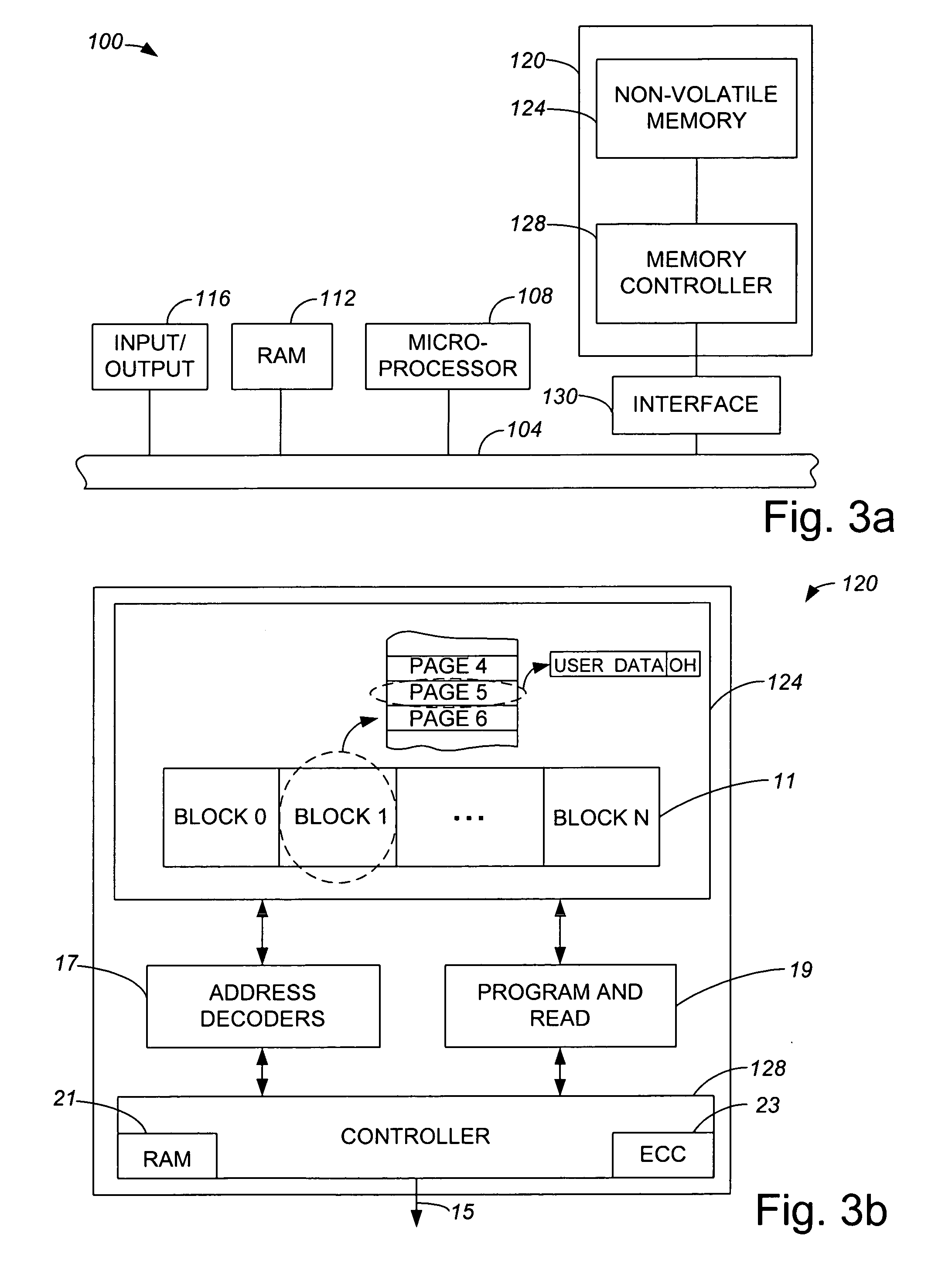

Method and apparatus for managing the integrity of data in non-volatile memory system

Methods and apparatus for encoding data associated with a page by dividing the page into segments and separately encoding the segments using extended error correction code (ECC) calculations are disclosed. According to one aspect of the present invention, a method for encoding data associated with a page which has a data area and an overhead area within a non-volatile memory of a memory system includes dividing at least a part of the page into at least two segments of the data, the at least two segments of the data including a first segment and a second segment, and performing ECC calculations on the first segment to encode the first segment. The method also includes performing the ECC calculations on the second segment to encode the second segment substantially separately from the first segment.

Owner:SANDISK TECH LLC

Systems and methods for processing data flows

InactiveUS20080133518A1Increased complexityAvoid problemsPlatform integrity maintainanceProgram controlData integrityData stream

A flow processing facility, which uses a set of artificial neurons for pattern recognition, such as a self-organizing map, in order to provide security and protection to a computer or computer system supports unified threat management based at least in part on patterns relevant to a variety of types of threats that relate to computer systems, including computer networks. Flow processing for switching, security, and other network applications, including a facility that processes a data flow to address patterns relevant to a variety of conditions are directed at internal network security, virtualization, and web connection security. A flow processing facility for inspecting payloads of network traffic packets detects security threats and intrusions across accessible layers of the IP-stack by applying content matching and behavioral anomaly detection techniques based on regular expression matching and self-organizing maps. Exposing threats and intrusions within packet payload at or near real-time rates enhances network security from both external and internal sources while ensuring security policy is rigorously applied to data and system resources. Intrusion Detection and Protection (IDP) is provided by a flow processing facility that processes a data flow to address patterns relevant to a variety of types of network and data integrity threats that relate to computer systems, including computer networks.

Owner:BLUE COAT SYST INC

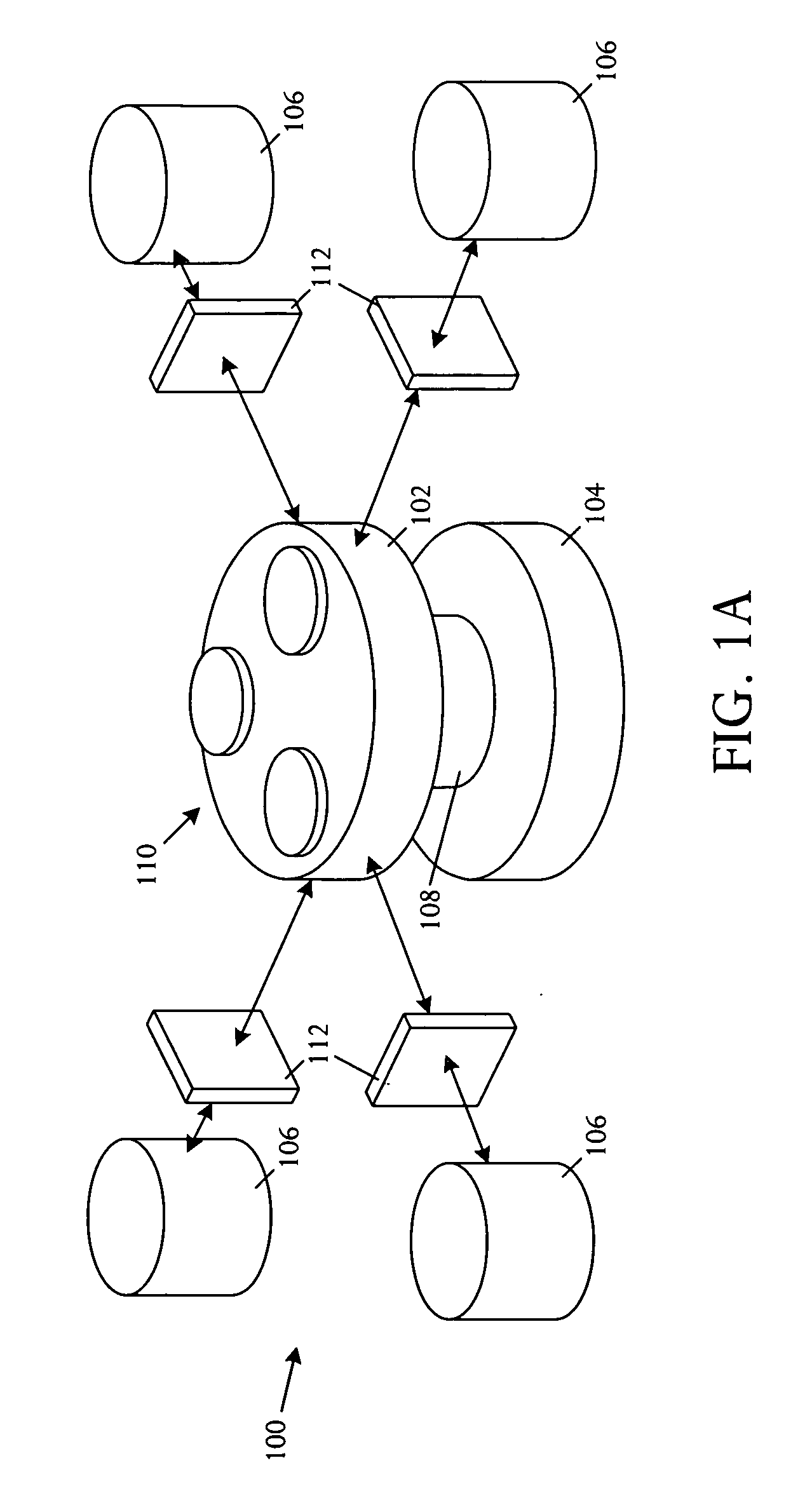

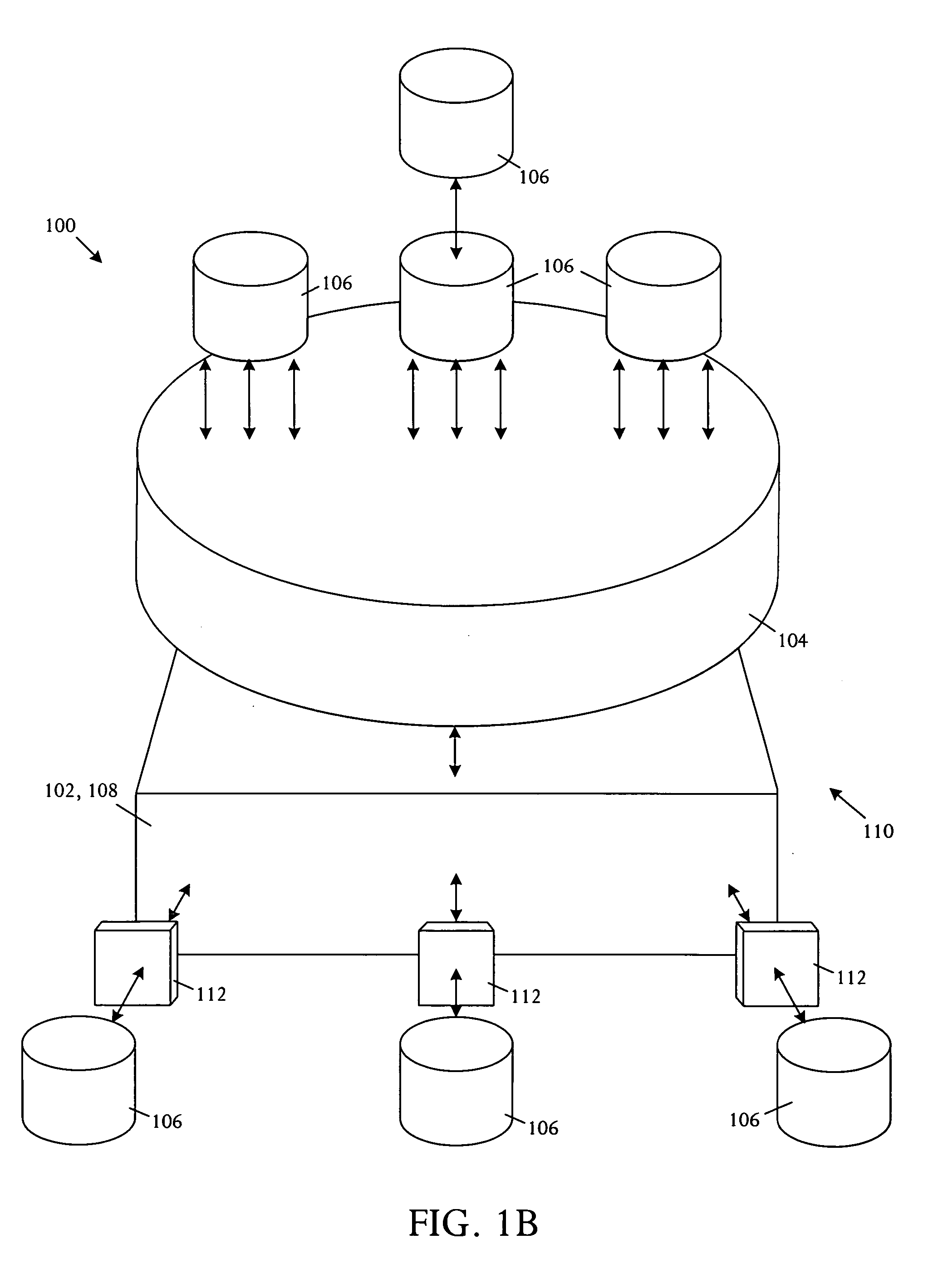

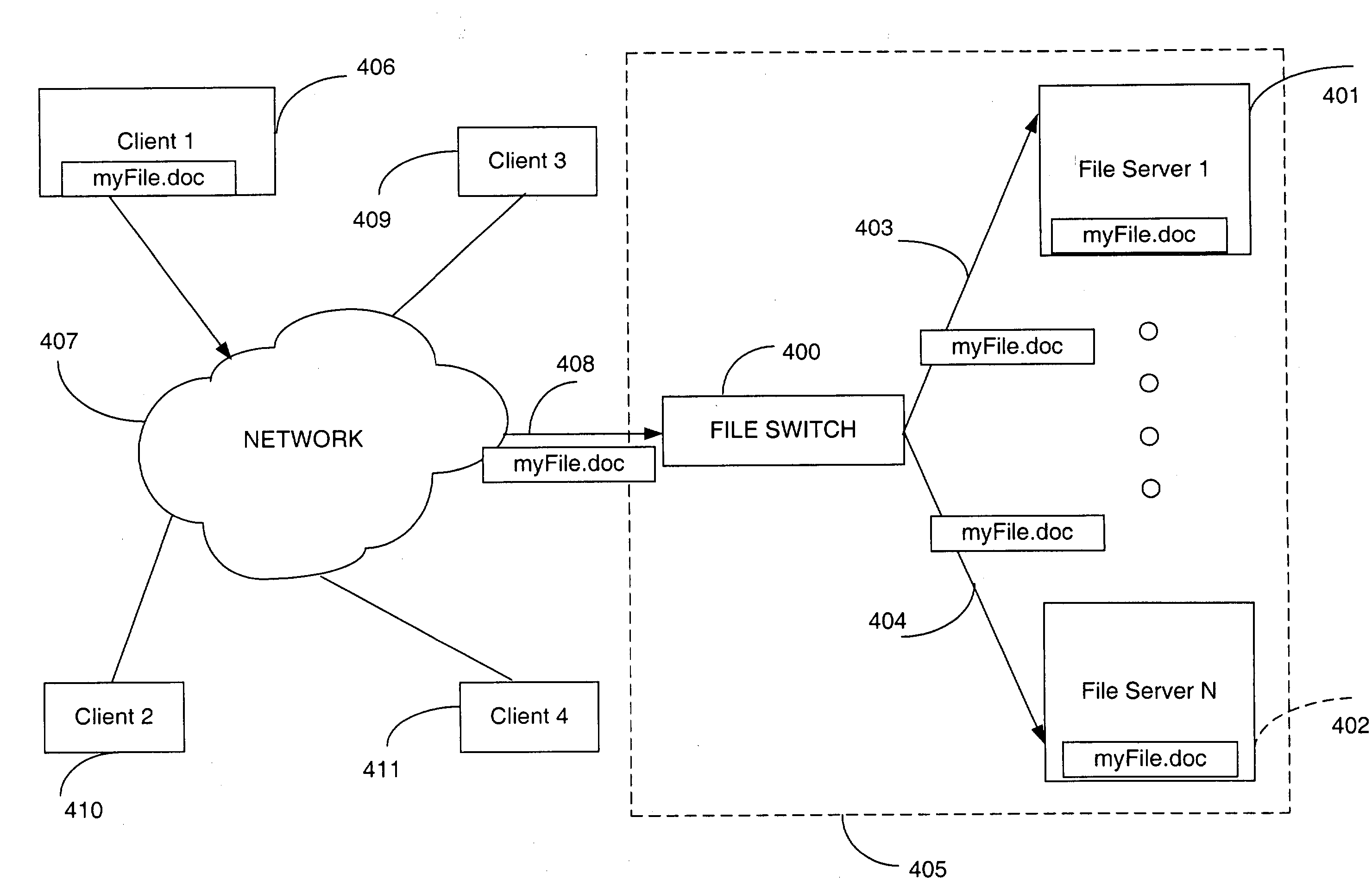

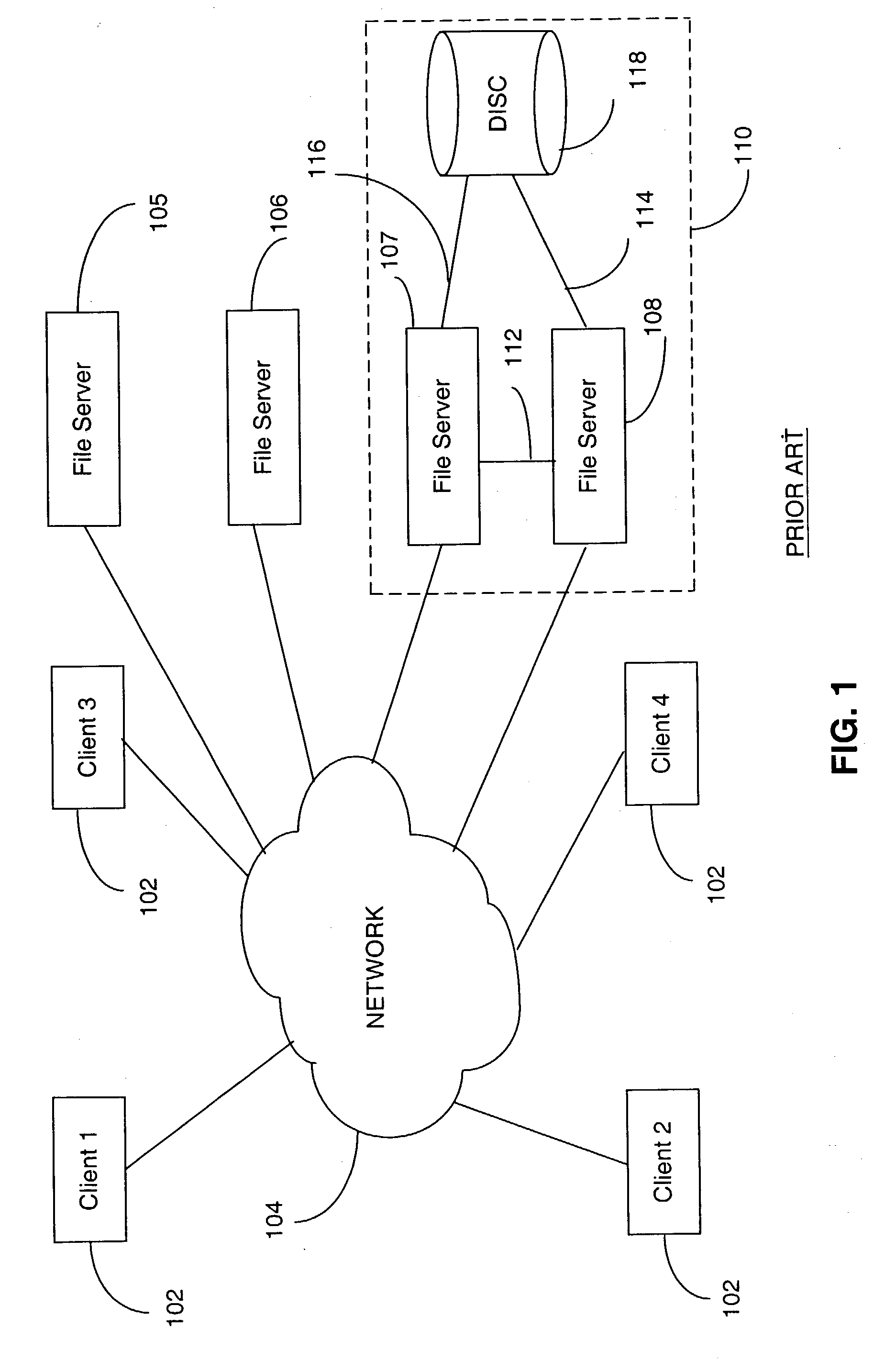

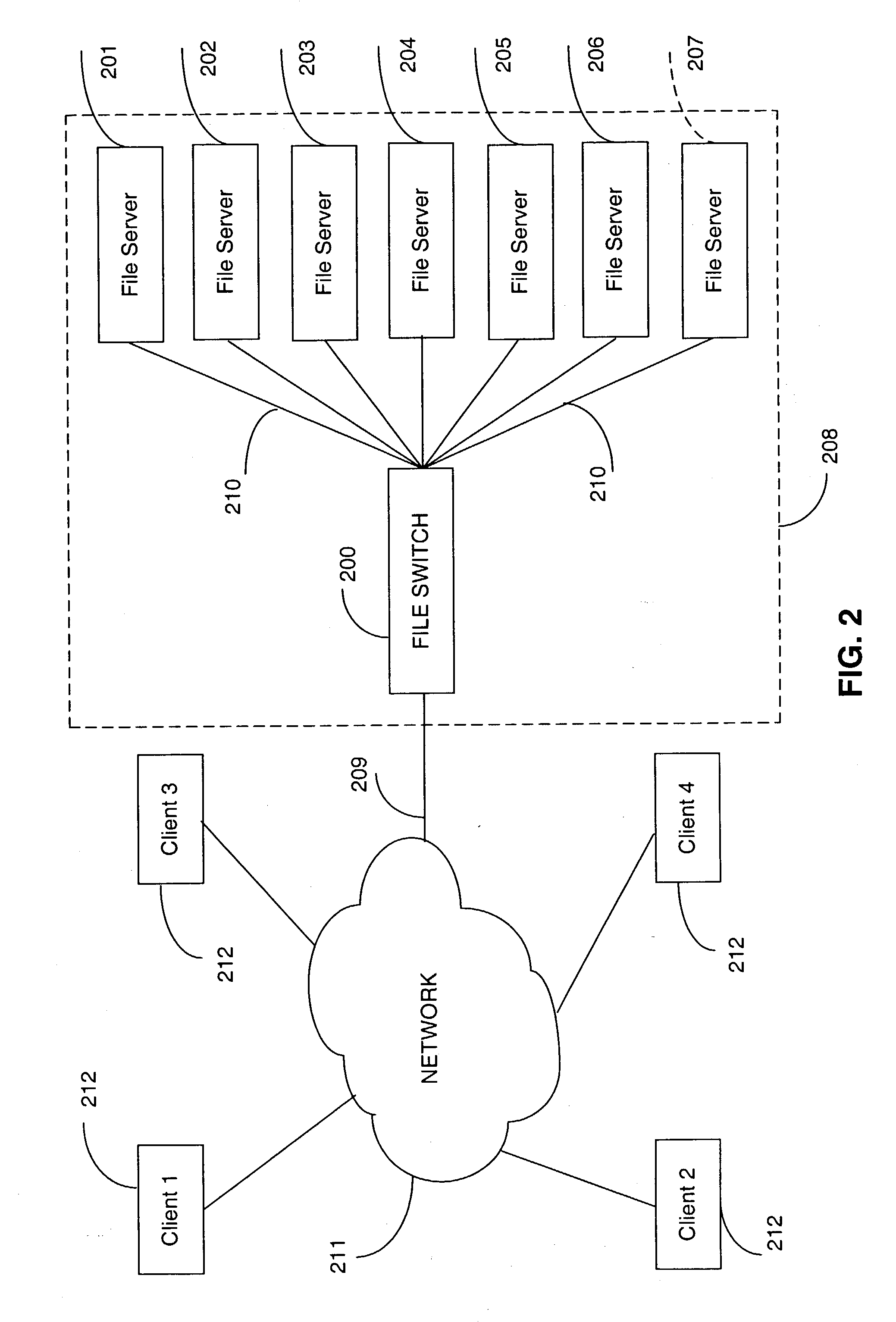

Aggregated opportunistic lock and aggregated implicit lock management for locking aggregated files in a switched file system

InactiveUS20040133652A1Improve performanceDigital data information retrievalError detection/correctionData integrityFile system

A switched file system, also termed a file switch, is logically positioned between client computers and file servers in a computer network. The file switch distributes user files among multiple file servers using aggregated file, transaction and directory mechanisms. The file switch supports caching of a particular aggregated data file either locally in a client computer or in the file switch in accordance with the exclusivity level of an opportunistic lock granted to the entity that requested caching. The opportunistic lock can be obtained either on the individual data files stored in the file servers or on the metadata files that contain the location of each individual data files in the file servers. The opportunistic lock can be broken if another client tries to access the aggregated data file. Opportunistic locks allows client-side caching while preserving data integrity and consistency, hence the performance of the switched file system is increased.

Owner:RPX CORP

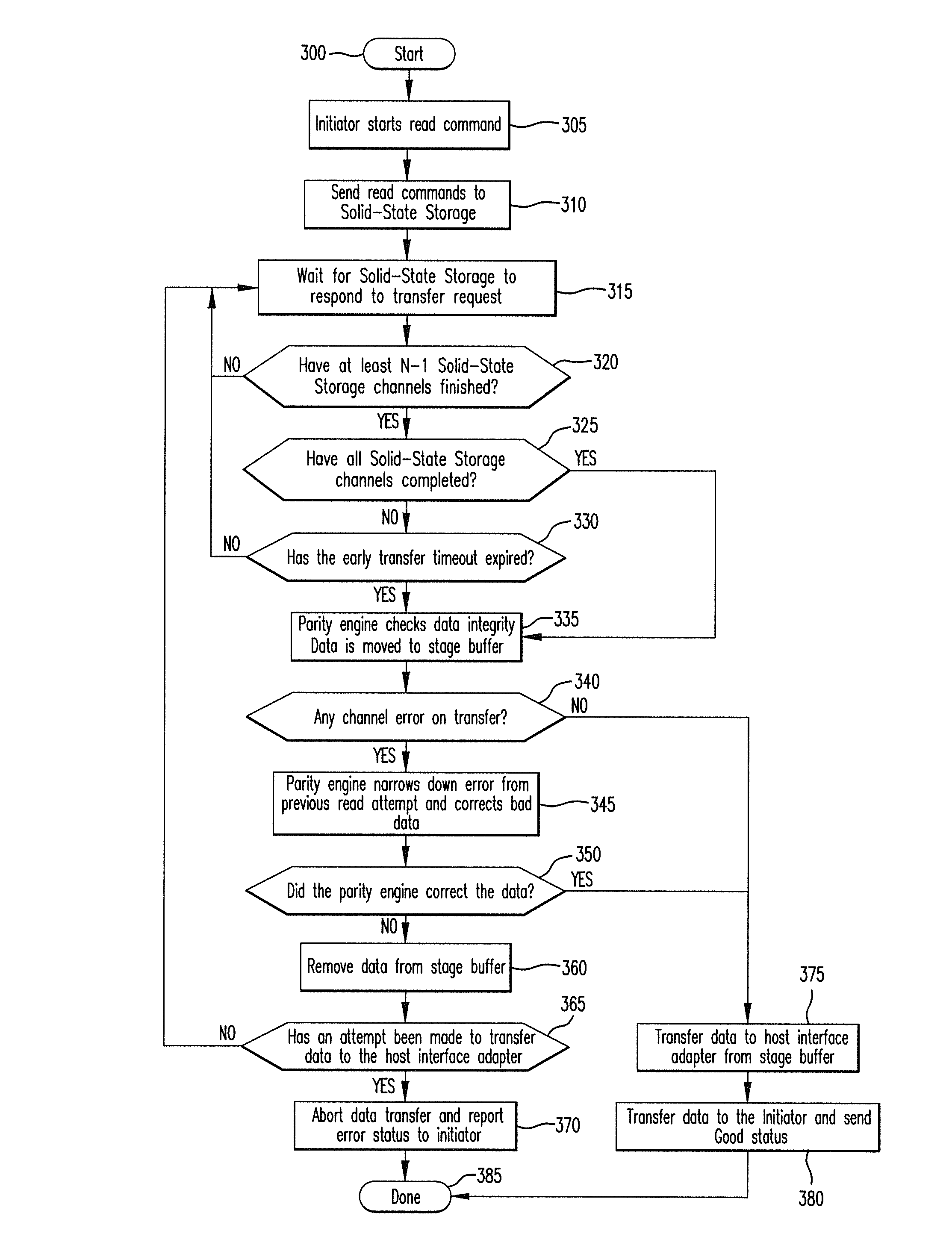

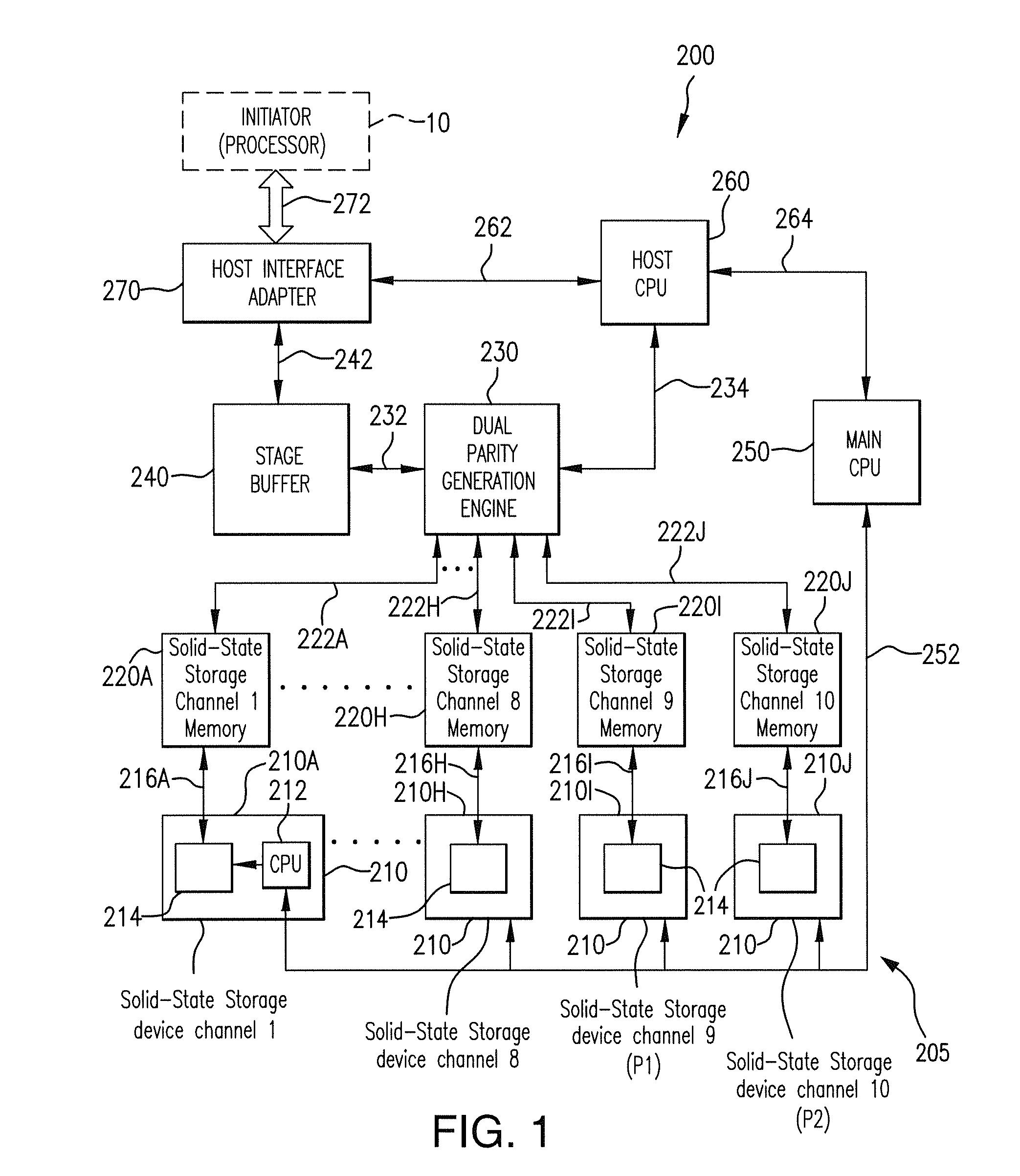

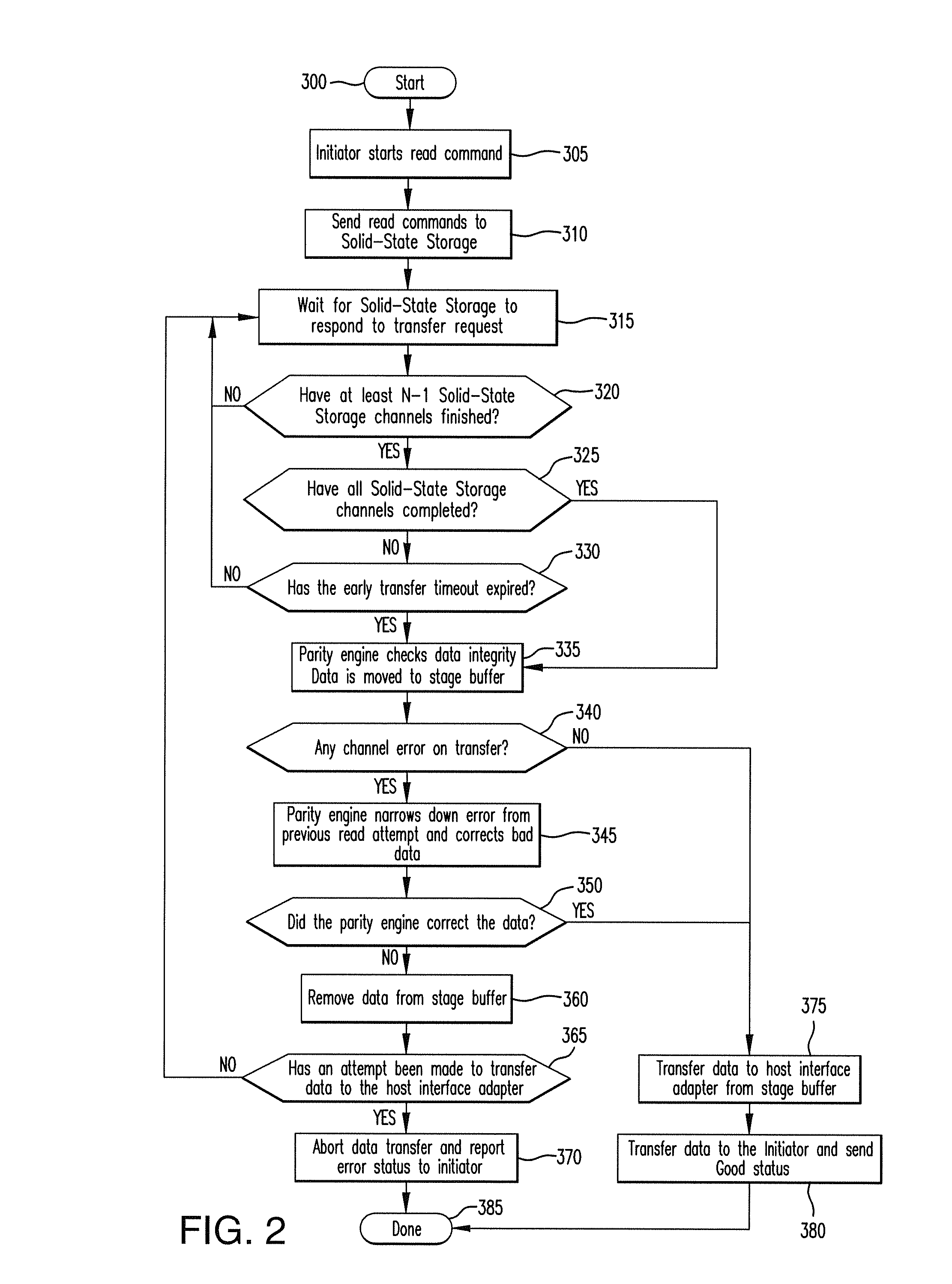

Method for reducing latency in a solid-state memory system while maintaining data integrity

ActiveUS8661218B1Easy accessReduce energy consumptionError prevention/detection by using return channelTransmission systemsSolid-state storageFault tolerance

A latency reduction method for read operations of an array of N solid-state storage devices having n solid-state storage devices for data storage and p solid-state storage devices for storing parity data is provided. Utilizing the parity generation engine fault tolerance for a loss of valid data from at least two of the N solid-state storage devices, the integrity of the data is determined when N−1 of the solid-state storage devices have completed executing a read command. If the data is determined to be valid, the missing data of the Nth solid-state storage device is reconstructed and the data transmitted to the requesting processor. By that arrangement the time necessary for the Nth solid-state storage device to complete execution of the read command is saved, thereby improving the performance of the solid-state memory system.

Owner:DATADIRECT NETWORKS

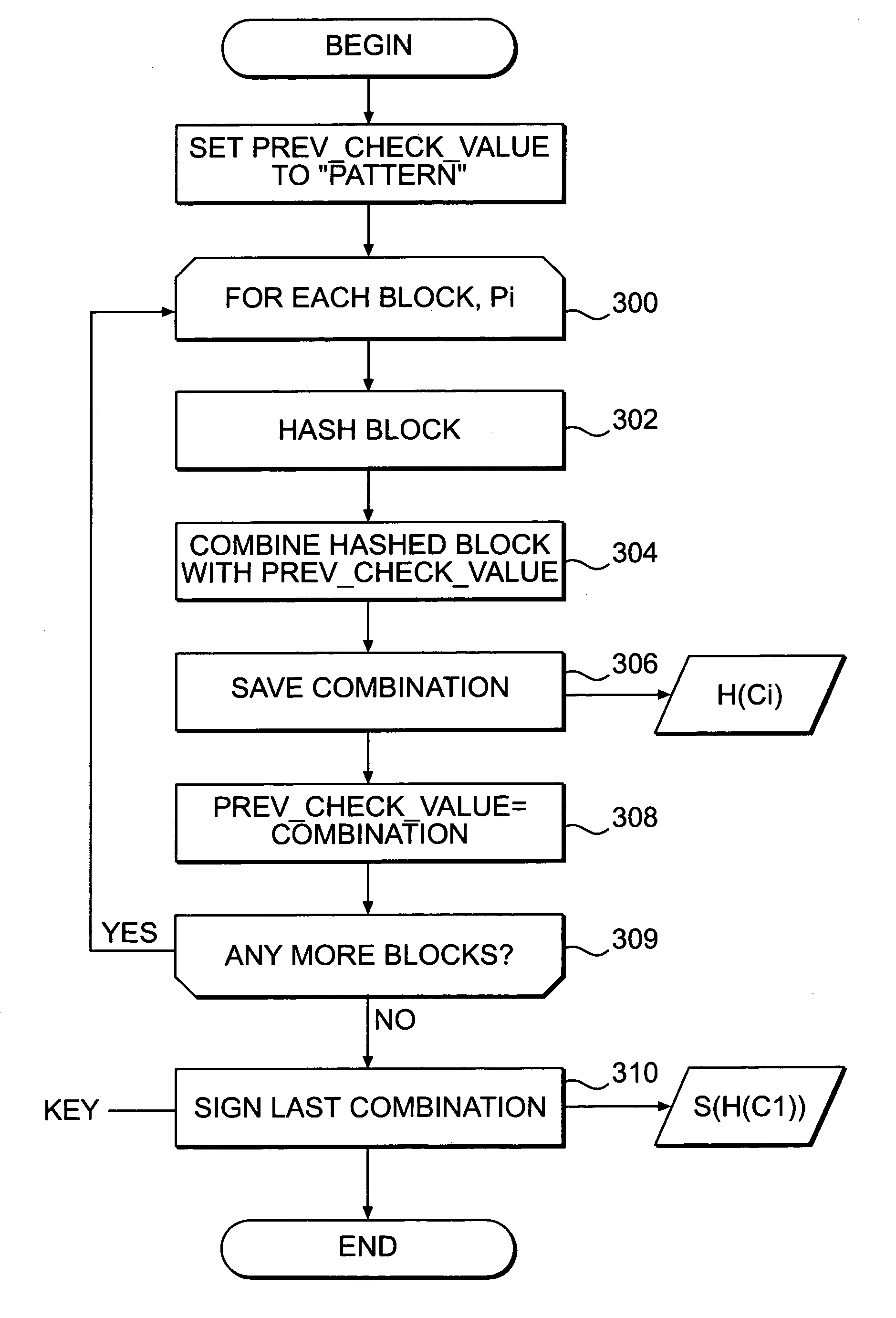

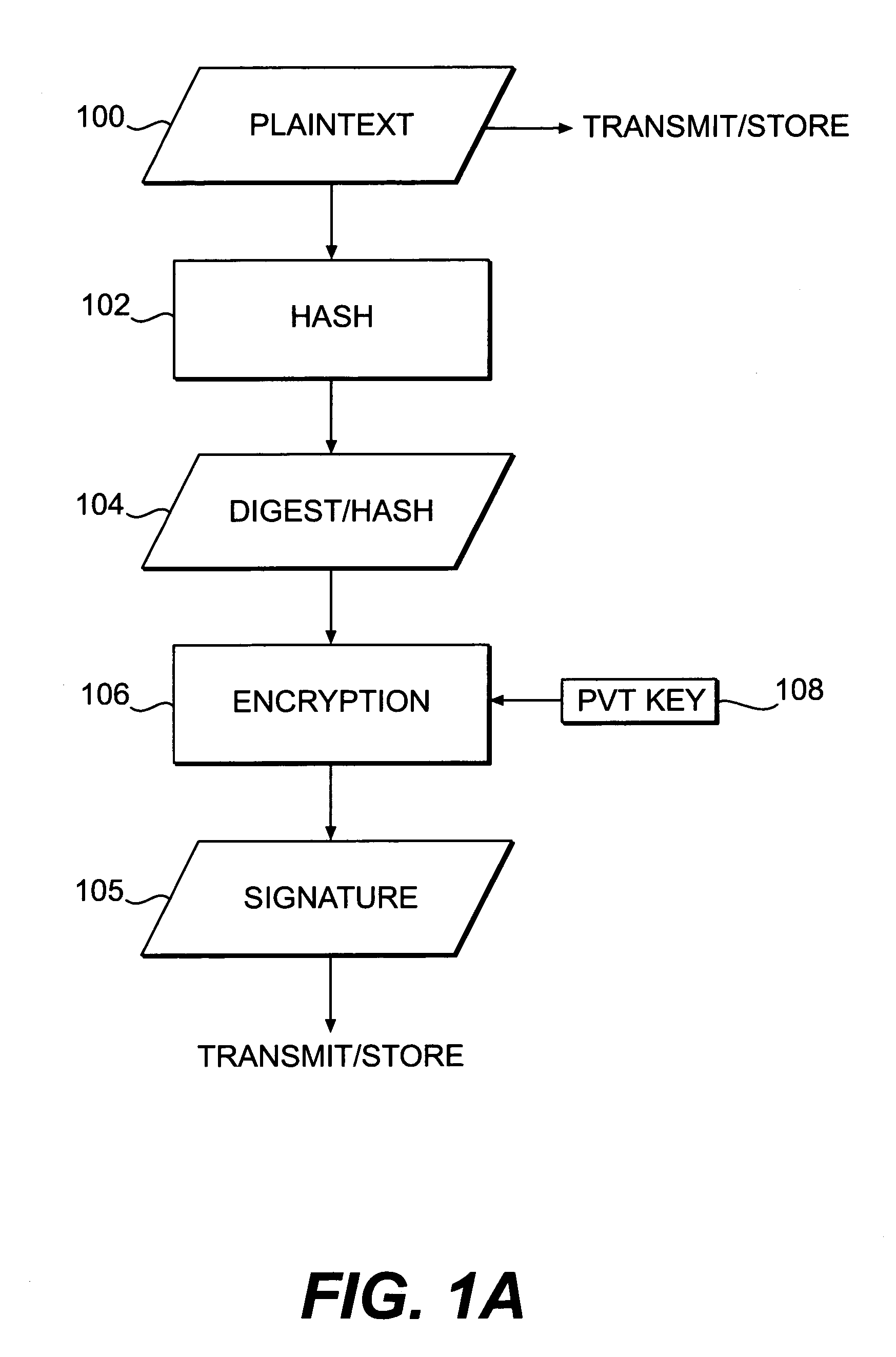

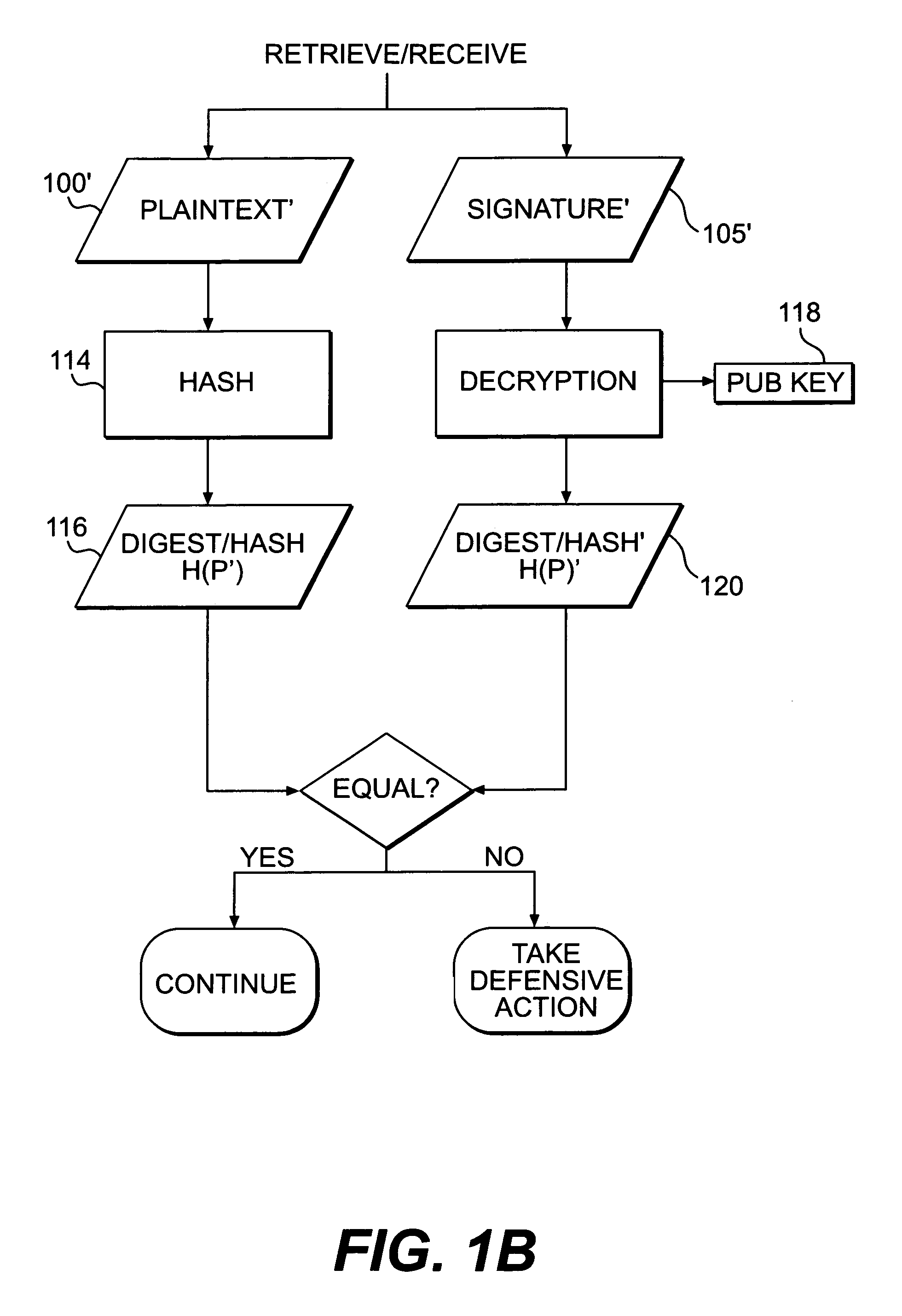

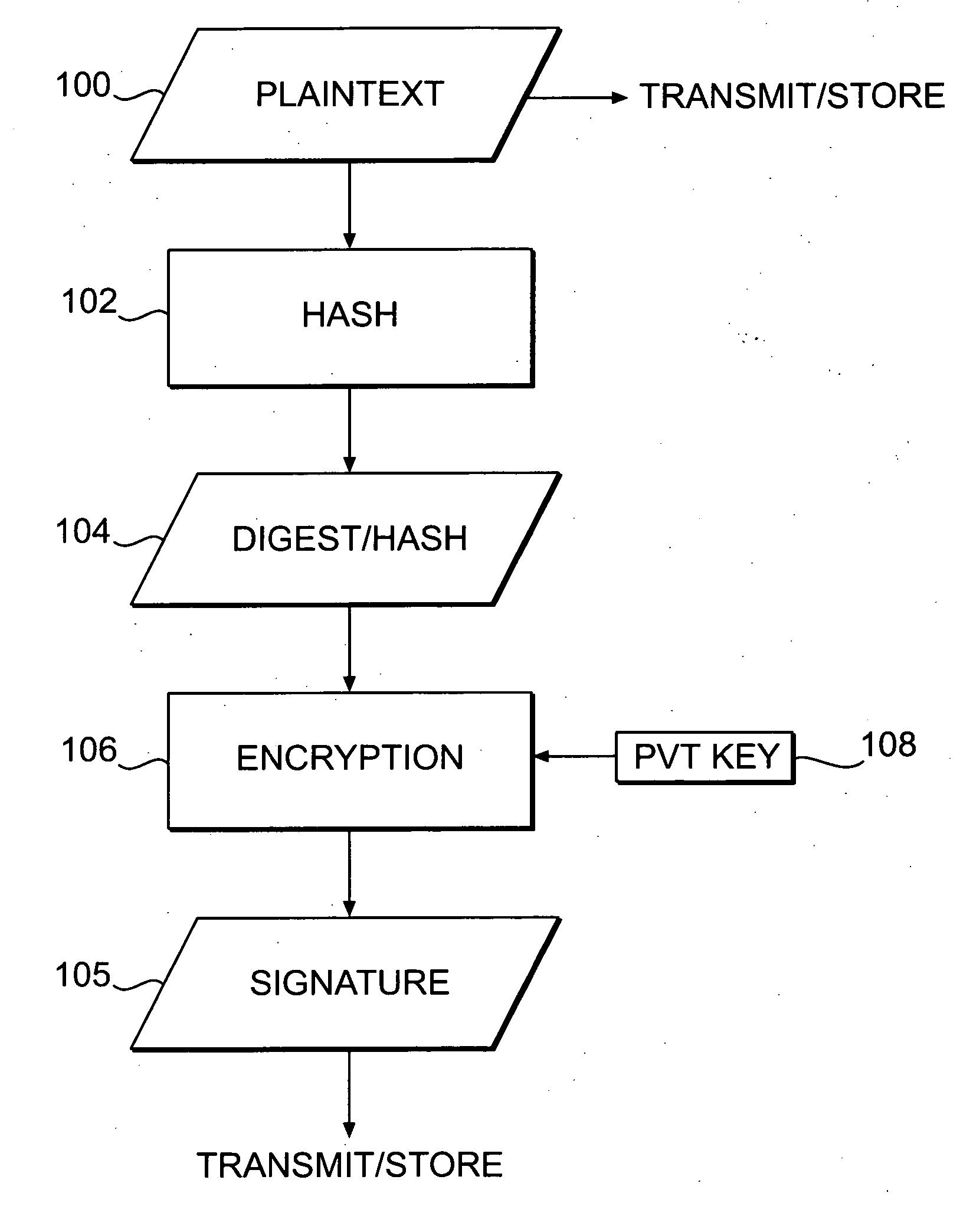

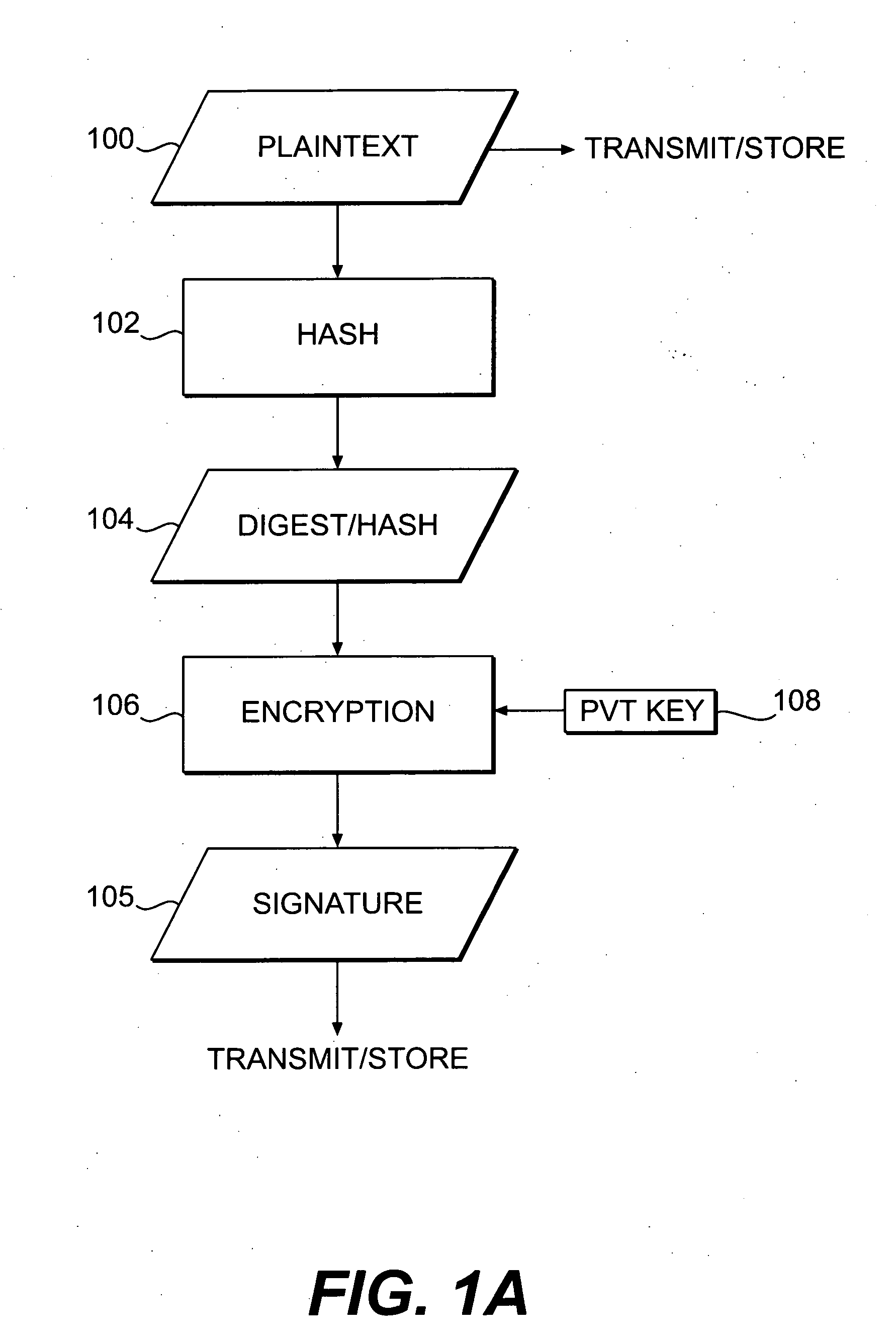

Systems and methods for authenticating and protecting the integrity of data streams and other data

InactiveUS6959384B1Authentication is convenientDetecting errorUser identity/authority verificationDigital data protectionError checkFault tolerance

Systems and methods are disclosed for enabling a recipient of a cryptographically-signed electronic communication to verify the authenticity of the communication on-the-fly using a signed chain of check values, the chain being constructed from the original content of the communication, and each check value in the chain being at least partially dependent on the signed root of the chain and a portion of the communication. Fault tolerance can be provided by including error-check values in the communication that enable a decoding device to maintain the chain's security in the face of communication errors. In one embodiment, systems and methods are provided for enabling secure quasi-random access to a content file by constructing a hierarchy of hash values from the file, the hierarchy deriving its security in a manner similar to that used by the above-described chain. The hierarchy culminates with a signed hash that can be used to verify the integrity of other hash values in the hierarchy, and these other hash values can, in turn, be used to efficiently verify the authenticity of arbitrary portions of the content file.

Owner:INTERTRUST TECH CORP

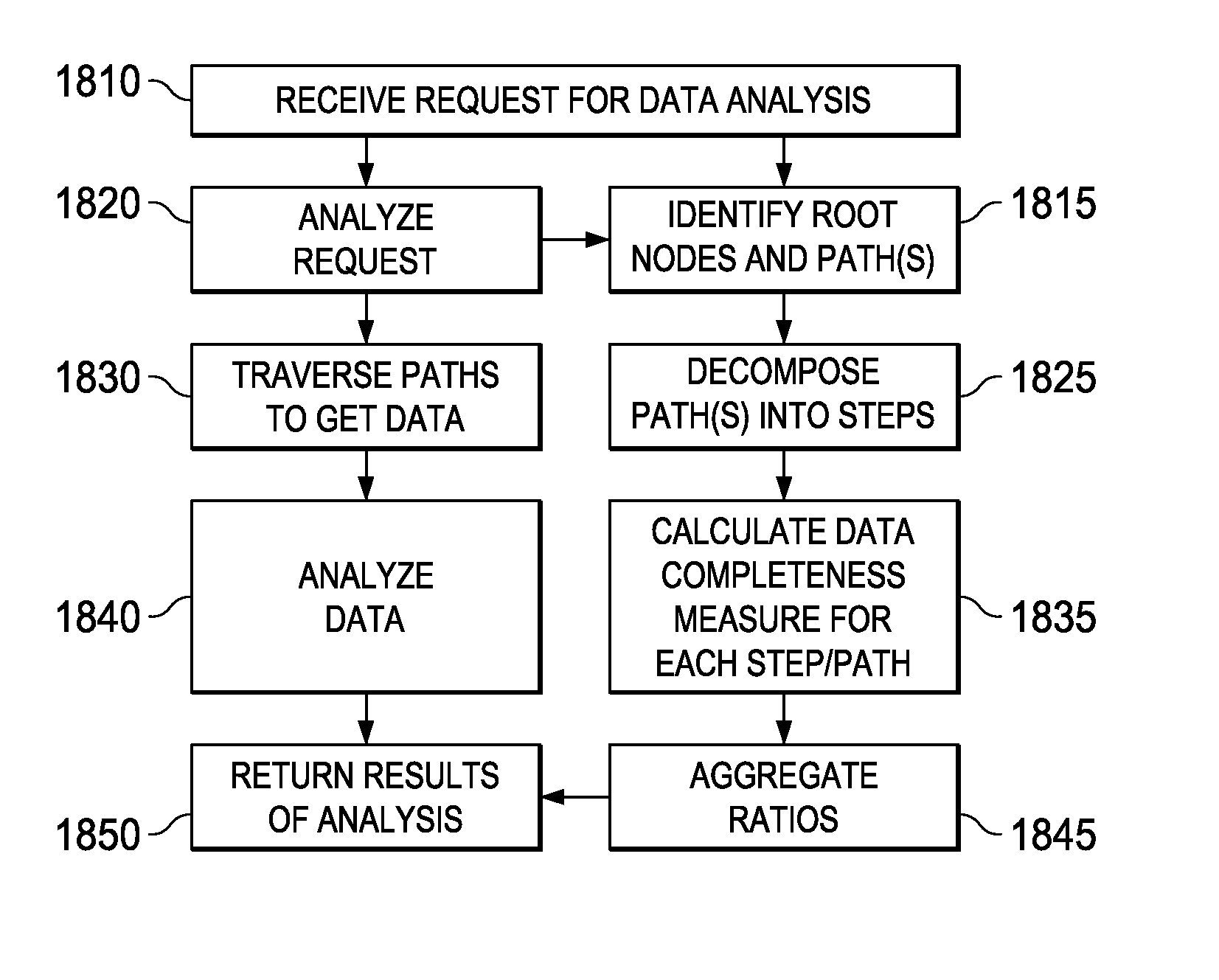

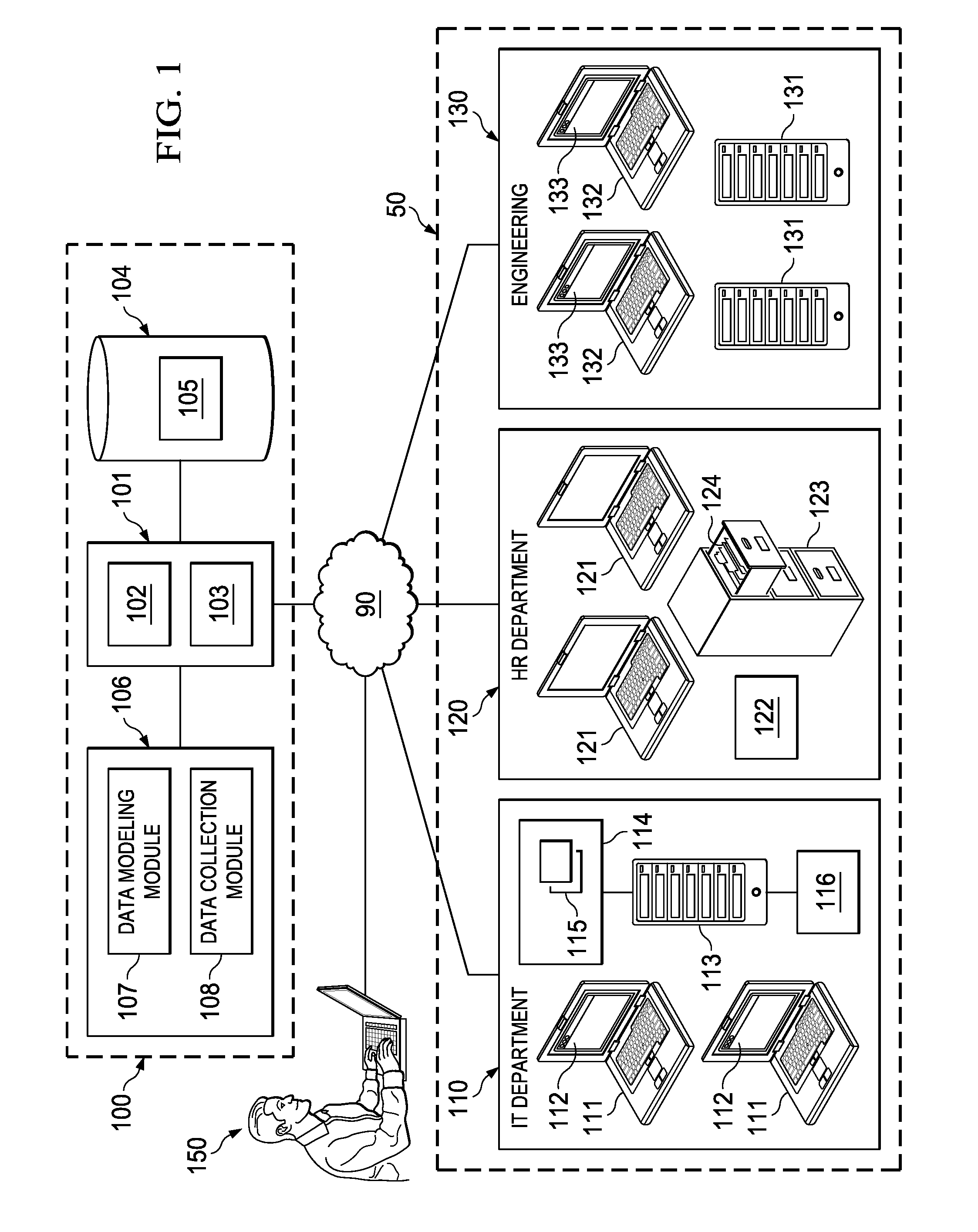

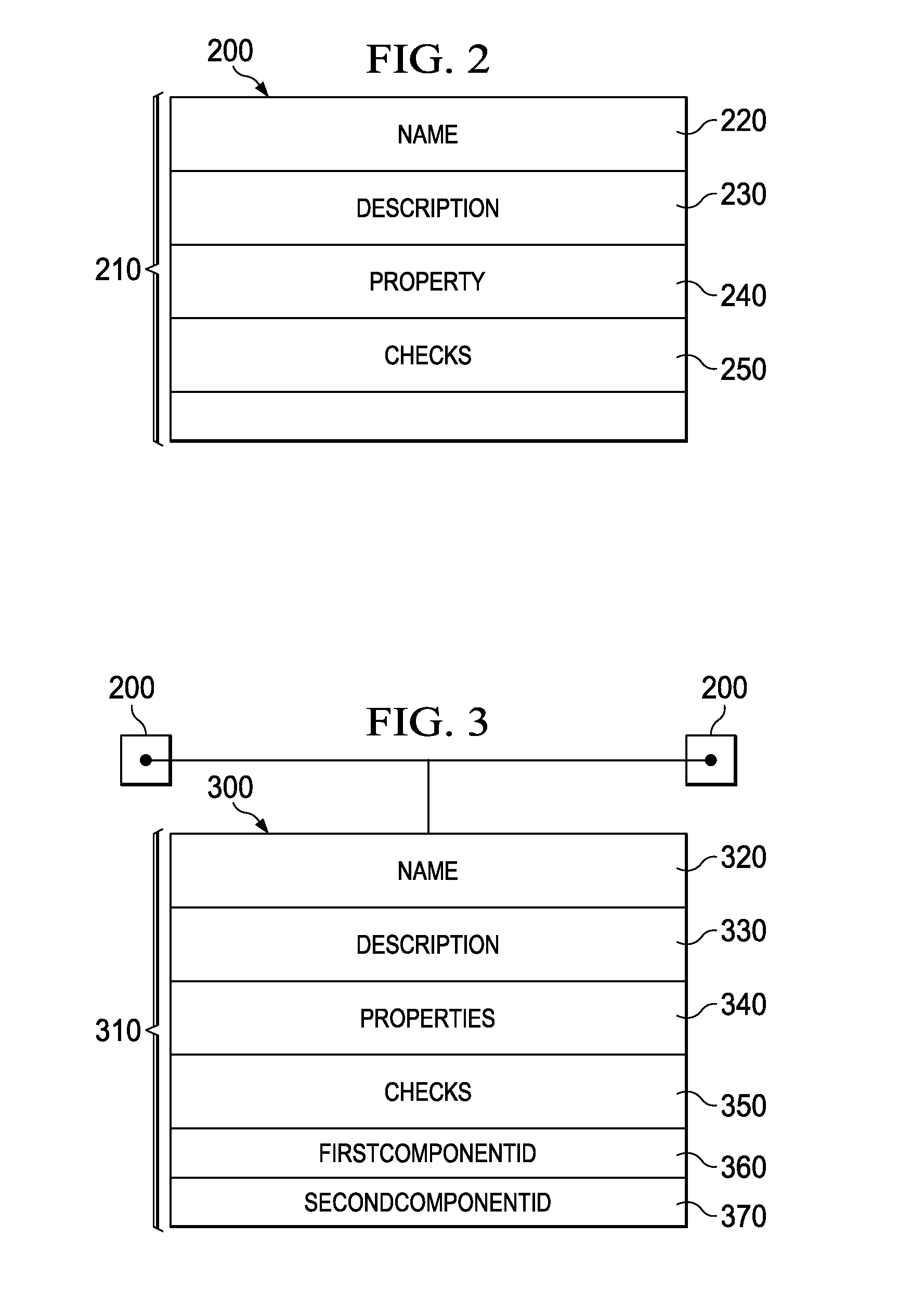

Method and system for determination of data completeness for analytic data calculations

ActiveUS9280581B1Digital data information retrievalComparison of digital valuesData integrityAnalysis data

A system, method and computer program product capable of determining data completeness associated with an analysis based on a data model at the same time that the data in the data model is being analyzed. A root node may be determined, and all paths from the root node discovered. Each path is decomposed into steps, and a ratio is calculated for each step. The ratios may be multiplied for each path, and the aggregate of the paths may determine a measure of the data completeness corresponding to the analysis and return the results of the analysis and the measure of data completeness at the same time.

Owner:TROUX TECHNOLOGIES

Systems and methods for authenticating and protecting the integrity of data streams and other data

InactiveUS20050235154A1Authentication is convenientDetecting errorUser identity/authority verificationDigital data protectionError checkFault tolerance

Systems and methods are disclosed for enabling a recipient of a cryptographically-signed electronic communication to verify the authenticity of the communication on-the-fly using a signed chain of check values, the chain being constructed from the original content of the communication, and each check value in the chain being at least partially dependent on the signed root of the chain and a portion of the communication. Fault tolerance can be provided by including error-check values in the communication that enable a decoding device to maintain the chain's security in the face of communication errors. In one embodiment, systems and methods are provided for enabling secure quasi-random access to a content file by constructing a hierarchy of hash values from the file, the hierarchy deriving its security in a manner similar to that used by the above-described chain. The hierarchy culminates with a signed hash that can be used to verify the integrity of other hash values in the hierarchy, and these other hash values can, in turn, be used to efficiently verify the authenticity of arbitrary portions of the content file.

Owner:INTERTRUST TECH CORP

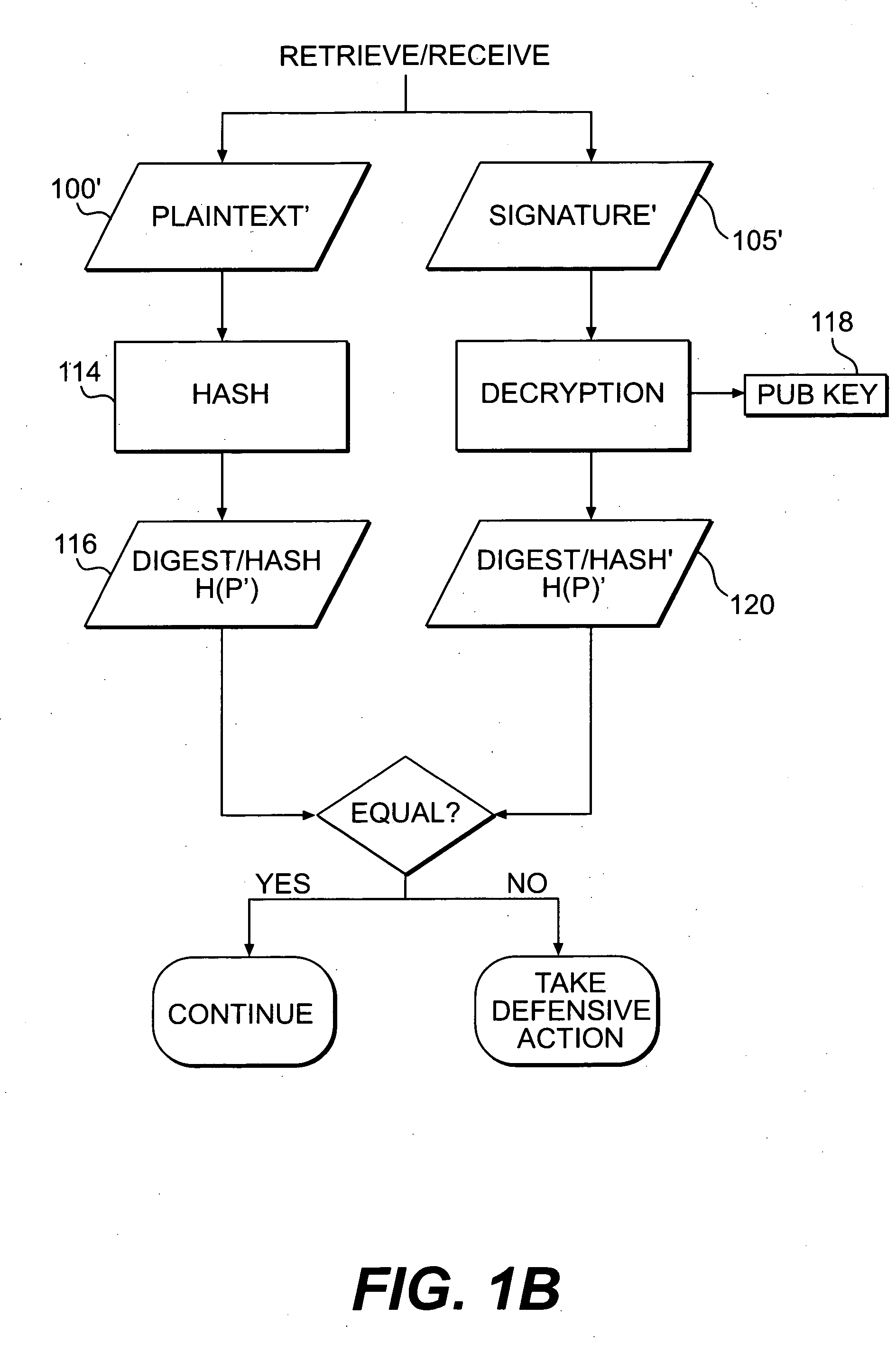

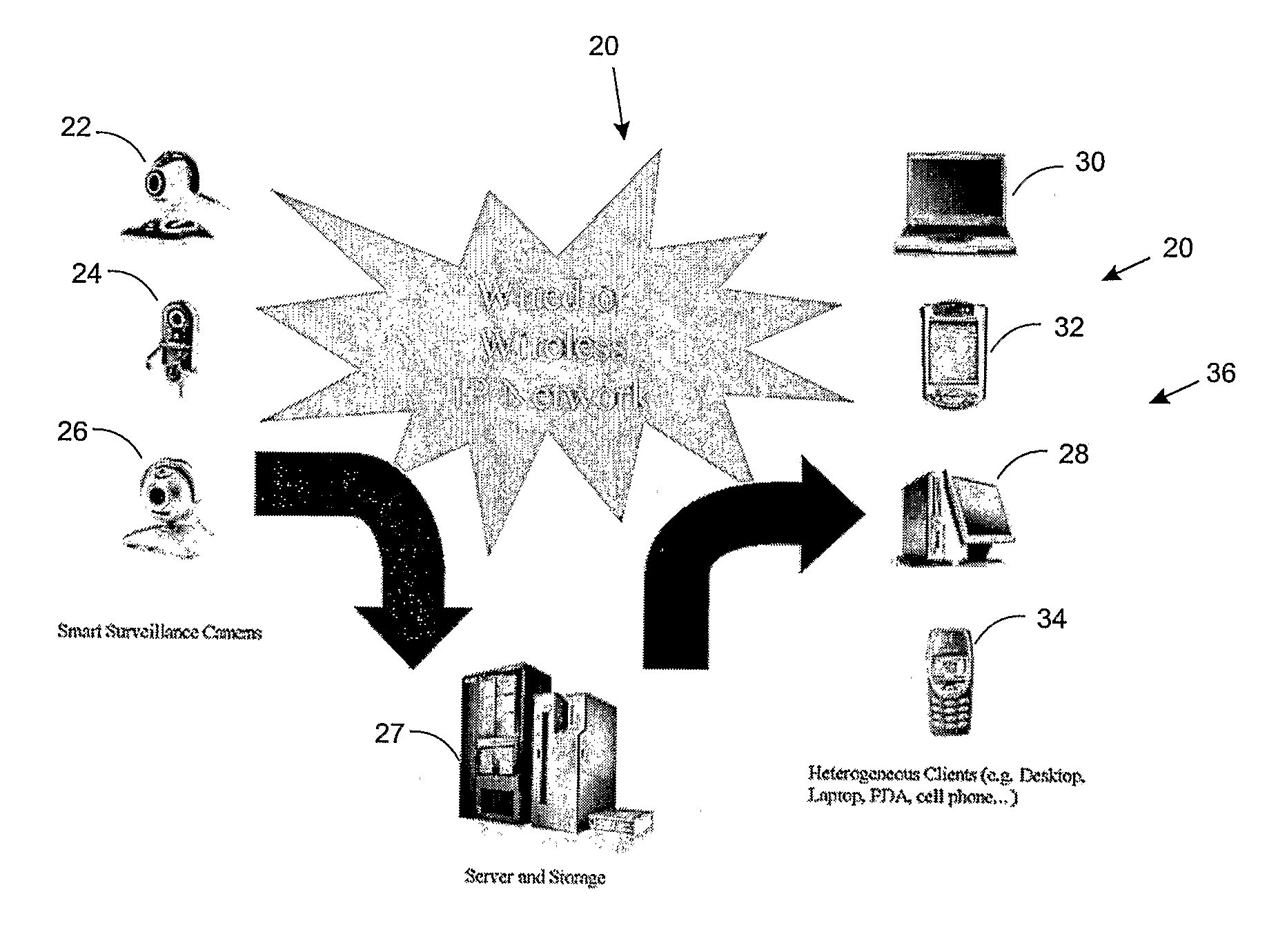

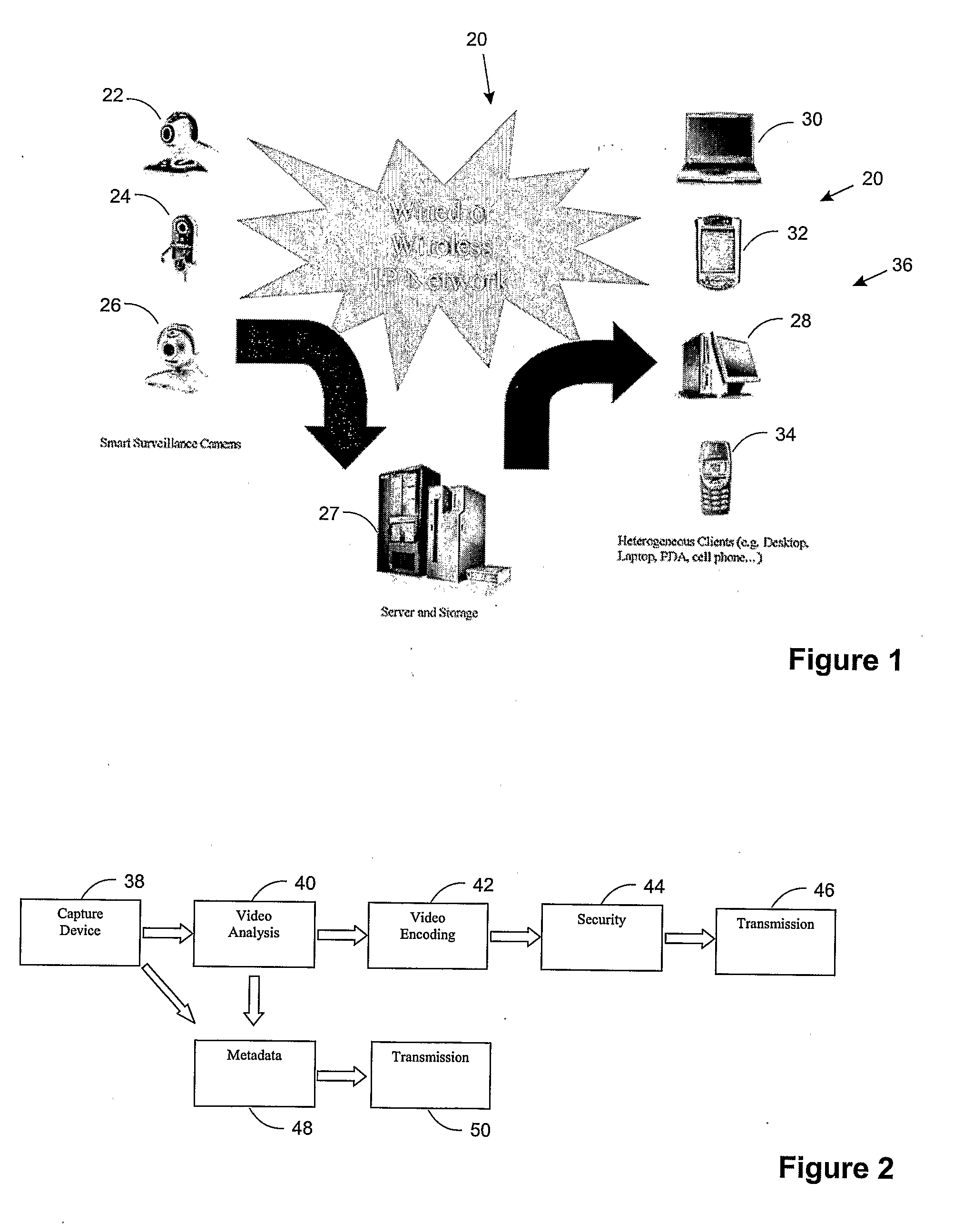

Smart Video Surveillance System Ensuring Privacy

InactiveUS20070296817A1Closed circuit television systemsBurglar alarmComputer hardwareVideo monitoring

This invention describes a video surveillance system which is composed of three key components 1—smart camera(s), 2—server(s), 3—client(s), connected through IP-networks in wired or wireless configurations. The system has been designed so as to protect the privacy of people and goods under surveillance. Smart cameras are based on JPEG 2000 compression where an analysis module allows for efficient use of security tools for the purpose of scrambling, and event detection. The analysis is also used in order to provide a better quality in regions of the interest in the scene. Compressed video streams leaving the camera(s) are scrambled and signed for the purpose of privacy and data integrity verification using JPSEC compliant methods. The same bit stream is also protected based on JPWL compliant methods for robustness to transmission errors. The operations of the smart camera are optimized in order to provide the best compromise in terms of perceived visual quality of the decoded video, versus the amount of power consumption. The smart camera(s) can be wireless in both power and communication connections. The server(s) receive(s), store(s), manage(s) and dispatch(es) the video sequences on wired and wireless channels to a variety of clients and users with different device capabilities, channel characteristics and preferences. Use of seamless scalable coding of video sequences prevents any need for transcoding operations at any point in the system.

Owner:EMITALL SURVEILLANCE

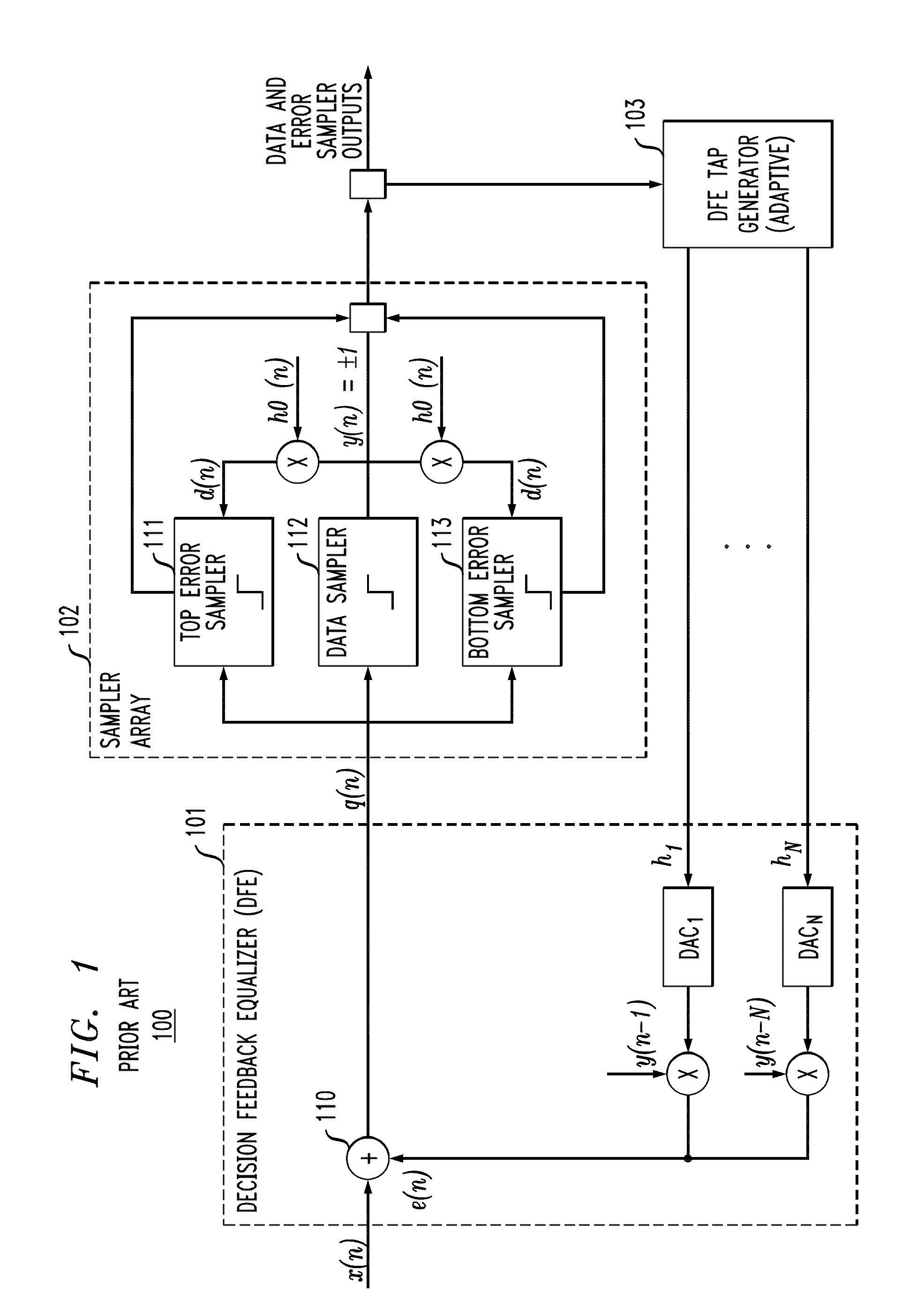

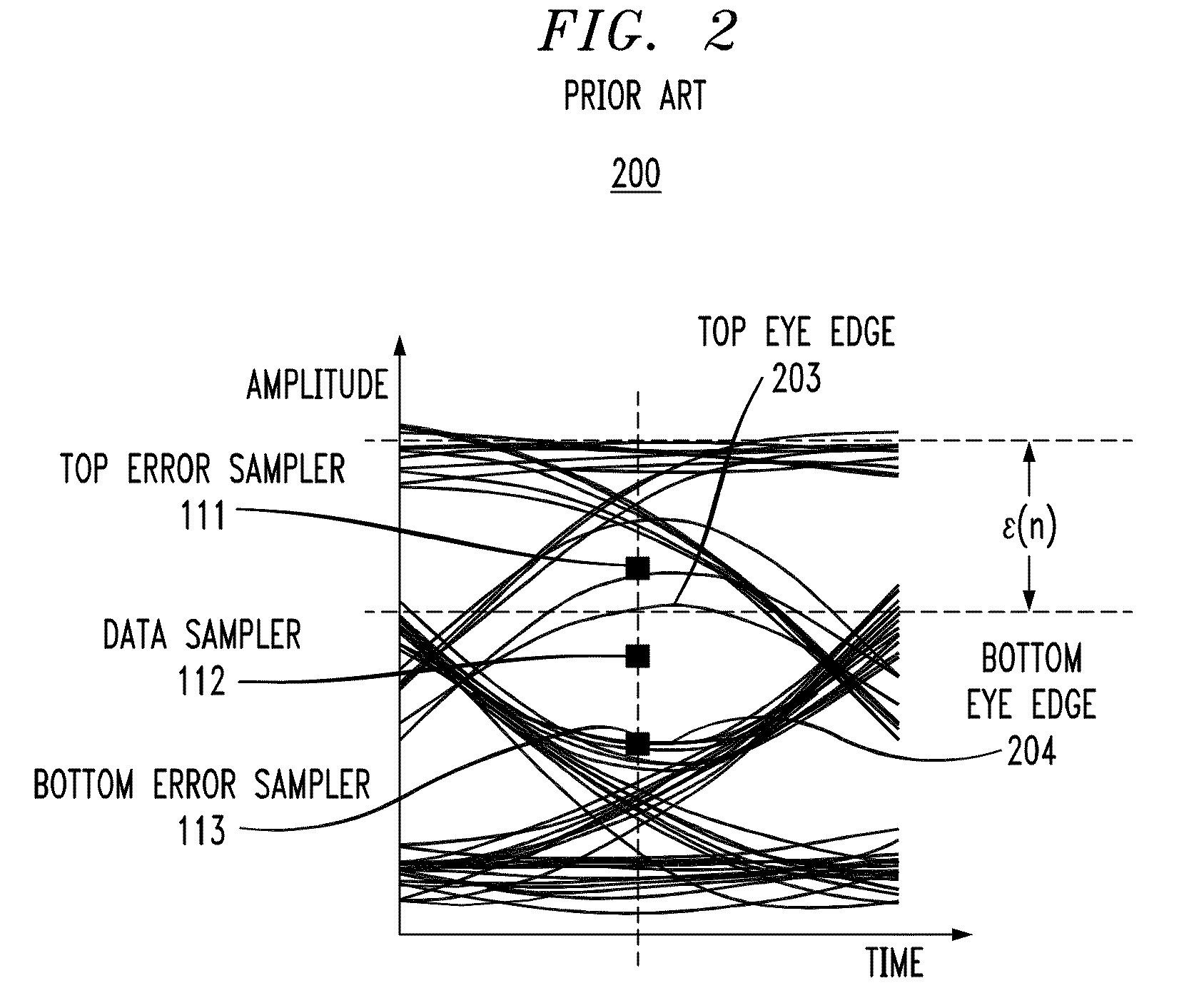

Statistically-Adapted Receiver and Transmitter Equalization

InactiveUS20100329325A1High error rateReduce error rateMultiple-port networksDelay line applicationsTraining periodData integrity

In described embodiments, adaptive equalization of a signal in, for example, Serializer / De-serializer transceivers by a) monitoring a data eye in a data path with an eye detector for signal amplitude and / or transition; b) setting the equalizer response of at least one equalizer in the signal path while the signal is present for statistical calibration of the data eye; c) monitoring the data eye and setting the equalizer during periods in which received data is allowed to contain errors (such as link initiation and training periods) and periods in which receive data integrity is to be maintained (such as normal data communication).

Owner:LSI CORPORATION

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com