Patents

Literature

1081 results about "Page table" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

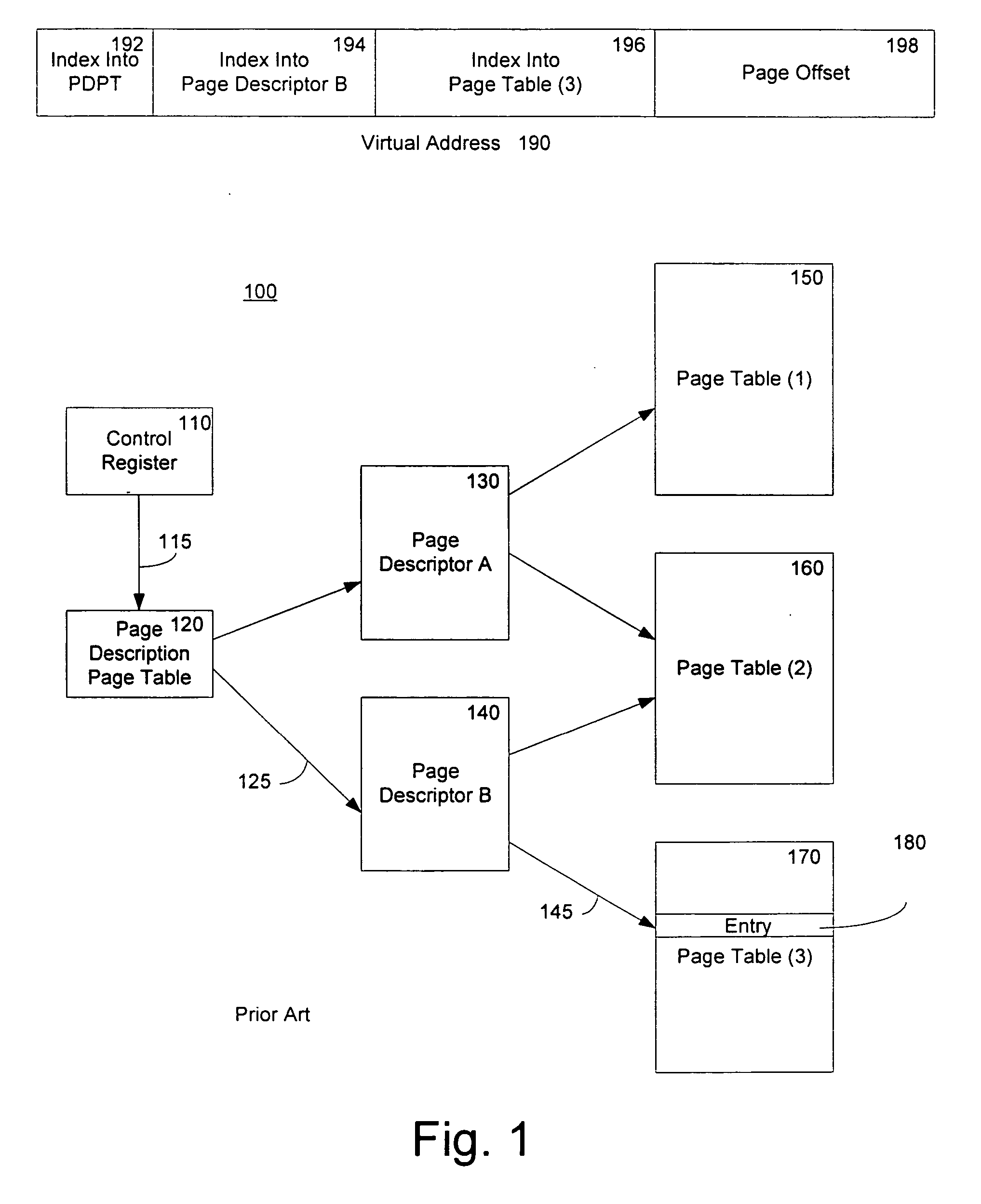

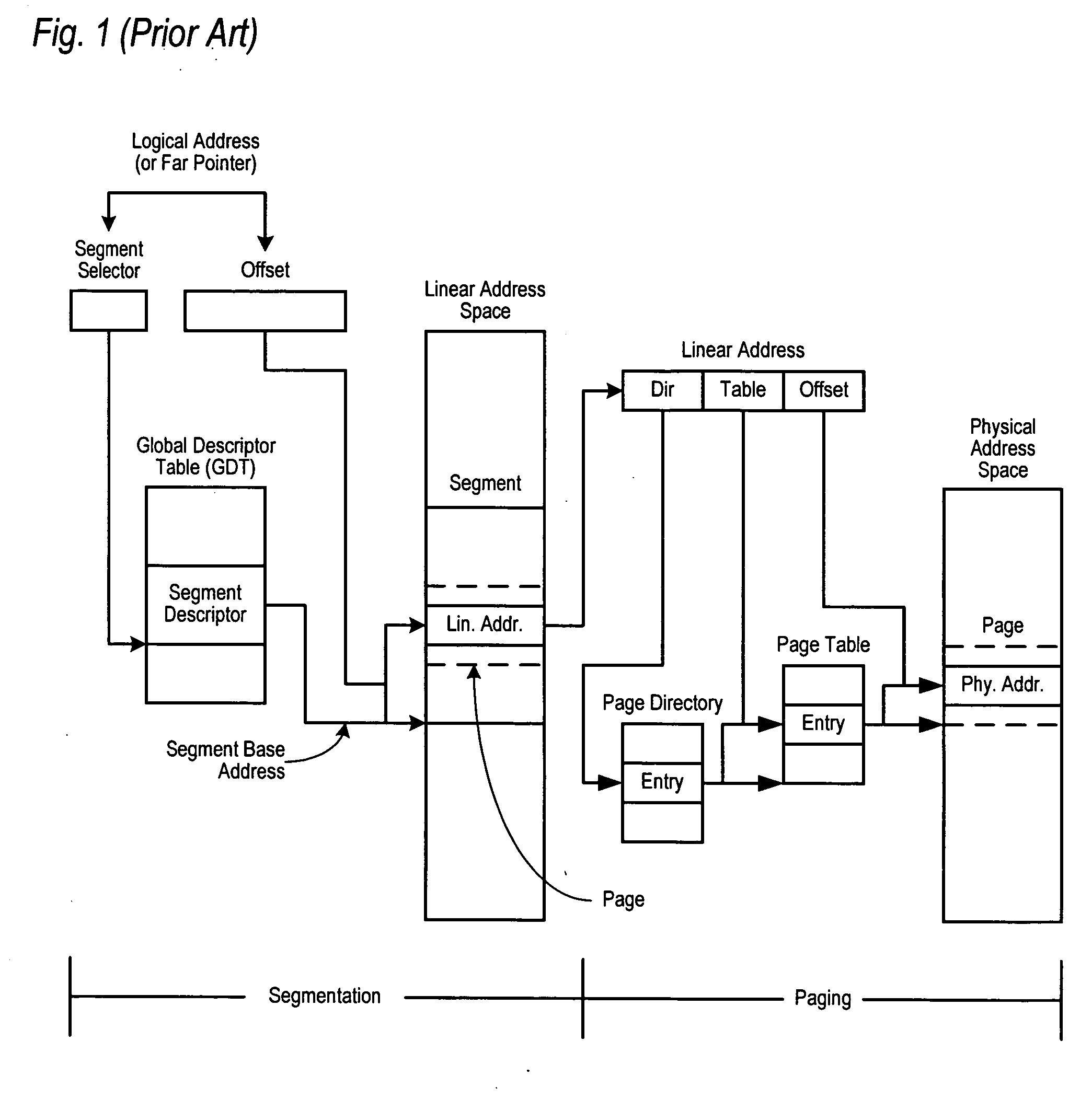

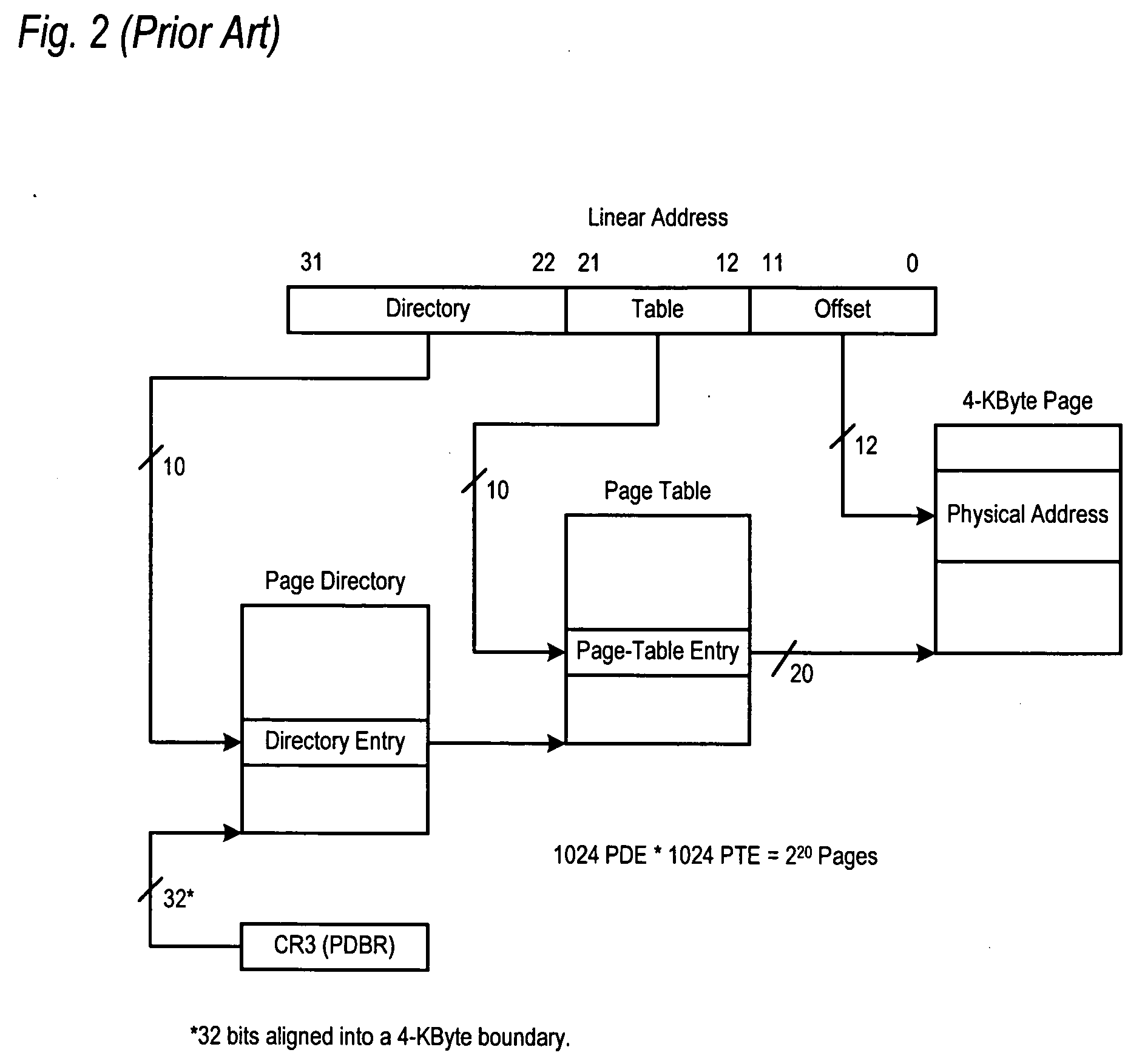

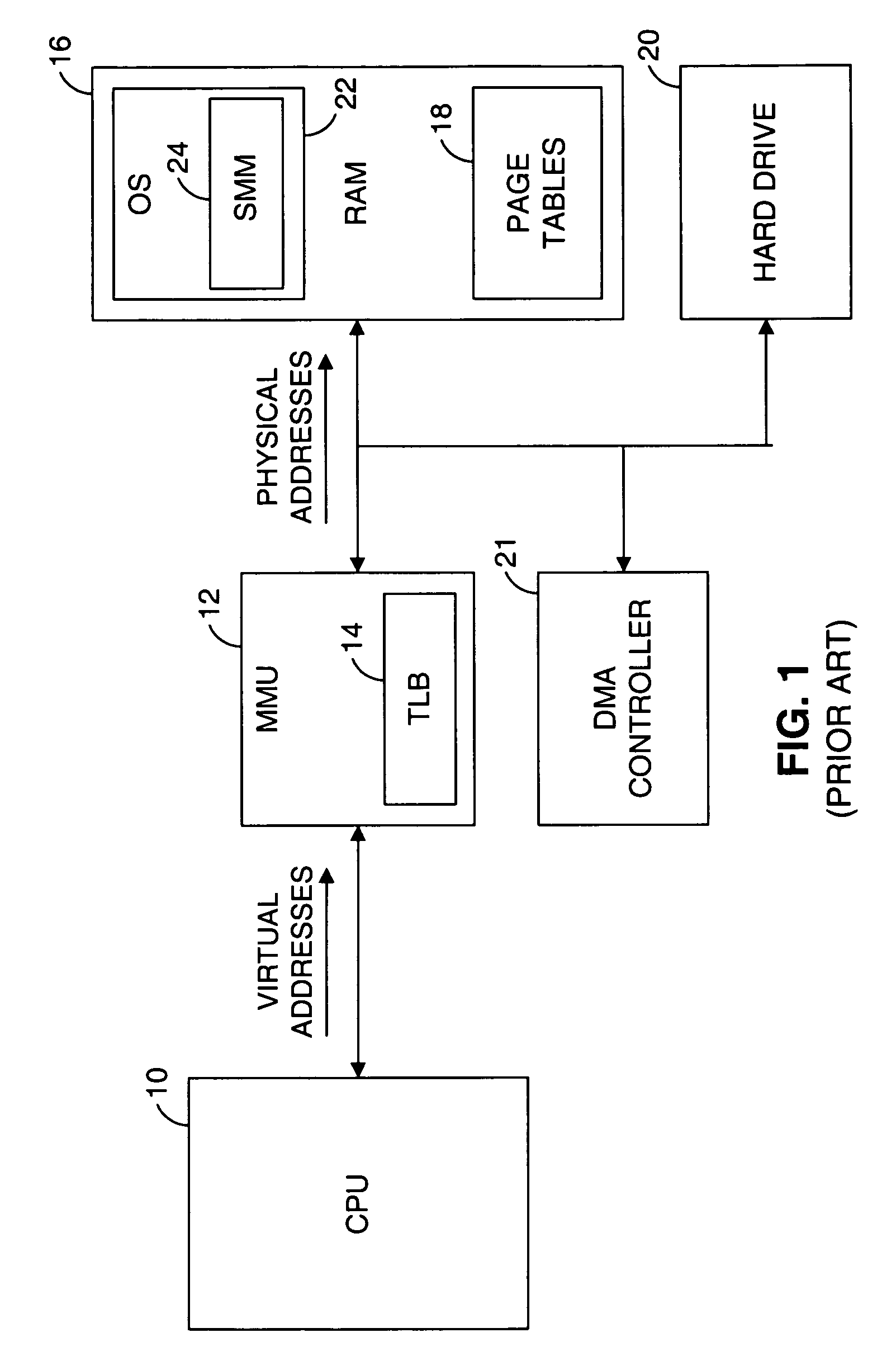

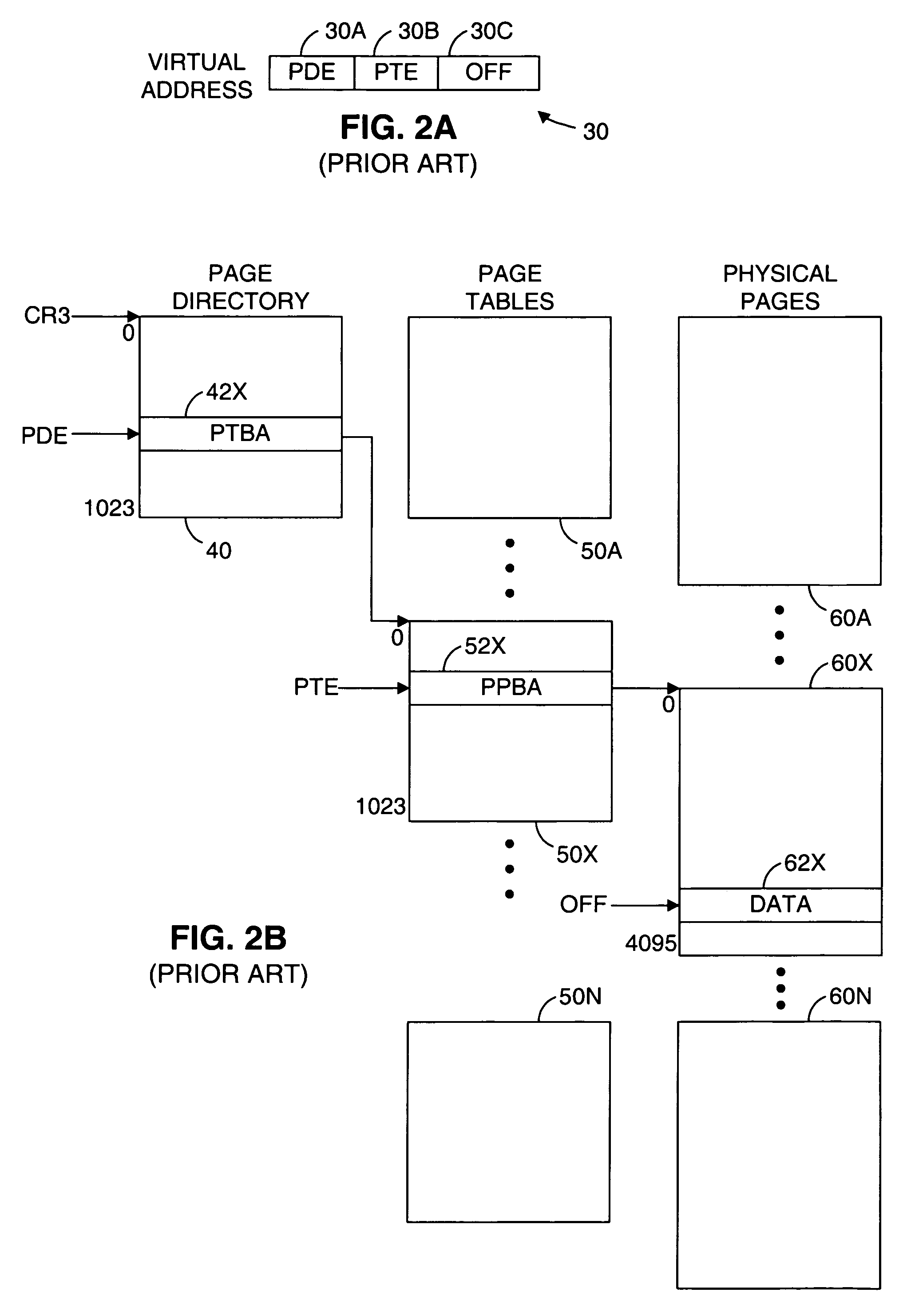

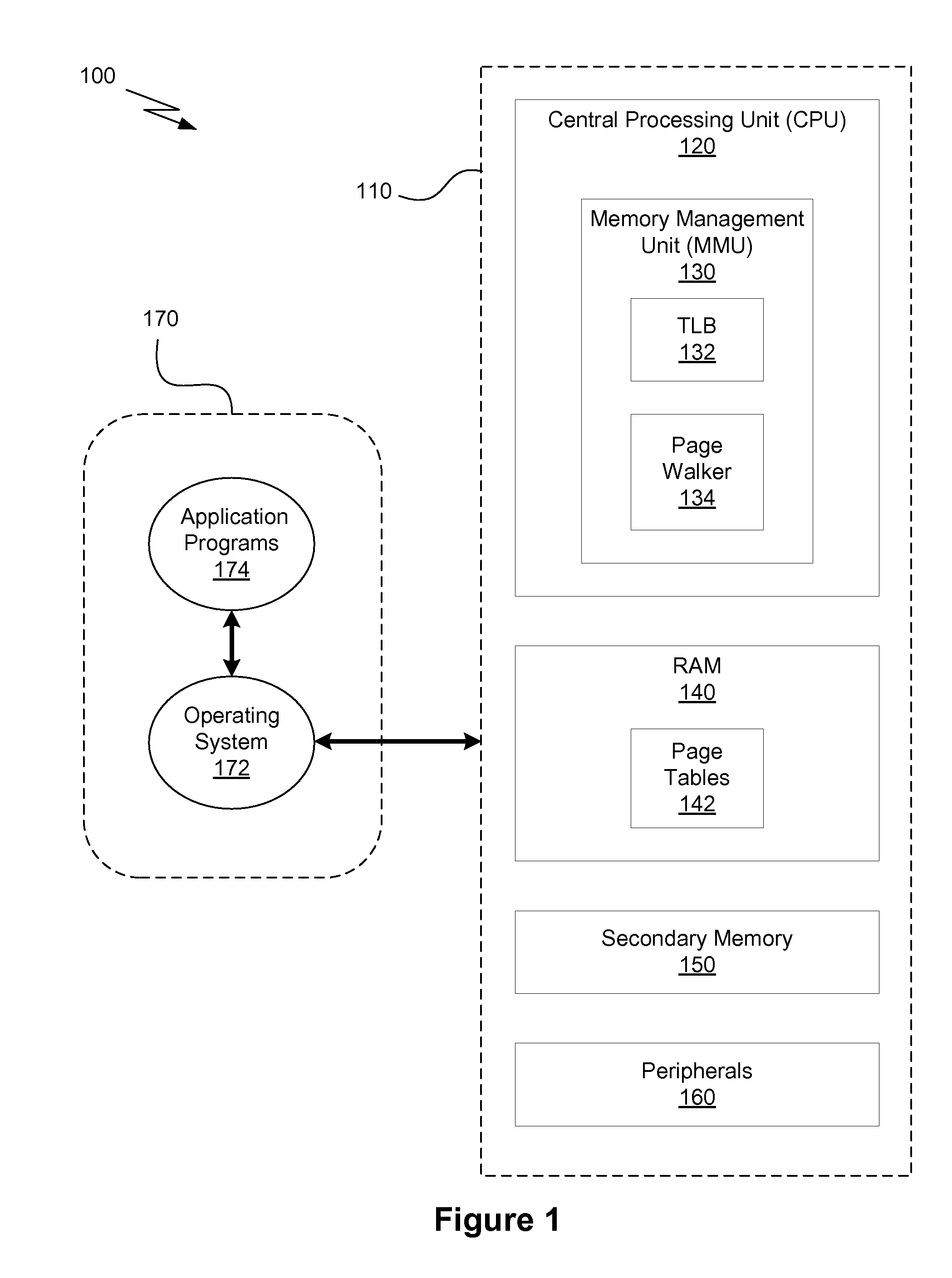

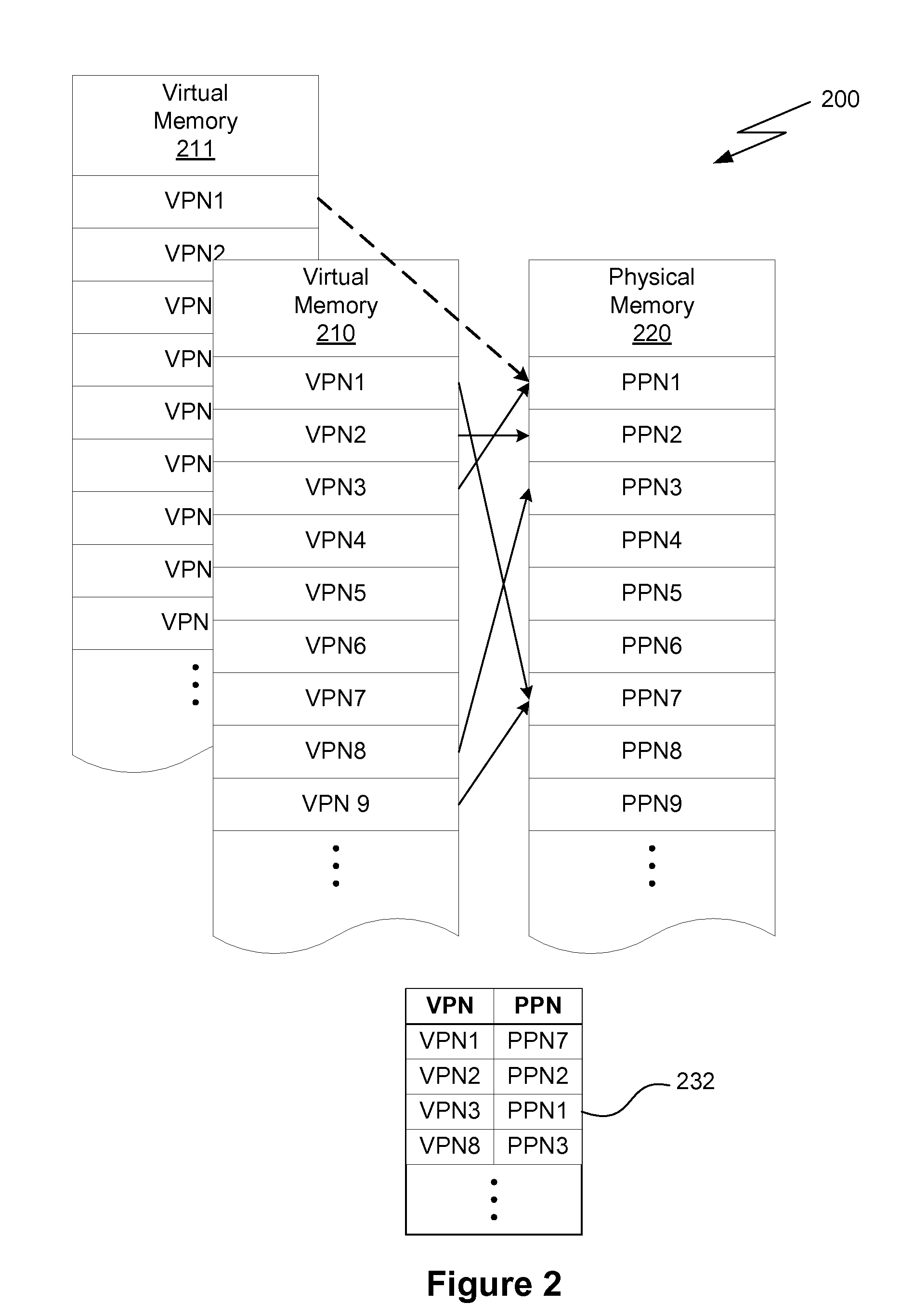

A page table is the data structure used by a virtual memory system in a computer operating system to store the mapping between virtual addresses and physical addresses. Virtual addresses are used by the program executed by the accessing process, while physical addresses are used by the hardware, or more specifically, by the RAM subsystem. The page table is a key component of virtual address translation which is necessary to access data in memory.

Caching using virtual memory

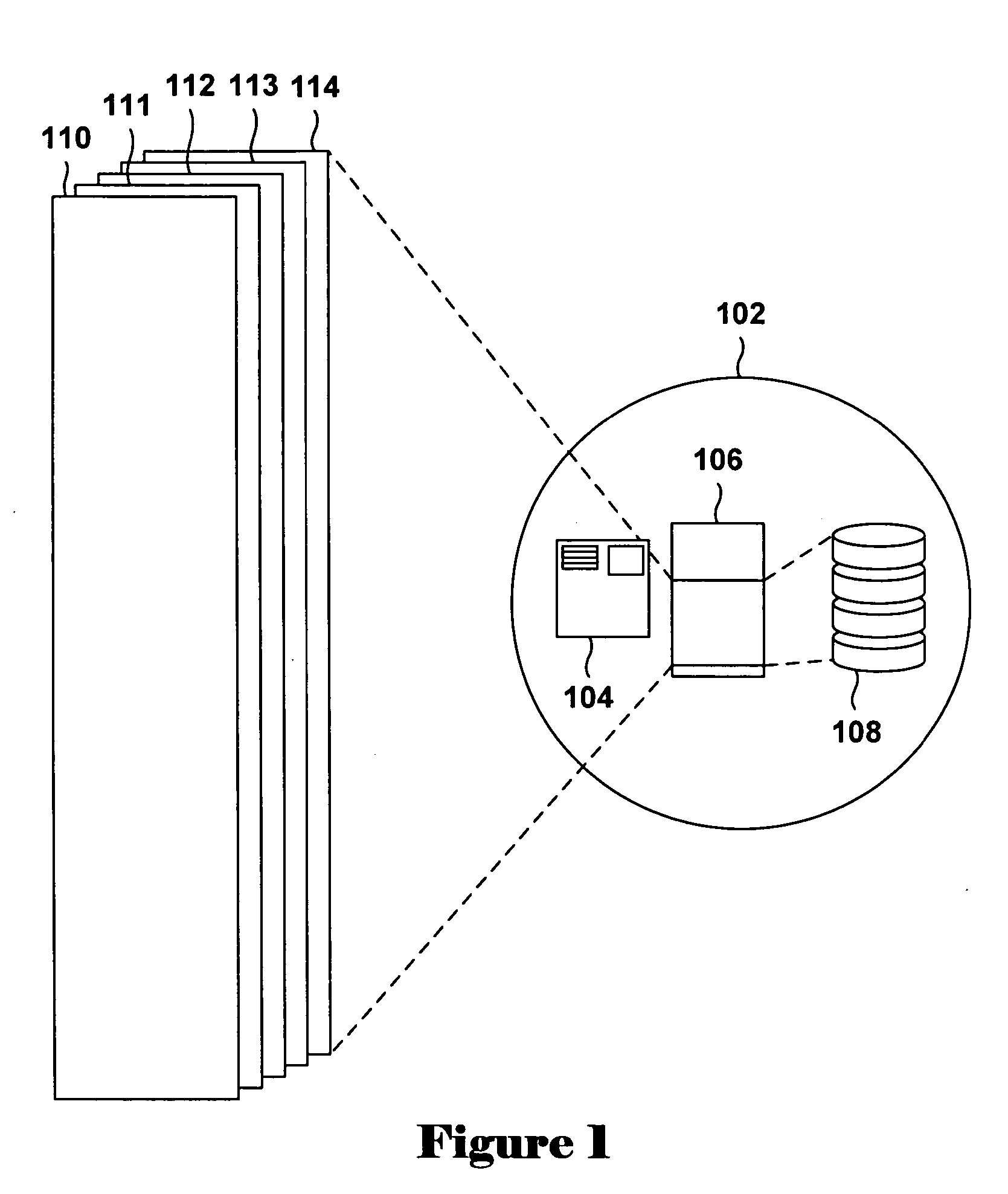

InactiveUS20120017039A1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryPage table

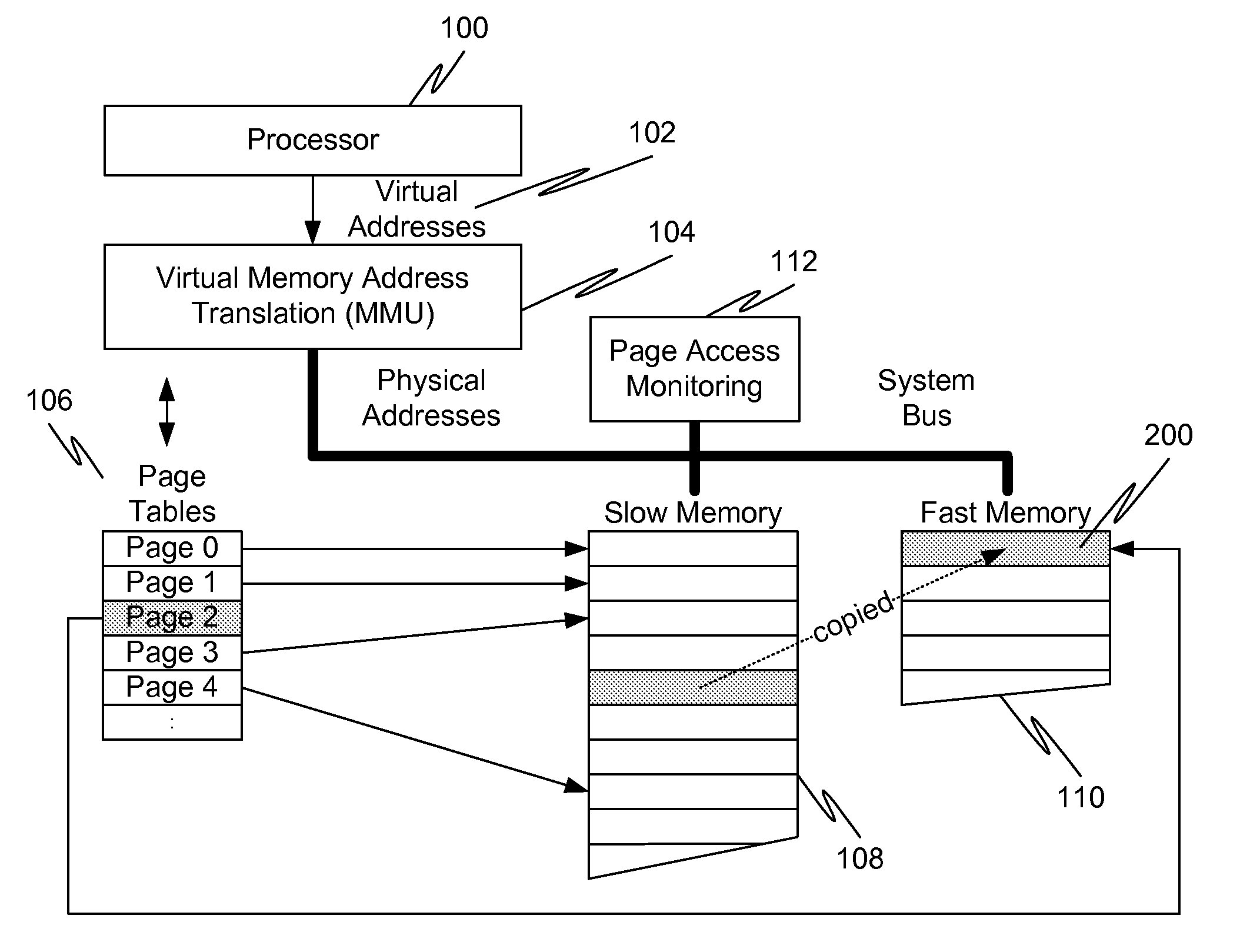

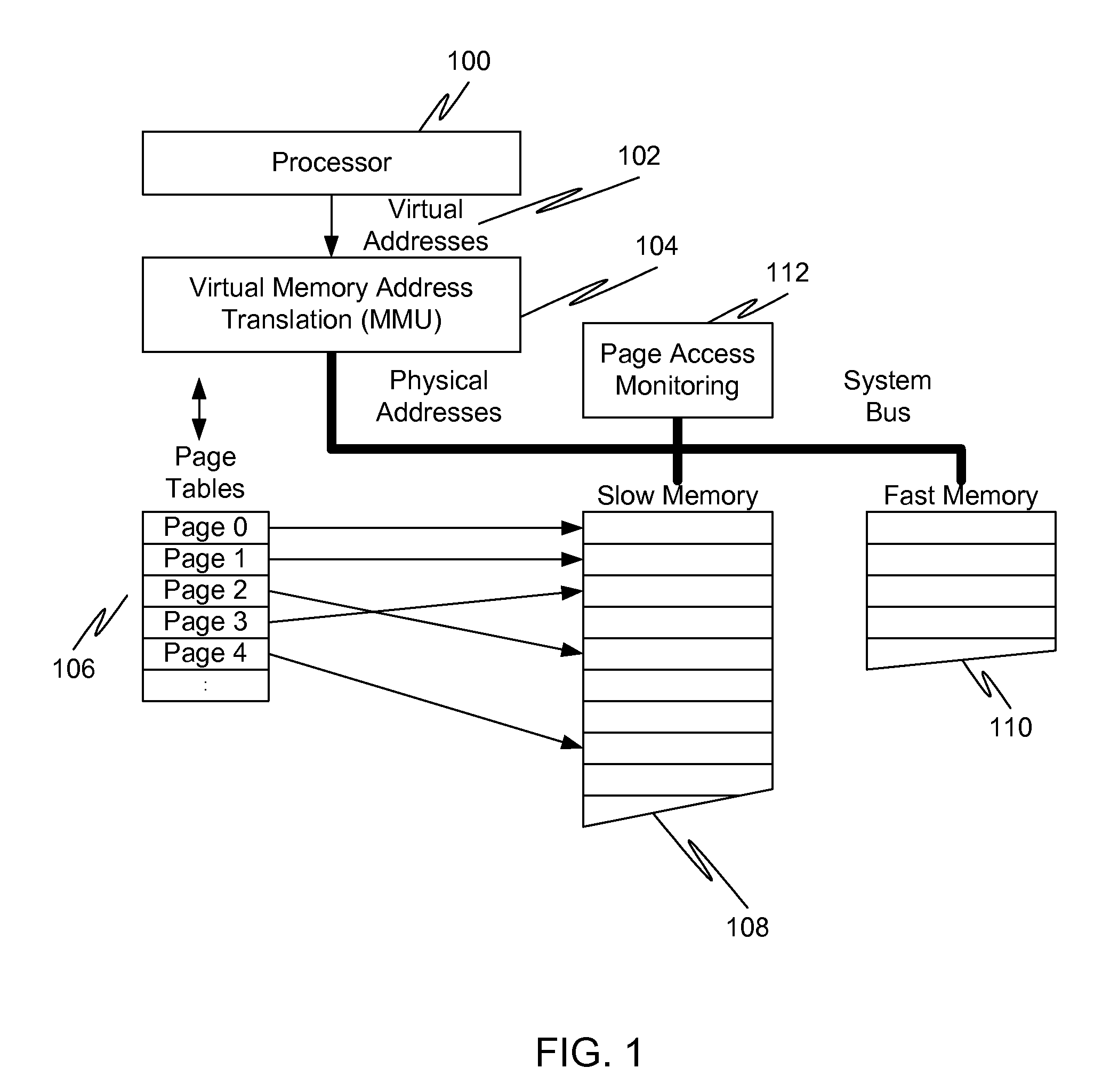

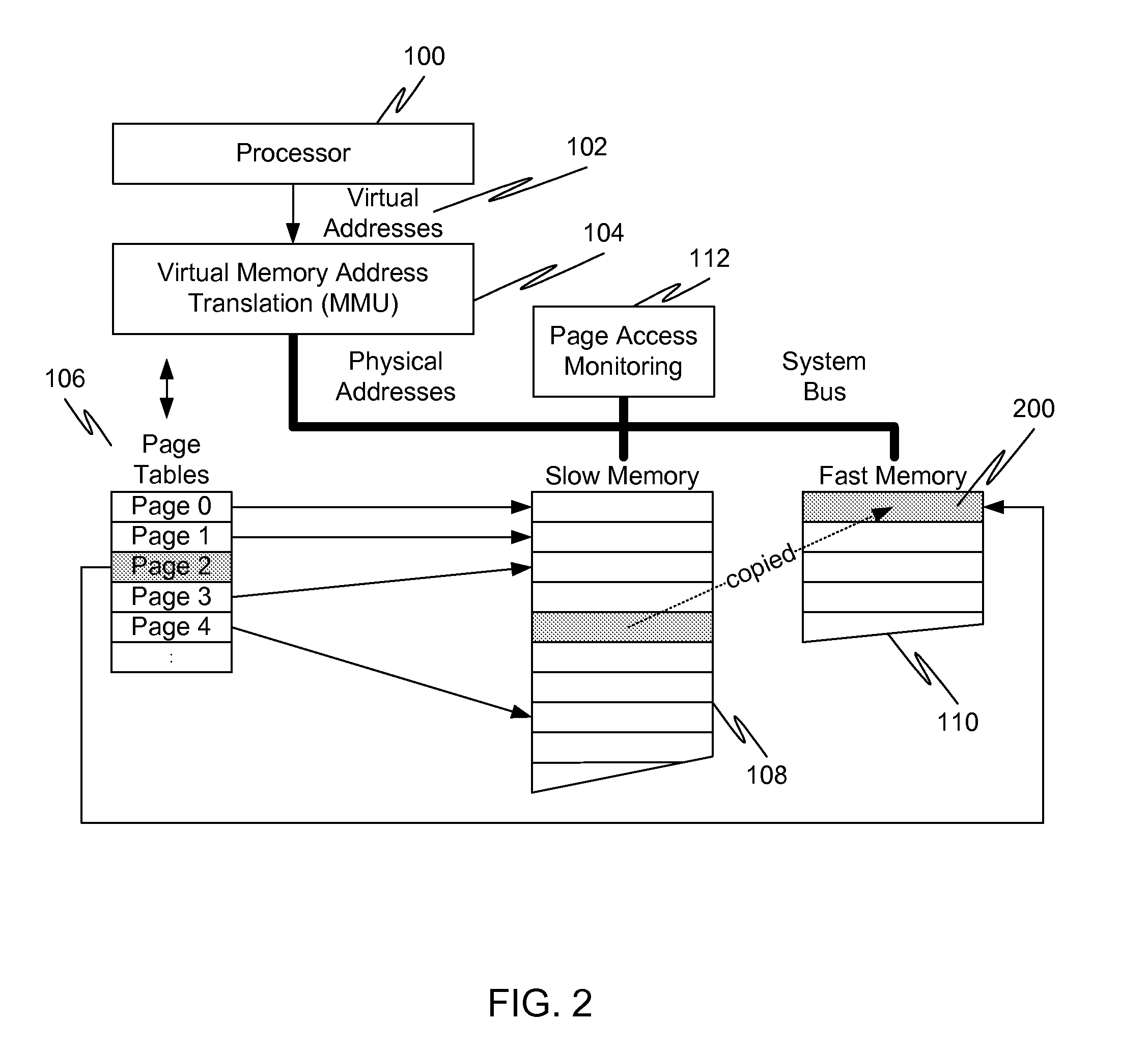

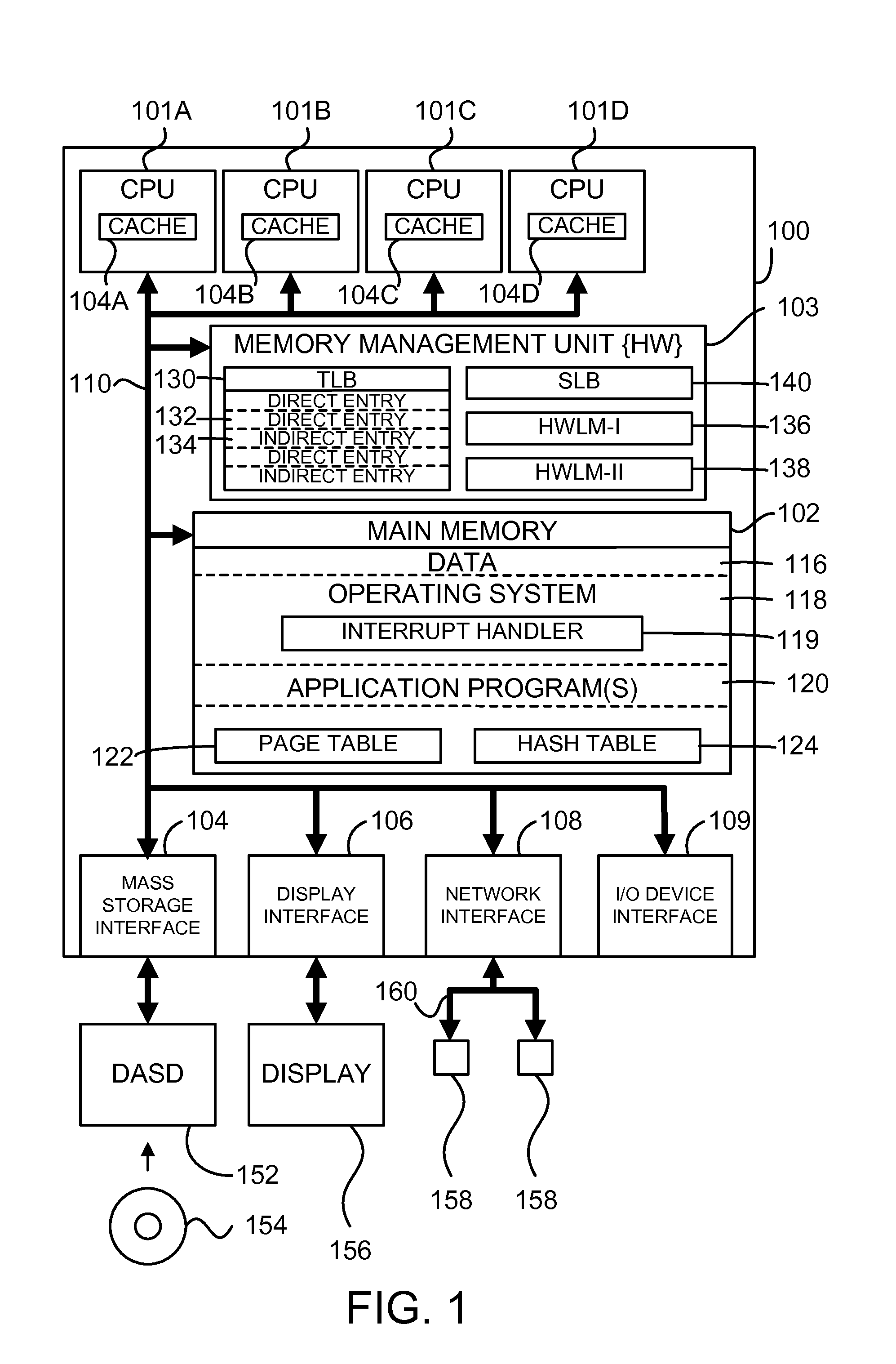

In a first embodiment of the present invention, a method for caching in a processor system having virtual memory is provided, the method comprising: monitoring slow memory in the processor system to determine frequently accessed pages; for a frequently accessed page in slow memory: copy the frequently accessed page from slow memory to a location in fast memory; and update virtual address page tables to reflect the location of the frequently accessed page in fast memory.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Memory addressing controlled by PTE fields

ActiveUS7805587B1Reduce accessMemory adressing/allocation/relocationComputer security arrangementsMemory addressDram memory

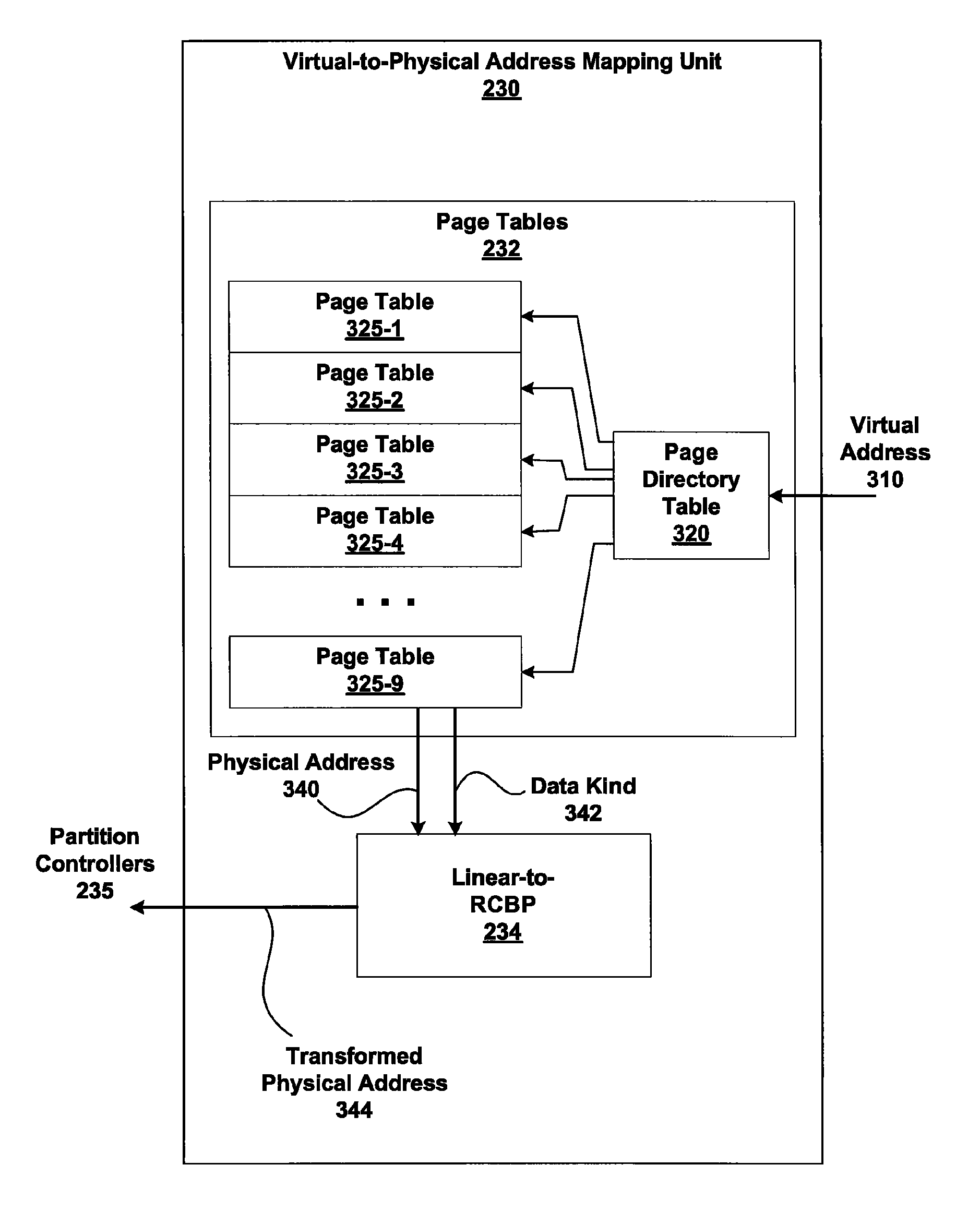

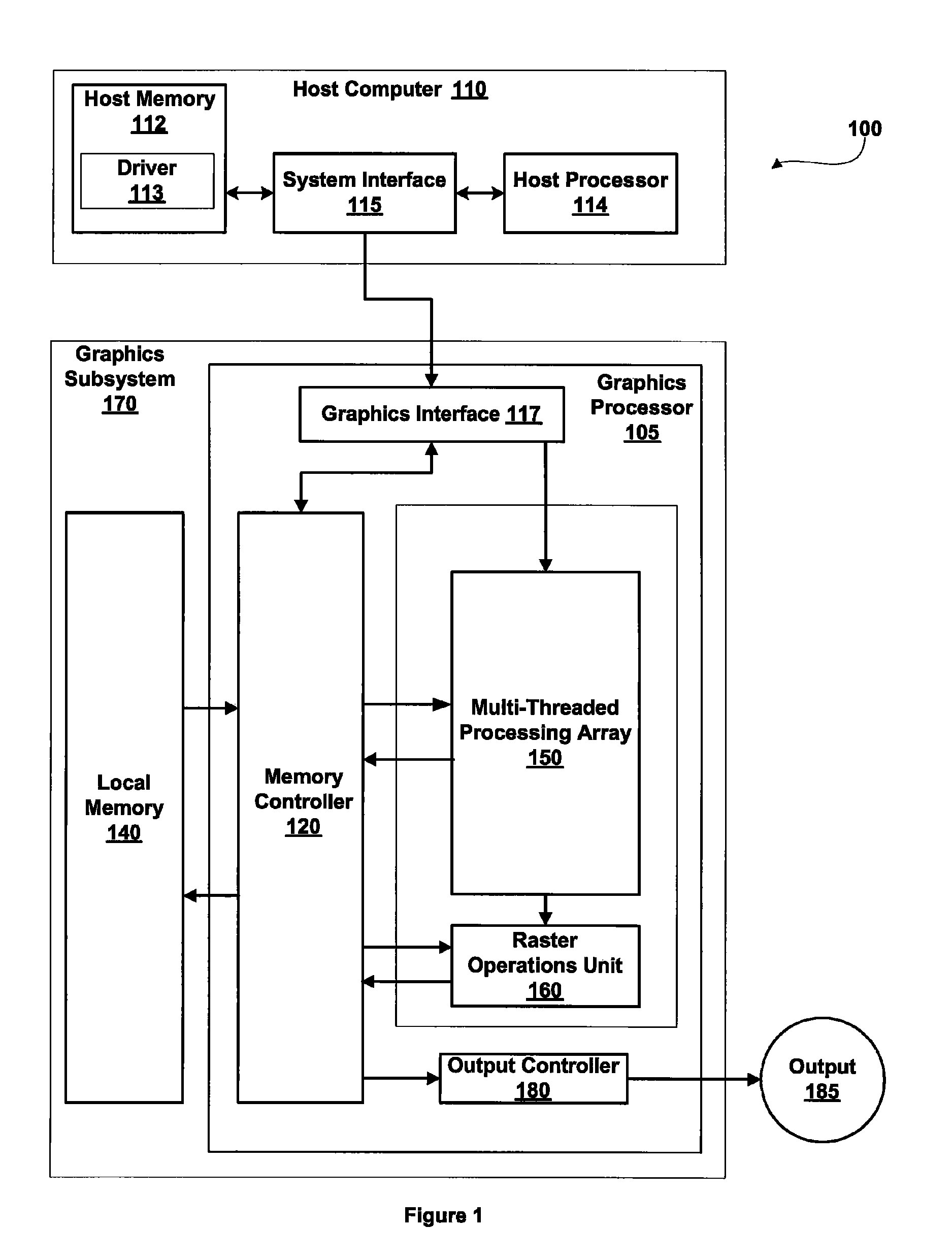

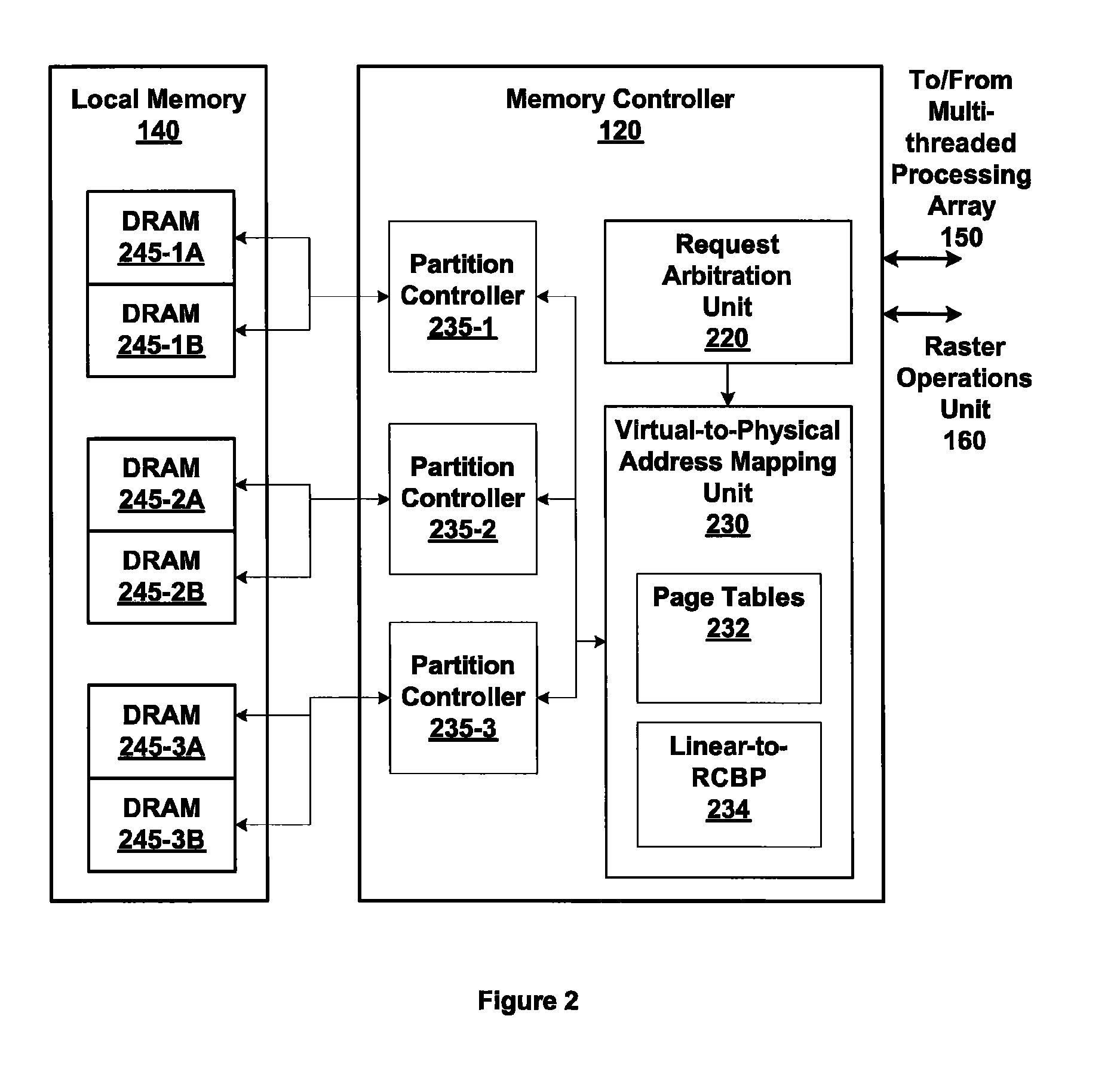

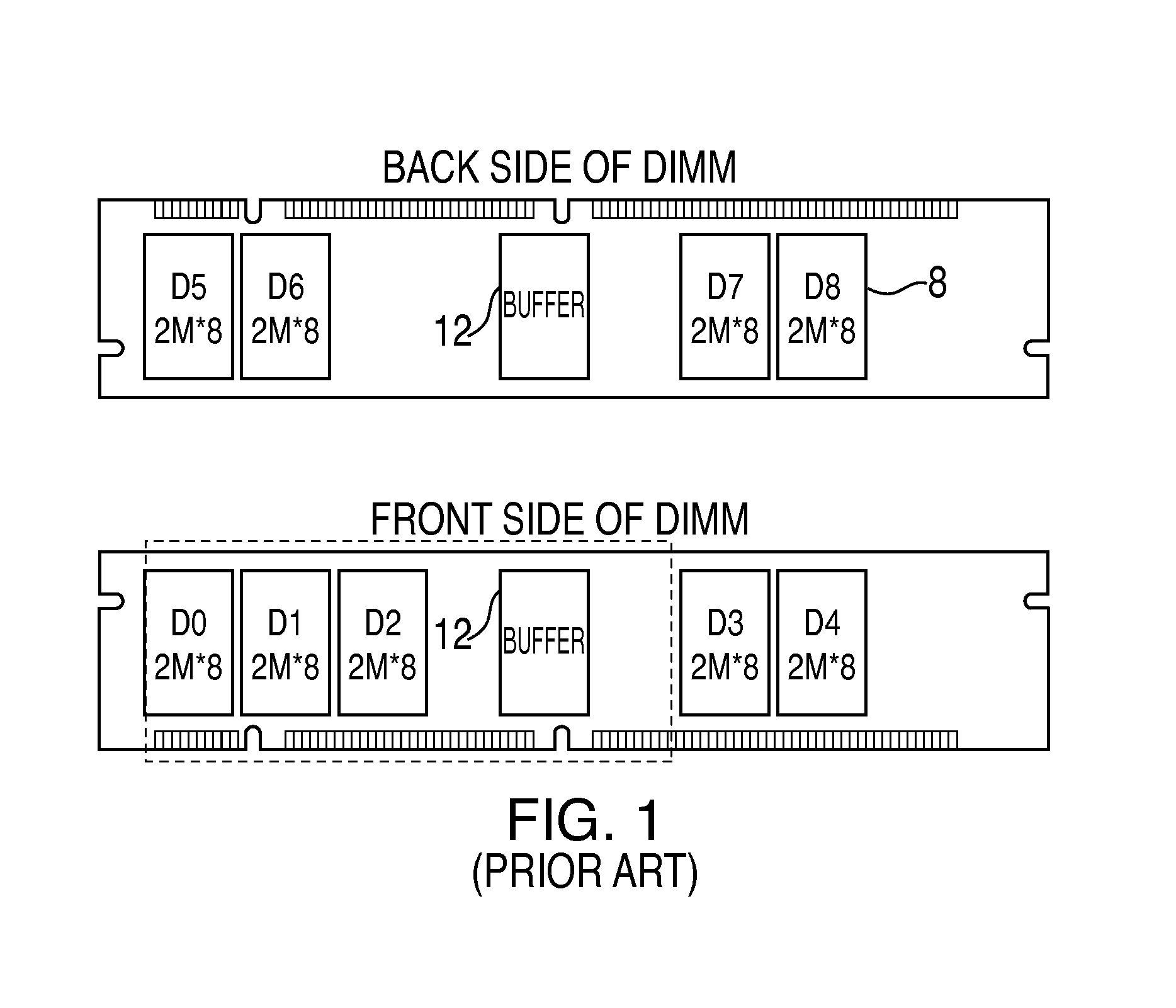

Embodiments of the present invention enable virtual-to-physical memory address translation using optimized bank and partition interleave patterns to improve memory bandwidth by distributing data accesses over multiple banks and multiple partitions. Each virtual page has a corresponding page table entry that specifies the physical address of the virtual page in linear physical address space. The page table entry also includes a data kind field that is used to guide and optimize the mapping process from the linear physical address space to the DRAM physical address space, which is used to directly access one or more DRAM. The DRAM physical address space includes a row, bank and column address. The data kind field is also used to optimize the starting partition number and partition interleave pattern that defines the organization of the selected physical page of memory within the DRAM memory system.

Owner:NVIDIA CORP

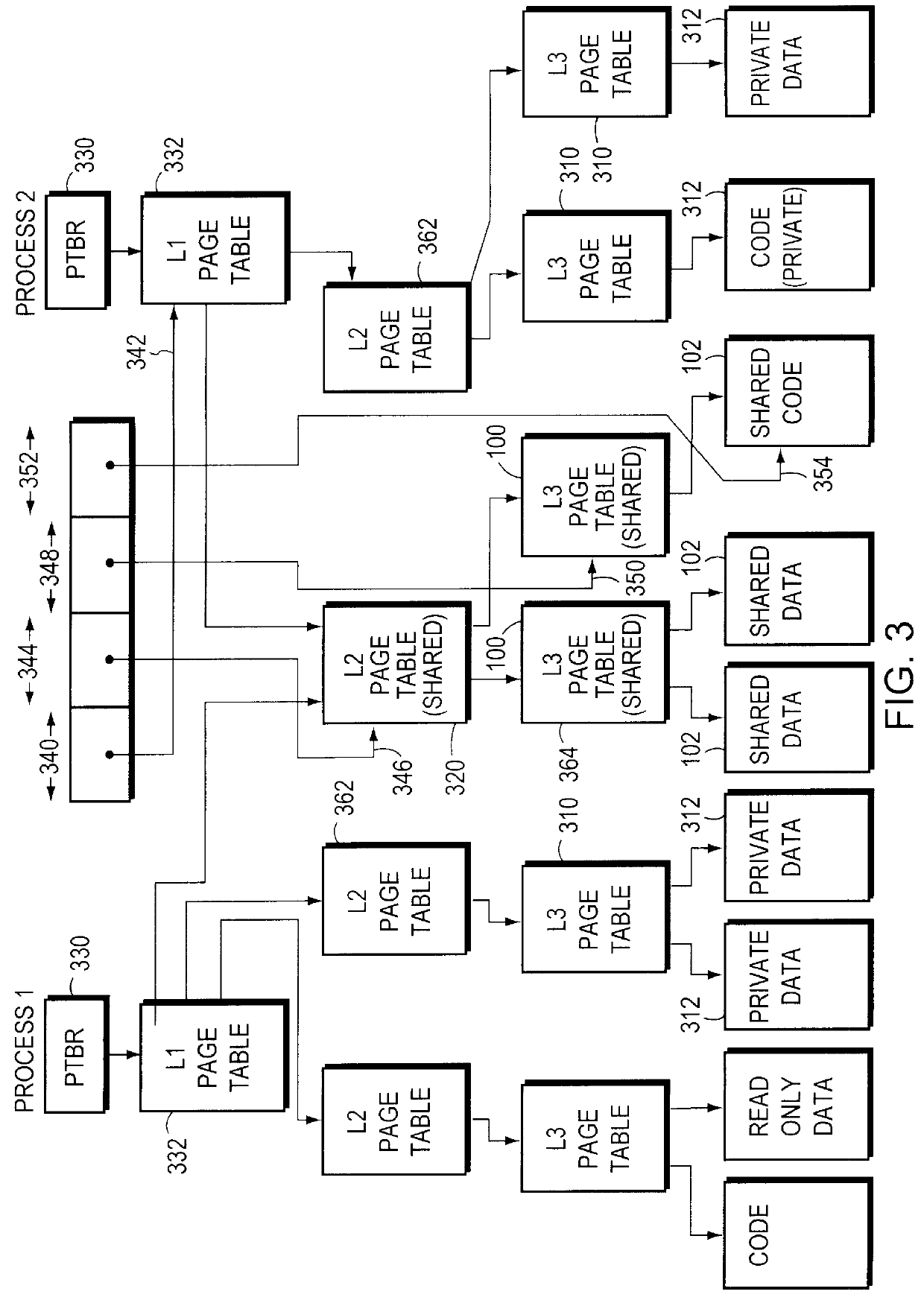

Sharing memory pages and page tables among computer processes

InactiveUS6085296AOptimizationReduce fragmentationMemory adressing/allocation/relocationMicro-instruction address formationPage tableComputer memory

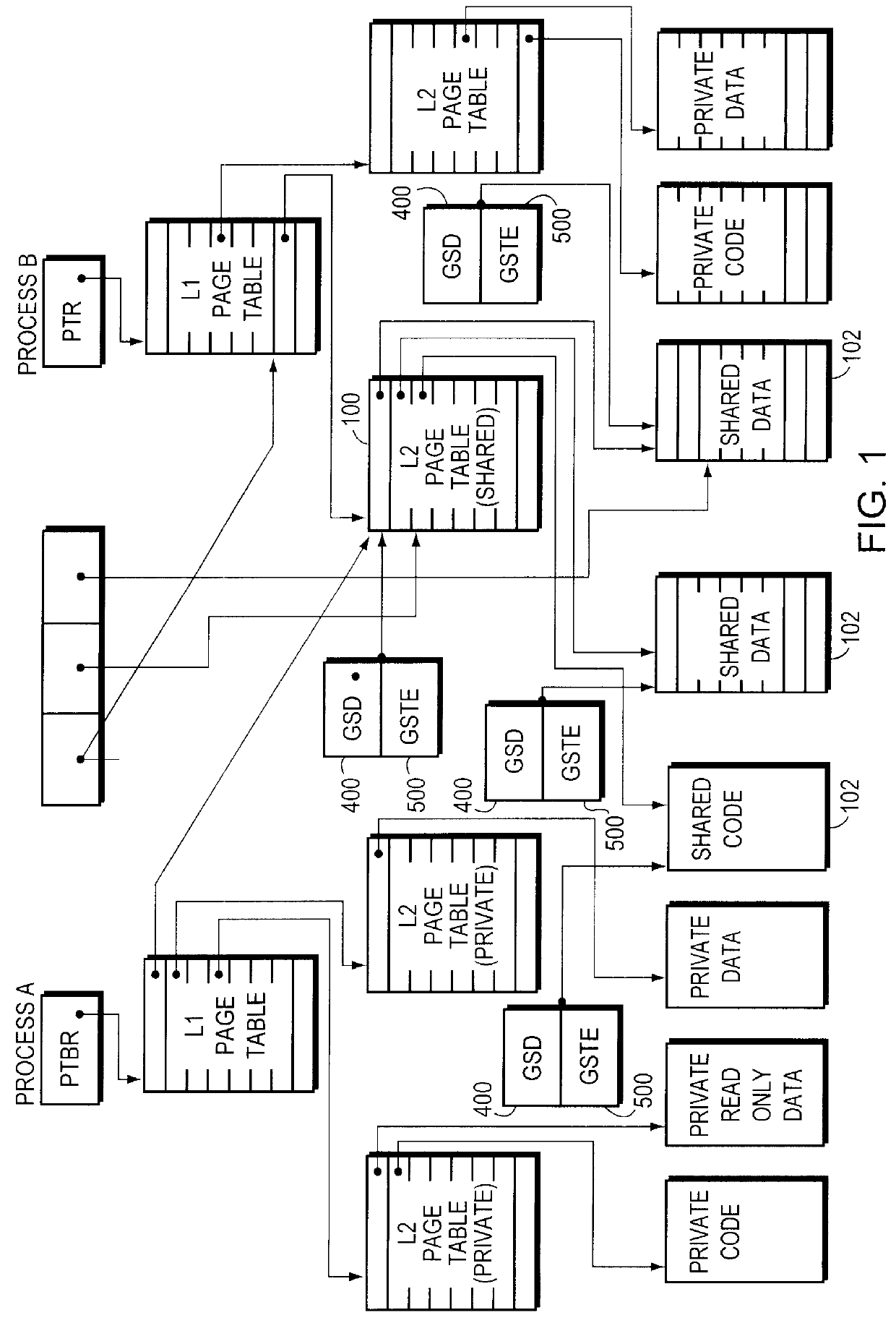

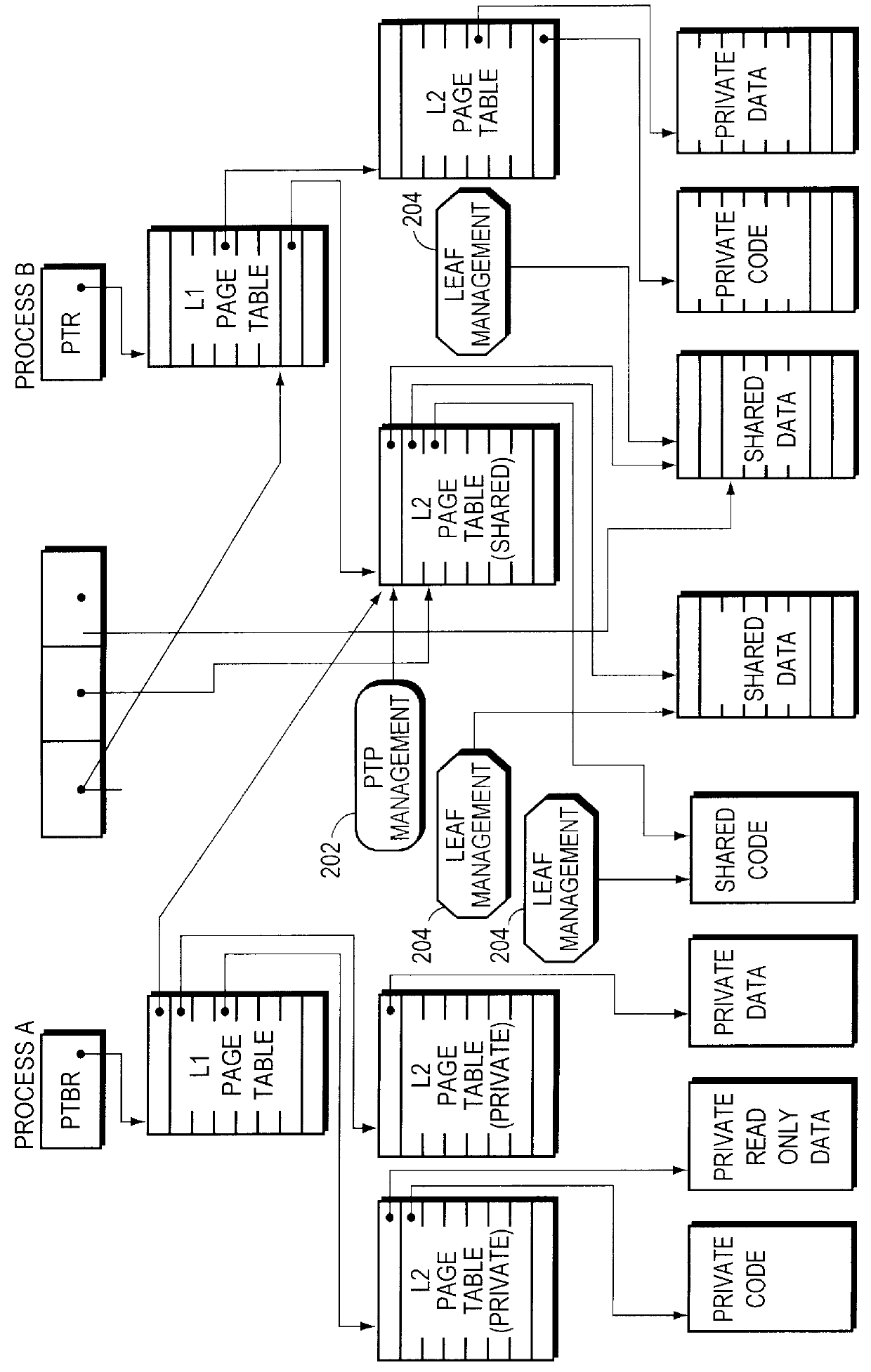

A method of managing computer memory pages. The sharing of a program-accessible page between two processes is managed by a predefined mechanism of a memory manager. The sharing of a page table page between the processes is managed by the same predefined mechanism. The data structures used by the mechanism are equally applicable to sharing program-accessible pages or page table pages.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

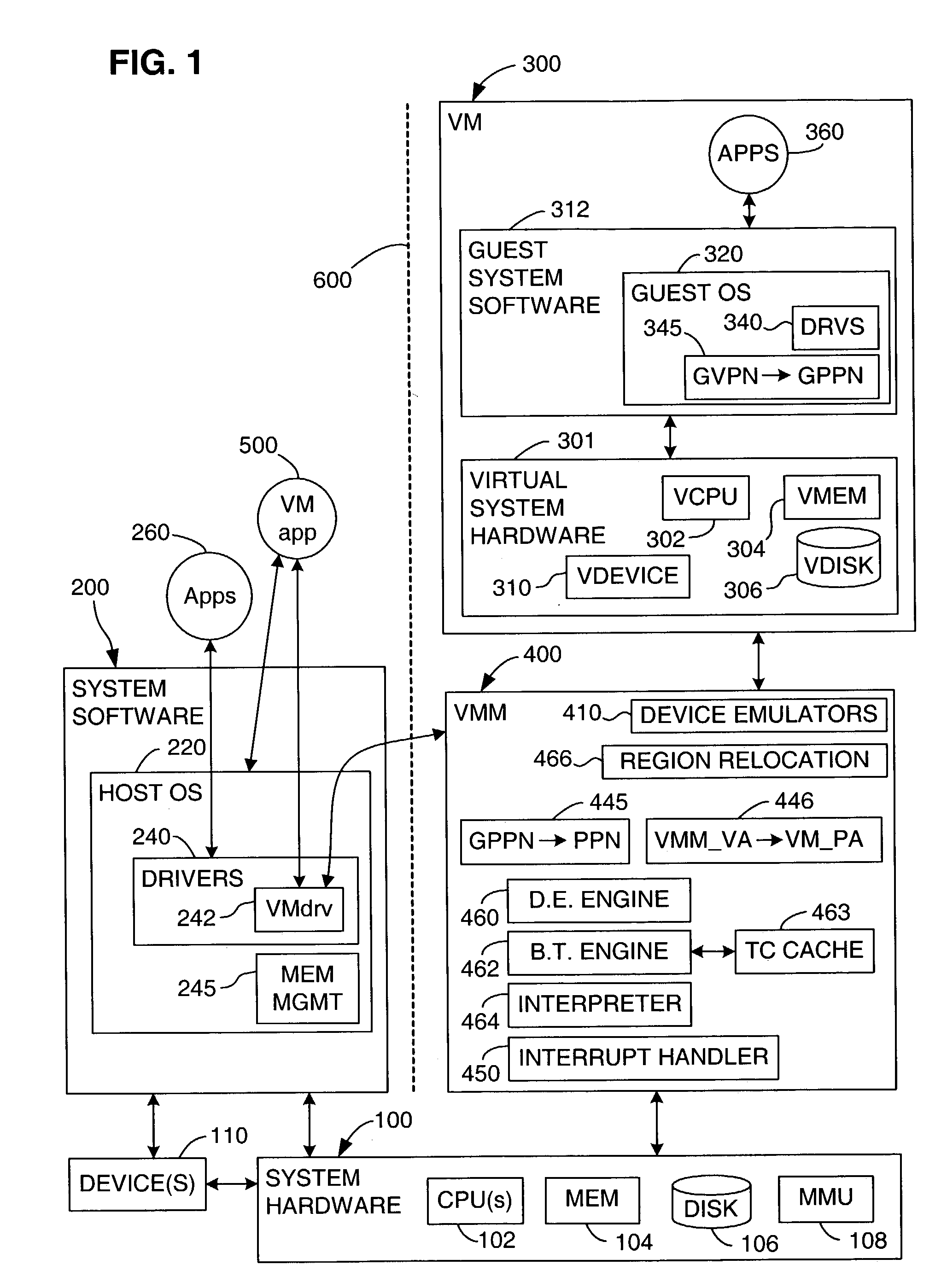

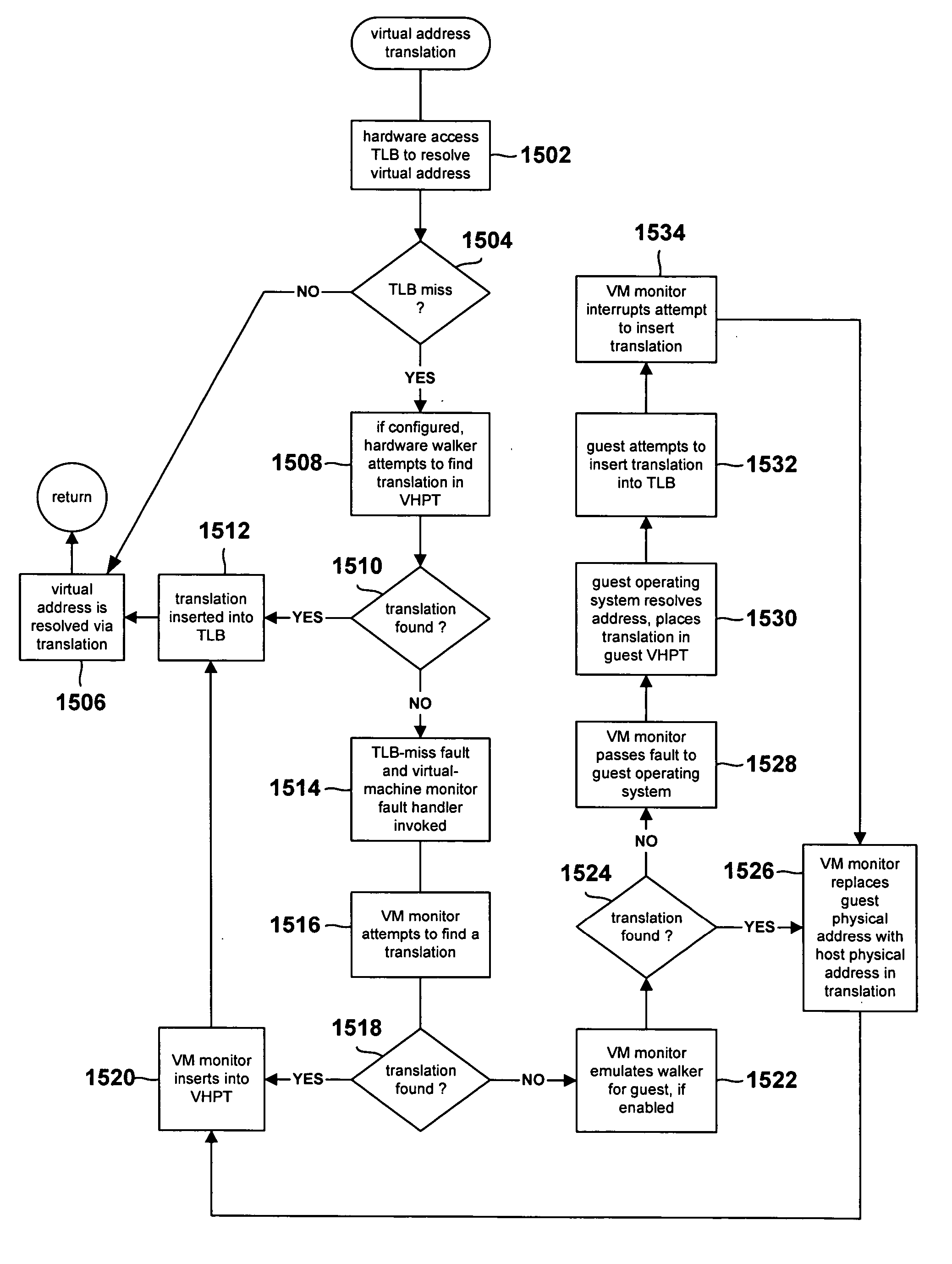

TLB miss fault handler and method for accessing multiple page tables

ActiveUS7111145B1Memory adressing/allocation/relocationComputer security arrangementsVirtual memoryError processing

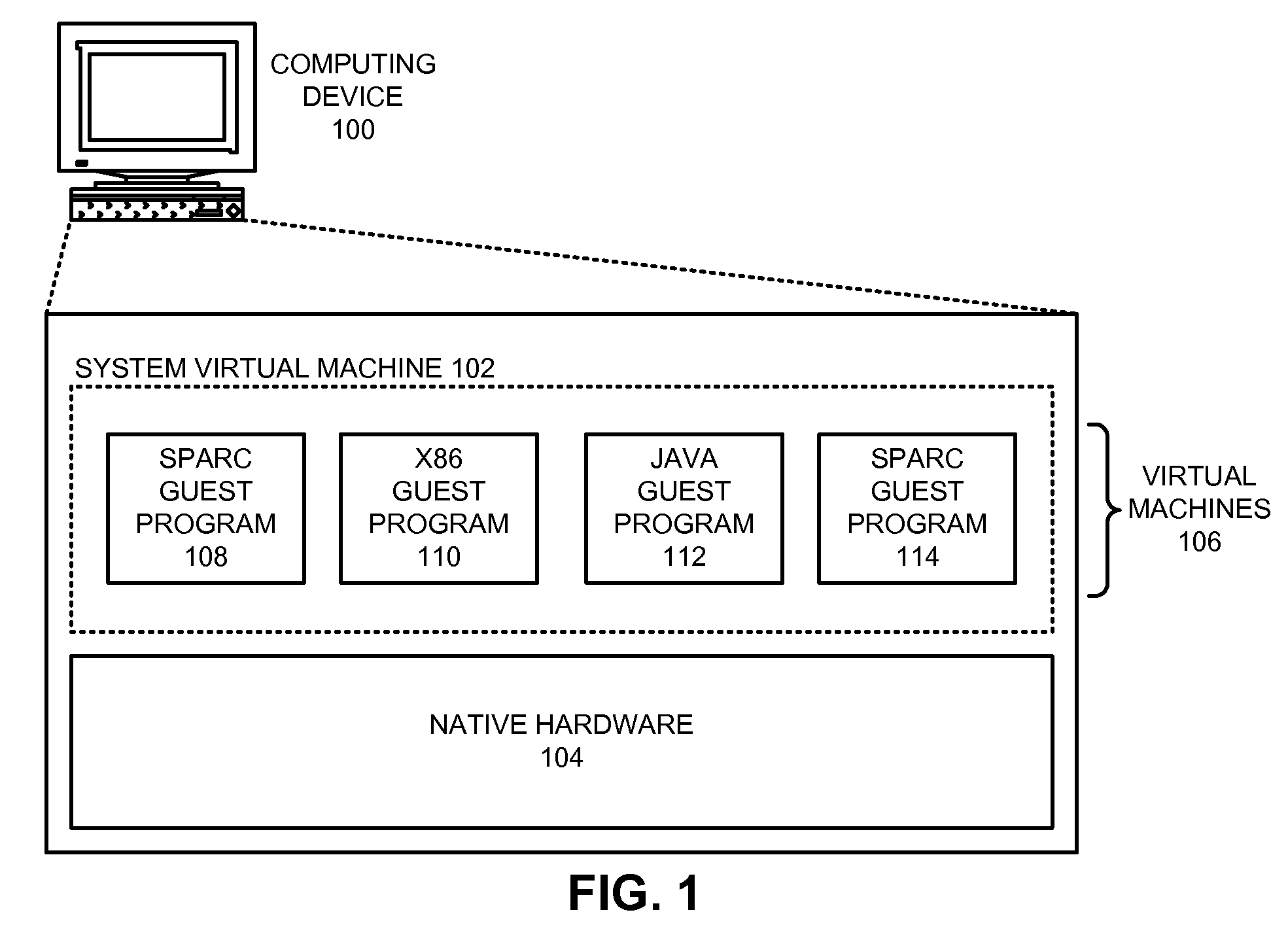

A virtual memory system implementing the invention provides concurrent access to translations for virtual addresses from multiple address spaces. One embodiment of the invention is implemented in a virtual computer system, in which a virtual machine monitor supports a virtual machine. In this embodiment, the invention provides concurrent access to translations for virtual addresses from the respective address spaces of both the virtual machine monitor and the virtual machine. Multiple page tables contain the translations for the multiple address spaces. Information about an operating state of the computer system, as well as an address space identifier, are used to determine whether, and under what circumstances, an attempted memory access is permissible. If the attempted memory access is permissible, the address space identifier is also used to determine which of the multiple page tables contains the translation for the attempted memory access.

Owner:VMWARE INC

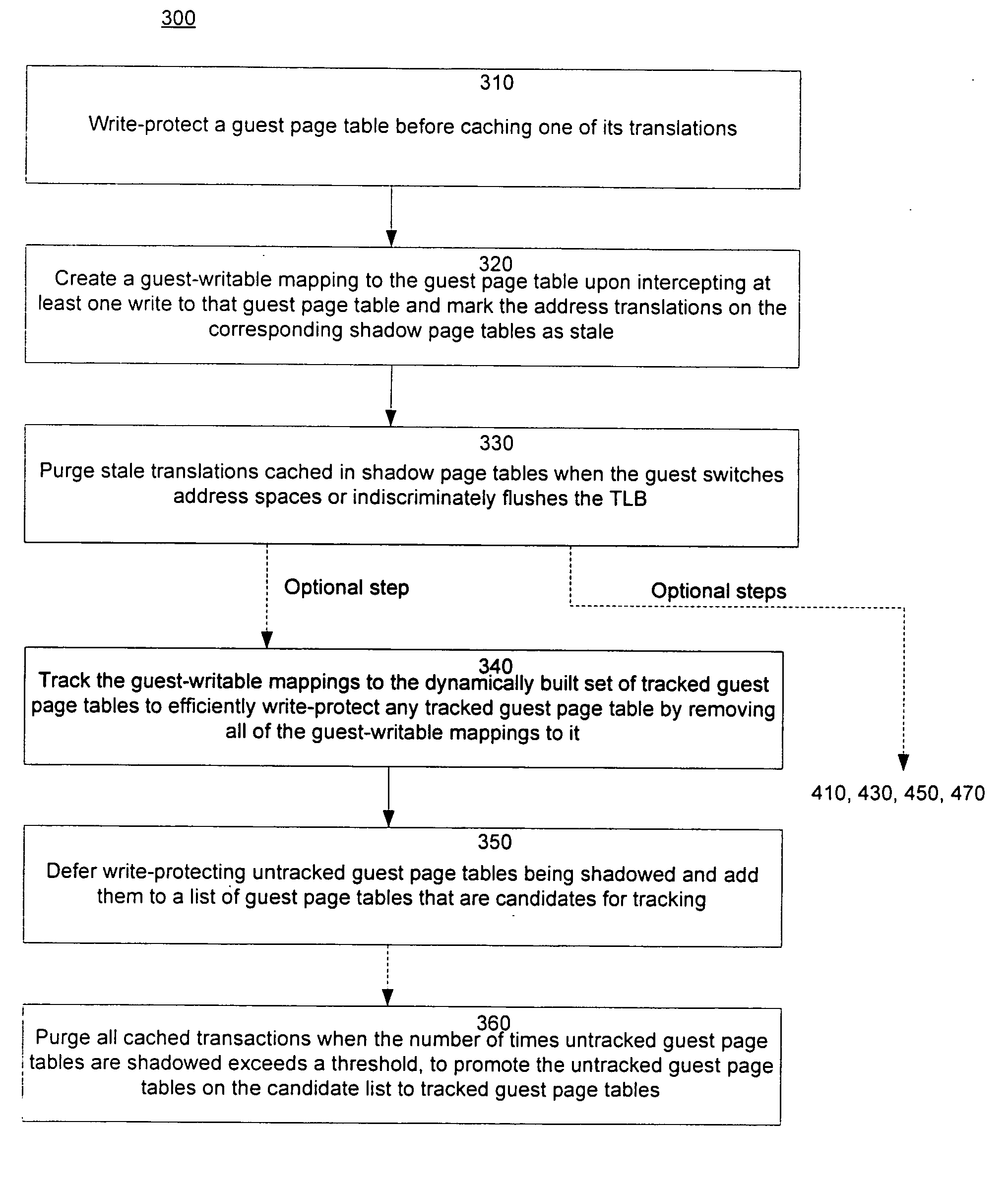

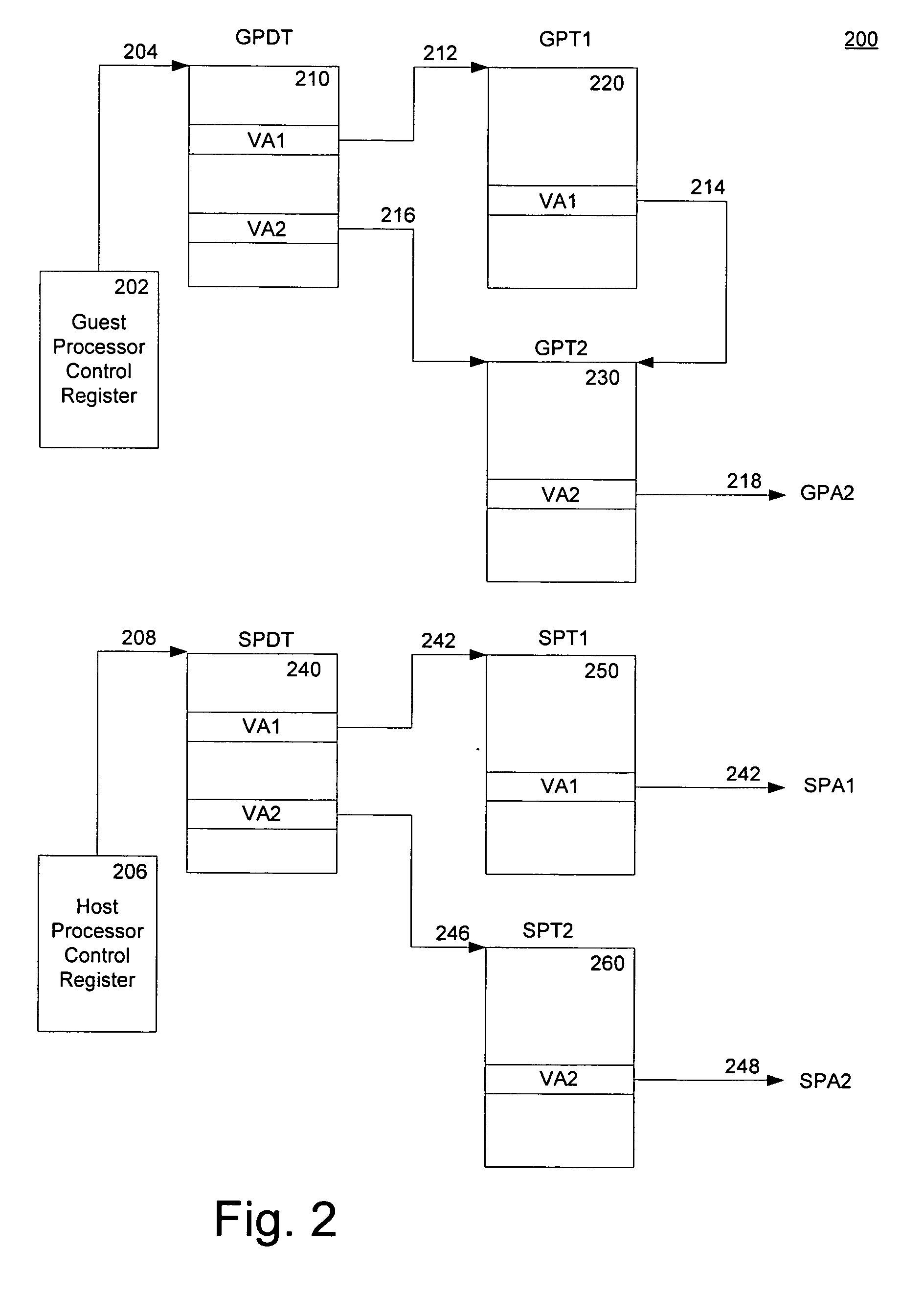

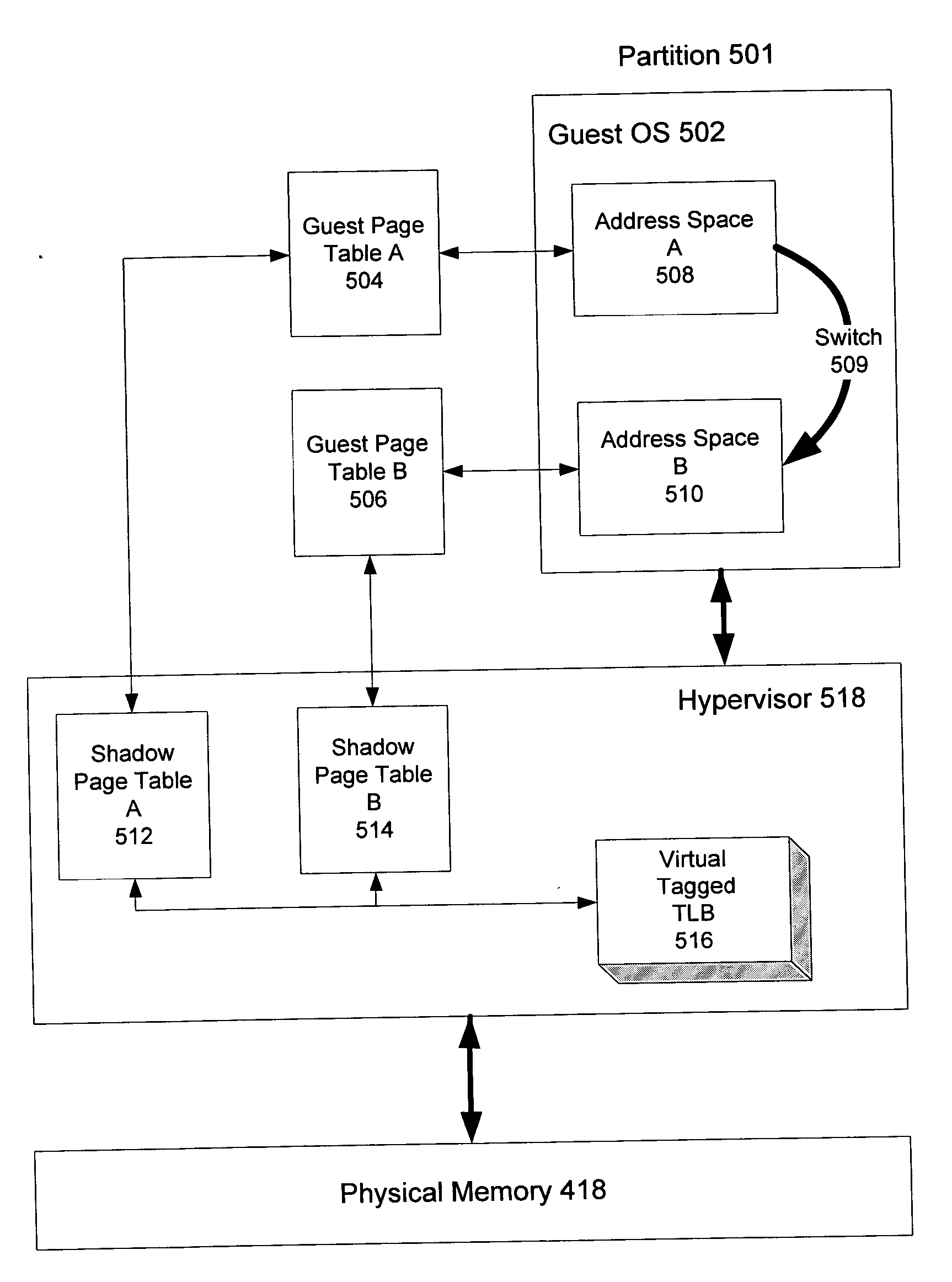

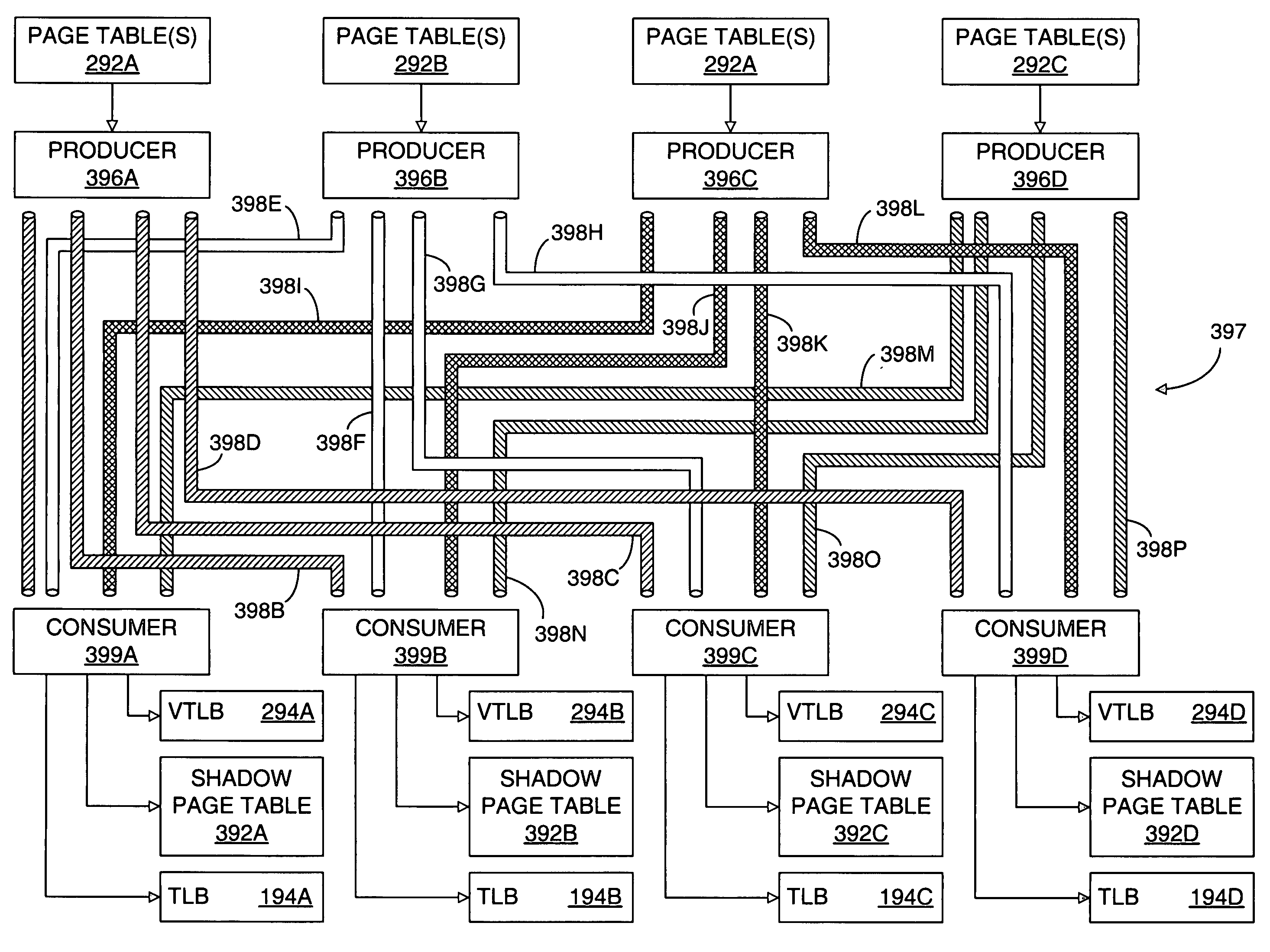

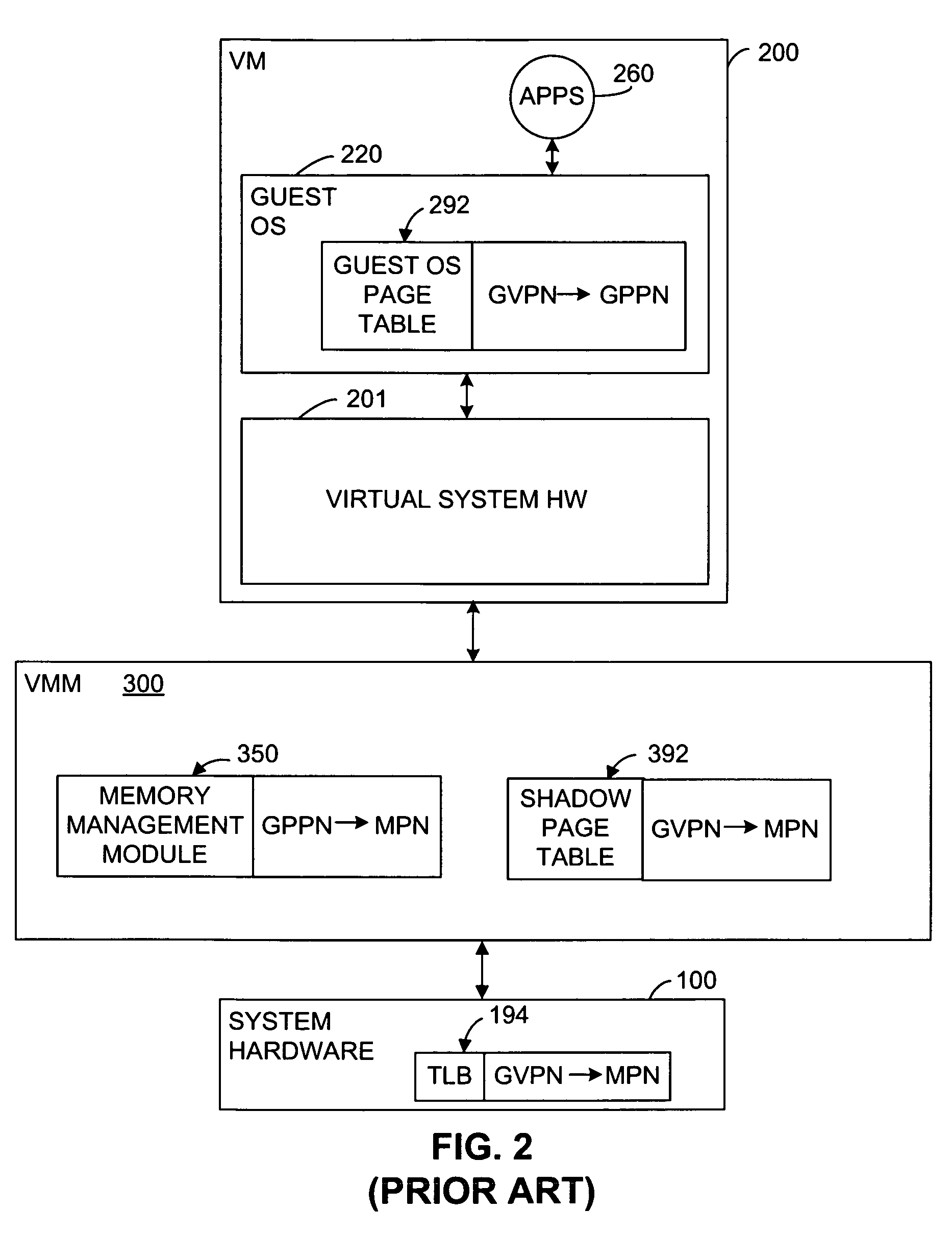

Method and system for caching address translations from multiple address spaces in virtual machines

InactiveUS20060259734A1Reduce memory overheadLow costMemory architecture accessing/allocationMemory systemsVirtualizationPage table

A method of virtualizing memory through shadow page tables that cache translations from multiple guest address spaces in a virtual machine includes a software version of a hardware tagged translation look-aside buffer. Edits to guest page tables are detected by intercepting the creation of guest-writable mappings to guest page tables with translations cached in shadow page tables. The affected cached translations are marked as stale and purged upon an address space switch or an indiscriminate flush of translations by the guest. Thereby, non-stale translations remain cached but stale translations are discarded. The method includes tracking the guest-writable mappings to guest page tables, deferring discovery of such mappings to a guest page table for the first time until a purge of all cached translations when the number of untracked guest page tables exceeds a threshold, and sharing shadow page tables between shadow address spaces and between virtual processors.

Owner:MICROSOFT TECH LICENSING LLC

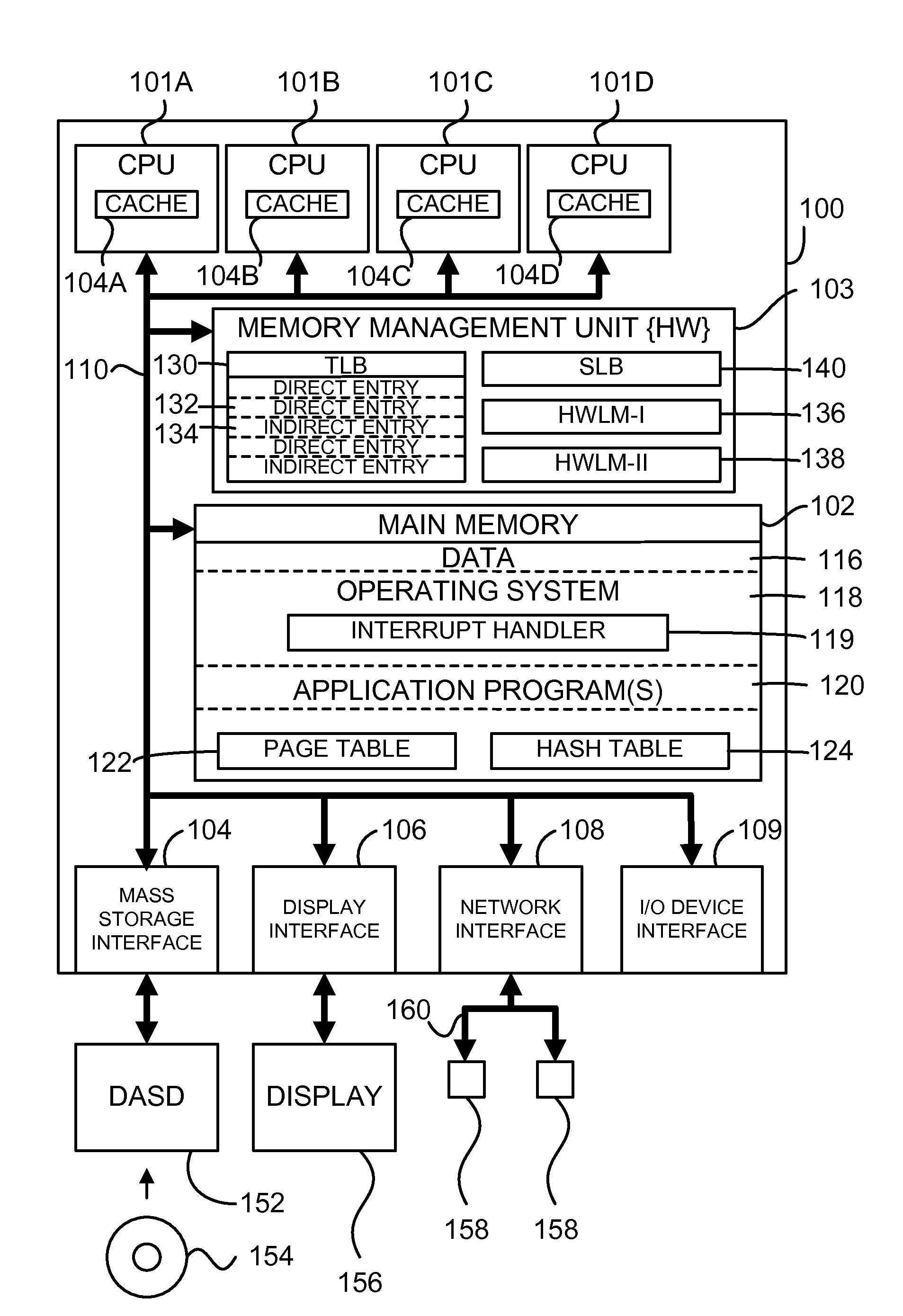

Loading entries into a tlb in hardware via indirect tlb entries

ActiveUS20100058026A1Eliminate areaEliminates powerMemory adressing/allocation/relocationMicro-instruction address formationPage tableTranslation lookaside buffer

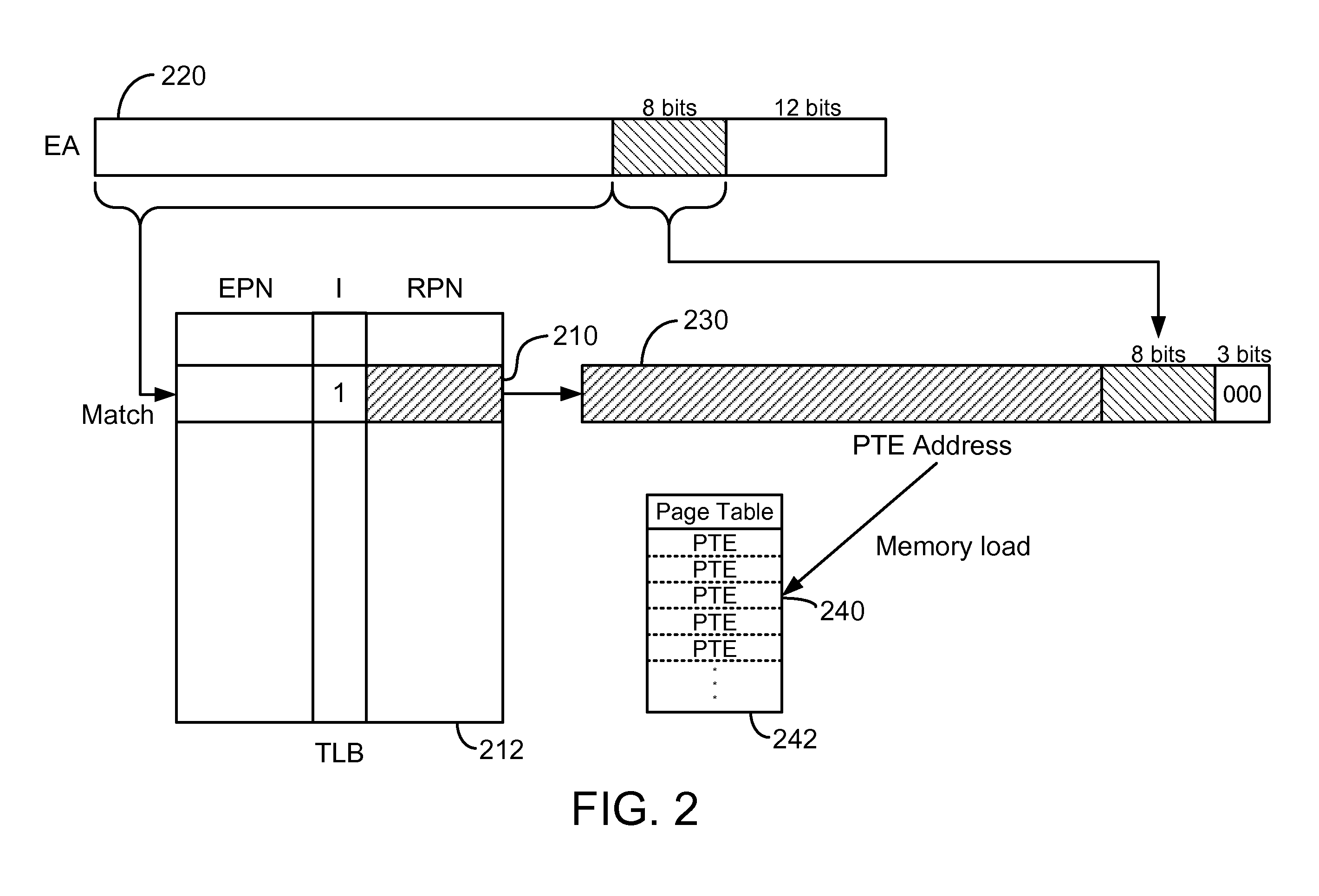

An enhanced mechanism for loading entries into a translation lookaside buffer (TLB) in hardware via indirect TLB entries. In one embodiment, if no direct TLB entry associated with the given virtual address is found in the TLB, the TLB is checked for an indirect TLB entry associated with the given virtual address. Each indirect TLB entry provides the real address of a page table associated with a specified range of virtual addresses and comprises an array of page table entries. If an indirect TLB entry associated with the given virtual address is found in the TLB, a computed address is generated by combining a real address field from the indirect TLB entry and bits from the given virtual address, a page table entry (PTE) is obtained by reading a word from a memory at the computed address, and the PTE is loaded into the TLB as a direct TLB entry.

Owner:IBM CORP

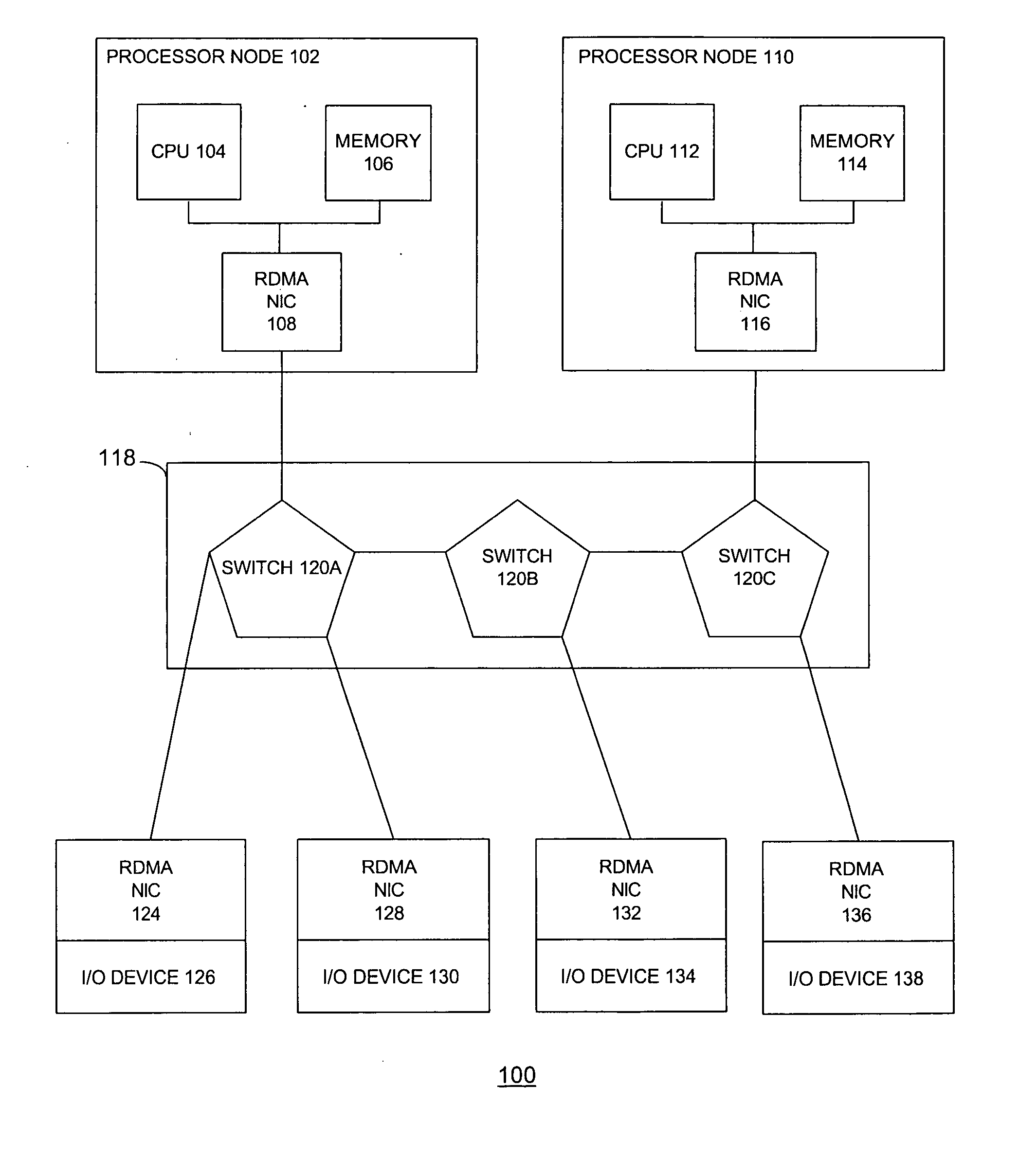

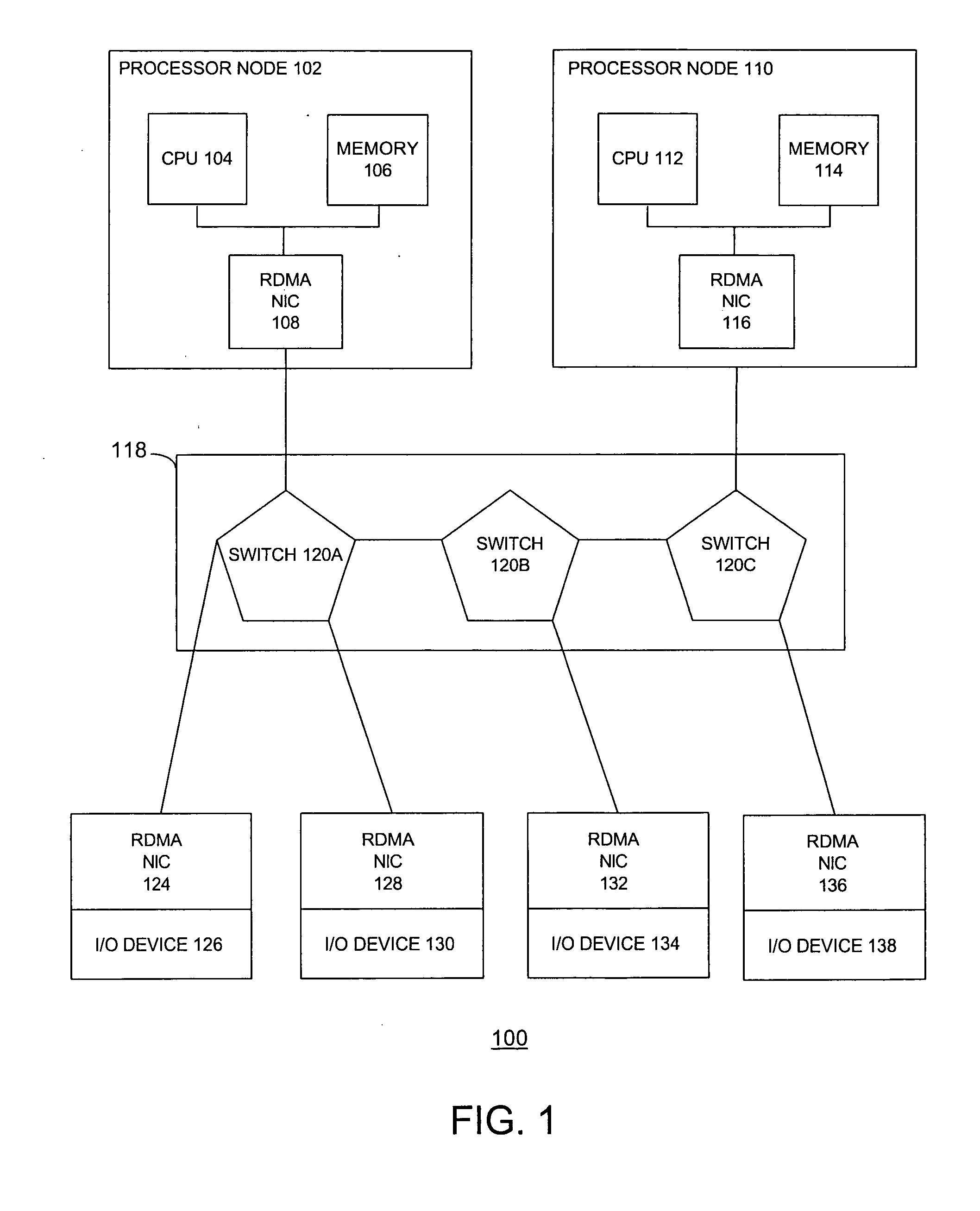

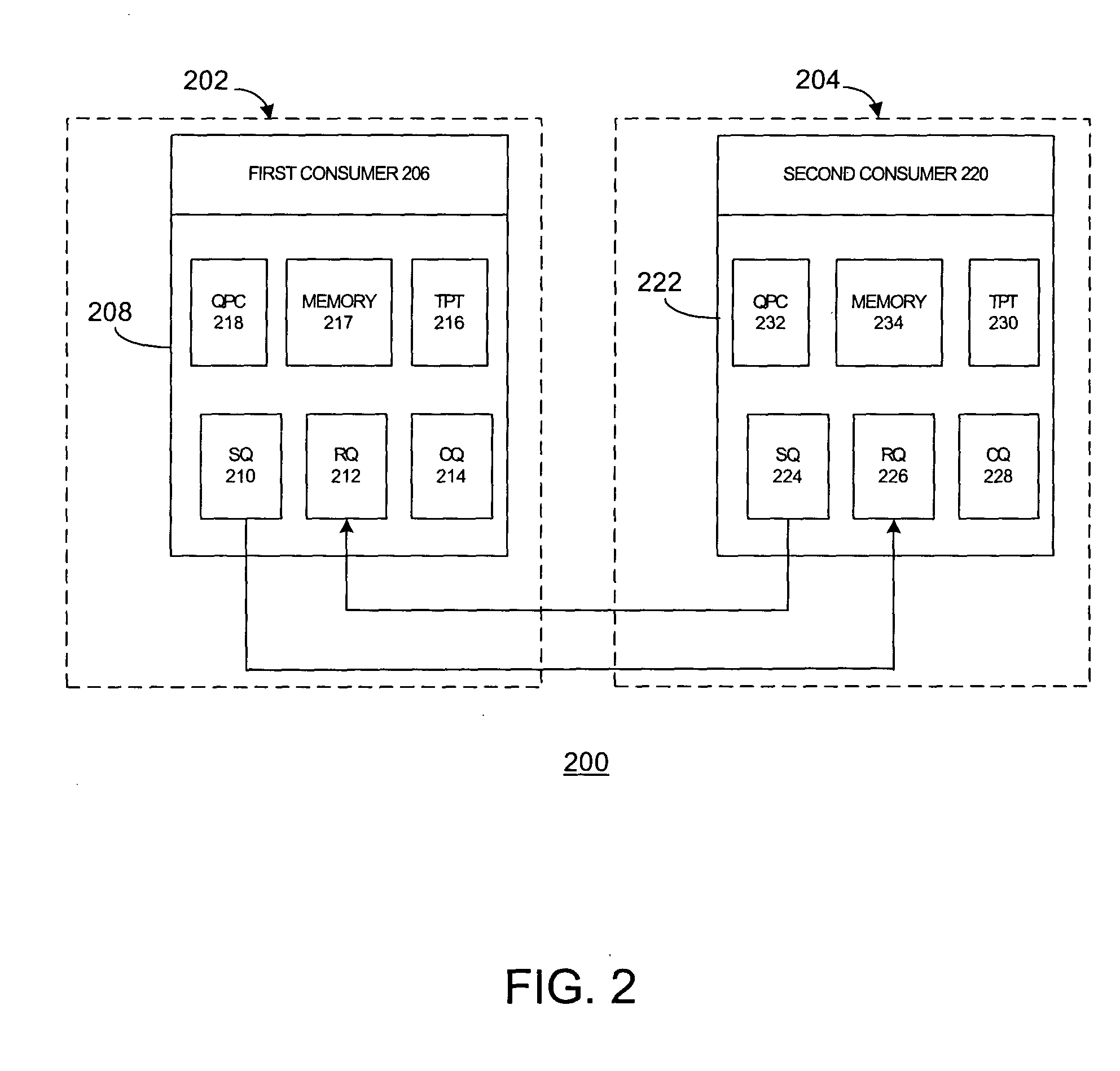

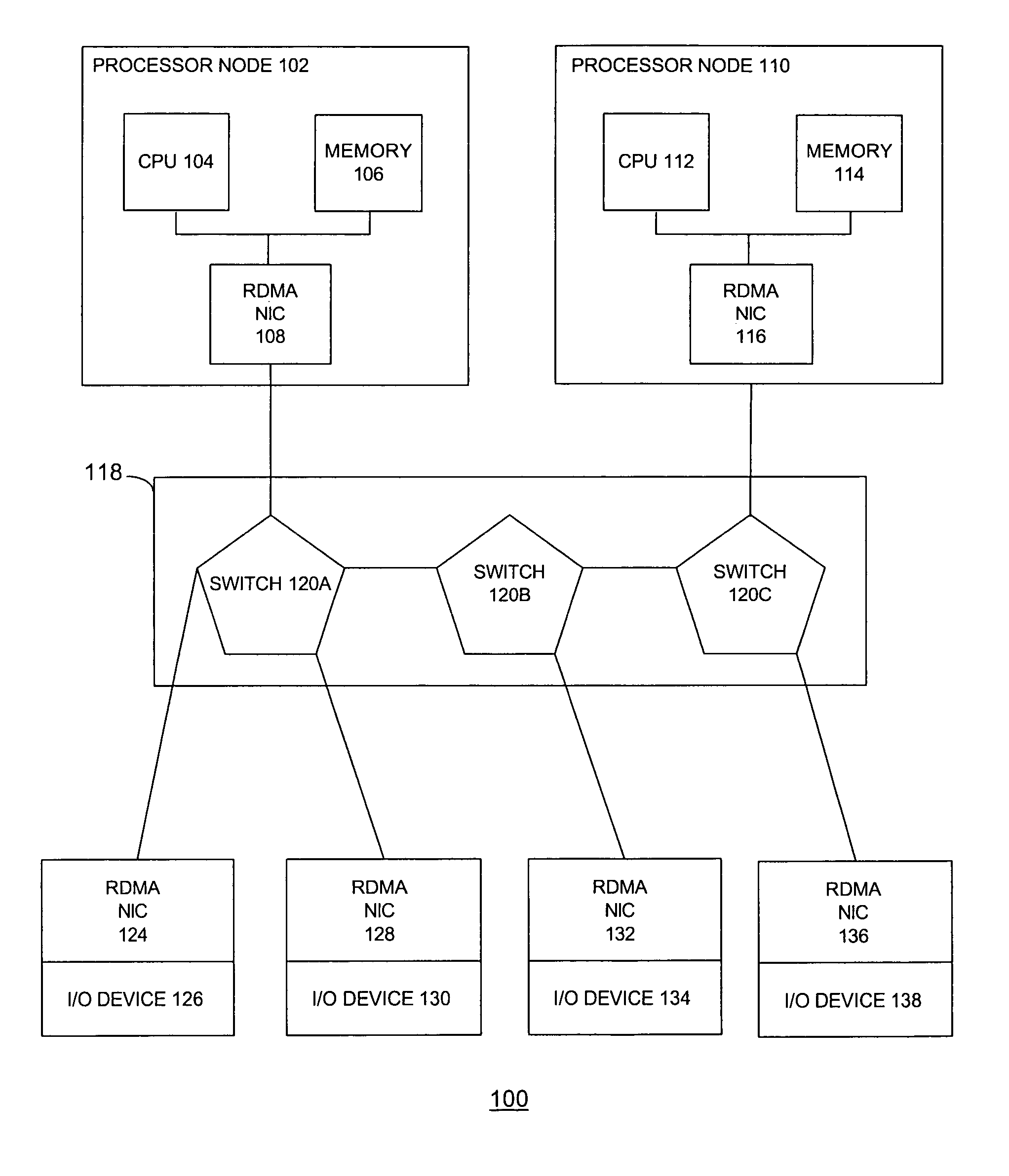

RDMA enabled I/O adapter performing efficient memory management

InactiveUS20060236063A1Reduce the numberReduce the amount requiredMemory systemsPhysical addressTerm memory

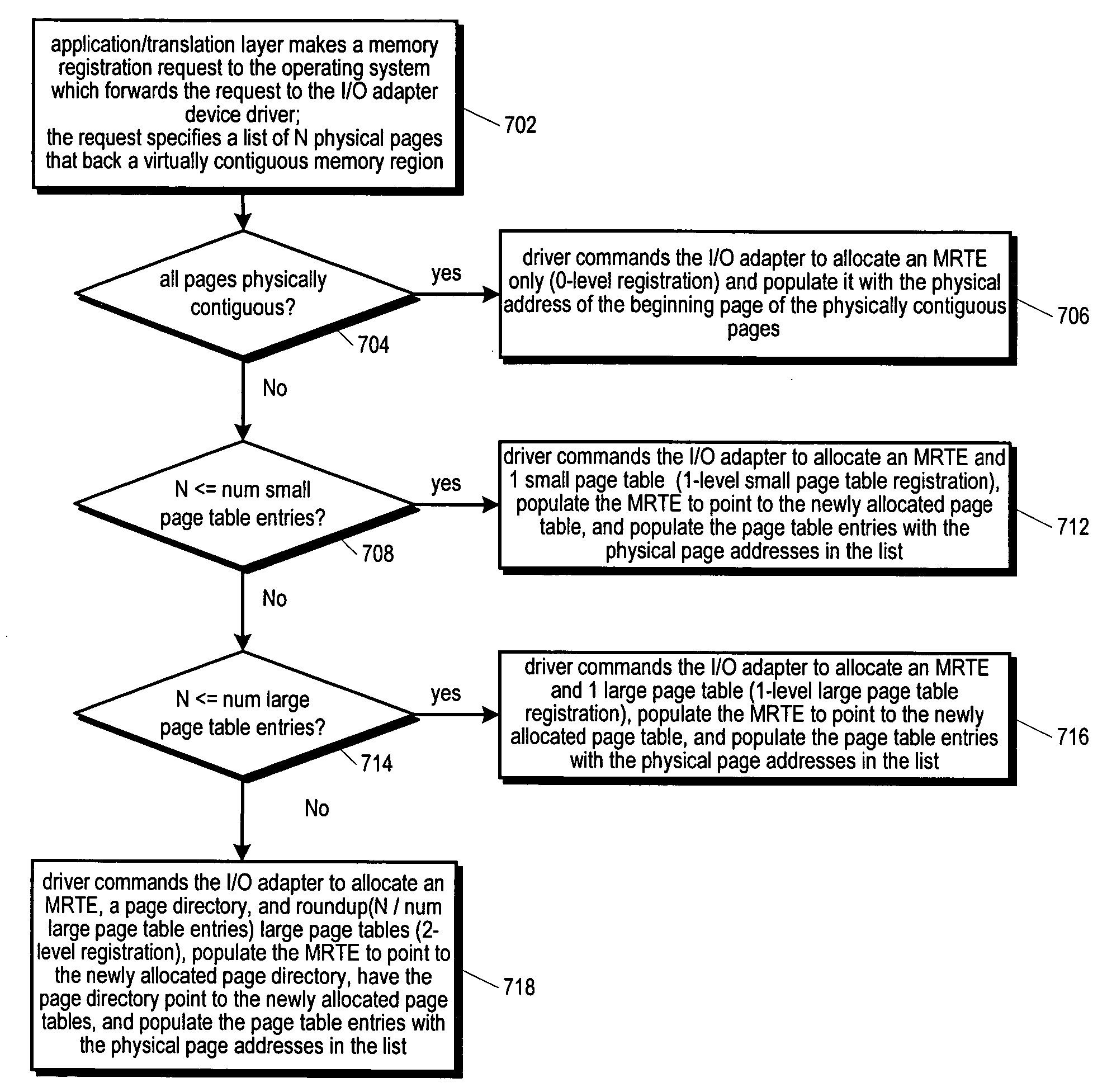

An RDMA enabled I / O adapter and device driver is disclosed. In response to a memory registration that includes a list of physical memory pages backing a virtually contiguous memory region, an entry in a table in the adapter memory is allocated. A variable size data structure to store the physical addresses of the pages is also allocated as follows: if the pages are physically contiguous, the physical page address of the beginning page is stored directly in the table entry and no other allocations are made; otherwise, one small page table is allocated if the addresses will fit in a small page table; otherwise, one large page table is allocated if the addresses will fit in a large page table; otherwise, a page directory is allocated and enough page tables to store the addresses are allocated. The size and number of the small and large page tables is programmable.

Owner:INTEL CORP

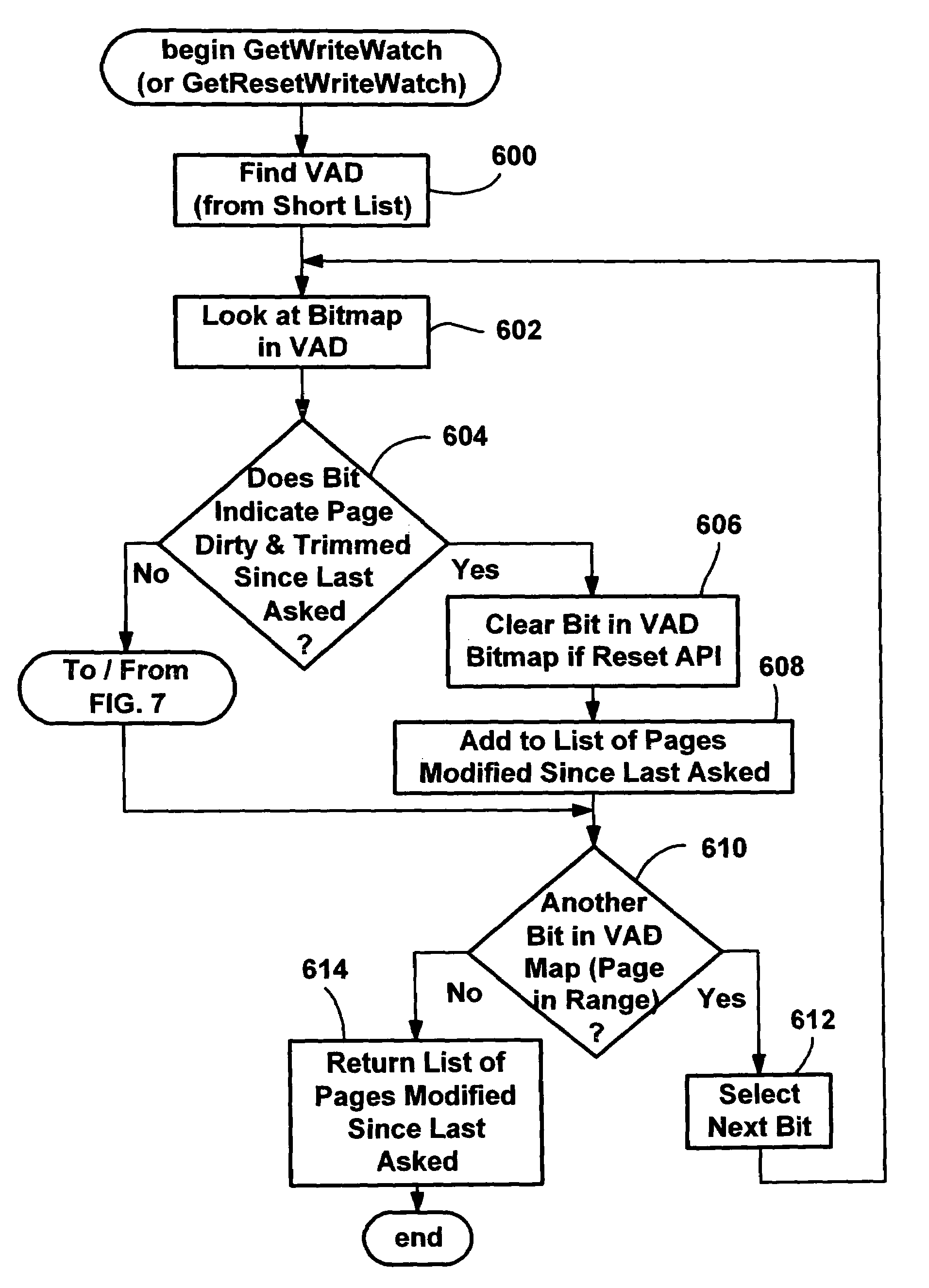

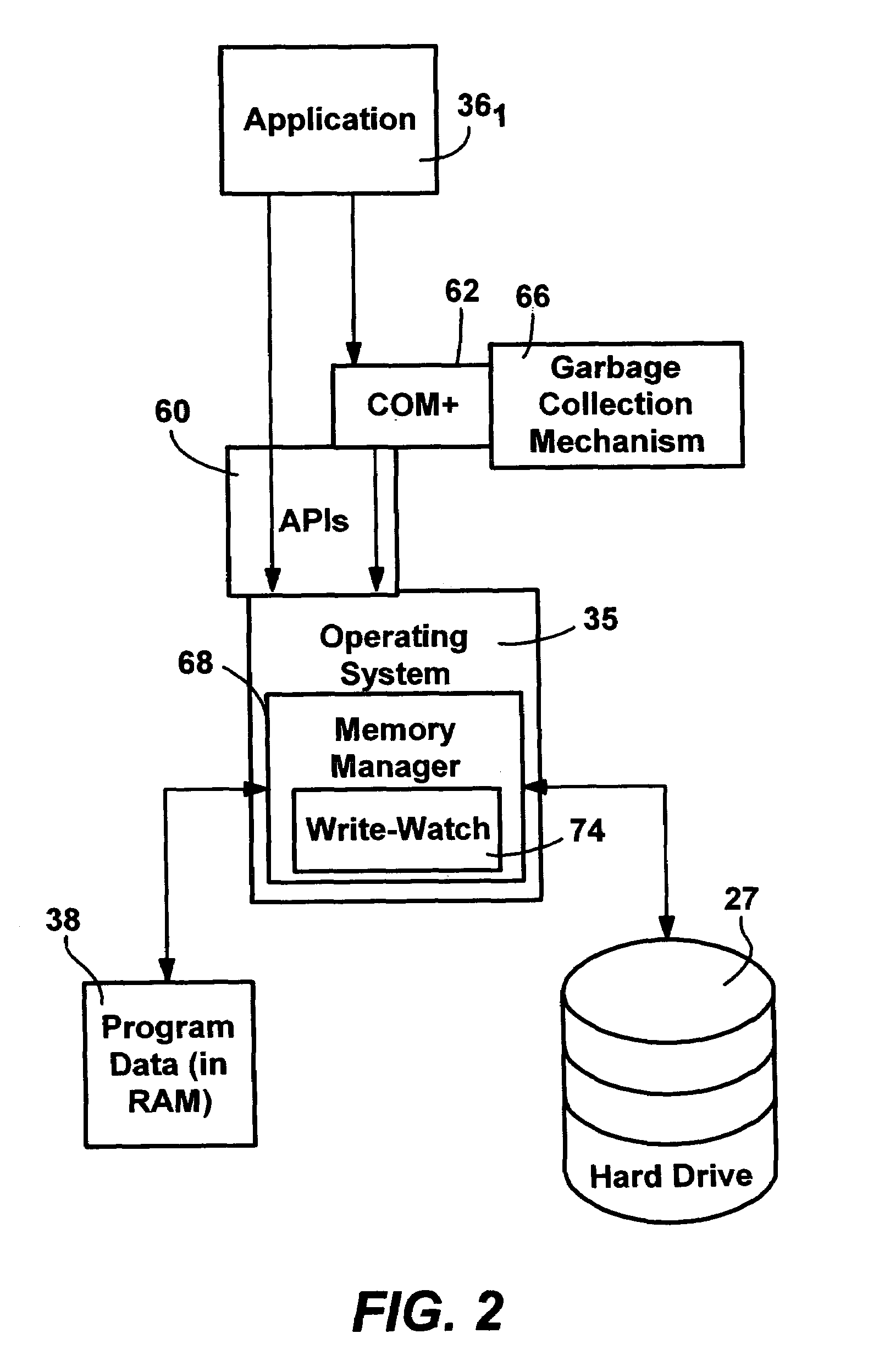

Efficient write-watch mechanism useful for garbage collection in a computer system

InactiveUS7065617B2Efficient write-watchReduce performanceData processing applicationsMemory adressing/allocation/relocationComputerized systemWaste collection

An efficient write-watch mechanism and process. A bitmap is associated with the virtual address descriptor (VAD) for a process, one bit for each virtual page address allocated to a process having write-watch enabled. As part of the write-watch mechanism, if a virtual address is trimmed to disk and that virtual address page is marked as modified, then the corresponding bit in the VAD is set for that virtual address page. In response to an API call (e.g., from a garbage collection mechanism) seeking to know which virtual addresses in a process have been modified since last checked, the memory manager walks the bitmap in the relevant VAD for the specified virtual address range for the requested process. If a bit is set, then the page corresponding to that bit is known to have been modified since last asked. If specified by the API, the bit is cleared in the VAD bitmap so that it will reflect the state since this time of asking. If the bit is not set, to determine if the page was modified, the page table entry (PTE) is checked for that page, and if the PTE indicates the page was modified, the page is known to be modified, otherwise that page is known to be unmodified since the last call. One enhancement uses page directory tables to locate a series of trimmed pages, sometimes avoiding the need to access the PTE.

Owner:MICROSOFT TECH LICENSING LLC

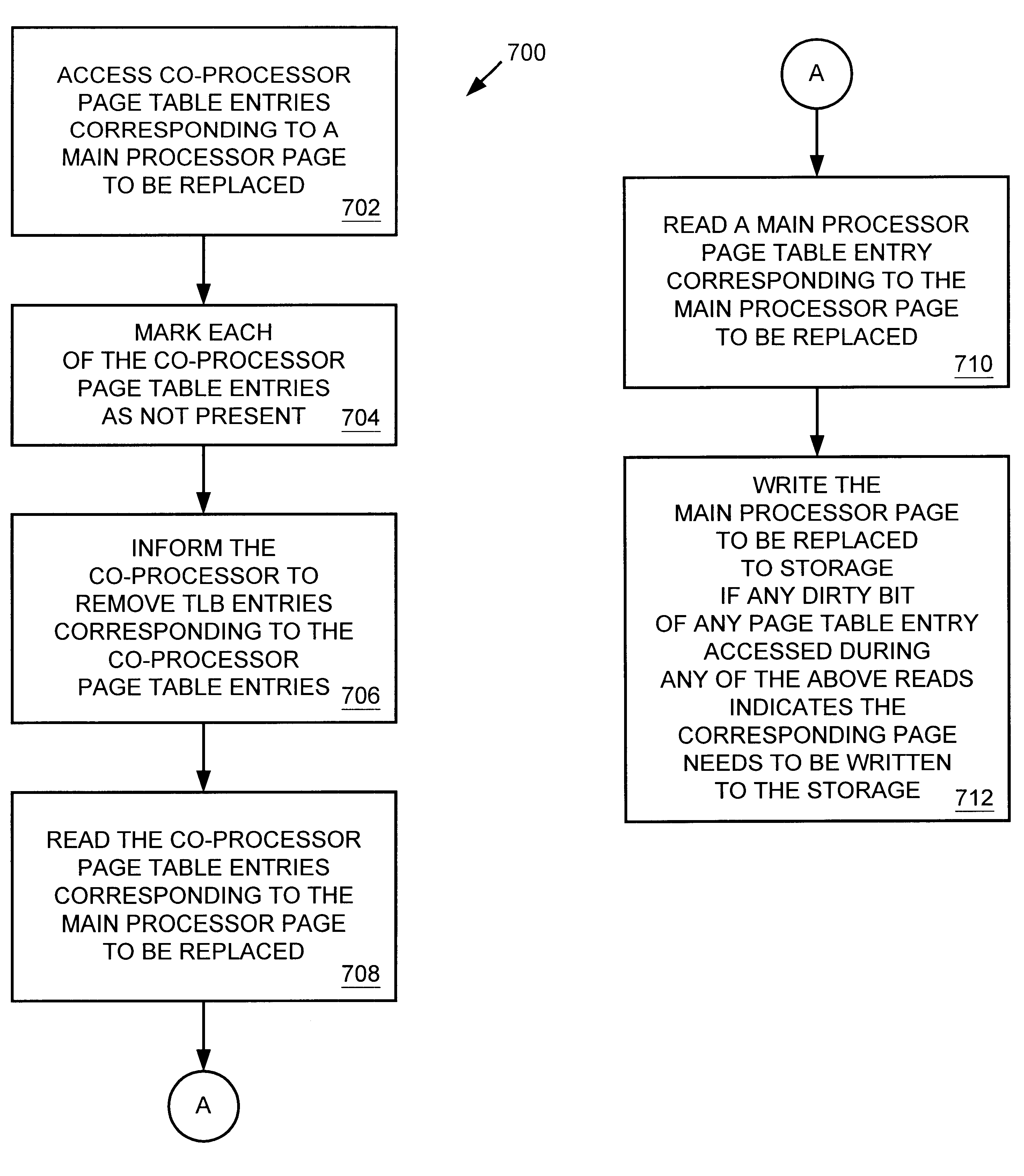

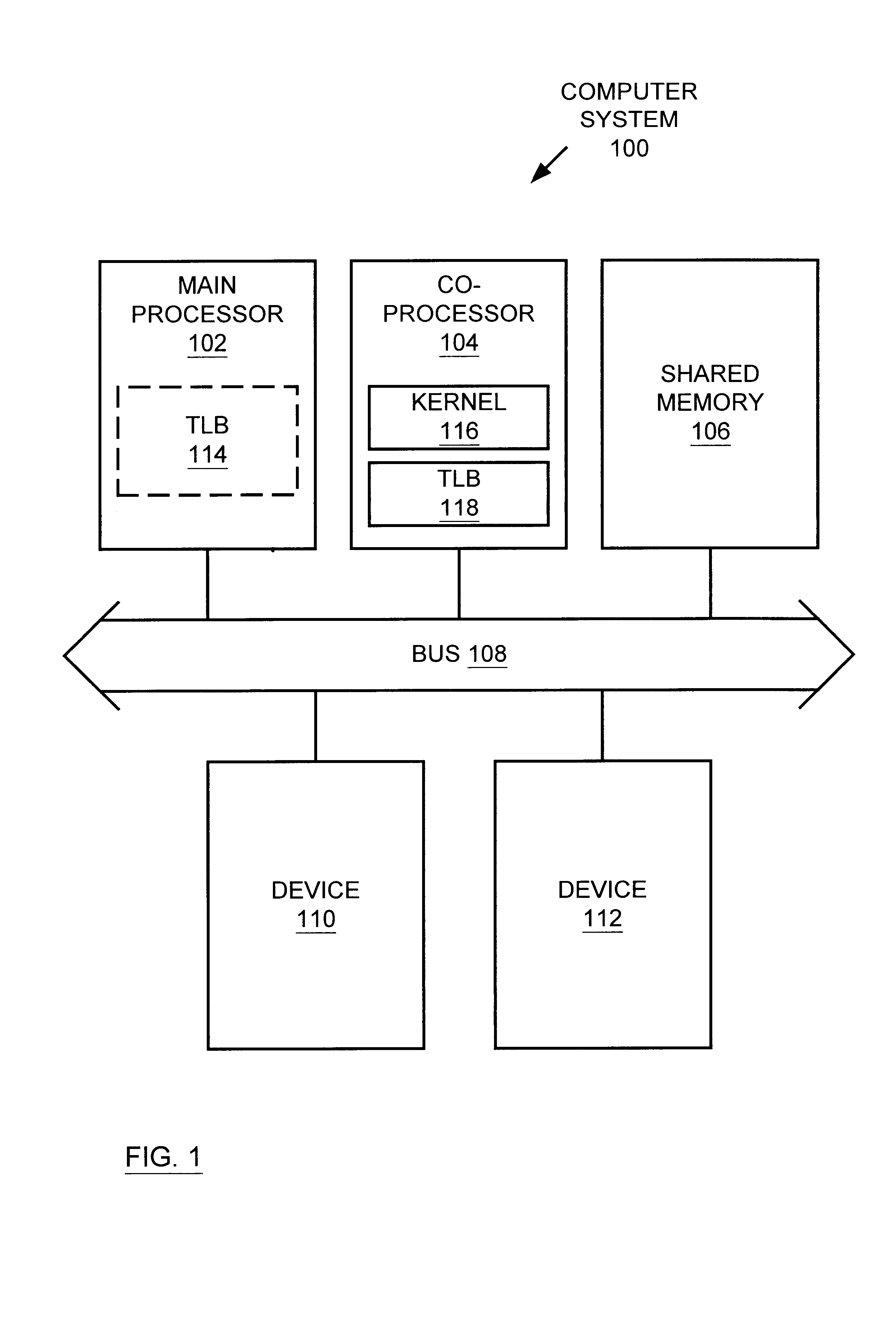

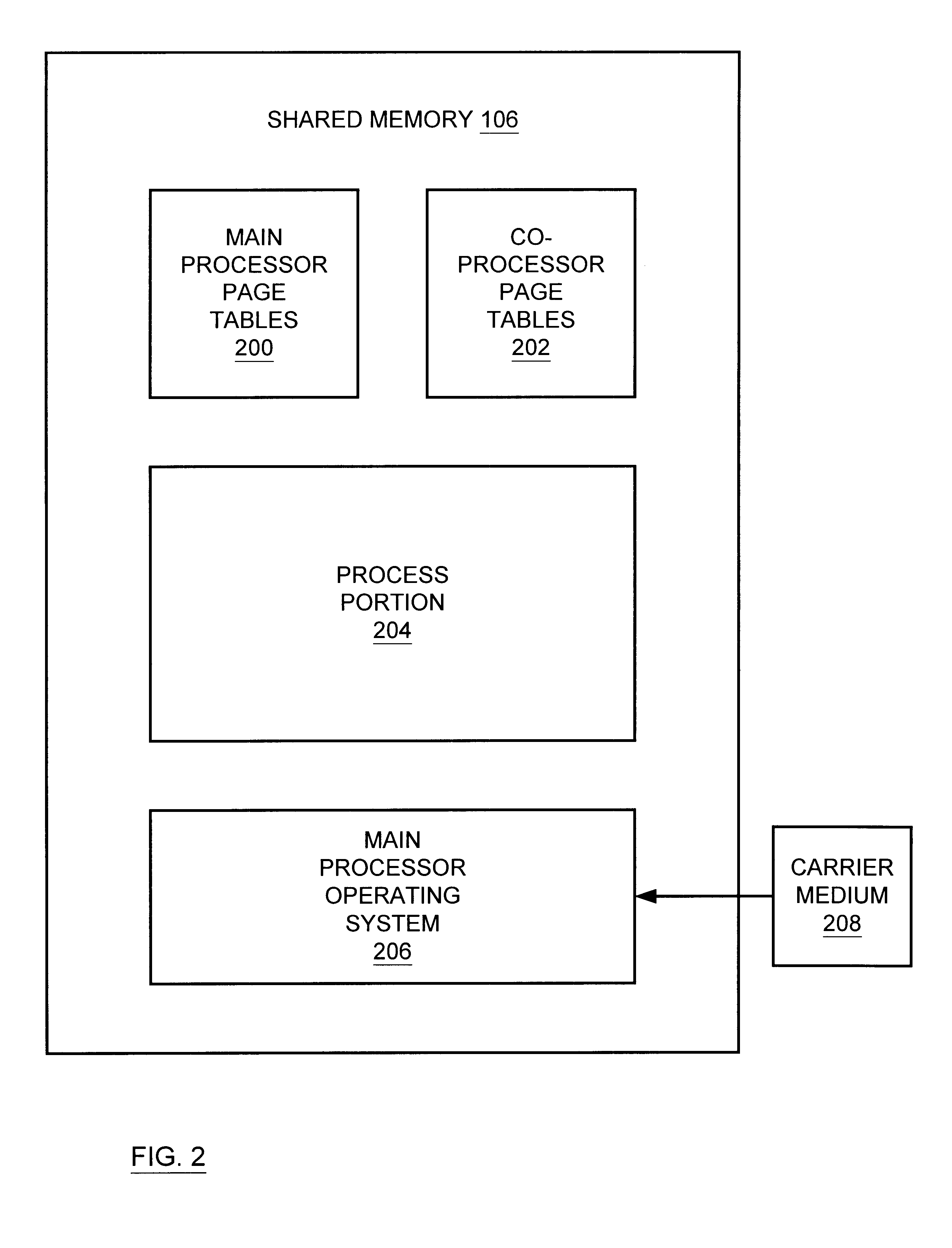

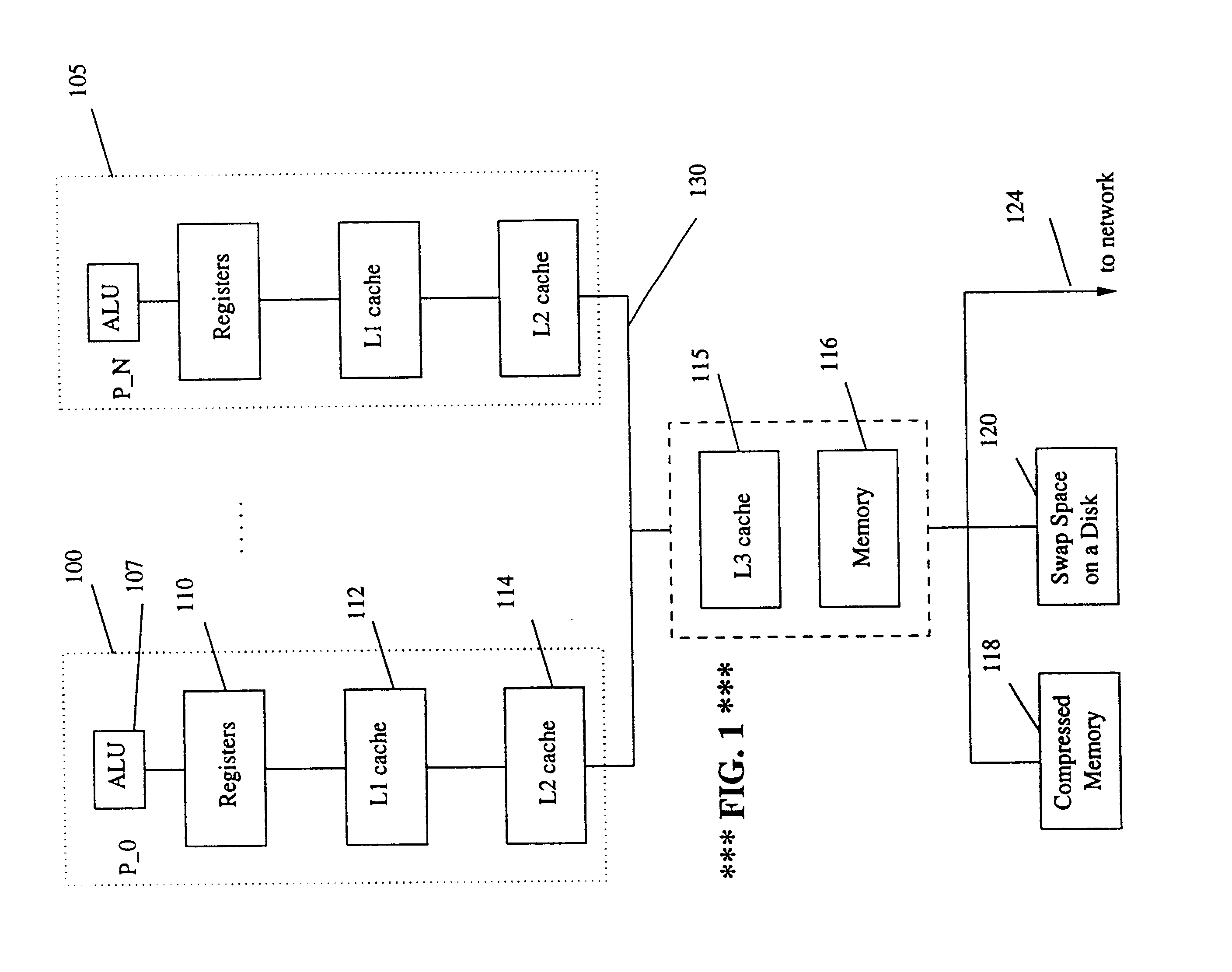

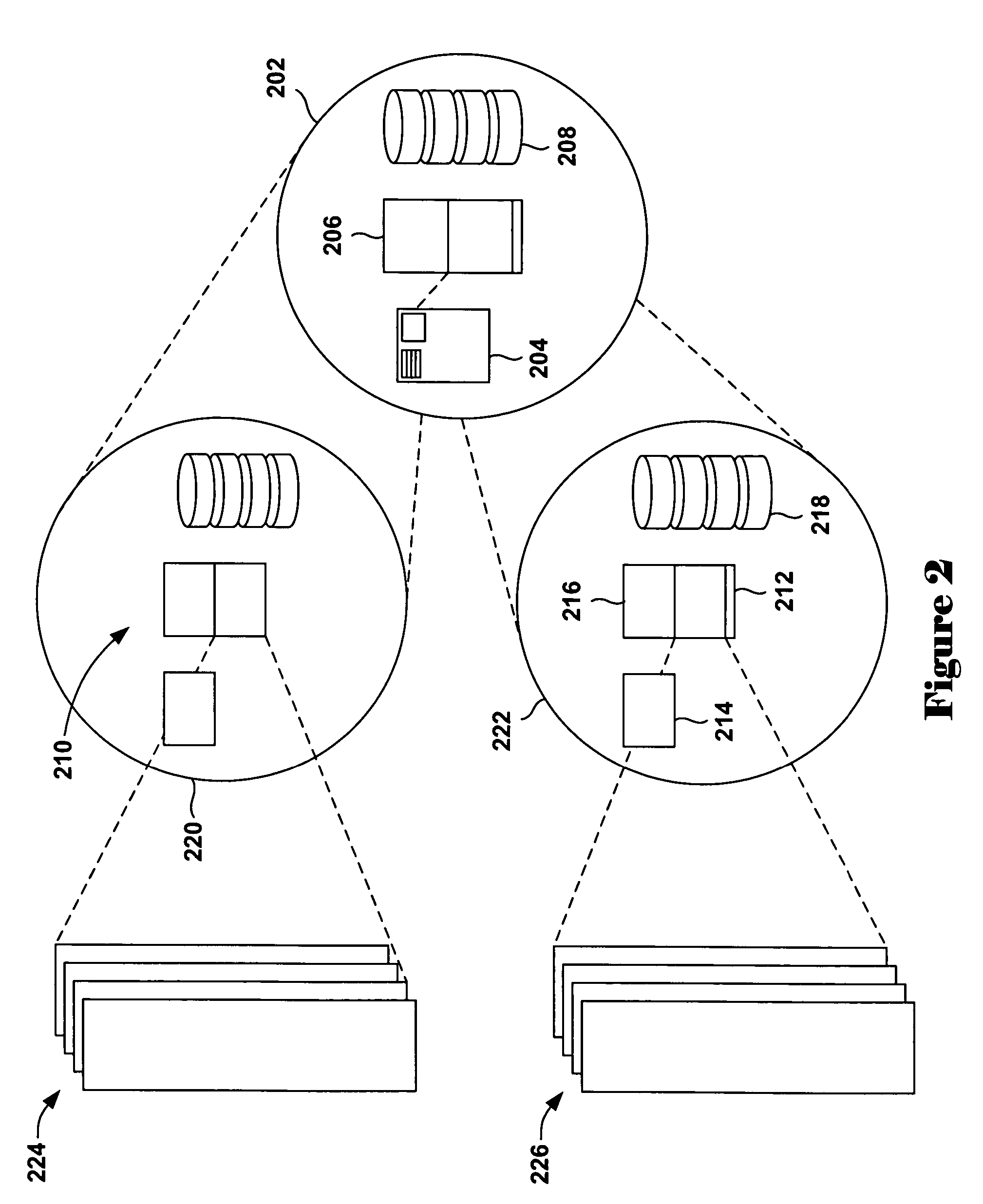

Multiprocessor system implementing virtual memory using a shared memory, and a page replacement method for maintaining paged memory coherence

InactiveUS6684305B1Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryComputer architecture

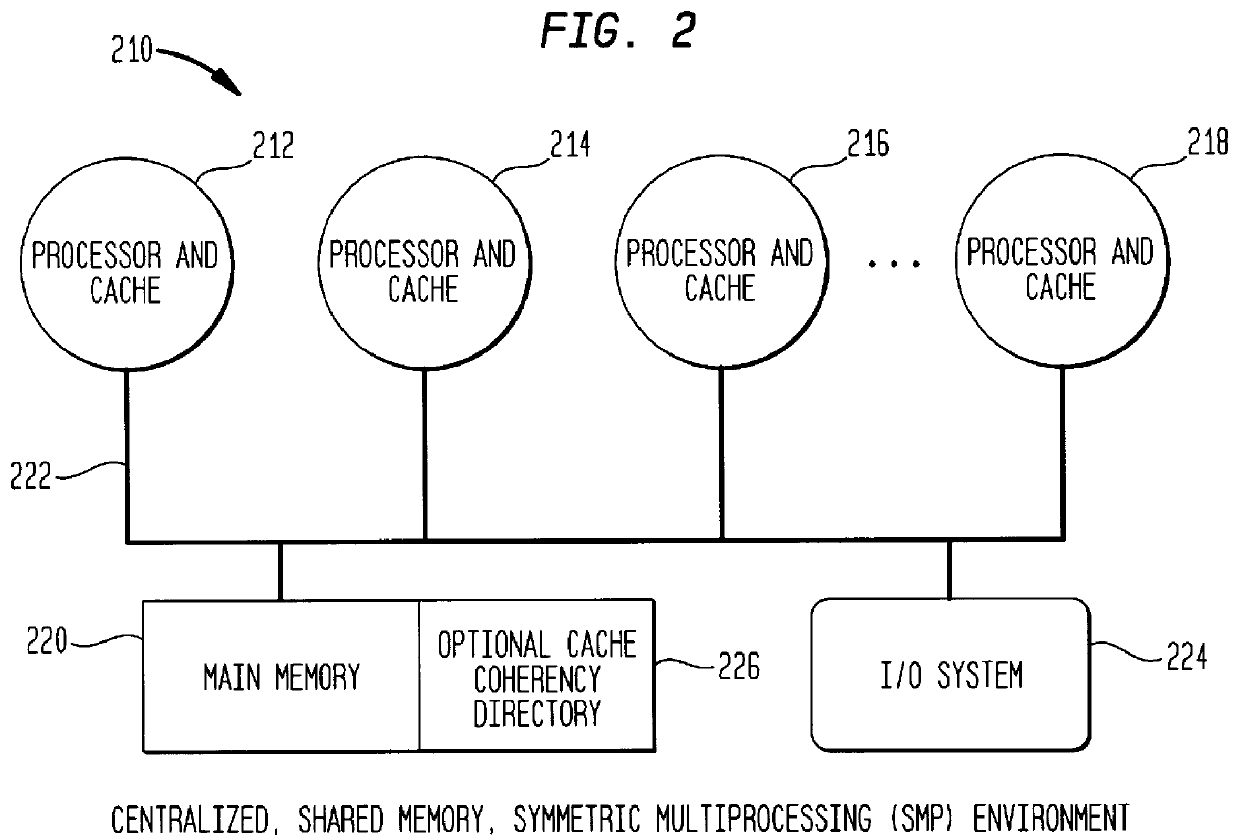

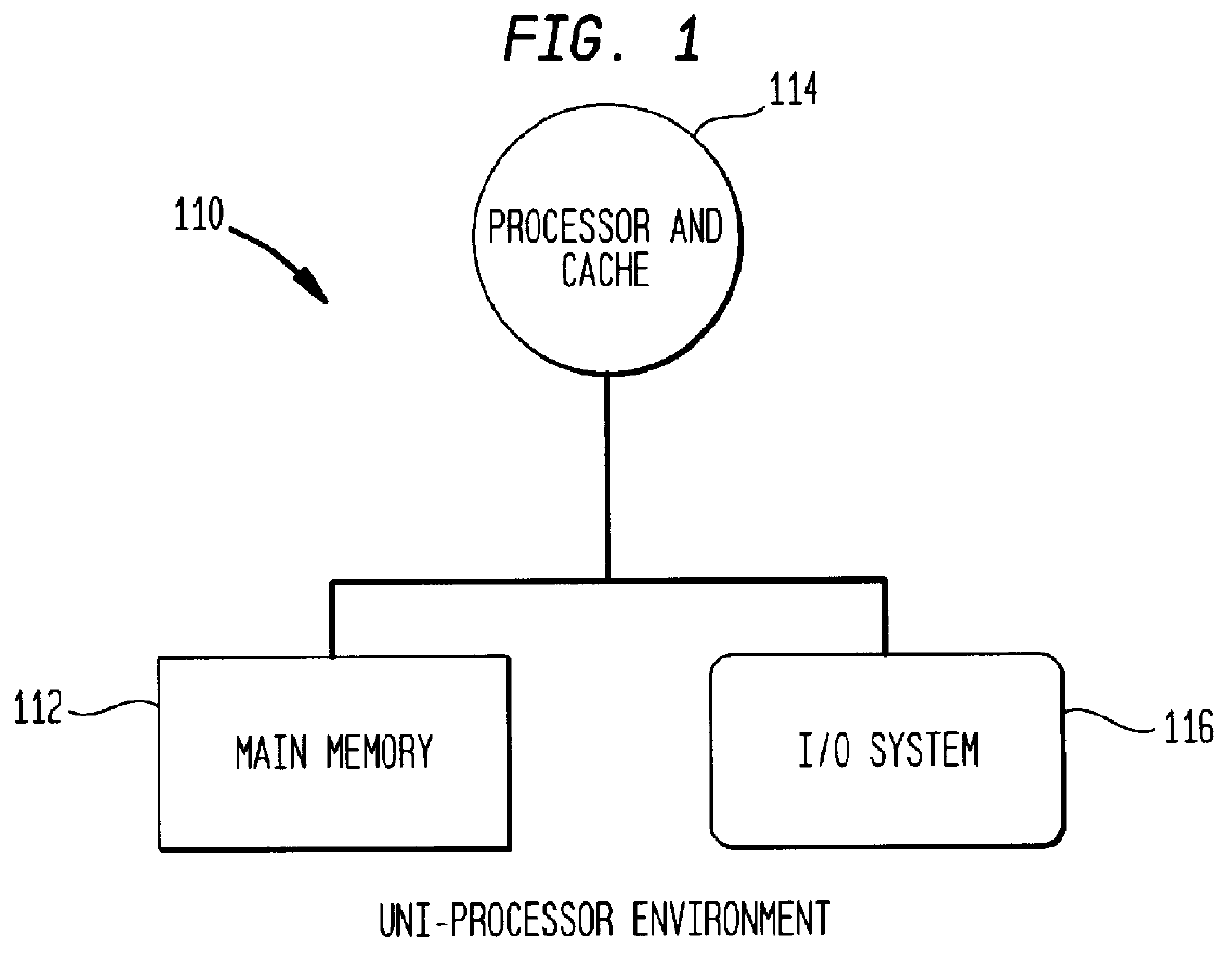

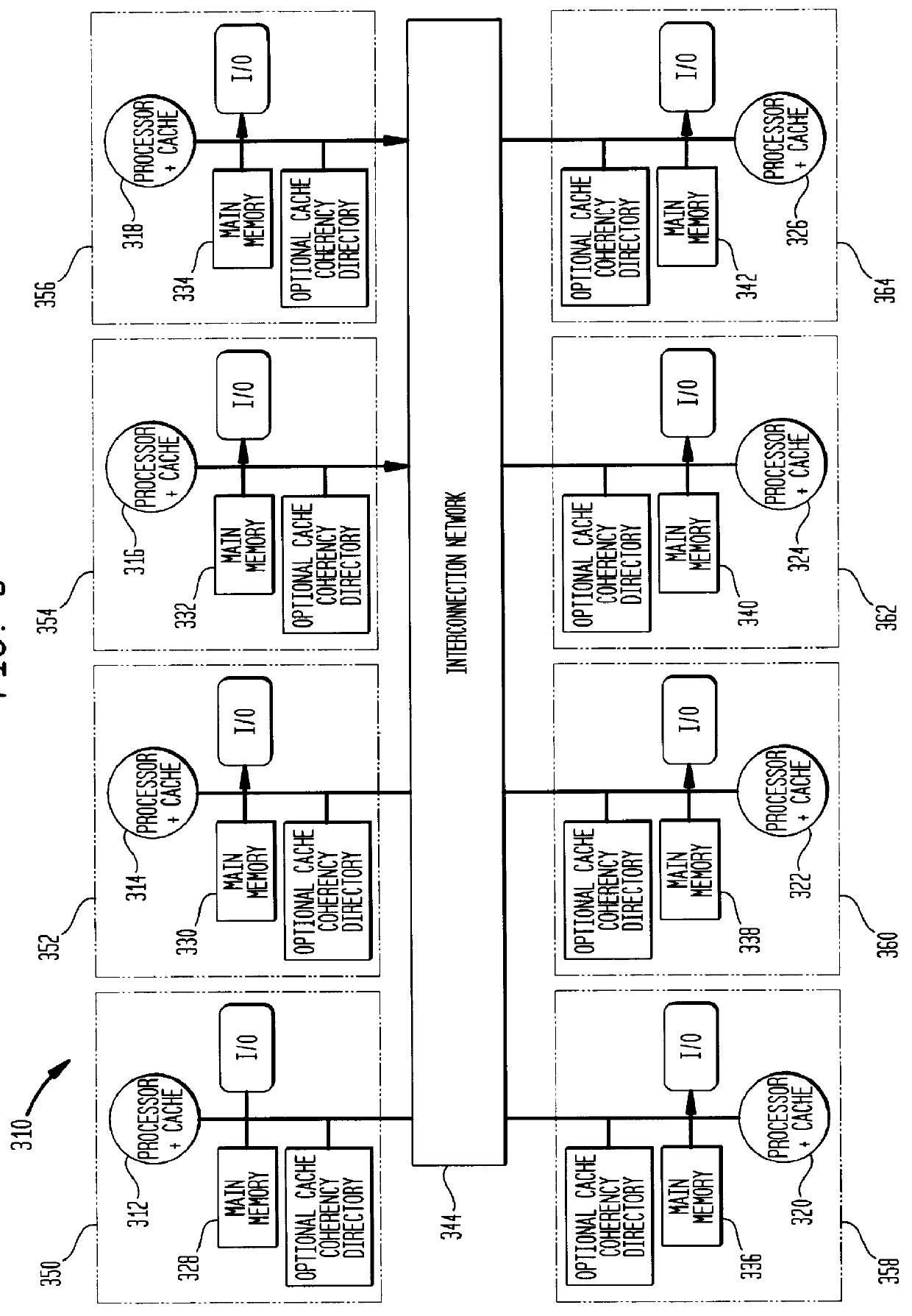

A computer system including a first processor, a second processor in communication with the first processor, a memory coupled to the first and second processors (i.e., a shared memory) and including multiple memory locations, and a storage device coupled to the first processor. The first and second processors implement virtual memory using the memory. The first processor maintains a first set of page tables and a second set of page tables in the memory. The first processor uses the first set of page tables to access the memory locations within the memory. The second processor uses the second set of page tables, maintained by the first processor, to access the memory locations within the memory. A virtual memory page replacement method is described for use in the computer system, wherein the virtual memory page replacement method is designed to help maintain paged memory coherence within the multiprocessor computer system.

Owner:GLOBALFOUNDRIES US INC

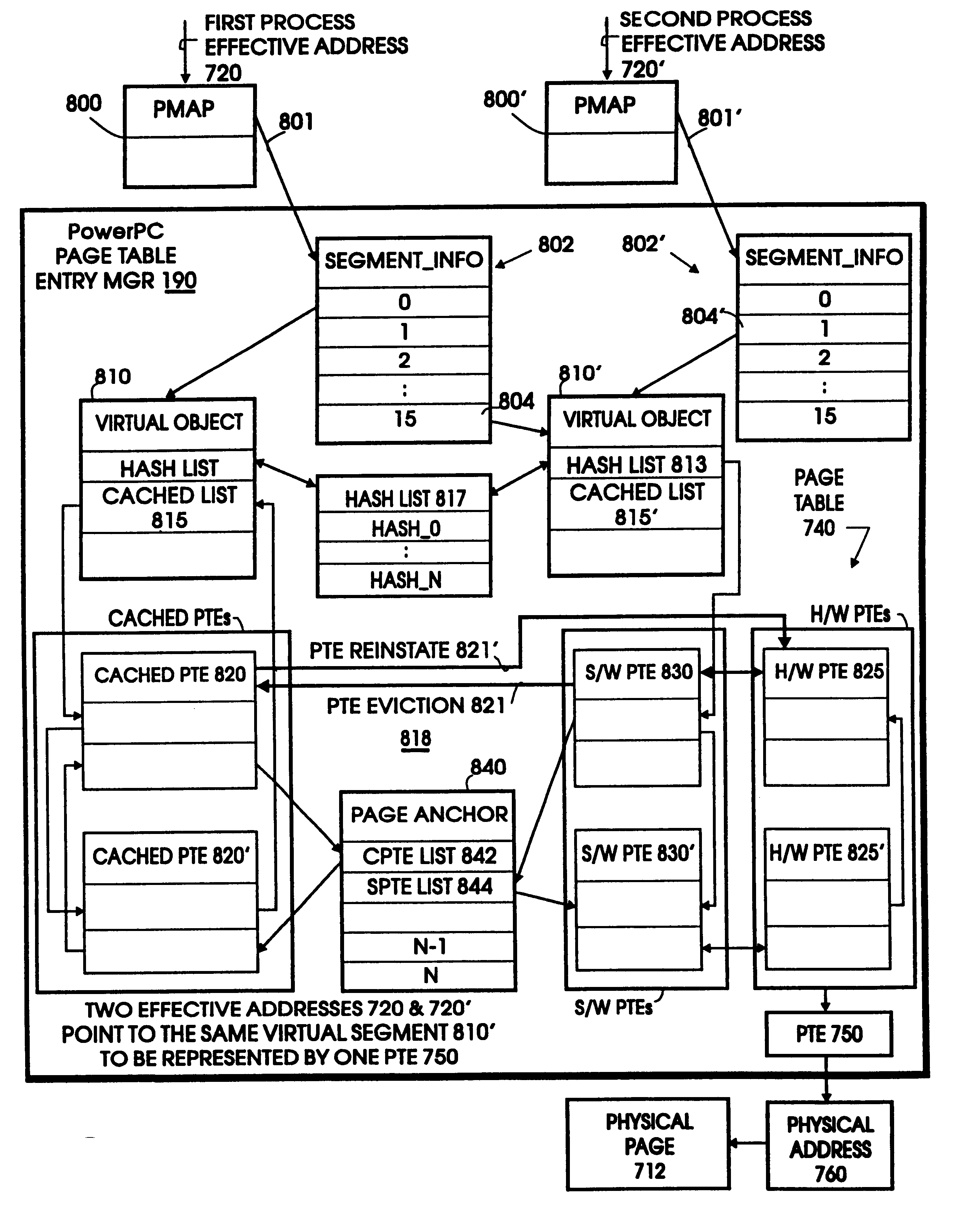

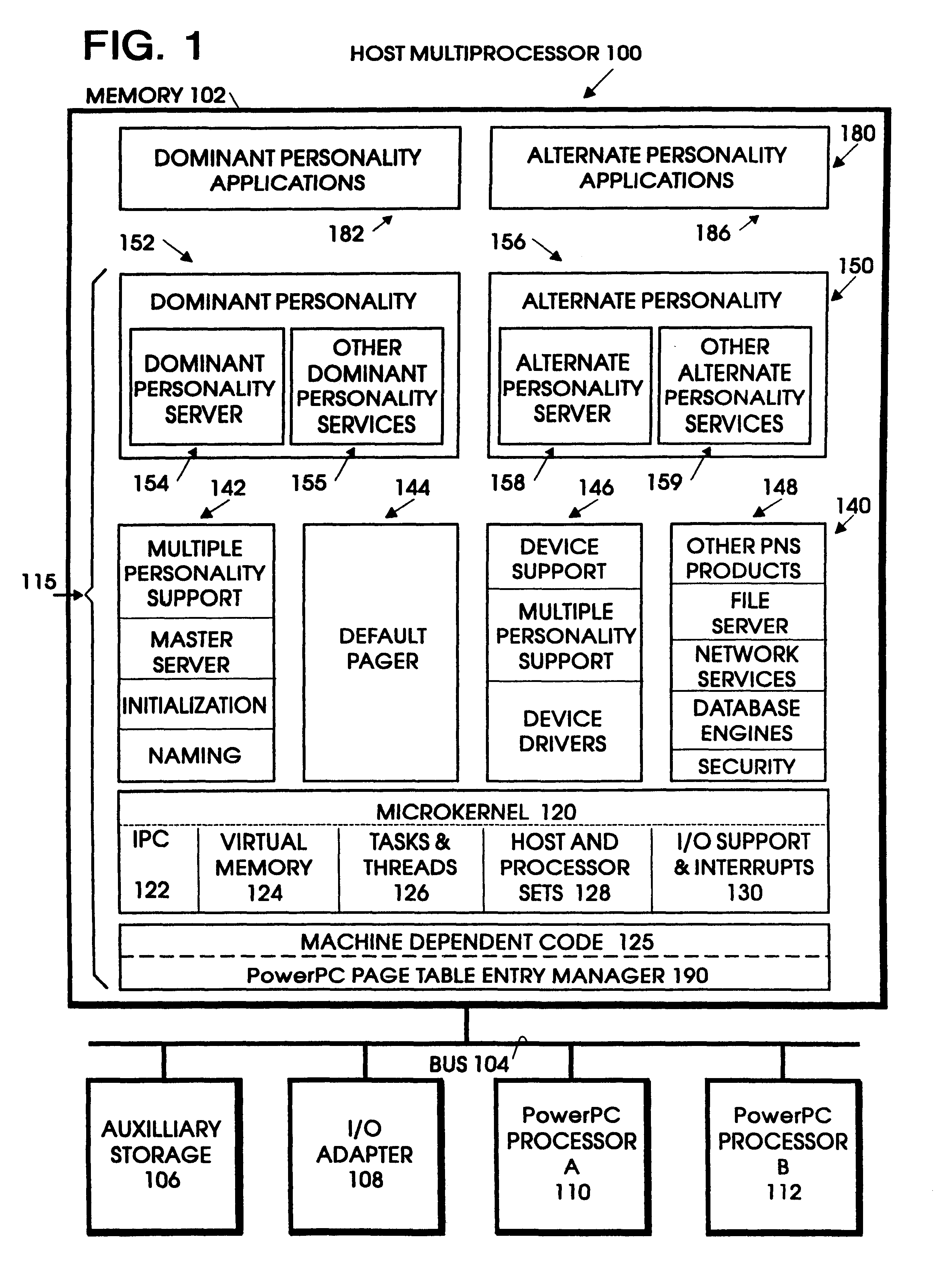

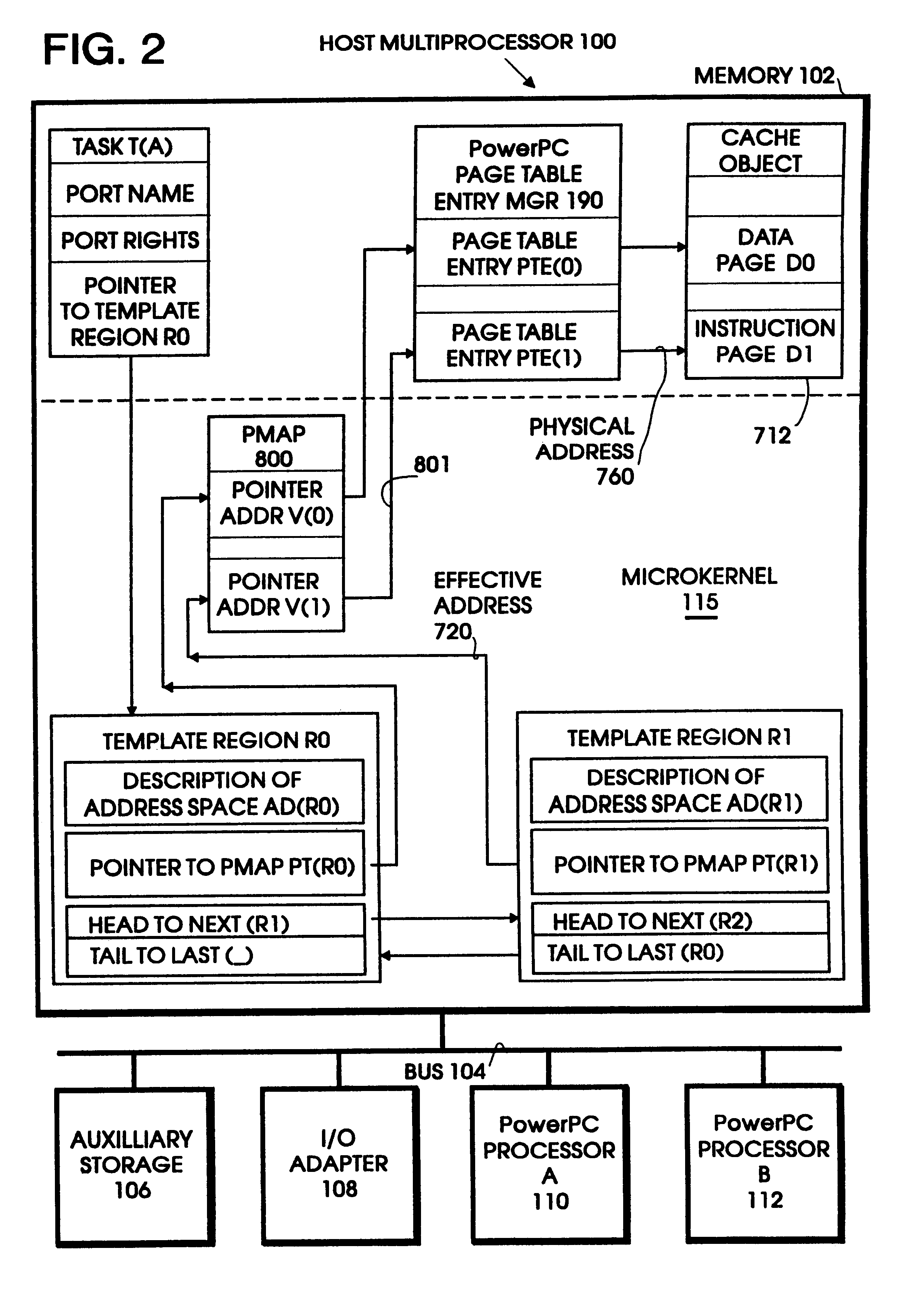

Page table entry management method and apparatus for a microkernel data processing system

InactiveUS6308247B1Maximize system performanceEasy to manageMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemManagement unit

A page table entry management method and apparatus provide the Microkernel System with the capability to program the memory management unit on the PowerPC family of processors. The PowerPC processors define a limited set of page table entries (PTEs) to maintain virtual to physical mappings. The page table entry management method and apparatus solves the problem of a limited number of PTEs by segment aliasing when two or more user processes share a segment of memory. The segments are aliased rather than duplicating the PTES. This significantly reduces the number of PTEs. In addition, the method provides for caching existing PTEs when the system actually runs out of PTEs. A cache of recently discarded PTEs provides a fast fault resolution when a recently used page is accessed again.

Owner:IBM CORP

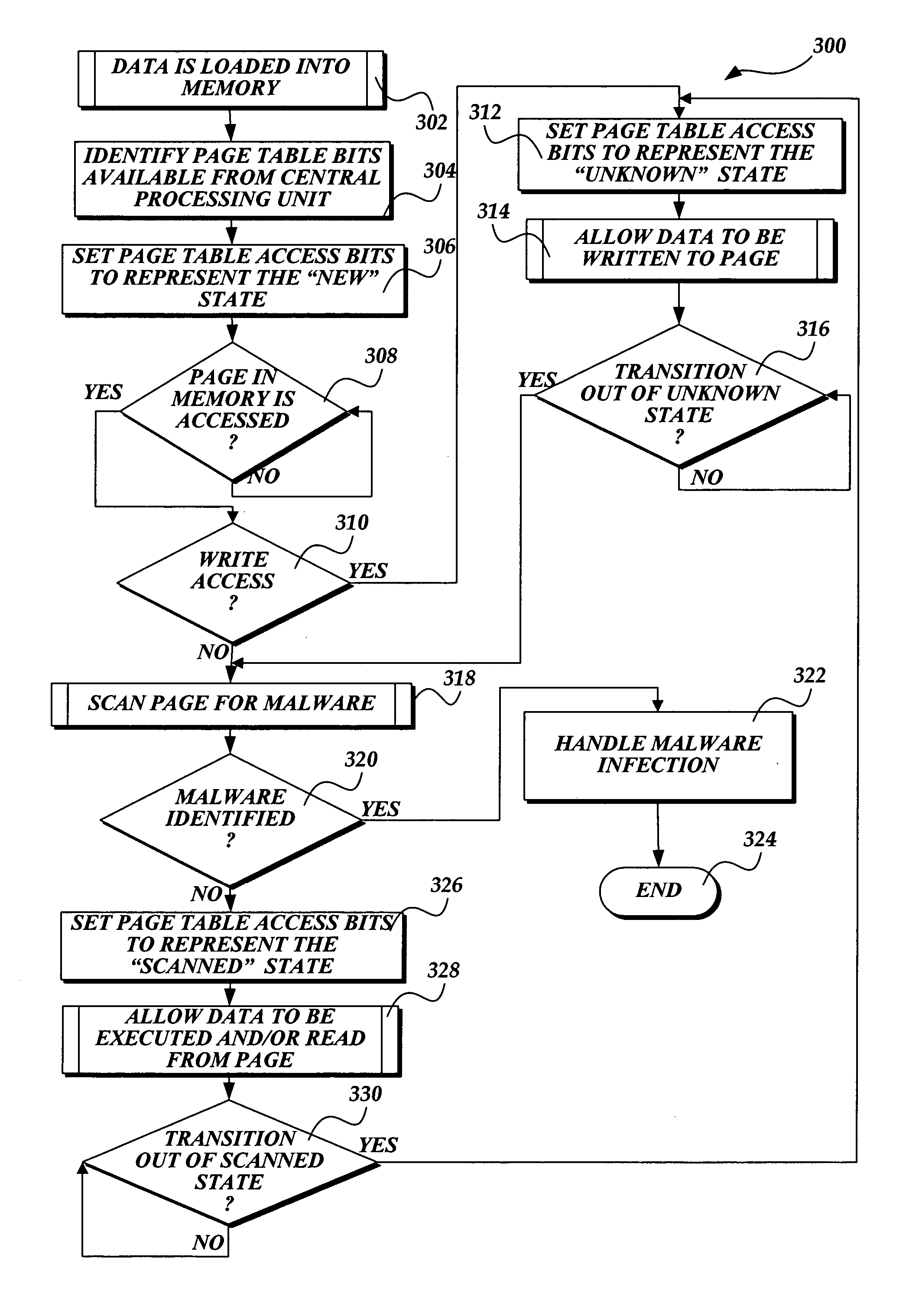

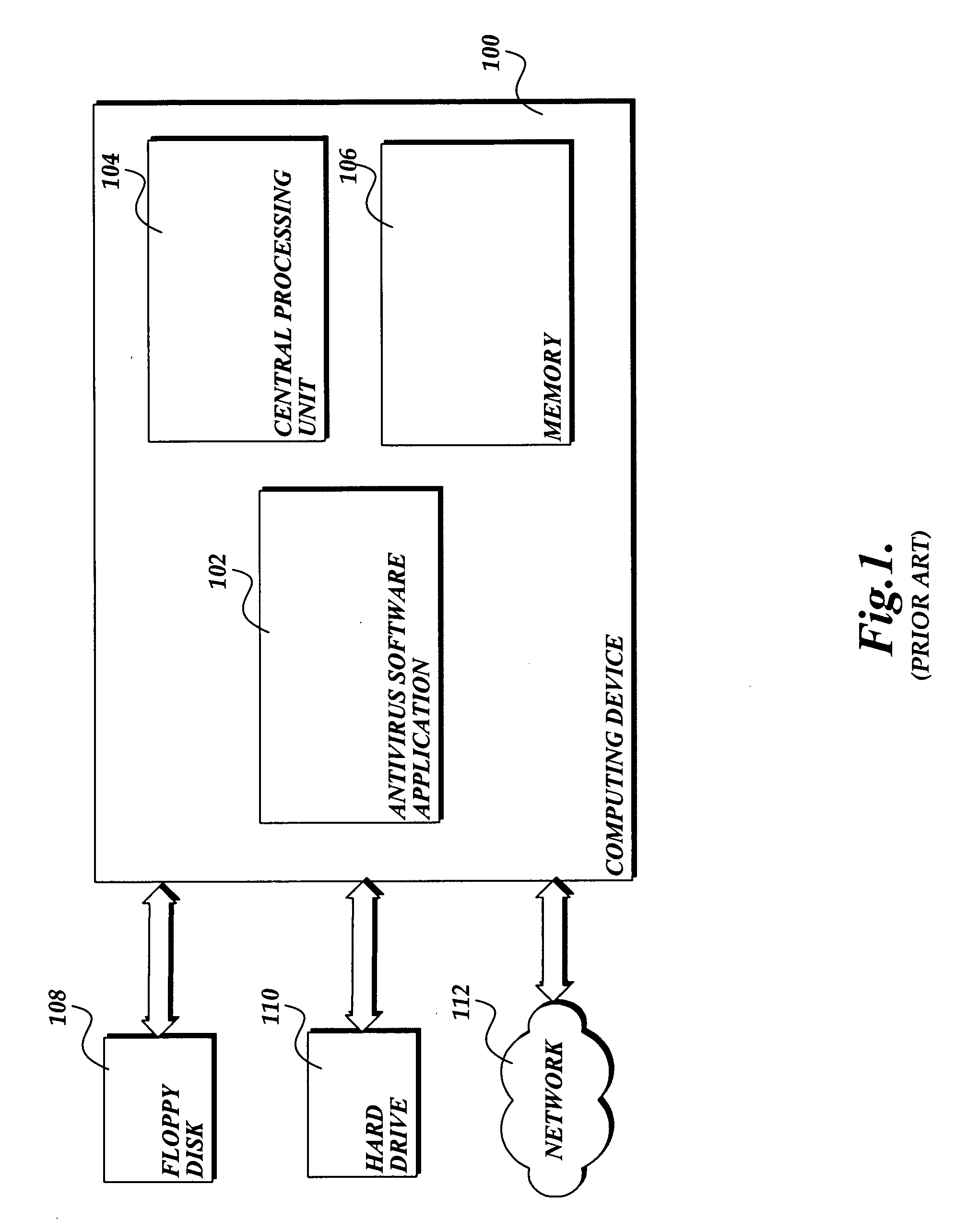

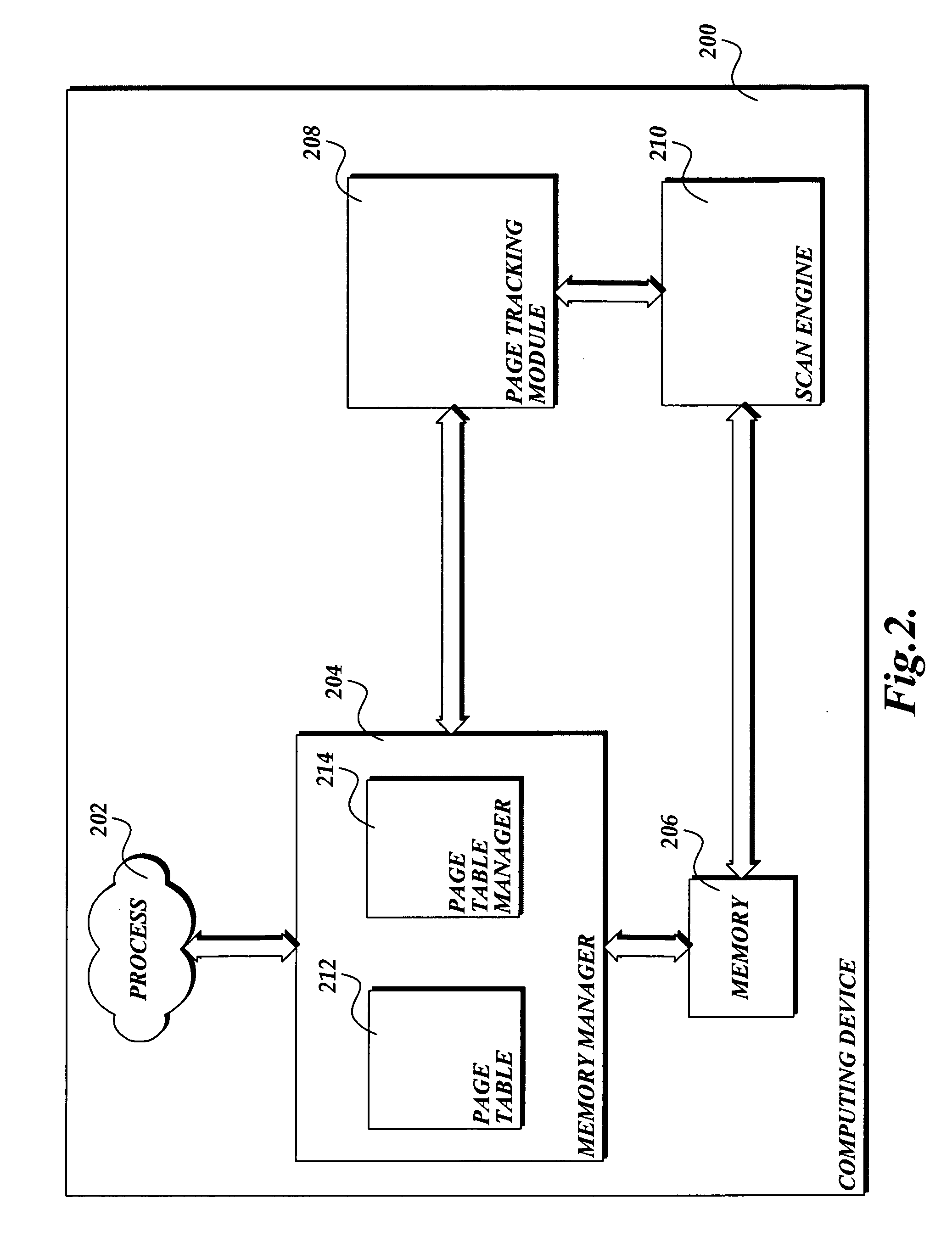

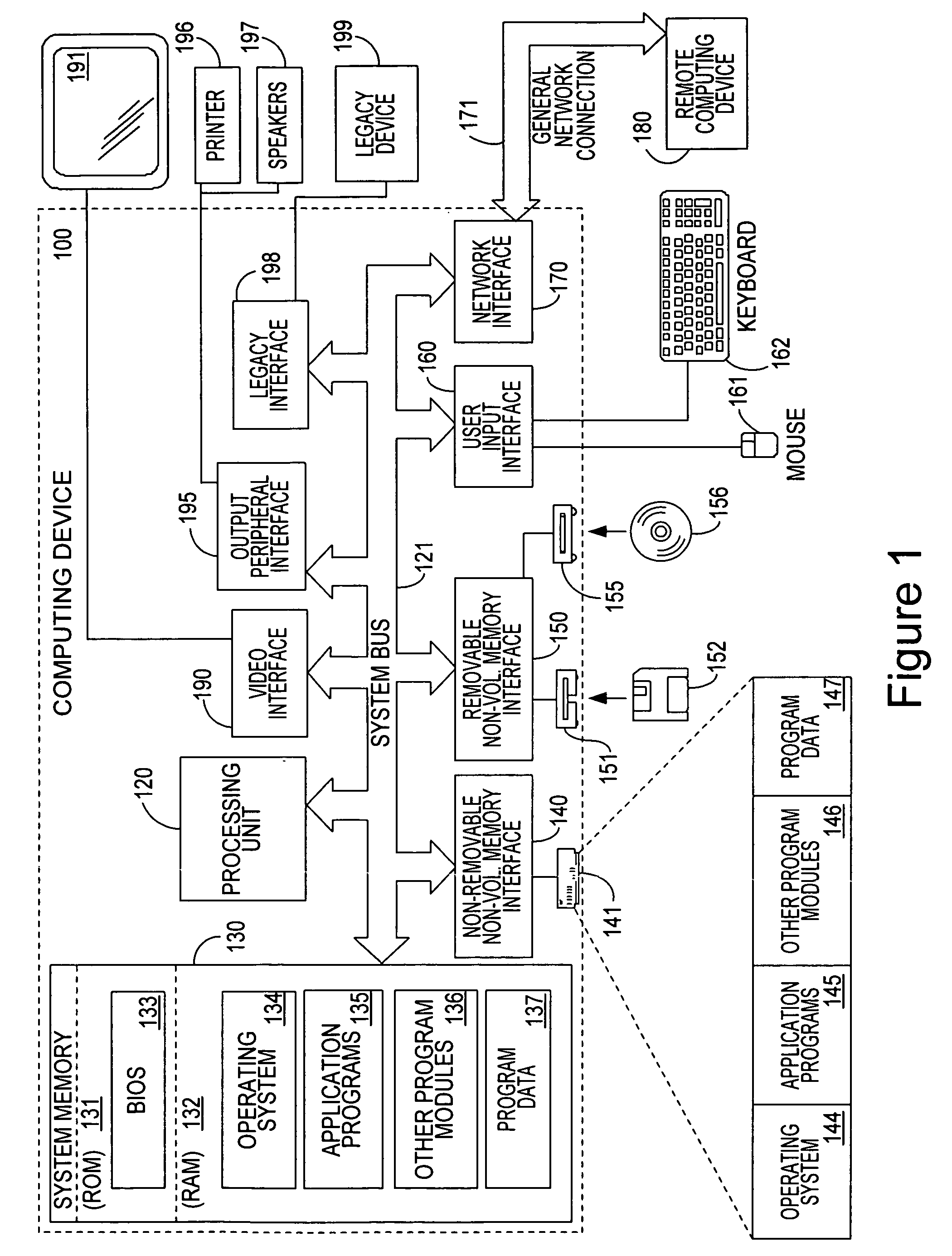

On-access scan of memory for malware

ActiveUS20060200863A1Effective infrastructureGood antivirus performanceMemory loss protectionError detection/correctionPage tableMalware

The present invention provides a system, method, and computer-readable medium for identifying malware that is loaded in the memory of a computing device. Software routines implemented by the present invention track the state of pages loaded in memory using page table access bits available from a central processing unit. A page in memory may be in a state that is “unsafe” or potentially infected with malware. In this instance, the present invention calls a scan engine to search a page for malware before information on the page is executed.

Owner:MICROSOFT TECH LICENSING LLC

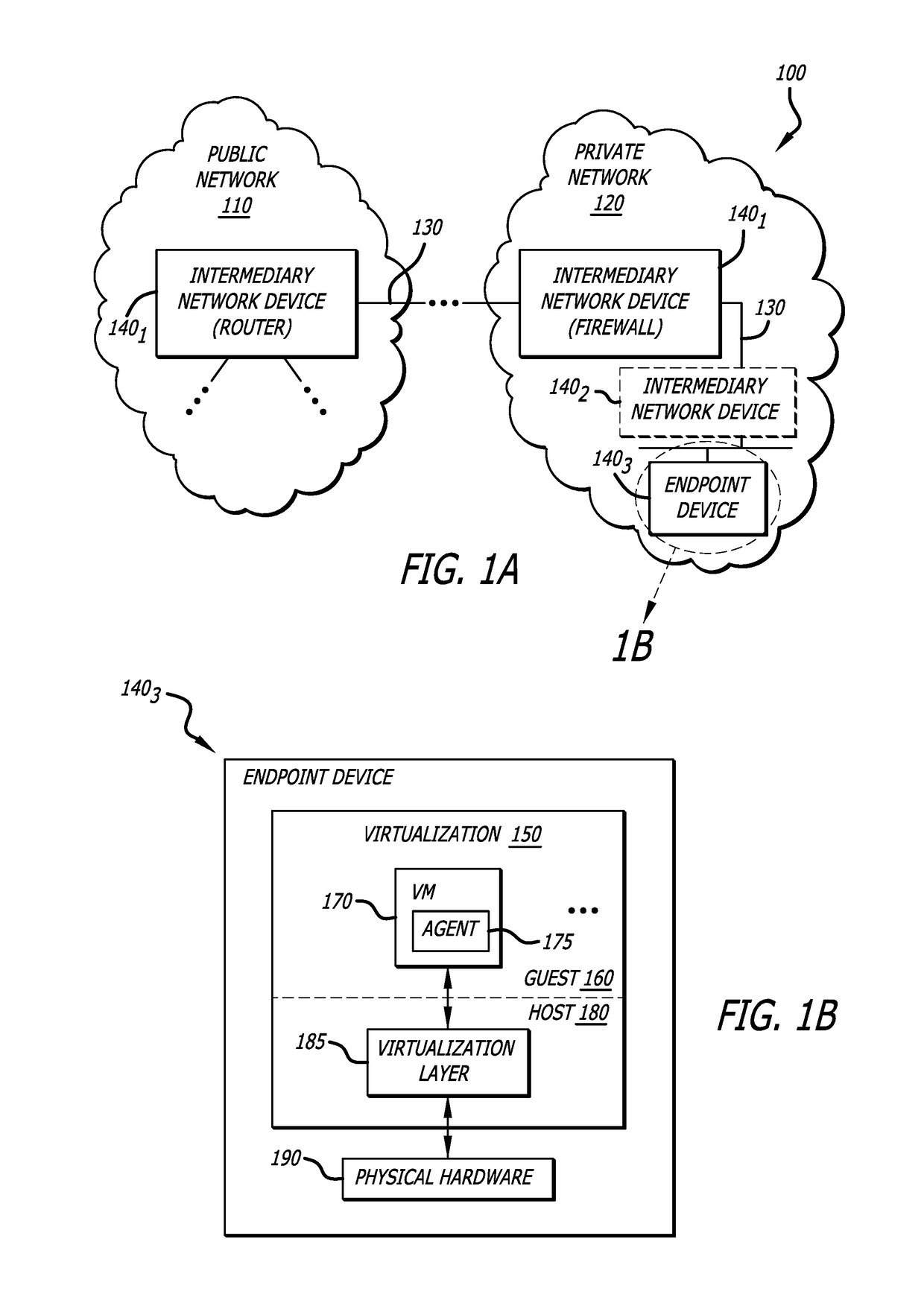

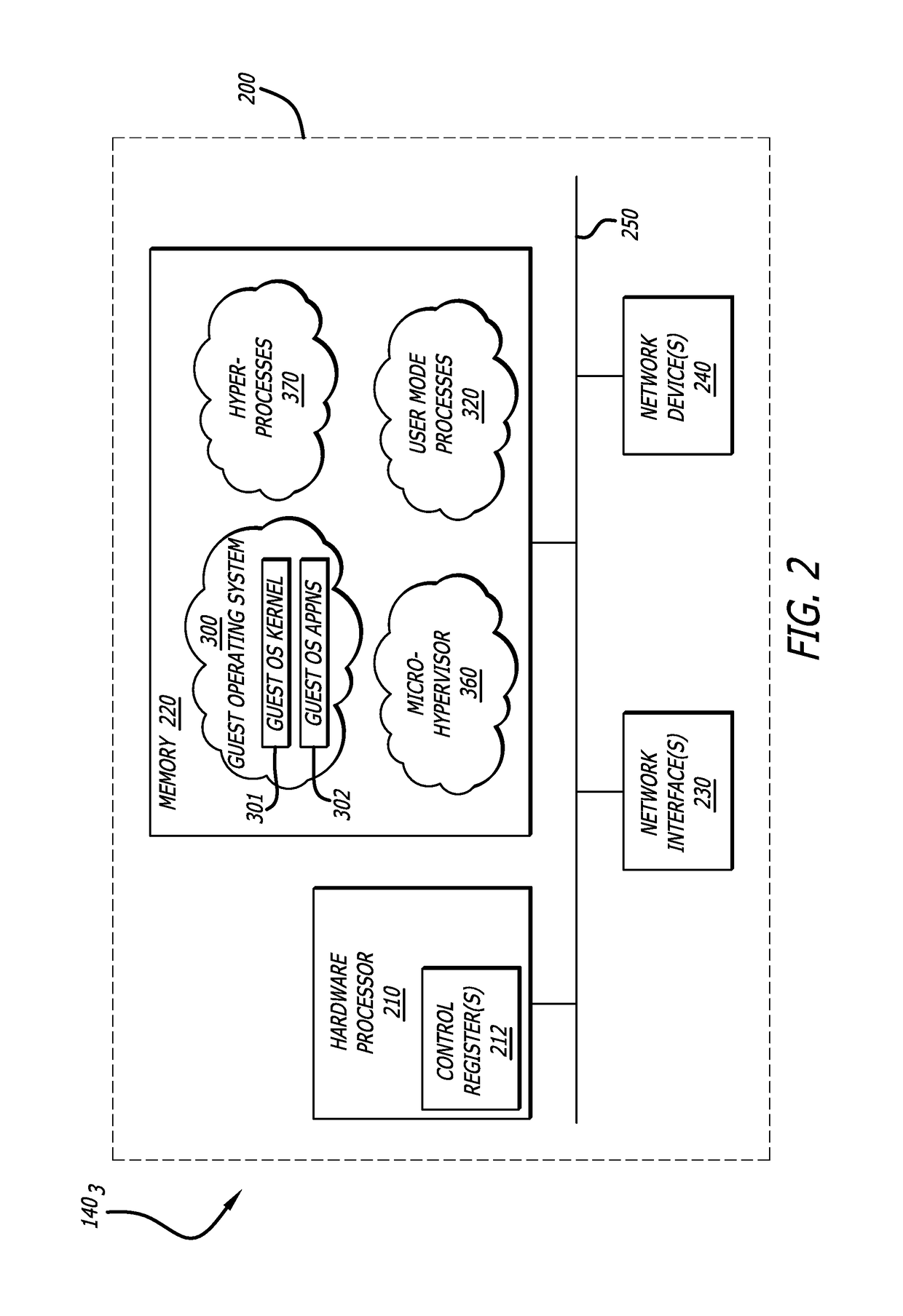

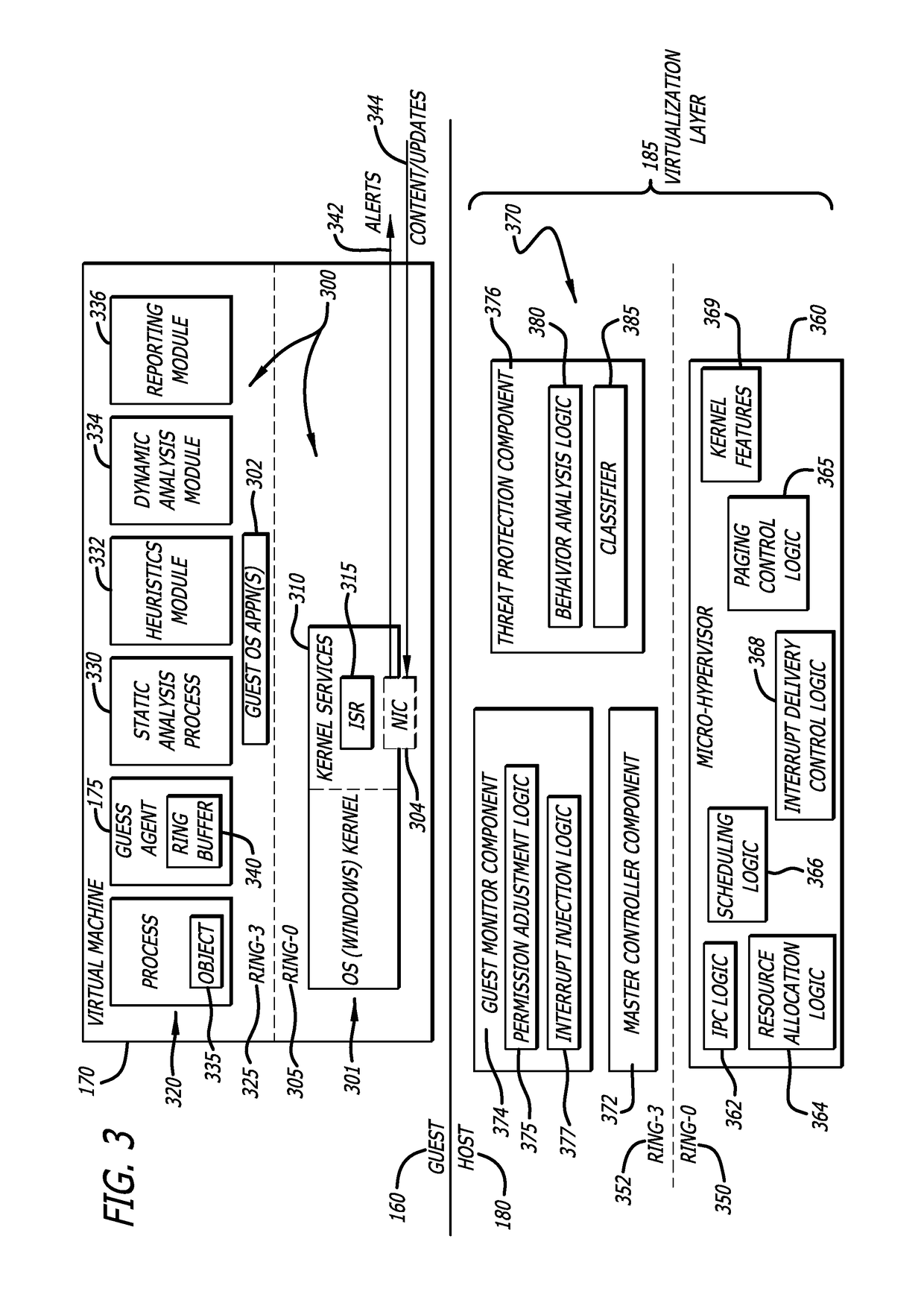

System and method for protecting memory pages associated with a process using a virtualization layer

ActiveUS10216927B1Memory architecture accessing/allocationUnauthorized memory use protectionVirtualizationPage table

A computerized method is provided for protecting processes operating within a computing device. The method comprises an operation for identifying, by a virtualization layer operating in a host mode, when a guest process switch has occurred. The guest process switch corresponds to a change as to an operating state of a process within a virtual machine. Responsive to an identified guest process switch, an operation is conducted to determine, by the virtualization layer, whether hardware circuitry within the computing device is to access a different nested page table for use in memory address translations. The different nested page table alters page permissions for one or more memory pages associated with at least the process that are executable in the virtual machine.

Owner:FIREEYE SECURITY HLDG US LLC

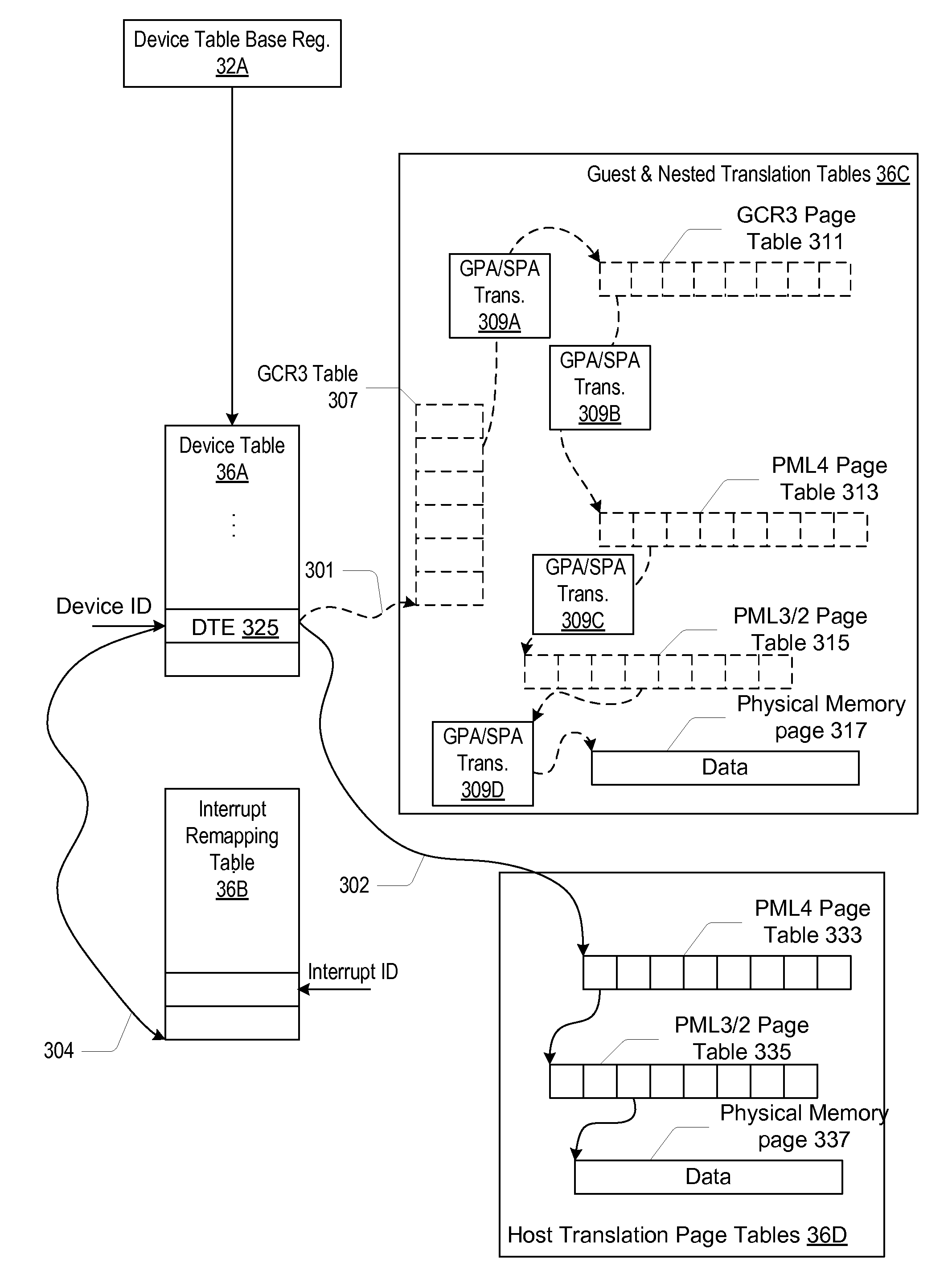

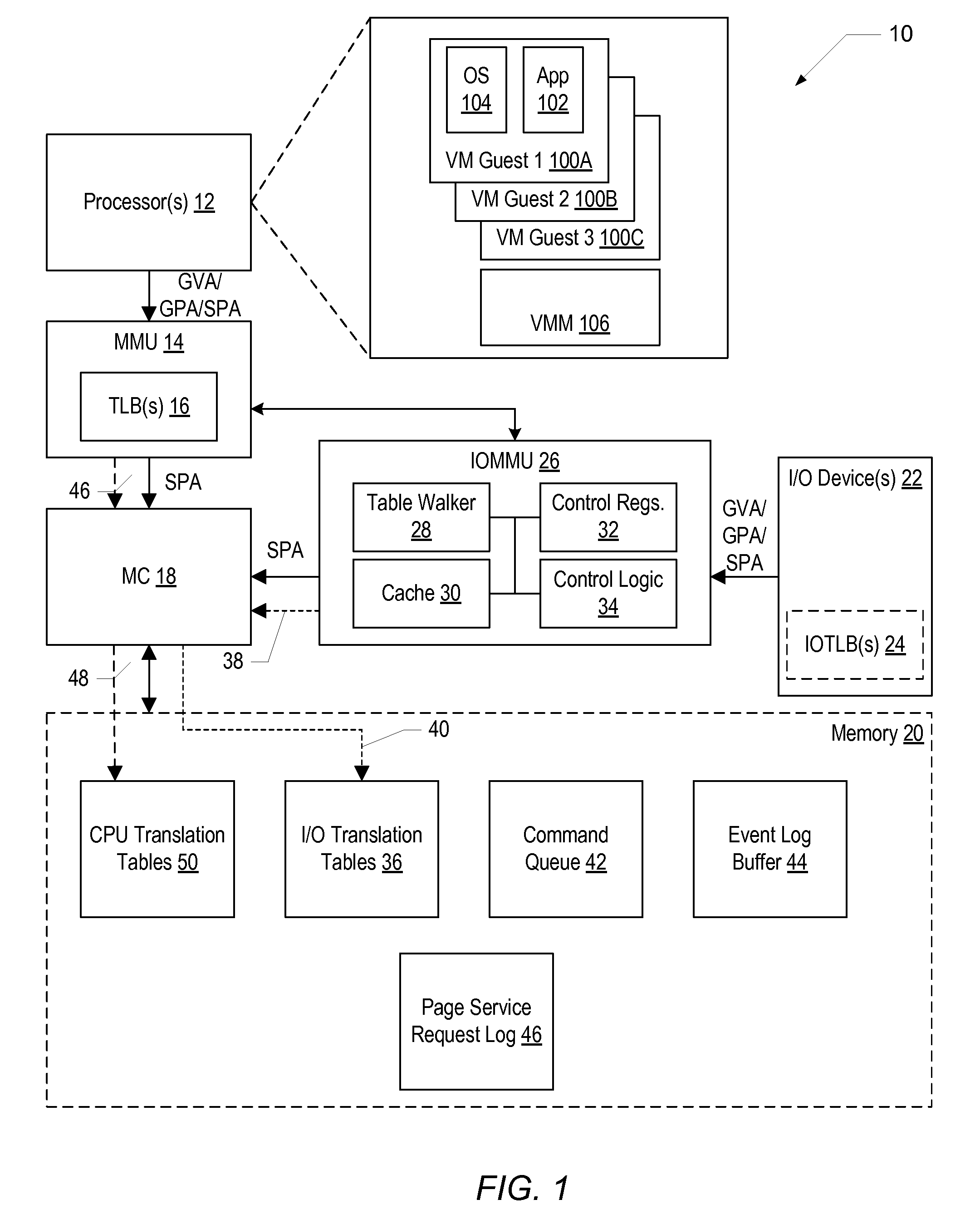

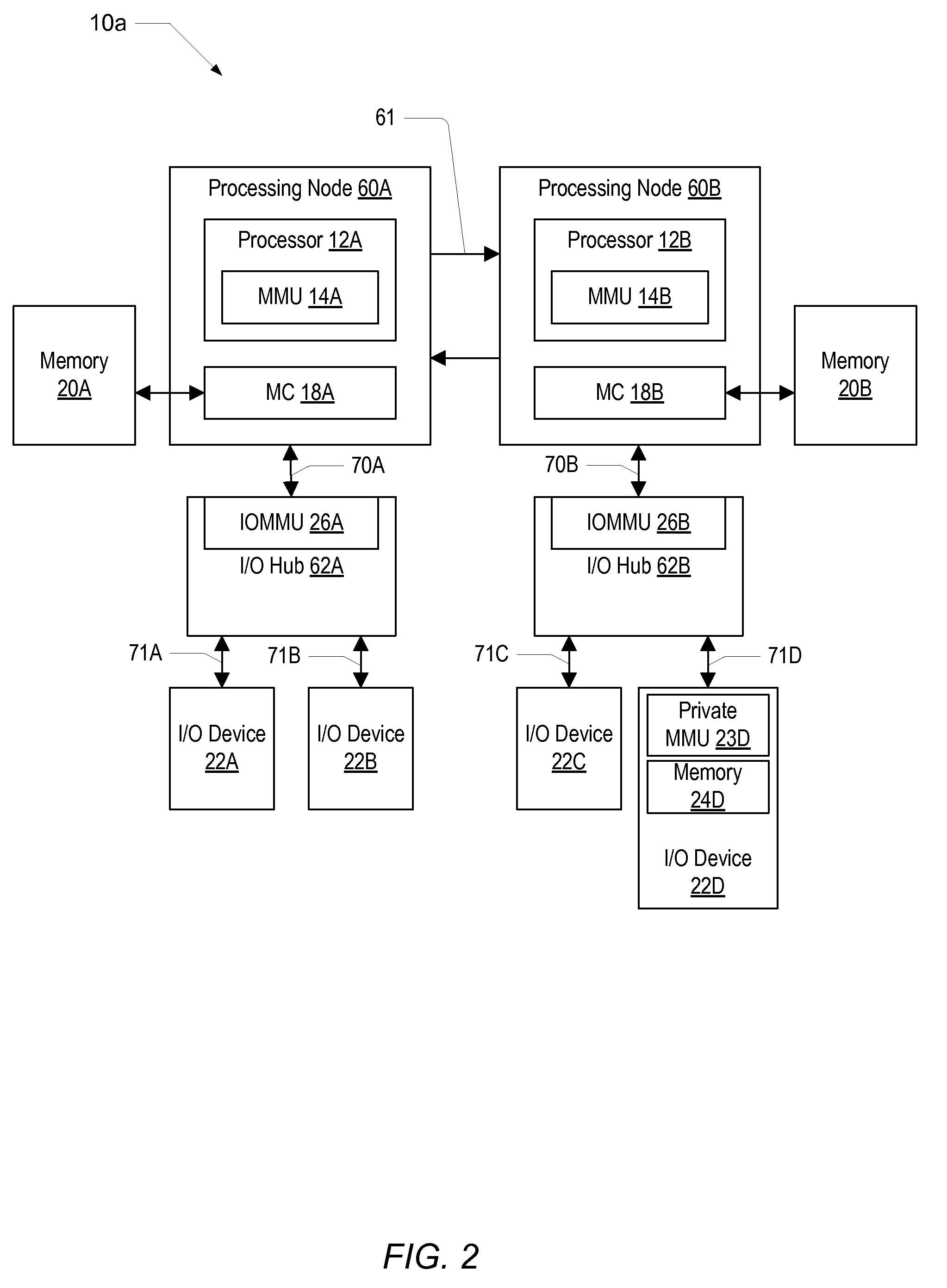

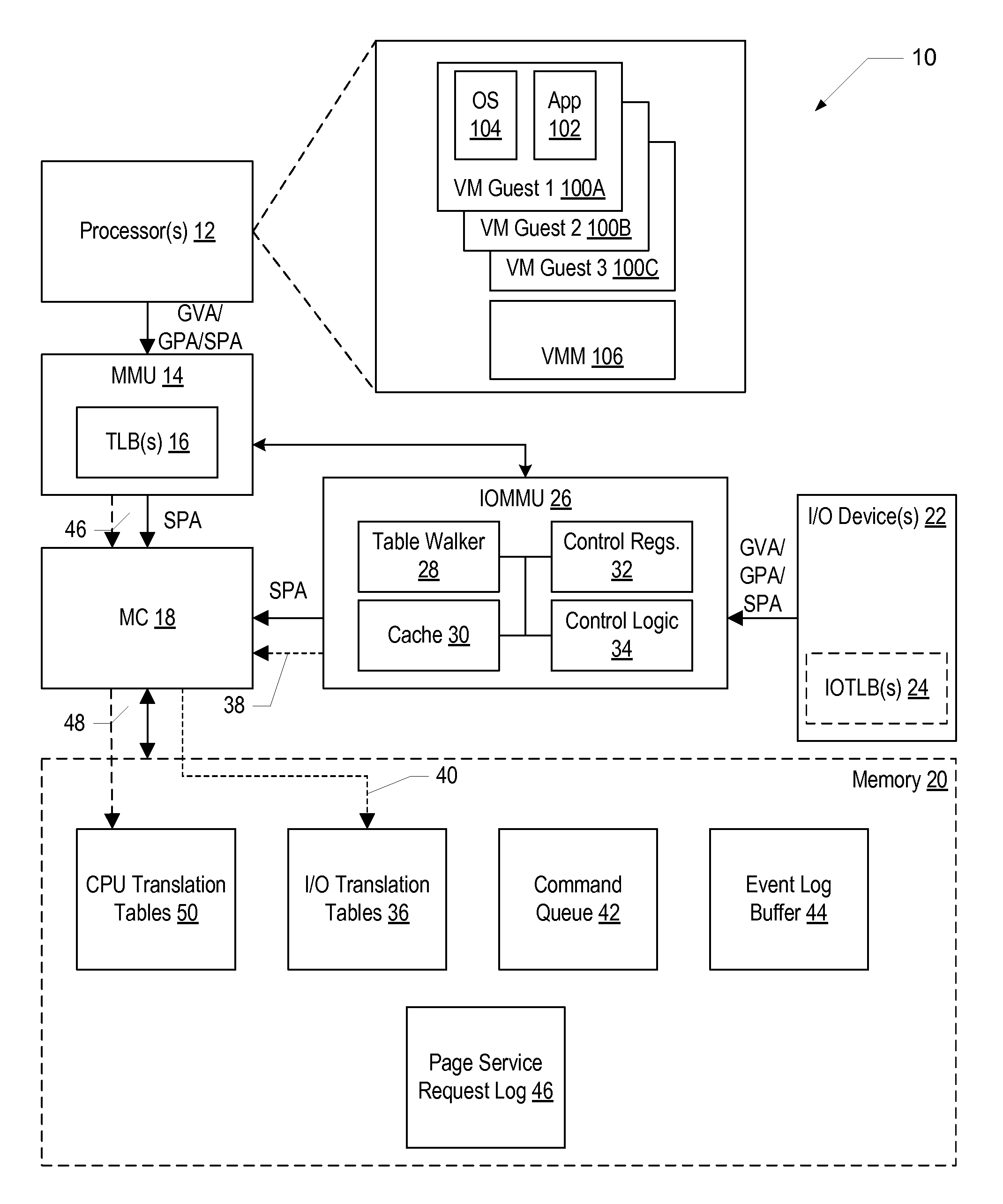

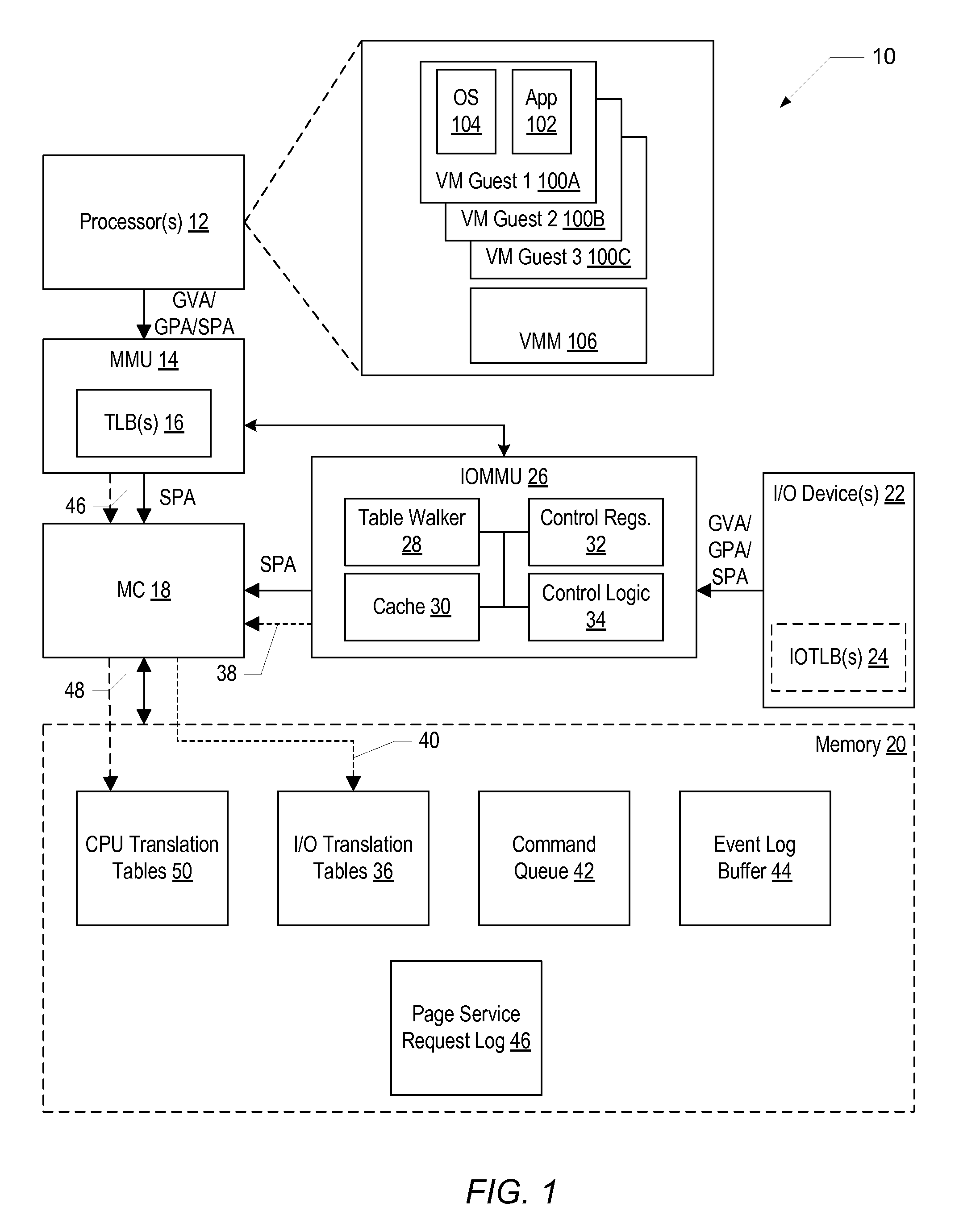

DMA Address Translation in an IOMMU

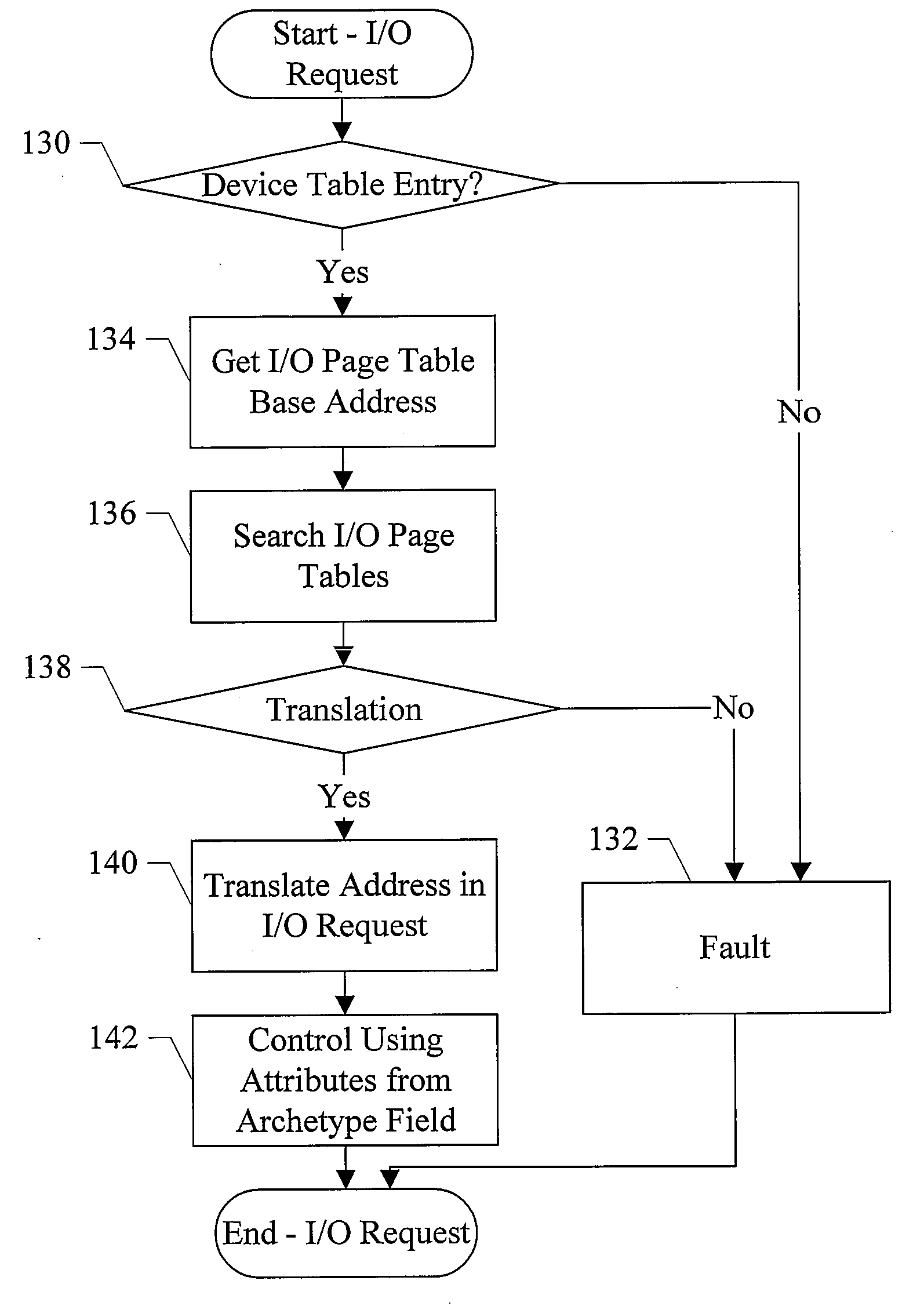

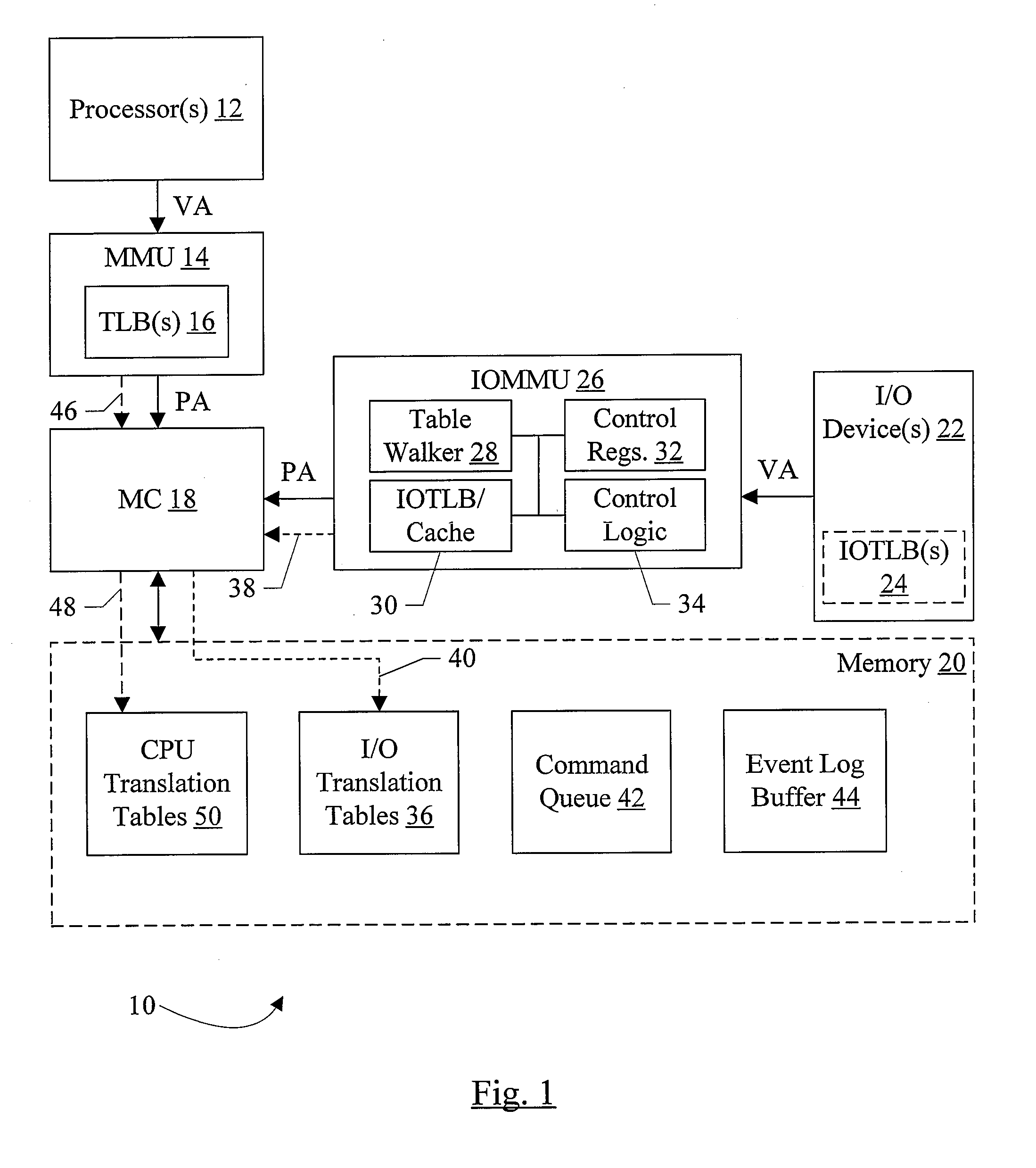

ActiveUS20070168643A1Memory architecture accessing/allocationMemory systemsPage tableMemory management unit

In an embodiment, an input / output (I / O) memory management unit (IOMMU) comprises at least one memory configured to store translation data; and control logic coupled to the memory and configured to translate an I / O device-generated memory request using the translation data. The translation data corresponds to one or more device table entries in a device table stored in a memory system of a computer system that includes the IOMMU, wherein the device table entry for a given request is selected by an identifier corresponding to the I / O device that generates the request. The translation data further corresponds to one or more I / O page tables, wherein the selected device table entry for the given request includes a pointer to a set of I / O page tables to be used to translate the given request.

Owner:MEDIATEK INC

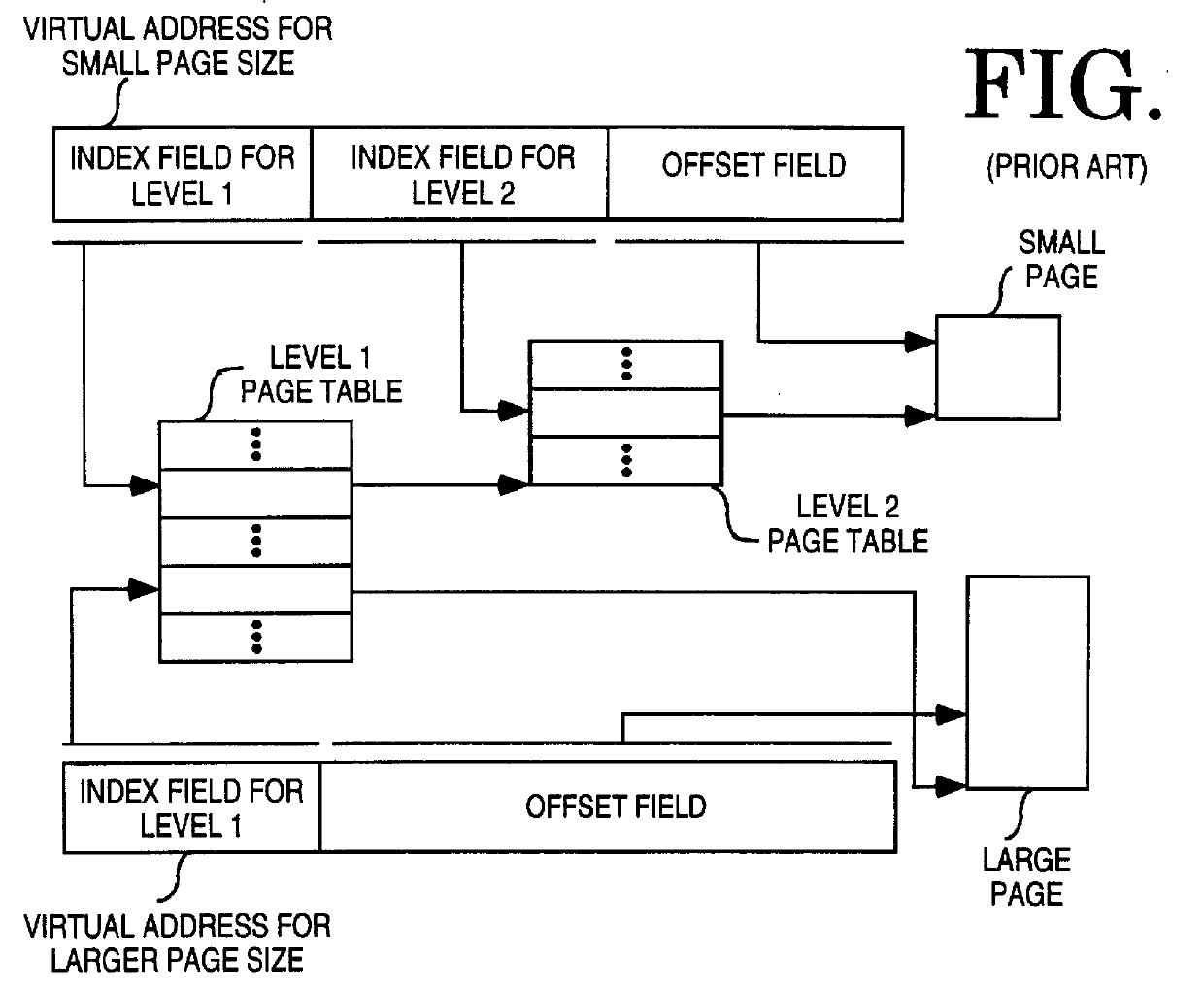

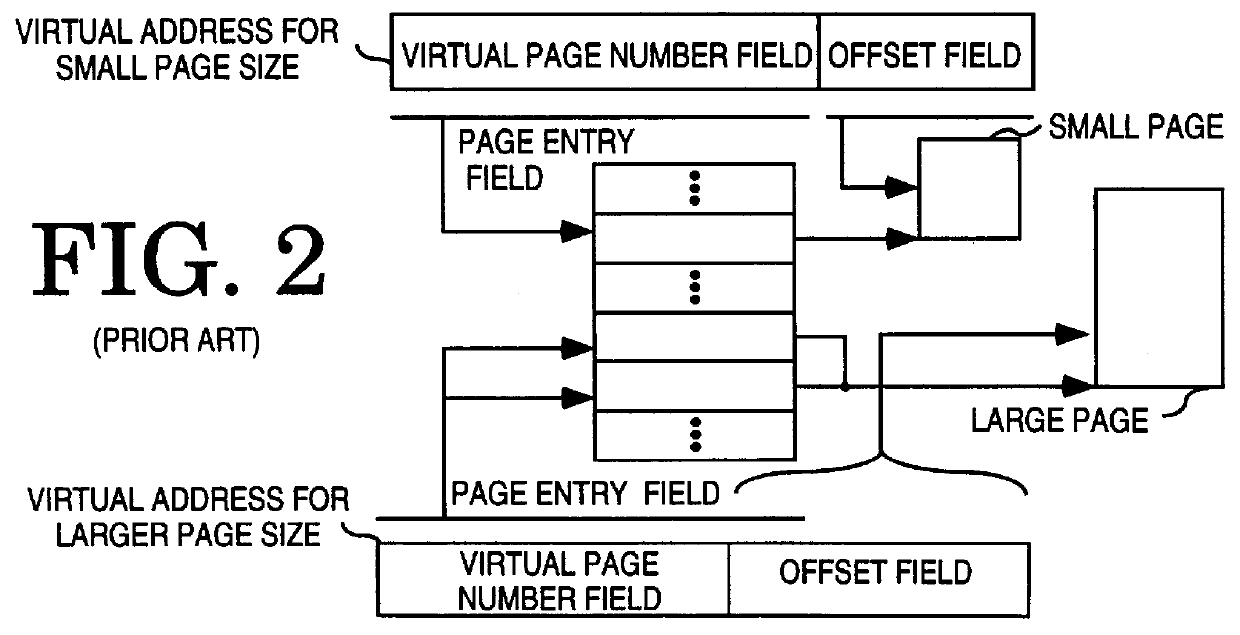

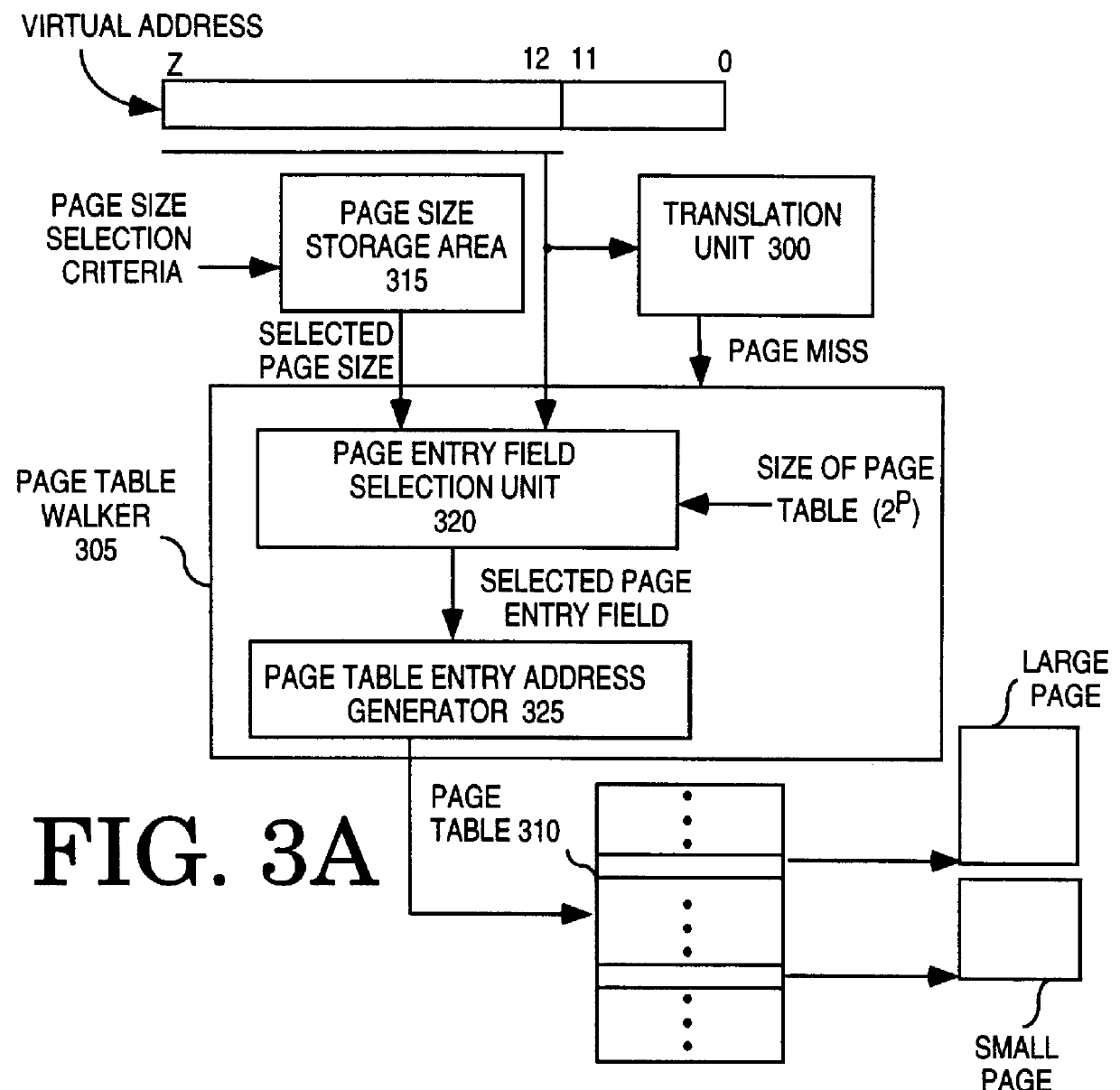

Page table walker that uses at least one of a default page size and a page size selected for a virtual address space to position a sliding field in a virtual address

InactiveUS6088780AMemory architecture accessing/allocationMemory adressing/allocation/relocationAddress generatorComputerized system

A method and apparatus for implementing a page table walker that uses at least one of a default page size and a page size selected for a virtual address space to position a sliding field in a virtual address. According to one aspect of the invention, an apparatus for use in a computer system is provided that includes a page size storage area and a page table walker. The page size storage area is used to store a number of page sizes each selected for translating a different set of virtual addresses. The page table walker includes a selection unit coupled to the page size storage area, as well as a page entry address generator coupled to the selection unit. For each of the virtual address received, the selection unit positions a field in that virtual address based on the page size selected for translating the set of virtual addresses to which that virtual address belongs. In response to receiving the bits in the field identified for each of the virtual addresses, the page entry address generator identifies an entry in a page table based on those bits.

Owner:INST FOR THE DEVMENT OF EMERGING ARCHITECTURES L L C

Enhanced shadow page table algorithms

InactiveUS20060259732A1Reduce processImprove efficiencyMemory systemsMicro-instruction address formationOperational systemPage table

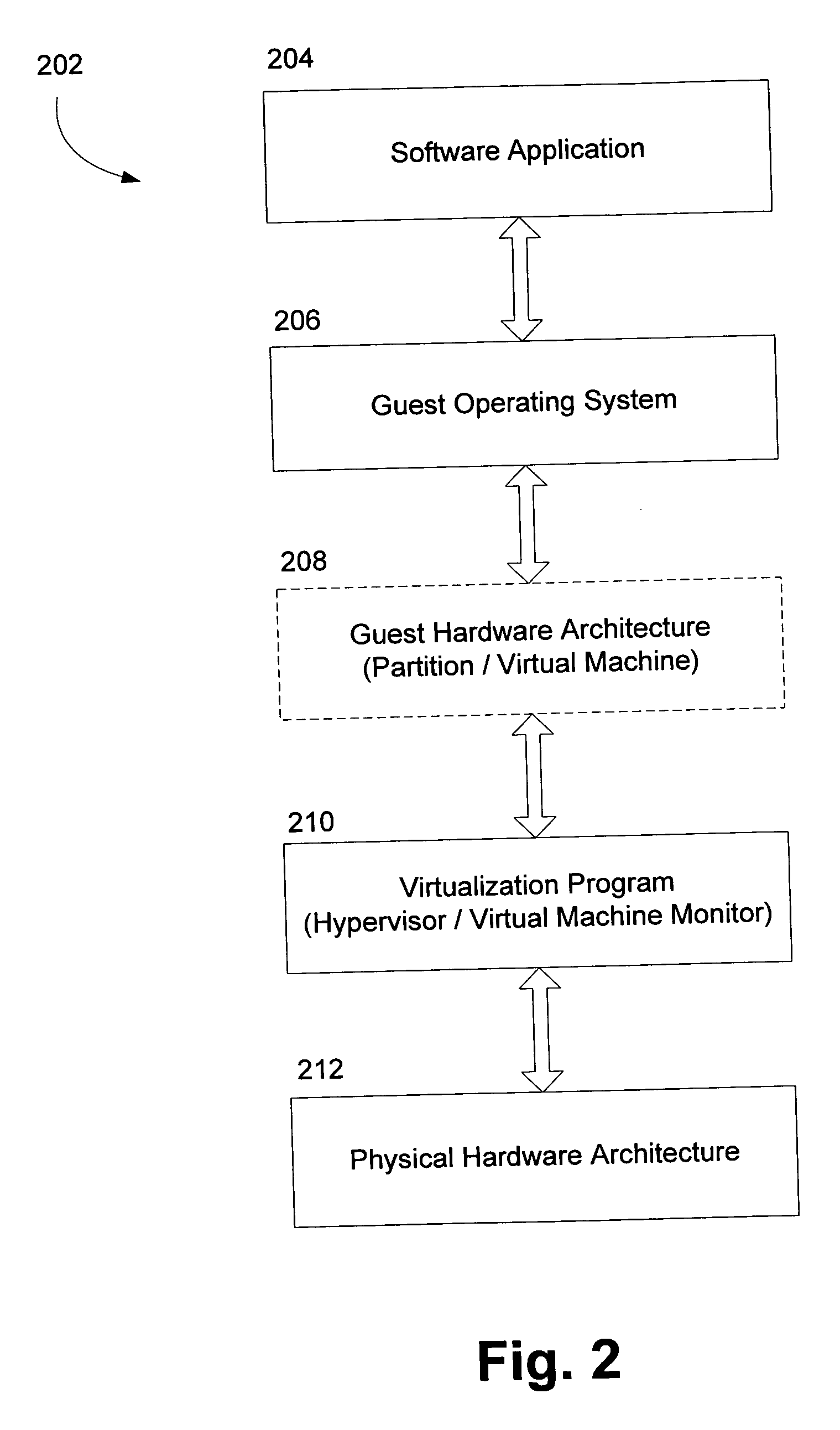

Enhanced shadow page table algorithms are presented for enhancing typical page table algorithms. In a virtual machine environment, where an operating system may be running within a partition, the operating system maintains it's own guest page tables. These page tables are not the real page tables that map to the real physical memory. Instead, the memory is mapped by shadow page tables maintained by a virtualing program, such as a hypervisor, that virtualizes the partition containing the operating system. Enhanced shadow page table algorithms provide efficient ways to harmonize the shadow page tables and the guest page tables. Specifically, by using tagged translation lookaside buffers, batched shadow page table population, lazy flags, and cross-processor shoot downs, the algorithms make sure that changes in the guest pages tables are reflected in the shadow page tables.

Owner:MICROSOFT TECH LICENSING LLC

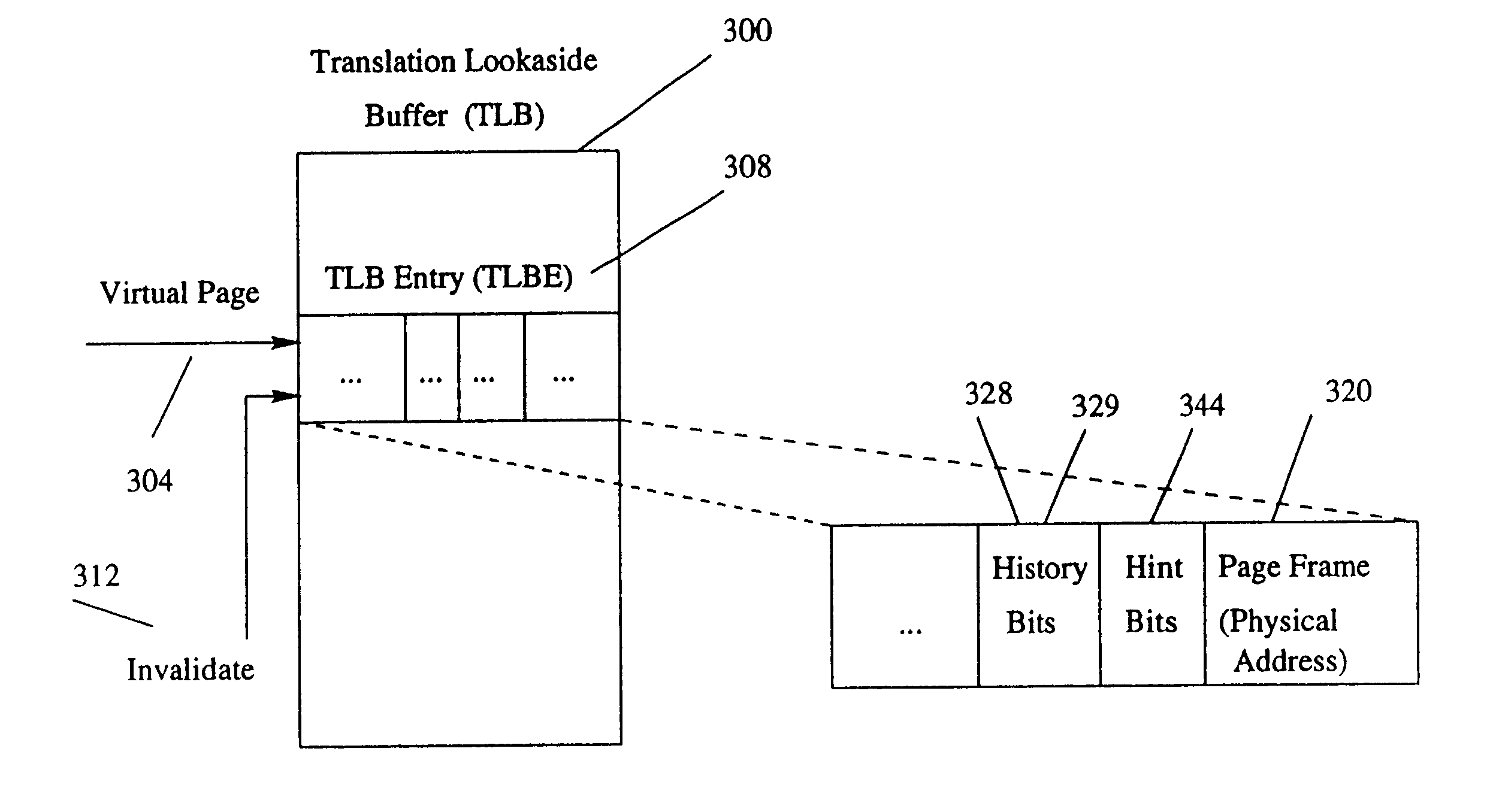

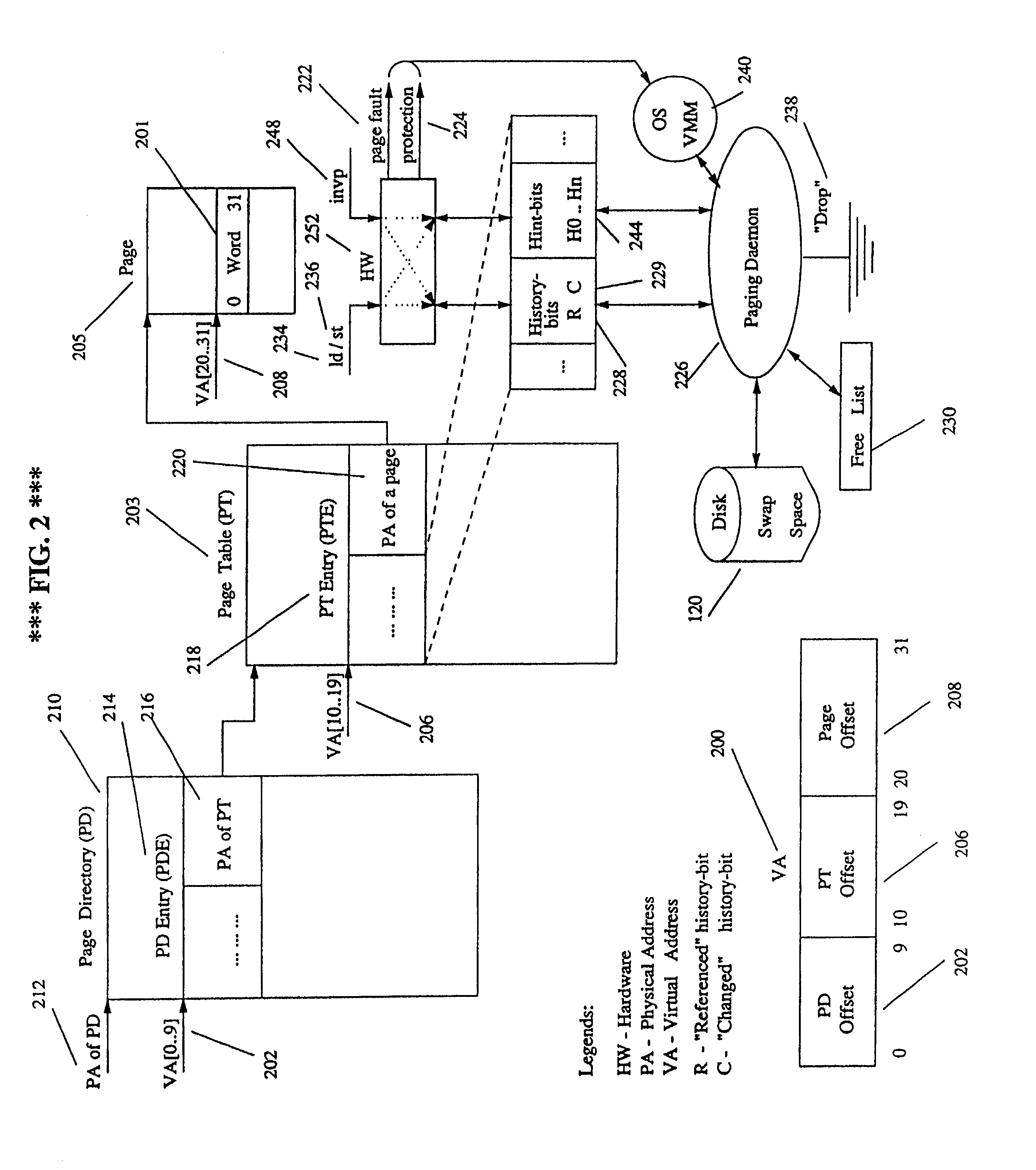

System and method for maintaining translation look-aside buffer (TLB) consistency

InactiveUS6105113AMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryMemory address

A system and method for maintaining consistency between translational look-aside buffers (TLB) and page tables. A TLB has a TLB table for storing a list of virtual memory address-to-physical memory address translations, or page table entries (PTES) and a hardware-based controller for invalidating a translation that is stored in the TLB table when a corresponding page table entry changes. The TLB table includes a virtual memory (VM) page tag and a page table entry address tag for indexing the list of translations The VM page tag can be searched for VM pages that are referenced by a process. If a referenced VM page is found, an associated physical address is retrieved for use by the processor. The TLB controller includes a snooping controller for snooping a cache-memory interconnect for activity that affects PTEs. The page table entry address tag can be searched by a search engine in the TLB controller for snooped page table entry addresses. The TLB controller includes an updating module for invalidating or updating translations associated with snooped page table entry addresses. Translations in TLBs are thus updated or invalidated through hardware when an operating system changes a PTE, without intervention by an operating system or other software.

Owner:RPX CORP +1

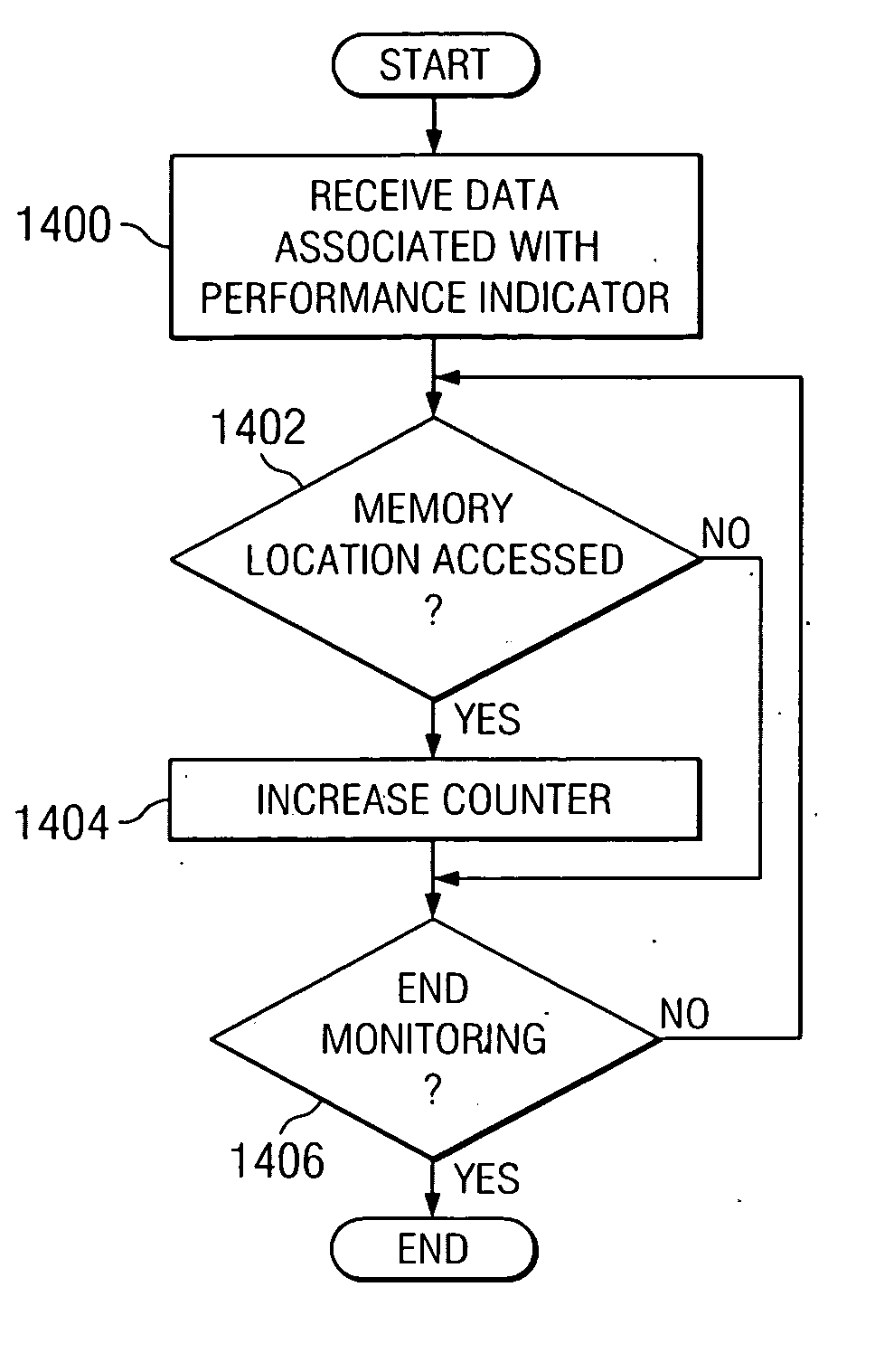

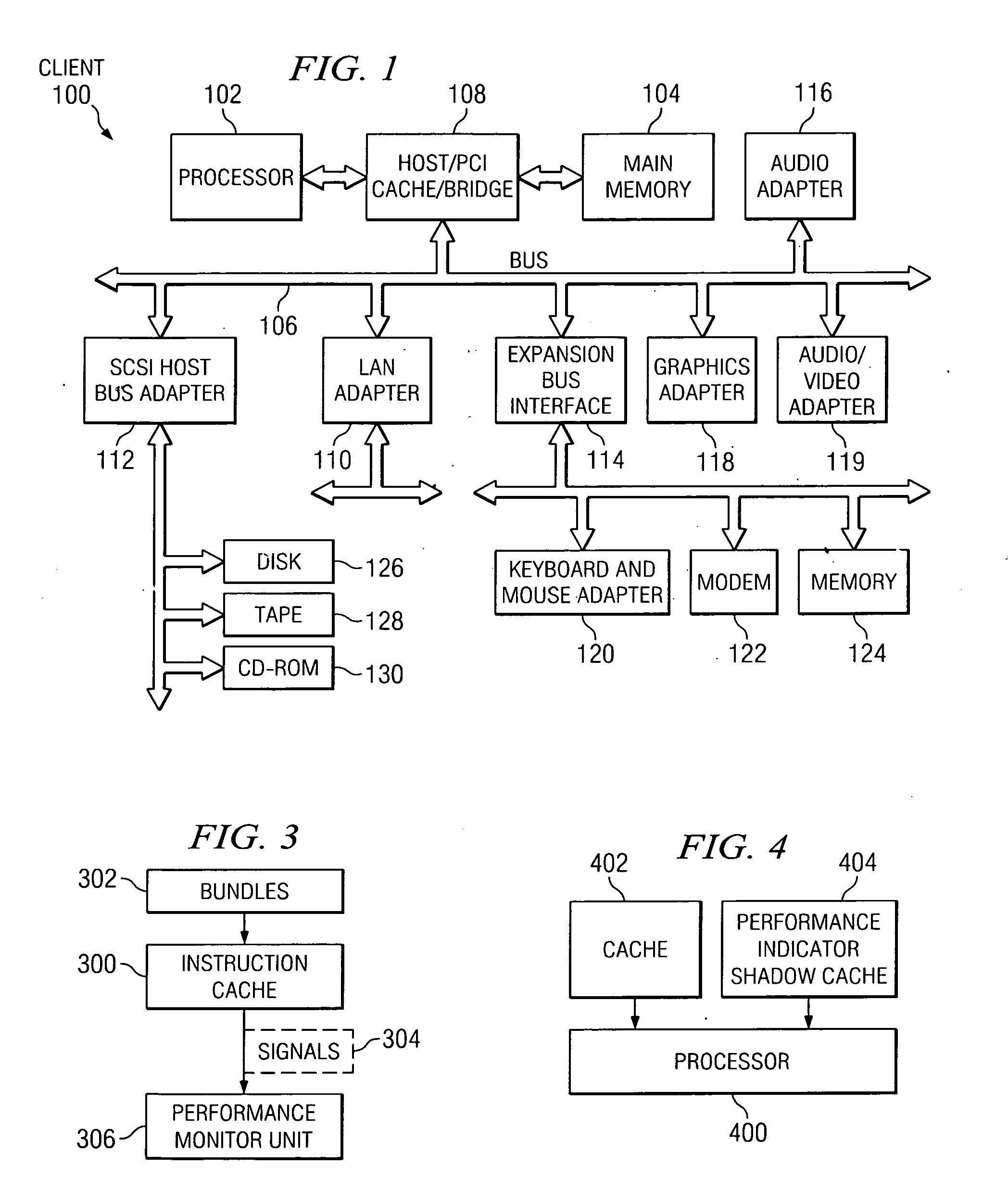

Method and apparatus for maintaining performance monitoring structures in a page table for use in monitoring performance of a computer program

InactiveUS20050155019A1Improve performanceError detection/correctionMemory adressing/allocation/relocationData processing systemApplication software

A method and apparatus in a data processing system for measuring events associated with the execution of instructions are provided. Instructions are received at a processor in the data processing system. If a selected indicator is associated with the instruction, counting of each event associated with the execution of the instruction is enabled. In some embodiments, the performance indicators, counters, thresholds, and other performance monitoring structures may be stored in a page table that is used to translate virtual addresses into physical storage addresses. A standard page table is augmented with additional fields for storing the performance monitoring structures. These structures may be set by the performance monitoring application and may be queried and modified as events occur that require access to physical storage.

Owner:IBM CORP

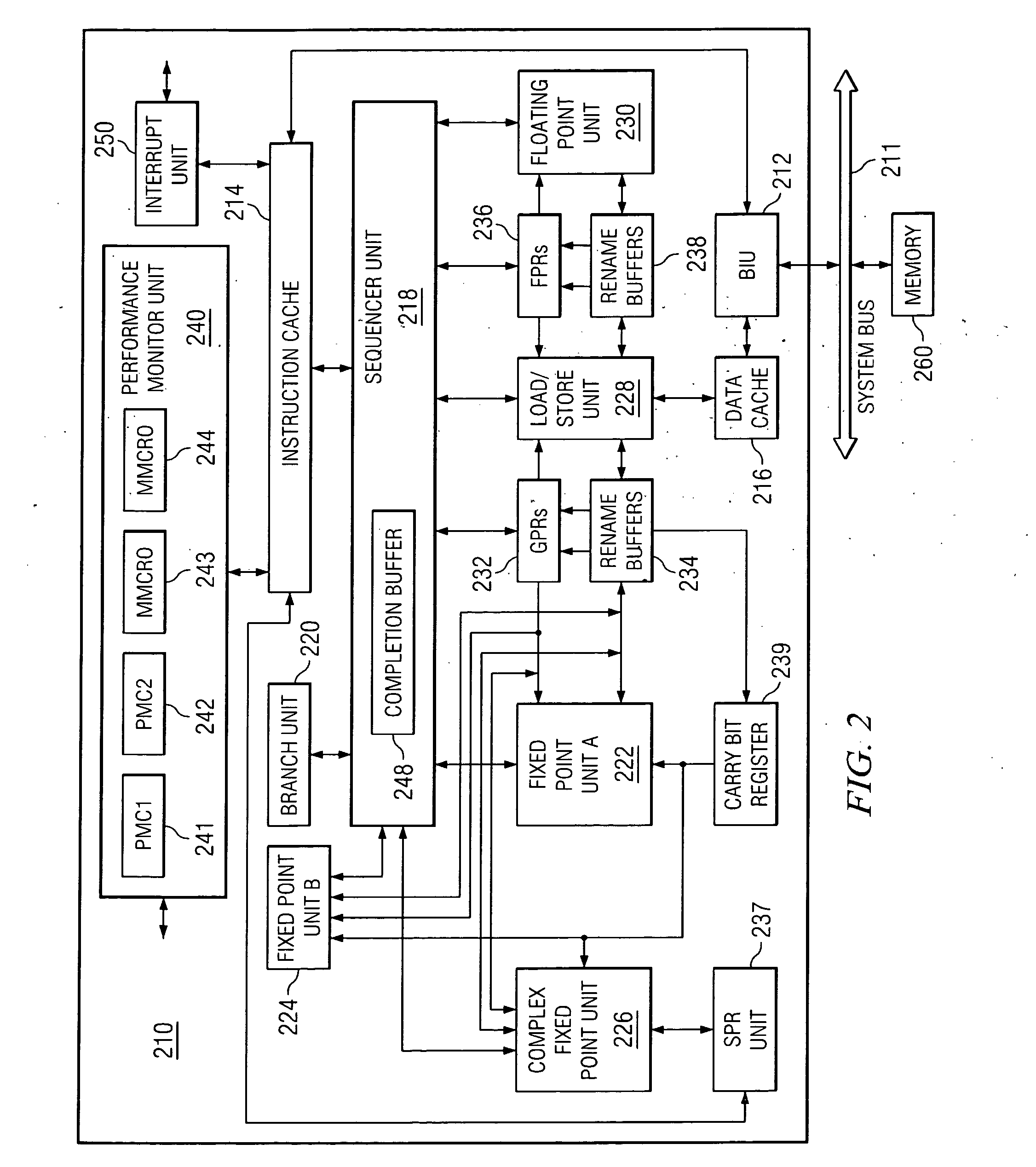

Maintaining coherency of derived data in a computer system

ActiveUS7222221B1Memory architecture accessing/allocationData processing applicationsOperational systemMulti processor

A computer system has secondary data that is derived from primary data, such as entries in a TLB being derived from entries in a page table. When an actor changes the primary data, a producer indicates the change in a set data structure, such as a data array, in memory that is shared by the producer and a consumer. There may be multiple producers and multiple consumers and each producer / consumer pair has a separate channel. At coherency events, at which incoherencies between the primary data and the secondary data should be removed, consumers read the channels to determine the changes, and update the secondary data accordingly. The system may be a multiprocessor virtual computer system, the actor may be a guest operating system, and the producers and consumers may be subsystems within a virtual machine monitor, wherein each subsystem exports a separate virtual central processing unit.

Owner:VMWARE INC

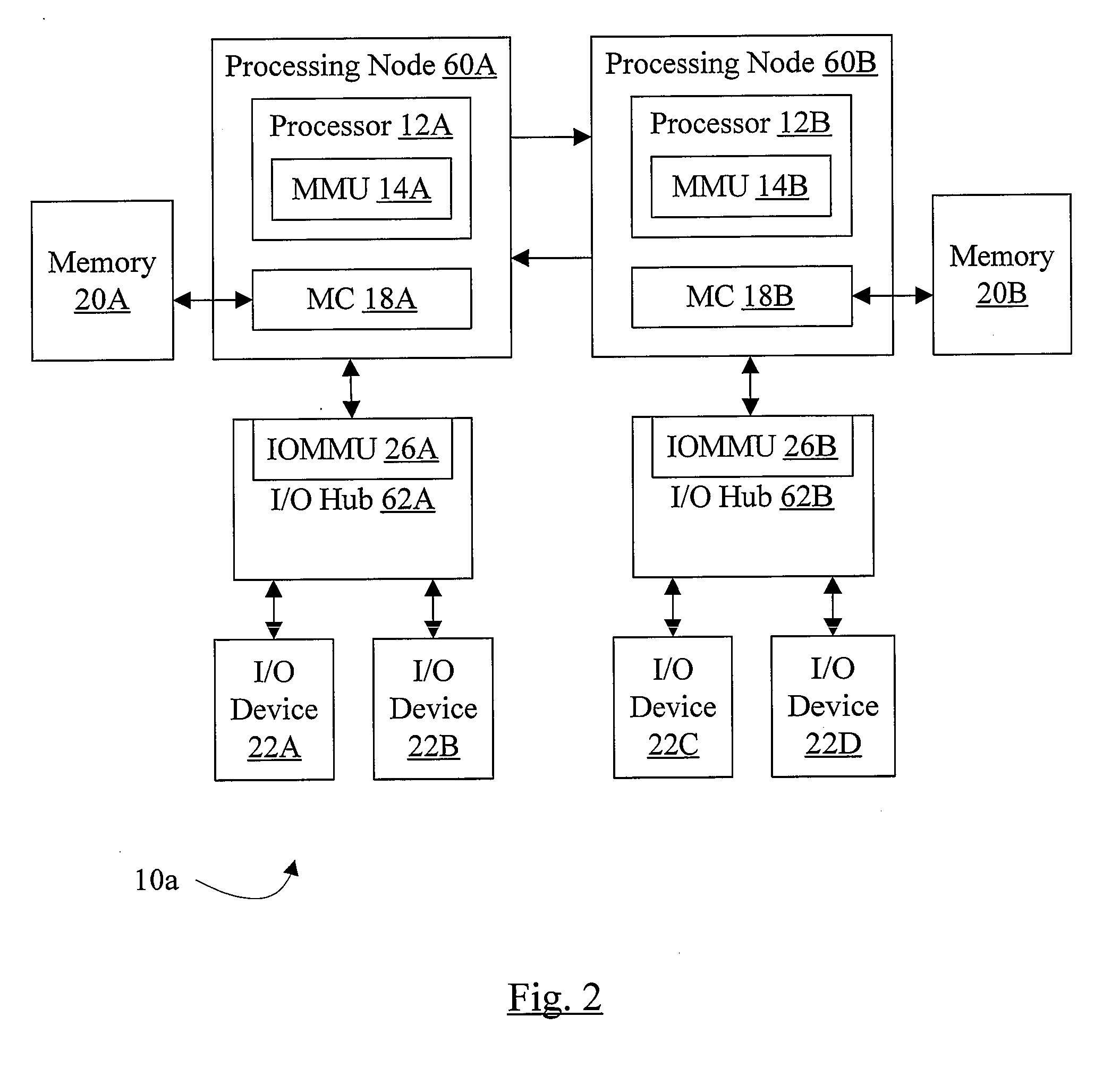

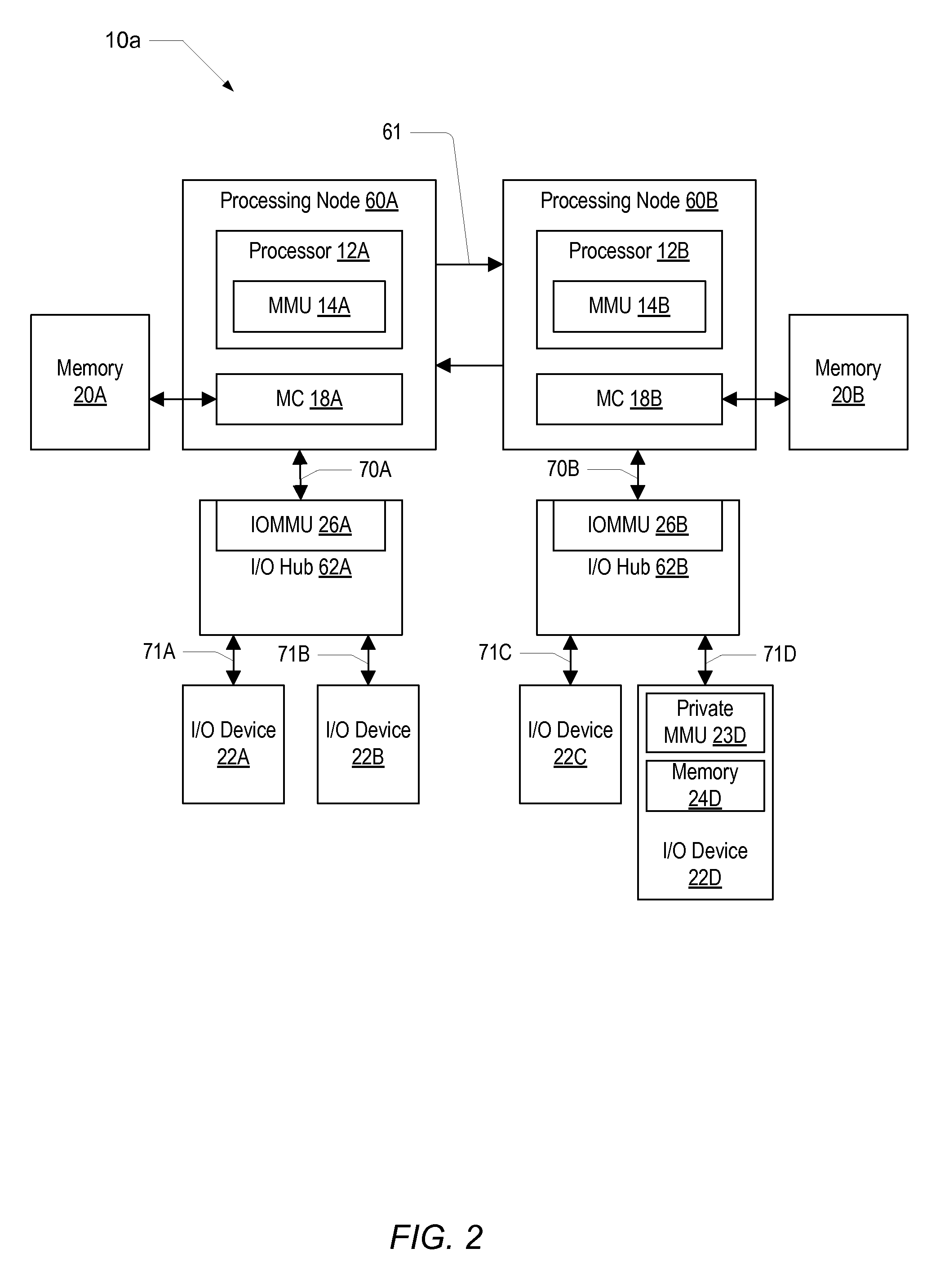

I/O memory management unit including multilevel address translation for I/O and computation offload

ActiveUS20110023027A1Memory architecture accessing/allocationMemory adressing/allocation/relocationTranslation tablePage table

An input / output memory management unit (IOMMU) configured to control requests by an I / O device to a system memory includes control logic that may perform a two-level guest translation to translate an address associated with an I / O device-generated request using translation data stored in the system memory. The translation data includes a device table having a number of entries. The control logic may select the device table entry for a given request by the using a device identifier that corresponds to the I / O device that generates the request. The translation data may also include a first set of I / O page tables including a set of guest page tables and a set of nested page tables. The selected device table entry for the given request may include a pointer to the set of guest translation tables, and a last guest translation table includes a pointer to the set of nested page tables

Owner:ADVANCED MICRO DEVICES INC

Method and apparatus for efficient virtual memory management

InactiveUS6886085B1Reduce the amount of dataReduce the number of dataMemory architecture accessing/allocationMemory adressing/allocation/relocationWaste collectionVirtual memory management

A method and an apparatus that improves virtual memory management. The proposed method and apparatus provides an application with an efficient channel for communicating information about future behavior of an application with respect to the use of memory and other resources to the OS, a paging daemon, and other system software. The state of hint bits, which are integrated into page table entries and TLB entries and are used for communicating information to the OS, can be changed explicitly with a special instruction or implicitly as a result of referencing the associated page. The latter is useful for canceling hints. The method and apparatus enables memory allocators, garbage collectors, and compilers (such as those used by the Java platform) to use a page-aligned heap and a page-aligned stack to assist the OS in effective management of memory resources. This mechanism can also be used in other system software.

Owner:IBM CORP

Method and apparatus for accessing a memory

InactiveUS20050038941A1Minimized on demandMemory adressing/allocation/relocationTransmissionTerm memoryPage table

The disclosed embodiments relate to an optimized memory registration mechanism that may comprise an upper layer protocol that associates I / O buffers with memory regions and that manages steering tags. The memory regions may be associated with a translation page table. The upper layer protocol may allocate one of the steering tags associated with at least one of the memory regions for a memory operation.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

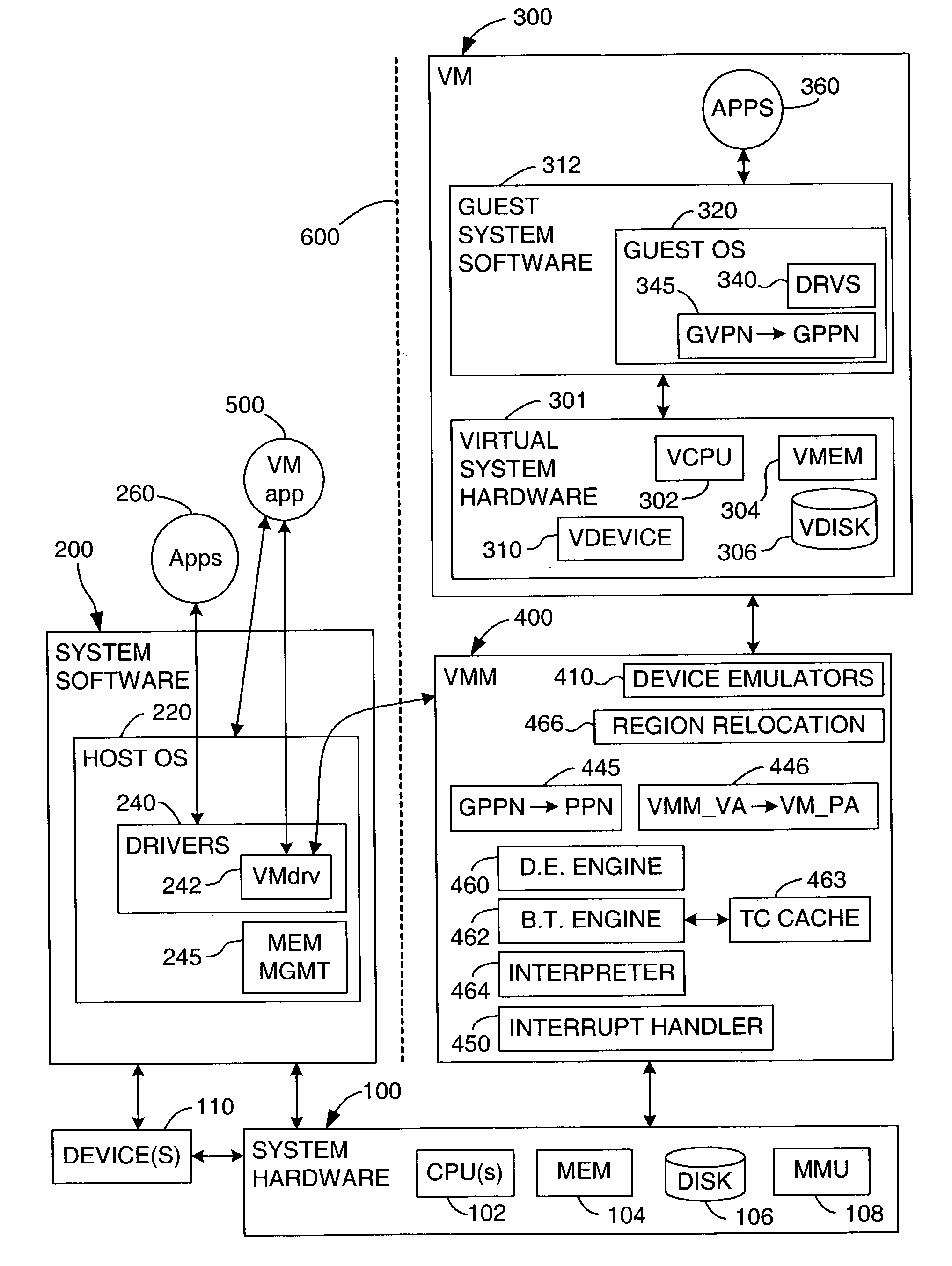

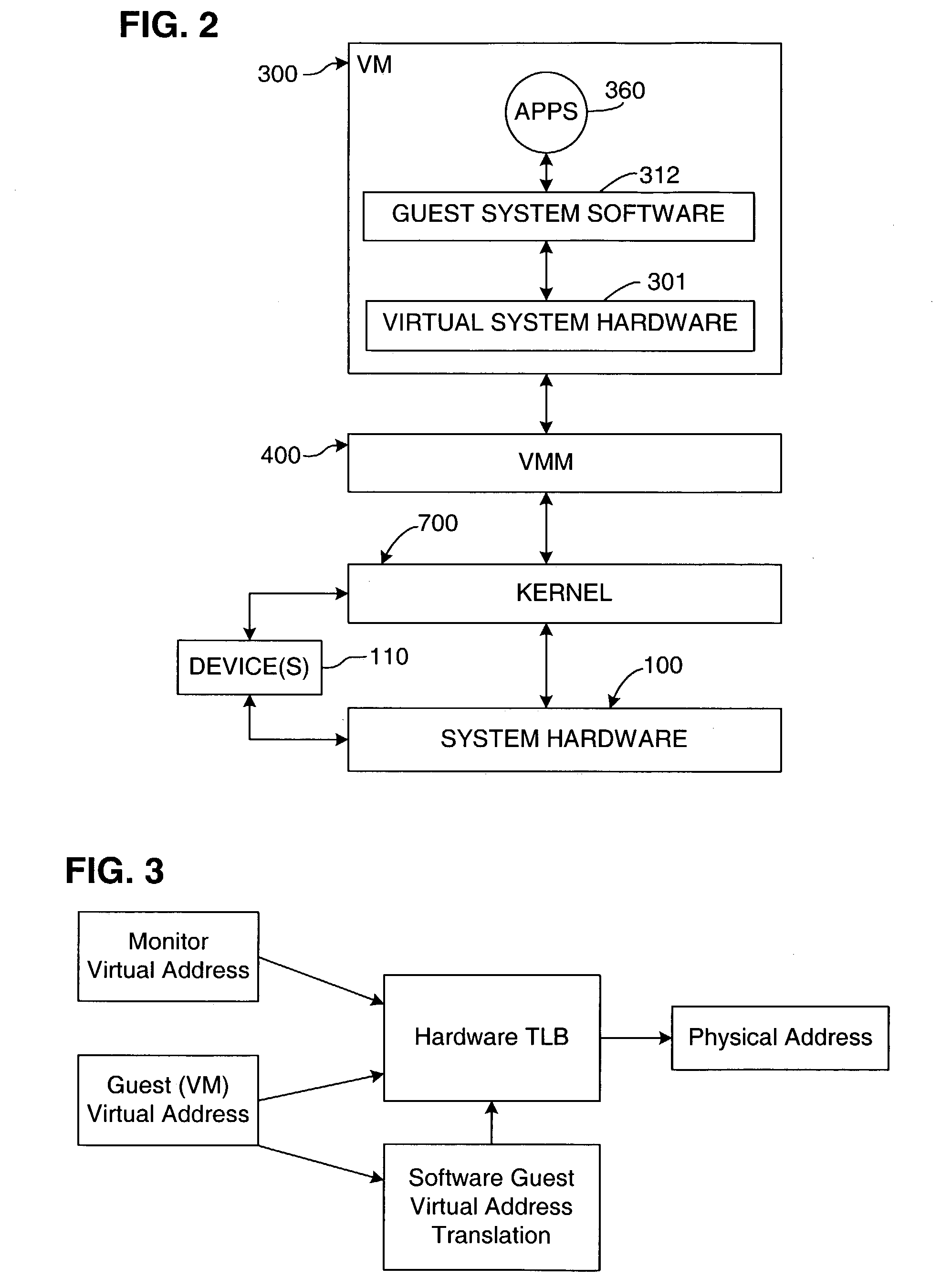

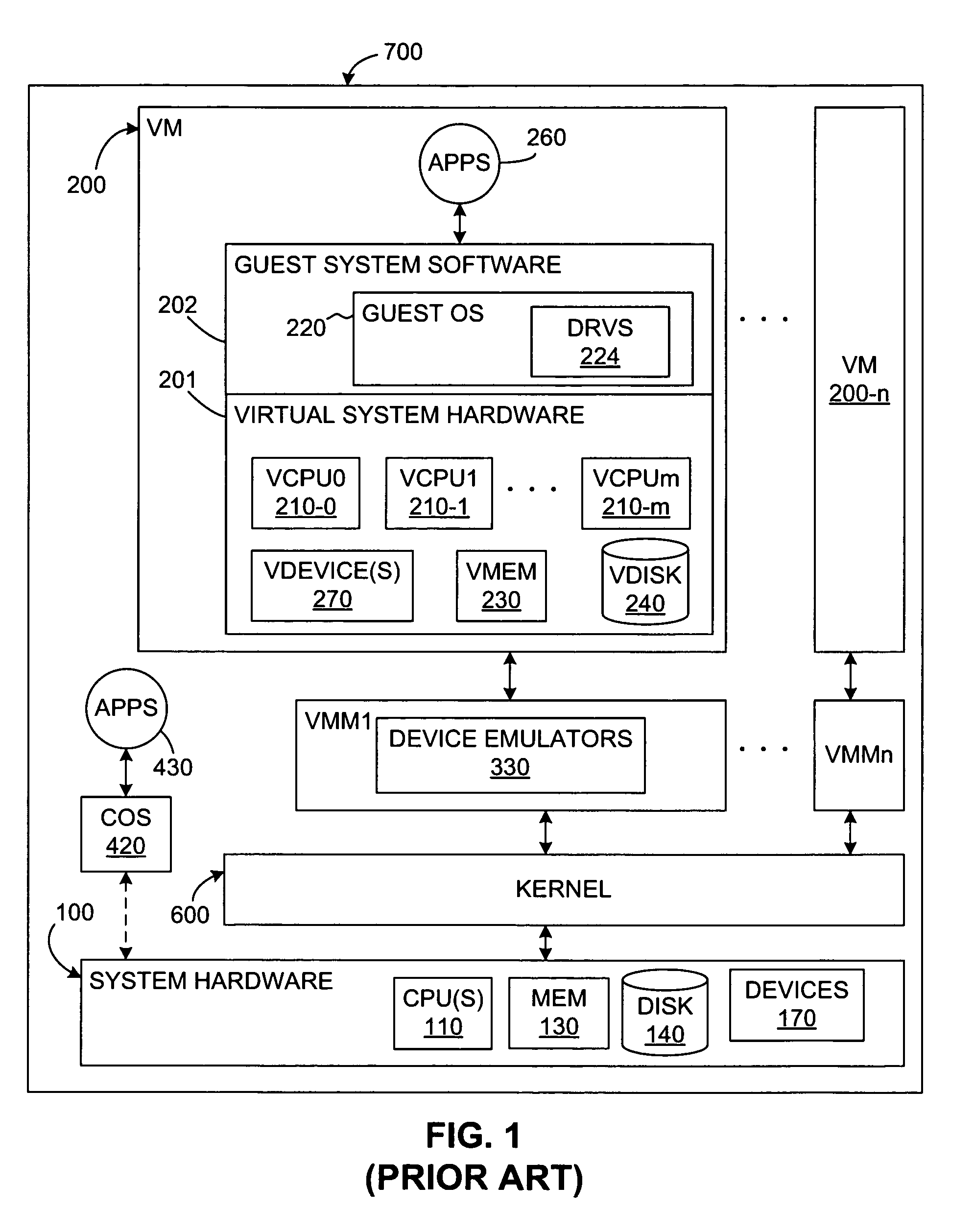

Method for efficient virtualization of physical memory in a virtual-machine monitor

ActiveUS20060026383A1Introducing excessive overheadIntroducing inefficiencyMemory adressing/allocation/relocationComputer security arrangementsPage tableVirtualization

Various embodiments of the present invention are directed to efficient provision, by a virtual-machine monitor, of a virtual, physical memory interface to guest operating systems and other programs and routines interfacing to a computer system through a virtual-machine interface. In one embodiment of the present invention, a virtual-machine monitor maintains control over a translation lookaside buffer (“TLB”), machine registers which control virtual memory translations, and a processor page table, providing each concurrently executing guest operating system with a guest-processor-page table and guest-physical memory-to-physical memory translations. In one embodiment, a virtual-machine monitor can rely on hardware virtual-address-translation mechanisms for the bulk of virtual-address translations needed during guest-operating-system execution, thus providing a guest-physical memory interface without introducing excessive overhead and inefficiency.

Owner:VALTRUS INNOVATIONS LTD +1

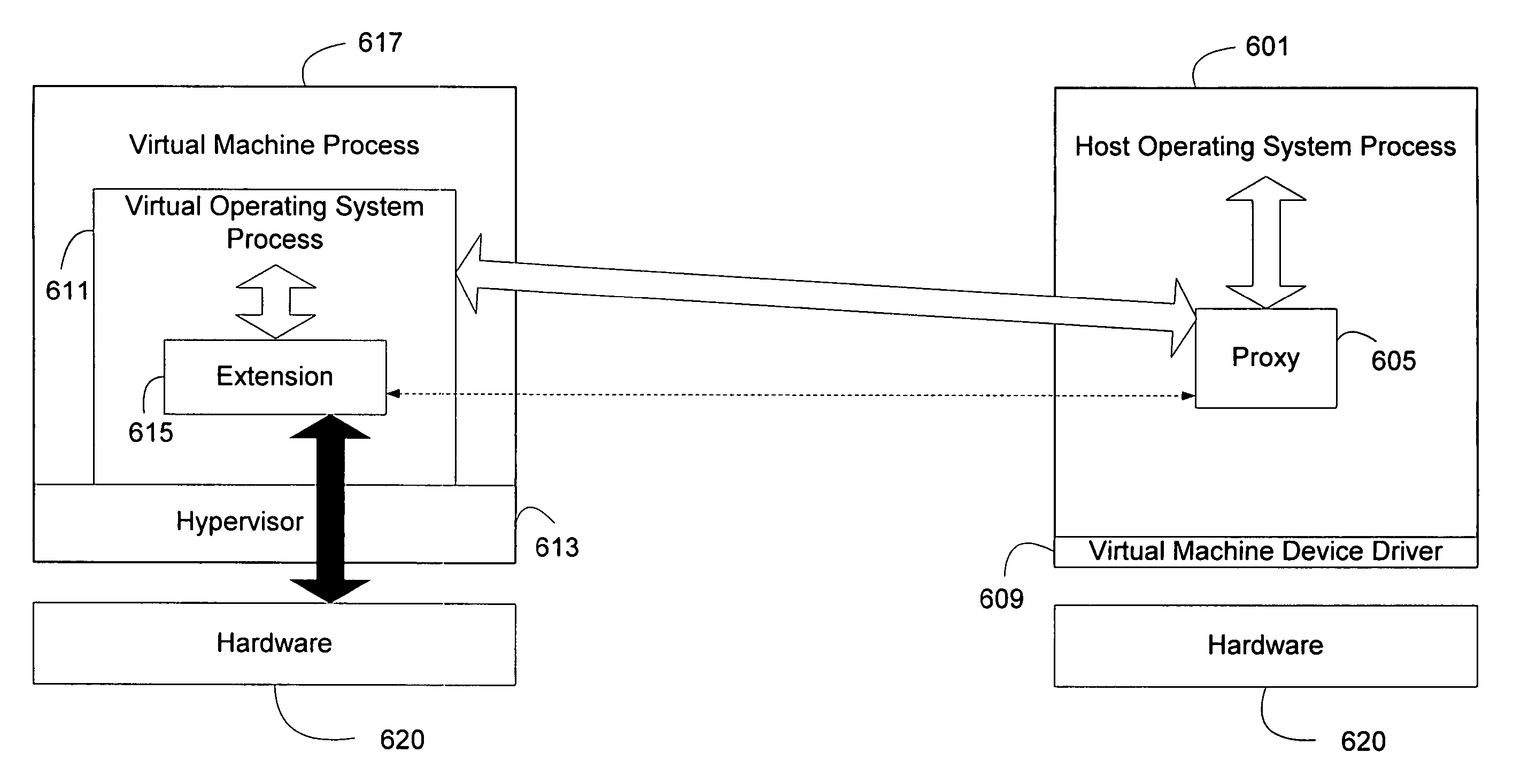

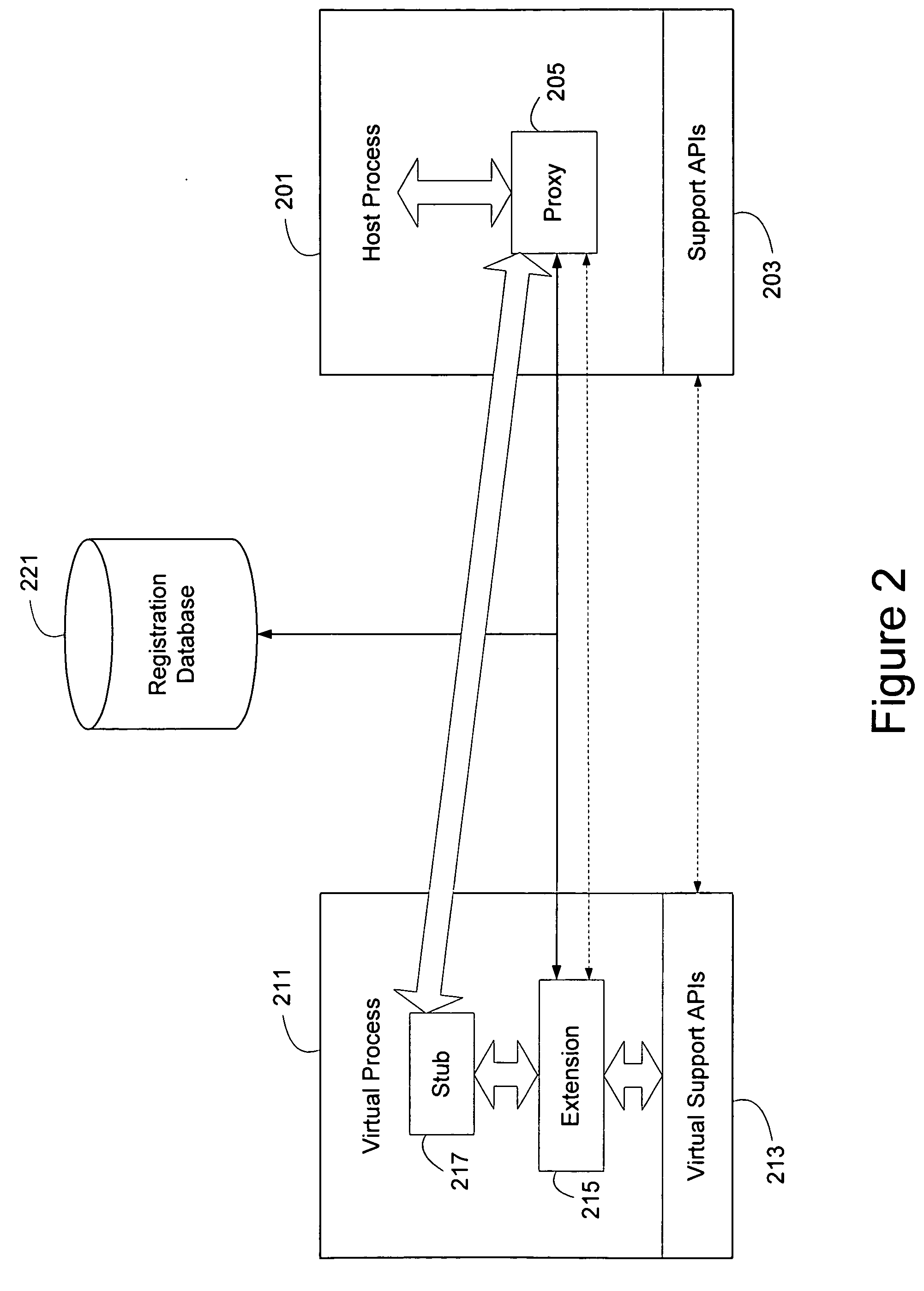

VEX-virtual extension framework

InactiveUS20050246718A1Provide benefitsImprove instabilityFault responseInterprogram communicationOperational systemApplication software

Extensions to operating systems or software applications can be hosted in virtual environments to fault isolate the extension. A generic proxy extension invoked by a host process can coordinate the invocation of an appropriate extension in a virtual process that can provide the same support APIs as the host process. Furthermore, a user mode context can be provided to the extension in the virtual process through memory copying or page table modifications. In addition, the virtual process, especially a virtual operating system process running on a virtual machine, can be efficiently started by cloning a coherent state. A coherent state can be created when a virtual machine starts up, or when the computing device starts up and the appropriate parameters are observed and saved. Alternatively, the operating system can create a coherent state by believing there is an additional CPU during the boot process.

Owner:MICROSOFT TECH LICENSING LLC

Iommu using two-level address translation for I/O and computation offload devices on a peripheral interconnect

ActiveUS20110022818A1Memory adressing/allocation/relocationSoftware simulation/interpretation/emulationComputerized systemPhysical address

An IOMMU for controlling requests by an I / O device to a system memory of a computer system includes control logic and a cache memory. The control logic may translate an address received in a request from the I / O device. If the request includes a transaction layer protocol (TLP) packet with a process address space identifier (PASID) prefix, the control logic may perform a two-level guest translation. Accordingly, the control logic may access a set of guest page tables to translate the address received in the request. A pointer in a last guest page table points to a first table in a set of nested page tables. The control logic may use the pointer in a last guest page table to access the set of nested page tables to obtain a system physical address (SPA) that corresponds to a physical page in the system memory. The cache memory stores completed translations.

Owner:ADVANCED MICRO DEVICES INC

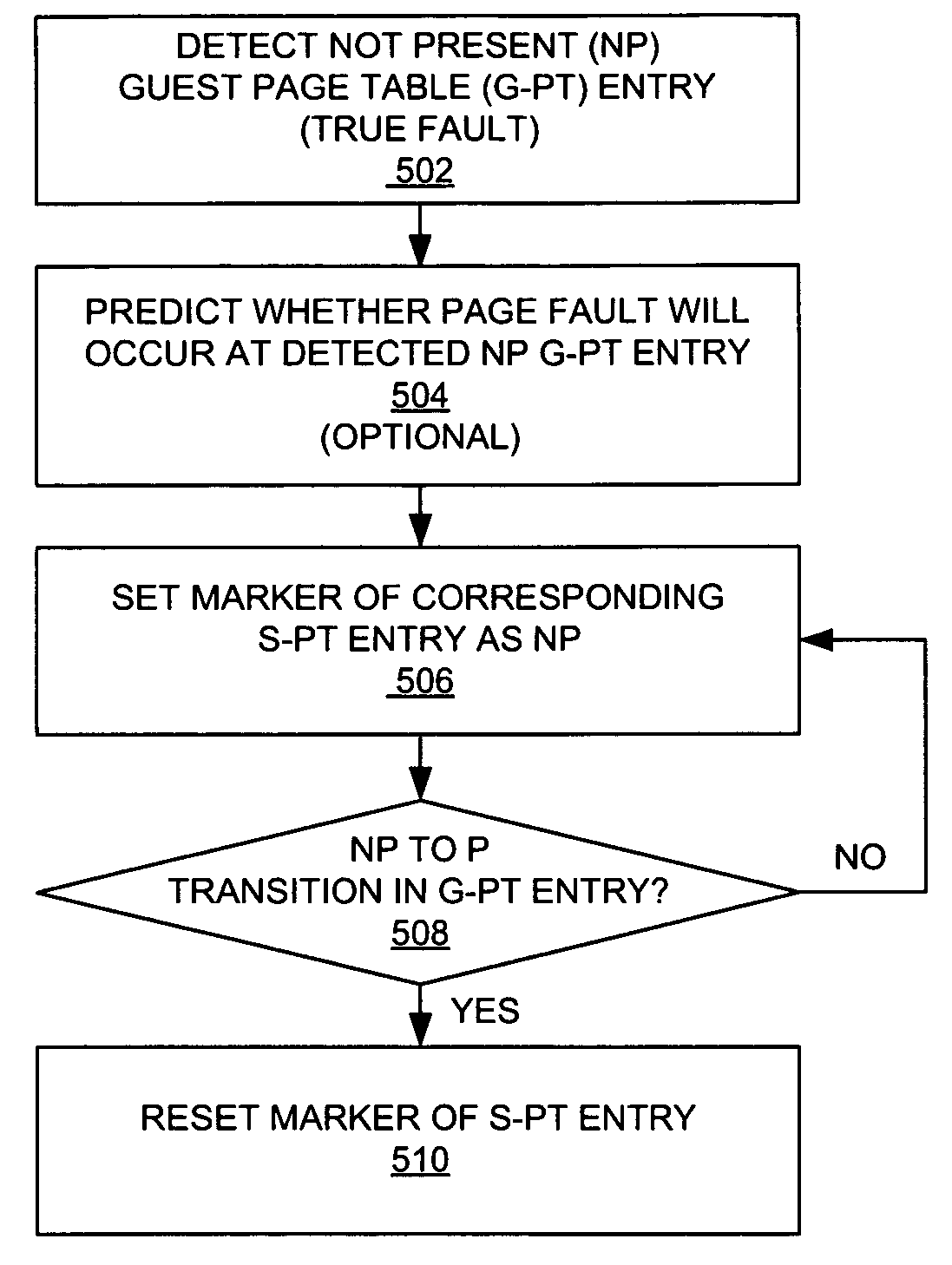

Bypassing guest page table walk for shadow page table entries not present in guest page table

ActiveUS8015388B1Less of computational burdenReduce the burden onMemory architecture accessing/allocationMemory systemsVirtual memoryPage table

A method and system are provided that does not perform a page walk on the guest page tables if the shadow page table entry corresponding to the guest virtual address for accessing the virtual memory indicates that a corresponding mapping from the guest virtual address to a guest physical address is not present in the guest page tables. A marker or indicator is stored in the shadow page table entries to indicate that a mapping corresponding to the guest virtual address of the shadow page table entry is not present in the guest page table.

Owner:VMWARE INC

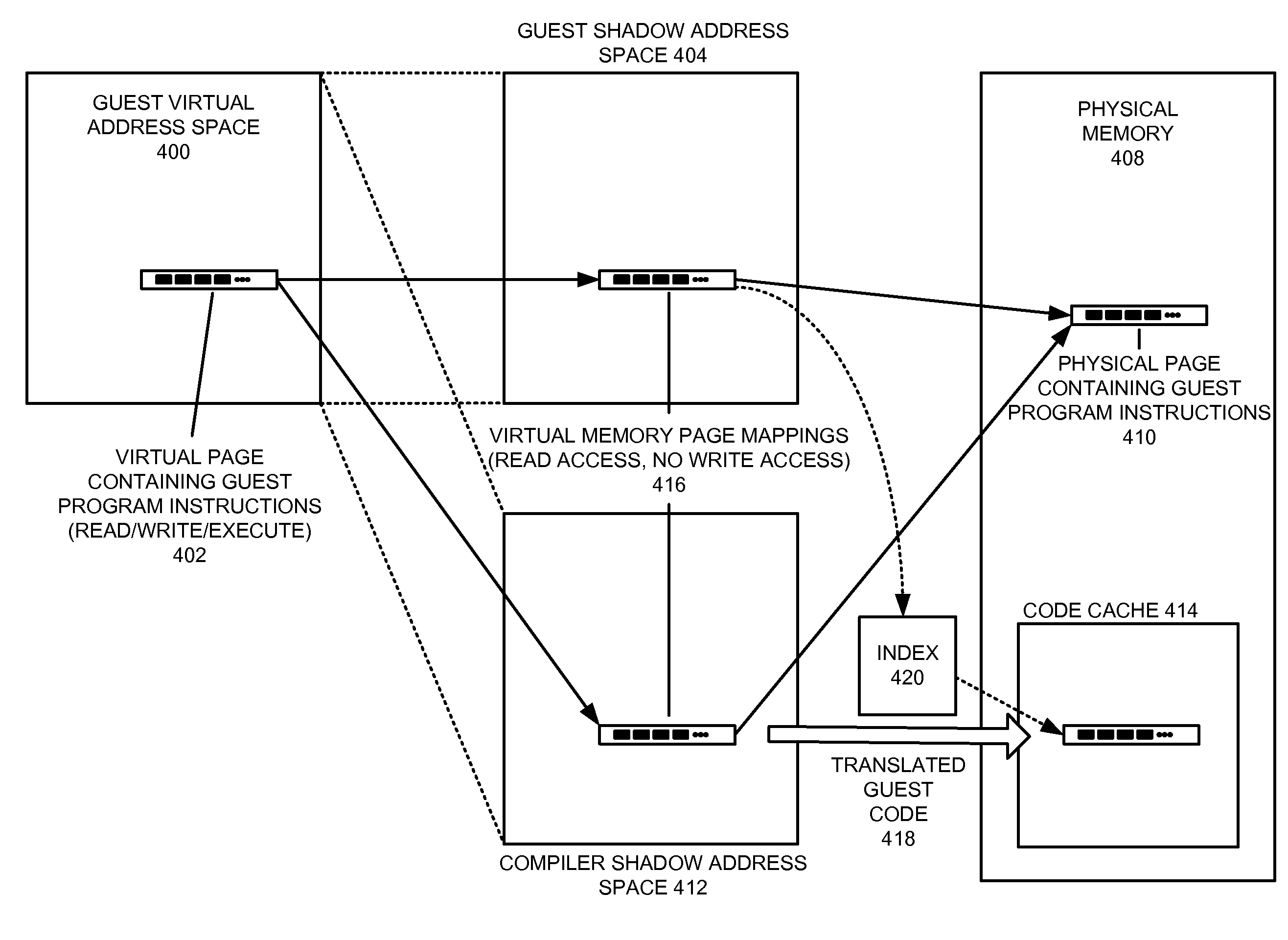

Method and apparatus for protecting translated code in a virtual machine

ActiveUS8799879B2Reduced overhead and checkSoftware simulation/interpretation/emulationMemory systemsDynamic compilationVirtual memory

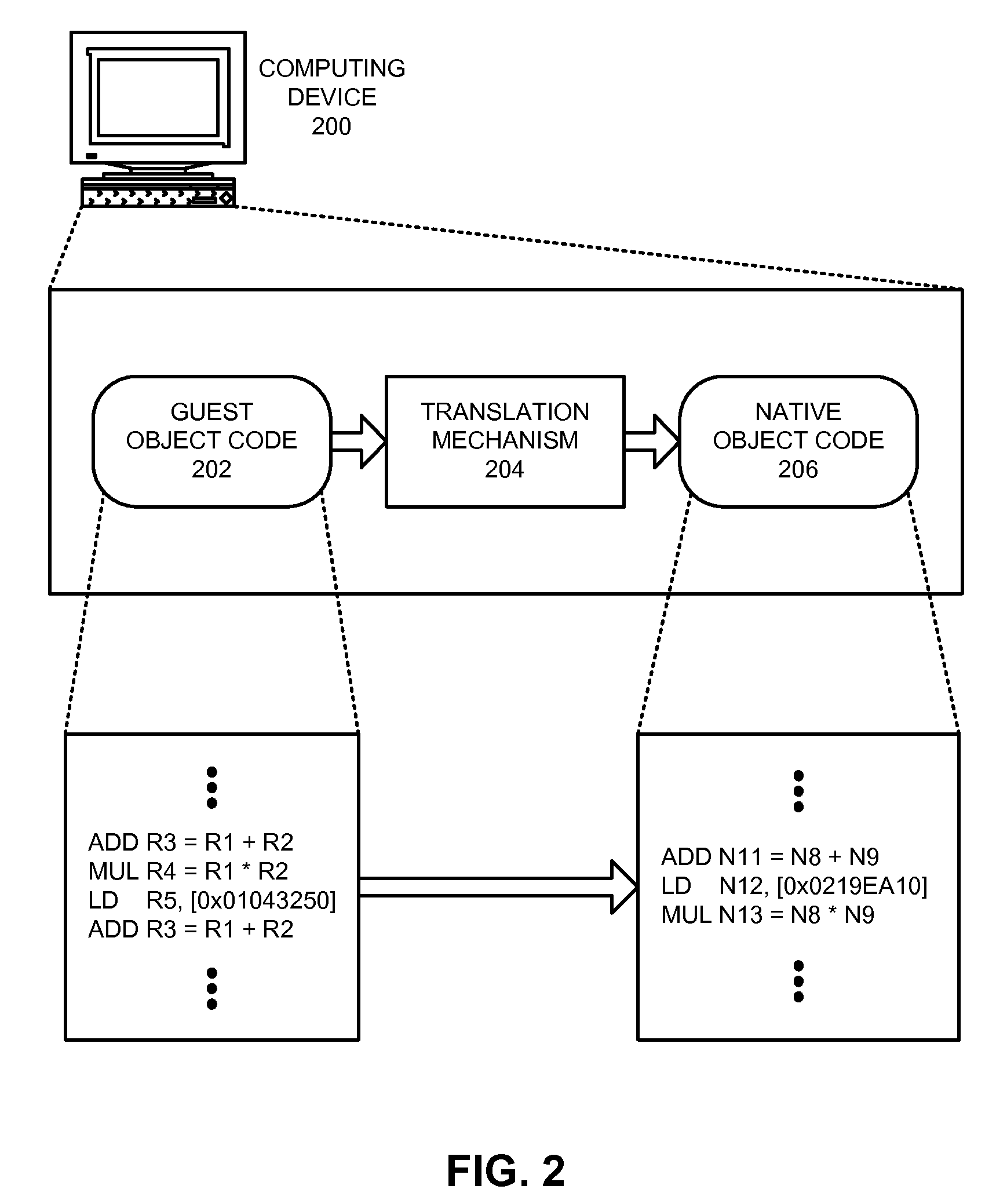

One embodiment provides a system that protects translated guest program code in a virtual machine that supports self-modifying program code. While executing a guest program in the virtual machine, the system uses a guest shadow page table associated with the guest program and the virtual machine to map a virtual memory page for the guest program to a physical memory page on the host computing device. The system then uses a dynamic compiler to translate guest program code in the virtual memory page into translated guest program code (e.g., native program instructions for the computing device). During compilation, the dynamic compiler stores in a compiler shadow page table and the guest shadow page table information that tracks whether the guest program code in the virtual memory page has been translated. The compiler subsequently uses the information stored in the guest shadow page table to detect attempts to modify the contents of the virtual memory page. Upon detecting such an attempt, the system invalidates the translated guest program code associated with the virtual memory page.

Owner:SUN MICROSYSTEMS INC

Systems and methods for program directed memory access patterns

InactiveUS20080046666A1Memory adressing/allocation/relocationMicro-instruction address formationDirect memory accessVirtual memory management

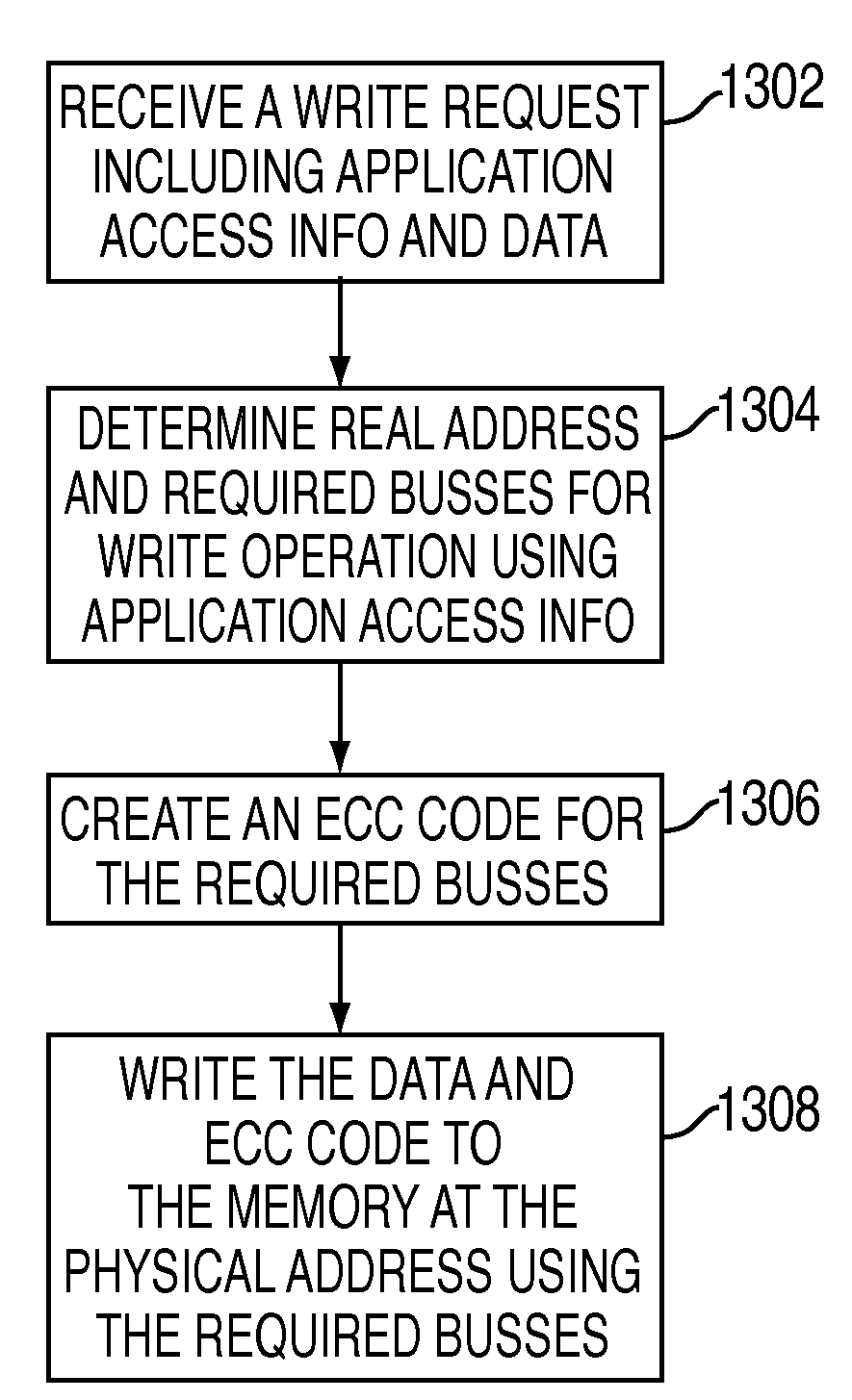

Systems and methods for program directed memory access patterns including a memory system with a memory, a memory controller and a virtual memory management system. The memory includes a plurality of memory devices organized into one or more physical groups accessible via associated busses for transferring data and control information. The memory controller receives and responds to memory access requests that contain application access information to control access pattern and data organization within the memory. Responding to memory access request includes accessing one or more memory devices. The virtual memory management system includes: a plurality of page table entries for mapping virtual memory addresses to real addresses in the memory; a hint state responsive to application access information for indicating how real memory for associated pages is to be physically organized within the memory; and a means for conveying the hint state to the memory controller.

Owner:IBM CORP

Large-Page Optimization in Virtual Memory Paging Systems

ActiveUS20090182976A1Improving virtual memory system performanceImprove system performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtualizationVirtual memory

A computer system that is programmed with virtual memory accesses to physical memory employs multi-bit counters associated with its page table entries. When a page walker visits a page table entry, the multi-bit counter associated with that page table entry is incremented by one. The computer operating system uses the counts in the multi-bit counters of different page table entries to determine where large pages can be deployed effectively. In a virtualized computer system having a nested paging system, multi-bit counters associated with both its primary page table entries and its nested page table entries are used. These multi-bit counters are incremented during nested page walks. Subsequently, the guest operating systems and the virtual machine monitors use the counts in the appropriate multi-bit counters to determine where large pages can be deployed effectively.

Owner:VMWARE INC

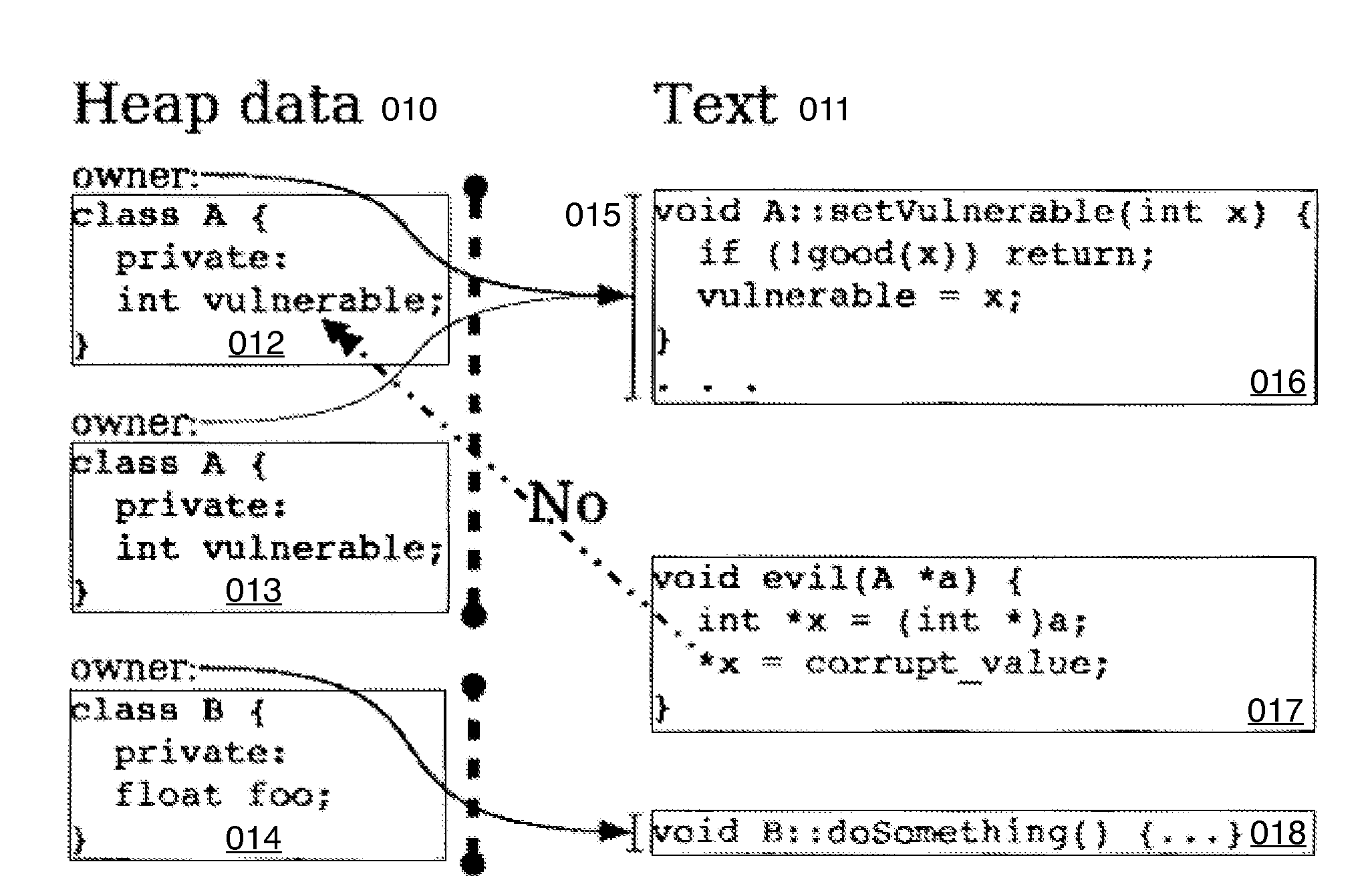

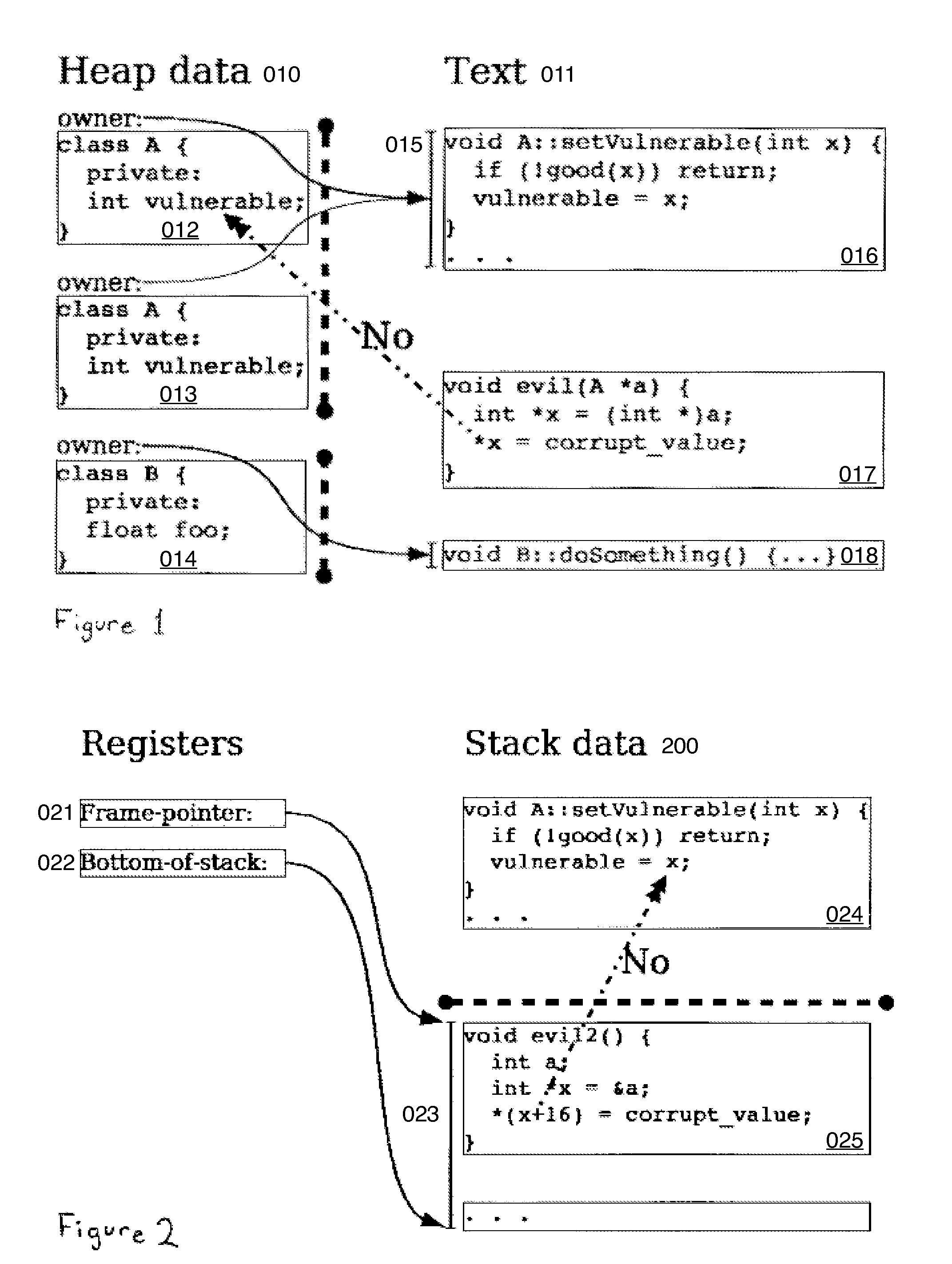

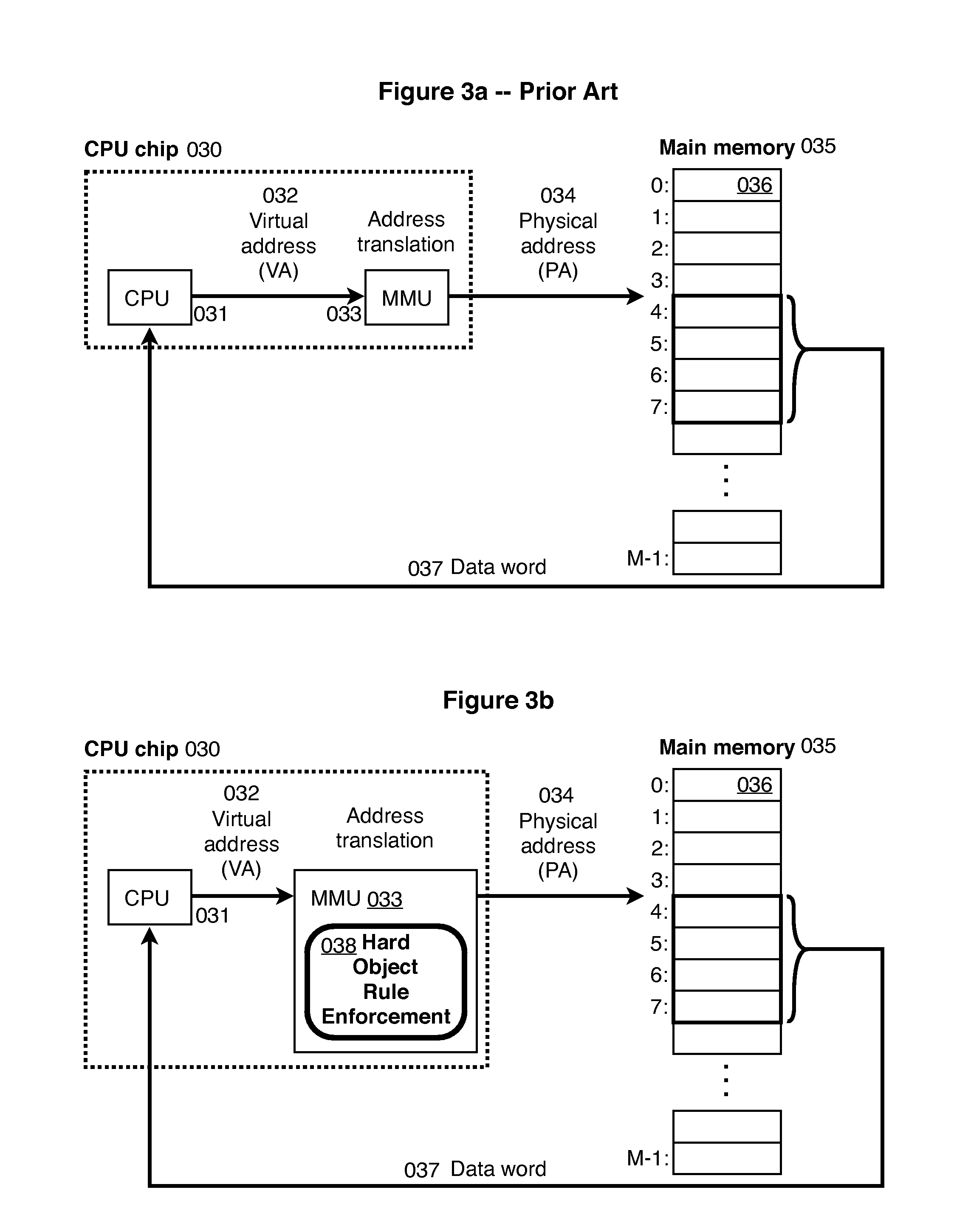

Hard Object: Hardware Protection for Software Objects

ActiveUS20080222397A1Efficiently implement enforceable separation of programDigital computer detailsAnalogue secracy/subscription systemsProcessor registerPhysical address

In accordance with one embodiment, additions to the standard computer microprocessor architecture hardware are disclosed comprising novel page table entry fields 015 062, special registers 021 022, instructions for modifying these fields 120 122 and registers 124 126, and hardware-implemented 038 runtime checks and operations involving these fields and registers. More specifically, in the above embodiment of a Hard Object system, there is additional meta-data 061 in each page table entry beyond what it commonly holds, and each time a data load or store is issued from the CPU, and the virtual address 032 translated to the physical address 034, the Hard Object system uses its additional PTE meta-data 061 to perform memory access checks additional to those done in current systems. Together with changes to software, these access checks can be arranged carefully to provide more fine-grain access control for data than do current systems.

Owner:WILKERSON DANIEL SHAWCROSS +1

Method and apparatus for accessing a memory

InactiveUS7617376B2Minimized on demandMemory adressing/allocation/relocationTransmissionPage tableMemory operation

The disclosed embodiments relate to an optimized memory registration mechanism that may comprise an upper layer protocol that associates I / O buffers with memory regions and that manages steering tags. The memory regions may be associated with a translation page table. The upper layer protocol may allocate one of the steering tags associated with at least one of the memory regions for a memory operation.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com