Patents

Literature

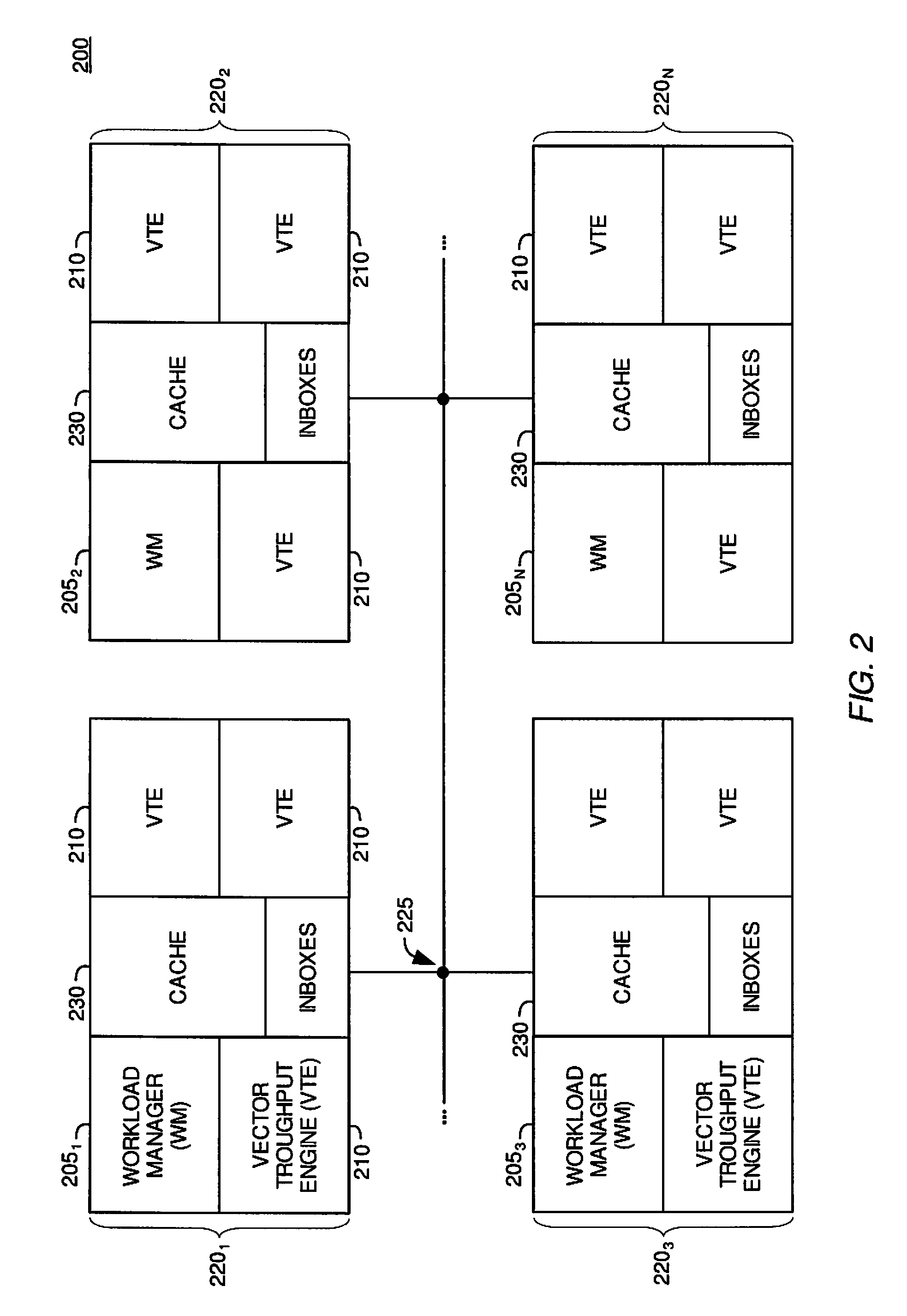

564 results about "Memory bandwidth" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Memory bandwidth is the rate at which data can be read from or stored into a semiconductor memory by a processor. Memory bandwidth is usually expressed in units of bytes/second, though this can vary for systems with natural data sizes that are not a multiple of the commonly used 8-bit bytes.

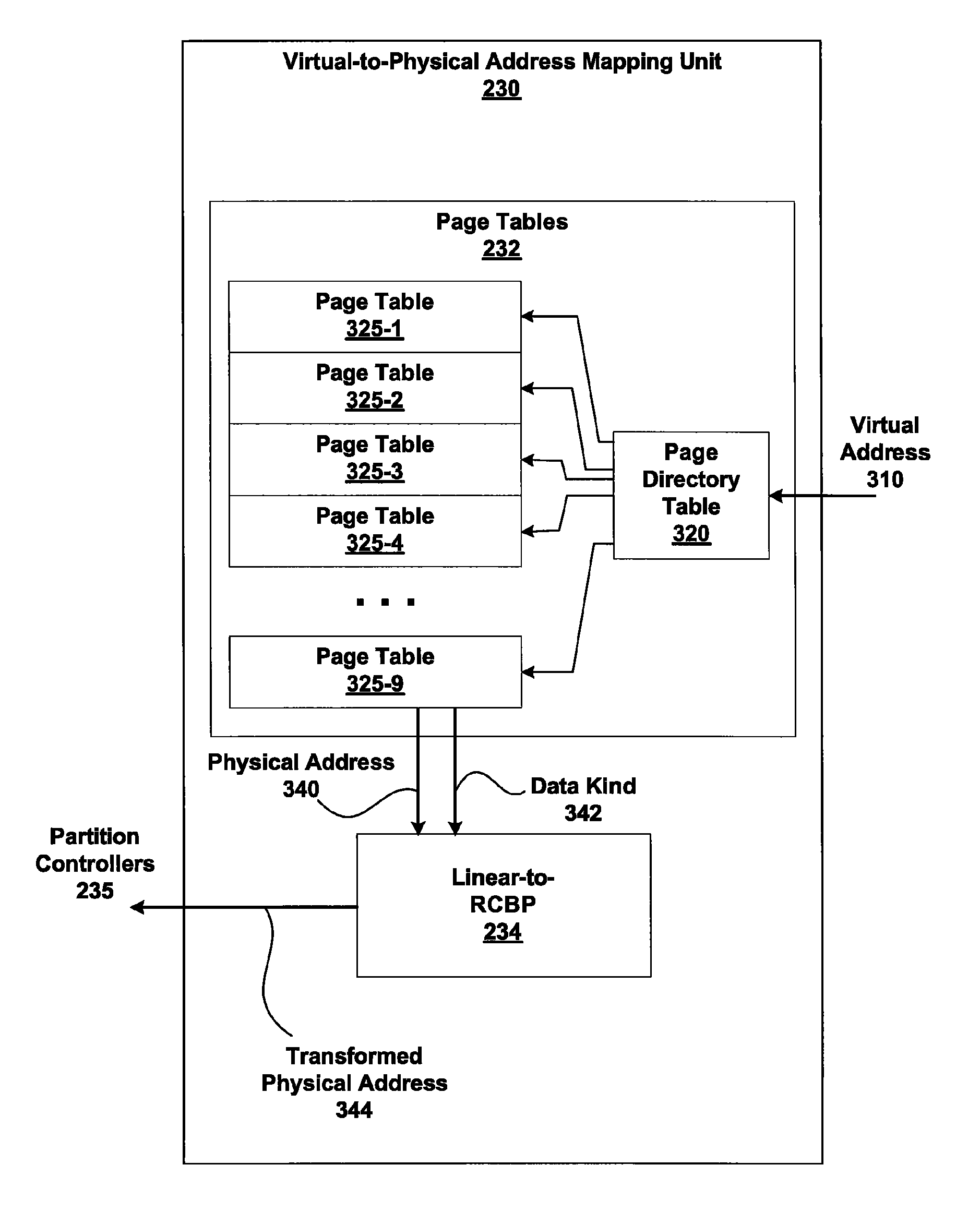

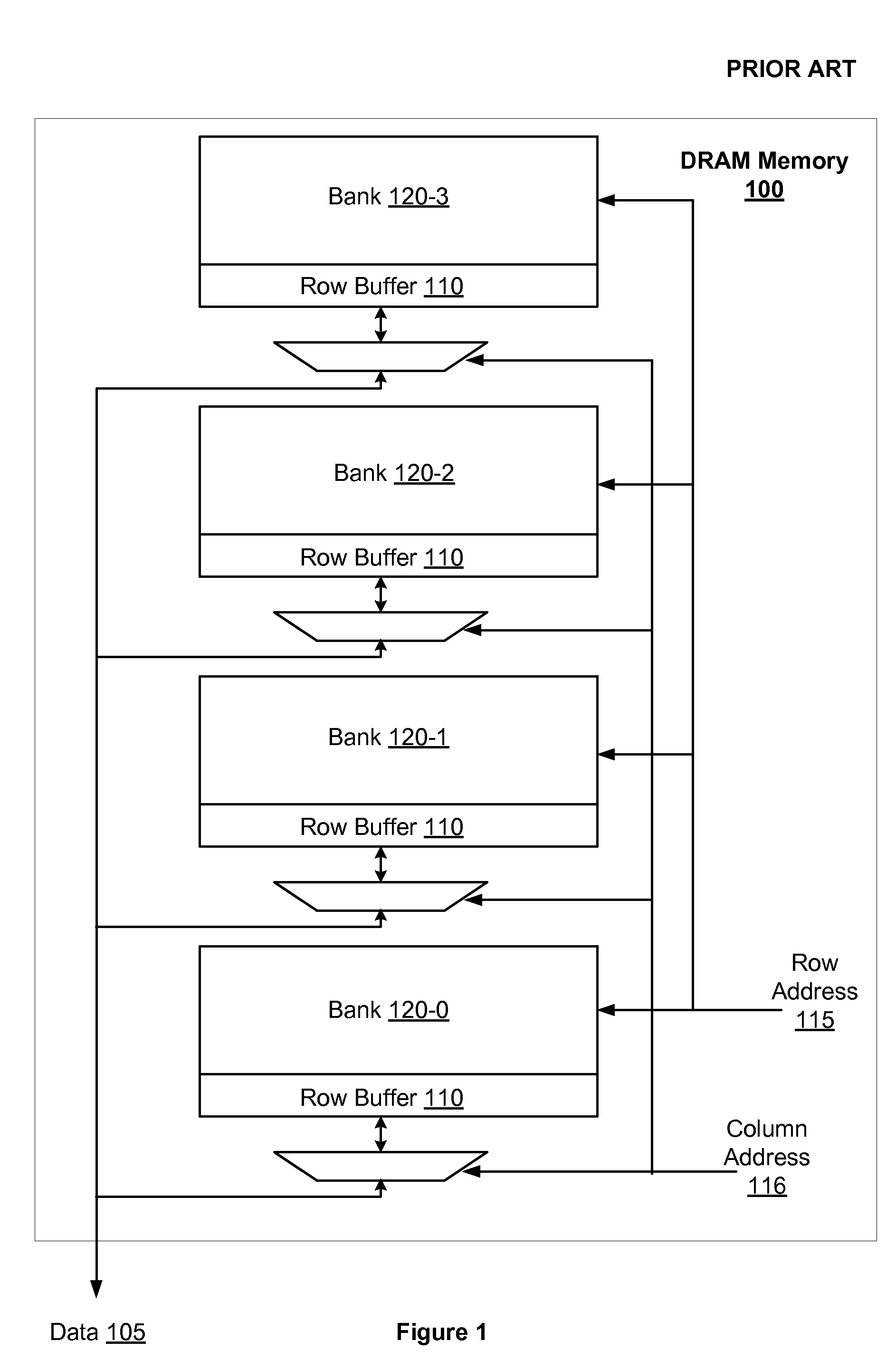

Memory addressing controlled by PTE fields

ActiveUS7805587B1Reduce accessMemory adressing/allocation/relocationComputer security arrangementsMemory addressDram memory

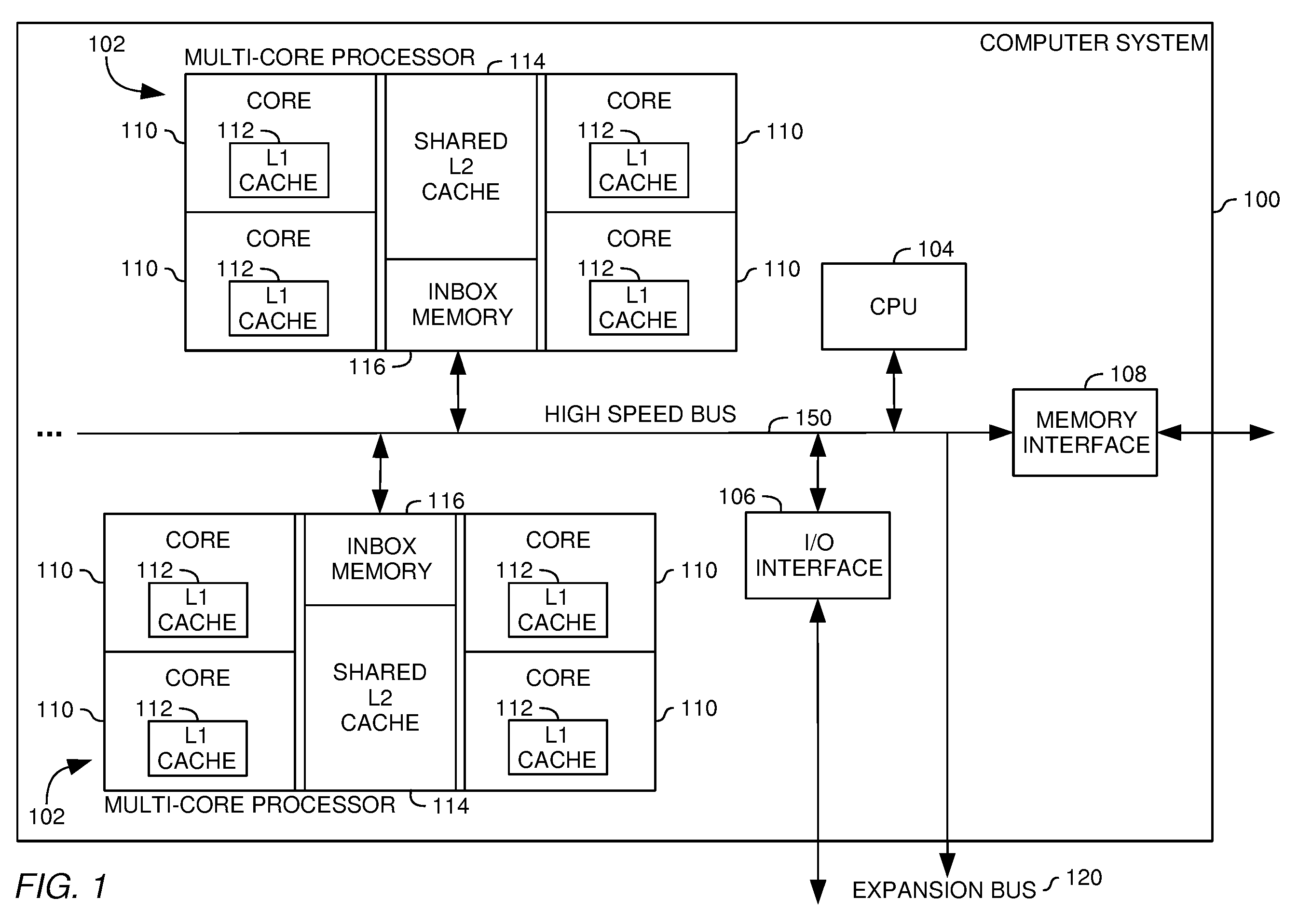

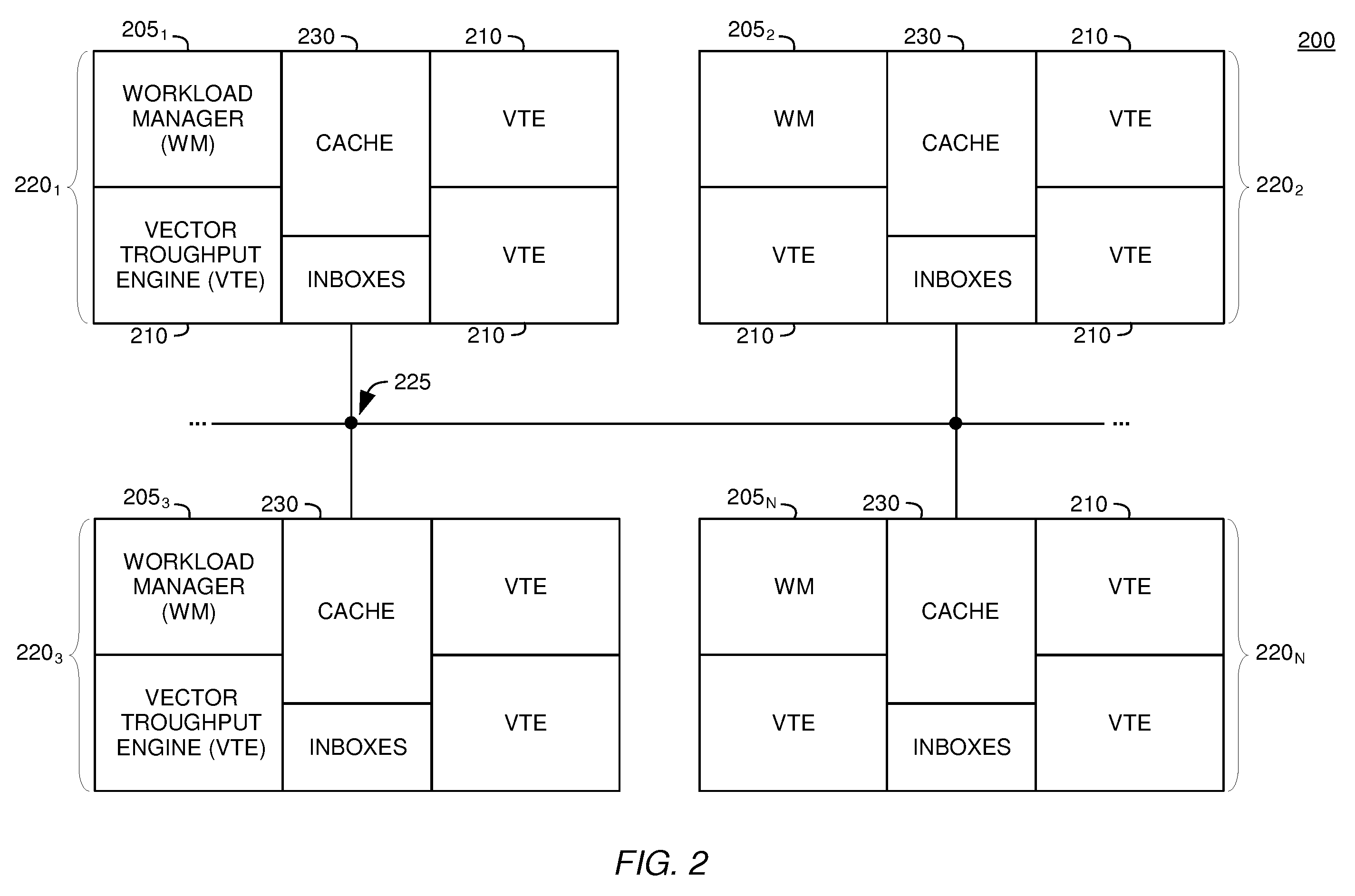

Embodiments of the present invention enable virtual-to-physical memory address translation using optimized bank and partition interleave patterns to improve memory bandwidth by distributing data accesses over multiple banks and multiple partitions. Each virtual page has a corresponding page table entry that specifies the physical address of the virtual page in linear physical address space. The page table entry also includes a data kind field that is used to guide and optimize the mapping process from the linear physical address space to the DRAM physical address space, which is used to directly access one or more DRAM. The DRAM physical address space includes a row, bank and column address. The data kind field is also used to optimize the starting partition number and partition interleave pattern that defines the organization of the selected physical page of memory within the DRAM memory system.

Owner:NVIDIA CORP

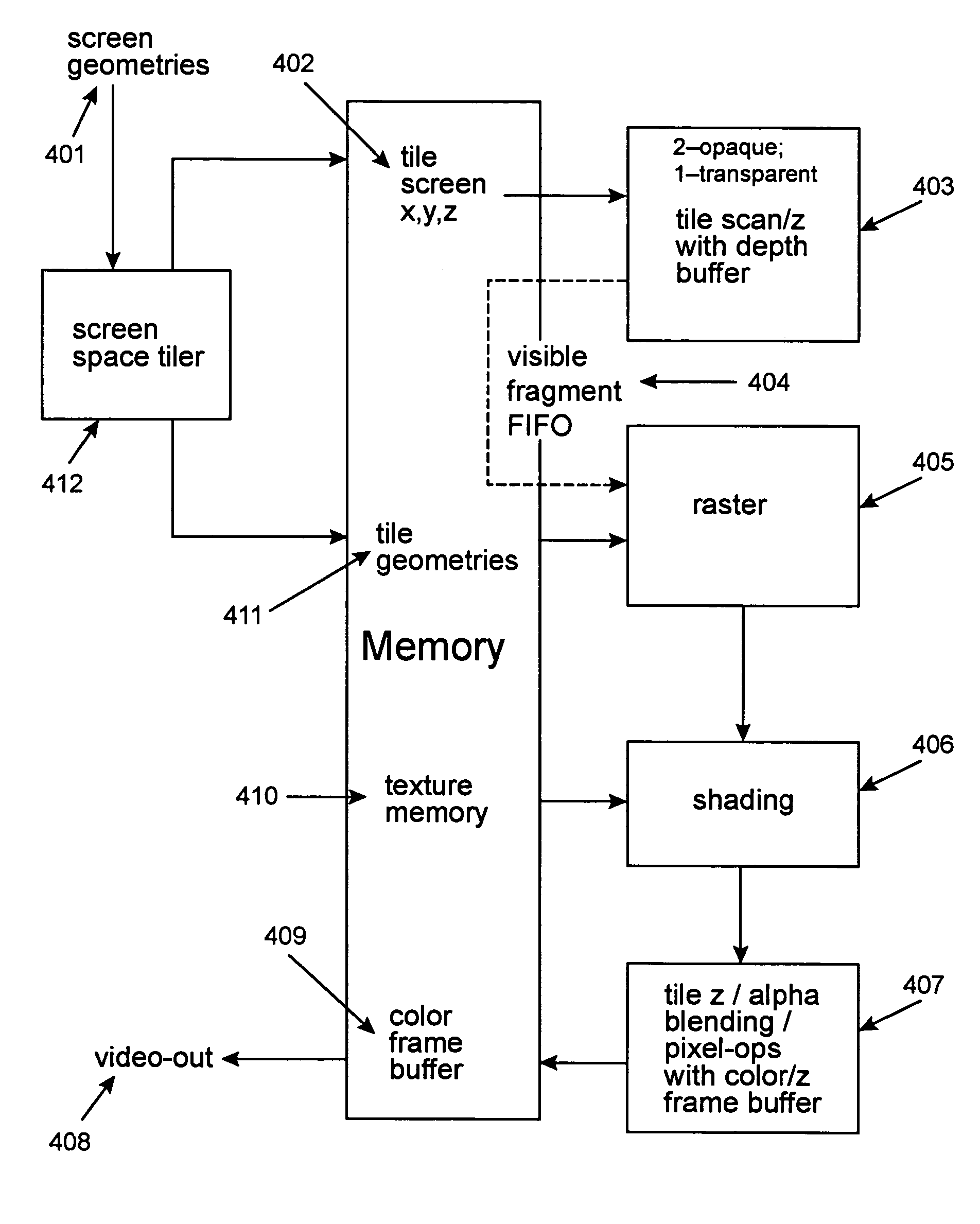

Rendering pipeline

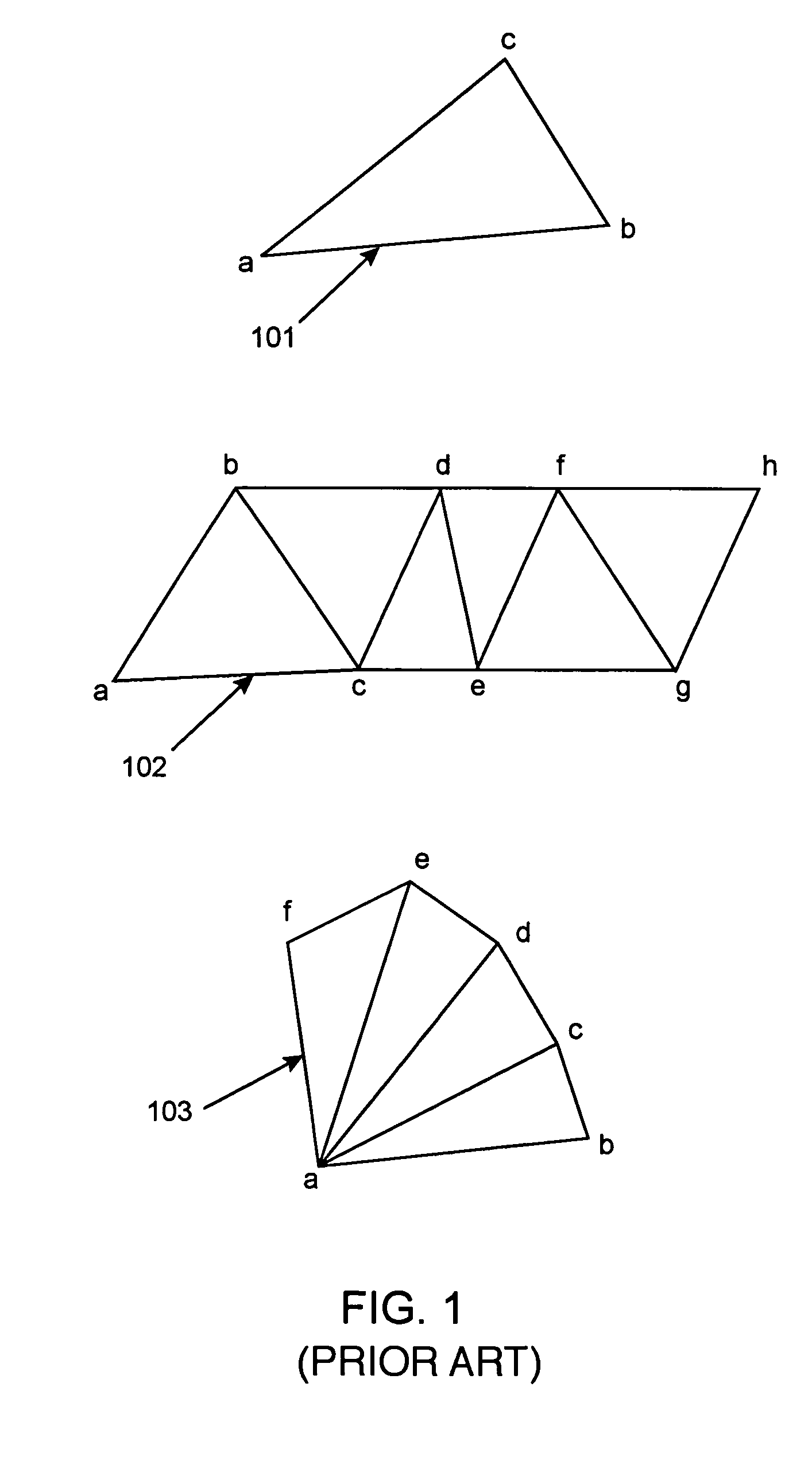

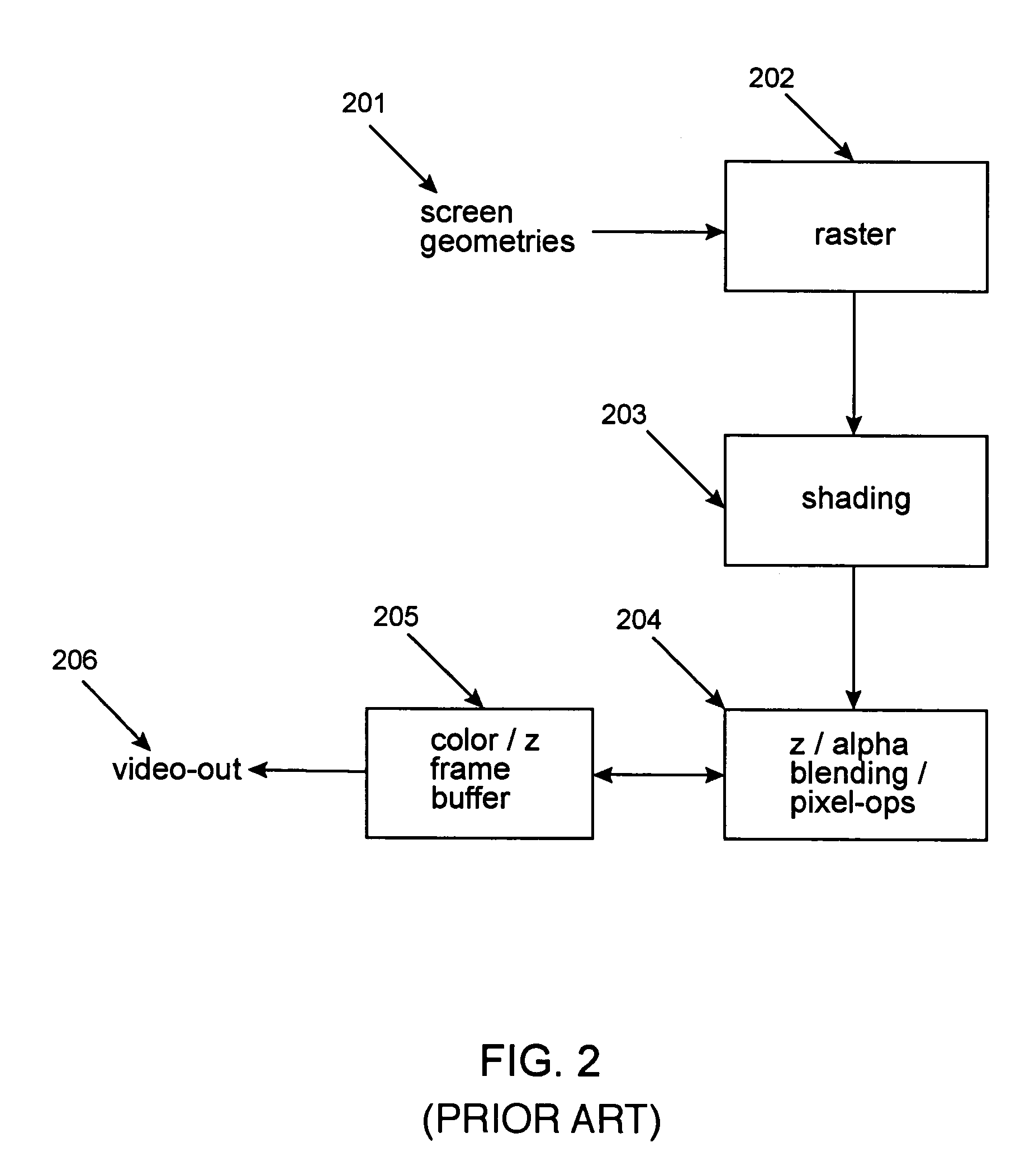

InactiveUS7170515B1EfficientlyReduce memory bandwidth3D-image rendering3D modellingComputational scienceVisibility

A rendering pipeline system for a computer environment uses screen space tiling (SST) to eliminate the memory bandwidth bottleneck due to frame buffer access and performs screen space tiling efficiently, while avoiding the breaking up of primitives. The system also reduces the buffering size required by SST. High quality, full-scene anti-aliasing is easily achieved because only the on-chip multi-sample memory corresponding to a single tile of the screen is needed. The invention uses a double-z scheme that decouples the scan conversion / depth-buffer processing from the more general rasterization and shading processing through a scan / z engine. The scan / z engine externally appears as a fragment generator but internally resolves visibility and allows the rest of the rendering pipeline to perform setup for only visible primitives and shade only visible fragments. The resulting reduced raster / shading requirements can lead to reduced hardware costs because one can process all parameters with generic parameter computing units instead of with dedicated parameter computing units. The invention processes both opaque and transparent geometries.

Owner:NVIDIA CORP

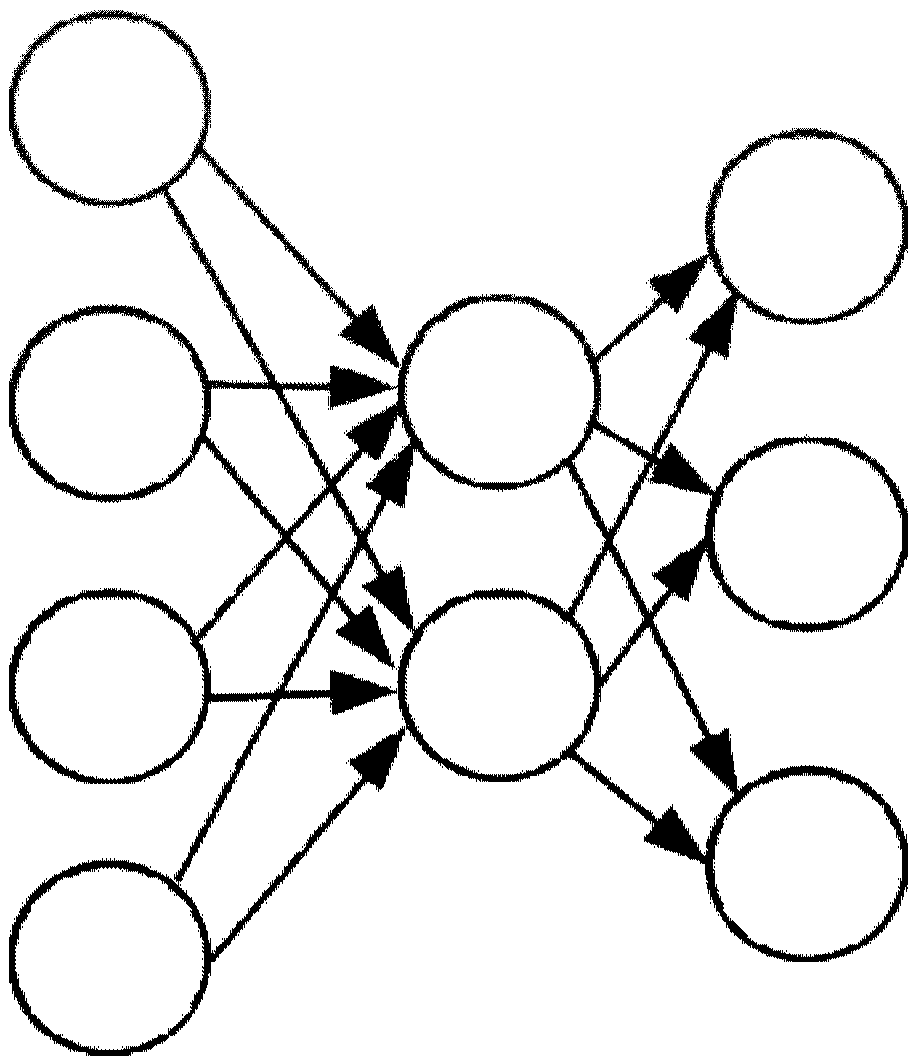

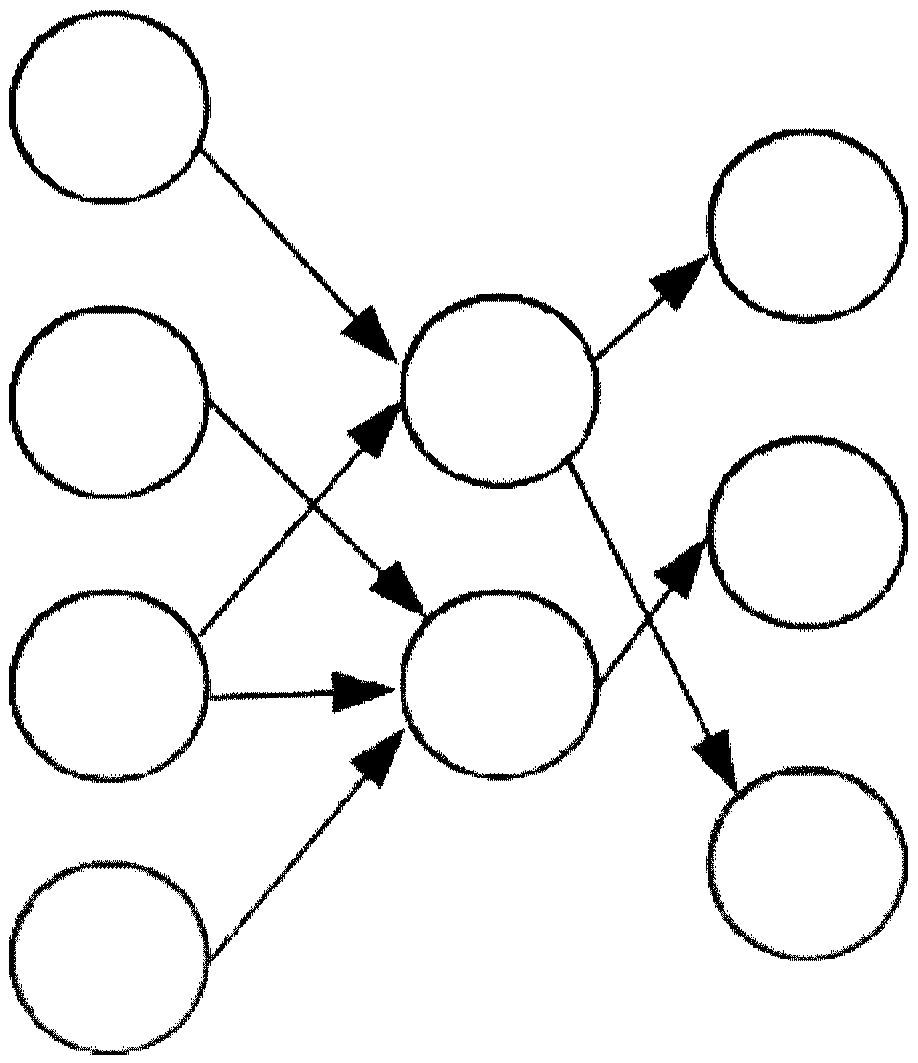

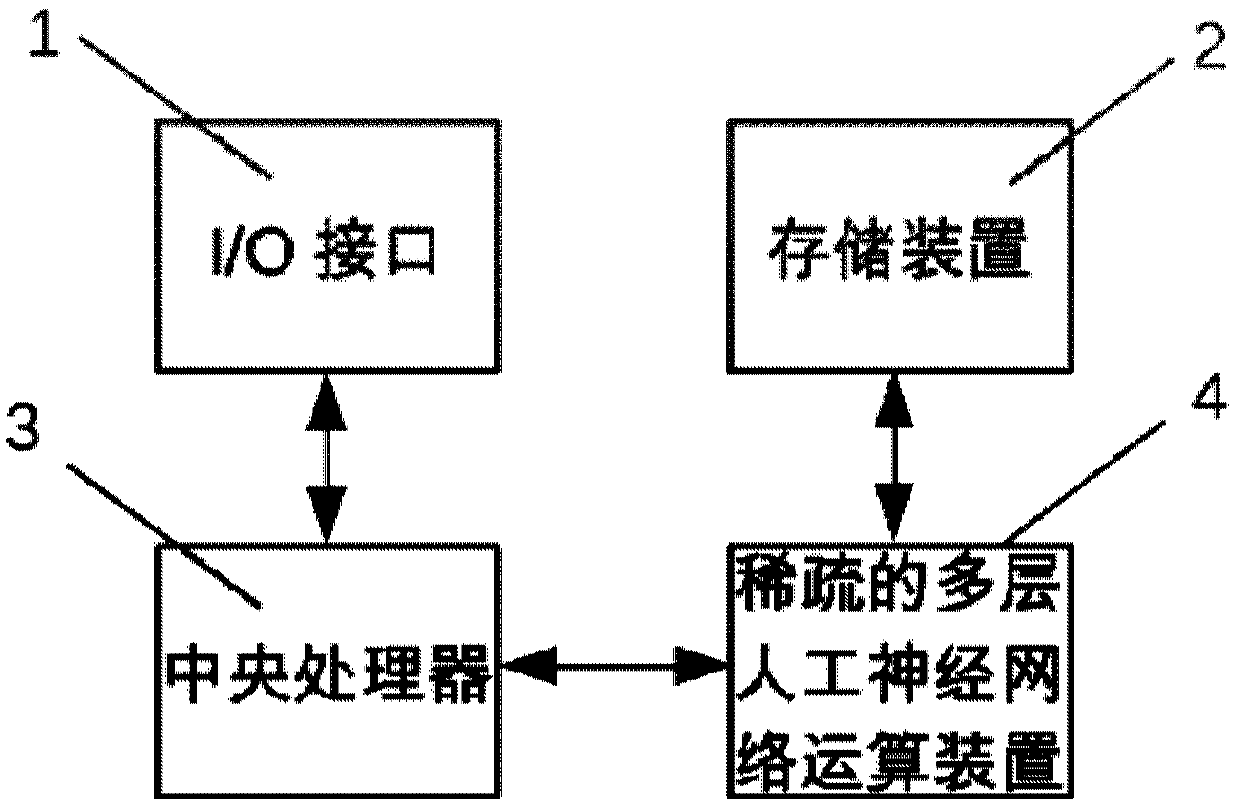

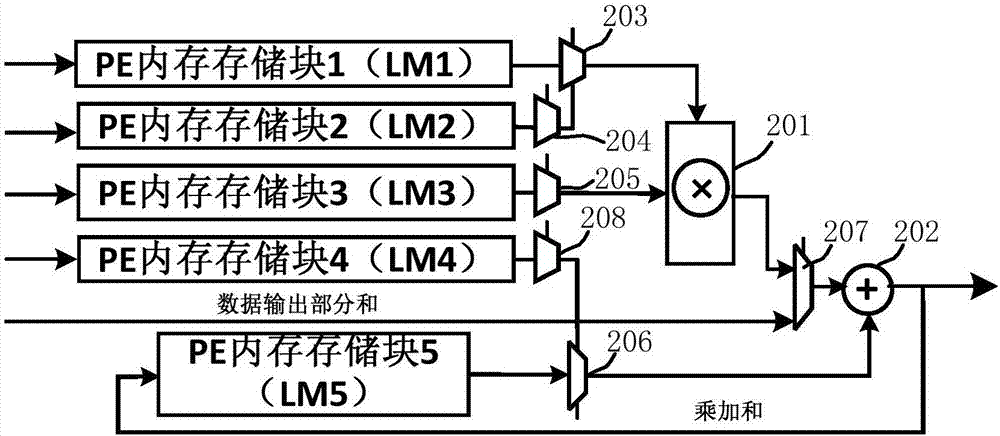

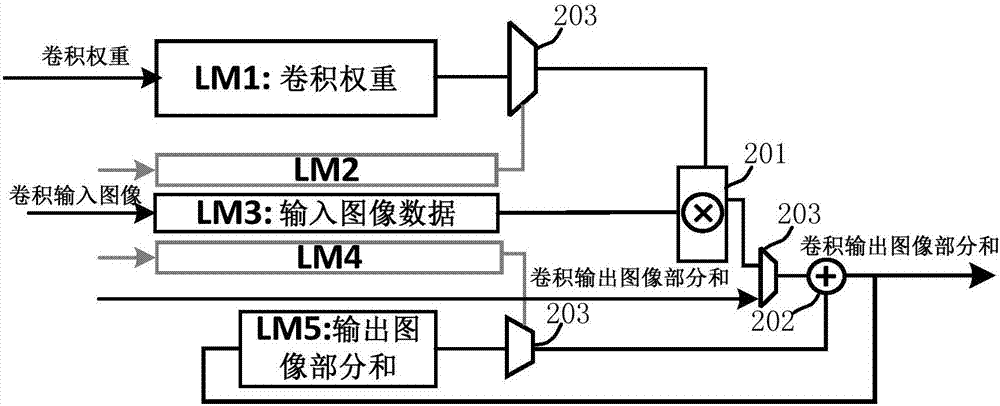

Artificial neural network calculating device and method for sparse connection

ActiveCN105512723ASolve the problem of insufficient computing performance and high front-end decoding overheadAdd supportMemory architecture accessing/allocationDigital data processing detailsActivation functionMemory bandwidth

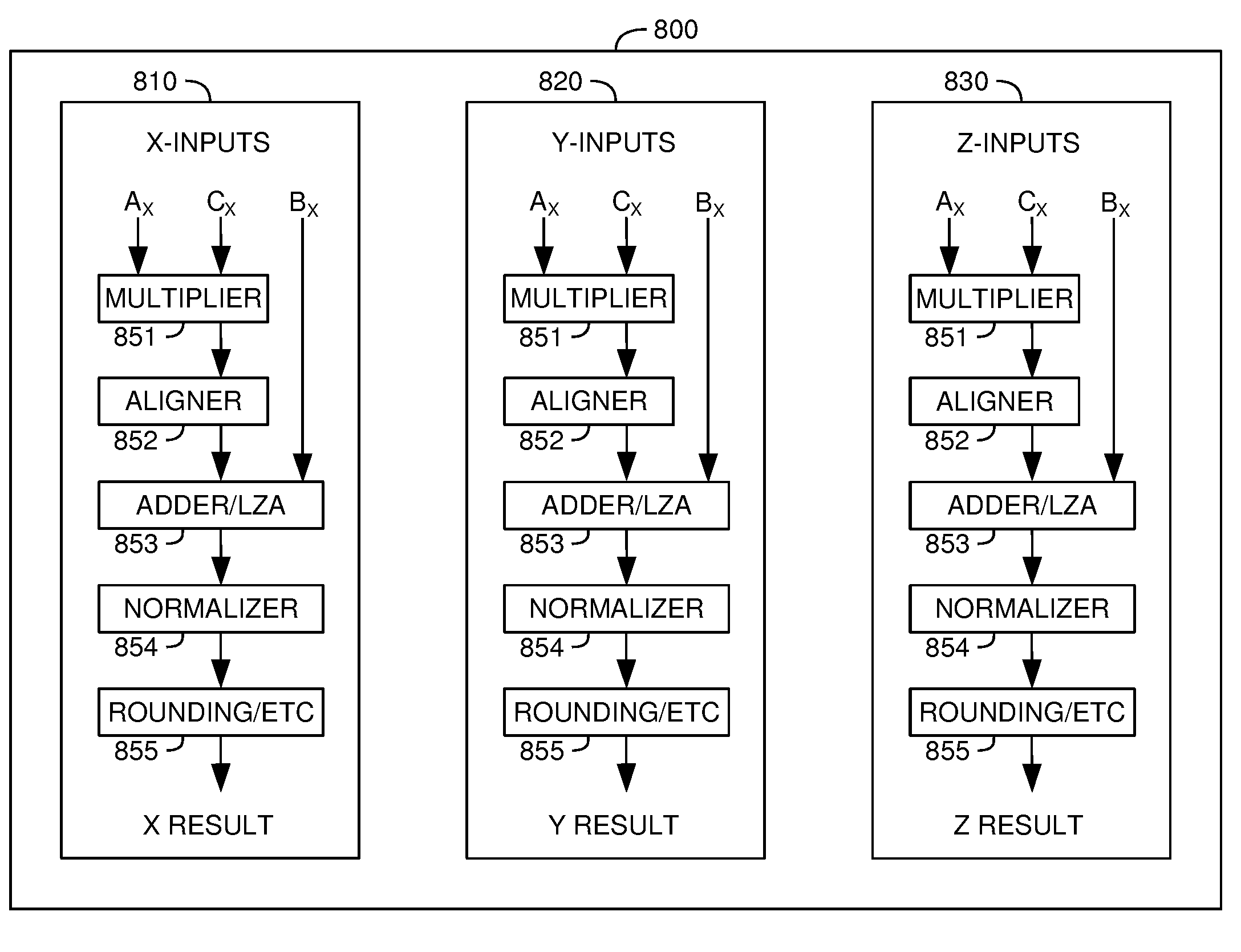

An artificial neural network calculating device for sparse connection comprises a mapping unit used for converting input data into the storage mode that input nerve cells and weight values correspond one by one, a storage unit used for storing data and instructions, and an operation unit used for executing corresponding operation on the data according to the instructions. The operation unit mainly executes three steps of operation, wherein in the first step, the input nerve cells and weight value data are multiplied; in the second step, addition tree operation is executed, the weighted output nerve cells processed in the first step are added level by level through an addition tree, or the output nerve cells are added with offset to obtain offset-added output nerve cells; in the third step, activation function operation is executed, and the final output nerve cells are obtained. By means of the device, the problems that the operation performance of a CPU and a GPU is insufficient, and the expenditure of front end coding is large are solved, support to a multi-layer artificial neural network operation algorithm is effectively improved, and the problem that memory bandwidth becomes a bottleneck of multi-layer artificial neural network operation and the performance of a training algorithm of the multi-layer artificial neural network operation is solved.

Owner:CAMBRICON TECH CO LTD

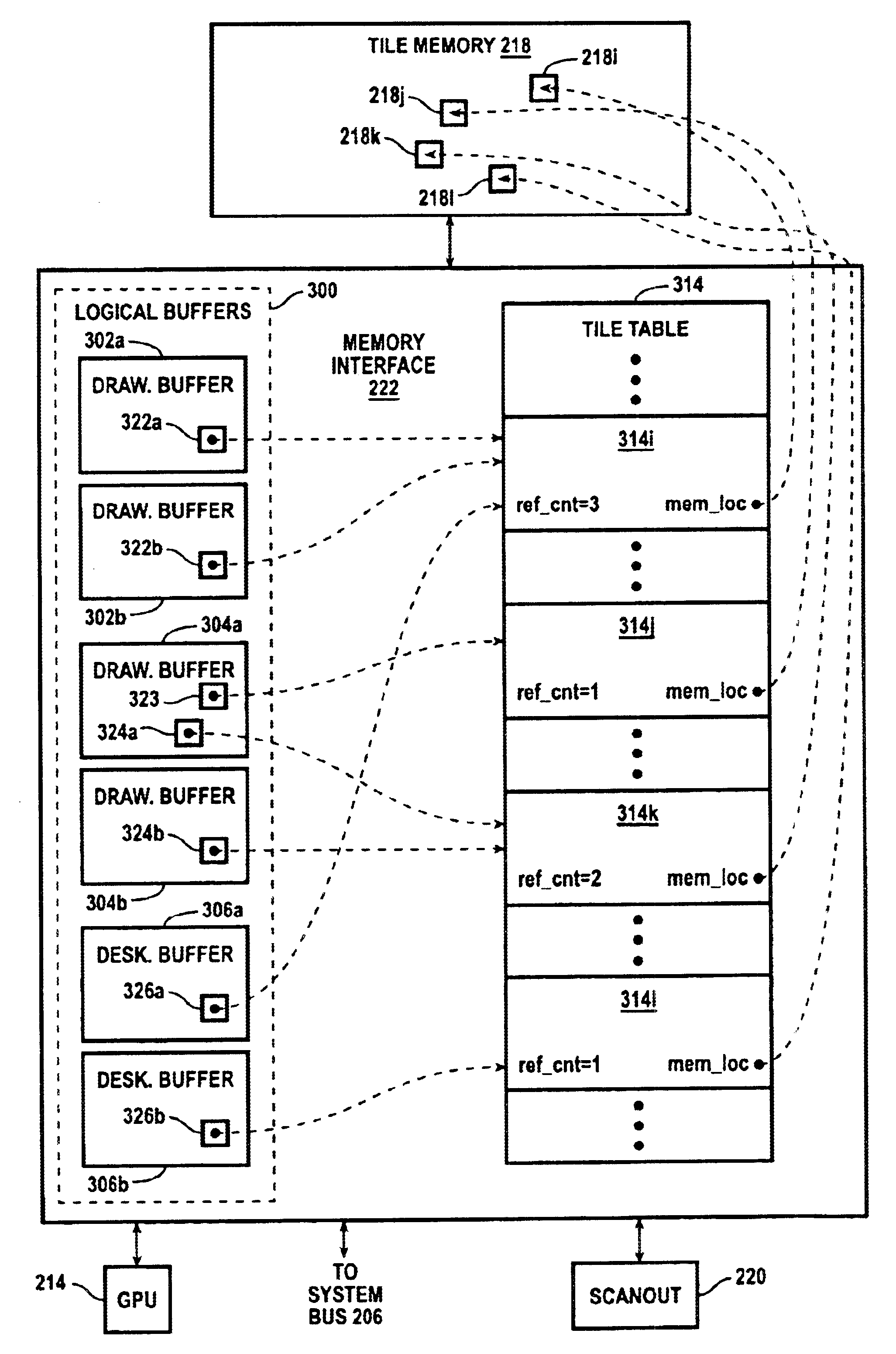

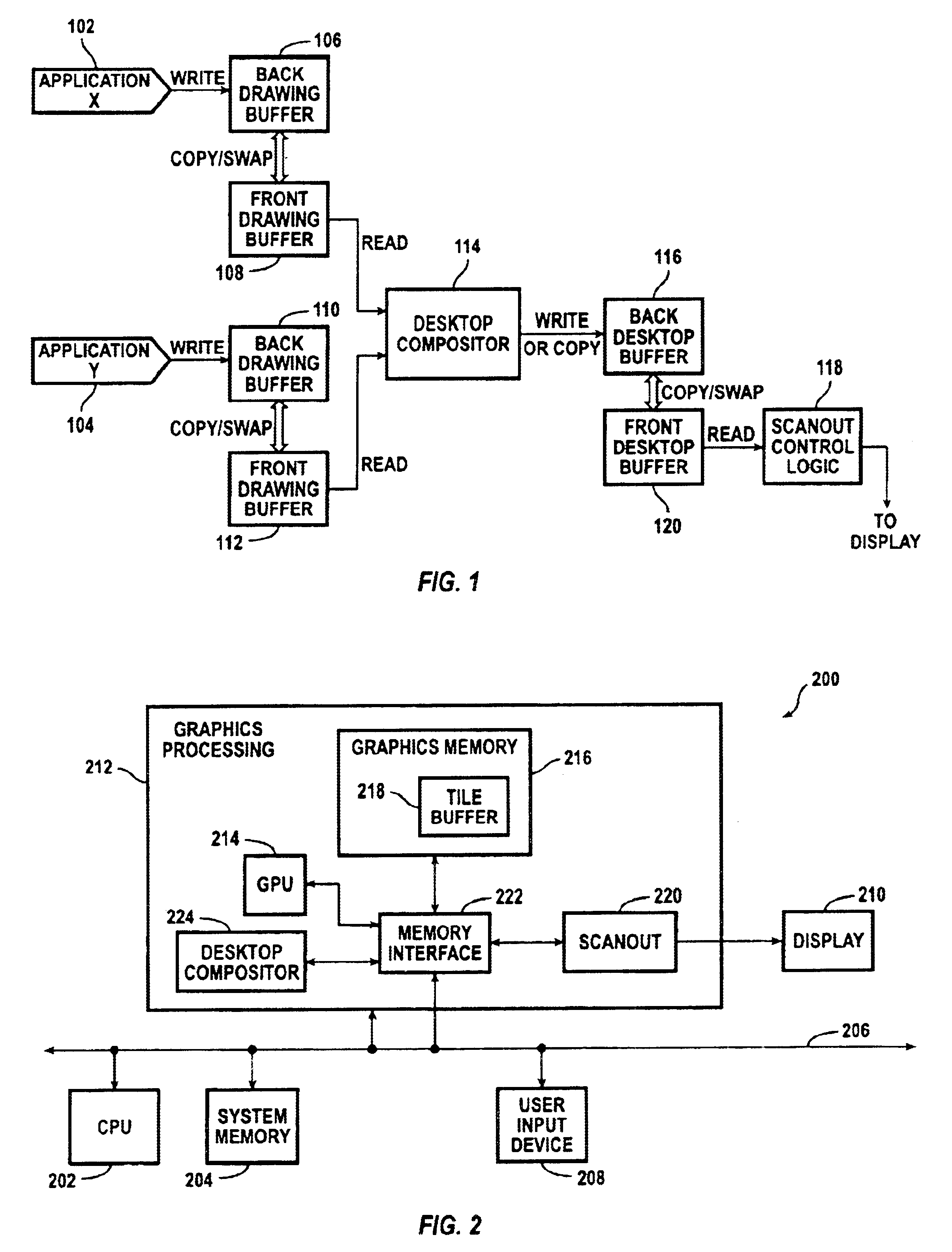

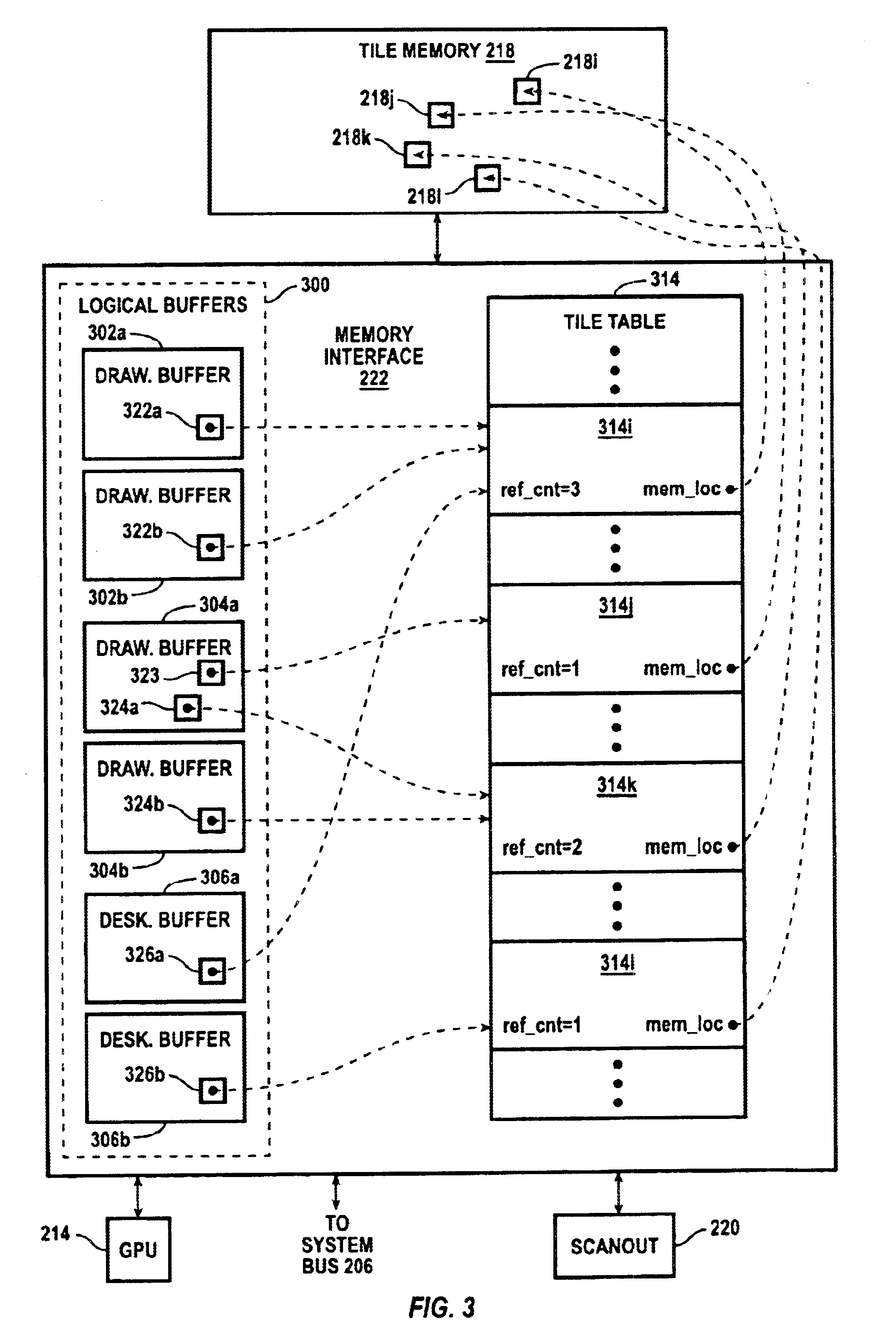

Desktop compositor using copy-on-write semantics

ActiveUS6911984B2Reduce memory bandwidthCathode-ray tube indicatorsMultiple digital computer combinationsParallel computingSemantics

Tile data for drawing and desktop buffers in a desktop compositor system is managed using “copy-on-write” semantics, in which tile data stored in a memory location is not transferred to another location until the tile data for one of the buffers is modified. For each tile in drawing buffers and desktop buffers, an association is maintained with a location in a tile memory, and the number of buffer tiles associated with each location is tracked. To copy a tile from one buffer to another, the tile association for the tile in the destination buffer is modified. New data for a tile of a buffer is written to the tile memory location associated with the buffer after ensuring that the tile memory location is not associated with any other tiles of any of the buffers. As a result, memory bandwidth can be considerably reduced.

Owner:NVIDIA CORP

Reverse-time depth migration with reduced memory requirements

InactiveUS20100054082A1Accurate source wave modelingEnhance computer storageSeismic signal processingReverse timeMass storage

A method of prestack reverse-time migration of seismic data that yields significant gains in computer storage and memory bandwidth efficiency is disclosed. The values only of the source wave incident on the boundaries of a simulation domain are saved, rather than all of the values of the wavefield throughout the entire simulation domain. This data allows an accurate and robust approximation of the forward propagated source wave for all finite-difference approximation orders of the acoustic wave equation. The method reduces the amount of data storage required by an order of magnitude and overcomes the present challenge of requiring special large memory hardware while allowing for the implementation of 3D prestack reverse-time migration on off-the-shelf platforms.

Owner:ACCELEWARE CORP

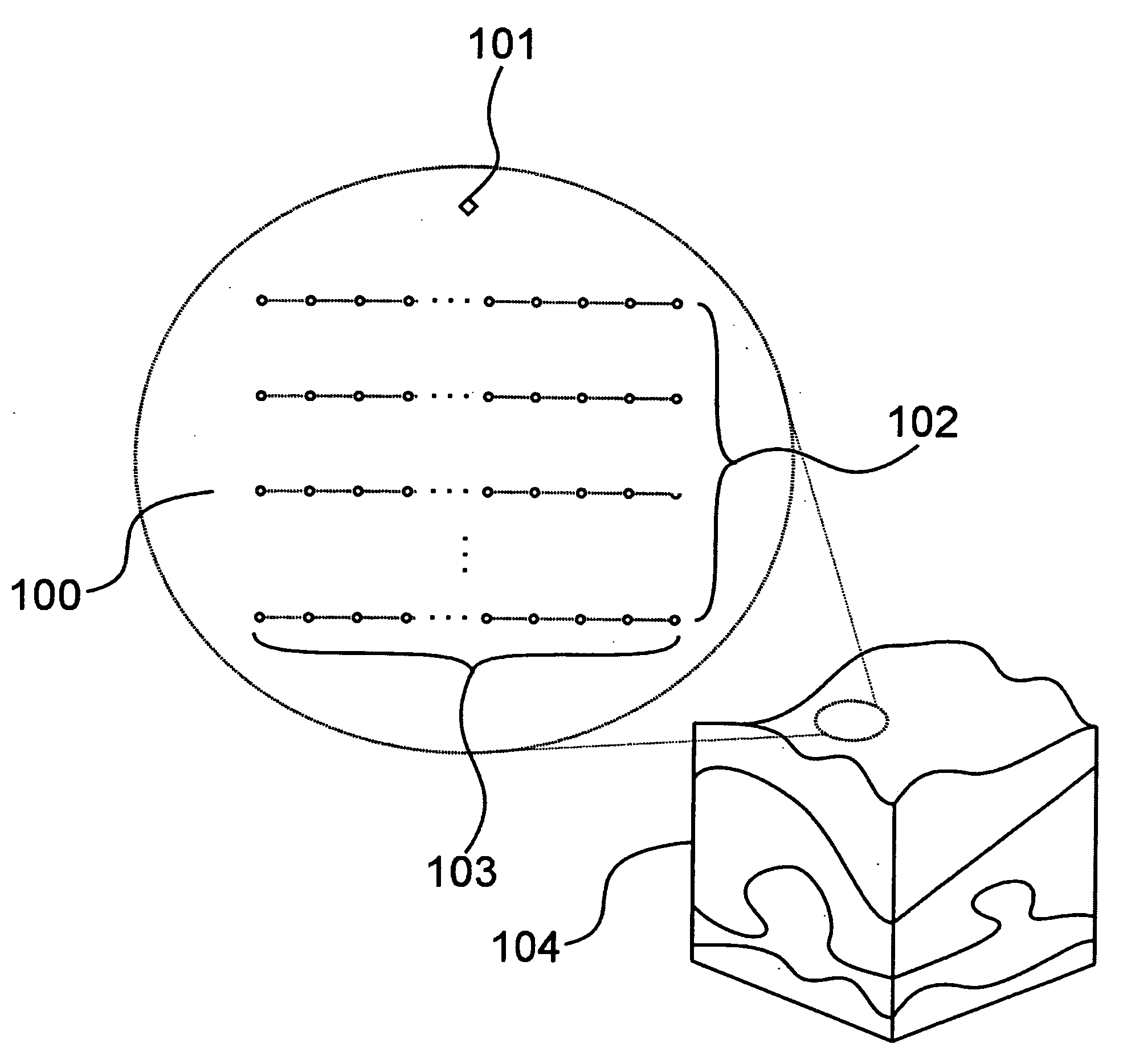

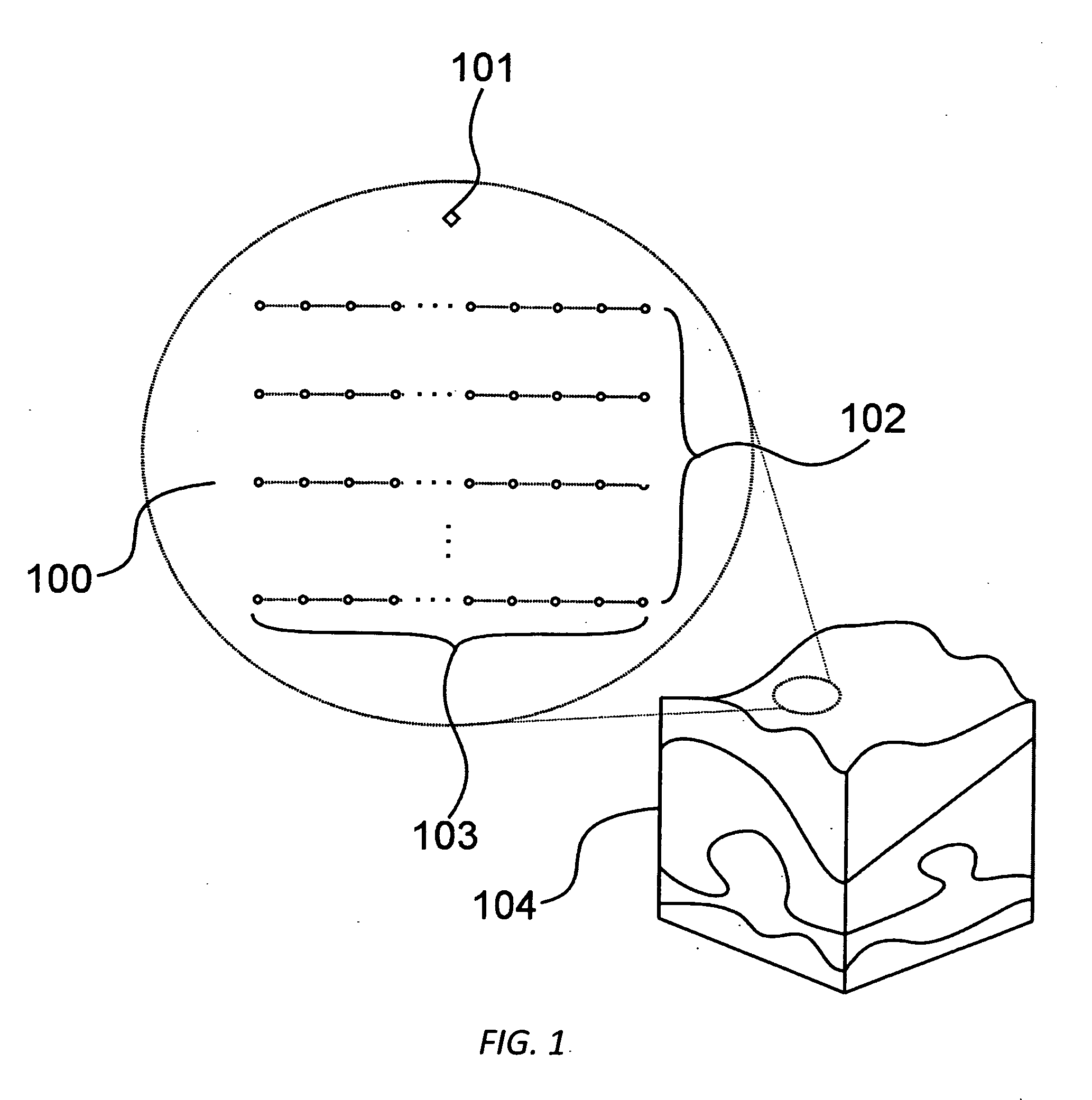

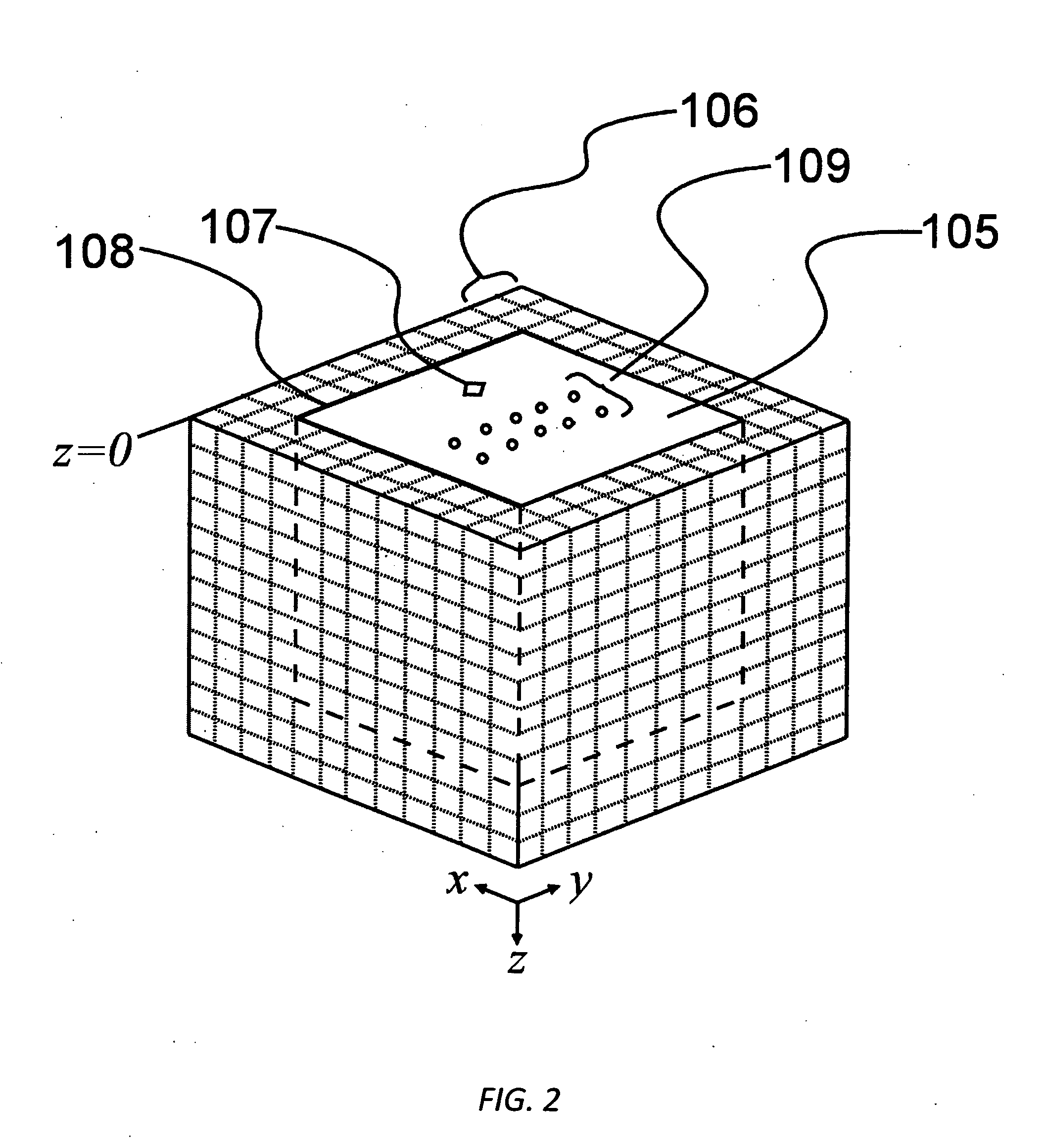

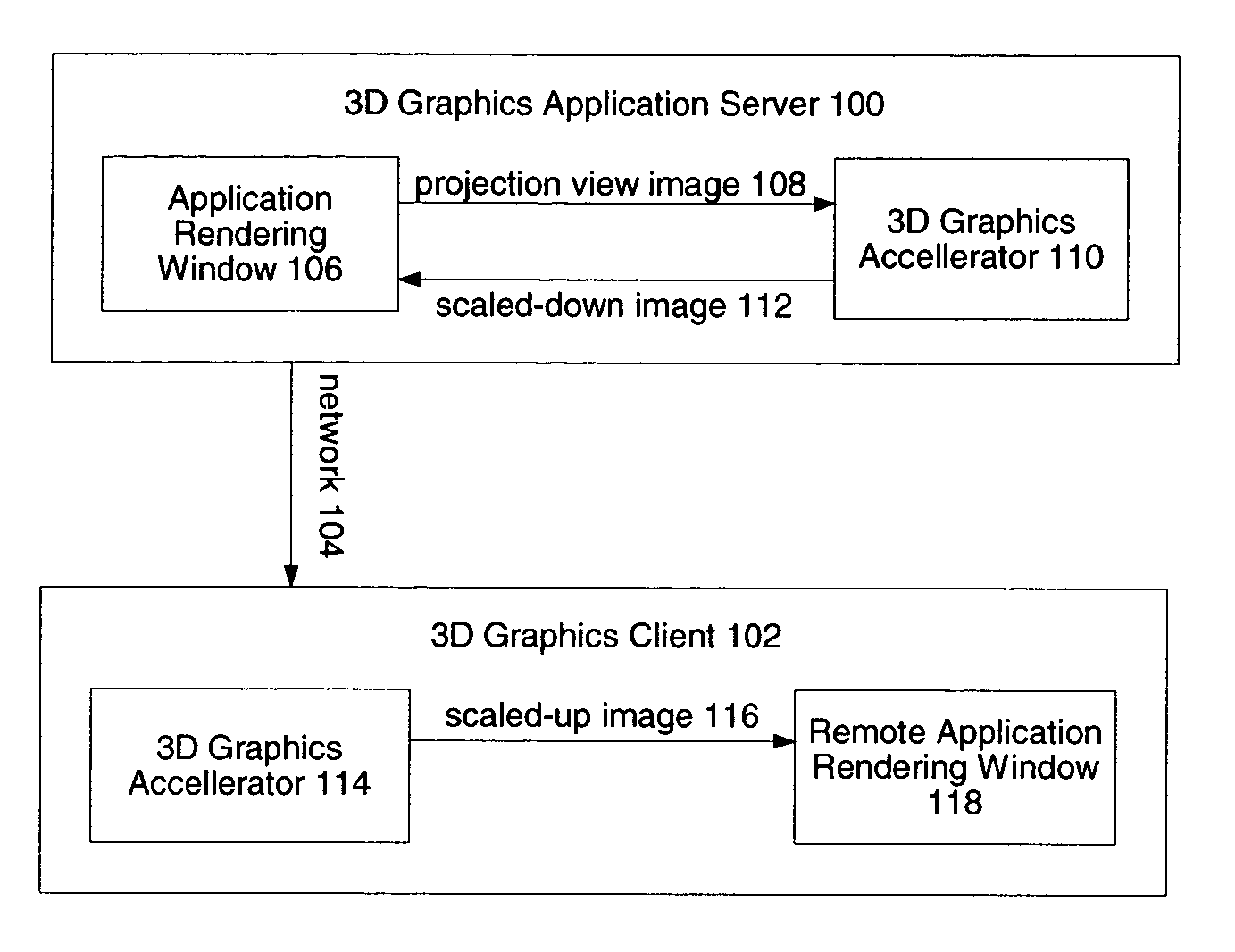

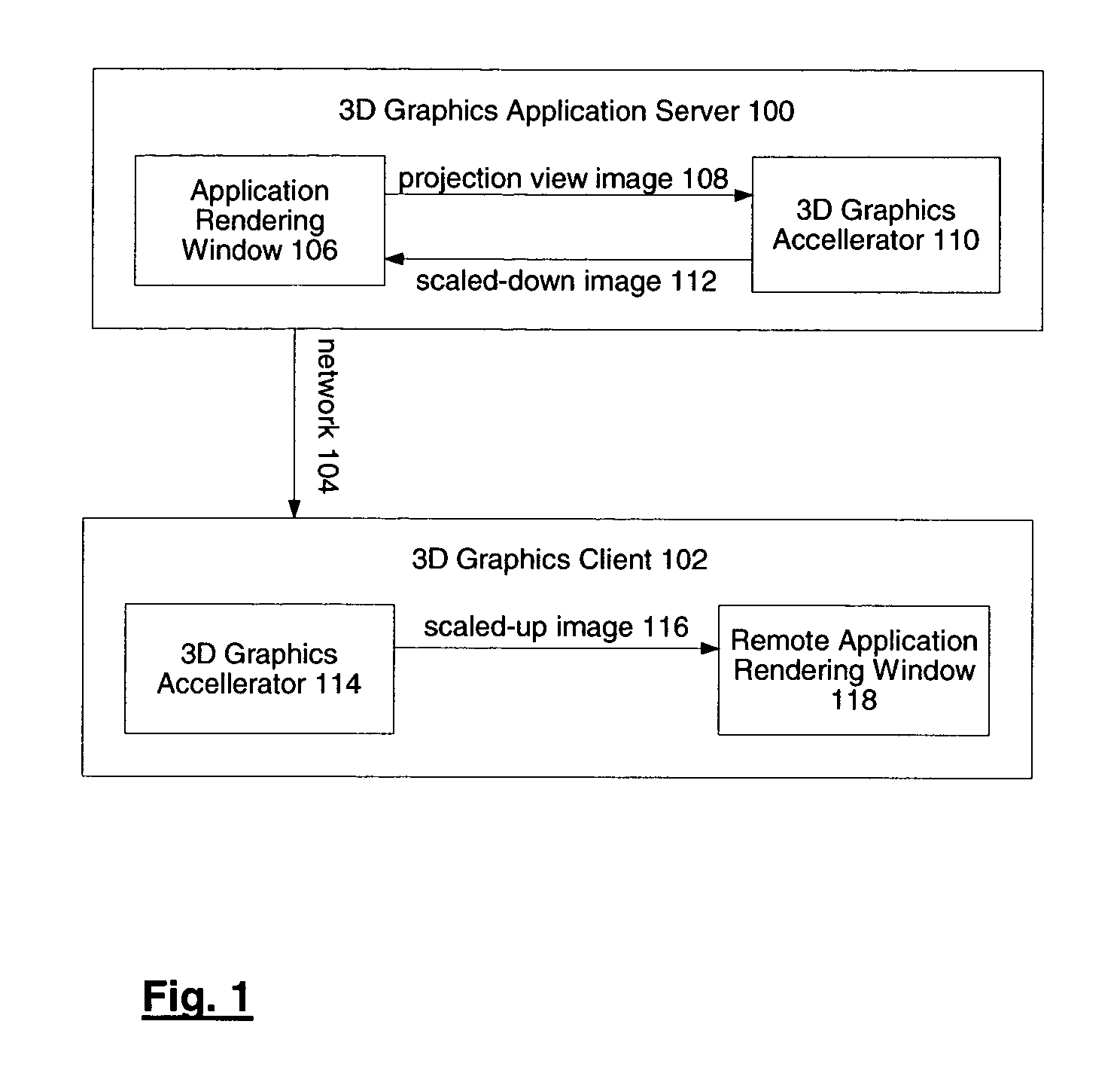

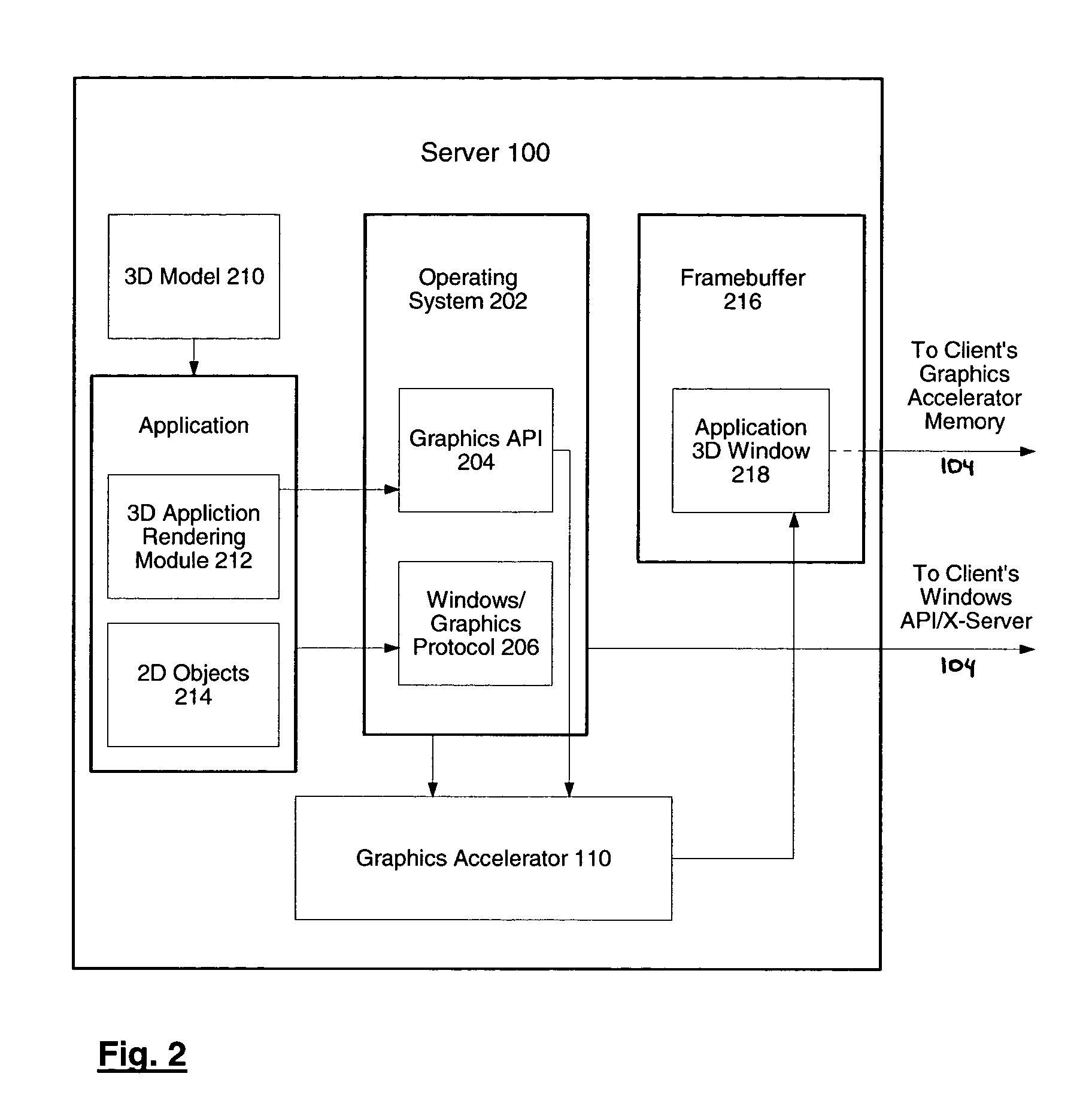

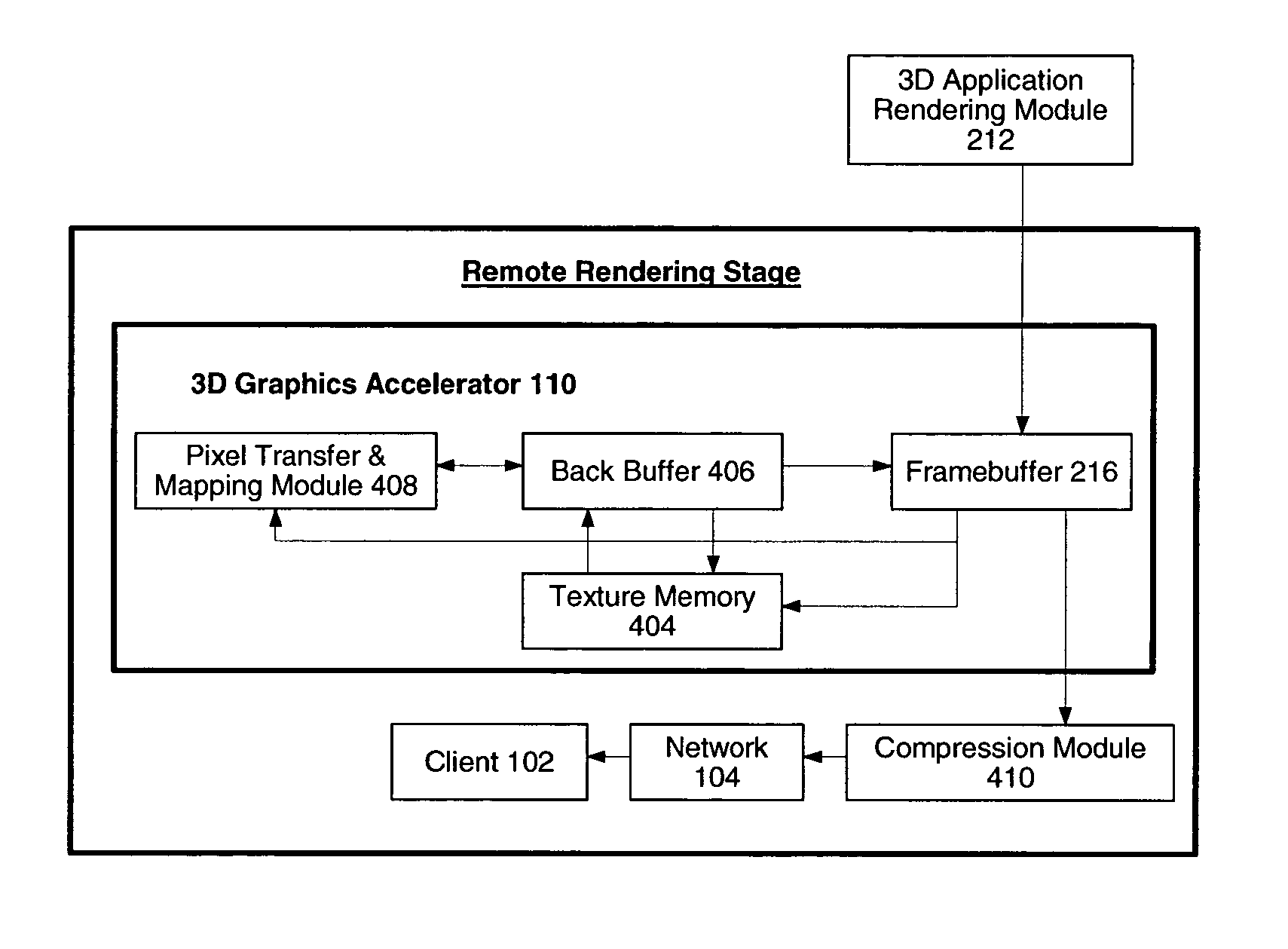

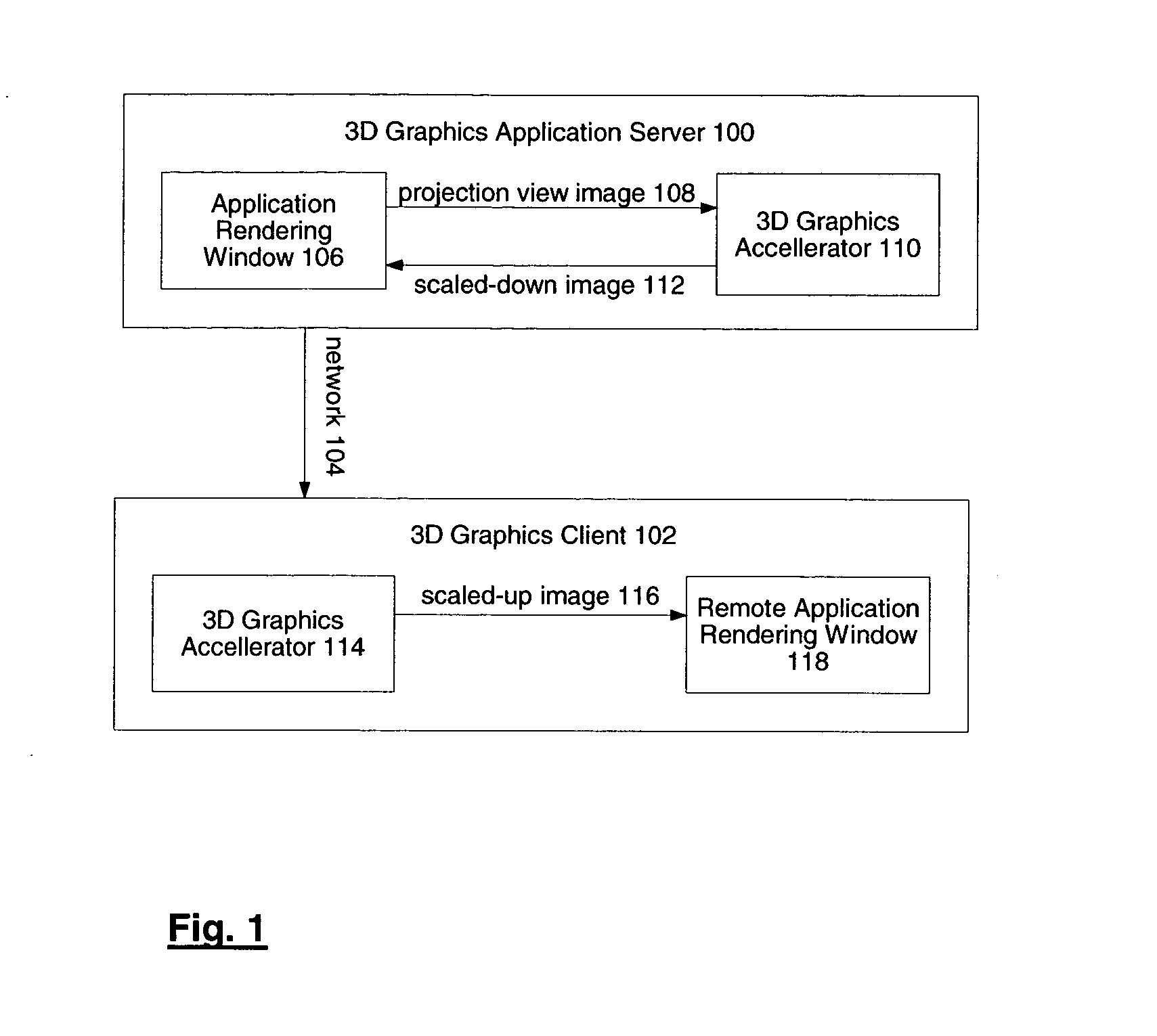

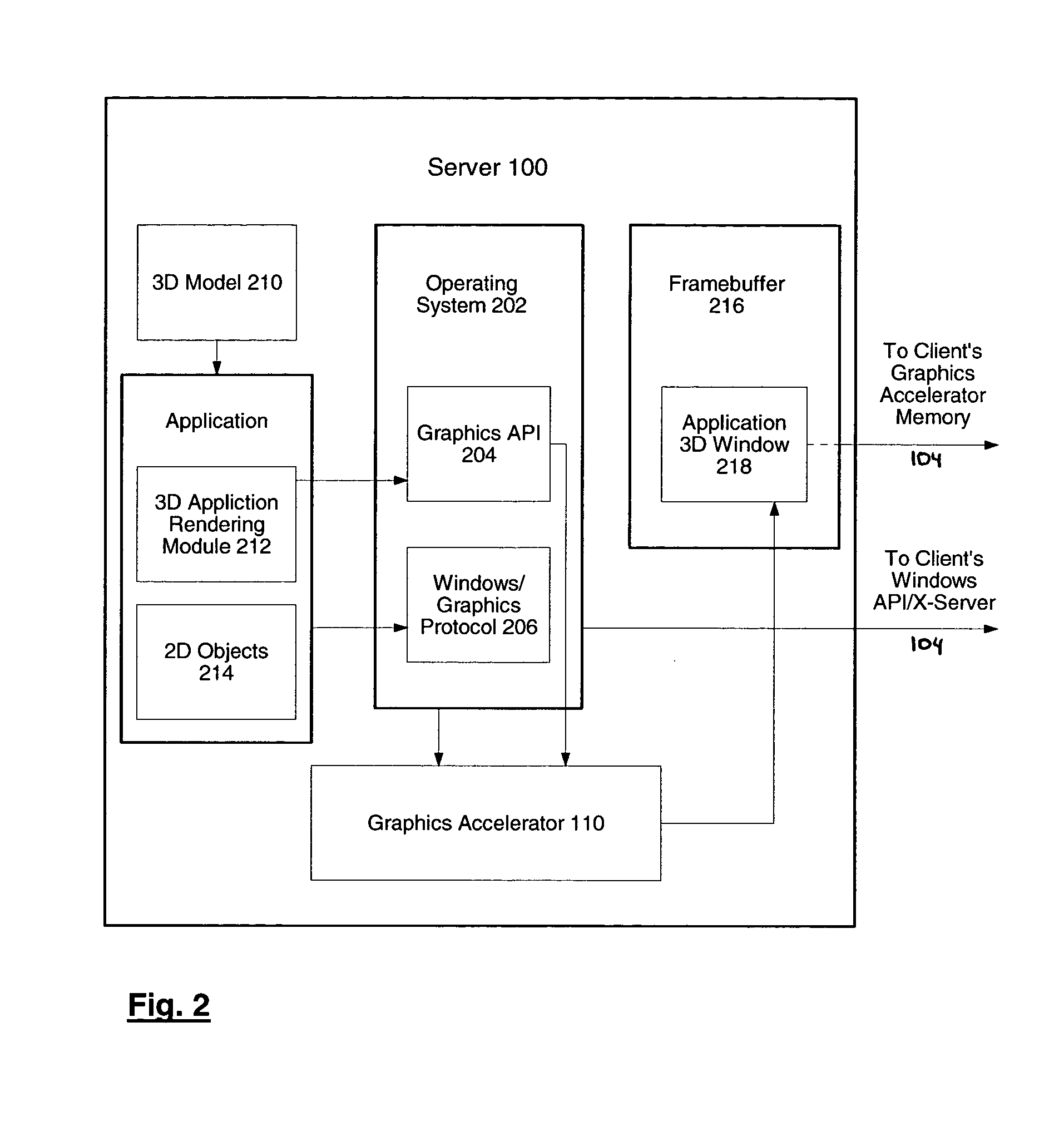

System and method for network transmission of graphical data through a distributed application

InactiveUS7076735B2Reduce network bandwidth requirementsCathode-ray tube indicatorsImage data processing detailsGraphic cardNetworked Transport of RTCM via Internet Protocol

Systems and methods for network transmission of three-dimensional graphical data are disclosed. A single graphical application instance can virtually and efficiently exist on multiple local or remote display systems by directly sharing its raw rendered framebuffer memory information among all local or remote graphics accelerators, thus avoiding the need to re-render any application information again on each node. An internal graphics card is used to scale the rendered data prior to transmission. This graphics scaling eliminates the need for data compression or image compression and achieves an adaptive, hardware-accelerated reduction in network bandwidth. Furthermore, since all memory and remote processing support tasks are performed within the graphics card, the CPU, system bus, and memory bandwidth remain available to the system and other applications.

Owner:LANDMARK GRAPHICS CORP

System and method for network transmission of graphical data through a distributed application

InactiveUS20050021656A1Reduce network bandwidth requirementsCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsGraphic card

Systems and methods for network transmission of three-dimensional graphical data are disclosed. A single graphical application instance can virtually and efficiently exist on multiple local or remote display systems by directly sharing its raw rendered framebuffer memory information among all local or remote graphics accelerators, thus avoiding the need to re-render any application information again on each node. An internal graphics card is used to scale the rendered data prior to transmission. This graphics scaling eliminates the need for data compression or image compression and achieves an adaptive, hardware-accelerated reduction in network bandwidth. Furthermore, since all memory and remote processing support tasks are performed within the graphics card, the CPU, system bus, and memory bandwidth remain available to the system and other applications.

Owner:LANDMARK GRAPHICS

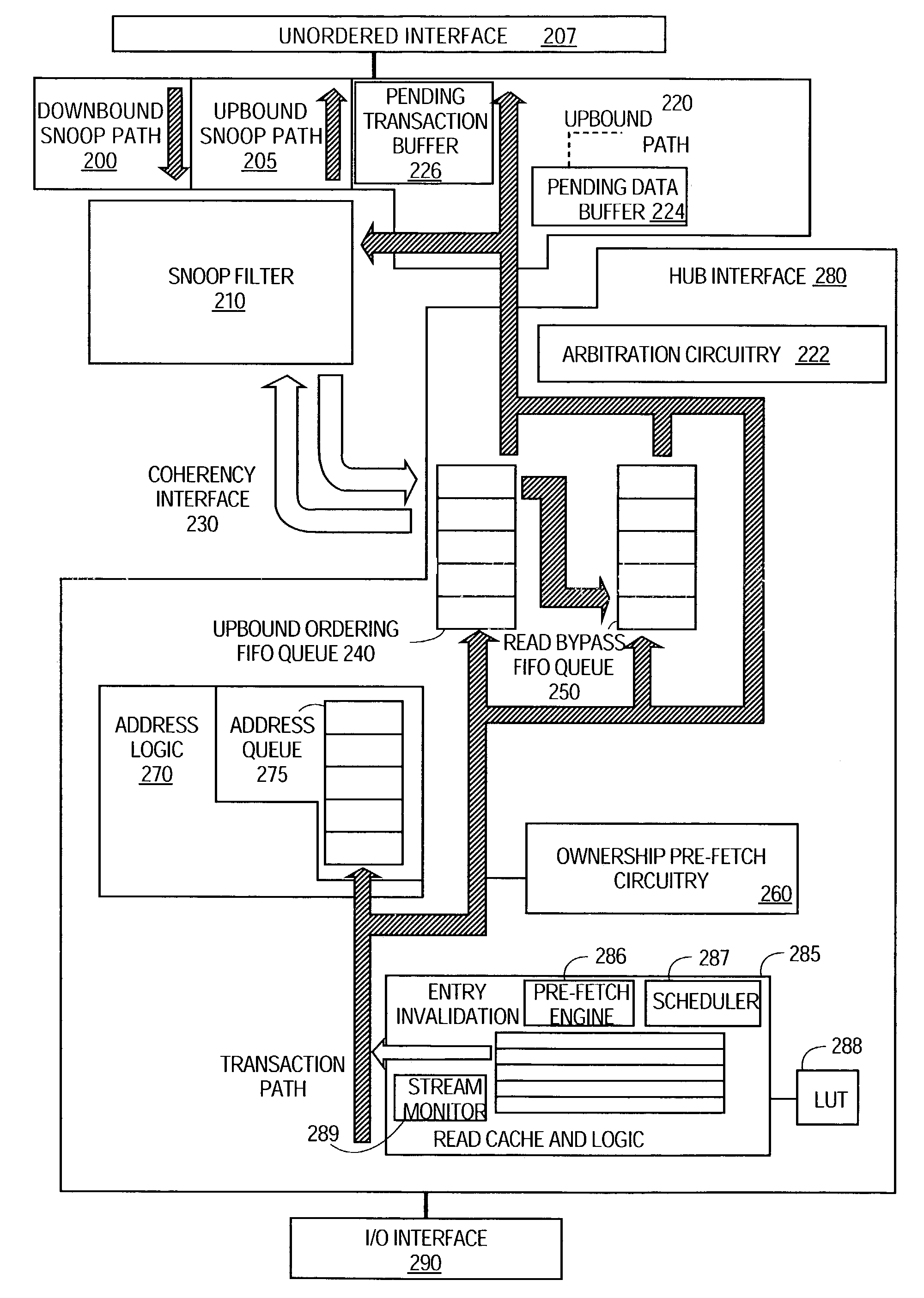

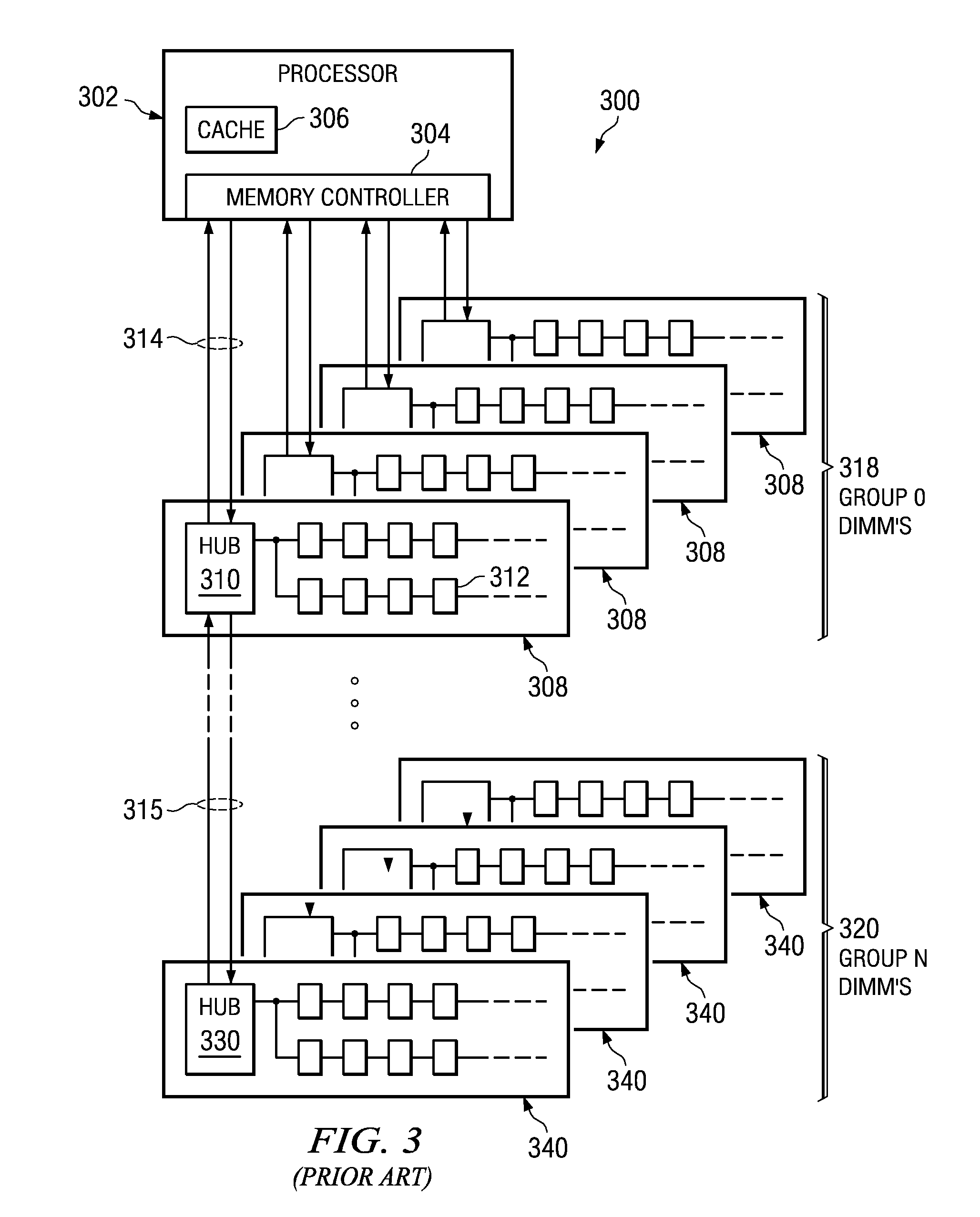

Memory read/write reordering

InactiveUS7047374B2Memory adressing/allocation/relocationInput/output processes for data processingDIMMMemory bandwidth

Owner:INTEL CORP

Data transfer error checking

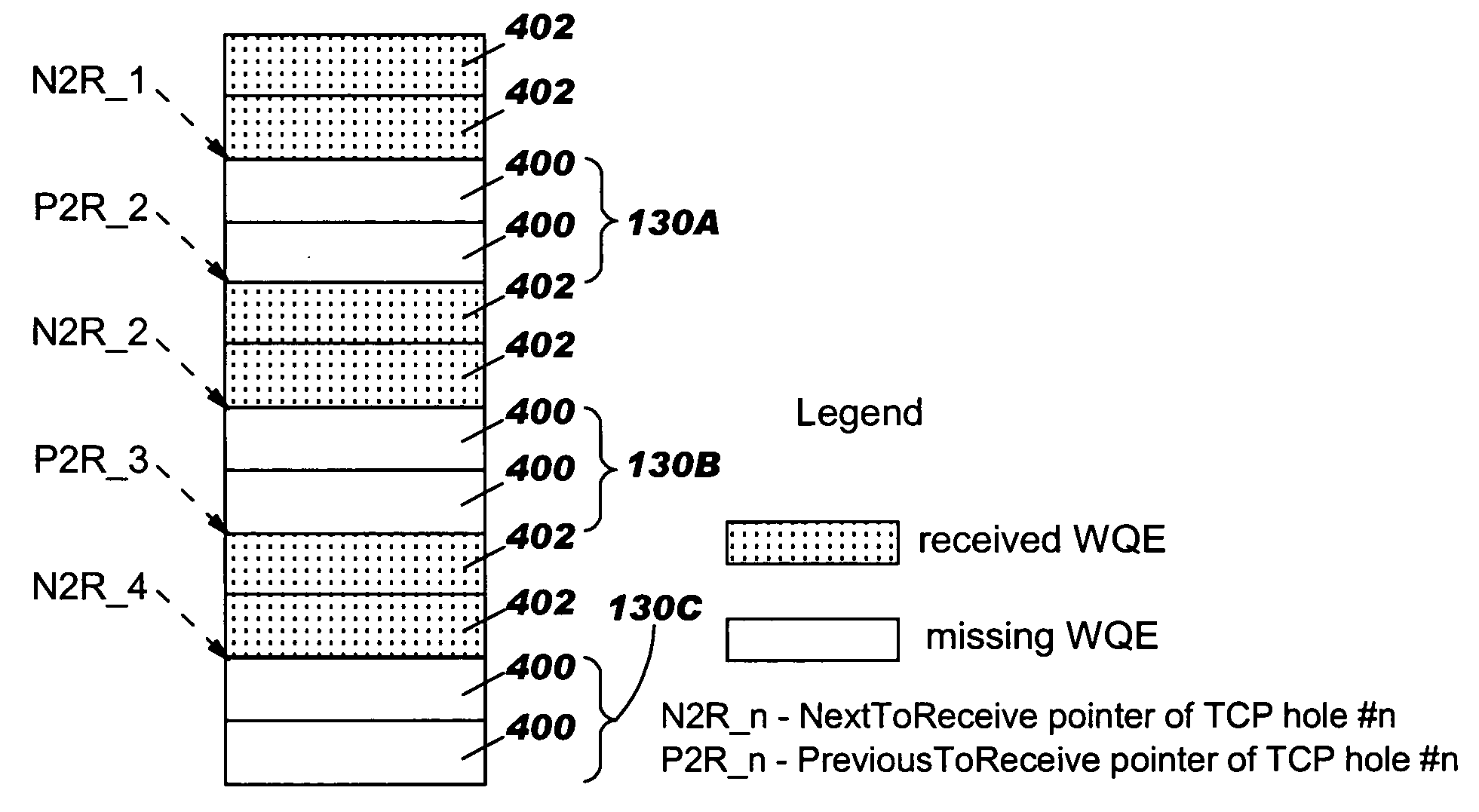

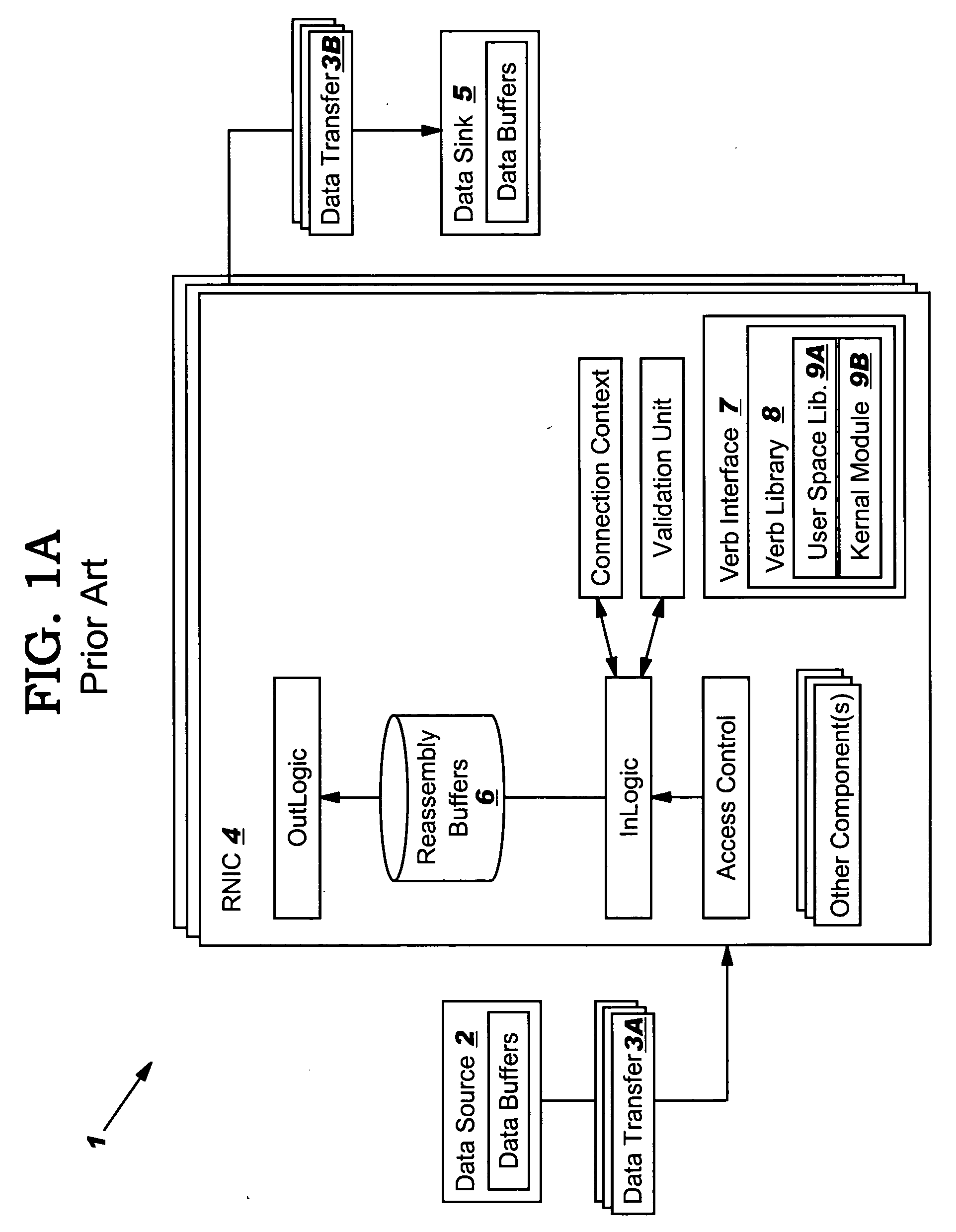

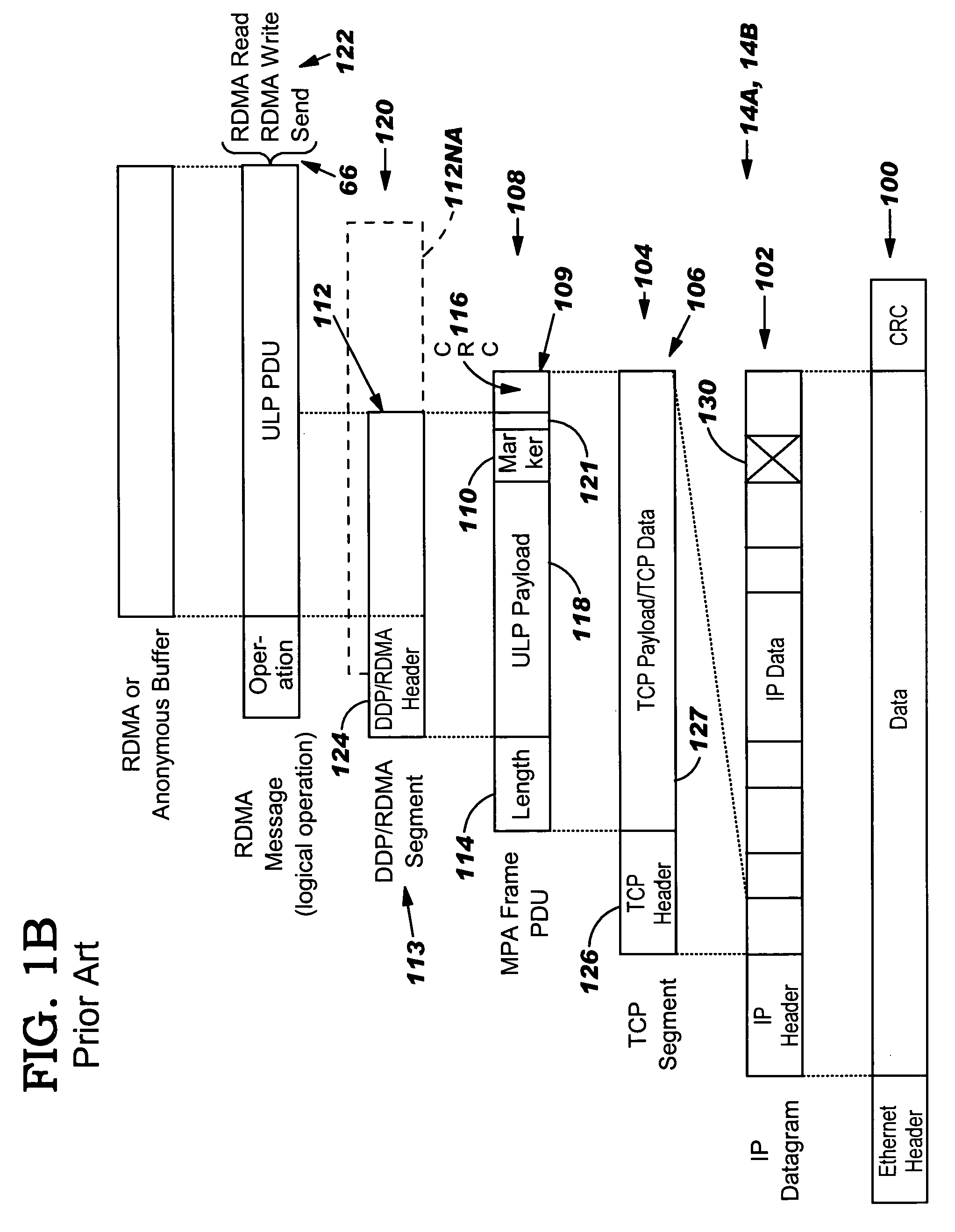

InactiveUS20050149817A1Reduce memory bandwidthReduce latencyCode conversionError detection onlyConnection typeData placement

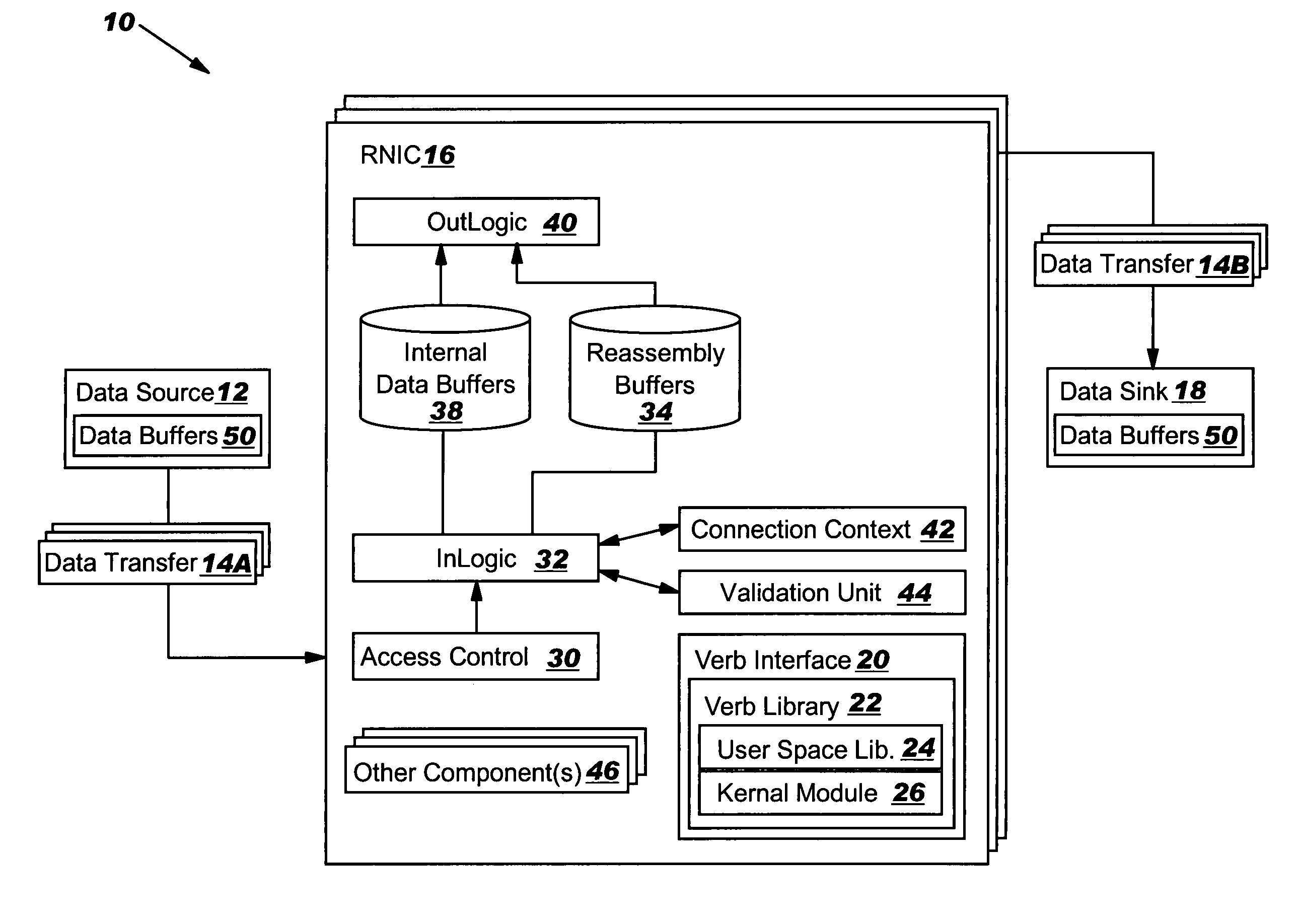

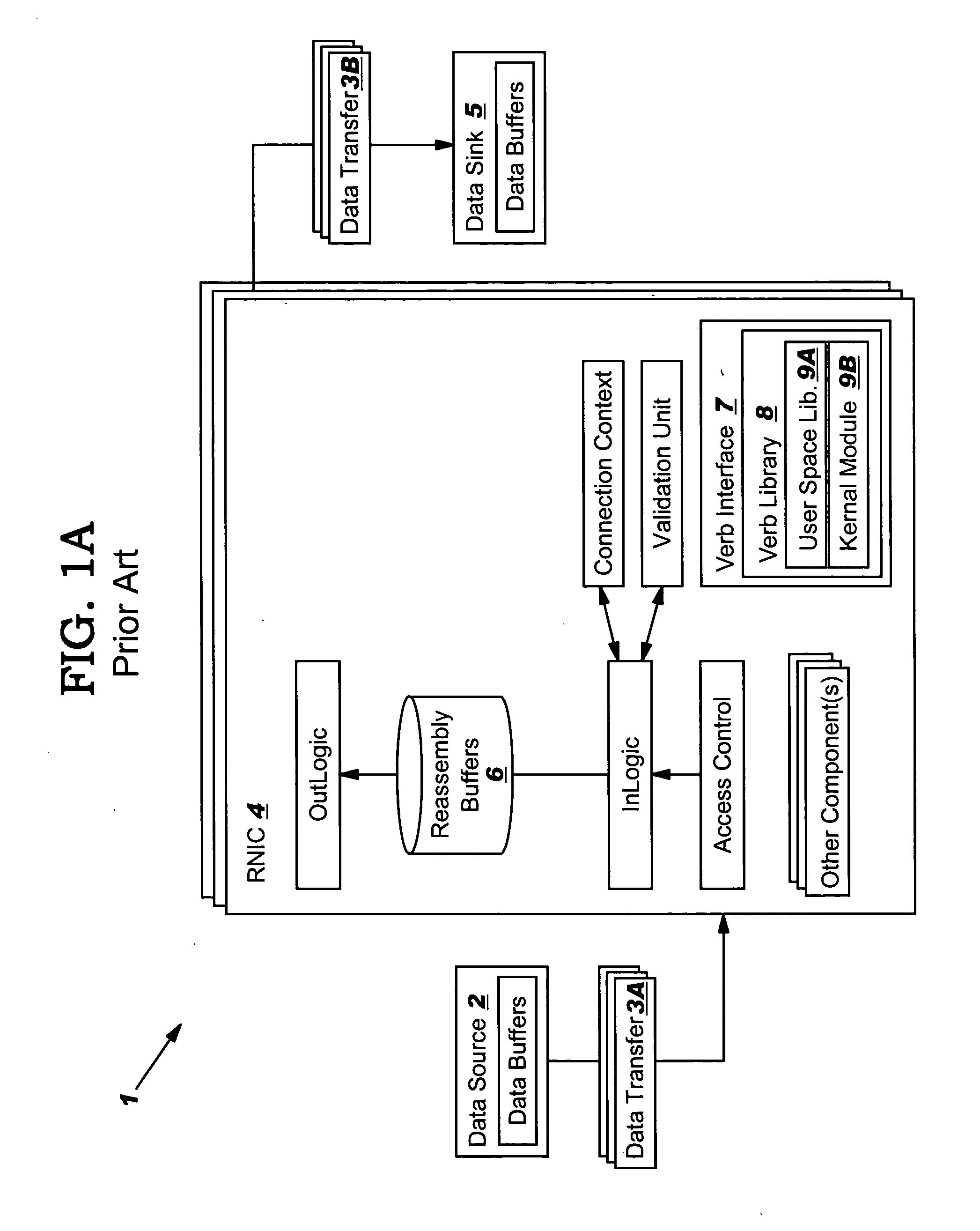

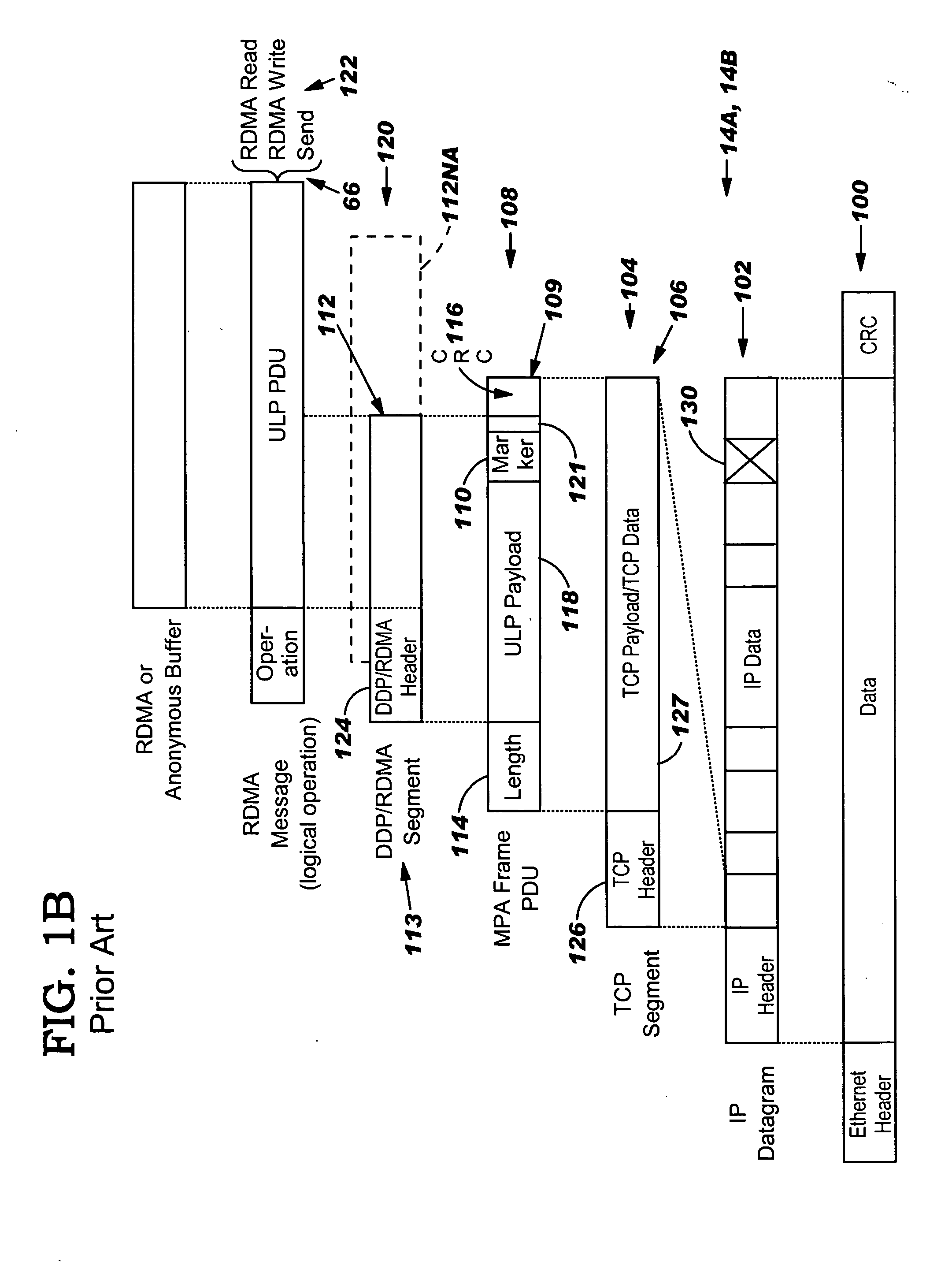

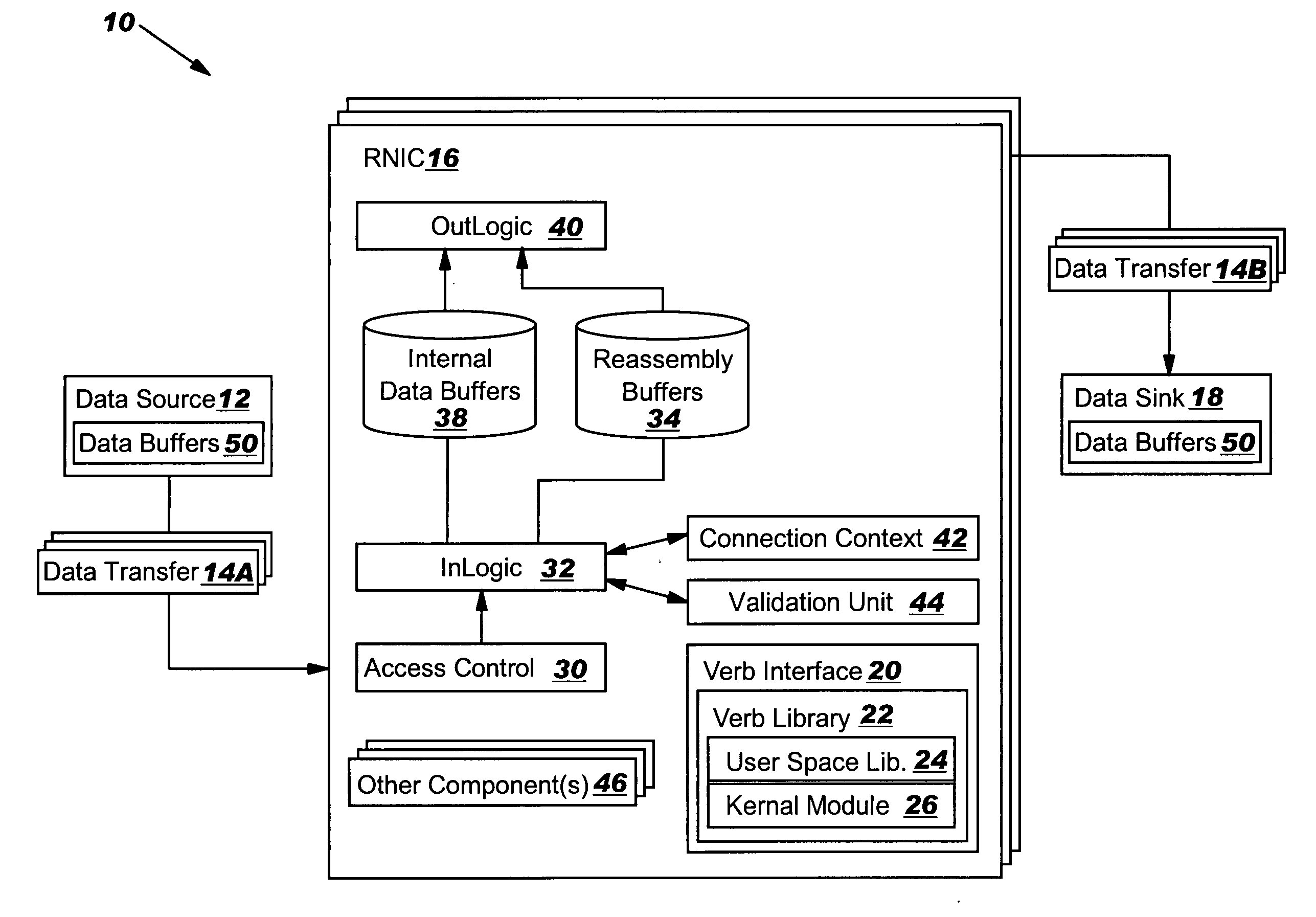

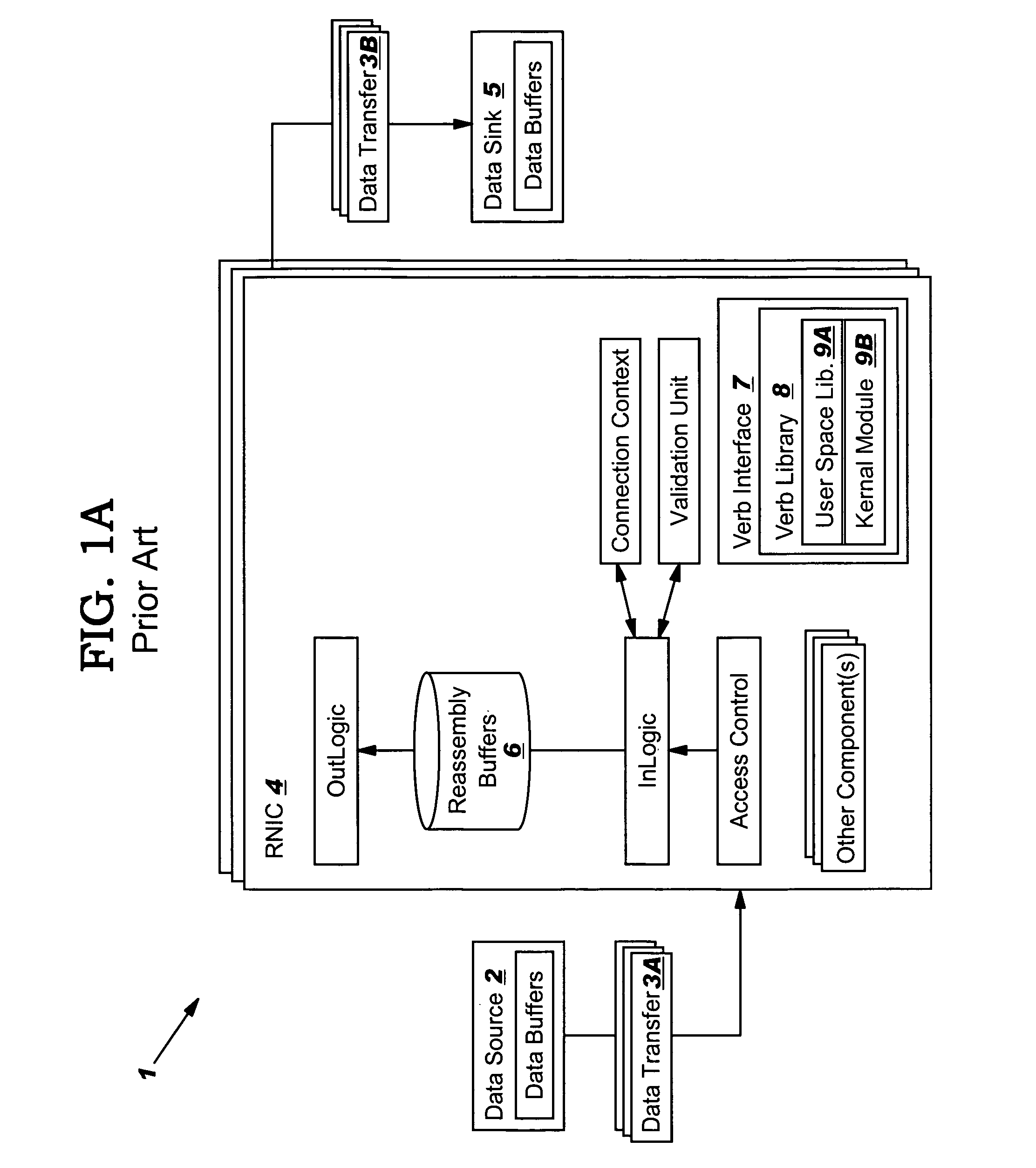

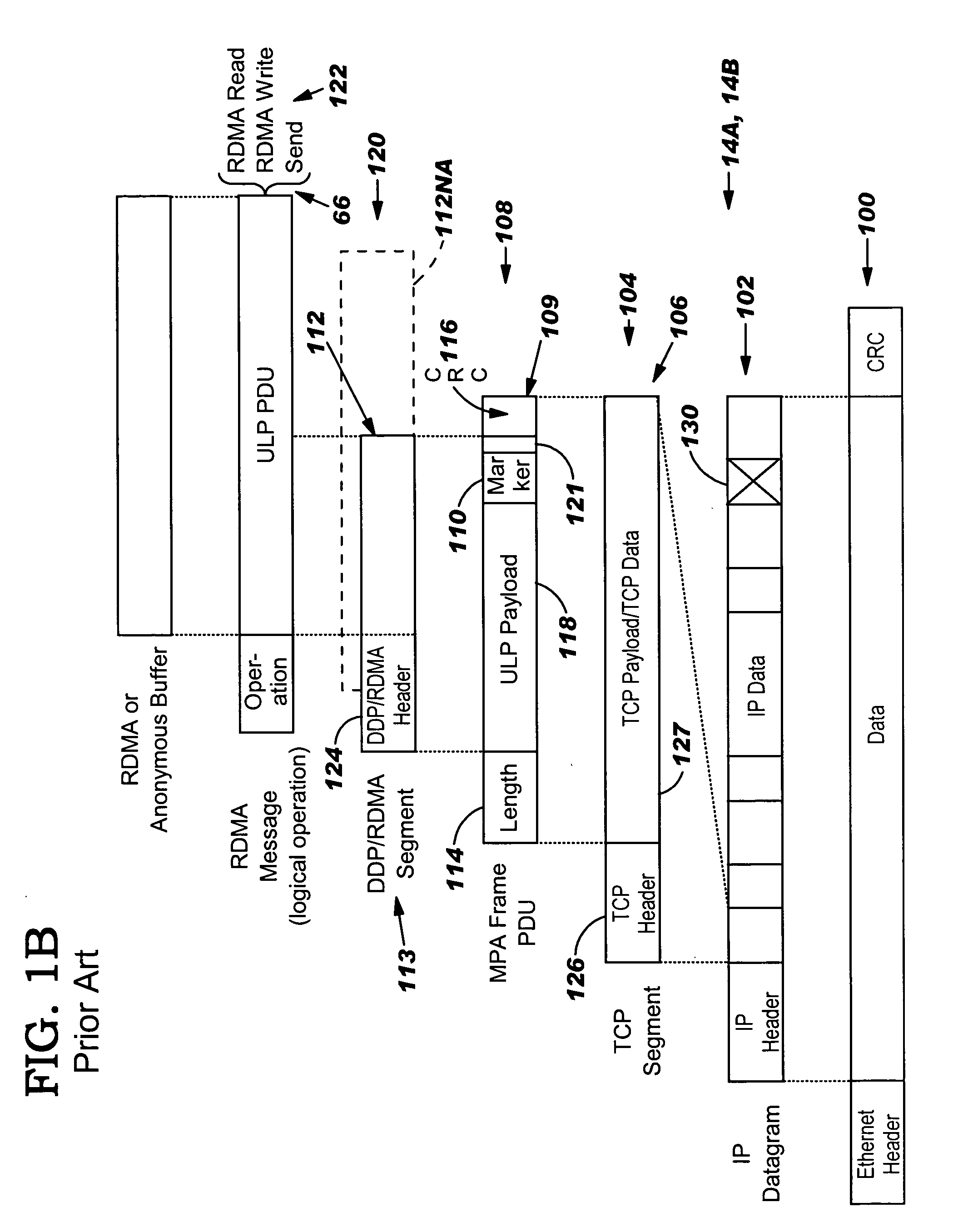

An RNIC implementation that performs direct data placement to memory where all segments of a particular connection are aligned, or moves data through reassembly buffers where all segments of a particular connection are non-aligned. The type of connection that cuts-through without accessing the reassembly buffers is referred to as a “Fast” connection because it is highly likely to be aligned, while the other type is referred to as a “Slow” connection. When a consumer establishes a connection, it specifies a connection type. The connection type can change from Fast to Slow and back. The invention reduces memory bandwidth, latency, error recovery using TCP retransmit and provides for a “graceful recovery” from an empty receive queue. The implementation also may conduct CRC validation for a majority of inbound DDP segments in the Fast connection before sending a TCP acknowledgement (Ack) confirming segment reception.

Owner:IBM CORP

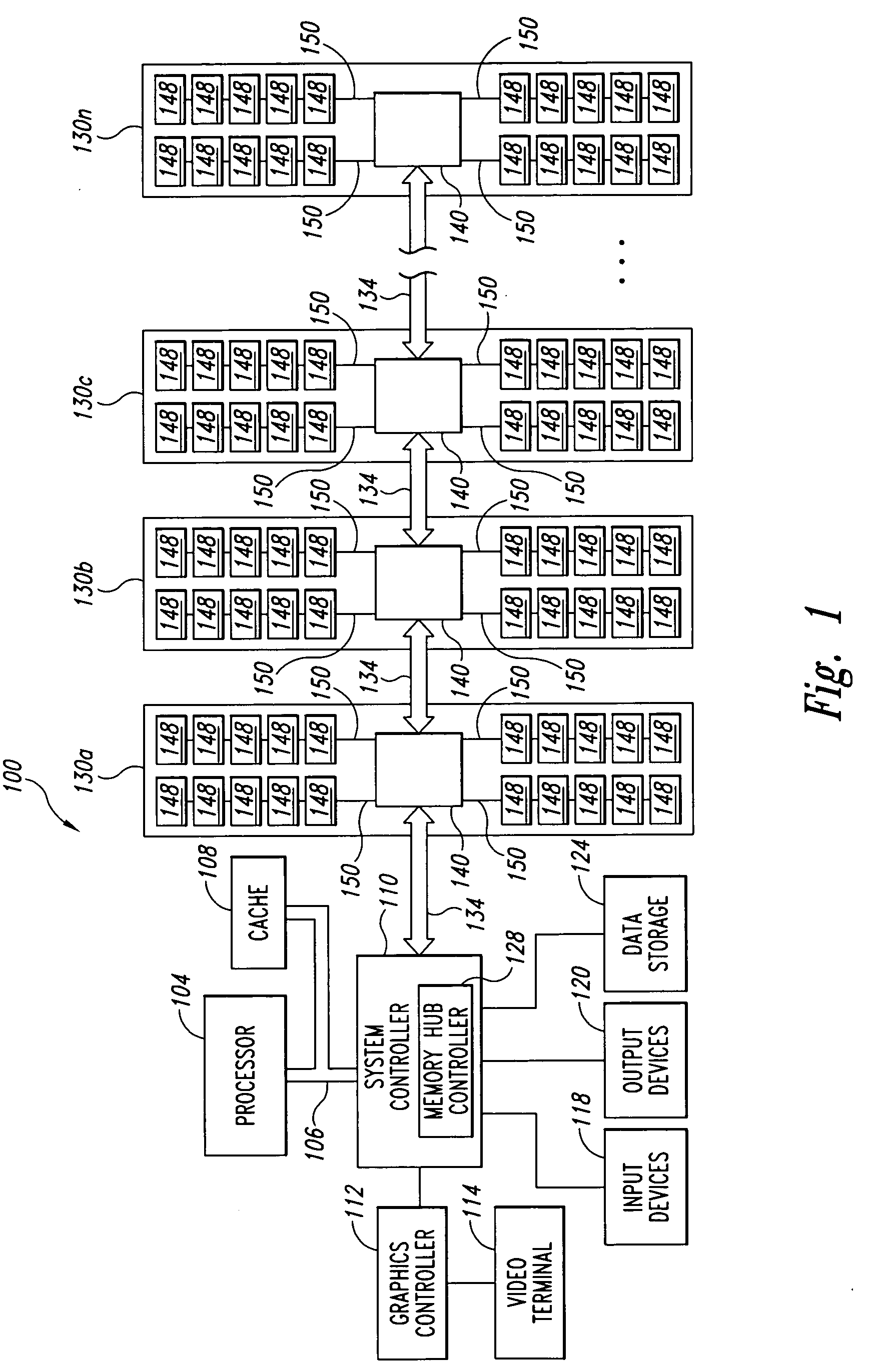

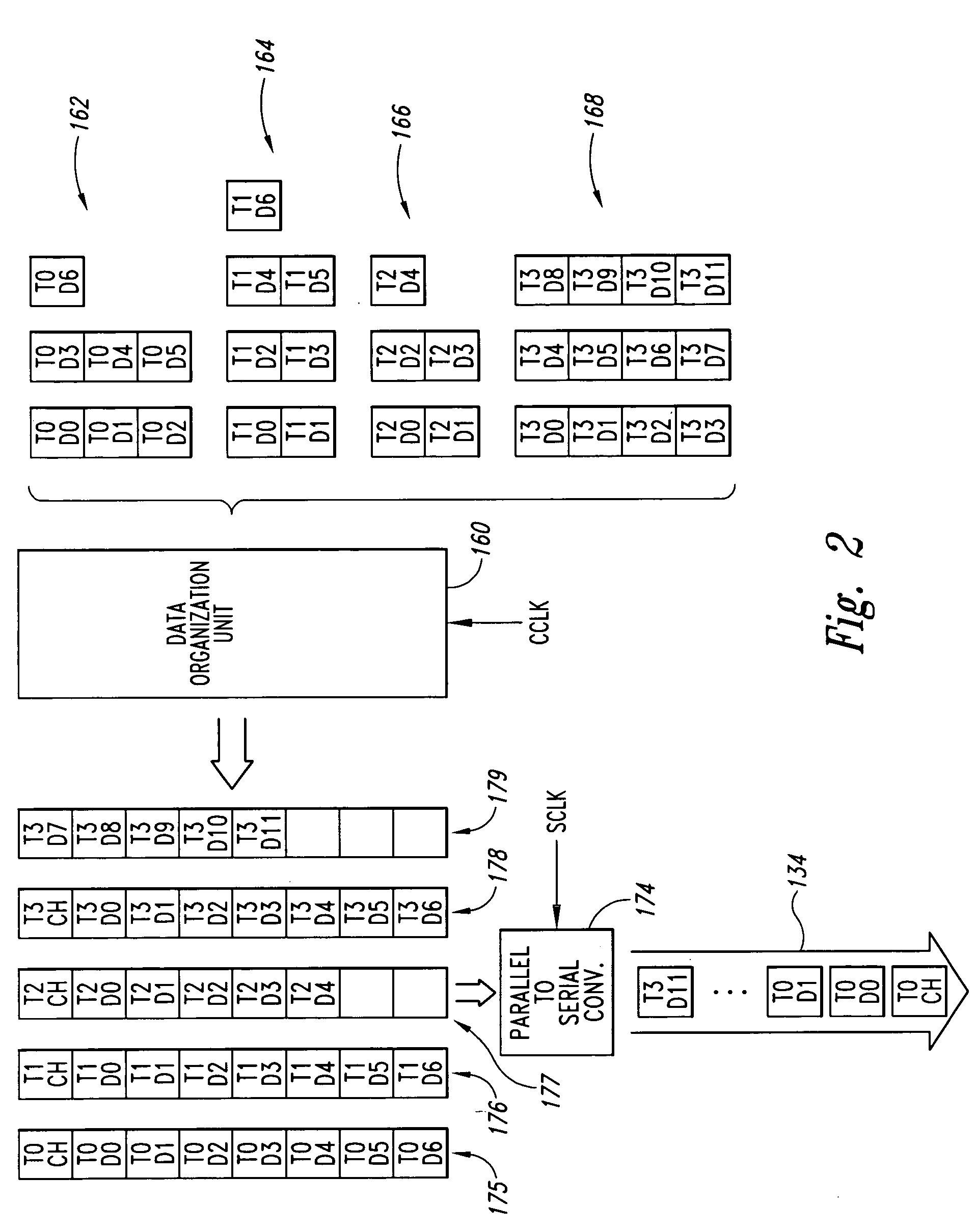

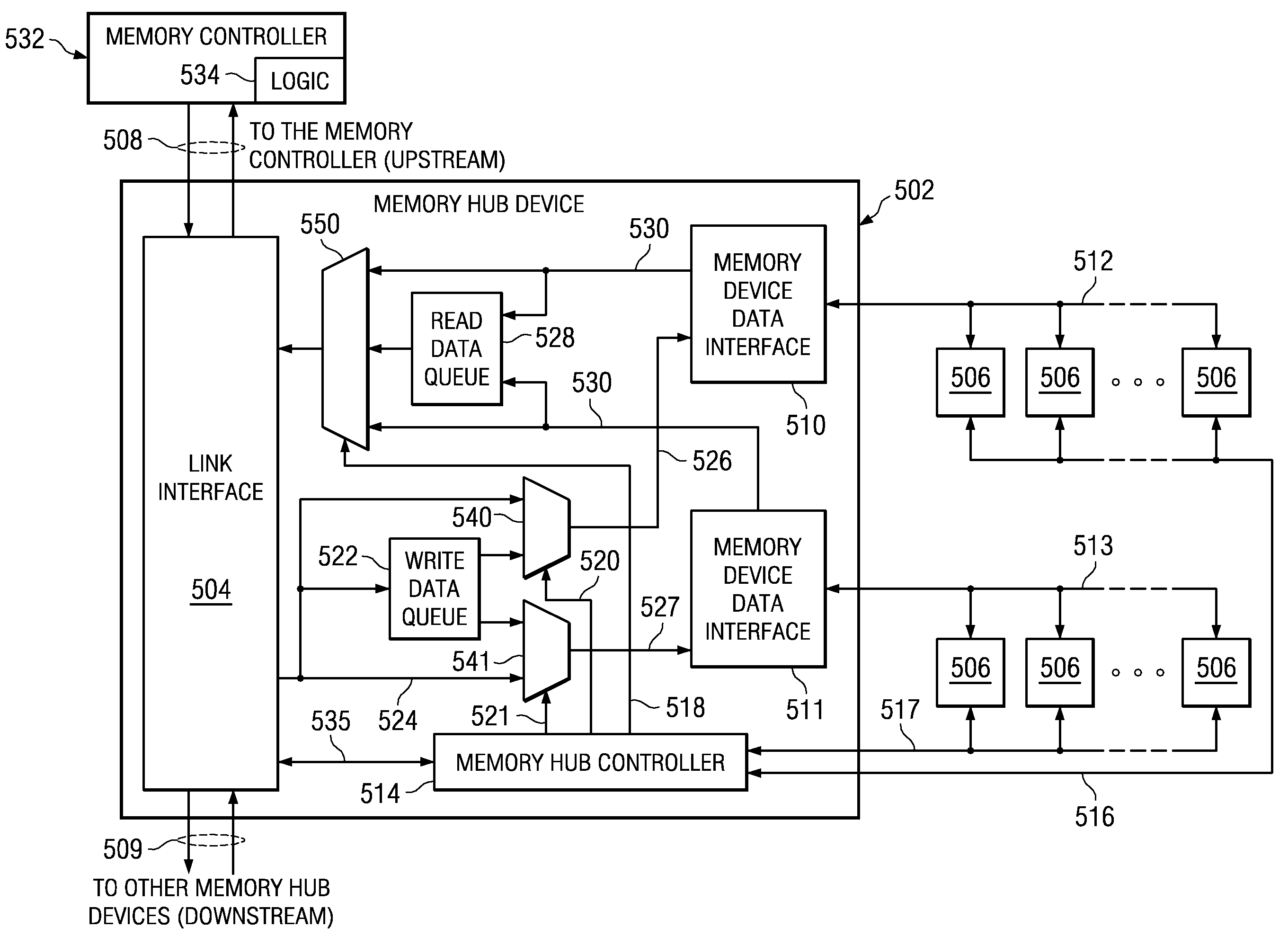

System and method for organizing data transfers with memory hub memory modules

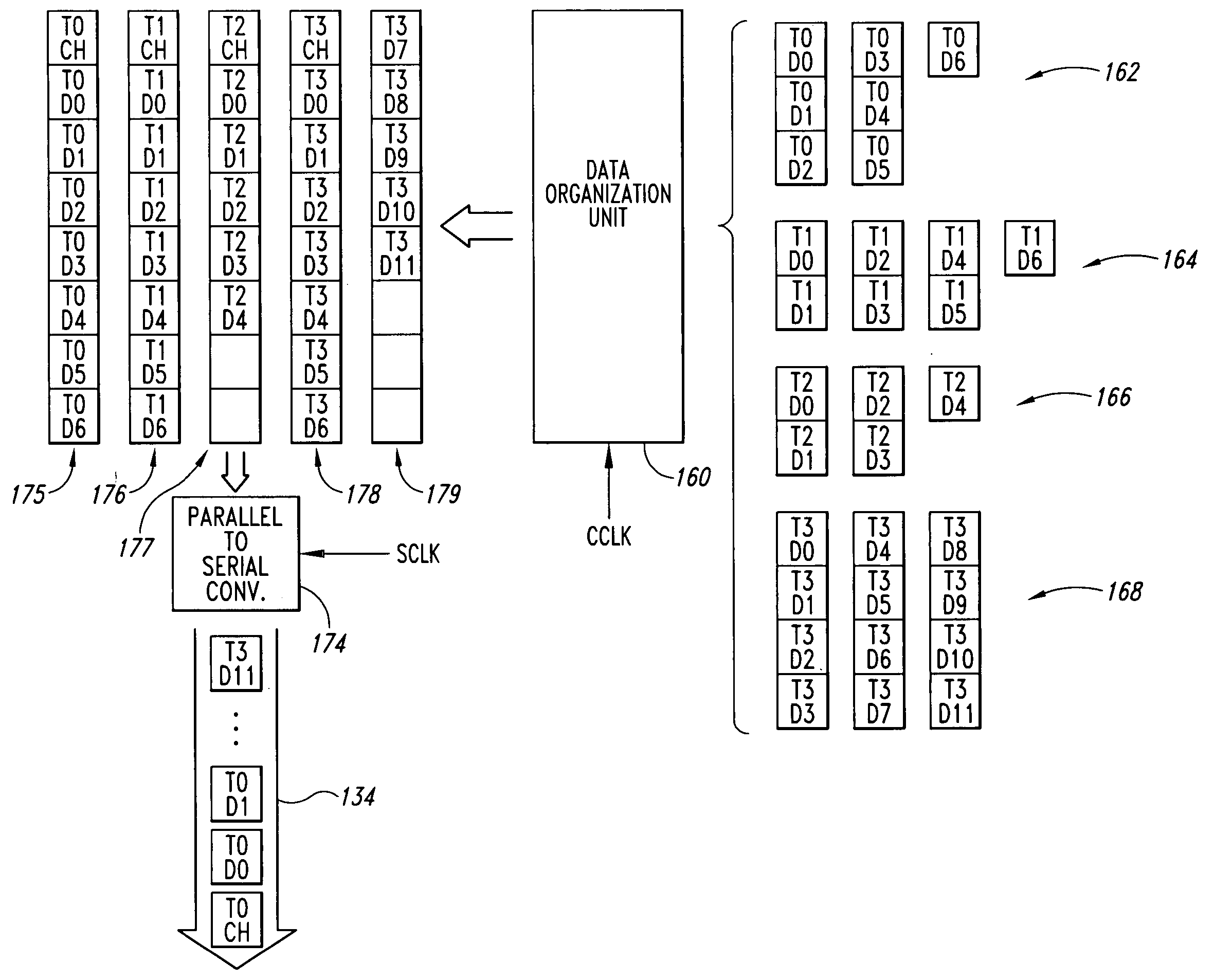

A memory system includes a memory hub controller coupled to a plurality of memory modules each of which includes a memory hub. The memory hubs each include a transmit interface having a data organization system that organizes a command header and data for each of a plurality of memory transactions into lane groups each of which contain a predetermined number of lanes. Each of the lanes contains either parallel command header bits or parallel data bits. The lane groups are then converted to a serial stream of lanes and transmitted from the memory hubs through a high-speed bus. The lane groups are organized so that they are always filled with lanes containing either a command header or data. As a result, the high-speed bus is never idle during transmission of memory transactions from the memory hub thereby maximizing the memory bandwidth of the memory system.

Owner:MICRON TECH INC

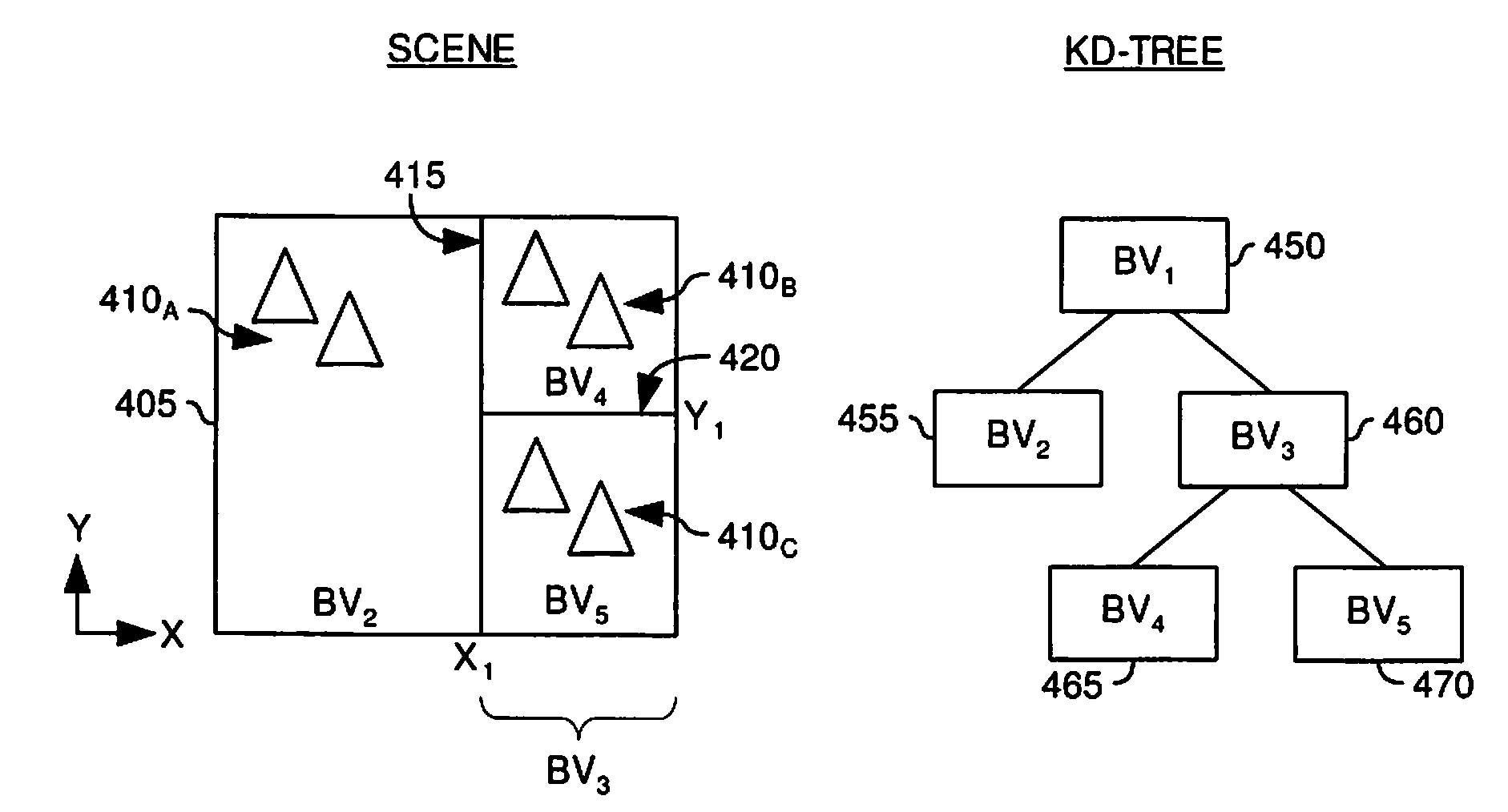

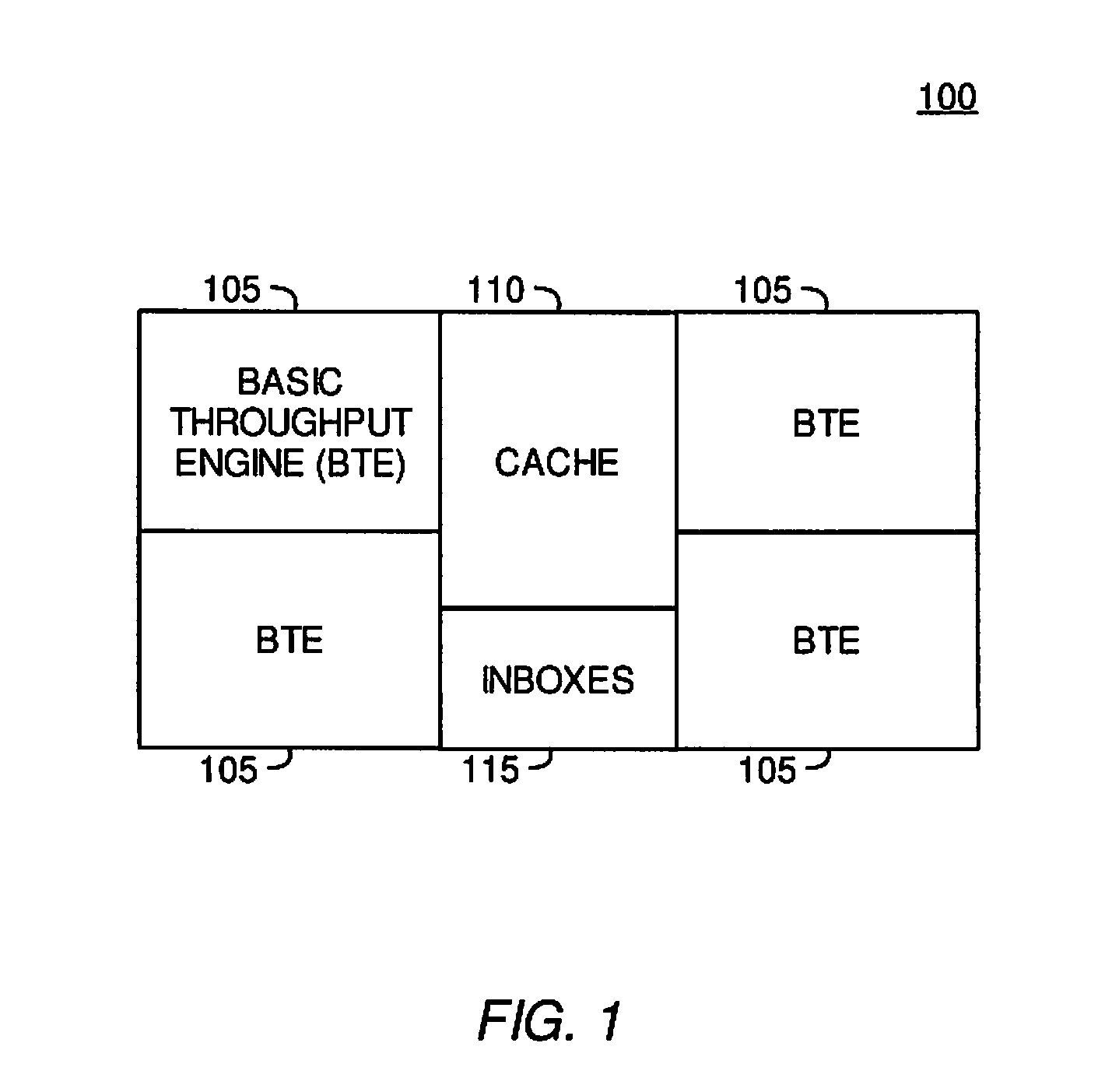

Cache Utilization Optimized Ray Traversal Algorithm with Minimized Memory Bandwidth Requirements

Embodiments of the invention provide methods and apparatus for recording the traversal history of a ray through a spatial index structure and utilizing the recorded traversal history. An image processing system may initially determine which nodes a ray intersects as it traverses through a spatial index. Results of the node intersection determinations may be recorded as the ray traverses the spatial index, and the recorded determinations may be associated with the ray. Furthermore, the image processing system may decide upon a traversal path based upon some probability of striking primitives corresponding to the nodes which make up the spatial index. This traversal path may also be recorded and associated with the ray. If the image processing system needs to re-traverse the spatial index at a later time, the recorded traversal history may be used to eliminate the need to recalculate ray-node intersections, and eliminate incorrect traversal path determinations.

Owner:IBM CORP

Limiting number of retransmission attempts for data transfer via network interface controller

ActiveUS20050129045A1Reduce memory bandwidthReduce latencyError prevention/detection by using return channelTransmission systemsConnection typeData placement

An RNIC implementation that performs direct data placement to memory where all segments of a particular connection are aligned, or moves data through reassembly buffers where all segments of a particular connection are non-aligned. The type of connection that cuts-through without accessing the reassembly buffers is referred to as a “Fast” connection because it is highly likely to be aligned, while the other type is referred to as a “Slow” connection. When a consumer establishes a connection, it specifies a connection type. The connection type can change from Fast to Slow and back. The invention reduces memory bandwidth, latency, error recovery using TCP retransmit and provides for a “graceful recovery” from an empty receive queue. The implementation also may conduct CRC validation for a majority of inbound DDP segments in the Fast connection before sending a TCP acknowledgement (Ack) confirming segment reception.

Owner:MELLANOX TECHNOLOGIES LTD

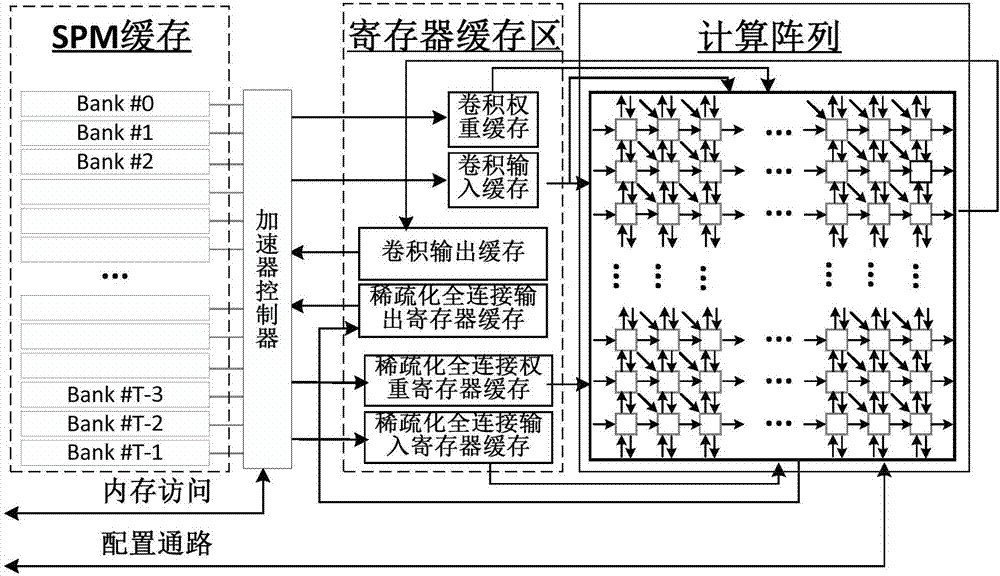

Separable array-based reconfigurable accelerator and realization method thereof

ActiveCN107341544AIncrease computing resourcesIncrease profitPhysical realisationNeural learning methodsProcessor registerParallel computing

The invention provides a separable array-based reconfigurable accelerator and a realization method thereof. The reconfigurable accelerator comprises a scratchpad memory cache area, separable calculation arrays, and a register cache area, wherein the scratchpad memory cache area is used for realizing reuse of data of convolution calculation and sparsity full connection calculation, the separable calculation arrays comprise multiple reconfigurable calculation units and fall into a convolution calculation array and a sparsity full connection calculation array, the register cache area is a storage area formed by multiple registers, and provides input data, weight data and corresponding output results for convolution calculation and sparsity full connection calculation, input data and weight data of convolution calculation are input into the convolution calculation array, the convolution calculation array outputs a convolution calculation result, input data and weight data of the sparsity full connection calculation are input into the sparsity full connection calculation array, and the sparsity full connection calculation array outputs a sparsity full connection calculation result. Characteristics of two neural networks are fused, so that the calculation resource of the chip and the memory bandwidth use ratio are improved.

Owner:TSINGHUA UNIV

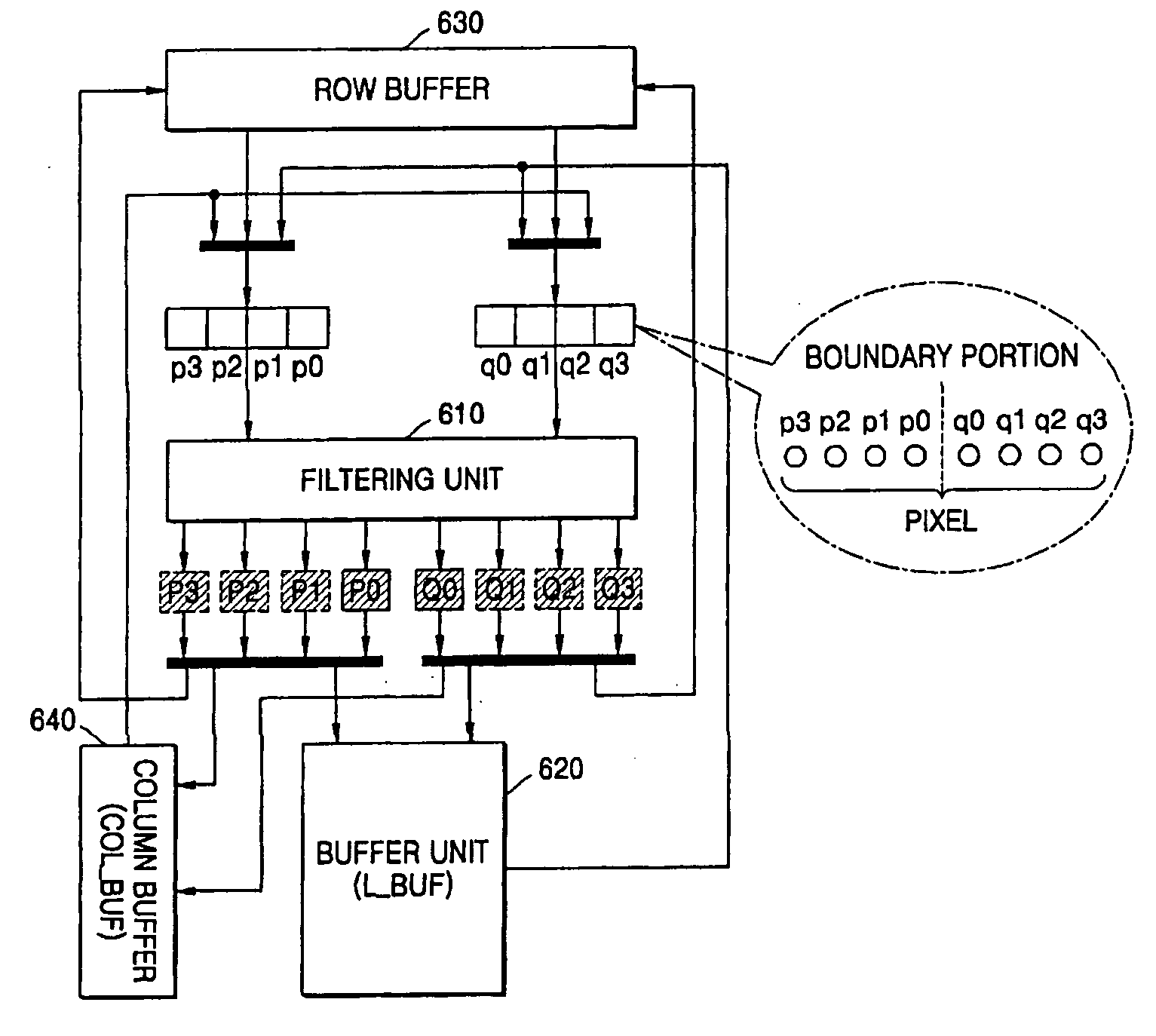

Filtering method, apparatus, and medium used in audio-video codec

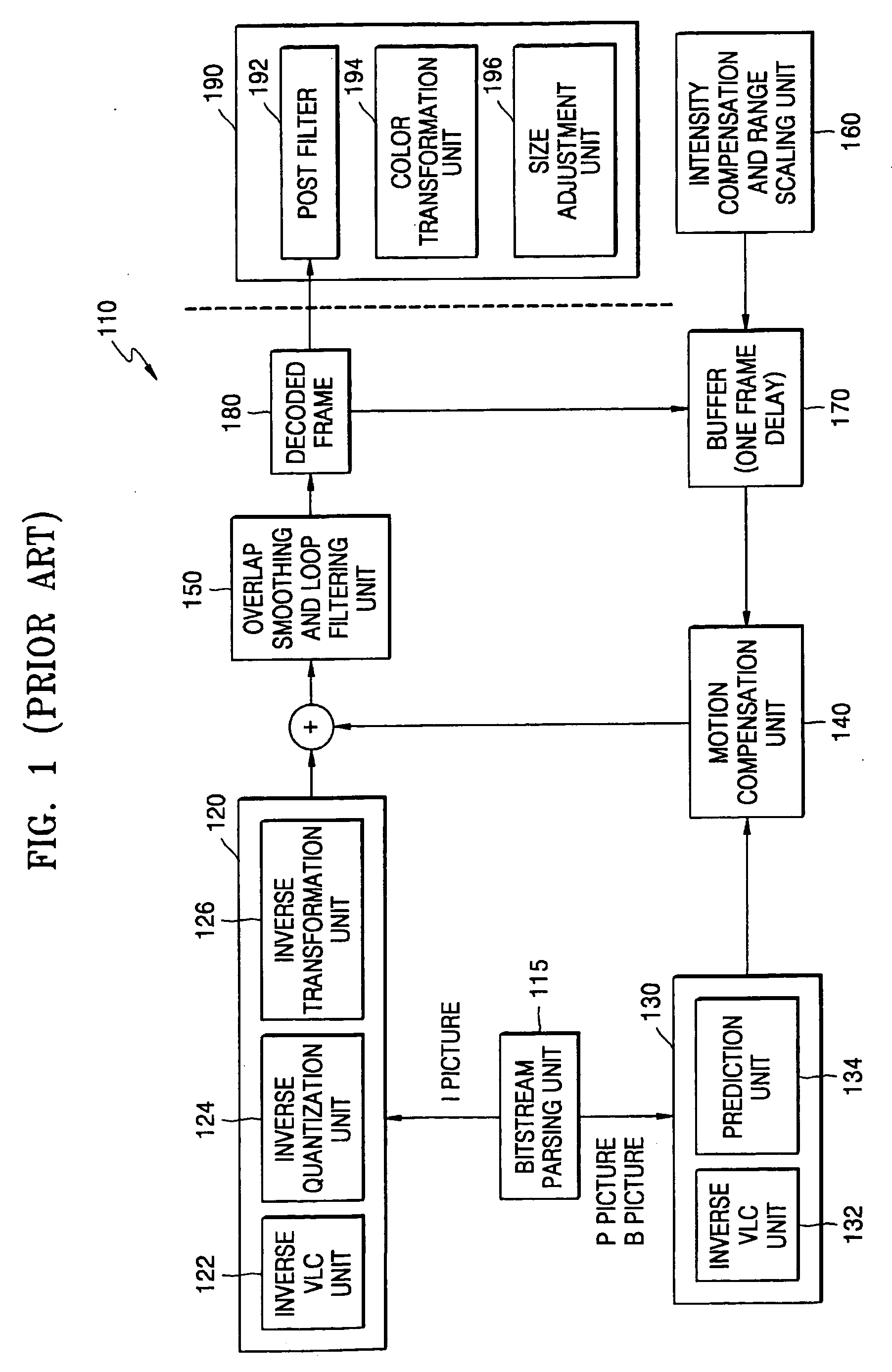

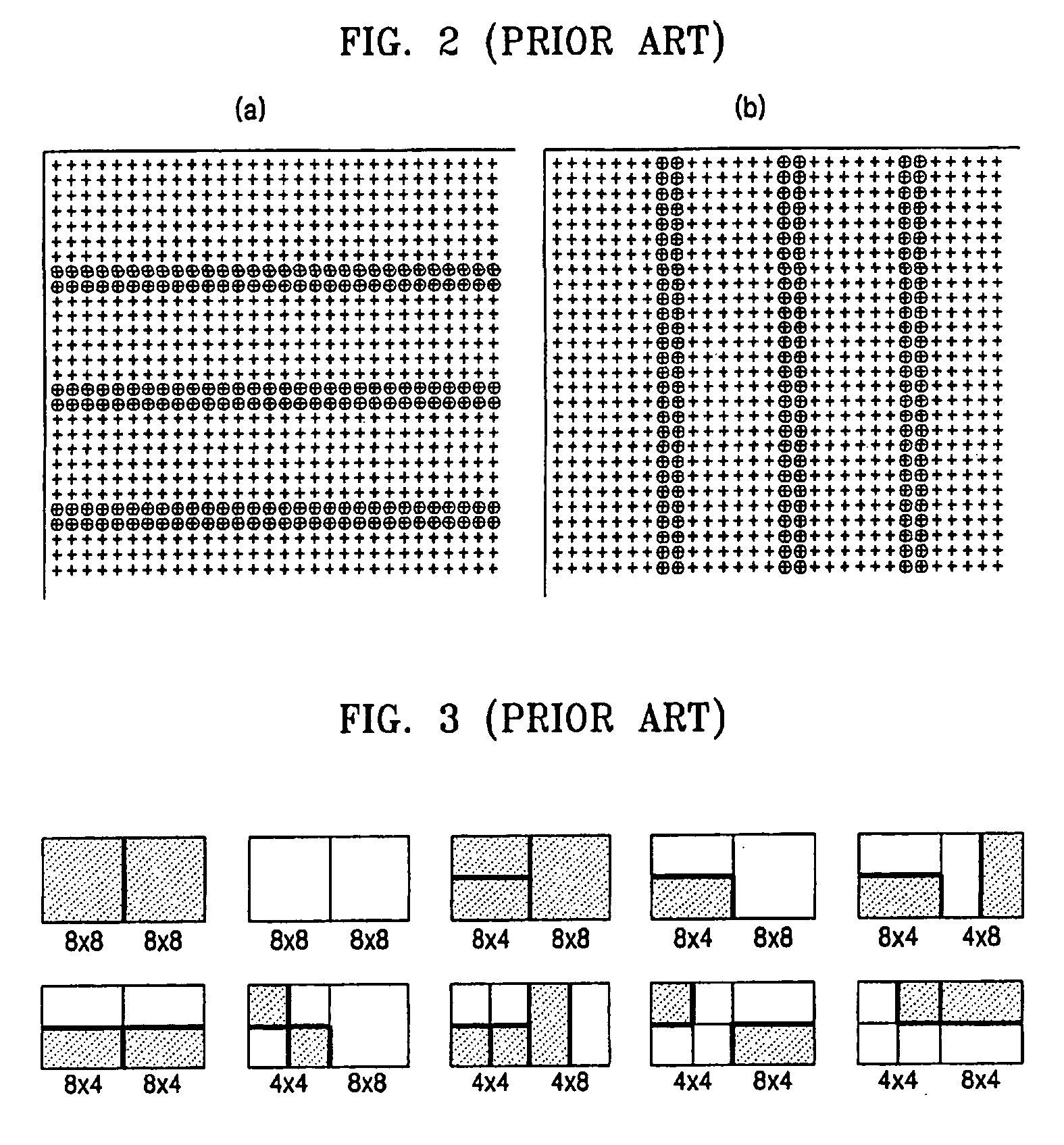

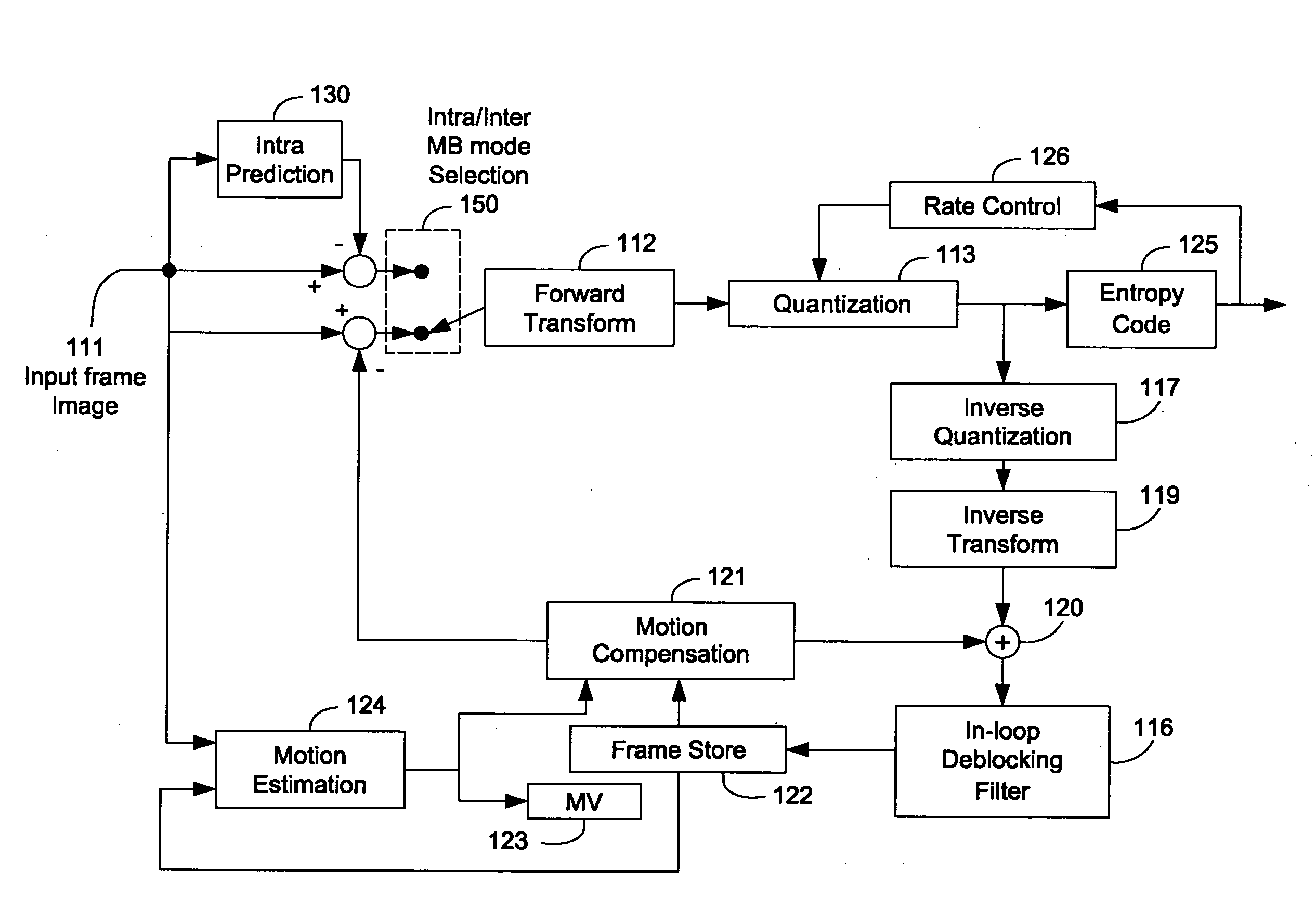

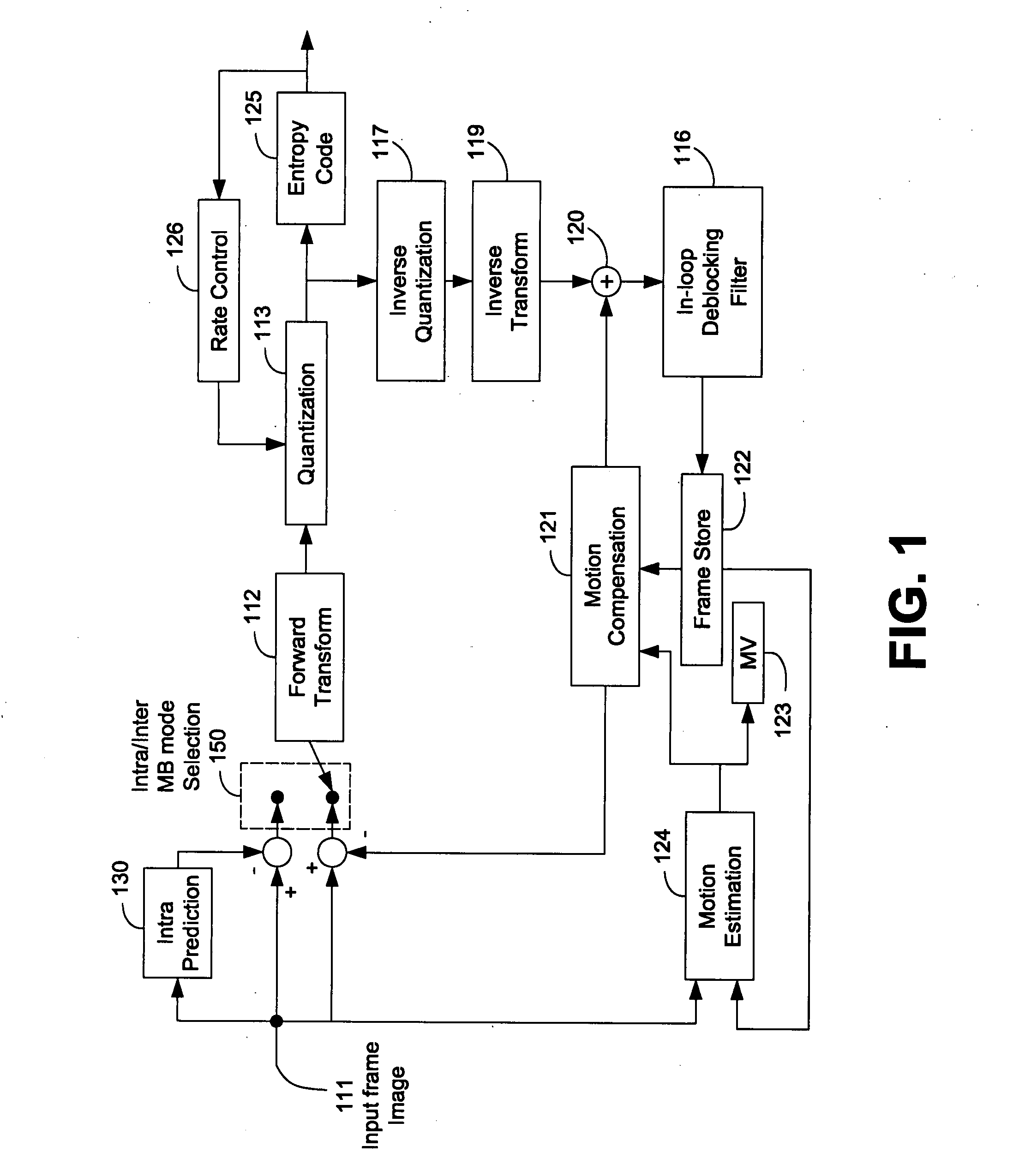

InactiveUS20060013315A1Efficient executionExpand memoryColor television with pulse code modulationColor television with bandwidth reductionPattern recognitionLoop filter

A filtering method, apparatus, and medium used in an audio-video codec are provided. The filtering method may include determining a predetermined macroblock as a filtering region; expanding the filtering region to further include a portion of a macroblock adjacent to the predetermined macroblock near a boundary between the predetermined macroblock and the macroblock adjacent to the predetermined macroblock; and performing a deblocking filtering operation on the expanded filtering region in a causal manner according to the passage of time. The filtering method, apparatus, and medium can be easily realized using a non-causal loop filter and buffers. The filtering method, apparatus, and medium can efficiently filter blocking artefacts without increasing a required memory bandwidth and causing frame delays.

Owner:SAMSUNG ELECTRONICS CO LTD

Memory latency and bandwidth optimizations

InactiveUS20040073767A1Memory adressing/allocation/relocationMicro-instruction address formationRAIDComputer architecture

Owner:HEWLETT PACKARD DEV CO LP

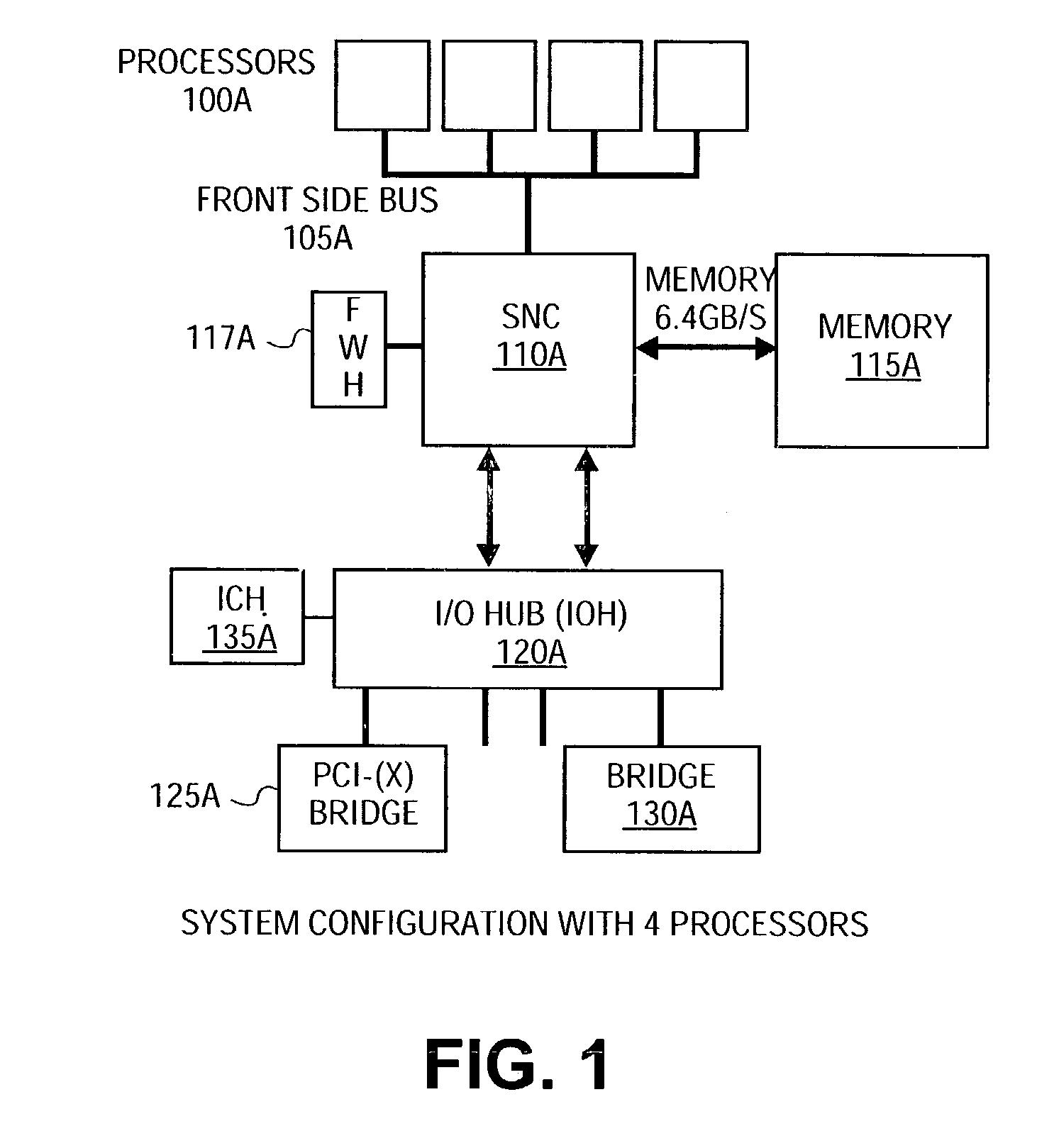

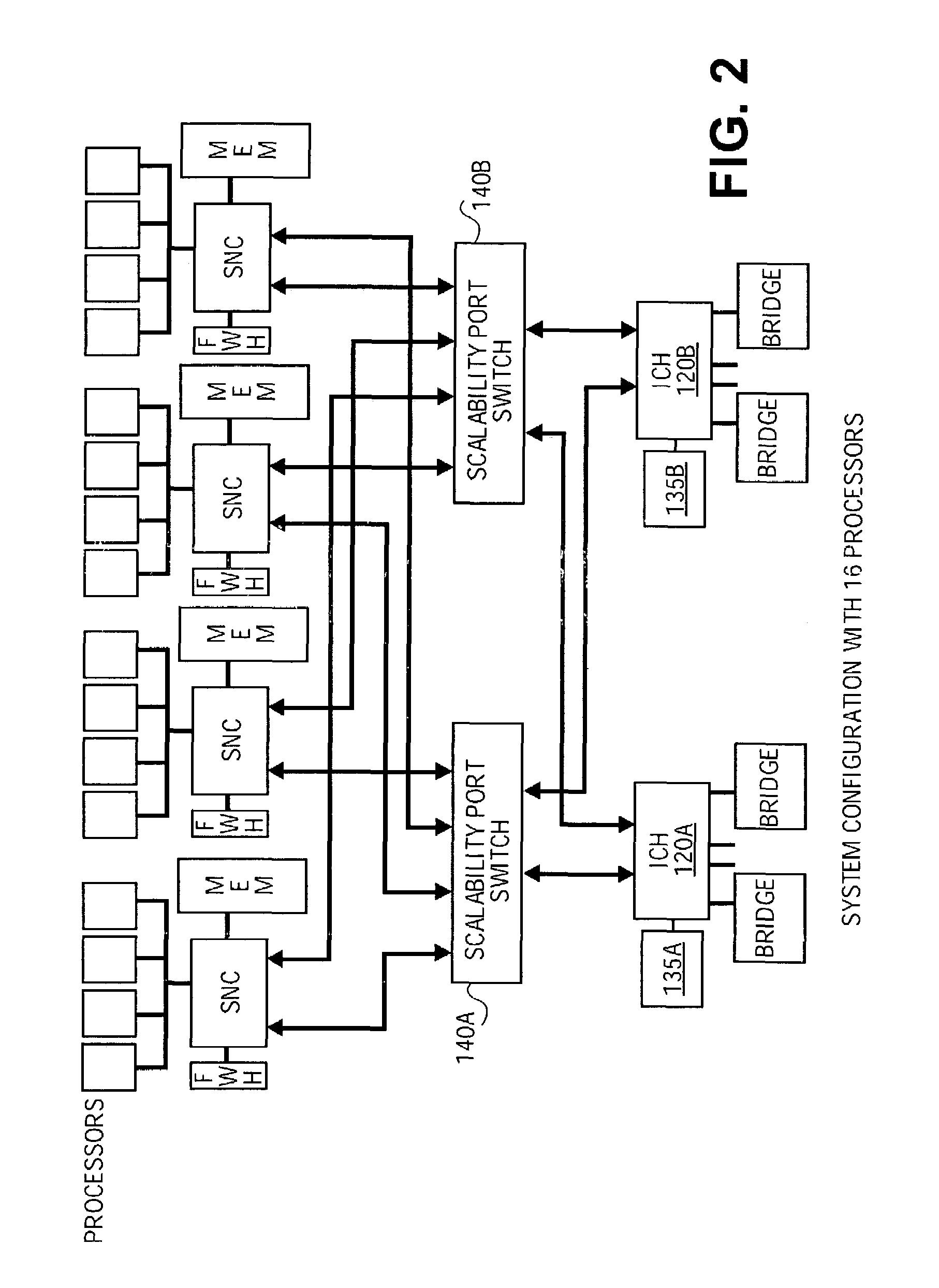

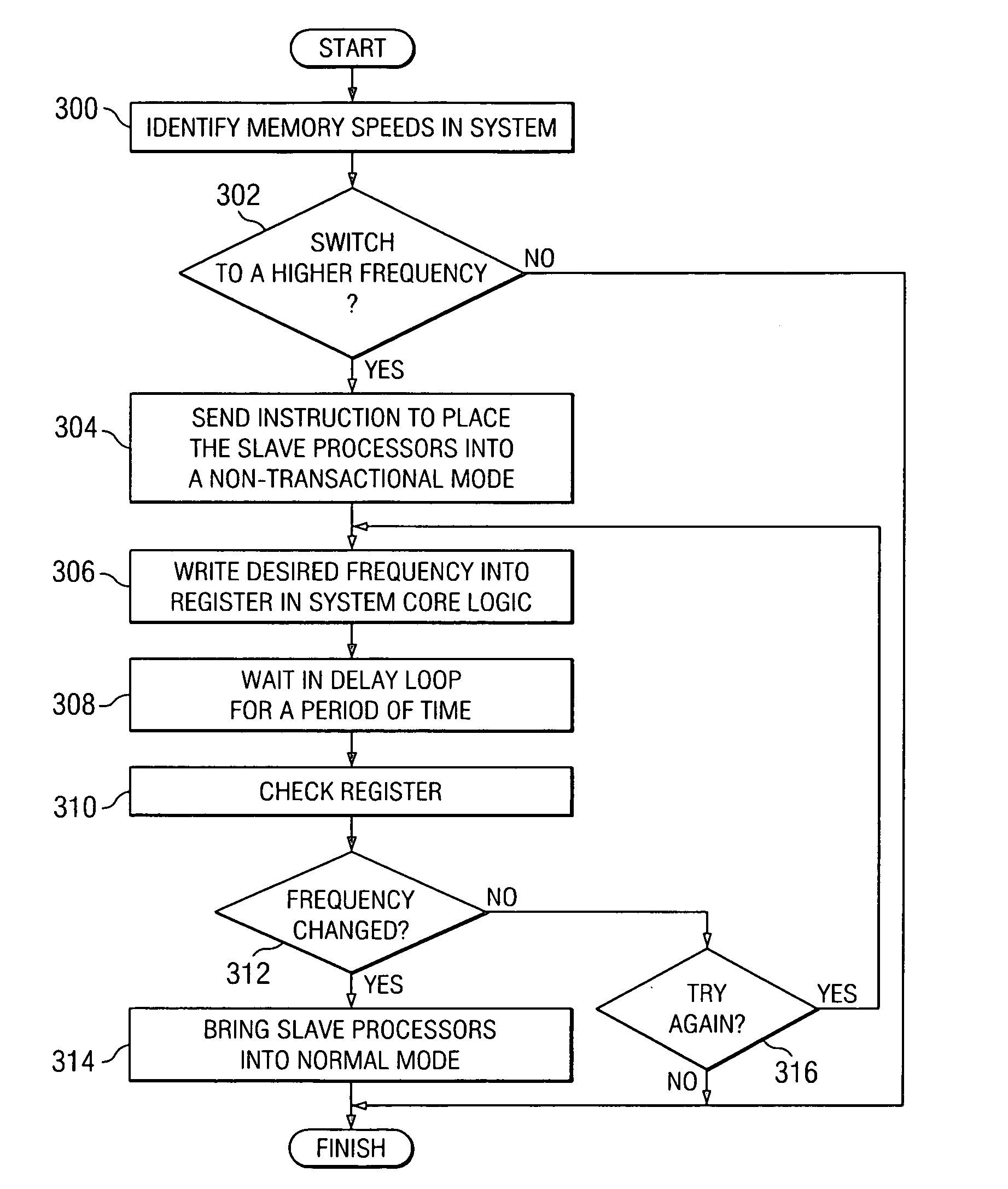

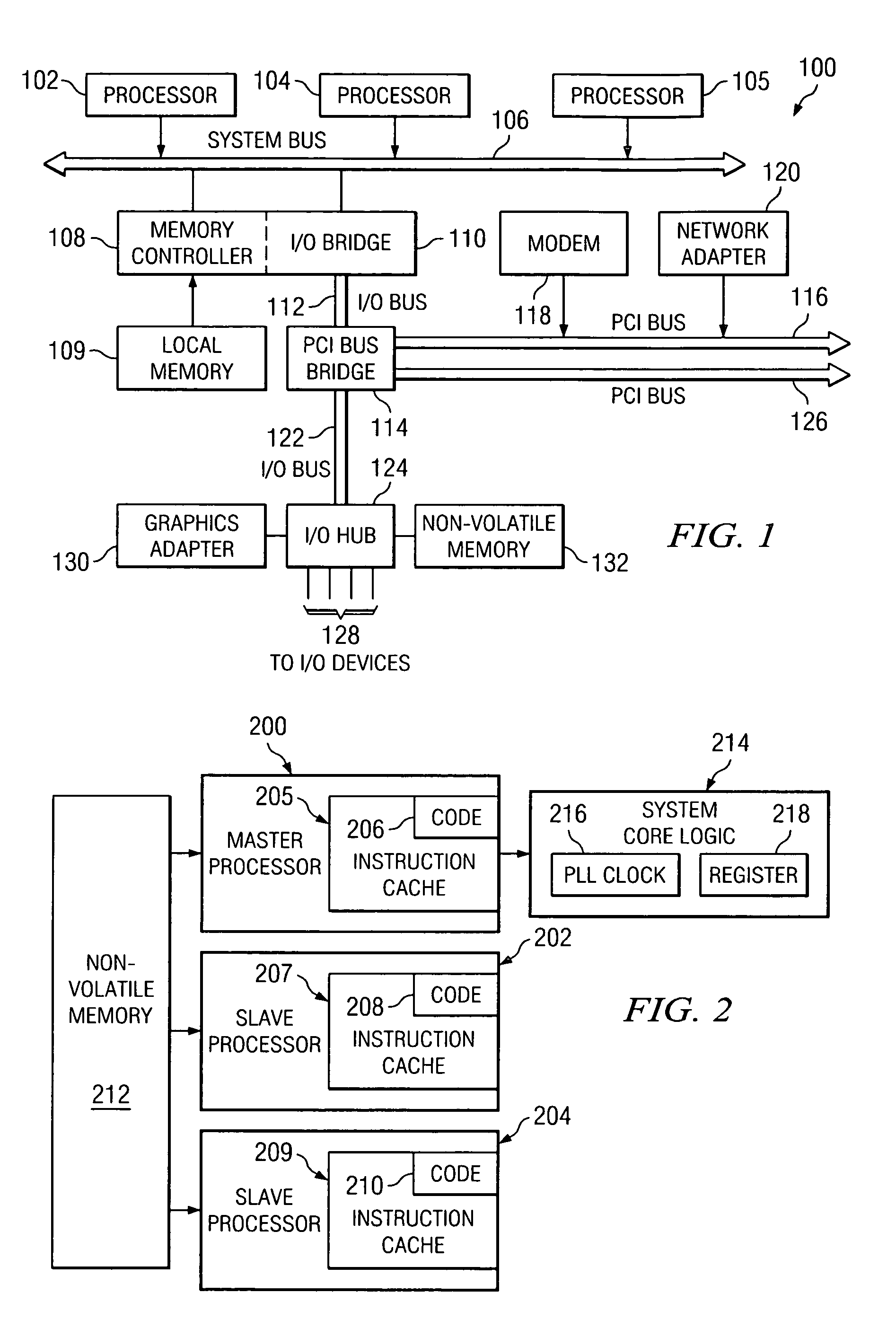

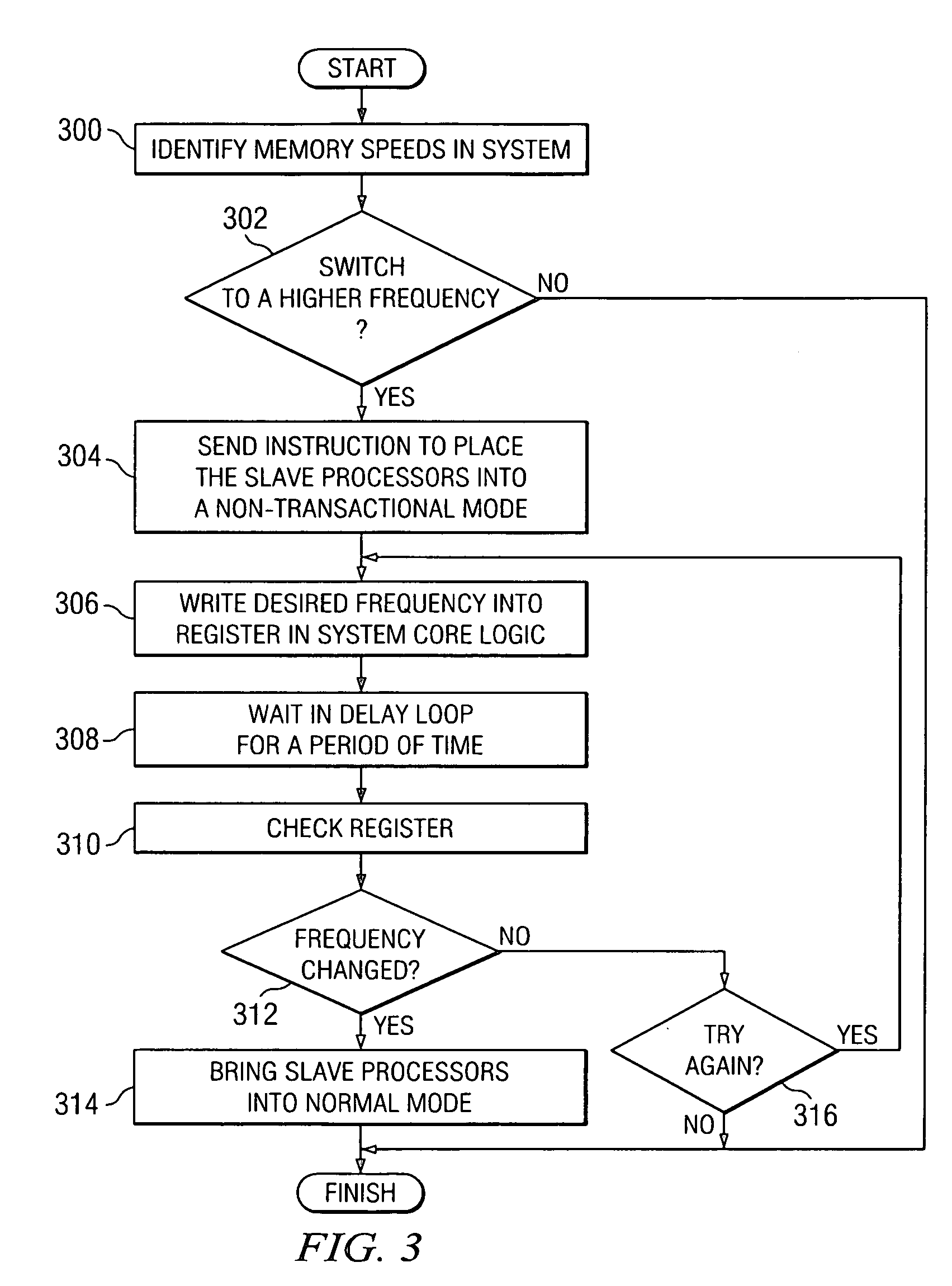

Method and apparatus to change the operating frequency of system core logic to maximize system memory bandwidth

InactiveUS7321979B2Energy efficient ICTVolume/mass flow measurementData processing systemMulti processor

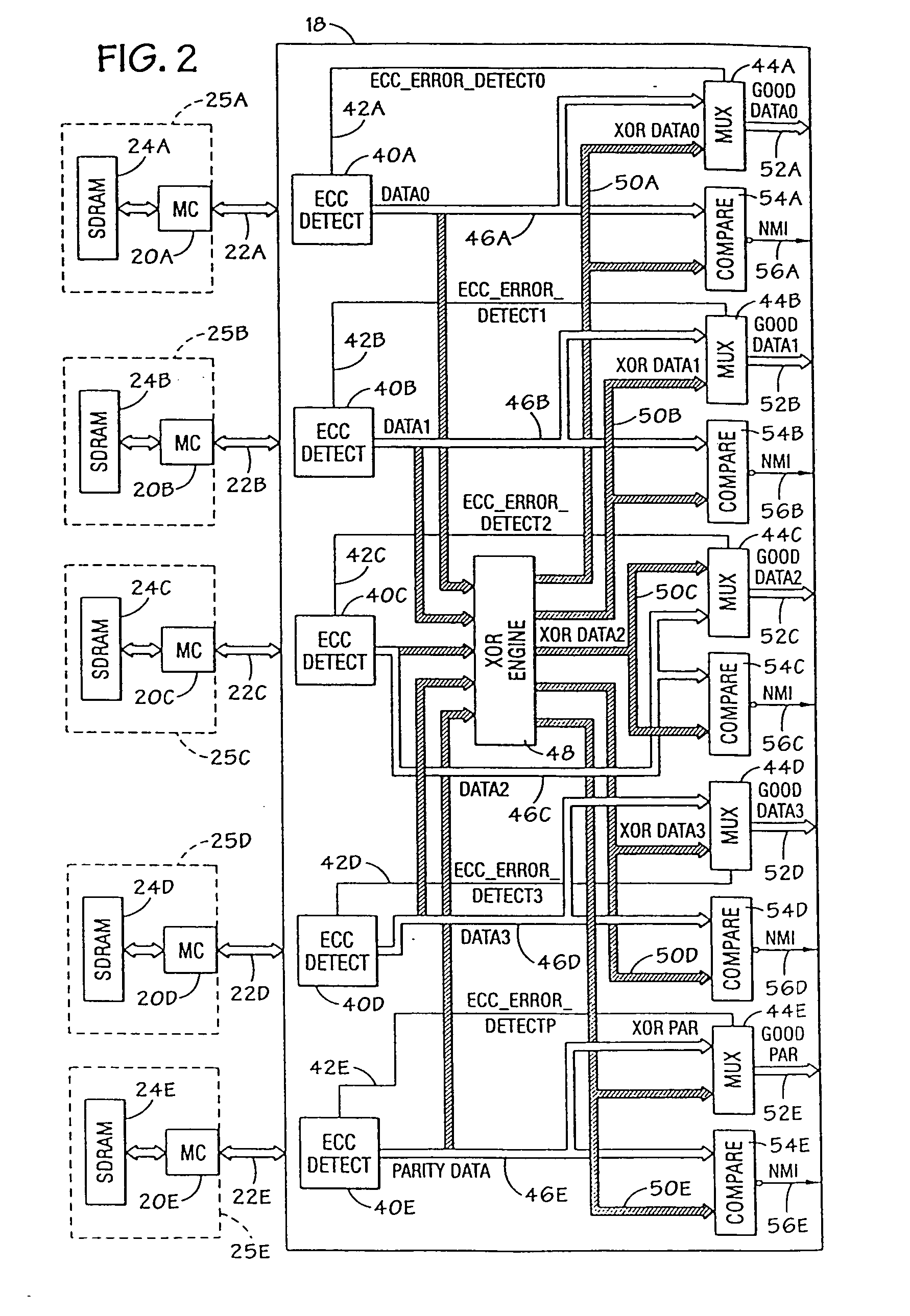

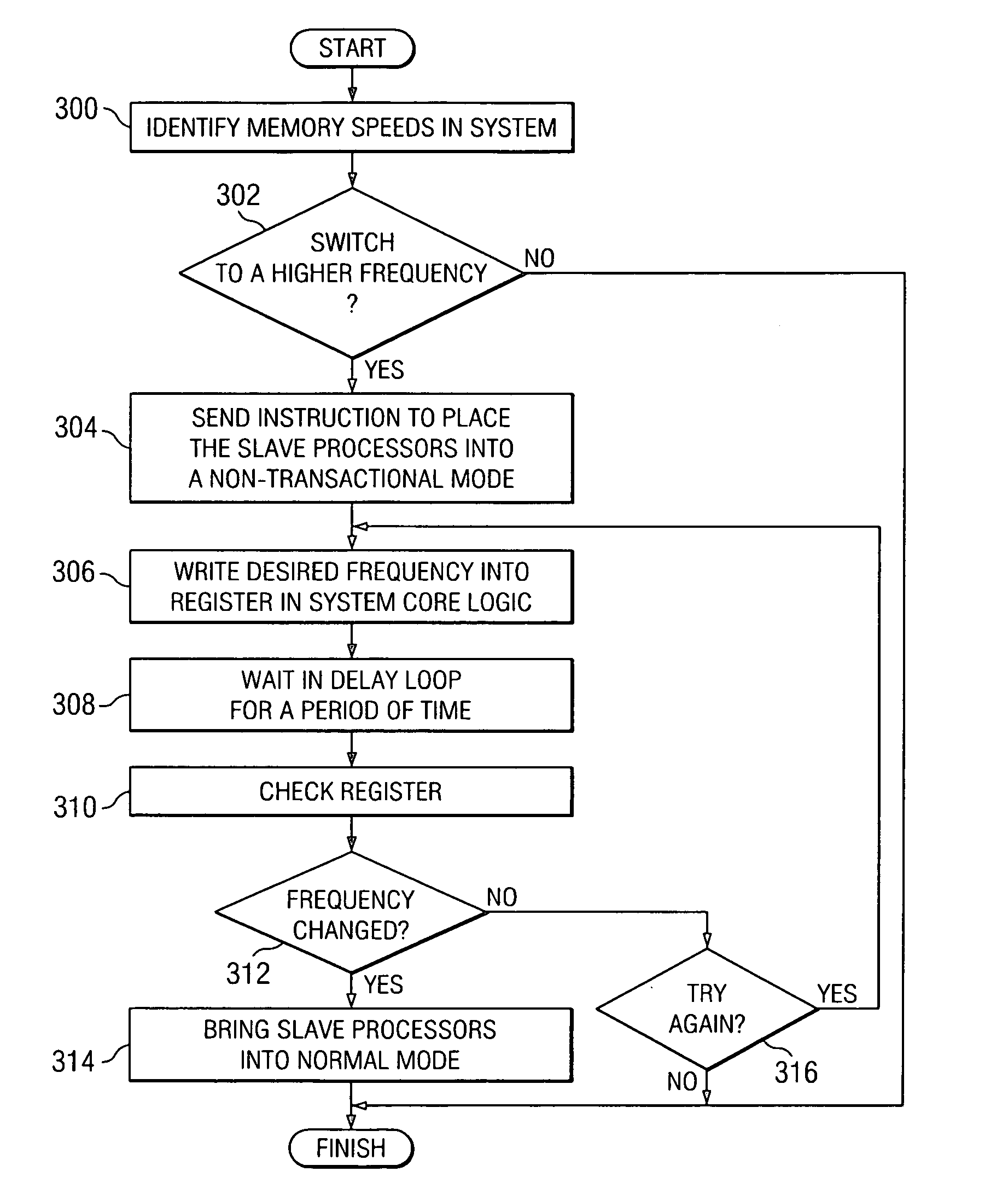

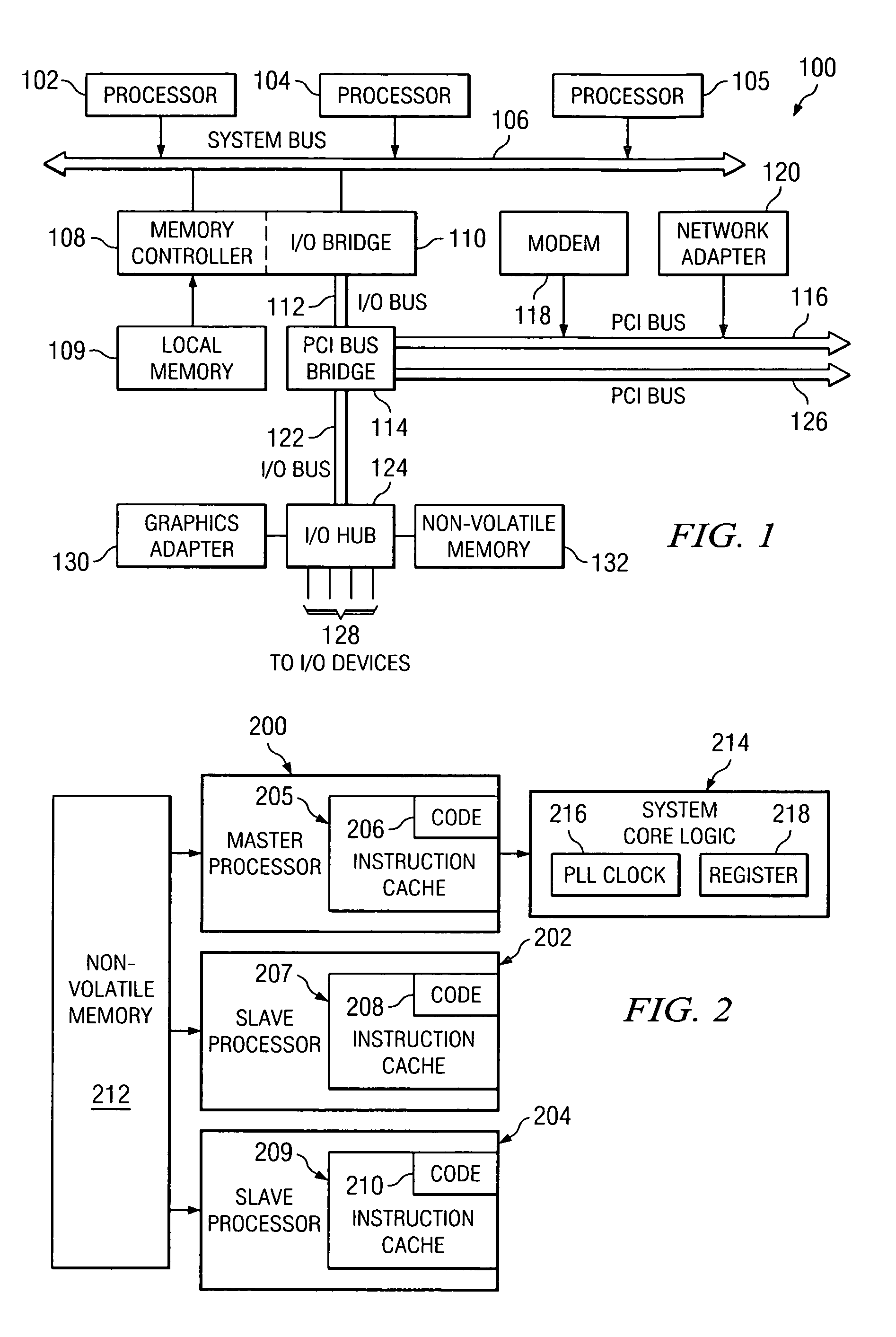

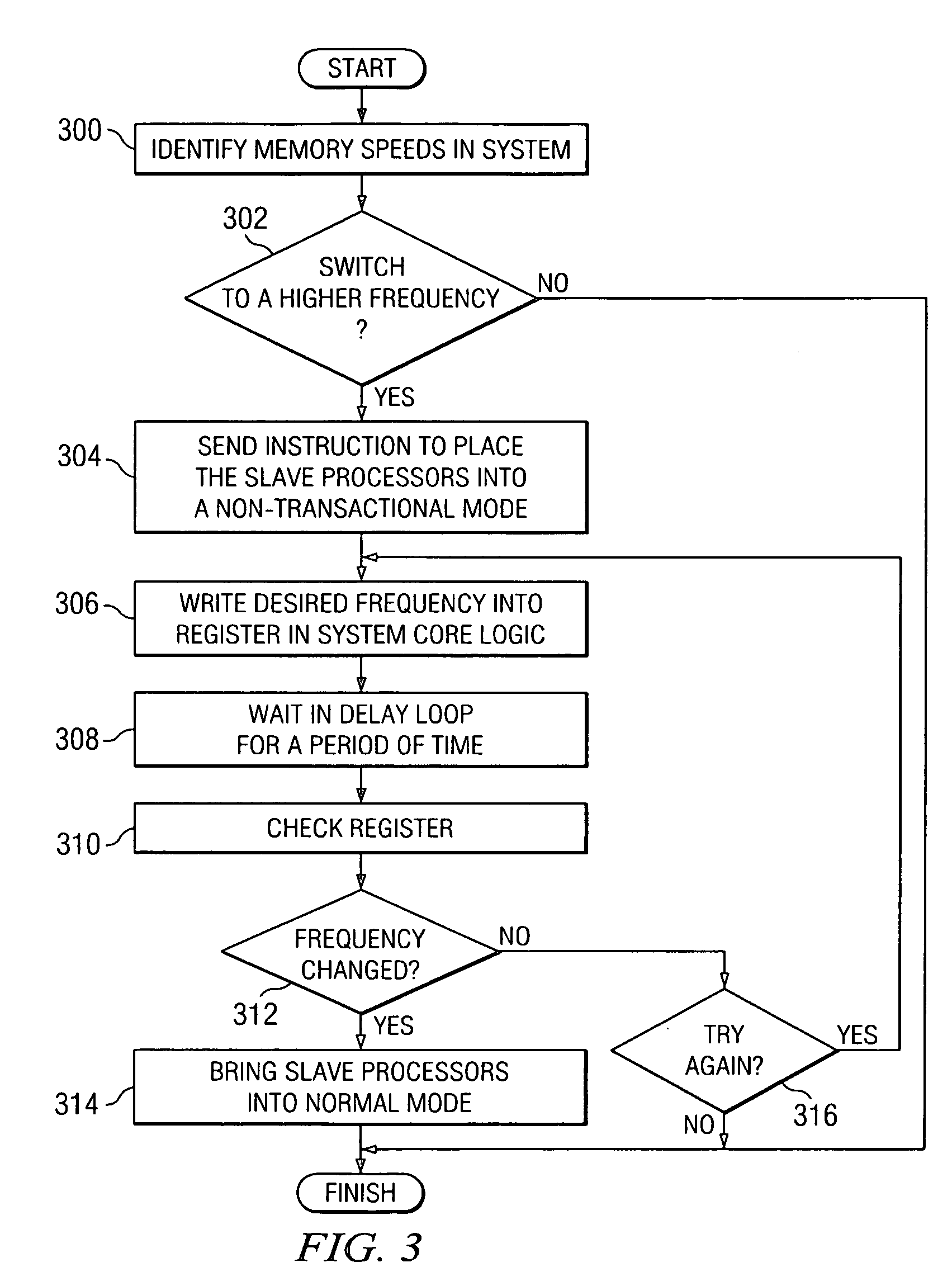

A method, apparatus, and computer instructions for changing an operating frequency for a system core logic used to interface to memory in the multi-processor data processing system. A determination is made as to whether the operating frequency should be changed from a default frequency to another frequency. Slave processors are placed in the multi-processor data processing system into a non-transactional mode, in response to determining the operating frequency should be changed from the default operating frequency to the another operating frequency. When the slave processors are in the non-transactional mode, the operating frequency is changed in the system core logic to other operating frequency by the master processor.

Owner:IBM CORP

Method and apparatus for writing network packets into computer memory

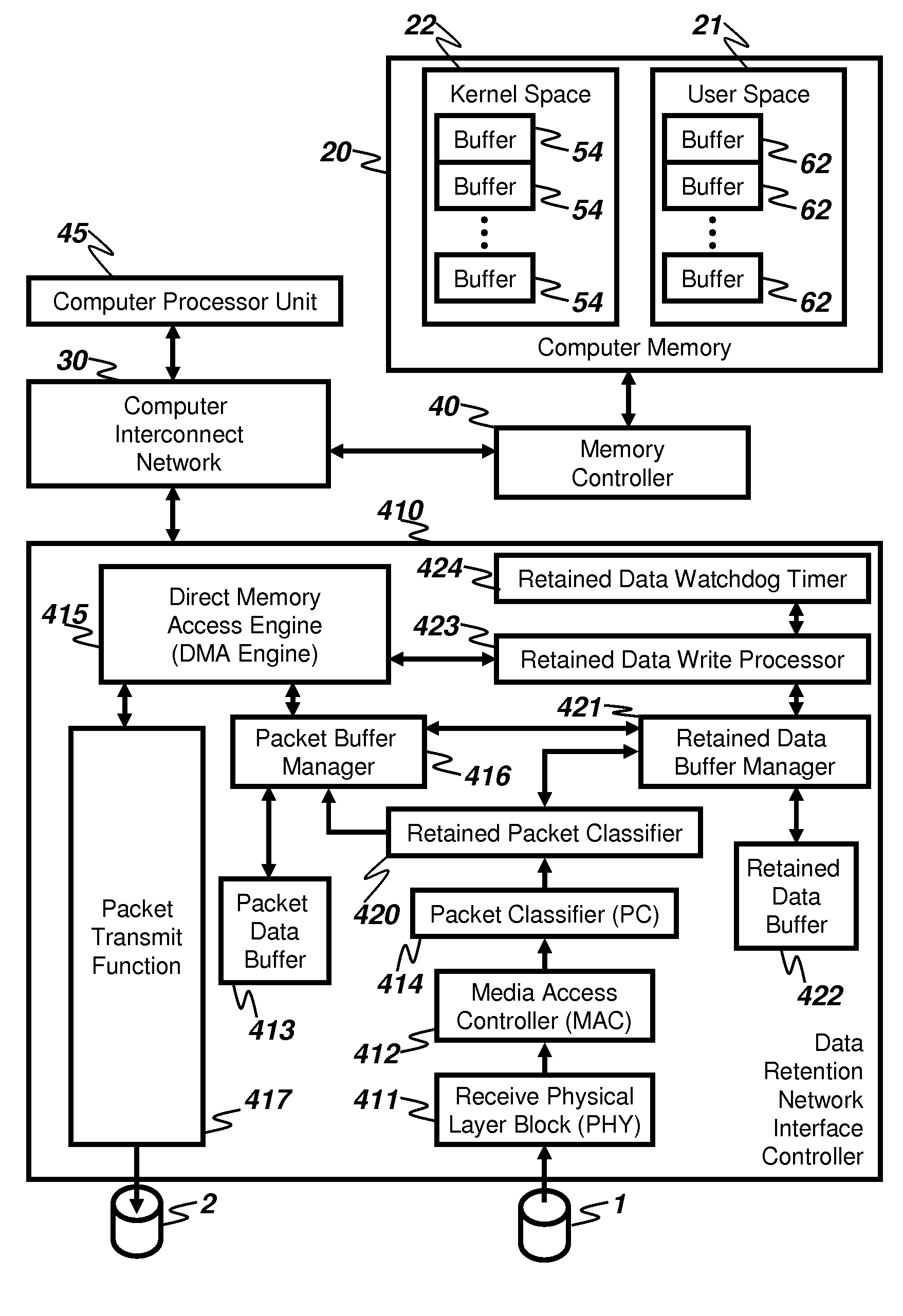

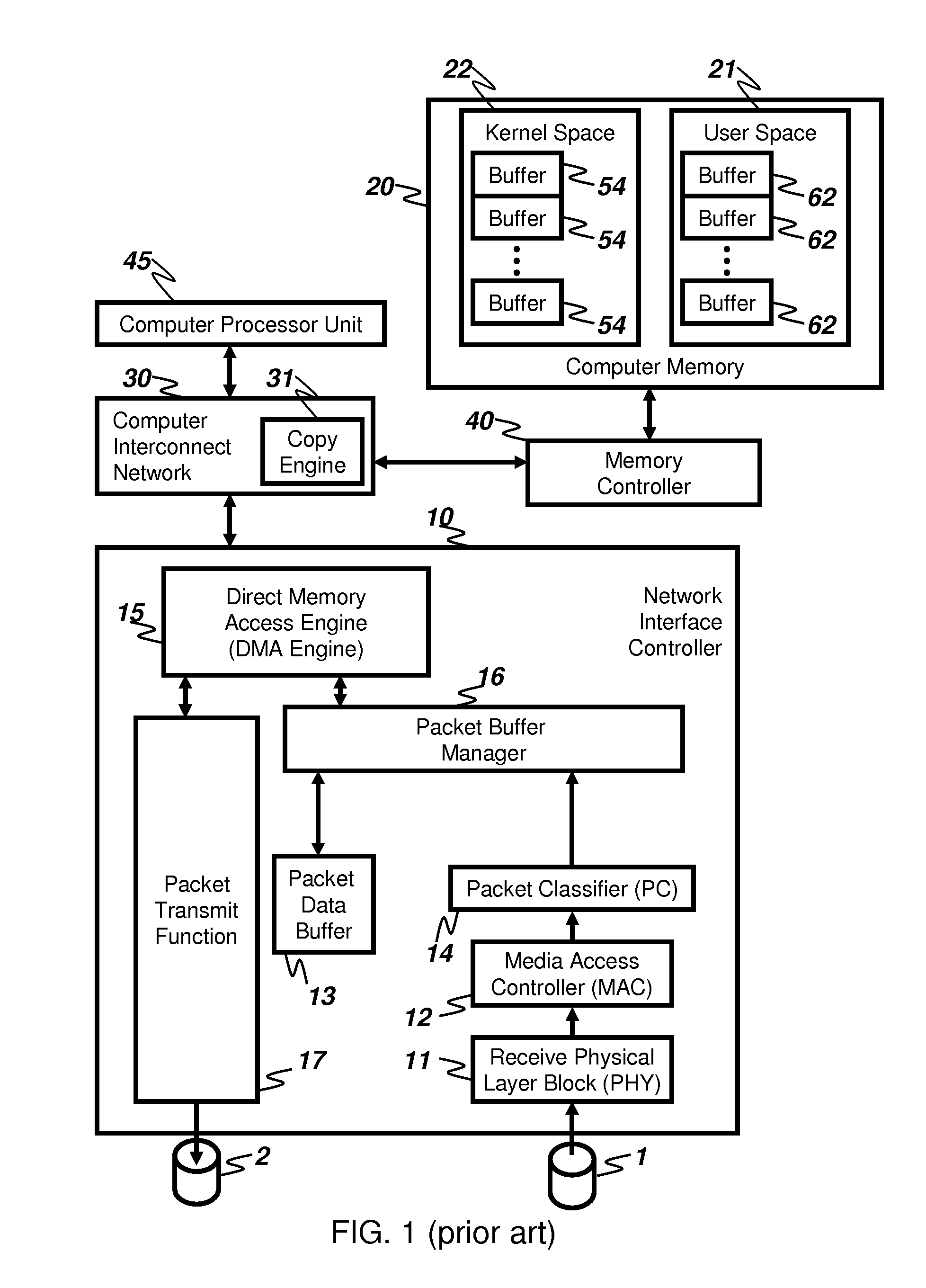

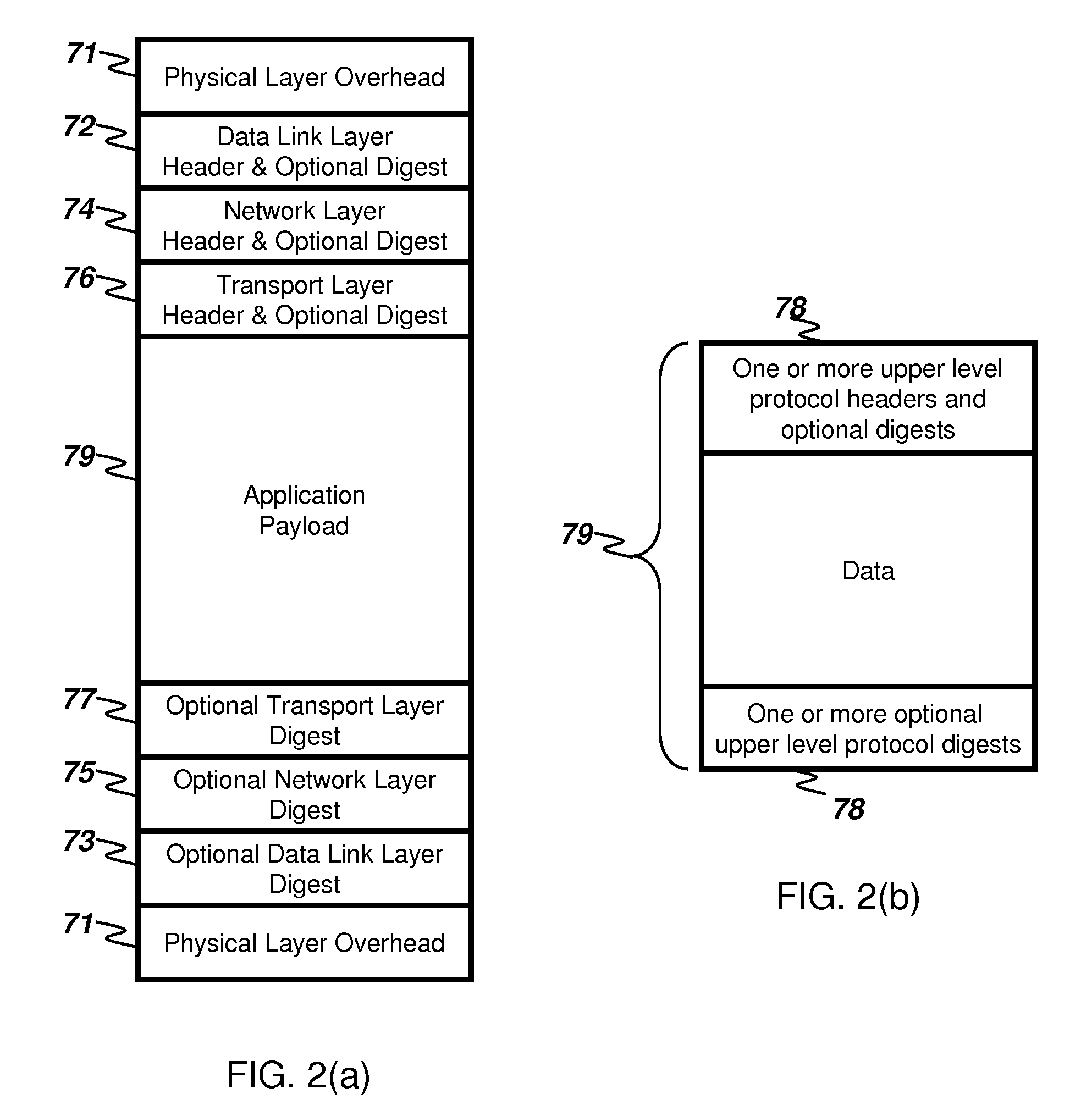

An apparatus receives packets from a network and inserts the packet data into the memory of a computer system. The invention reduces the amount of computer system memory bandwidth required to receive packets through the use of a retained data buffer. Packet headers and a summary of packet validation results are processed by network stacks within the computer system to identify the intended memory buffer in which the packet payload is to be placed. Packet payload data is directly placed from the retained data buffer to the intended memory buffer without the use of intermediate buffers. A preferred embodiment includes a data retention network interface card (DRNIC) which includes the required data retention buffer for use in direct placement of received data.

Owner:GADELRAB SERAG

Load Misaligned Vector with Permute and Mask Insert

InactiveUS20090037694A1General purpose stored program computerMemory systemsDatapathMemory bandwidth

Embodiments of the invention provide logic within the store data path between a processor and a memory array. The logic may be configured to misalign vector data as it is stored to memory. By misaligning vector data as it is stored to memory, memory bandwidth may be maximized while processing bandwidth required to store vector data misaligned is minimized. Furthermore, embodiments of the invention provide logic within the load data path which allows vector data which is stored misaligned to be aligned as it is loaded into a vector register. By aligning misaligned vector data as it is loaded into a vector register, memory bandwidth may be maximized while processing bandwidth required to align misaligned vector data may be minimized.

Owner:IBM CORP

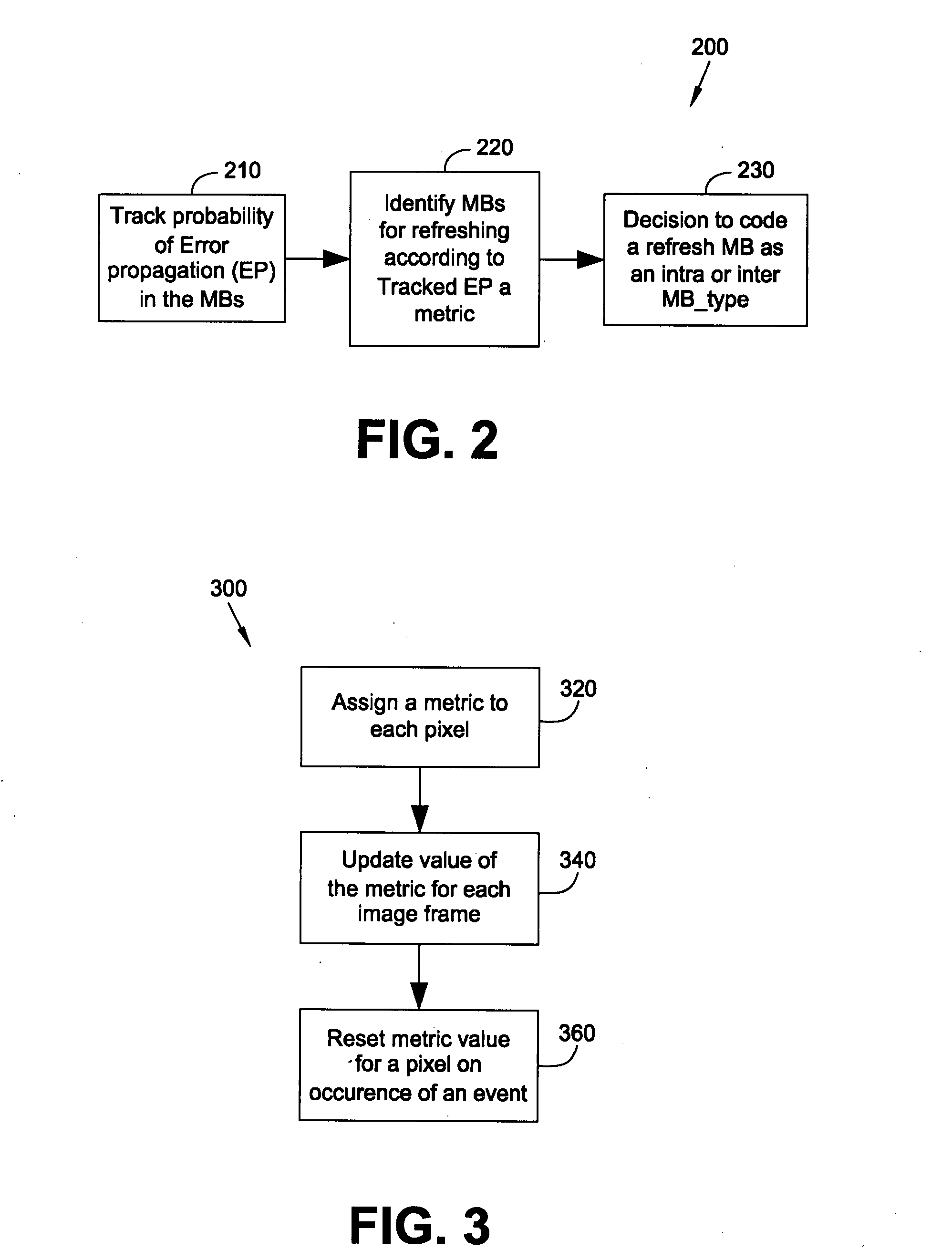

Method and device for tracking error propagation and refreshing a video stream

ActiveUS20080247469A1Reduce probabilityError propagationColor television with pulse code modulationColor television with bandwidth reductionPacket lossInterframe coding

A method and device for tracking error propagation and refreshing a video stream is provided. The proposed subject matter comprises of an error propagation tracking method that works in the sub-sampled domain to reduce computational cycles and memory bandwidth. Further, the tracking based update of the error propagation metric is done differently for static and non-static regions to avoid unnecessary refresh of static areas. Through suitable thresholding of the metric at a macroblock (MB) level, a set of refresh MBs are selected for each frame. These refresh MBs are coded either as an intra MB or as an inter MB that is predicted from one or more reliable reference frames (—frames that are known to be available at the decoder with negligible errors—). Such inter coding of refresh MBs improves the compression efficiency when compared to pure intra coding of refresh MBs. Further, variants to the threshold selection are presented that result in temporally uniform distribution of the number of refresh MBs and a strict refresh scheme wherein all MBs are guaranteed to be with negligible errors following a packet loss within a committed refresh period. In addition, to using the error propagation metric, spatial connectivity to already chosen refresh MBs is used in the selection of additional refresh MBs within a frame and across frames; this reduces the rate of error propagation due to part of a macroblock predicting from older, erroneous neighboring MBs and in turn requiring more refresh MBs on the average per frame.

Owner:ITTIAM SYST P

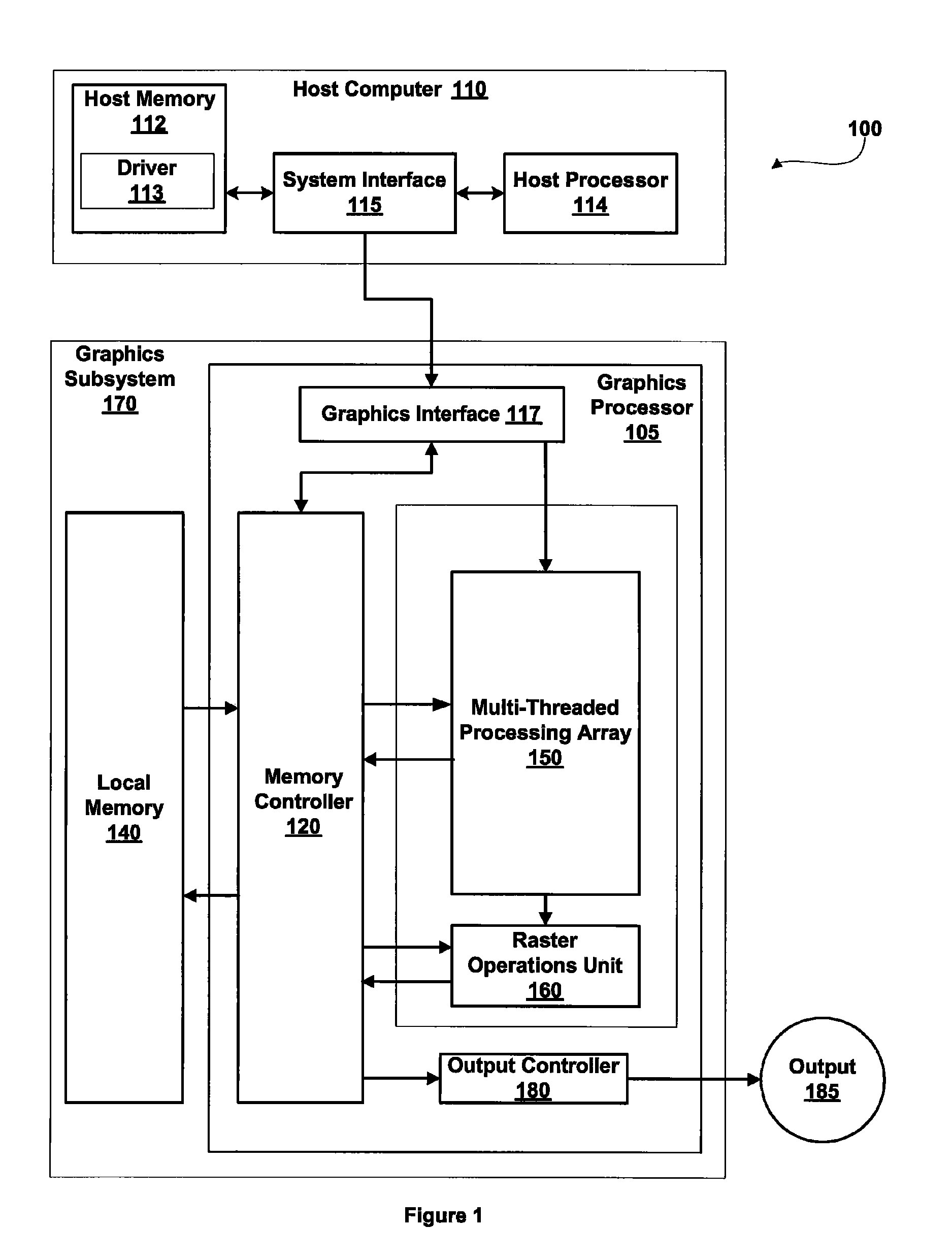

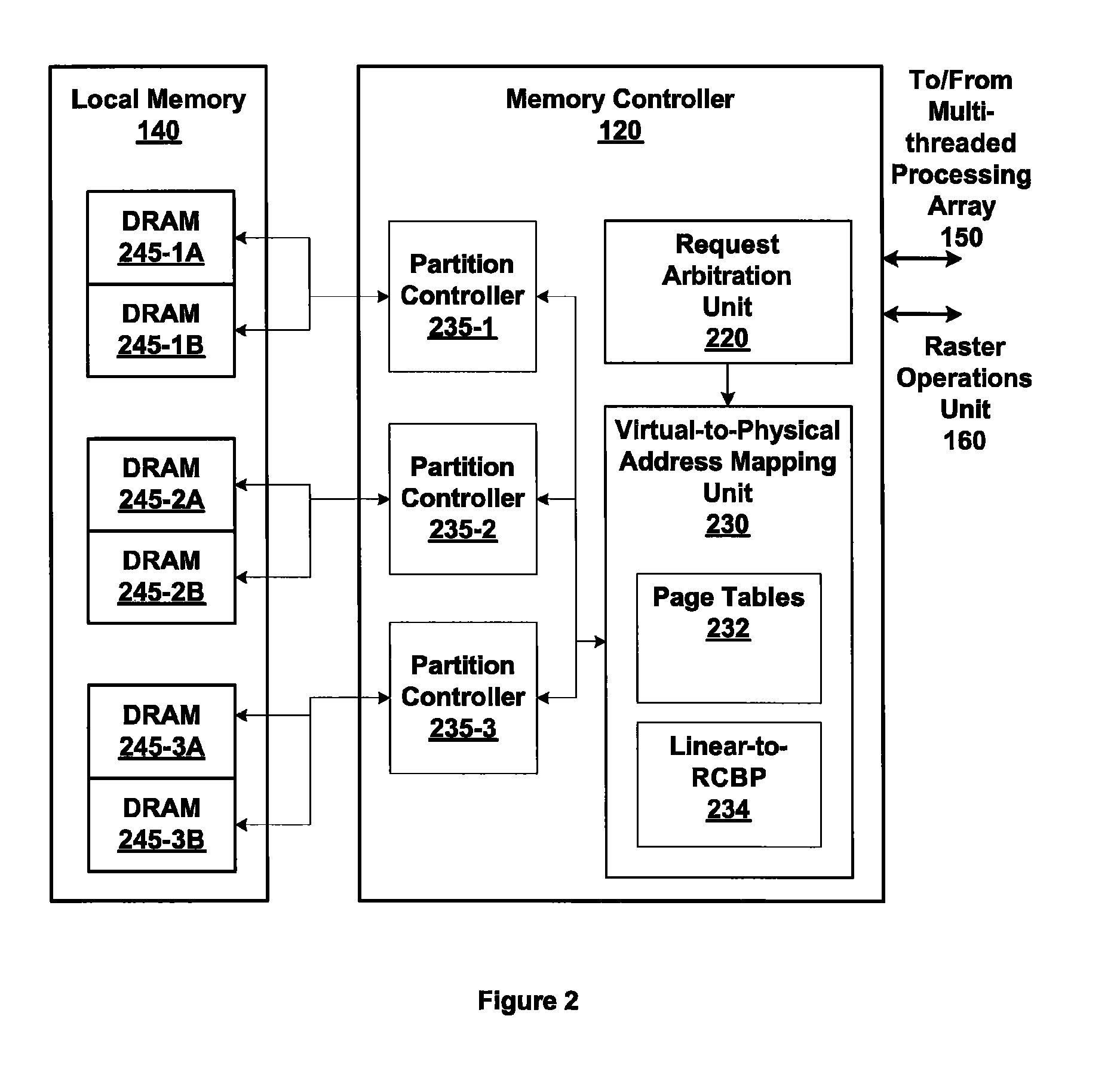

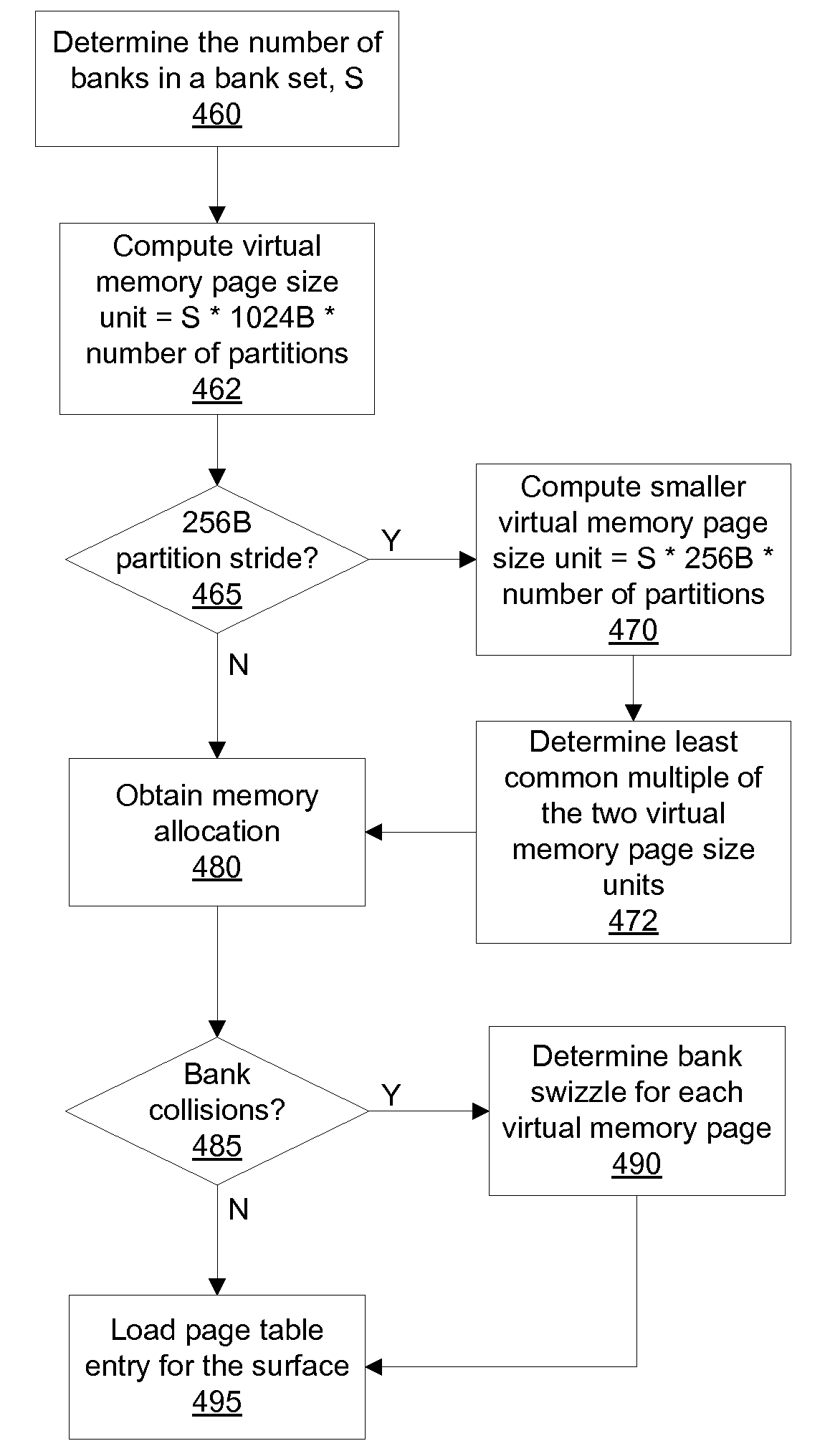

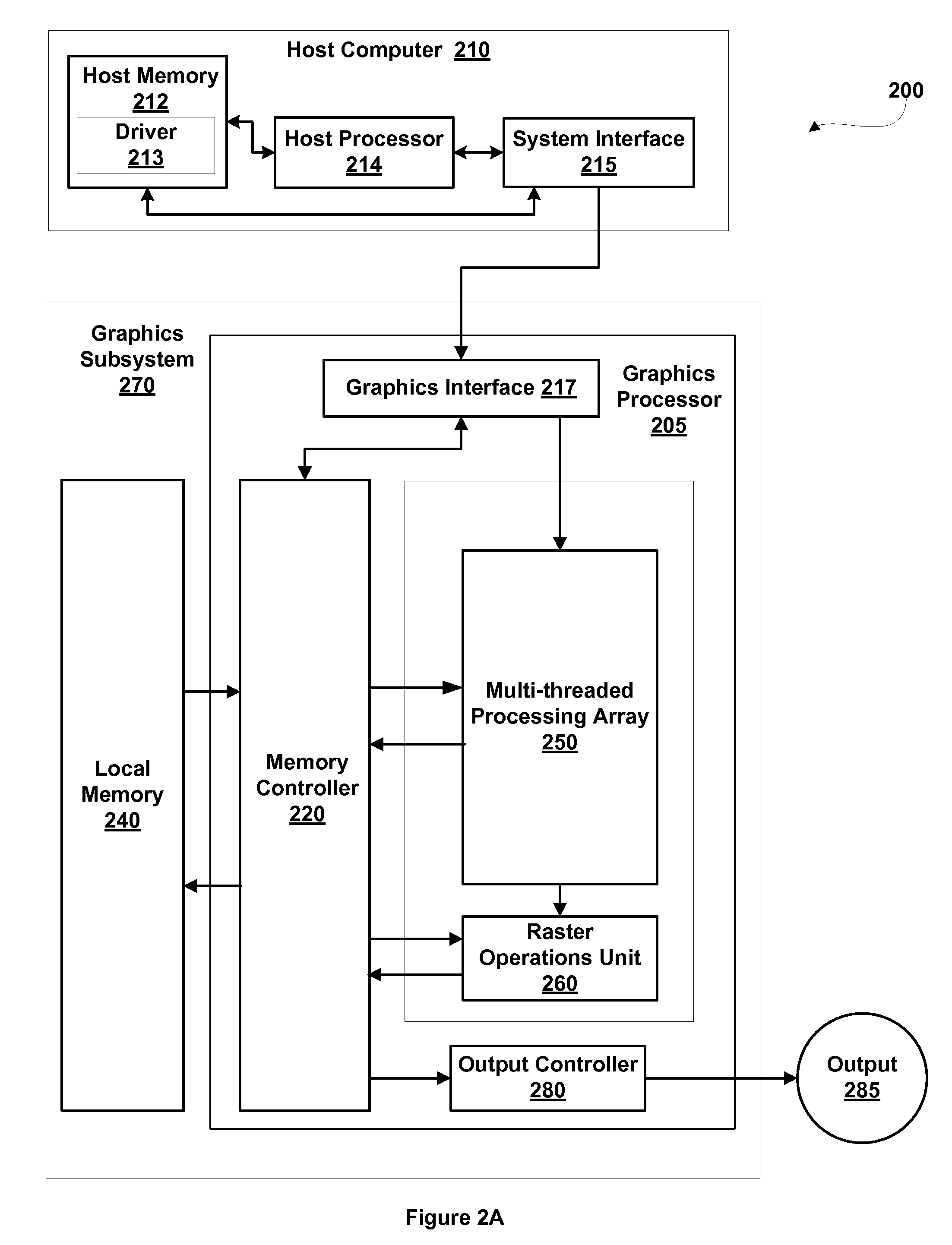

Mapping memory partitions to virtual memory pages

ActiveUS7620793B1Improve graphic memory bandwidthHigh bandwidthMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryGraphics

Systems and methods for addressing memory using non-power-of-two virtual memory page sizes improve graphics memory bandwidth by distributing graphics data for efficient access during rendering. Various partition strides may be selected for each virtual memory page to modify the number of sequential addresses mapped to each physical memory partition and change the interleaving granularity. The addressing scheme allows for modification of a bank interleave pattern for each virtual memory page to reduce bank conflicts and improve memory bandwidth utilization. The addressing scheme also allows for modification of a partition interleave pattern for each virtual memory page to distribute accesses amongst multiple partitions and improve memory bandwidth utilization.

Owner:NVIDIA CORP

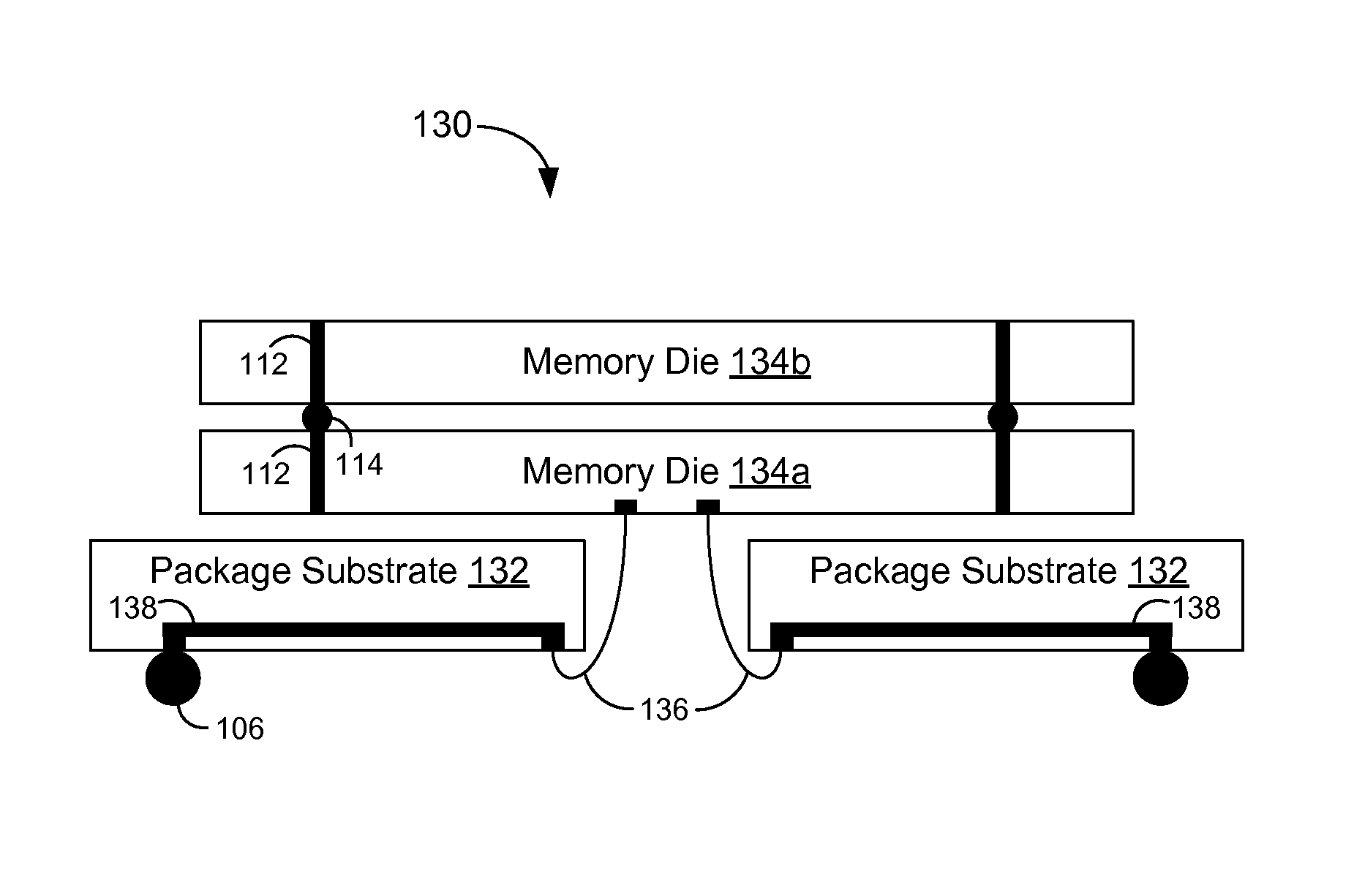

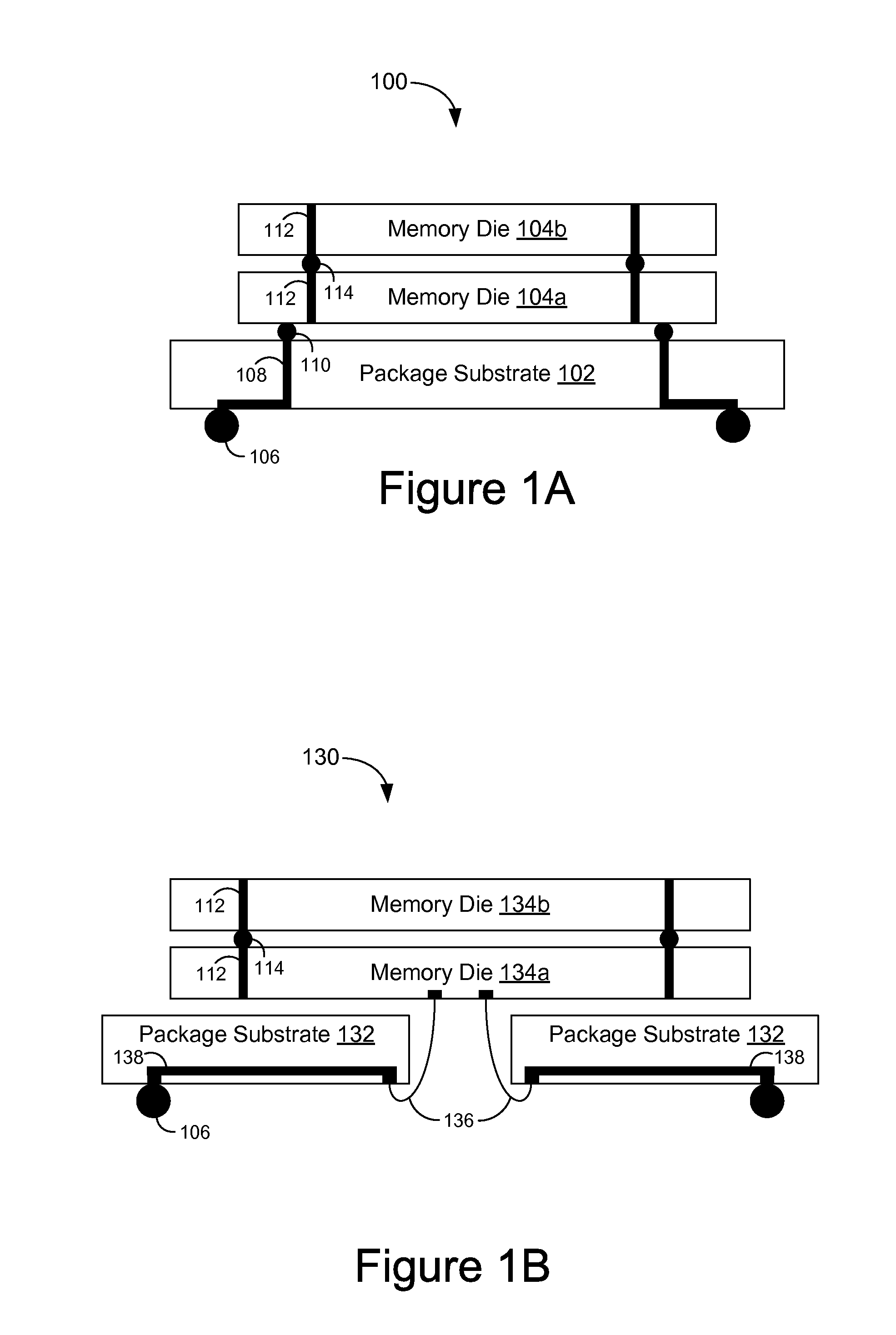

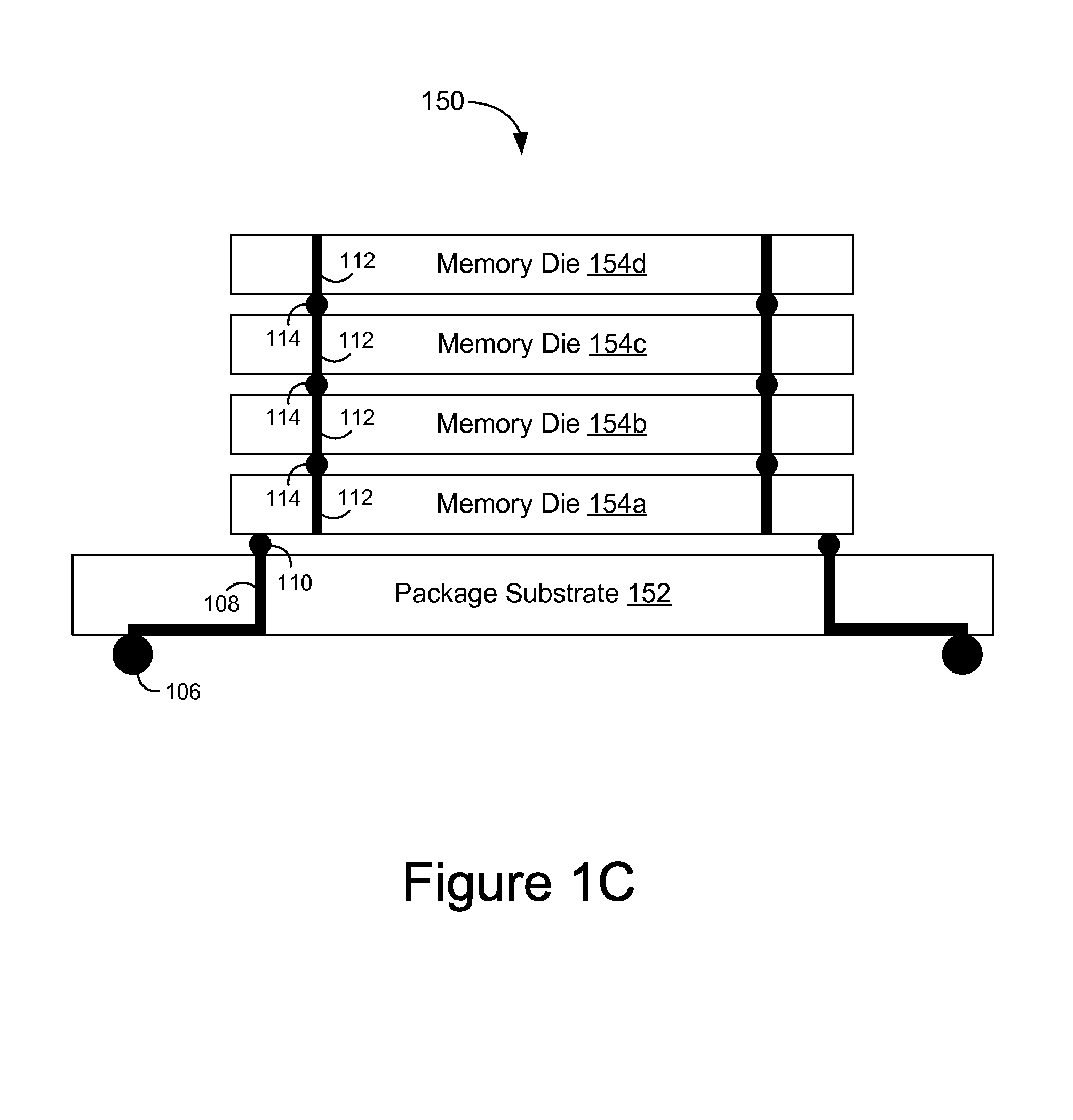

Memory bandwidth aggregation using simultaneous access of stacked semiconductor memory die

A packaged semiconductor device includes a data pin, a first memory die, and a second memory die stacked with the first memory die. The first memory die includes a first data interface coupled to the data pin and a first memory core having a plurality of banks. The second memory die includes a second memory core having a plurality of banks. A respective bank of the first memory core and a respective bank of the second memory core perform parallel row access operations in response to a first command signal and parallel column access operations in response to a second command signal. The first data interface of the first die provides aggregated data from the parallel column access operations in the first and second die to the data pin.

Owner:RAMBUS INC

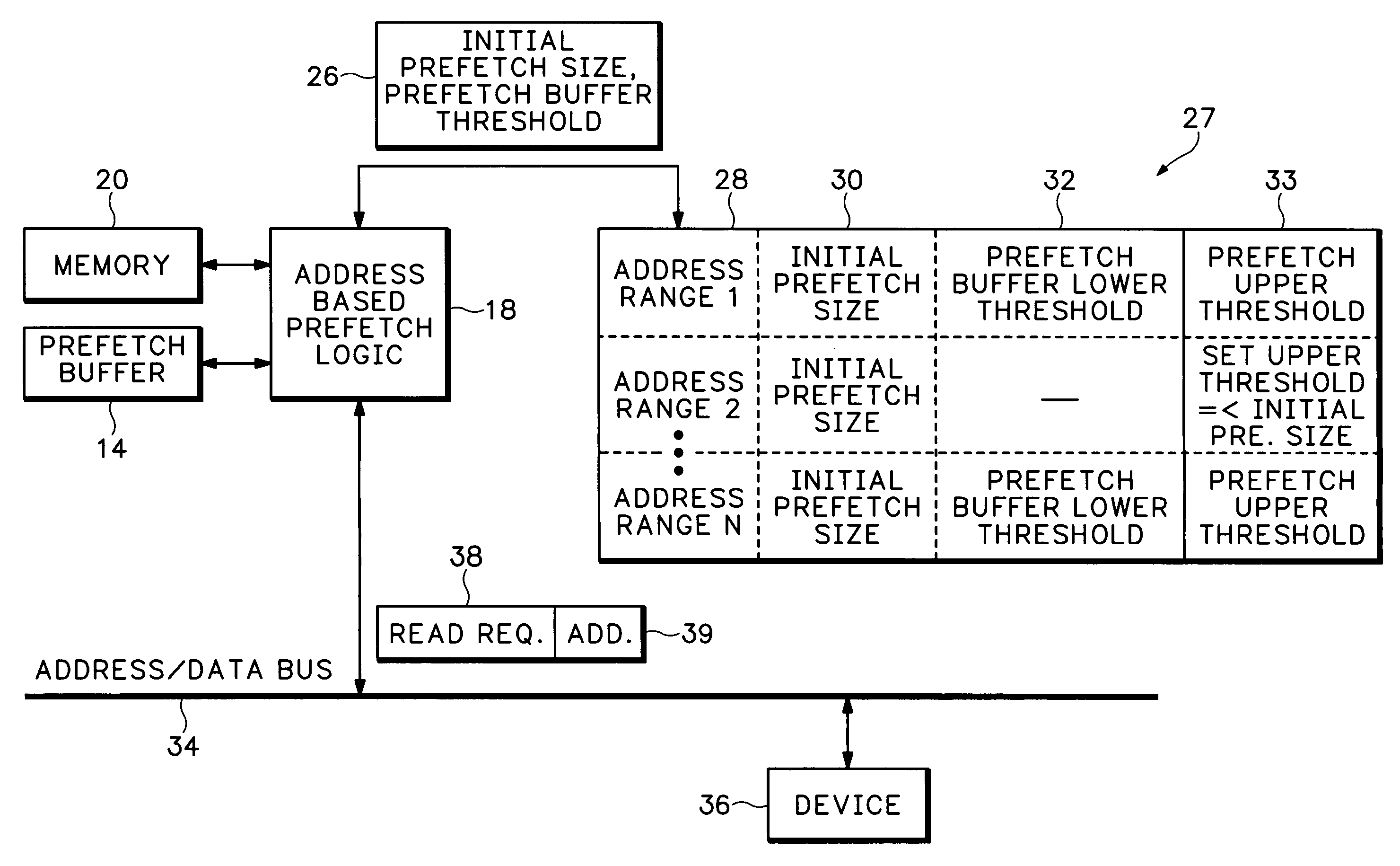

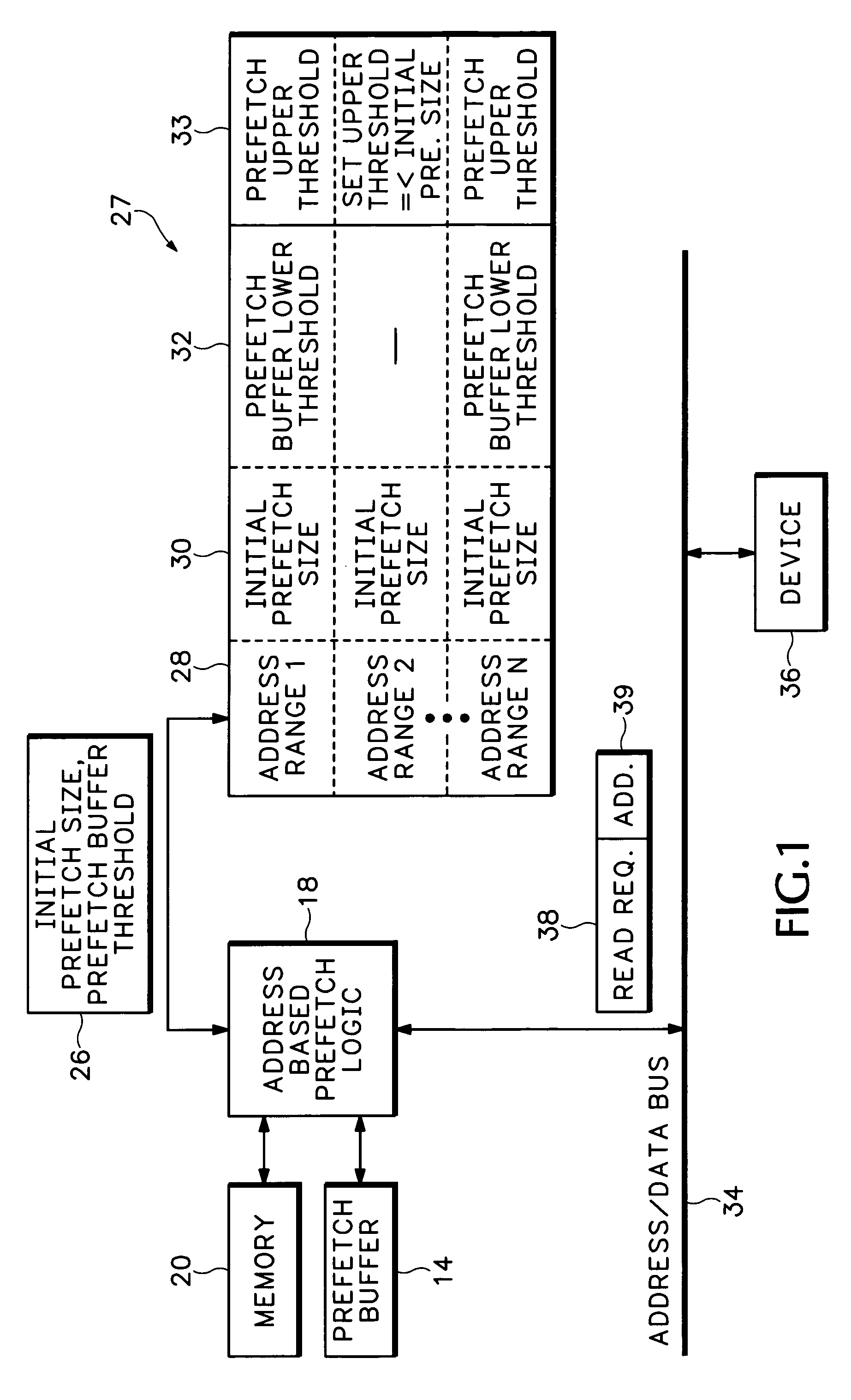

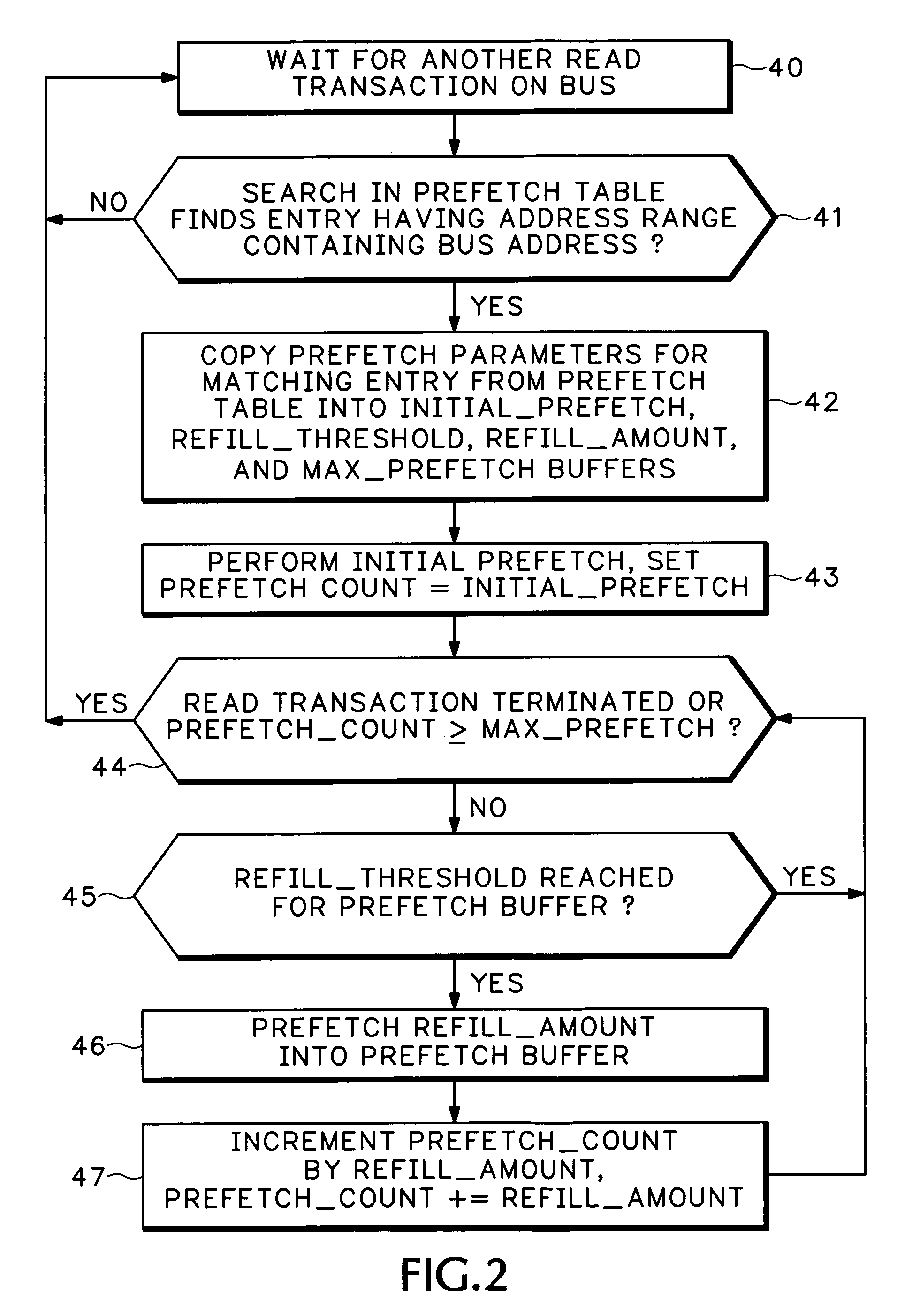

Method and apparatus for optimizing prefetching based on memory addresses

InactiveUS6963954B1Reduces unnecessary prefetchingPreserving system resourcesMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory addressMemory bandwidth

Address based prefetch logic varies prefetching according to address values in read requests. The address based prefetch logic can vary how much data is initially read into a prefetch buffer or when a prefetch buffer is refilled to an initial prefetch amount. One advantage of the address based prefetch logic is that prefetching and prefetch buffer refill rates are tuned for particular application. This is important since the system controller ordinarily does not know how much data the master is requesting beyond the first data phase. The requested read address is used as a hint to determine how much prefetching needs to occur. Over prefetching wastes memory bandwidth, and potentially adds latency to other masters sharing common busses. Under prefetching may cause the system controller that is acting as a PCI target to terminate the master's read request, thus wasting PCI bandwidth, adding latency.

Owner:CISCO TECH INC

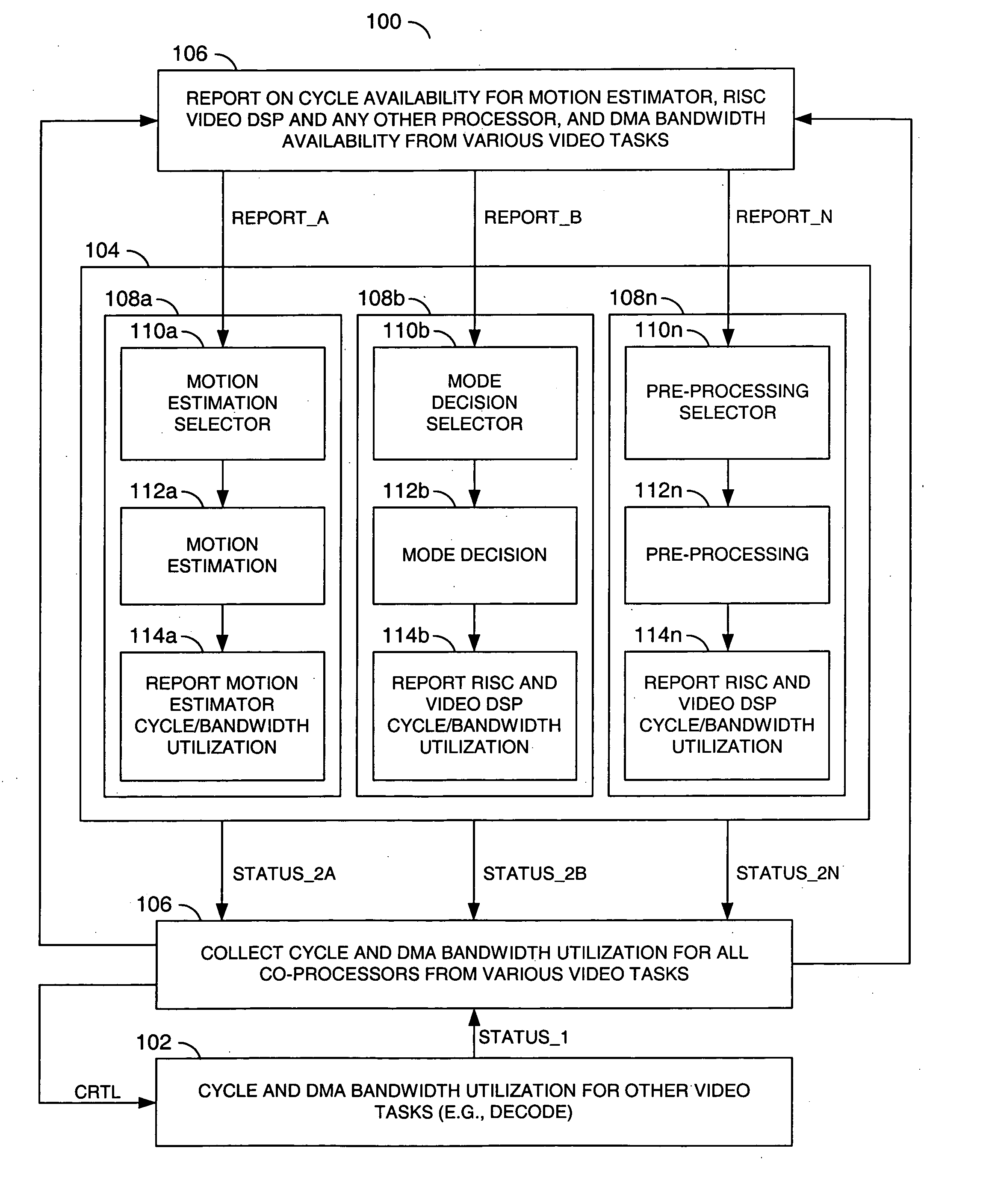

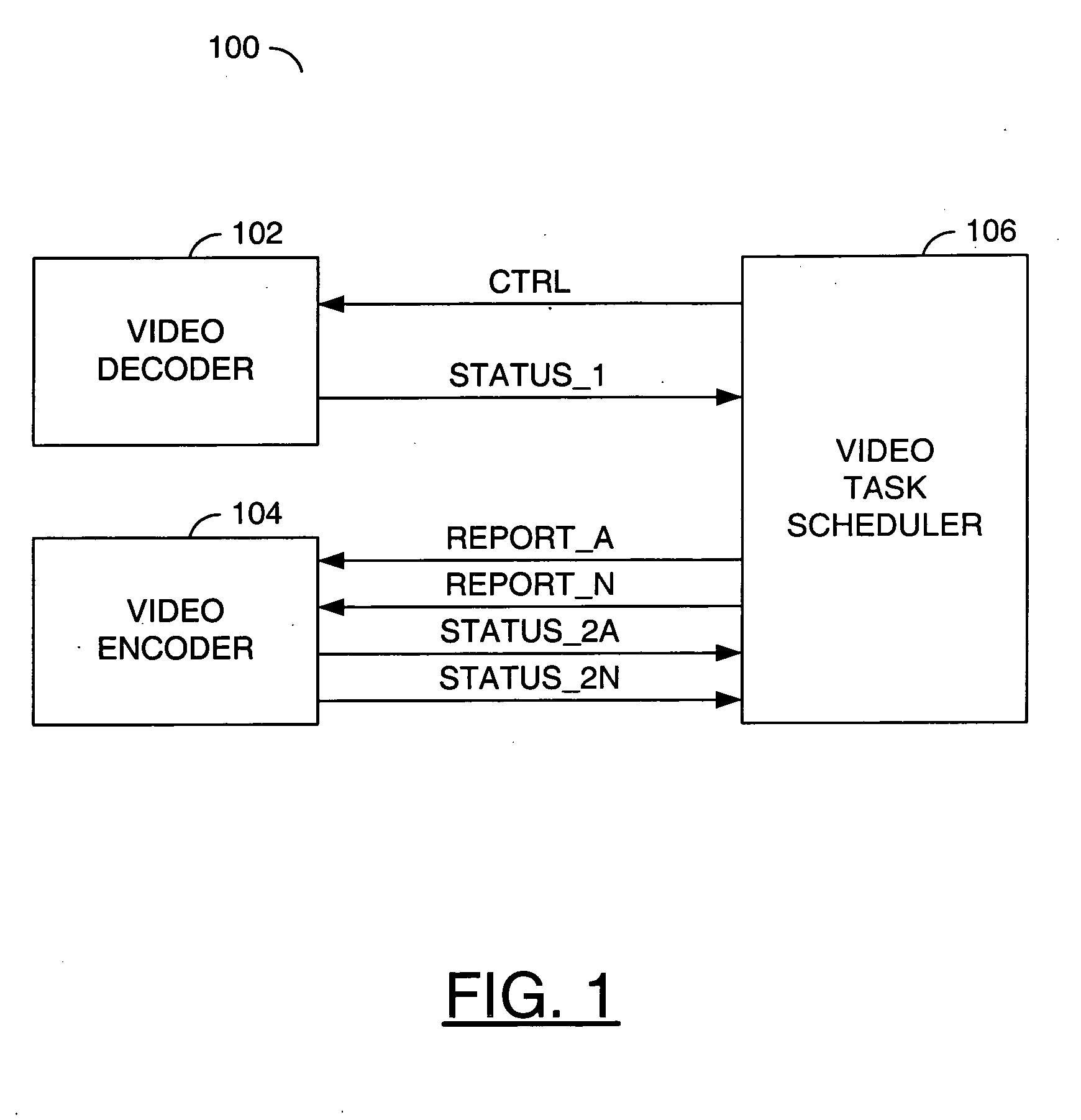

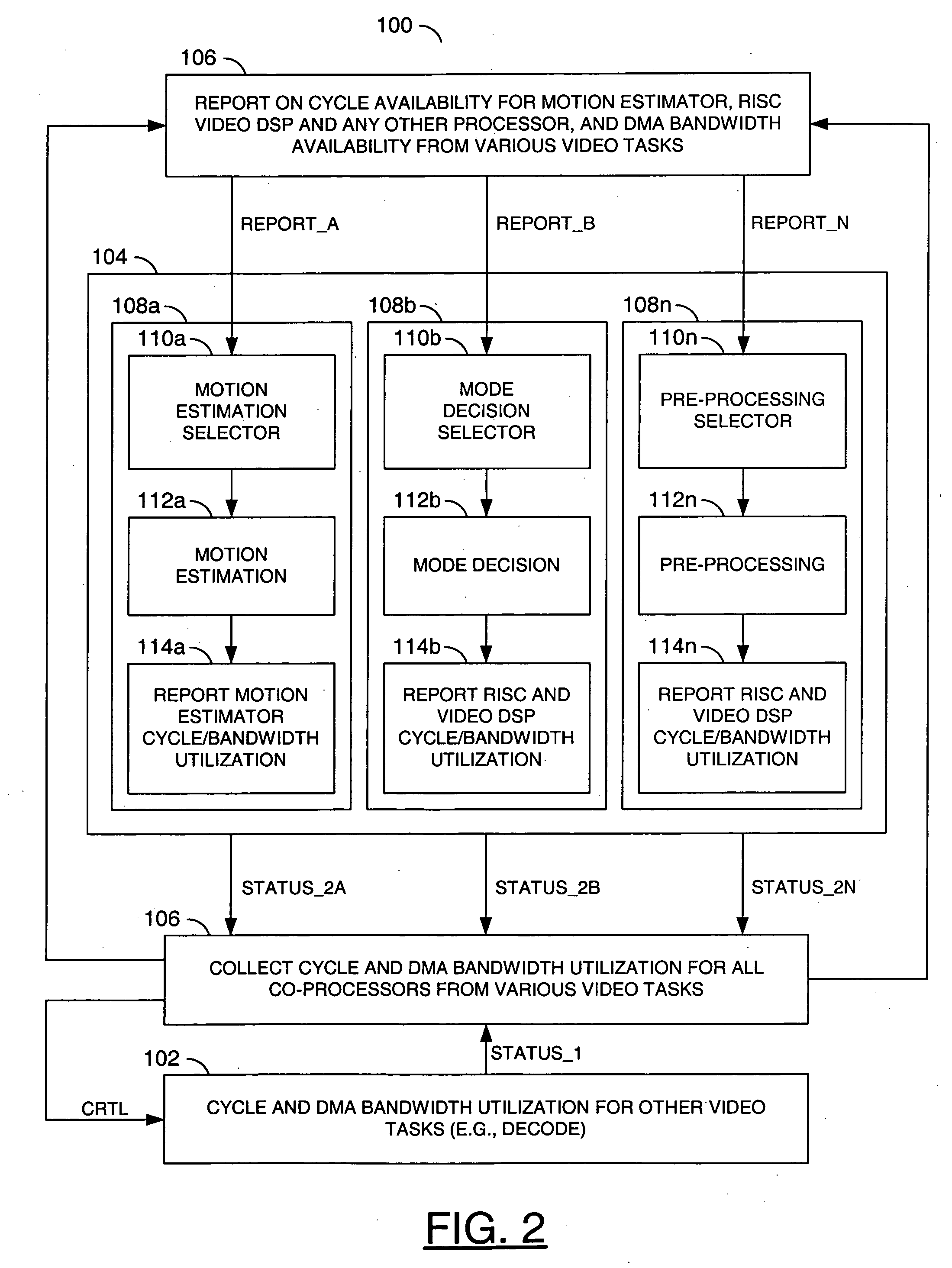

Performance adaptive video encoding with concurrent decoding

ActiveUS20070030898A1Improve video qualityImprove codec efficiencyColor television with pulse code modulationColor television with bandwidth reductionComputer architectureControl signal

An encoder circuit, a task scheduler circuit and a decoder circuit. The encoder circuit may be configured to (i) generate one or more first status signals in response to one or more report signals and (ii) perform video encoding tasks based on available central processing unit (CPU) cycles and memory bandwidth. The task scheduler circuit may be configured to (i) generate a control signal and the one or more report signals in response to the one or more first status signals. The decoder circuit may be configured to (i) generate one or more second status signals and (ii) perform concurrent decoding while the encoder circuit perform adaptive video encoding tasks in response to the control signal.

Owner:AVAGO TECH INT SALES PTE LTD

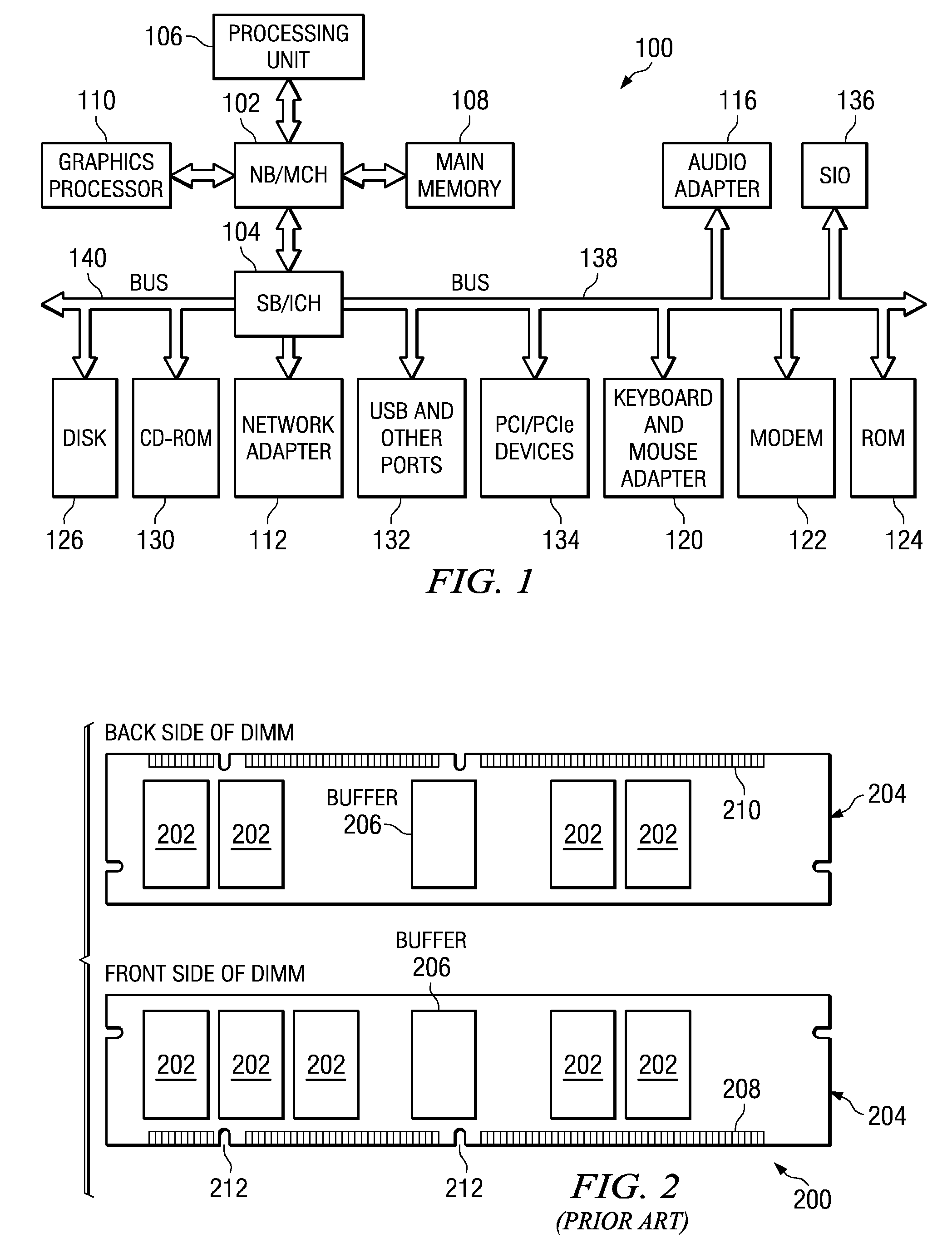

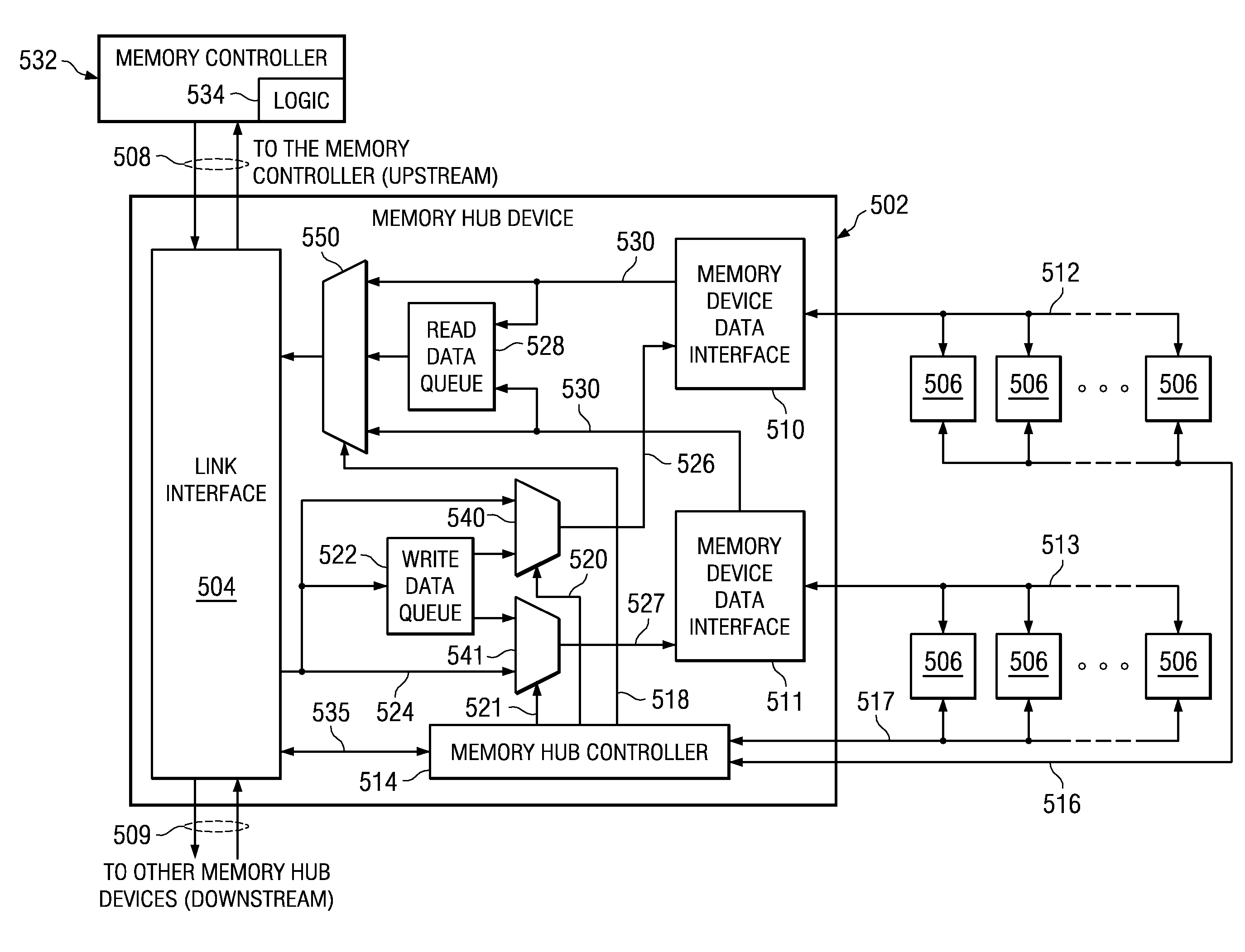

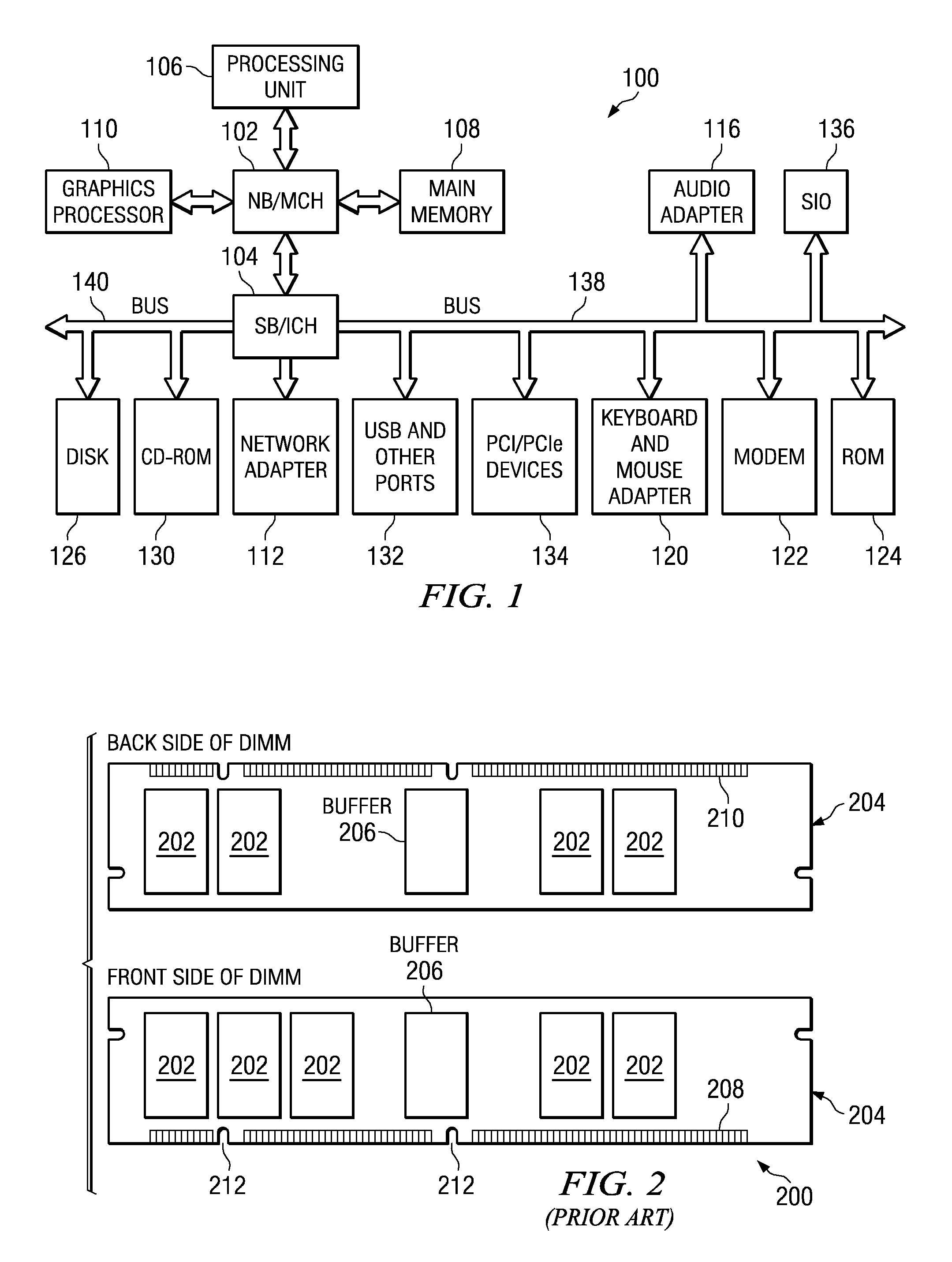

Buffered Memory Module Supporting Double the Memory Device Data Width in the Same Physical Space as a Conventional Memory Module

InactiveUS20090063785A1Increased memory bandwidthDouble storage capacityFinal product manufacturePrinted circuit aspectsConventional memoryPhysical space

A memory system is provided that enhances the memory bandwidth available through a memory module. The memory system includes a memory hub device integrated into a memory module, a first memory device data interface integrated that communicates with a first set of memory devices and a second memory device data interface integrated that communicates with a second set of memory devices. In the memory system, the first set of memory devices are spaced in a first plane and coupled to a substrate of the memory module and the second set of memory devices are spaced in a second plane above the first plane and coupled to the substrate. In the memory system, data buses of the first set of memory devices are coupled to the substrate separately from data buses of the second set of memory devices.

Owner:IBM CORP

In-order delivery of plurality of RDMA messages

InactiveUS20050144310A1Increase probabilityAttenuation bandwidthMultiple digital computer combinationsTransmissionConnection typeData placement

An RNIC implementation that performs direct data placement to memory where all segments of a particular connection are aligned, or moves data through reassembly buffers where all segments of a particular connection are non-aligned. The type of connection that cuts-through without accessing the reassembly buffers is referred to as a “Fast” connection because it is highly likely to be aligned, while the other type is referred to as a “Slow” connection. When a consumer establishes a connection, it specifies a connection type. The connection type can change from Fast to Slow and back. The invention reduces memory bandwidth, latency, error recovery using TCP retransmit and provides for a “graceful recovery” from an empty receive queue. The implementation also may conduct CRC validation for a majority of inbound DDP segments in the Fast connection before sending a TCP acknowledgement (Ack) confirming segment reception.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Method and apparatus to change the operating frequency of system core logic to maximize system memory bandwidth

InactiveUS20050166073A1Energy efficient ICTVolume/mass flow measurementData processing systemMulti processor

A method, apparatus, and computer instructions for changing an operating frequency for a system core logic used to interface to memory in the multi-processor data processing system. A determination is made as to whether the operating frequency should be changed from a default frequency to another frequency. Slave processors are placed in the multi-processor data processing system into a non-transactional mode, in response to determining the operating frequency should be changed from the default operating frequency to the another operating frequency. When the slave processors are in the non-transactional mode, the operating frequency is changed in the system core logic to other operating frequency by the master processor.

Owner:IBM CORP

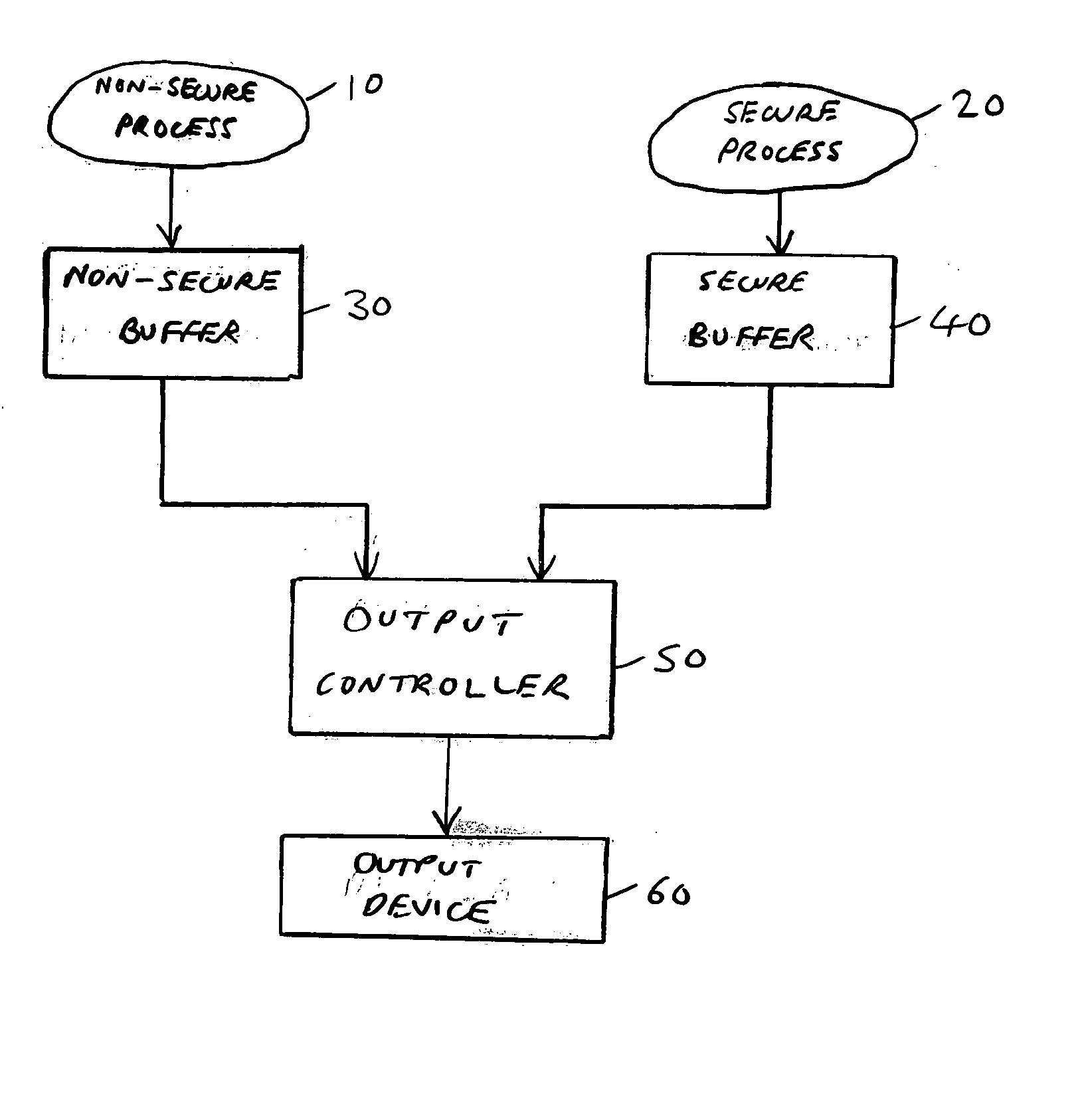

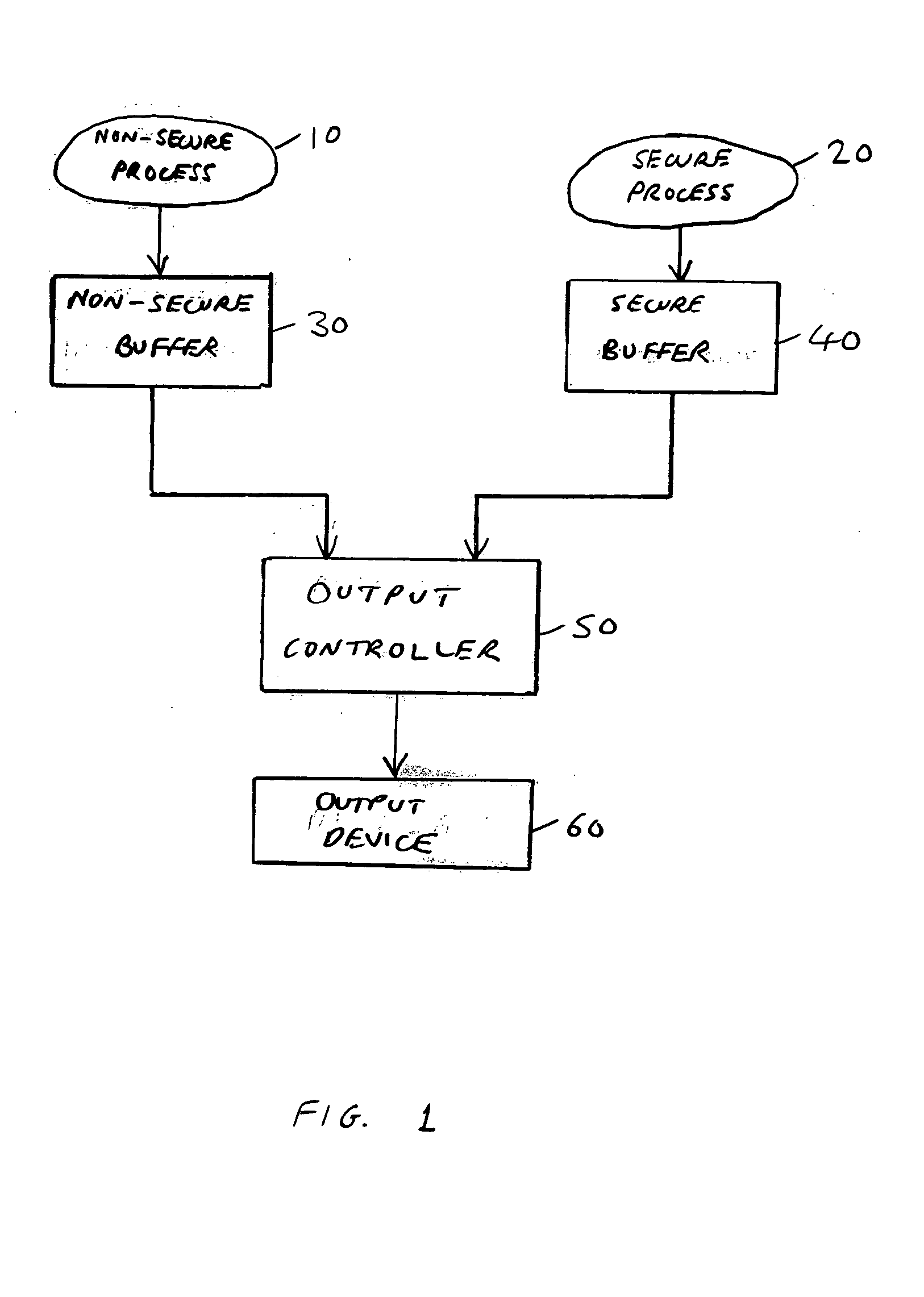

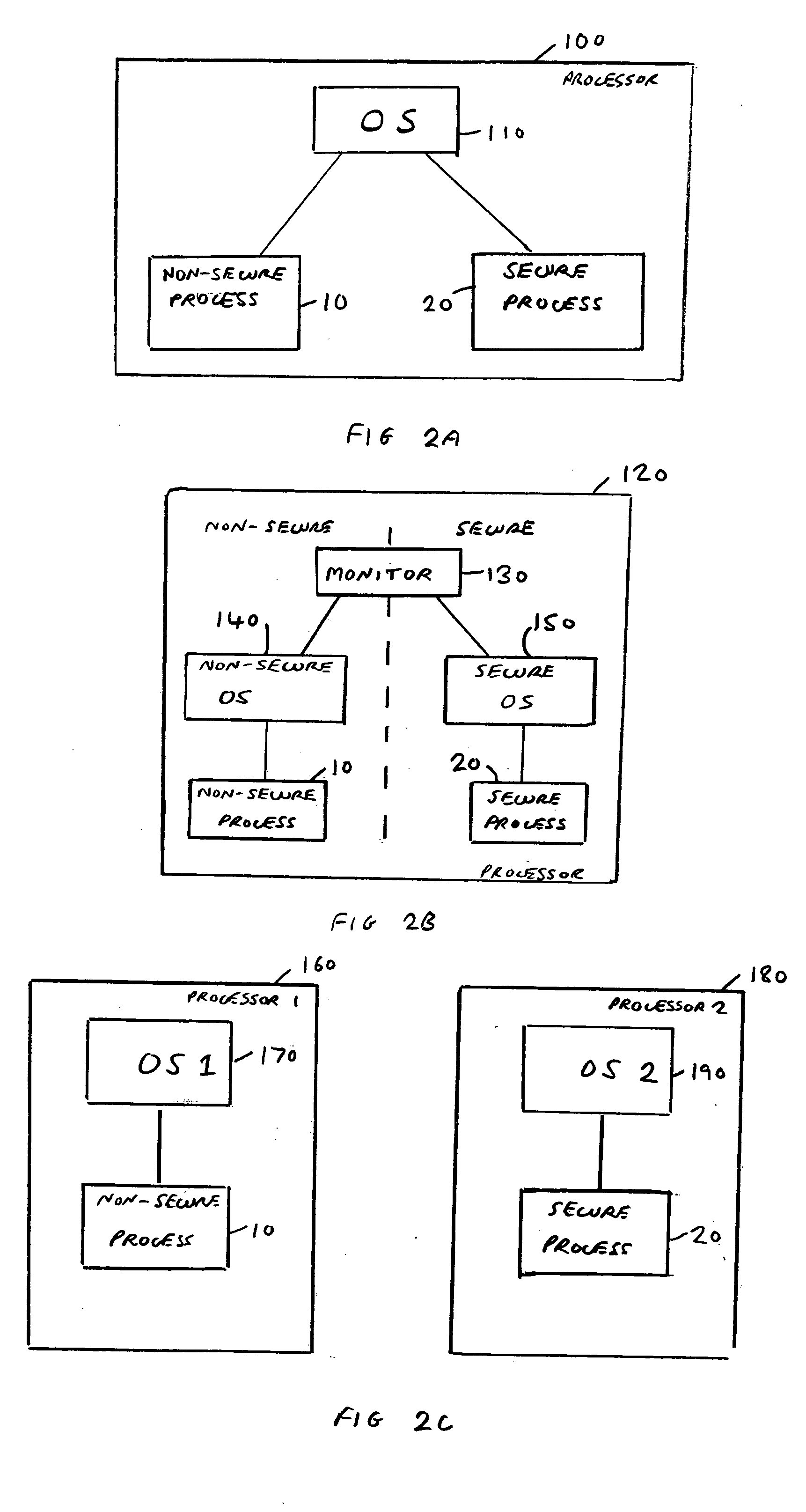

Data processing apparatus and method for merging secure and non-secure data into an output data stream

ActiveUS20050097341A1Reduce memory bandwidthReduce processing requirementsDigital data processing detailsUser identity/authority verificationData streamMemory bandwidth

The present invention provides a data processing apparatus and method for merging secure and non-secure data. The apparatus comprises at least one processor operable to execute a non-secure process to produce non-secure data to be included in an output data stream, and to execute a secure process to produce secure data to be included in the output data stream. A non-secure buffer is provided for receiving the non-secure data produced by the non-secure process, and in addition a secure buffer is provided for receiving the secure data produced by the secure process, the secure buffer not being accessible by the non-secure process. An output controller is then arranged to read the non-secure data from the non-secure buffer and the secure data from the secure buffer, and to merge the non-secure data and the secure data in order to produce a combined data stream, the output data stream then being derivable from the combined data stream. It has been found that such an approach assists in improving the security of the secure data, and in reducing memory bandwidth requirements and the processing requirements of the processor.

Owner:ARM LTD

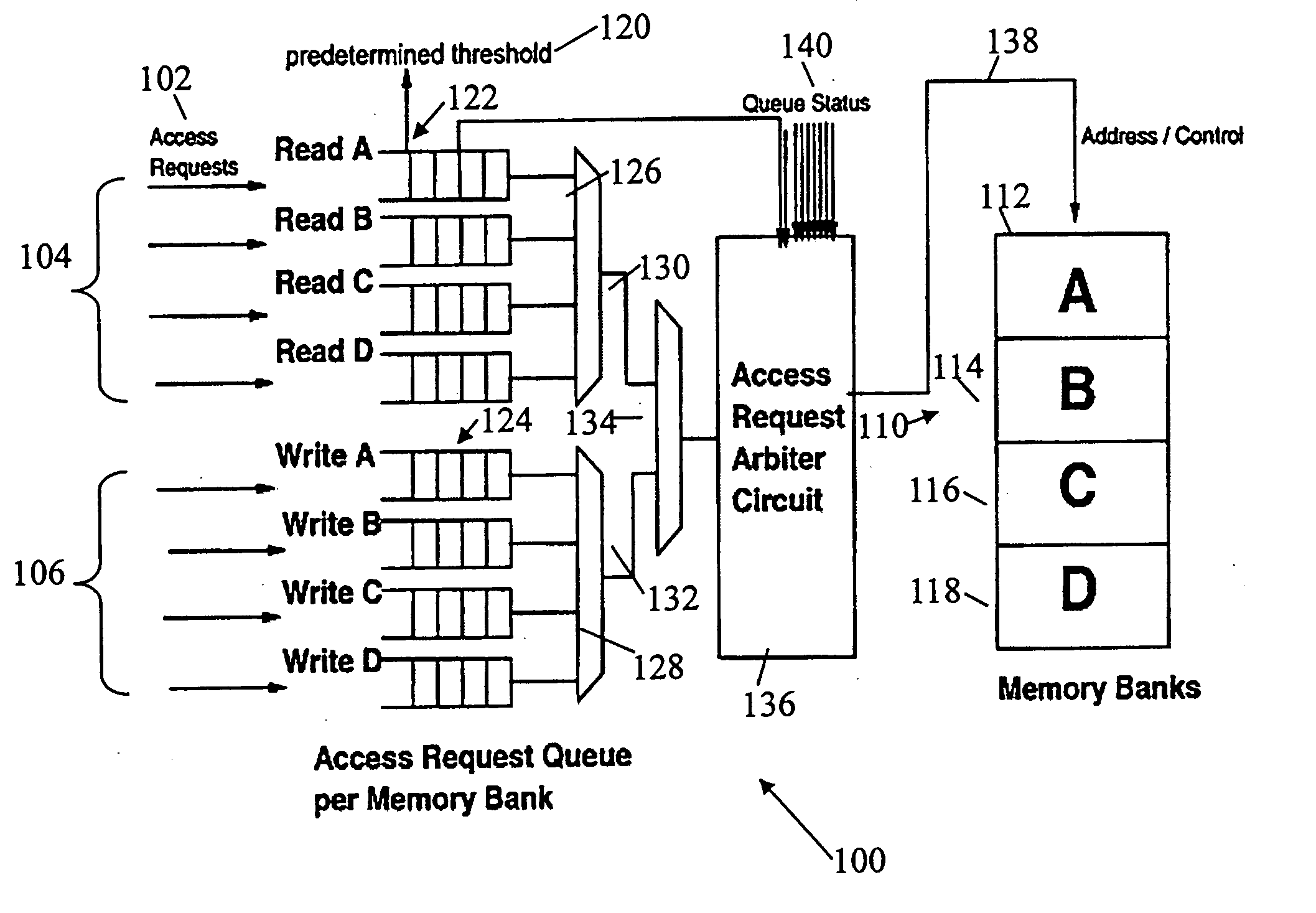

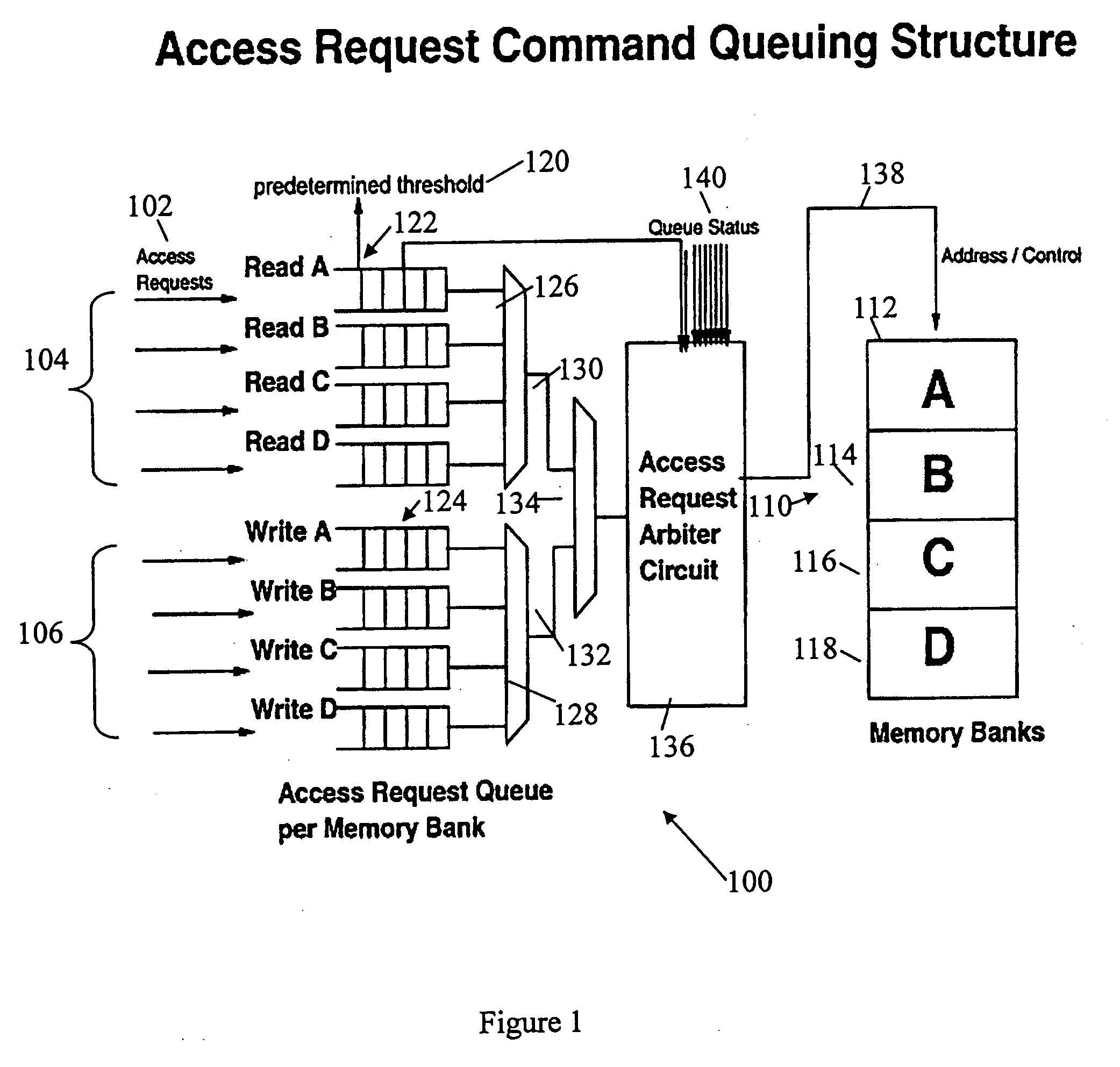

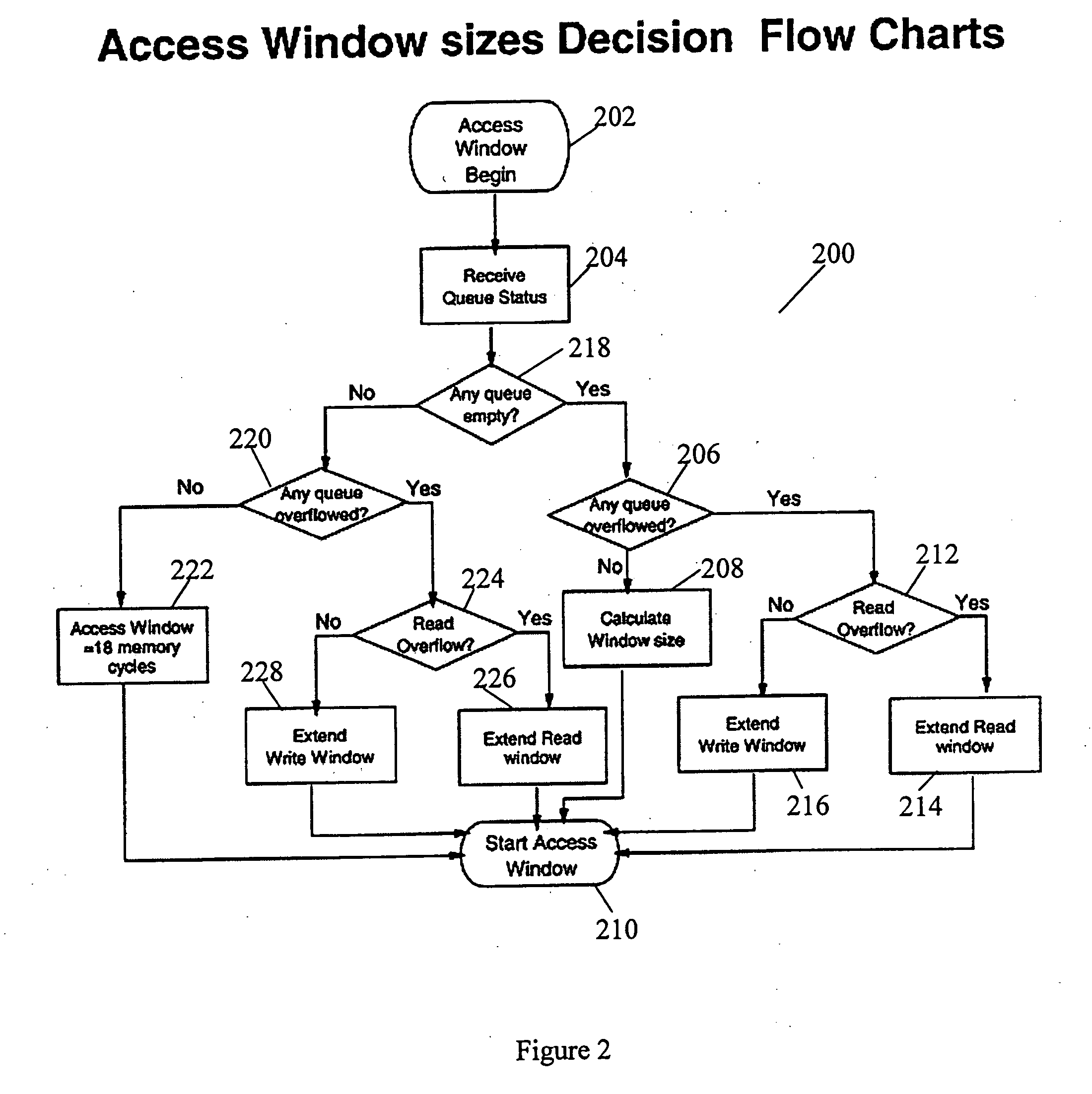

DRAM access command queuing structure

ActiveUS20060026342A1Solve insufficient bandwidthAvoid bottleneck and conflictMemory adressing/allocation/relocationMemory systemsMemory bankDram memory

Access arbiters are used to prioritize read and write access requests to individual memory banks in DRAM memory devices, particularly fast cycle DRAMs. This serves to optimize the memory bandwidth available for the read and the write operations by avoiding consecutive accesses to the same memory bank and by minimizing dead cycles. The arbiter first divides DRAM accesses into write accesses and read accesses. The access requests are divided into accesses per memory bank with a threshold limit imposed on the number of accesses to each memory bank. The write receive packets are rotated among the banks based on the write queue status. The status of the write queue for each memory bank may also be used for system flow control. The arbiter also typically includes the ability to determine access windows based on the status of the command queues, and to perform arbitration on each access window.

Owner:GLOBALFOUNDRIES US INC

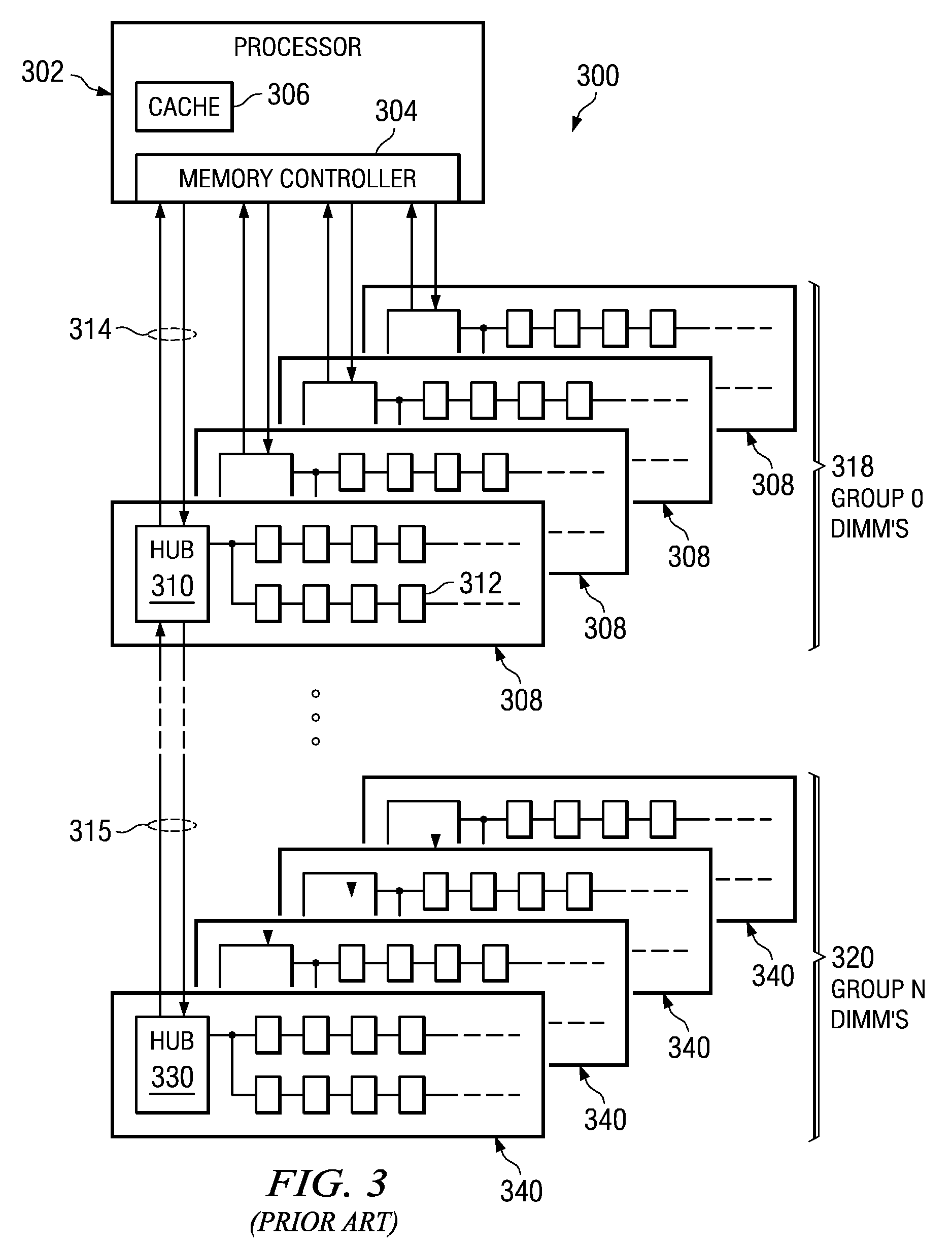

Buffered Memory Module Supporting Two Independent Memory Channels

InactiveUS20090063761A1Increased memory bandwidthDouble storage capacityMemory systemsChannel couplingMemory controller

A memory system is provided that enhances the memory bandwidth available through a memory module. The memory system includes a memory controller and a memory module coupled to the memory controller. In the memory system, the memory controller is coupled to the memory module via at least two independent memory channels. In the memory system, the at least two independent memory channels are coupled to one or more memory hub devices of the memory module.

Owner:IBM CORP

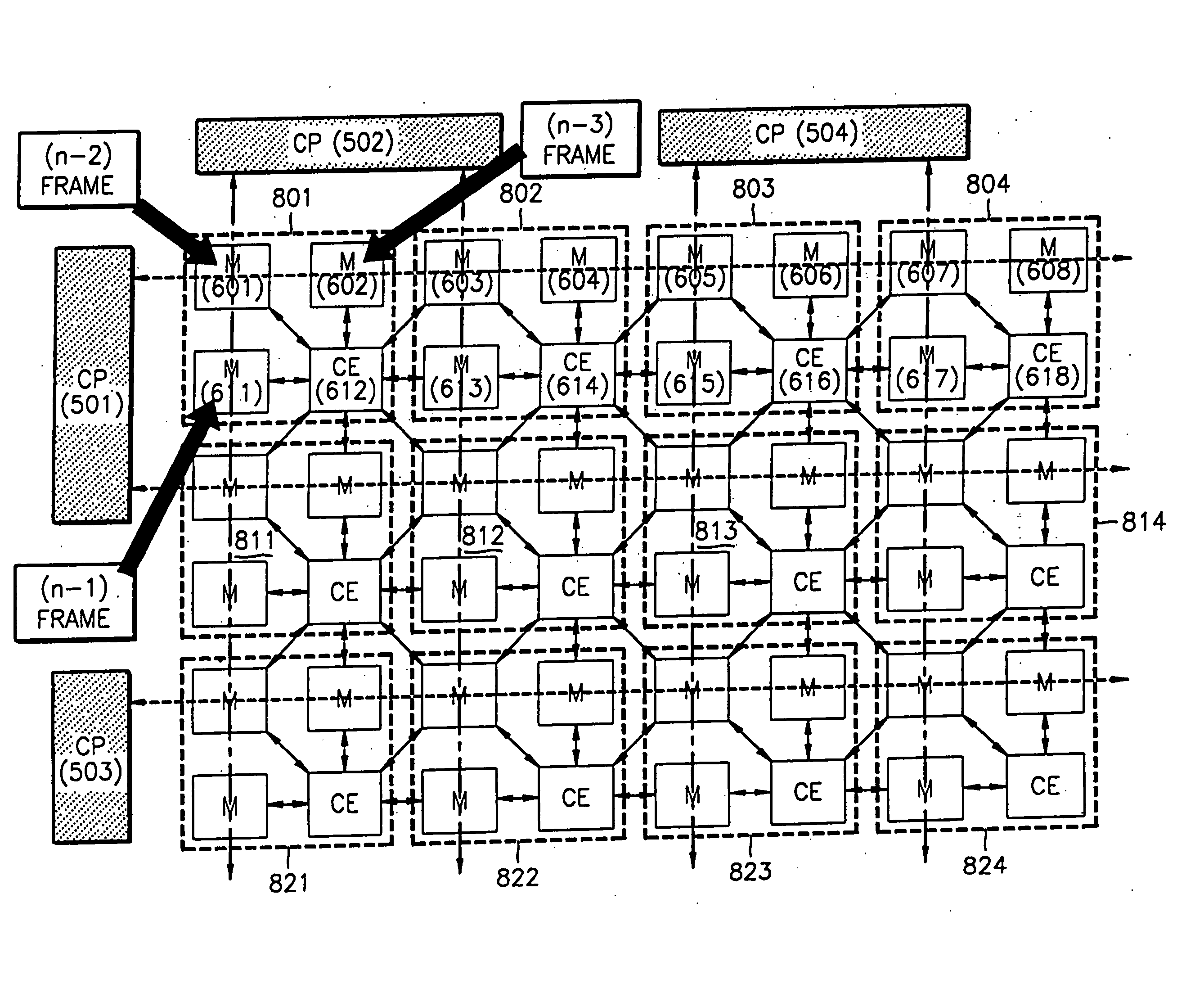

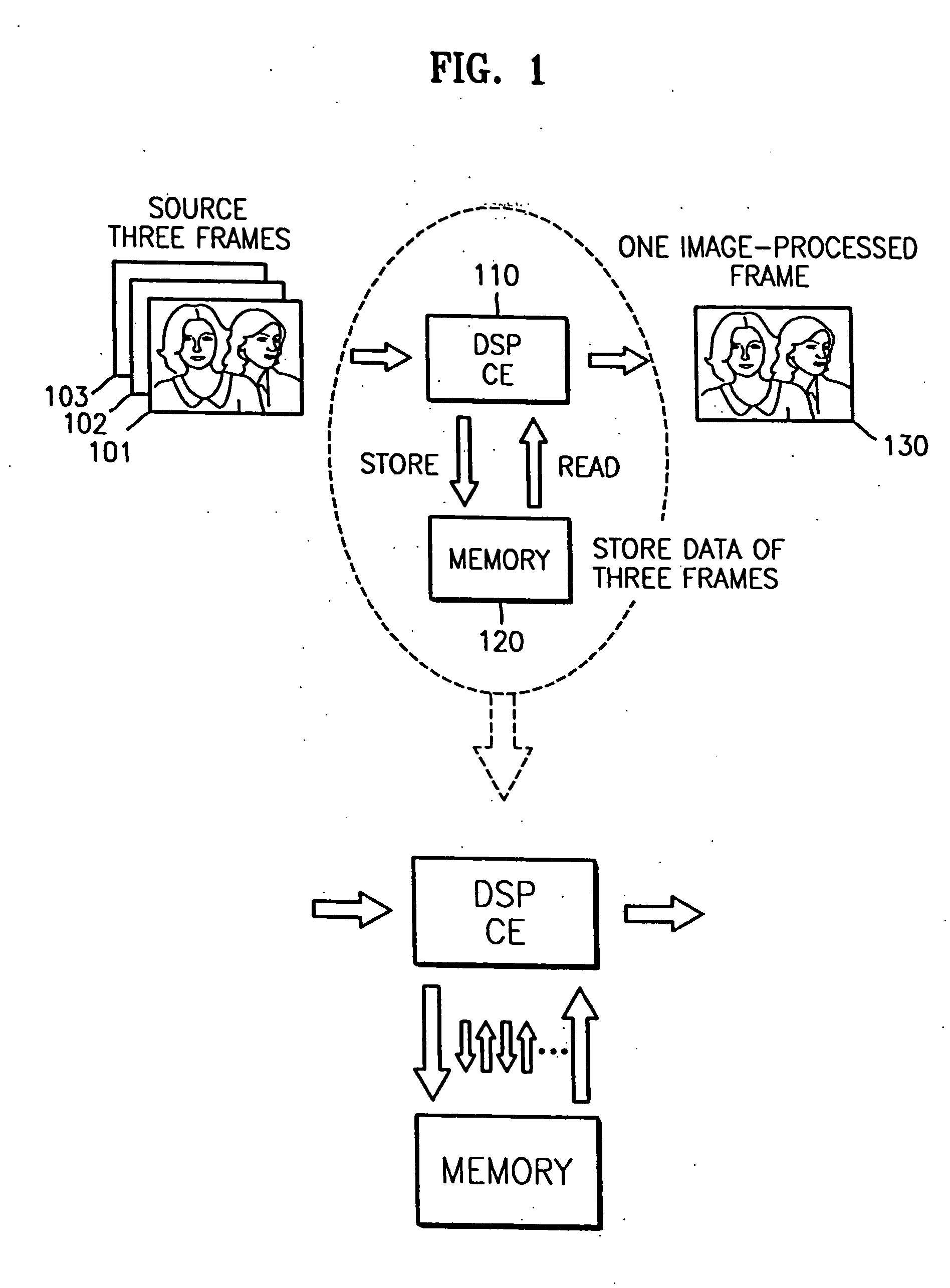

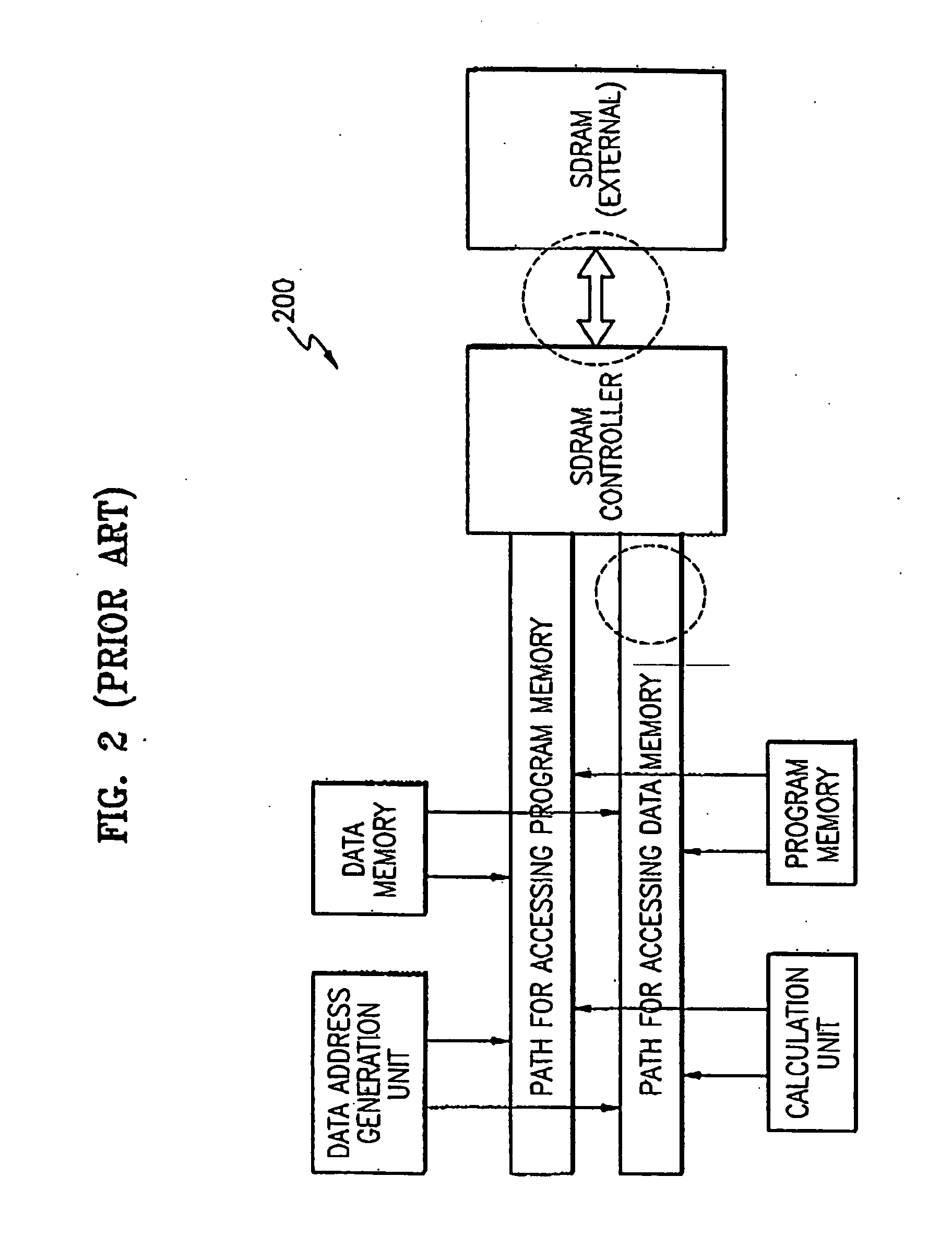

DSP (digital signal processing) architecture with a wide memory bandwidth and a memory mapping method thereof

InactiveUS20050083338A1Reduce memory access timeIncrease data rateSingle instruction multiple data multiprocessorsImage data processing detailsDsp architectureComputer architecture

A DSP (Digital Signal Processing) architecture with a wide memory bandwidth and a memory mapping method thereof. The DSP architecture includes: a first communication port; first, second, and third memory devices, which are connected with the first communication port and are arranged in a first row direction of the DSP architecture; a fourth memory device, a calculation element, and a fifth memory device, which are arranged in a second row direction below a first row direction of the DSP architecture; and sixth, seventh, and eighth memory devices, which are connected with the first communication port and arranged in a third row direction of the DSP architecture, wherein the calculation element is connected with the first through the eight memory devices. In the DSP architecture, the calculation element and the first through the eighth memory devices form one arrangement unit, wherein the calculation element is disposed in the center of the arrangement unit, the first through the eighth memory devices are connected to the calculation element, and a plurality of arrangement units are arranged in row directions and column directions of the DSP architecture. Therefore, since a wide data bandwidth is provided between the calculation element of the DSP architecture and the memory devices, it is possible to reduce memory access times when data is processed, and accordingly, to process data with a high data rate, such as a moving image with a high resolution.

Owner:SAMSUNG ELECTRONICS CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com