Patents

Literature

279 results about "Scratchpad memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Scratchpad memory (SPM), also known as scratchpad, scratchpad RAM or local store in computer terminology, is a high-speed internal memory used for temporary storage of calculations, data, and other work in progress. In reference to a microprocessor ("CPU"), scratchpad refers to a special high-speed memory circuit used to hold small items of data for rapid retrieval. It is similar to the usage and size of a scratchpad in life: a pad of paper for preliminary notes or sketches or writings, etc.

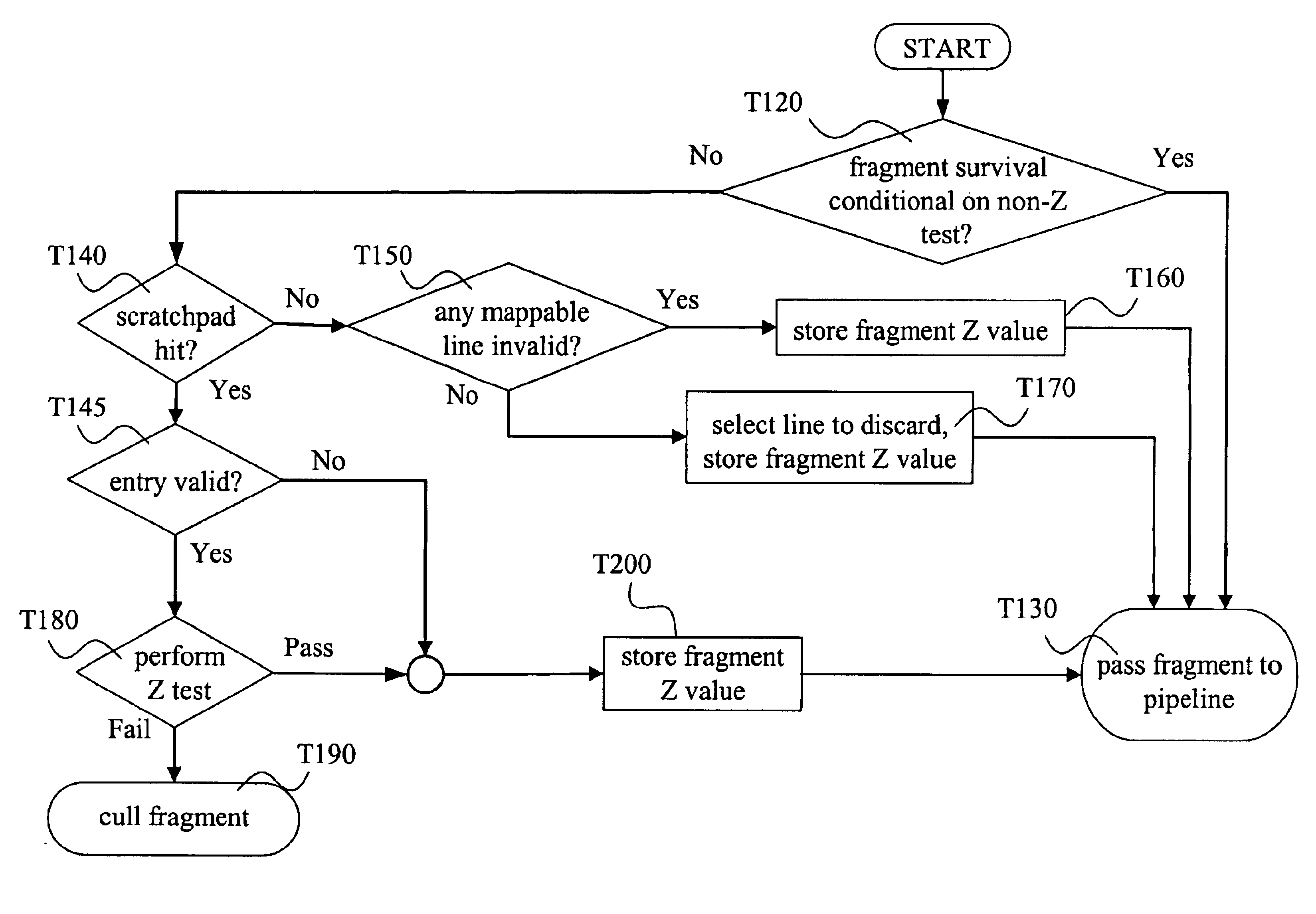

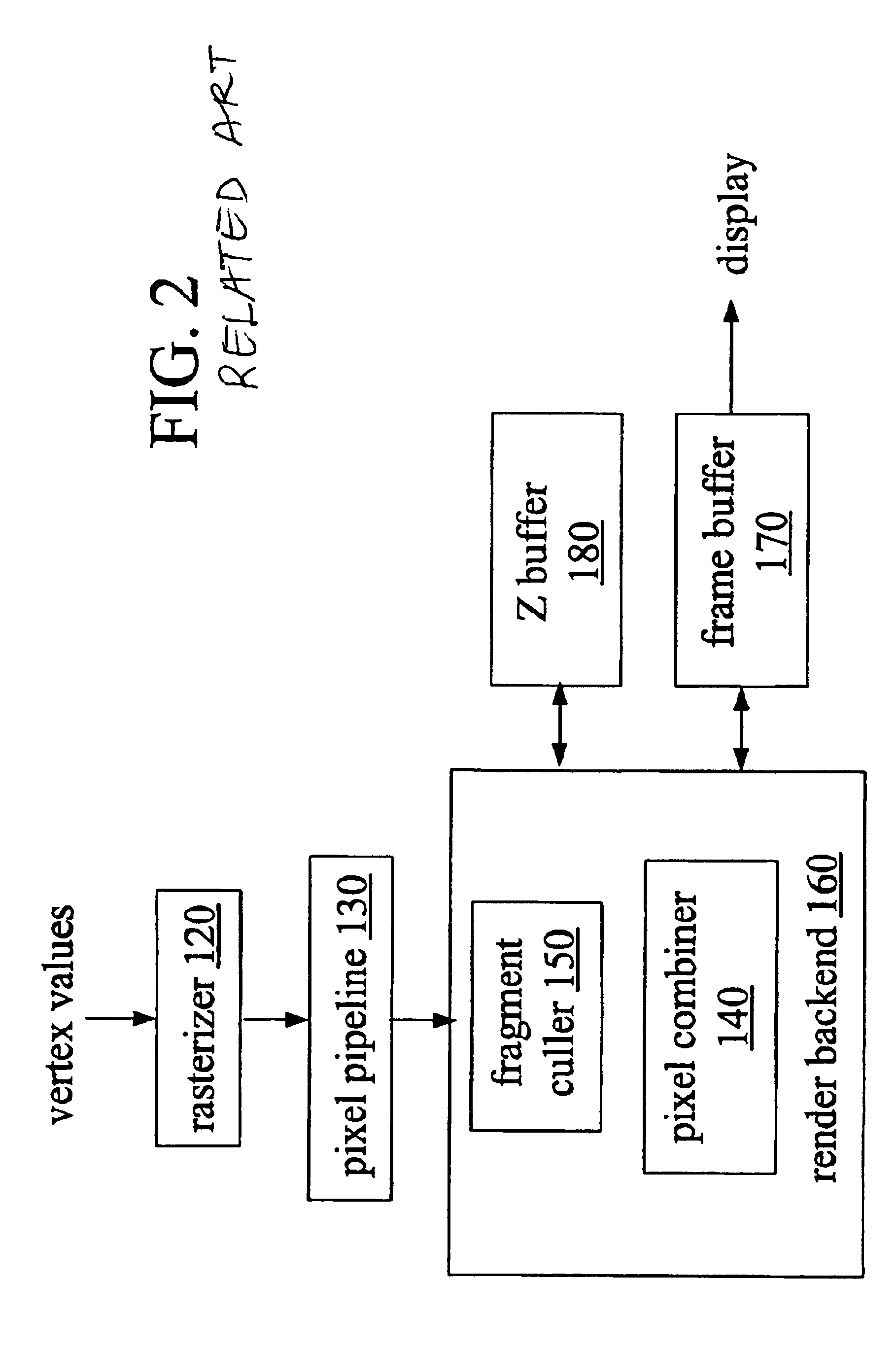

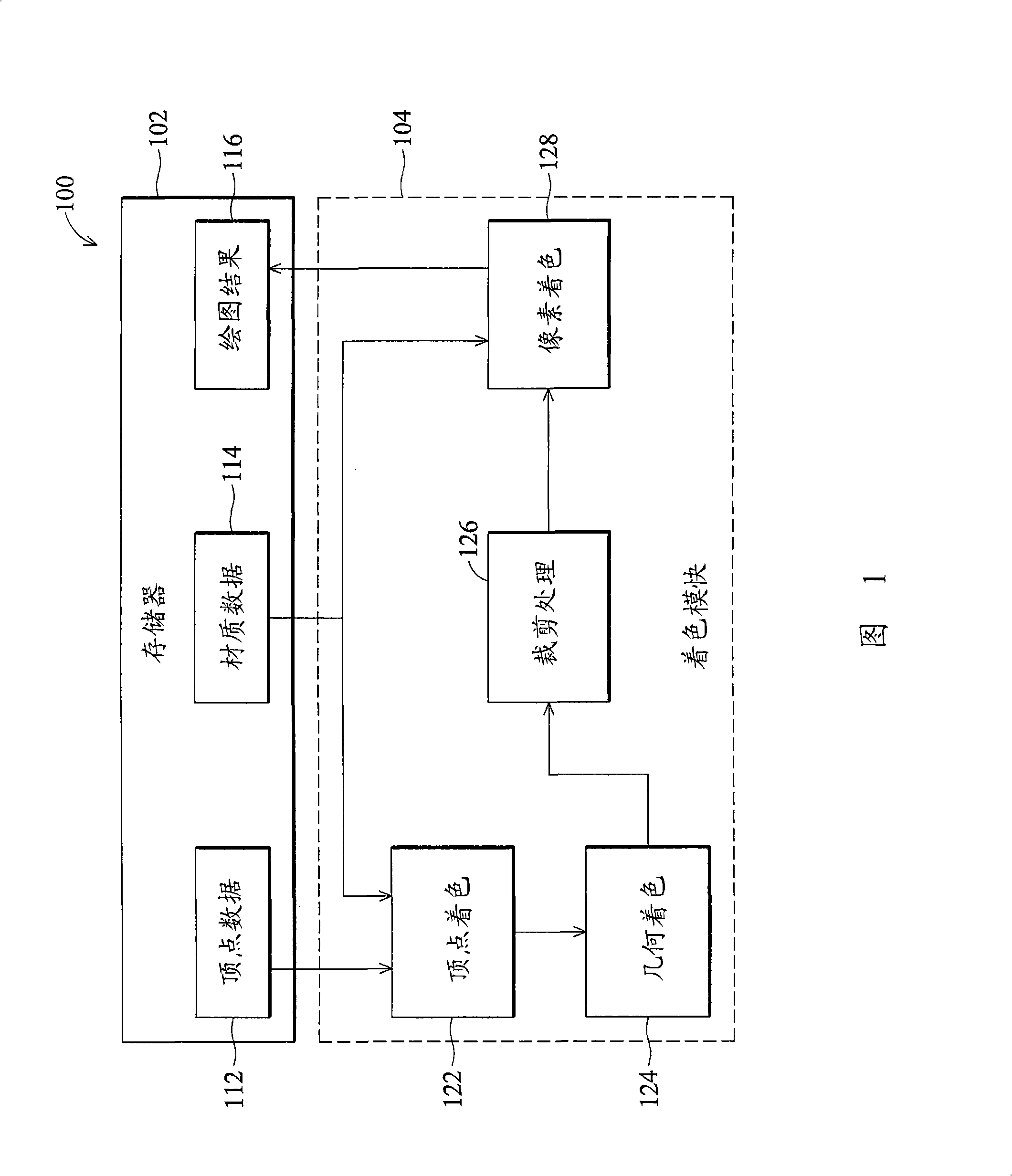

System, method, and apparatus for early culling

InactiveUS6999076B2Cathode-ray tube indicatorsDetails involving image processing hardwareComputational scienceGraphics

A method of graphics processing includes determining a non-depth conditional status and an occlusion status of a fragment. Such a method may be used in culling occluded fragments before expending resources such as processing cycles and memory bus usage. In one example, a scratchpad stores depth values of robust fragments and is used for occlusion testing. Graphics architectures, and methods that include use of representative Z values, are also disclosed.

Owner:ADVANCED SILICON TECH

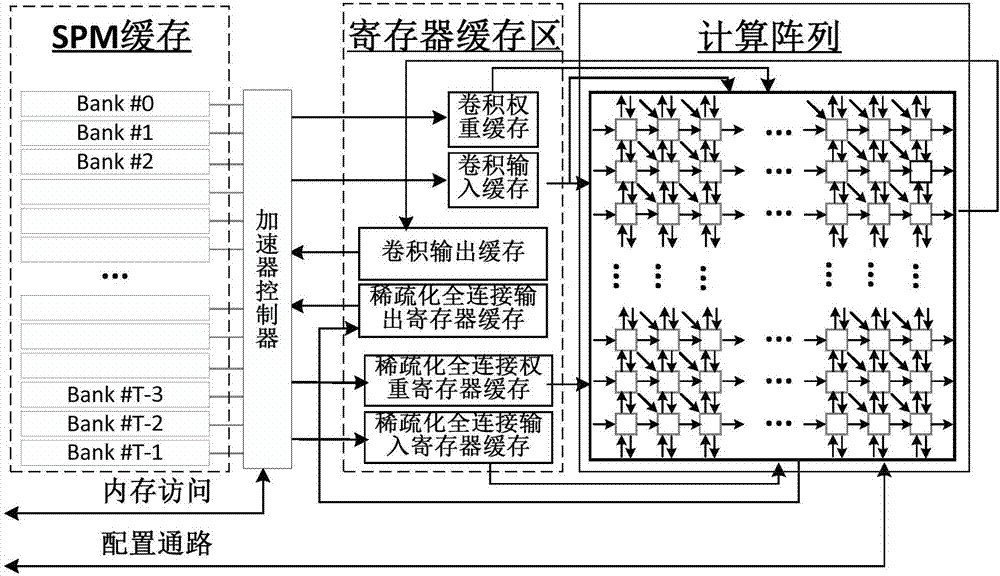

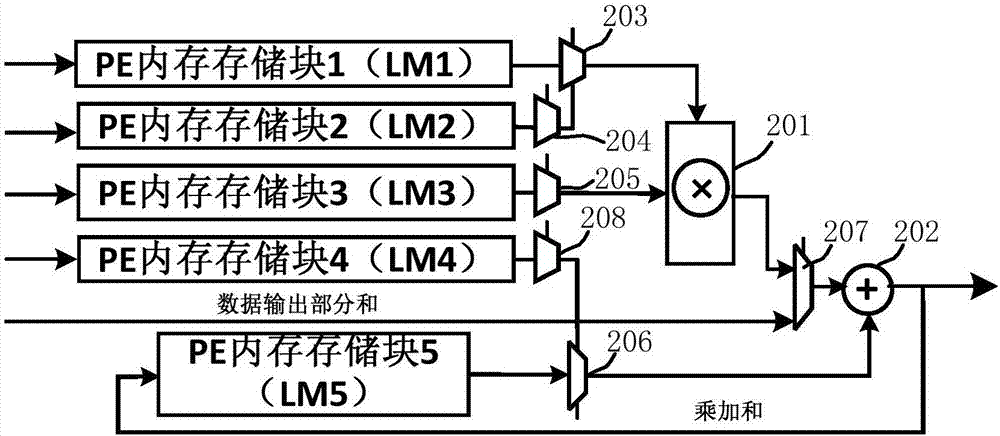

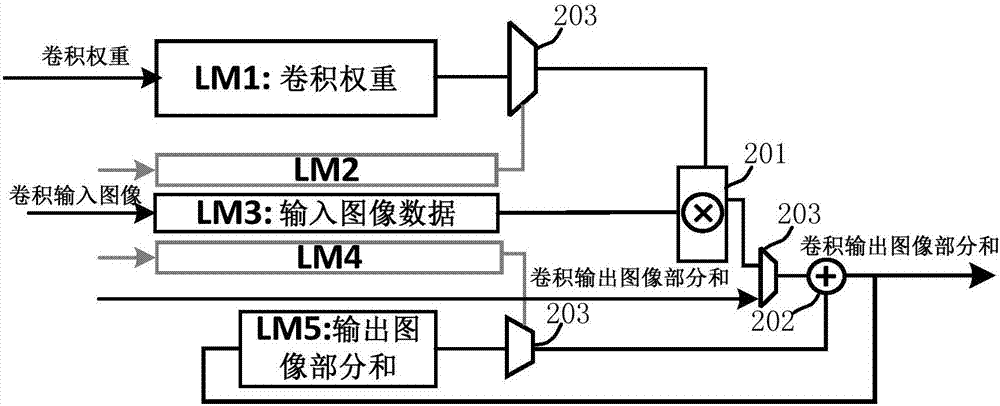

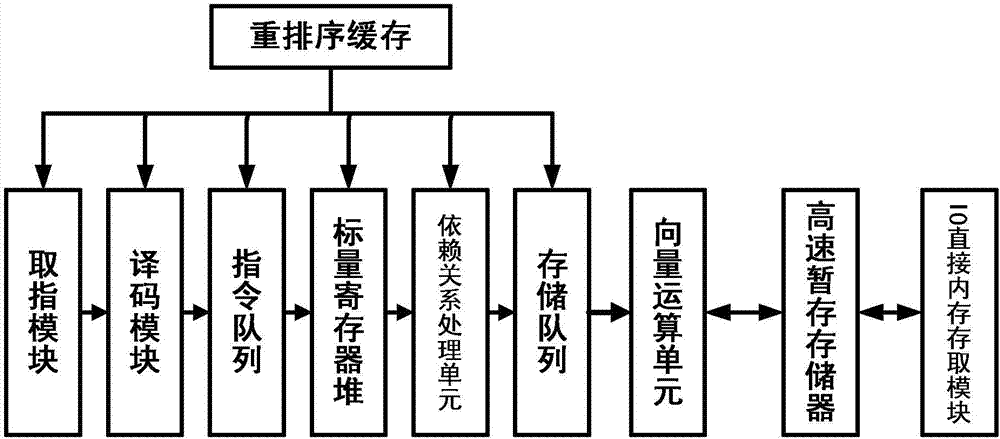

Separable array-based reconfigurable accelerator and realization method thereof

ActiveCN107341544AIncrease computing resourcesIncrease profitPhysical realisationNeural learning methodsProcessor registerParallel computing

The invention provides a separable array-based reconfigurable accelerator and a realization method thereof. The reconfigurable accelerator comprises a scratchpad memory cache area, separable calculation arrays, and a register cache area, wherein the scratchpad memory cache area is used for realizing reuse of data of convolution calculation and sparsity full connection calculation, the separable calculation arrays comprise multiple reconfigurable calculation units and fall into a convolution calculation array and a sparsity full connection calculation array, the register cache area is a storage area formed by multiple registers, and provides input data, weight data and corresponding output results for convolution calculation and sparsity full connection calculation, input data and weight data of convolution calculation are input into the convolution calculation array, the convolution calculation array outputs a convolution calculation result, input data and weight data of the sparsity full connection calculation are input into the sparsity full connection calculation array, and the sparsity full connection calculation array outputs a sparsity full connection calculation result. Characteristics of two neural networks are fused, so that the calculation resource of the chip and the memory bandwidth use ratio are improved.

Owner:TSINGHUA UNIV

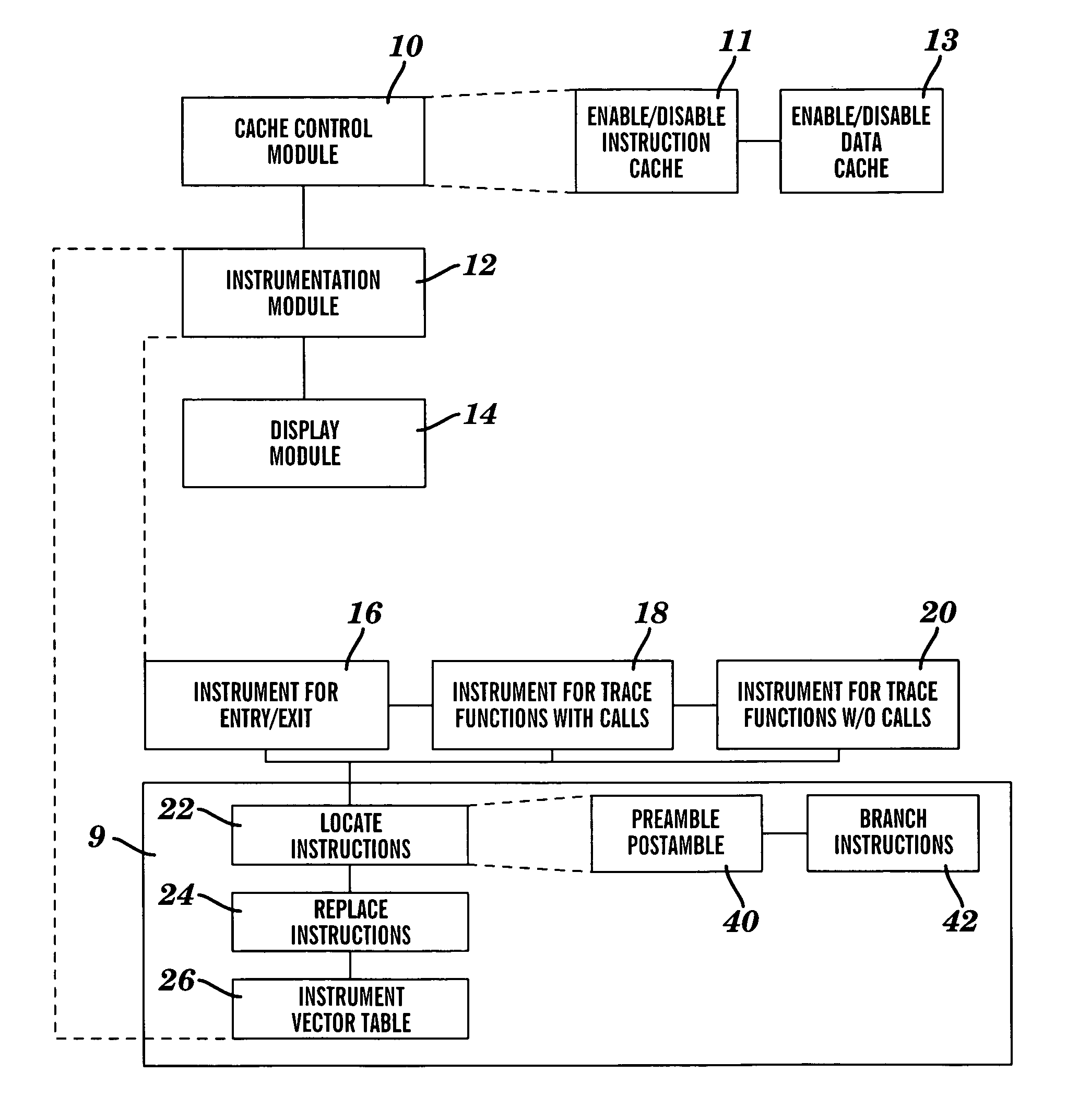

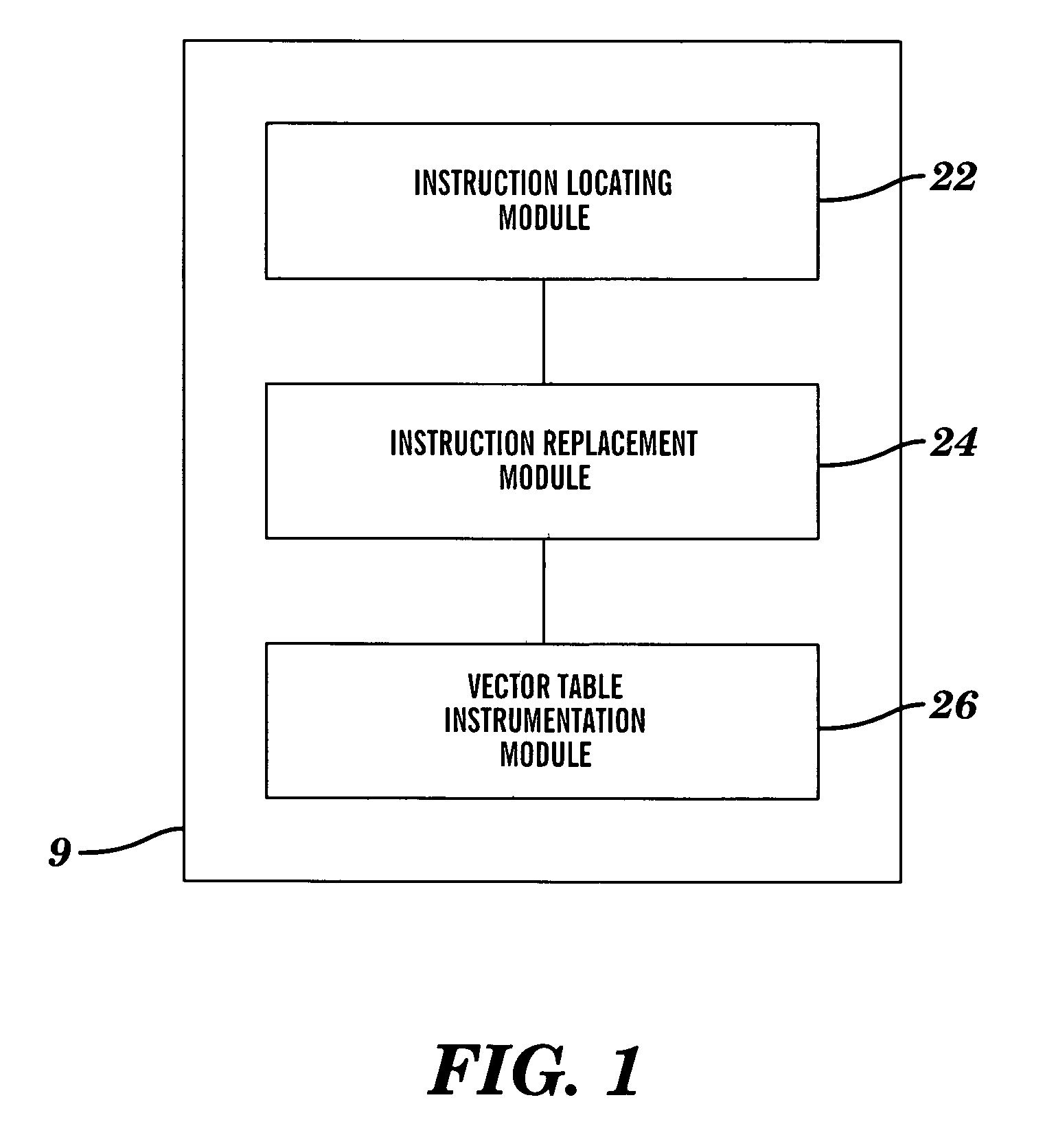

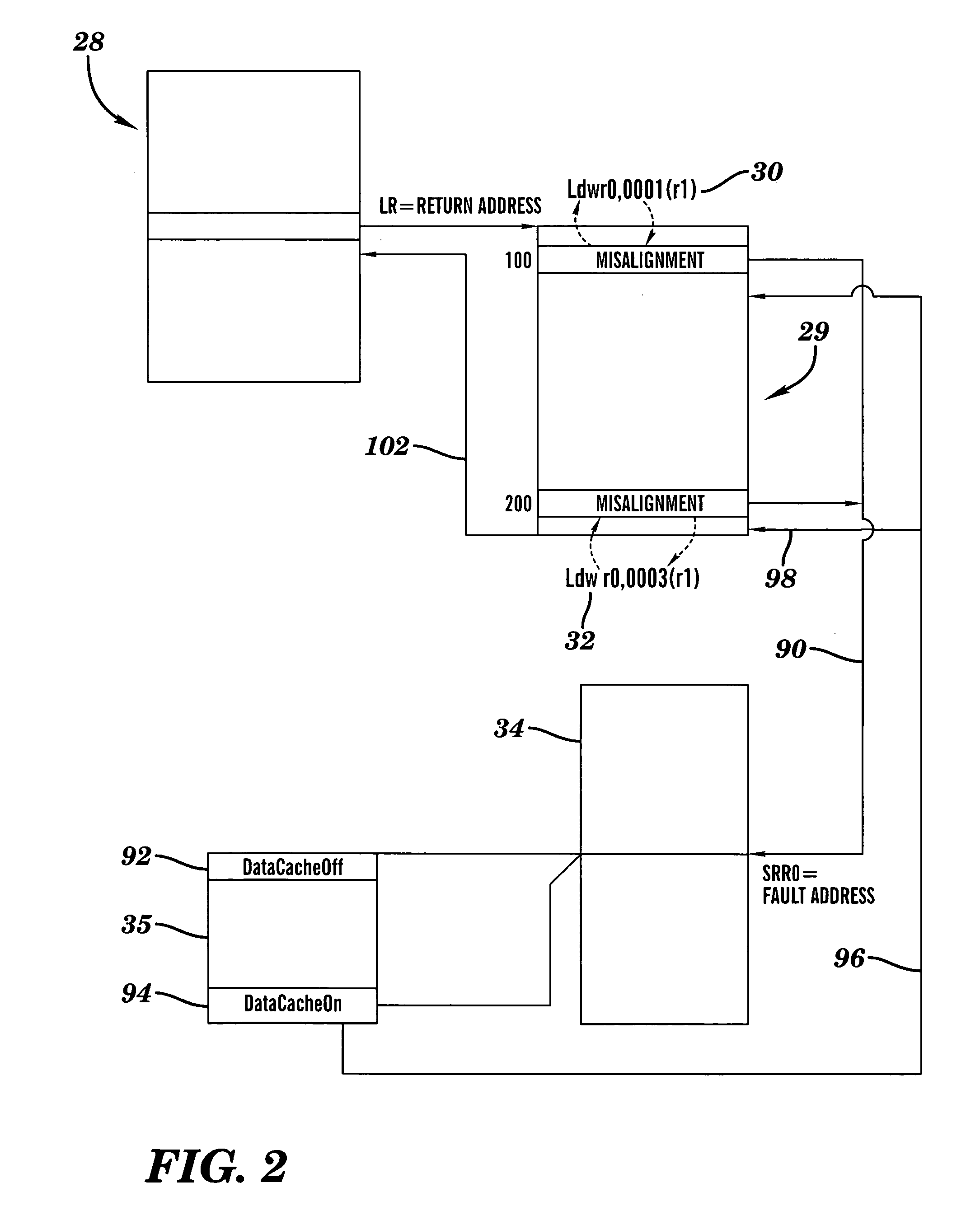

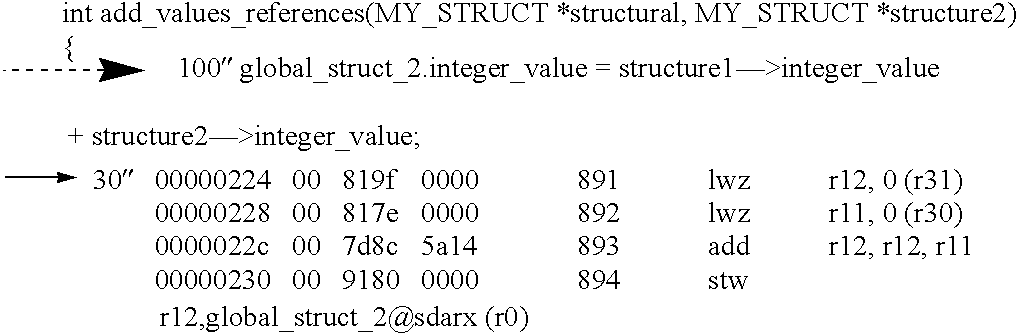

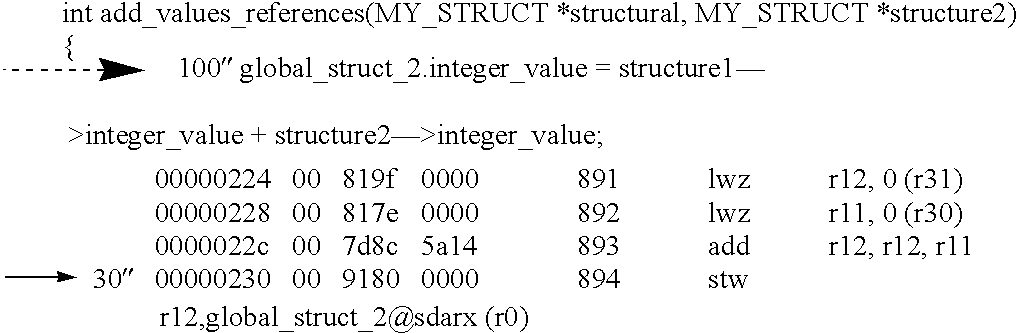

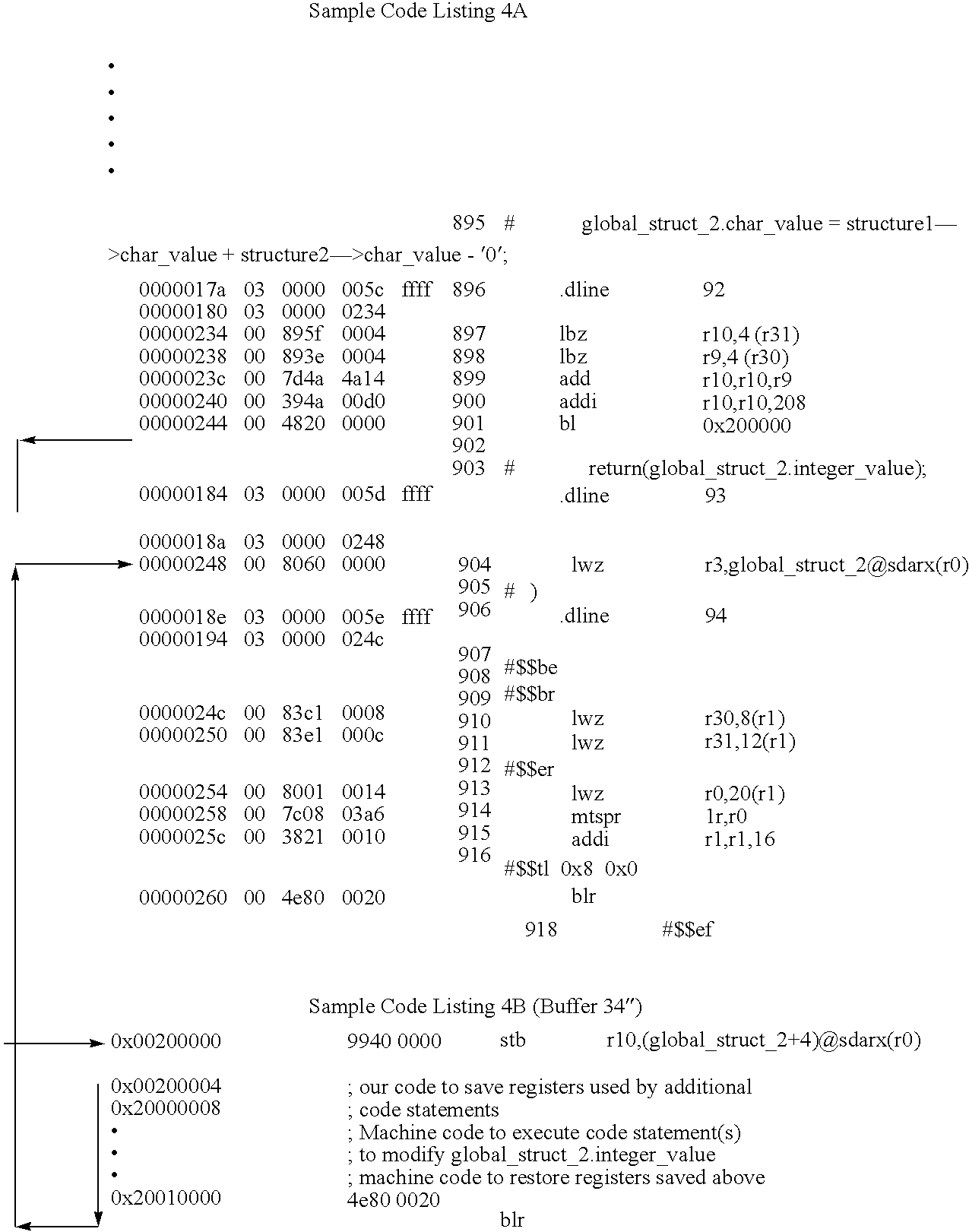

Dynamic software code instrumentation method and system

A method and system of monitoring code after being compiled or assembled is provided. Software code instructions, such as a function preamble and / or a data manipulation instruction, are identified using debug information and then dynamically replaced with an instruction that will branch execution to a scratchpad buffer. A routine is inserted into the scratchpad buffer, and includes instruction(s) to generate output to a trace buffer. Subsequent instructions in the scratchpad may execute the replaced instruction(s) and branch back to the address of the program code immediately following the original address of the replaced instruction.

Owner:WIND RIVER SYSTEMS

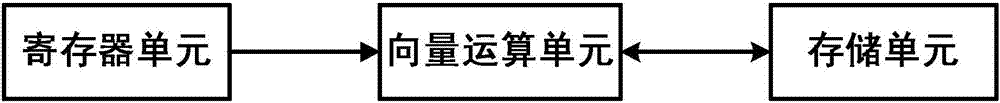

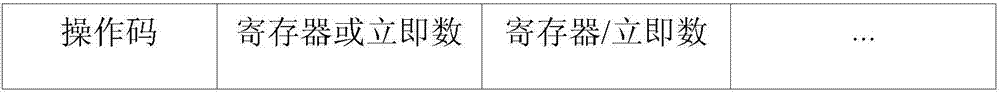

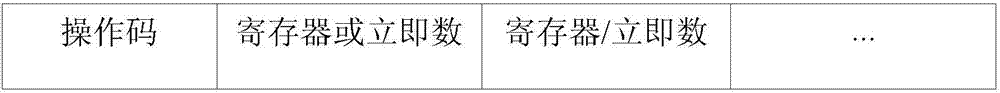

Vector calculating device

ActiveCN106990940AImprove execution performanceSimple formatRegister arrangementsComplex mathematical operationsProcessor registerScratchpad memory

The invention provides a vector calculating device comprises a memory cell, a register unit and a vector operation unit. Vectors are stored in the memory cell, addresses stored by the vectors are stored in the register unit, and the vector operation unit obtains a vector address in the register unit in dependence on a vector operation instruction, and then obtains a corresponding vector in the memory cell in dependence on the vector address, and carries out vector operation in dependence on the obtained vector to obtain a vector operation result. According to the invention, vector data participating in calculation is temporarily stored in a scratchpad memory, data in different widths can be supported flexibly and effectively during the vector operation process, and the execution performance of tasks including a lot of vector calculations is improved.

Owner:CAMBRICON TECH CO LTD

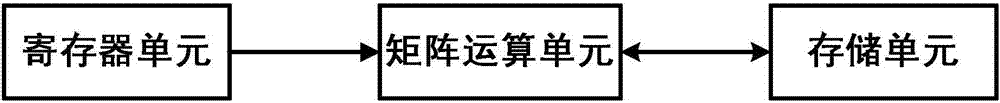

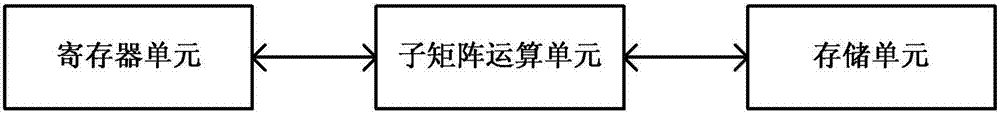

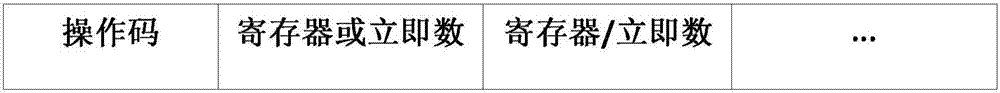

Matrix calculating device

PendingCN106991077AFlexible lengthSimple formatComplex mathematical operationsProcessor registerParallel computing

The invention provides a matrix calculating device comprising a memory cell, a register unit and a matrix operation unit. Matrixes are stored in the memory cell, addresses stored by the matrixes are stored in the register unit, and the matrix operation unit obtains a matrix address in the register unit in dependence on a matrix operation instruction, and then obtains a corresponding matrix in the memory cell in dependence on the matrix address, and carries out matrix operation in dependence on the obtained matrix to obtain a matrix operation result. According to the invention, matrix data participating in calculation is temporarily stored in a scratchpad memory, data in different widths can be supported flexibly and effectively during the matrix operation process, and the execution performance of tasks including a lot of matrix calculations is improved.

Owner:CAMBRICON TECH CO LTD

Dynamic software code instrumentation method and system

A method and system of monitoring code after being compiled or assembled is provided. Software code instructions, such as a function preamble and / or a data manipulation instruction, are identified using debug information and then dynamically replaced with an instruction that will branch execution to a scratchpad buffer. A routine is inserted into the scratchpad buffer, and includes instruction(s) to generate output to a trace buffer. Subsequent instructions in the scratchpad may execute the replaced instruction(s) and branch back to the address of the program code immediately following the original address of the replaced instruction.

Owner:WIND RIVER SYSTEMS

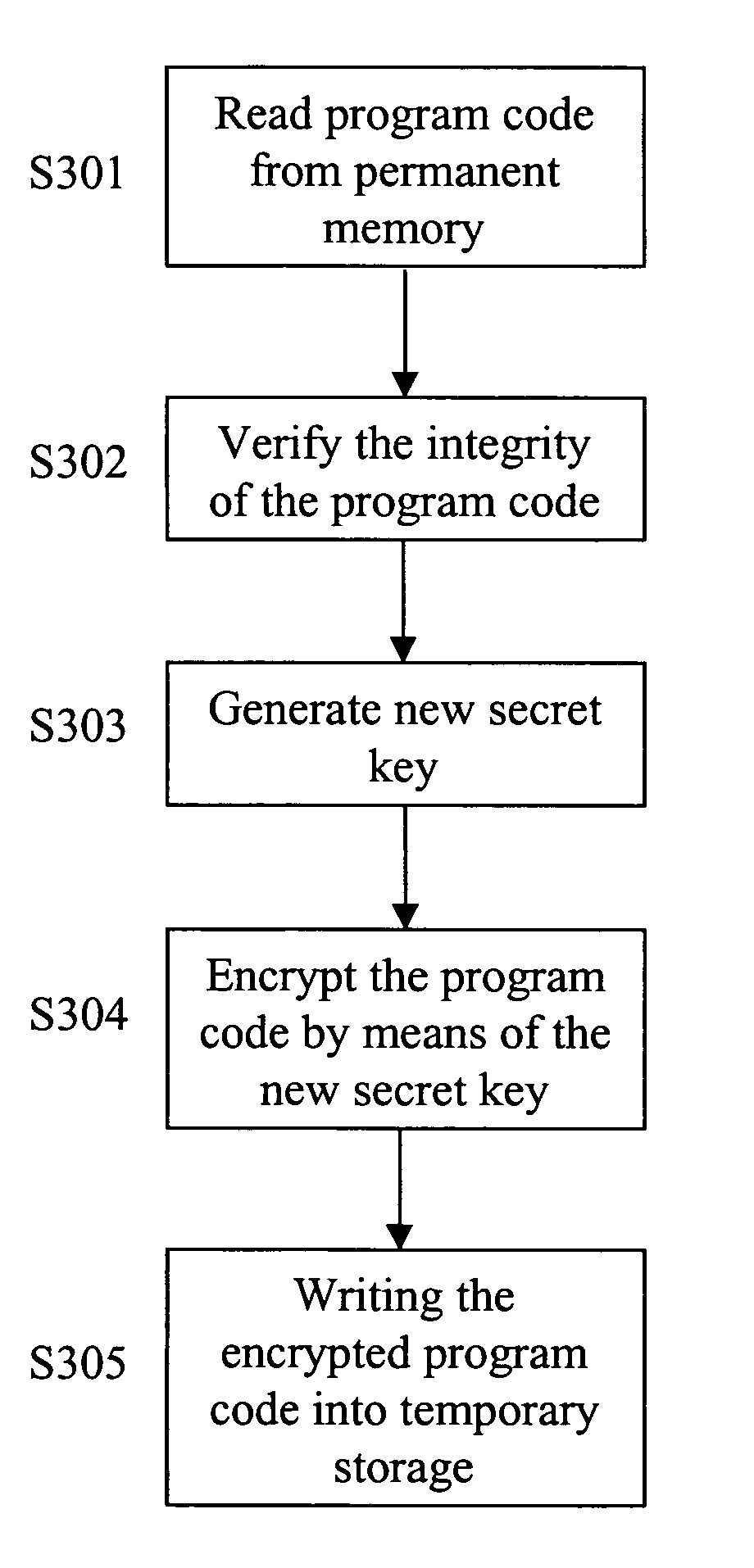

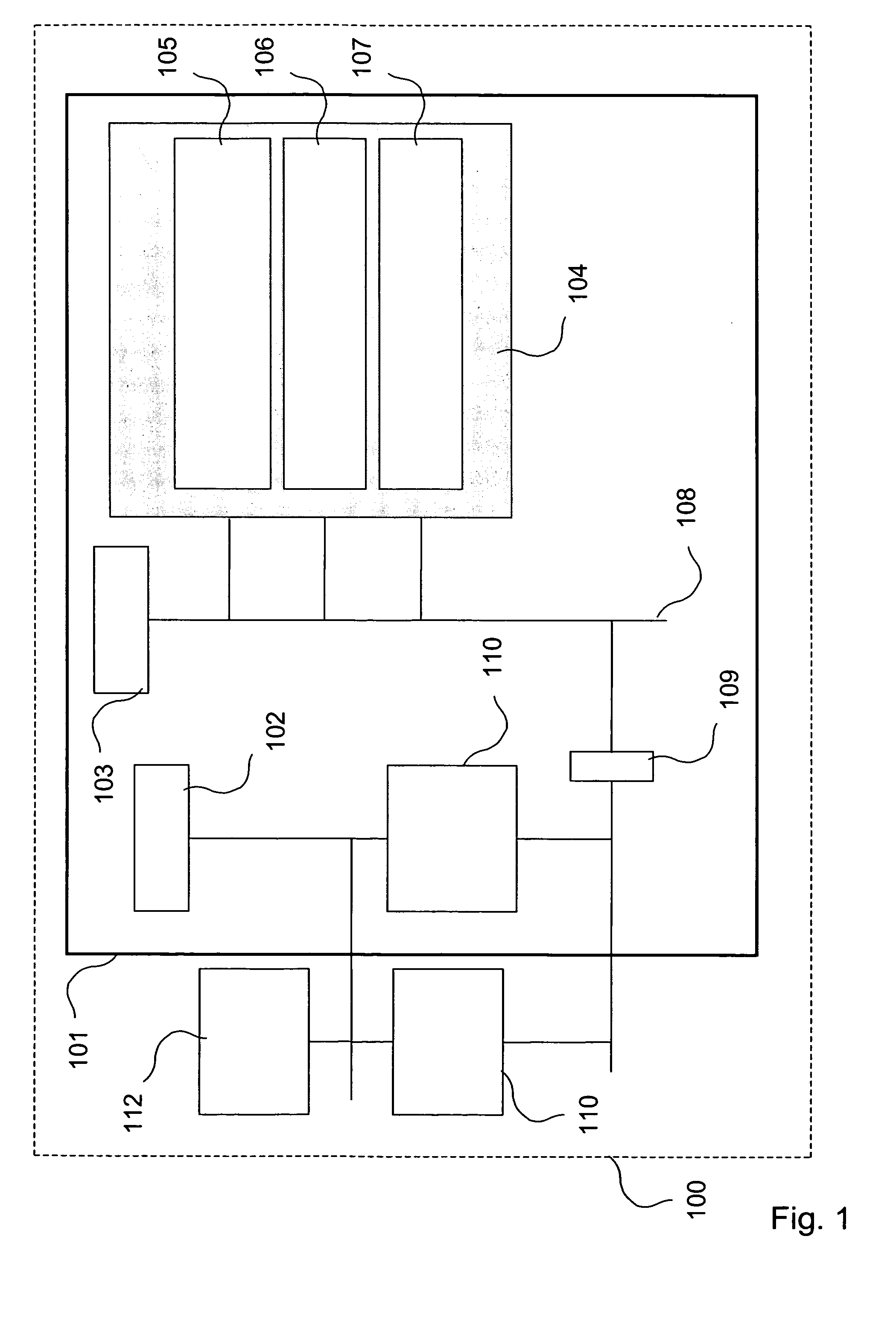

Secure mode controlled memory

ActiveUS7500098B2Less-strong algorithmComprehensive performance is smallDigital data processing detailsUnauthorized memory use protectionComputer hardwareMode control

The present invention relates to a method of, and a system for, enhancing data security, which data is to be executed in an electronic device (101) comprising a secure execution environment (104) to which access is restricted. A basic idea of the present invention is that, at device boot, data in the form of e.g. program code is copied from permanent memory (112) to temporary memory (110). The integrity of this program code must be verified to ensure that the program code has not been altered during the transmission between the memories. Further, a new secret key is generated in the secure execution environment. This new secret key is used by a device processor (103) to encrypt the program code to be stored in the temporary memory in order to ensure that the program code is kept secret during transmission. The device processor thereafter writes the encrypted program code into the temporary memory.

Owner:RPX CORP

Local scratchpad and data caching system

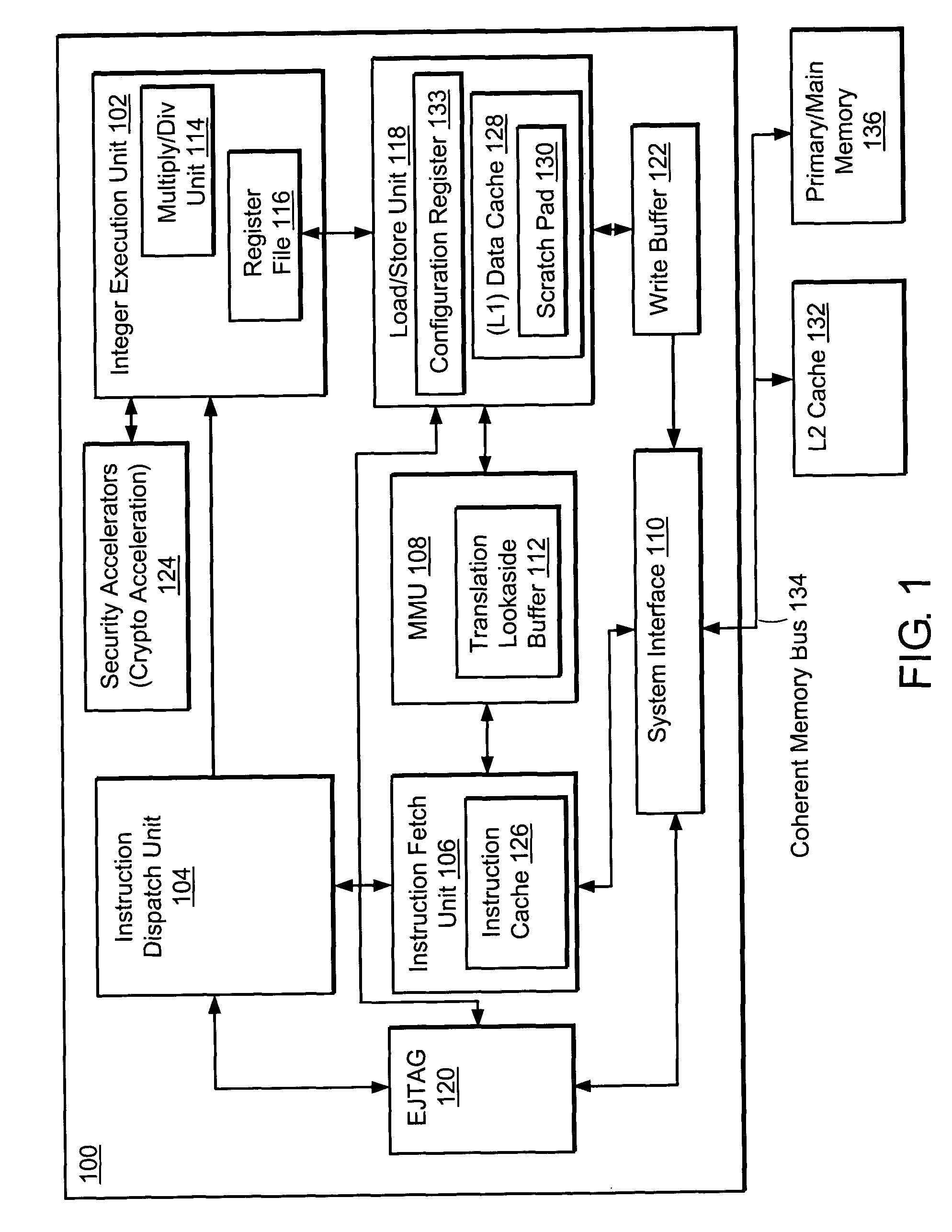

InactiveUS20060059310A1Memory architecture accessing/allocationError detection/correctionScratchpad memoryLocal memories

A RISC-type processor includes a main register file and a data cache. The data cache can be partitioned to include a local memory, the size of which can be dynamically changed on a cache block basis while the processor is executing instructions that use the main register file. The local memory can emulate as an additional register file to the processor and can reside at a virtual address. The local memory can be further partitioned for prefetching data from a non-cacheable address to be stored / loaded into the main register file.

Owner:MARVELL ASIA PTE LTD

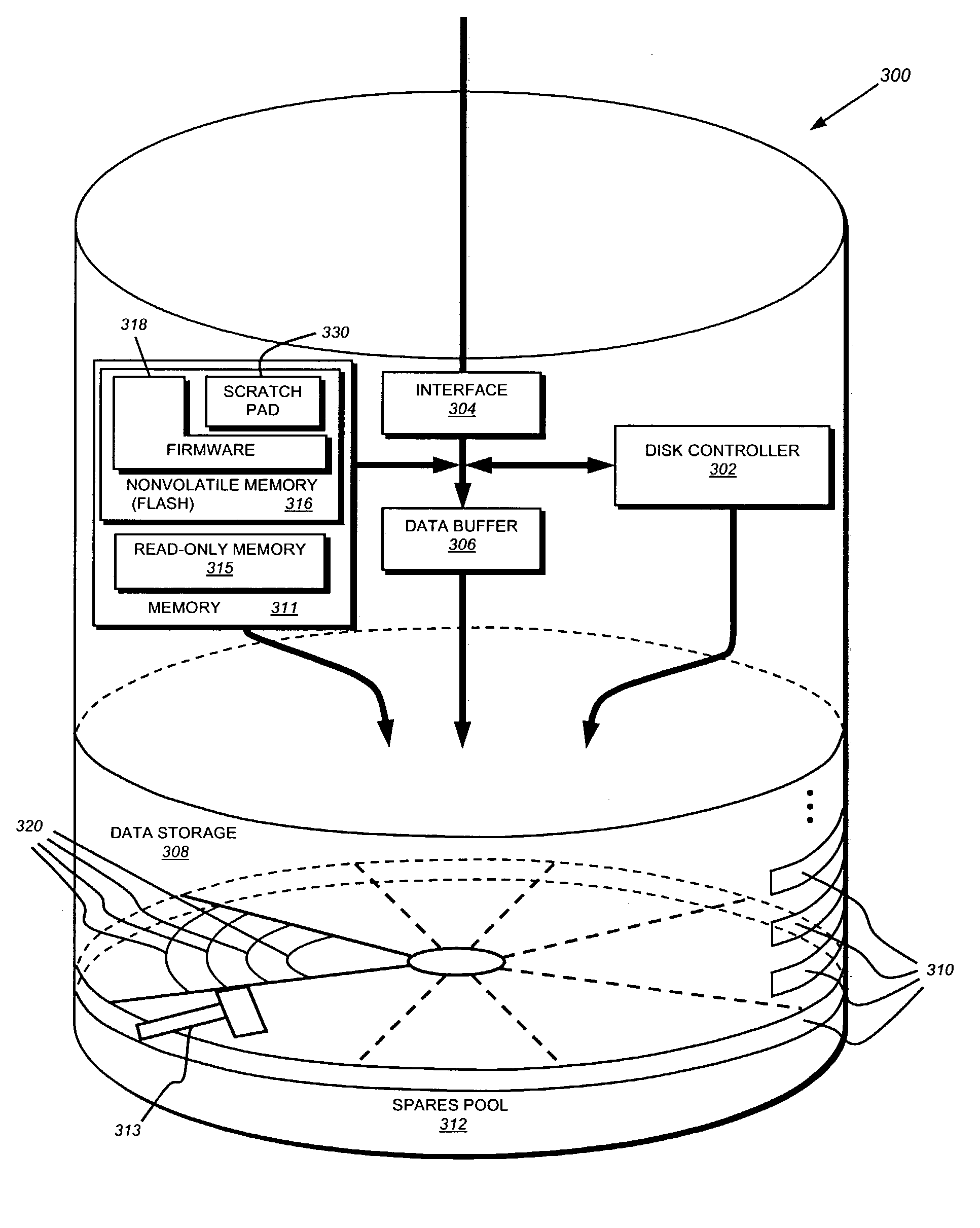

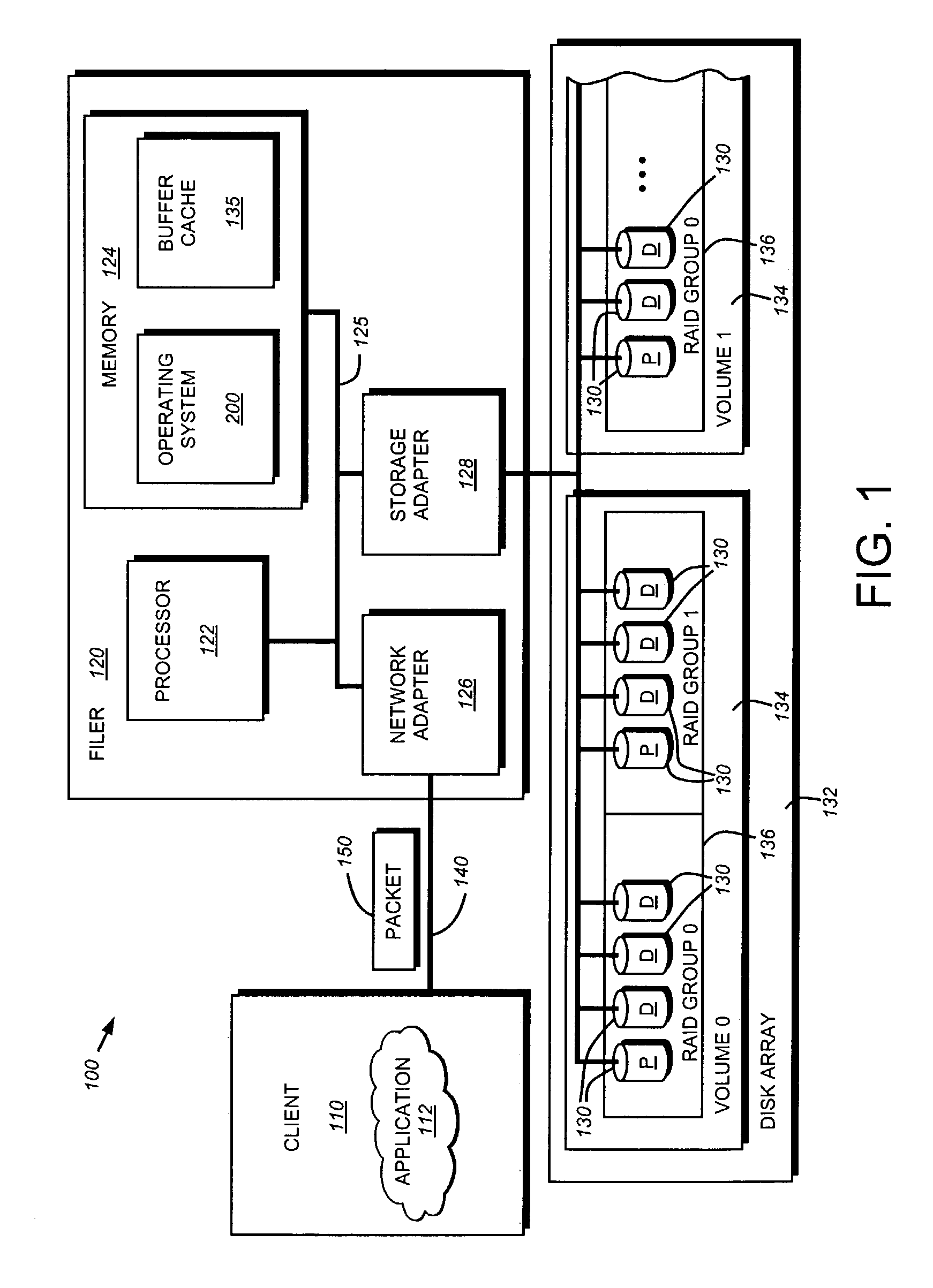

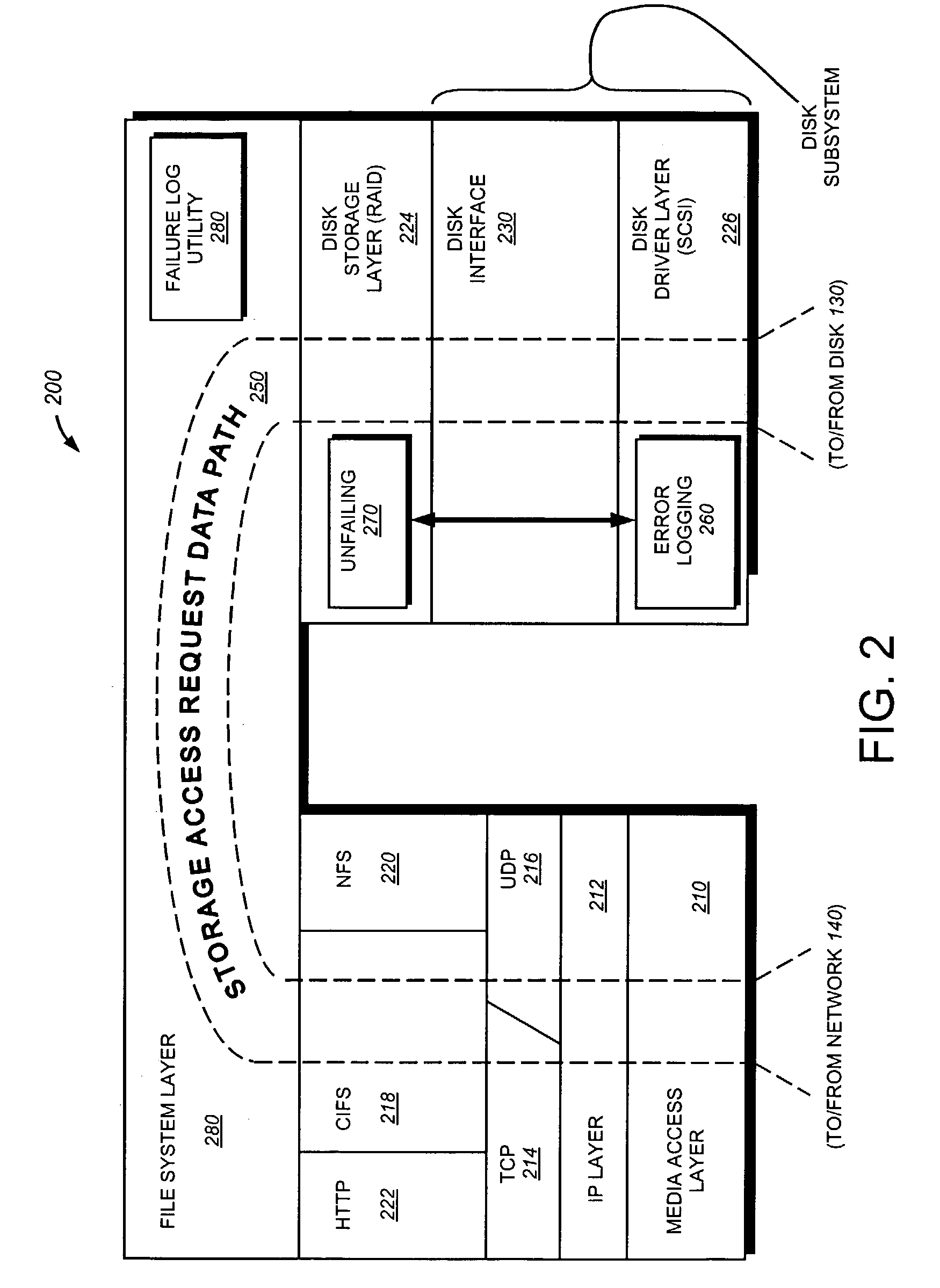

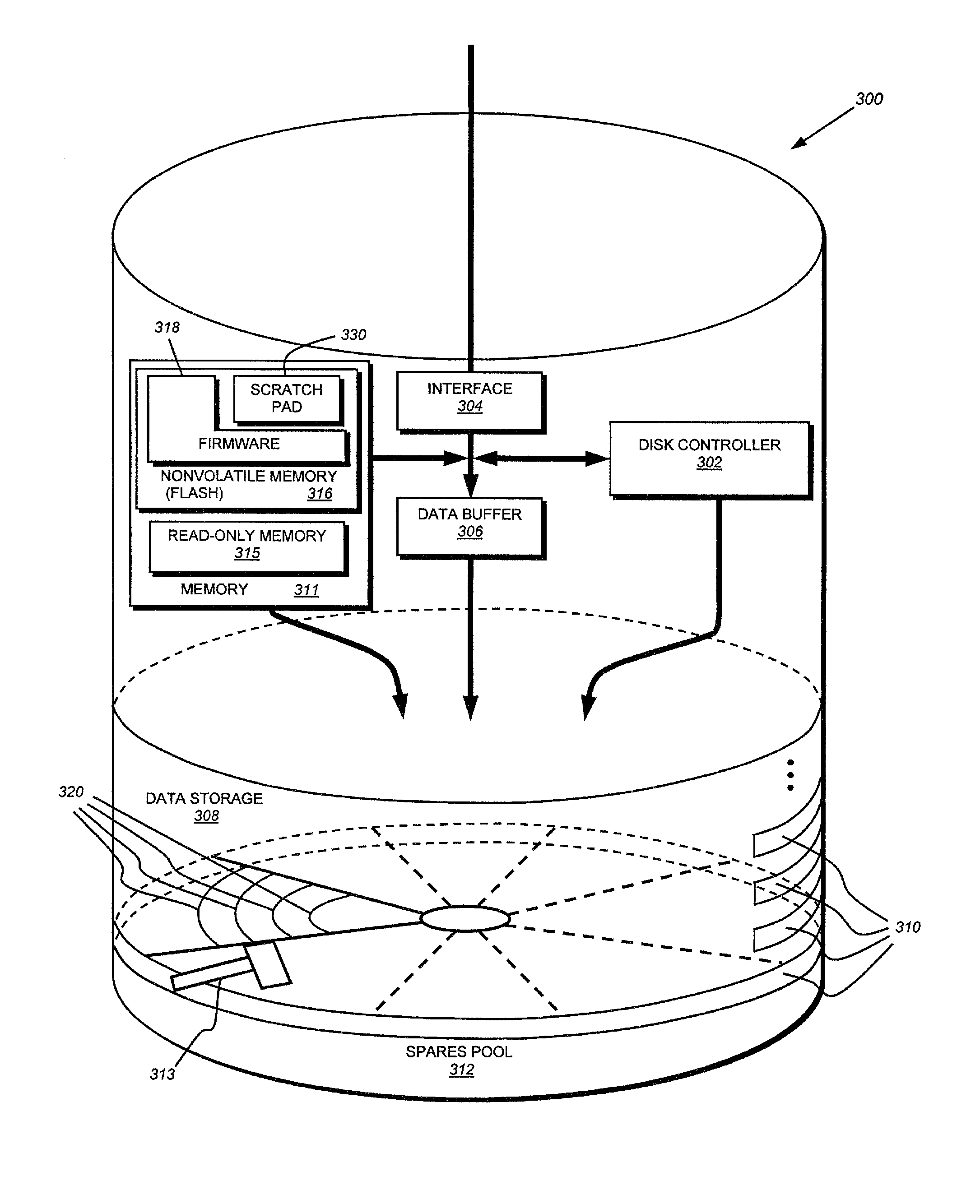

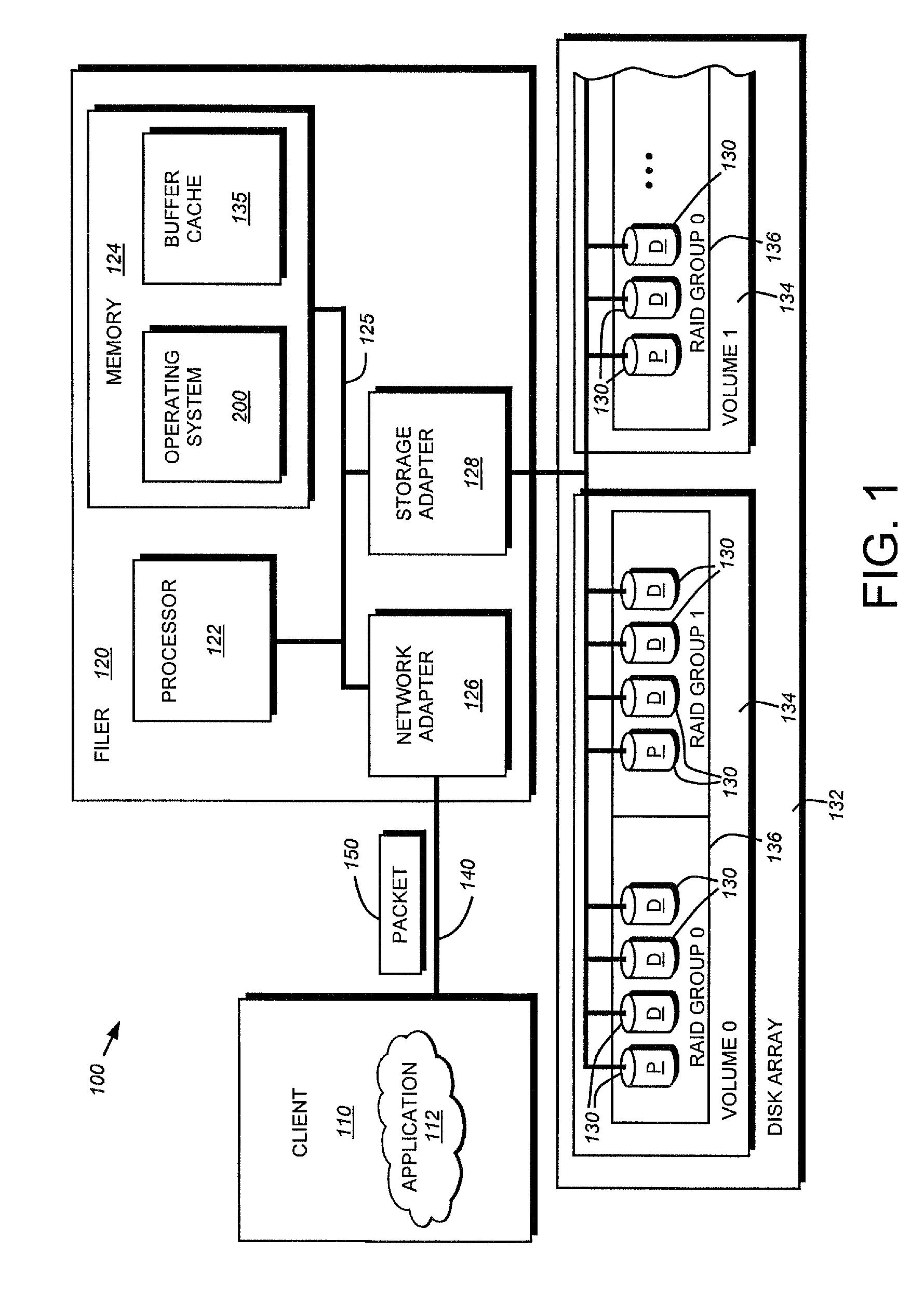

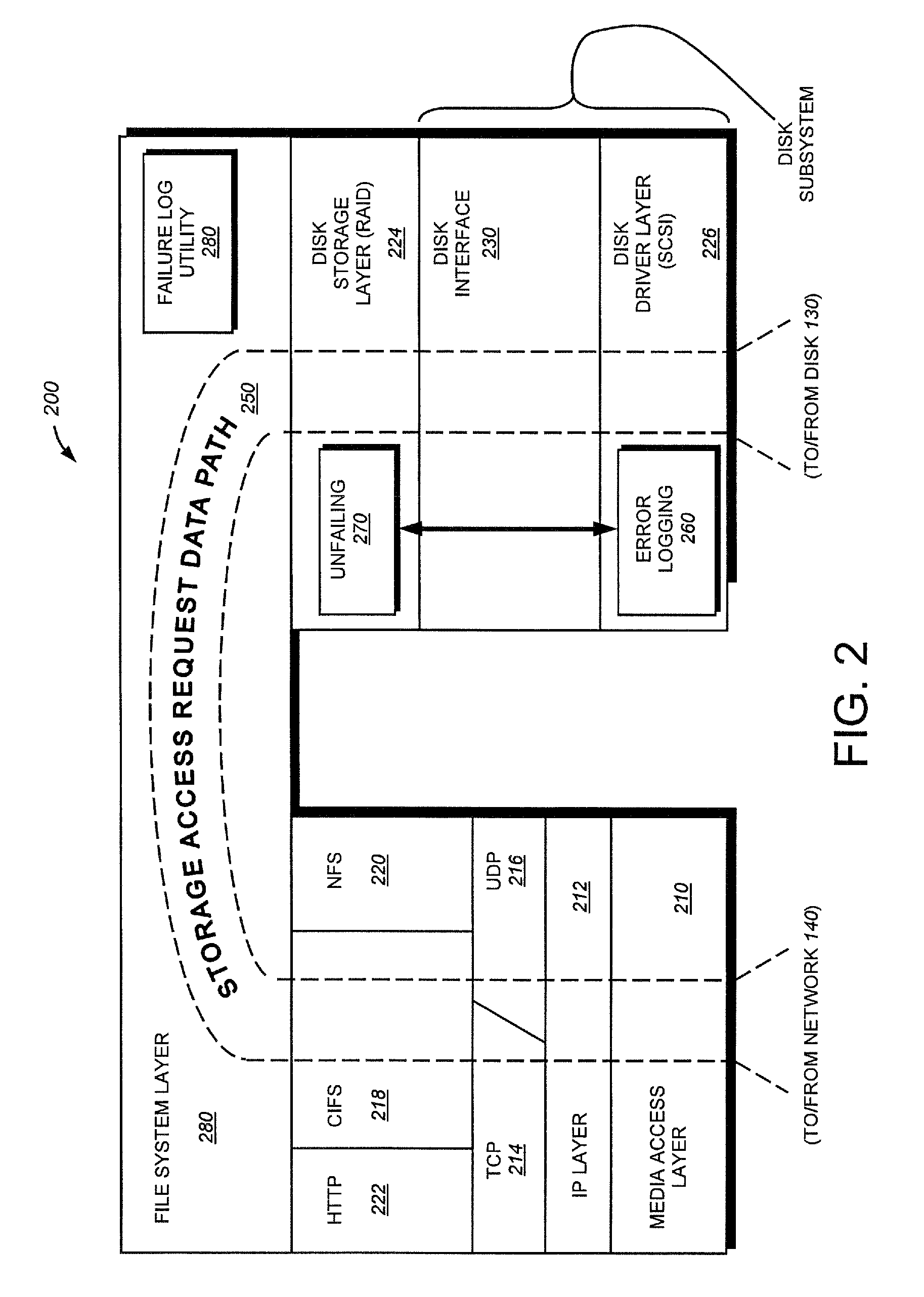

System and method for logging disk failure analysis in disk nonvolatile memory

ActiveUS7293203B1Reduce needError detection/correctionRecord information storageRandom access memoryFailure analysis

A system and method for logging and storing failure analysis information on disk drive so that the information is readily and reliably available to vendor customer service and other interested parties is provided. The information, in an illustrative embodiment, is stored on a nonvolatile (flash) random access memory (RAM), found generally in most types of disk drives for storage of updateable disk drive firmware. A known location of limited size is defined in the flash RAM, to form a scratchpad. This scratchpad is a blank area of known addresses, formed during the original firmware download onto the memory, and which is itself free of firmware code. This scratchpad is sufficient in size to write a series of failure codes in a non-erasable list as failures / errors (and user / administrator attempts to unfail the disk) are logged. The log of failures always travels with the drive, and can be read by the vendor to determine the general nature of the most recent failure(s) and whether these failures represent a problematic history for the drive.

Owner:NETWORK APPLIANCE INC

System and method for logging disk failure analysis in disk nonvolatile memory

InactiveUS7451355B1Error detection/correctionRecord information storageRandom access memoryFailure analysis

A system and method for logging and storing failure analysis information on disk drive so that the information is readily and reliably available to vendor customer service and other interested parties is provided. The information, in an illustrative embodiment, is stored on a nonvolatile (flash) random access memory (RAM), found generally in most types of disk drives for storage of updateable disk drive firmware. A known location of limited size is defined in the flash RAM, to form a scratchpad. This scratchpad is a blank area of known addresses, formed during the original firmware download onto the memory, and which is itself free of firmware code. This scratchpad is sufficient in size to write a series of failure codes in a non-erasable list as failures / errors (and user / administrator attempts to unfail the disk) are logged. The log of failures always travels with the drive, and can be read by the vendor to determine the general nature of the most recent failure(s) and whether these failures represent a problematic history for the drive.

Owner:NETWORK APPLIANCE INC

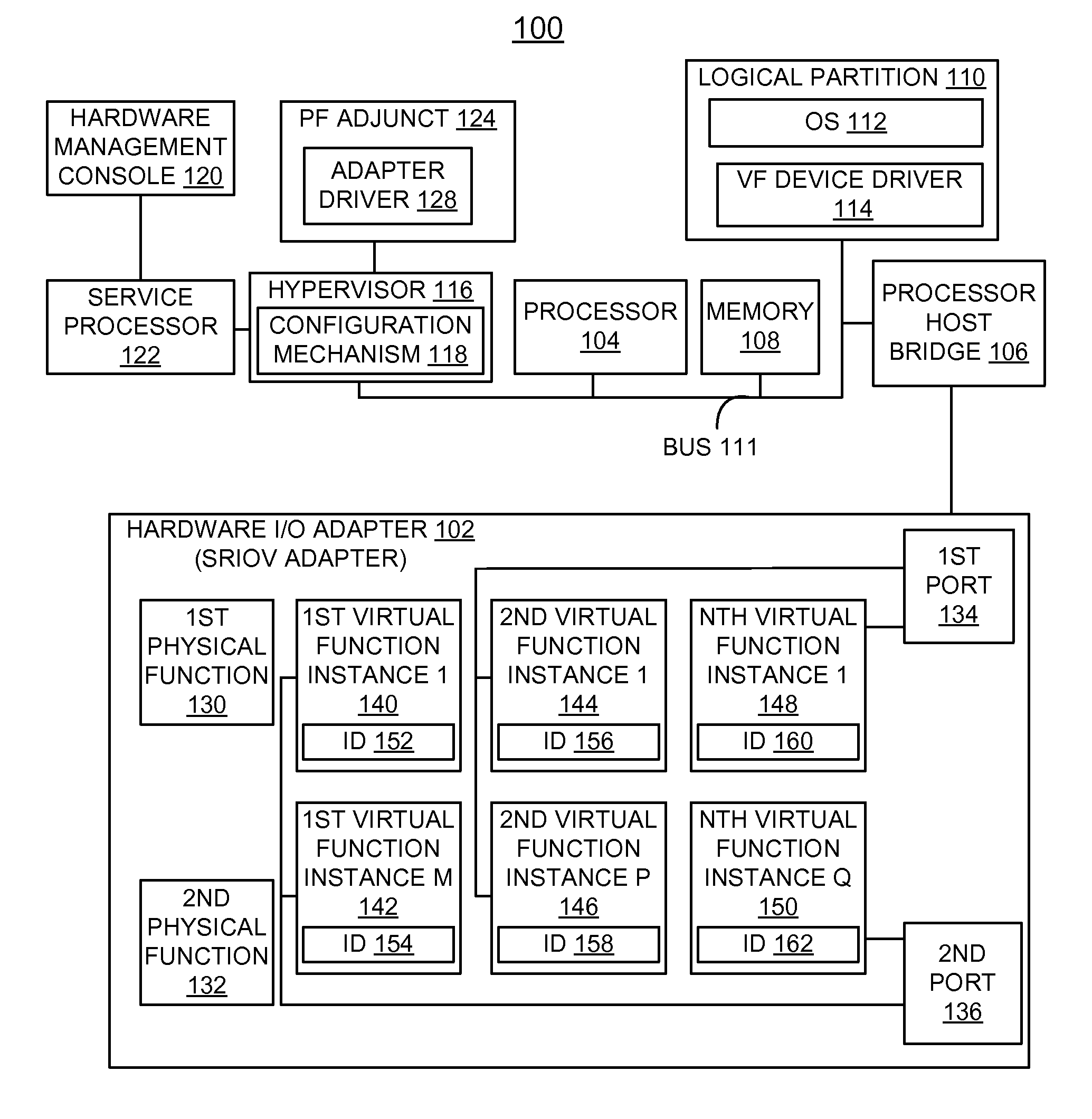

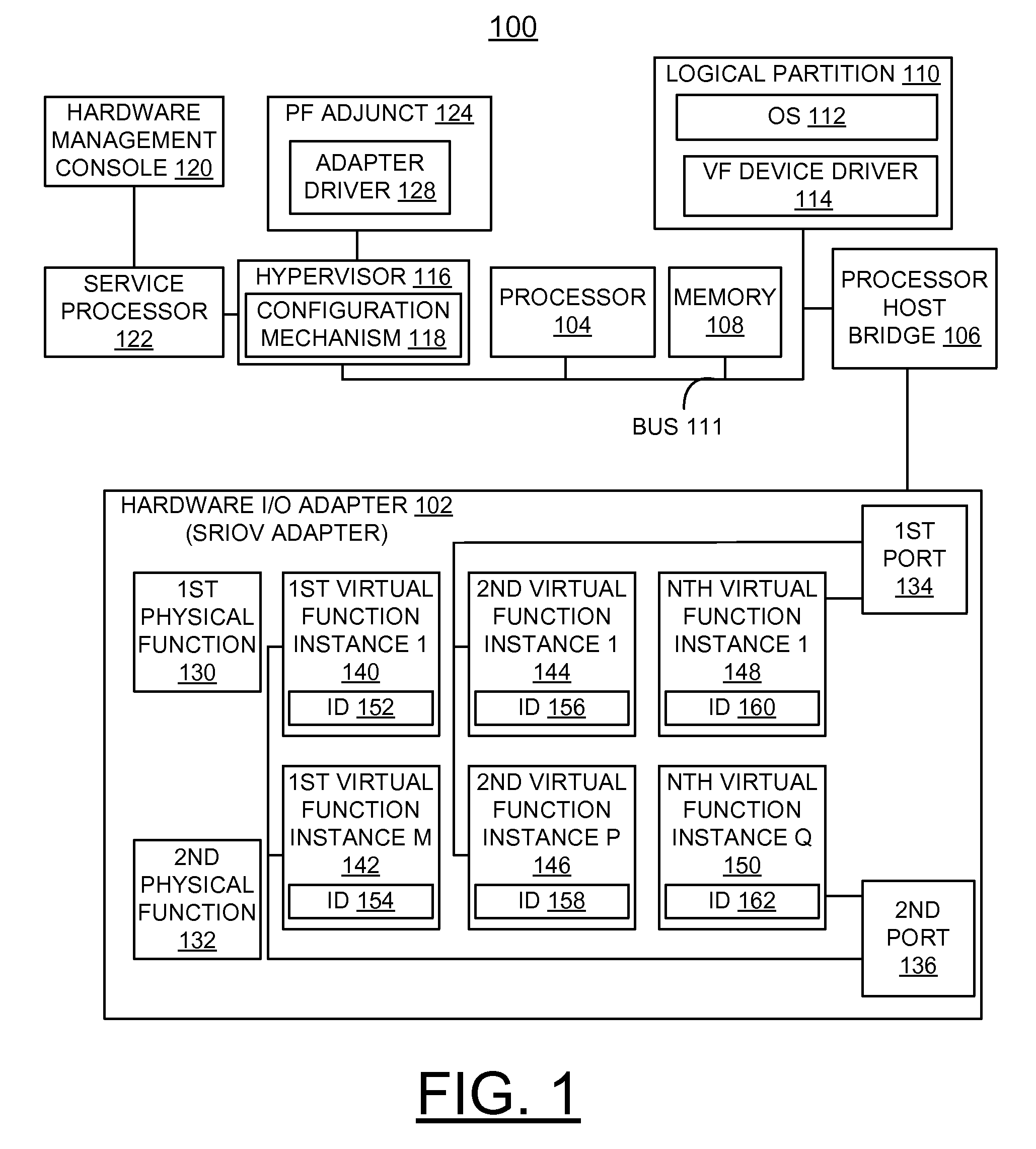

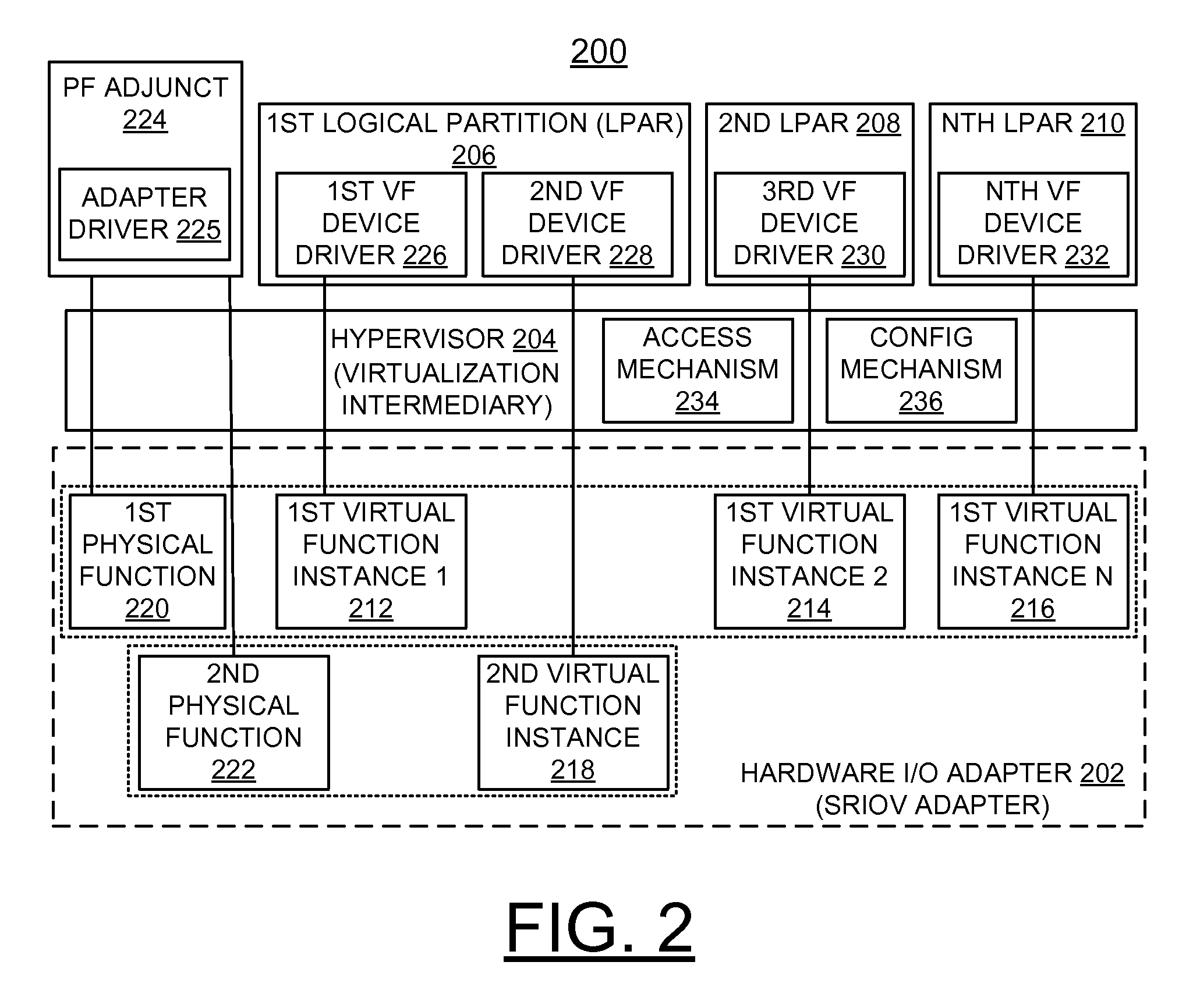

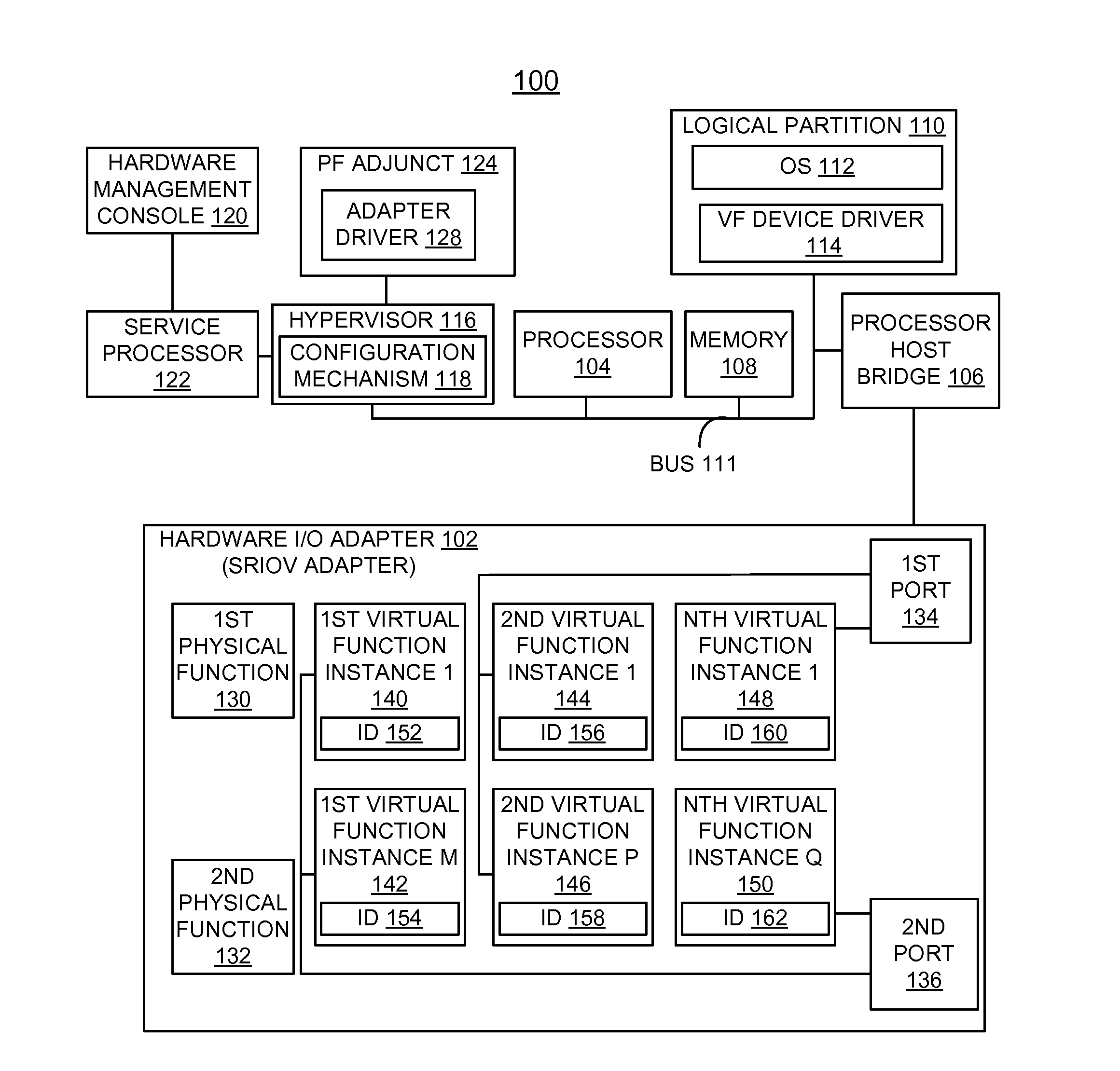

Implementing concurrent device driver maintenance and recovery for an SRIOV adapter in a virtualized system

InactiveUS9317317B2Without negative effectResource allocationSoftware simulation/interpretation/emulationStart up timeComputerized system

A method, system and computer program product are provided for implementing concurrent adapter driver maintenance and recovery for a Single Root Input / Output Virtualization (SRIOV) adapter in a computer system. An adapter driver at start up time performs configuration of the adapter and each of a set of virtual functions (VFs). The adapter driver writes critical adapter and VF configuration data to a scratchpad buffer. When device driver maintenance is needed, such as to load updated adapter driver firmware, all VF drivers are held off temporarily, current adapter driver is detached, and then the adapter driver is reloaded with the updated driver firmware. Then the adapter driver is restarted with the updated adapter driver firmware, and performs a reinitialization process. The adapter driver performs adapter and VF configuration restoring existing configuration using values read from the scratchpad buffer.

Owner:IBM CORP

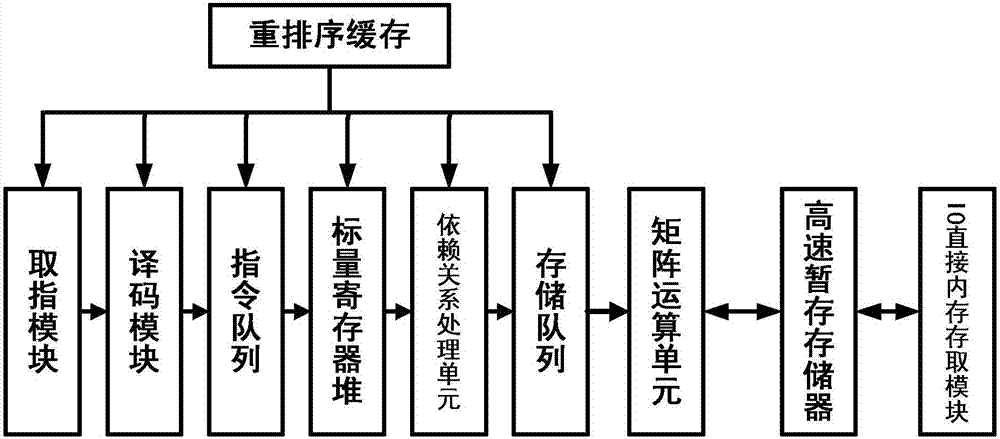

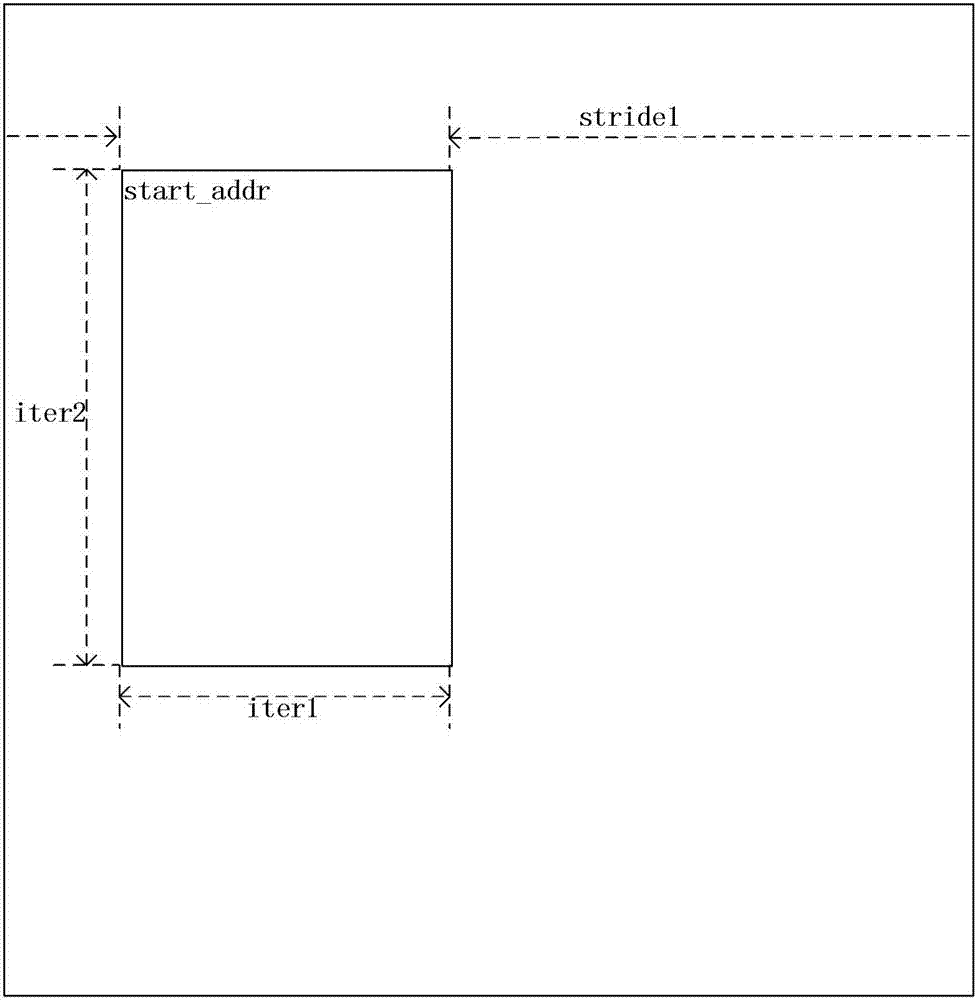

Sub-matrix operation device and method

ActiveCN107305538AFlexible lengthSimple formatComplex mathematical operationsProcessor registerParallel computing

The invention provides a sub-matrix operation device and method. The device includes a storage unit, a register unit and a sub-matrix operation unit. Sub-matrix data are stored in the storage unit. Sub-matrix information is stored in the register unit. The sub-matrix operation unit acquires the sub-matrix information in the register unit according to a sub-matrix operation instruction, acquires the corresponding sub-matrix data in the storage unit according to the sub-matrix information, and then carries out sub-matrix operations according to the acquired sub-matrix data to obtain a sub-matrix operation result. According to the device and method, the sub-matrix data involved in computation is temporarily stored on a high-speed scratchpad memory, the device can be enabled to more flexibly and effectively support data with different widths in sub-matrix operation processes, and the execution performance of a computation task including a large number of sub-matrices is improved.

Owner:CAMBRICON TECH CO LTD

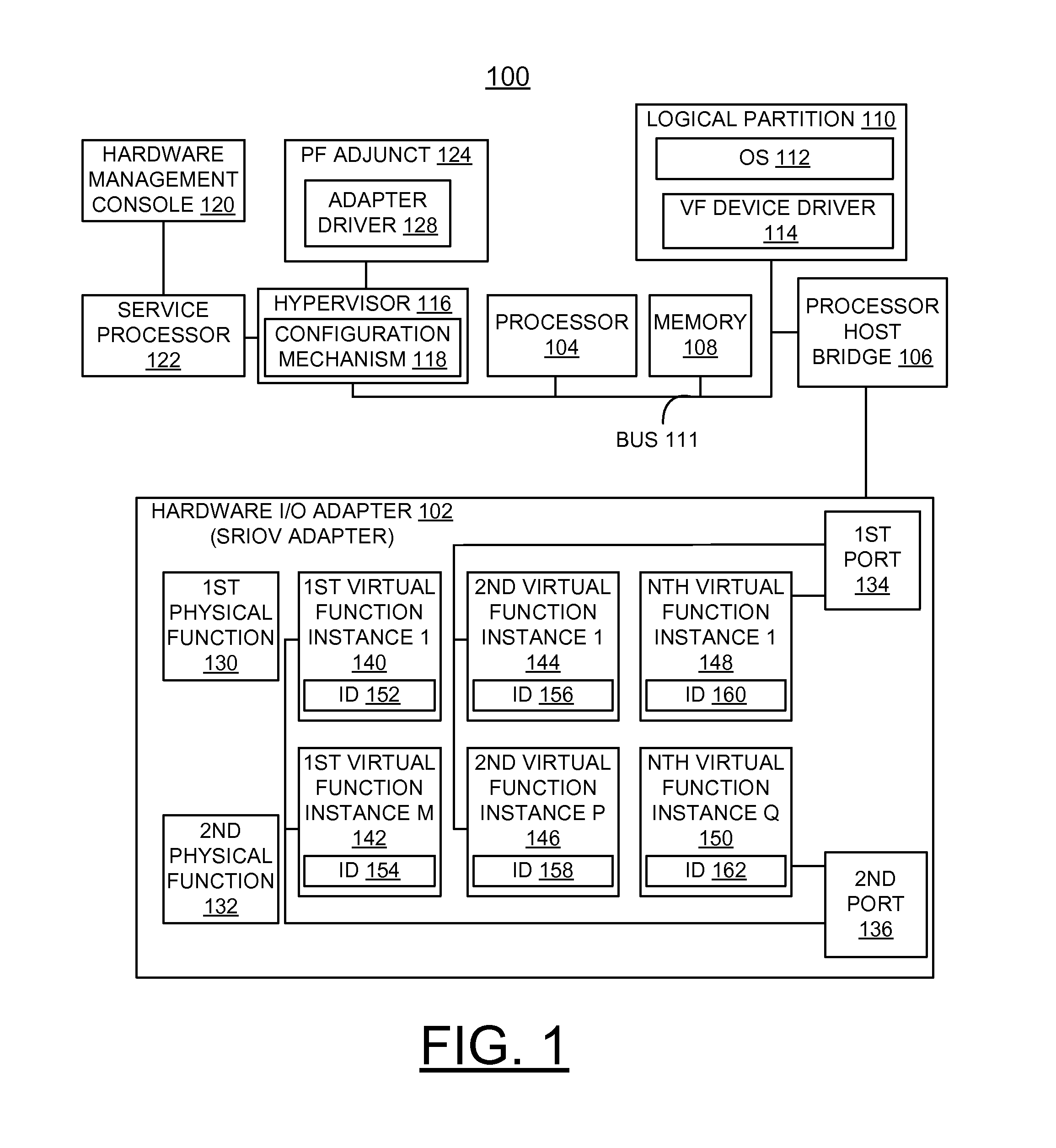

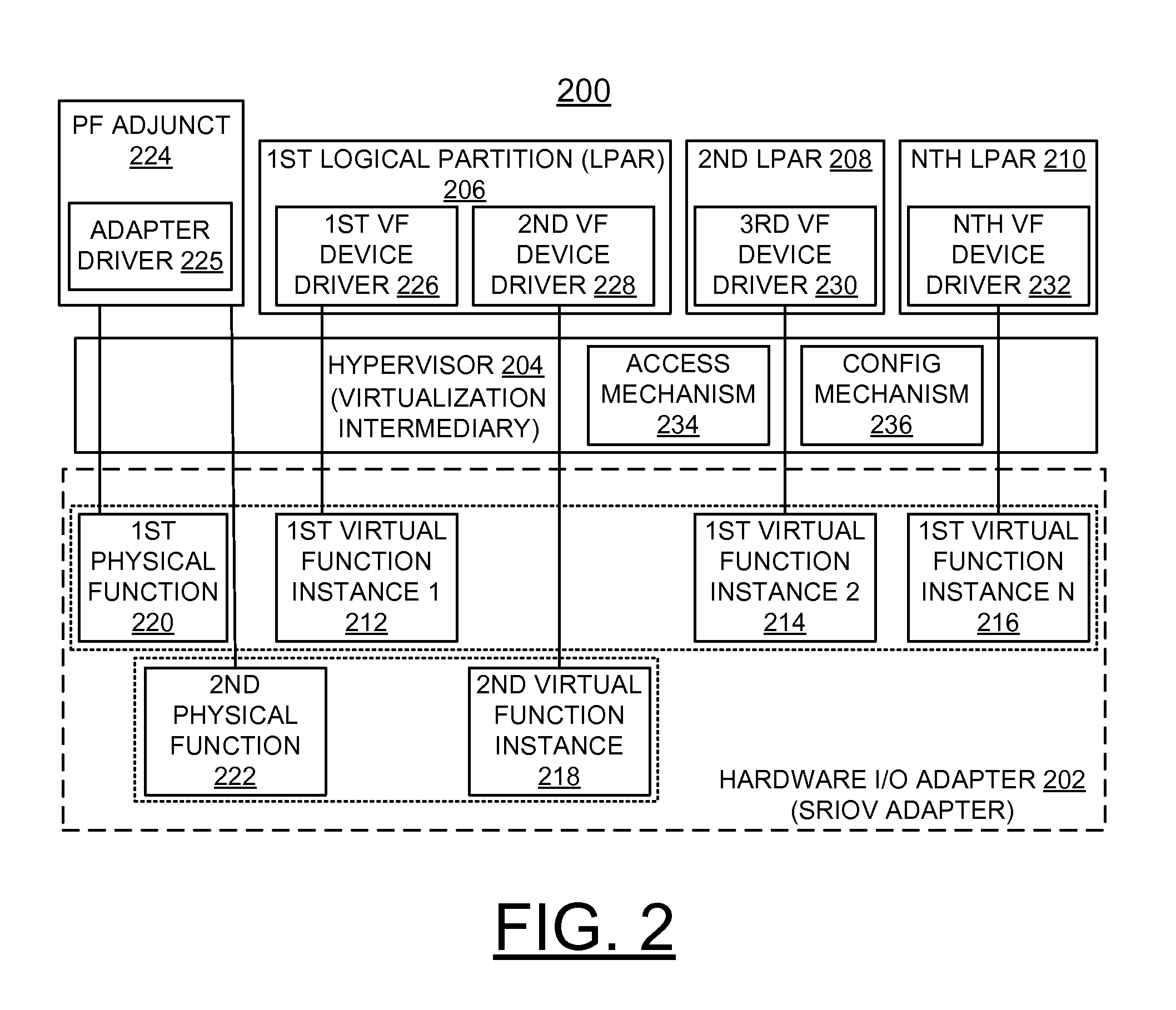

Implementing concurrent adapter firmware update for an sriov adapter in a virtualized system

ActiveUS20140372739A1Without negative effectMaintenance freeSoftware engineeringError detection/correctionEngineeringScratchpad memory

A method, system and computer program product are provided for implementing concurrent adapter firmware update of a Single Root Input / Output Virtualization (SRIOV) adapter in a virtualized system. An adapter driver is used to update adapter firmware concurrent with normal I / O operations. When configuration is stored in a scratchpad buffer, the adapter driver detects virtual functions (VFs) configured and operating. An enhanced error handling (EEH) process is initiated, freezing the VFs, and an updated adapter firmware image is loaded to the adapter. The adapter driver completes the EEH recovery, the adapter is restarted using the new updated adapter firmware. The VFs device drivers unfreeze the VFs, and complete the EEH recovery.

Owner:IBM CORP

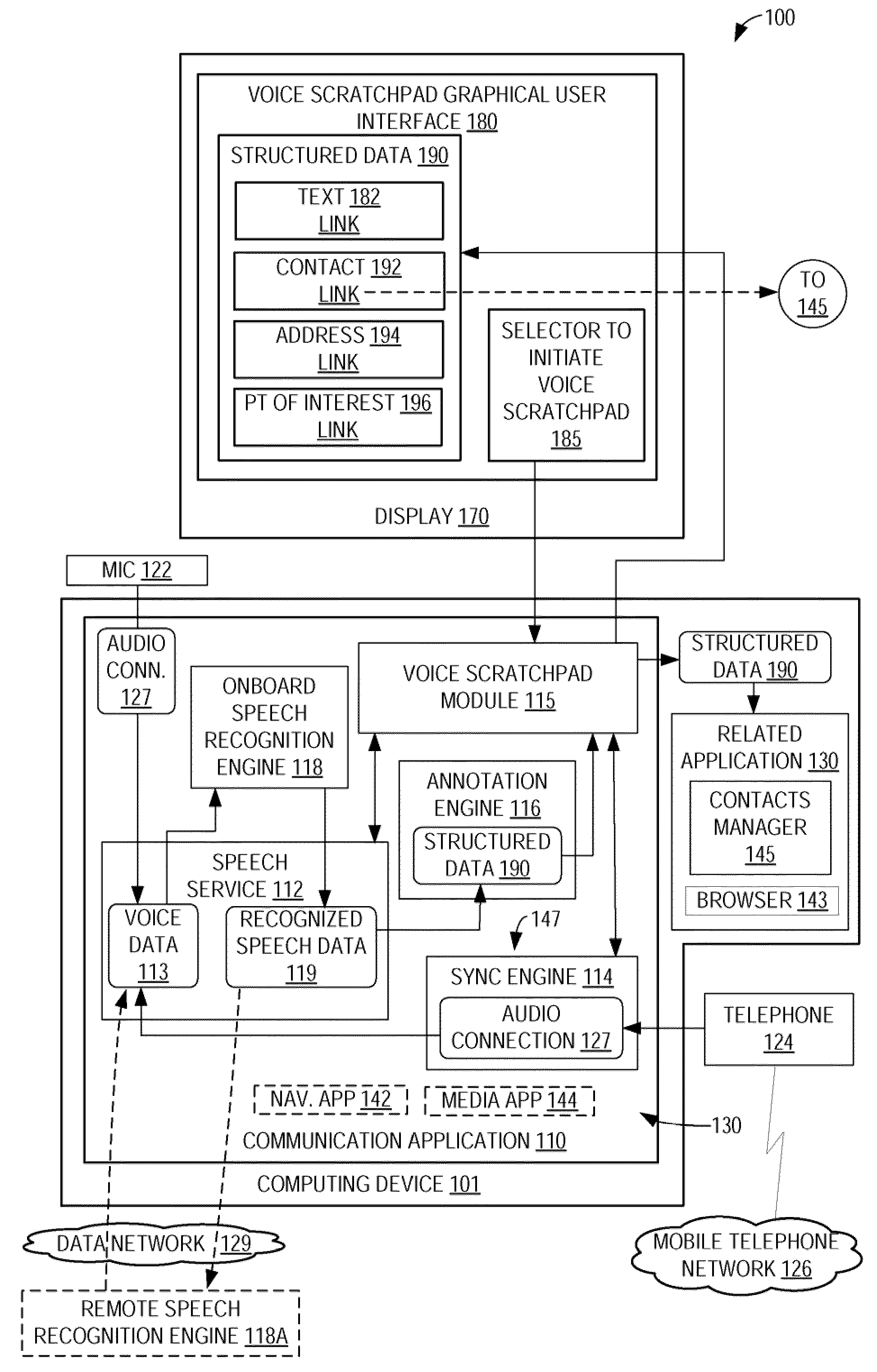

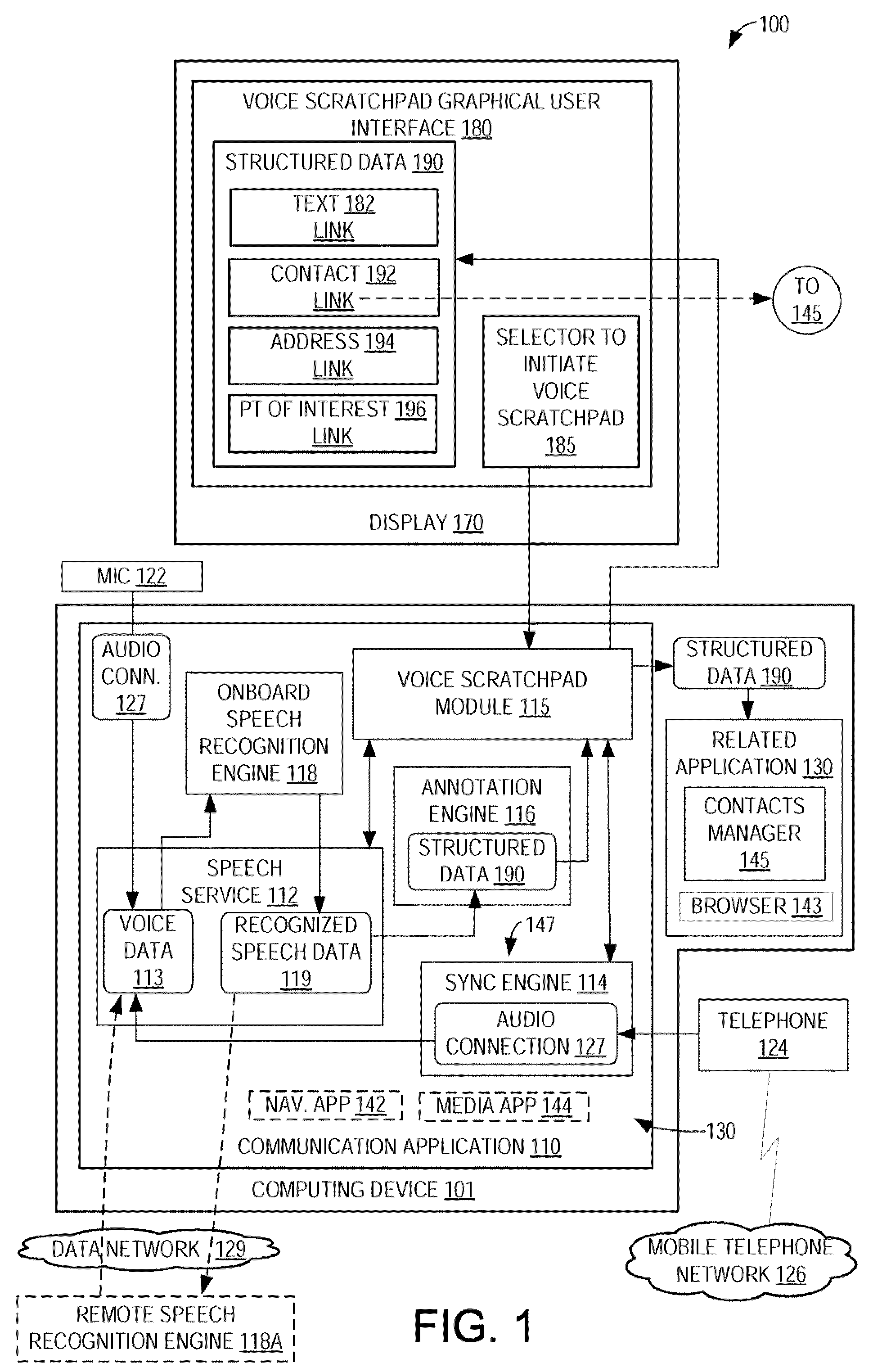

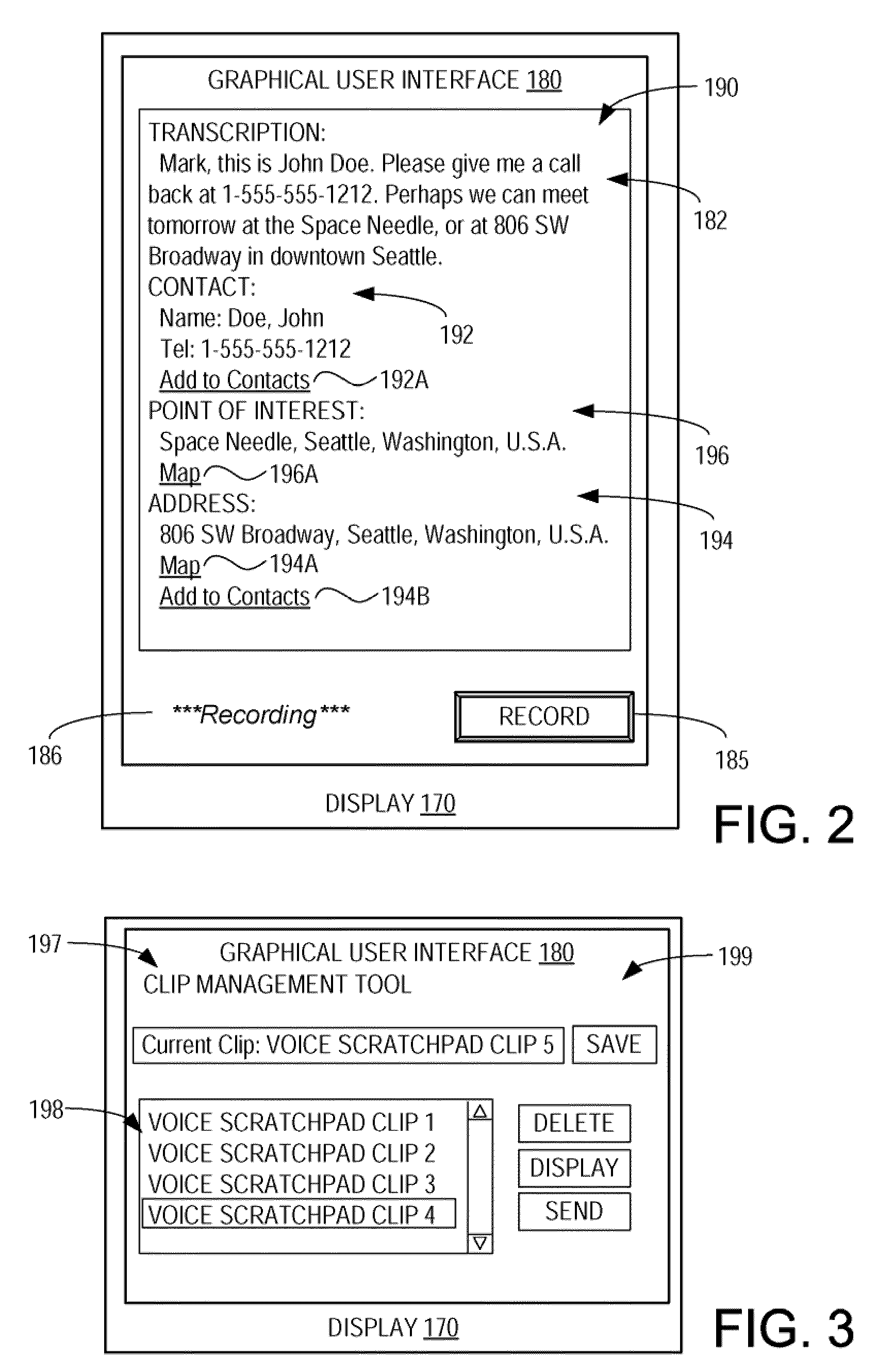

Voice scratchpad

ActiveUS20100254521A1Well formedInstruments for road network navigationRoad vehicles traffic controlVoice communicationOn board

Various embodiments of systems and methods to implement a voice scratchpad for deriving data from a voice communication are provided. The system may include a voice scratchpad module configured to detect an audio connection between an on-board vehicle computing device and a communications device, and present a selector on a voice scratchpad graphical user interface, which upon selection by a user causes the voice scratchpad module to process voice data received via the audio connection to obtain recognized speech data. The system may further include an annotation engine configured to annotate the recognized speech data to produce structured data in one of a plurality of predetermined formats. The voice scratchpad module may be configured to receive the structured data from the annotation engine, and display the structured data on voice scratchpad graphical user interface.

Owner:MICROSOFT TECH LICENSING LLC

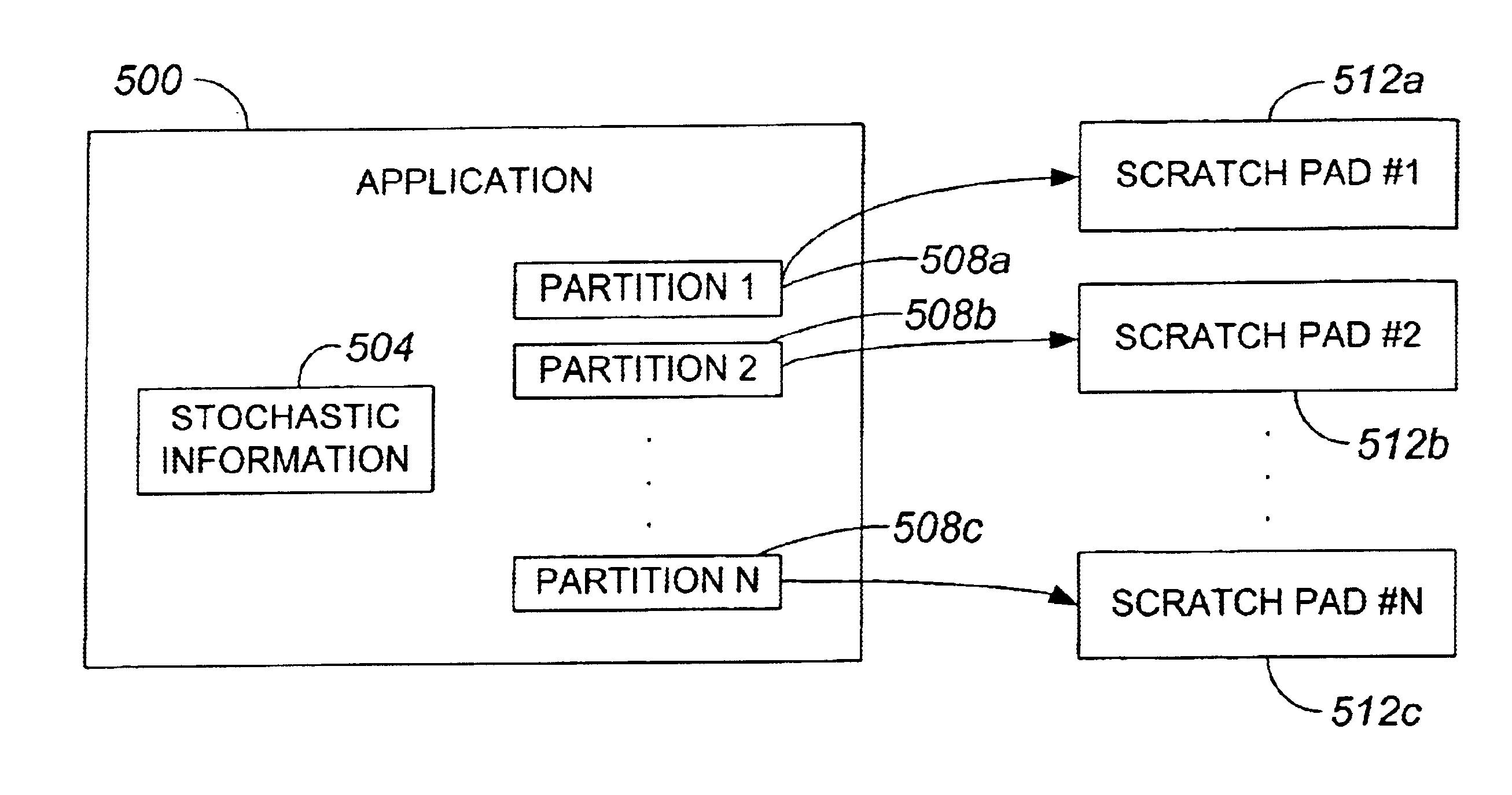

Stochastic scratchpad storage management technique

InactiveUS6799253B1Data processing applicationsMemory adressing/allocation/relocationVirtual memoryPath length

Methods and apparatus for dynamically allocating space within virtual memory at run-time while substantially minimizing an associated path length are disclosed. According to one aspect of the present invention, a method for allocating virtual storage associated with a computer system includes creating a scratchpad, allocating a unit of storage space at a current location within the scratchpad, and writing a set of information into the unit of storage space such that the set of information is substantially not tracked. The scratchpad supports allocation of storage space therein, and includes a first pointer that identifies a current location within the scratchpad. Finally, the method includes moving the first pointer in the scratchpad to identify a second location within the scratchpad. The first pointer moves in the first linear space in substantially only a top-to-bottom direction.

Owner:ORACLE INT CORP

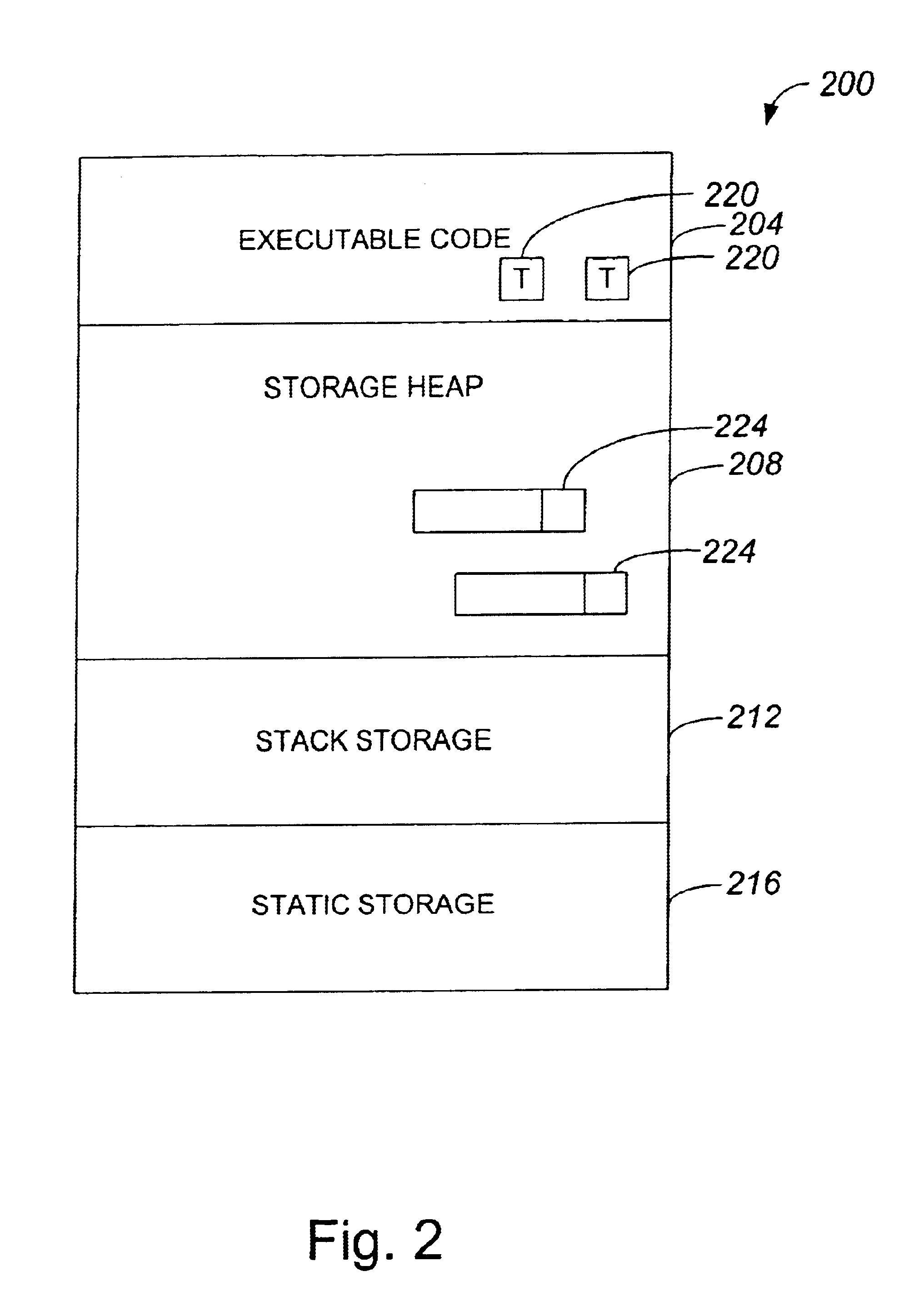

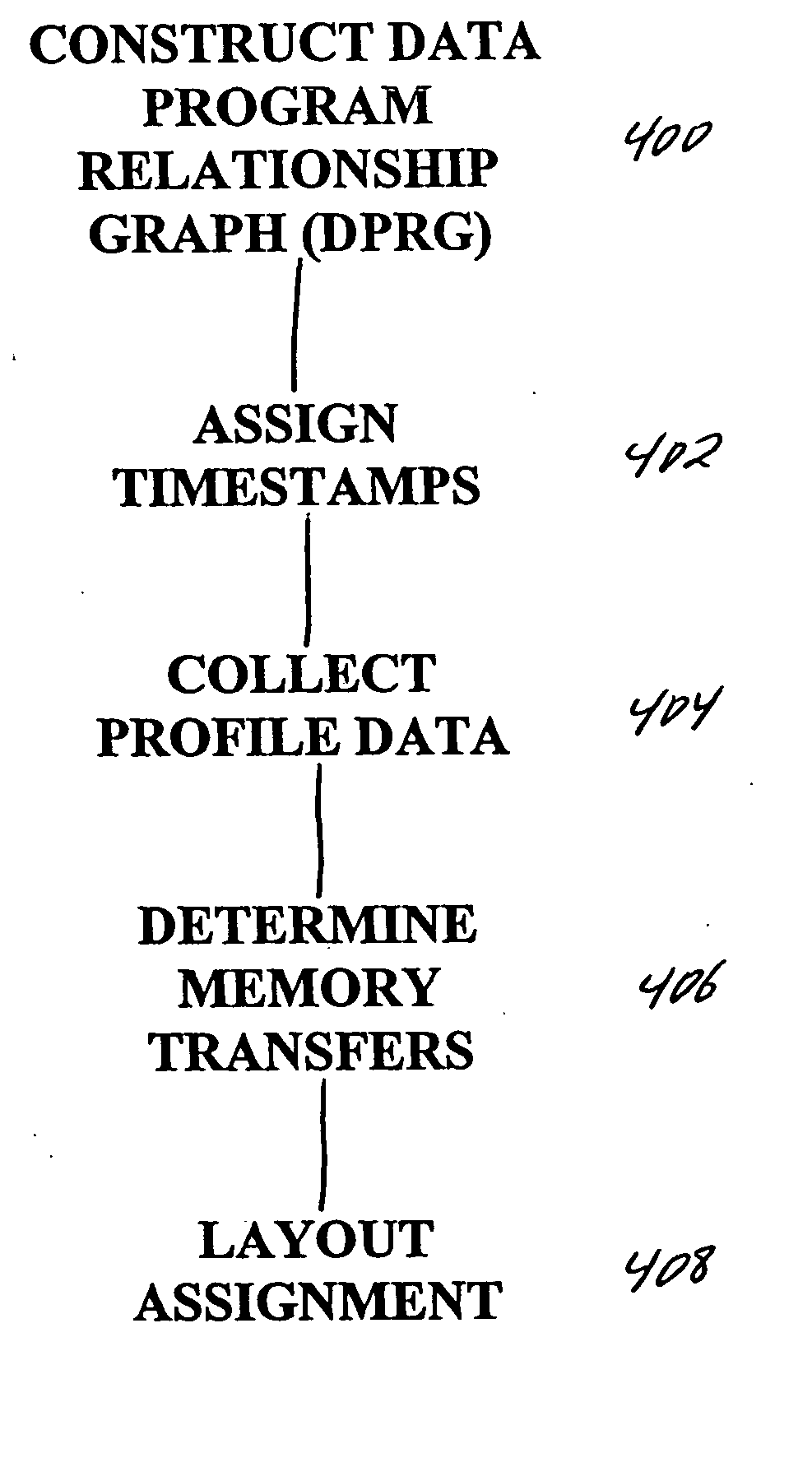

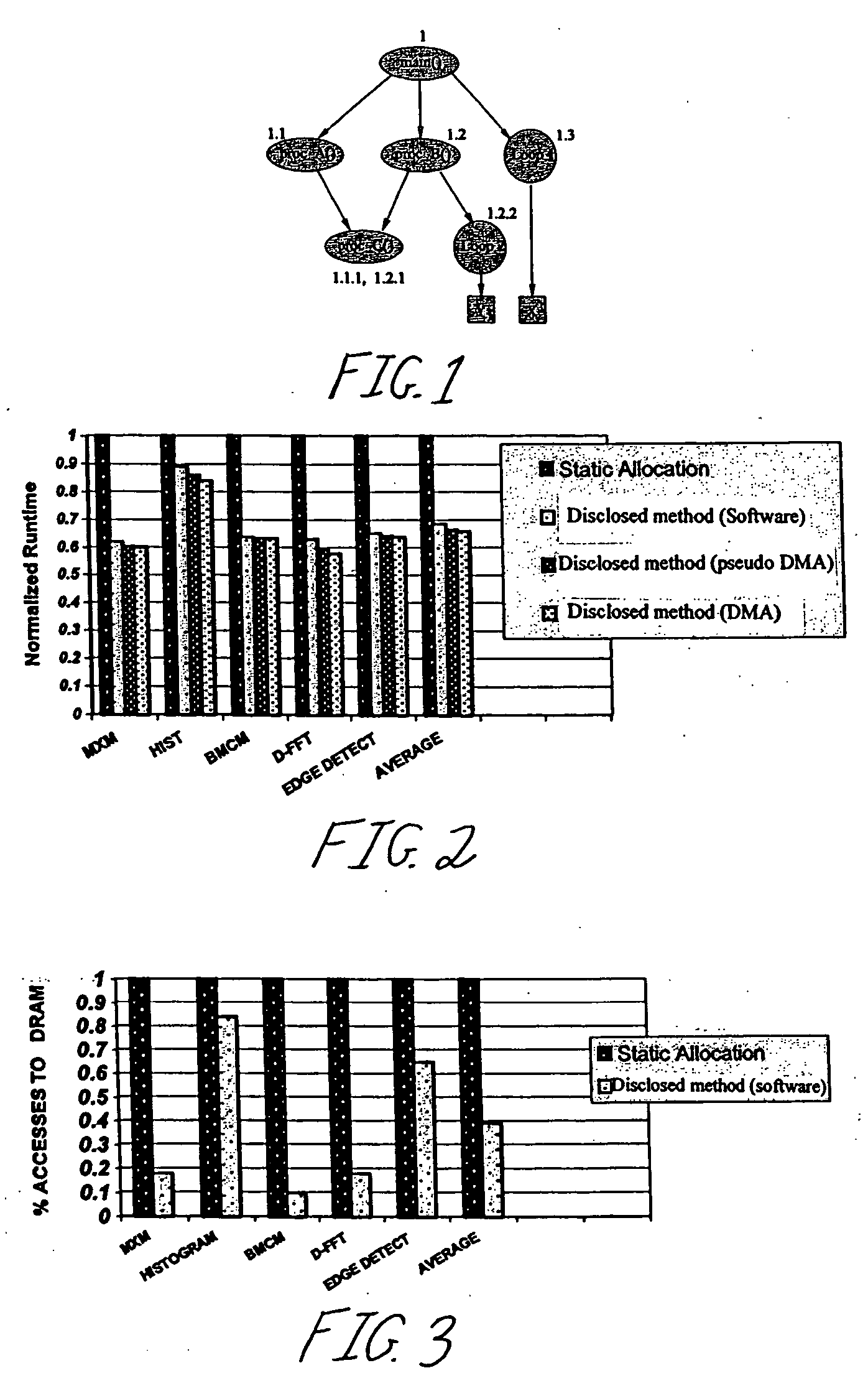

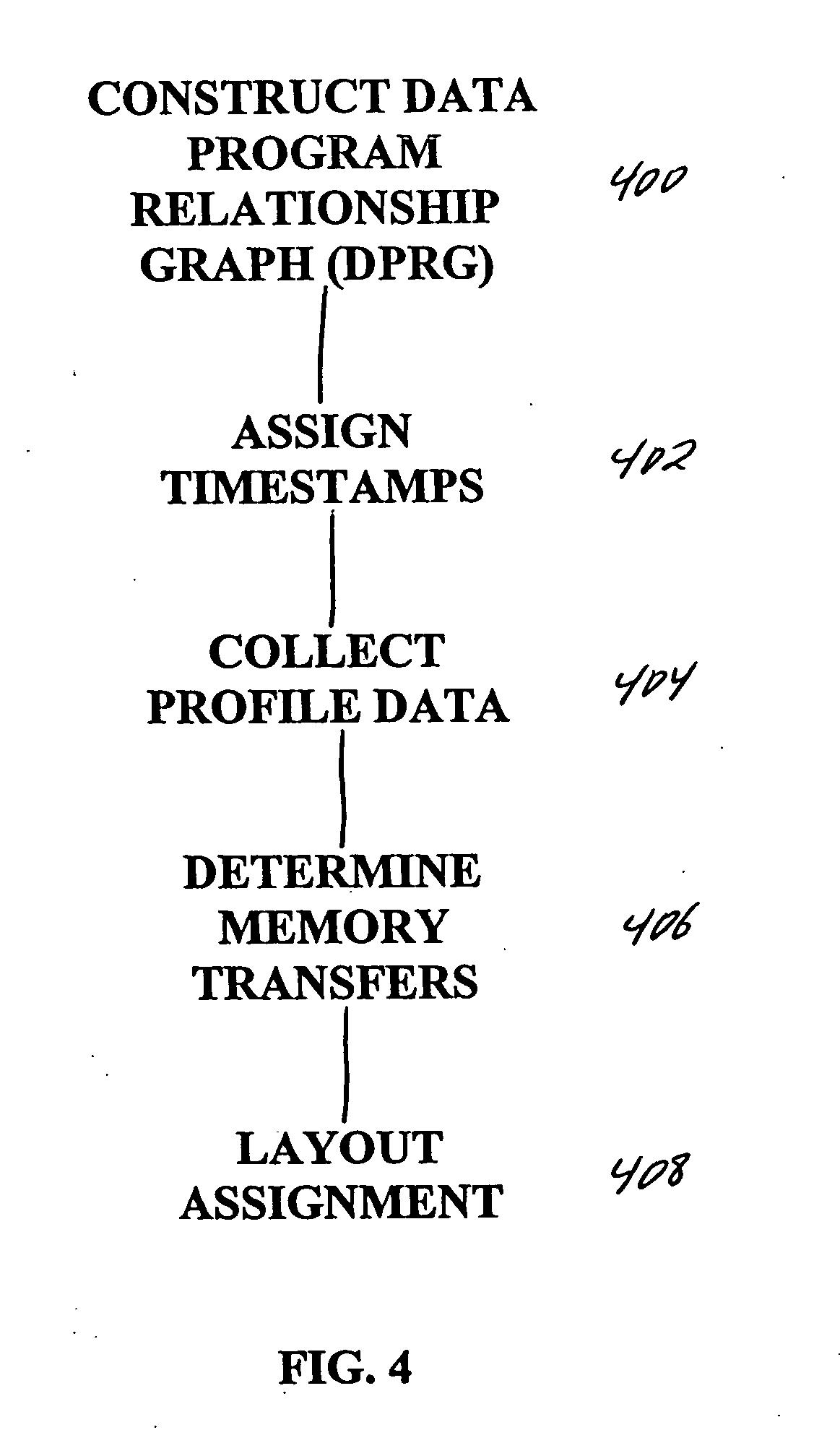

Compiler-driven dynamic memory allocation methodology for scratch-pad based embedded systems

InactiveUS20060080372A1Avoid overheadReduce overheadEnergy efficient ICTProgram controlDistribution methodAccess time

A highly predictable, low overhead and yet dynamic, memory allocation methodology for embedded systems with scratch-pad memory is presented. The dynamic memory allocation methodology for global and stack data (i) accounts for changing program requirements at runtime; (ii) has no software-caching tags; (iii) requires no run-time checks; (iv) has extremely low overheads; and (v) yields 100% predictable memory access times. The methodology provides that for data that is about to be accessed frequently is copied into the SRAM using compiler-inserted code at fixed and infrequent points in the program. Earlier data is evicted if necessary.

Owner:UNIV OF MARYLAND

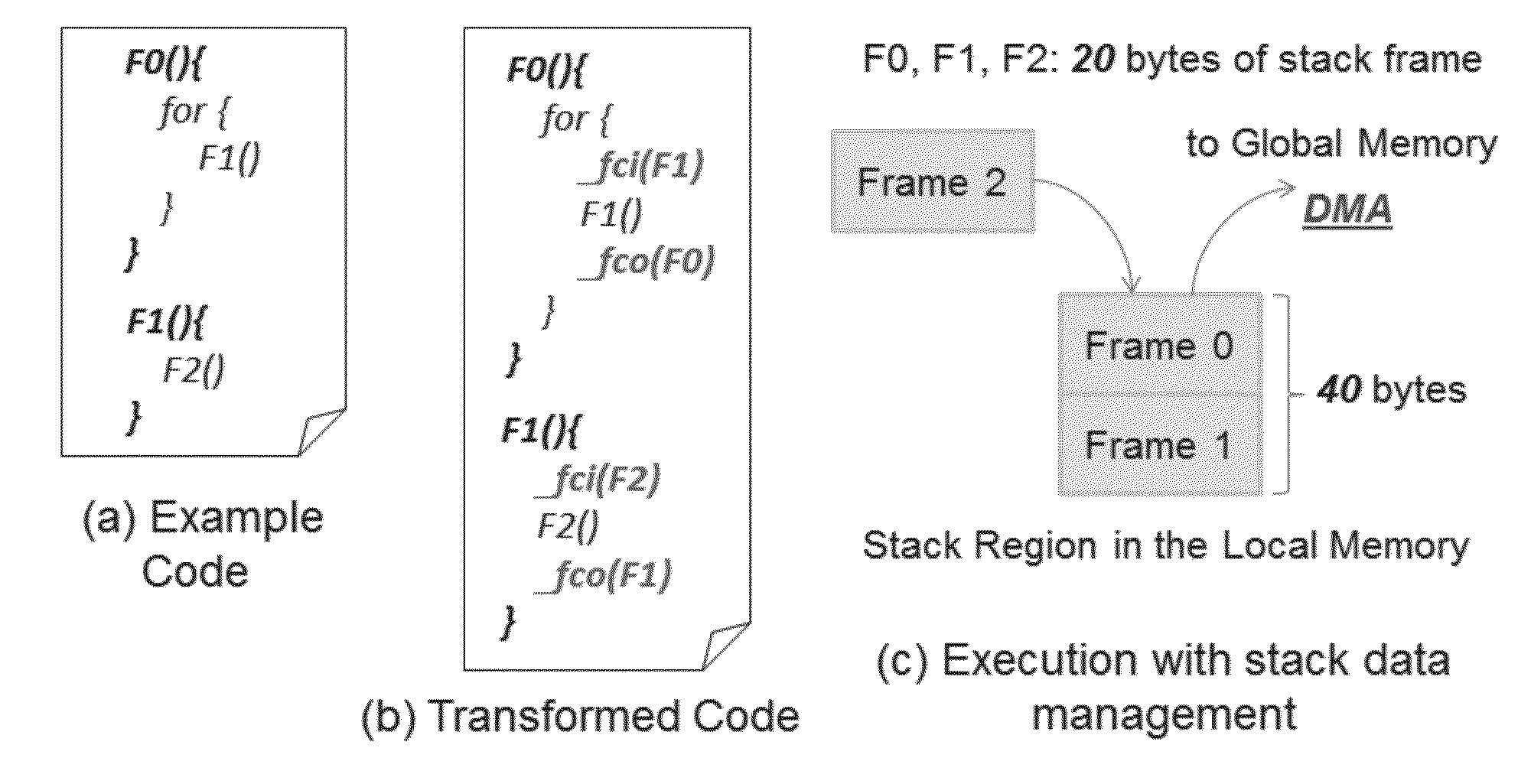

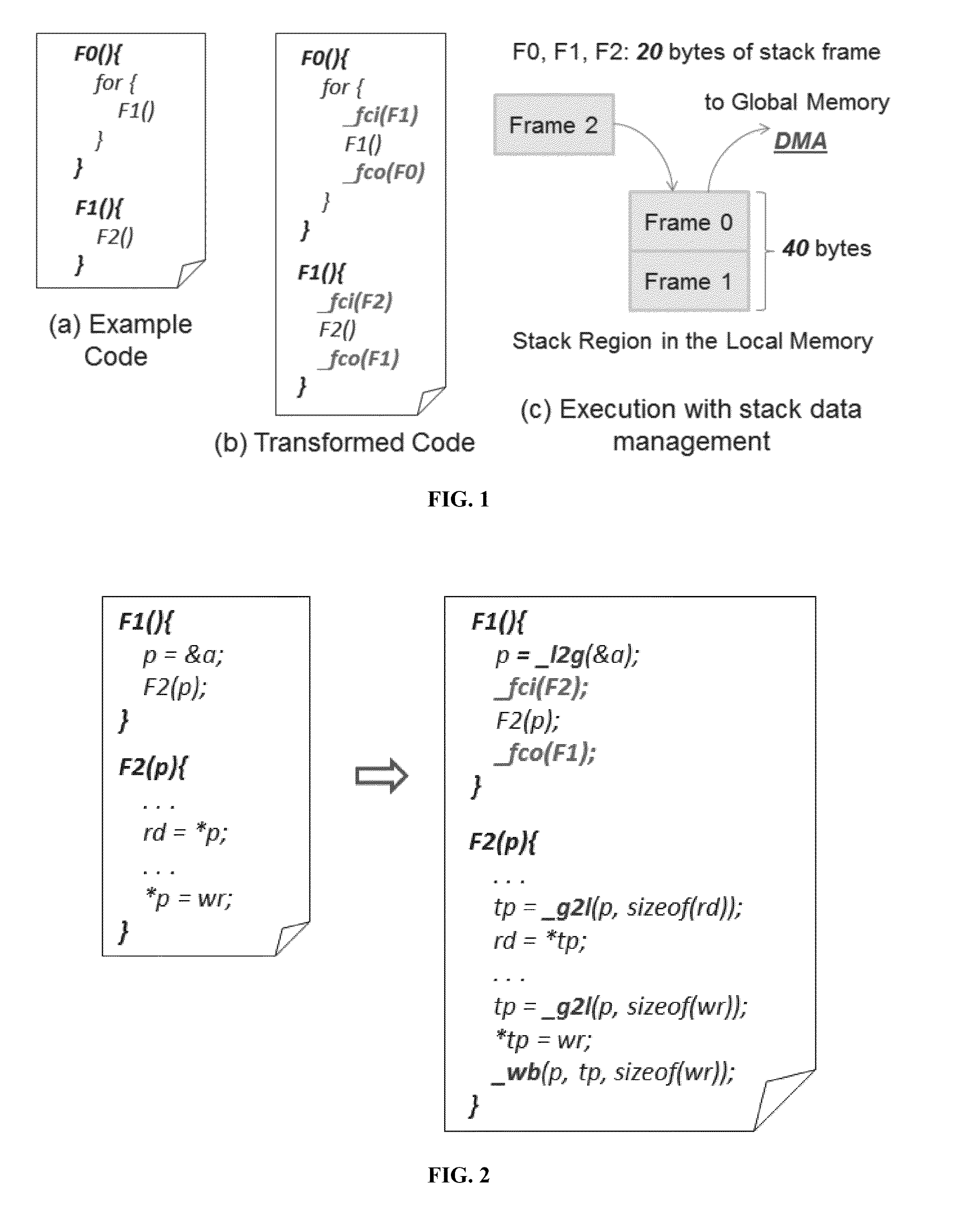

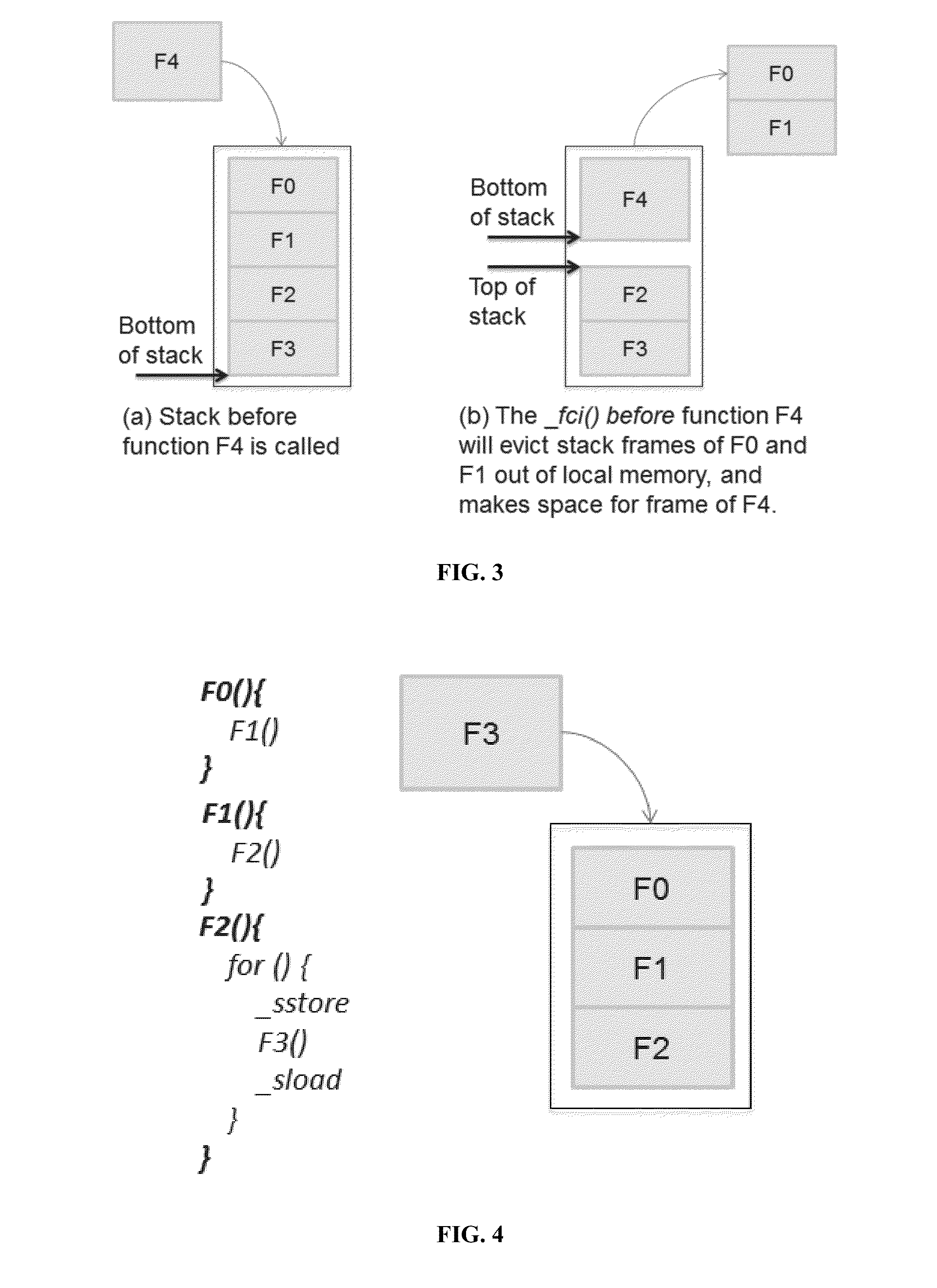

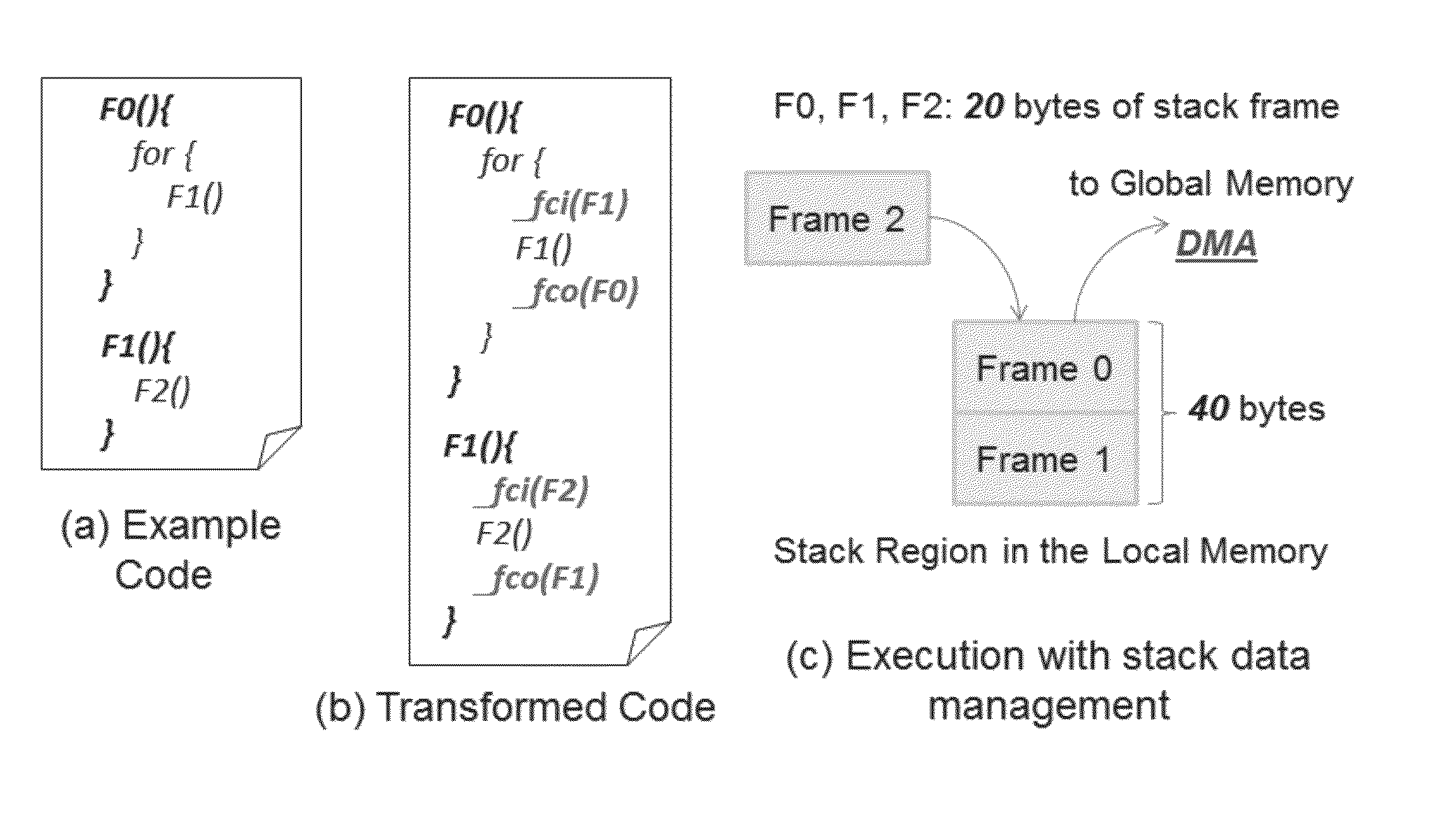

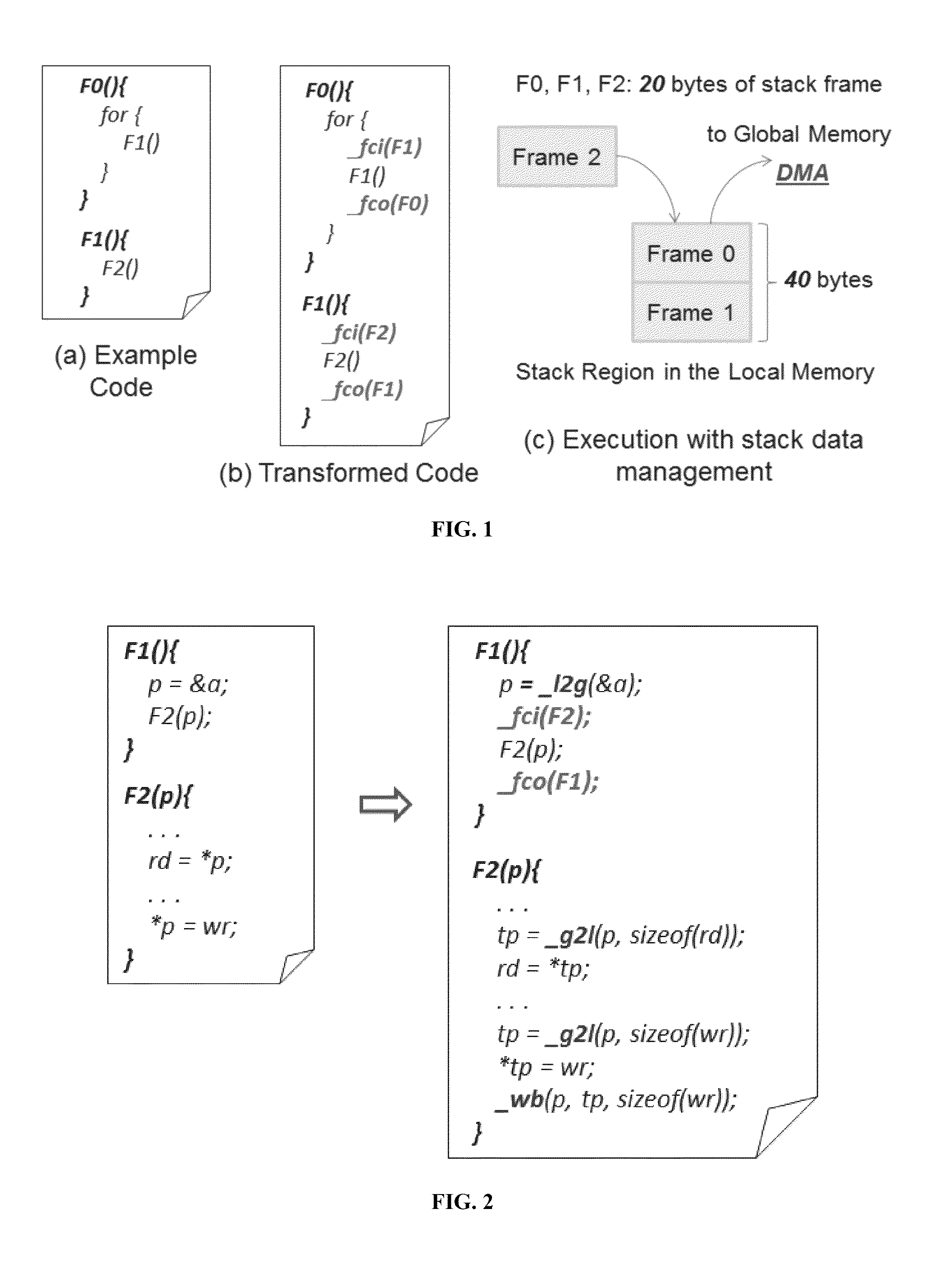

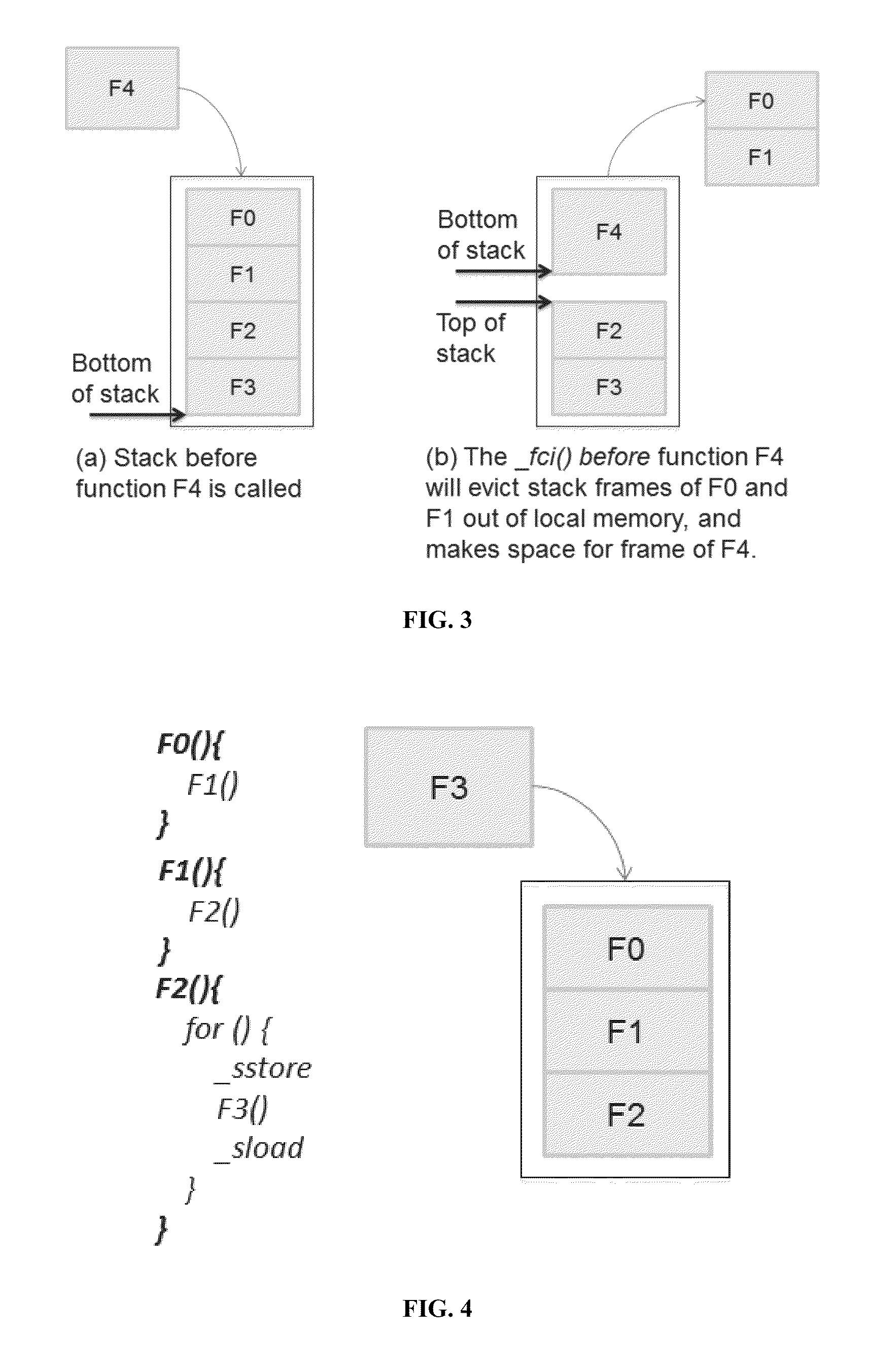

Stack Data Management for Software Managed Multi-Core Processors

InactiveUS20140282454A1Minimal costEasy to cutResource allocationSoftware engineeringInteger linear programming formulationData management

Methods and apparatus for managing stack data in multi-core processors having scratchpad memory or limited local memory. In one embodiment, stack data management calls are inserted into software in accordance with an integer linear programming formulation and a smart stack data management heuristic. In another embodiment, stack management and pointer management functions are inserted before and after function calls and pointer references, respectively. The calls may be inserted in an automated fashion by a compiler utilizing an optimized stack data management runtime library.

Owner:ARIZONA STATE UNIVERSITY

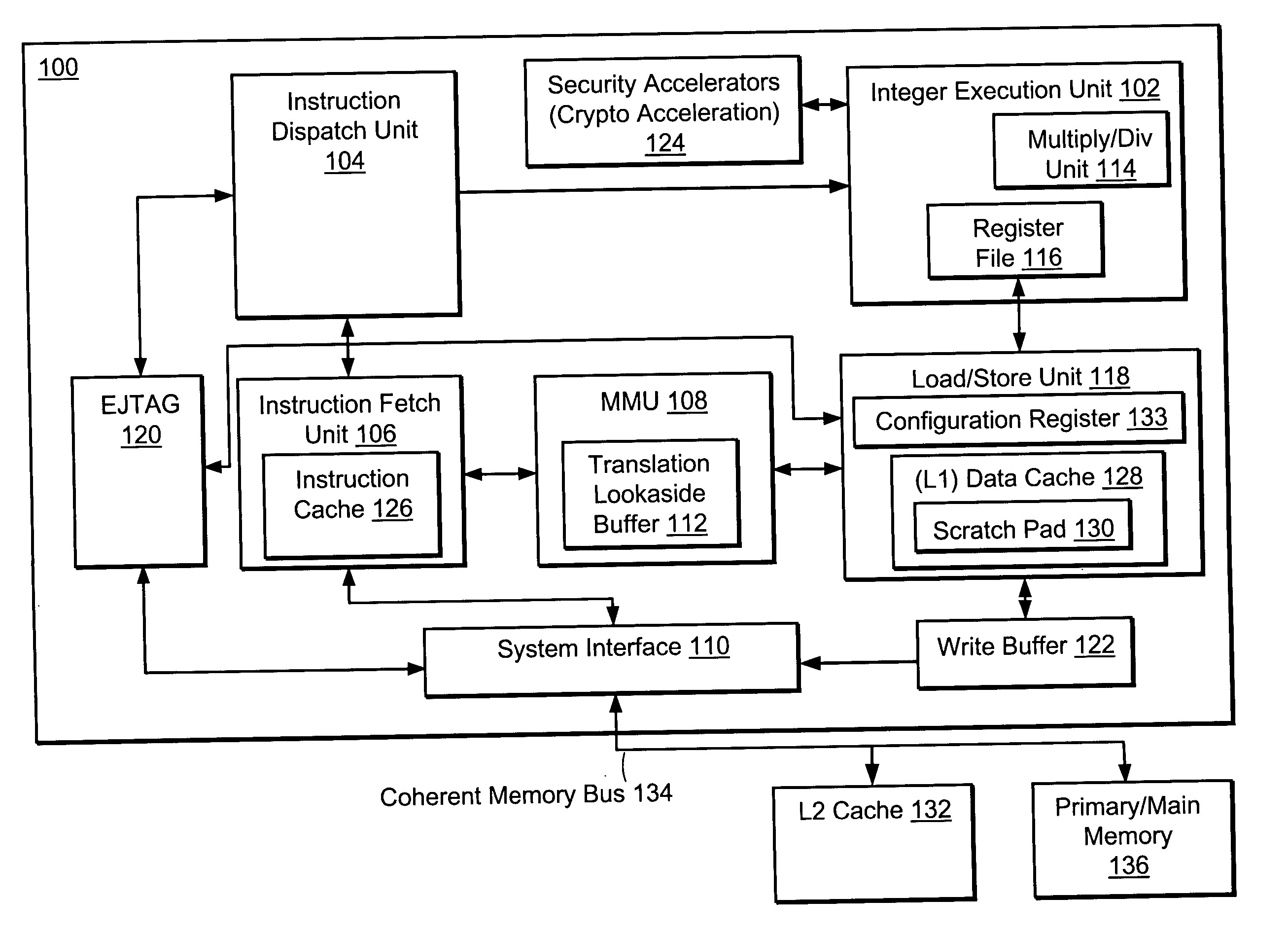

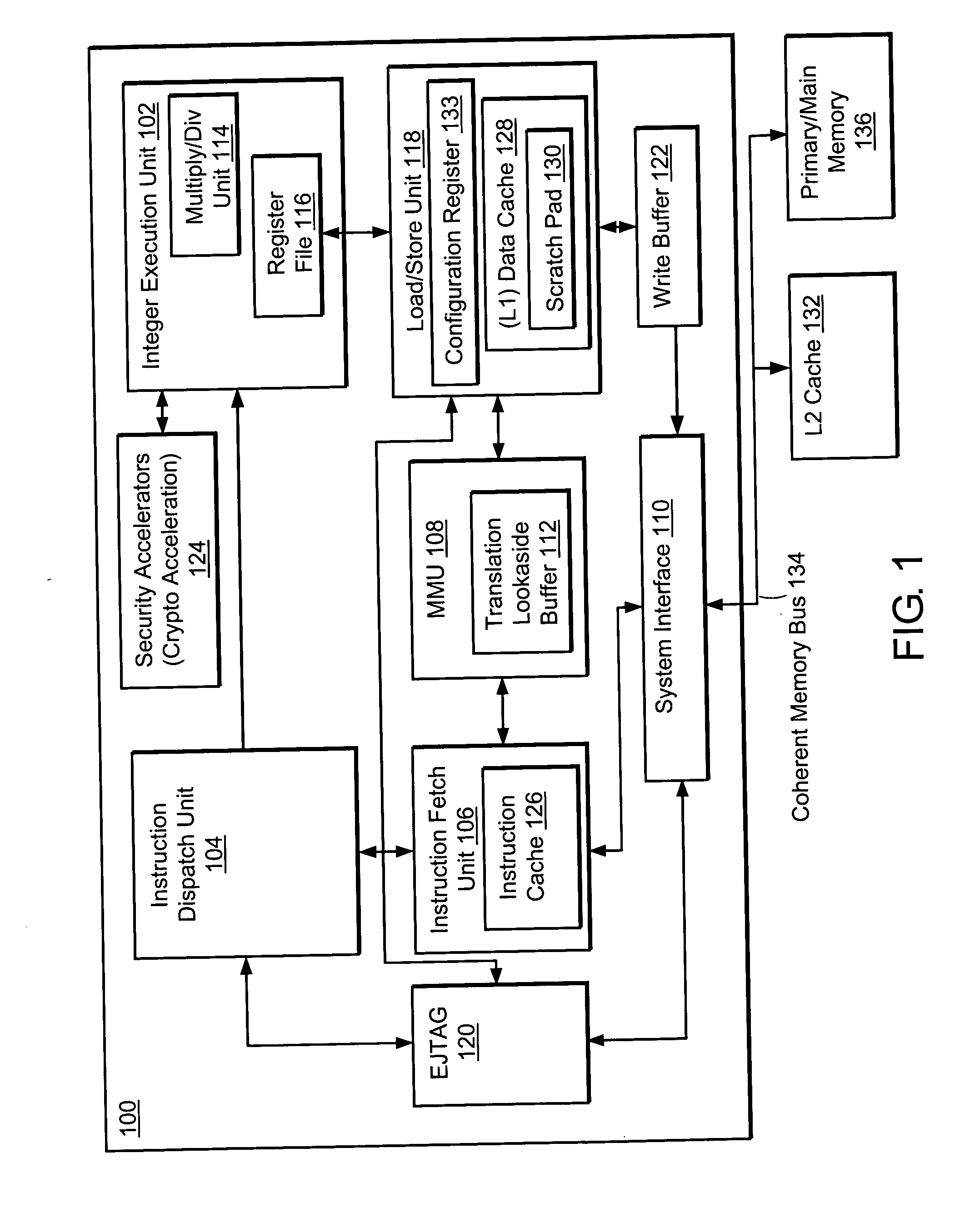

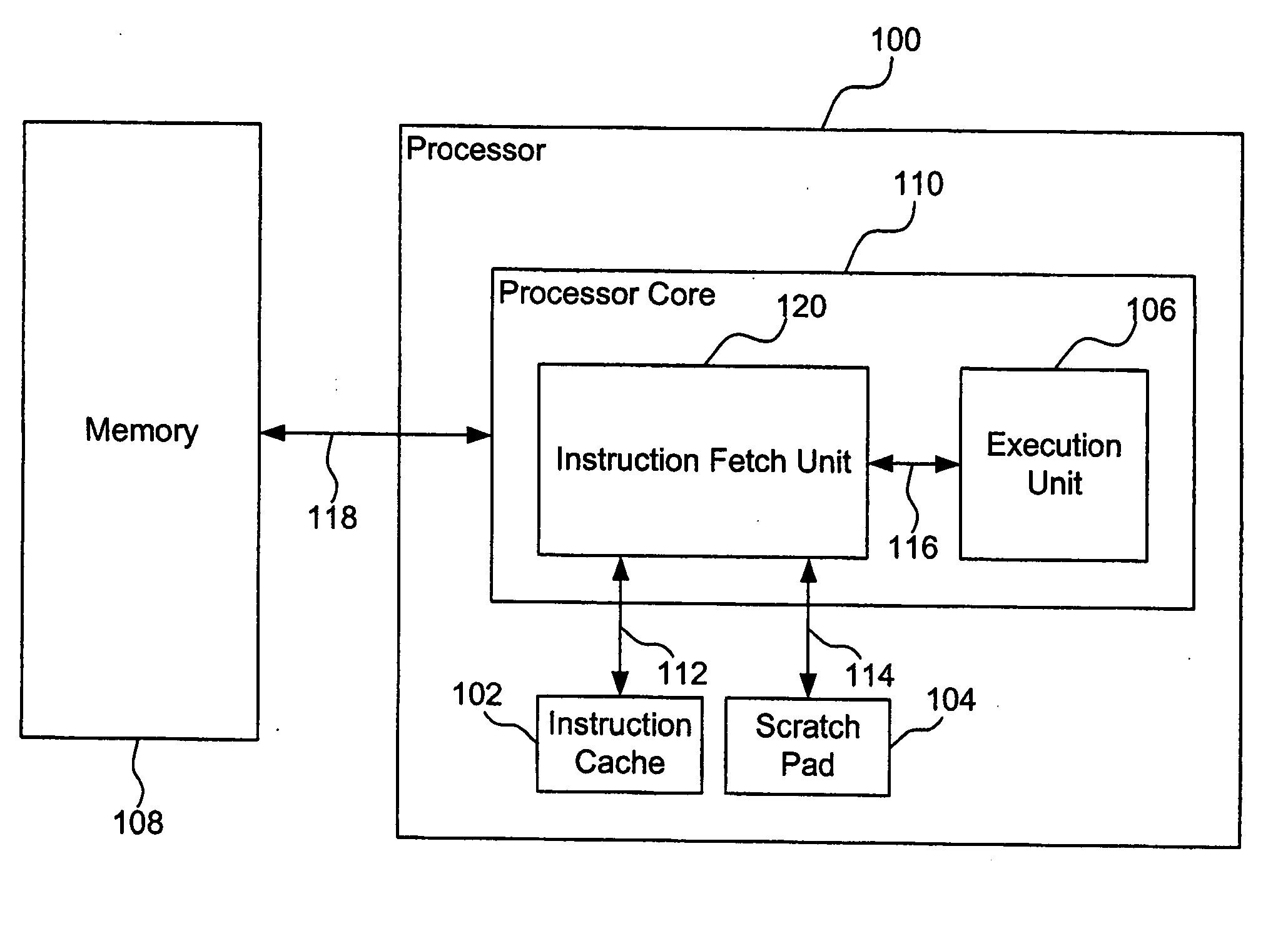

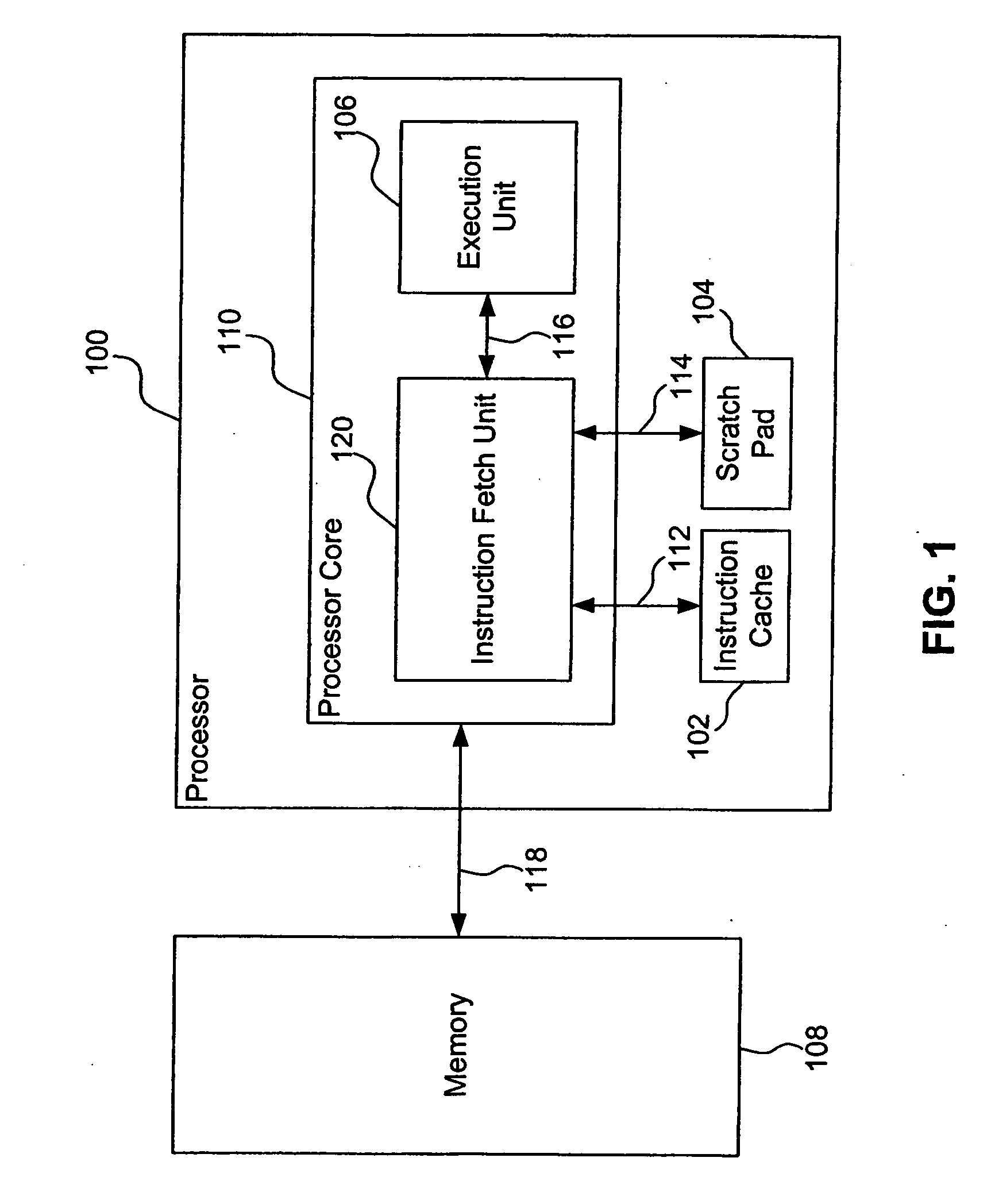

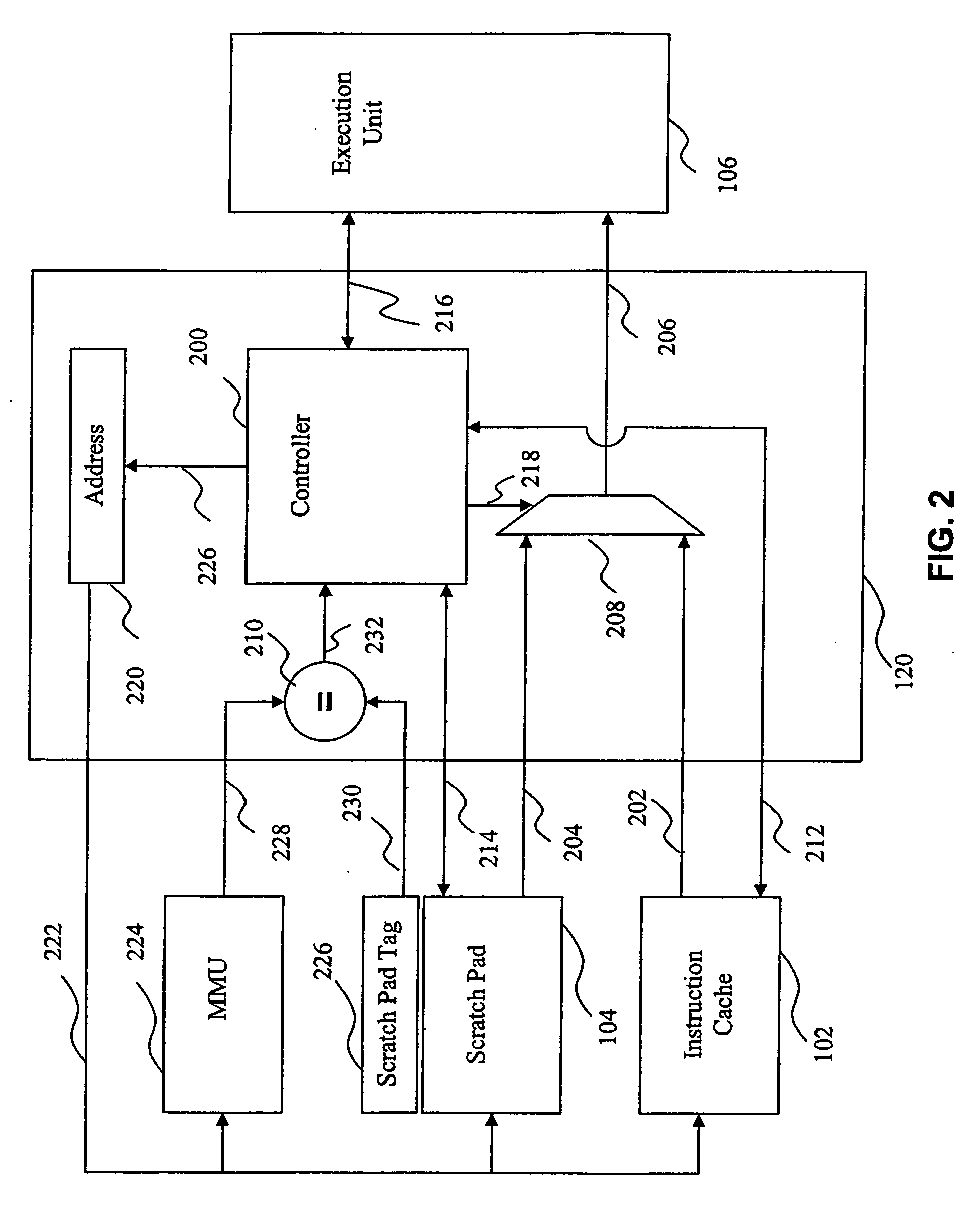

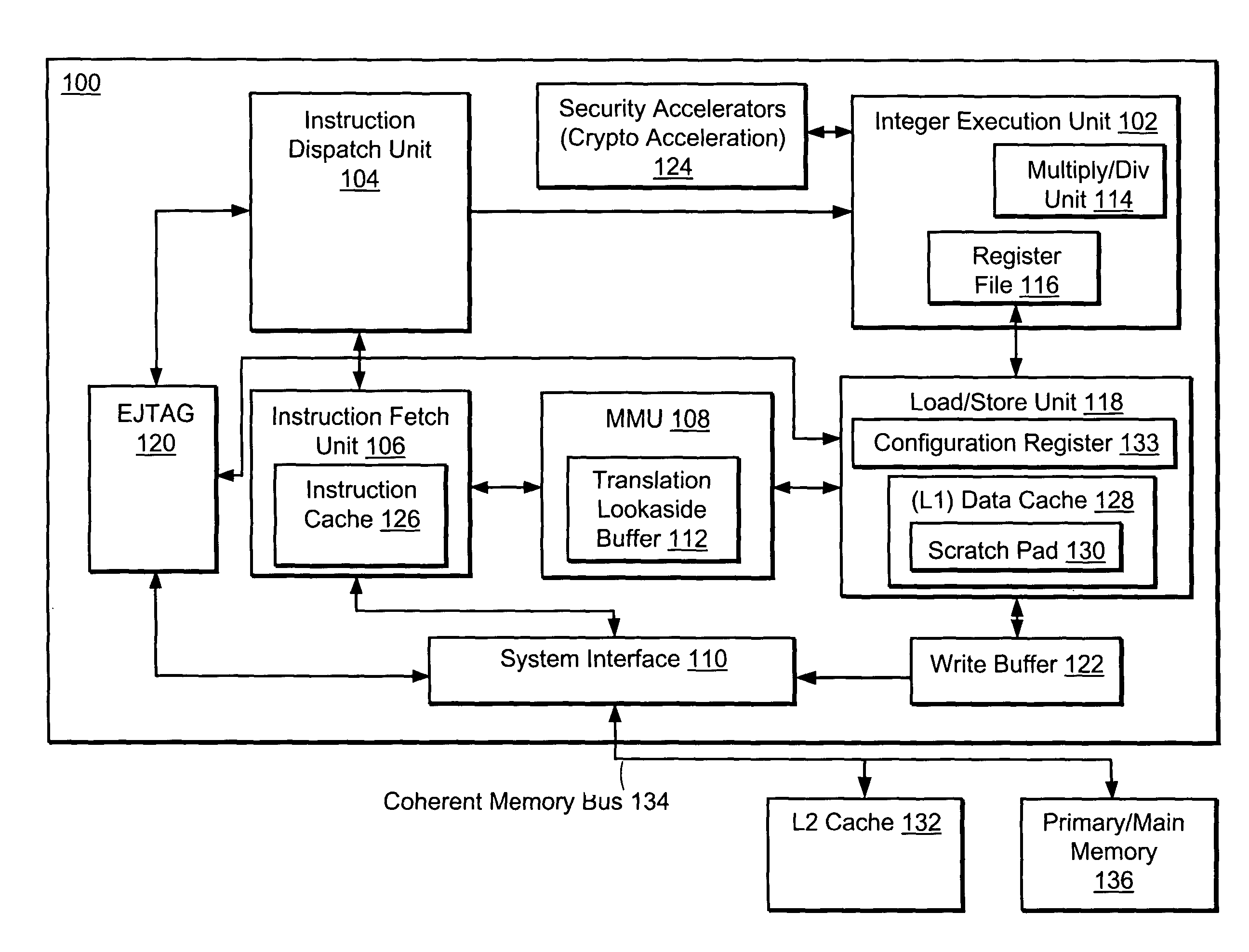

Processor accessing a scratch pad on-demand to reduce power consumption

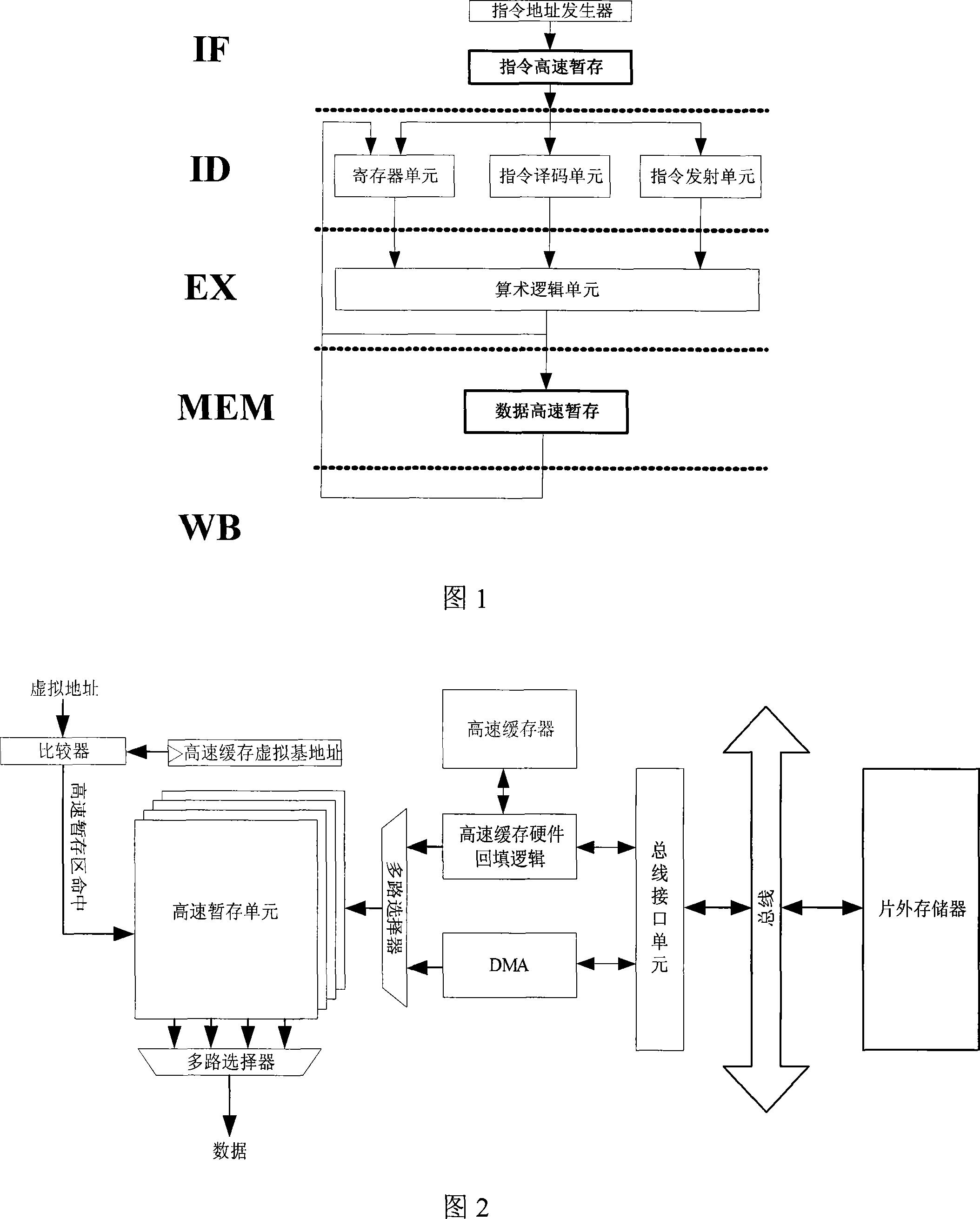

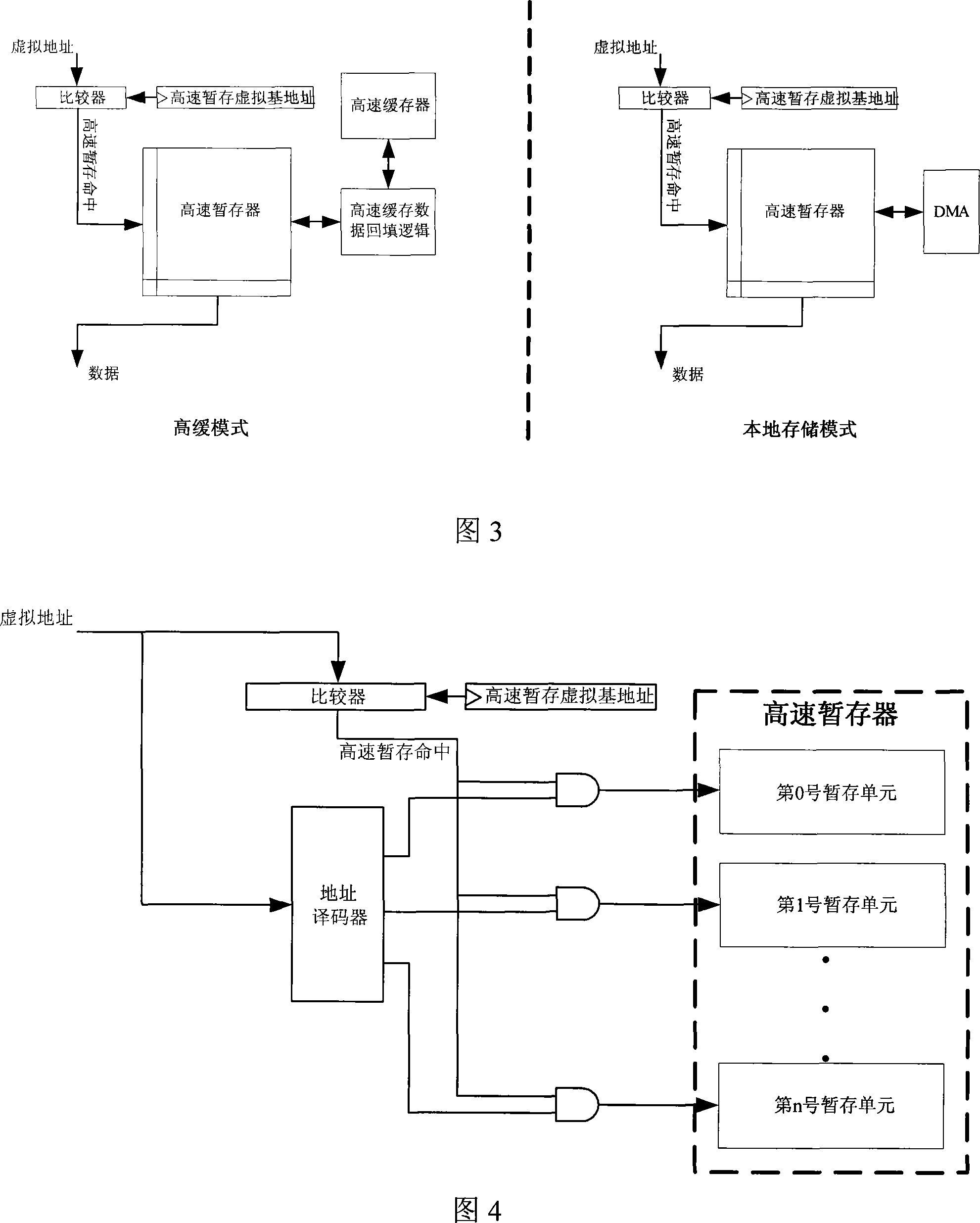

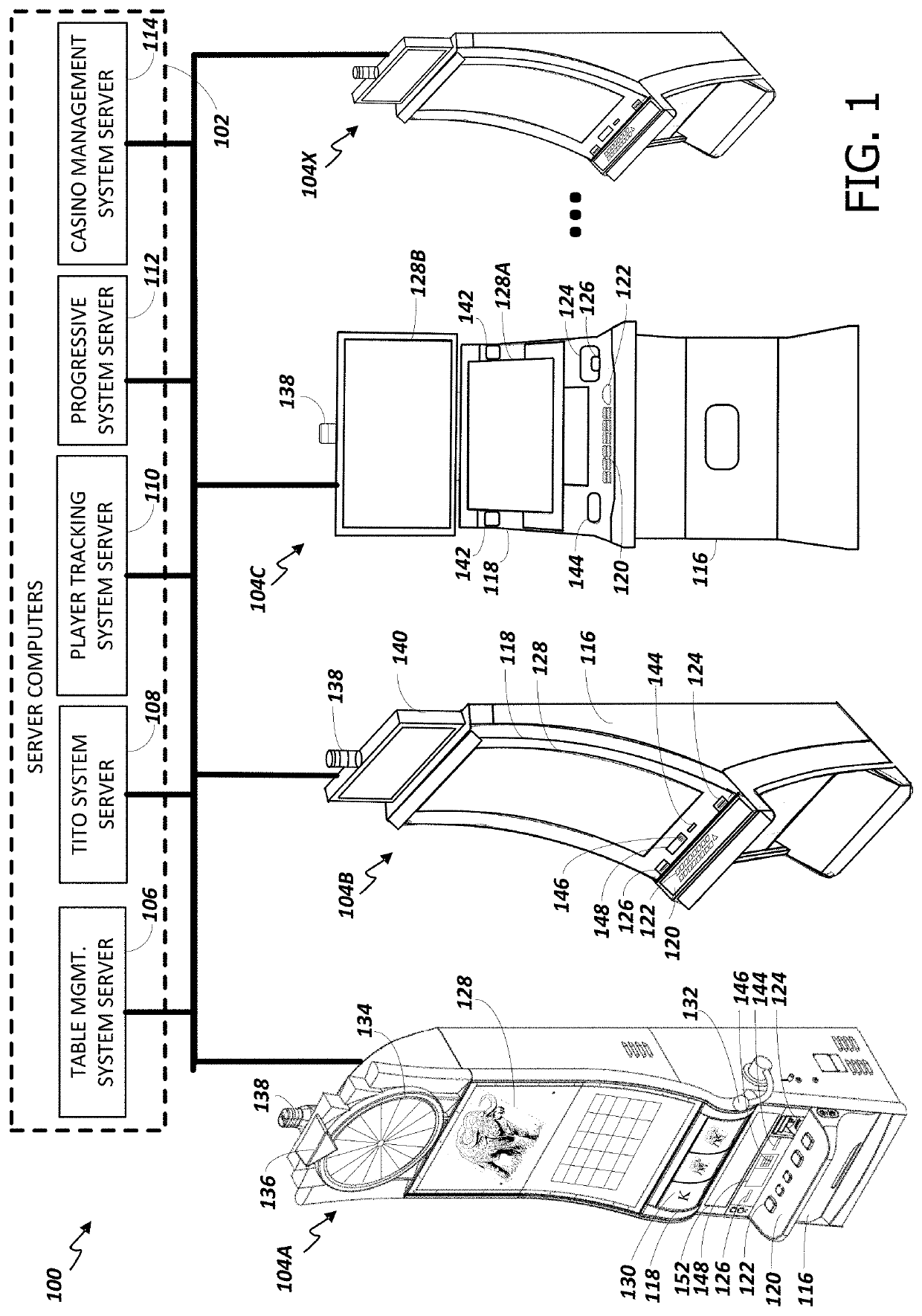

ActiveUS20070113050A1Reduce power consumptionMemory architecture accessing/allocationEnergy efficient ICTParallel computingPhysical address

The present invention provides processing systems, apparatuses, and methods that access a scratch pad on-demand to reduce power consumption. In an embodiment, an instruction fetch unit initiates an instruction fetch. When a scratch pad is enabled, an instruction is retrieved from the scratch pad in parallel with a translation of a virtual address to a physical address. If the physical address is associated with the scratch pad, the retrieved instruction is provided to an execution unit. Otherwise, the scratch pad is disabled to reduce power consumption and the instruction fetch is re-initiated. When the scratch pad is disabled, an instruction is retrieved from another instruction source, such as an instruction cache, in parallel with the translation of the virtual address to the physical address. If the physical address is associated with the scratch pad, the scratch pad is enabled and the instruction fetch is re-initiated.

Owner:ARM FINANCE OVERSEAS LTD

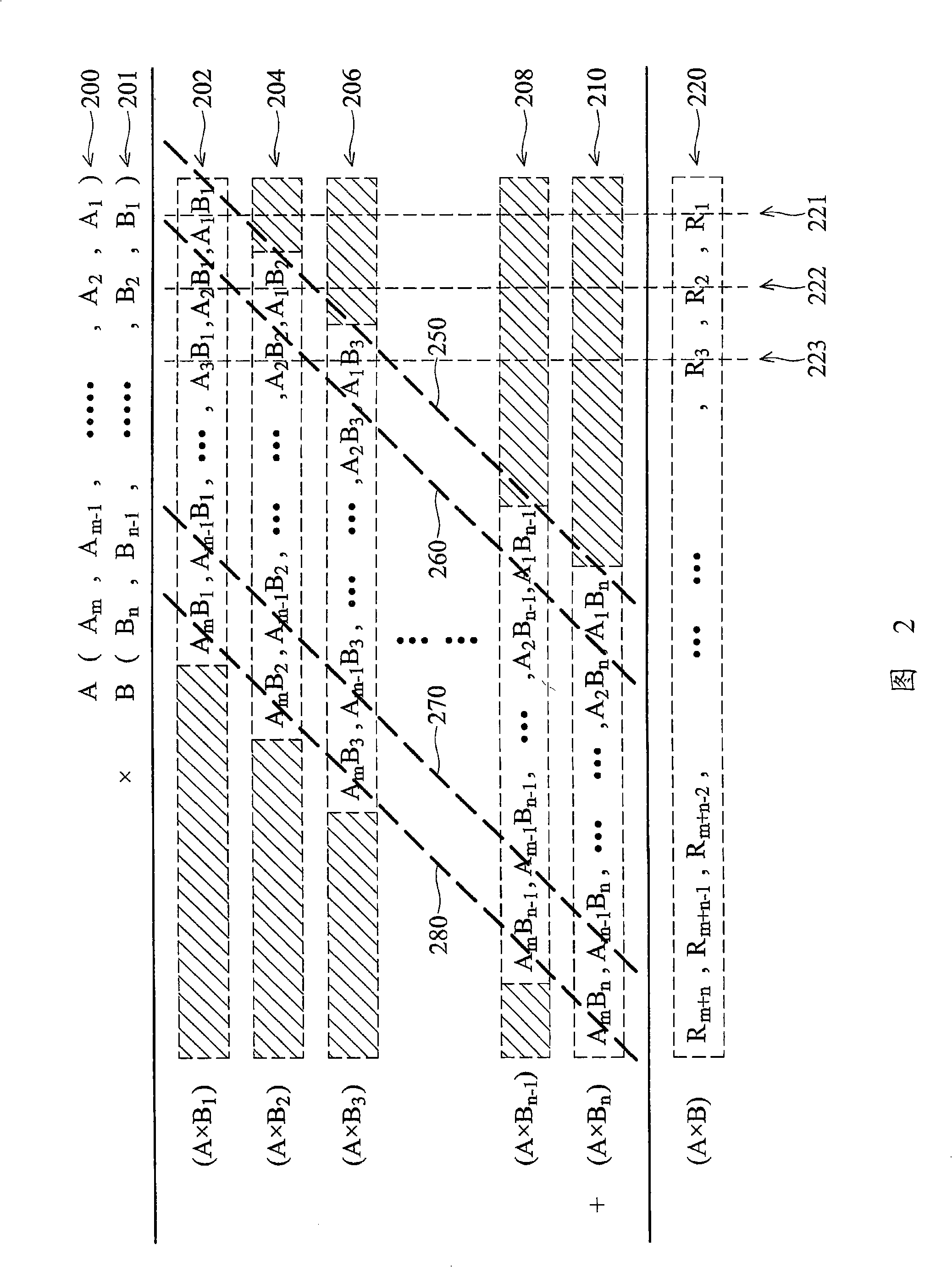

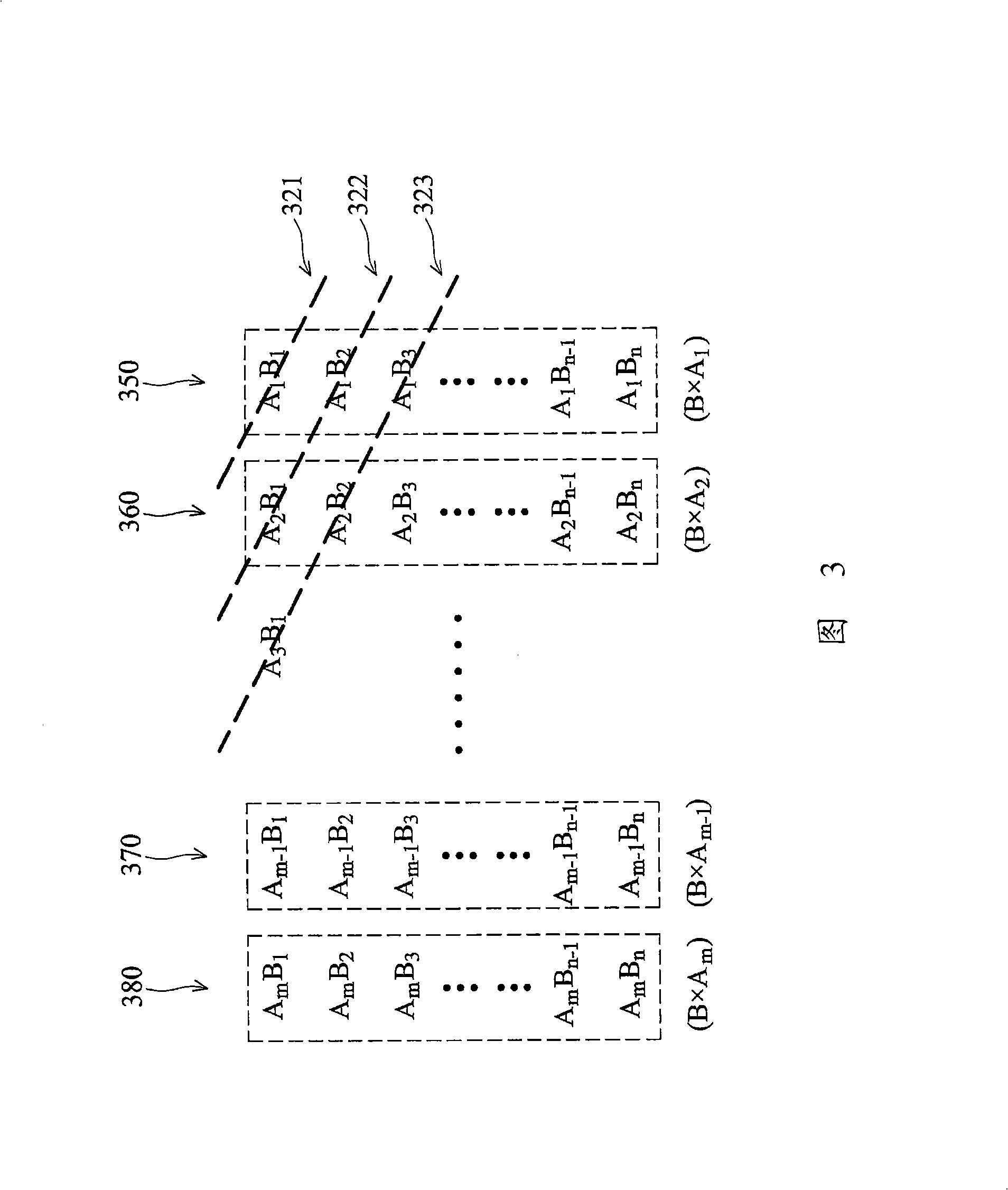

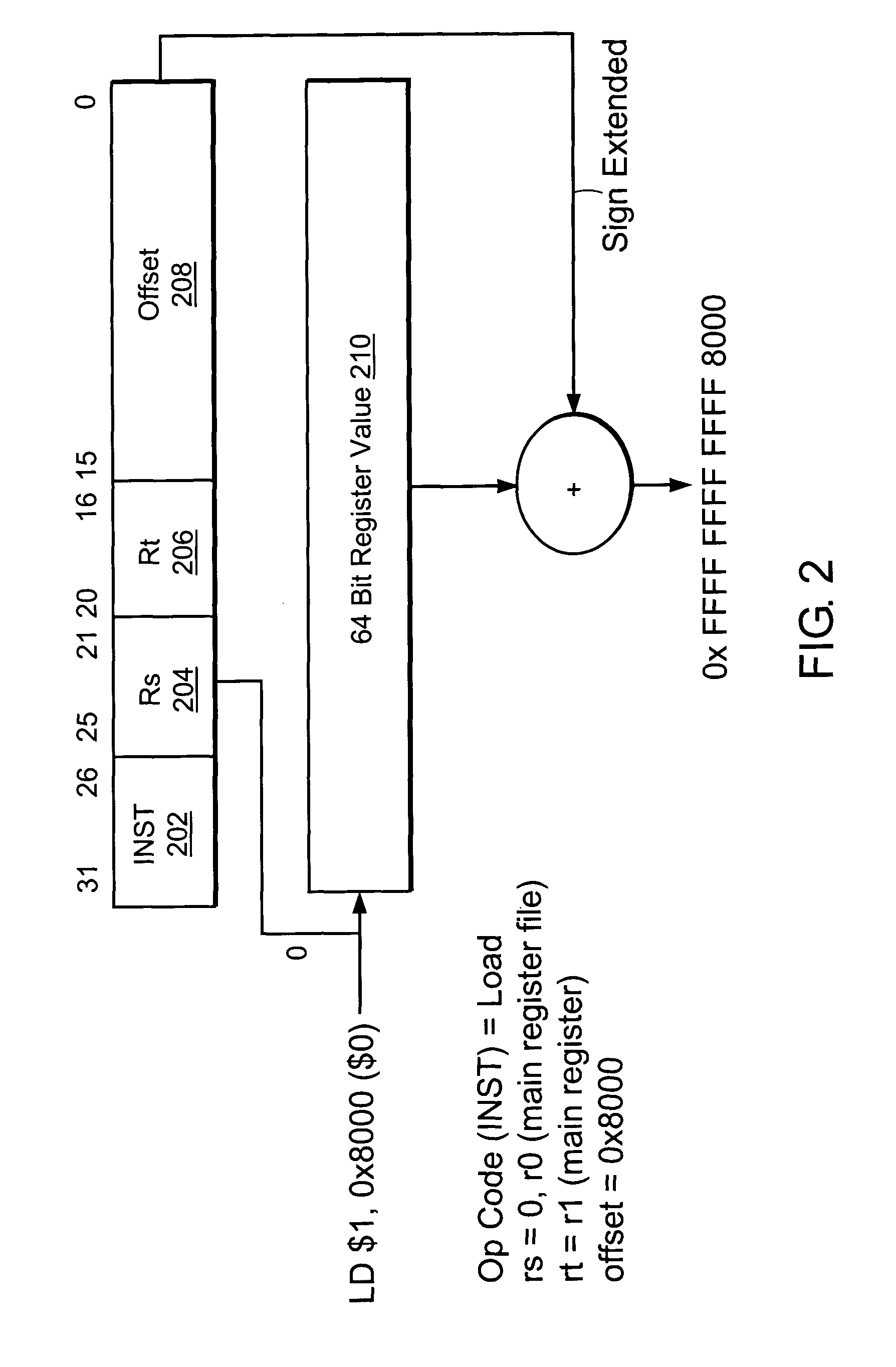

Apparatus and method for large integer multiplication operation

ActiveCN101271570AImprove computing powerFast Data Processing EfficiencyProcessor architectures/configurationScratchpad memoryProduct value

The invention relates to an apparatus and a method used for an operation of a big integer multiplication. The apparatus of the operation of the big integer multiplication consists of a temporary memory of a multiplicand, the temporary memory of a multiplier, a multiplication module, an addition module, the temporary memory of a carry value and a storage temporary memory of a multiplication result. The storage of the temporary memory of the multiplicand comprises m multiplicand data of multiplicand elements Ak; a k is a serial number of the multiplicand element Ak. The storage of the temporary memory of the multiplier comprises n multiplier data of multiplier elements Bj; a j is the serial number of the multiplier element Bj. In the multiplication module the multiplicand element Ak multiplied by the multiplier element Bj makes a product value AkBj. The addition module adds the product value AkBj, a multiplication result value Rk-1,j +1 of the lower right and the right carry value Ck-1, j to get the multiplication result value Rkjand the carry value Ckj. The storage temporary memory of the carry value stores the carry value Ck j and provides the carry value Ck-1, j on the right of the addition module. The temporary memory of the multiplication result stores the multiplication result value Rk j, and provides the multiplication result value Rk-1, j+1 on the lower right of the addition module.

Owner:威盛电子(上海)有限公司

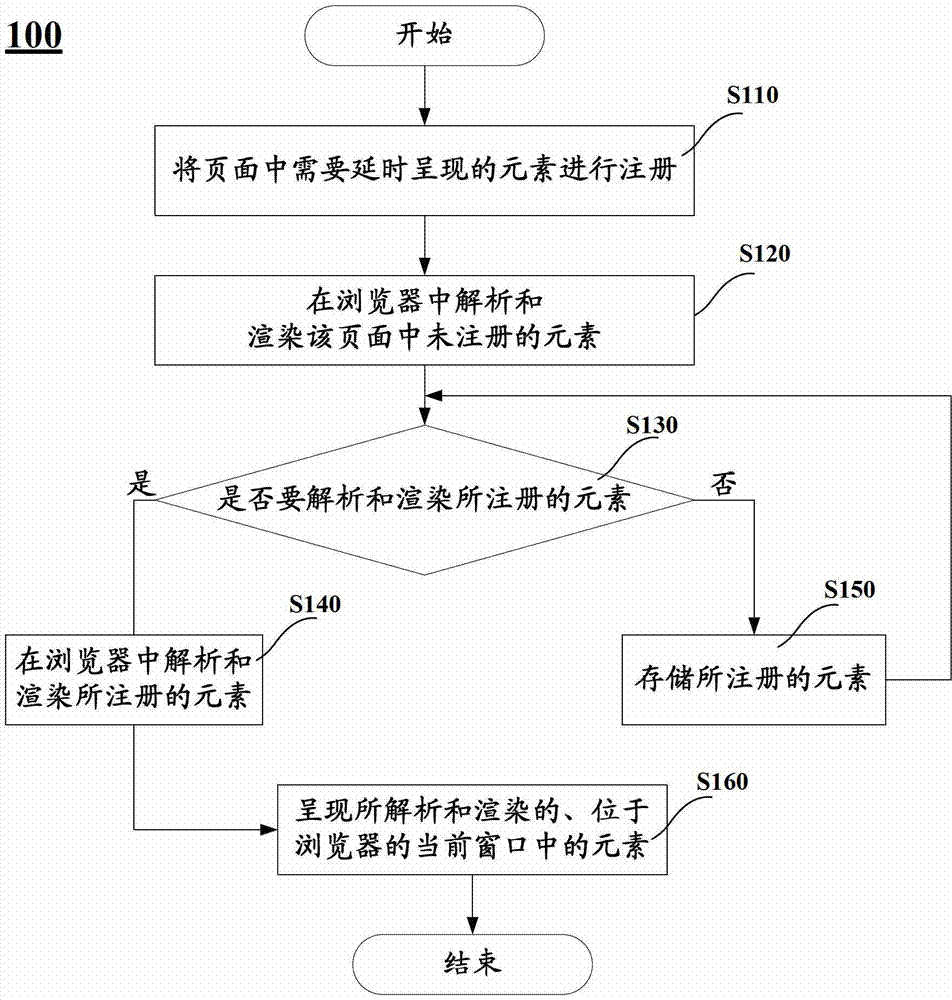

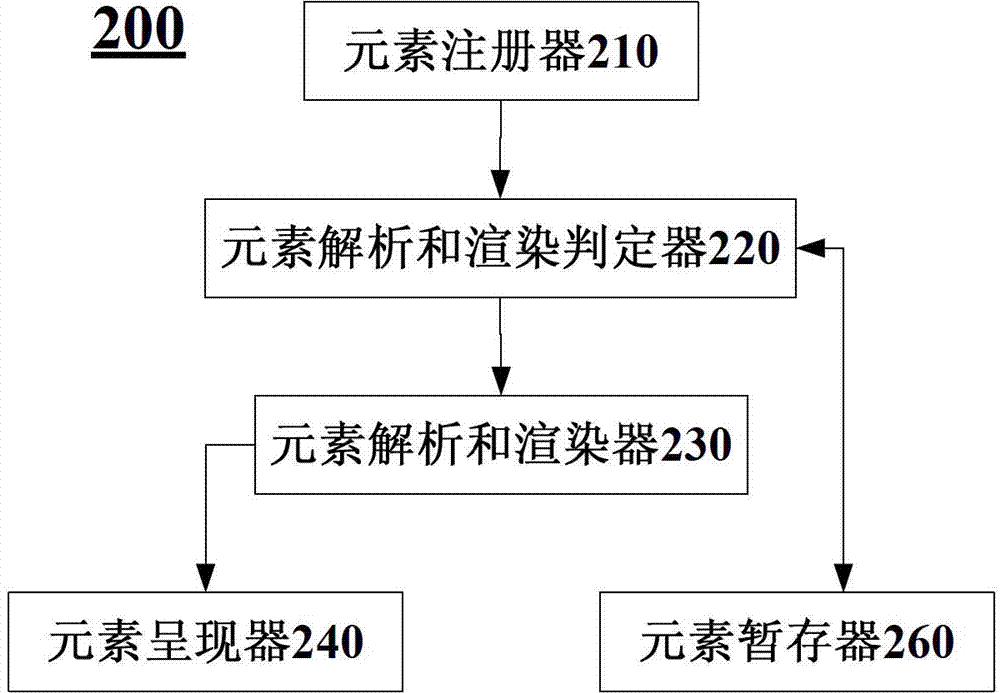

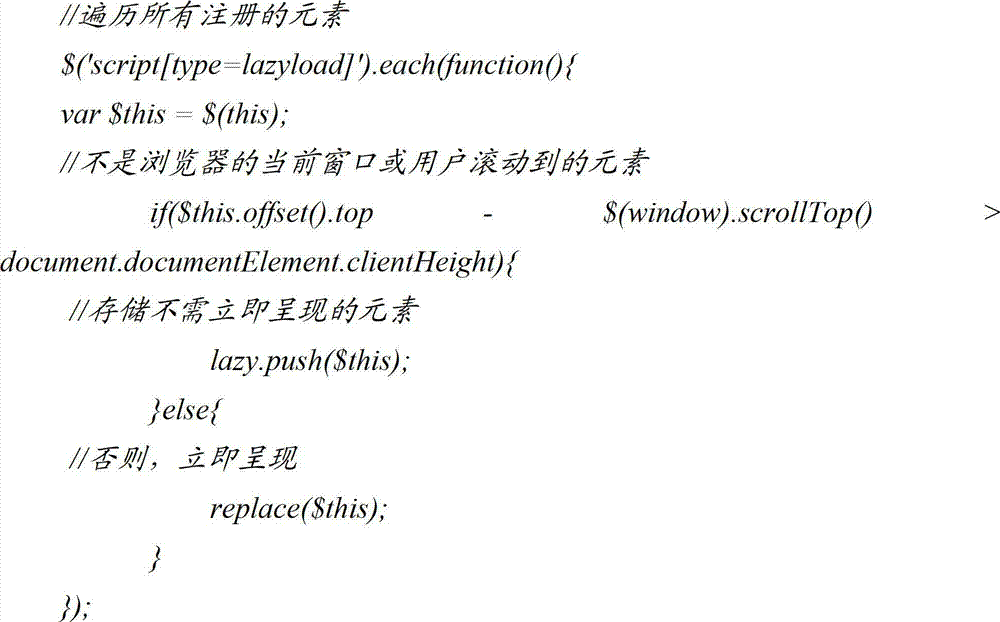

Page presenting method and equipment

InactiveCN103034723AImprove display speedProgram loading/initiatingSpecial data processing applicationsPresent methodComputer graphics (images)

Owner:BEIJING QIHOO TECH CO LTD +1

Design method of low-power consumption high performance high speed scratch memory

InactiveCN101178690AReduce overheadCutting costsEnergy efficient ICTMemory adressing/allocation/relocationMemory addressSwitching time

The invention discloses a design method of a high speed register with low power consumption and high capacity. On the one hand, the invention prevents the invalid visiting to the high speed register by beforehand obtaining the information whether the high speed register is hitting and reduce the dynamic power consumption; on the other hand, the invention divides the storing district in to a number of physical blocks and only visit 1 / n of the total size under the hitting status of the high speed working-storage section, the dynamic power consumption is 1 / n of the original situation. The invention saves the switching time of the dummy address to the physical address, reduces the extra hardware spending brought by the address switching and reduces the whole power consumption of the system byadopting the dummy address to search the high speed register. The invention can greatly reduce the power consumption of the high speed register and greatly elevate the whole capacity of the highly embedding type processor being utilized, which has the advantages of low hardware cost and simple design realization.

Owner:ZHEJIANG UNIV +1

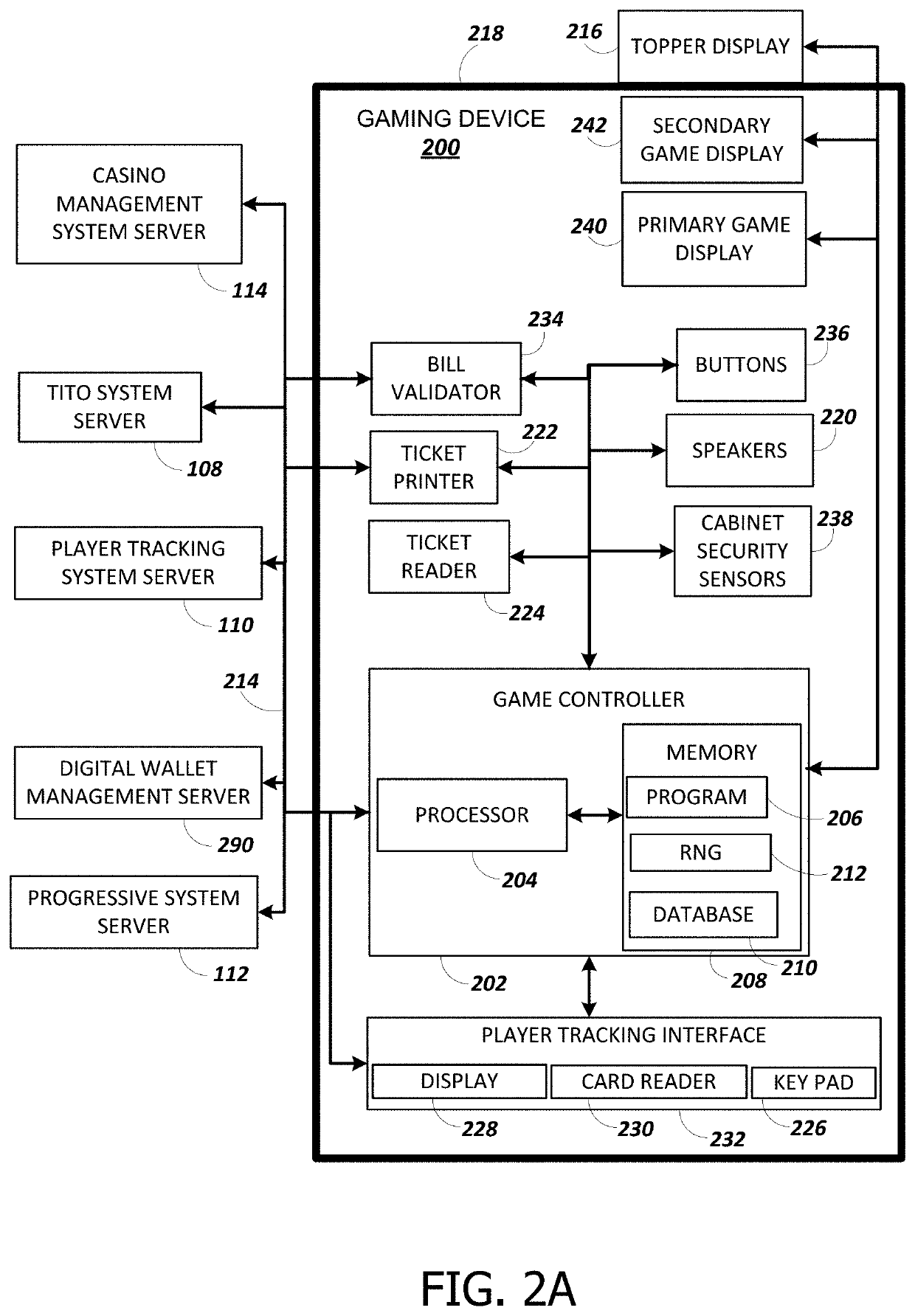

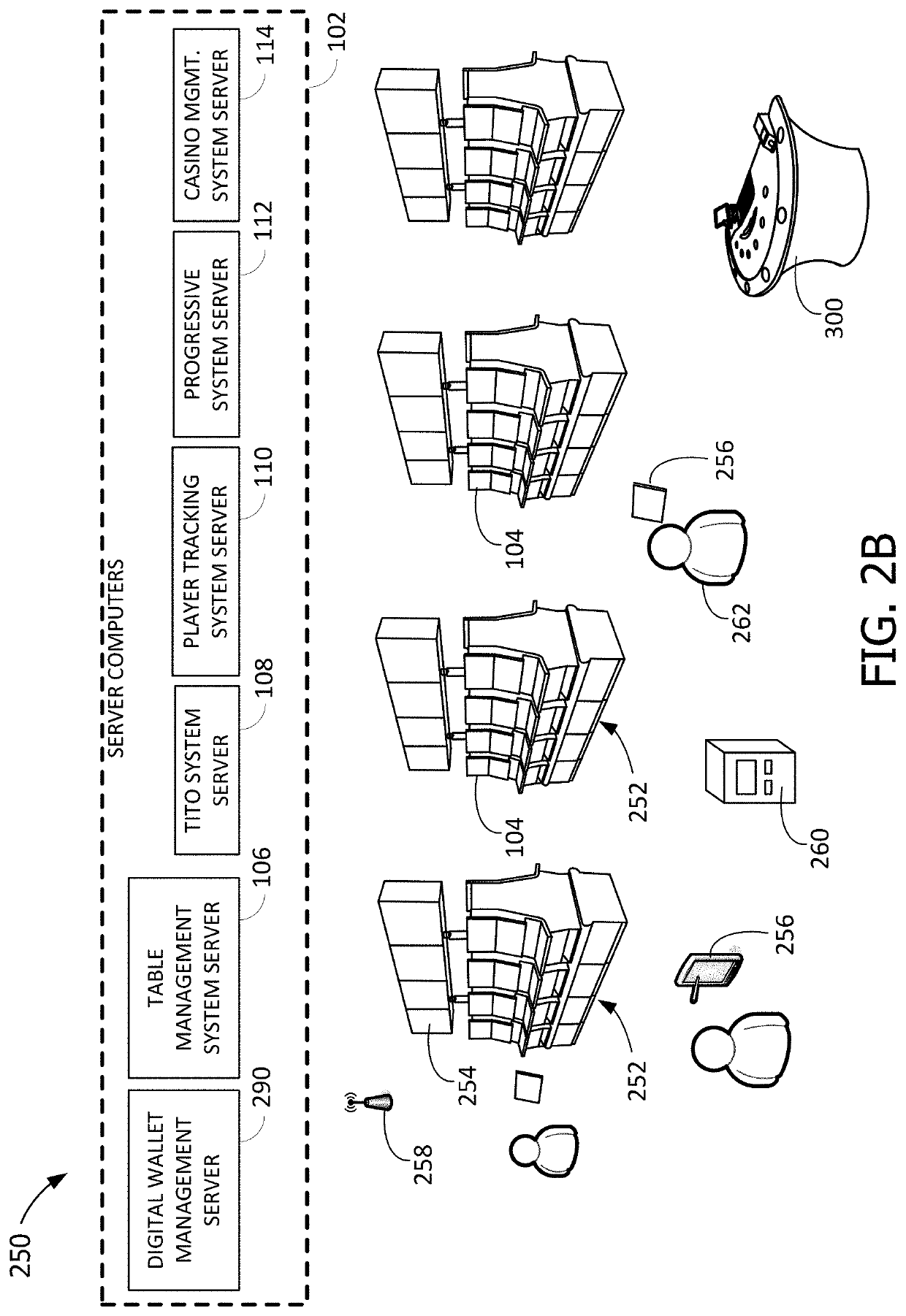

System and method for ticketing at a gaming table

A system includes a smart table at which a wagering game is provided RFID-enabled chips and RFID sensors configured to detect the presence of RFID-enabled chips. The smart table includes a dealer scratchpad for detecting a value of RFID-enabled chips within a scratchpad area on the smart table. The smart table includes a ticket reader and a processor configured to scan, with the ticket reader, a voucher presented by a player, the voucher is redeemable for a voucher value, determine the voucher value of the voucher in response to the scanning, display, to a dealer of the wagering game at the smart table, a confirmation that the player is entitled to receive the voucher value, detect, from the RFID sensor, a chip value within the scratchpad area, compare the chip value to the voucher value, and display, to the dealer, a confirmation that the chip value matches the voucher value.

Owner:ARISTOCRAT TECH AUSTRALIA PTY LTD

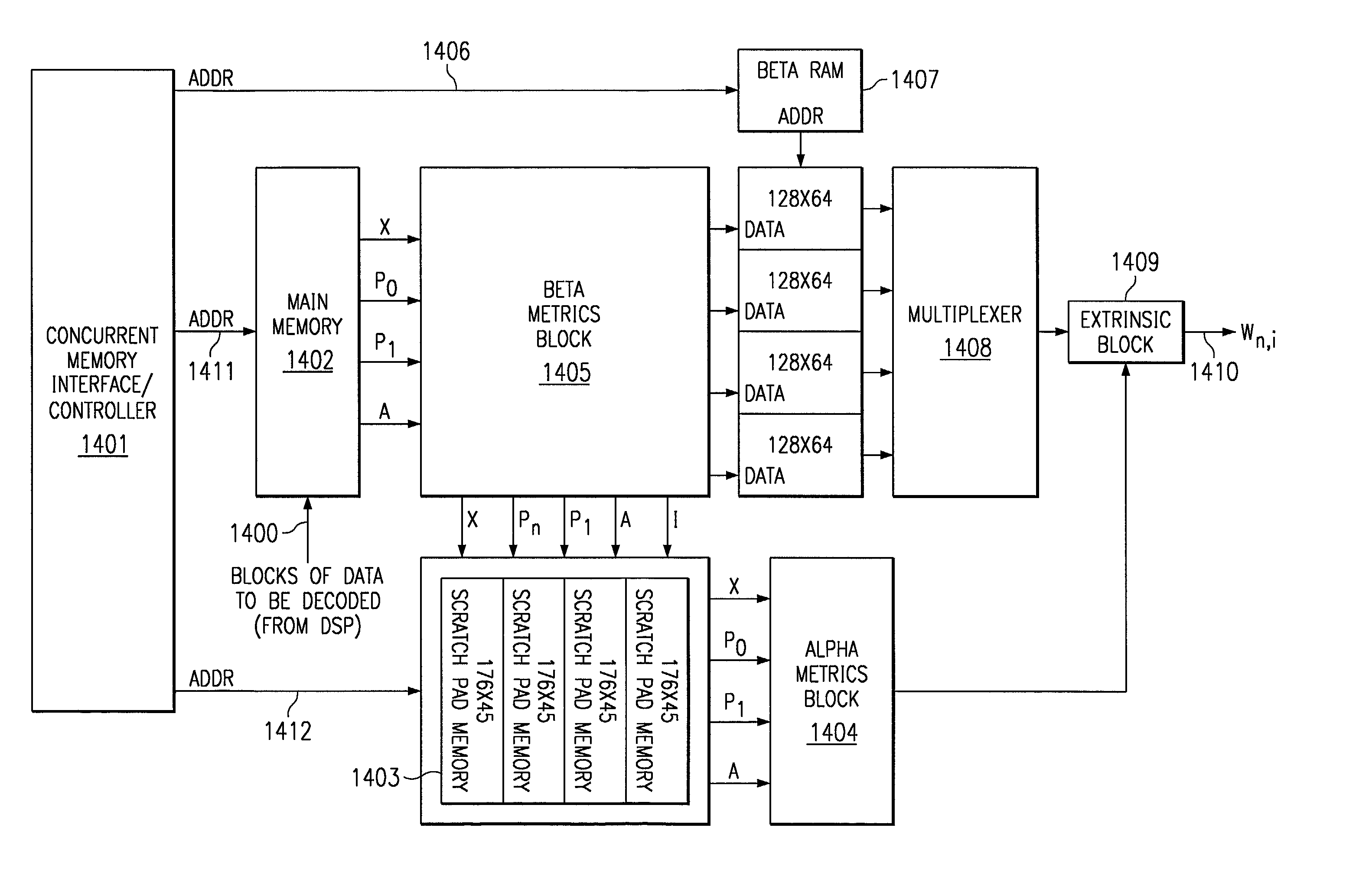

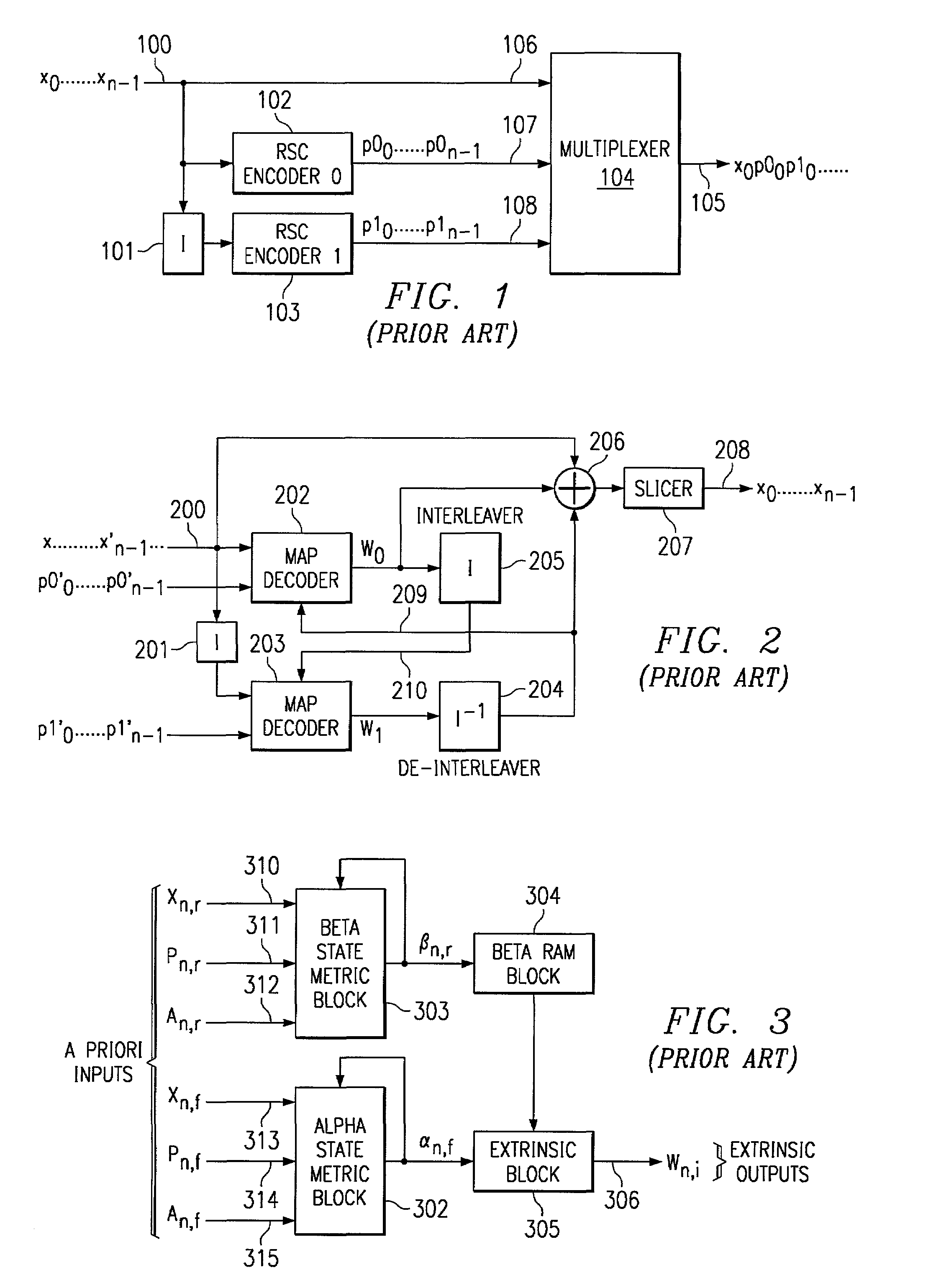

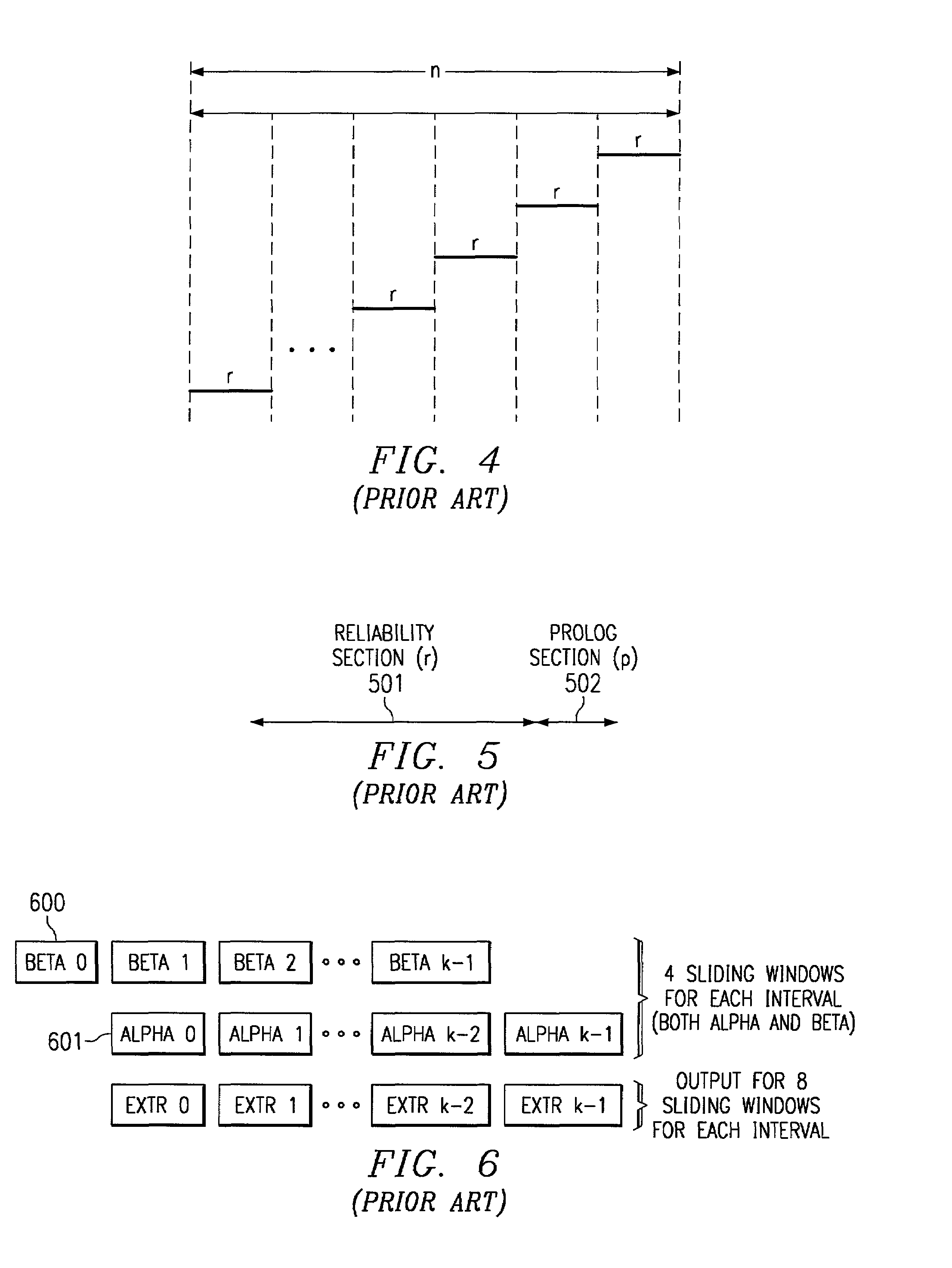

Concurrent memory control for turbo decoders

InactiveUS6993704B2Data representation error detection/correctionOther decoding techniquesComputer architectureScratchpad memory

The concurrent memory control turbo decoder solution of this invention uses a single port main memory and a simplified scratch memory. This approach uses an interleaved forward-reverse addressing which greatly relieves the amount of memory required. This approach is in marked contrast to conventional turbo decoders which employ either a dual port main memory or a single port main memory in conjunction with a complex ping-ponged scratch memory. In the system of this invention, during each cycle accomplishes one read and one write operation in the scratch memories. If a particular location in memory, has been read, then that location is free. The next write cycle can use that location to store its data. Similarly a simplified beta RAM is implemented using a unique addressing scheme which also obviates the need for a complex ping-ponged beta RAM.

Owner:TEXAS INSTR INC

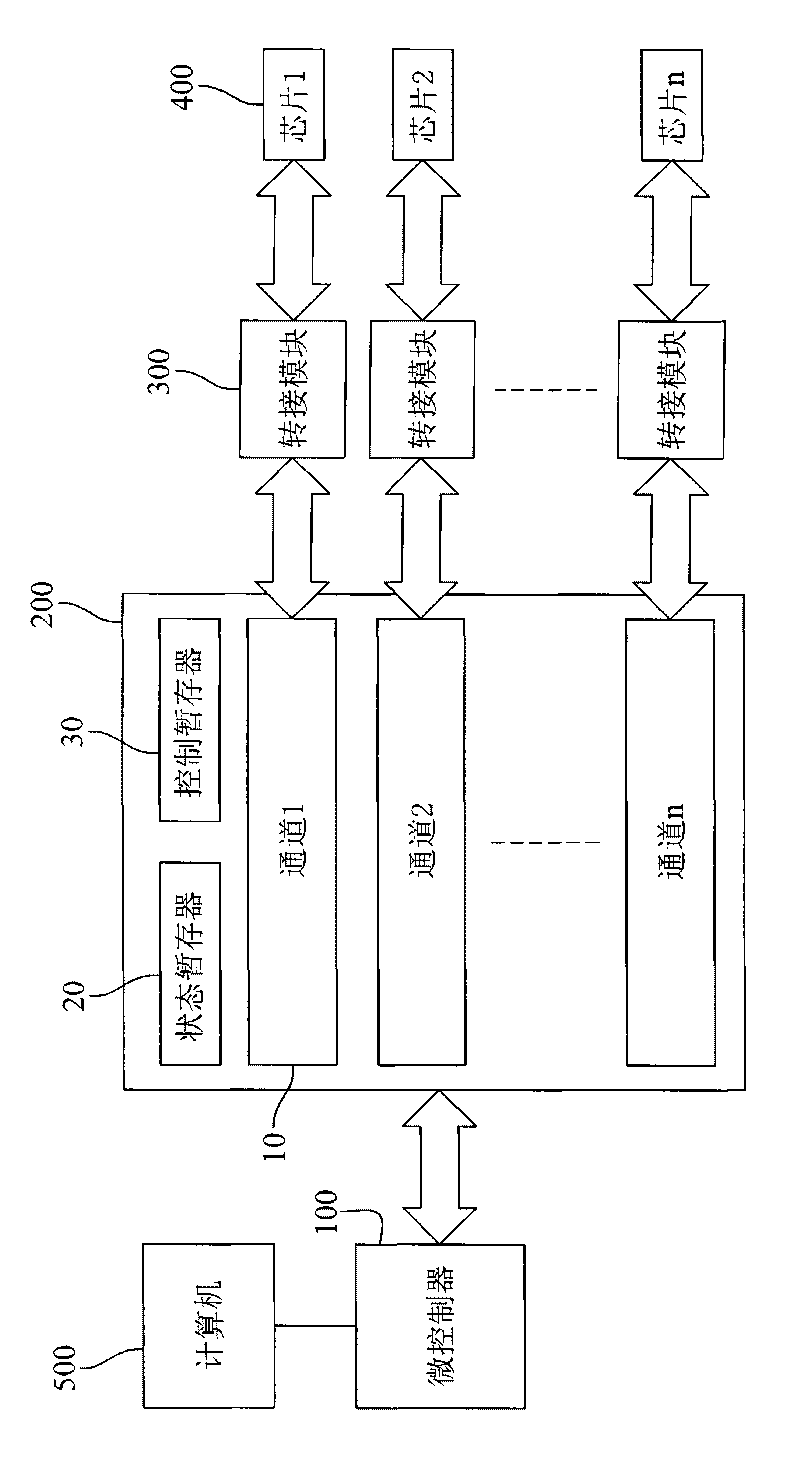

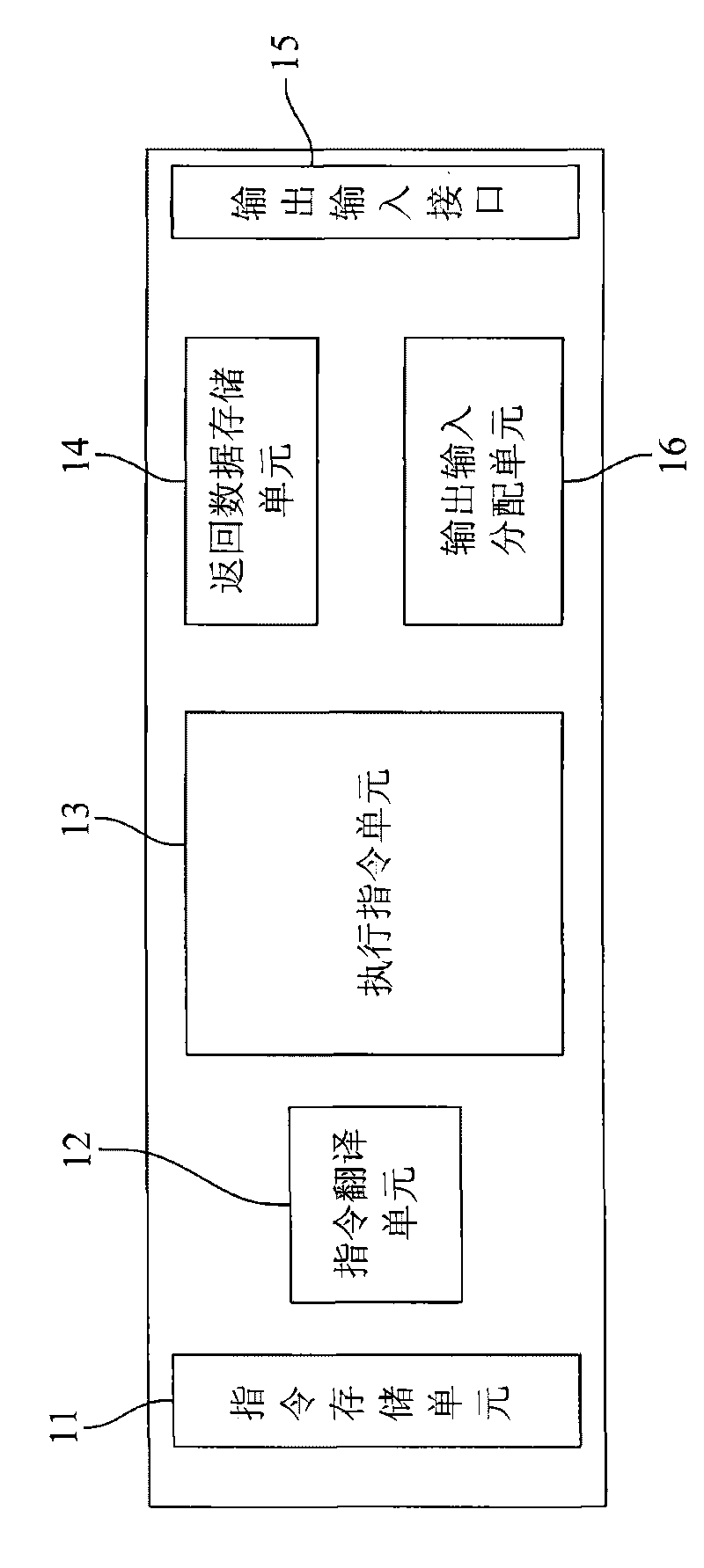

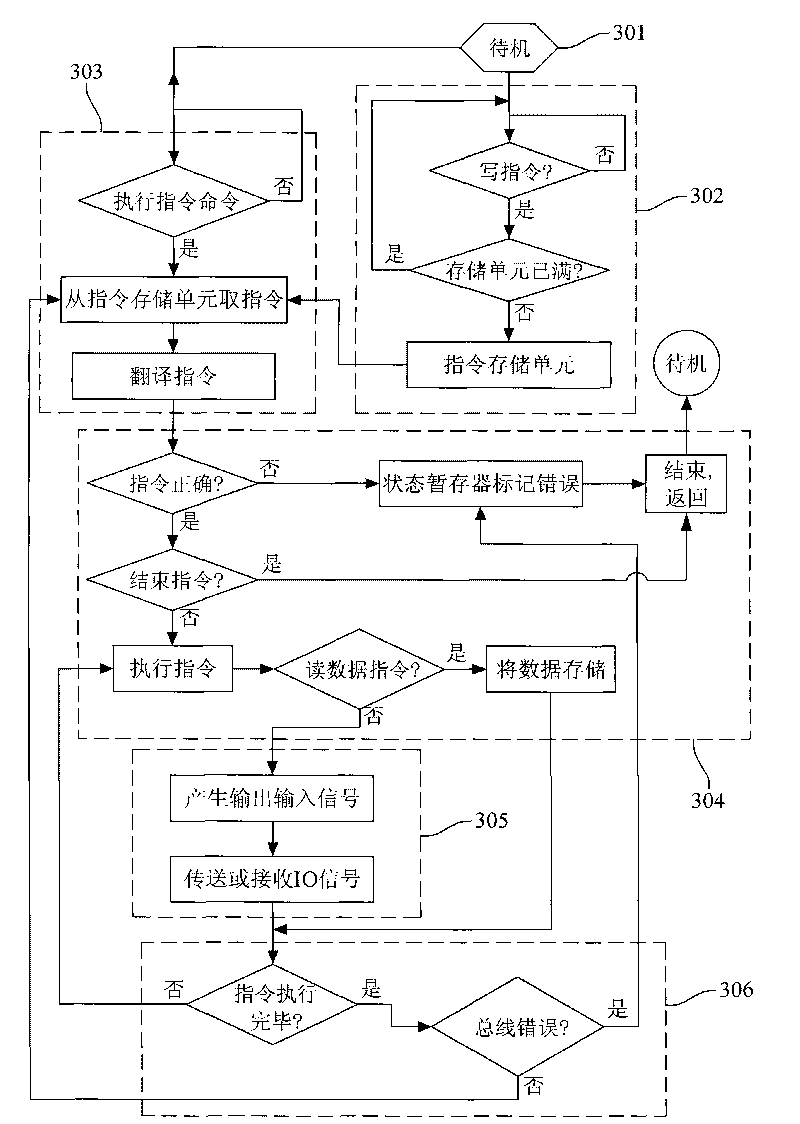

Multichip parallel program burning system

InactiveCN101727416ALow costSave programming timeRead-only memoriesElectric digital data processingMicrocontrollerGate array

A multichip parallel program burning system is connected with a computer and is used to support the action of parallel program burning of various chips. The burning system comprises a microcontroller and a programmable gate array, wherein the microcontroller is in a communications linkage with the computer; the programmable gate array is in a communications linkage with the microcontroller; the programmable gate array comprises a plurality of channels, status registers and control registers; each channel corresponds to and is electrically connected with one kind of chip; and the microcontroller receives the parallel burning command and transmits the parallel burning command to the programmable gate array, the programmable gate array translates the parallel burning command to ensure that the translated parallel burning command corresponds to each chip and the motion of the parallel program burning can be performed simultaneously to various chips, thus saving the cost and reducing the recording time.

Owner:INVENTEC CORP

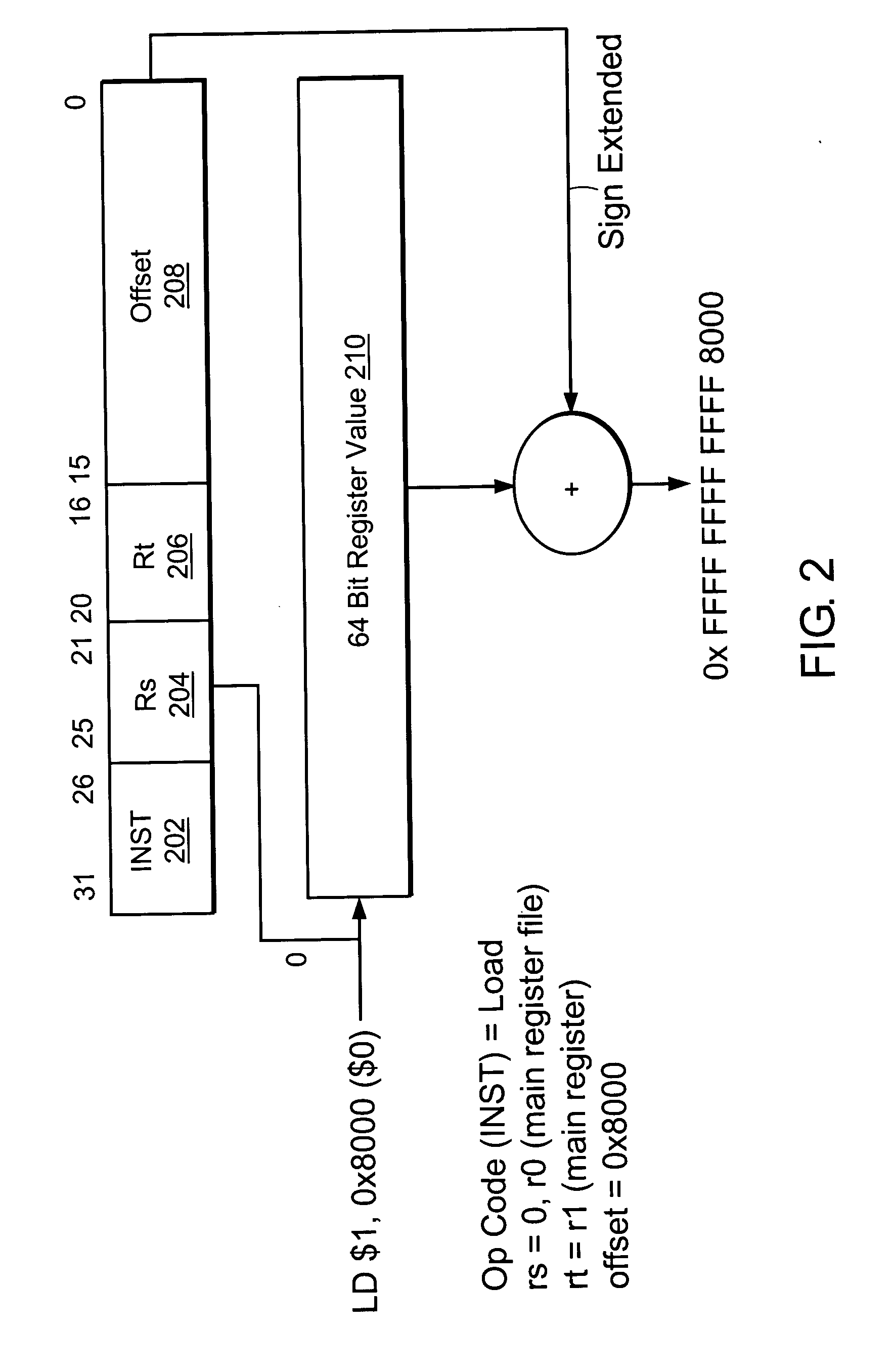

Local scratchpad and data caching system

InactiveUS7941585B2Memory architecture accessing/allocationError detection/correctionProcessor registerScratchpad memory

A RISC-type processor includes a main register file and a data cache. The data cache can be partitioned to include a local memory, the size of which can be dynamically changed on a cache block basis while the processor is executing instructions that use the main register file. The local memory can emulate as an additional register file to the processor and can reside at a virtual address. The local memory can be further partitioned for prefetching data from a non-cacheable address to be stored / loaded into the main register file.

Owner:MARVELL ASIA PTE LTD

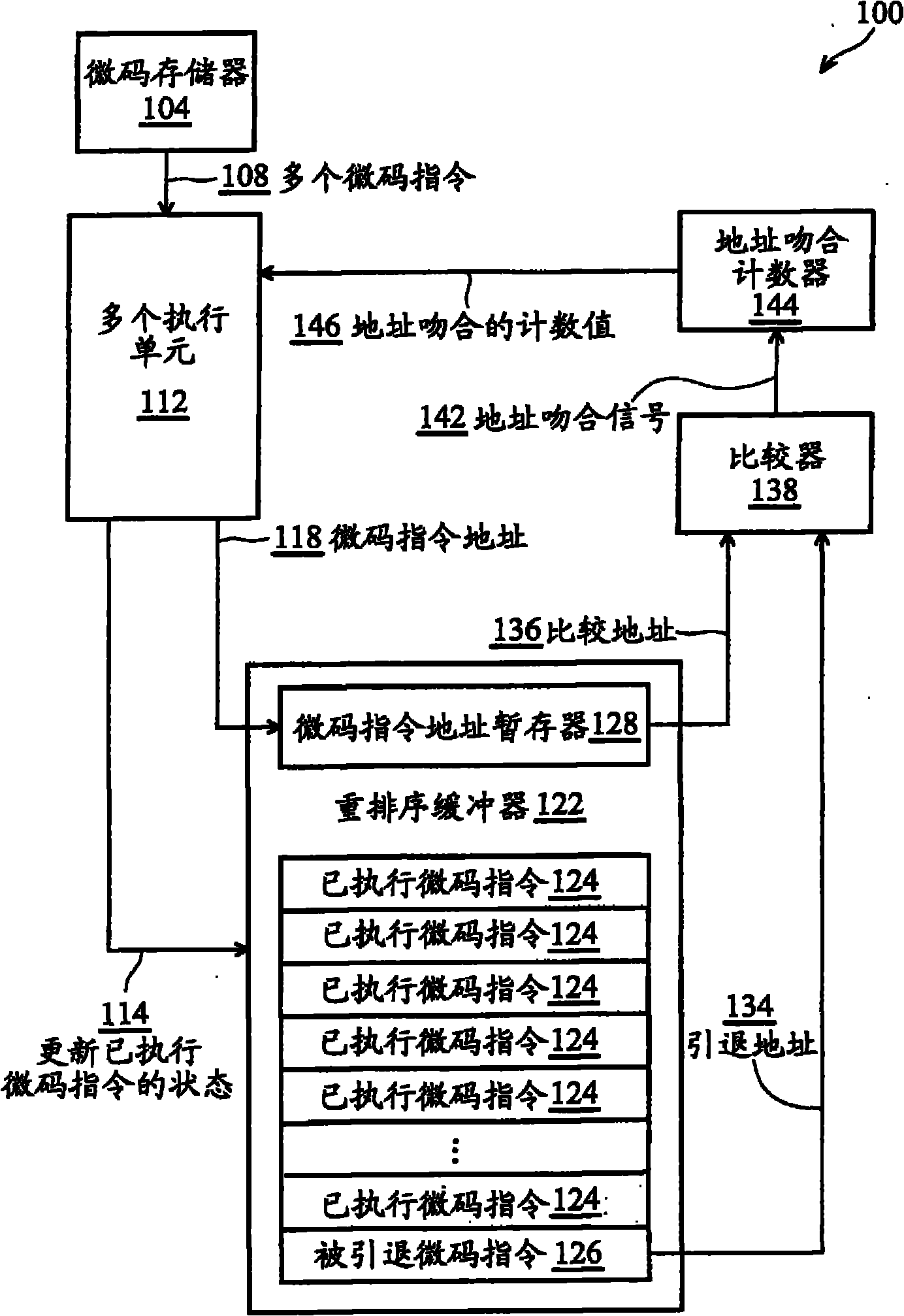

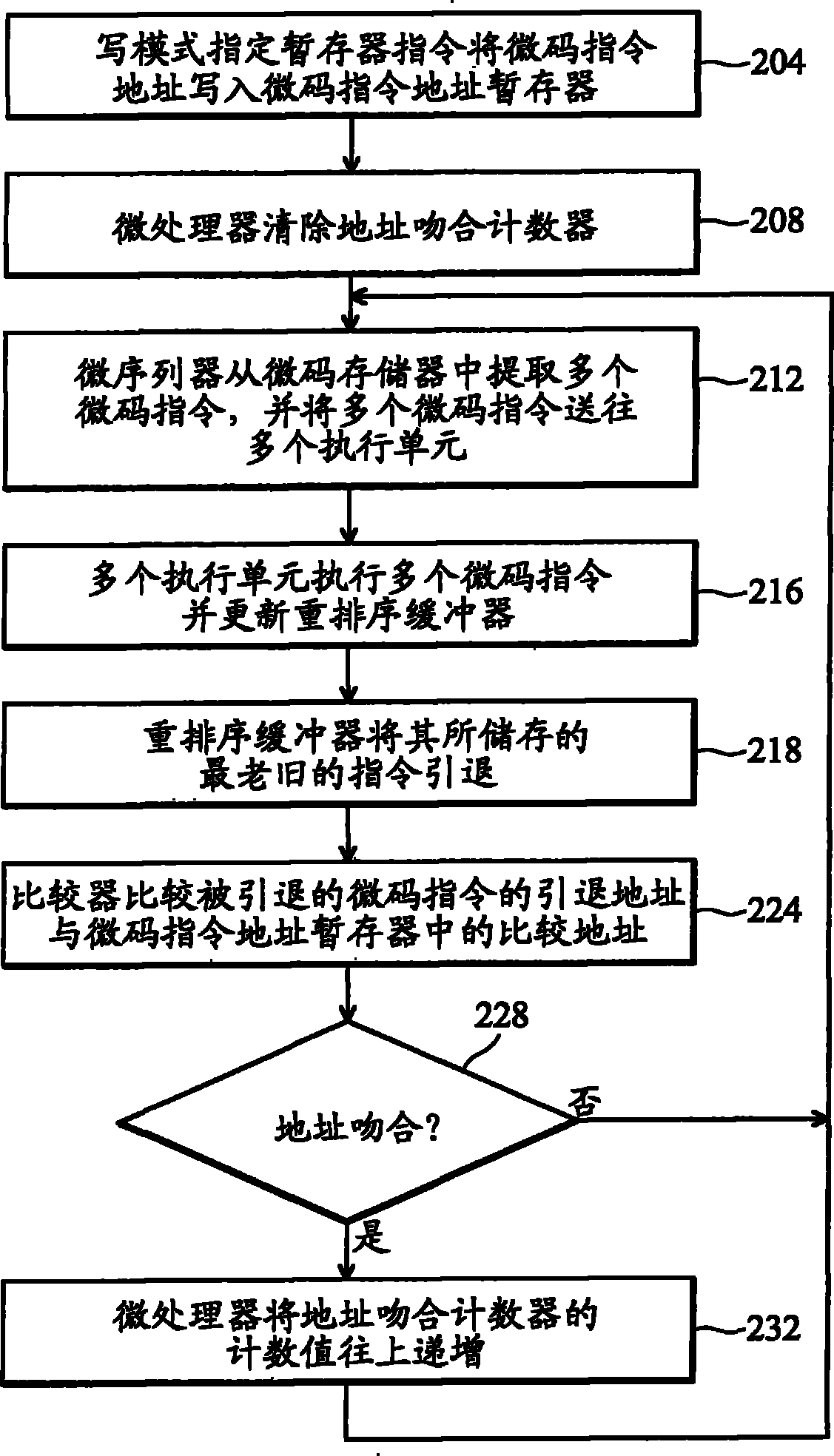

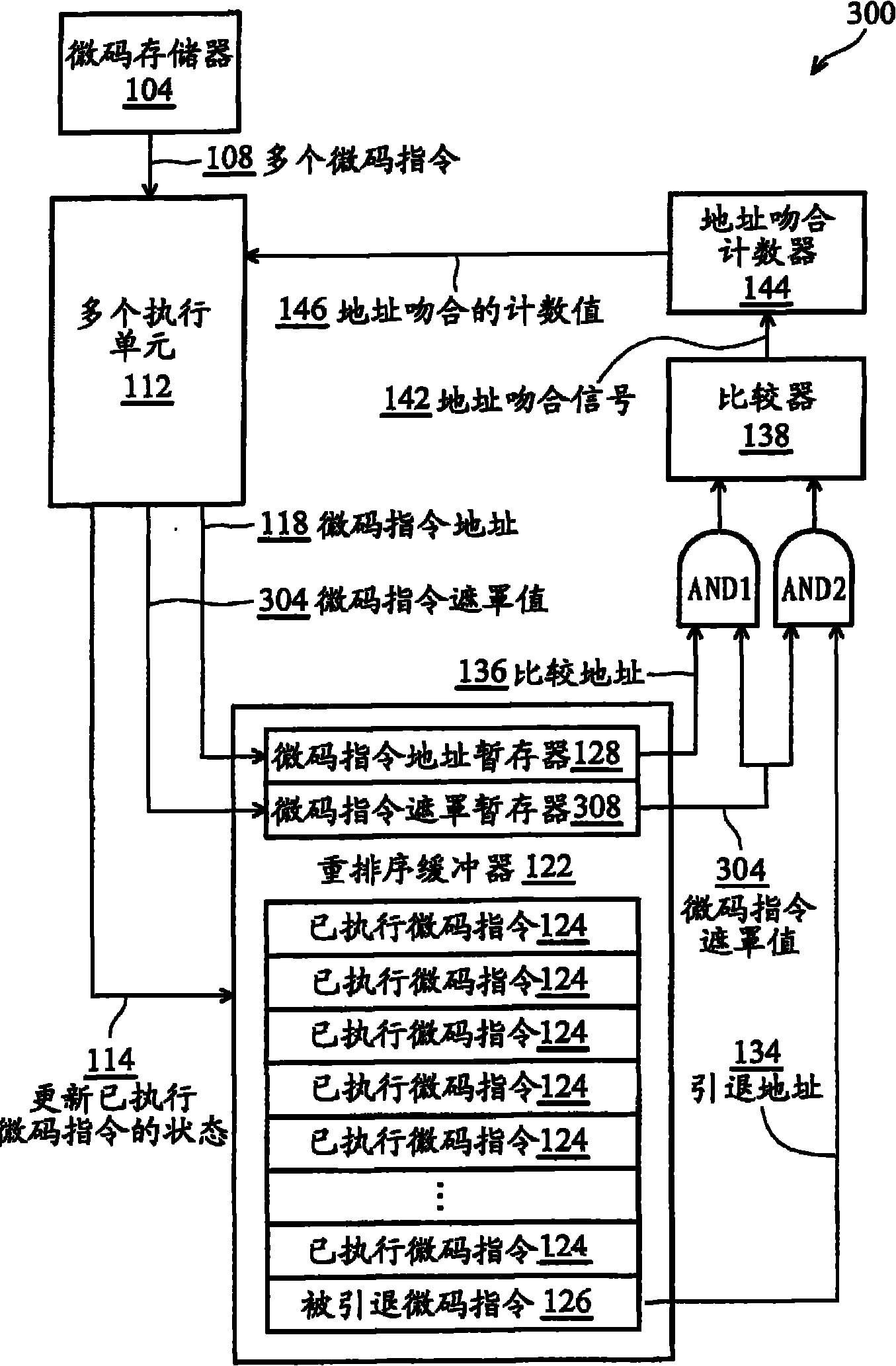

Performance counter for microcode instruction execution and counting method

The invention provides a performance counter for microcode instruction execution and a counting method. An apparatus for counting microcode instruction execution in a microprocessor includes a first register, a second register, a comparator, and a counter. The first register stores an address of a microcode instruction. The microcode instruction is stored in a microcode memory of the microprocessor. The second register stores an address of the next microcode instruction to be retired by a retire unit of the microprocessor. The comparator compares the addresses stored in the first and second registers to indicate a match between them. The counter counts the number of times the comparator indicates a match between the addresses stored in the first register and the second register. The overhead required to commence measuring microcode execution and to subsequently obtain the measurements are a small number of writes / reads to / from control registers without professional exterior tools or deepening into a detector inside the microprocessor.

Owner:VIA TECH INC

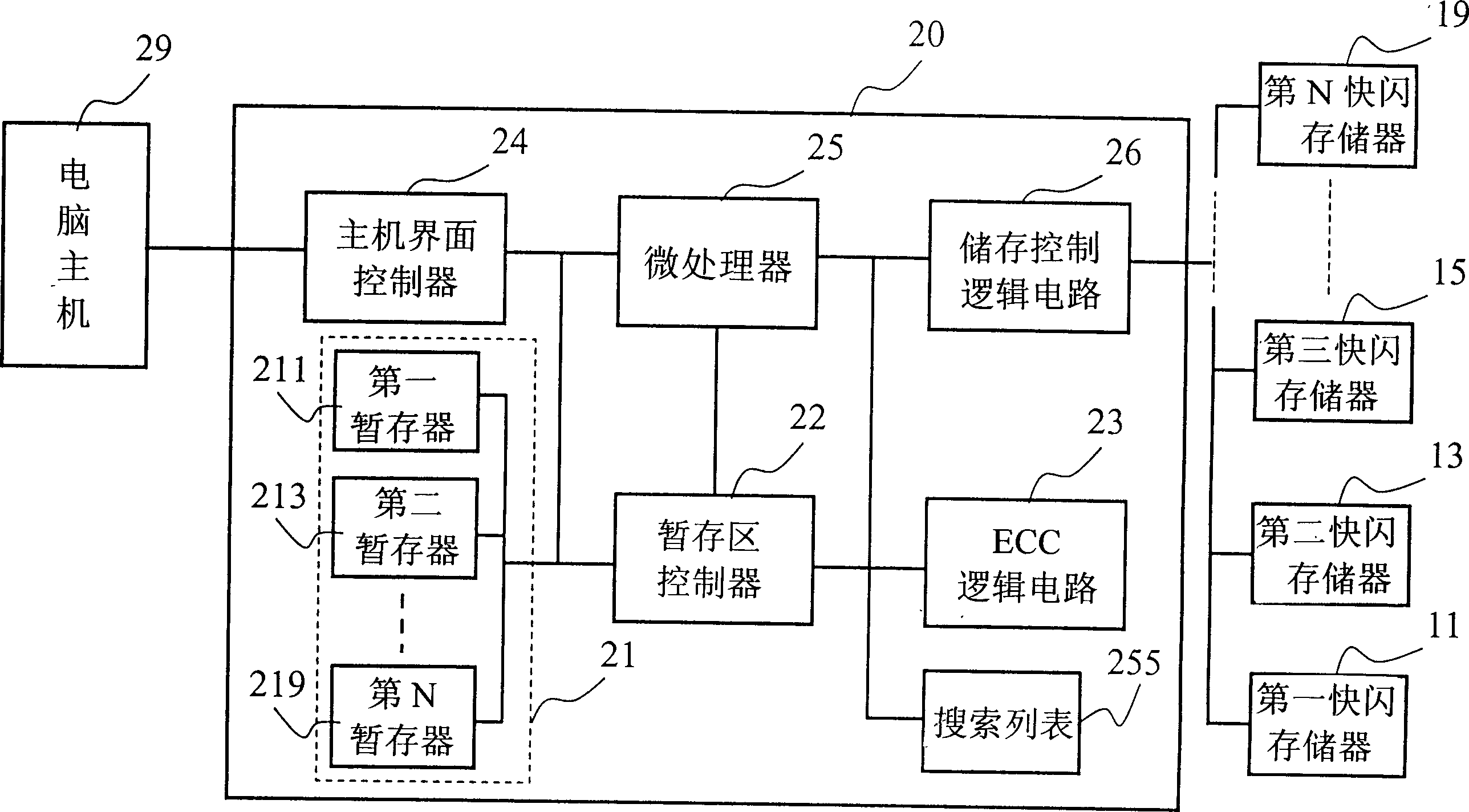

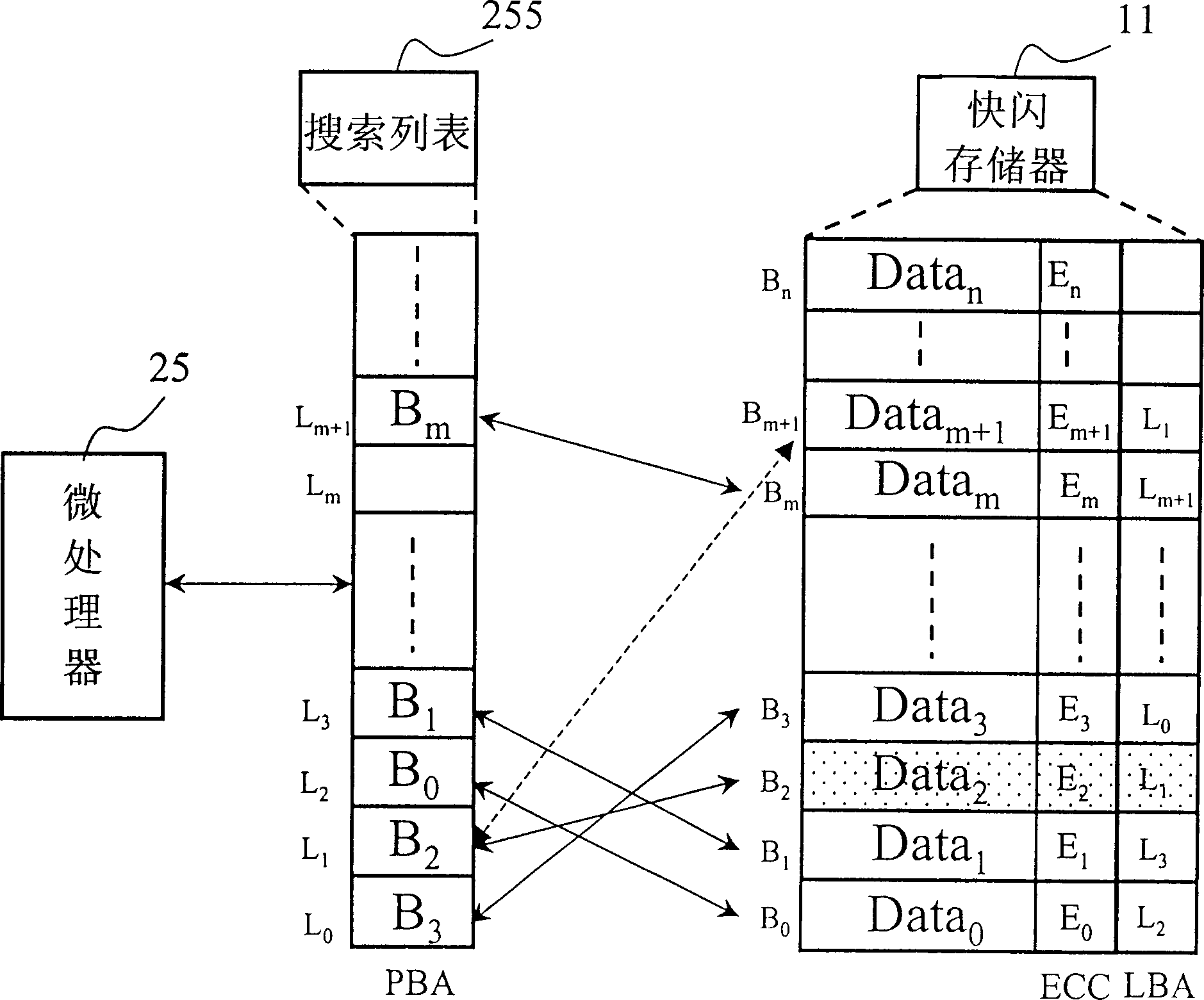

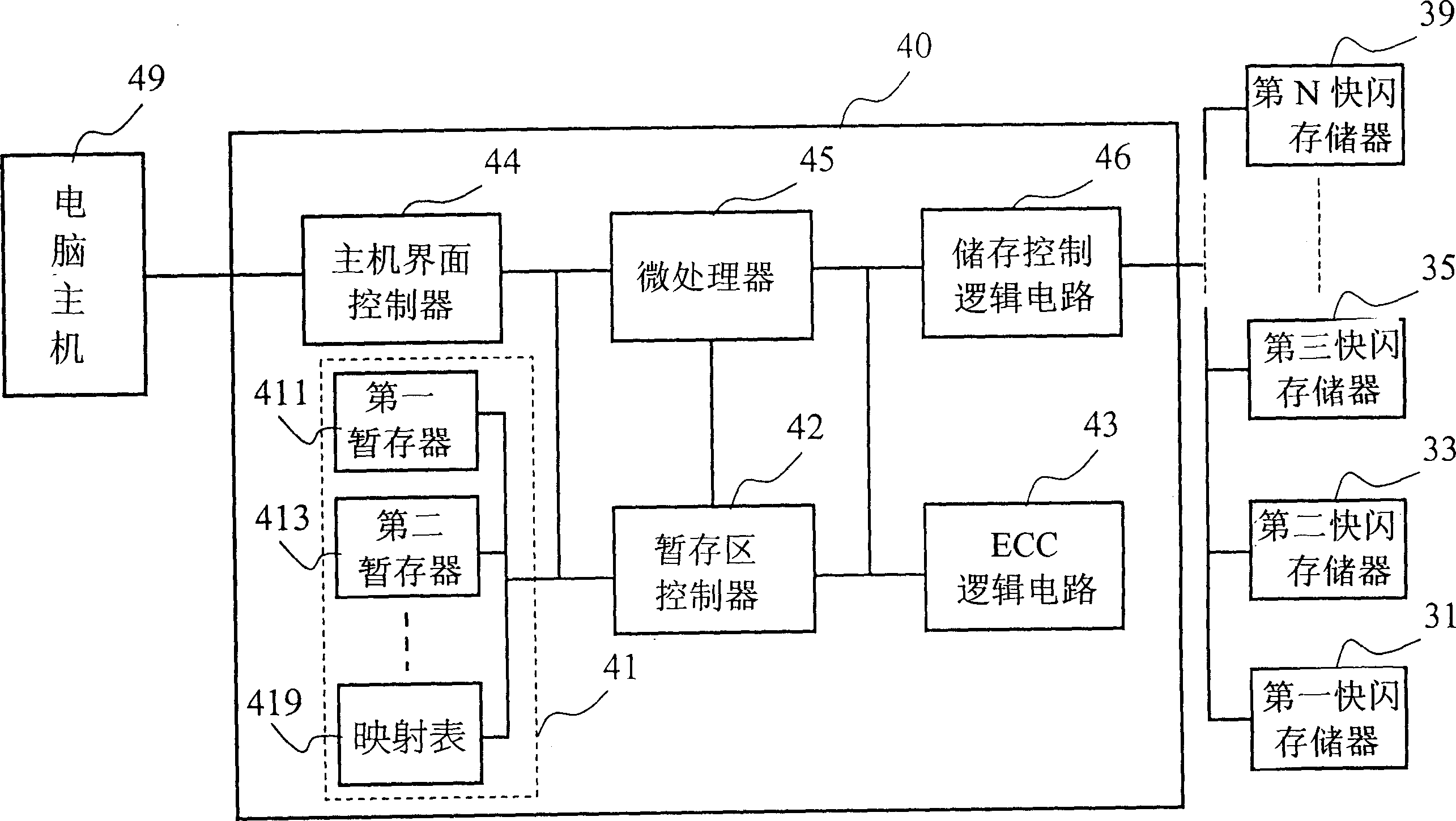

Calculation method executed in quick flash memory fast and not caused abnormal power breakdown as well as its control system

InactiveCN1447243ASimplify work timeShorten working timeMemory adressing/allocation/relocationControl systemPaging

A quick algorithm preventing from irregular power cut off in flash memory and a control system is to mainly store logic BA and related interlock relationship mapping list information of PBA information in a PB paging of the memory protected by a set of ECC. When the host in operation, the controller logs in the mapping list information directly on a temporary memory of the said control device forits fetching, in which, the set up of mapping list is promptly and quickly without scanning program, and if the mapping list is wrong because of the irregular operation system then it will turn back to the last one to recover and ordinary interlock.

Owner:KII TEKU INC

Stack data management for software managed multi-core processors

InactiveUS9015689B2Minimal costEasy to cutResource allocationSoftware engineeringInteger linear programming formulationData management

Methods and apparatus for managing stack data in multi-core processors having scratchpad memory or limited local memory. In one embodiment, stack data management calls are inserted into software in accordance with an integer linear programming formulation and a smart stack data management heuristic. In another embodiment, stack management and pointer management functions are inserted before and after function calls and pointer references, respectively. The calls may be inserted in an automated fashion by a compiler utilizing an optimized stack data management runtime library.

Owner:ARIZONA STATE UNIVERSITY

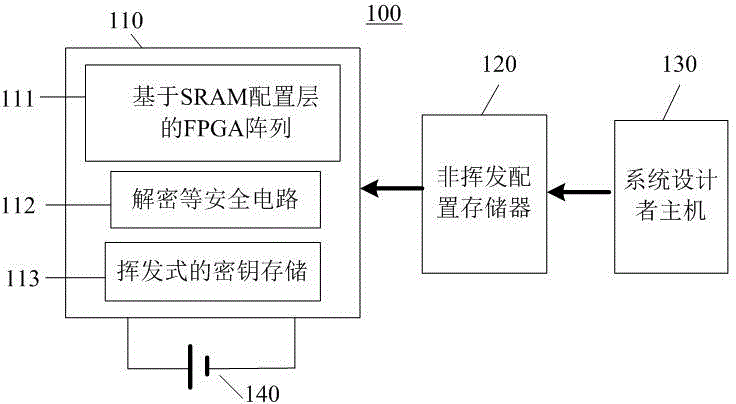

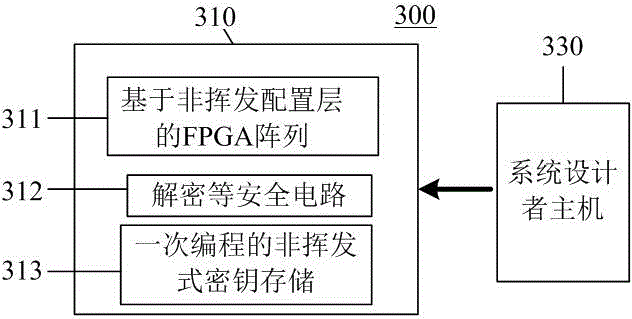

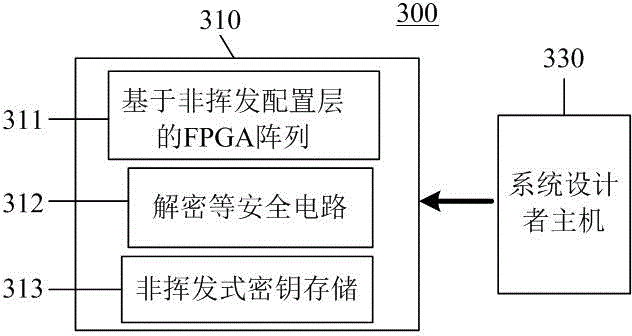

Programmable logic device enabling configuration data flows to be high in safety

ActiveCN104615953AAvoid attacks such as interception and stealingAvoid attacks such as reverse engineeringInternal/peripheral component protectionData streamRandom access memory

The invention belongs to the technical field of programmable logic devices, and particularly relates to a programmable logic device enabling configuration data flows to be high in safety. The programmable logic device comprises a programmable logic array based on an SRAM configuration layer, a non-volatile scratchpad memory, a security mechanism control circuit, a configuration control circuit, an interface module and other modules; the non-volatile scratchpad memory can comprise multiple partitions for storing different data, such as configuration data, secret keys and authentication tags, the non-volatile scratchpad memory further comprises a write-protect circuit, and the write-protect circuit can provide different write-protect control strategies according to the different partitions on the non-volatile scratchpad memory; the non-volatile scratchpad memory specially comprises a resistance type random access memory and other non-volatile memories capable of being compatible with logic processes. The programmable logic device enabling the configuration data flows to be high in safety can prevent the configuration data flows from being stolen and loaded maliciously and enable the configuration data flows to have other security features in the storage and transmitting processes.

Owner:FUDAN UNIV

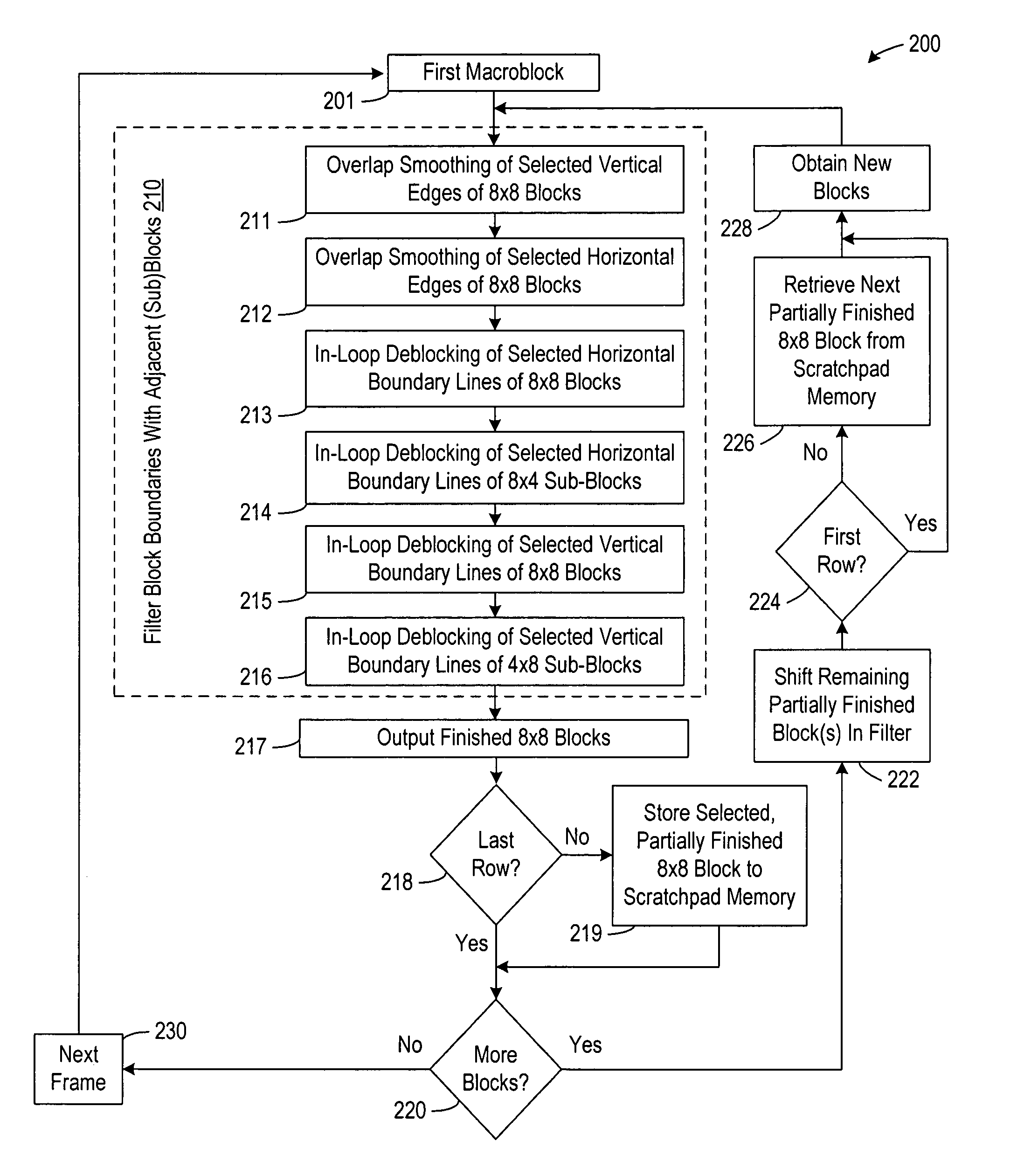

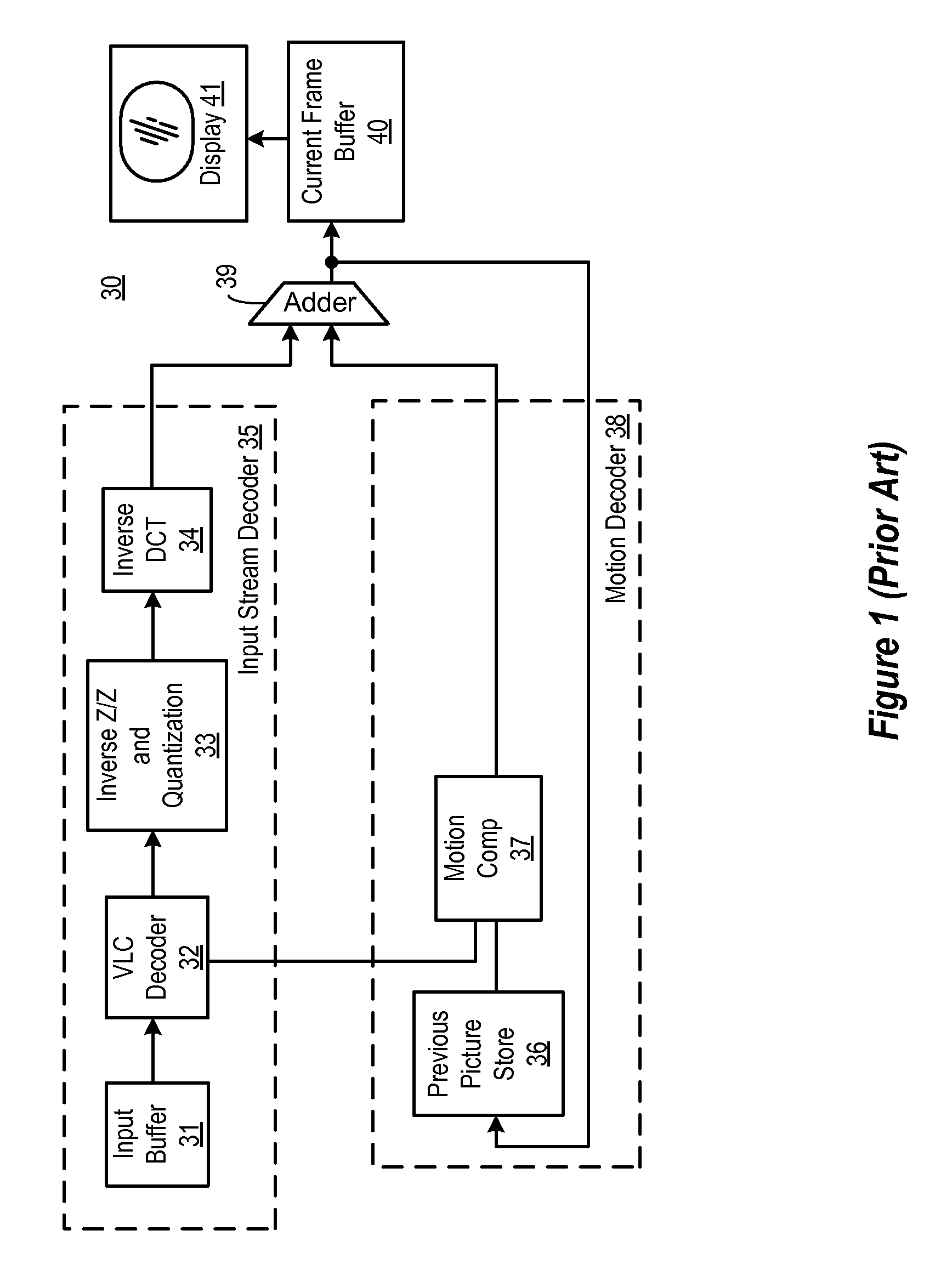

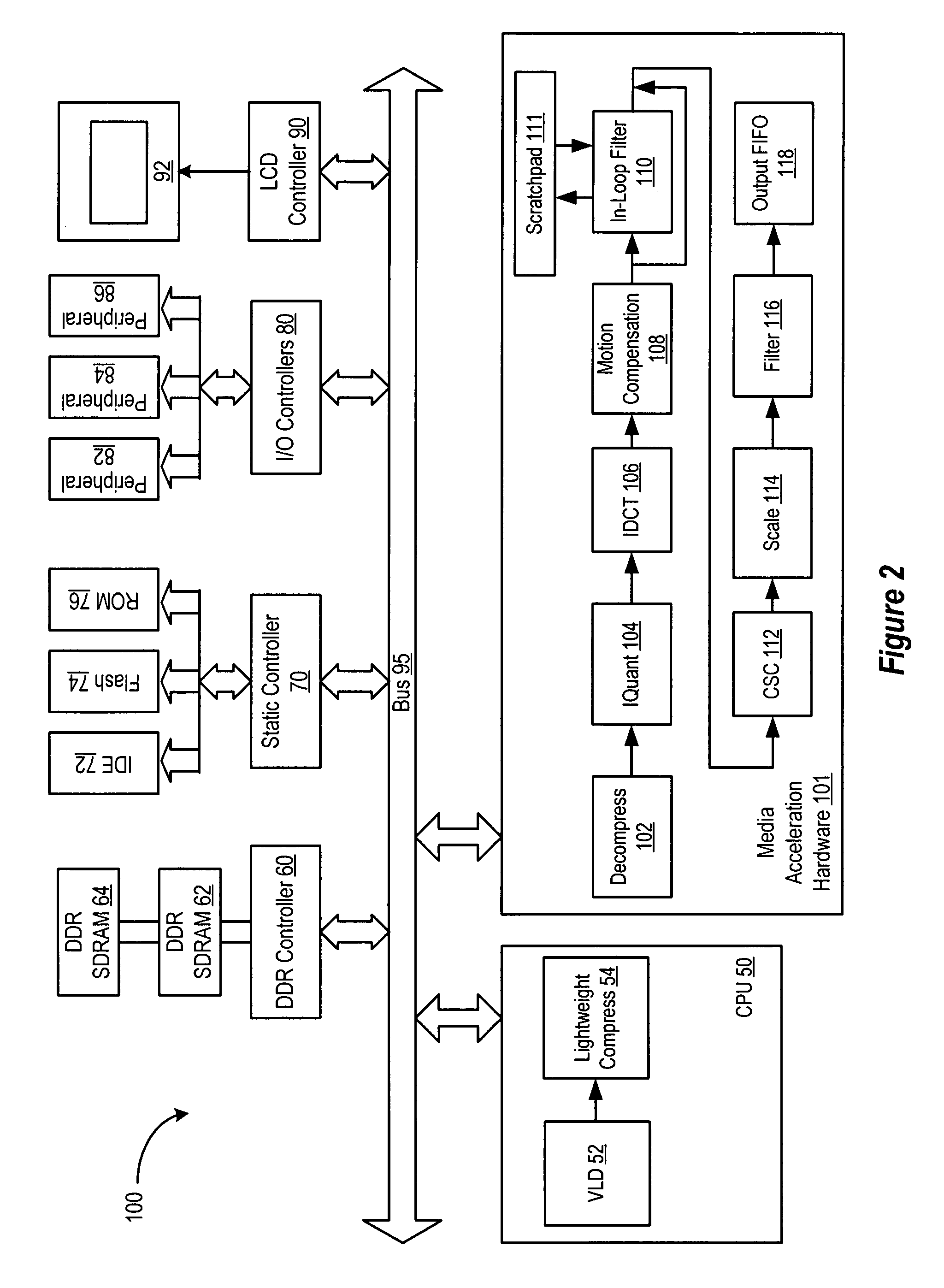

Scratch pad for storing intermediate loop filter data

ActiveUS7792385B2Quickly and efficiently processMeet cutting requirementsColor television with pulse code modulationColor television with bandwidth reductionLoop filterFrame based

A video processing apparatus and methodology are implemented as a combination of a processor and a video decoding hardware block to decode video data by providing the video decoding block with an in-loop filter and a scratch pad memory, so that the in-loop filter may efficiently perform piecewise processing of overlap smoothing and in-loop deblocking in a macroblock-based fashion which is a much more efficient algorithm than the frame-based method.

Owner:MEDIATEK INC +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com