Patents

Literature

286results about How to "Improve execution performance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

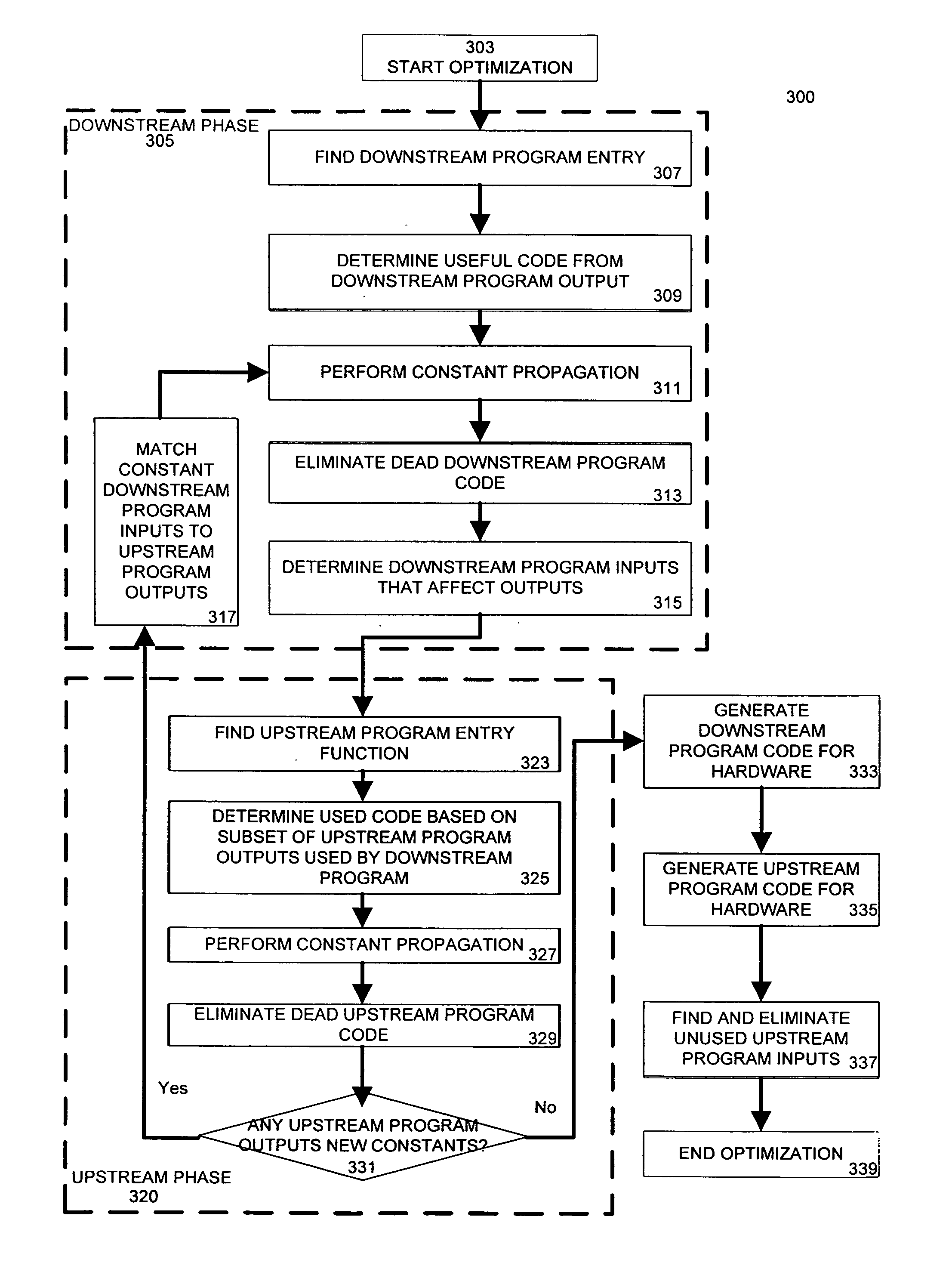

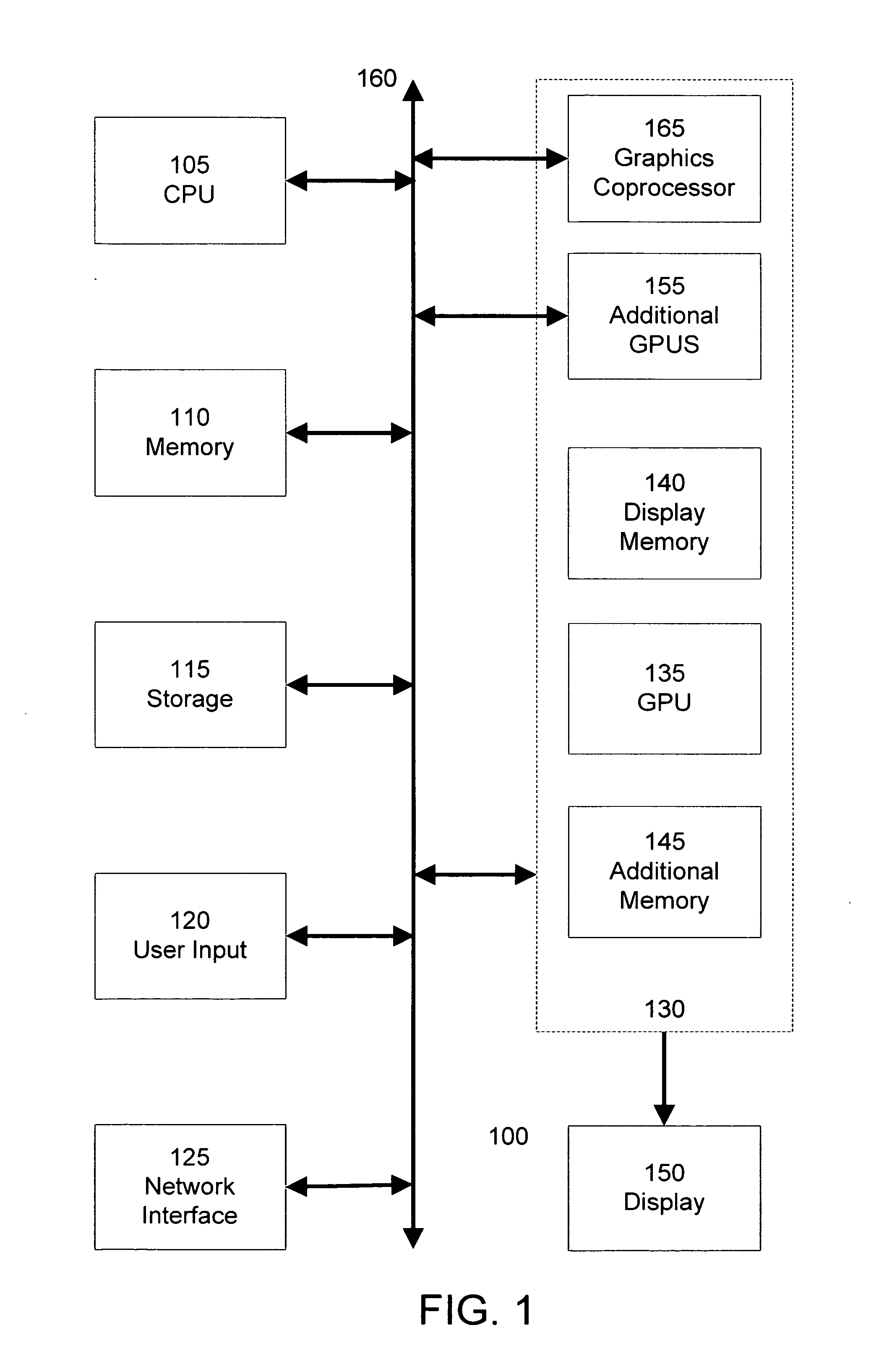

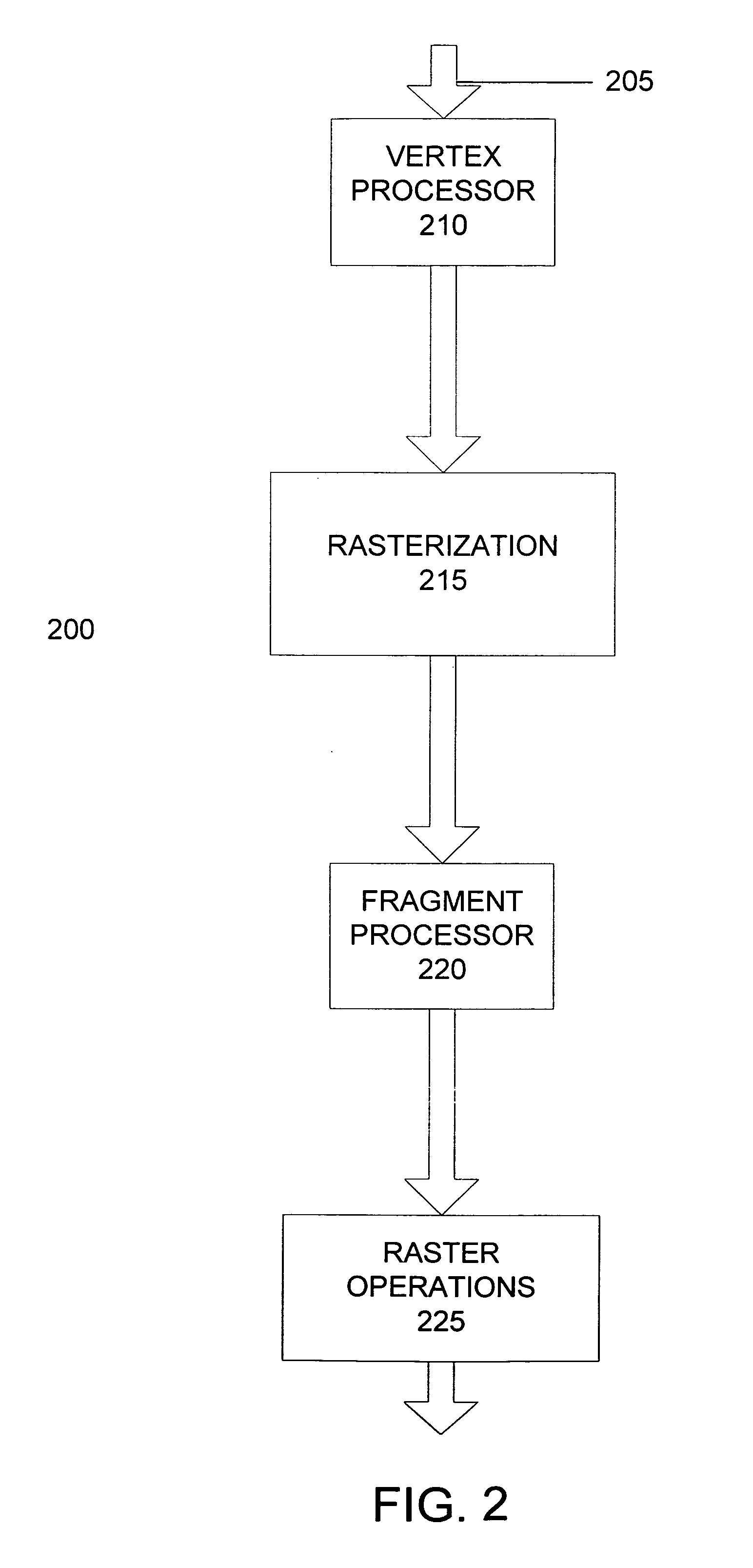

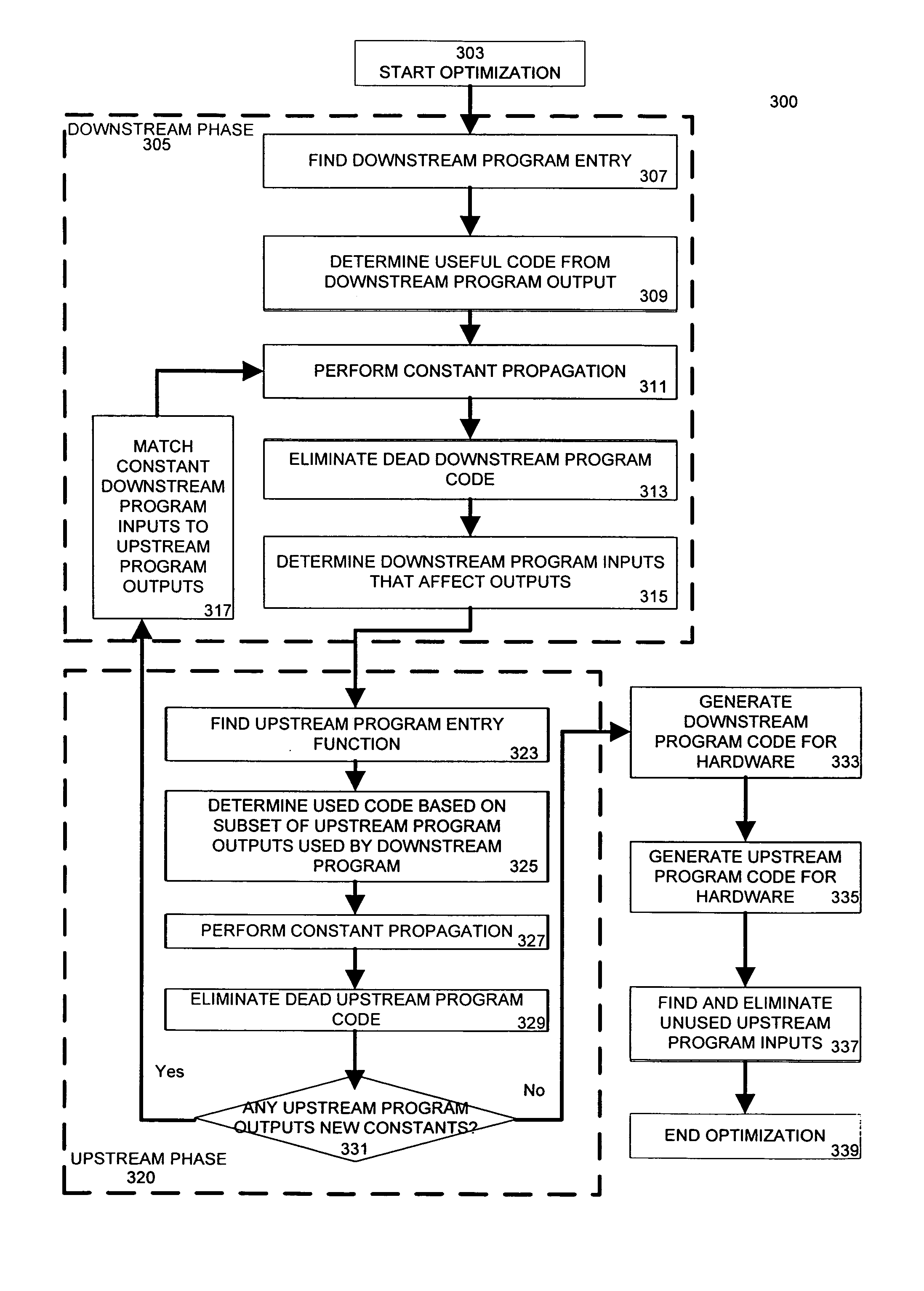

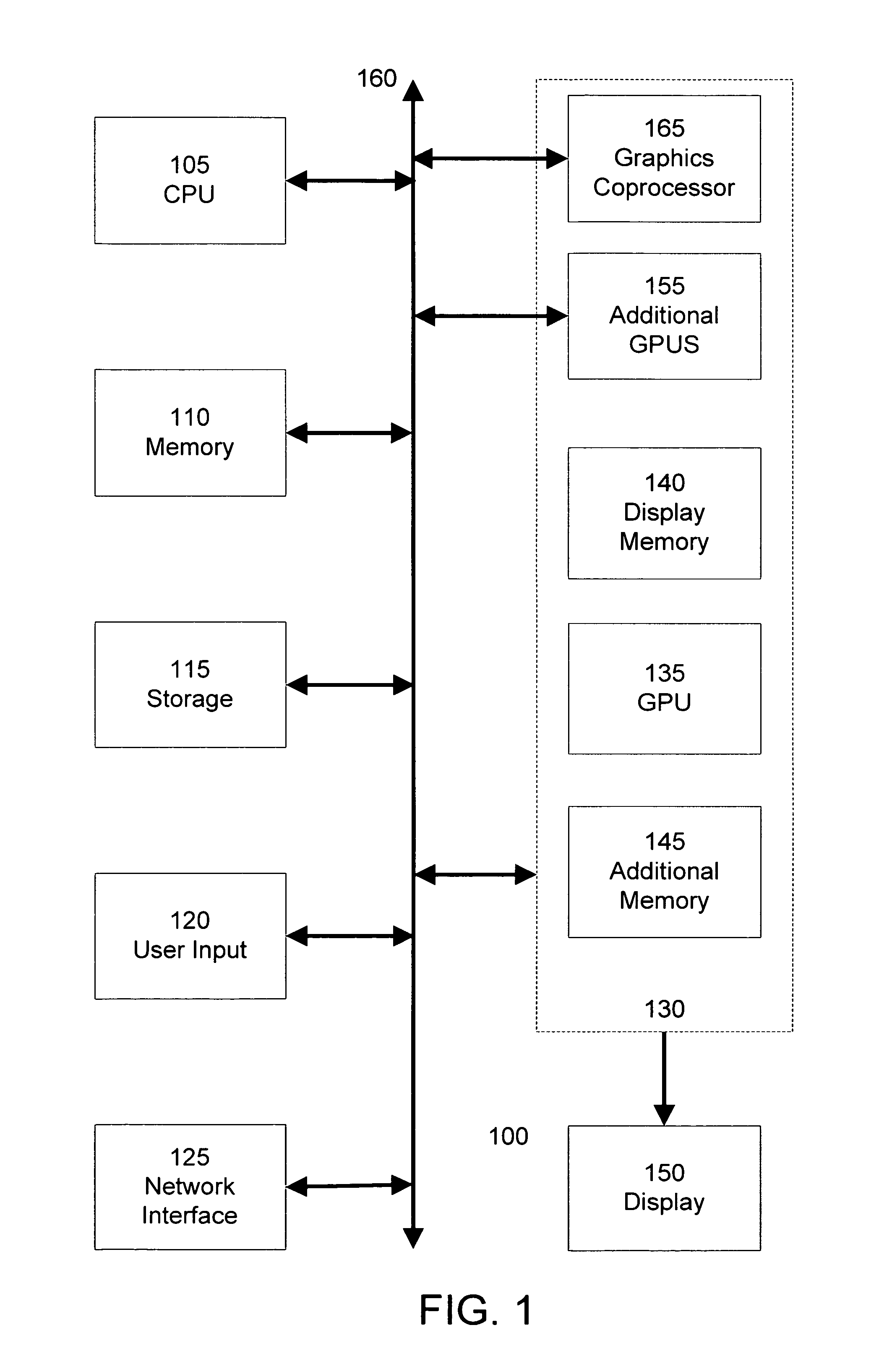

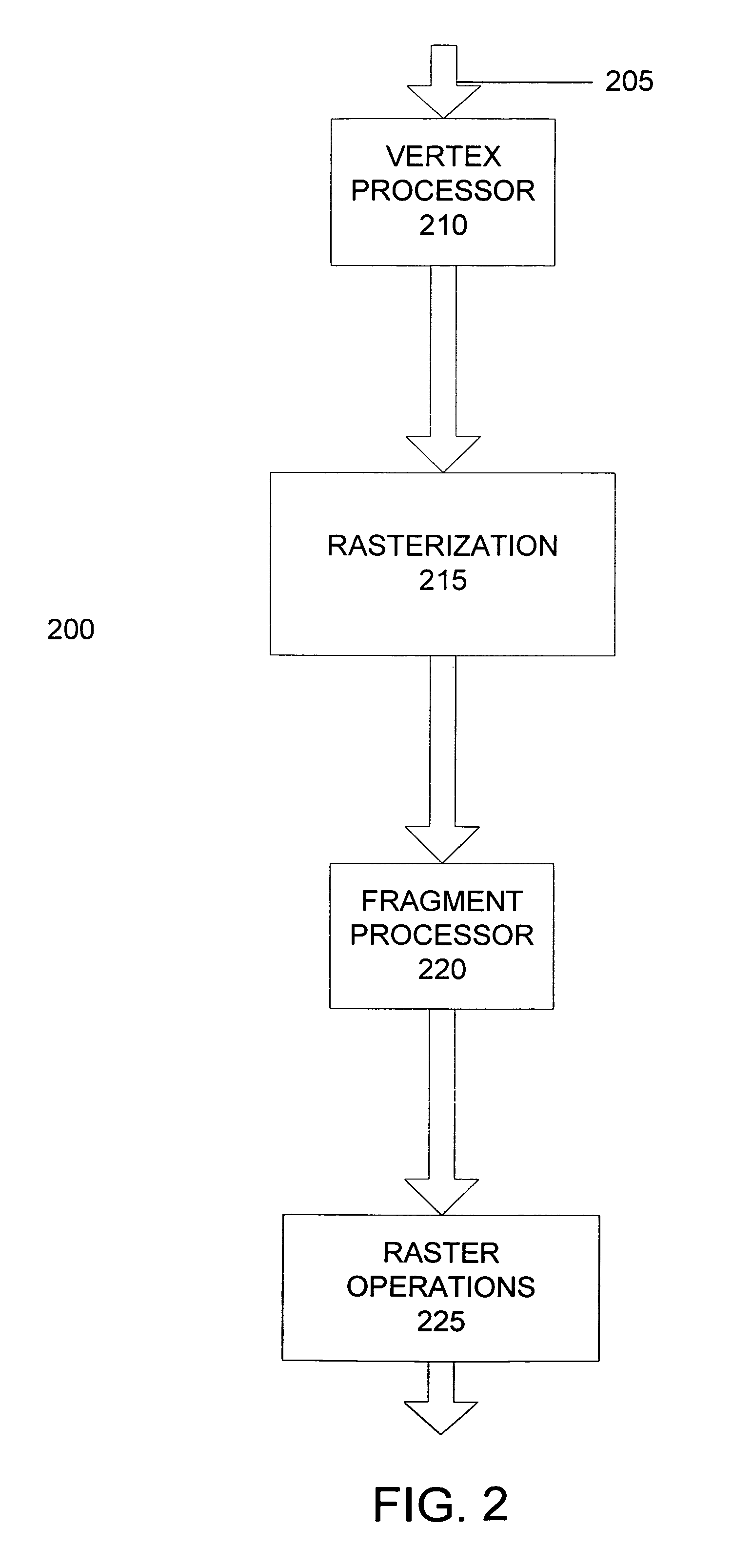

Optimized chaining of vertex and fragment programs

ActiveUS20060005178A1Improve execution performanceTransformation of program codeGeneral purpose stored program computerData optimizationProgram code

A system optimizes two or more stream processing programs based upon the data exchanged between the stream processing programs. The system alternately processes each stream processing program to identify and remove dead program code, thereby improving execution performance. Dead program code is identified by propagating constants received as inputs from other stream processing programs and by analyzing a first stream processing program and determining the outputs of a second stream processing program that are unused by the first stream processing program. The system may perform multiple iterations of this optimization is previous iterations introduce additional constants used as inputs to a stream processing program. Following optimization of the stream processing programs, the optimized stream processing programs are compiled to a format adapted to be executed by a stream processing system.

Owner:NVIDIA CORP

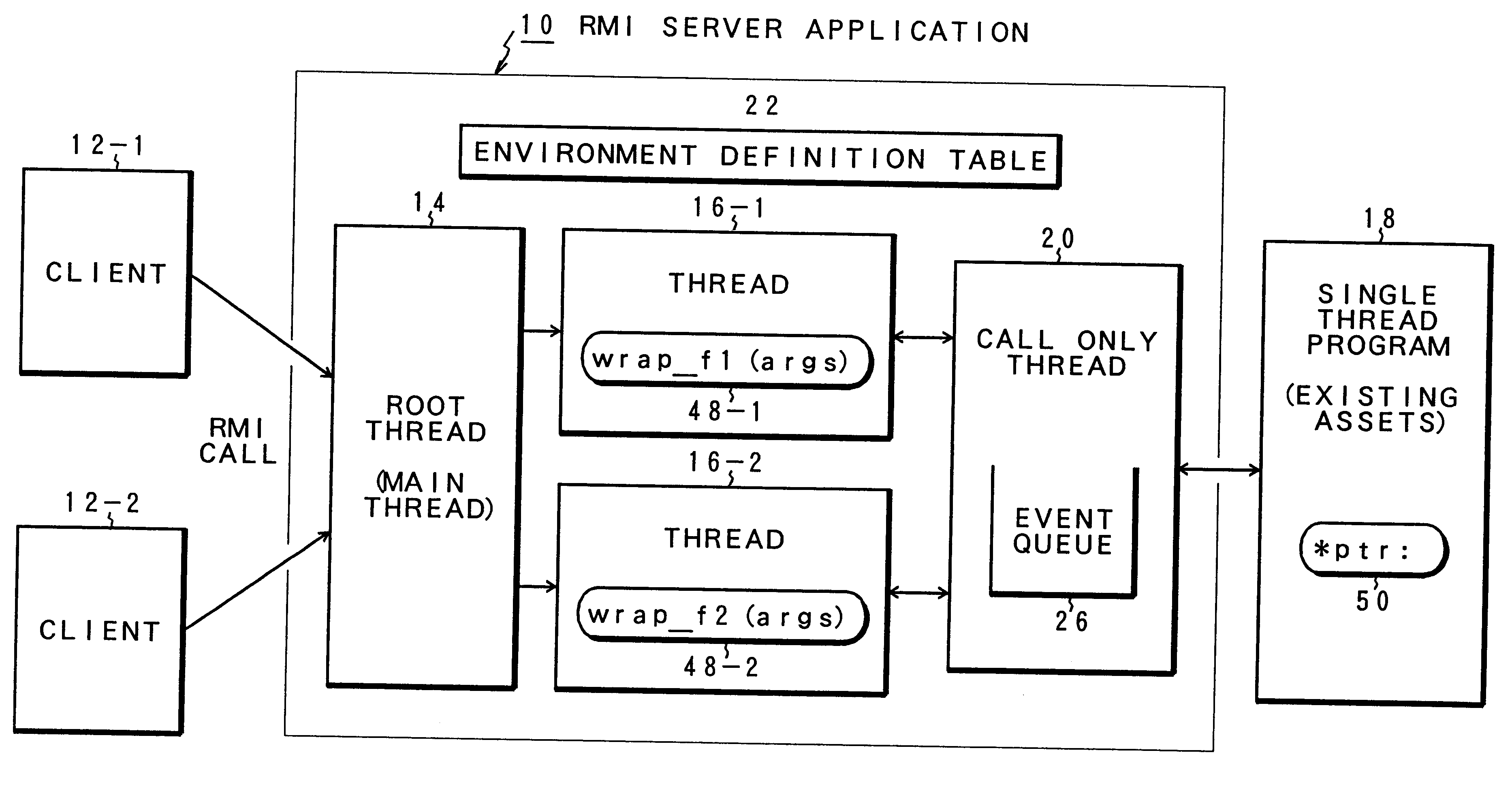

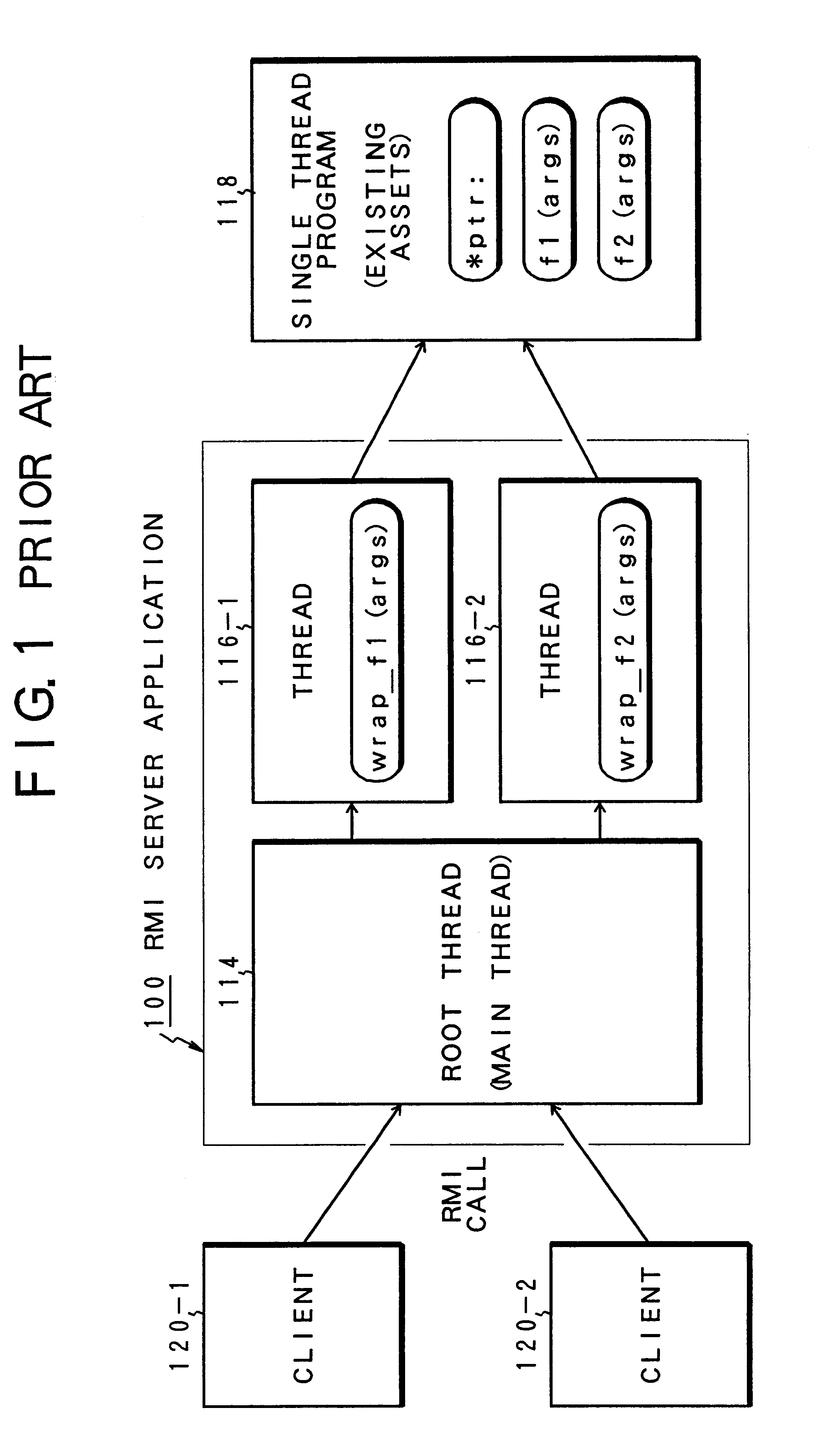

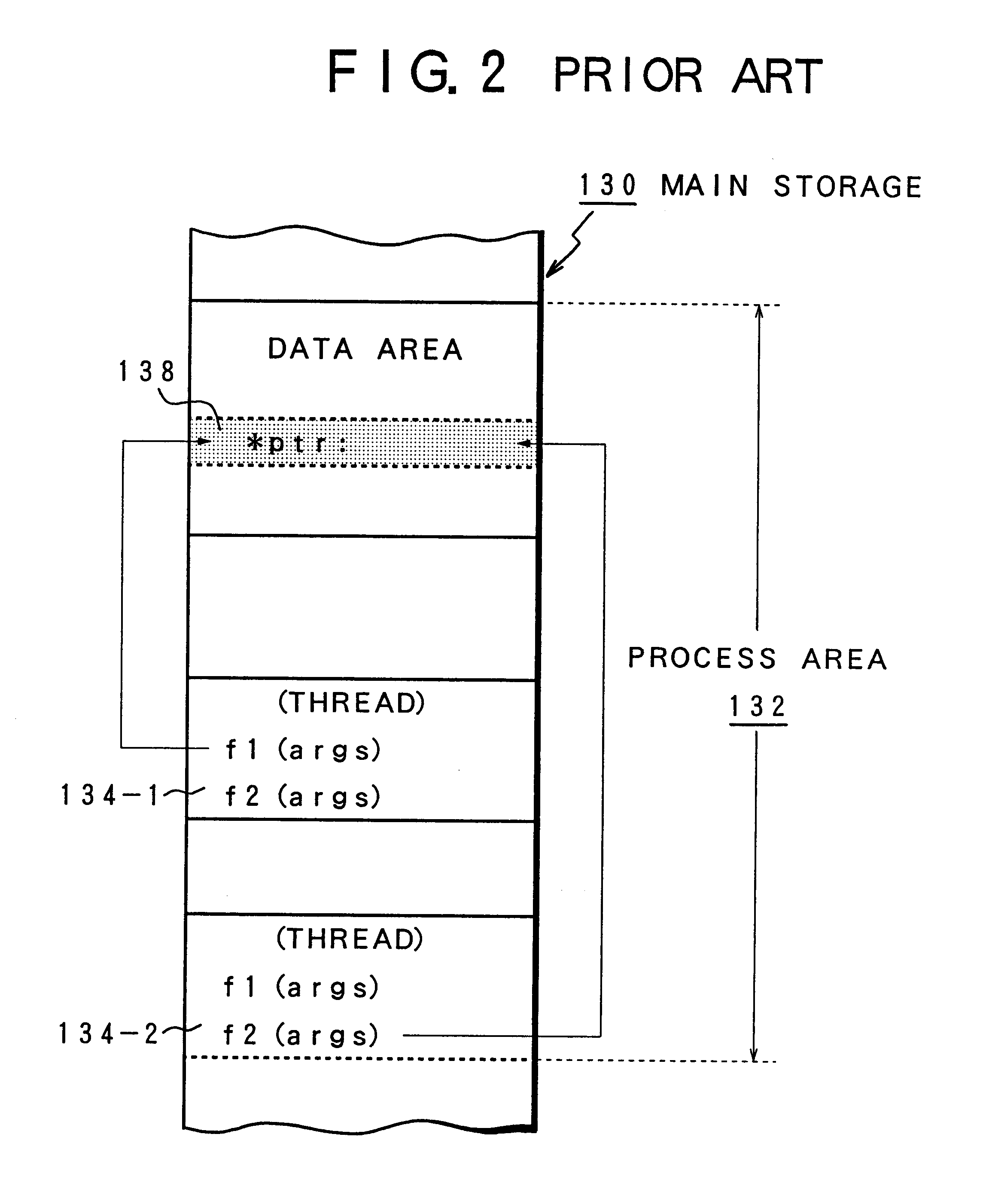

Multi-thread processing apparatus, processing method and record medium having multi-thread processing program stored thereon

InactiveUS6857122B1Efficient use ofImprove execution performanceProgram initiation/switchingInterprogram communicationParallel computingRecording media

Owner:FUJITSU LTD

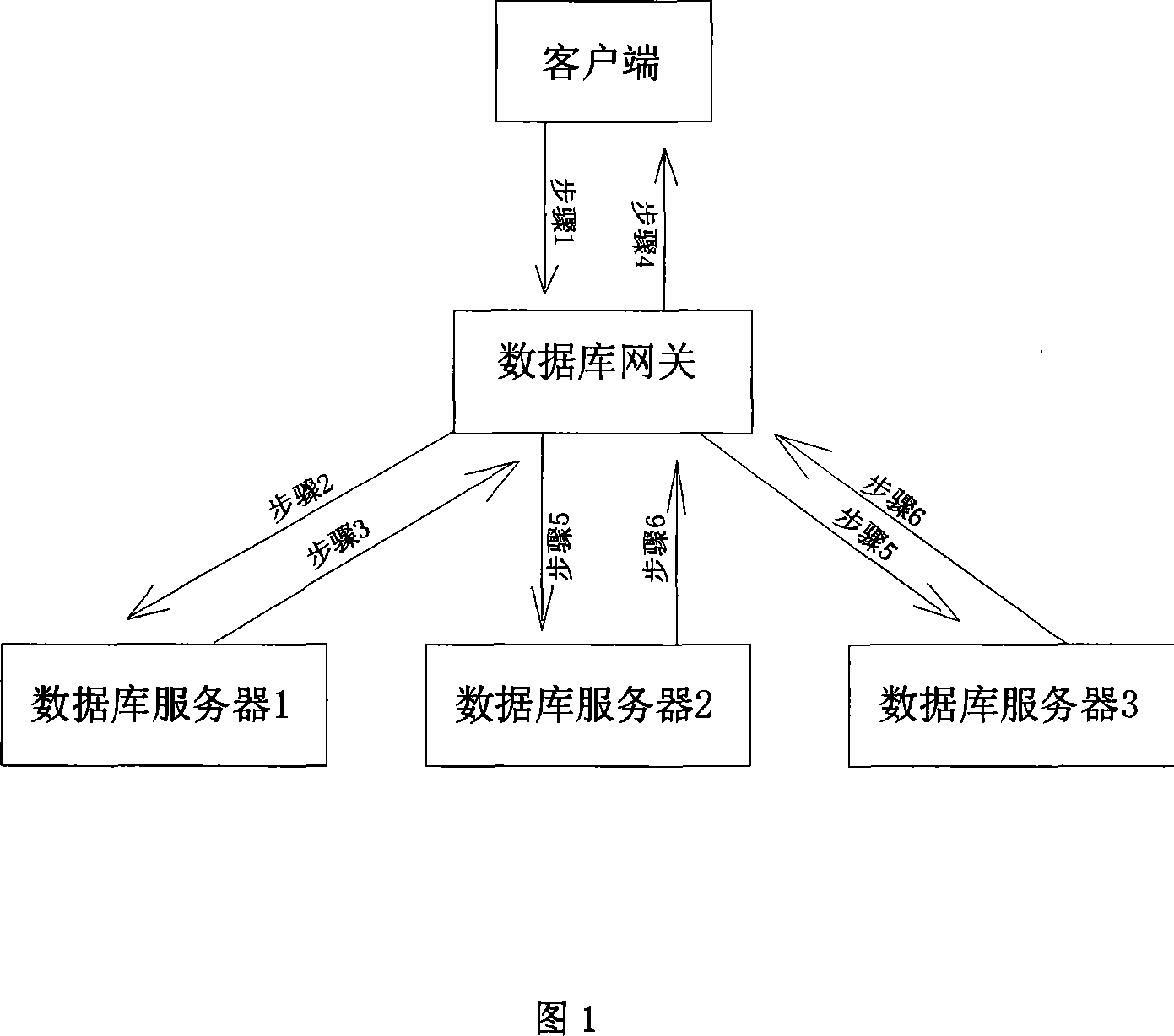

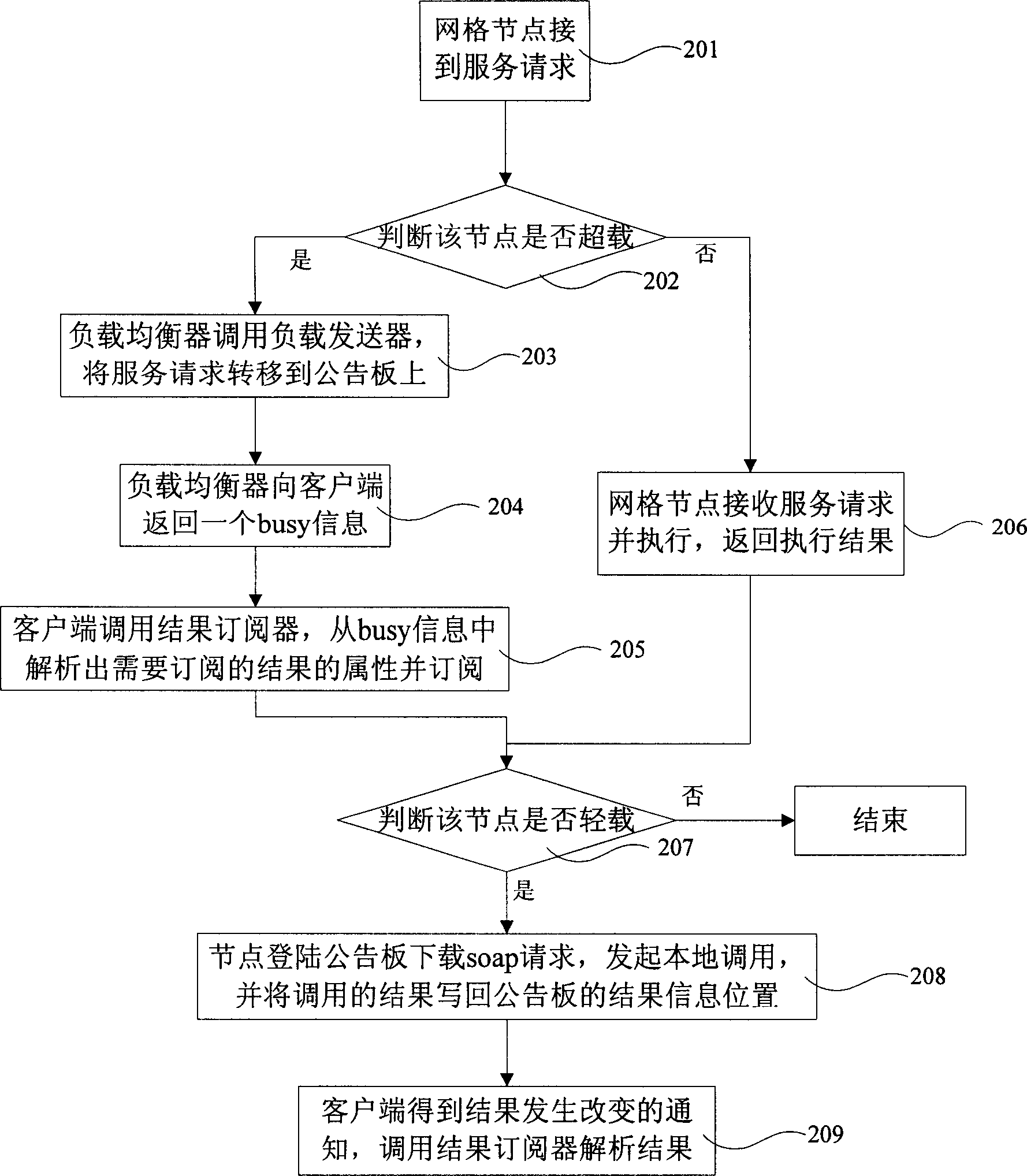

Clustered database system dynamic loading balancing method

InactiveCN101169785AImprove overall resource utilizationImprove overall execution performanceStore-and-forward switching systemsSpecial data processing applicationsCluster basedResource utilization

The invention provides a dynamic load balancing method for a cluster database system. The method comprises determining the loading state of a database server according to a dynamic load balancing algorithm, transmitting a database sentence to the database server with the lowest load through a database gateway system, and returning the result back to a client-end. Different data synchronization mechanisms are executed according to different database sentences. For the database searching sentence, the database gateway directly returns the corresponding result back to the client-end; for the database updating sentence, the database gateway returns the corresponding result back to the client-end, records the state of the updating table, and then transmits the updating sentence to other database servers, to keep the data consistency in each database. On the basis of keeping the performance and the usability of the cluster based on database gateway (middleware), the invention effectively improves the overall resource utilization rate of the database cluster through the dynamic load balancing mechanism, and further improves the overall execution performance of the database.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Optimized chaining of vertex and fragment programs

ActiveUS7426724B2Improve execution performanceTransformation of program codeGeneral purpose stored program computerProgram codeStream processing

A system optimizes two or more stream processing programs based upon the data exchanged between the stream processing programs. The system alternately processes each stream processing program to identify and remove dead program code, thereby improving execution performance. Dead program code is identified by propagating constants received as inputs from other stream processing programs and by analyzing a first stream processing program and determining the outputs of a second stream processing program that are unused by the first stream processing program. The system may perform multiple iterations of this optimization is previous iterations introduce additional constants used as inputs to a stream processing program. Following optimization of the stream processing programs, the optimized stream processing programs are compiled to a format adapted to be executed by a stream processing system.

Owner:NVIDIA CORP

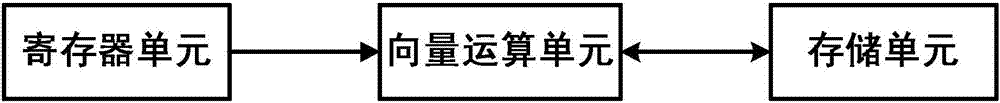

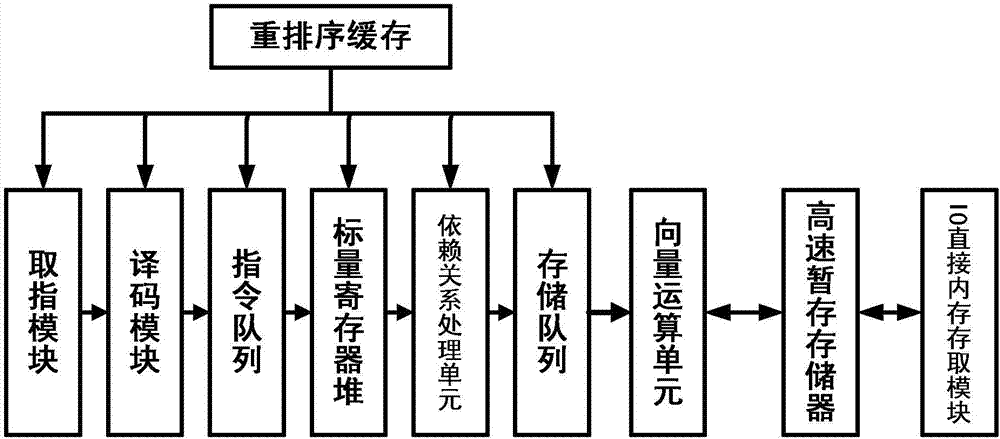

Vector calculating device

ActiveCN106990940AImprove execution performanceSimple formatRegister arrangementsComplex mathematical operationsProcessor registerScratchpad memory

The invention provides a vector calculating device comprises a memory cell, a register unit and a vector operation unit. Vectors are stored in the memory cell, addresses stored by the vectors are stored in the register unit, and the vector operation unit obtains a vector address in the register unit in dependence on a vector operation instruction, and then obtains a corresponding vector in the memory cell in dependence on the vector address, and carries out vector operation in dependence on the obtained vector to obtain a vector operation result. According to the invention, vector data participating in calculation is temporarily stored in a scratchpad memory, data in different widths can be supported flexibly and effectively during the vector operation process, and the execution performance of tasks including a lot of vector calculations is improved.

Owner:CAMBRICON TECH CO LTD

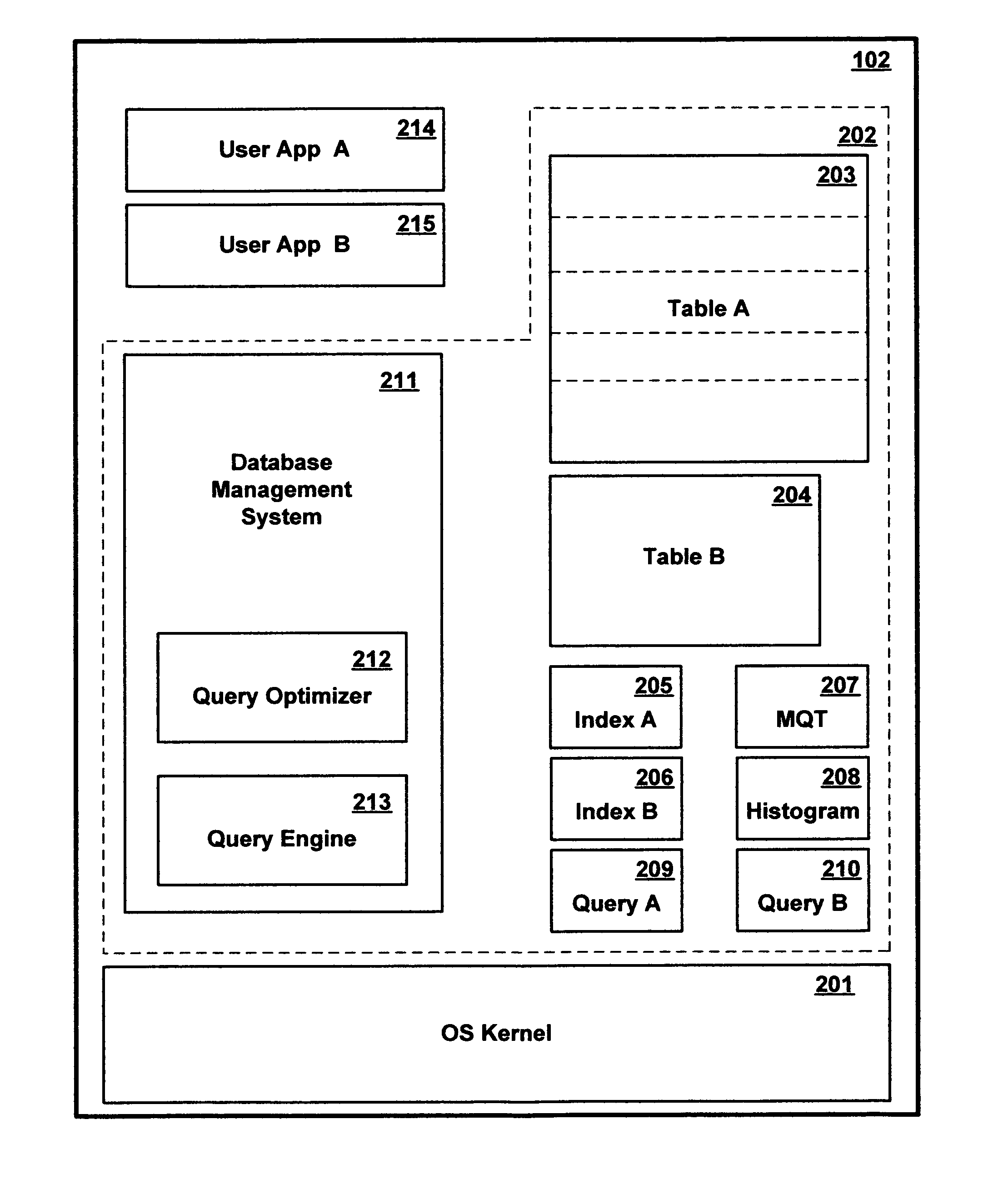

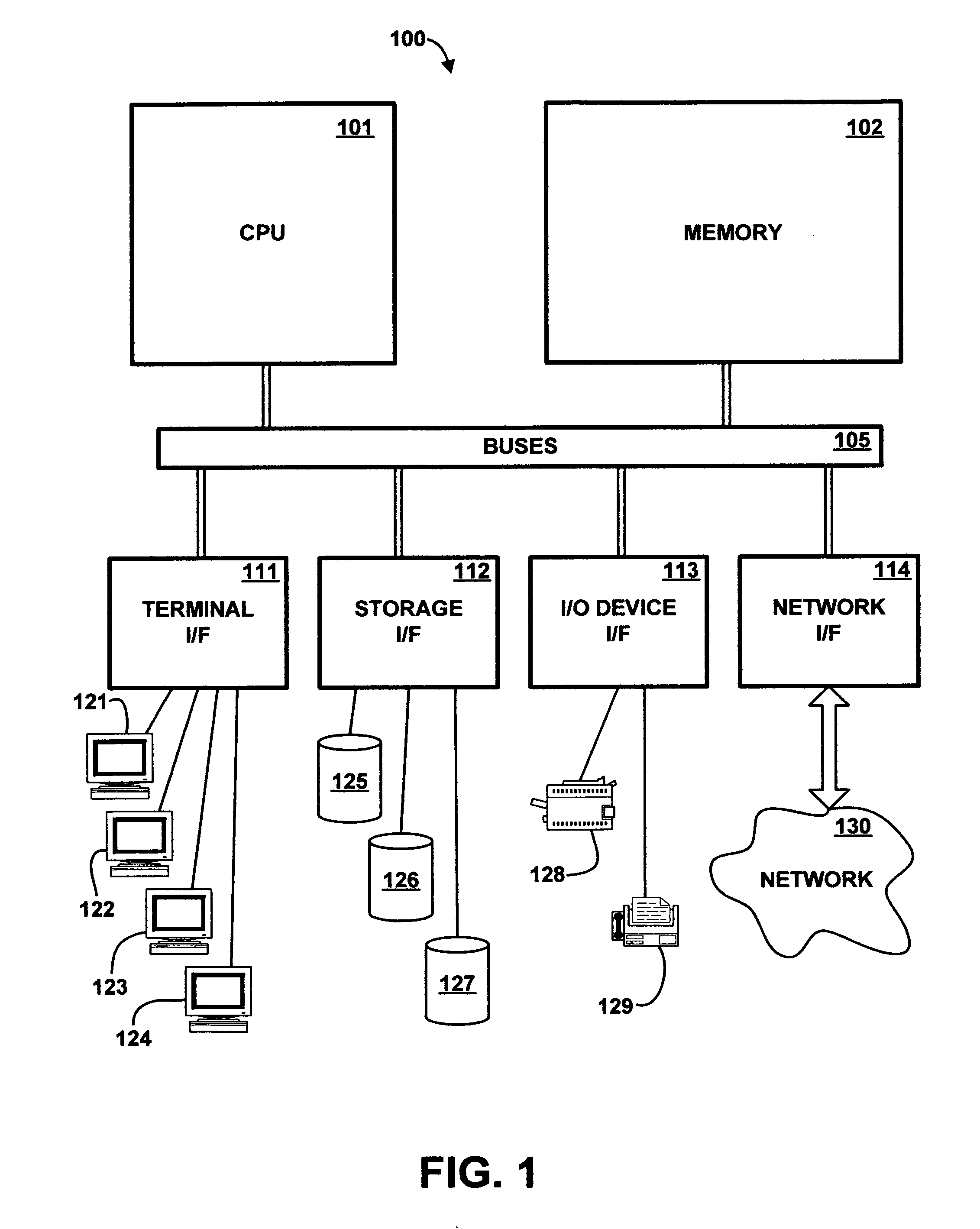

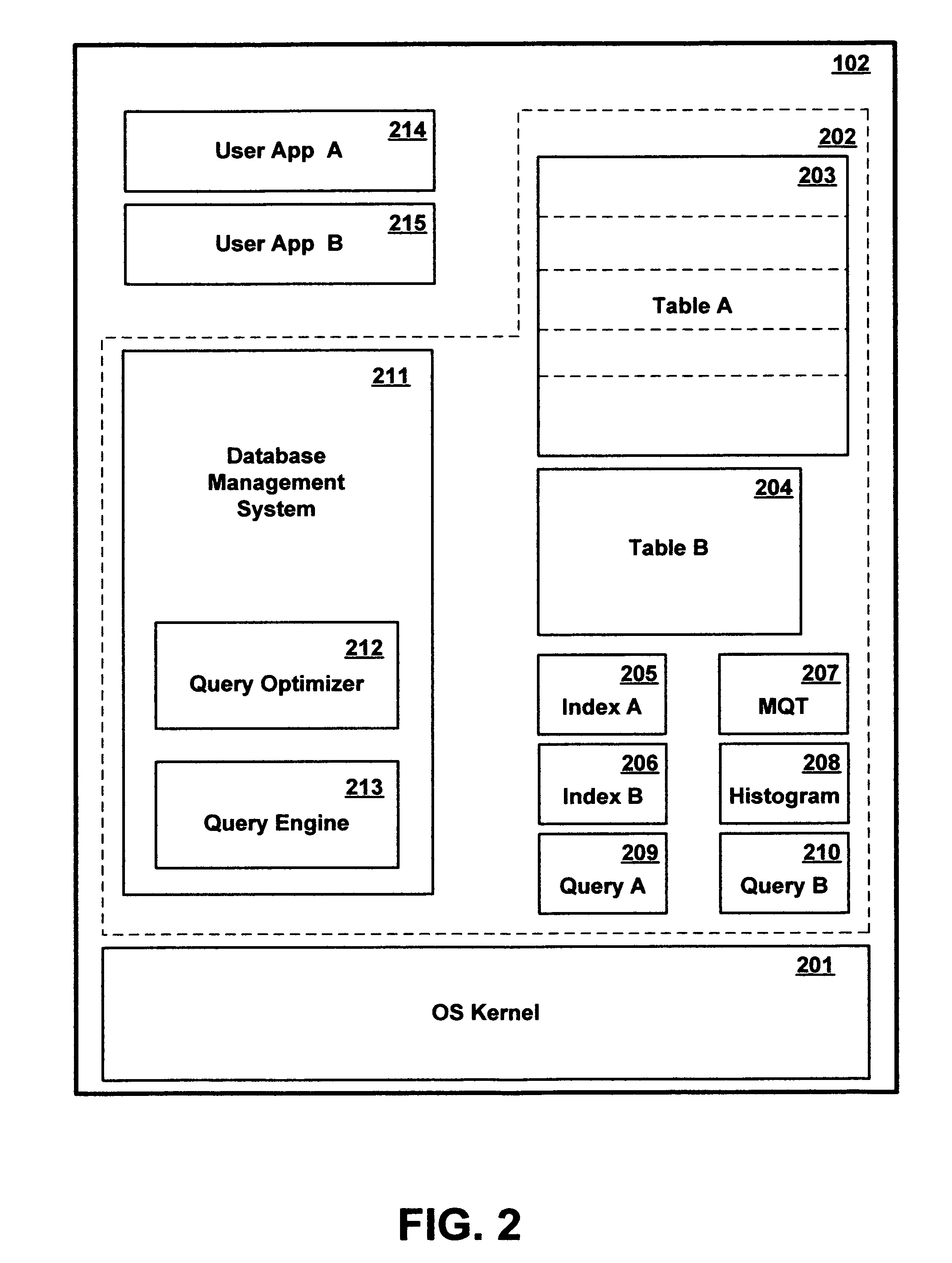

Method and apparatus for dynamically associating different query execution strategies with selective portions of a database table

InactiveUS20070016558A1Little overheadAvoid overheadDigital data information retrievalDigital data processing detailsDatabase queryMetadata

A query facility for database queries dynamically determines whether selective portions of a database table are likely to benefit from separate query execution strategies, and constructs an appropriate separate execution strategies accordingly. Preferably, the database contains at least one relatively large table comprising multiple partitions, each sharing the definitional structure of the table and containing a different respective discrete subset of the table records. The query facility compares metadata for different partitions to determine whether sufficiently large differences exist among the partitions, and in appropriate cases selects one or more partitions for separate execution strategies. Preferably, partitions are ranked for separate evaluation using a weighting formula which takes into account: (a) the number of indexes for the partition, (b) recency of change activity, and (c) the size of the partition.

Owner:IBM CORP

Dimension context propagation techniques for optimizing SQL query plans

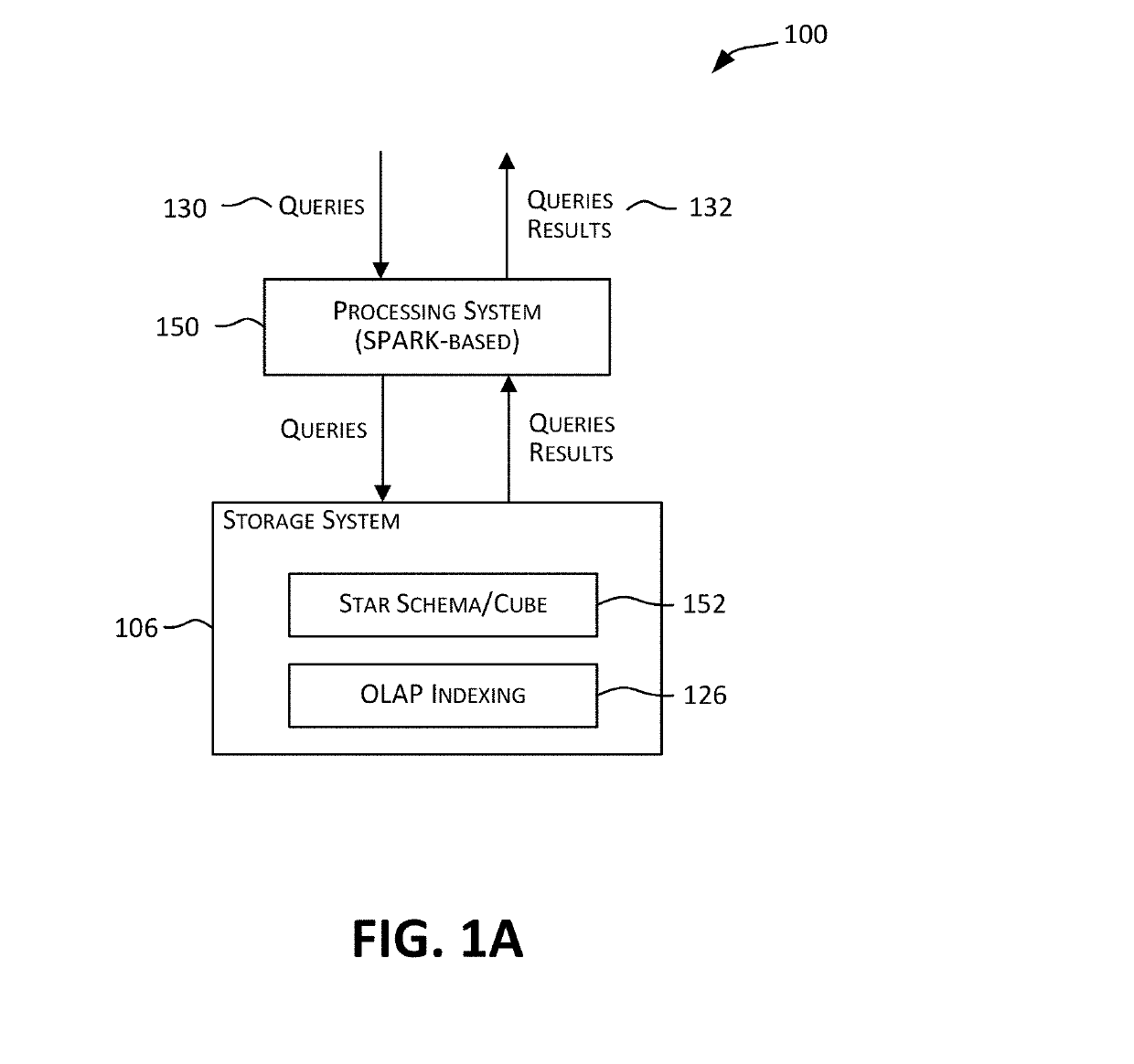

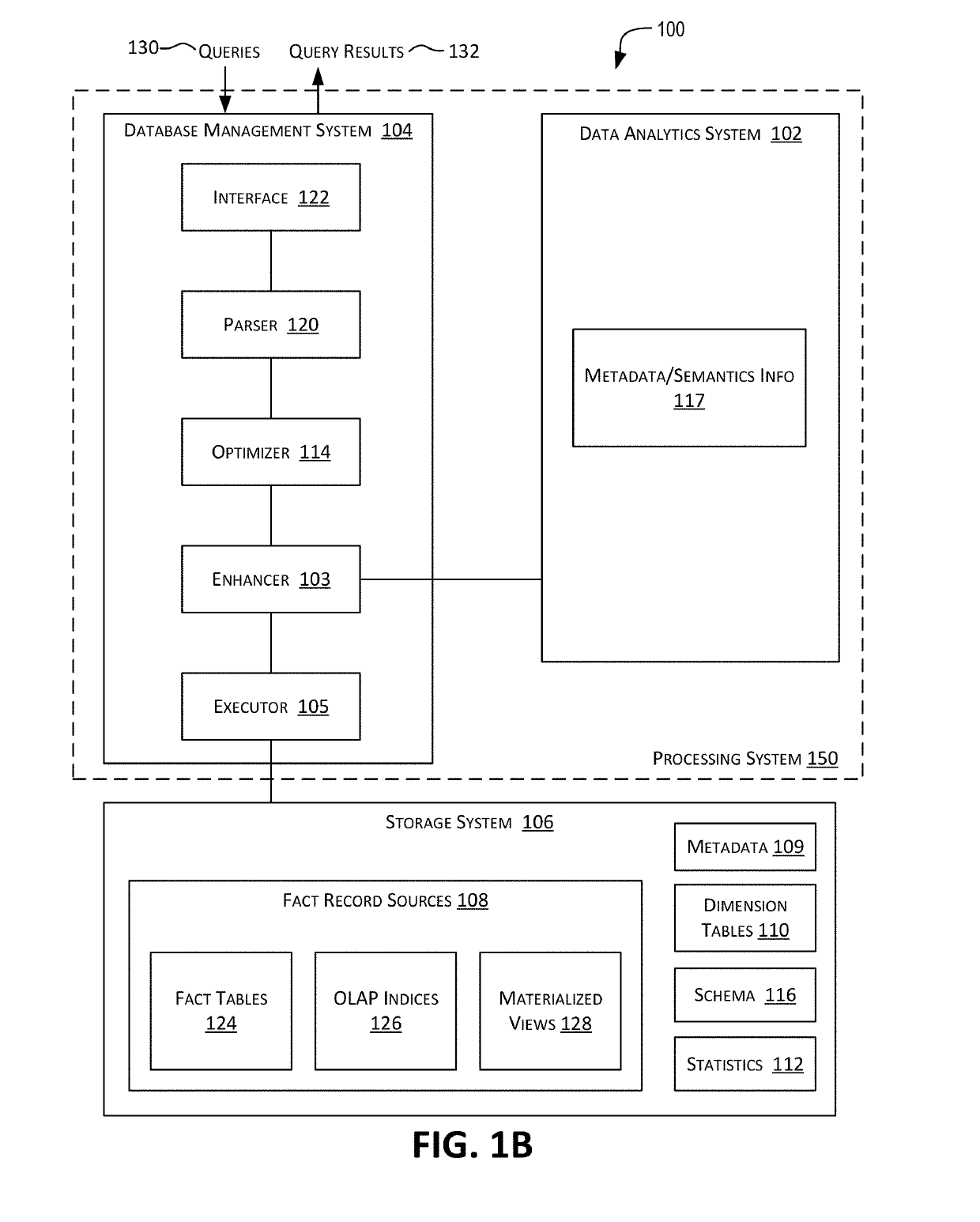

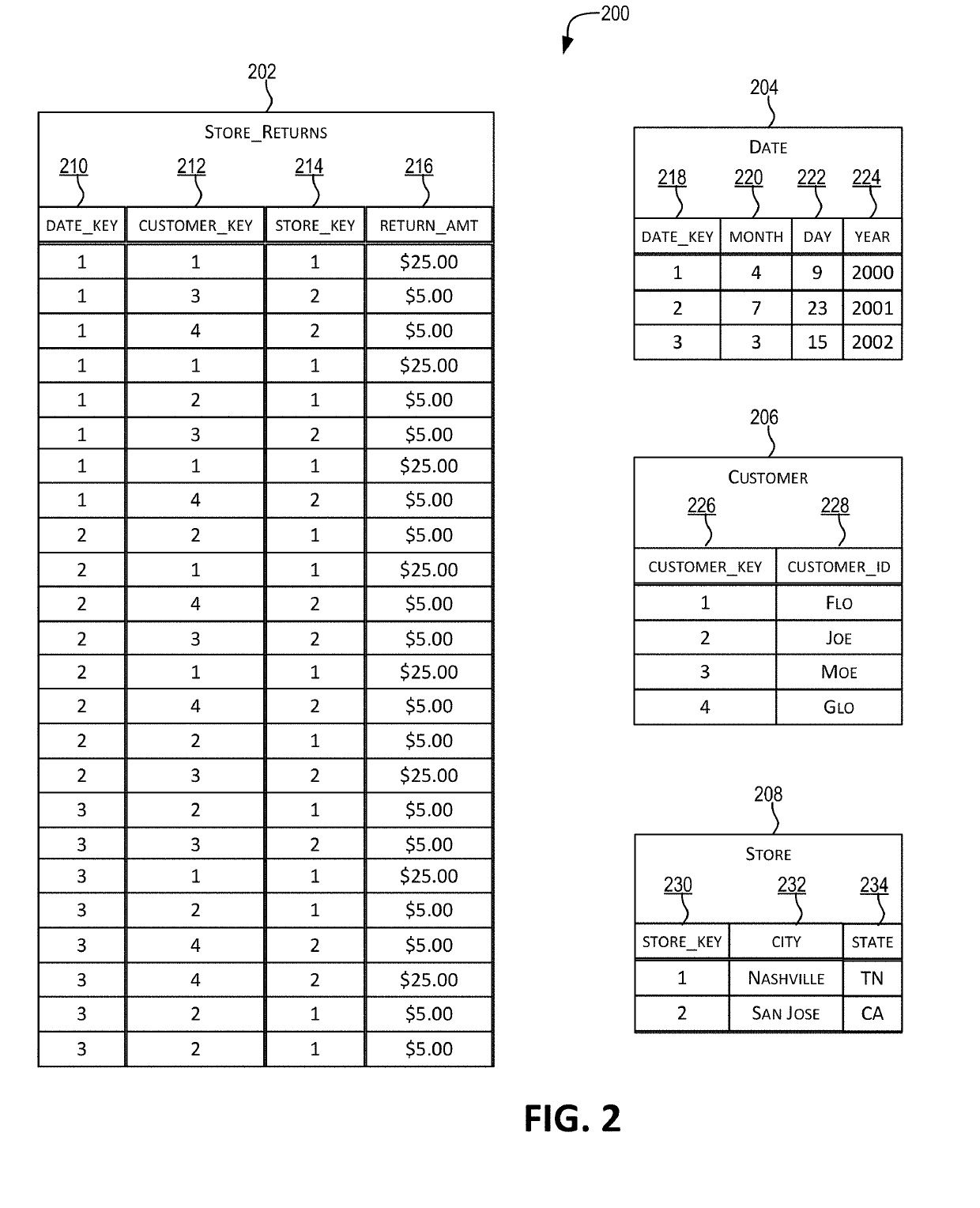

ActiveUS20190220464A1Enhance performanceImprove execution performanceMulti-dimensional databasesSpecial data processing applicationsProgram planningSQL

Techniques for efficient execution of queries. A query plan generated for the query is optimized and rewritten as an enhanced query plan, which when executed, uses fewer CPU cycles and thus executes faster than the original query plan. The query for which the enhanced query plan is generated thus executes faster without compromising the results obtained or the data being queried. Optimization includes identifying a set of one or more fact scan operations in the original query plan and then, in the rewritten enhanced query plan, associating one or more dimension context predicate conditions with one or more of the set of fact scan operations. This reduces the overall cost of scanning and / or processing fact records in the enhanced query plan compared to the original query plan and makes the enhanced query plan execute faster than the original query plan.

Owner:ORACLE INT CORP

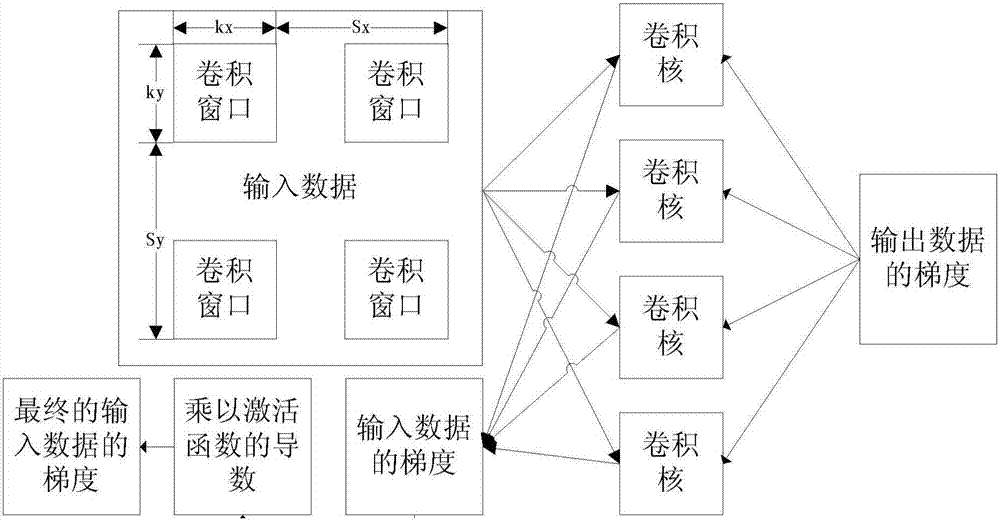

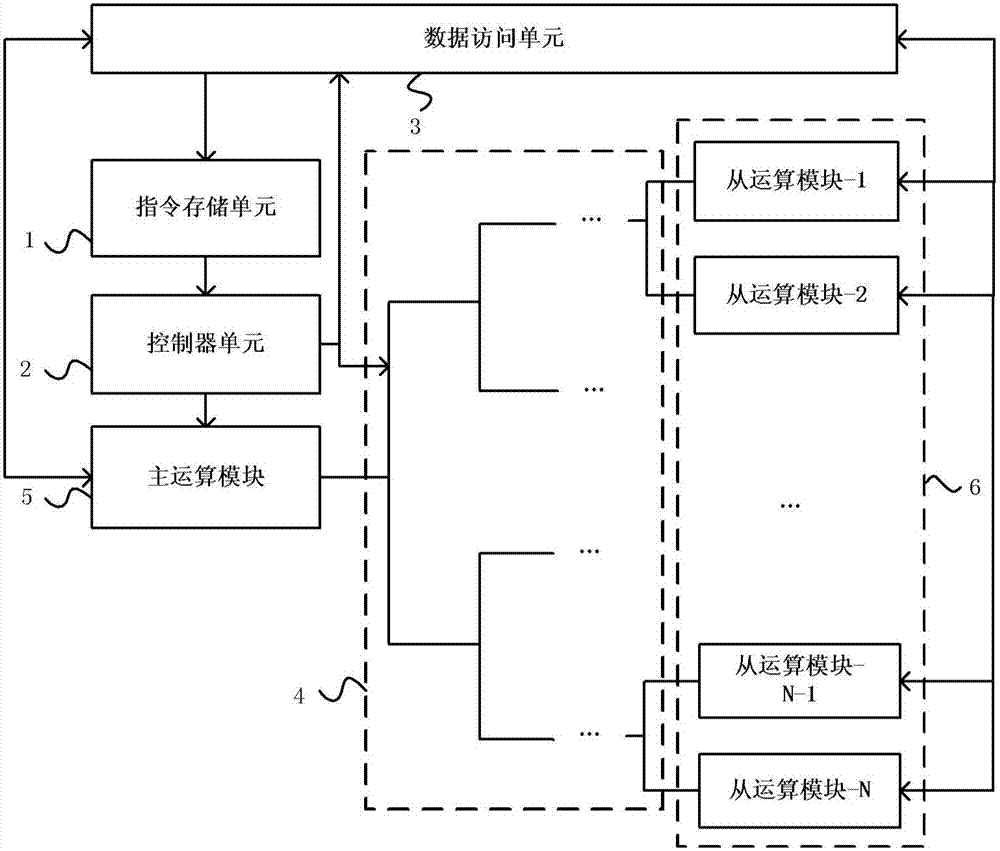

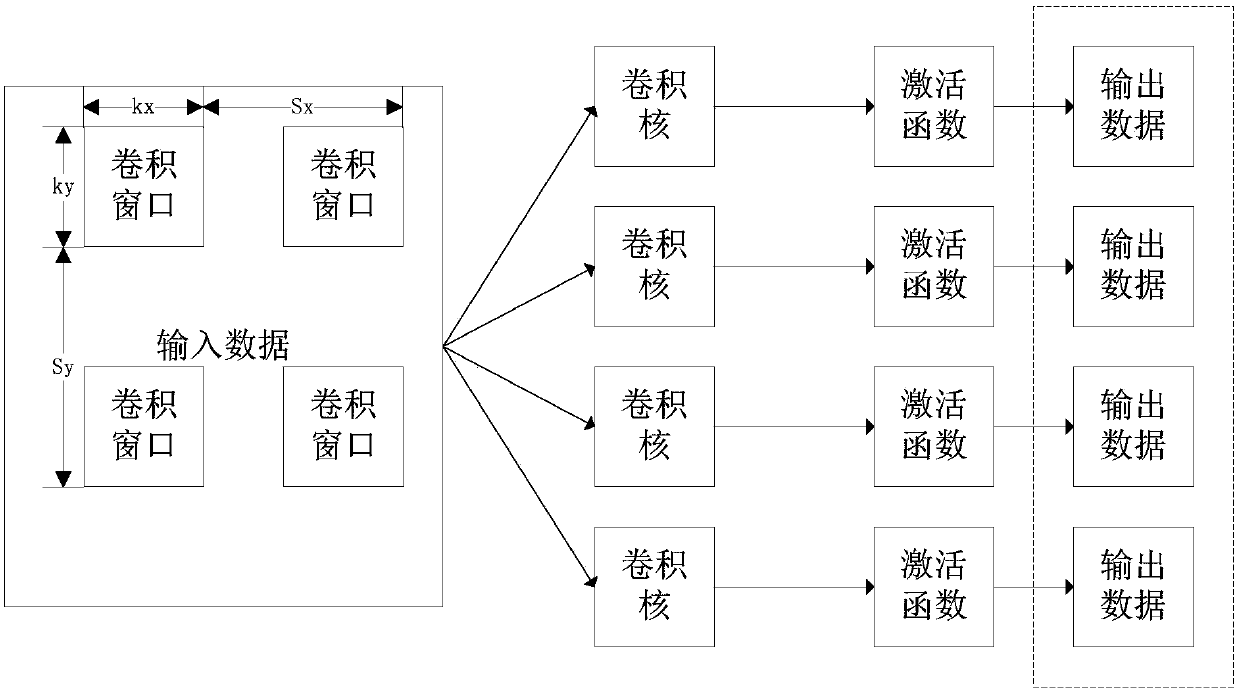

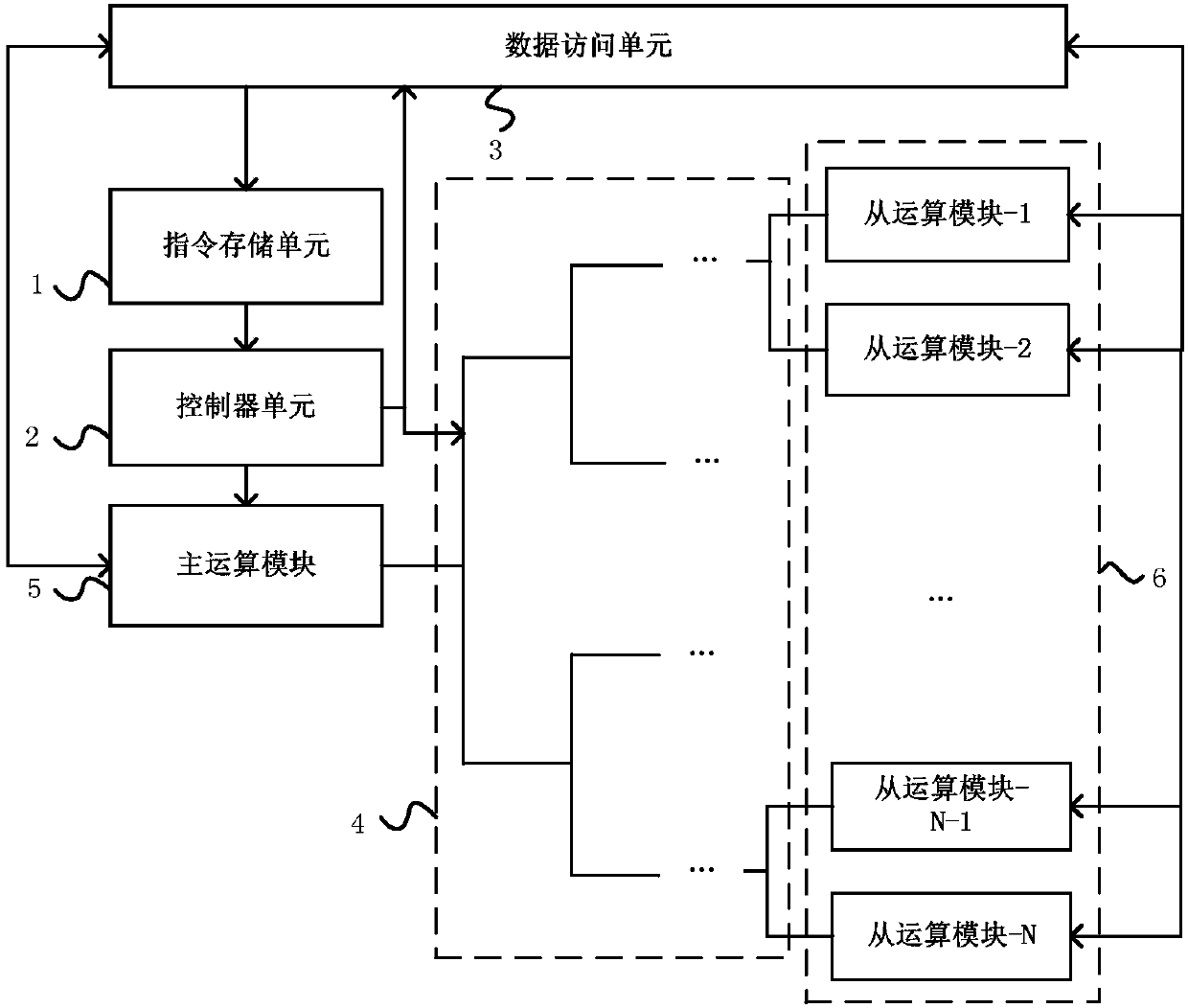

Apparatus and method for performing convolutional neural network training

ActiveCN107341547ASimple formatFlexible vector lengthDigital data processing detailsDigital computer detailsActivation functionAlgorithm

The present invention provides an apparatus and a method for performing convolution neural network inverse training. The apparatus comprises an instruction storage unit, a controller unit, a data access unit, an interconnection module, a main computing module, and a plurality of slave computing modules. The method comprises: for each layer, carrying out data selection on the input neuron vector according to the convolution window; and taking the data from the previous layer and the data gradient from the subsequent layer that are obtained according to selection as the inputs of the computing unit of the apparatus; calculating and updating the convolution kernel; and according to the convolution kernel, the data gradient, and the derivative function of the activation function, calculating the data gradient output by the apparatus, and storing the data gradient to a memory so as to output to the previous layer for inverse propagation calculation. According to the apparatus and method provided by the present invention, data and weight parameters involved in the calculation are temporarily stored in the high-speed cache memory, so that convolution neural network inverse training can be supported more flexibly and effectively, and the executing performance of the application containing a large number of memory access is improved.

Owner:CAMBRICON TECH CO LTD

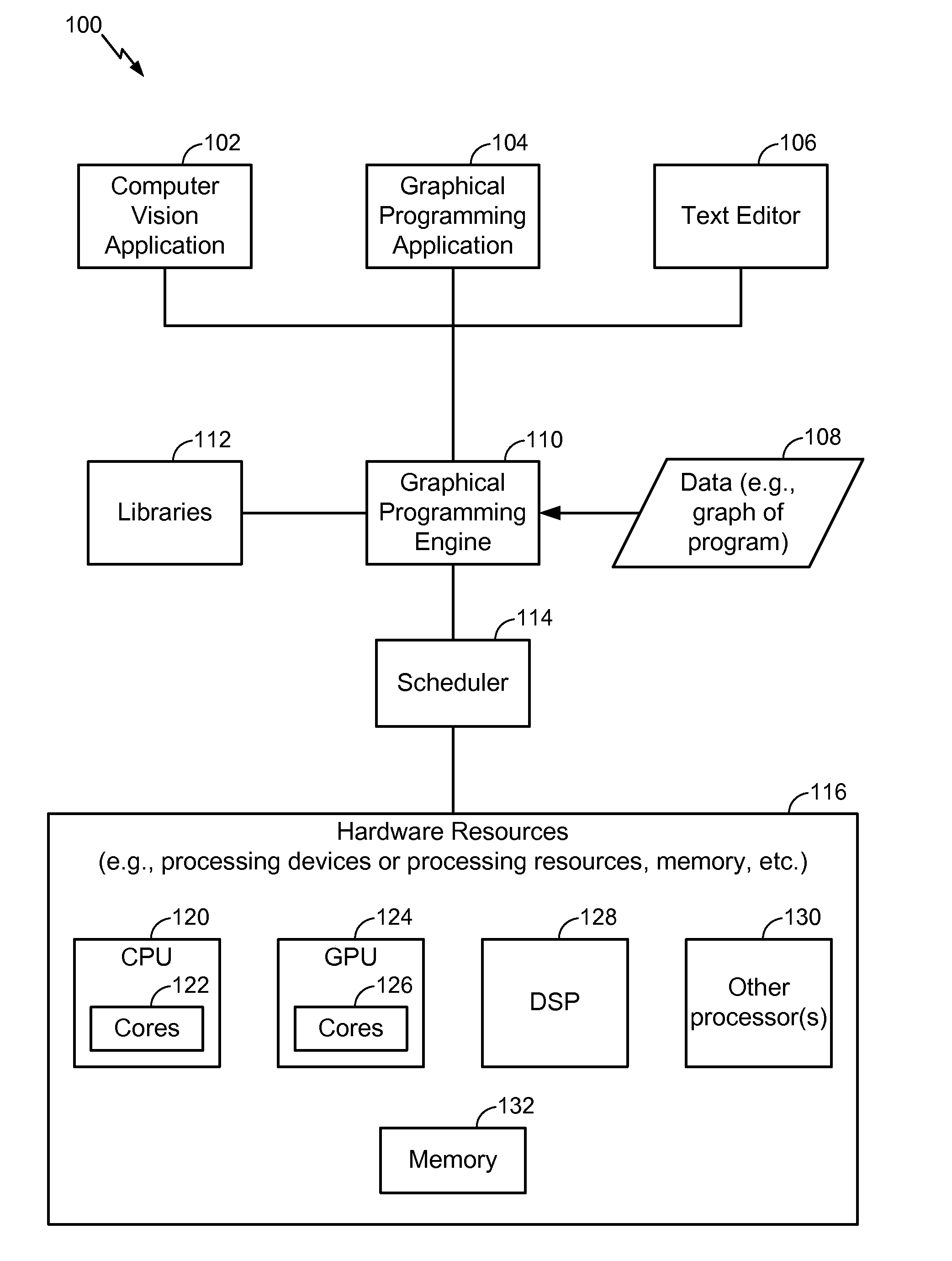

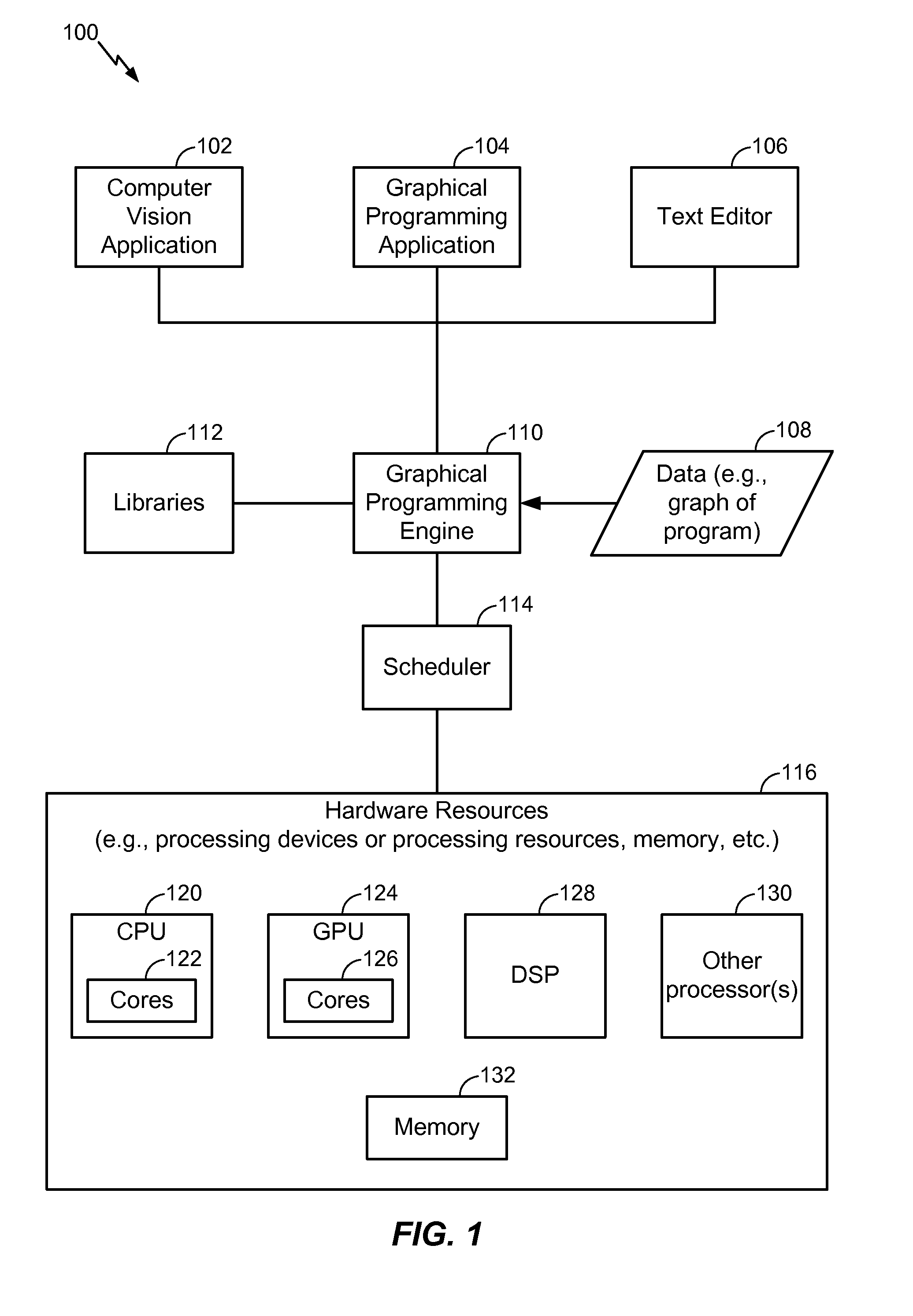

Efficient execution of graph-based programs

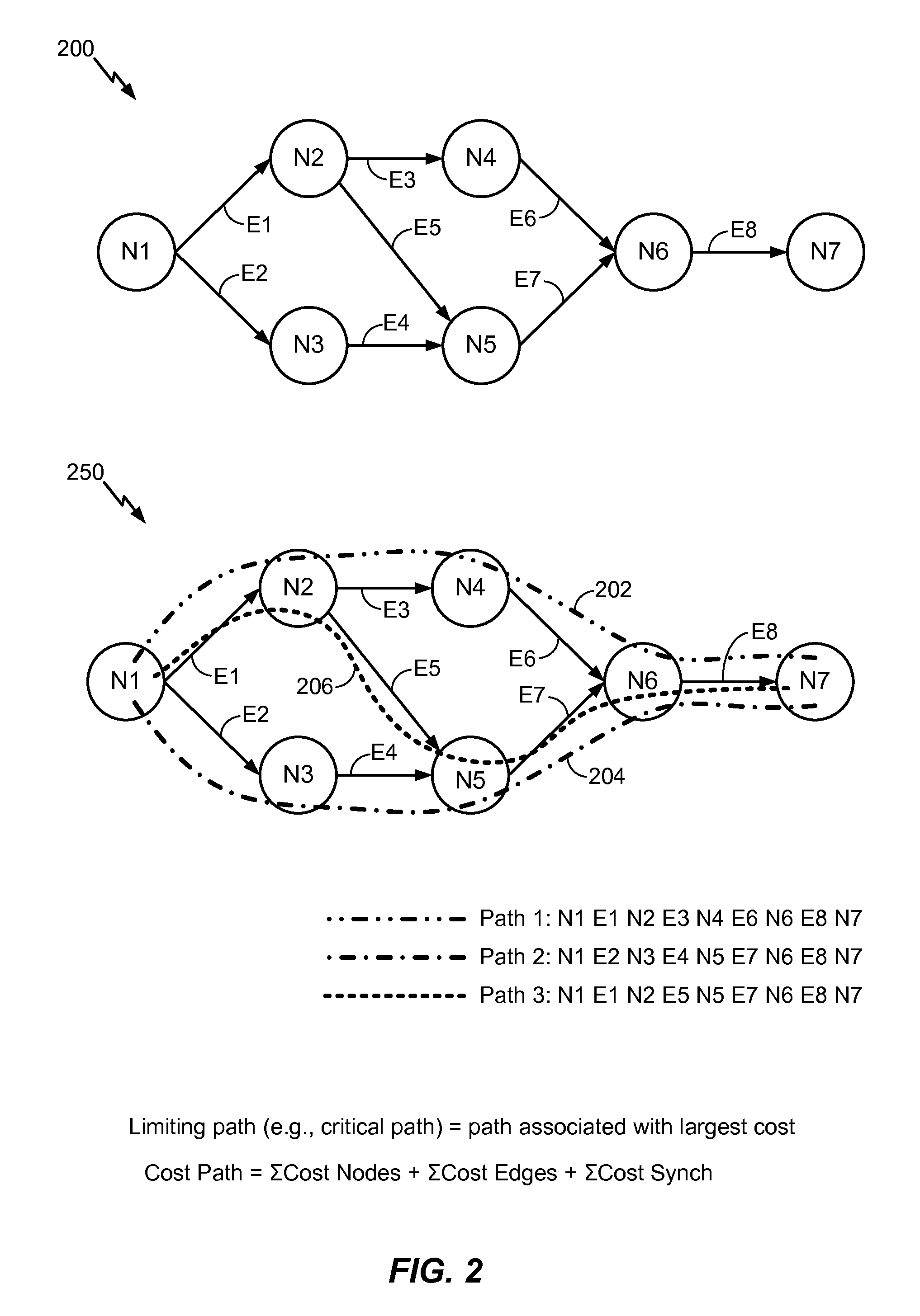

InactiveUS20140359563A1Efficient and quick assemblyEfficient executionResource allocationVisual/graphical programmingMultiple edgesGraphics

A method includes accessing, at a computing device, data descriptive of a graph representing a program. The graph includes multiple nodes representing execution steps of the program and includes multiple edges representing data transfer steps. The method also includes determining at least two heterogeneous hardware resources of the computing device that are available to execute code represented by one or more of the nodes, and determining one or more paths from a source node to a sink node based on a topology of the graph. The method further includes scheduling execution of code at the at least two heterogeneous hardware resources. The code is represented by at least one of the multiple nodes, and the execution of the code is scheduled based on the one or more paths.

Owner:QUALCOMM INC

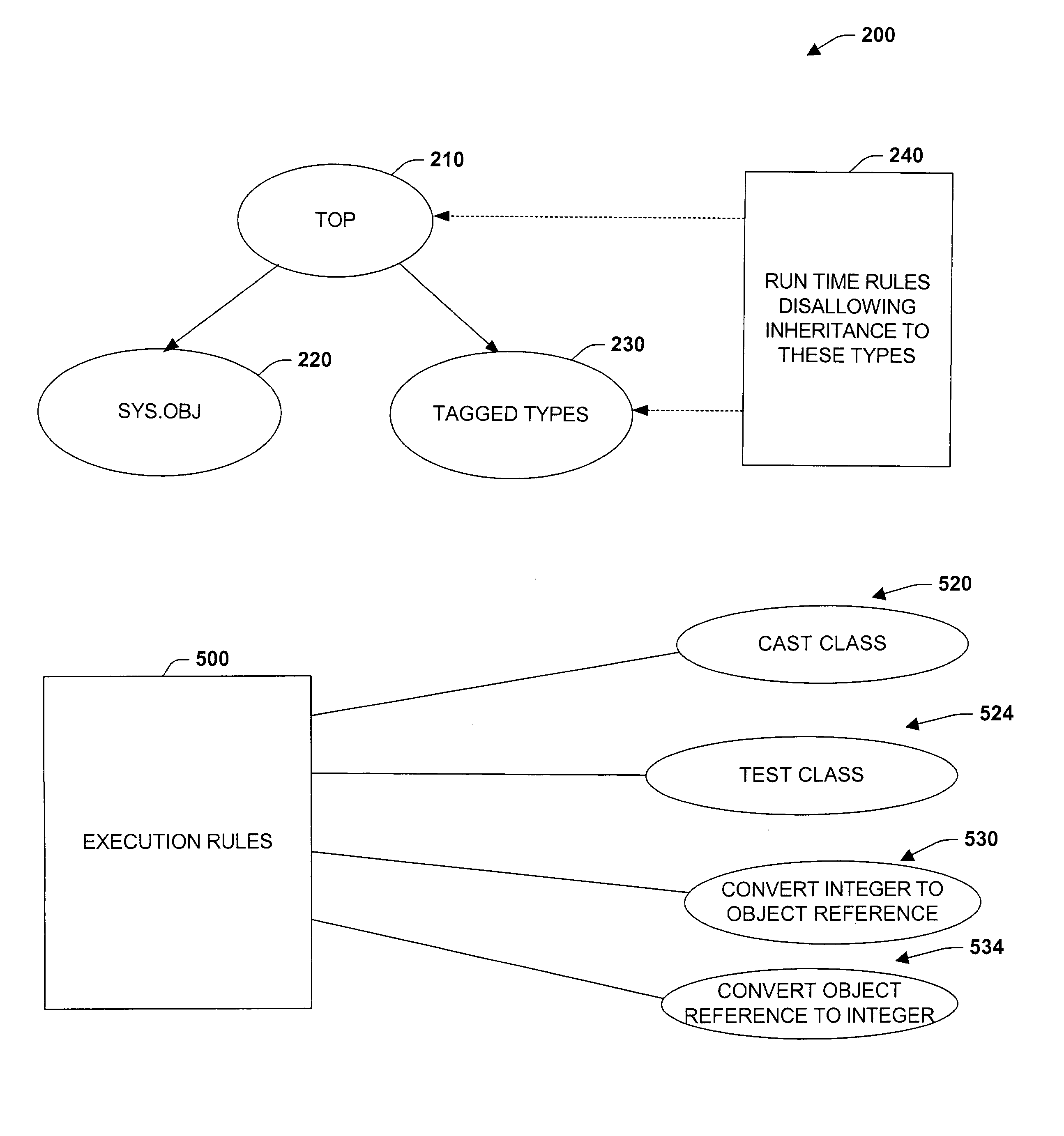

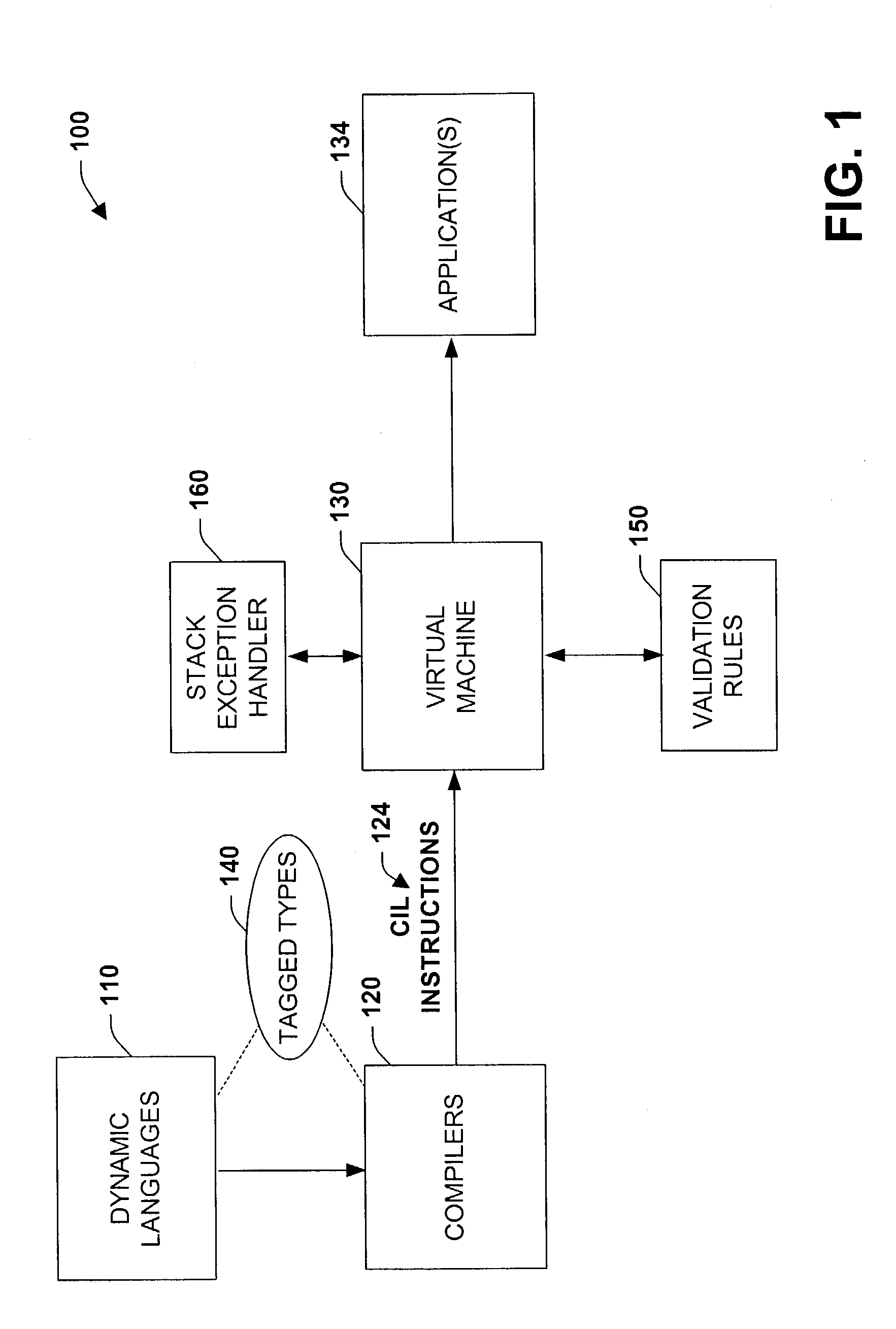

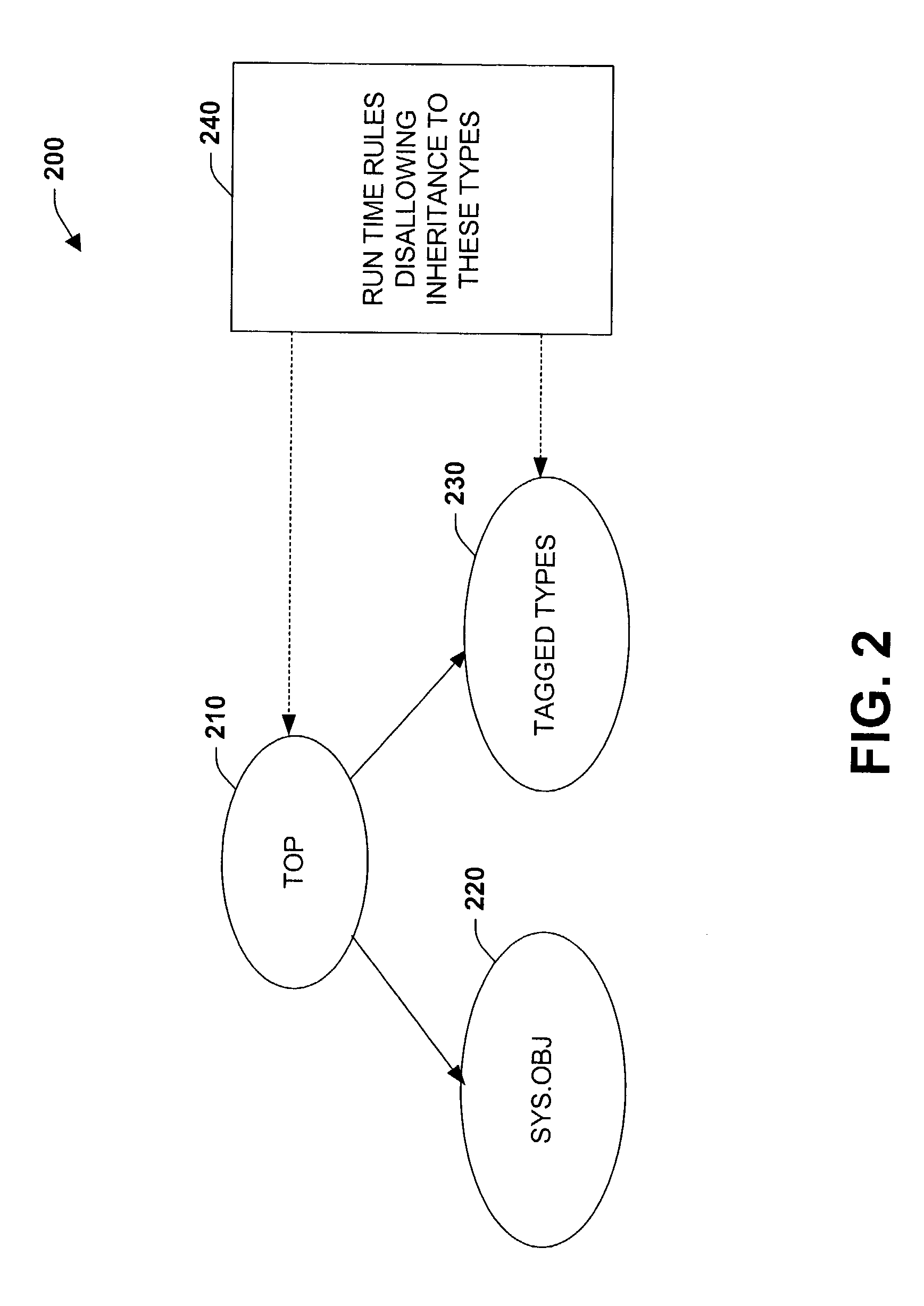

Systems and methods for employing tagged types in a dynamic runtime environment

ActiveUS7168063B2Facilitate proper executionReduce dataTransformation of program codeSoftware simulation/interpretation/emulationManaged codeType safety

The present invention relates to systems and methods that facilitate dynamic programming language execution in a managed code environment. A class component is provided that declares an inheritance hierarchy for one or more tagged values associated with a dynamic programming language. During execution of the tagged values, a rules component mitigates user-defined types from inheriting or deriving properties from the tagged values in order to support a type safe runtime environment. A bifurcated class tree is provided that defines non-tagged type elements on one side of the tree and tagged type element values on an alternate branch of the tree. The rules component analyzes runtime extensions that help to prevent data from one component of the tree deriving or inheriting properties from another component of the tree. The runtime extensions include such aspects as cast class extensions, test class extensions, and conversion class extensions for converting data types from one class subtype to another.

Owner:MICROSOFT TECH LICENSING LLC

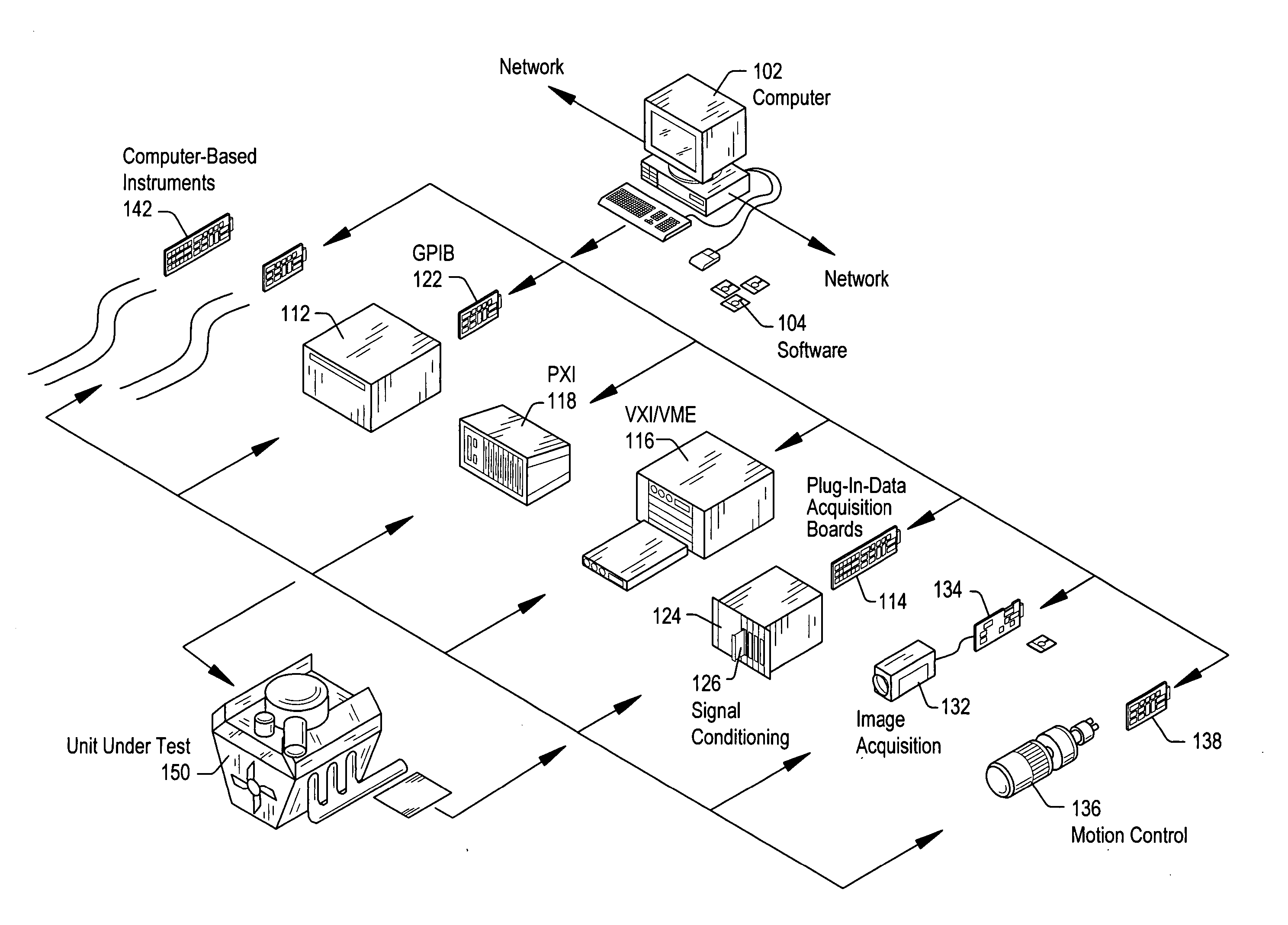

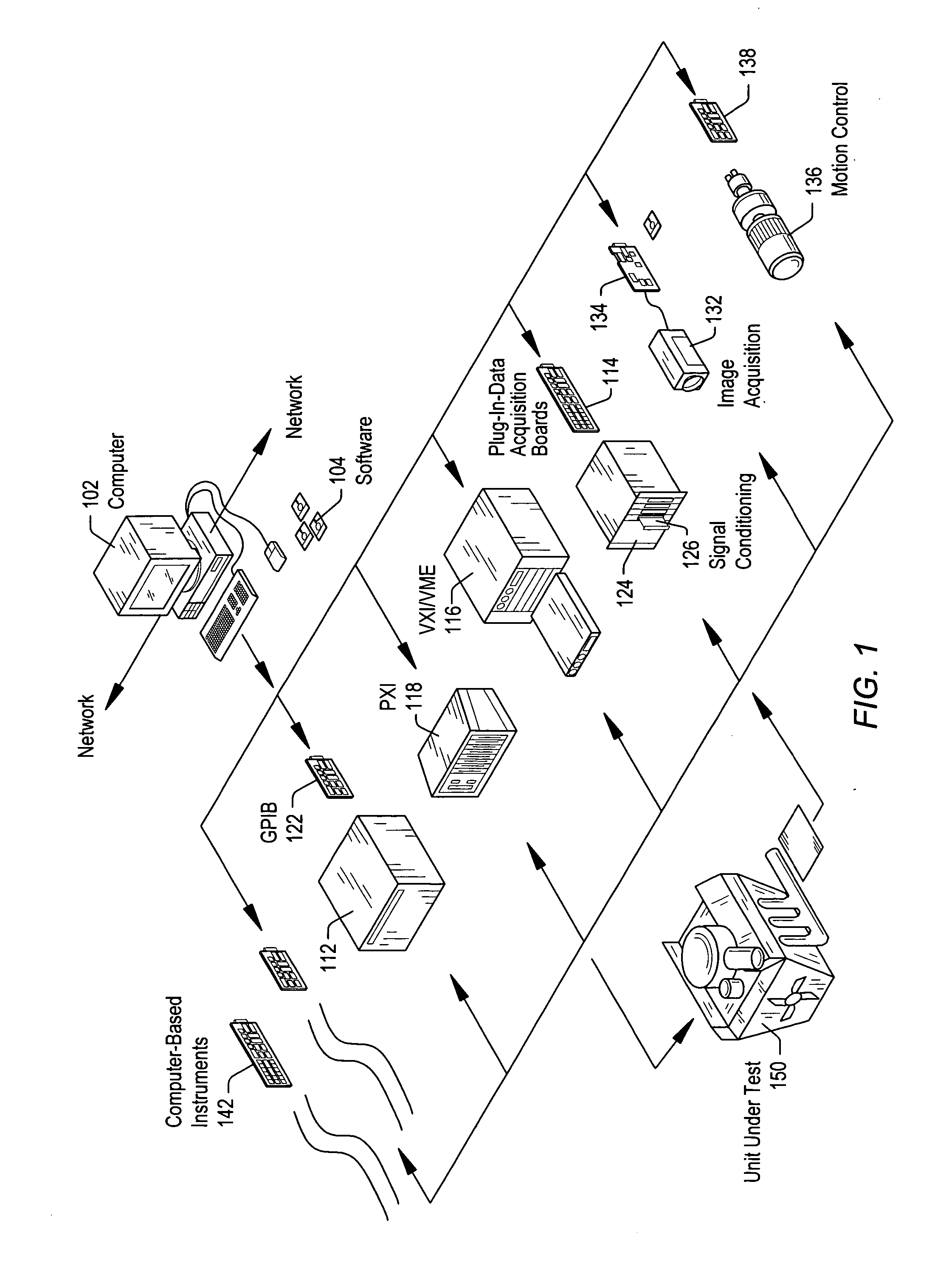

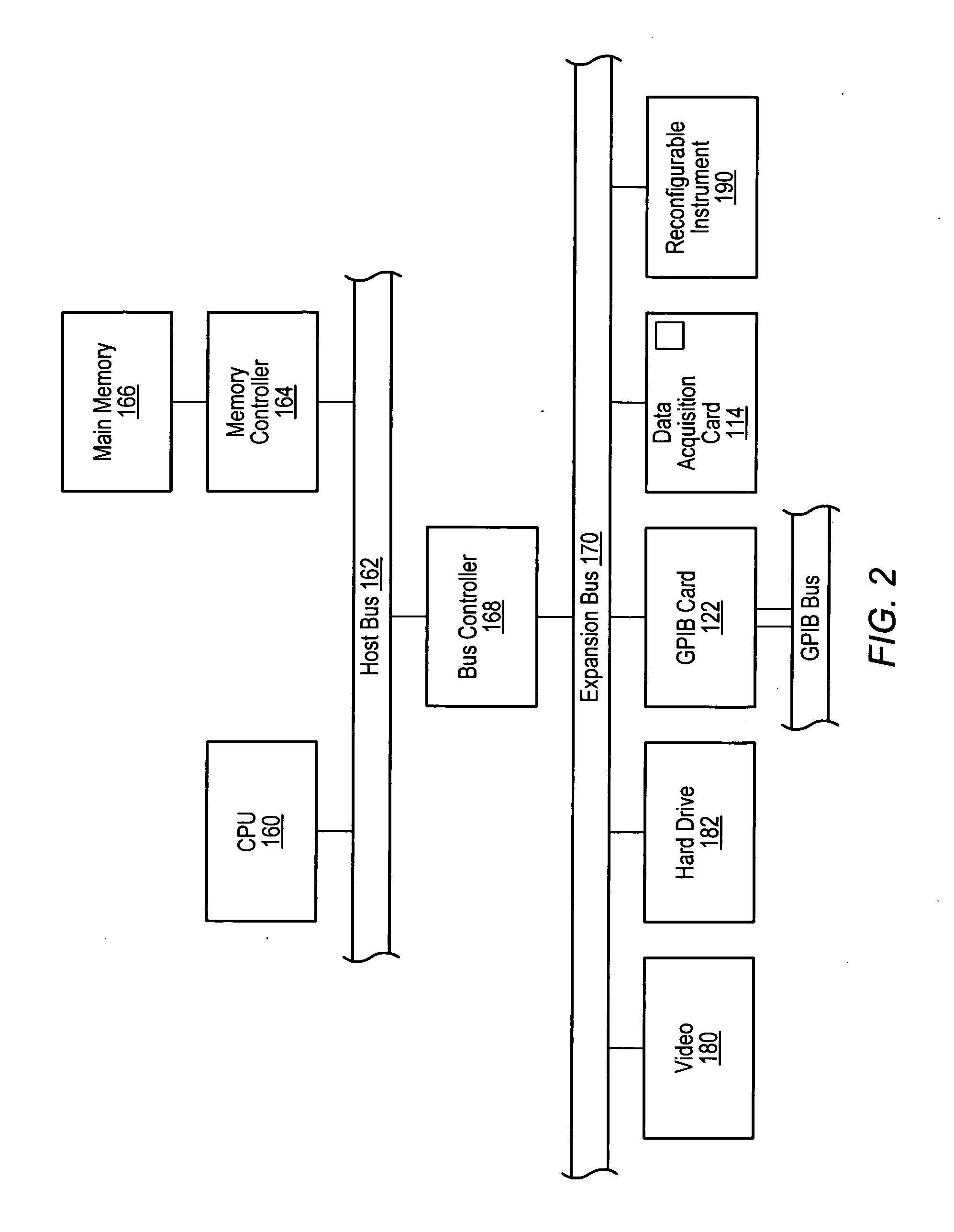

Test executive which provides heap validity checking and memory leak detection for user code modules

ActiveUS20060143537A1Improve executionImprove execution performanceError detection/correctionCode moduleTest execution

A system and method for automatically detecting heap corruption errors and memory leak errors caused by user-supplied code modules that are called by steps of a test executive sequence. The test executive sequence may first be created by including a plurality of test executive steps in the test executive sequence and configuring at least a subset of the steps to call user-supplied code modules. The test executive sequence may then be executed on a host computer under control of a test executive engine. For each step that calls a user-supplied code module, the test executive engine may perform certain actions to automatically detect whether the user-supplied code module causes a heap corruption error and / or automatically detect whether the user-supplied code module causes a memory leak error.

Owner:NATIONAL INSTRUMENTS

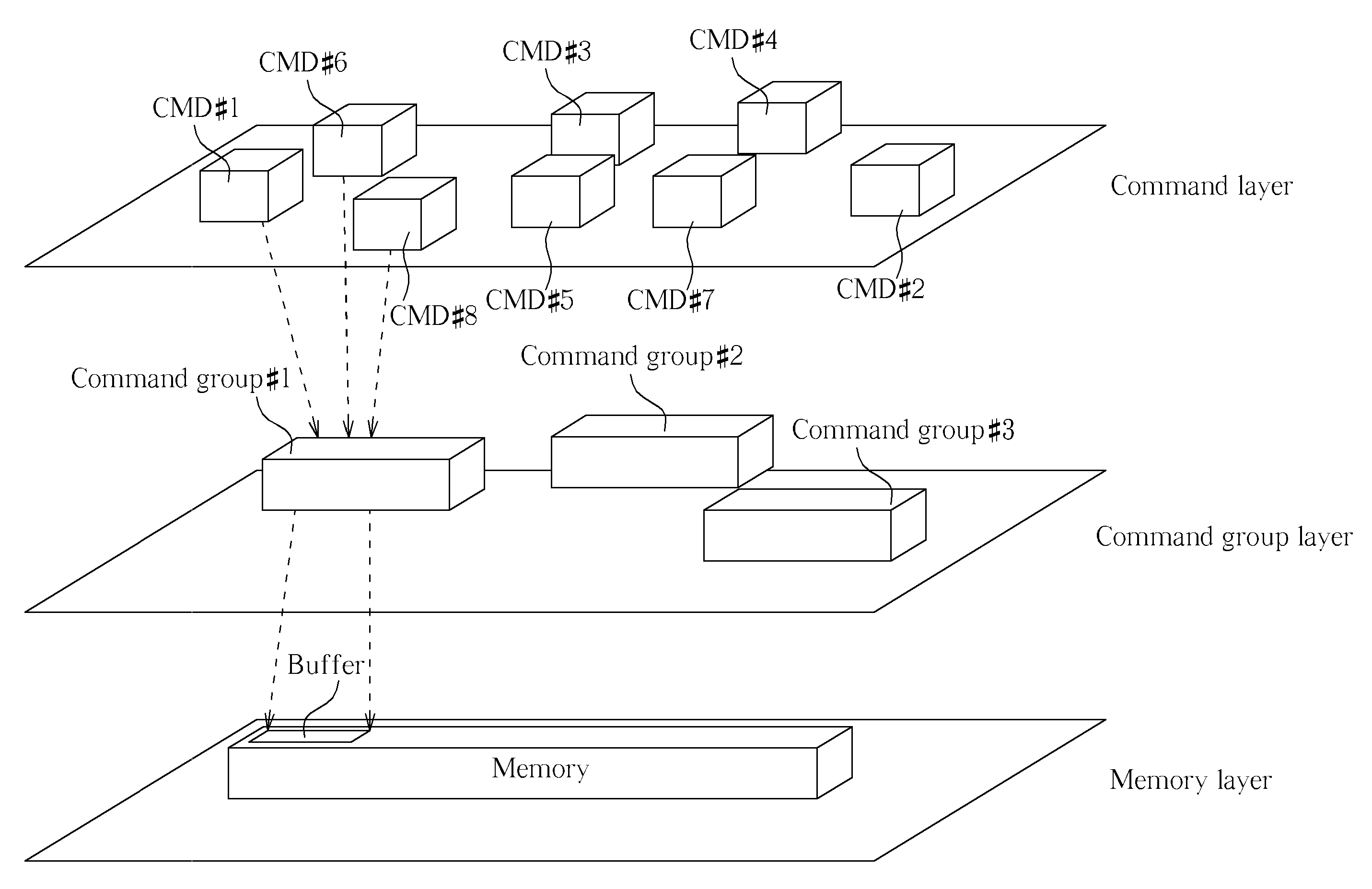

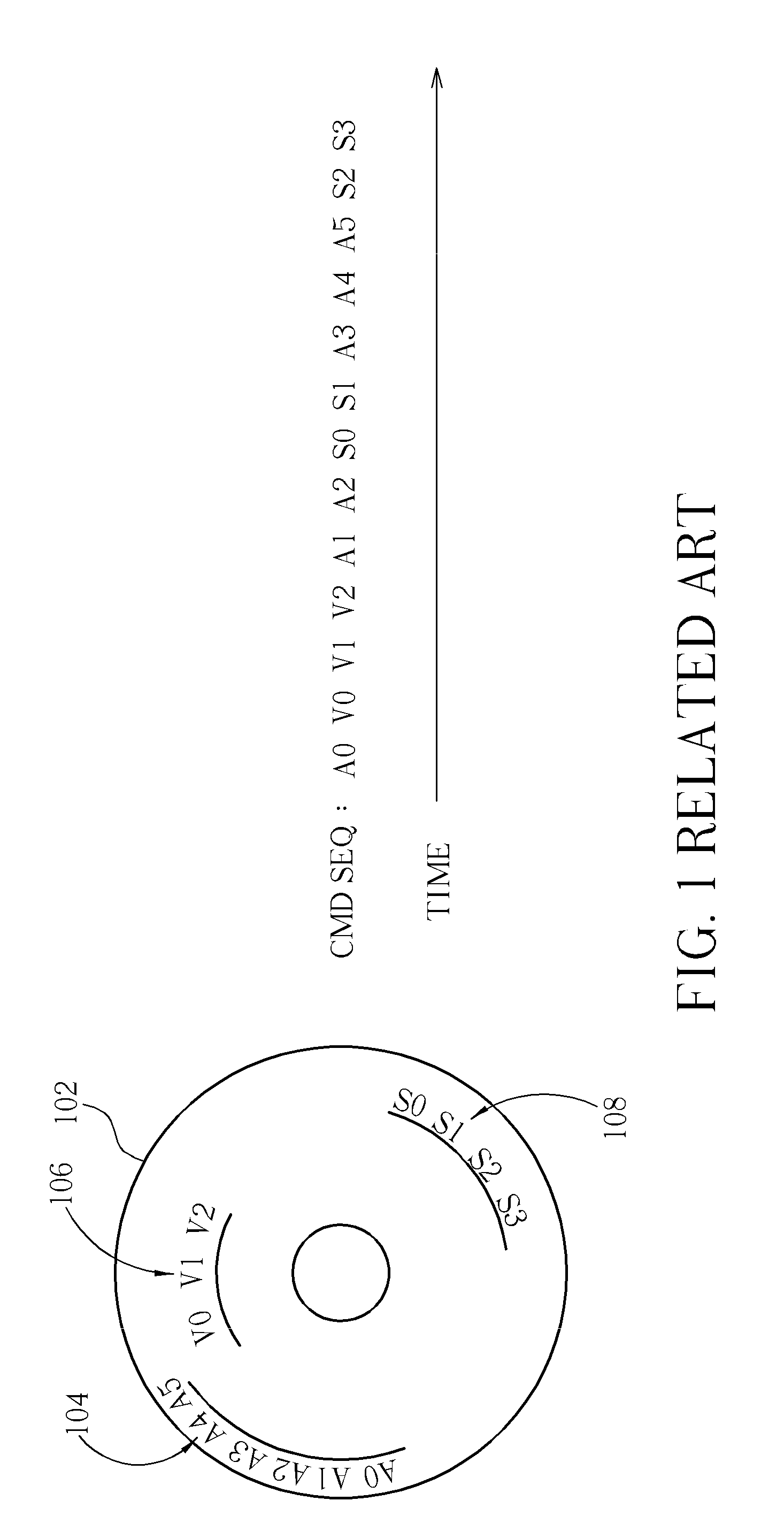

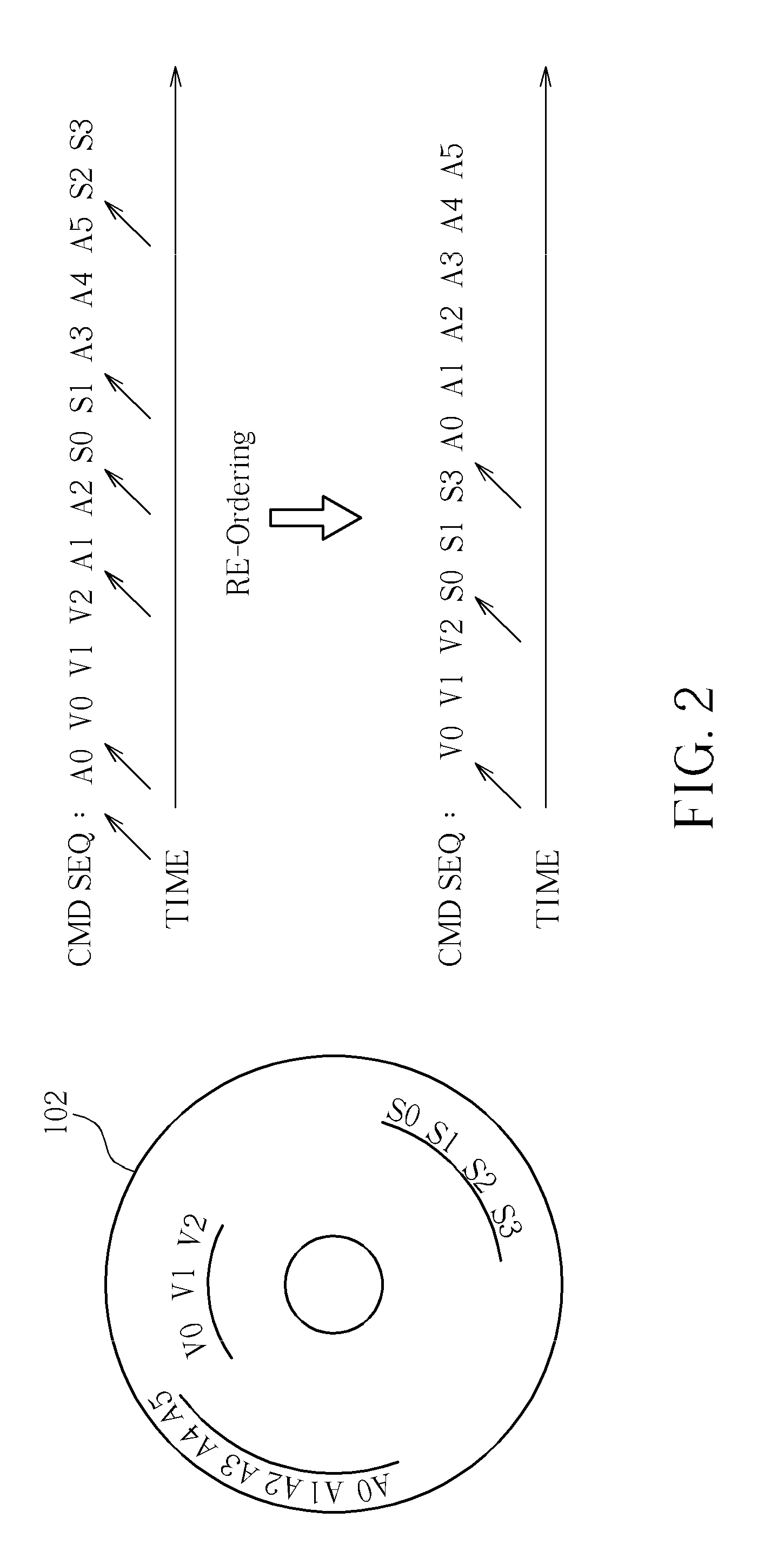

Method of Enhancing Command Executing Performance of Disc Drive

ActiveUS20100077175A1Improve execution performanceReduce the amount requiredMemory systemsInput/output processes for data processingComputer hardwarePhysical address

For decreasing seeks generated when switching an execution flow between commands to enhance read and write performances of a disc drive, a command is implemented with a specifically-designed data structure, and commands having neighboring physical addresses and the same type of read or write operations are grouped and linked together. With the aid of command groups, seeks between commands are significantly decreased, though starvation may arise. A few techniques are further provided for preventing starvation of command groups and for preserving the benefits of decreasing seeks.

Owner:MEDIATEK INC

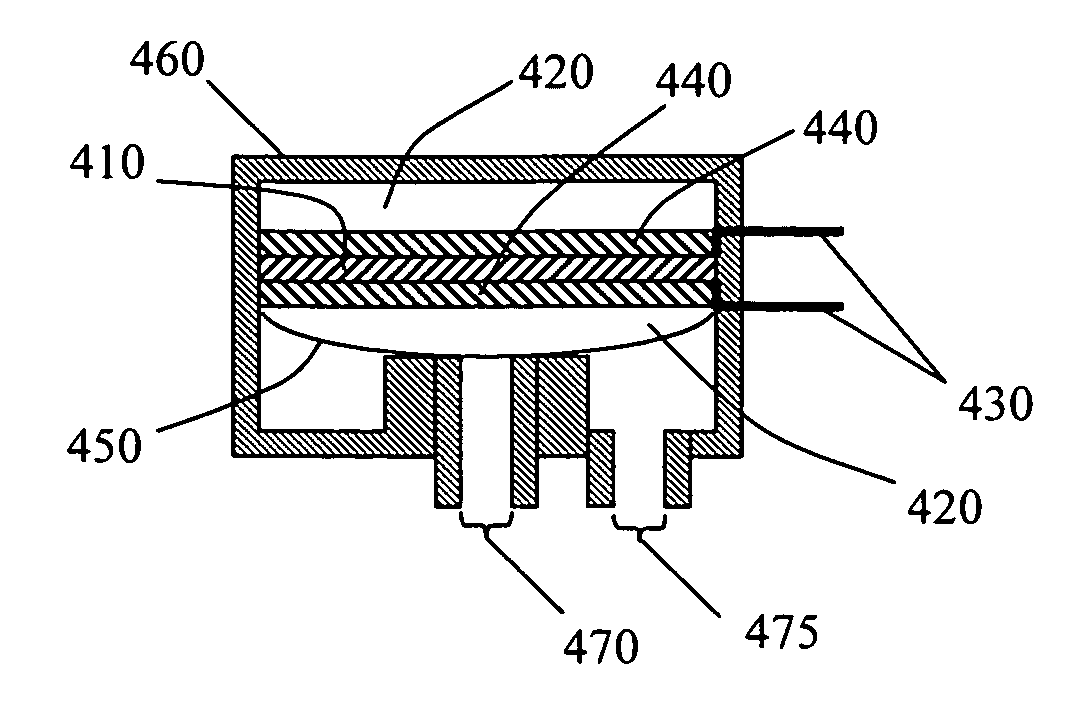

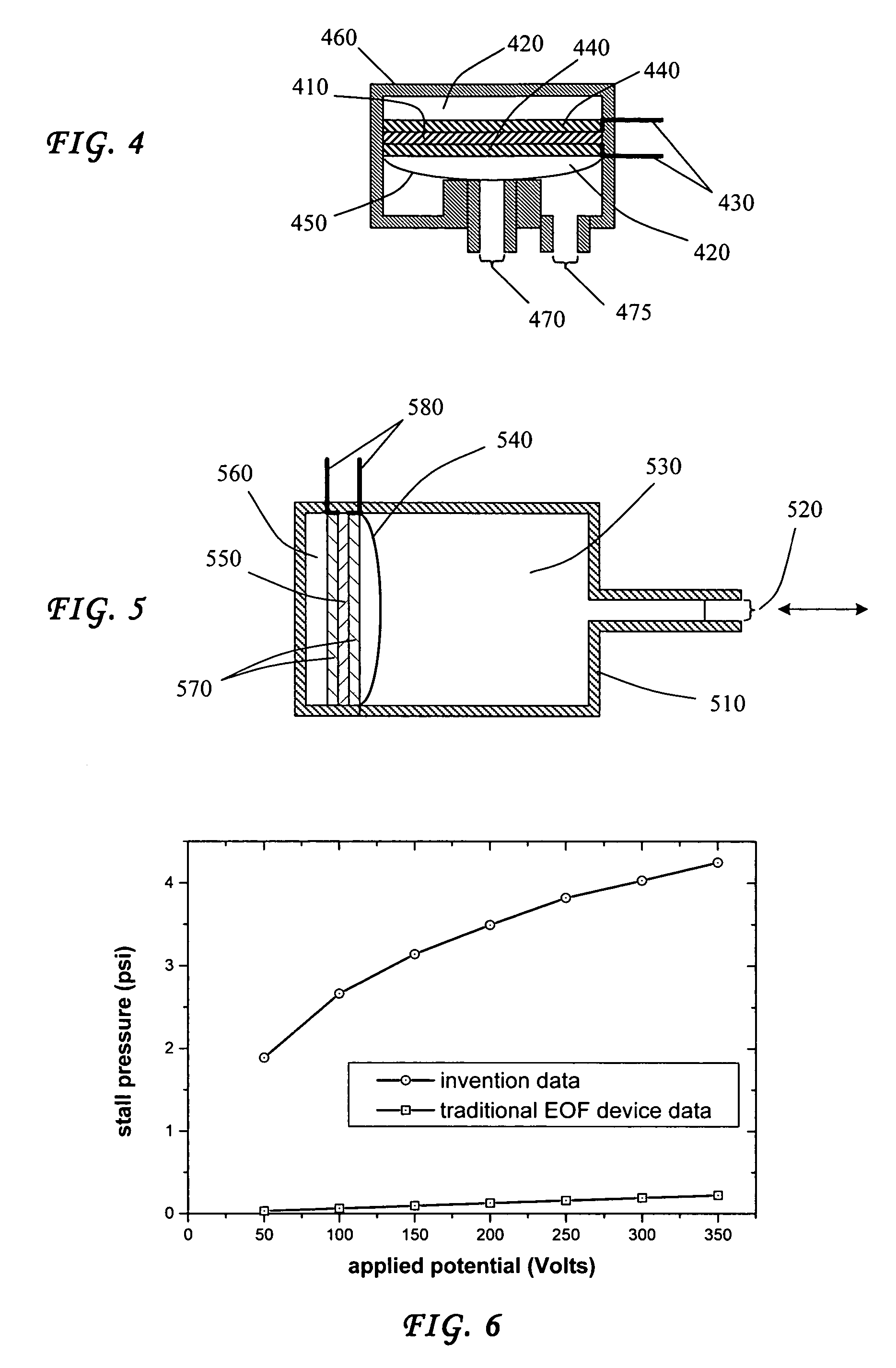

Electrokinetic device employing a non-newtonian liquid

InactiveUS7429317B2Improve execution performanceOvercome limitationsSludge treatmentEngine diaphragmsDiaphragm valveEngineering

A non-Newtonian fluid is used in an electrokinetic device to produce electroosmotic flow therethrough. The nonlinear viscosity of the non-Newtonian fluid allows the electrokinetic device to behave differently under different operating conditions, such as externally applied pressures and electric potentials. Electrokinetic devices can be used with a non-Newtonian fluid in a number of applications, including but not limited to electrokinetic pumps, flow controllers, diaphragm valves, and displacement systems.

Owner:TELEFLEX LIFE SCI PTE LTD

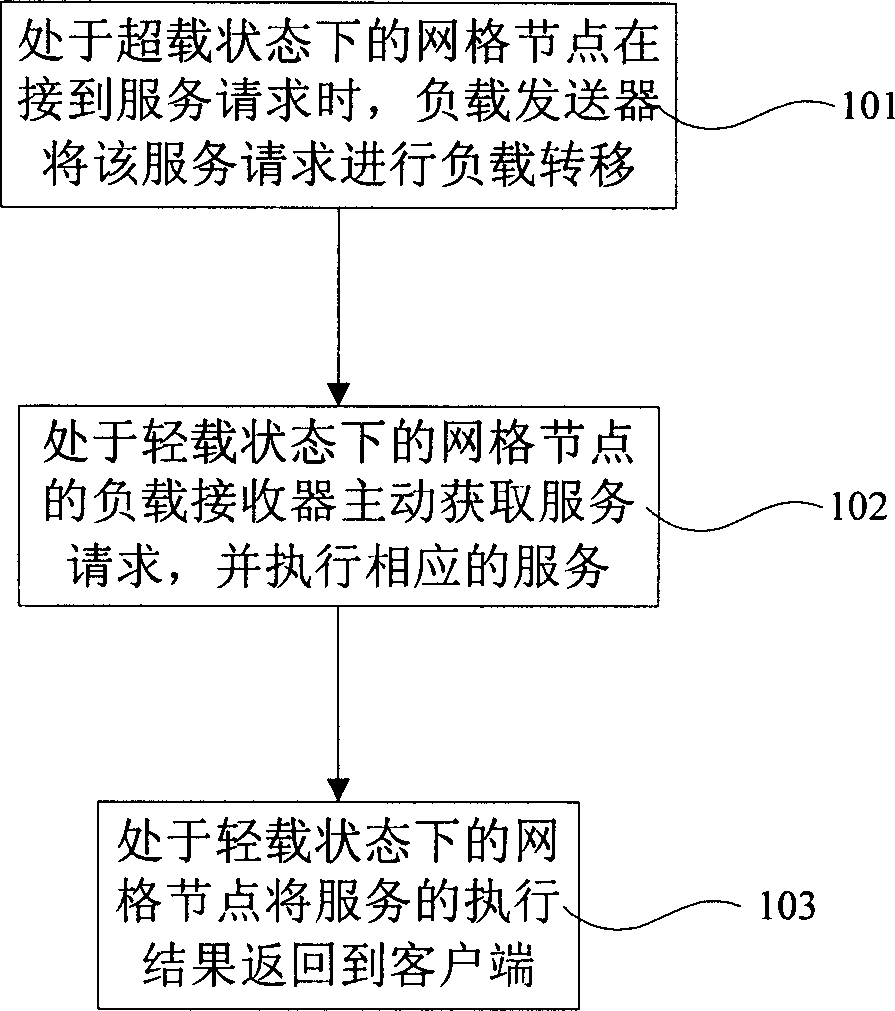

Method for balancing gridding load

InactiveCN1791113AHigh transparencyImprove execution performanceTransmissionOperational systemClient-side

The invention relates to a method to balance grid load, which comprises: 1. the overloaded grid node receives user service request for SOAPS and sets its load transmitter to send load; 2. the load receiver of light-load grid node active acquires service request and executes service; 3. returns result to client. This invention method is fit to isomerous scientific research network, has well transparency, and improves system performance.

Owner:BEIHANG UNIV

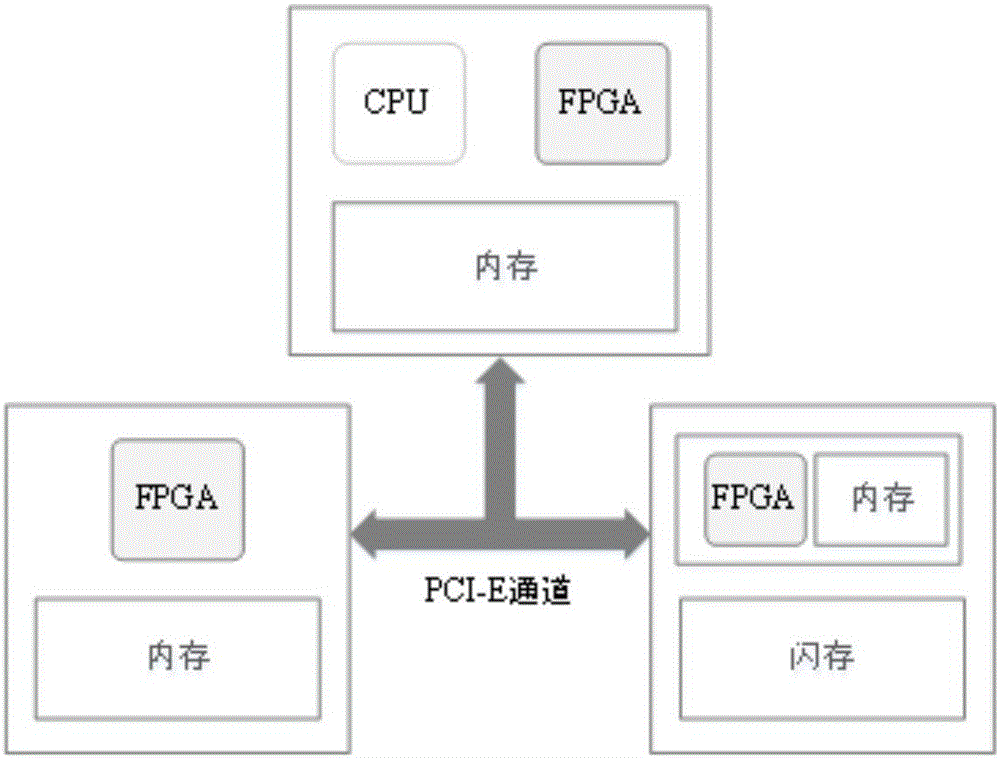

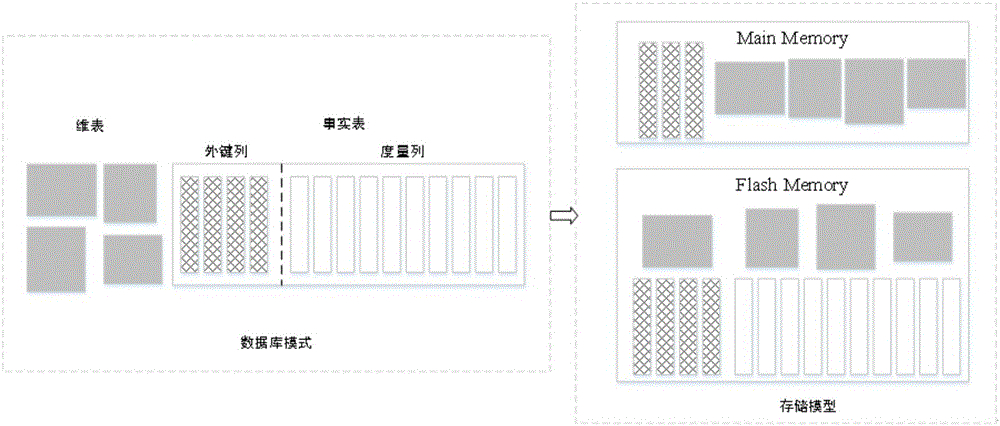

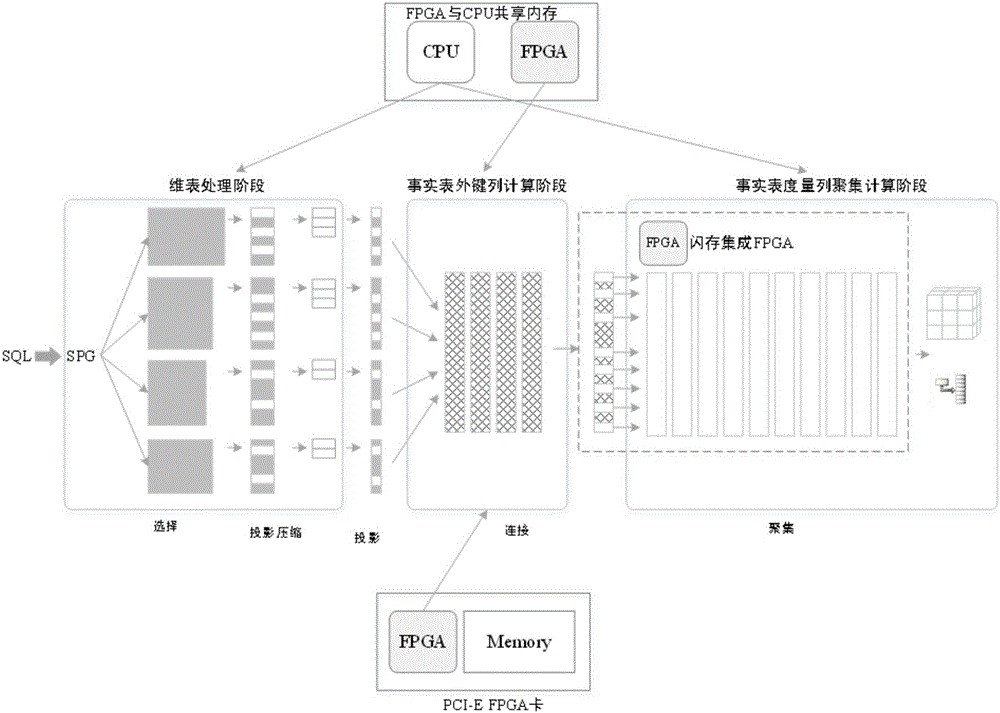

Method for memory on-line analytical processing (OLAP) query optimization based on field programmable gate array (FPGA)

ActiveCN105868388AReduce memory storage costsReduce Computational Cost and Power ConsumptionMulti-dimensional databasesSpecial data processing applicationsQuery optimizationStorage model

The invention relates to a method for memory on-line analytical processing (OLAP) query optimization based on a field programmable gate array (FPGA). The method comprises the steps of constructing a memory-memory-faced data warehouse heterogeneous storage model; performing query optimization facing a central processing unit (CPU)-FPGA heterogeneous processor based on the heterogeneous storage model: generating a grouping projection vector through subquery; performing dictionary table compression on the grouping projection vector; updating a grouping projection as a grouping projection vector based on dictionary table coding according to a projection dictionary table; performing connection operation on the grouping projection vector and a fact table foreign key, and generating a measurement vector based on measurement list aggregation computation; performing index aggregation computation based on the measurement vector; performing query optimization facing a CPU and FPGA heterogeneous computing platform based on the heterogeneous storage model: causing the FPGA and the CPU to perform shared access of an identical memory address space; when the FPGA is in PCI-E accelerator card configuration, using an FPGA acceleration connection performance and the FPGA to directly access a flash card through a PCI-E channel to perform data processing; and when the FPGA is integrated to a flash memory, accelerating data access and aggregation computation performances of the flash card through the FPGA.

Owner:RENMIN UNIVERSITY OF CHINA

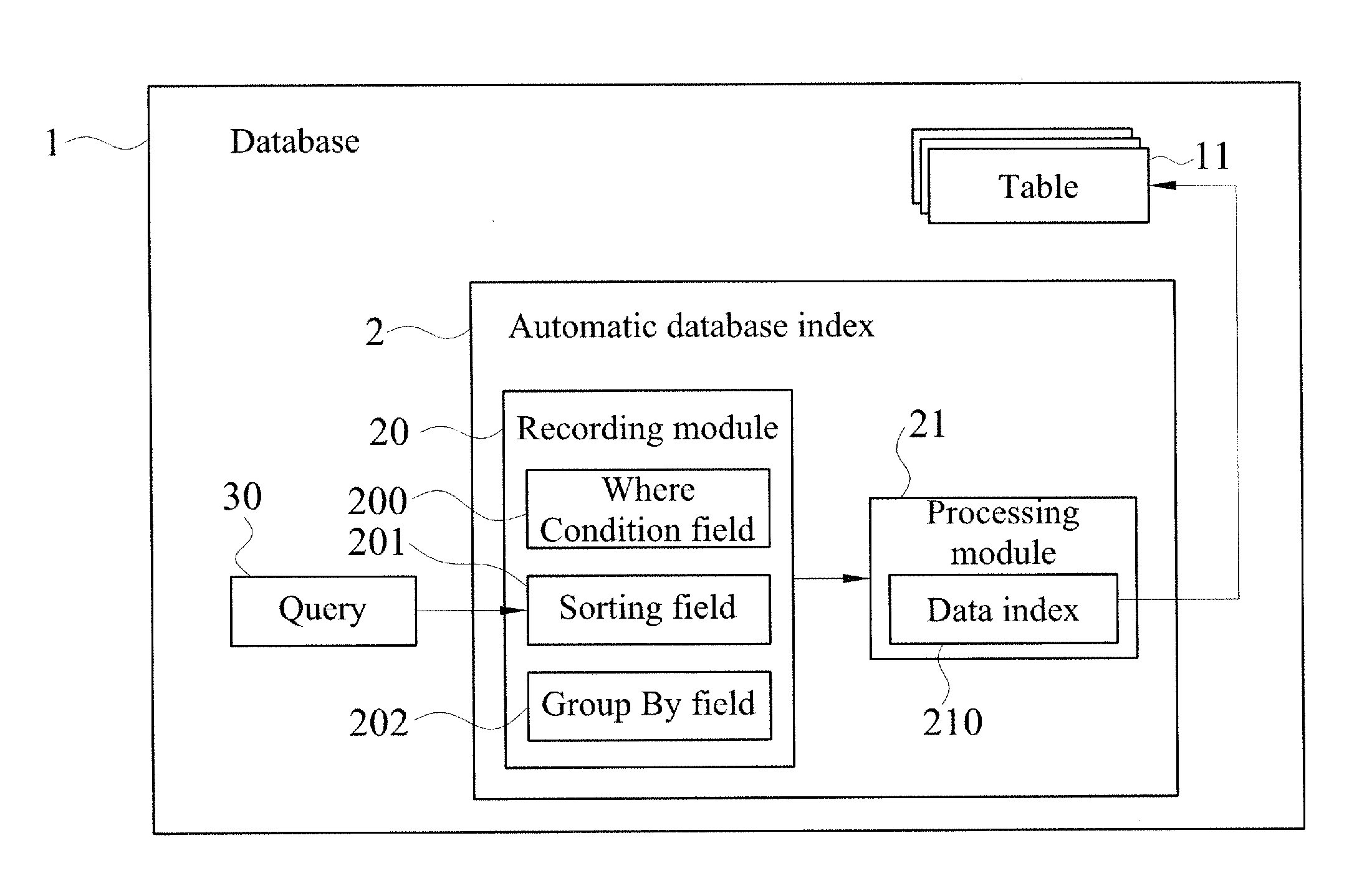

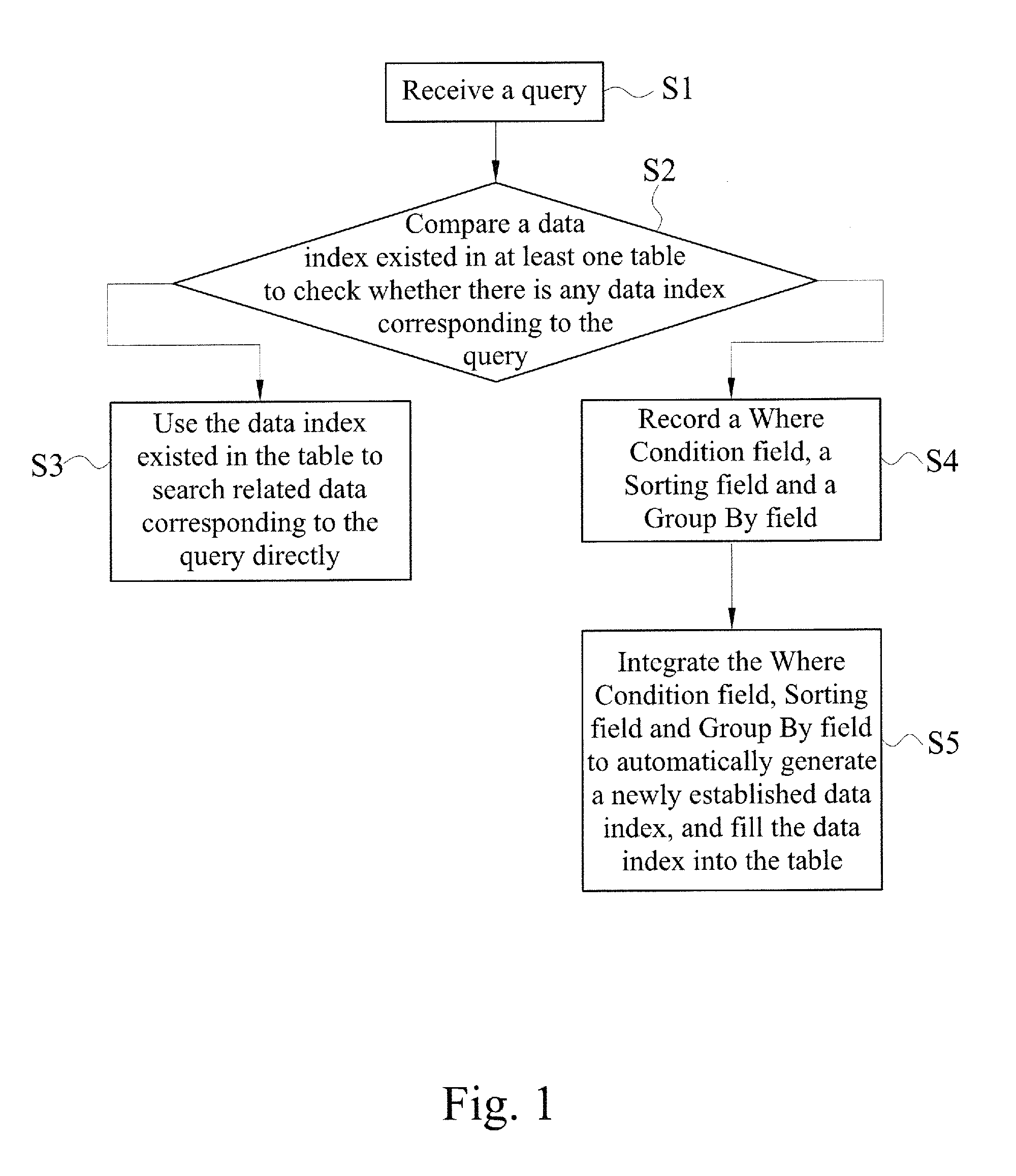

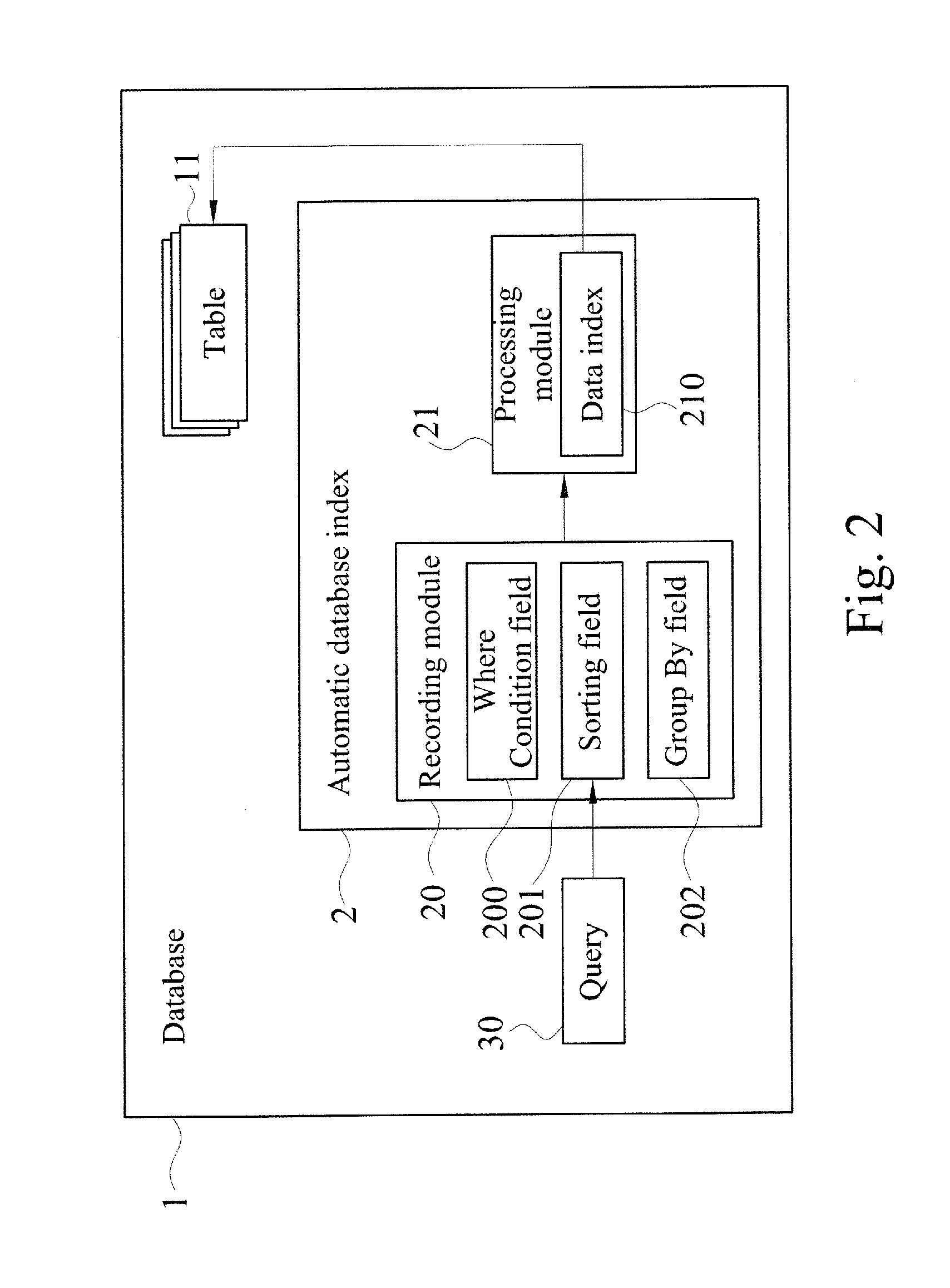

Automatic data index establishment method

InactiveUS20140201192A1Improve practicalityReduce data management costsDigital data information retrievalDigital data processing detailsData management

An automatic data index establishment method includes the steps of comparing a data index existed in at least one table when a database inquires at least one query to obtain the data index correspondingly and directly uses the data index to search related data. If the query has no corresponding data index, a Where Condition field, a Sorting field and a Group By field of the query are recorded and integrated to automatically generate the newly established data index and fill the data index into the table correspondingly. Therefore, the database has intelligent functions with automatic analysis to automatically generate, update, and delete the data index, so as to achieve the effects of enhancing the accuracy and execution efficiency of the data management and reducing the manpower of data managers effectively.

Owner:SYSCOM COMP ENG

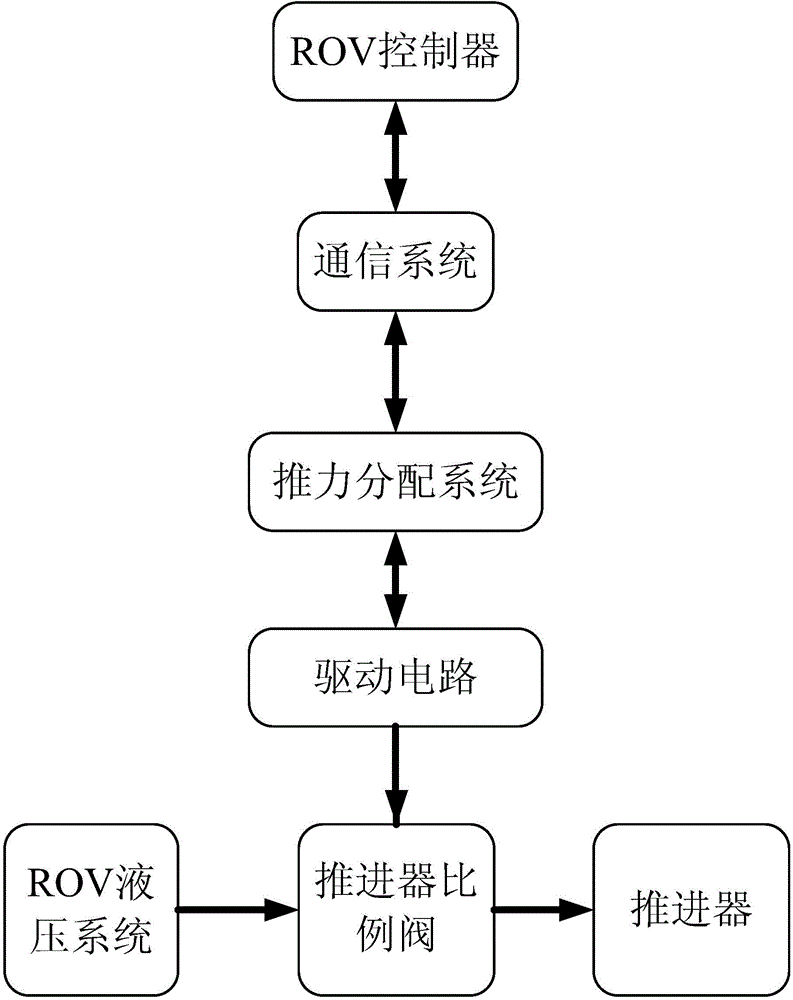

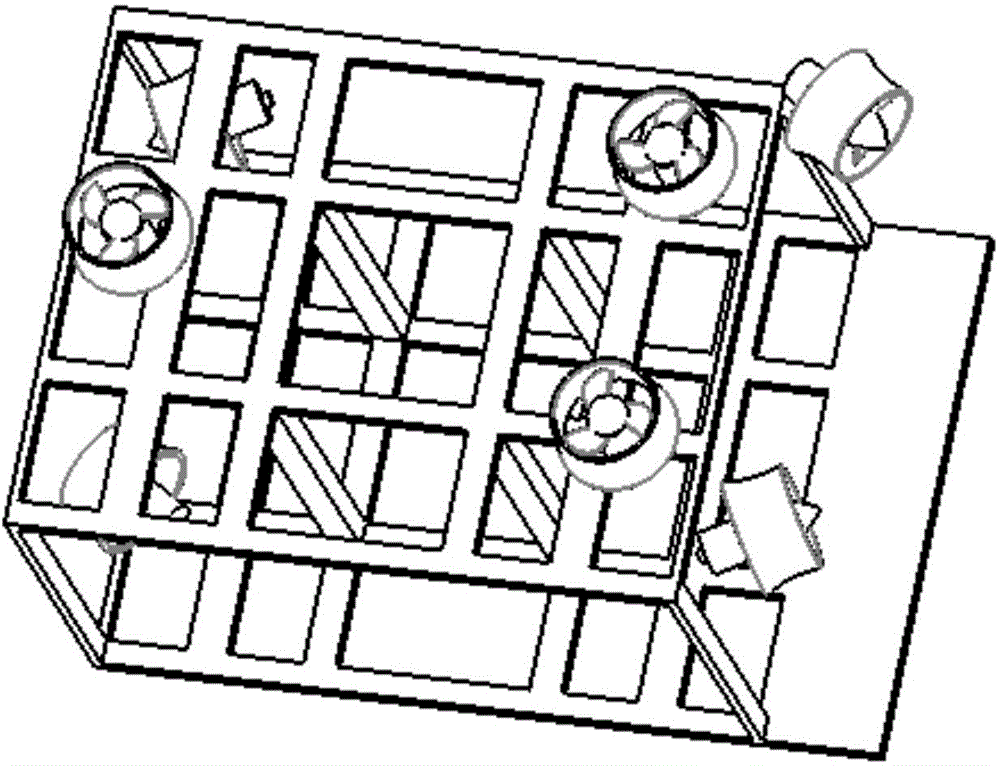

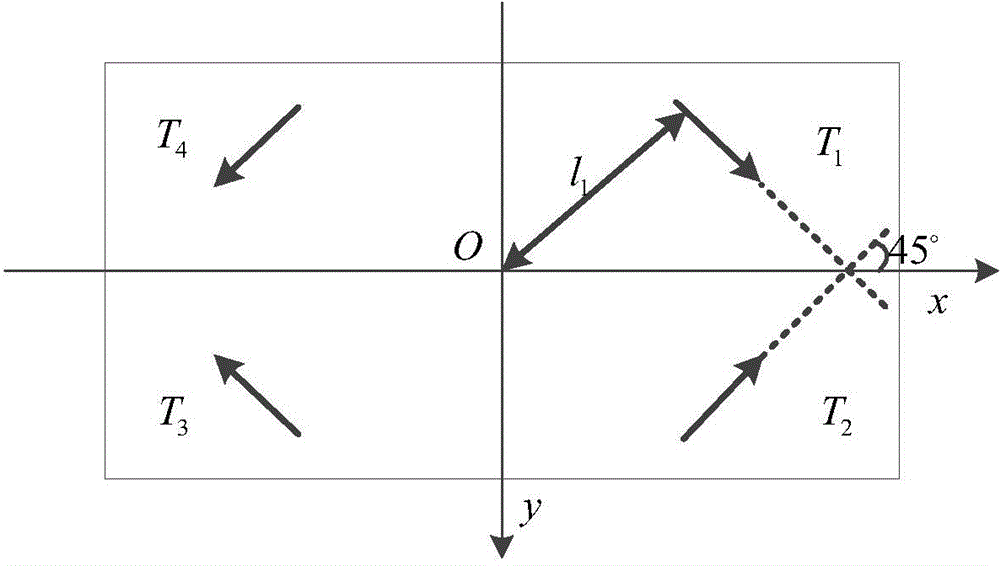

Deep-sea working ROV (Remotely Operated Vehicle) propeller system

ActiveCN104802971AReceive quicklyReceive data quicklyUnderwater vesselsUnderwater equipmentSix degrees of freedomVoltage

The invention discloses a deep-sea working ROV (Remotely Operated Vehicle) propeller system. An ROV controller can generate a speed control instruction of six degrees of freedom according to the current motion state of an ROV; a communication unit adopts a TCP (Transmission Control Protocol) / IP (Internet Protocol) network communication mode and transmits the speed control instruction generated by the ROV controller to a thrust distribution unit; a propeller unit comprises four horizontal propellers and three vertical propellers; the thrust distribution unit decomposes the instruction according to the received speed control instruction, and the obtained thrust values of all the propellers are transmitted to a driving unit; the driving unit outputs corresponding voltage signals according to the received thrust values and transmits the voltage signals to a propeller proportional valve, and opening and closing of the propeller proportional valve is adjusted; a hydraulic unit transmits hydraulic oil to the propeller unit through the propeller proportional valve. The deep-sea working ROV propeller system can improve the execution capability and efficiency of a propelling system.

Owner:HARBIN ENG UNIV

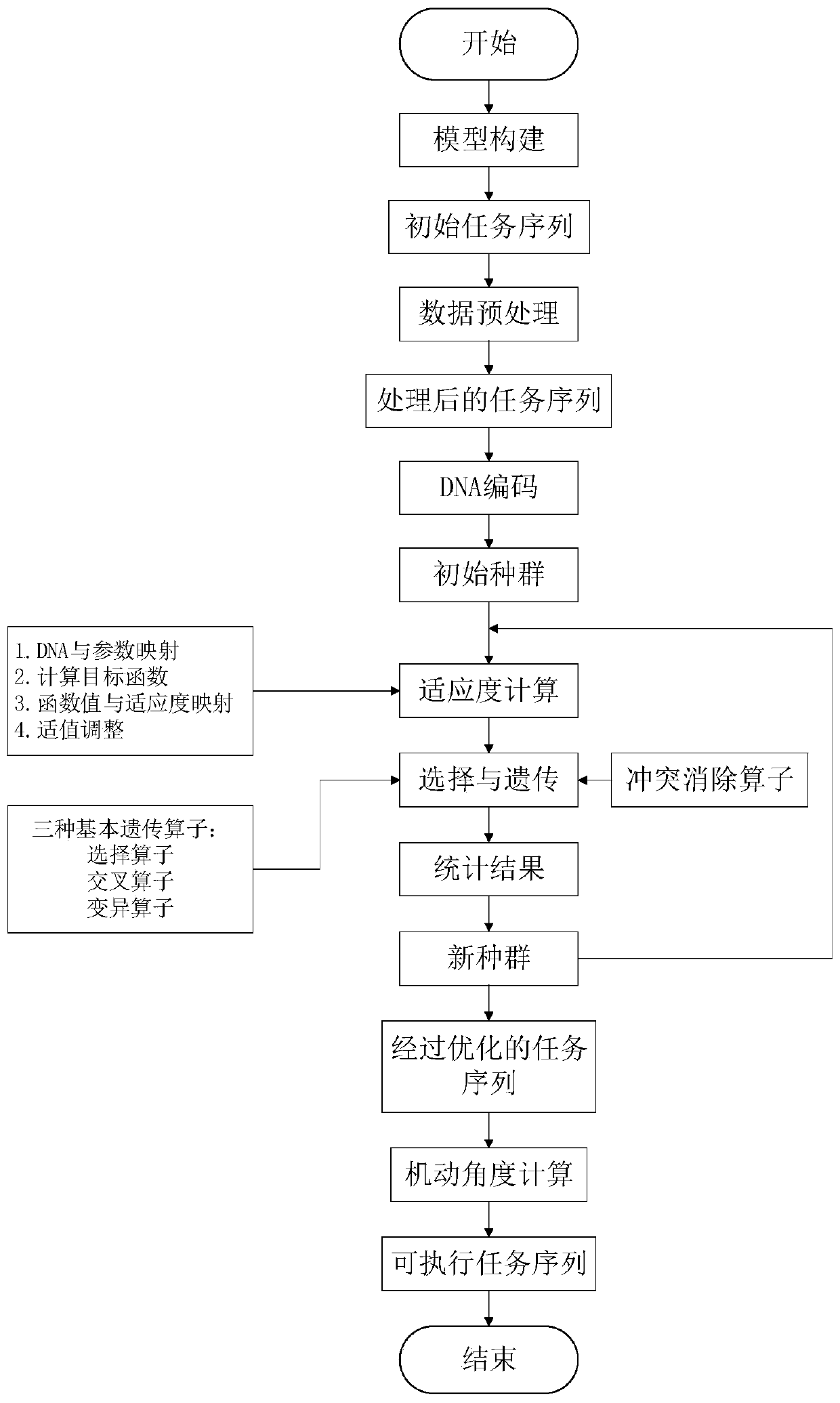

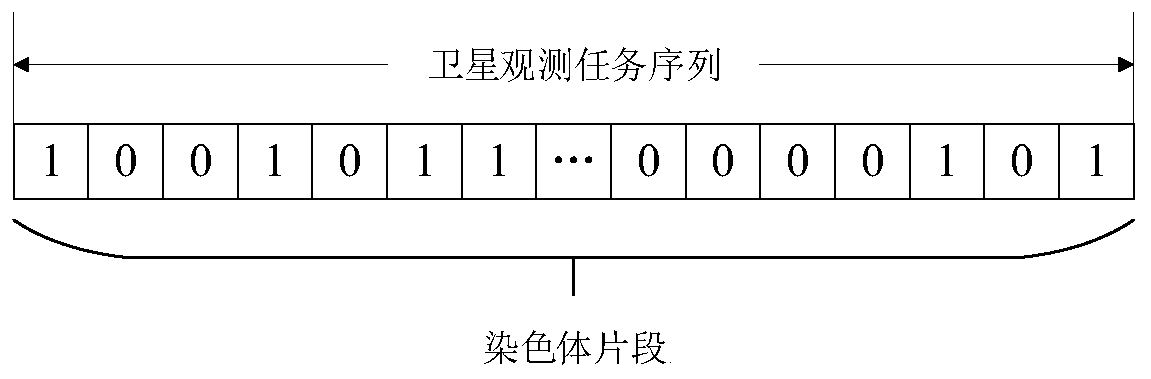

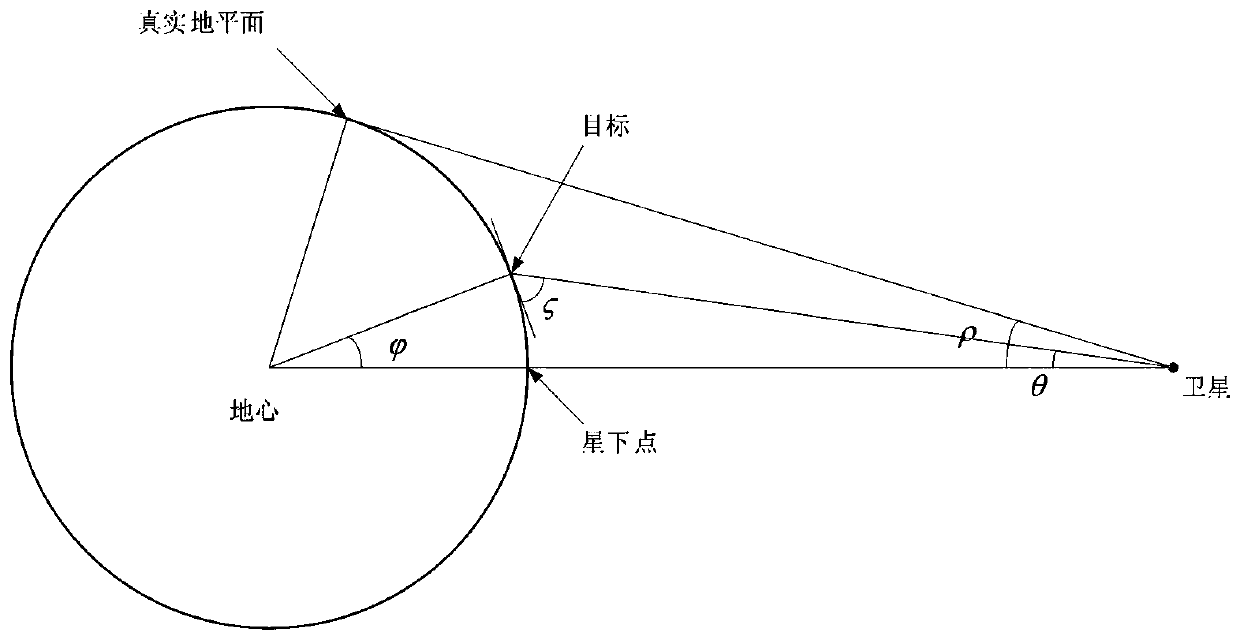

A moving target single-satellite task planning method based on a constraint satisfaction genetic algorithm

ActiveCN109933842AMeet needsMeet observation needsGenetic modelsSpecial data processing applicationsEarth observationGenetic algorithm

The invention discloses a moving target single-satellite task planning method based on a constraint satisfaction genetic algorithm, which comprises the following steps of: firstly, calculating a visible time window according to model characteristics and constraints of a satellite and an observed target to form an initial task sequence; Secondly, constructing an objective function and a genetic algorithm model, and introducing a conflict elimination operator to calculate a conflict sequence; Thirdly, optimizing and solving the task sequence to obtain a conflict-free task sequence meeting the constraint; And finally, calculating a satellite task observation scheme and a satellite maneuvering angle. According to the method, the satellite earth observation efficiency can be improved, limited satellite resources are reasonably utilized, and the capture observation task of the moving target is completed.

Owner:BEIHANG UNIV

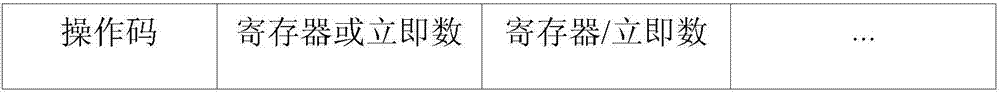

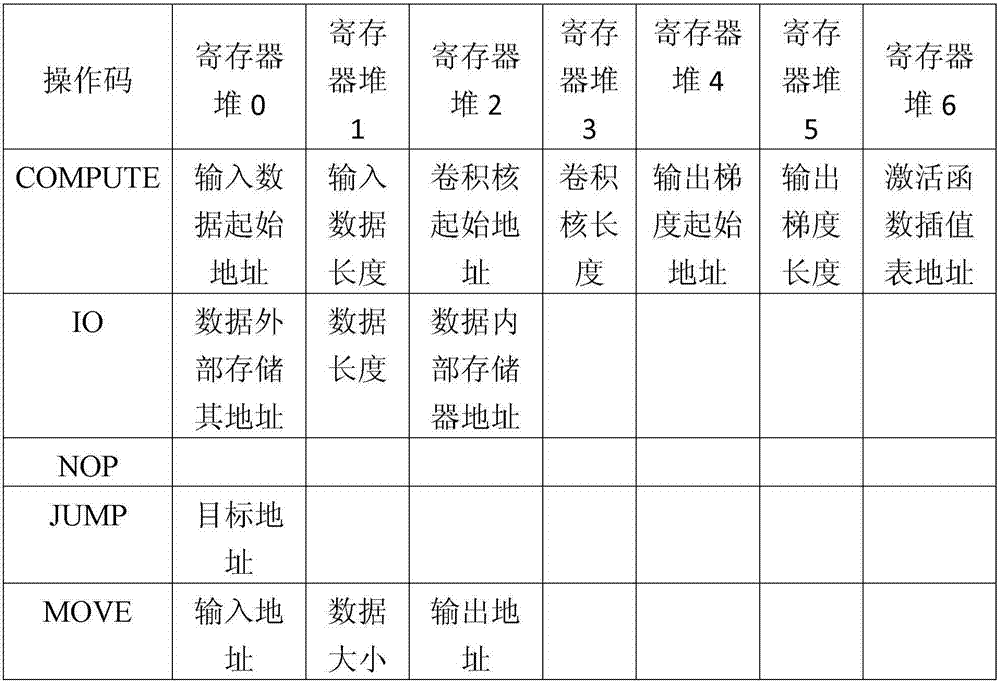

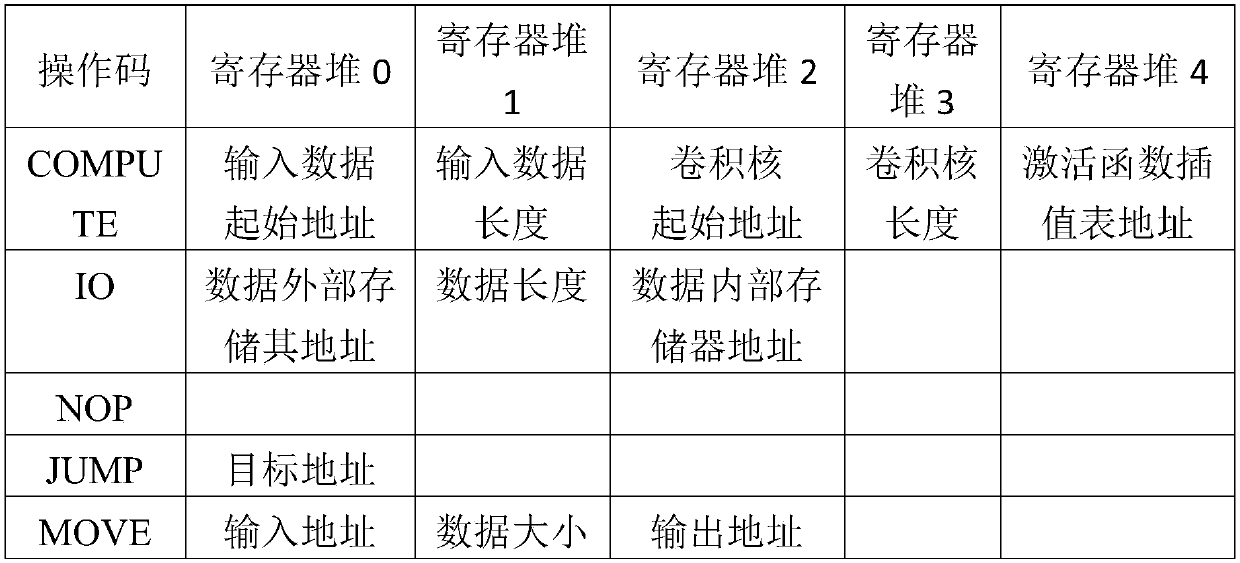

Convolutional neural network calculation instruction and method thereof

ActiveCN107704267AFlexible supportImprove execution performanceConcurrent instruction executionNeural architecturesActivation functionAlgorithm

The invention provides a convolutional neural network calculation instruction and a method thereof. The convolutional neural network calculation instruction comprises at least one operation code and at least one operation domain, wherein the operation codes are used for indicating a function of the convolutional neural network calculation instruction; the operation domains are used for indicatingdata information of the convolutional neural network calculation instruction; and the data information comprises an immediate operand or a register number, and specifically comprises an initial address and the data length of input data, an initial address and the data length of a convolution kernel, and the type of an activation function. Output data serves as input data of the next layer.

Owner:CAMBRICON TECH CO LTD

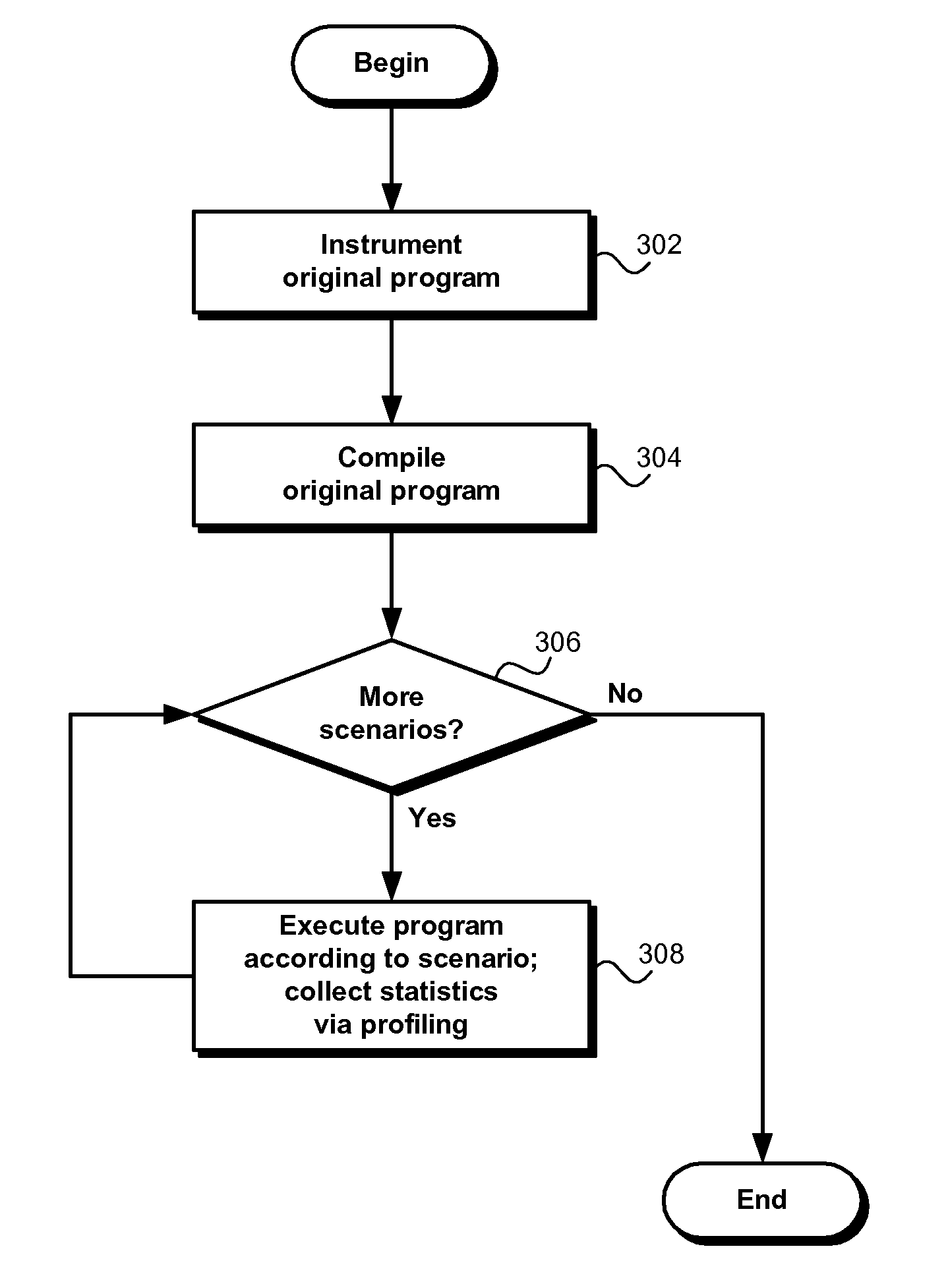

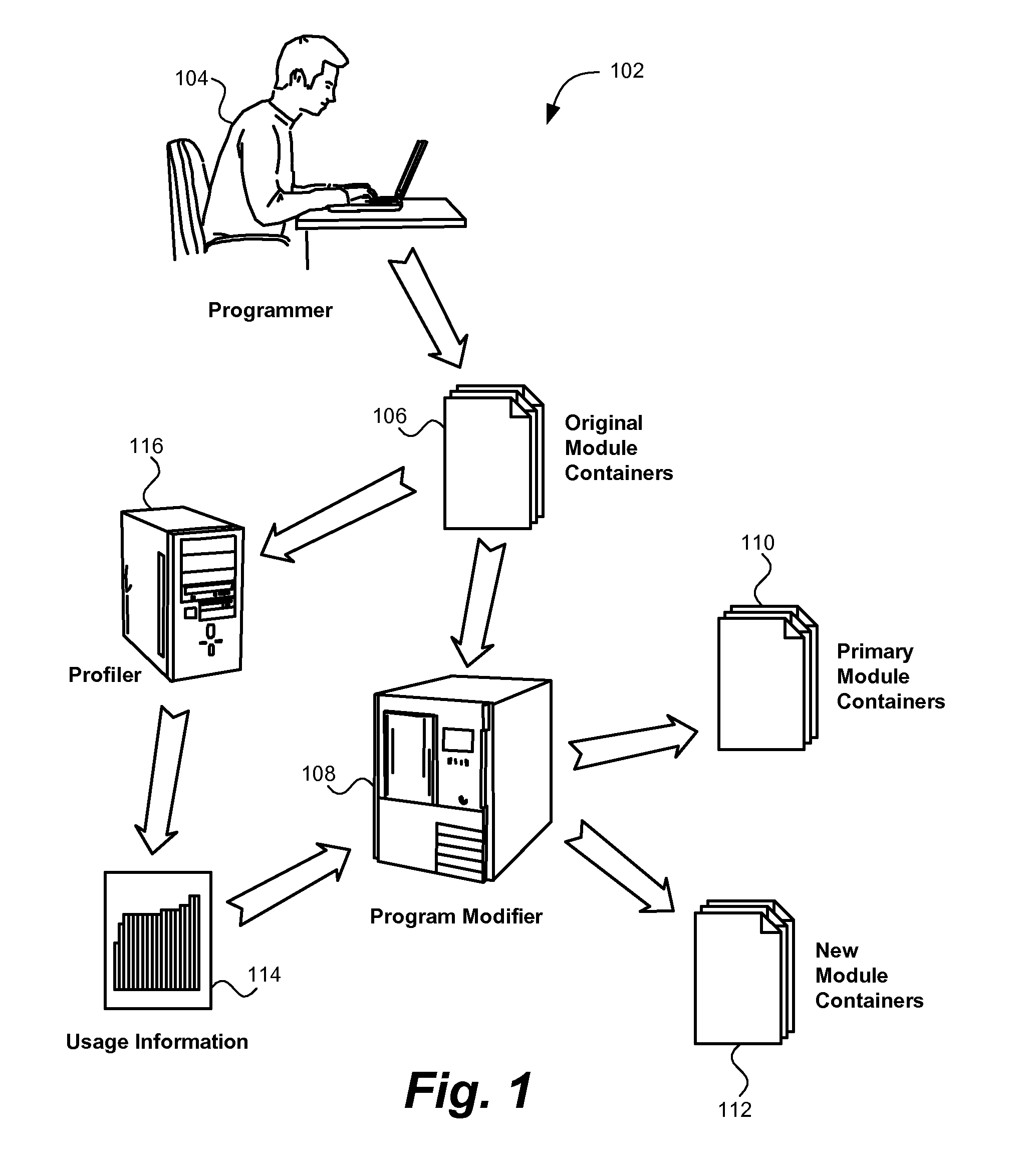

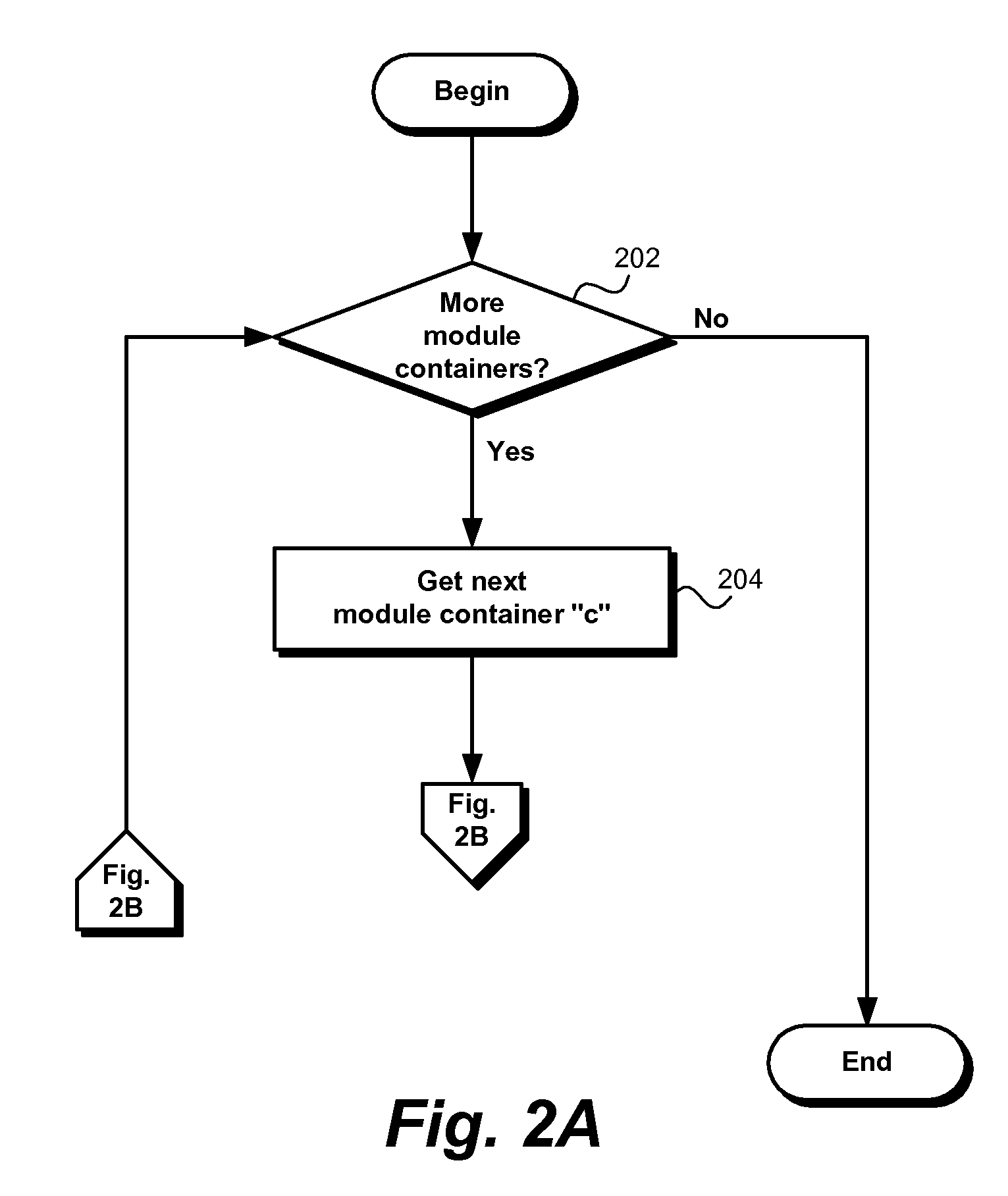

Computer code partitioning for enhanced performance

InactiveUS20070283328A1Improve execution performanceError detection/correctionSoftware engineeringProgramming languageCode module

A method and system for enhancing the execution performance of program code. An analysis of the program code is used to generate code usage information for each code module. For each module, the code usage information is used to determine whether the code module should be separated from its original module container. If so, the code module is migrated to a new module container, and the code module in the original module container is replaced with a reference to the code module in the new module container.

Owner:AIRBNB

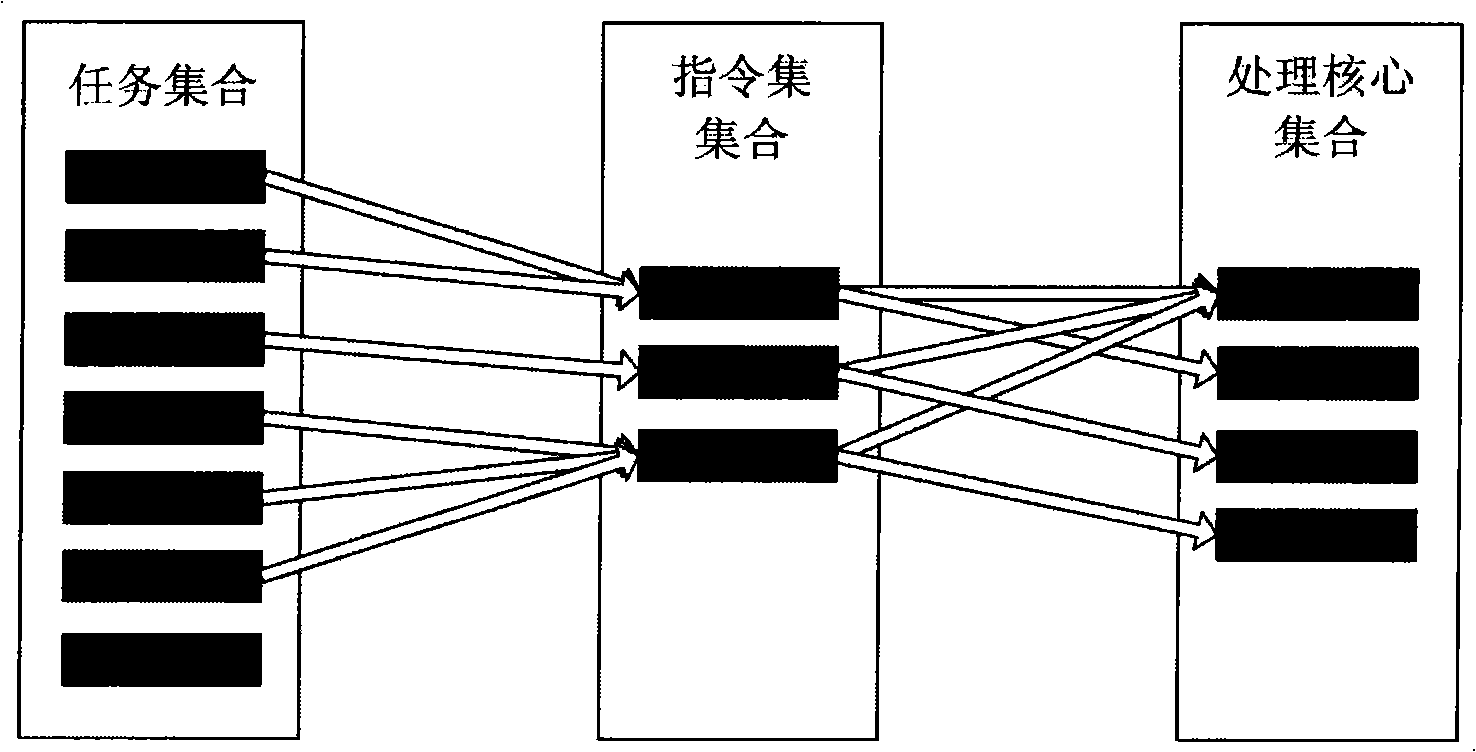

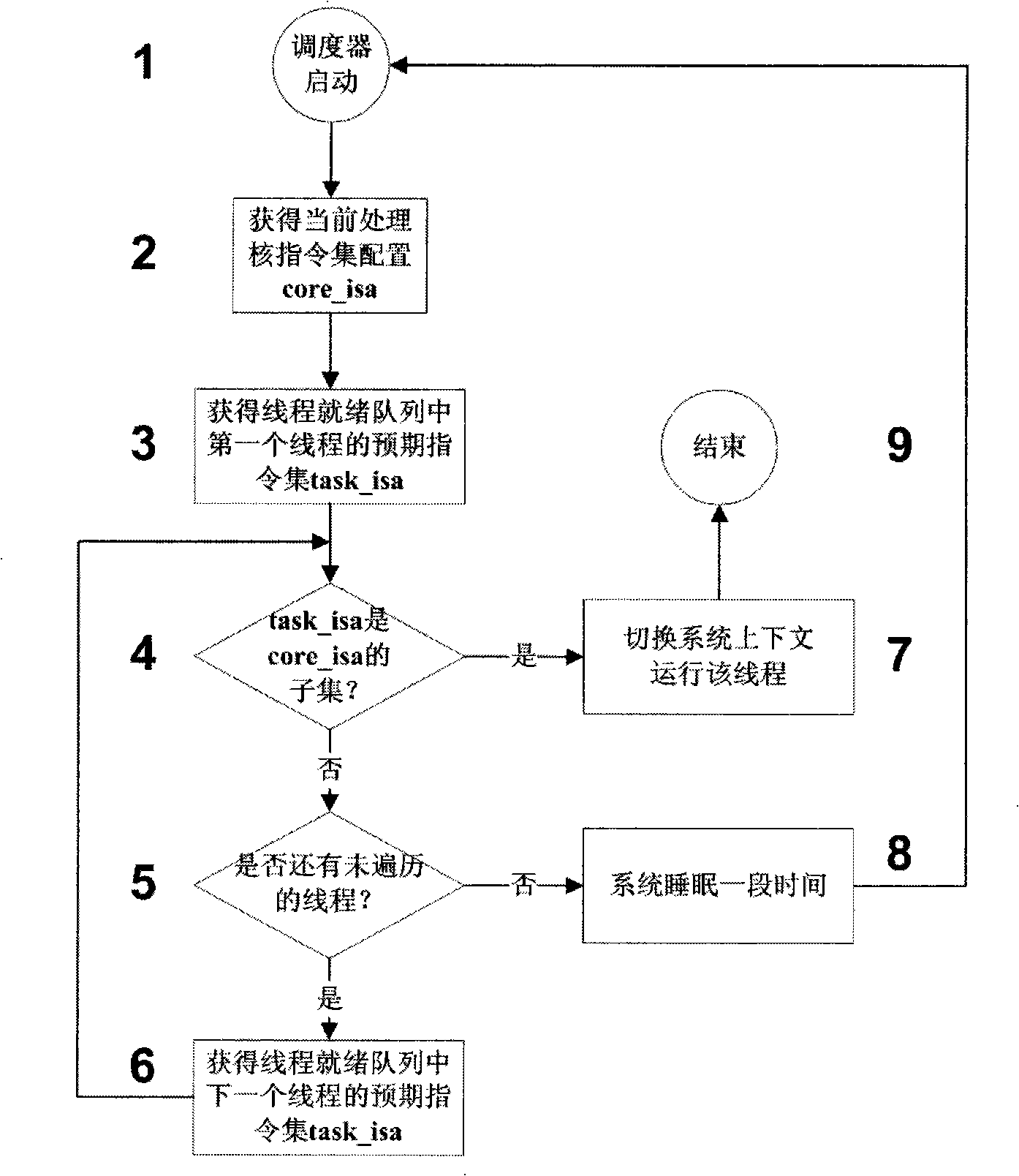

Heterogeneous multi-core system thread-level dynamic dispatching method based on configurable processor

InactiveCN101299194AReduce complexityShorten study timeProgram initiation/switchingOperational systemIsomerization

A thread level dynamic scheduling method based on isomerization multicore system of configurable processor includes steps as follows: a first step, the isomerization multicore structure is composed of a set of configurable process cores, each process core is provided with a set of common instruction set, each core has an instruction set configuration core_isa, which represents that the core can operate certain instruction sets; a second step, the application program is divided into a plurality of threads, only data dependence exists between these threads, each thread is provided with an expectation instruction property thread_isa, representing that the thread uses the instruction set on which this instruction focused; a third step, the operating system and the application running on the operating system are translated and compiled into binary executable file all together, all the processor cores share a same operating system data area; a fourth step, after the completion of the above-mentioned steps, thread-level dynamic scheduling is carried out. The present invention solves the low allocation efficiency shortcoming of the static state scheduling, thereby reducing the learning time and simplifying the programming model.

Owner:SHANGHAI JIAO TONG UNIV

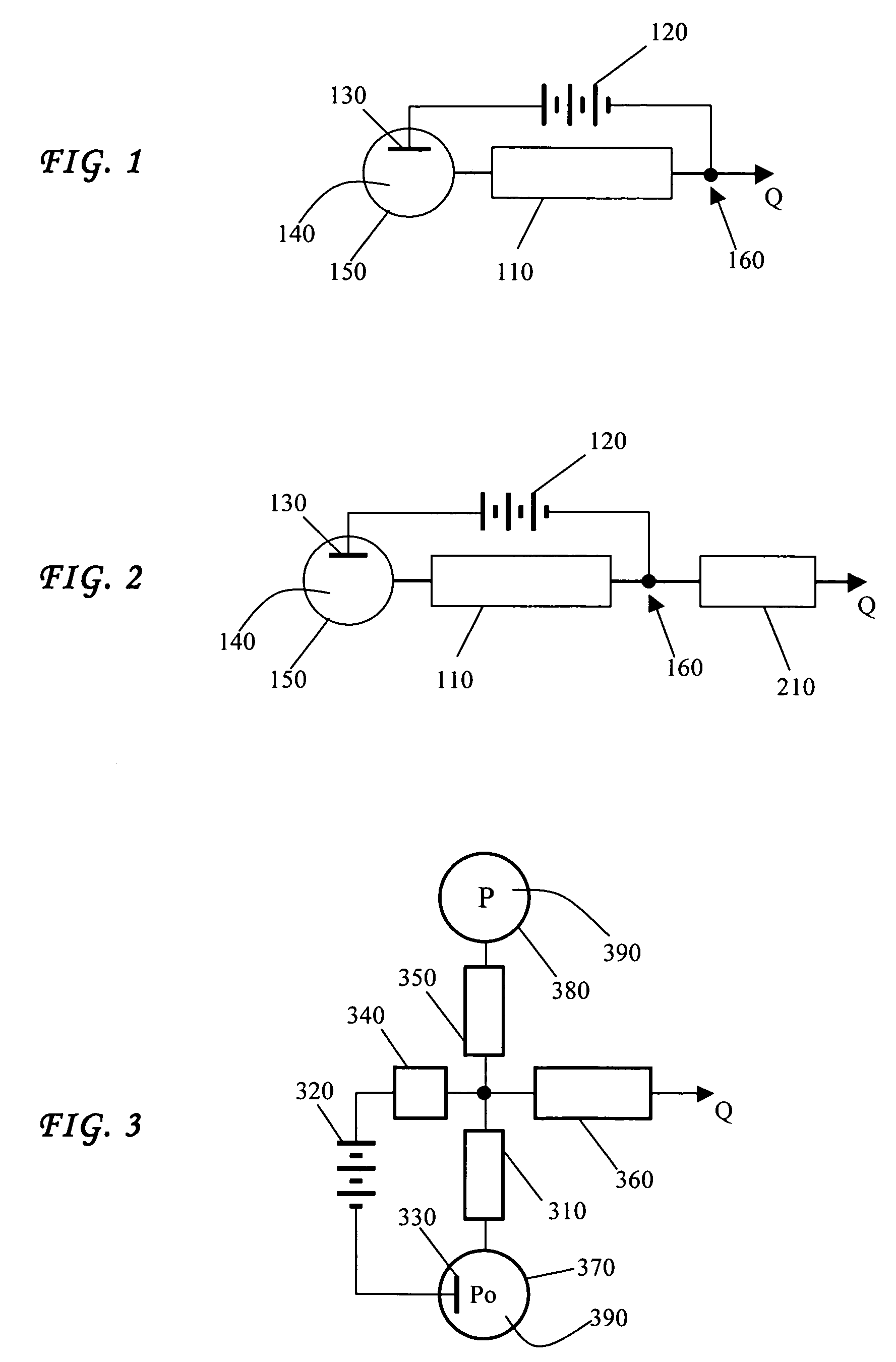

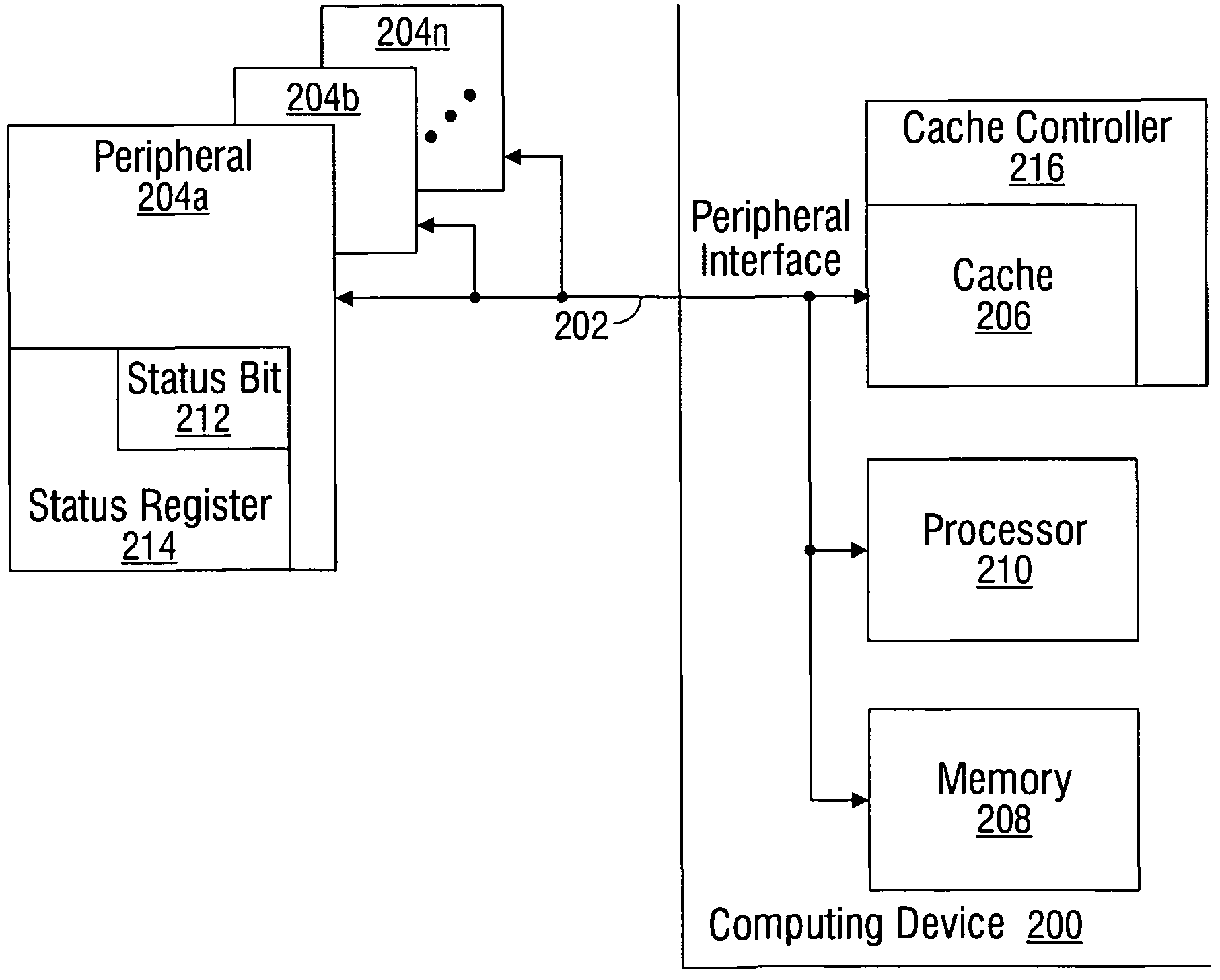

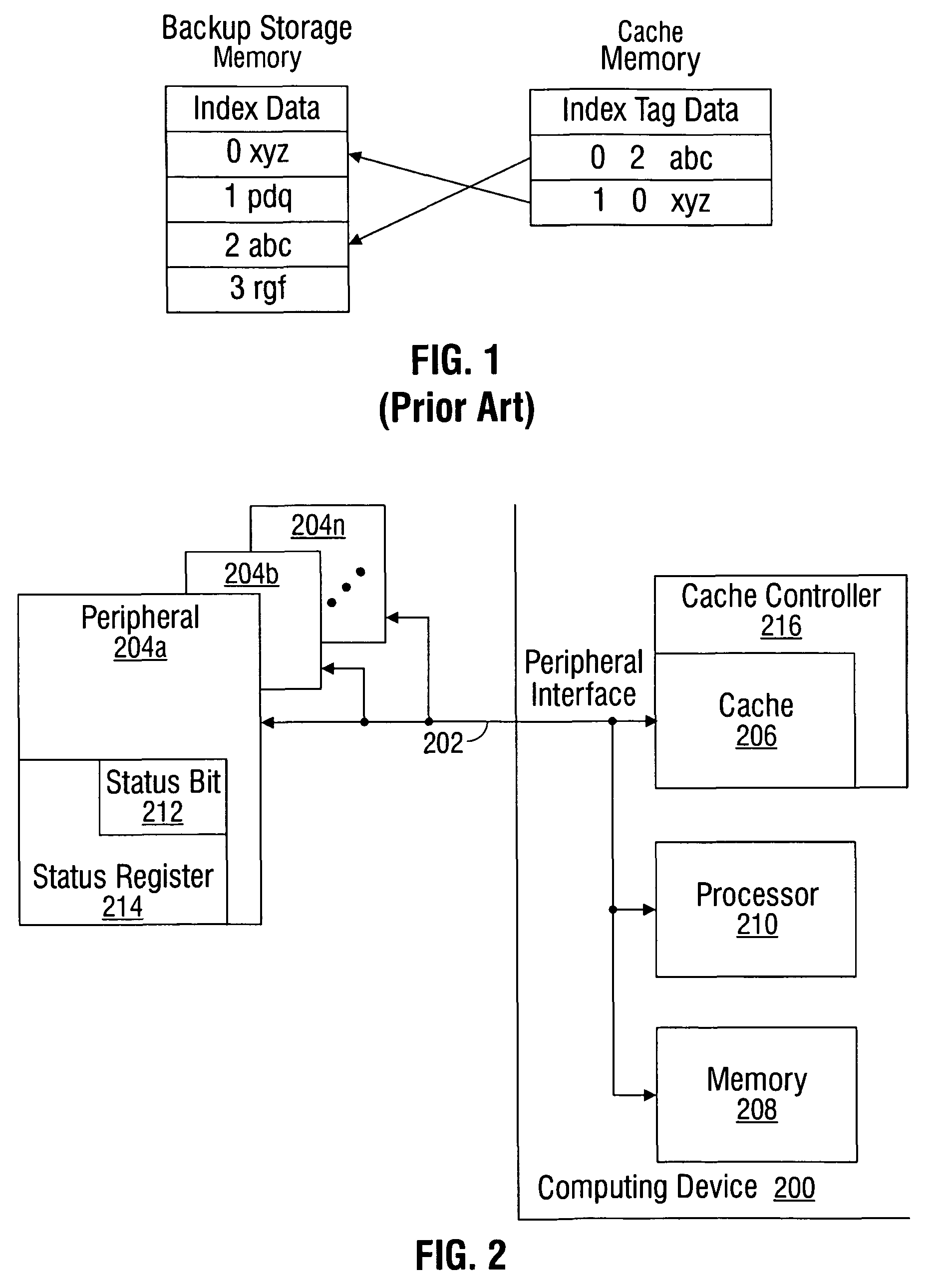

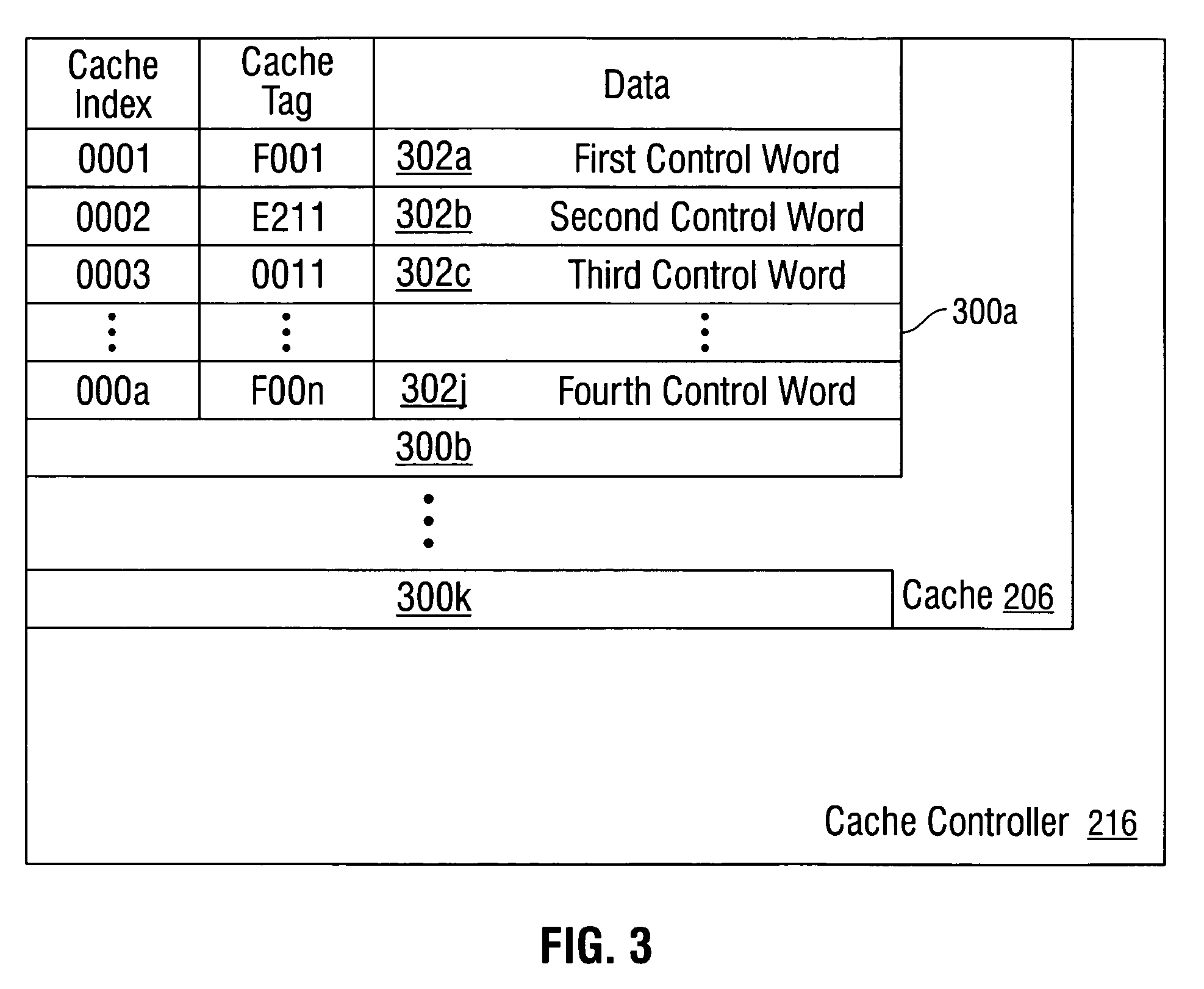

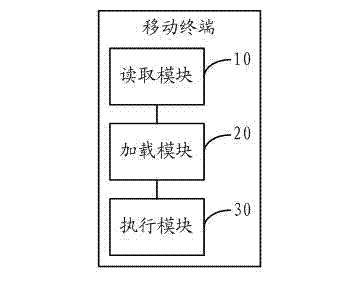

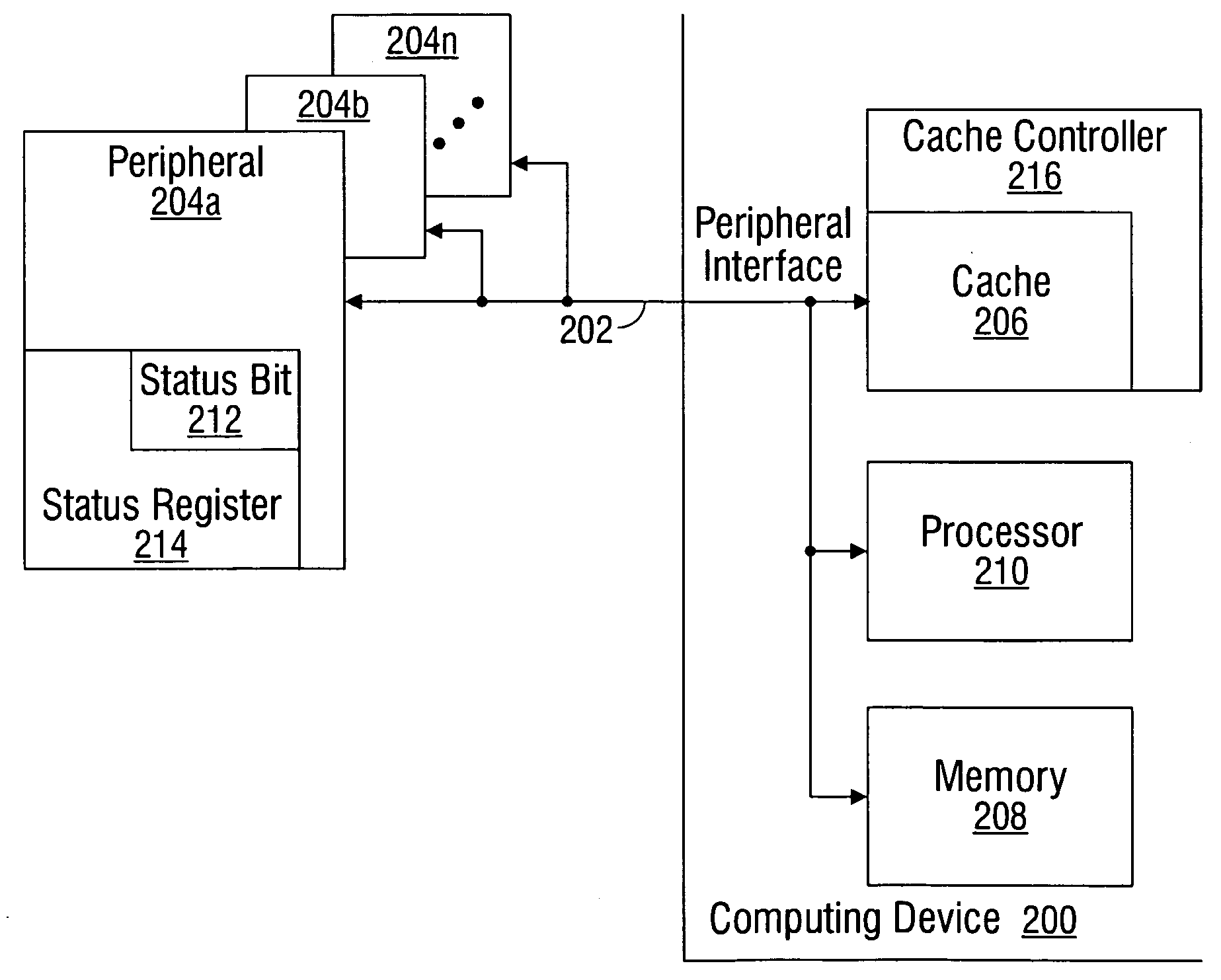

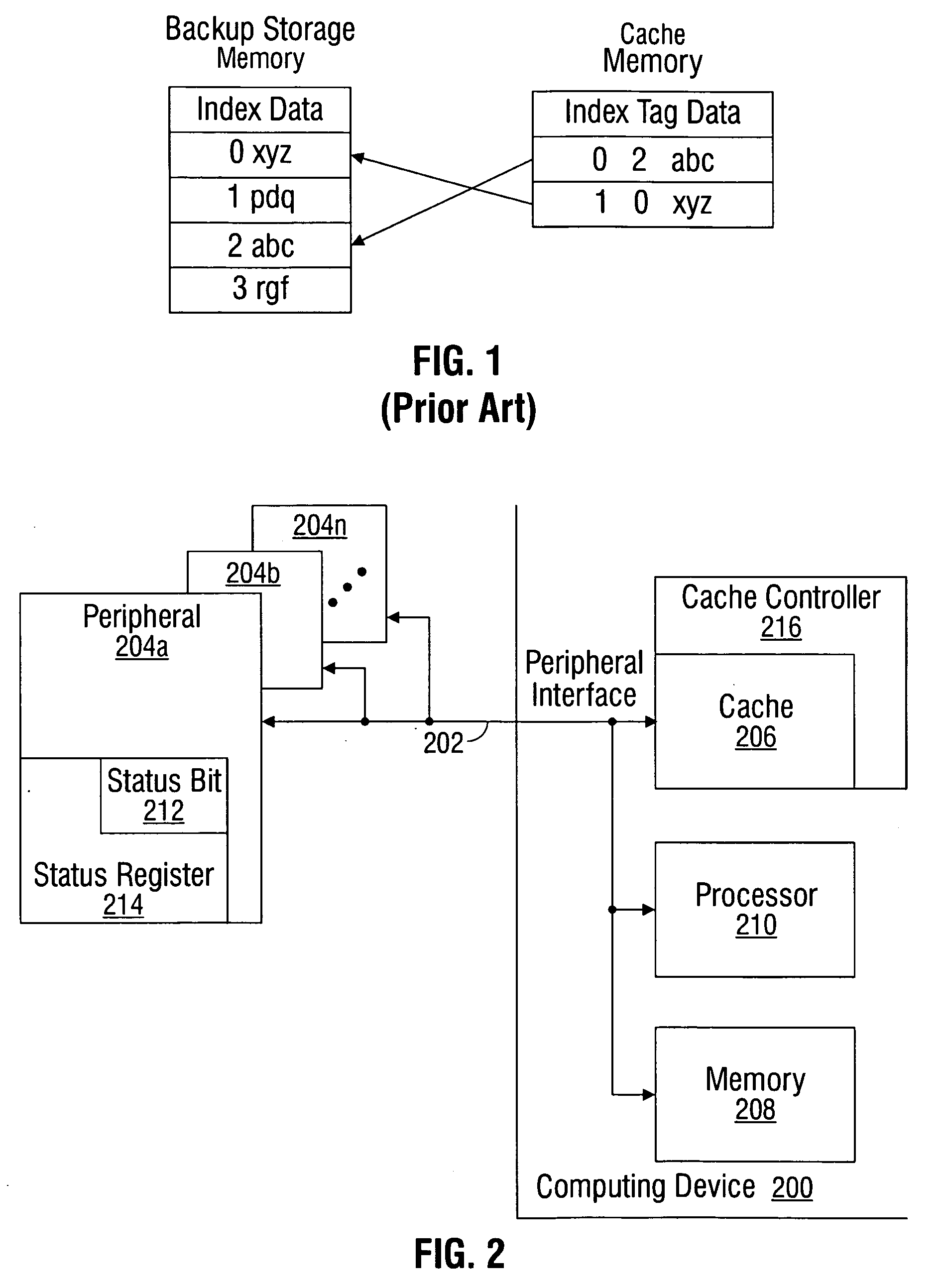

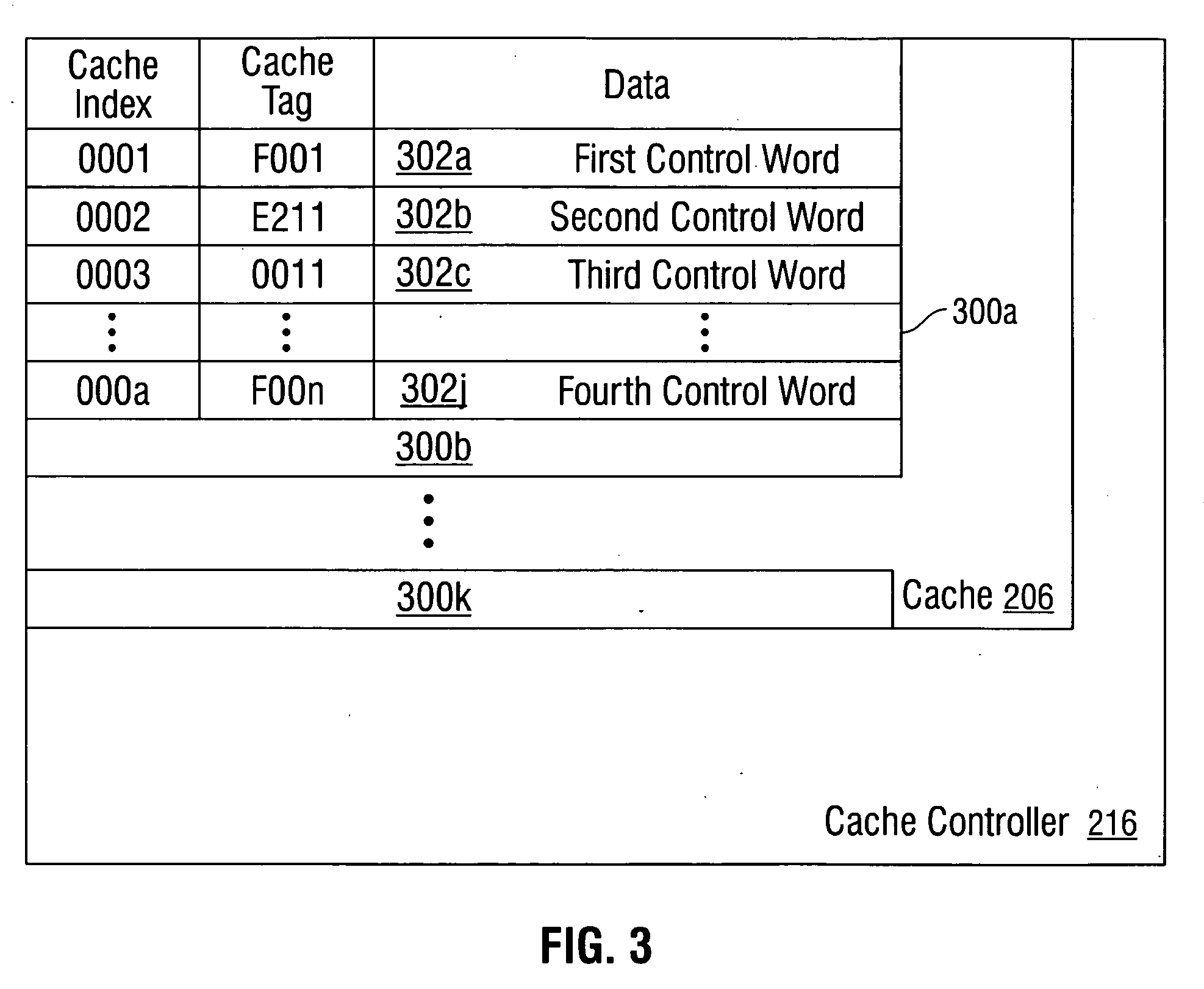

Cache stashing processor control messages

InactiveUS7774522B2Extra memory accessImprove execution performanceMemory systemsInput/output processes for data processingEmbedded systemPeripheral

A system and method have been provided for pushing cacheable control messages to a processor. The method accepts a first control message, identified as cacheable and addressed to a processor, from a peripheral device. The first control message is allocated into a cache that is associated with the processor, but not associated with the peripheral device. In response to a read-prompt the processor reads the first control message directly from the cache. The read-prompt can be a hardware interrupt generated by the peripheral device referencing the first control message. For example, the peripheral may determine that the first control message has been allocated into the cache and generate a hardware interrupt associated with the first control message. Then, the processor reads the first control message in response to the hardware interrupt read-prompt. Alternately, the read-prompt can be the processor polling the cache for pending control messages.

Owner:MACOM CONNECTIVITY SOLUTIONS LLC

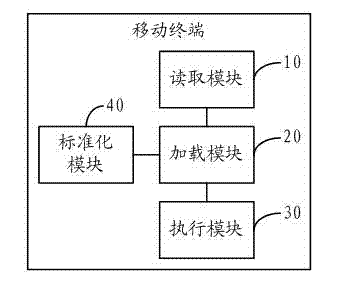

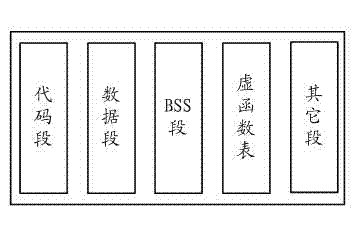

Cross-platform implementation method for executable programs and mobile terminal

ActiveCN102681893AImprove execution efficiencyImprove execution performanceProgram initiation/switchingInternal memoryStandard form

The embodiment of the invention discloses a cross-platform implementation method for executable programs. The cross-platform implementation method comprises the following steps: reading a file in a standard format; selecting the executable programs matched with a present platform environment from the file in the standard format, and uploading the executable programs in an internal memory of a present platform; and running the uploaded executable programs. The embodiment of the invention also discloses a mobile terminal. According to the invention, the cross-platform uploading and running of the executable programs in the mobile terminal can be realized, the execution efficiency is high, the performance is high, and the cost is low.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Cache Stashing Processor Control Messages

InactiveUS20100125677A1Improves processor instruction execution performanceReduce latency overheadMemory adressing/allocation/relocationInput/output processes for data processingEmbedded systemPeripheral

A system and method have been provided for pushing cacheable control messages to a processor. The method accepts a first control message, identified as cacheable and addressed to a processor, from a peripheral device. The first control message is allocated into a cache that is associated with the processor, but not associated with the peripheral device. In response to a read-prompt the processor reads the first control message directly from the cache. The read-prompt can be a hardware interrupt generated by the peripheral device referencing the first control message. For example, the peripheral may determine that the first control message has been allocated into the cache and generate a hardware interrupt associated with the first control message. Then, the processor reads the first control message in response to the hardware interrupt read-prompt. Alternately, the read-prompt can be the processor polling the cache for pending control messages.

Owner:MACOM CONNECTIVITY SOLUTIONS LLC

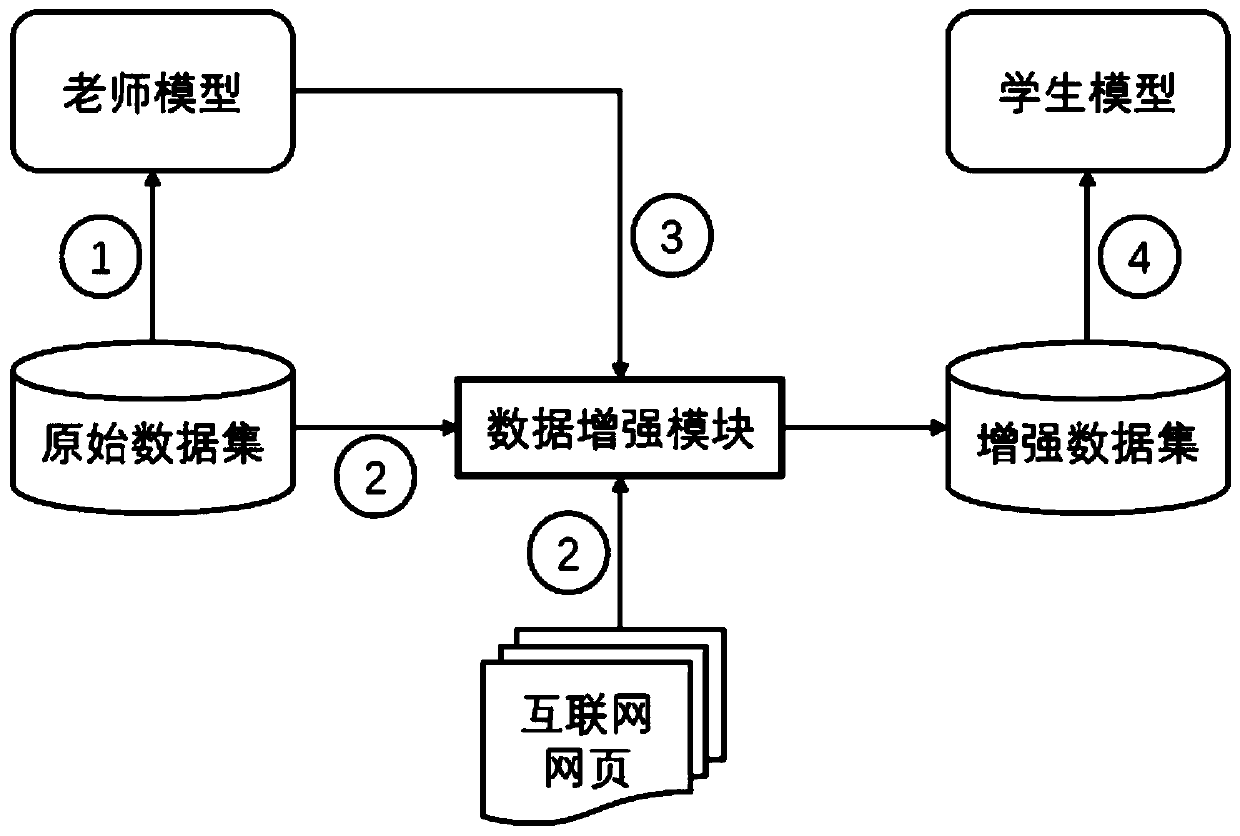

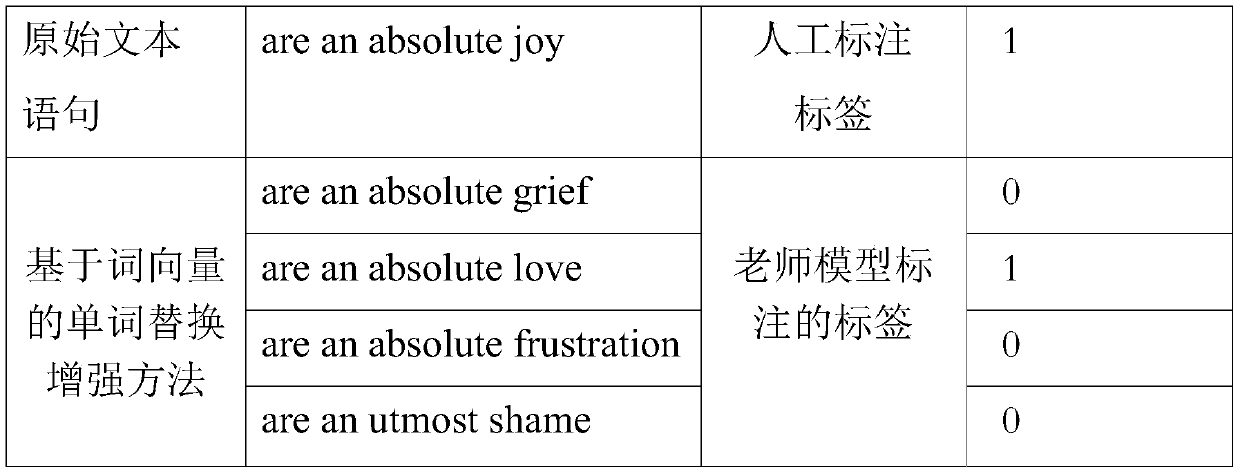

Natural language processing model training method, task execution method, equipment and system

ActiveCN111079406ASolve deployment difficultiesEnhanced natural language processing capabilitiesSemantic analysisMachine learningData setOriginal data

The invention discloses a natural language processing model training method, a natural language processing method, natural language processing equipment and a natural language processing system, whichbelong to the field of natural language processing, and the method comprises the following steps: training a teacher model by utilizing a marked original data set; enhancing text sentences in the original data set to obtain enhanced text sentences, and labeling the enhanced text sentences by using a trained teacher model to obtain a labeled enhanced data set; taking the original data set and theenhanced data set as a training data set, training the student model, and taking the trained student model as a natural language processing model, wherein the teacher model and the student model are both deep learning models and execute the same natural language processing task, and the teacher model is more complex and larger in scale. According to the invention, the data set of the natural language processing task can be effectively enhanced in a knowledge distillation scene, and the processing capability of the natural language processing model is improved, so that the execution effect of the natural language processing task is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

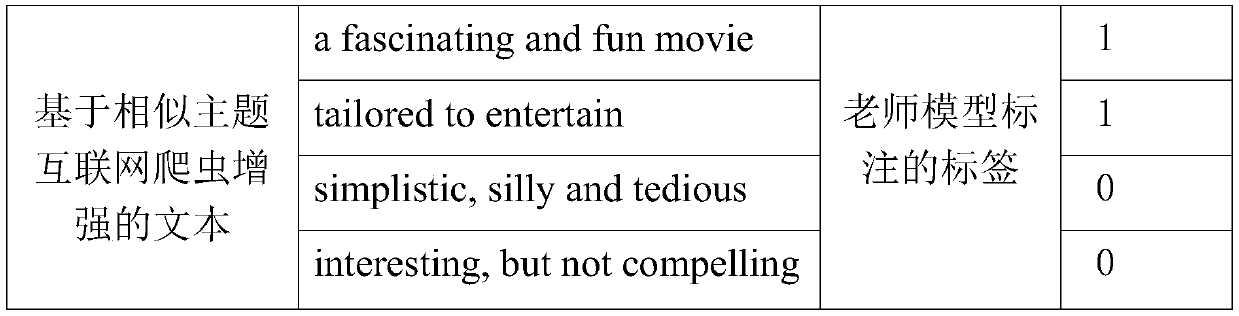

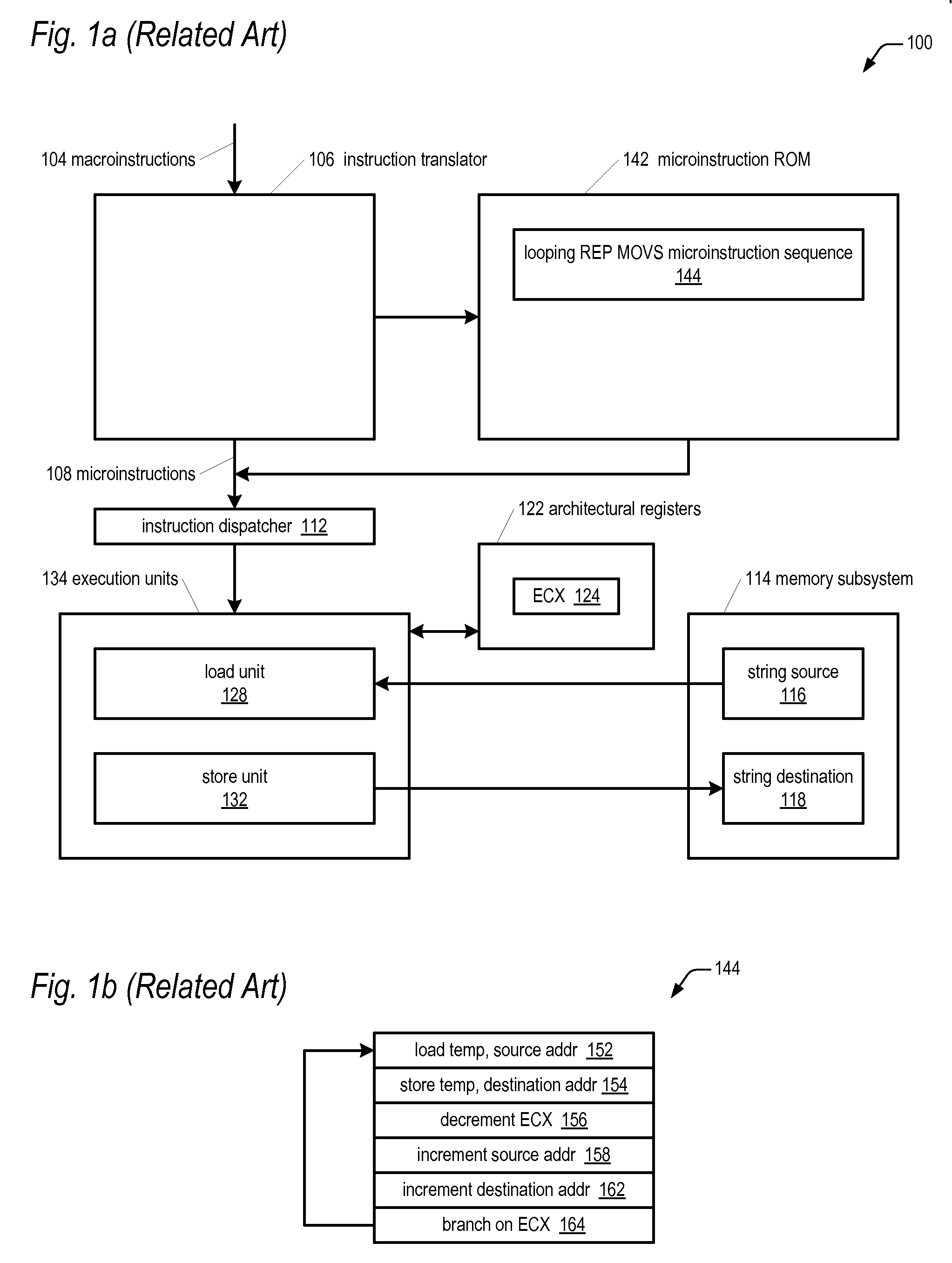

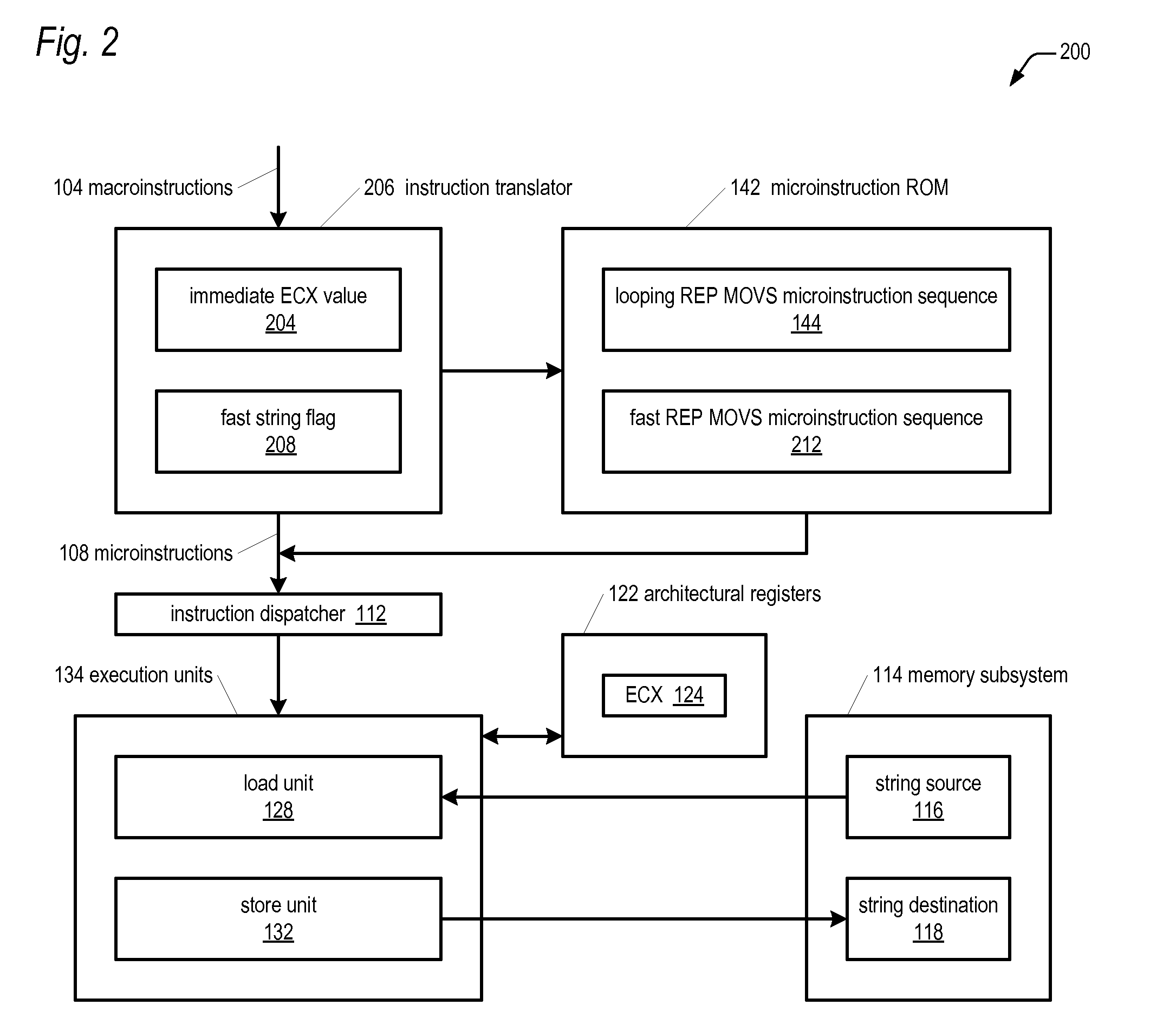

REP MOVE string instruction execution by selecting loop microinstruction sequence or unrolled sequence based on flag state indicative of low count repeat

ActiveUS7802078B2Improve execution performanceRuntime instruction translationDigital computer detailsMicroprocessorWord length

A microprocessor REP MOVS macroinstruction specifies the word length of the string in the IA-32 ECX register. The microprocessor includes a memory, configured to store a first and second sequence of microinstructions. The first sequence conditionally transfers control to a microinstruction within the first sequence based on the ECX register. The second sequence does not conditionally transfer control based on the ECX register. The microprocessor includes an instruction translator, coupled to the memory. In response to a macroinstruction that moves an immediate value into the ECX register, the instruction translator sets a flag and saves the immediate value. In response to a macroinstruction that modifies the ECX register in a different manner, the translator clears the flag. In response to a REP MOVS macroinstruction, the instruction translator transfers control to the first sequence if the flag is clear; and transfers control to the second sequence if the flag is set.

Owner:VIA TECH INC

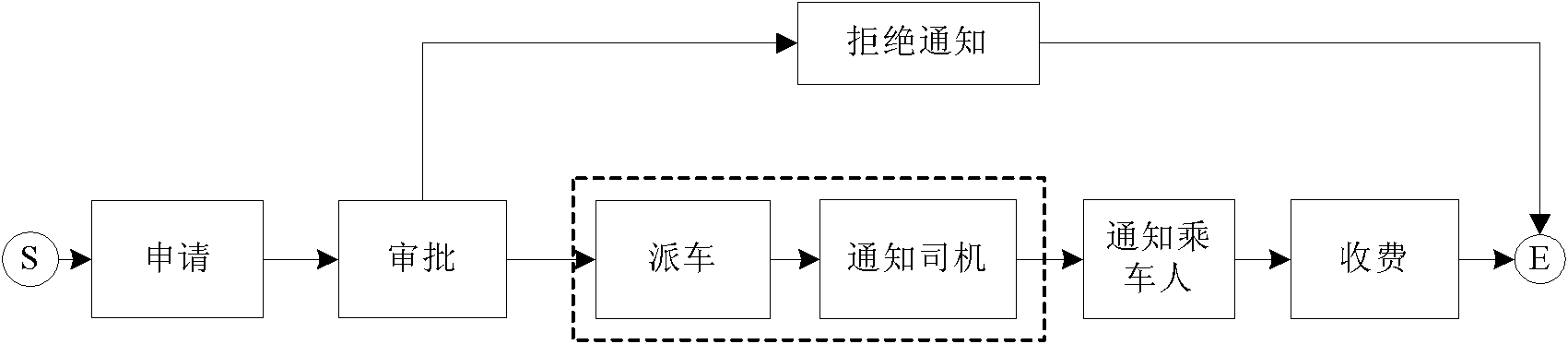

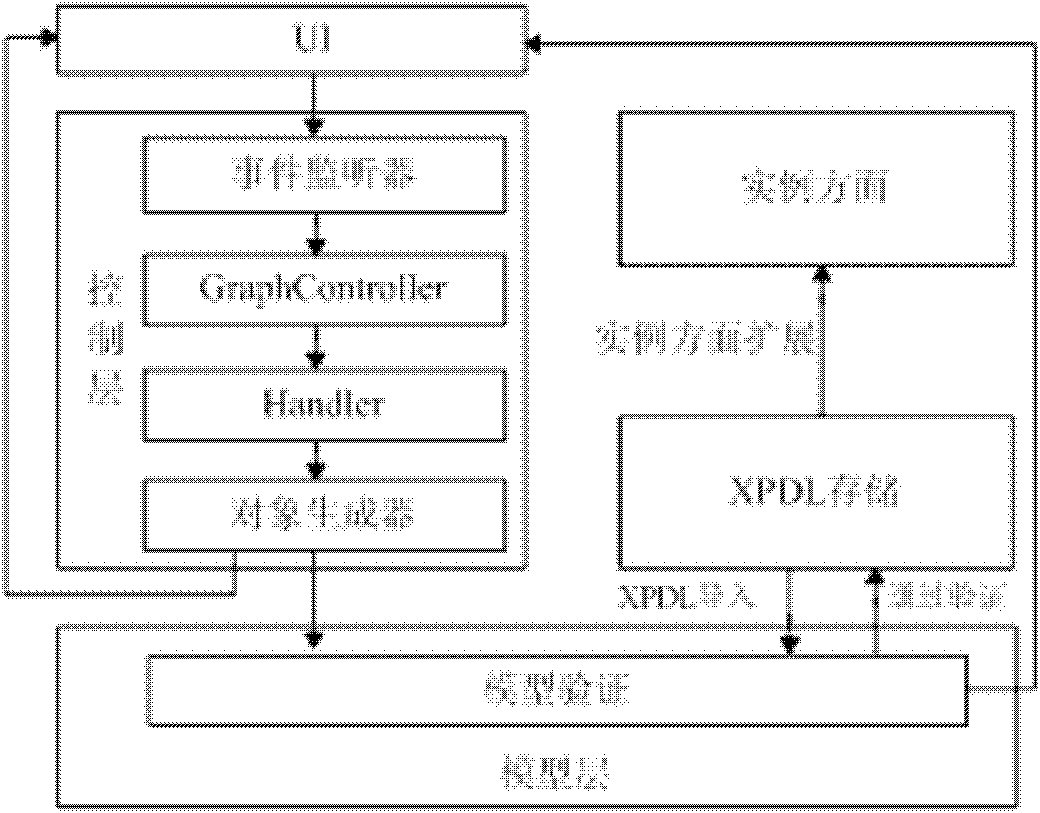

Method for supporting batch processing execution on workflow activity instances

InactiveCN101833712ABatch processing is convenientImprove execution performanceLogisticsBatch processingOperational costs

The invention provides a method for supporting batch processing execution on workflow activity instances. A workflow serve module with a workflow engine is established on a workflow model supporting batch processing. The method is characterized in that the workflow serve module comprises a grouped batch-processing dispatching engine; an event triggering mechanism is used for organizing the execution process of the activity instance batch processing; events are sent into an event queue; an ECA rule interpreter in the grouped batch processing dispatching engine is used for monitoring the event queue; when the length of the event queue changes, the ECA rule interpreter processes the newcome event in the event queue; and the ECA rule interpreter is an event-condition-action rule interpreter. The invention can realize batch processing on multiple activity instances of the same type in the workflow system, is beneficial to enhancing the execution performance of the workflow system, can enhance the operation efficiency for the enterprises, and provides a method instruction and application tool support for lowering or saving the production or operational cost.

Owner:HUNAN UNIV OF SCI & TECH

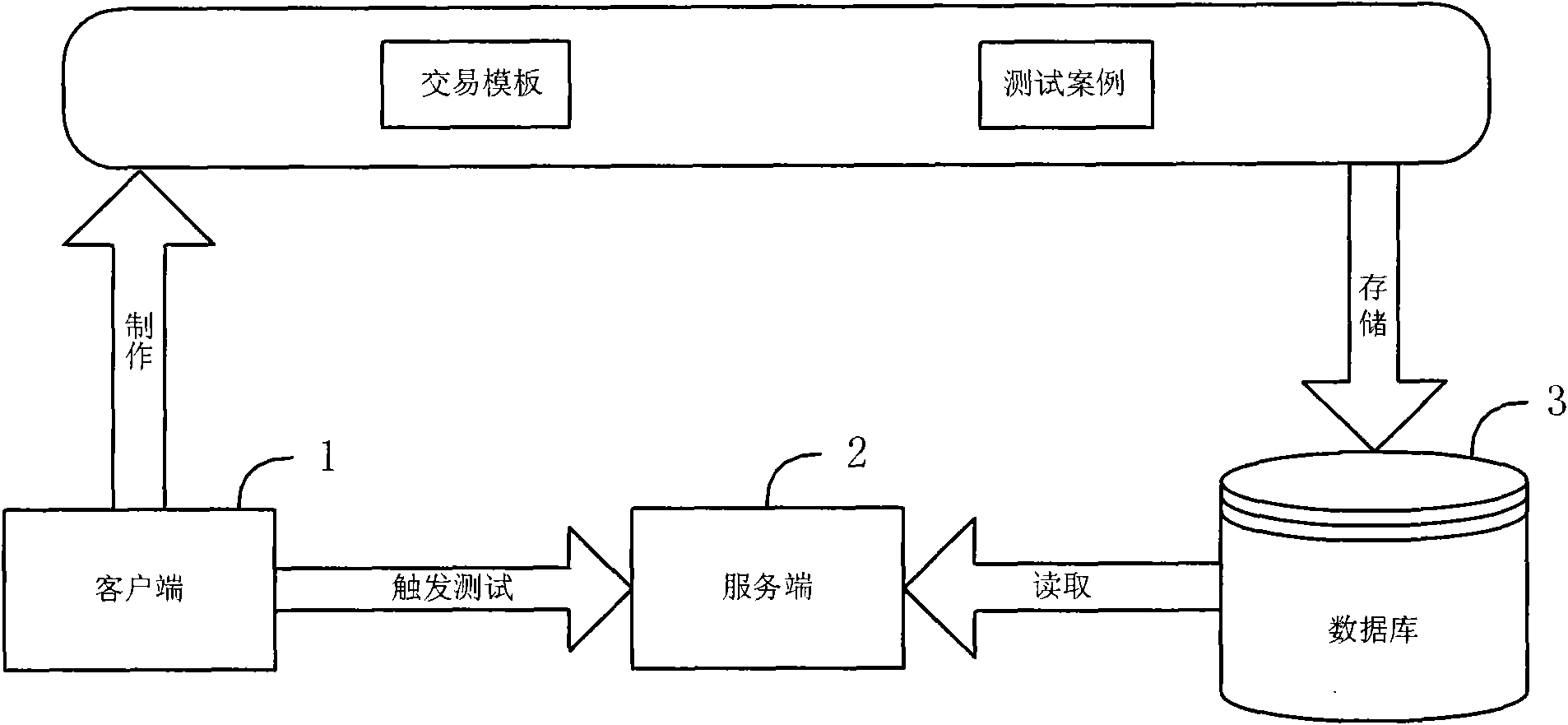

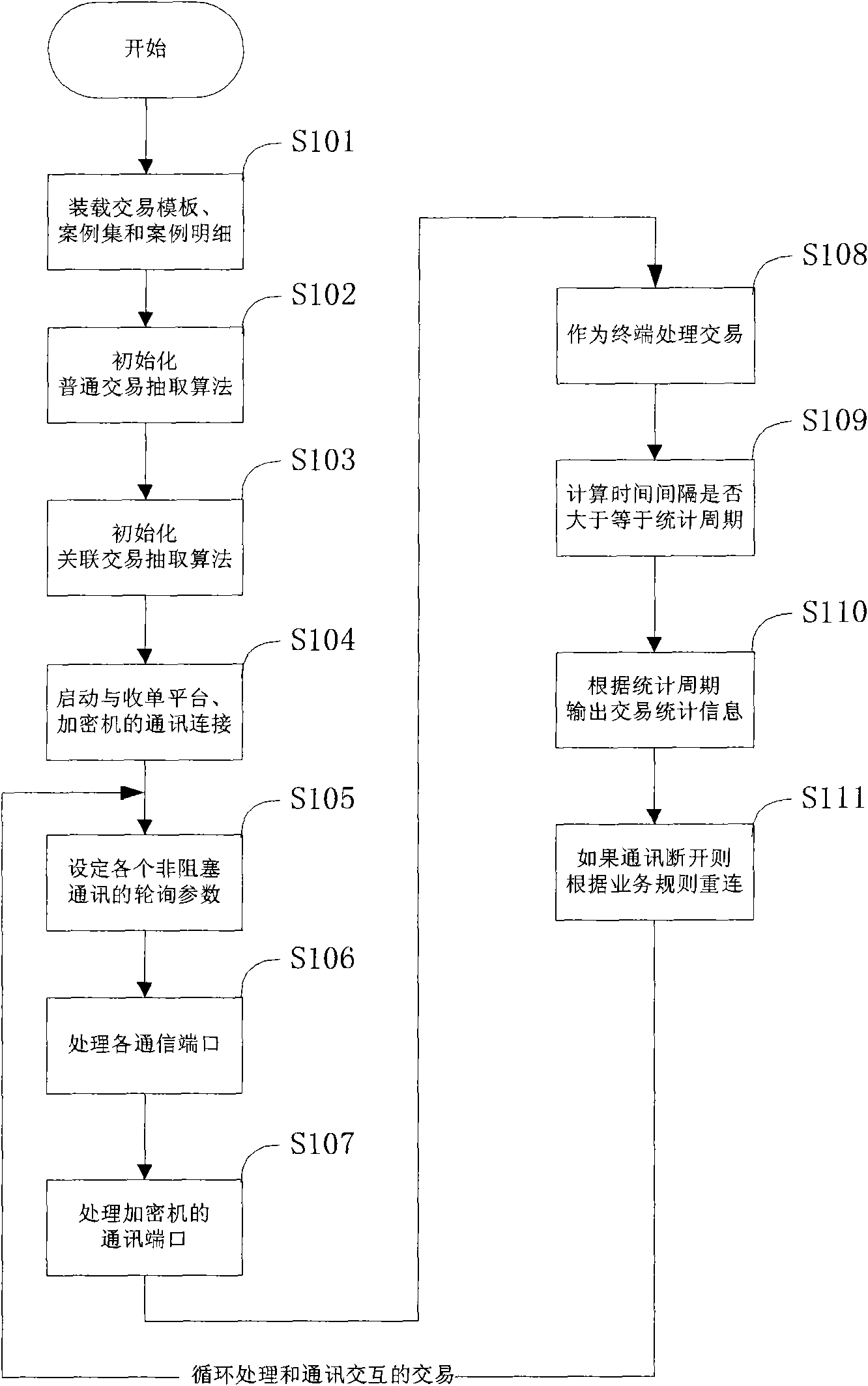

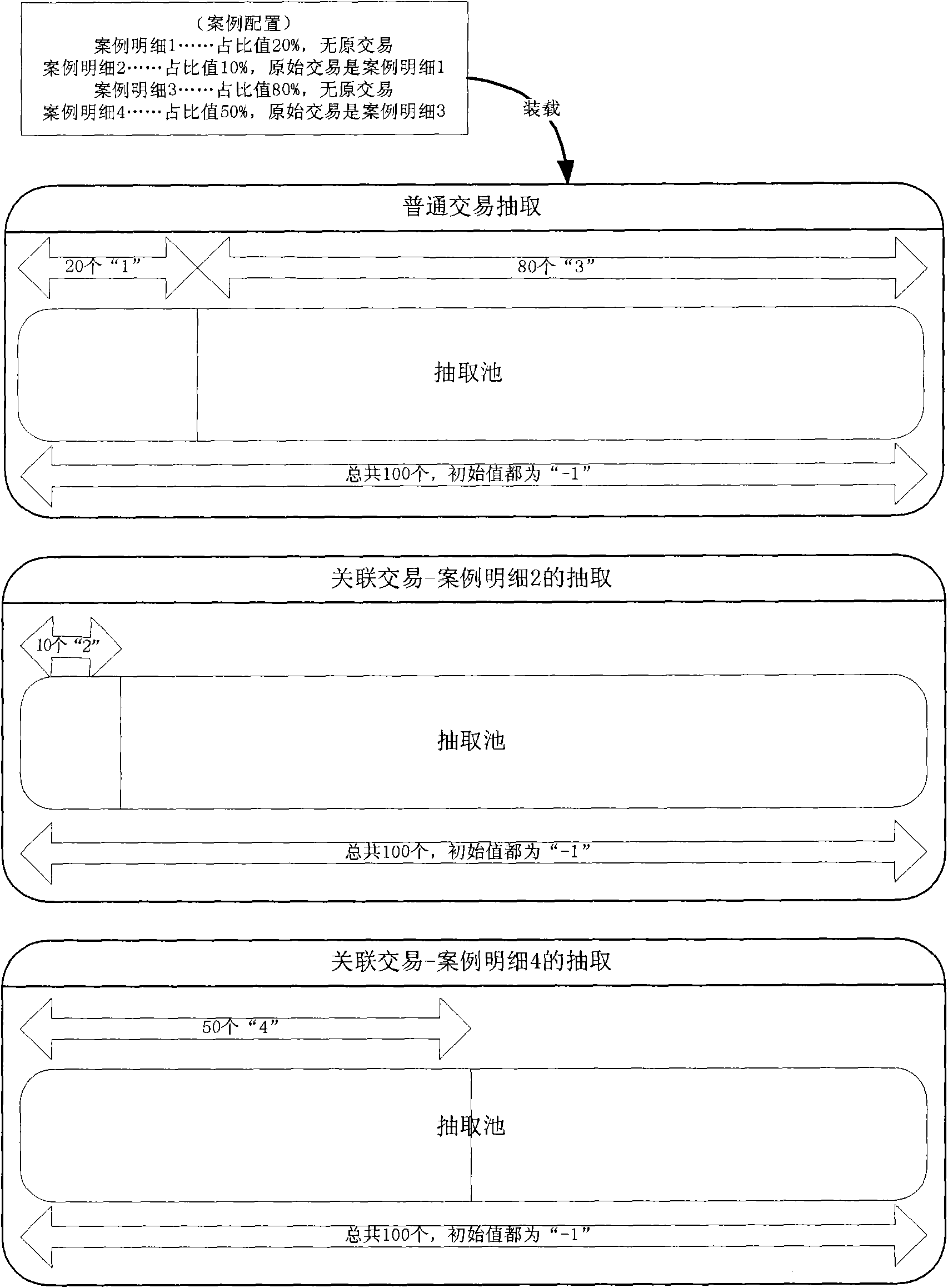

Method for testing transaction performance of terminal

ActiveCN102053872AImprove execution performanceAvoid the disadvantages of losing original transaction informationFinanceError detection/correctionCommunication linkComputer science

The invention discloses a method for testing the transaction performance of a terminal. A test tool comprises a client, a database and a server; a user makes a transaction template and test cases at the client and stores the transaction template and the test cases in the database; and during testing, the server receives a test command and adopts a processing mode comprising the following steps of: a, loading the transaction template and the test cases to a memory pool from the database; b, initializing an extraction algorithm for extracting transactions from the memory pool; c, starting communication connection among the server, an acquiring platform and an encryptor; d, setting communication links as required; e, serving as terminal processed transactions; f, calculating whether an interval between the current time and last statistical time is more than or equal to a statistical period or not, and outputting transaction statistical information according to the statistical period when the condition is met; and g, returning to the step d and circularly processing transactions interacted with communication. By the method for testing the performance, multi-level related transactions can be supported, the actual transaction situation can be truly simulated, and test continuity is ensured.

Owner:CHINA UNIONPAY

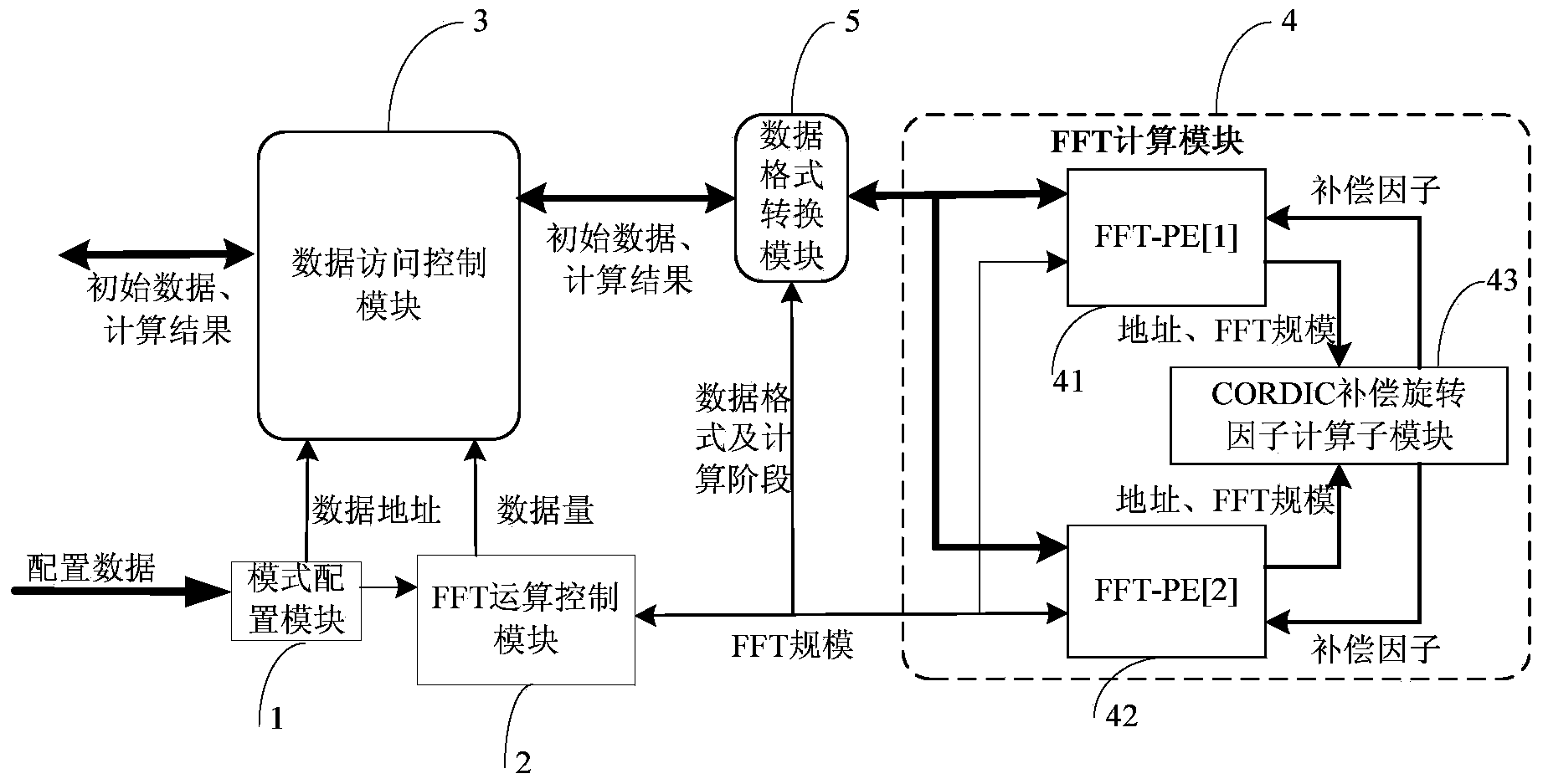

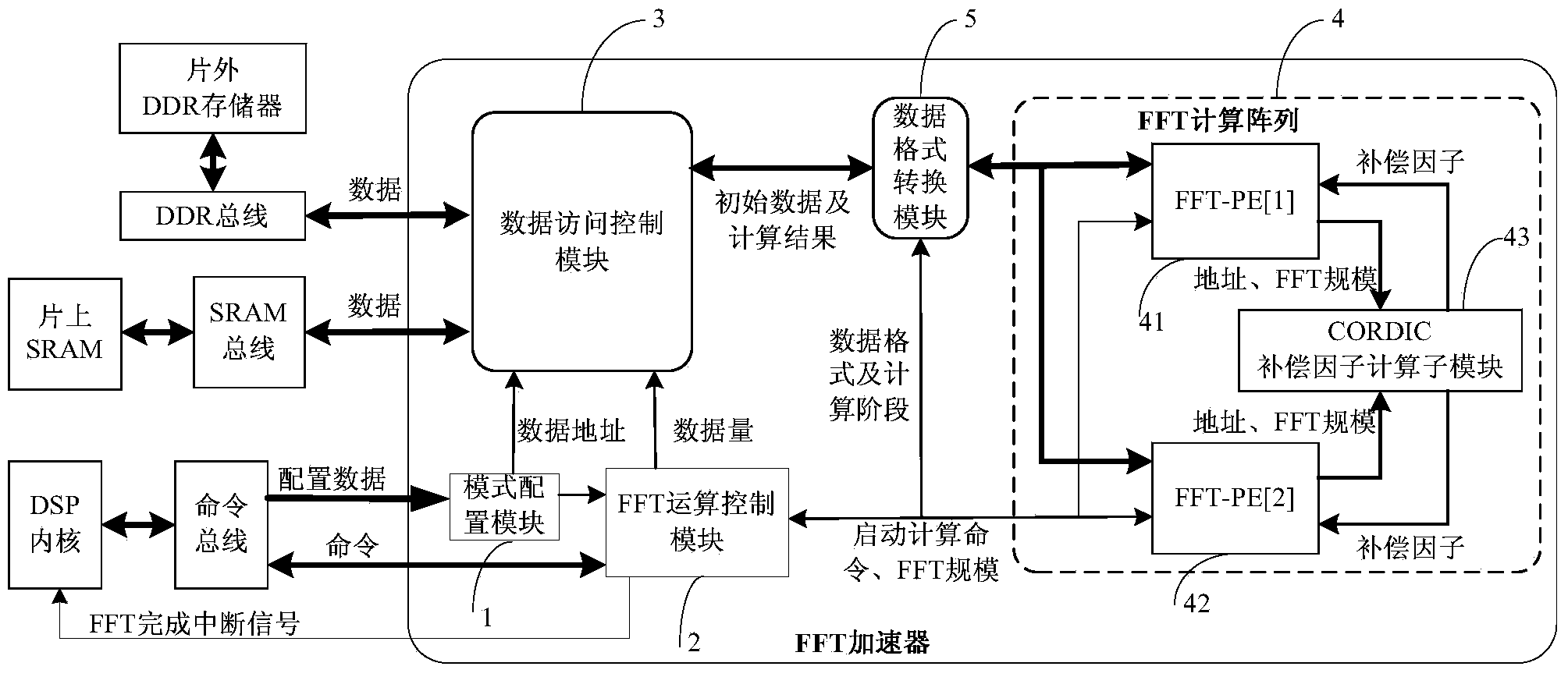

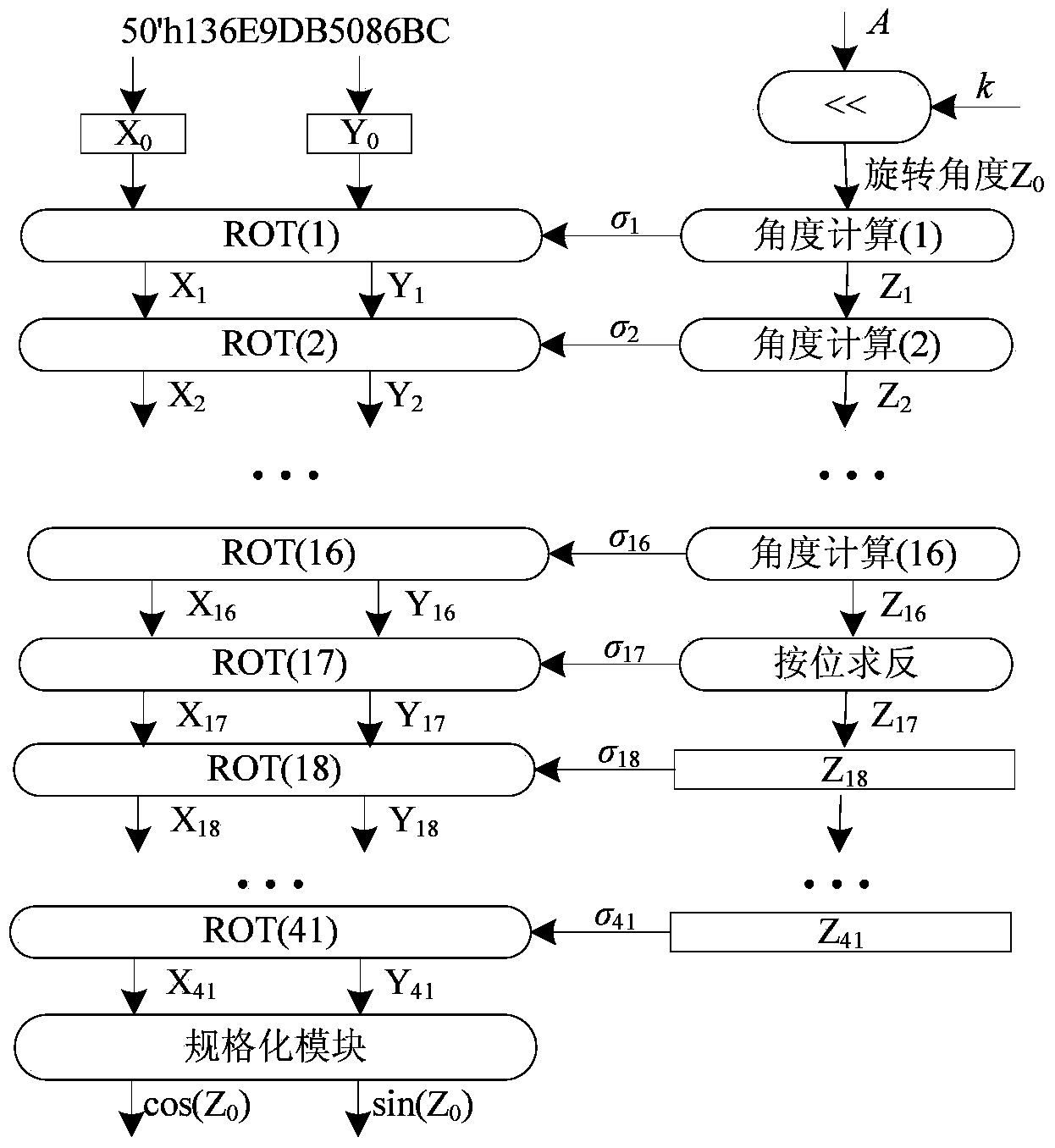

FFT accelerator based on DSP chip

ActiveCN103955447AIncrease flexibilityComputational Acceleration ImplementationComplex mathematical operationsResource utilizationData access control

The invention discloses an FFT accelerator based on a DSP chip. The accelerator comprises a mode configuring module, an FFT computing control module, a data access control module and an FFT computing module, wherein the mode configuring module is used for receiving the configuring data of a data address, a computing scale and computing times; when the computing scale is less than the maximum computing scale which can be directly supported, the FFT computing control module is used for controlling the FFT computing module to carry out the one-dimensional FFT computing; when the computing scale is greater than the maximum computing scale which can be directly supported, the FFT computing control module is used for controlling the FFT computing module to carry out the two-dimensional FFT computing; the data access control module is used for controlling the read of the computing data from a memory in a DMA manner and writing the computing result back to the memory; the FFT computing module is used for carrying out the FFT computing according to a control signal output by the FFT computing control module. The accelerator has the advantages that various configuring modes of the computing scale, the computing times and the data format can be supported, the FFT computing from the small scale to the large scale can be realized, the implementation effect is high, and the utilization ratio of hardware resources is high.

Owner:NAT UNIV OF DEFENSE TECH

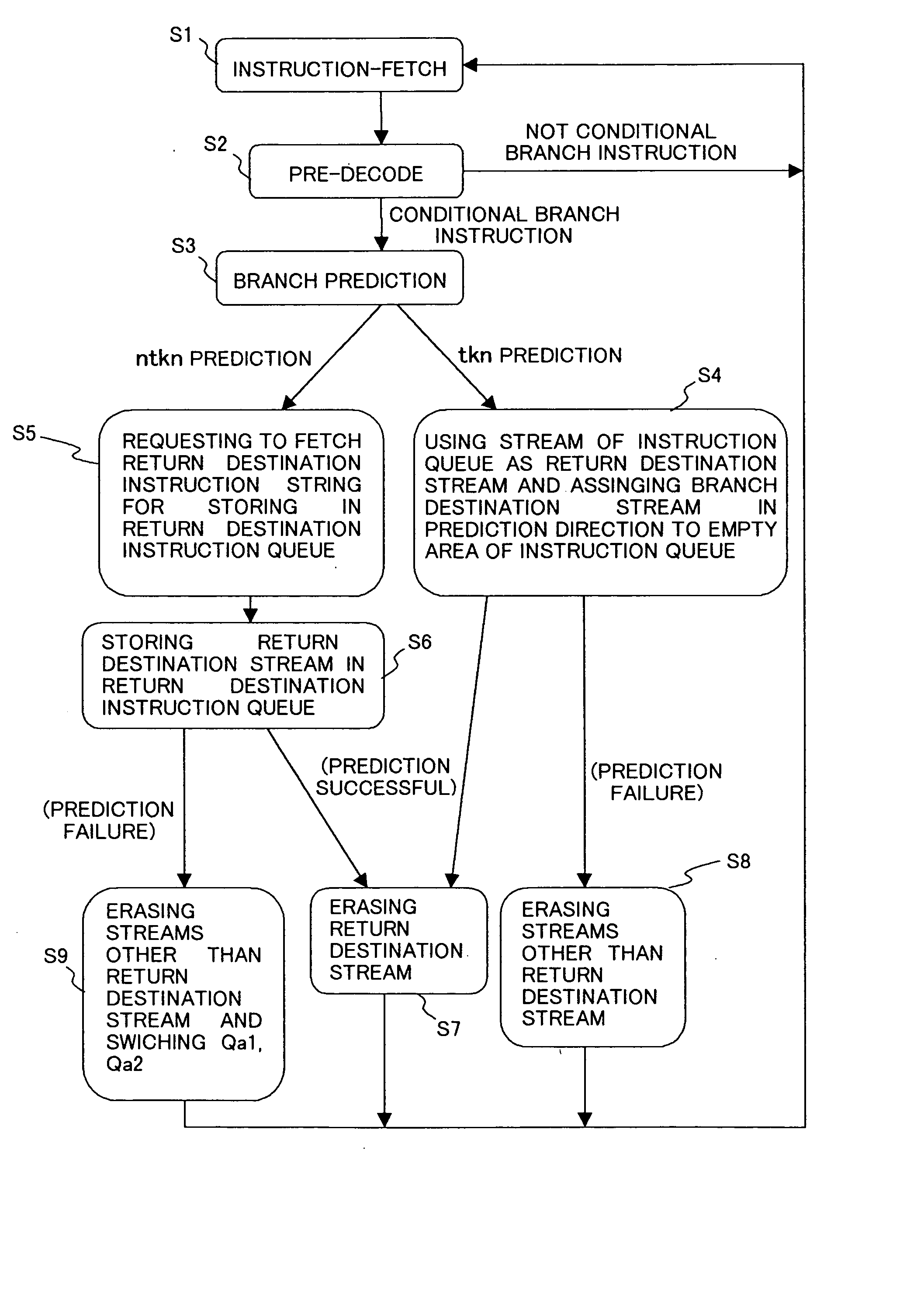

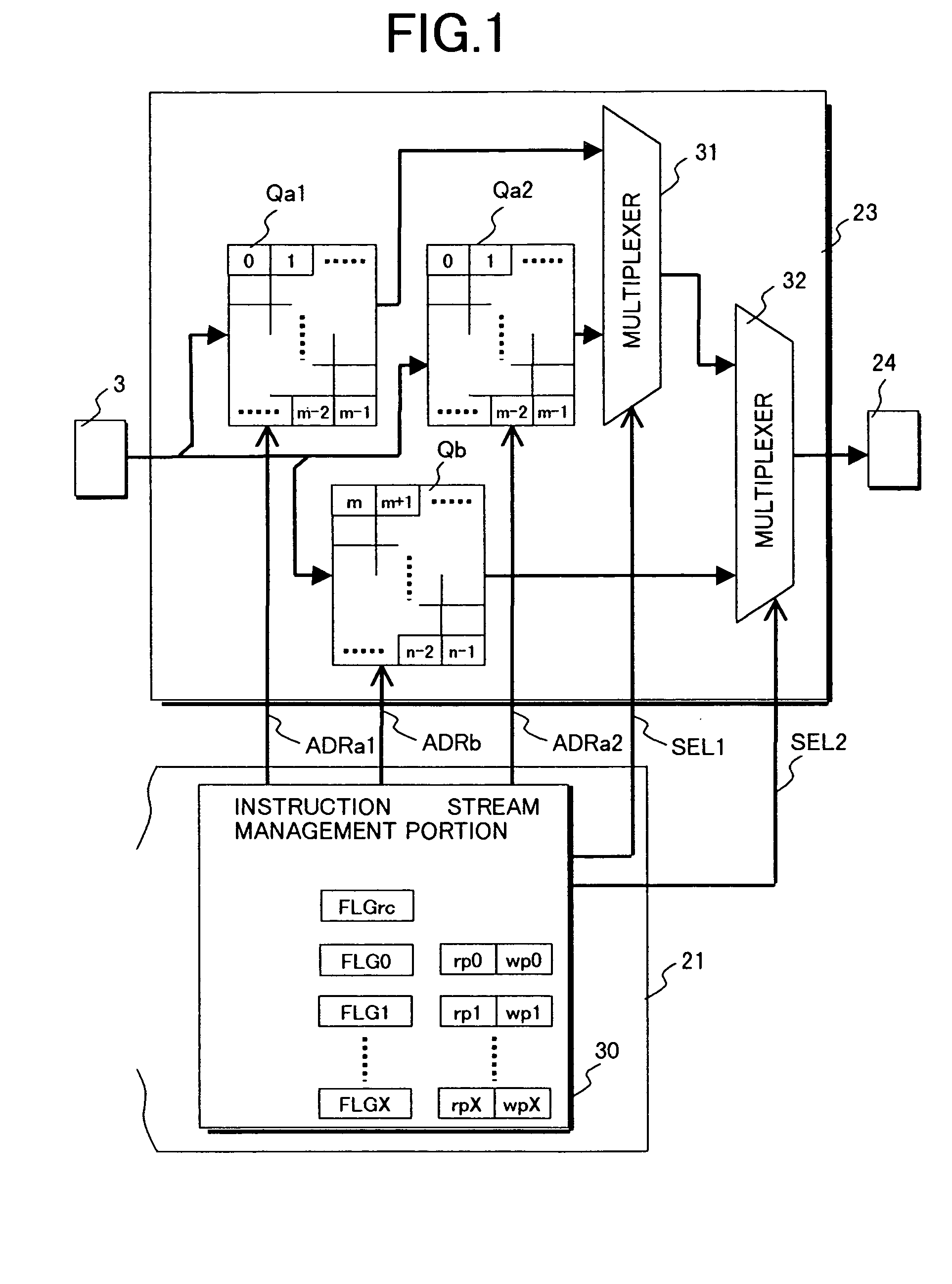

Data processor

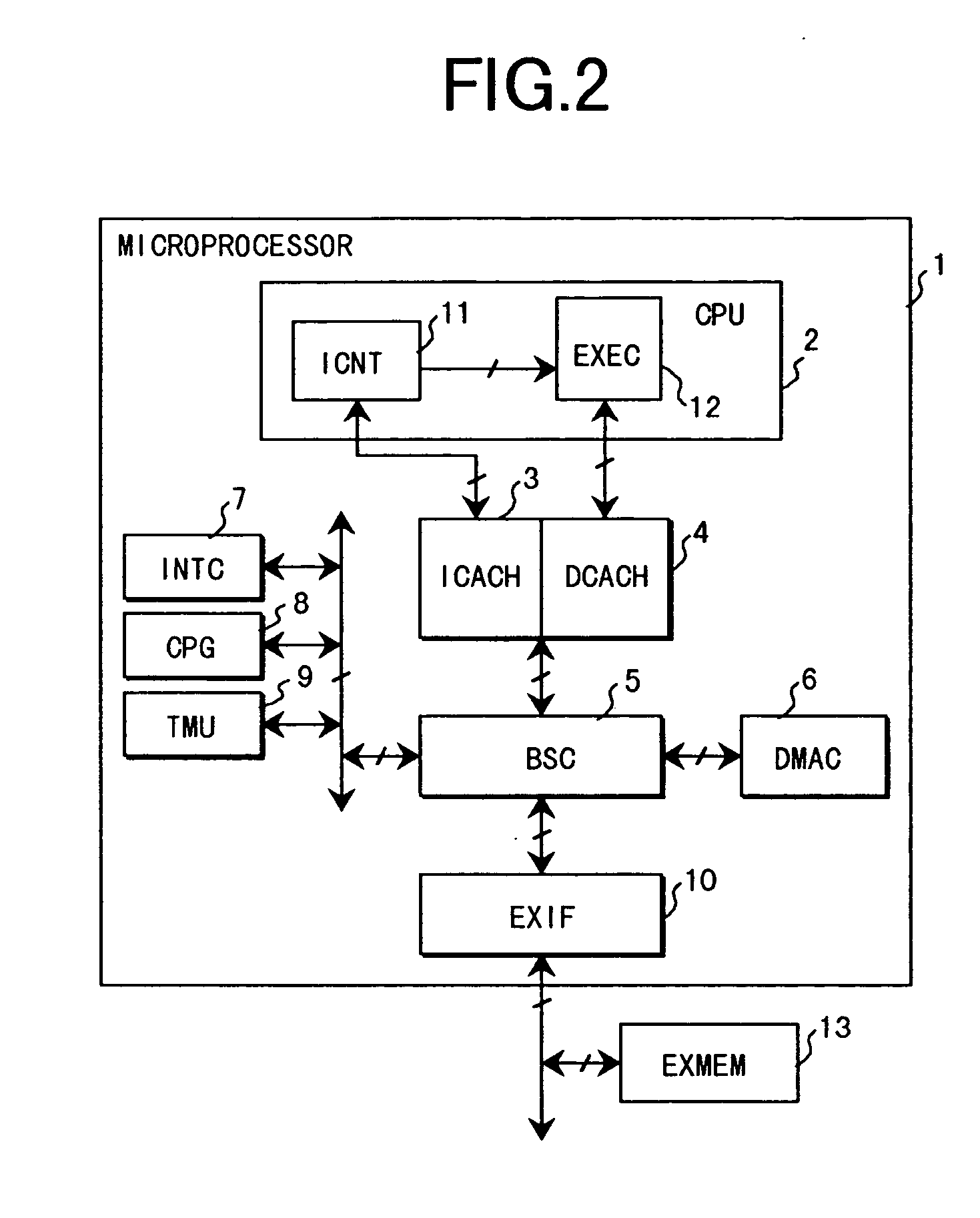

InactiveUS20050050309A1Simplify the linkImprove execution performanceDigital computer detailsConcurrent instruction executionStream managementData treatment

A data processor for executing branch prediction comprises a queuing buffer (23) allocated to an instruction queue and to a return destination instruction queue and having address pointers managed for each instruction stream and a control portion (21) for the queuing buffer. The control portion stores a prediction direction instruction stream and a non-prediction direction instruction stream in the queuing buffer and switches an instruction stream as an execution object from the prediction direction instruction stream to the non-prediction direction instruction stream inside the queuing buffer in response to failure of branch prediction. When buffer areas (Qa1, Qb) are used as the instruction queue, the buffer area (Qa2) is used as a return instruction queue and the buffer area (Qa1) is used as a return instruction queue. A return operation of a non-prediction direction instruction string at the time of failure of branch prediction is accomplished by stream management without using fixedly and discretely the instruction queue and the return destination instruction queue.

Owner:RENESAS TECH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com