Patents

Literature

647 results about "Instruction stream" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Managing VT for reduced power using power setting commands in the instruction stream

InactiveUS6477654B1Improve performanceSpeed maximizationEnergy efficient ICTSoftware engineeringEmbedded systemInstruction stream

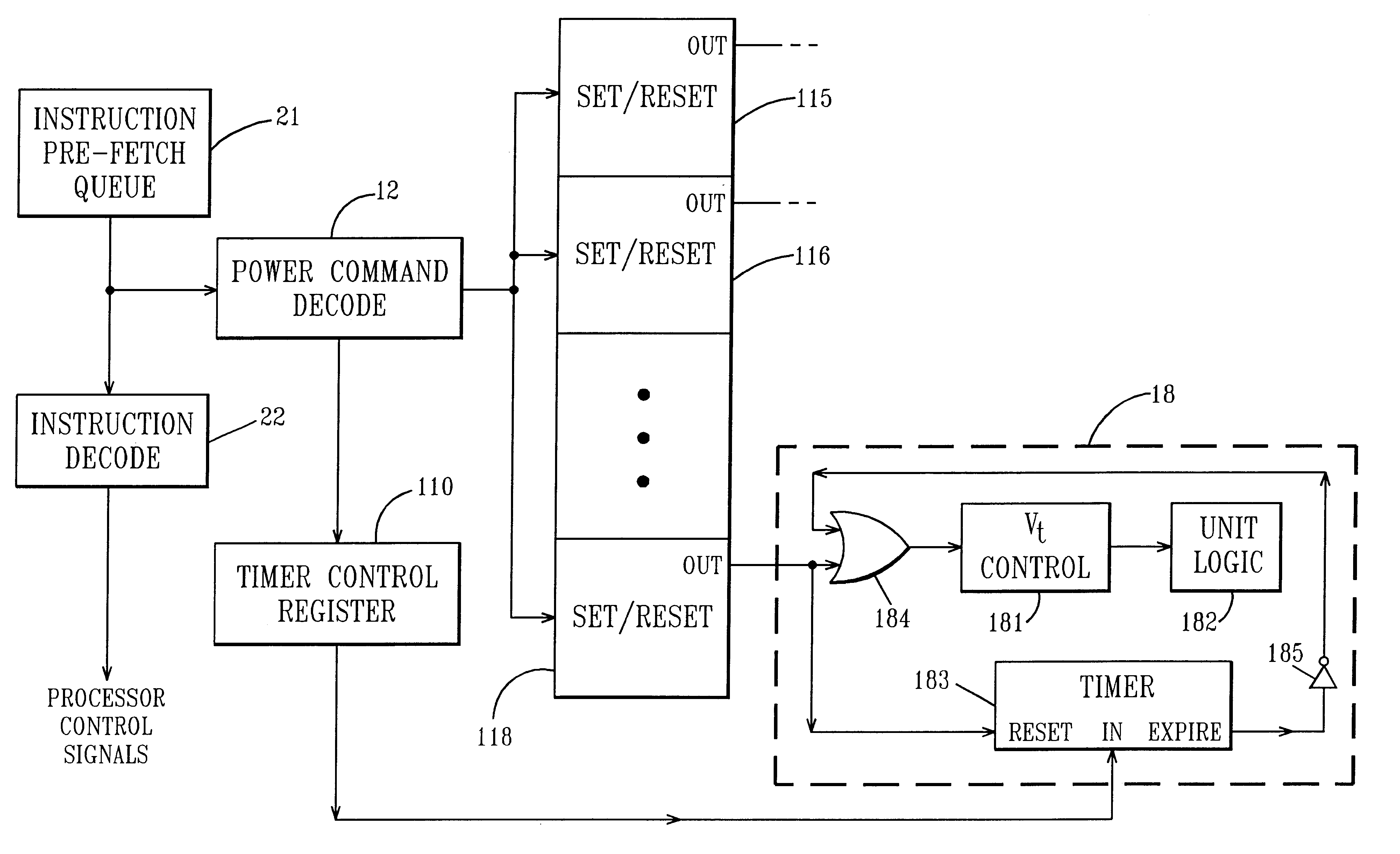

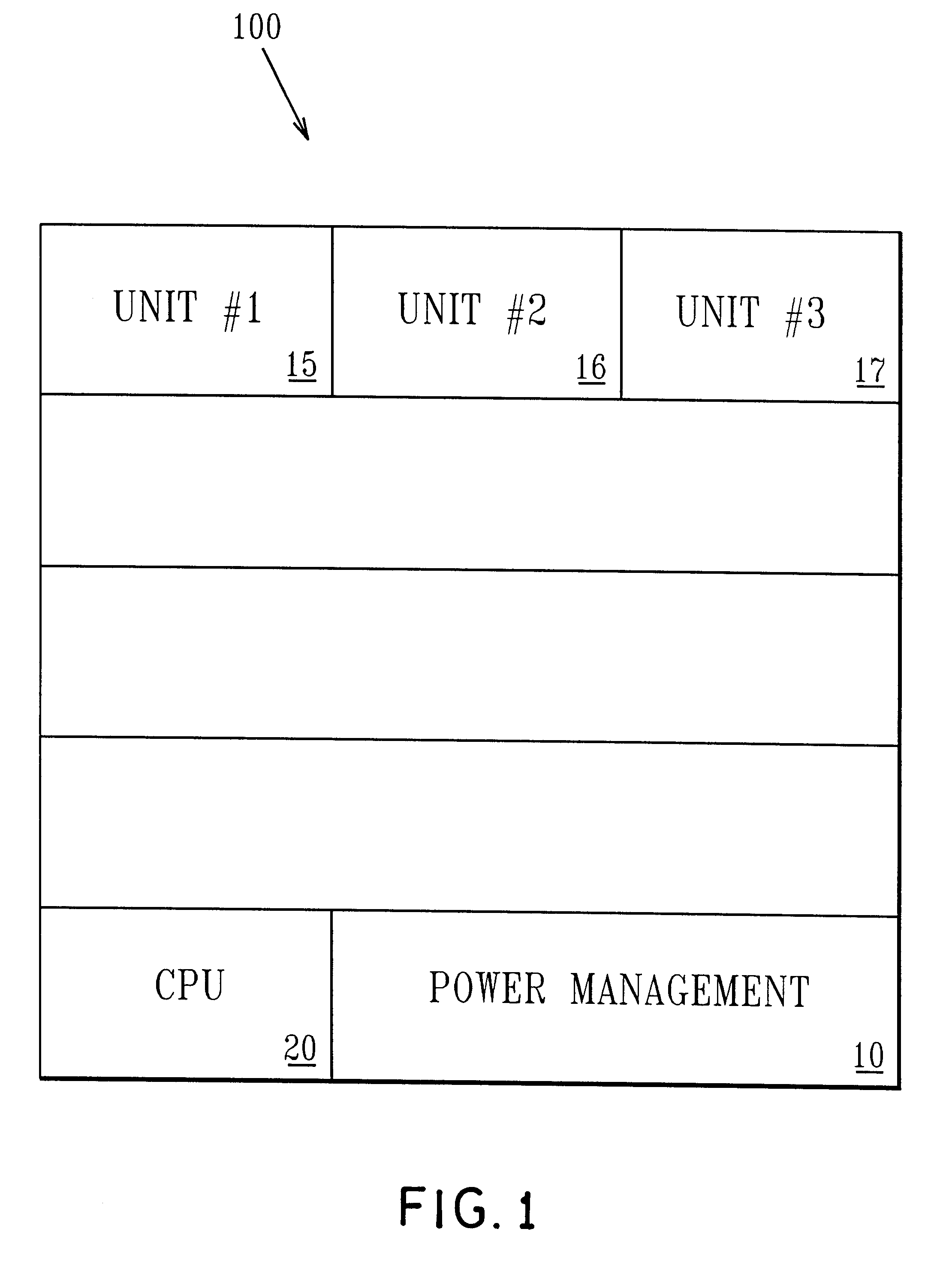

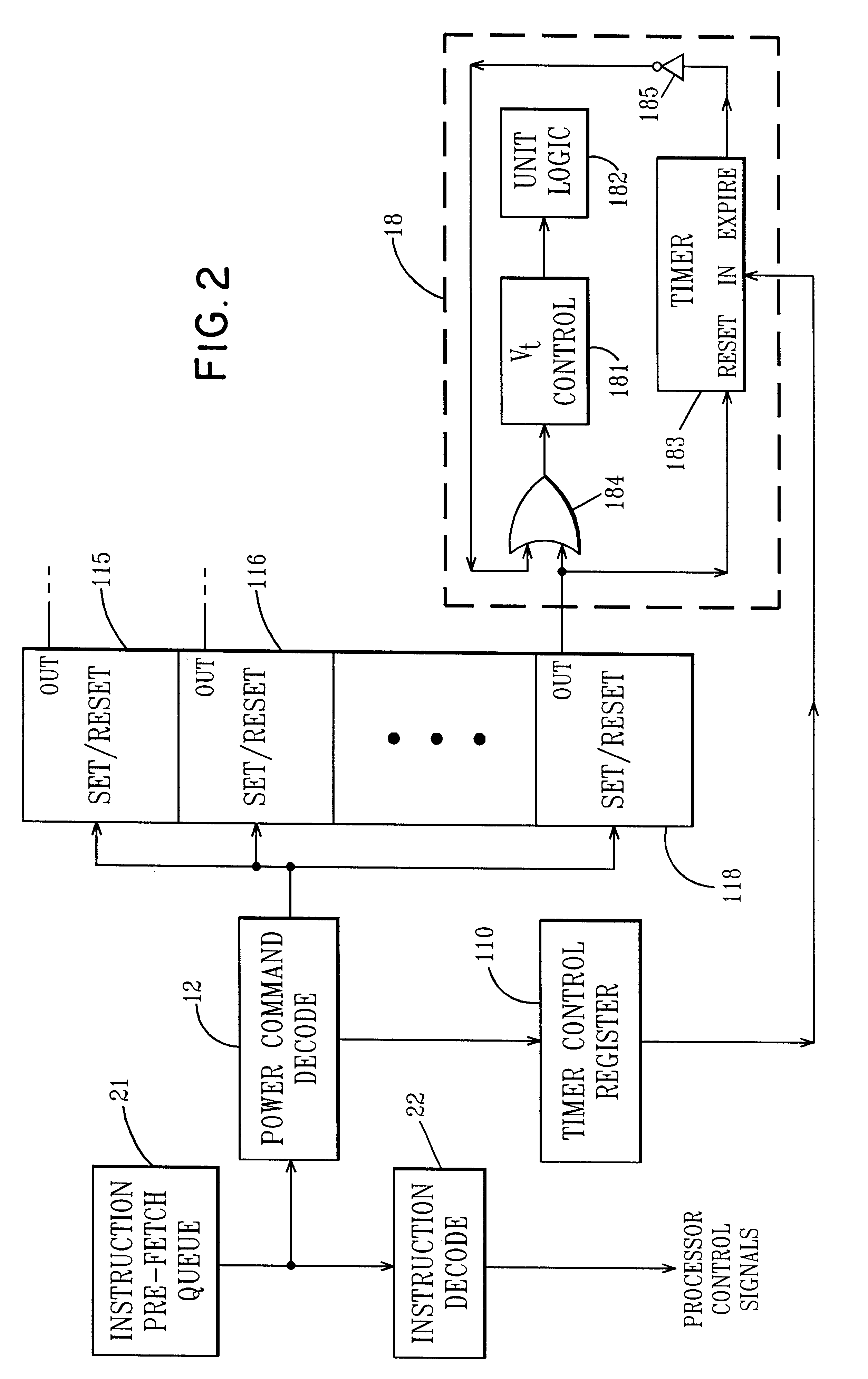

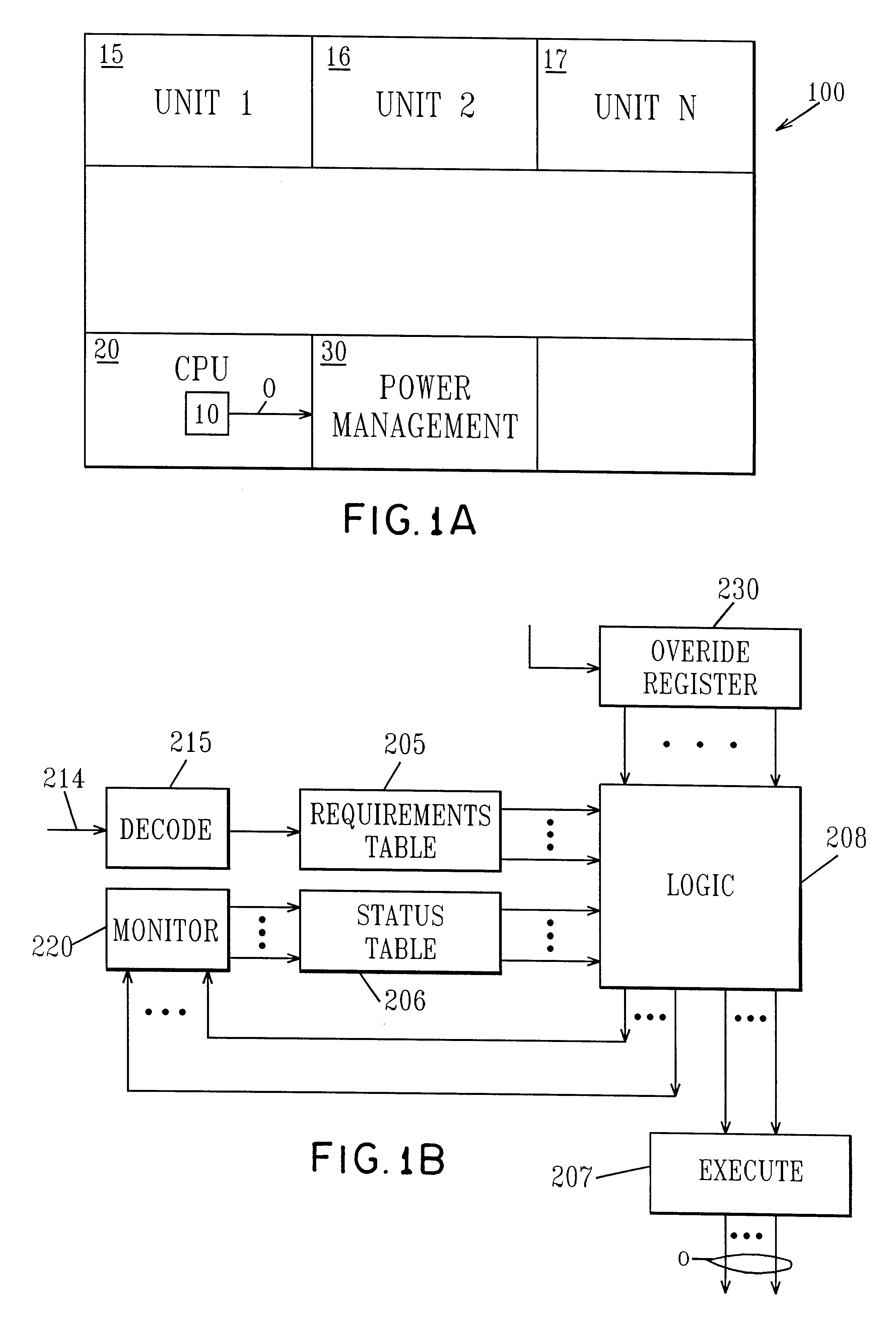

An integrated circuit includes a plurality of functional units which are capable of operating at more than one power / performance level and a power control unit. The power control unit controls the power / performance consumption of the different functional units to optimize operation of the integrated circuit. Special power control instructions are added to user applications in order to control via the power control unit, the power consumption of the different functional units.

Owner:MICROSOFT TECH LICENSING LLC

Managing Vt for reduced power using a status table

InactiveUS6345362B1Improve performanceReduce power consumptionEnergy efficient ICTVolume/mass flow measurementHemt circuitsLow power dissipation

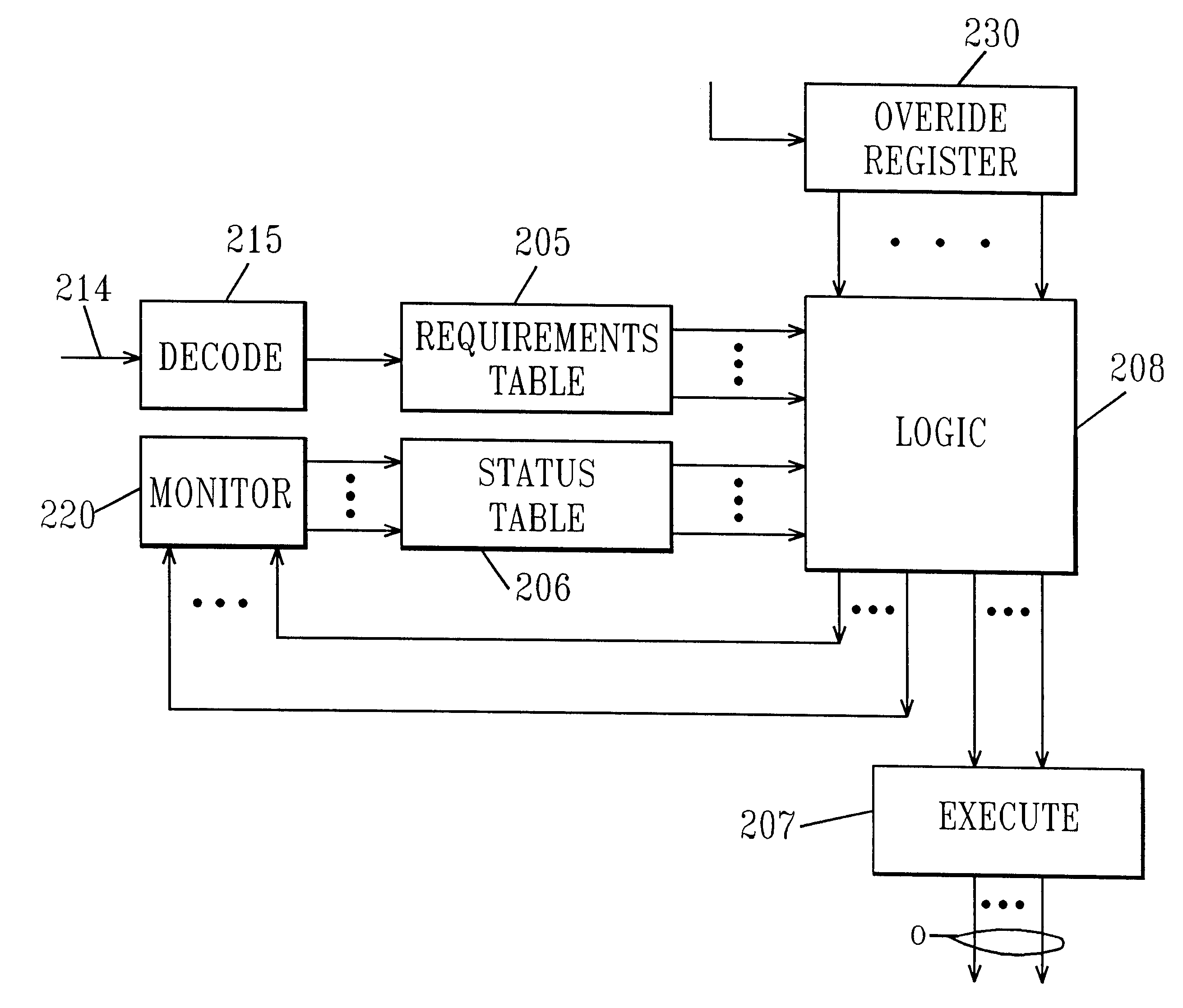

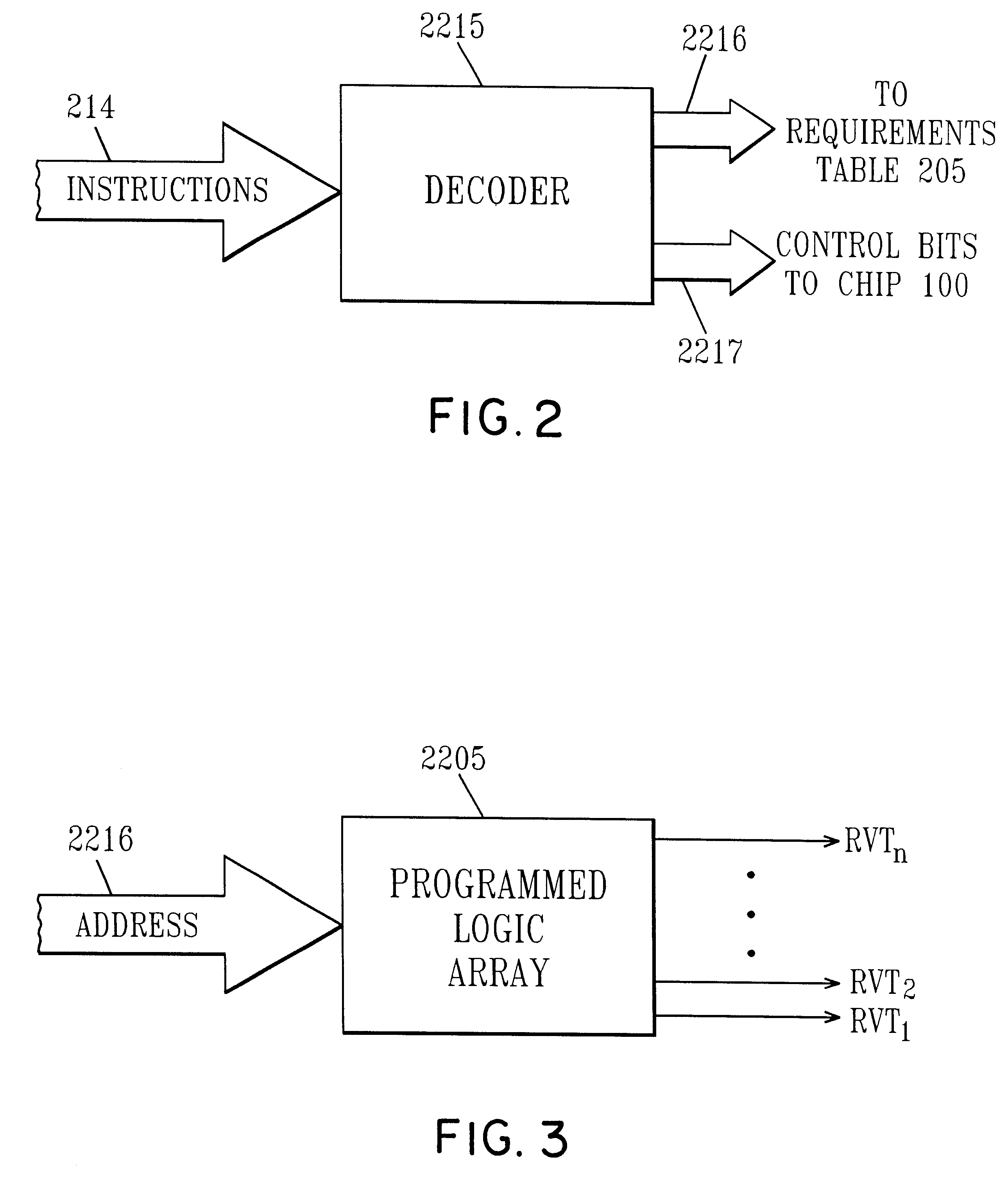

An integrated circuit includes a CPU, a power management unit and plural functional units each dedicated to executing different functions. The power management unit controls the threshold voltage of the different functional units to optimize power / performance operation of the circuit and intelligent power management control responds to the instruction stream and decodes each instruction in turn. This information identifies which of the functional units are required for the particular instruction and by comparing that information to power status, the intelligent power control determines whether the functional units required to execute the command are at the optimum power level. If they are, the command is allowed to proceed, otherwise the intelligent power control either stalls the instruction sequence or modifies process speed.

Owner:GLOBALFOUNDRIES INC

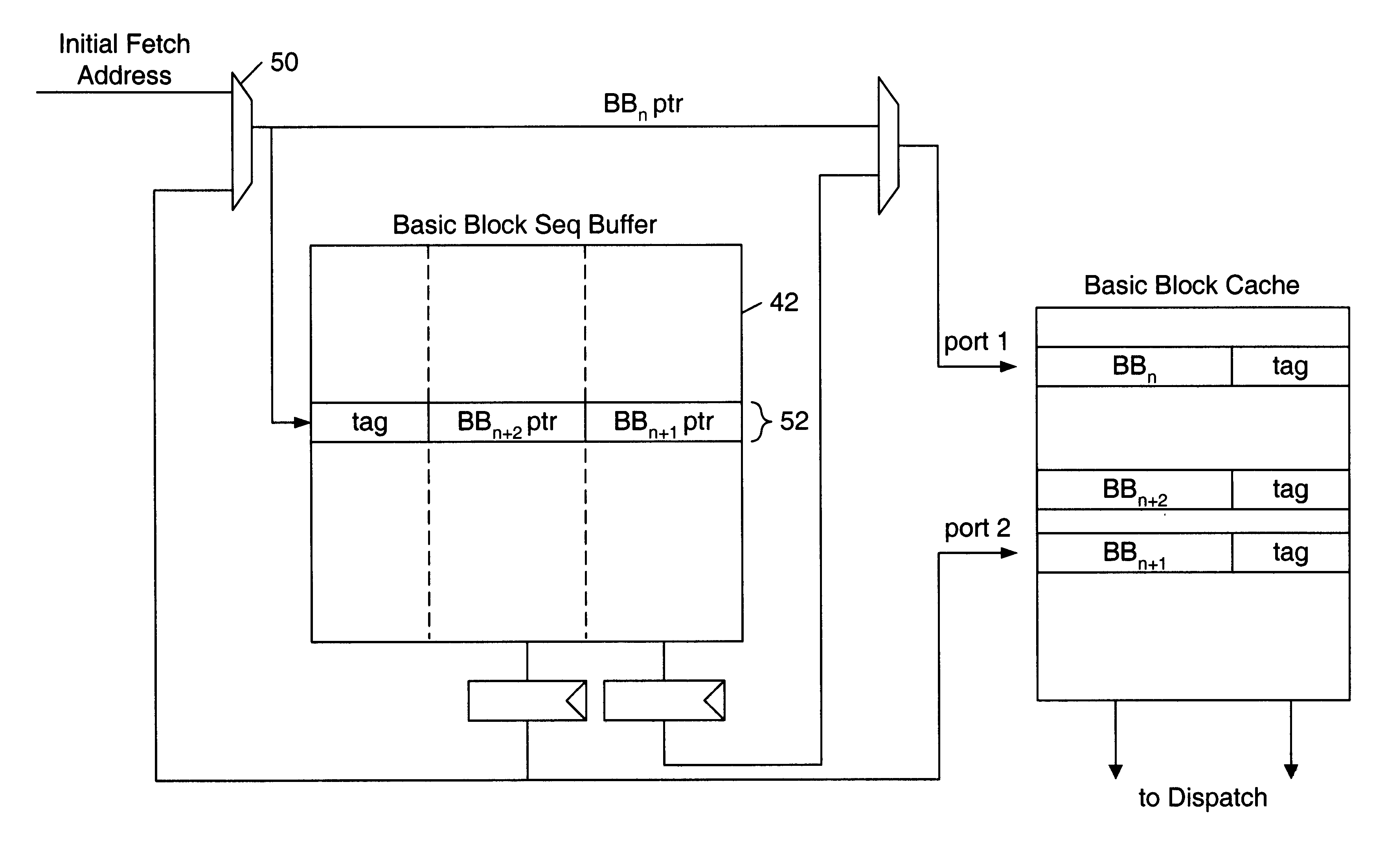

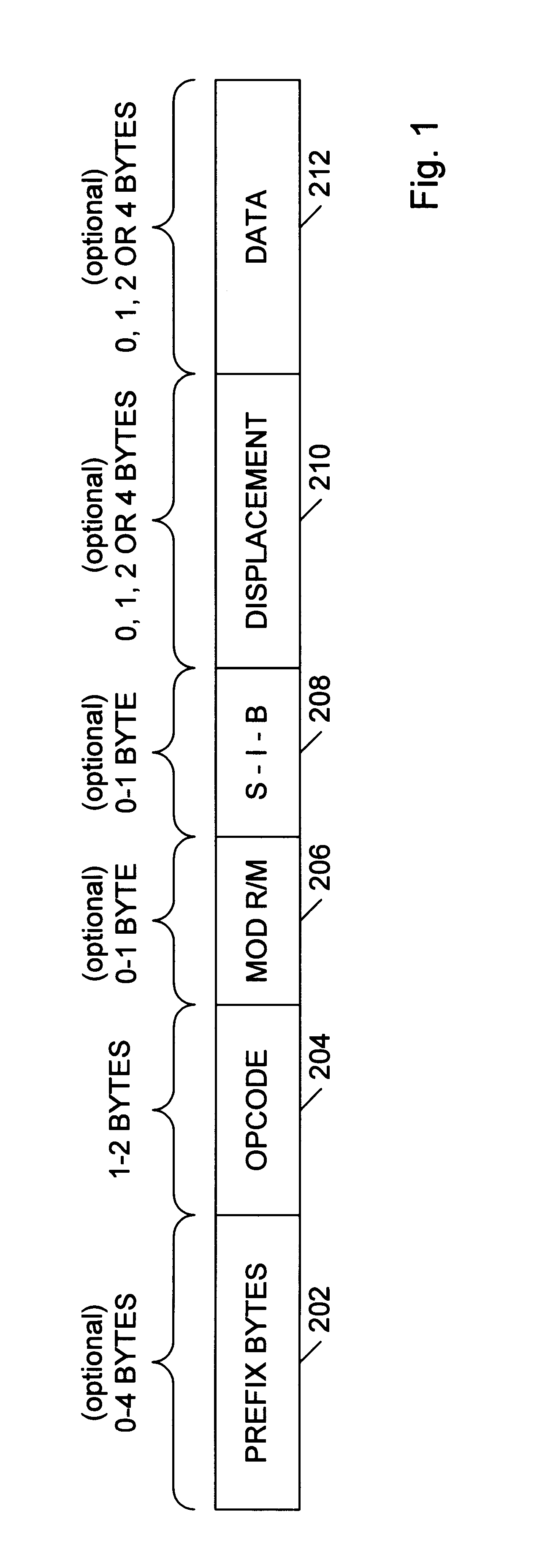

Using padded instructions in a block-oriented cache

InactiveUS6339822B1Memory architecture accessing/allocationInstruction analysisComputerized systemVariable length

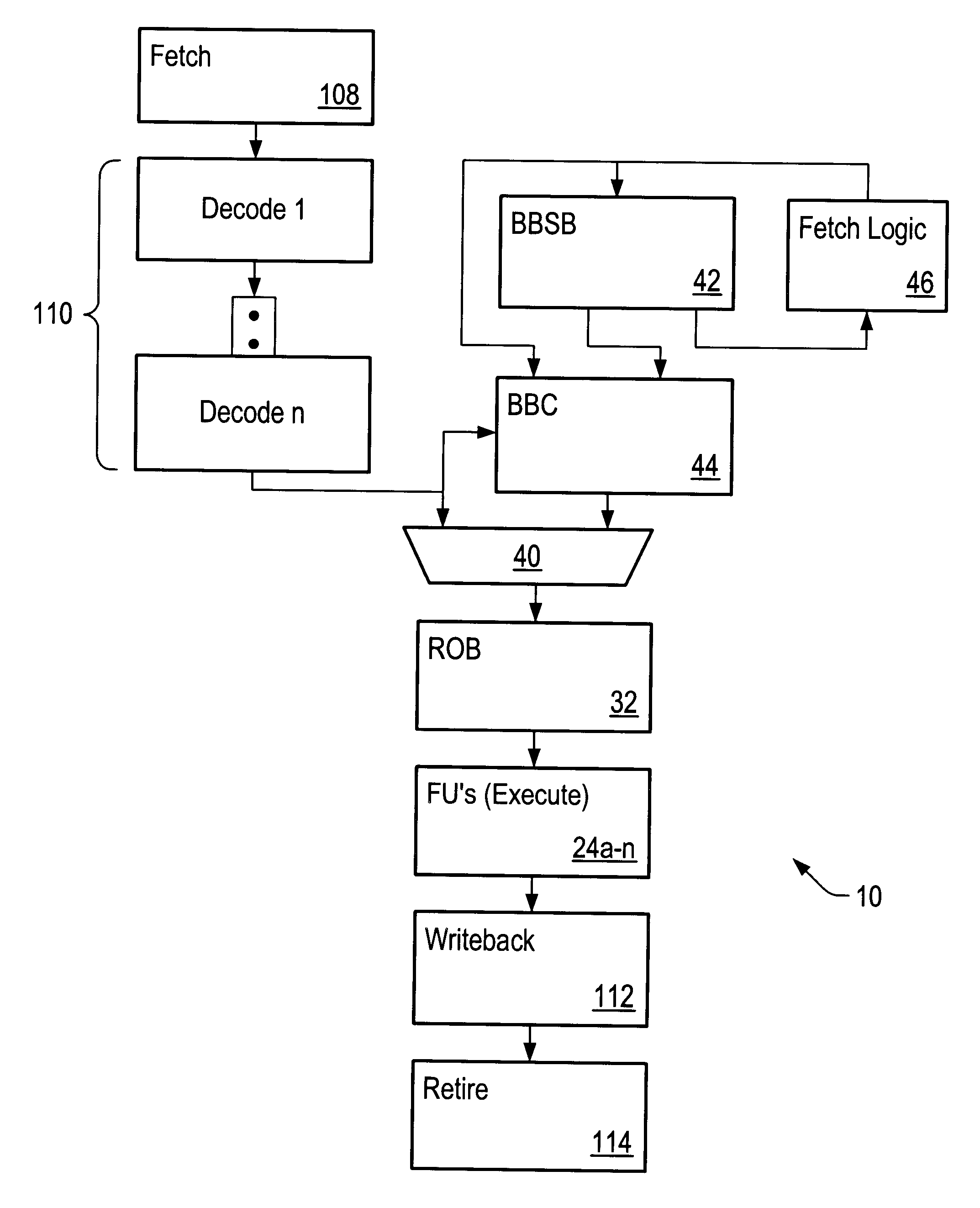

A microprocessor configured to cache basic blocks of instructions is disclosed. The microprocessor may comprise decoding logic, a basic block cache, and a branch prediction unit. The decoding logic is coupled to receive and decode variable-length instructions into padded instructions that have one of a predetermined number of predetermined lengths. The decoding logic is further configured to form basic blocks of instructions from the padded and decoded instructions. Basic blocks are natural divisions in instruction streams resulting from branch instructions. The start of a basic block is a target of a branch, and the end is another branch instruction. The basic block cache is configured to store the basic blocks in a plurality of storage locations, wherein each storage location is configured to store an address tag, a link bit, and at least a portion of one basic block. The link bit indicates whether the basic block stored in said storage location extends into another storage location. The branch prediction unit has a branch prediction array storing branch prediction information corresponding to each storage location within the basic block cache. A computer system and method for operating are also disclosed.

Owner:GLOBALFOUNDRIES INC

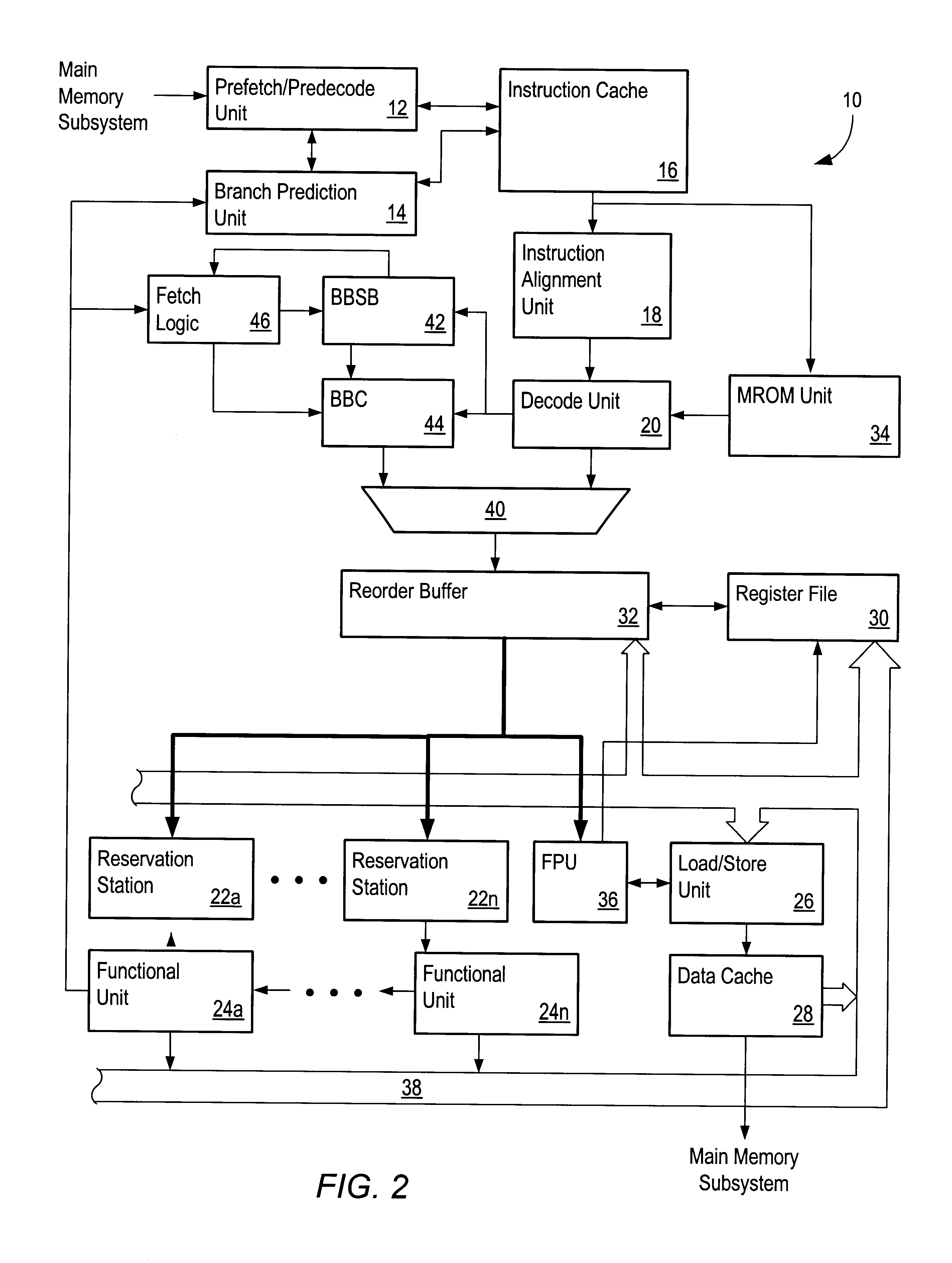

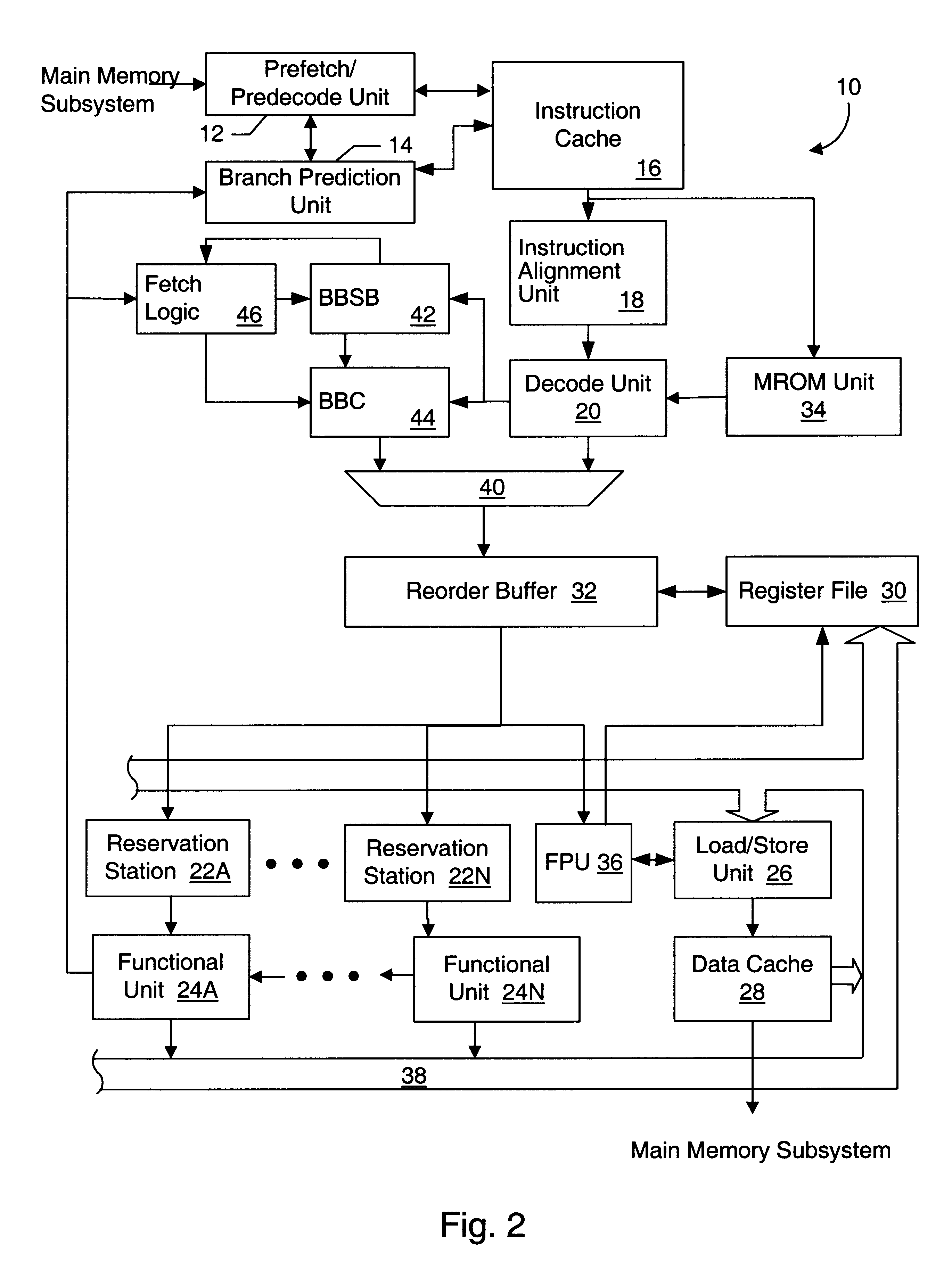

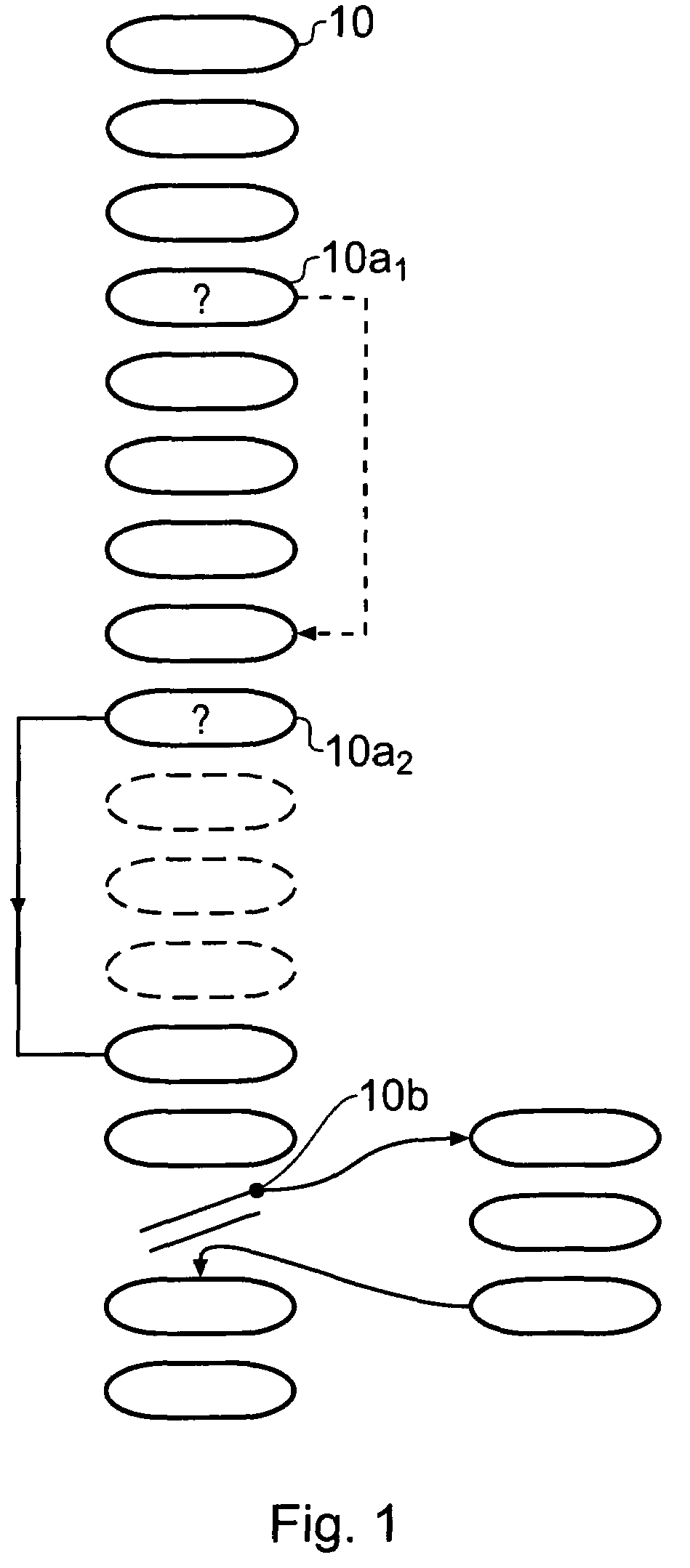

Basic block oriented trace cache utilizing a basic block sequence buffer to indicate program order of cached basic blocks

A cache memory configured to access stored instructions according to basic blocks is disclosed. Basic blocks are natural divisions in instruction streams resulting from branch instructions. The start of a basic block is a target of a branch, and the end is another branch instruction. A microprocessor configured to use a basic block oriented cache may comprise a basic block cache and a basic block sequence buffer. The basic block cache may have a plurality of storage locations configured to store basic blocks. The basic block sequence buffer also has a plurality of storage locations, each configured to store a block sequence entry. The block sequence entry may comprise an address tag and one or more basic block pointers. The address tag corresponds to the fetch address of a particular basic block, and the pointers point to basic blocks that follow the particular basic block in a predicted order. A system using the microprocessor and a method for caching instructions in a block oriented manner rather than conventional power-of-two memory blocks are also disclosed.

Owner:GLOBALFOUNDRIES INC

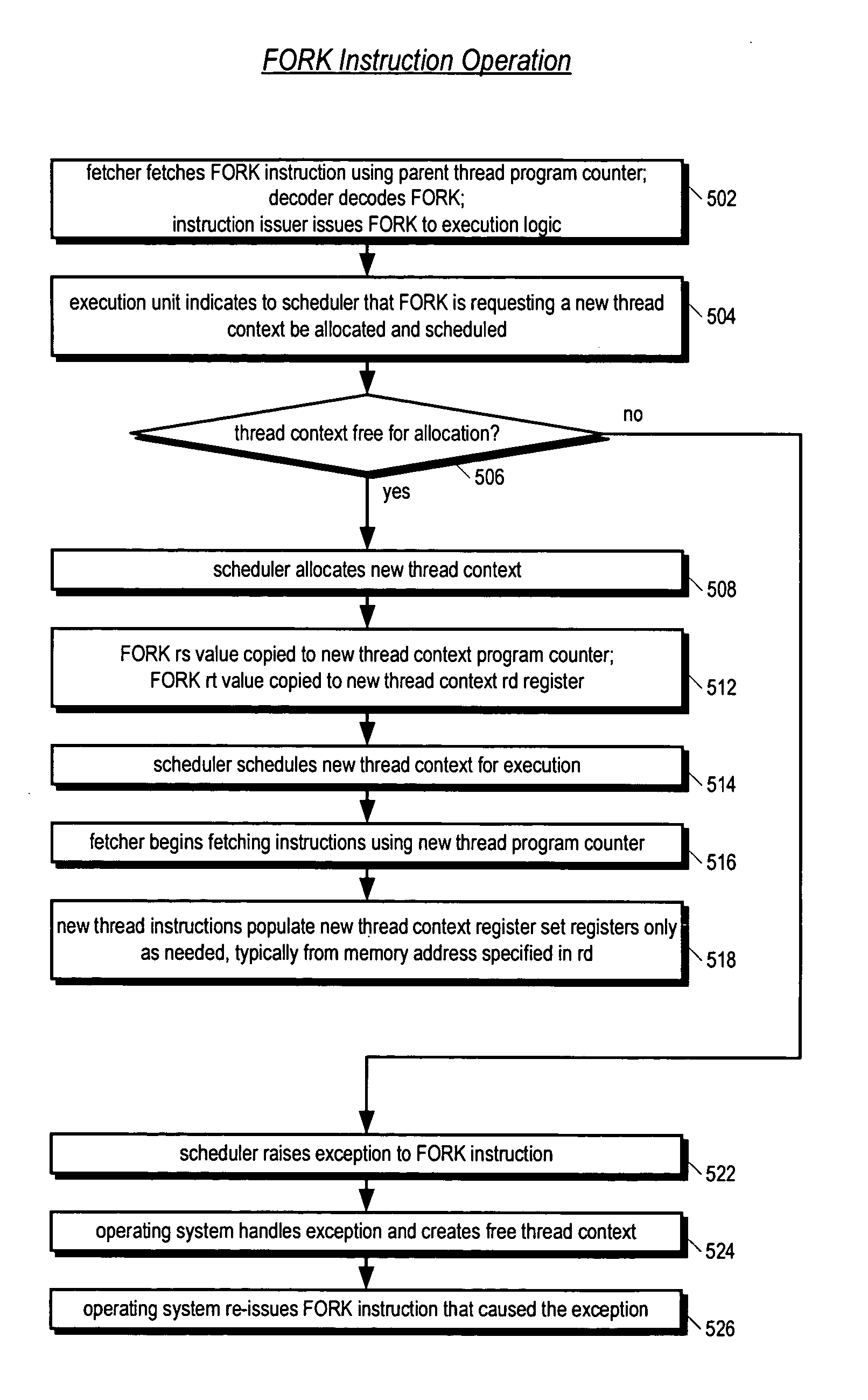

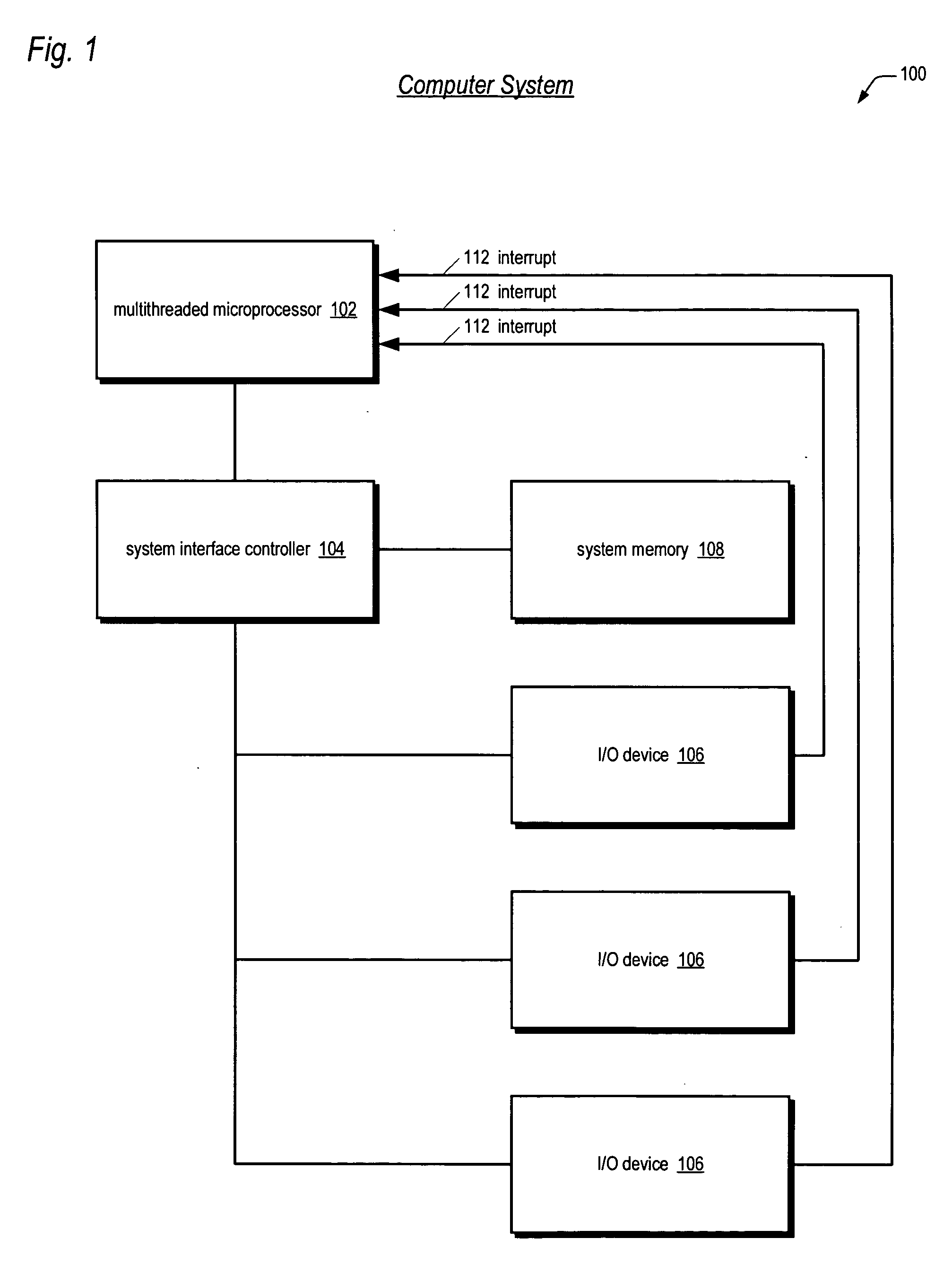

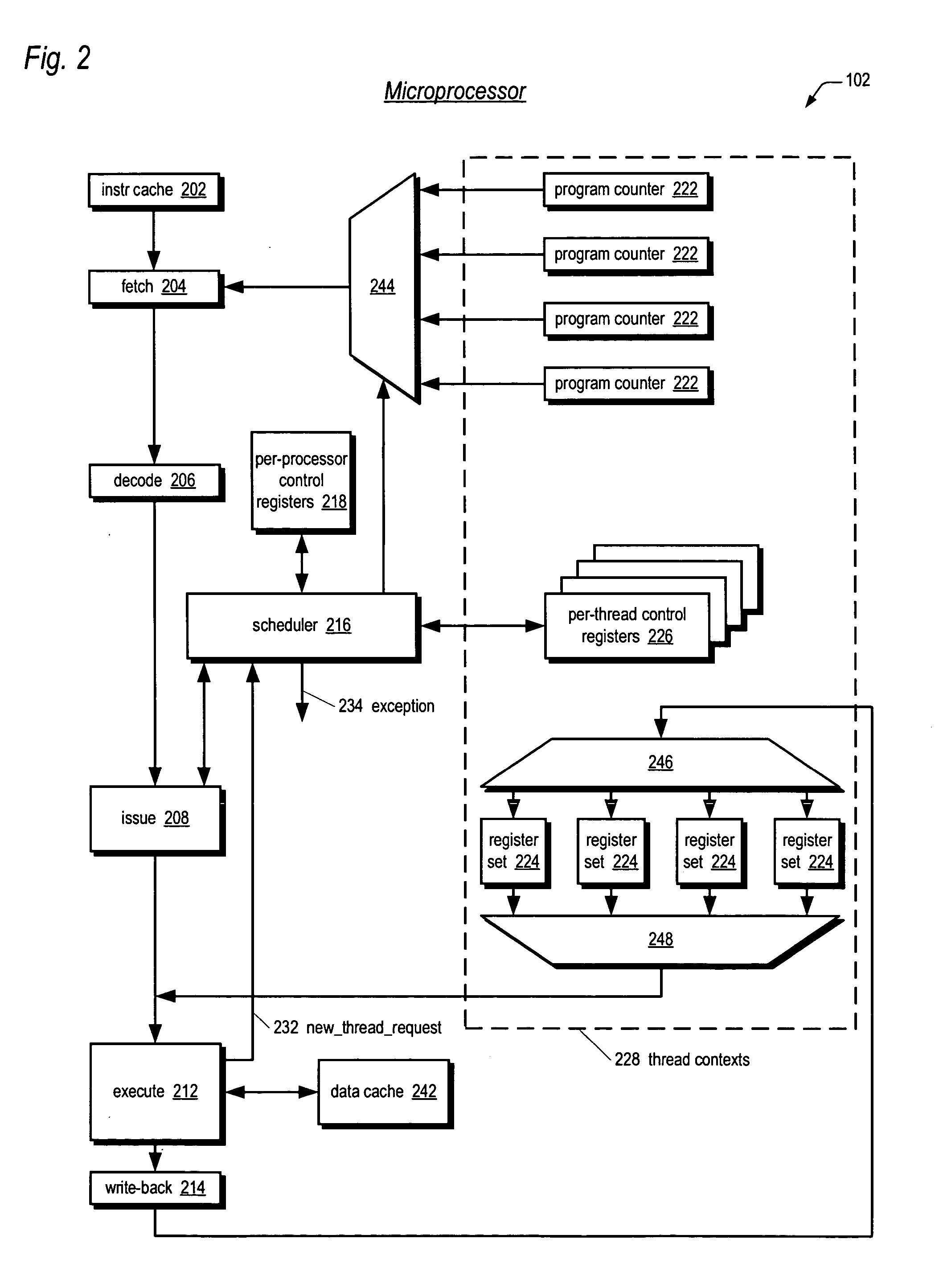

Apparatus, method, and instruction for initiation of concurrent instruction streams in a multithreading microprocessor

ActiveUS20050120194A1Reduce overheadAvoid wasting energyProgram initiation/switchingSoftware engineeringGeneral purposeComputer architecture

A fork instruction for execution on a multithreaded microprocessor and occupying a single instruction issue slot is disclosed. The fork instruction, executing in a parent thread, includes a first operand specifying the initial instruction address of a new thread and a second operand. The microprocessor executes the fork instruction by allocating context for the new thread, copying the first operand to a program counter of the new thread context, copying the second operand to a register of the new thread context, and scheduling the new thread for execution. If no new thread context is free for allocation, the microprocessor raises an exception to the fork instruction. The fork instruction is efficient because it does not copy the parent thread general purpose registers to the new thread. The second operand is typically used as a pointer to a data structure in memory containing initial general purpose register set values for the new thread.

Owner:MIPS TECH INC

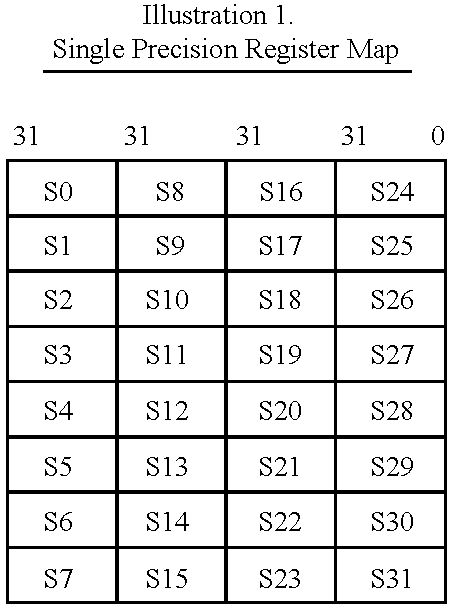

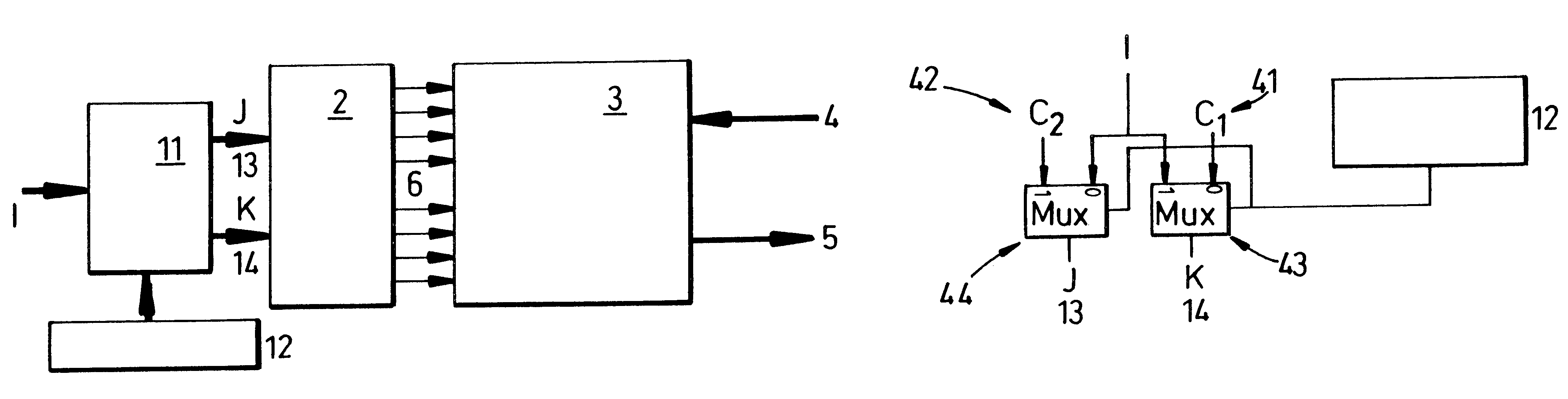

Coprocessor opcode division by data type

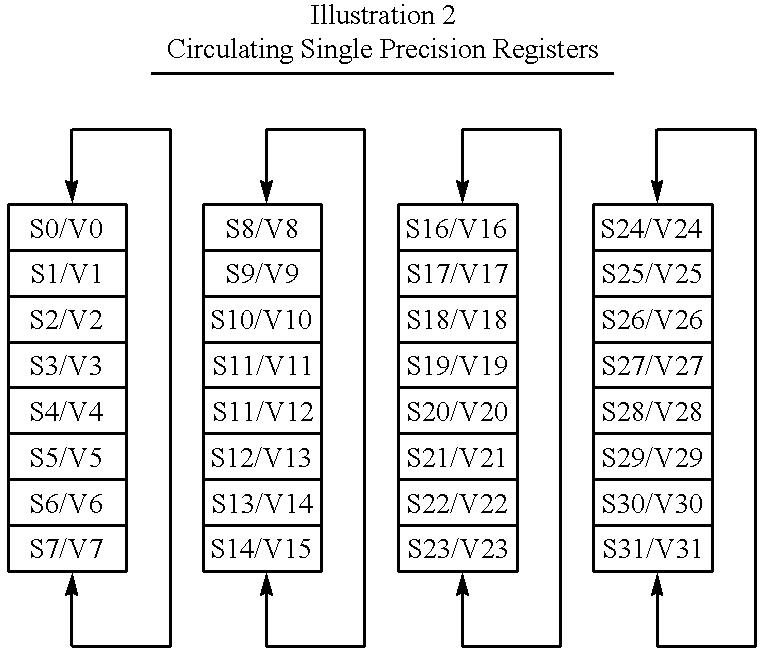

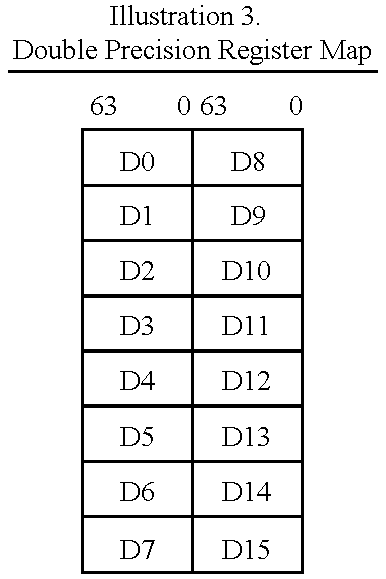

InactiveUS6247113B1Easy to scaleReduced hardware coprocessorRegister arrangementsGeneral purpose stored program computerData processing systemCoprocessor

A data processing system having a main processor and a coprocessor. The main processor responsds to coprocessor instructions within its instruction stream by issuing the coprocessor instructions upon a coprocessor bus and detecting if the coprocessor accepts them by returning an accept signal. The coprocessor instructions include a coprocessor number and the coprocessor checks this number to see if it matches its own number(s) to determine whether or not it should accept the coprocessor instruction. A data type field within the coprocessor number in the coprocessor instruction also serves to specify one of multiple data types to be used in the coprocessor operation; particular coprocessors can interpret this part of the coprocessor number to determine data type. If the coprocessor supports multiple data types, then it has multiple coprocessor numbers for which it will issue accept signals and then uses the data type field to control the data type used. If a coprocessor does not support a particular data type then it will not issue an accept signal for coprocessor instructions that specify that data type. The main processor can then use emulation code to provide support for that coprocessor instruction.

Owner:ARM LTD

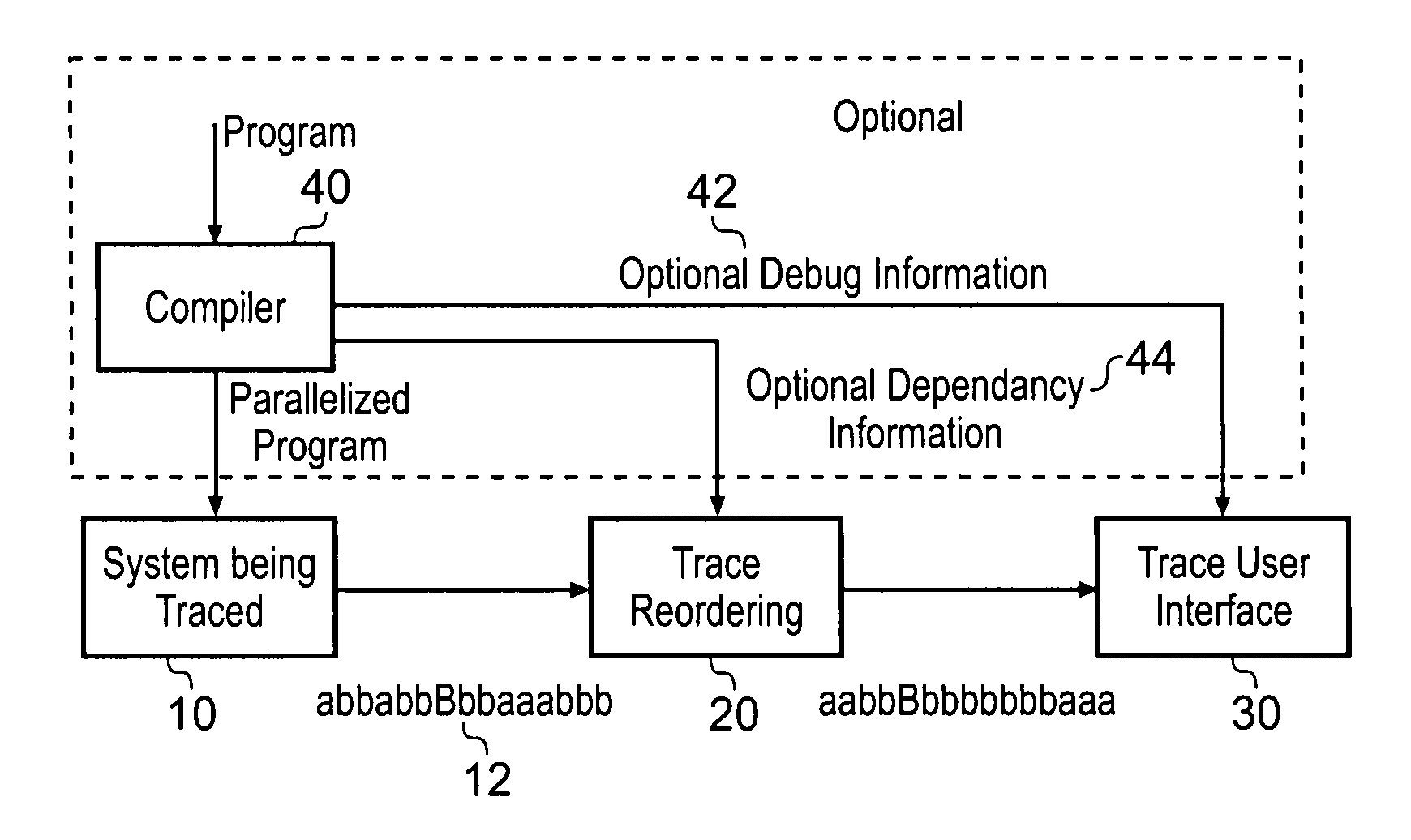

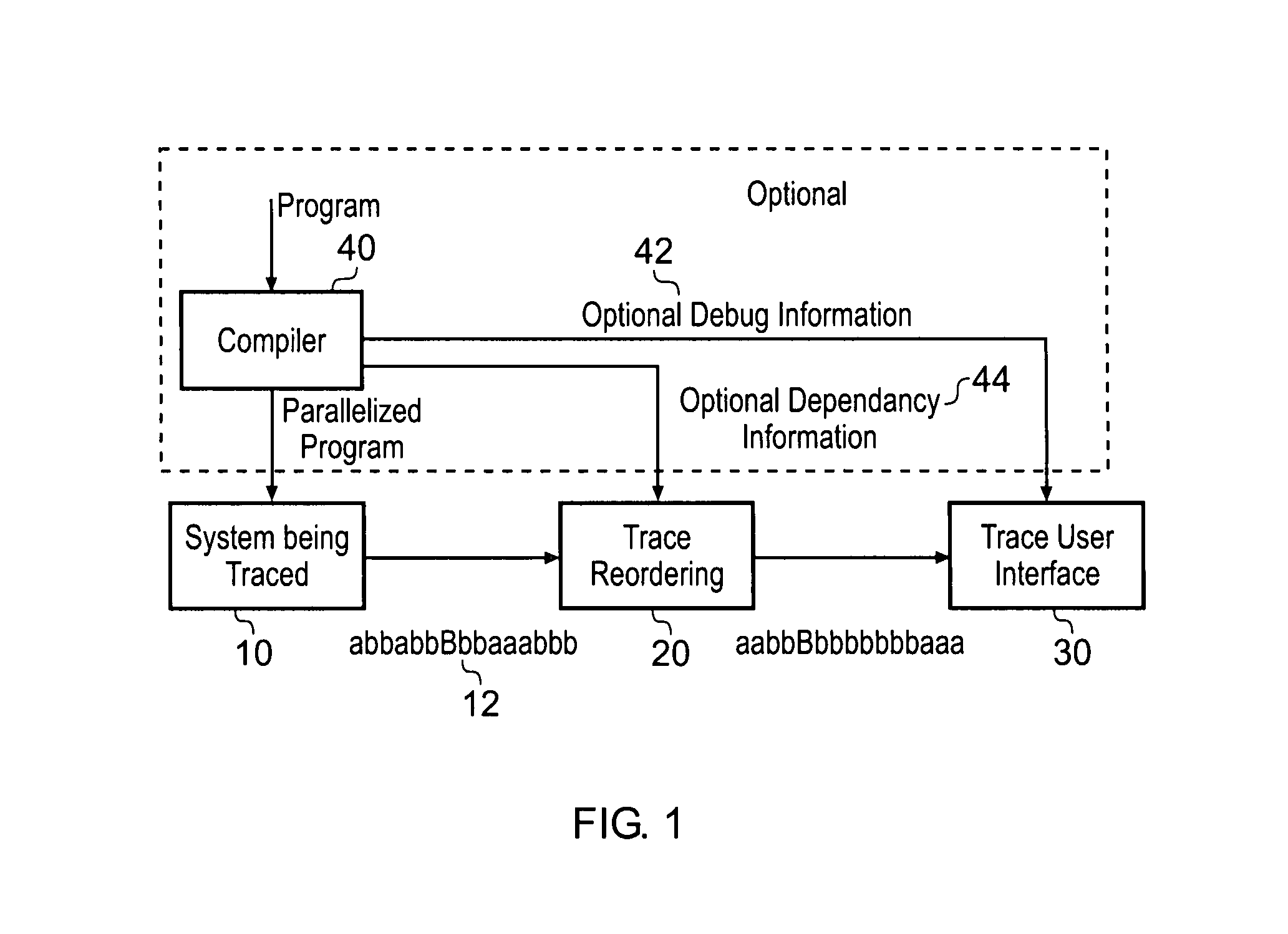

Analyzing diagnostic data generated by multiple threads within an instruction stream

InactiveUS20080098207A1Easy to understandDifficult to analyseDetecting faulty computer hardwareDigital computer detailsDiagnostic dataParallel processing

A diagnostic method for outputting diagnostic data relating to processing of instruction streams stemming from a computer program, at least some of said instructions streams comprising multiple threads is disclosed. The method comprises the steps of: (i) receiving diagnostic data; (ii) reordering said received diagnostic data in dependence upon reordering data, said reordering data comprising data relating to said computer program; and (iii) outputting said reordered diagnostic data. In general, the instructions streams are processed by a plurality of processing units arranged to process at least some of said instructions in parallel, said diagnostic data being received from said plurality of processing units.

Owner:ARM LTD

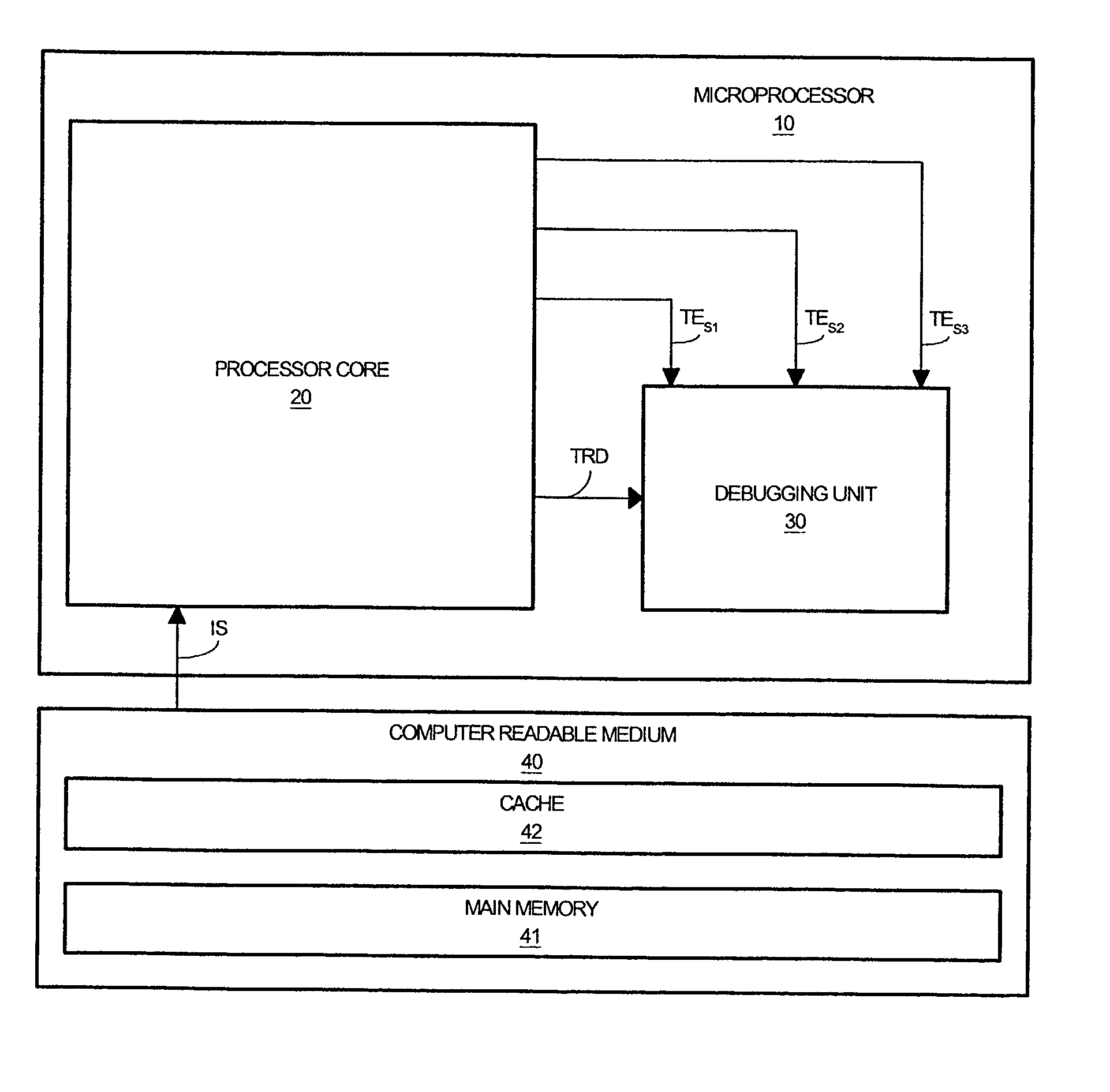

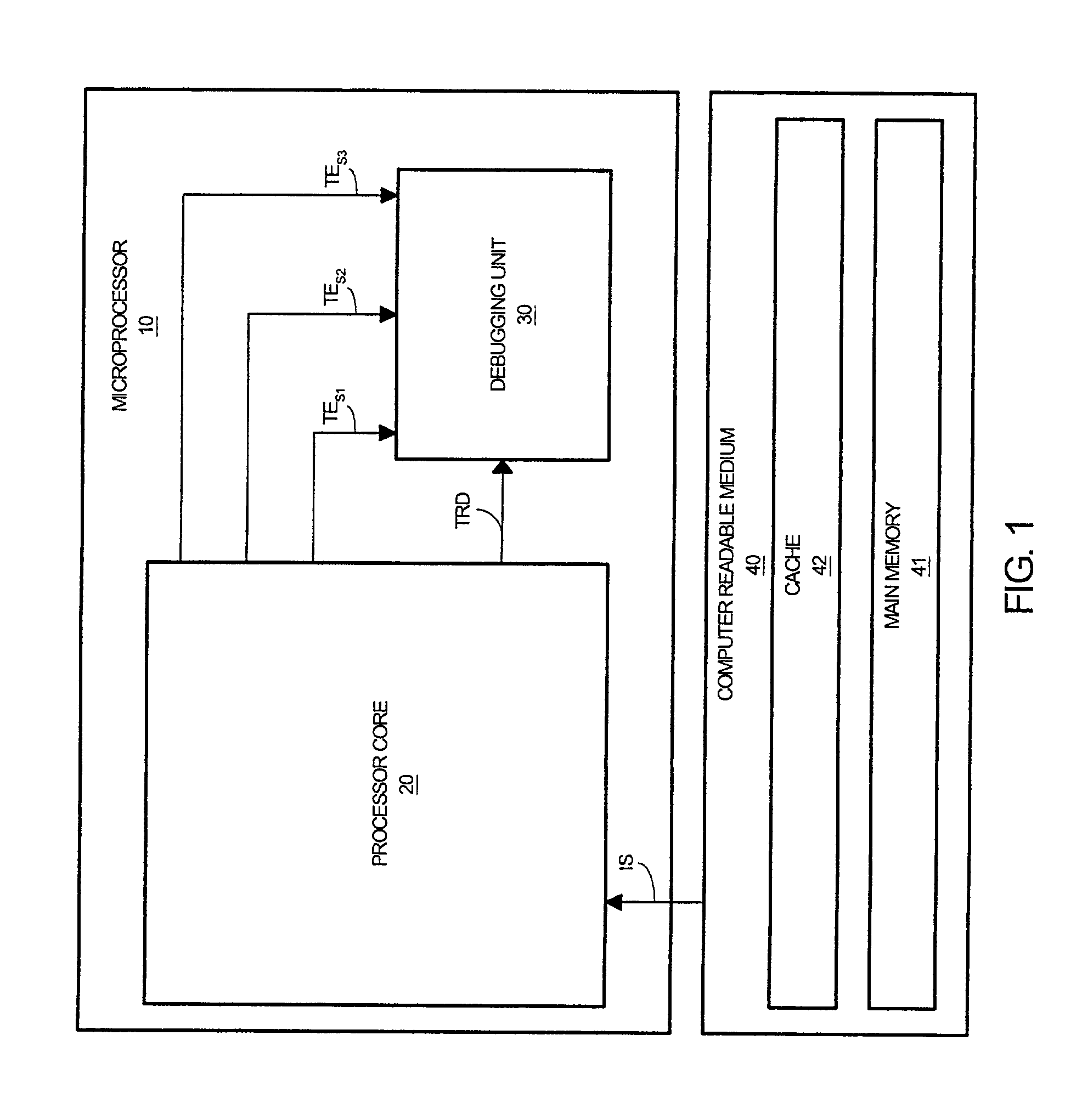

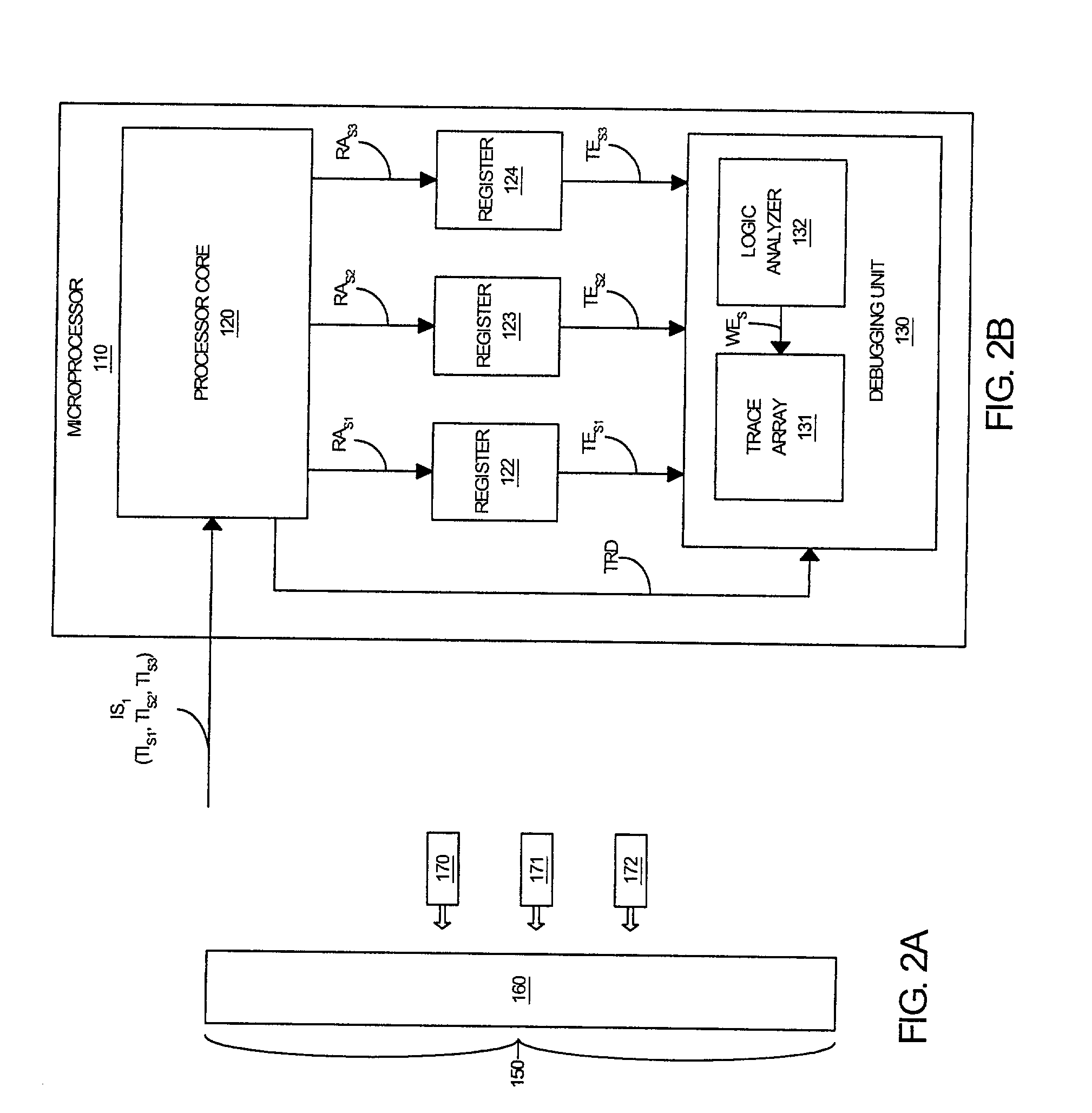

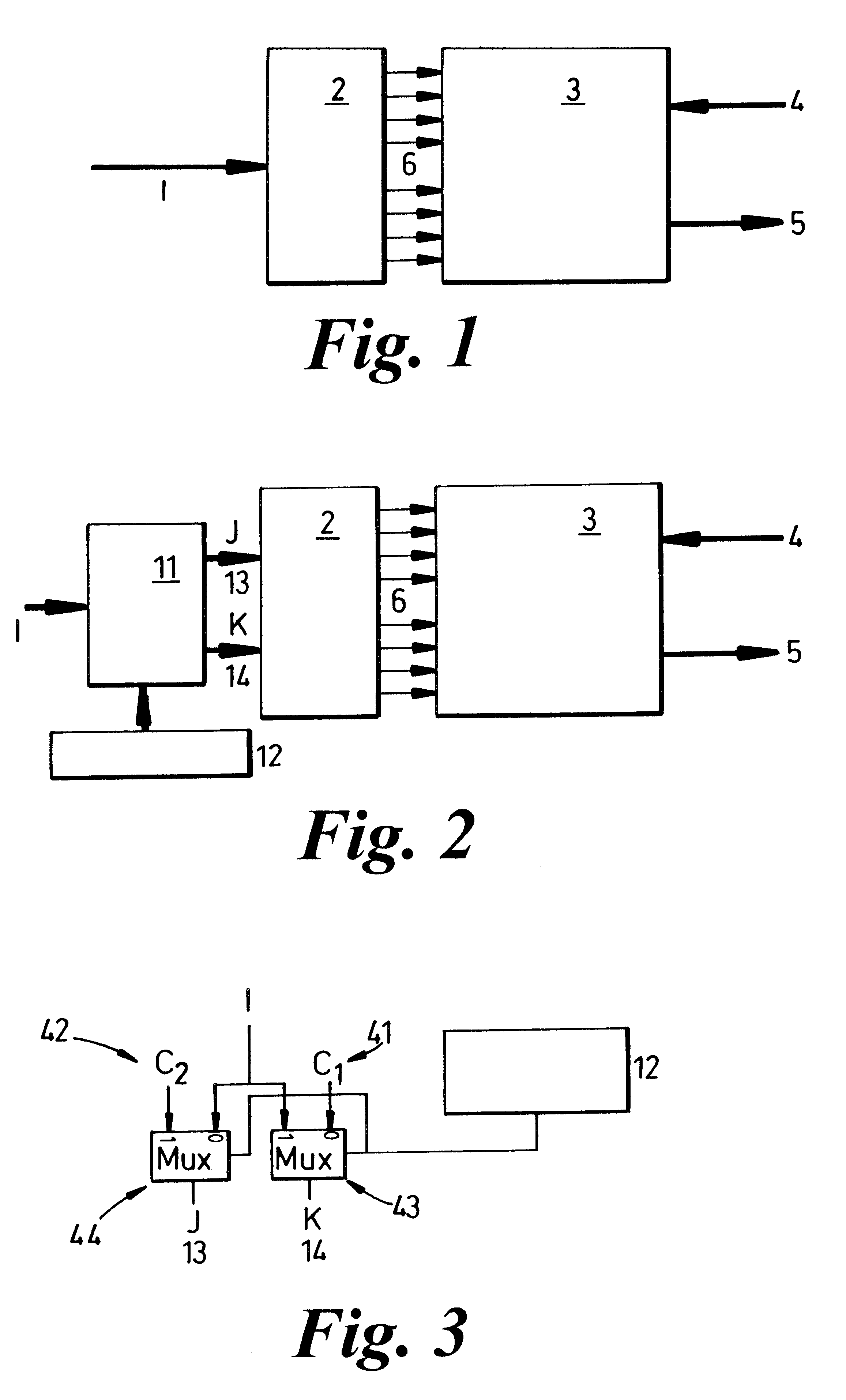

Method and system for triggering a debugging unit

InactiveUS20020129309A1Electronic circuit testingDigital computer detailsProcessing InstructionDynamic storage

A processor core for transitioning a debugging unit between a plurality of operating states in response to an instruction stream is disclosed. The processor core generates trace data as it processes operating signals of the instruction stream. The processor core provides a first trigger event signal to the debugging unit in response to a first trigger instruction signal within the instruction stream that is representative of a triggering instruction to transitions the debugging unit to a base operating state. The processor core provides a second trigger event signal to the debugging unit in response to a second trigger instruction signal within the instruction stream that is representative of a triggering instruction to dynamically store trace data within the memory component of the debugging unit. The processor core provides a third trigger event signal to the debugging unit in response to a third trigger instruction signal within the instruction stream that is representative of a triggering instruction to statically store trace data within the memory component of the debugging unit. Concurrently or alternatively, the processor core can provide one or more of the trigger event signals to the debugging unit as a function of a generated trigger data in response to additional operational instructions within the instruction stream.

Owner:IBM CORP

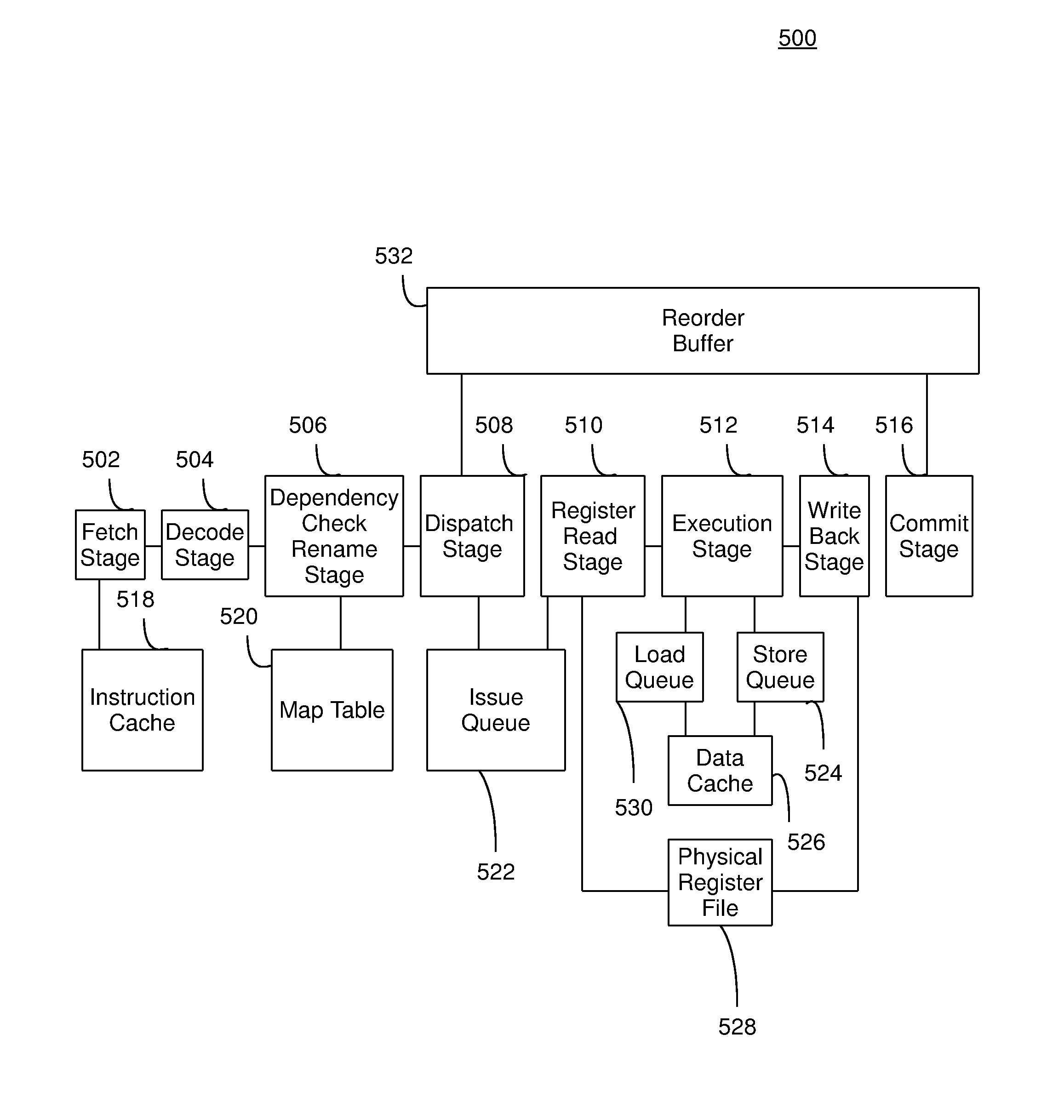

Processor with hybrid pipeline capable of operating in out-of-order and in-order modes

InactiveUS20140281402A1Instruction analysisDigital computer detailsOrder processingInstruction stream

A method and circuit arrangement provide support for a hybrid pipeline that dynamically switches between out-of-order and in-order modes. The hybrid pipeline may selectively execute instructions from at least one instruction stream that require the high performance capabilities provided by out-of-order processing in the out-of-order mode. The hybrid pipeline may also execute instructions that have strict power requirements in the in-order mode where the in-order mode conserves more power compared to the out-of-order mode. Each stage in the hybrid pipeline may be activated and fully functional when the hybrid pipeline is in the out-of-order mode. However, stages in the hybrid pipeline not used for the in-order mode may be deactivated and bypassed by the instructions when the hybrid pipeline dynamically switches from the out-of-order mode to the in-order mode. The deactivated stages may then be reactivated when the hybrid pipeline dynamically switches from the in-order mode to the out-of-order mode.

Owner:IBM CORP

Method and apparatus for providing instruction streams to a processing device

InactiveUS6523107B1Efficient expansionIncrease profitComputation using non-contact making devicesConcurrent instruction executionInstruction stream

A circuit is provided to provide instruction streams to a processing device: embodiments of the circuit are appropriate for use with RISC CPUs, whereas other embodiments are useable with other processing devices, such as small processing devices used in a field programmable array. The circuit receives an external instruction stream which provides a first set of instruction values, and has a memory which contains a second set of instruction values. Two or more outputs provide instruction streams to the processing device. The circuit has a control input in the form of a mask which causes a selection means to allocate bits from the first and second sets of instruction values to different instruction streams to the processing device.

Owner:PANASONIC CORP

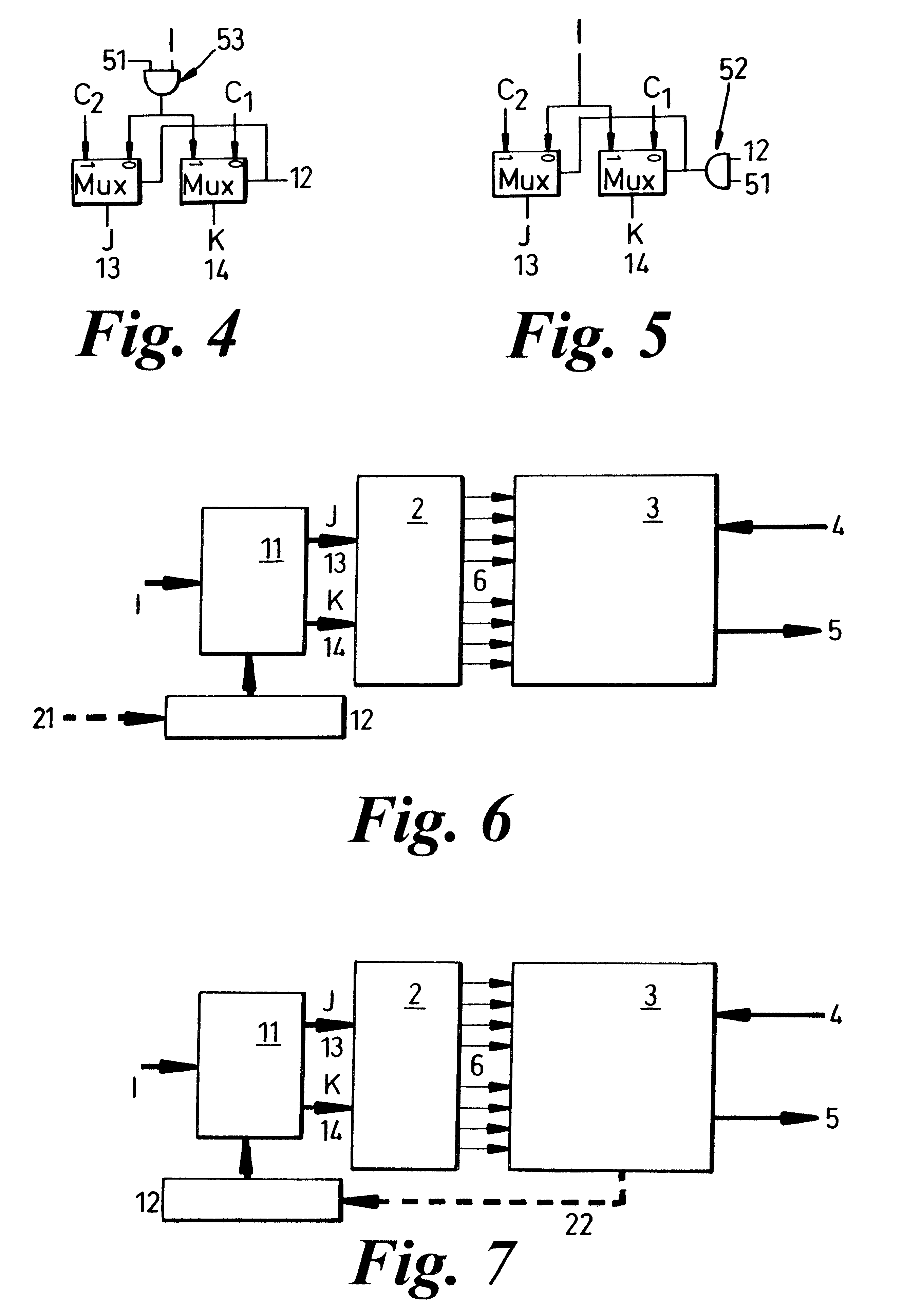

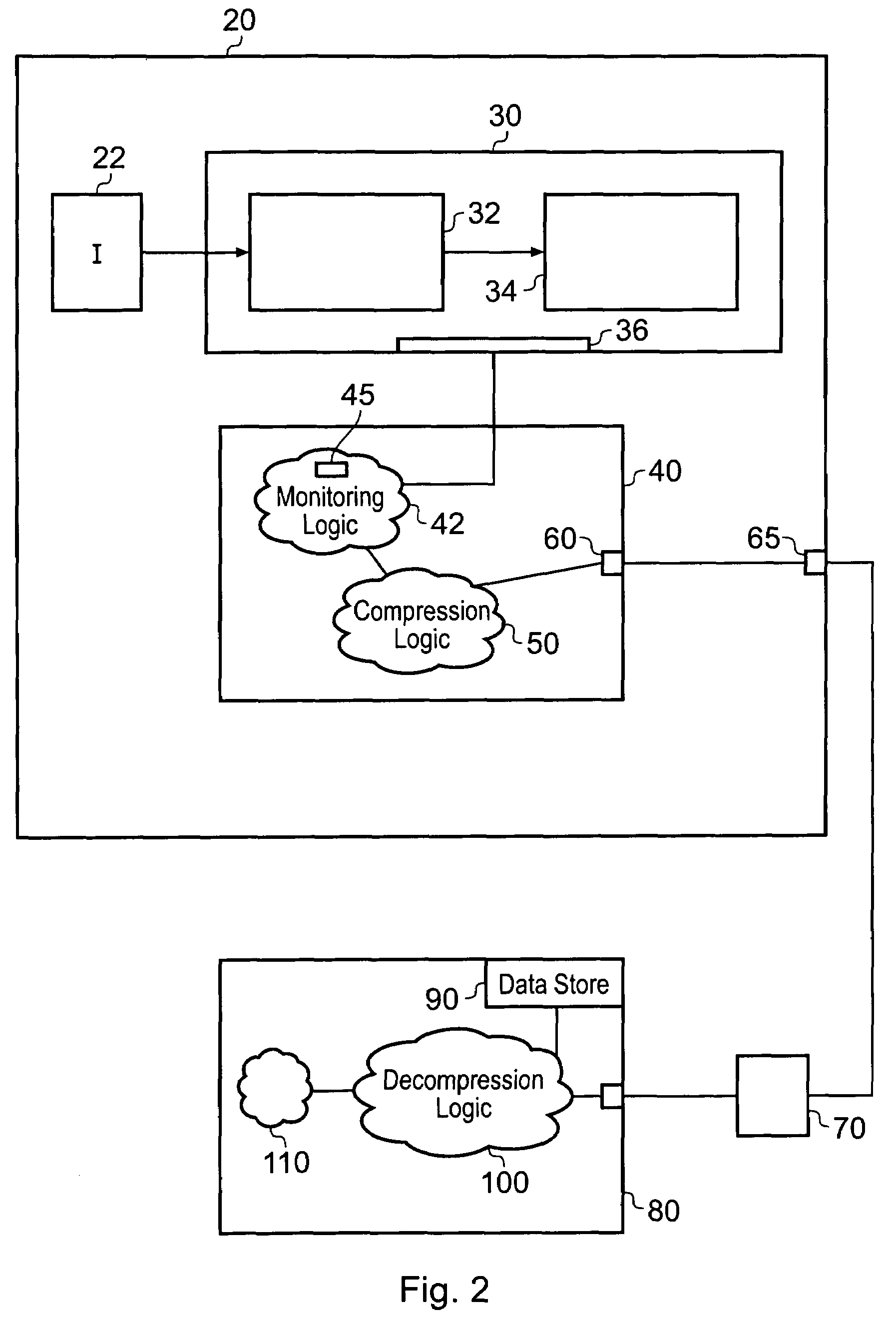

Reducing the size of a data stream produced during instruction tracing

ActiveUS7707394B2Reduce in quantityReduce data volumeEnergy efficient ICTDigital computer detailsProcessing InstructionData stream

Tracing logic for monitoring a stream of processing instructions from a program being processed by a data processor is disclosed, said tracing logic comprising monitoring logic operable to detect processing of said instructions in said instruction stream; detect which of said instructions in said instruction stream are conditional direct branch instructions, which of said instructions in said instruction stream are conditional indirect branch instructions and which of said instructions in said instruction stream are unconditional indirect branch instructions; said tracing logic further comprising compression logic operable to: designate said conditional direct branch instructions, said conditional indirect branch instructions and said indirect branch instructions as marker instructions; for each marker instruction, output an execution indicator indicating if said marker instruction has executed or a non-execution indicator indicating if said marker instruction has not executed and not output data relating to previously processed instructions that are not marker instructions.

Owner:ARM LTD

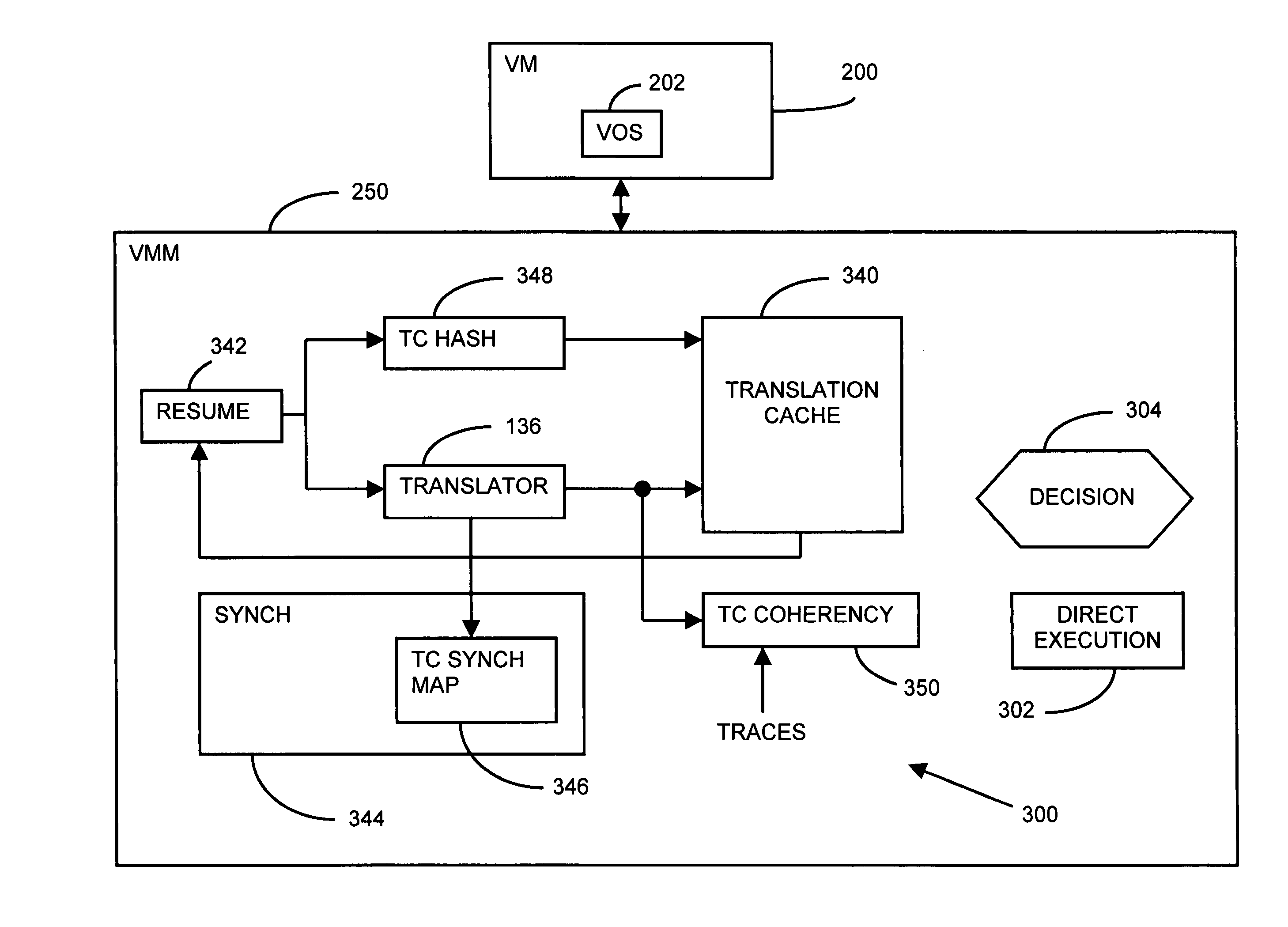

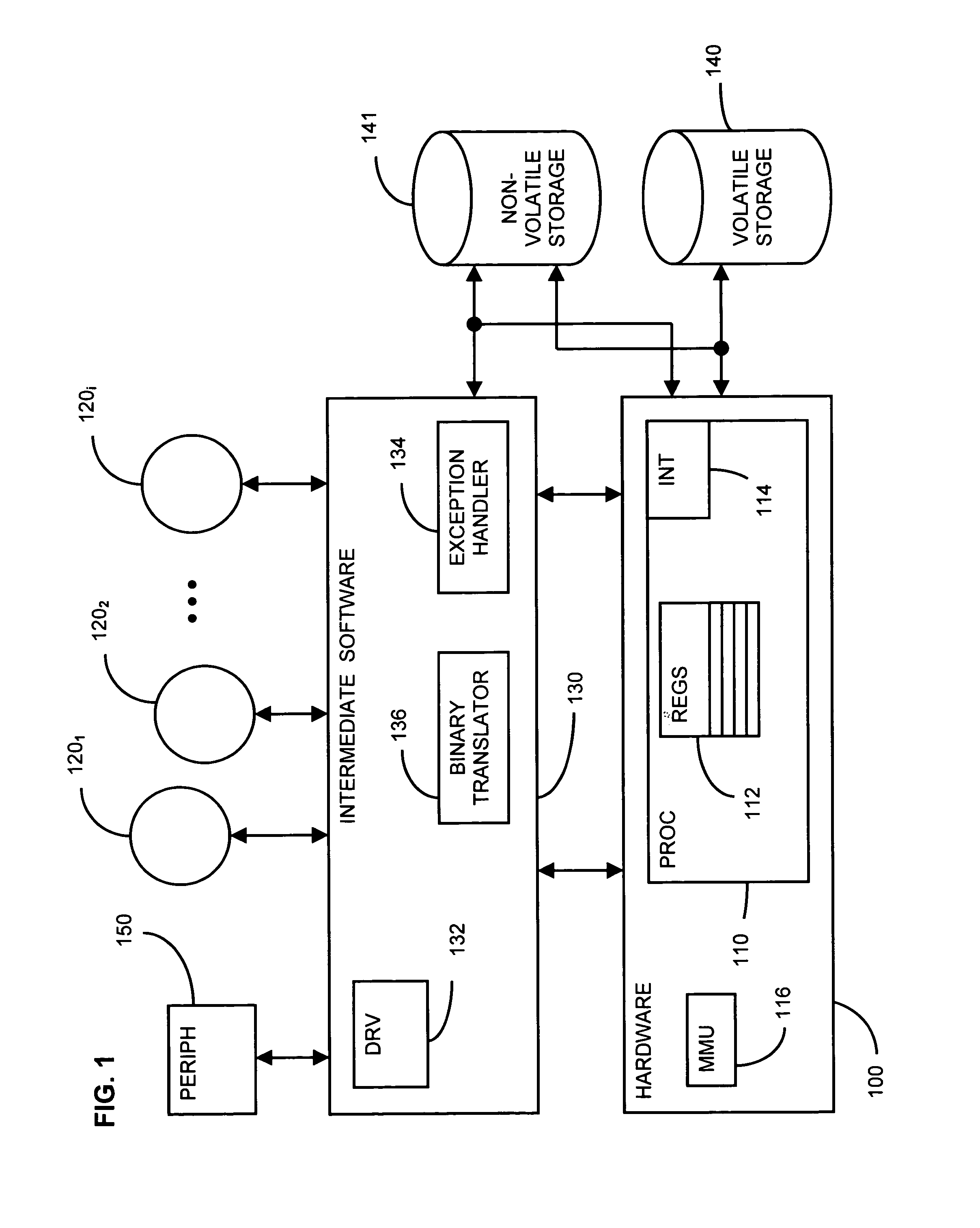

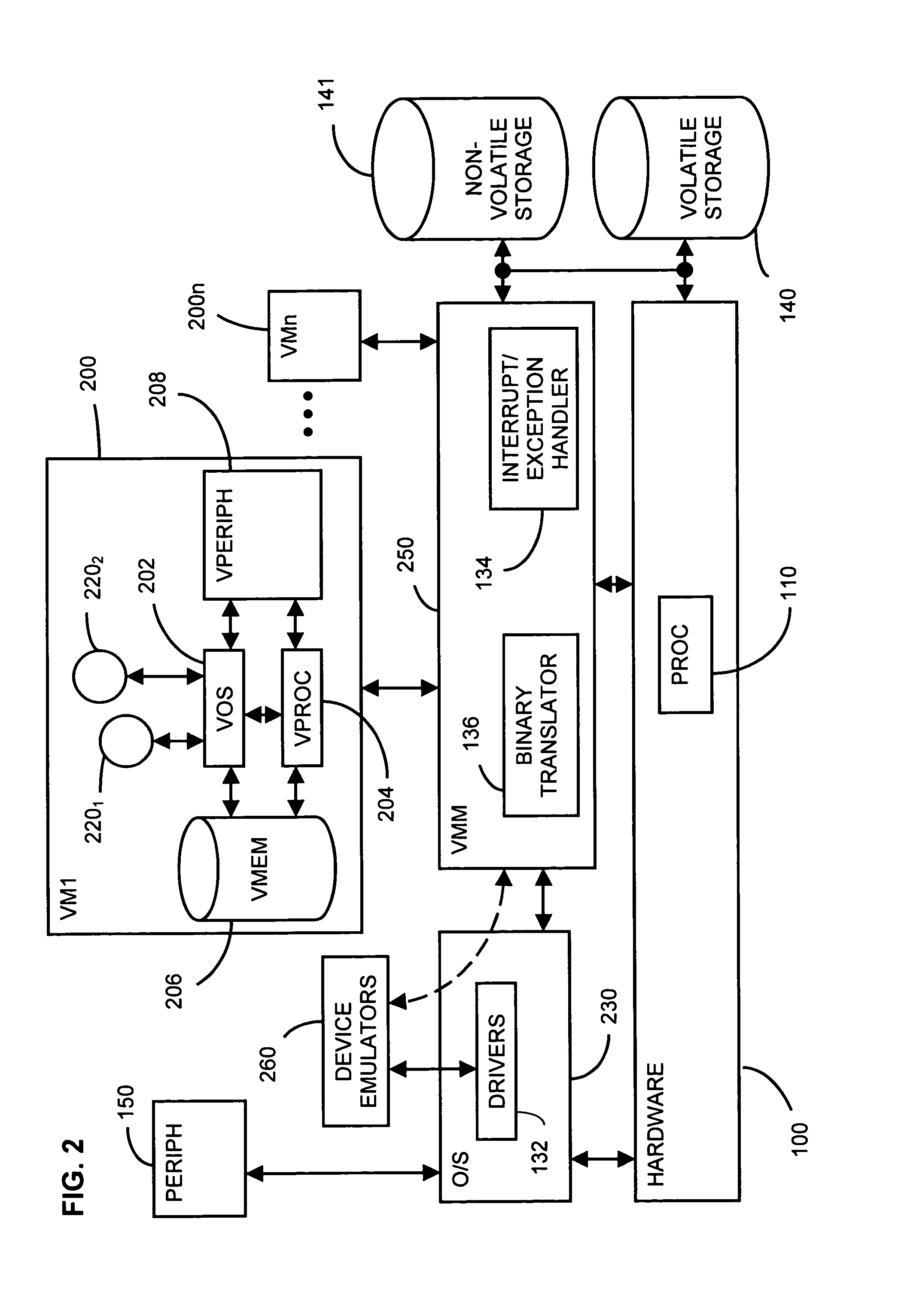

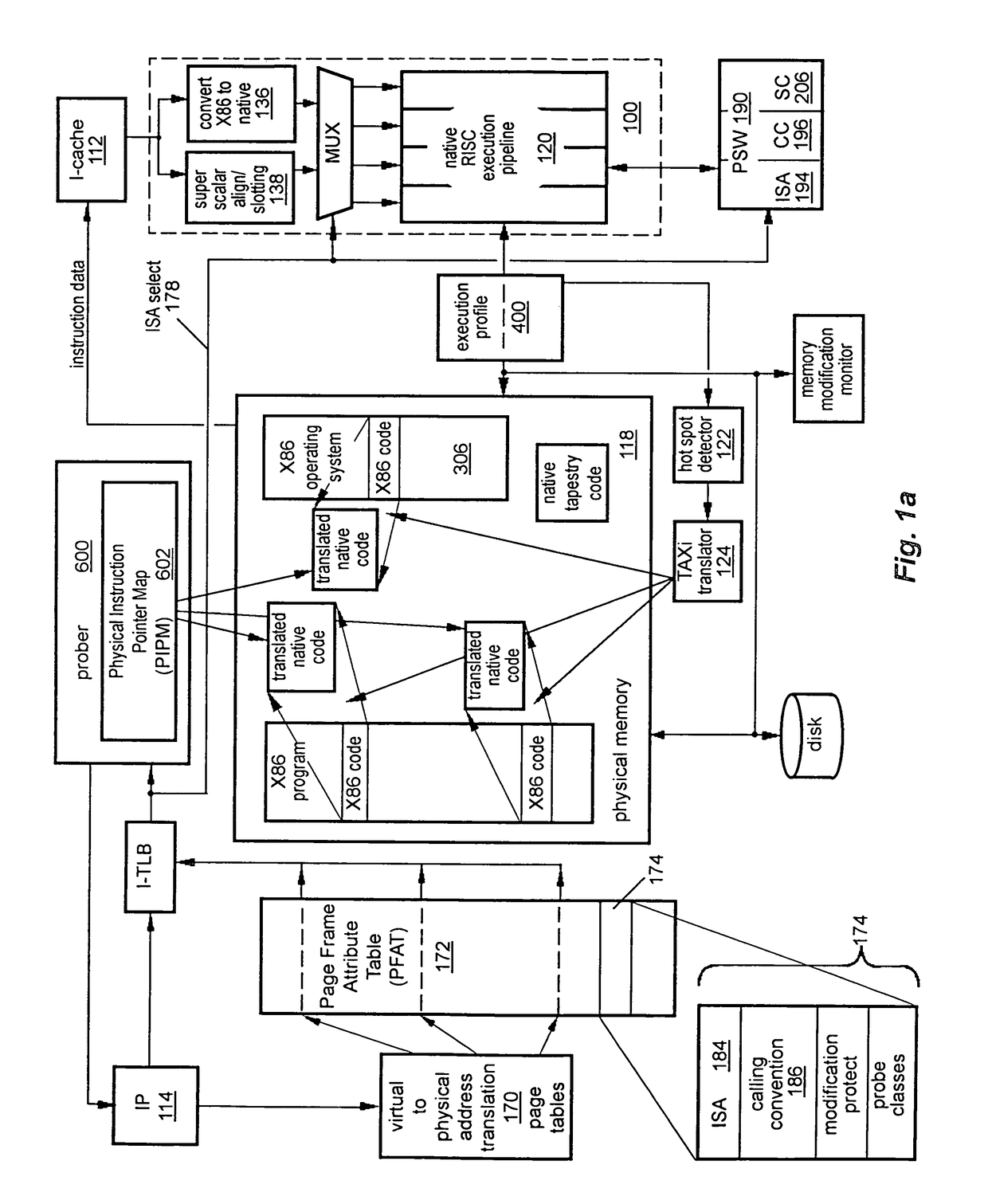

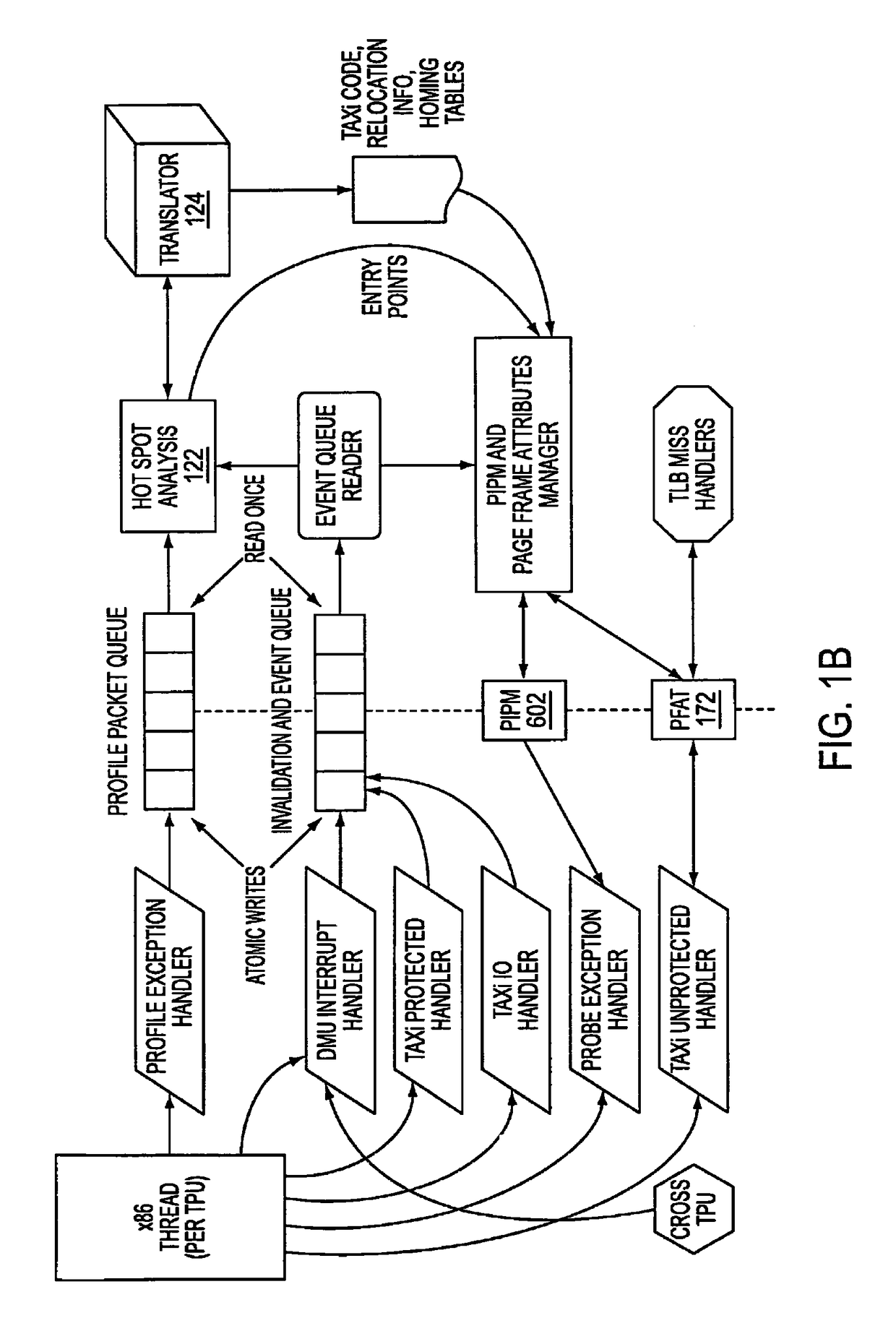

Binary translator with precise exception synchronization mechanism

InactiveUS7516453B1Digital computer detailsConcurrent instruction executionComputerized systemGoal system

A source computer system with one instruction set architecture (ISA) is configured to run on a target hardware system that has its own ISA, which may be the same as the source ISA. In cases where the source instructions cannot be executed directly on the target system, the invention provides binary translation system. During execution from binary translation, however, both synchronous and asynchronous exceptions may arise. Synchronous exceptions may be either transparent (requiring processing action wholly within the target computer system) or non-transparent (requiring processing that alters a visible state of the source system). Asynchronous exceptions may also be either transparent or non-transparent, in which case an action that alters a visible state of the computer system needs to be applied. The invention includes subsystems, and related methods of operation, for detecting the occurrence of all of these types of exceptions, to handle them, and to do so with precise reentry into the interrupted instruction stream; by “precise” is meant that the atomic execution of the source instructions is guaranteed, and that the application of actions, including those that originate from asynchronous exceptions, occurs at the latest at the completion of the current source instruction at the time of the request for the action. The binary translation and exception-handling subsystems are preferably included as components of a virtual machine monitor which is installed between the target hardware system and the source system, which is preferably a virtual machine.

Owner:VMWARE INC

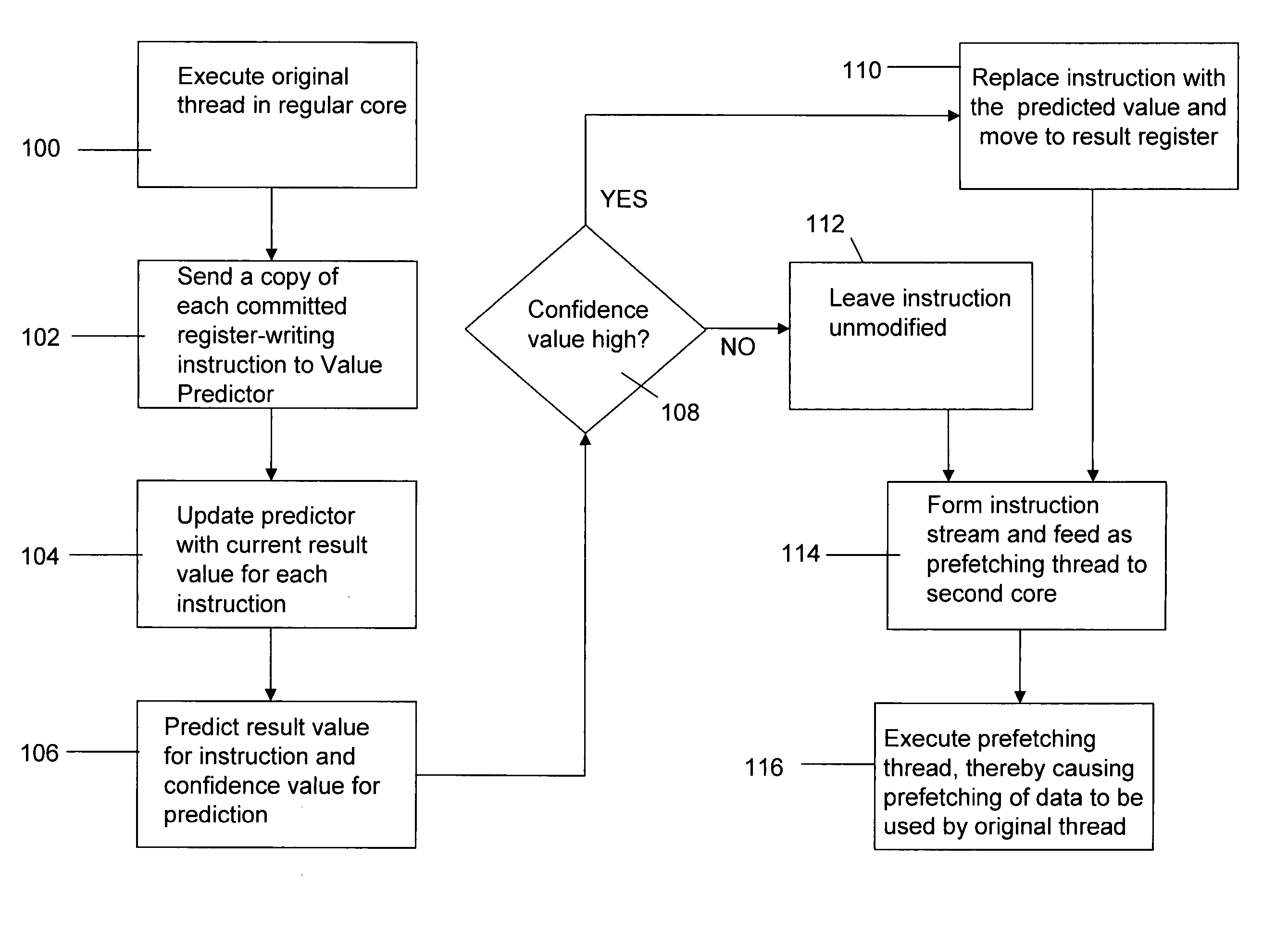

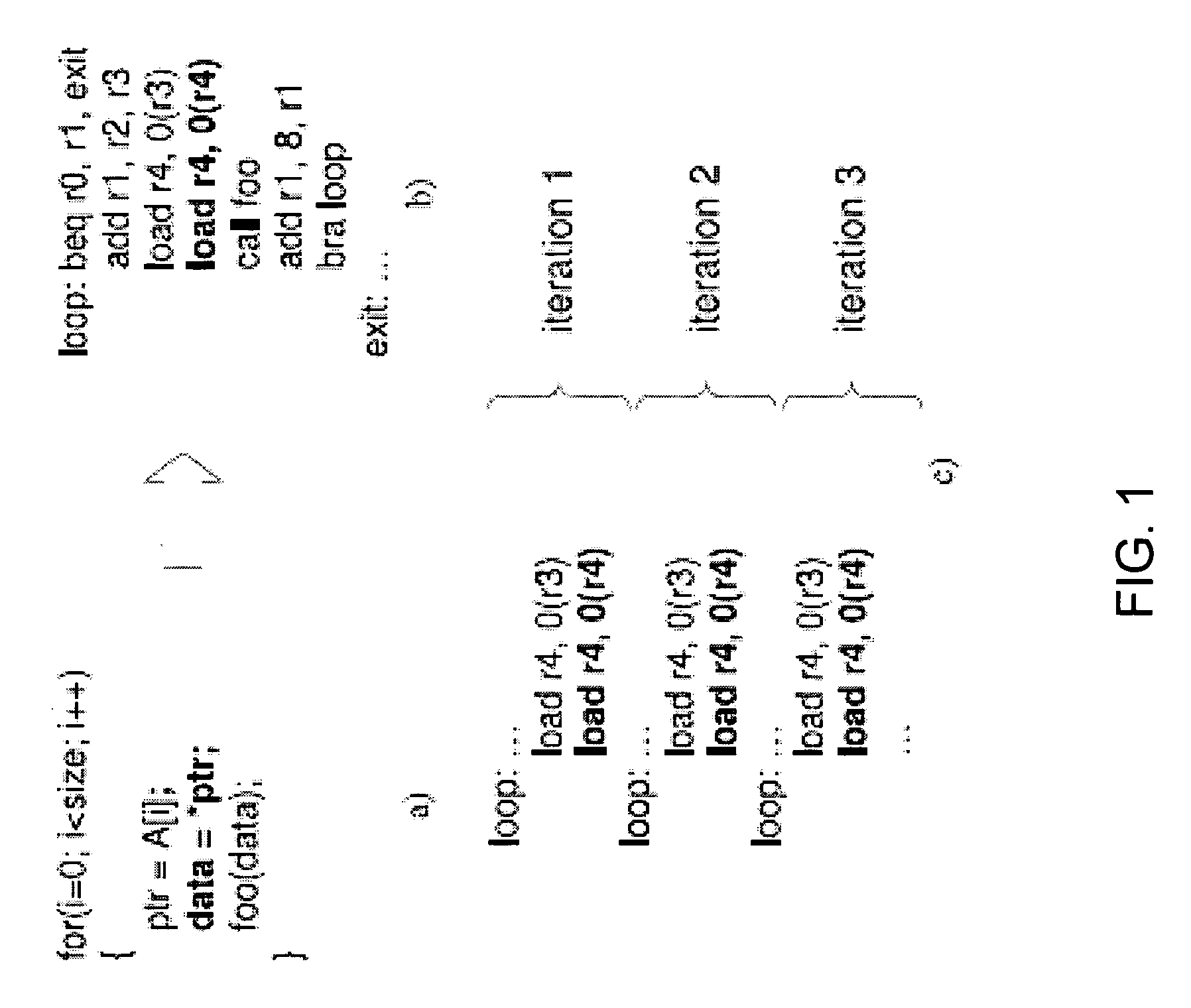

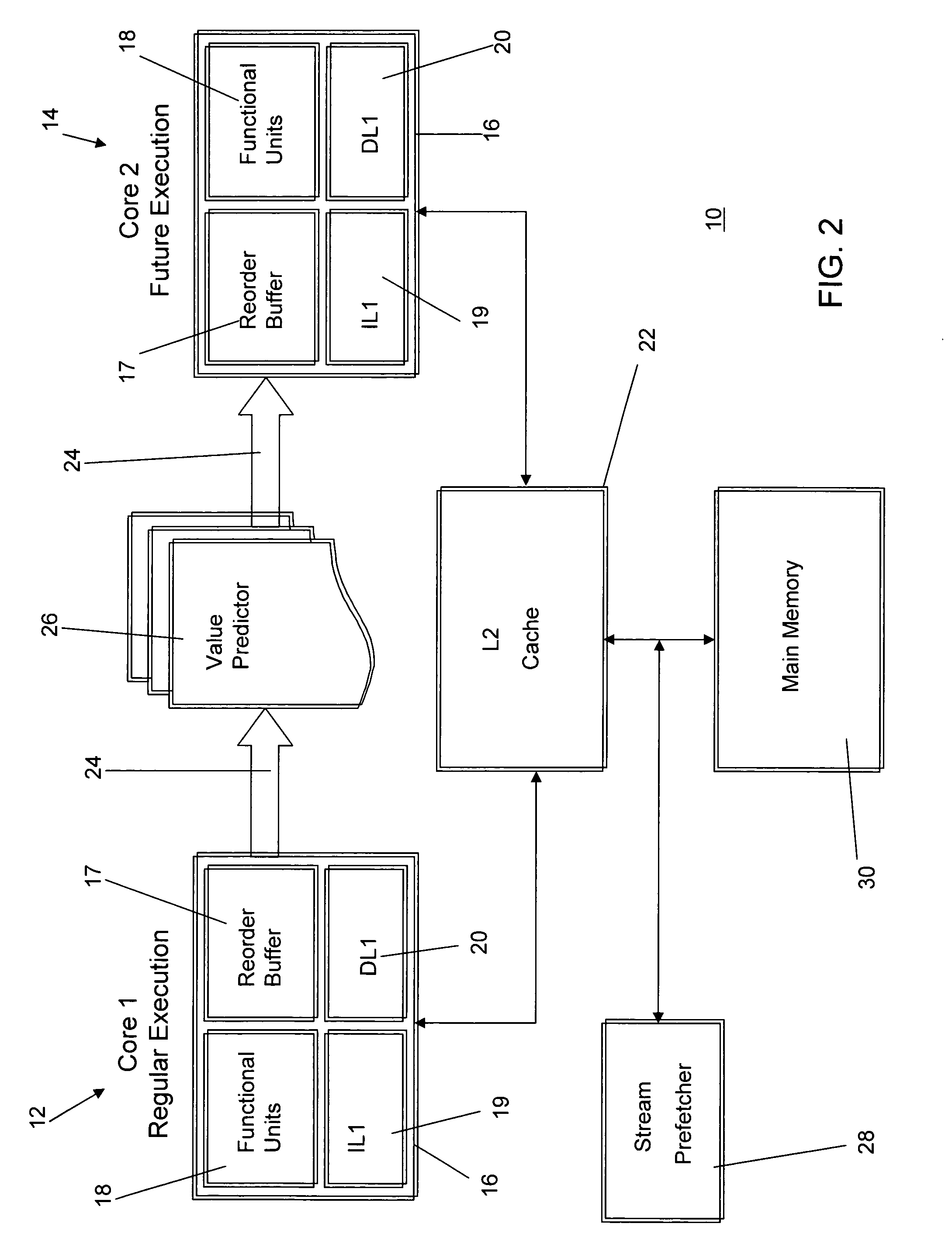

Future execution prefetching technique and architecture

ActiveUS20070174555A1Lower performance requirementsIncreased complexityRuntime instruction translationMemory systemsAccess timeTerm memory

A prefetching technique referred to as future execution (FE) dynamically creates a prefetching thread for each active thread in a processor by simply sending a copy of all committed, register-writing instructions in a primary thread to an otherwise idle processor. On the way to the second processor, a value predictor replaces each predictable instruction with a load immediate instruction, where the immediate is the predicted result that the instruction is likely to produce during its nth next dynamic execution. Executing this modified instruction stream (i.e., the prefetching thread) in another processor allows computation of the future results of the instructions that are not directly predictable. This causes the issuance of prefetches into the shared memory hierarchy, thereby reducing the primary thread's memory access time and speeding up the primary thread's execution.

Owner:CORNELL RES FOUNDATION INC

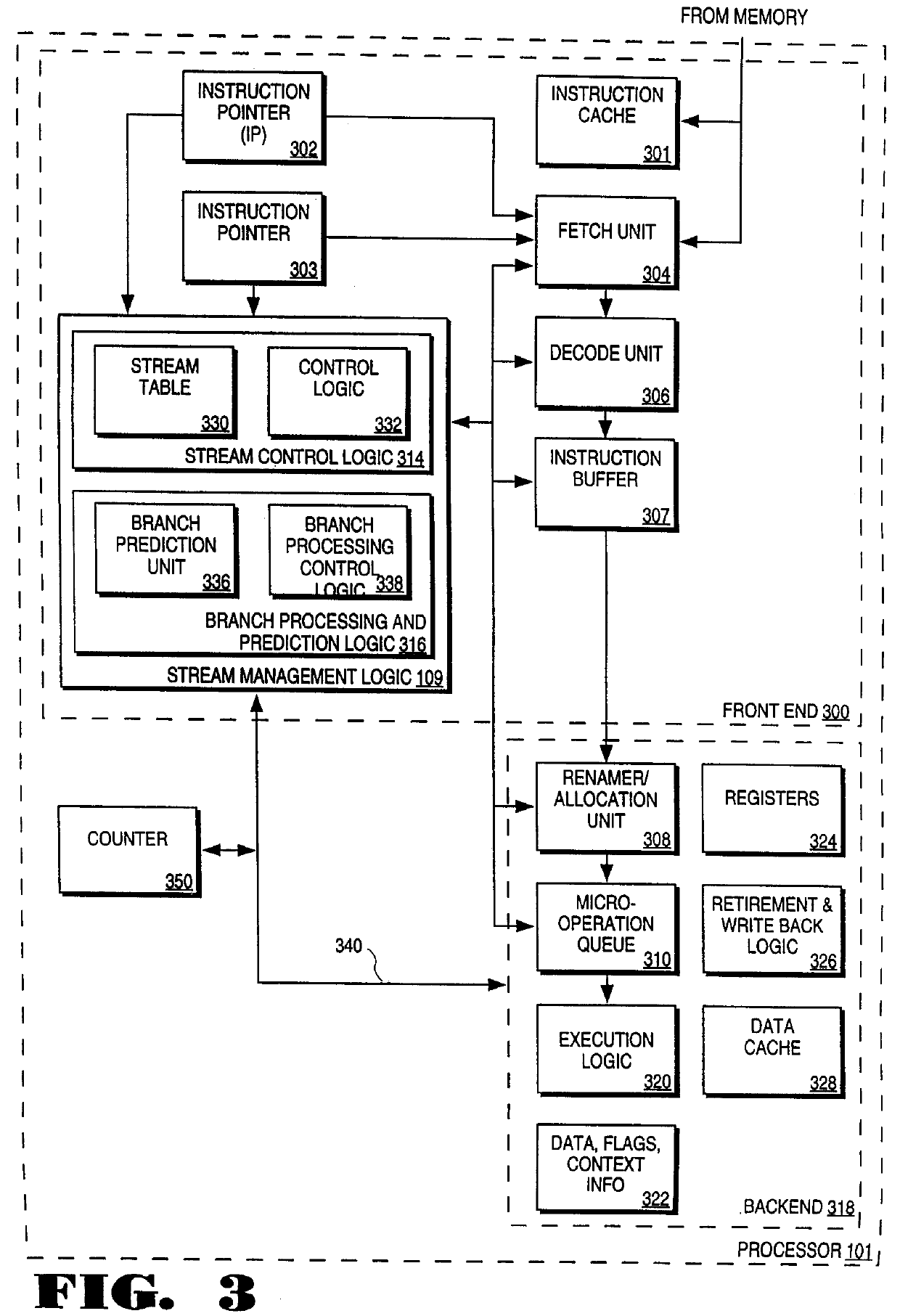

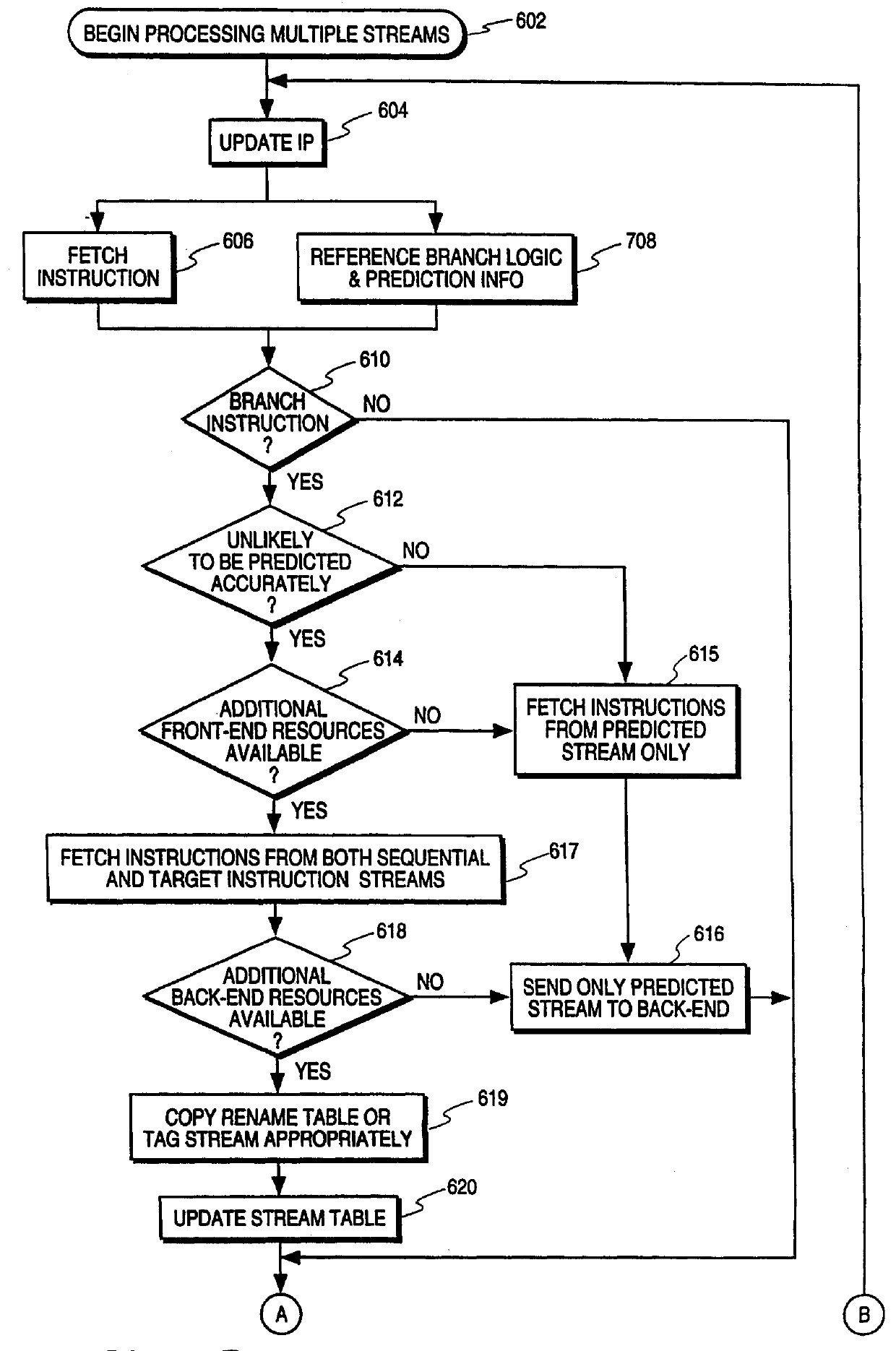

Processor and method for speculatively executing instructions from multiple instruction streams indicated by a branch instruction

InactiveUS6065115AGeneral purpose stored program computerConcurrent instruction executionImage resolutionInstruction stream

Owner:INTEL CORP

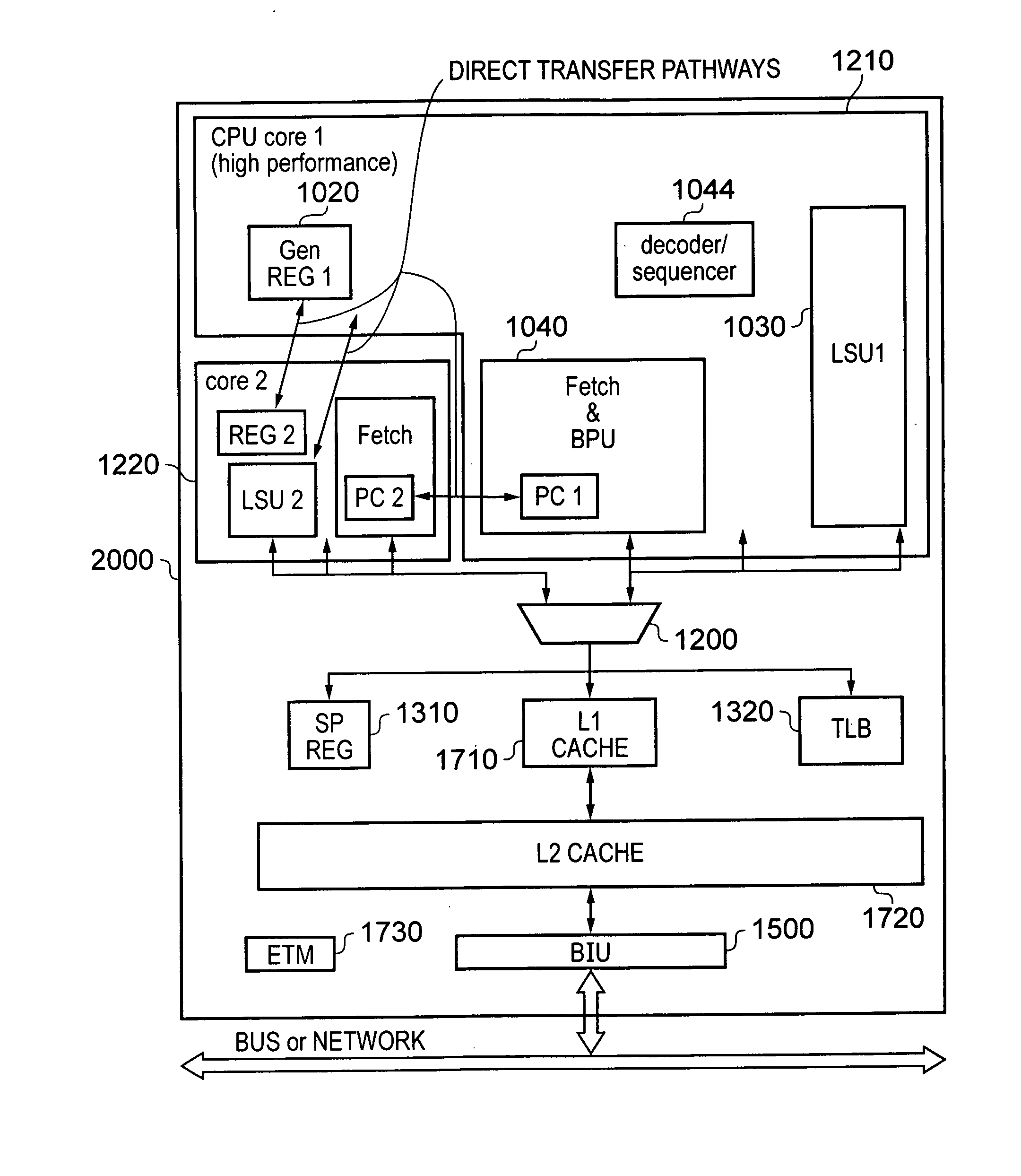

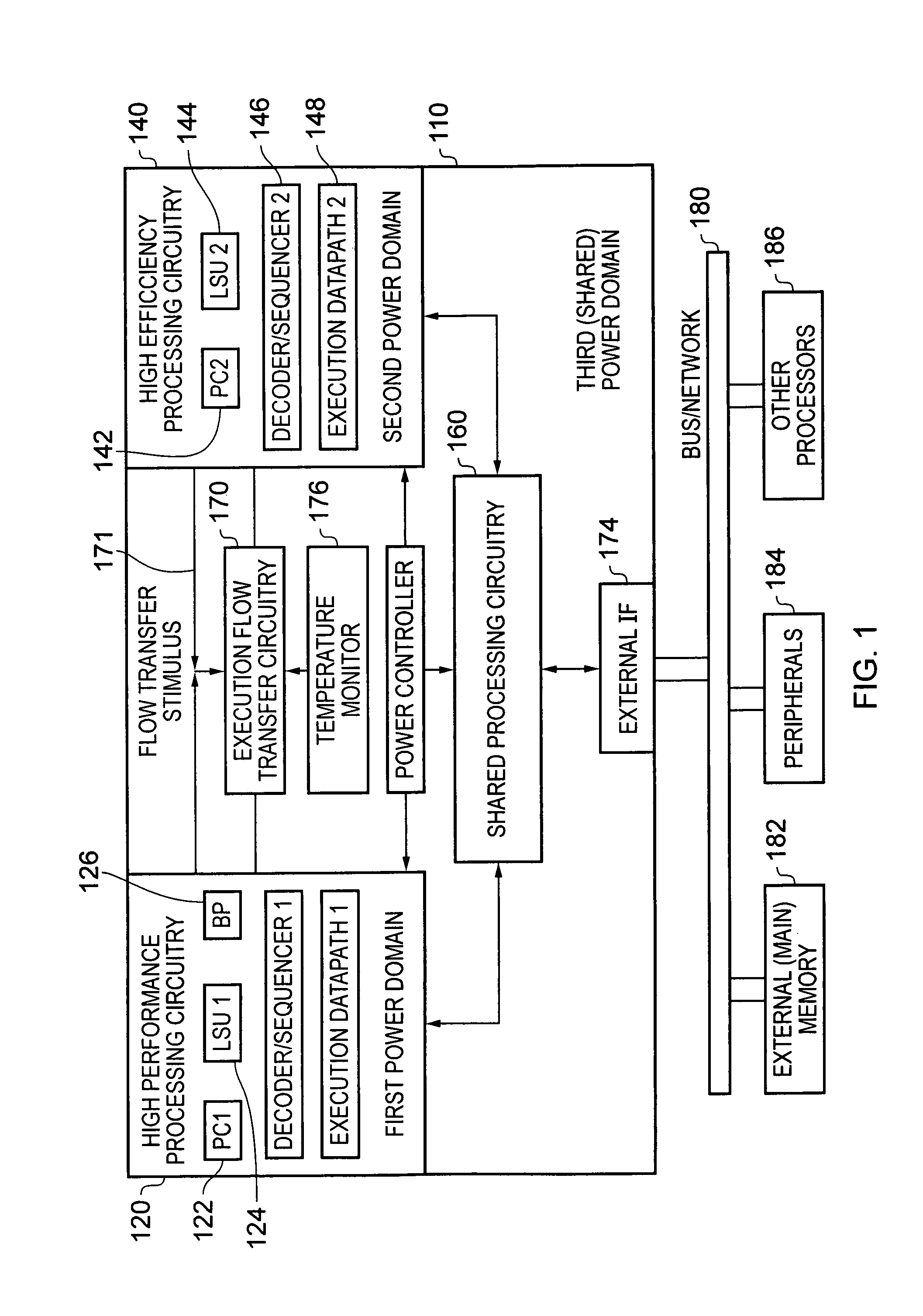

Data processing system

ActiveUS20110271126A1Easy to adaptData processing processEnergy efficient ICTVolume/mass flow measurementData processing systemPower domains

A data processing apparatus is provided comprising first processing circuitry, second processing circuitry and shared processing circuitry. The first processing circuitry and second processing circuitry are configured to operate in different first and second power domains respectively and the shared processing circuitry is configured to operate in a shared power domain. The data processing apparatus forms a uni-processing environment for executing a single instruction stream in which either the first processing circuitry and the shared processing circuitry operate together to execute the instruction stream or the second processing circuitry and the shared processing circuitry operate together to execute the single instruction stream. Execution flow transfer circuitry is provided for transferring at least one bit of processing-state restoration information between the two hybrid processing units.

Owner:ARM LTD

Detecting conditions for transfer of execution from one computer instruction stream to another and executing transfer on satisfaction of the conditions

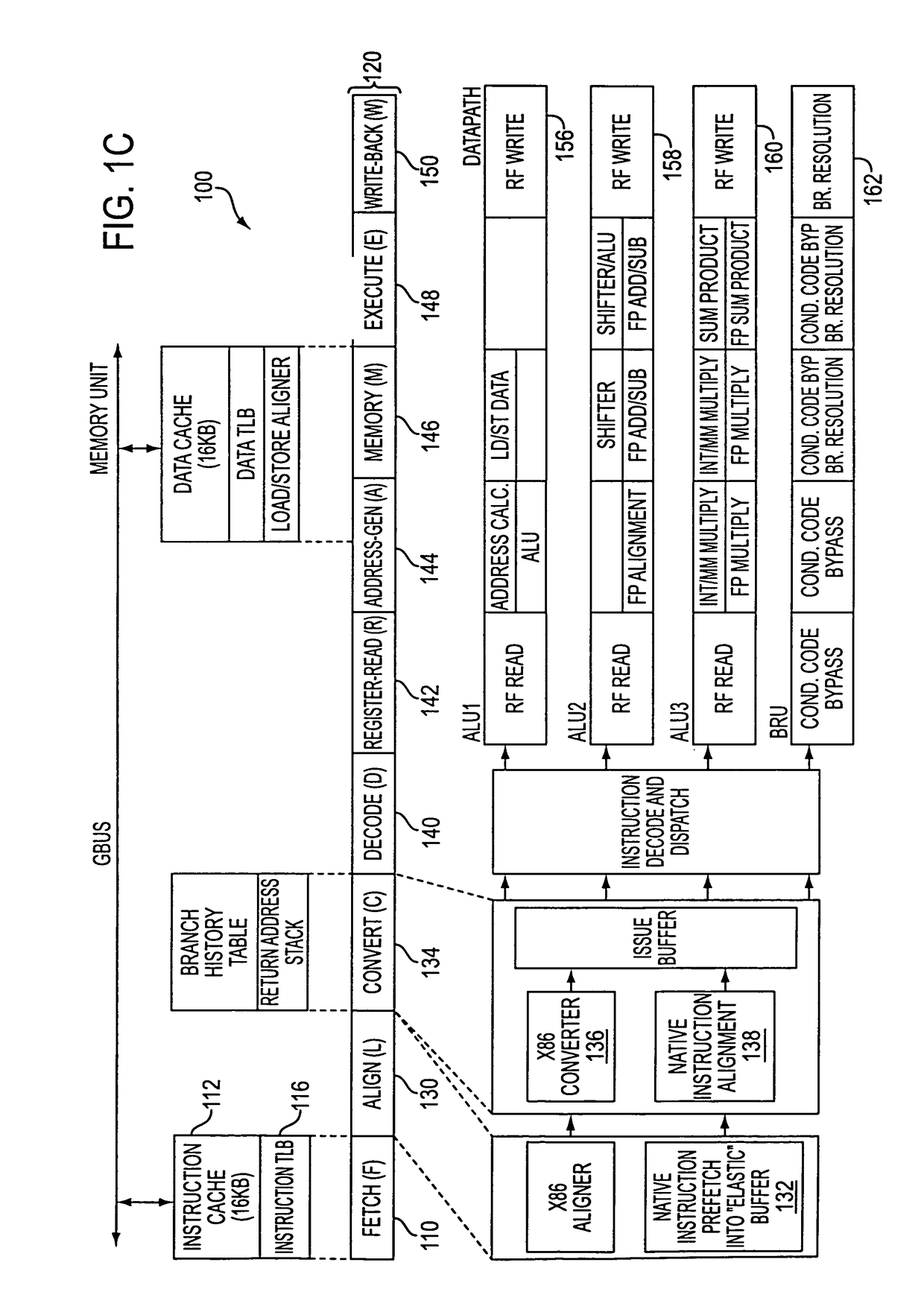

InactiveUS8121828B2Efficiently tailoredEasy to operateGeneral purpose stored program computerSoftware simulation/interpretation/emulationInstruction pipelineInstruction stream

A computer has instruction pipeline circuitry capable of executing two instruction set architectures (ISA's). A binary translator translates at least a selected portion of a computer program from a lower-performance one of the ISA's to a higher-performance one of the ISA's. Hardware initiates a query when about to execute a program region coded in the lower-performance ISA, to determine whether a higher-performance translation exists. If so, the about-to-be-executed instruction is aborted, and control transfers to the higher-performance translation. After execution of the higher-performance translation, execution of the lower-performance region is reestablished at a point downstream from the aborted instruction, in a context logically equivalent to that which would have prevailed had the code of the lower-performance region been allowed to proceed.

Owner:ADVANCED SILICON TECH

Adaptive dynamic selection and application of multiple virtualization techniques

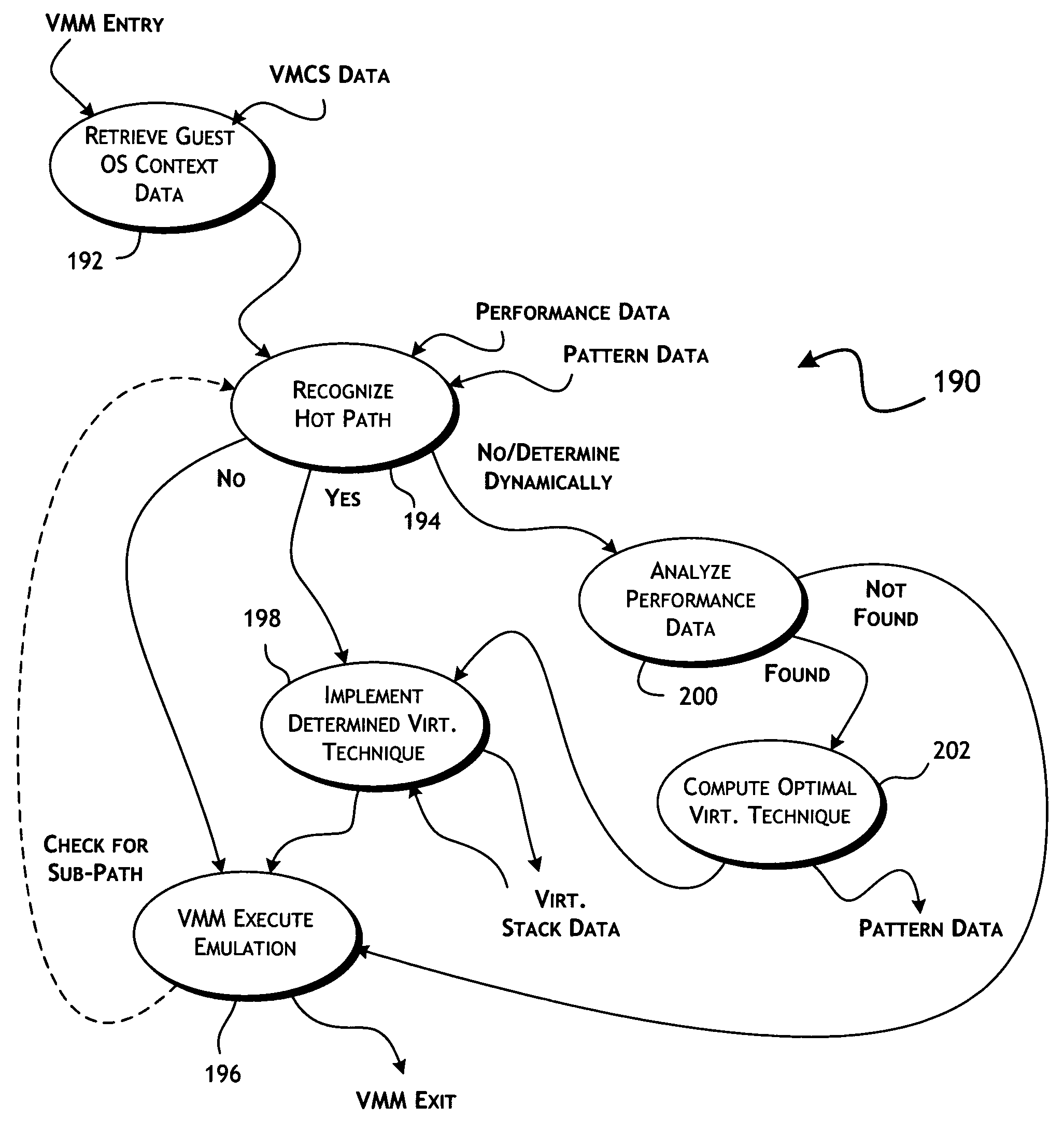

ActiveUS20080288941A1Minimum performance costMaximize potential execution performanceSoftware simulation/interpretation/emulationMemory systemsVirtualizationOperational system

Autonomous selection between multiple virtualization techniques implemented in a virtualization layer of a virtualized computer system. The virtual machine monitor implements multiple virtualization support processors that each provide for the comprehensive handling of potential virtualization exceptions. A virtual machine monitor resident virtualization selection control is operable to select between use of first and second virtualization support processors dependent on identifying a predetermined pattern of temporally local privilege dependent instructions within a portion of an instruction stream as encountered in the execution of a guest operating system.

Owner:VMWARE INC

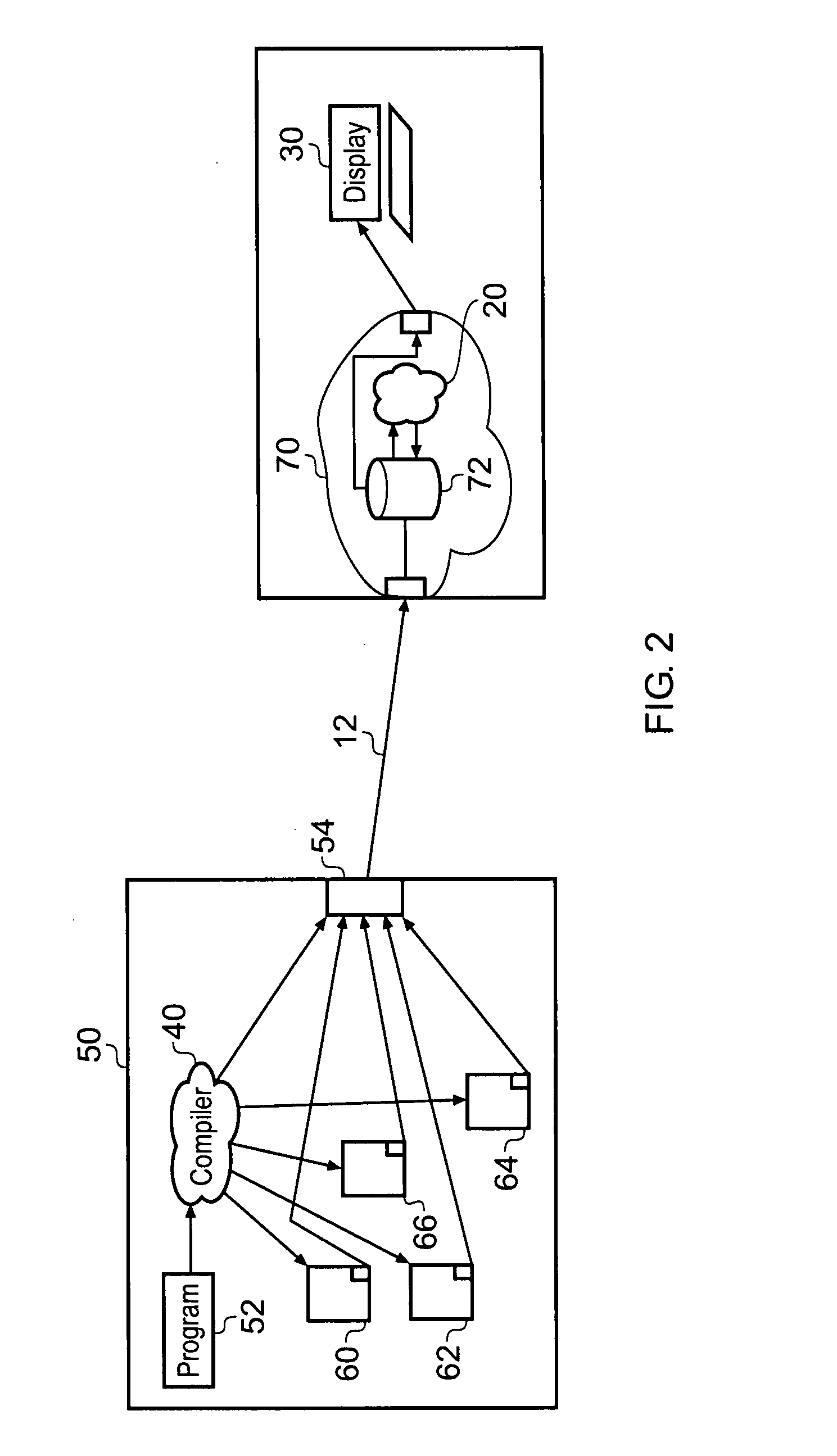

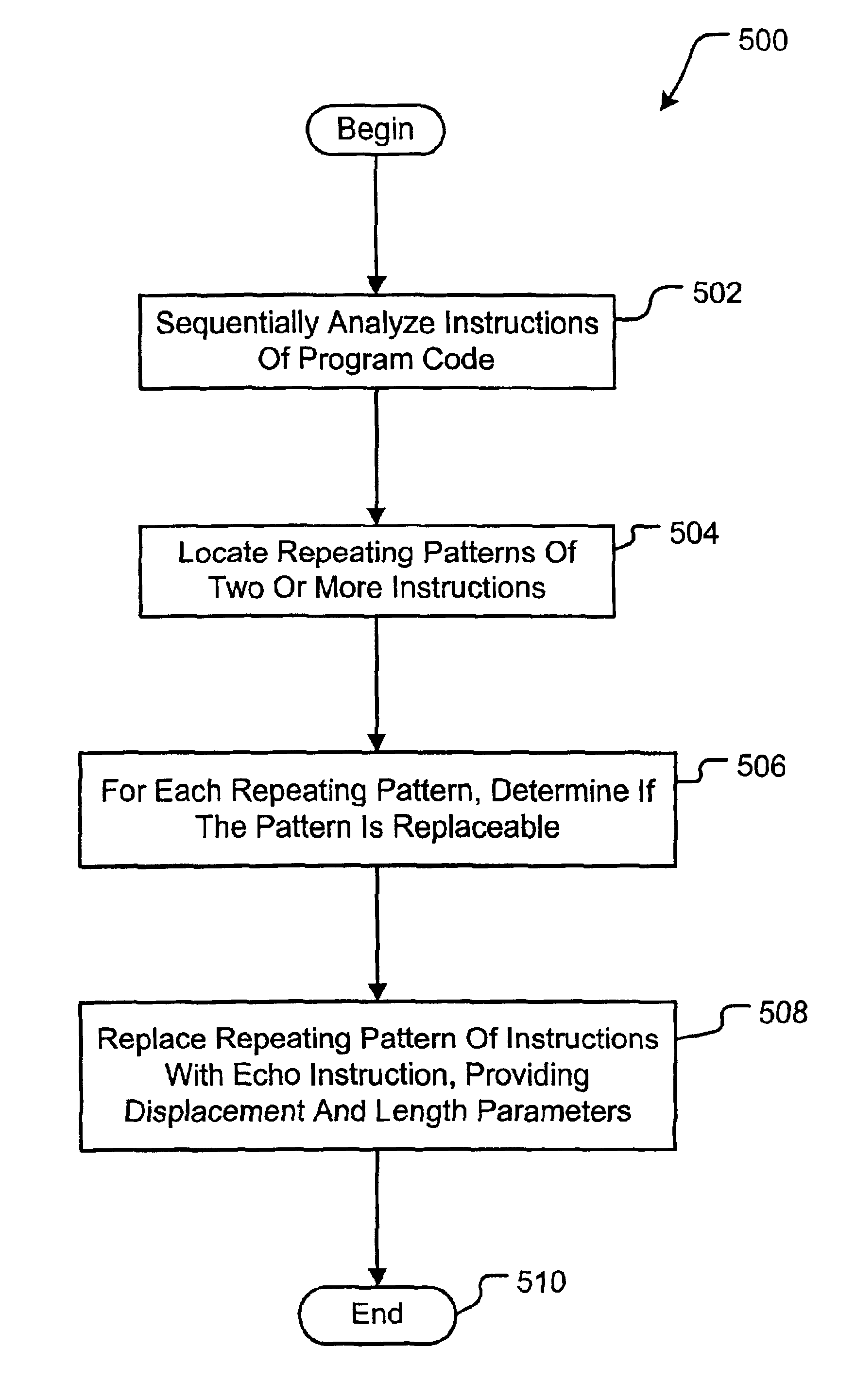

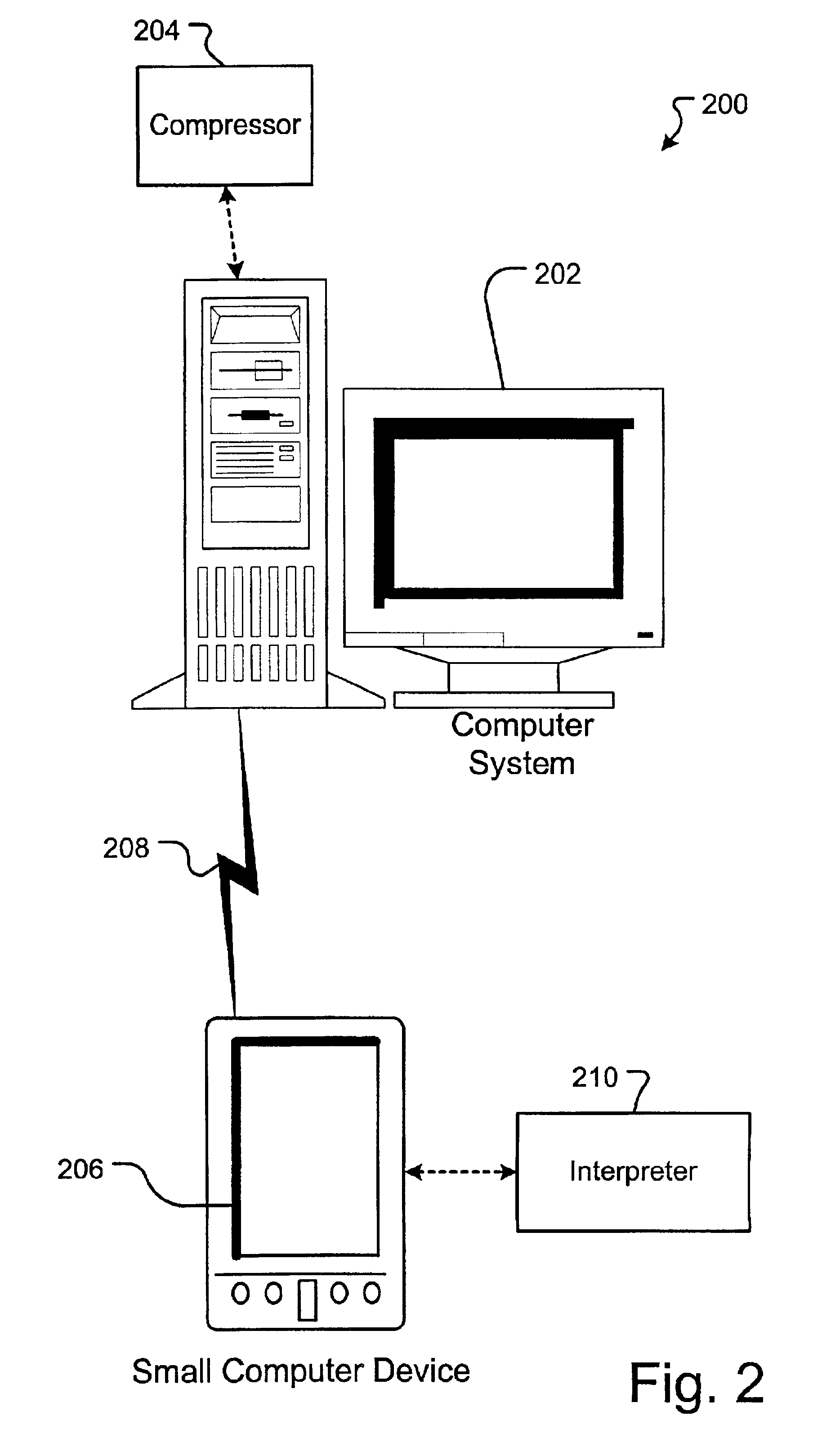

Method and system for compressing program code and interpreting compressed program code

ActiveUS6907598B2Small sizeReduce in quantityRuntime instruction translationMultiple digital computer combinationsProgram codeInstruction stream

A computer system and method for compressing an instruction stream and executing the compressed instruction stream without decompression. The invention utilizes a new pointer instruction, i.e., an “Echo” instruction that is used to replace repeated instructions or sequences of instructions, also referred to as phrases. Replacing subsequent, repeated phrases with the Echo instruction reduces the size of the instruction stream, i.e., compresses the instruction stream. The Echo instruction generally identifies at least one literal instruction appearing before the Echo instruction and further identifies the number of instructions appearing before the Echo instruction to be repeated. No additional delimiters are necessary, e.g., no End Echo instructions are required. Omitting the End Echo instruction allows for overlapping phrases without the need for two Echo instructions. Reducing the number of instructions used significantly increases compression.

Owner:MICROSOFT TECH LICENSING LLC

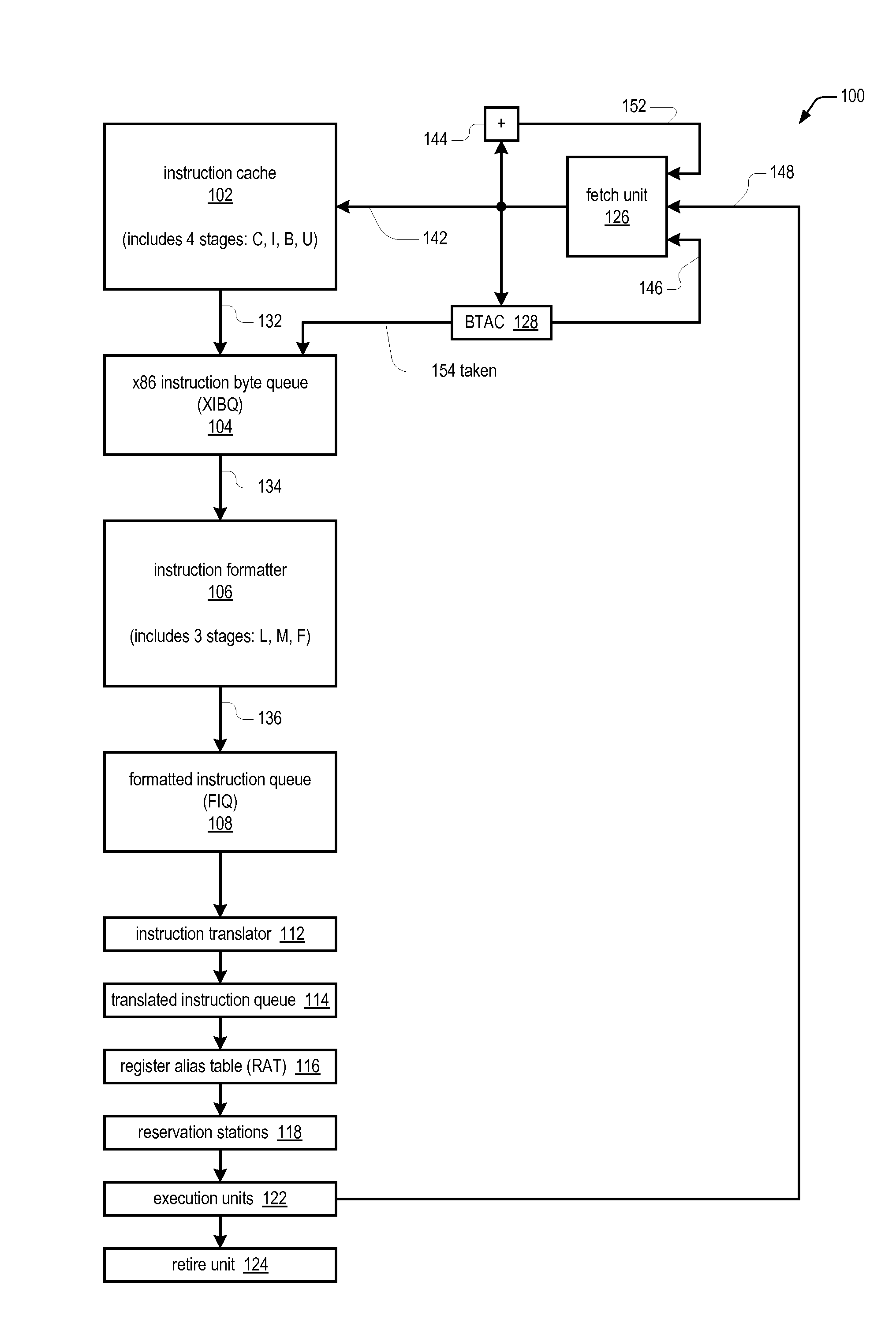

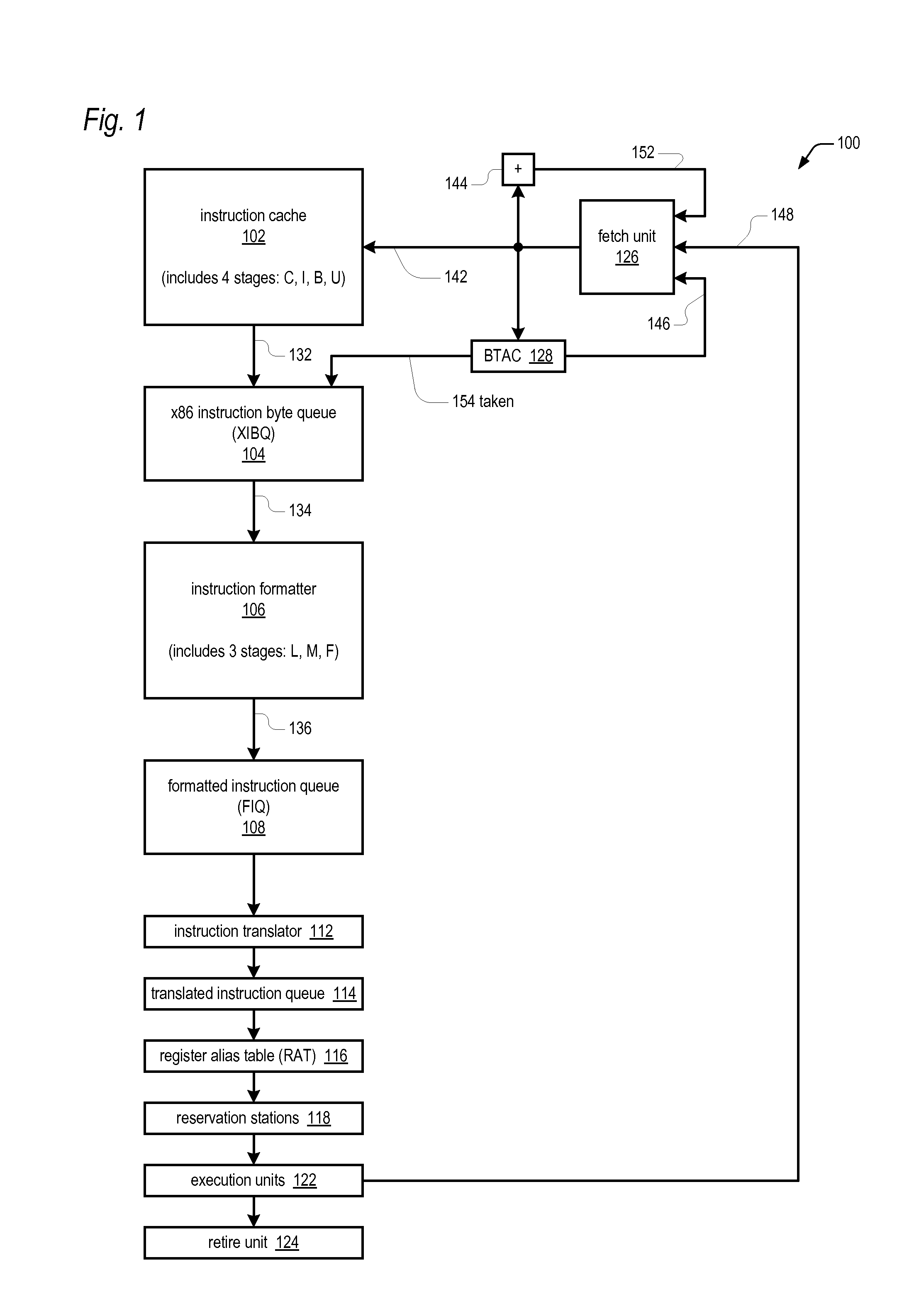

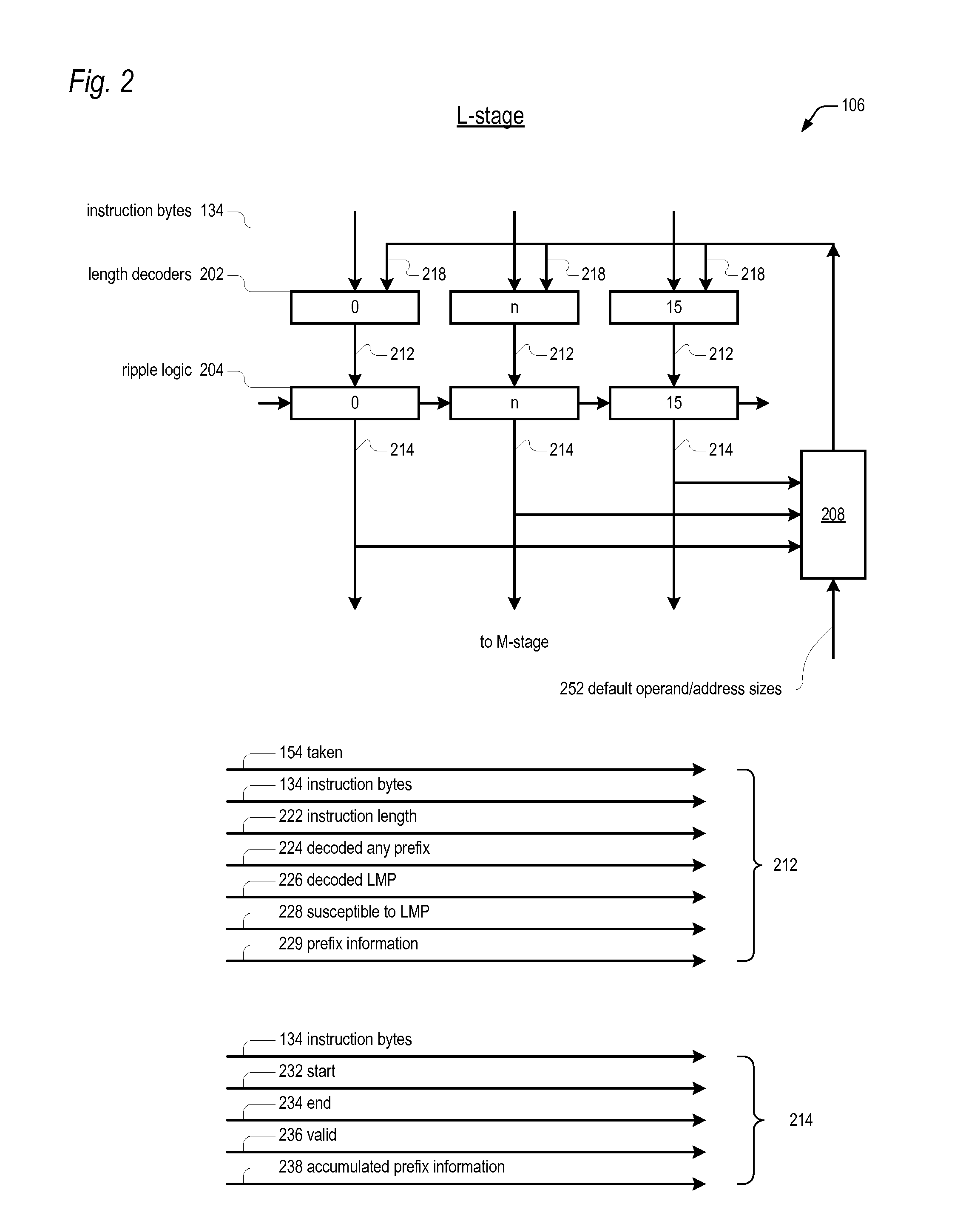

Bad branch prediction detection, marking, and accumulation for faster instruction stream processing

ActiveUS20100299502A1Memory architecture accessing/allocationDigital computer detailsVariable lengthByte

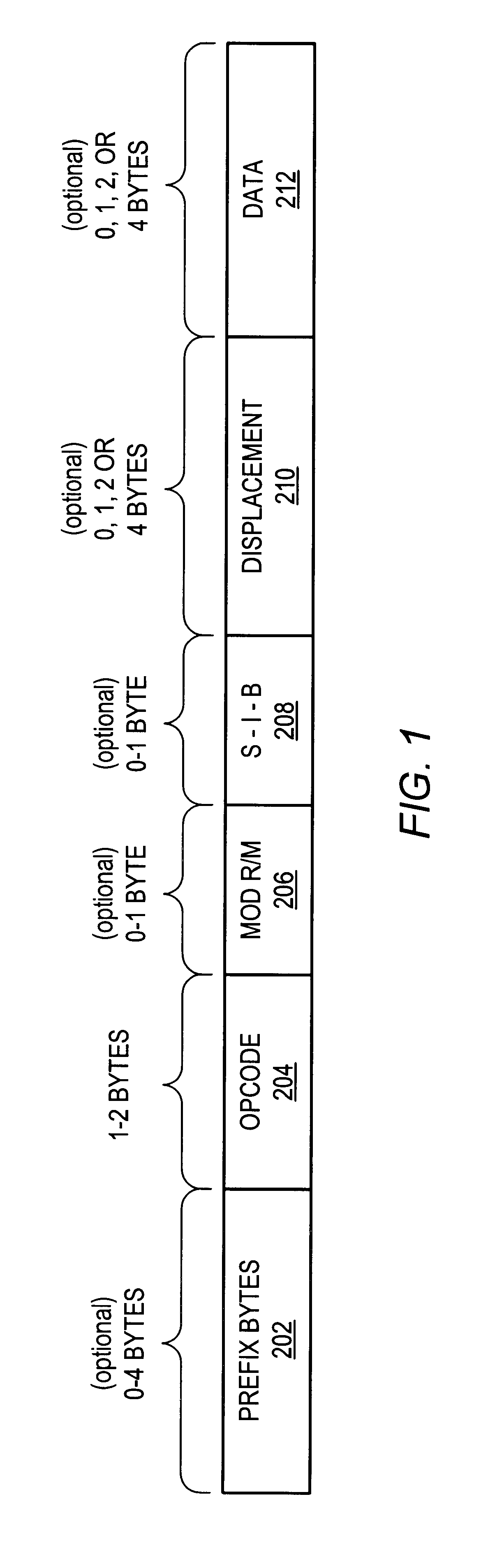

An apparatus for extracting instructions from a stream of undifferentiated instruction bytes in a microprocessor having an instruction set architecture in which the instructions are variable length. Decode logic decodes the instruction bytes of the stream to generate for each a corresponding opcode byte indictor and end byte indicator and receives a corresponding taken indicator for each of the instruction bytes. The taken indicator is true if a branch predictor predicted the instruction byte is the opcode byte of a taken branch instruction. The decode logic generates a corresponding bad prediction indicator for each of the instruction bytes. The bad prediction indicator is true if the corresponding taken indicator is true and the corresponding opcode byte indicator is false. The decode logic sets to true the bad prediction indicator for each remaining byte of an instruction whose opcode byte has a true bad prediction indicator. Control logic extracts instructions from the stream and sends the extracted instructions for further processing by the microprocessor. The control logic foregoes sending an instruction having both a true end byte indicator and a true bad prediction indicator.

Owner:VIA TECH INC

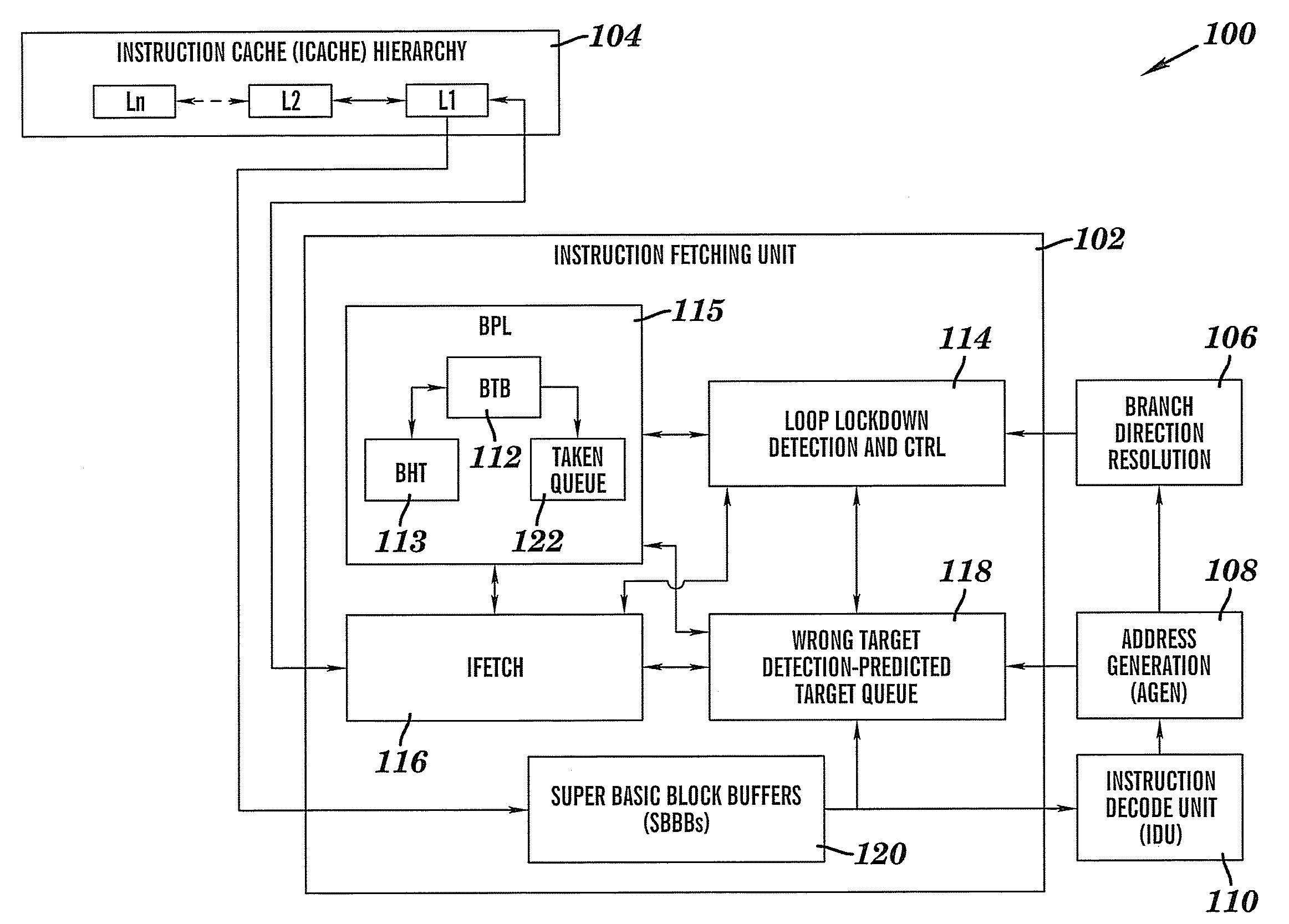

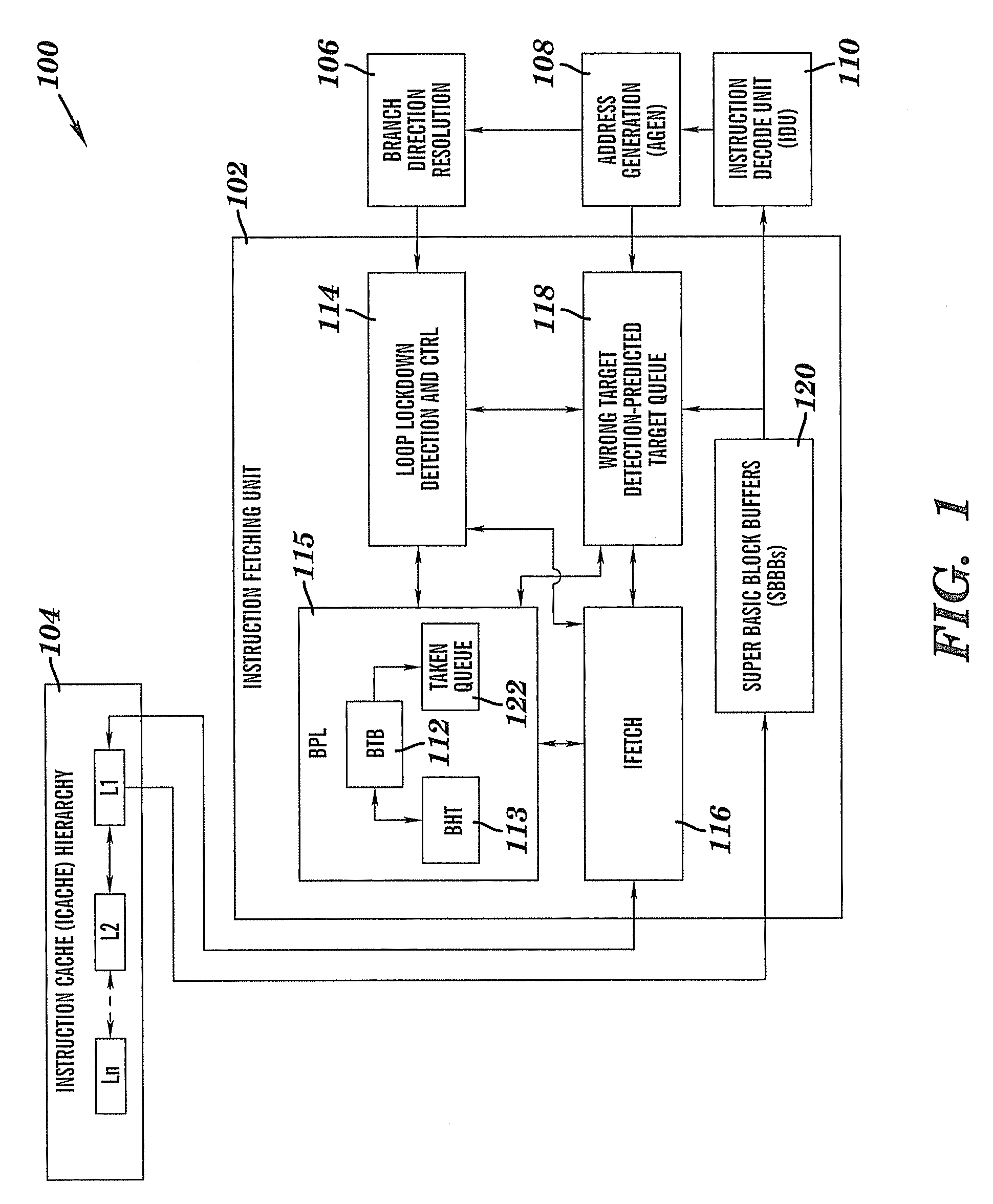

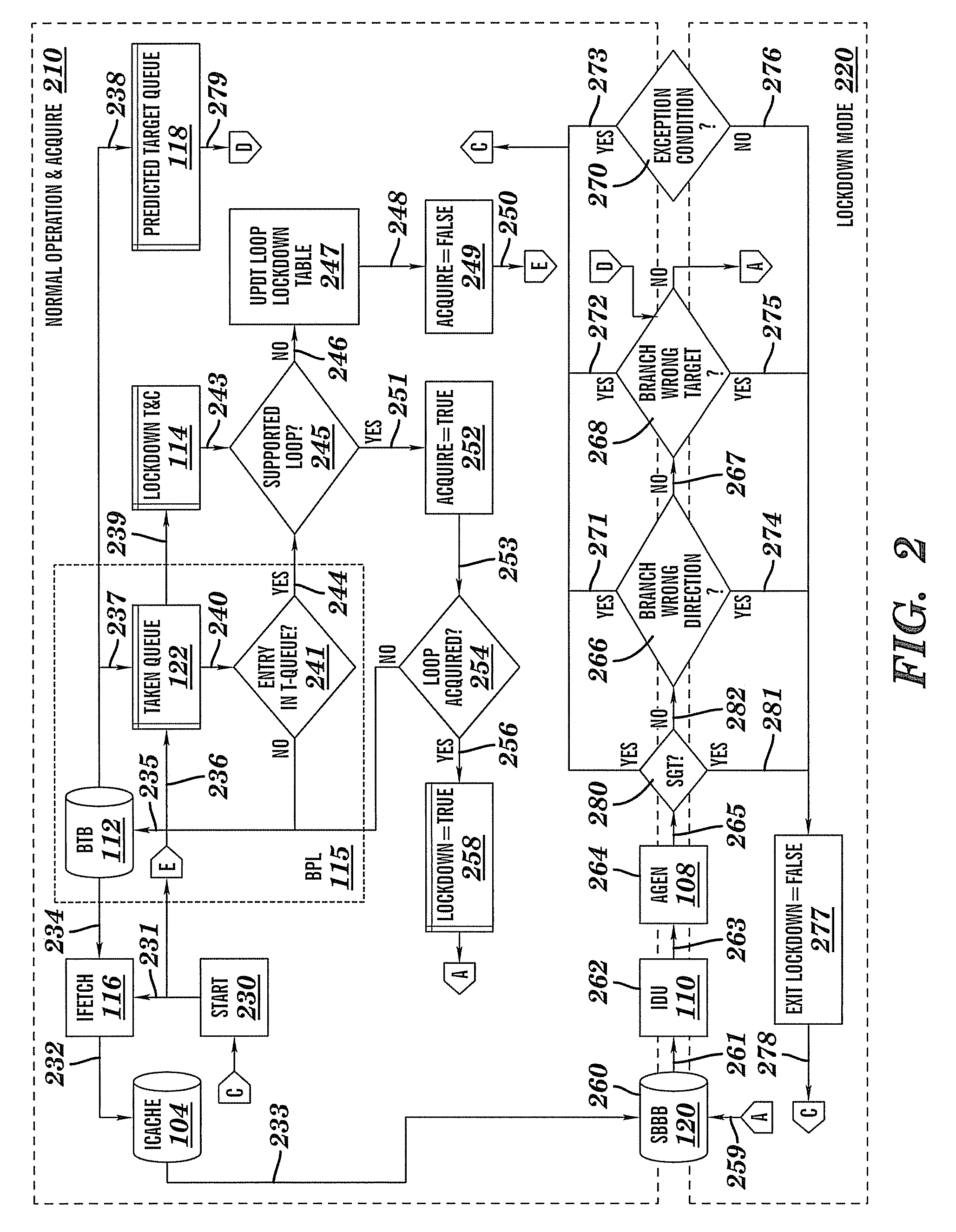

Method, system and computer program product for minimizing branch prediction latency

InactiveUS20090217017A1Minimizing branch prediction latencyDelay minimizationDigital computer detailsSpecific program execution arrangementsInstruction streamComputer program

A method, system, and computer program product for minimizing branch prediction latency in a pipelined computer processing environment are provided. The method includes detecting a branch loop utilizing branch instruction addresses and corresponding target addresses stored in a branch target buffer (BTB). The method also includes fetching the branch loop into a pre-decode instruction buffer and qualifying the branch loop for loop lockdown. The method further includes locking an instruction stream that forms the branch loop in the pre-decode instruction buffer and processing qualified branch loop instructions from the buffer and powering down instruction fetching and branch prediction logic (BPL) associated with the BTB.

Owner:IBM CORP

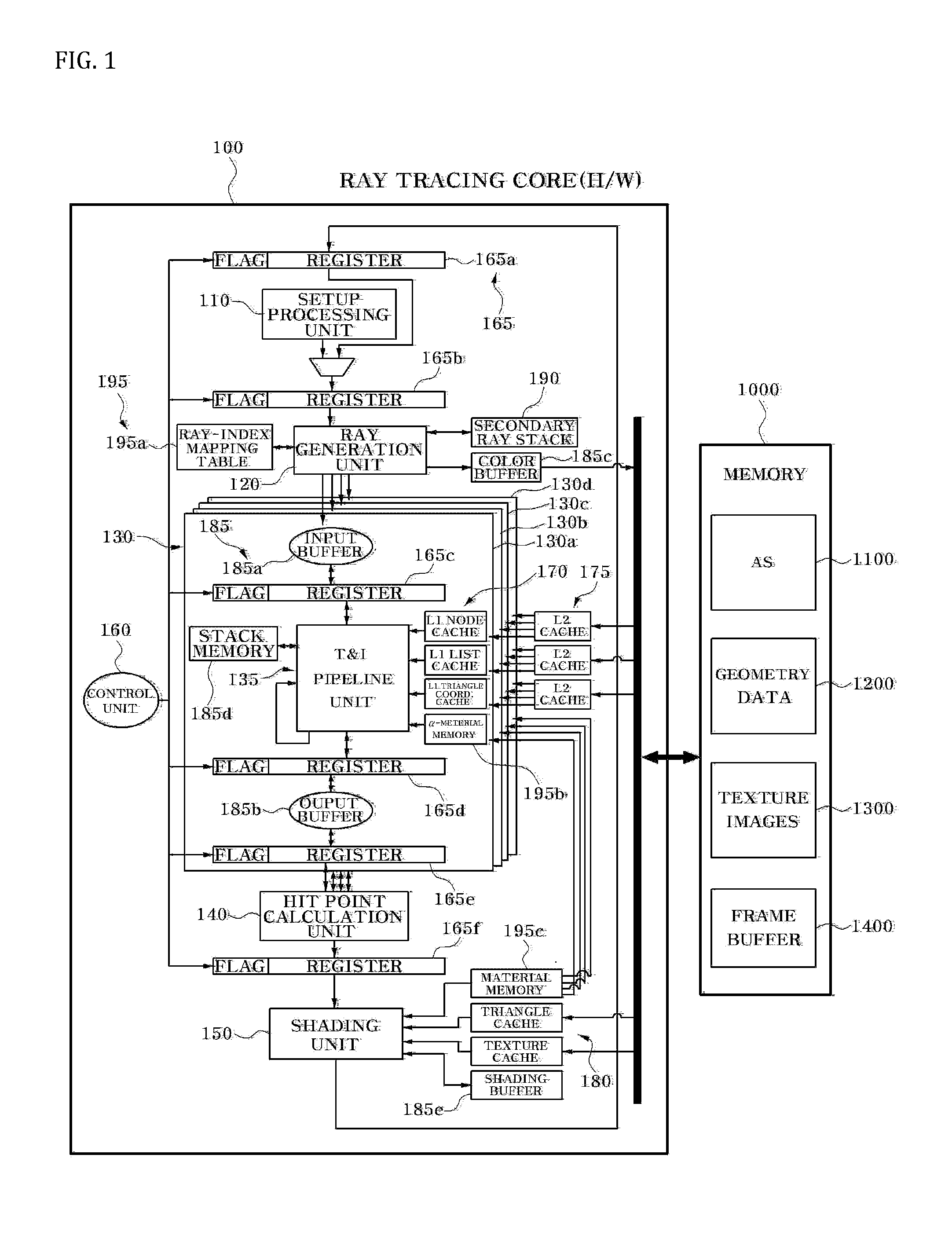

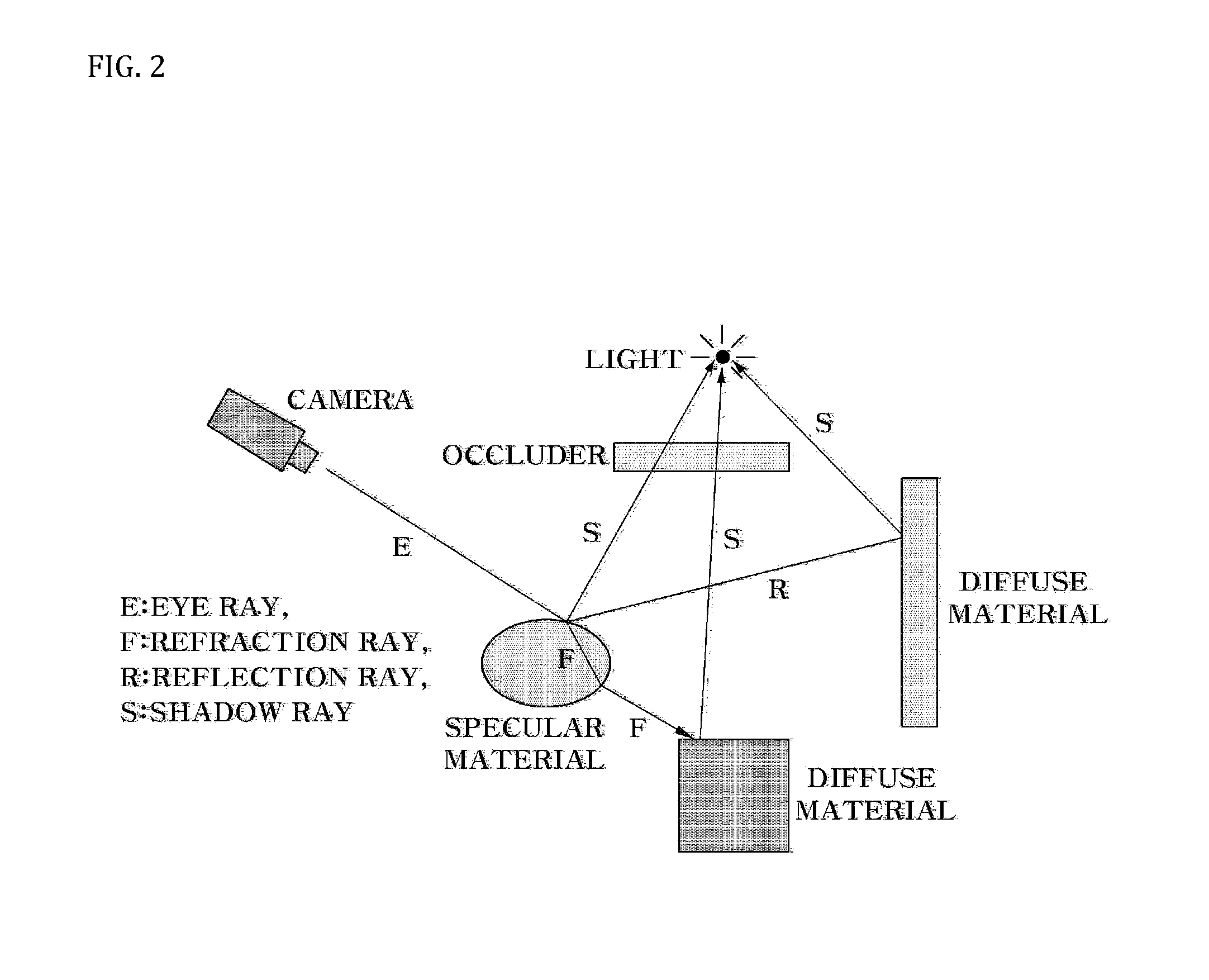

Ray tracing core and ray tracing chip having the same

A ray tracing core comprising a ray generation unit and a plurality of T&I (Traversal & Intersection) units with MIMD (Multiple Instruction stream Multiple Data stream) architecture is disclosed. The ray generation unit generates at least one eye ray based on an eye ray generation information. The eye ray generation information includes a screen coordinate value. Each of the plurality of T&I units receives the at least one eye ray and checks whether there exists a triangle intersected with the received at least one eye ray. The triangle configures a space.

Owner:SILICONARTS

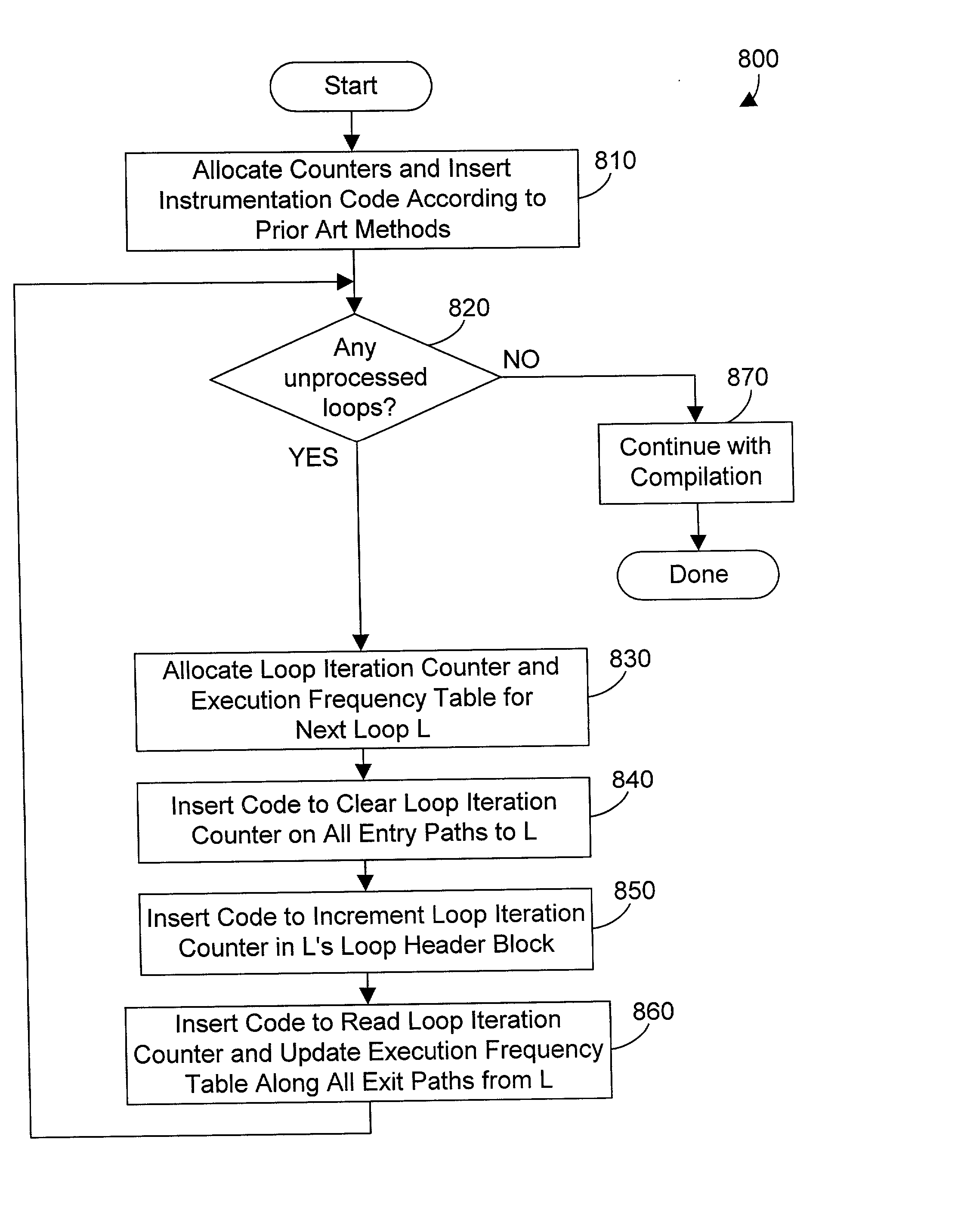

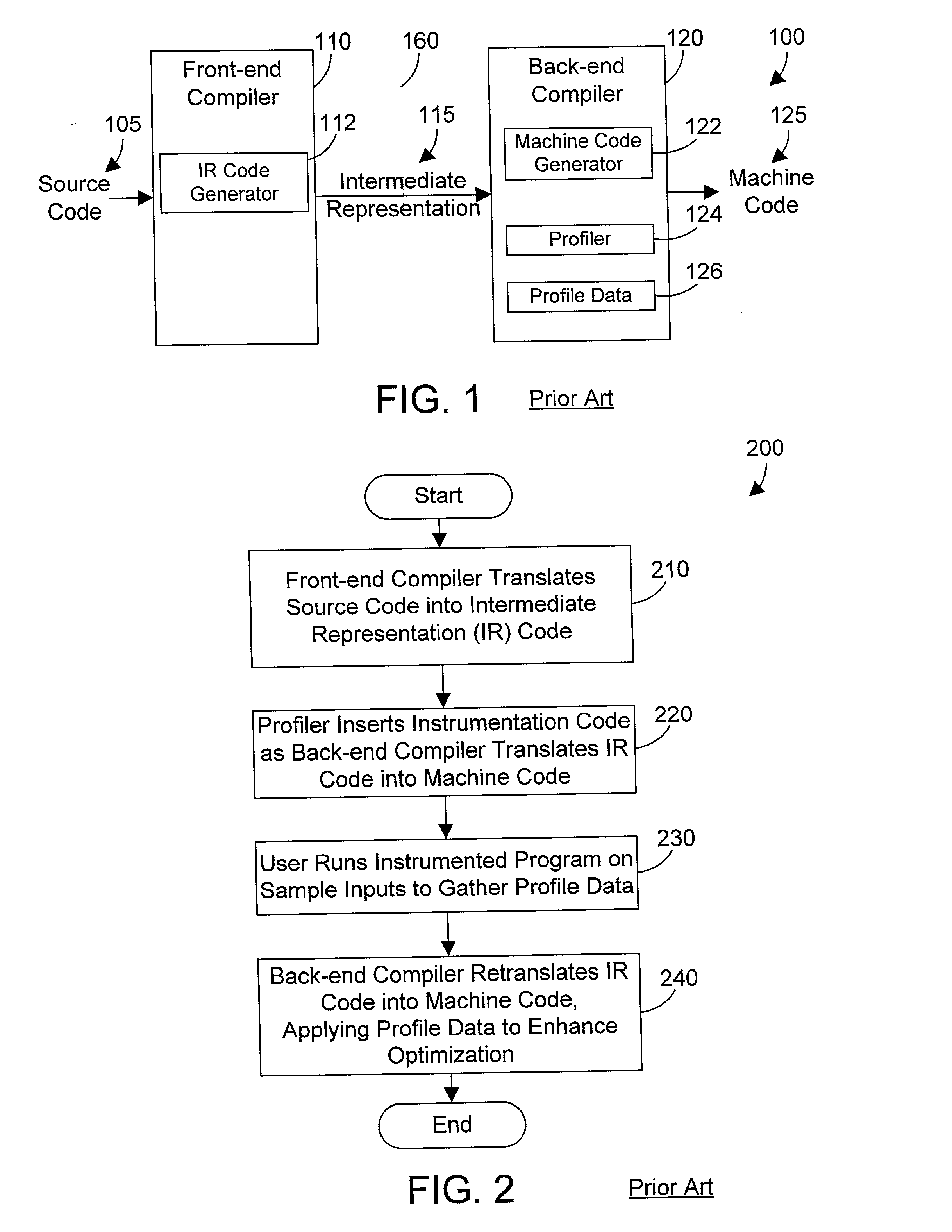

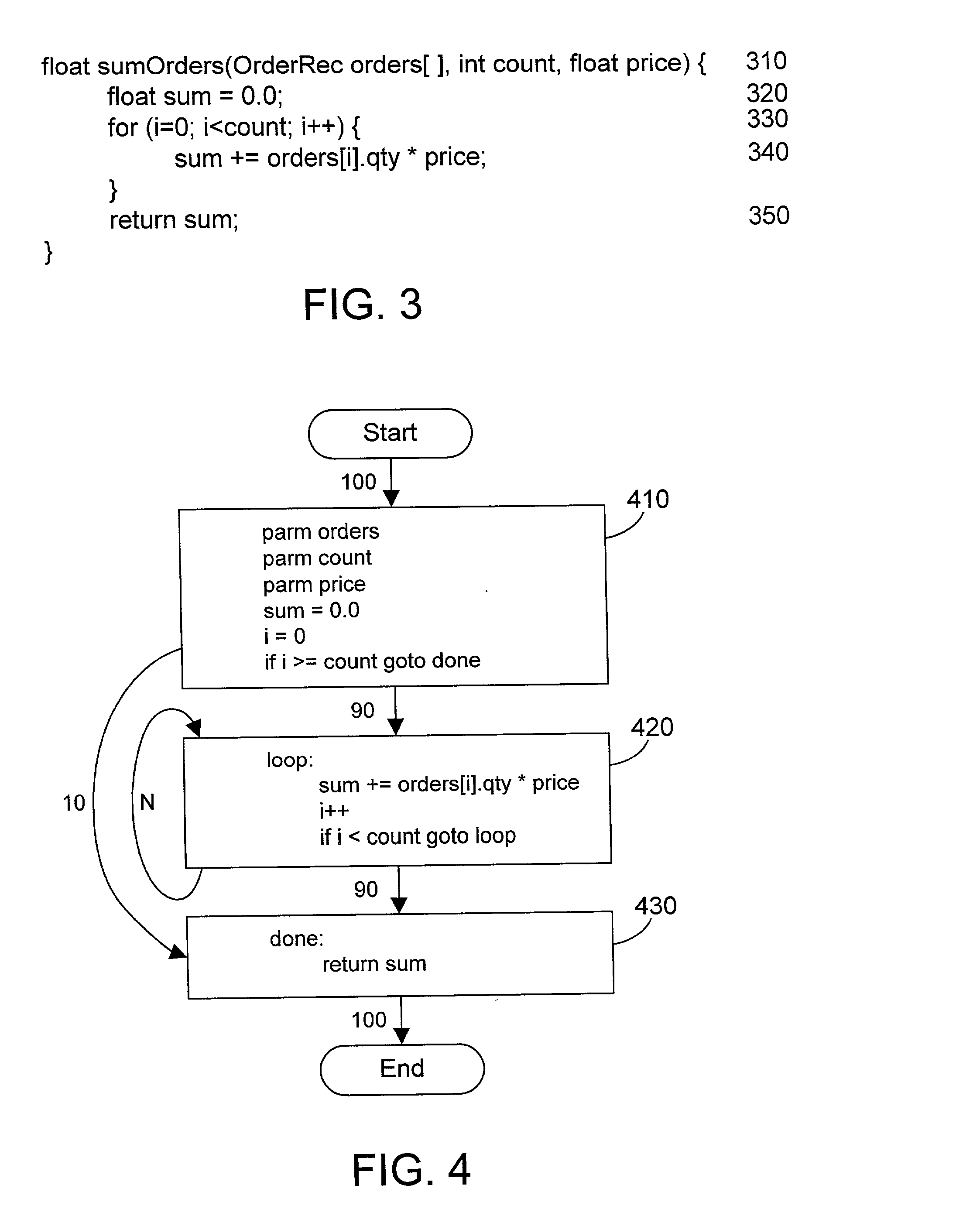

Compiler apparatus and method for optimizing loops in a computer program

ActiveUS20030097652A1Intelligent decisionMinimize total execution timeSoftware engineeringHardware monitoringTheoretical computer scienceLoop optimization

A profile-based loop optimizer generates an execution frequency table for each loop that gives more detailed profile data that allows making a more intelligent decision regarding if and how to optimize each loop in the computer program. The execution frequency table contains entries that correlate a number of times a loop is executed each time the loop is entered with a count of the occurrences of each number during the execution of an instrumented instruction stream. The execution frequency table is used to determine whether there is one dominant mode that appears in the profile data, and if so, optimizes the loop according to the dominant mode. The optimizer may perform optimizations by peeling a loop, by unrolling a loop, and by performing both peeling and unrolling on a loop according to the profile data in the execution frequency table for the loop. In this manner the execution time of the resulting code is minimized according to the detailed profile data in the execution frequency tables, resulting in a computer program with loops that are more fully optimized.

Owner:INTELLECTUAL DISCOVERY INC

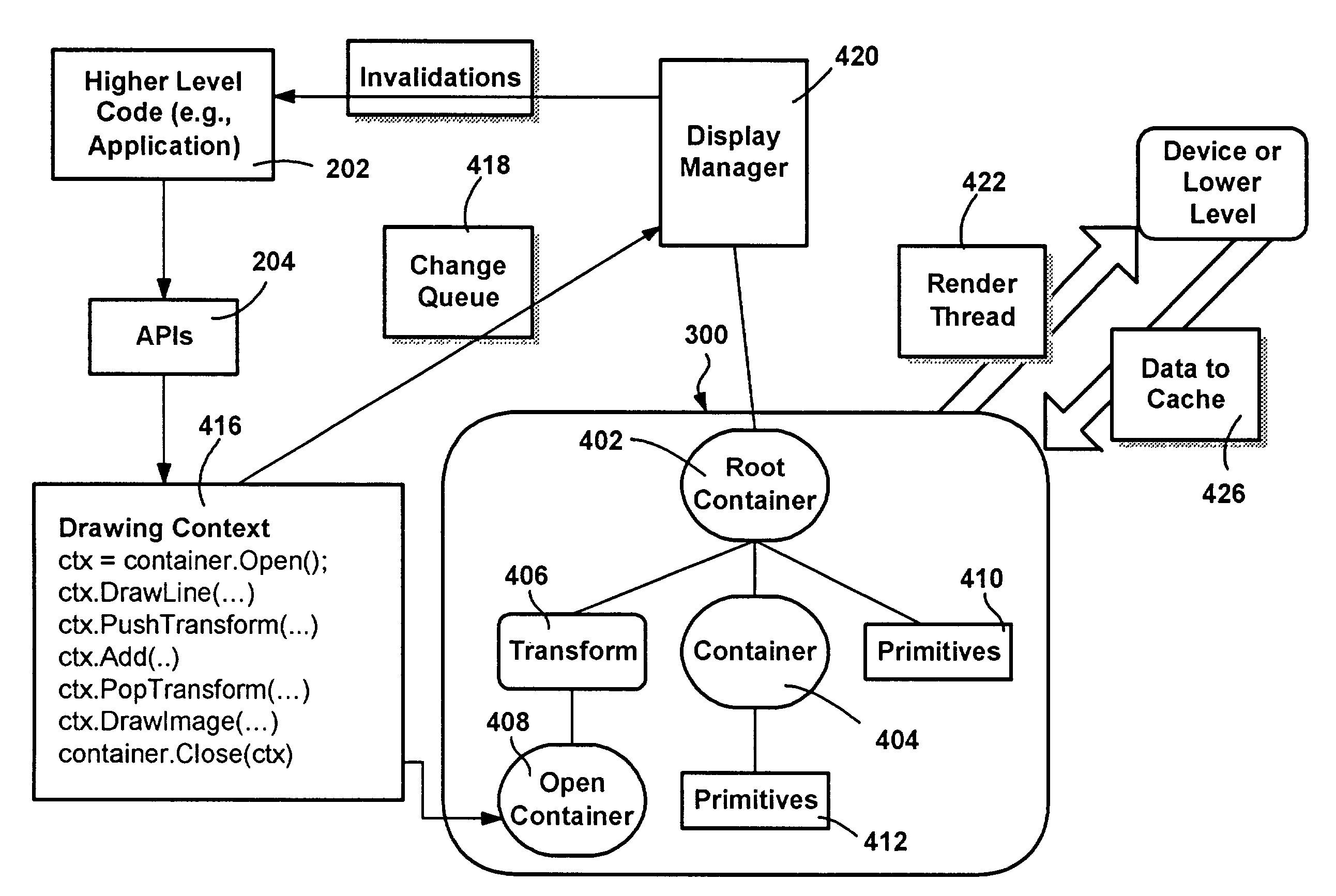

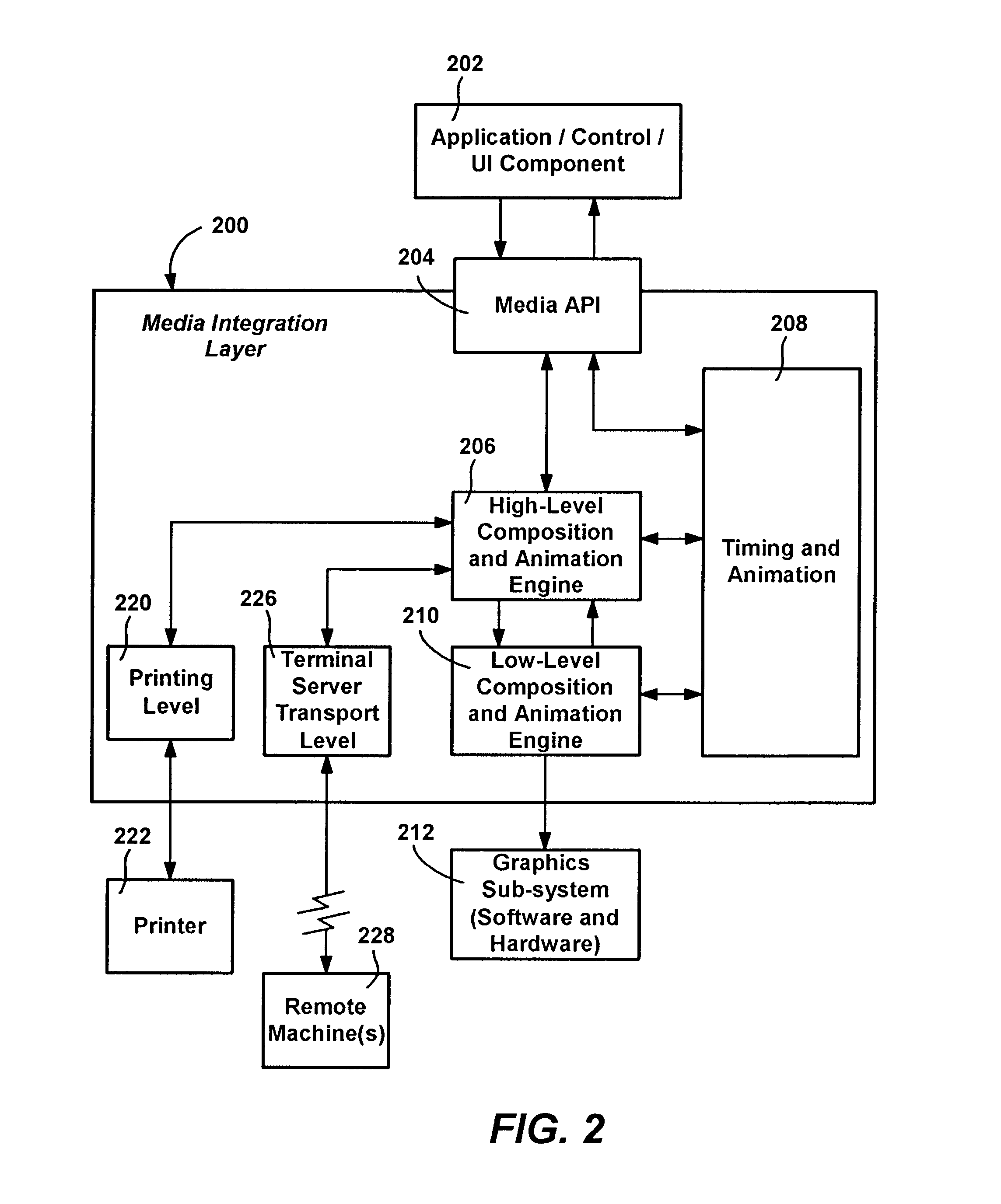

Intelligent caching data structure for immediate mode graphics

ActiveUS7064766B2Save resourcesDigital computer detailsCathode-ray tube indicatorsSearch data structureGraphics

An intelligent caching data structure and mechanisms for storing visual information via objects and data representing graphics information. The data structure is generally associated with mechanisms that intelligently control how the visual information therein is populated and used. The cache data structure can be traversed for direct rendering, or traversed for pre-processing the visual information into an instruction stream for another entity. Much of the data typically has no external reference to it, thereby enabling more of the information stored in the data structure to be processed to conserve resources. A transaction / batching-like model for updating the data structure enables external modifications to the data structure without interrupting reading from the data structure, and such that changes received are atomically implemented. A method and mechanism are provided to call back to an application program in order to create or re-create portions of the data structure as needed, to conserve resources.

Owner:MICROSOFT TECH LICENSING LLC

Method and apparatus for dynamically managing instruction buffer depths for non-predicted branches

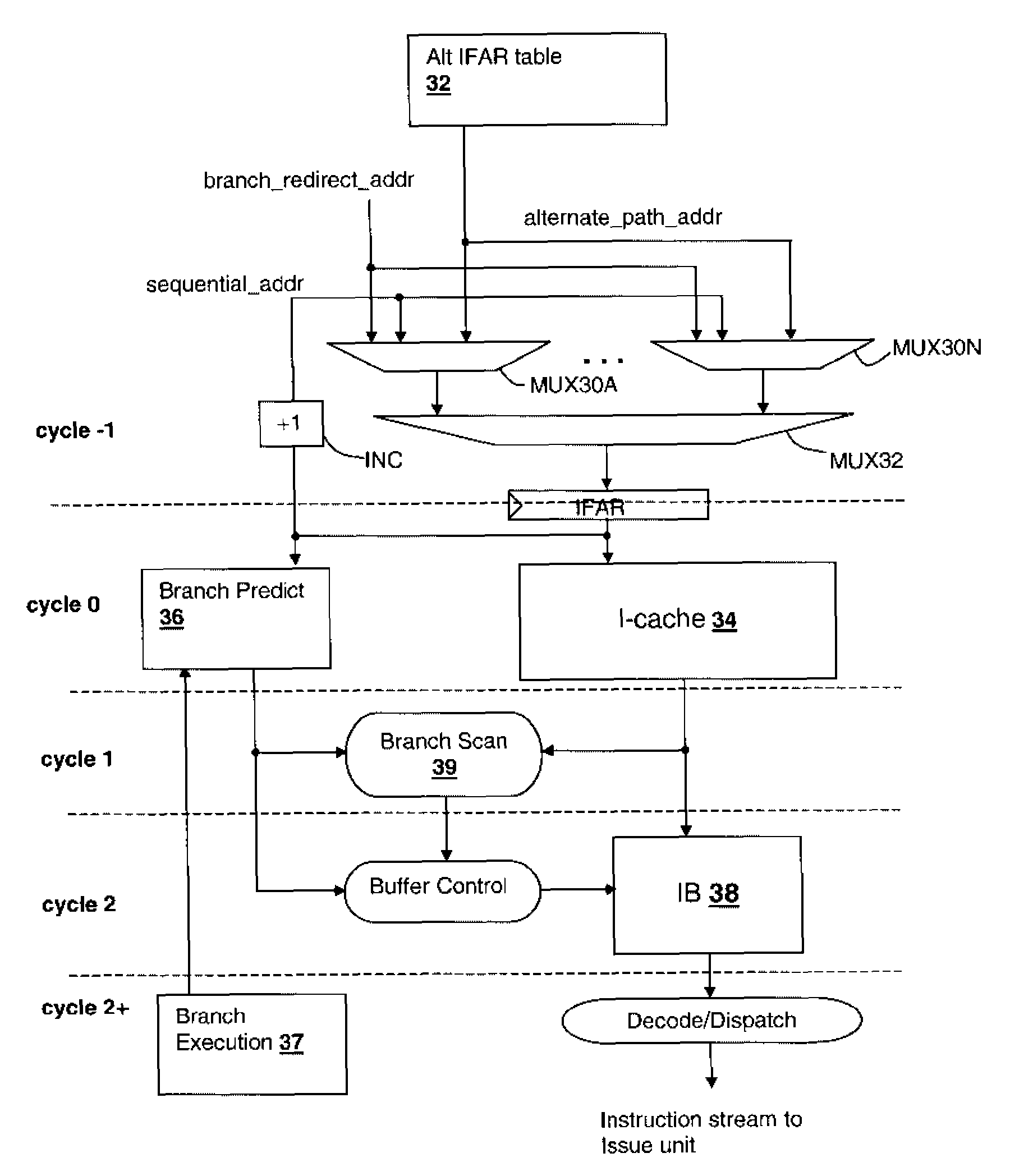

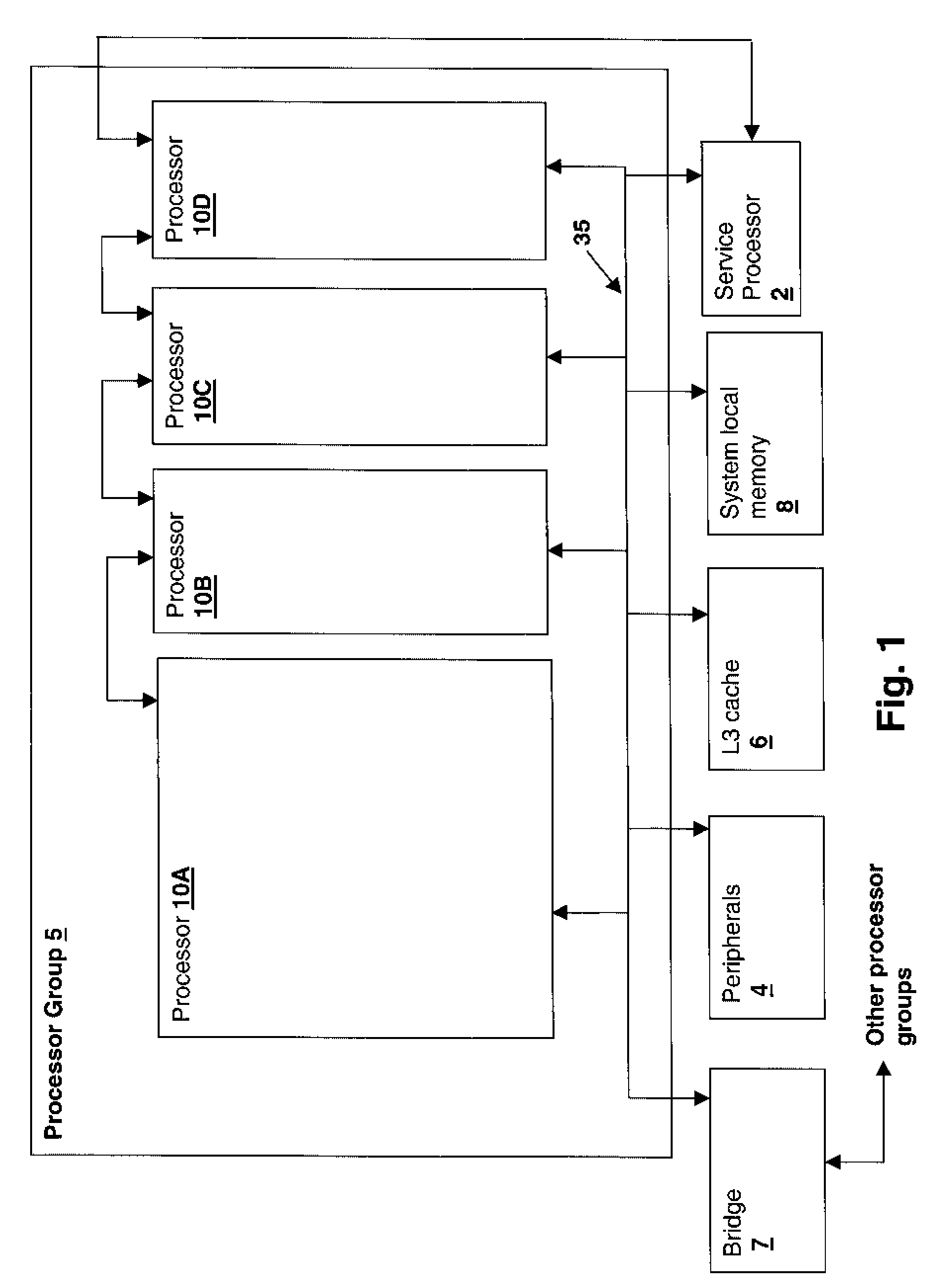

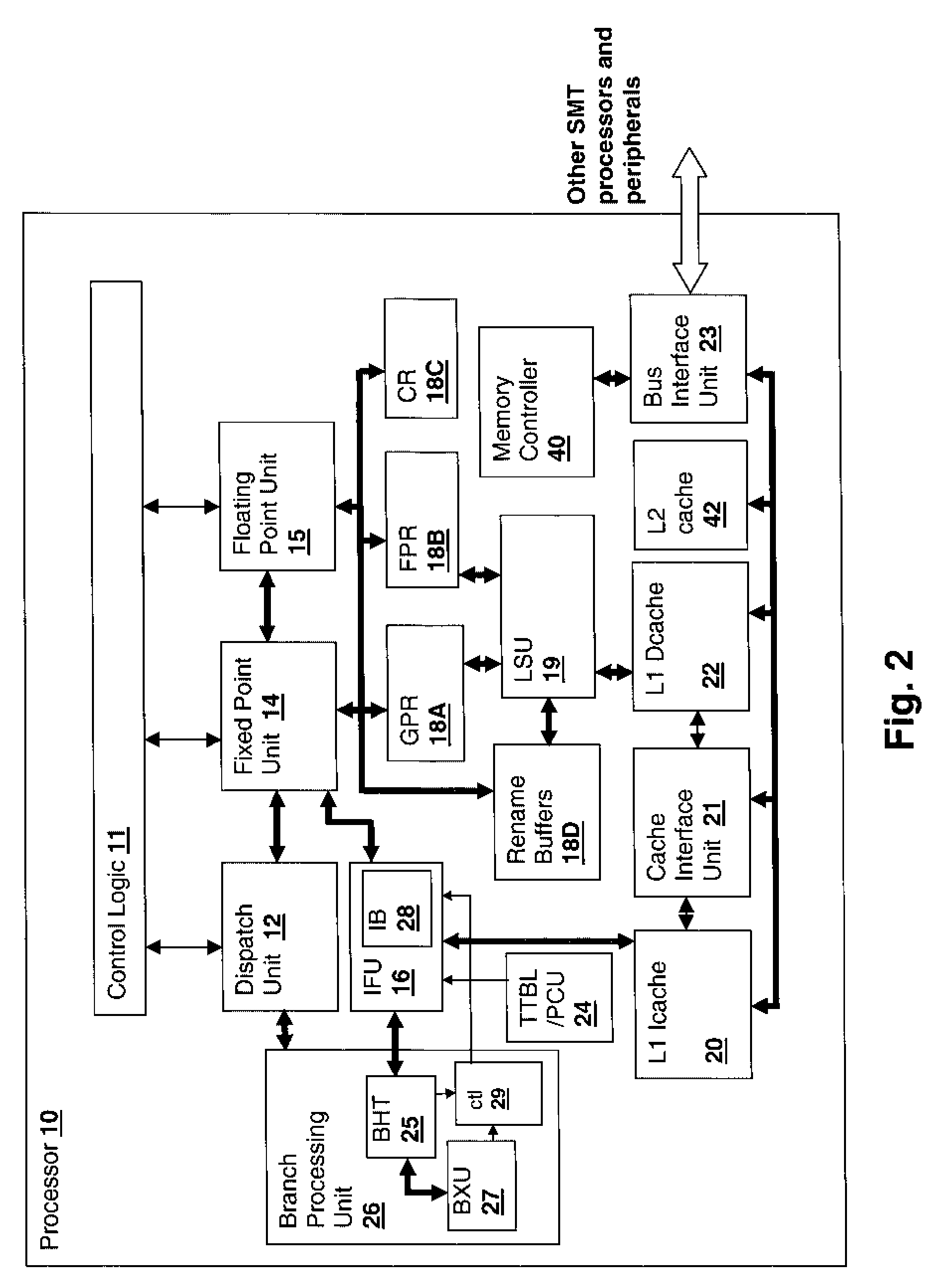

InactiveUS7779232B2Reduces resource and energy wastedDigital computer detailsSpecific program execution arrangementsProcessor registerDynamic management

A method and apparatus for dynamically managing instruction buffer depths for non-predicted branches reduces wasted energy and resources associated with low confidence branch prediction conditions. A portion of the instruction buffer for a instruction thread is allocated for storing predicted branch instruction streams and another portion, which may be zero-sized during high prediction confidence conditions, is allocated to the non-predicted branch instruction stream. The size of the buffers is adjusted dynamically in conformity with an on-going prediction confidence that provides a measure of how well branch prediction mechanisms are working for a given instruction thread. An alternate instruction fetch address table can be maintained and multiplexed with the main fetch address register for addressing the instruction cache, so that the instruction stream can be quickly shifted to the non-predicted path when a branch instruction is resolved to the non-predicted path.

Owner:INT BUSINESS MASCH CORP

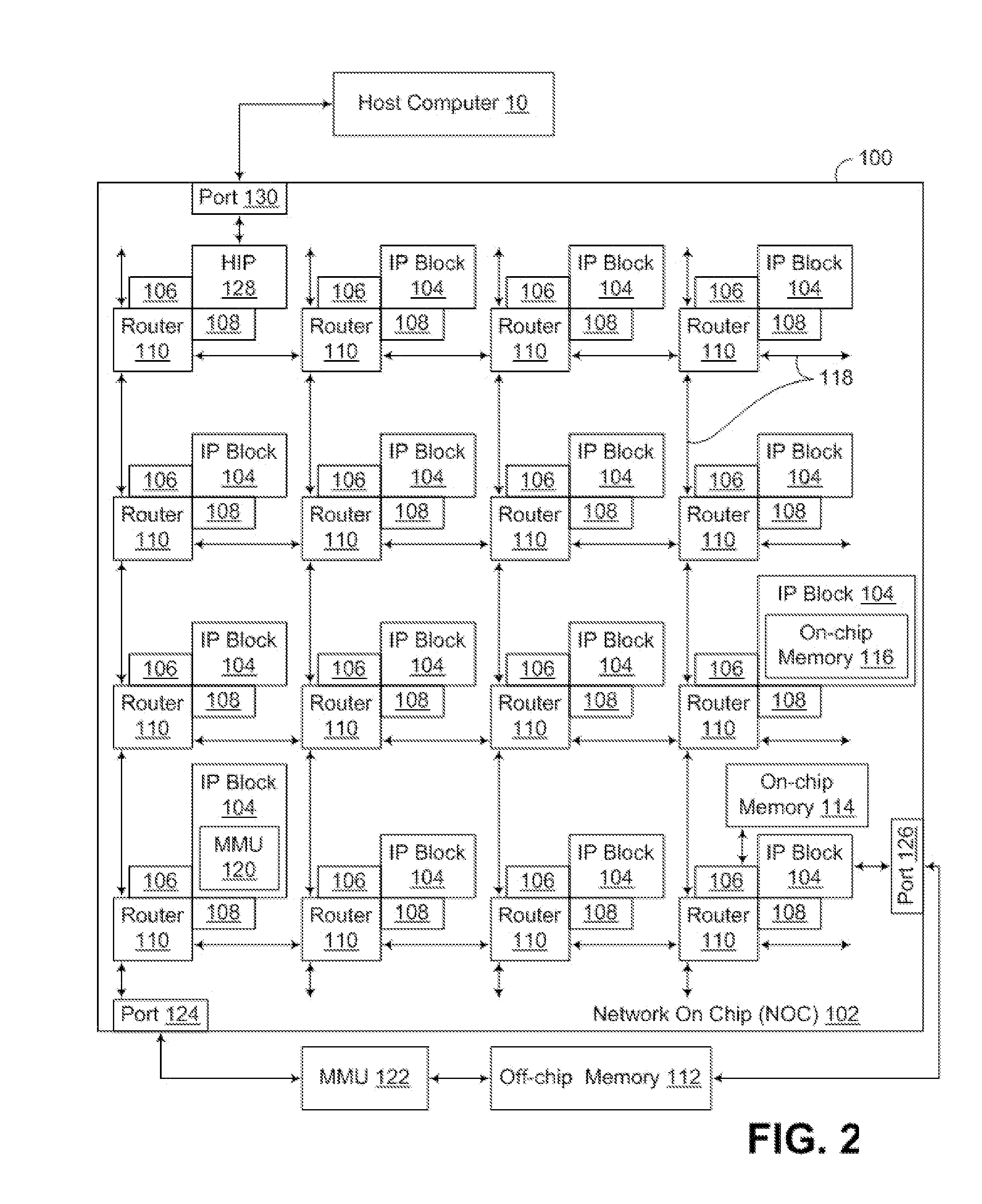

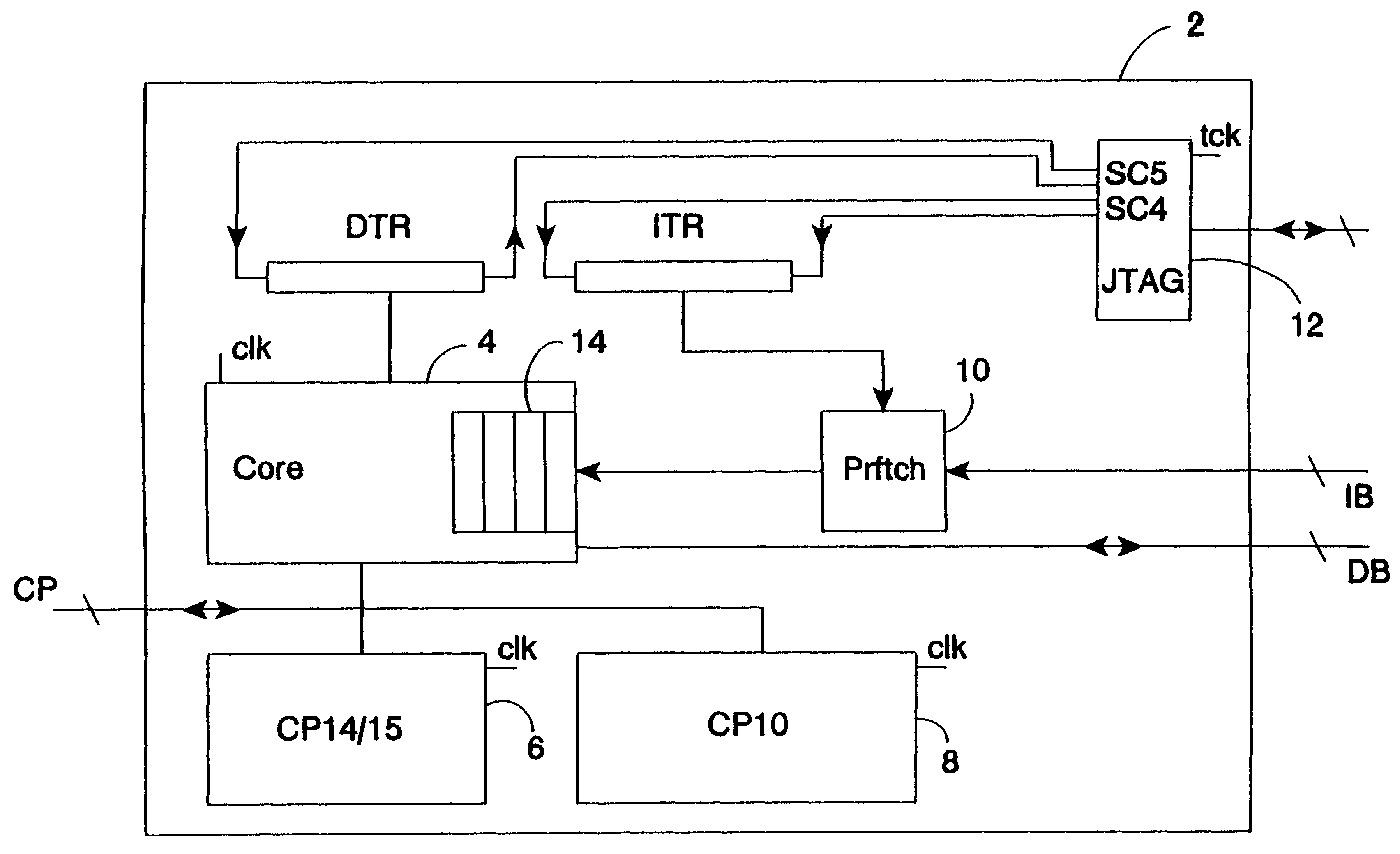

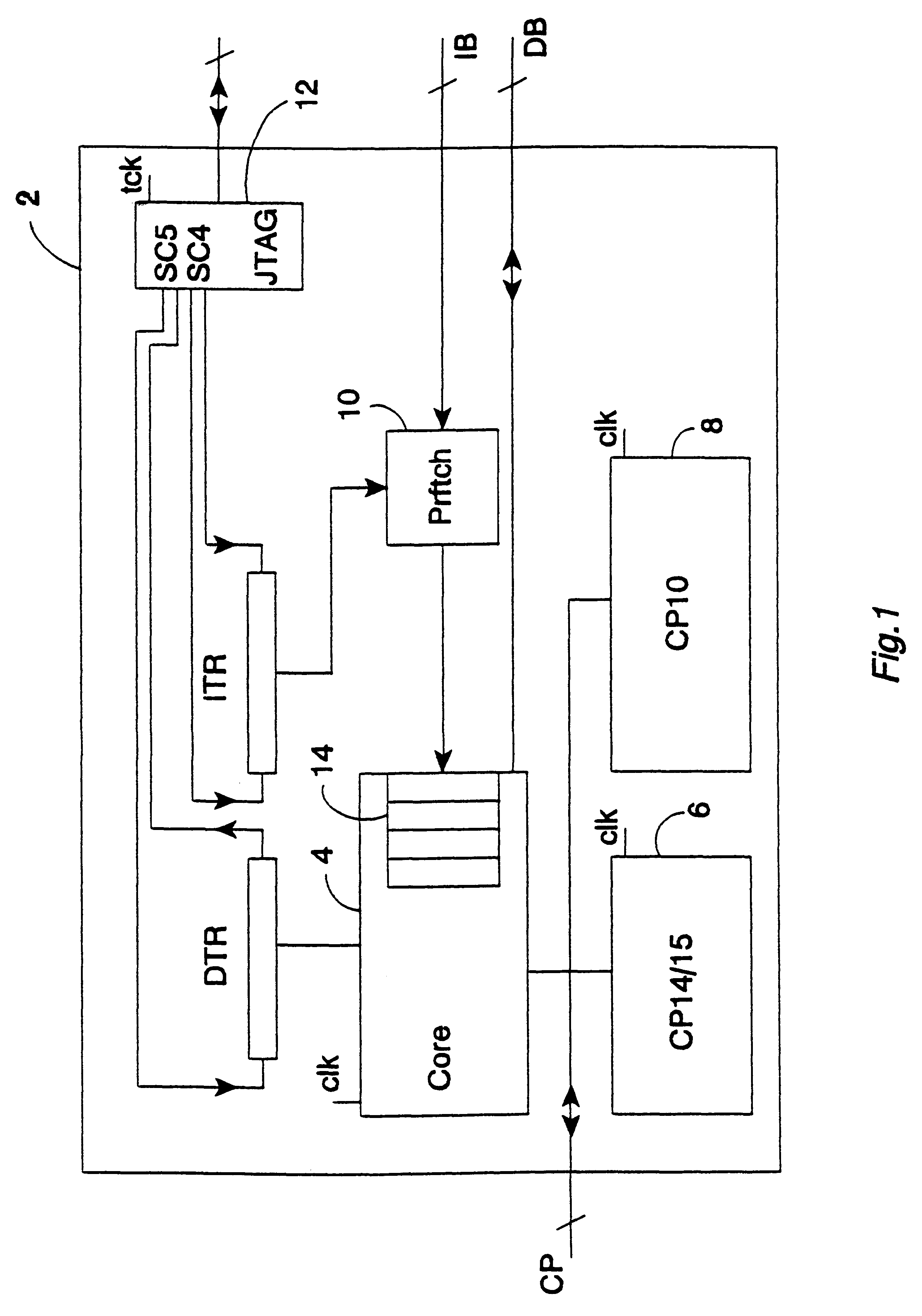

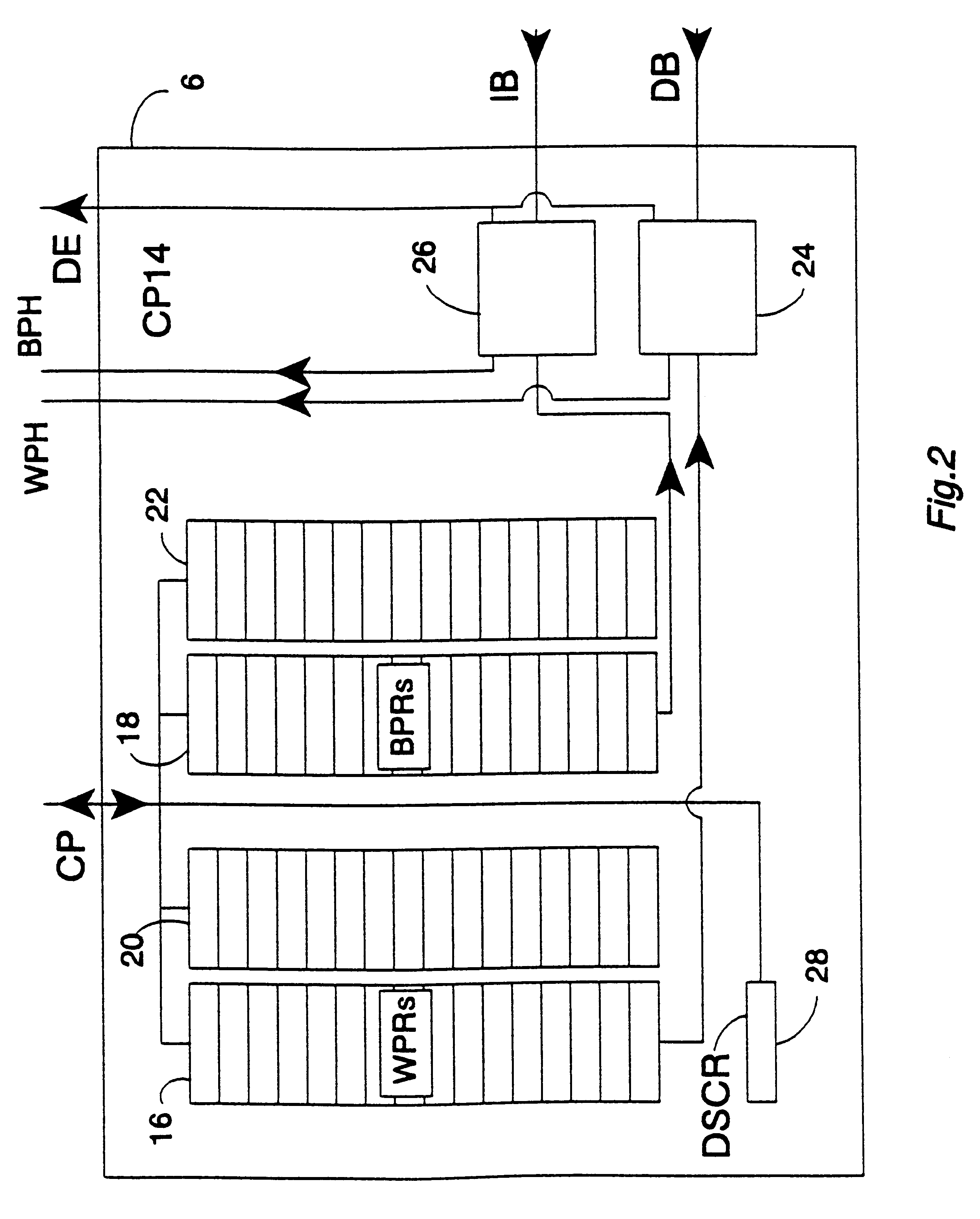

Debug mechanism for data processing systems

InactiveUS6446221B1Efficient programmingAvoid Data ConflictsProgram synchronisationDetecting faulty computer hardwareDiagnostic dataData processing system

Apparatus for processing data is provided, said apparatus comprising: a main processor 4 responsive to main processor instructions within a stream of instructions input to said main processor 4 to perform main processor operations; a coprocessor 6 coupled to said main processor 4 via a coprocessor interface CP and responsive to coprocessor instructions MCR, MRC within said stream of instructions to perform coprocessor operations; wherein said coprocessor 6 is a debug coprocessor operable to at least partially control generation of diagnostic data for debugging said apparatus for processing data and said coprocessor instructions are debug coprocessor instructions that control operation of said debug coprocessor. Using a debug mechanism in the form of a debug coprocessor reduces the impact of the debug mechanism upon normal operation.

Owner:ARM LTD

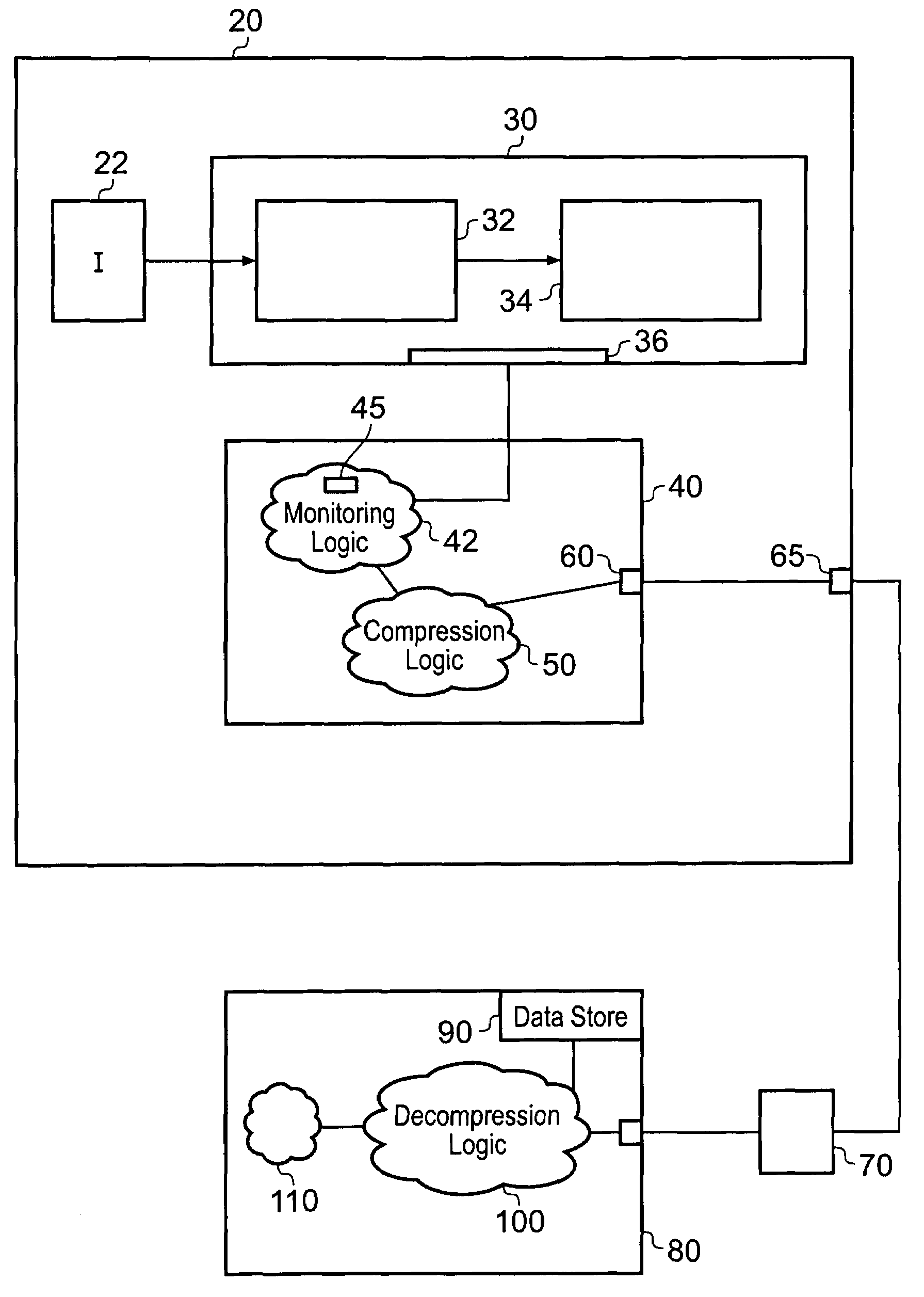

Method and apparatus for accelerating processing of a non-sequential instruction stream on a processor with multiple compute units

ActiveUS20060174236A1Speed up the processIncrease computing speedSoftware engineeringInstruction analysisData storingData store

Accelerating processing of a non-sequential instruction stream on a processor with multiple compute units by broadcasting to a plurality of compute units a generic instruction stream derived from a sequence of instructions; the generic instruction stream including an index section and a compute section; applying the index section to localized data stored in each compute unit to select one of a plurality of stored local parameter sets; and applying in each compute unit the selected set of parameters to the local data according to the compute section to produce each compute unit's localized solution to the generic instruction.

Owner:ANALOG DEVICES INC

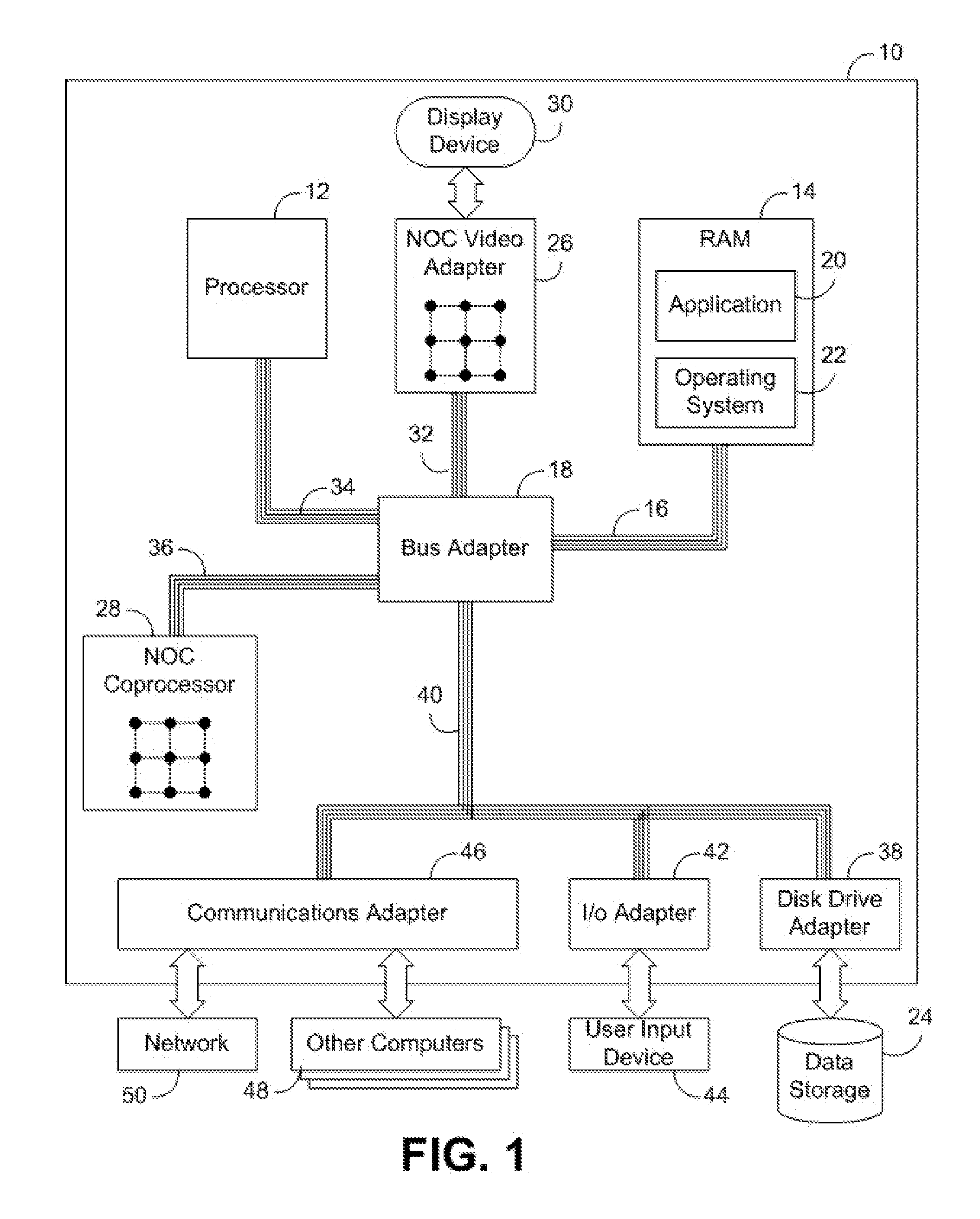

Method and apparatus for efficient utilization for prescient instruction prefetch

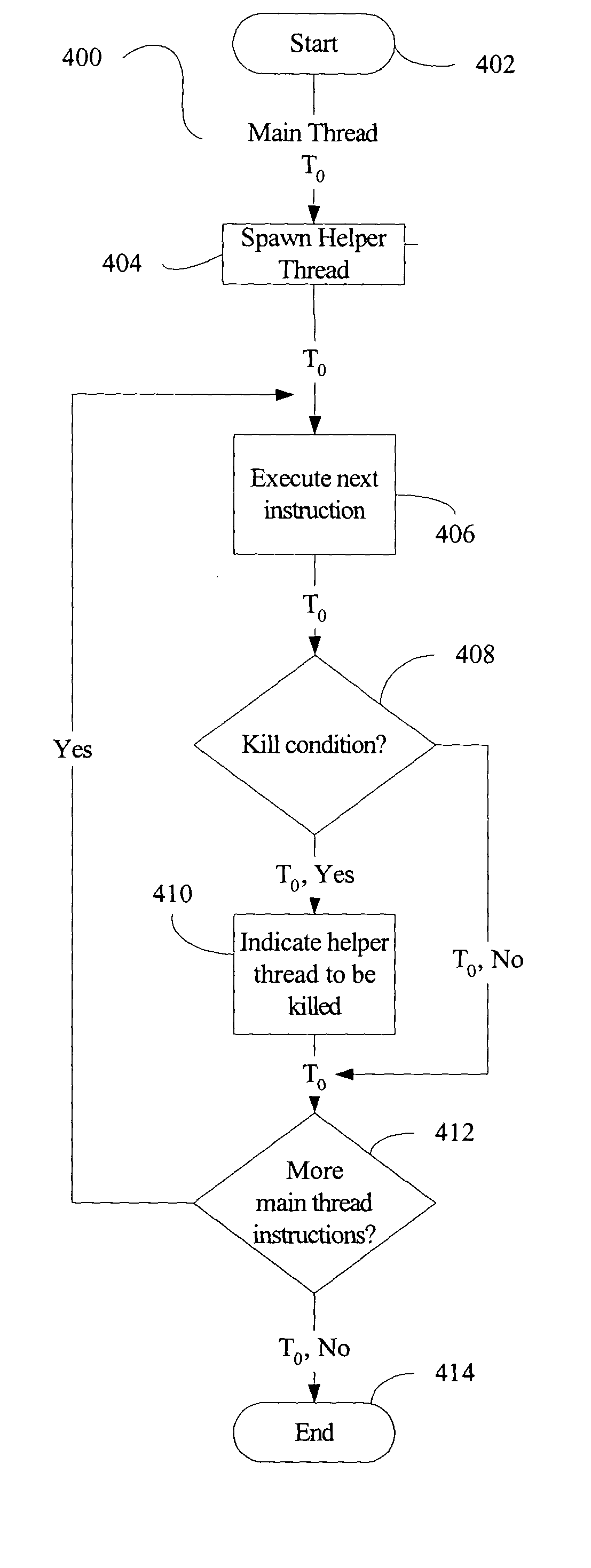

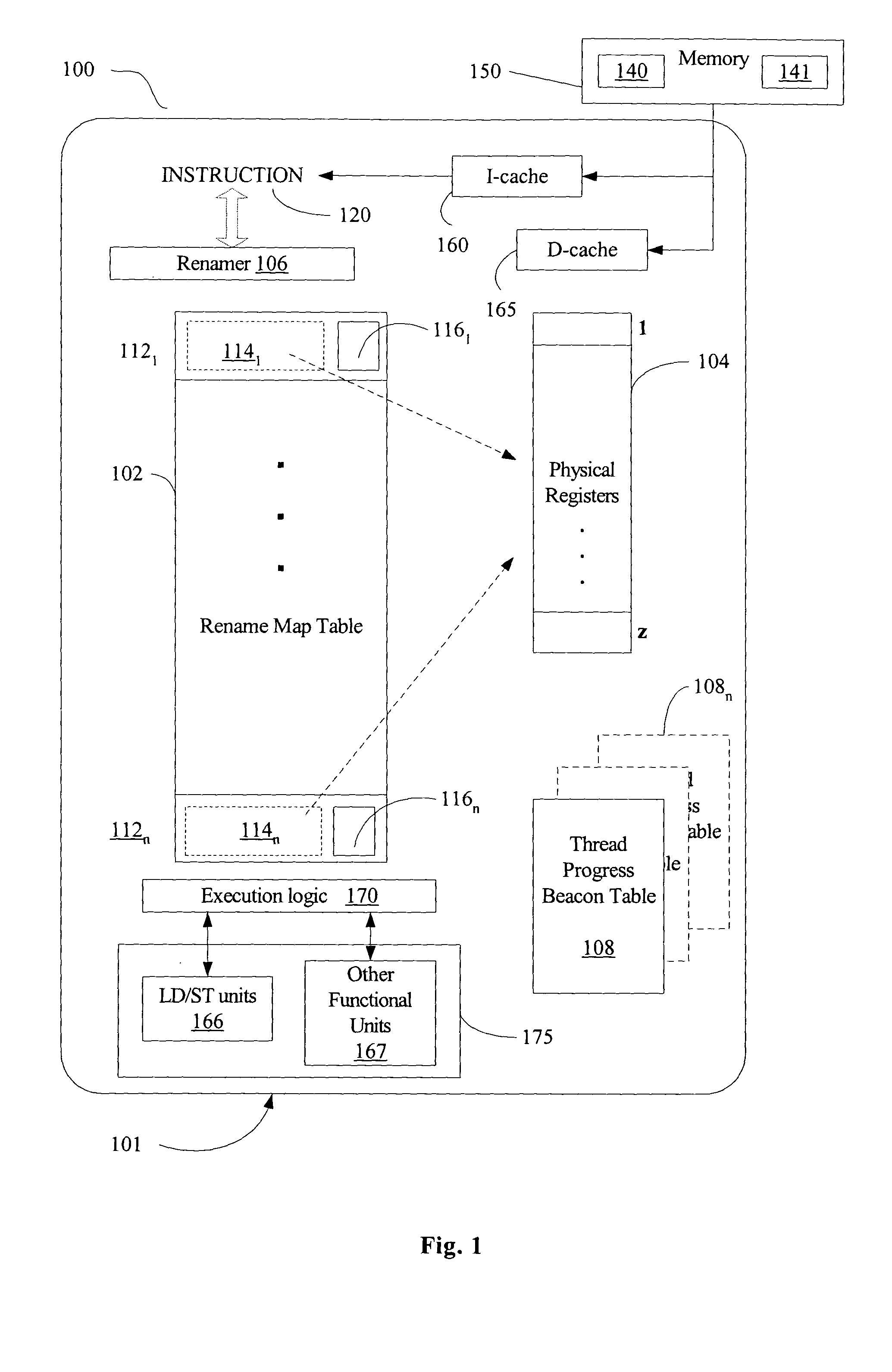

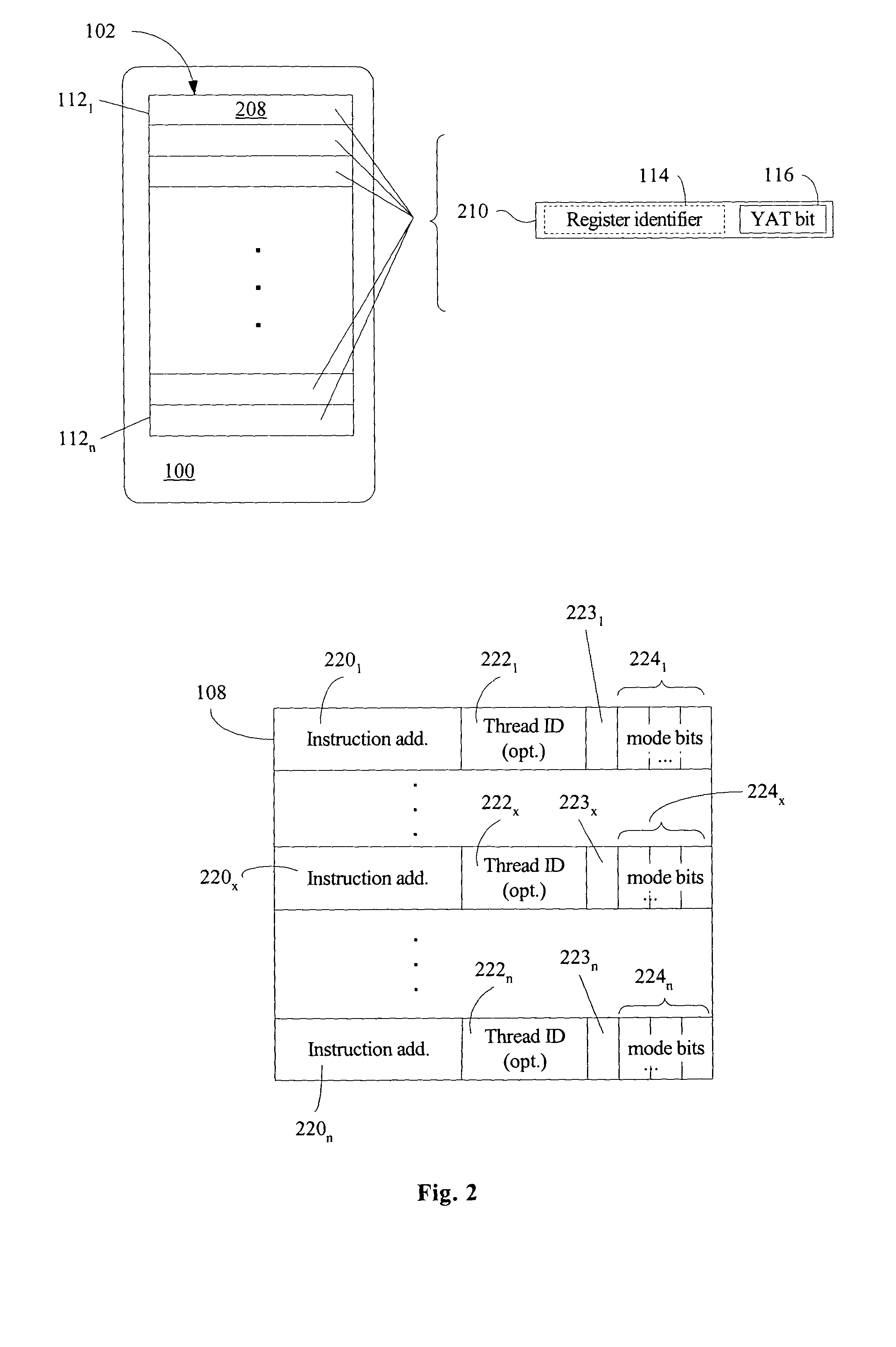

ActiveUS20050055541A1Digital computer detailsConcurrent instruction executionResource utilizationOperating system

Embodiments of an apparatus, system and method enhance the efficiency of processor resource utilization during instruction prefetching via one or more speculative threads. Renamer logic and a map table are utilized to perform filtering of instructions in a speculative thread instruction stream. The map table includes a yes-a-thing bit to indicate whether the associated physical register's content reflects the value that would be computed by the main thread. A thread progress beacon table is utilized to track relative progress of a main thread and a speculative helper thread. Based upon information in the thread progress beacon table, the main thread may effect termination of a helper thread that is not likely to provide a performance benefit for the main thread.

Owner:TAHOE RES LTD

Suppression of control transfer instructions on incorrect speculative execution paths

ActiveUS20120290820A1Suppresses resultEliminates spurious flushDigital computer detailsConcurrent instruction executionSpeculative executionExecution control

Owner:ORACLE INT CORP

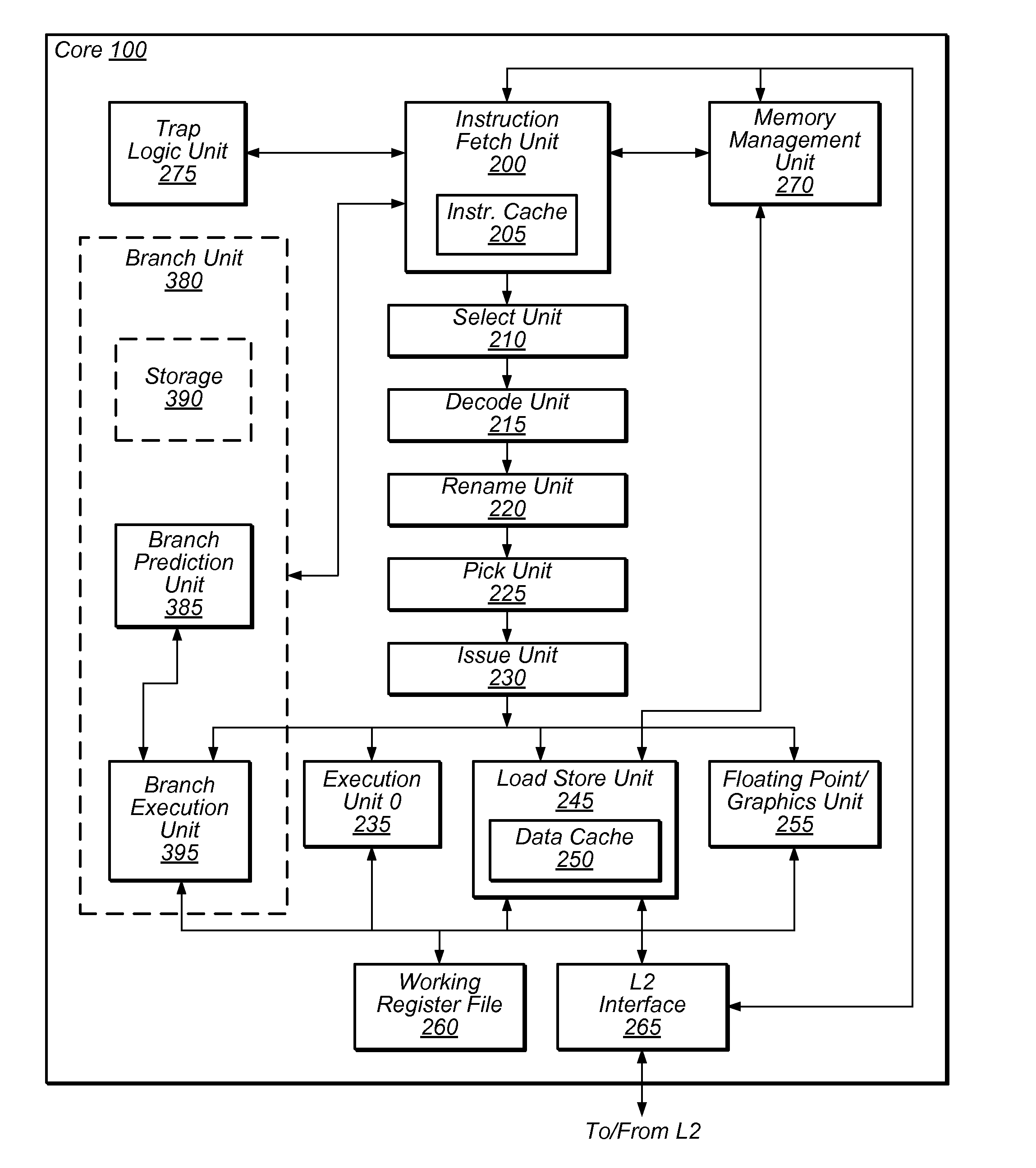

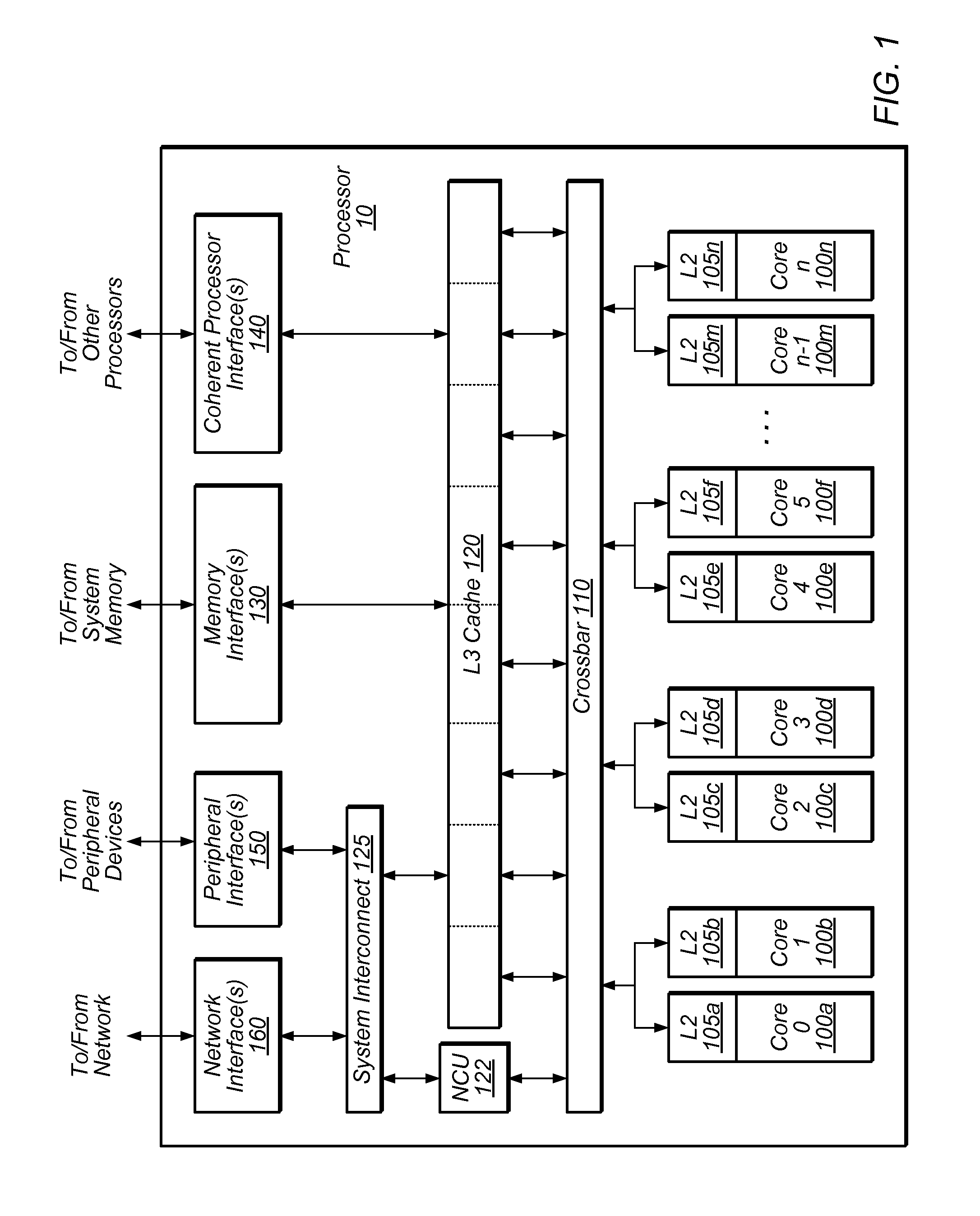

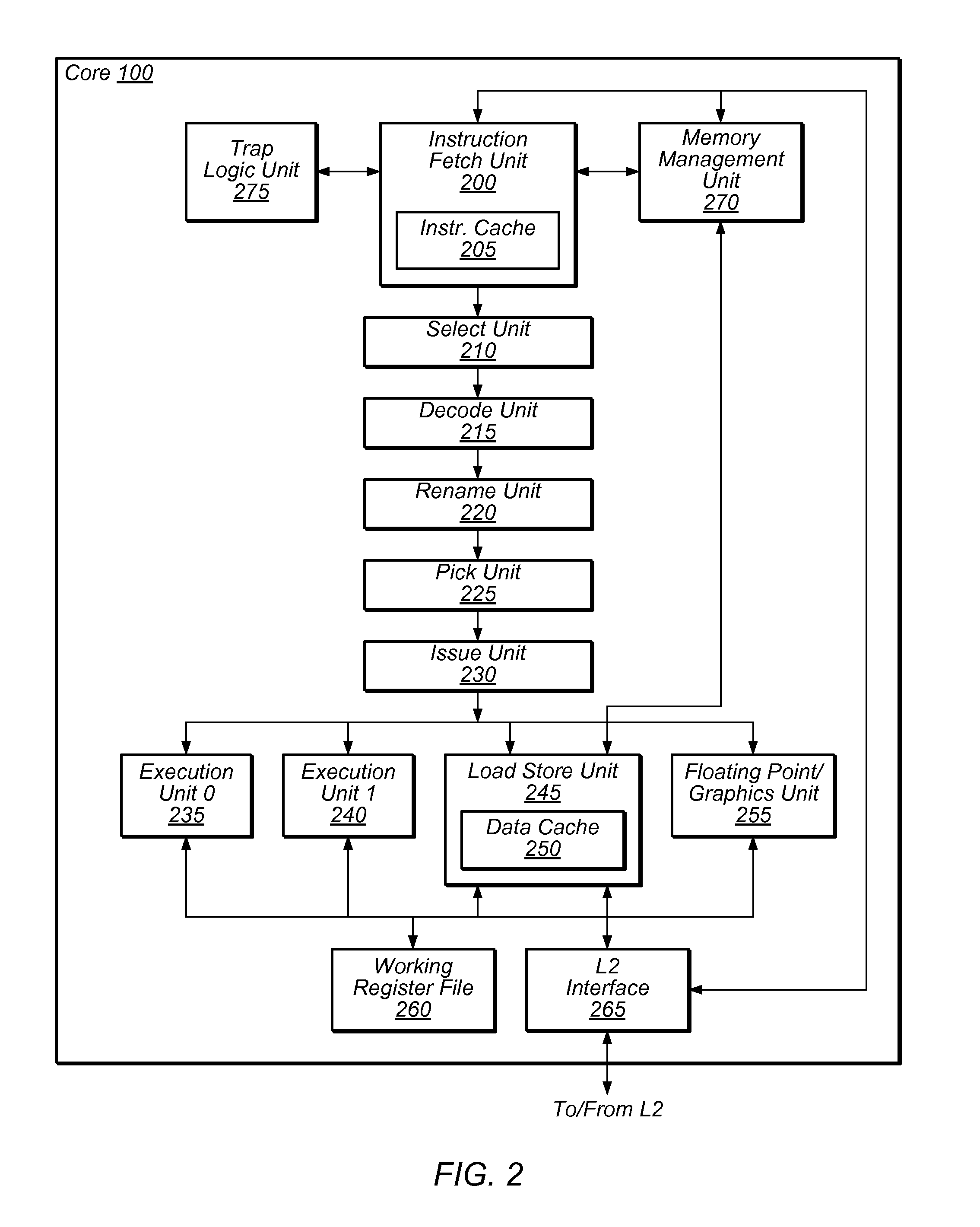

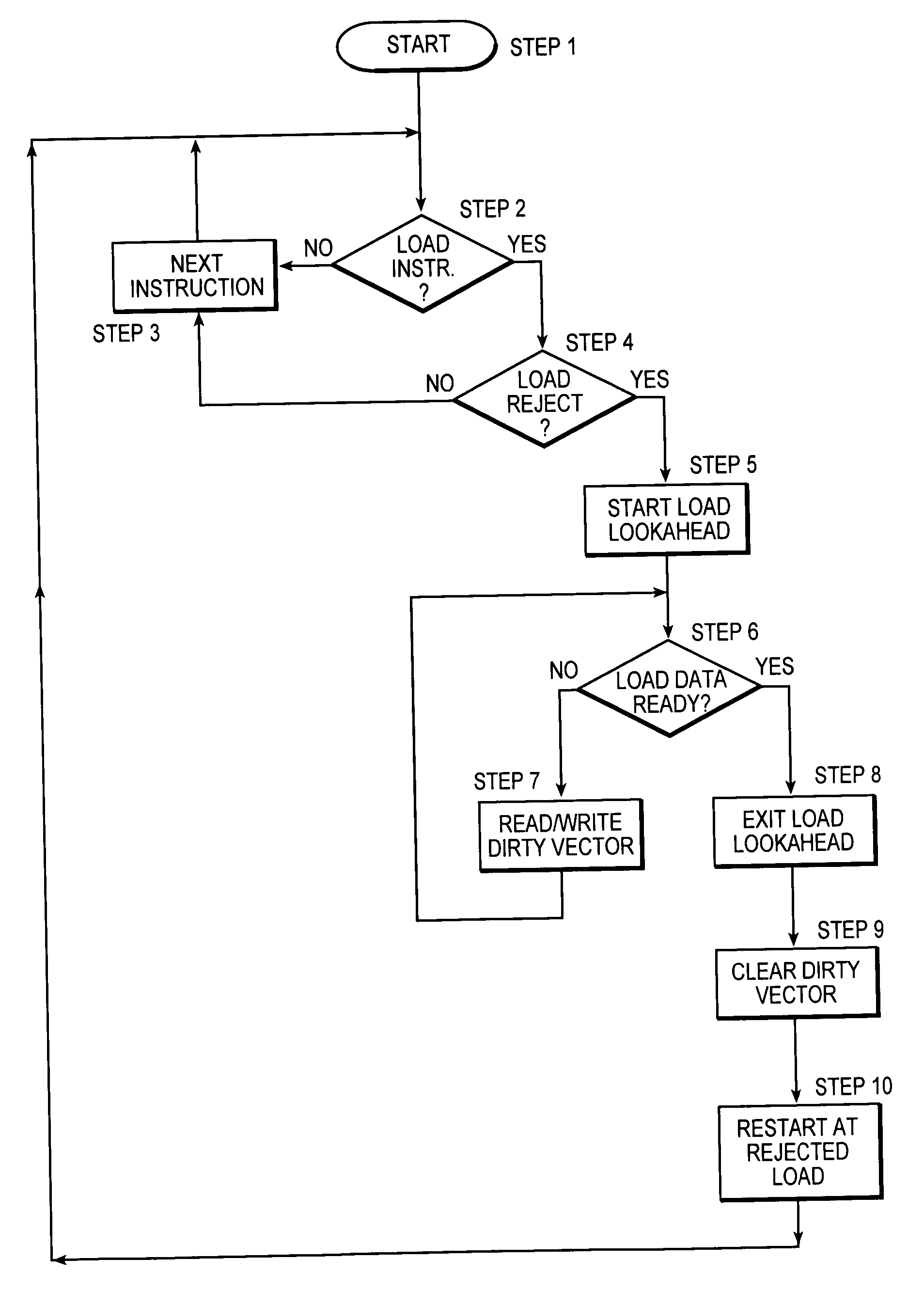

Load lookahead prefetch for microprocessors

InactiveUS20060149935A1Reduced performance impactLower latencyDigital computer detailsSpecific program execution arrangementsSpeculative executionLoad instruction

The present invention allows a microprocessor to identify and speculatively execute future load instructions during a stall condition. This allows forward progress to be made through the instruction stream during the stall condition which would otherwise cause the microprocessor or thread of execution to be idle. The data for such future load instructions can be prefetched from a distant cache or main memory such that when the load instruction is re-executed (non speculative executed) after the stall condition expires, its data will reside either in the L1 cache, or will be enroute to the processor, resulting in a reduced execution latency. When an extended stall condition is detected, load lookahead prefetch is started allowing speculative execution of instructions that would normally have been stalled. In this speculative mode, instruction operands may be invalid due to source loads that miss the L1 cache, facilities not available in speculative execution mode, or due to speculative instruction results that are not available via forwarding and are not written to the architected registers. A set of status bits are used to dynamically keep track of the dependencies between instructions in the pipeline and a bit vector tracks invalid architected facilities with respect to the speculative instruction stream. Both sources of information are used to identify load instructions with valid operands for calculating the load address. If the operands are valid, then a load prefetch operation is started to retrieve data from the cache ahead of time such that it can be available for the load instruction when it is non-speculatively executed.

Owner:INTEL CORP

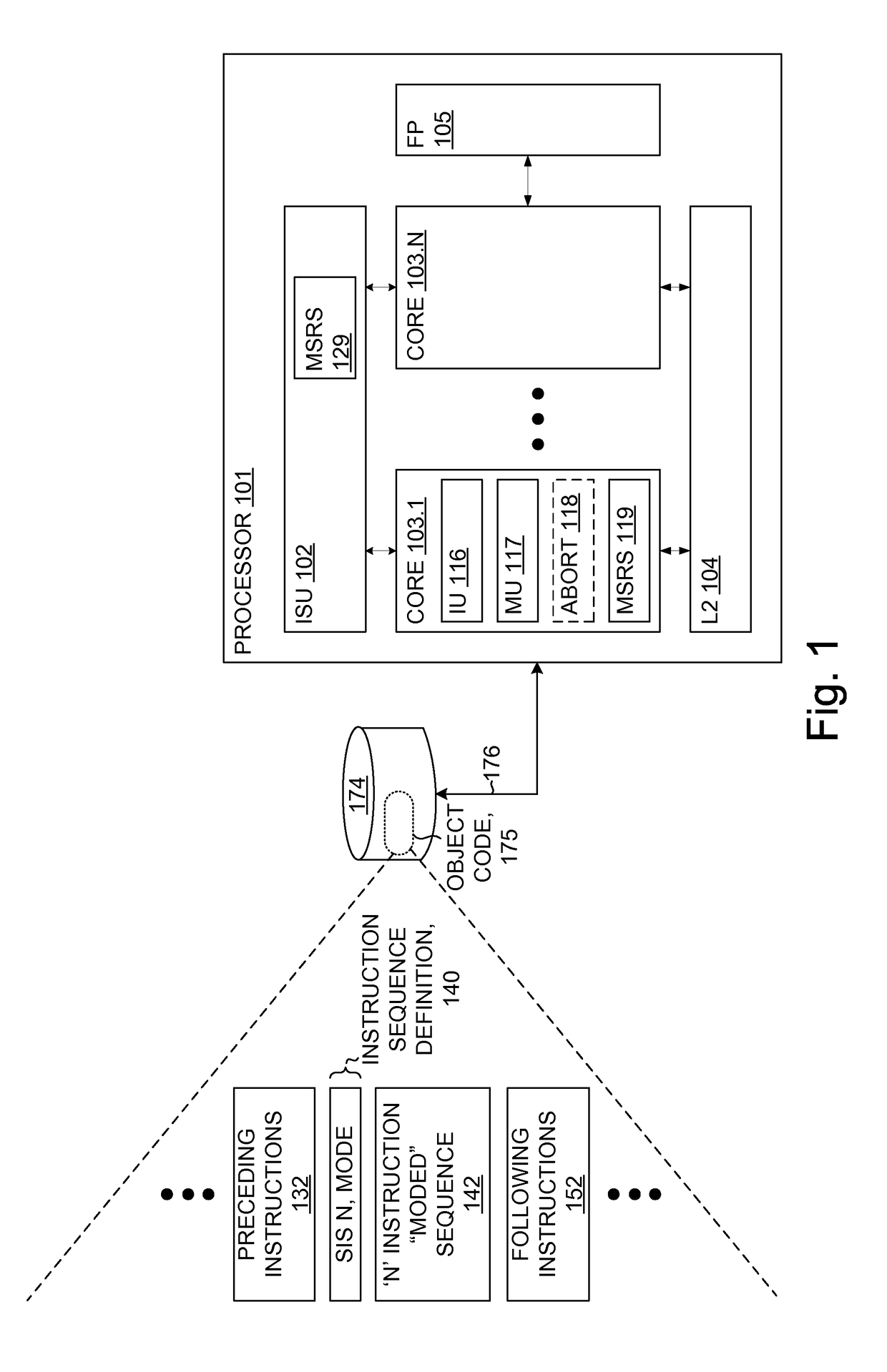

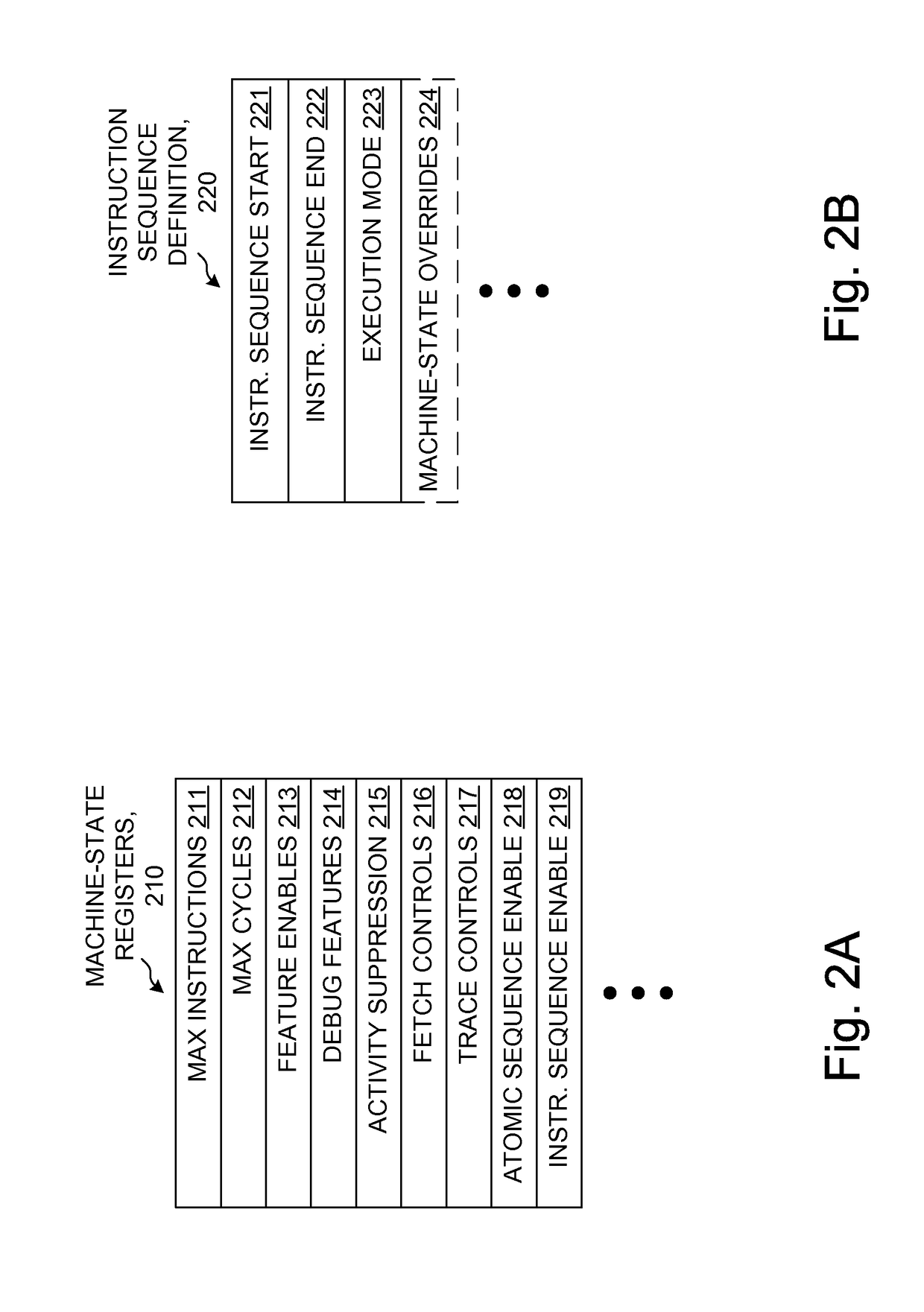

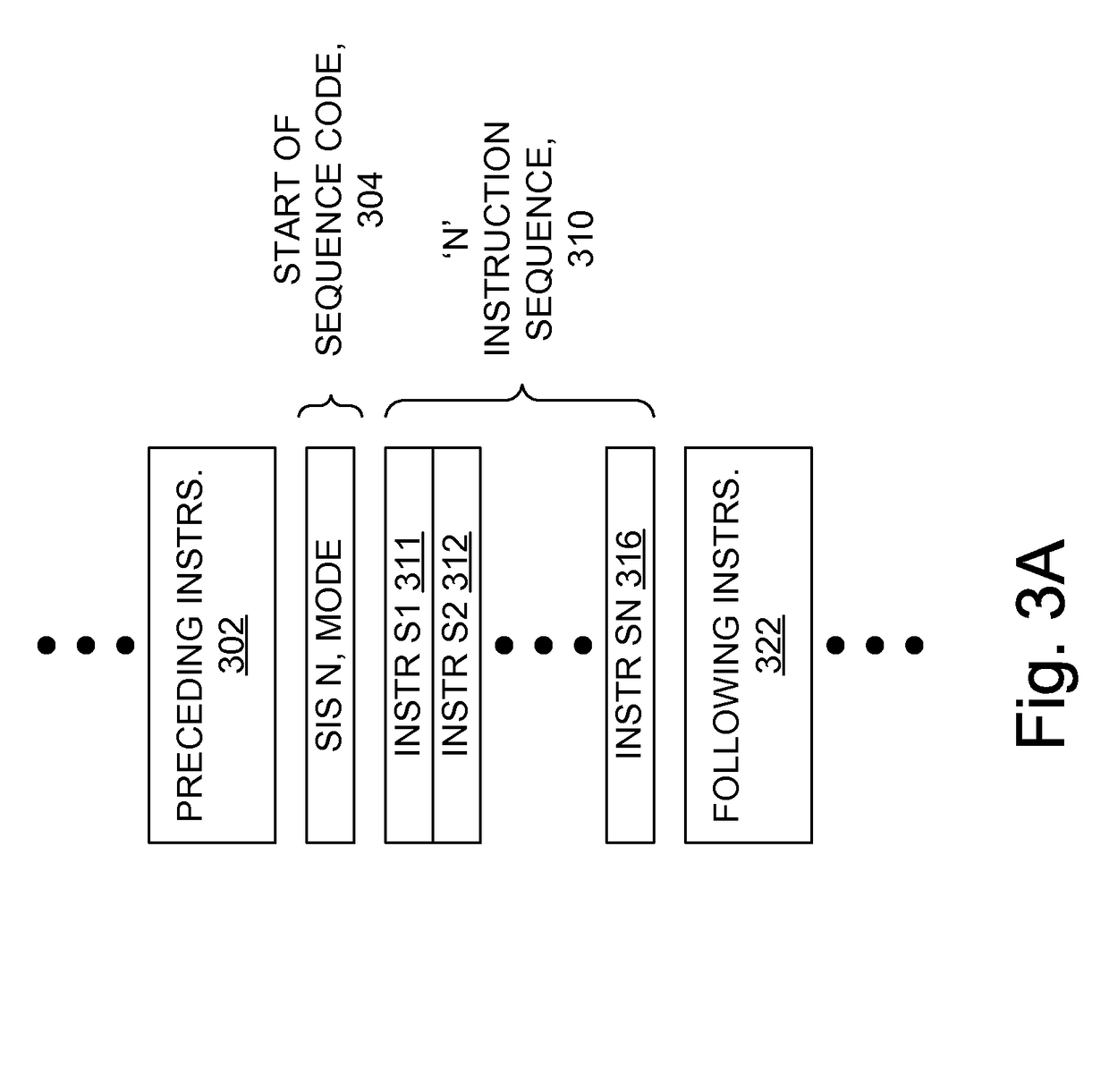

Controlling operation of a processor according to execution mode of an instruction sequence

ActiveUS8473724B1Digital computer detailsSpecific program execution arrangementsMode controlExecution unit

In a processor, instructions of an instruction stream are supplied to an execution unit which executes the supplied instructions according to respective execution modes. A control unit recognizes a user-defined instruction sequence (UDIS) in the instruction stream. The UDIS is associated with a UDIS definition provided in-line and / or as contents of machine-state registers (MSRs), and specifying, at least in part, a start, optionally an end, and a particular execution mode for the UDIS. Subsequently, ones of the instructions of the UDIS are executed in accordance with the particular execution mode, such as by optionally altering recognition of asynchronous events. For example, disabling hardware interrupts during the executing results in apparent atomic execution. Fetching, decoding, issuing, and / or caching of the instructions of the UDIS are optionally dependent on the particular execution mode. MSRs optionally specify a maximum length and / or execution time.

Owner:SUN MICROSYSTEMS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com