Patents

Literature

892 results about "Load instruction" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method for data input into a service device and arrangement for the implementation of the method

InactiveUS7103583B1Reduce conversionMinimize timeComputer security arrangementsFranking apparatusLoad instructionData center

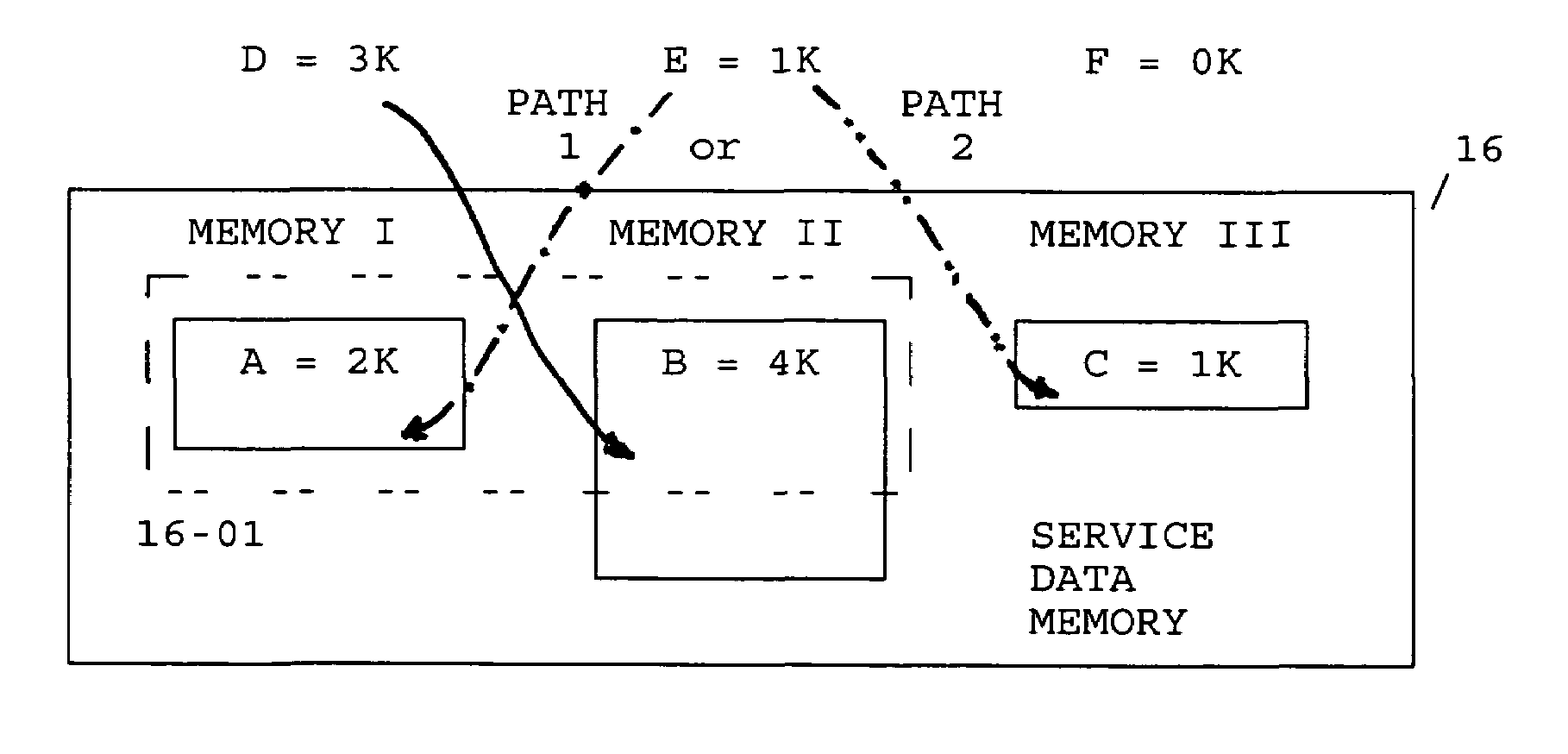

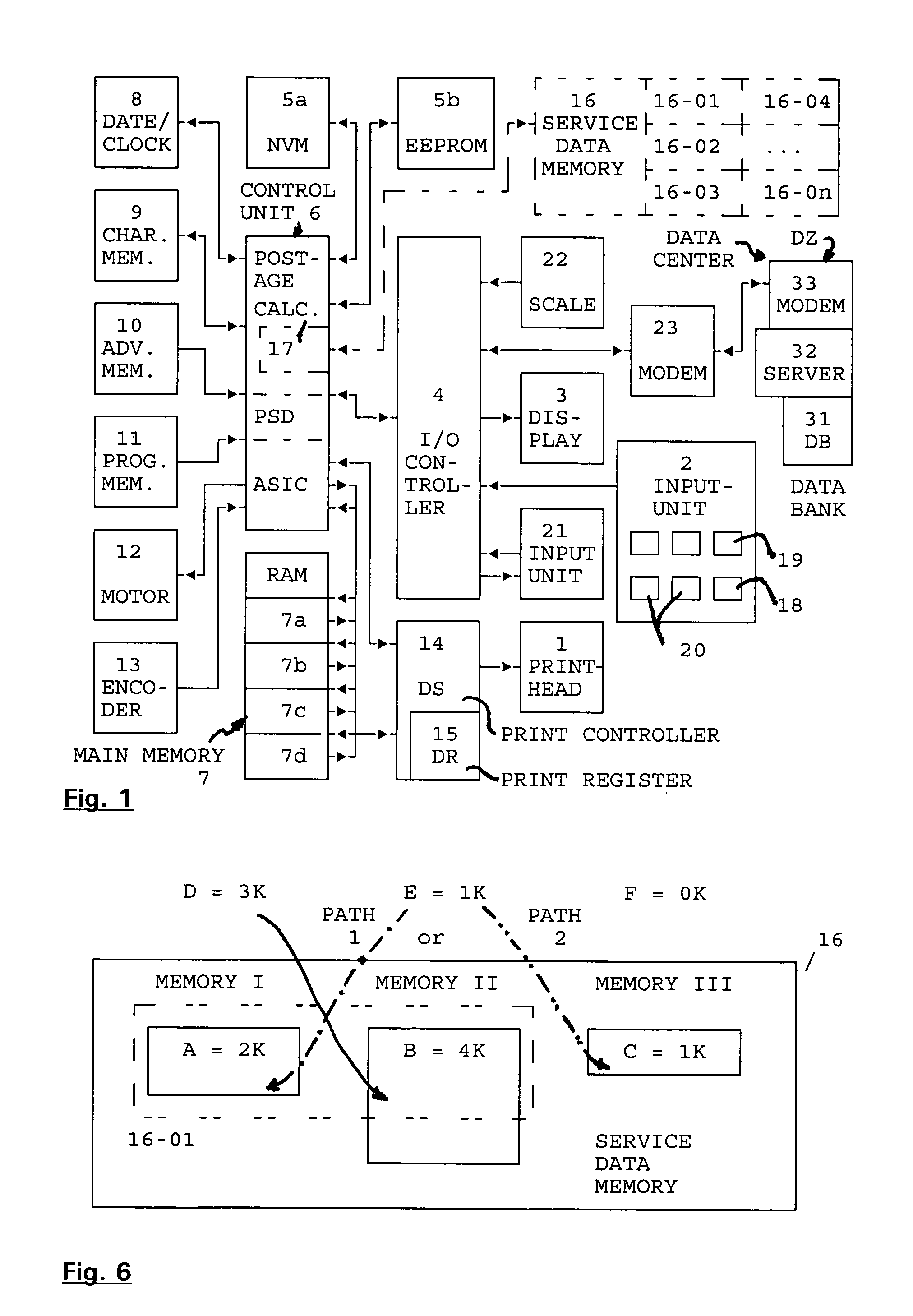

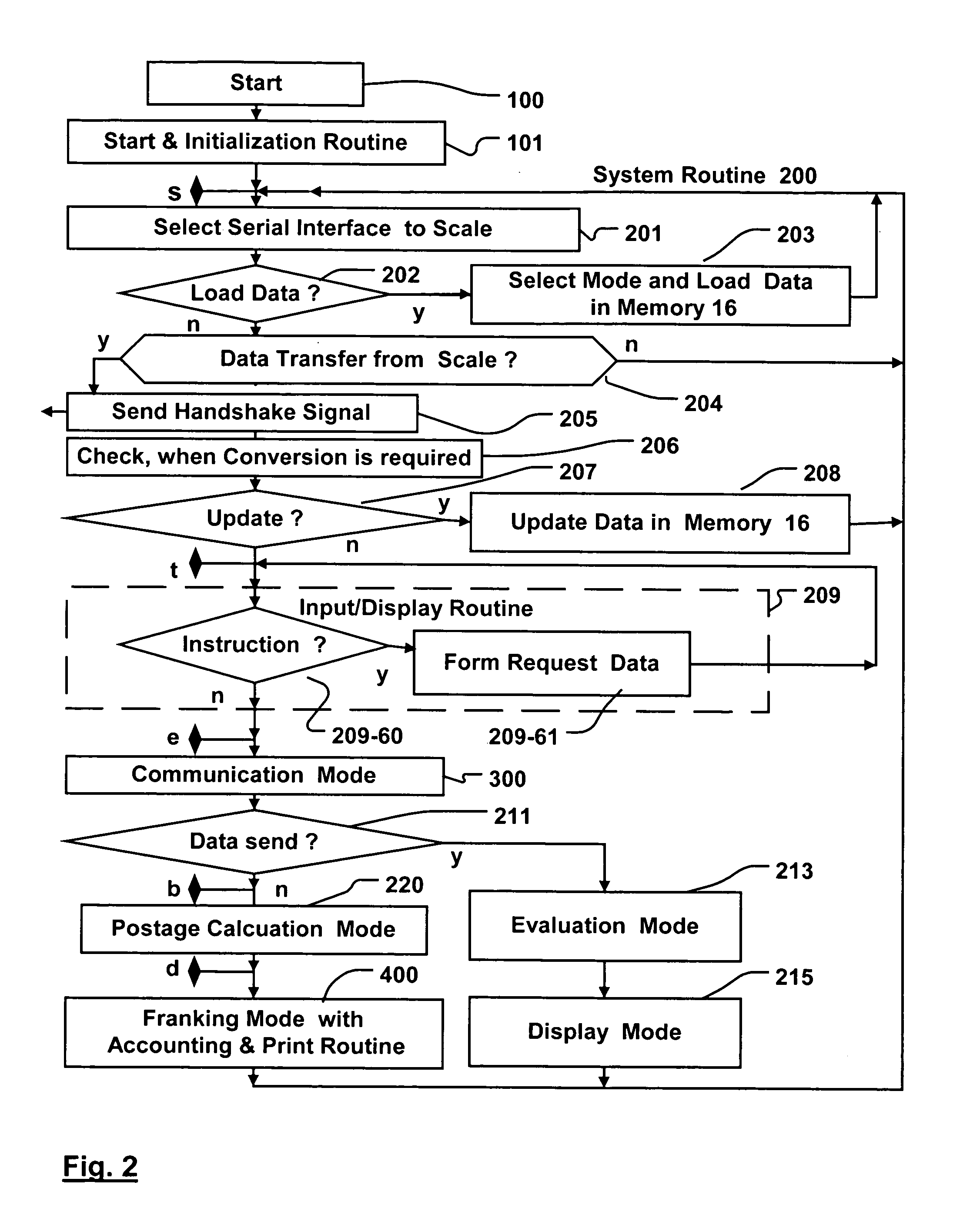

A method and apparatus for data input into a service device allow a loading and updating of service data, particularly postage fee schedule tables, separated from one another in time. The method and apparatus are suitable for postage meter machines as well as for scales containing postage computers or similar devices. The apparatus for implementation of the method contains a processor and a memory with memory areas for service data. After detecting the input and storage of a load requirement, a check for the presence of a load instruction, formation of a status report of the memory occupancy for service data and a transmission of the status report to the data center ensue. The data center forms recommendations for a future status of the memory occupancy in the service device on the basis of an analysis of the status report of the memory occupancy for service data that is implemented in the data center. The data center transmits the recommendations to the service device that, after evaluation thereof, sends corresponding request data before the actual loading.

Owner:FRANCOTYP POSTALIA

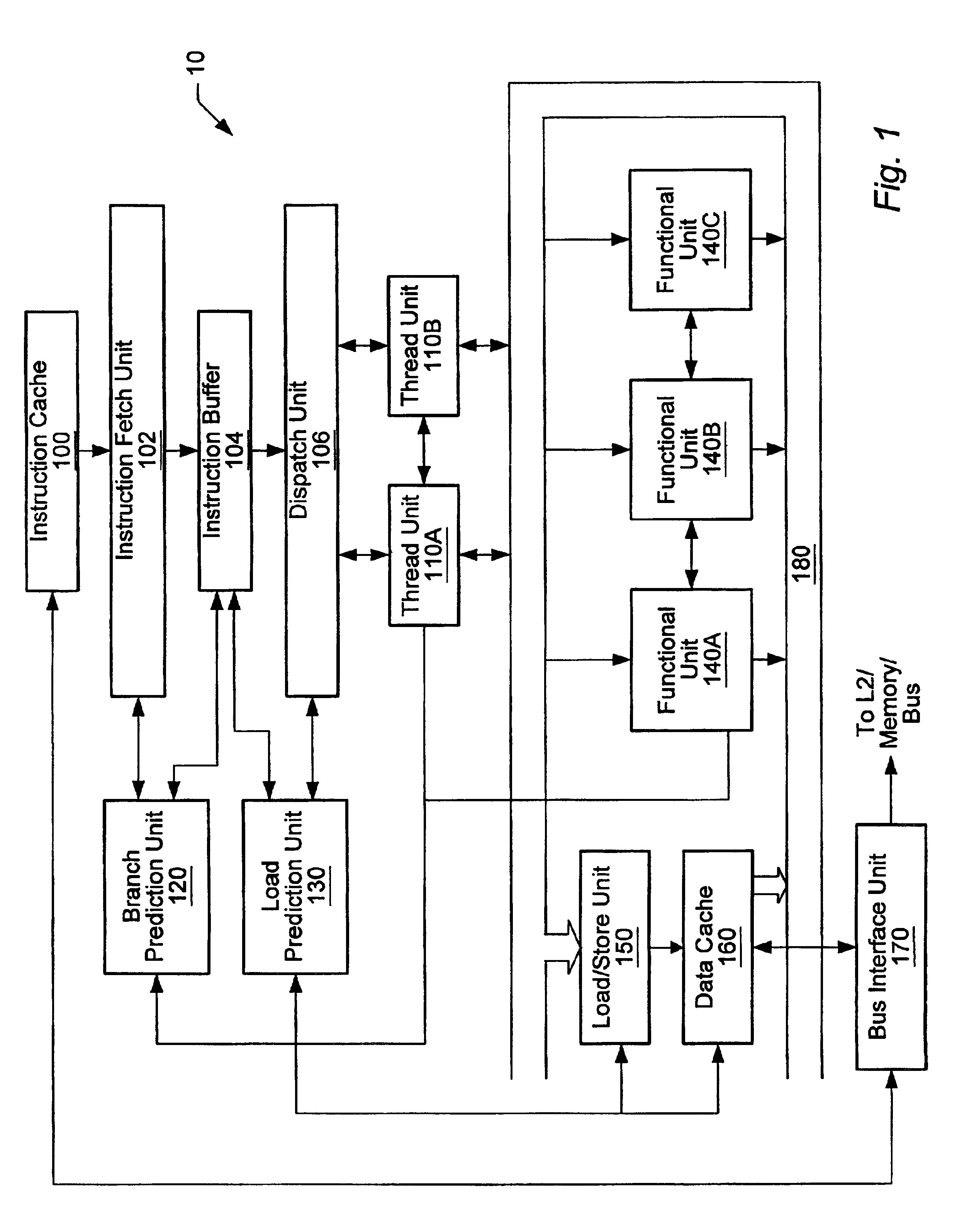

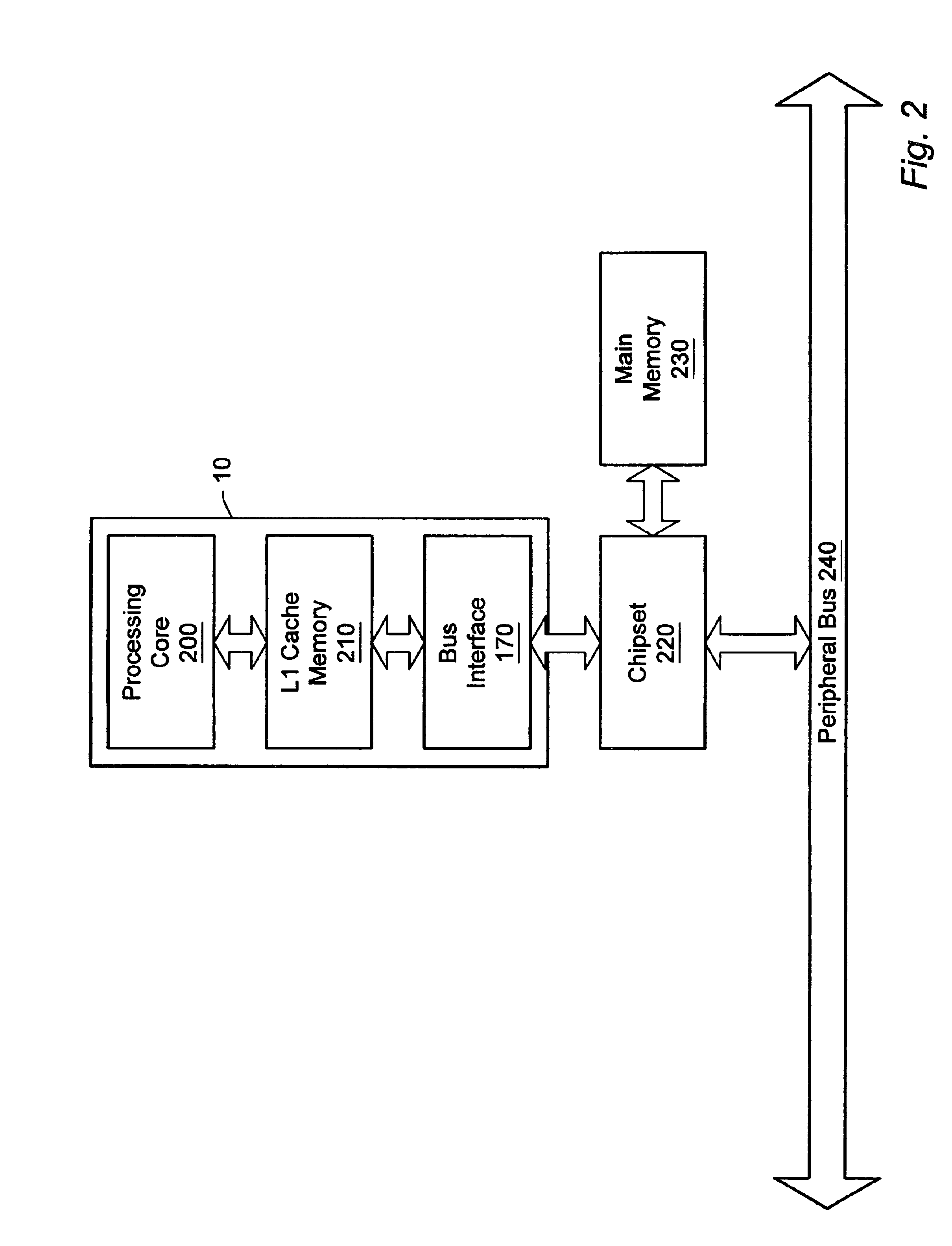

Threshold-based load address prediction and new thread identification in a multithreaded microprocessor

ActiveUS6907520B2Memory access latencyTake advantage ofMemory architecture accessing/allocationDigital computer detailsLoad instructionInstruction window

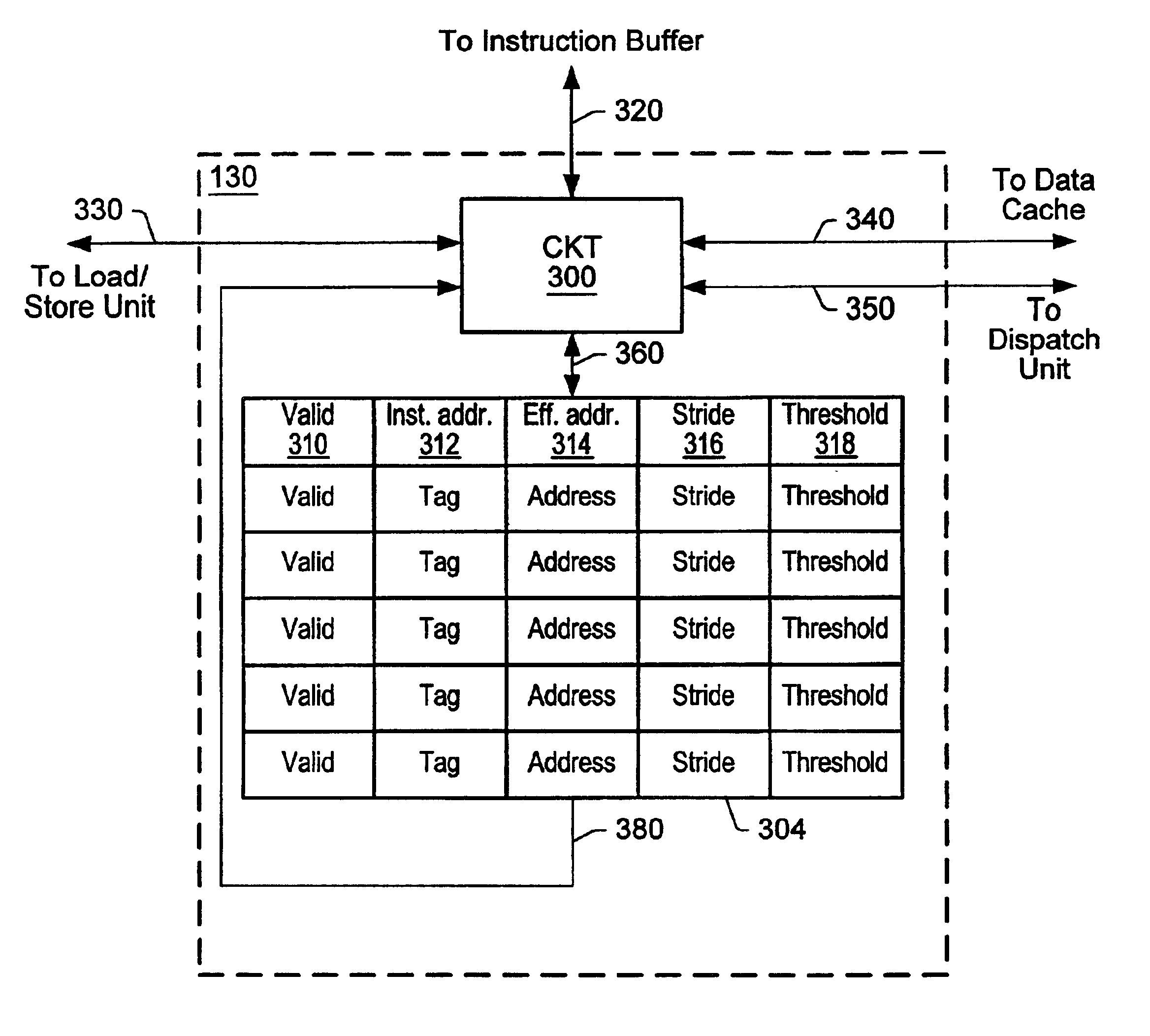

A method and apparatus for predicting load addresses and identifying new threads of instructions for execution in a multithreaded processor. A load prediction unit scans an instruction window for load instructions. A load prediction table is searched for an entry corresponding to a detected load instruction. If an entry is found in the table, a load address prediction is made for the load instruction and conveyed to the data cache. If the load address misses in the cache, the data is prefetched. Subsequently, if it is determined that the load prediction was incorrect, a miss counter in the corresponding entry in the load prediction table is incremented. If on a subsequent detection of the load instruction, the miss counter has reached a threshold, the load instruction is predicted to miss. In response to the predicted miss, a new thread of instructions is identified for execution.

Owner:ORACLE INT CORP

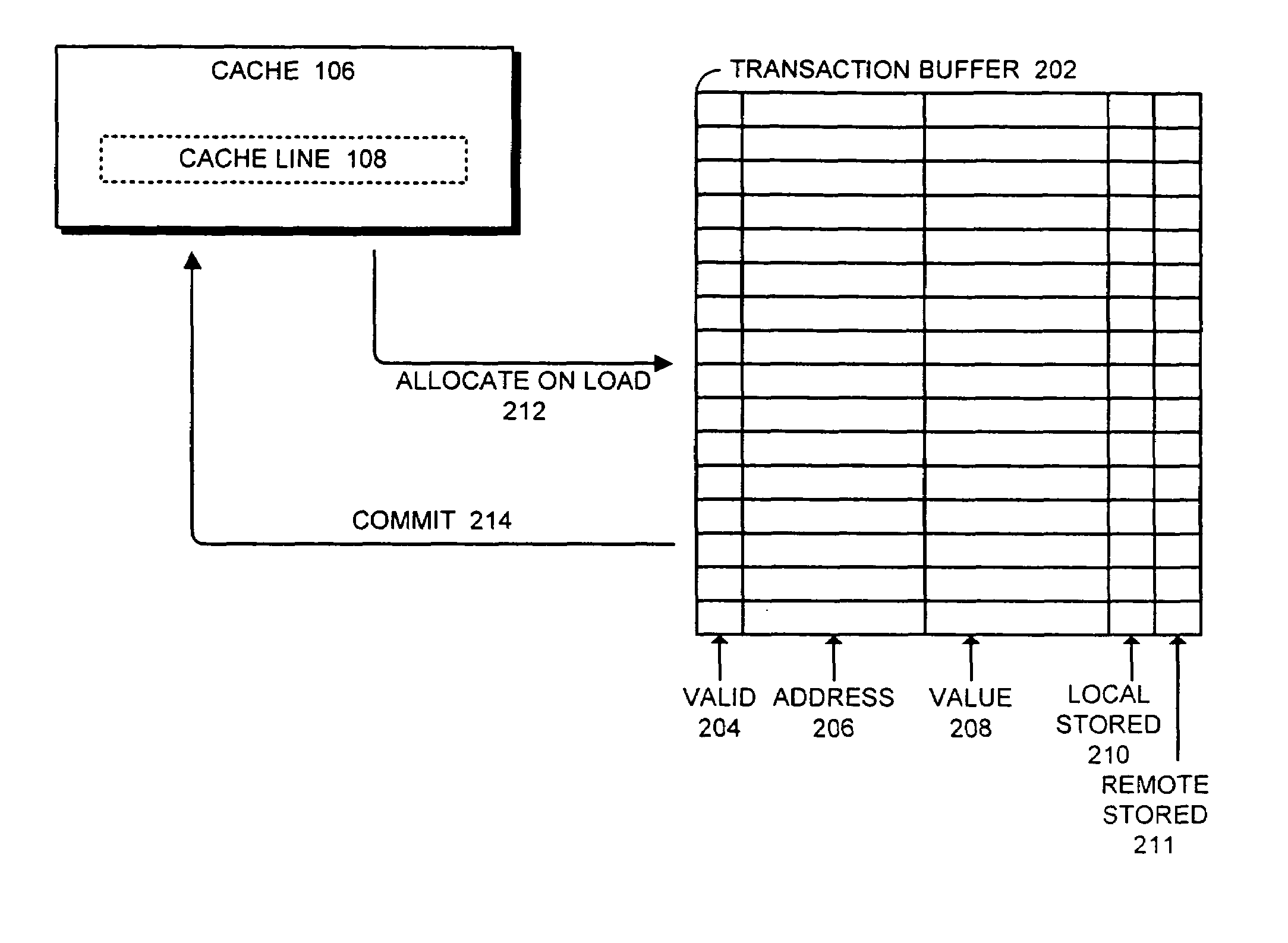

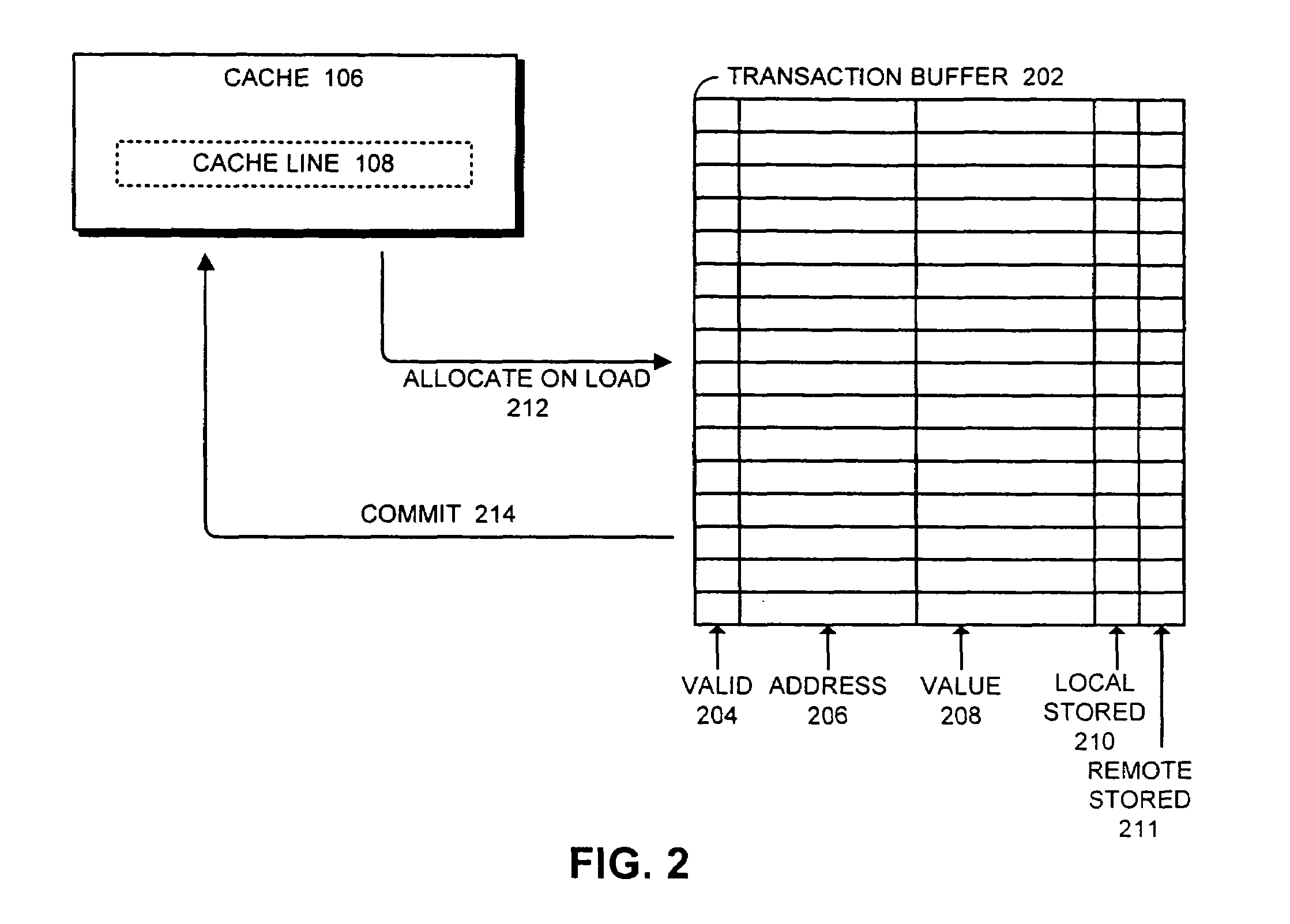

Facilitating concurrent non-transactional execution in a transactional memory system

ActiveUS7421544B1Facilitates concurrent non-transactional operationMemory loss protectionTransaction processingLoad instructionParallel computing

One embodiment of the present invention provides a system that facilitates concurrent non-transactional operations in a transactional memory system. During operation, the system receives a load instruction related to a local transaction. Next, the system determines if an entry for the memory location requested by the load instruction already exists in the transaction buffer. If not, the system allocates an entry for the memory location in the transaction buffer, reads data for the load instruction from the cache, and stores the data in the transaction buffer. Finally, the system returns the data to the processor to complete the load instruction. In this way, if a remote non-transactional store instruction is received during the transaction, the remote non-transactional store proceeds and does not cause the local transaction to abort.

Owner:ORACLE INT CORP

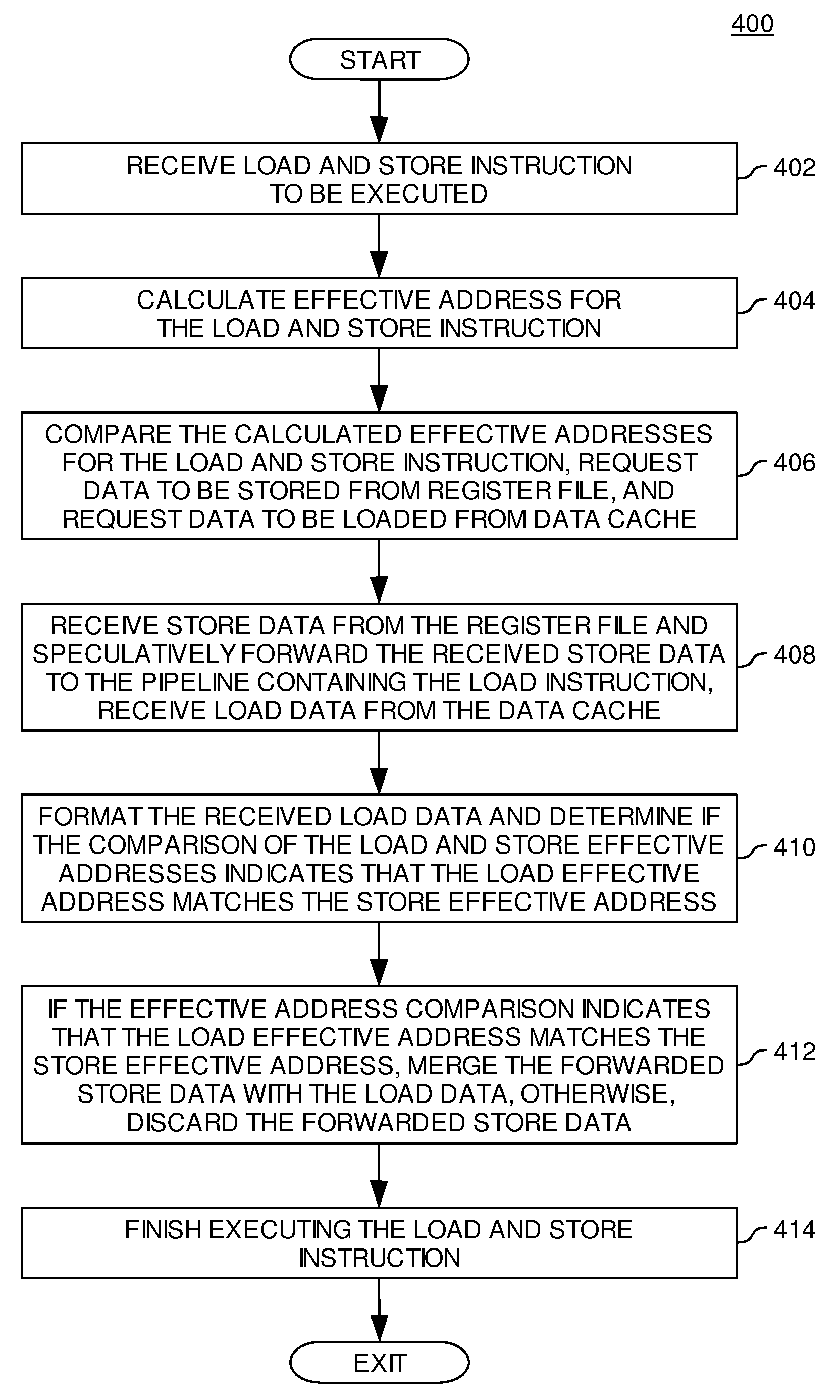

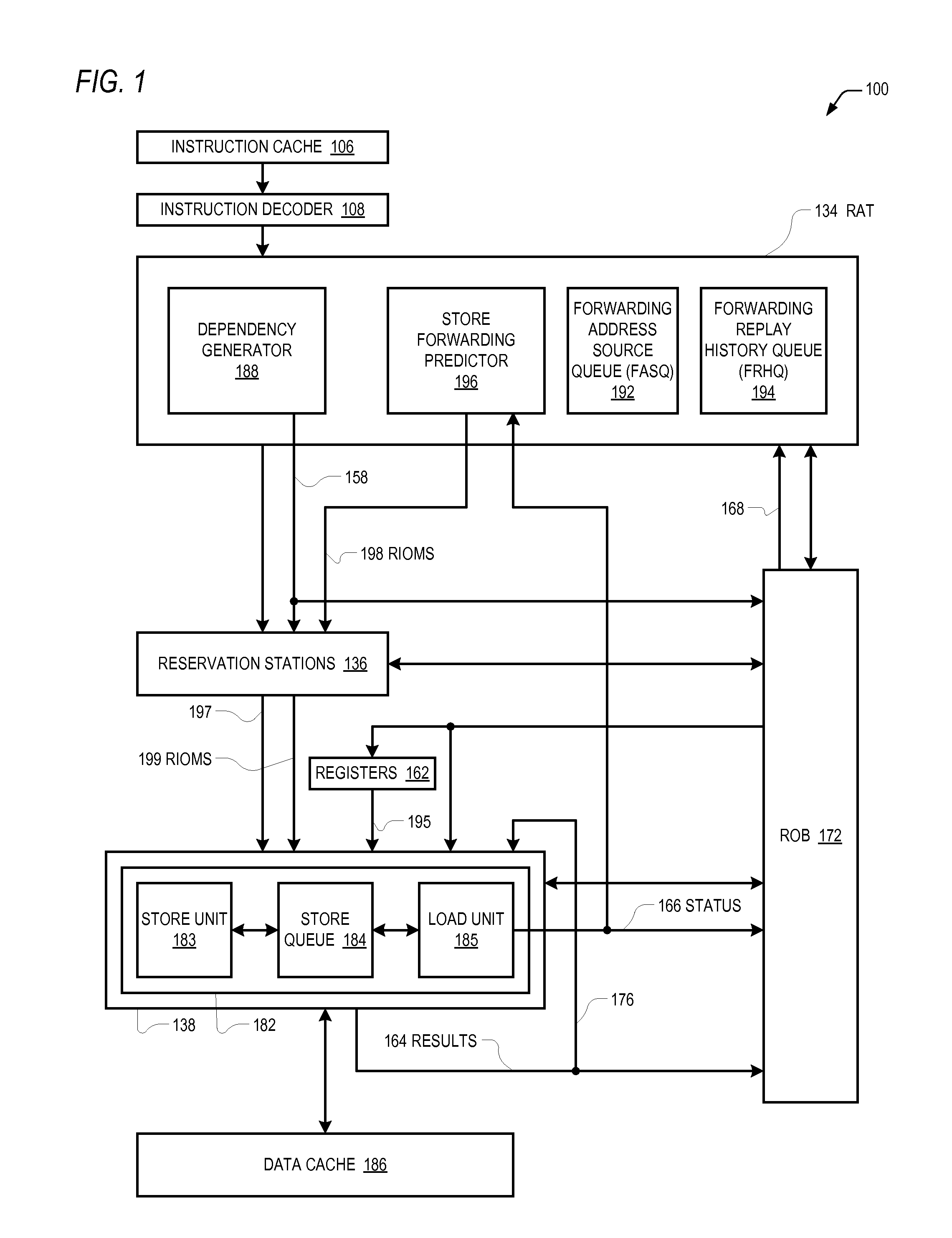

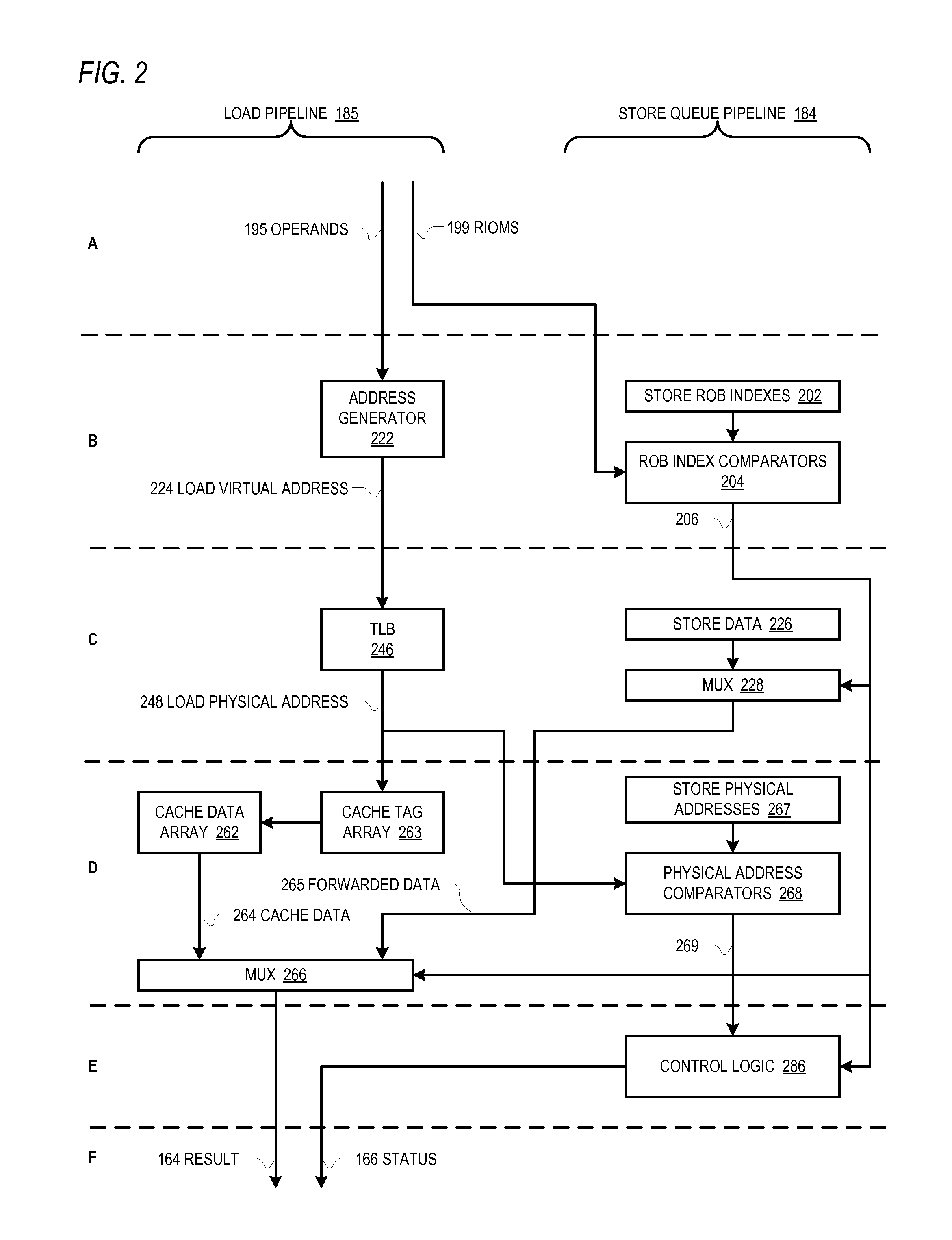

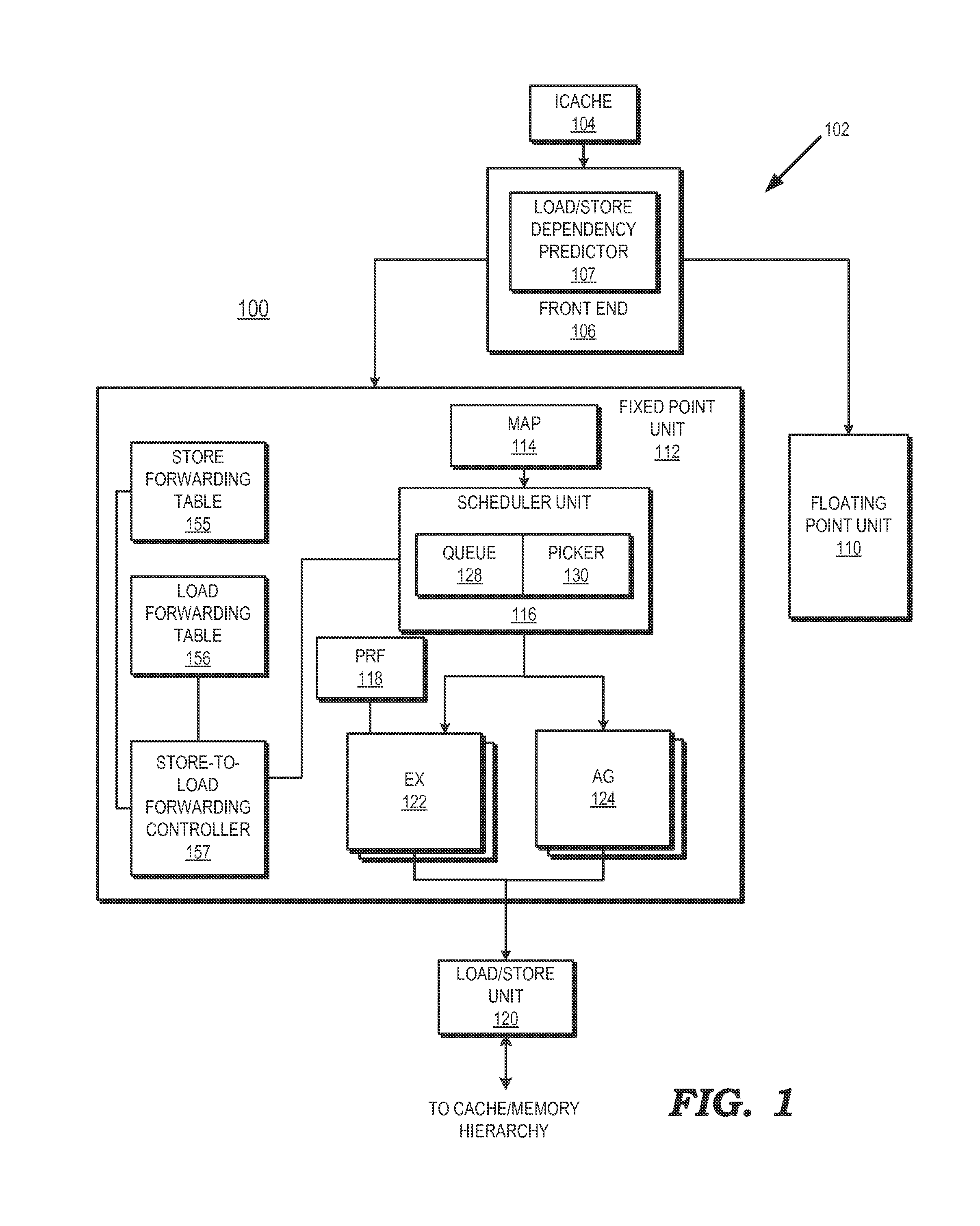

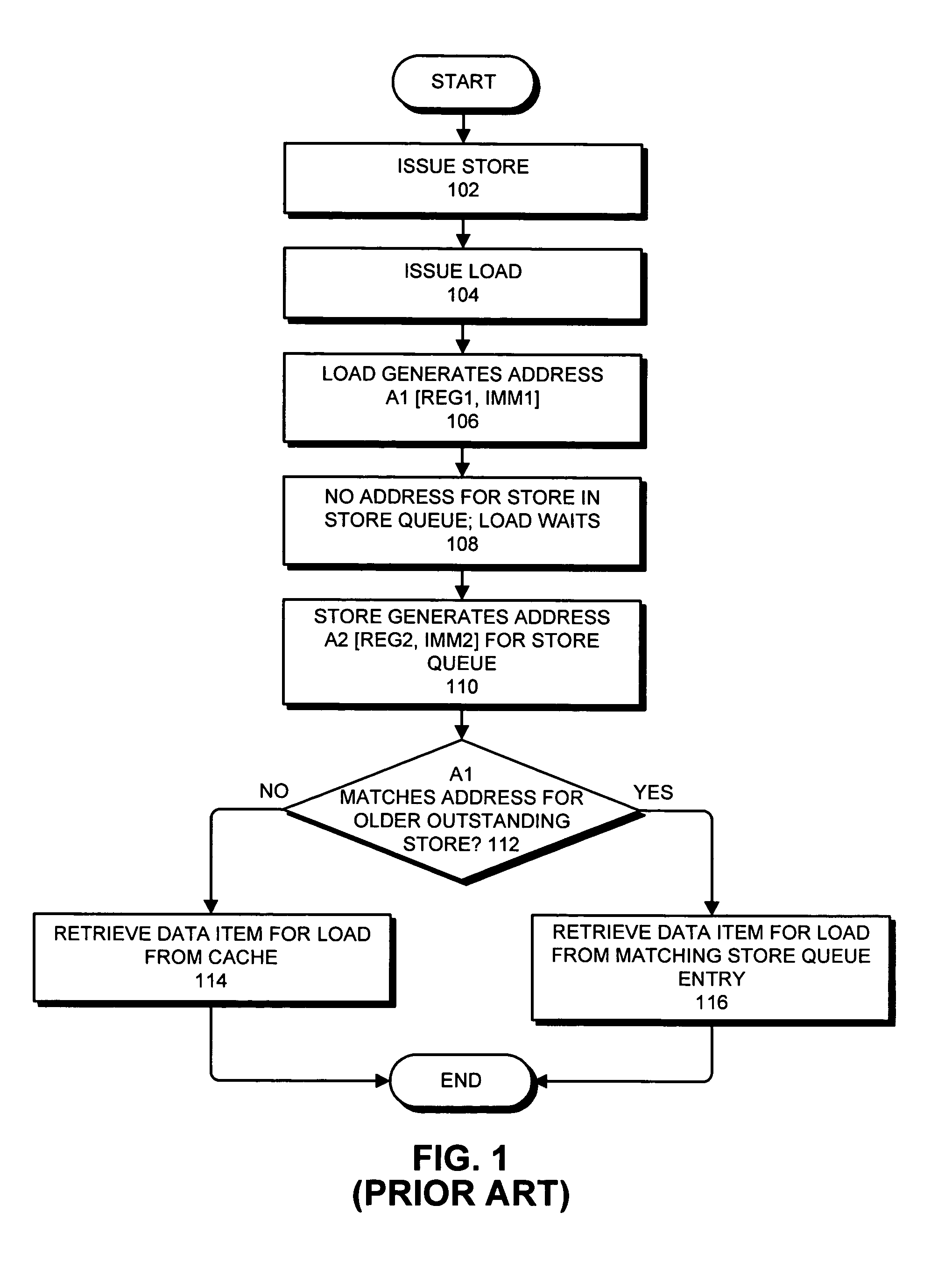

A Fast and Inexpensive Store-Load Conflict Scheduling and Forwarding Mechanism

InactiveUS20070288725A1Digital computer detailsSpecific program execution arrangementsLoad instructionParallel computing

Embodiments provide a method and apparatus for executing instructions. In one embodiment, the method includes receiving a load instruction and a store instruction and calculating a load effective address of load data for the load instruction and a store effective address of store data for the store instruction. The method further includes comparing the load effective address with the store effective address and speculatively forwarding the store data for the store instruction from a first pipeline in which the store instruction is being executed to a second pipeline in which the load instruction is being executed. The load instruction receives the store data from the first pipeline and requested data from a data cache. If the load effective address matches the store effective address, the speculatively forwarded store data is merged with the load data. If the load effective address does not match the store effective address the requested data from the data cache is merged with the load data.

Owner:IBM CORP

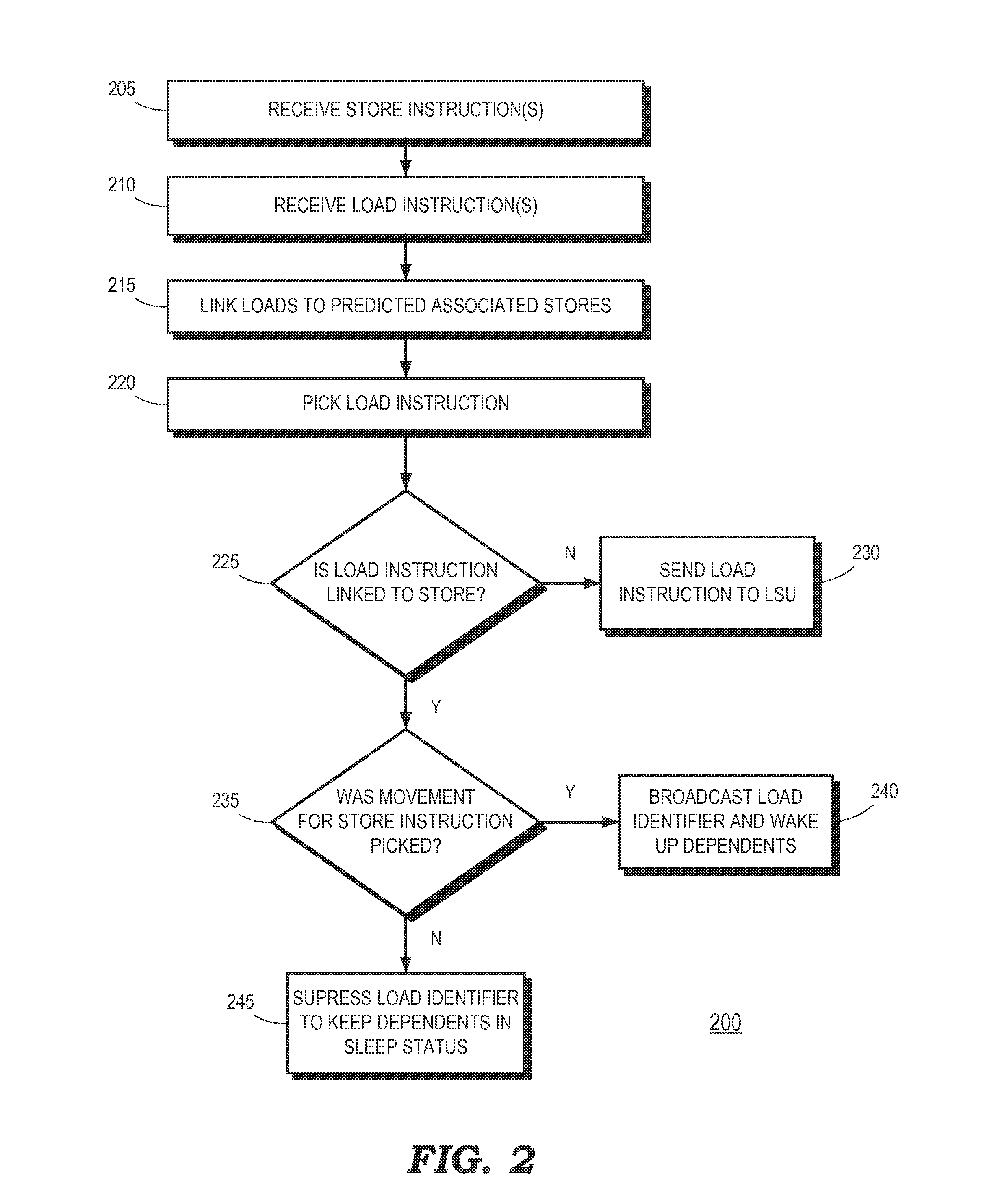

Dependent instruction suppression

ActiveUS20140380024A1Register arrangementsDigital computer detailsDependency informationLoad instruction

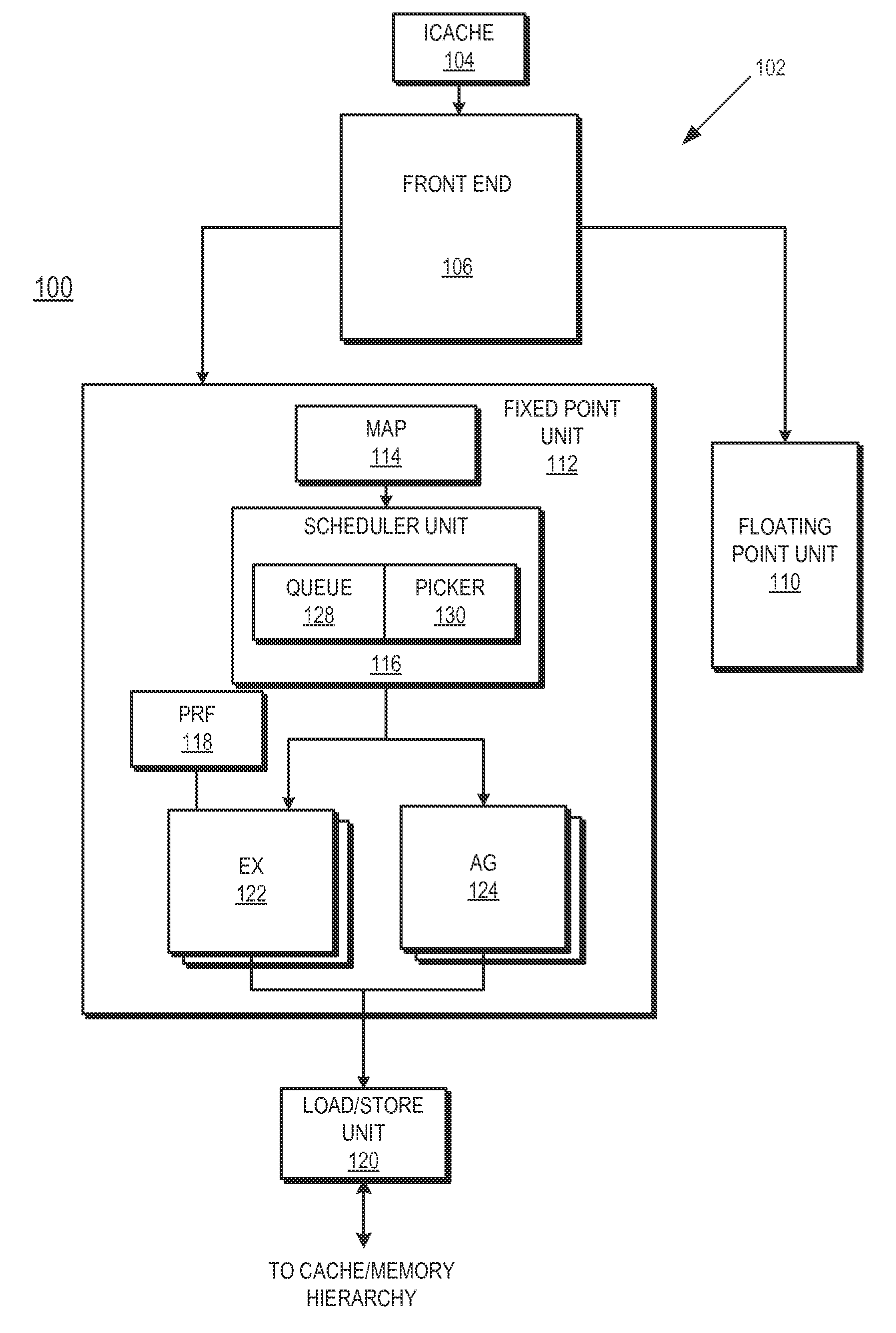

A method includes suppressing execution of at least one dependent instruction of a load instruction by a processor using stored dependency information responsive to an invalid status of the load instruction. A processor includes an execution unit to execute instructions and a scheduler. The scheduler is to select for execution in the execution unit a load instruction having at least one dependent instruction and suppress execution of the at least one dependent instruction using stored dependency information responsive to an invalid status of the load instruction.

Owner:ADVANCED MICRO DEVICES INC

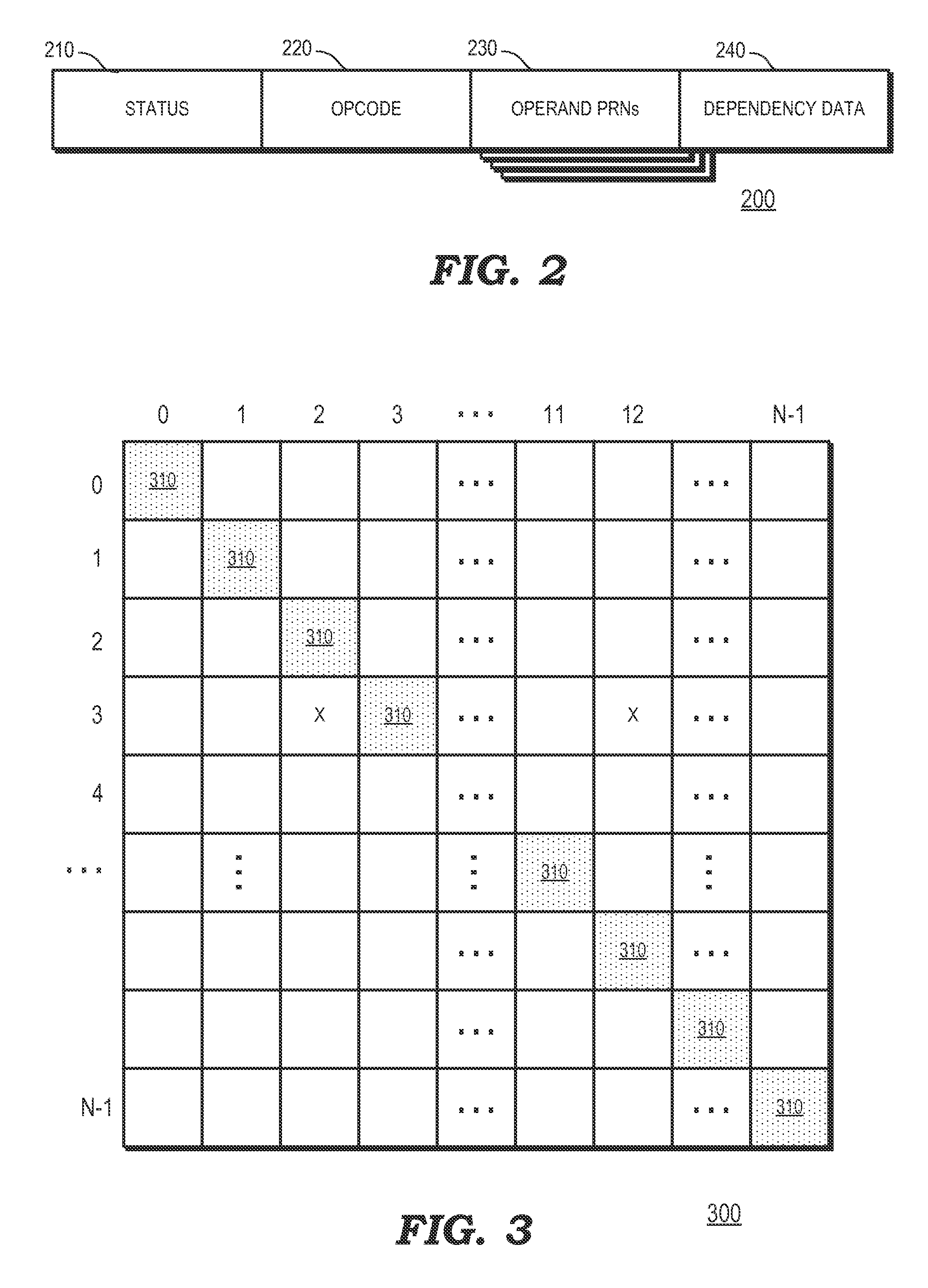

Store-to-load forwarding based on load/store address computation source information comparisons

ActiveUS20110040955A1Digital computer detailsSpecific program execution arrangementsLoad instructionOperand

A microprocessor includes a queue comprising a plurality of entries each configured to hold store information for a store instruction. The store information specifies sources of operands used to calculate a store address. The store instruction specifies store data to be stored to a memory location identified by the store address. The microprocessor also includes control logic, coupled to the queue, configured to encounter a load instruction. The load instruction includes load information that specifies sources of operands used to calculate a load address. The control logic detects that the load information matches the store information held in a valid one of the plurality of queue entries and responsively predicts that the microprocessor should forward to the load instruction the store data specified by the store instruction whose store information matches the load information.

Owner:VIA TECH INC

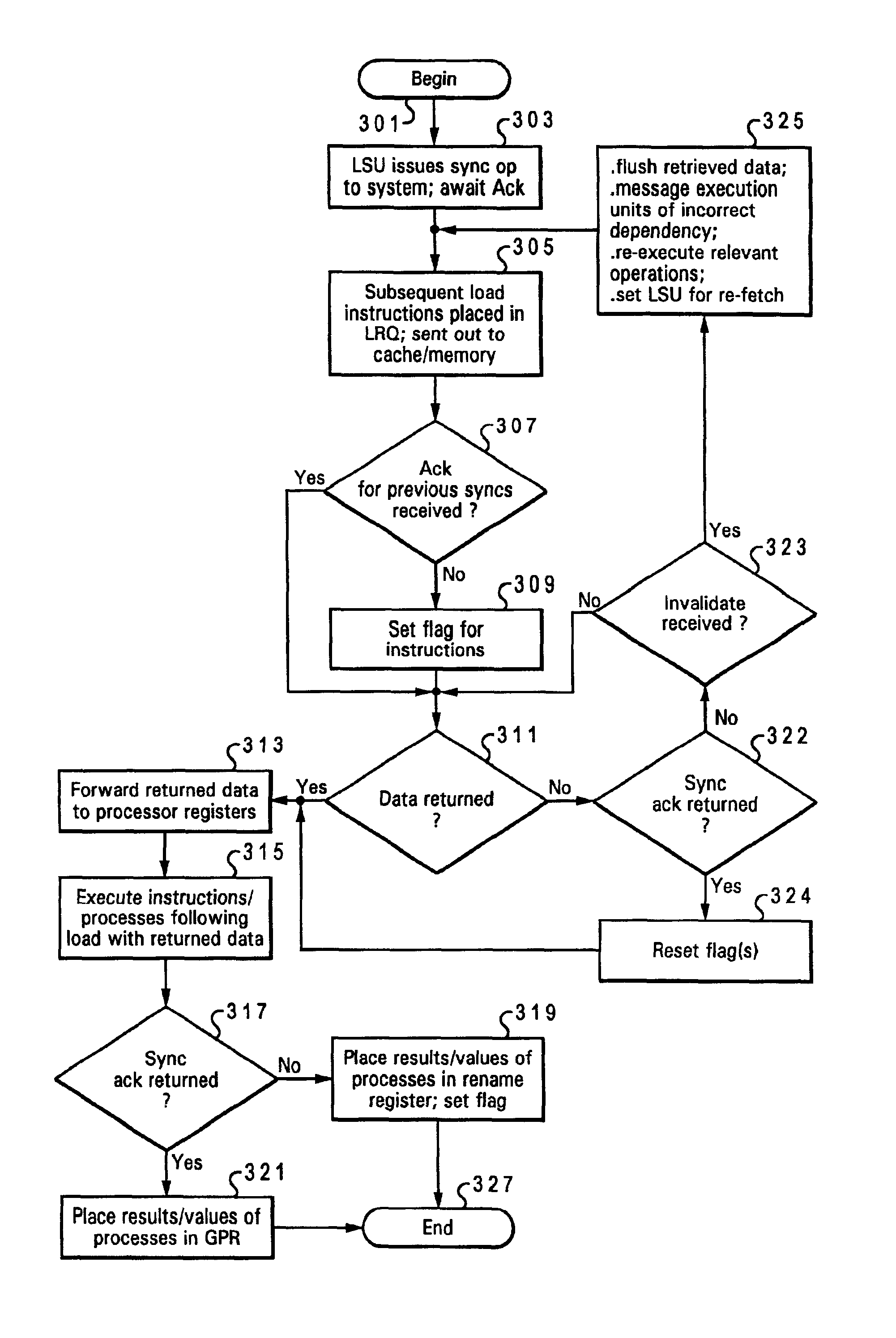

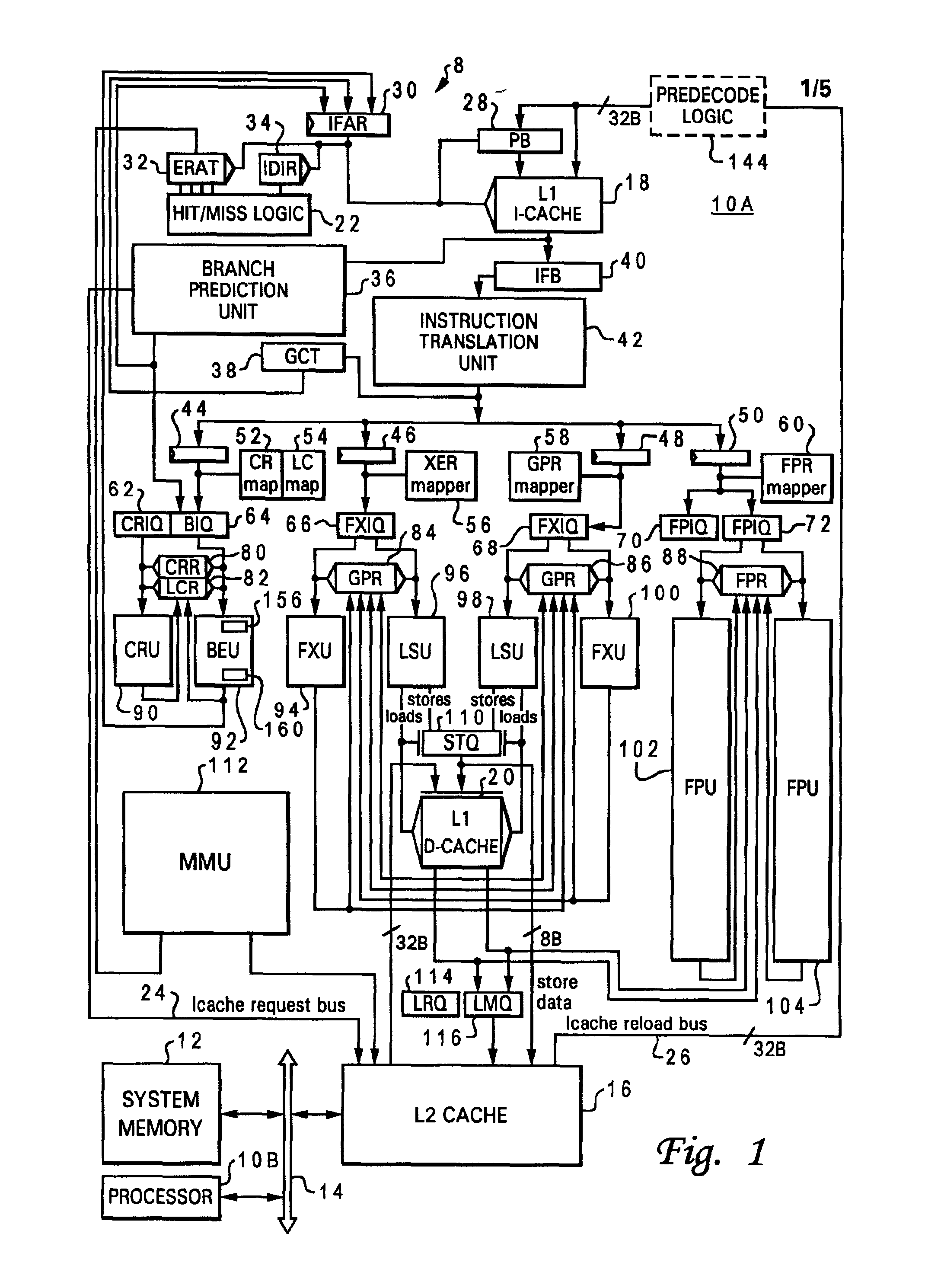

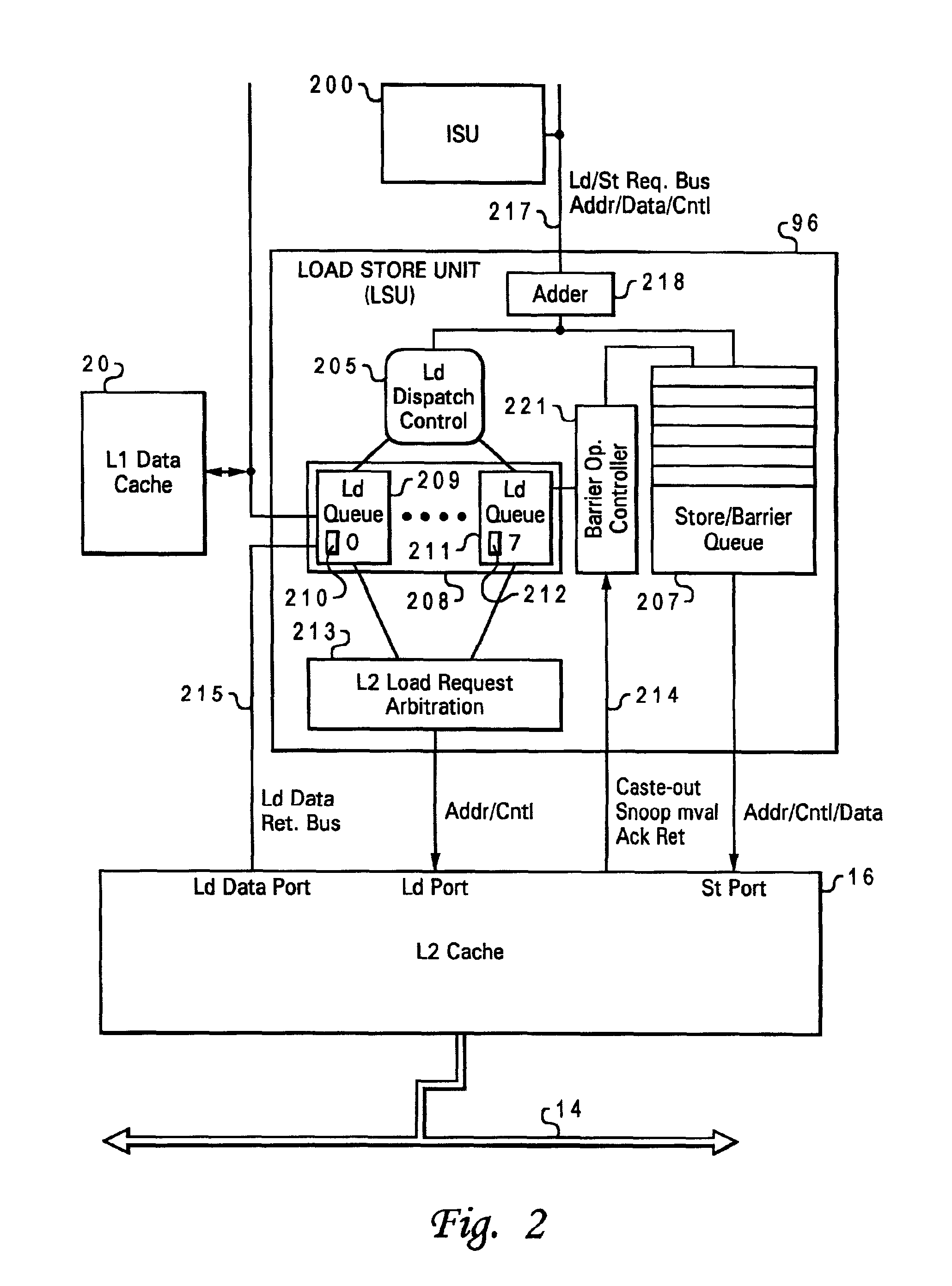

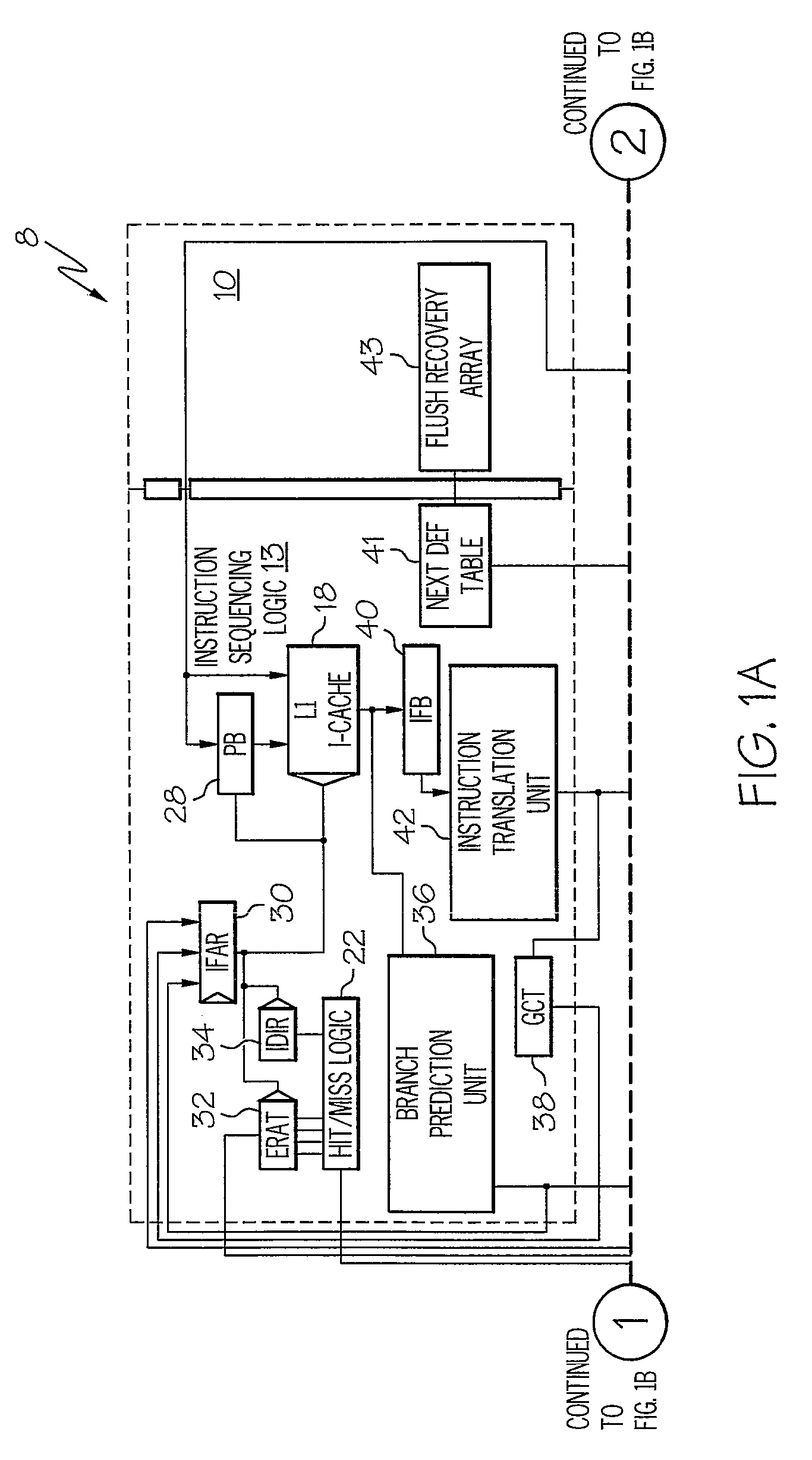

Speculative execution of instructions and processes before completion of preceding barrier operations

InactiveUS6880073B2Digital computer detailsConcurrent instruction executionData processing systemSpeculative execution

Described is a data processing system and processor that provides full multiprocessor speculation by which all instructions subsequent to barrier operations in a instruction sequence are speculatively executed before the barrier operation completes on the system bus. The processor comprises a load / store unit (LSU) with a barrier operation (BOP) controller that permits load instructions subsequent to syncs in an instruction sequence to be speculatively issued prior to the return of the sync acknowledgment. Data returned is immediately forwarded to the processor's execution units. The returned data and results of subsequent operations are held temporarily in rename registers. A multiprocessor speculation flag is set in the corresponding rename registers to indicate that the value is “barrier” speculative. When a barrier acknowledge is received by the BOP controller, the flag(s) of the corresponding rename register(s) are reset.

Owner:IBM CORP

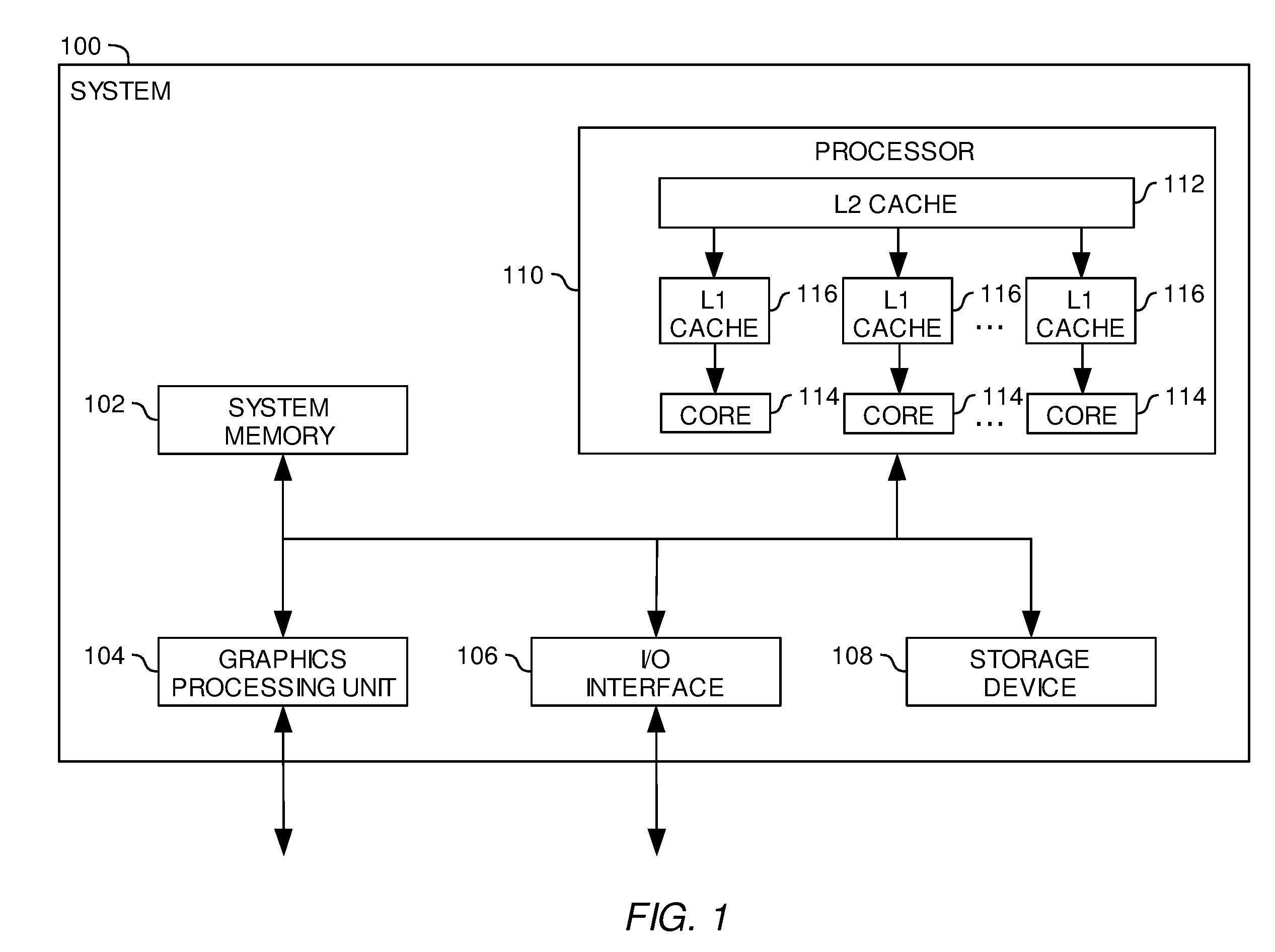

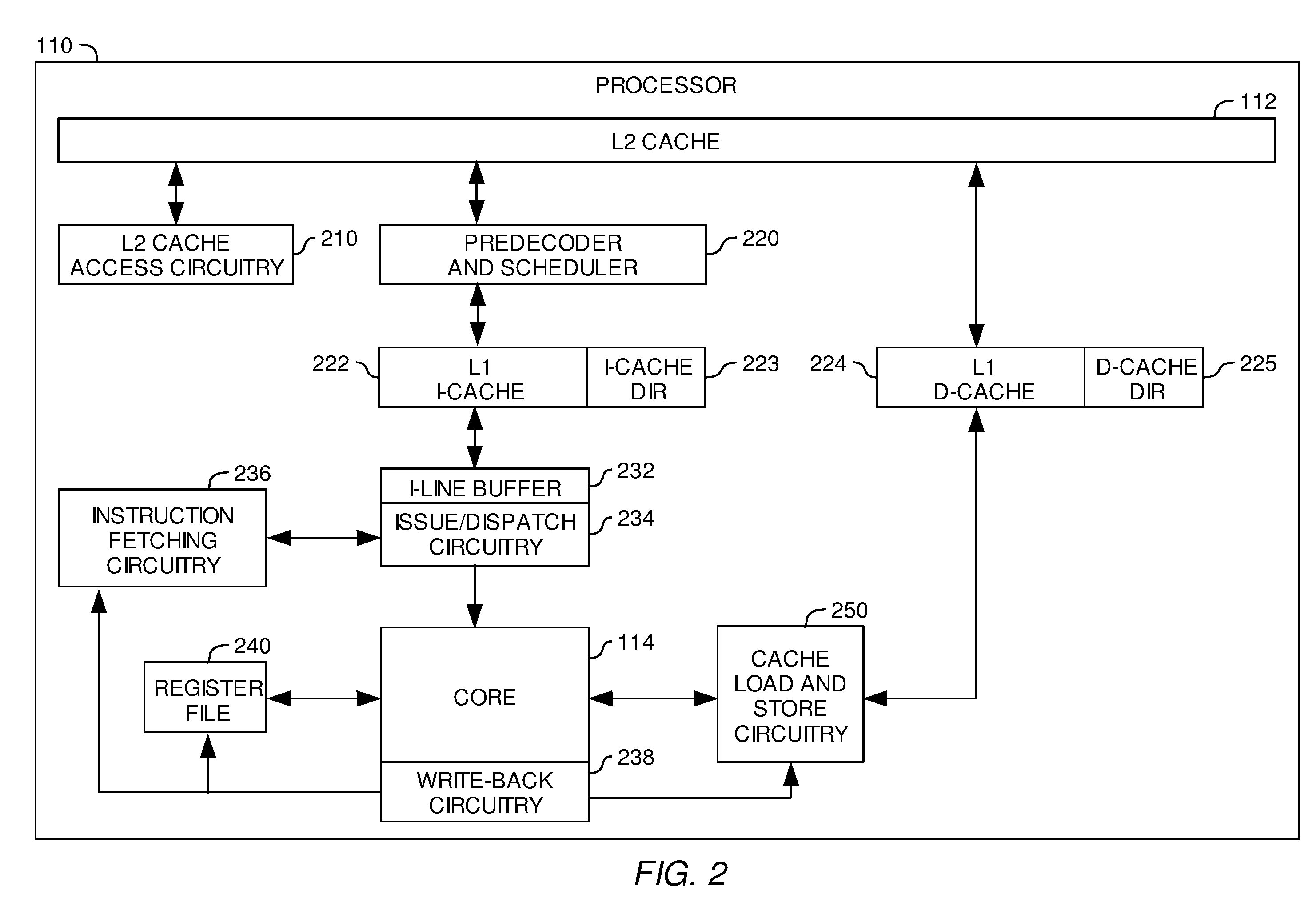

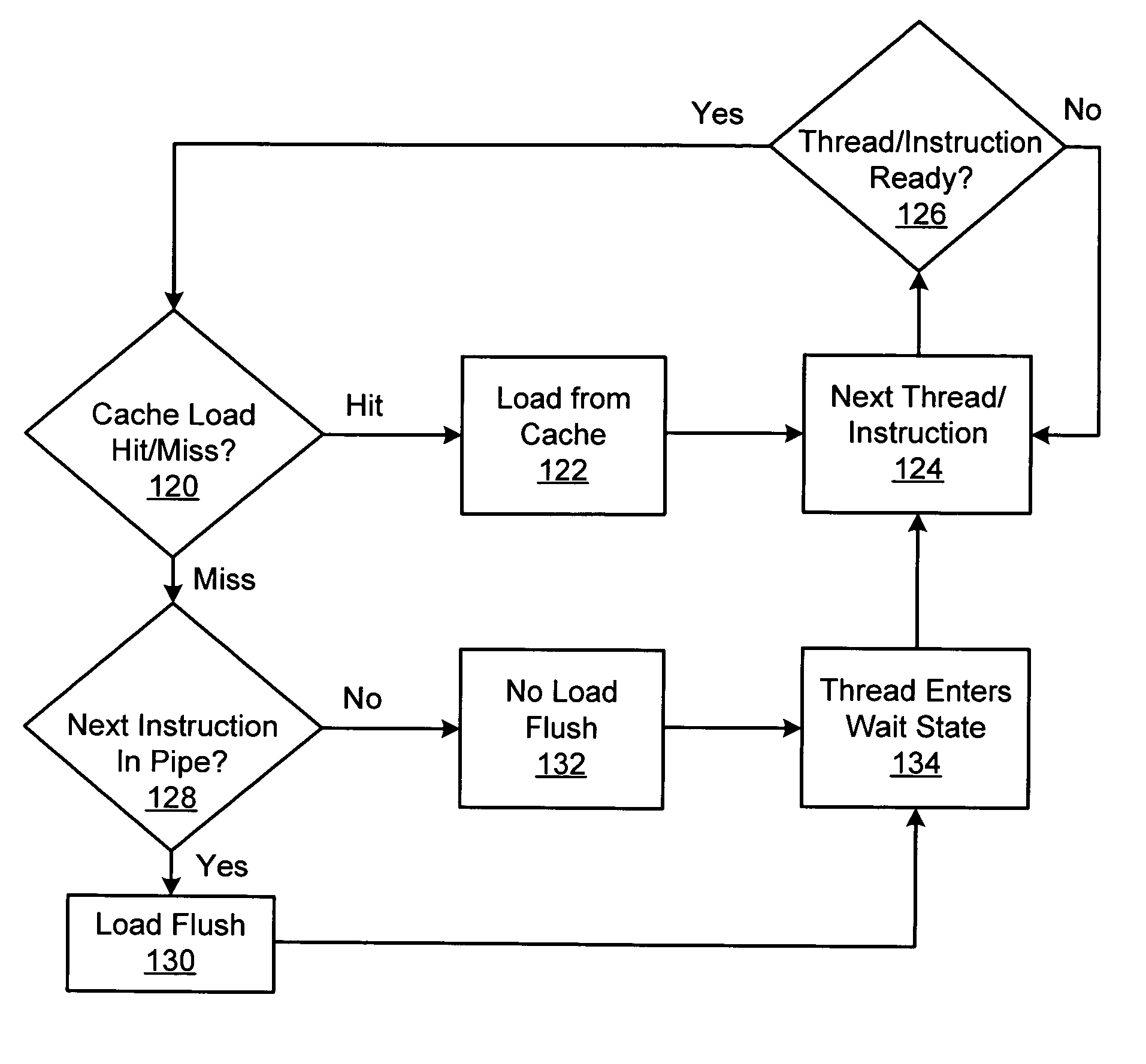

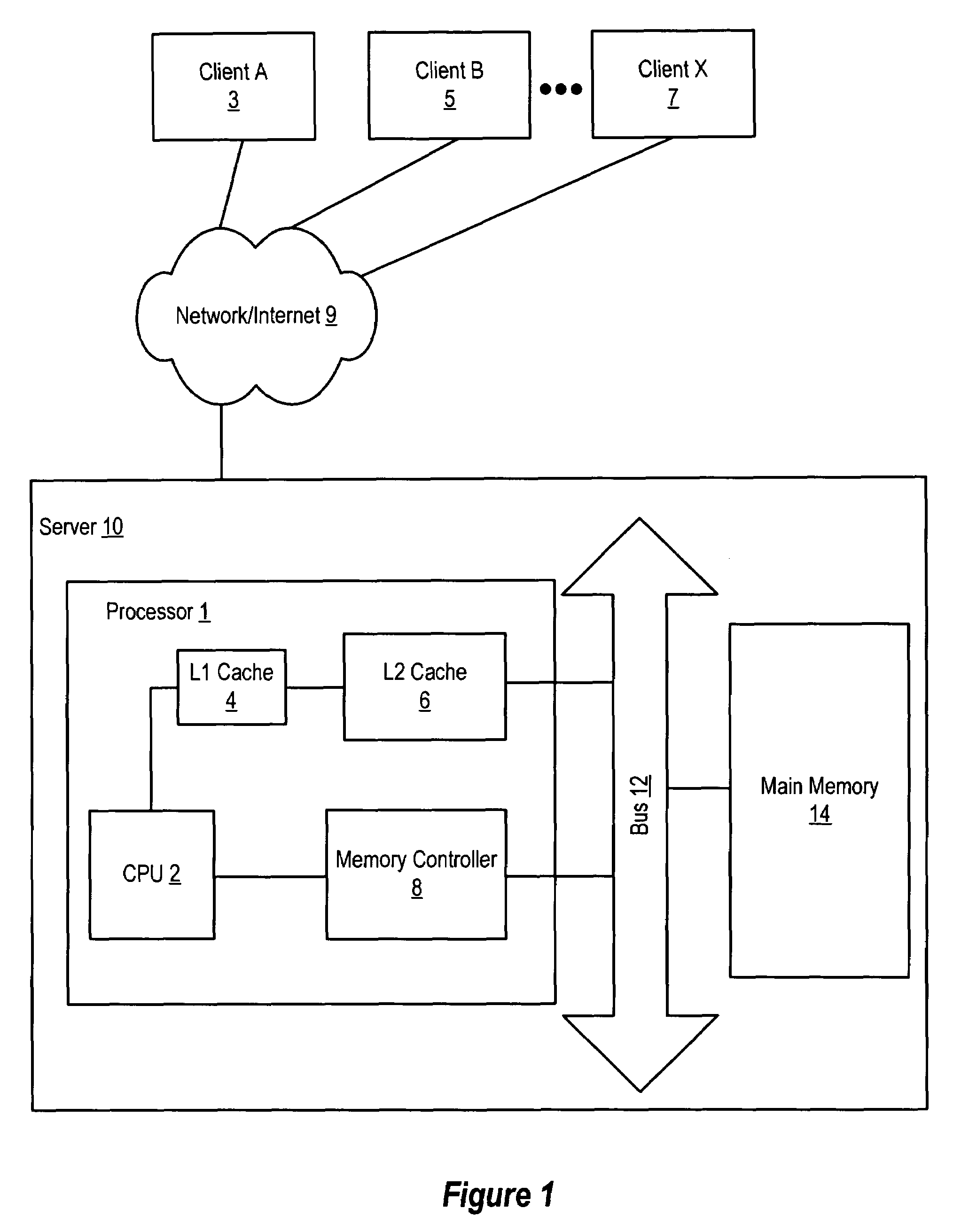

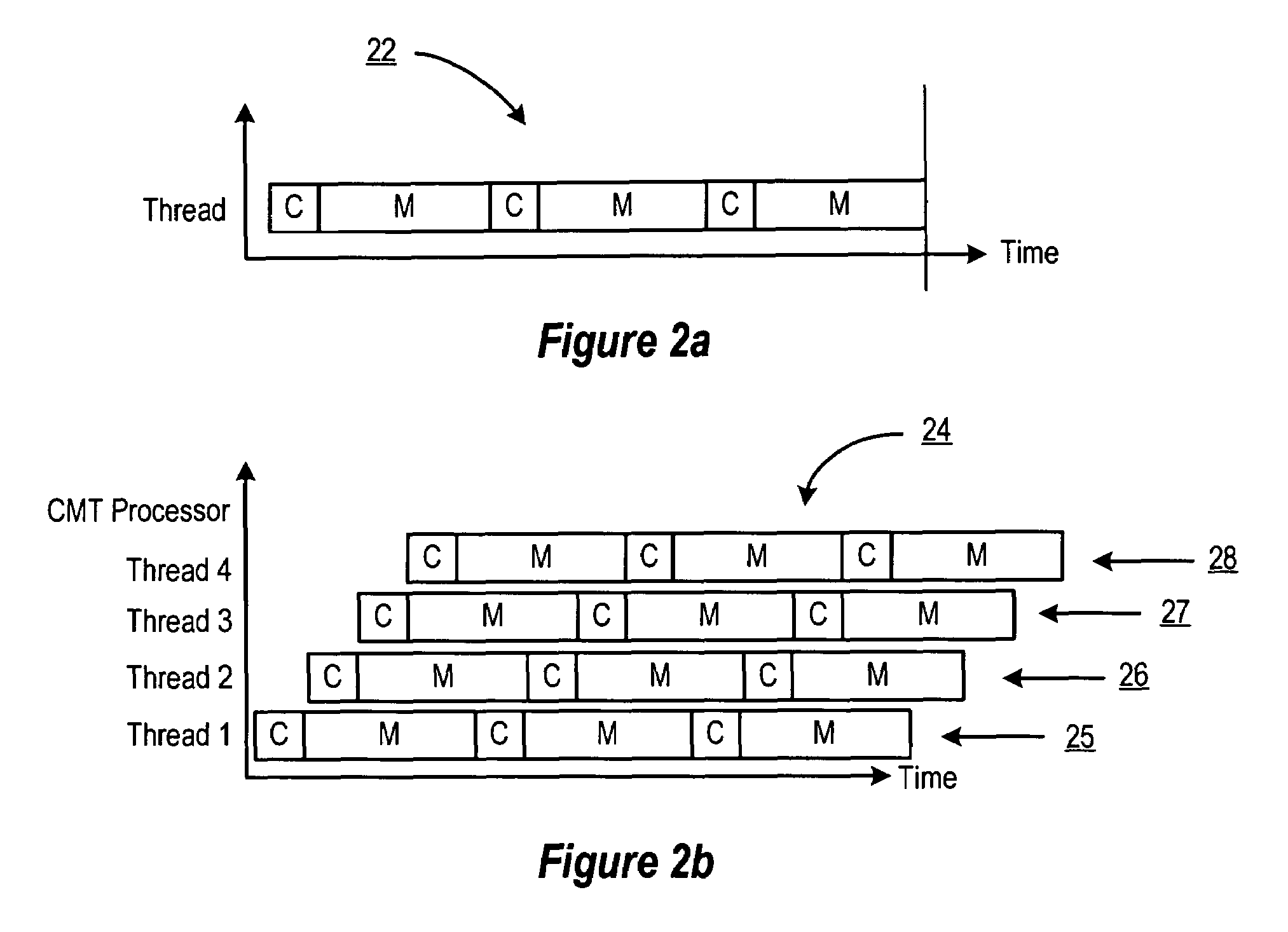

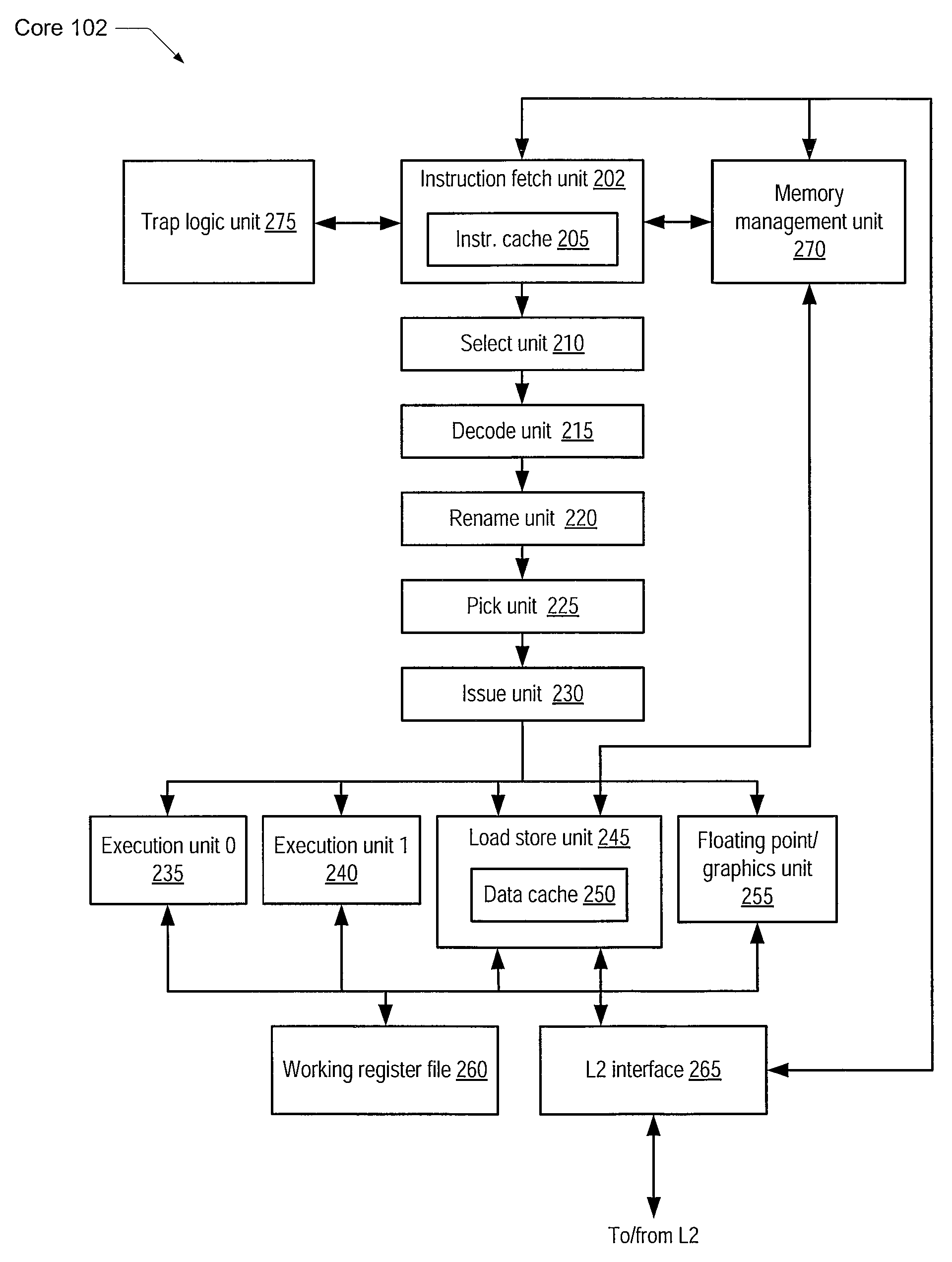

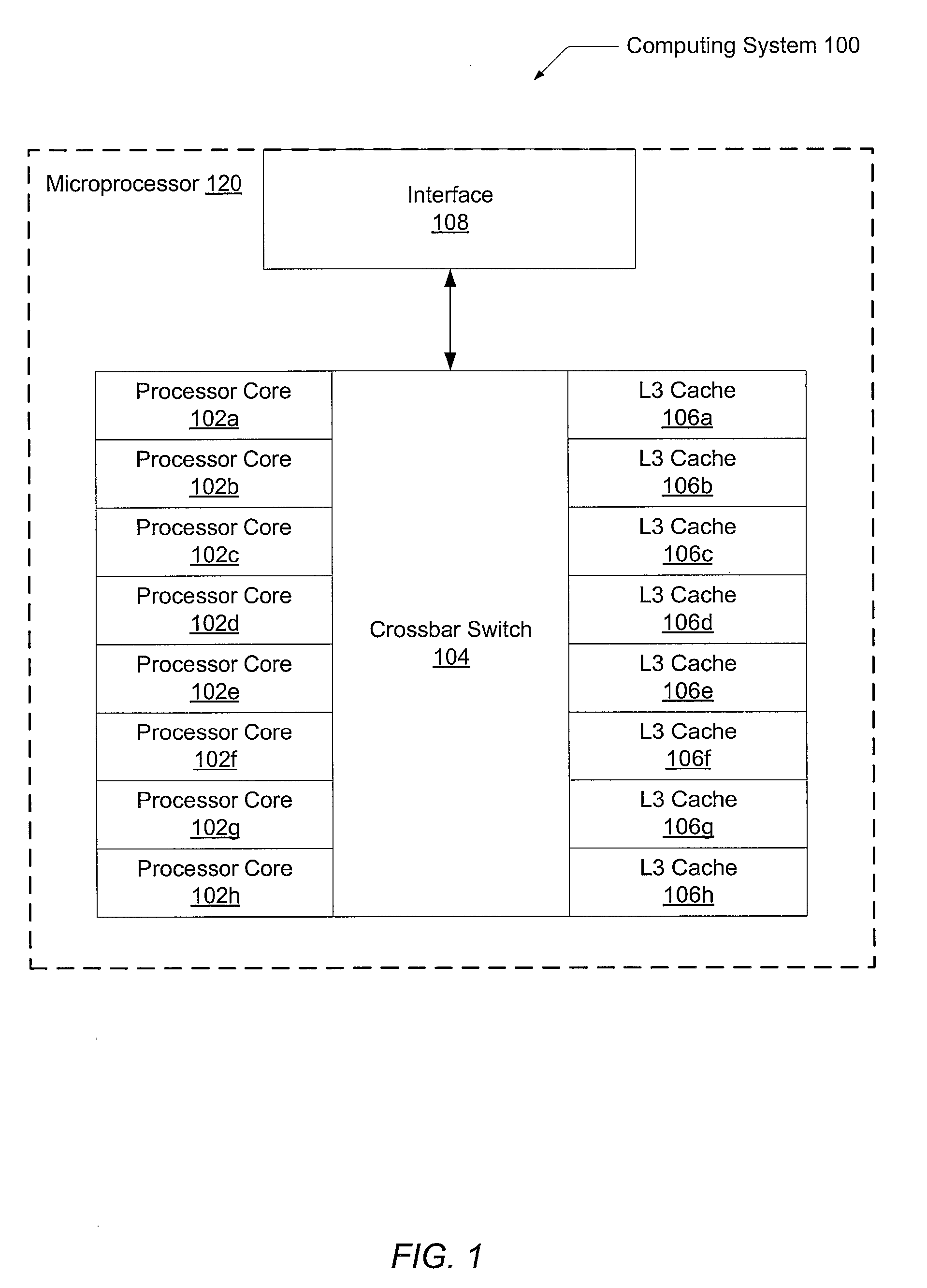

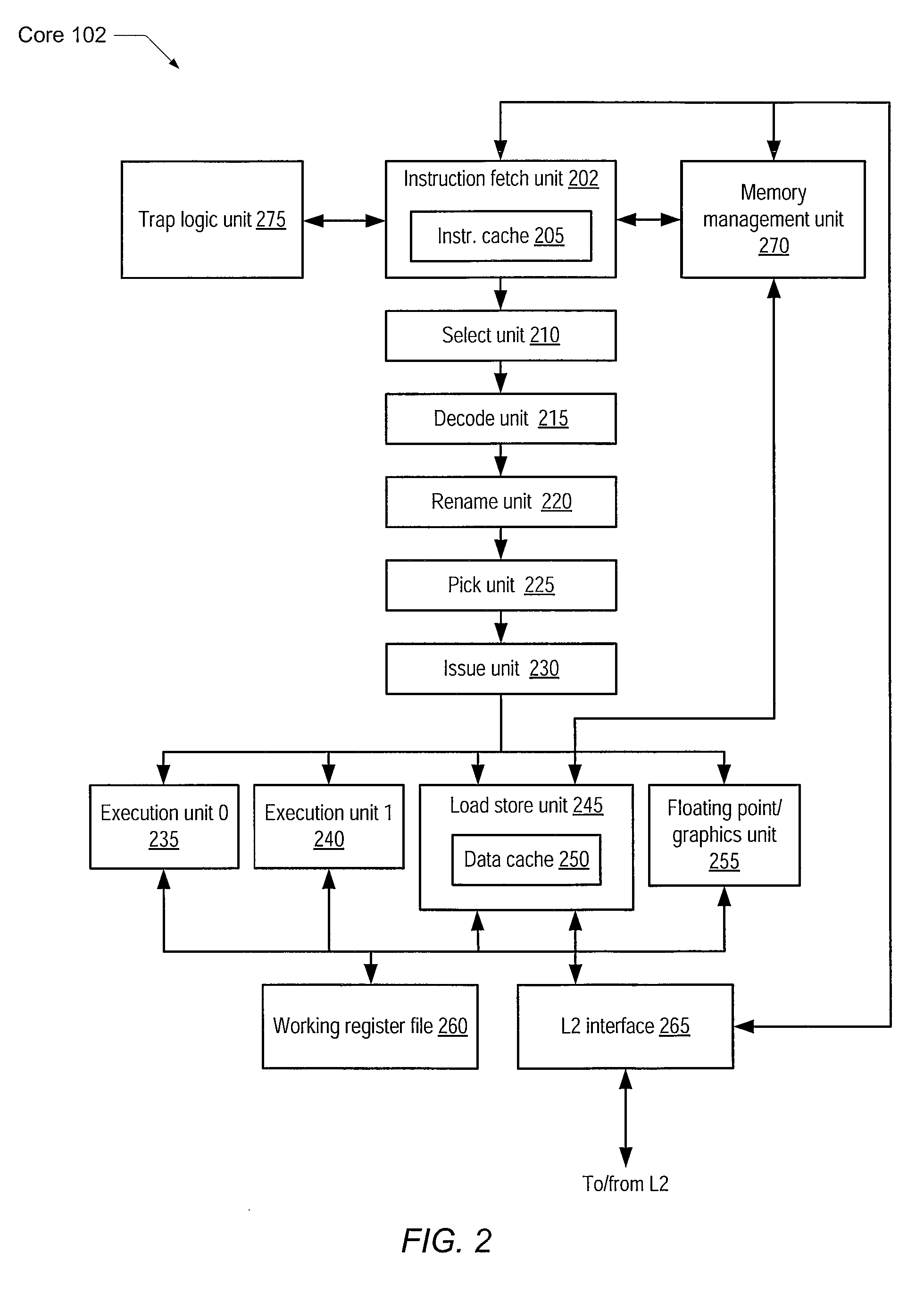

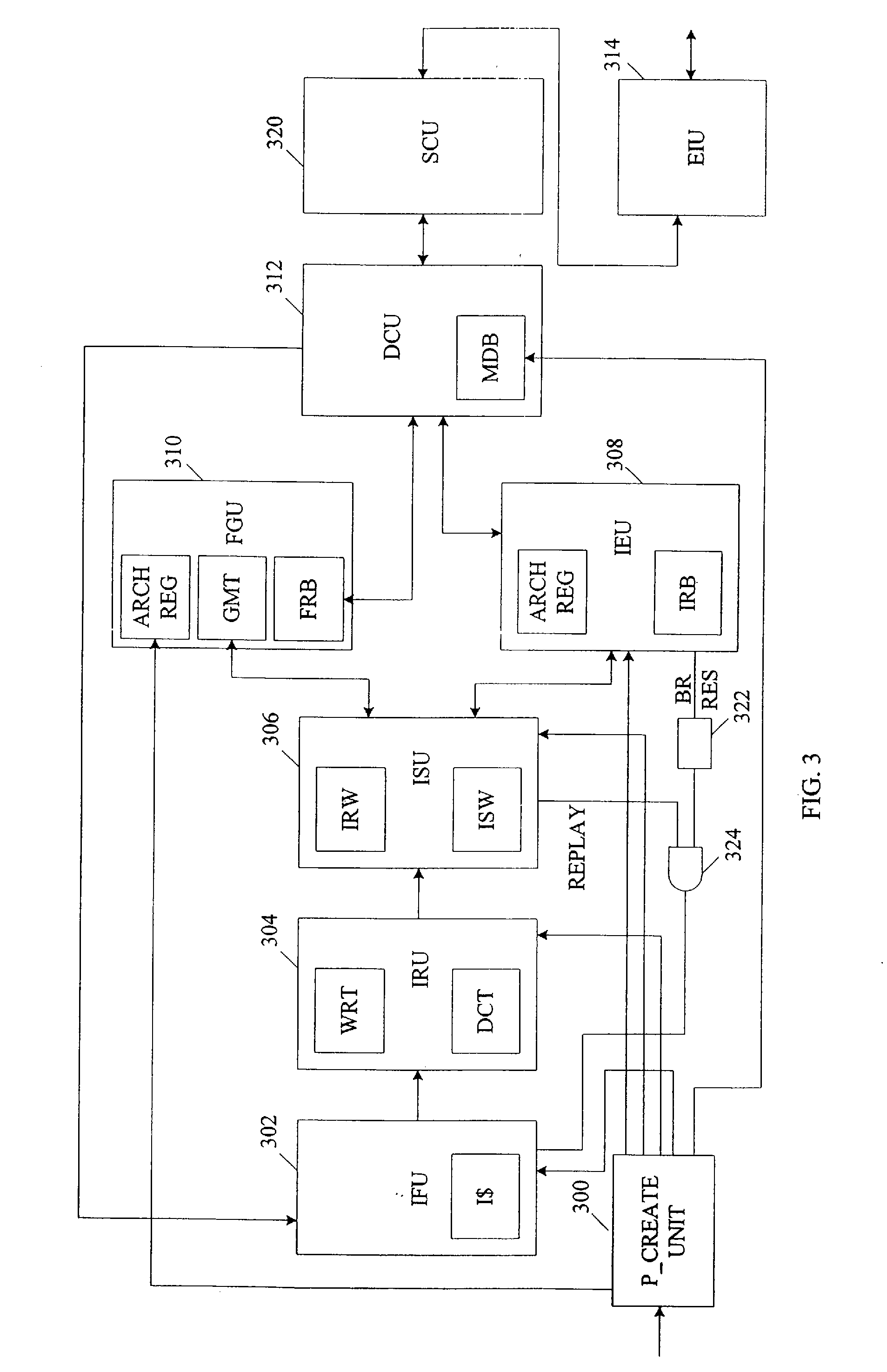

Handling cache misses by selectively flushing the pipeline

ActiveUS7509484B1Eliminate operationHigh bandwidthDigital computer detailsSpecific program execution arrangementsLoad instructionParallel computing

An apparatus and method for efficiently managing data cache load misses is described in connection with a multithreaded, pipelined multiprocessor chip. A CMT processor keeps track of load misses for each thread by issuing a load miss signal each time a load instruction to the data cache misses. A detection logic functionality in the IFU responds the load miss signal to determine if a valid instruction from the thread is at the one of the pipeline stages. If no instructions from the thread are detected in the pipeline, then no flush is required and the thread is placed in a wait state until the requested data is returned from higher order memory. If any instruction from the thread is detected in the pipeline, the thread is flushed and the instruction is re-fetched.

Owner:ORACLE INT CORP

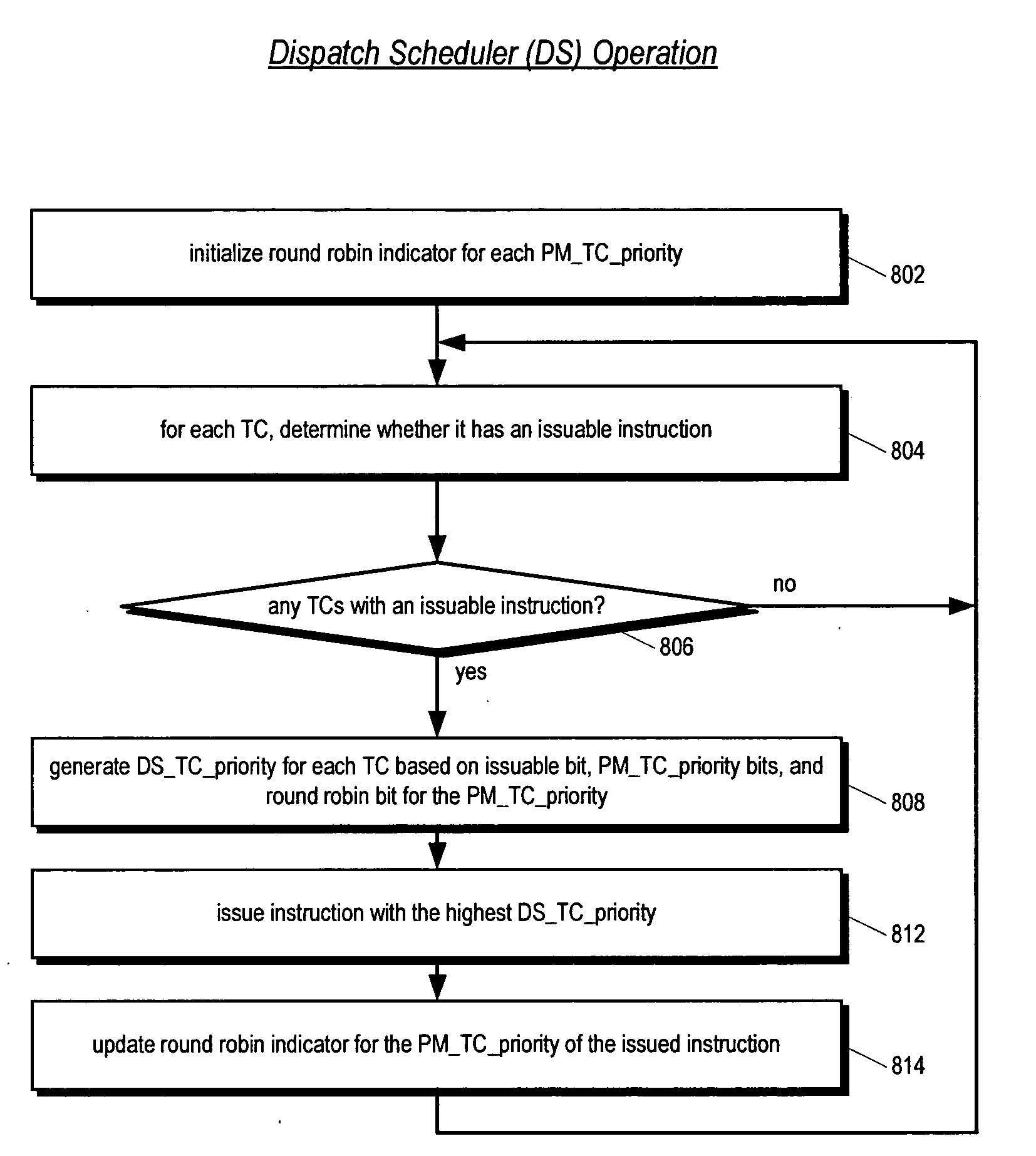

Multithreading processor including thread scheduler based on instruction stall likelihood prediction

ActiveUS20060179280A1Increase processor efficiencyReduce in quantityDigital computer detailsMultiprogramming arrangementsLoad instructionScheduling instructions

An apparatus for scheduling dispatch of instructions among a plurality of threads being concurrently executed in a multithreading processor is provided. The apparatus includes an instruction decoder that generate register usage information for an instruction from each of the threads, a priority generator that generates a priority for each instruction based on the register usage information and state information of instructions currently executing in an execution pipeline, and selection logic that dispatches at least one instruction from at least one thread based on the priority of the instructions. The priority indicates the likelihood the instruction will execute in the execution pipeline without stalling. For example, an instruction may have a high priority if it has little or no register dependencies or its data is known to be available; or may have a low priority if it has strong register dependencies or is an uncacheable or synchronized storage space load instruction.

Owner:ARM FINANCE OVERSEAS LTD

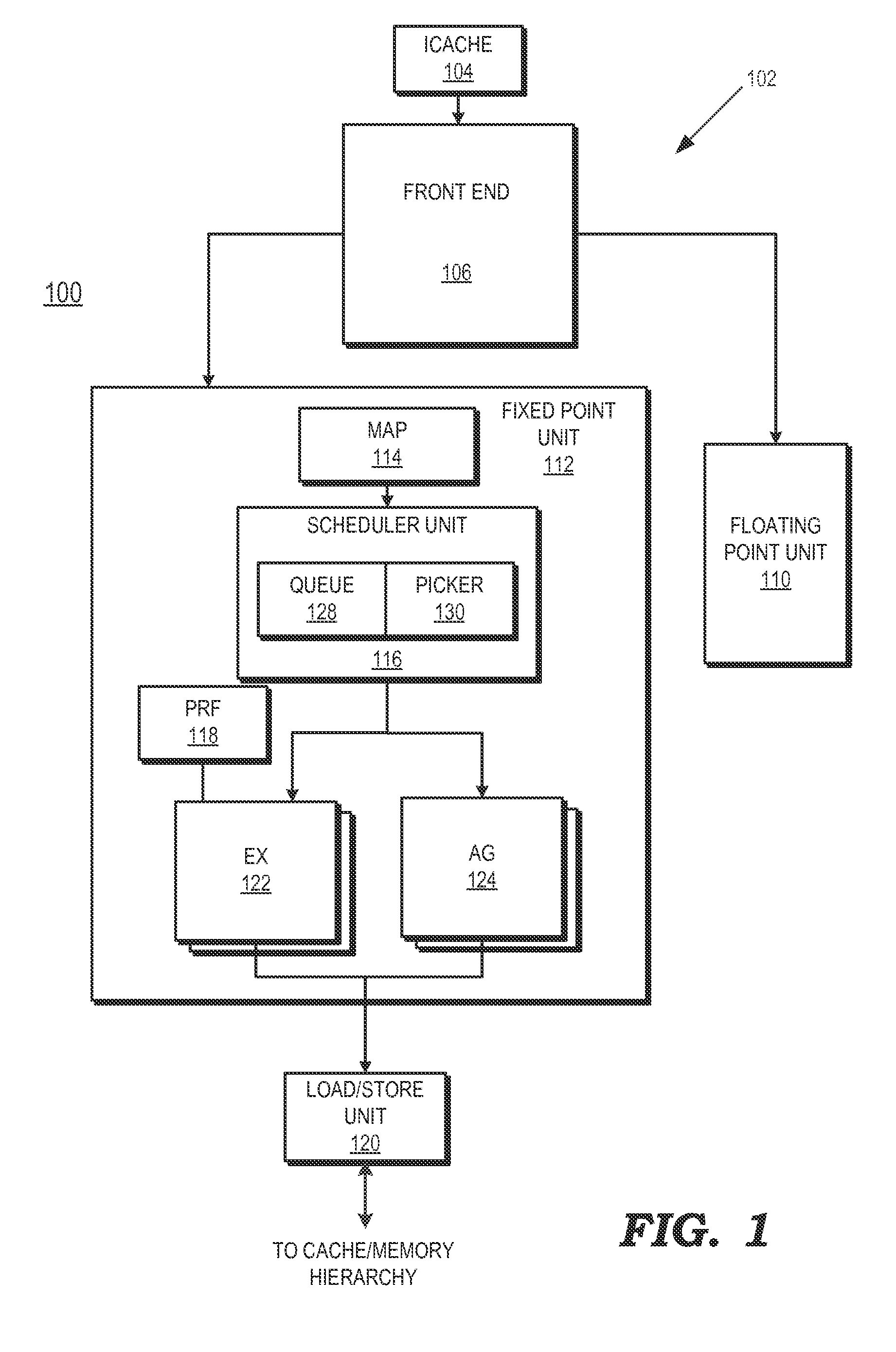

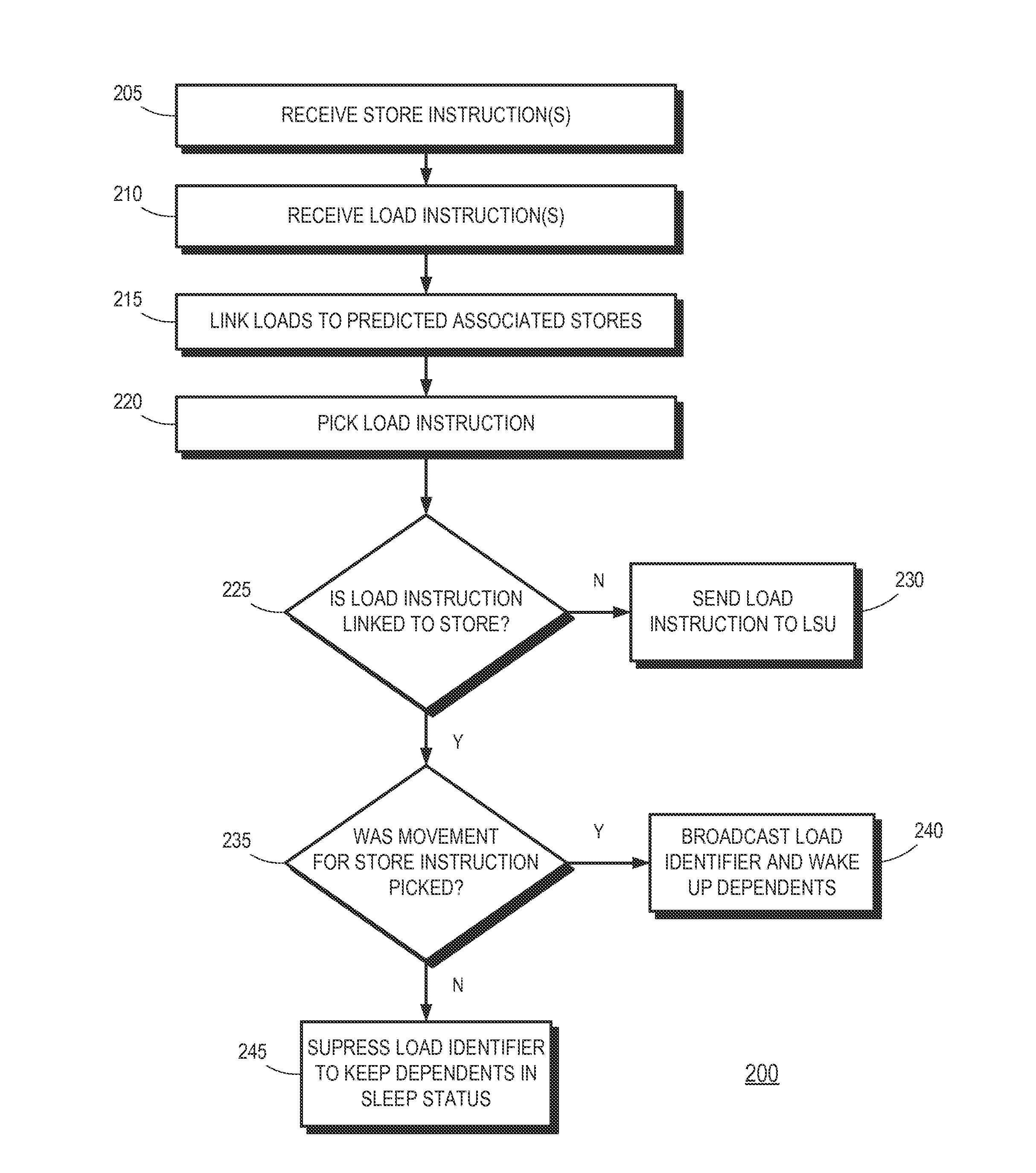

Dependence-based replay suppression

ActiveUS20140380023A1Digital computer detailsConcurrent instruction executionLoad instructionExecution unit

A method includes selecting for execution in a processor a load instruction having at least one dependent instruction. Responsive to selecting the load instruction, the at least one dependent instruction is selectively awakened based on a status of a store instruction associated with the load instruction to indicate that the at least one dependent instruction is eligible for execution. A processor includes an instruction pipeline having an execution unit to execute instructions, a scheduler, and a controller. The scheduler selects for execution in the execution unit a load instruction having at least one dependent instruction. The controller, responsive to the scheduler selecting the load instruction, selectively awakens the at least one dependent instruction based on a status of a store instruction associated with the load instruction to indicate that the at least one dependent instruction is eligible for execution by the execution unit.

Owner:ADVANCED MICRO DEVICES INC

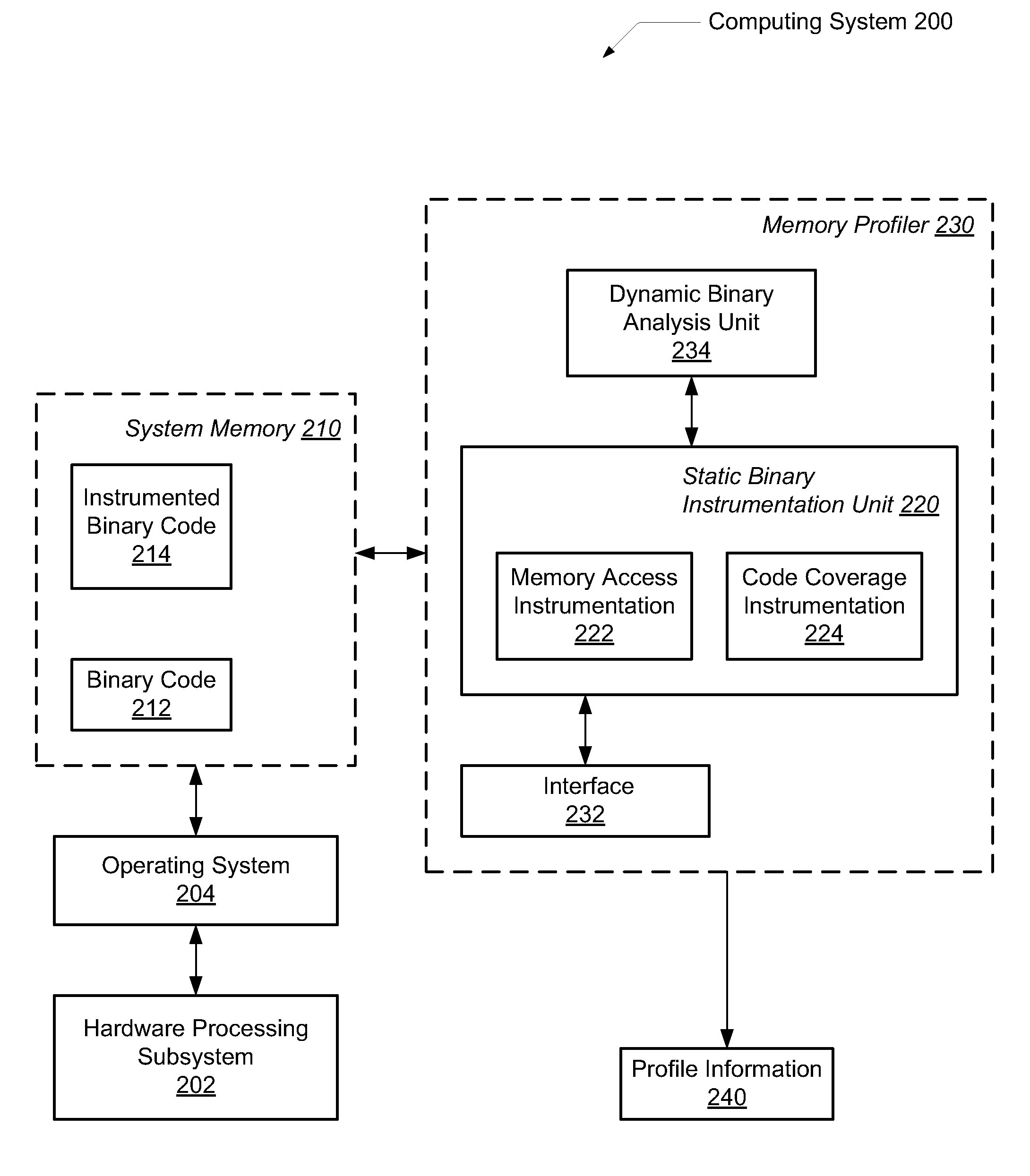

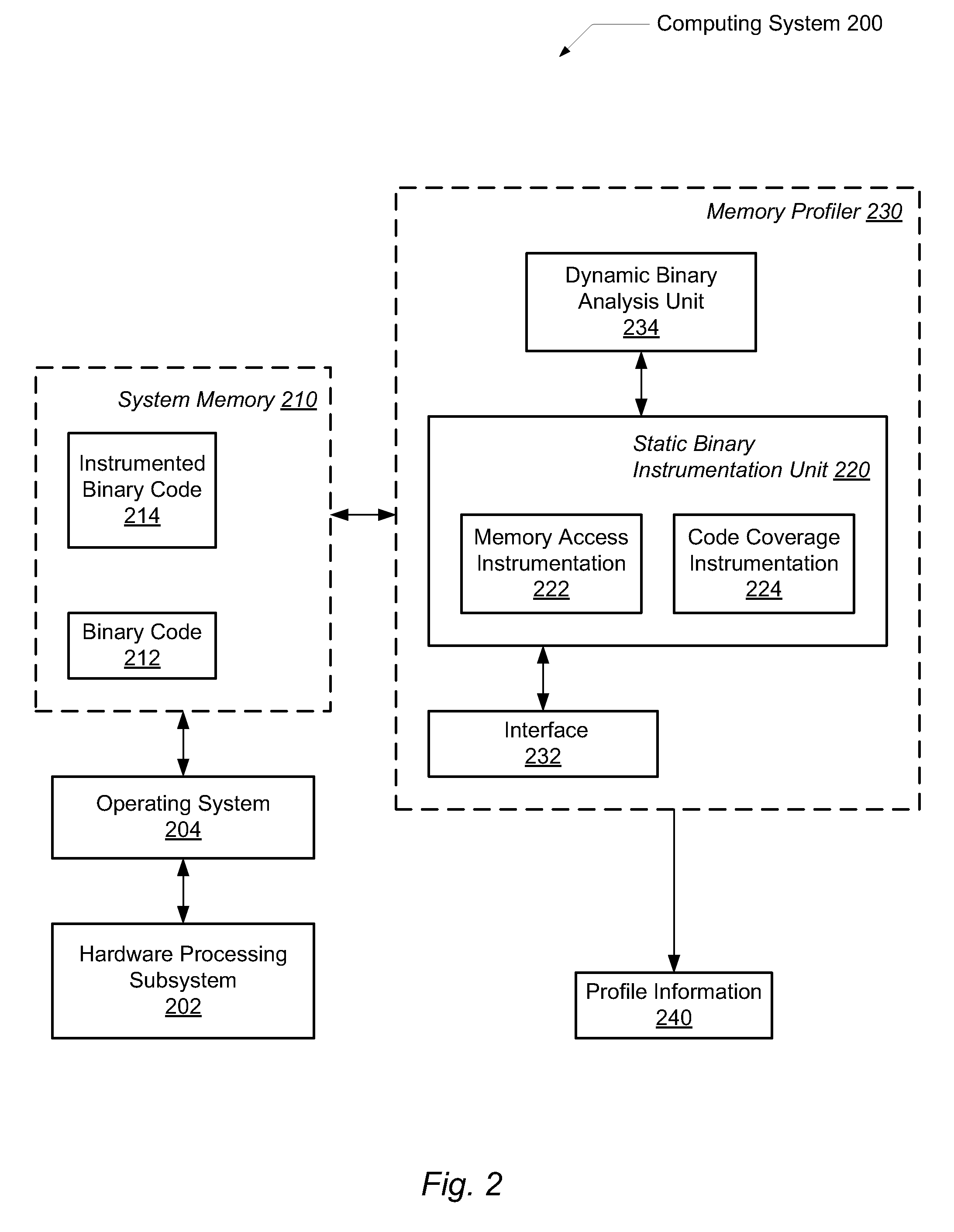

Efficient program instrumentation for memory profiling

ActiveUS20100146220A1Improve system performanceReduce false positiveError detection/correctionSpecific program execution arrangementsMemory profilingLoad instruction

A system and method for performing efficient program instrumentation for memory profiling. A computing system comprises a memory profiler comprising a static binary instrumentation (SBI) tool and a dynamic binary analysis (DBA) tool. The profiler is configured to selectively instrument memory access operations of a software application. Instrumentation may be bypassed completely for an instruction if the instruction satisfies some predetermined conditions. Some sample conditions include the instruction accesses an address within a predetermined read-only area, the instruction accesses an address within a user-specified address range, and / or the instruction is a load instruction accessing a memory location determined from a data flow graph to store an initialized value. An instrumented memory access instruction may have memory checking analysis performed only upon an initial execution of the instruction in response to determining during initial execution that a read data value of the instruction is initialized. Both unnecessary instrumentation and memory checking analysis may be reduced.

Owner:ORACLE INT CORP

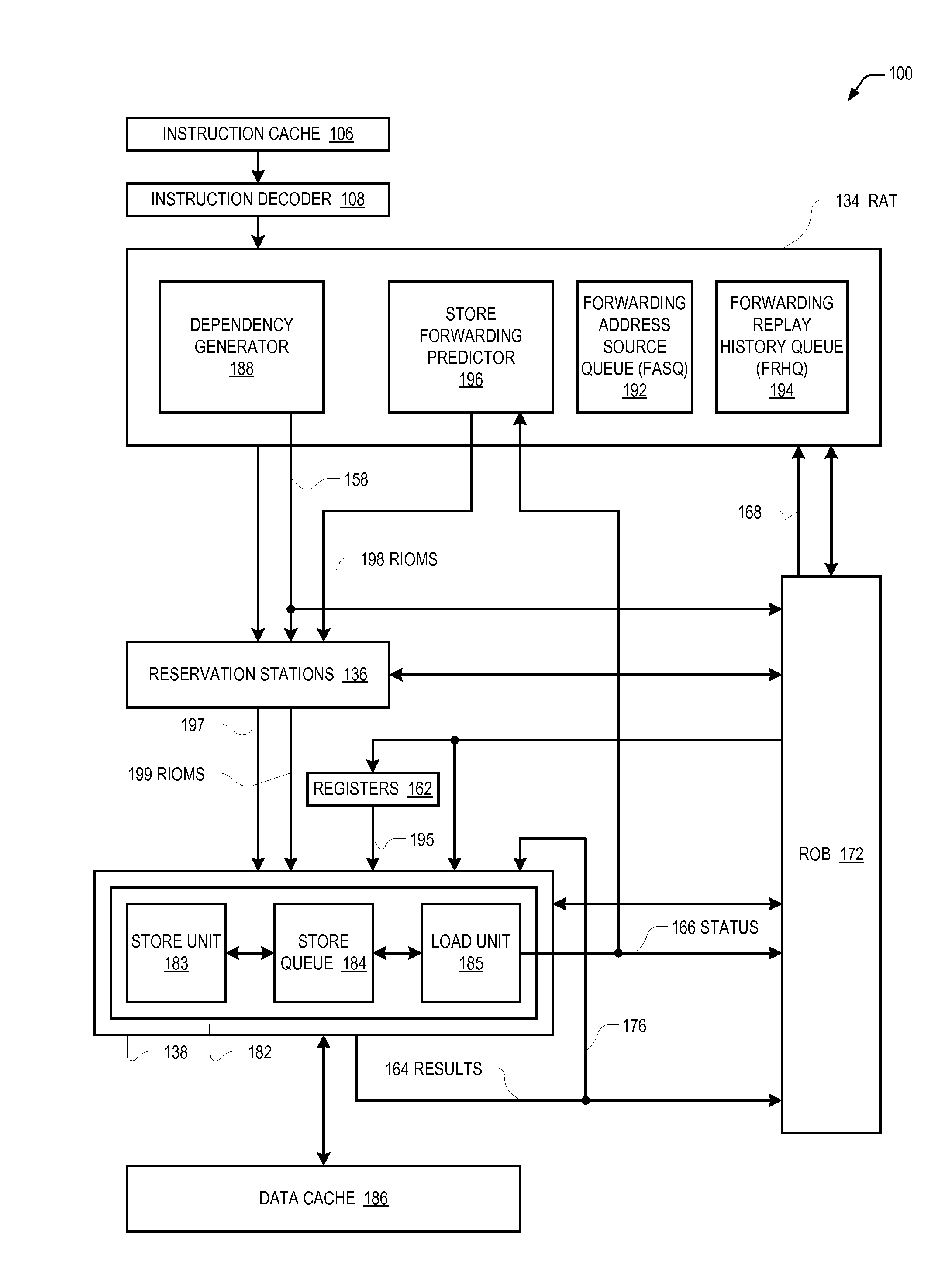

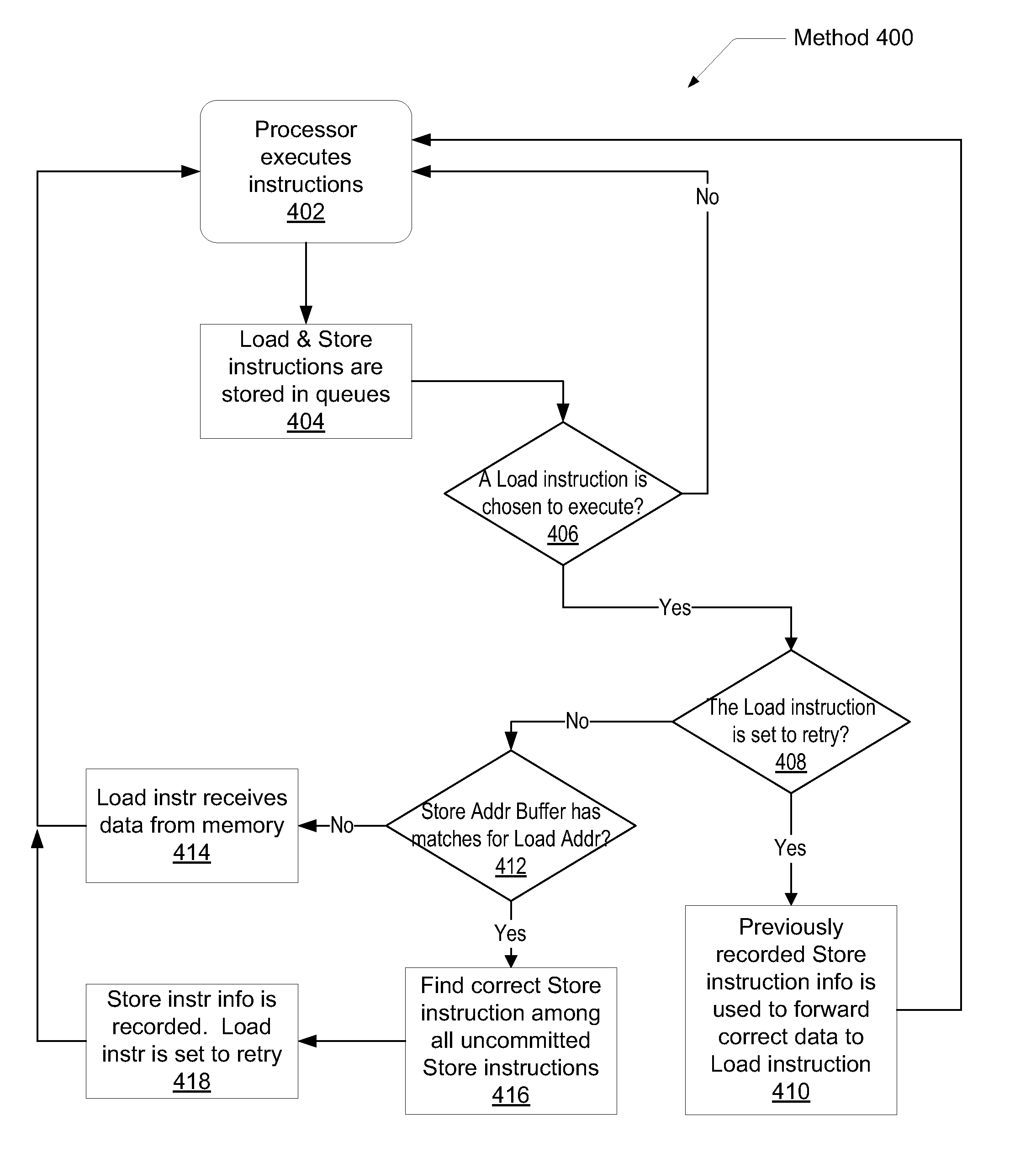

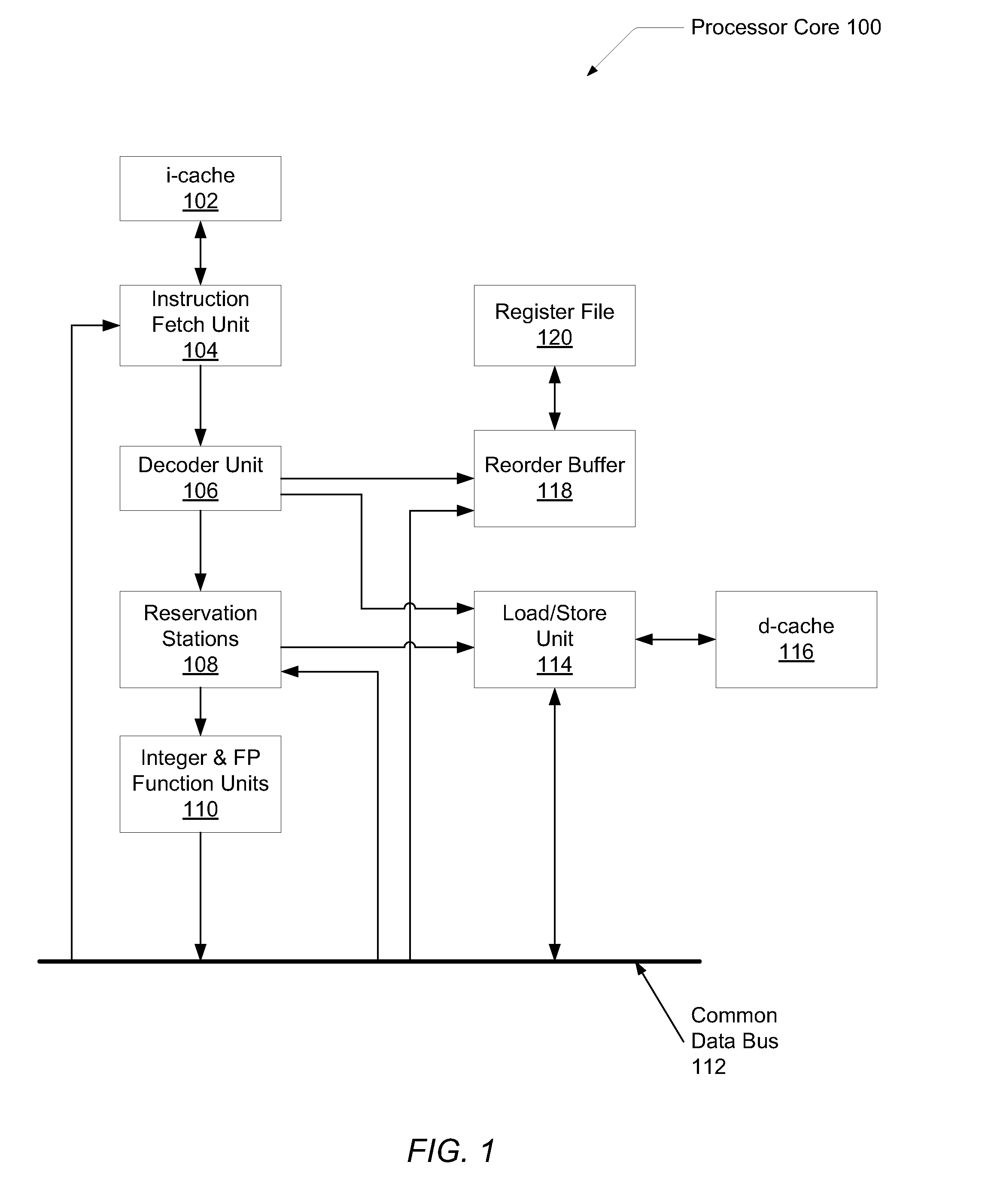

System and method of load-store forwarding

ActiveUS20090037697A1Improve processor performanceImprove performanceDigital computer detailsMemory systemsLoad instructionStore and forward

A system and method for data forwarding from a store instruction to a load instruction during out-of-order execution, when the load instruction address matches against multiple older uncommitted store addresses or if the forwarding fails during the first pass due to any other reason. In a first pass, the youngest store instruction in program order of all store instructions older than a load instruction is found and an indication to the store buffer entry holding information of the youngest store instruction is recorded. In a second pass, the recorded indication is used to index the store buffer and the store bypass data is forwarded to the load instruction. Simultaneously, it is verified if no new store, younger than the previously identified store and older than the load has not been issued due to out-of-order execution.

Owner:ADVANCED MICRO DEVICES INC

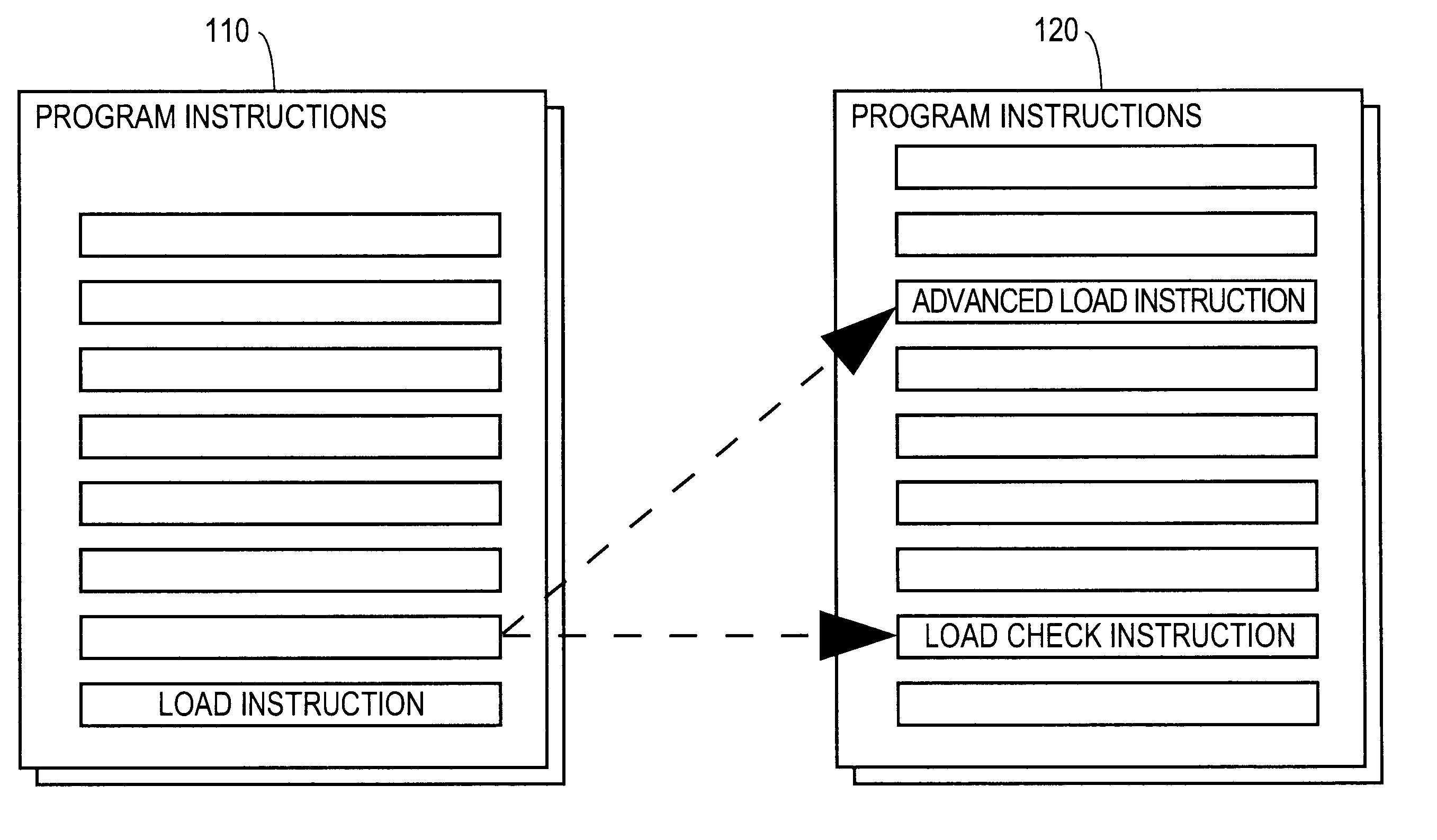

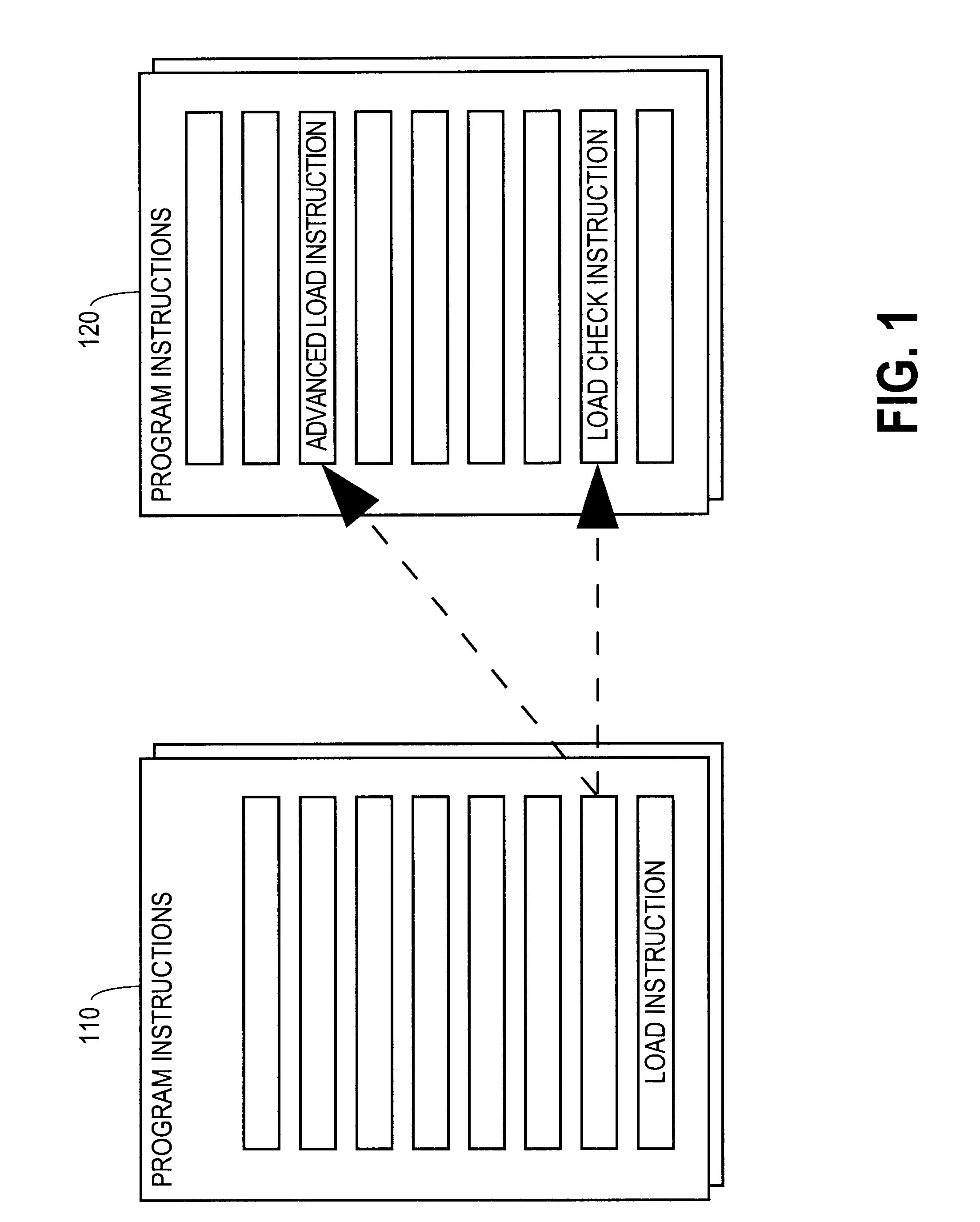

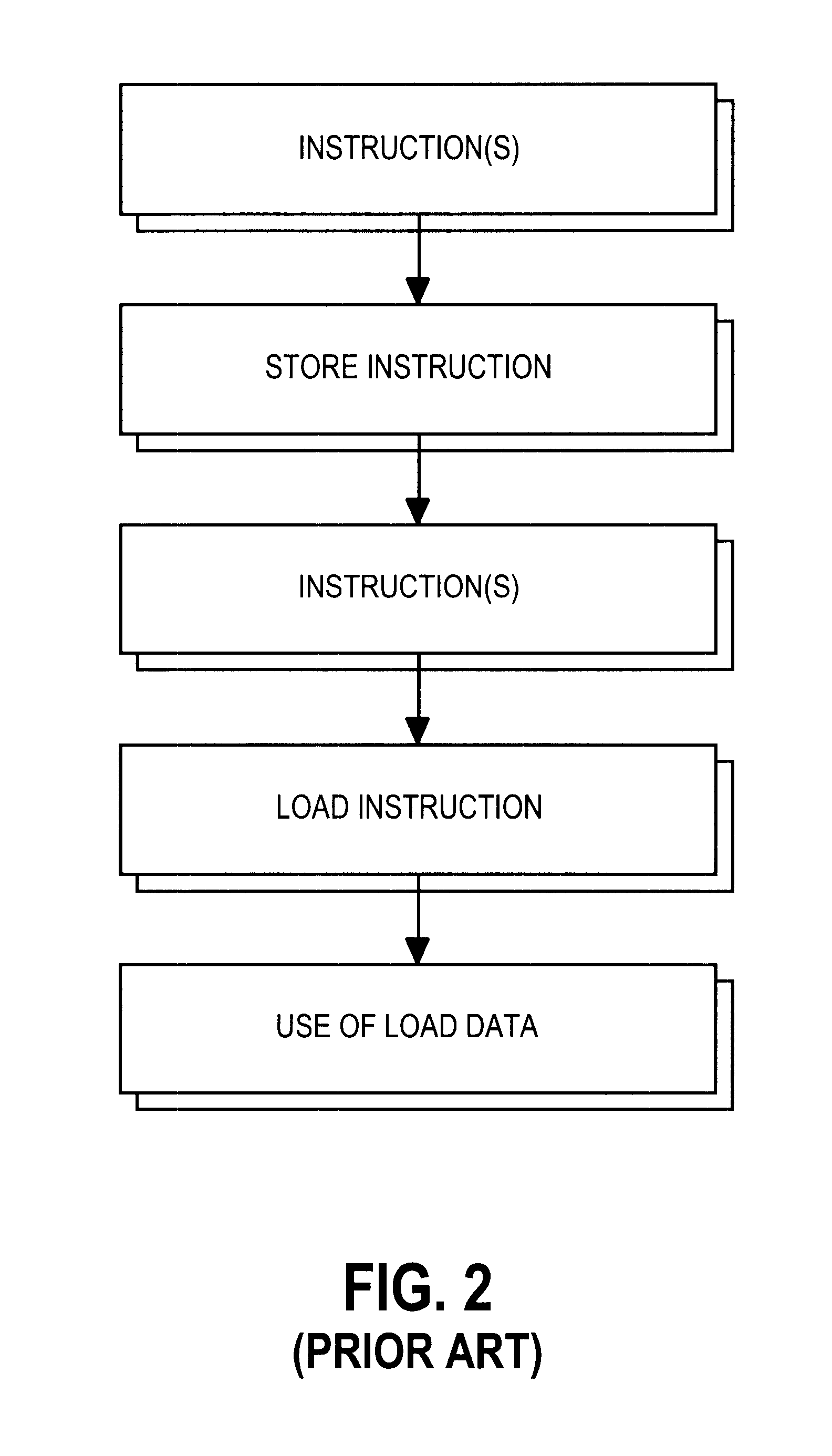

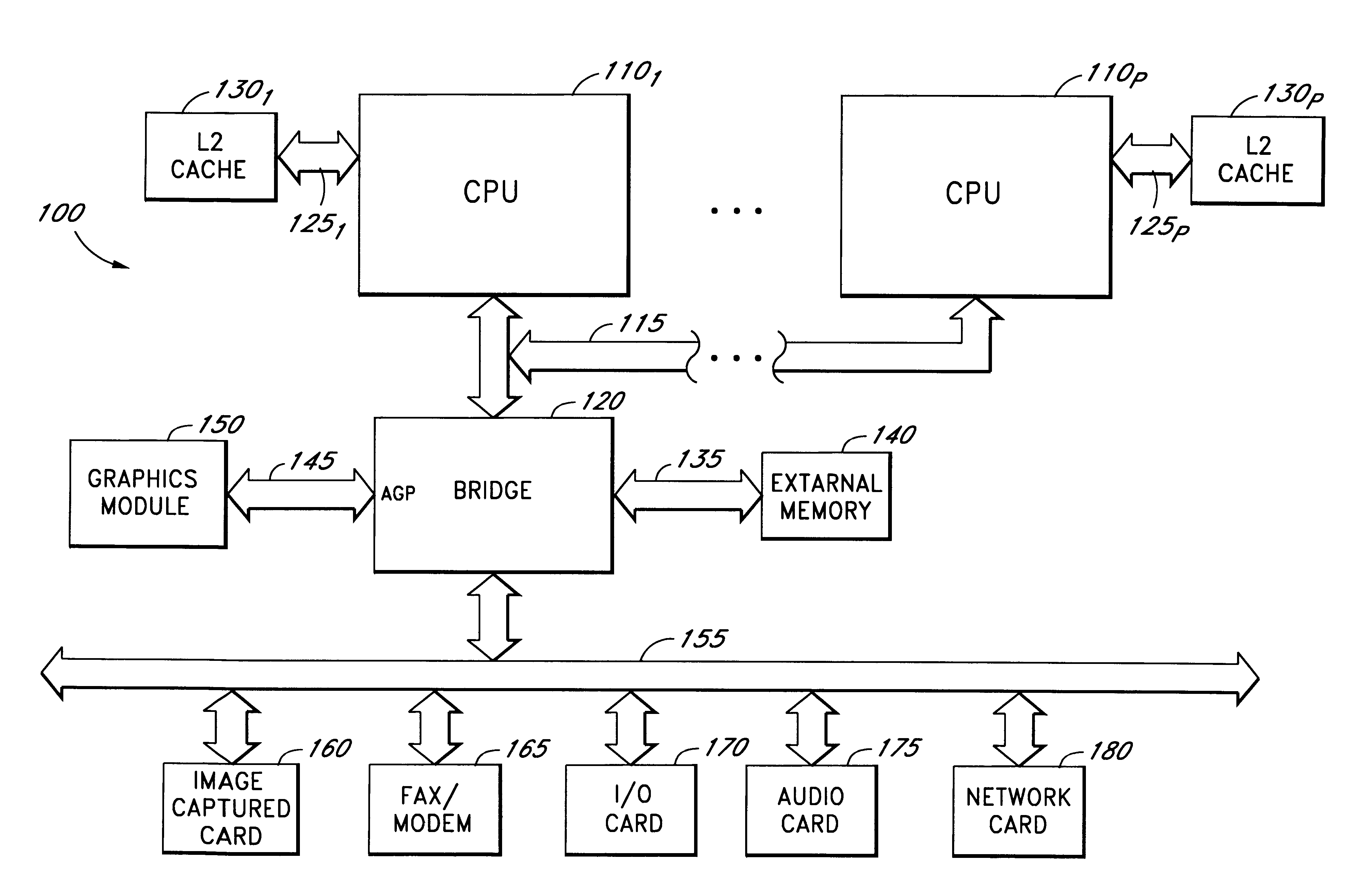

Method and apparatus for advancing load operations

InactiveUS6658559B1Digital computer detailsConcurrent instruction executionLoad instructionPhysical address

A computer product, method, and apparatus for causing a computer to perform load operations in a particular way are disclosed. The computer is made to replace a load instruction at a particular location in a computer program instruction sequence with two instructions, an advanced load instruction and a load check instruction. The advanced load instruction is inserted into the instruction sequence up-stream from where the original load instruction was located, and may be inserted above store instructions. The load check instruction is inserted into the instruction sequence after the store instructions. An Advanced Load Address Table (ALAT) structure, containing physical address data and validity data for each non-speculative advanced load, is updated with data about each advanced load and each store instruction executed, and queried on execution of each load check instruction about whether or not a particular advanced load is safe to use. An advanced load speculative pipeline and speculative invalidation pipeline are similarly queried regarding speculative advanced loads.

Owner:INTEL CORP

Method and apparatus for implementing non-temporal loads

InactiveUS6223258B1Memory adressing/allocation/relocationConcurrent instruction executionLoad instructionParallel computing

A processor is described. The processor includes a decoder to decode instructions and a circuit, in response to a decoded instruction, to detect an incoming load instruction that misses a cache, allocate a buffer to service the incoming load instruction, and issue a bus request to load the data in the buffer without accessing said cache.

Owner:INTEL CORP

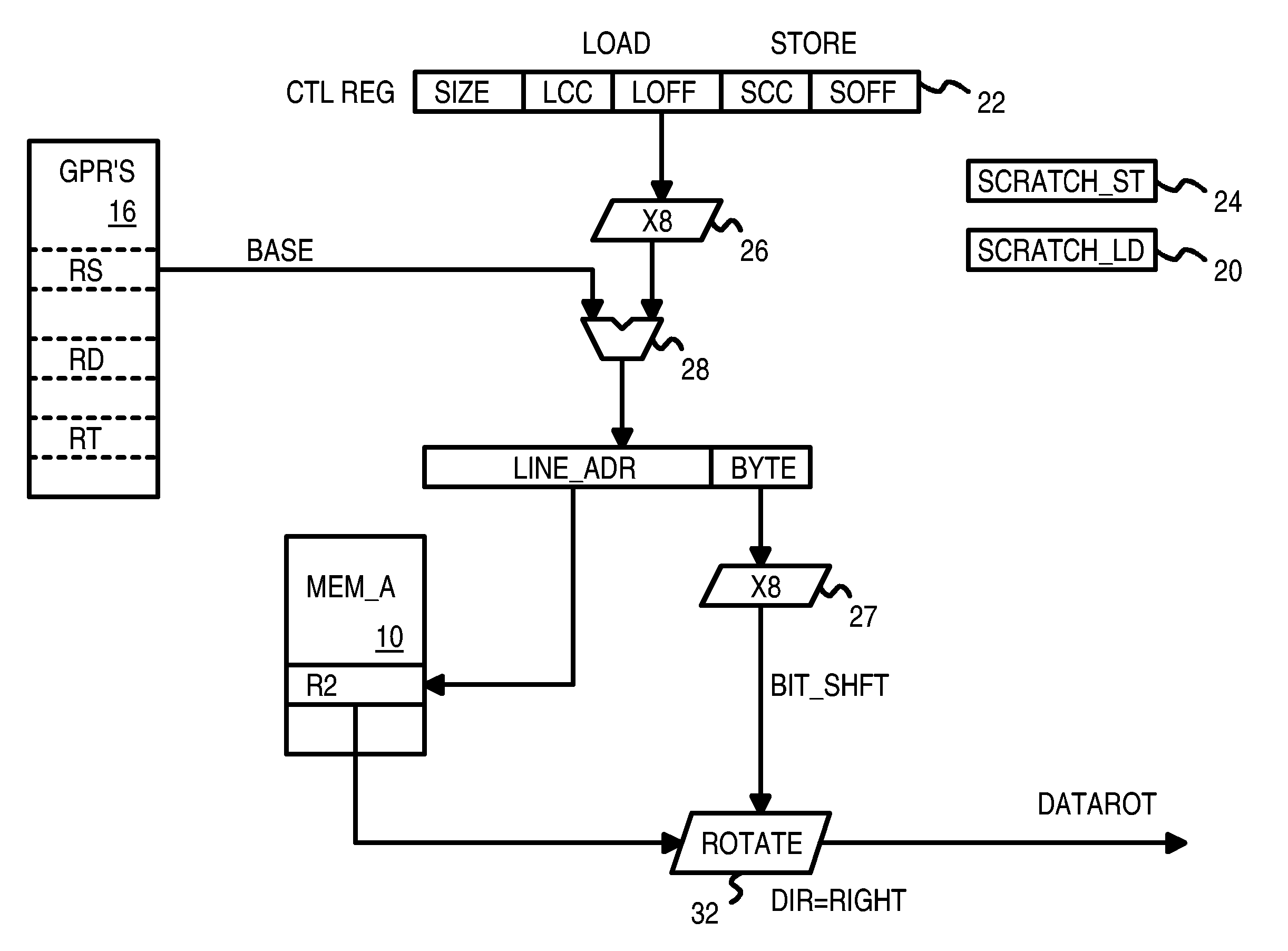

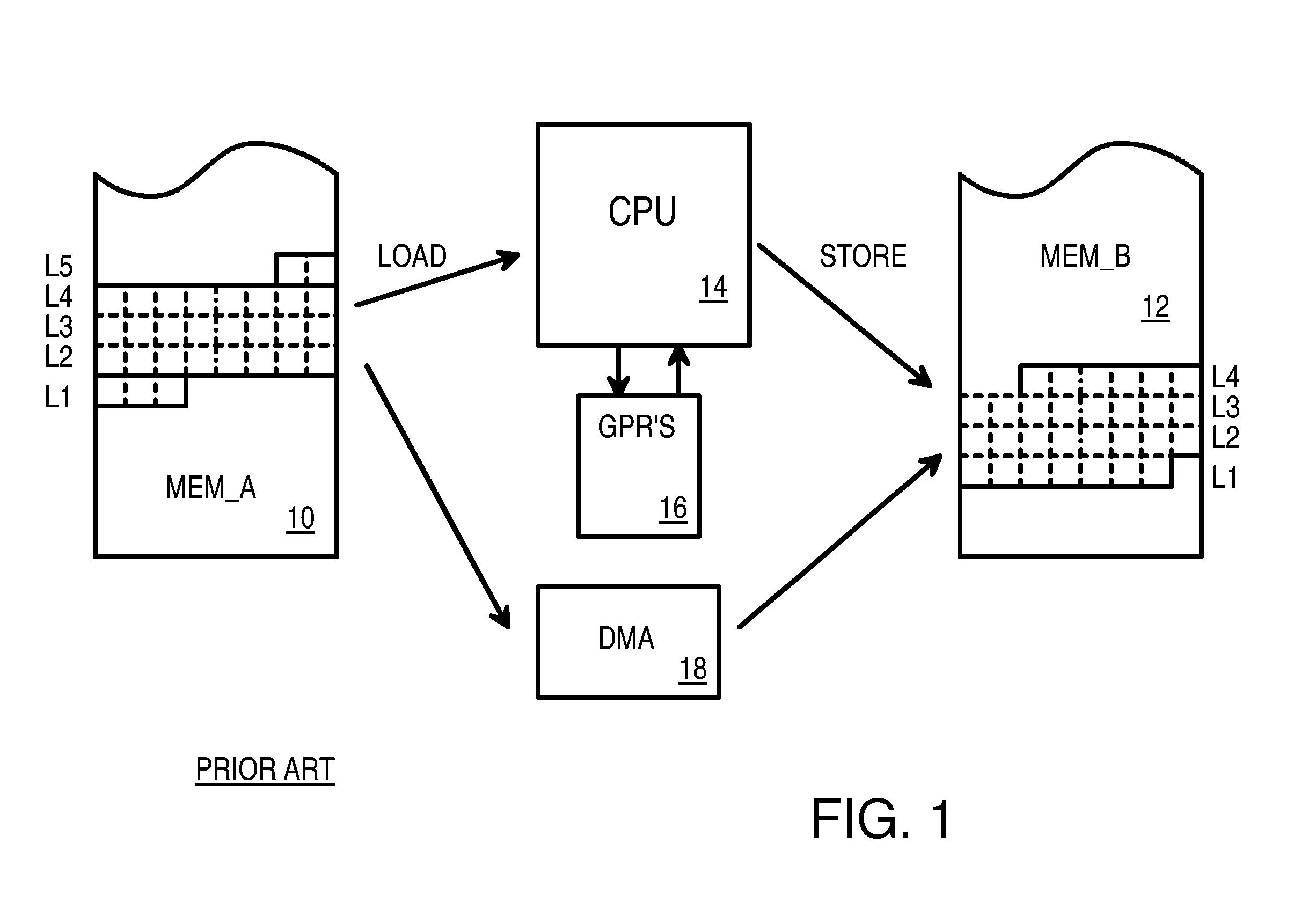

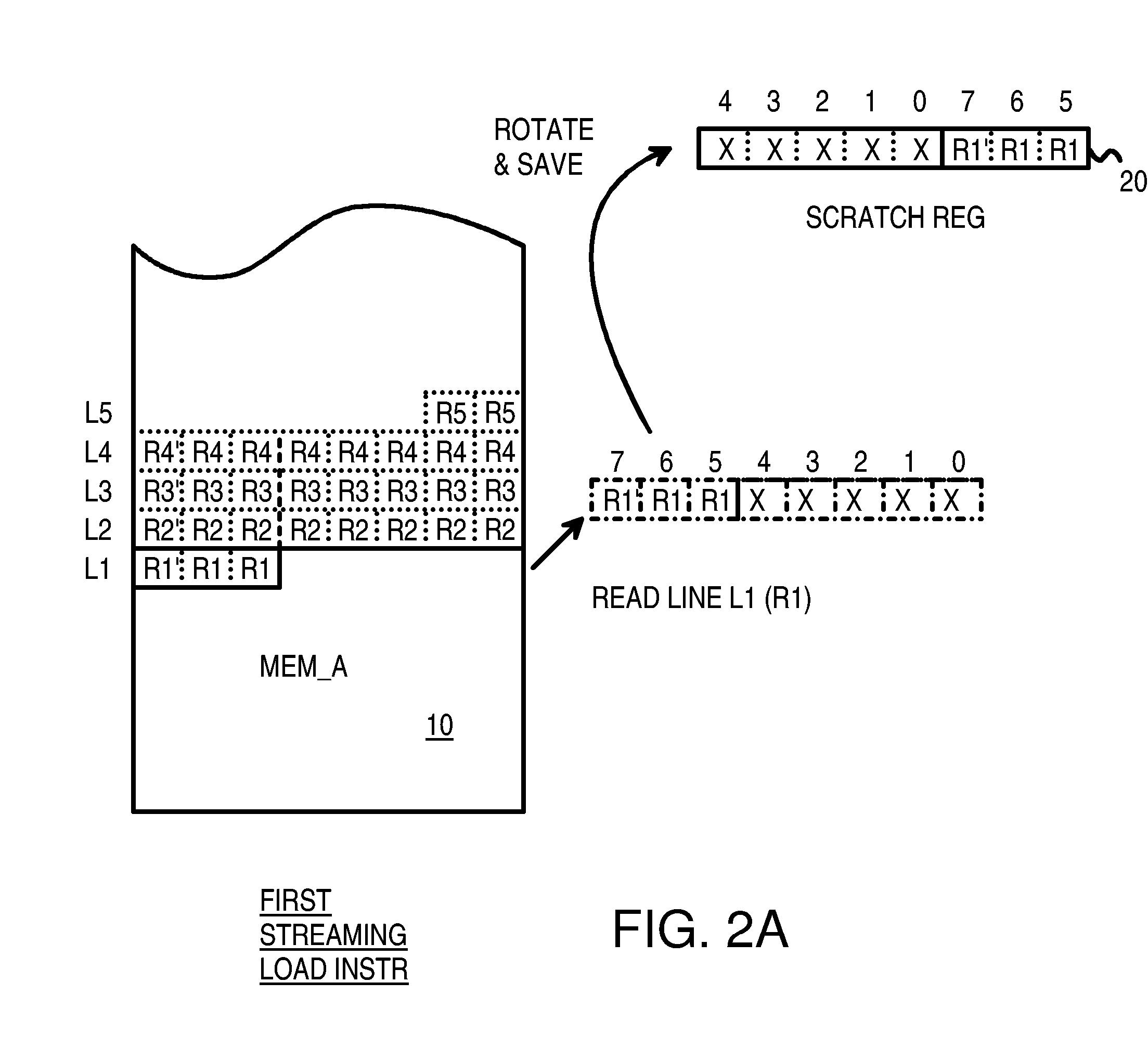

Efficient Streaming of Un-Aligned Load/Store Instructions that Save Unused Non-Aligned Data in a Scratch Register for the Next Instruction

InactiveUS20070106883A1Digital computer detailsSpecific program execution arrangementsGeneral purposeLoad instruction

A memory block with any source alignment is streamed into general-purpose registers (GPRs) as aligned data using a streaming load instruction. A streaming store instruction reads the aligned data from the GPRs and writes the data into memory with any destination alignment. Data is streamed from any source alignment to any destination alignment. Memory accesses are aligned to memory lines. The data is rotated using the offset within a memory line of the base address. The rotated data is stored in a scratch register for use by the next streaming load instruction. Rotated data just read from memory is combined with rotated data in the scratch register read by the last streaming load instruction to generate result data to load into the destination GPR. Streaming condition codes are set when the block's end is detected to disable future streaming instructions. Aligned memory accesses at full bandwidth read the un-aligned block.

Owner:AZUL SYSTEMS

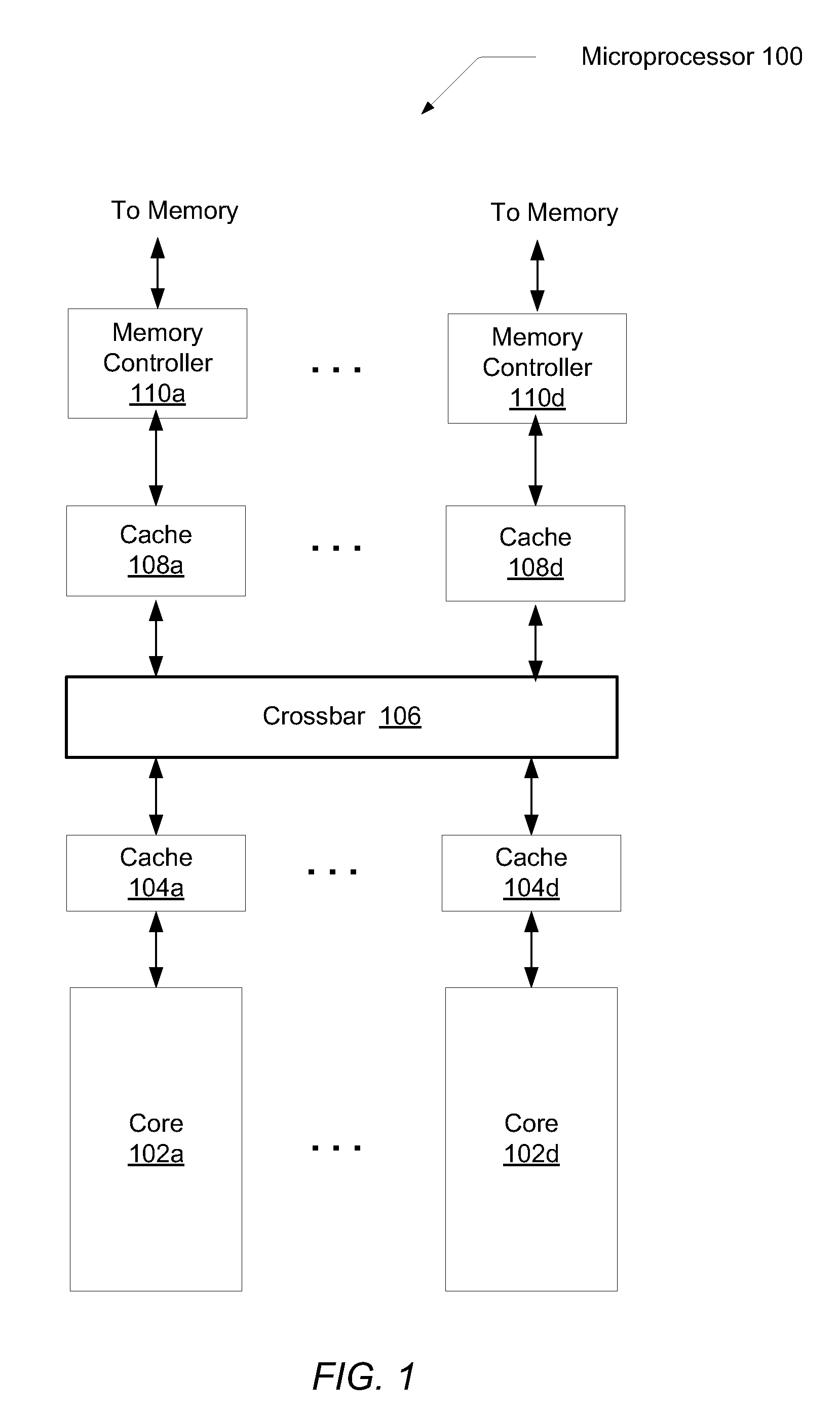

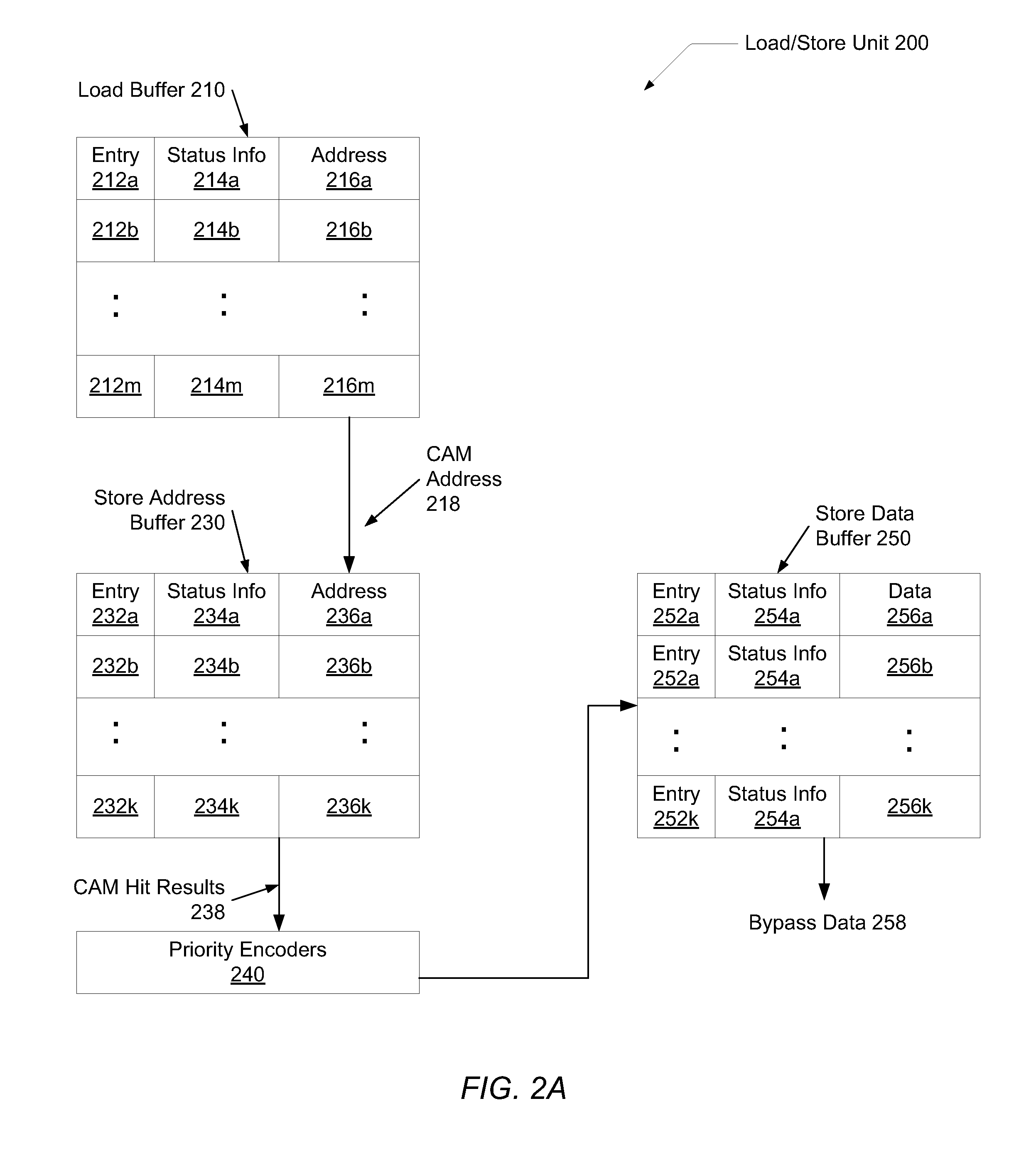

Load/store ordering in a threaded out-of-order processor

ActiveUS20100293347A1Memory adressing/allocation/relocationProgram controlArray data structureLoad instruction

Systems and methods for efficient load-store ordering. A processor comprises a store buffer that includes an array. The store buffer dynamically allocates any entry of the array for an out-of-order (o-o-o) issued store instruction independent of a corresponding thread. Circuitry within the store buffer determines a first set of entries of the array entries that have store instructions older in program order than a particular load instruction, wherein the store instructions have a same thread identifier and address as the load instruction. From the first set, the logic locates a single final match entry of the first set corresponding to the youngest store instruction of the first set, which may be used for read-after-write (RAW) hazard detection.

Owner:ORACLE INT CORP

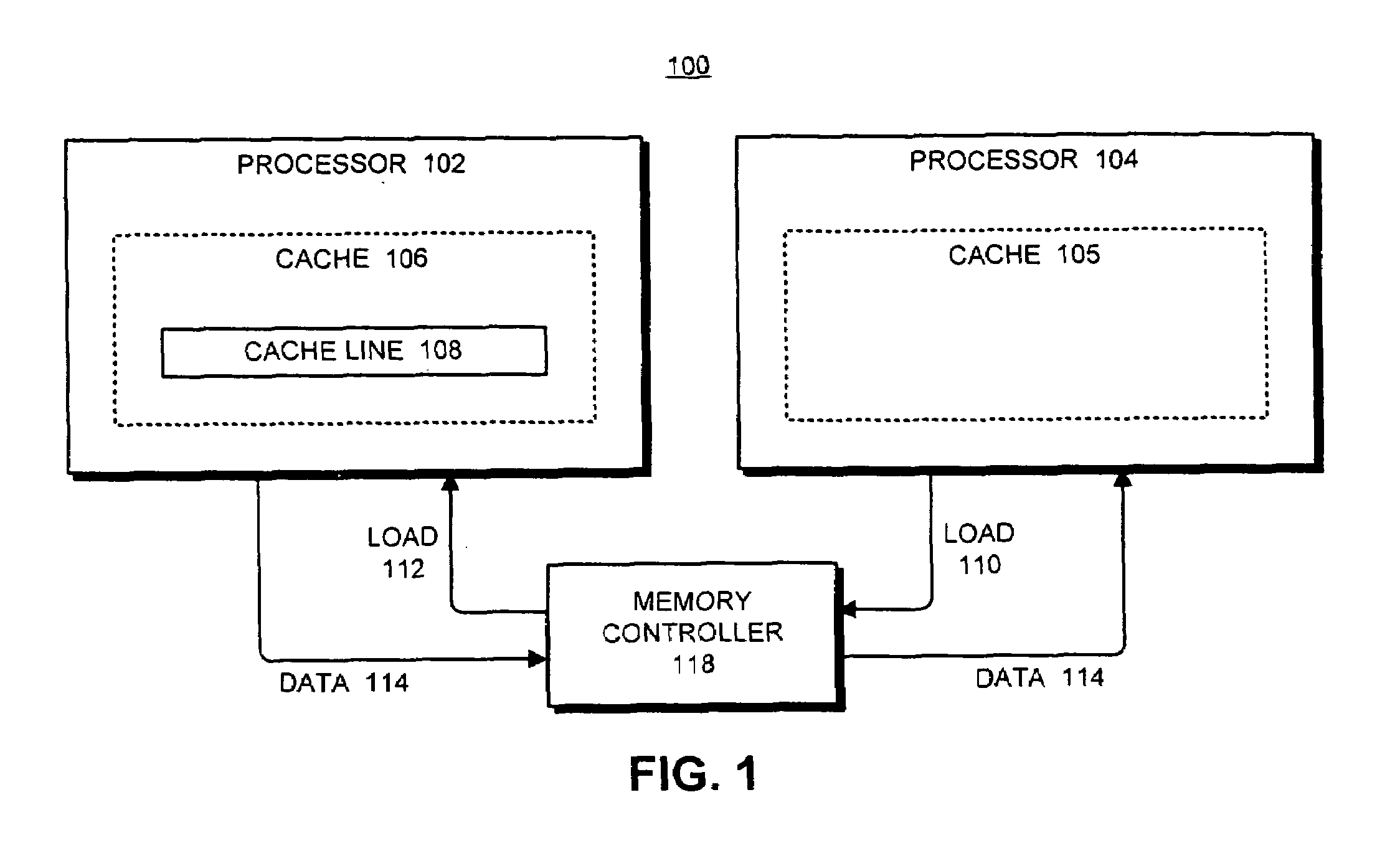

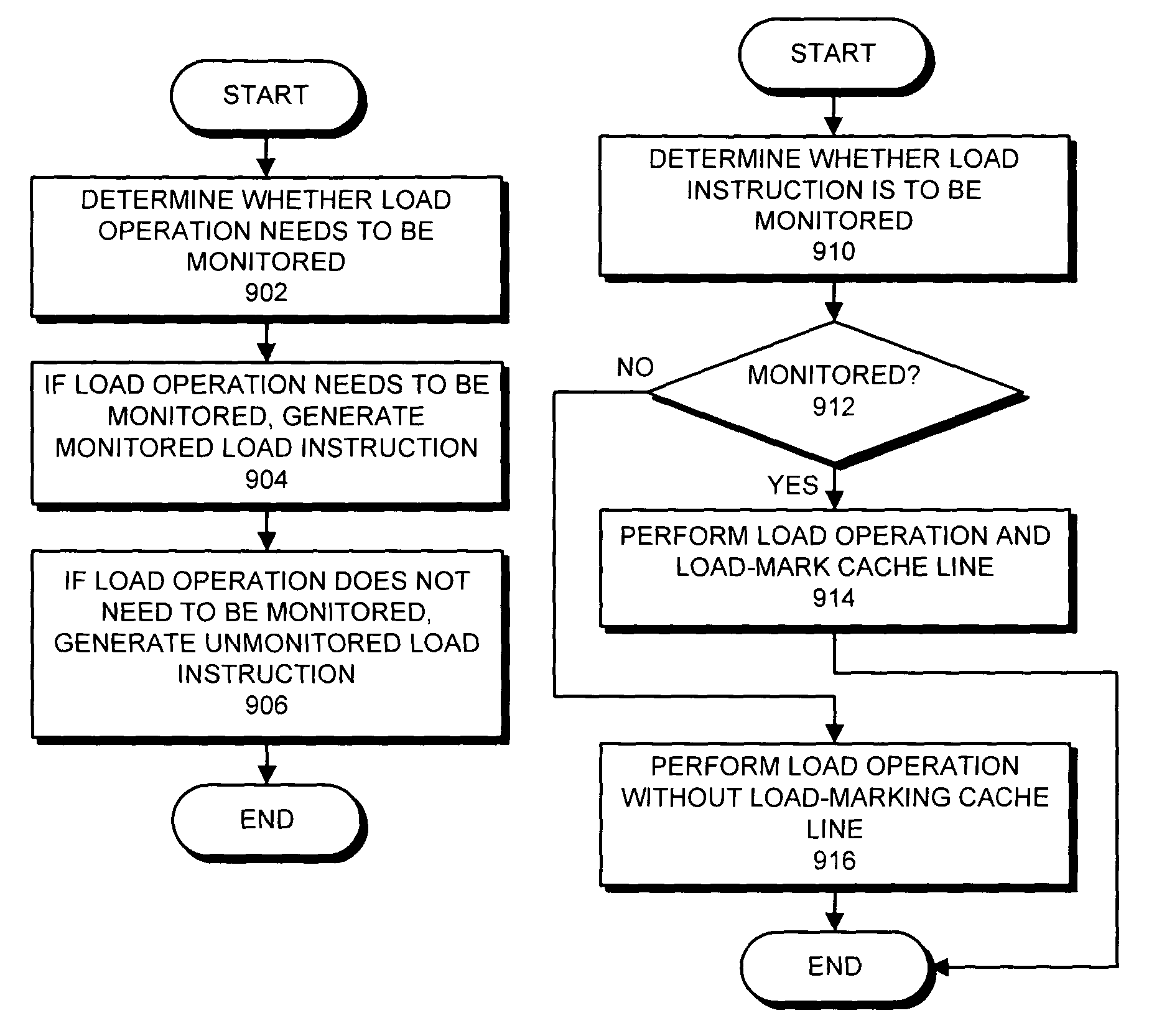

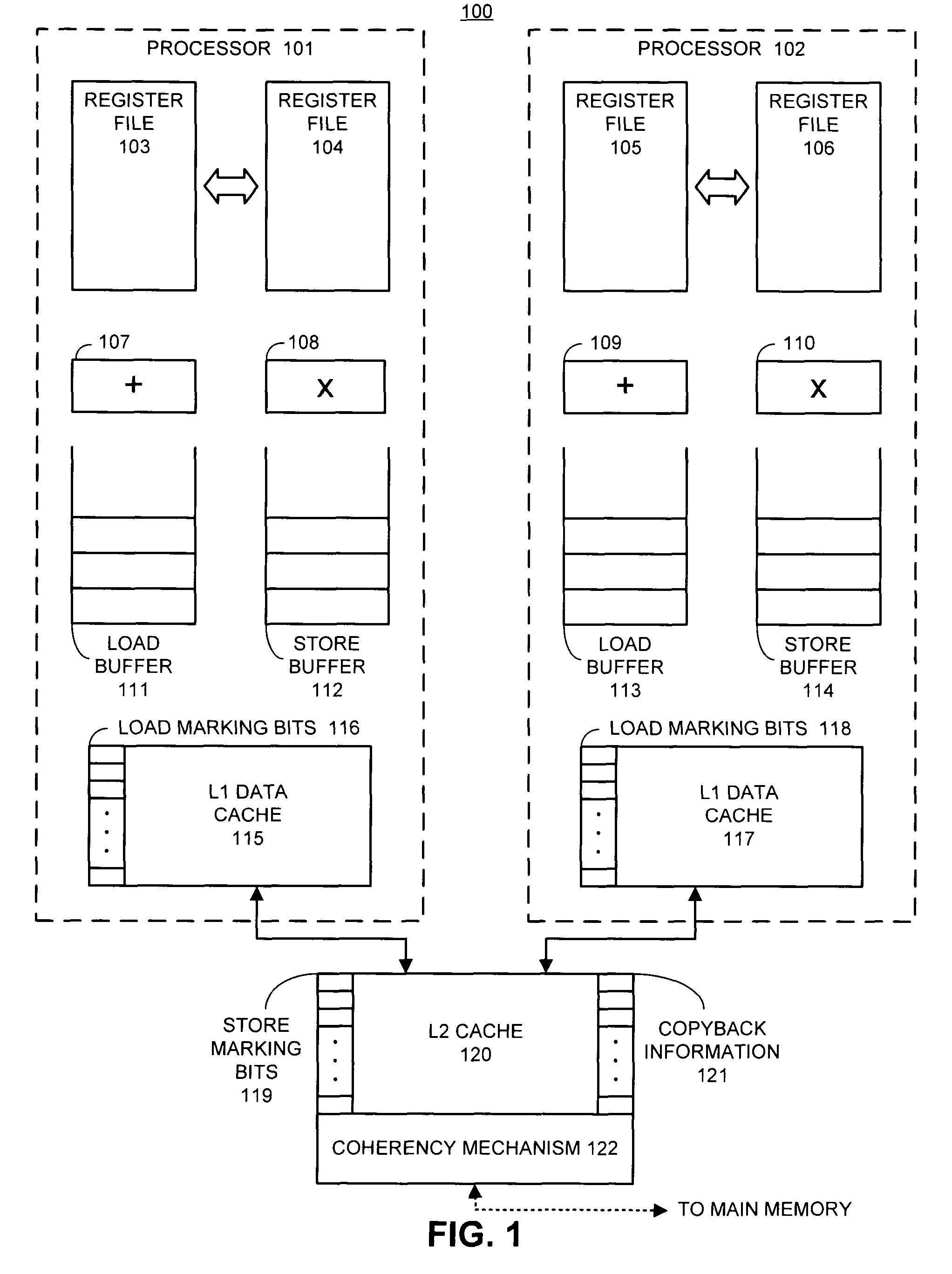

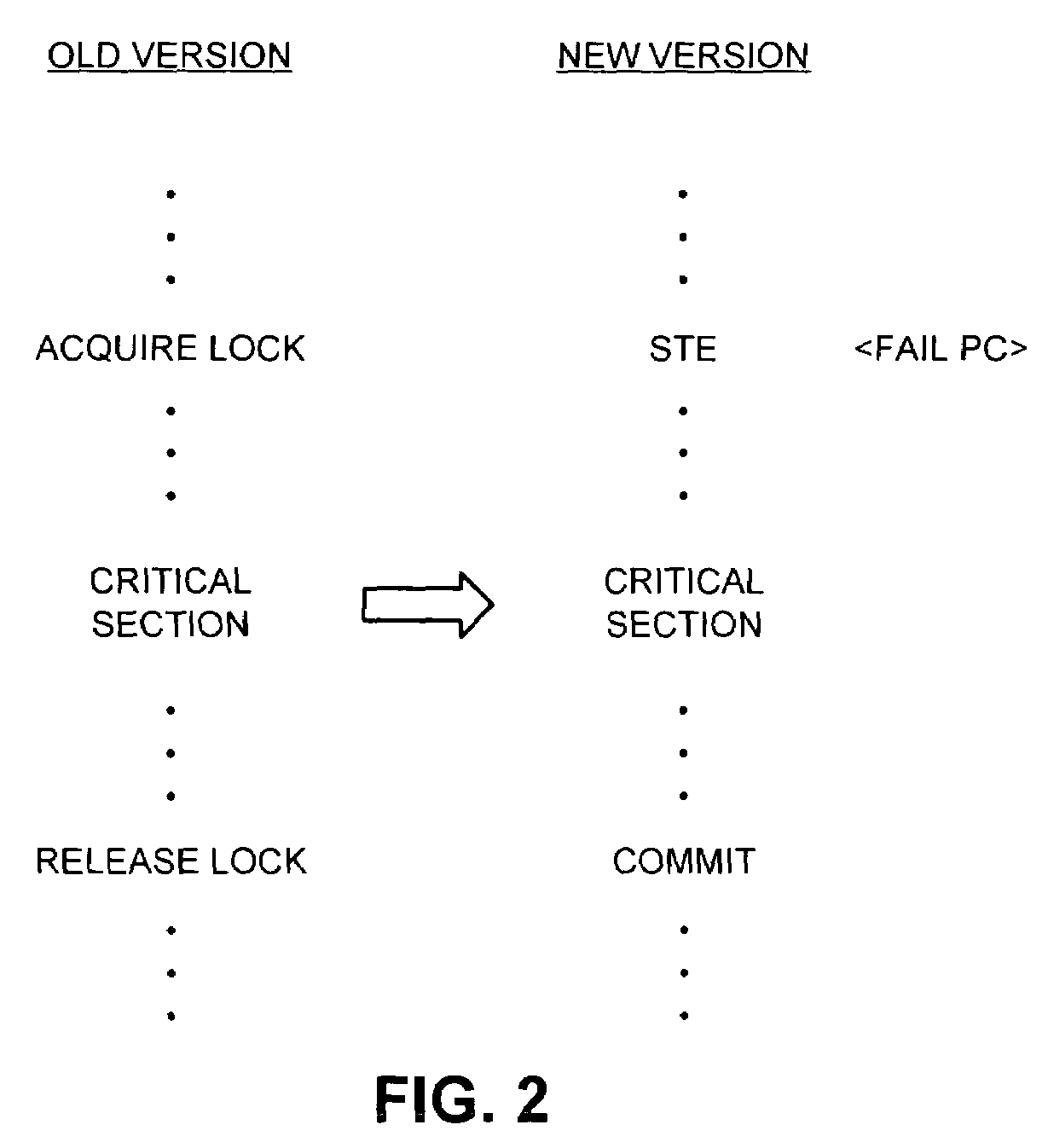

Selectively monitoring loads to support transactional program execution

ActiveUS7269694B2Easy to detectMemory adressing/allocation/relocationUnauthorized memory use protectionLoad instructionParallel computing

One embodiment of the present invention provides a system that selectively monitors load instructions to support transactional execution of a process, wherein changes made during the transactional execution are not committed to the architectural state of a processor until the transactional execution successfully completes. Upon encountering a load instruction during transactional execution of a block of instructions, the system determines whether the load instruction is a monitored load instruction or an unmonitored load instruction. If the load instruction is a monitored load instruction, the system performs the load operation, and load-marks a cache line associated with the load instruction to facilitate subsequent detection of an interfering data access to the cache line from another process. If the load instruction is an unmonitored load instruction, the system performs the load operation without load-marking the cache line.

Owner:ORACLE INT CORP

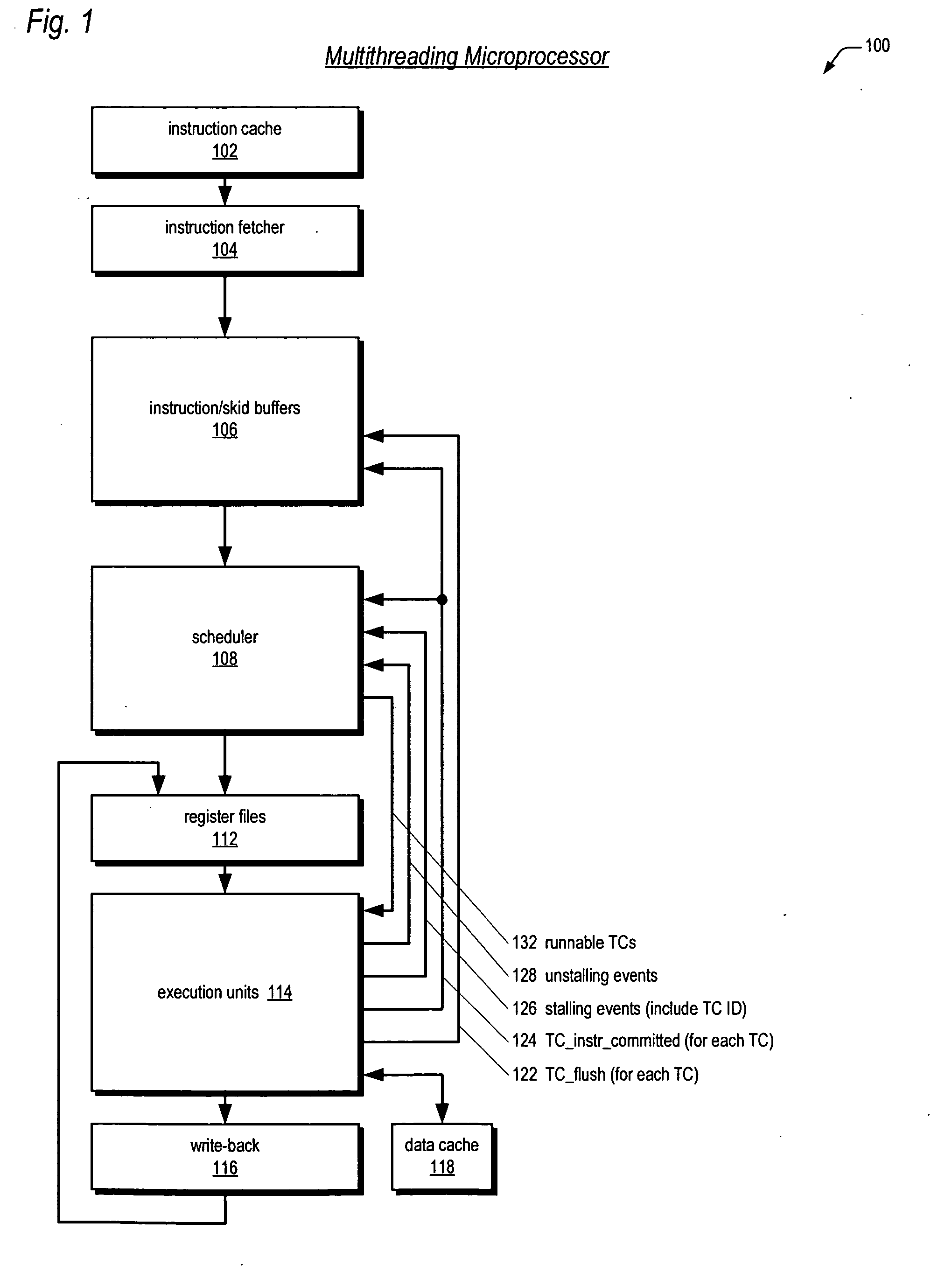

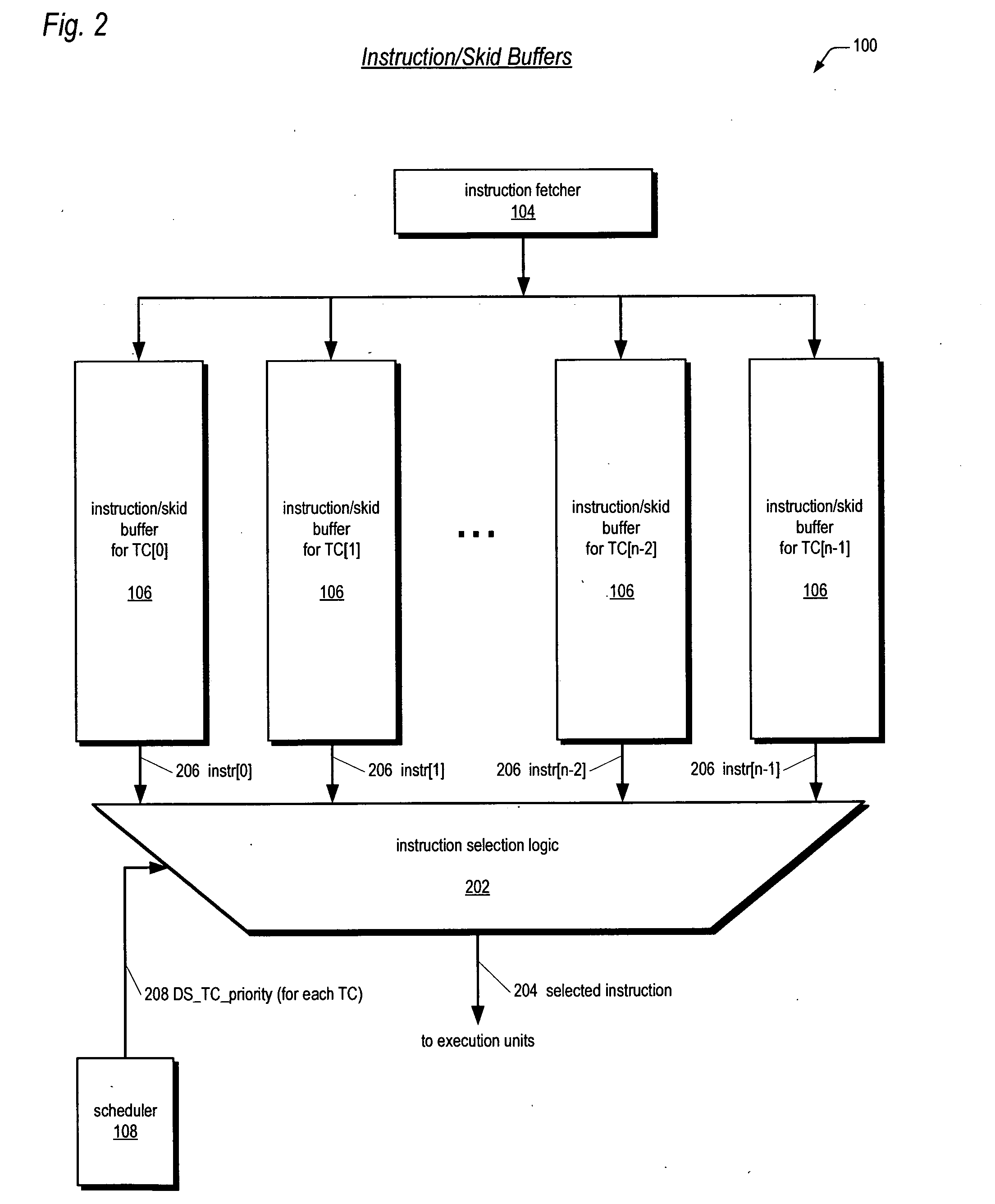

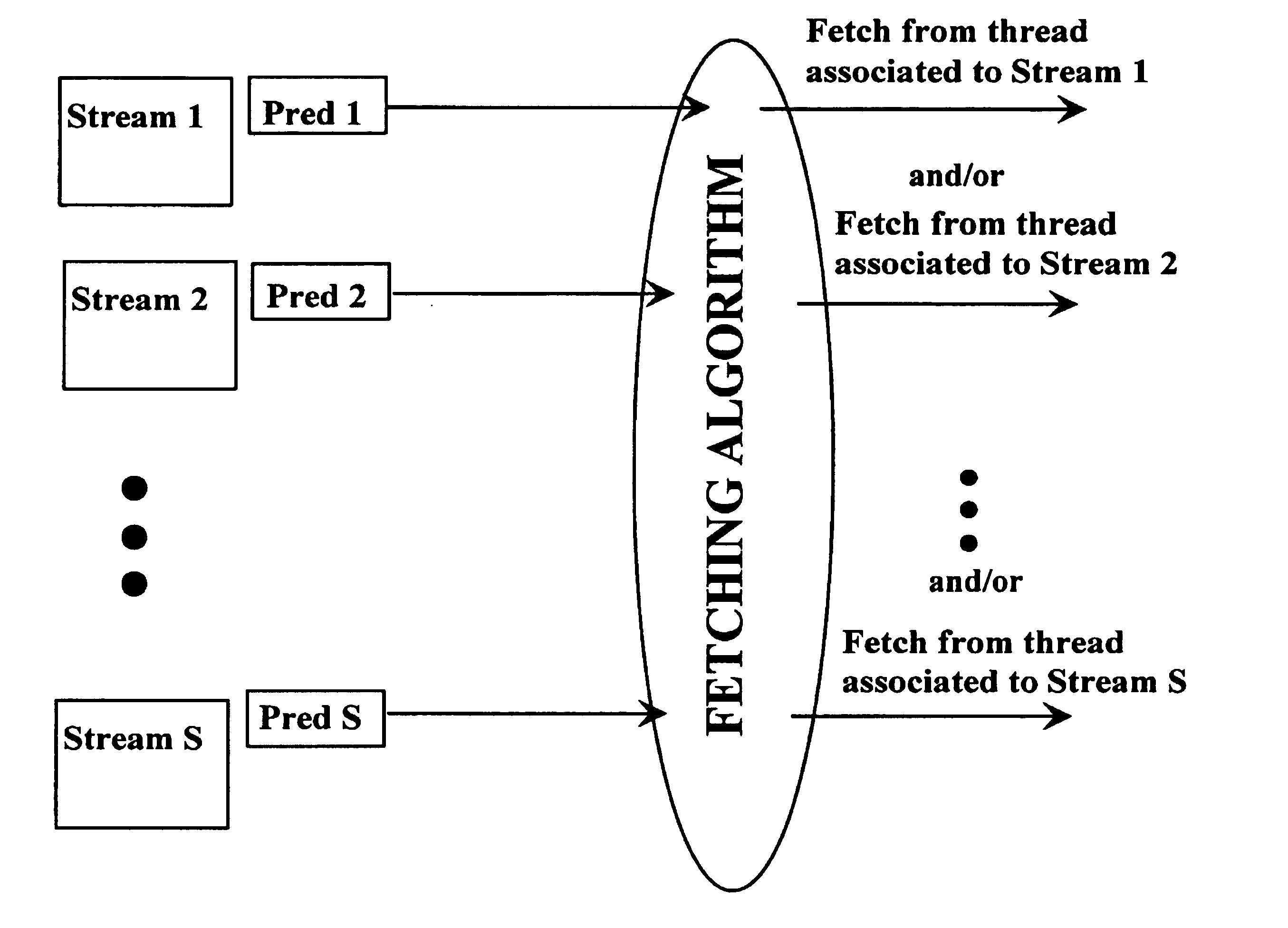

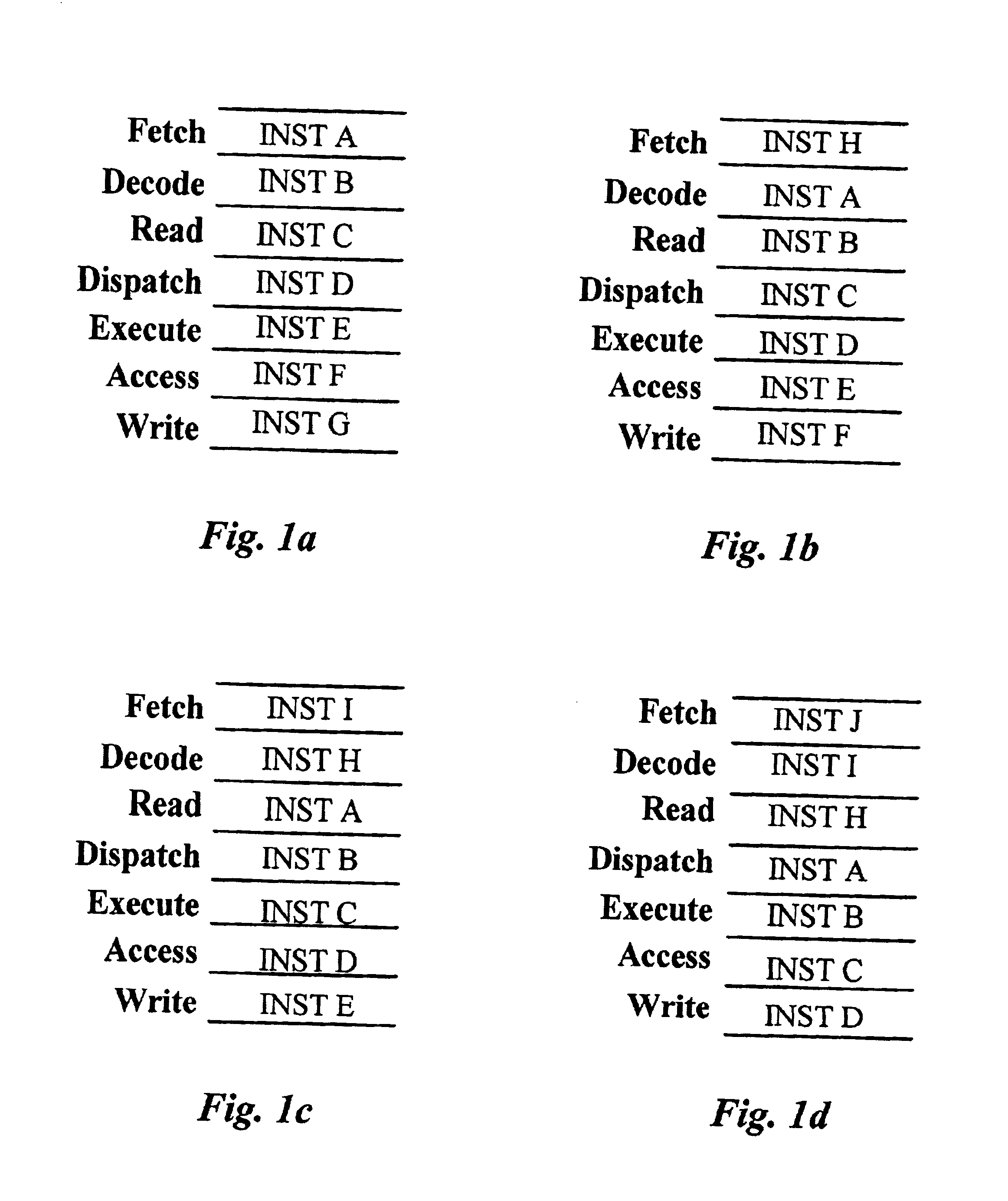

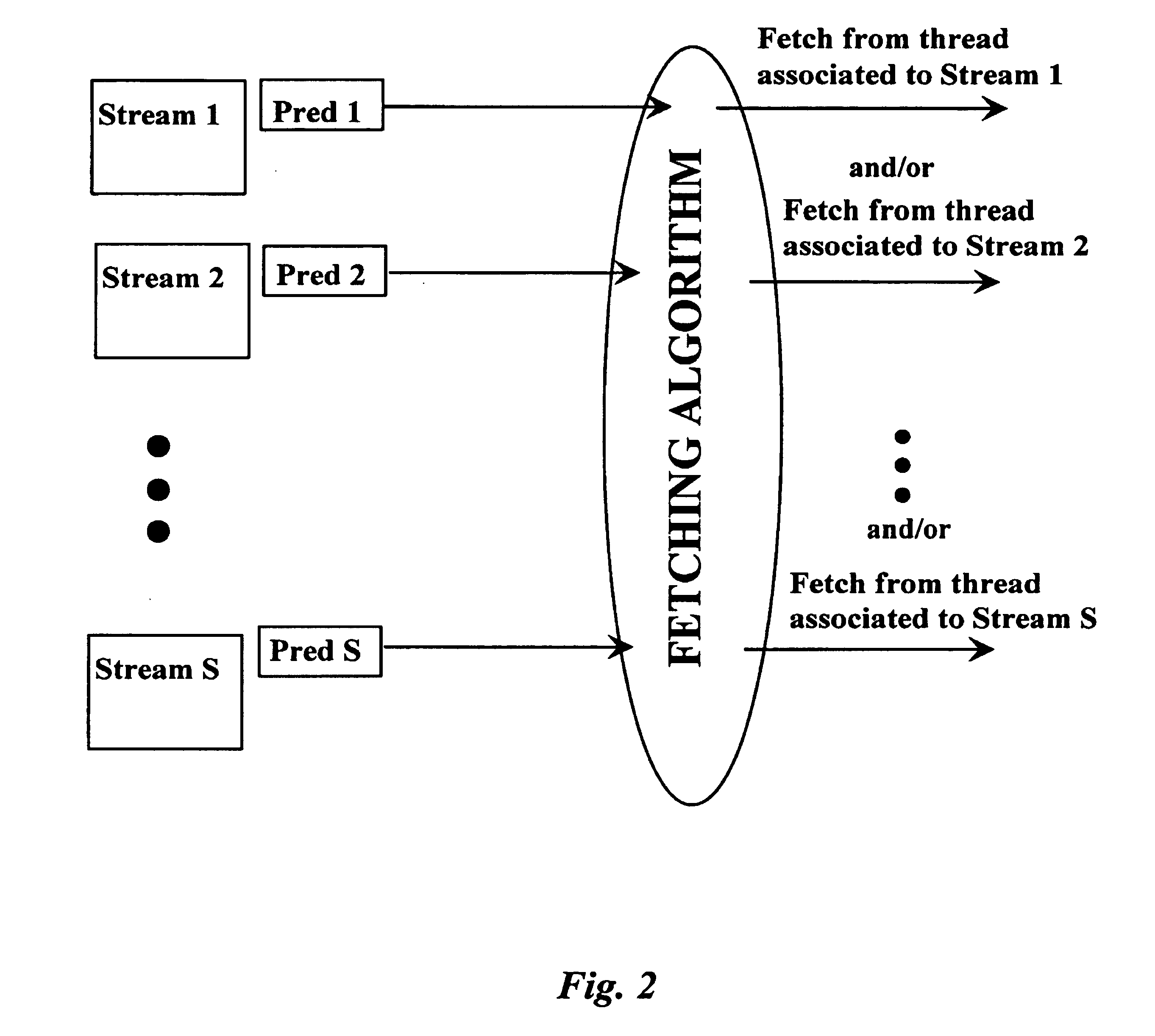

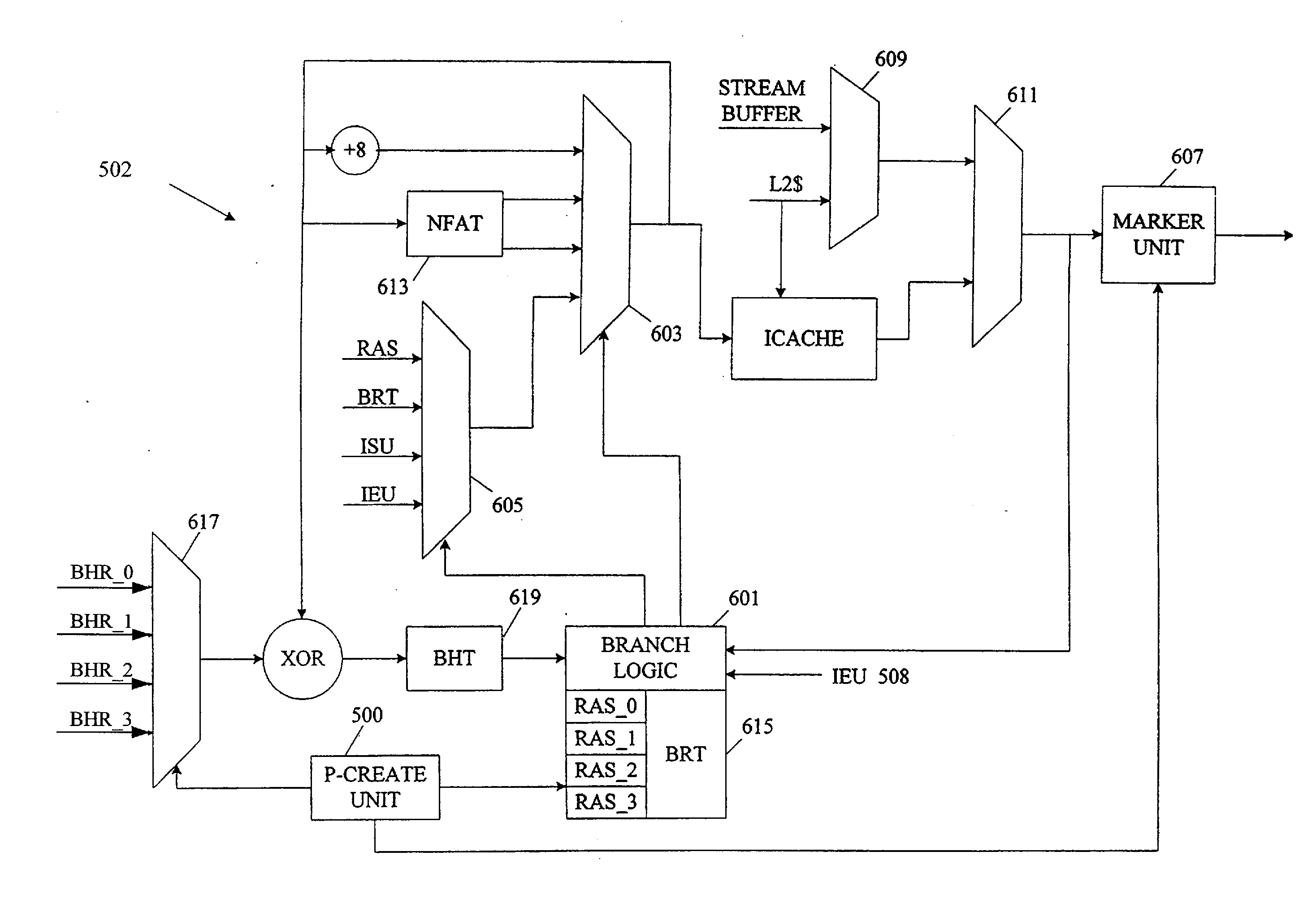

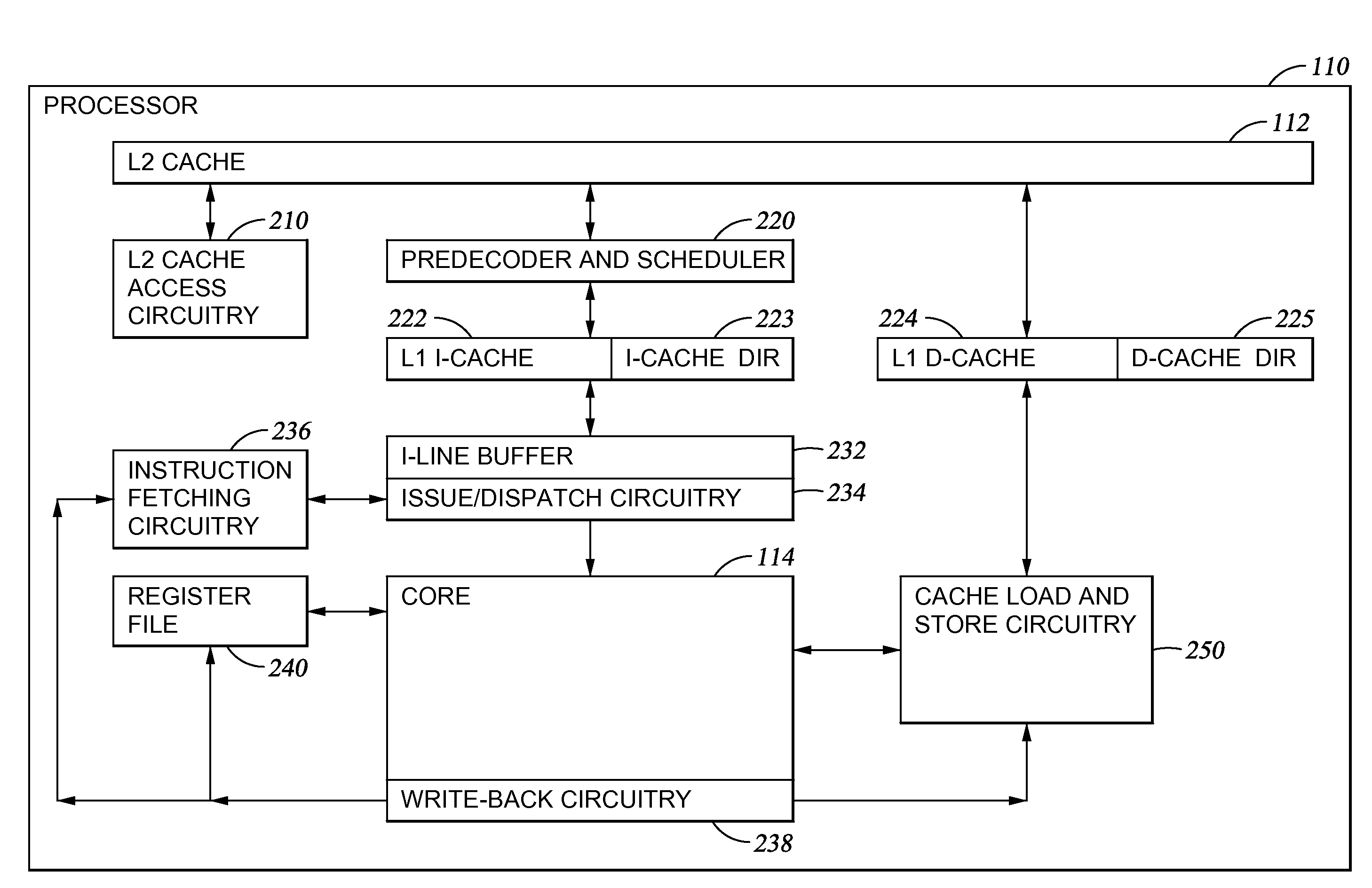

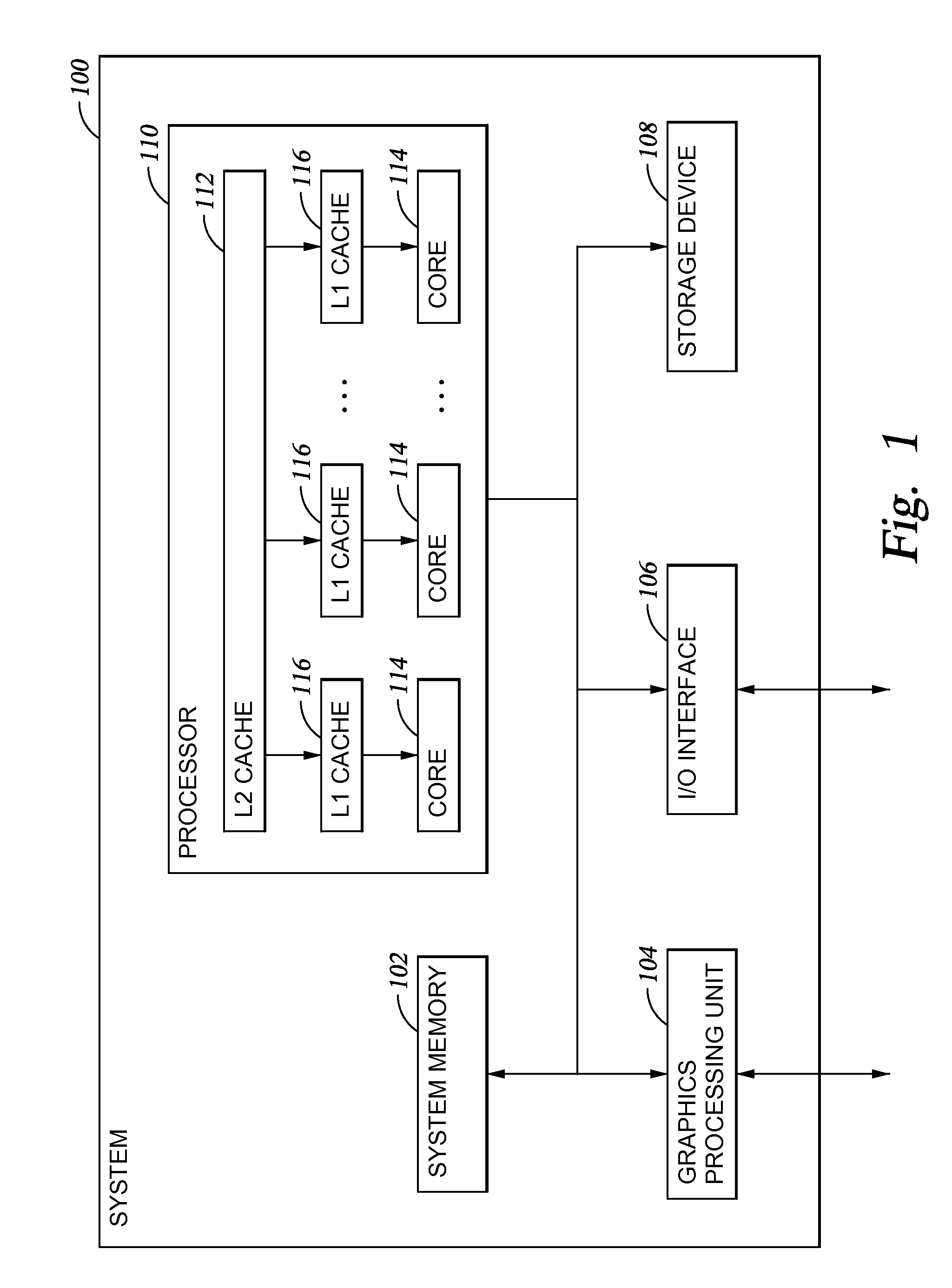

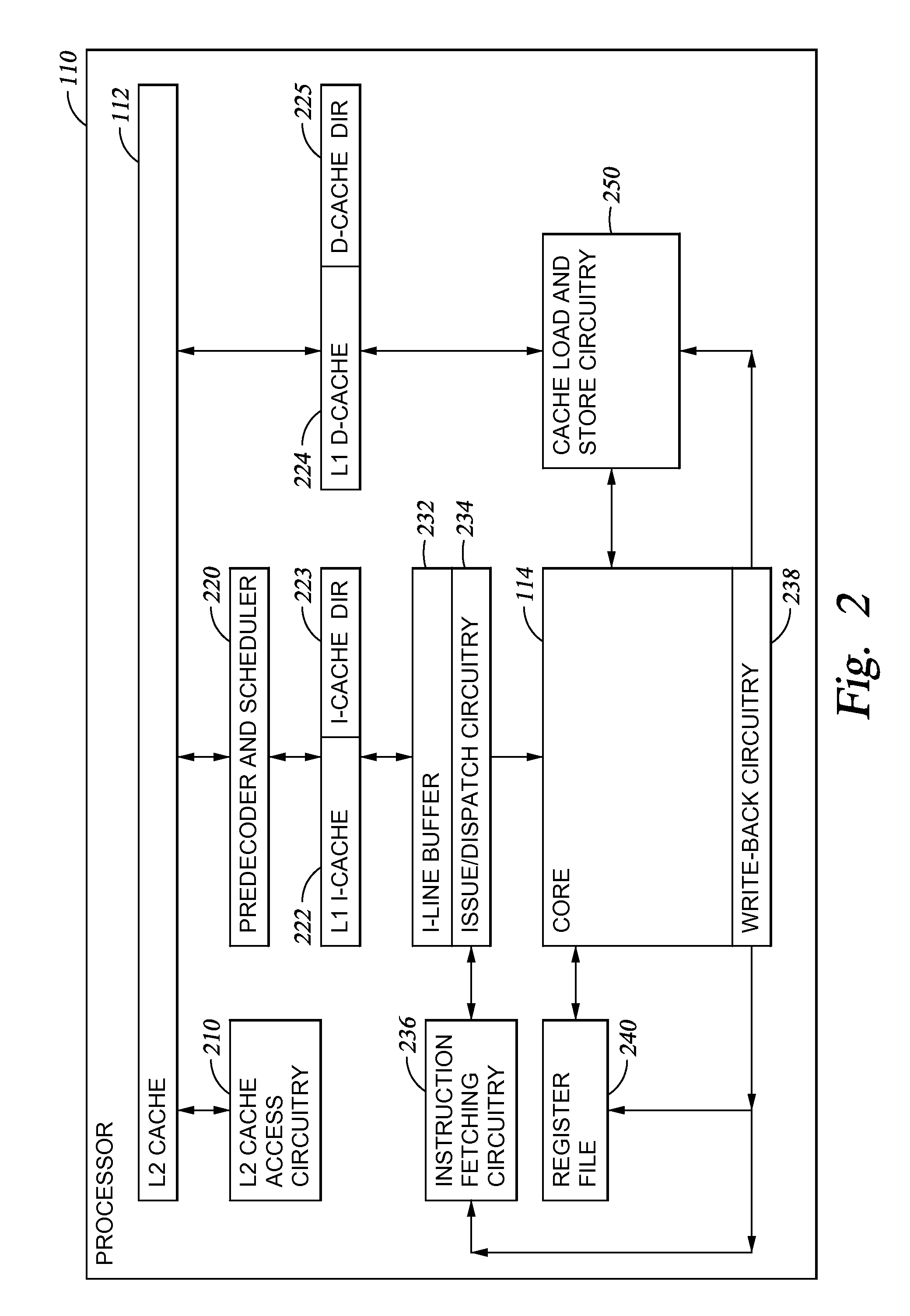

Methods and apparatus for improving fetching and dispatch of instructions in multithreaded processors

InactiveUS7035997B1Improve performanceDigital computer detailsMemory systemsLoad instructionInstruction pipeline

In a multi-streaming processor, a system for fetching instructions from individual ones of multiple streams to an instruction pipeline is provided, comprising a fetch algorithm for selecting from which stream to fetch an instruction, and one or more predictors for forecasting whether a load instruction will hit or miss the cache or a branch will be taken. The prediction or predictions are used by the fetch algorithm in determining from which stream to fetch. In some cases probabilities are determined and also used in decisions, and predictors may be used at either or both of fetch and dispatch stages.

Owner:ARM FINANCE OVERSEAS LTD

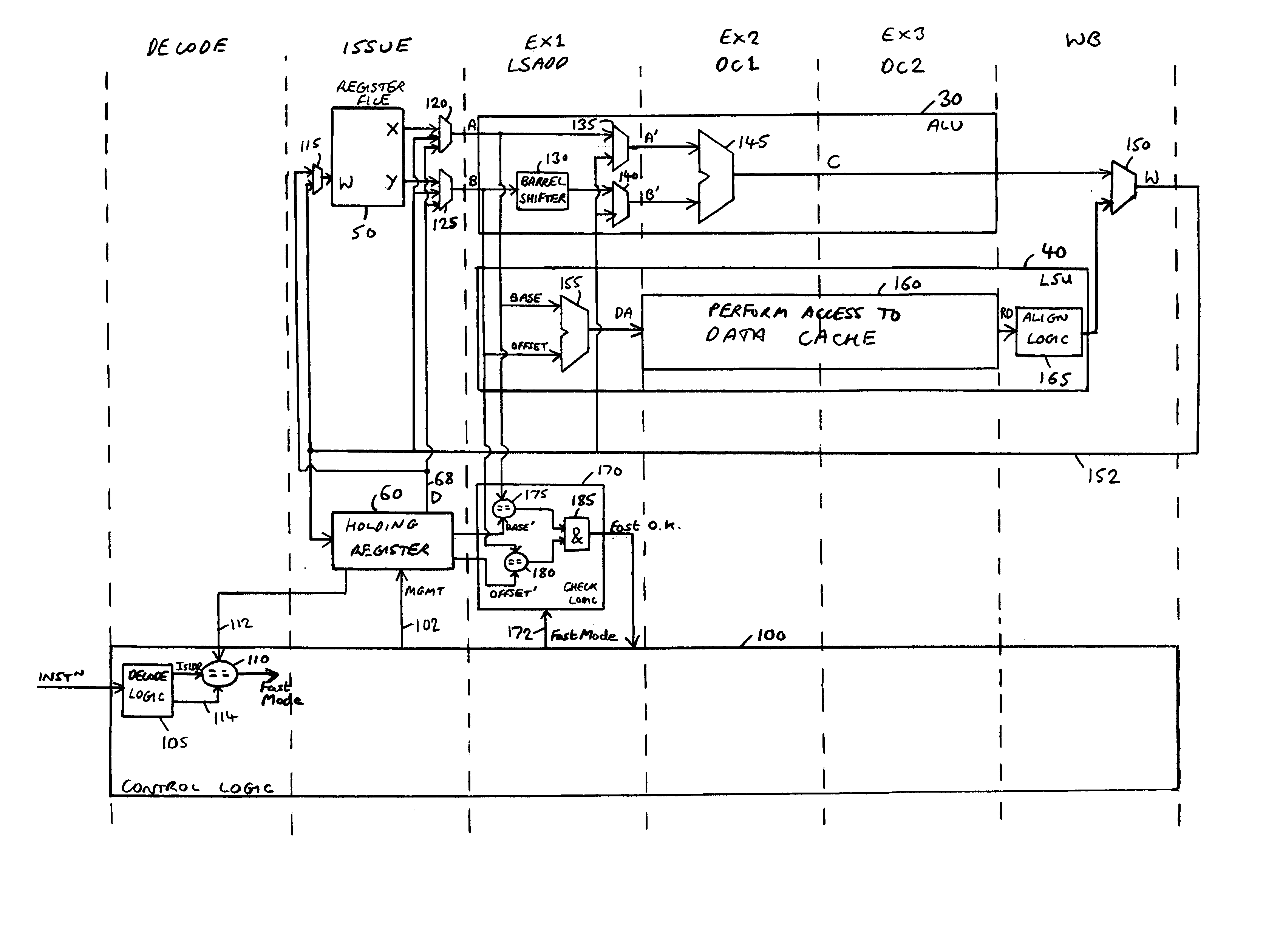

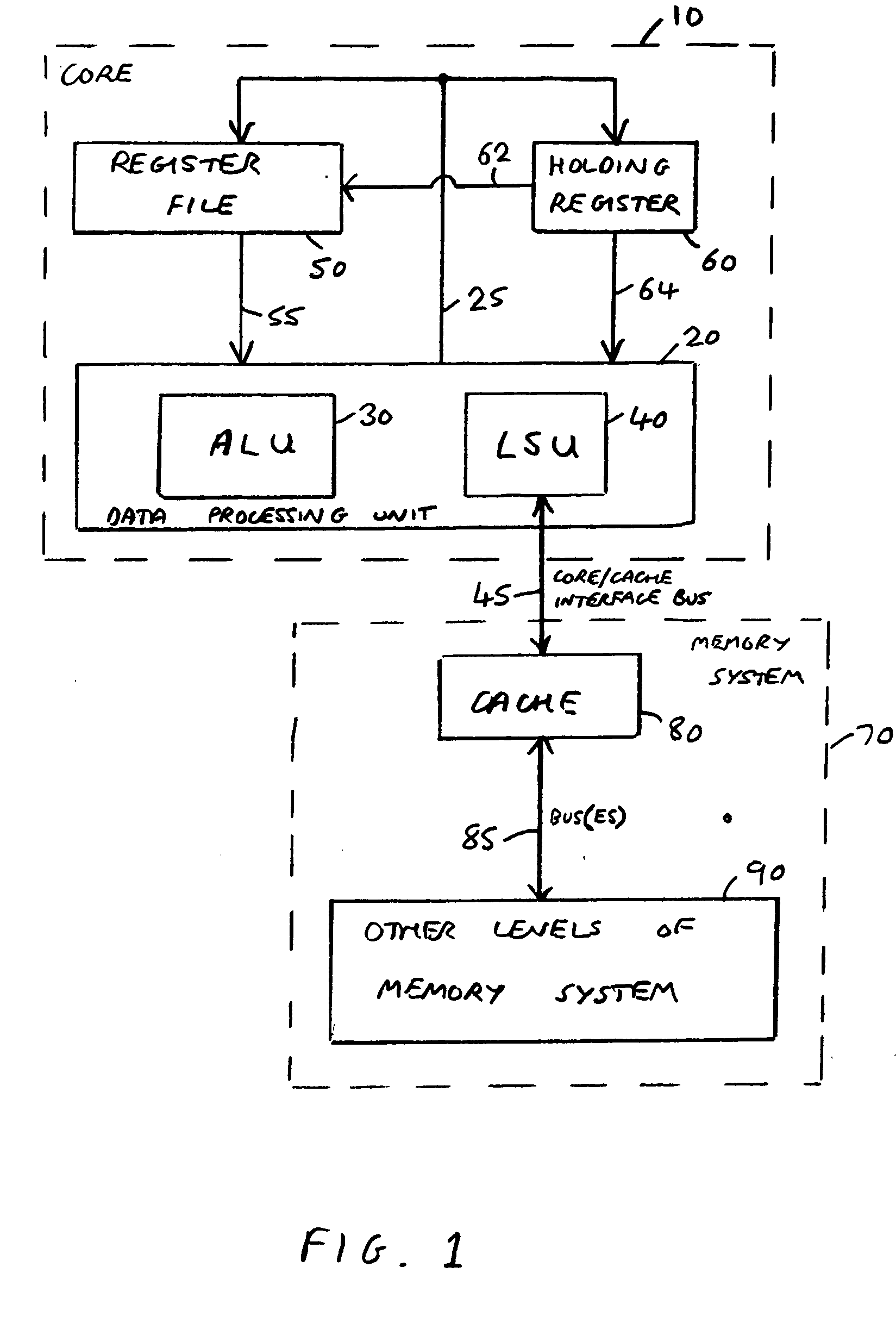

Apparatus and method for loading data values

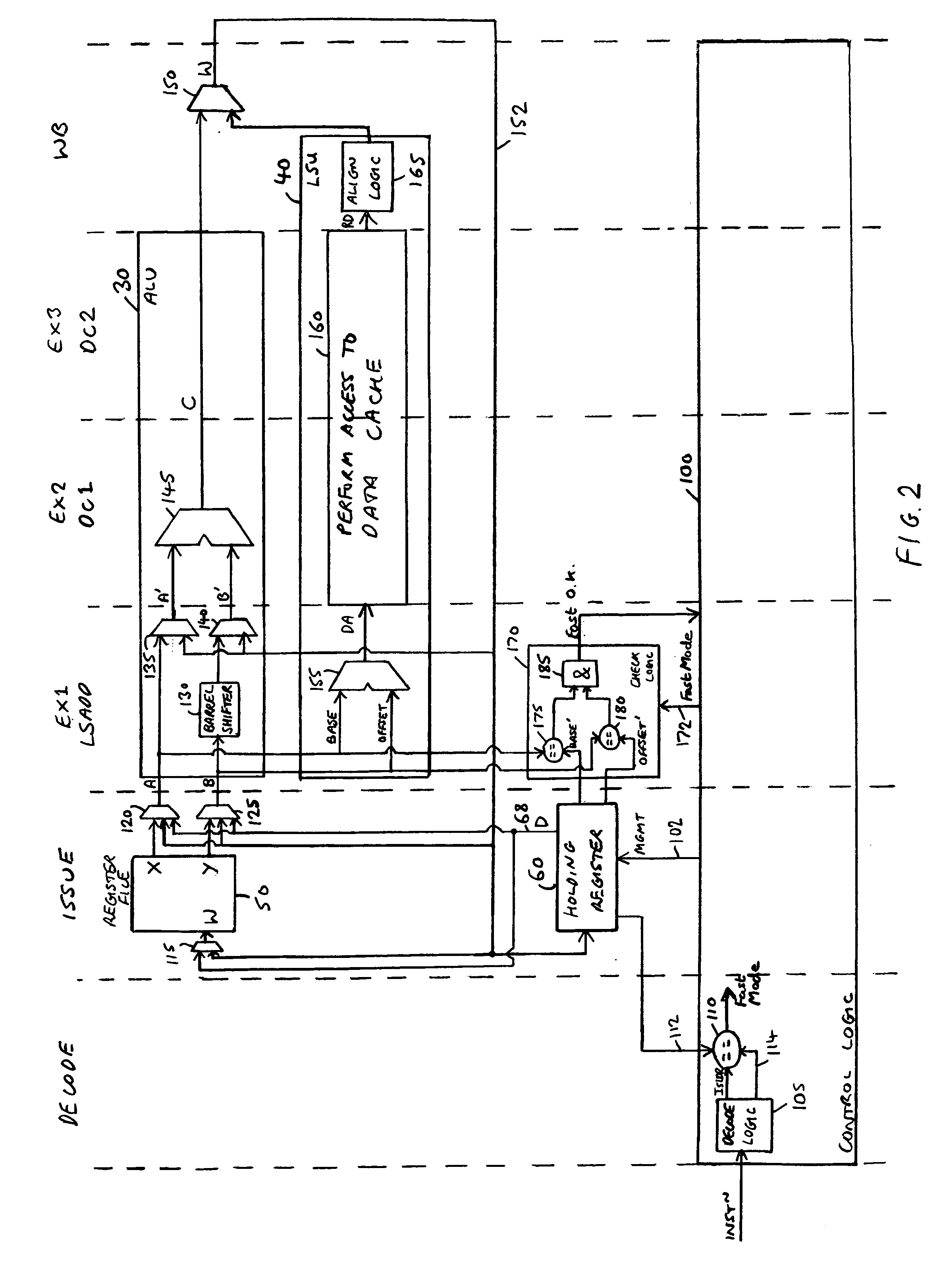

ActiveUS20050066131A1Save powerAvoids time penaltyMemory adressing/allocation/relocationConcurrent instruction executionLoad instructionWorking set

An apparatus and method for loading data values from a memory system are provided. The data processing apparatus comprises a data processing unit operable to execute instructions, and a register file having a plurality of registers operable to store data values accessible by the data processing unit when executing the instructions. Further, a holding register is provided which does not form one of a working set of registers of the register file, and is operable to temporarily store a data value, the holding register having a data portion for storing the data value, and an identifier portion operable to store identifier data associated with the data value. The data processing unit is then responsive to a preload instruction to issue a preload memory access request to a memory system to cause a data value identified by the preload instruction to be located in the memory system, and dependent on predetermined criteria to cause a copy of that data value along with associated identifier data to be loaded from the memory system into the holding register. Furthermore, the data processing unit is responsive to a load instruction to cause a comparison operation to be performed to determine whether identifier data derived from the load instruction matches the identifier data in the identifier portion of the holding register. If it does, the data value stored in the holding register is made available to the data processing unit without requiring a memory access request to be issued to the memory system. Only in the event of there being no match does the memory access request get issued to the memory system to cause a data value identified by the load instruction to be made available to the data processing unit from the memory system.

Owner:ARM LTD

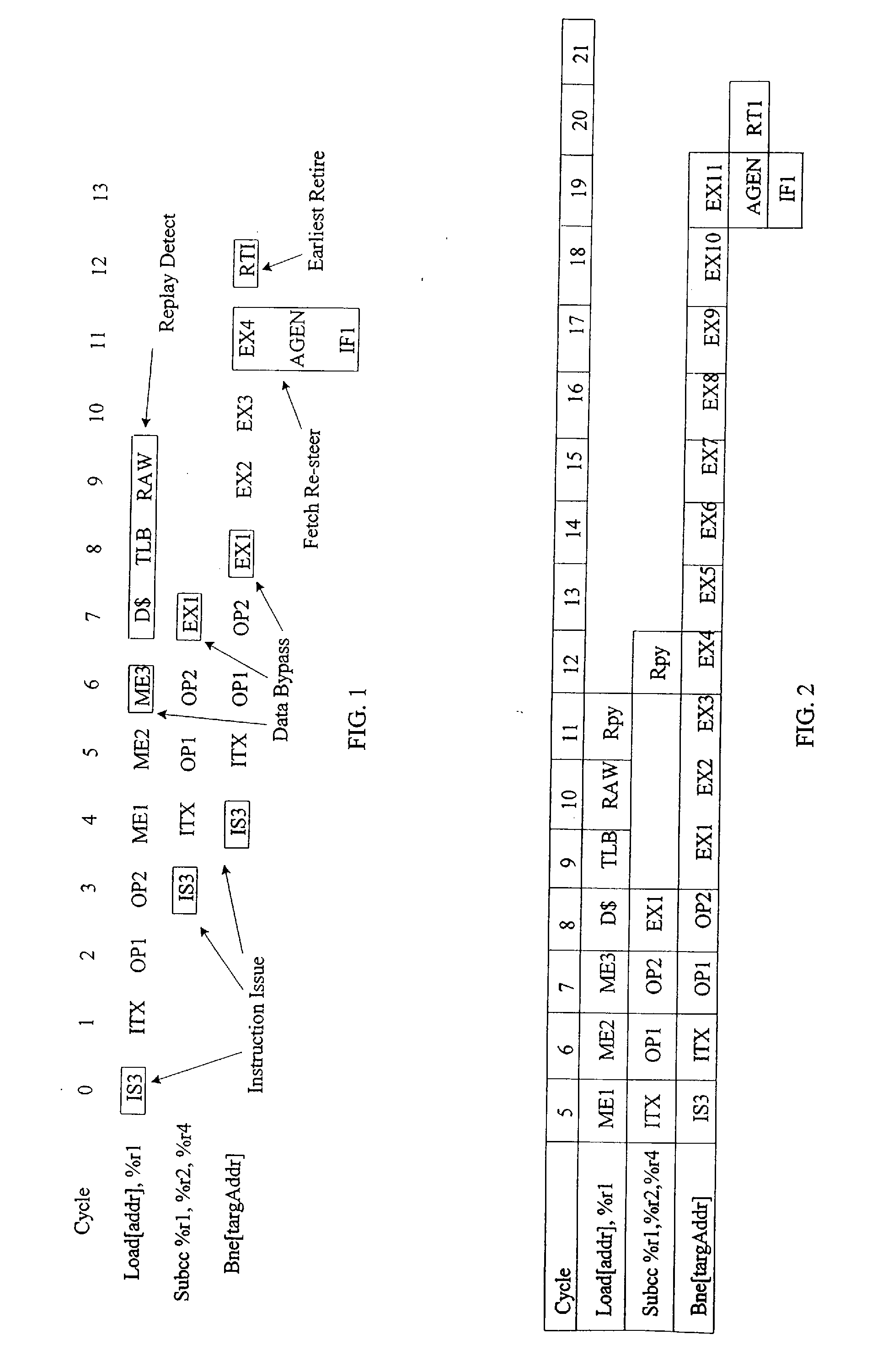

Validating branch resolution to avoid mis-steering instruction fetch

InactiveUS20060248319A1Avoids and eliminates repetitive replay conditionResolve delayDigital computer detailsSpecific program execution arrangementsLoad instructionImage resolution

A processor avoids or eliminates repetitive replay conditions and frequent instruction resteering through various techniques including resteering the fetch after the branch instruction retires, and delaying branch resolution. A processor resolves conditional branches and avoids repetitive resteering by delaying branch resolution. The processor has an instruction pipeline with inserted delay in branch condition and replay control pathways. For example, an instruction sequence that includes a load instruction followed by a subtract instruction then a conditional branch, delays branch resolution to allow time for analysis to determine whether the condition branch has resolved correctly. Eliminating incorrect branch resolutions prevents flushing of correctly predicted branches.

Owner:SUN MICROSYSTEMS INC

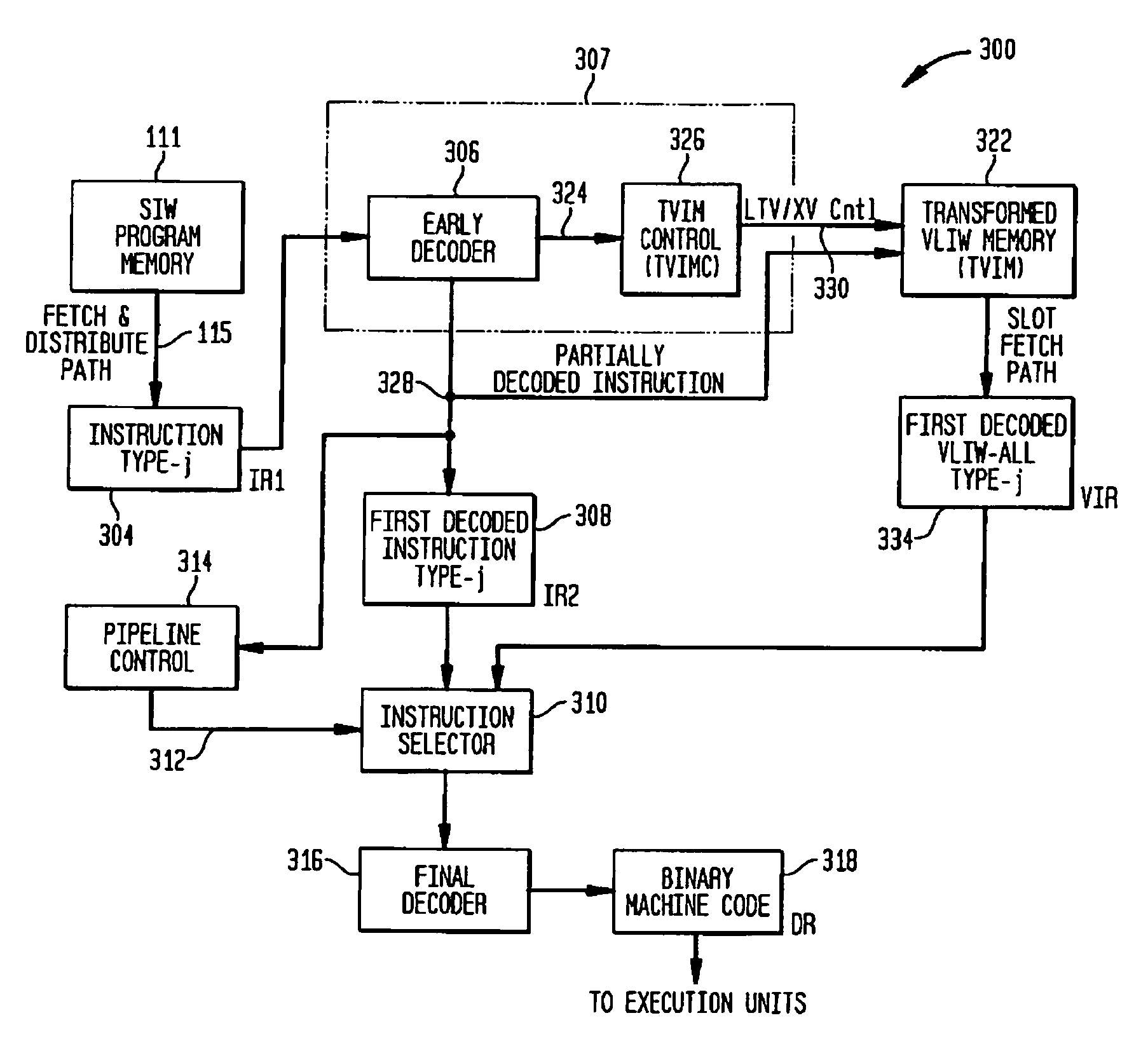

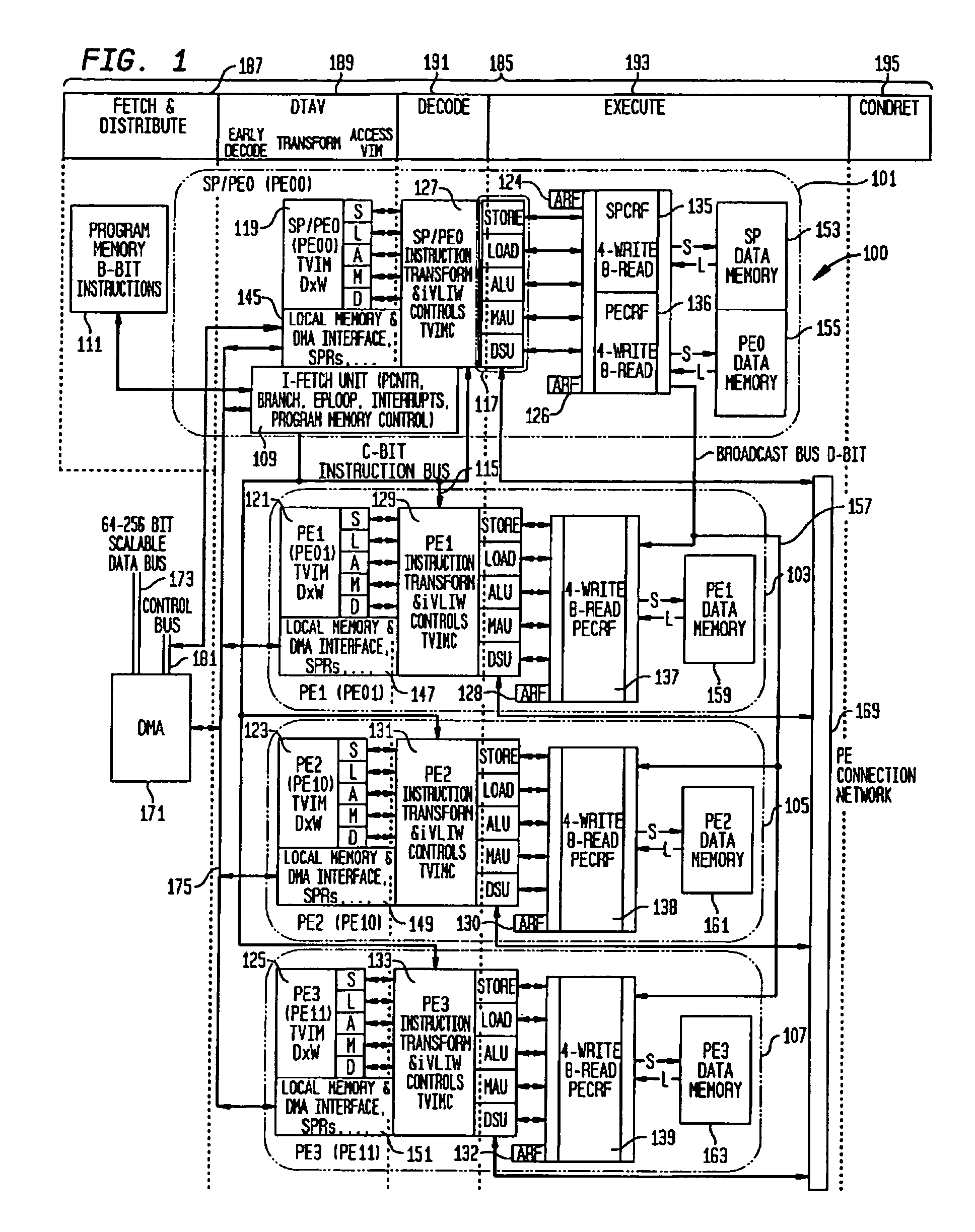

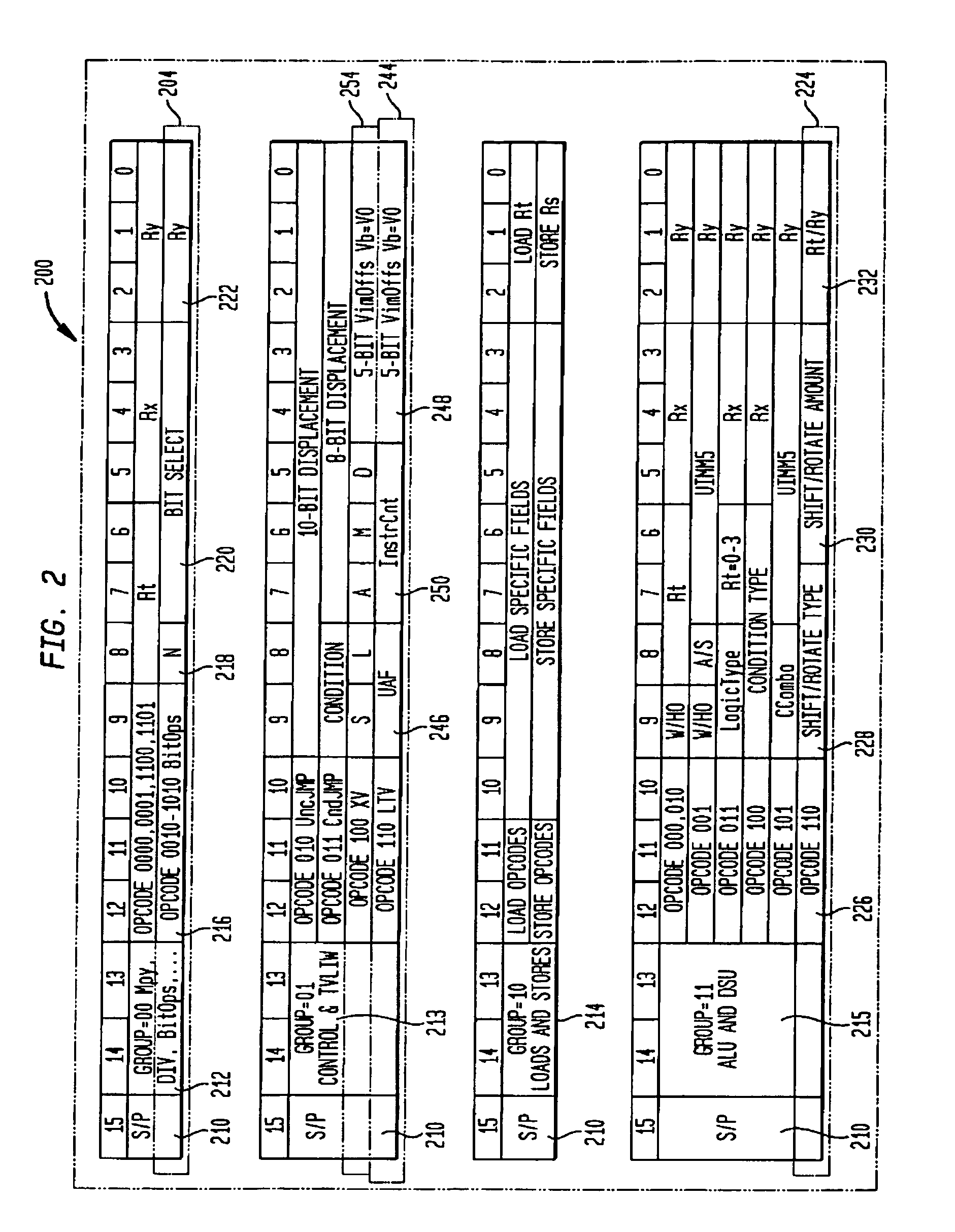

Methods and apparatus for transforming, loading, and executing super-set instructions

InactiveUS7493474B1Eliminate decode stageMinimization of actionRegister arrangementsInstruction analysisInstruction memoryLoad instruction

Techniques are described for loading decoded instructions and super-set instructions in a memory for later access. For loading a decoded instruction, the decoded instruction is a transformed form of an original instruction that was stored in the program memory. The transformation is from an encoded assembly level format to a binary machine level format. In one technique, the transformation mechanism is invoked by a transform and load instruction that causes an instruction retrieved from program memory to be transformed into a new language format and then loaded into a transformed instruction memory. The format of the transformed instruction may be optimized to the implementation requirements, such as improving critical path timing. The transformation of instructions may extend to other needs beyond timing path improvement, for example, requiring super-set instructions for increased functionality and improvements to instruction level parallelism. Techniques for transforming, loading, and executing super-set instructions are described.

Owner:ALTERA CORP

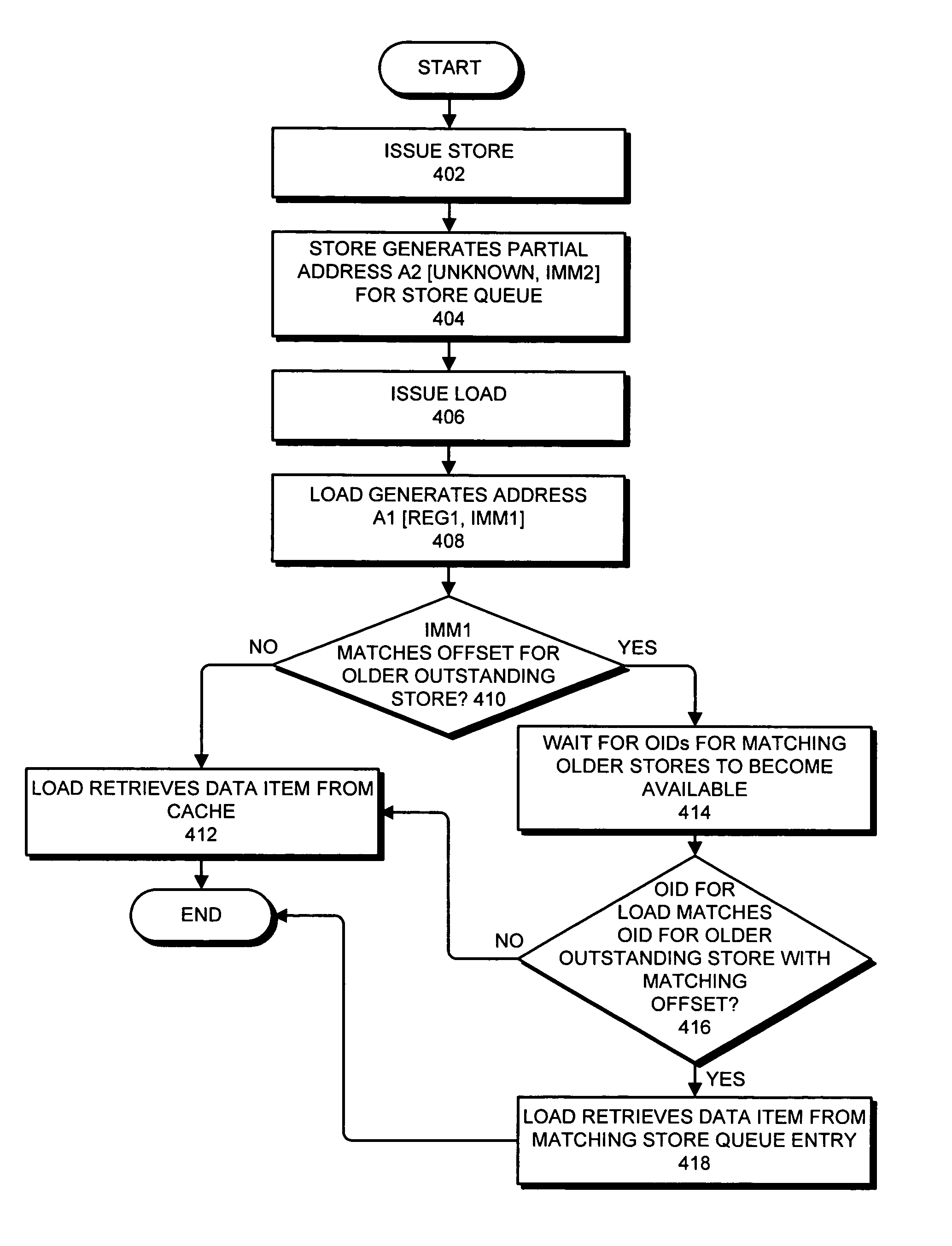

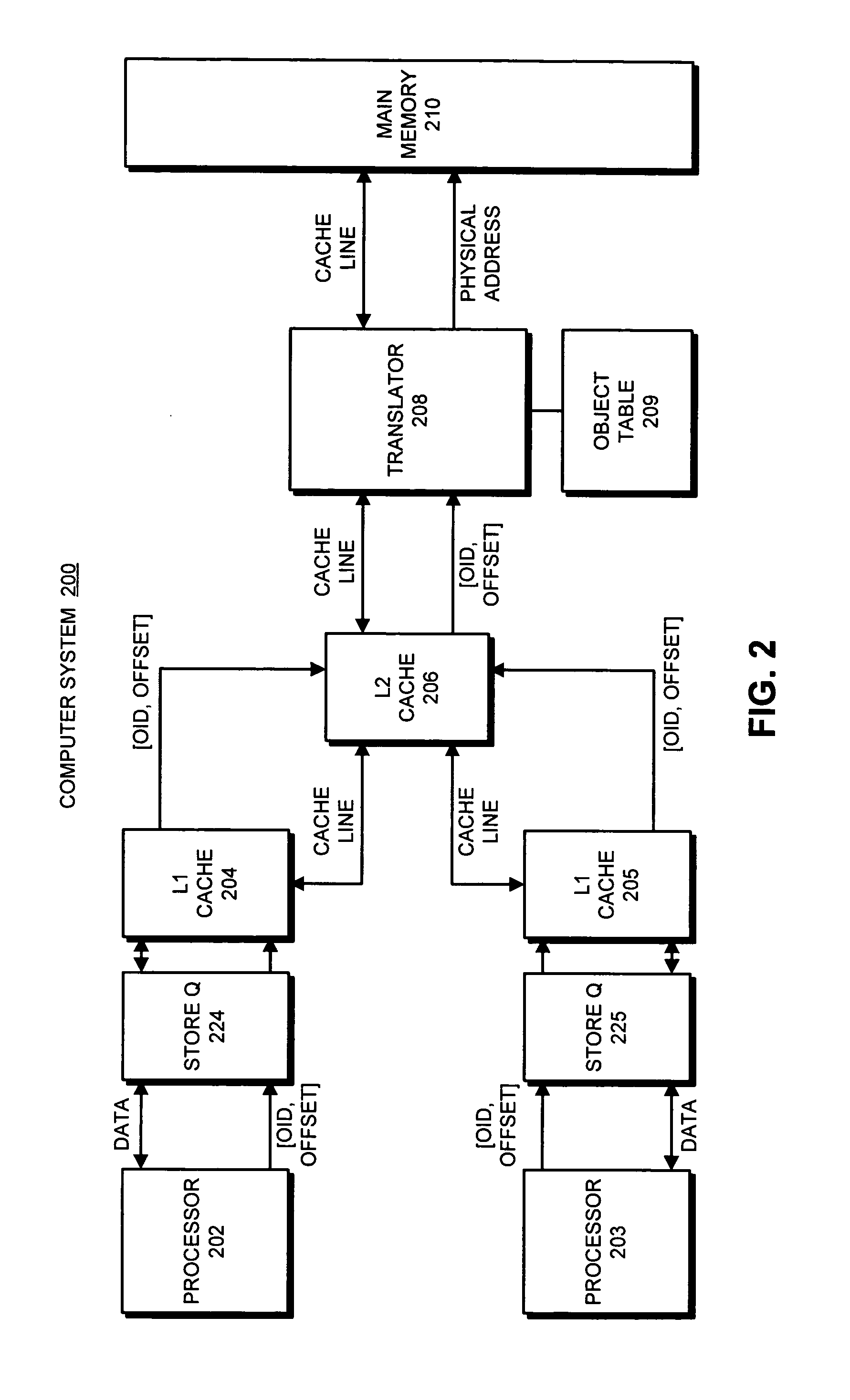

Detecting raw hazards in an object-addressed memory hierarchy by comparing an object identifier and offset for a load instruction to object identifiers and offsets in a store queue

One embodiment of the present invention provides a system that processes memory-access instructions in an object-addressed memory hierarchy. During operation, the system receives a load instruction to be executed, wherein the load instruction loads a data item from an object, and wherein the load instruction specifies an object identifier (OID) for the object and an offset for the data item within the object. Next, the system compares the OID and the offset for the data item against OIDs and offsets for outstanding store instructions in a store queue. If the offset for the data item does not match any of the offsets for the outstanding store instructions in the store queue, and hence no read-after-write (RAW) hazard exists, the system performs a cache access to retrieve the data item for the load instruction.

Owner:ORACLE INT CORP

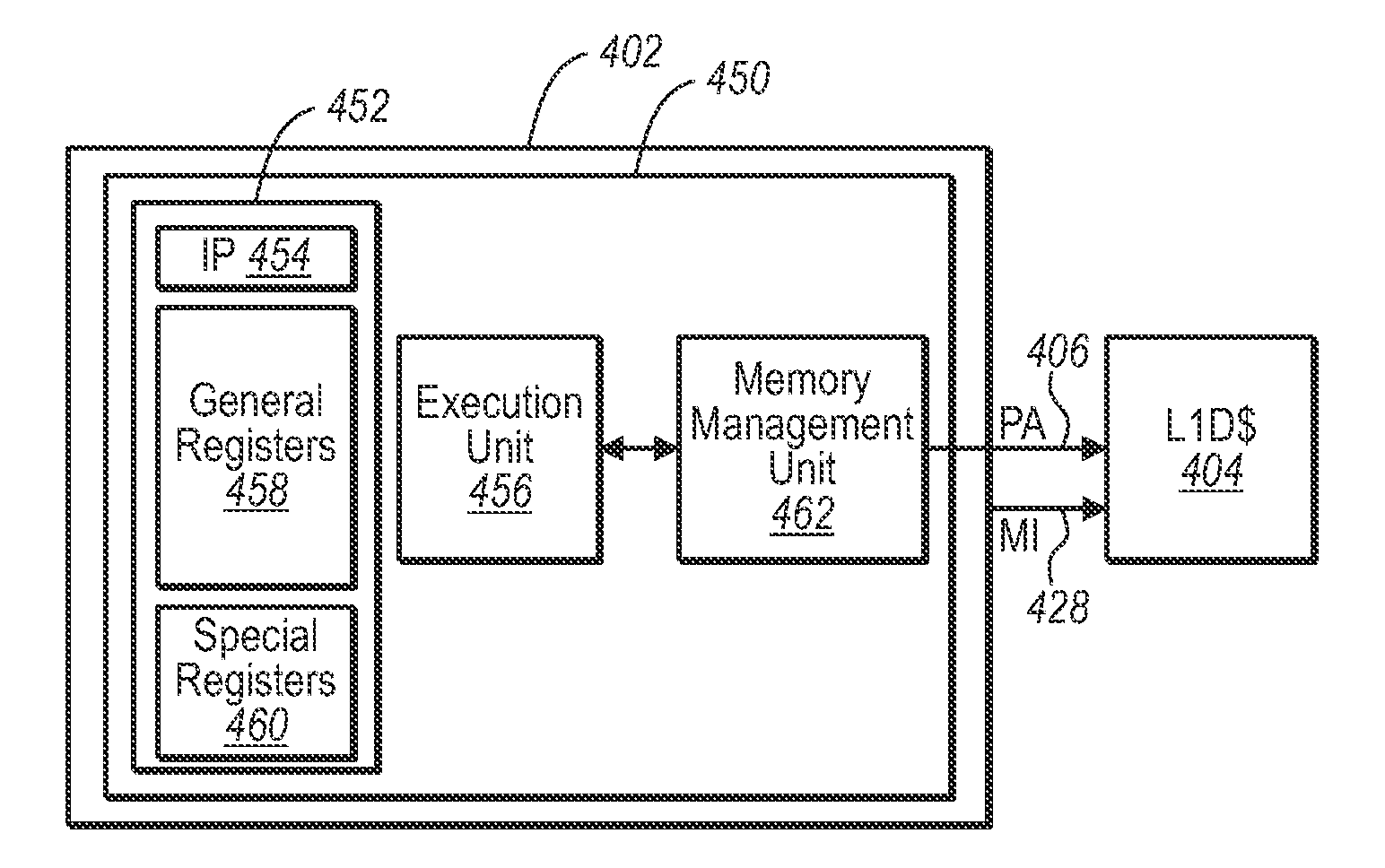

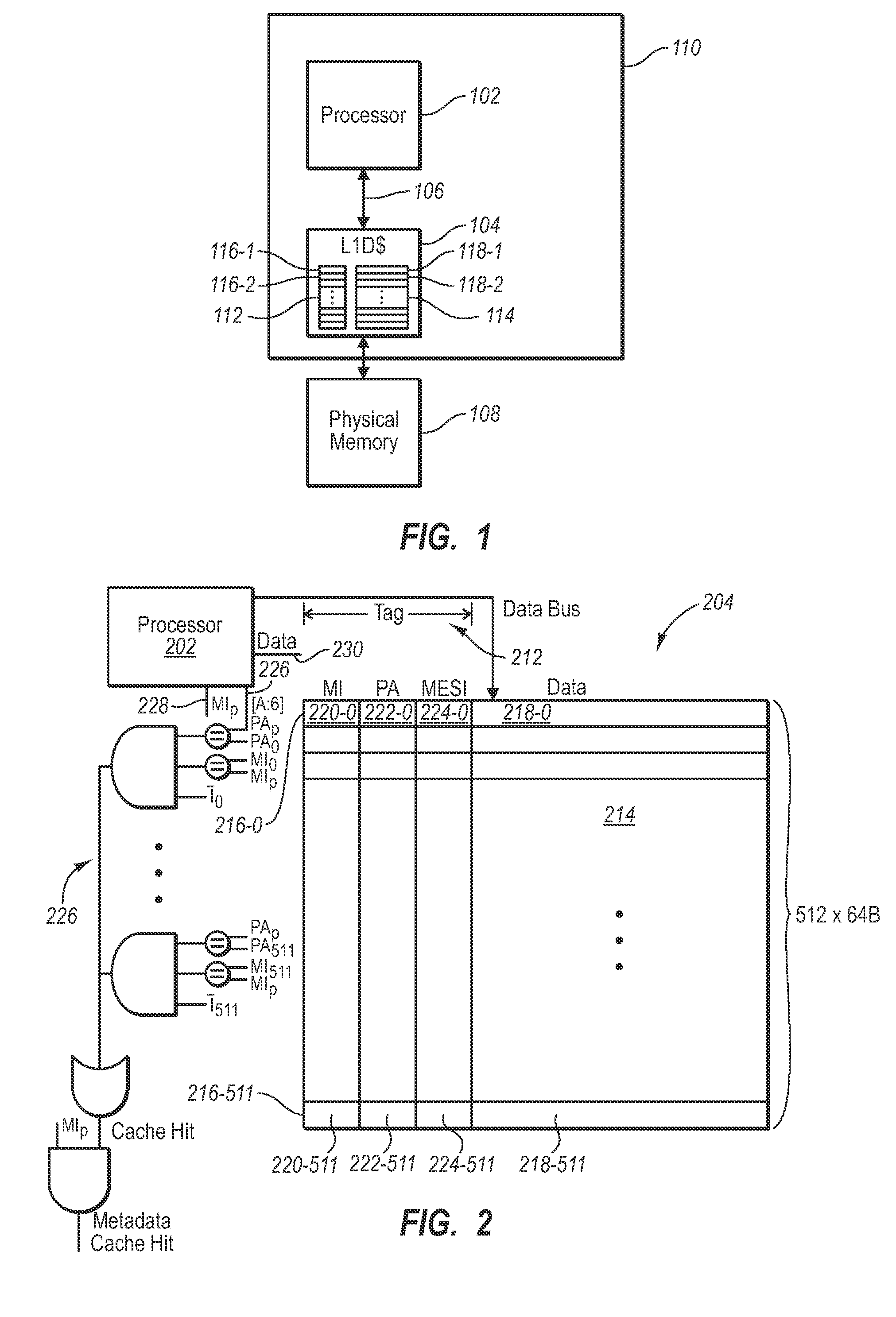

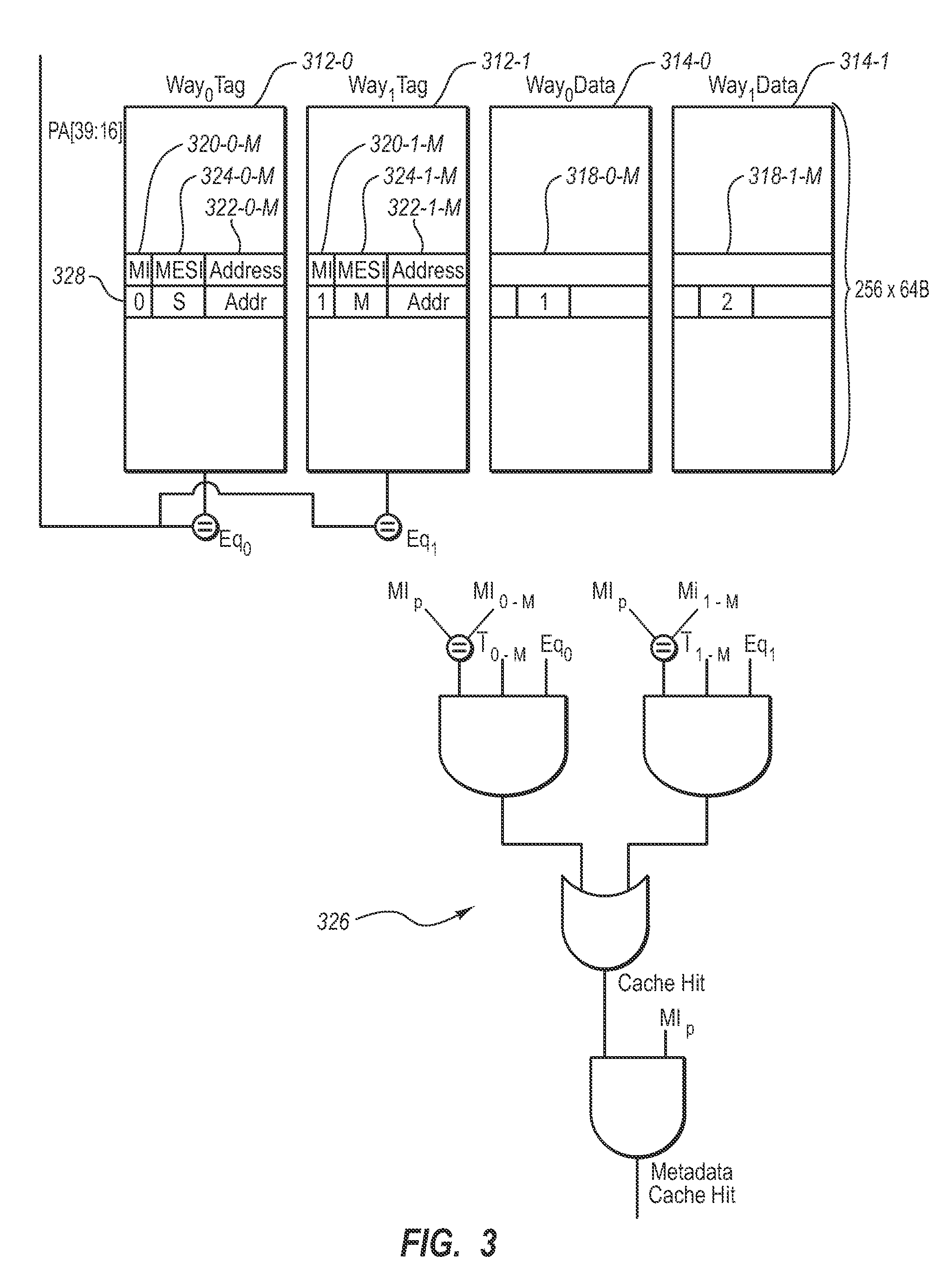

Metaphysically addressed cache metadata

ActiveUS20100332716A1Well formedDigital data processing detailsMemory adressing/allocation/relocationLoad instructionData store

Storing metadata that is disjoint from corresponding data by storing the metadata to the same address as the corresponding data but in a different address space. A metadata store instruction includes a storage address for the metadata. The storage address is the same address as that for data corresponding to the metadata, but the storage address when used for the metadata is implemented in a metadata address space while the storage address, when used for the corresponding data is implemented in a different data address space. As a result of executing the metadata store instruction, the metadata is stored at the storage address. A metadata load instruction includes the storage address for the metadata. As a result of executing the metadata load instruction, the metadata stored at the address is received. Some embodiments may further implement a metadata clear instruction which clears any entries in the metadata address space.

Owner:MICROSOFT TECH LICENSING LLC

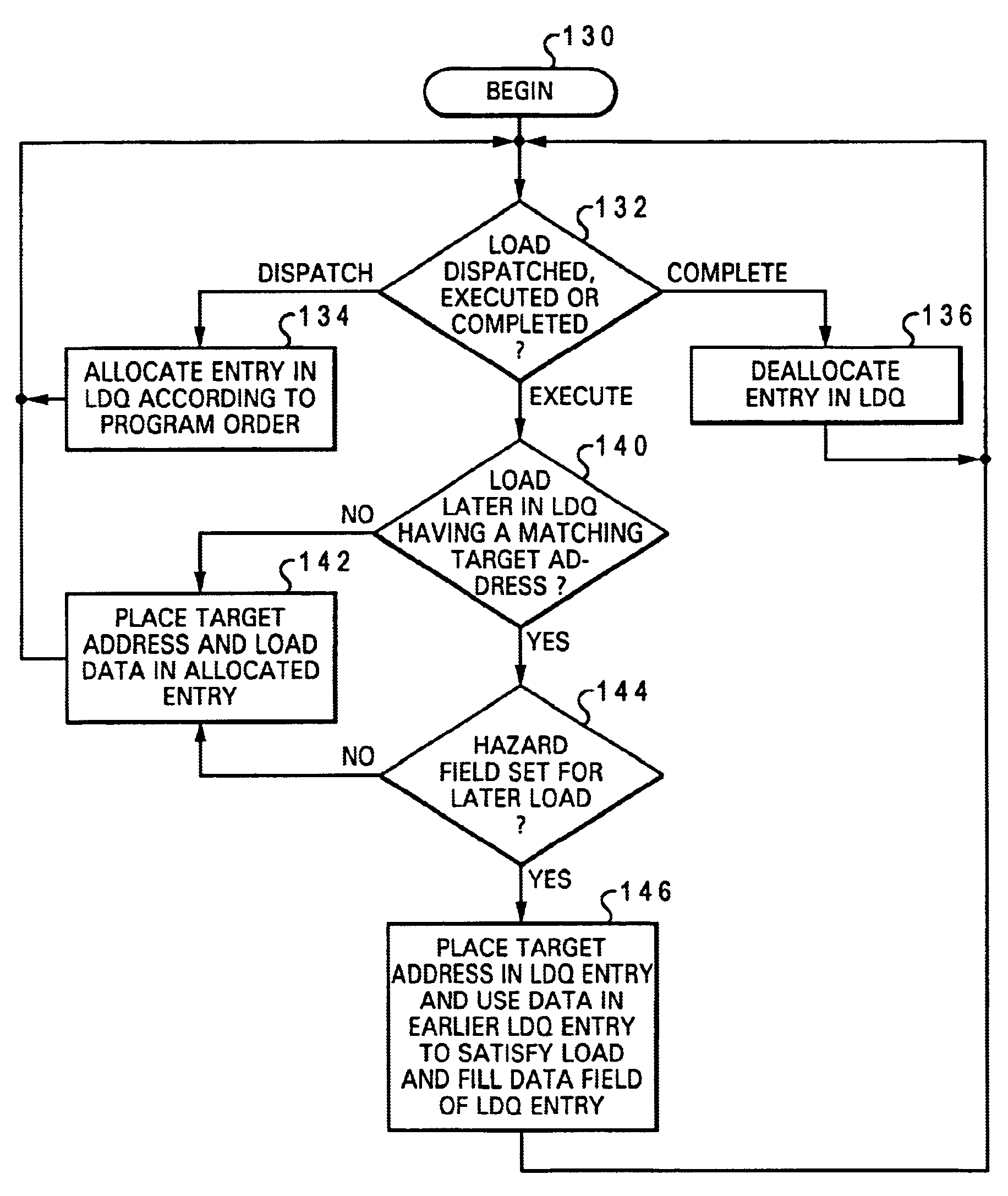

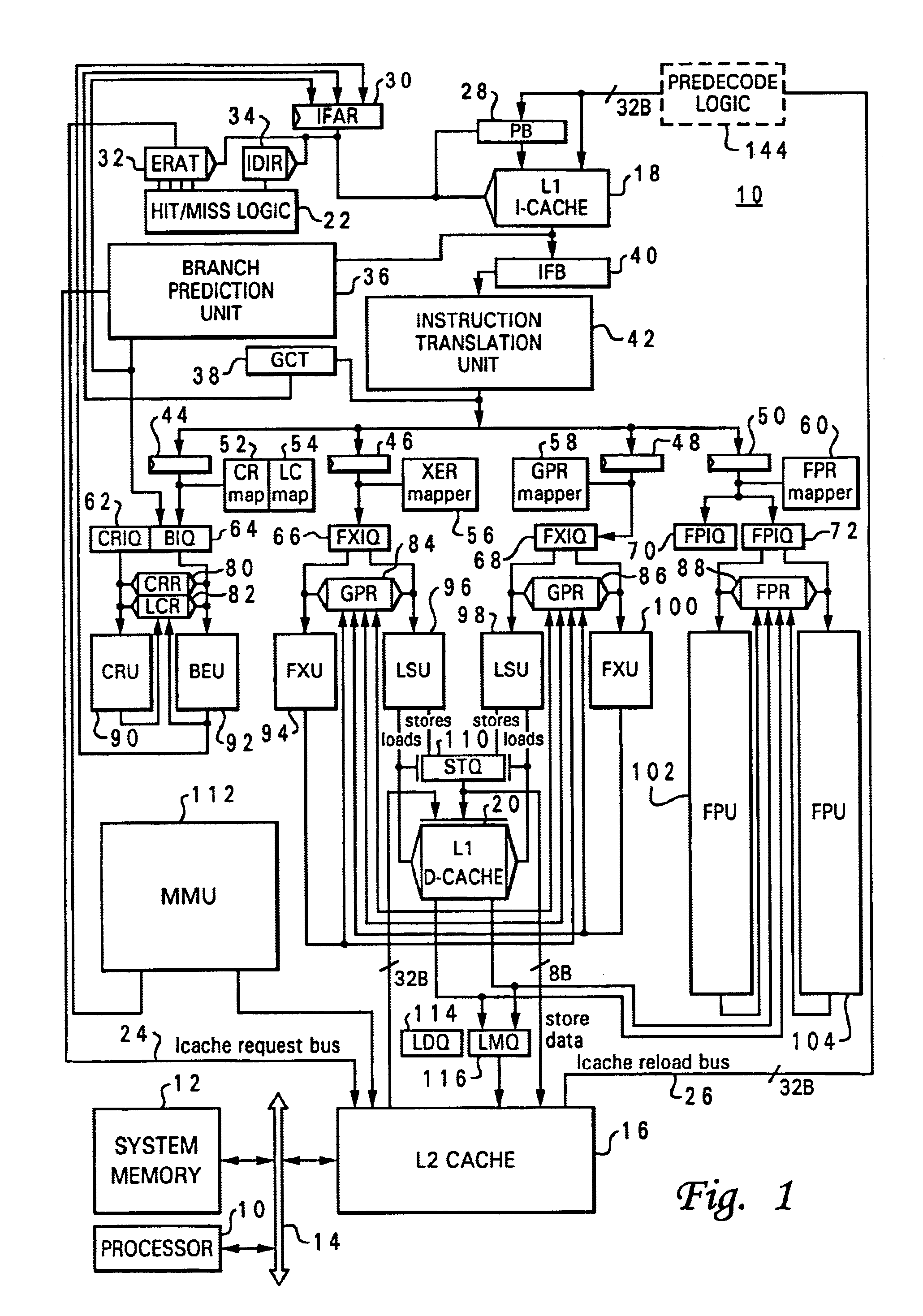

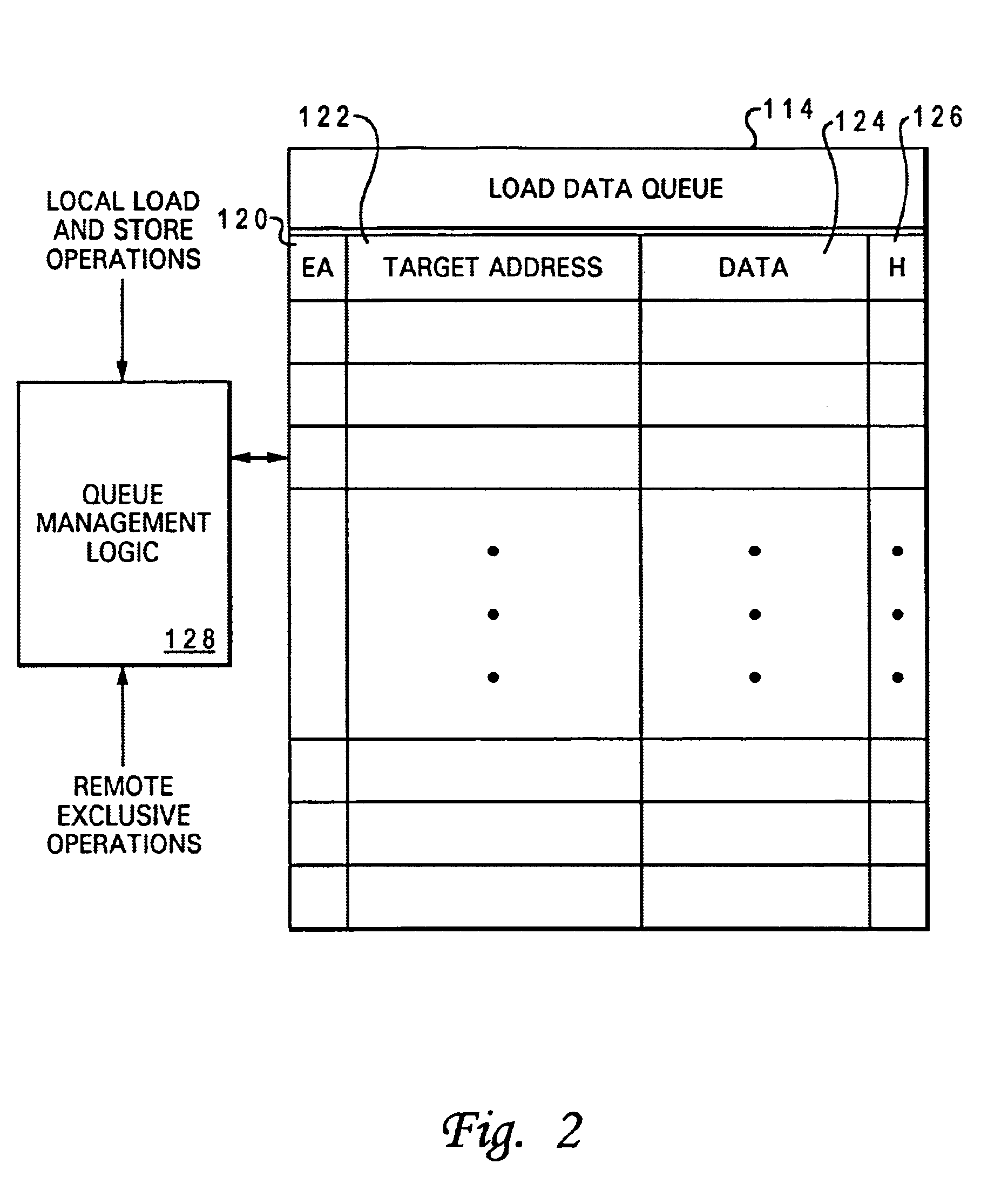

Processor and method of executing load instructions out-of-order having reduced hazard penalty

InactiveUS6868491B1Reduce performance lossLower performance requirementsRuntime instruction translationDigital computer detailsLoad instructionProcessor register

A processor having a reduced data hazard penalty includes a register set, at least one execution unit that executes load instructions to transfer data into the register set, and a load queue. The load queue contains at least one entry, and each occupied entry in the load queue stores load data retrieved by an executed load instruction in association with a target address of the executed load instruction. The load queue has associated queue management logic that, in response to execution by the execution unit of a load instruction, determines by reference to the load queue whether a data hazard exists for the load instruction. If so, the queue management logic outputs load data from the load queue to the register set in accordance with the load instruction, thus eliminating the need to flush and re-execute the load instruction.

Owner:INTEL CORP

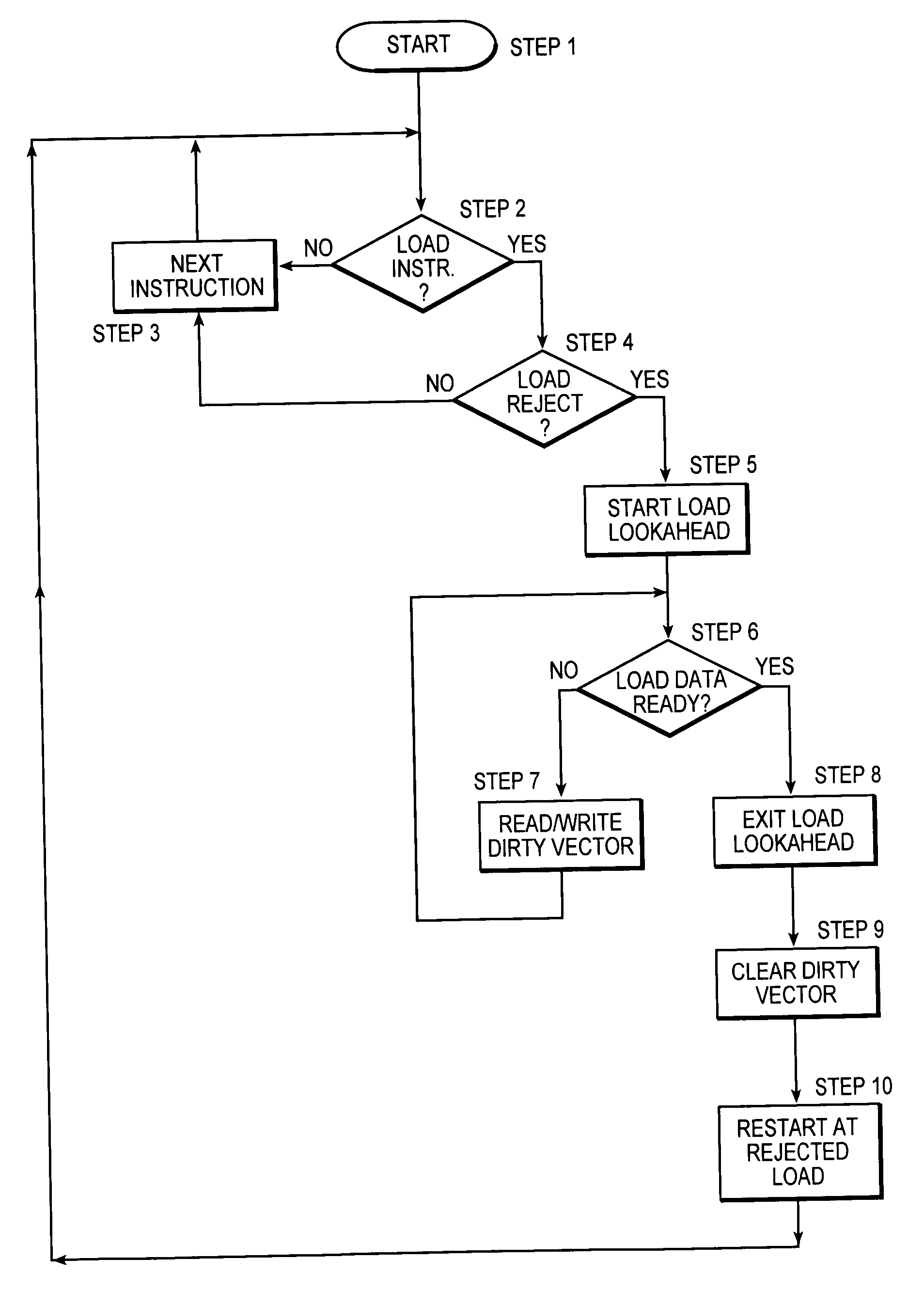

Load lookahead prefetch for microprocessors

InactiveUS20060149935A1Reduced performance impactLower latencyDigital computer detailsSpecific program execution arrangementsSpeculative executionLoad instruction

The present invention allows a microprocessor to identify and speculatively execute future load instructions during a stall condition. This allows forward progress to be made through the instruction stream during the stall condition which would otherwise cause the microprocessor or thread of execution to be idle. The data for such future load instructions can be prefetched from a distant cache or main memory such that when the load instruction is re-executed (non speculative executed) after the stall condition expires, its data will reside either in the L1 cache, or will be enroute to the processor, resulting in a reduced execution latency. When an extended stall condition is detected, load lookahead prefetch is started allowing speculative execution of instructions that would normally have been stalled. In this speculative mode, instruction operands may be invalid due to source loads that miss the L1 cache, facilities not available in speculative execution mode, or due to speculative instruction results that are not available via forwarding and are not written to the architected registers. A set of status bits are used to dynamically keep track of the dependencies between instructions in the pipeline and a bit vector tracks invalid architected facilities with respect to the speculative instruction stream. Both sources of information are used to identify load instructions with valid operands for calculating the load address. If the operands are valid, then a load prefetch operation is started to retrieve data from the cache ahead of time such that it can be available for the load instruction when it is non-speculatively executed.

Owner:INTEL CORP

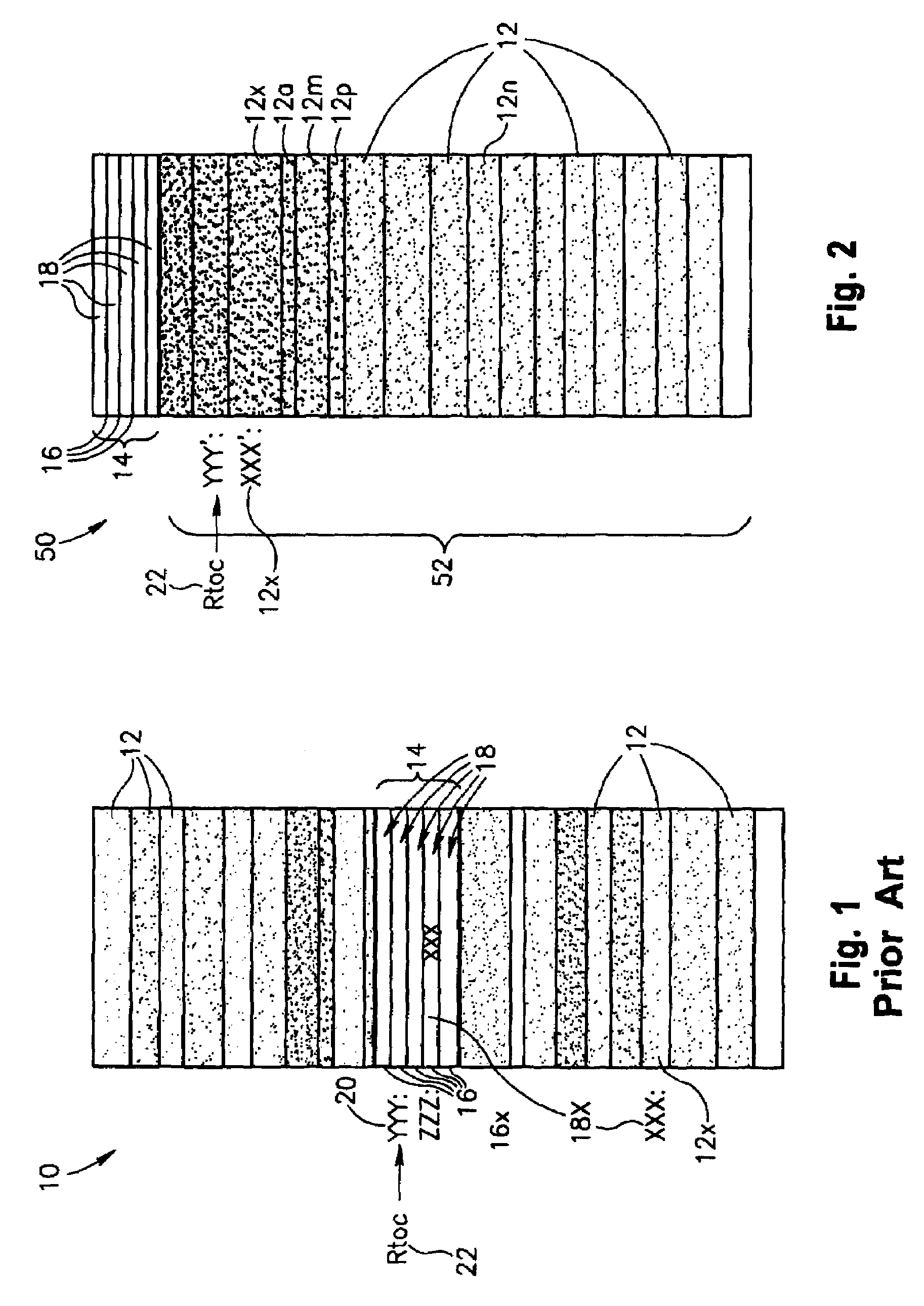

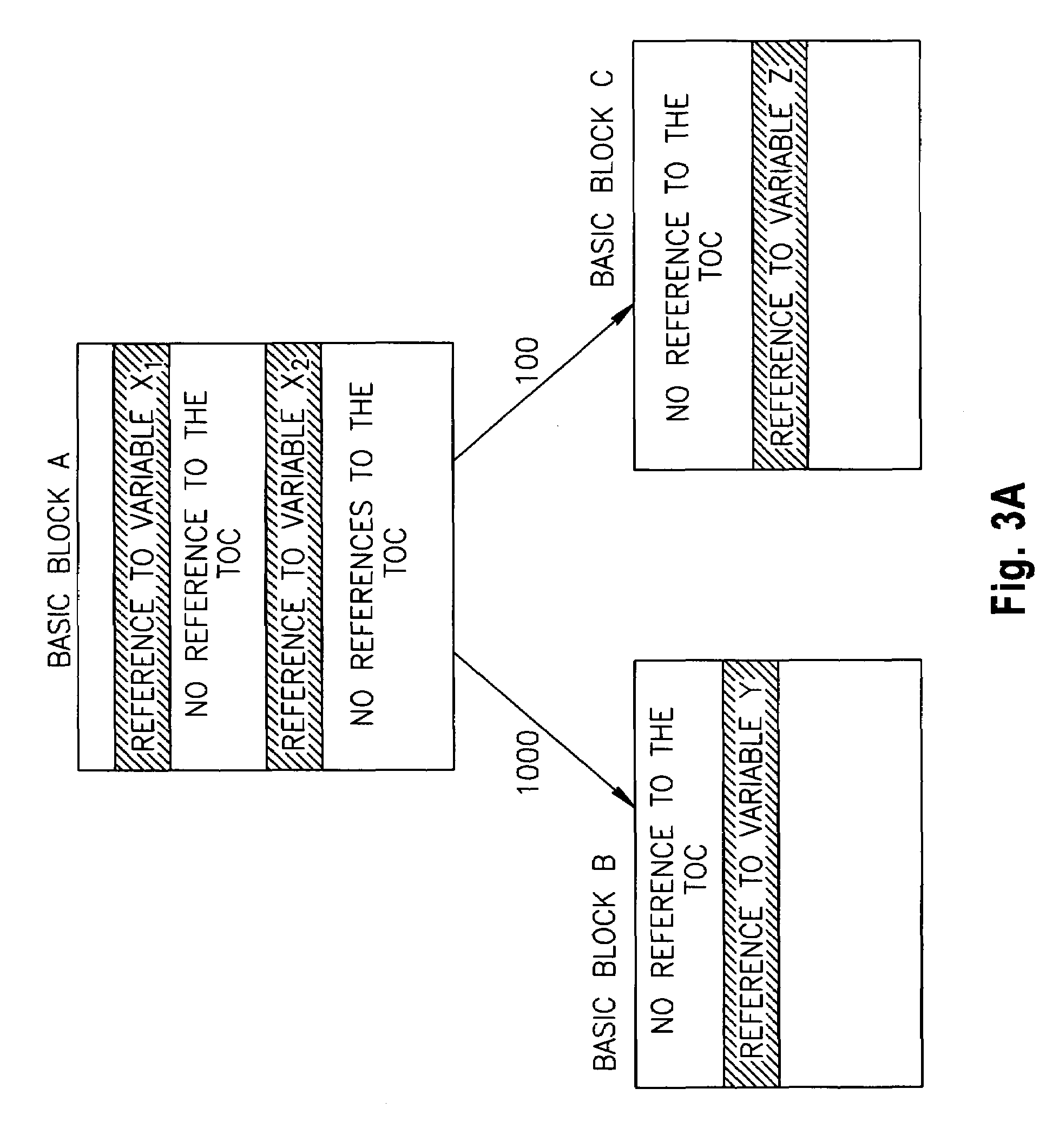

Reducing load instructions via global data reordering

InactiveUS7310799B2Improve performanceSoftware engineeringProgram controlLoad instructionGlobal variable

A method for improving program performance including reordering a global data area of a program and for each load instruction referencing global variables within range of the immediate part of an add immediate instruction from a TOC anchor, replacing the load instruction with an add immediate instruction. The method may further include placing a TOC at the top, or within a predetermined distance from the top, of the global data area. The method may also include placing the global variables after the TOC, wherein more frequently referenced global variable are closer to the TOC than less frequently referenced global variables. Also, the method may further include placing in run-time order, groups of the global variables that frequently follow each other in run-time.

Owner:IBM CORP

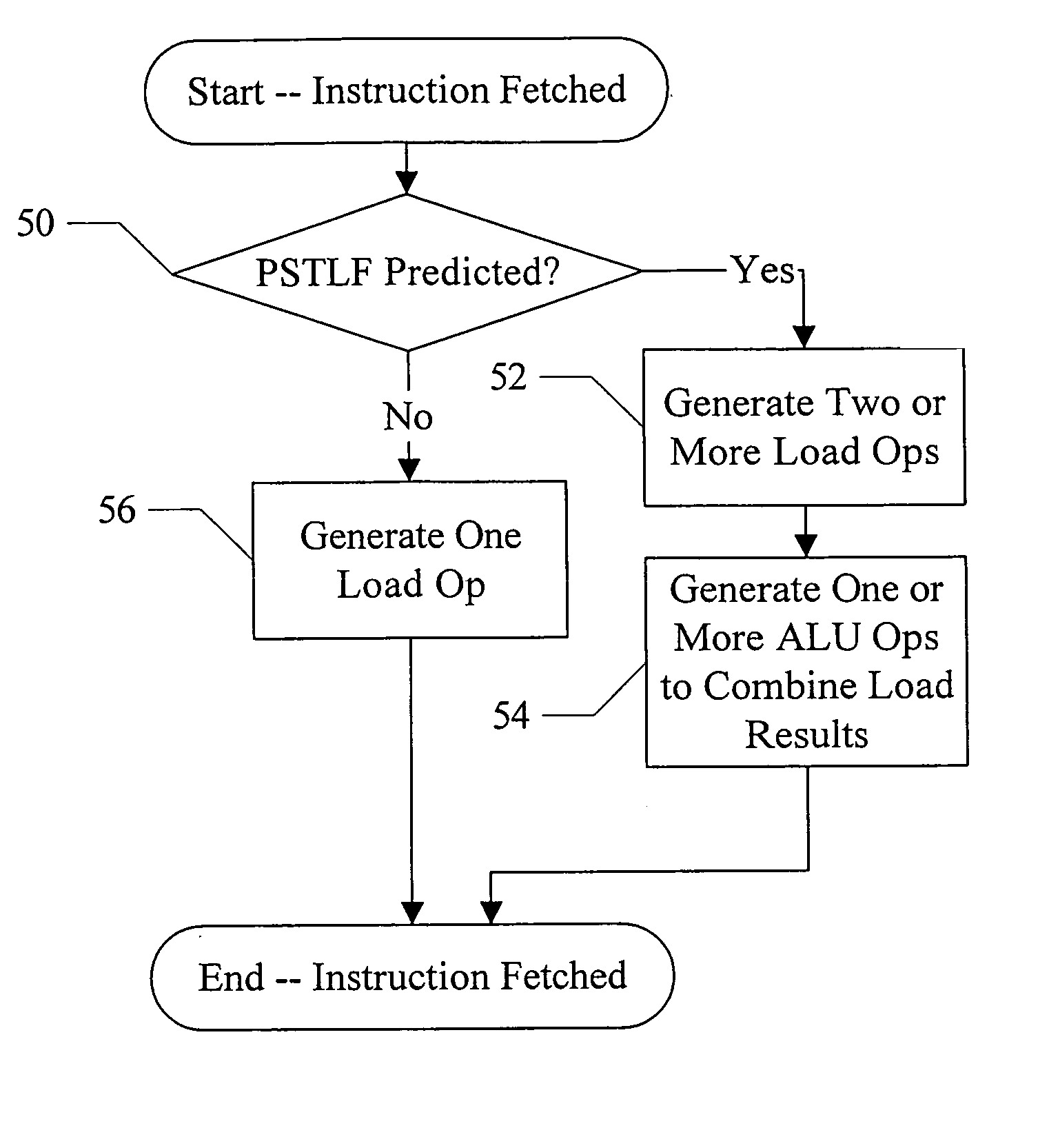

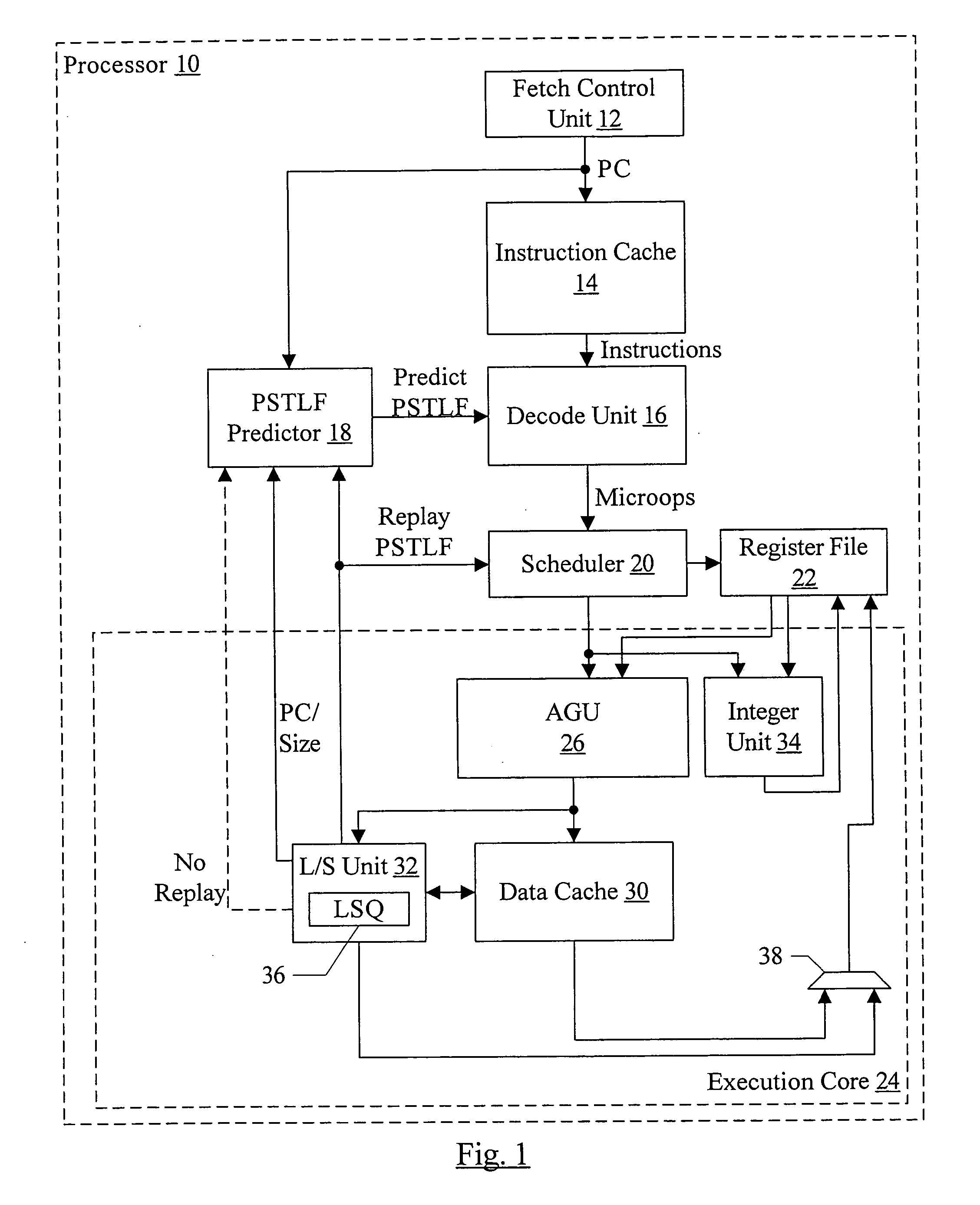

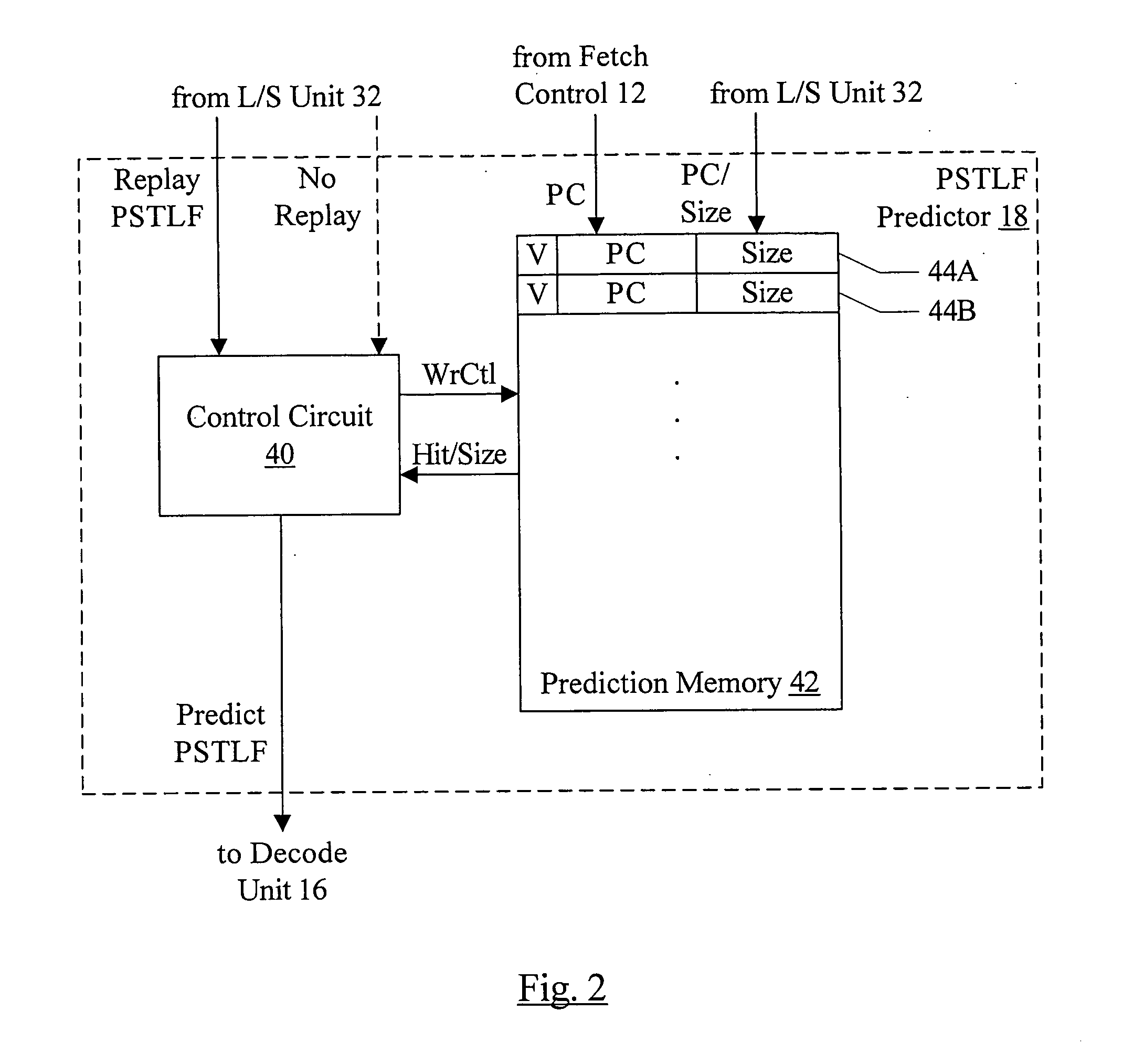

Partial load/store forward prediction

ActiveUS20070038846A1Instruction analysisRuntime instruction translationLoad instructionParallel computing

In one embodiment, a processor comprises a prediction circuit and another circuit coupled to the prediction circuit. The prediction circuit is configured to predict whether or not a first load instruction will experience a partial store to load forward (PSTLF) event during execution. A PSTLF event occurs if a plurality of bytes, accessed responsive to the first load instruction during execution, include at least a first byte updated responsive to a previous uncommitted store operation and also include at least a second byte not updated responsive to the previous uncommitted store operation. Coupled to receive the first load instruction, the circuit is configured to generate one or more load operations responsive to the first load instruction. The load operations are to be executed in the processor to execute the first load instruction, and a number of the load operations is dependent on the prediction by the prediction circuit.

Owner:APPLE INC

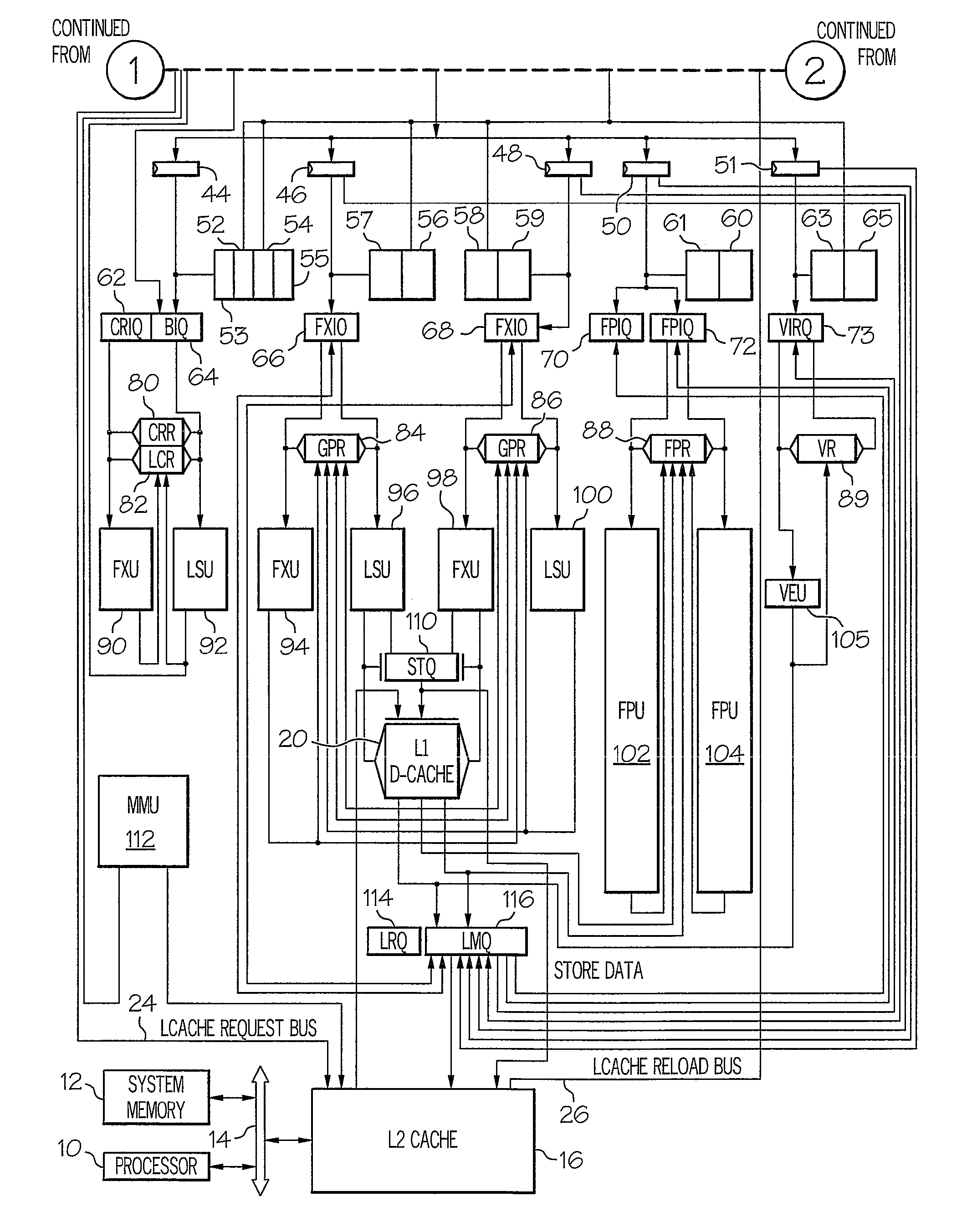

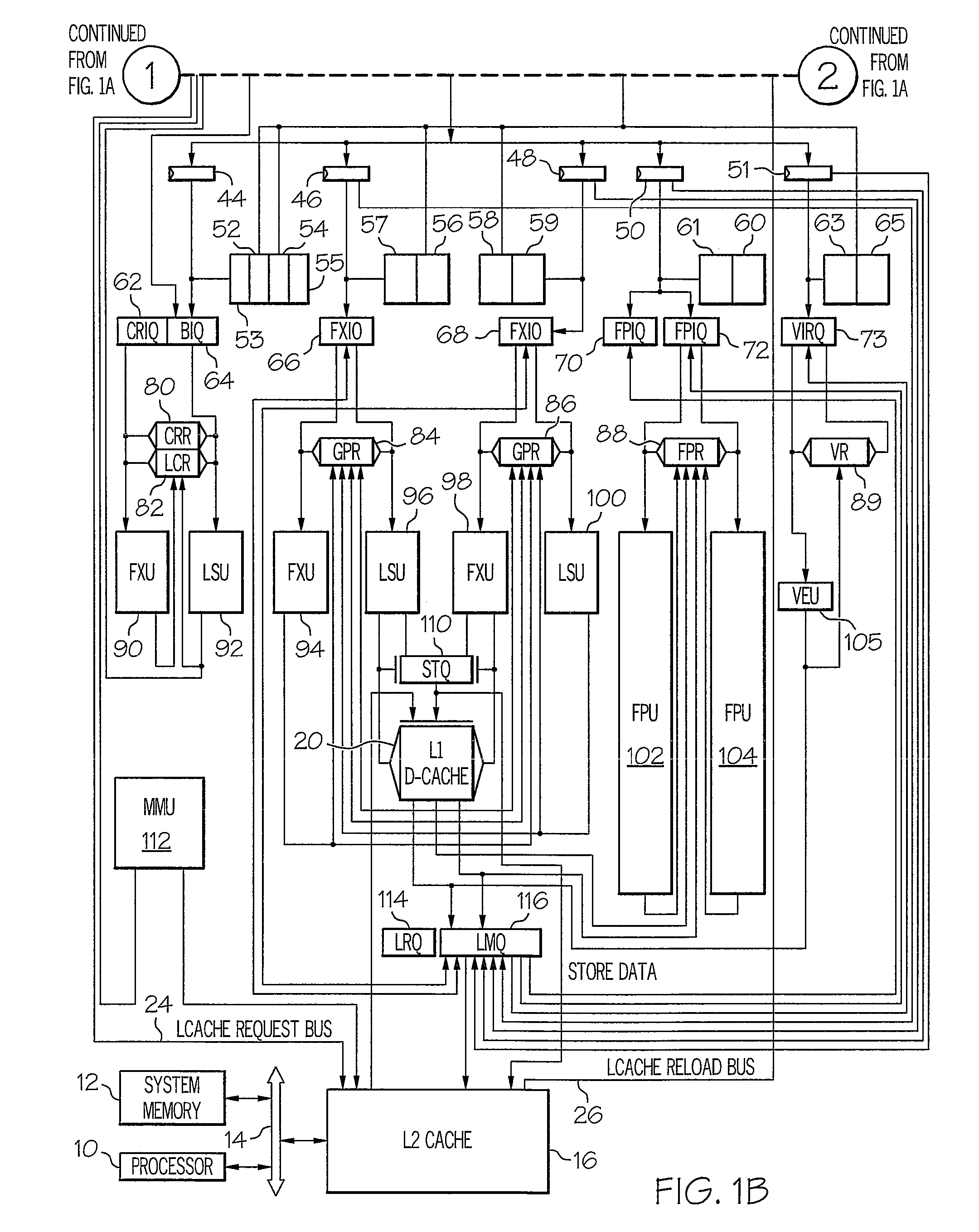

Design structure for a mechanism to minimize unscheduled d-cache miss pipeline stalls

ActiveUS20080162895A1Digital computer detailsSpecific program execution arrangementsLoad instructionParallel computing

A design structure embodied in a machine readable storage medium for designing, manufacturing, and / or testing a design for minimizing unscheduled D-cache miss pipeline stalls is provided. The design structure includes an integrated circuit device, which includes a cascaded delayed execution pipeline unit having two or more execution pipelines that begin execution of instructions in a common issue group in a delayed manner relative to each other, and circuitry. The circuitry is configured to receive an issue group of instructions, determine whether the issue group is a load instruction, and if so, schedule the load instruction in a first pipeline of the two or more execution pipelines, and schedule each remaining instruction in the issue group to be executed in remaining pipelines of the two or more pipelines, wherein execution of the load instruction in the first pipeline begins prior to beginning execution of the remaining instructions in the remaining pipelines.

Owner:IBM CORP

System and Method for Issuing Load-Dependent Instructions from an Issue Queue in a Processing Unit

InactiveUS20090113182A1Conditional code generationRegister arrangementsData processing systemMemory hierarchy

A system and method for issuing load-dependent instructions from an issue queue in a processing unit in a data processing system. In response to a LSU determining that a load request from a load instruction missed a first level in a memory hierarchy, a LMQ allocates a load-miss queue entry corresponding to the load instruction. The LMQ associates at least one instruction dependent on the load request with the load-miss queue entry. Once data associated with the load request is retrieved, the LMQ selects at least one instruction dependent on the load request for execution on the next cycle. At least one instruction dependent on the load request is executed and a result is outputted.

Owner:IBM CORP

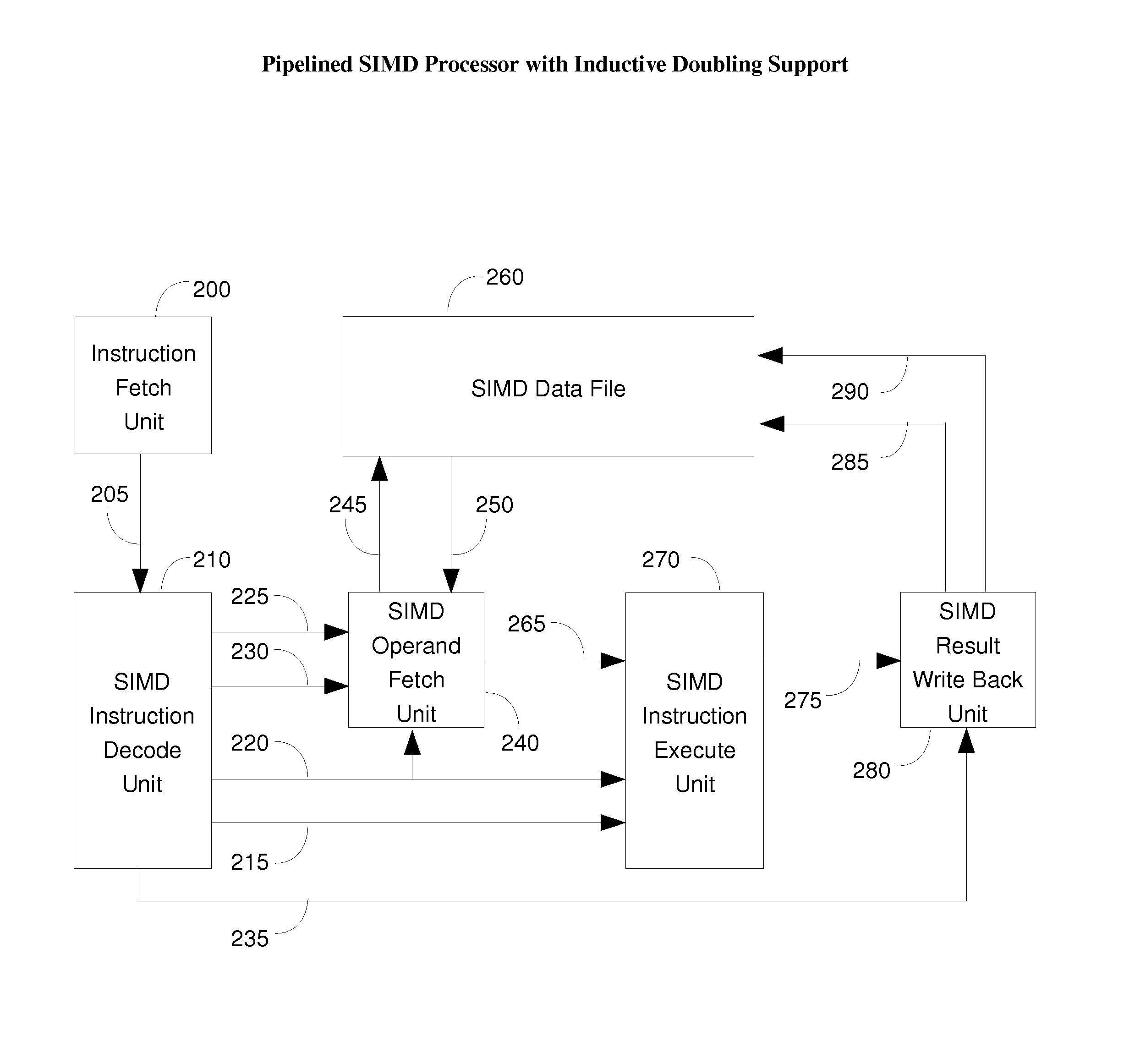

Method and Apparatus for an Inductive Doubling Architecture

InactiveUS20080046686A1Runtime instruction translationArchitecture with multiple processing unitsComputer architectureLoad instruction

One embodiment of the present invention is a processor that processes inductive doubling SIMD instructions, which processor comprises: an Instruction Fetch Unit that loads a SIMD instruction and applies it as input to a SIMD Instruction Decode Unit; wherein the SIMD Instruction Decode Unit decodes the applied SIMD instruction and produces output signals including SIMD field width identification signals and one or more SIMD half-operand modifier signals.

Owner:INT CHARACTERS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com