Patents

Literature

112results about How to "Improve processor performance" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

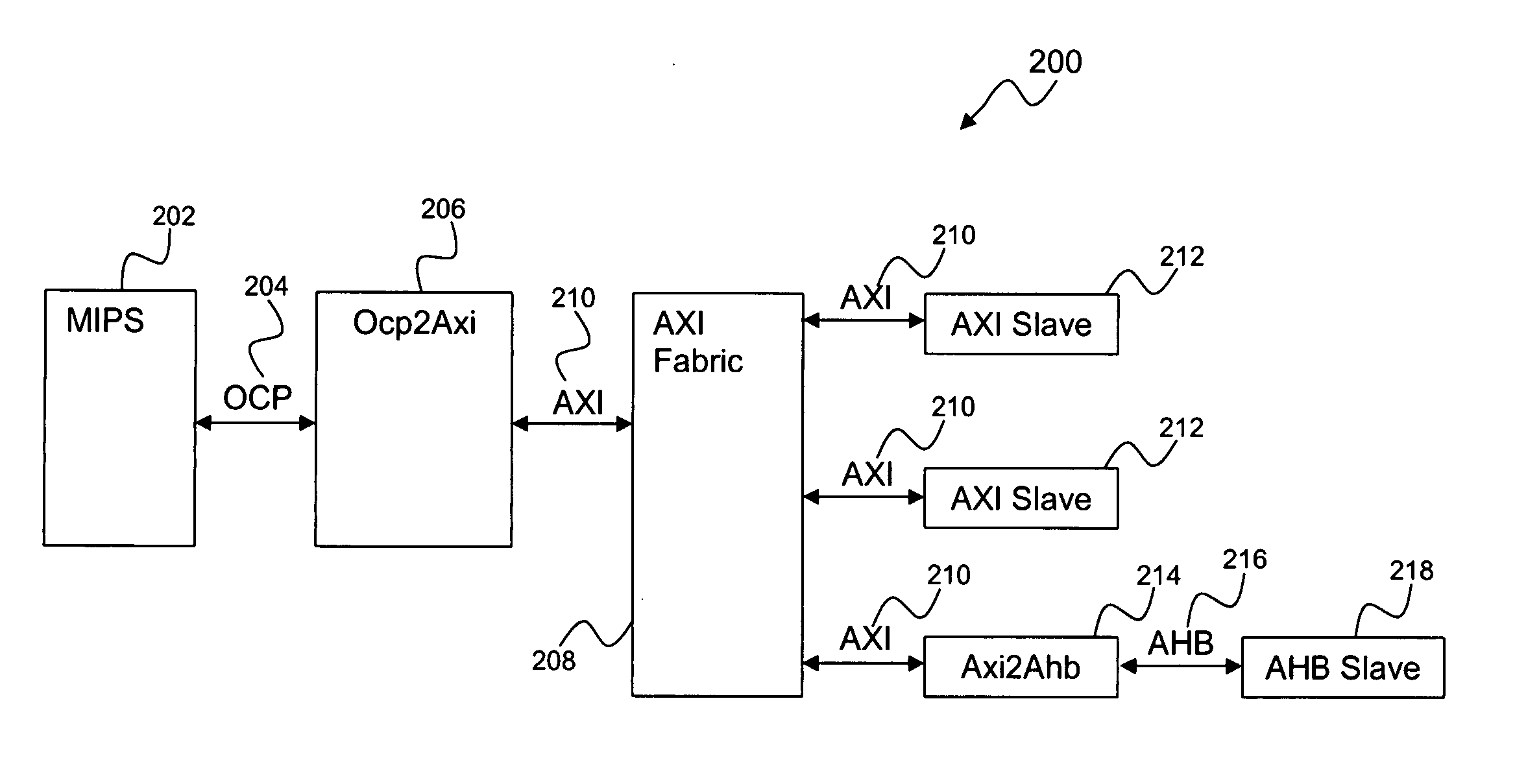

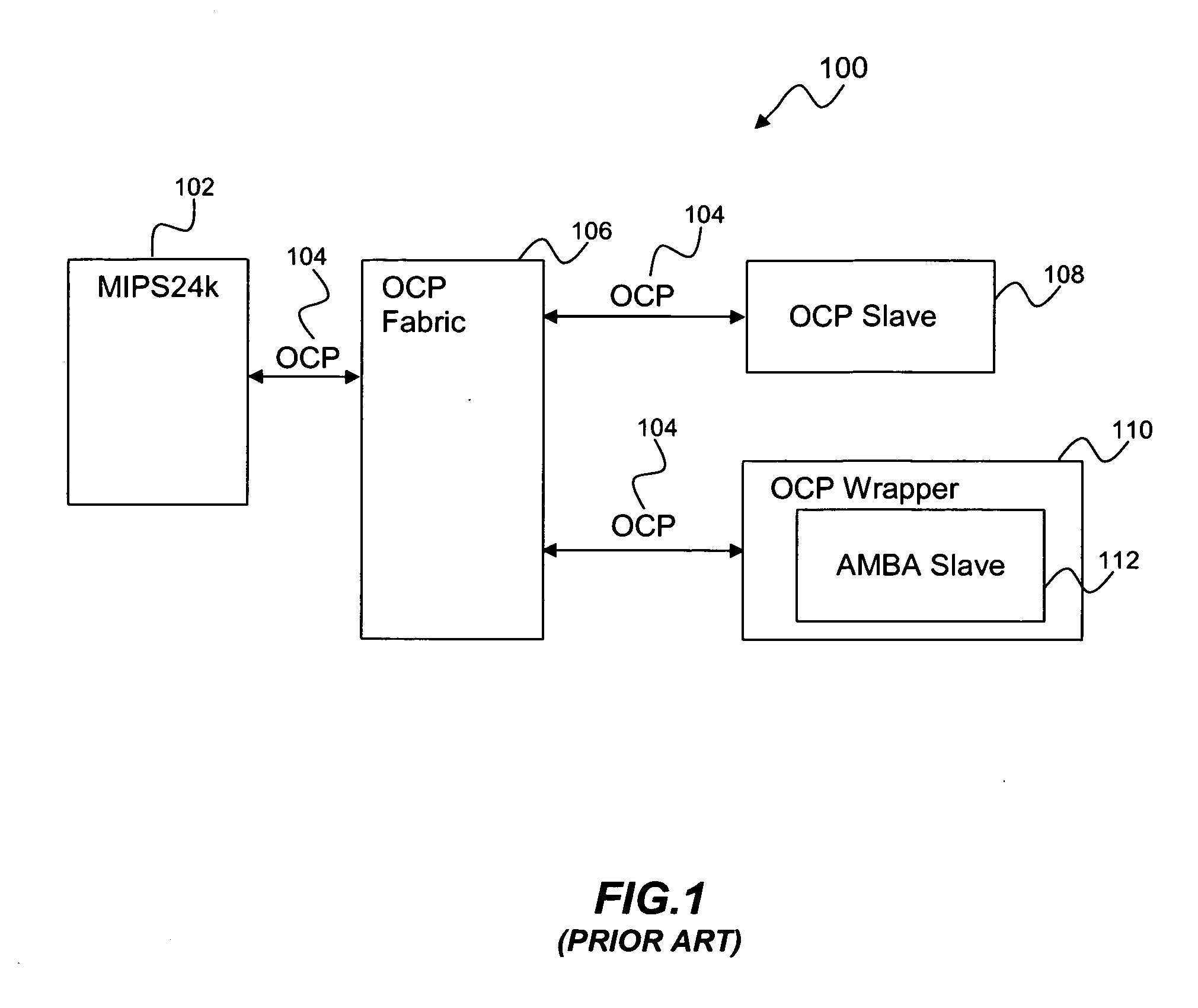

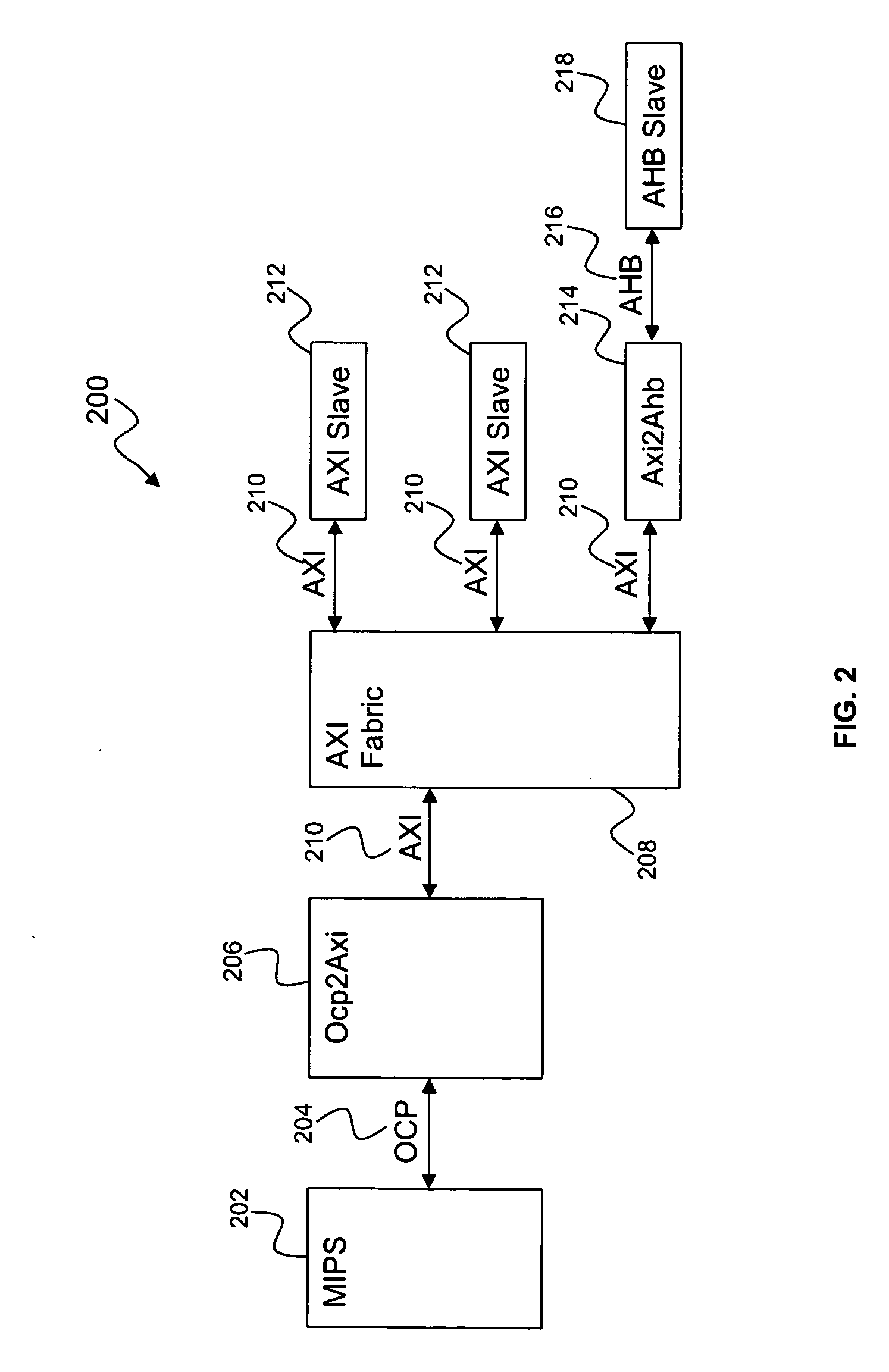

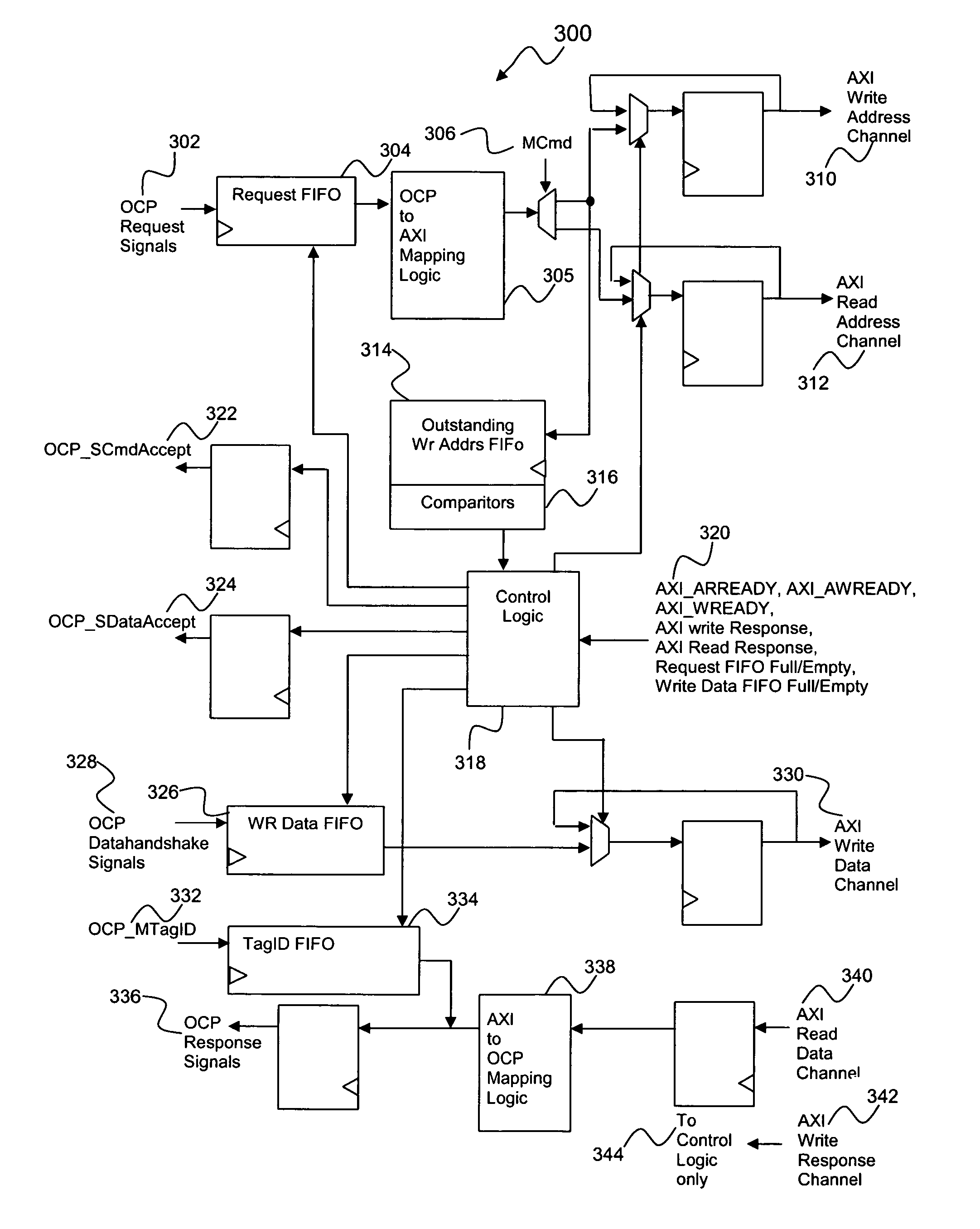

Method for request transaction ordering in OCP bus to AXI bus bridge design

ActiveUS20070067549A1Improve processor performanceImprove performanceData switching networksElectric digital data processingEmbedded systemComparator

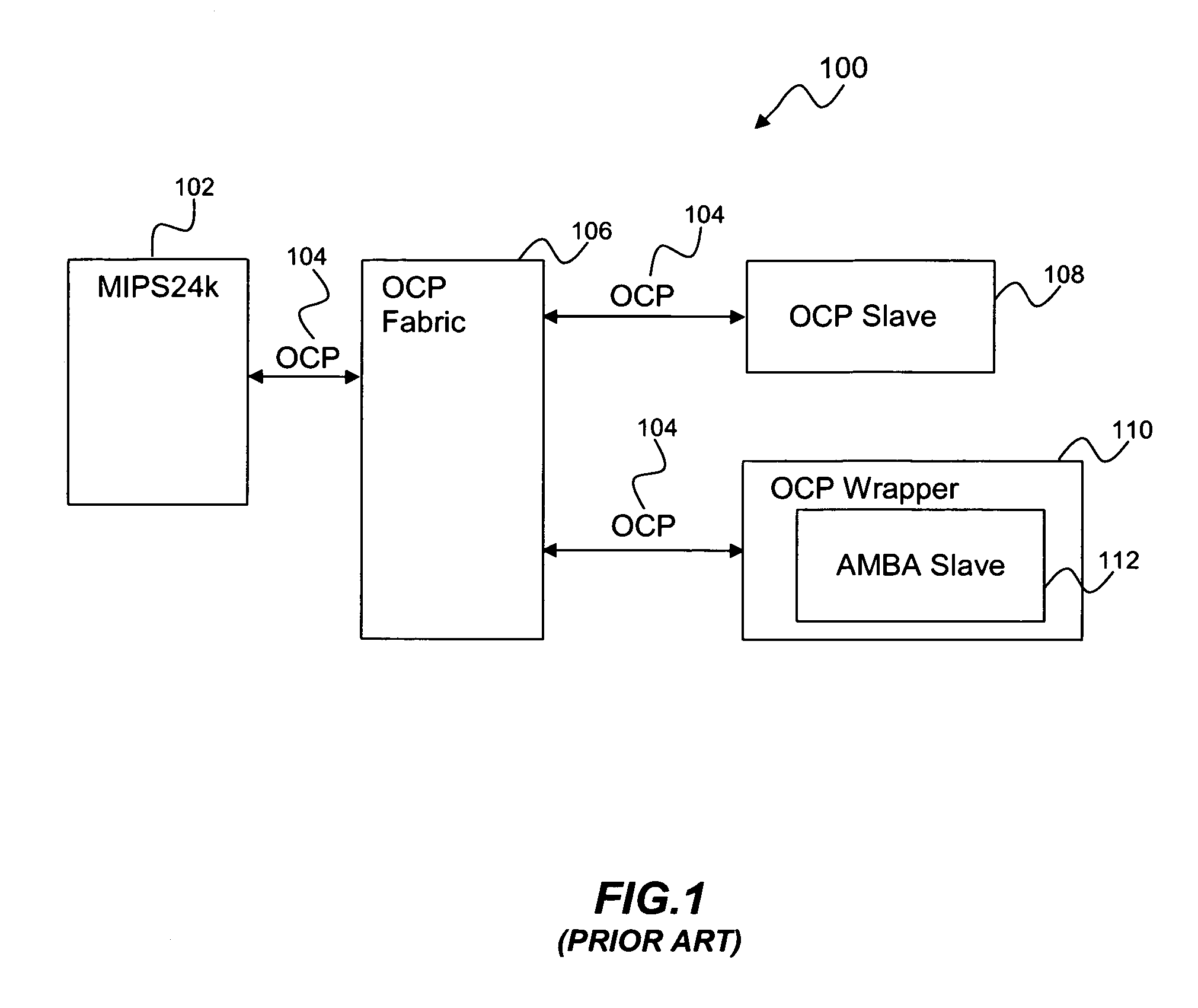

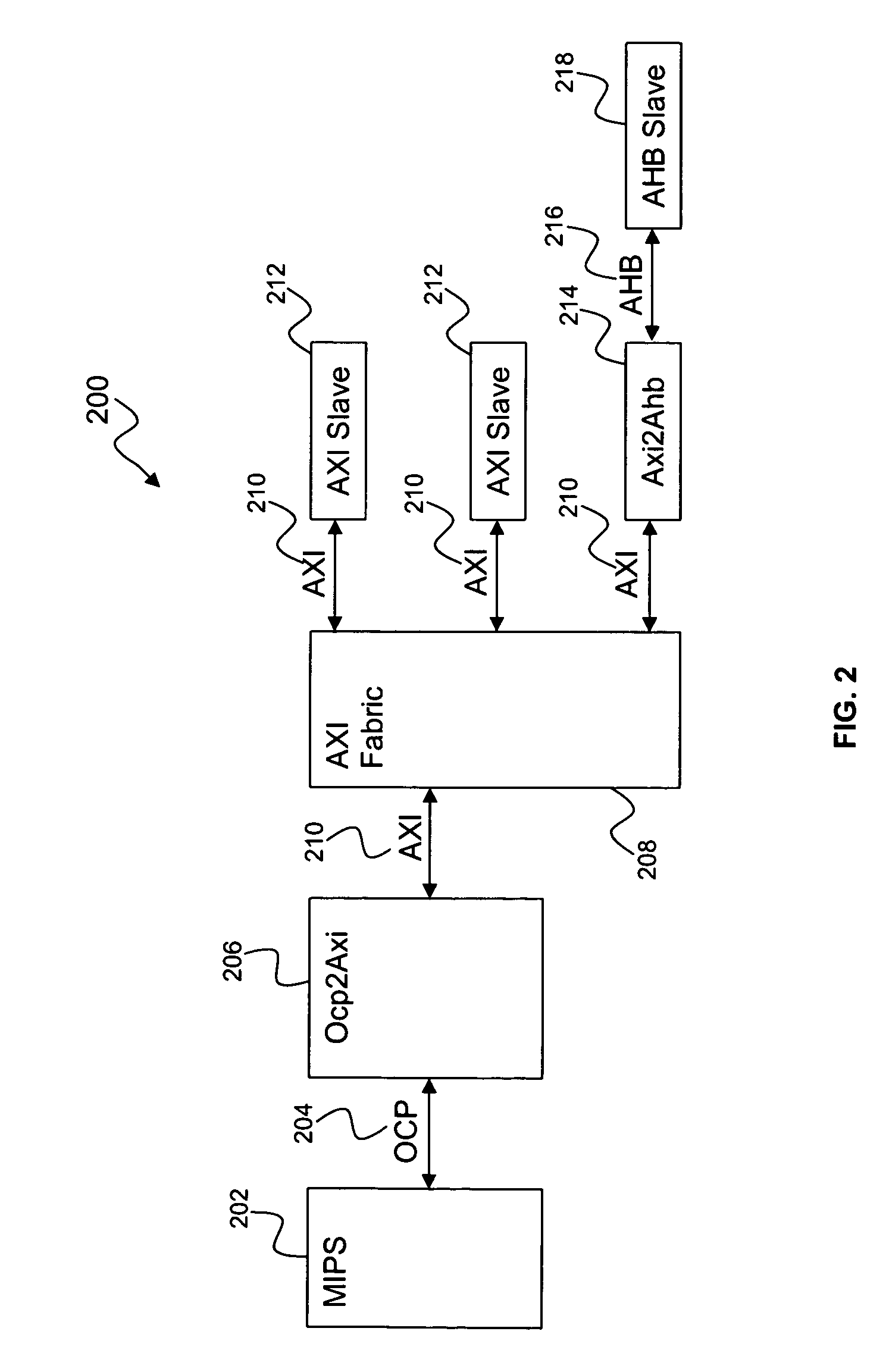

A request transaction ordering method and system includes designing of the Open Core Protocol (OCP) bus to an Advanced extensible Interface (AXI) bus bridge. The general flow of the bridge is to accept a plurality of read and write requests from the OCP bus and convert them to a plurality of AXI read and write requests. Control logic is set for each first in first out policy of push and pop control and for a plurality of handshake signals in OCP and in the AXI. The request ordering part of the bridge performs hazard checking to preserve required order policies for both OCP and AXI bus protocols by using a FIFO (first in first out) policy to hold the outstanding writes, a plurality of comparators, a first in first out policy to hold OCP identities for a plurality of read requests.

Owner:AVAGO TECH INT SALES PTE LTD

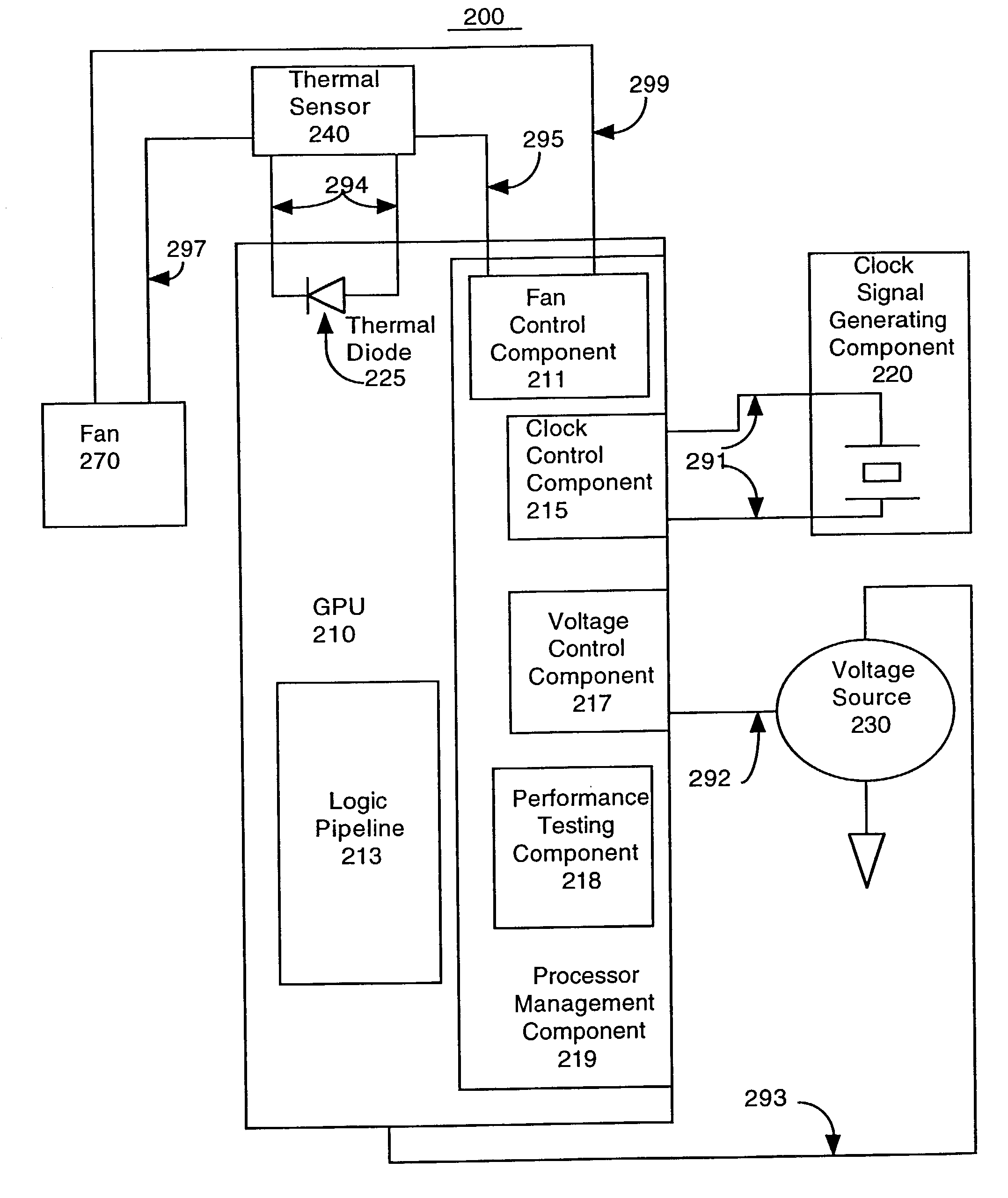

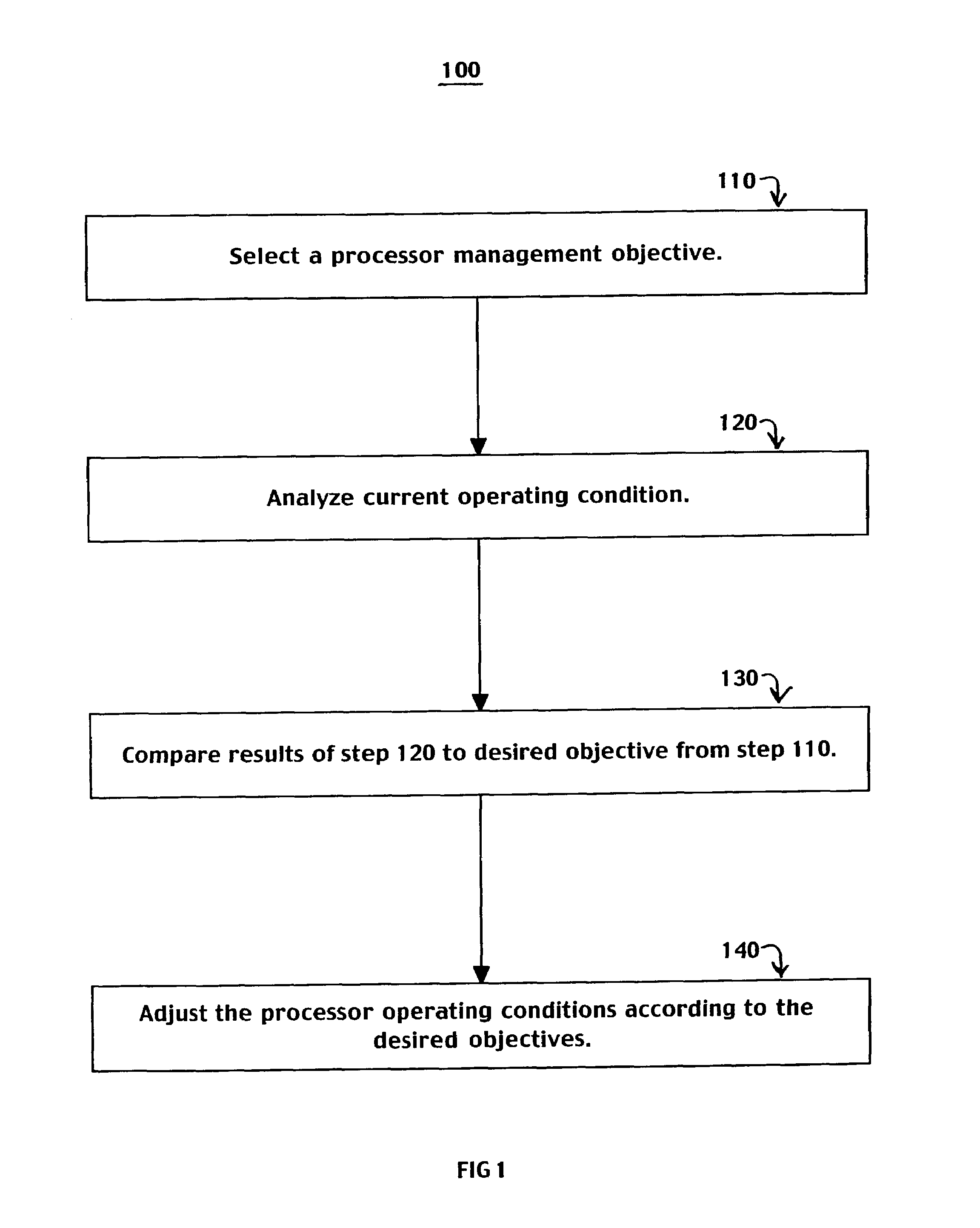

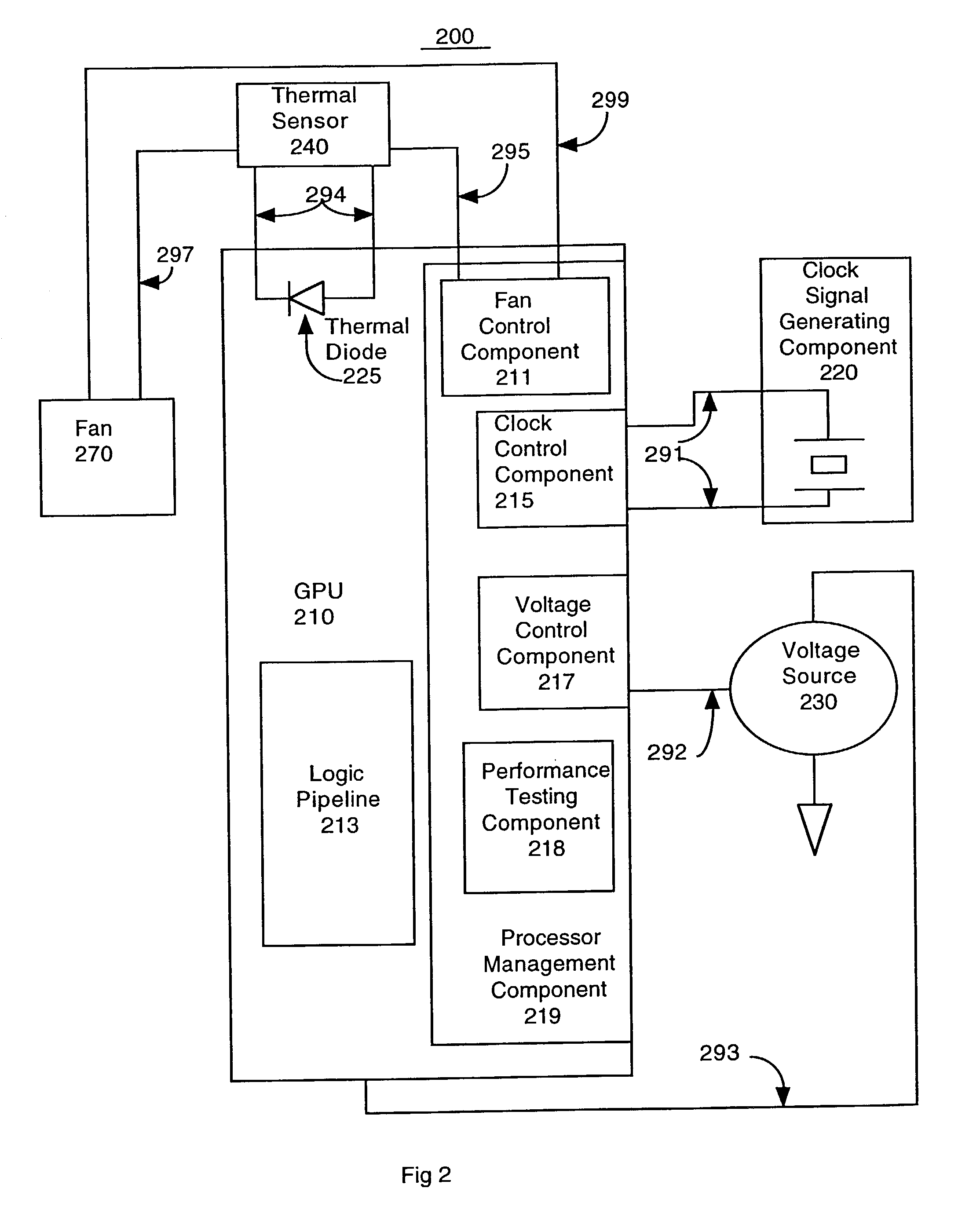

Processor performance adjustment system and method

ActiveUS7882369B1Enhance performance of processorMinimal impactEnergy efficient ICTVolume/mass flow measurementEmbedded systemError ratio

The present invention performance enhancement and reliability maintenance system and method pushes a processor to its maximized performance capabilities when performing processing intensive tasks (e.g., 3D graphics, etc). For example, a clock speed and voltage are increased until an unacceptable error rate begins to appear in the processing results and then the clock speed and voltage are backed off to the last setting at which excessive errors did not occur, enabling a processor at its full performance potential. The present invention also includes the ability to throttle back settings which facilitates the maintenance of desired reliability standards. The present invention is readily expandable to provide adjustment for a variety of characteristics in response to task performance requirements. For example, a variable speed fan that is software controlled can be adjusted to alter the temperature of the processor in addition to clock frequency and voltage.

Owner:NVIDIA CORP

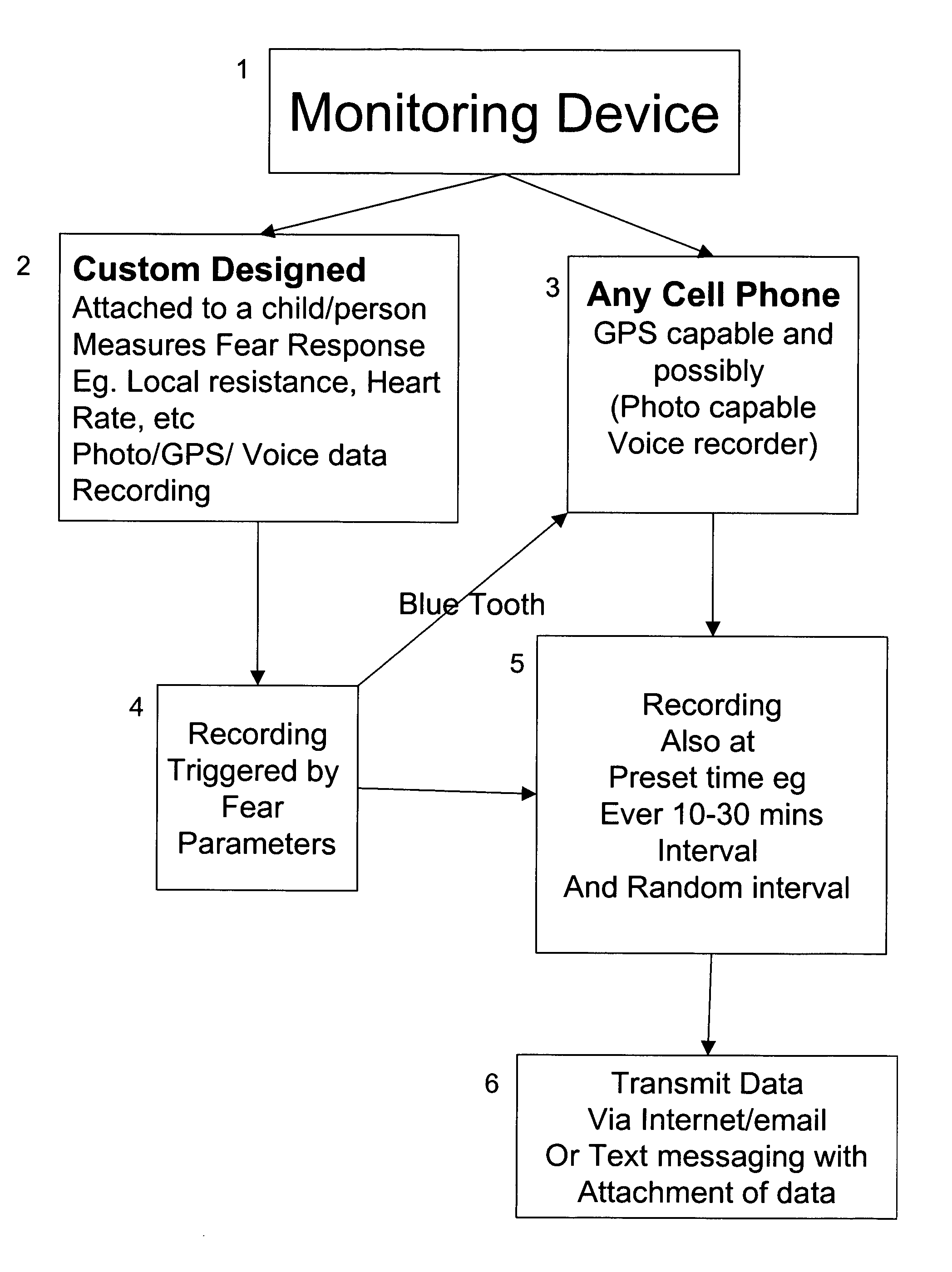

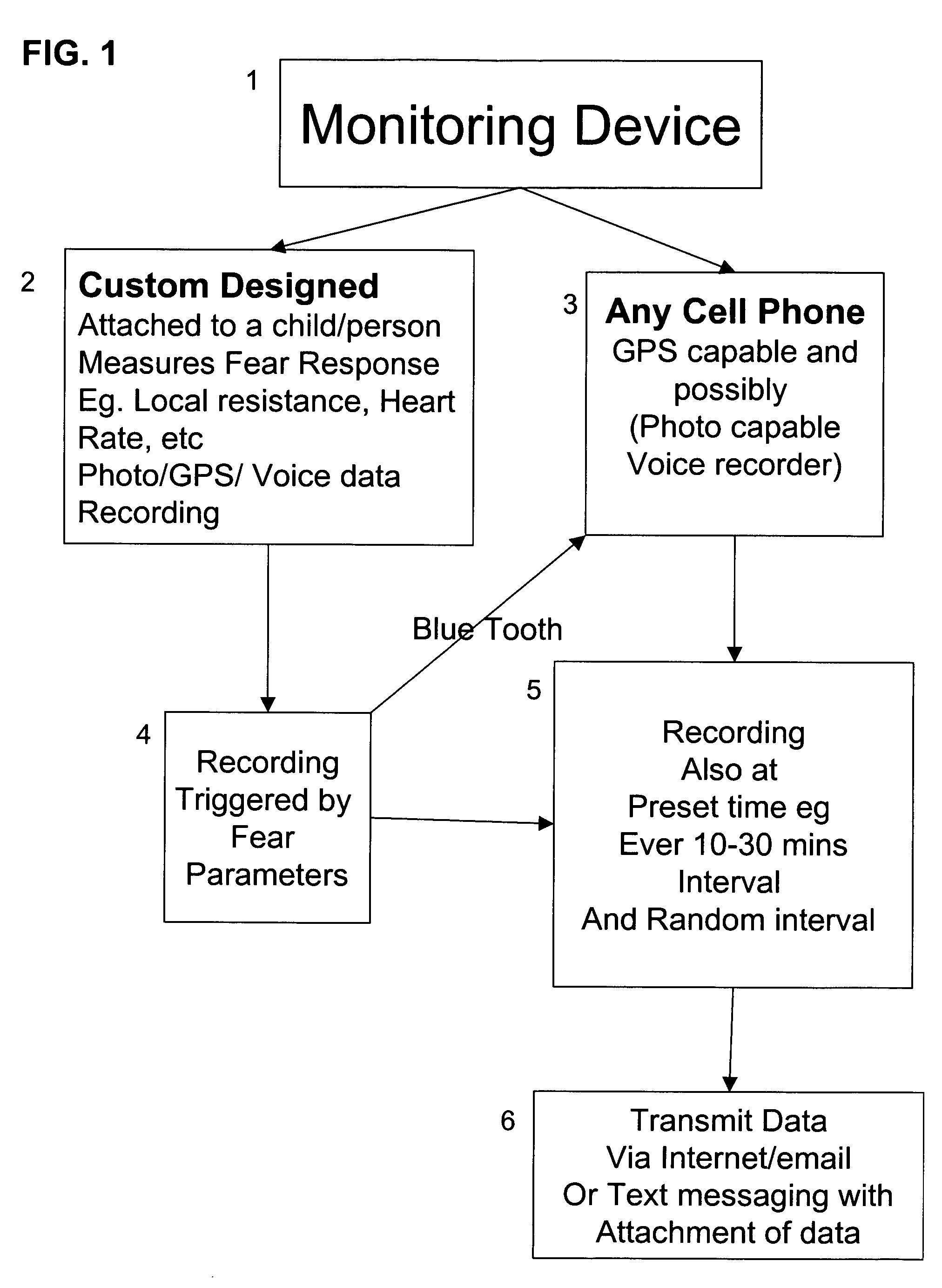

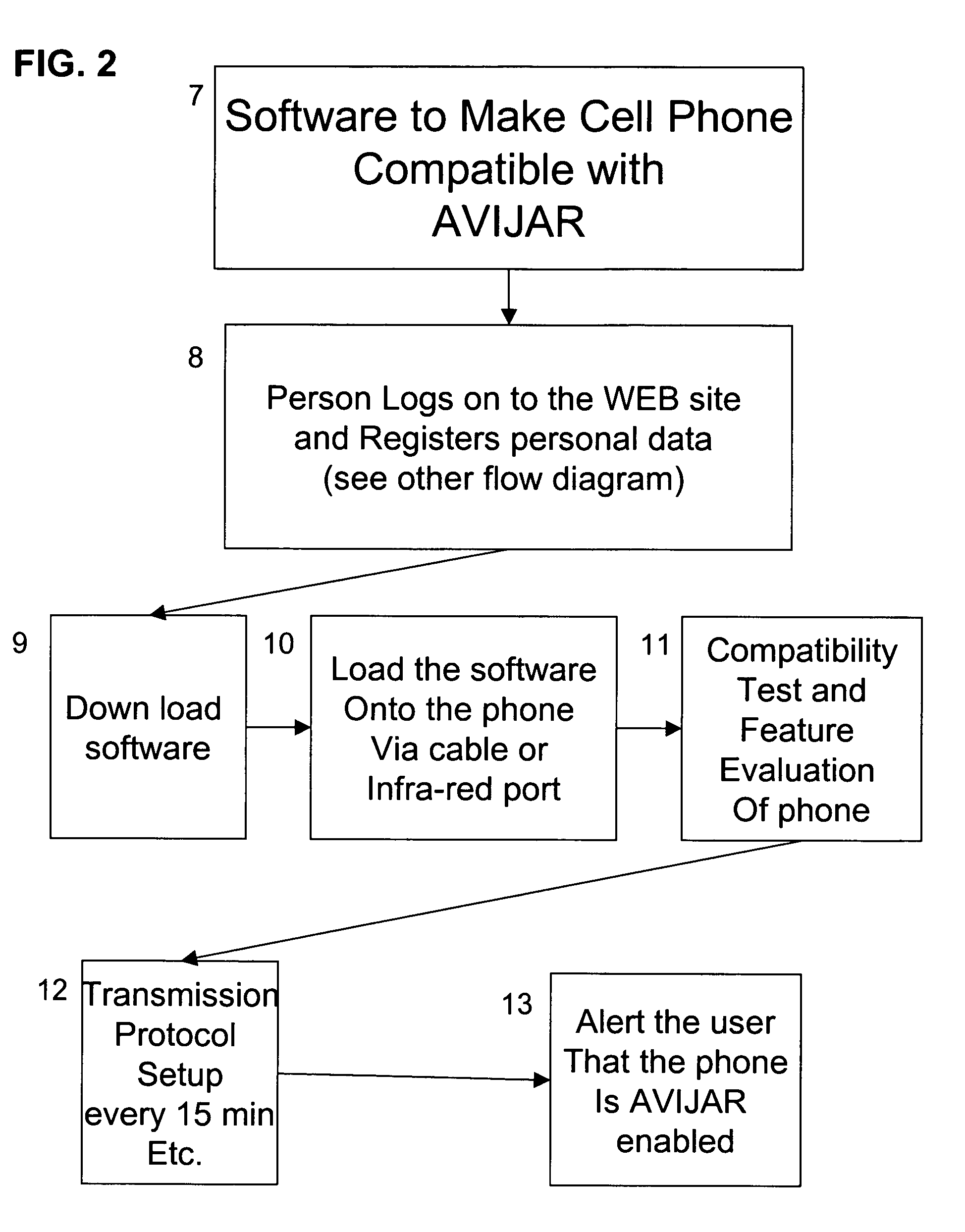

Systems and methods for remote monitoring of fear and distress responses

InactiveUS20060047187A1Limited accessImprove processor performanceSurgeryDiagnostic recording/measuringRemote monitoring and controlReal-time computing

Owner:GOYAL MUNA C +1

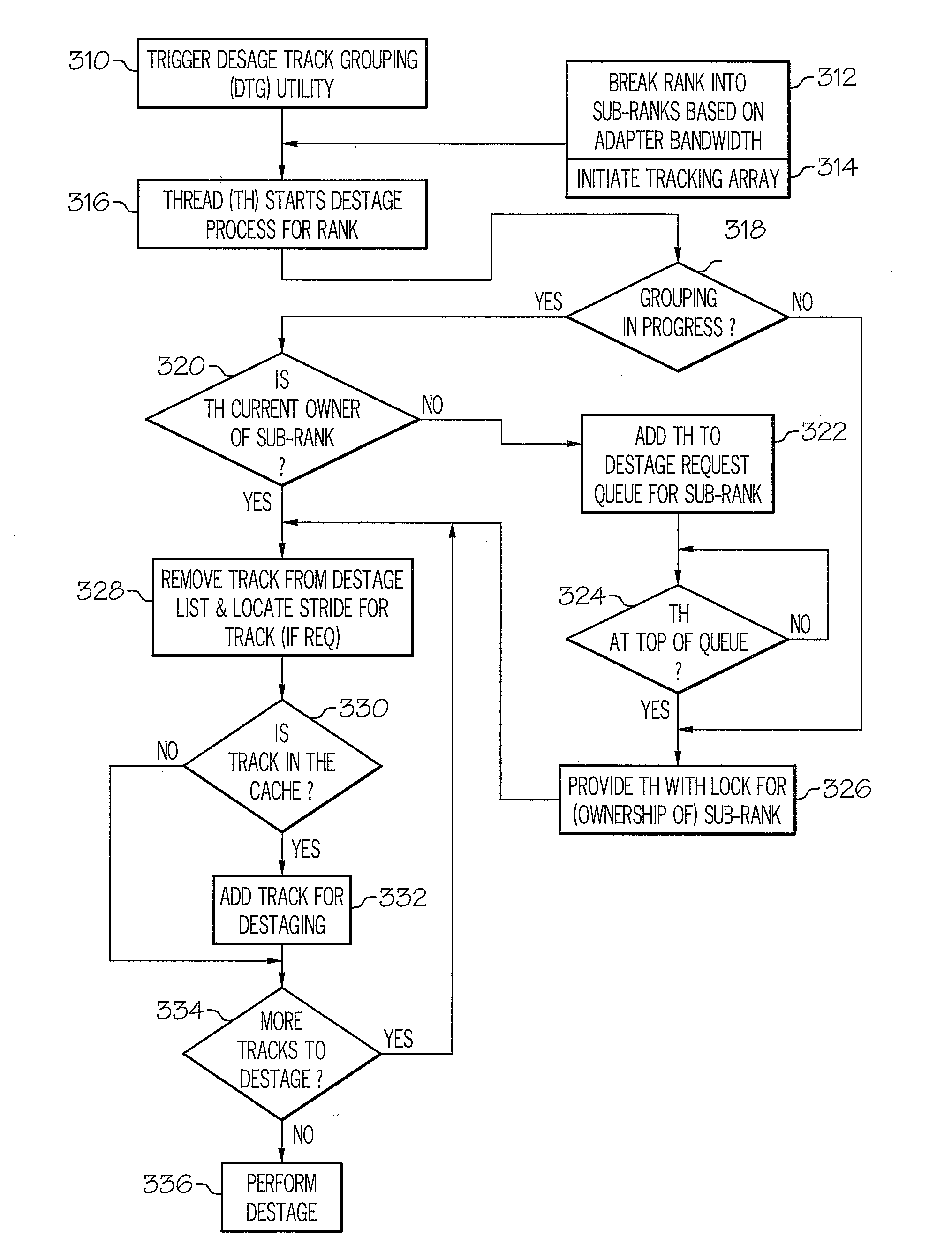

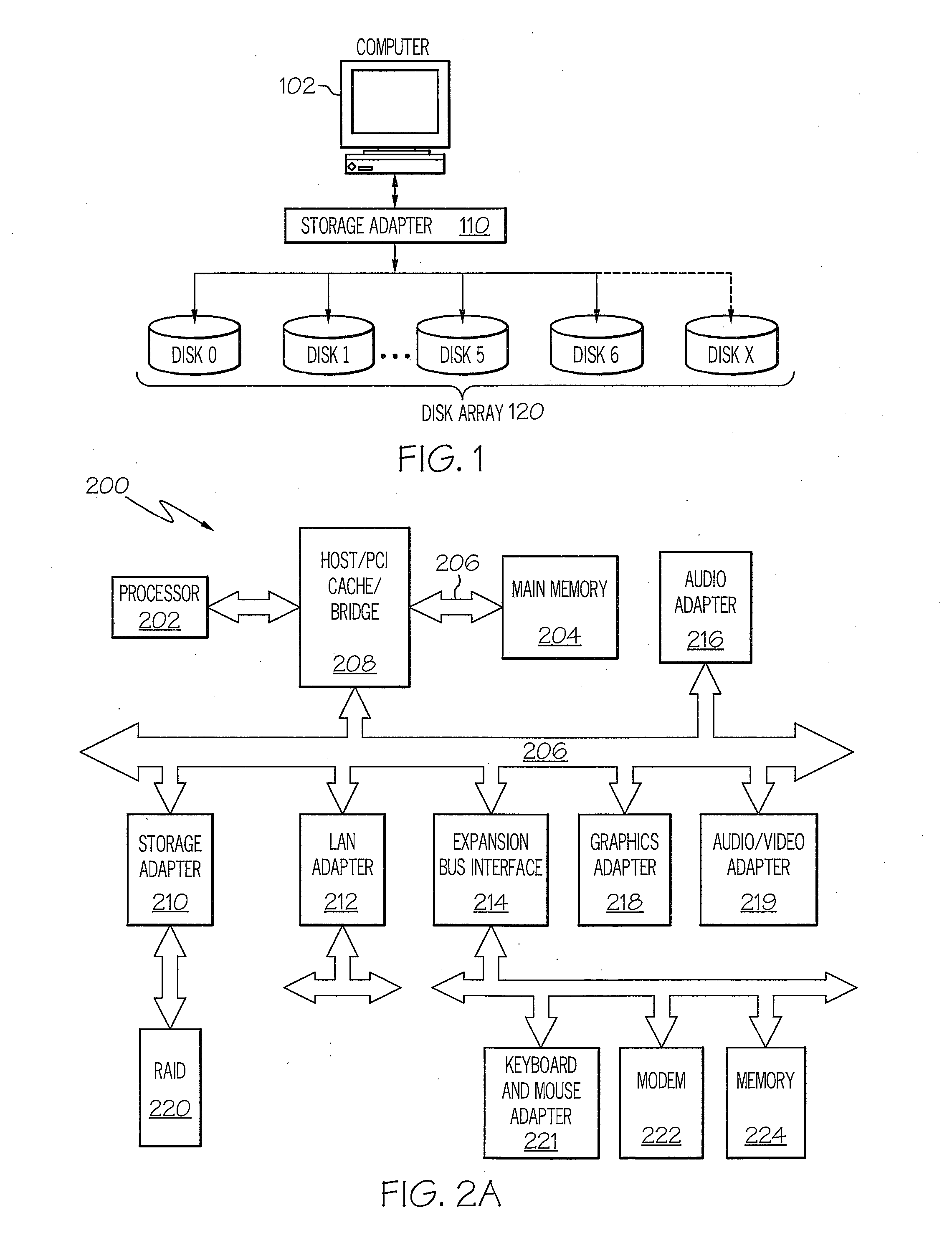

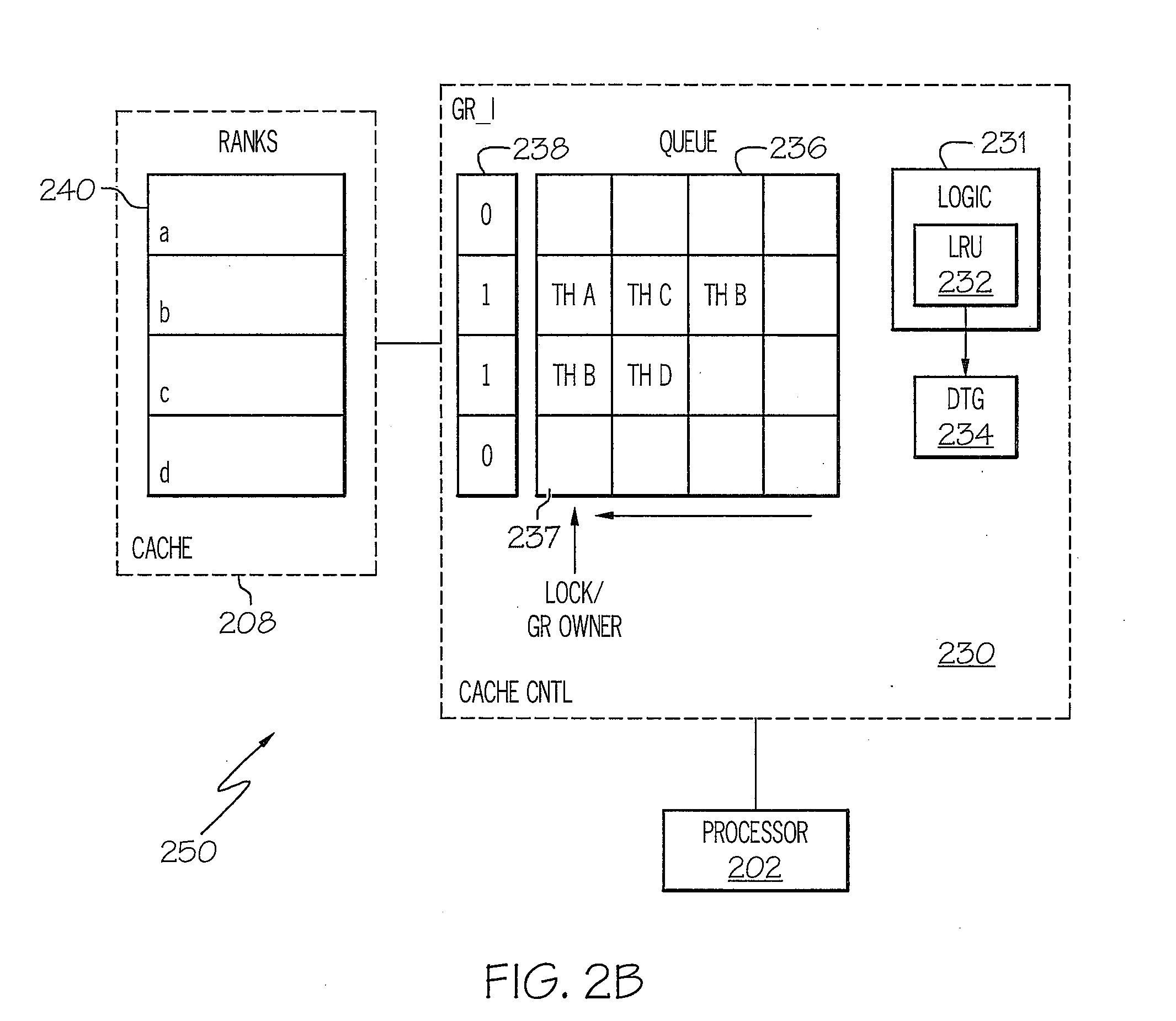

Method and system for grouping tracks for destaging on raid arrays

InactiveUS20080040553A1Maximize full stripe writesLower latencyError detection/correctionMemory systemsRAIDData selection

A method, system and processor for substantially reducing the write penalty (or latency) associated with writes and / or destaging operations within a RAID 5 array and / or RAID 6 array. When a write or destaging operation is initiated, i.e., when modified data is to be evicted from the cache, an existing data selection mechanism first selects the track of data to be evicted from the cache. The data selection mechanism then triggers a data track grouping (DTG) utility, which executes a thread to group data tracks, in order to maximize full stripe writes. Once the DTG algorithm completes the grouping of data tracks to complete a full stripe, a fall-stripe write is performed, and parity is generated without requiring a read from the disk(s). In this manner, the write penalty is substantially reduced, and the overall write performance of the processor is significantly improved.

Owner:IBM CORP

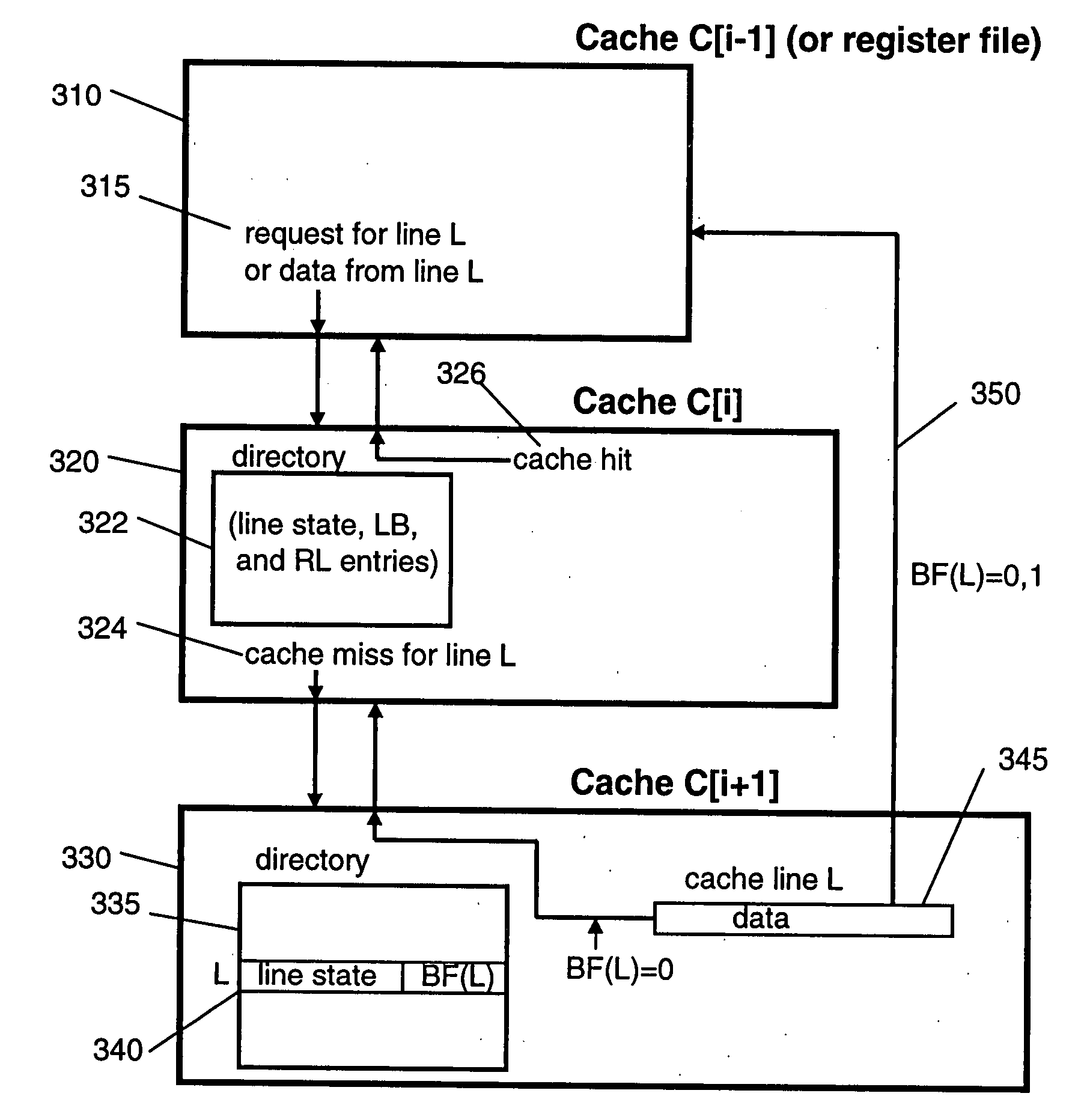

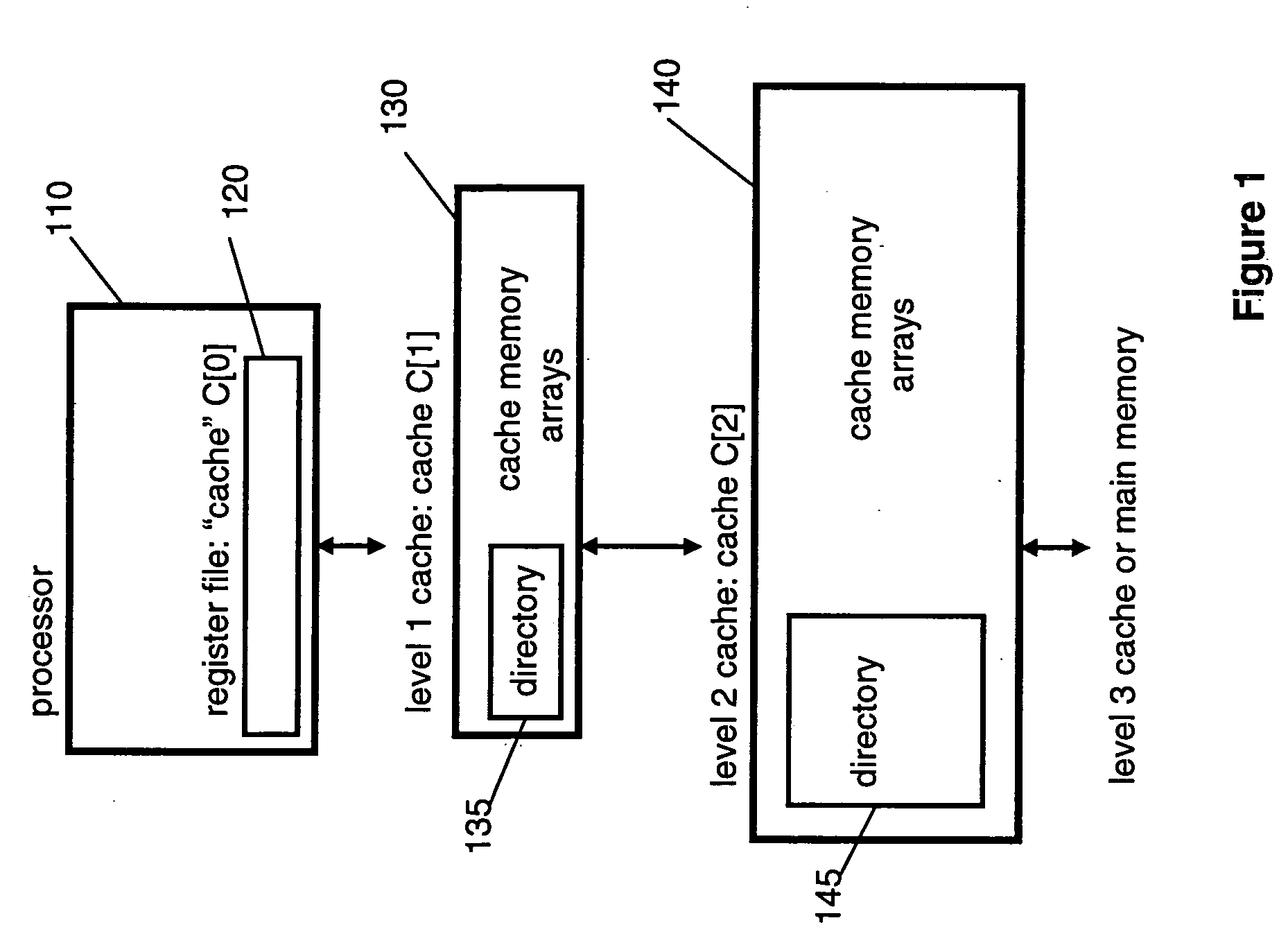

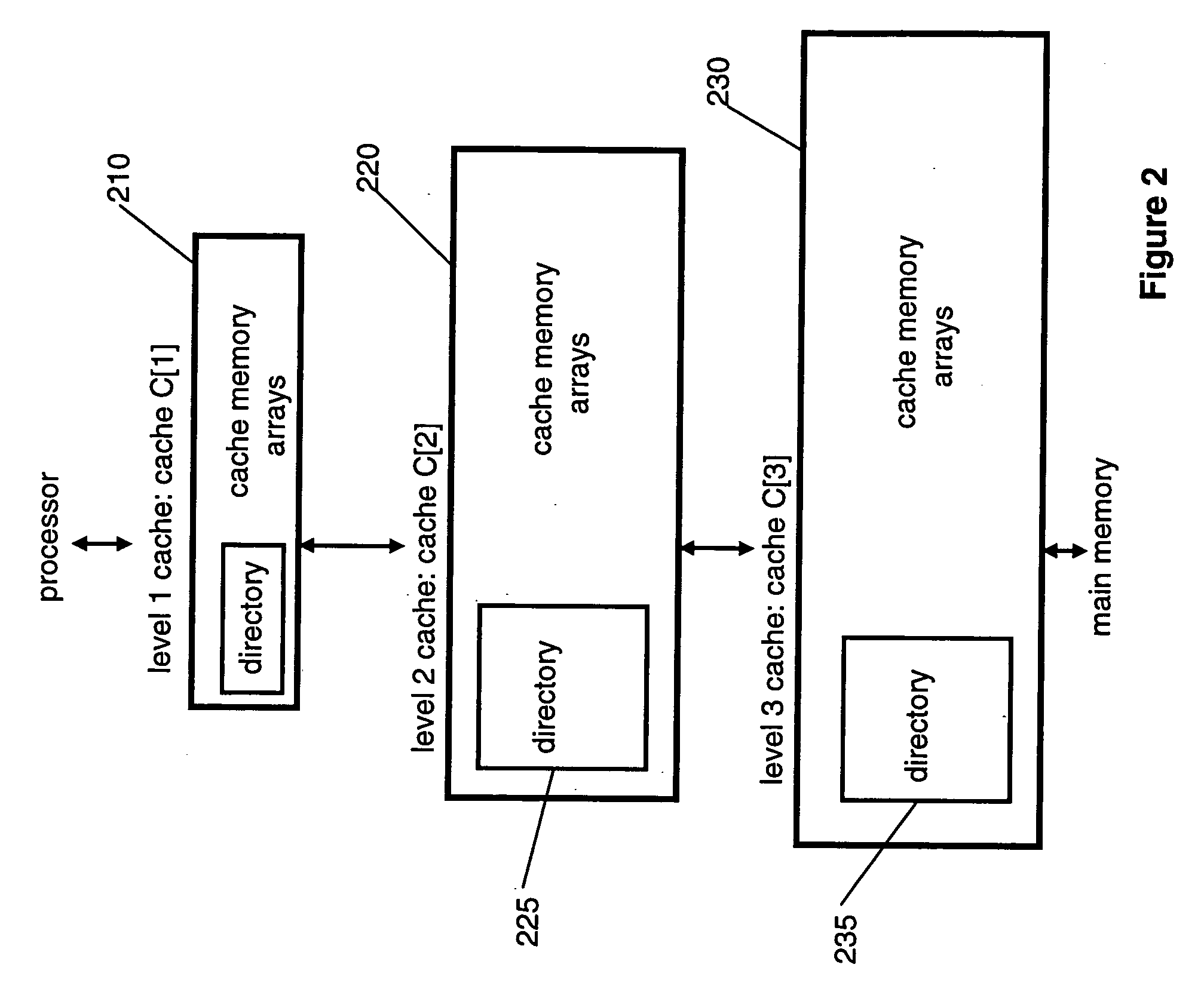

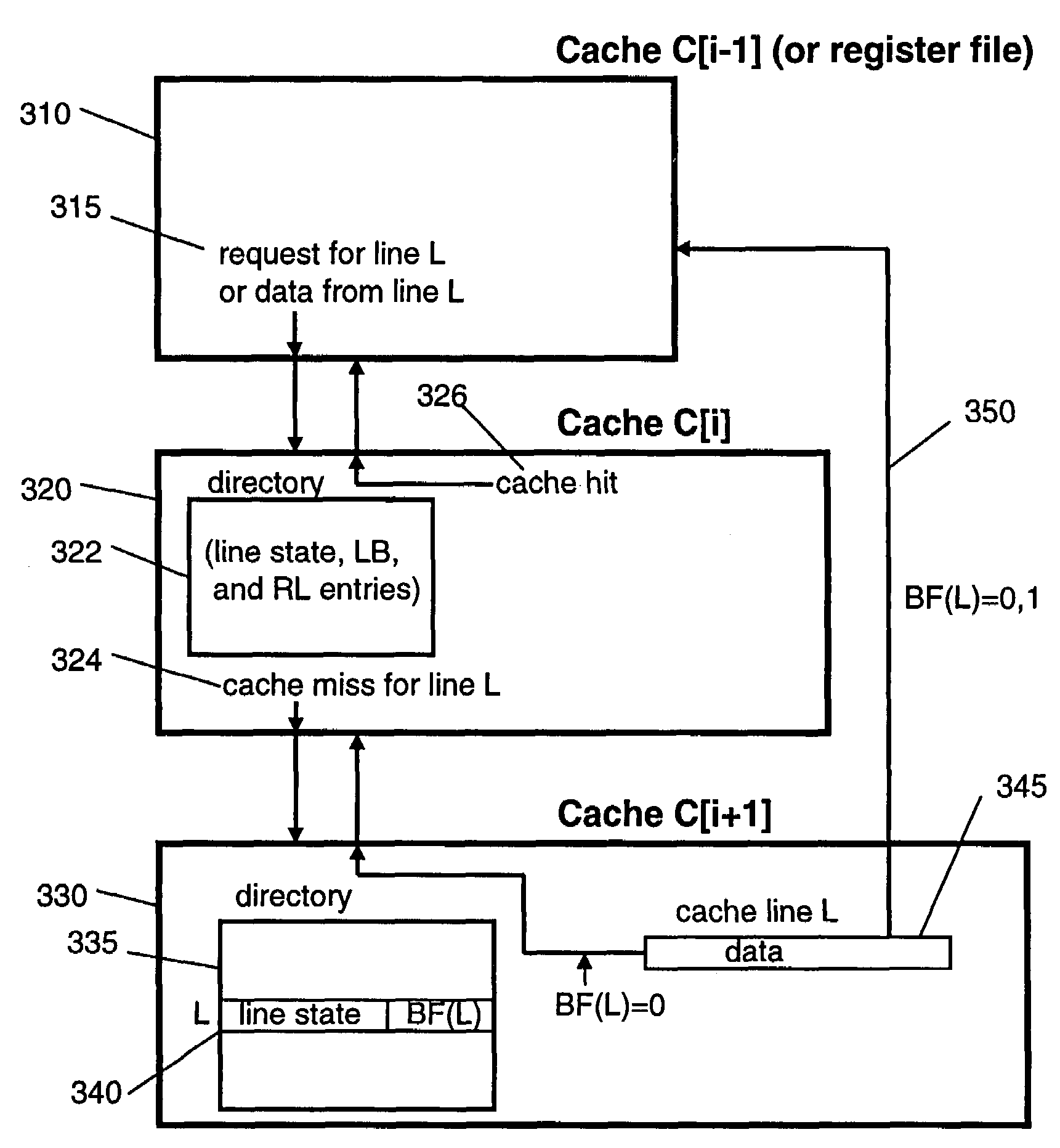

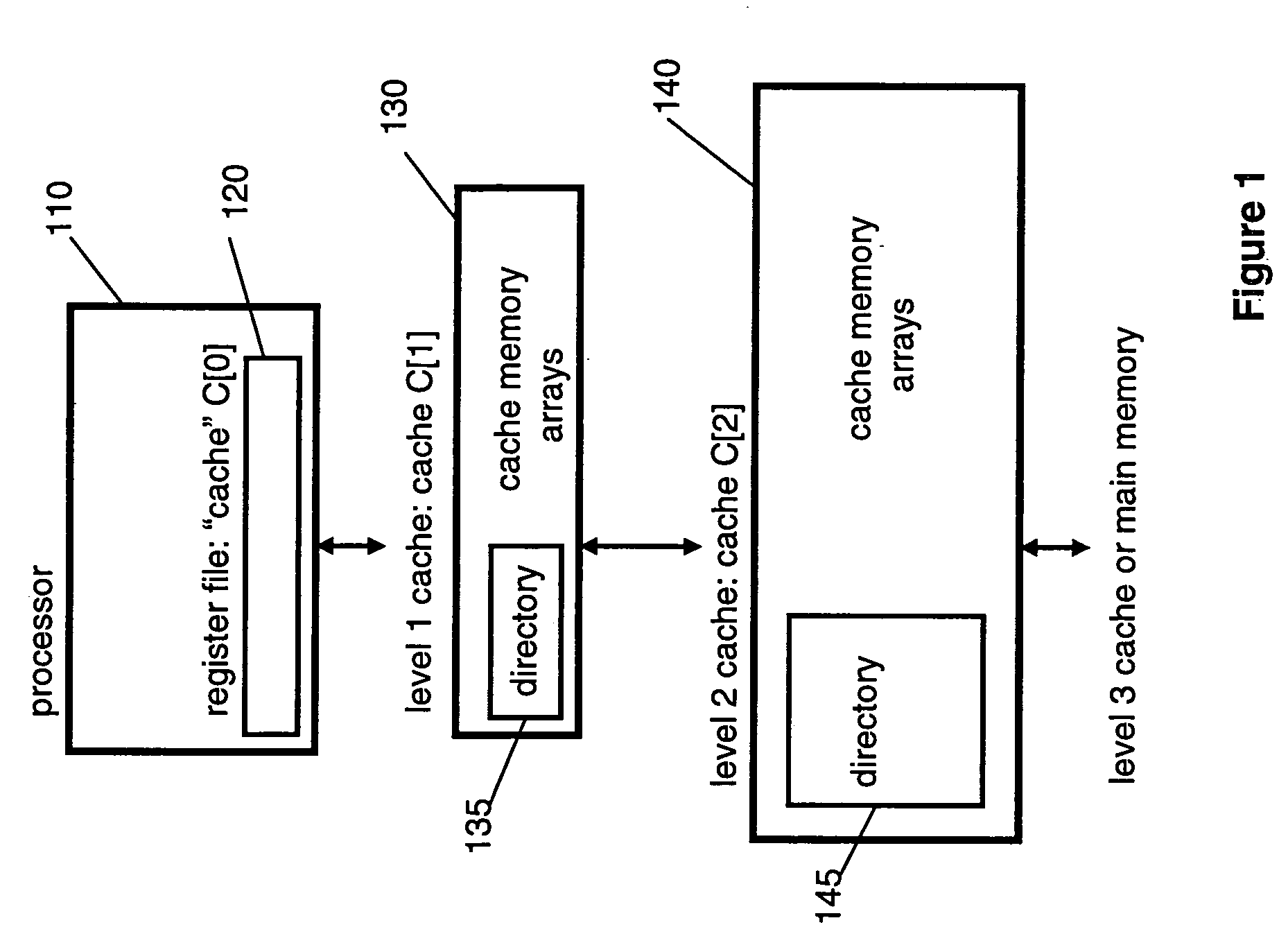

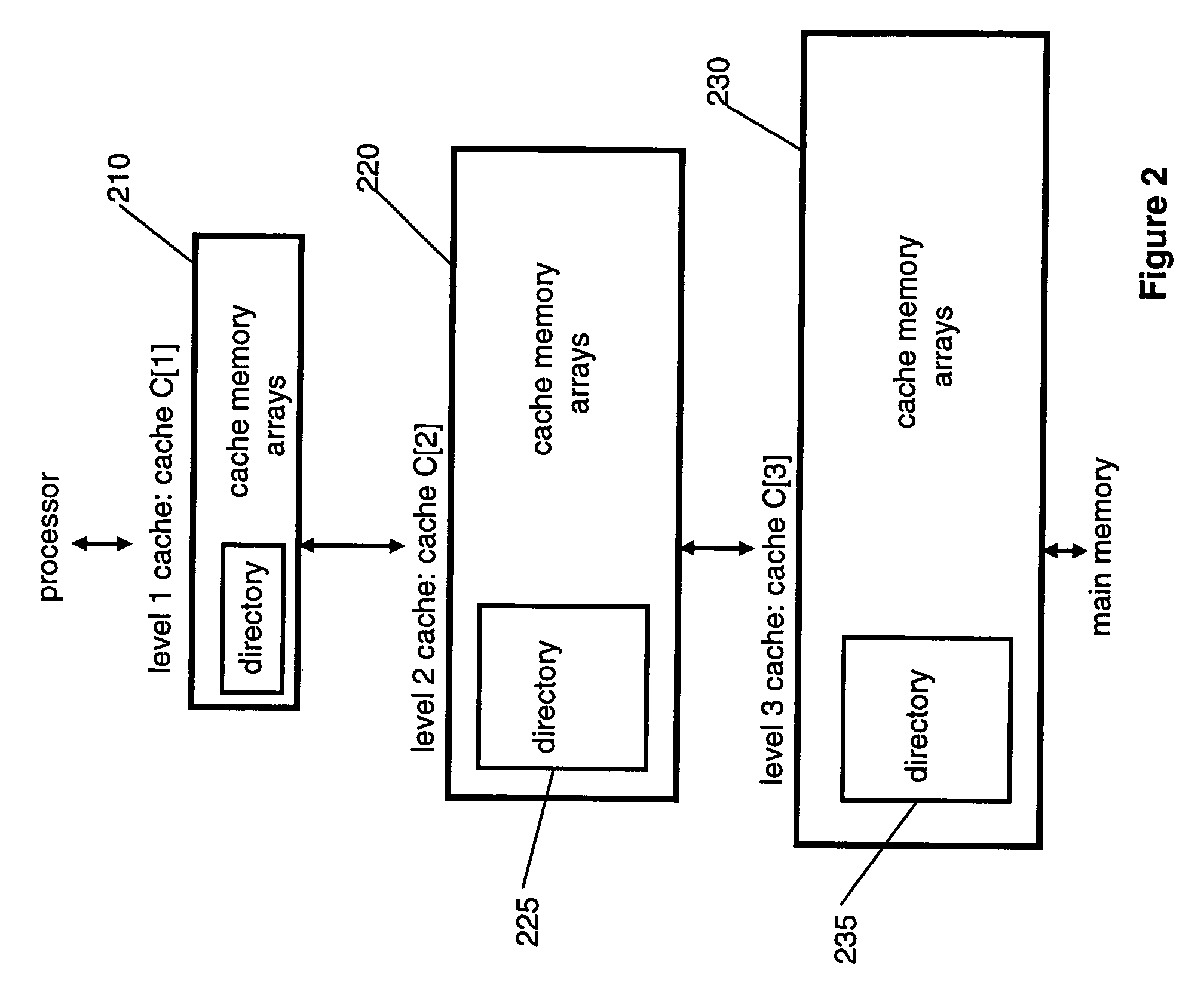

Enabling and disabling cache bypass using predicted cache line usage

InactiveUS20060112233A1Improve system performanceImprove hit rateMemory systemsCache hierarchyCache access

Arrangements and method for enabling and disabling cache bypass in a computer system with a cache hierarchy. Cache bypass status is identified with respect to at least one cache line. A cache line identified as cache bypass enabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is bypassed, while a cache line identified as cache bypass disabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is not bypassed. Included is an arrangement for selectively enabling or disabling cache bypass with respect to at least one cache line based on historical cache access information.

Owner:IBM CORP

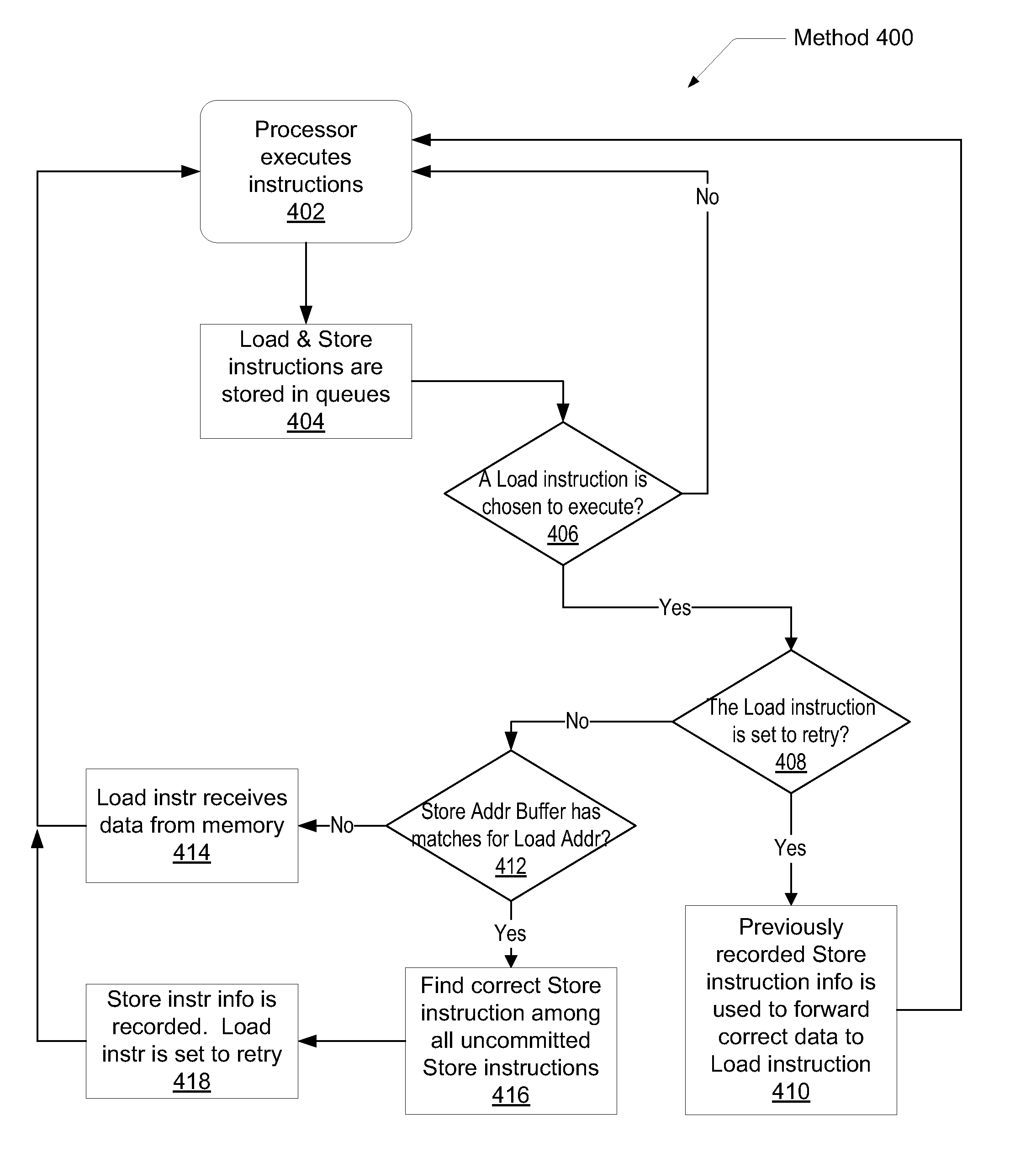

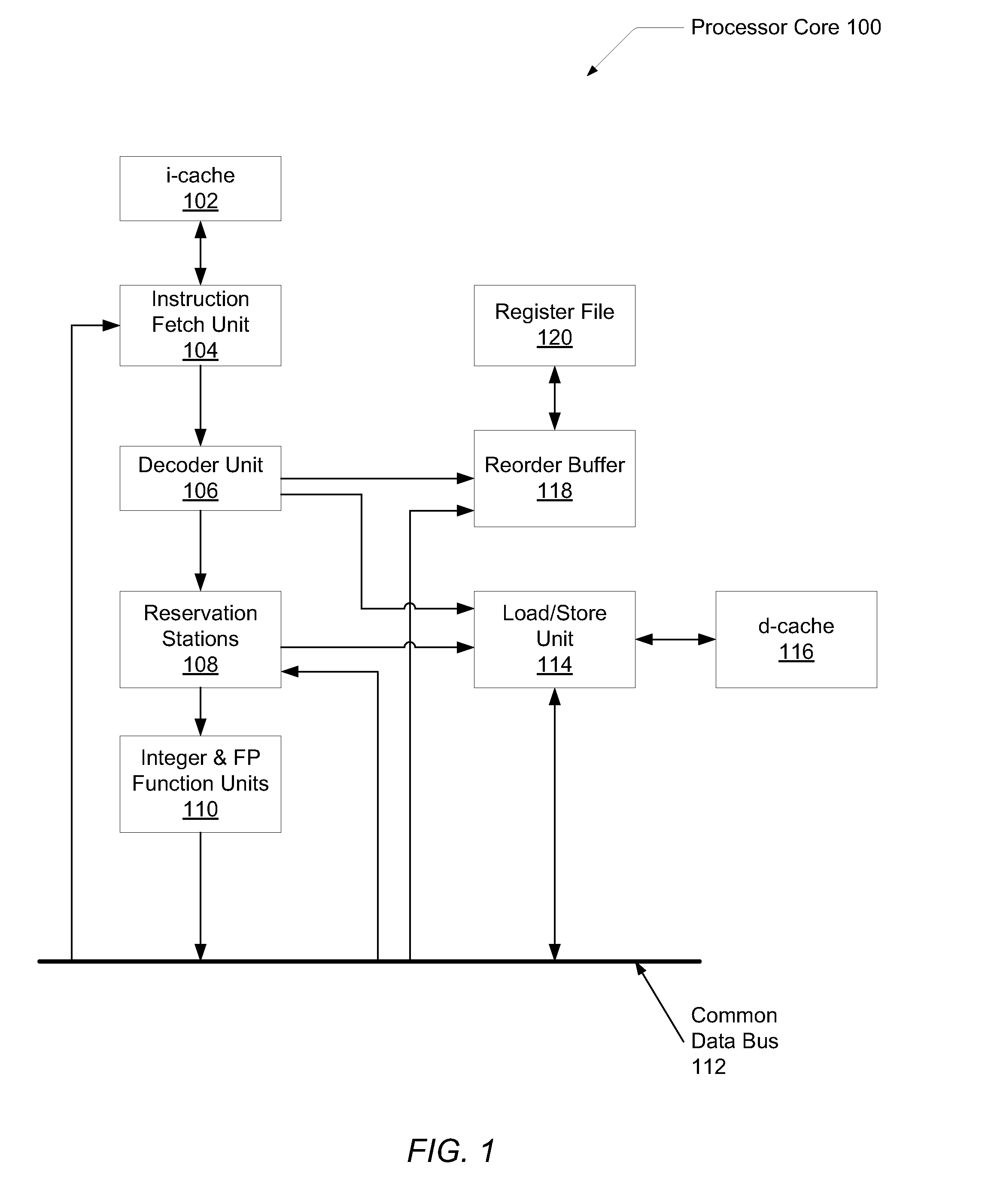

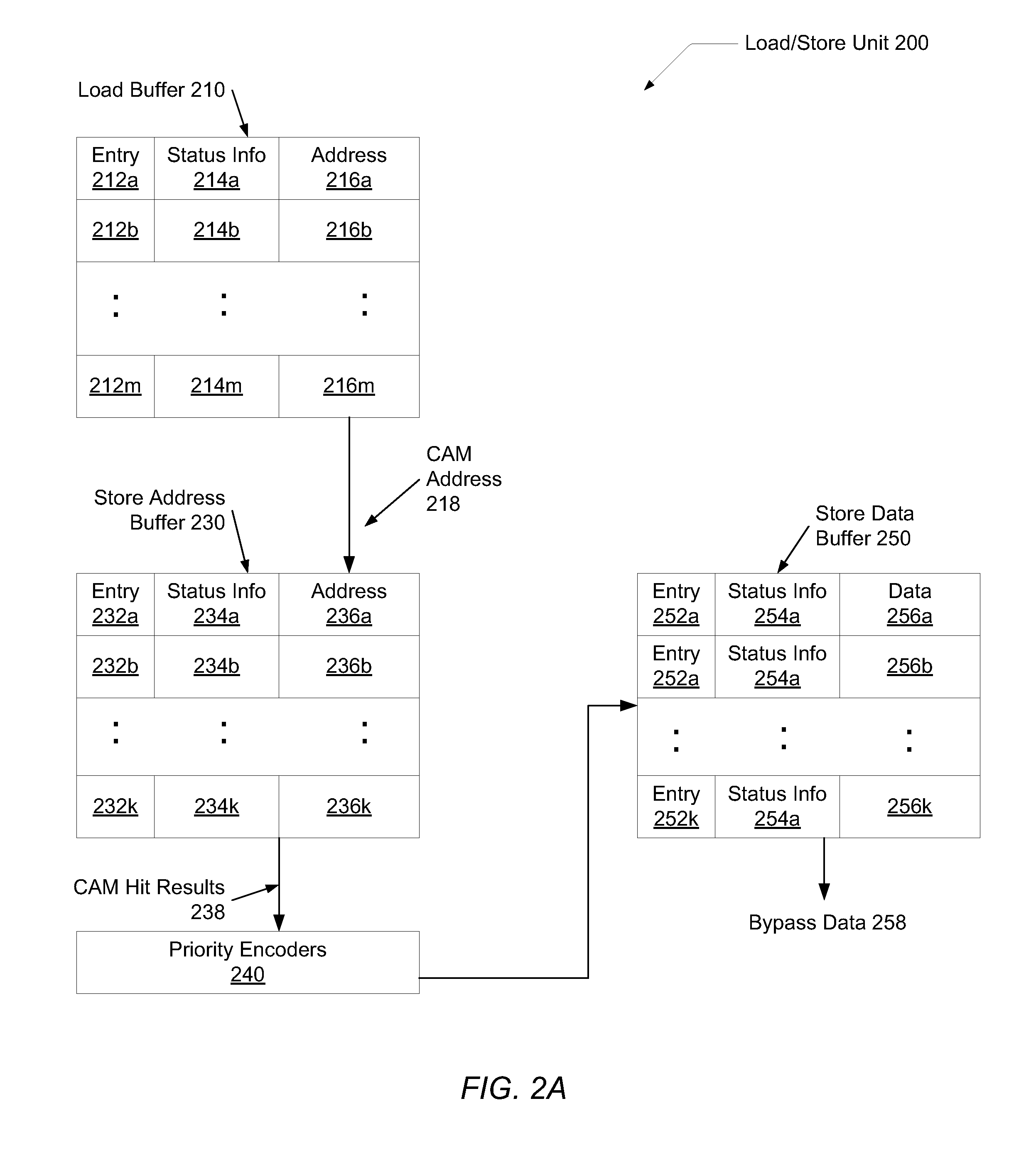

System and method of load-store forwarding

ActiveUS20090037697A1Improve processor performanceImprove performanceDigital computer detailsMemory systemsLoad instructionStore and forward

A system and method for data forwarding from a store instruction to a load instruction during out-of-order execution, when the load instruction address matches against multiple older uncommitted store addresses or if the forwarding fails during the first pass due to any other reason. In a first pass, the youngest store instruction in program order of all store instructions older than a load instruction is found and an indication to the store buffer entry holding information of the youngest store instruction is recorded. In a second pass, the recorded indication is used to index the store buffer and the store bypass data is forwarded to the load instruction. Simultaneously, it is verified if no new store, younger than the previously identified store and older than the load has not been issued due to out-of-order execution.

Owner:ADVANCED MICRO DEVICES INC

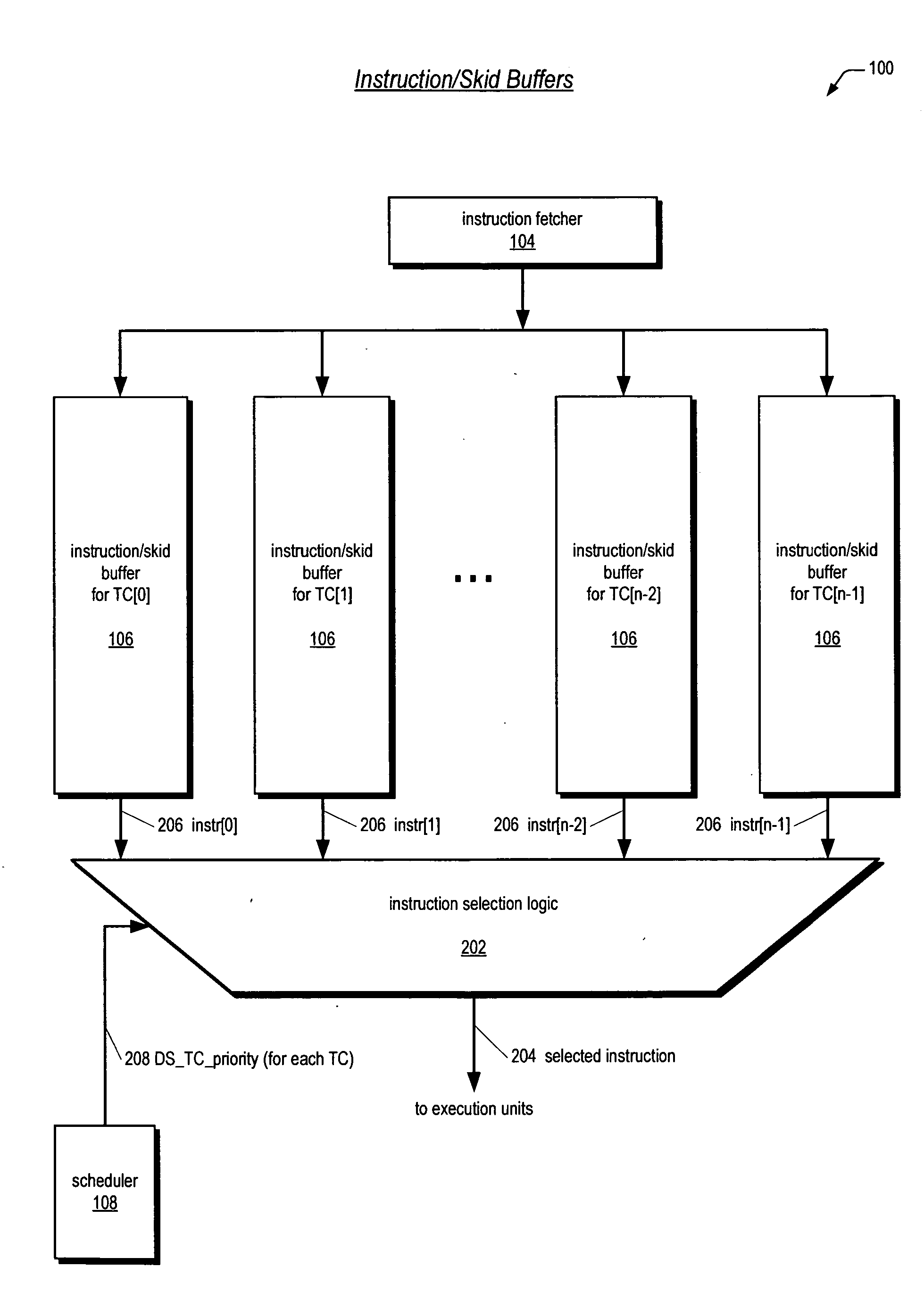

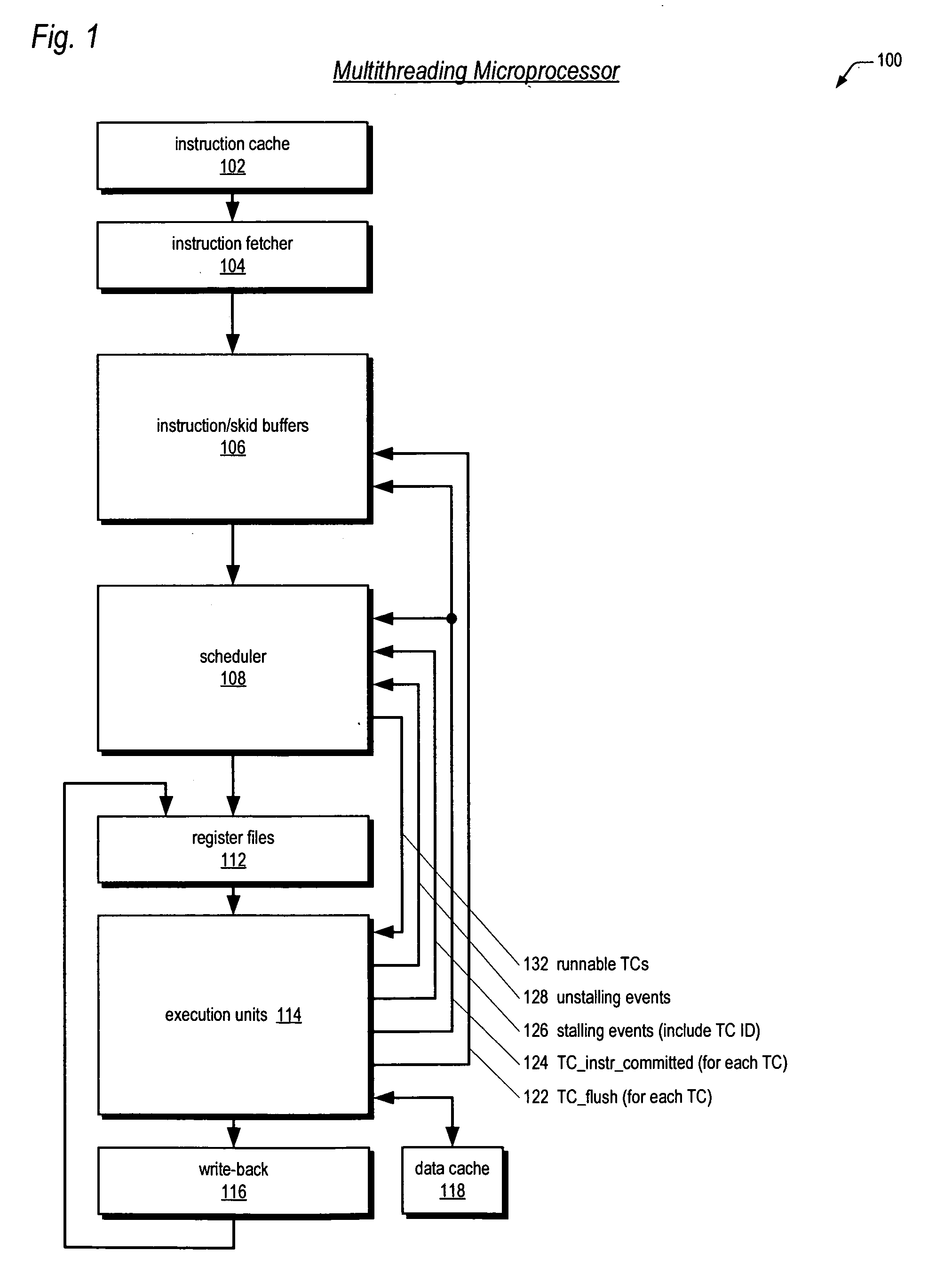

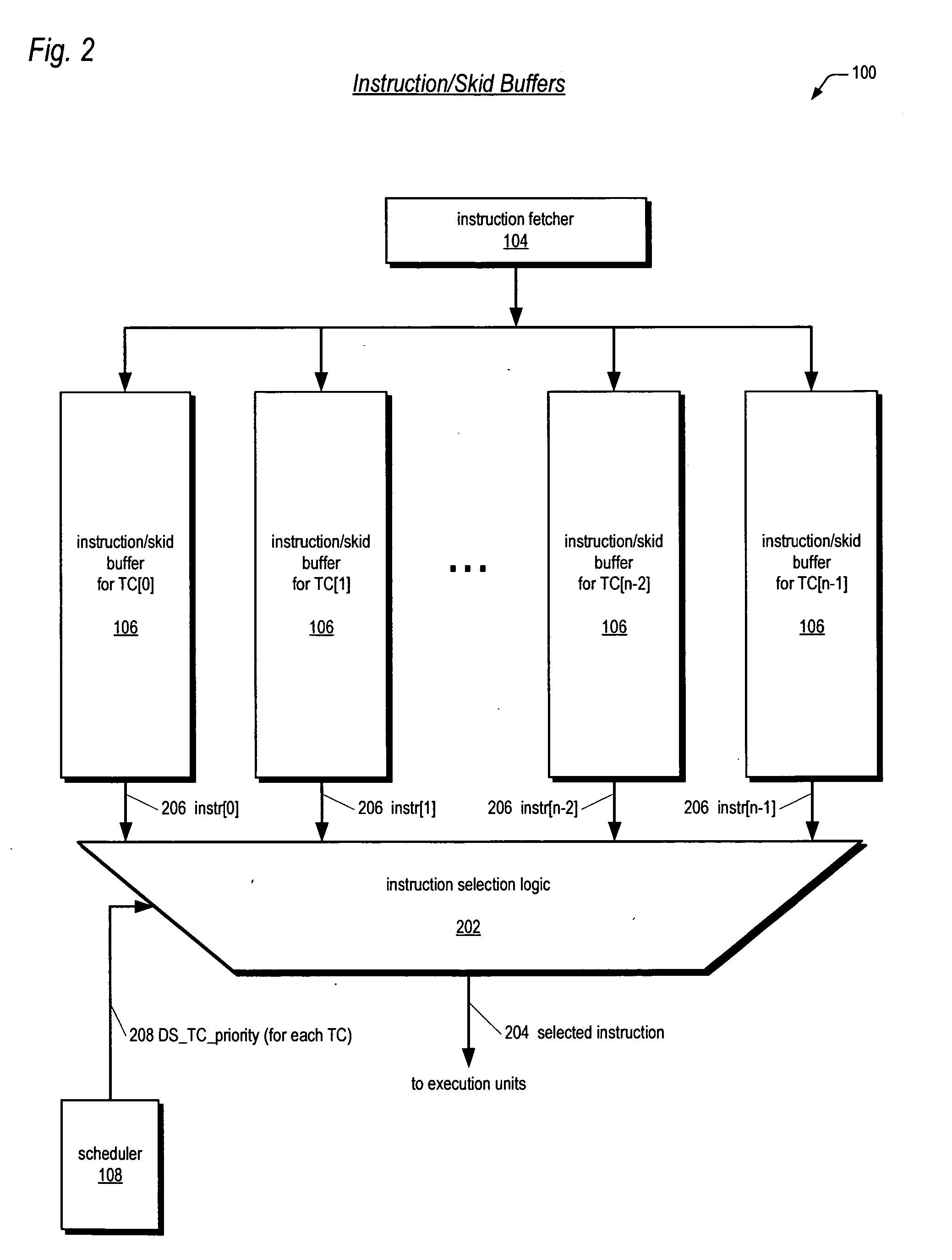

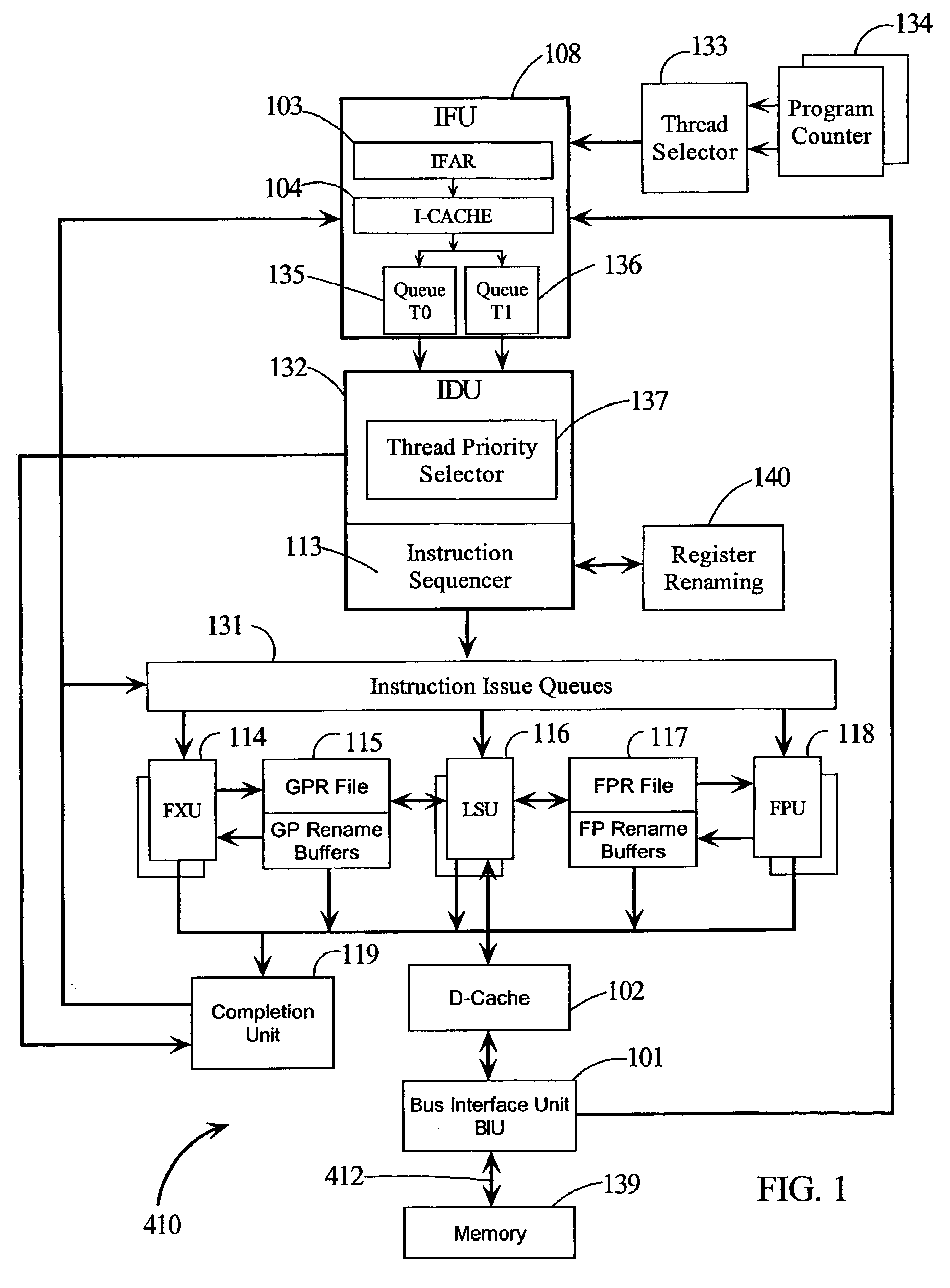

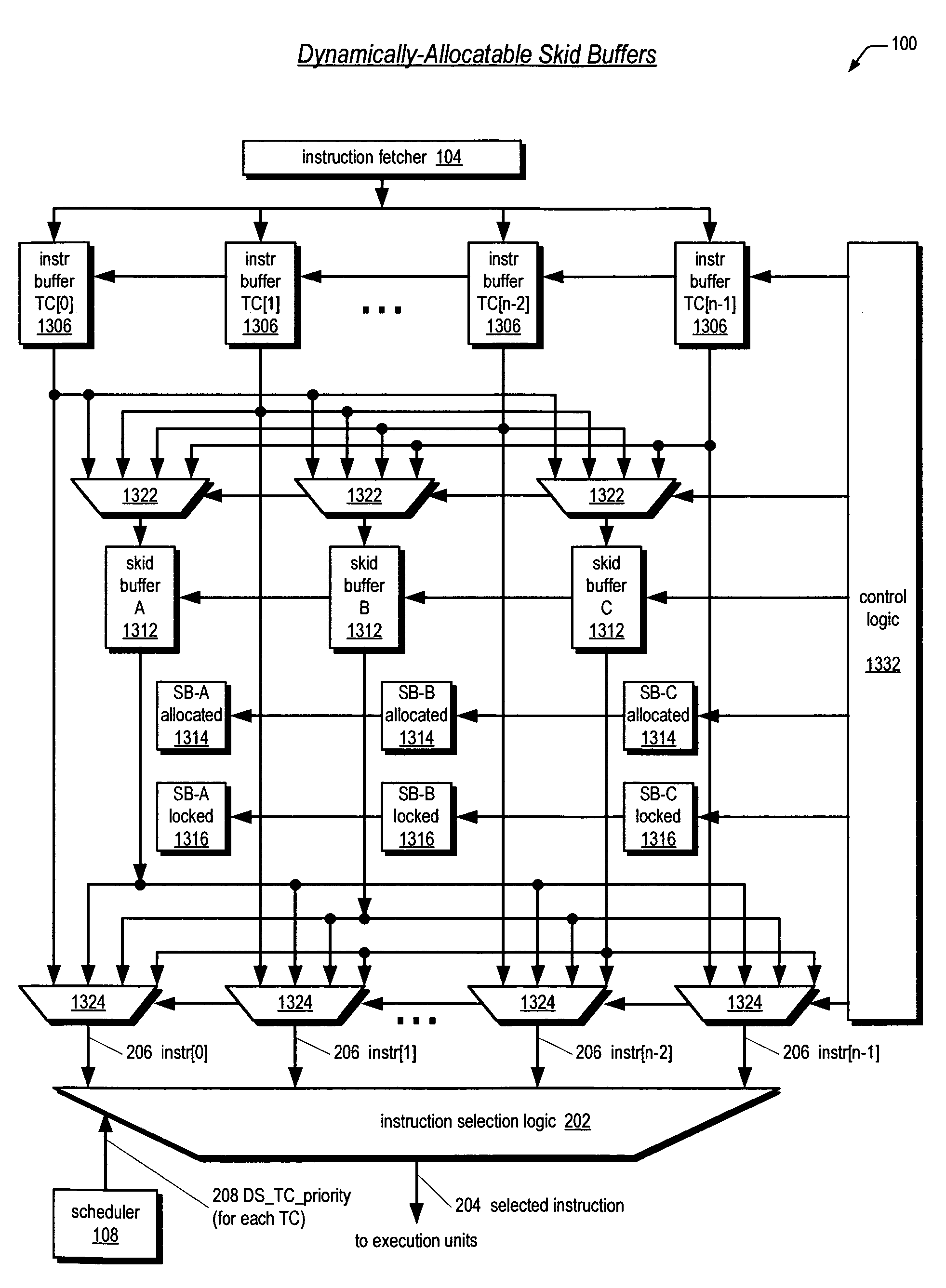

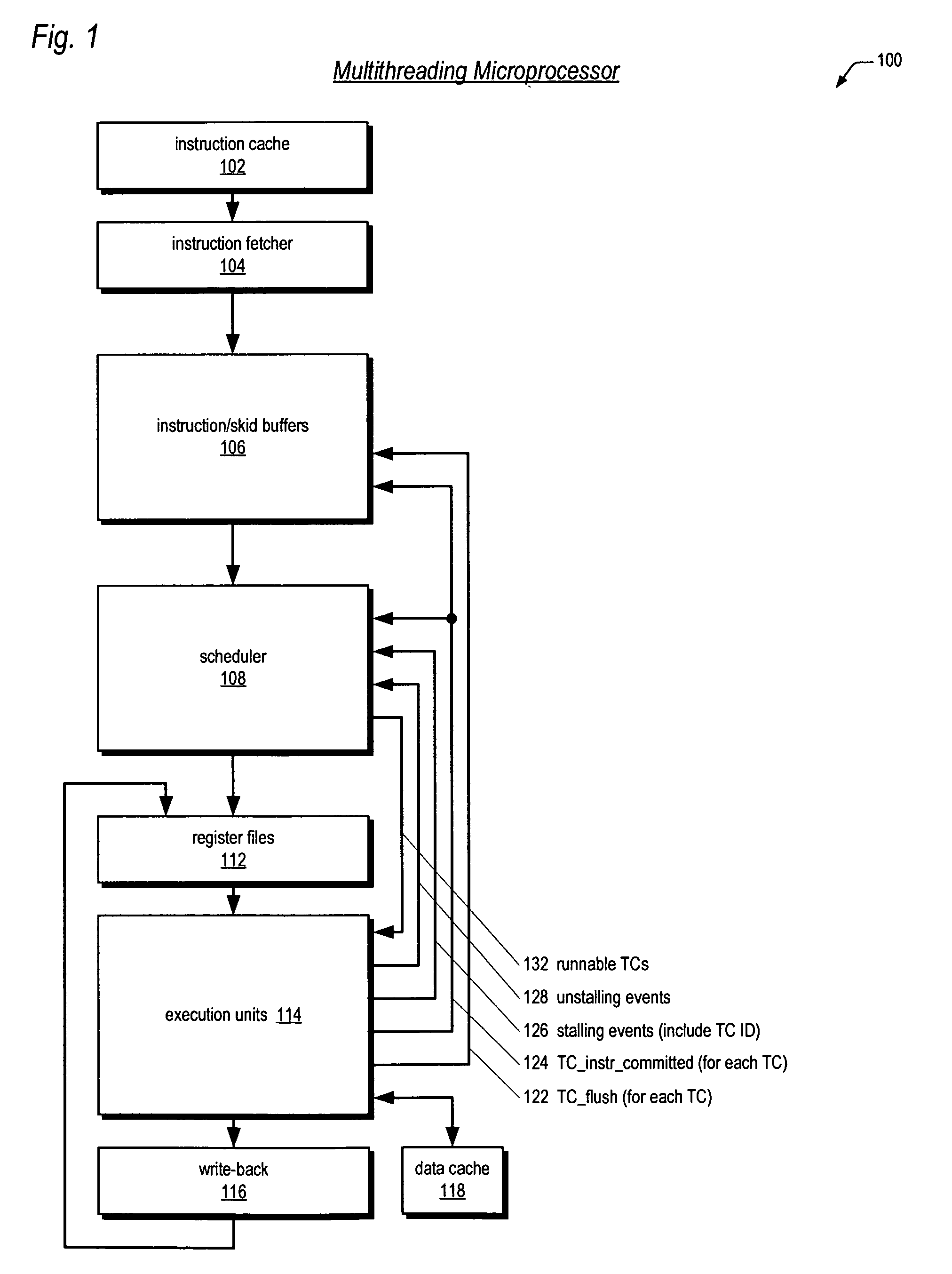

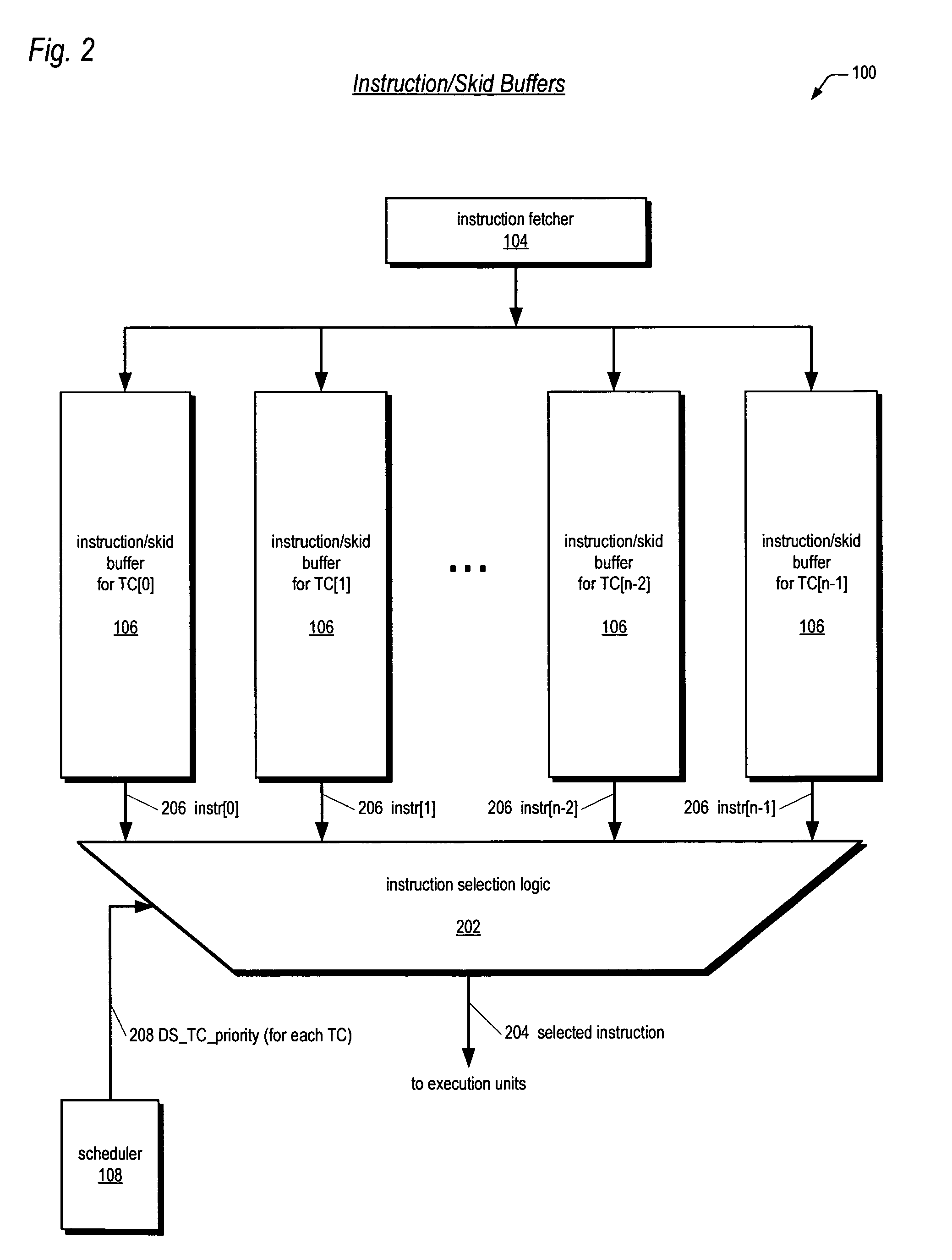

Instruction/skid buffers in a multithreading microprocessor

ActiveUS20060179274A1Reduce the amount requiredImprove processor performanceDigital computer detailsMemory systemsParallel computingControl logic

An apparatus for reducing instruction re-fetching in a multithreading processor configured to concurrently execute a plurality of threads is disclosed. The apparatus includes a buffer for each thread that stores fetched instructions of the thread, having an indicator for indicating which of the fetched instructions in the buffer have already been dispatched for execution. An input for each thread indicates that one or more of the already-dispatched instructions in the buffer has been flushed from execution. Control logic for each thread updates the indicator to indicate the flushed instructions are no longer already-dispatched, in response to the input. This enables the processor to re-dispatch the flushed instructions from the buffer to avoid re-fetching the flushed instructions. In one embodiment, there are fewer buffers than threads, and they are dynamically allocatable by the threads. In one embodiment, a single integrated buffer is shared by all the threads.

Owner:MIPS TECH INC

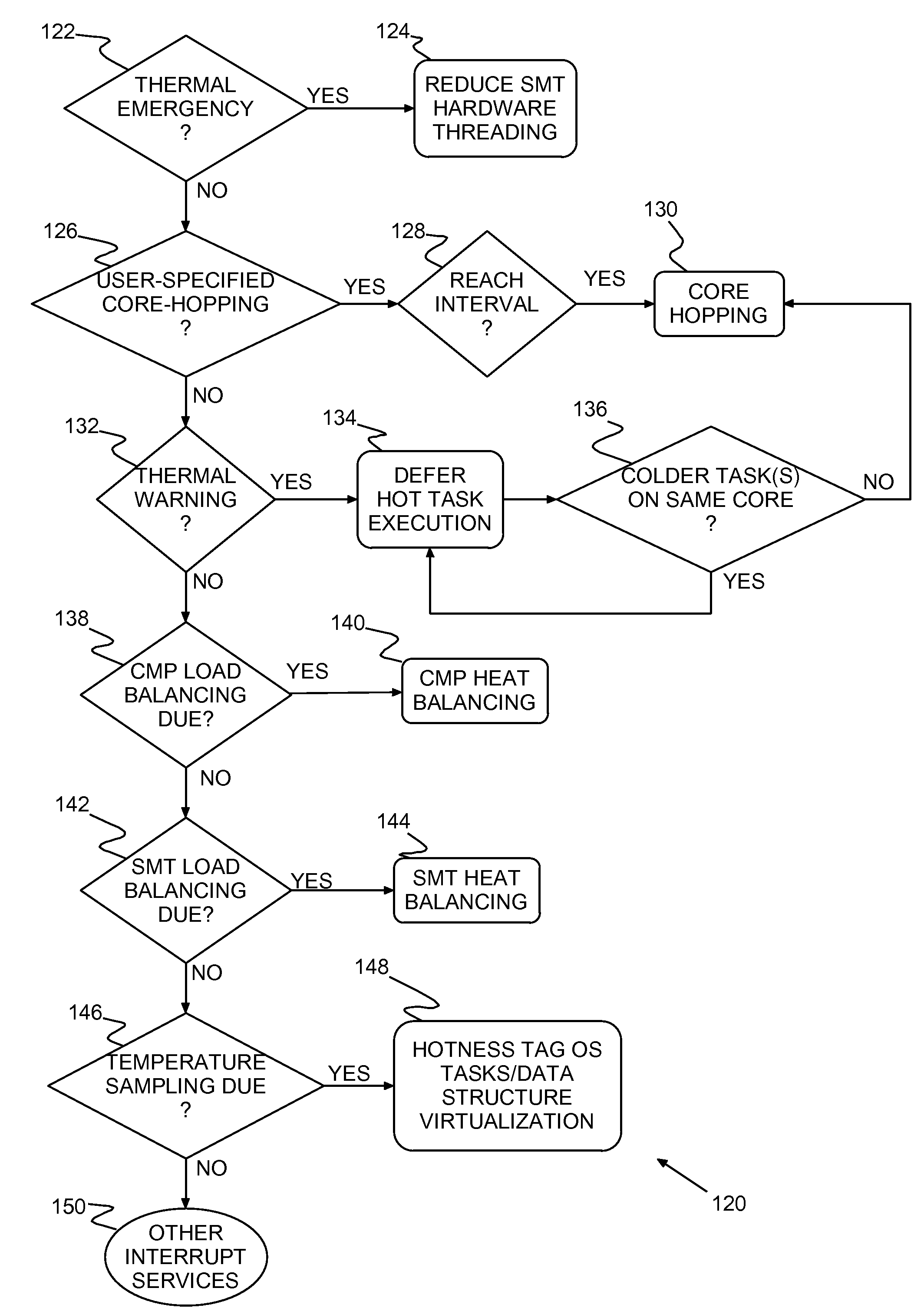

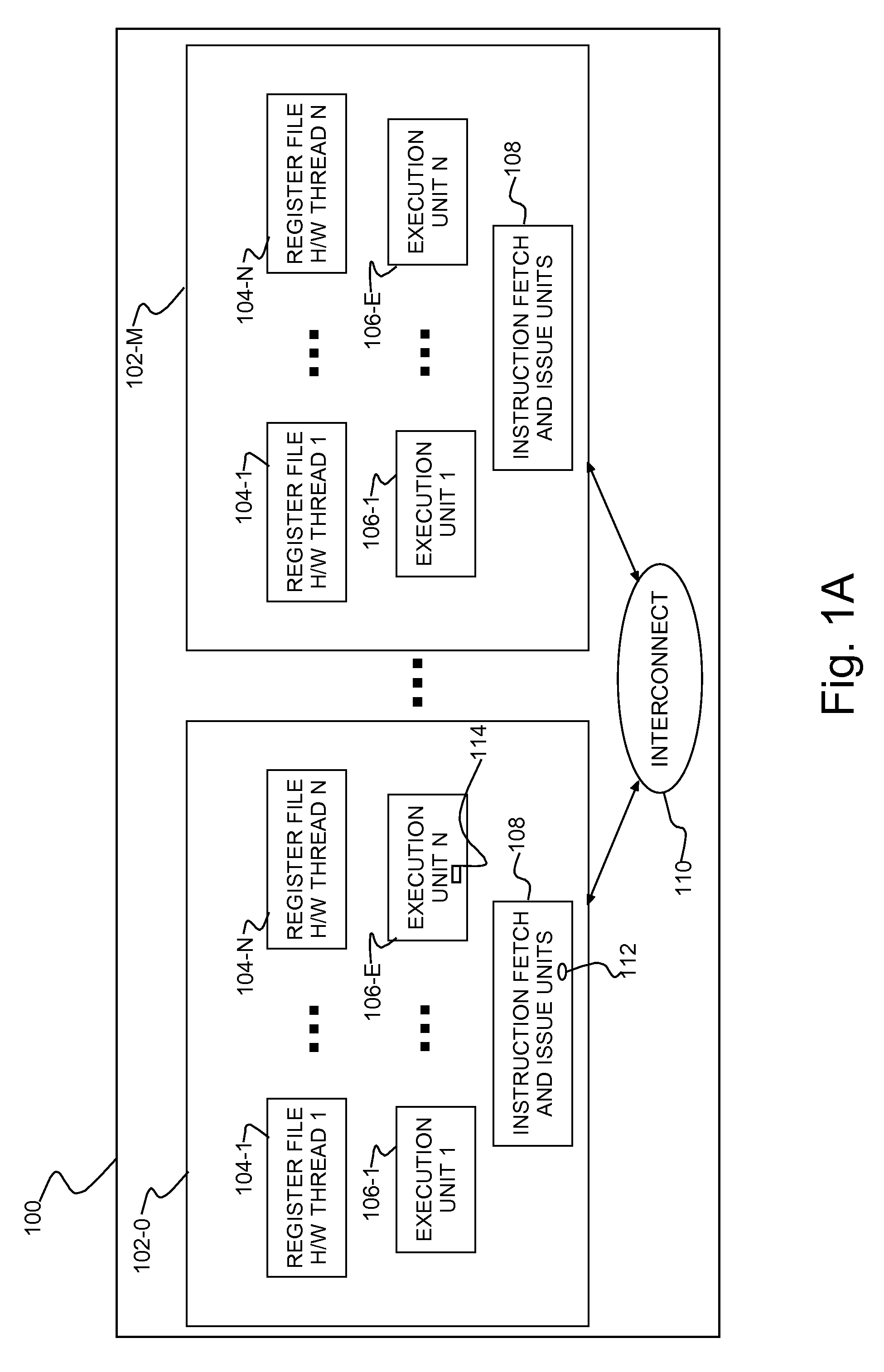

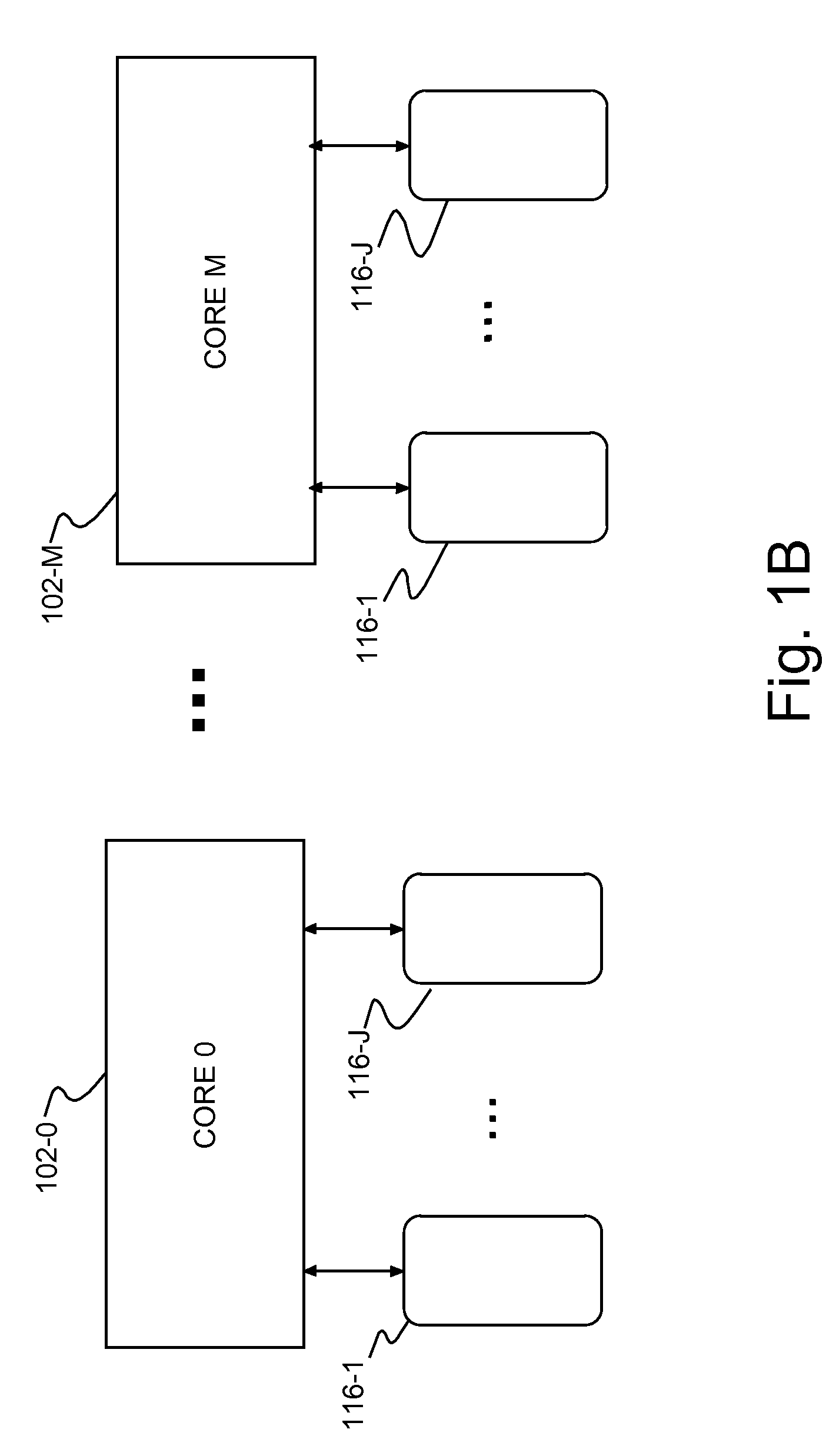

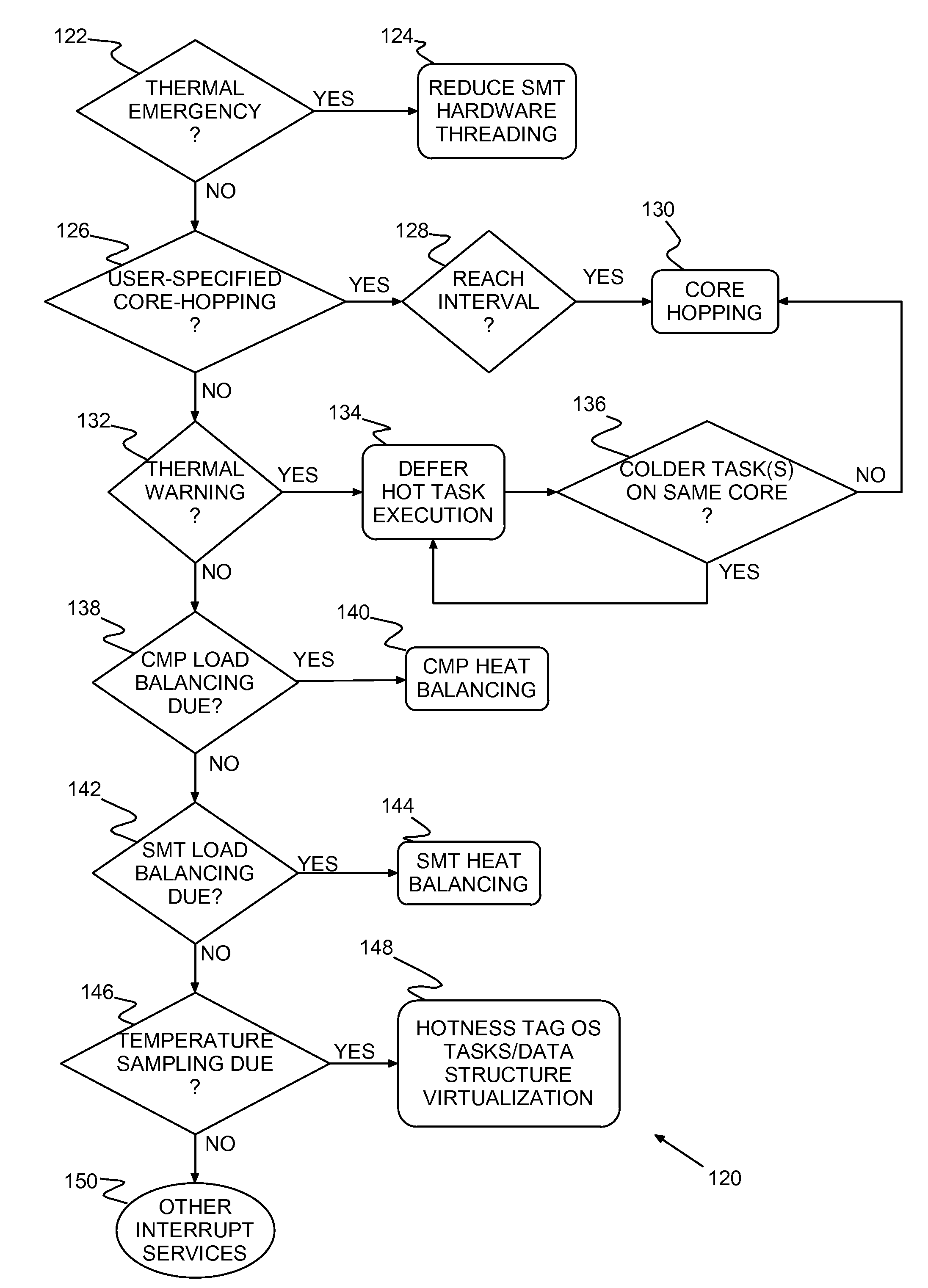

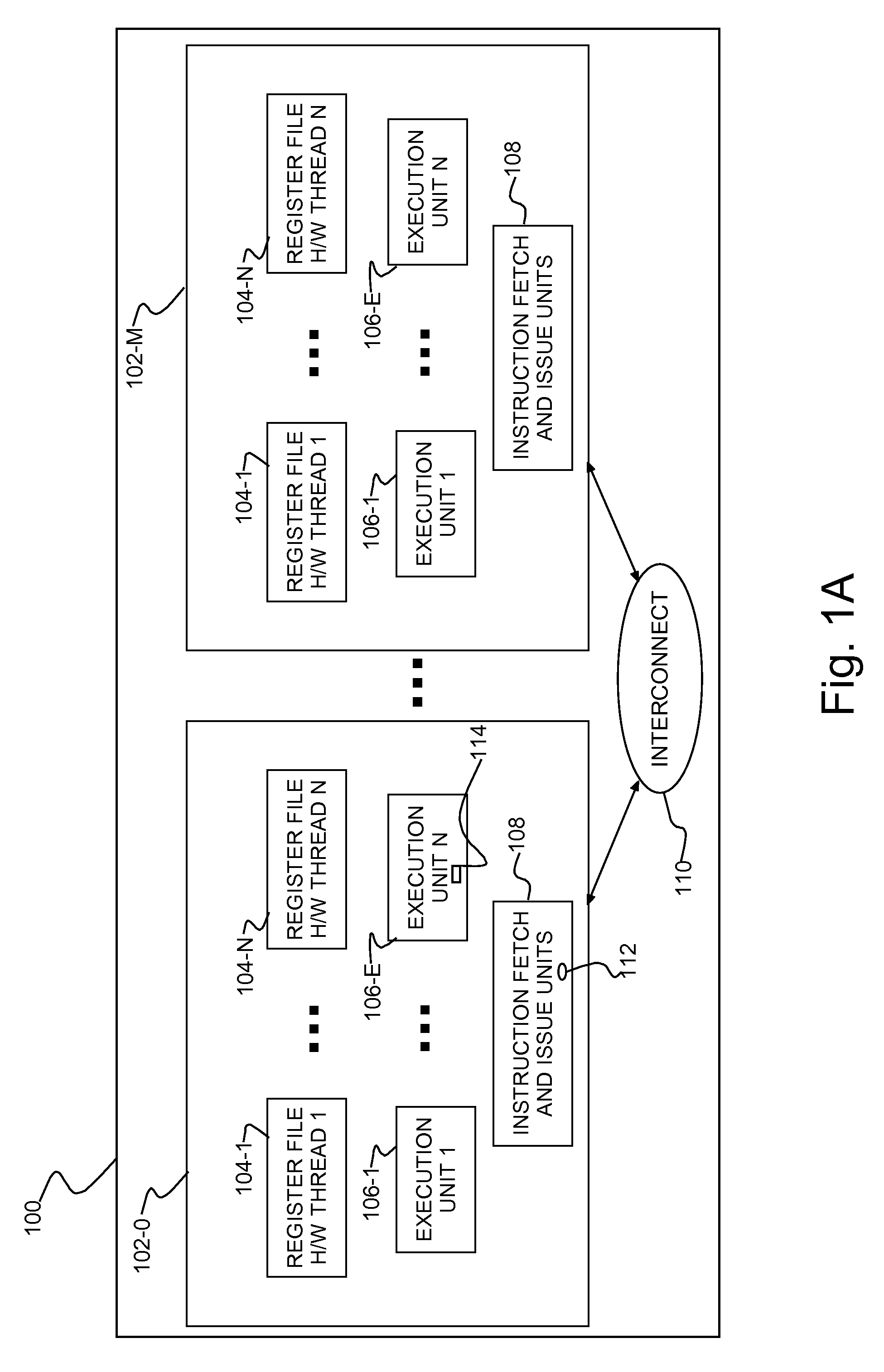

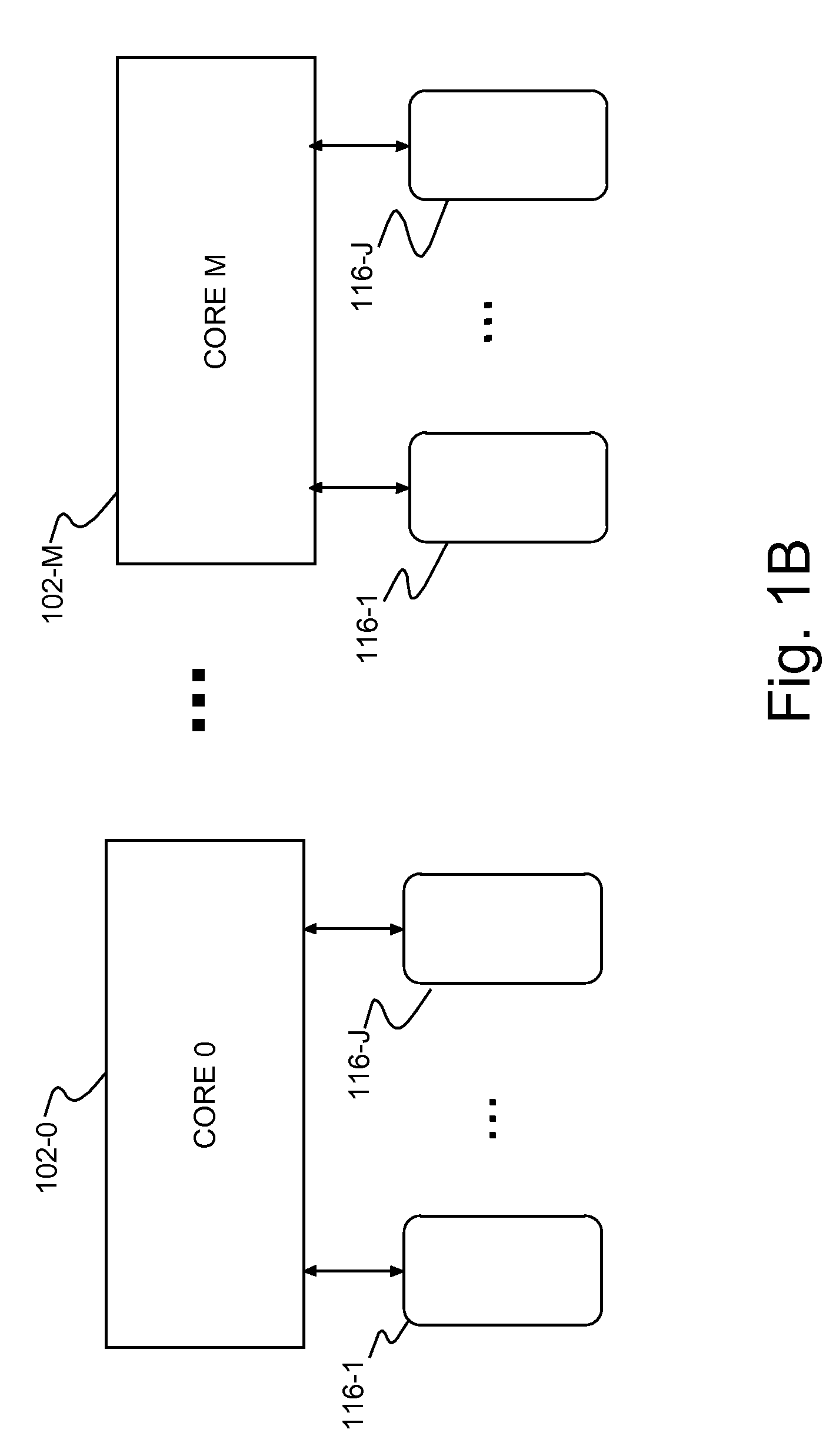

Method of virtualization and os-level thermal management and multithreaded processor with virtualization and os-level thermal management

InactiveUS20090064164A1Improve performanceImprove processor performanceEnergy efficient ICTVolume/mass flow measurementVirtualizationIntegrated circuit

A program product and method of managing task execution on an integrated circuit chip such as a chip-level multiprocessor (CMP) with Simultaneous MultiThreading (SMT). Multiple chip operating units or cores have chip sensors (temperature sensors or counters) for monitoring temperature in units. Task execution is monitored for hot tasks and especially for hotspots. Task execution is balanced, thermally, to minimize hot spots. Thermal balancing may include Simultaneous MultiThreading (SMT) heat balancing, chip-level multiprocessors (CMP) heat balancing, deferring execution of identified hot tasks, migrating identified hot tasks from a current core to a colder core, User-specified Core-hopping, and SMT hardware threading.

Owner:IBM CORP

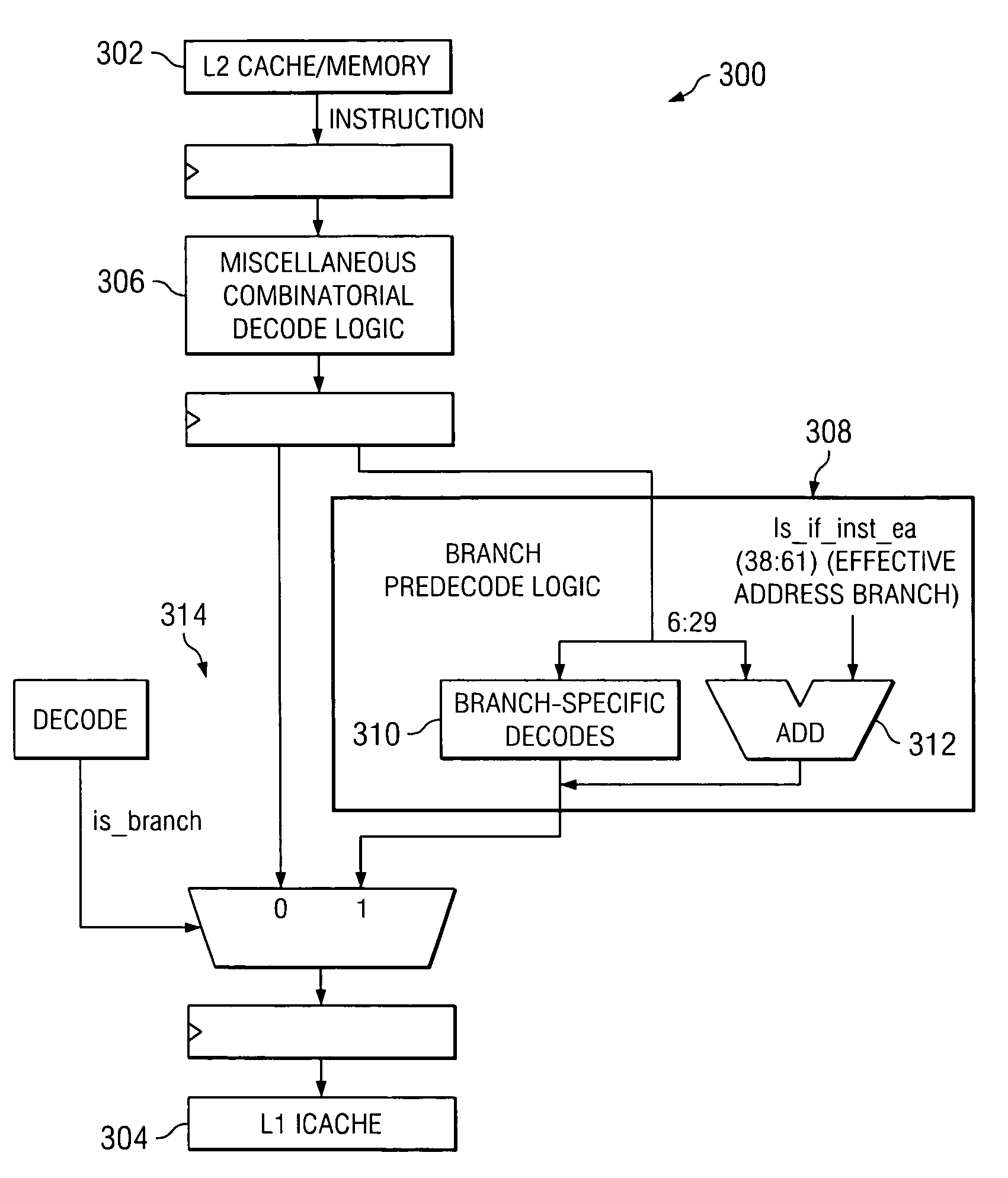

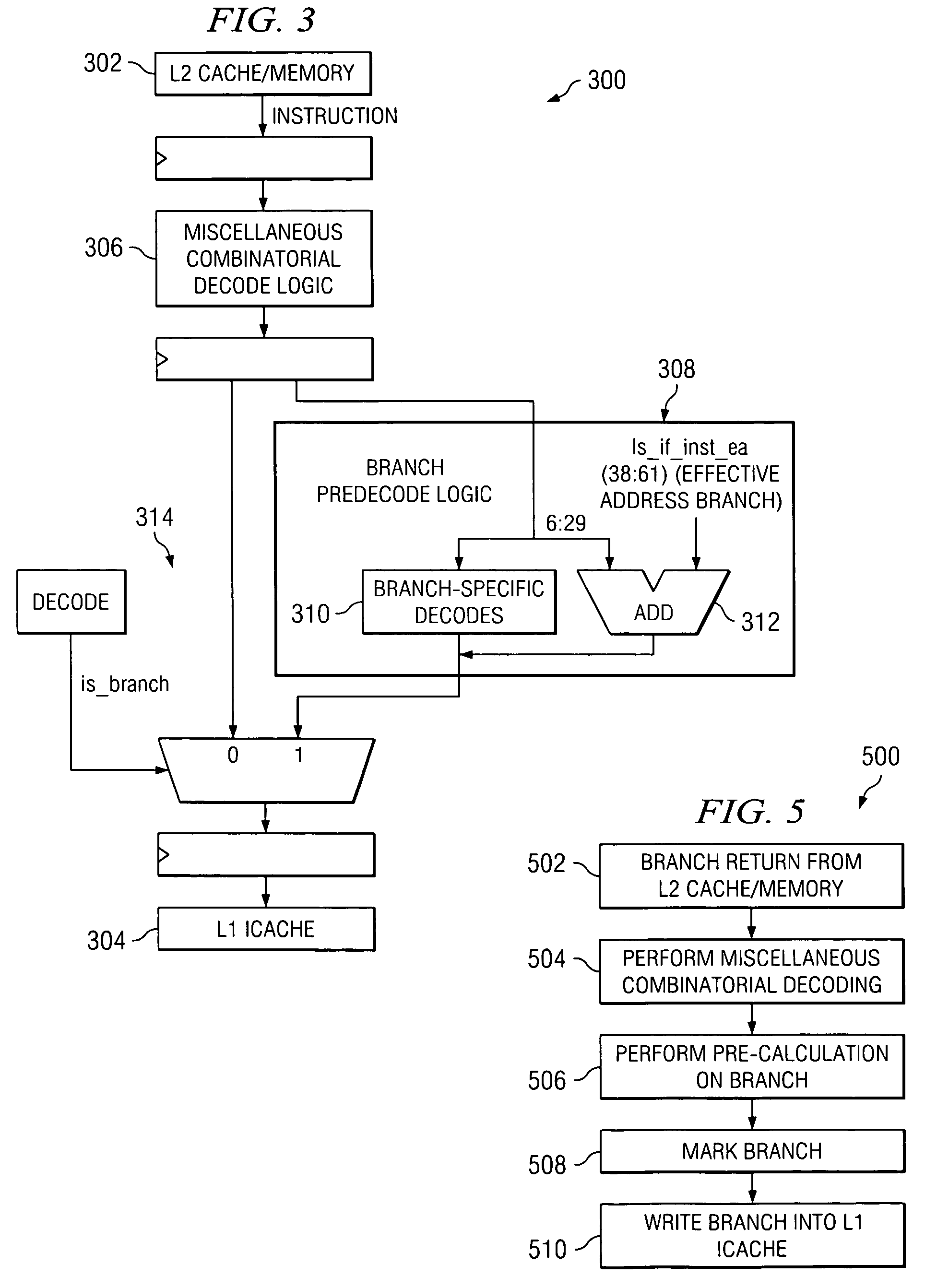

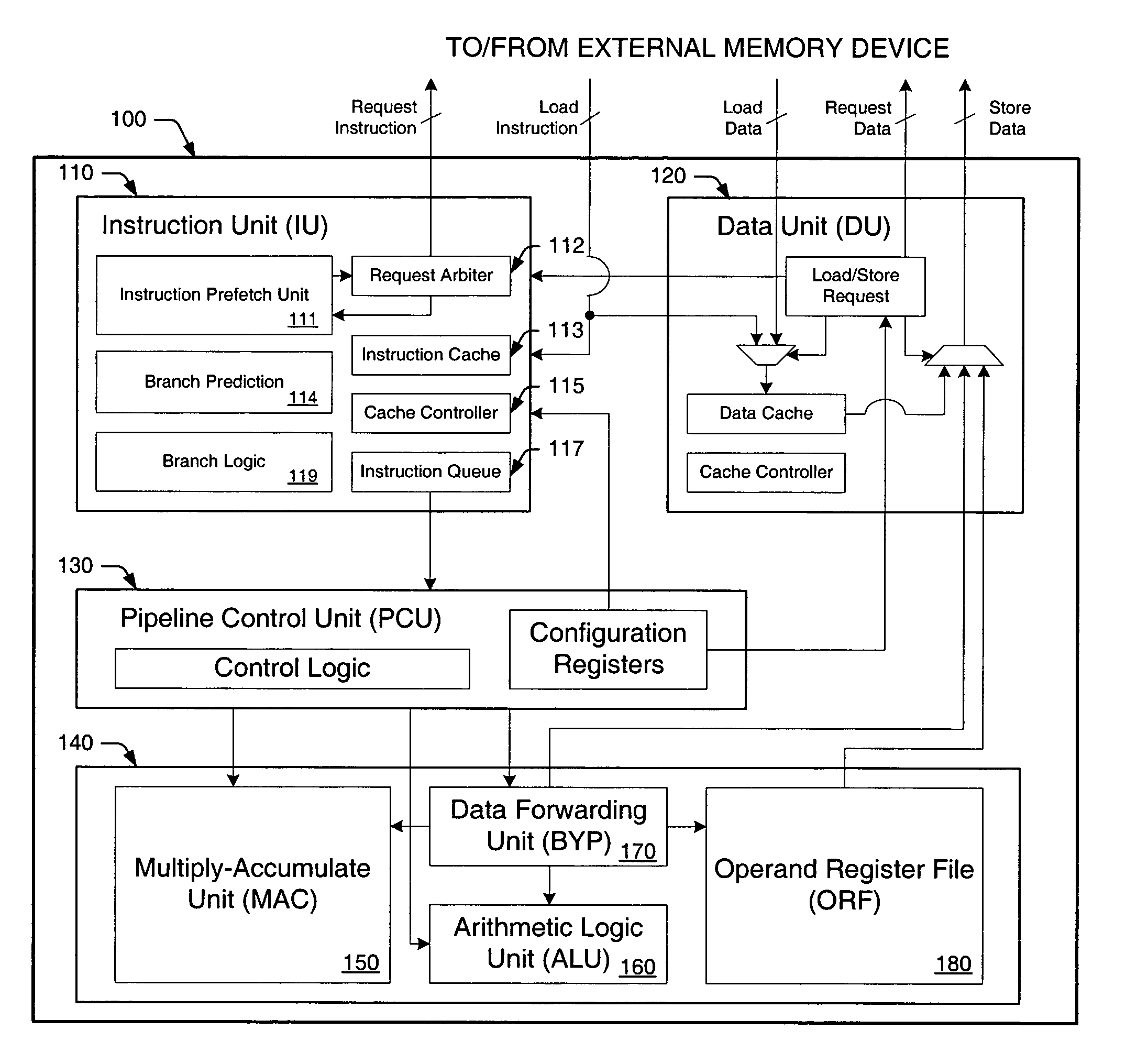

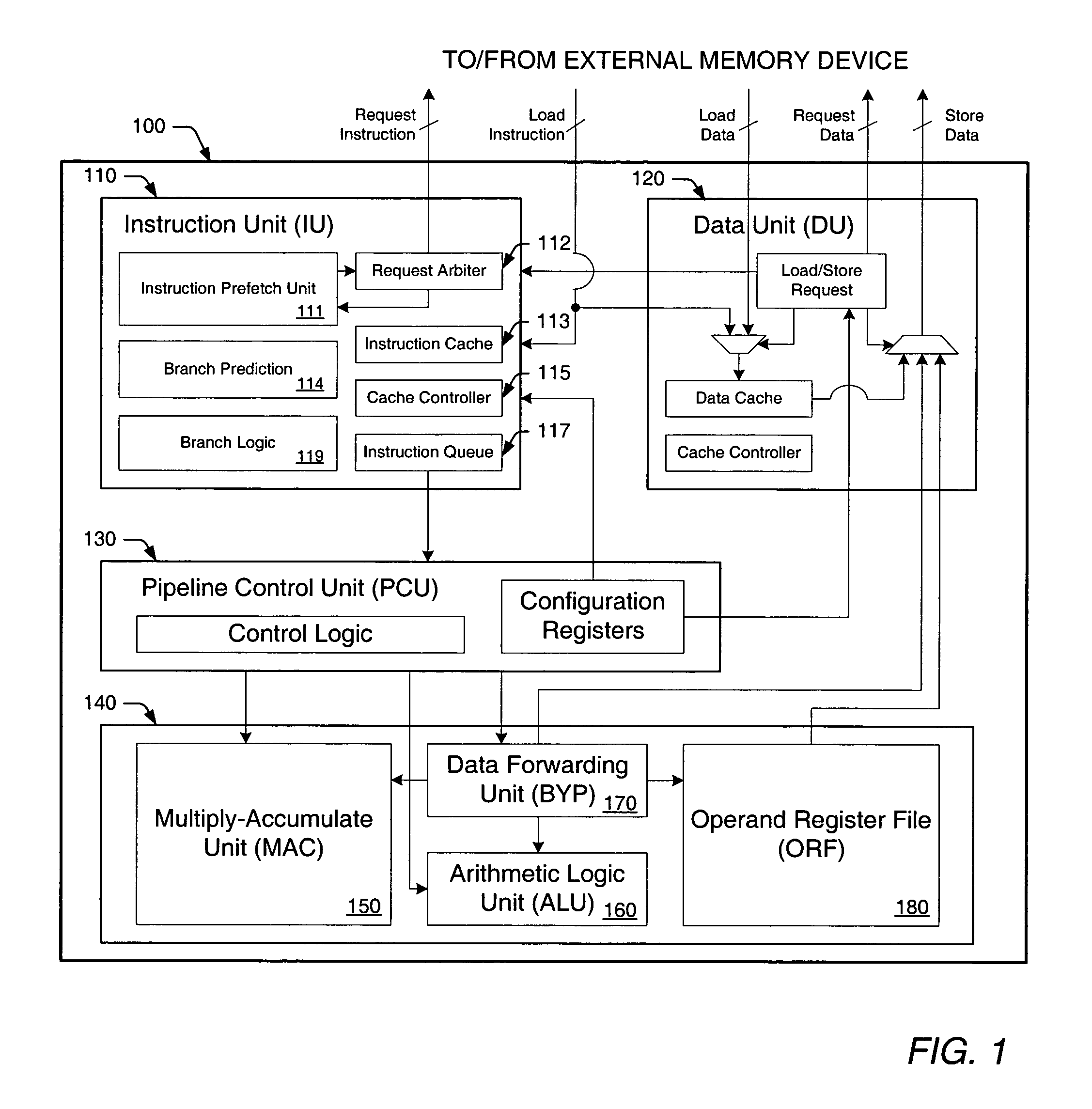

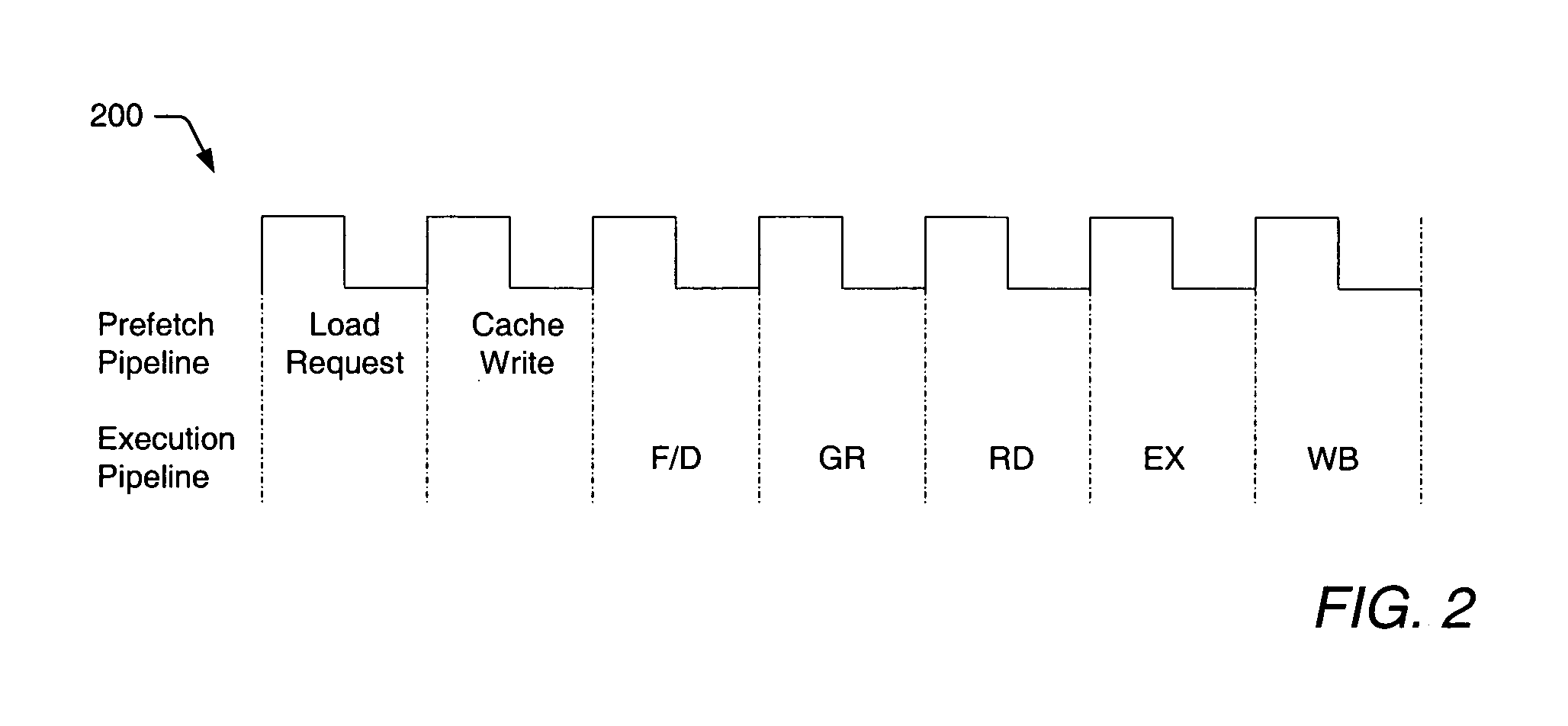

Branch encoding before instruction cache write

InactiveUS7487334B2Reduce delaysImprove processor performanceDigital computer detailsSpecific program execution arrangementsData processing systemParallel computing

Method, system and computer program product for determining the targets of branches in a data processing system. A method for determining the target of a branch in a data processing system includes performing at least one pre-calculation relating to determining the target of the branch prior to writing the branch into a Level 1 (L1) cache to provide a pre-decoded branch, and then writing the pre-decoded branch into the L1 cache. By pre-calculating matters relating to the targets of branches before the branches are written into the L1 cache, for example, by re-encoding relative branches as absolute branches, a reduction in branch redirect delay can be achieved, thus providing a substantial improvement in overall processor performance.

Owner:INT BUSINESS MASCH CORP

Method for request transaction ordering in OCP bus to AXI bus bridge design

ActiveUS7457905B2Improve processor performanceImprove performanceData switching networksElectric digital data processingControl logicComparator

A request transaction ordering method and system includes designing of the Open Core Protocol (OCP) bus to an Advanced extensible Interface (AXI) bus bridge. The general flow of the bridge is to accept a plurality of read and write requests from the OCP bus and convert them to a plurality of AXI read and write requests. Control logic is set for each first in first out policy of push and pop control and for a plurality of handshake signals in OCP and in the AXI. The request ordering part of the bridge performs hazard checking to preserve required order policies for both OCP and AXI bus protocols by using a FIFO (first in first out) policy to hold the outstanding writes, a plurality of comparators, a first in first out policy to hold OCP identities for a plurality of read requests.

Owner:AVAGO TECH INT SALES PTE LTD

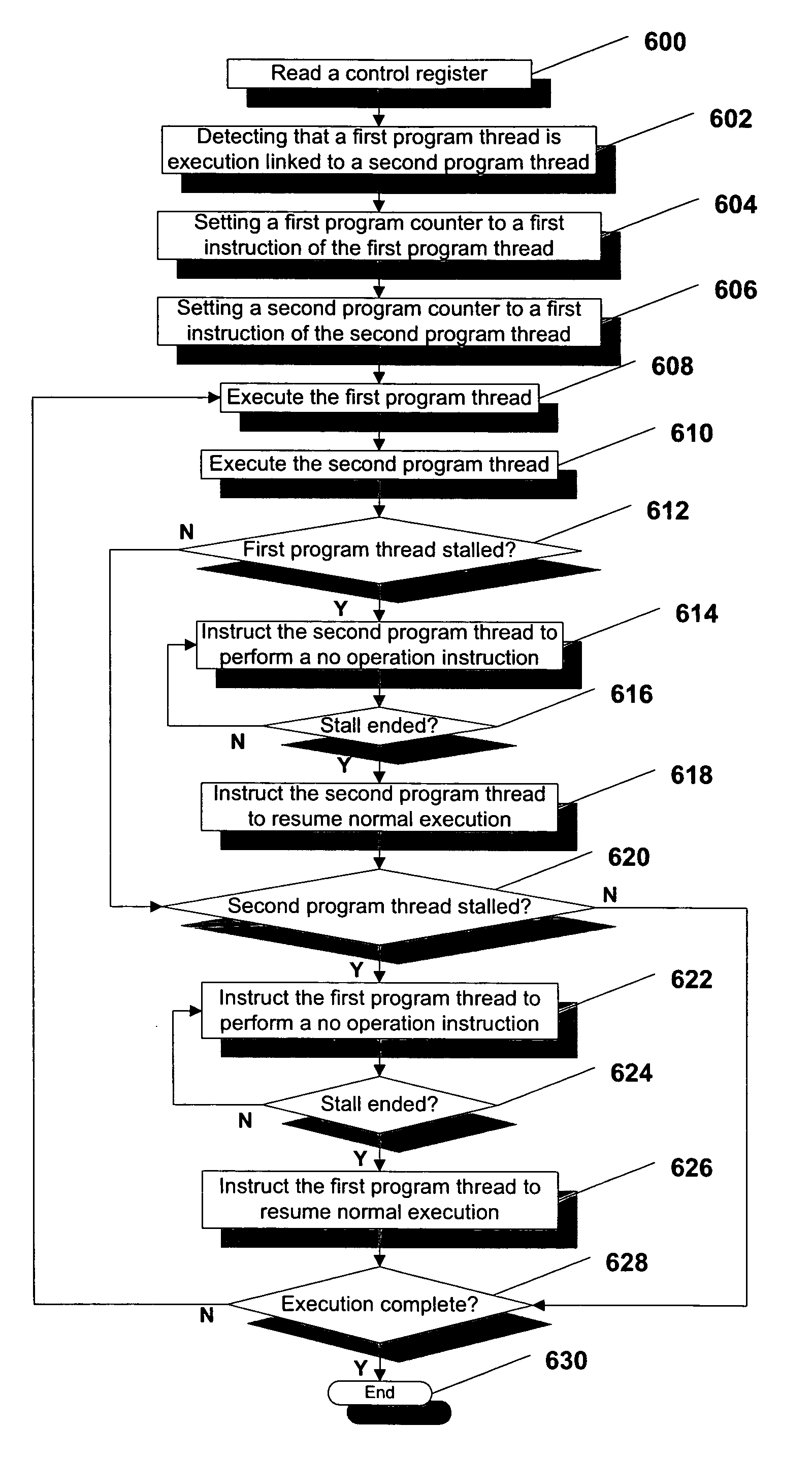

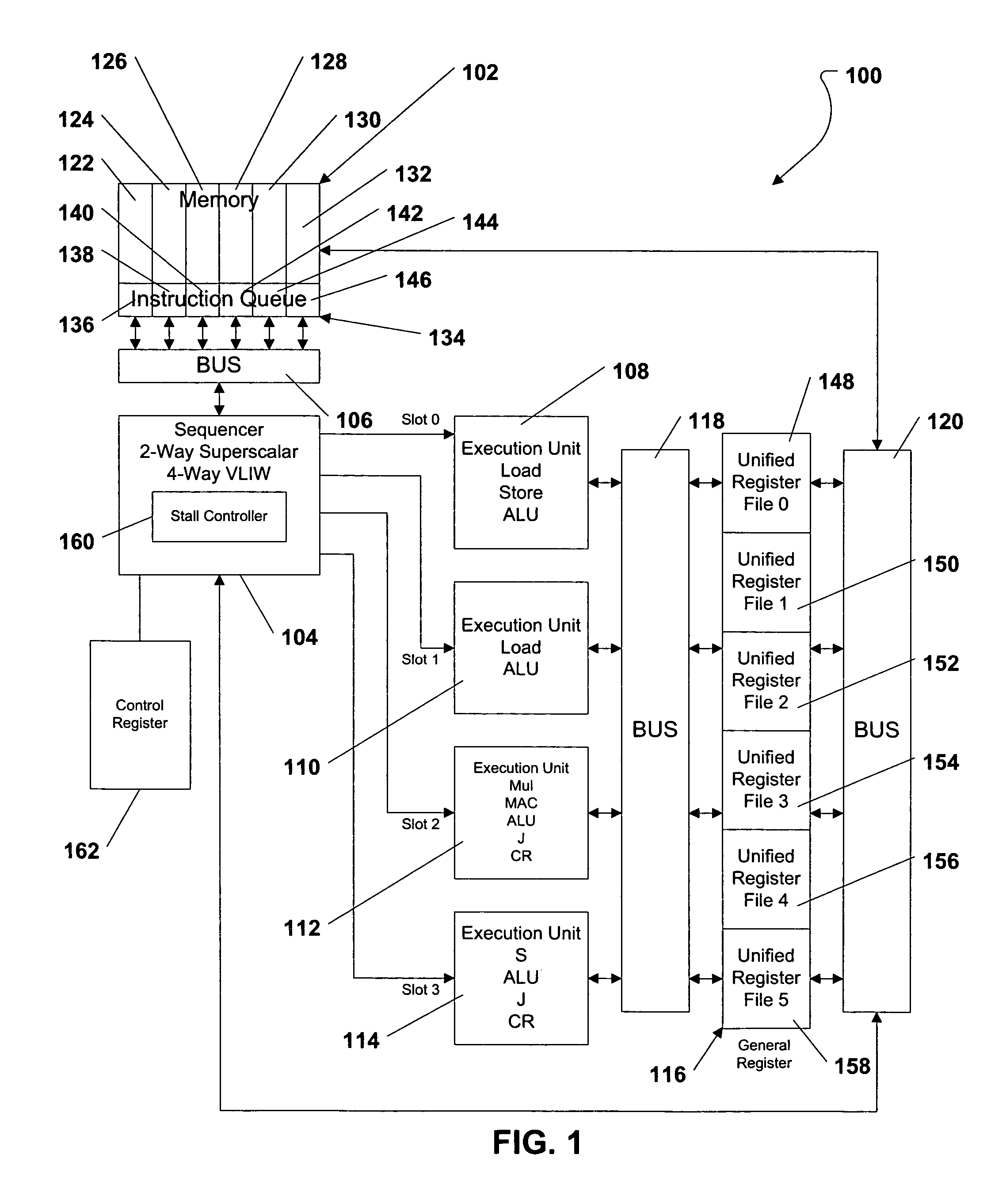

System and method of executing program threads in a multi-threaded processor

ActiveUS20060242645A1Reduces parallel programming complexityImprove processor performanceDigital computer detailsMultiprogramming arrangementsProgram ThreadOperating system

A multithreaded processor device is disclosed and includes a first program thread and second program thread. The second program thread is execution linked to the first program thread in a lock step manner. As such, when the first program thread experiences a stall event, the second program thread is instructed to perform a no operation instruction in order to keep the second program thread execution linked to the first program thread. Also, the second program thread performs a no operation instruction during each clock cycle that the first program thread is stalled due to the stall event. When the first program thread performs a first successful operation after the stall event, the second program thread restarts normal execution.

Owner:QUALCOMM INC

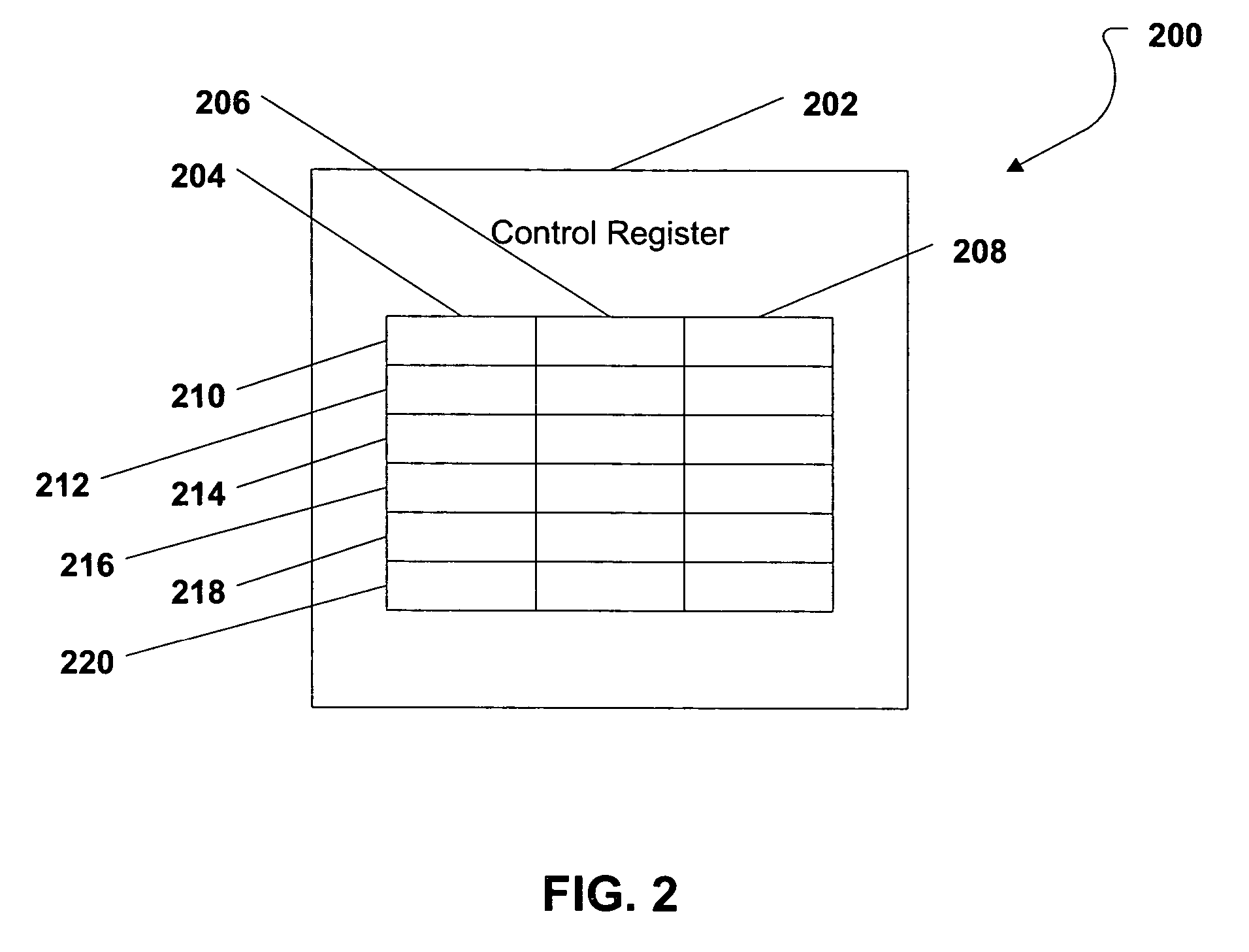

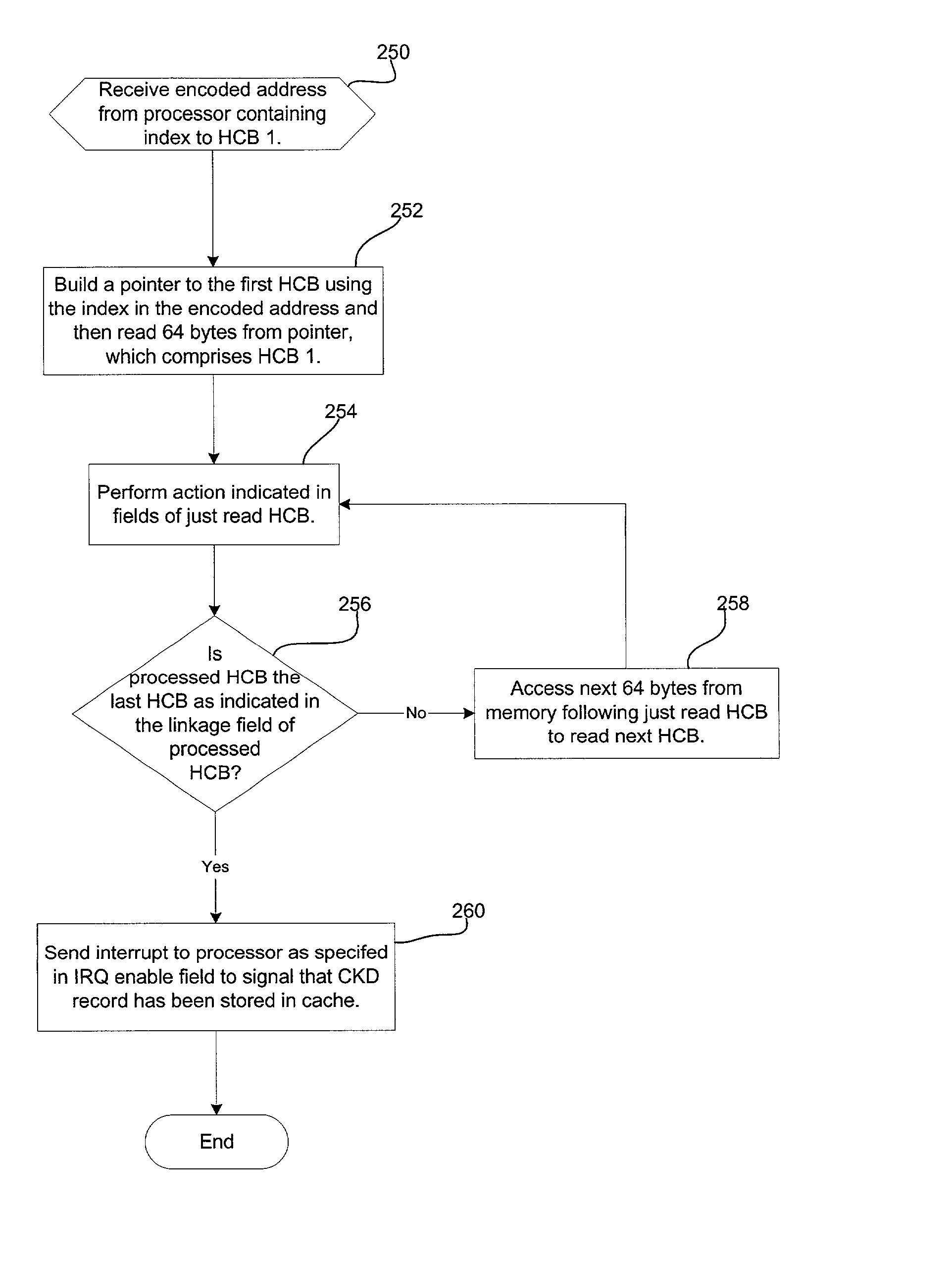

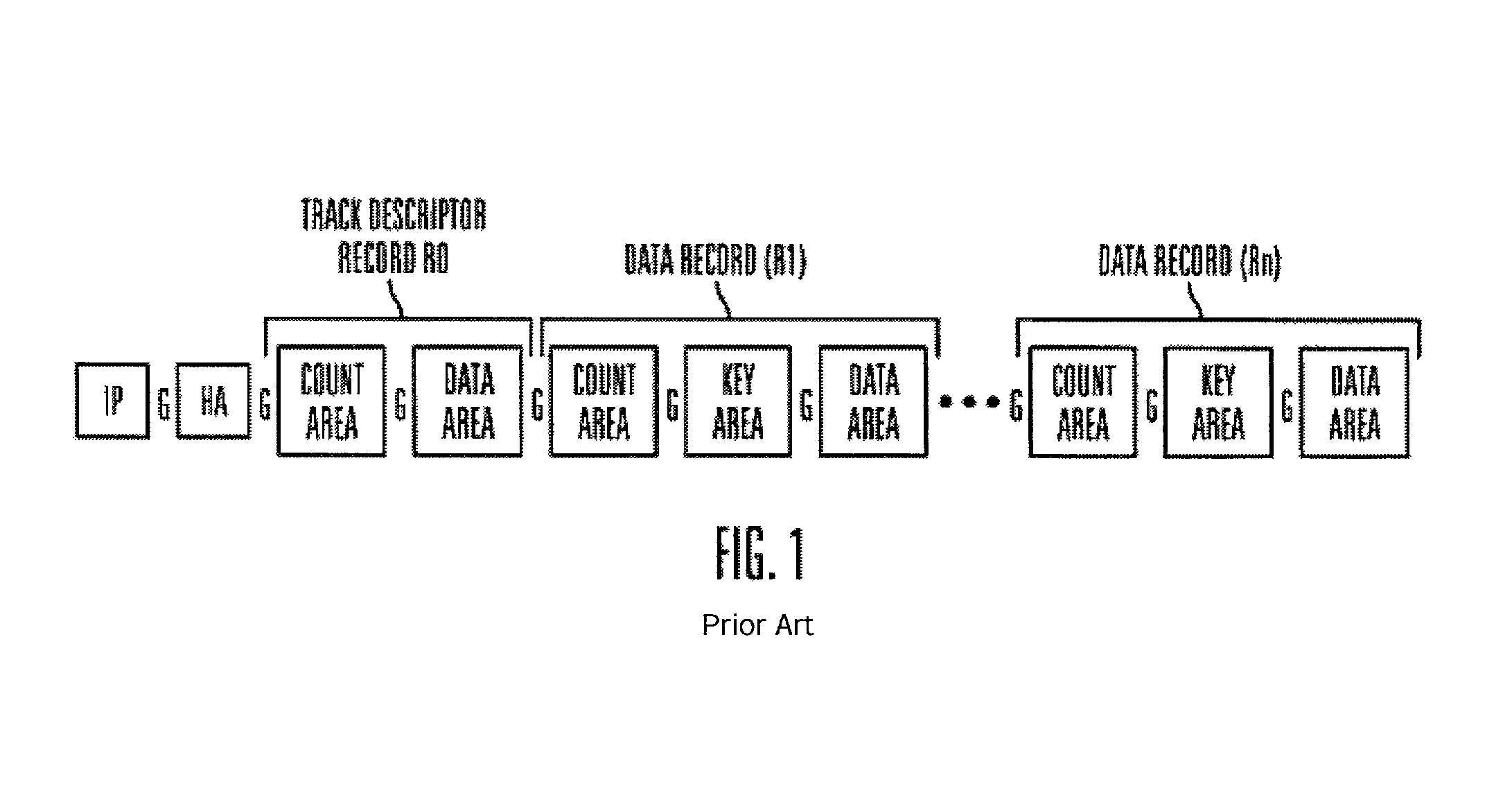

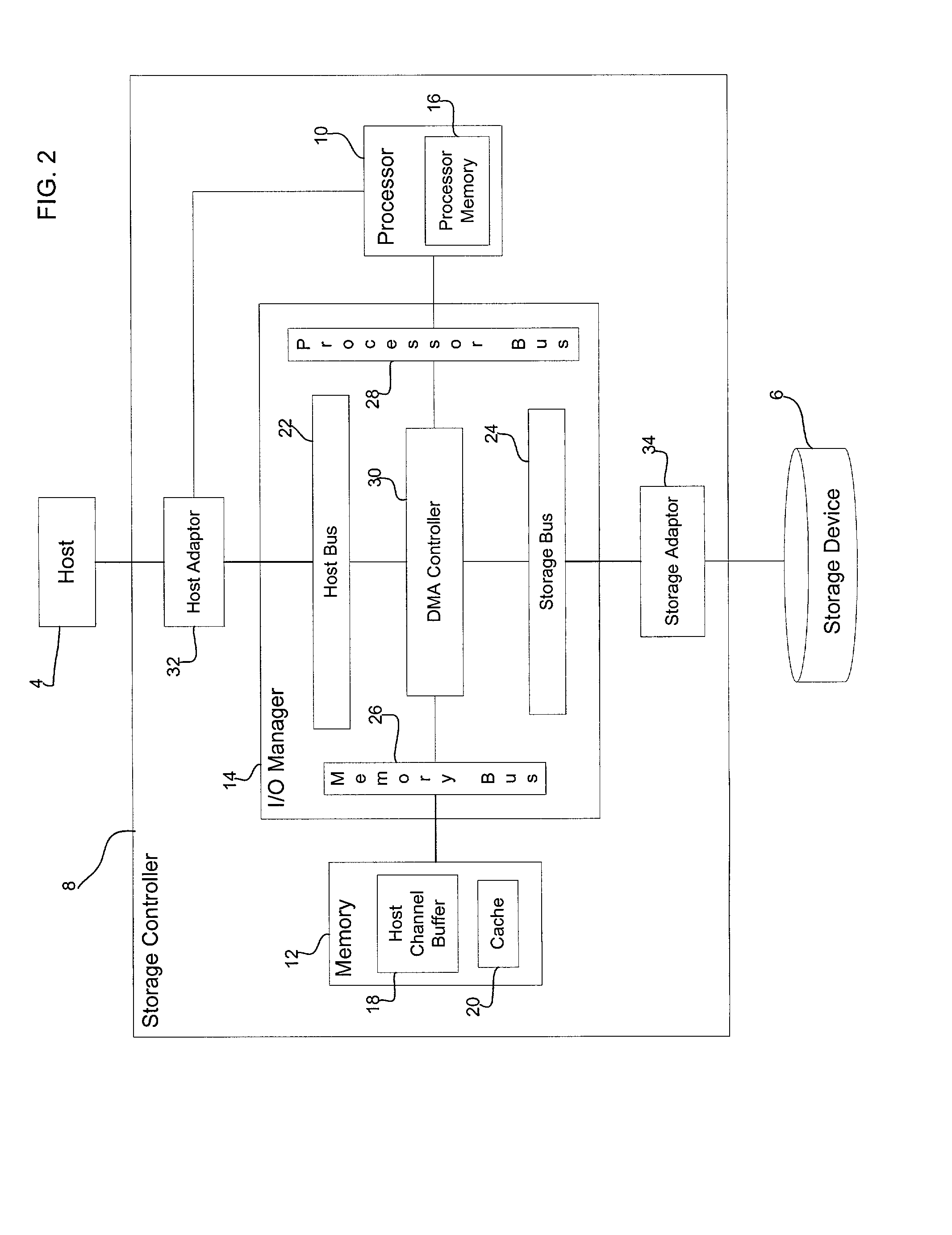

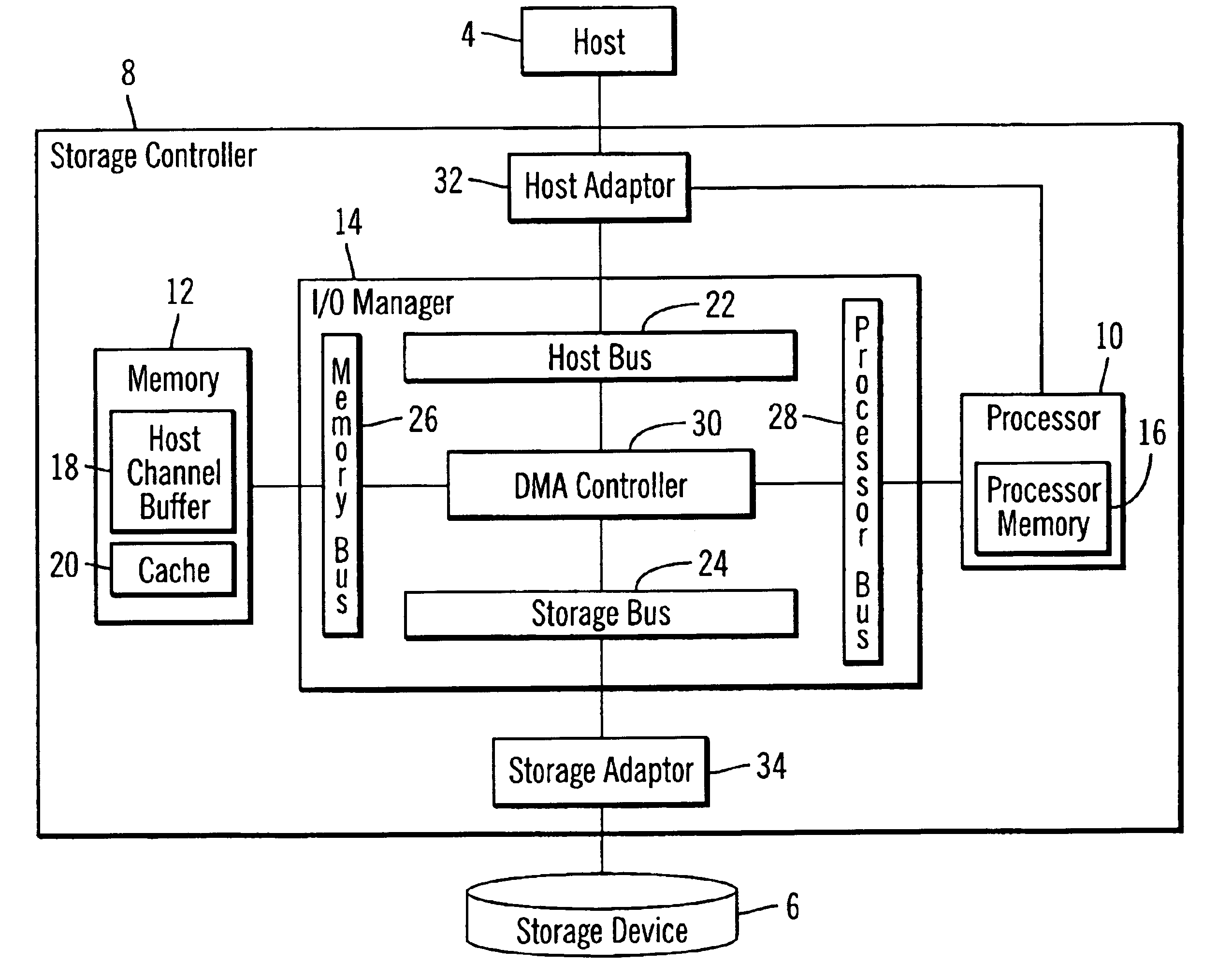

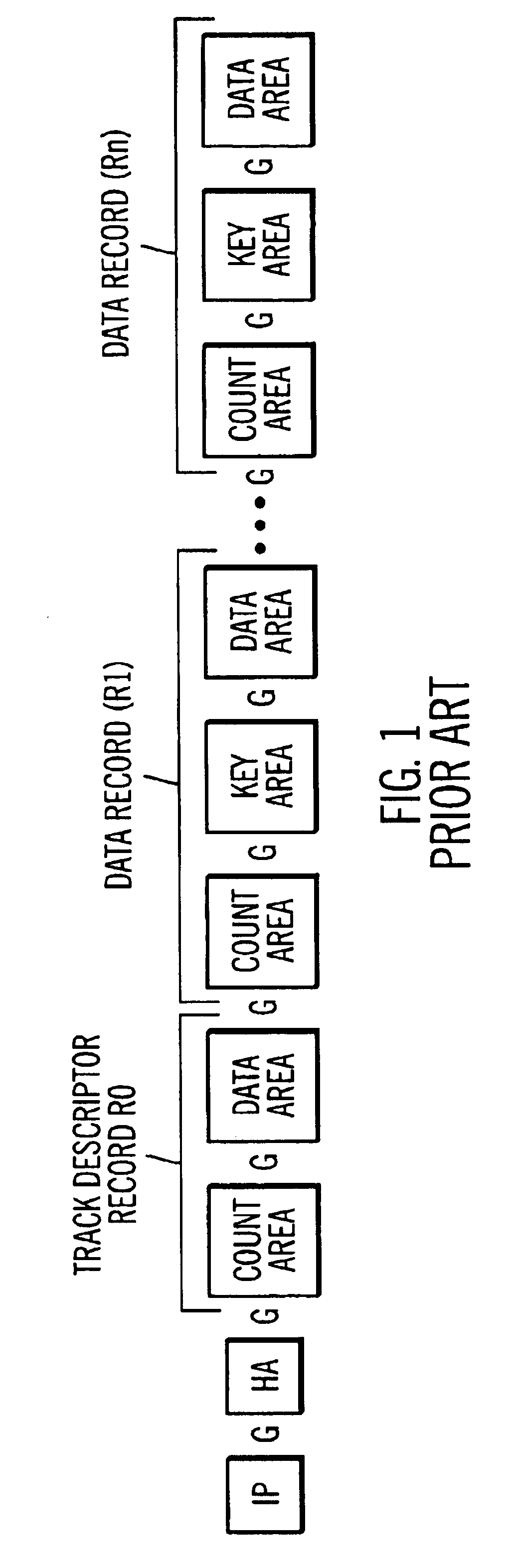

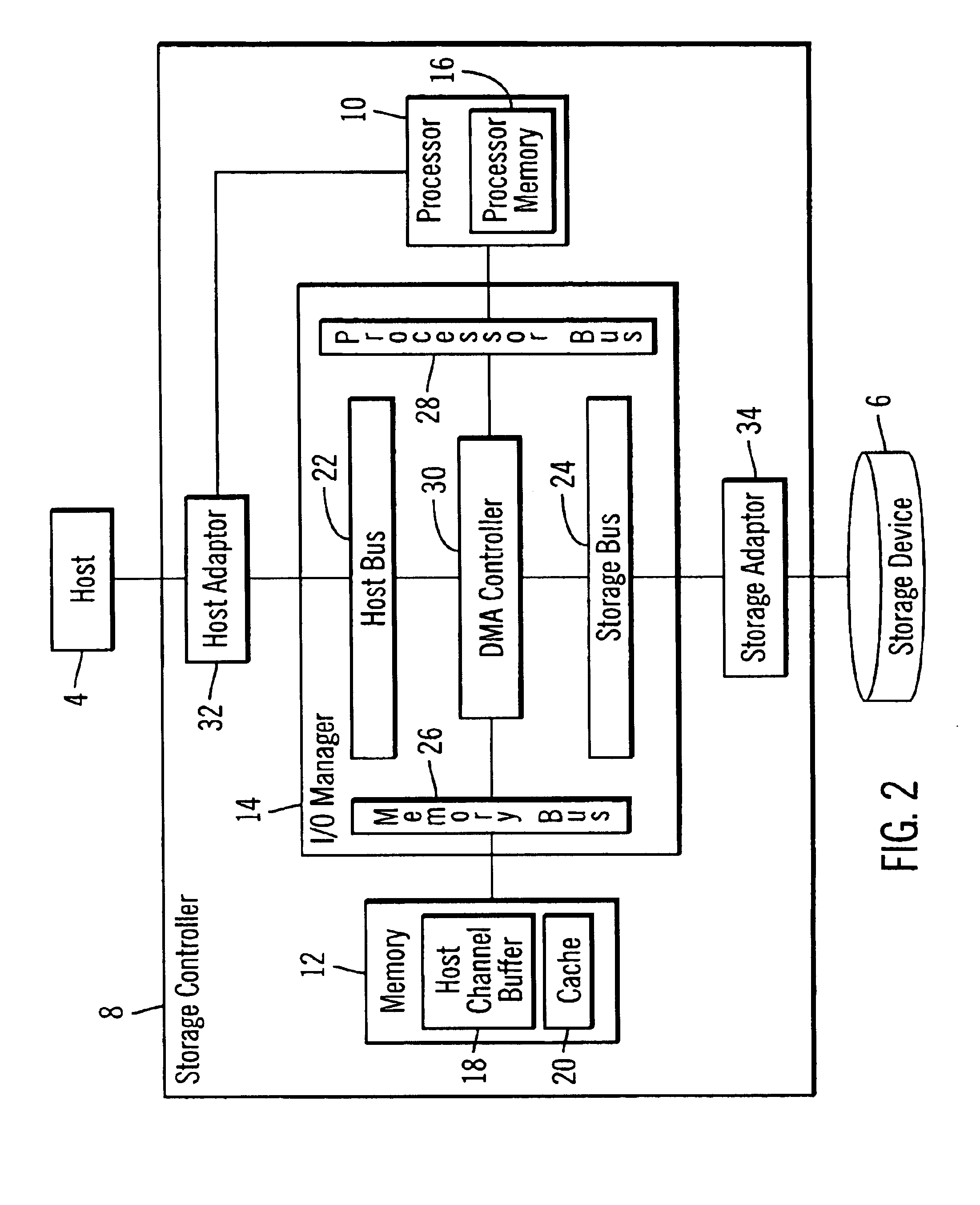

Method, system, and data structures for superimposing data records in a first data format to memory in a second data format

InactiveUS20020087786A1Improve processor performanceImprove performanceInput/output to record carriersMemory adressing/allocation/relocationData recordingData shipping

Provided is a method, system, and program for superimposing a data record in a first data format onto a storage space in a second data format. A plurality of control blocks are built in memory indicating operations to perform to transfer components of the data record in the first data format to locations in memory in the second data format. A data transfer device is signaled to access the control blocks built in the memory. The data transfer device accesses the control blocks in the memory and then transfers components of the data record in the first data format to the memory to be stored in the second data format according to the operations indicated in the control blocks.

Owner:IBM CORP

Method, system, and data structures for superimposing data records in a first data format to memory in a second data format

InactiveUS6748486B2Improve performanceImprove processor performanceInput/output to record carriersMemory adressing/allocation/relocationData recordingData shipping

Provided is a method, system, and program for superimposing a data record in a first data format onto a storage space in a second data format. A plurality of control blocks are built in memory indicating operations to perform to transfer components of the data record in the first data format to locations in memory in the second data format. A data transfer device is signaled to access the control blocks built in the memory. The data transfer device accesses the control blocks in the memory and then transfers components of the data record in the first data format to the memory to be stored in the second data format according to the operations indicated in the control blocks.

Owner:IBM CORP

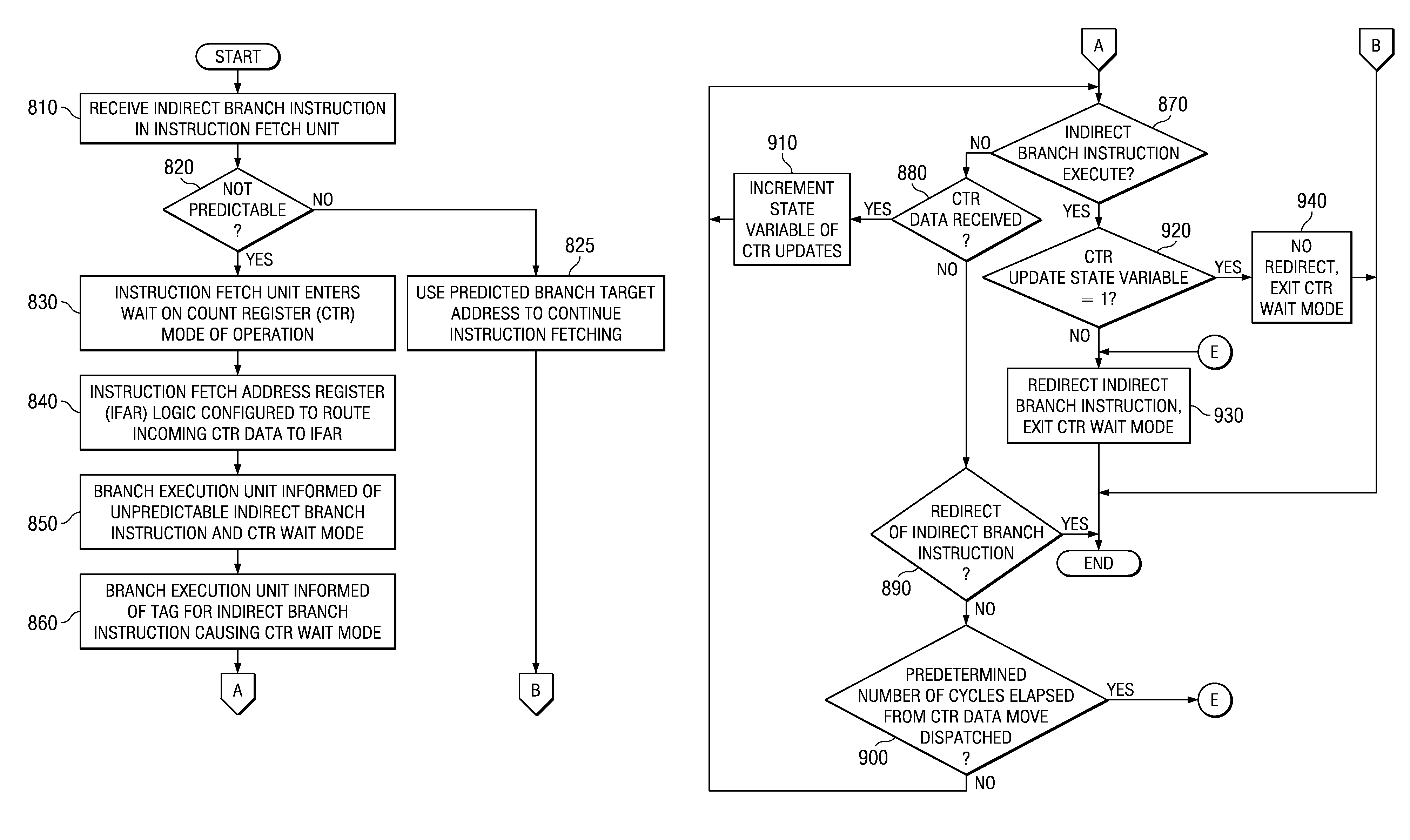

System and method for optimizing branch logic for handling hard to predict indirect branches

ActiveUS7809933B2Easy to handleImprove processor performanceDigital computer detailsSpecific program execution arrangementsParallel computingIndirect branch

A system and method for optimizing the branch logic of a processor to improve handling of hard to predict indirect branches are provided. The system and method leverage the observation that there will generally be only one move to the count register (mtctr) instruction that will be executed while a branch on count register (bcctr) instruction has been fetched and not executed. With the mechanisms of the illustrative embodiments, fetch logic detects that it has encountered a bcctr instruction that is hard to predict and, in response to this detection, blocks the target fetch from entering the instruction buffer of the processor. At this point, the fetch logic has fetched all the instructions up to and including the bcctr instruction but no target instructions. When the next mtctr instruction is executed, the branch logic of the processor grabs the data and starts fetching using that target address. Since there are no other target instructions that were fetched, no flush is needed if that target address is the correct address, i.e. the branch prediction is correct.

Owner:INT BUSINESS MASCH CORP

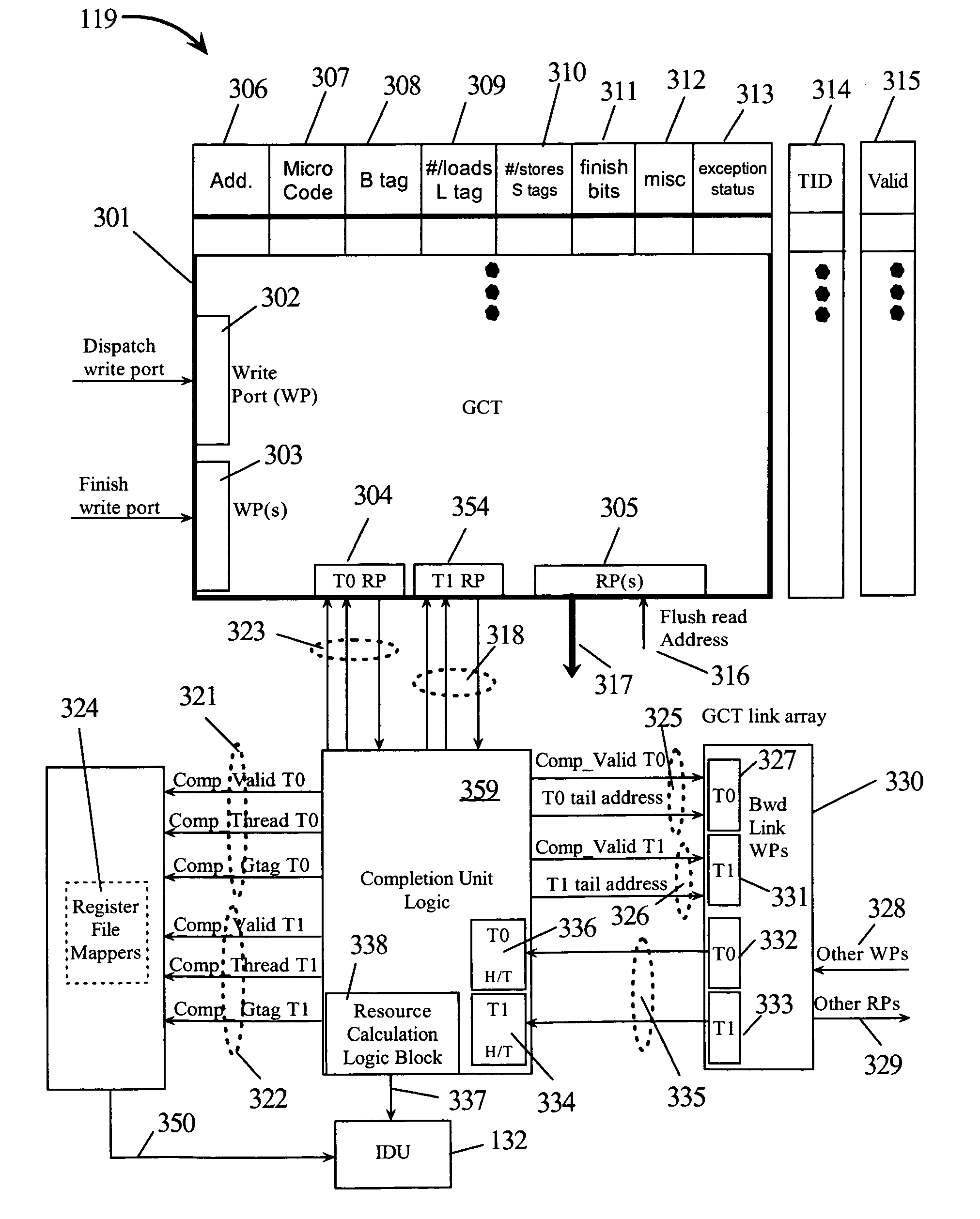

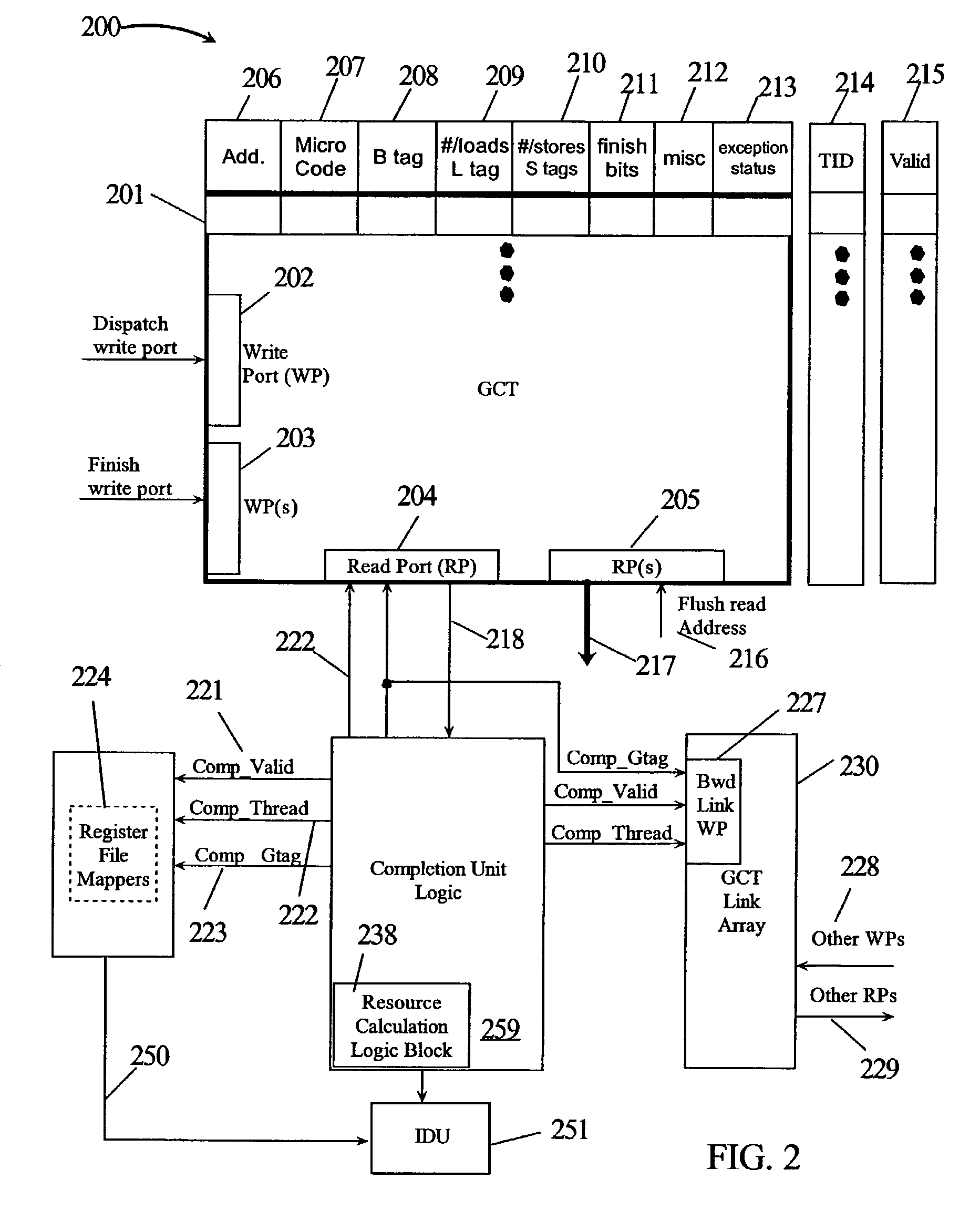

Dynamically shared group completion table between multiple threads

InactiveUS7472258B2Improving SMT processor performanceImprove processor performanceDigital computer detailsMultiprogramming arrangementsArray data structureProcessor register

An SMT system has a dynamically shared GCT. Performance for the SMT is improved by configuring the GCT to allow an instruction group from each thread to complete simultaneously. The GCT has a read port for each thread corresponding to the completion table instruction / address array for simultaneous updating on completion. The forward link array also has a read port for each thread to find the next instruction group for each thread upon completion. The backward link array has a backward link write port for each thread in order to update the backward links for each thread simultaneously. The GCT has independent pointer management for each thread. Each of the threads has simultaneous commit of their renamed result registers and simultaneous updating of outstanding load and store tag usage.

Owner:IBM CORP

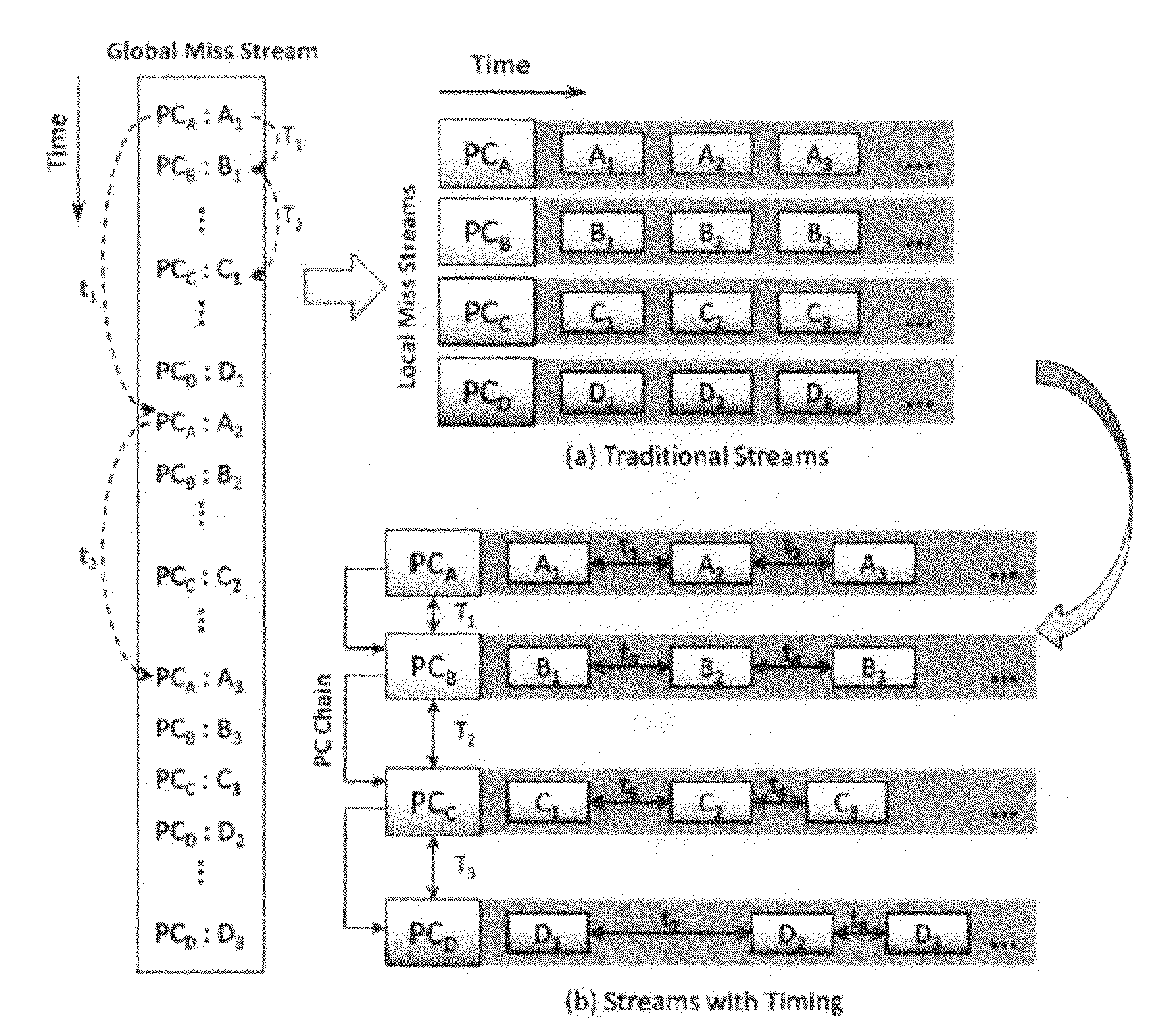

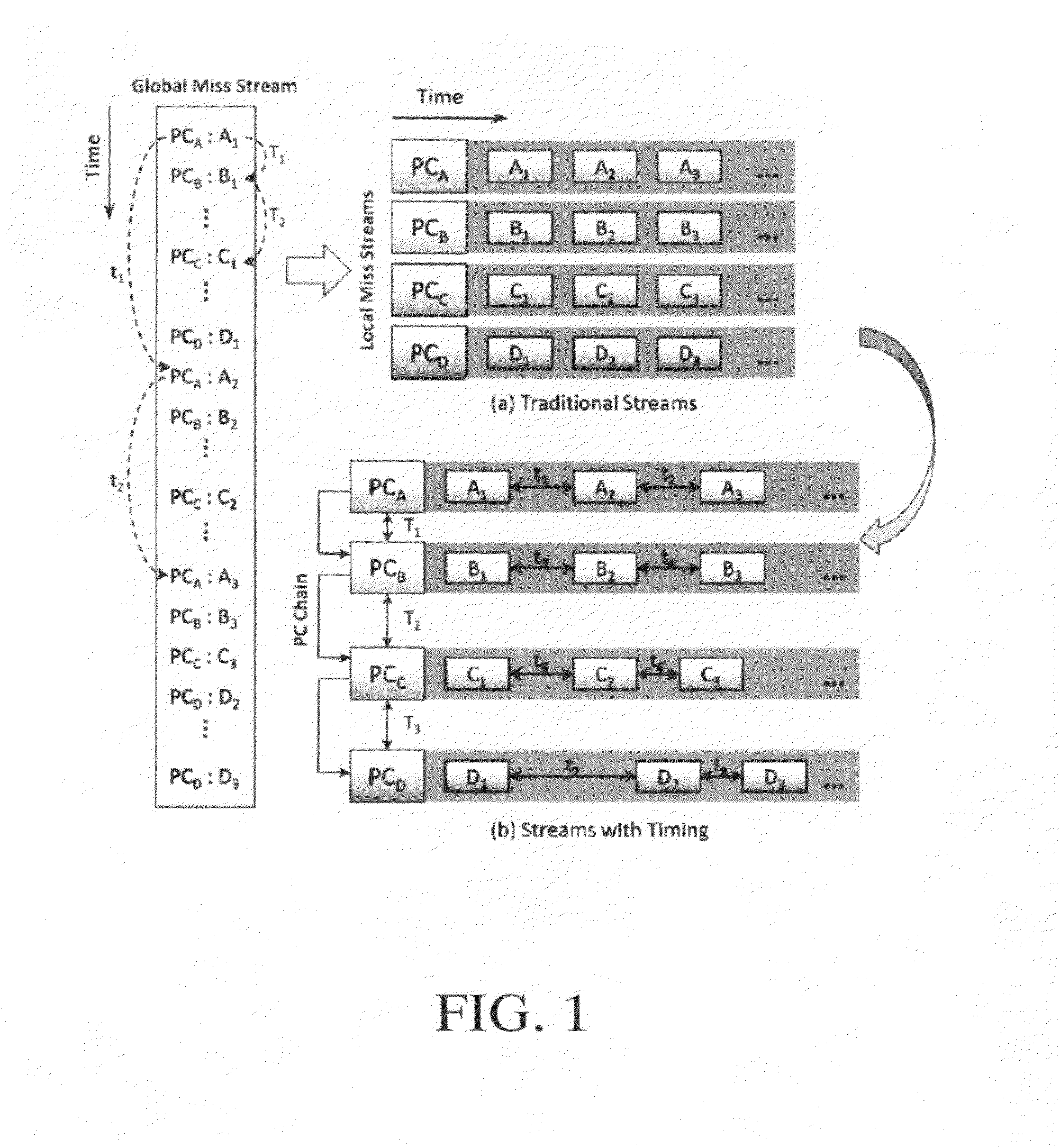

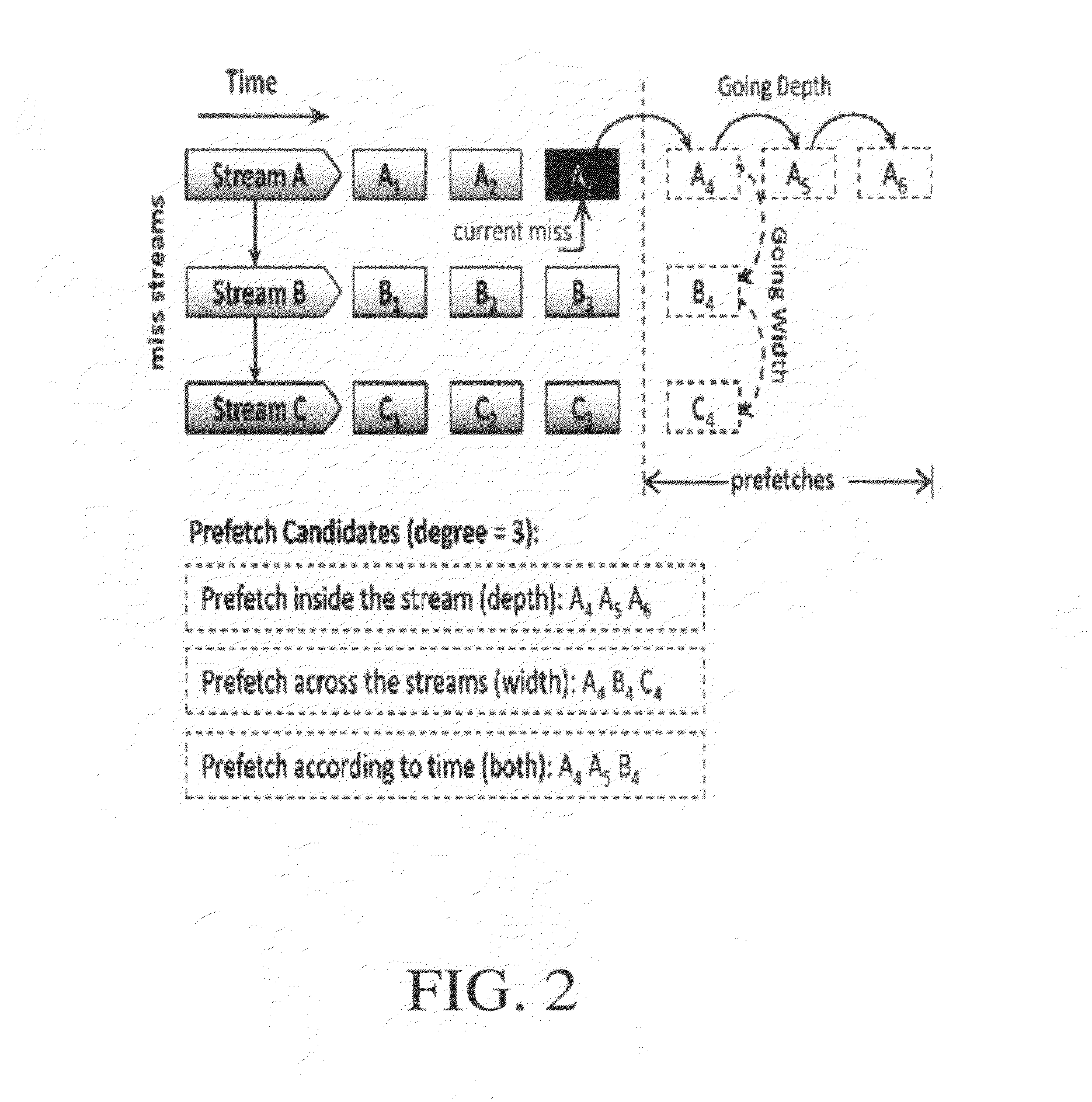

Timing-aware data prefetching for microprocessors

ActiveUS20120311270A1Reduce untimely prefetchesIncrease performance of processorMemory architecture accessing/allocationMemory adressing/allocation/relocationCache missParallel computing

Owner:ILLINOIS INSTITUTE OF TECHNOLOGY

Hardware looping mechanism and method for efficient execution of discontinuity instructions

ActiveUS7272704B1Reduce numberImproved processor performanceDigital computer detailsSpecific program execution arrangementsProgram instructionSingle loop

A hardware looping mechanism and method is described herein for handling any number and / or type of discontinuity instruction that may arise when executing program instructions within a scalar or superscalar processor. For example, the hardware looping mechanism may provide zero-overhead looping for branch instructions, in addition to single loop constructs and multiple loop constructs (which may or may not be nested). Zero-overhead looping may also be provided in special cases, e.g., when servicing an interrupt or executing a branch-out-of-loop instruction. In addition to reducing the number of instructions required to execute a program, as well as the overall time and power consumed during program execution, the hardware looping mechanism described herein may be integrated within any processor architecture without modifying existing program code.

Owner:VERISILICON HLDGCO LTD

Method of virtualization and OS-level thermal management and multithreaded processor with virtualization and OS-level thermal management

InactiveUS7886172B2Improve processor performanceMinimize SMT processor performance lossEnergy efficient ICTVolume/mass flow measurementVirtualizationMonitoring temperature

A program product and method of managing task execution on an integrated circuit chip such as a chip-level multiprocessor (CMP) with Simultaneous MultiThreading (SMT). Multiple chip operating units or cores have chip sensors (temperature sensors or counters) for monitoring temperature in units. Task execution is monitored for hot tasks and especially for hotspots. Task execution is balanced, thermally, to minimize hot spots. Thermal balancing may include Simultaneous MultiThreading (SMT) heat balancing, chip-level multiprocessors (CMP) heat balancing, deferring execution of identified hot tasks, migrating identified hot tasks from a current core to a colder core, User-specified Core-hopping, and SMT hardware threading.

Owner:INT BUSINESS MASCH CORP

Instruction/skid buffers in a multithreading microprocessor that store dispatched instructions to avoid re-fetching flushed instructions

ActiveUS7853777B2Reduce the amount requiredPenalty associated with flushing instructions is reducedDigital computer detailsMemory systemsOperating systemControl logic

An apparatus for reducing instruction re-fetching in a multithreading processor configured to concurrently execute a plurality of threads is disclosed. The apparatus includes a buffer for each thread that stores fetched instructions of the thread, having an indicator for indicating which of the fetched instructions in the buffer have already been dispatched for execution. An input for each thread indicates that one or more of the already-dispatched instructions in the buffer has been flushed from execution. Control logic for each thread updates the indicator to indicate the flushed instructions are no longer already-dispatched, in response to the input. This enables the processor to re-dispatch the flushed instructions from the buffer to avoid re-fetching the flushed instructions. In one embodiment, there are fewer buffers than threads, and they are dynamically allocatable by the threads. In one embodiment, a single integrated buffer is shared by all the threads.

Owner:MIPS TECH INC

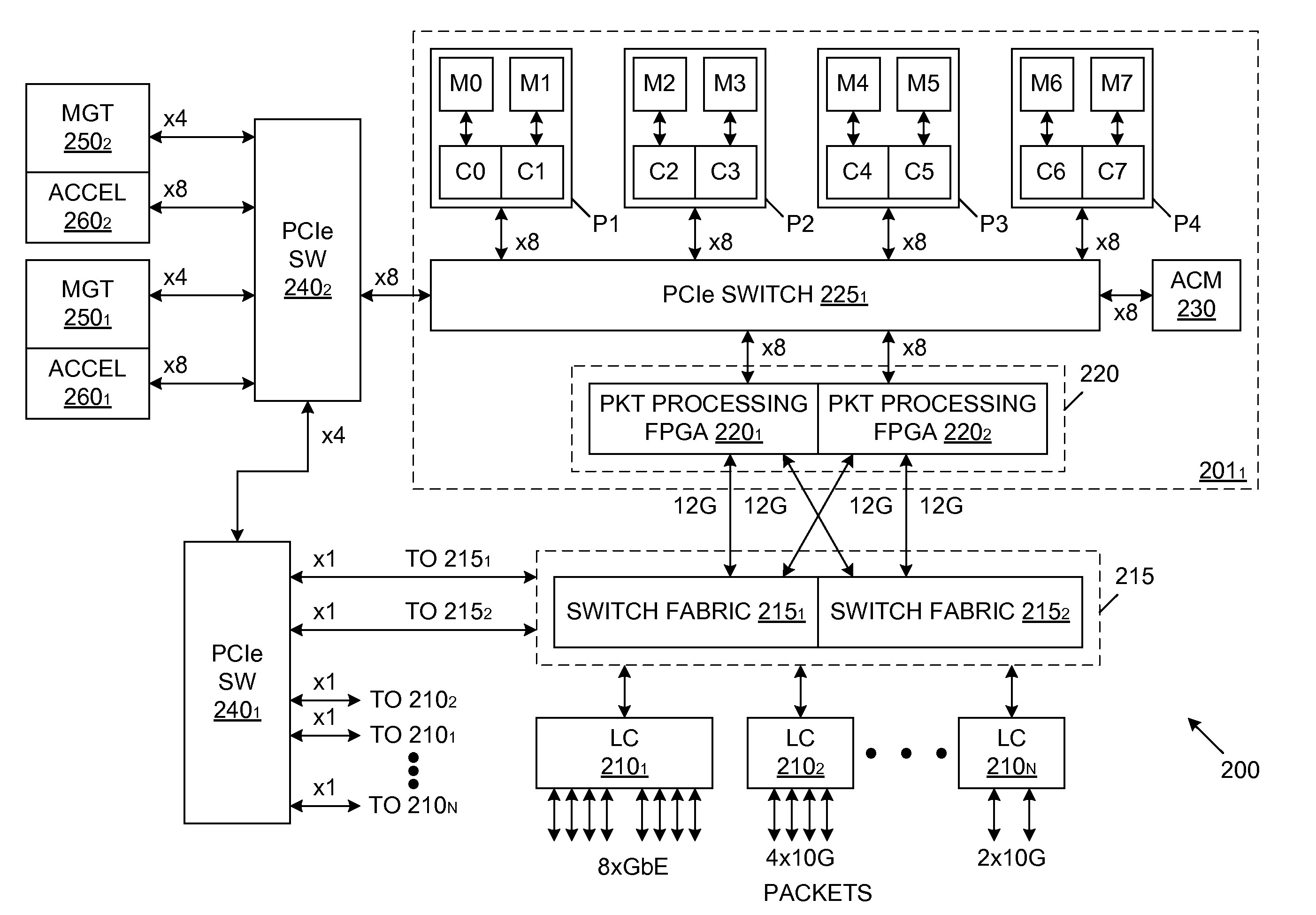

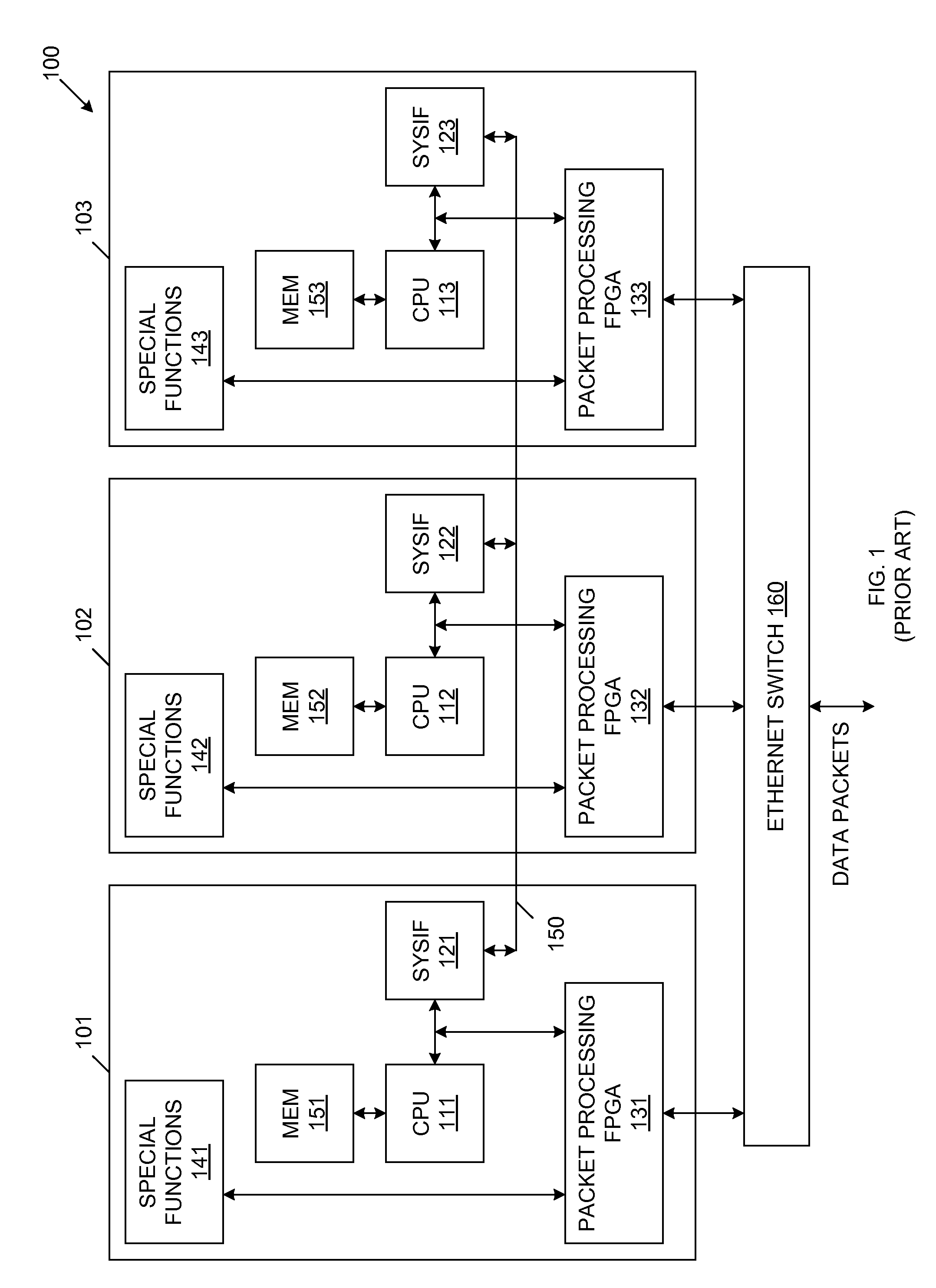

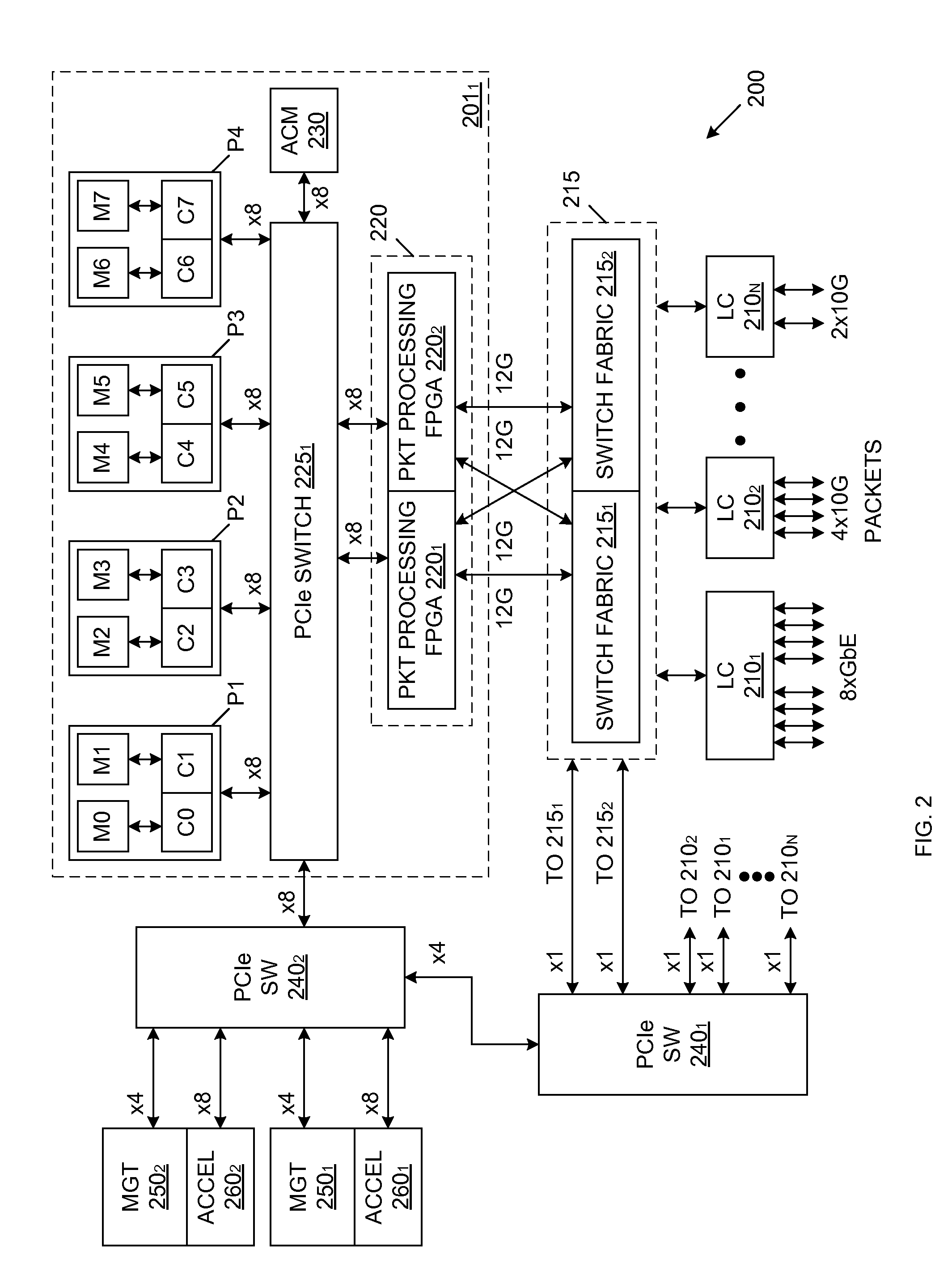

Massive multi-core processor built with serial switching

ActiveUS20110010481A1Improve processor performanceEasy to upgradeEnergy efficient ICTEnergy efficient computingMulti-core processorPacket processing

A multi-processor architecture for a network device that includes a plurality of barrel cards, each including: a plurality of processors, a PCIe switch coupled to each of the plurality of processors, and packet processing logic coupled to the PCIe switch. The PCIe switch on each barrel card provides high speed flexible data paths for the transmission of incoming / outgoing packets to / from the processors on the barrel card. An external PCIe switch is commonly coupled to the PCIe switches on the barrel cards, as well as to a management processor, thereby providing high speed connections between processors on separate barrel cards, and between the management processor and the processors on the barrel cards.

Owner:AVAGO TECH INT SALES PTE LTD

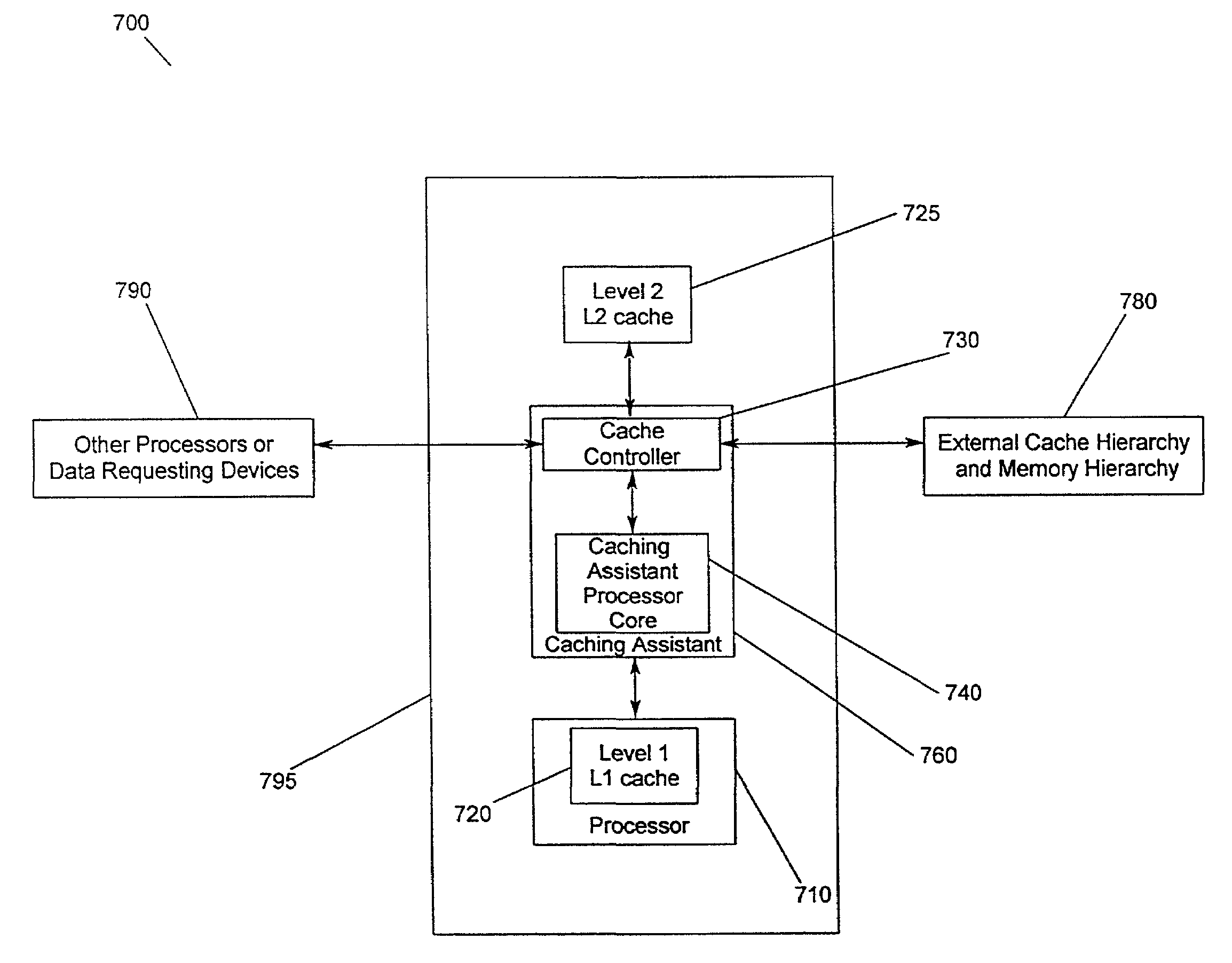

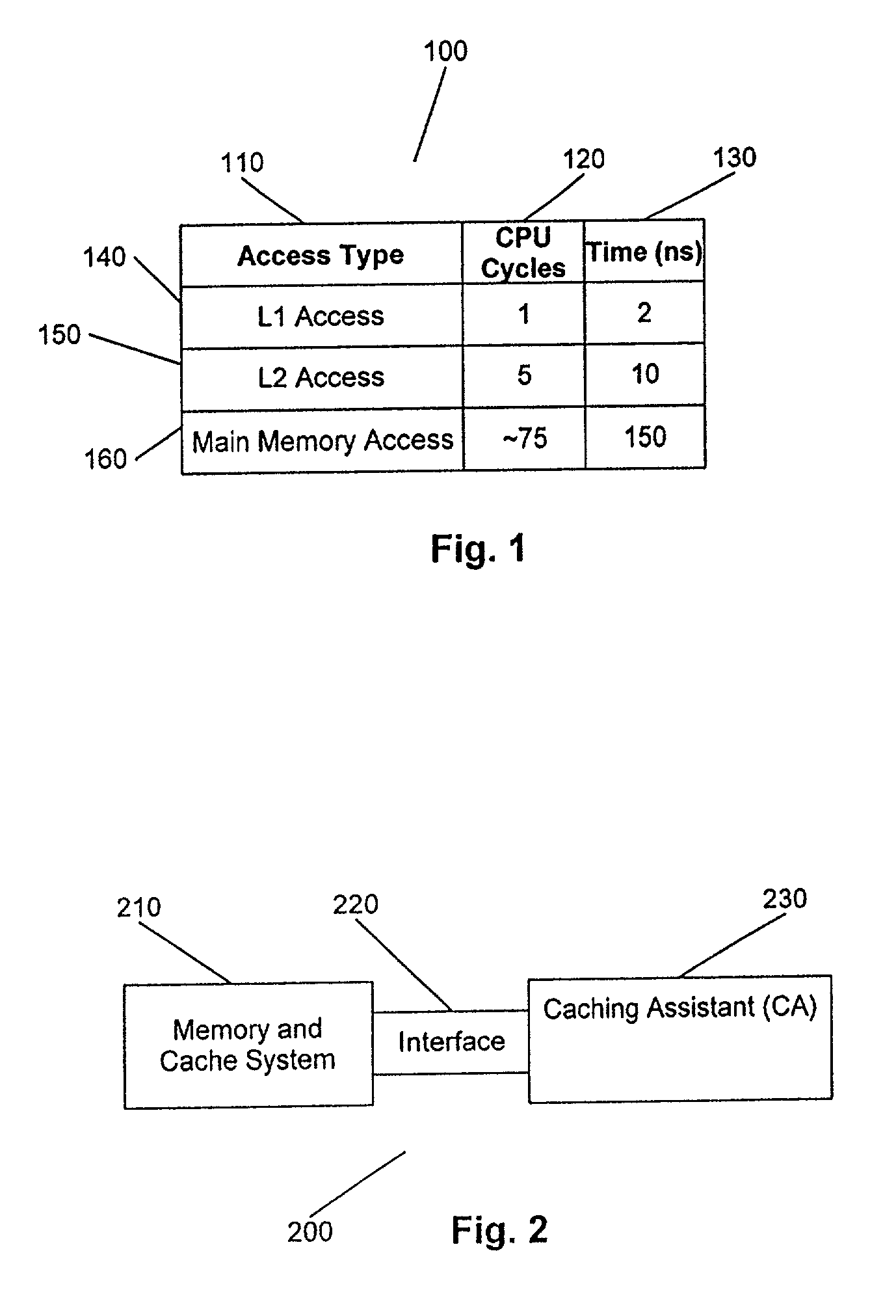

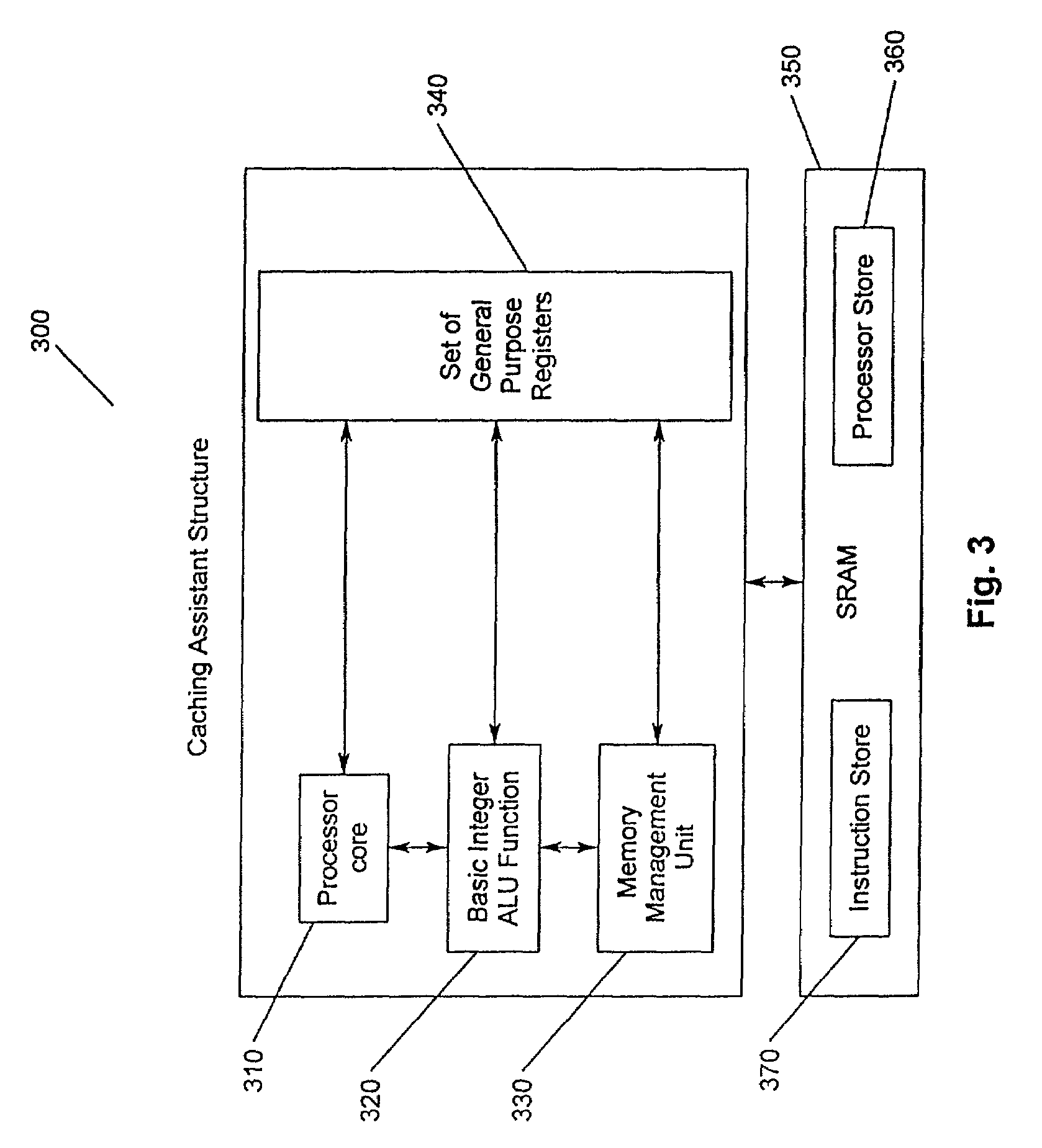

Method and apparatus for optimizing cache hit ratio in non L1 caches

ActiveUS7035979B2Improve processor performanceMemory architecture accessing/allocationEnergy efficient ICTCache hierarchyMonitoring data

A method and apparatus for increasing the performance of a computing system and increasing the hit ratio in at least one non-L1 cache. A caching assistant and a processor are embedded in a processing system. The caching assistant analyzes system activity, monitors and coordinates data requests from the processor, processors and other data accessing devices, and monitors and analyzes data accesses throughout the cache hierarchy. The caching assistant is provided with a dedicated cache for storing fetched and prefetched data. The caching assistant improves the performance of the computing system by anticipating which data is likely to be requested for processing next, accessing and storing that data in an appropriate non-L1 cache prior to the data being requested by processors or data accessing devices. A method for increasing the processor performance includes analyzing system activity and optimizing a hit ratio in at least one non-L1 cache. The caching assistant performs processor data requests by accessing caches and monitoring the data requests to determine knowledge of the program code currently being processed and to determine if patterns of data accession exist. Based upon the knowledge gained through monitoring data accession, the caching assistant anticipates future data requests.

Owner:IBM CORP

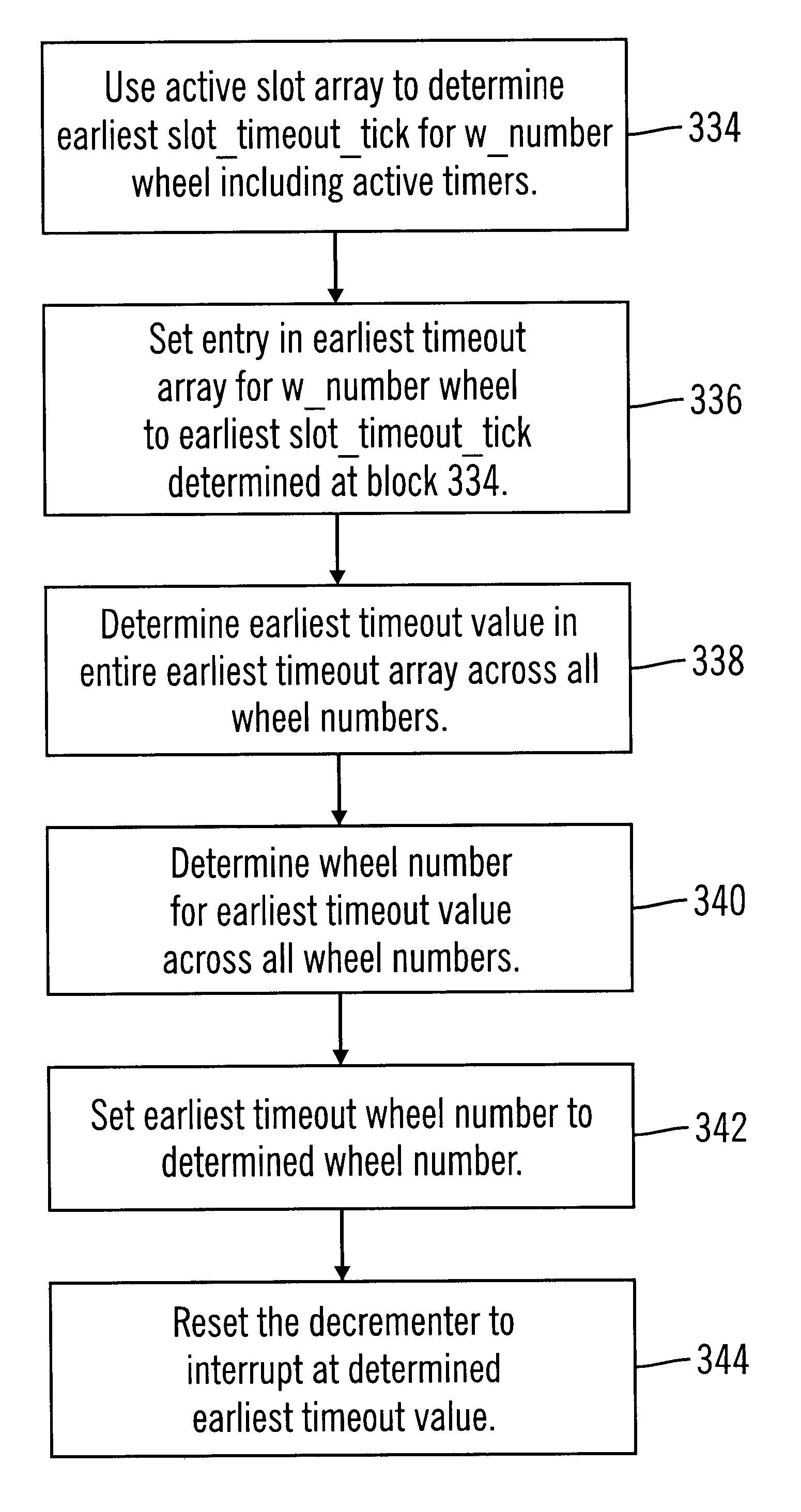

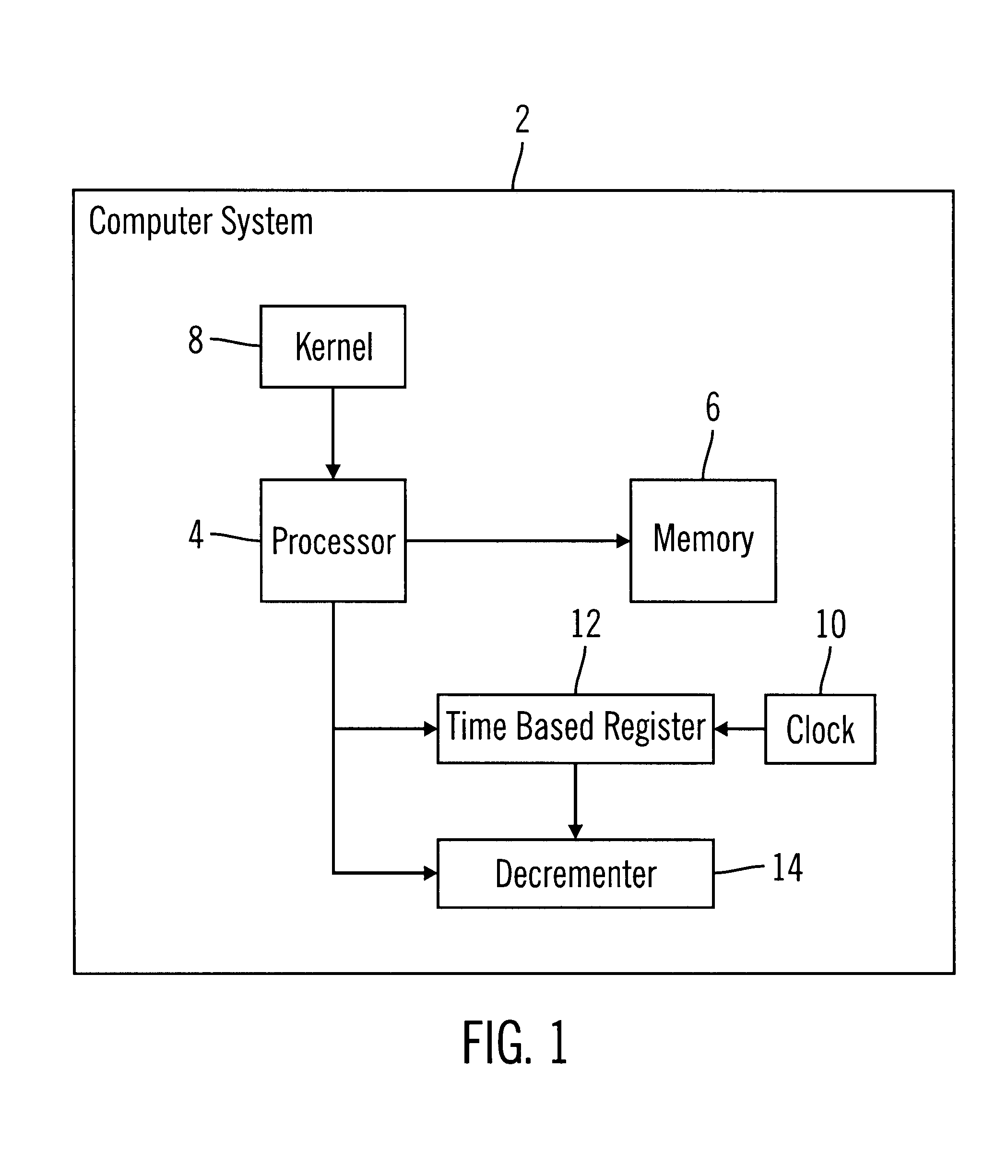

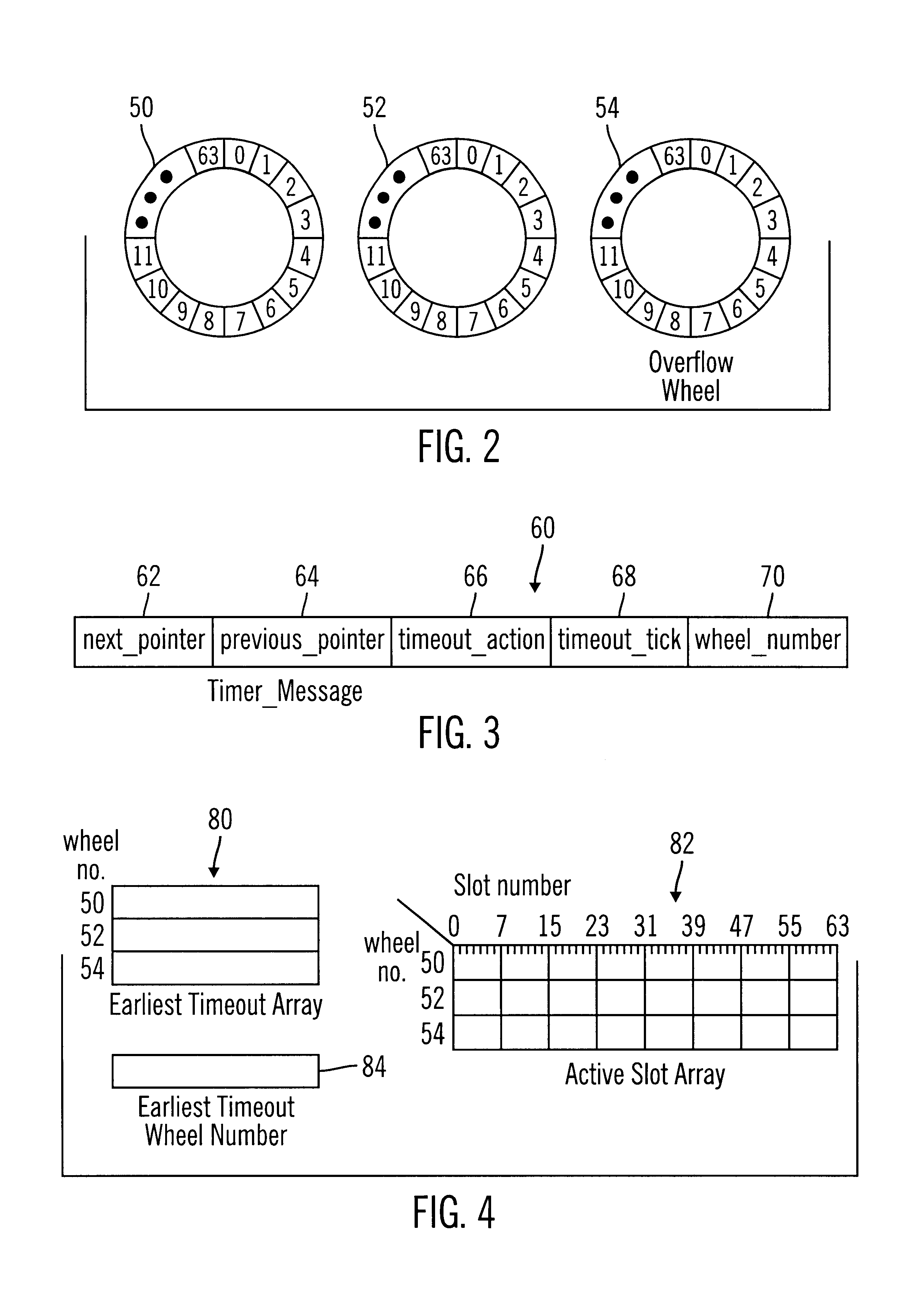

Method, system, program, and data structures for managing hierarchical timing wheels

InactiveUS6718479B1Reduce the burden onImprove processor performanceProgram initiation/switchingGenerating/distributing signalsArray data structureProcessor register

Disclosed is a method, system, program, and data structures for managing timers in timing wheel data structures in a computer readable medium. Each timer is enqueued in one slot in one of multiple timing wheels. Each timing wheel includes multiple slots and each slot is associated with a time value. Each slot is capable of queuing one or more timers. Each timer indicates a timeout value at which the timer expires. A register is decremented to zero and a determination is made of a current time. A determination is further made, in response to decrementing the register to zero, of a slot having a time value that expires at the determined current time. All timers in the determined slot having a timeout value expiring at the current time are then dequeued.

Owner:IBM CORP

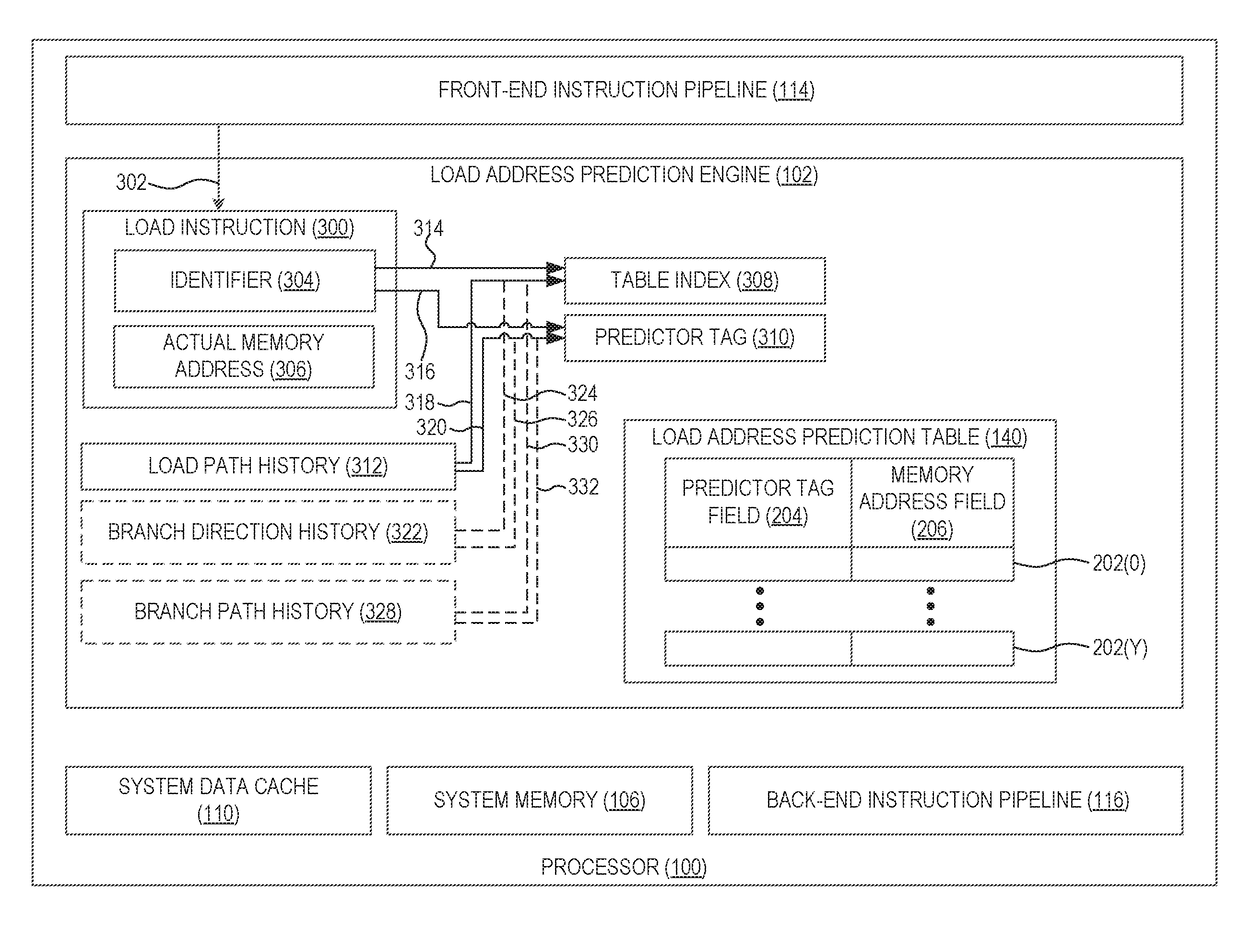

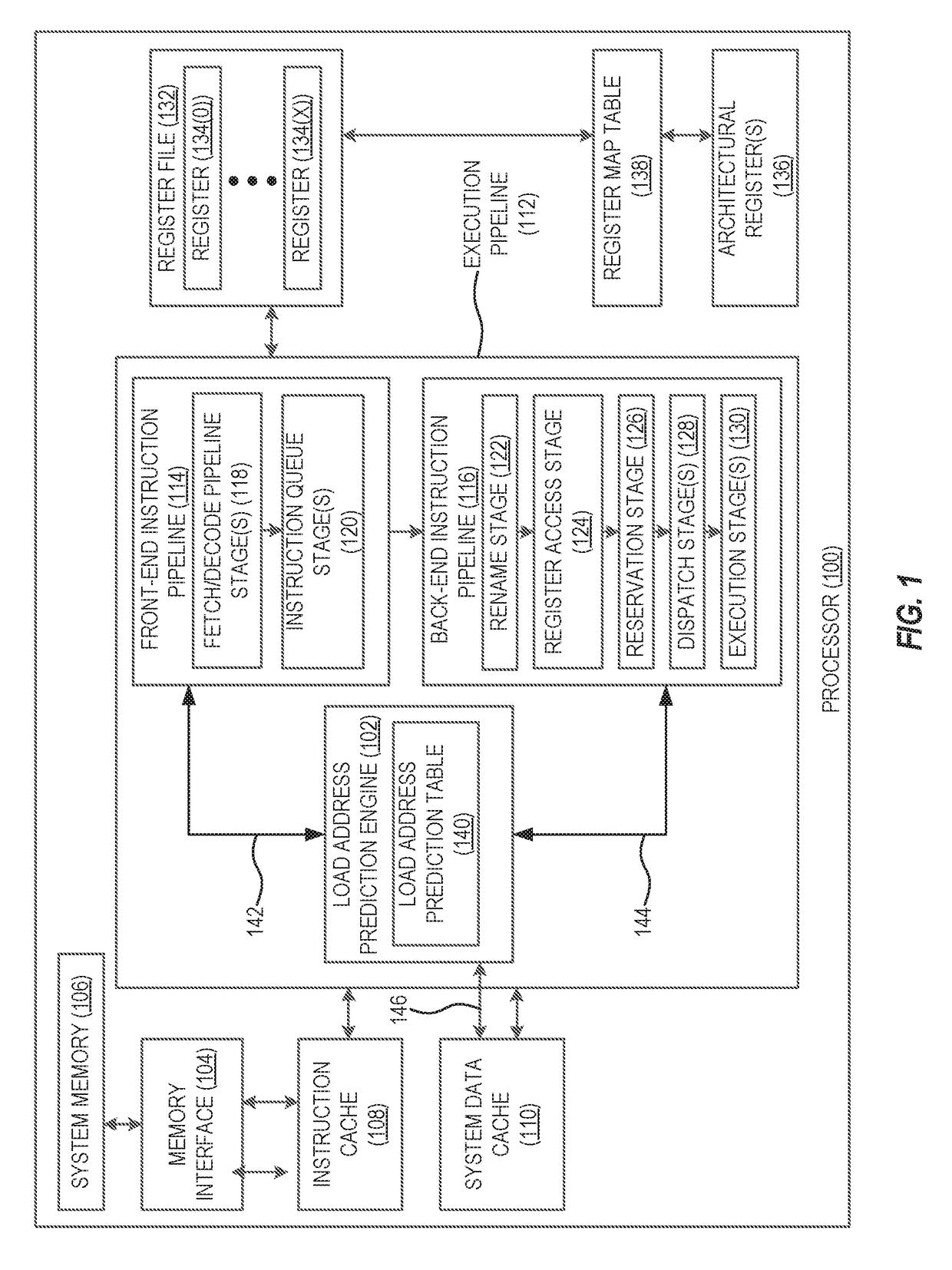

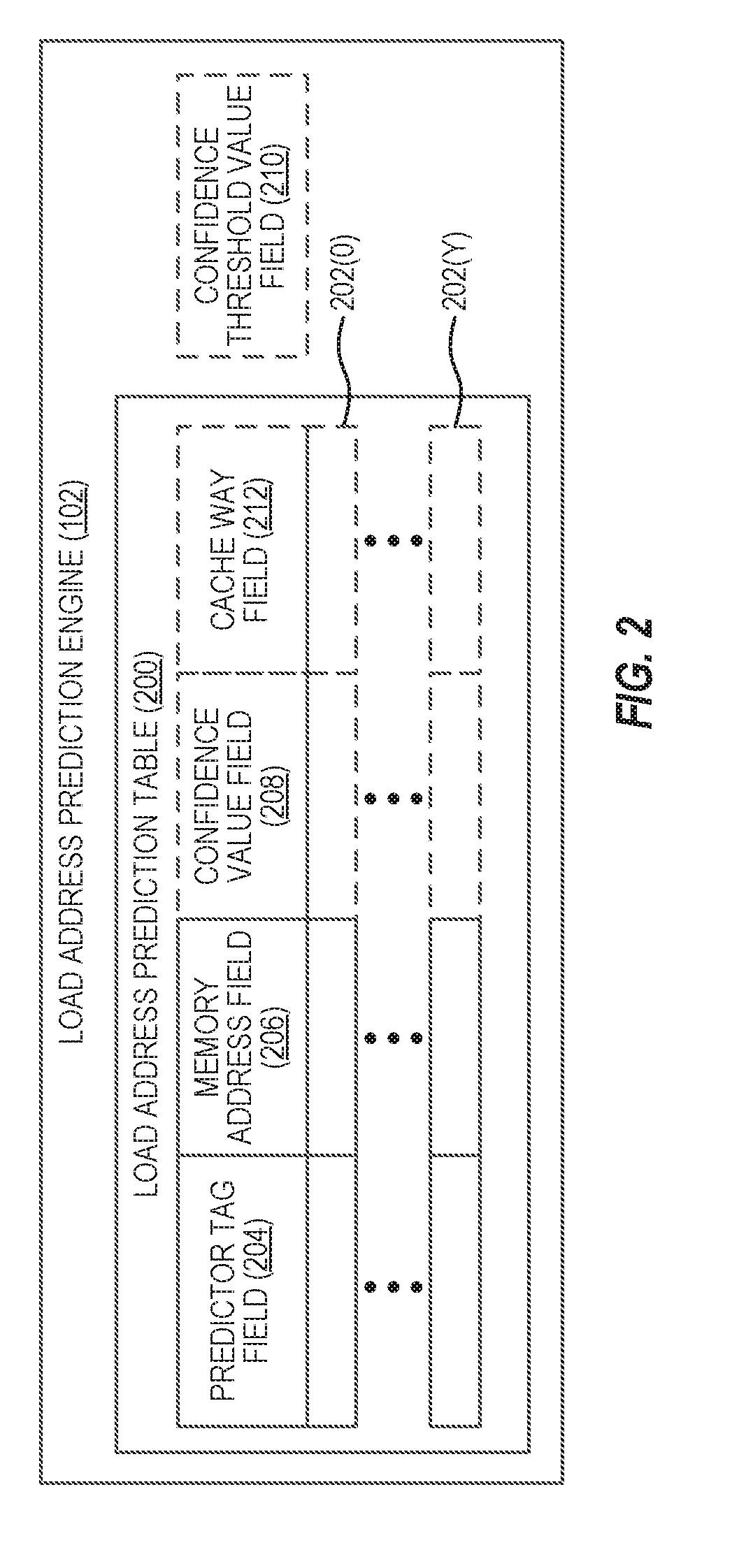

Providing load address predictions using address prediction tables based on load path history in processor-based systems

ActiveUS20170286119A1Improve efficiencyImprove processor performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory addressPredictor variable

Aspects disclosed in the detailed description include providing load address predictions using address prediction tables based on load path history in processor-based systems. In one aspect, a load address prediction engine provides a load address prediction table containing multiple load address prediction table entries. Each load address prediction table entry includes a predictor tag field and a memory address field for a load instruction. The load address prediction engine generates a table index and a predictor tag based on an identifier and a load path history for a detected load instruction. The table index is used to look up a corresponding load address prediction table entry. If the predictor tag matches the predictor tag field of the load address prediction table entry corresponding to the table index, the memory address field of the load address prediction table entry is provided as a predicted memory address for the load instruction.

Owner:QUALCOMM INC

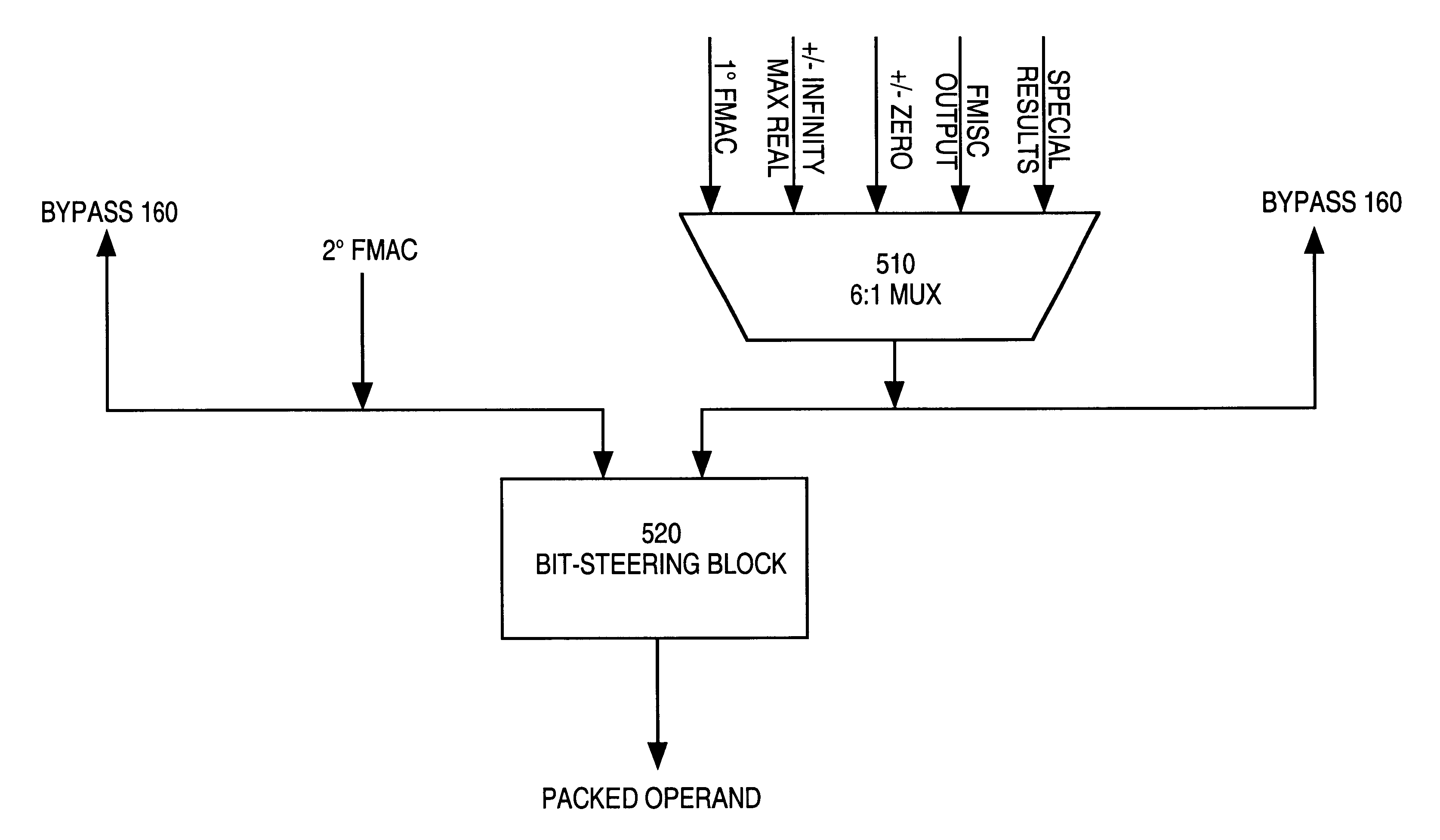

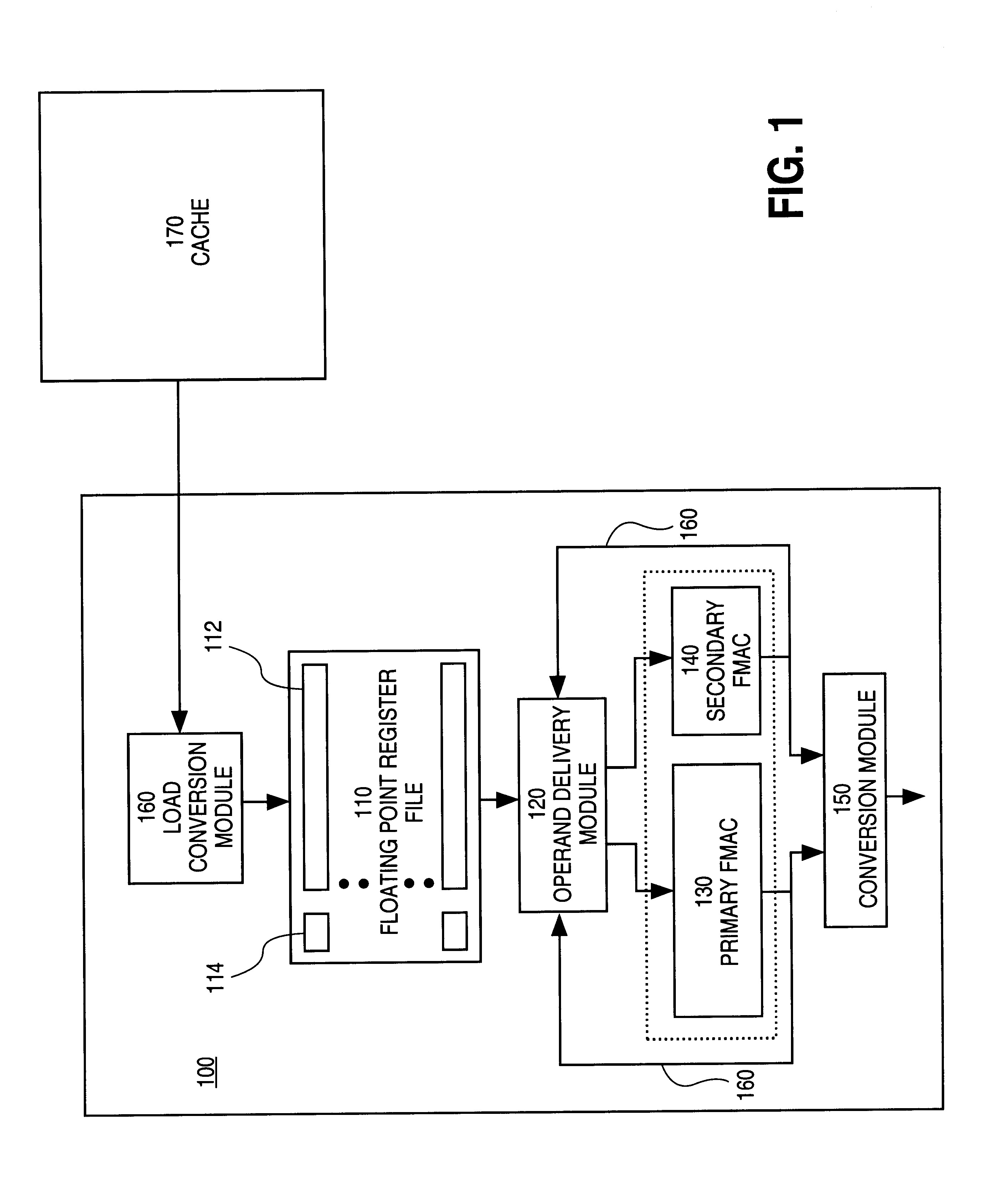

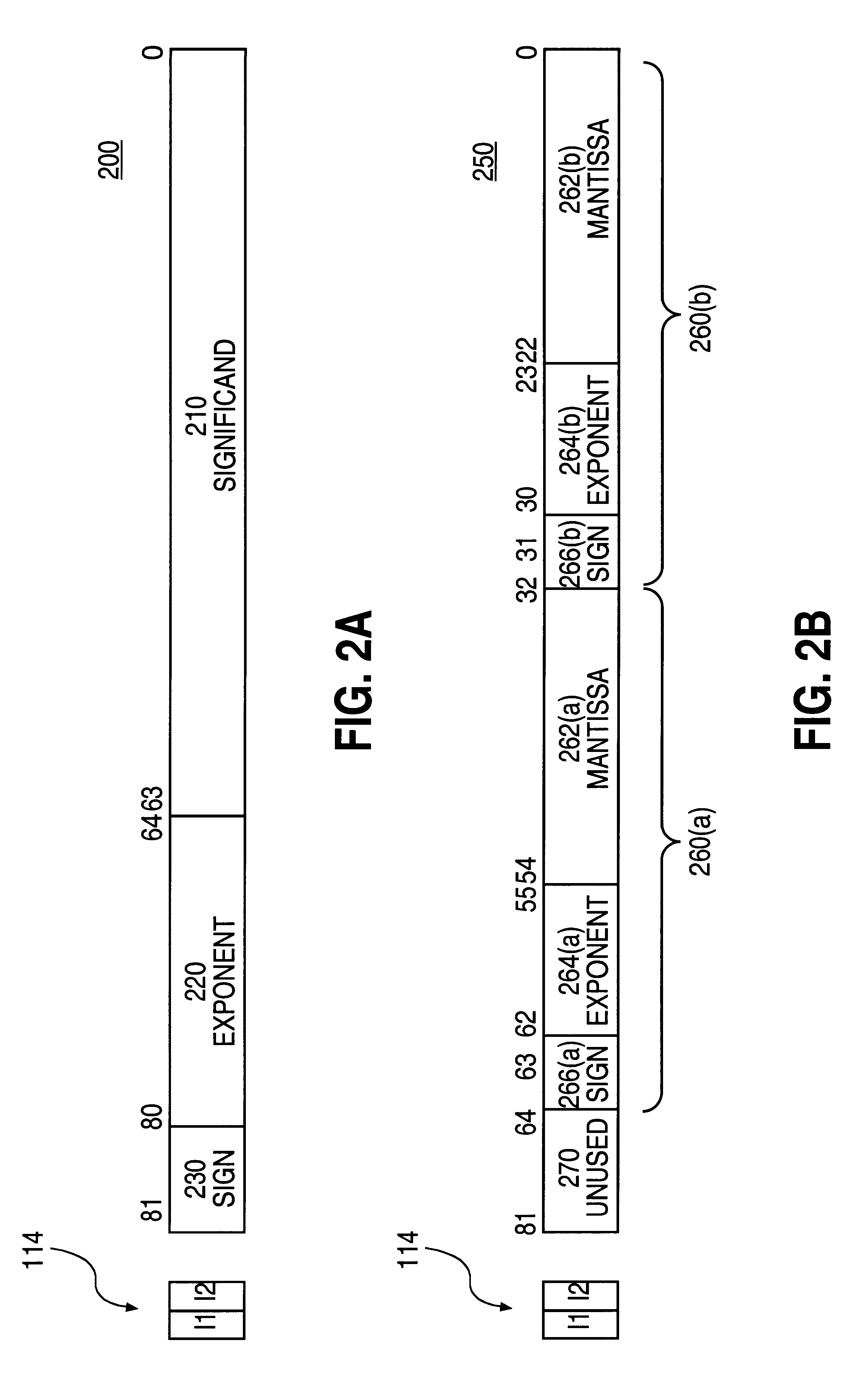

Method for setting a bit associated with each component of packed floating-pint operand that is normalized in SIMD operations

InactiveUS6321327B1Improve processor performanceEliminate needGeneral purpose stored program computerSpecific program execution arrangementsProcessor registerOperand

A method is provided for loading a packed floating-point operand into a register file entry having one or more associated implicit bits. The packed floating point operand includes multiple component operands. Significand and exponent bits for each component operand are copied to corresponding fields of the register entry, and the exponent bits are tested to determine whether the component operand is normalized. An implicit bit corresponding to the component operand is set when the component operand is normalized.

Owner:INTEL CORP

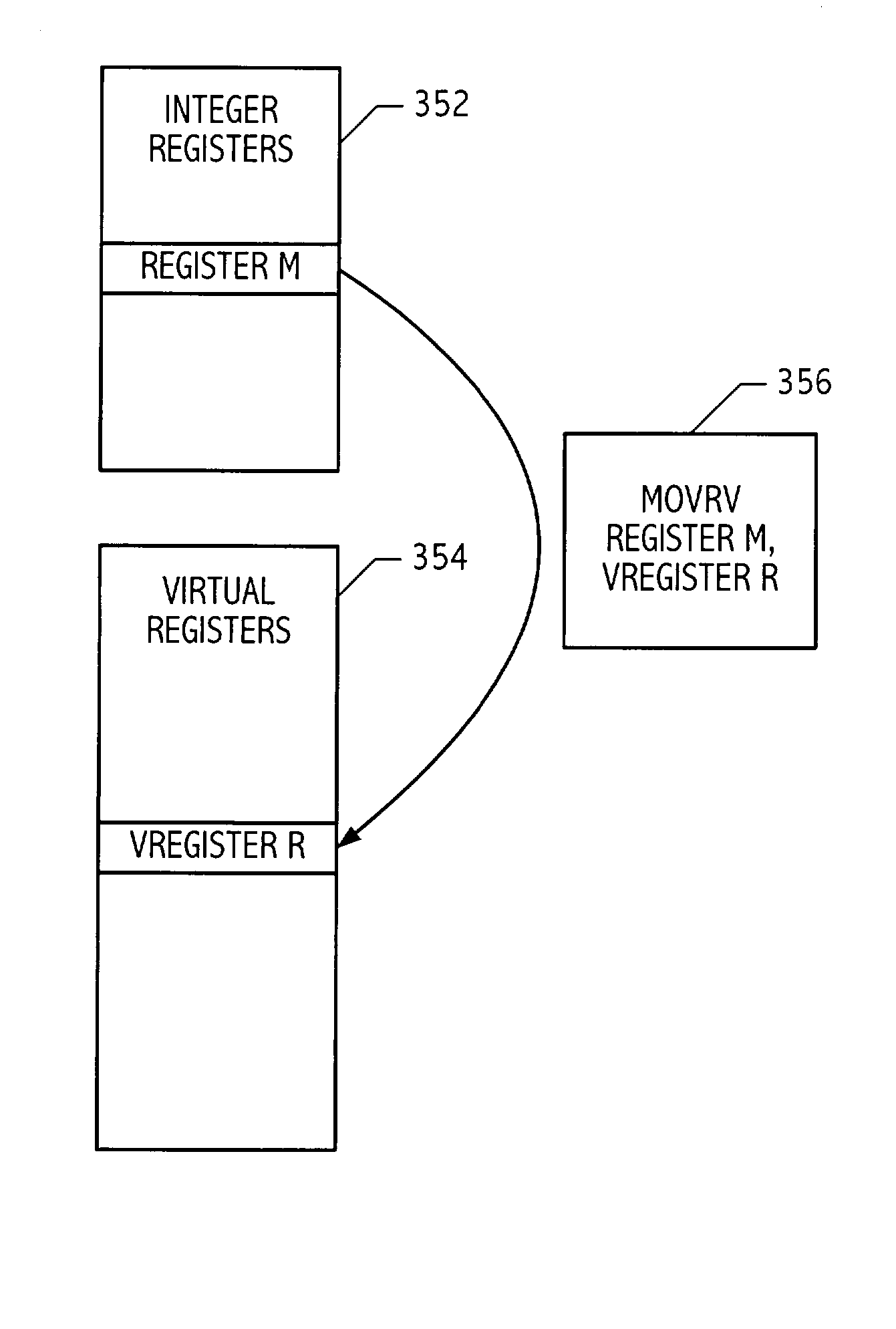

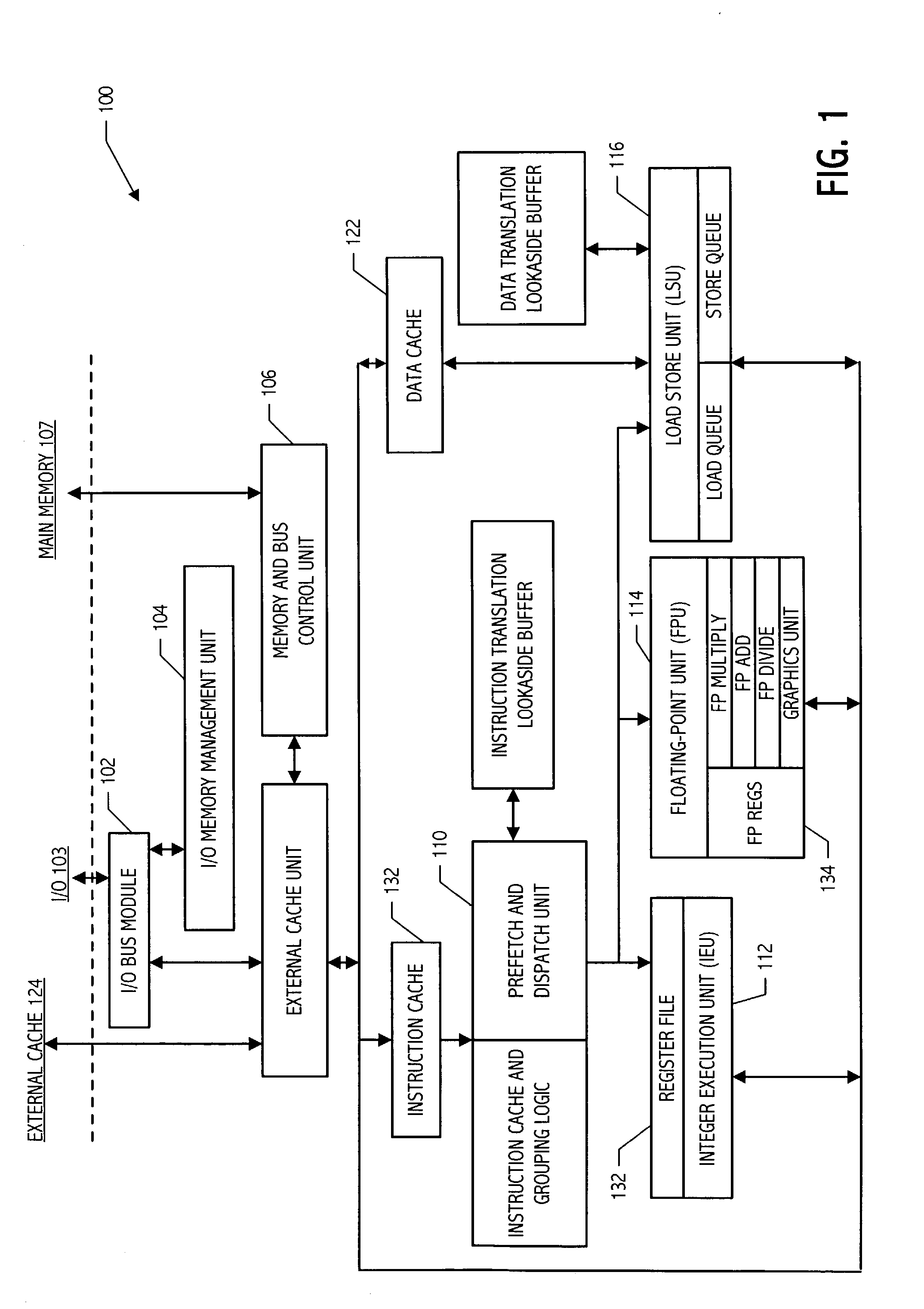

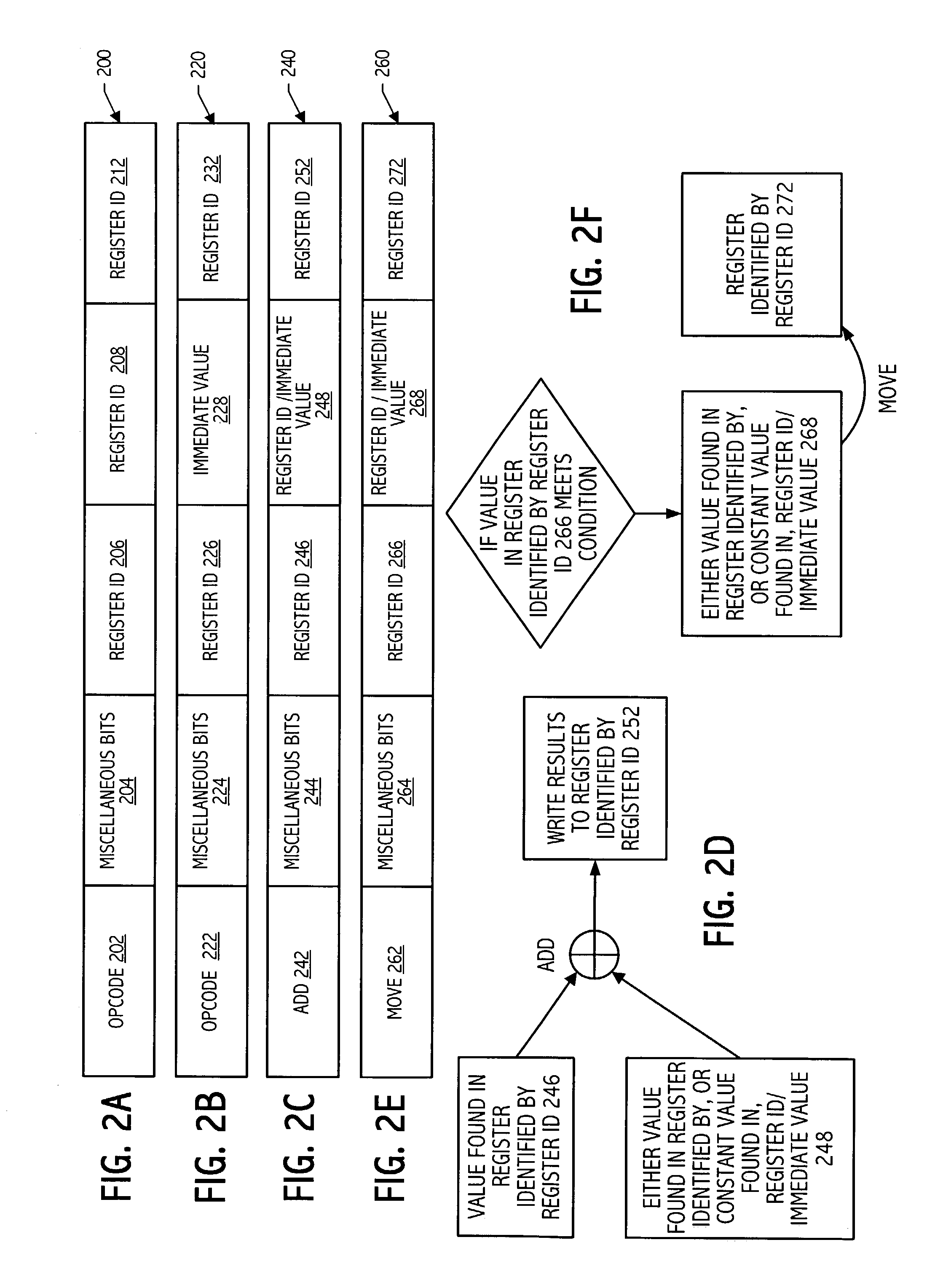

Virtual register set expanding processor internal storage

ActiveUS20040003208A1Improve processor performanceDigital computer detailsSpecific program execution arrangementsProcessor registerParallel computing

A processor includes a set of registers, each individually addressable using a corresponding register identification, and plural virtual registers, each individually addressable using a corresponding virtual register identification. The processor transfers values between the set of registers and the plural virtual registers under control of a transfer operation. The processor can include a virtual register cache configured to store multiple sets of virtual register values, such that each of the multiple sets of virtual register values corresponds to a different context. Each of the plural virtual registers can include a valid bit that is reset on a context switch and set when a value is loaded from the virtual register cache. The processor can include a virtual register translation look-aside buffer for tracking the location of each set of virtual register values associated with each context.

Owner:ORACLE INT CORP

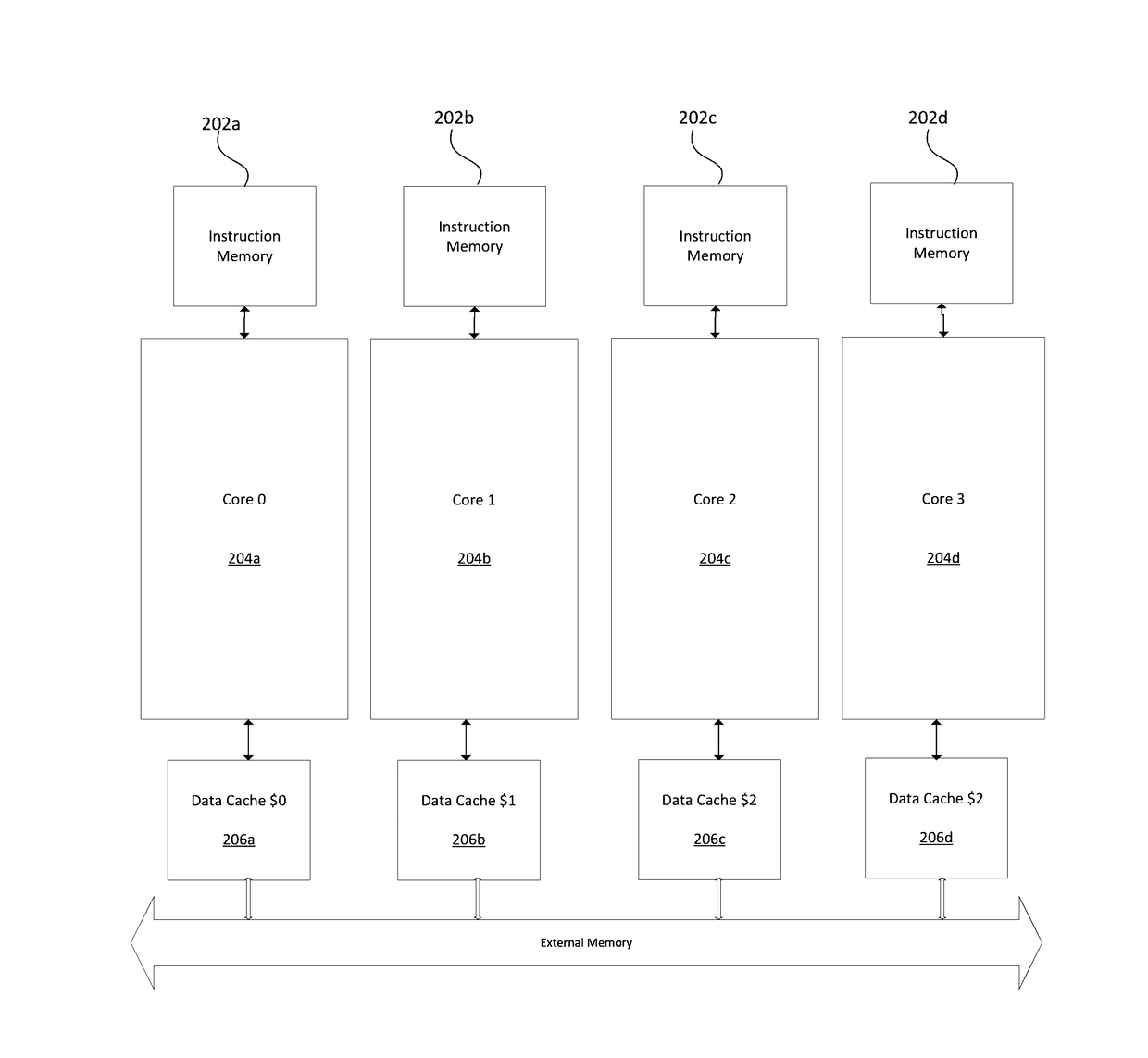

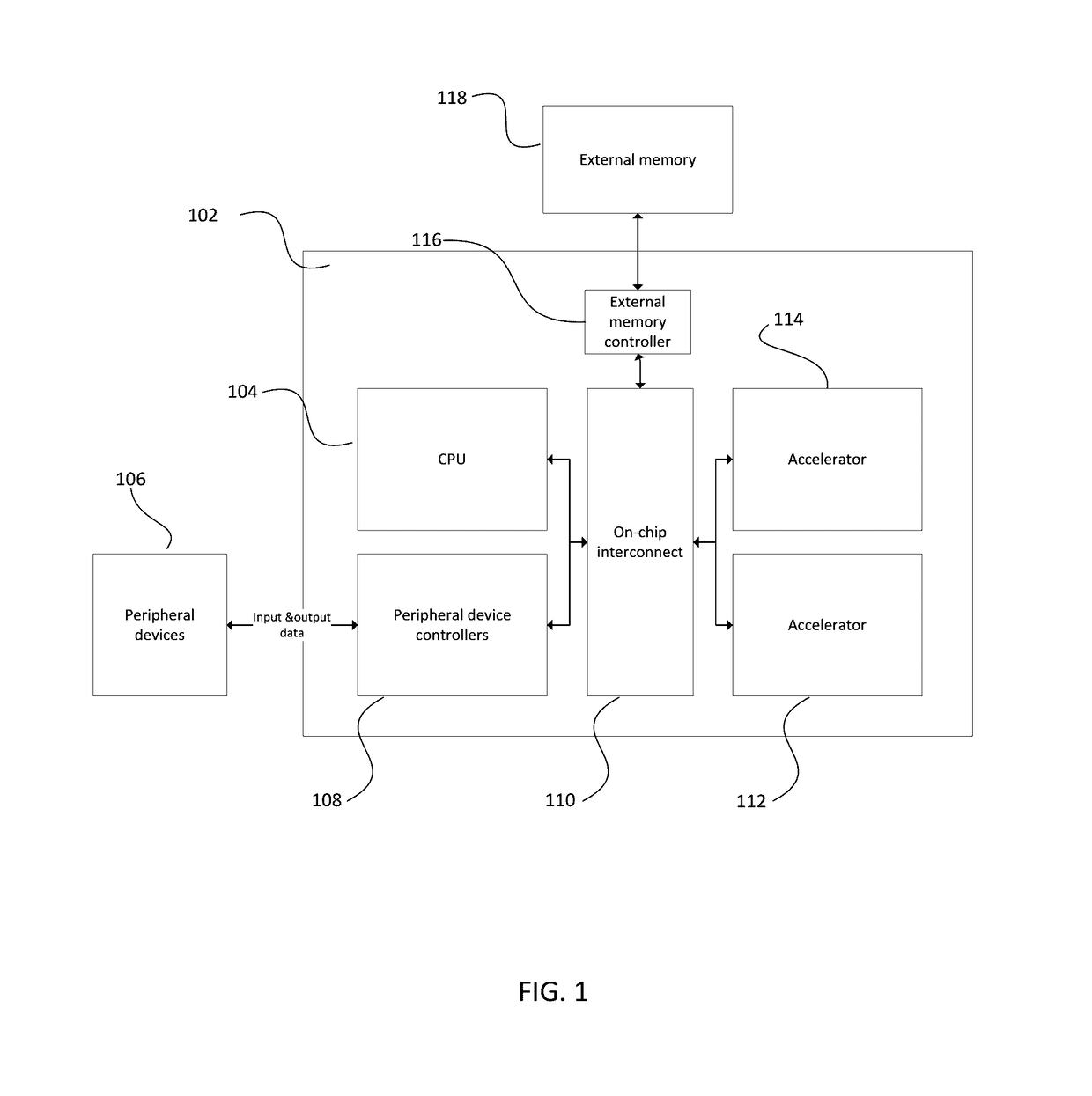

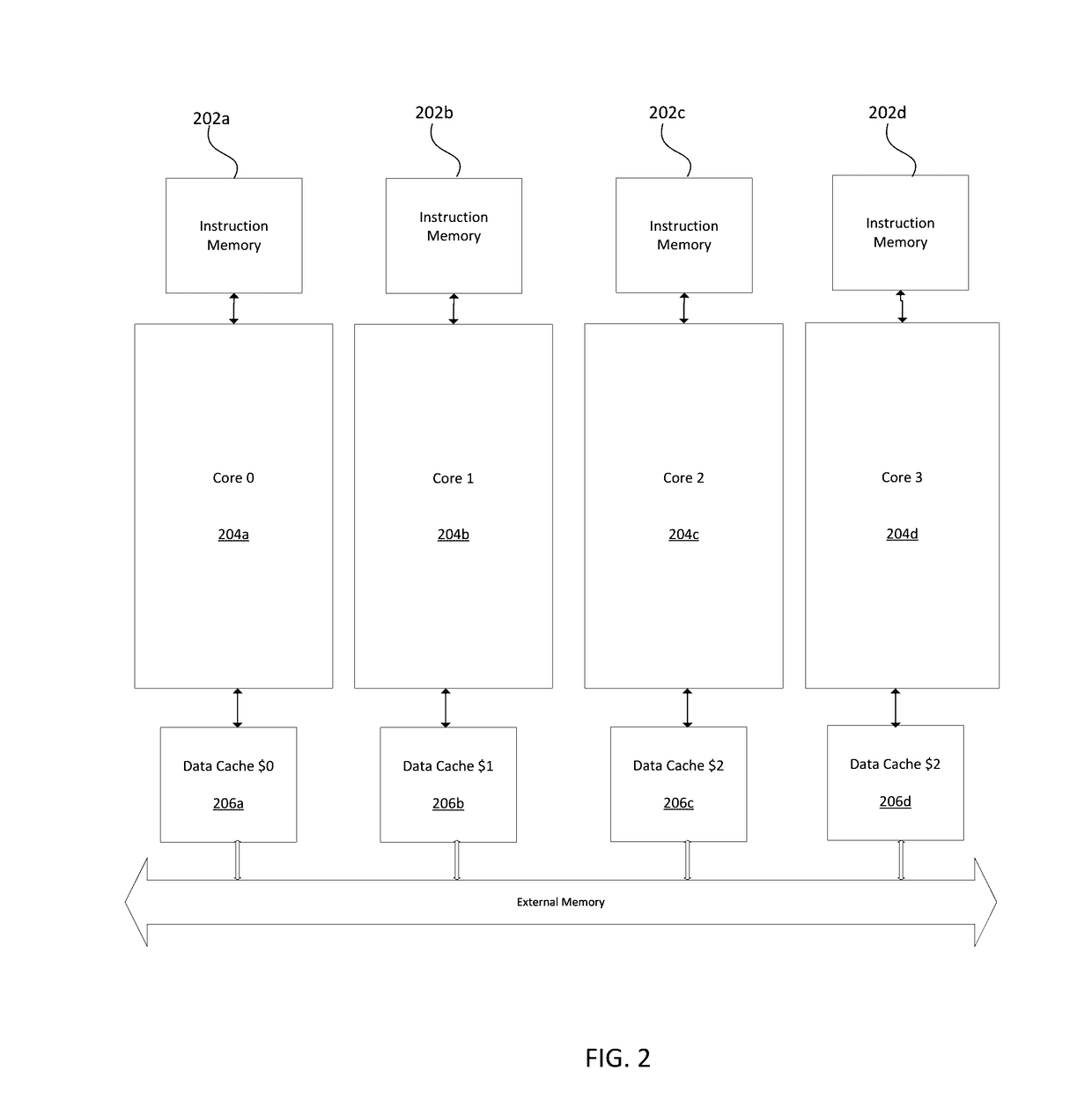

System on chip with image processing capabilities

ActiveUS20170103022A1Faster processing timeReduce in quantityMemory architecture accessing/allocationRegister arrangementsProcessing coreImaging processing

A multi-core processor configured to improve processing performance in certain computing contexts is provided. The multi-core processor includes multiple processing cores that implement barrel threading to execute multiple instruction threads in parallel while ensuring that the effects of an idle instruction or thread upon the performance of the processor is minimized. The multiple cores can also share a common data cache, thereby minimizing the need for expensive and complex mechanisms to mitigate inter-cache coherency issues. The barrel-threading can minimize the latency impacts associated with a shared data cache. In some examples, the multi-core processor can also include a serial processor configured to execute single threaded programming code that may not yield satisfactory performance in a processing environment that employs barrel threading.

Owner:MOBILEYE VISION TECH LTD

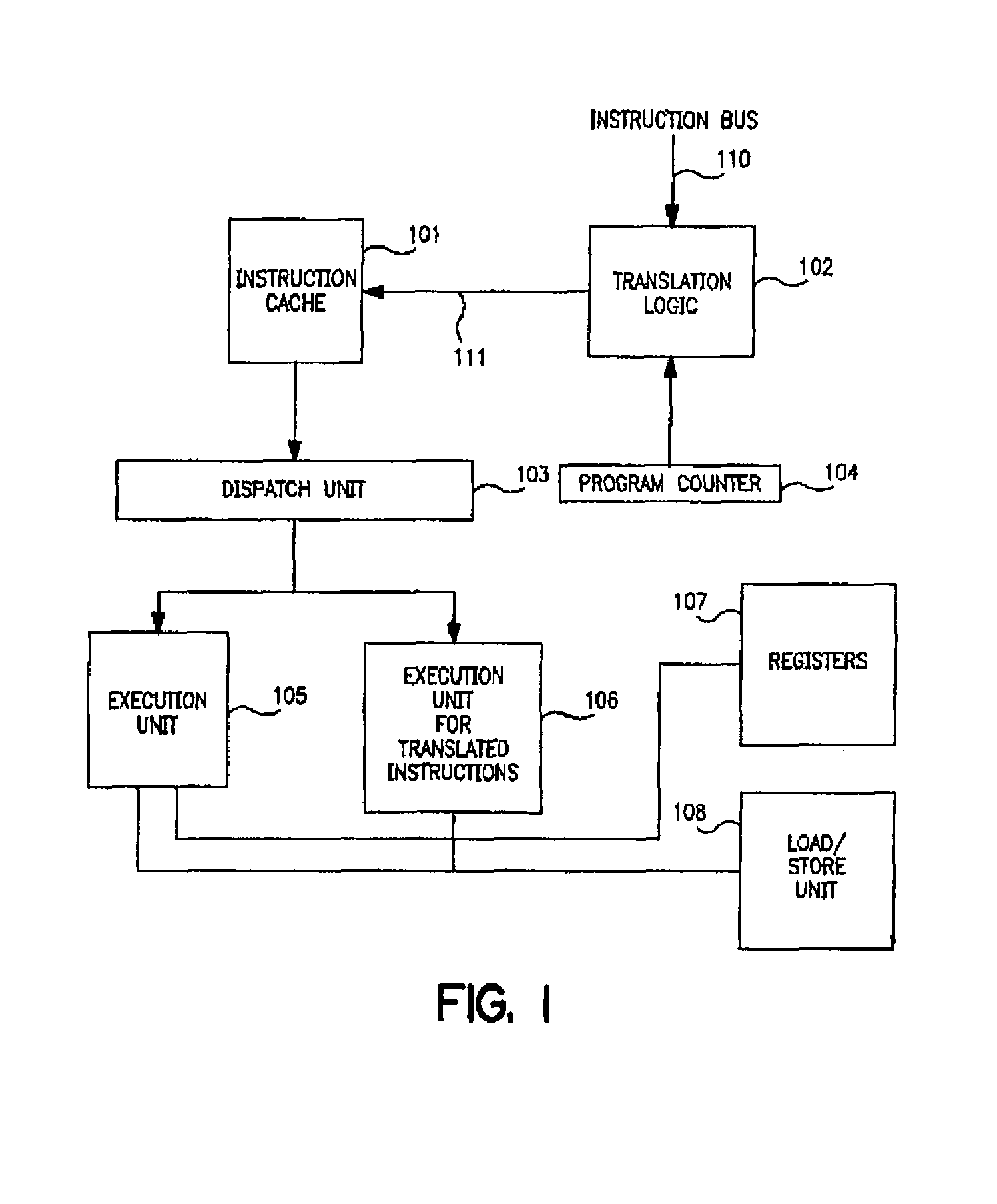

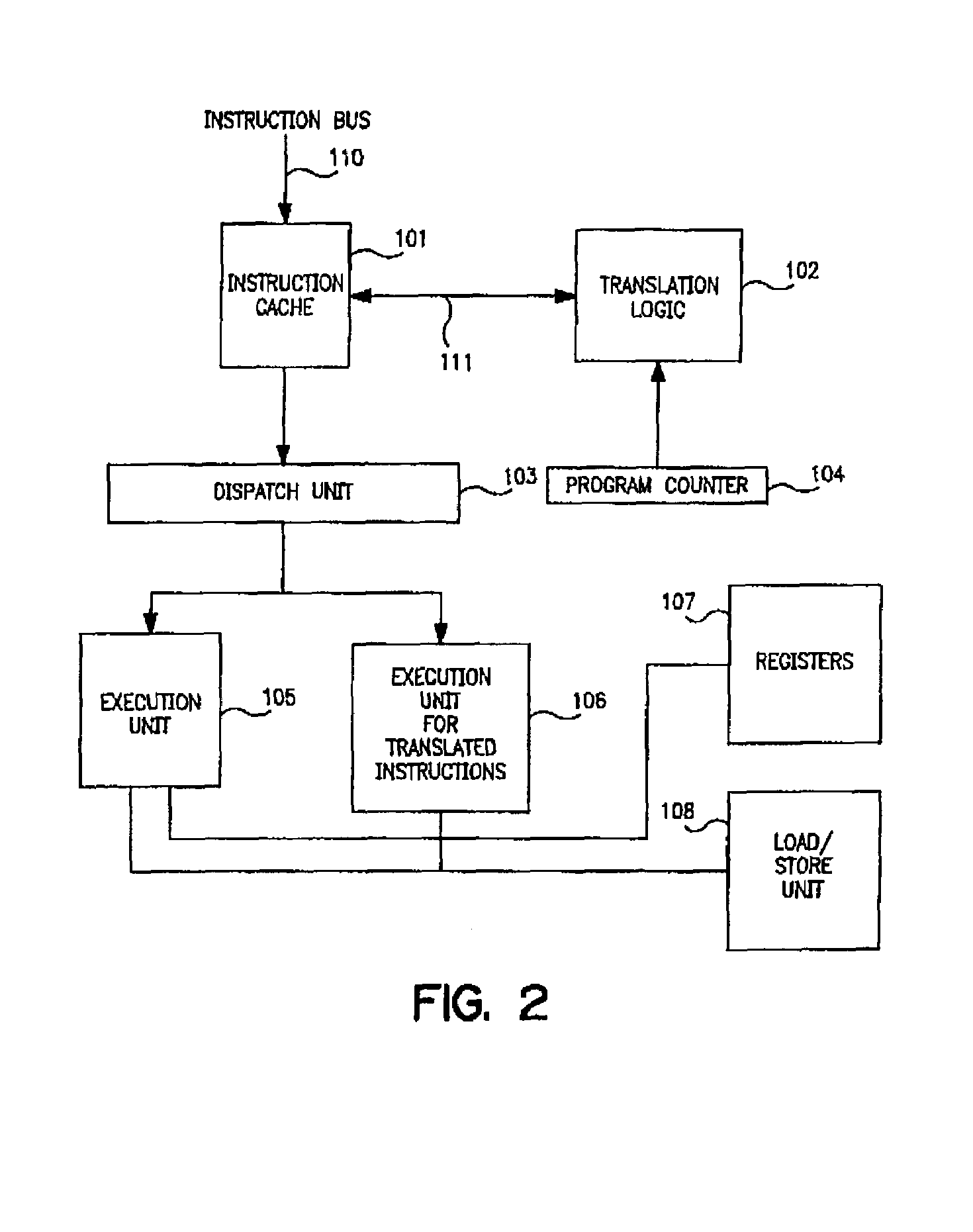

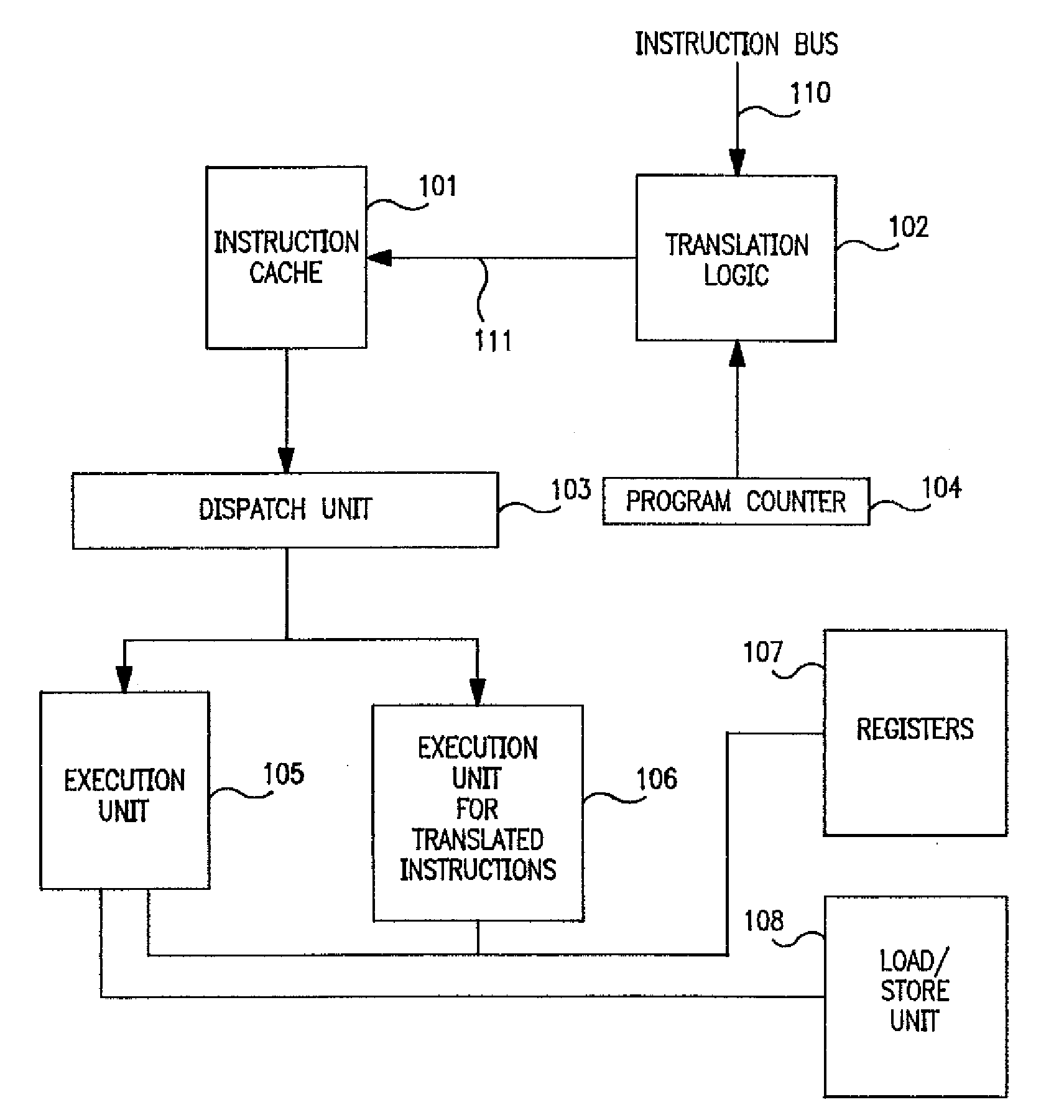

Dynamic object-level code transaction for improved performance of a computer

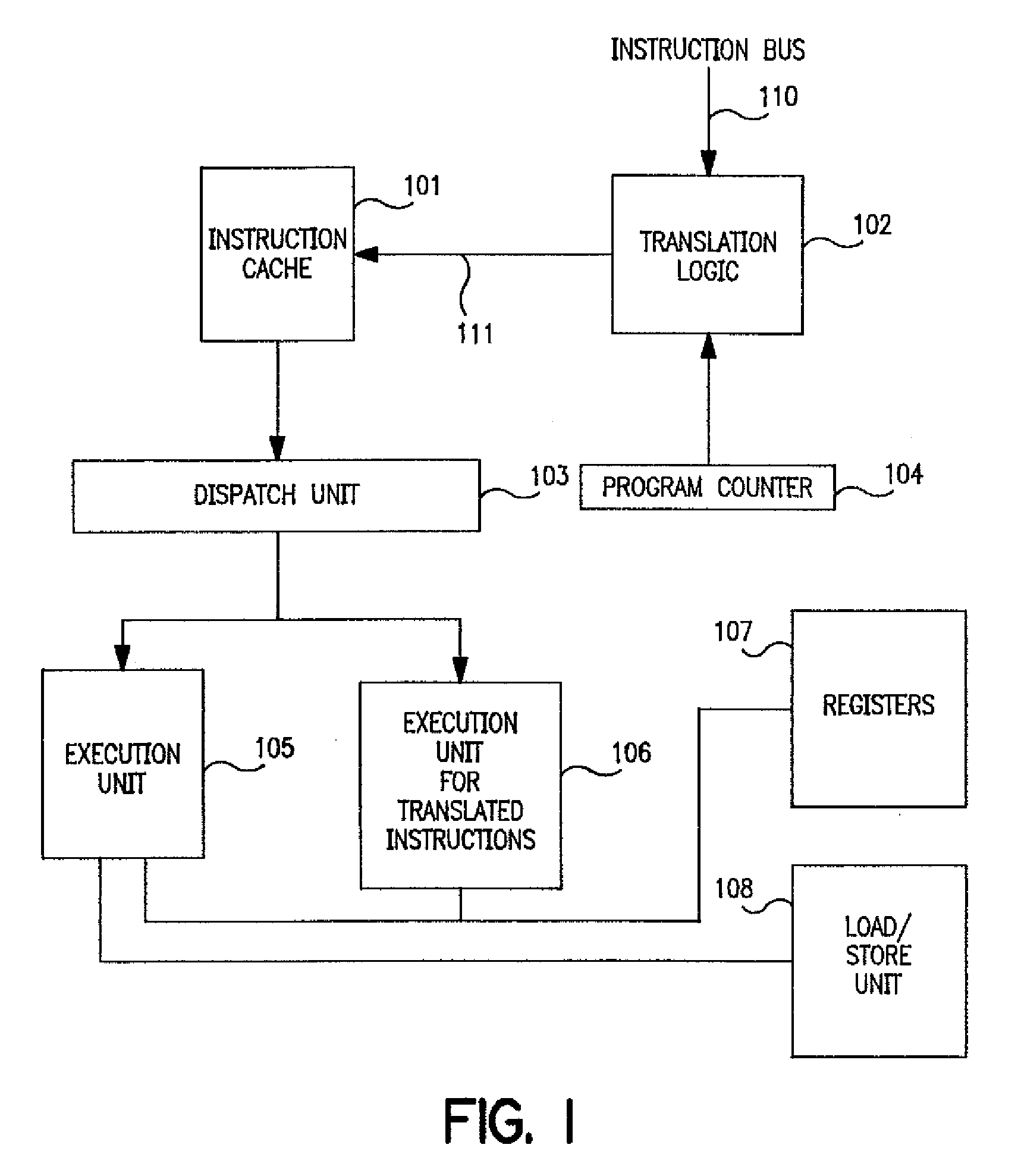

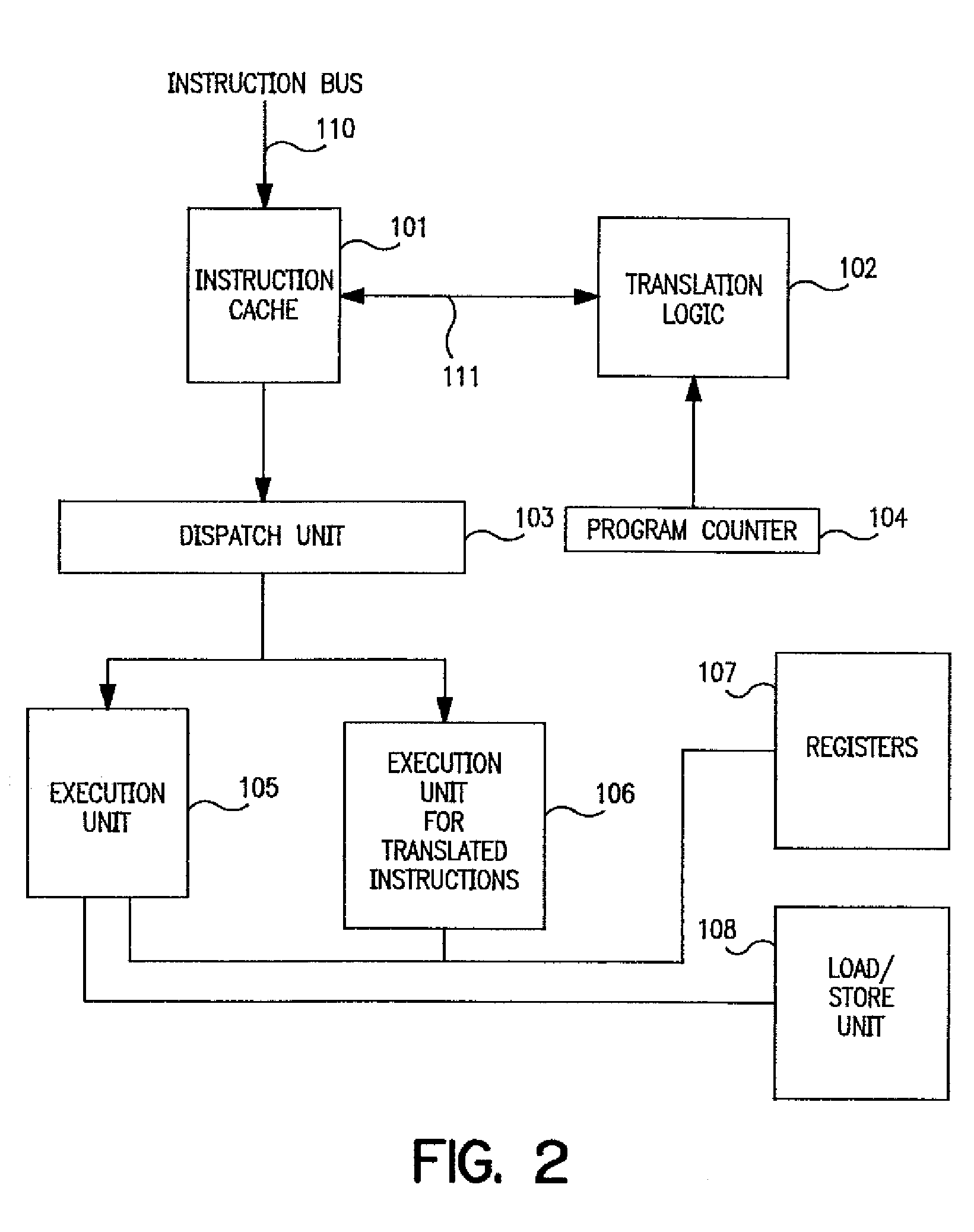

InactiveUS7418580B1Improve processor performanceIncrease the number ofRuntime instruction translationDigital computer detailsExecution unitObject level

A system and method for improving the efficiency of an object-level instruction stream in a computer processor. Translation logic for generating translated instructions from an object-level instruction stream in a RISC-architected computer processor, and an execution unit which executes the translated instructions, are integrated into the processor. The translation logic combines the functions of a plurality of the object-level instructions into a single translated instruction which can be dispatched to a single execution unit as compared with the untranslated instructions, which would otherwise be serially dispatched to separate execution units. Processor throughput is thereby increased since the number of instructions which can be dispatched per cycle is extended.

Owner:IBM CORP

Enabling and disabling cache bypass using predicted cache line usage

InactiveUS7228388B2Improve system performanceImprove hit rateMemory systemsCache hierarchyCache access

Arrangements and method for enabling and disabling cache bypass in a computer system with a cache hierarchy. Cache bypass status is identified with respect to at least one cache line. A cache line identified as cache bypass enabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is bypassed, while a cache line identified as cache bypass disabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is not bypassed. Included is an arrangement for selectively enabling or disabling cache bypass with respect to at least one cache line based on historical cache access information.

Owner:INT BUSINESS MASCH CORP

Address generation unit for a processor

ActiveUS20060010255A1Good suitImprove processor performanceMemory adressing/allocation/relocationImage memory managementAddress generation unitAddress control

A processor includes a memory port for accessing a physical memory under control of an address. A processing unit executing instructions stored in the memory and / or operates on data stored in the memory. An address generation unit (“AGU”) generates address for controlling access to the memory; the AGU being associated with a plurality of N registers enabling the AGU to generate the address under control of an address generation mechanism. A memory unit is operative to save / load k of the N registers, where 2<=k<=N, triggered by one operation. To this end, the memory unit includes a concatenator for concatenating the k registers to one memory word to be written to the memory through the memory port and a splitter for separating a word read from the memory through the memory port into the k registers.

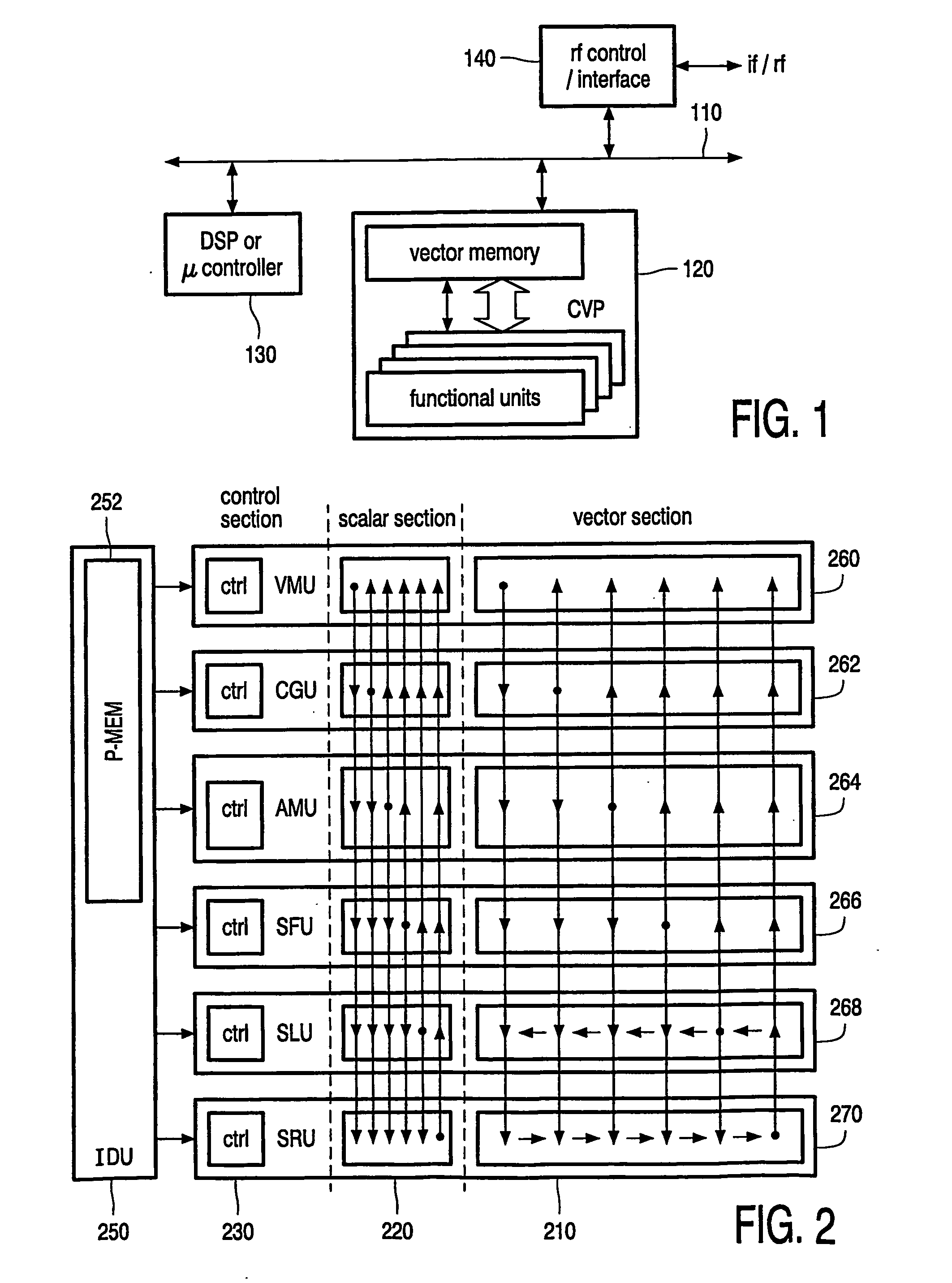

Owner:TELEFON AB LM ERICSSON (PUBL)

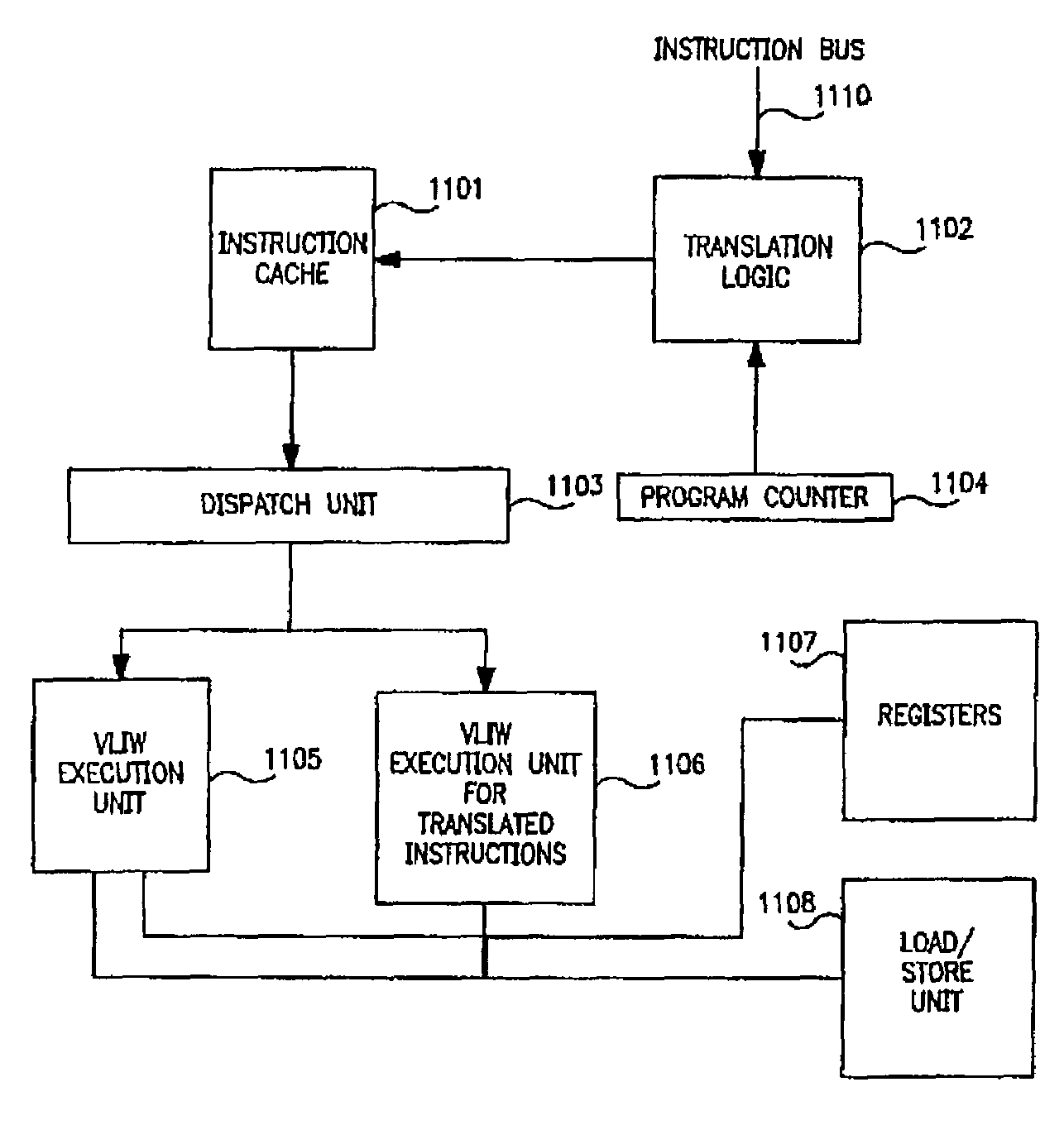

Dynamic object-level code translation for improved performance of a computer processor

InactiveUS20080320286A1Increase the number ofImprove processor performanceRuntime instruction translationDigital computer detailsCode TranslationExecution unit

A system and method for improving the efficiency of an object-level instruction stream in a computer processor. Translation logic for generating translated instructions from an object-level instruction stream in a RISC-architected computer processor, and an execution unit which executes the translated instructions, are integrated into the processor. The translation logic combines the functions of a plurality of the object-level instructions into a single translated instruction which can be dispatched to a single execution unit as compared with the untranslated instructions, which would otherwise be serially dispatched to separate execution units. Processor throughput is thereby increased since the number of instructions which can be dispatched per cycle is extended.

Owner:INT BUSINESS MASCH CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com