Patents

Literature

1778 results about "Multi-core processor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

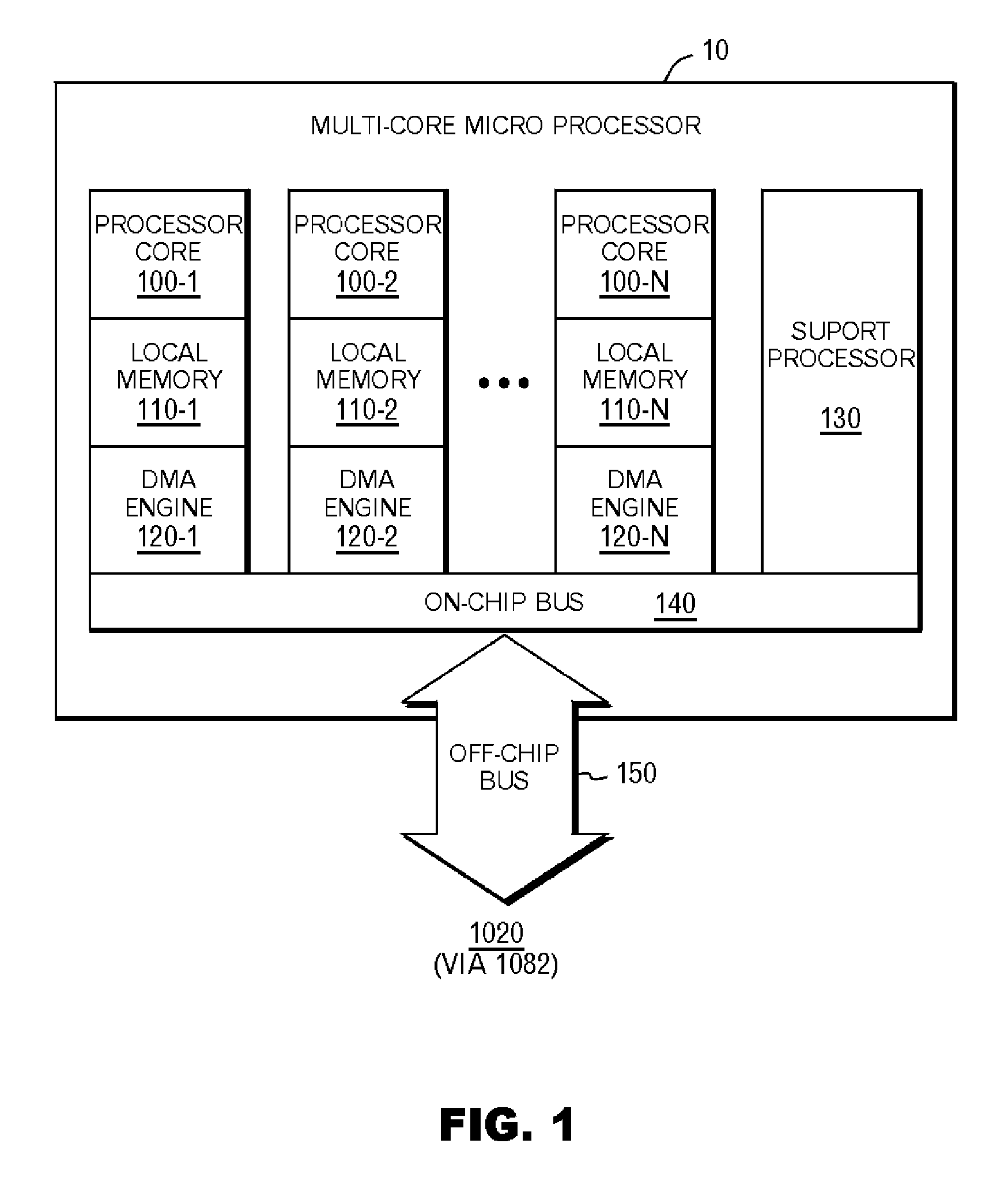

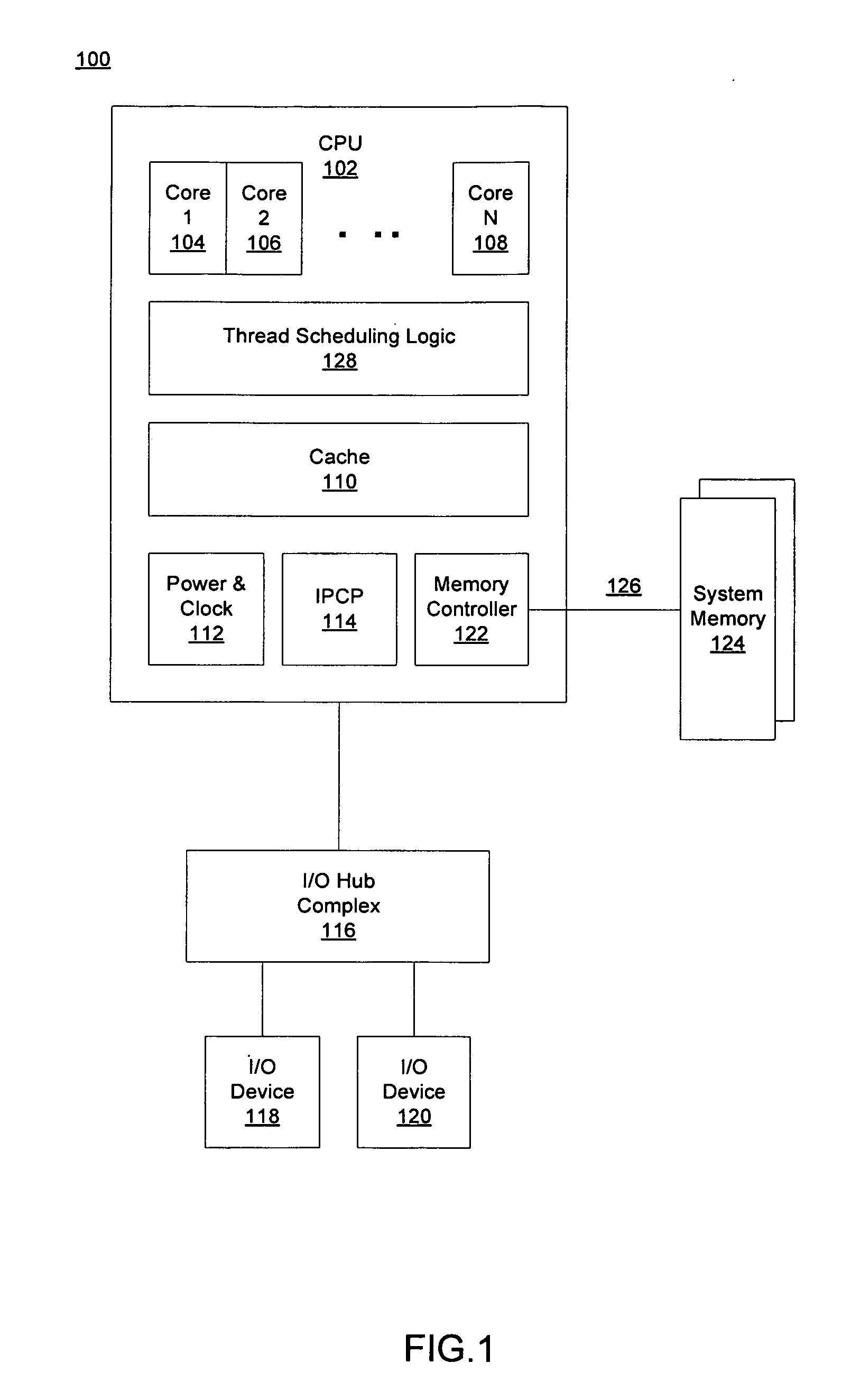

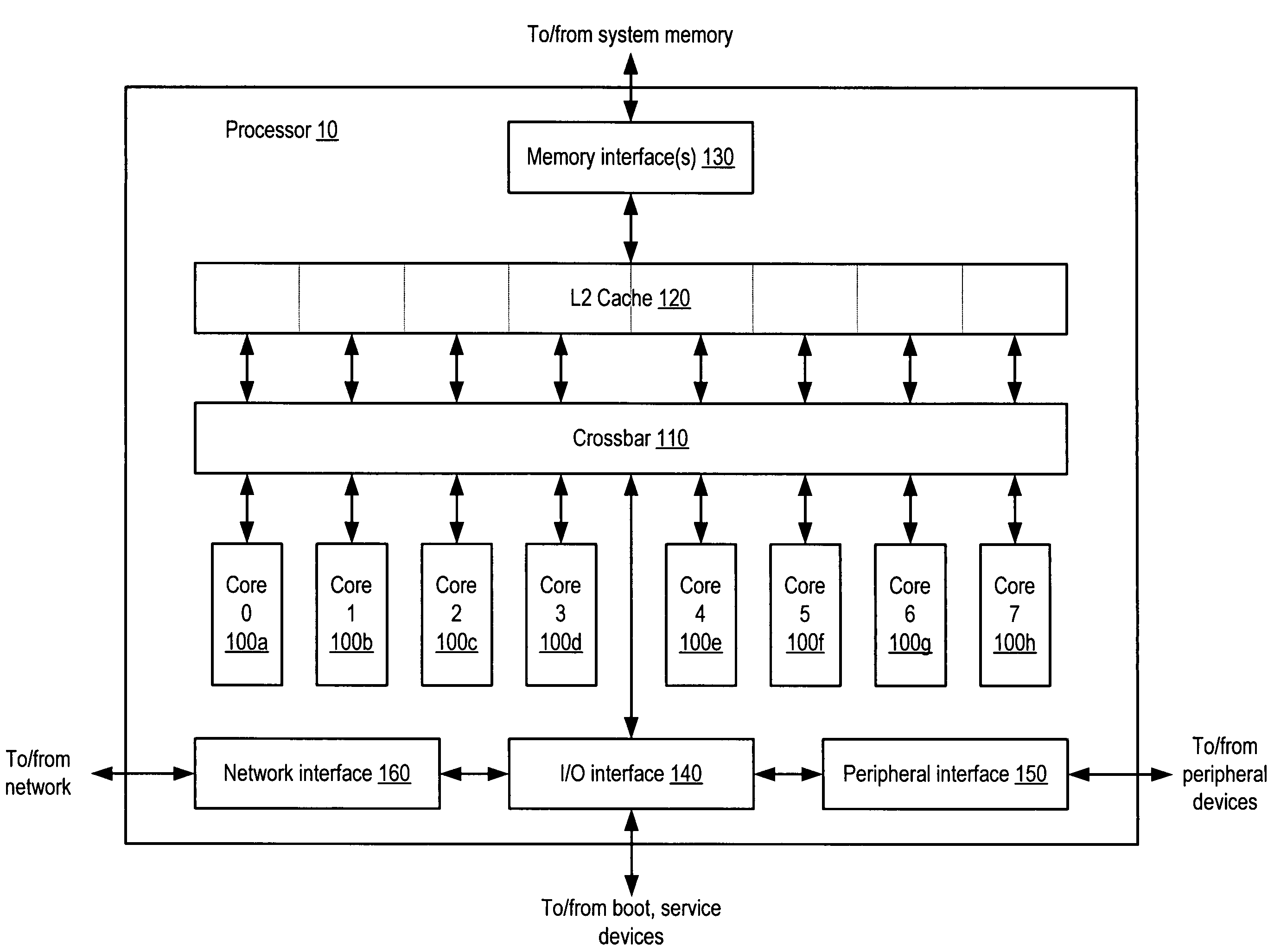

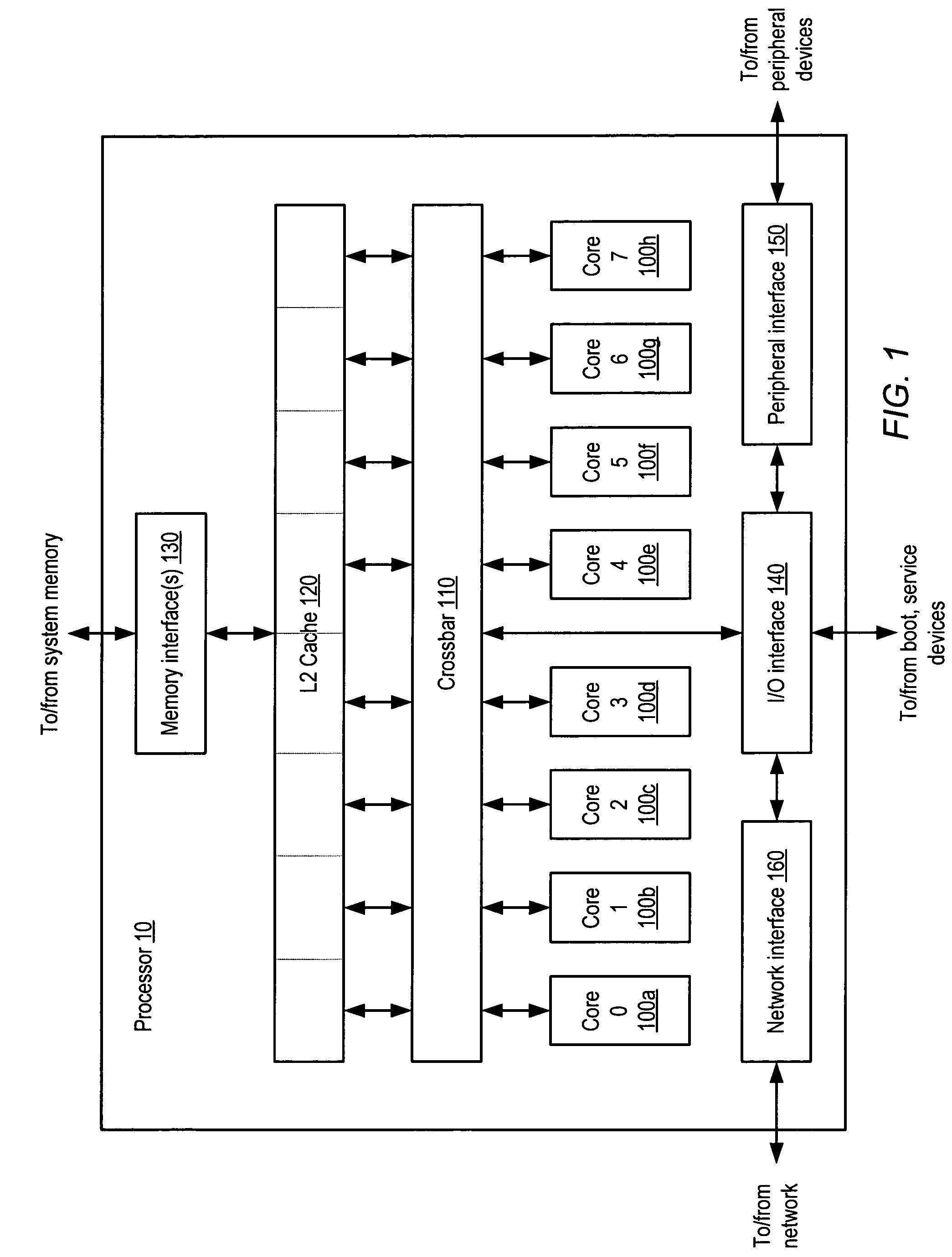

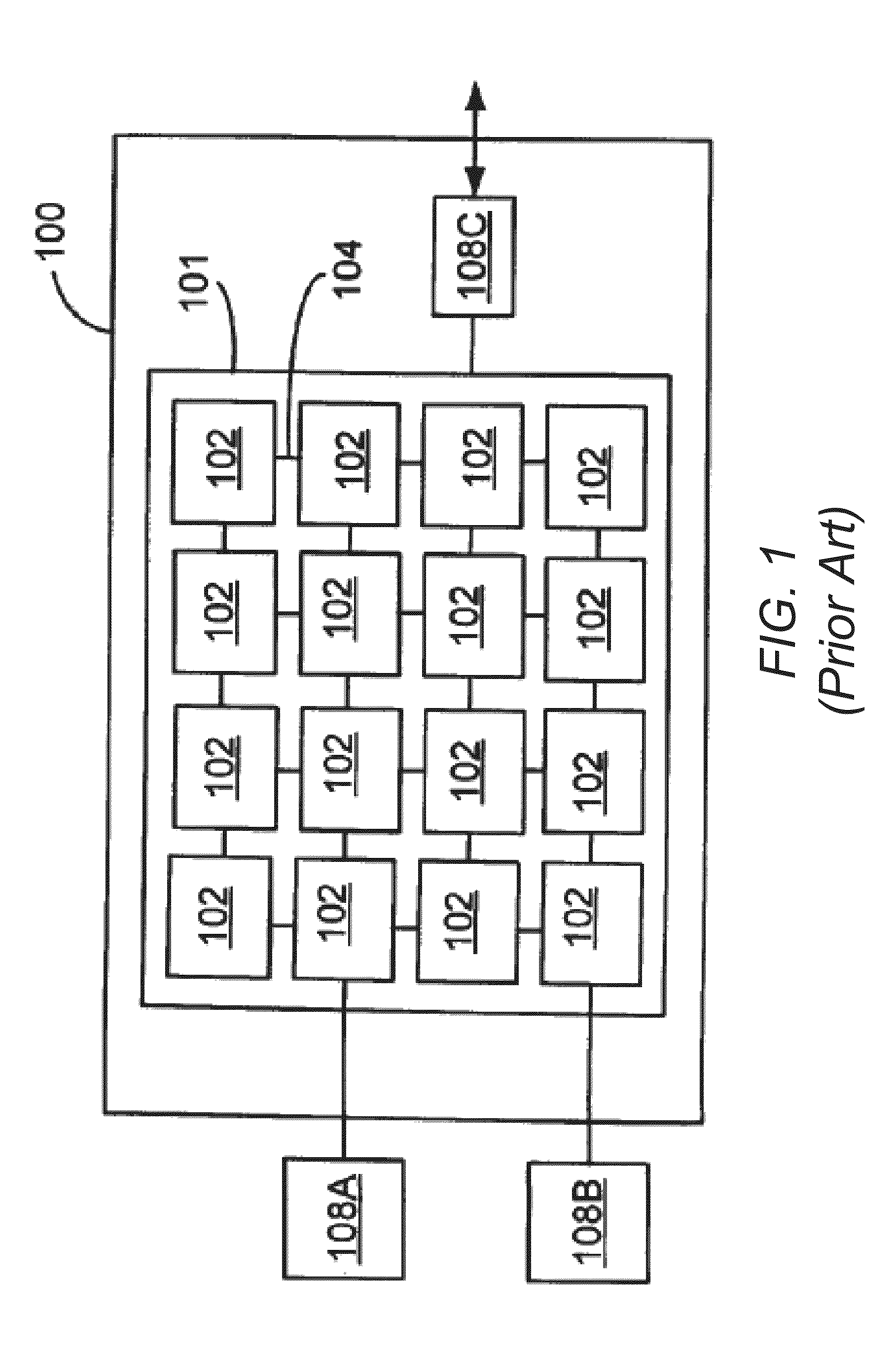

A multi-core processor is a computer processor integrated circuit with two or more separate processing units, called cores, each of which reads and executes program instructions, as if the computer had several processors. The instructions are ordinary CPU instructions (such as add, move data, and branch) but the single processor can run instructions on separate cores at the same time, increasing overall speed for programs that support multithreading or other parallel computing techniques. Manufacturers typically integrate the cores onto a single integrated circuit die (known as a chip multiprocessor or CMP) or onto multiple dies in a single chip package. The microprocessors currently used in almost all personal computers are multi-core. A multi-core processor implements multiprocessing in a single physical package. Designers may couple cores in a multi-core device tightly or loosely. For example, cores may or may not share caches, and they may implement message passing or shared-memory inter-core communication methods. Common network topologies to interconnect cores include bus, ring, two-dimensional mesh, and crossbar. Homogeneous multi-core systems include only identical cores; heterogeneous multi-core systems have cores that are not identical (e.g. big.LITTLE have heterogeneous cores that share the same instruction set, while AMD Accelerated Processing Units have cores that don't even share the same instruction set). Just as with single-processor systems, cores in multi-core systems may implement architectures such as VLIW, superscalar, vector, or multithreading.

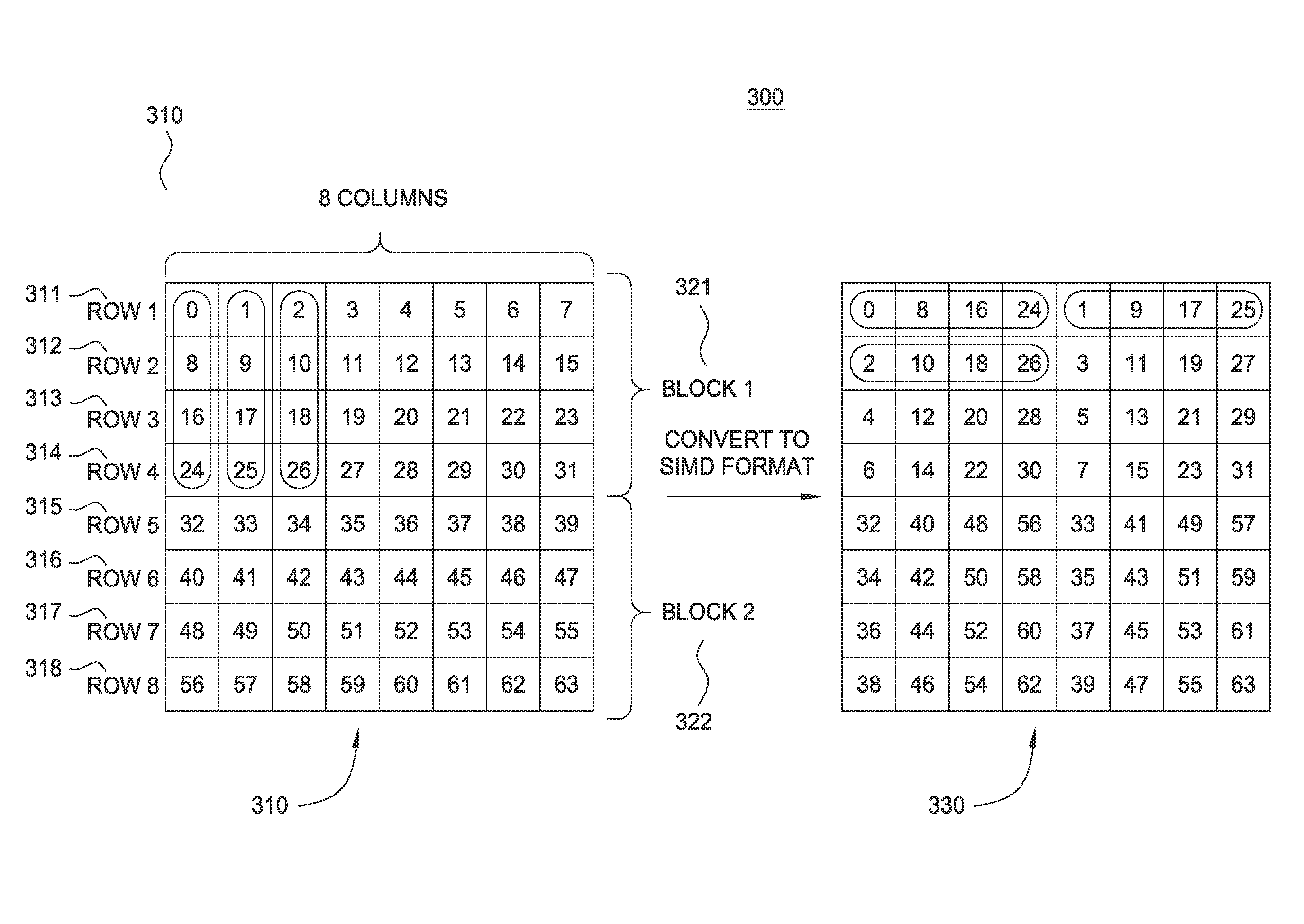

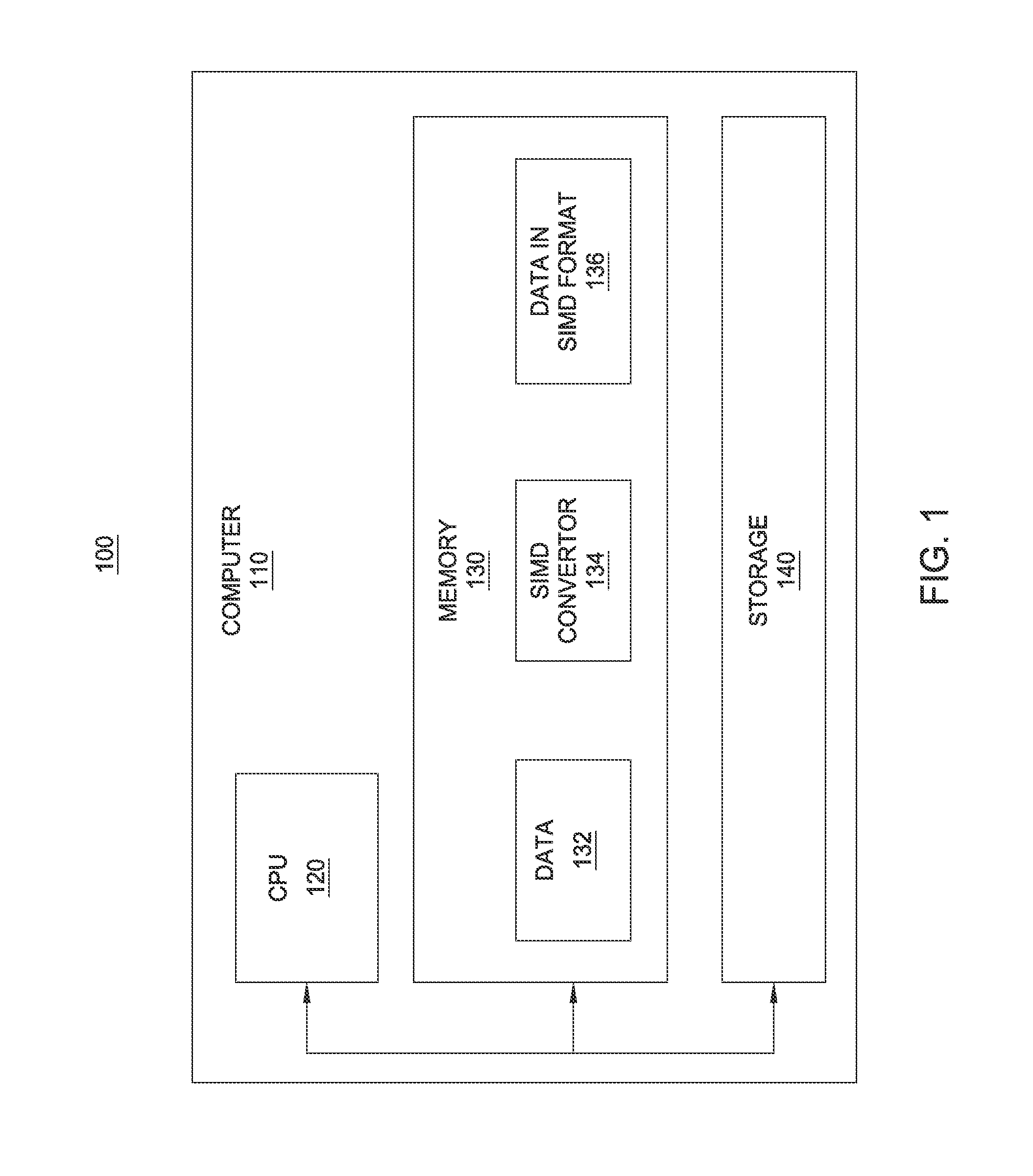

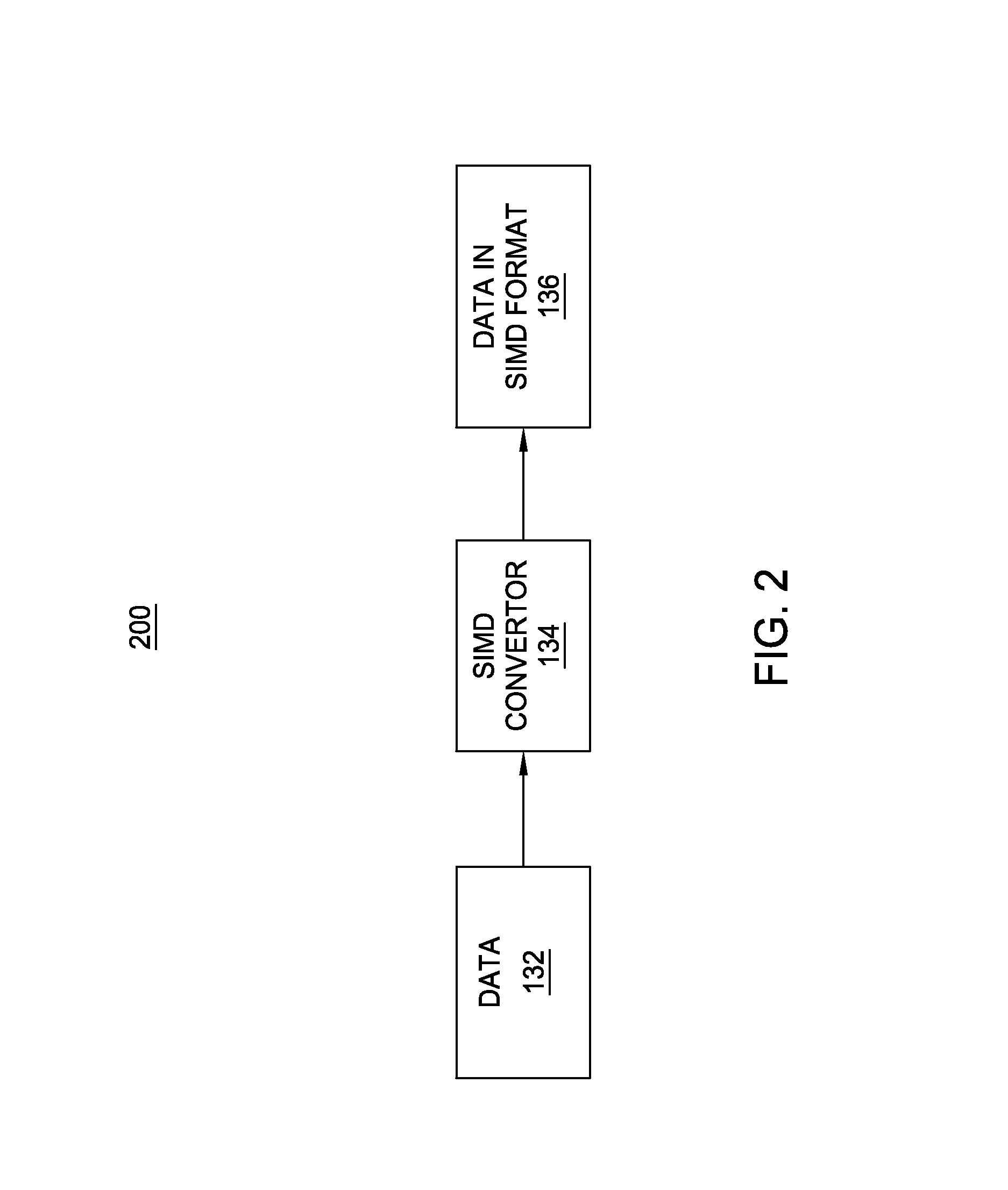

Processing array data on SIMD multi-core processor architectures

InactiveUS8484276B2Program control using stored programsGeneral purpose stored program computerFast Fourier transformFourier transform on finite groups

Owner:INT BUSINESS MASCH CORP

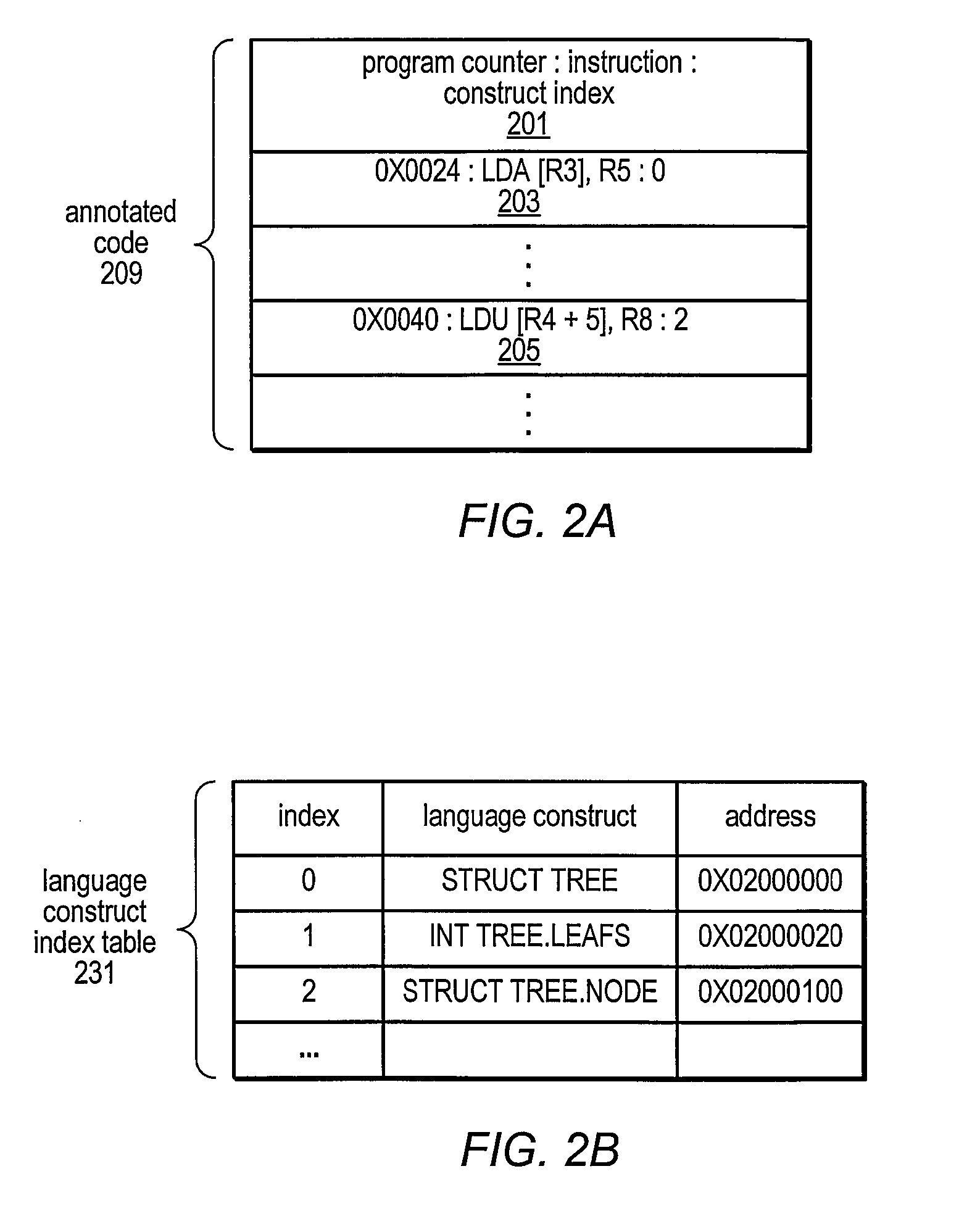

Multi-core debugger

InactiveUS20060059286A1Avoid interferenceMemory architecture accessing/allocationError detection/correctionMulti-core processorEmbedded system

In a multi-core processor, a high-speed interrupt-signal interconnect allows more than one of the processors to be interrupted at substantially the same time. For example, a global signal interconnect is coupled to each of the multiple processors, each processor being configured to selectively provide an interrupt signal, or pulse thereon. Preferably, each of the processor cores is capable of pulsing the global signal interconnect during every clock cycle to minimize delay between a triggering event and its respective interrupt signal. Each of the multiple processors also senses, or samples the global signal interconnect, preferably during the same cycle within which the pulse was provided, to determine the existence of an interrupt signal. Upon sensing an interrupt signal, each of the multiple processors responds to it substantially simultaneously. For example, an interrupt signal sampled by each of the multiple processors causes each processor to invoke a debug handler routine.

Owner:CAVIUM NETWORKS

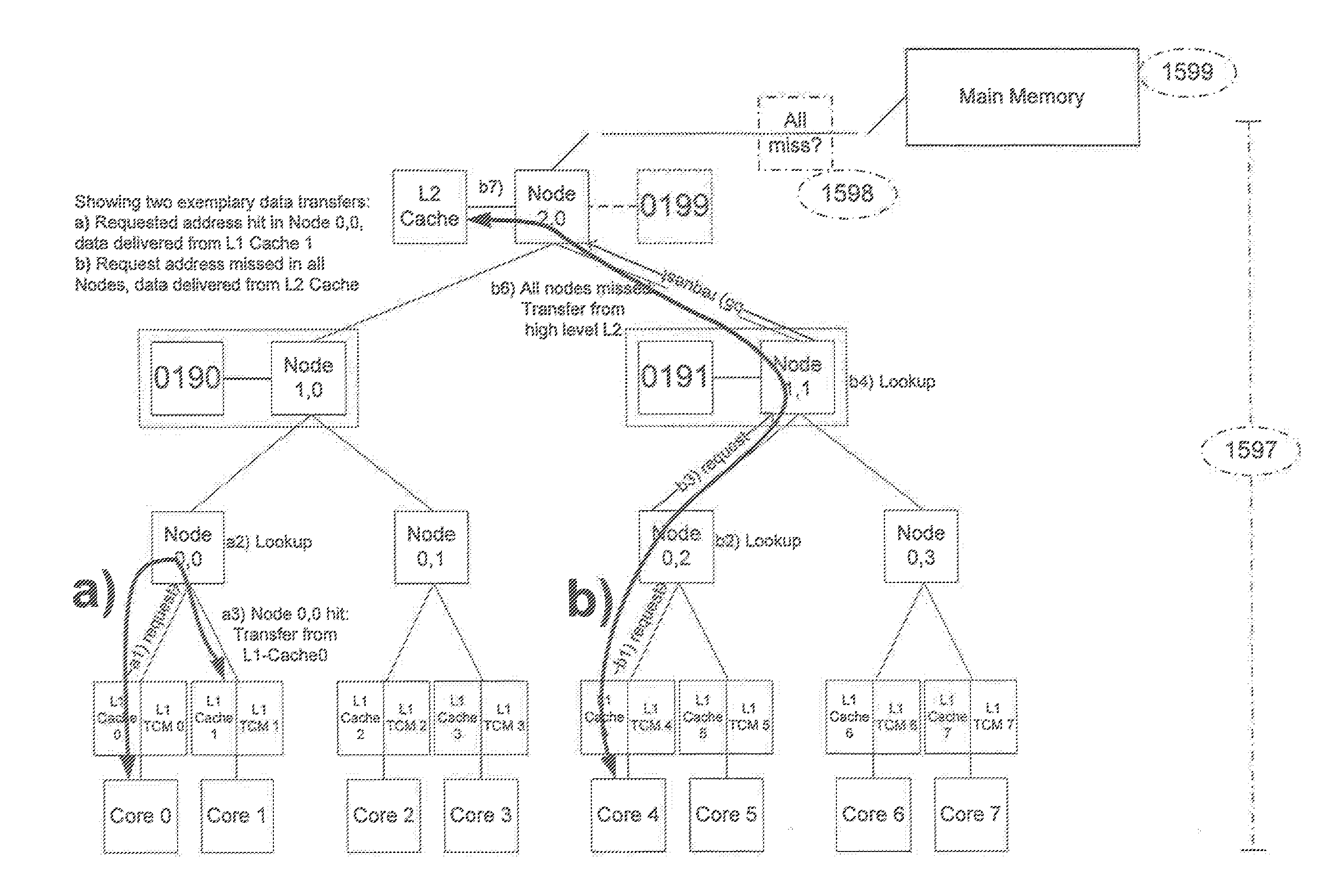

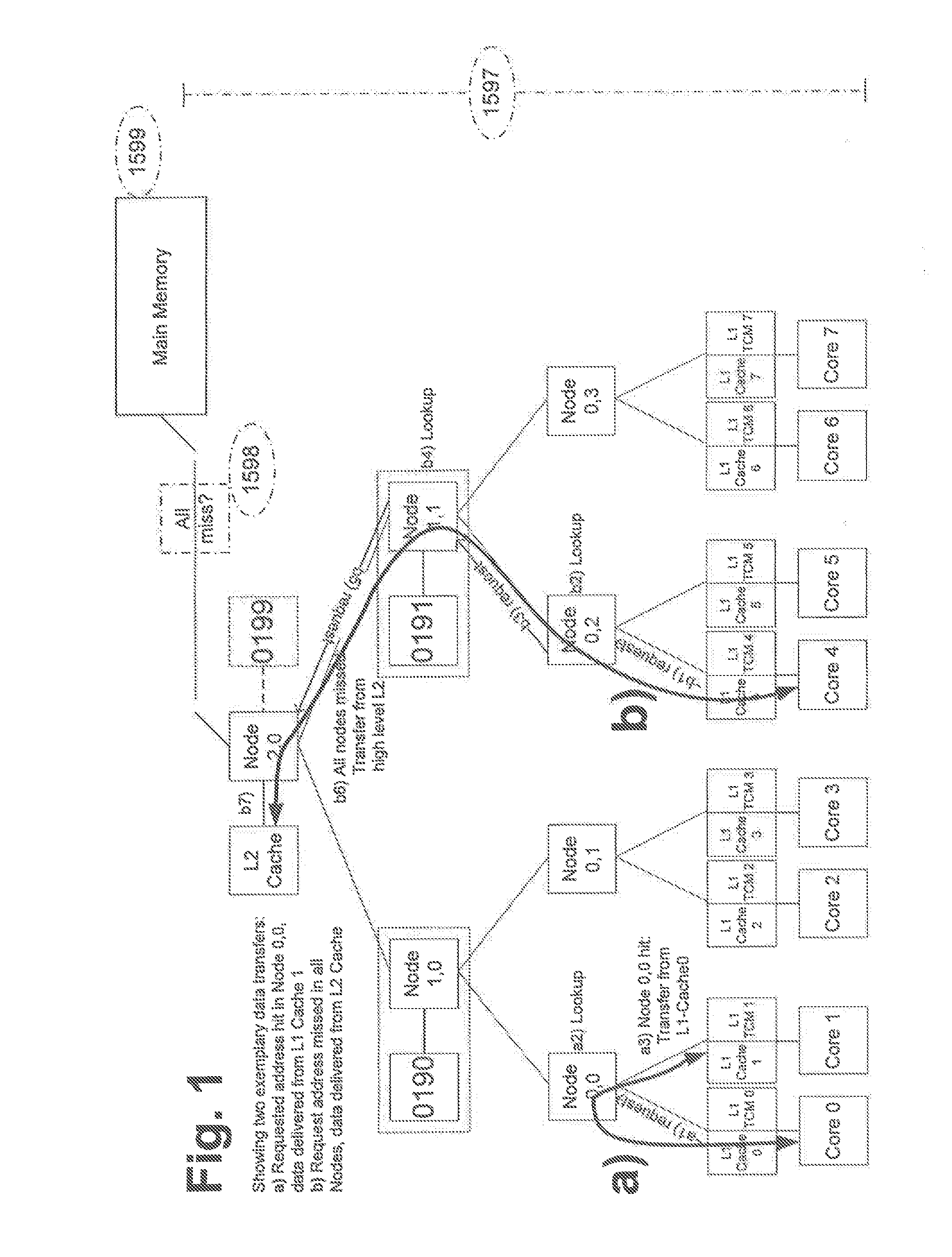

System and Method for a Cache in a Multi-Core Processor

ActiveUS20120137075A1Memory architecture accessing/allocationEnergy efficient ICTMulti-core processor

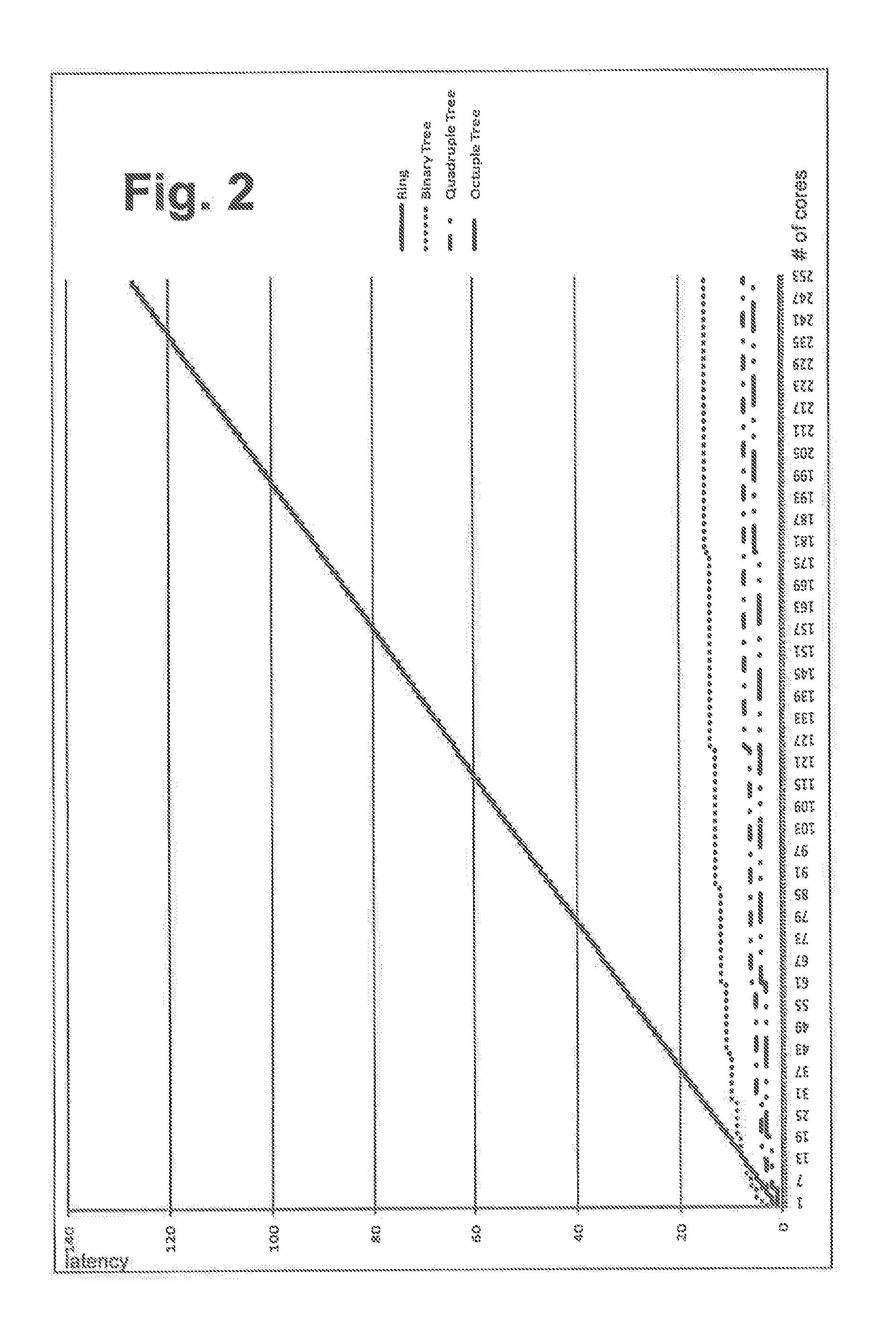

The invention relates to a multi-core processor system, in particular a single-package multi-core processor system, comprising at least two processor cores, preferably at least four processor cores, each of said at least two cores, preferably at least four processor cores, having a local LEVEL-1 cache, a tree communication structure combining the multiple LEVEL-1 caches, the tree having at least one node, preferably at least three nodes for a four processor core multi-core processor, and TAG information is associated to data managed within the tree, usable in the treatment of the data.

Owner:HYPERION CORE

Preprocessor to improve the performance of message-passing-based parallel programs on virtualized multi-core processors

InactiveUS20070038987A1Reduced execution timeEasy to implementMultiprogramming arrangementsMemory systemsCluster basedParallel processing

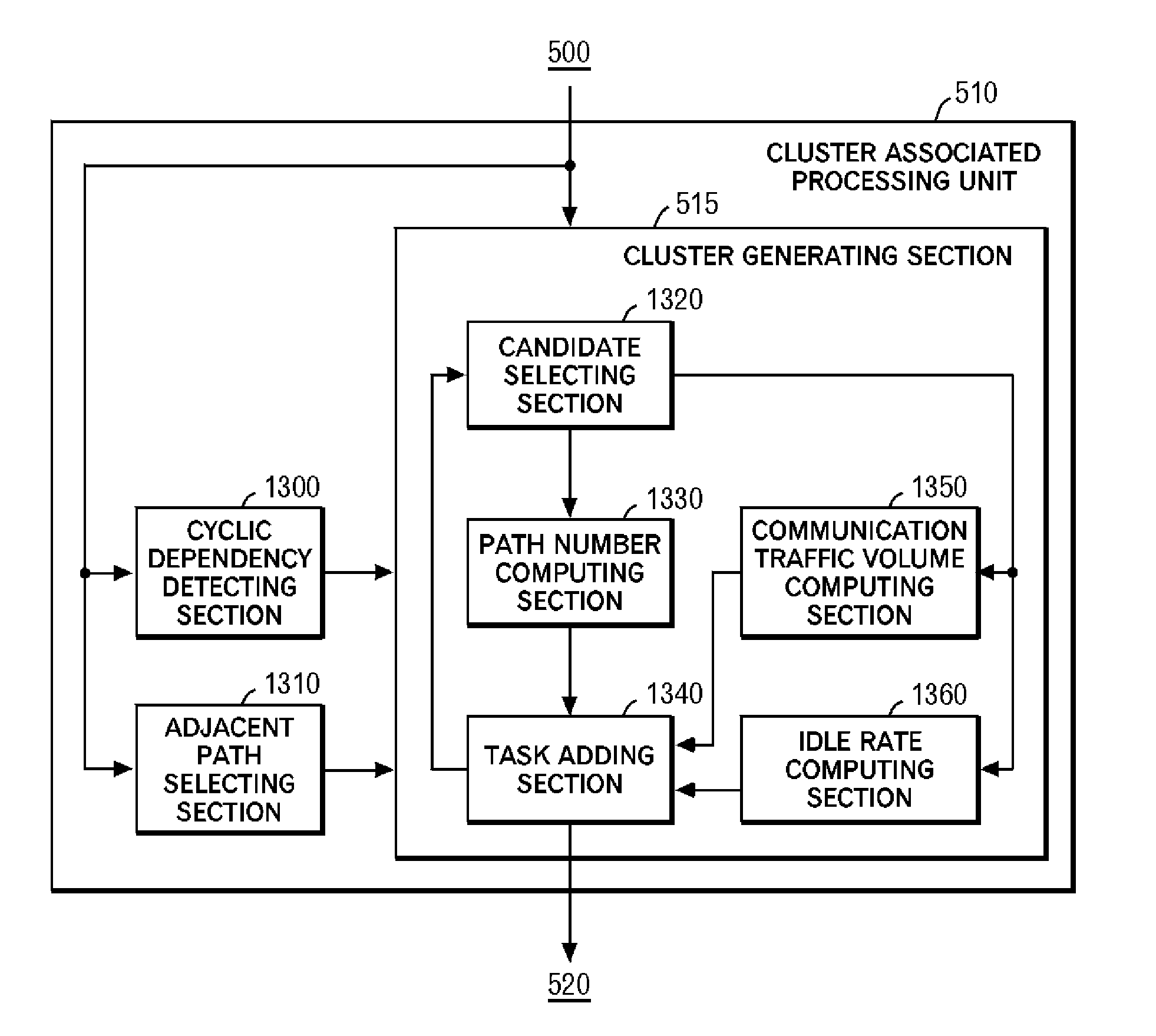

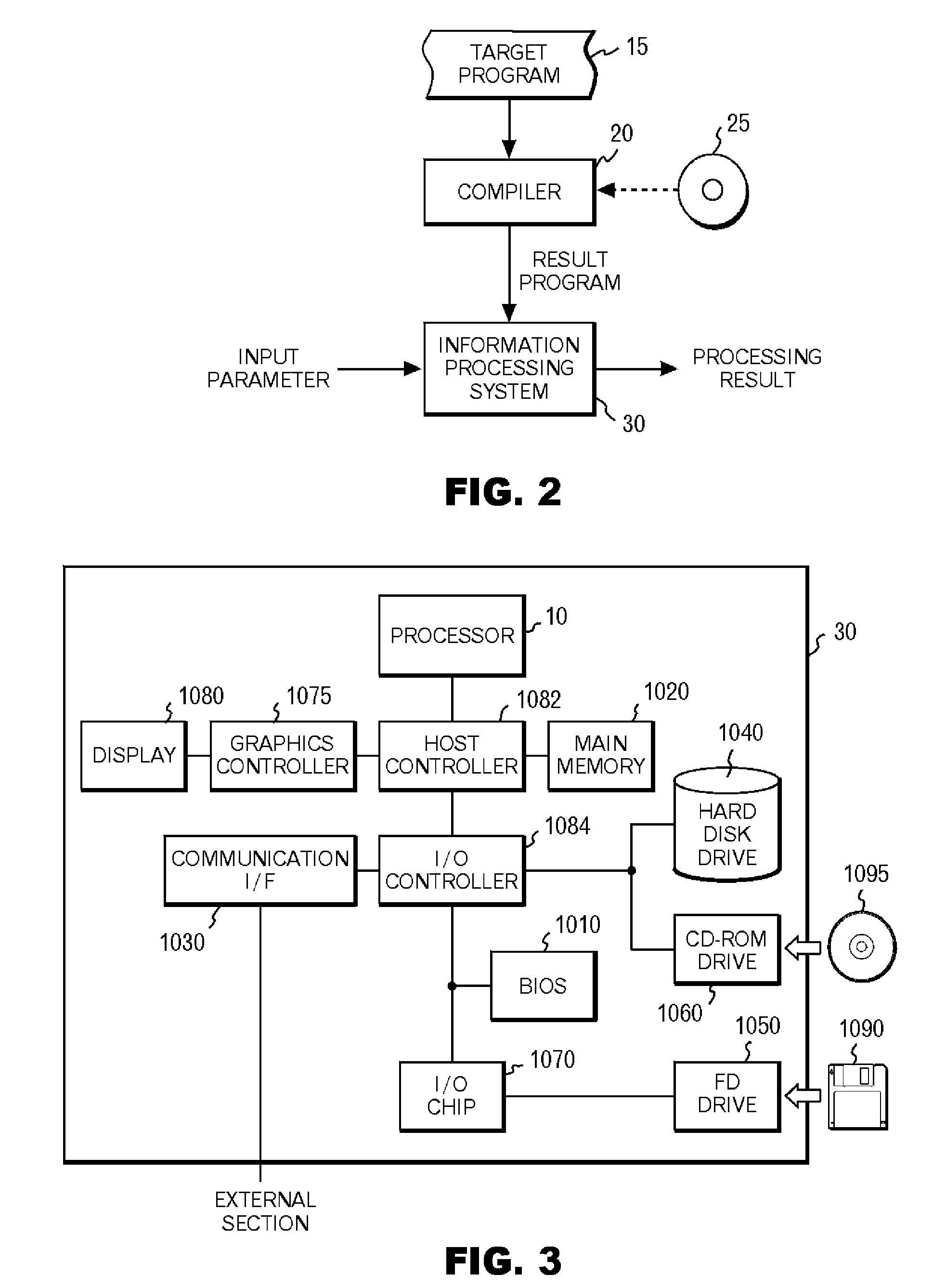

Provided is a complier which optimizes parallel processing. The complier records the number of execution cores, which is the number of processor cores that execute a target program. First, the compiler detects a dominant path, which is a candidate of an execution path to be consecutively executed by a single processor core, from a target program. Subsequently, the compiler selects dominant paths with the number not larger than the number of execution cores, and generates clusters of tasks to be executed by a multi-core processor in parallel or consecutively. After that, the compiler computes an execution time for which each of the generated clusters is executed by the processor cores with the number equal to one or each of a plurality natural numbers selected from the natural numbers not larger than the number of execution cores. Then, the compiler selects the number of processor cores to be assigned for execution of each of the clusters based on the computed execution time.

Owner:IBM CORP

Increasing workload performance of one or more cores on multiple core processors

ActiveUS20070033425A1Performance state can be reducedHigh performance stateEnergy efficient ICTVolume/mass flow measurementOperational systemWorkload

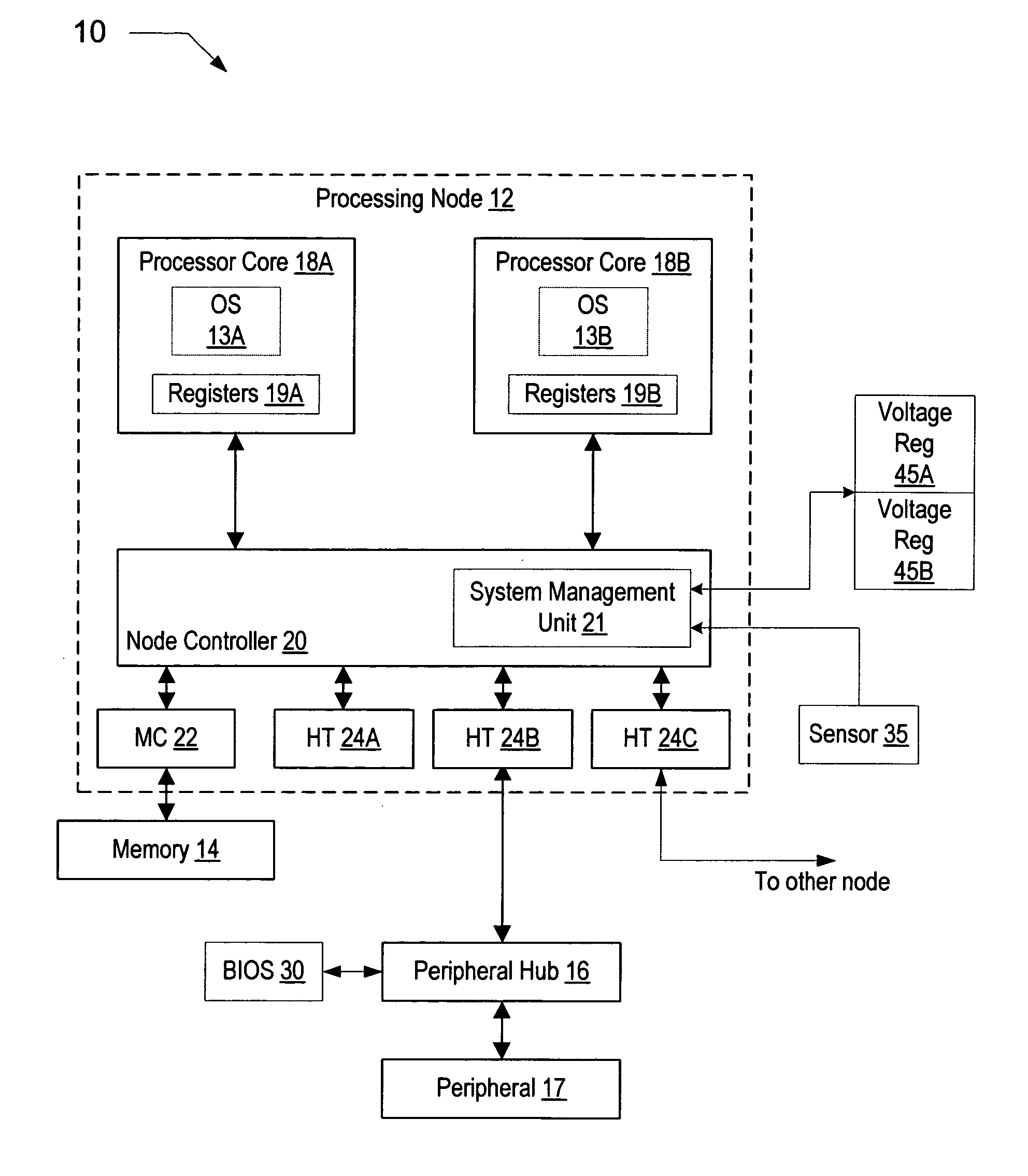

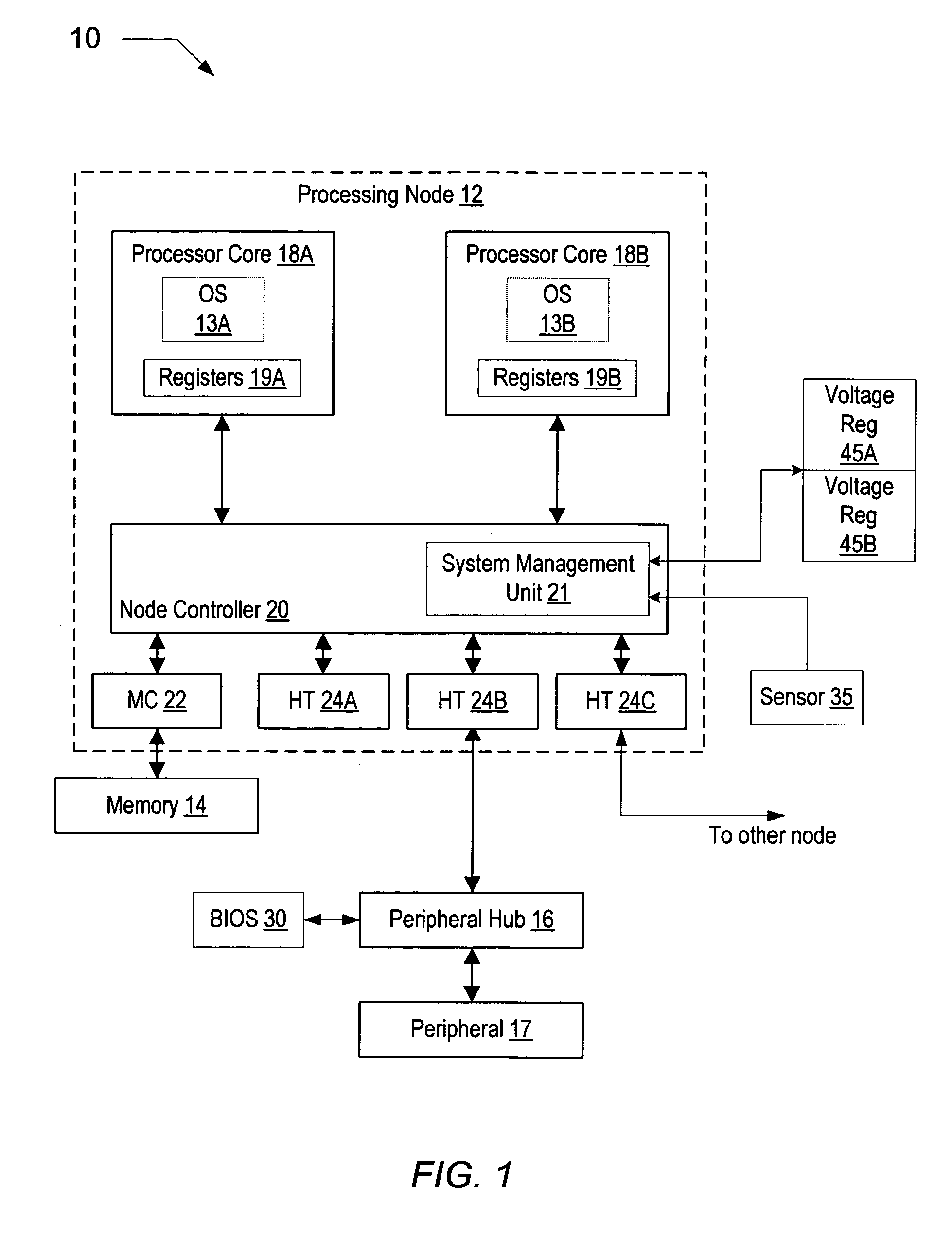

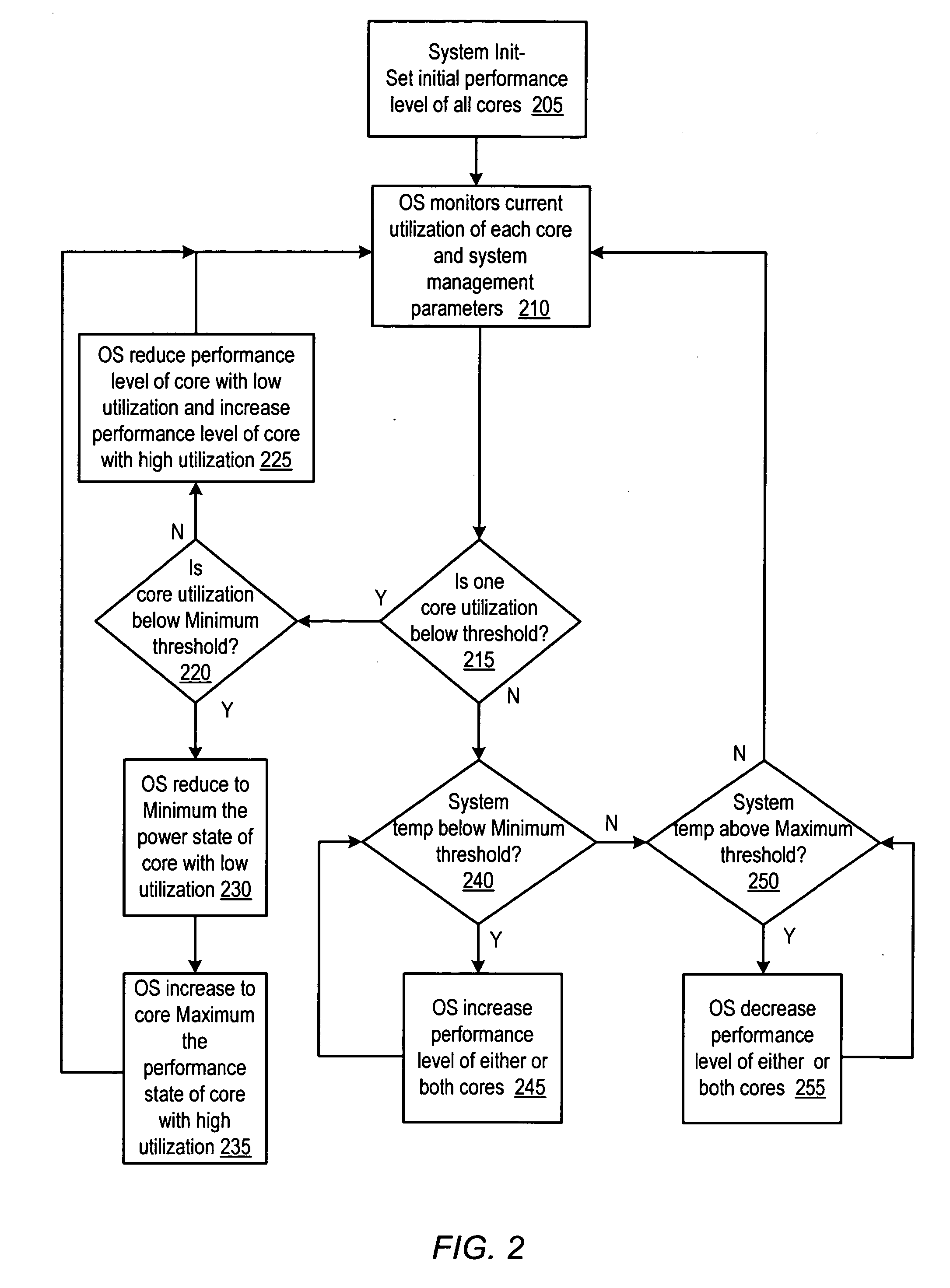

A processing node that is integrated onto a single integrated circuit chip includes a first processor core and a second processor core. The processing node also includes an operating system executing on either of the first processor core and the second processor core. The operating system may monitor a current utilization of the first processor core and the second processor core. The operating system may cause the first processor core to operate at performance level that is lower than a system maximum performance level and the second processor core to operate at performance level that is higher than the system maximum performance level in response to detecting the first processor core operating below a utilization threshold.

Owner:ADVANCED MICRO DEVICES INC

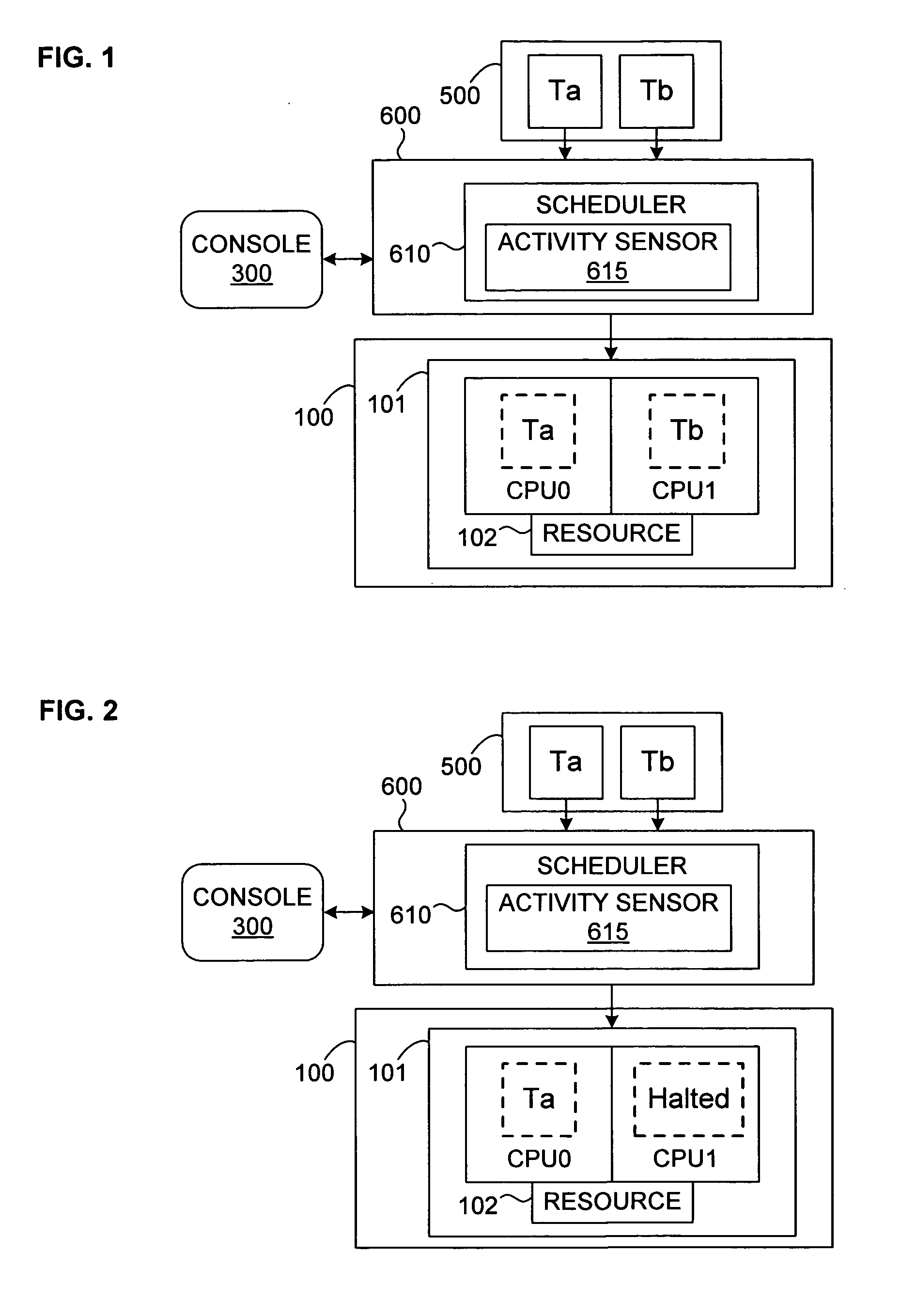

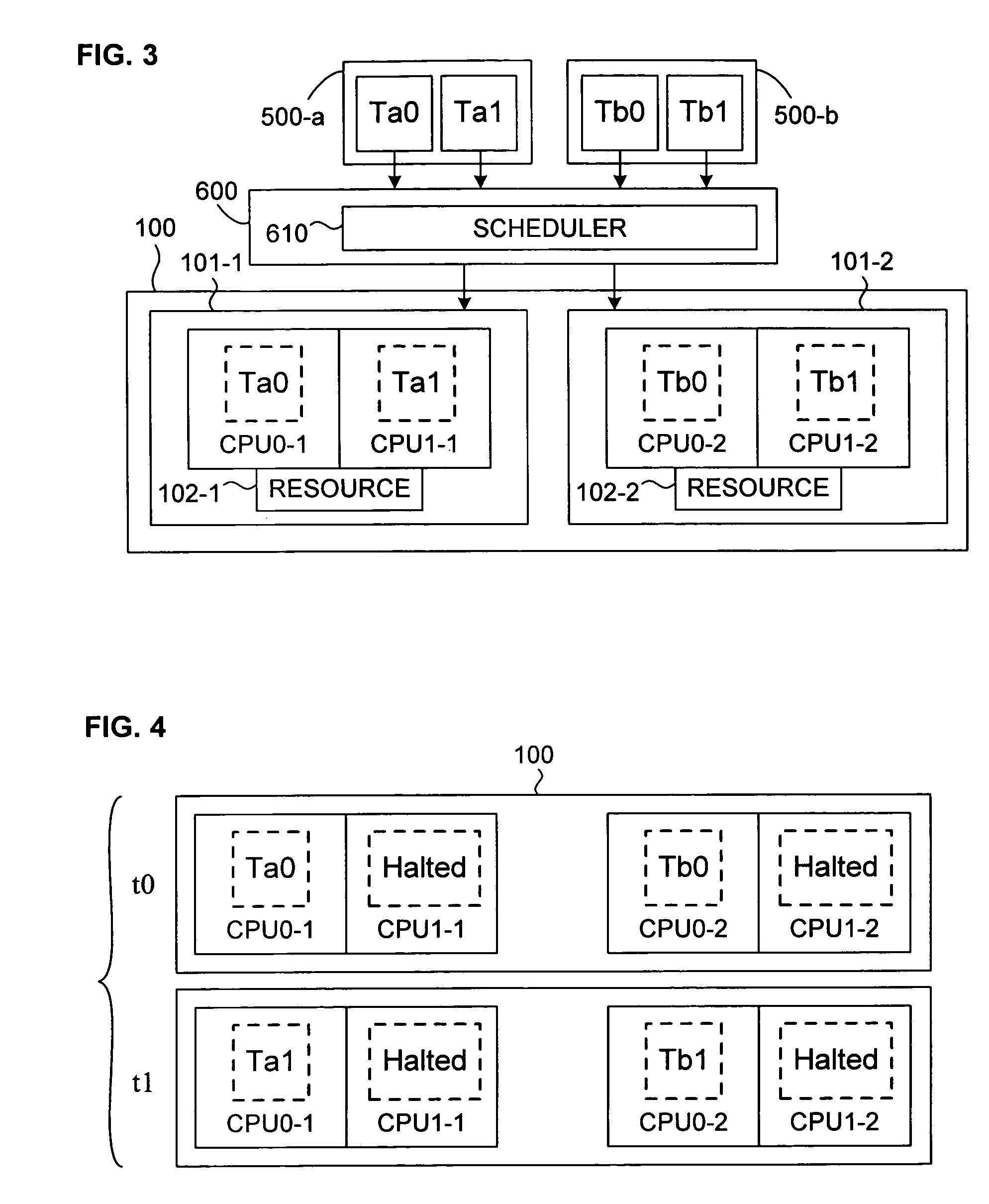

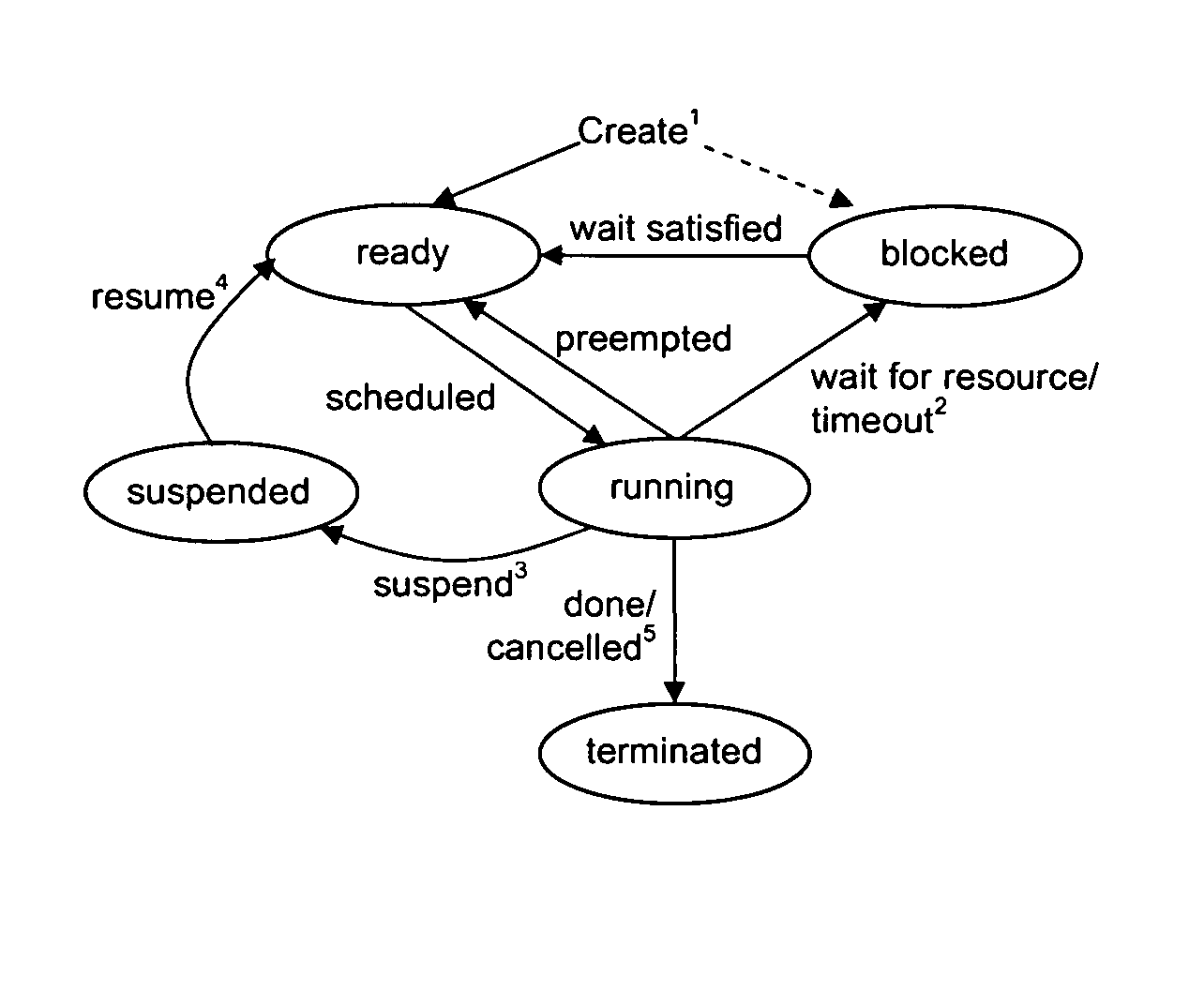

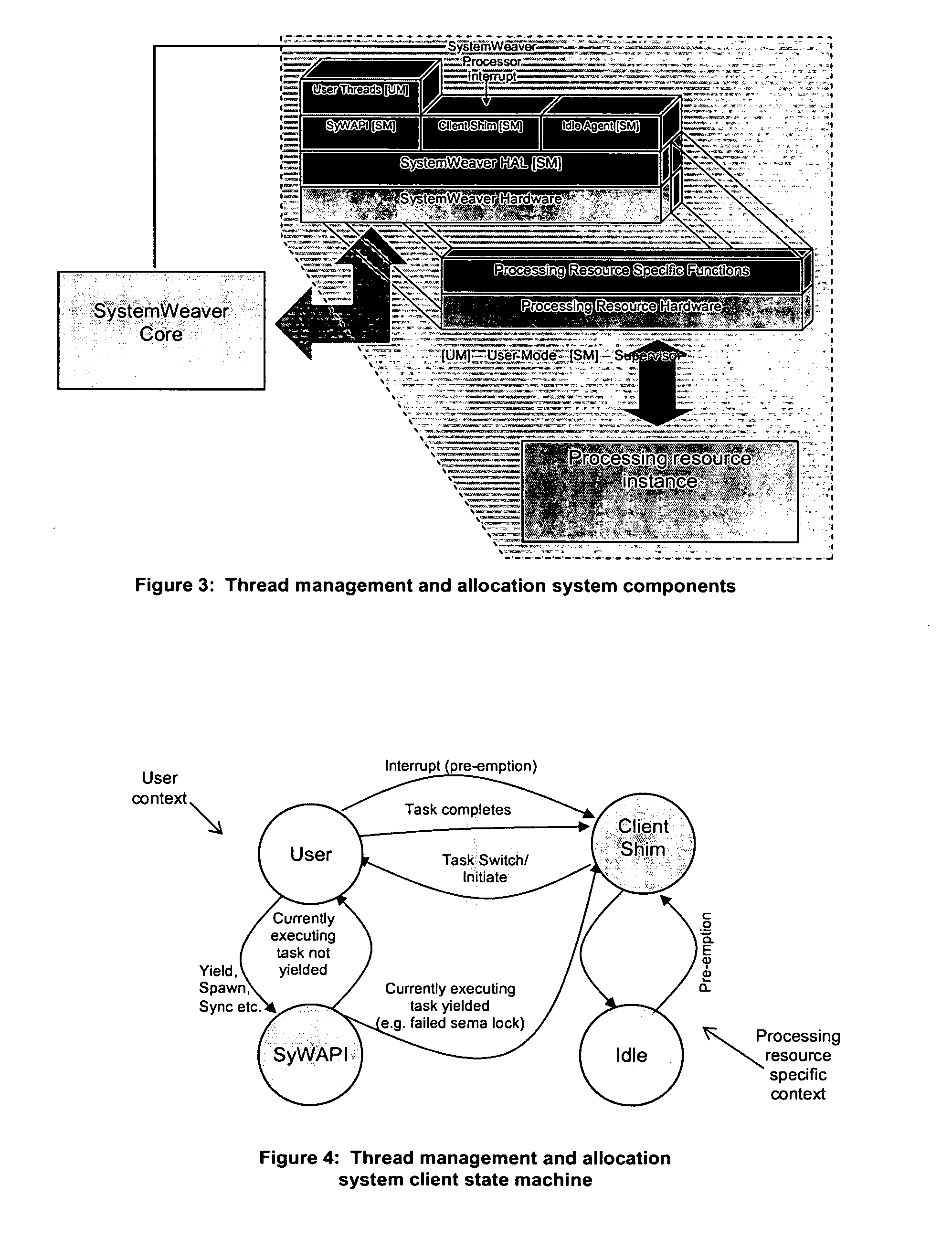

Mechanism for scheduling execution of threads for fair resource allocation in a multi-threaded and/or multi-core processing system

ActiveUS7707578B1Digital computer detailsMultiprogramming arrangementsThread schedulingShared resource

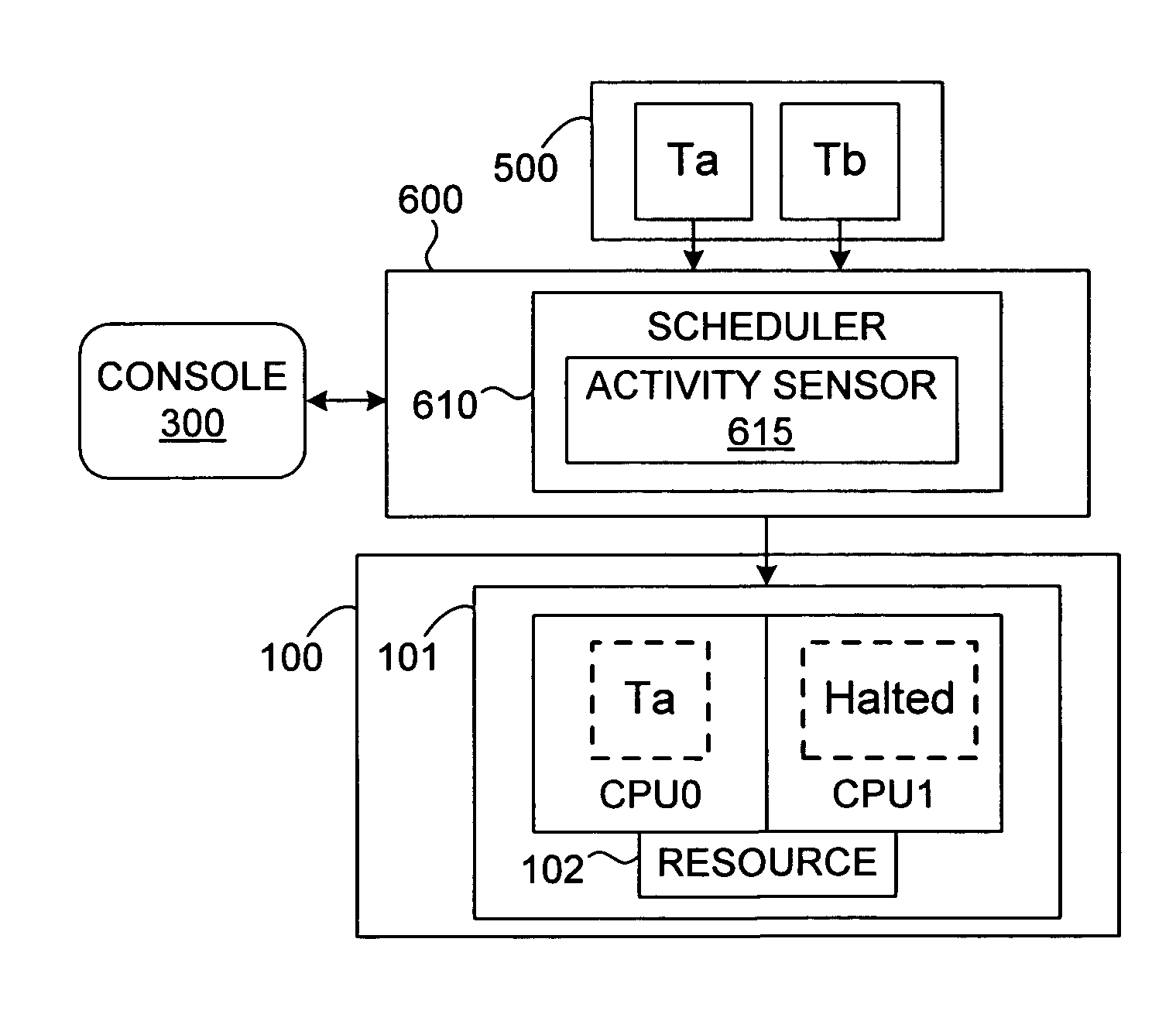

A thread scheduling mechanism is provided that flexibly enforces performance isolation of multiple threads to alleviate the effect of anti-cooperative execution behavior with respect to a shared resource, for example, hoarding a cache or pipeline, using the hardware capabilities of simultaneous multi-threaded (SMT) or multi-core processors. Given a plurality of threads running on at least two processors in at least one functional processor group, the occurrence of a rescheduling condition indicating anti-cooperative execution behavior is sensed, and, if present, at least one of the threads is rescheduled such that the first and second threads no longer execute in the same functional processor group at the same time.

Owner:VMWARE INC

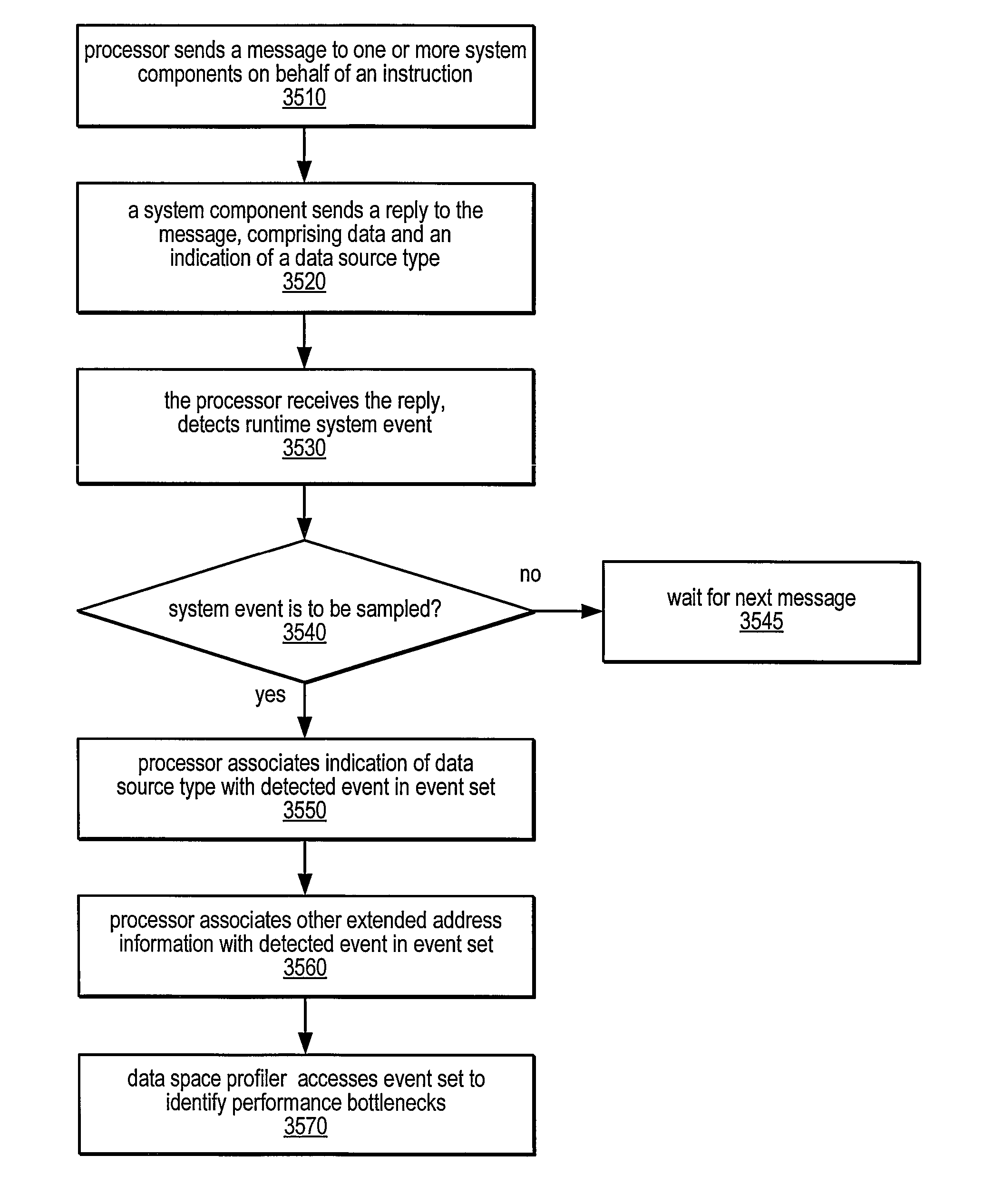

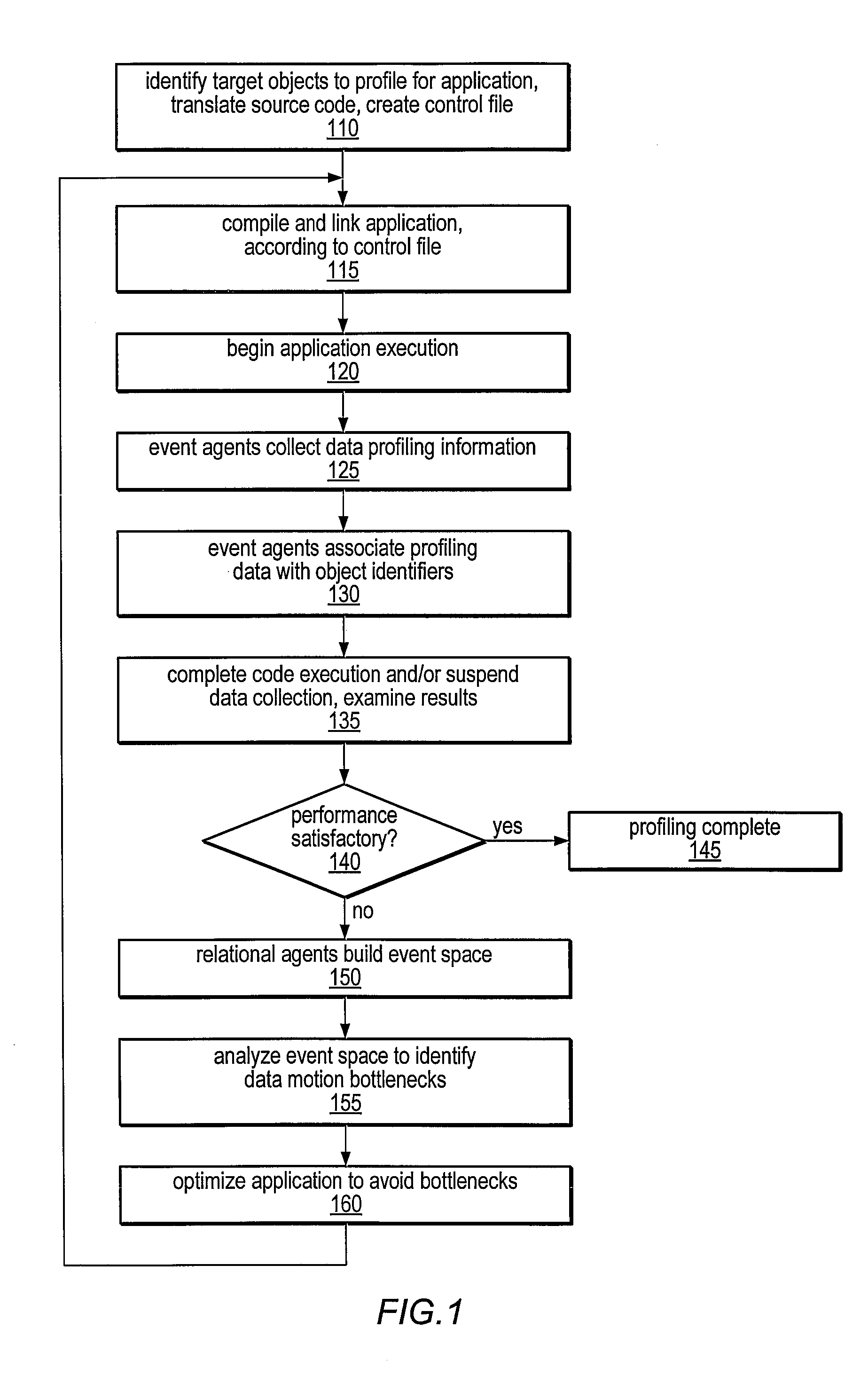

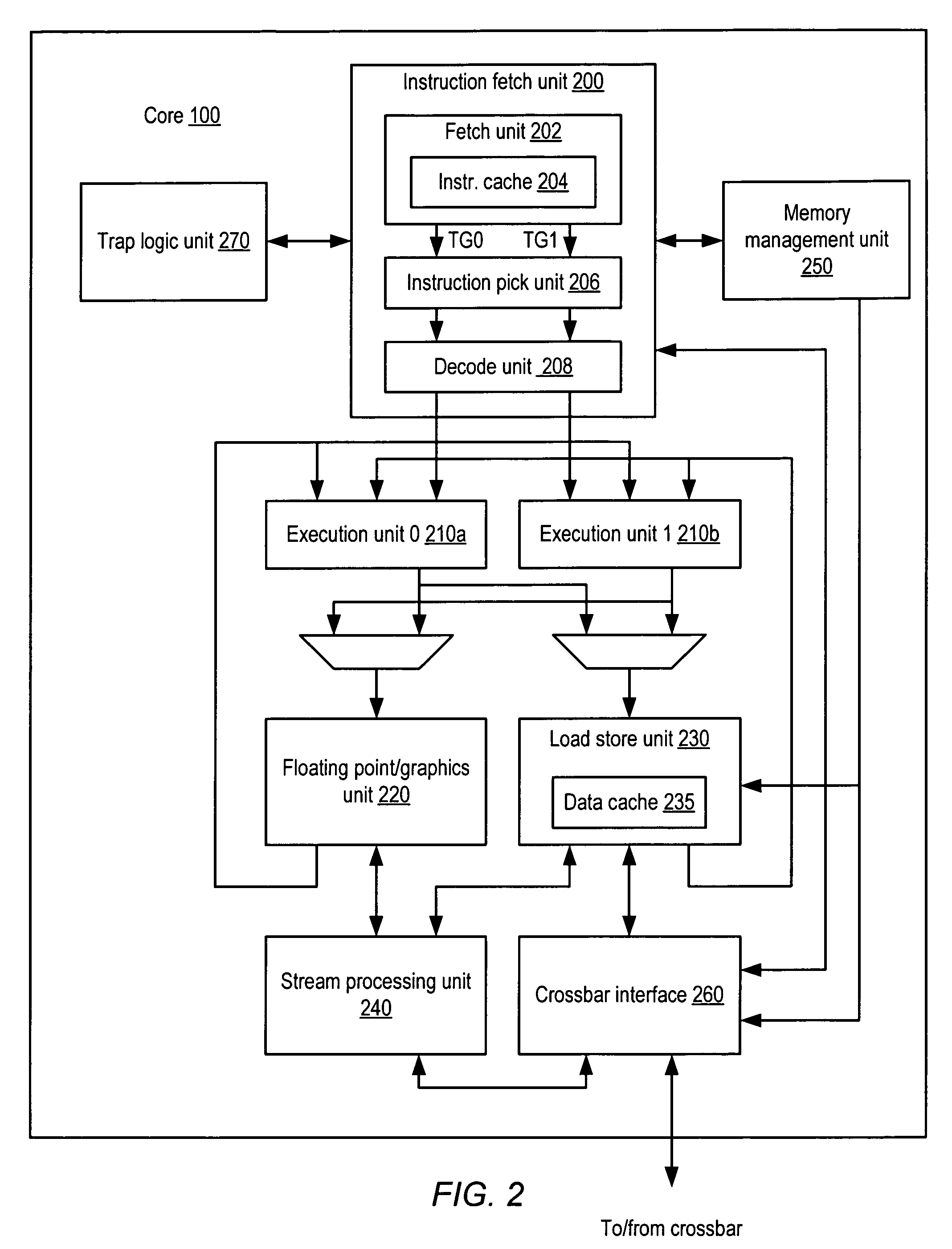

Apparatus and method for profiling system events in a fine grain multi-threaded multi-core processor

A system and method for profiling runtime system events of a computer system may include associating a data source type with detected system events. The system events may be detected dependent on information included in a reply message received by a processor in response to a data request or other transaction request message. The reply message may include information characterizing a source type of a source of data included in the reply message. The source type information may indicate that the source is remote or local; that it is a shared or a private storage location; that the data is supplied via a cache-to-cache transfer; or that the data is sourced from a coherency domain other than that of the requesting process. Instructions, events, messages, and replies may be sampled, and extended address information corresponding to the samples may be stored in an event set database for performance analysis.

Owner:SUN MICROSYSTEMS INC

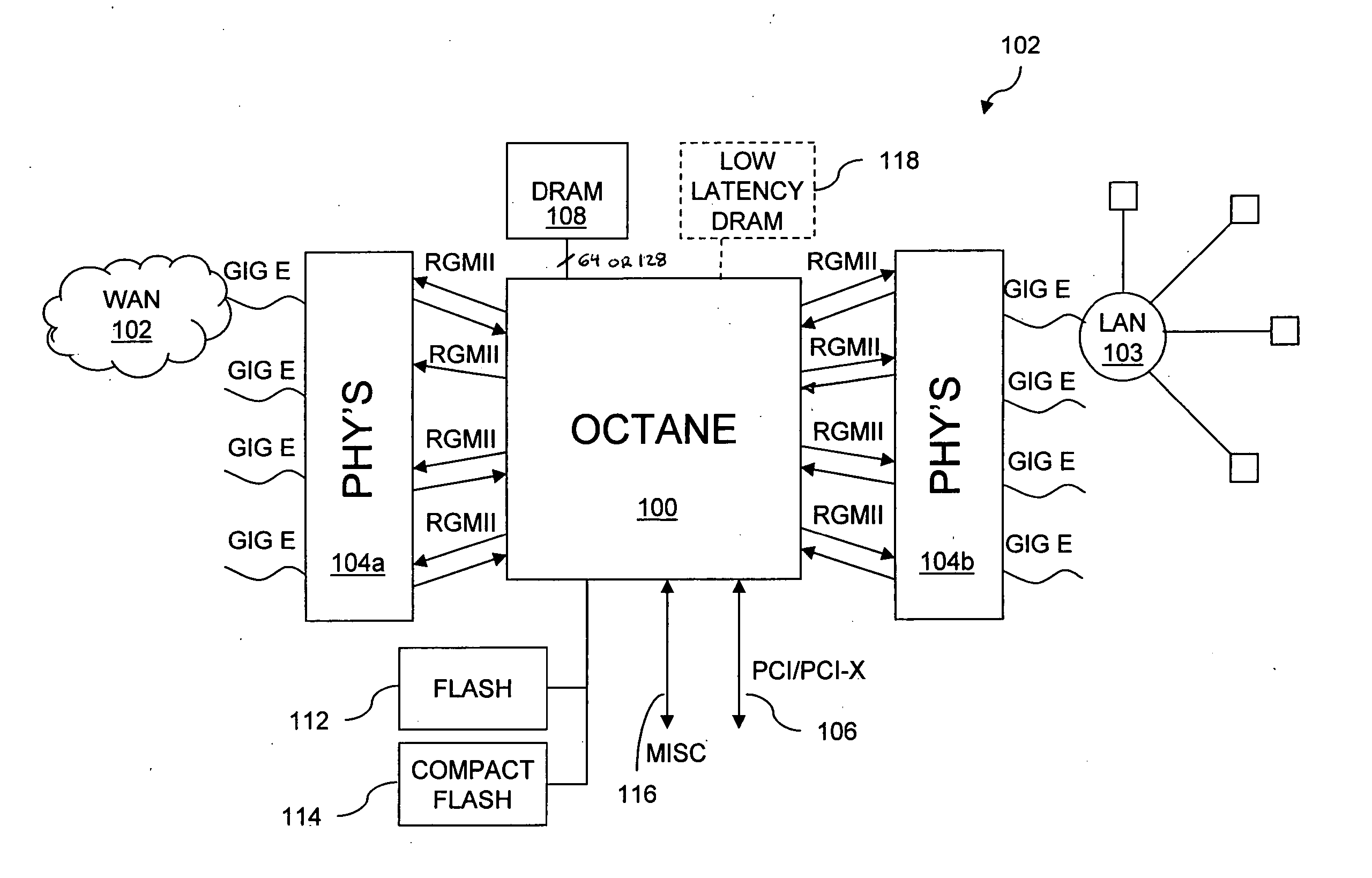

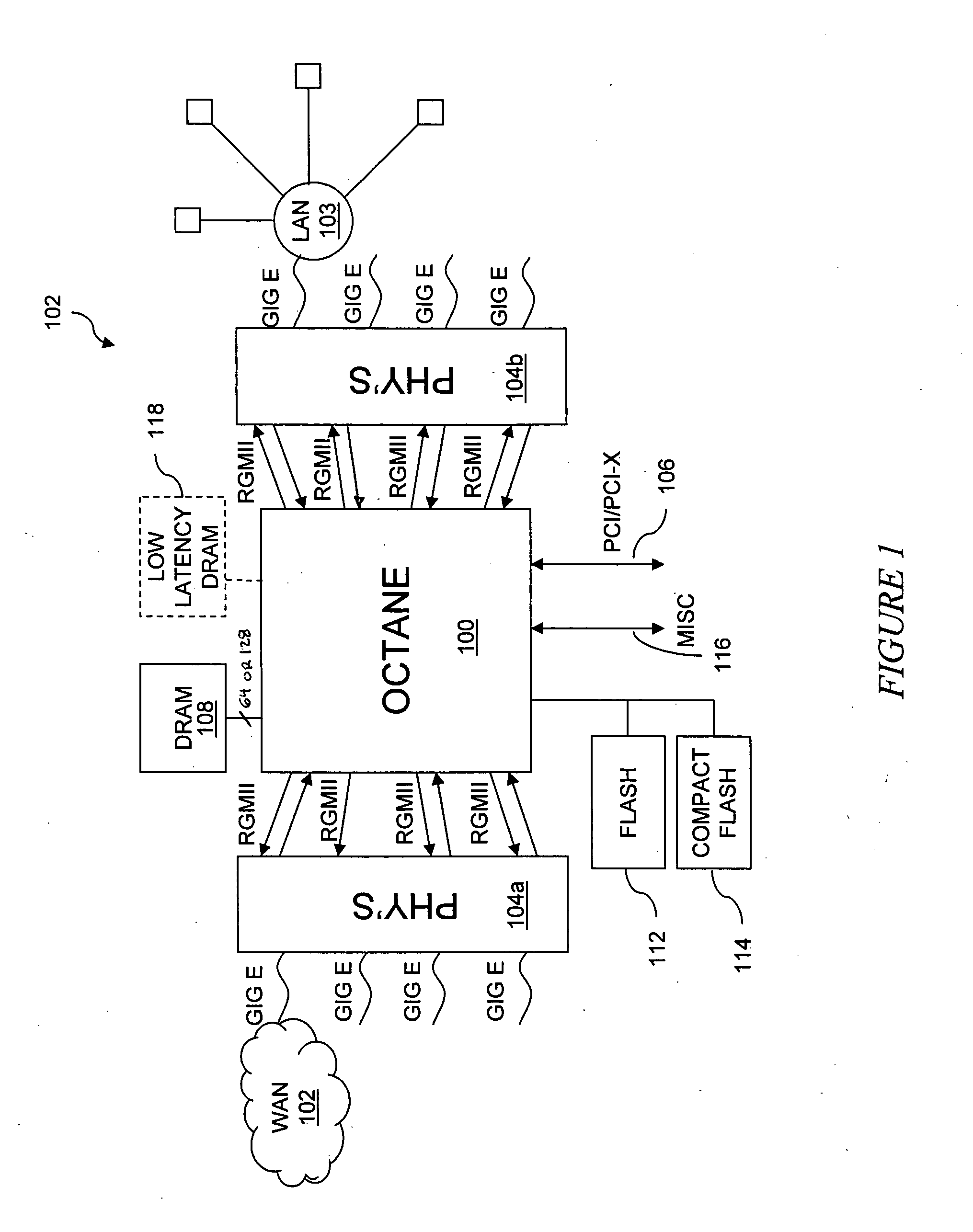

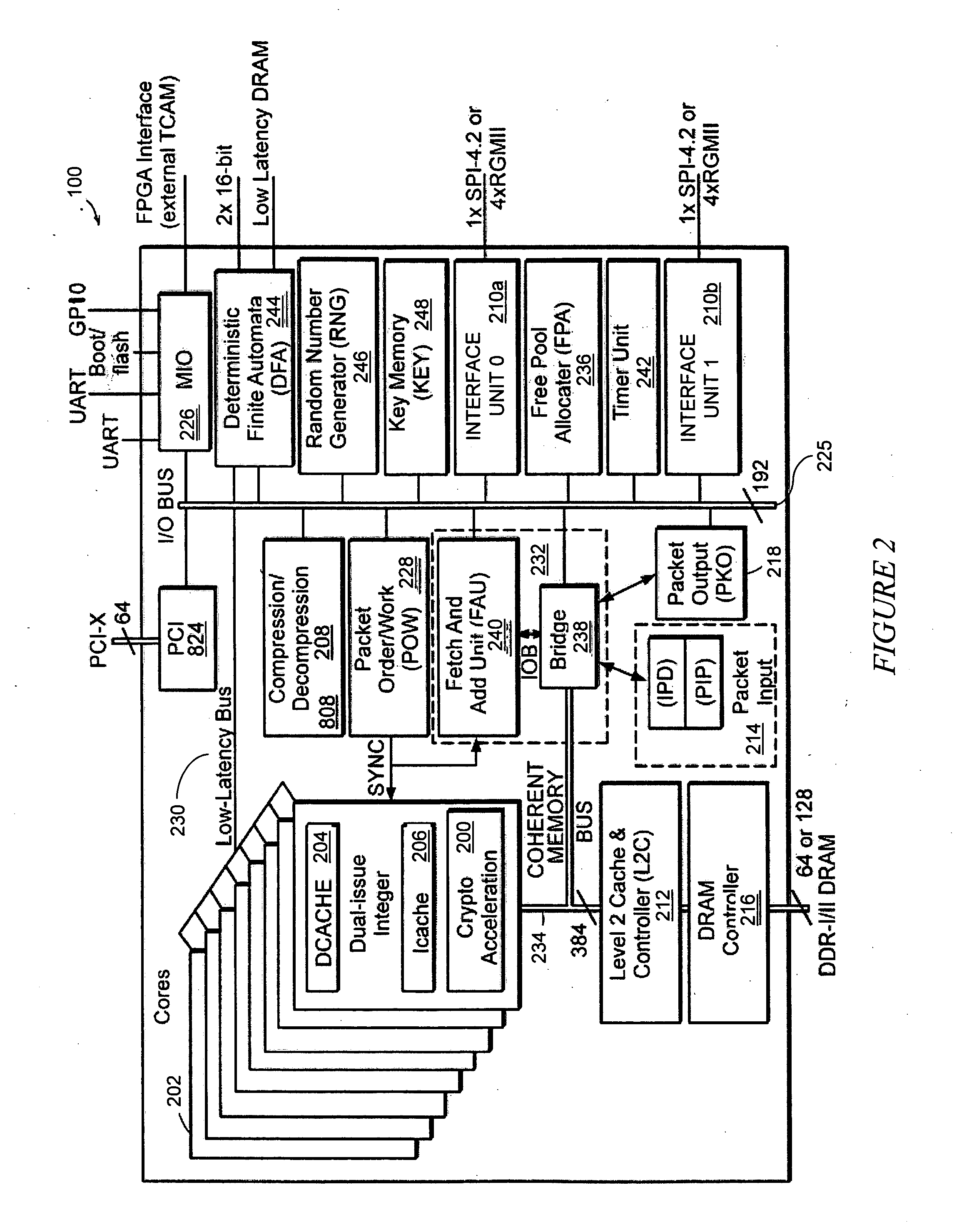

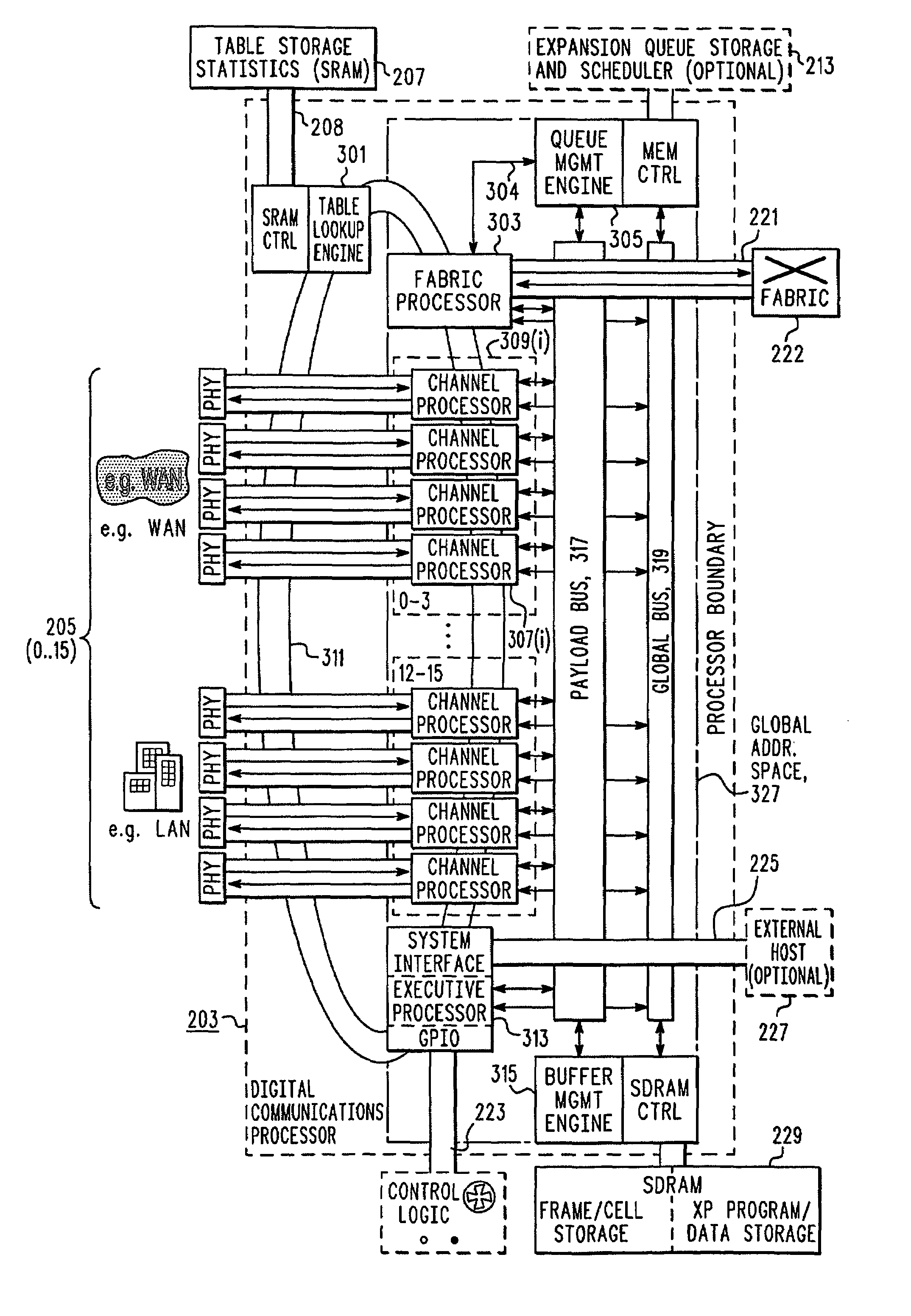

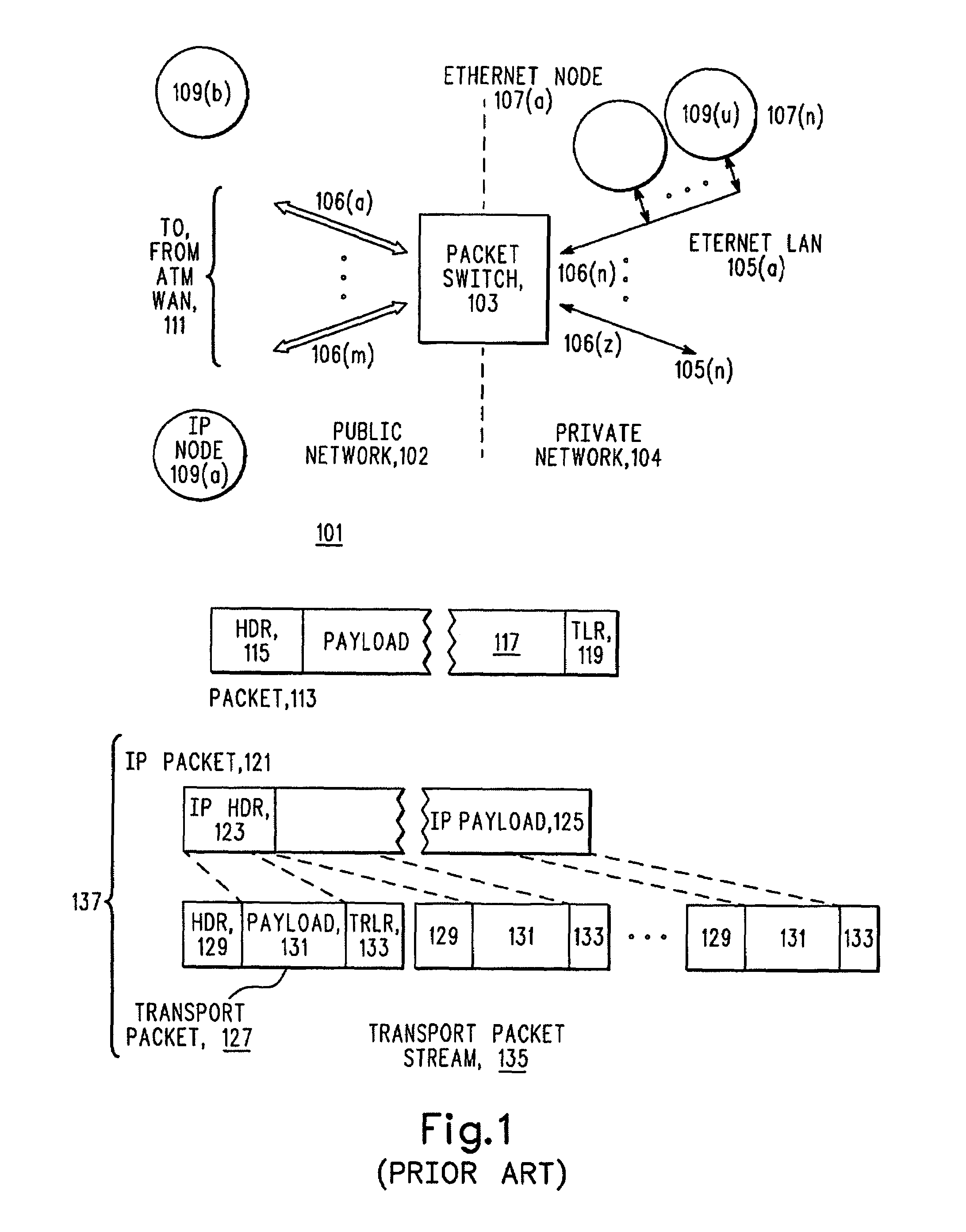

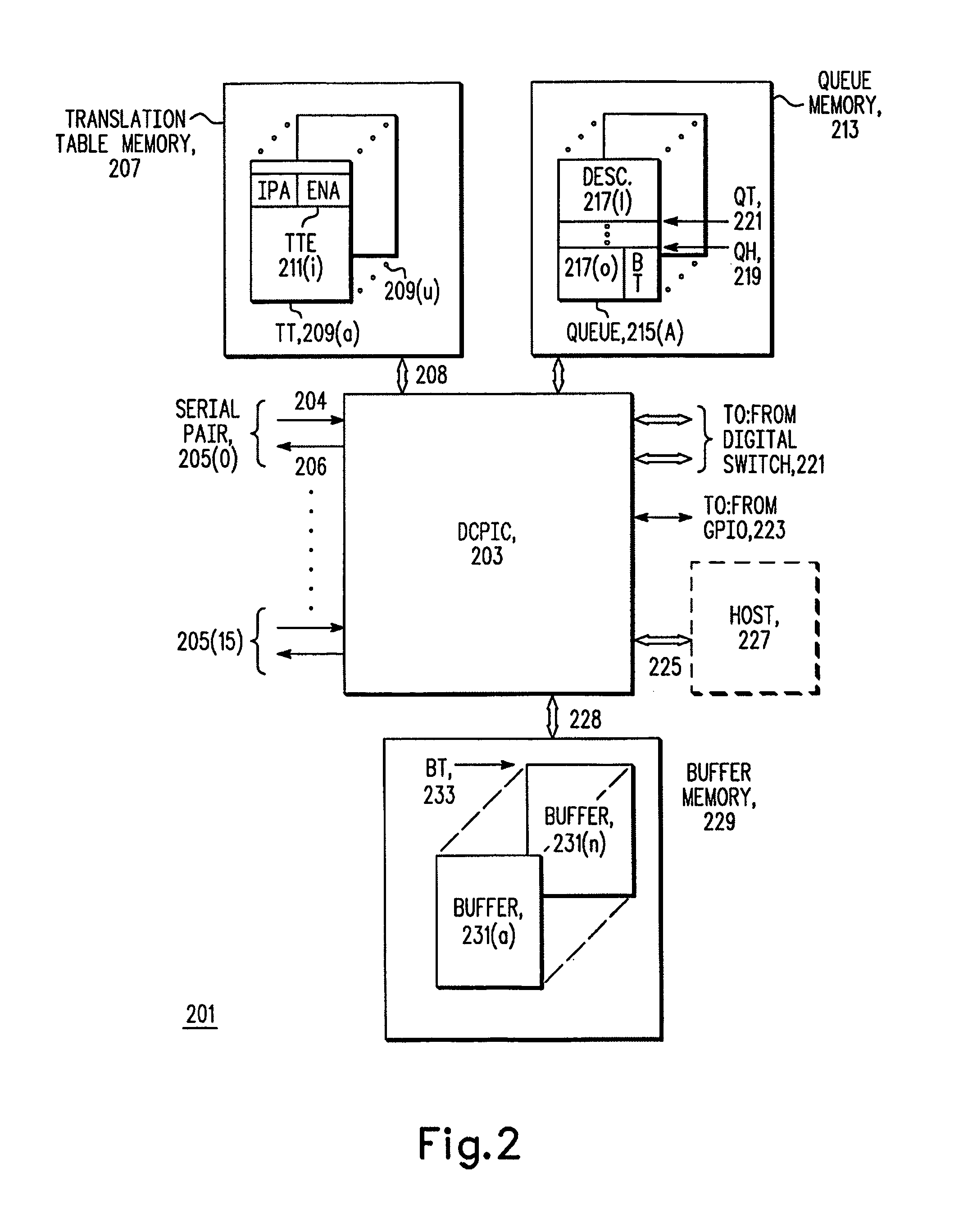

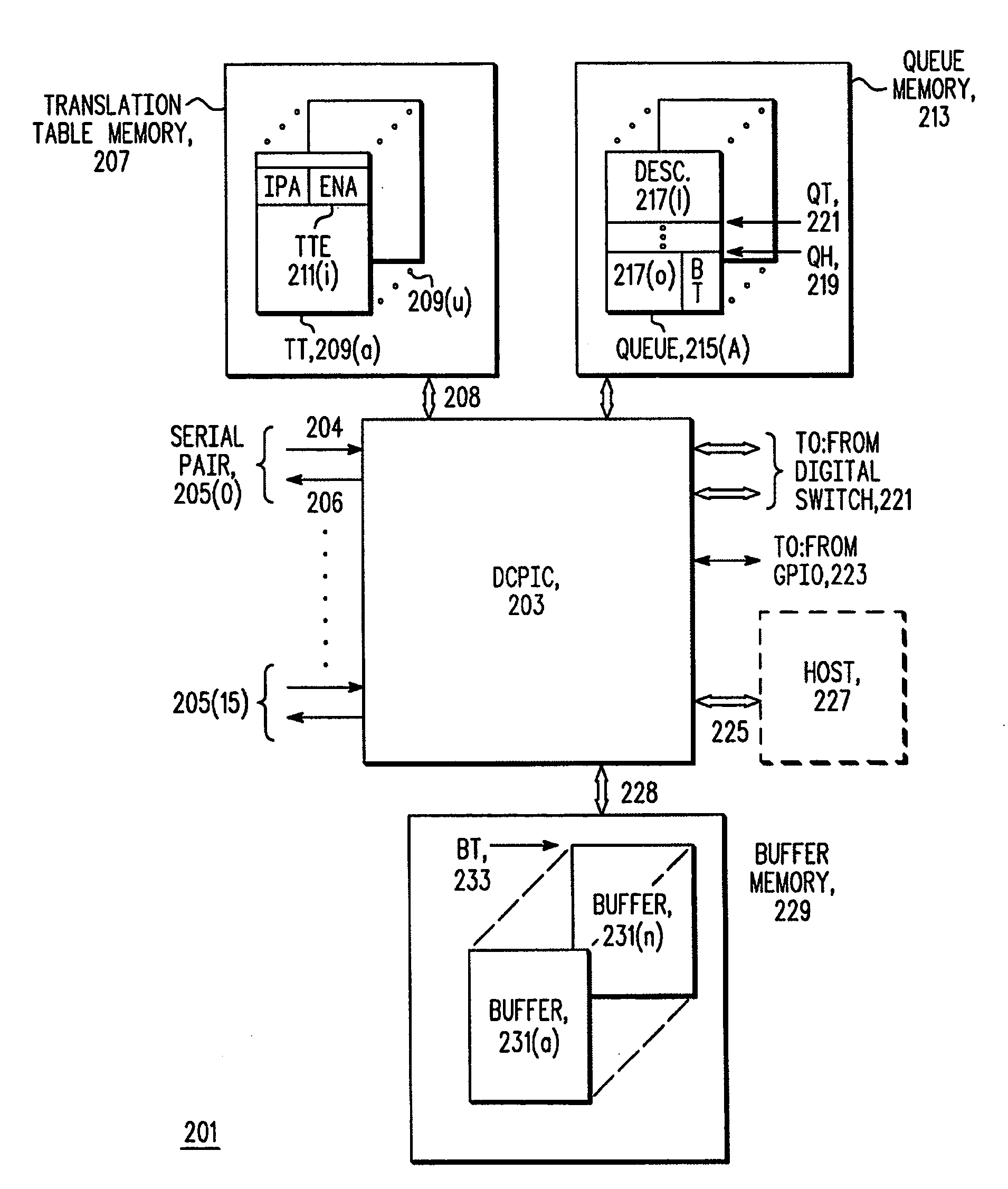

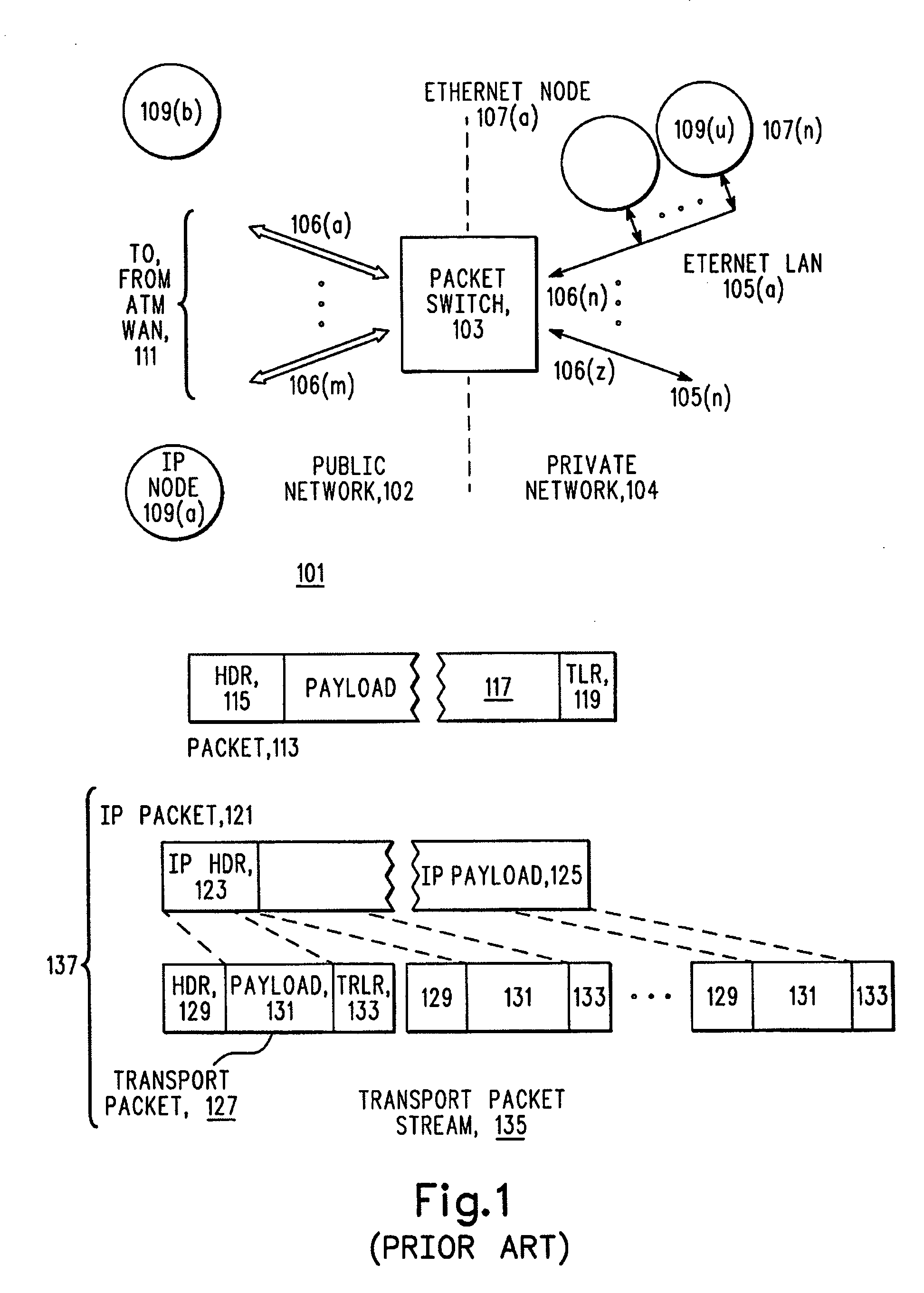

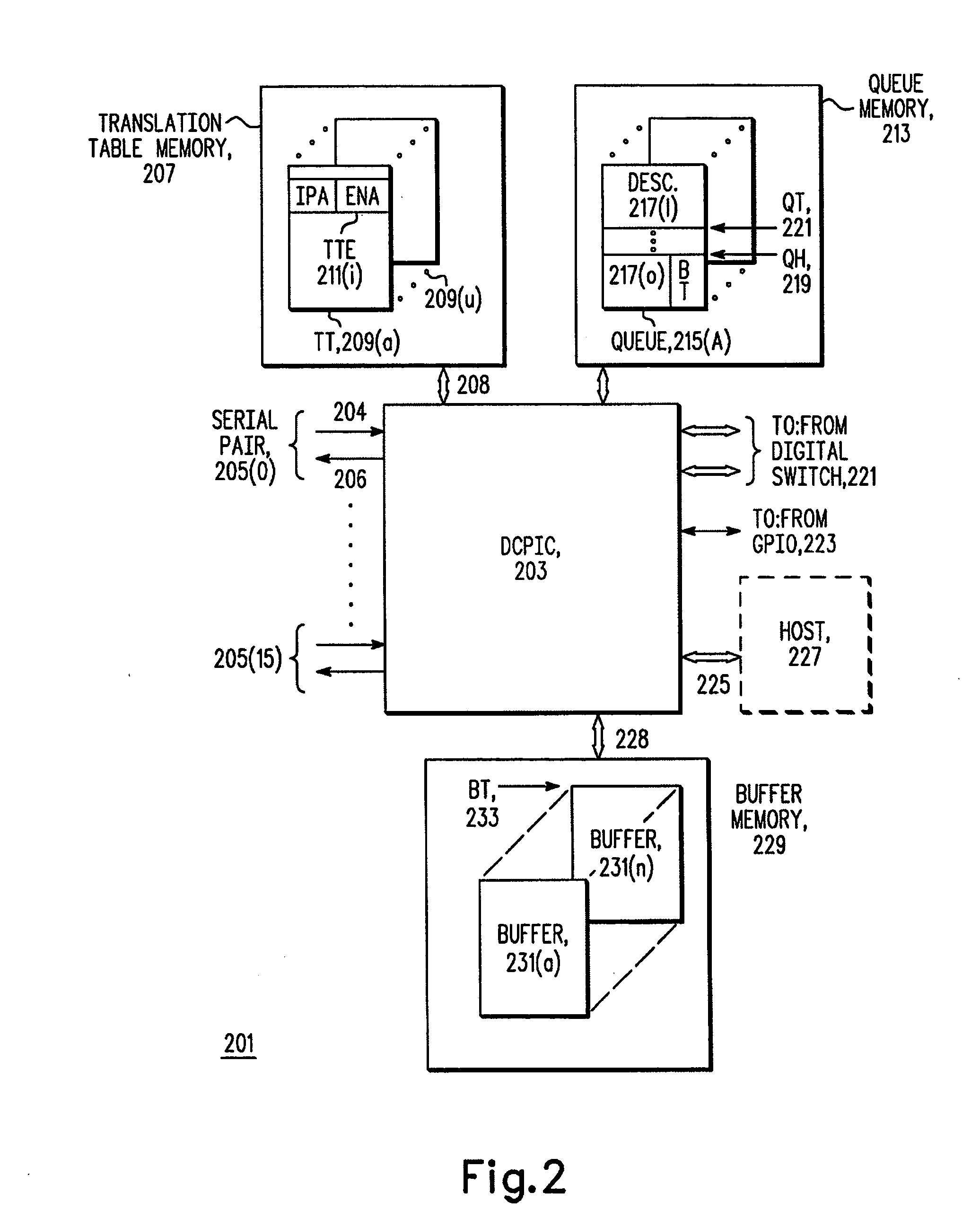

Digital communications processor

InactiveUS7100020B1Promote exchangeGeneral purpose stored program computerMultiple digital computer combinationsMulti-core processorIntegrated circuit

An integrated circuit (203) for use in processing streams of data generally and streams of packets in particular. The integrated circuit (203) includes a number of packet processors (307, 313, 303), a table look up engine (301), a queue management engine (305) and a buffer management engine (315). The packet processors (307, 313, 303) include a receive processor (421), a transmit processor (427) and a risc core processor (401), all of which are programmable. The receive processor (421) and the core processor (401) cooperate to receive and route packets being received and the core processor (401) and the transmit processor (427) cooperate to transmit packets. Routing is done by using information from the table look up engine (301) to determine a queue (215) in the queue management engine (305) which is to receive a descriptor (217) describing the received packet's payload.

Owner:NORTH STAR INNOVATIONS

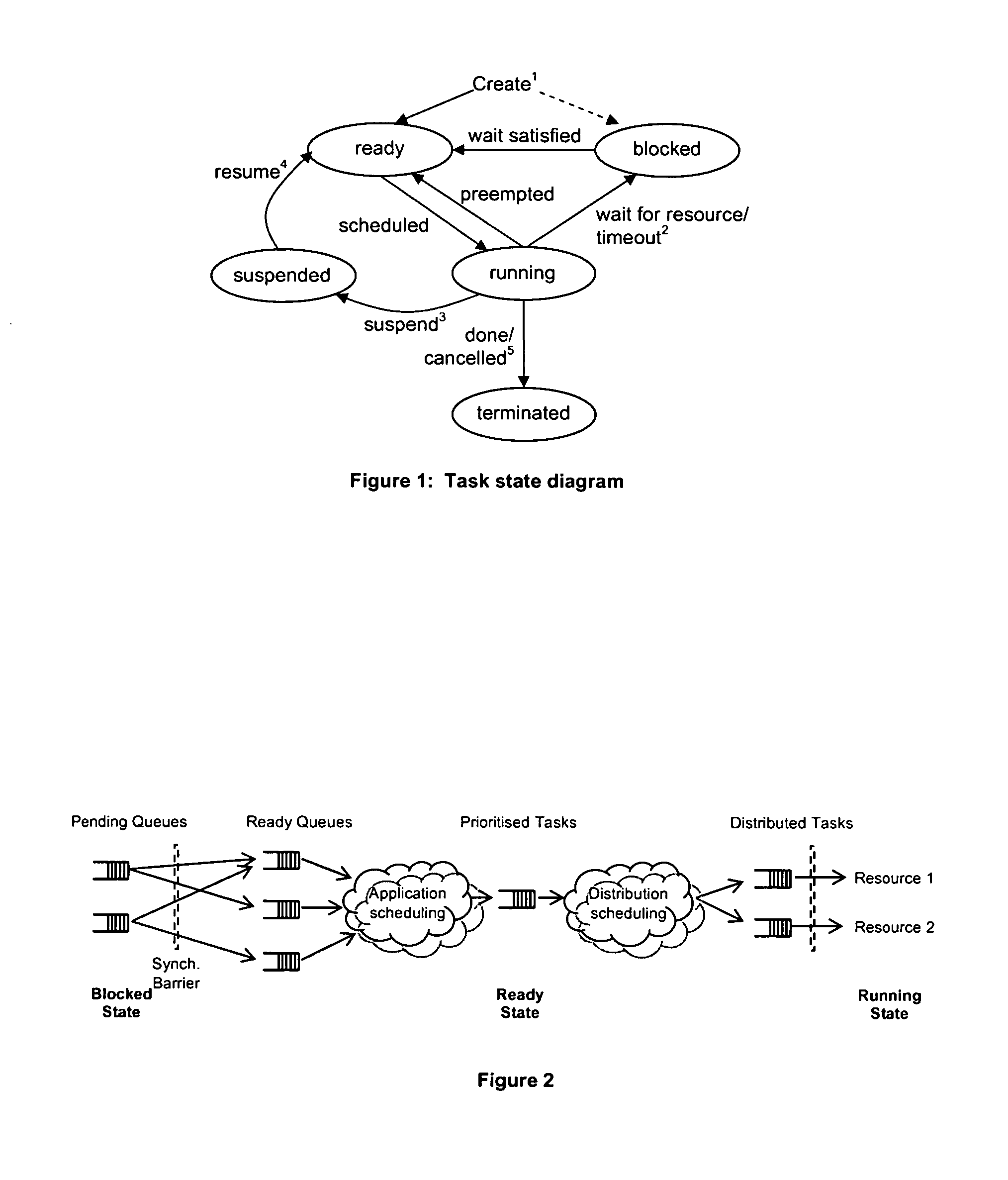

Scheduling in a multicore processor

ActiveUS20070220517A1Improve application performanceIncrease speedEnergy efficient ICTConcurrent instruction executionProcessor elementMulti-core processor

A method and computer-usable medium including instructions for performing a method for scheduling executable transactions within a multicore processor comprising a plurality of processor elements. The method includes listing, using at least one distribution queue, a portion of the executable transactions in order of eligibility for execution. A plurality of executable transaction schedulers are provided, wherein each executable transaction scheduler includes a scheduling process for determining a most eligible executable transaction for execution from at least one candidate executable transaction ready for execution. The executable transaction schedulers are linked together to provide a multilevel scheduler. The most eligible executable transaction is output from the multilevel scheduler to the at least one distribution queue.

Owner:SYNOPSYS INC +1

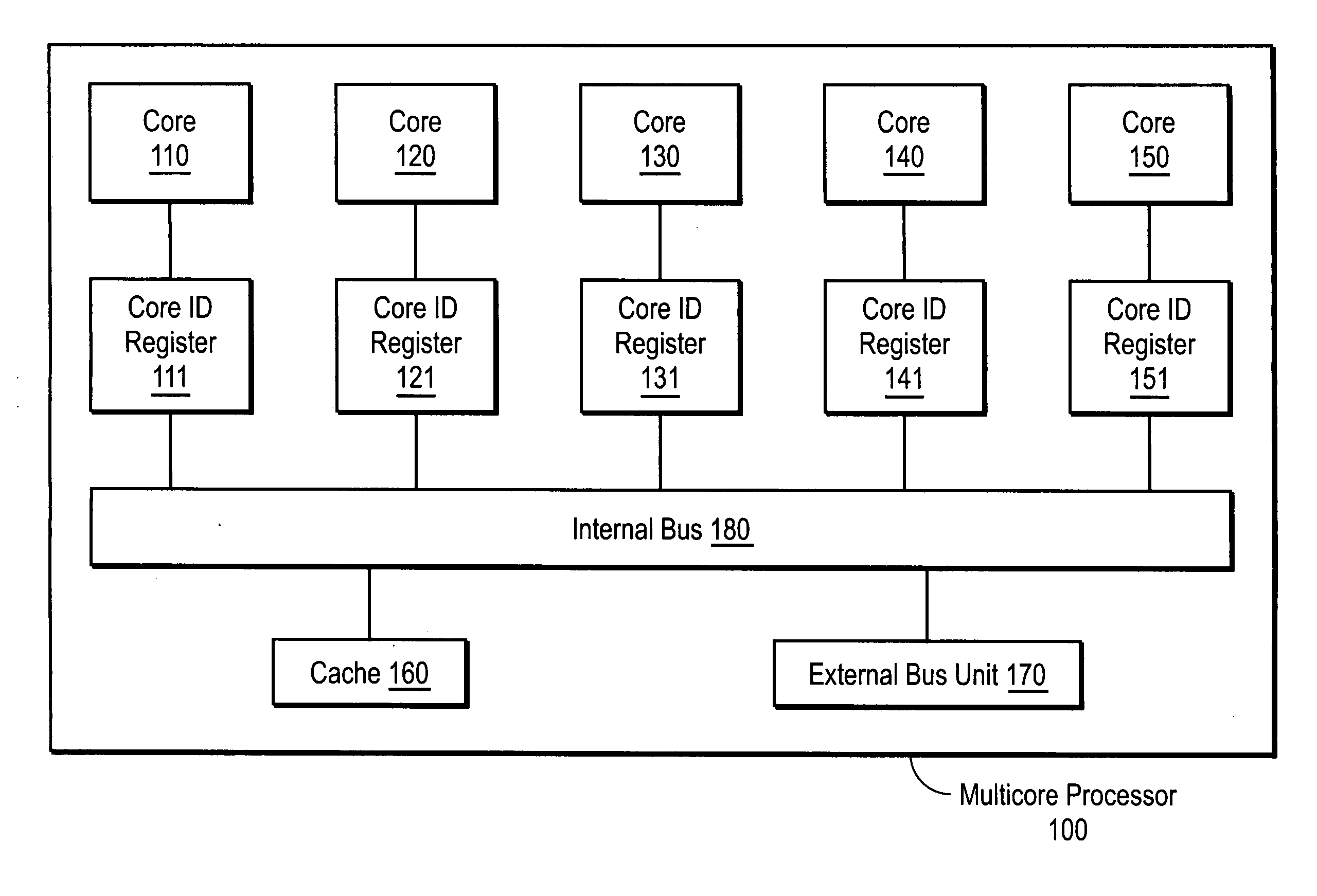

Multicore processor having active and inactive execution cores

InactiveUS20060212677A1Energy efficient ICTError detection/correctionMulti-core processorIntegrated circuit

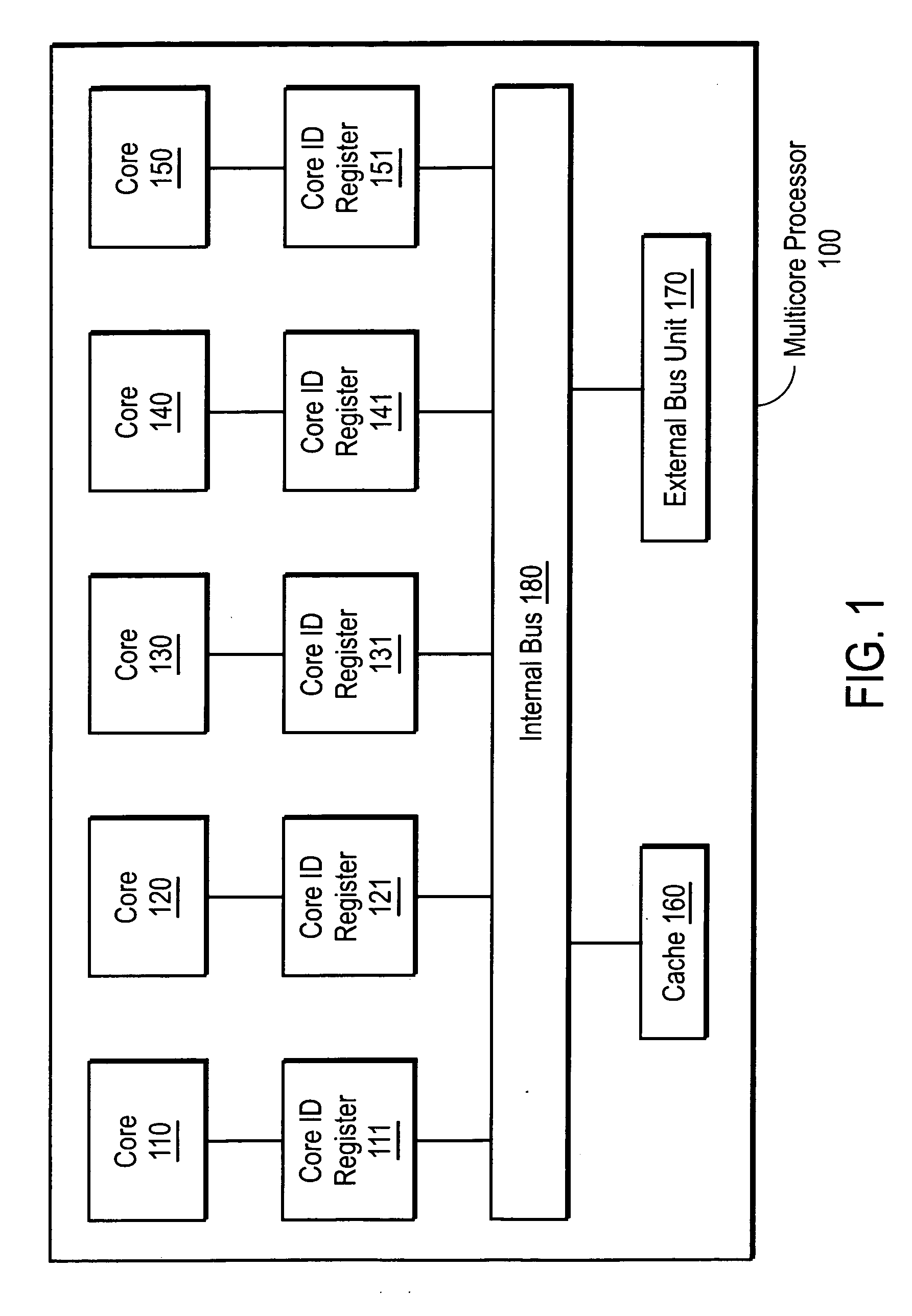

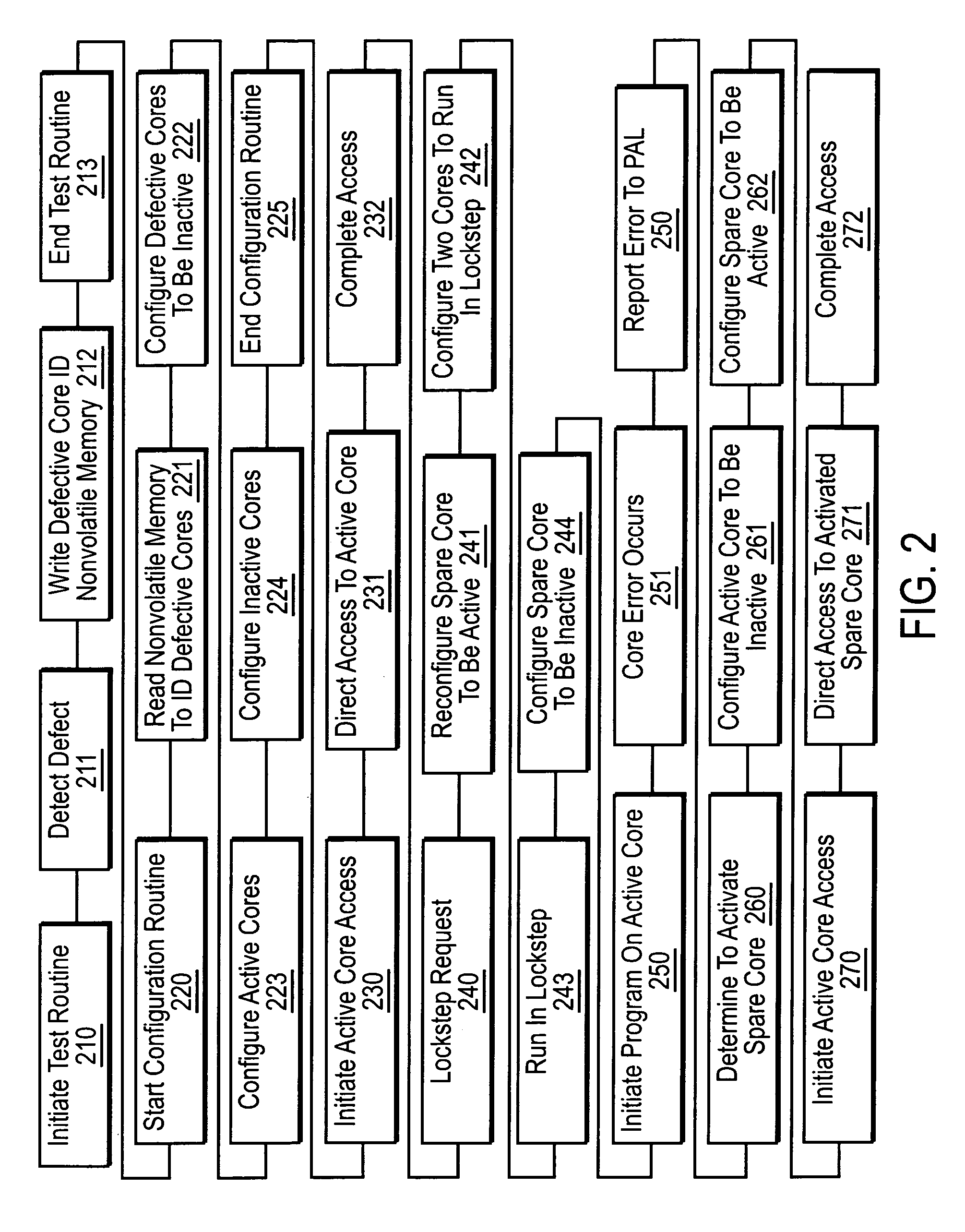

Embodiments of a multicore processor having active and inactive execution cores are disclosed. In one embodiment, an apparatus includes a processor having a plurality of execution cores on a single integrated circuit, and a plurality of core identification registers. Each of the plurality of core identification registers corresponds to one of the execution cores to identify whether the execution core is active.

Owner:INTEL CORP

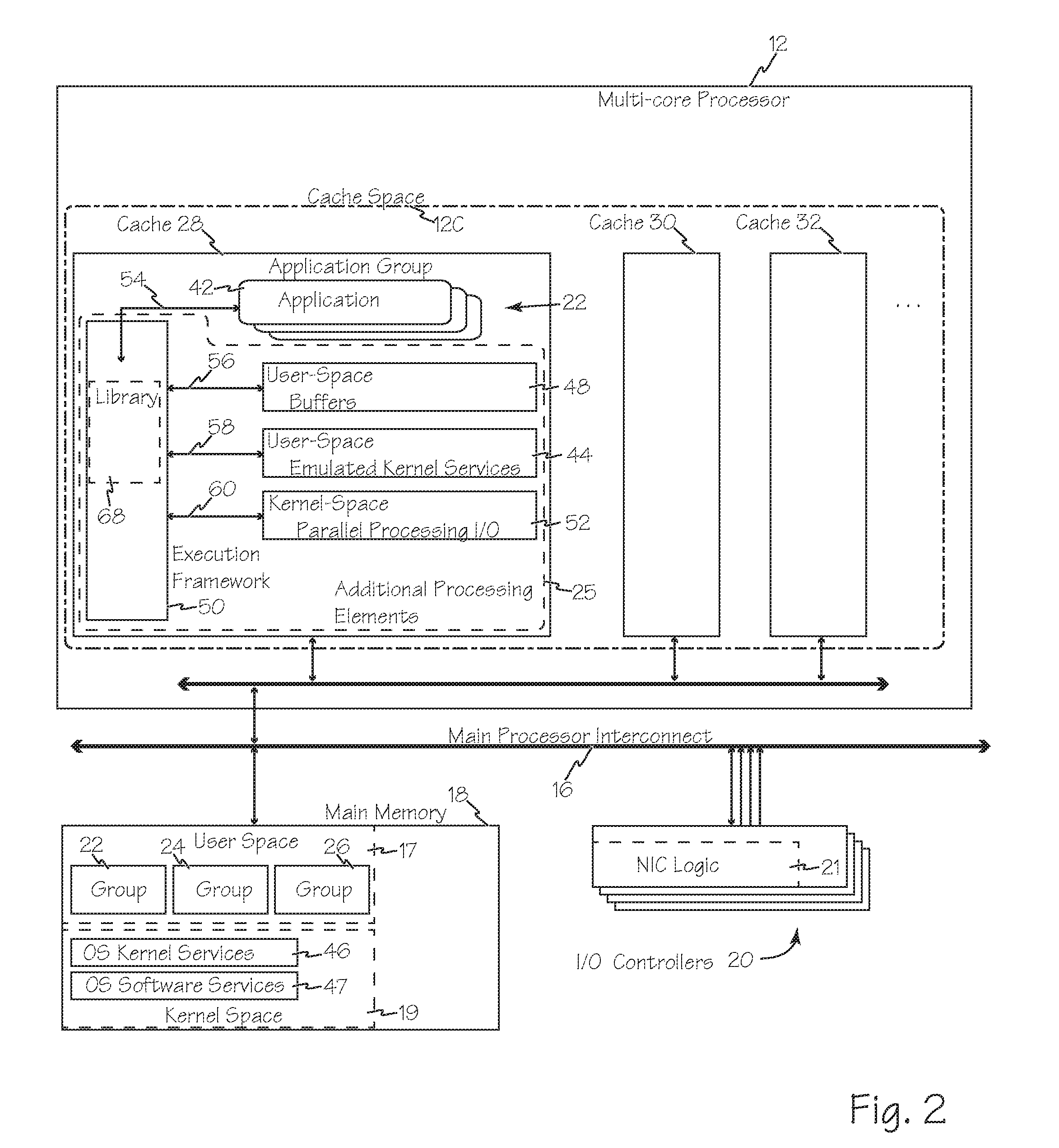

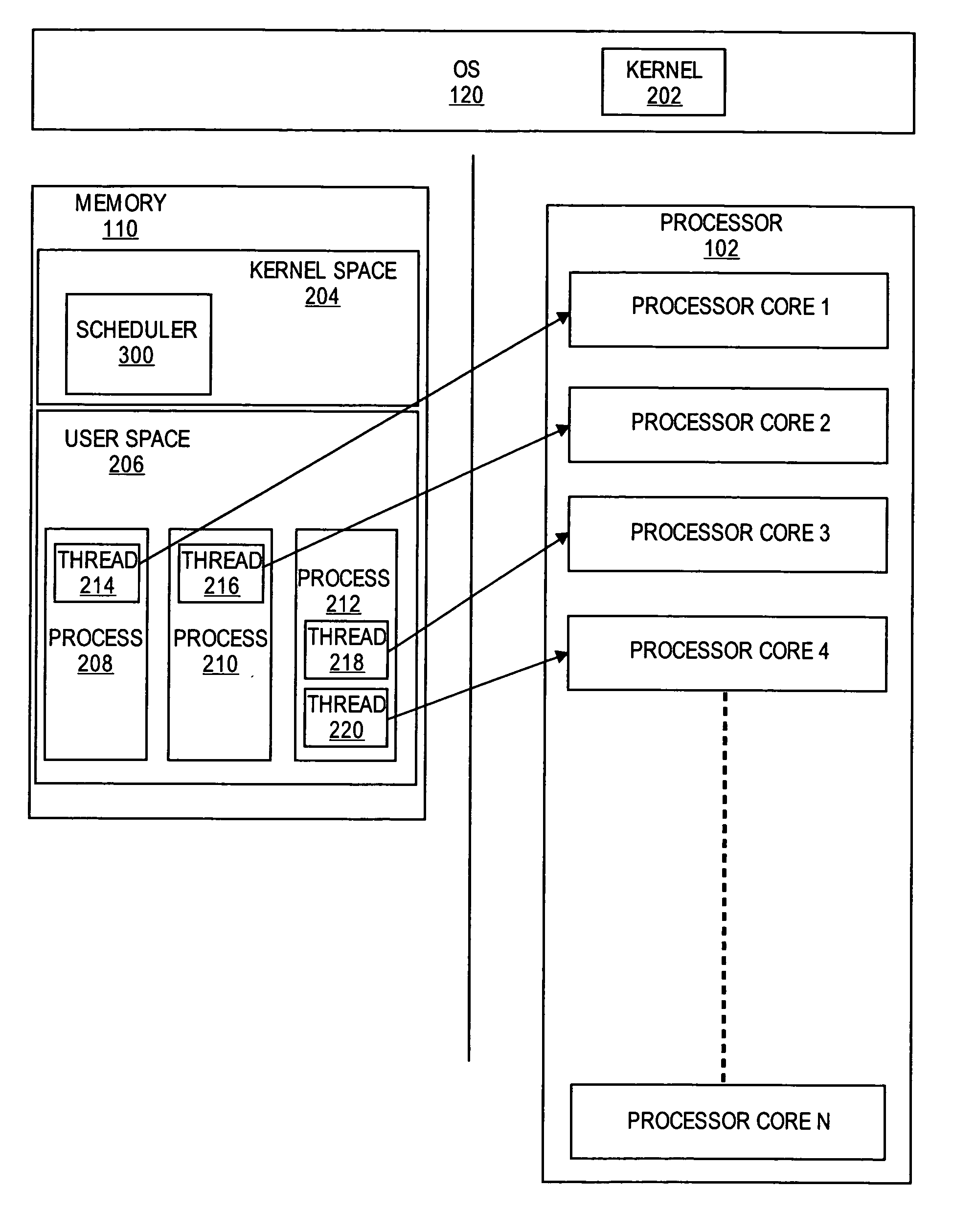

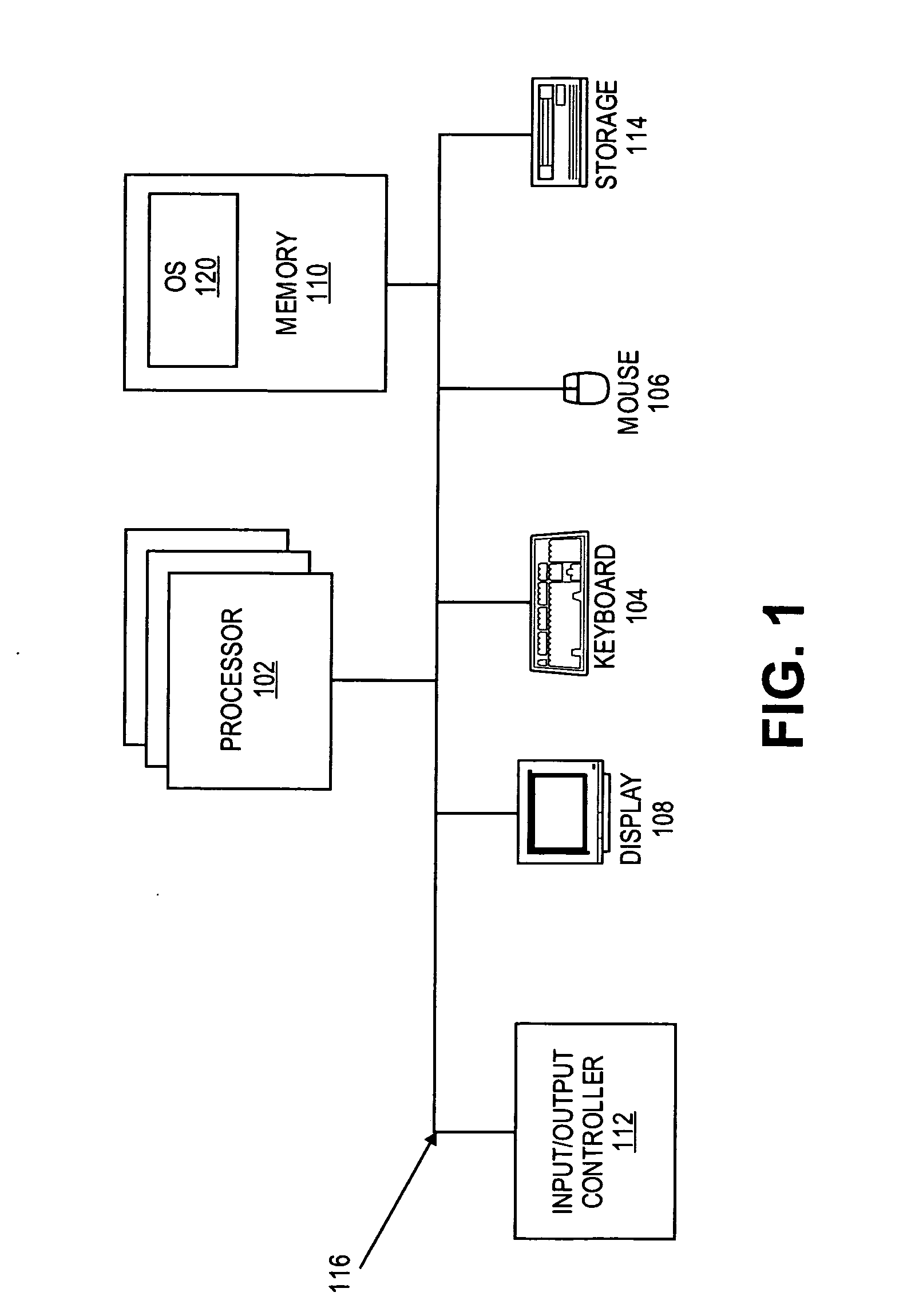

Methods and architecture for enhanced computer performance

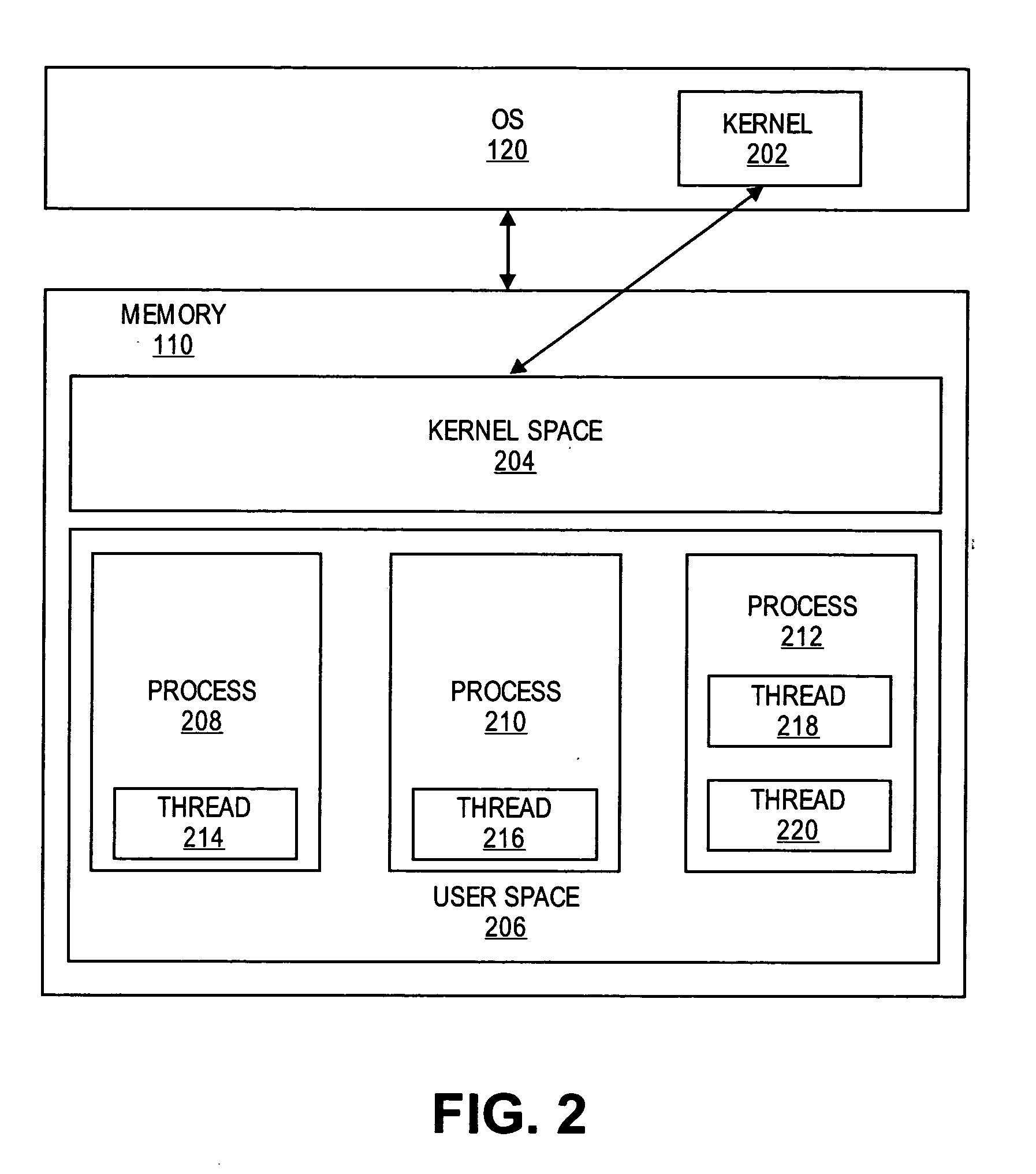

InactiveUS20160378545A1Reducing mode switchingReduce limitationsProgram initiation/switchingHardware monitoringOperational systemComputer performance

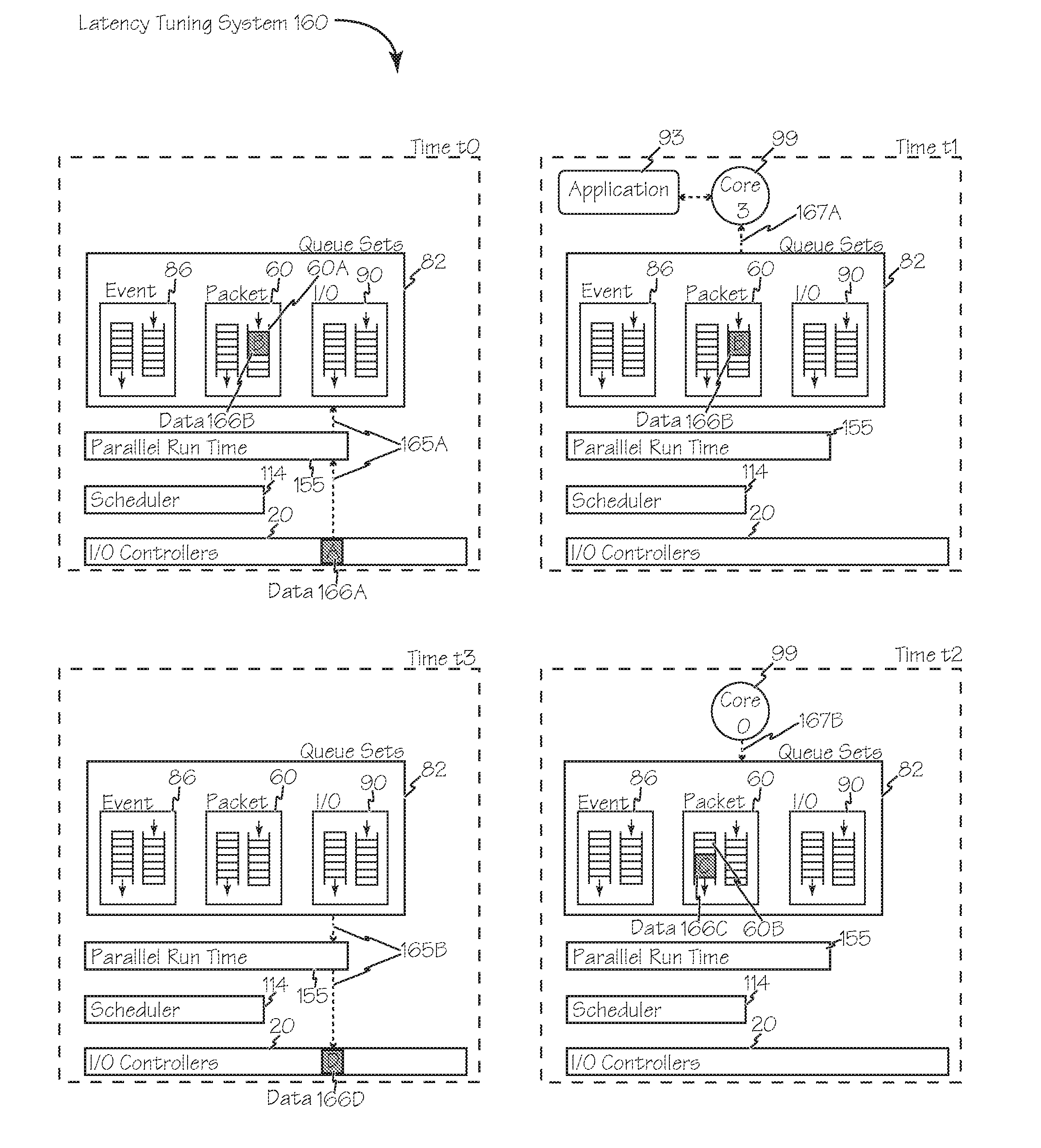

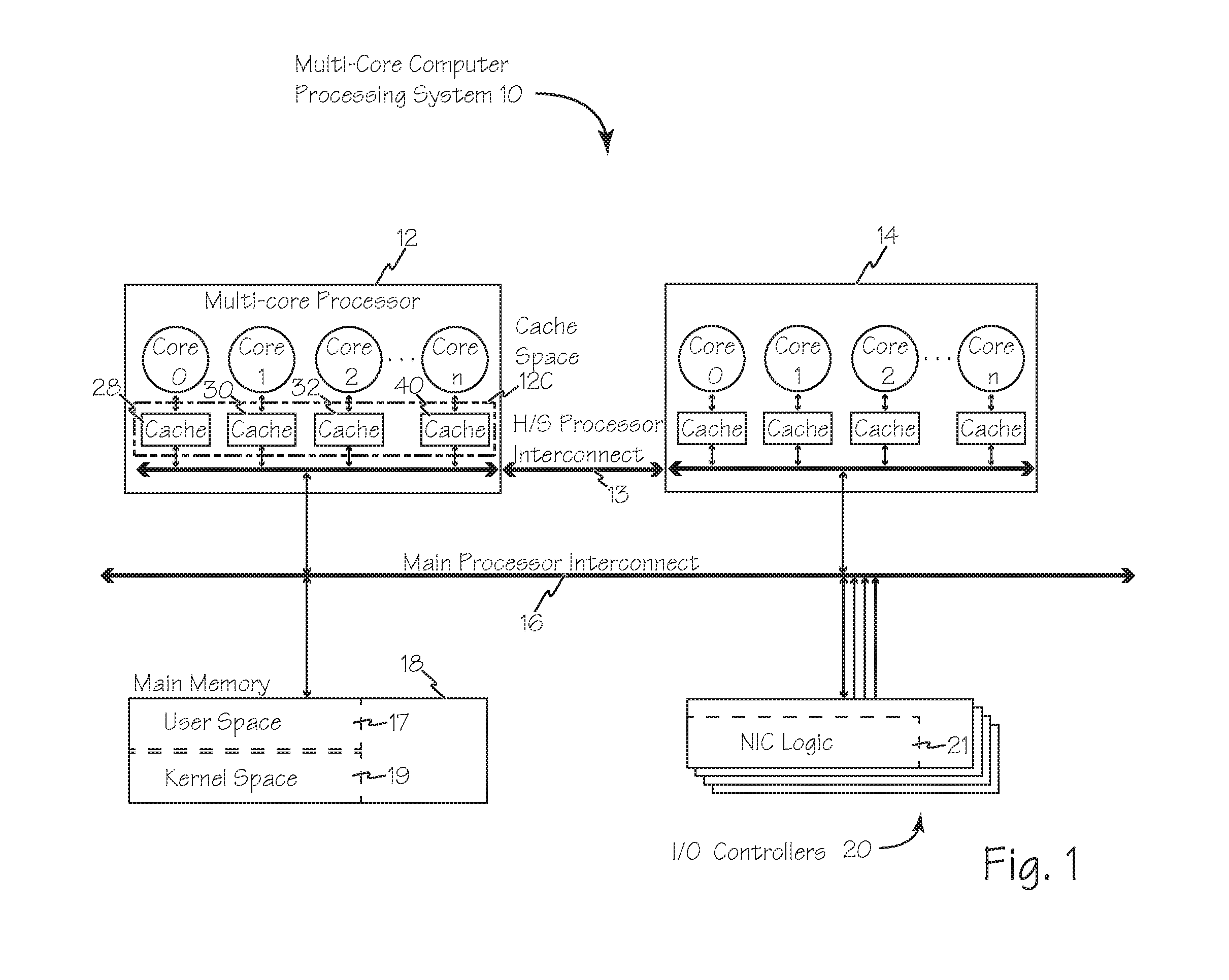

Methods and systems for enhanced computer performance improve software application execution in a computer system using, for example, a symmetrical multi-processing operating system including OS kernel services in kernel space of main memory, by using groups of related applications isolated areas in user space, such as containers, and using a reduced set of application group specific set of resource management services stored with each application group in user space, rather than the OS kernel facilities in kernel space, to manage shared resources during execution of an application, process or thread from that group. The reduced sets of resource management services may be optimized for the group stored therewith. Execution of each group may be exclusive to a different core of a multi-core processor and multiple groups may therefore execute separately and simultaneously on the different cores.

Owner:APL SOFTWARE INC

Digital communications processor

An integrated circuit (203) for use in processing streams of data generally and streams of packets in particular. The integrated circuit (203) includes a number of packet processors (307, 313, 303), a table look up engine (301), a queue management engine (305) and a buffer management engine (315). The packet processors (307, 313, 303) include a receive processor (421), a transmit processor (427) and a risc core processor (401), all of which are programmable. The receive processor (421) and the core processor (401) cooperate to receive and route packets being received and the core processor (401) and the transmit processor (427) cooperate to transmit packets. Routing is done by using information from the table look up engine (301) to determine a queue (215) in the queue management engine (305) which is to receive a descriptor (217) describing the received packet's payload.

Owner:NORTH STAR INNOVATIONS

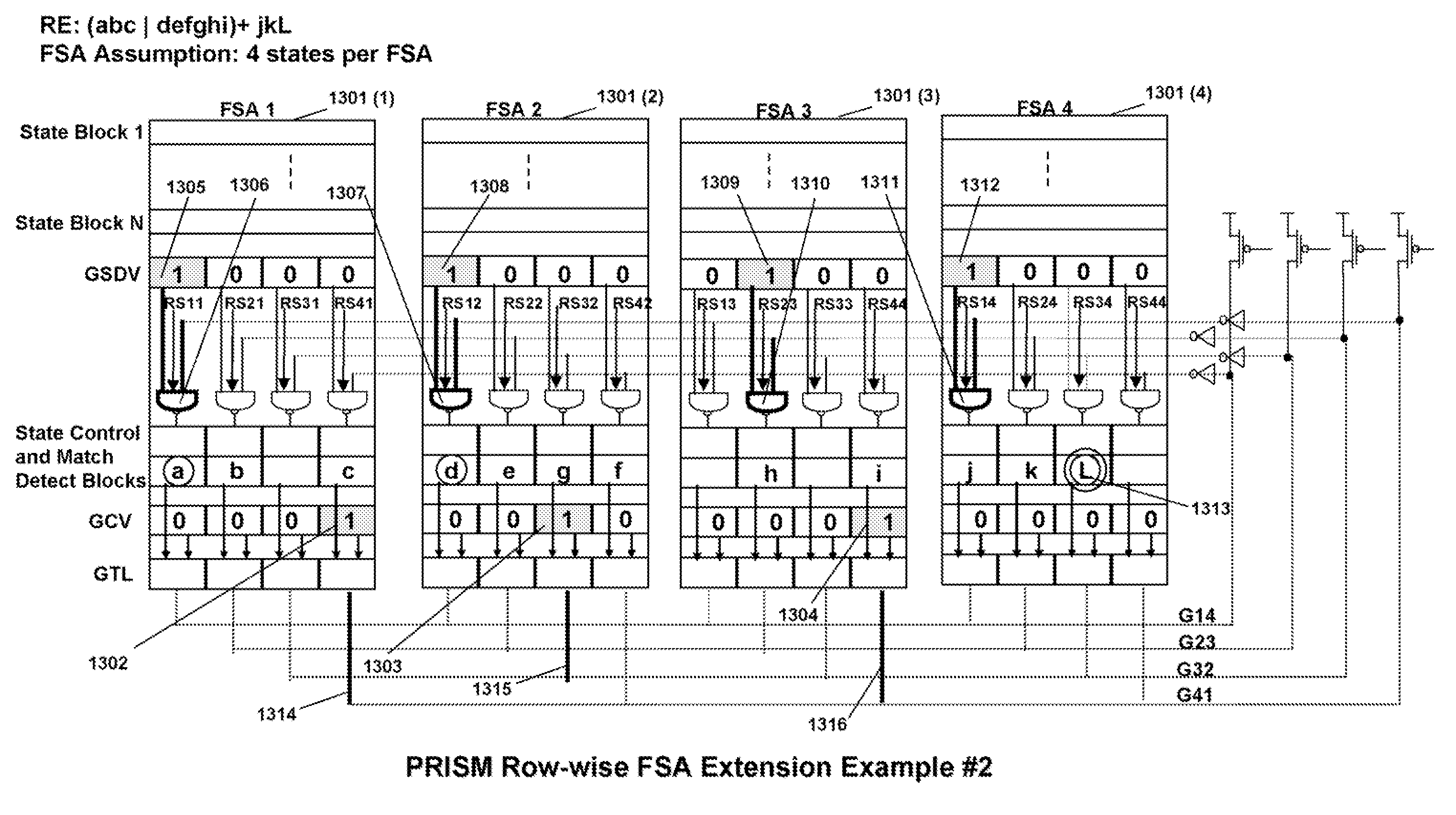

Embedded Programmable Intelligent Search Memory

ActiveUS20080140661A1Reduce classification overheadImprove performanceDigital data information retrievalDigital data processing detailsMulti processorMemory architecture

Memory architecture provides capabilities for high performance content search. The architecture creates an innovative memory that can be programmed with content search rules which are used by the memory to evaluate presented content for matching with the programmed rules. When the content being searched matches any of the rules programmed in the Programmable intelligent Search Memory (PRISM) action(s) associated with the matched rule(s) are taken The PRISM content search memory is embedded in a single core or multi-core processors or in multi-processor systems to perform content search. PRISM accelerates content search by offloading the content search tasks from the processors. Content search rules comprise of regular expressions which are converted to finite state automata and then programmed in PRISM for evaluating content with the search rules.

Owner:INFOSIL INC

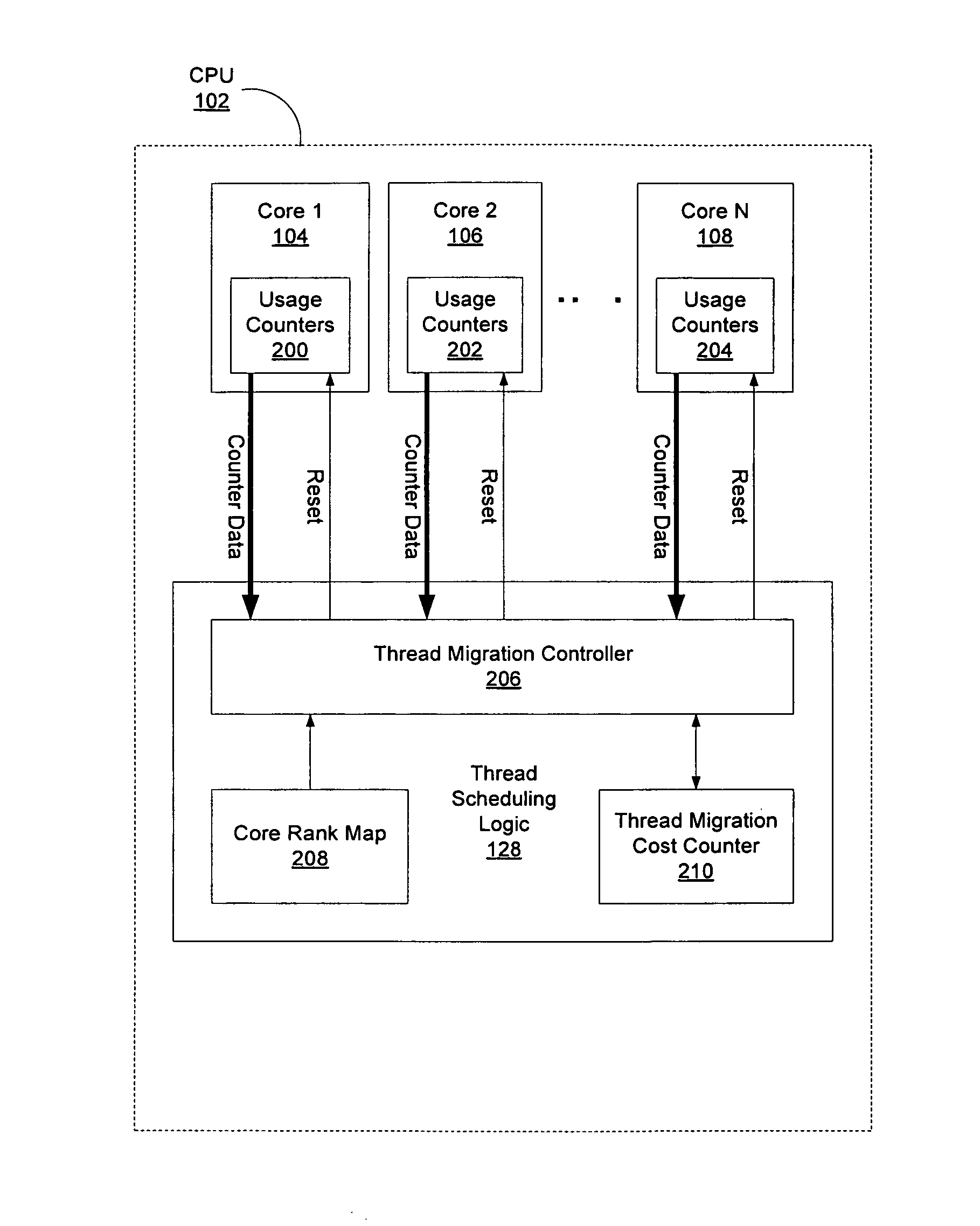

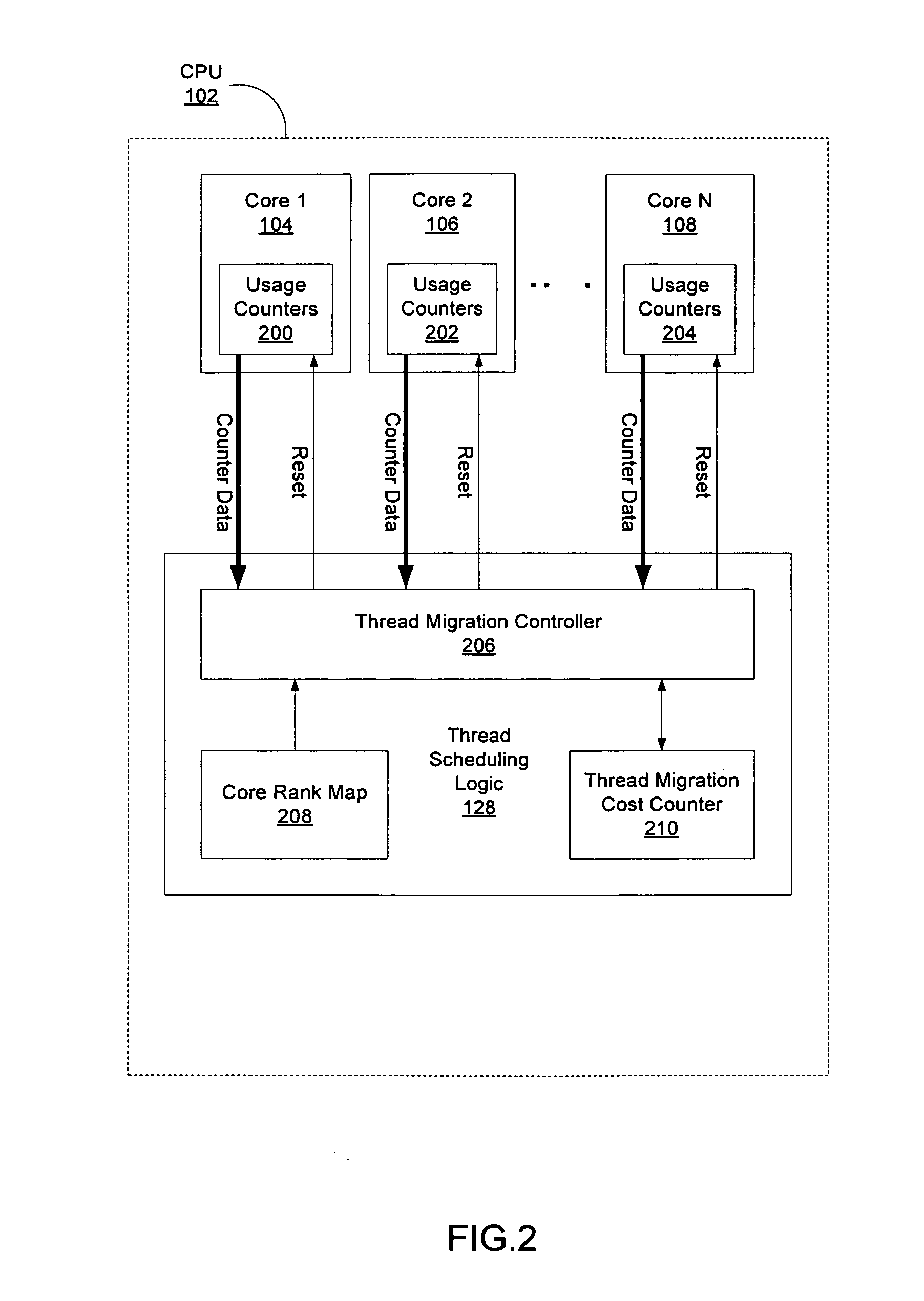

Hardware support for thread scheduling on multi-core processors

ActiveUS20110088041A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationThread schedulingWorkload

A method, device, and system are disclosed. In one embodiment the method includes scheduling a thread to run on first core of a multi-core processor. The determination as to which core the thread is scheduled on uses one or more processes. These processes may include ranking all of the cores specific to a workload of the thread, establishing a current utilization of each core of the multi-core processor, and calculating an inter-core migration cost for the thread.

Owner:TAHOE RES LTD

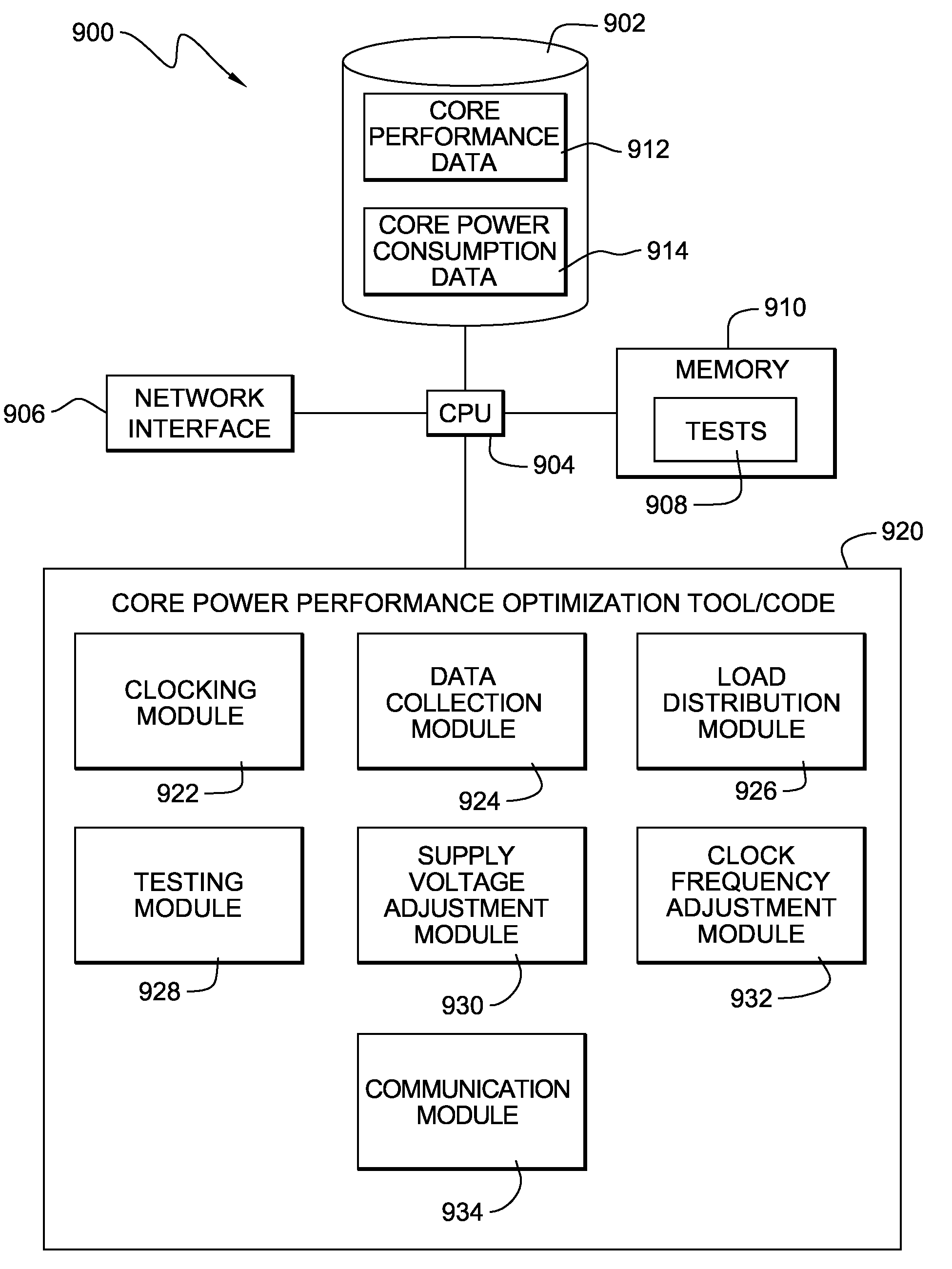

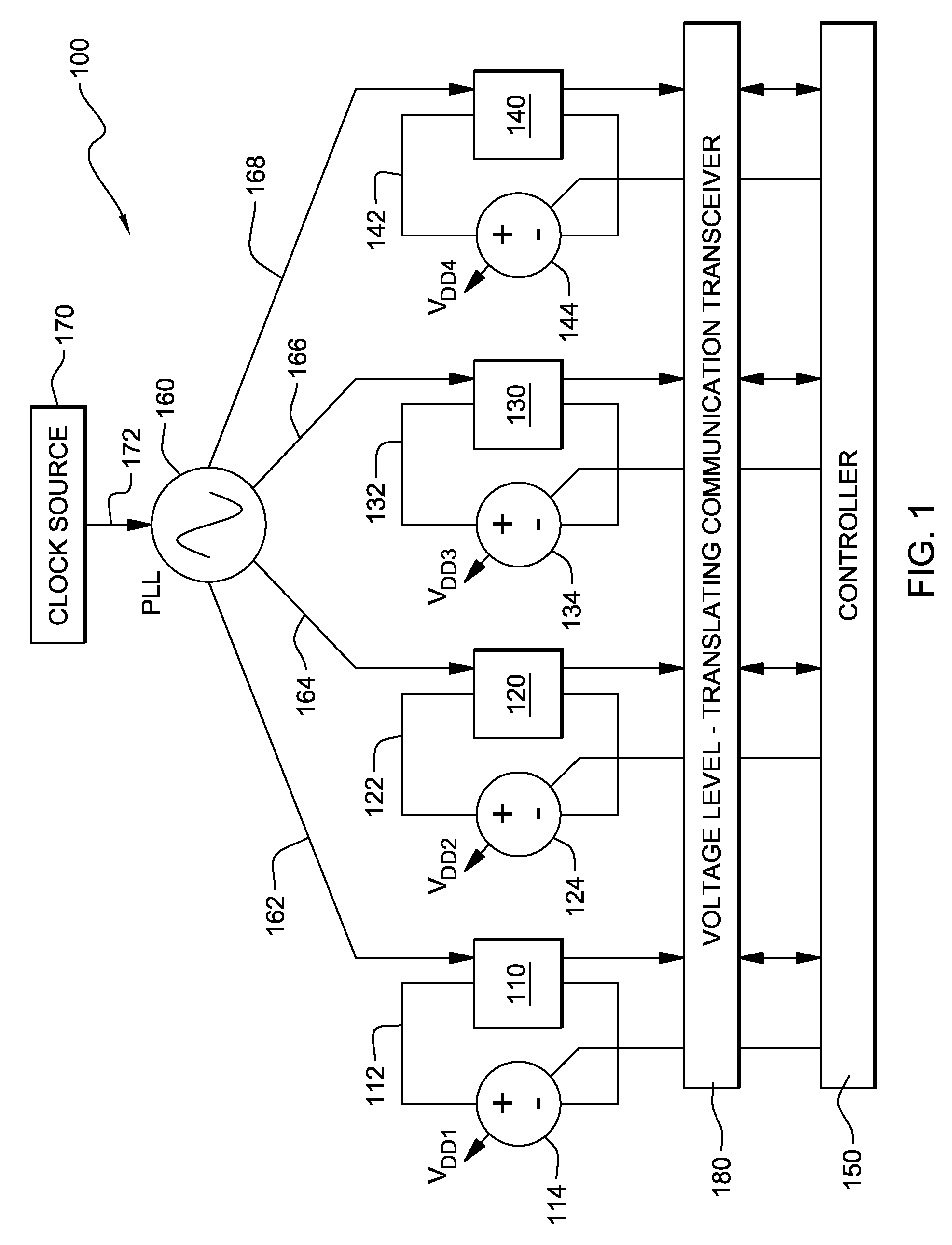

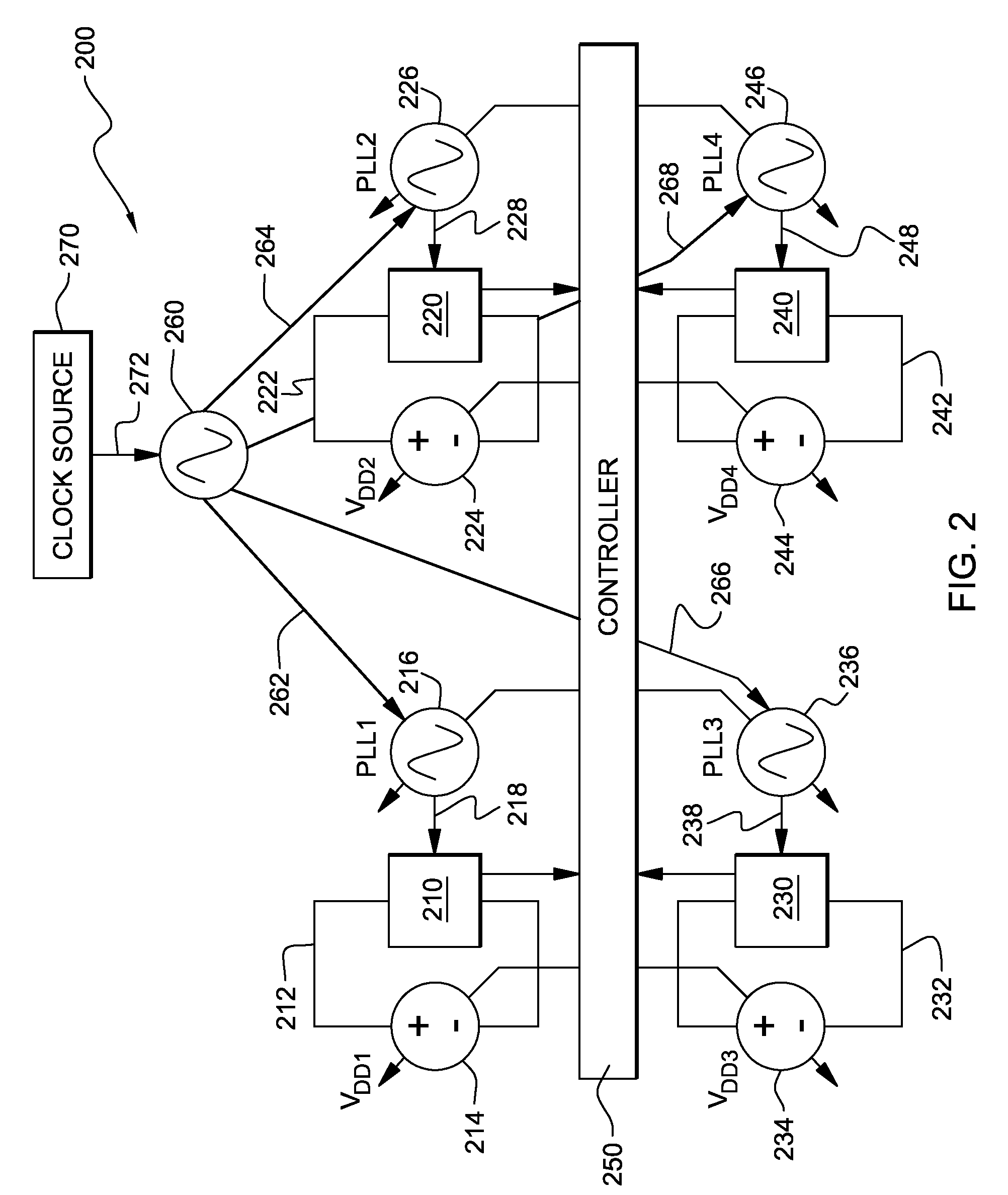

Apparatus, method and program product for adaptive real-time power and perfomance optimization of multi-core processors

InactiveUS20090138737A1Improve performanceIncrease power consumptionVolume/mass flow measurementPower supply for data processingClock rateEngineering

An apparatus, method and program product for optimizing core performance and power in of a multi-core processor. The apparatus includes a multi-core processor coupled to a clock source providing a clock frequency to one or more cores, an independent power supply coupled to each core for providing a supply voltage to each core and a Phase-Locked Loop (PLL) circuit coupled to each core for dynamically adjusting the clock frequency provided to each core. The apparatus further includes a controller coupled to each core and being configured to collect performance data and power consumption data measured for each core and to adjust, using the PLL circuit, a supply voltage provided to a core, such that, the operational core frequency of the core is greater than a specification core frequency preset for the core and, such that, core performance and power consumption is optimized.

Owner:KYNDRYL INC

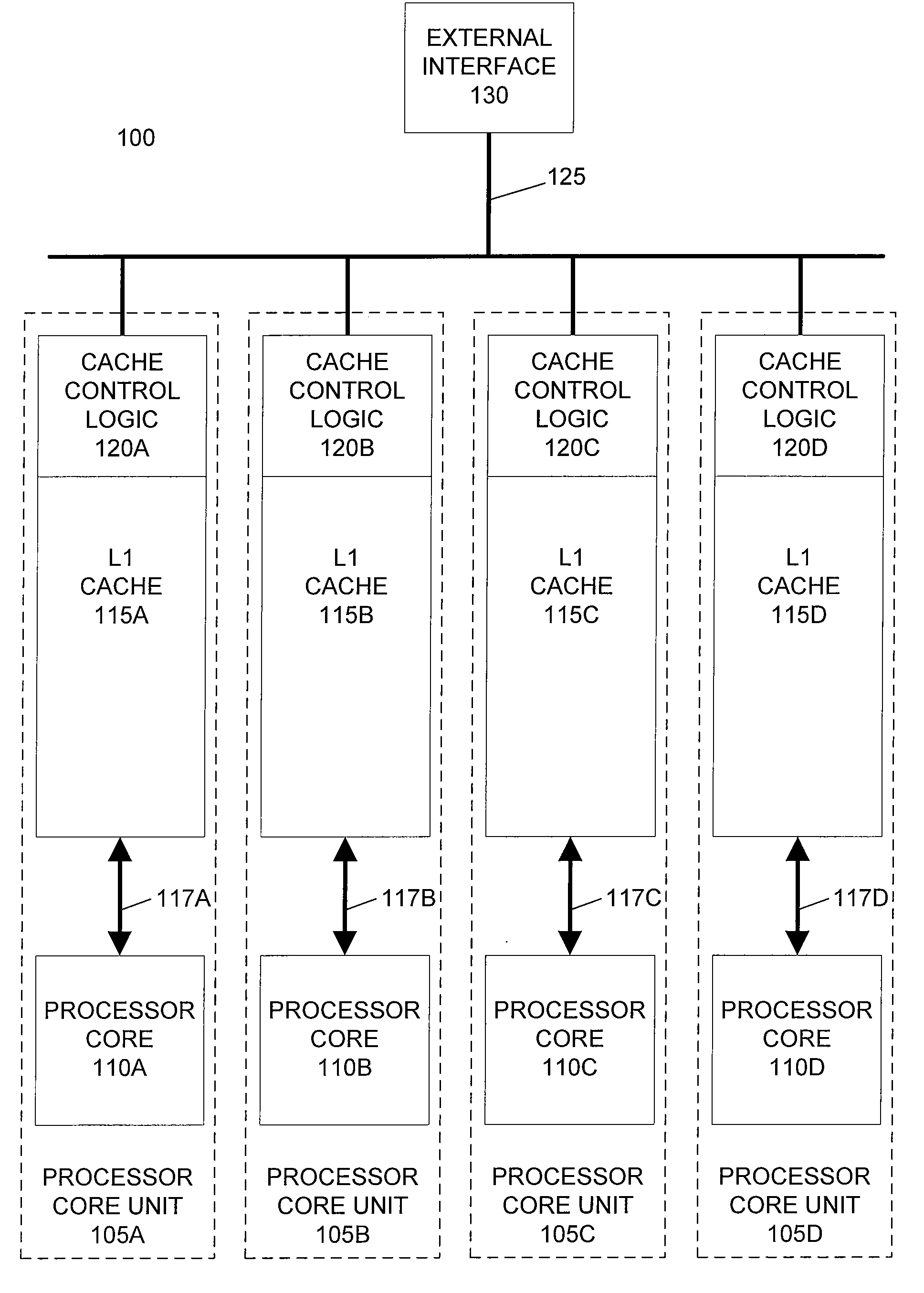

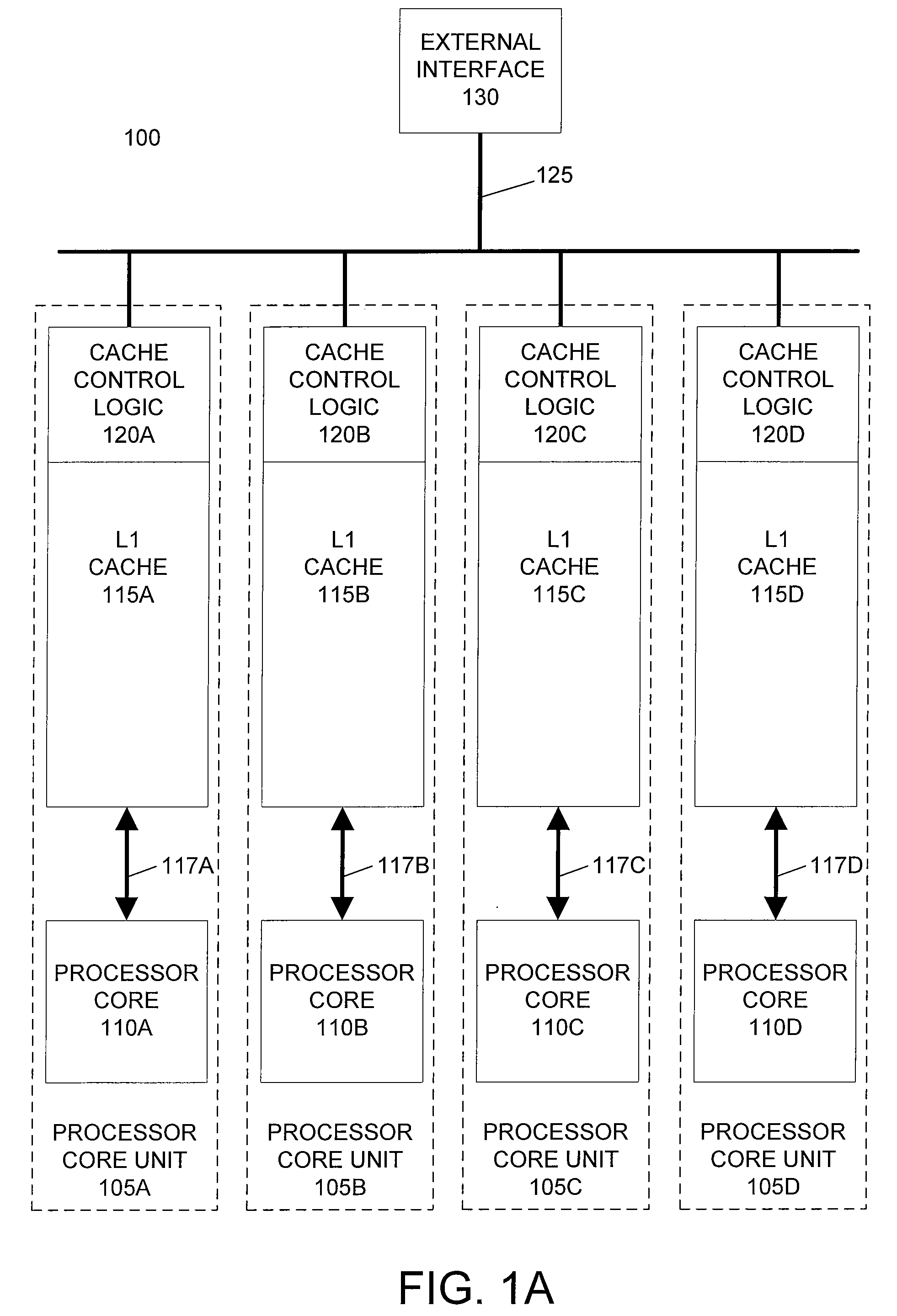

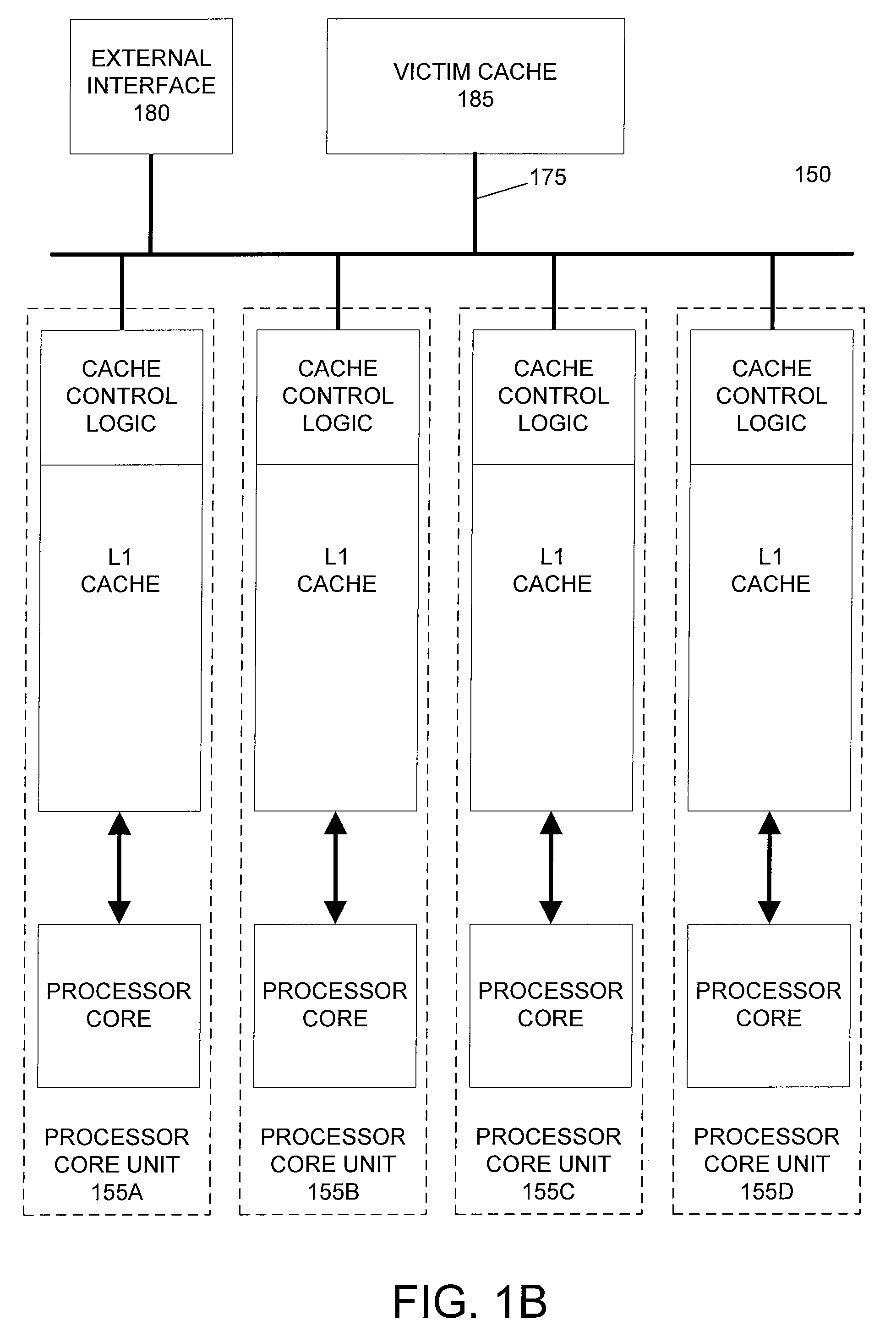

Horizontally-shared cache victims in multiple core processors

ActiveUS20080091880A1Lower latencyImprove performanceEnergy efficient ICTMemory adressing/allocation/relocationLatency (engineering)Multi-core processor

A processor includes multiple processor core units, each including a processor core and a cache memory. Victim lines evicted from a first processor core unit's cache may be stored in another processor core unit's cache, rather than written back to system memory. If the victim line is later requested by the first processor core unit, the victim line is retrieved from the other processor core unit's cache. The processor has low latency data transfers between processor core units. The processor transfers victim lines directly between processor core units' caches or utilizes a victim cache to temporarily store victim lines while searching for their destinations. The processor evaluates cache priority rules to determine whether victim lines are discarded, written back to system memory, or stored in other processor core units' caches. Cache priority rules can be based on cache coherency data, load balancing schemes, and architectural characteristics of the processor.

Owner:MIPS TECH INC

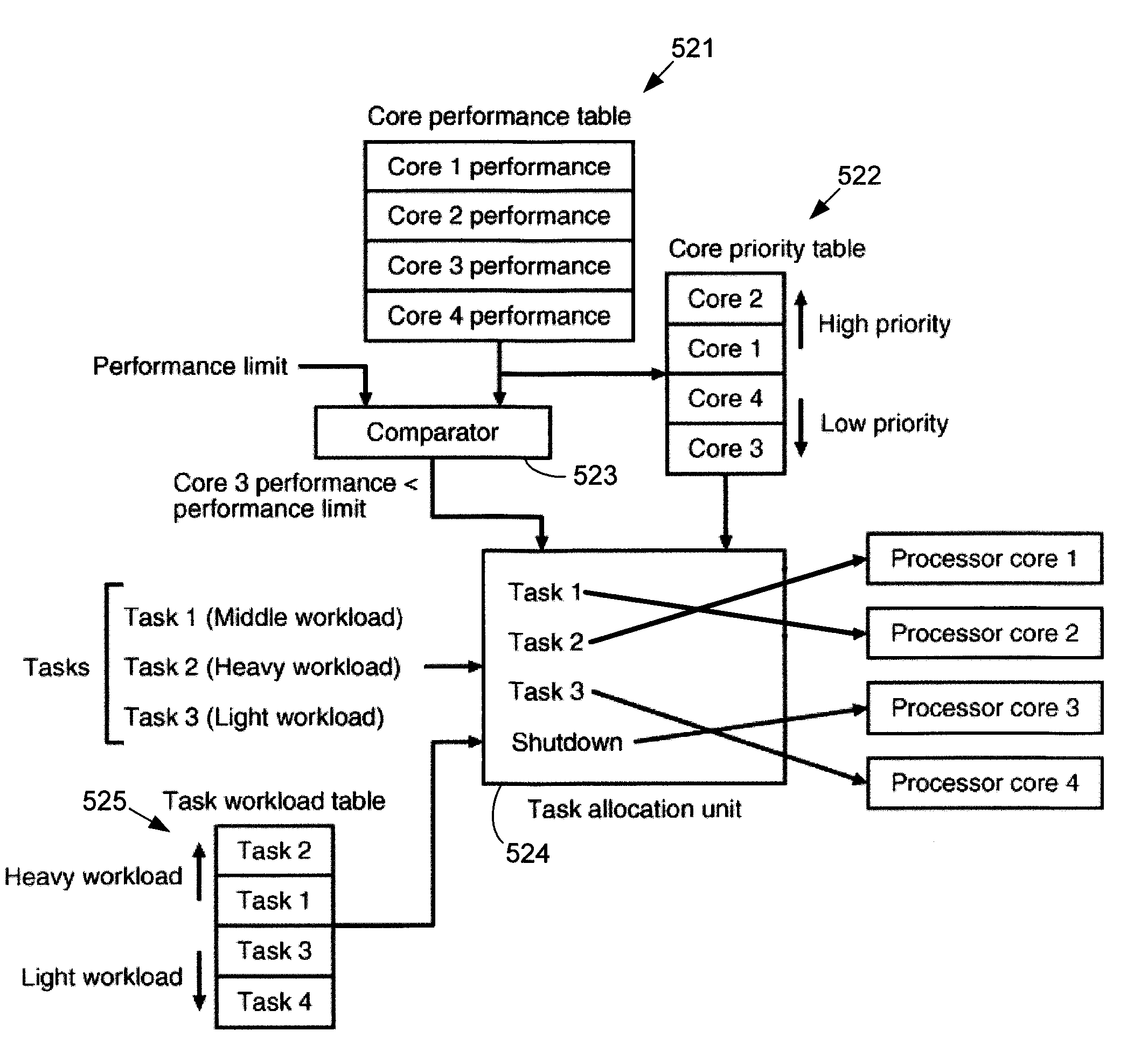

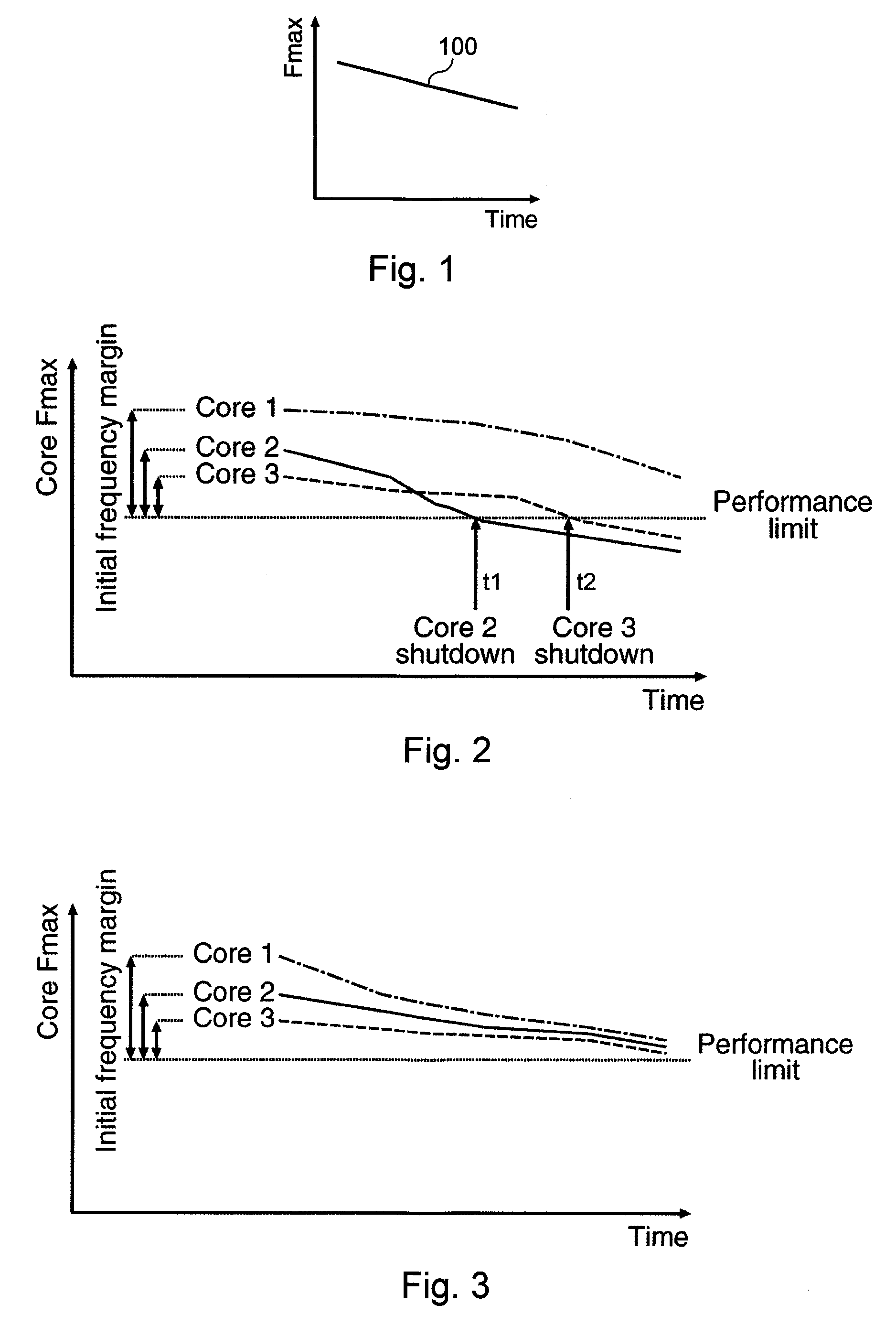

Systems and Methods for Improving the Reliability of a Multi-Core Processor

InactiveUS20090288092A1Improve reliabilityDelay agingResource allocationMemory systemsMulti processorBelow threshold level

Systems and methods for improving the reliability of multiprocessors by reducing the aging of processor cores that have lower performance. One embodiment comprises a method implemented in a multiprocessor system having a plurality of processor cores. The method includes determining performance levels for each of the processor cores and determining an allocation of the tasks to the processor cores that substantially minimizes aging of a lowest-performing one of the operating processor cores. The allocation may be based on task priority, task weight, heat generated, or combinations of these factors. The method may also include identifying processor cores whose performance levels are below a threshold level and shutting down these processor cores. If the number of processor cores that are still active is less than a threshold number, the multiprocessor system may be shut down, or a warning may be provided to a user.

Owner:KK TOSHIBA

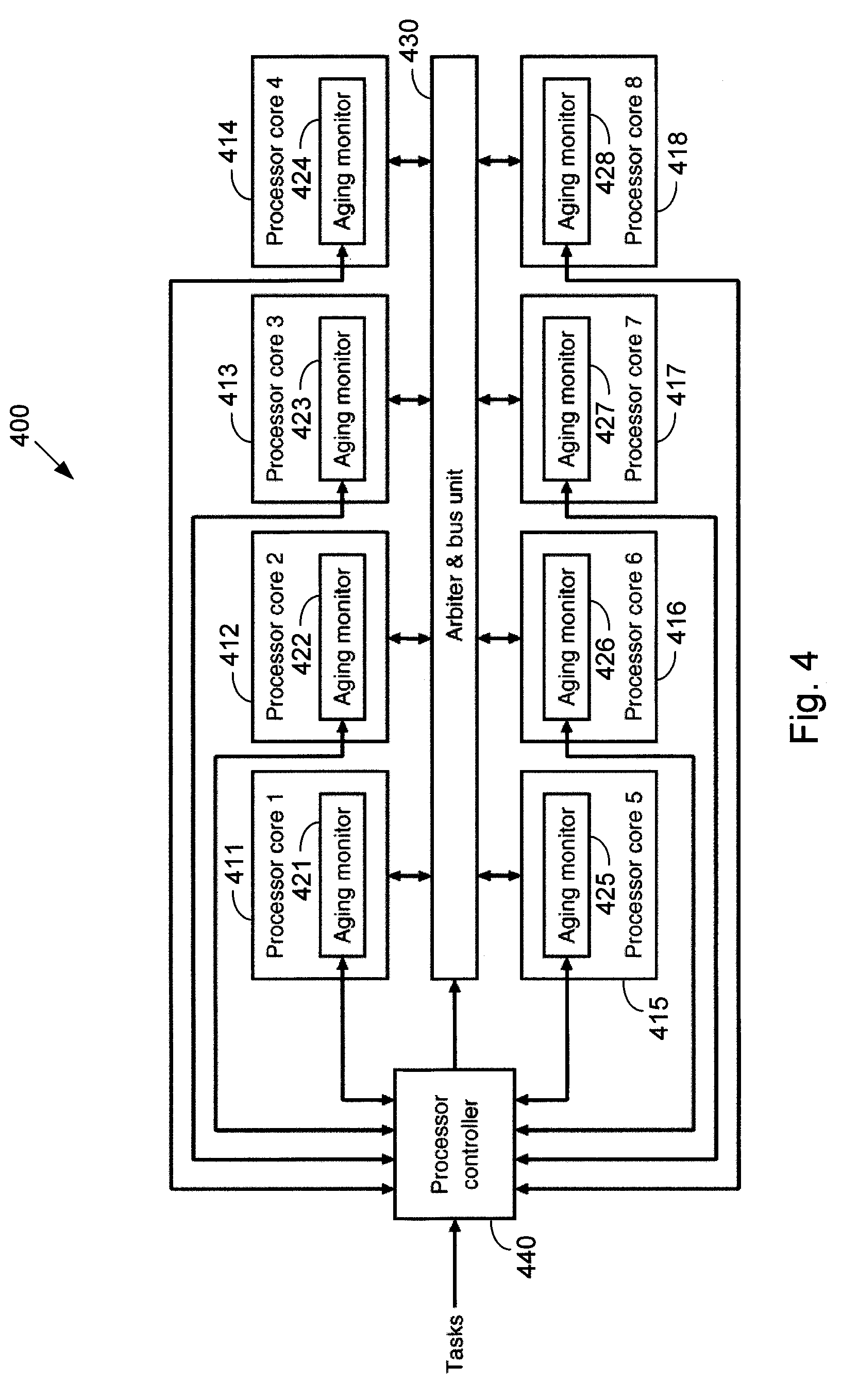

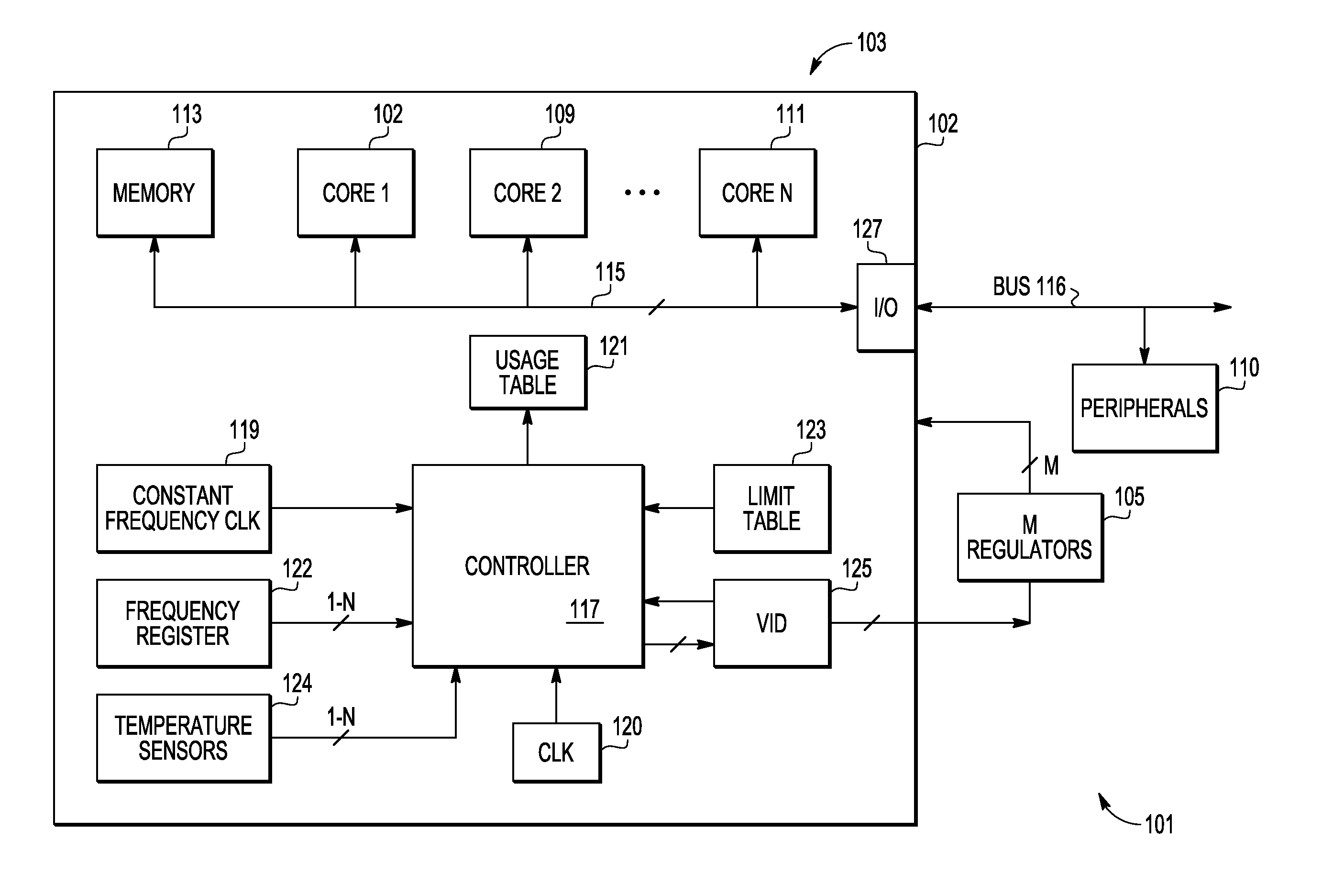

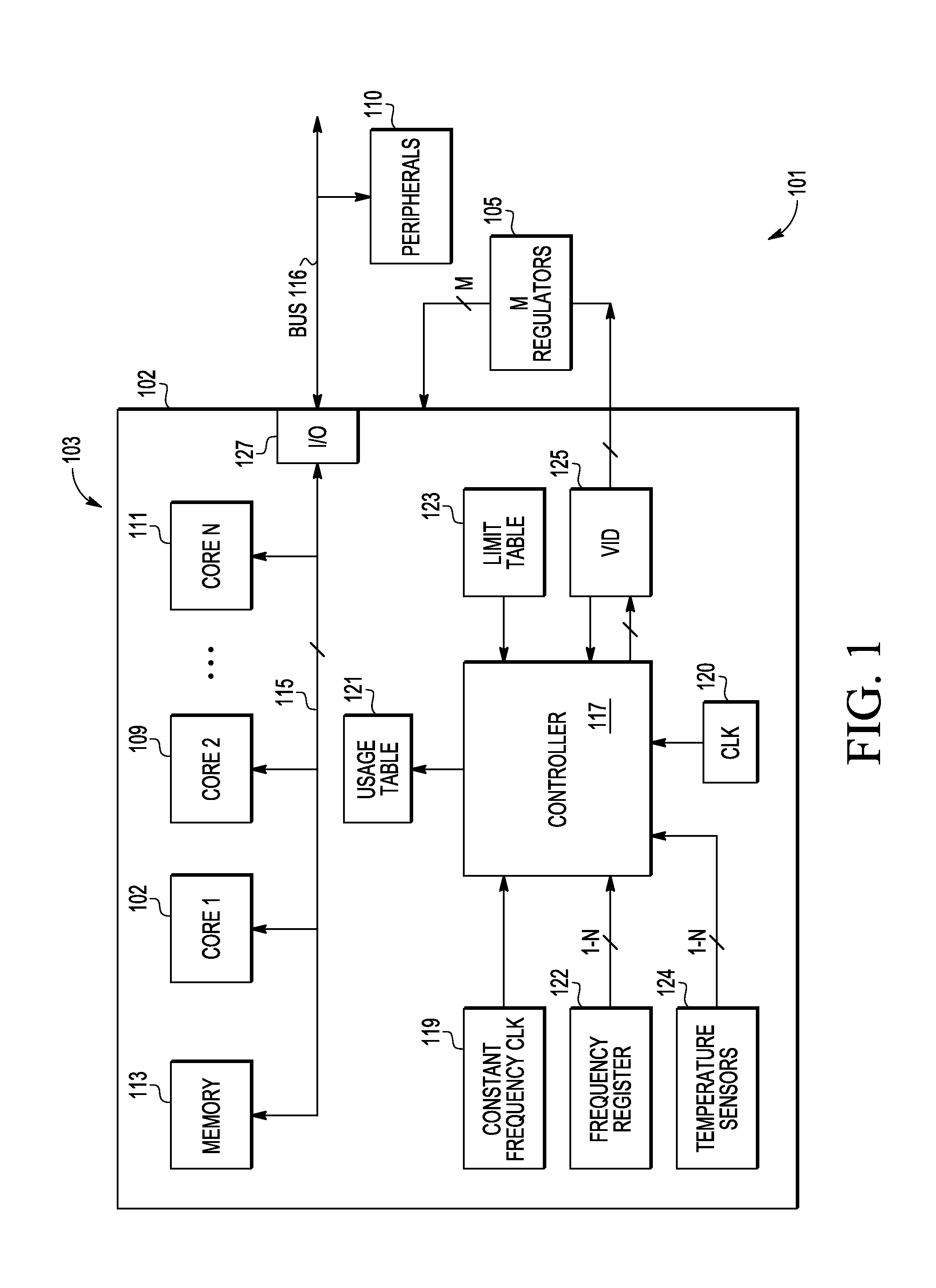

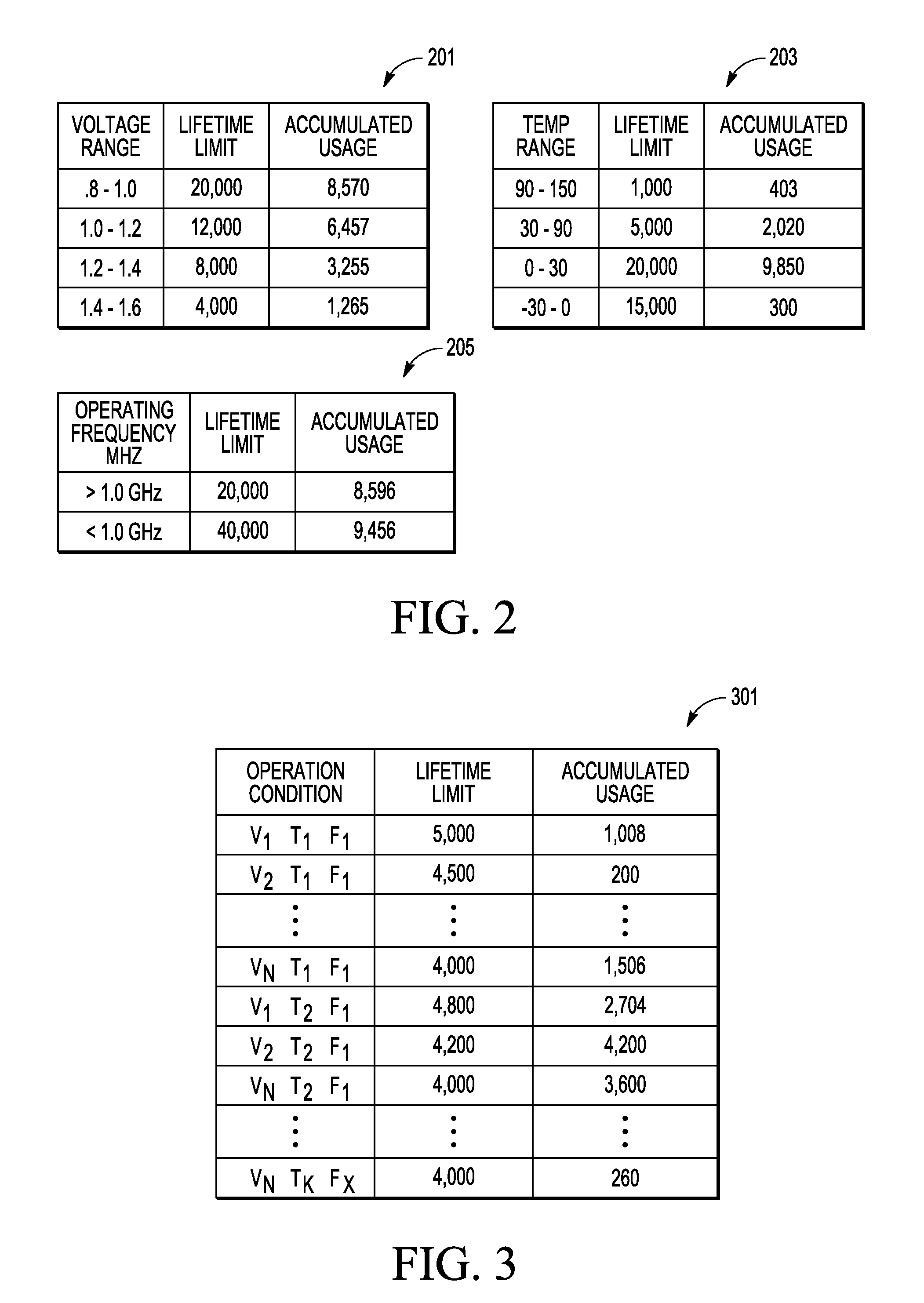

Multiple core data processor with usage monitoring

InactiveUS20110265090A1Digital data information retrievalError detection/correctionMulti-core processorInformation storage

Owner:FREESCALE SEMICON INC

Methods and systems for scheduling processes in a multi-core processor environment

InactiveUS20070204268A1Energy efficient ICTEnergy efficient computingOperational systemMulti-core processor

Embodiments of the present invention provide efficient scheduling in a multi-core processor environment. In some embodiments, each core is assigned, at most, one execution context. Each execution context may then asynchronously run on its assigned core. If execution context is blocked, then its dedicated core may be suspended or powered down until the execution context resumes operation. The processor core may remain dedicated to a particular thread, and thus, avoid the costly operations of a process or context switch, such as clearing register contents. In other embodiments, execution contexts are partitioned into two groups. The execution contexts may be partitioned based on various factors, such as their relative priority. One group of the execution contexts may be assigned their own dedicated core and allowed to run asynchronously. The other group of execution contexts, such as those with a lower priority, are co-scheduled among the remaining cores by the scheduler of the operating system.

Owner:RED HAT

Multiple-core processor with flexible mapping of processor cores to cache banks

ActiveUS7685354B1Memory architecture accessing/allocationInput/output to record carriersMemory addressMulti-core processor

A multiple-core processor providing flexible mapping of processor cores to cache banks. In one embodiment, a processor may include a cache including a number of cache banks. The processor may further include a number of processor cores configured to access the cache banks, as well as core / bank mapping logic coupled to the cache banks and processor cores. The core / bank mapping logic may be configurable to map a cache bank select portion of a memory address specified by a given one of the processor cores to any one of the cache banks.

Owner:ORACLE INT CORP

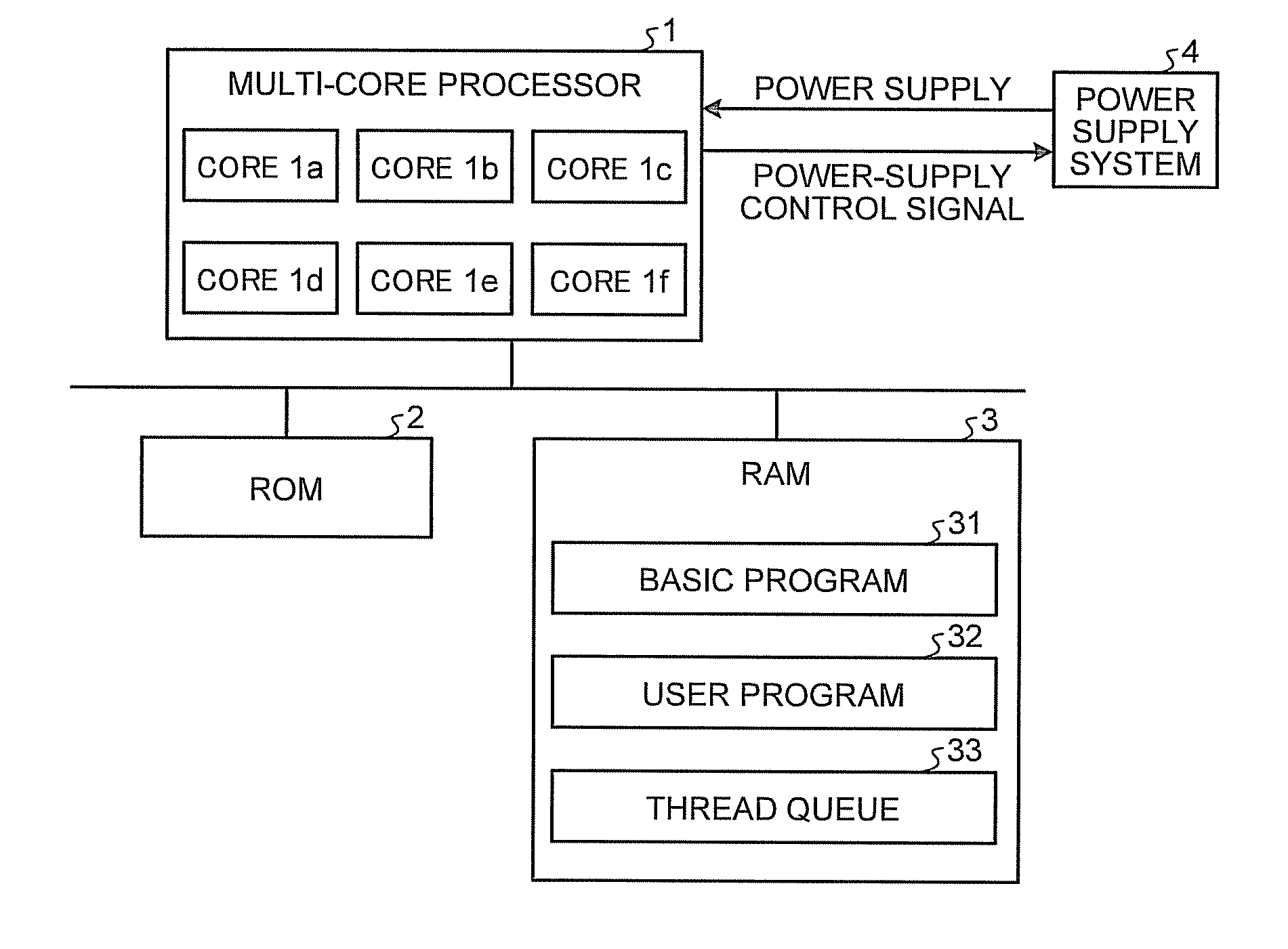

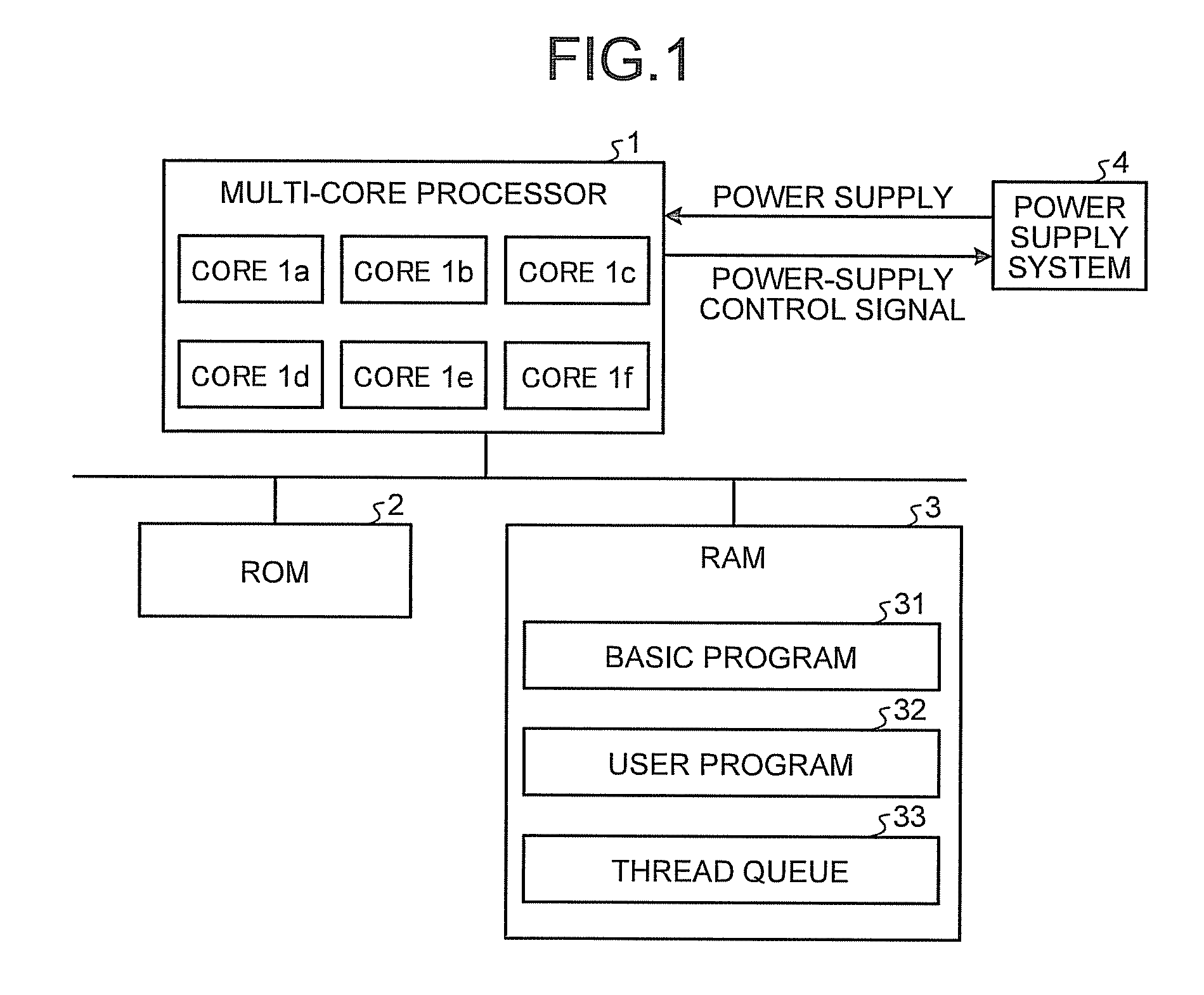

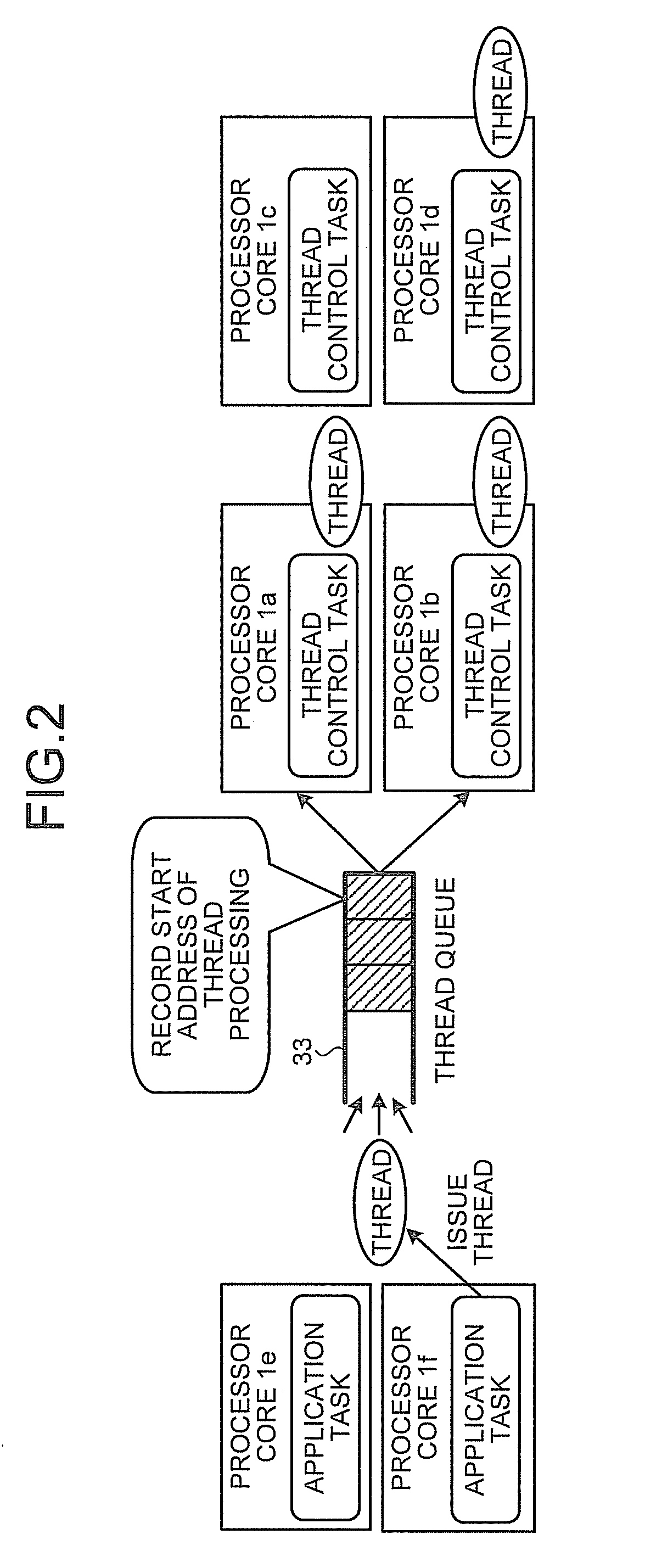

Multi-core processor system

InactiveUS20100299541A1Energy efficient ICTDigital data processing detailsMulti-core processorThread count

A multi-core processor system includes: a plurality of processor cores; a power supply unit that stops supplying or supplies power to each of the processor cores individually; and a thread queue that stores threads that the multi-core processor system causes the processor cores to execute. Each of the processor cores includes: a power-supply stopping unit that causes the power supply unit to stop power supply to an own processor core when a number of threads stored in the thread queue is equal to or smaller than a first threshold; and a power-supply resuming unit that causes the power supply unit to resume power supply to the other stopped processor cores when the number of threads stored in the thread queue exceeds a second value equal to or lager than the first threshold.

Owner:KK TOSHIBA

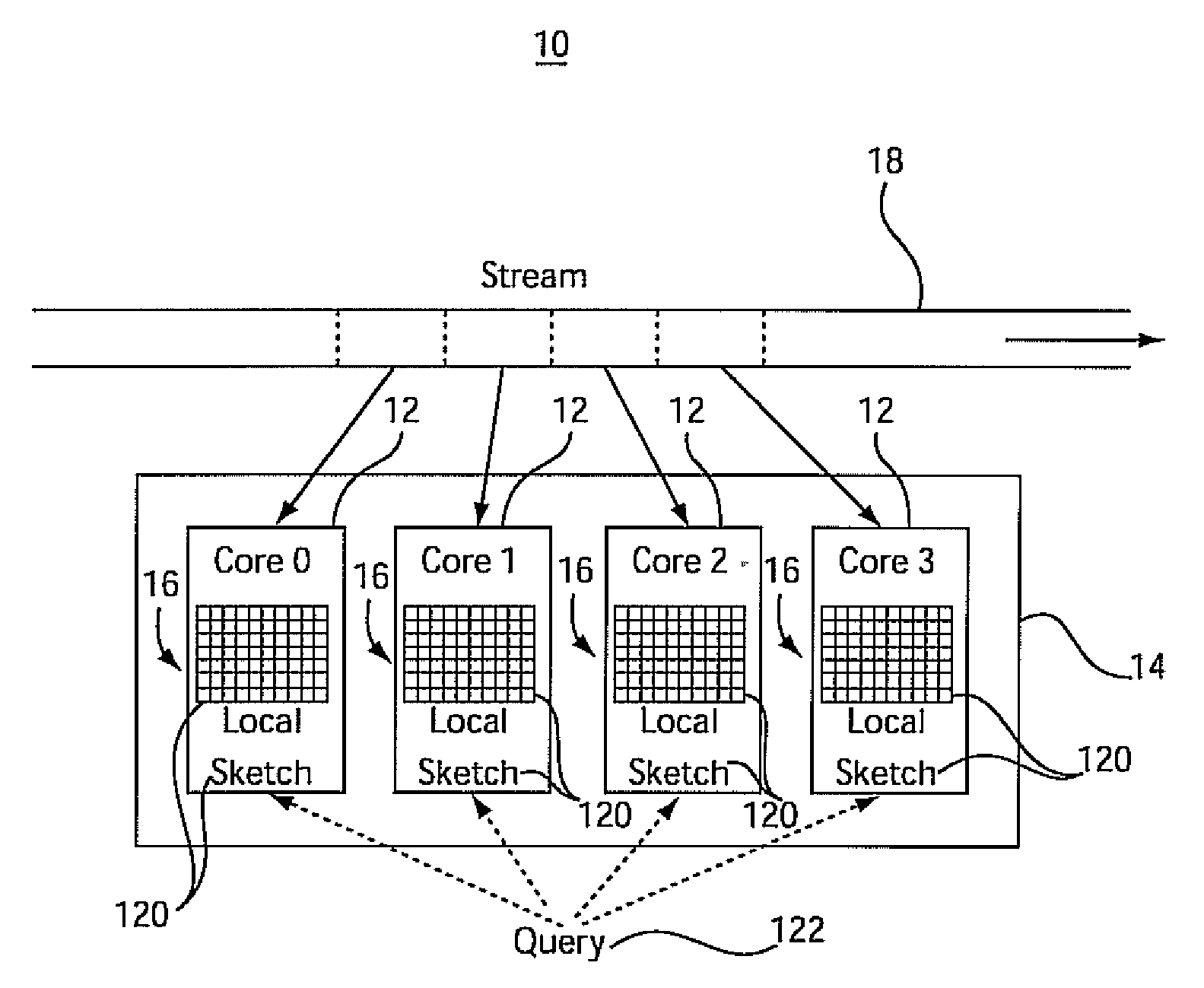

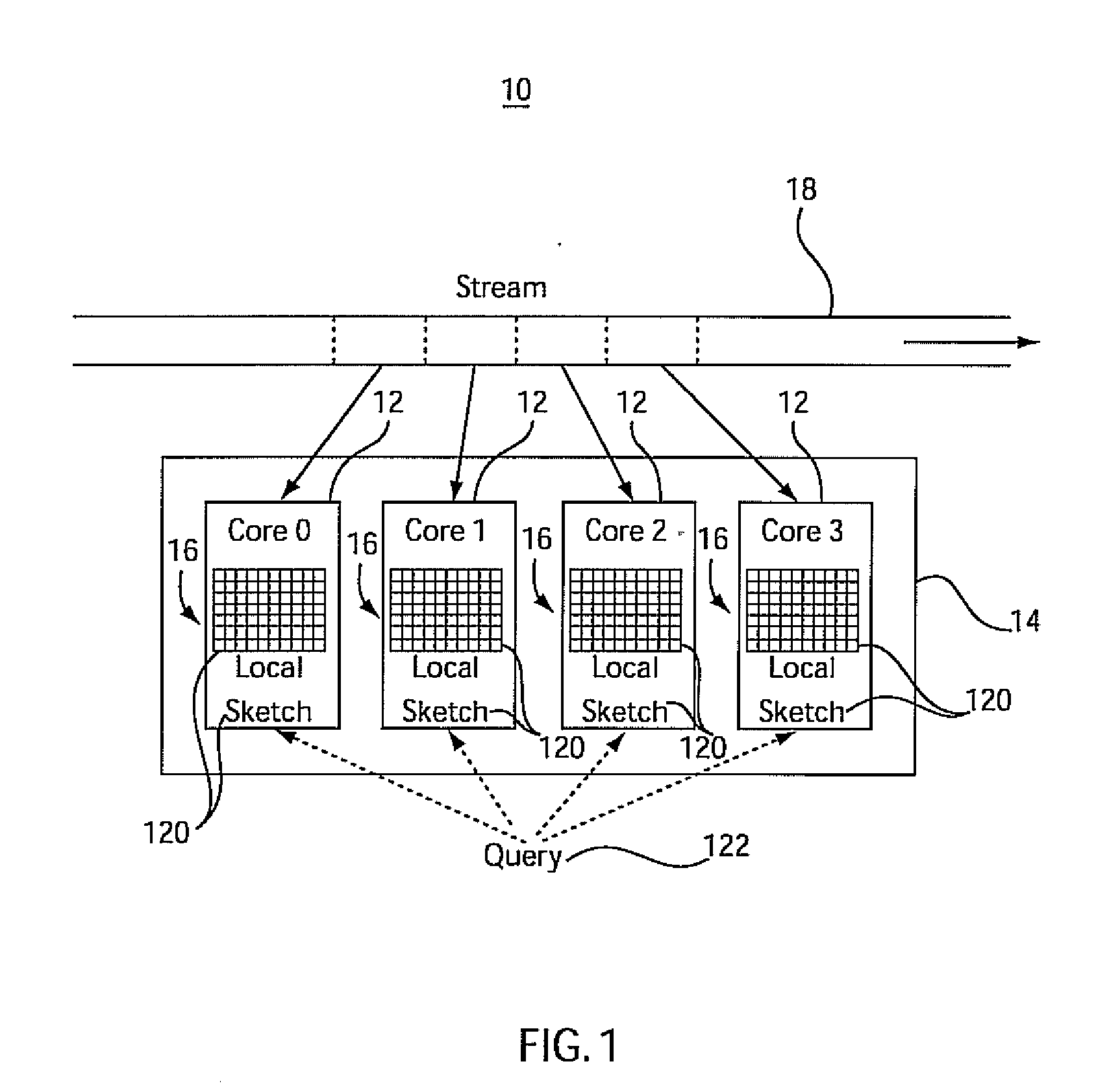

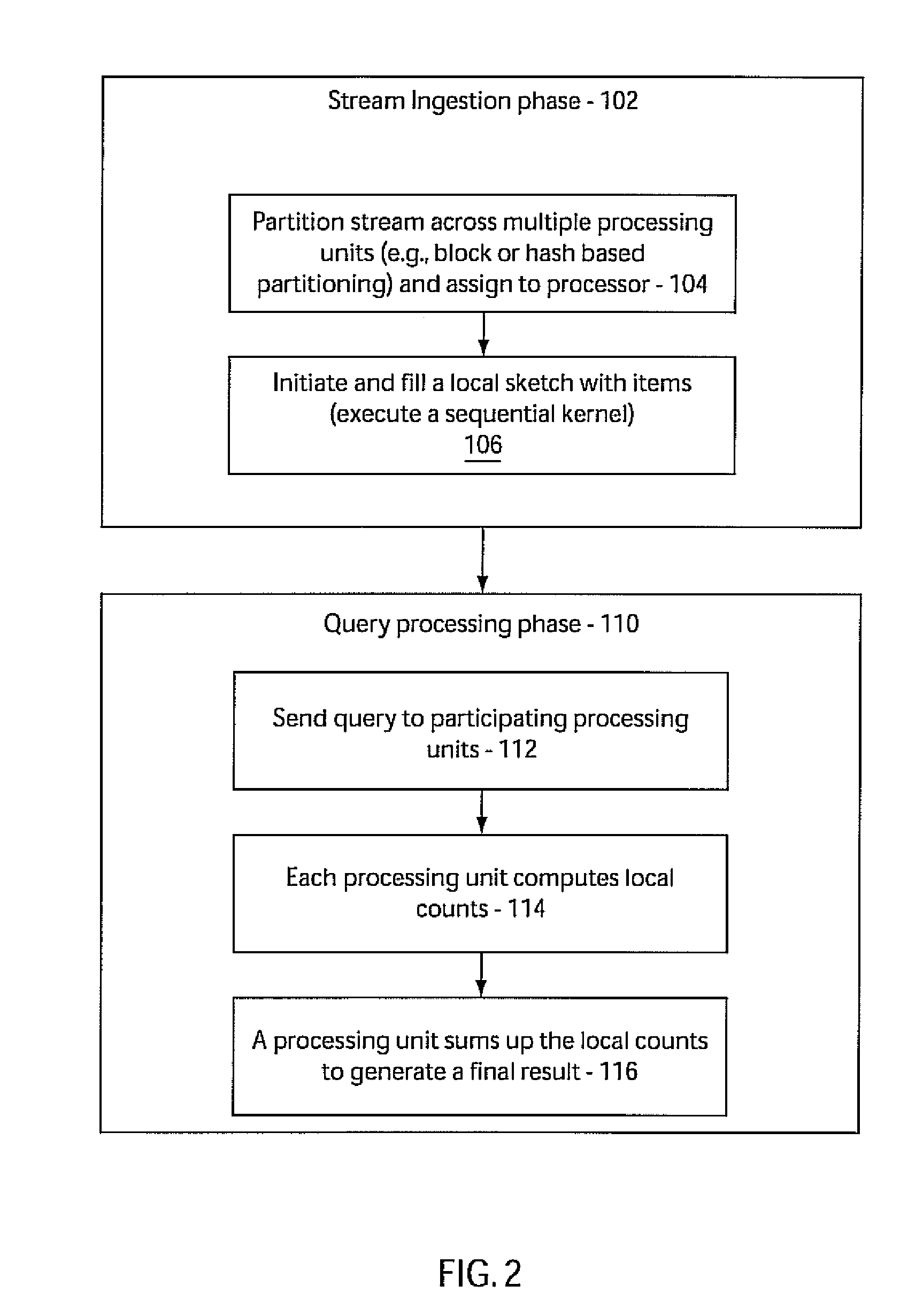

System and method for analyzing streams and counting stream items on multi-core processors

InactiveUS20090031175A1Improve computing powerImprove performanceProgram control using stored programsError detection/correctionMulti-core processorZero frequency

Systems and methods for parallel stream item counting are disclosed. A data stream is partitioned into portions and the portions are assigned to a plurality of processing cores. A sequential kernel is executed at each processing core to compute a local count for items in an assigned portion of the data stream for that processing core. The counts are aggregated for all the processing cores to determine a final count for the items in the data stream. A frequency-aware counting method (FCM) for data streams includes dynamically capturing relative frequency phases of items from a data stream and placing the items in a sketch structure using a plurality of hash functions where a number of hash functions is based on the frequency phase of the item. A zero-frequency table is provided to reduce errors due to absent items.

Owner:IBM CORP

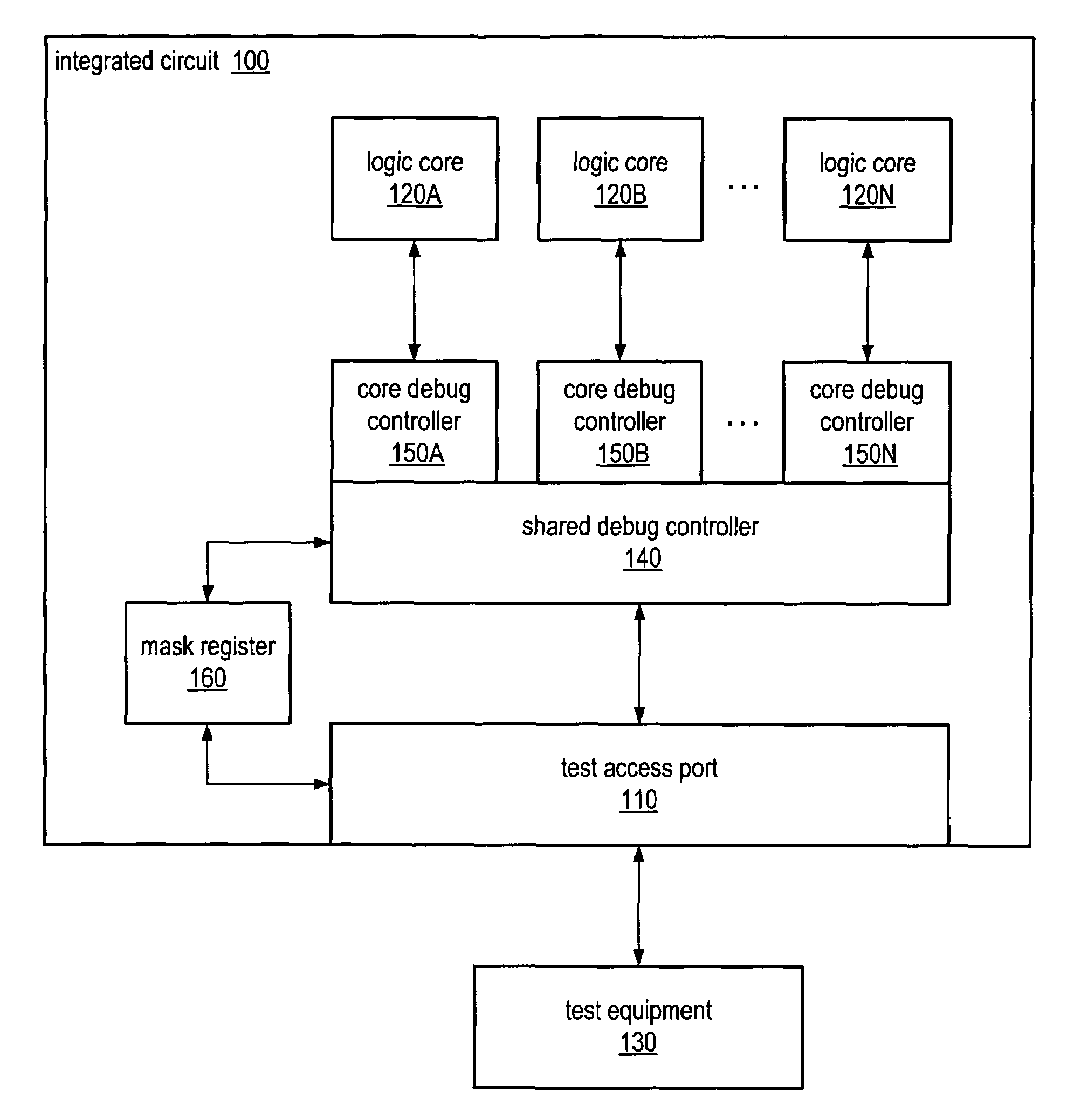

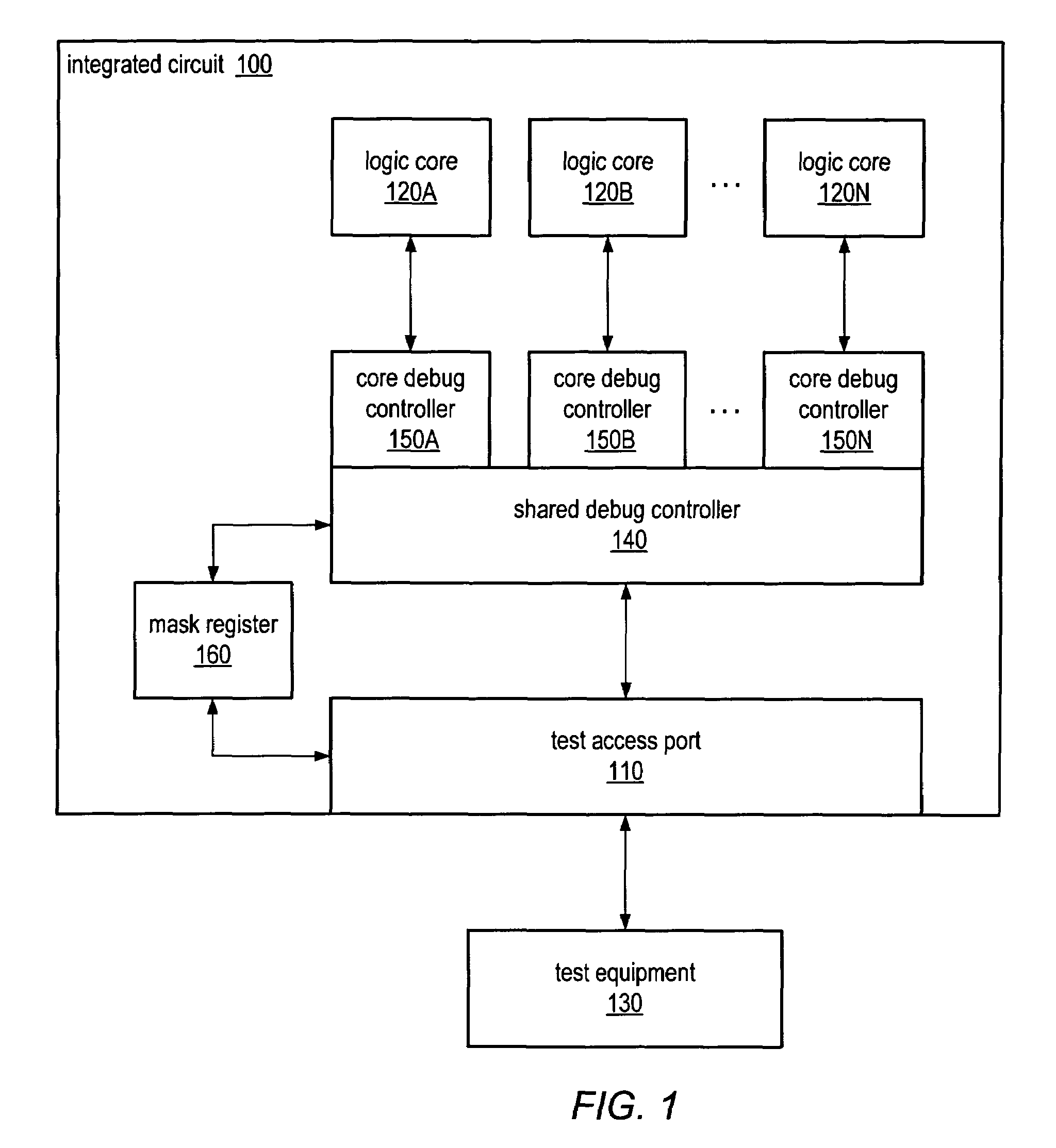

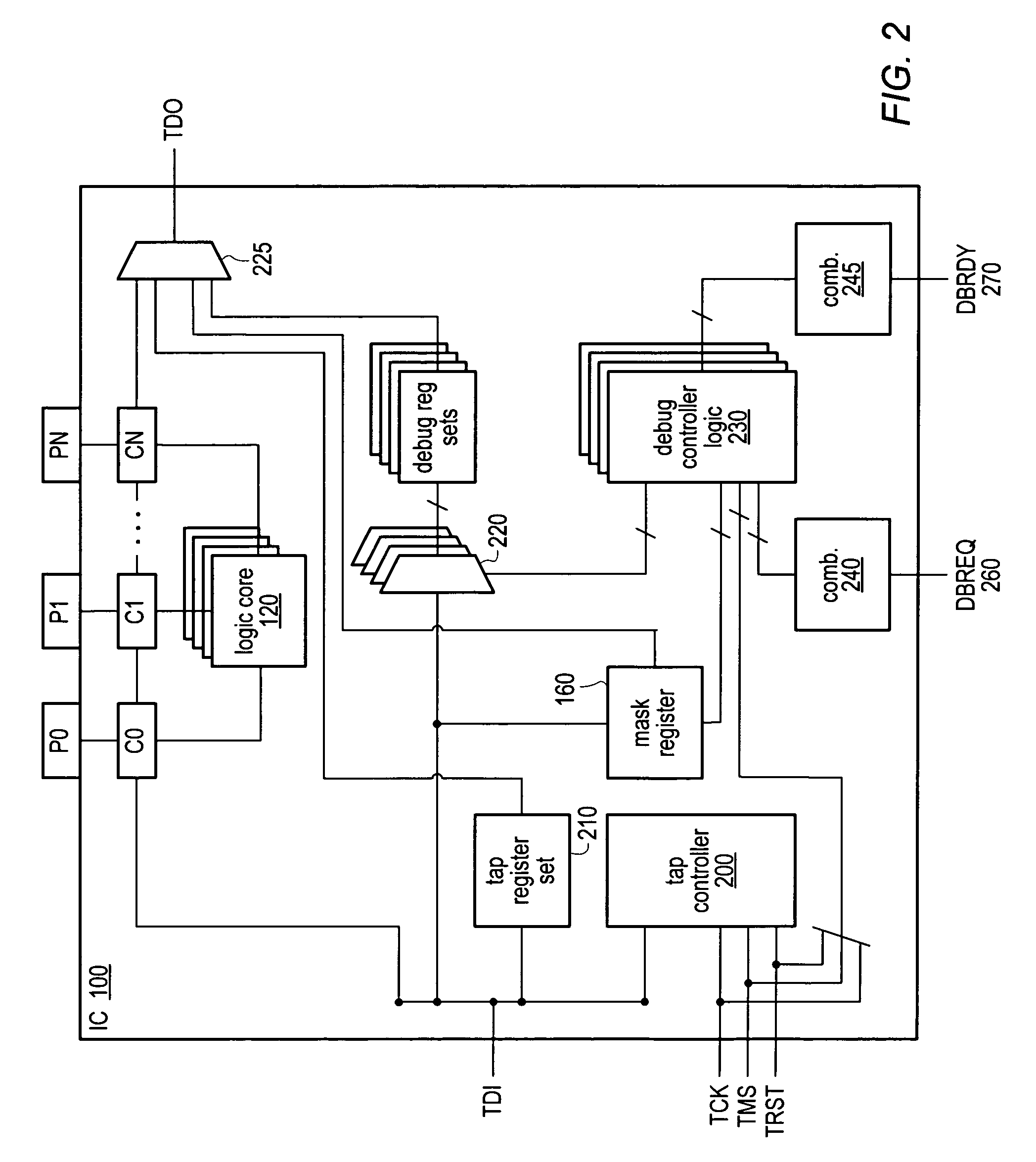

Multi-core integrated circuit with shared debug port

ActiveUS7665002B1Electronic circuit testingError detection/correctionComputer architectureJoint Test Action Group

A single test access port, such as a JTAG-based debug port may be utilized to perform debug operations on logic cores of a multi-core integrated circuit, such as a multi-core processor. The shared debug port may respond to a particular command to enter a debugging mode and may be configured to forward all commands and data to a debugging controller of the integrated circuit during debugging. A mask register may be used to indicate which logic cores of the multi-core integrated circuit should be debugged. Additionally, custom debugging commands may include mask or core select fields to indicate which logic cores should be affected by the particular command. Debugging mode may be initialized for one or more logic cores either externally, such as be asserted a DBREQ signal, or internally, such as by configuring one or more breakpoints.

Owner:ADVANCED MICRO DEVICES INC

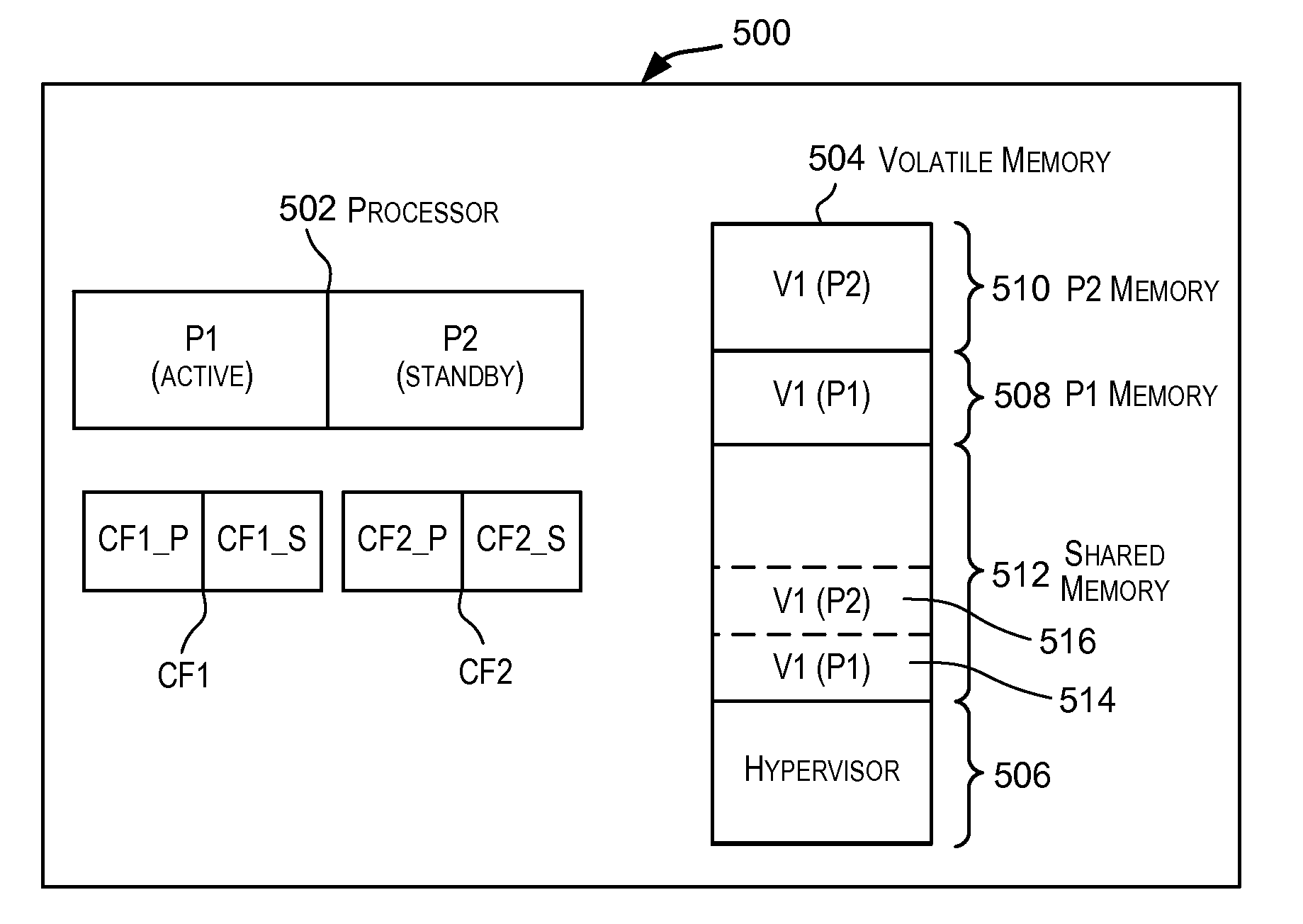

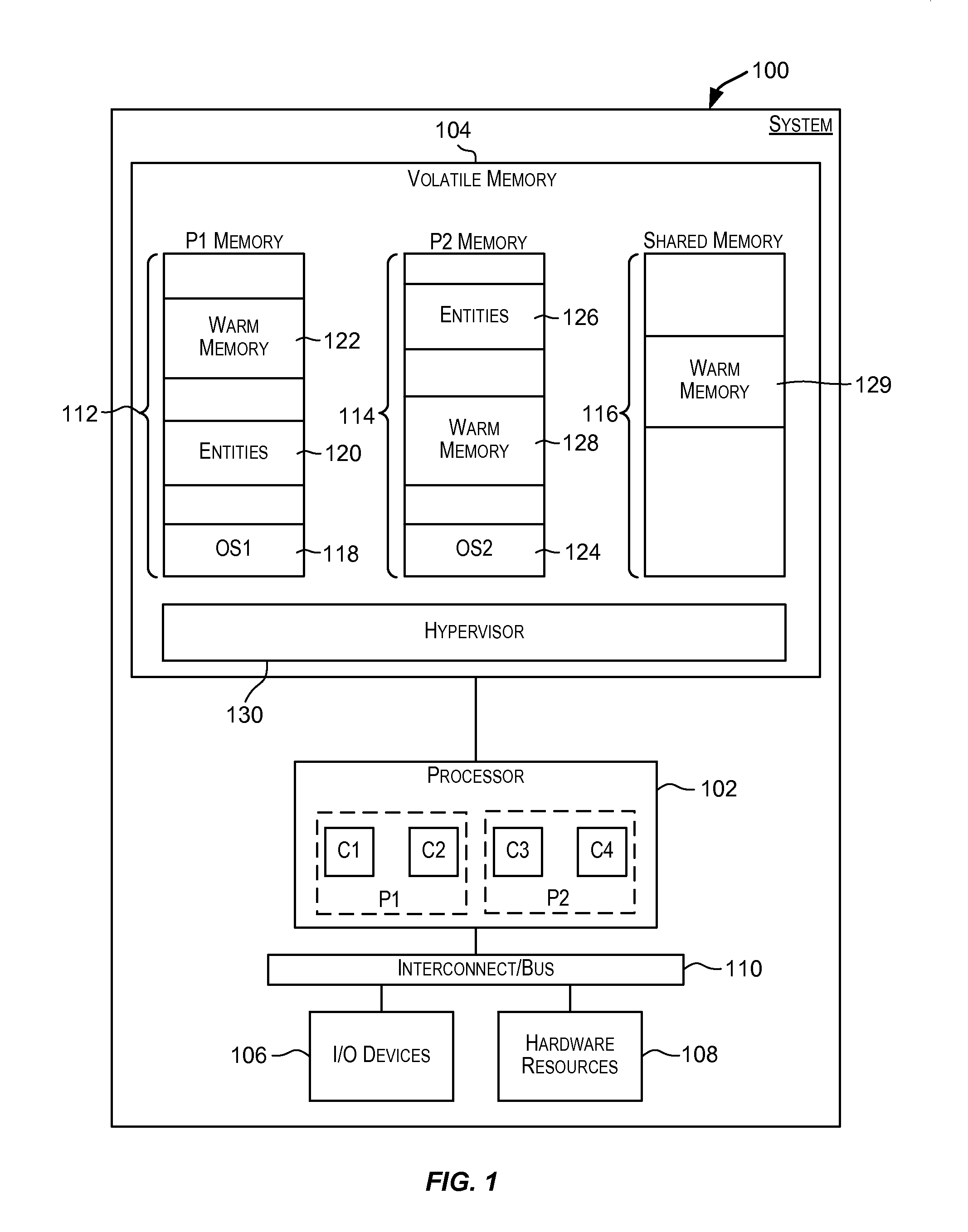

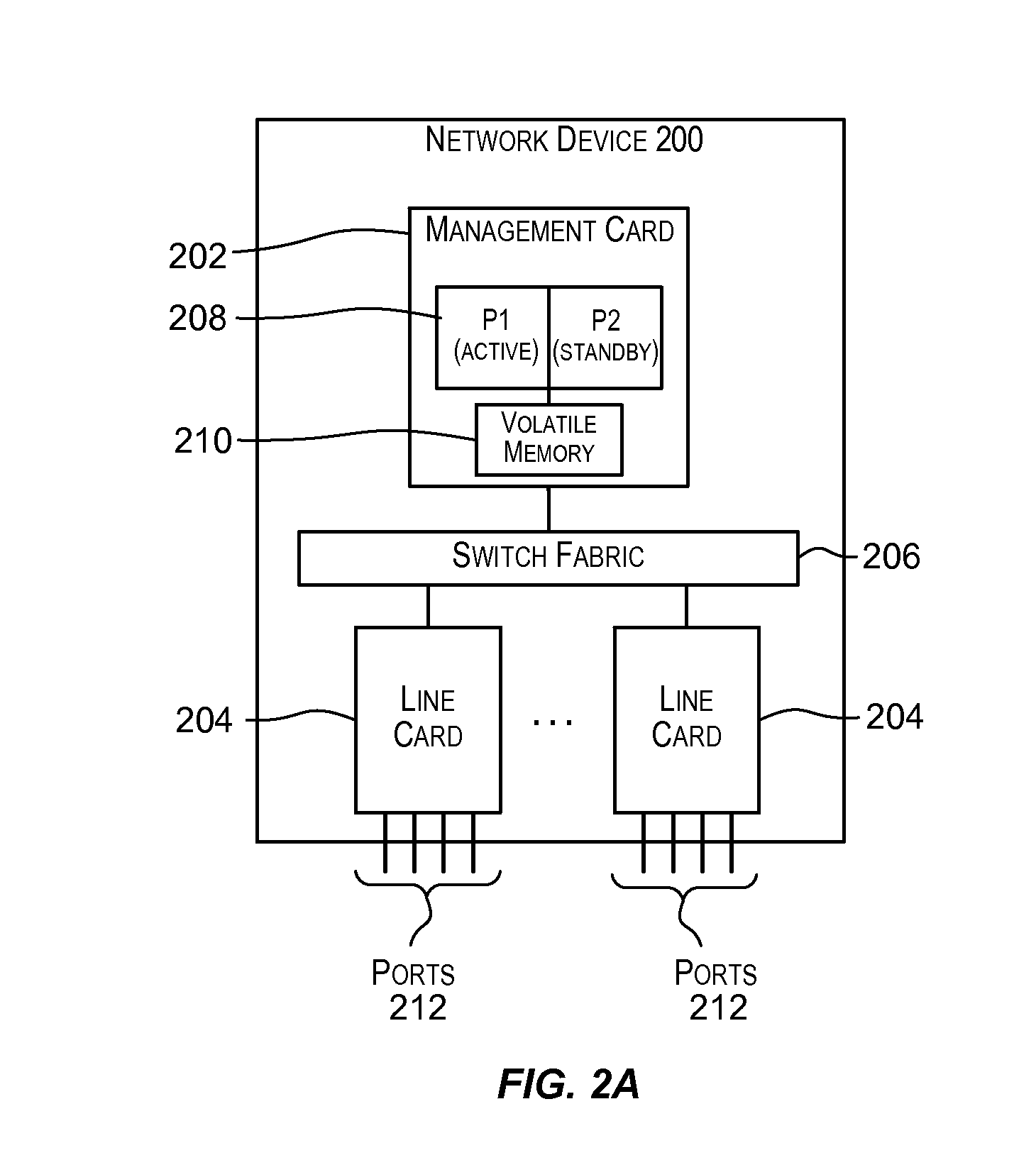

Achieving ultra-high availability using a single CPU

ActiveUS20120023309A1Improve usabilityProgram control using wired connectionsGeneral purpose stored program computerComputer architectureHigh availability

Techniques for achieving high-availability using a single processor (CPU). In a system comprising a multi-core processor, at least two partitions may be configured with each partition being allocated one or more cores of the multiple cores. The partitions may be configured such that one partition operates in active mode while another partition operates in standby mode. In this manner, a single processor is able to provide active-standby functionality, thereby enhancing the availability of the system comprising the processor.

Owner:AVAGO TECH INT SALES PTE LTD

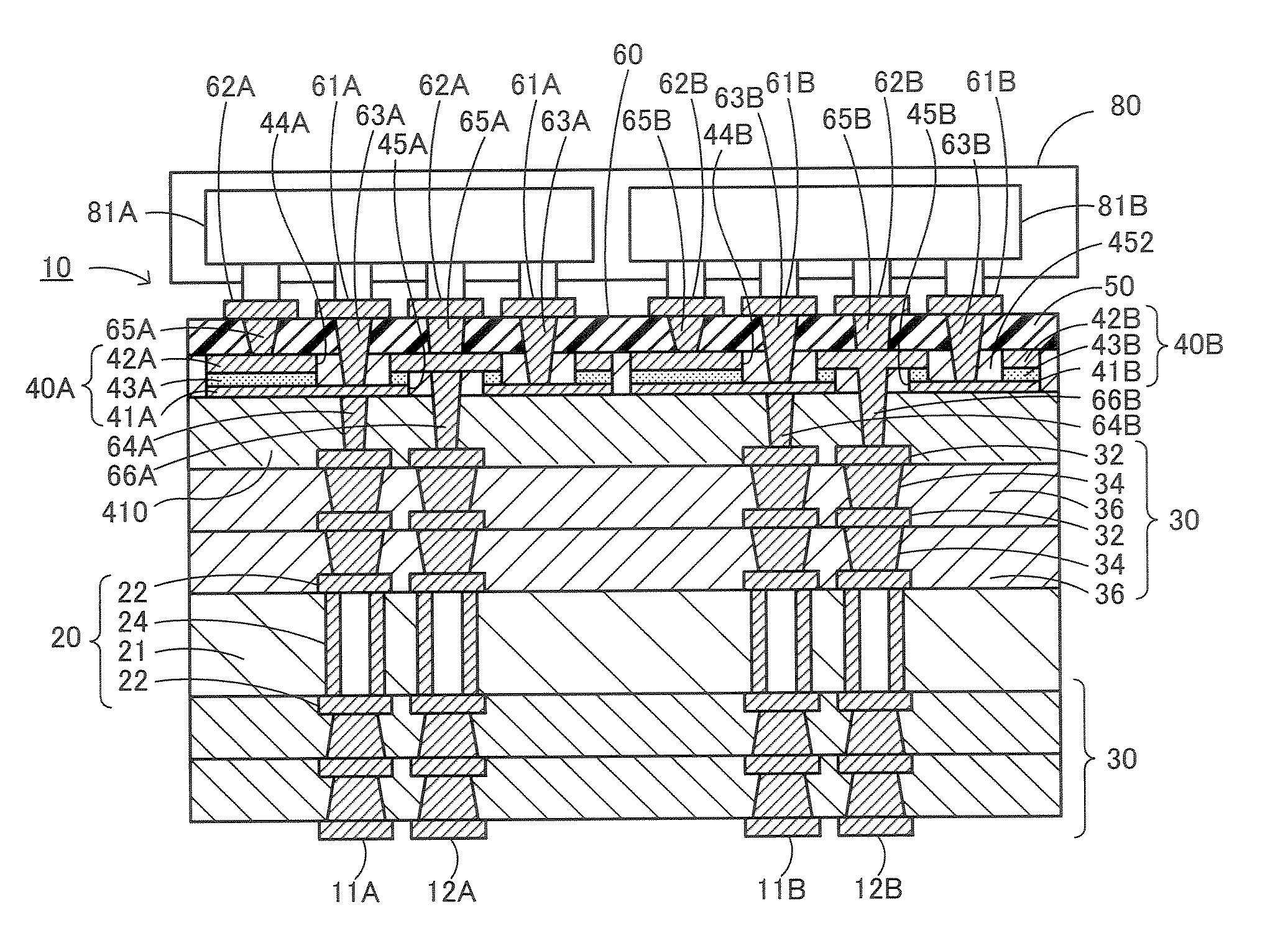

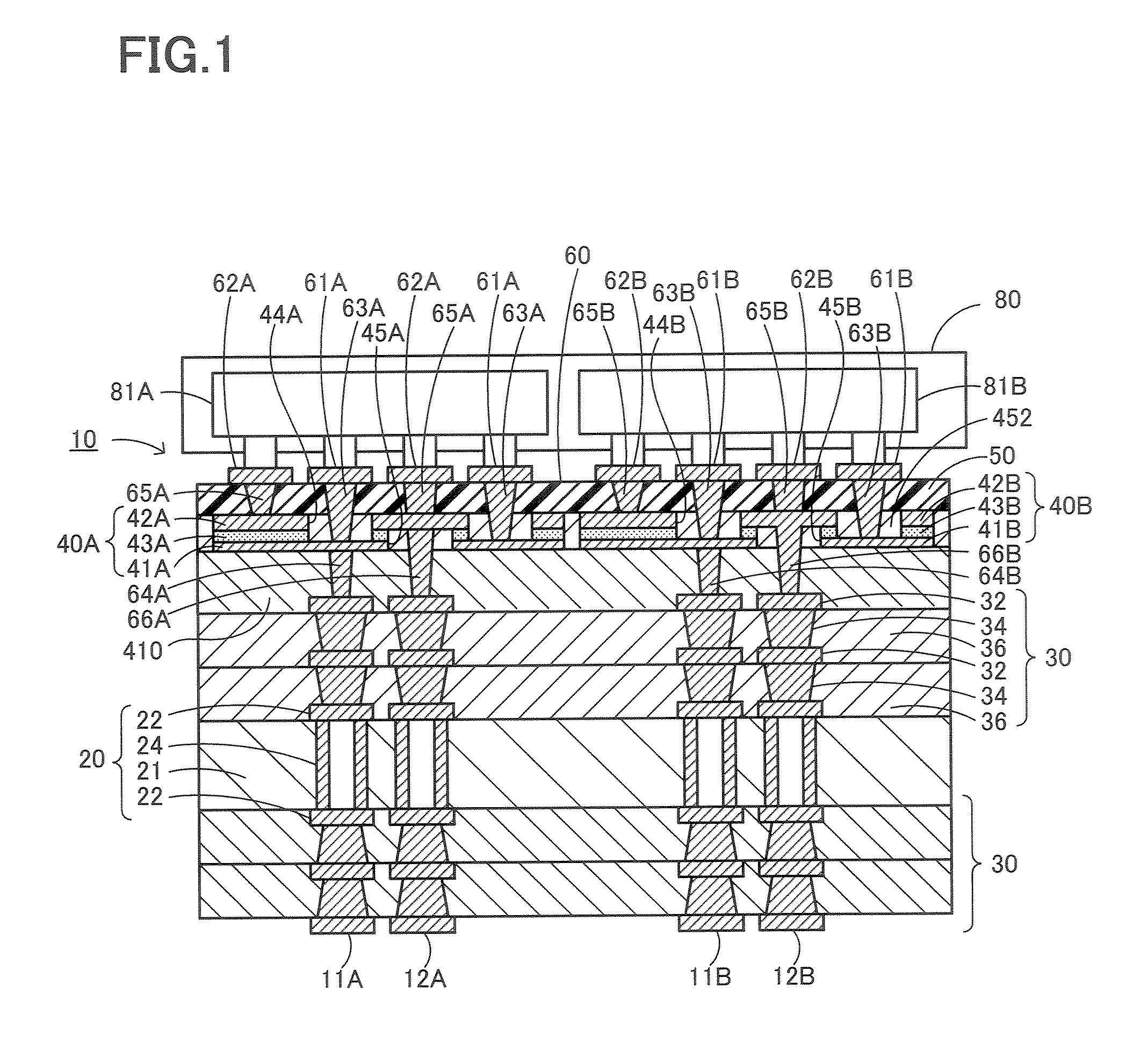

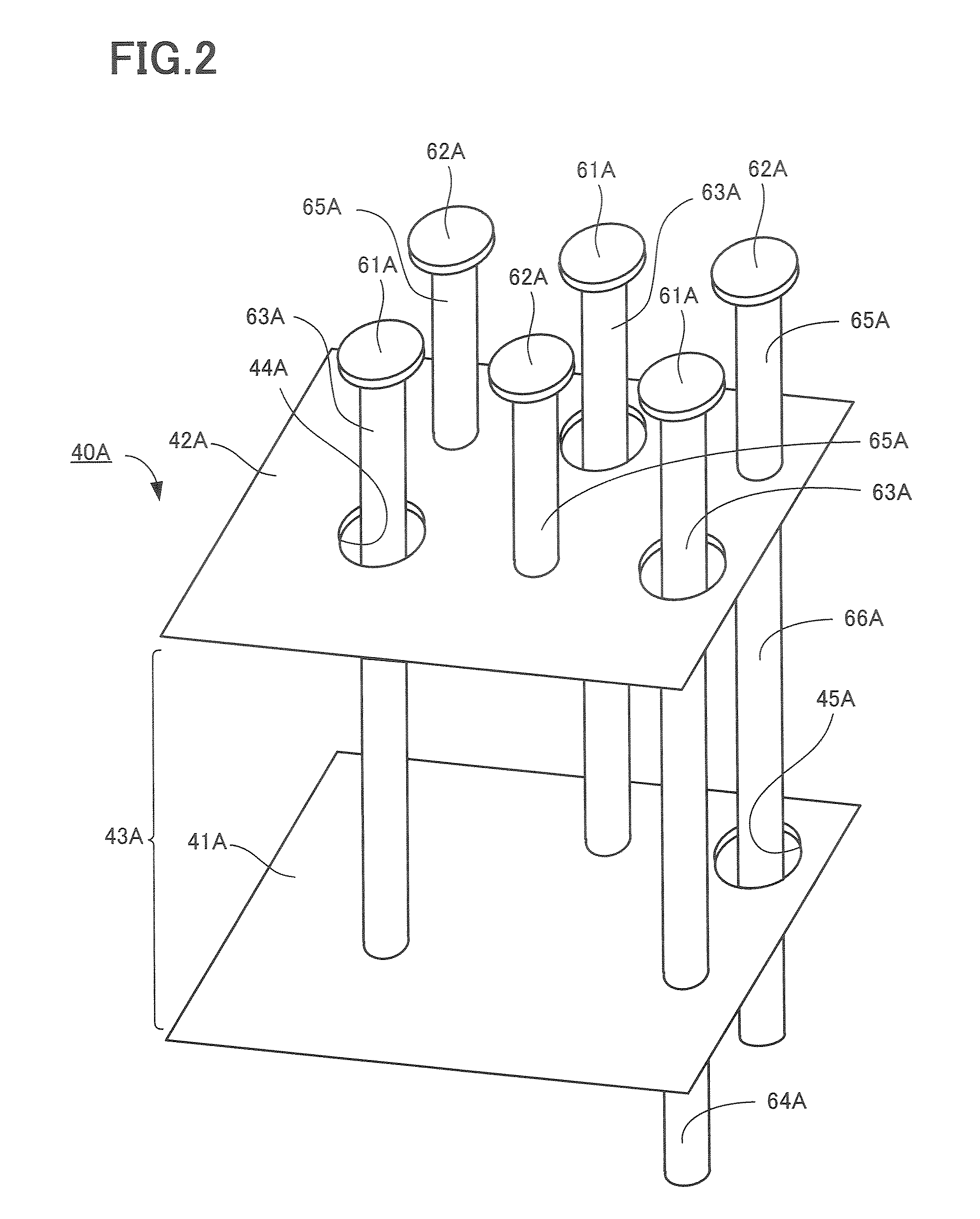

Printed wiring board

ActiveUS20090290316A1Decoupling effect decreaseGood decoupling effectSemiconductor/solid-state device detailsPrinted circuit aspectsDual coreVoltage variation

A printed wiring board includes a mounting portion on which a dual core processor including two processor cores in a single chip can be mounted, power supply lines, ground lines, and a first layered capacitor and a second layered capacitor that are independently provided for each of the processor cores, respectively. Accordingly, even when the electric potentials of the processor cores instantaneously drop, an instantaneous drop of the electric potential can be suppressed by action of the layered capacitors corresponding to the processor cores, respectively. In addition, even when the voltage of one of the processor cores varies, the variation in the voltage does not affect the other processor core, and thus malfunctioning does not occur.

Owner:IBIDEN CO LTD

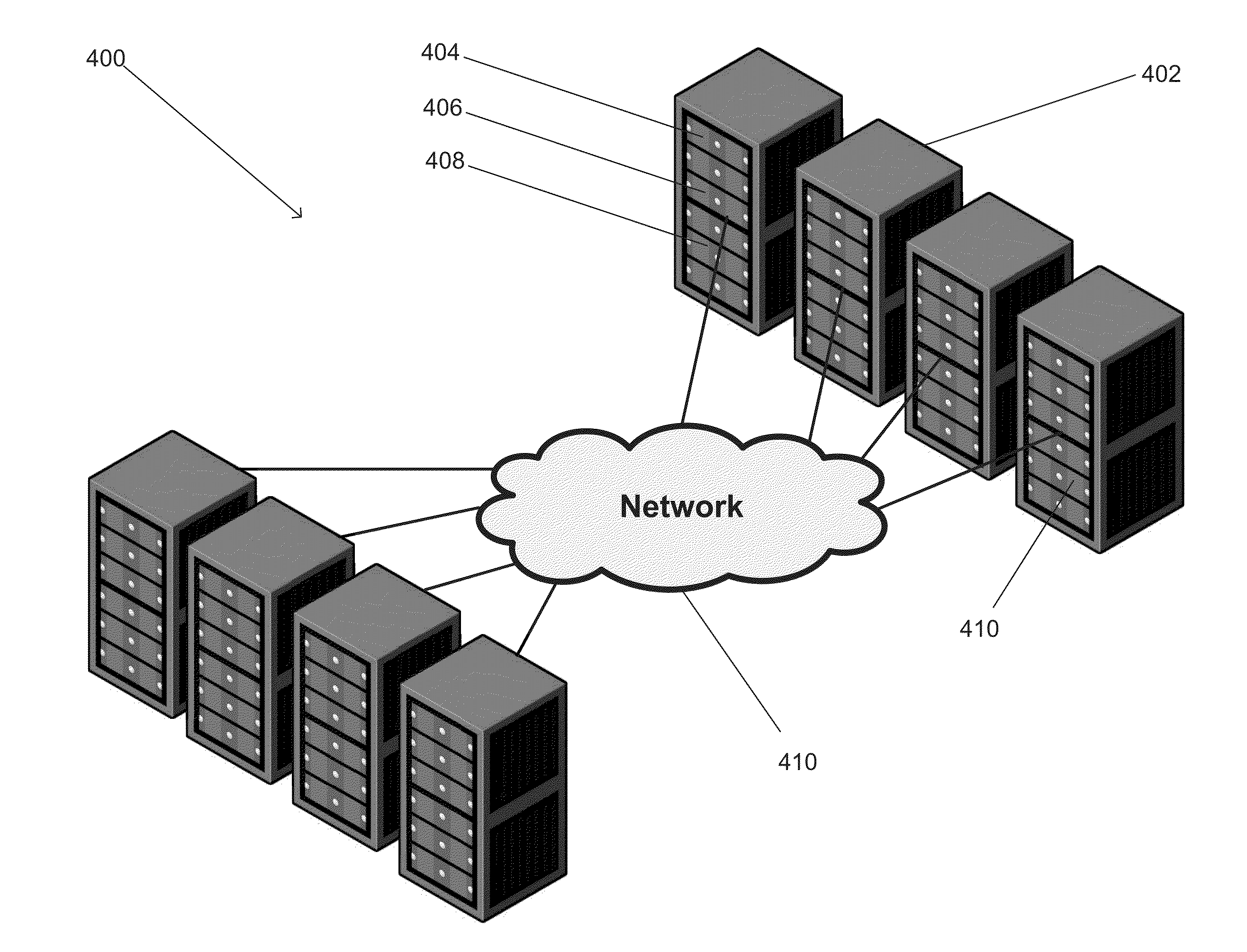

Systems and methods for implementing distributed databases using many-core processors

InactiveUS20140280375A1Rapid and low power retrievalDigital data processing detailsSpecial data processing applicationsSolid-state driveDistributed database

A distributed database, comprising a plurality of server racks, and one or more many-core processor servers in each of the plurality of server racks, wherein each of the one or more many-core processor servers comprises a many-core processor configured to store and access data on one or more solid state drives in the distributed database, where the one or more solid state drives are configured to enable retrieval of data through one or more text-searchable indexes. The one or more many-core processor servers are configured to communicate within the plurality of server racks via a network, and the data is configured as one or more tables distributed to the one or more many-core processor servers for storage in the one or more solid state drives.

Owner:WANDISCO

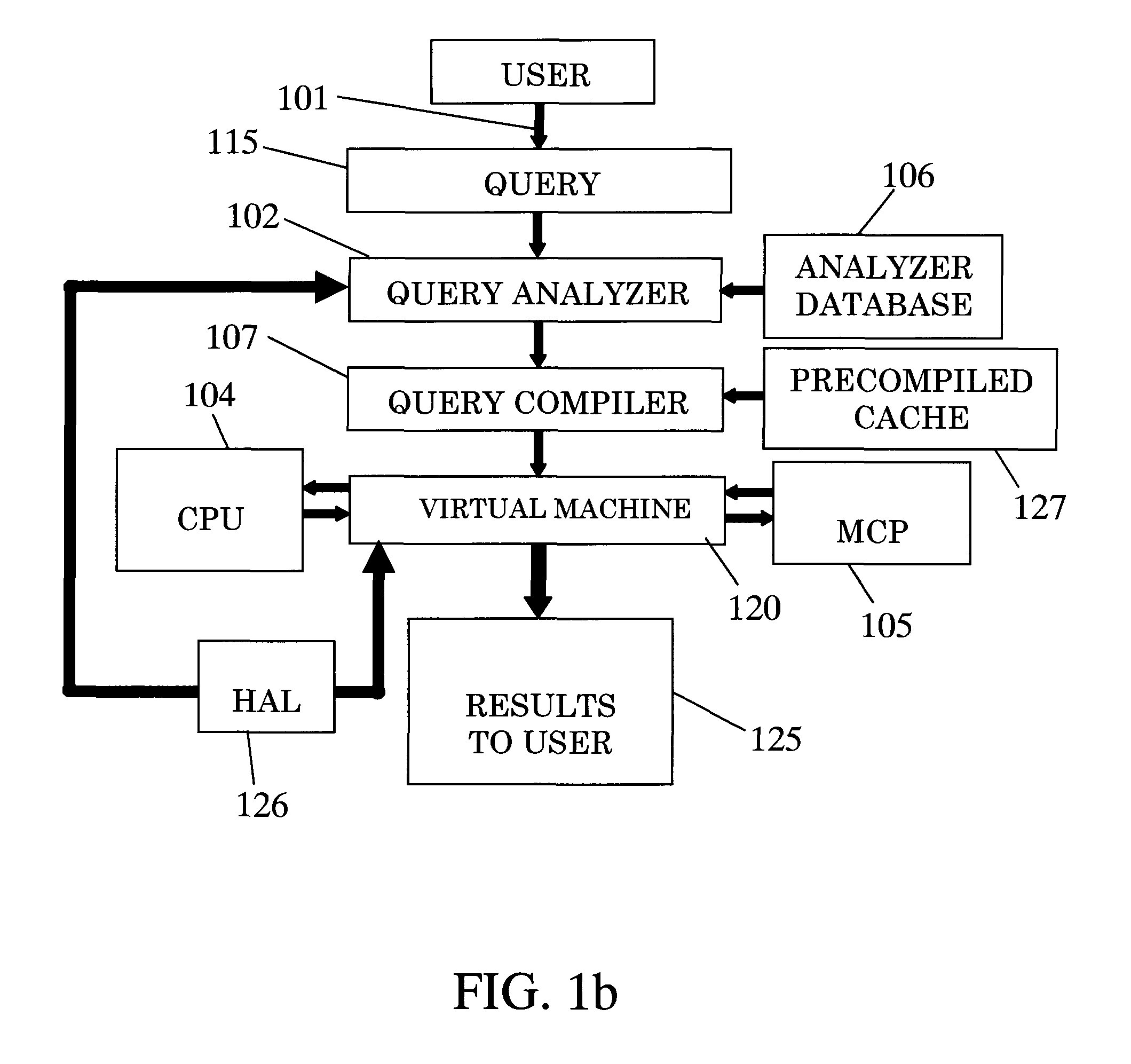

System and method for the parallel execution of database queries over CPUs and multi core processors

ActiveUS9298768B2DisadvantageousDigital data information retrievalResource allocationDatabase queryComputer architecture

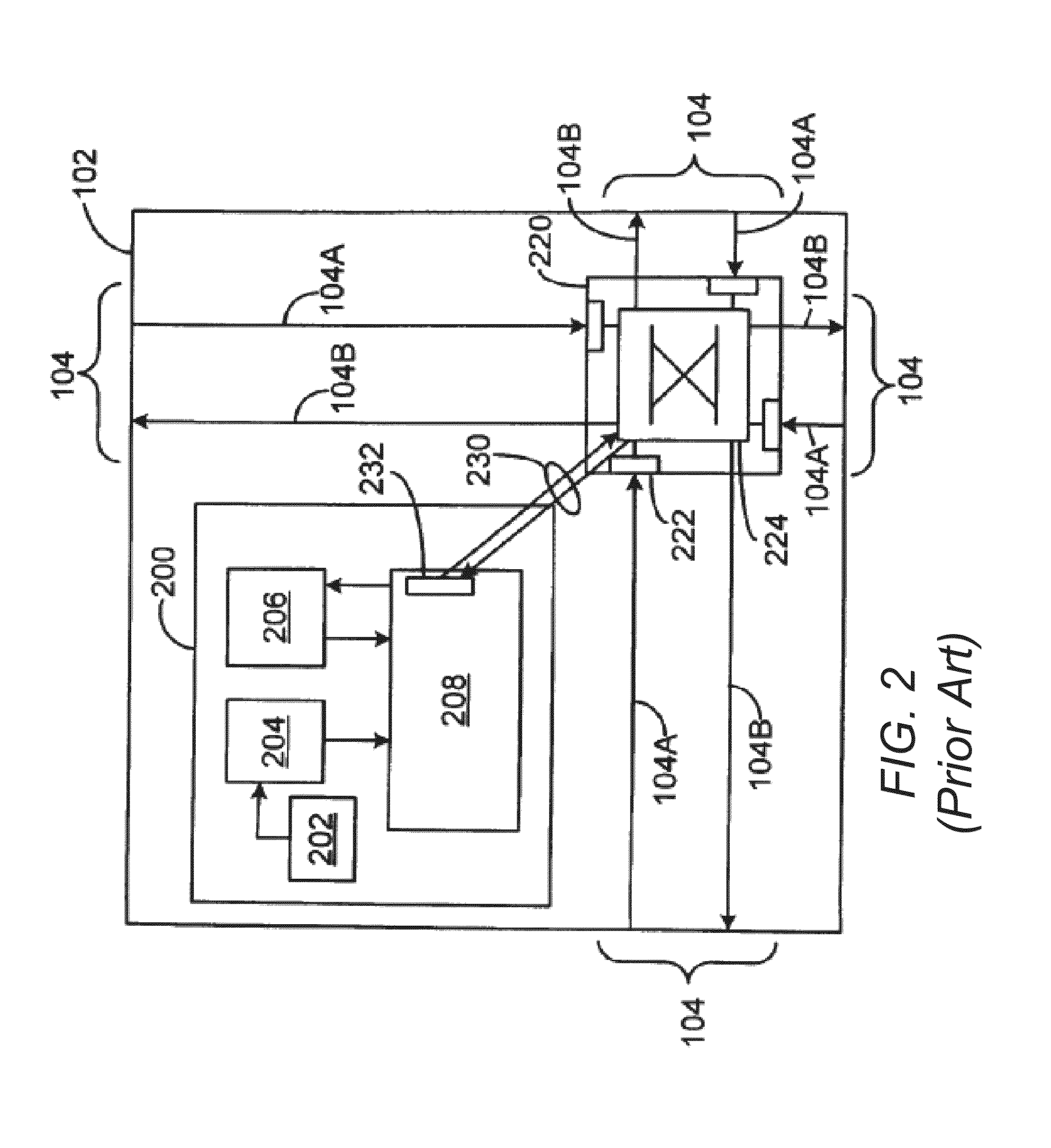

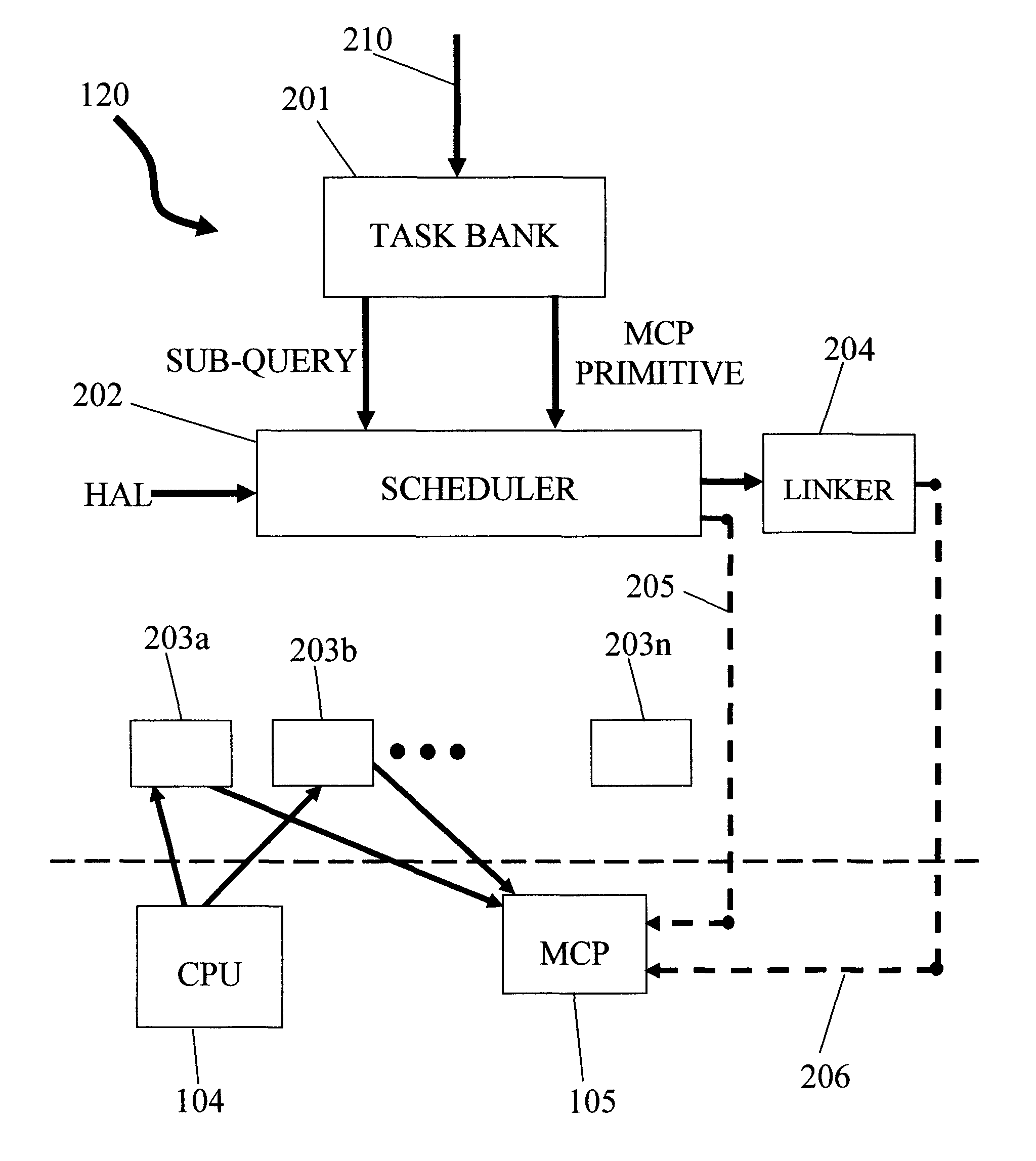

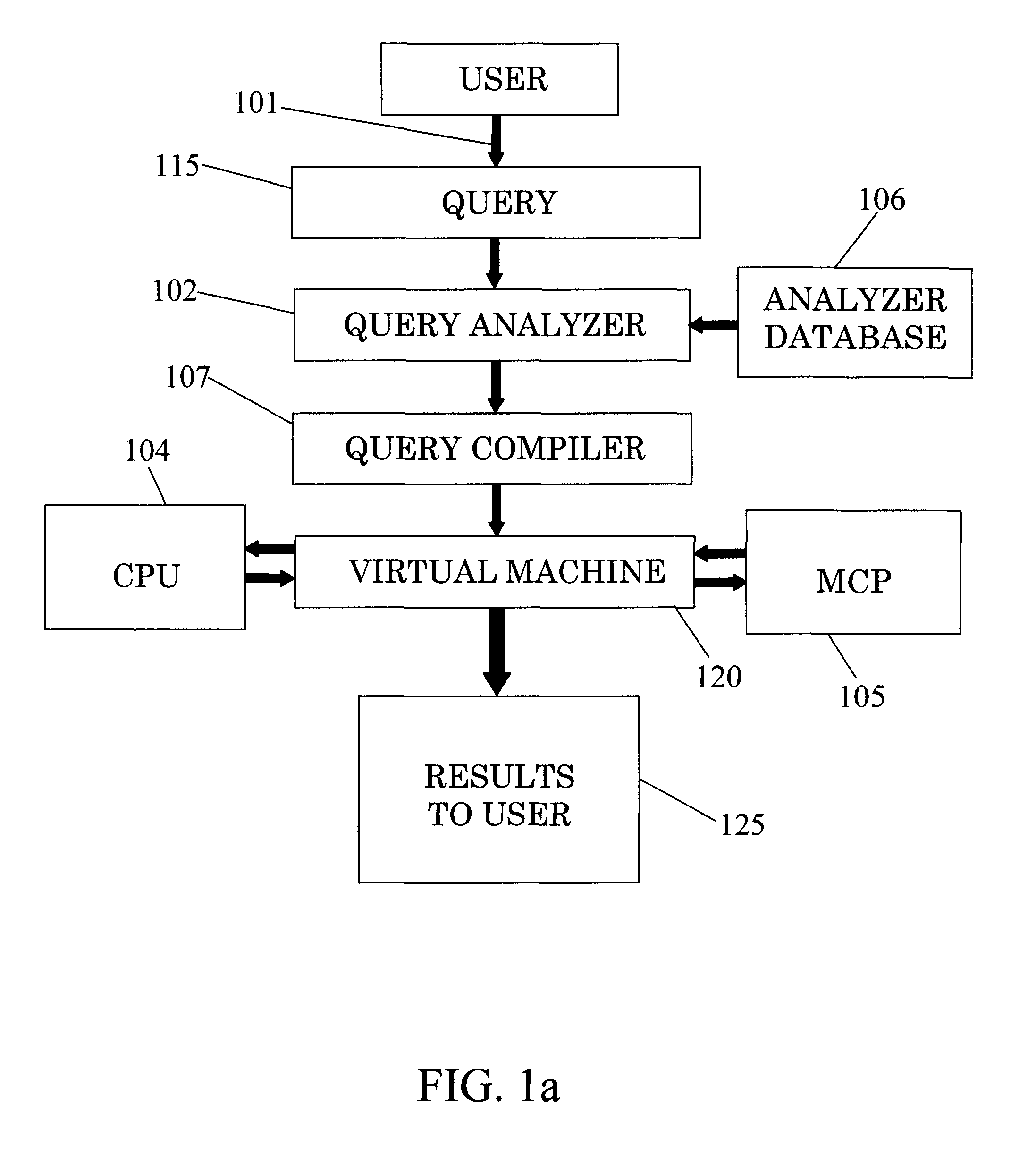

The invention relates to a system for parallel execution of database queries over one or more Central Processing Units (CPUs), and one or more Multi Core Processor, (MCPs), the system comprises (a) a query analyzer for dividing the query to plurality of sub-queries, and for computing and assigning to each sub-query a target address of either a CPU of an MCP; (b) a query compiler for creating an Abstract Syntax Tree (AST) and OpenCL primitives only for those sub-queries that are targeted to an MCP, and for conveying both the remaining sub-queries, and the AST and the OpenCL code to a virtual machine, and (A) a Virtual Machine (VM) which comprises: a task bank, a buffers; a scheduler. The virtual machine combines said sub-query results by the CPUs and said primitive results by said MCPs to a final query result.

Owner:SQREAM TECH

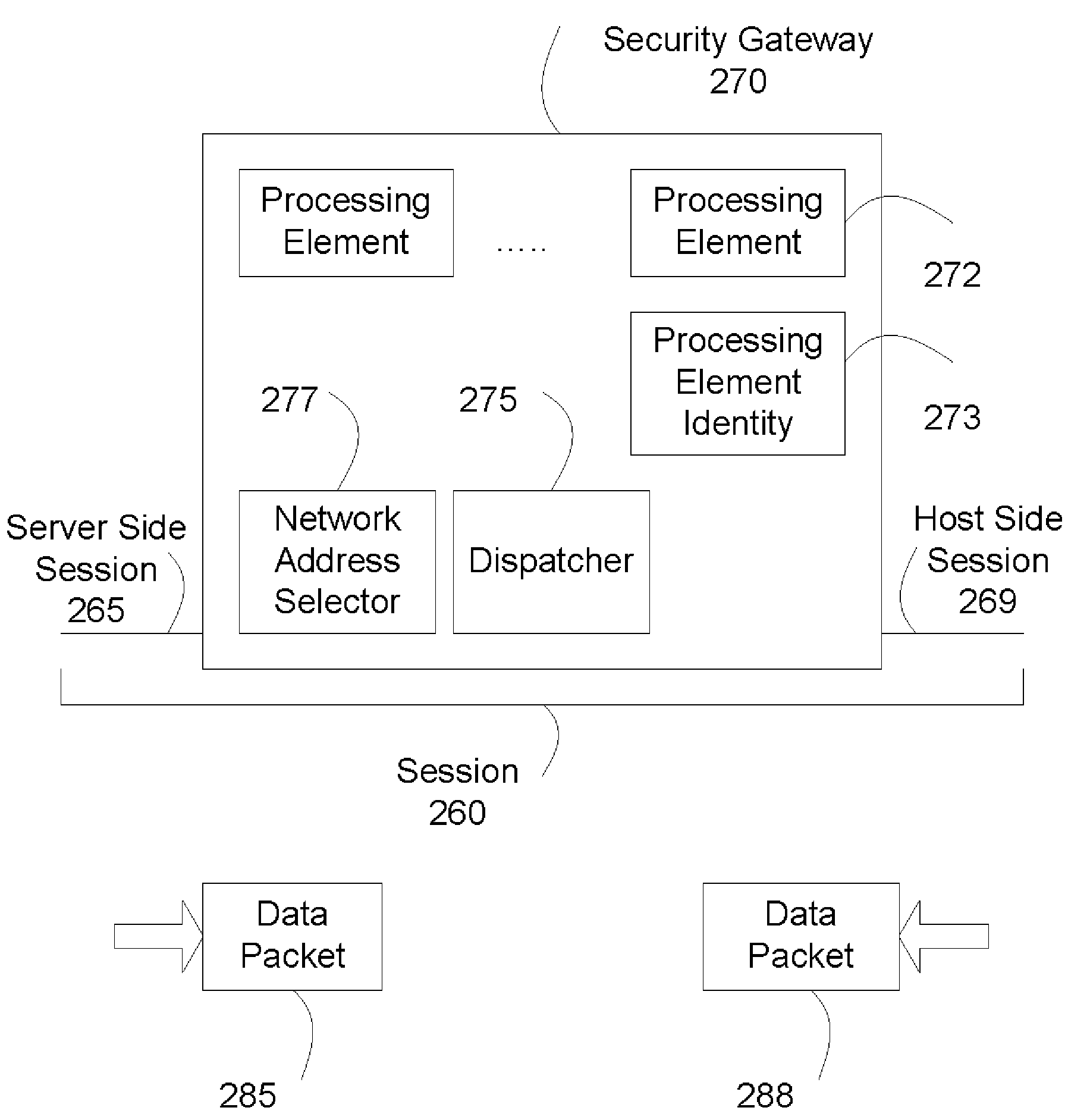

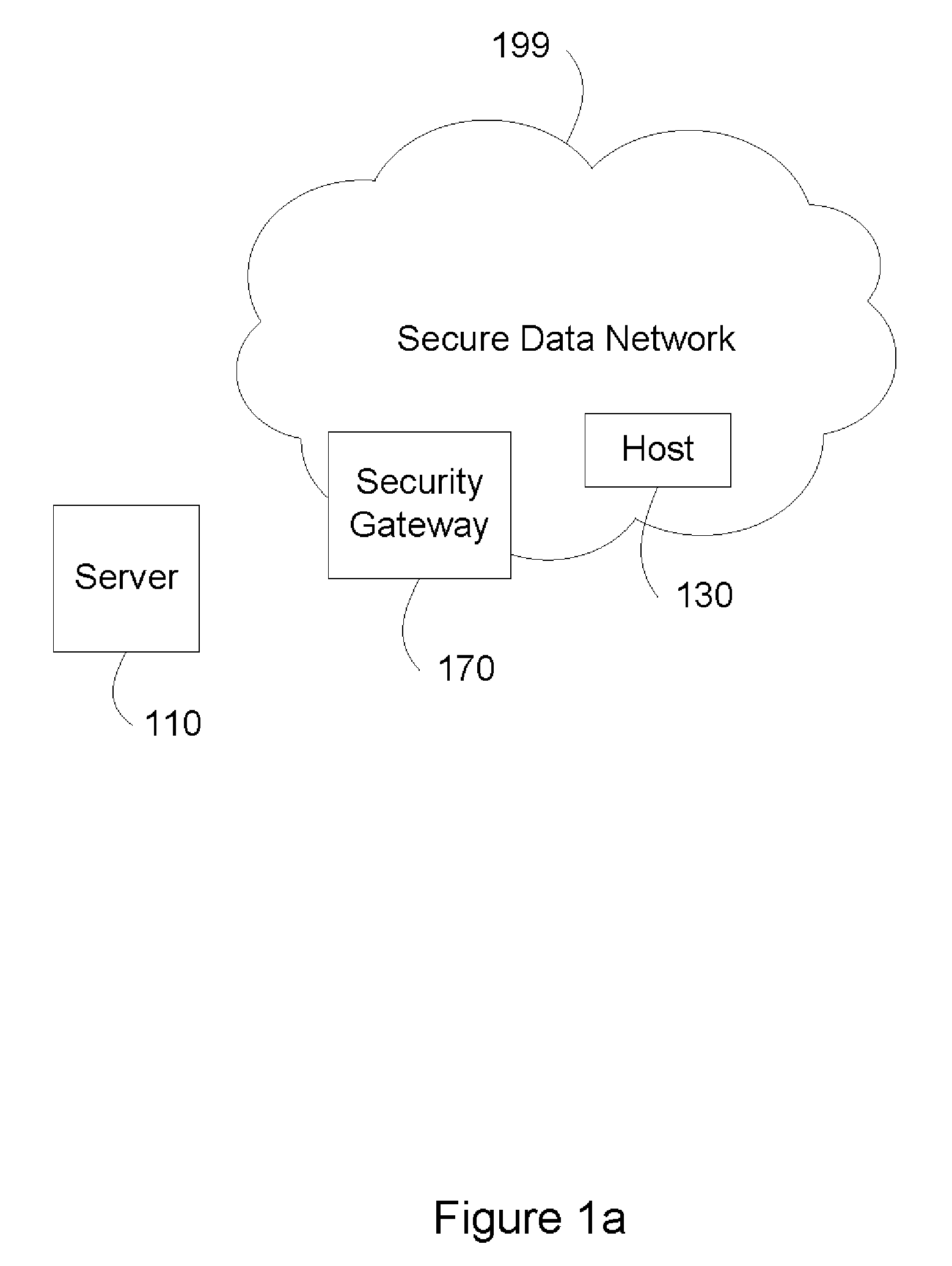

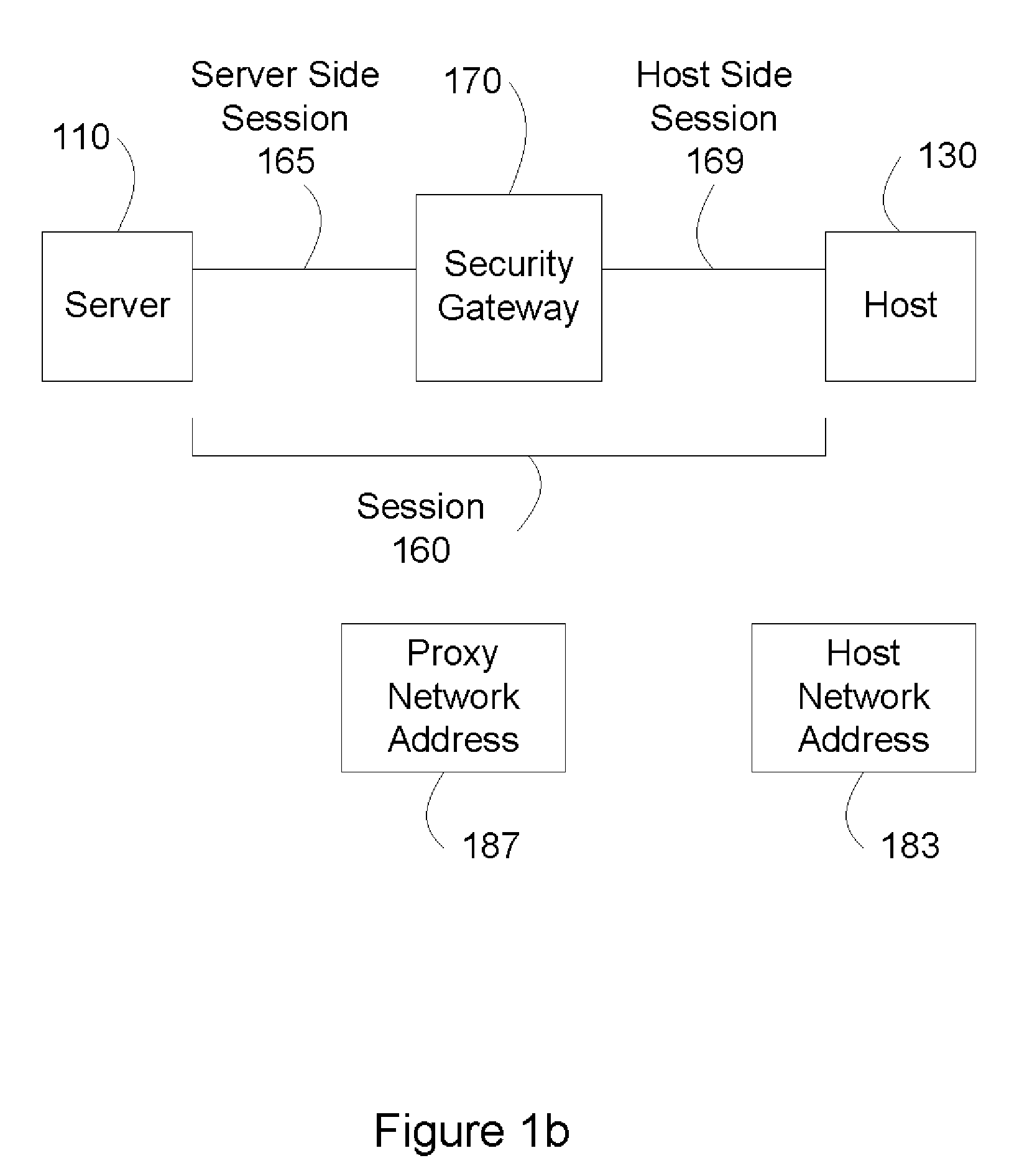

System and Method for Distributed Multi-Processing Security Gateway

ActiveUS20090049537A1Digital data processing detailsUnauthorized memory use protectionNetwork packetNetwork addressing

A system and method for a distributed multi-processing security gateway establishes a host side session, selects a proxy network address for a server, uses the proxy network address to establish a server side session, receives a data packet, assigns a central processing unit core from a plurality of central processing unit cores in a multi-core processor of the security gateway to process the data packet, processes the data packet according to security policies, and sends the processed data packet. The proxy network address is selected such that a same central processing unit core is assigned to process data packets from the server side session and the host side session. By assigning central processing unit cores in this manner, higher capable security gateways are provided.

Owner:A10 NETWORKS

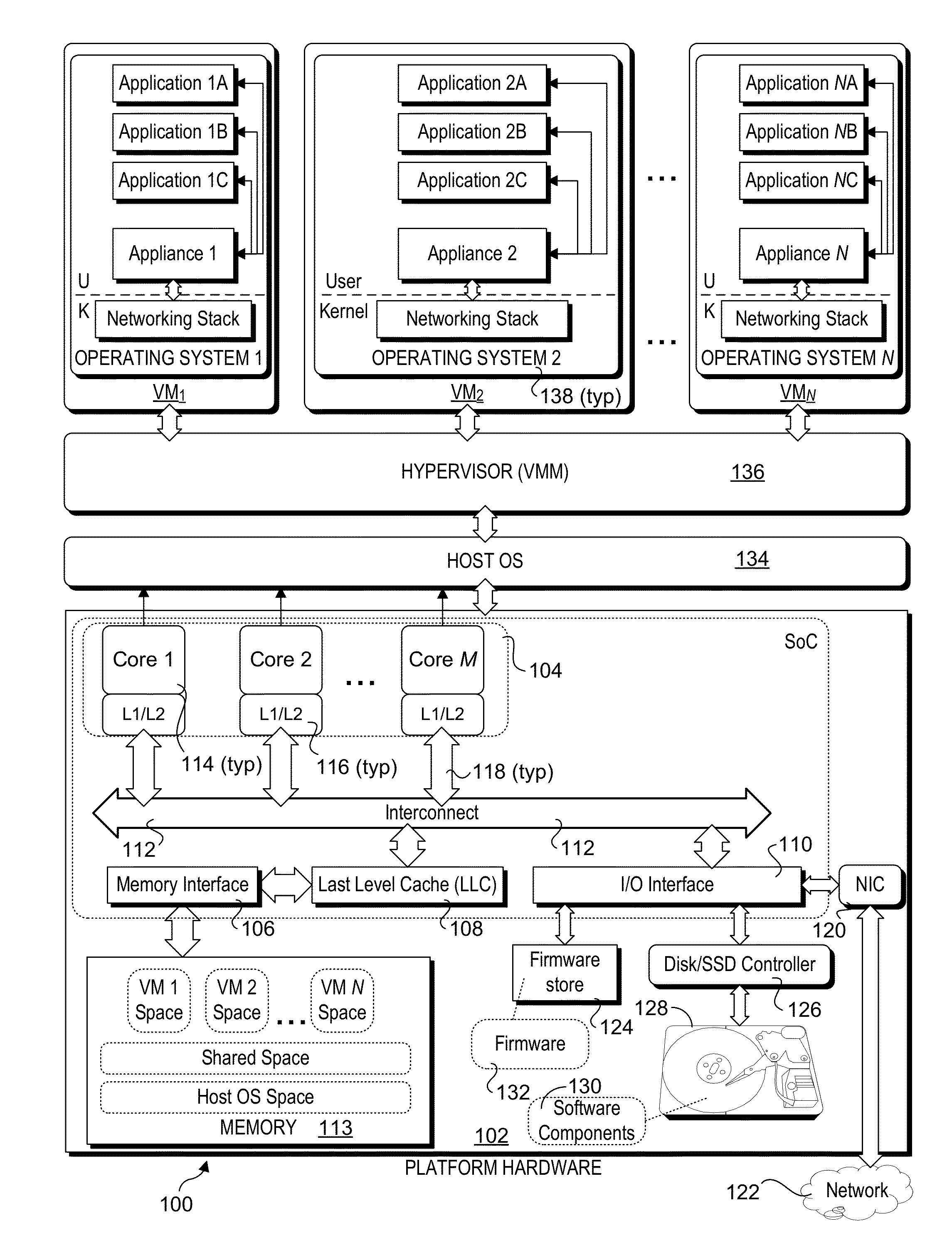

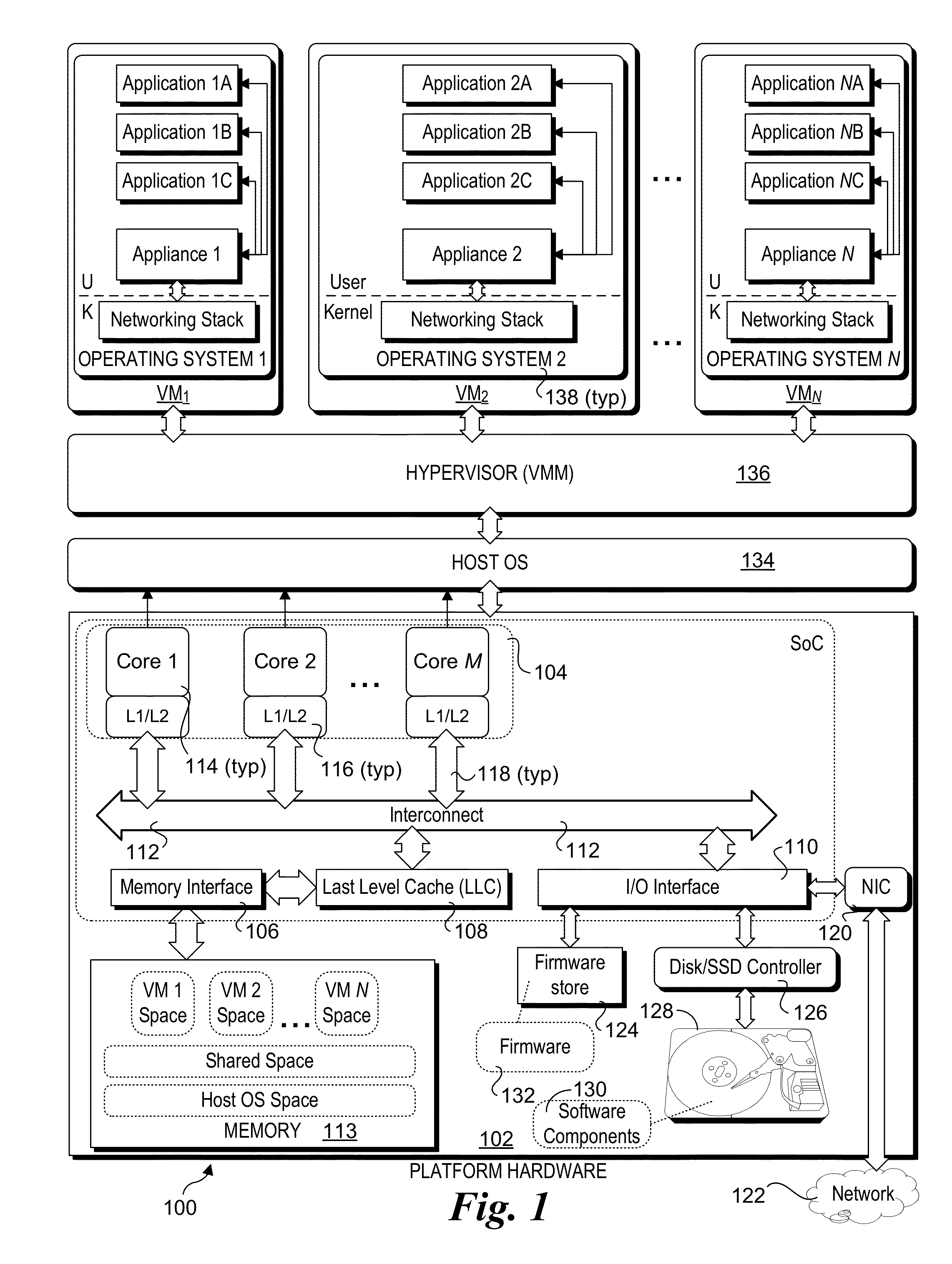

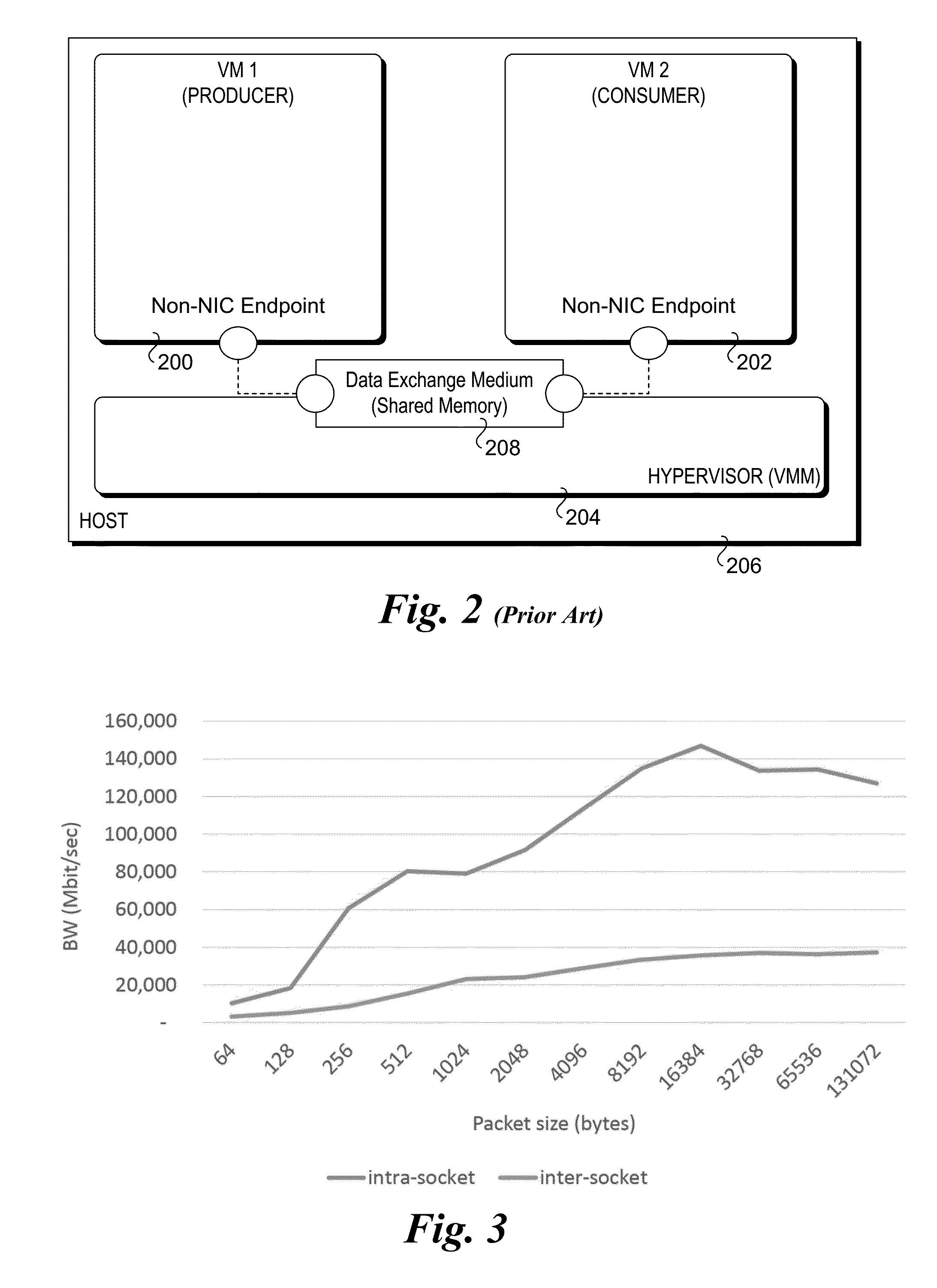

Hardware/software co-optimization to improve performance and energy for inter-vm communication for nfvs and other producer-consumer workloads

ActiveUS20160188474A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyStructure of Management Information

Methods and apparatus implementing Hardware / Software co-optimization to improve performance and energy for inter-VM communication for NFVs and other producer-consumer workloads. The apparatus include multi-core processors with multi-level cache hierarchies including and L1 and L2 cache for each core and a shared last-level cache (LLC). One or more machine-level instructions are provided for proactively demoting cachelines from lower cache levels to higher cache levels, including demoting cachelines from L1 / L2 caches to an LLC. Techniques are also provided for implementing hardware / software co-optimization in multi-socket NUMA architecture system, wherein cachelines may be selectively demoted and pushed to an LLC in a remote socket. In addition, techniques are disclosure for implementing early snooping in multi-socket systems to reduce latency when accessing cachelines on remote sockets.

Owner:INTEL CORP

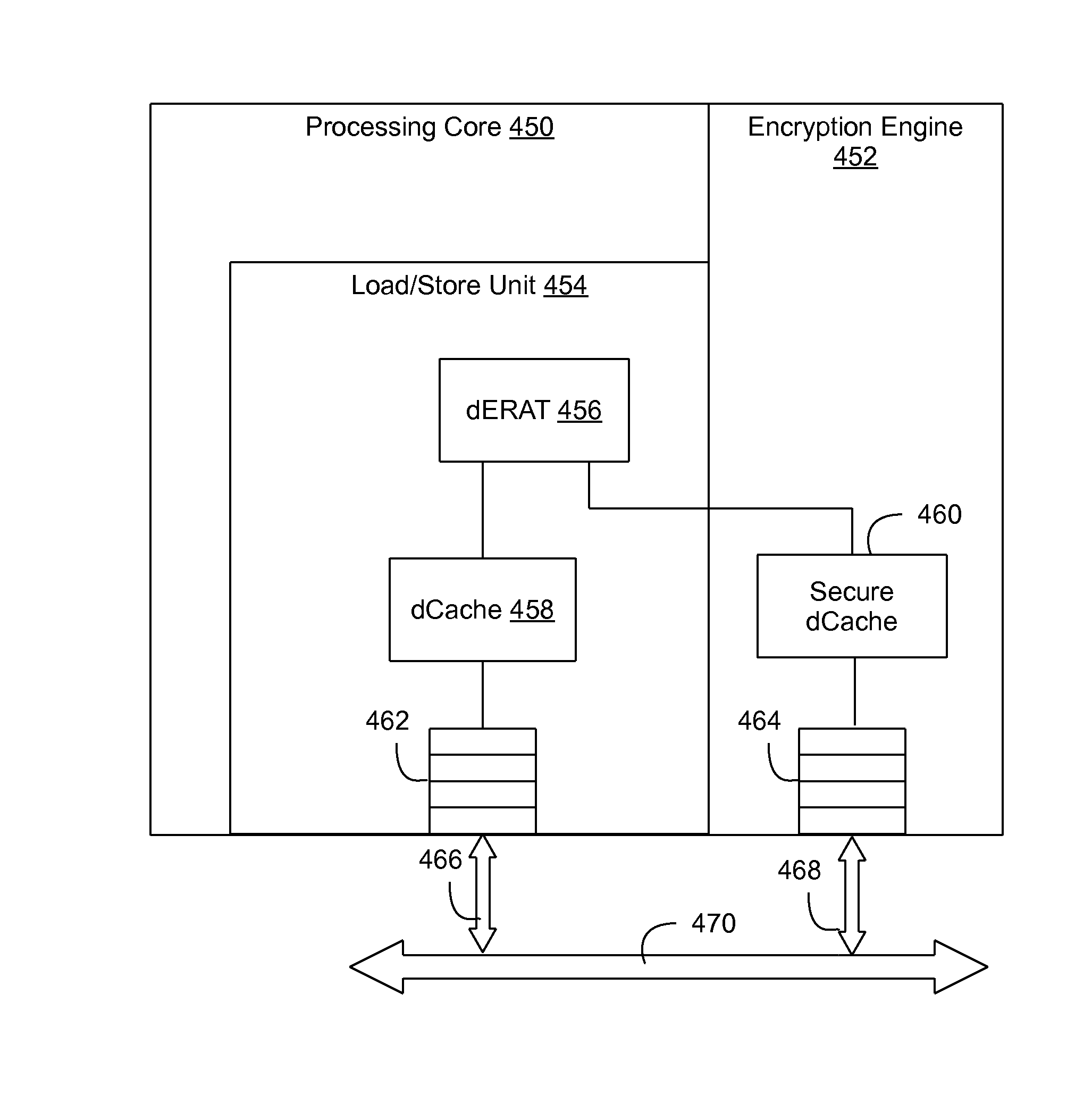

Memory address translation-based data encryption with integrated encryption engine

ActiveUS20130191651A1Memory architecture accessing/allocationUnauthorized memory use protectionMemory addressProcessing core

A method and circuit arrangement utilize an integrated encryption engine within a processing core of a multi-core processor to perform encryption operations, i.e., encryption and decryption of secure data, in connection with memory access requests that access such data. The integrated encryption engine is utilized in combination with a memory address translation data structure such as an Effective To Real Translation (ERAT) or Translation Lookaside Buffer (TLB) that is augmented with encryption-related page attributes to indicate whether pages of memory identified in the data structure are encrypted such that secure data associated with a memory access request in the processing core may be selectively streamed to the integrated encryption engine based upon the encryption-related page attribute for the memory page associated with the memory access request.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com