Patents

Literature

476 results about "Thread count" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

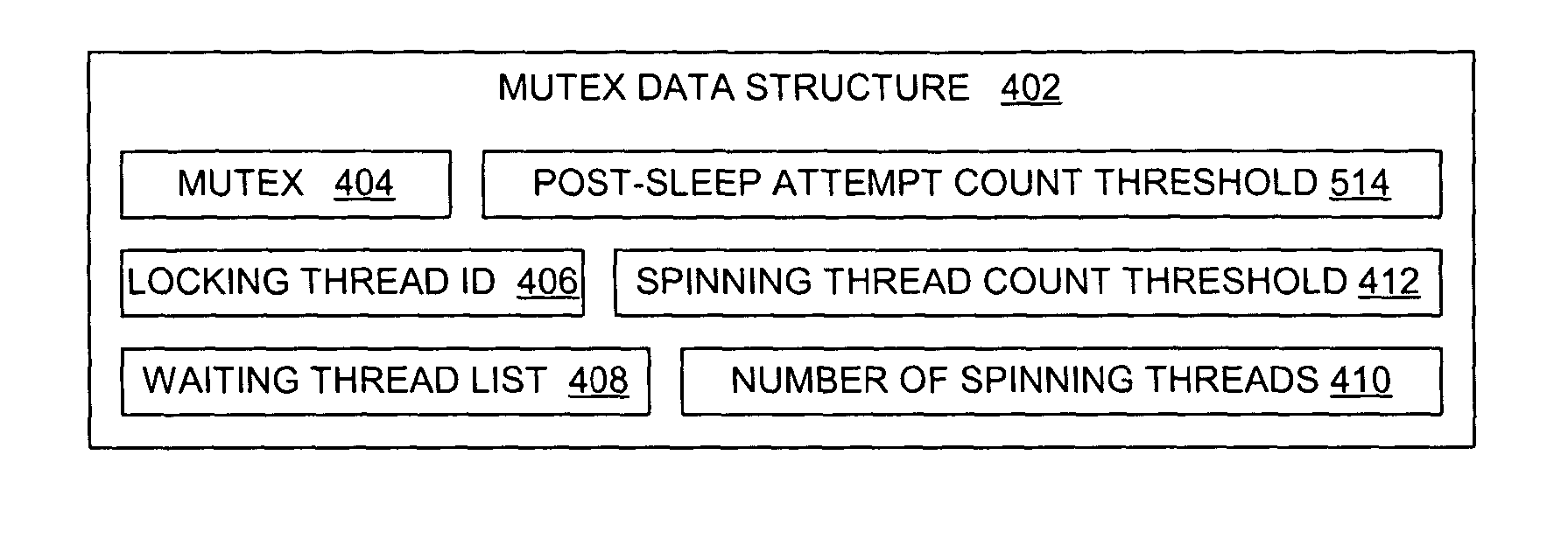

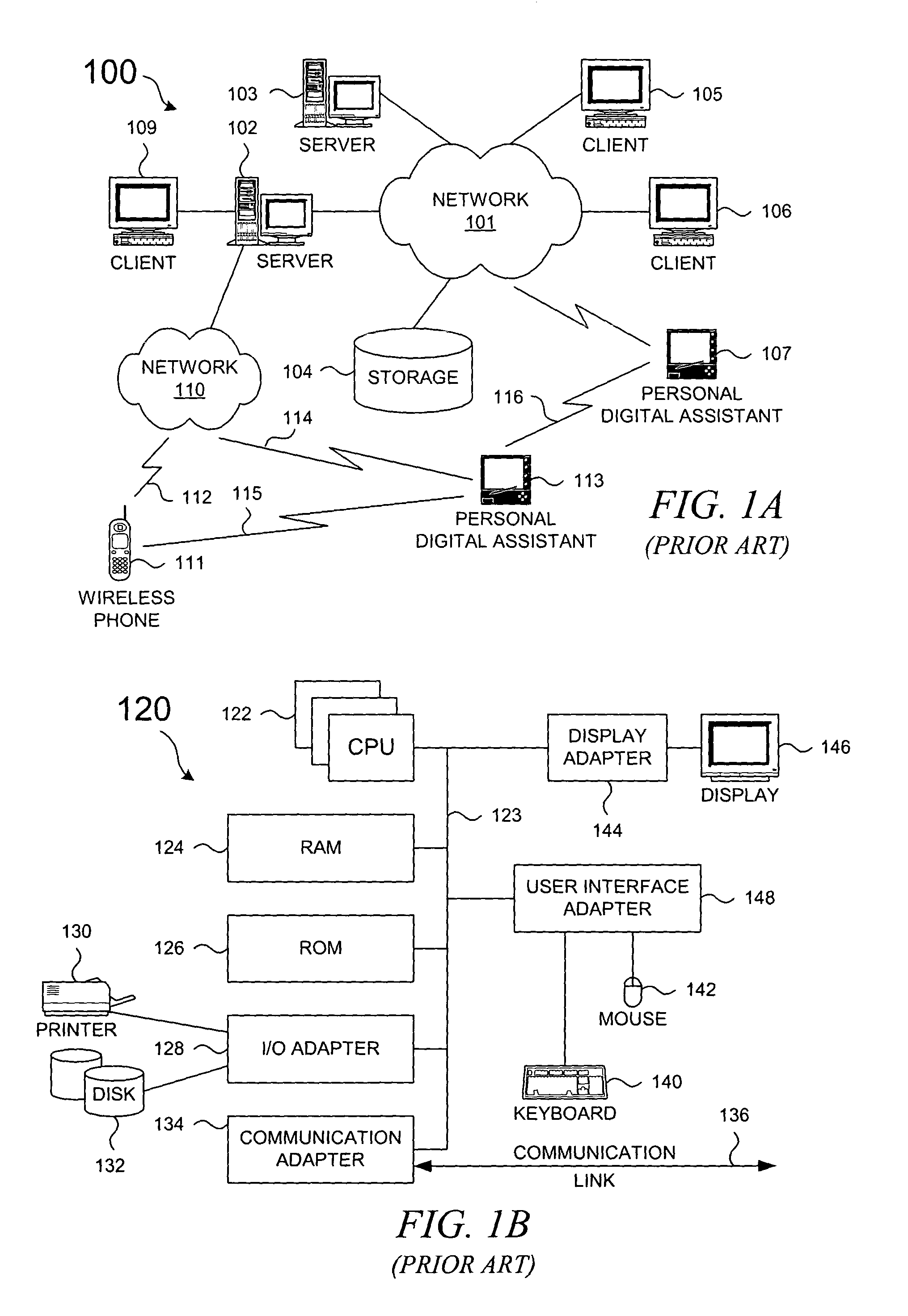

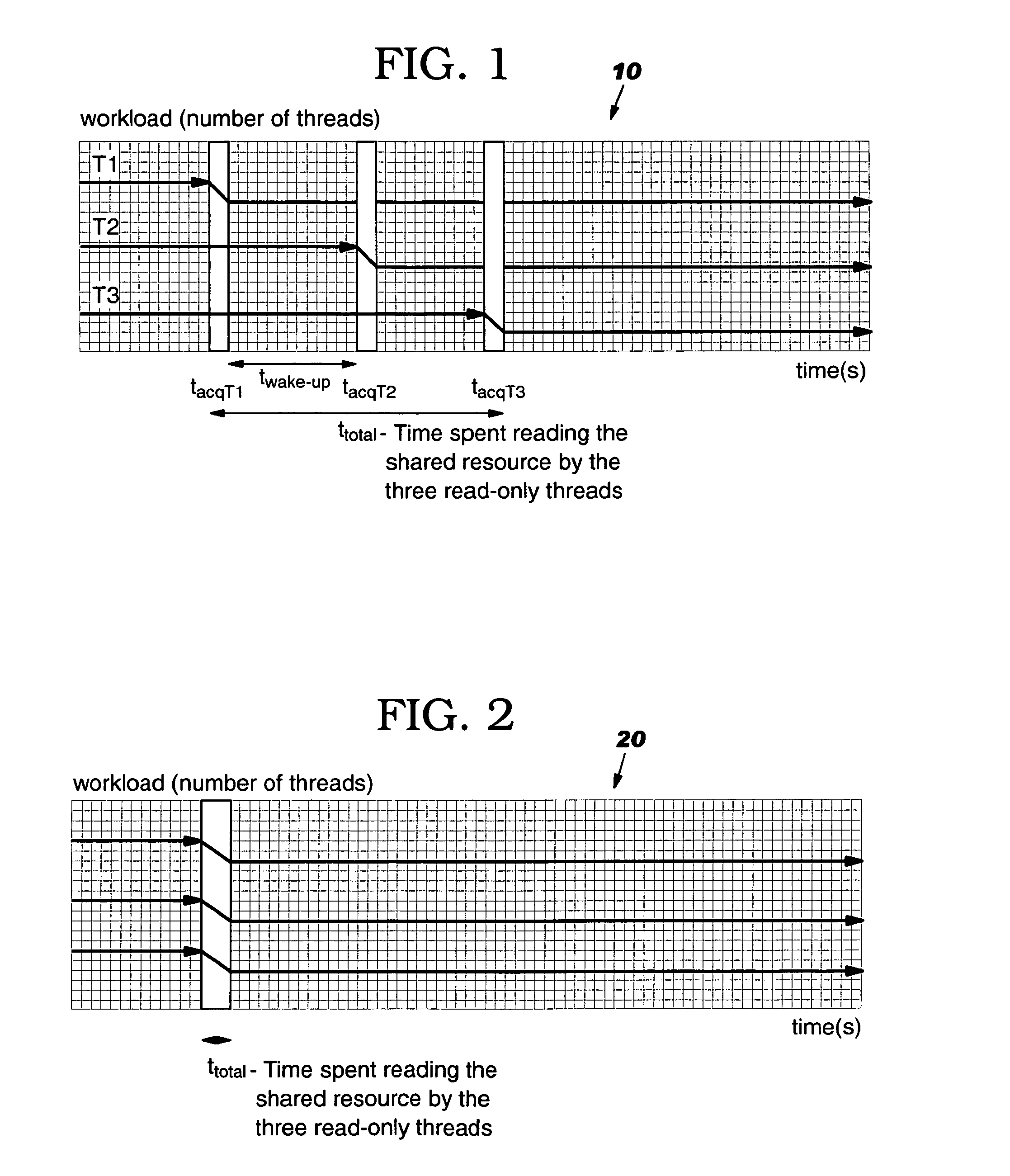

Method and system for dynamically bounded spinning threads on a contested mutex

A method for managing a mutex in a data processing system is presented. For each mutex, a count is maintained of the number of threads that are spinning while waiting to acquire a mutex. If a thread attempts to acquire a locked mutex, then the thread enters a spin state or a sleep state based on restrictive conditions and the number of threads that are spinning during the attempted acquisition. In addition, the relative length of time that is required by a thread to spin on a mutex after already sleeping on the mutex may be used to regulate the number of threads that are allowed to spin on the mutex.

Owner:IBM CORP

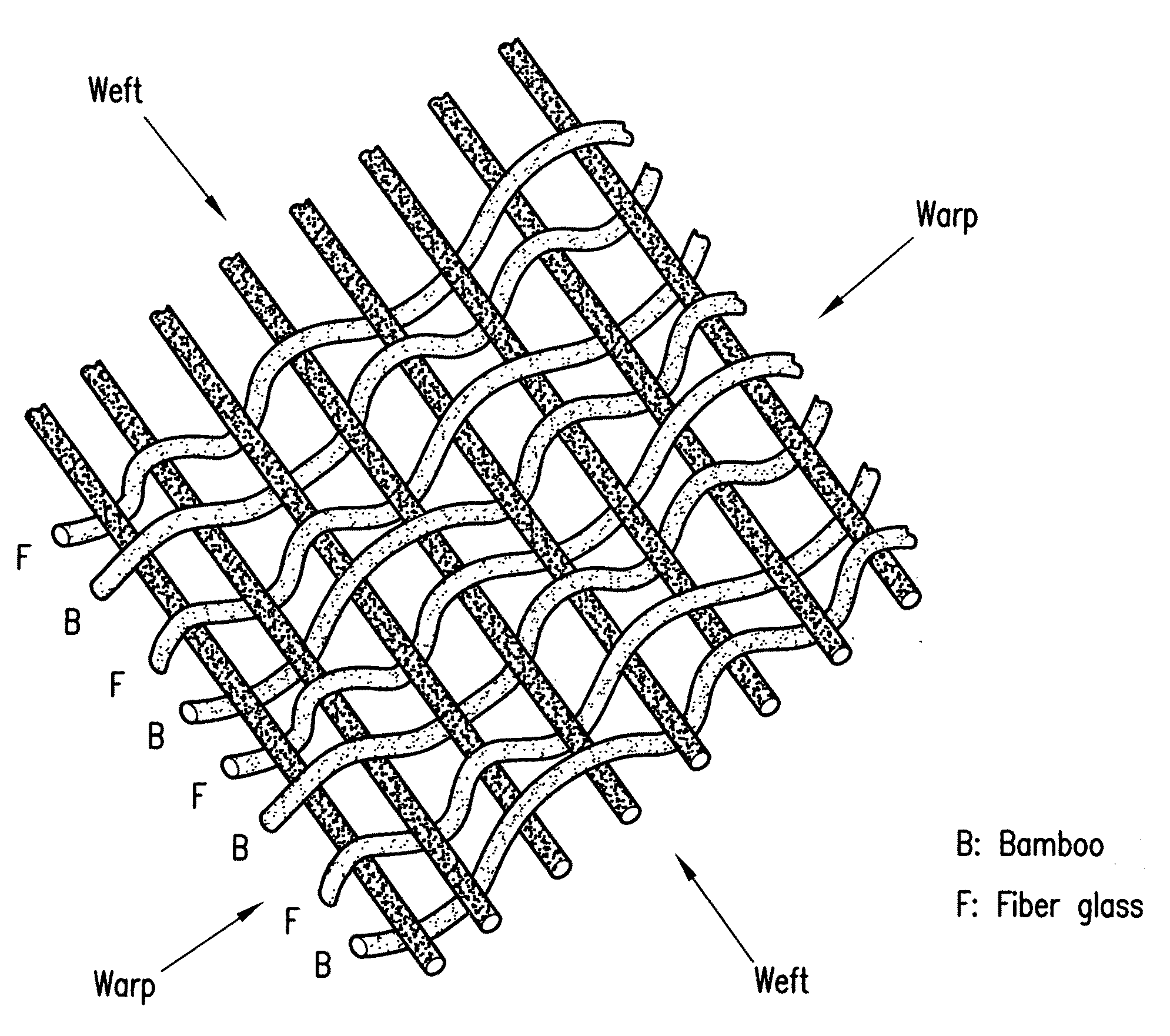

Hemostatic woven fabric

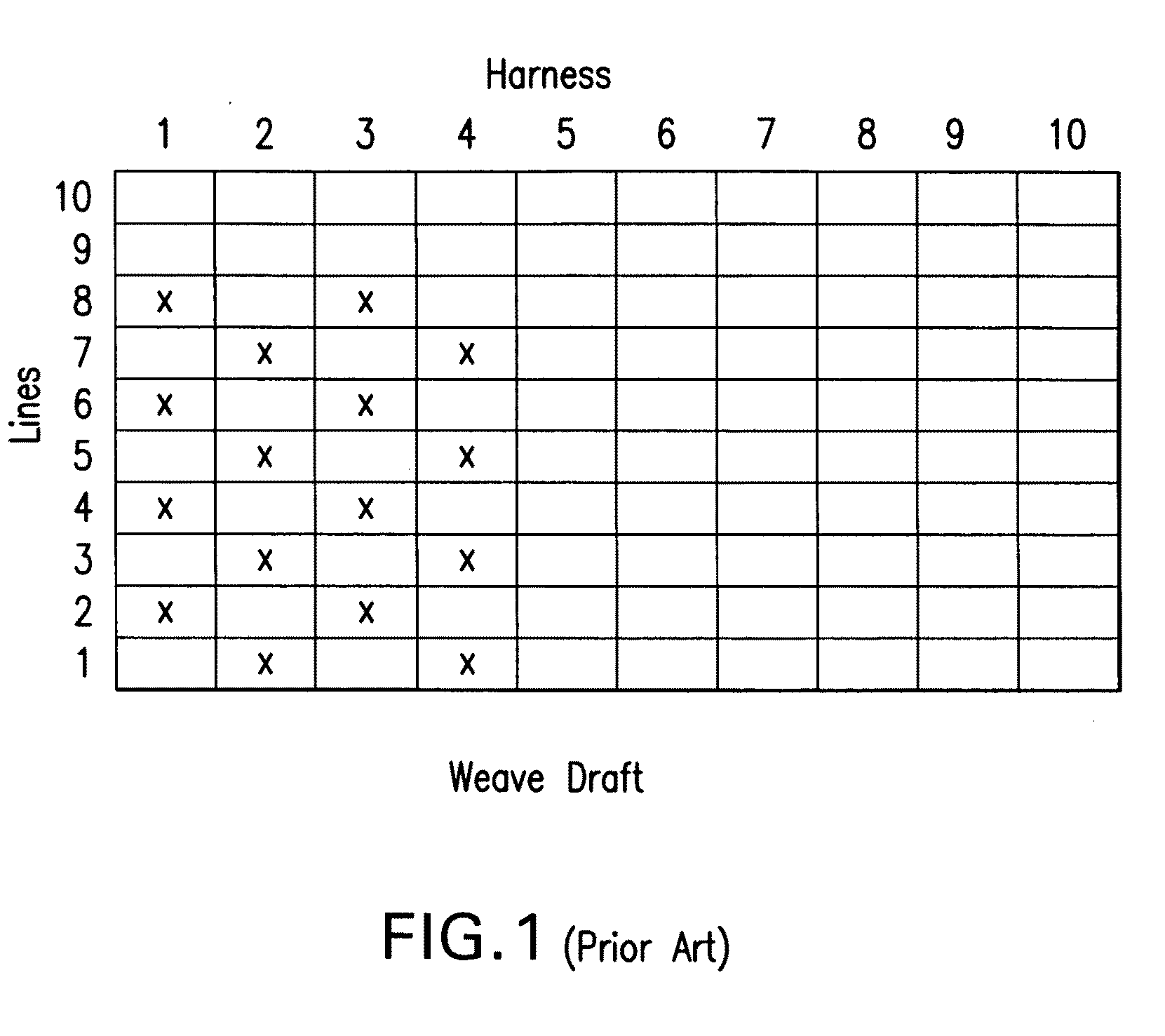

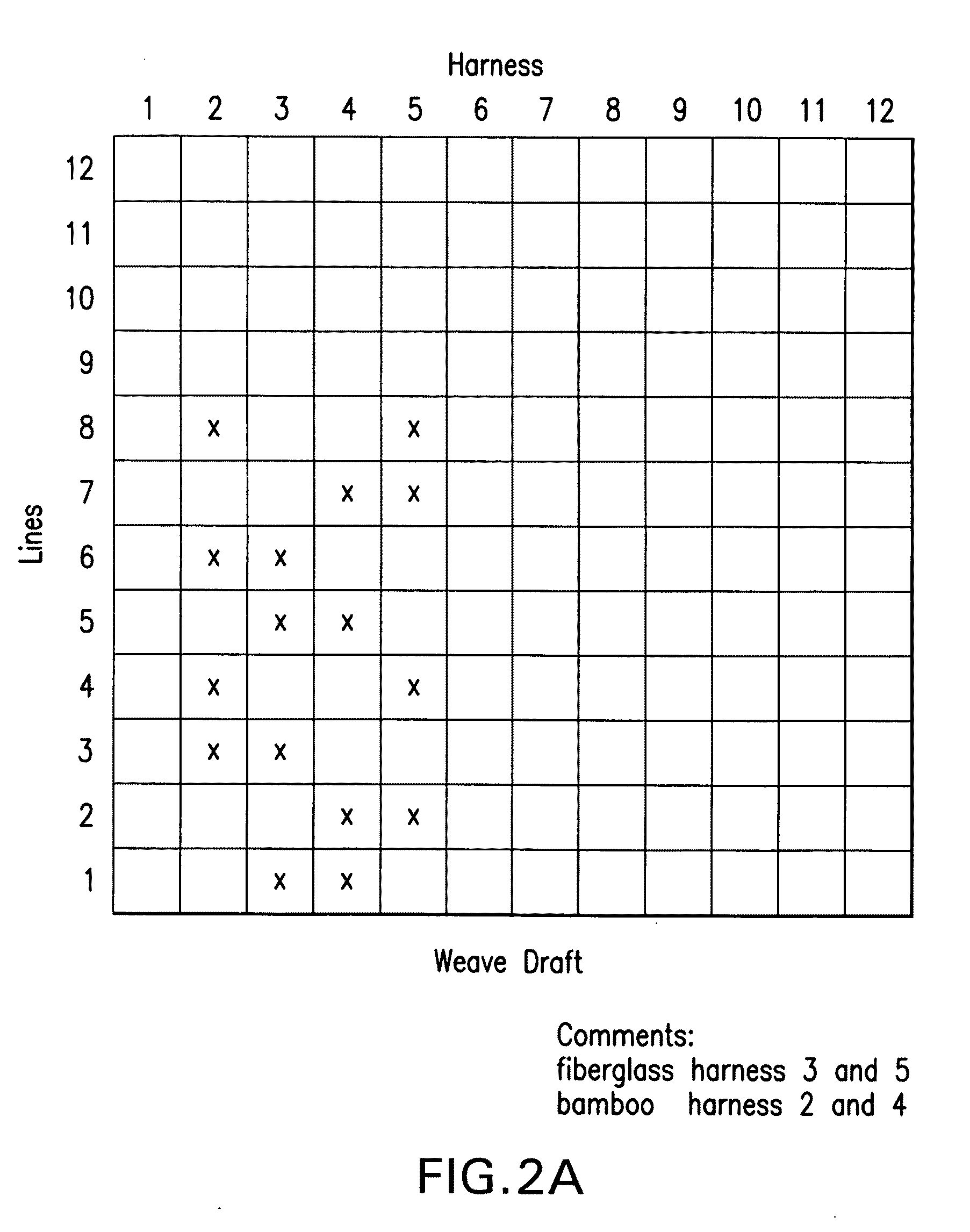

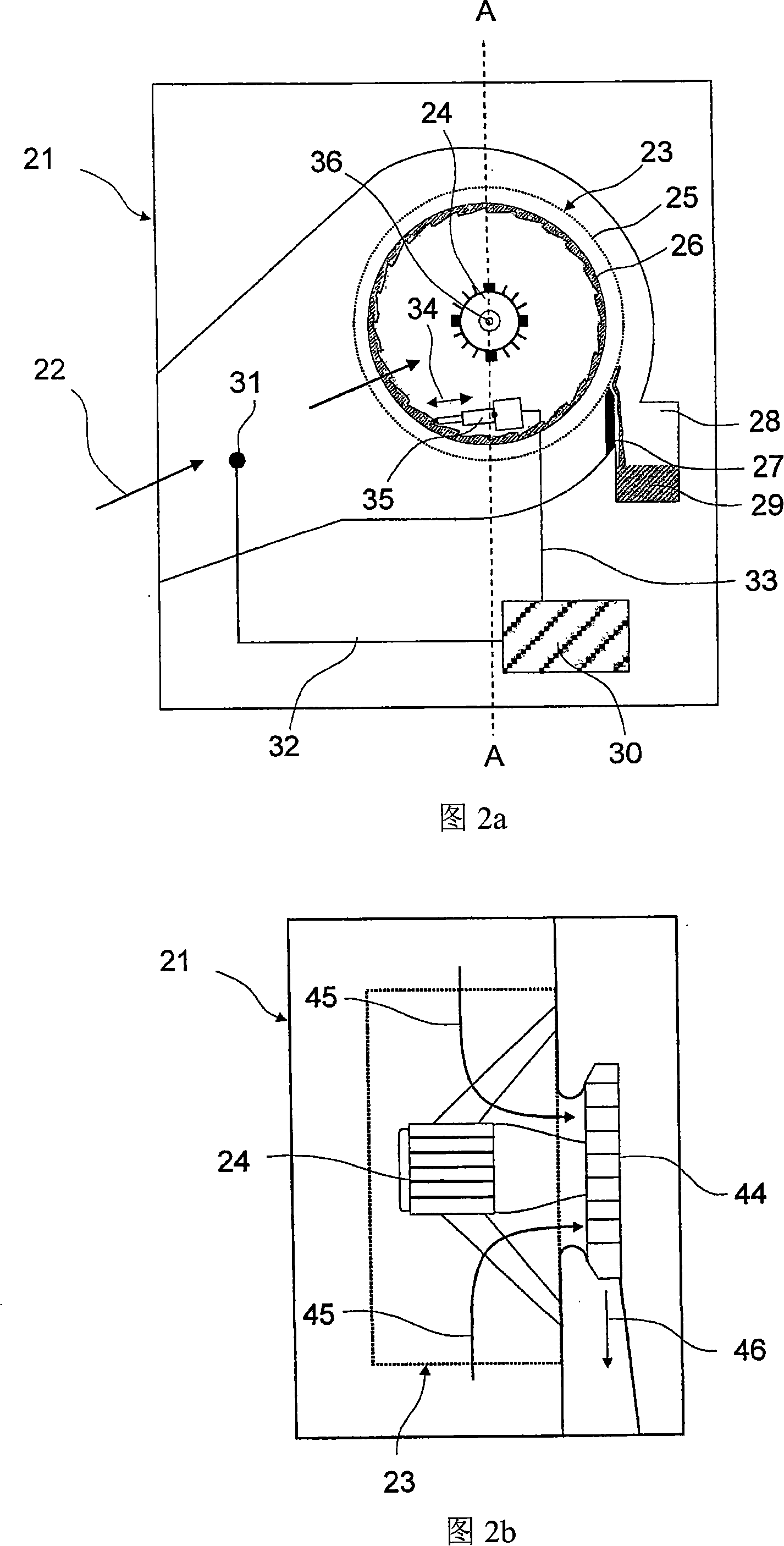

The present invention is directed to a woven fabric having the modified crowsfoot weave pattern shown in FIGS. 2A and 2B. The present invention is directed to a woven fabric comprising about 65 wt % fiberglass yarn and about 35 wt % bamboo yarn, the woven fabric (1) being about 15.0 ounces per square yard (OSY); (2) having a thread count of about 760; and (3) having the modified crowsfoot weave pattern shown in FIGS. 2A and 2B. Additional ingredients may also be added to the woven fabrics of the invention to enhance the hemostatic properties.

Owner:ENTEGRION INC

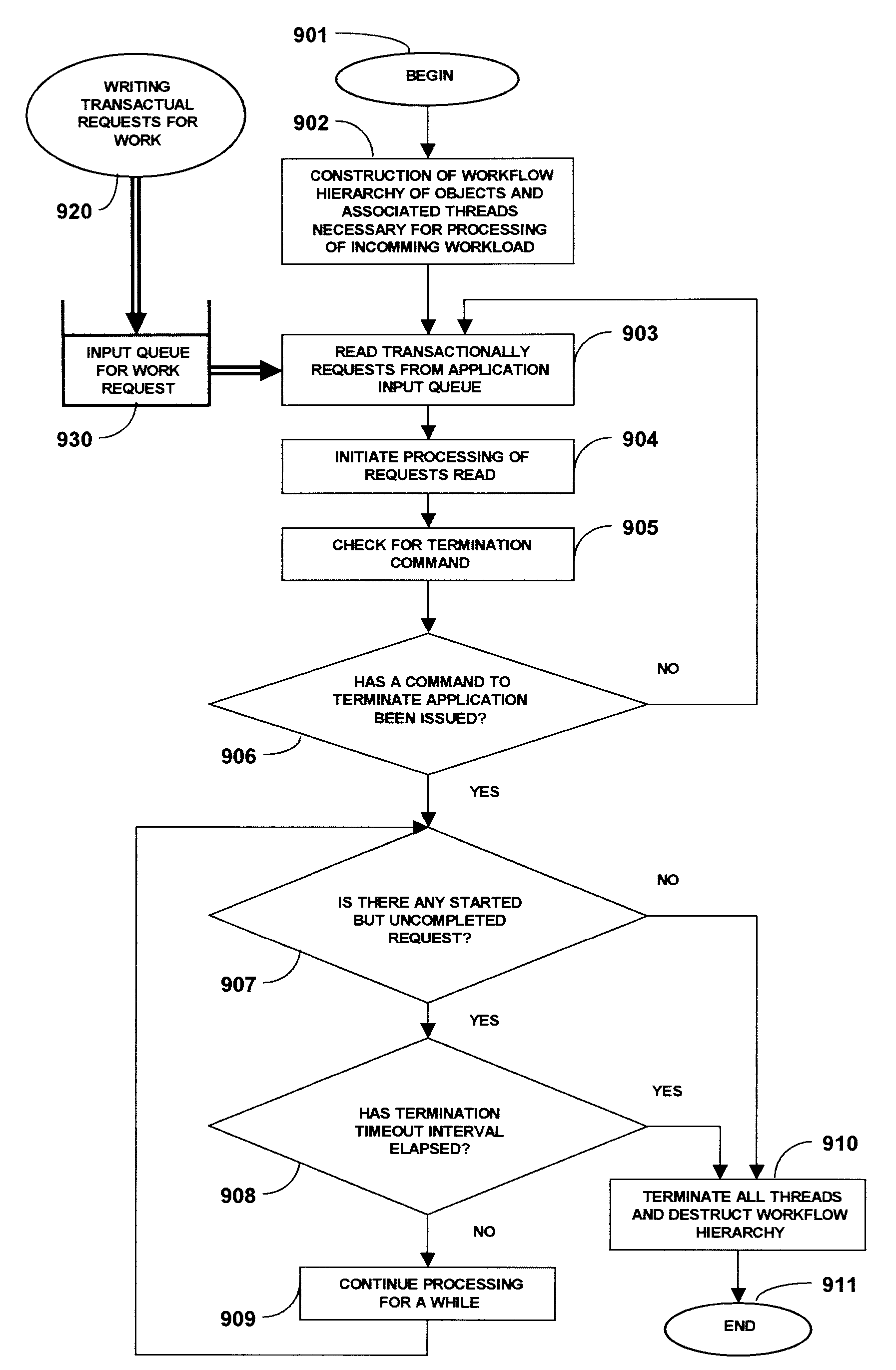

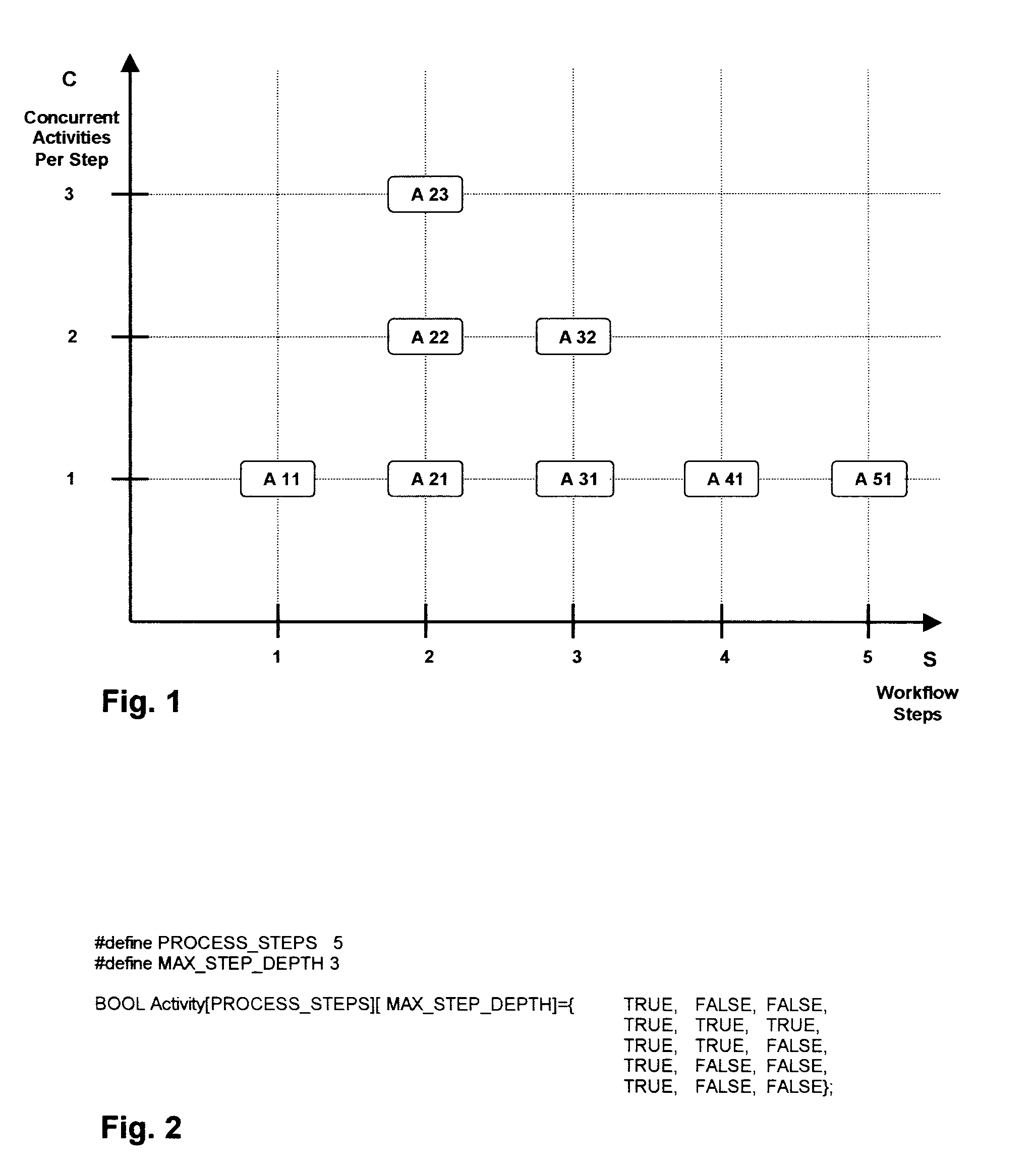

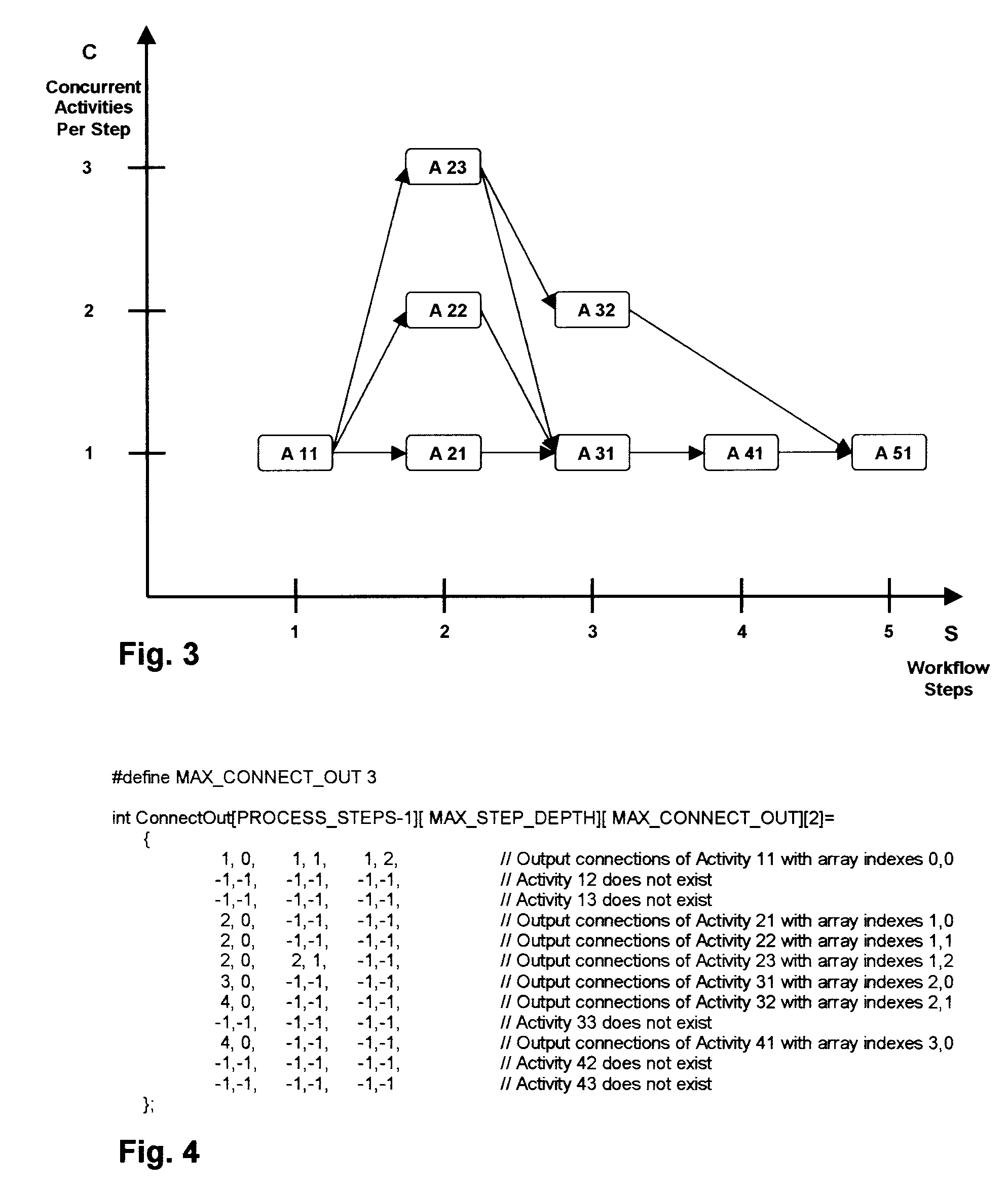

Graphical development of fully executable transactional workflow applications with adaptive high-performance capacity

InactiveUS7272820B2Overcome limitationsResource allocationVisual/graphical programmingGraphicsTransactional workflows

This invention is about engineering approach to development of transactional workflow applications and about ability of this way produced applications to concurrently process large number of workflow requests of identical type with high speed. It provides methods and articles of manufacture: for graphical development of fully executable workflow application; for producing configuration of class objects and threads with capacity for concurrent processing of multitude of requests of identical type for transactional workflow and for concurrent execution and synchronization of parallel workflow-activity sequences within processing of a workflow request; for application self-scaling up and self-scaling down of its processing capacity; and for real-time visualization of application's thread structures, thread quantity, thread usage, and scaling-enacted changes in threads structure and quantity.

Owner:EXTRAPOLES

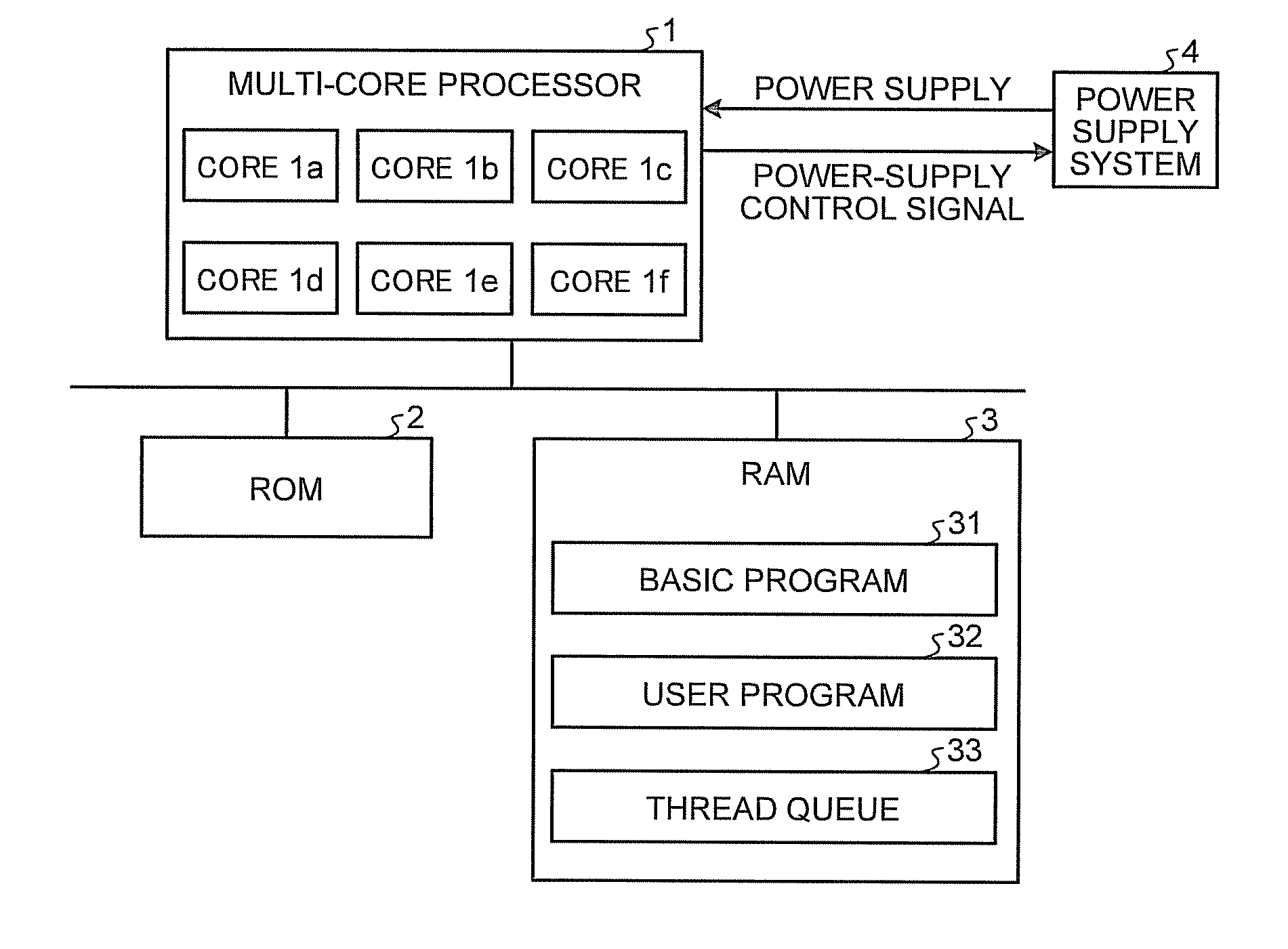

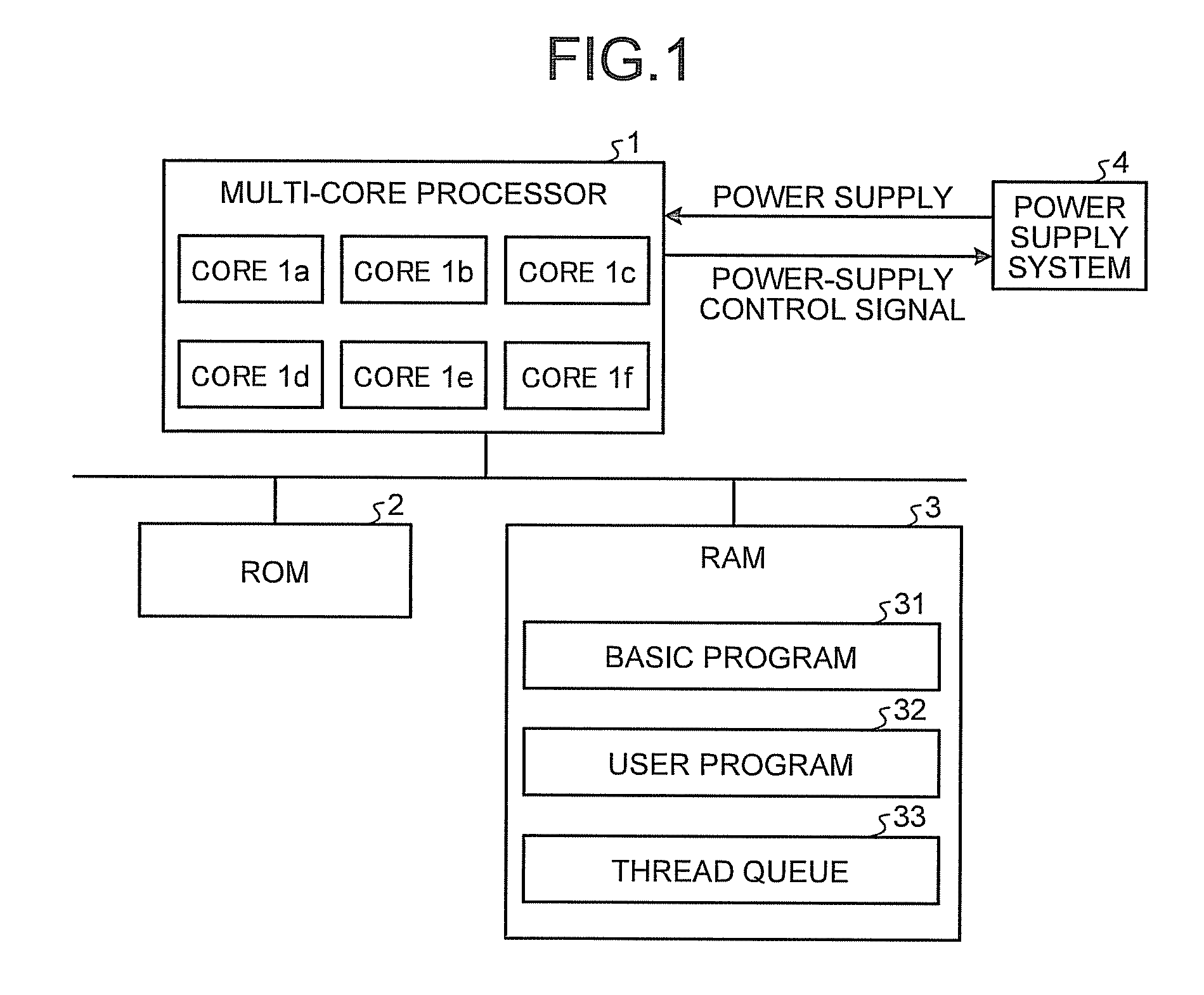

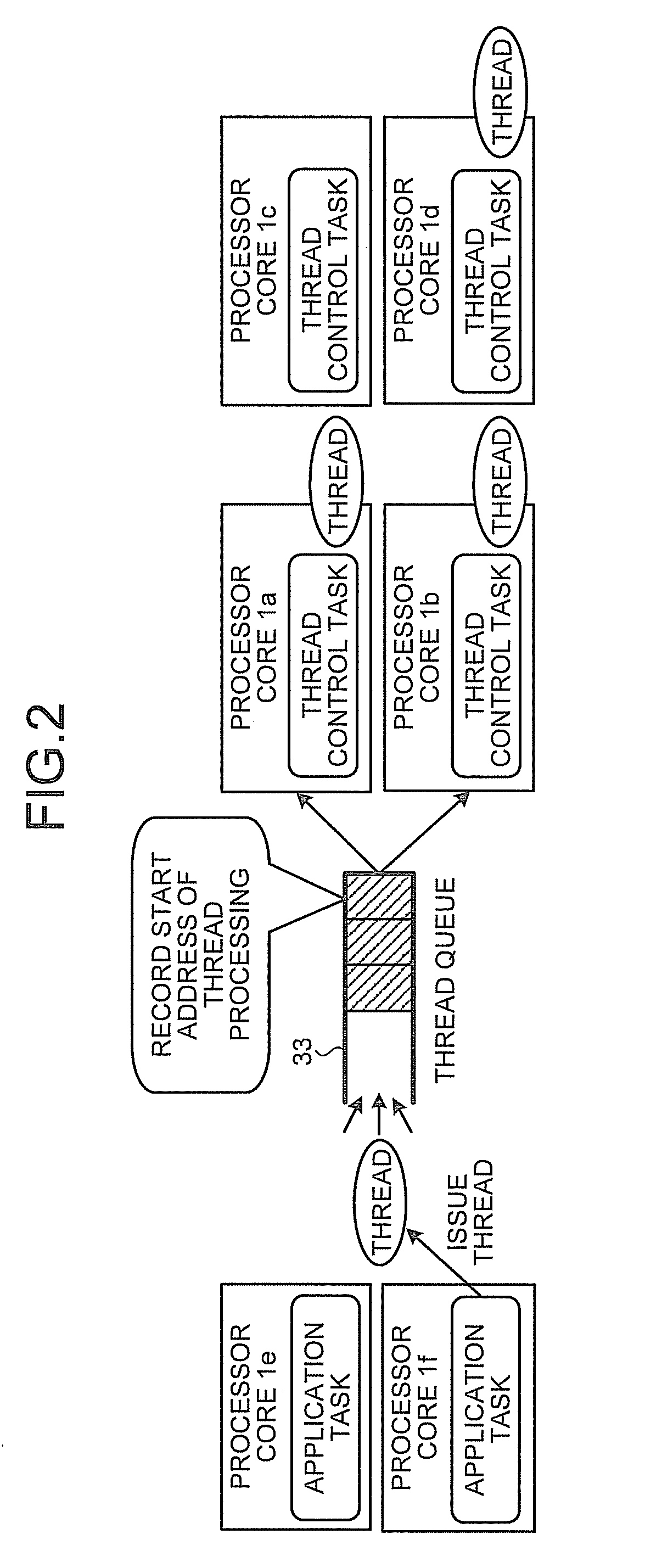

Multi-core processor system

InactiveUS20100299541A1Energy efficient ICTDigital data processing detailsMulti-core processorThread count

A multi-core processor system includes: a plurality of processor cores; a power supply unit that stops supplying or supplies power to each of the processor cores individually; and a thread queue that stores threads that the multi-core processor system causes the processor cores to execute. Each of the processor cores includes: a power-supply stopping unit that causes the power supply unit to stop power supply to an own processor core when a number of threads stored in the thread queue is equal to or smaller than a first threshold; and a power-supply resuming unit that causes the power supply unit to resume power supply to the other stopped processor cores when the number of threads stored in the thread queue exceeds a second value equal to or lager than the first threshold.

Owner:KK TOSHIBA

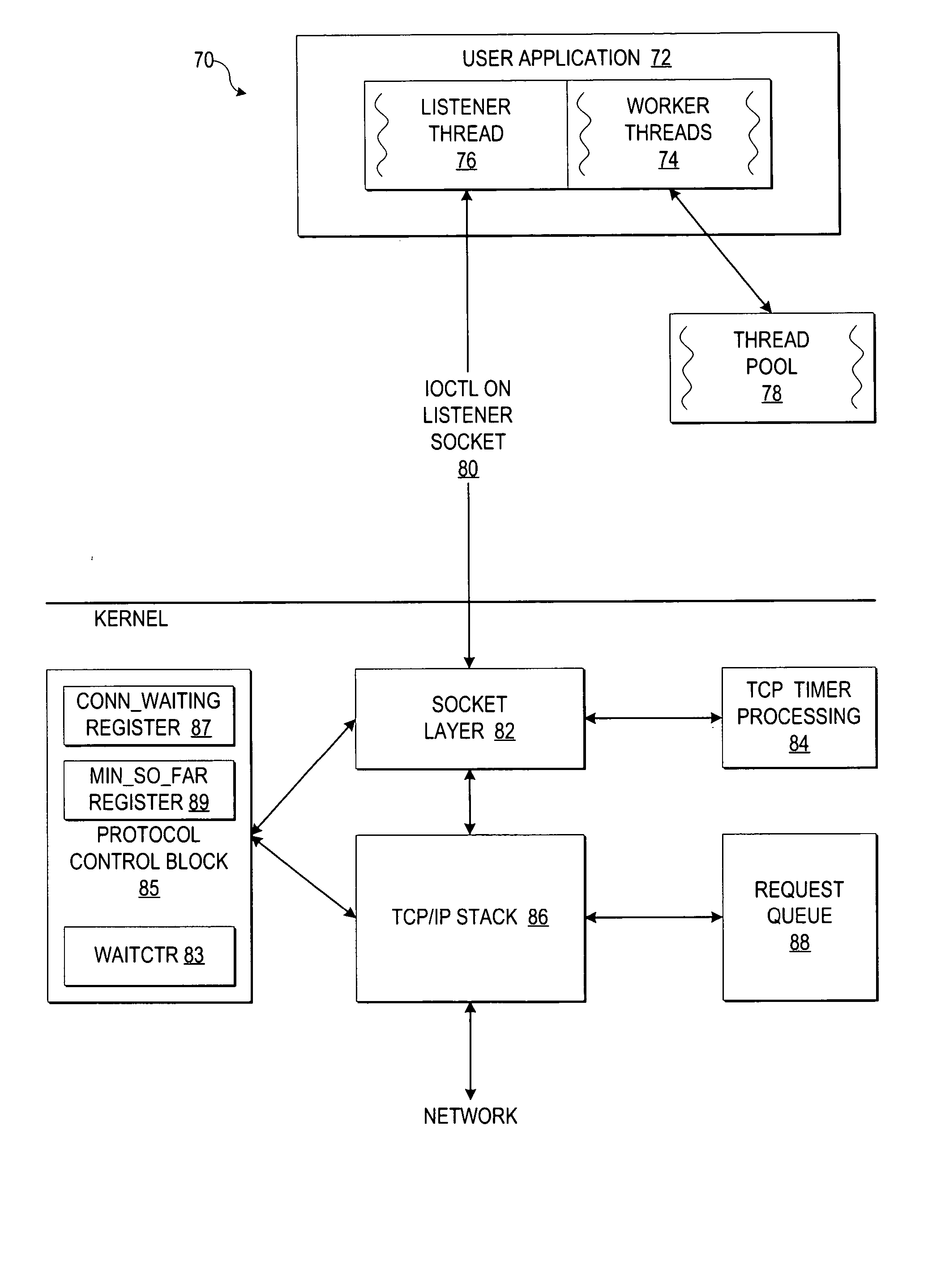

Monitoring thread usage to dynamically control a thread pool

InactiveUS20050086359A1Efficient loadingResource allocationMultiple digital computer combinationsClient-sideThread pool

A method, system, and program for monitoring thread usage to dynamically control a thread pool are provided. An application running on the server system invokes a listener thread on a listener socket for receiving client requests at the server system and passing the client requests to one of multiple threads waiting in a thread pool. Additionally, the application sends an ioctl call in blocking mode on the listener thread. A TCP layer within the server system detects the listener thread in blocking mode and monitors a thread count of at least one of a number of incoming requests waiting to be processed and a number of said plurality of threads remaining idle in the thread pool over a sample period. Once the TCP layer detects a thread usage event, the ioctl call is returned indicating the thread usage event with the thread count, such that a number of threads in the thread pool may be dynamically adjusted to handle the thread count.

Owner:IBM CORP

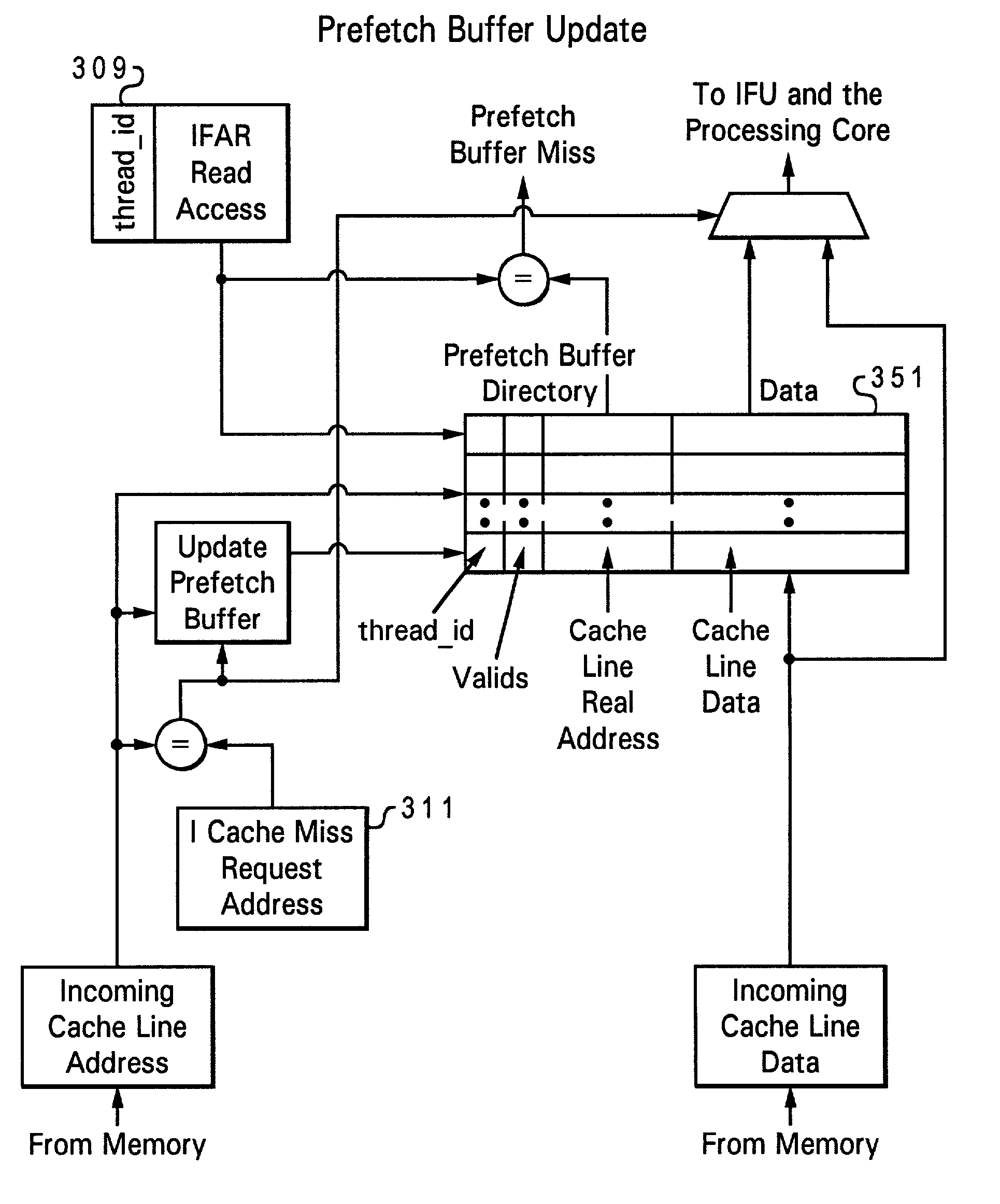

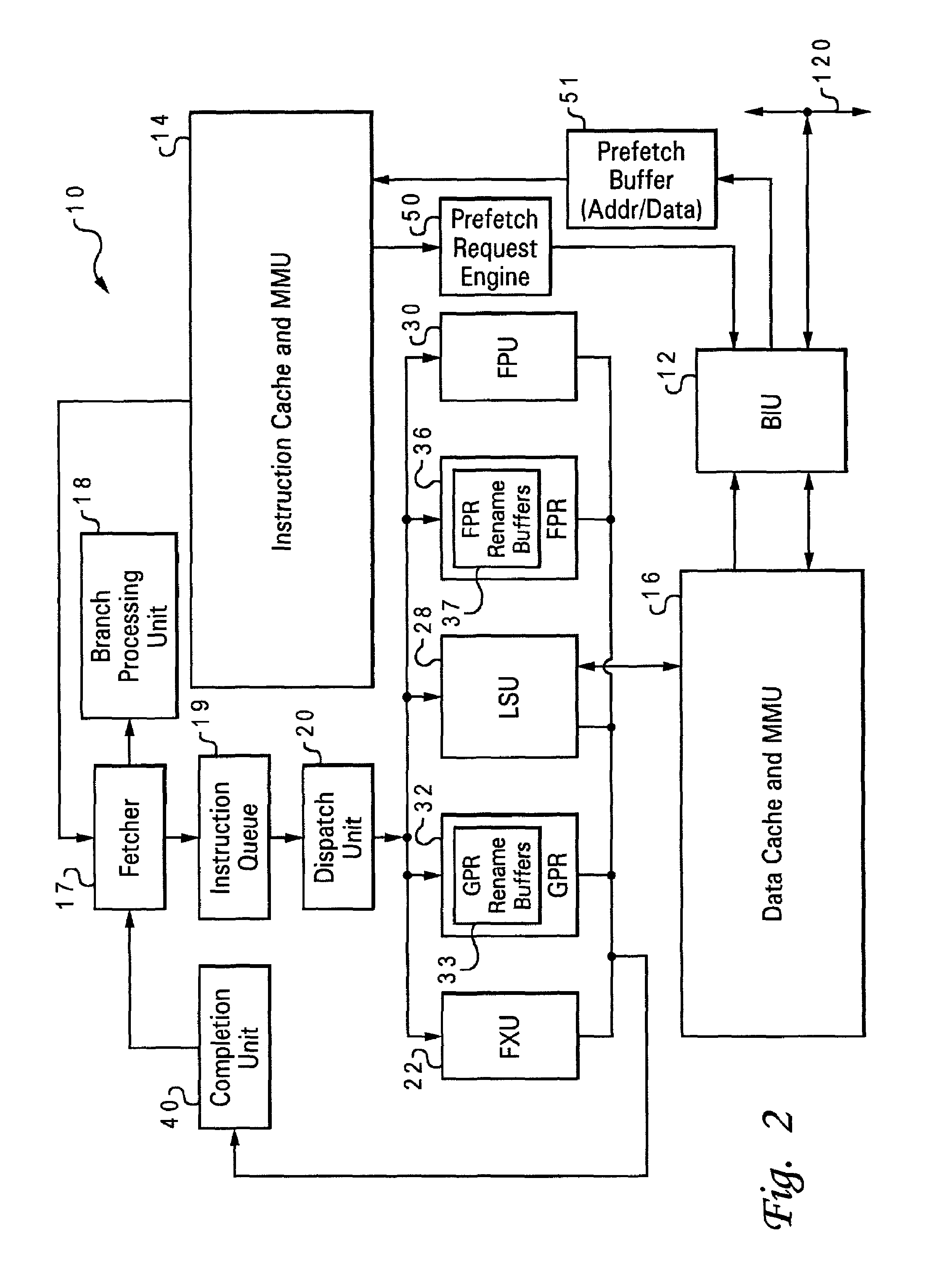

Multithreaded processor efficiency by pre-fetching instructions for a scheduled thread

InactiveUS6965982B2Ensure proper implementationMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer architectureFlip-flop

Owner:INTEL CORP

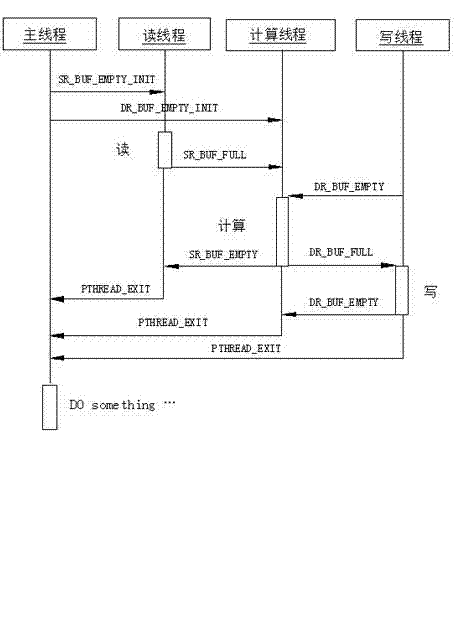

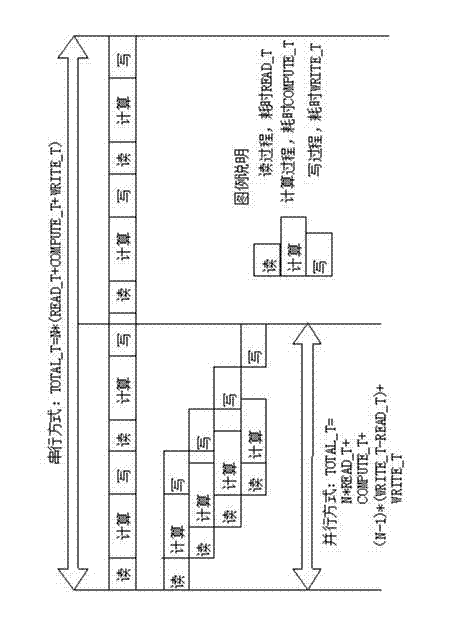

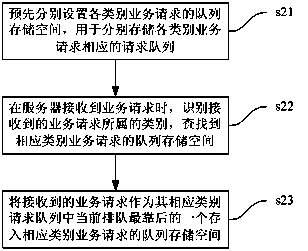

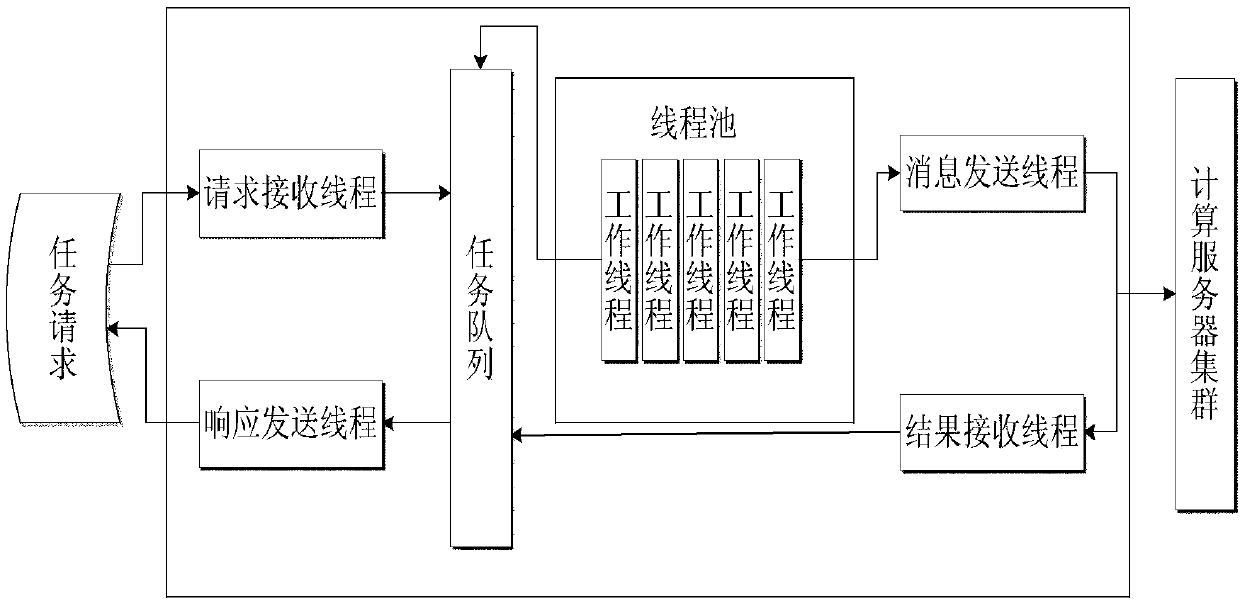

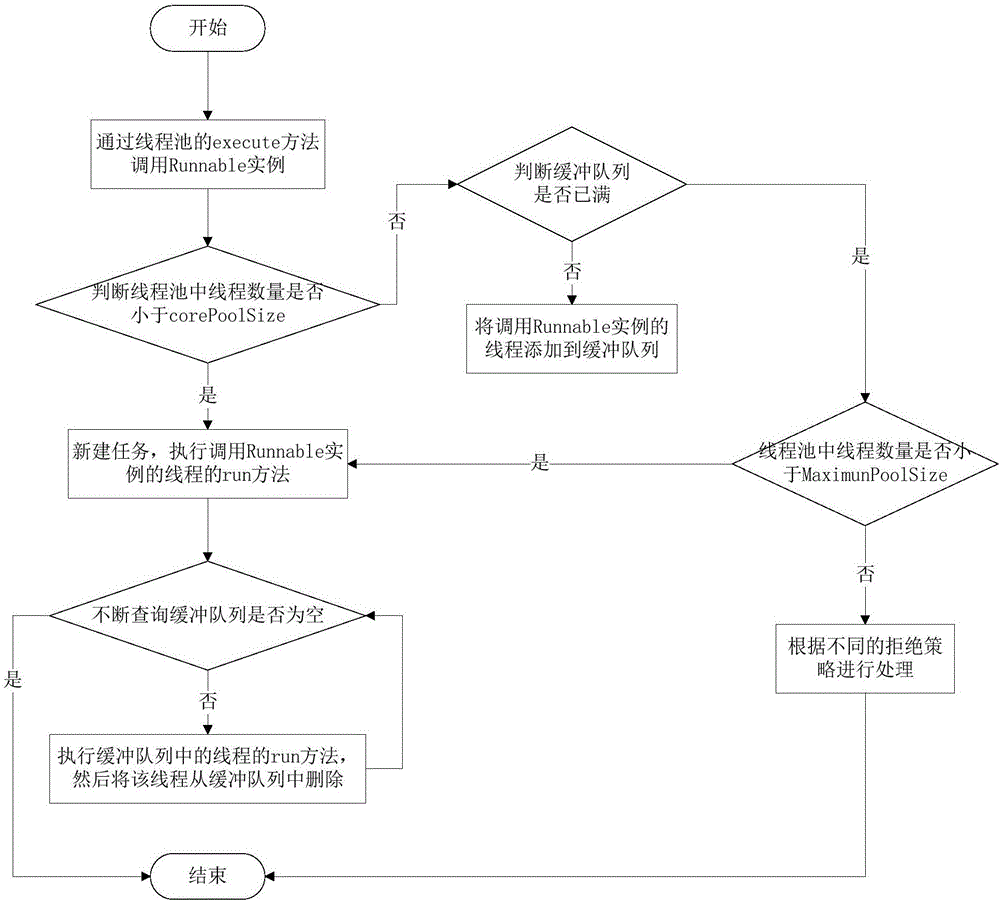

Multi-thread parallel processing method based on multi-thread programming and message queue

ActiveCN102902512AFast and efficient multi-threaded transformationReduce running timeConcurrent instruction executionComputer architectureConcurrent computation

The invention provides a multi-thread parallel processing method based on a multi-thread programming and a message queue, belonging to the field of high-performance computation of a computer. The parallelization of traditional single-thread serial software is modified, and current modern multi-core CPU (Central Processing Unit) computation equipment, a pthread multi-thread parallel computing technology and a technology for realizing in-thread communication of the message queue are utilized. The method comprises the following steps of: in a single node, establishing three types of pthread threads including a reading thread, a computing thread and a writing thread, wherein the quantity of each type of the threads is flexible and configurable; exploring multi-buffering and establishing four queues for the in-thread communication; and allocating a computing task and managing a buffering space resource. The method is widely applied to the application field with multi-thread parallel processing requirements; a software developer is guided to carry out multi-thread modification on existing software so as to realize the optimization of the utilization of a system resource; and the hardware resource utilization rate is obviously improved, and the computation efficiency of software and the whole performance of the software are improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

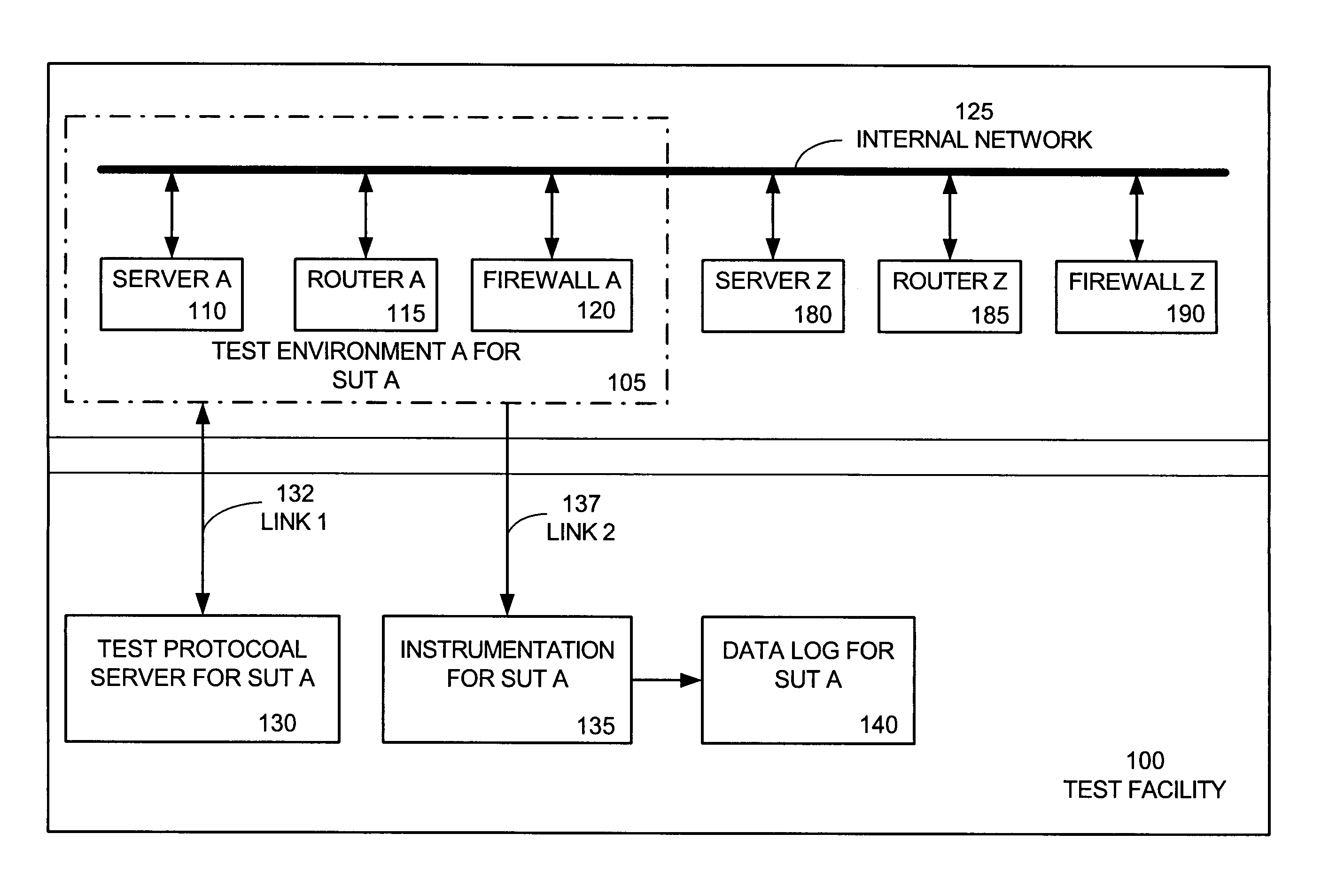

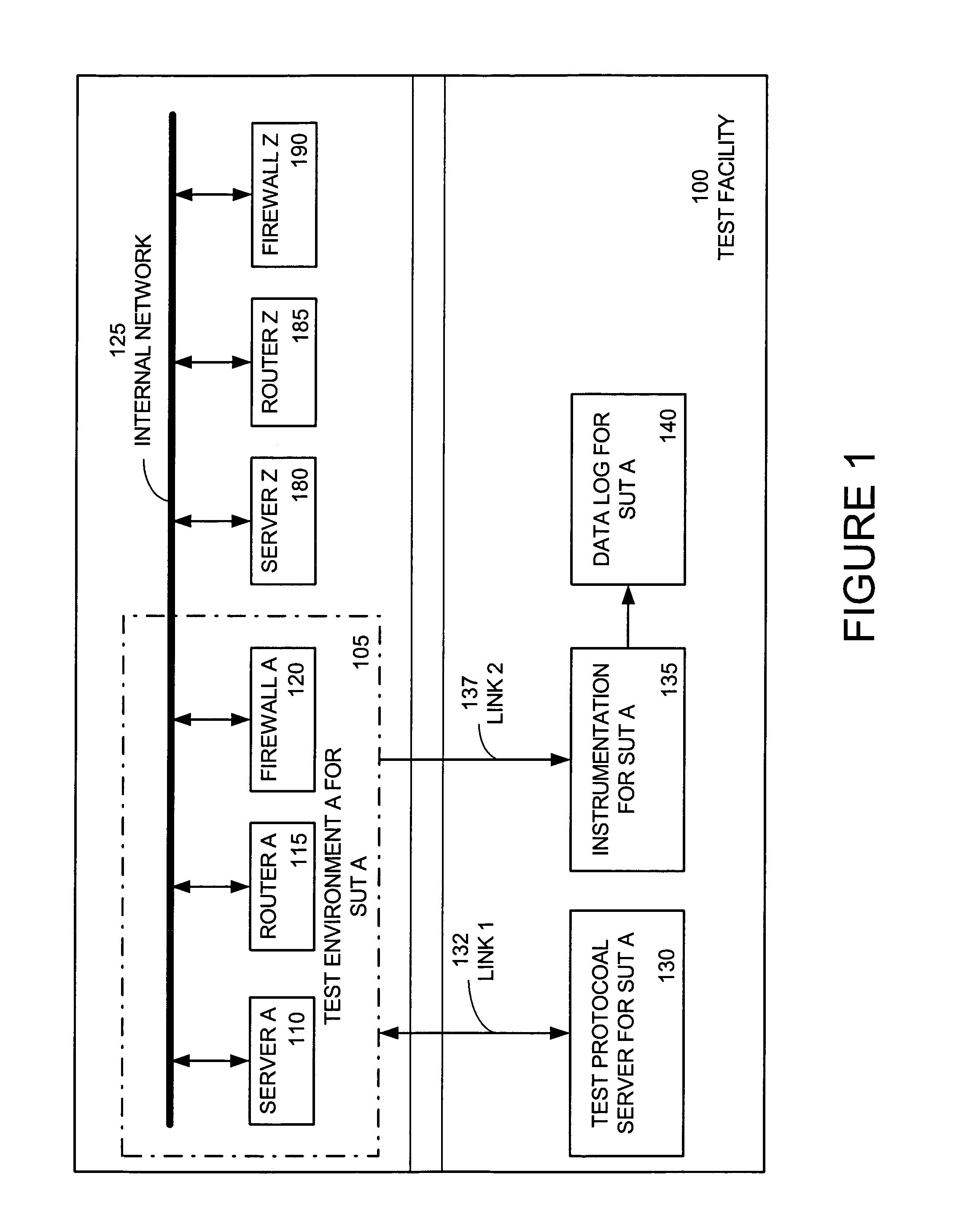

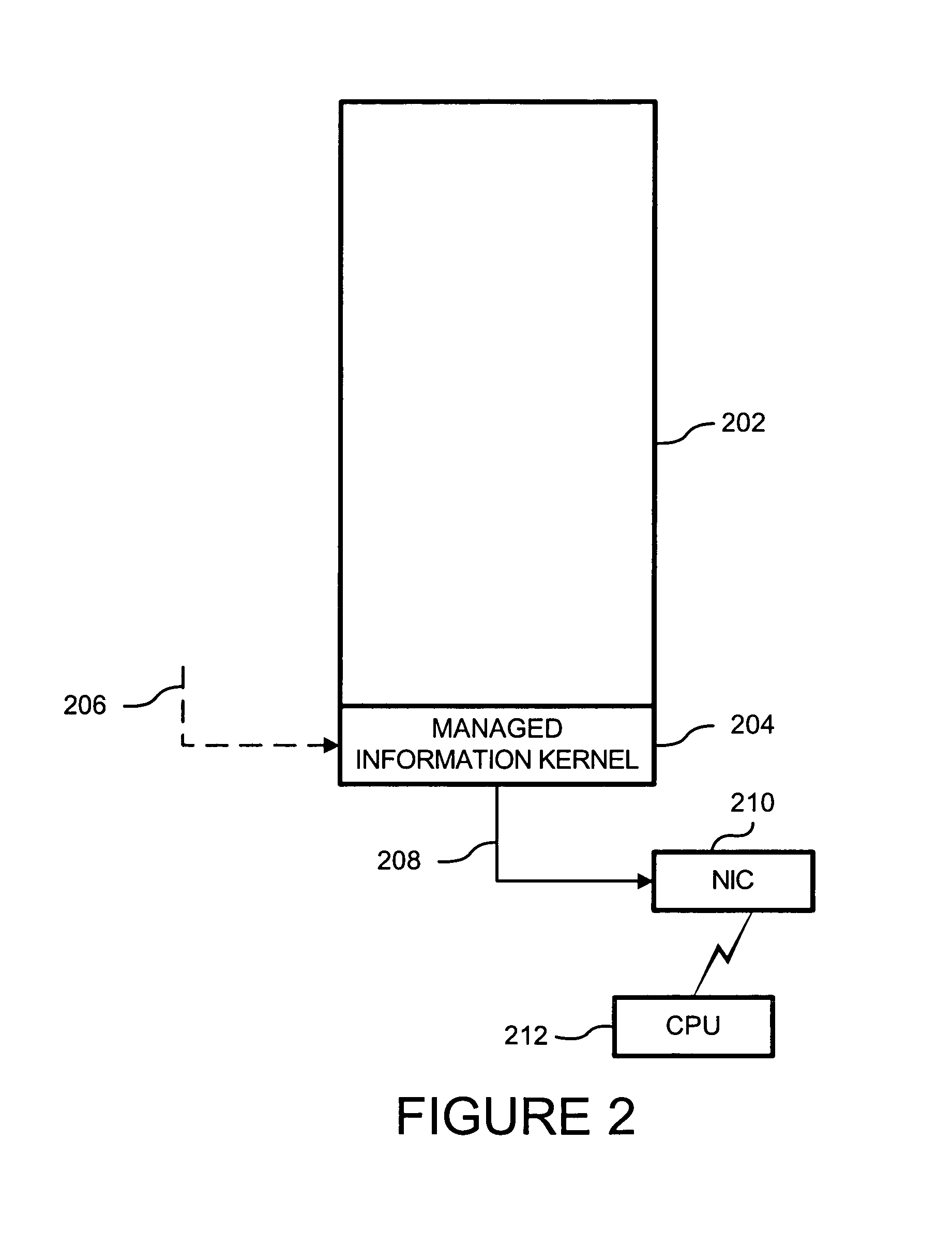

System and method of testing software and hardware in a reconfigurable instrumented network

A method of testing a computer system in a testing environment formed of a network of routers, servers, and firewalls. Performance of the computer system is monitored. A log is made of the monitored performance of the computer system. The computer system is subjected to hostile conditions until it no longer functions. The state of the computer system at failure point is recorded. The performance monitoring is done with substantially no interference with the testing environment. The performance monitoring includes monitoring, over a sampling period, of packet flow, hardware resource utilization, memory utilization, data access time, or thread count. A business method entails providing a testing environment formed of a network of network devices including routers, servers, and firewalls, while selling test time to a customer on one or more of the network devices during purchased tests that test the security of the customer's computer system. The purchased tests are conducted simultaneously with other tests for other customers within the testing environment. Customer security performance data based on the purchased tests is provided without loss of privacy by taking security measures to ensure that none of the other customers can access the security performance data. The tests may also be directed to scalability or reliability of the customer's computer system. Data about a device under test is gathered using a managed information kernel that is loaded into the devices operating memory before its operating system. The gathered data is prepared as managed information items.

Owner:AVANZA TECH

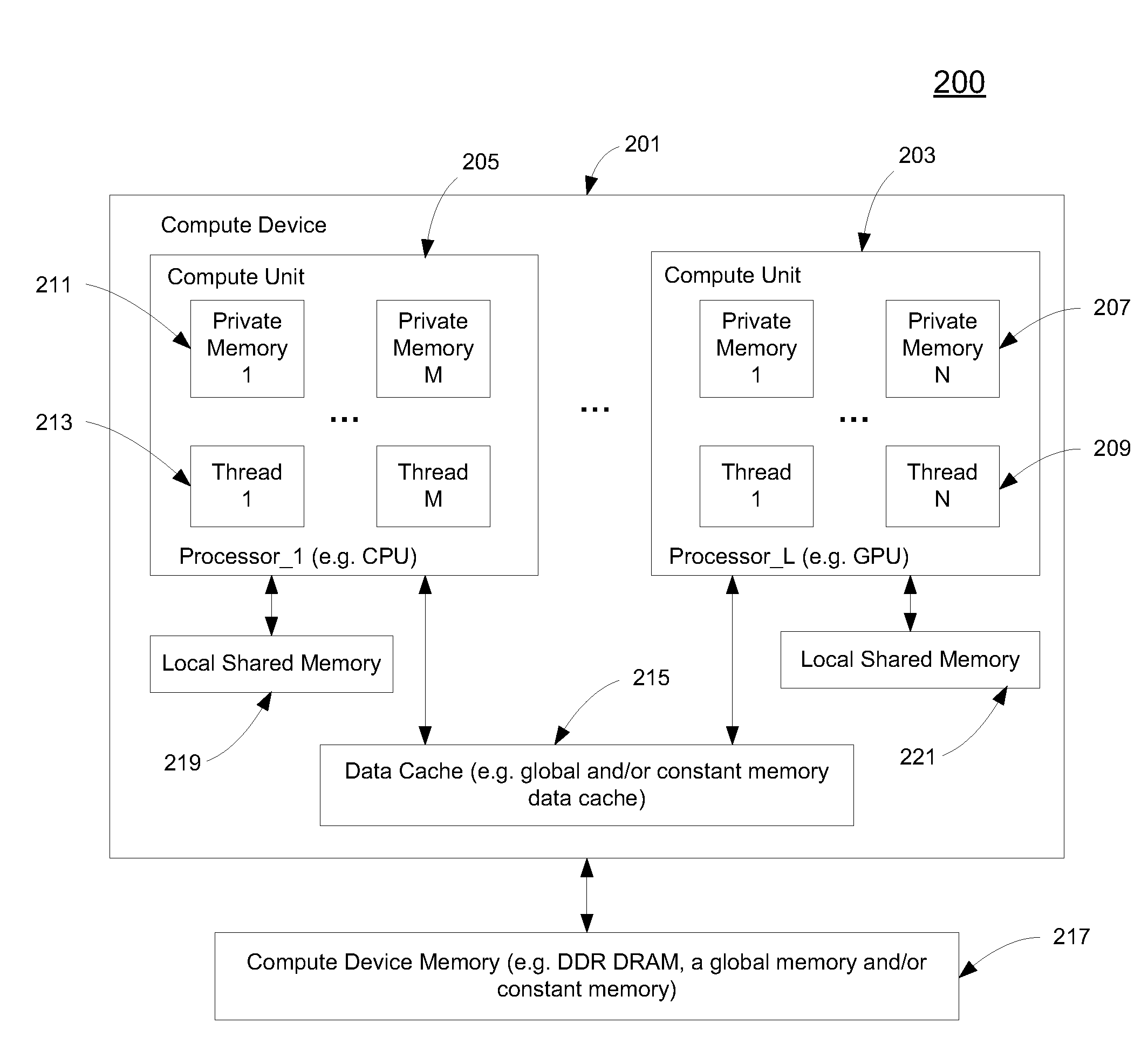

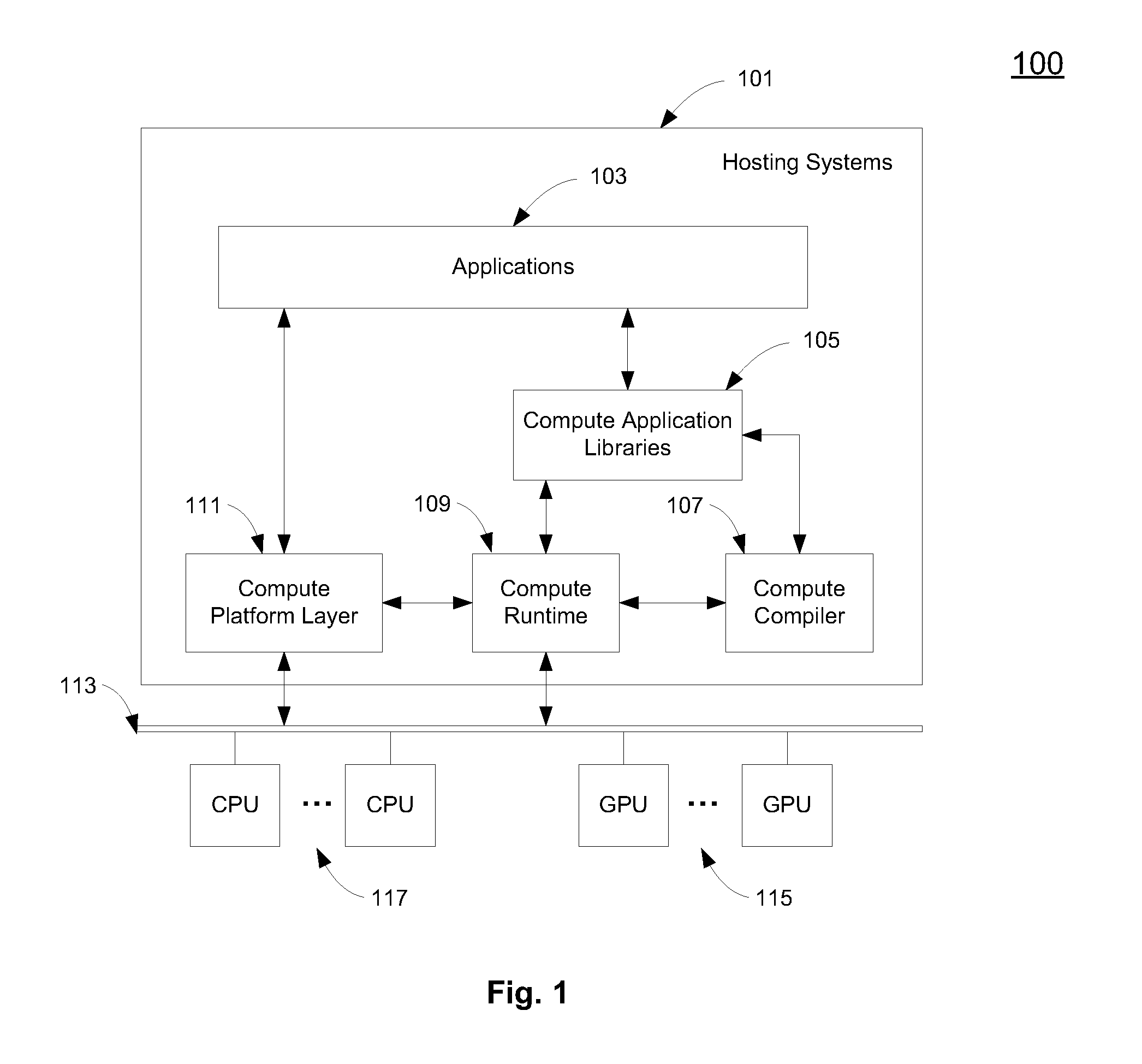

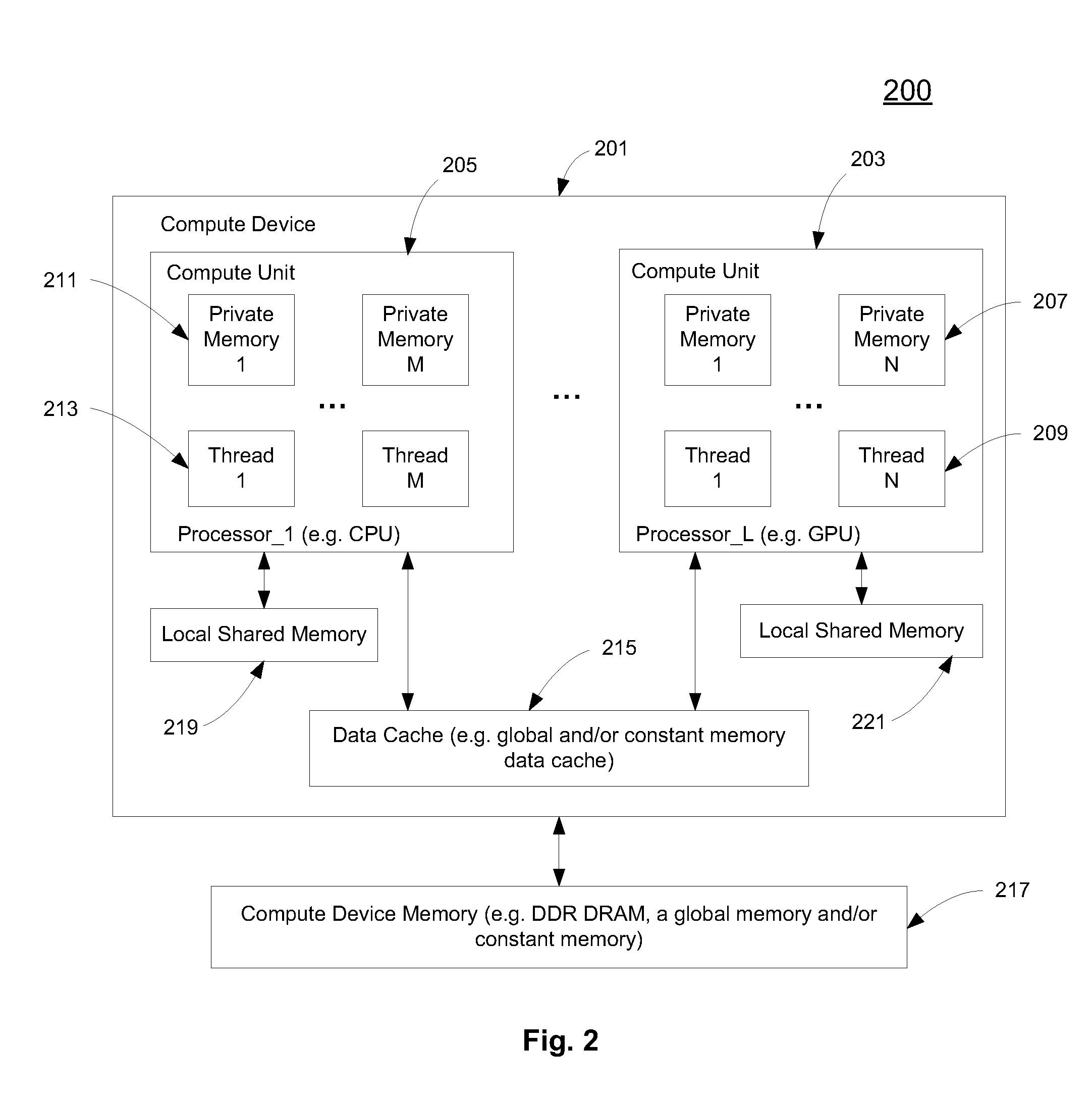

Multi-dimensional thread grouping for multiple processors

ActiveUS20090307704A1Optimize runtime resource usageResource allocationProgram control using stored programsGraphicsMulti processor

A method and an apparatus that determine a total number of threads to concurrently execute executable codes compiled from a single source for target processing units in response to an API (Application Programming Interface) request from an application running in a host processing unit are described. The target processing units include GPUs (Graphics Processing Unit) and CPUs (Central Processing Unit). Thread group sizes for the target processing units are determined to partition the total number of threads according to a multi-dimensional global thread number included in the API request. The executable codes are loaded to be executed in thread groups with the determined thread group sizes concurrently in the target processing units.

Owner:APPLE INC

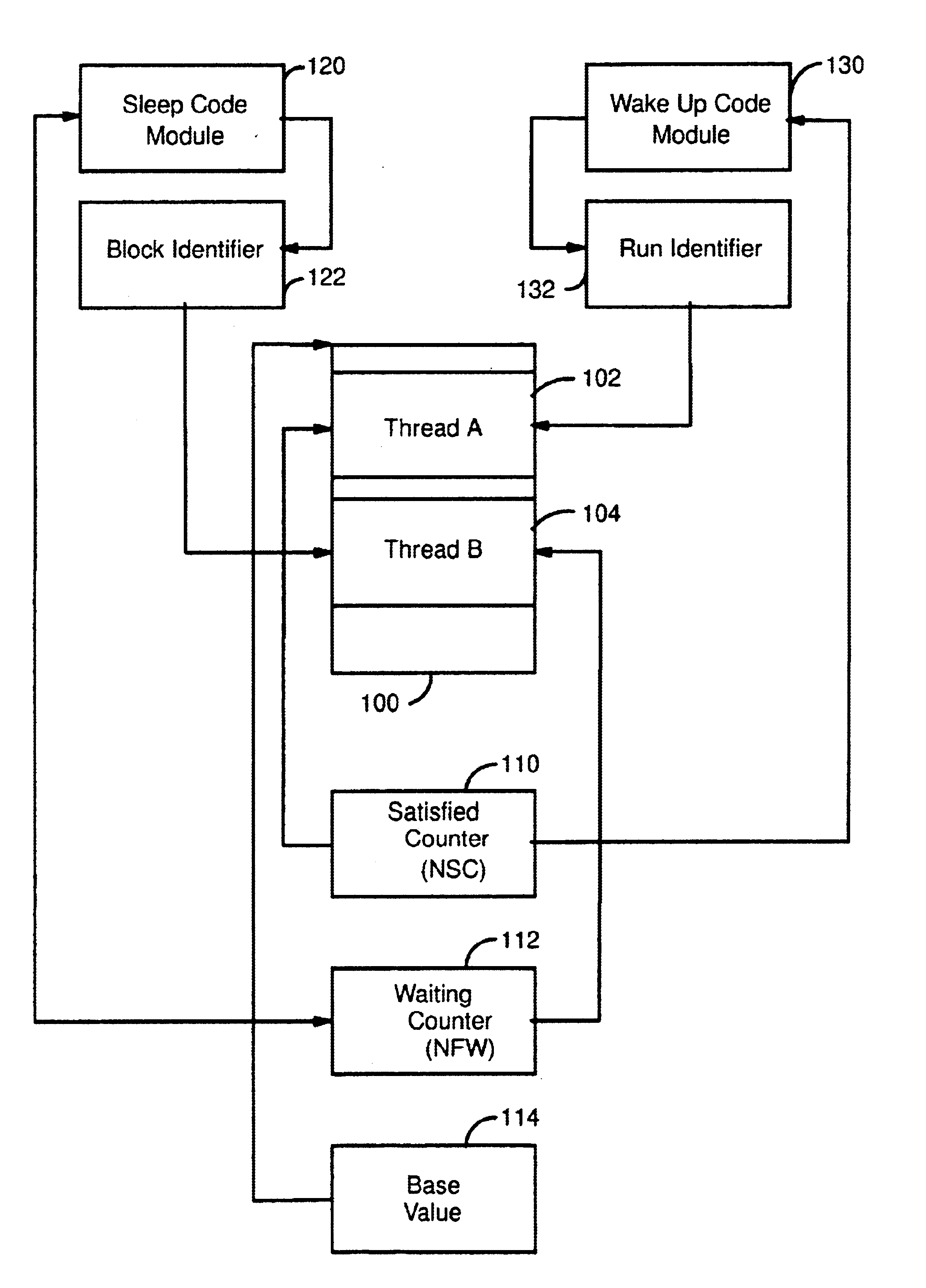

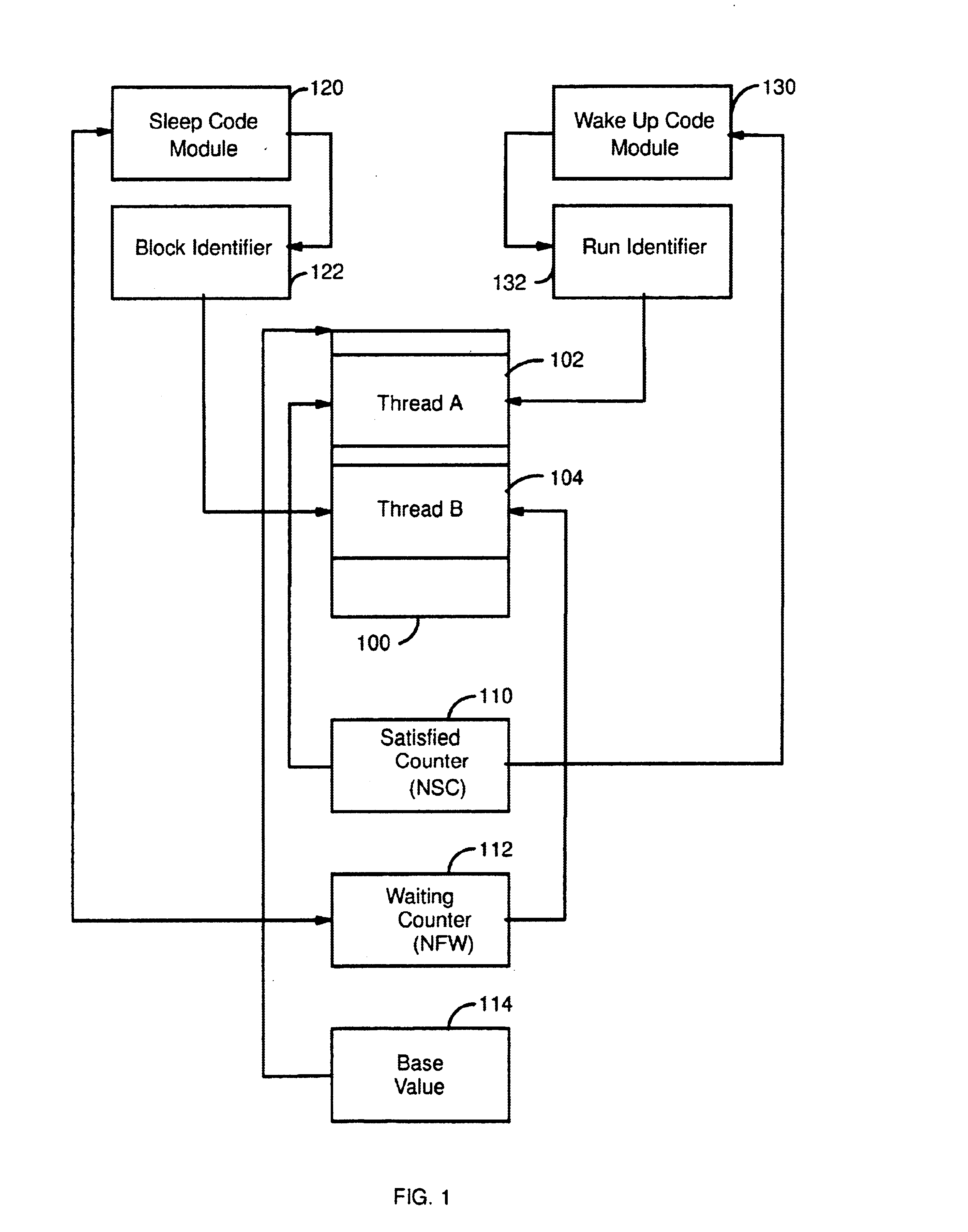

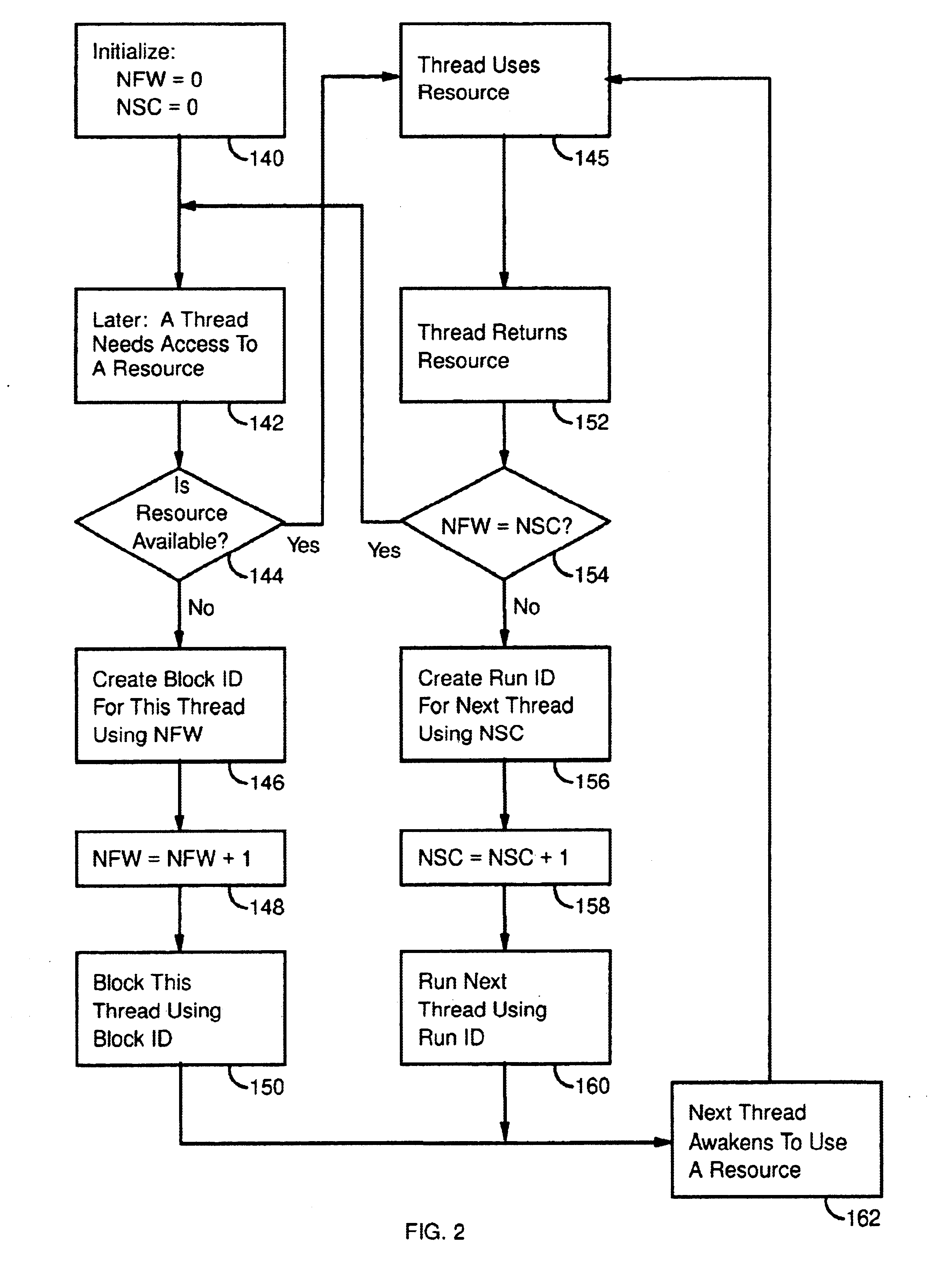

System and method for queue-less enforcement of queue-like behavior on multiple threads accessing a scarce source

InactiveUS6910211B1Minimizing system churnProgram synchronisationMemory systemsOperating systemThread count

A system and method for managing simultaneous access to a scarce or serially re-usable resource by multiple process threads. A stationary queue is provided, including a wait counter for counting the cumulative number of threads that have been temporarily denied the resource; a satisfied counter for counting the cumulative number of threads that have been denied access and subsequently granted access to said resource; a sleep code routine responsive to the wait counter for generating a run identifier; and a wakeup code routine responsive to the satisfied counter for generating the run identifier.

Owner:IBM CORP

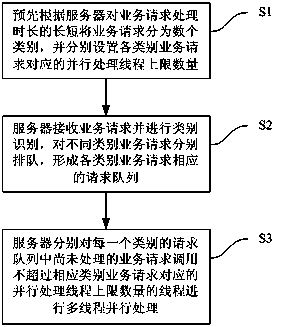

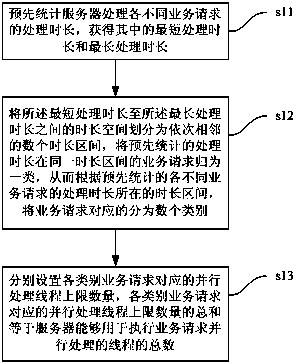

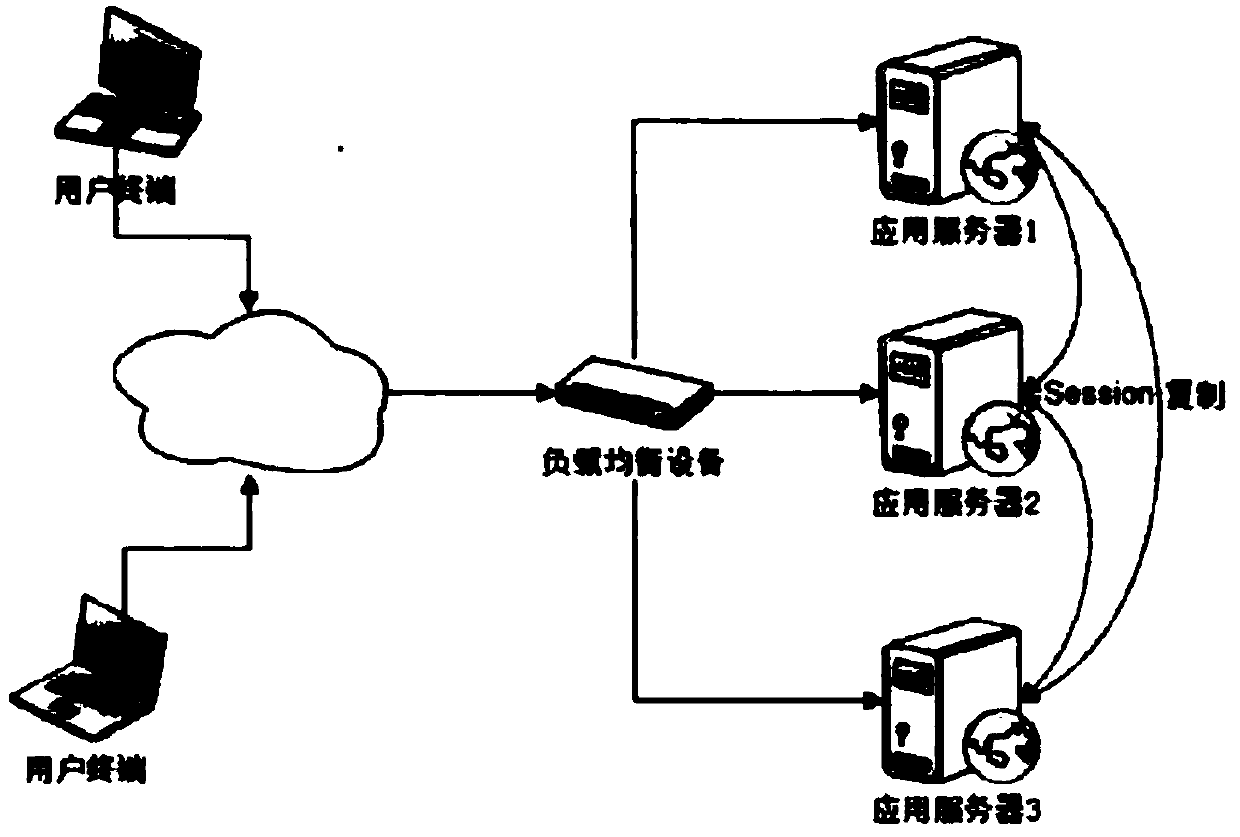

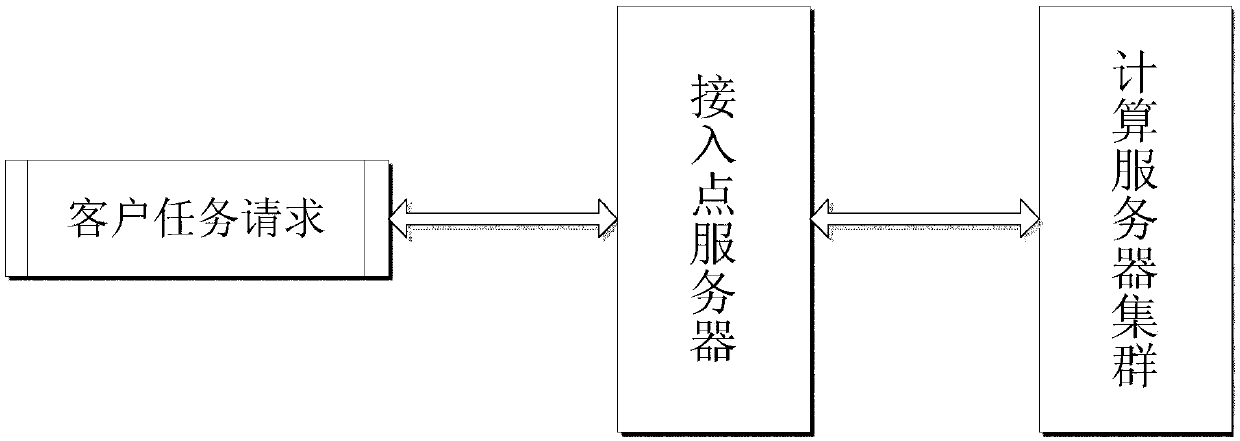

Server service request parallel processing method based on thread number limit and system thereof

ActiveCN103516536AAvoid "monopoly" situationsIncreased distribution balanceData switching networksEngineeringService efficiency

The present invention provides a server service request parallel processing method based on thread number limiting and a system thereof. According to the server service request parallel processing method and the system, a control solution for classifying service requests according to different processing time lengths, and the upper limit of the number of the threads which are called by the server for parallelly processing each kind of service requests, thereby preventing monopoly occupation of server thread by the service request with long processing time length, ensuring partial threads in the server is used for parallelly processing the service request with short processing time length, so that distribution balance for the threads for processing the service request of the server is improved, thereby improving service request processing executing efficiency and user service efficiency of the integral body of the server. Simultaneously the possibility of long-time monopoly occupation for server system resource caused by parallel processing for a large amount of service requests with complicated operation and long processing time length by the server is reduced, thereby improving system resource distribution performance of the server.

Owner:NEW SINGULARITY INT TECHN DEV

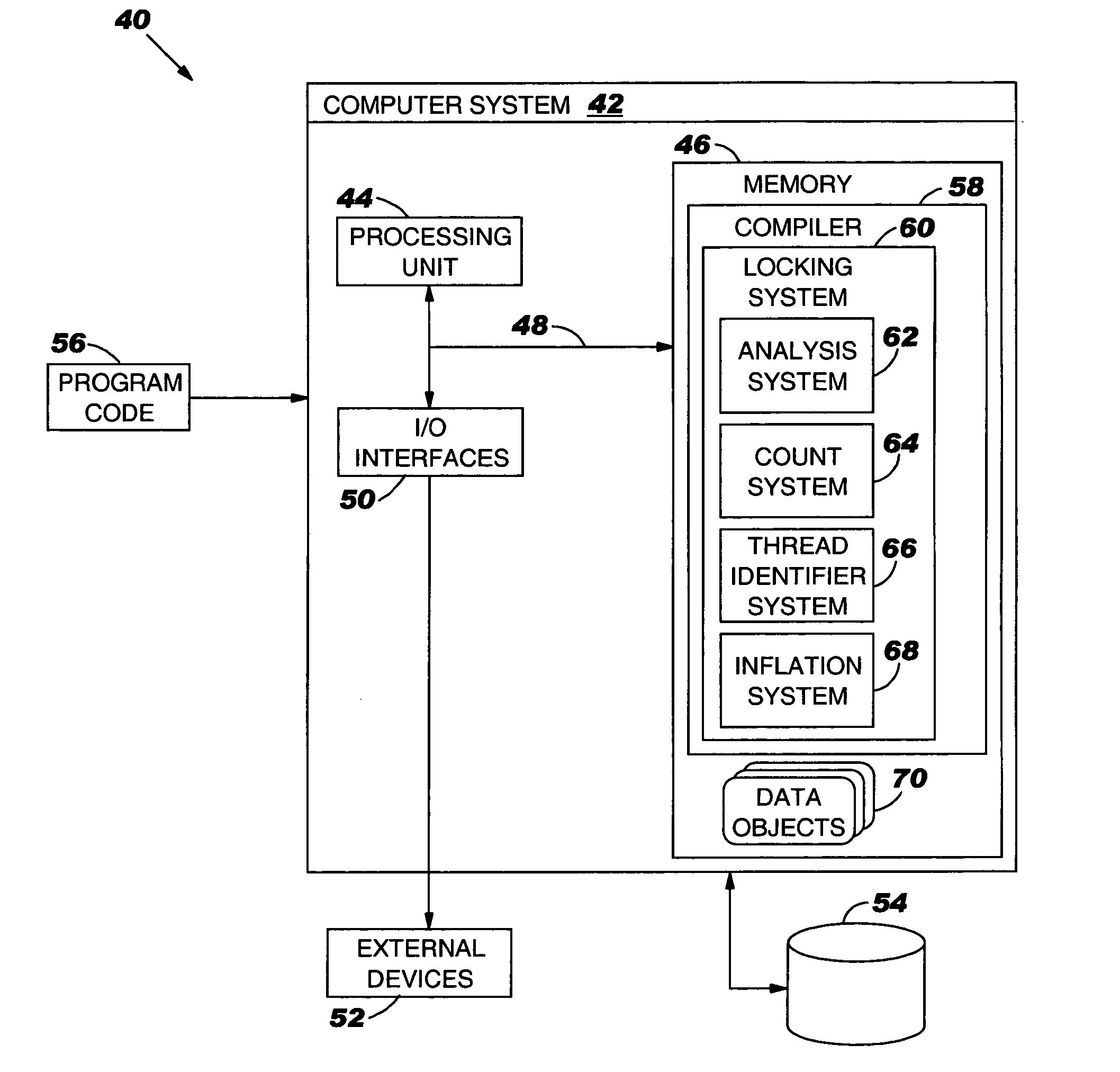

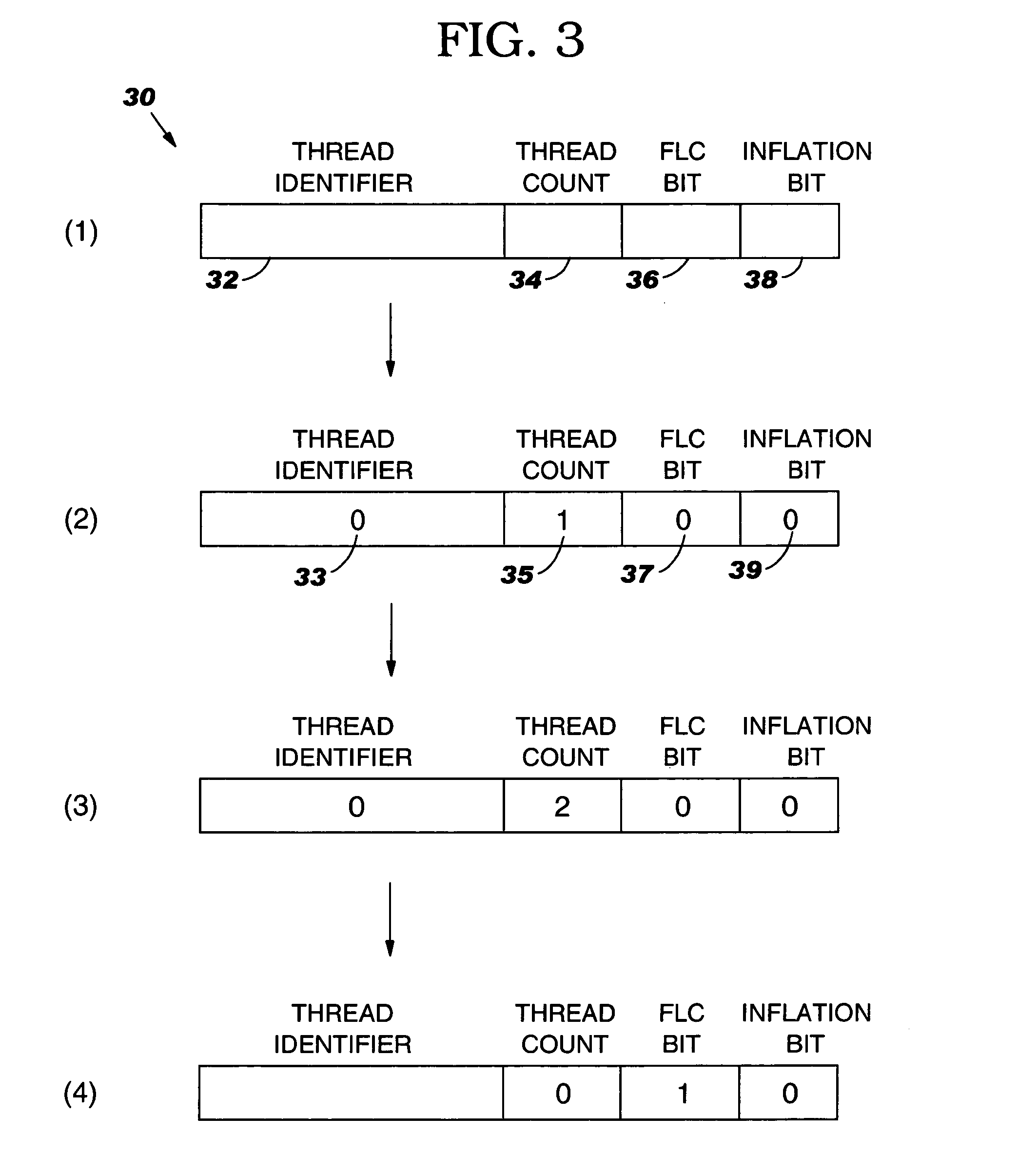

Computer-implemented method, system and program product for establishing multiple read-only locks on a shared data object

InactiveUS20060168585A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationOperating systemThread count

Under the present invention, a locking primitive associated with a shared data object is automatically transformed to allow multiple read-only locks if certain conditions are met. To this extent, when a read-only lock on a shared data object is desired, a thread identifier of an object header lock word (hereinafter “lock word”) associated with the shared data object is examined to determine if a read-write lock on the shared data object already exists. If not, then the thread identifier is set to a predetermined value indicative of read-only locks, and a thread count in the lock word is incremented. If another thread attempts a read-only lock, the thread identifier will be examined for the predetermined value. If it is present, the thread count will be incremented again, and a second read-only lock will be simultaneously established.

Owner:IBM CORP

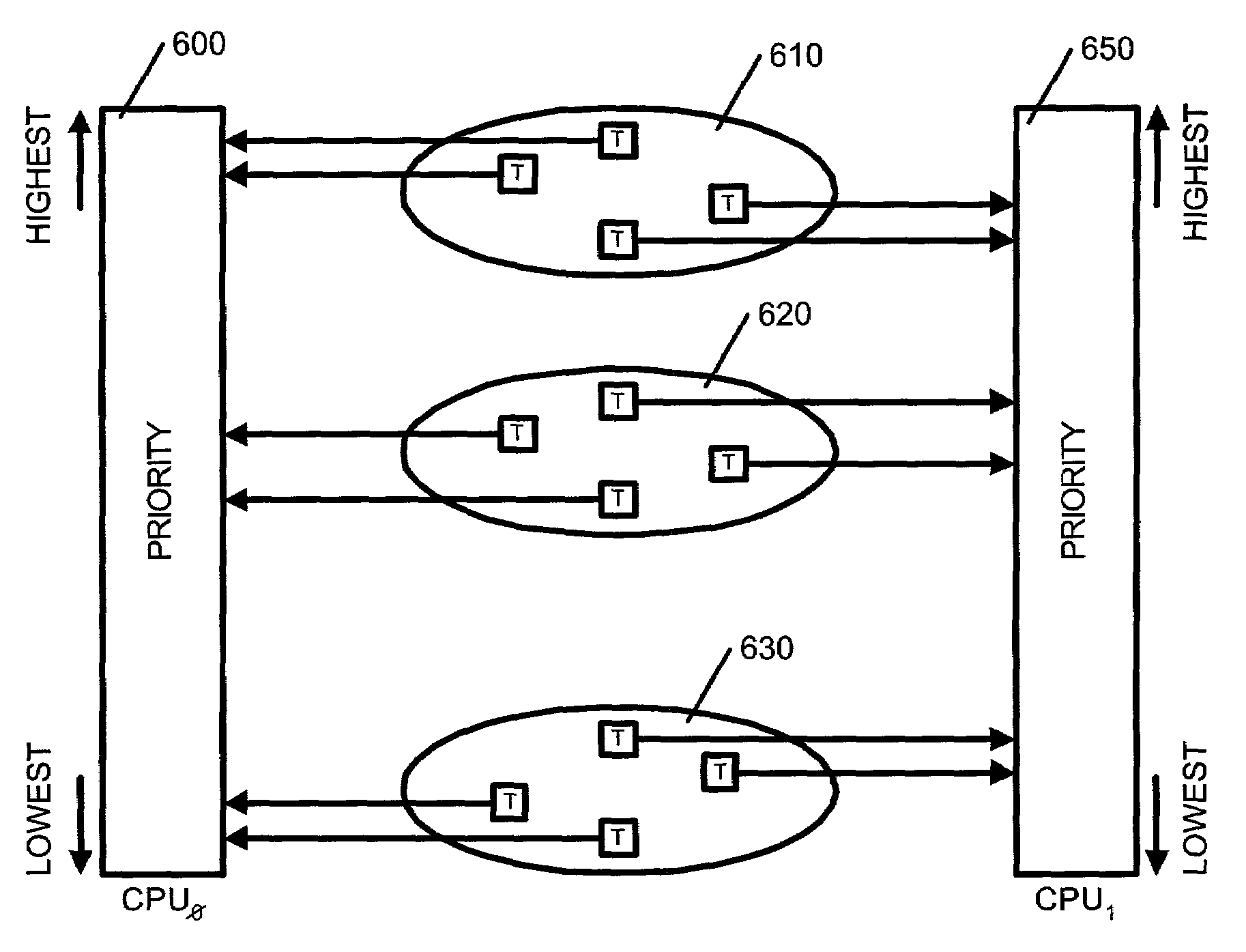

Multiprocessor load balancing system for prioritizing threads and assigning threads into one of a plurality of run queues based on a priority band and a current load of the run queue

InactiveUS7080379B2Resource allocationMultiple digital computer combinationsCurrent loadMulti processor

A method, system and apparatus for integrating a system task scheduler with a workload manager are provided. The scheduler is used to assign default priorities to threads and to place the threads into run queues and the workload manager is used to implement policies set by a system administrator. One of the policies may be to have different classes of threads get different percentages of a system's CPU time. This policy can be reliably achieved if threads from a plurality of classes are spread as uniformly as possible among the run queues. To do so, the threads are organized in classes. Each class is associated with a priority as per a use-policy. This priority is used to modify the scheduling priority assigned to each thread in the class as well as to determine in which band or range of priority the threads fall. Then periodically, it is determined whether the number of threads in a band in a run queue exceeds the number of threads in the band in another run queue by more than a pre-determined number. If so, the system is deemed to be load-imbalanced. If not, the system is load-balanced by moving one thread in the band from the run queue with the greater number of threads to the run queue with the lower number of threads.

Owner:IBM CORP

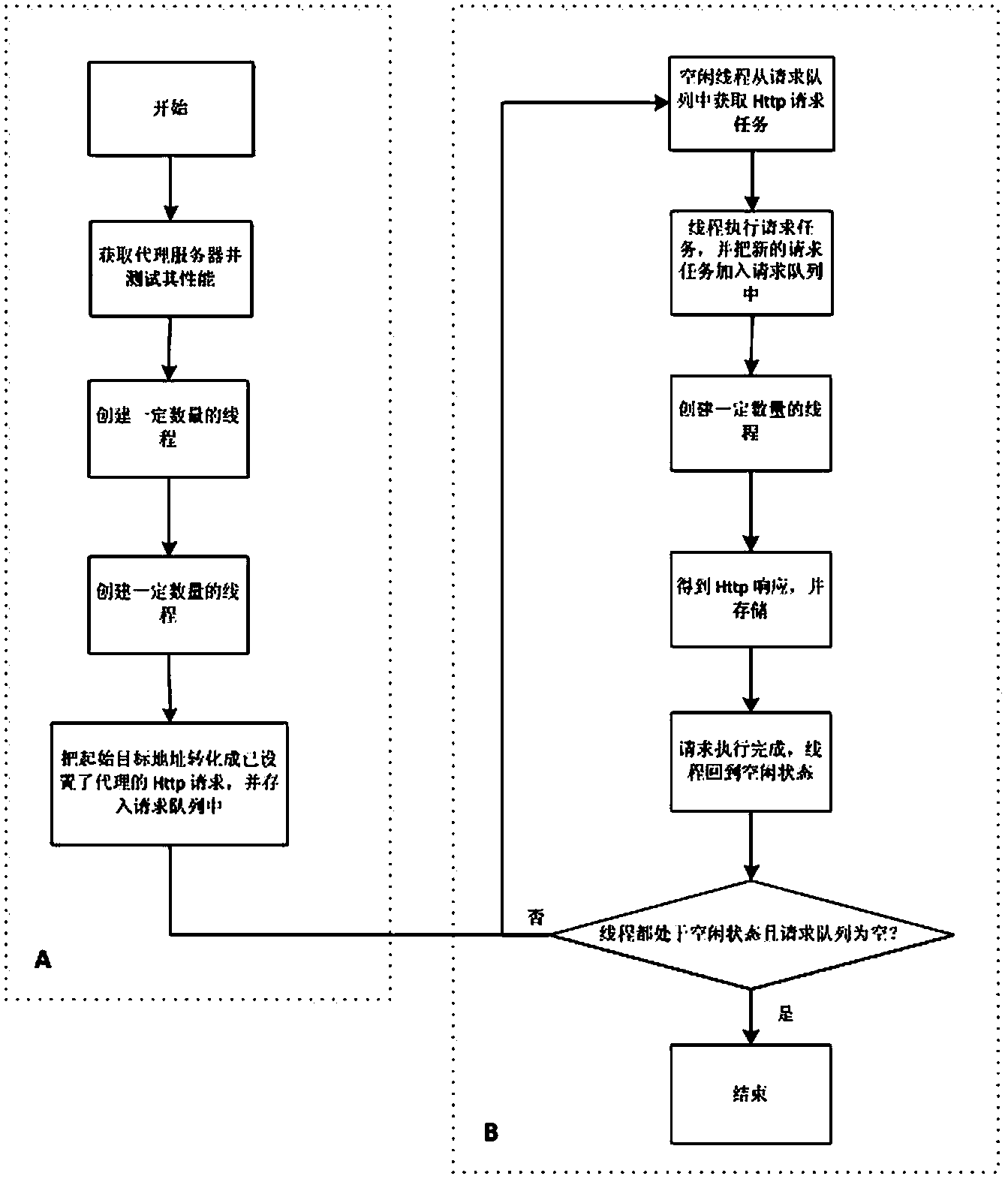

Multi-thread network crawler processing method based on connection proxy optimal management

InactiveCN103902386ABalanced usageAffect normal useResource allocationTransmissionEngineeringServer-side

The invention belongs to the technical field of information processing, and particularly relates to a multi-thread network crawler processing method based on connection proxy optimal management. The multi-thread network crawler processing method comprises the steps of firstly, obtaining a public proxy server on a network, testing the network connection performance of the proxy server, obtaining the optimal number of threads according to the performance of the proxy server, then managing a proxy server pool, setting a valid proxy server for each Http request, and finally executing an access request for a Web page. The multi-thread network crawler processing method has the advantages that the number of the threads is obtained through calculation, resources can be effectively utilized to the maximum extent, resource waste cannot be caused, the number of use of each usable proxy server is balanced, and the phenomenon that frequent access is detected by a server terminal is effectively avoided.

Owner:FUDAN UNIV

Instruction level execution preemption

InactiveUS20130124838A1Quantity minimizationShort amount of timeDigital computer detailsConcurrent instruction executionGranularityParallel computing

One embodiment of the present invention sets forth a technique instruction level and compute thread array granularity execution preemption. Preempting at the instruction level does not require any draining of the processing pipeline. No new instructions are issued and the context state is unloaded from the processing pipeline. When preemption is performed at a compute thread array boundary, the amount of context state to be stored is reduced because execution units within the processing pipeline complete execution of in-flight instructions and become idle. If, the amount of time needed to complete execution of the in-flight instructions exceeds a threshold, then the preemption may dynamically change to be performed at the instruction level instead of at compute thread array granularity.

Owner:NVIDIA CORP

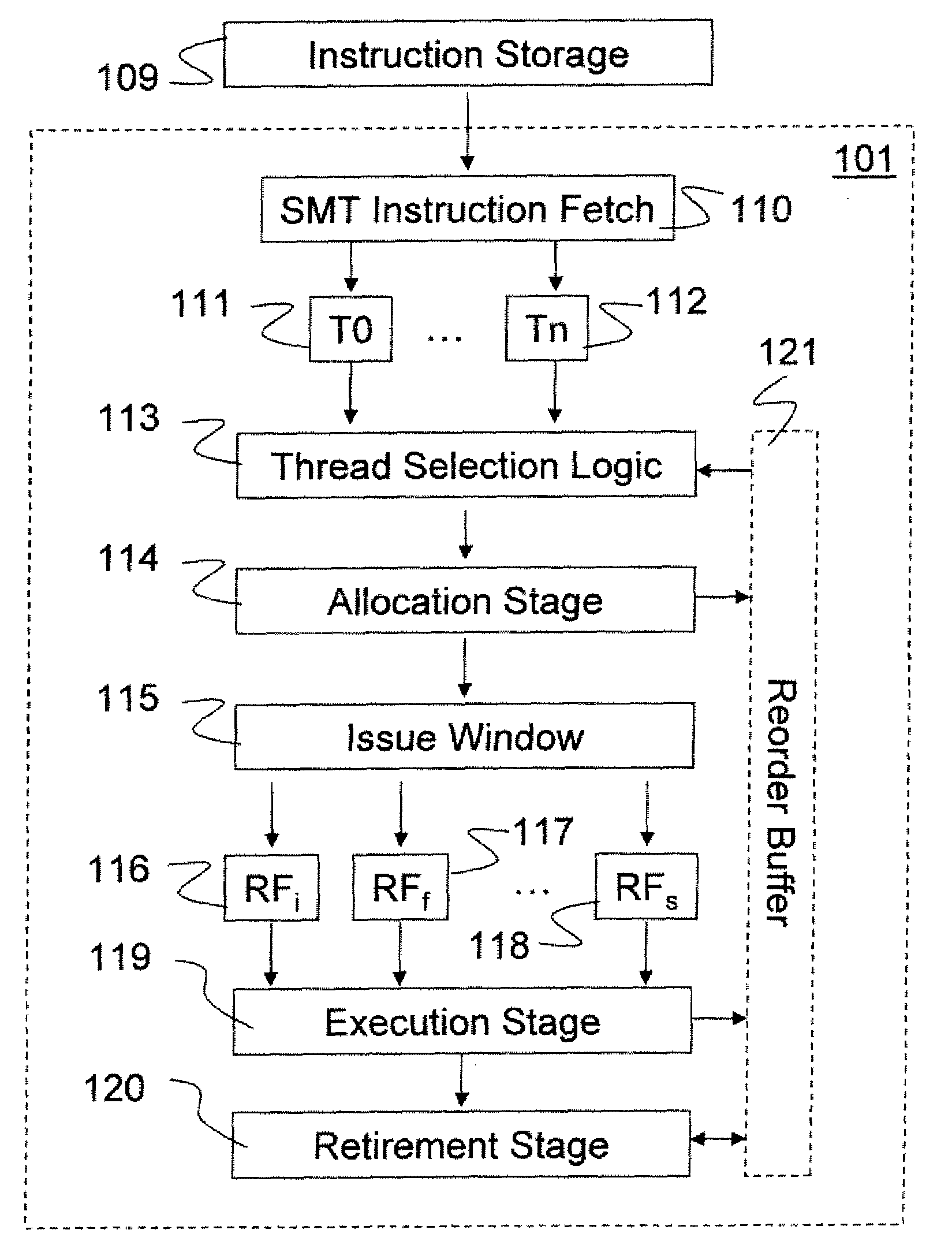

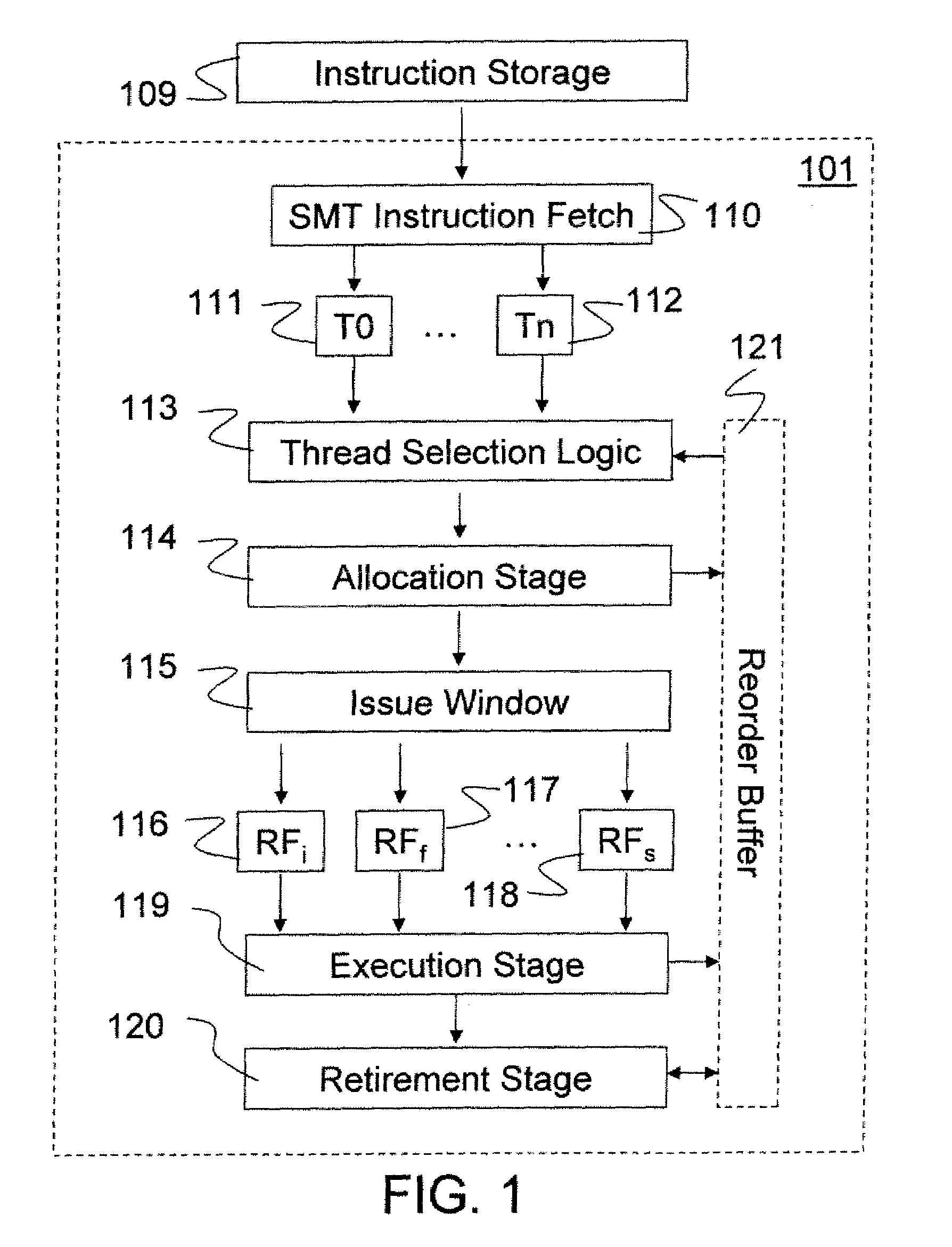

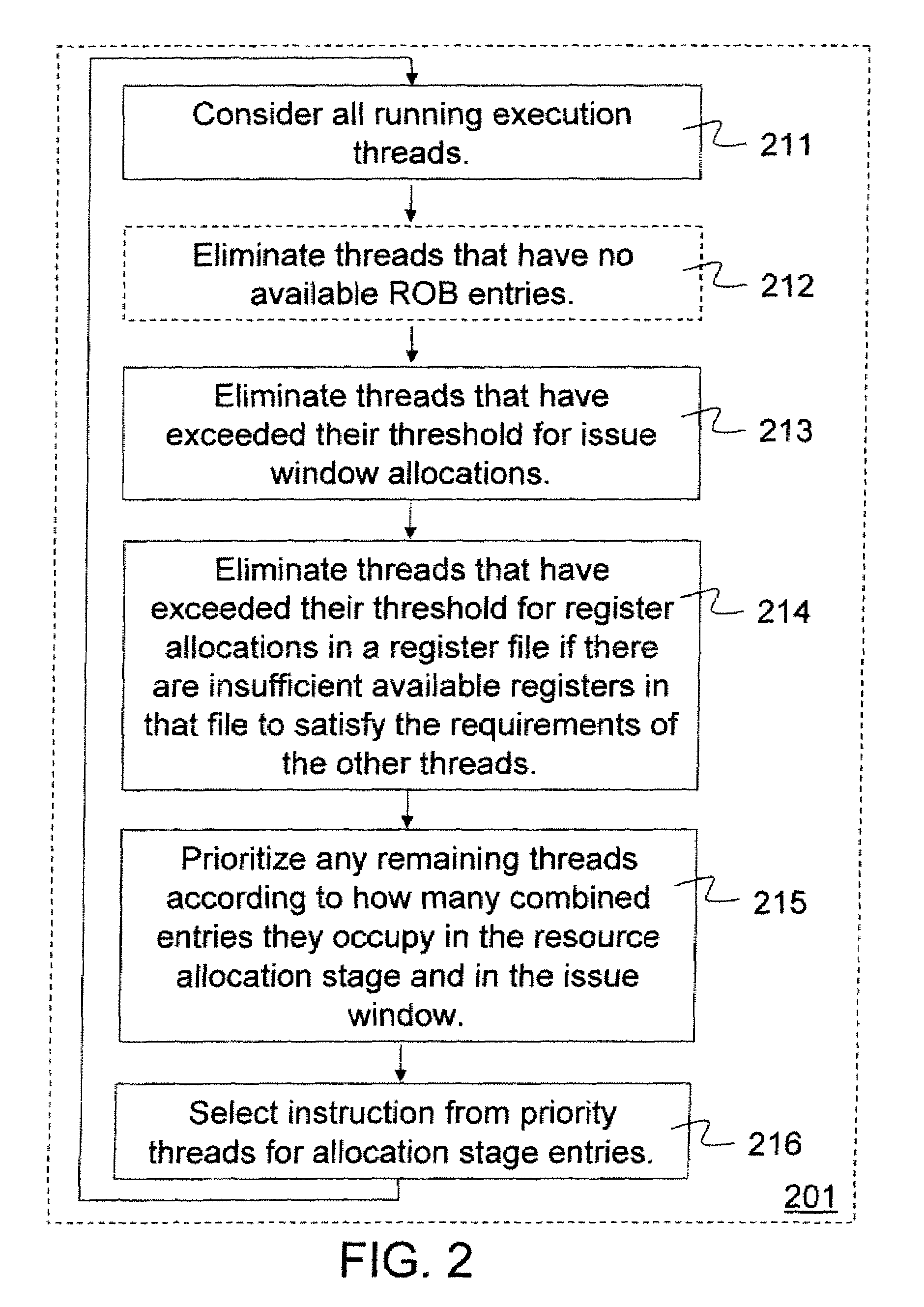

Method and apparatus for selection among multiple execution threads

Methods and apparatus for selecting and prioritizing execution threads for consideration of resource allocation include eliminating threads for consideration from all the running execution threads: if they have no available entries in their associated reorder buffers, or if they have exceeded their threshold for entry allocations in the issue window, or if they have exceeded their threshold for register allocations in some register file and if that register file also has an insufficient number of available registers to satisfy the requirements of the other running execution threads. Issue window thresholds may be dynamically computed by dividing the current number of entries by the number of threads under consideration. Register thresholds may also be dynamically computed and associated with a thread and a register file. Execution threads remaining under consideration can be prioritized according to how many combined entries the thread occupies in the resource allocation stage and the issue window.

Owner:INTEL CORP

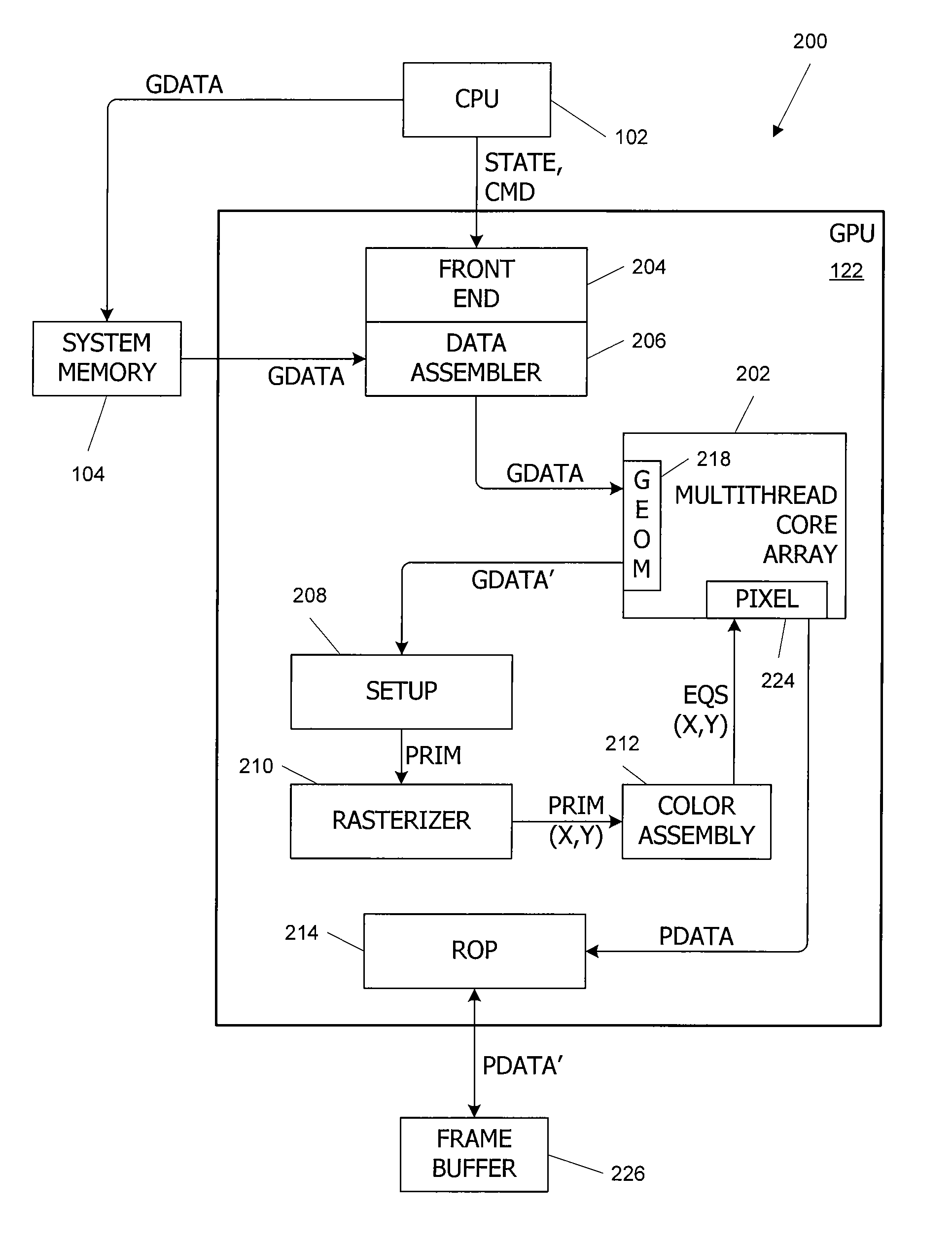

Thread count throttling for efficient resource utilization

ActiveUS8429656B1Reduce in quantityEnergy efficient ICTGeneral purpose stored program computerGraphicsResource utilization

Methods and apparatuses are presented for graphics operations with thread count throttling, involving operating a processor to carry out multiple threads of execution of, wherein the processor comprises at least one execution unit capable of supporting up to a maximum number of threads, obtaining a defined memory allocation size for allocating, in at least one memory device, a thread-specific memory space for the multiple threads, obtaining a per thread memory requirement corresponding to the thread-specific memory space, determining a thread count limit based on the defined memory allocation size and the per thread memory requirement, and sending a command to the processor to cause the processor to limit the number of threads carried out by the at least one execution unit to a reduced number of threads, the reduced number of threads being less than the maximum number of threads.

Owner:NVIDIA CORP

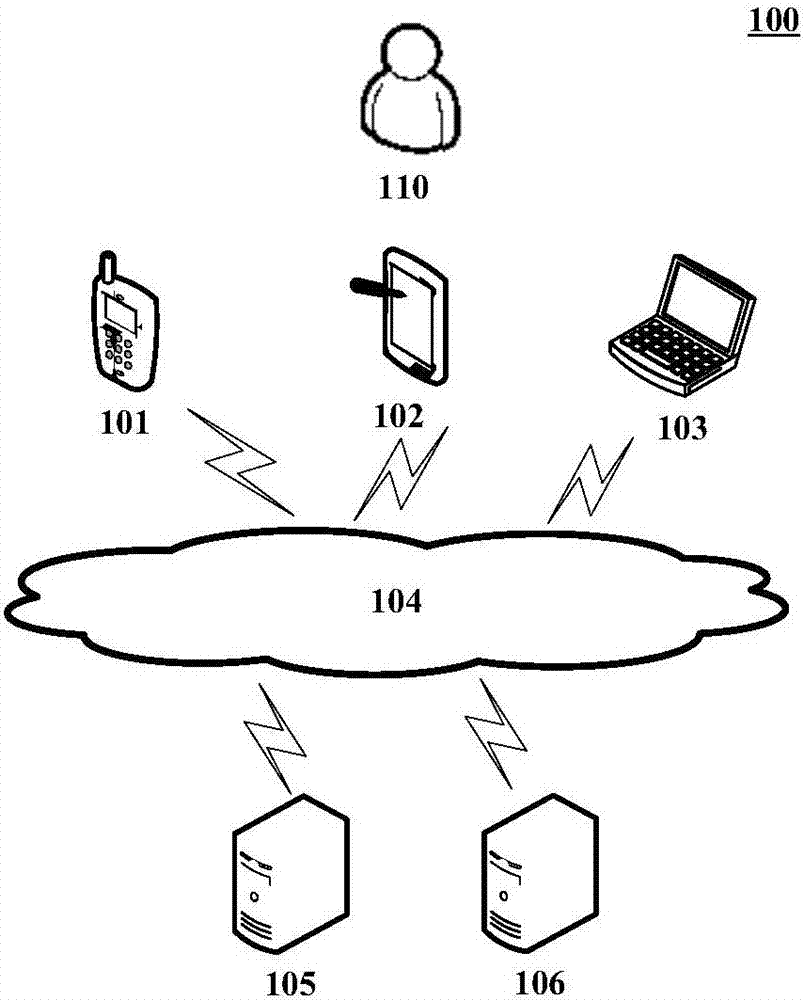

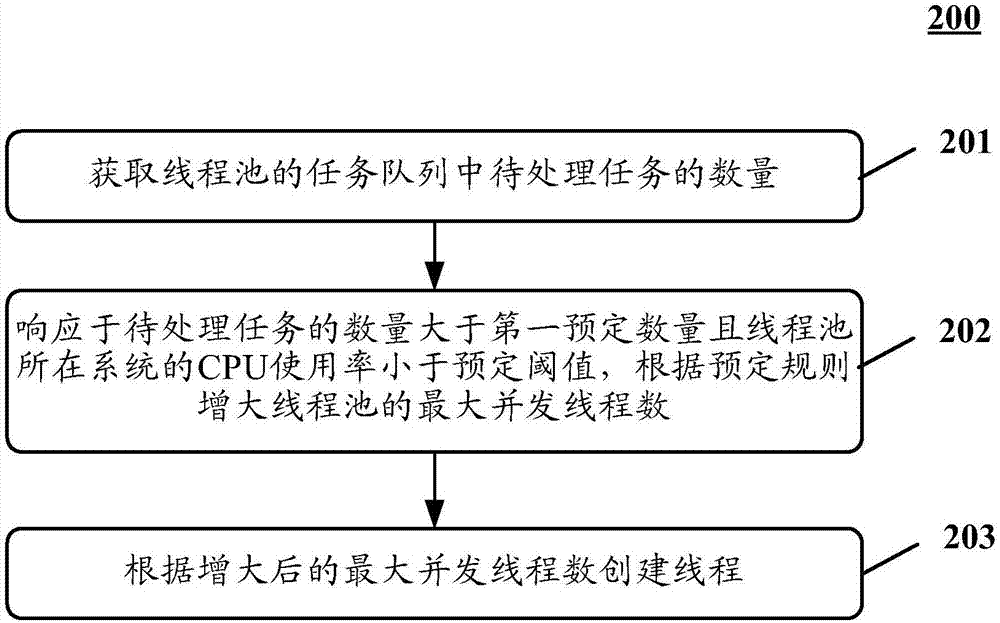

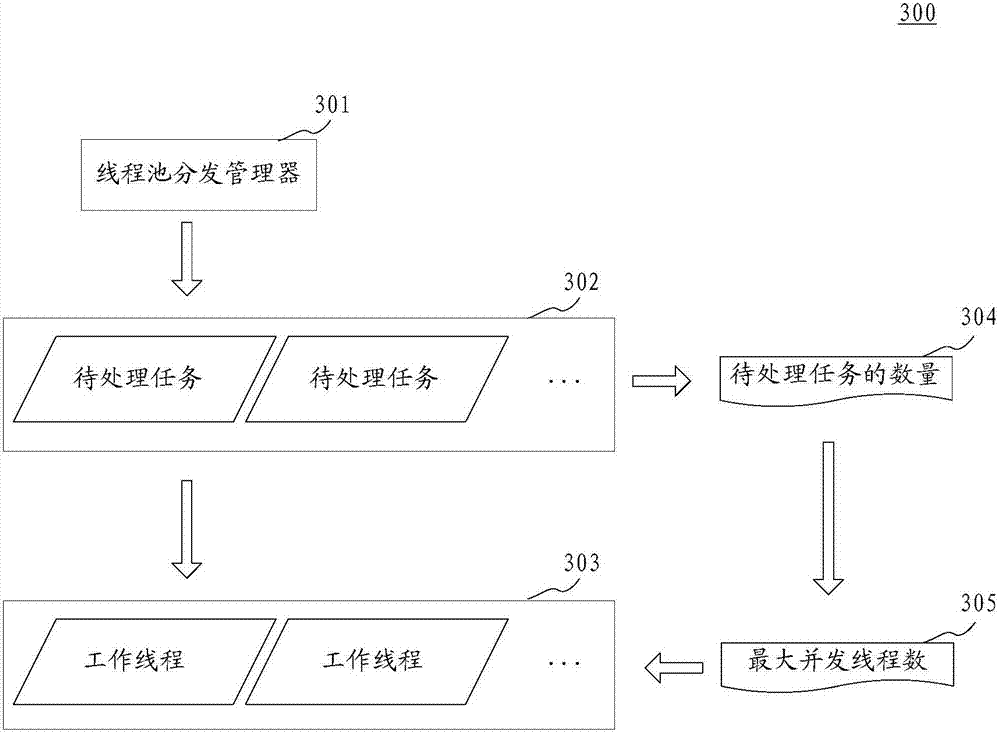

Method and device for controlling thread quantity in thread pool

InactiveCN107220033AImprove operational efficiencyConcurrent instruction executionOperating systemThread pool

The application discloses a method and a device for controlling thread quantity in a thread pool. An embodiment of the method includes steps of acquiring quantity of tasks to be treated in a task queue of the thread pool; increasing the maximum concurrent thread number of the thread pool according to a predetermined rule since the quantity in responding to the to-be-treated task is more than the first predetermined quantity, and the CPU use rate of the system where the thread pool locates is less than the predetermined threshold value; establishing a thread according to the increased maximum concurrent thread number. By the method, the running efficiency of the thread pool is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD +1

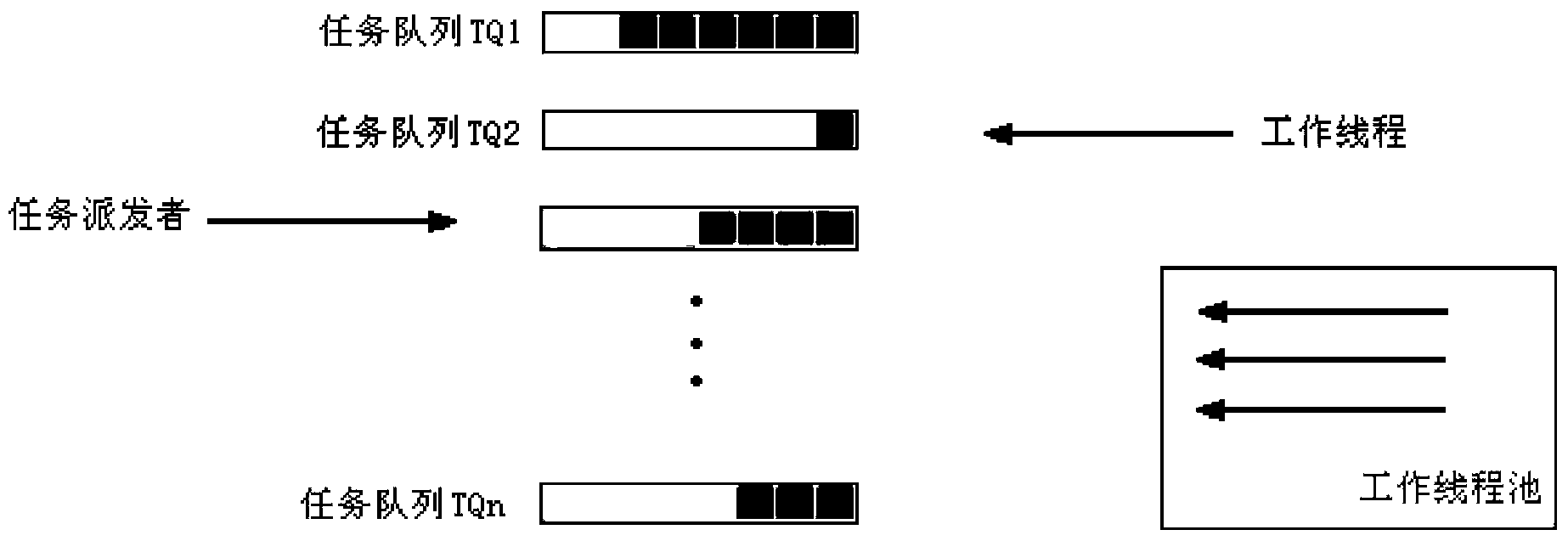

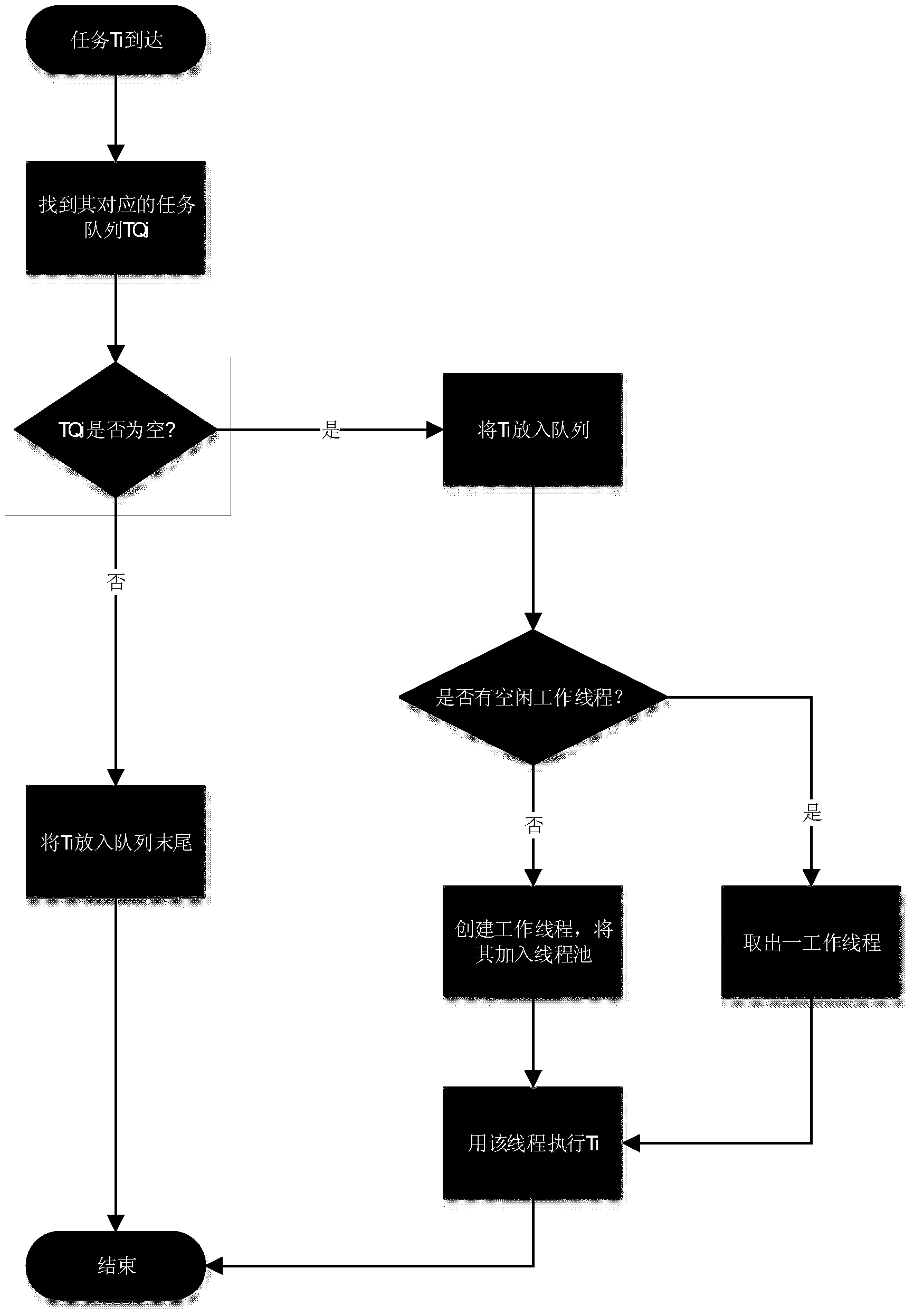

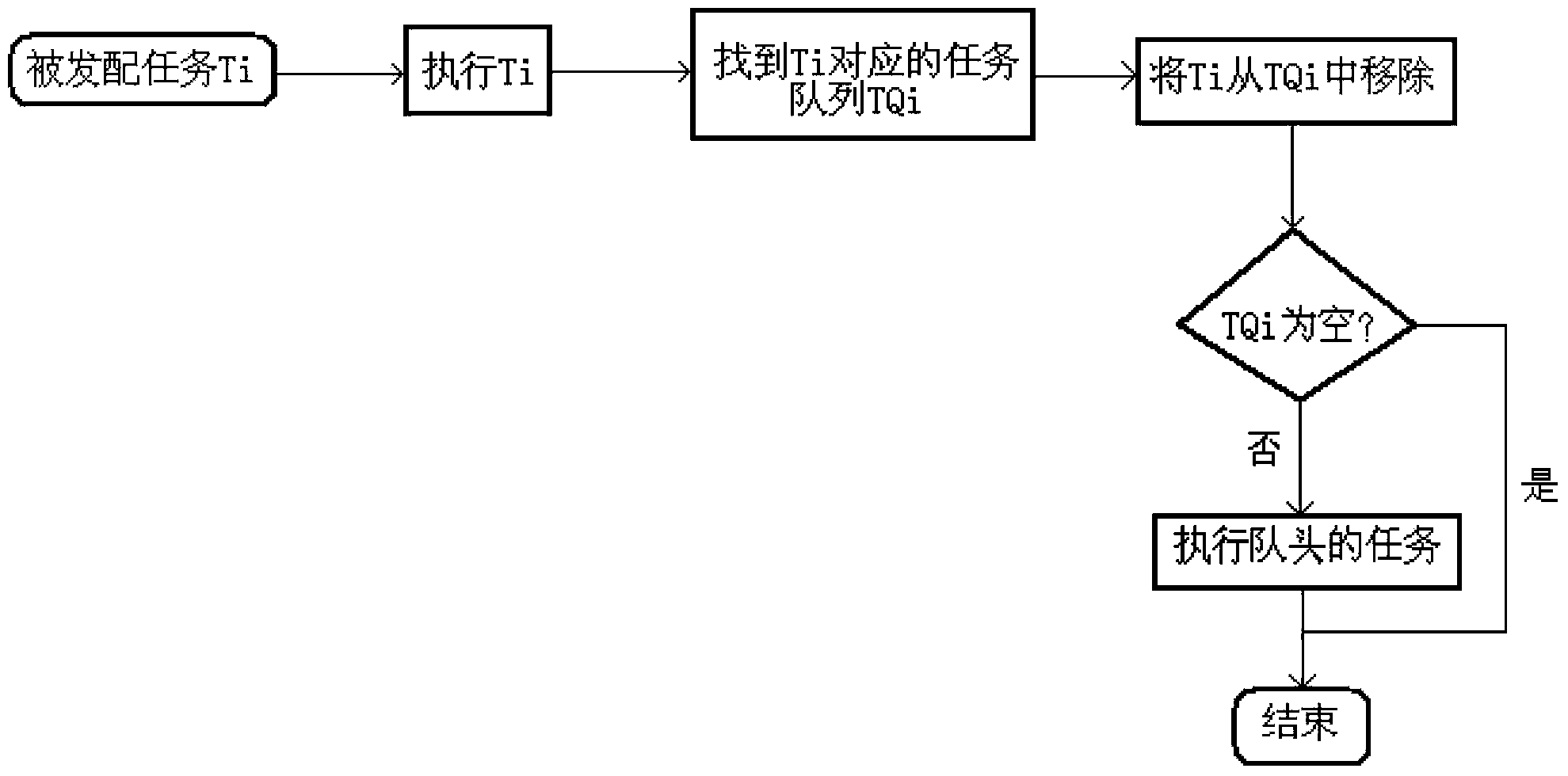

Multi-tasking queue scheduling method based on thread pool

InactiveCN103473138AImmediate and orderly processingSave resourcesResource allocationThread poolThread count

The invention discloses a multi-tasking queue scheduling method based on a thread pool. First, a corresponding task queue is created according to task types, and the task queue is not directly associated with work threads in a work thread pool. Then, a task dispatcher is created for receiving a task and dispatching the task to the corresponding task queue according to the type of the task. Finally, when the task is dispatched to the task queue by the task dispatcher, an idle work thread is invoked from the work thread pool to execute the task. After the task is executed, the work thread returns to the work thread pool. The method can adapt to conditions that a large quantity of tasks belonging to different types exist and that all the tasks need to be instantly and orderly processed, one independent work process or thread does not need to be created for each task type, system resources can be greatly and effectively saved, and the work threads in the work thread pool can be created or deleted according to actual conditions, so that the scheduling system adopting the method has great thread count scalability.

Owner:柳州市博源环科科技有限公司

MIL-STD-1553B bus monitoring and data analysis system

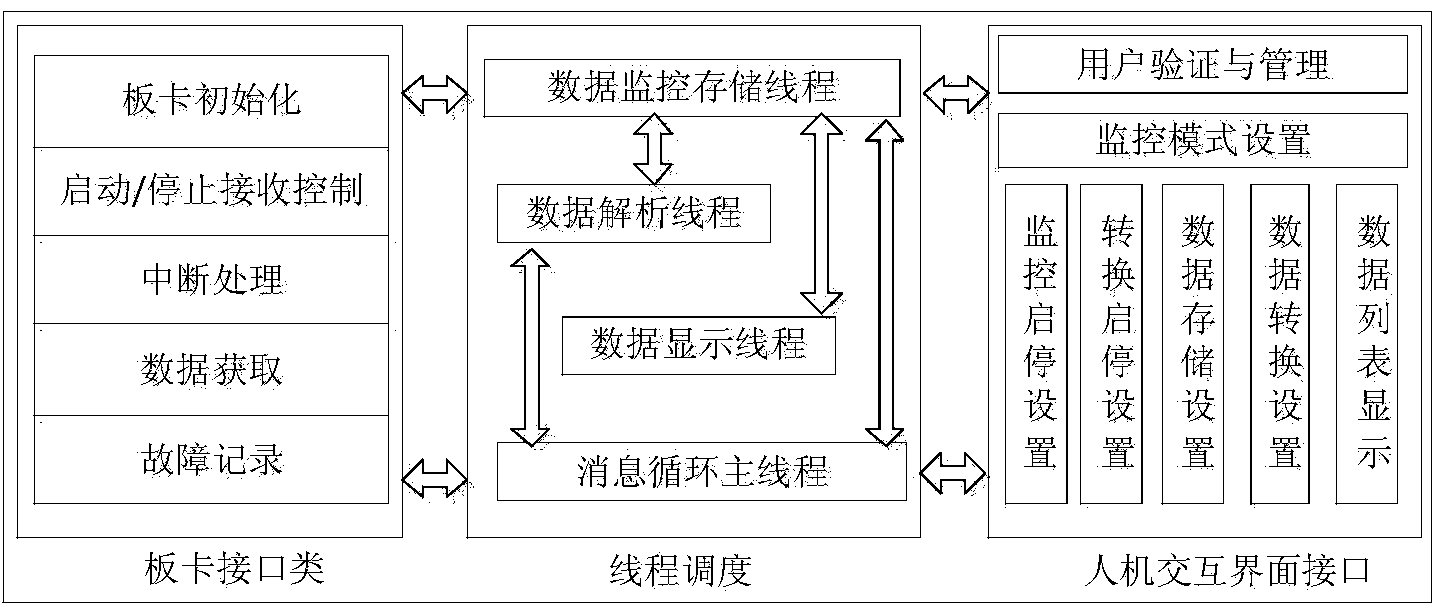

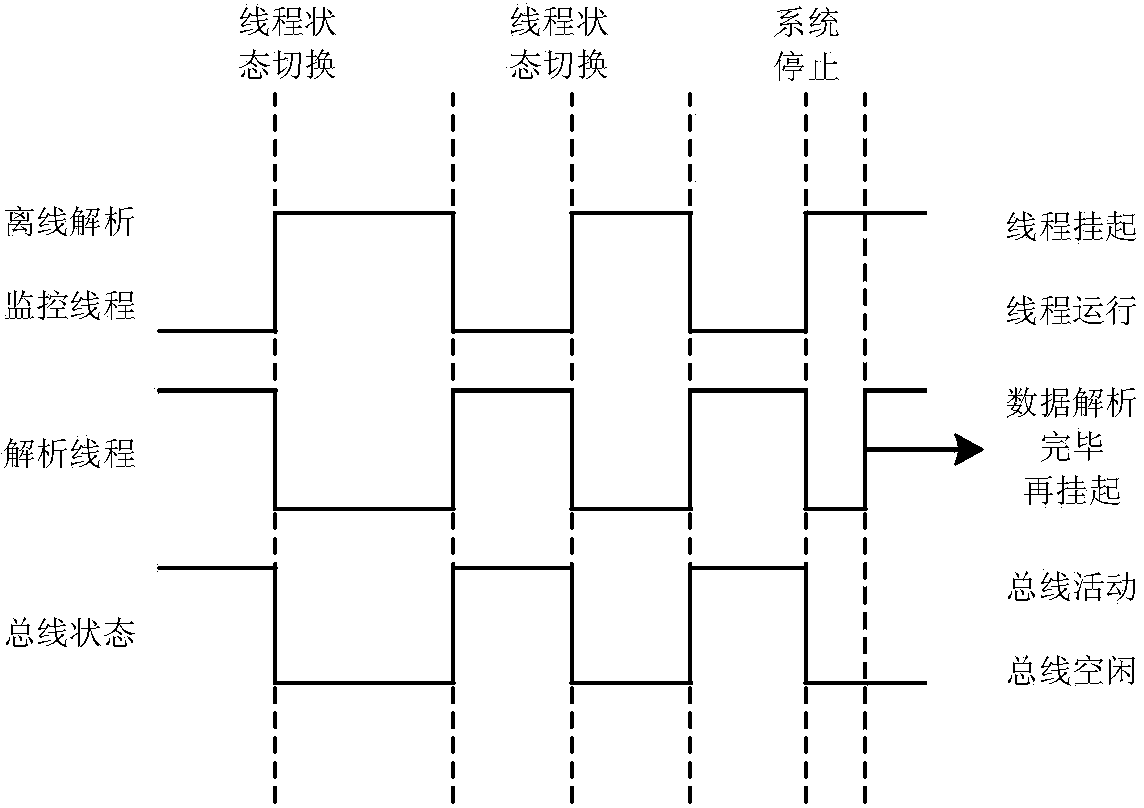

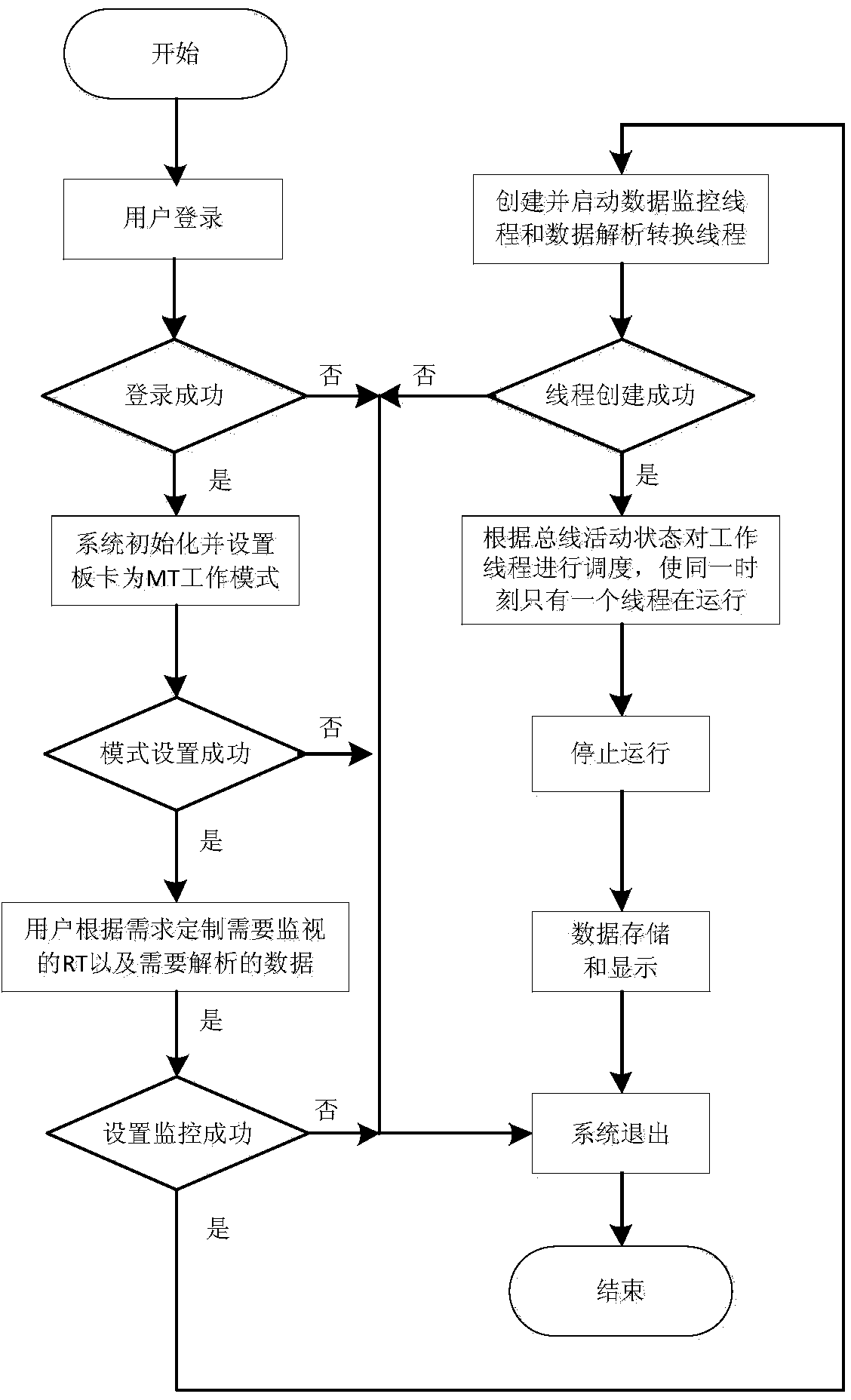

ActiveCN103645947AGuaranteed resource exclusivityImprove data processing capabilitiesProgram initiation/switchingSpecial data processing applicationsData conversionMonitoring data

The invention discloses a 1553B bus monitoring and data analysis system. The 1553B bus monitoring and data analysis system comprises a board card interface module, a thread scheduling module and a man-machine interaction interface module. The board card interface module comprises a board card initialization module, a starting / stopping receiving control module, an interrupt processing module, a data acquisition module and a failure recording module. The thread scheduling module is provided with a data monitoring storage thread, a data analysis thread, a data display thread and a message loop main thread. The man-machine interaction interface module is provided with a user authentication and management module, a monitoring mode setting module, a monitoring starting and stopping setting module, a data conversion setting module, a conversion starting and stopping setting module, a data memory module and a data list display module. According to the 1553B bus monitoring and data analysis system, a thread dynamic scheduling algorithm is adopted, comprehensive monitoring of bus data and real-time storage, display and analysis conversion of MT monitoring data can be achieved, and display of bus monitoring information stored in a specific format and conversion processing of stored data can also be achieved.

Owner:BEIHANG UNIV

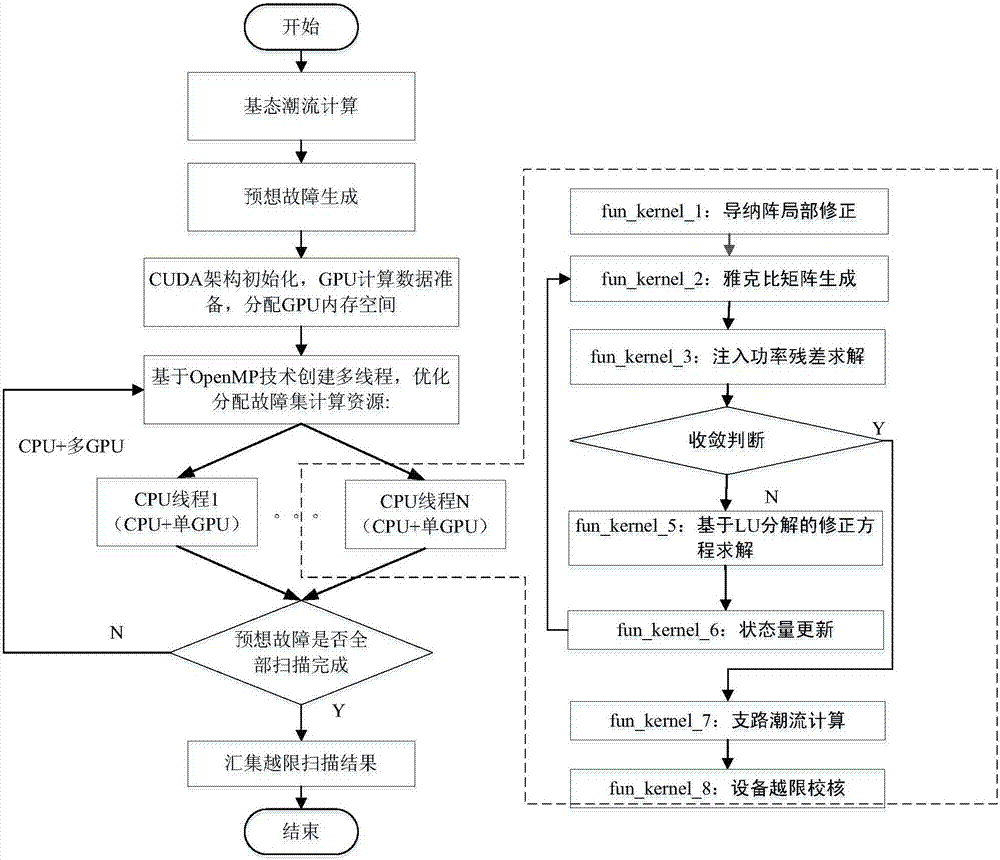

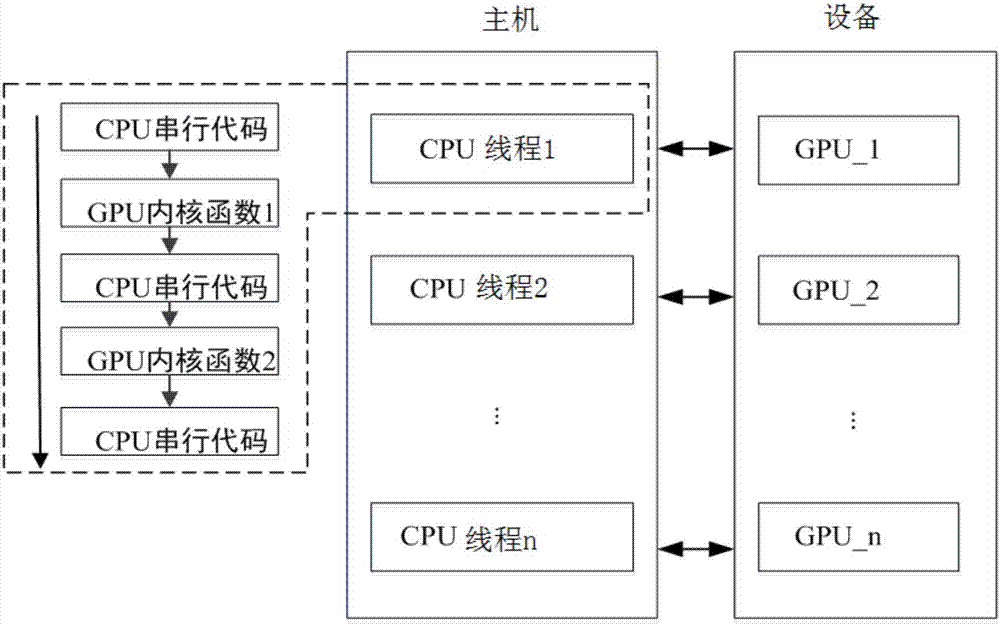

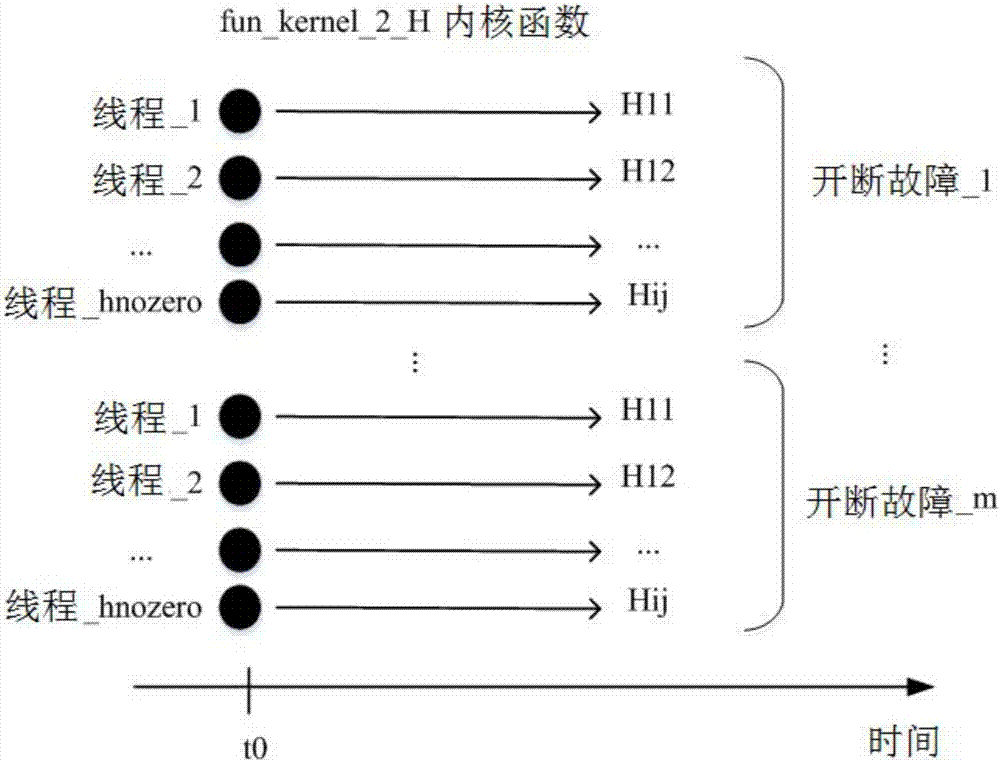

CPU+multi-GPU heterogeneous mode static safety analysis calculation method

InactiveCN106874113AImprove static security analysis scanning calculation efficiencyData processing applicationsResource allocationHybrid programmingMulti gpu

The invention discloses a CPU+multi-GPU heterogeneous mode static safety analysis calculation method. For the requirement for fast scanning for static safety analysis of large power grid in a practical engineering application, an OpenMp multithreading technique is adopted for allocating corresponding thread count according to a system GPU configuration condition and a calculation requirement on a CUDA unified calculation frame platform; each thread is uniquely corresponding to a single GPU; on the basis of CPU and GPU hybrid programming development, a CPU+multi-GPU heterogeneous calculation mode is constructed for collaboratively completing the preconceived fault parallel calculation; on the basis of single preconceived fault load flow calculation, the highly synchronous parallel of a plurality of cut-off load flow iterative processes is realized; the parallel processing capability of preconceived fault scanning for the static safety analysis is greatly promoted through element-grade fine grit parallel; a powerful technical support is supplied for the online safety analysis early-warning scanning of an integrated dispatching system of an interconnected large power grid.

Owner:NARI TECH CO LTD +2

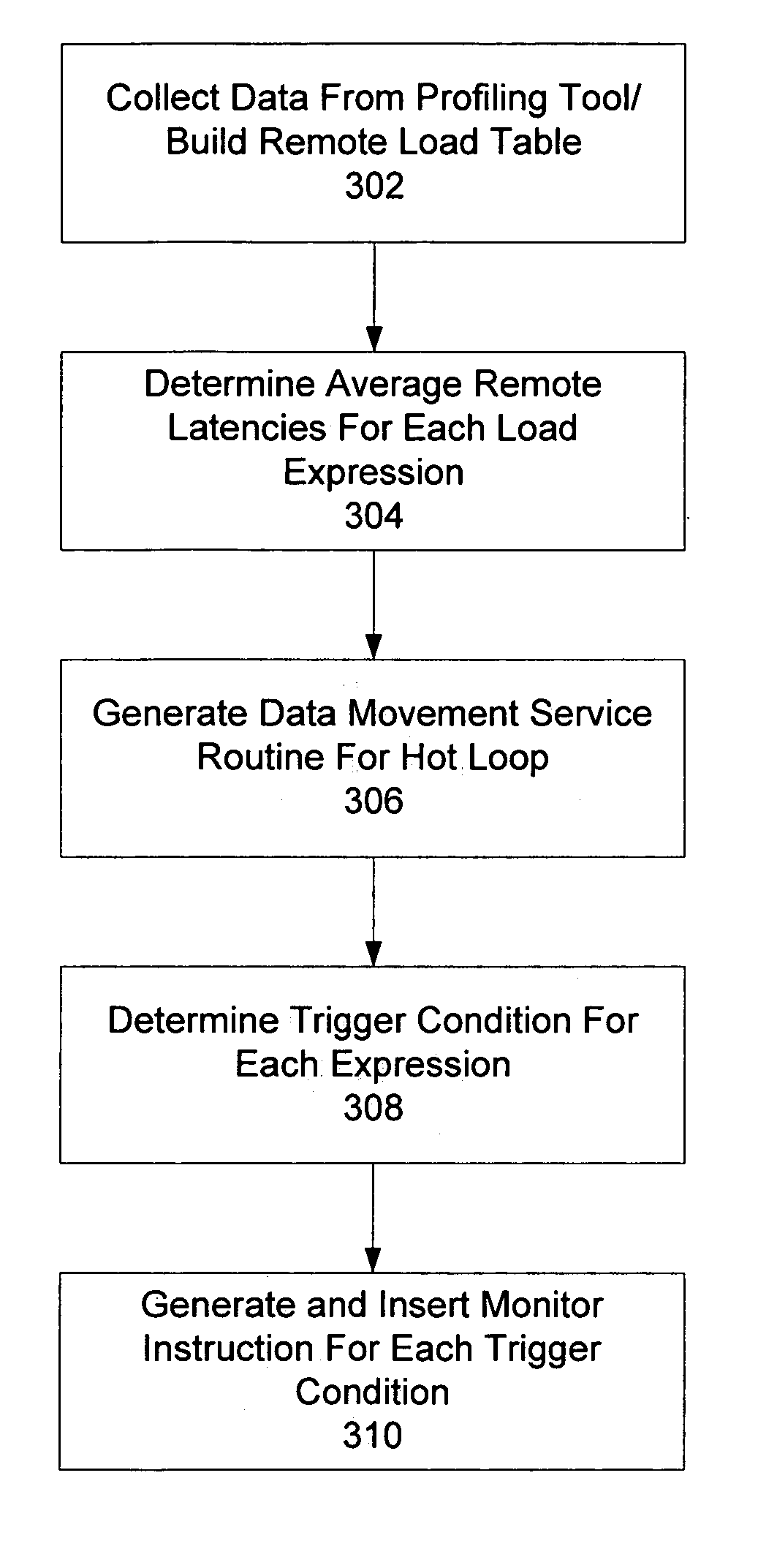

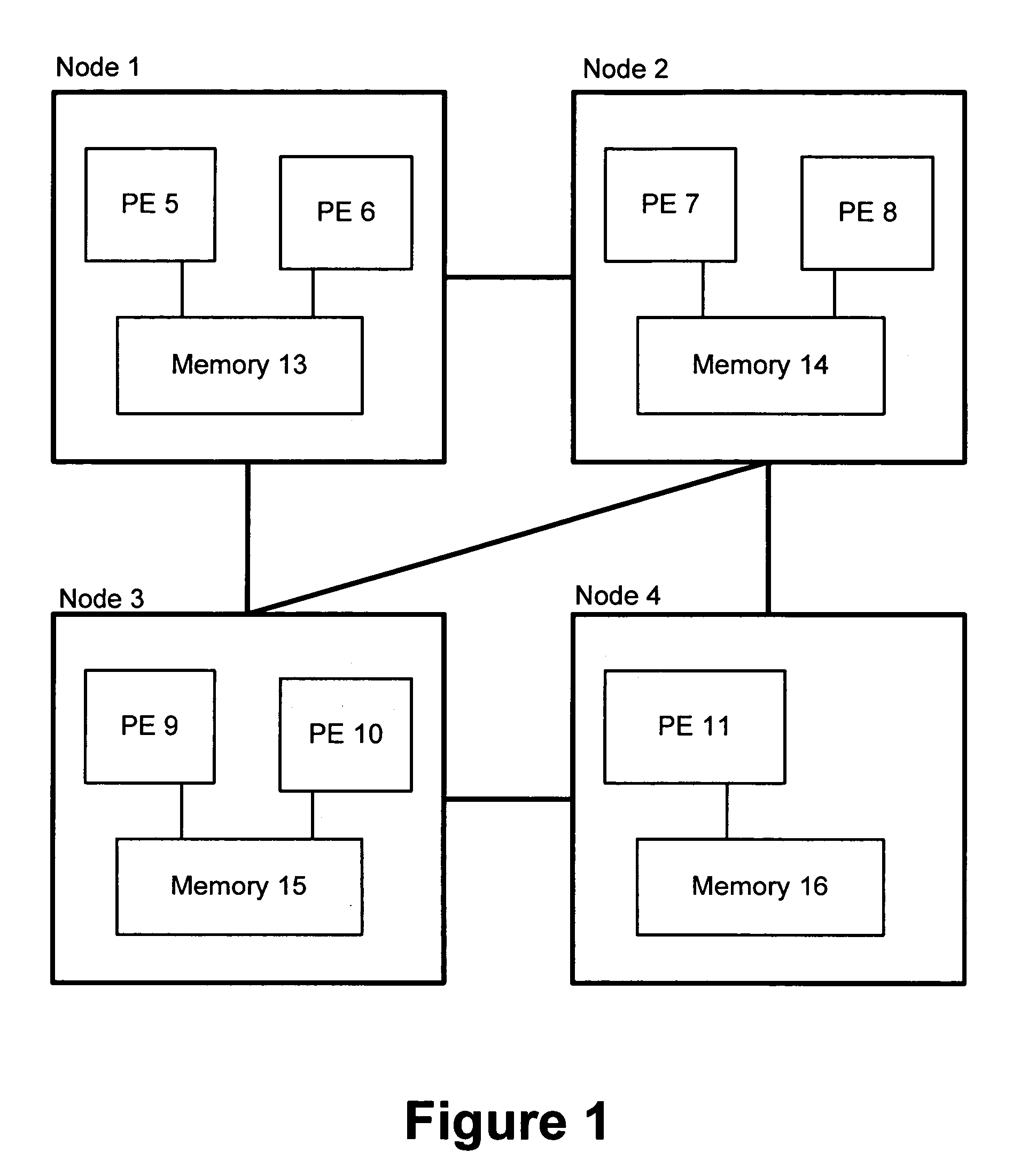

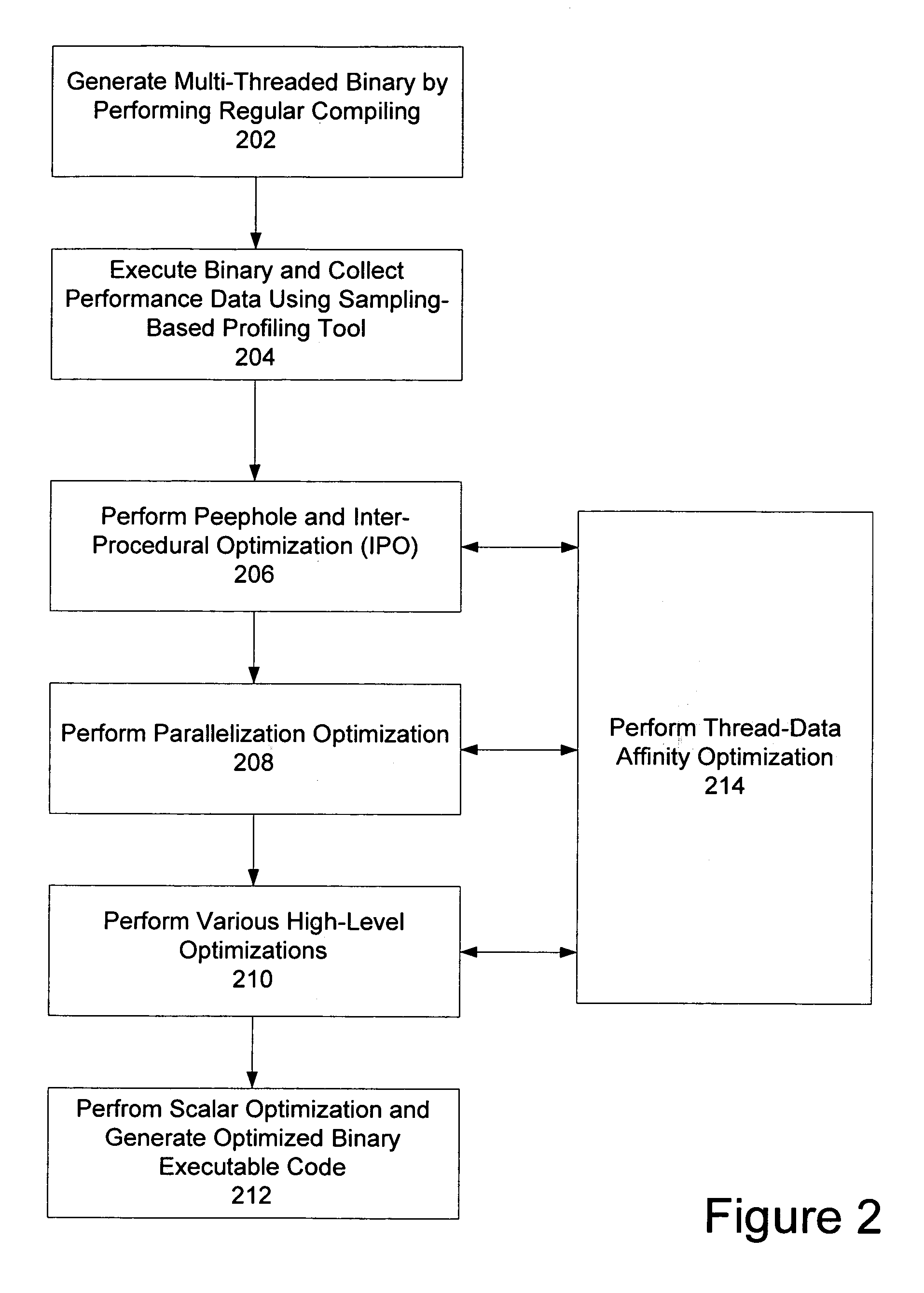

Thread-data affinity optimization using compiler

InactiveUS8037465B2Compact integrationSpecific program execution arrangementsMemory systemsUniform memory accessParallel computing

Thread-data affinity optimization can be performed by a compiler during the compiling of a computer program to be executed on a cache coherent non-uniform memory access (cc-NUMA) platform. In one embodiment, the present invention includes receiving a program to be compiled. The received program is then compiled in a first pass and executed. During execution, the compiler collects profiling data using a profiling tool. Then, in a second pass, the compiler performs thread-data affinity optimization on the program using the collected profiling data.

Owner:INTEL CORP

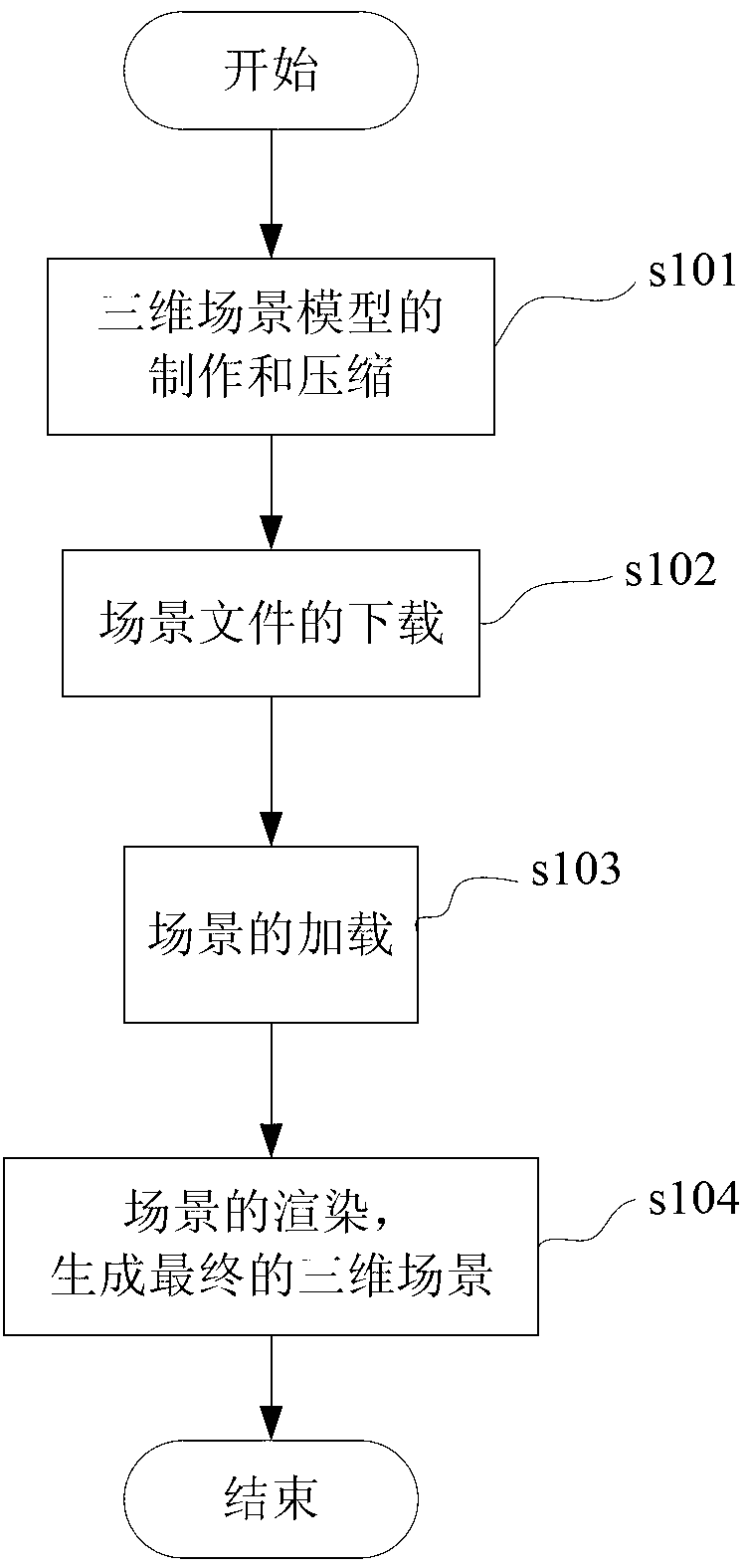

Three-dimensional scene construction method based on browser

InactiveCN103021023AReduce the amount of data transferredGuaranteed uptimeTransmission3D-image renderingComputer graphics (images)Resource management

The invention relates to a three-dimensional scene construction method based on a browser. The method includes producing an original three-dimensional scene model, compressing the original three-dimensional scene model into a required three-dimensional scene file through a three-dimensional compression algorithm, and storing the file in a server; downloading the three-dimensional scene file on the server through the browser, setting a download thread count according to the size of the file, and setting the download priority of the three-dimensional scene file simultaneously; loading the downloaded three-dimensional scene file in a resource manager of a client; and rendering a three-dimensional scene in the resource manager to generate a final three-dimensional scene. Compared with the prior art, the construction method has the advantages of being rapid in scene download speed, high in rendering efficiency, capable of improving operating speed and the like.

Owner:上海创图网络科技股份有限公司

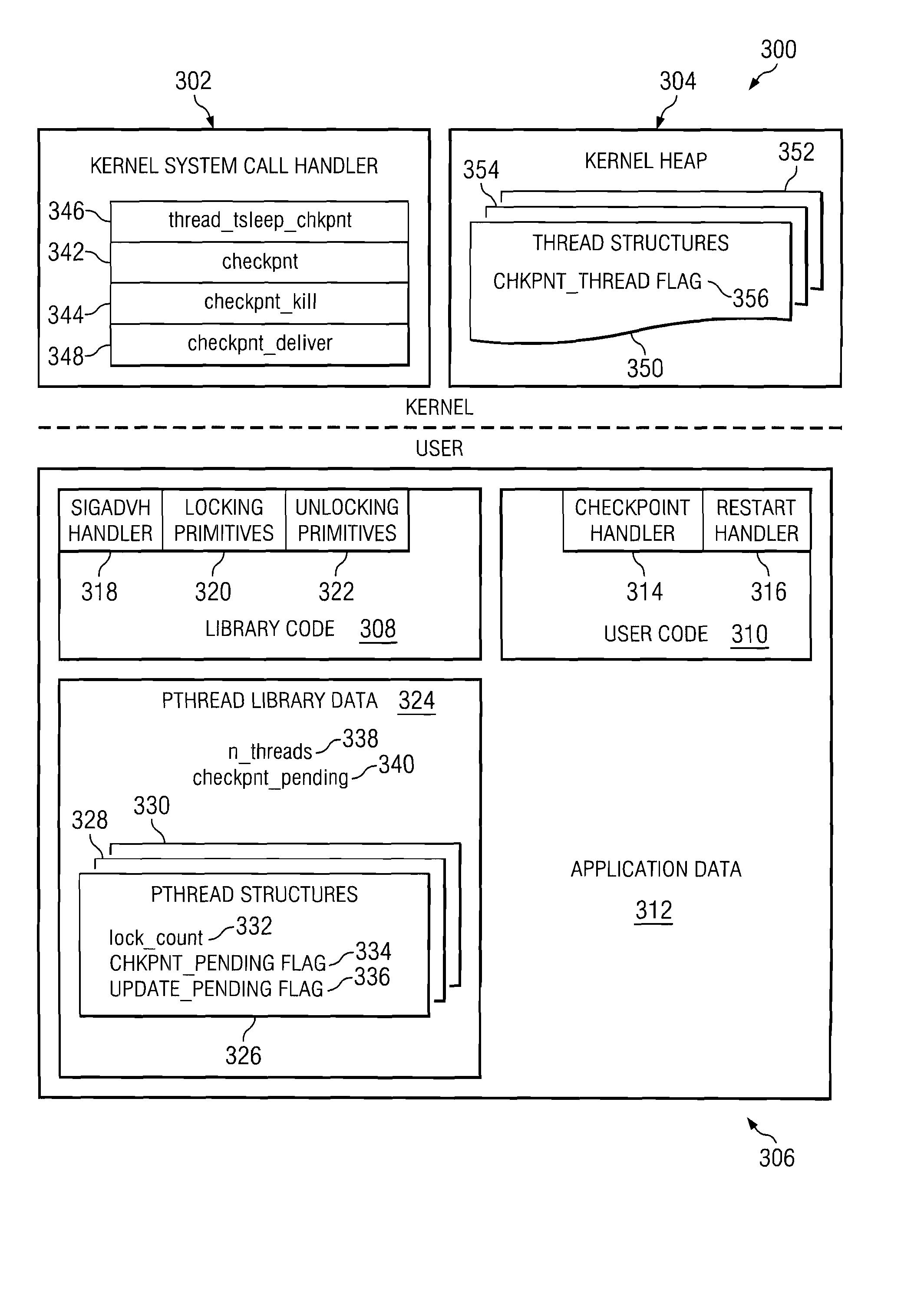

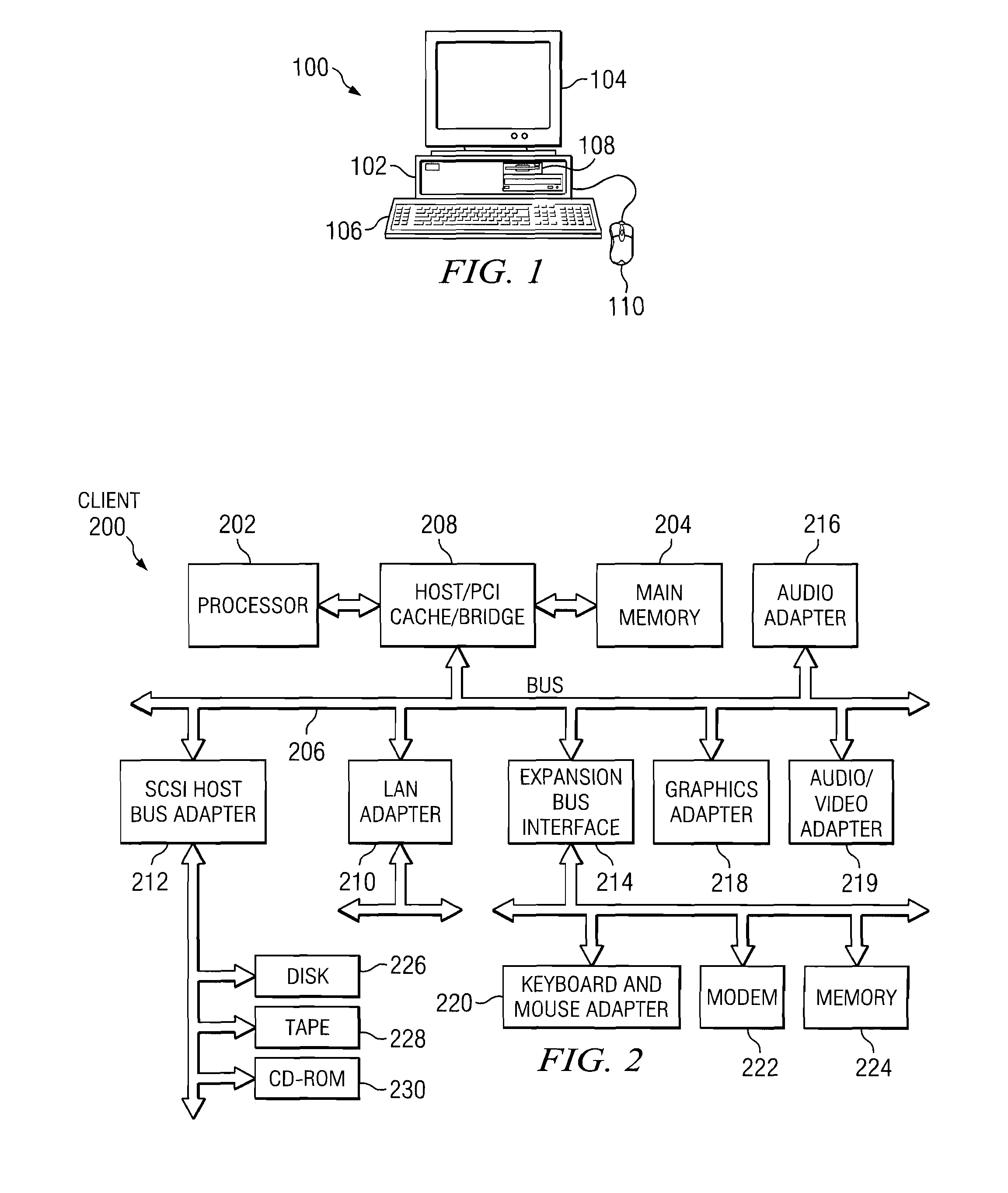

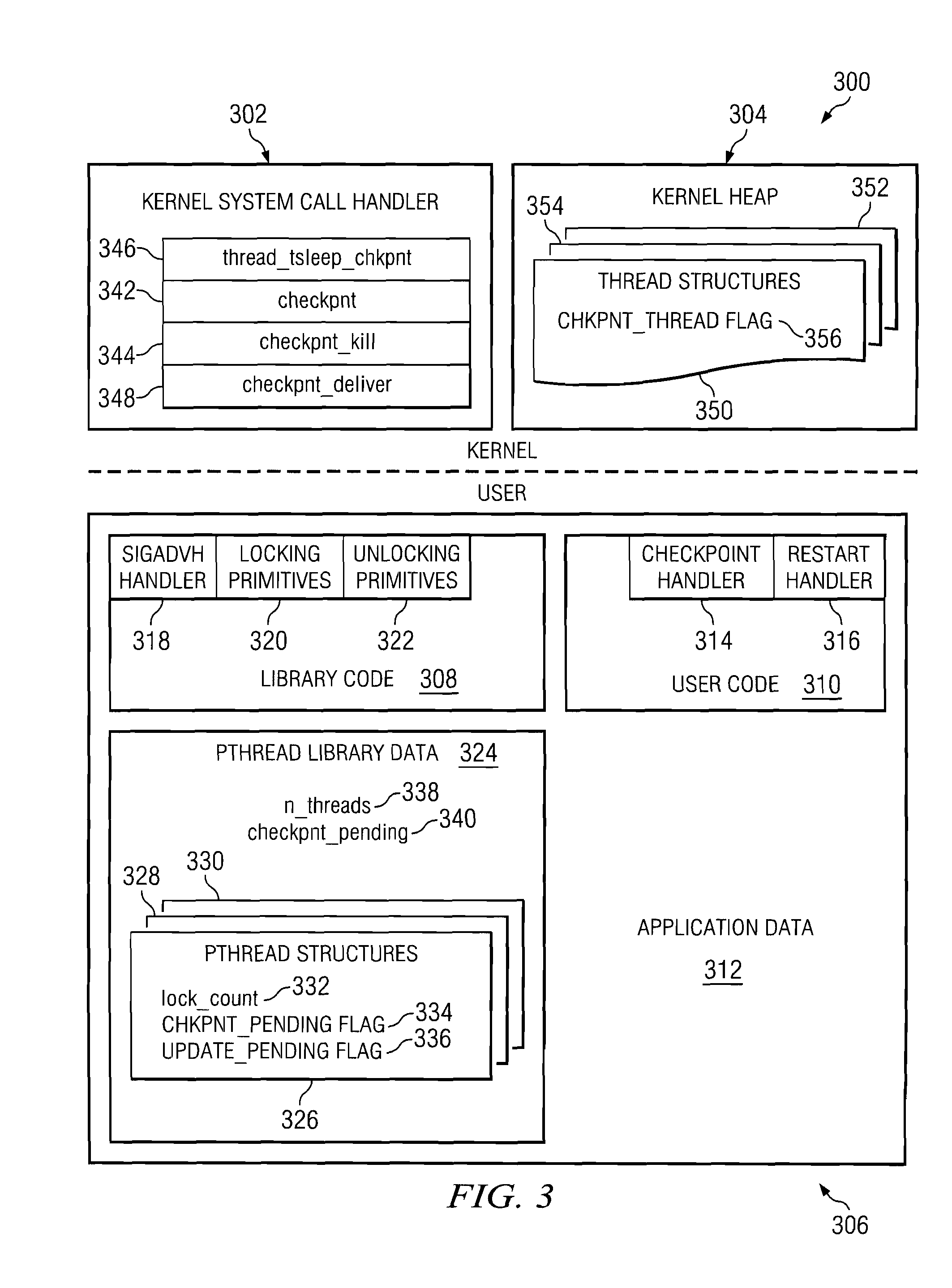

Method and apparatus for thread-safe handlers for checkpoints and restarts

InactiveUS20080077934A1Unauthorized memory use protectionMultiprogramming arrangementsData processing systemParallel computing

A method, apparatus, and computer instructions for executing a handler in a multi-threaded process handling a number of threads in a manner that avoids deadlocks. A value equal to the number of threads executing in the data processing system is set. The value is decremented each time a lock count for a thread within the number of threads is zero. A thread within the number of threads is suspended if the thread requests a lock and has a lock count of zero. A procedure, such as a handler, is executed in response to all of the threads within the number of threads having no locks.

Owner:INT BUSINESS MASCH CORP

Thread pool task processing method in high-availability cluster system

InactiveCN107832146AImprove real-time data transmission efficiencyLoad balancingProgram initiation/switchingResource allocationThread poolThread count

The invention discloses a thread pool task processing method in high-availability cluster system. the method includes the steps of previously creating a certain number of unoccupied working threads atfirst, wherein the unoccupied working threads all stay at a condition blocking state during initiation; forming a working task array; adopting a main thread of a thread pool for sequentially processing a cycle of searching for working tasks, examining the state of the thread pool and distributing working threads for the working tasks; obtaining a working task to be processed from the head part ofthe working task array; if the working task to be processed is obtained successfully, proceeding to the next step; if the working task to be processed is not obtained successfully, maintaining the state of obtaining the working task; when the number of the current occupied threads in the thread pool accounts for over a certain proportion of the total thread number, not processing the current working task; detecting the state of the thread pool, and when the number of the current unoccupied threads is smaller than a minimum unoccupied value, creating a certain number of unoccupied threads to maintain the balance state of the thread pool; when the number of the current unoccupied threads is greater than a maximum unoccupied value, releasing a certain number of unoccupied threads; distributing a working thread for the working task to be processed.

Owner:BEIJING INST OF COMP TECH & APPL

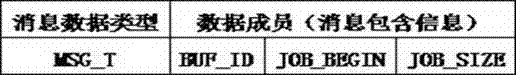

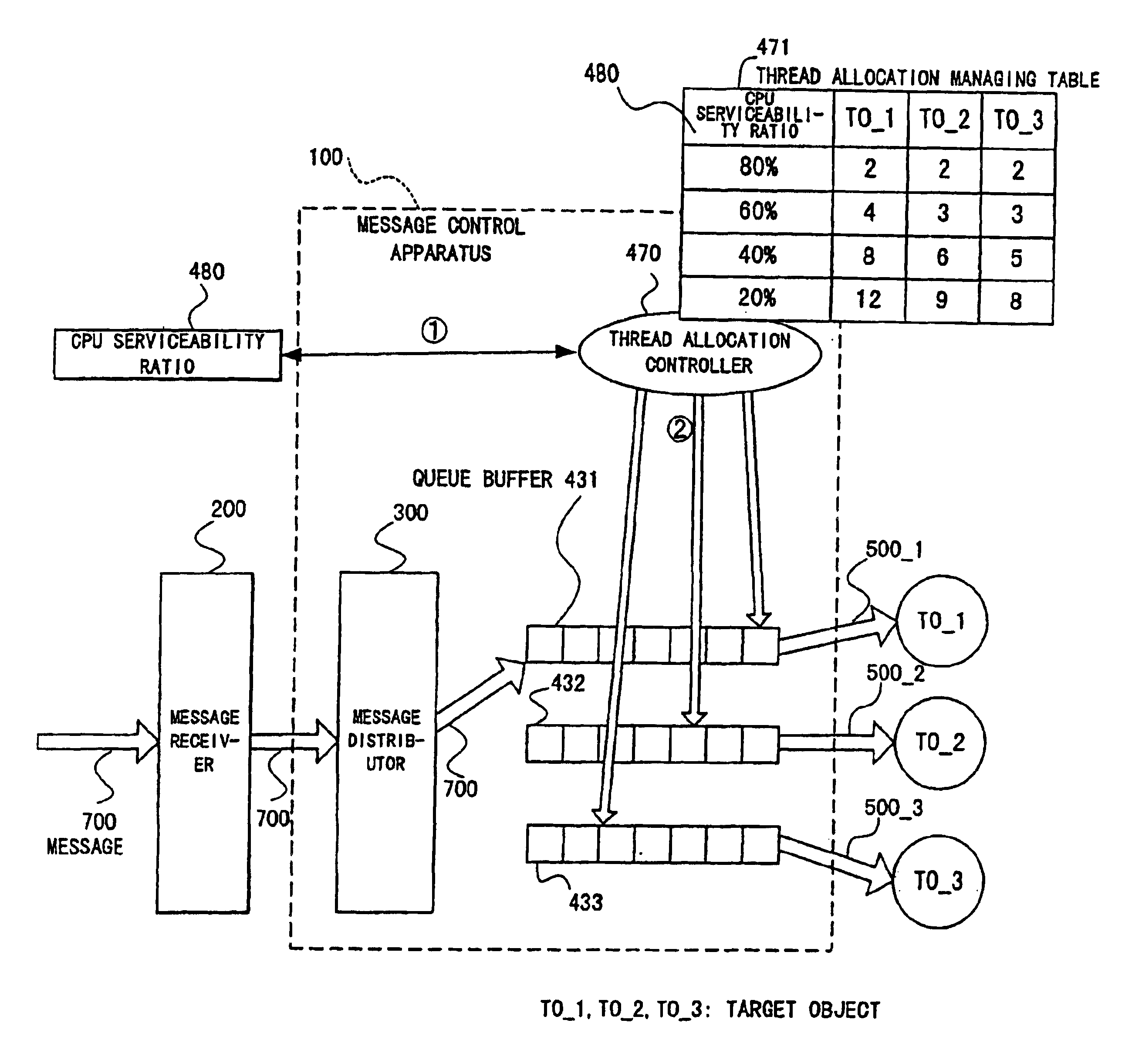

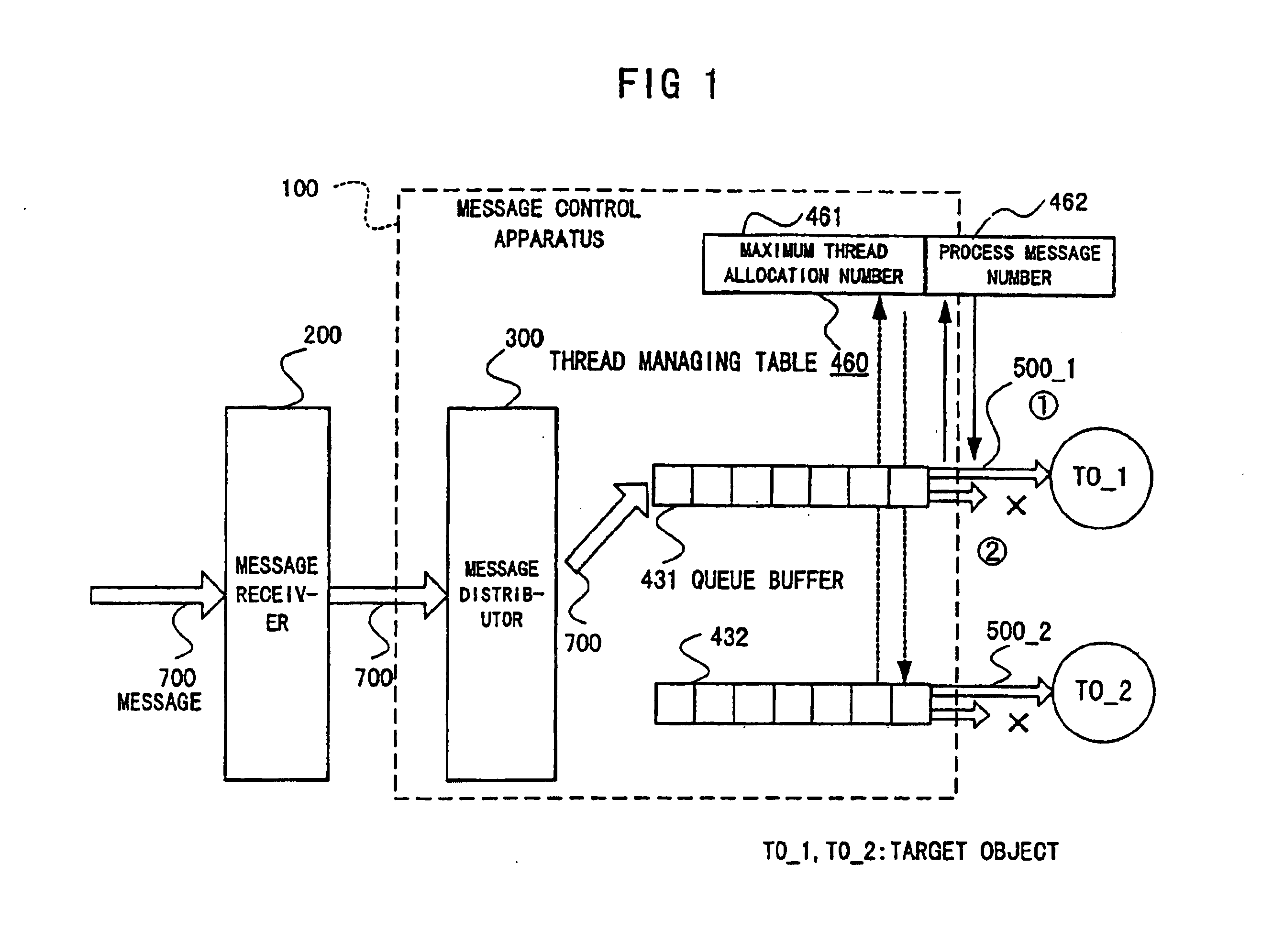

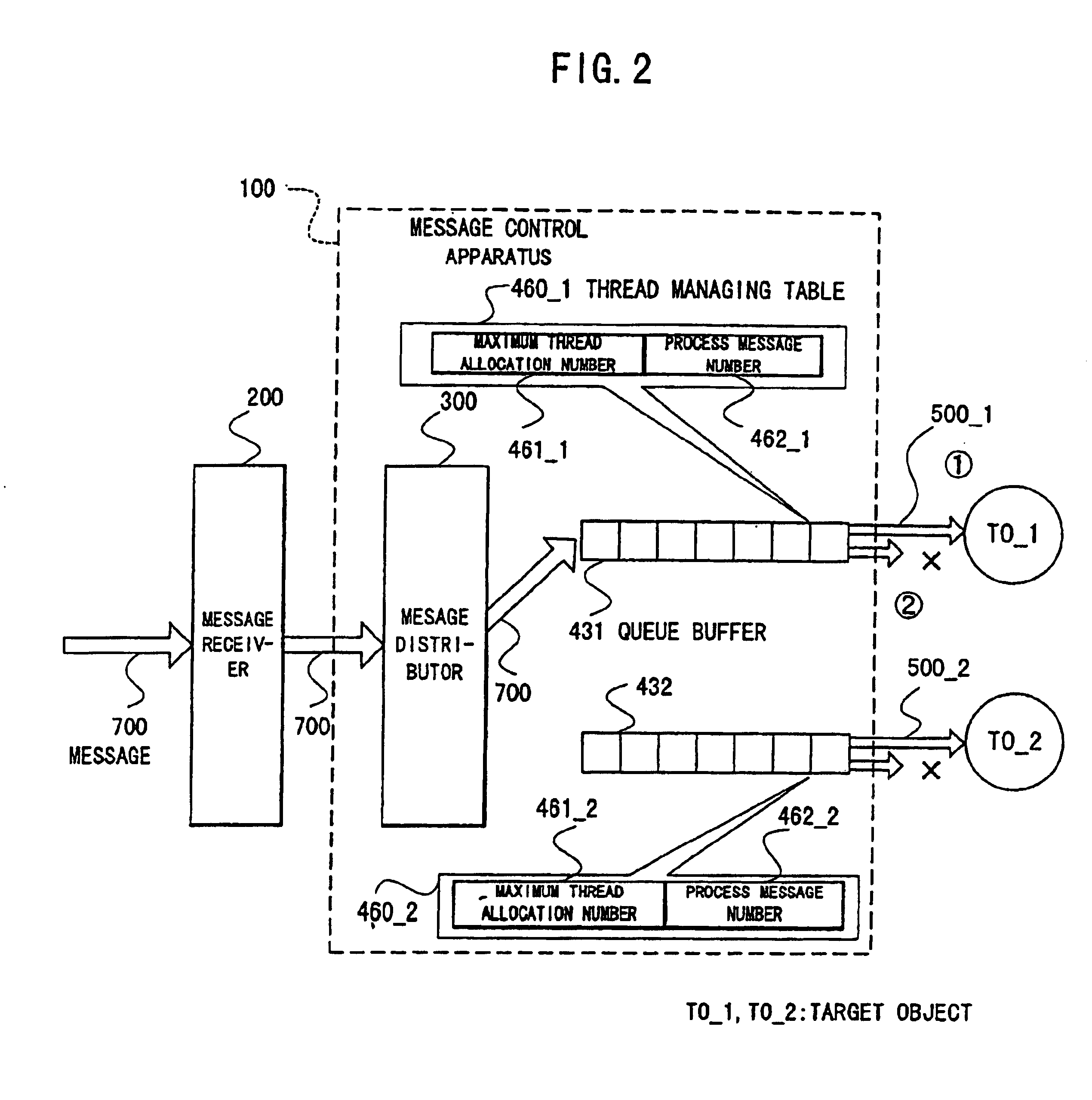

System for controlling message transfer between objects having a controller that assumes standby state and without taking out excess messages from a queue

InactiveUS6848107B1Improve processing speedInterprogram communicationSpecific program execution arrangementsParallel computingDistributor

In a message control apparatus for transferring messages between objects which belong to different processes, a message distributor distributes messages to queue buffers provided for each of target objects, and a thread controller simultaneously prepares a plurality of threads less than a maximum thread allocation number predetermined for each of processes and the target objects and takes out the messages to be given to a corresponding target object. Also, the maximum thread allocation number corresponding to a CPU serviceability ratio in the processes can be designated, and a part of the thread allocation number of the target objects with few thread number used is allotted to the maximum thread allocation number of the target objects with many thread number used.

Owner:FUJITSU LTD

Full-automatic firing method for wideband individual line subscriber based on flowpath optimization

InactiveCN101510831AImprove accuracyImprove human resourcesData switching networksAsynchronous communicationSystem configuration

The invention relates to a fully-automatic subscribing method for broadband special line users based on process optimization, which comprises the steps: a special line automatic subscribing system receives a work order from BSS, resolves the work order, obtains information of such as equipment to be configured, and searches upper and lower correlative equipment information, login manner and login password according to the existing equipment information, and then logins in the equipment according to the equipment information to configure related data order; the special line automatic subscribing system is configured with thread counts for processing the work order, starts a work order receiving module, and selects a system operation mode through reading configuration files, namely a parent-child process or an automatic reset mode; socket communication is utilized to simulate telnet to login in the equipment and issue orders, the port information of the equipment is validated and judged during the process of issuing orders and the execution result of the orders issued currently is validated and judged; if other socket demands are connected at the same time, other thread counts are assigned to do the work so as to fulfill the long connection asynchronous communication between the automatic subscribing system and the socket of BSS.

Owner:LINKAGE SYST INTEGRATION

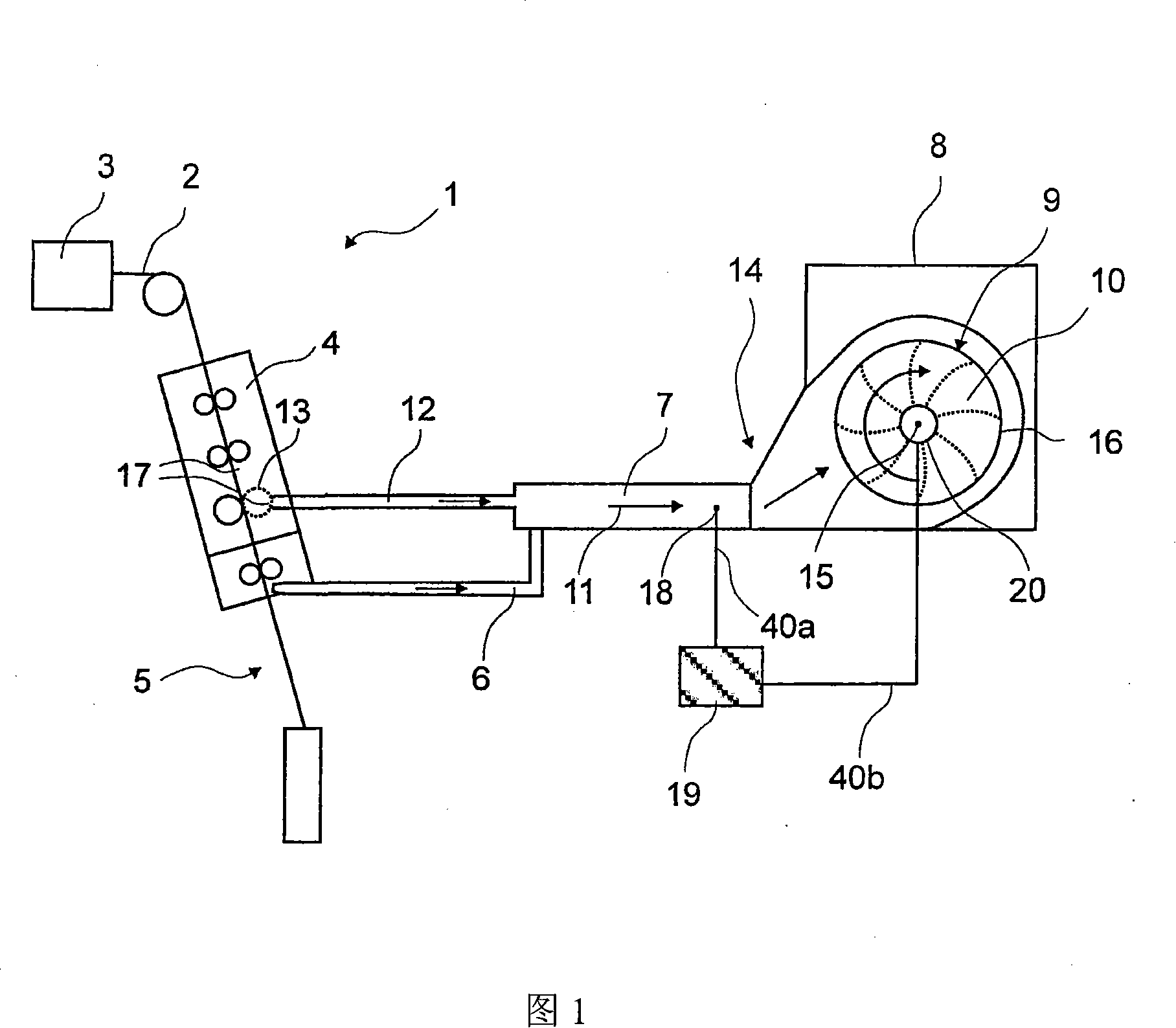

Device and method for pumping and filtering air containing dust and/or fibre on the spinning machine

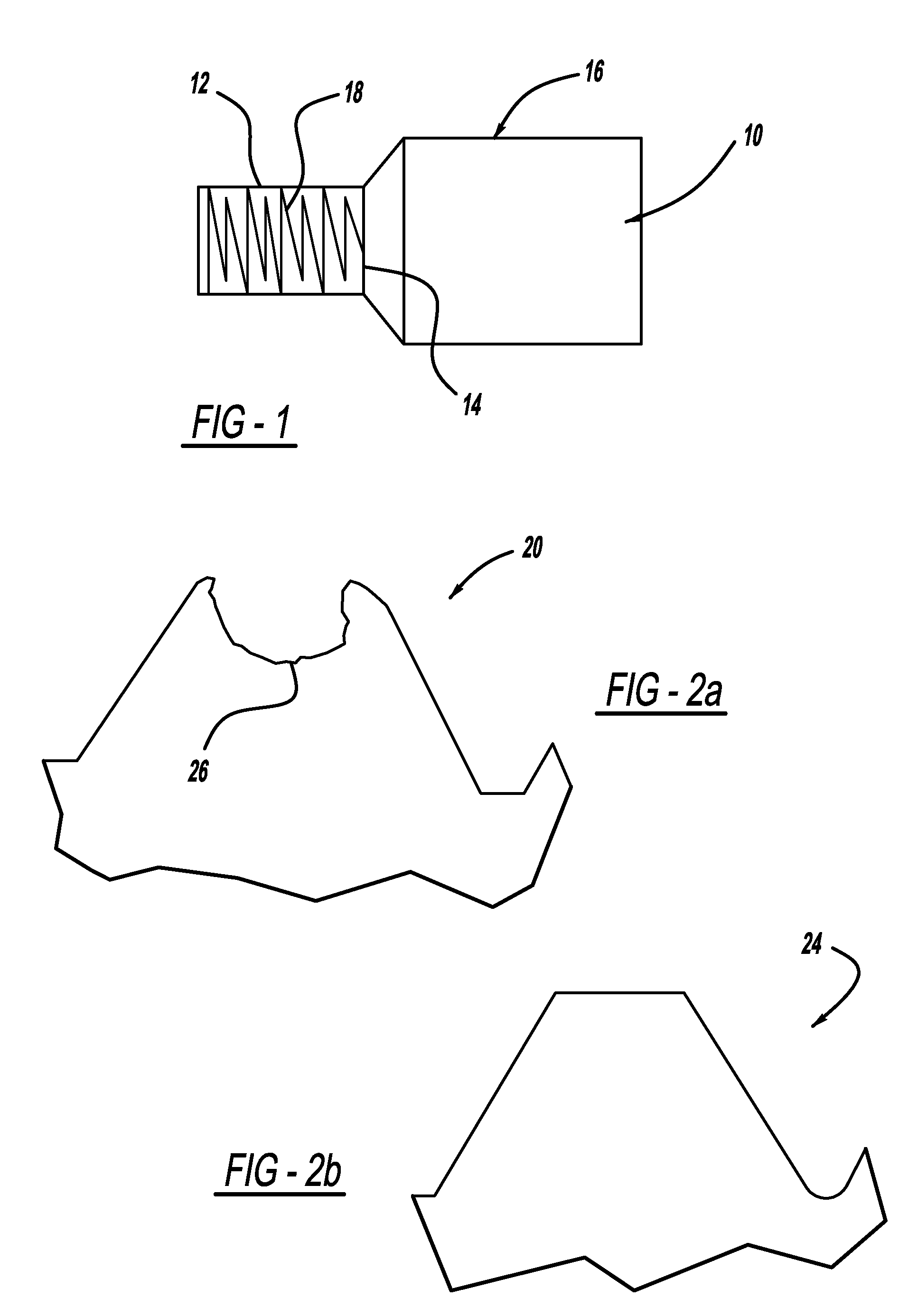

InactiveCN101113537AEasy to storeEffectively fixedDispersed particle filtrationTransportation and packagingFiberMotor drive

The device for the suction and filtration of dust- and / or fiber loaded air on spinning machines (1), comprises working places consisting of a suction channel (7), a filtering device (8) with a filter forming a filtering surface (16), a system for removing the filter outflow from the filtering surface, a vacuum source (10) for generating an induced draft, an operating means for carrying out a filter cleaning process using a removal system, and a vacuum sensor arranged in the main suction channel for measuring the vacuum in a vacuum zone before and / or after the filtering. The device for the suction and filtration of dust- and / or fiber loaded air on spinning machines (1), comprises working places consisting of a suction channel (7), a filtering device (8) with a filter forming a filtering surface (16), a system for removing the filter outflow from the filtering surface, a vacuum source (10) for generating an induced draft, an operating means for carrying out a filter cleaning process by a removal system, and a vacuum sensor arranged in the main suction channel for measuring the vacuum in a vacuum zone before and / or after the filtering. The device contains a controller or regulator for vacuum in the vacuum zone before and / or after the filtering surface based on the vacuum values, vacuum target values or vacuum target value areas, which are measured by the vacuum sensor. The controller or regulator is connected with a driving mechanism for filter cleaning. The controlling or regulating of the vacuum takes places by the operation of the driving mechanism. The spinning place contains suction places (17), over which polluted air is sucked out and supplied over a central suction channel or -channel of the filter arrangement. The vacuum source contains an axial- or radial ventilator. The filter outflow is liftable or removable from the filtering surface using removal- or a lifting device and is fed to a collecting- or disposing device. The controller contains a signal converter and a control device, by which the measured values received by the vacuum sensor in the form of vacuum values are compared with the vacuum target values and vacuum target value areas. Controlling or regulating signals are generated for correcting the variation of actual value from the target value for operating on an actuator and / or a final control element containing driving means. The filtering device contains a filter drum with a cylinder shaped, fixed or flexible filter surface, which is arranged on the removal system. The driving mechanism comprises a drive system for turning the filter drum around the drum axis. The filter cleaning is carried out by a continuous or sequential turning of the filter drum, by which the filter surface is directed to the removal device and the filter outflow is removed. The driving mechanism contains a hydraulic or pneumatic piston drive, and a linear motor or electric cylinder, which is connected with a gear between the driving mechanism and the filter. A control is intended for thread count and / or equipment parameters of the pressure ratio in the channels and / or pipes are adjustable. The control consists of a means for changing the vacuum over the filter device and / or over the ventilator output. The filter device contains a continuous filter band forming a space, and electro motor driving mechanism for a circulatory movement of the filter band, which forms a layered filter surface. Independent claims are included for: (1) a spinning machine; and (2) a method for the suction and filtration of dust- and / or fiber loaded air on spinning machines.

Owner:MASCHINENFABRIK RIETER AG

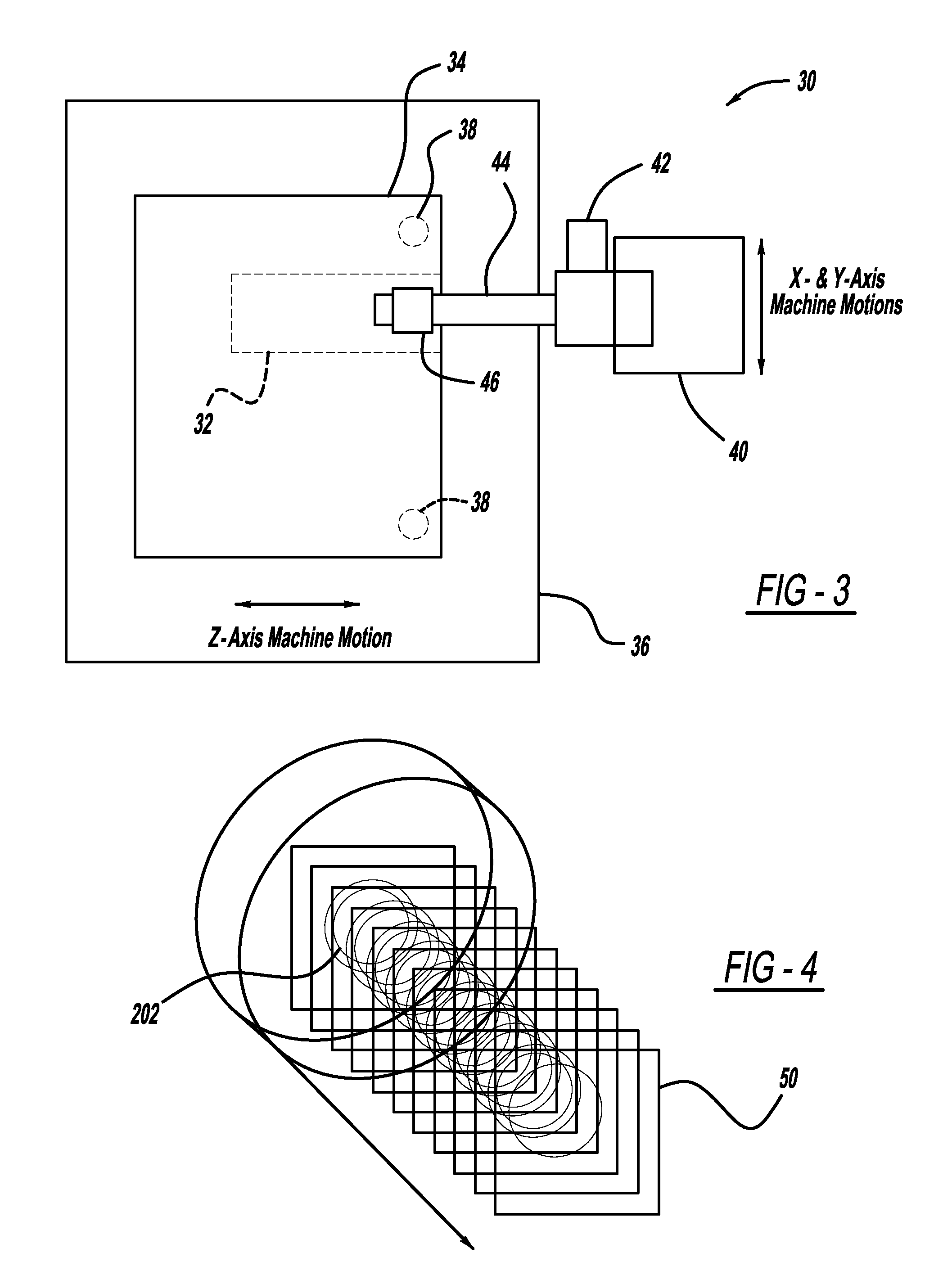

Methodology for evaluating the start and profile of a thread with a vision-based system

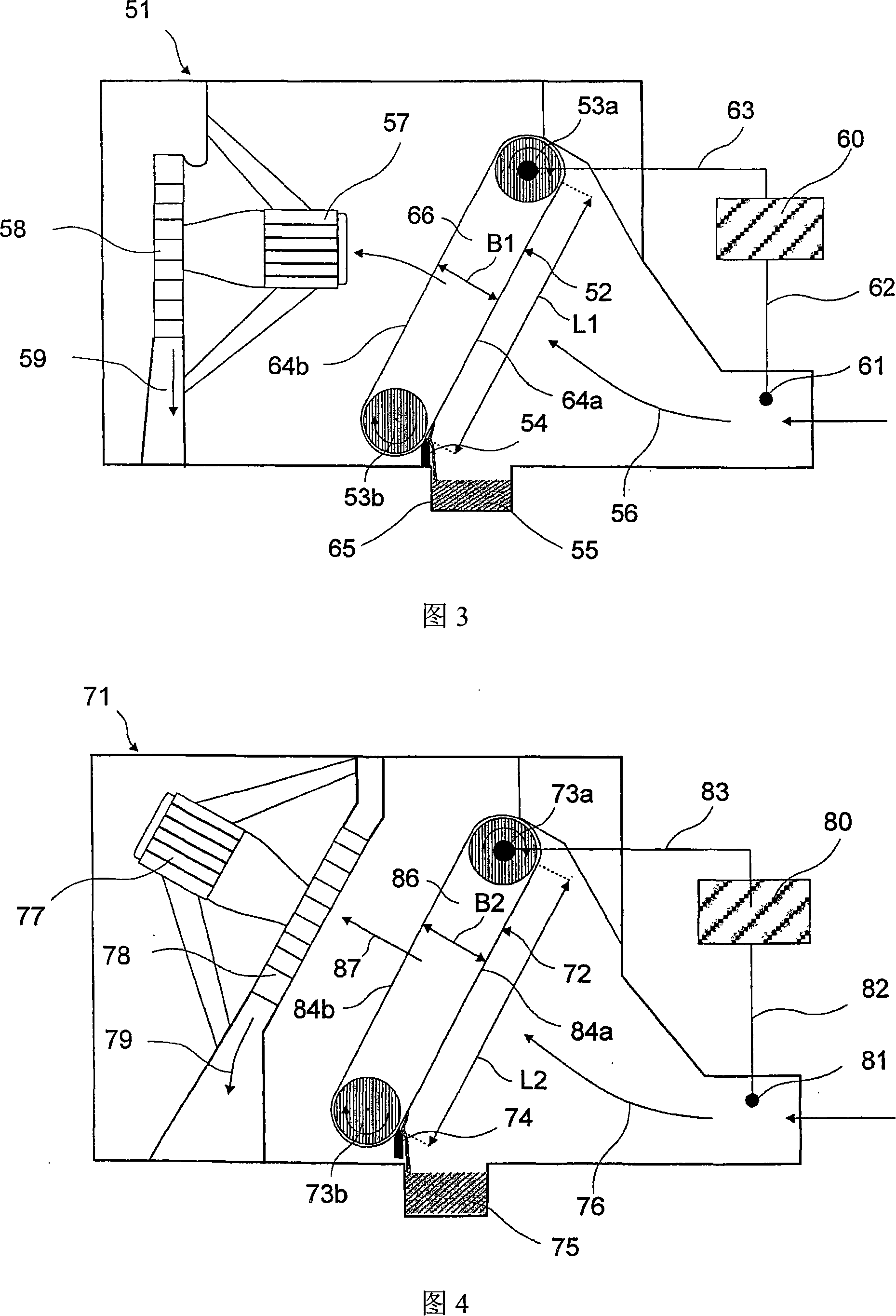

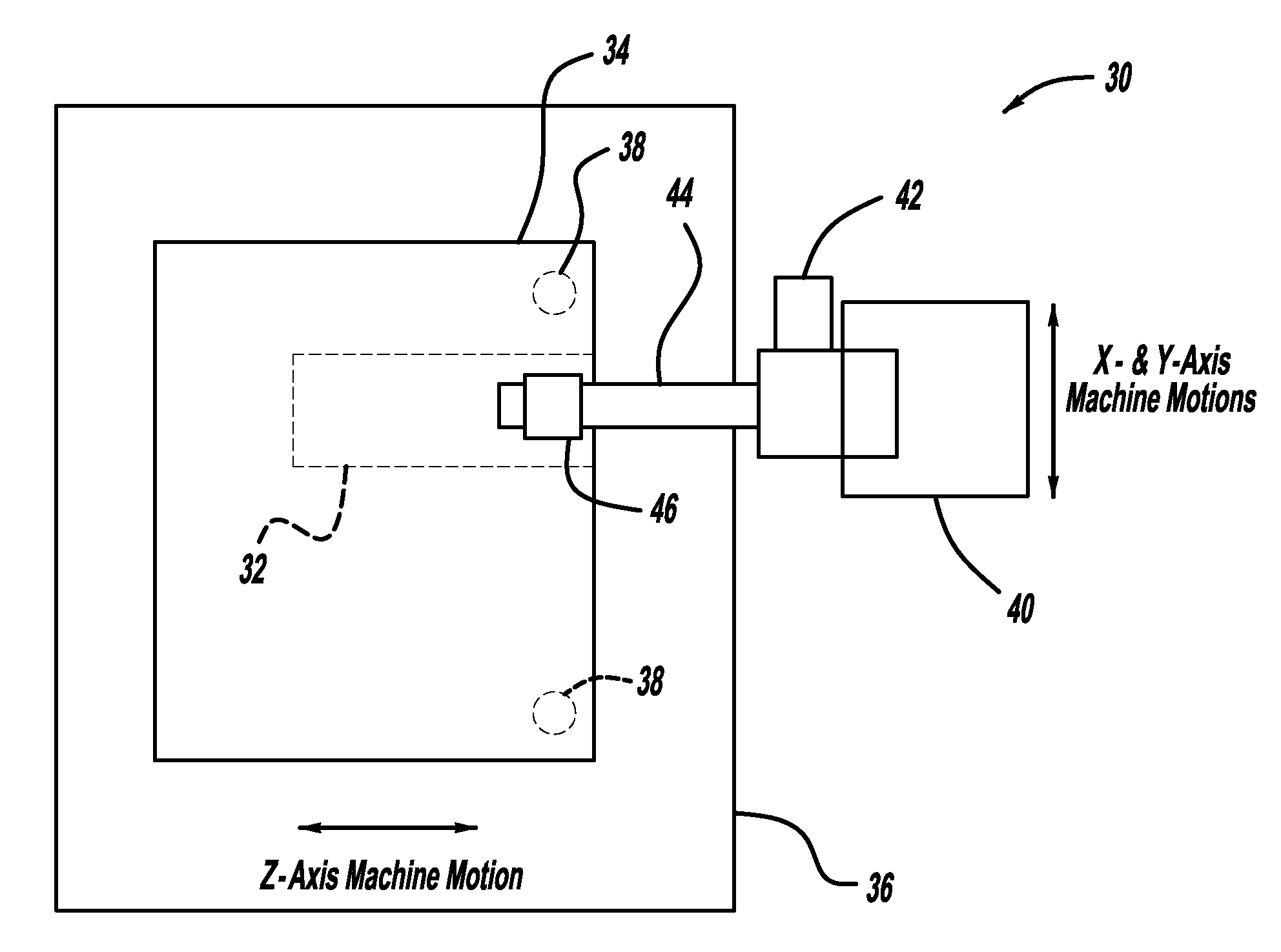

A system and method for identifying the start location of the lead thread and the thread profile and quality in a threaded bore in a part, where the system and method can be used for determining the location of the lead thread in a threaded bore in a cylinder head for a spark plug in one non-limiting embodiment. The system includes a moveable table on which the part is mounted. The system also includes a probe having an optical assembly that is inserted in the threaded bore. A camera uses the optical assembly to generate images of the thread bore, where the images are image slices as the probe moves through the threaded bore. The image slices are unwrapped and then joined together to form a planer image to determine a start location of the lead thread, thread count, defects in the threaded bore and thread profile parameters.

Owner:GM GLOBAL TECH OPERATIONS LLC

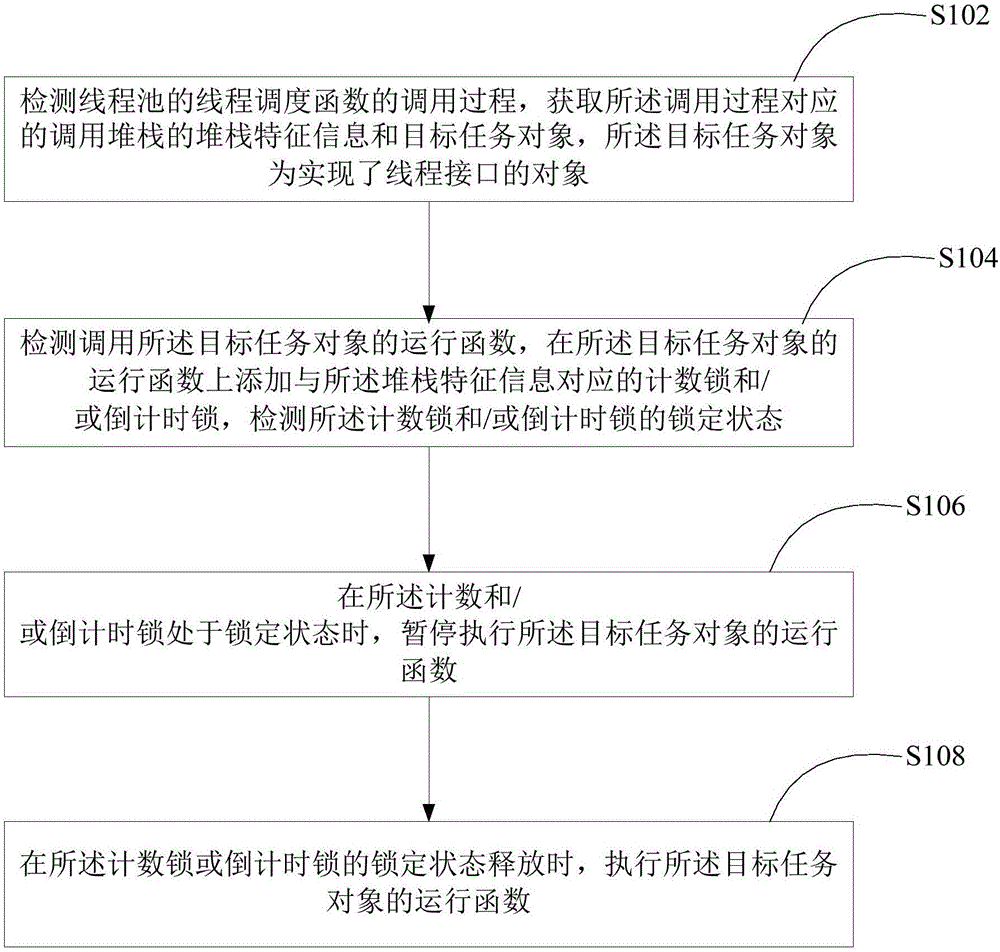

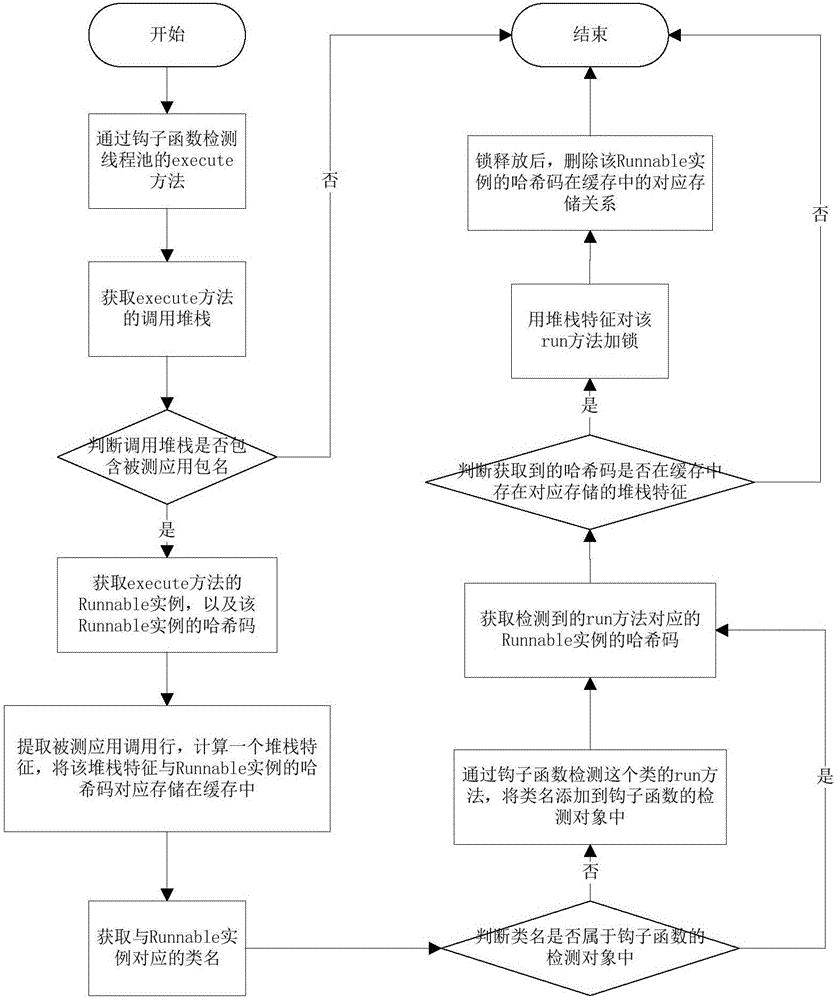

Multi-thread scheduling method and device based on thread pool

ActiveCN106681811AImprove crashImprove test efficiencyProgram initiation/switchingResource allocationCall stackThread scheduling

The embodiment of the invention discloses a multi-thread scheduling method based on a thread pool. The method comprises the steps that a calling process of a thread scheduling function of the thread pool is detected, and stack characteristic information of a calling stack corresponding to the calling process and a target task object are obtained; an operation function of the target task object is detected and called, a counting lock and / or a countdown lock corresponding to the stack characteristics information are / is added to the operation function of the target task object, the locking state of the counting lock and / or the countdown lock is detected, the counting lock is released when the thread number corresponding to the target task object is larger than or equal to the threshold value of the counting lock, and the countdown lock is released after a preset period of time is waited; when the counting lock and / or the countdown lock are / is in the locked state, the execution of the operation function of the target task object is stopped. When the locked state of the counting lock or the countdown lock is released, the operation function of the target task object is executed. By adopting the multi-thread scheduling method, the program crash recurrence rate can be increased.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com