Patents

Literature

200 results about "CPU time" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

CPU time (or process time) is the amount of time for which a central processing unit (CPU) was used for processing instructions of a computer program or operating system, as opposed to elapsed time, which includes for example, waiting for input/output (I/O) operations or entering low-power (idle) mode. The CPU time is measured in clock ticks or seconds. Often, it is useful to measure CPU time as a percentage of the CPU's capacity, which is called the CPU usage.

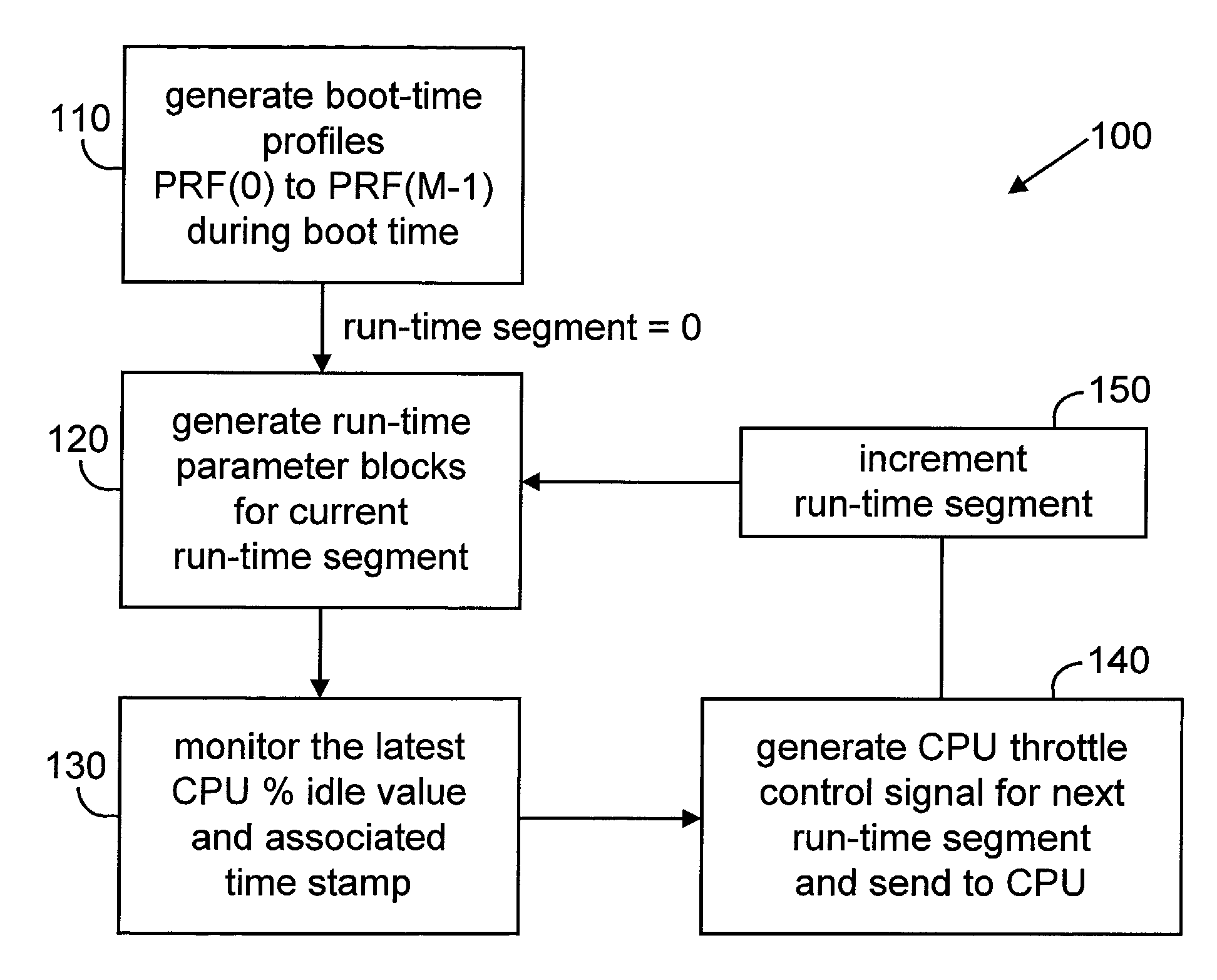

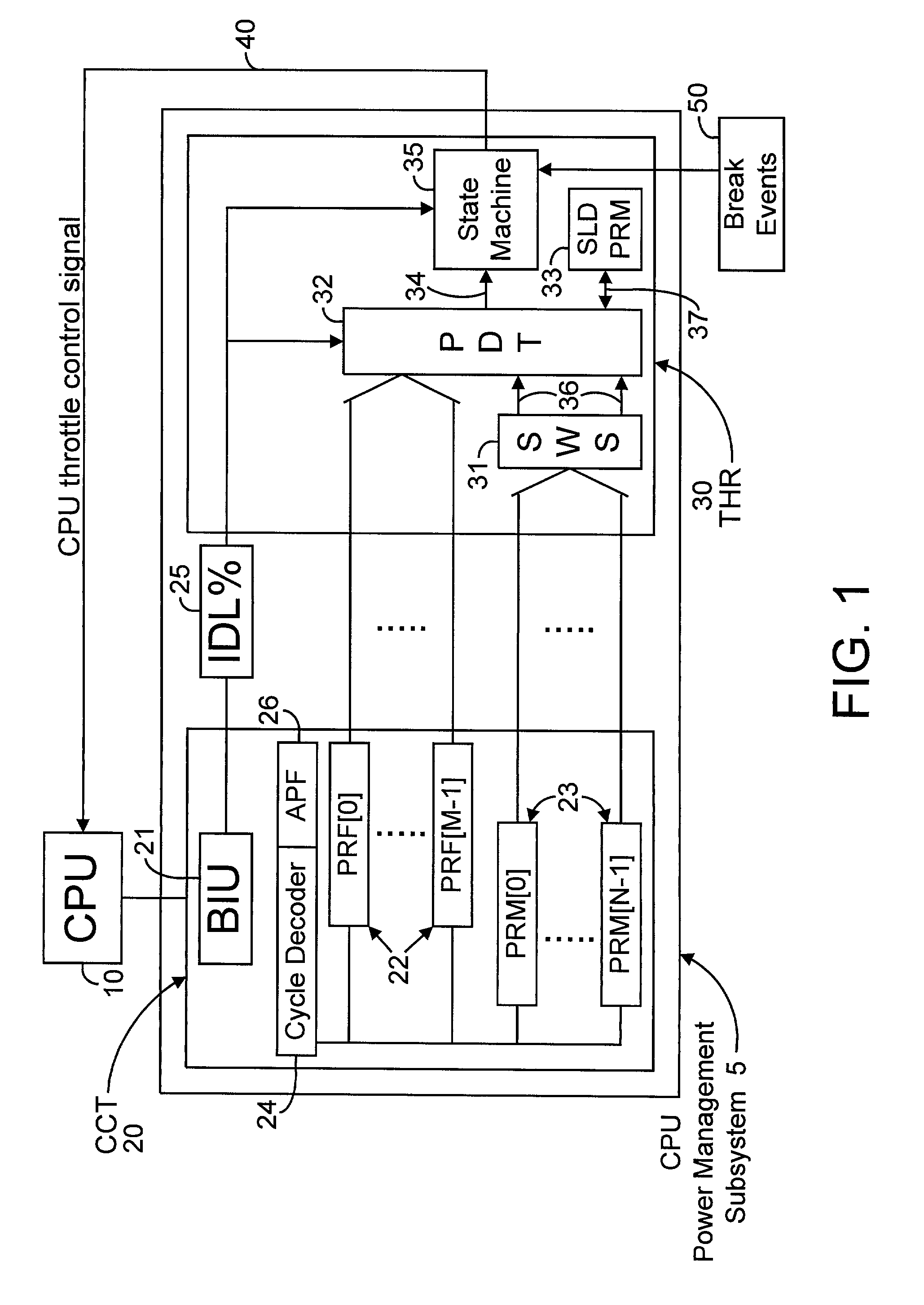

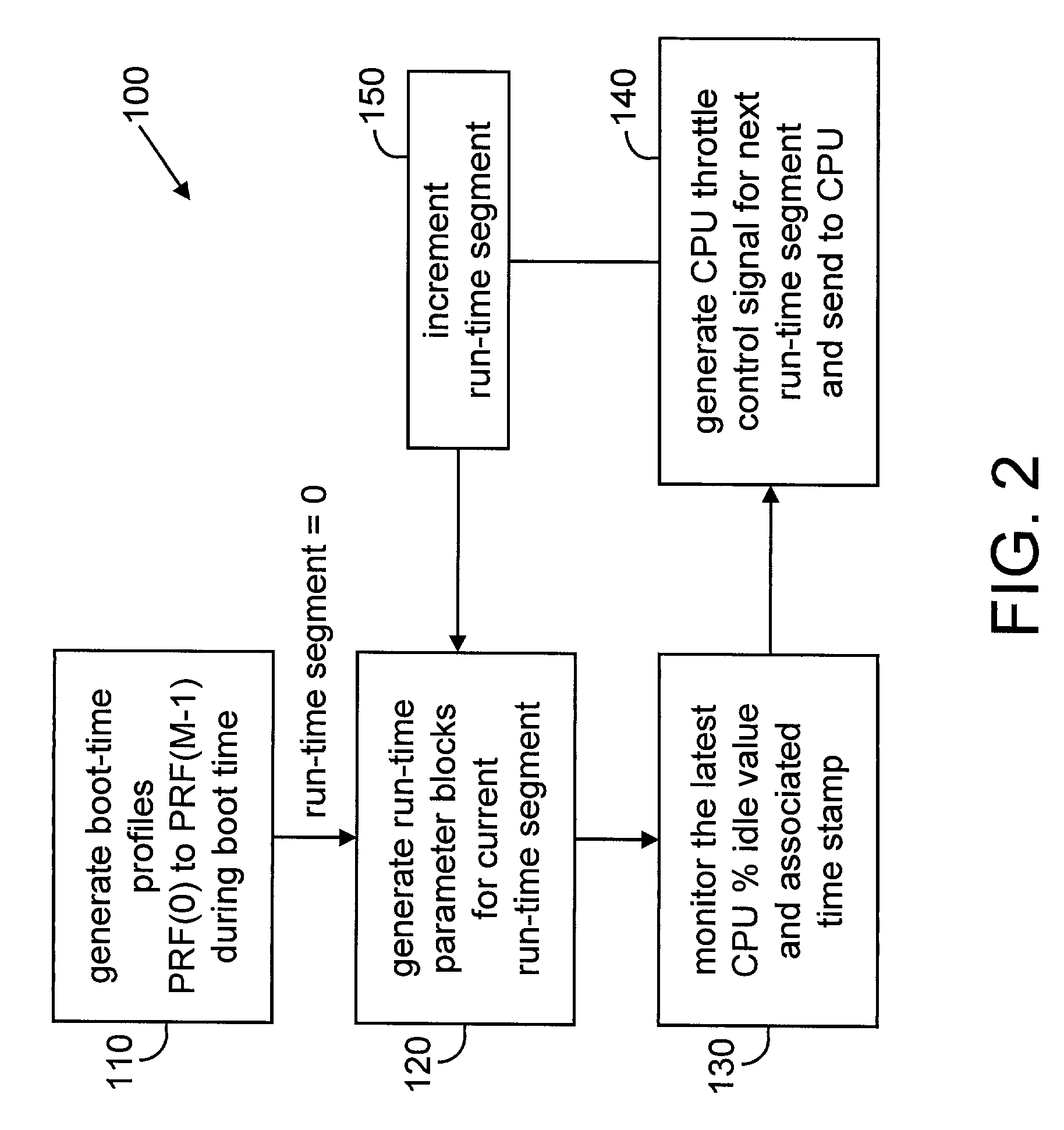

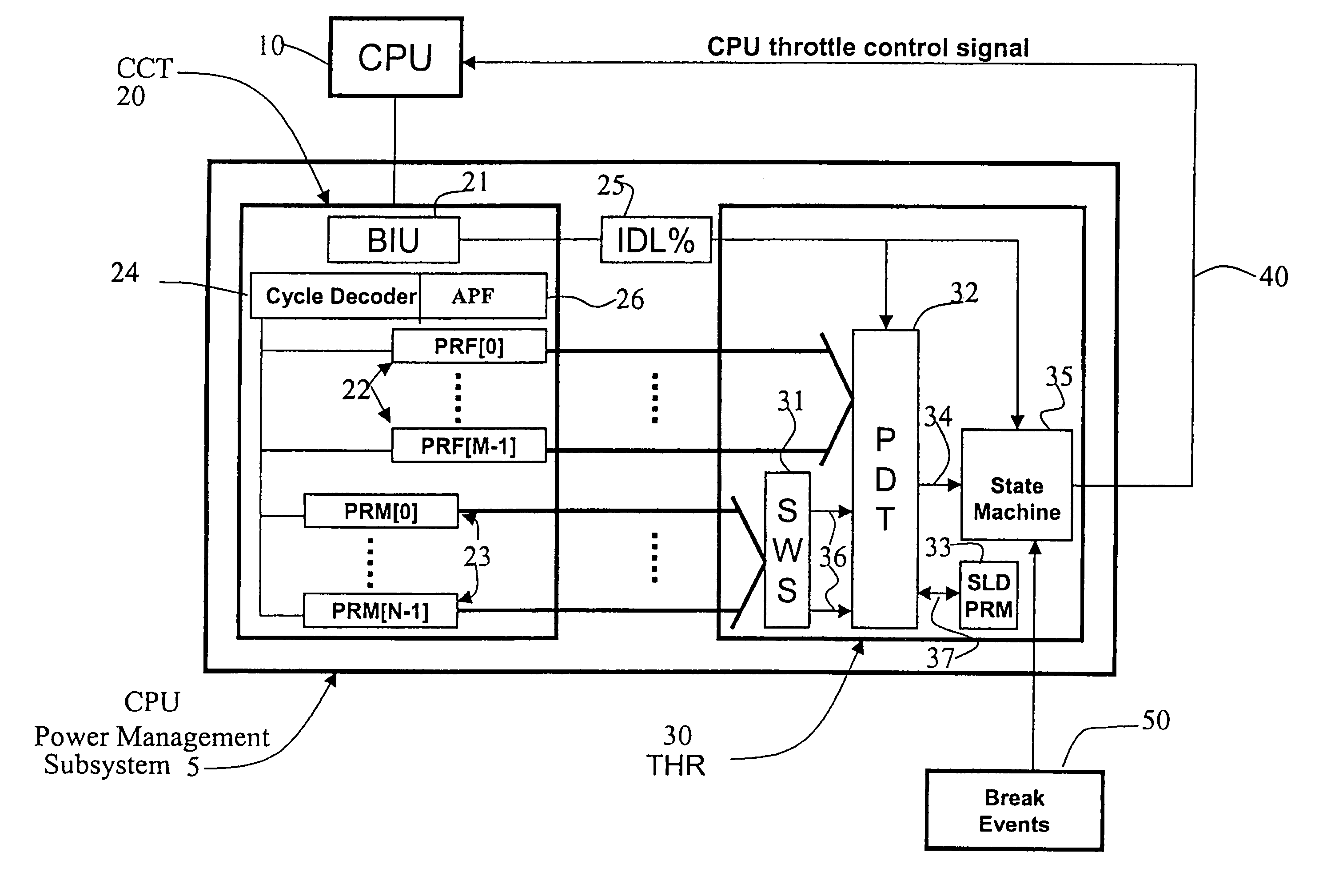

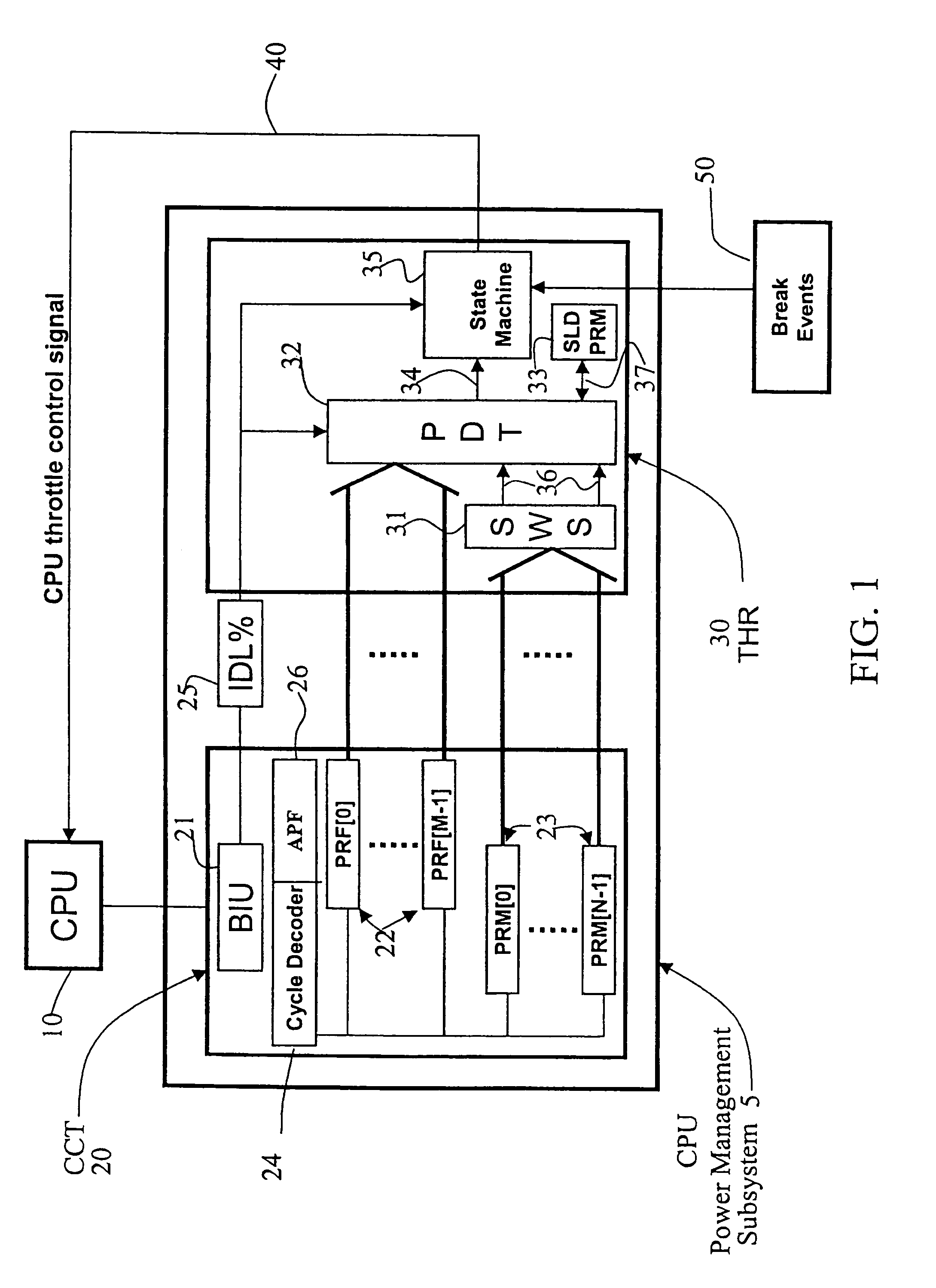

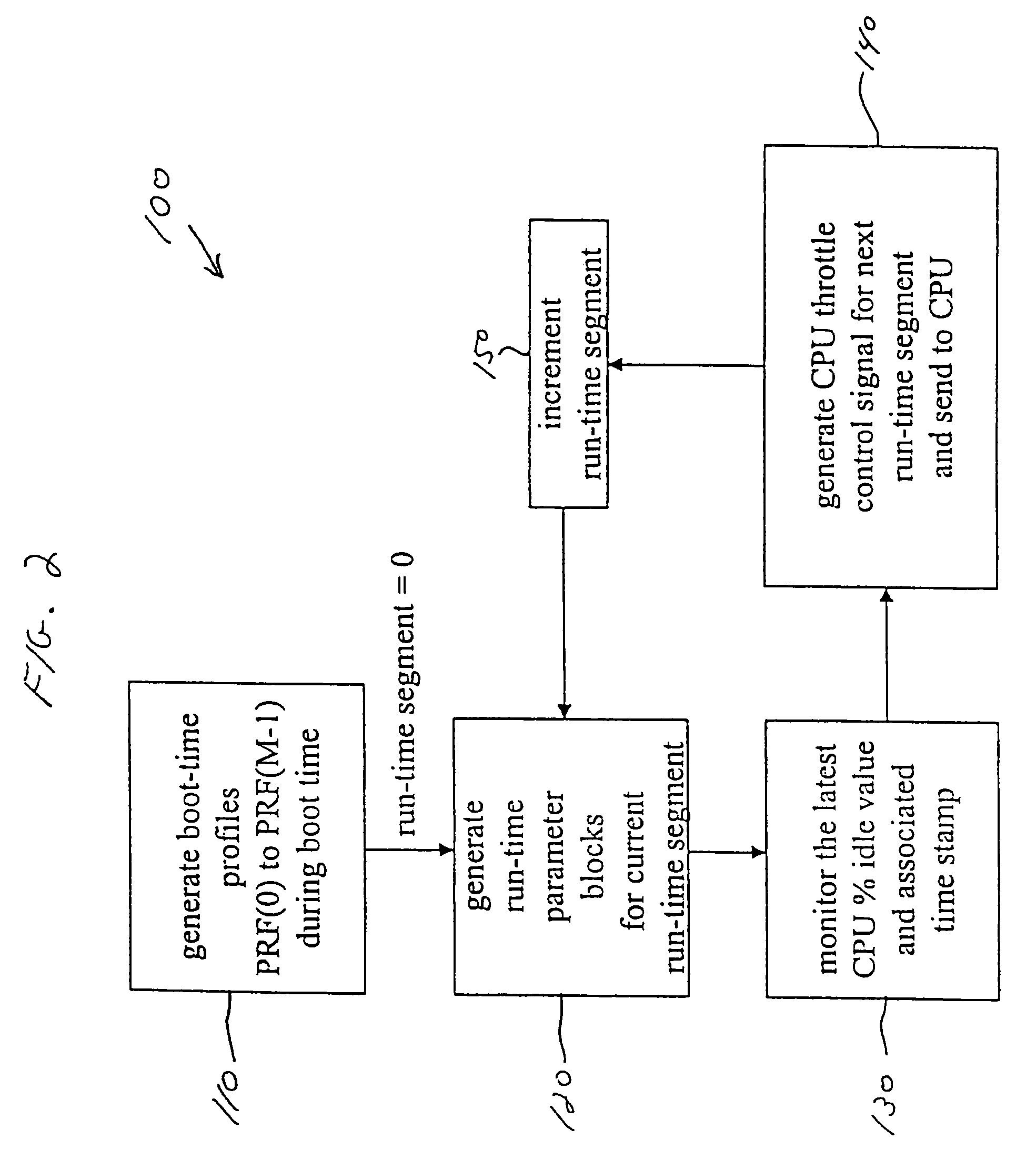

Method and apparatus for adaptive CPU power management

A method and apparatus are disclosed for performing adaptive run-time power management in a system employing a CPU and an operating system. A CPU cycle tracker (CCT) module monitors critical CPU signals and generates CPU performance data based on the critical CPU signals. An adaptive CPU throttler (THR) module uses the CPU performance data, along with a CPU percent idle value fed back from the operating system, to generate a CPU throttle control signal during predefined run-time segments of the CPU run time. The CPU throttle control signal links back to the CPU and adaptively adjusts CPU throttling and, therefore, power usage of the CPU during each of the run-time segments.

Owner:AVAGO TECH INT SALES PTE LTD

Method and apparatus for adaptive CPU power management

A method and apparatus are disclosed for performing adaptive run-time power management in a system employing a CPU and an operating system. A CPU cycle tracker (CCT) module monitors critical CPU signals and generates CPU performance data based on the critical CPU signals. An adaptive CPU throttler (THR) module uses the CPU performance data, along with a CPU percent idle value fed back from the operating system, to generate a CPU throttle control signal during predefined run-time segments of the CPU run time. The CPU throttle control signal links back to the CPU and adaptively adjusts CPU throttling and, therefore, power usage of the CPU during each of the run-time segments.

Owner:AVAGO TECH INT SALES PTE LTD

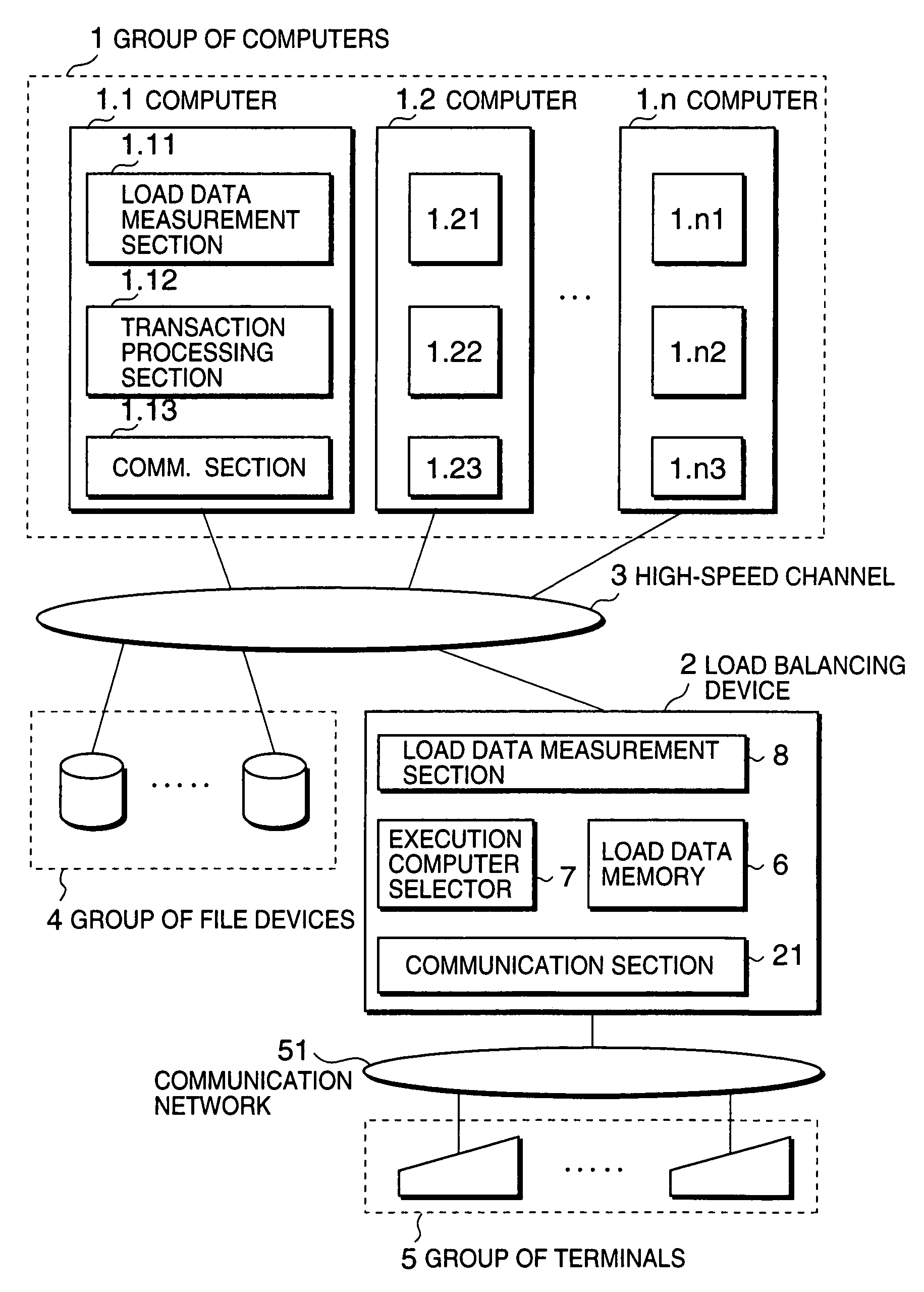

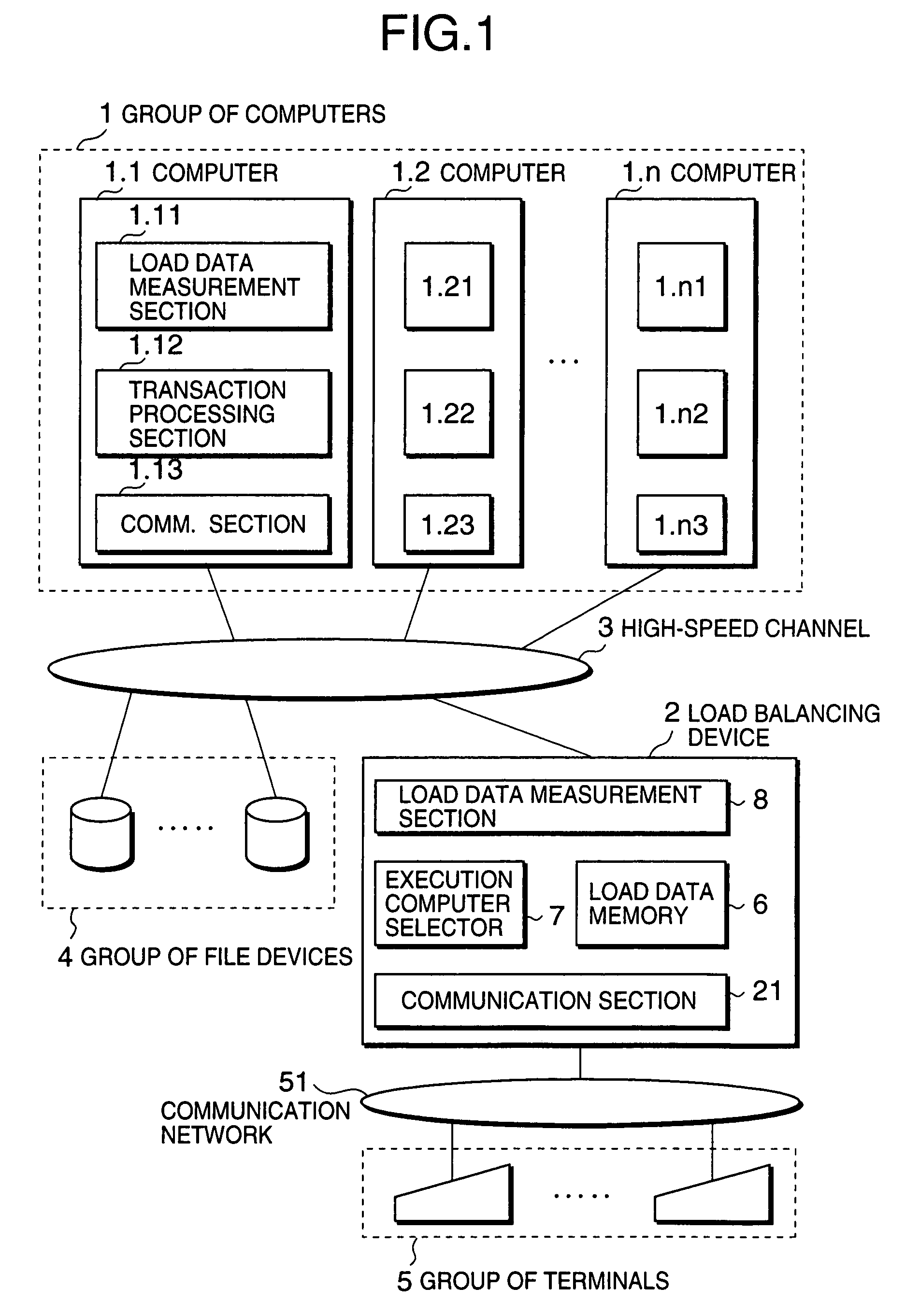

Load balancing method and system based on estimated elongation rates

InactiveUS6986139B1Little changeReduce overheadResource allocationDigital computer detailsLoad SheddingDynamic load balancing

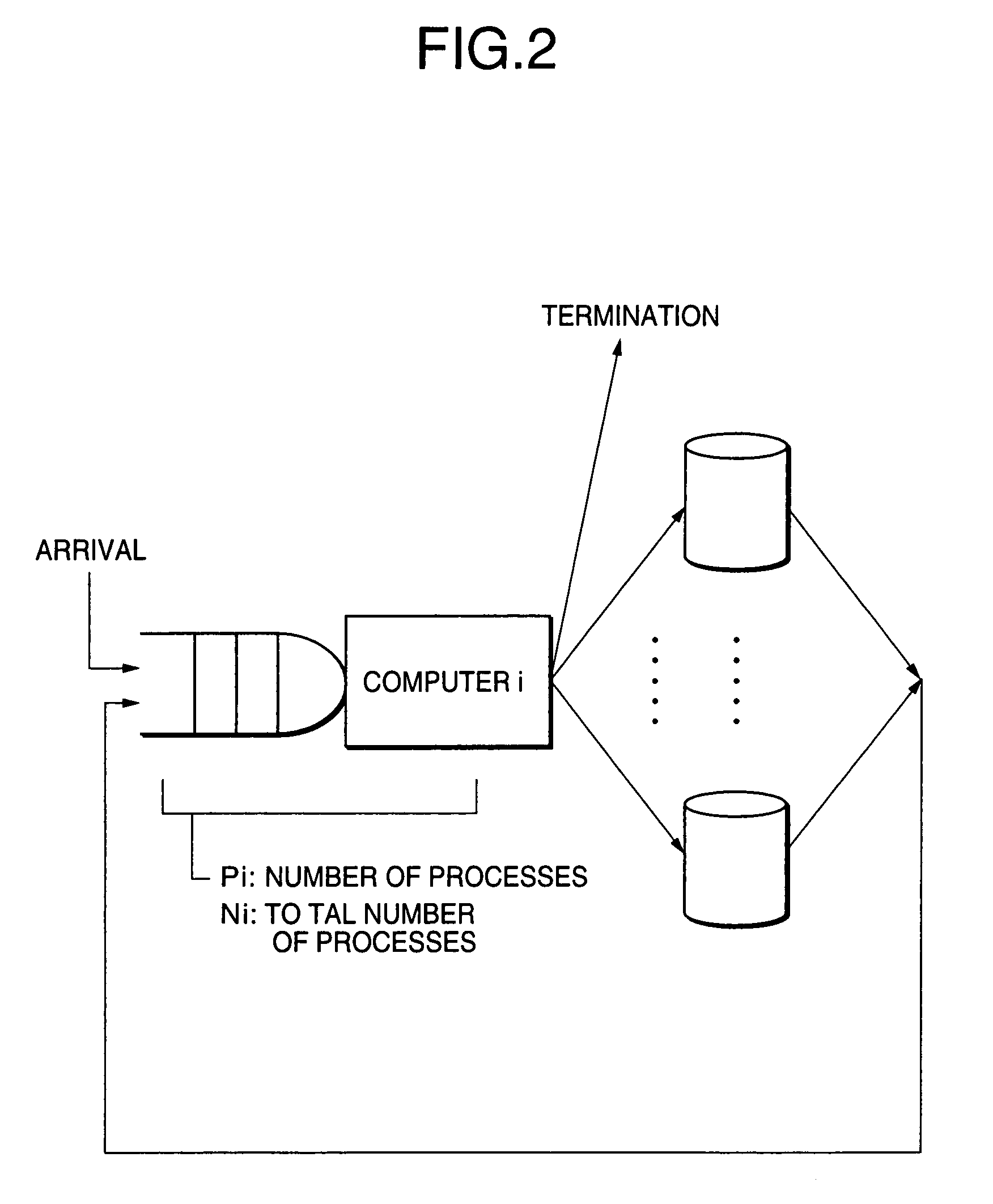

A dynamic load balancing method allowing a transaction processing load is balanced among computers by using estimated elongation rates is disclosed. In a system composed of a plurality of computers for processing transaction processing requests originating from a plurality of terminals, elongation rates of processing time for respective ones of the computers are estimated, where an elongation rate is a ratio of a processing time required for processing a transaction to a net processing time which is a sum of CPU time and an input / output time for processing the transaction. After calculating load indexes of respective ones of the computers based on the estimated elongation rates, a destination computer is selected from the computers based on the load indexes, wherein the destination computer having a minimum one among the load indexes.

Owner:NEC CORP

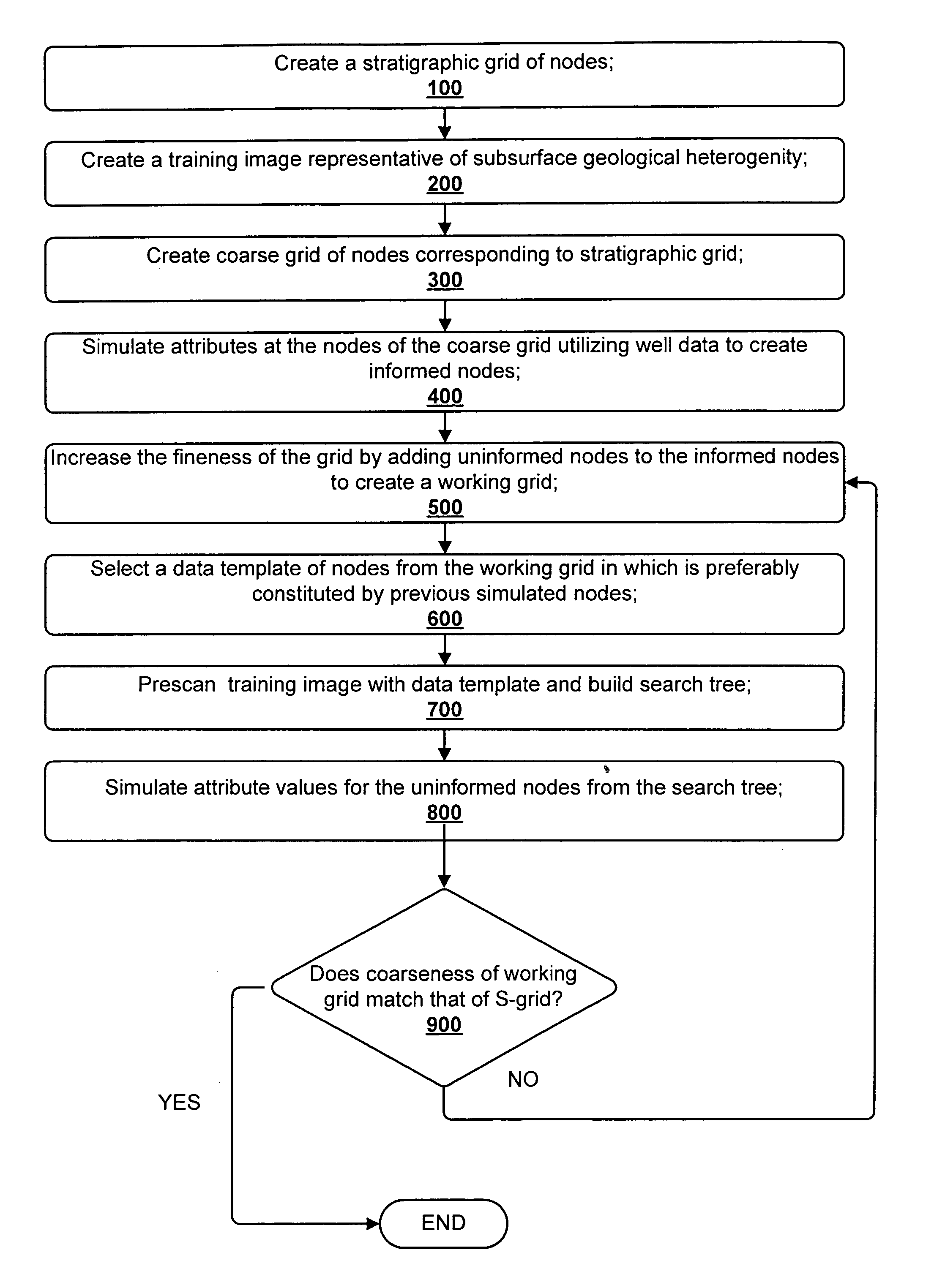

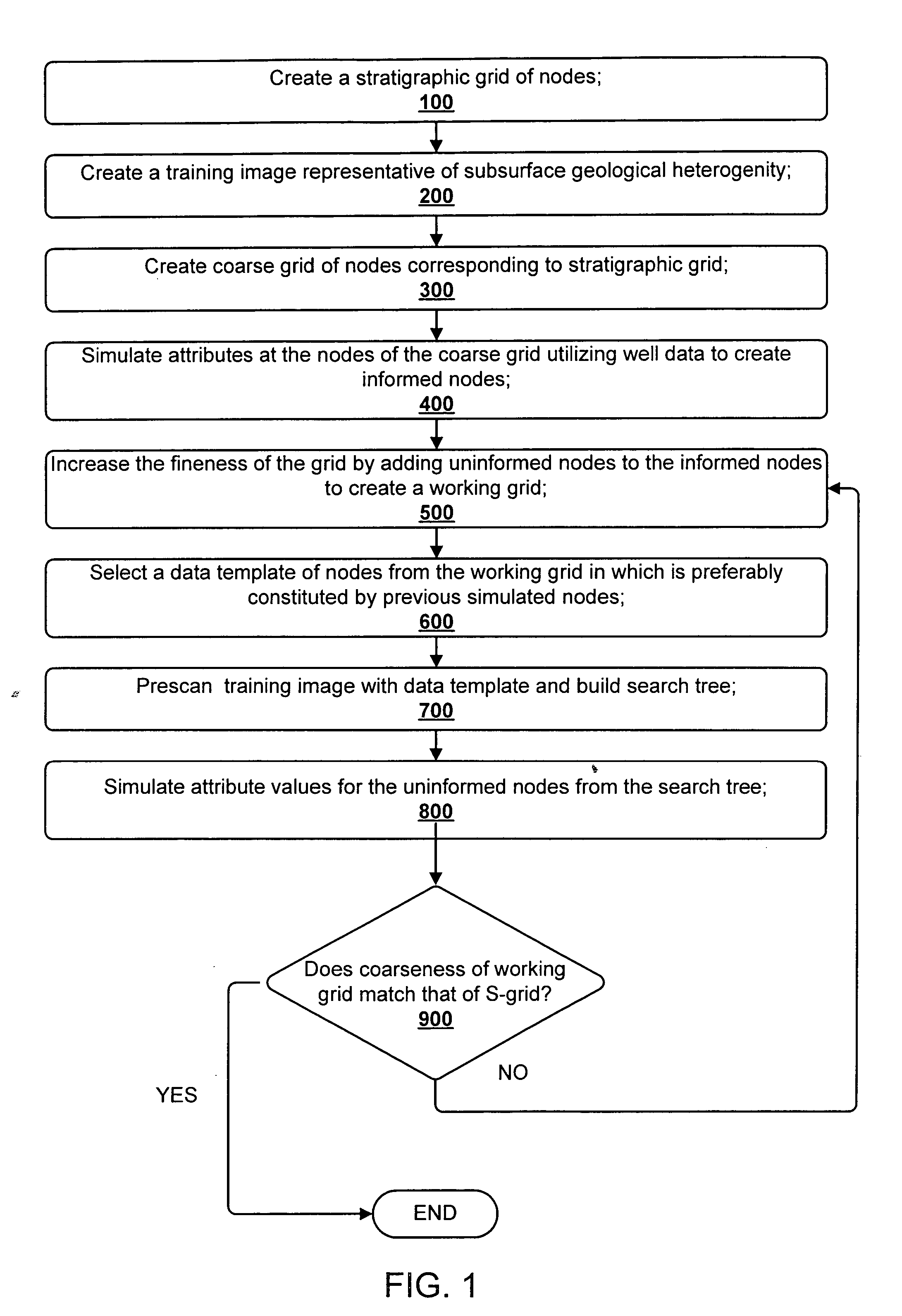

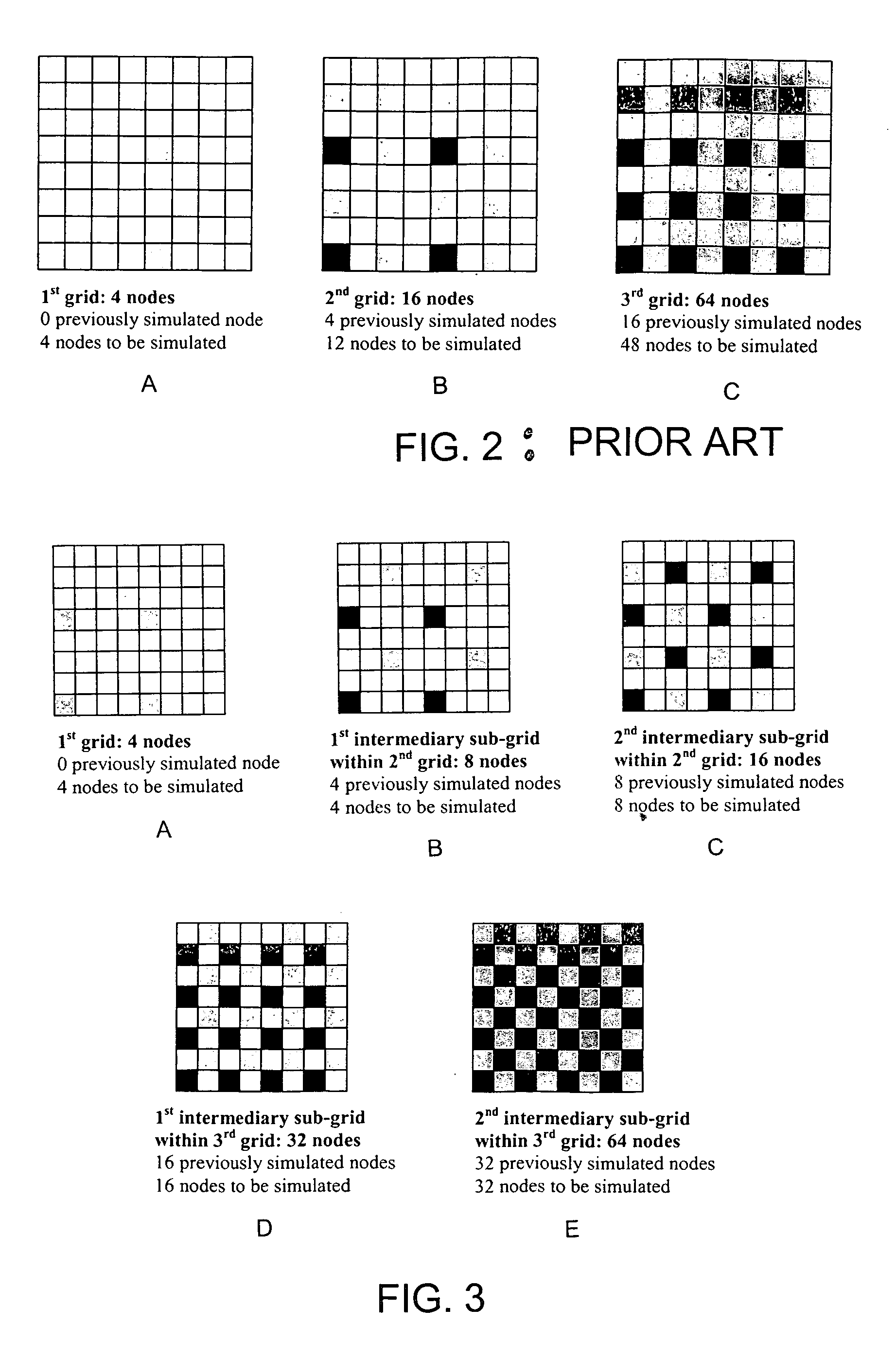

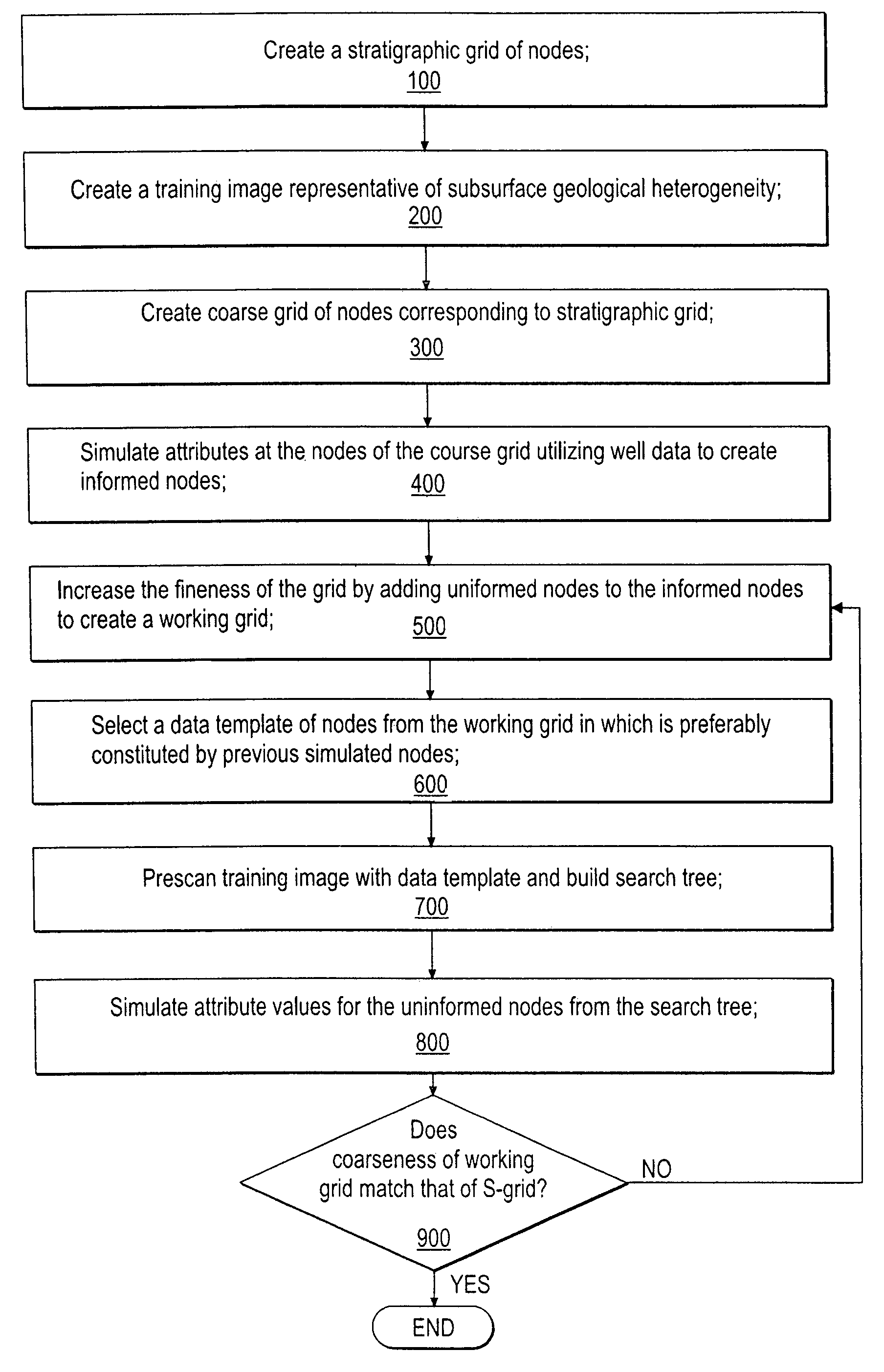

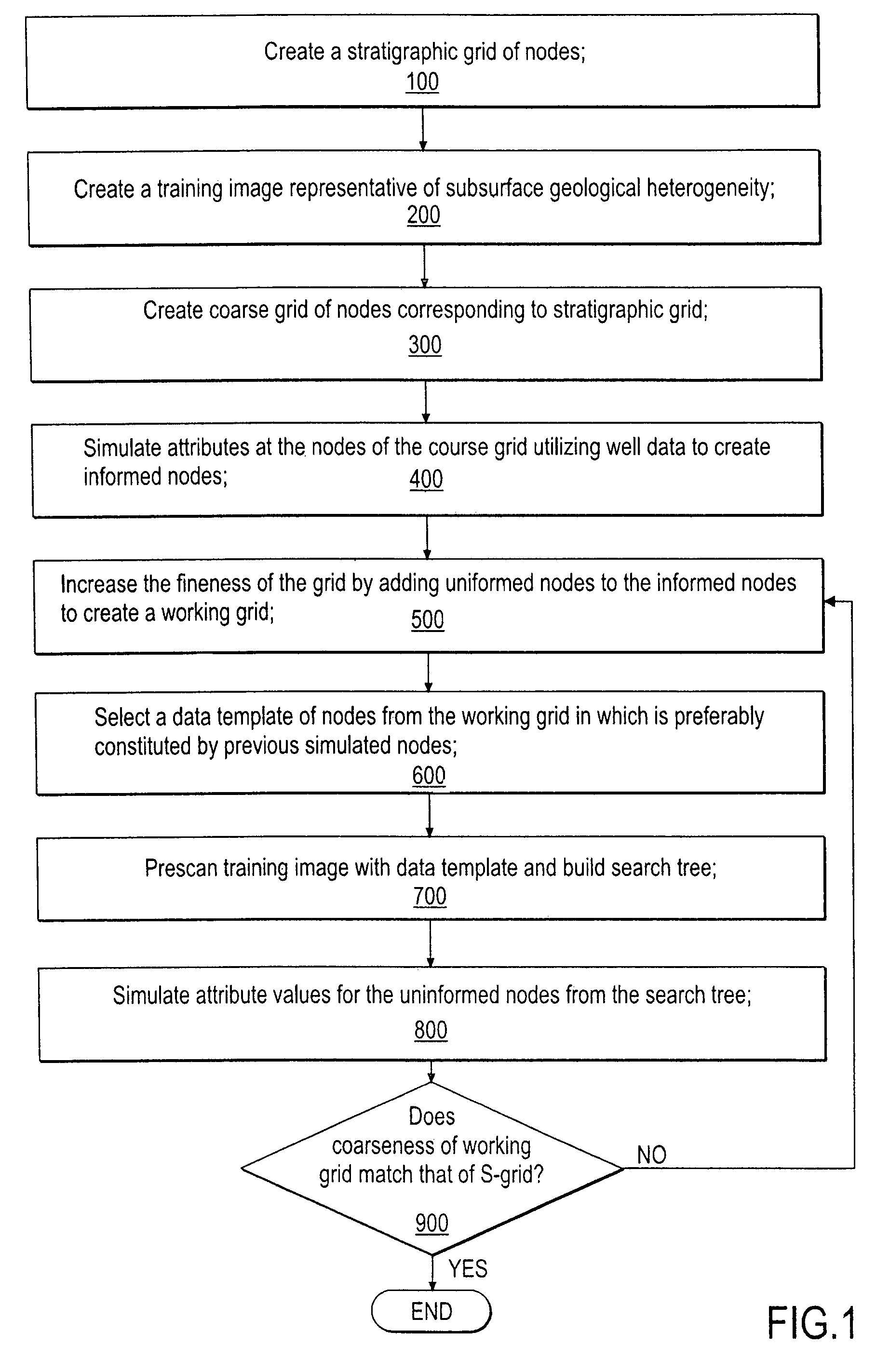

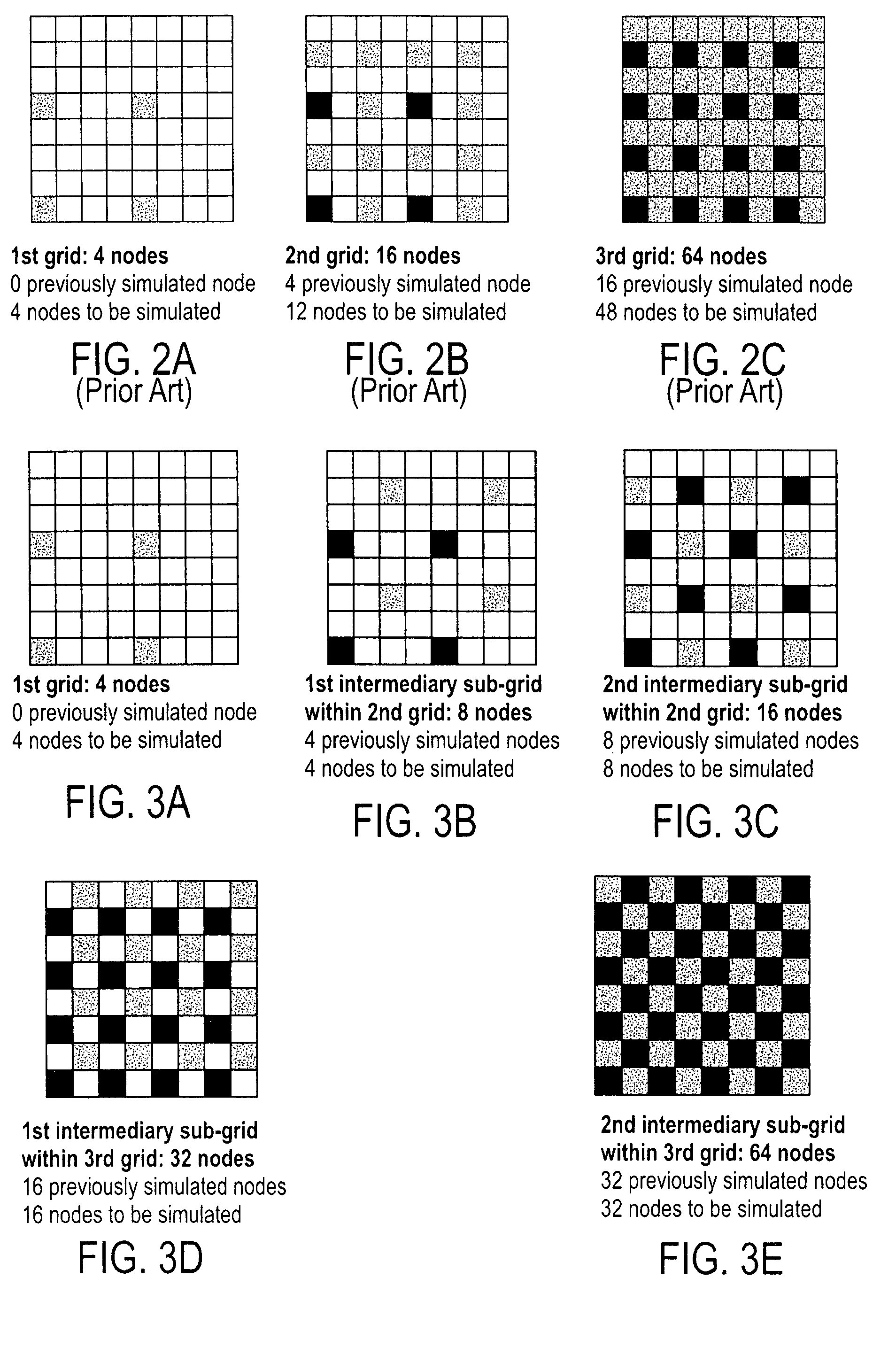

Multiple-point statistics (MPS) simulation with enhanced computational efficiency

ActiveUS20060041410A1Reduce data template sizeImprove computing efficiencySeismic signal processingAnalogue processes for specific applicationsTheoretical computer scienceTerm memory

An enhanced multi-point statistical (MPS) simulation is disclosed. A multiple-grid simulation approach is used which has been modified from a conventional MPS approach to decrease the size of a data search template, saving a significant amount of memory and cpu-time during the simulation. Features used to decrease the size of the data search template include: (1) using intermediary sub-grids in the multiple-grid simulation approach, and (2) selecting a data template that is preferentially constituted by previously simulated nodes. The combination of these features allows saving a significant amount of memory and cpu-time over previous MPS algorithms, yet ensures that large-scale training structures are captured and exported to the simulation exercise.

Owner:CHEVROU USA INC

Multiple-point statistics (MPS) simulation with enhanced computational efficiency

ActiveUS7516055B2Small sizeA large amountSeismic signal processingAnalogue processes for specific applicationsTheoretical computer scienceCPU time

An enhanced multi-point statistical (MPS) simulation is disclosed. A multiple-grid simulation approach is used which has been modified from a conventional MPS approach to decrease the size of a data search template, saving a significant amount of memory and cpu-time during the simulation. Features used to decrease the size of the data search template include: (1) using intermediary sub-grids in the multiple-grid simulation approach, and (2) selecting a data template that is preferentially constituted by previously simulated nodes. The combination of these features allows saving a significant amount of memory and cpu-time over previous MPS algorithms, yet ensures that large-scale training structures are captured and exported to the simulation exercise.

Owner:CHEVROU USA INC

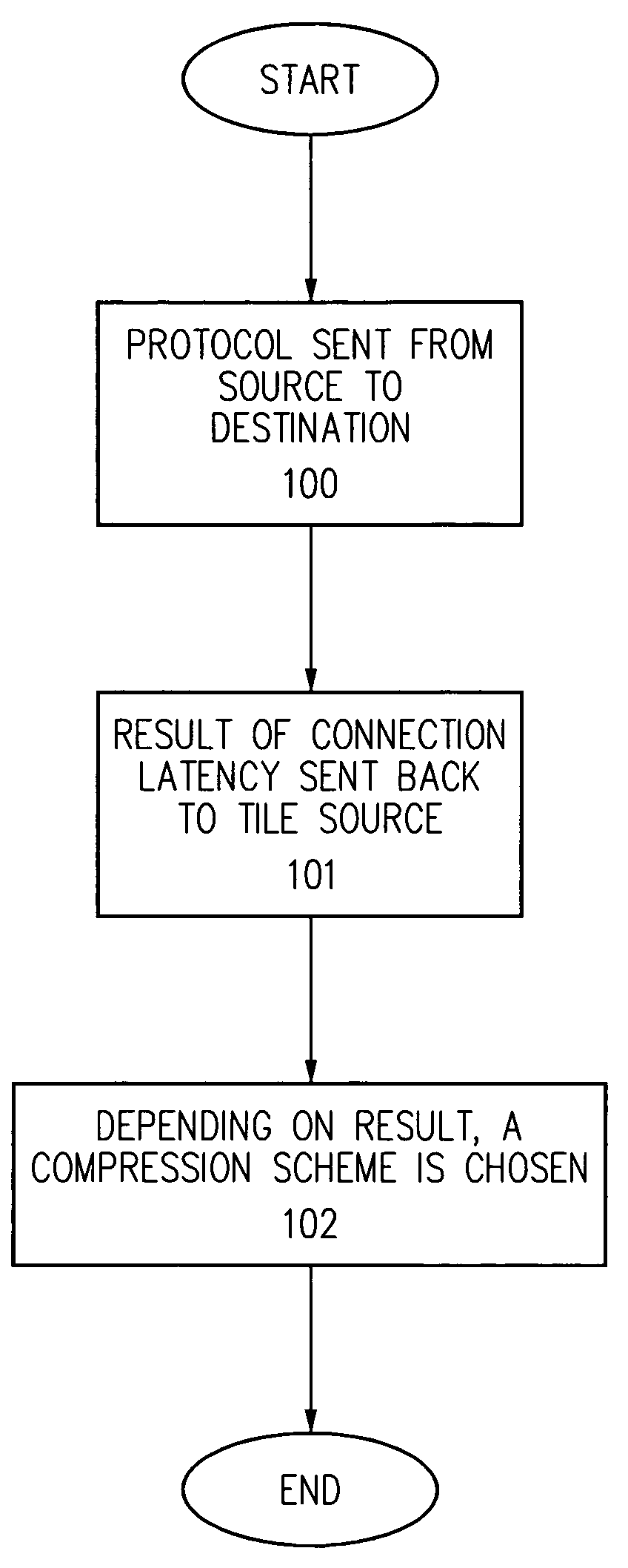

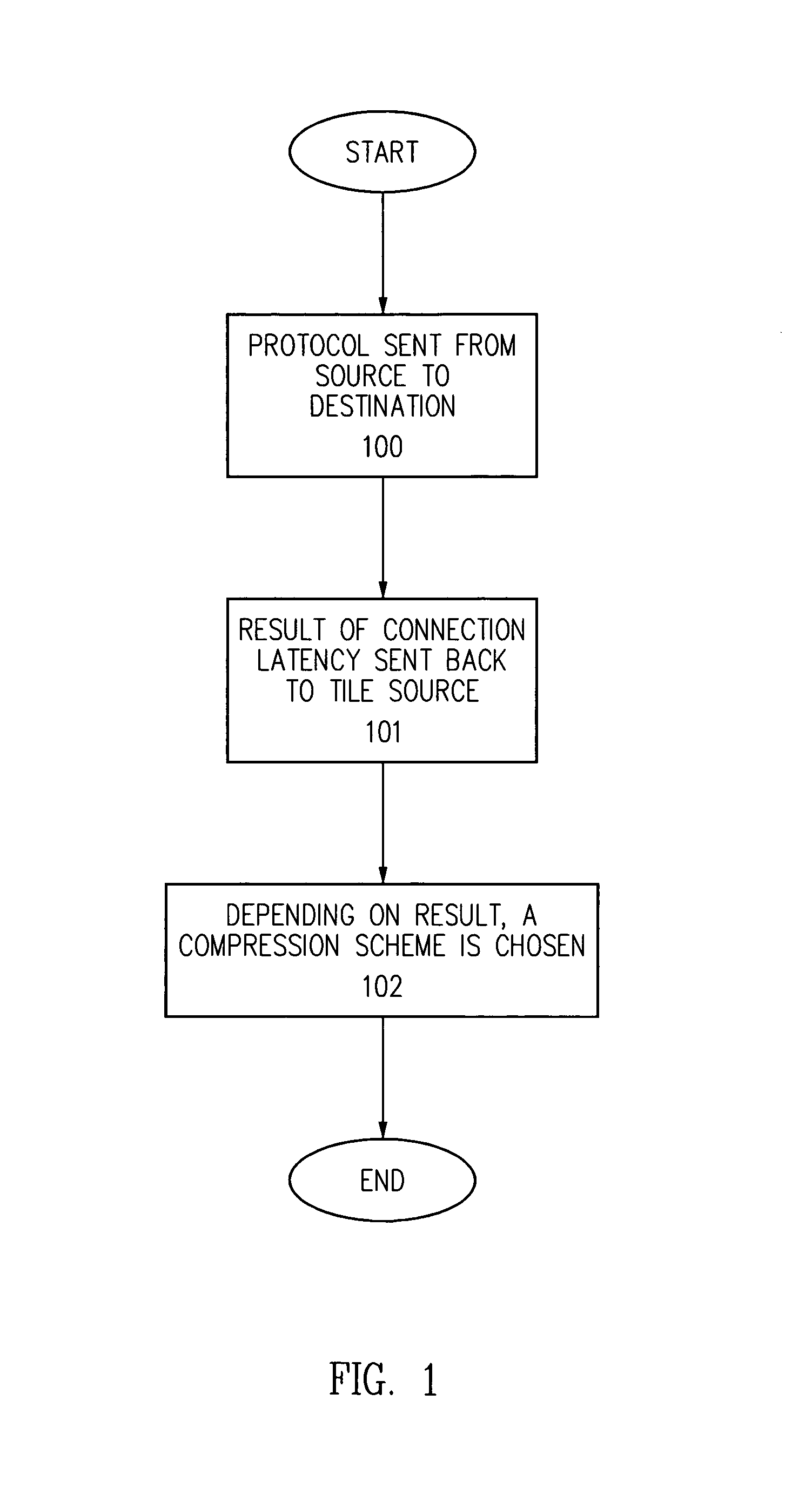

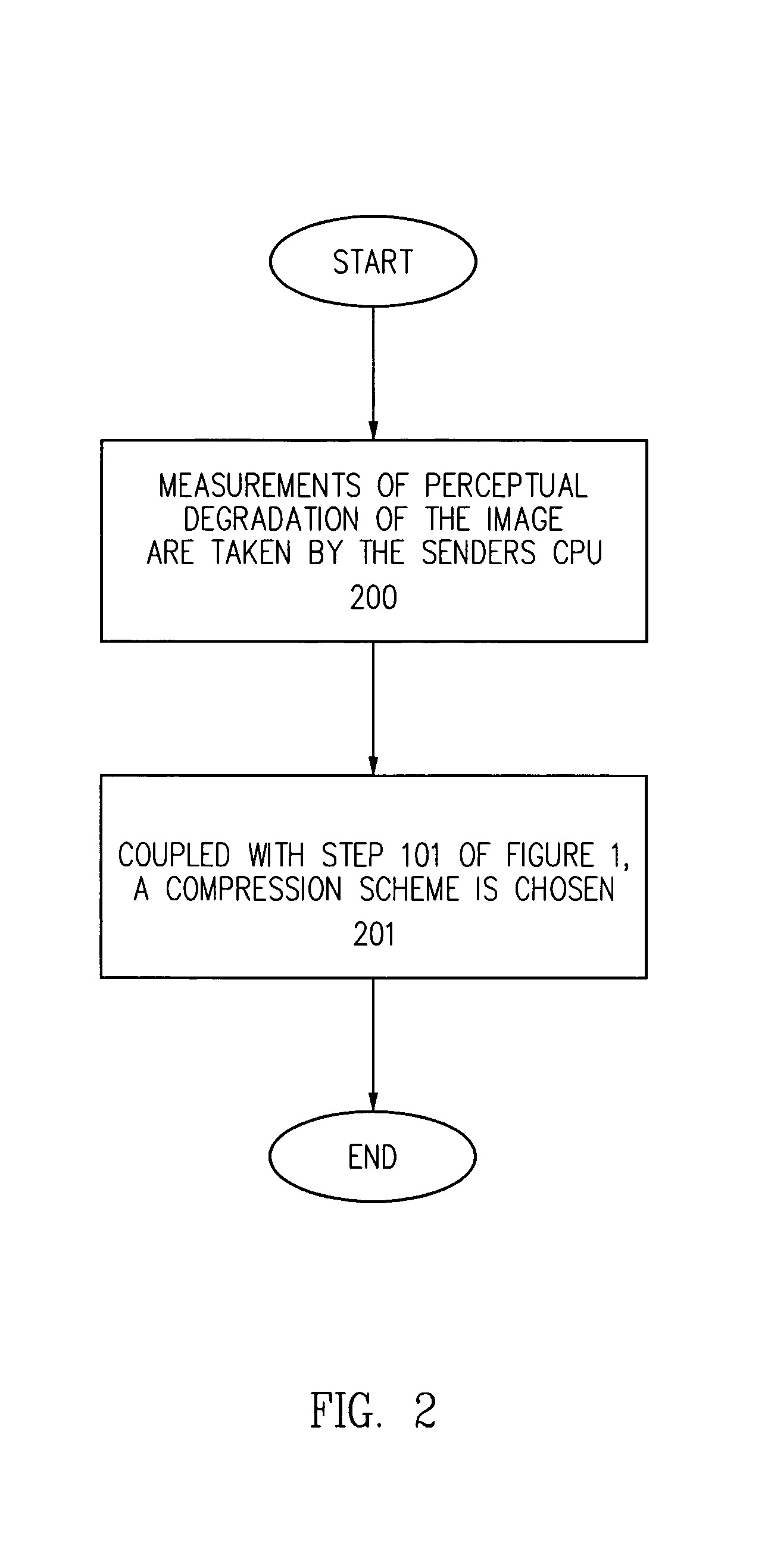

Dynamic bandwidth adaptive image compression/decompression scheme

InactiveUS7024045B2Save CPU timeCharacter and pattern recognitionImage codingImage compressionCPU time

The present invention provides a method and apparatus for a bandwidth adaptive image compression / decompression scheme. In one embodiment, the present invention uses a special protocol between the sender and the receiver to measure the latency of the connection. This protocol and its result are sent and received at an interval based on a dynamic feedback loop algorithm. Based on the results of the protocol, a compression scheme is chosen. This scheme uses CPU time conservatively, and also transmits the most interesting data first. In another embodiment, the present invention throws away data that is repetitious, especially when the connection is down for a short period of time. In yet another embodiment of the present invention, measurements are taken for the perceptual degradation of the image for various compression schemes, and the results are supplemented with the results of the protocol to choose a viable compression / decompression scheme.

Owner:ORACLE INT CORP

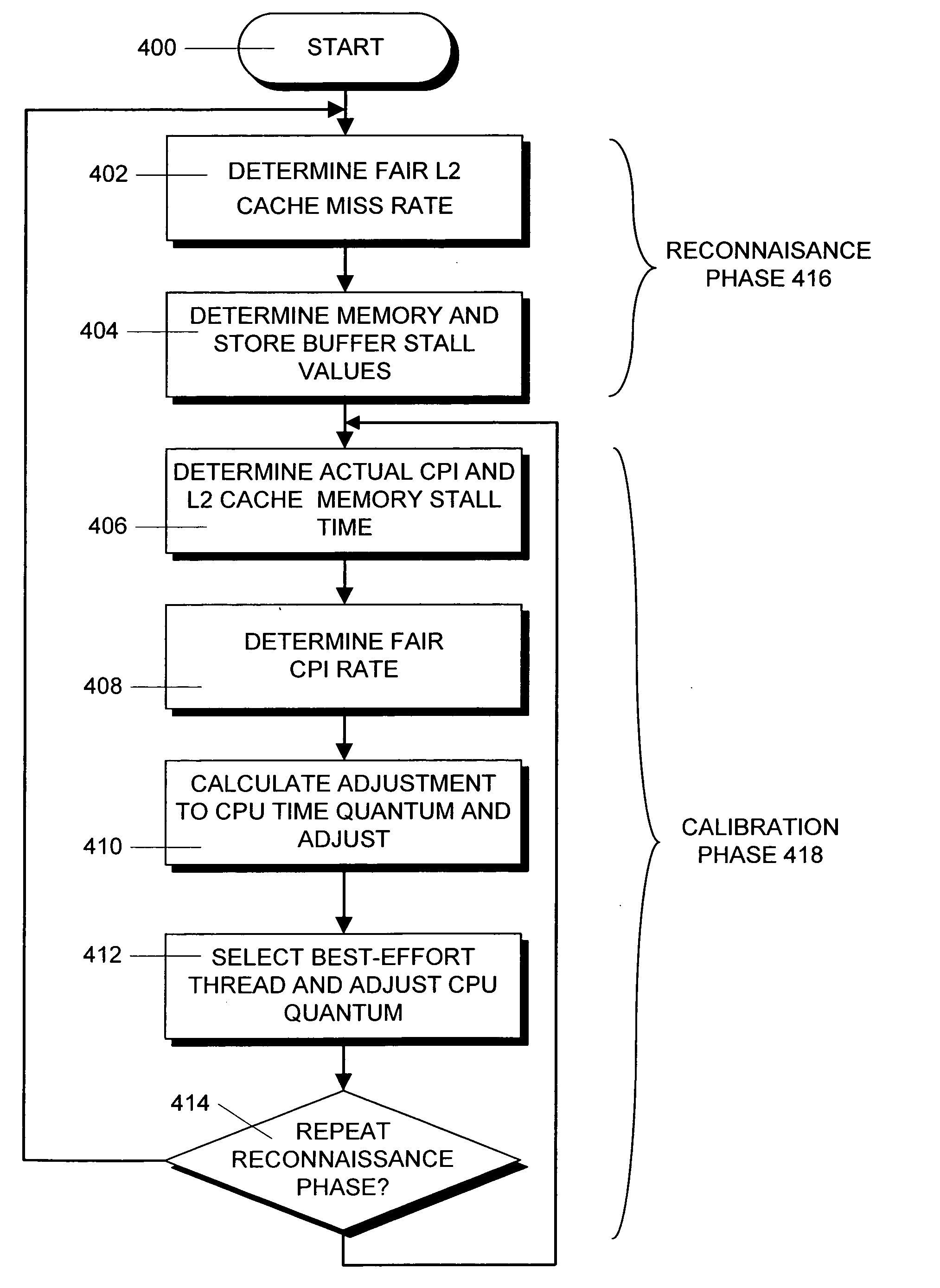

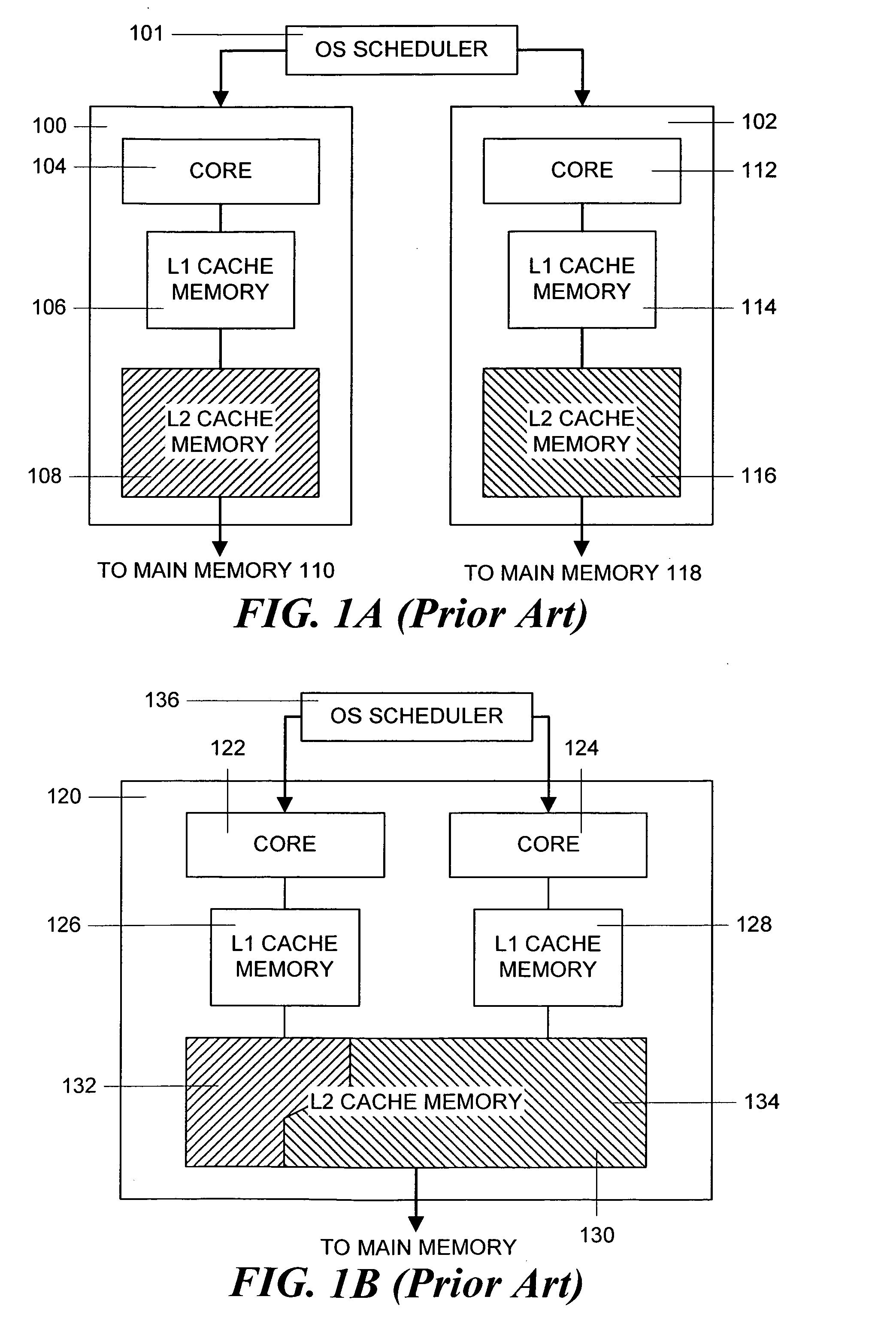

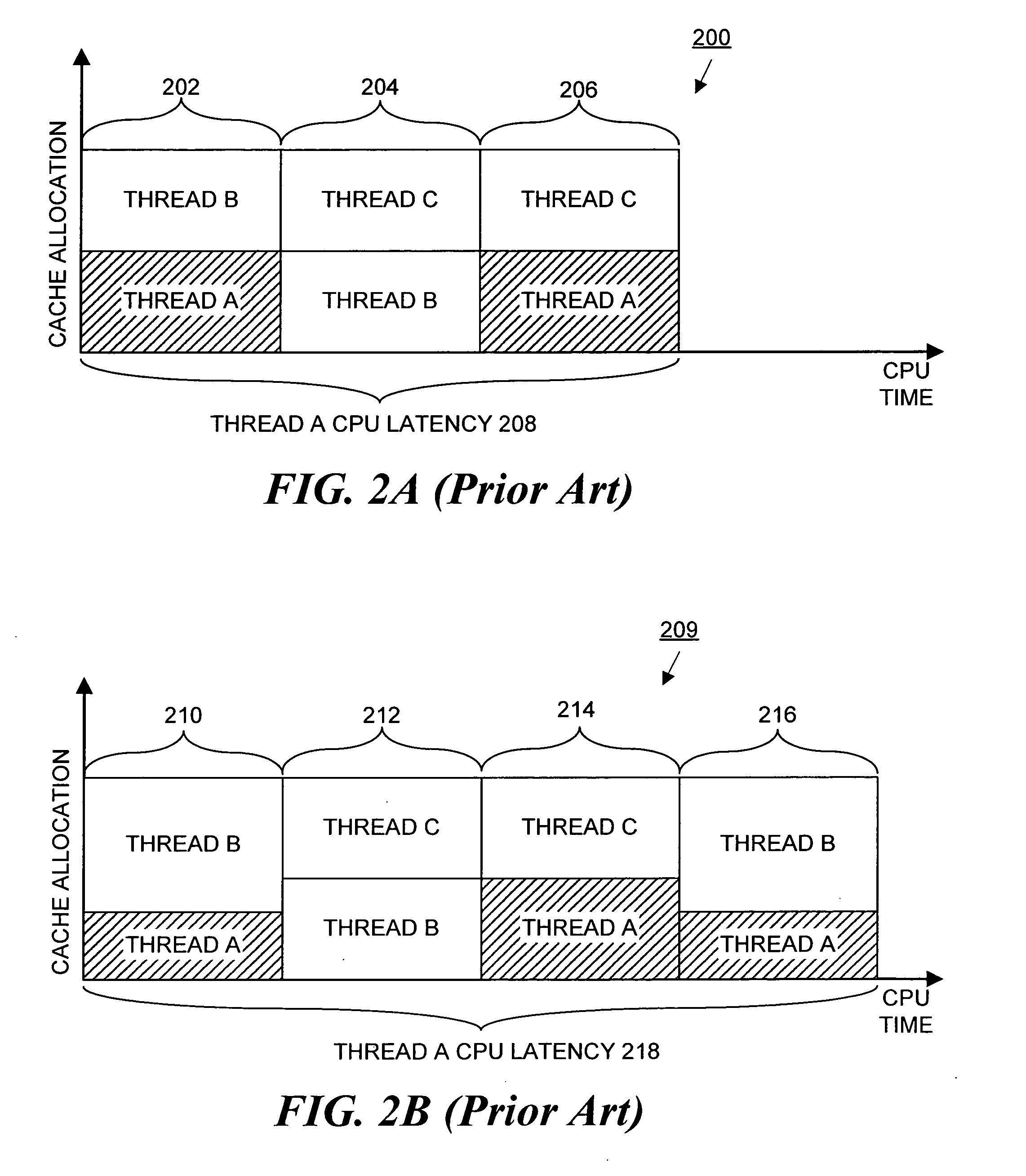

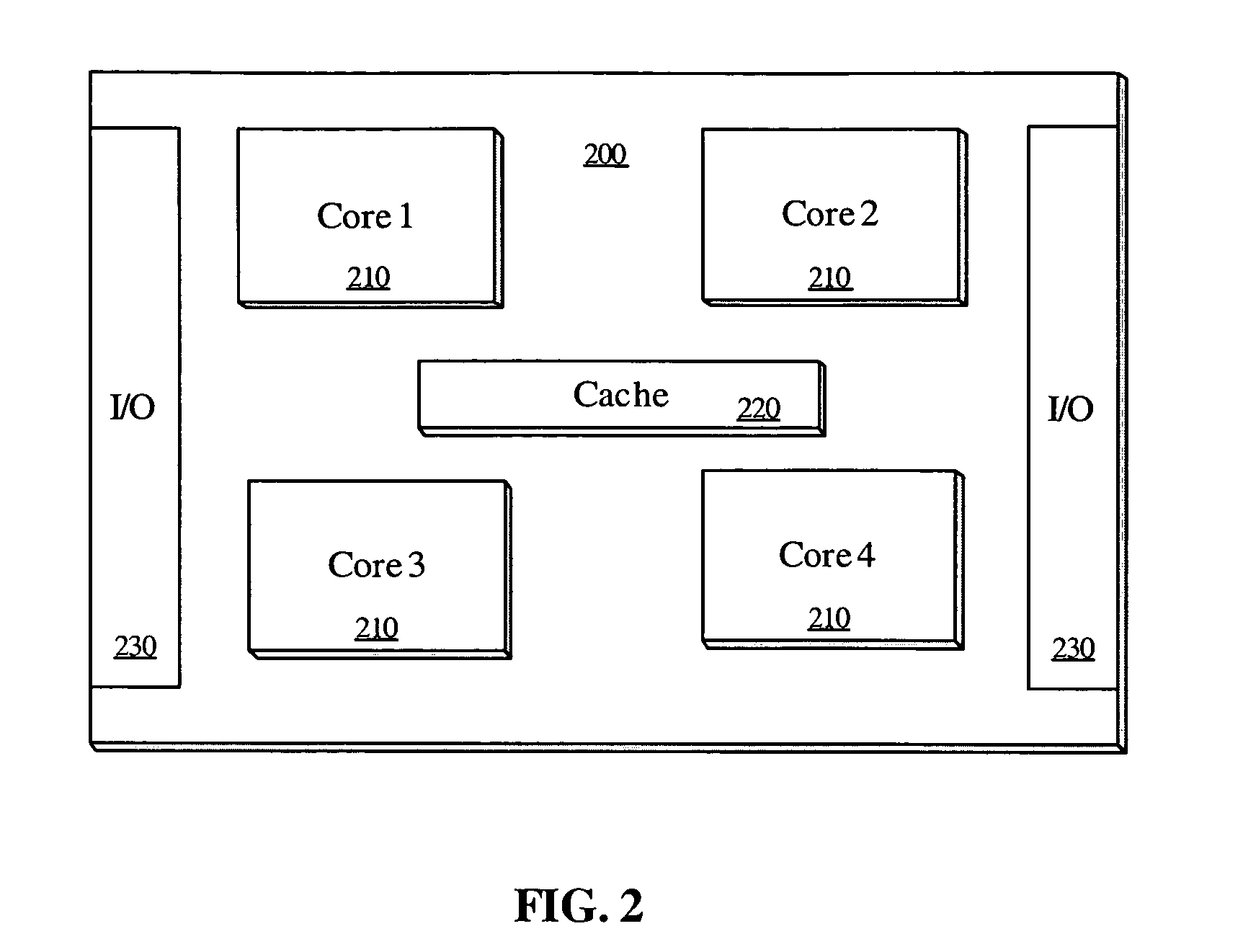

Method and apparatus for achieving fair cache sharing on multi-threaded chip multiprocessors

ActiveUS20080059712A1Fair cache miss rateMemory adressing/allocation/relocationMultiprogramming arrangementsOperational systemMulti processor

In a computer system with a multi-core processor having a shared cache memory level, an operating system scheduler adjusts the CPU latency of a thread running on one of the cores to be equal to the fair CPU latency which that thread would experience when the cache memory was equally shared by adjusting the CPU time quantum of the thread. In particular, during a reconnaissance time period, the operating system scheduler gathers information regarding the threads via conventional hardware counters and uses an analytical model to estimate a fair cache miss rate that the thread would experience if the cache memory was equally shared. During a subsequent calibration period, the operating system scheduler computes the fair CPU latency using runtime statistics and the previously computed fair cache miss rate value to determine the fair CPI value.

Owner:ORACLE INT CORP

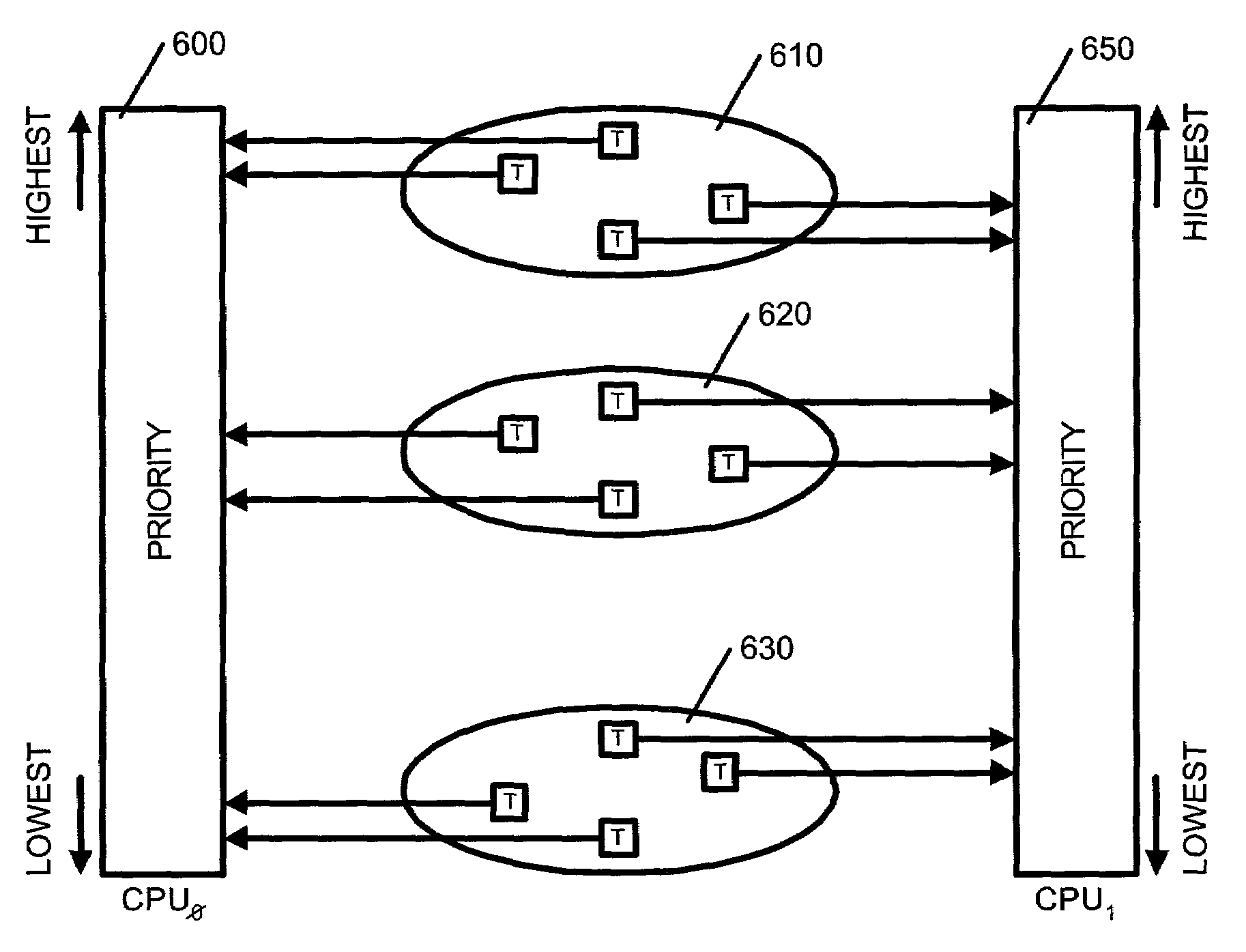

Multiprocessor load balancing system for prioritizing threads and assigning threads into one of a plurality of run queues based on a priority band and a current load of the run queue

InactiveUS7080379B2Resource allocationMultiple digital computer combinationsCurrent loadMulti processor

A method, system and apparatus for integrating a system task scheduler with a workload manager are provided. The scheduler is used to assign default priorities to threads and to place the threads into run queues and the workload manager is used to implement policies set by a system administrator. One of the policies may be to have different classes of threads get different percentages of a system's CPU time. This policy can be reliably achieved if threads from a plurality of classes are spread as uniformly as possible among the run queues. To do so, the threads are organized in classes. Each class is associated with a priority as per a use-policy. This priority is used to modify the scheduling priority assigned to each thread in the class as well as to determine in which band or range of priority the threads fall. Then periodically, it is determined whether the number of threads in a band in a run queue exceeds the number of threads in the band in another run queue by more than a pre-determined number. If so, the system is deemed to be load-imbalanced. If not, the system is load-balanced by moving one thread in the band from the run queue with the greater number of threads to the run queue with the lower number of threads.

Owner:IBM CORP

Multiple-mode-string matching method and device

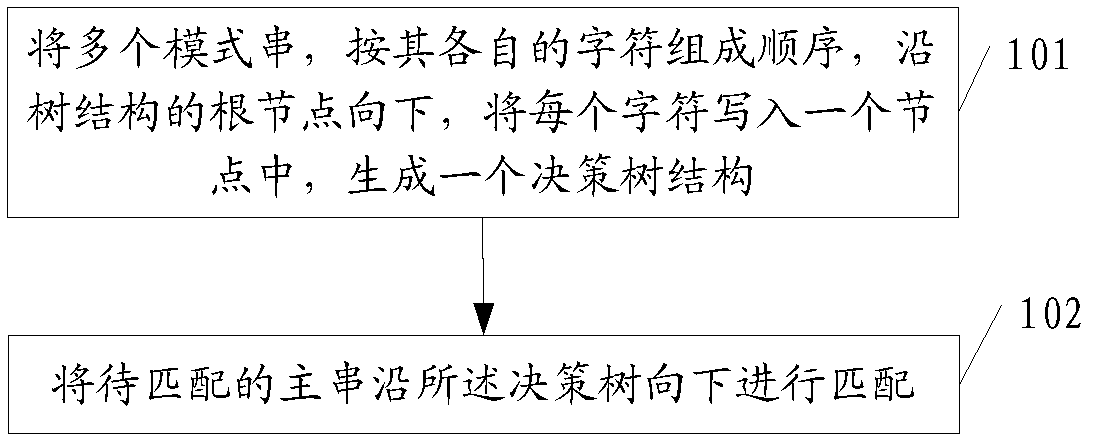

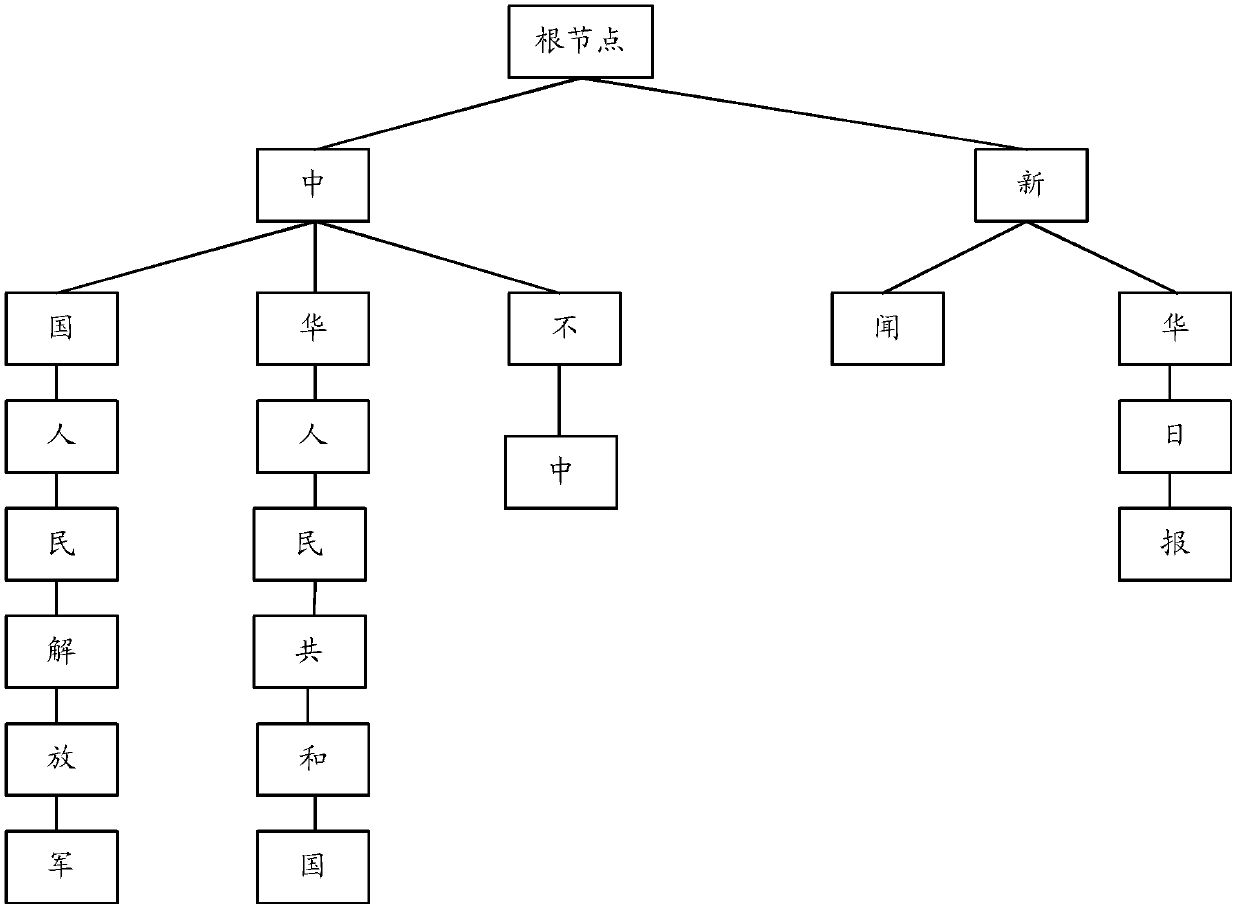

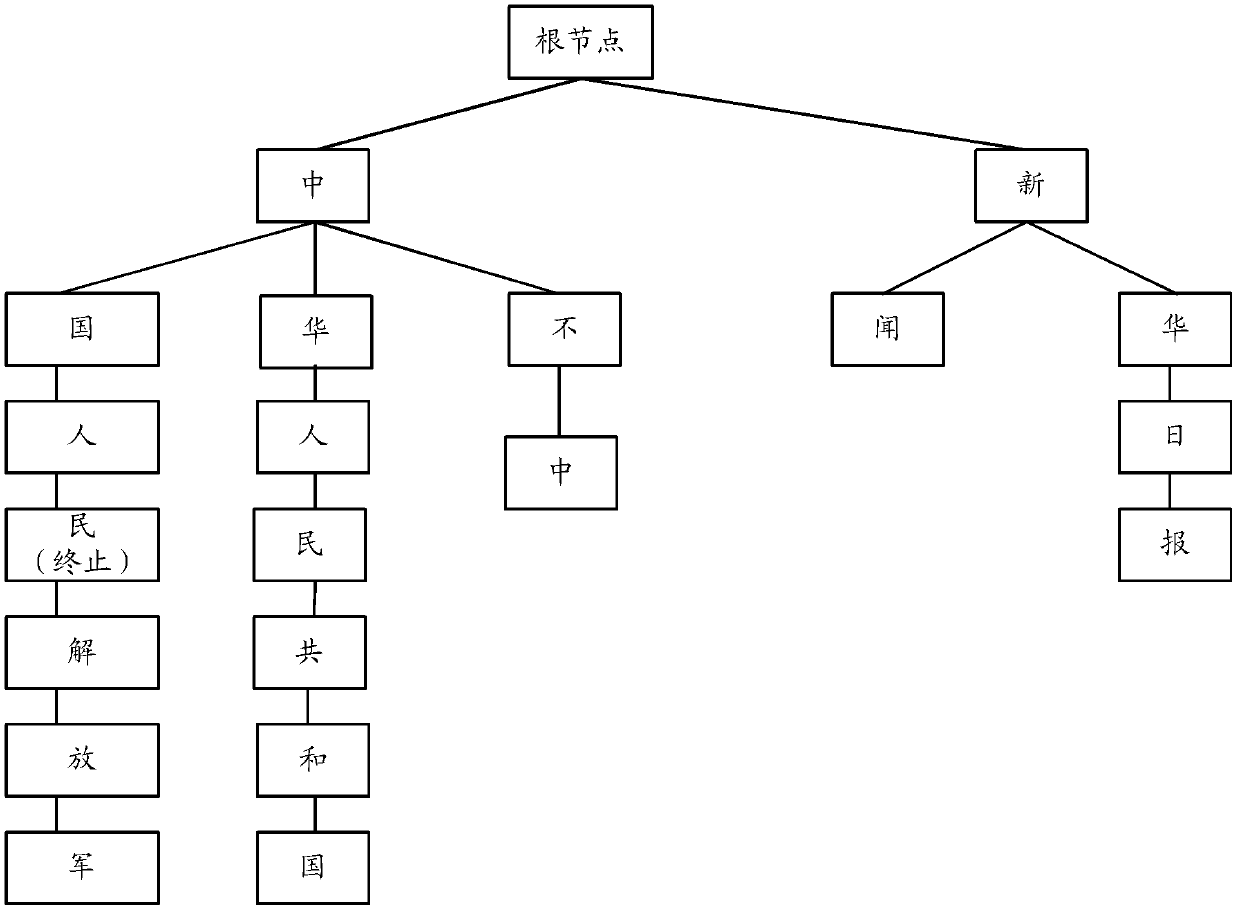

ActiveCN103377259AAchieve matchingReduce time overheadSpecial data processing applicationsExact matchTheoretical computer science

The invention discloses a multiple-mode-string matching method and device. The method includes that a plurality of mode strings are sequenced according to their respective characters, each character is written into a node along a root node of a tree structure downwards to generate a decision tree structure, and main strings to be matched are matched downwards along the decision tree. By means of the method and device, accurate matching of multiple mode strings can be achieved, meanwhile sub nodes are searched according to the Hash values that the sub nodes correspond to, the width change of the decision tree cannot affect time expense of a central processing unit (CPU) matched with the strings, and the time expense of the algorithm depends on the average depth of the decision tree and is unrelated to the number of the mode strings. For string matching with a large number of mode strings, the algorithm can greatly reduce the time expense of the CPU and improve application response speed.

Owner:BEIJING FEINNO COMM TECH

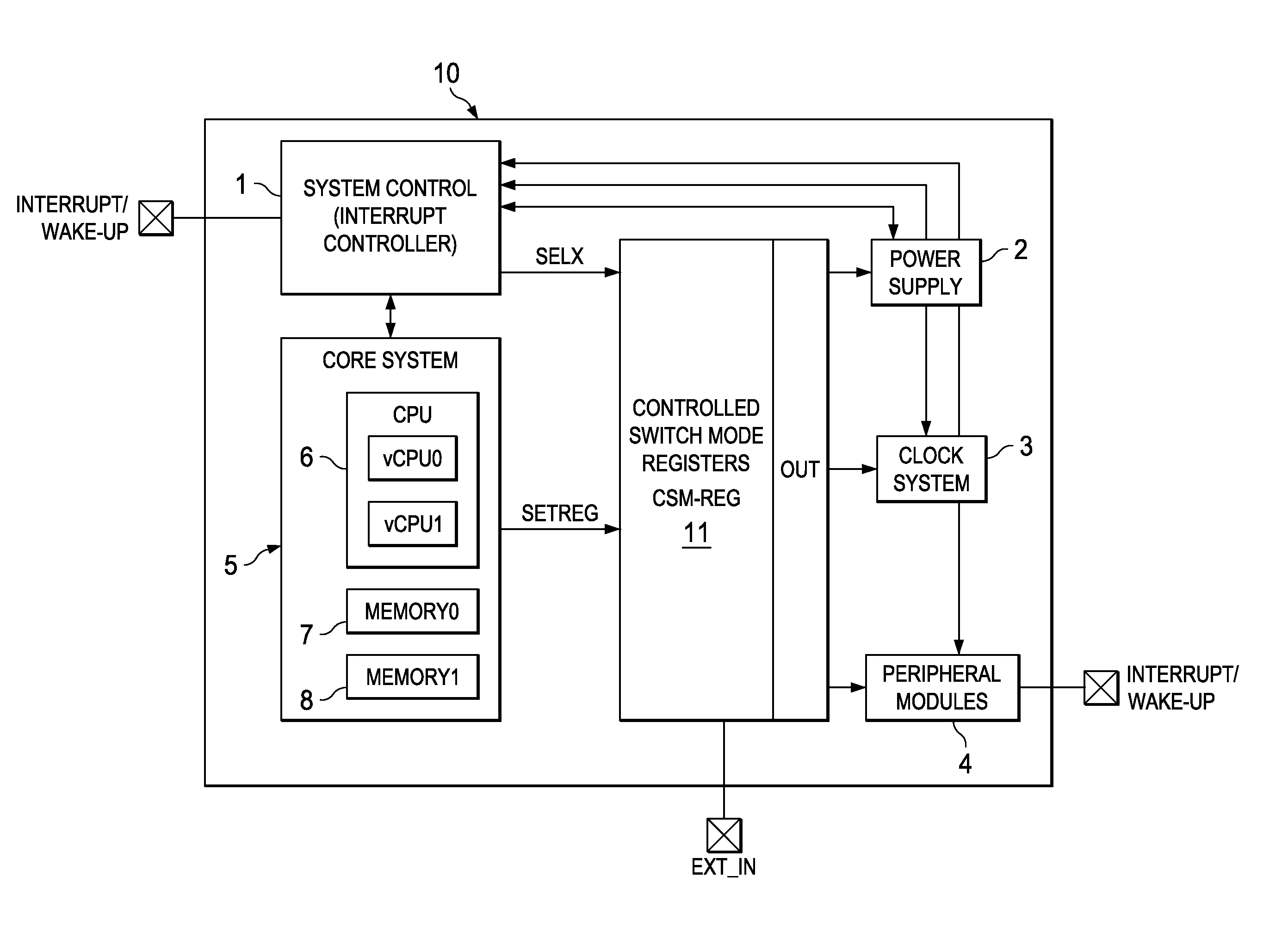

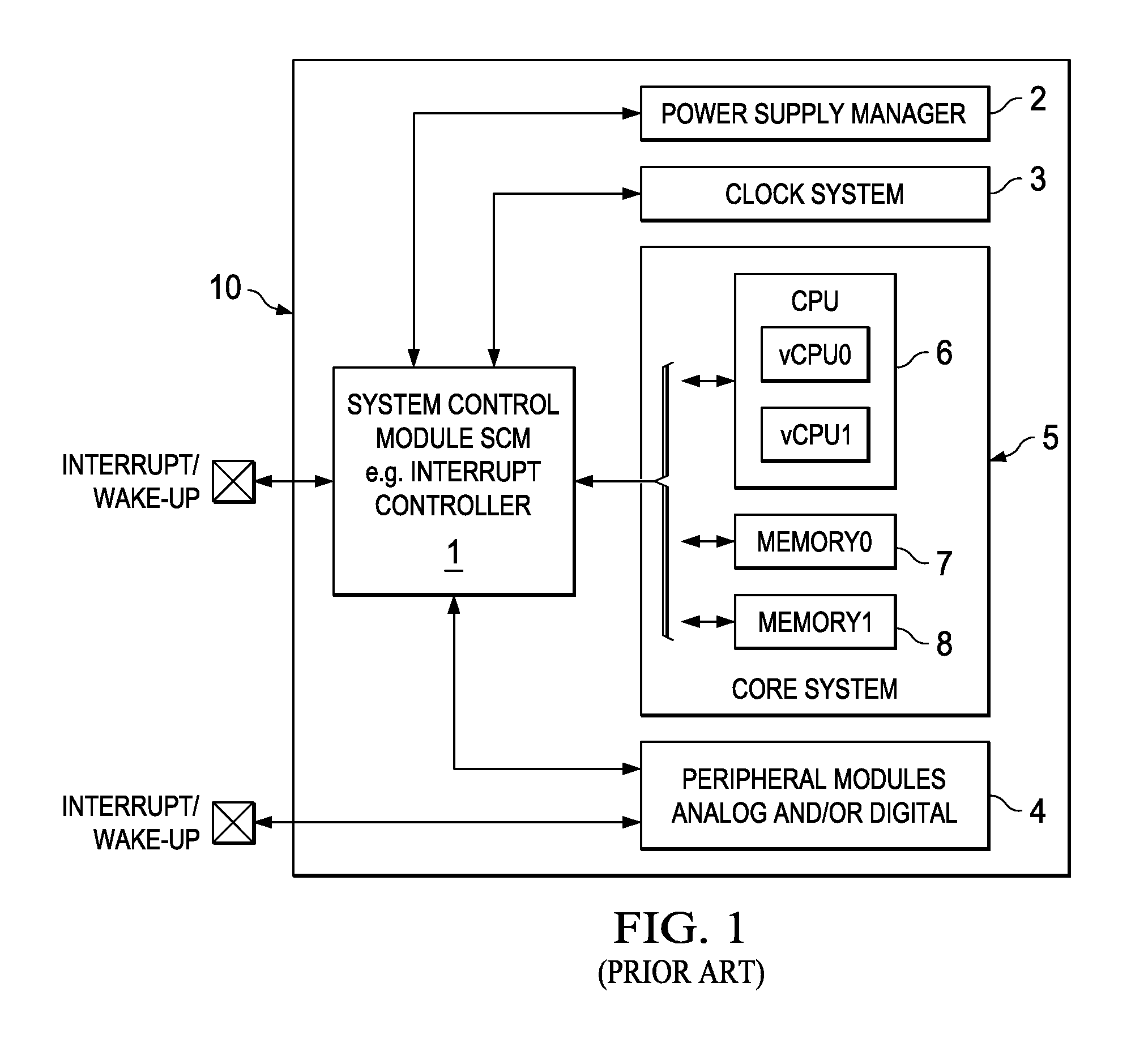

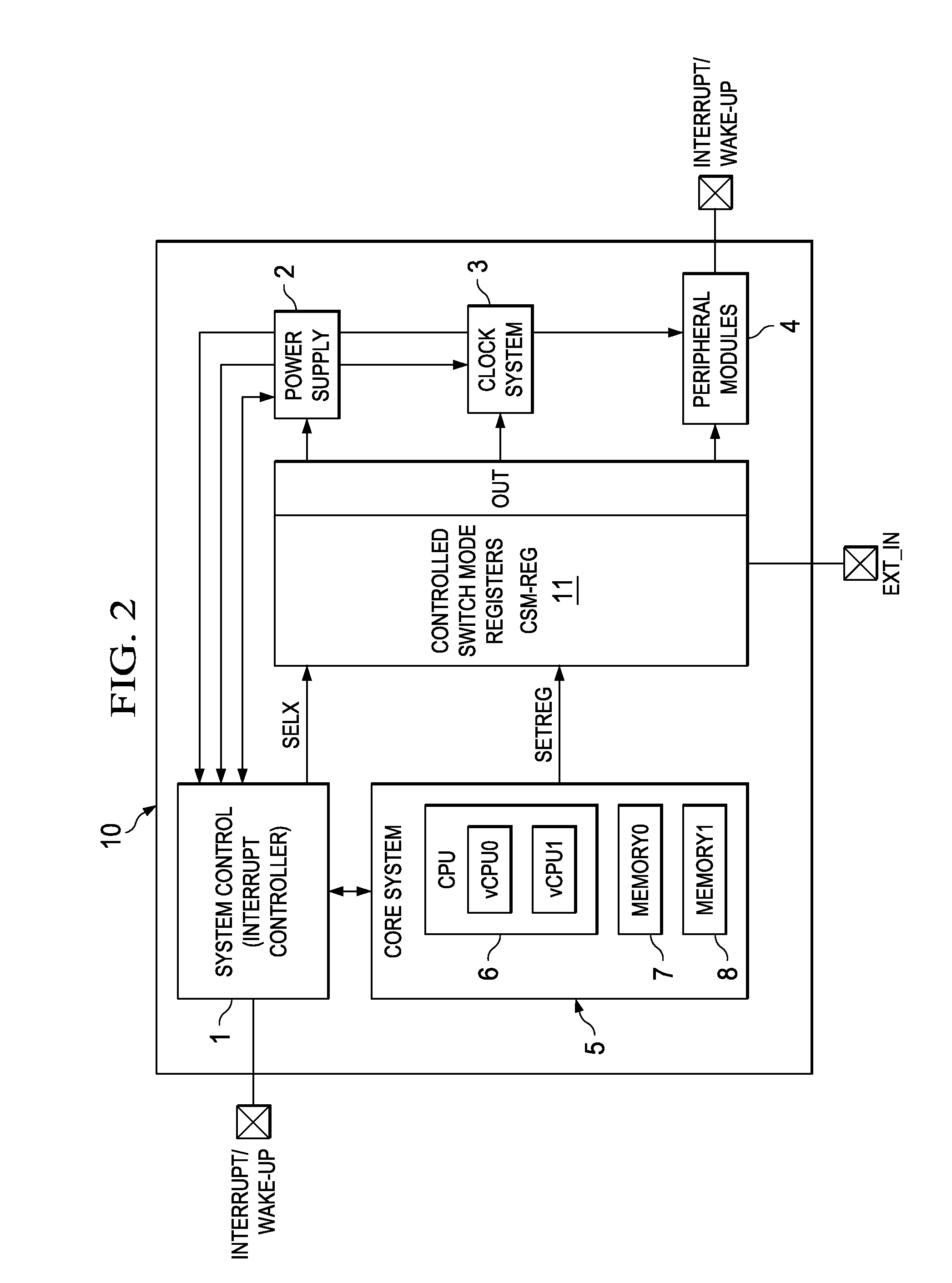

Apparatus and Method with Controlled Switch Method

ActiveUS20100191979A1Solve excessive overheadLess powerEnergy efficient ICTResource allocationMicrocontrollerProcessor register

An embedded microcontroller system comprises a central processing unit, a system controller for receiving and handling an interrupt, a register having storage locations containing sets of predefined system data for different operating conditions of the system assigned to the interrupts coupled to set a system configuration. The system data in the register is defined and stored before receipt of an interrupt. On receipt of an interrupt the system controller transmits a selection signal to the register. The register selects a predefined storage location assigned to the received interrupt. The corresponding system configuration data is used to control system configuration of the embedded microcontroller system, such as allocation of CPU time to virtual CPUs and selection of clock frequency or power voltage for modules.

Owner:TEXAS INSTR INC

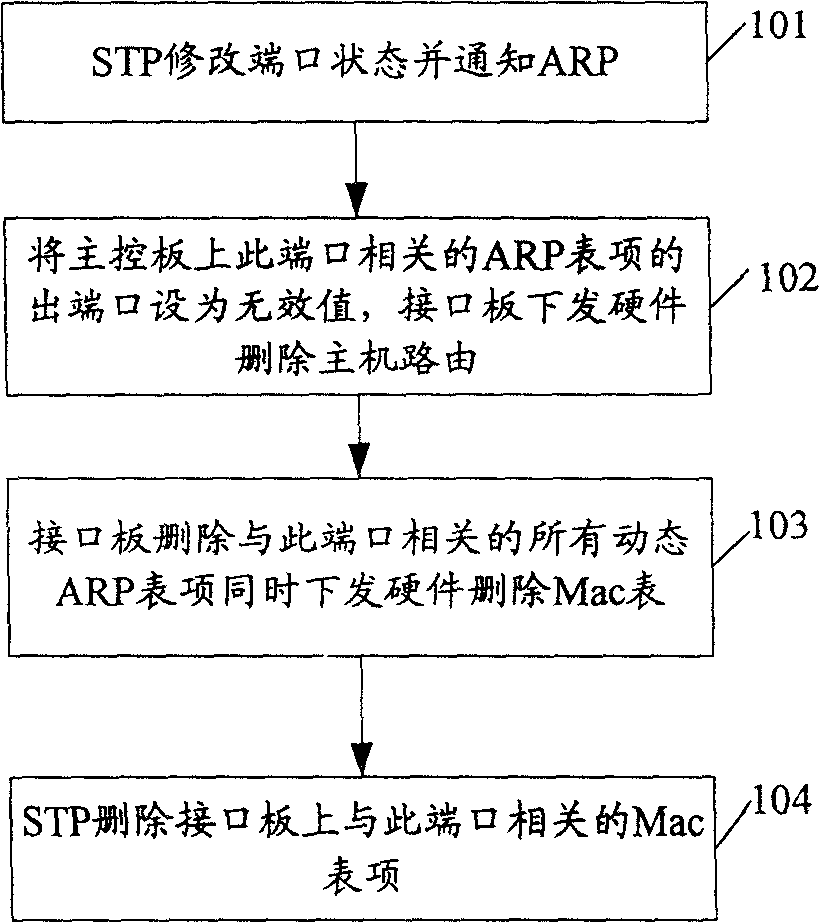

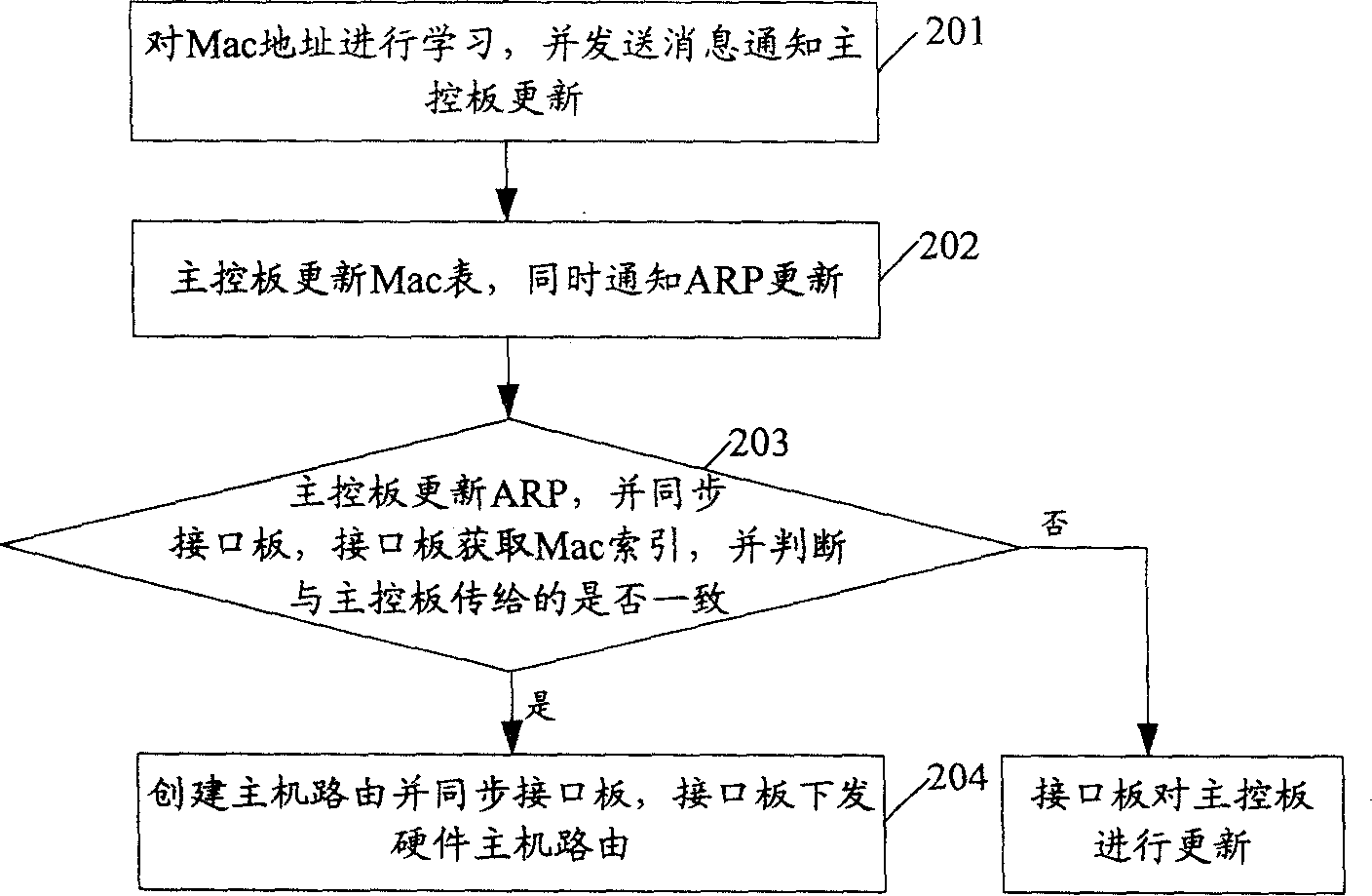

Method for renewing address analysis protocol rapidly

InactiveCN1764193APrevent floodingShorten the timeData switching networksAddress Resolution ProtocolCPU time

The invention discloses a method to rapid update ARP, which comprises: when the exchange port changes, it conserves the ARP list item of the exchange; the exchange acquires opposite Mac address and carried port information to find ARP list item with same Mac address information and update the port information in ARP item. This invention can save CPU time, improves convergence capacity and efficiency, and avoids flooding of much message as ARP active triggers.

Owner:NEW H3C TECH CO LTD

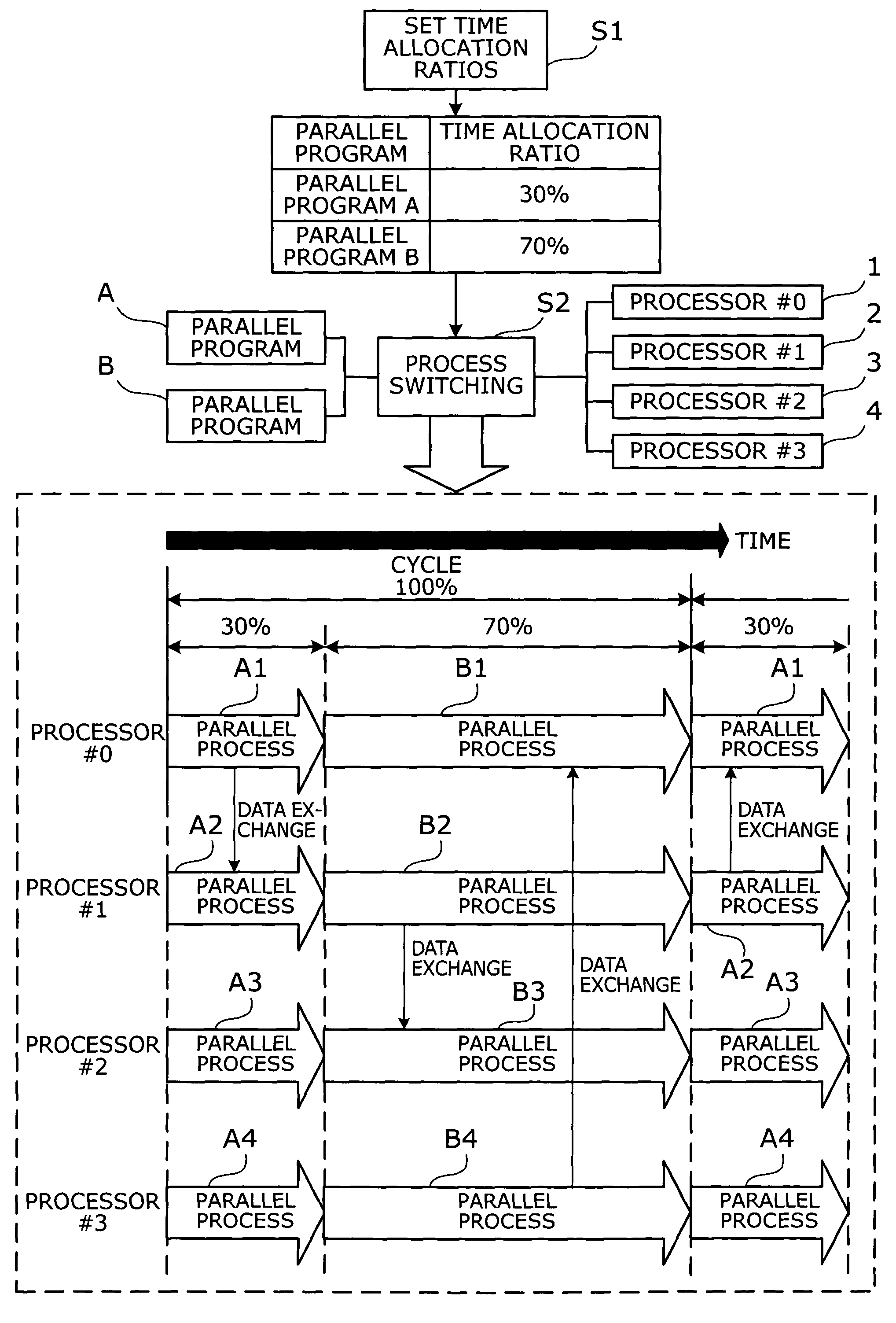

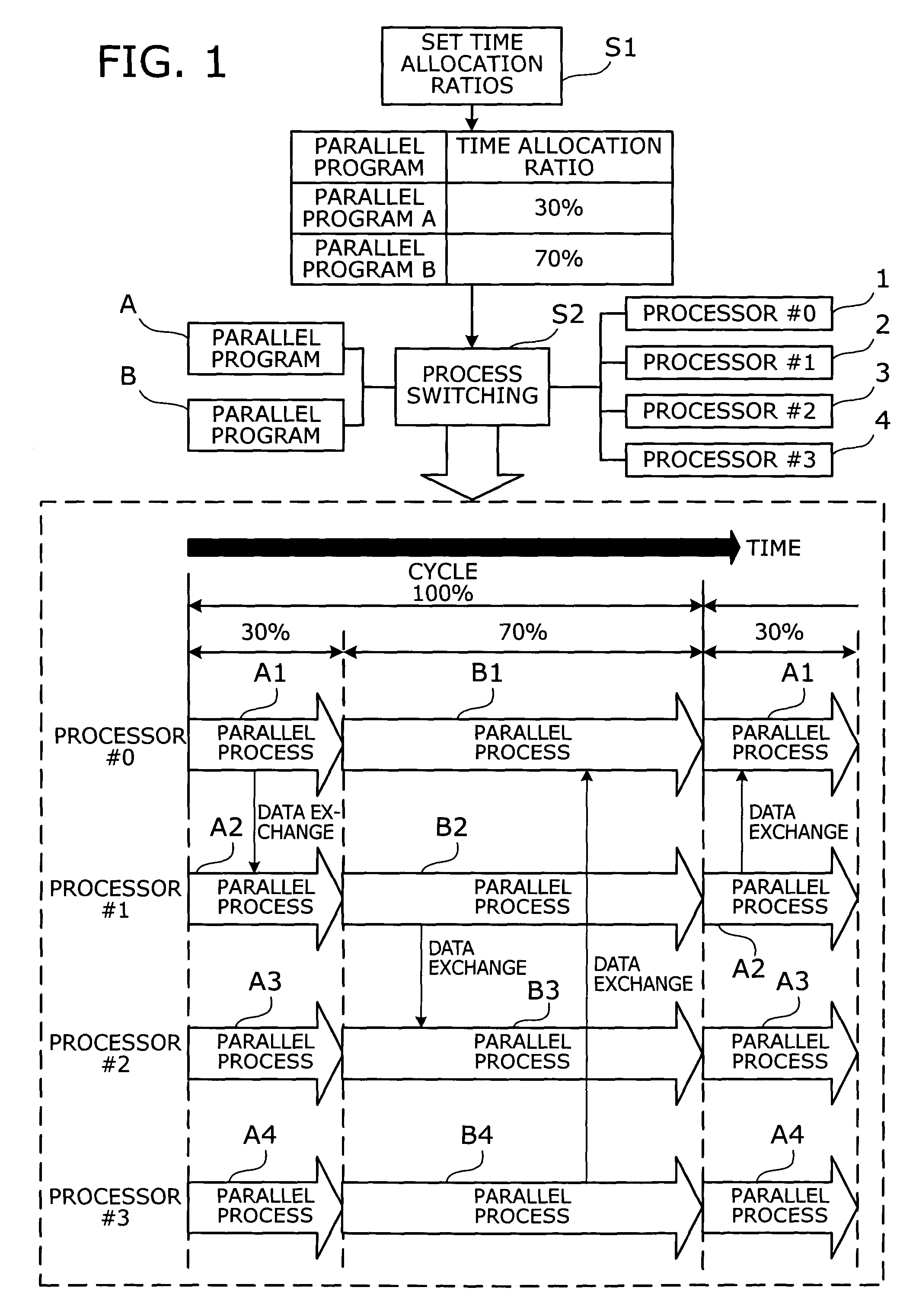

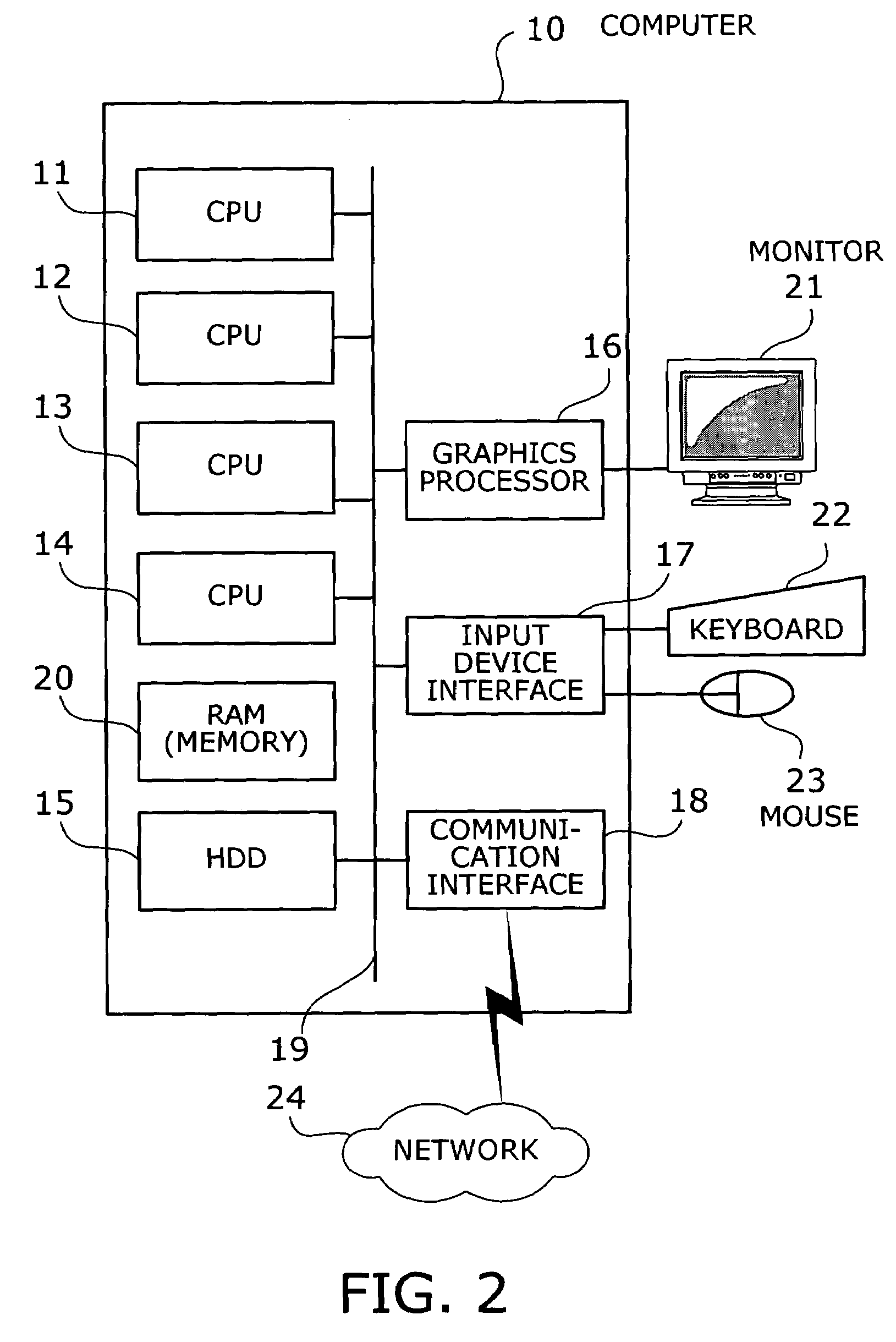

Parallel process execution method and multiprocessor computer

A parallel process execution method that allocates CPU time to parallel processes at any desired ratios. The method sets a time allocation ratio to determine how much of a given cycle period should be allocated for execution of a parallel program. Process switching is then performed in accordance with the time allocation ratio set to the parallel program. More specifically, parallel processes produced from a parallel program are each assigned to a plurality of processors, and those parallel processes are started simultaneously on the processors. When the time elapsed since the start of the parallel processes has reached a point that corresponds to the time allocation ratio that has been set to the parallel program, the execution of the assigned parallel processes is stopped simultaneously on the plurality of processors.

Owner:FUJITSU LTD

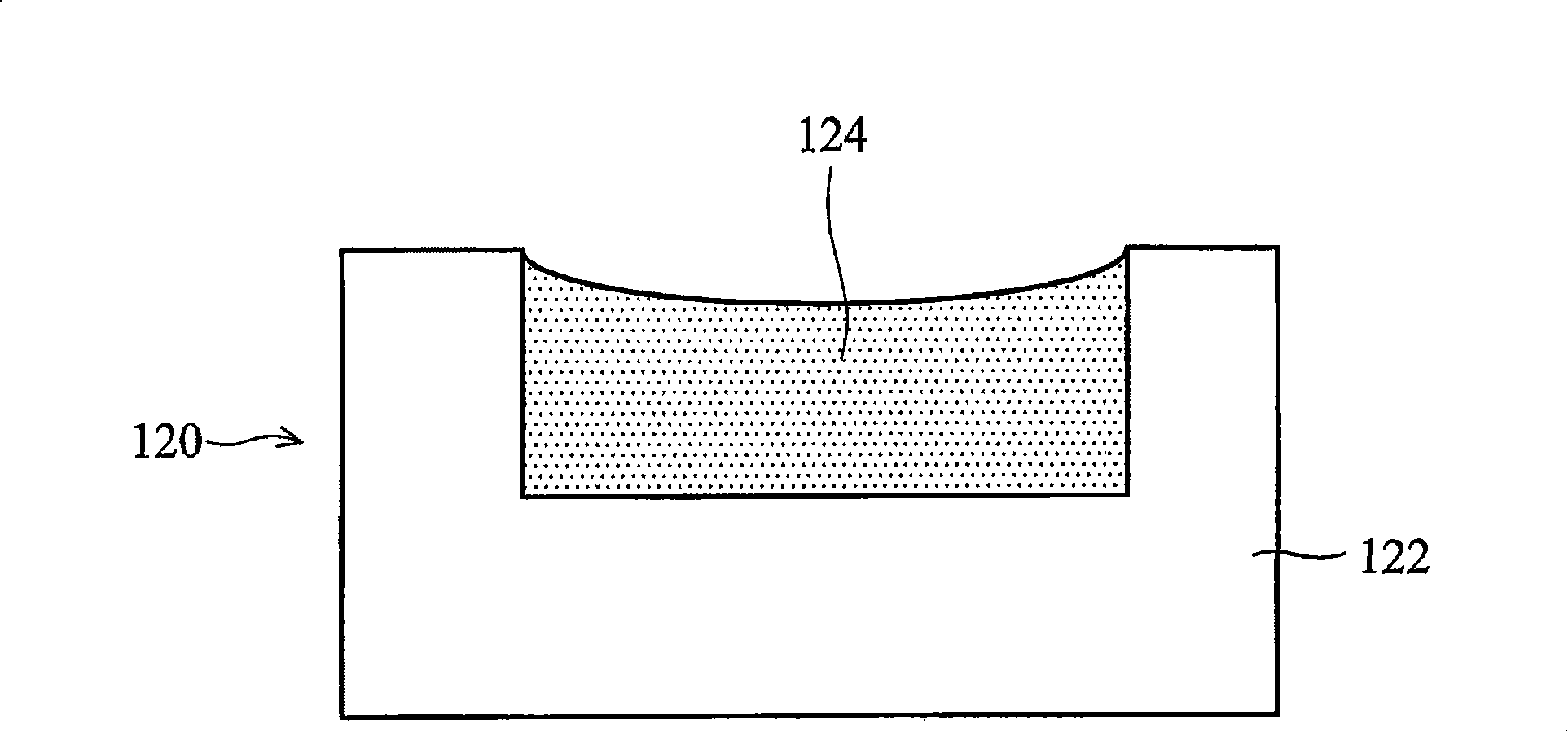

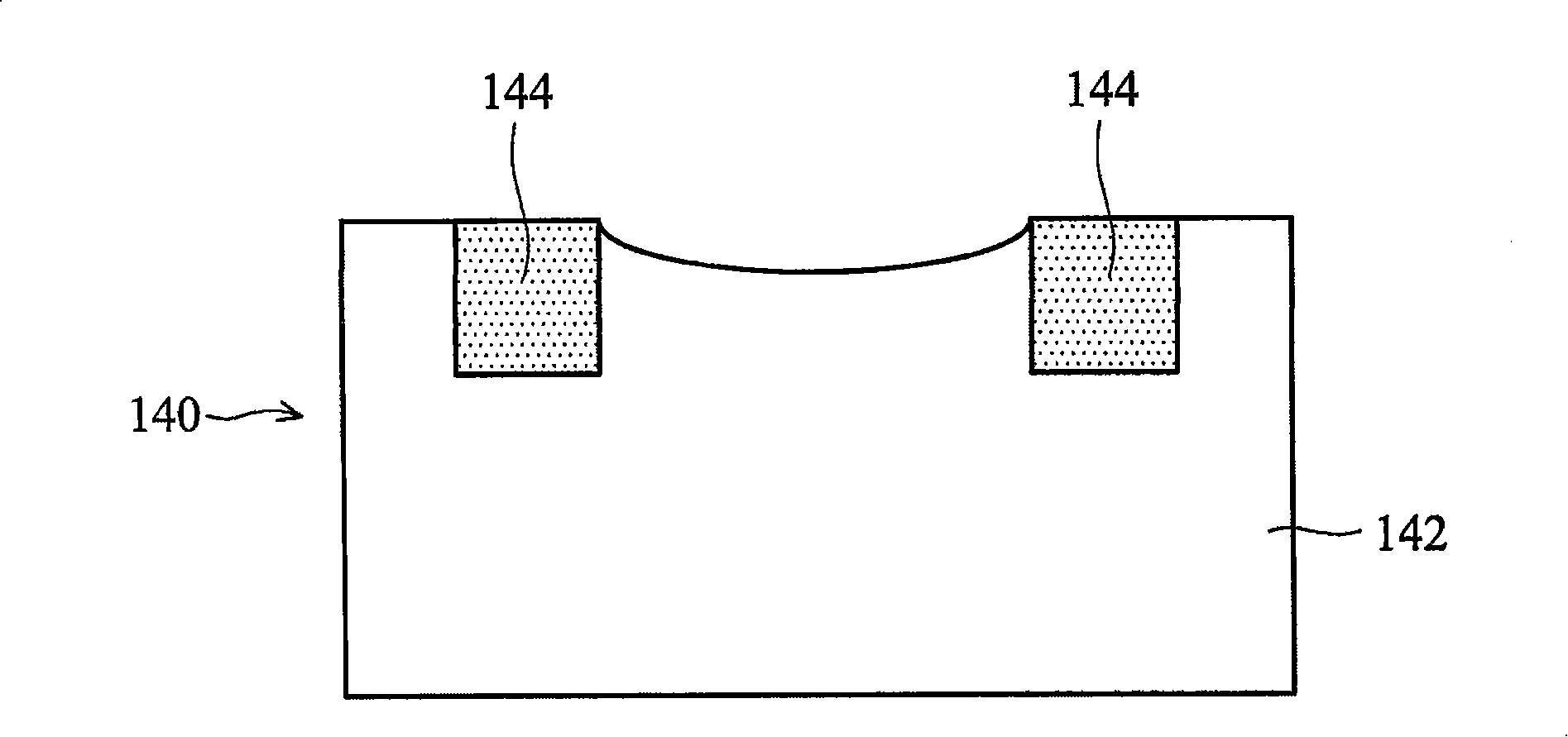

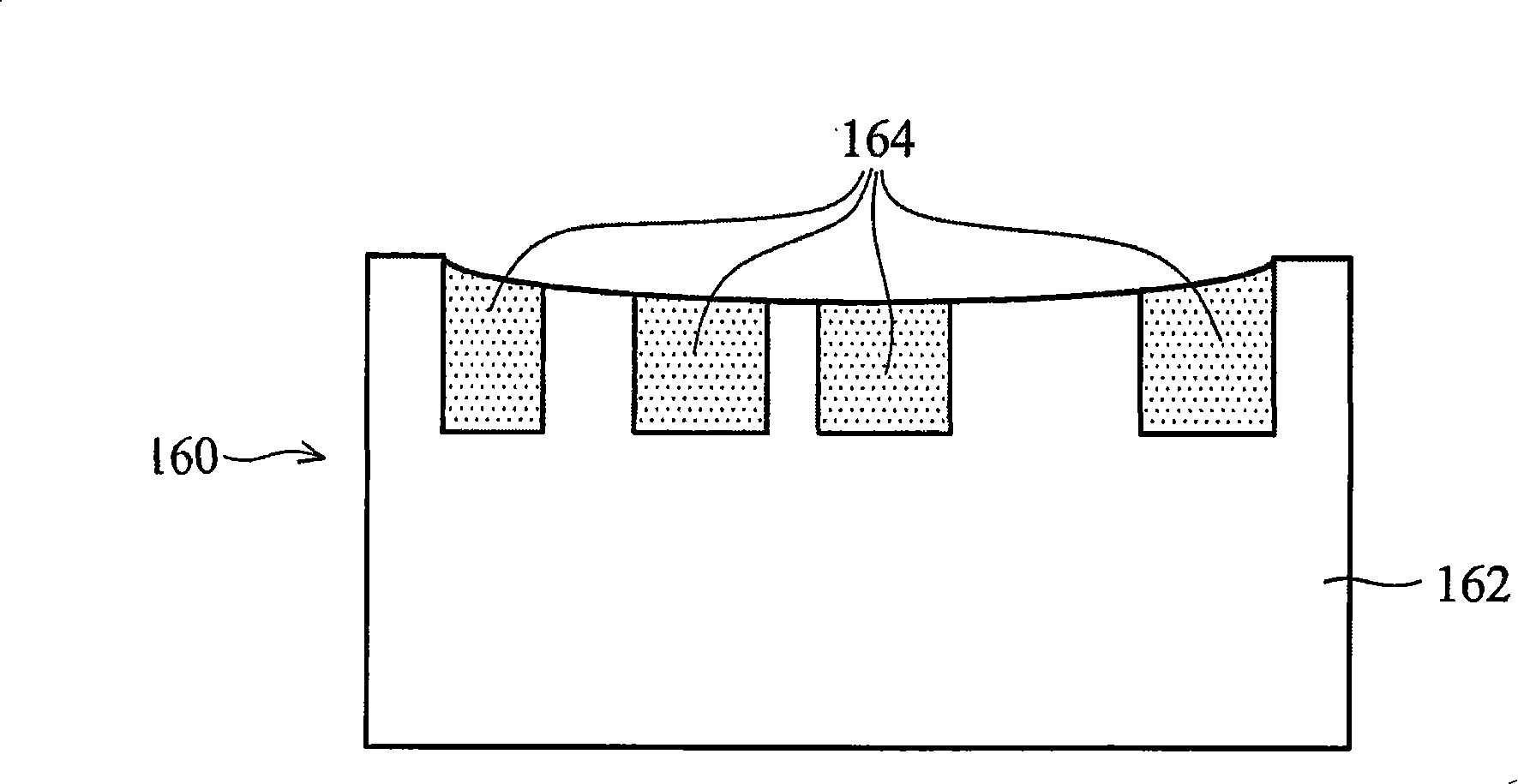

Method of filling redundancy for semiconductor manufacturing process and semiconductor device

ActiveCN101231667AReduce in quantityReduce consumptionSemiconductor/solid-state device detailsSolid-state devicesGraphicsCPU time

A dummy filling method for a semiconductor manufacturing process provides a circuit pattern and generates a density report of the circuit pattern to identify a feasible area for dummy insertion. The method also includes using the density report to simulate a planarization manufacturing process and identifying hot spots on the circuit pattern, filling virtual redundant patterns in the feasible area, and then adjusting the density report. The method simulates the planarization process using the adjusted density report until the hot spot is removed. The invention can reduce the amount of redundant metal in circuit design and save photomask time, CPU time, and signal storage memory. This will help design timing closure (time closure) faster and easier.

Owner:TAIWAN SEMICON MFG CO LTD

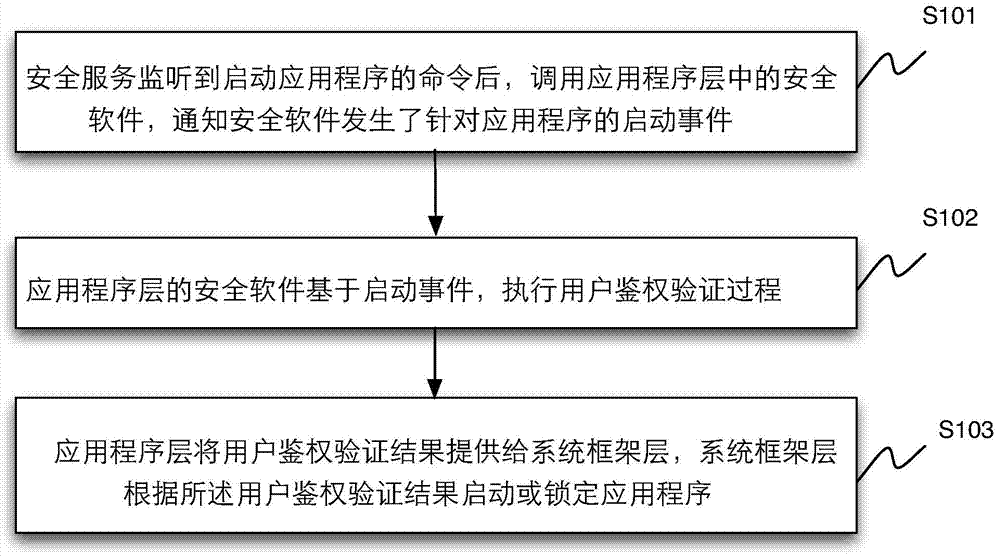

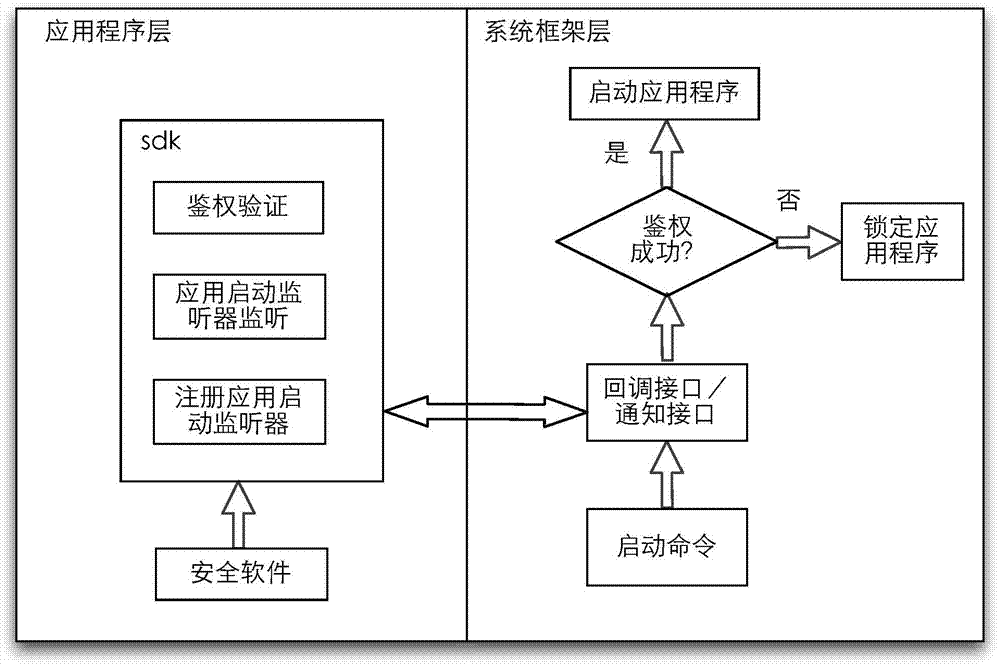

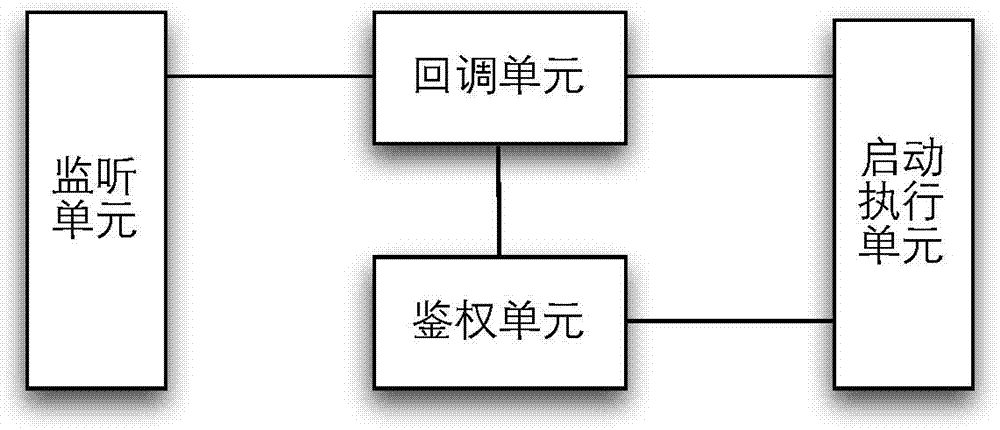

Application program starting control method and device

ActiveCN103577237AAvoid flashAvoid wasting timed polling issuesProgram loading/initiatingTransmissionSecurity softwareApplication procedure

The invention discloses an application program starting control method and device. Starting of an application program locked by safety software is controlled by means of system service used for processing safety service in a system framework layer. The method includes the steps that after the safety service monitors a command to start the application program, the safety software in an application program layer is called; the safety software executes a user authentication and verification process on the basis of a starting event; the system framework layer determines to start or lock the application program according to a user authentication result. Due to the facts that the application program is not started directly and the safety software is called back, the application program can be started only when user authentication is successful, and the application program is locked when authentication fails, so that the problem that the application program flashes due to the fact that the application program is started firstly and then the safety software is used for locking the application program is avoided, and the problem that CUP time is wasted in timed training in rotation is avoided.

Owner:BEIJING QIHOO TECH CO LTD

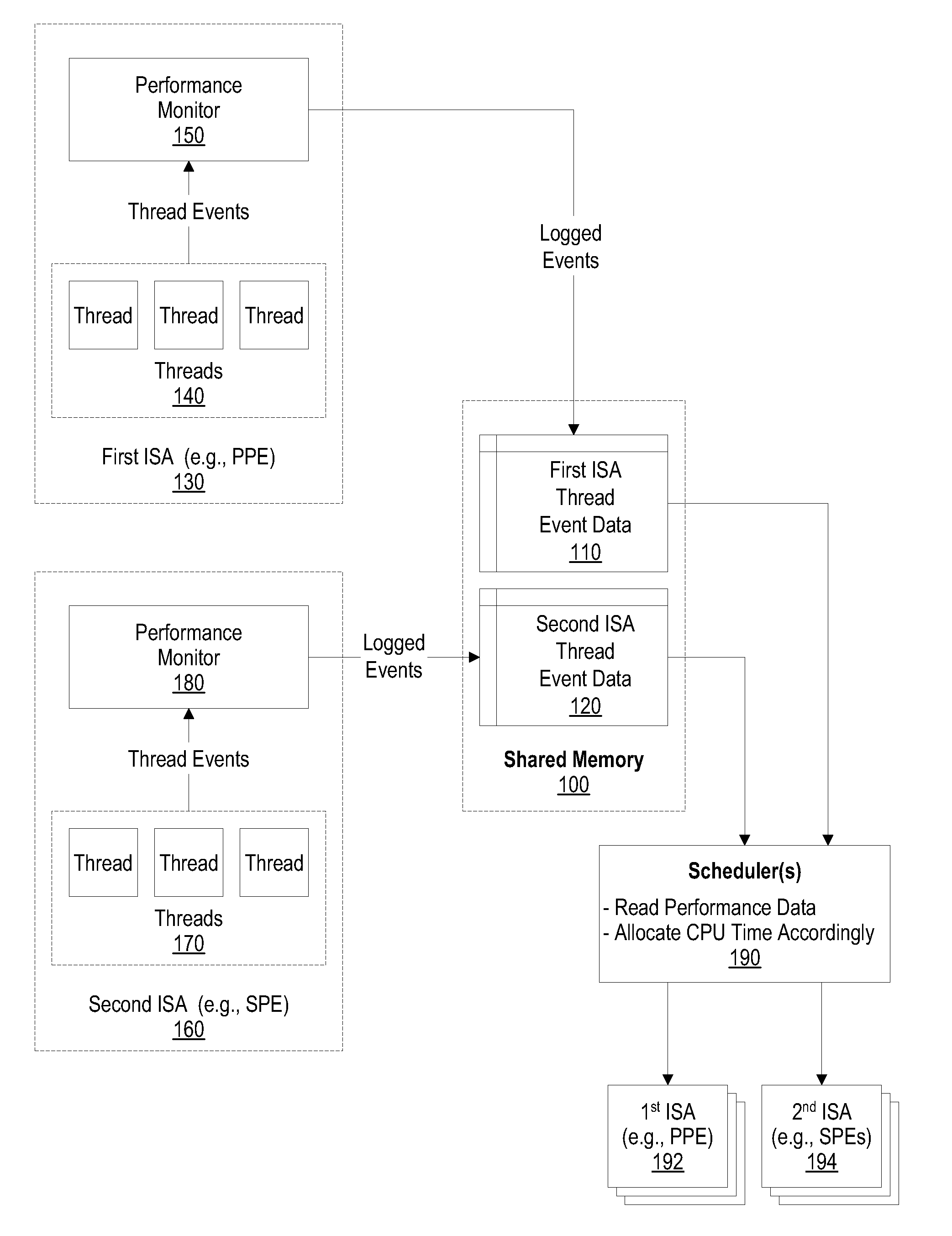

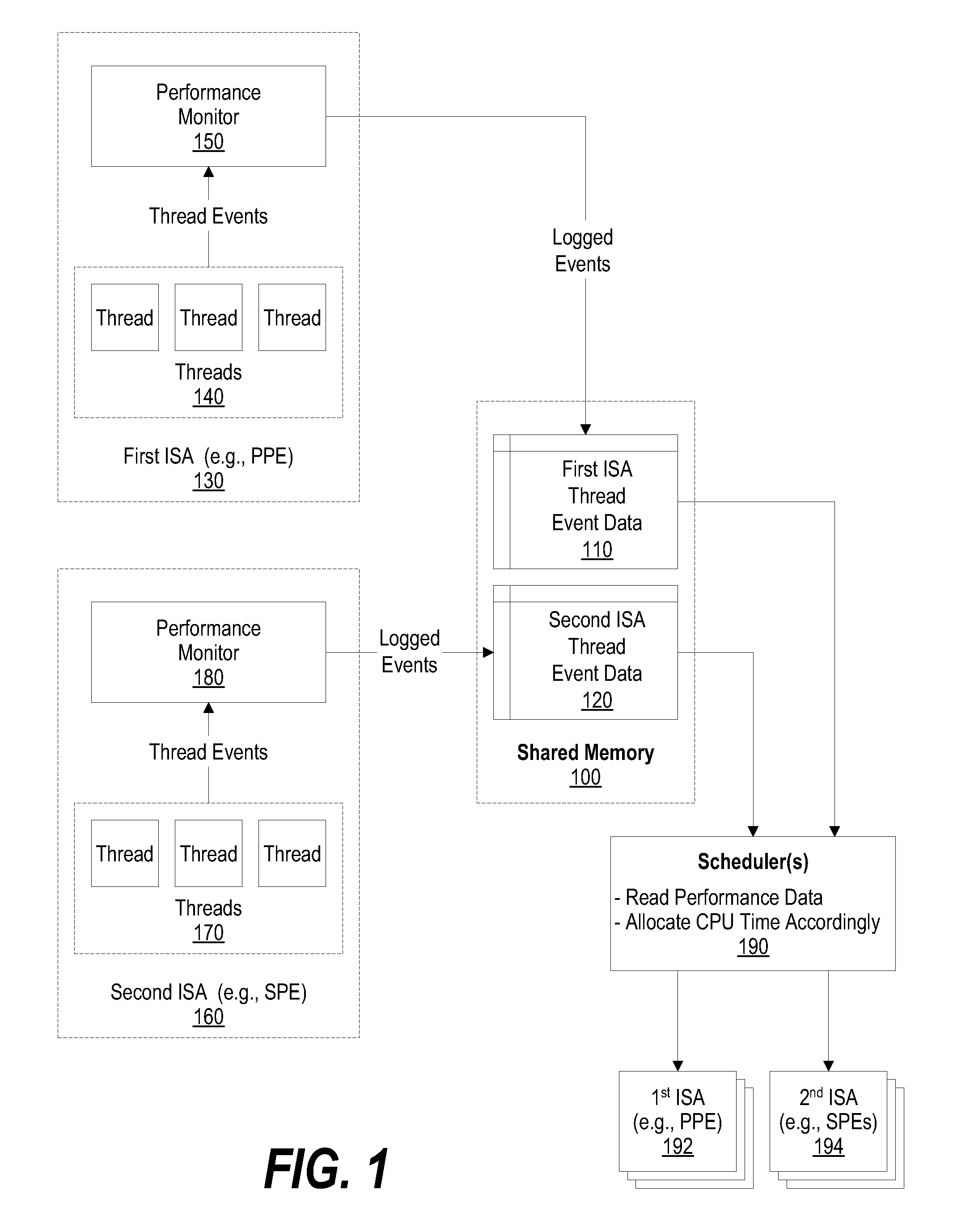

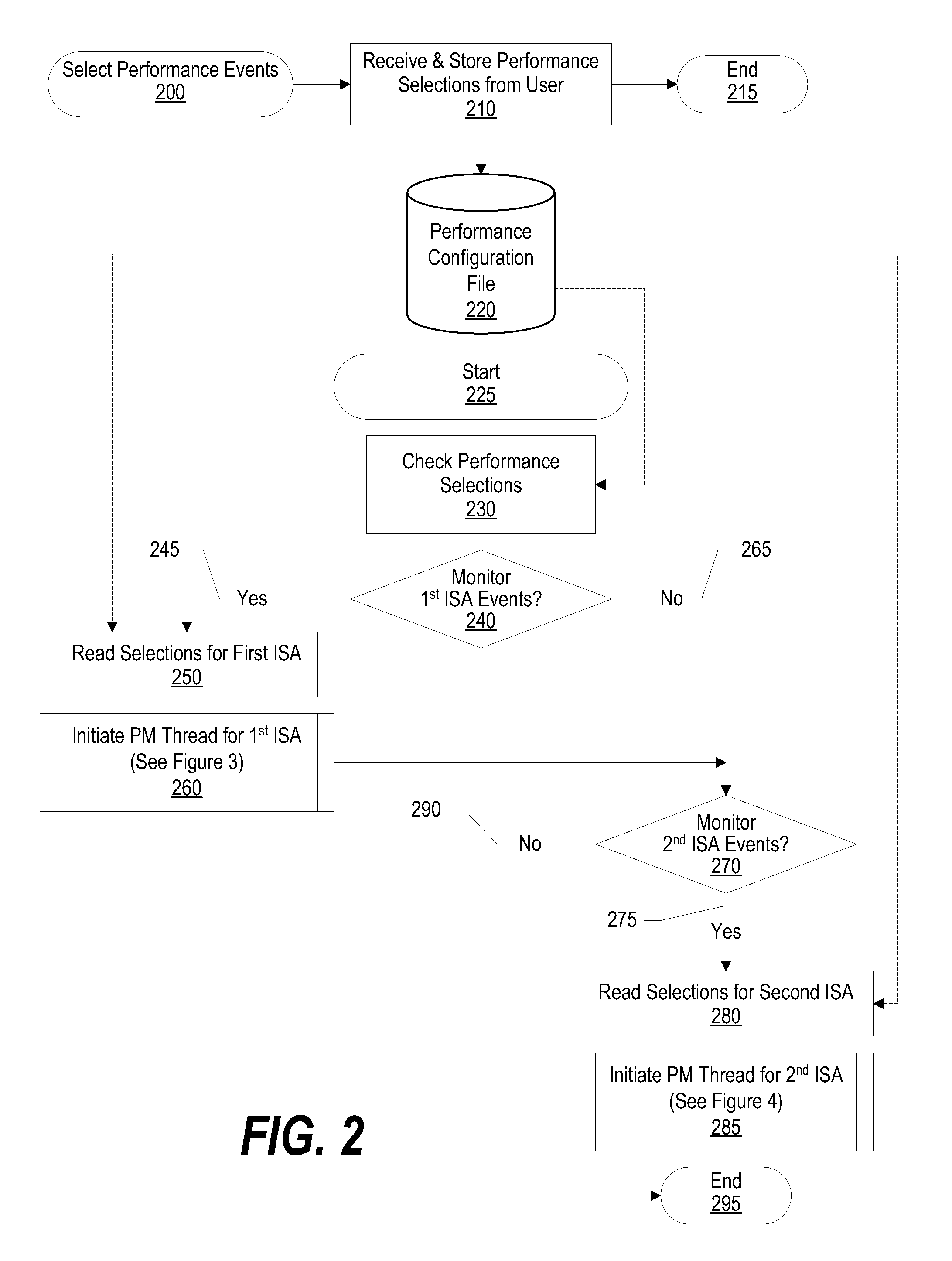

System and method for using performance monitor to optimize system performance

InactiveUS20070300231A1System can be configuredSmall needError detection/correctionMultiprogramming arrangementsComputerized systemCPU time

A system, method, and program product that optimizes system performance using performance monitors is presented. The system gathers thread performance data using performance monitors for threads running on either a first ISA processor or a second ISA processor. Multiple first processors and multiple second processors may be included in a single computer system. The first processors and second processors can each access data stored in a common shared memory. The gathered thread performance data is analyzed to determine whether the corresponding thread needs additional CPU time in order to optimize system performance. If additional CPU time is needed, the amount of CPU time that the thread receives is altered (increased) so that the thread receives the additional time when it is scheduled by the scheduler. In one embodiment, the increased CPU time is accomplished by altering a priority value that corresponds to the thread.

Owner:GLOBALFOUNDRIES INC

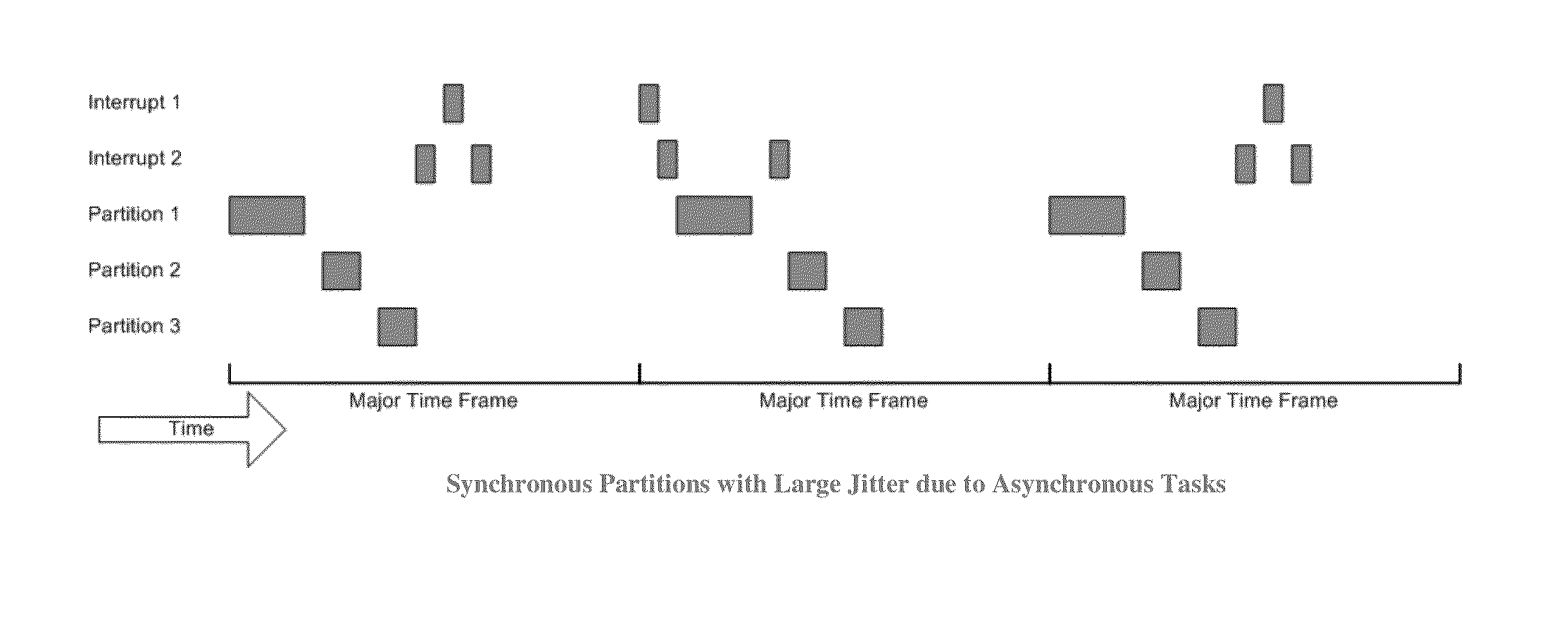

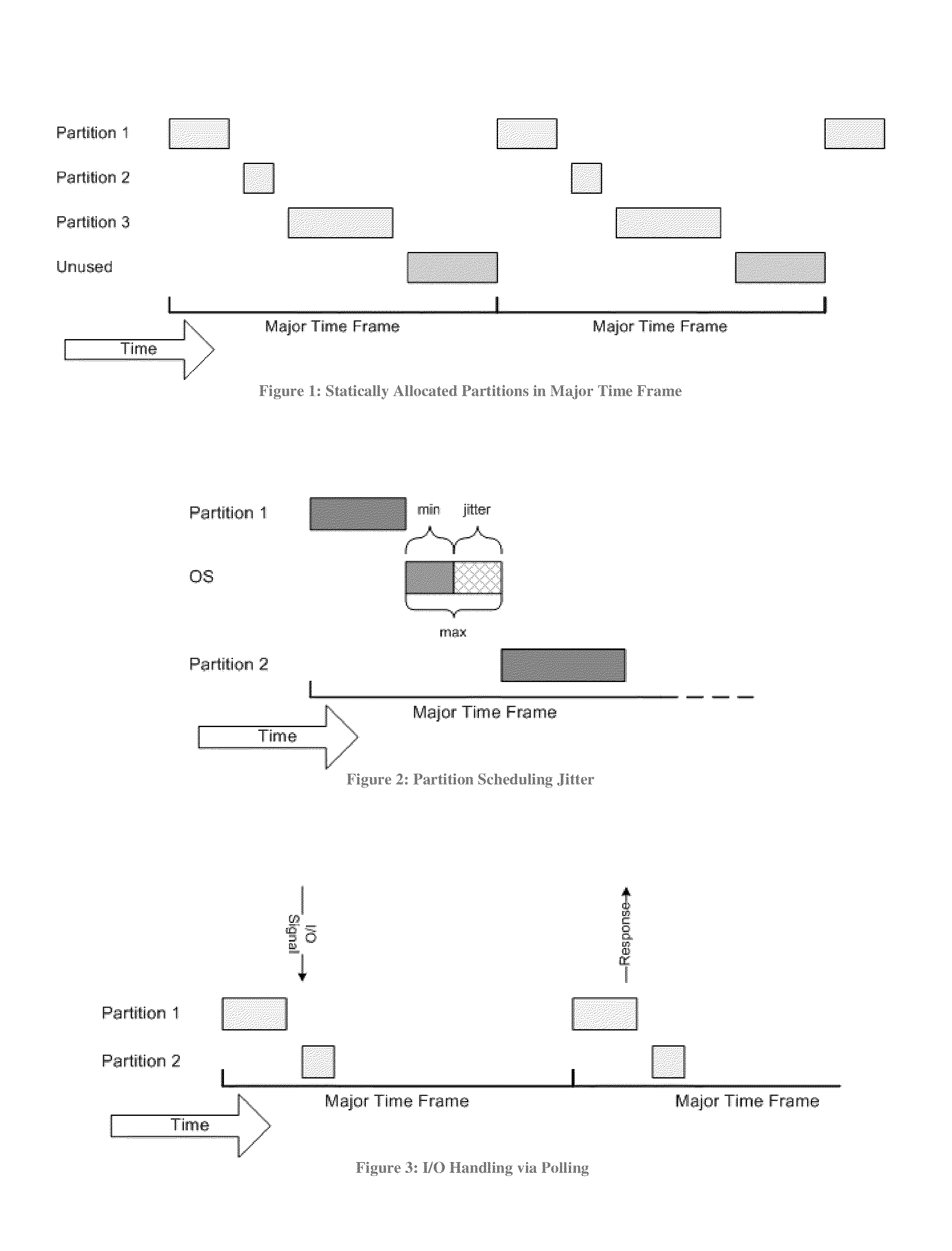

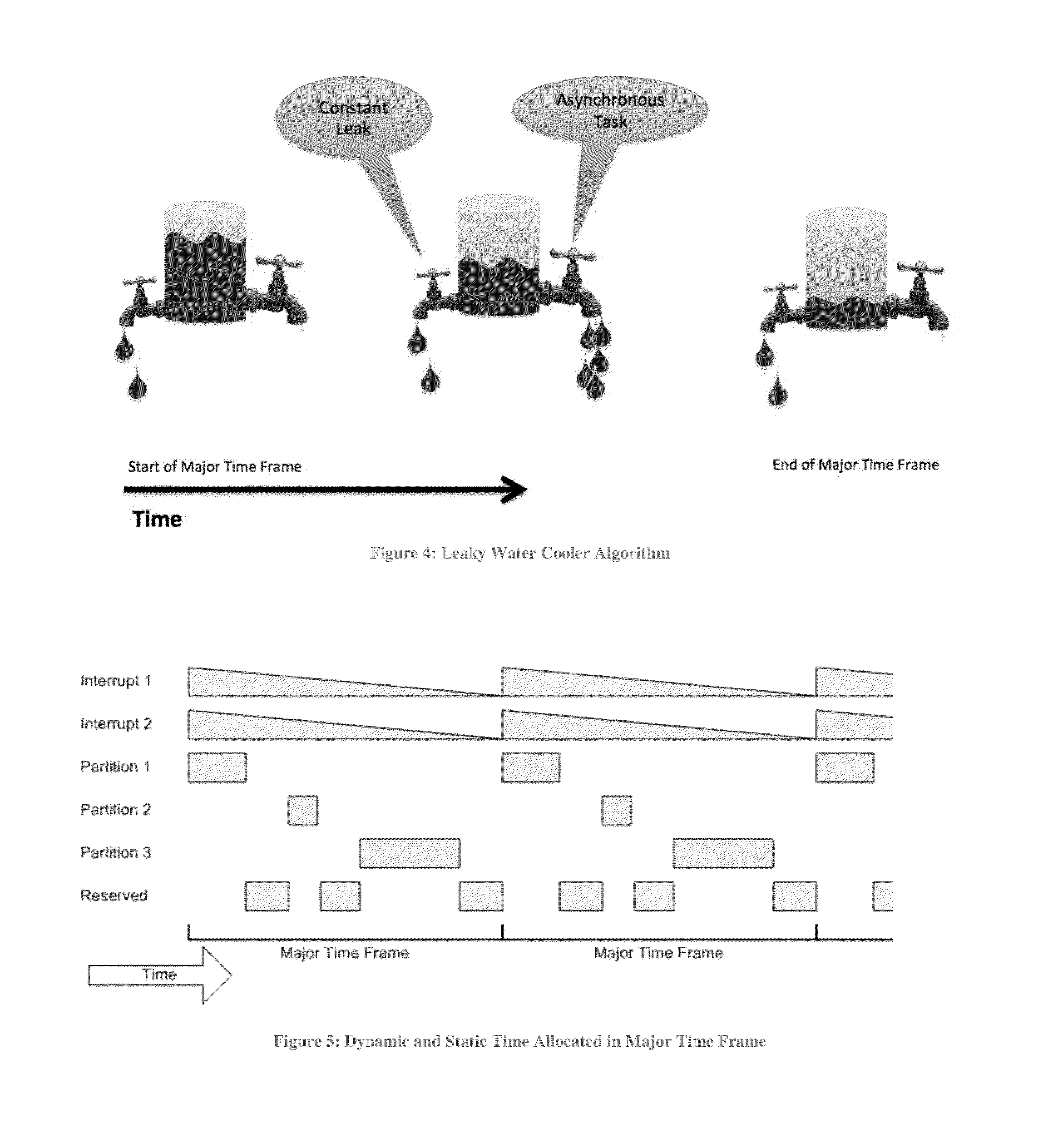

System and method for deterministic time partitioning of asynchronous tasks in a computing environment

A method of scheduling and controlling asynchronous tasks to provide deterministic behavior in time-partitioned operating systems, such as an ARINC 653 partitioned operating environment. The asynchronous tasks are allocated CPU time in a deterministic but dynamically decreasing manner. In one embodiment, the asynchronous tasks may occur in any order within a major time frame (that is, their sequencing is not statically deterministic); however, the dynamic time allotment prevents any task from overrunning its allotment and prevents any task from interfering with other tasks (whether synchronous or asynchronous).

Owner:DORNERWORKS

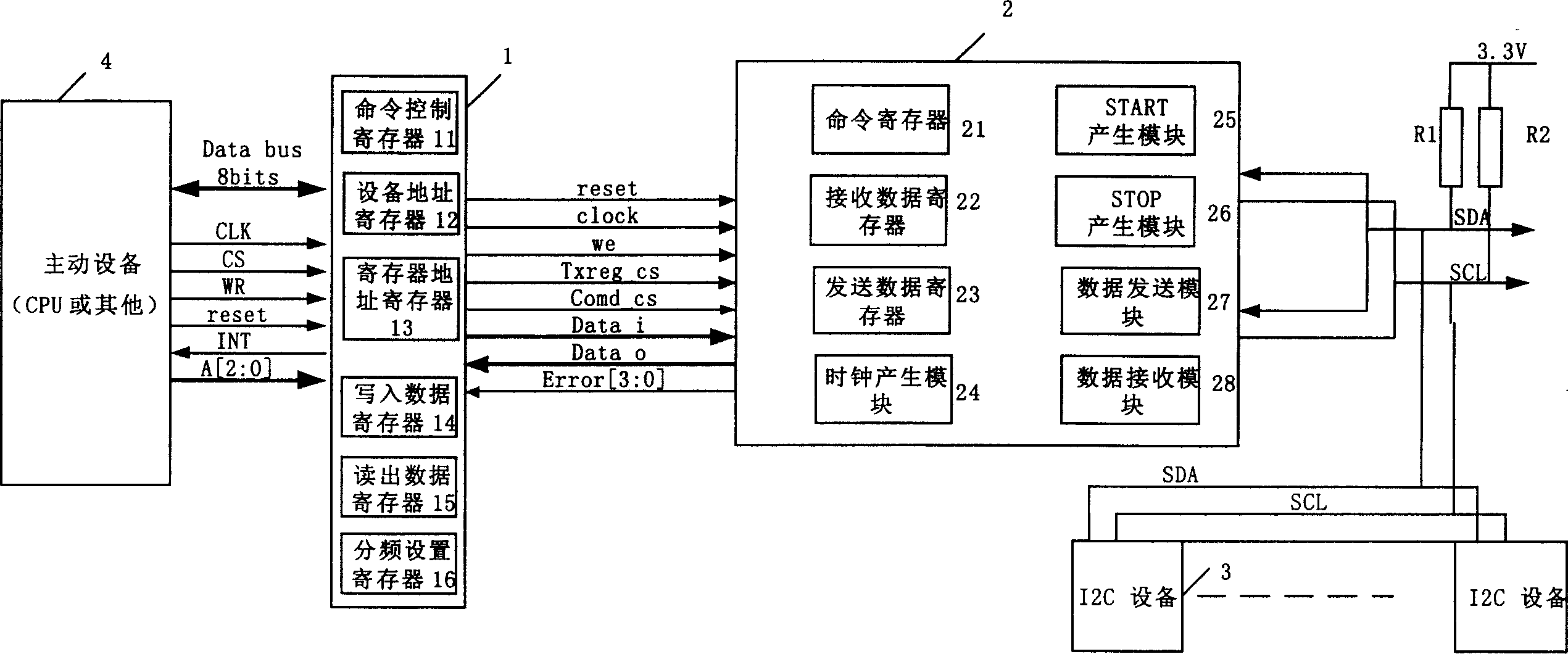

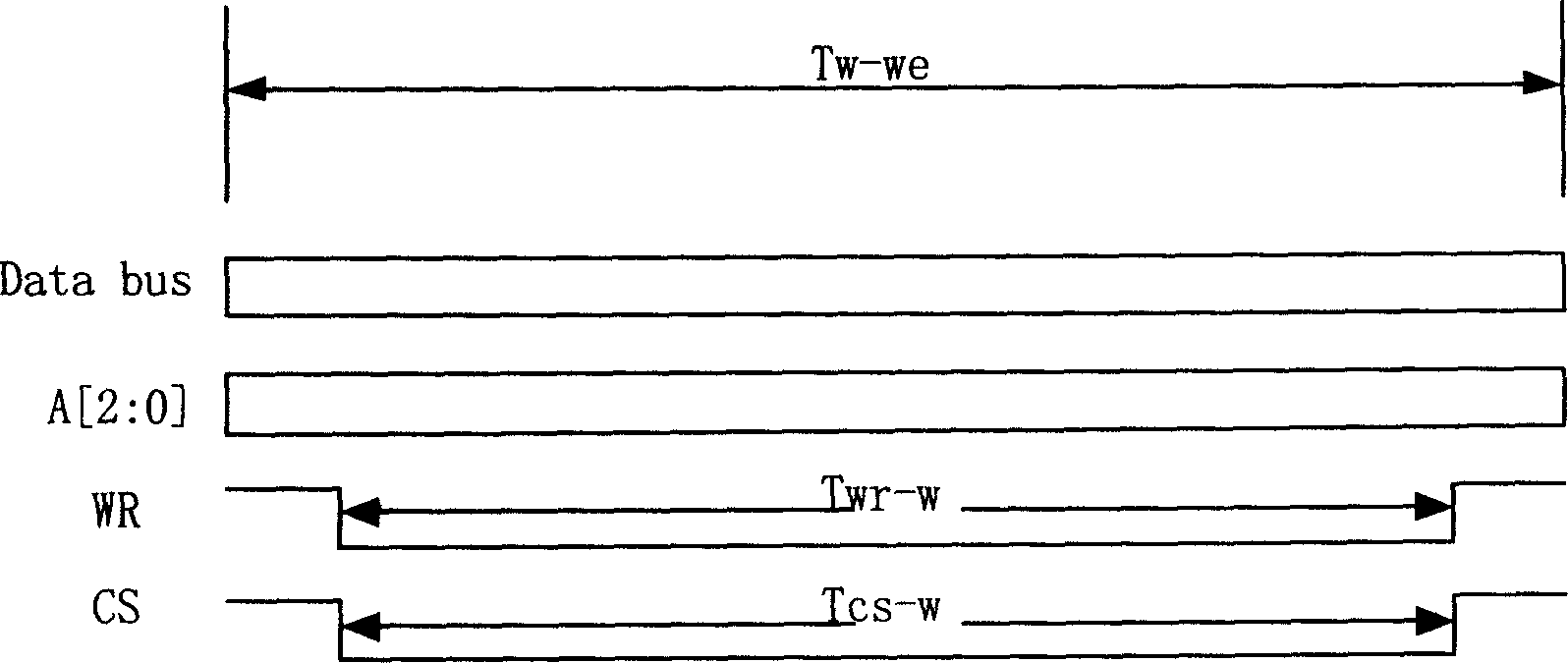

Device and method for implementing automatically reading and writing internal integrated circuit equipment

InactiveCN1558332ASimplify workloadChange this phenomenon of relying on CPUElectric digital data processingProcessor registerACCESS.bus

The present invention discloses equipment and method of automatically reading / writing I2C device. The equipment consists of hardware realized command generating module and write / read operation executing module, the command generating module is connected via the asynchronous access bus with active equipment and the write / read operation executing module, and the write / read operation executing module is connected via the I2C bus with I2C equipment. During write / read, the active equipment provides the starting signal, write / read signal and the address and register address of the I2C equipment and the data to be written; the command generating module generates automatically the commands essential for access of the I2C equipment and sends to write / read operation executing module; and the write / read operation executing module generates the signal time sequence for access of the I2C equipment based on I2C bus protocol and executes the write / read operation to the I2C equipment.

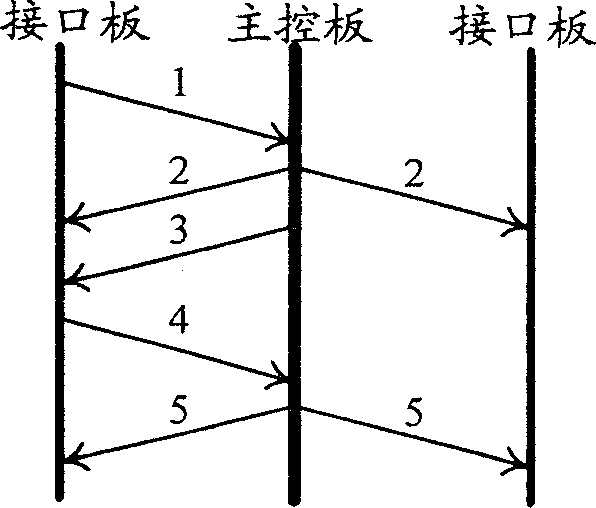

Owner:ZTE CORP

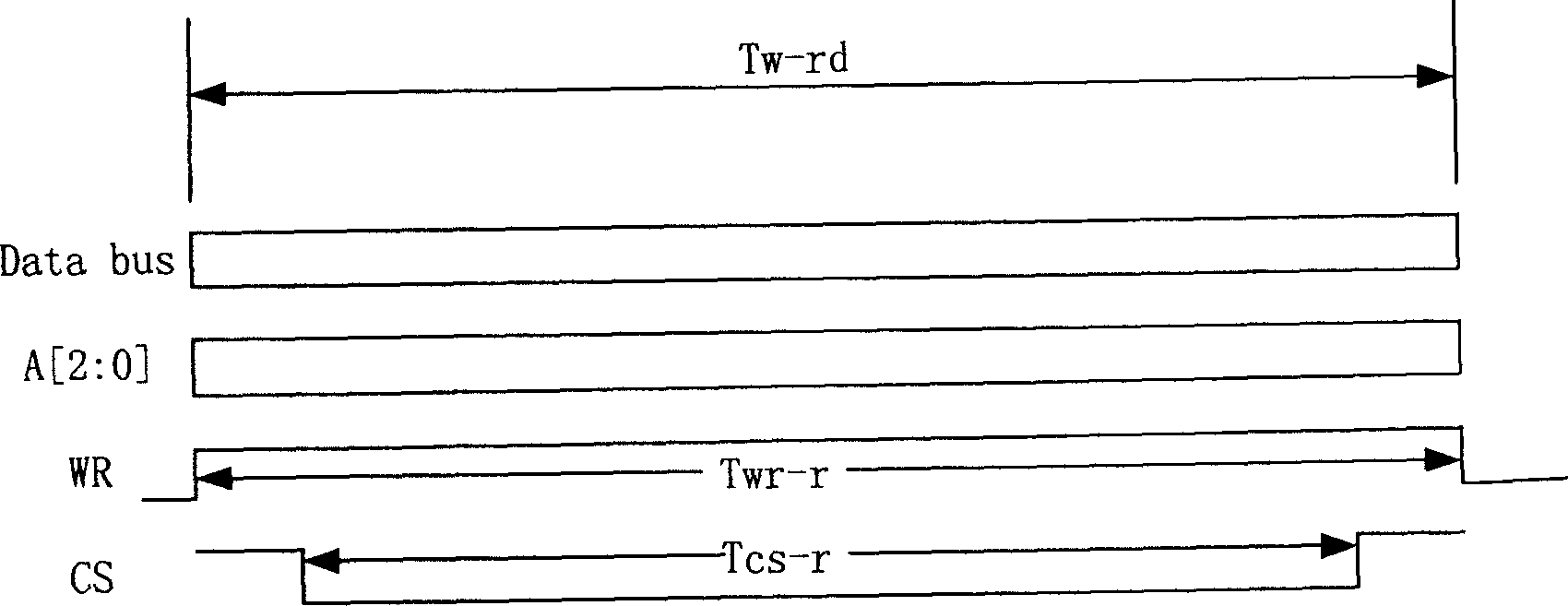

Target selecting method based on transient visual evoked electroencephalogram

InactiveCN101515200AImprove signal-to-noise ratioEasy to identifyInput/output for user-computer interactionBiological neural network modelsTimestampCPU time

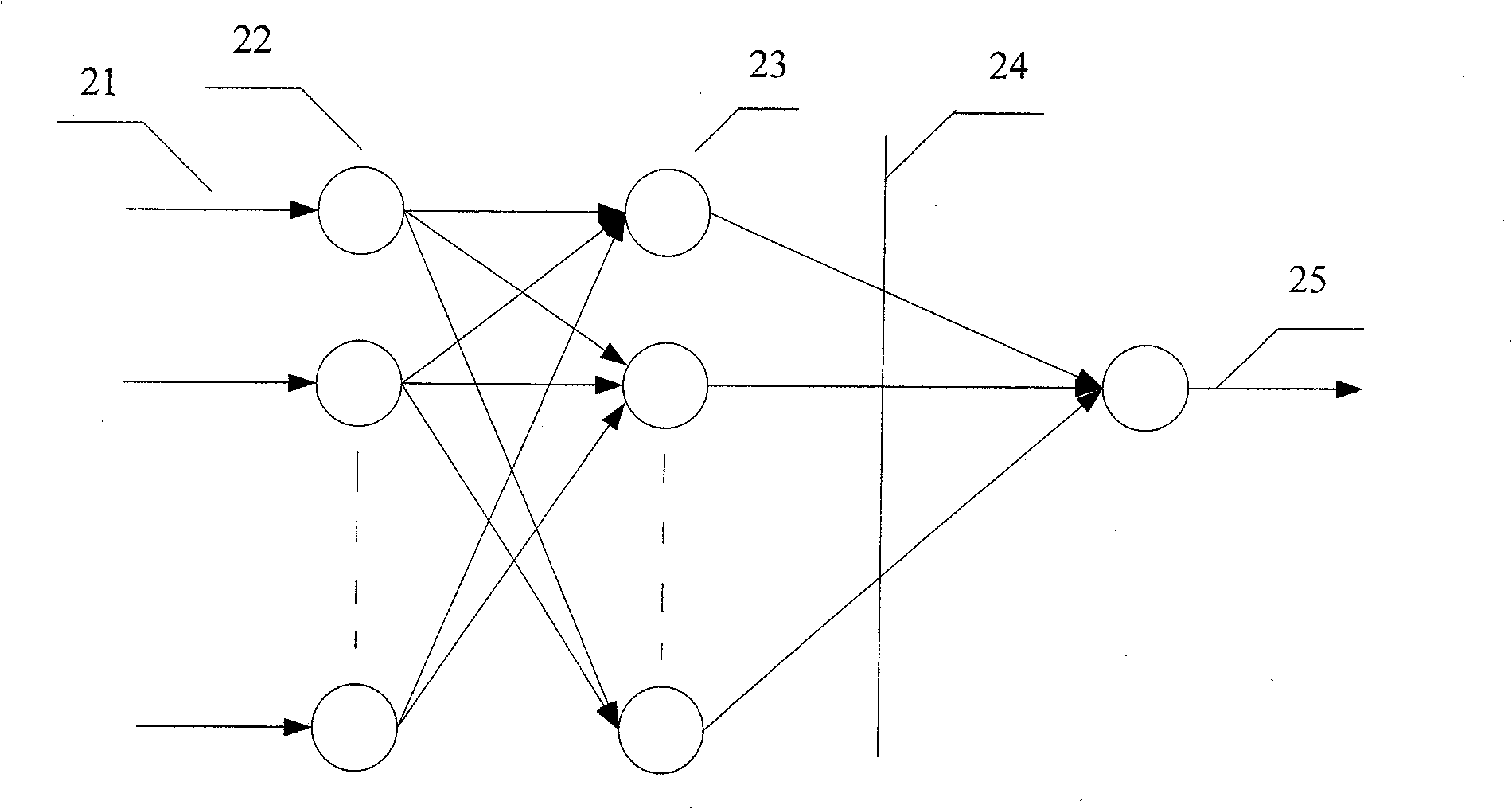

The invention relates to a target selecting method based on transient visual evoked electroencephalogram, comprising the following steps: VC + + writing visual stimulator evokes an electroencephalogram signal, 16-lead collecting device collects an electroencephalogram signal VEP which is amplified by an electroencephalogram amplifier and A / D converted, so that the signal is input into a computer and memorized in a memorizer in a way of signal voltage magnitude; B sample band biorthogonal wavelet method is used for extracting an electroencephalogram characteristic signal, in addition, corresponding results are classified, identified and output by the self-learning ability of BP neuronic network; wherein, the method also comprising the following steps of: designing the accurate timing visual stimulator by CPU timestamp; answering the output impulse of paralled port; collecting the electroencephalogram signal VEP by a collecting device; pretreating the collected signal; extracting the electroencephalogram signal by the B sample band biorthogonal wavelet method; and classifying characteristic quantity by the BP neuronic network. The method has the advantage that the BP neuronic network is used for effectively improving signal to the noise ratio and the recognition rate of visual evoked potential VEP.

Owner:BEIJING UNIV OF TECH

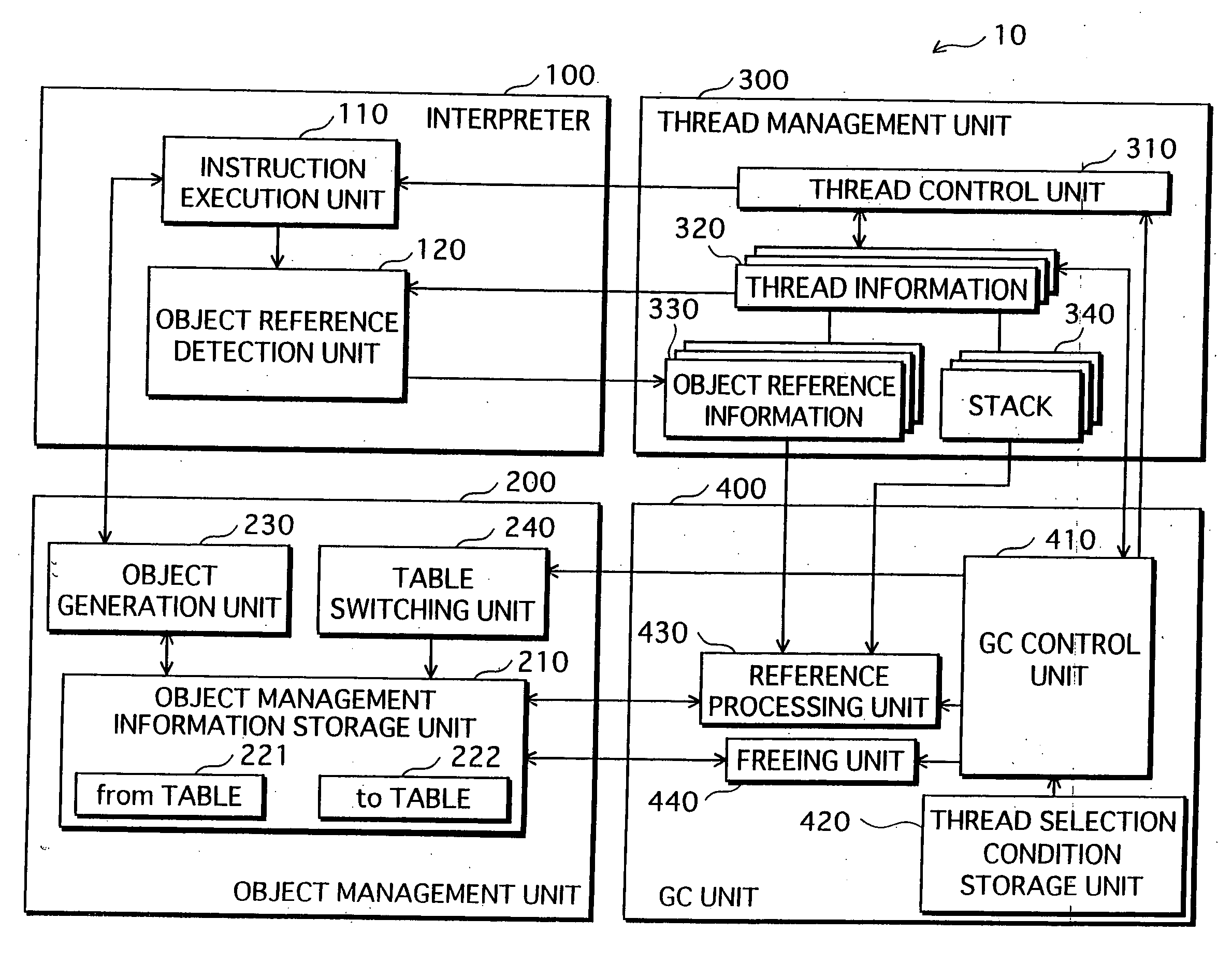

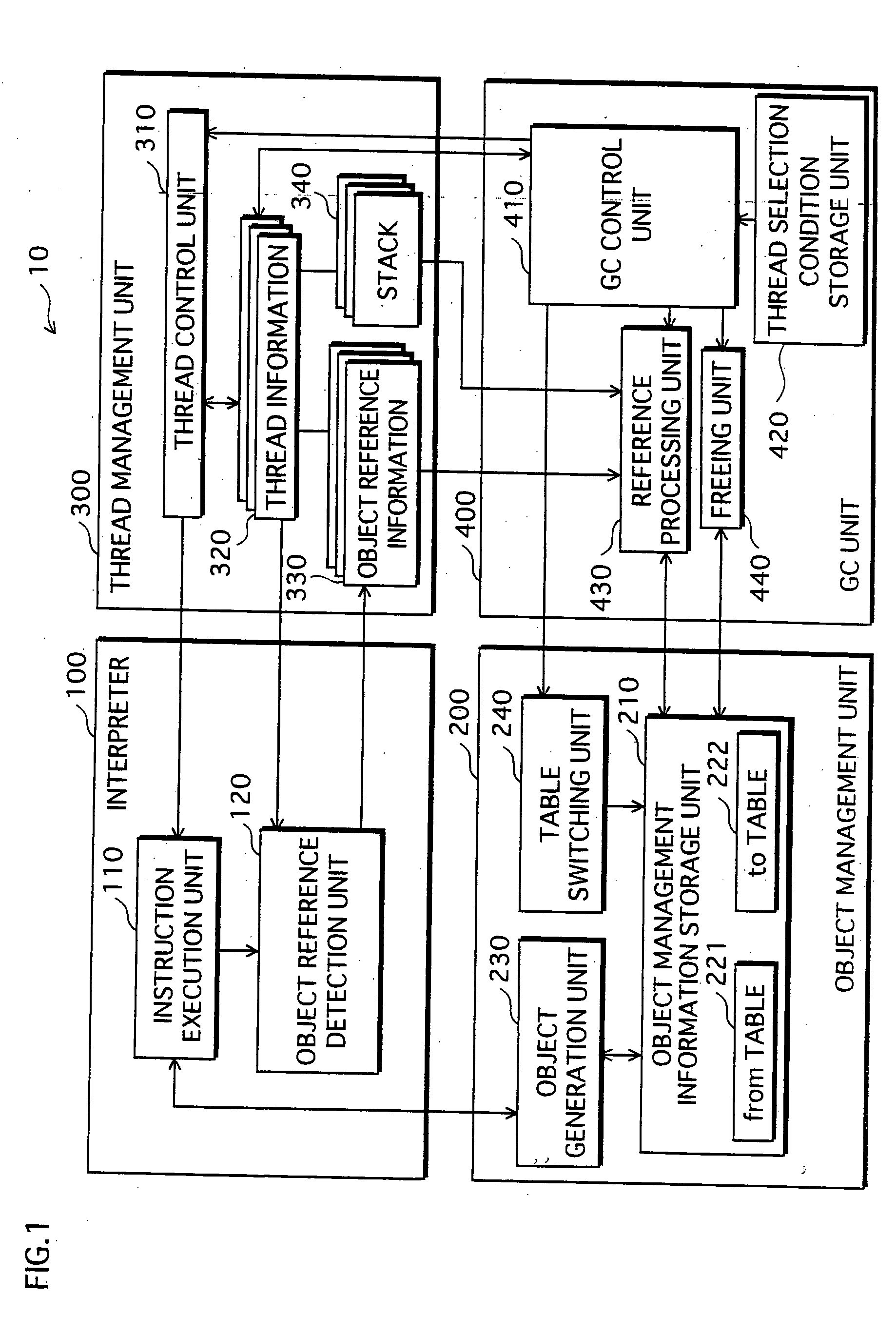

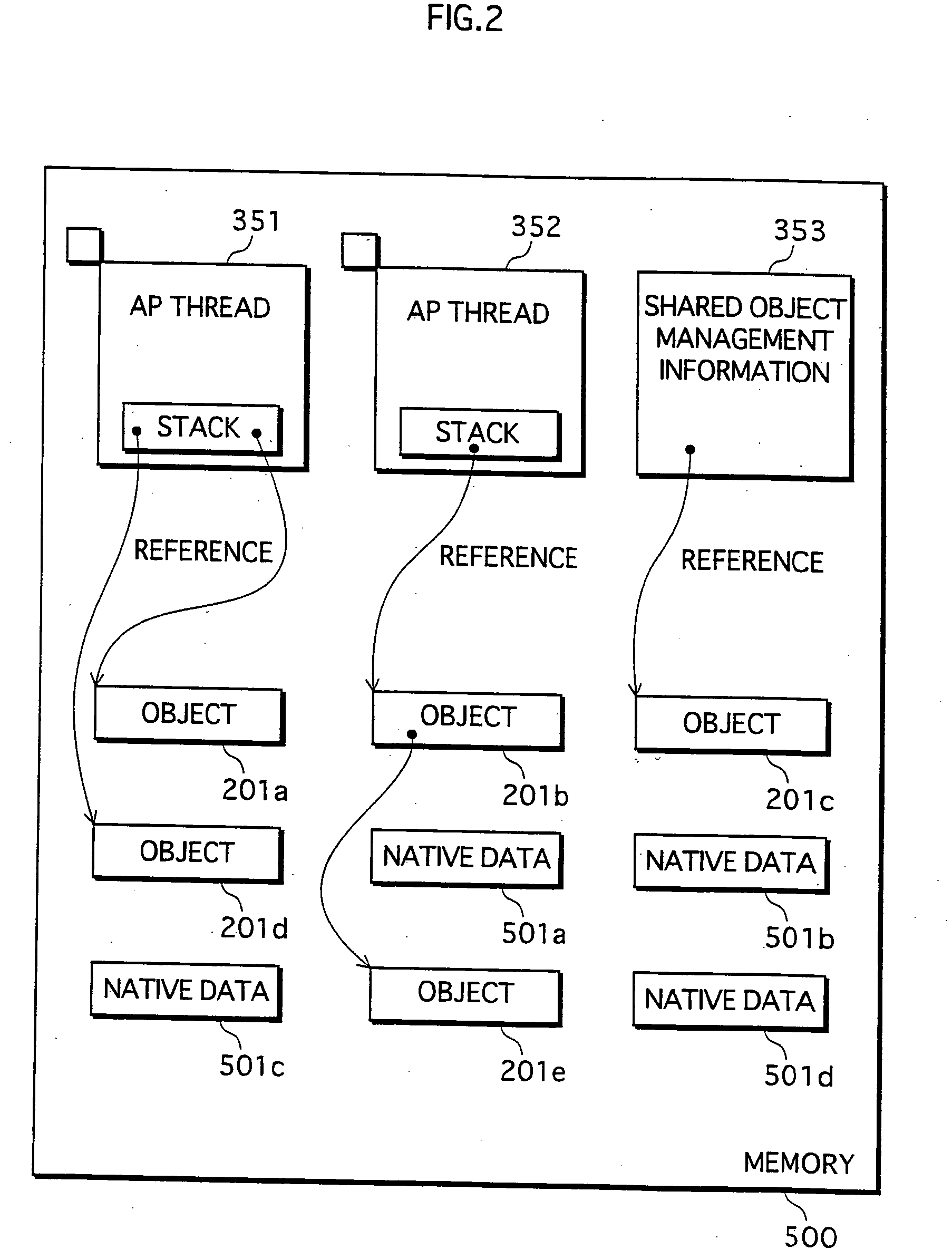

Garbage collection system

InactiveUS20060074988A1Avoid timeExtension of timeData processing applicationsMemory adressing/allocation/relocationRefuse collectionCollection system

The object of the present invention is to provide a garbage collection (GC) system that suppresses wasteful increase in CPU time required for GC, without stopping all AP threads for an excessively long amount of time. The garbage collection system frees memory areas corresponding to objects that are no longer required in an execution procedure of an object-oriented program composed of a plurality of threads, and includes: a selection unit operable to select the threads one at a time; an examination unit operable to execute examination processing with respect to the selected thread, the examination processing including procedures of stopping execution of the thread, finding an object that is accessible from the thread by referring to an object pointer, managing the found object as a non-freeing target, and resuming execution of the thread; a detection unit operable to, when having detected, after the selection unit has commenced selecting, that an object pointer has been processed as a processing target by a currently-executed thread, manage an object indicated by the processing target object pointer, as a non-freeing target; and a freeing unit operable to, after the examination processing has been completed with respect to all of the threads, free memory areas that correspond to objects other than the objects that are managed as non-freeing targets.

Owner:PANASONIC CORP

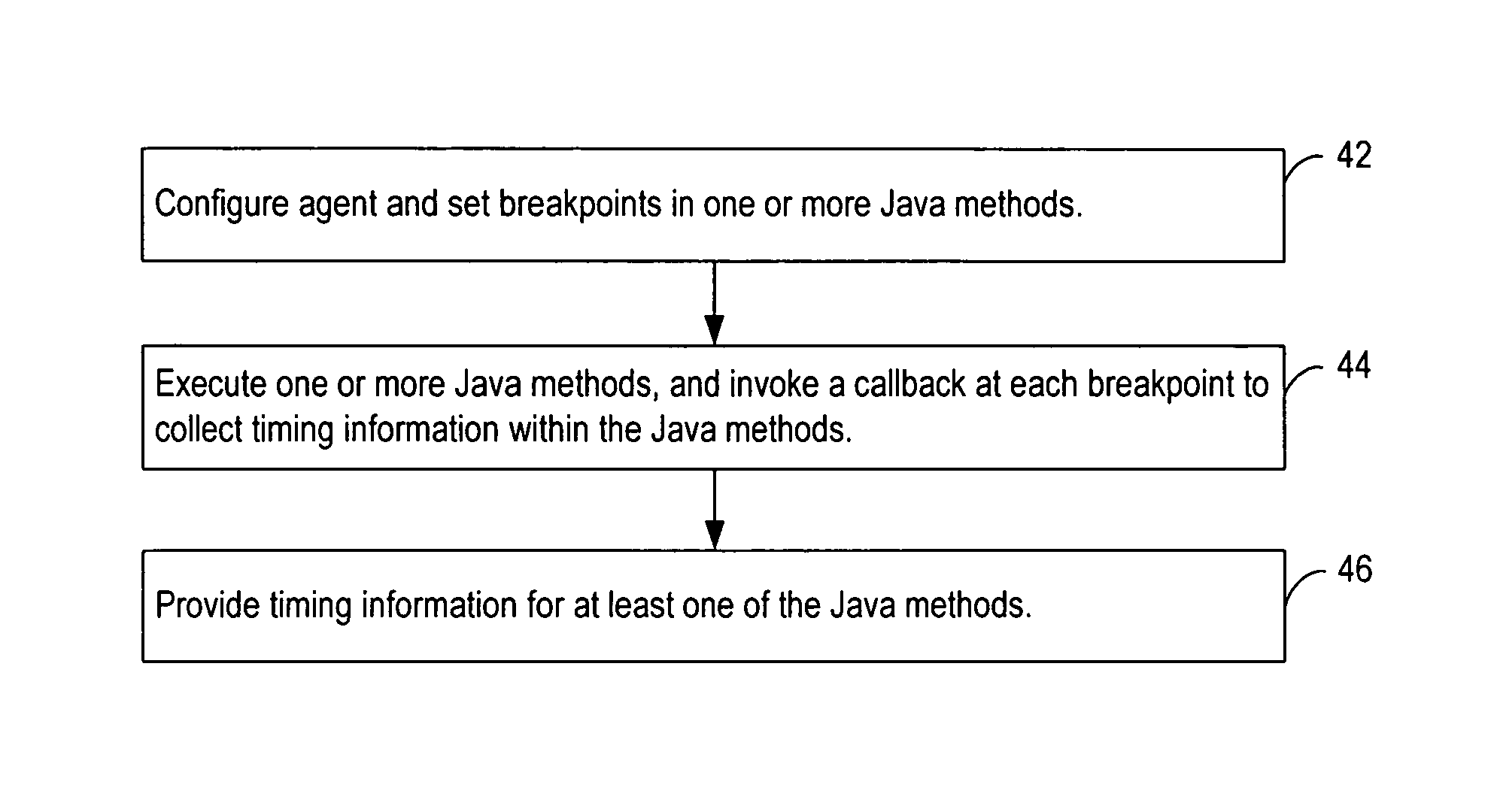

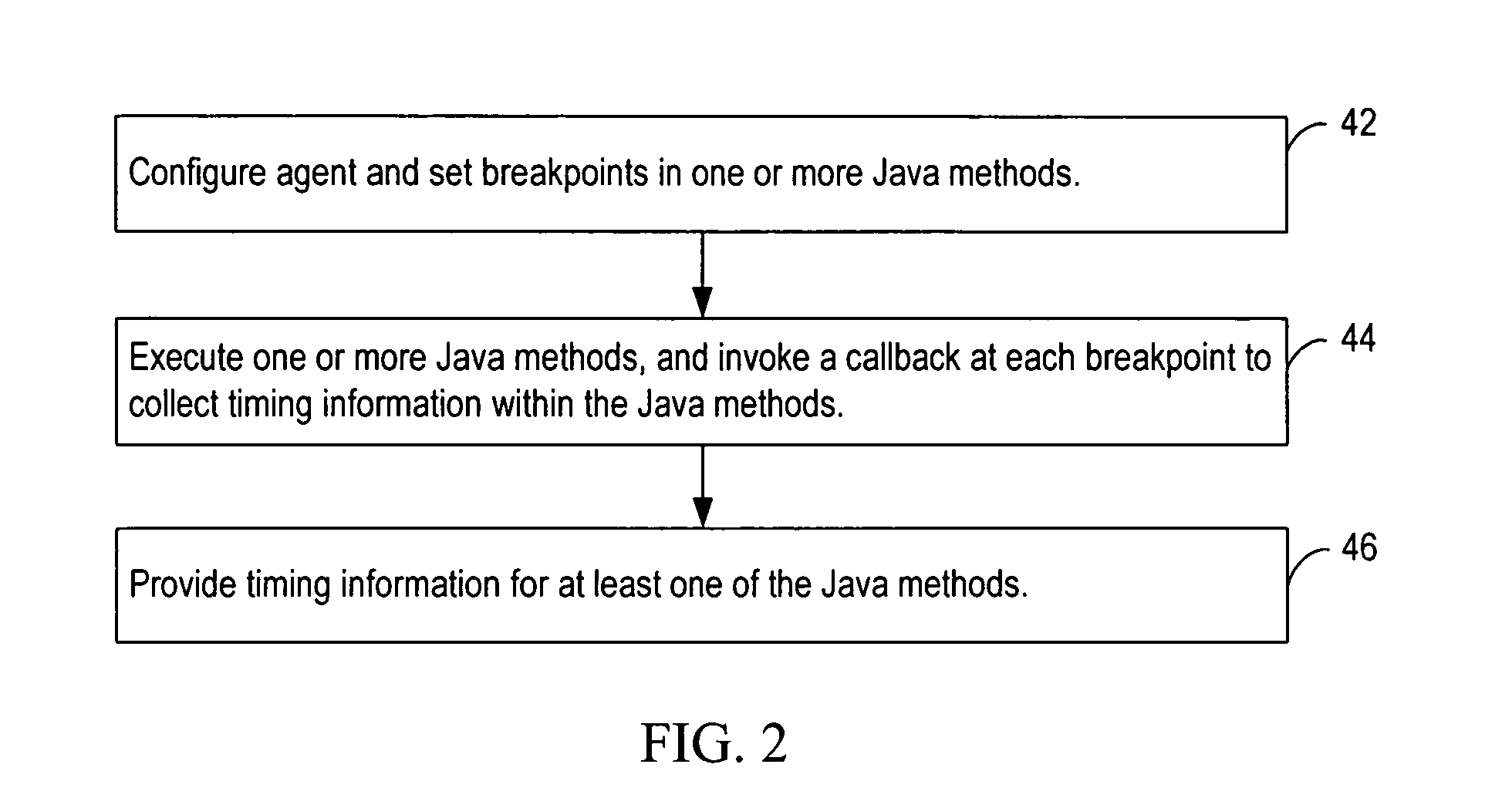

Dynamically profiling consumption of CPU time in Java methods with respect to method line numbers while executing in a Java virtual machine

InactiveUS20070168996A1Error detection/correctionSpecific program execution arrangementsCPU timeAnalysis method

Various embodiments of a computer-implemented method, system and computer program product monitor the performance of a program component executing in a virtual machine. Breakpoints associated with position indicators within the program component are set. In response to reaching one of the breakpoints, an amount of time consumed between the breakpoint and a previous breakpoint is determined. The amount of time associated with the position indicators is accumulated. The amount of time is associated with a position indicator that is associated with the previous breakpoint.

Owner:IBM CORP

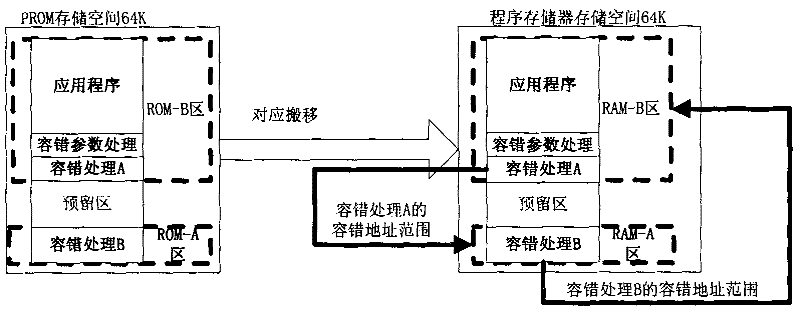

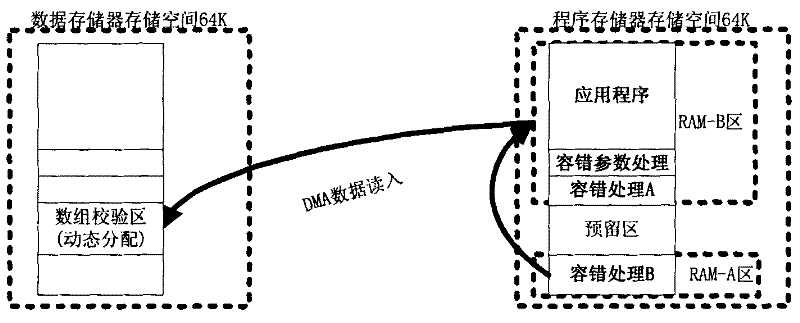

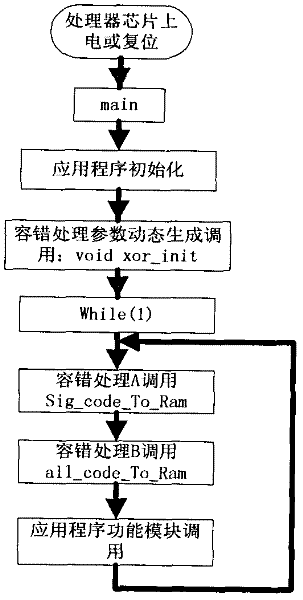

Software fault-tolerant method capable of comprehensively on-line self-detection single event upset

ActiveCN102521062AGuaranteed to run in real timeUse less CPU timeFault responseSoftware faultCPU time

A software fault-tolerant method capable of comprehensively on-line self-detection single event upset comprises the steps of executing storage address interlinking configuration, a fault-tolerant processing parameter generation module, a fault-tolerant processing A module and a fault-tolerant processing B module, reading program storage data in direct memory access (DMA) subsection mode, dynamically generating fault-tolerant processing parameters through verification algorithm and conducting redundancy storage. The fault-tolerant processing B module is used for autonomously and timely monitoring application programs and operation of the fault-tolerant processing A module which is used for timely monitoring operation of the fault-tolerant processing B module, once the single event upset of the programs occurs, corresponding code segment is loaded from a read only memory (ROM), a purpose of conducting error correction of application program codes is achieved, the whole realization process is carried out in a DMA mode, no central processing unit (CPU) time is occupied, the programs is guaranteed to timely operate while conducting error correction, and reliability and safety of on-track operation of software is improved, simultaneously a large amount of hardware cost and time cost are saved, and efficiency is improved.

Owner:XIAN INSTITUE OF SPACE RADIO TECH

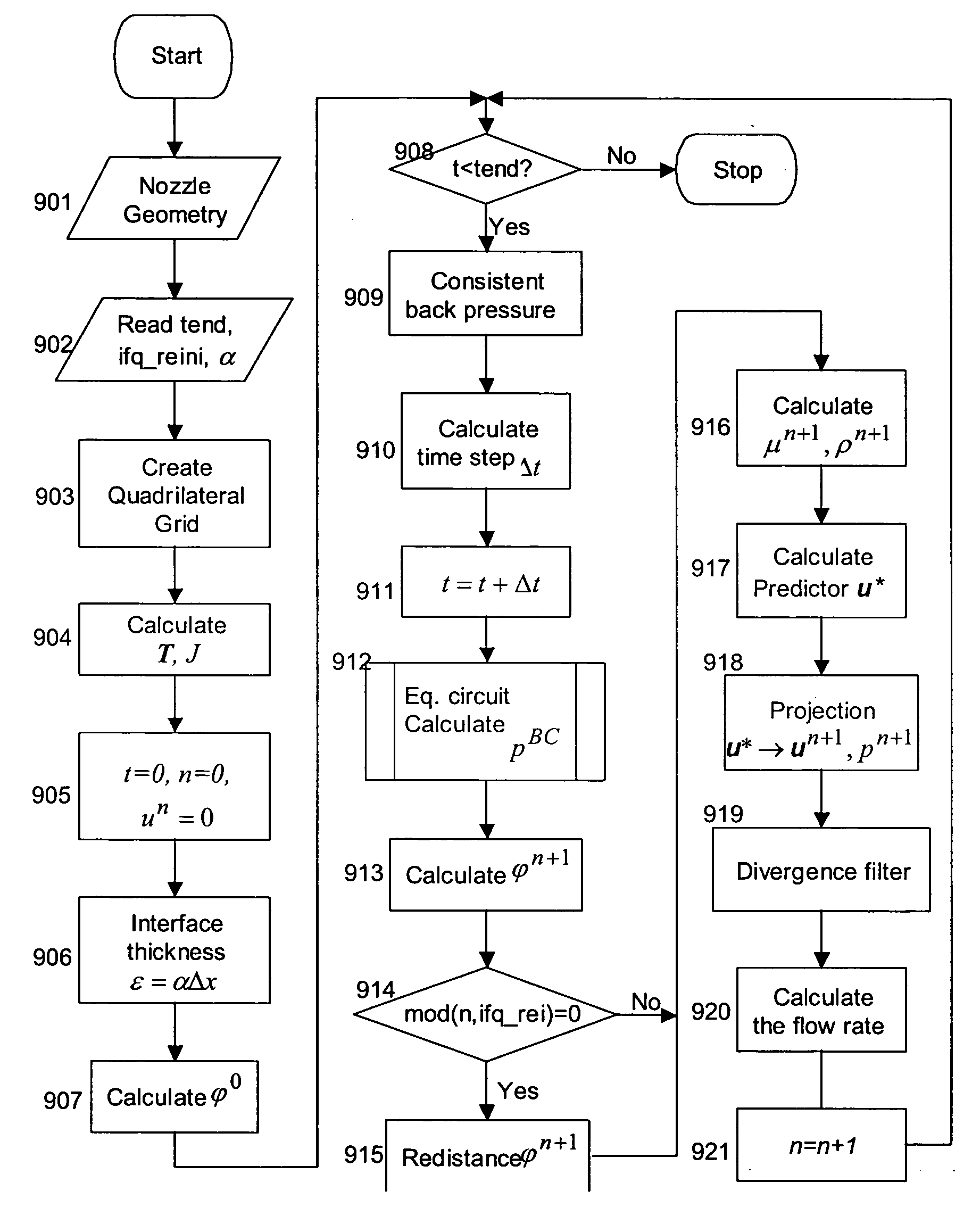

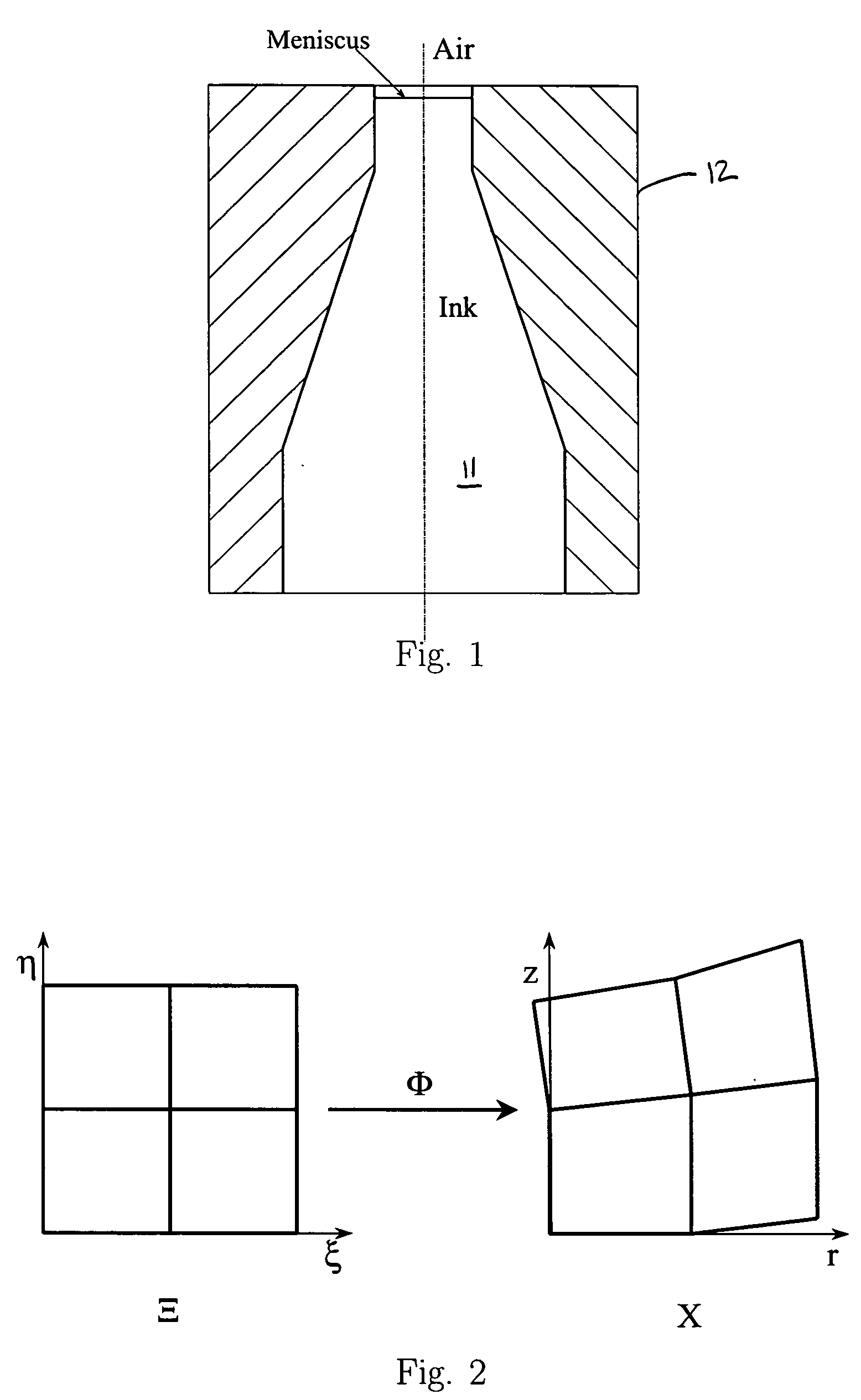

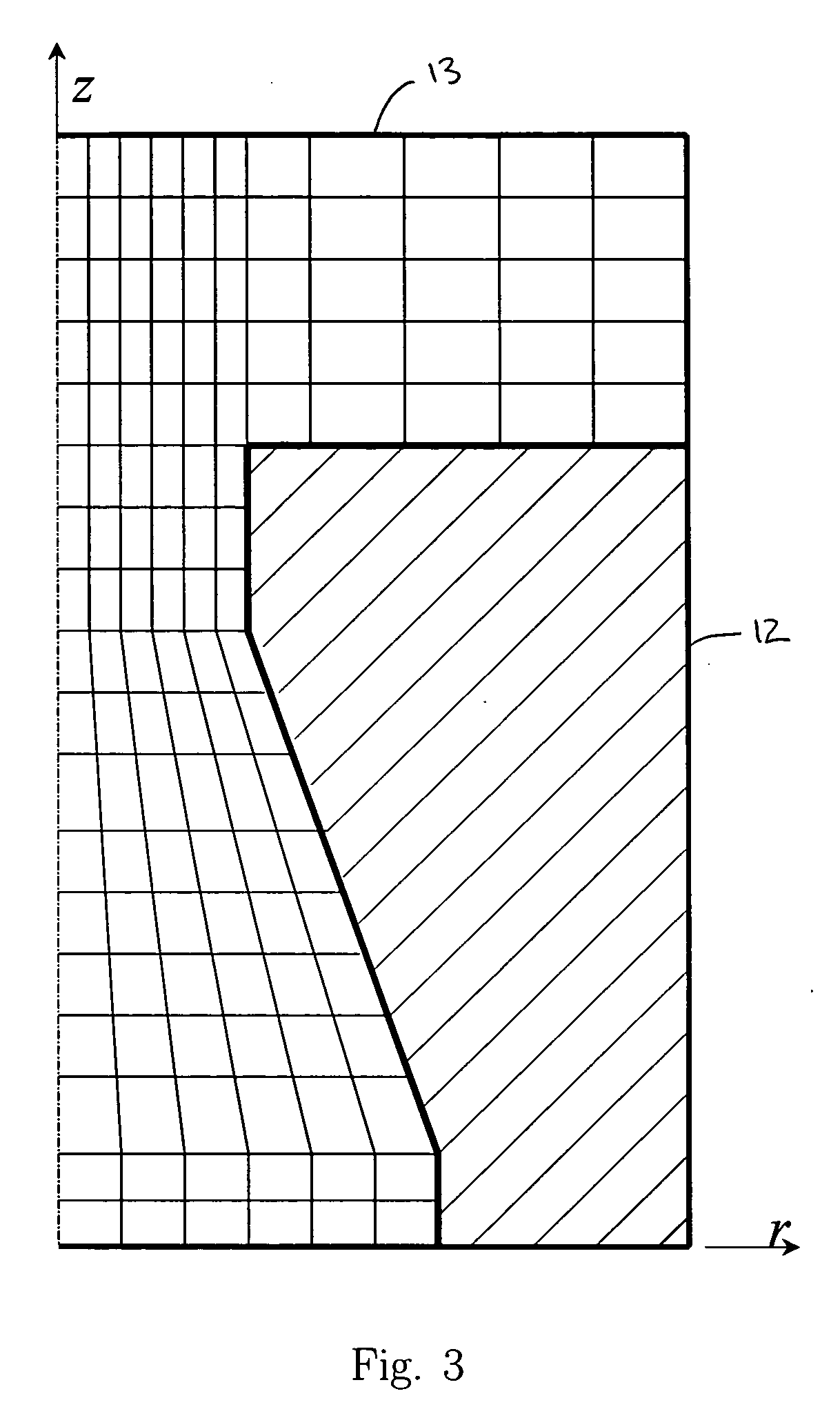

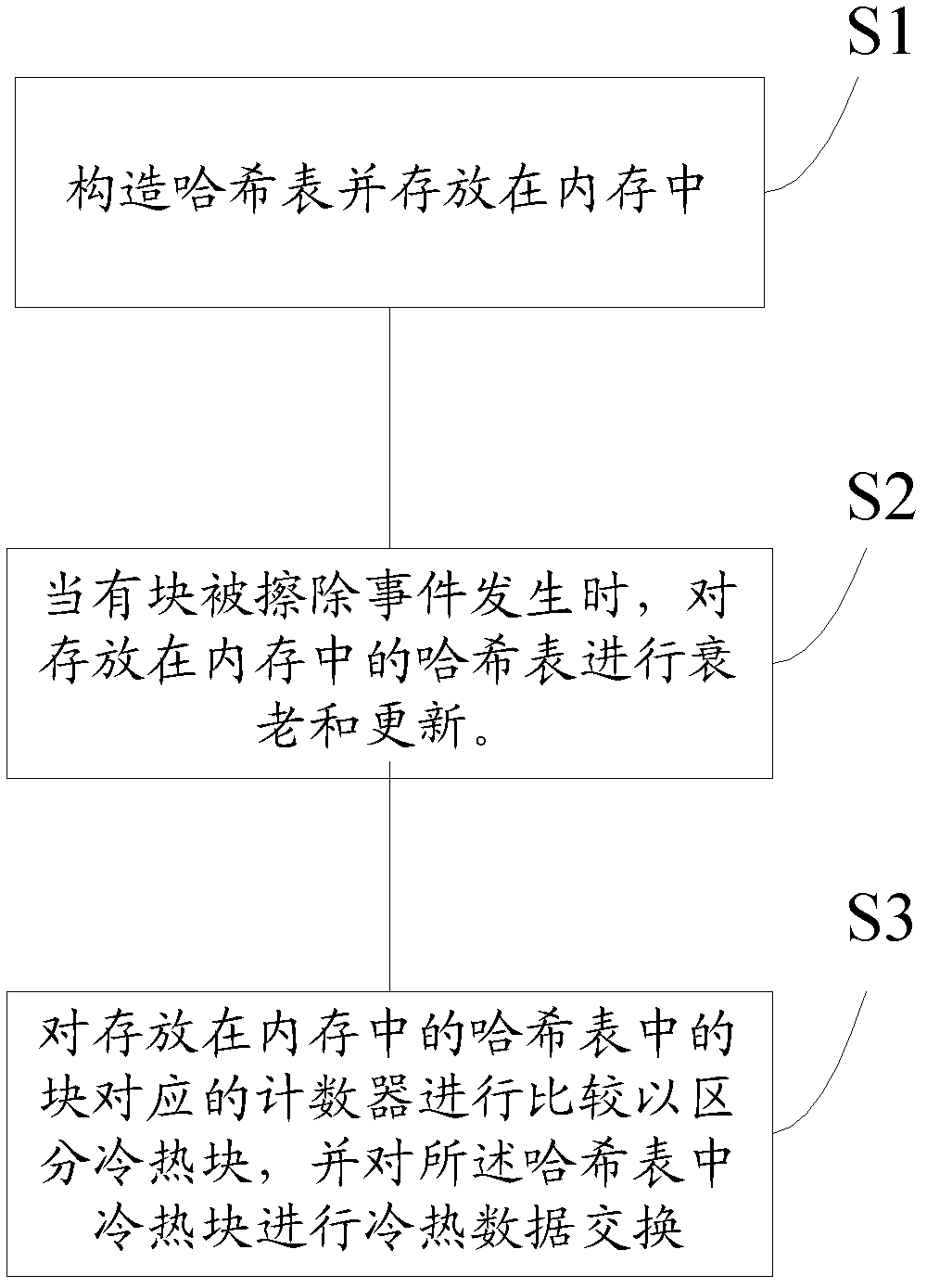

Divergence filters on quadrilateral grids for ink-jet simulations

InactiveUS20050243117A1Improve stabilityIncrease in sizeDesign optimisation/simulationOther printing apparatusQuadrilateral gridCPU time

The development and use of divergence filters on quadrilateral grids in connection with a finite-difference-based ink-jet simulation model improves the stability of the code in the model, and allows the use of larger time step size and hence reduces the CPU time. The filters are employed after the finite element projection in each time step and function as additional finite difference projections that are enforced at edge mid-points and at cell centers. The improved model and accompanying algorithm enable more precise control of ink droplet size and shape.

Owner:SEIKO EPSON CORP

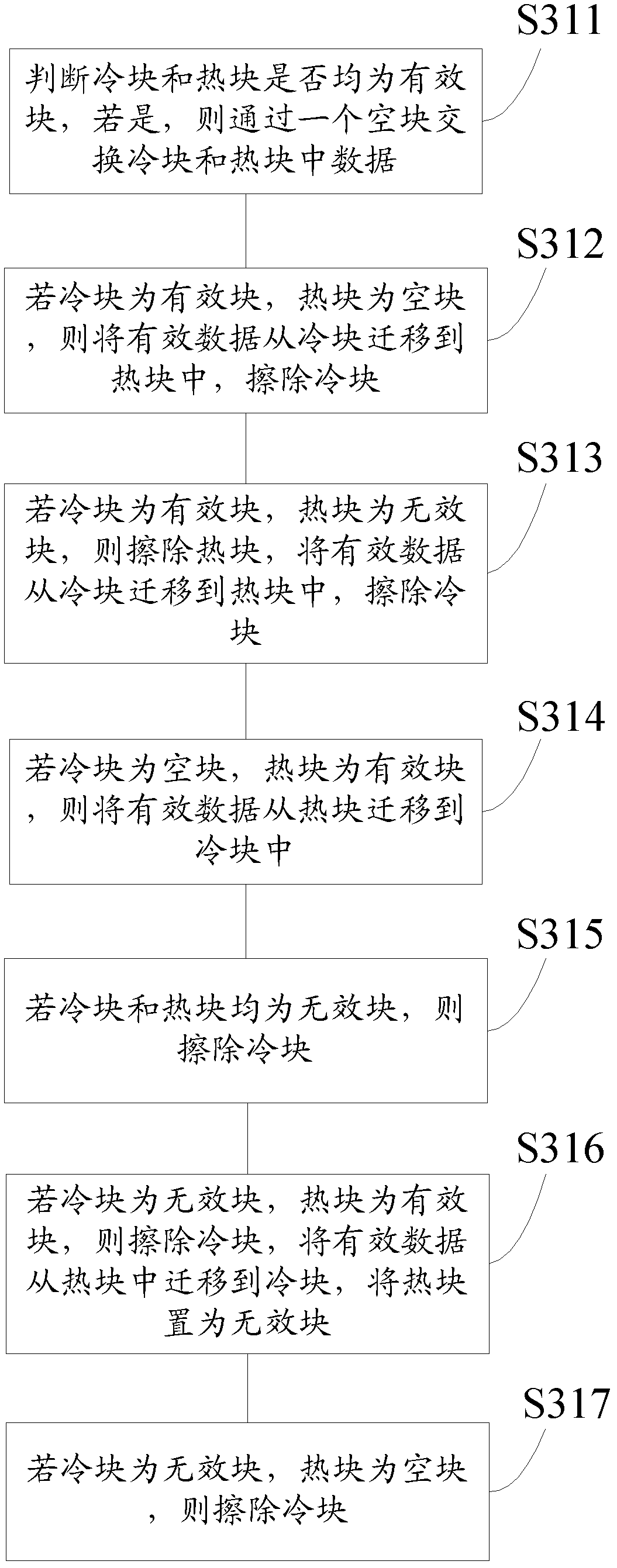

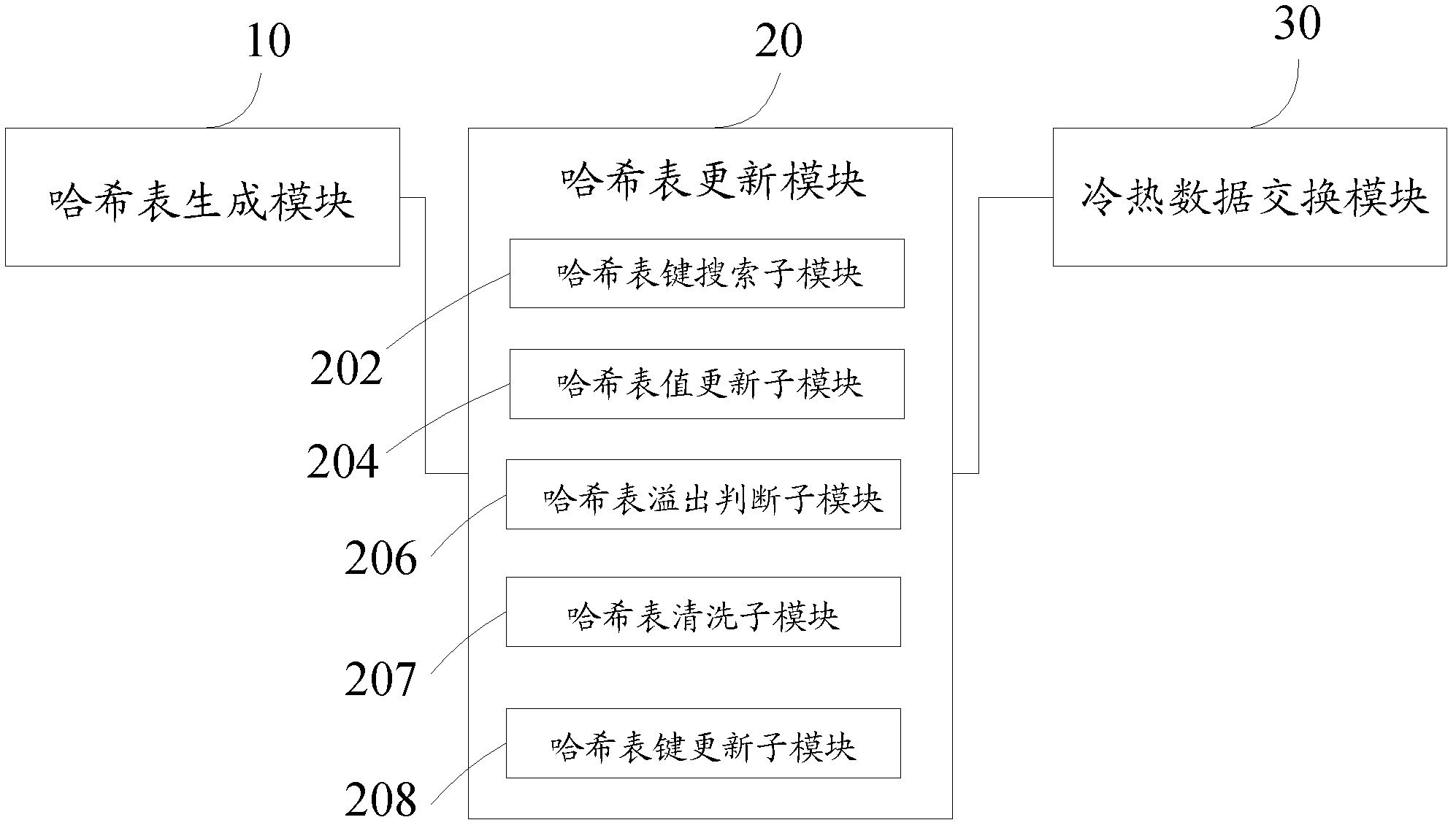

Static Wear Leveling Method and System for Solid State Disk

InactiveCN102289412AGuaranteed performanceReduce occupancyMemory adressing/allocation/relocationInternal memory16-bit

The invention provides a method for balancing static abrasion of a solid hard disc, comprising the following steps of: S1, constructing a hash table by using physical addresses corresponding to erased blocks as a key and using a 16-bit counter as a value, and storing the hash table in a internal memory; S2, aging and updating the hash table in the internal memory when a block erasing event occurs; and S3, comparing counters corresponding to blocks in the hash table in the internal memory so as to distinguish cold and hot blocks, and carrying out cold and hot data exchange on the cold and hot blocks in the hash table. The invention also provides a system for balancing the static abrasion of the solid hard disc. In the invention, the cold and hot attributes of the blocks are counted and processed by adopting a least recently used (LRU) algorithm of an aging mechanism on the basis of the hash table and corresponding operations are carried out, so that the performance of an abrasion balancing algorithm is ensured while less internal memory is occupied and shorter CPU (Central Processing Unit) time is consumed.

Owner:SHANGHAI JIAO TONG UNIV +1

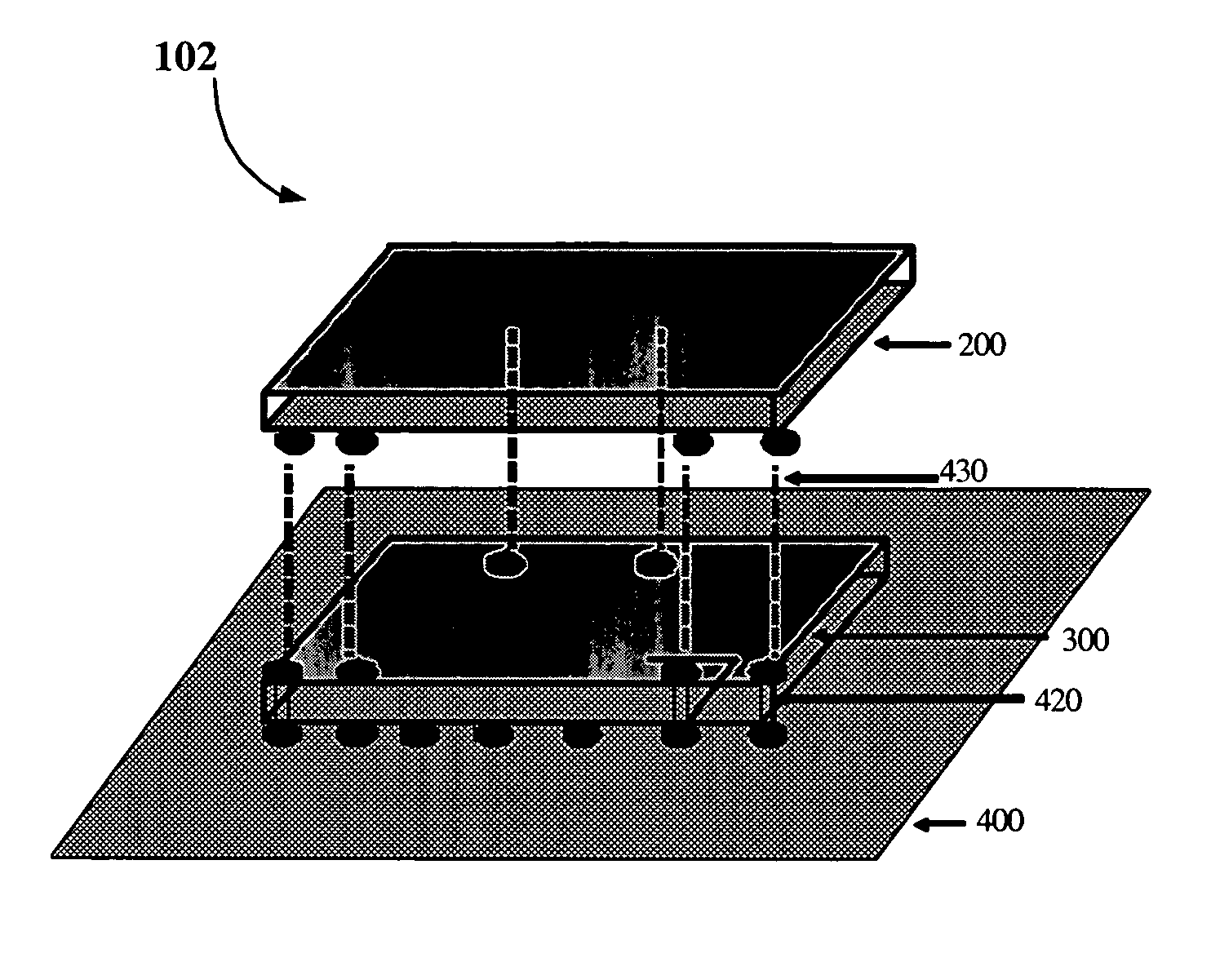

Power management integrated circuit

InactiveUS7247930B2Semiconductor/solid-state device detailsLoad balancing in dc networkEngineeringCPU time

A central processing unit (CPU) is disclosed. The CPU includes a CPU die; and a power management die bonded to the CPU die in a three dimensional packaging layout.

Owner:INTEL CORP

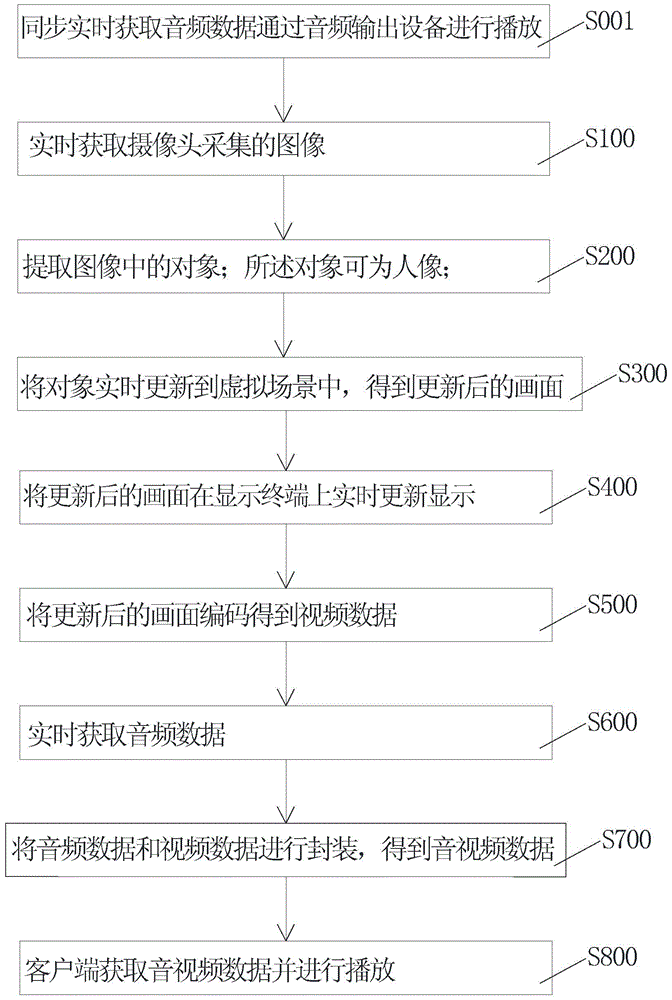

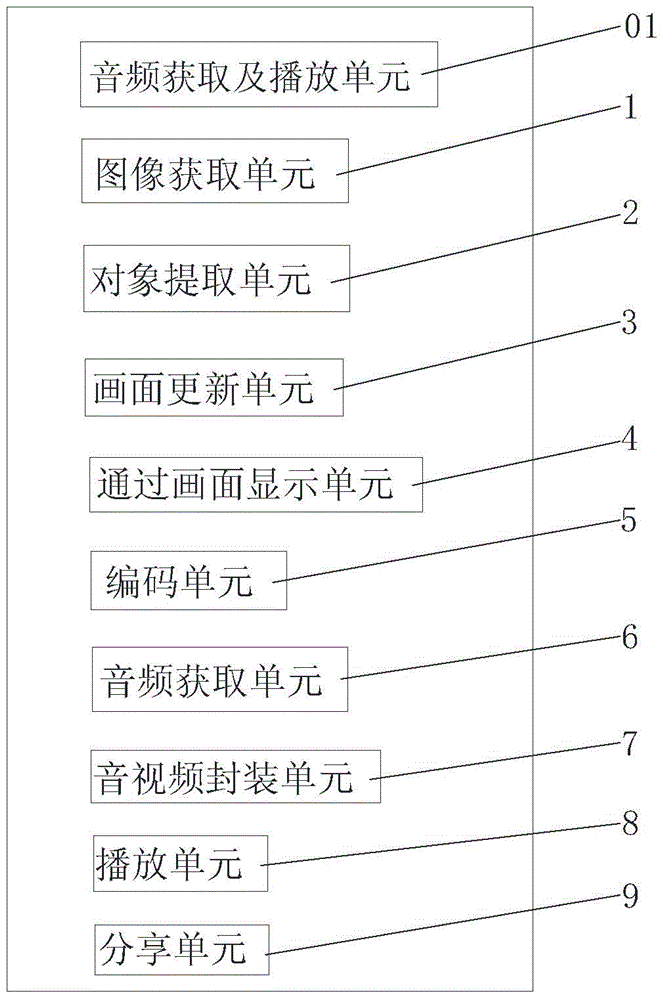

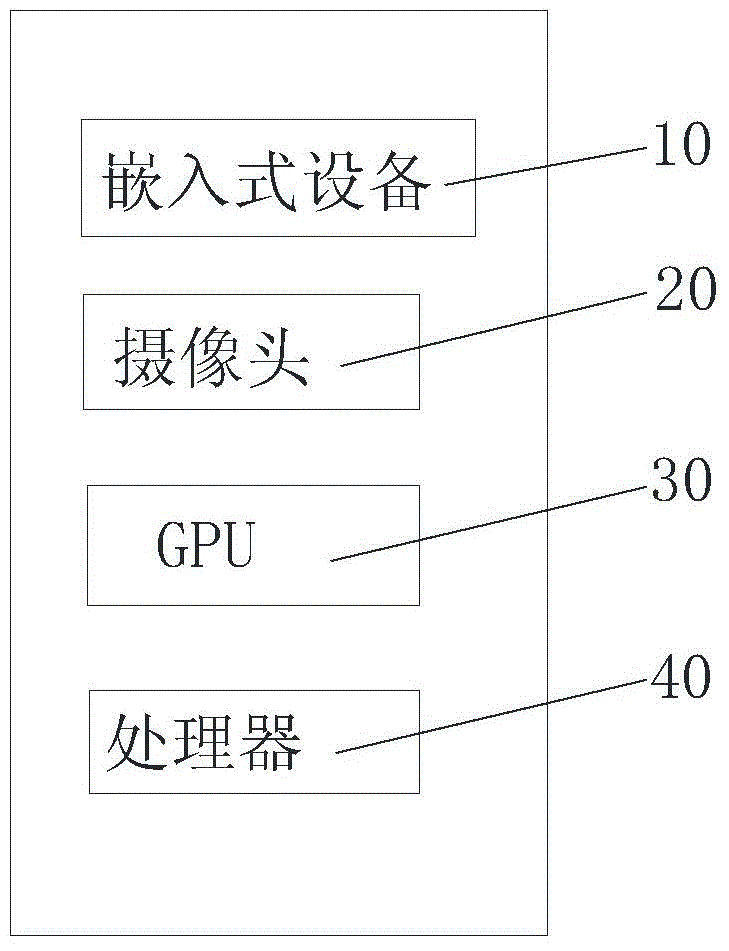

Method, device and system for fusion display of real object and virtual scene

ActiveCN106303289AReduce the burden onOperation impactTelevision system detailsColor television detailsComputer graphics (images)CPU time

The invention relates to a method, device and system for the fusion display of a real object and a virtual scene, and the method comprises the steps: obtaining an image collected by a camera in real time; extracting an object in the image; carrying out the updating of the object in the virtual scene, and obtaining an updated image. The method employs a built-in GPU of embedded equipment for matting operation, does not occupy the time of a CPU, and increases the system speed. Meanwhile, the method employs a processor in the embedded equipment for the coding of a synthesis image of a human image and the virtual scene, obtains video data, greatly reduces the size of video data through coding, facilitates the smooth network transmission of video data, and carries out the real-time and smooth display at other clients.

Owner:福建凯米网络科技有限公司

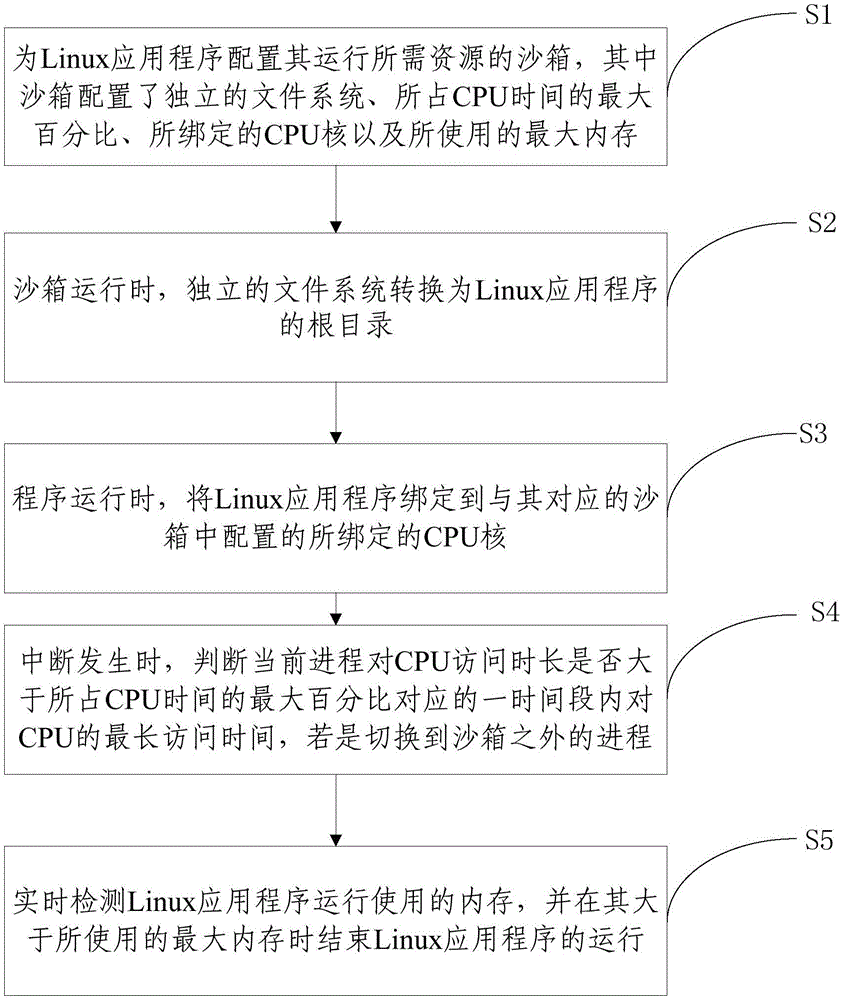

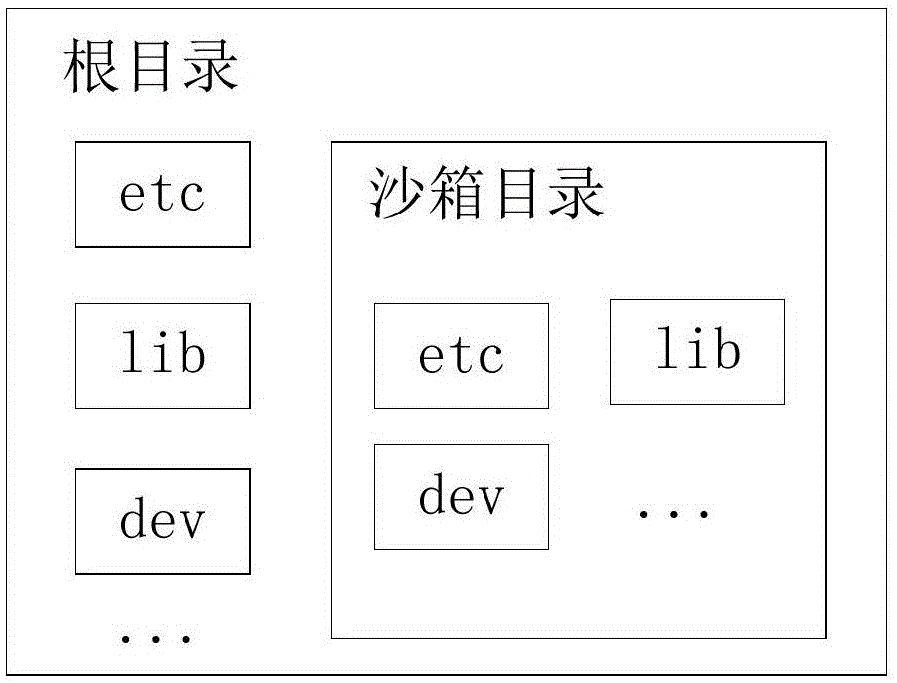

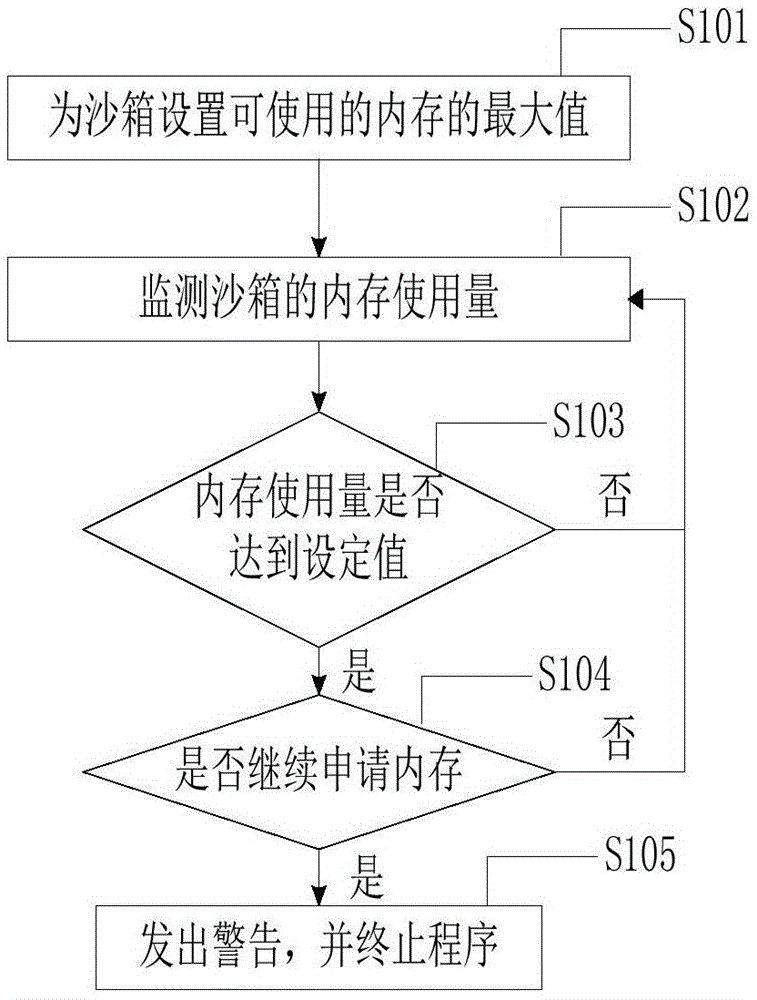

Isolation operation method for Linux application program

InactiveCN105138905ANo threatAchieve isolationPlatform integrity maintainanceOperational systemAccess time

The invention provides an isolation operation method for a Linux application program. The method includes the following steps that a sandbox of sources needed by operation of the Linux application program is configured for the Linux application program, and an independent file system, the maximum percent of occupied CPU time, a bound CPU core and the maximum memory in use are configured for the sandbox; the independent file system is converted into a root directory of the Linux application program; the Linux application program is bound to the bound CPU core configured in the sandbox corresponding to the Linux application program; when an interruption happens, whether the access time of the current process on a CPU is longer than the maximum access time on the CPU in a time period corresponding to the maximum percent of the occupied CPU time is judged, and if yes, the process outside the sandbox is switched to; the memory used in operation of the Linux application program is detected in real time, and operation of the Linux application program is ended when the memory is larger than the maximum memory in use. According to the isolation operation method, isolation between application programs and isolation between application programs and an operation system are achieved, and it is ensured that malicious application programs cannot threaten the operation system.

Owner:INST OF INFORMATION ENG CAS

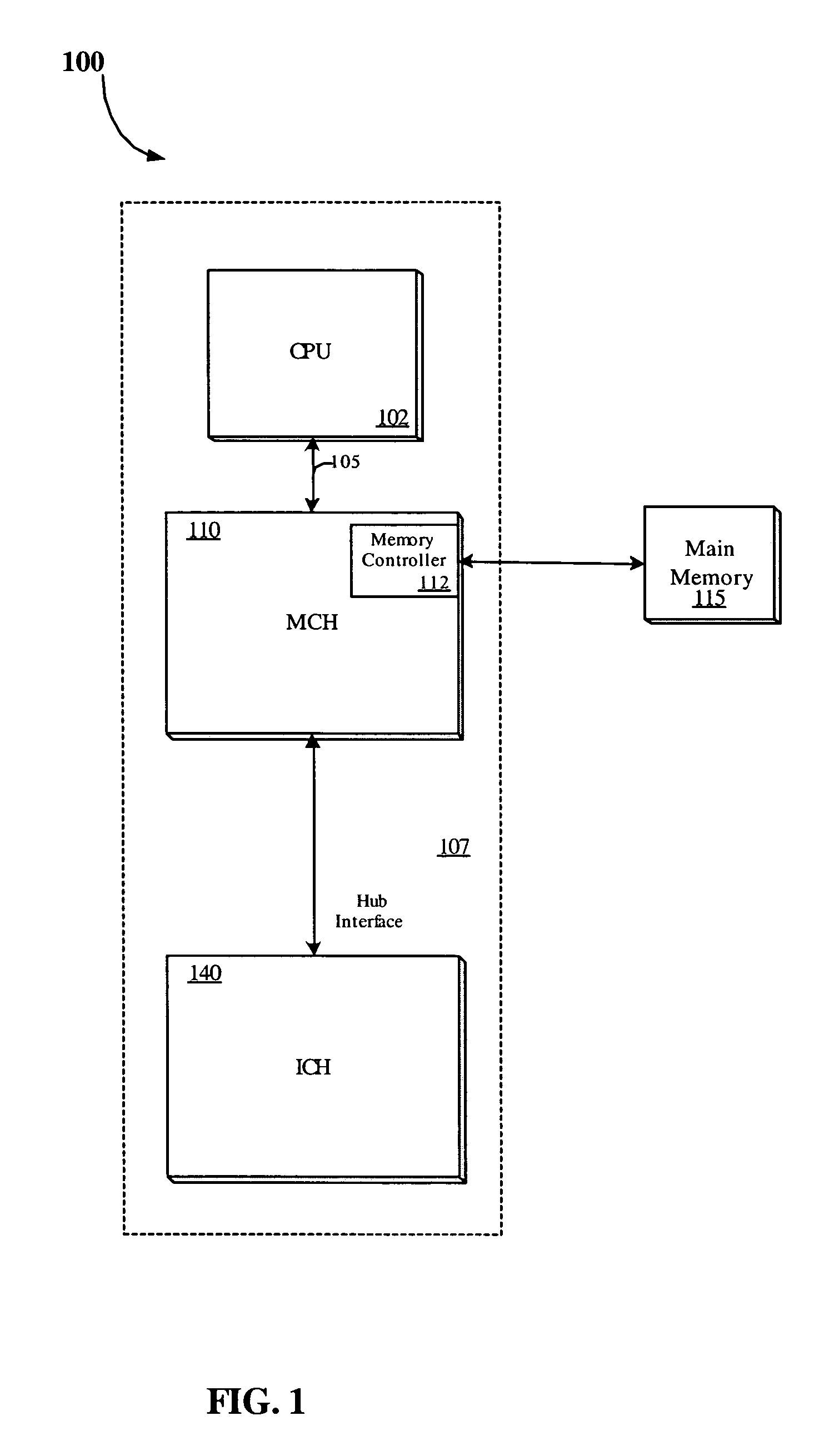

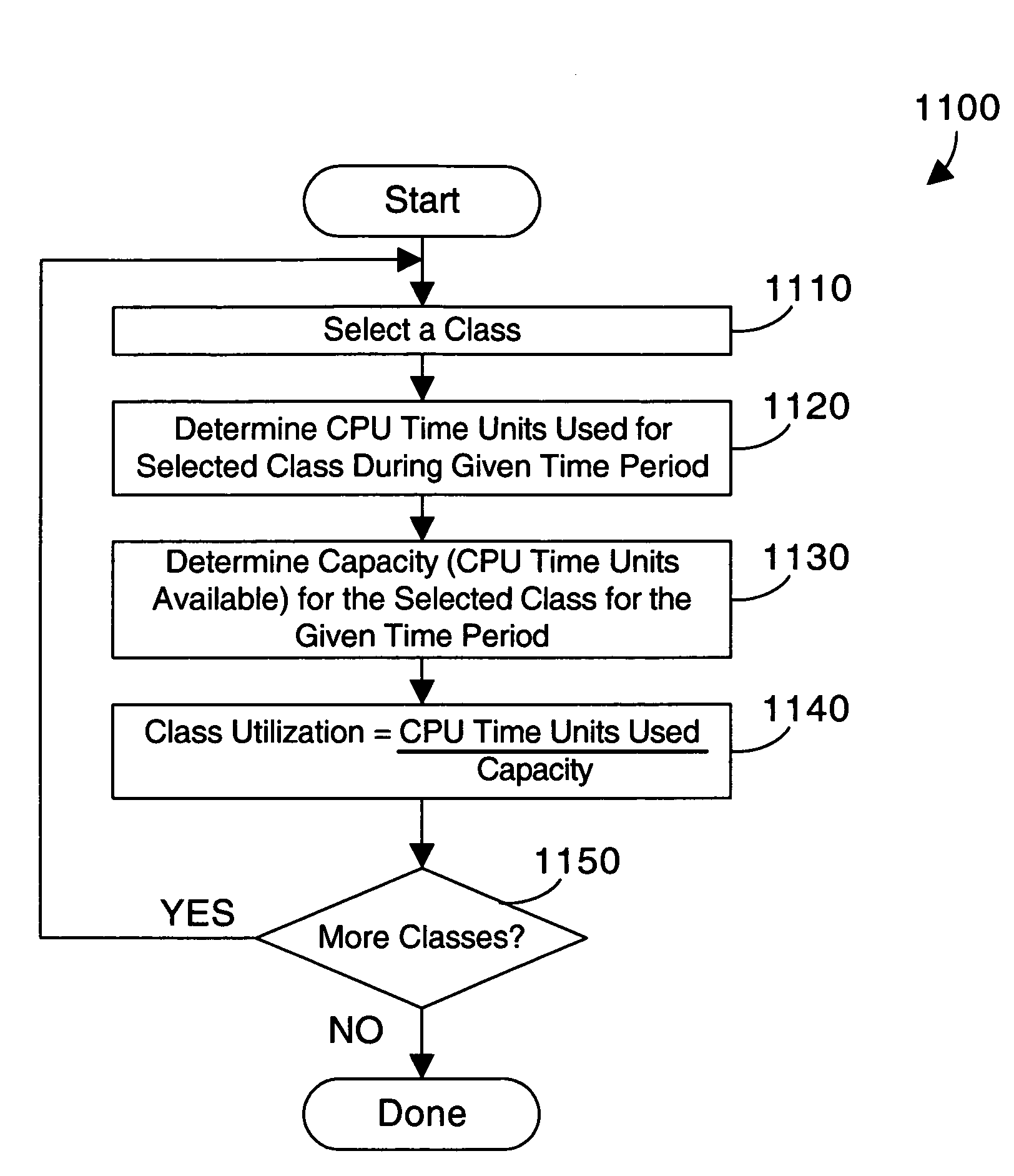

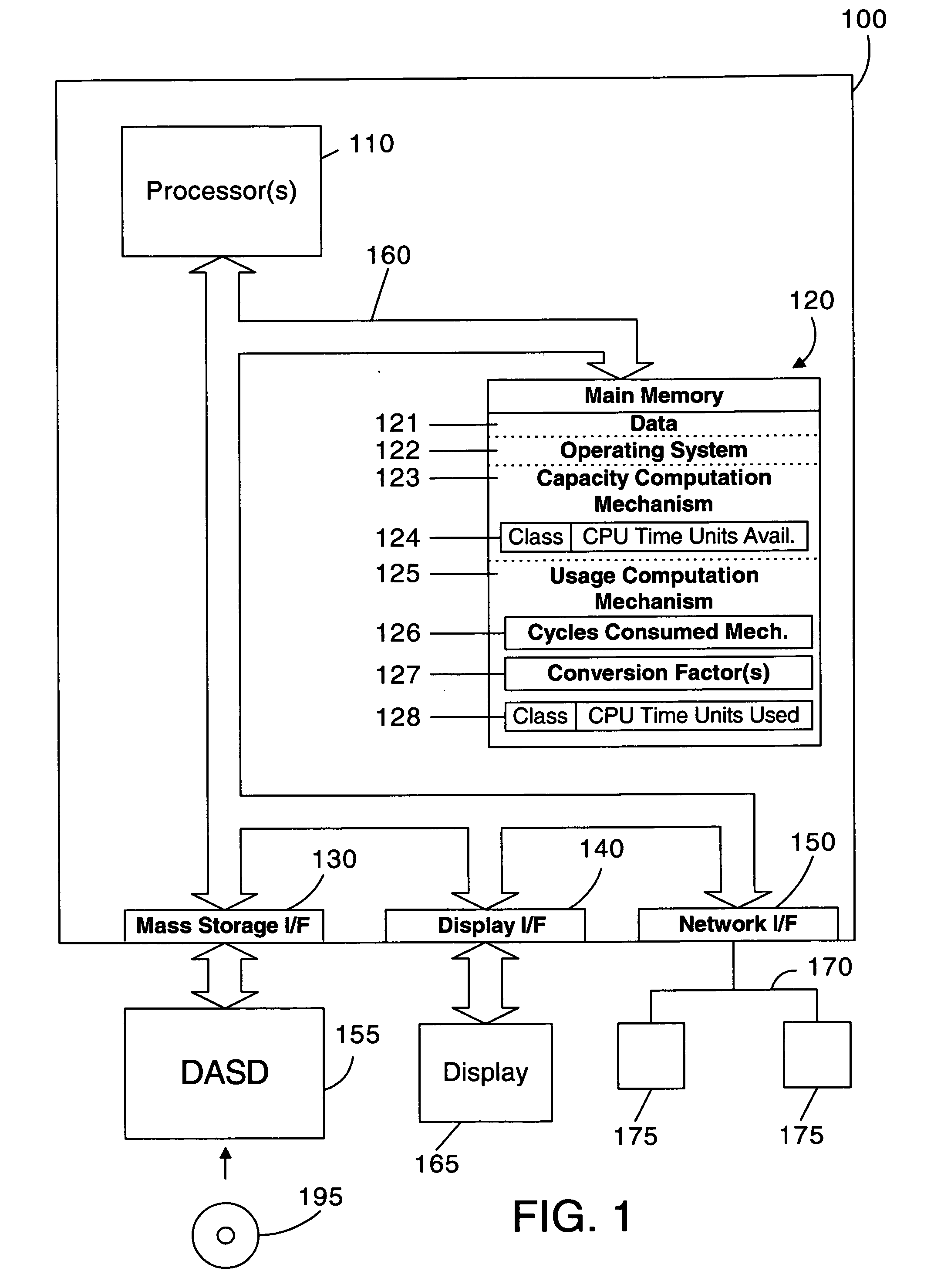

Apparatus and method for measuring and reporting processor capacity and processor usage in a computer system with processors of different speed and/or architecture

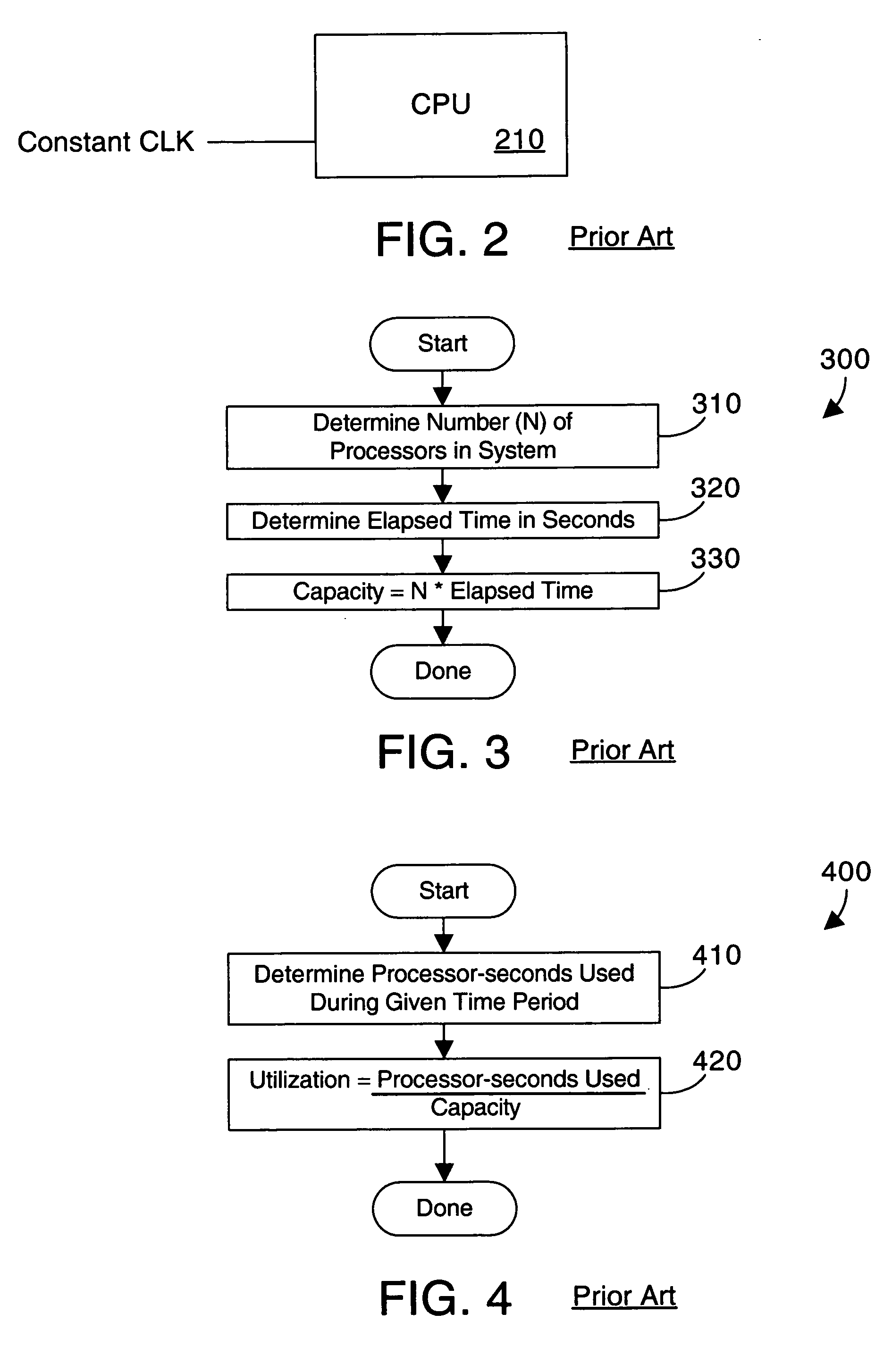

ActiveUS20070124730A1Error detection/correctionMultiprogramming arrangementsConversion factorParallel computing

In a computer system that includes multiple processors, each processor in a computer system is assigned a processor class. Processor capacity and usage are monitored according to the class assigned to the processor. Capacity and usage are reported on a class-by-class basis so that the capacity and performance of different classes of processors are not erroneously compared or summed. The capacity and usage are monitored and reported in an abstract unit of measurement referred to as a “CPU time unit”. Processors of the same type that run at different clock speeds or that have different internal circuitry enabled are preferably assigned the same class, with one or more conversion factors being used to appropriately scale the performance of the processors to the common CPU time unit for this class.

Owner:IBM CORP

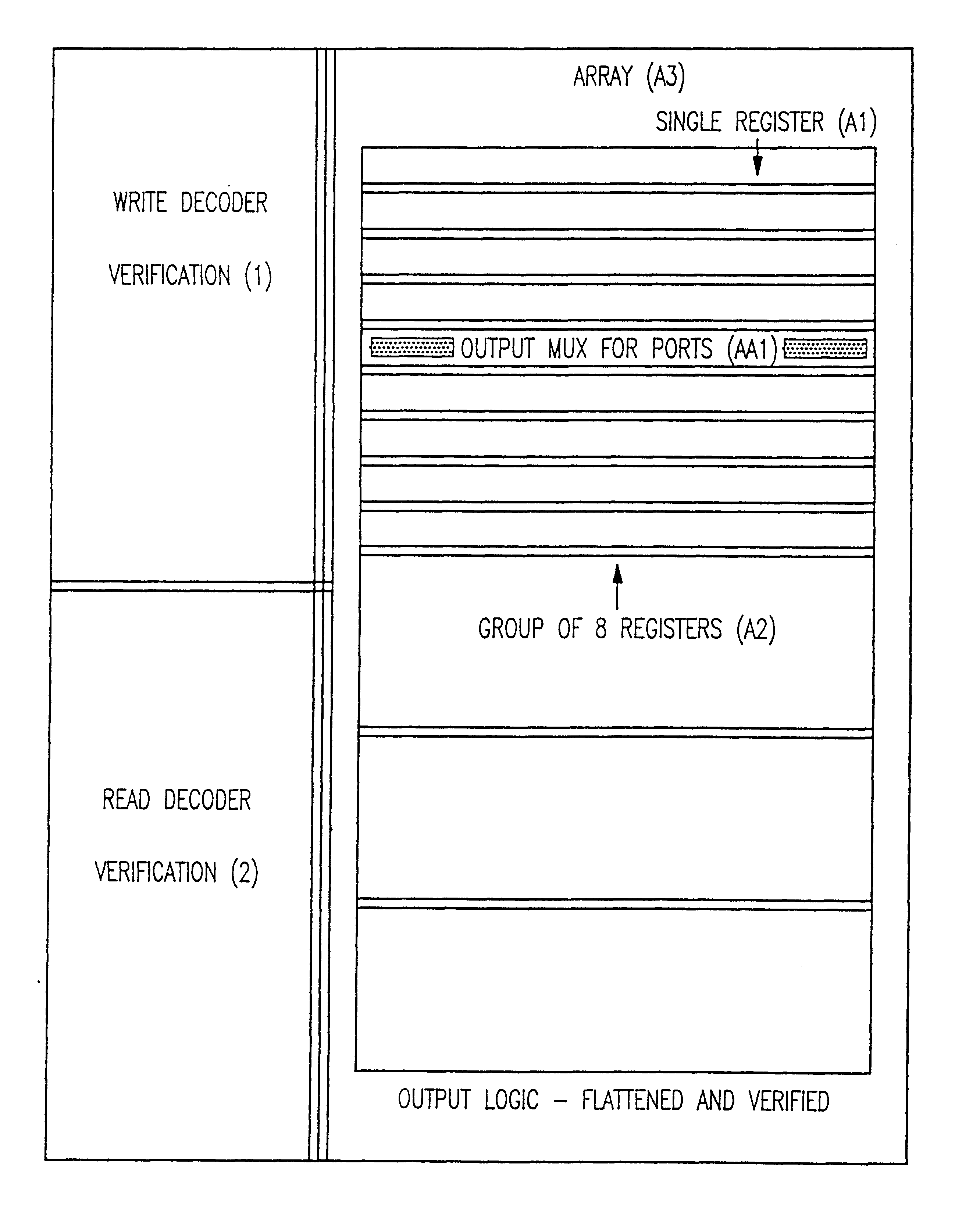

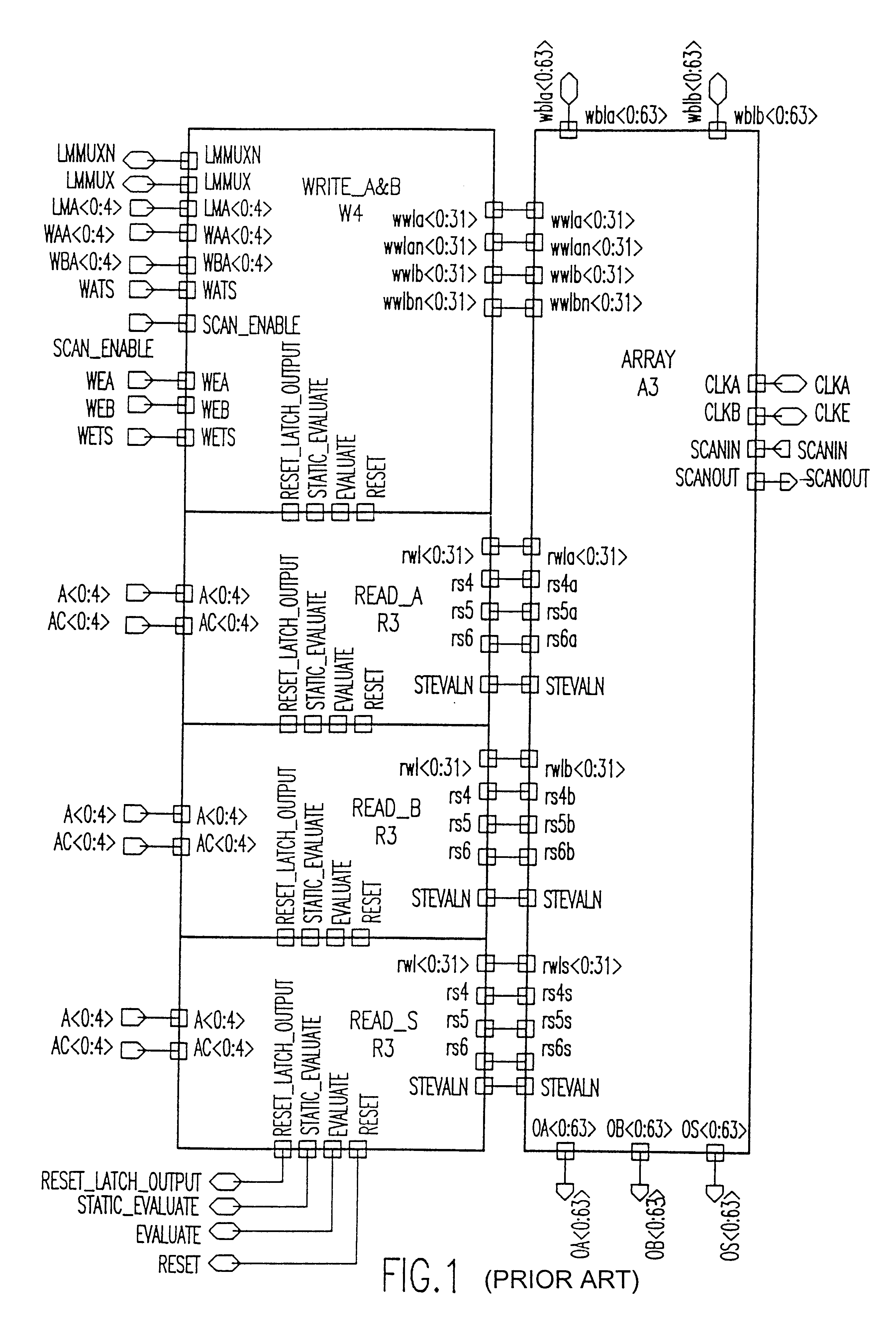

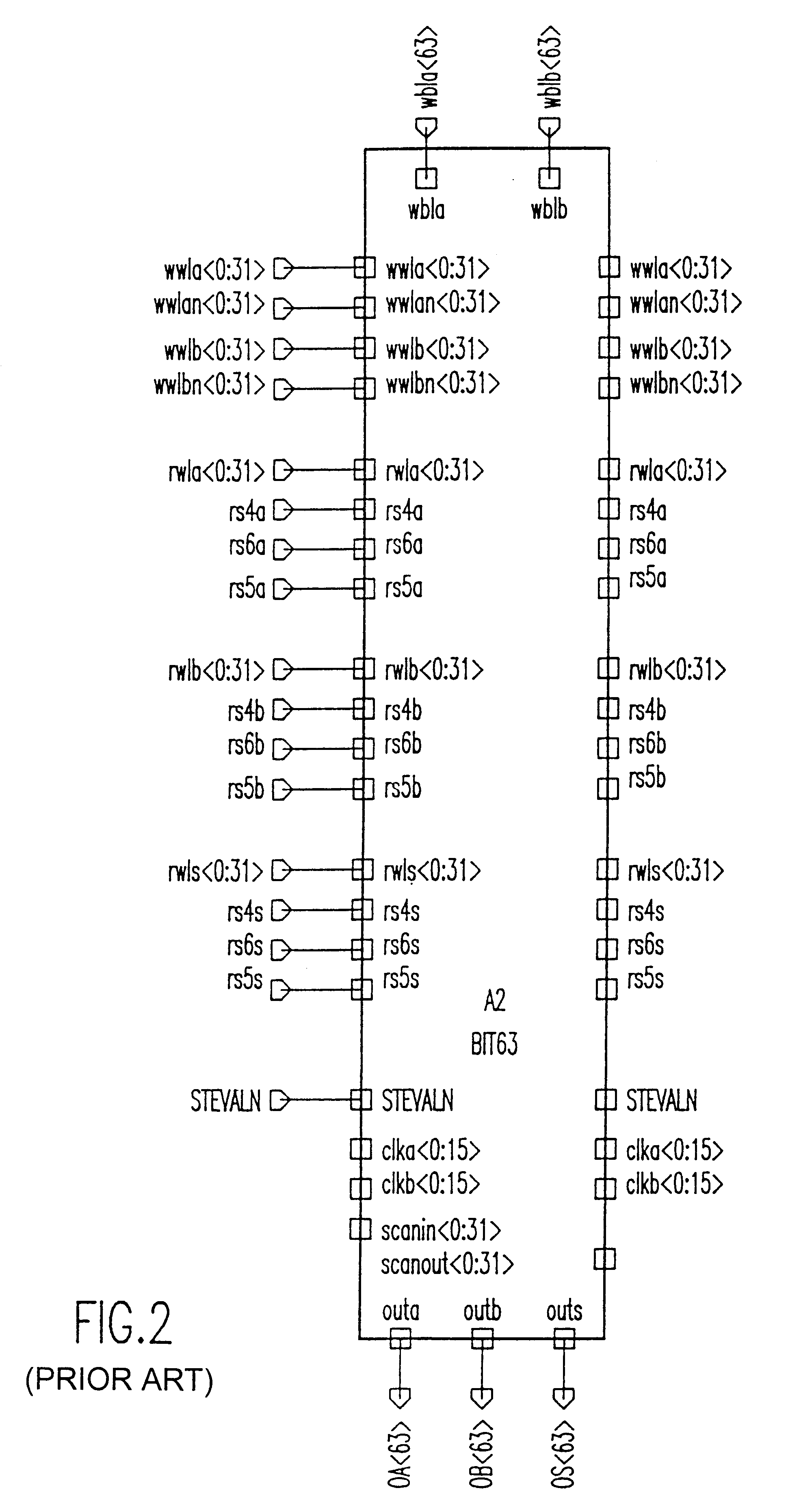

Provably correct storage arrays

A hardware design technique allows checking of design system language (DSL) specification of an element and schematics of large macros with embedded arrays and registers. The hardware organization reduces CPU time for logical verification by exponential order of magnitude without blowing up a verification process or logic simulation. The hardware organization consists of horizontal word level rather than bit level. A memory array cell comprises a pair of cross-coupled inverters forming a first latch for storing data. The first latch has an output connected to a read bit line. True and complement write word and bit line input to the first latch. A first set of pass gates connects between the true and complement write word and bit line inputs via gates and the input of said first latch. The first set of pass gates is responsive to a first clock via a second pass gate. A pair of cross-coupled inverters forms a second latch of a Level Sensitive Scan Design (LSSD). The second latch has output connected to an LSSD output for design verification. A second pass gate connects between the output of the first set of pass gates and the input of said first latch. The second pass gate is responsive to said first clock. A third pass gate connects between the output of said first latch and the input of said second latch. The third pass gate is responsive to a second clock. The first and second clocks are responsive to a black boxing process for incremental verification.

Owner:IBM CORP

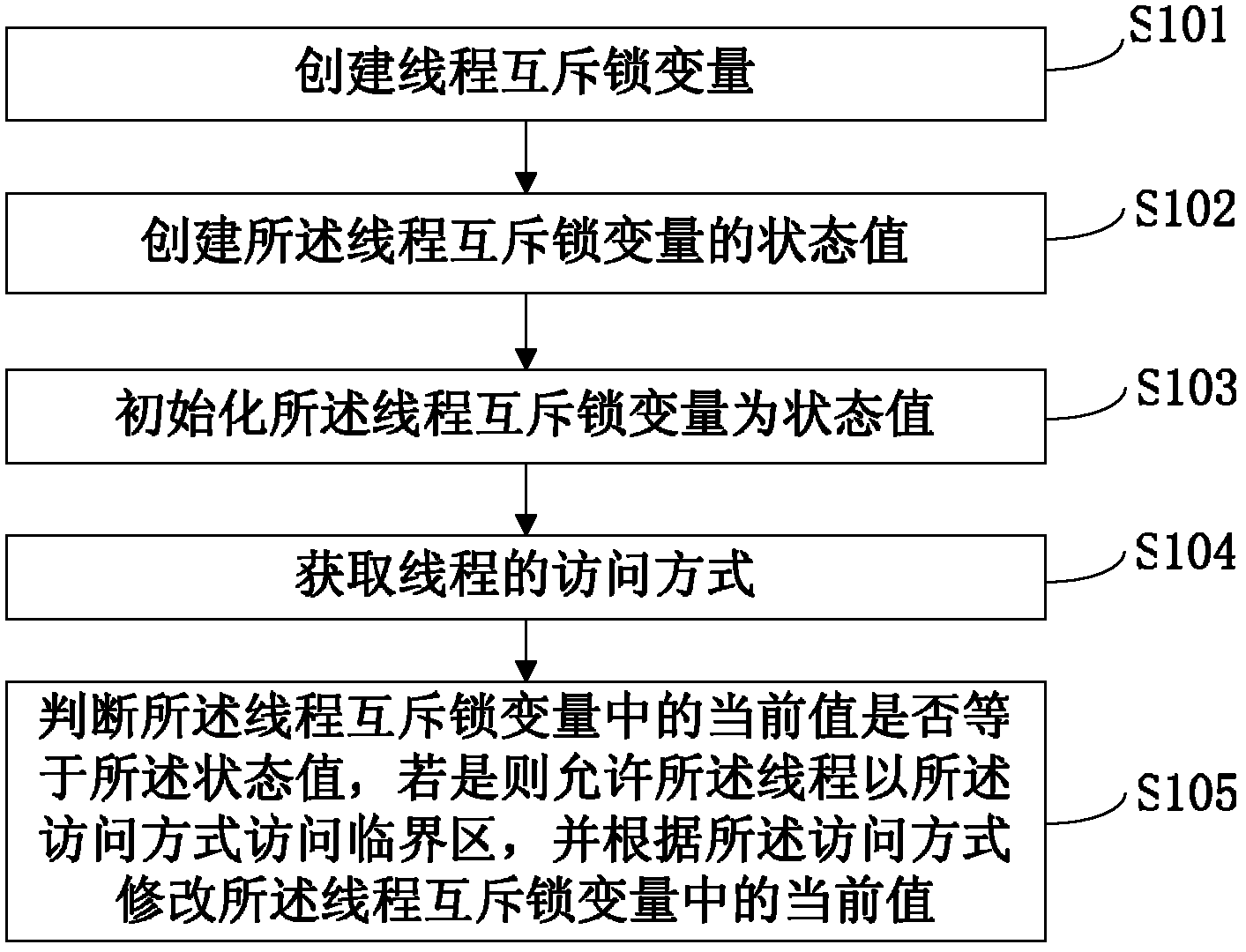

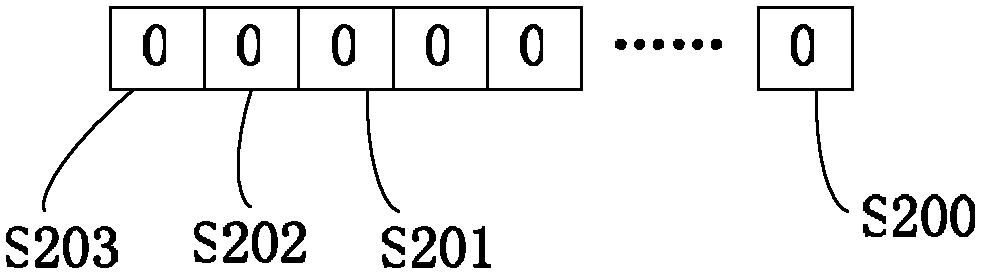

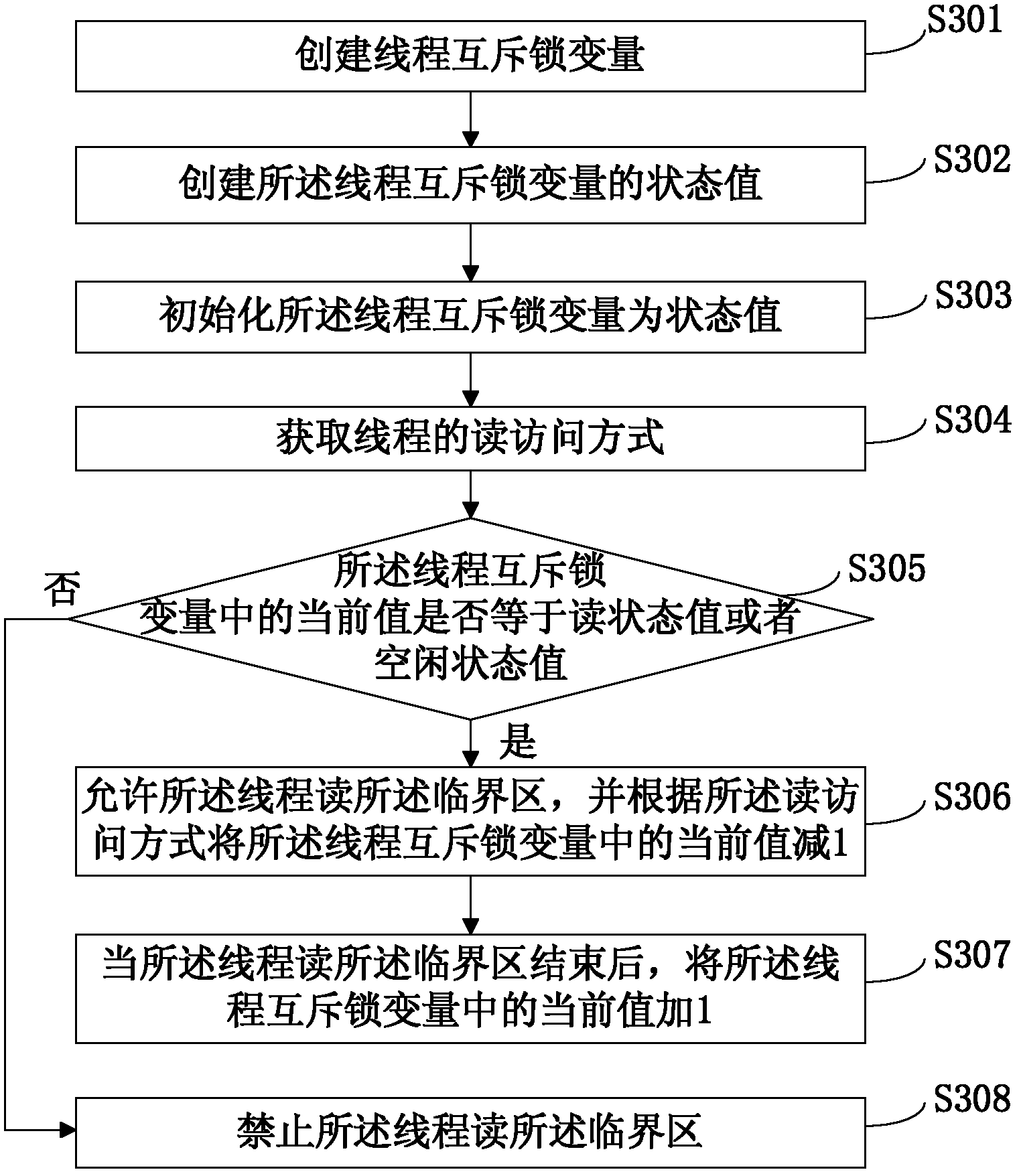

Method, system and terminal device for threads to access critical zones

InactiveCN102662747AAccurate and effective accessImprove access efficiencyMultiprogramming arrangementsTerminal equipmentComputer terminal

The invention discloses a method, a system and a terminal device for threads to access critical zones. The method establishes a thread mutual exclusion lock variable and a status value of the thread mutual exclusion lock variable, initializes the thread mutual exclusion lock variable to be the status value, obtains an access mode of the threads, and judges whether a current valve in the thread mutual exclusion lock variable is equal to the status value. If the current valve in the thread mutual exclusion lock variable is equal to the status value, the threads are allowed to access the critical zones in the access mode, and the current value in the thread mutual exclusion lock variable is modified according to the access mode. Whether the critical zones can be used can be determined rapidly before the threads access the critical zones. If the critical zones cannot be used, the threads can give up central processing unit (CPU) time slices automatically, a heavy and time-consuming system lock for thread access is avoided, access efficiency and multi-thread mutual exclusion access efficiency of the critical zones are improved, CPU consumption is reduced, and market competitiveness of software products is improved simultaneously. By means of atomic increasing or reducing, the critical zones can be accessed accurately and effectively.

Owner:融创天下(上海)科技发展有限公司

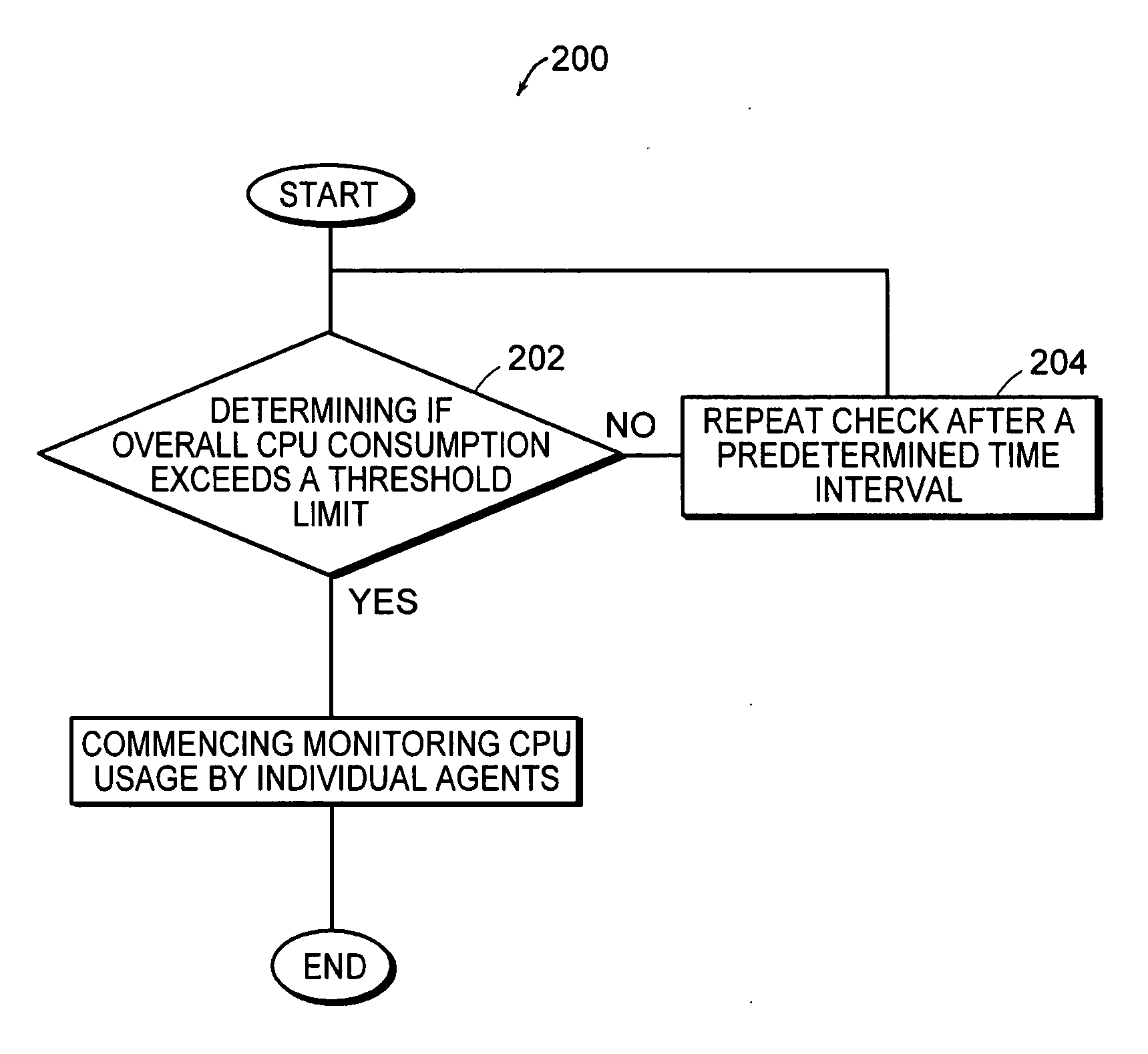

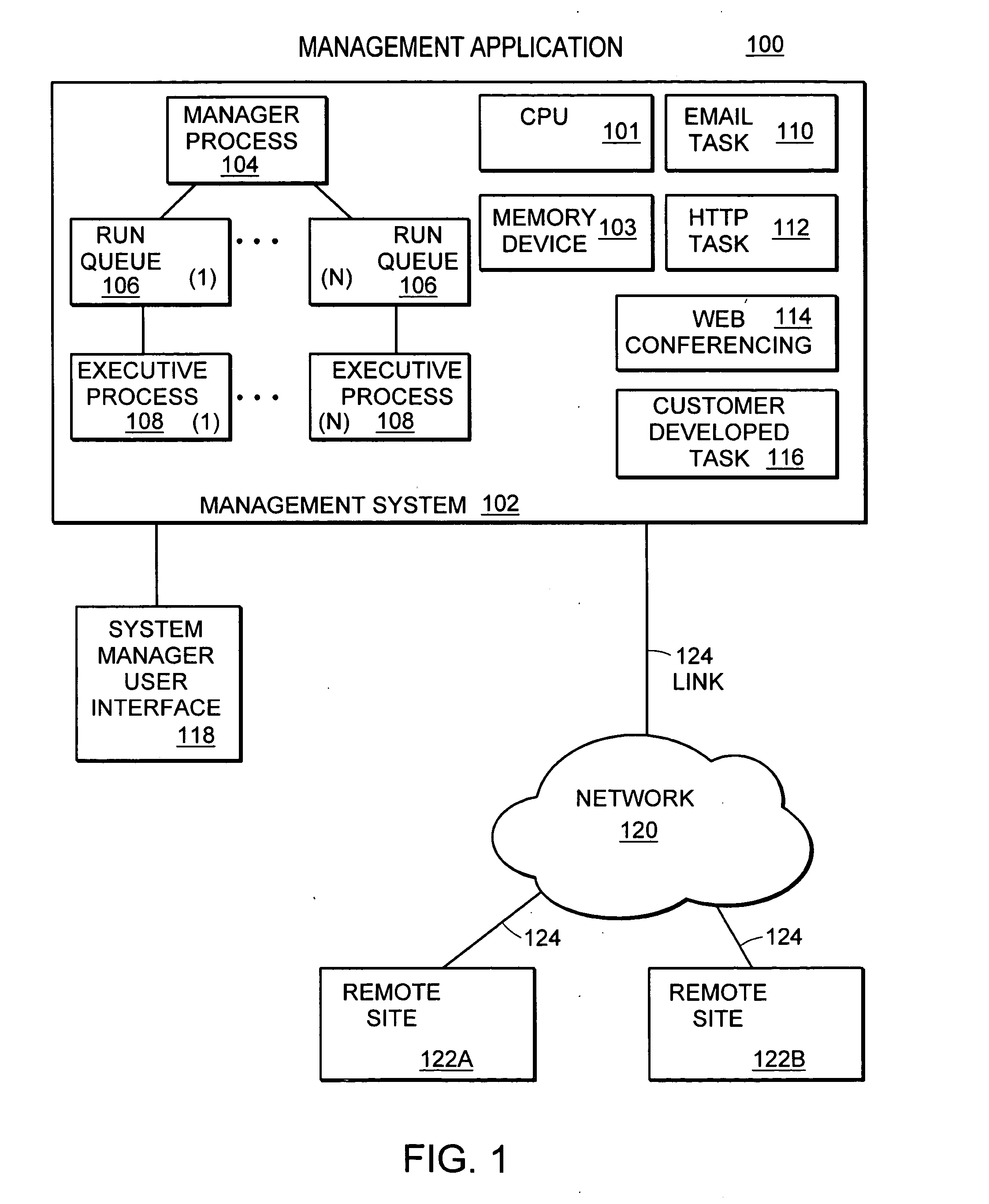

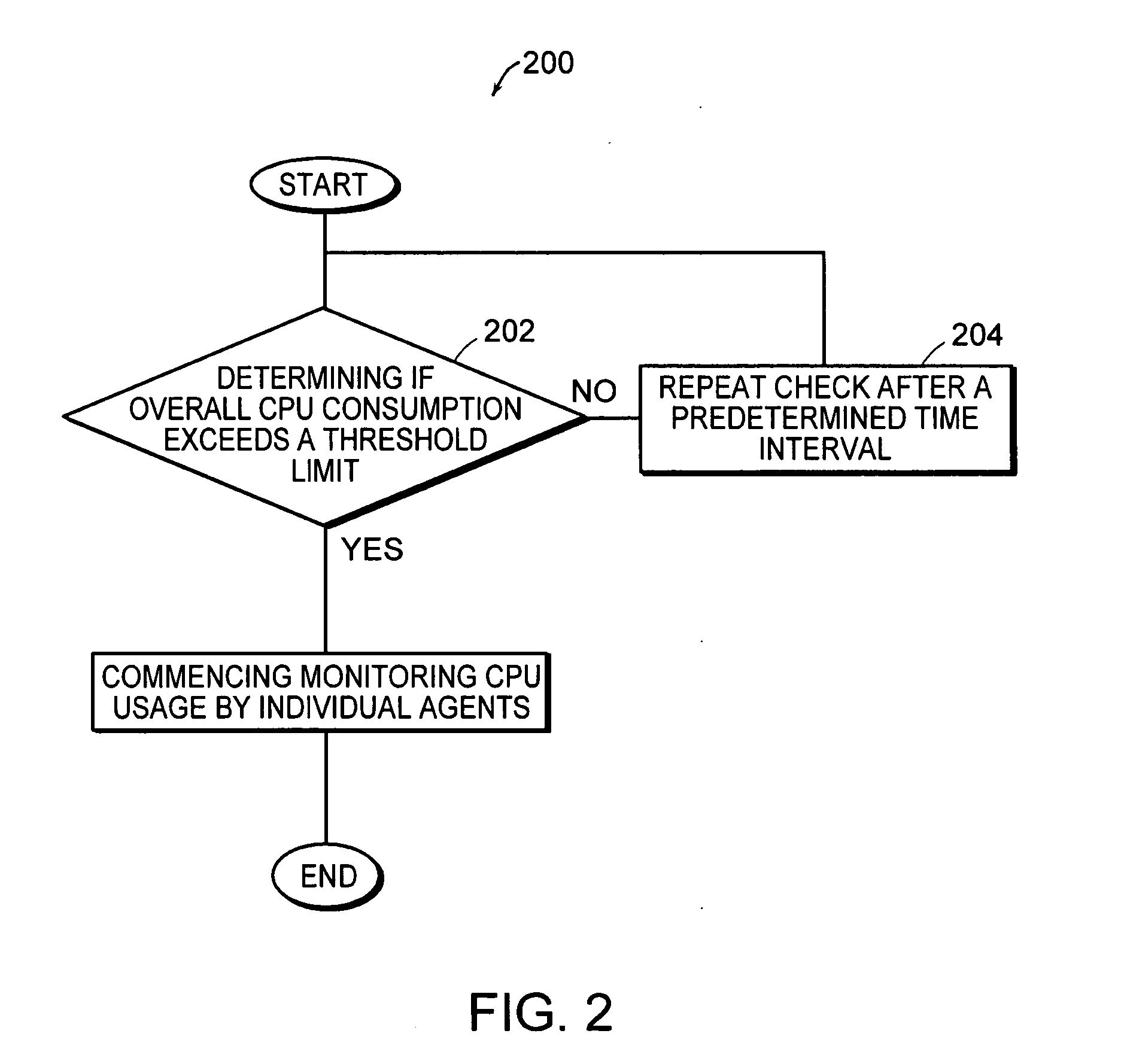

Systems and methods for tracking processing unit usage

A method and system for monitoring the CPU time consumed by a software agent operating in a computer system is disclosed. A resource tracking process is executed on the system. When an operating agent is detected, an agent lifetime timer is initialized. Then, CPU resources for the agent are identified and stored. Checks are made at predetermined intervals to determine if the agent is still alive. When the agent terminates, a measurement is made of the CPU time utilized by the agent. The measurement is then stored in memory.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com