Method, device and system for fusion display of real object and virtual scene

A technology for virtual scenes and real objects, applied in the multimedia field, can solve the problems of inability to display real-time display on terminals, heavy CPU burden, etc., to save computing time, reduce CPU burden, and improve system speed.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

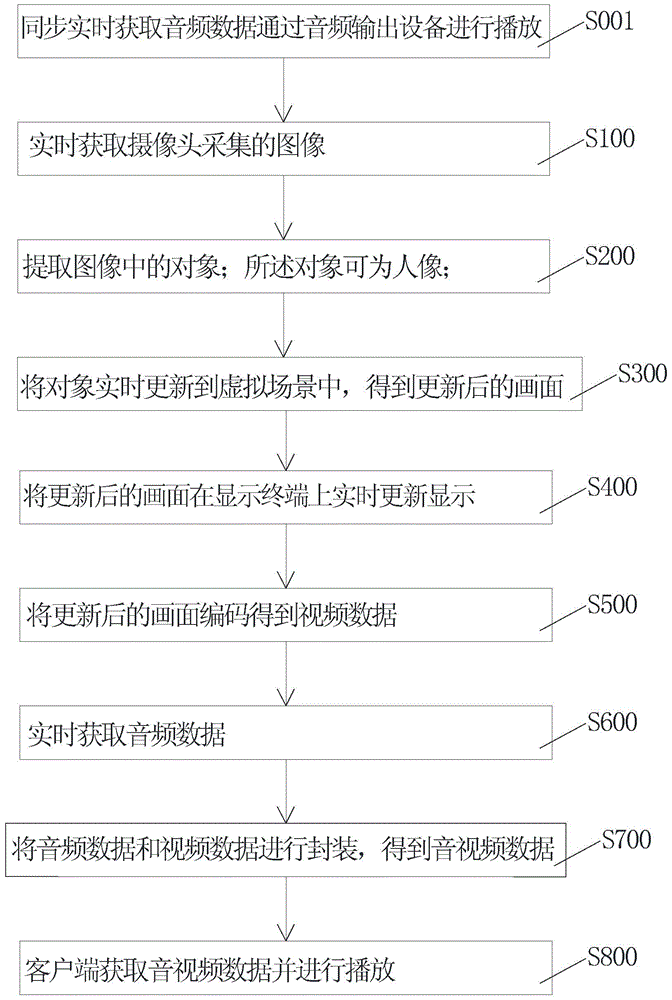

[0058] Such as figure 1 As shown, the present invention provides a method for fused display of real objects and virtual scenes, which includes:

[0059] S100: obtaining images collected by the camera in real time;

[0060] S200: extract an object in the image; the object may be a portrait;

[0061] S300: Updating the object into the virtual scene in real time to obtain an updated picture.

[0062] The present invention realizes the real-time synthesis of objects and virtual scenes through the above solutions. In the present invention, the virtual scene includes a 3D virtual stage, a 3D virtual reality scene, or a 3D video.

[0063] The 3D virtual stage is a special case in the 3D virtual reality scene. The real stage is simulated by computer technology to achieve a three-dimensional and realistic stage effect.

[0064] 3D virtual reality scene technology is a computer simulation system that can create and experience a virtual world. It uses a computer to generate a 3D simu...

Embodiment 2

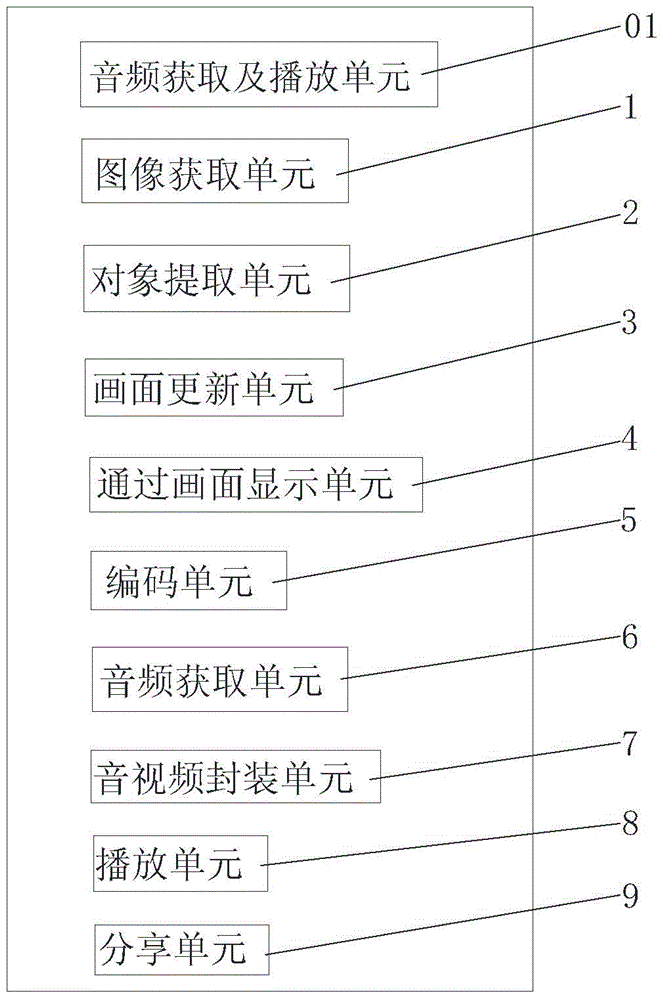

[0084] Such as figure 2 As shown, the present invention also provides a device for fusing and displaying real objects and virtual scenes, including:

[0085] Image acquisition unit 1: used to acquire images collected by the camera in real time;

[0086] Object extraction unit 2: for extracting objects in the image;

[0087] Picture updating unit 3: used to update the object into the virtual scene in real time to obtain the updated picture.

[0088] Through the above device, the extraction of the object in the image and the synthesis of the object and the virtual scene are realized.

[0089] The device for fusing and displaying a real object and a virtual scene according to the present invention further includes: a picture display unit 4 : used for updating and displaying the updated picture on a display terminal in real time. Through the picture display unit 4, the real-time updated picture can be watched on the display terminal.

[0090] The device for fusing and display...

Embodiment 3

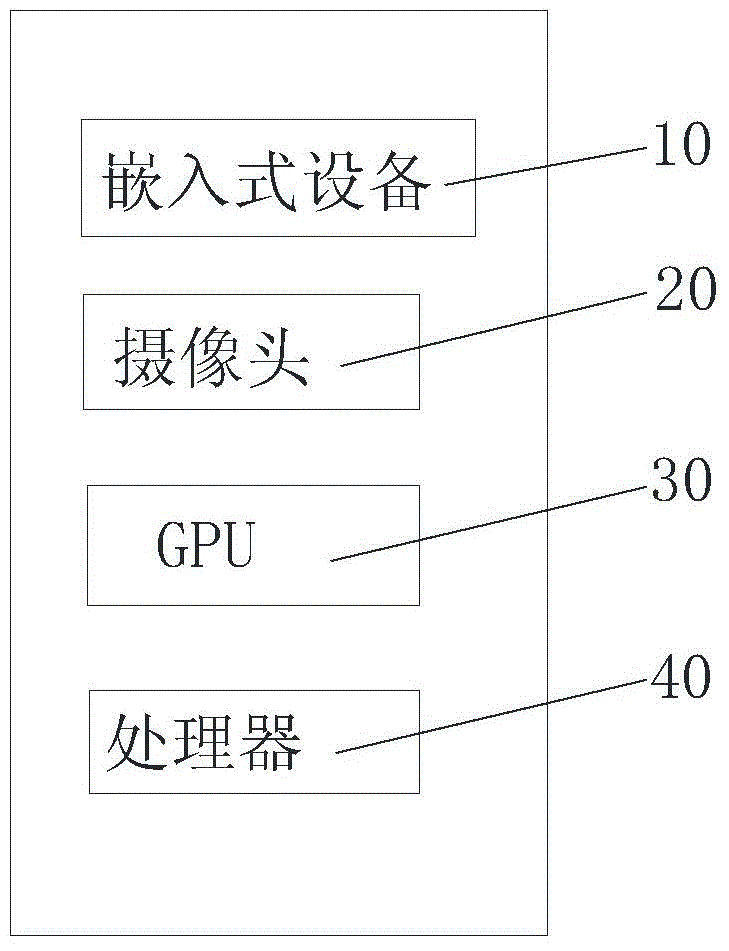

[0100] Such as image 3 As shown, the present invention also provides an embedded device 10 for fused display of real objects and virtual scenes, including: a camera 20, a GPU 30 and a processor 40;

[0101] The camera 20 is used to collect images in real time;

[0102] GPU 30 is used to extract objects in the image;

[0103] The processor 40 is used to update the object in the virtual scene in real time to obtain an updated picture; update and display the updated picture on the display terminal in real time.

[0104] The processor 40 is further configured to: encode the picture to obtain video data; obtain audio data; package the audio data and video data to obtain audio and video data.

[0105] The embedded device of the present invention can be installed with an Android operating system, and uses the image processing function of the Android system to encode pictures and package audio data and video data, thereby greatly reducing the cost of the device.

[0106] In the em...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com