Patents

Literature

93 results about "Run queue" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

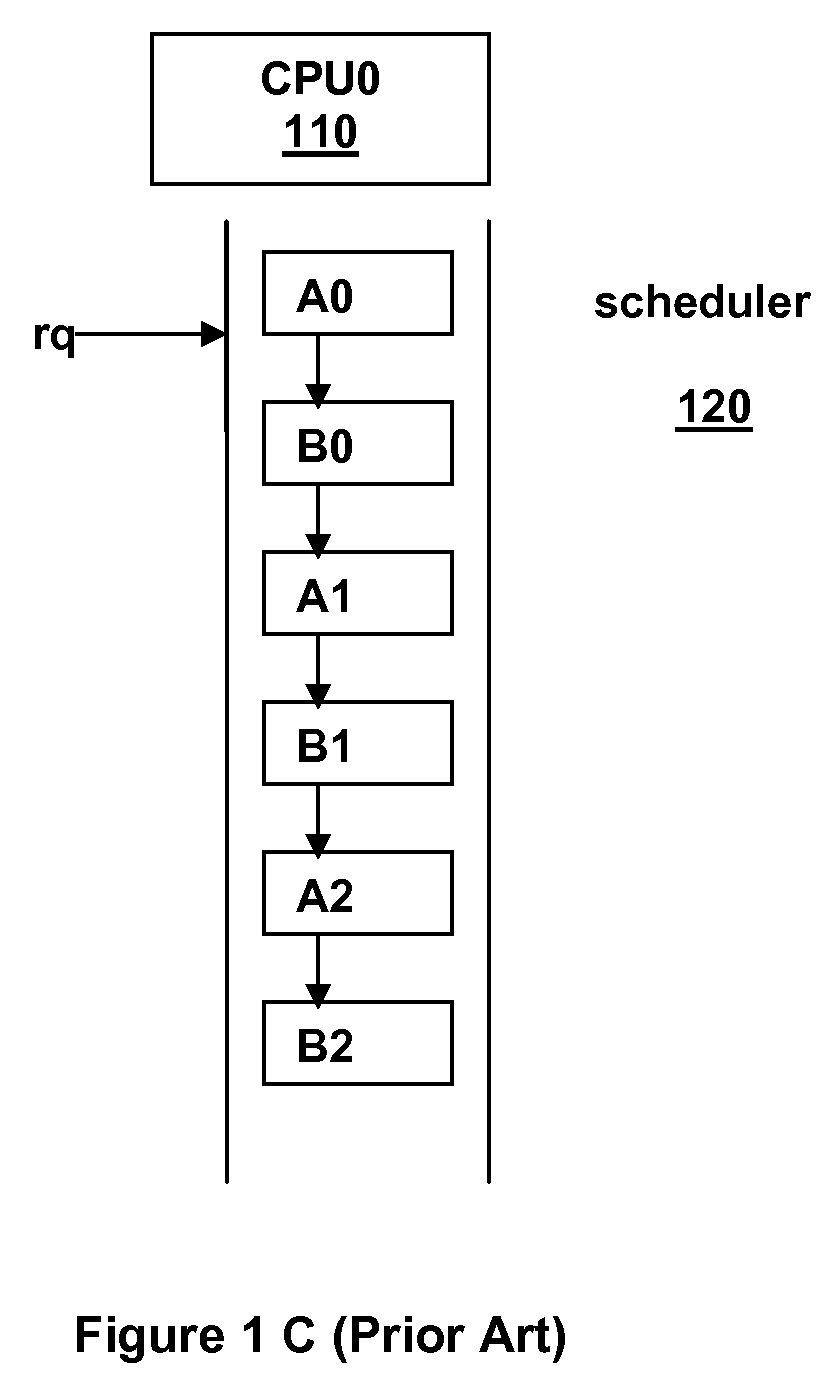

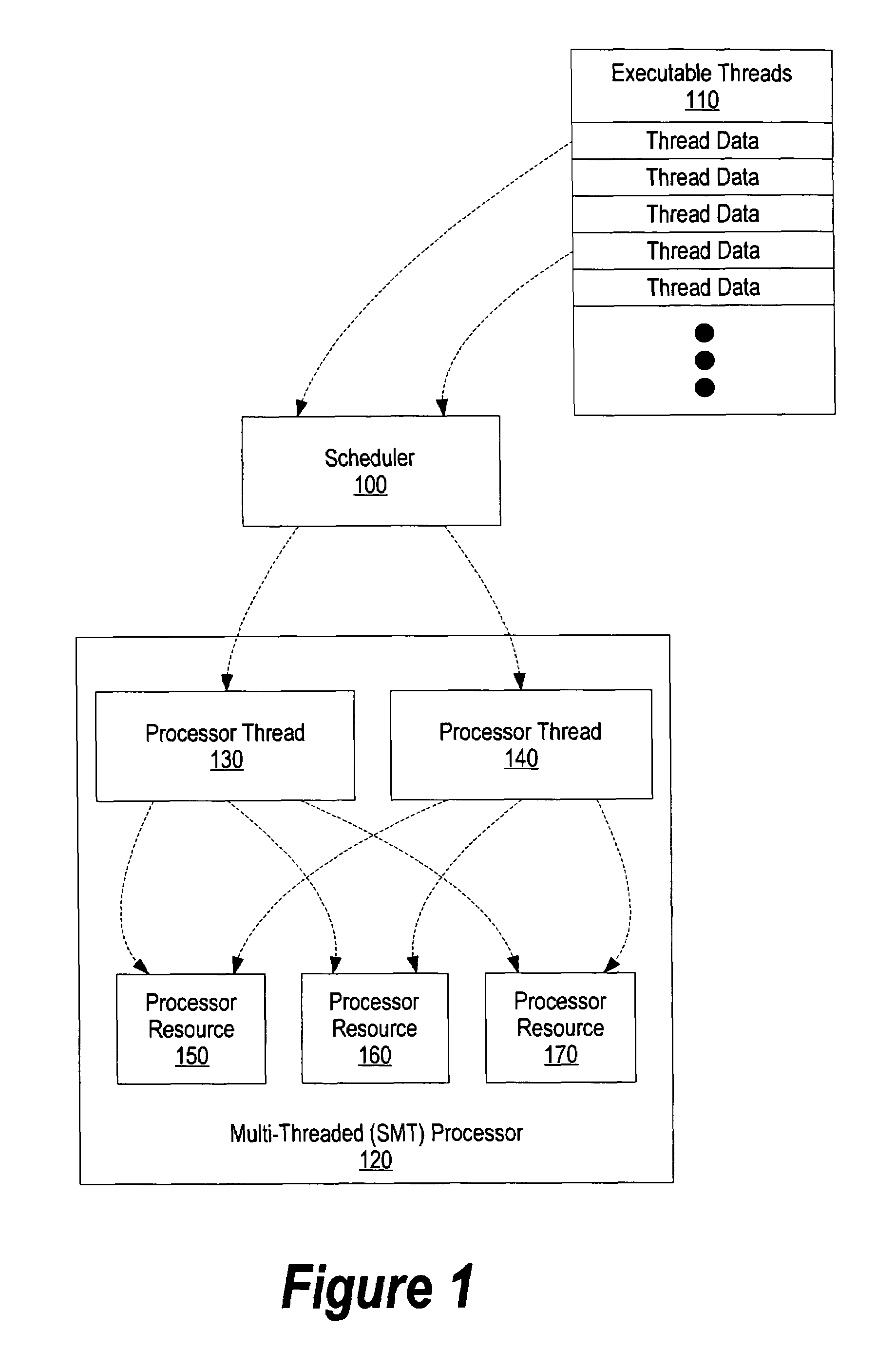

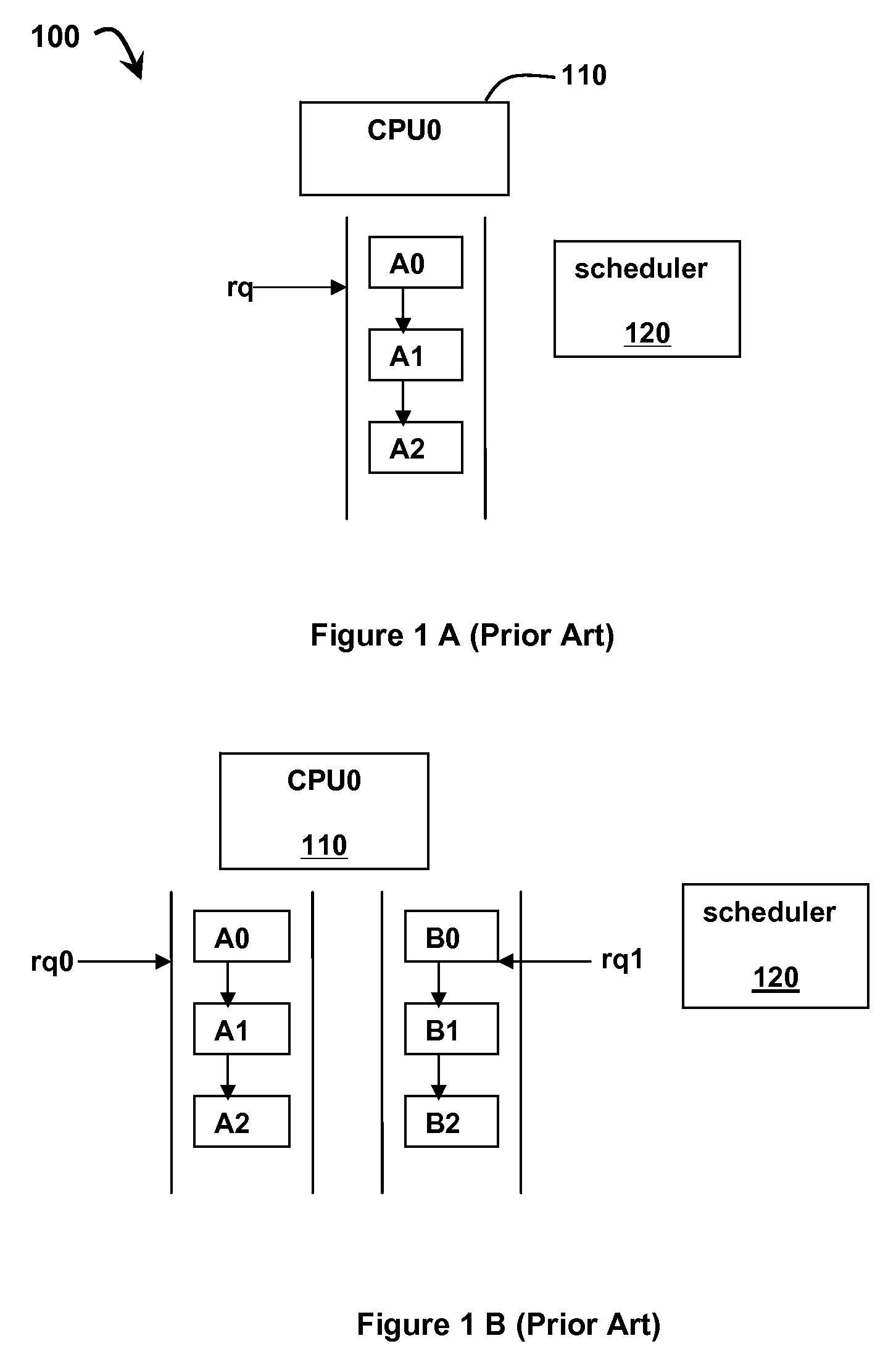

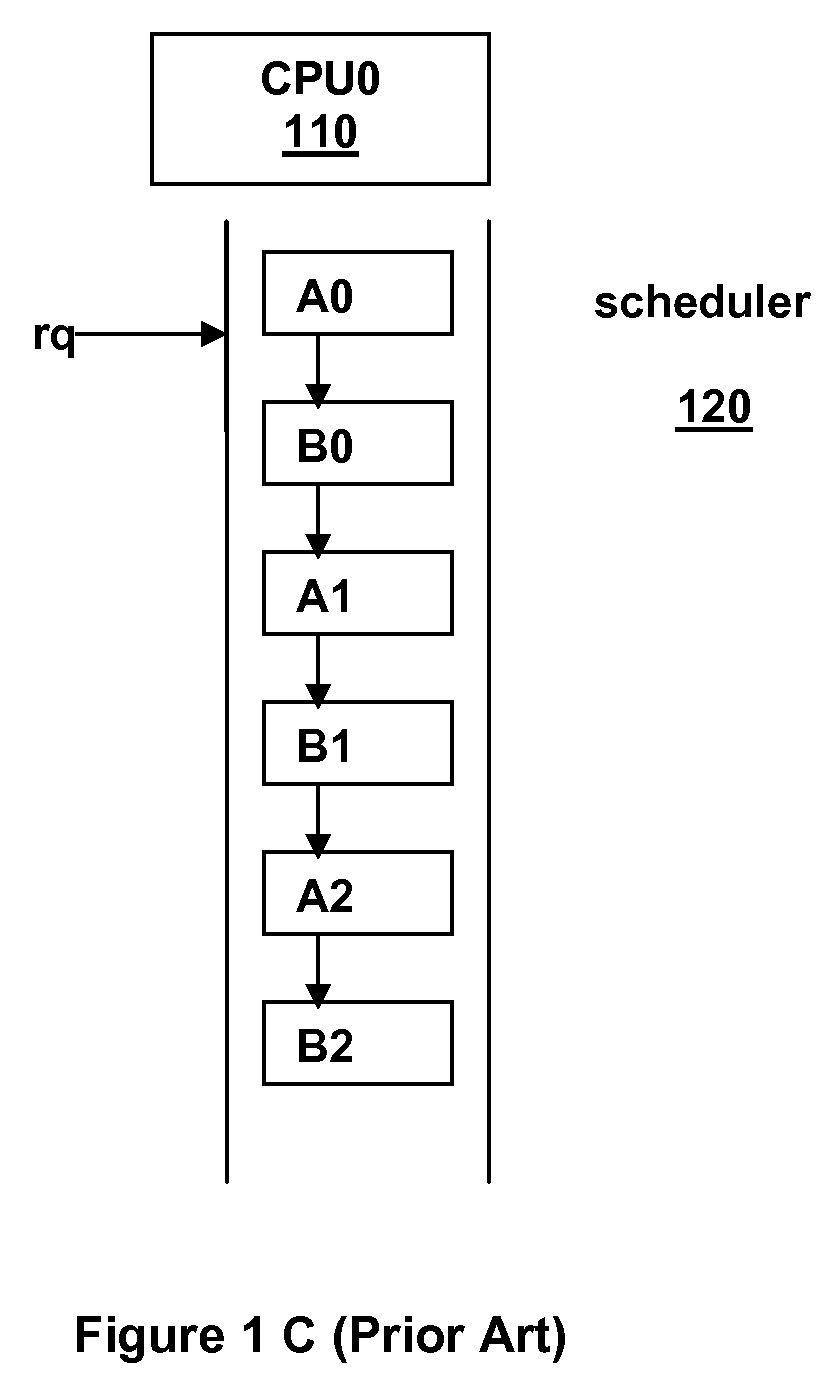

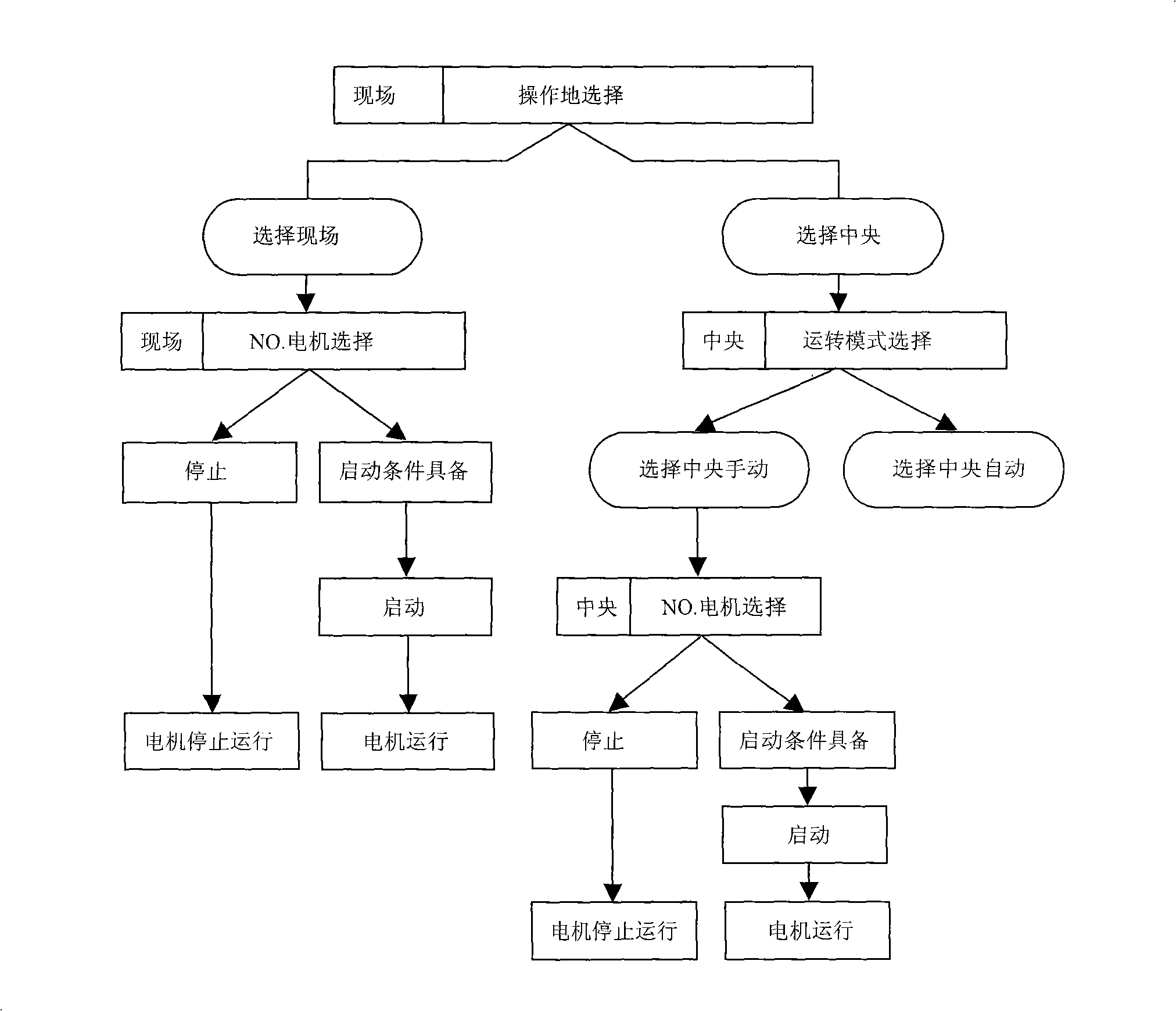

In modern computers many processes run at once. Active processes are placed in an array called a run queue, or runqueue. The run queue may contain priority values for each process, which will be used by the scheduler to determine which process to run next. To ensure each program has a fair share of resources, each one is run for some time period (quantum) before it is paused and placed back into the run queue. When a program is stopped to let another run, the program with the highest priority in the run queue is then allowed to execute.

Computer system with dual operating modes

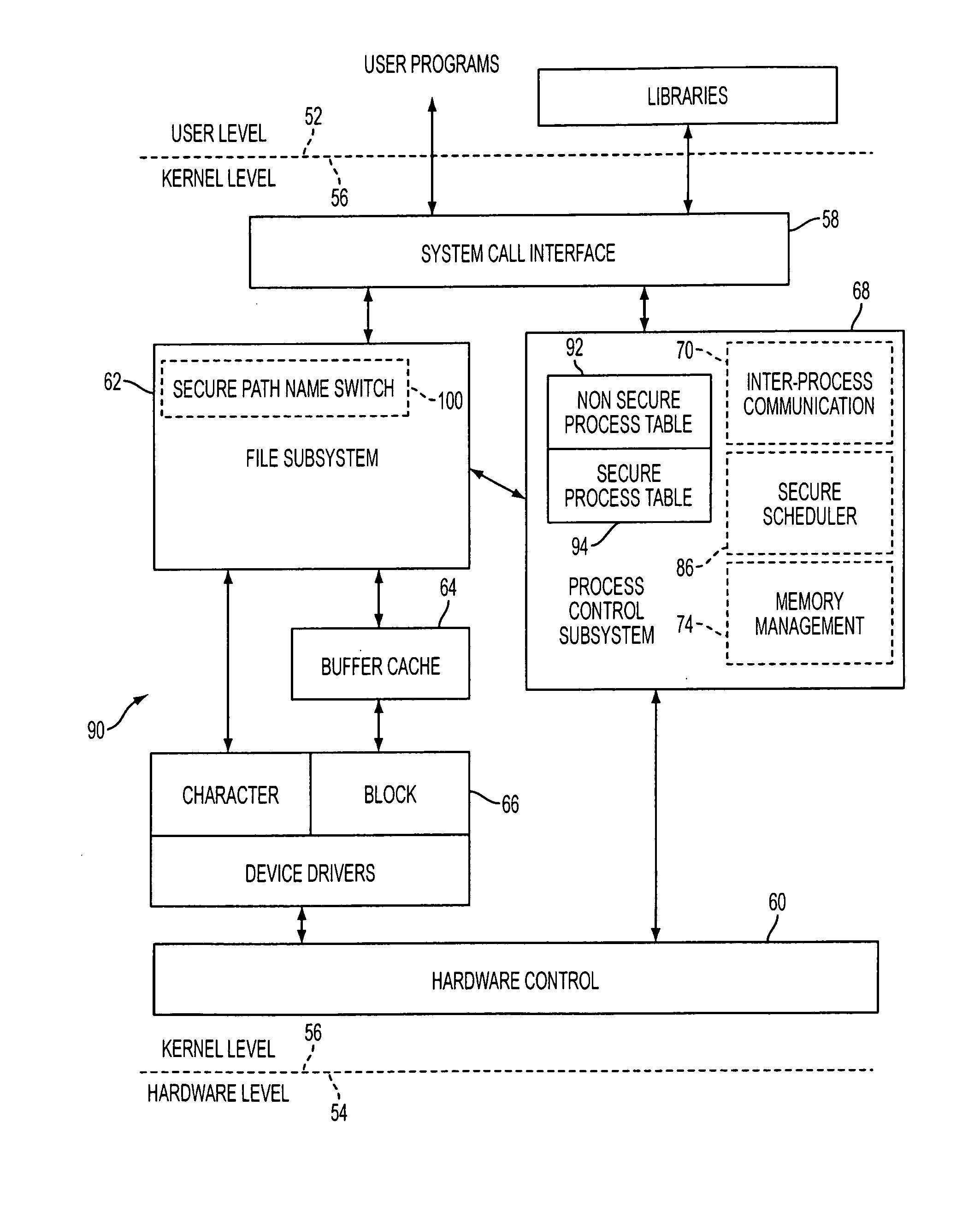

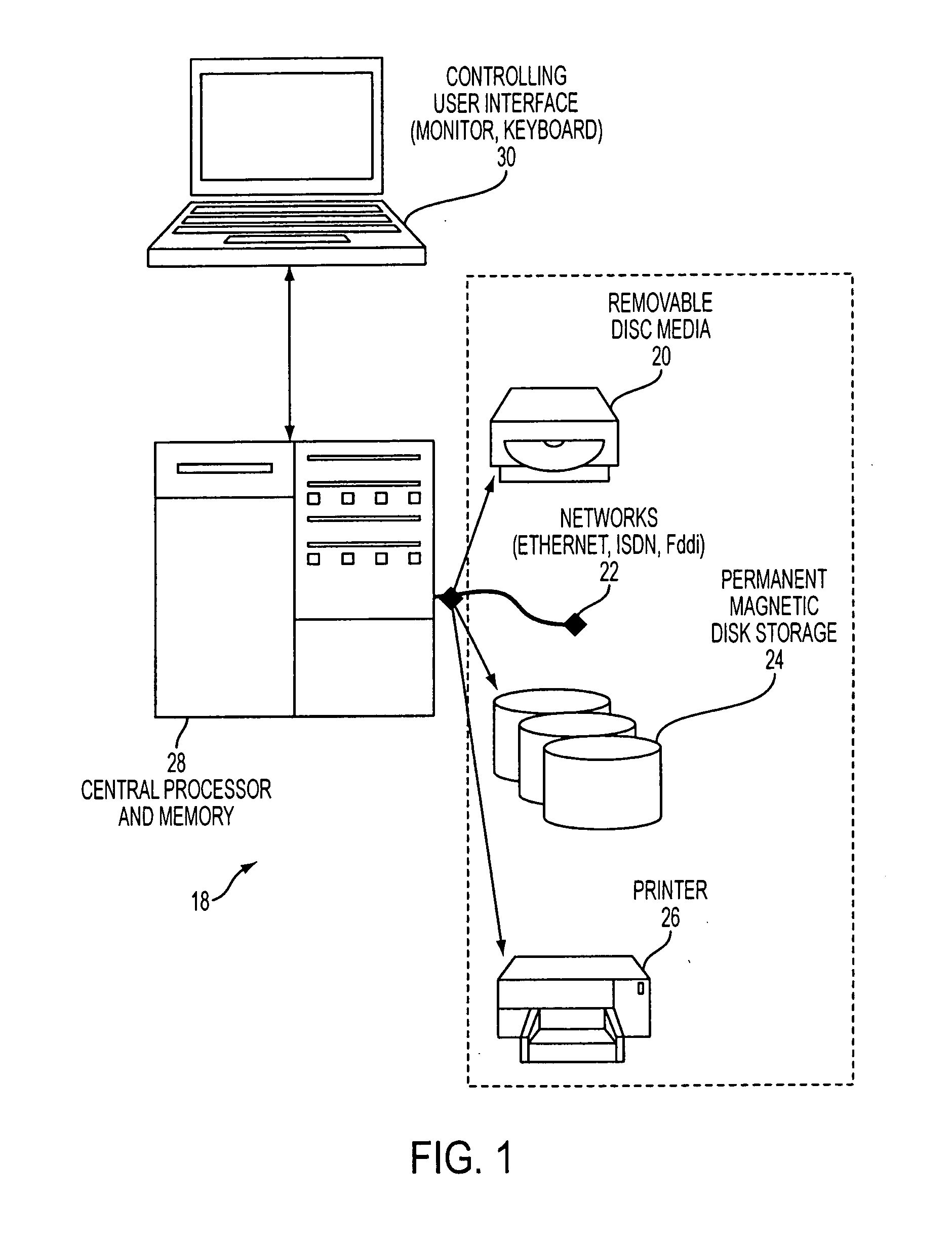

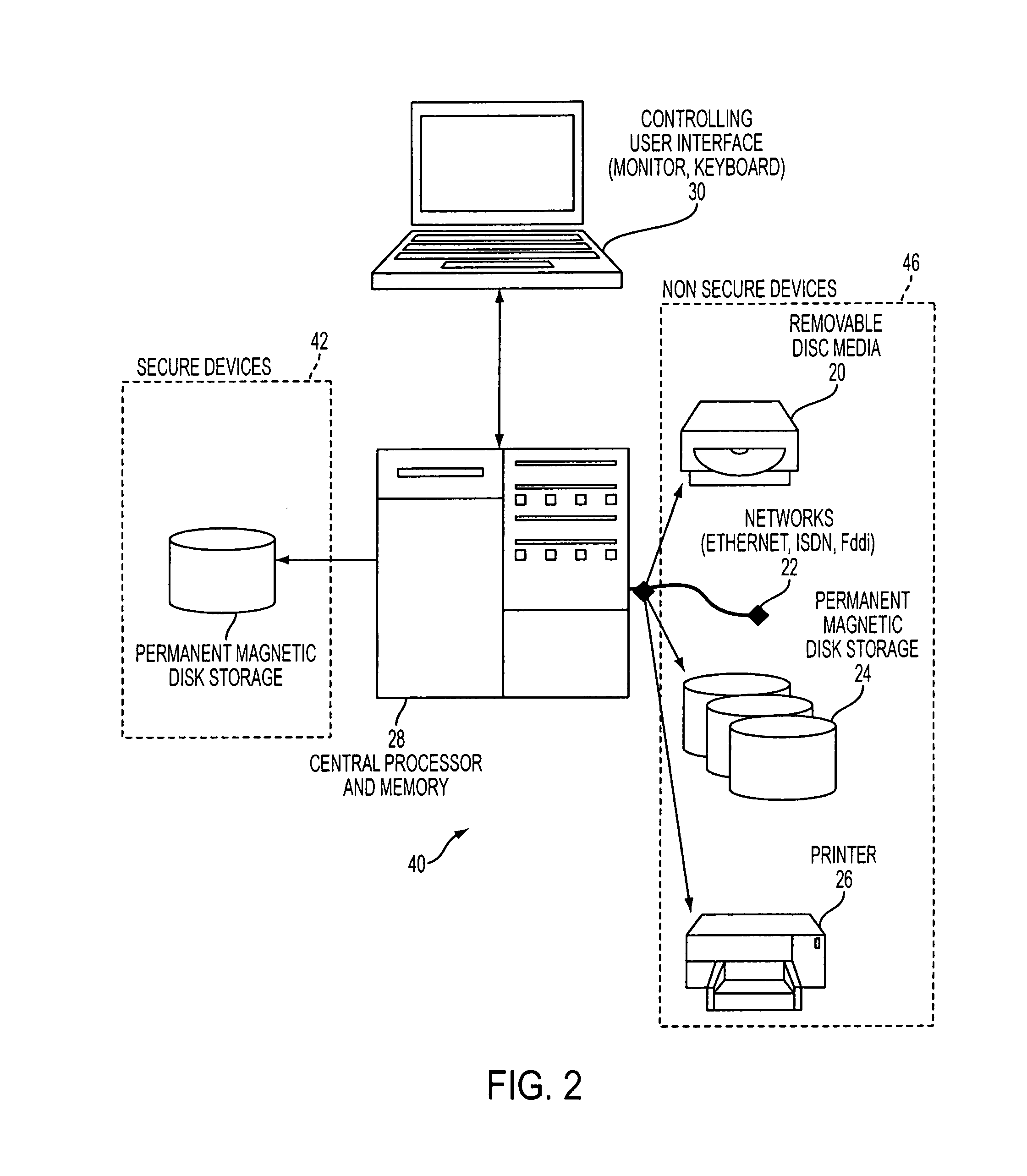

ActiveUS20060212945A1Safely and securely co-existDigital data processing detailsAnalogue secracy/subscription systemsOperation modeCurrent mode

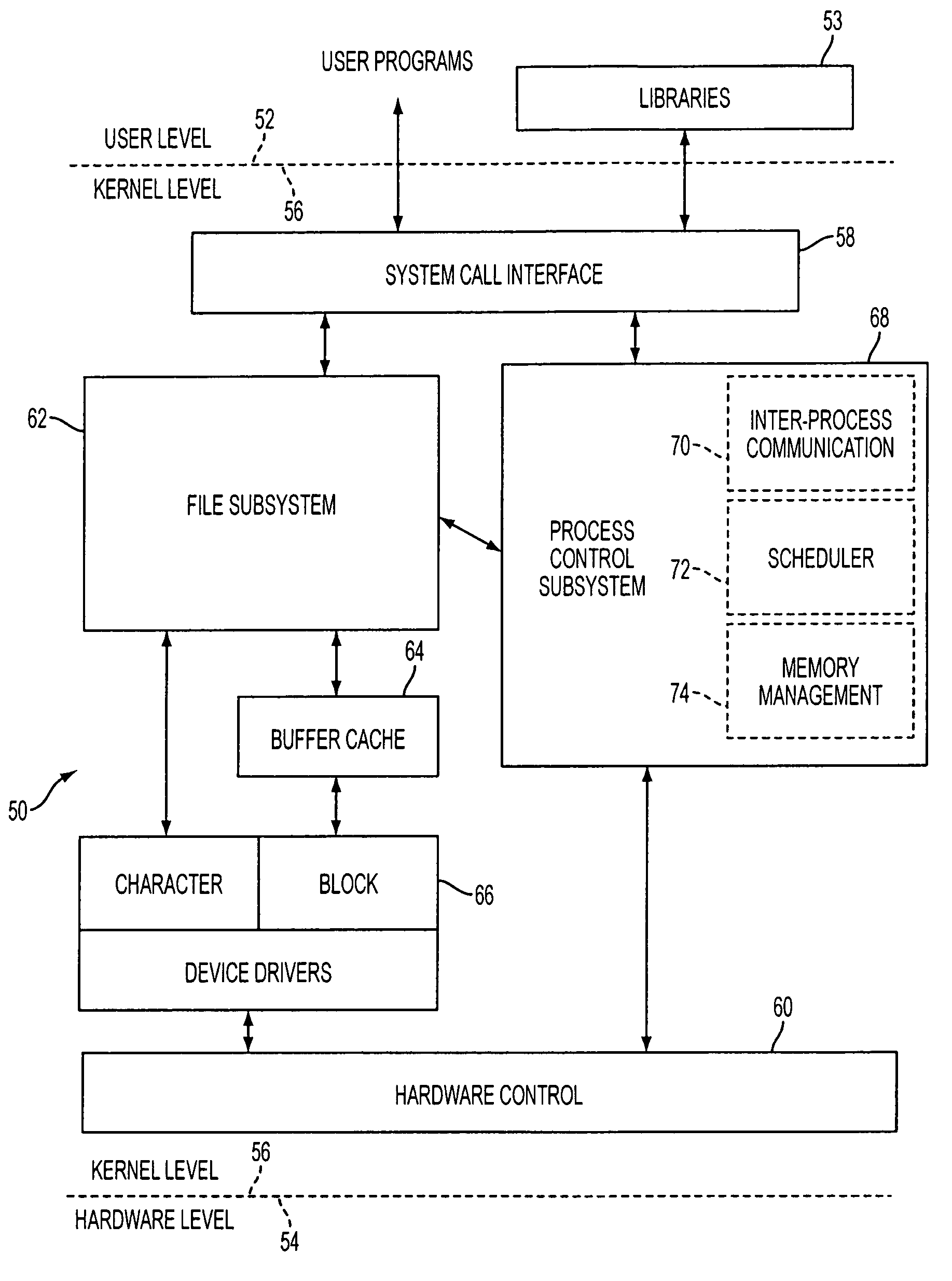

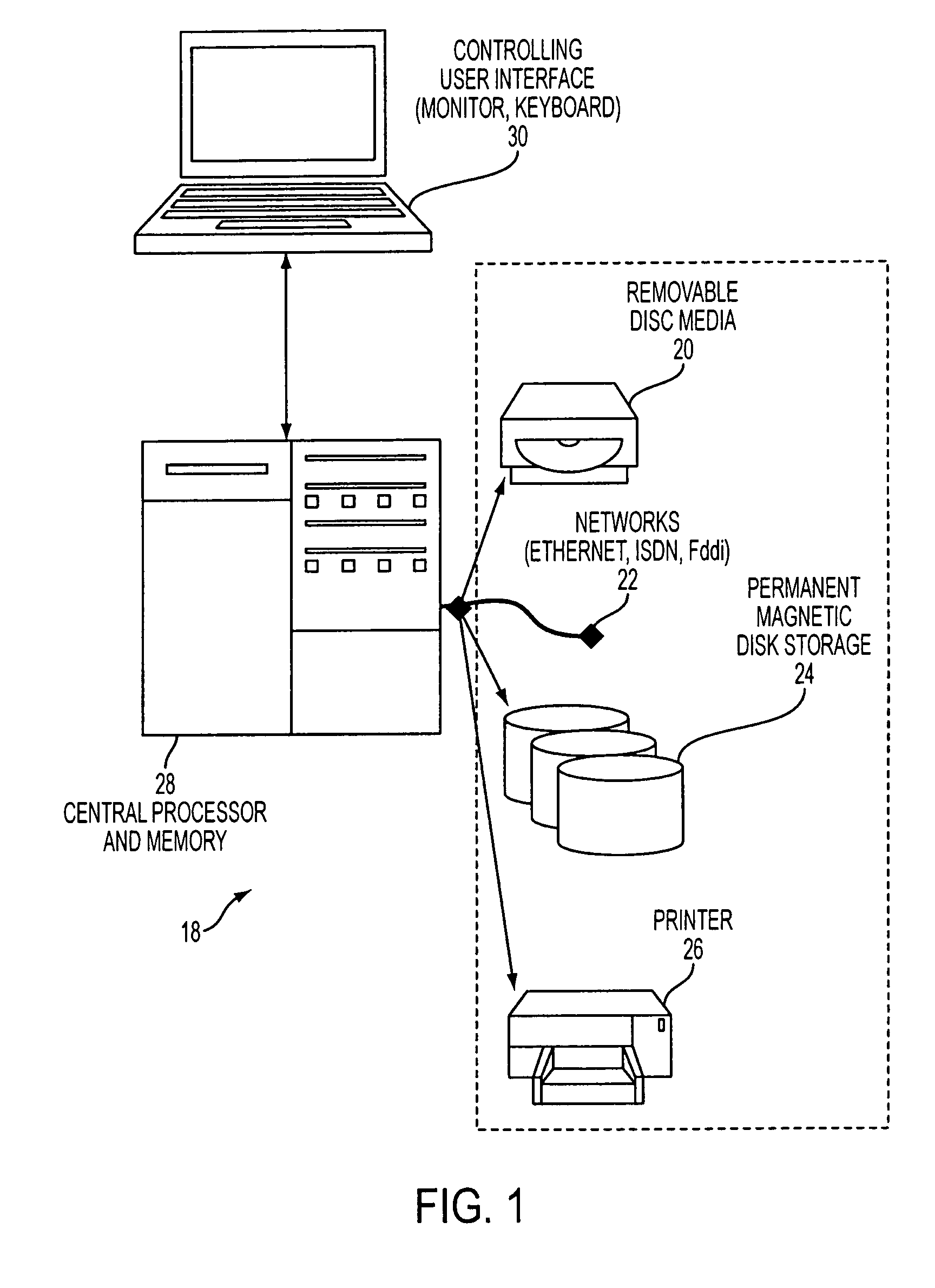

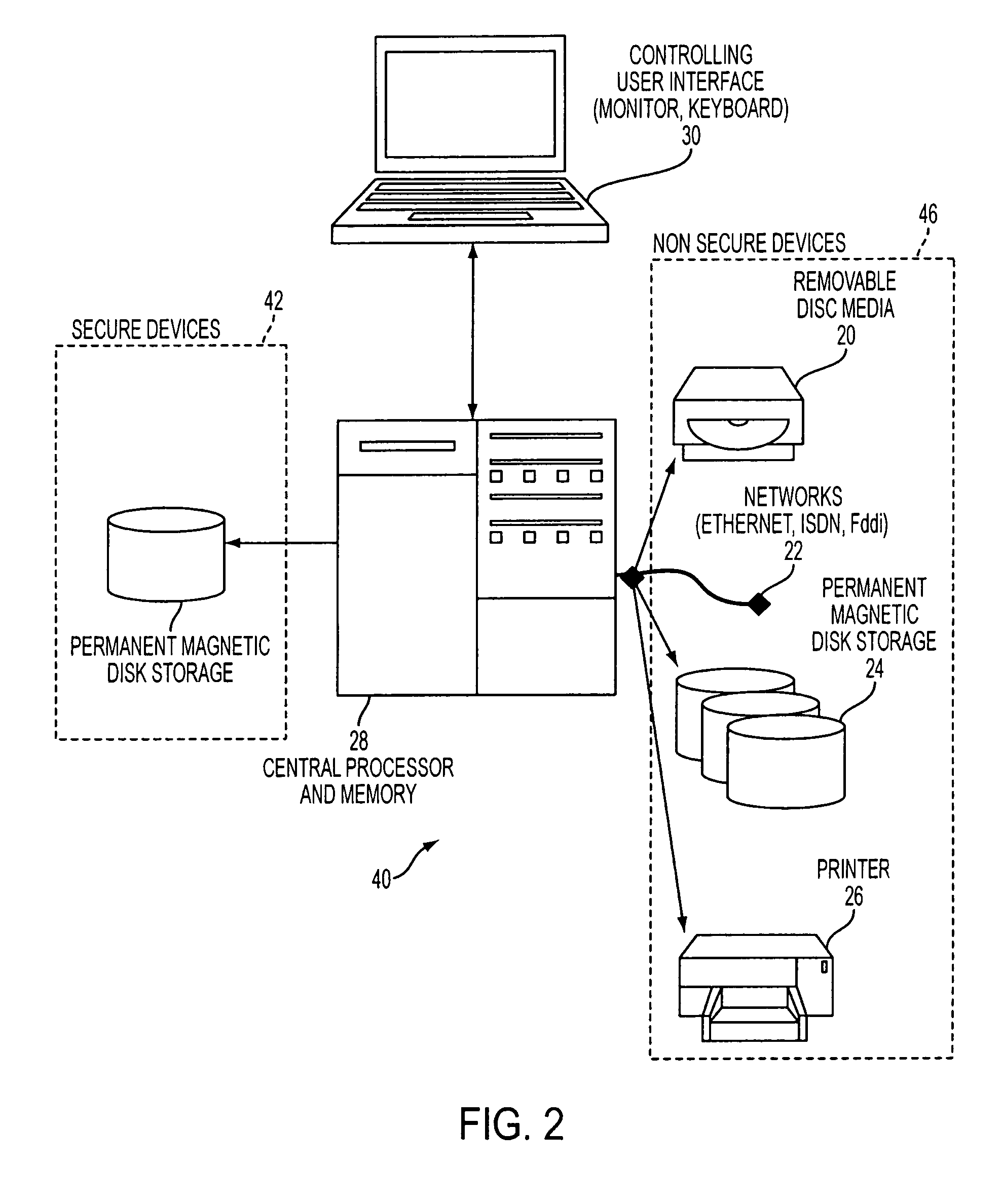

The present invention is a system that switches between non-secure and secure modes by making processes, applications and data for the non-active mode unavailable to the active mode. That is, non-secure processes, applications and data are not accessible when in the secure mode and visa versa. This is accomplished by creating dual hash tables where one table is used for secure processes and one for non-secure processes. A hash table pointer is changed to point to the table corresponding to the mode. The path-name look-up function that traverses the path name tree to obtain a device or file pointer is also restricted to allow traversal to only secure devices and file pointers when in the secure mode and only to non-secure devices and files in the non-secure mode. The process thread run queue is modified to include a state flag for each process that indicates whether the process is a secure or non-secure process. A process scheduler traverses the queue and only allocates time to processes that have a state flag that matches the current mode. Running processes are marked to be idled and are flagged as unrunnable, depending on the security mode, when the process reaches an intercept point. The switch operation validates the switch process and pauses the system for a period of time to allow all running processes to reach an intercept point and be marked as unrunnable. After all the processes are idled, the hash table pointer is changed, the look-up control is changed to allow traversal of the corresponding security mode branch of the file name path tree, and the scheduler is switched to allow only threads that have a flag that corresponds to the security mode to run. The switch process is then put to sleep and a master process, either secure or non-secure, depending on the mode, is then awakened.

Owner:MORGAN STANLEY +1

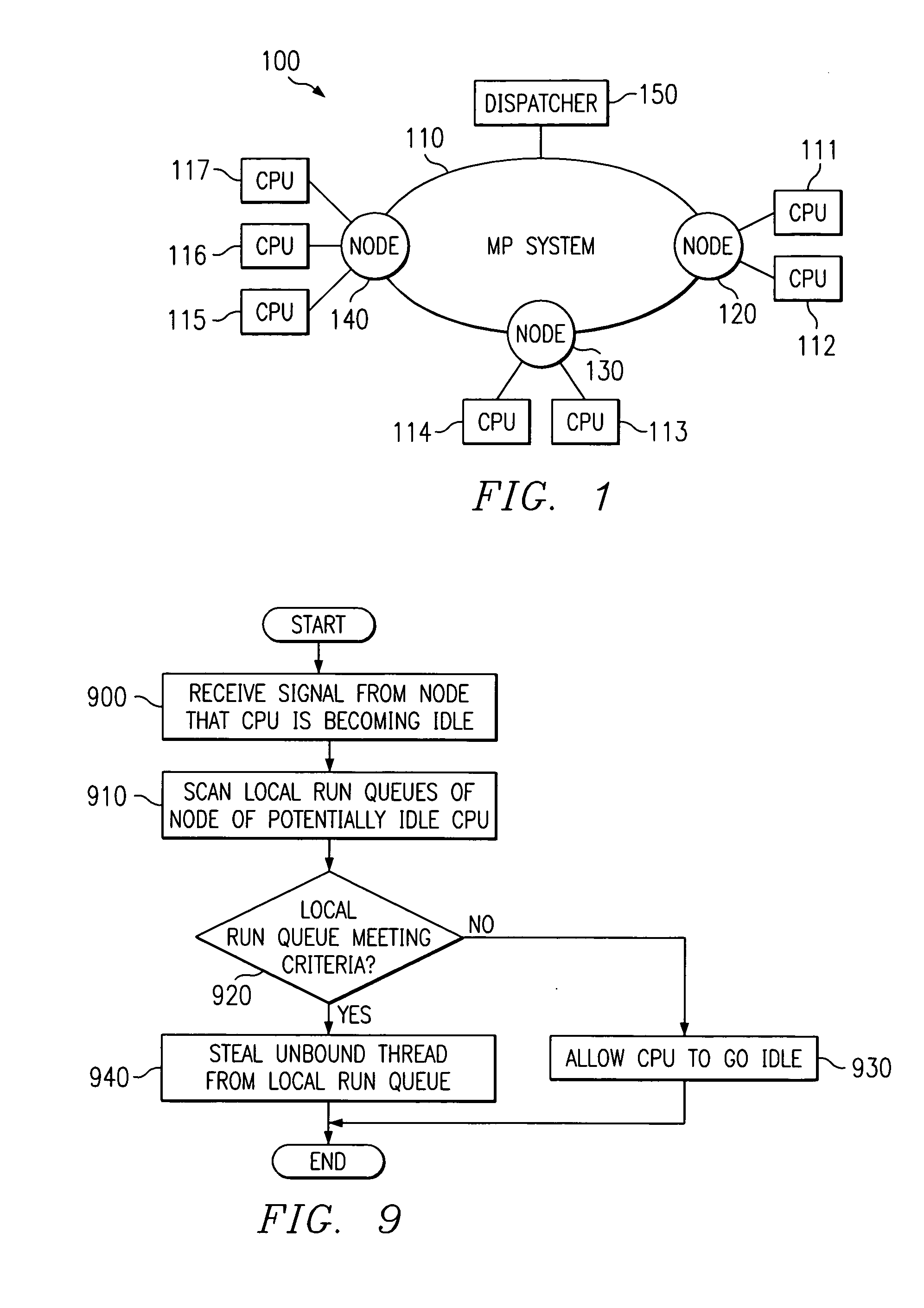

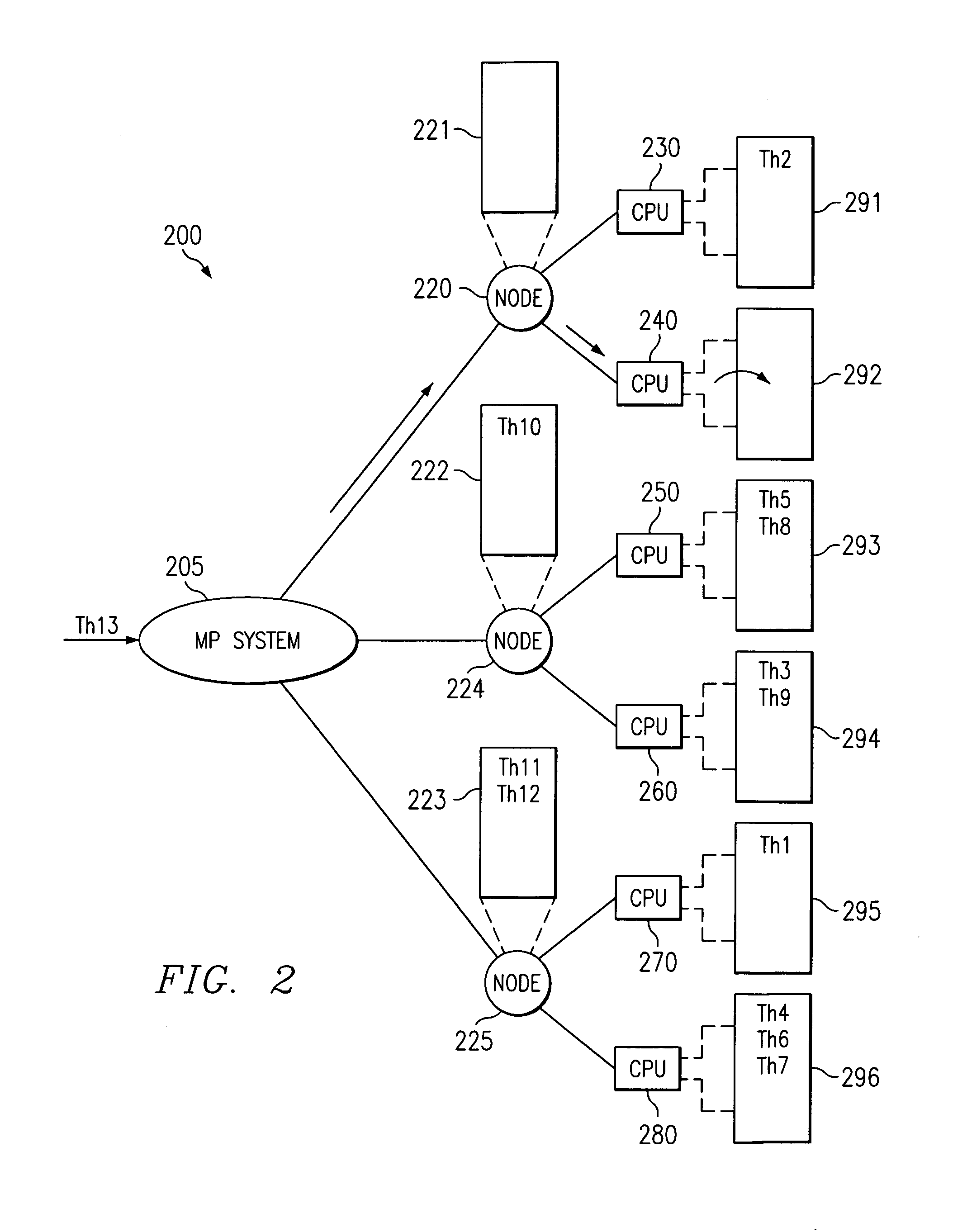

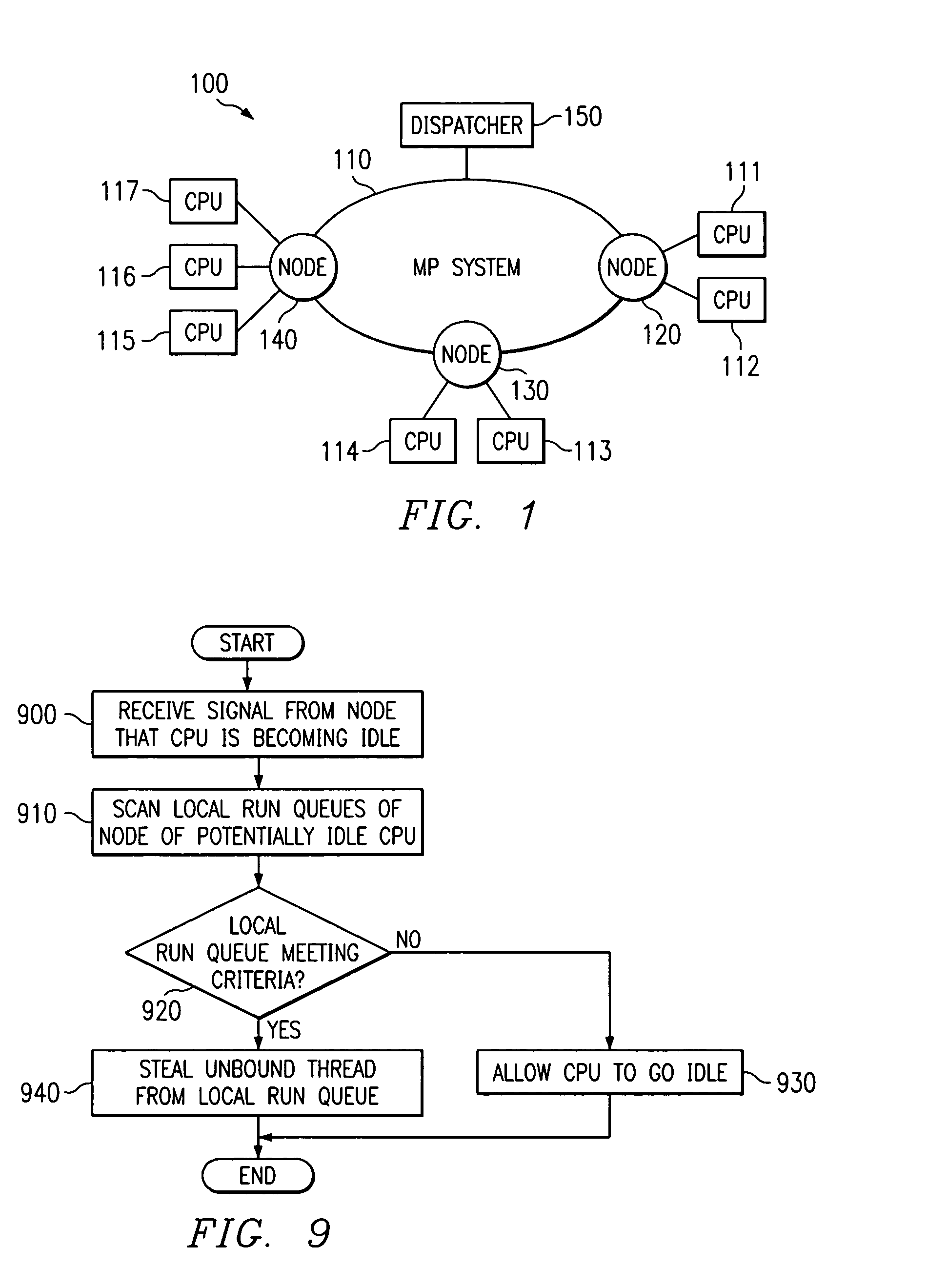

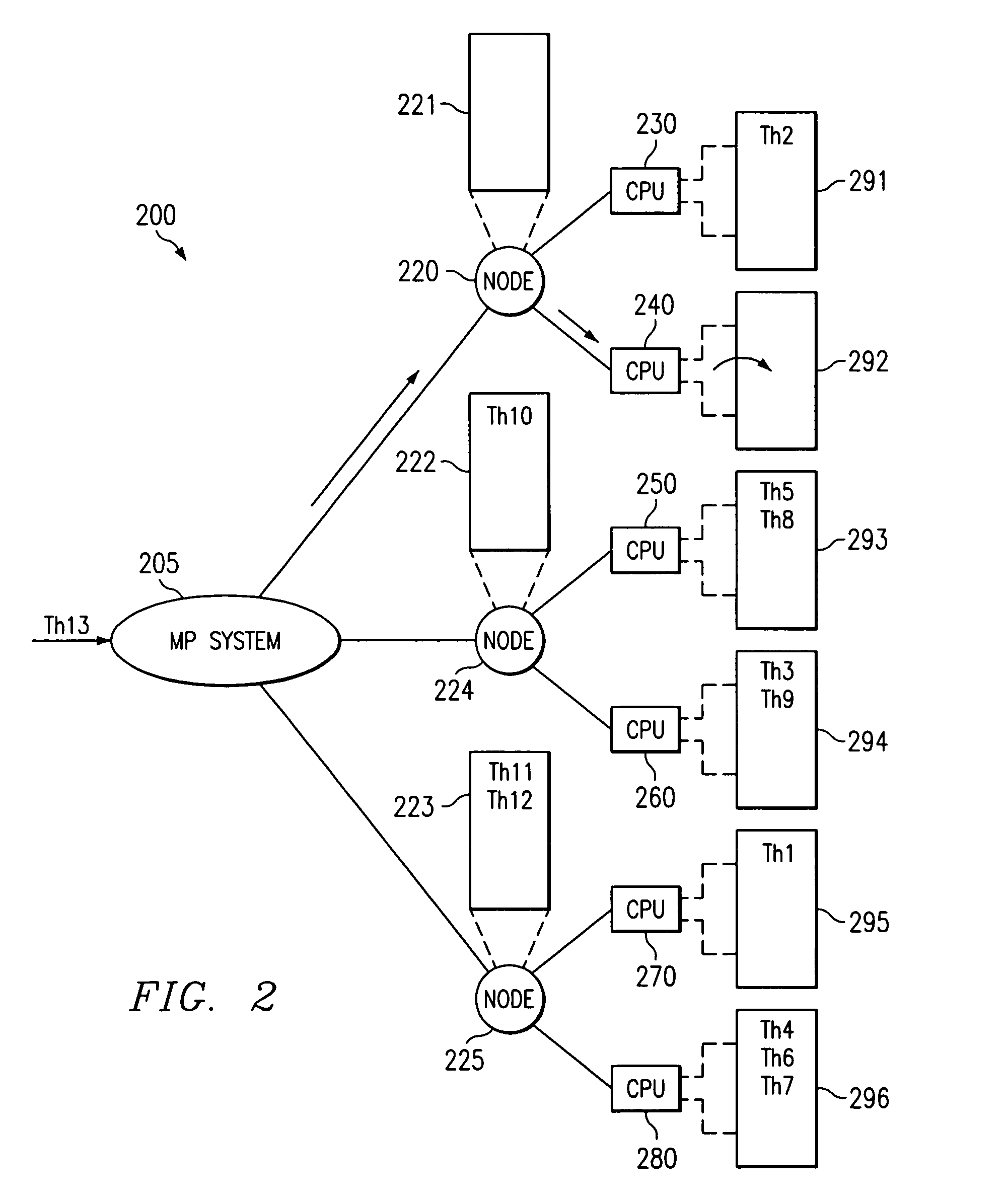

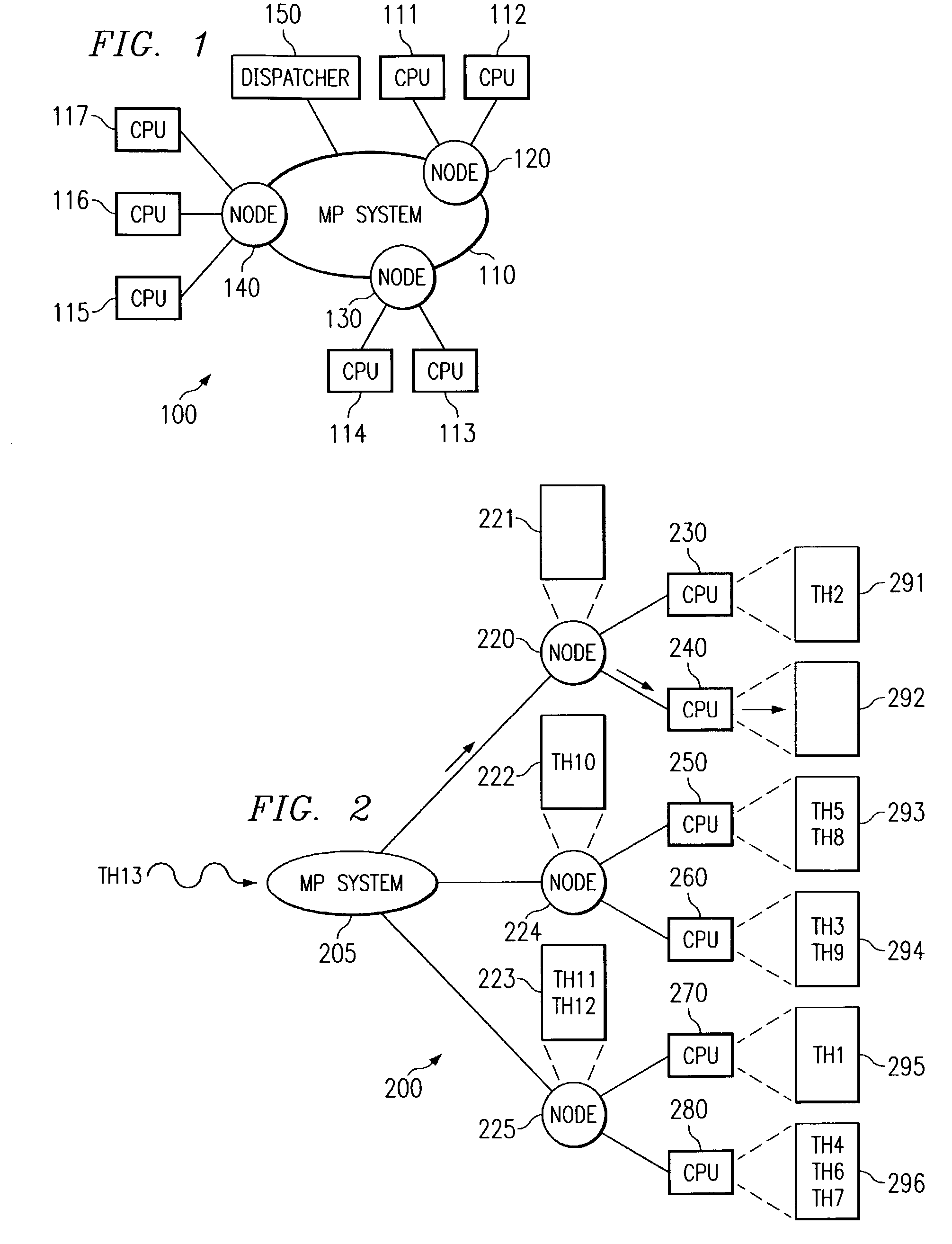

Method for determining idle processor load balancing in a multiple processors system

InactiveUS6986140B2Great likelihoodResource allocationMemory systemsDynamic load balancingMulti processor

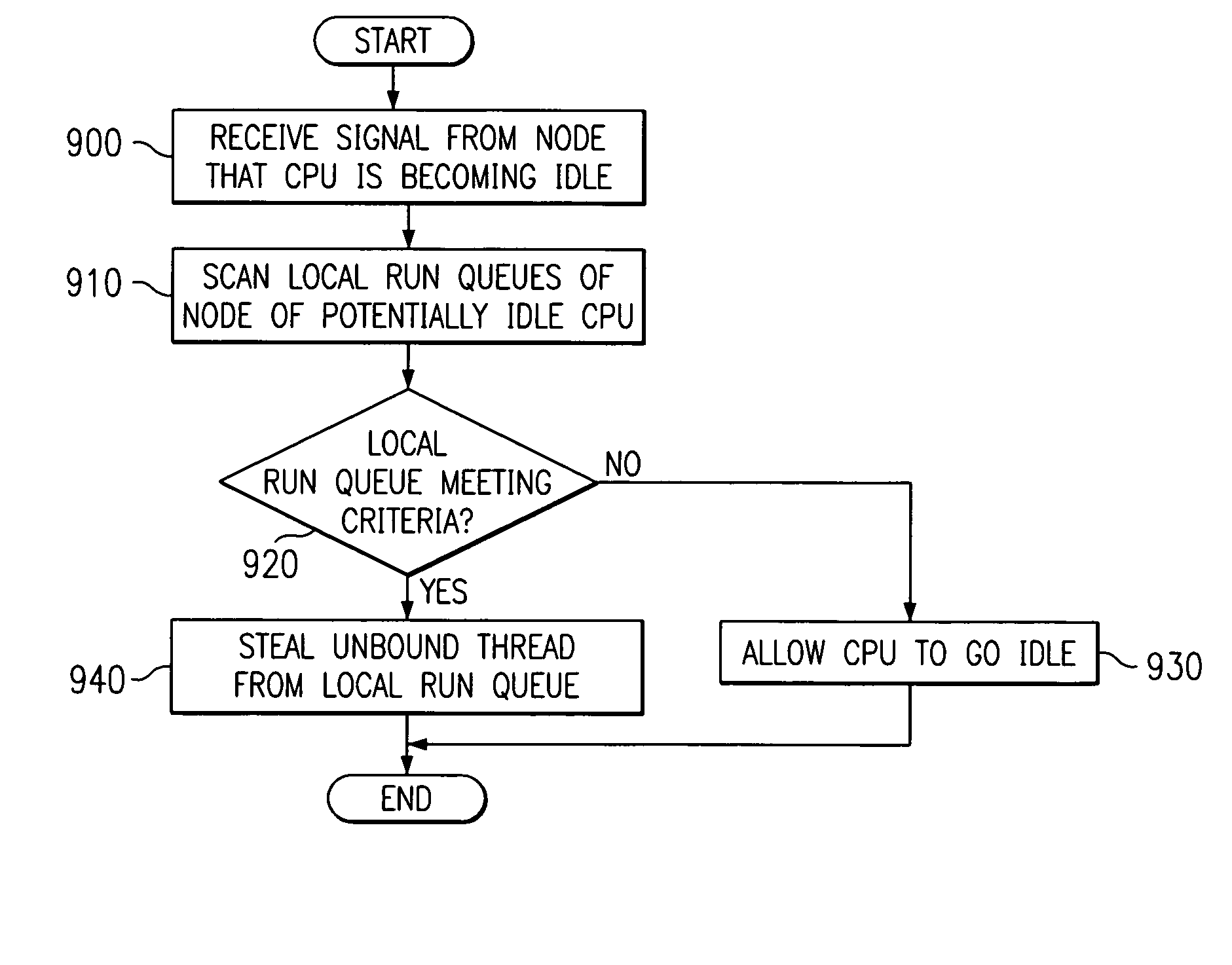

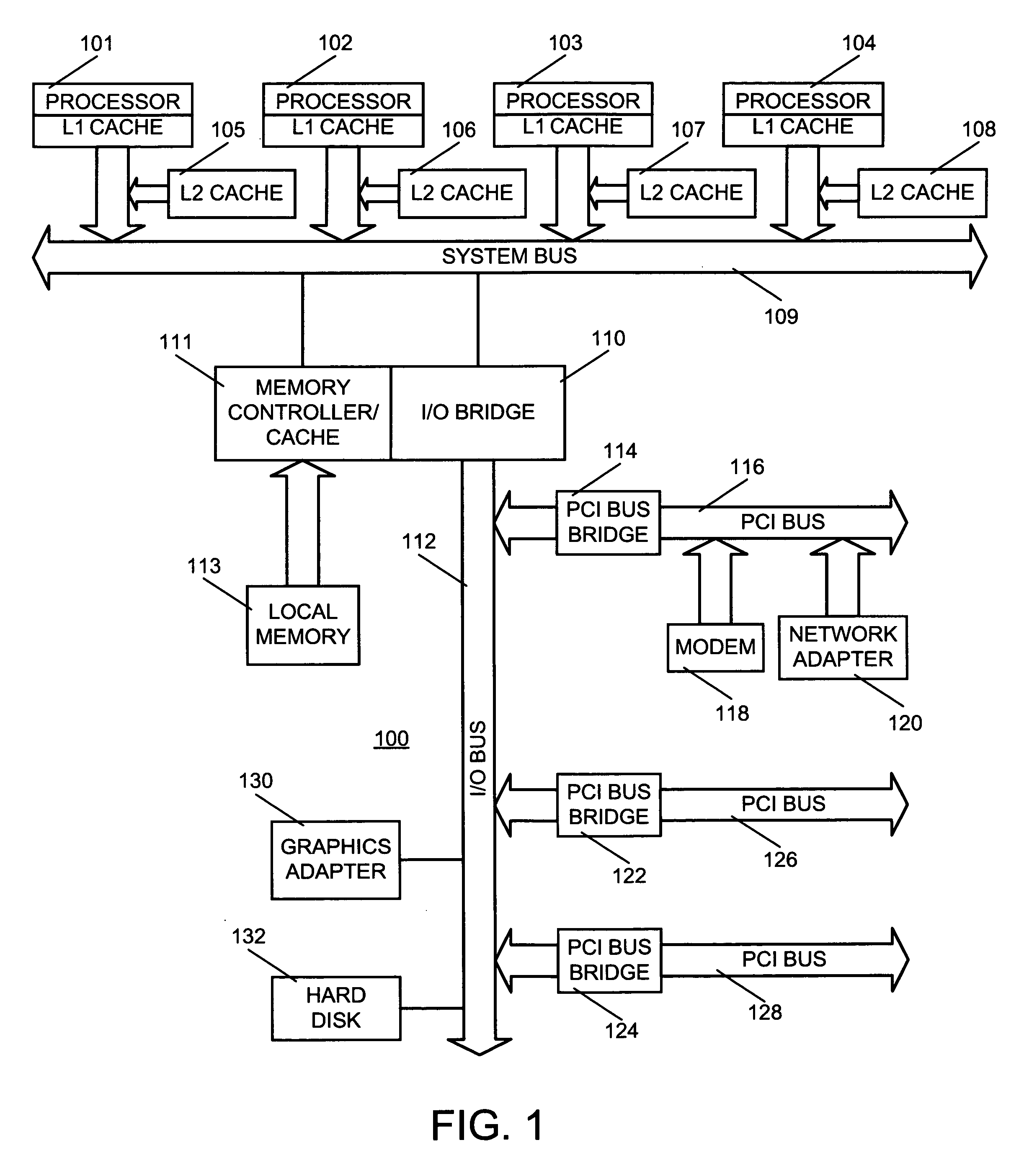

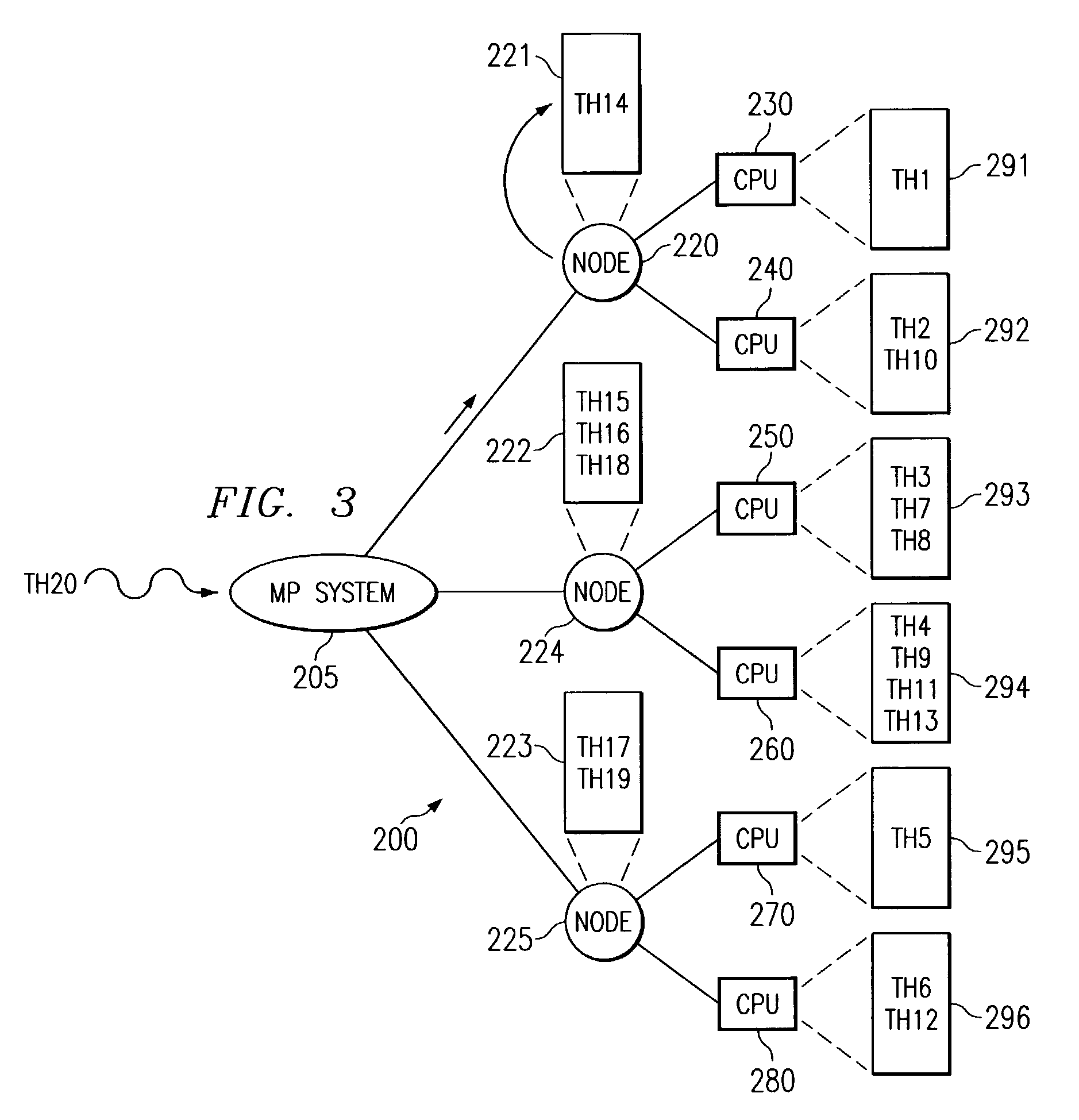

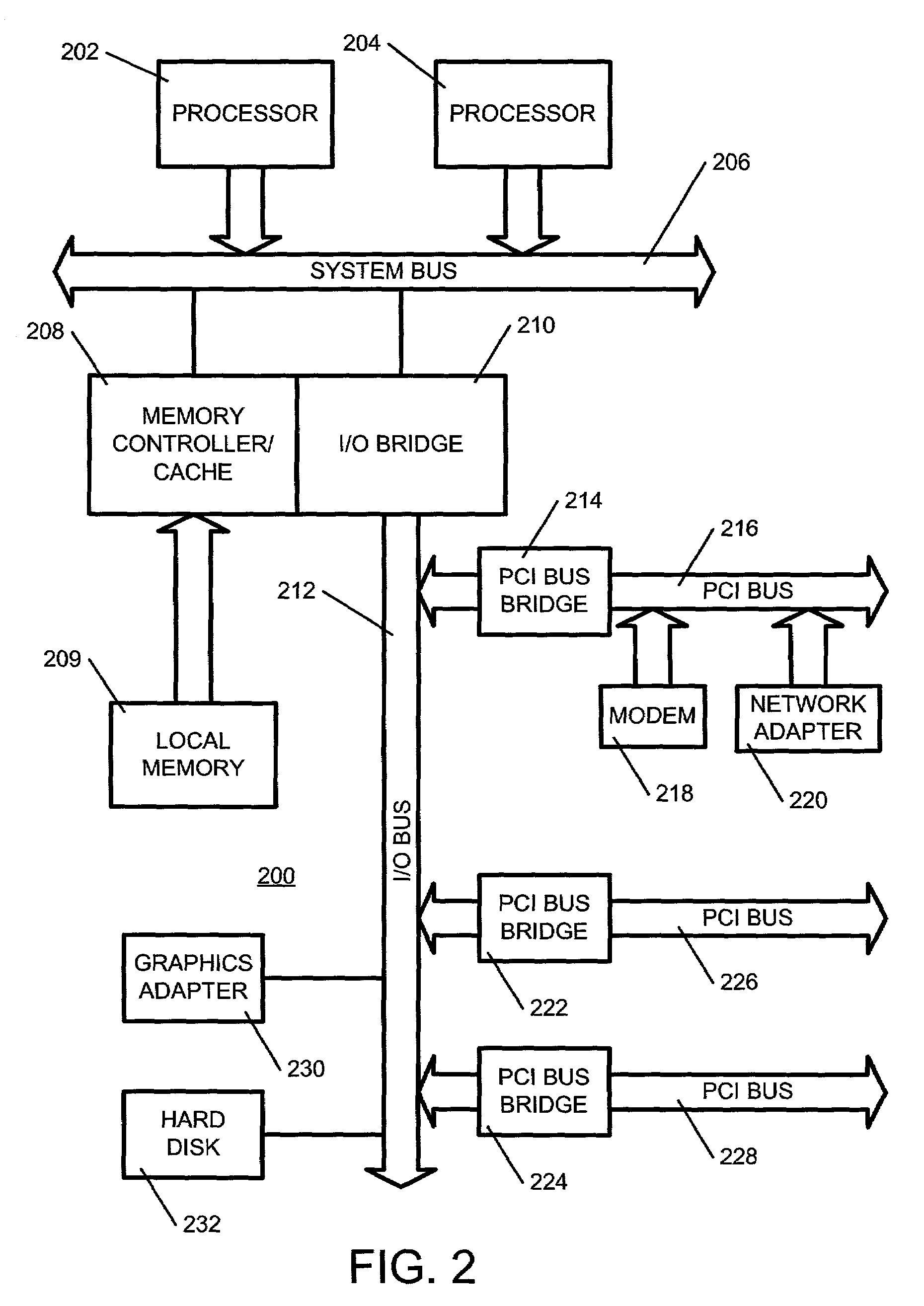

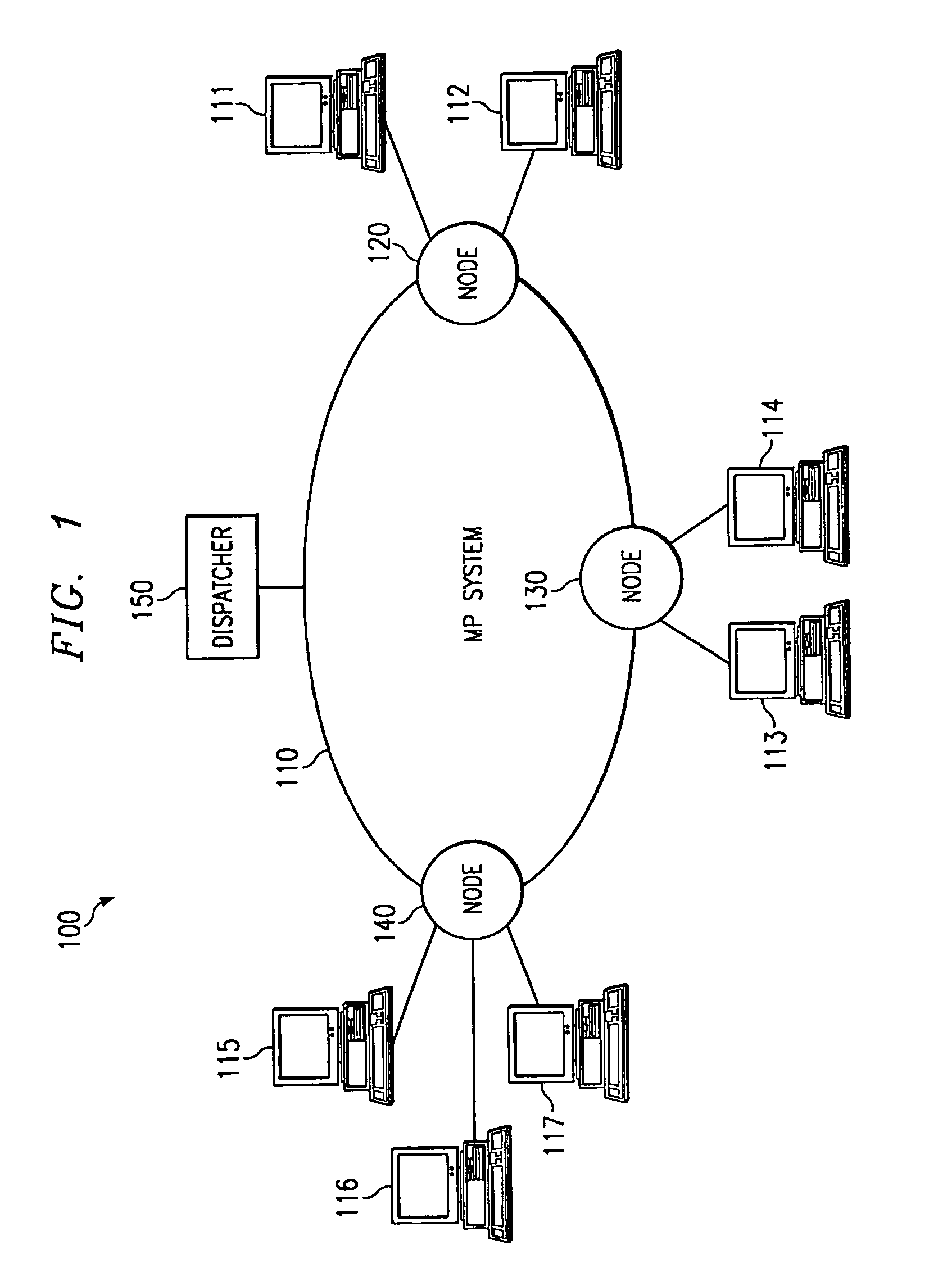

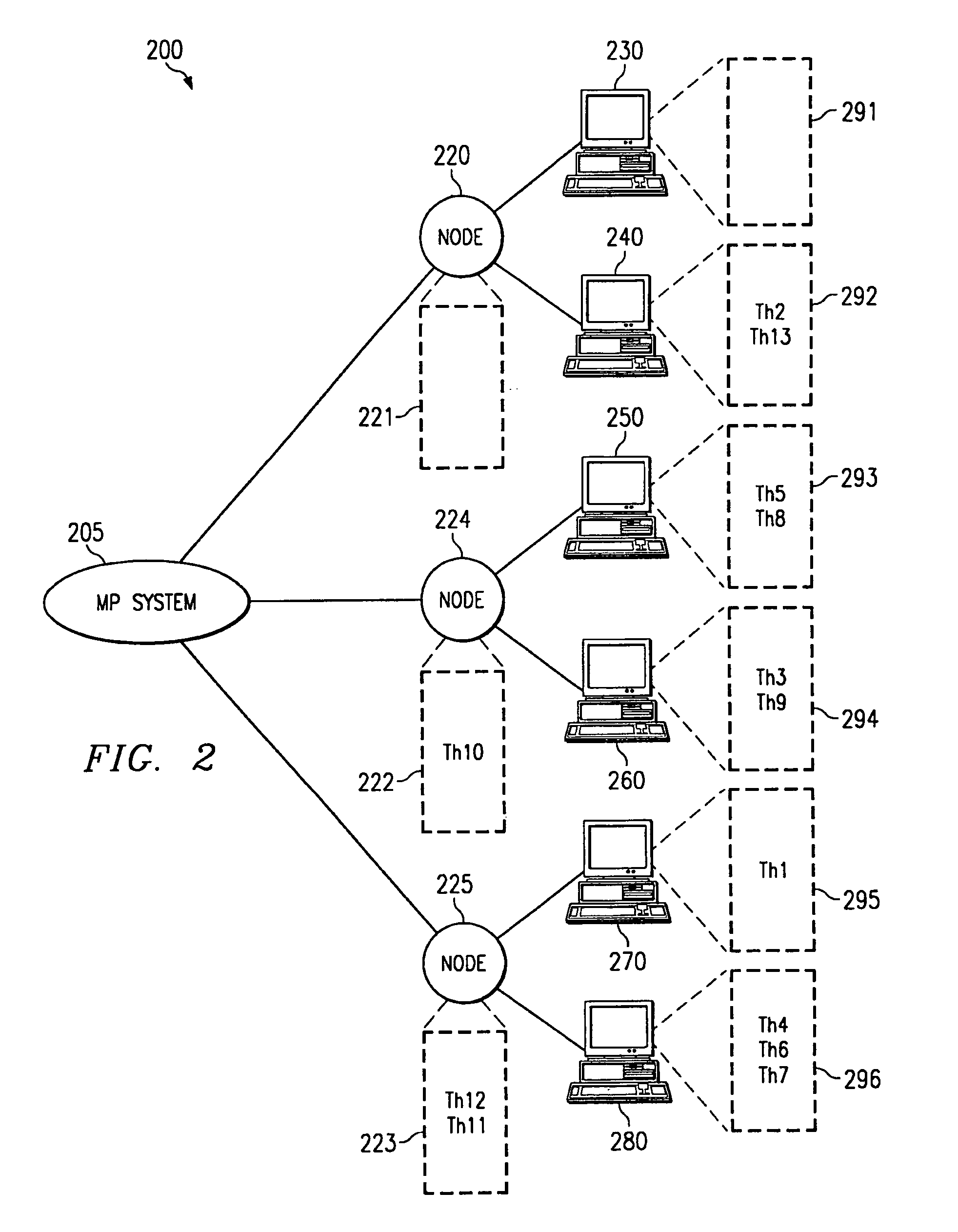

An apparatus and methods for periodic load balancing in a multiple run queue system are provided. The apparatus includes a controller, memory, initial load balancing device, idle load balancing device, periodic load balancing device, and starvation load balancing device. The apparatus performs initial load balancing, idle load balancing, periodic load balancing and starvation load balancing to ensure that the workloads for the processors of the system are optimally balanced.

Owner:IBM CORP

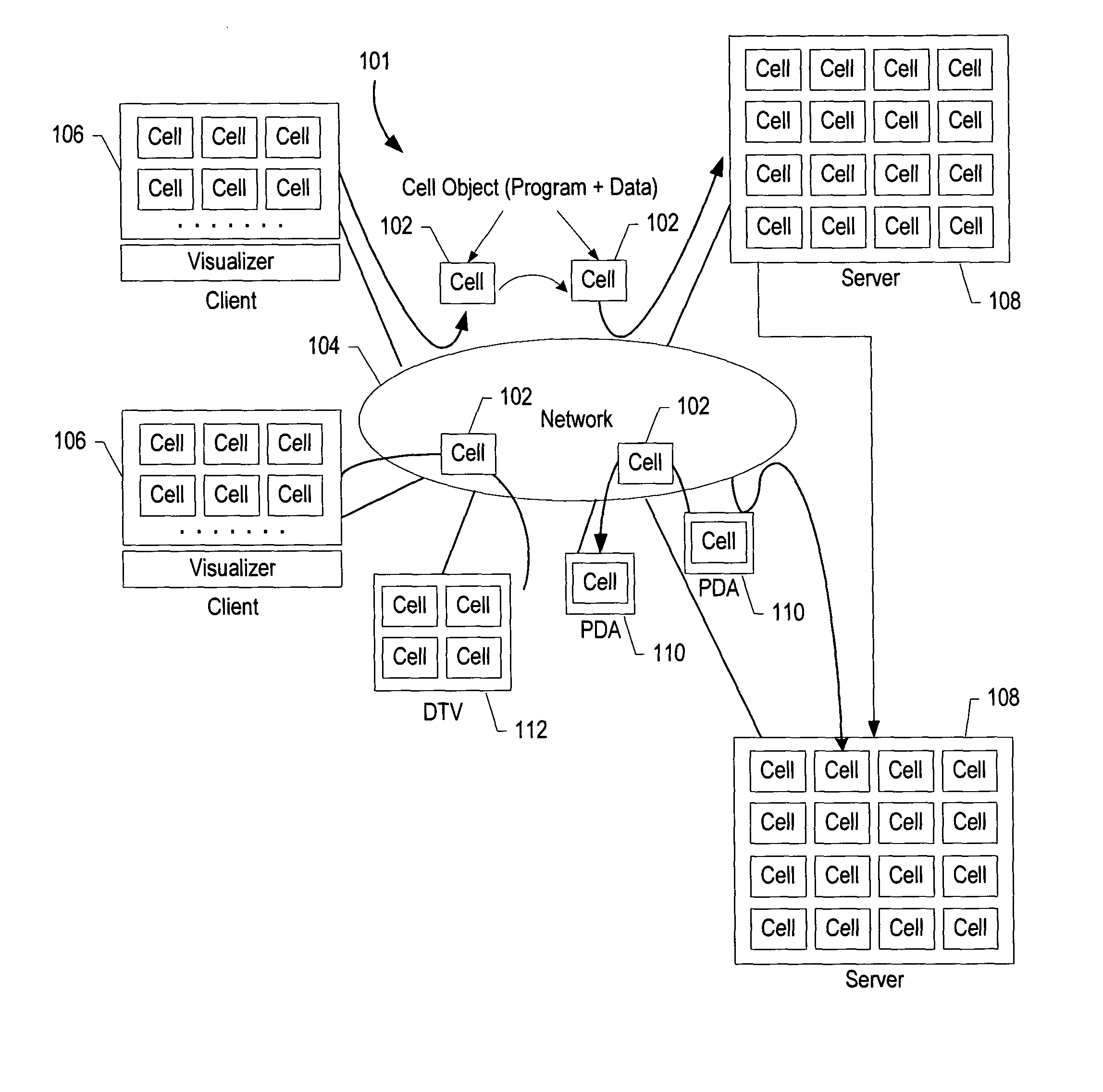

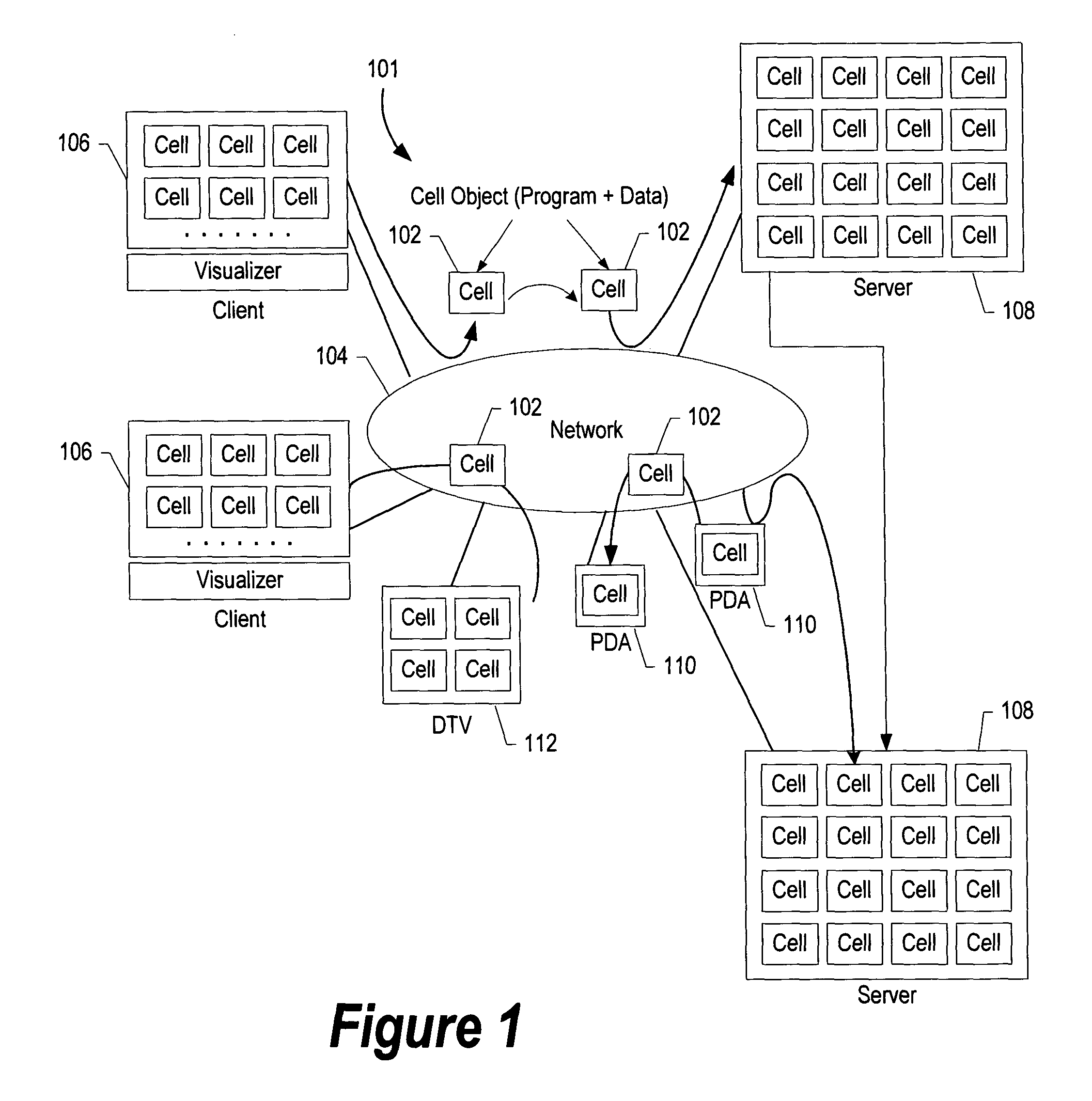

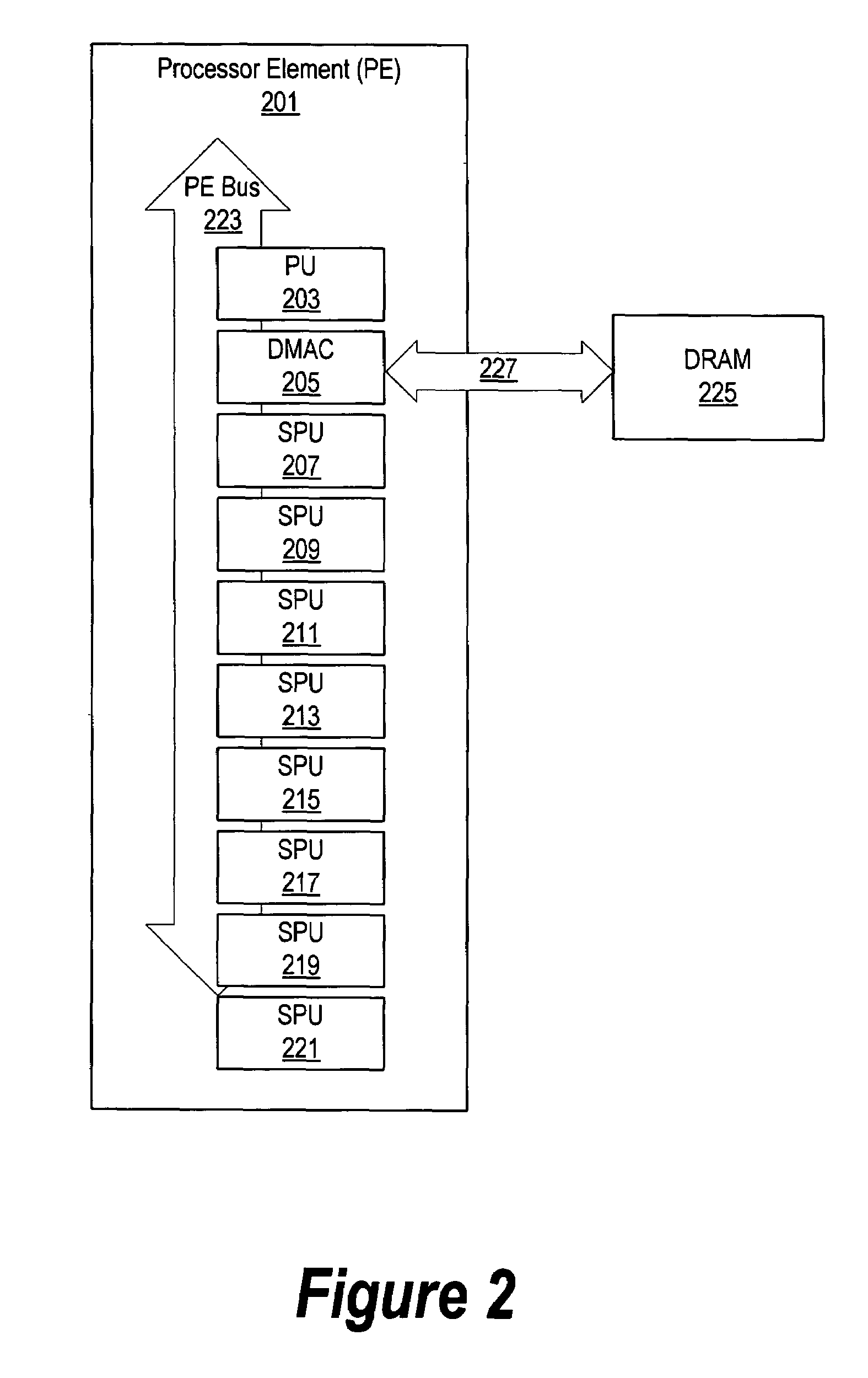

System and method for asymmetric heterogeneous multi-threaded operating system

InactiveUS20050081203A1Multiprogramming arrangementsMemory systemsOperational systemOperating system

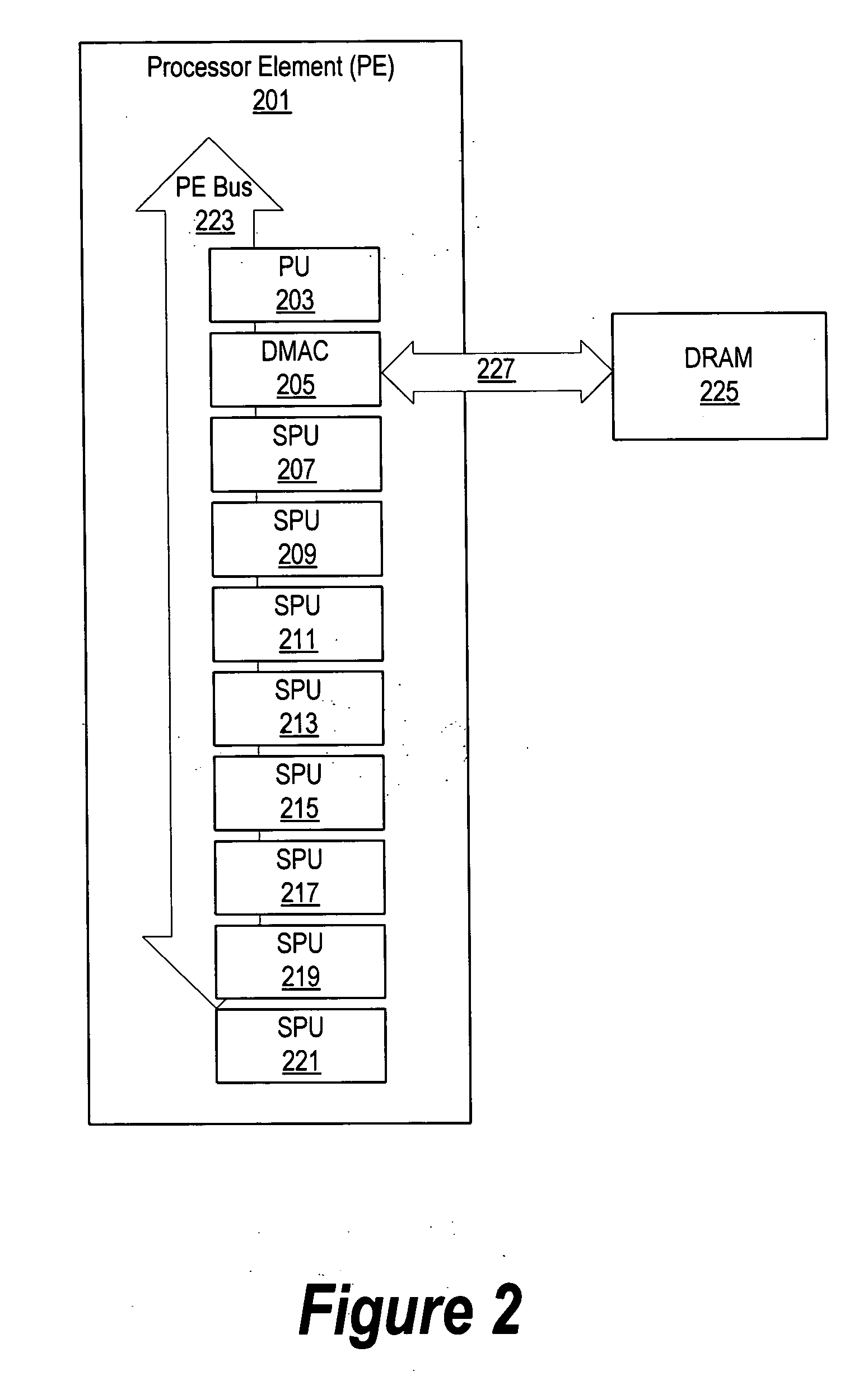

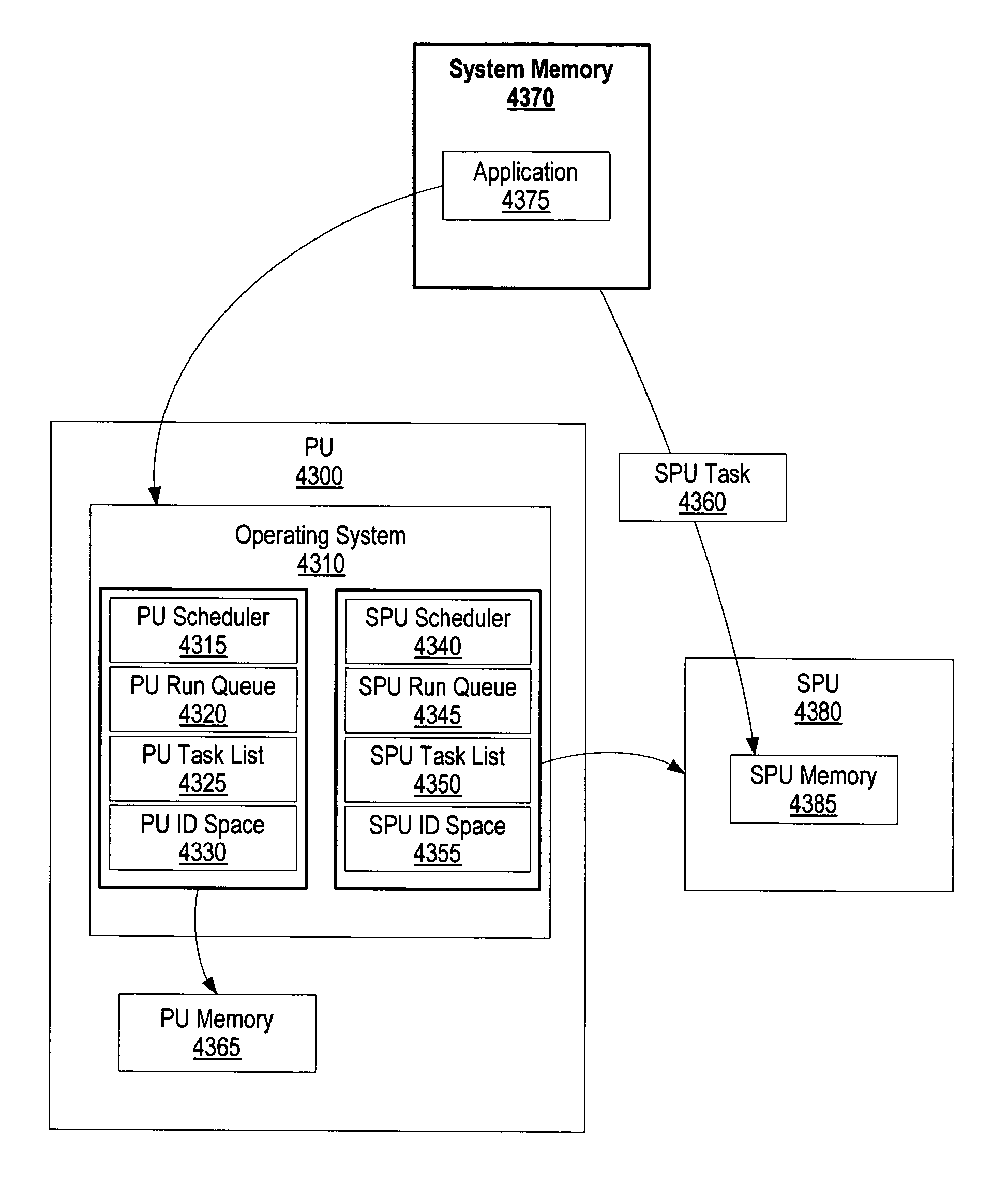

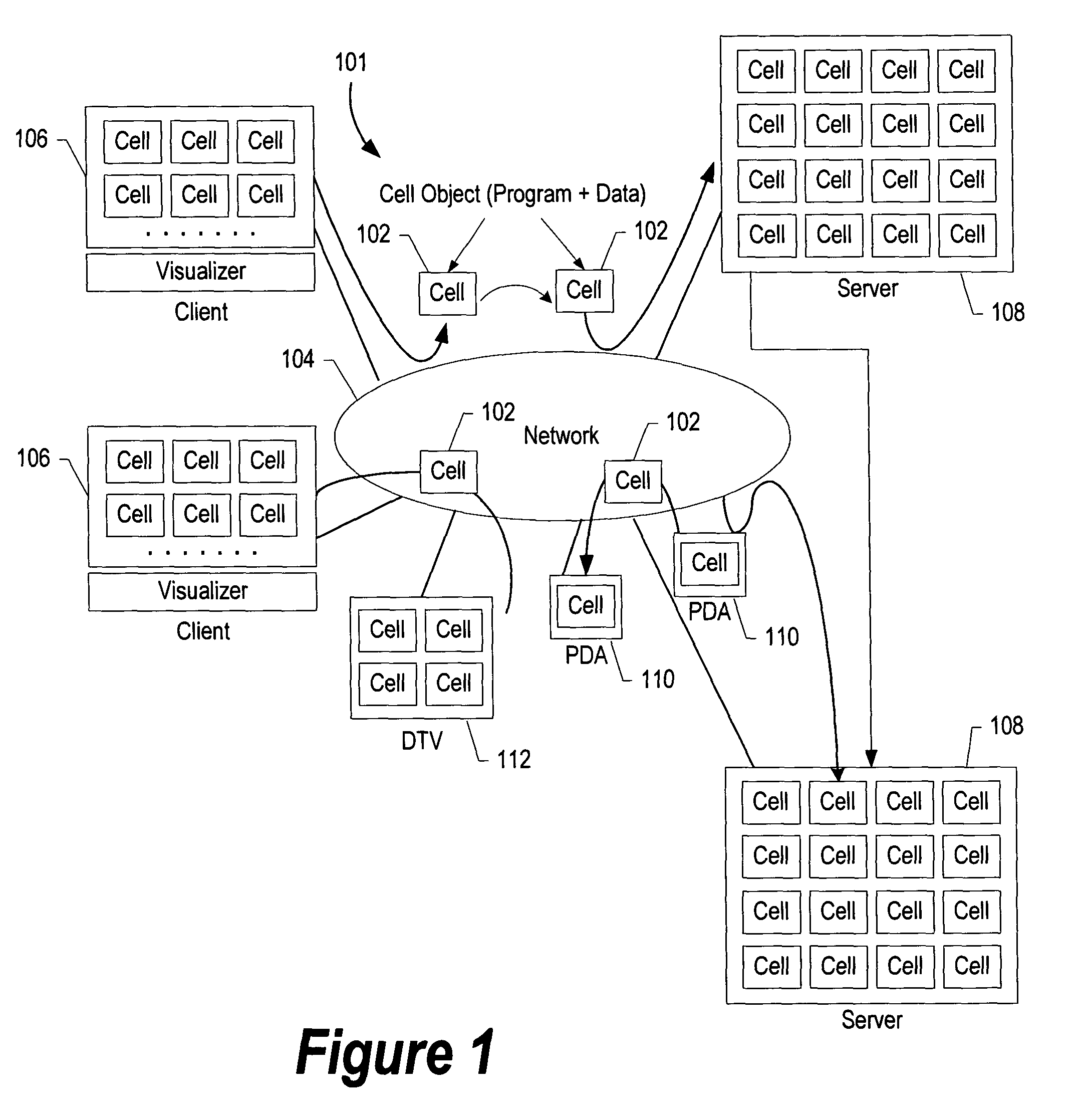

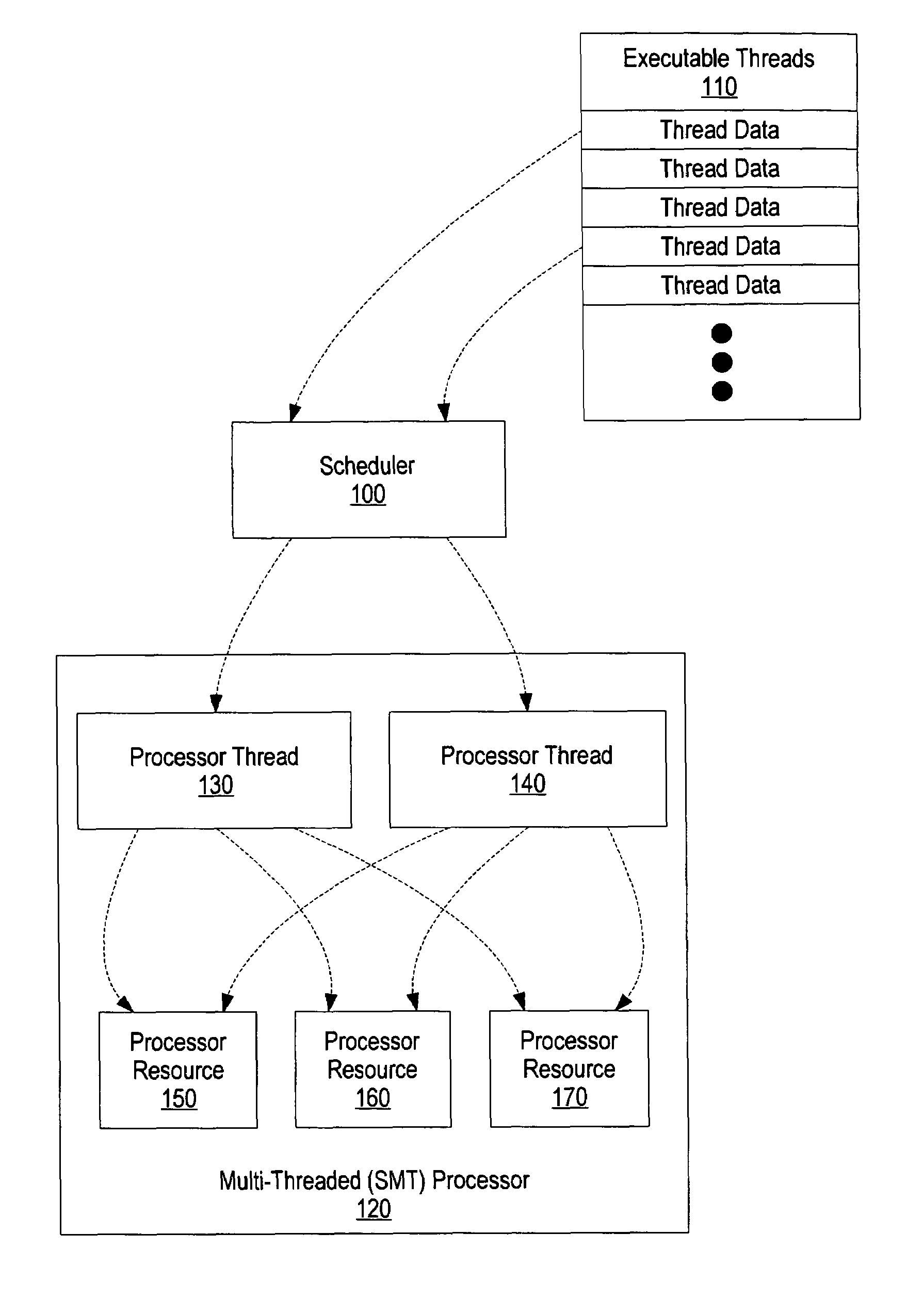

A system and method for an asymmetric heterogeneous multi-threaded operating system are presented. A processing unit (PU) provides a trusted mode environment in which an operating system executes. A heterogeneous processor environment includes a synergistic processing unit (SPU) that does not provide trusted mode capabilities. The PU operating system uses two separate and distinct schedulers which are a PU scheduler and an SPU scheduler to schedule tasks on a PU and an SPU, respectively. In one embodiment, the heterogeneous processor environment includes a plurality of SPUs. In this embodiment, the SPU scheduler may use a single SPU run queue to schedule tasks for the plurality of SPUs or, the SPU scheduler may use a plurality of run queues to schedule SPU tasks whereby each of the run queues correspond to a particular SPU.

Owner:GLOBALFOUNDRIES INC

System, apparatus and method of reducing adverse performance impact due to migration of processes from one CPU to another

InactiveUS20060037017A1Adverse performance impactMultiprogramming arrangementsMemory systemsMulti processorOperating system

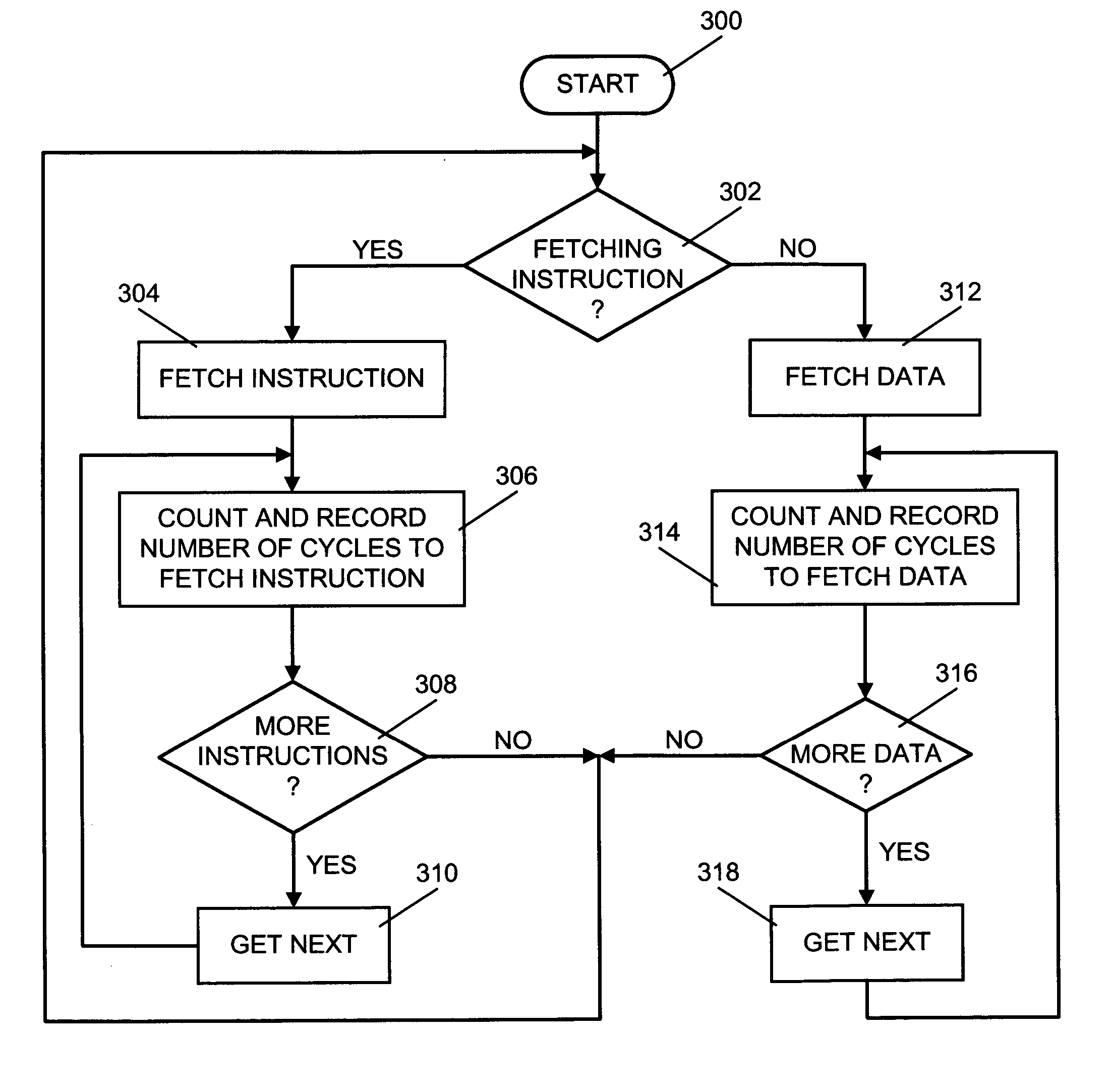

A system, apparatus and method of reducing adverse performance impact due to migration of processes from one processor to another in a multi-processor system are provided. When a process is executing, the number of cycles it takes to fetch each instruction (CPI) of the process is stored. After execution of the process, an average CPI is computed and stored in a storage device that is associated with the process. When a run queue of the multi-processor system is empty, a process may be chosen from the run queue that has the most processes awaiting execution to migrate to the empty run queue. The chosen process is the process that has the highest average number of CPIs.

Owner:IBM CORP

Webpage-crawling-based crawler technology

InactiveCN103970788ARealize acquisitionRealize multi-machine parallel crawlingWeb data indexingSpecial data processing applicationsMulti machineThe Internet

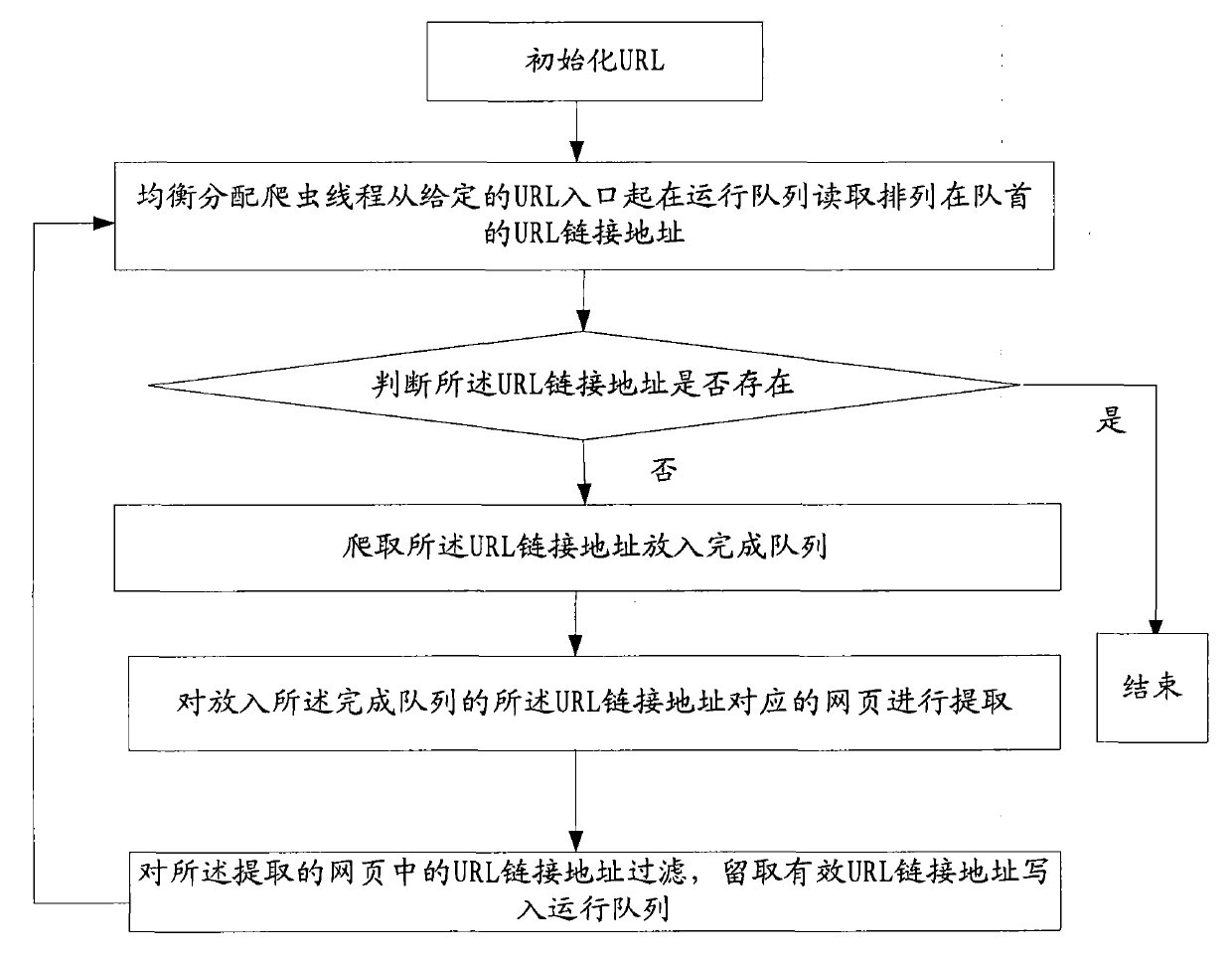

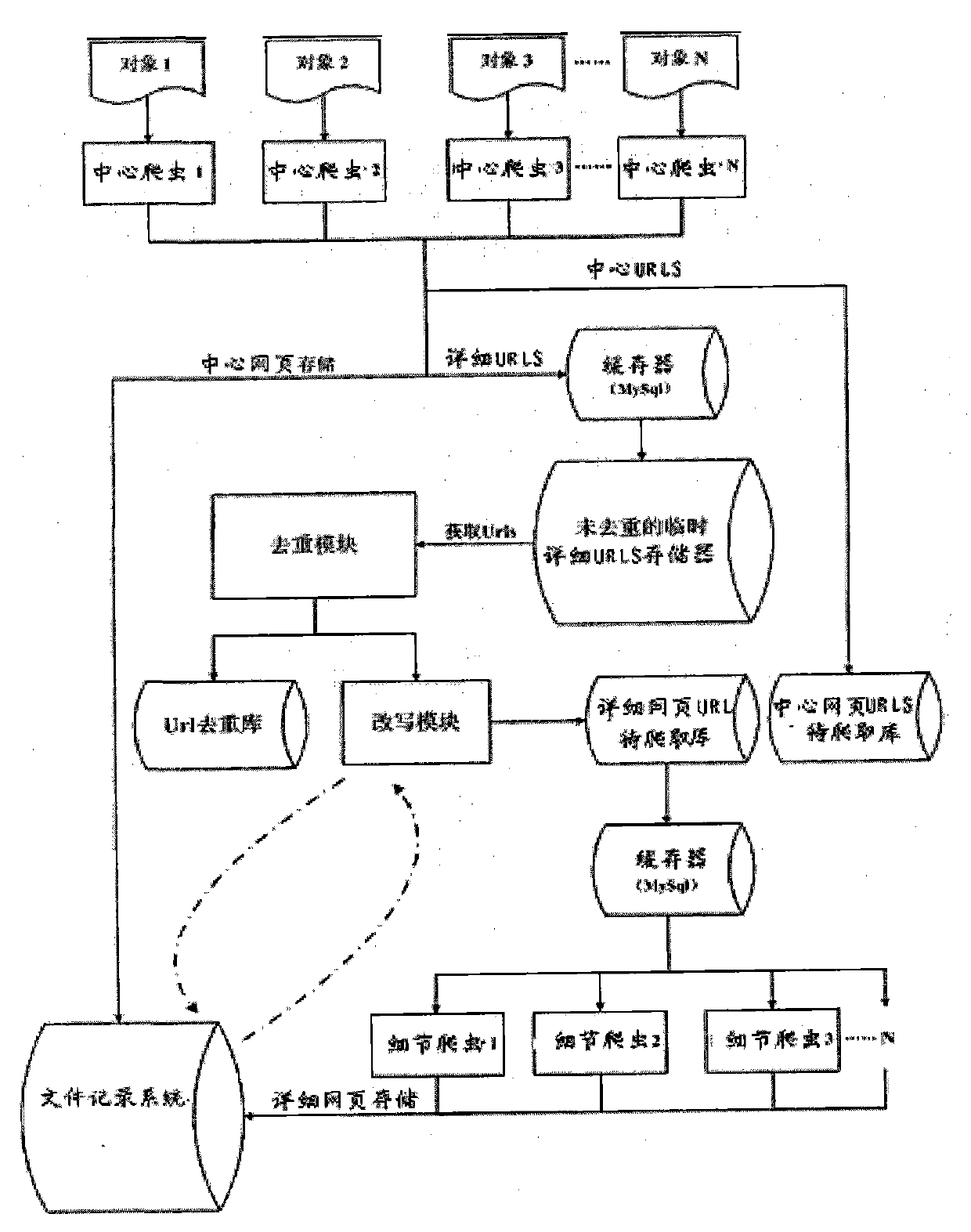

The invention relates to the field of technology, in particular to a webpage-crawling-based crawler technology. After URL (uniform resource locator) link addresses are initiated, the technology comprises the following steps: (1), reading the URL link addresses at the head of a running queue in the queue from a given access by using an equilibrium assignment crawler thread; (2), judging whether the URL link addresses exist or not, stopping crawling if the URL link addresses exist, otherwise crawling and placing the URL link addresses in a completion queue; (3), extracting webpages corresponding to the URL link addresses which are placed in the completion queue; (4), filtering the URL link addresses in the extracted webpages, keeping and writing effective URL link addresses into the running queue, and returning to the step (1) to repeat the steps. According to the technology, corresponding resources are crawled from the Internet, and the URL link addresses are rewritten and stored to pertinently acquire Internet information based on objects set by users according to tasks created by the users; in addition, multi-machine parallel crawling, multi-task scheduling, continuous crawling from a breakpoint, distributed crawler management and crawler control can be implemented.

Owner:BEIJING INFCN INFORMATION TECH

Asymmetric heterogeneous multi-threaded operating system

A method for an asymmetric heterogeneous multi-threaded operating system is presented. A processing unit (PU) provides a trusted mode environment in which an operating system executes. A heterogeneous processor environment includes a synergistic processing unit (SPU) that does not provide trusted mode capabilities. The PU operating system uses two separate and distinct schedulers which are a PU scheduler and an SPU scheduler to schedule tasks on a PU and an SPU, respectively. In one embodiment, the heterogeneous processor environment includes a plurality of SPUs. In this embodiment, the SPU scheduler may use a single SPU run queue to schedule tasks for the plurality of SPUs or, the SPU scheduler may use a plurality of run queues to schedule SPU tasks whereby each of the run queues correspond to a particular SPU.

Owner:GLOBALFOUNDRIES INC

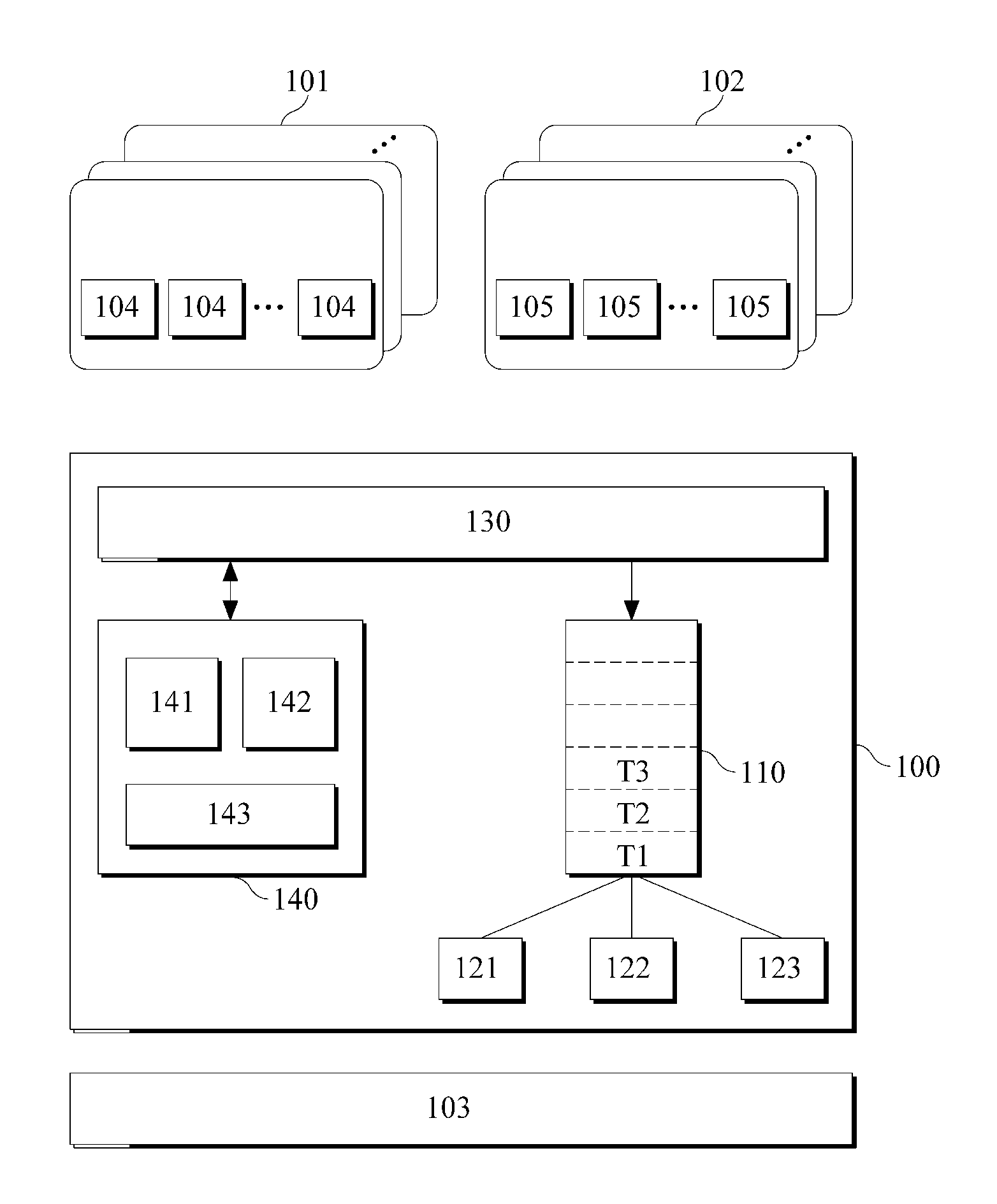

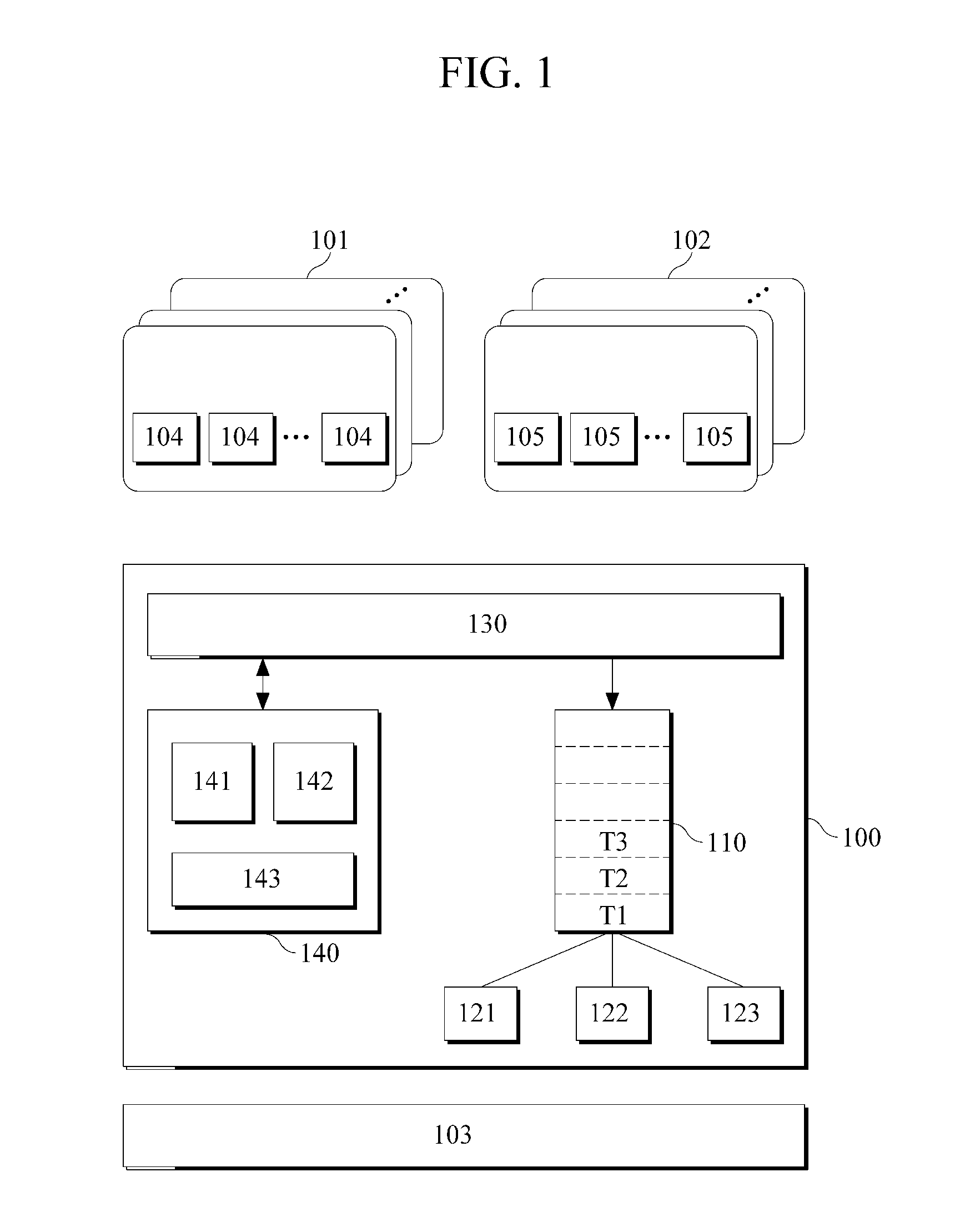

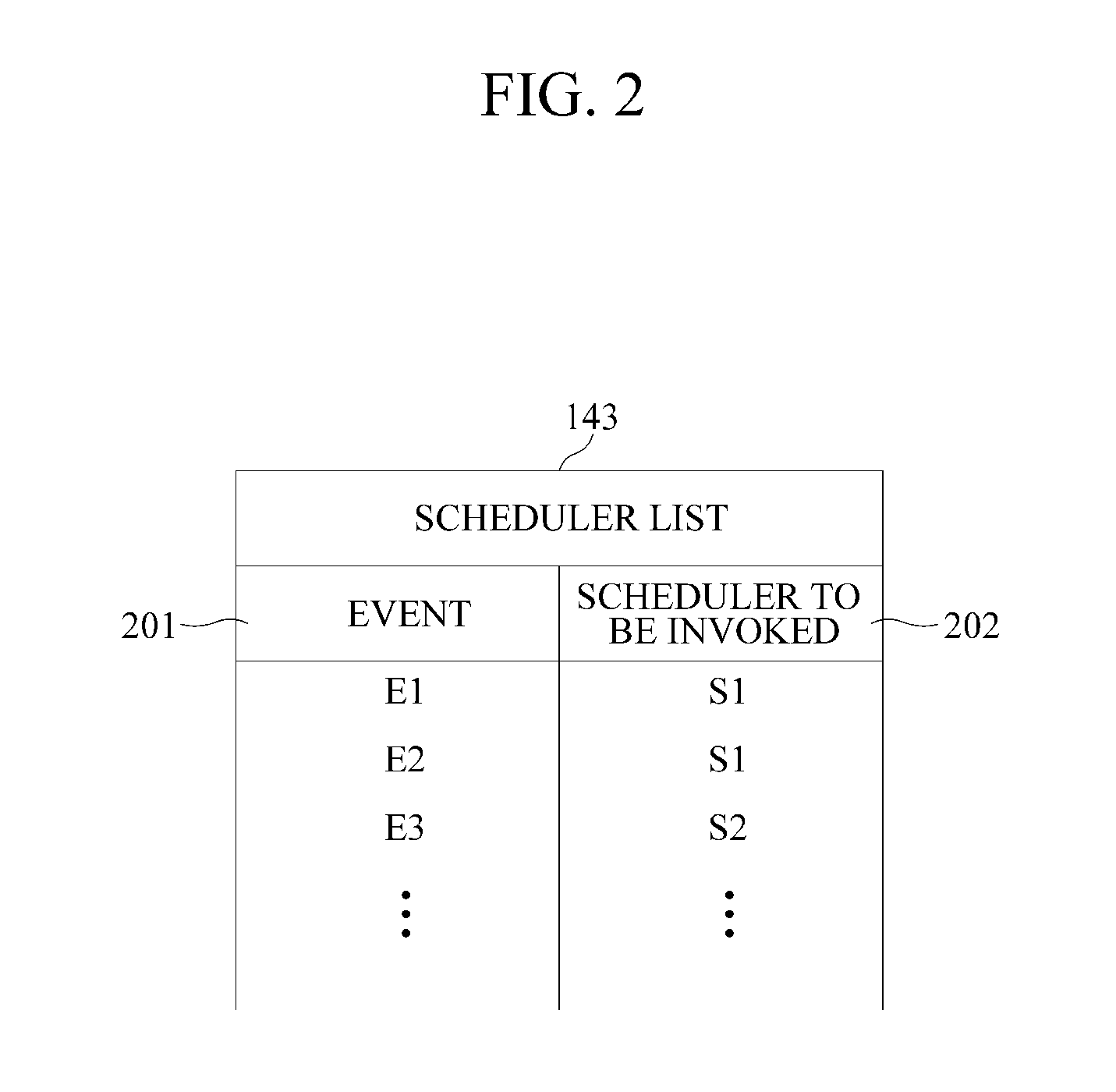

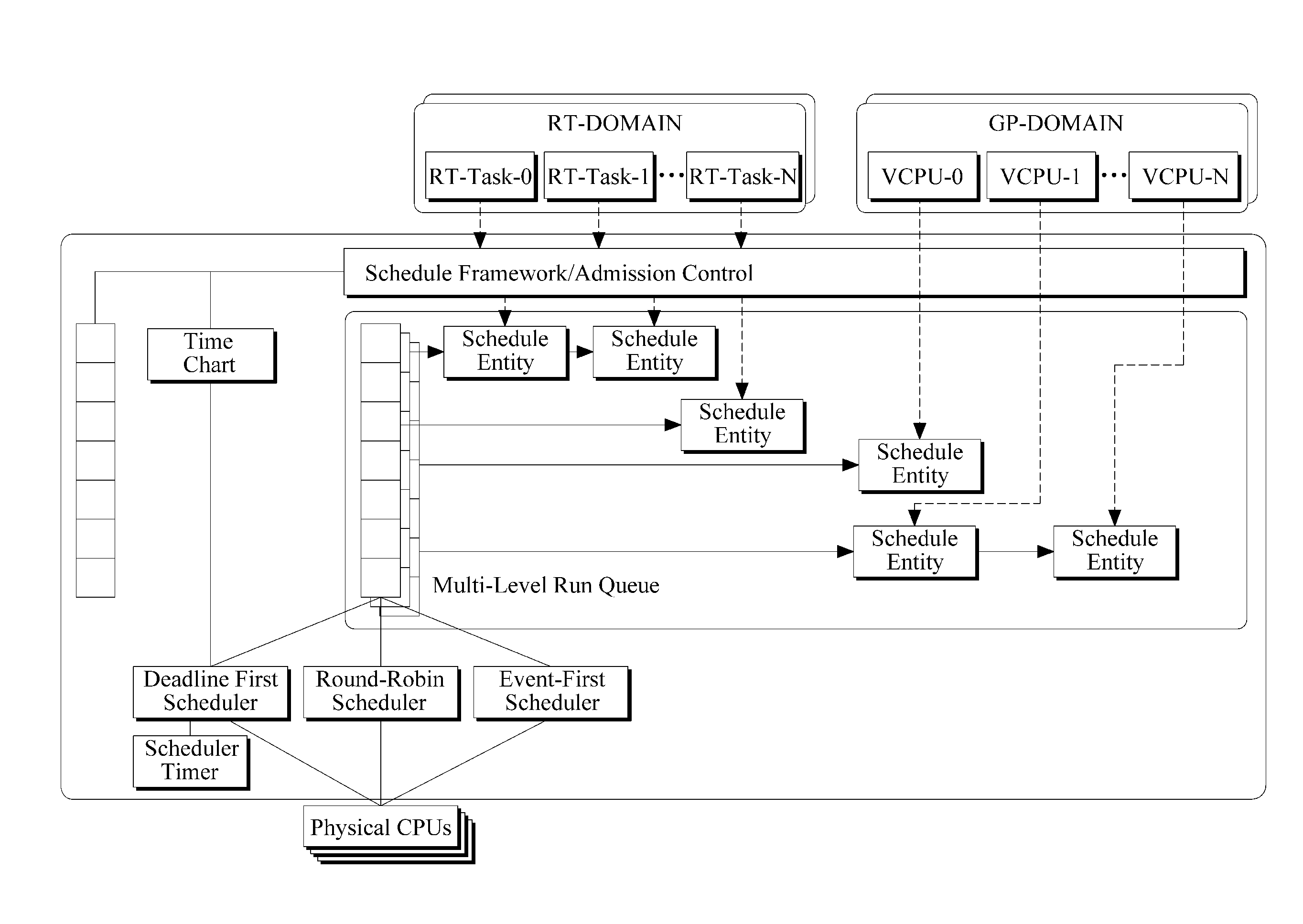

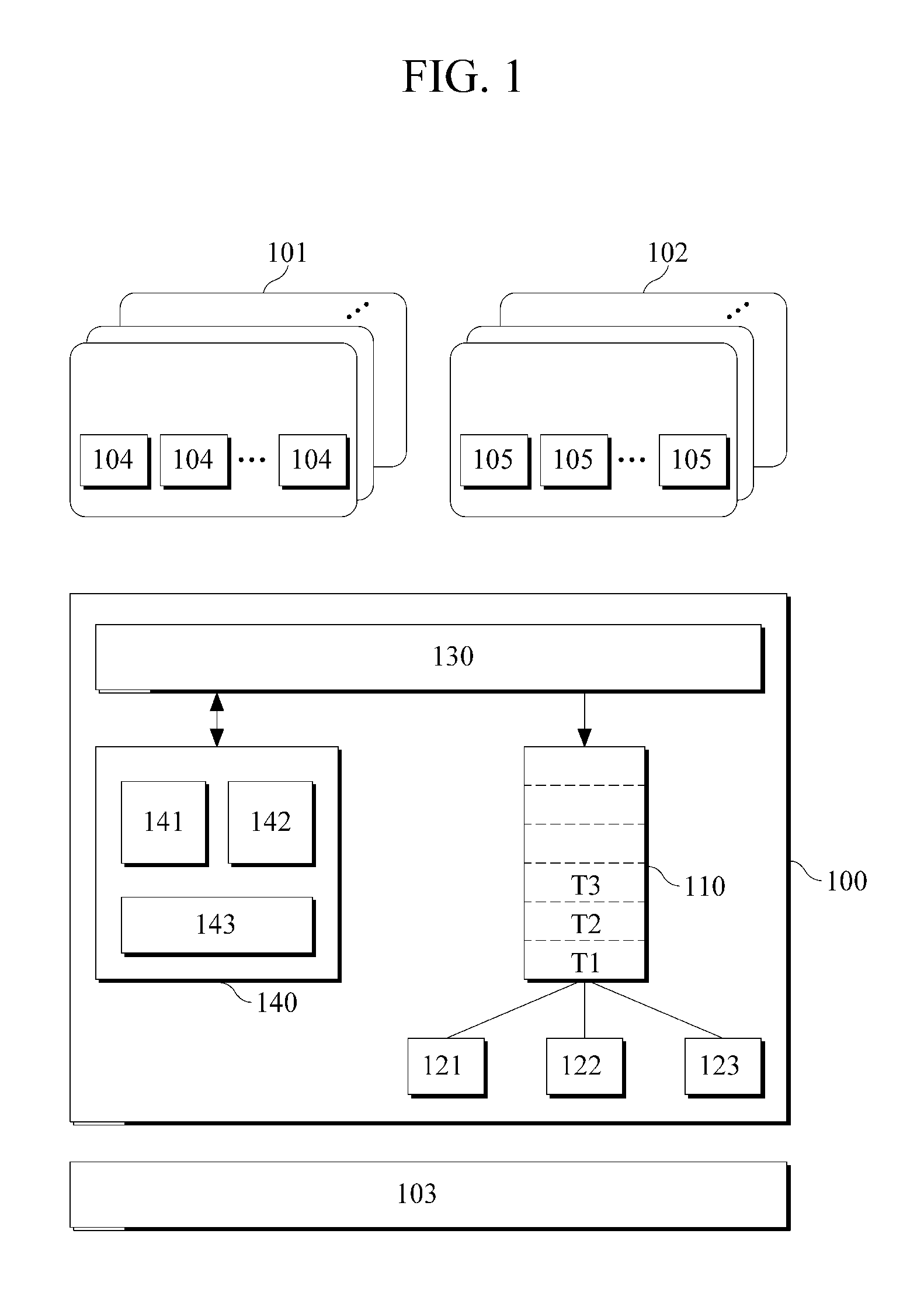

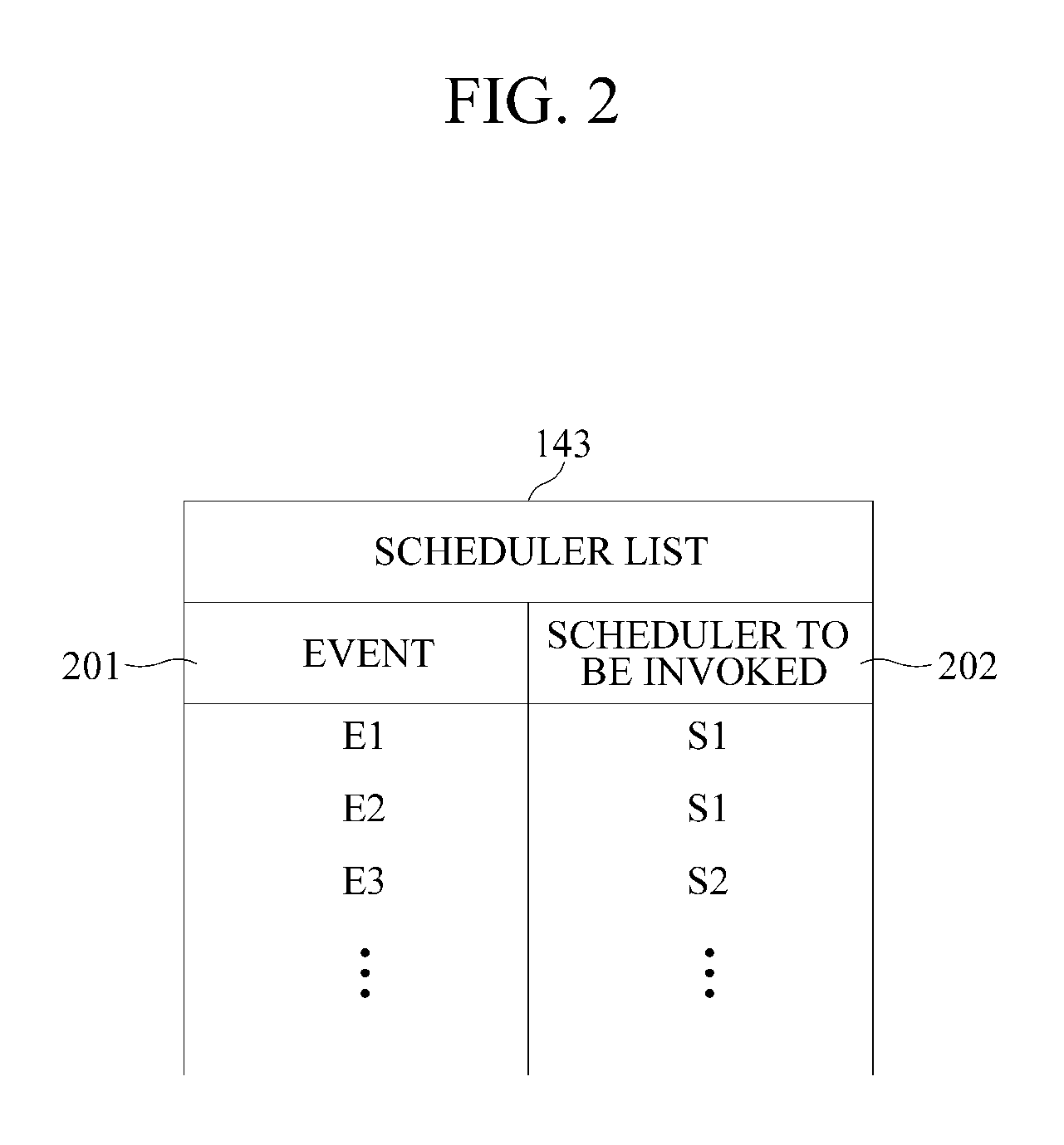

Virtual machine monitor and scheduling method thereof

ActiveUS20110225583A1Multiprogramming arrangementsSoftware simulation/interpretation/emulationEvent typeRun queue

A virtual machine monitor and a scheduling method thereof is provided. The virtual machine monitor may operate at least two domains. The virtual machine monitor may include at least one run queue and a plurality of schedulers, at least two of the plurality of schedulers comprising different scheduling characteristics. The virtual machine monitor may insert a task received from the domain into the run queue and may select a scheduler for scheduling the task, which may be inserted into the run queue, from the schedulers, according to an event type.

Owner:SAMSUNG ELECTRONICS CO LTD

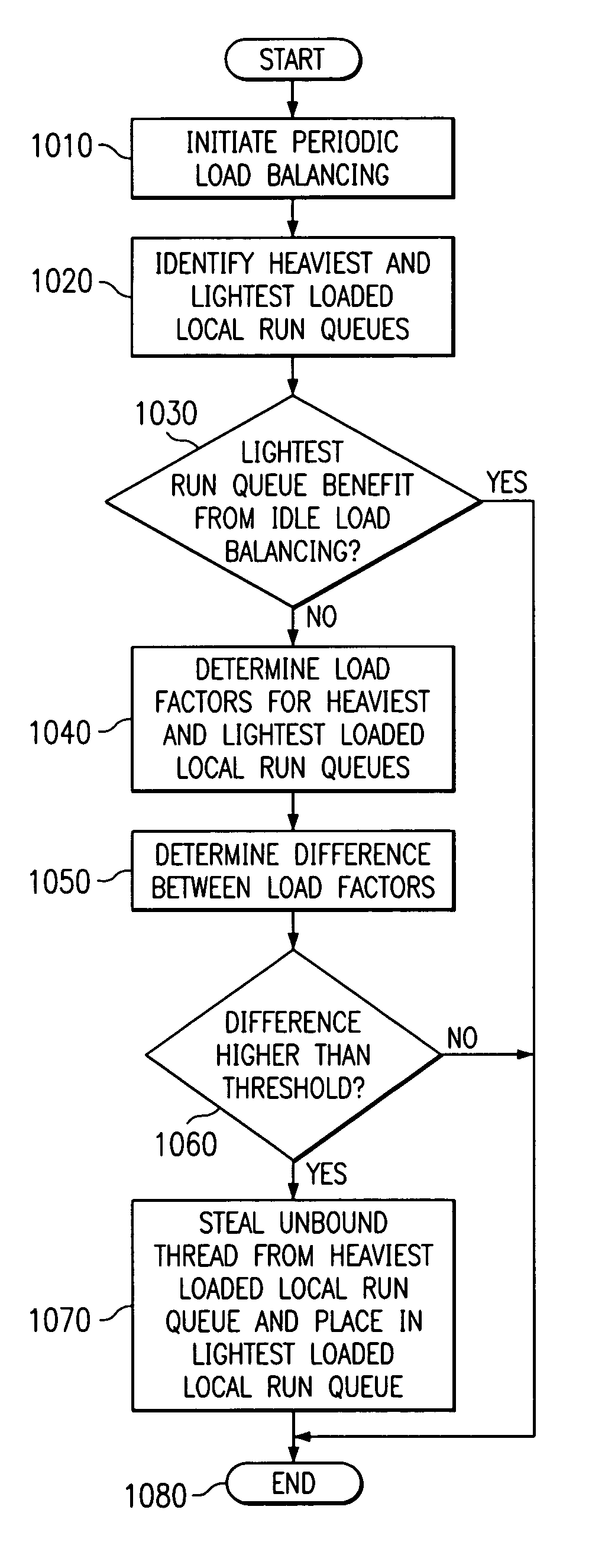

System for preventing periodic load balancing if processor associated with lightest local run queue has benefited from idle processor load balancing within a determined time period

An apparatus and methods for periodic load balancing in a multiple run queue system are provided. The apparatus includes a controller, memory, initial load balancing device, idle load balancing device, periodic load balancing device, and starvation load balancing device. The apparatus performs initial load balancing, idle load balancing, periodic load balancing and starvation load balancing to ensure that the workloads for the processors of the system are optimally balanced.

Owner:INT BUSINESS MASCH CORP

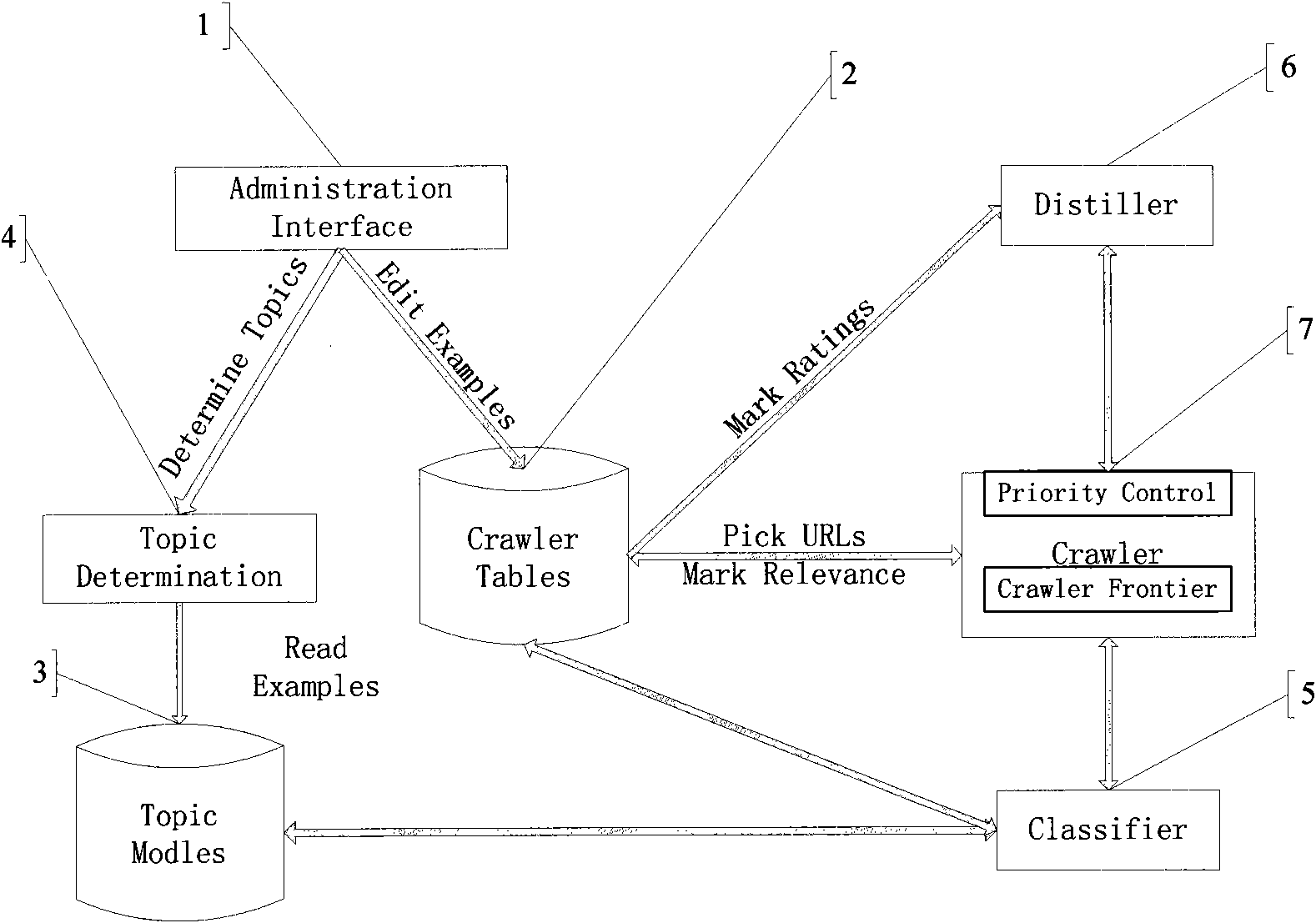

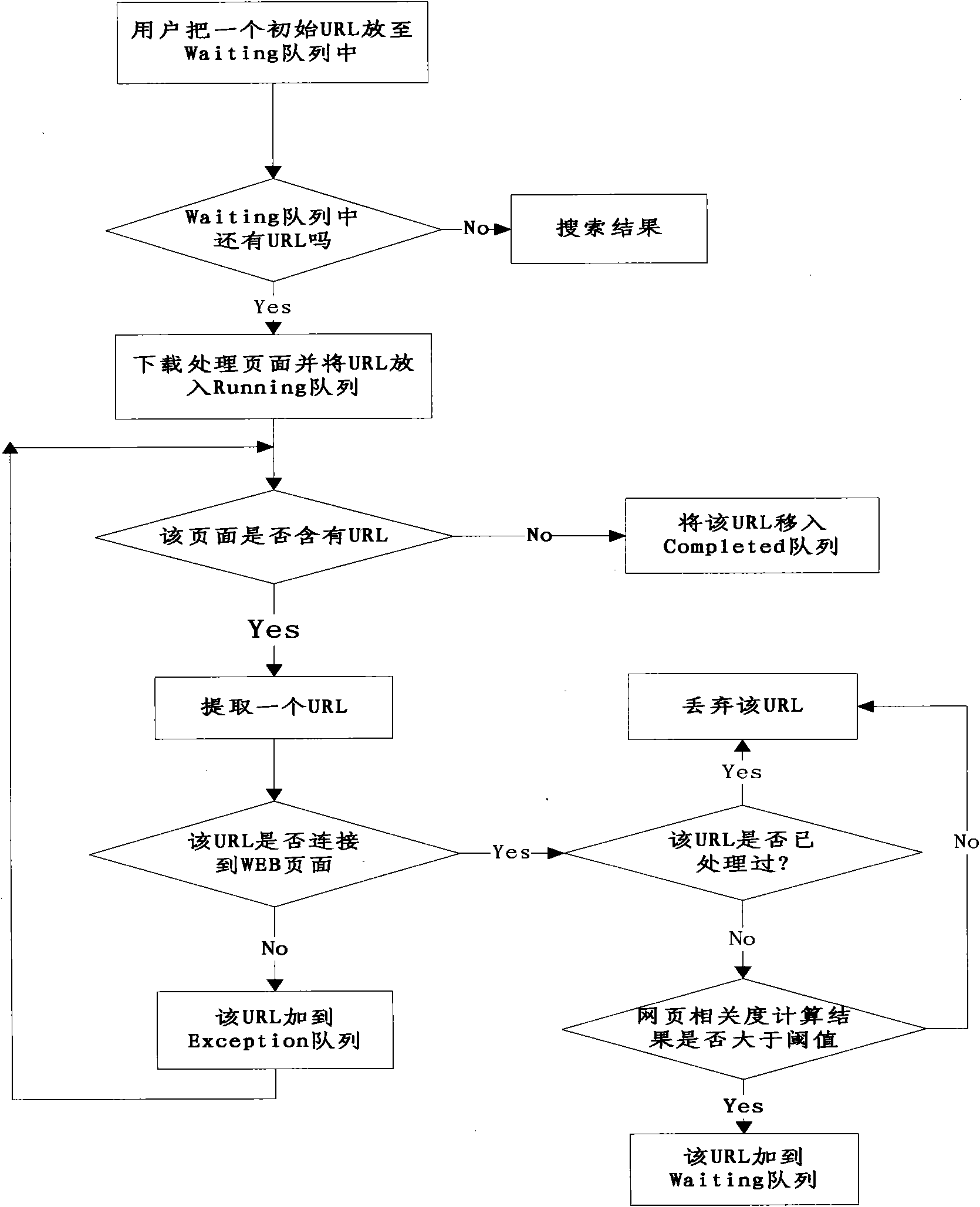

Design method of theme network crawler system

InactiveCN101630327AReduce workloadCrawl fasterSpecial data processing applicationsWorkloadData mining

The invention discloses a design method for a theme network crawler system, which is based on a best-first search strategy and mainly comprises the following steps: 1. establishing a theme word stock; 2. filtering crawling web pages, and eliminating the web pages with lower theme association degree (smaller than a set threshold value); 3. computing the significance of the web pages and determining the accessing order of the web pages; and 4. establishing four URL queues: a waiting queue, a running queue, a completed queue and an exceptions queue. By the design method, the workload of a crawler is greatly reduced, and the accuracy rate and the comprehension rate of crawling results are improved.

Owner:KUNMING UNIV OF SCI & TECH

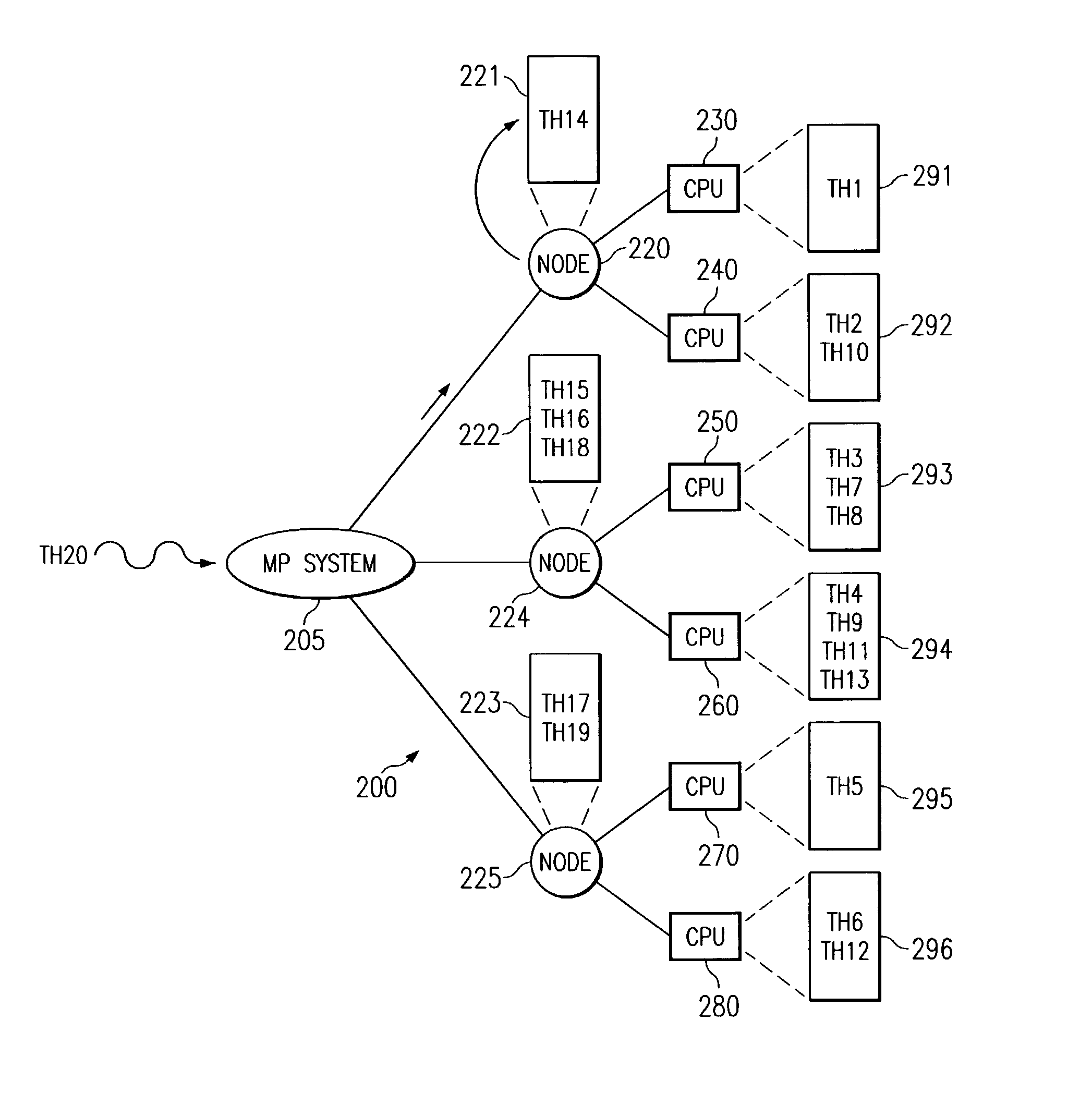

Apparatus and method for load balancing of fixed priority threads in a multiple run queue environment

Apparatus and methods for load balancing fixed priority threads in a multiprocessor system are provided. The apparatus and methods of the present invention identify unbound fixed priority threads at the top of local run queues. A best fixed priority thread is then identified and its priority checked against the priorities of threads executing on processors of the node. A set of executing threads that may be displaced by the best fixed priority thread is identified. The lowest priority executing thread from the set is then identified and the best fixed priority thread is moved to displace this lowest priority executing thread.

Owner:IBM CORP

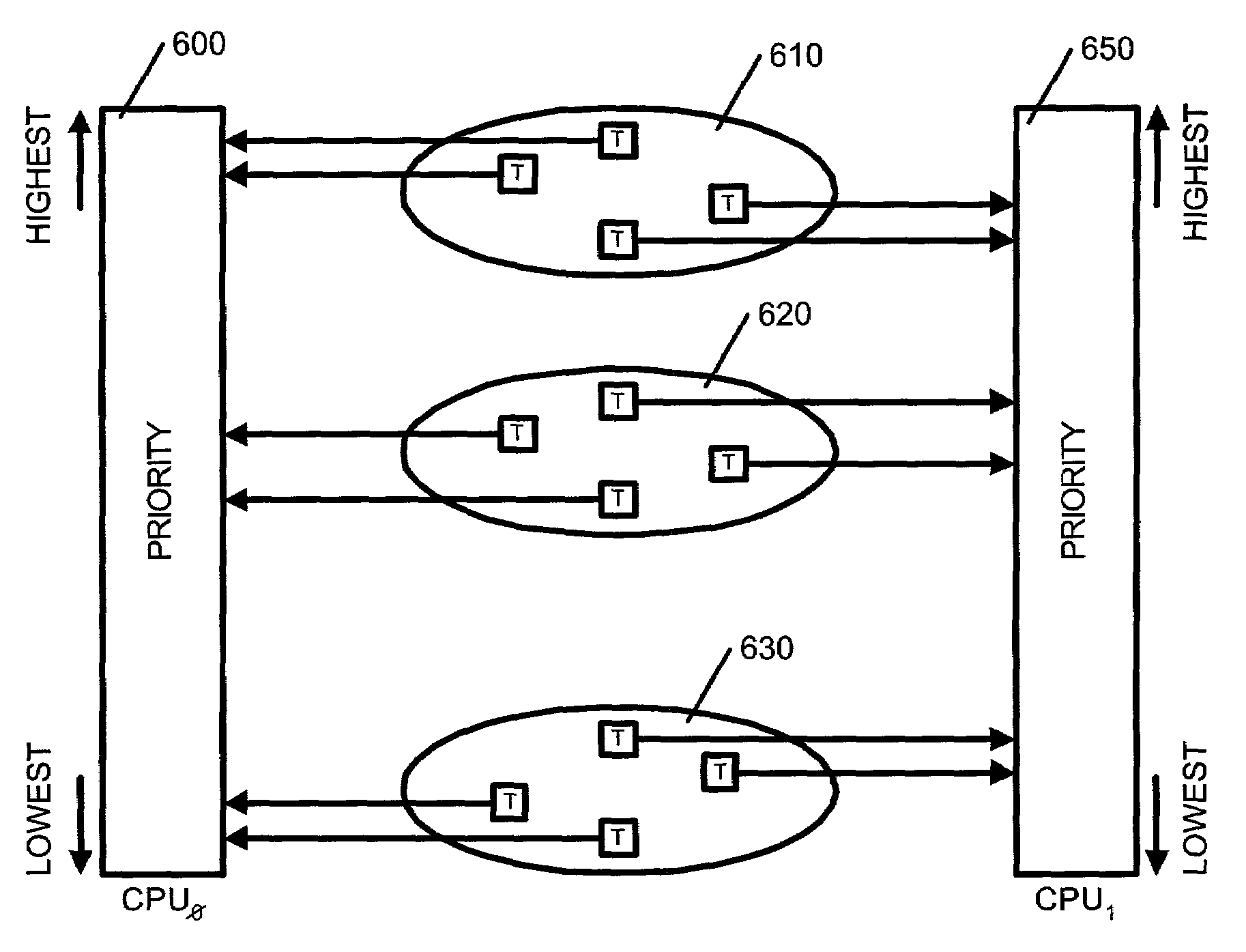

Multiprocessor load balancing system for prioritizing threads and assigning threads into one of a plurality of run queues based on a priority band and a current load of the run queue

InactiveUS7080379B2Resource allocationMultiple digital computer combinationsCurrent loadMulti processor

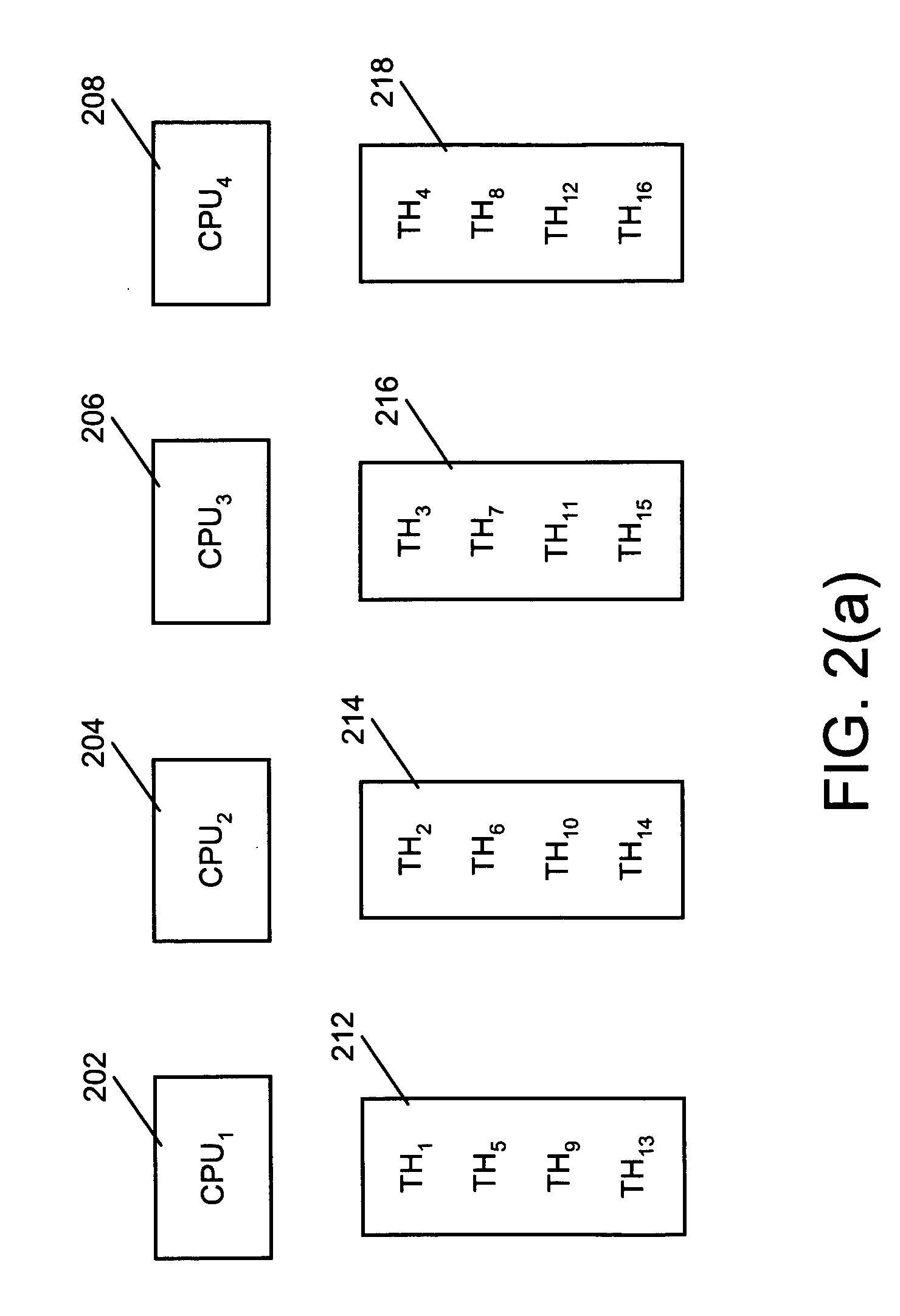

A method, system and apparatus for integrating a system task scheduler with a workload manager are provided. The scheduler is used to assign default priorities to threads and to place the threads into run queues and the workload manager is used to implement policies set by a system administrator. One of the policies may be to have different classes of threads get different percentages of a system's CPU time. This policy can be reliably achieved if threads from a plurality of classes are spread as uniformly as possible among the run queues. To do so, the threads are organized in classes. Each class is associated with a priority as per a use-policy. This priority is used to modify the scheduling priority assigned to each thread in the class as well as to determine in which band or range of priority the threads fall. Then periodically, it is determined whether the number of threads in a band in a run queue exceeds the number of threads in the band in another run queue by more than a pre-determined number. If so, the system is deemed to be load-imbalanced. If not, the system is load-balanced by moving one thread in the band from the run queue with the greater number of threads to the run queue with the lower number of threads.

Owner:IBM CORP

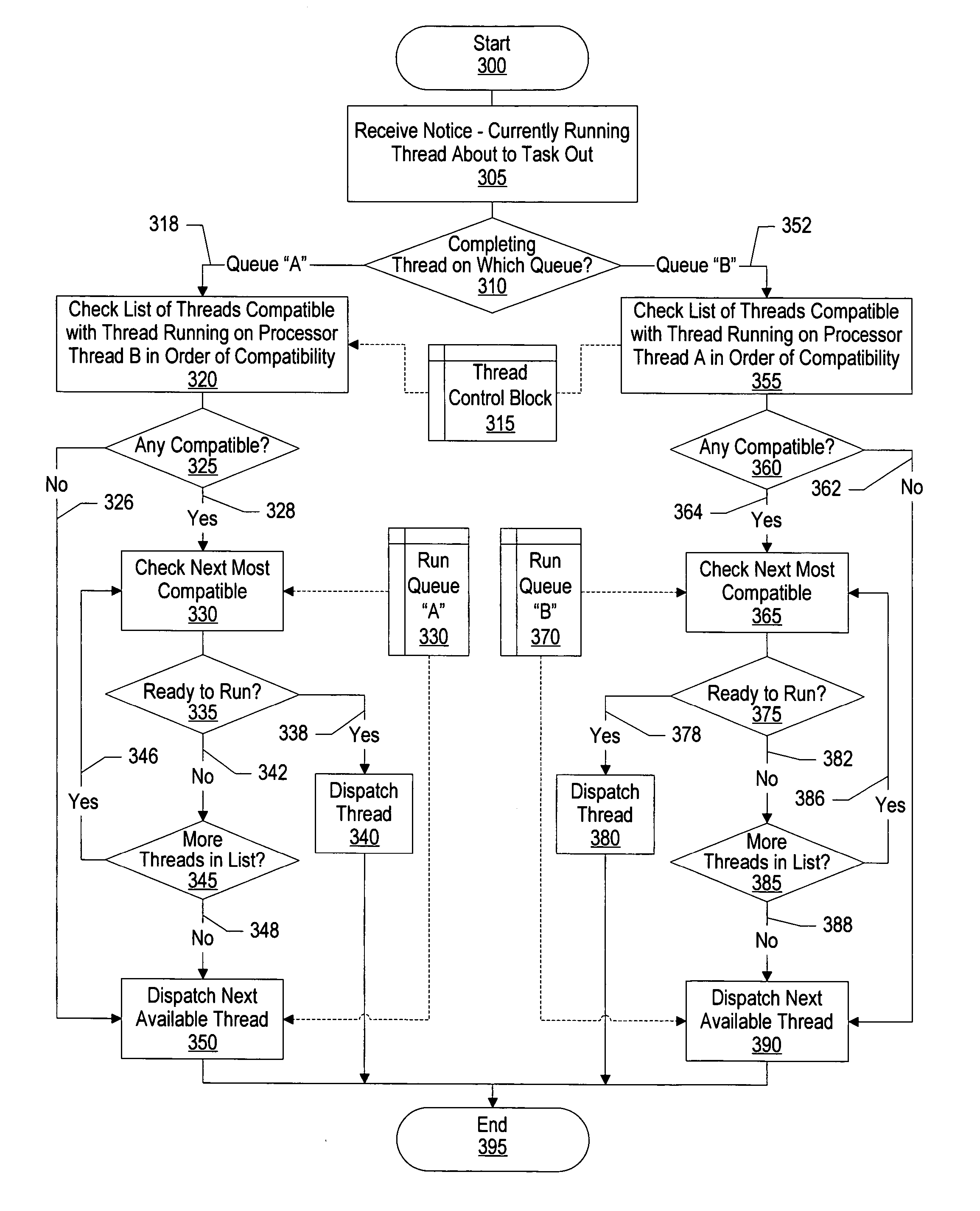

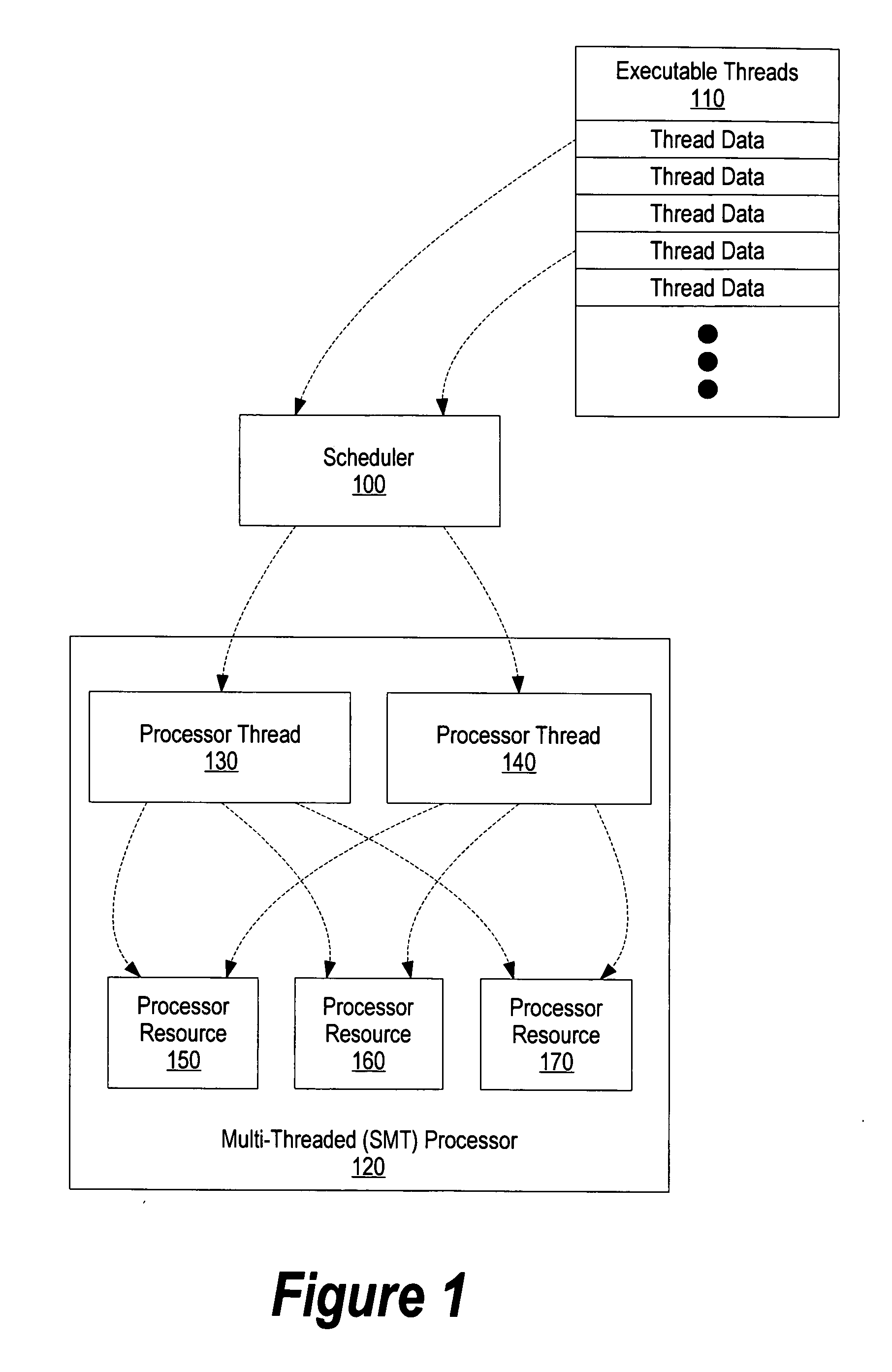

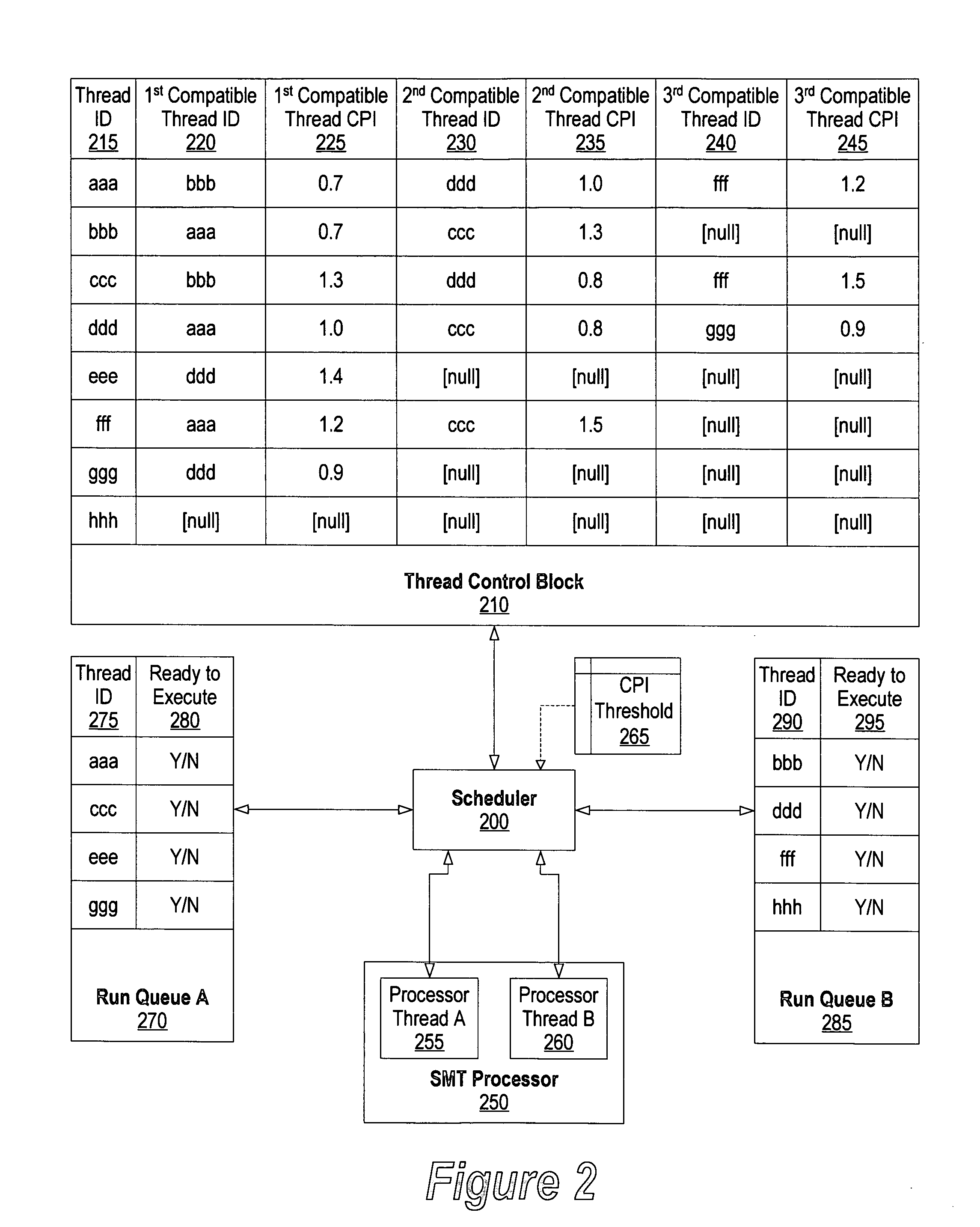

System and method for CPI scheduling on SMT processors

ActiveUS20050086660A1Improve performanceDigital computer detailsMultiprogramming arrangementsThread schedulingParallel computing

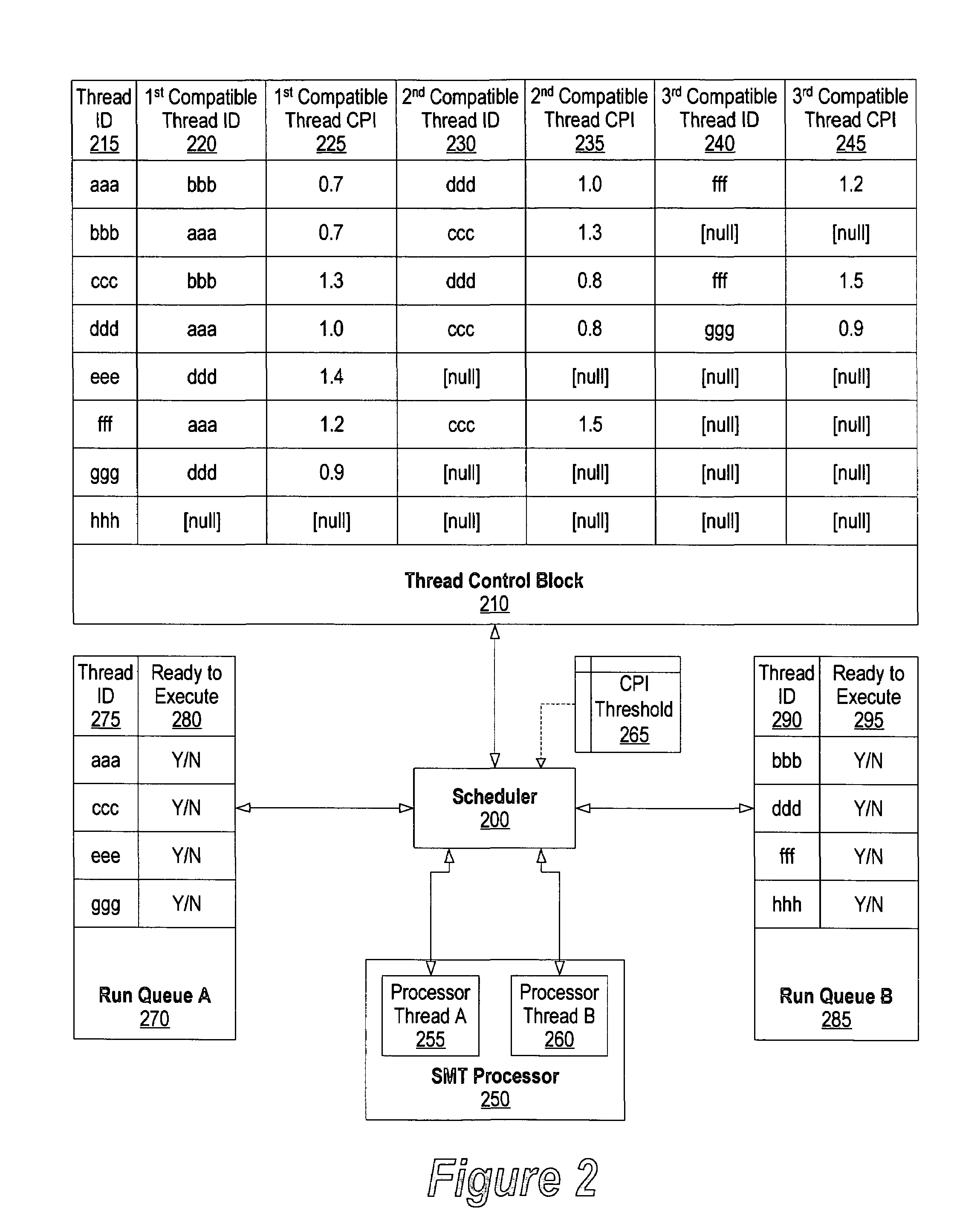

A system and method for identifying compatible threads in a Simultaneous Multithreading (SMT) processor environment is provided by calculating a performance metric, such as CPI, that occurs when two threads are running on the SMT processor. The CPI that is achieved when both threads were executing on the SMT processor is determined. If the CPI that was achieved is better than the compatibility threshold, then information indicating the compatibility is recorded. When a thread is about to complete, the scheduler looks at the run queue from which the completing thread belongs to dispatch another thread. The scheduler identifies a thread that is (1) compatible with the thread that is still running on the SMT processor (i.e., the thread that is not about to complete), and (2) ready to execute. The CPI data is continually updated so that threads that are compatible with one another are continually identified.

Owner:META PLATFORMS INC

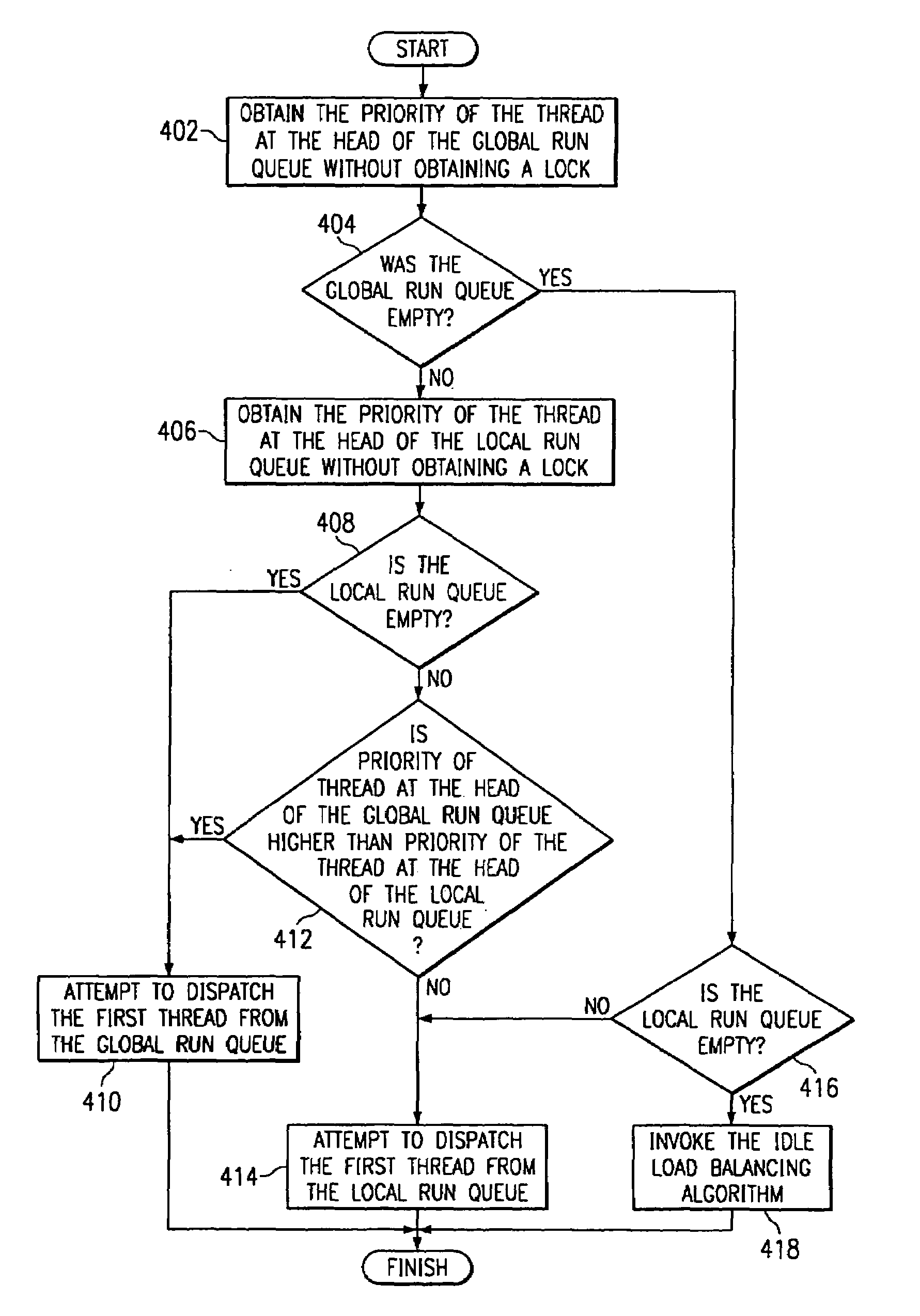

Apparatus for minimizing lock contention in a multiple processor system with multiple run queues when determining the threads priorities

Apparatus and methods are provided for selecting a thread to dispatch in a multiple processor system having a global run queue associated with each multiple processor node and having a local run queue associated with each processor. If the global run queue and the local run queue associated with the processor performing the dispatch are both not empty, then the highest priority queue is selected for dispatch, as determined by examining the queues without obtaining a lock. If one of the two queues is empty and the other queue is not empty, then the non-empty queue is selected for dispatch. If the global queue is selected for dispatch but a lock on the global queue cannot be obtained immediately, then the local queue is selected for dispatch. If both queues are empty, then an idle load balancing operation is performed. Local run queues for other processors at the same node are examining without obtaining a lock. If a candidate thread is found that satisfies a set of shift conditions, and if a lock can be obtained on both the non-local run queue and the candidate thread, then the thread is shifted for dispatch by the processor that is about to become idle.

Owner:IBM CORP

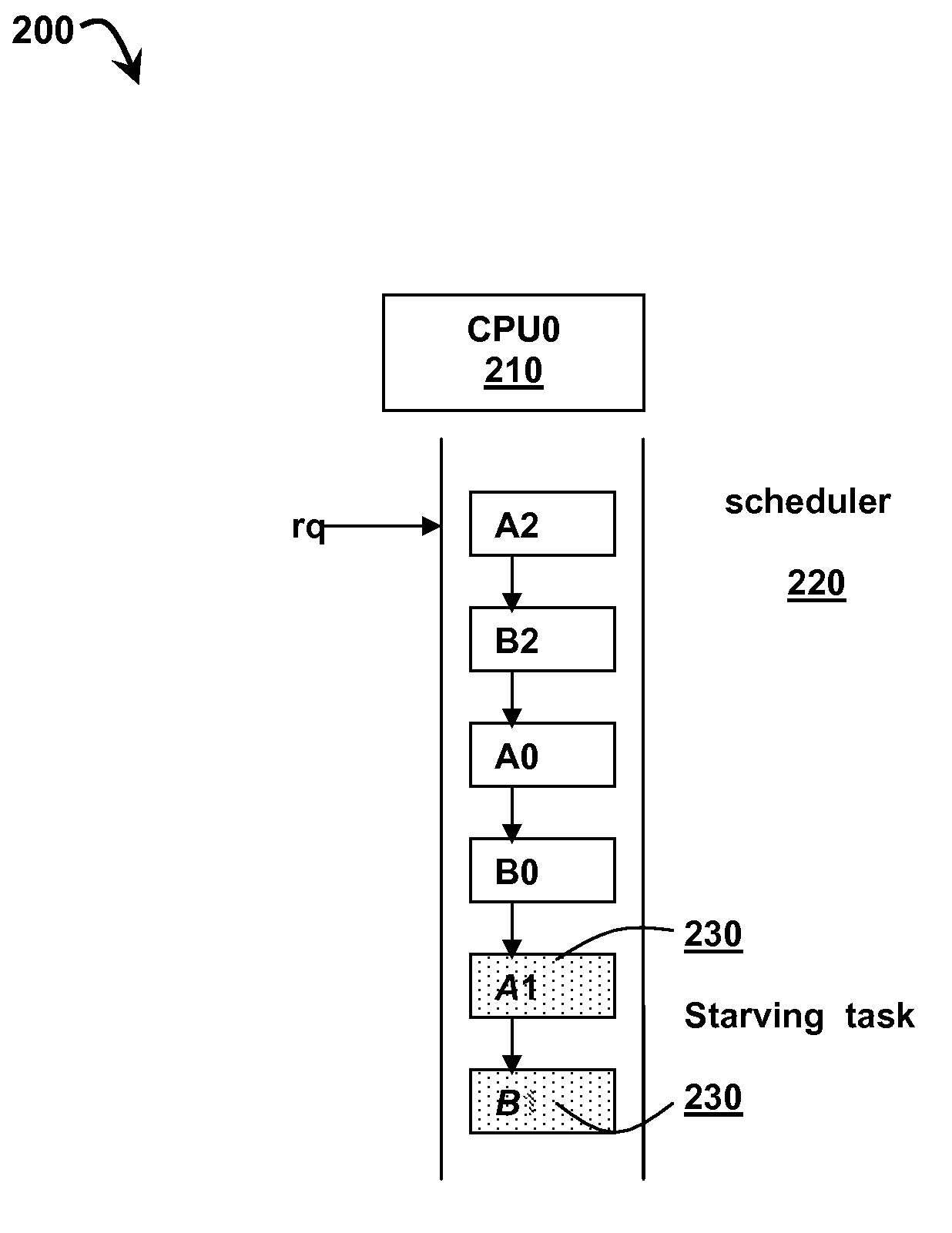

Method and system for simulating a multi-queue scheduler using a single queue on a processor

InactiveUS20090113432A1Multiprogramming arrangementsMemory systemsOperational systemOperating system

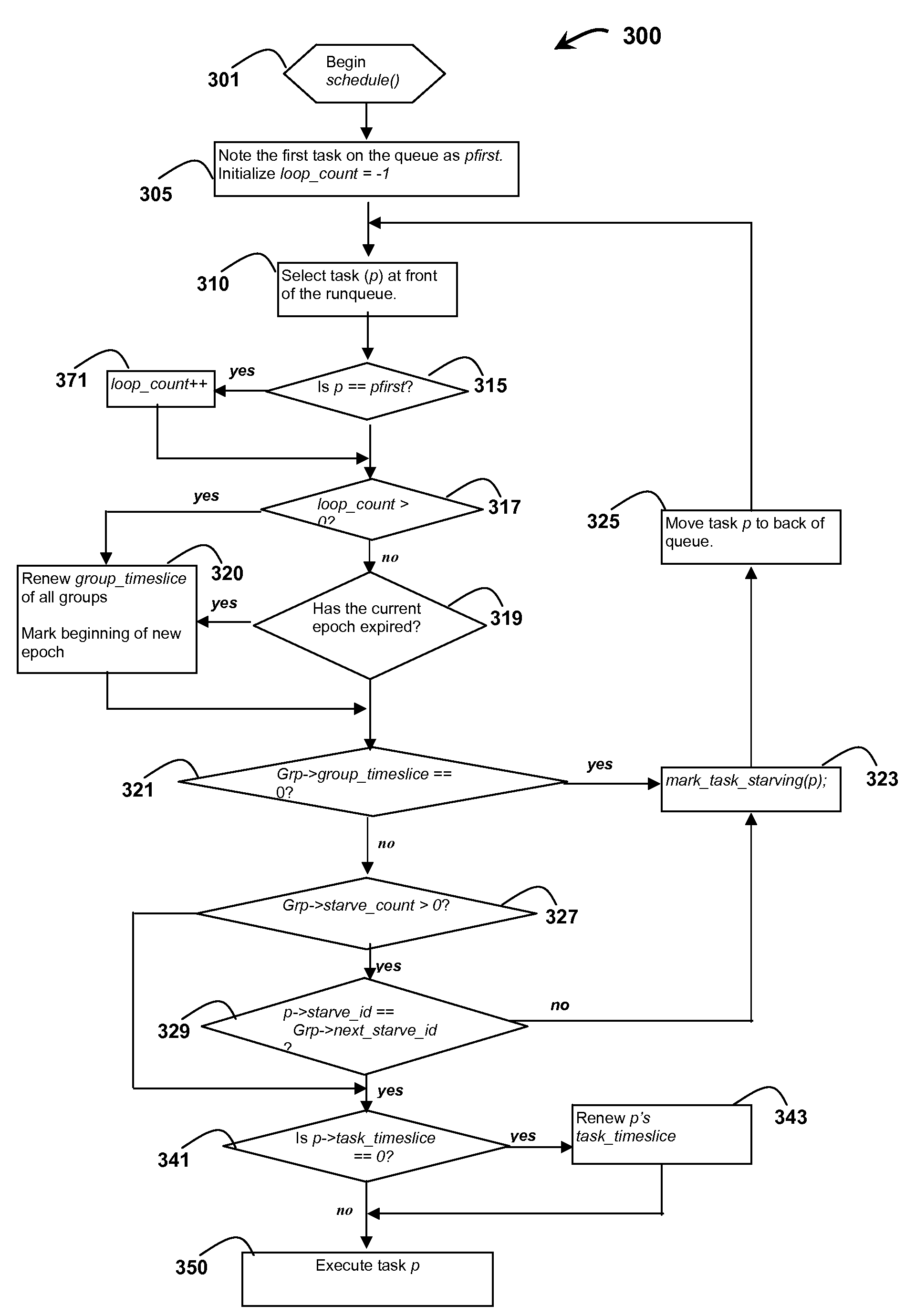

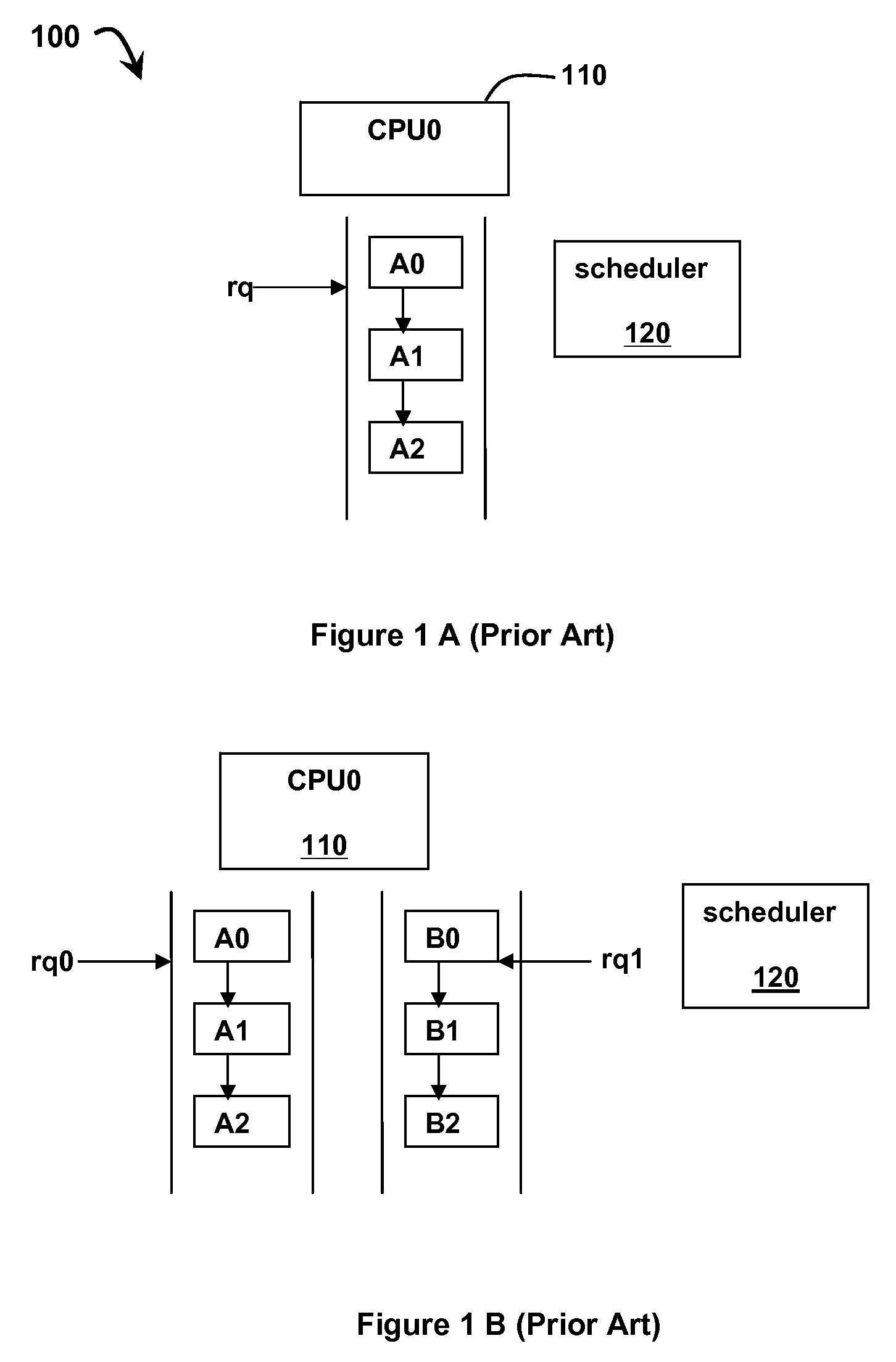

A method and system for scheduling tasks on a processor, the tasks being scheduled by an operating system to run on the processor in a predetermined order, the method comprising identifying and creating task groups of all related tasks; assigning the tasks in the task groups into a single common run-queue; selecting a task at the start of the run-queue; determining if the task at the start of the run-queue is eligible to be run based on a pre-defined timeslice allocated and on the presence of older starving tasks on the runqueue; executing the task in the pre-defined time slice; associating a starving status to all unexecuted tasks and running all until all tasks in the run-queue complete execution and the run-queue become empty.

Owner:IBM CORP

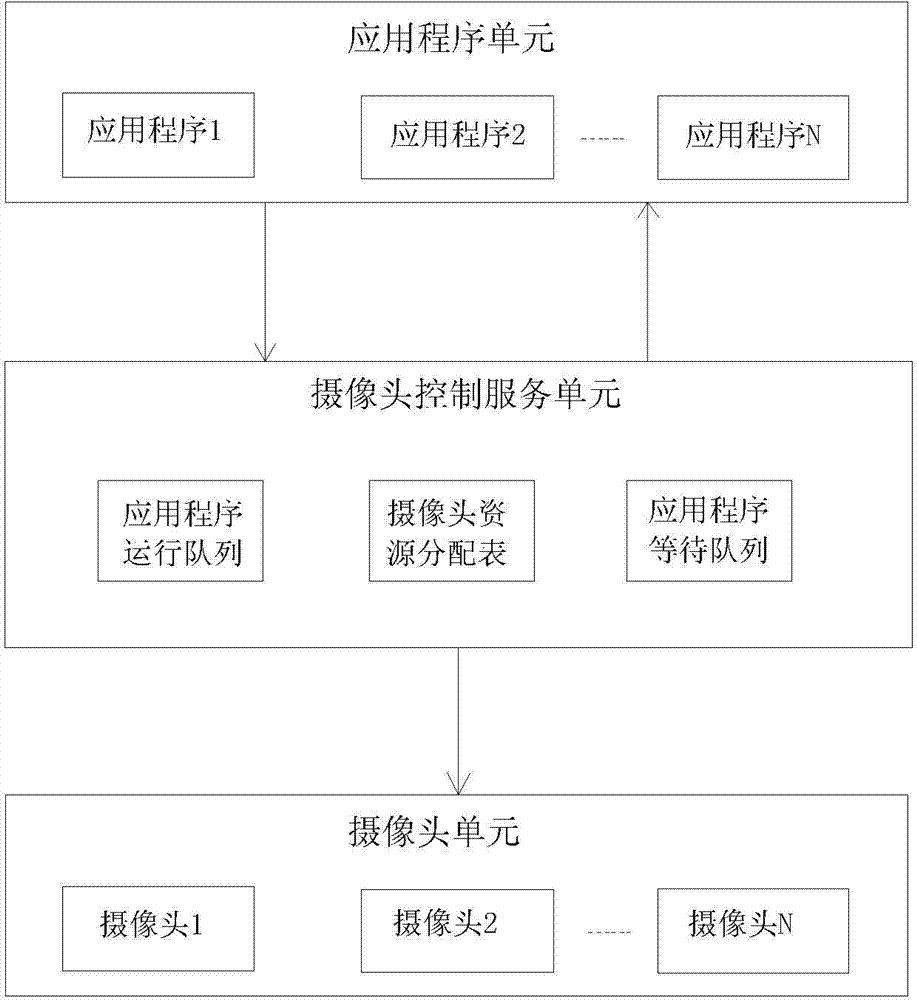

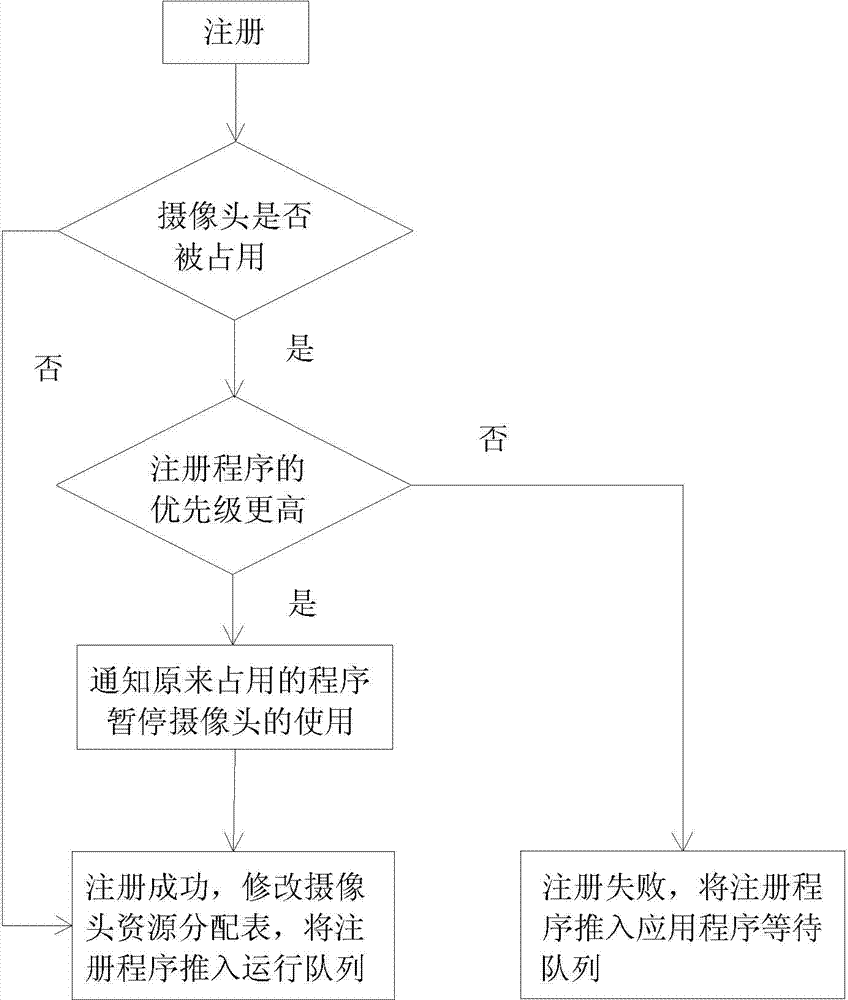

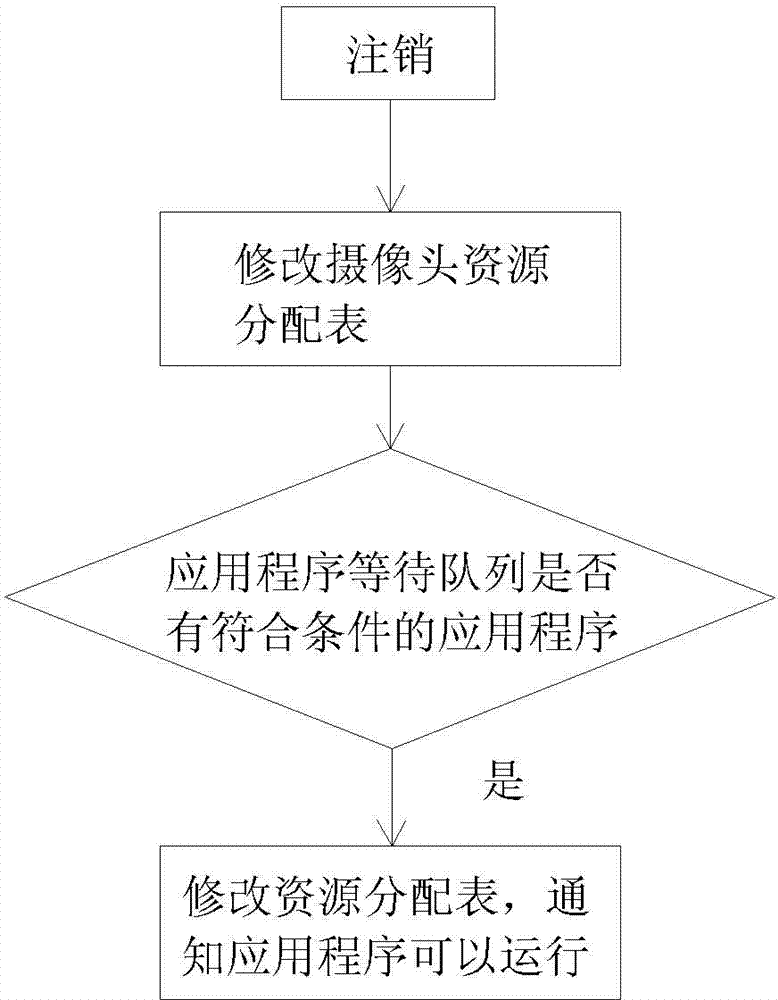

Camera control method based on Android

ActiveCN103677848AAddress Control ManagementEasy to operateSpecific program execution arrangementsCamera controlApplication software

The invention discloses a camera control method based on the Android. The camera control method includes the steps that first, a camera resource allocation table, an application running queue and an application waiting queue are established, wherein the information of the camera resource allocation table contains the name of a camera, a mark whether in use or not, the names of applications using the camera and the priority of the applications, the application running queue represents a list of the applications using the camera, and the application waiting queue represents a list of the applications waiting for use of the camera; second, the application running queue is updated in real time, if a new application A requiring to calling the camera exists, the camera resource allocation table is scanned, and whether the calling application succeeds or not is judged. The camera control method based on the Android solves the problems that in the Android system, the multiple applications compete to use the same camera to achieve control management, and a user avoids manually releasing the applications occupying the camera resources.

Owner:XIAMEN YAXON NETWORKS CO LTD

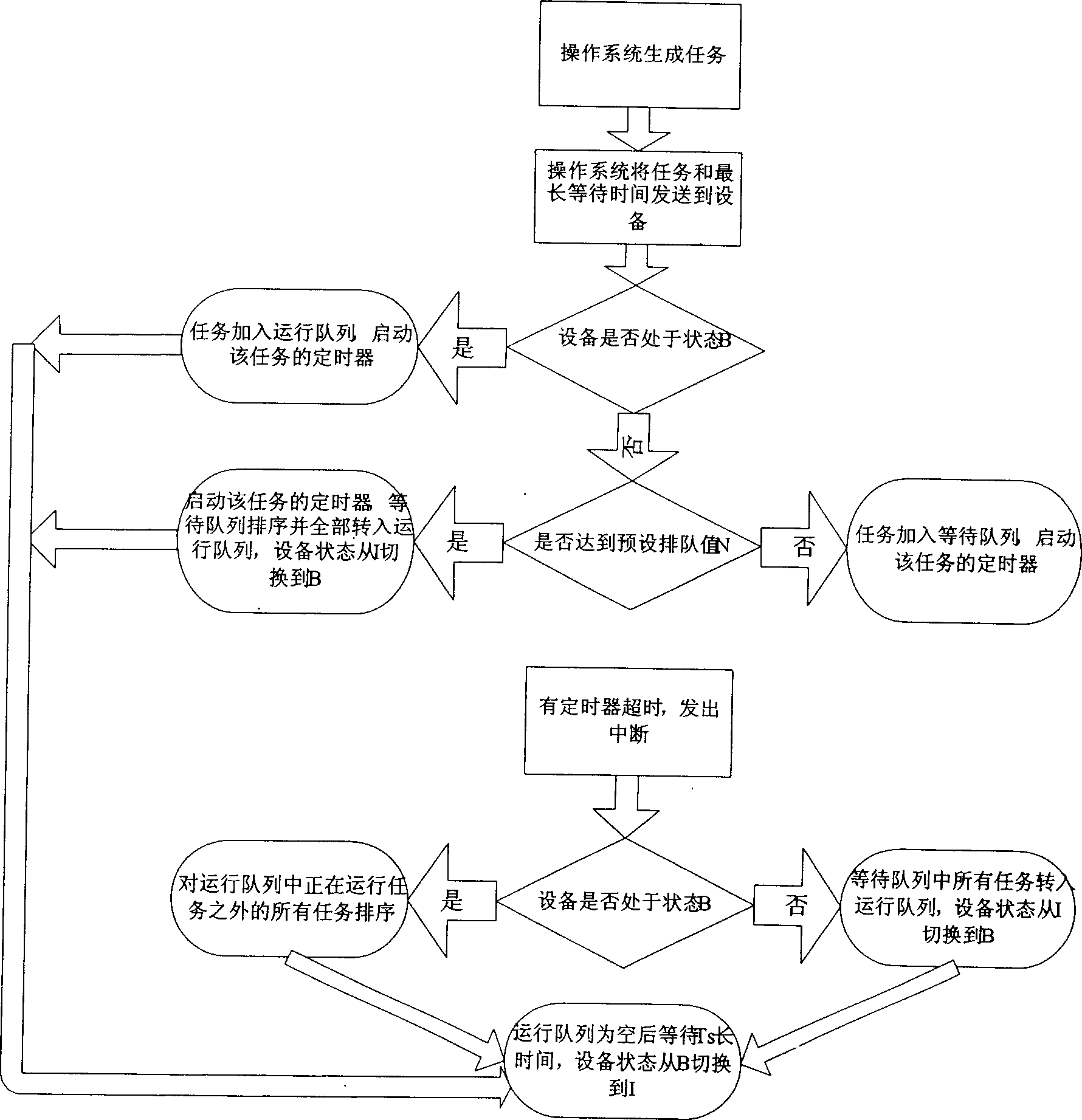

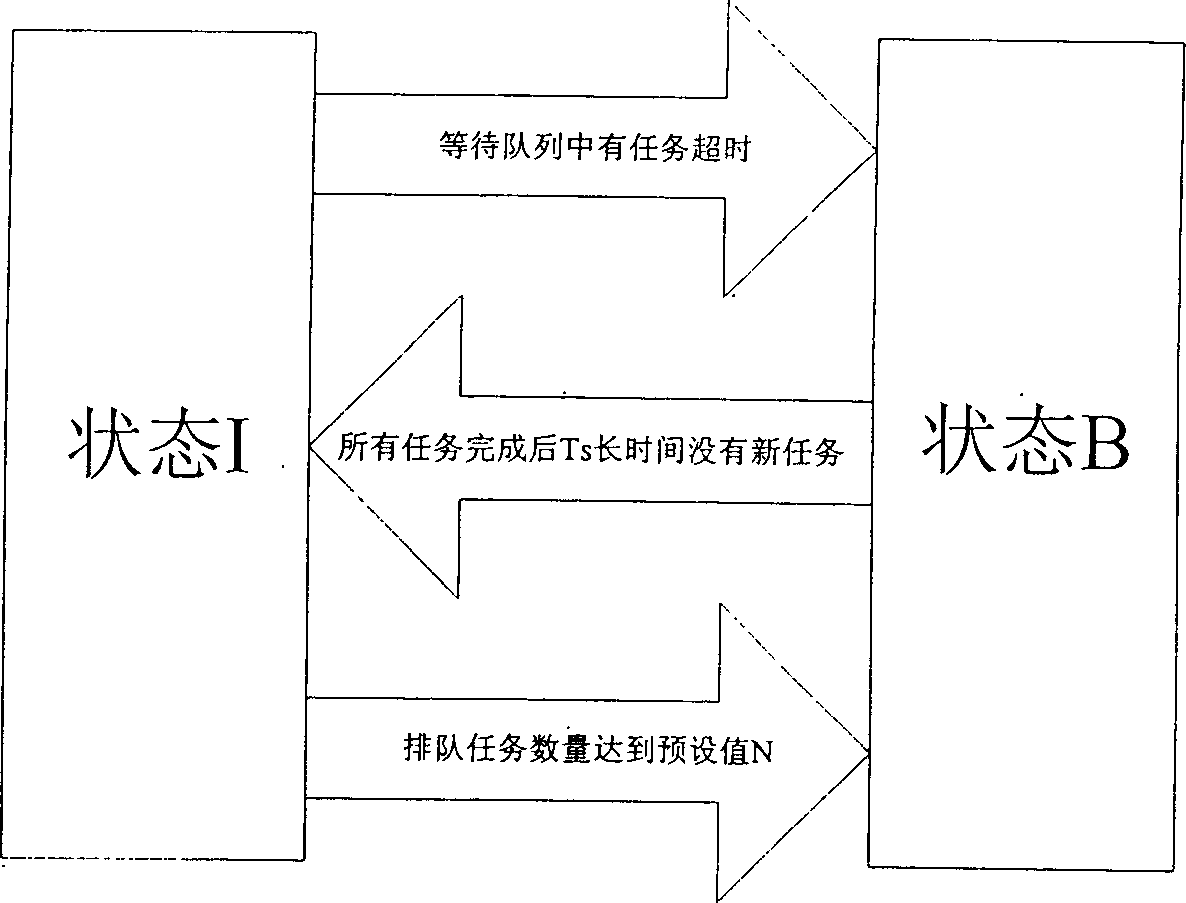

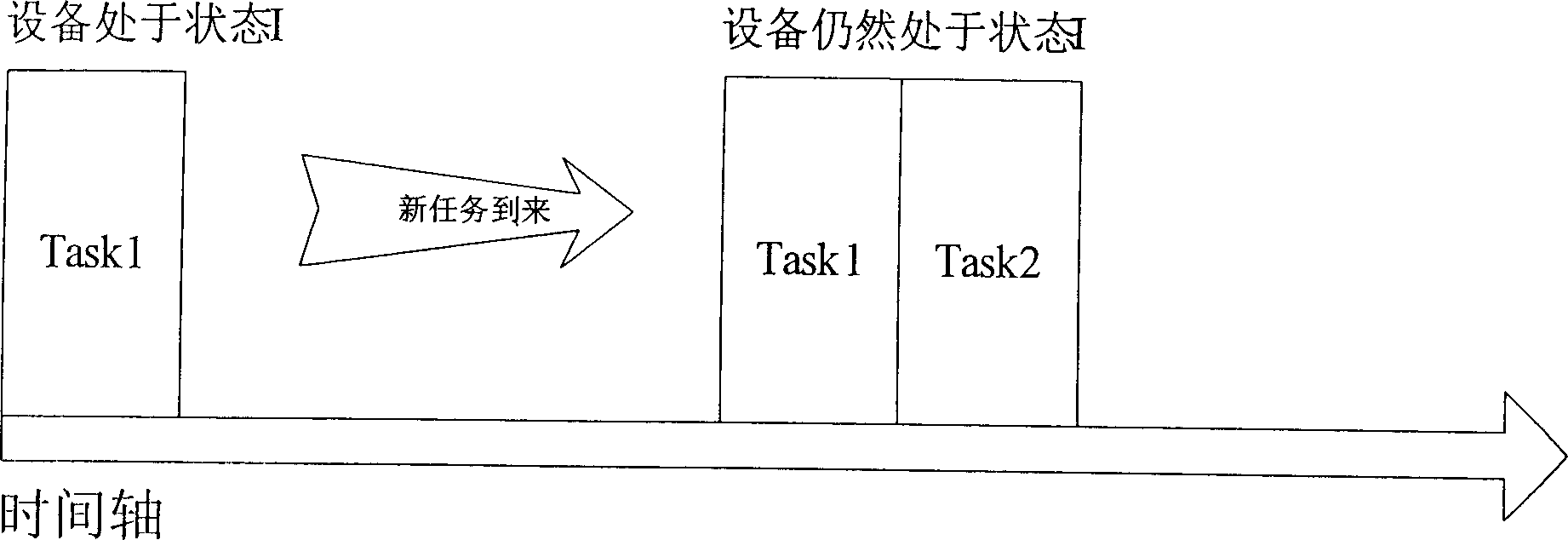

Energy-saving switching method for waiting overtime judge of flush type system outer apparatus

InactiveCN1828481AHigh energy consumptionPreserve Threshold Shutdown MethodEnergy efficient ICTPower supply for data processingOperational systemEnergy expenditure

The disclosed method comprises: sending the task with longest waiting time and task itself to opposite device by OS, according to current waiting sequence and running condition, and determining the next state to enter by the arbitration part in the device drive program. This invention can centralize effectively the computer free time, reduces energy loss as frequent switch, and can prevent system performance fall as over waiting by the time-out decision mechanism.

Owner:ZHEJIANG UNIV

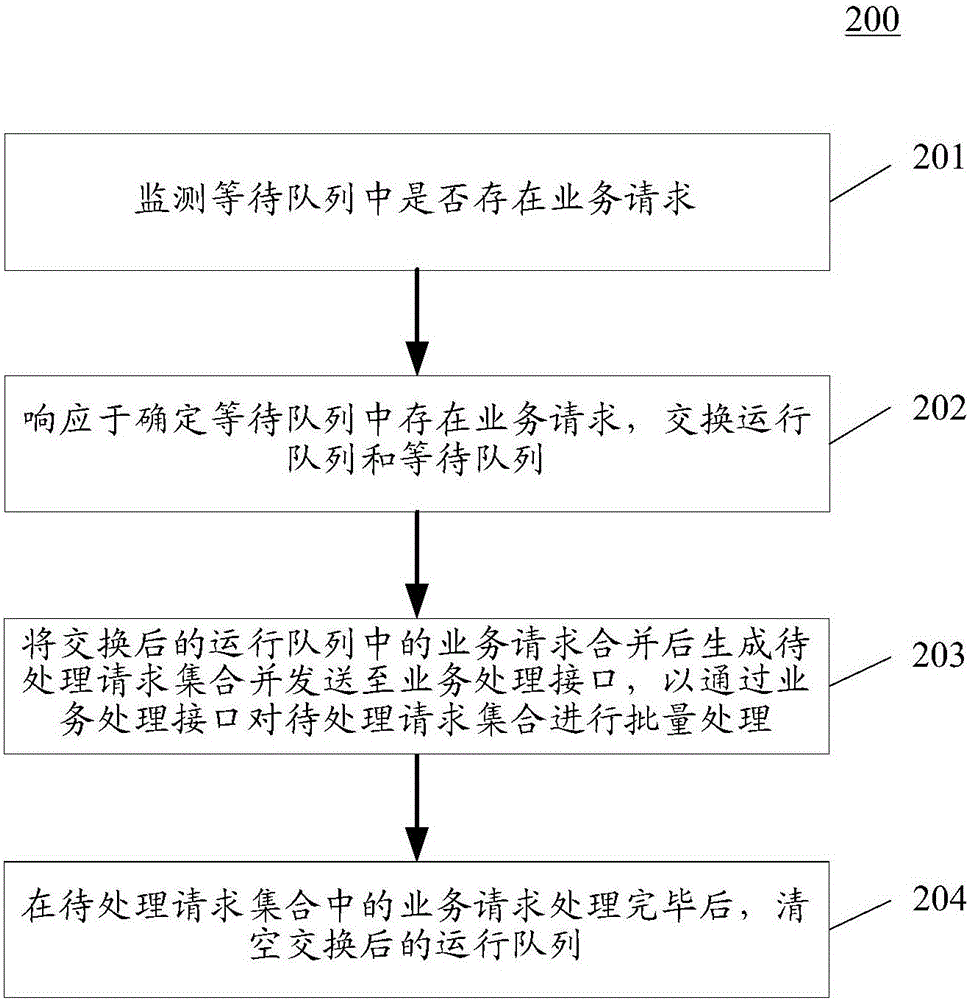

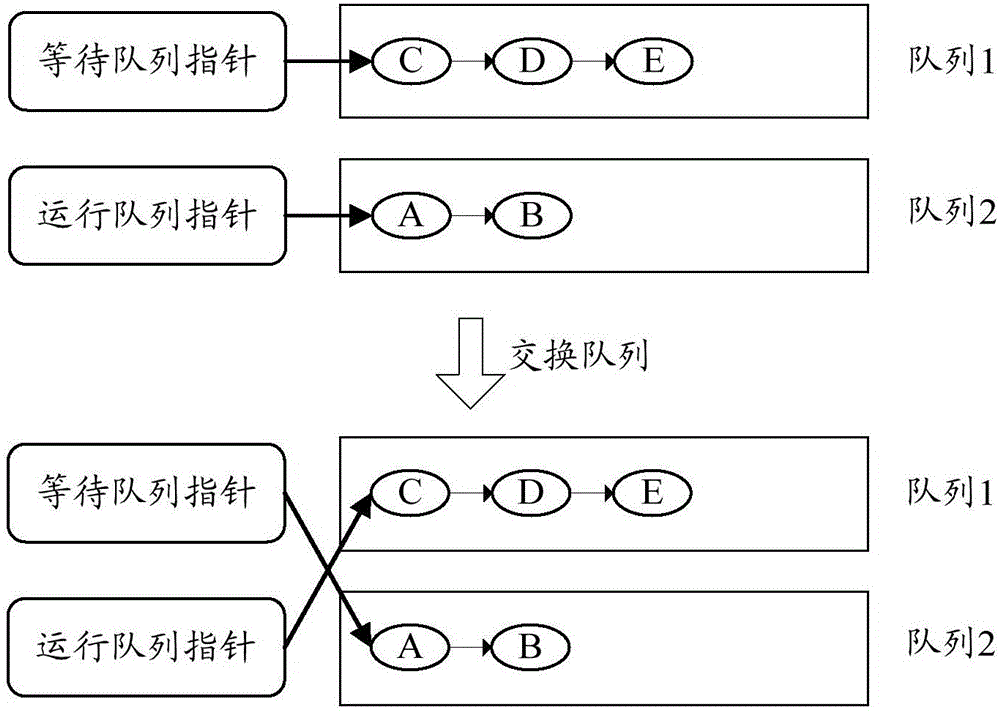

Service scheduling method, device and system

The invention discloses a service scheduling method, device and system. A specific embodiment of the method comprises the steps of: monitoring whether a waiting queue has service requests, wherein the waiting queue is used for storing service requests to be executed; in response to the determined service requests in the waiting queue, exchanging a running queue with the waiting queue, wherein the running queue is used for storing the current executed service request; combining the service requests in the exchanged running queue to generate a to-be-processed request set and sending the to-be-processed request set to a service processing interface, so that the service processing interface processes the to-be-processed request set in batch; and after the service requests in the to-be-processed request set are processed, emptying the exchanged running queue. The embodiment can adaptively adjust the batch processing quantity according to the service demand and the hardware processing capability, thereby improving the service processing efficiency.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

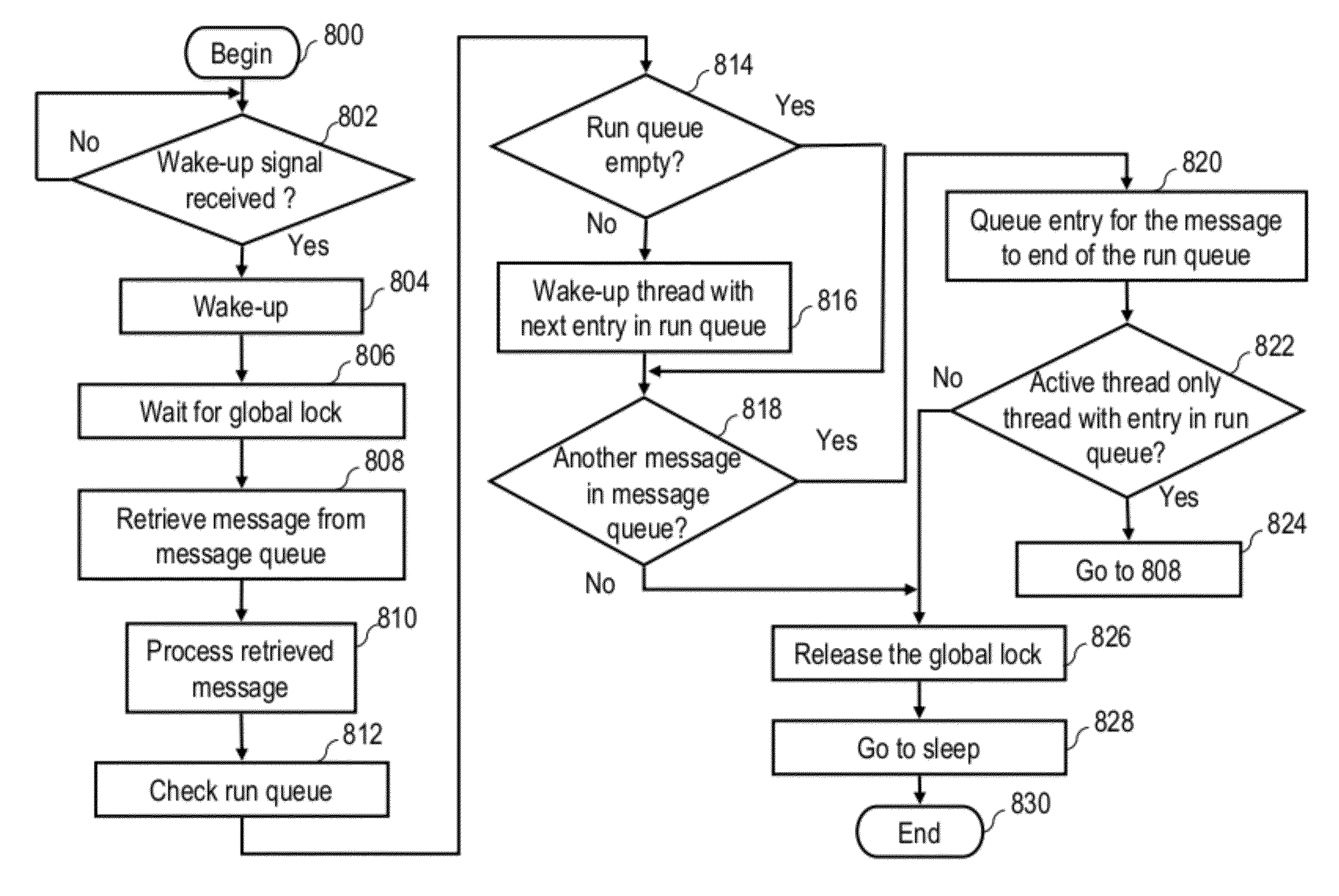

Techniques for executing threads in a computing environment

A technique for executing normally interruptible threads of a process in a non-preemptive manner includes in response to a first entry associated with a first message for a first thread reaching a head of a run queue, receiving, by the first thread, a first wake-up signal. In response to receiving the wake-up signal, the first thread waits for a global lock. In response to the first thread receiving the global lock, the first thread retrieves the first message from an associated message queue and processes the retrieved first message. In response to completing the processing of the first message, the first thread transmits a second wake-up signal to a second thread whose associated entry is next in the run queue. Finally, following the transmitting of the second wake-up signal, the first thread releases the global lock.

Owner:IBM CORP

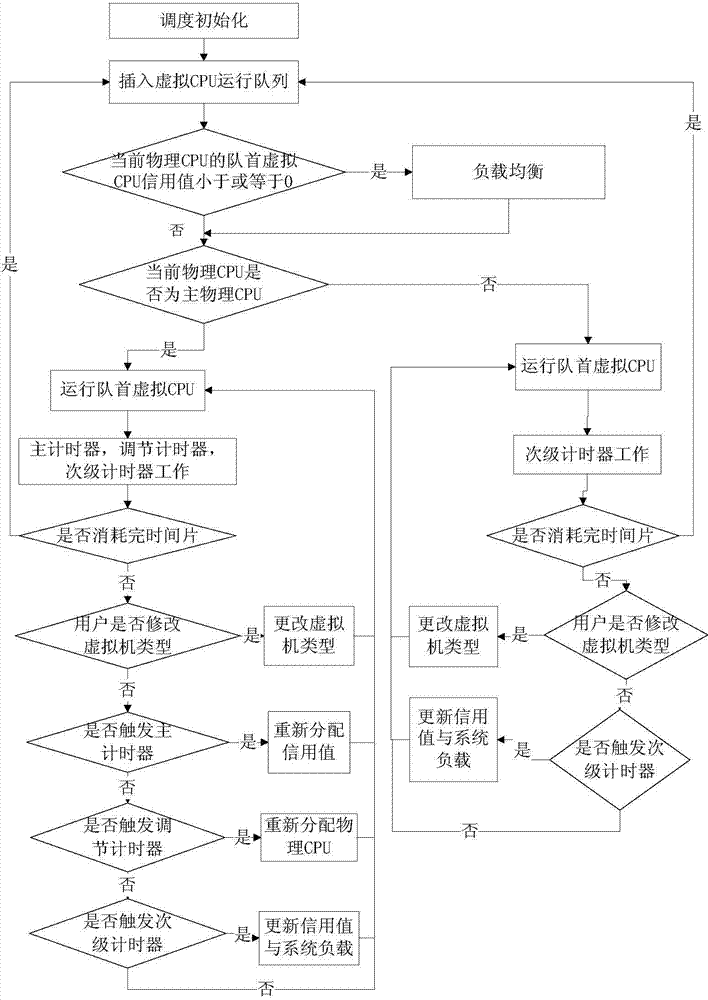

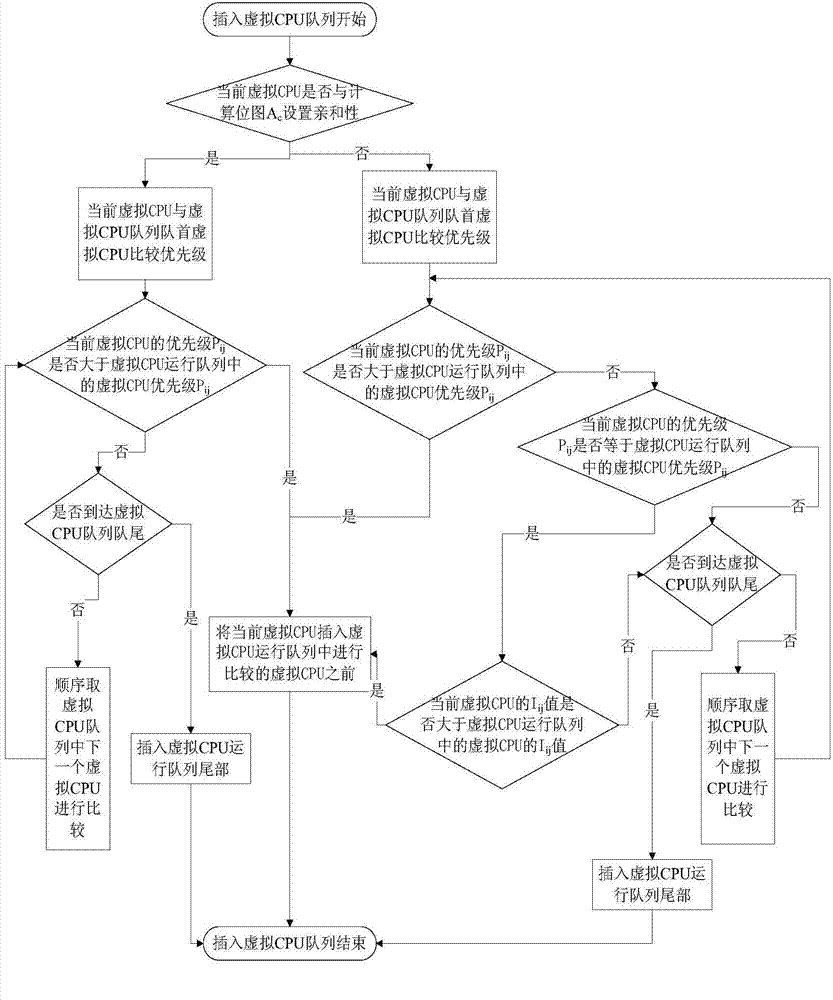

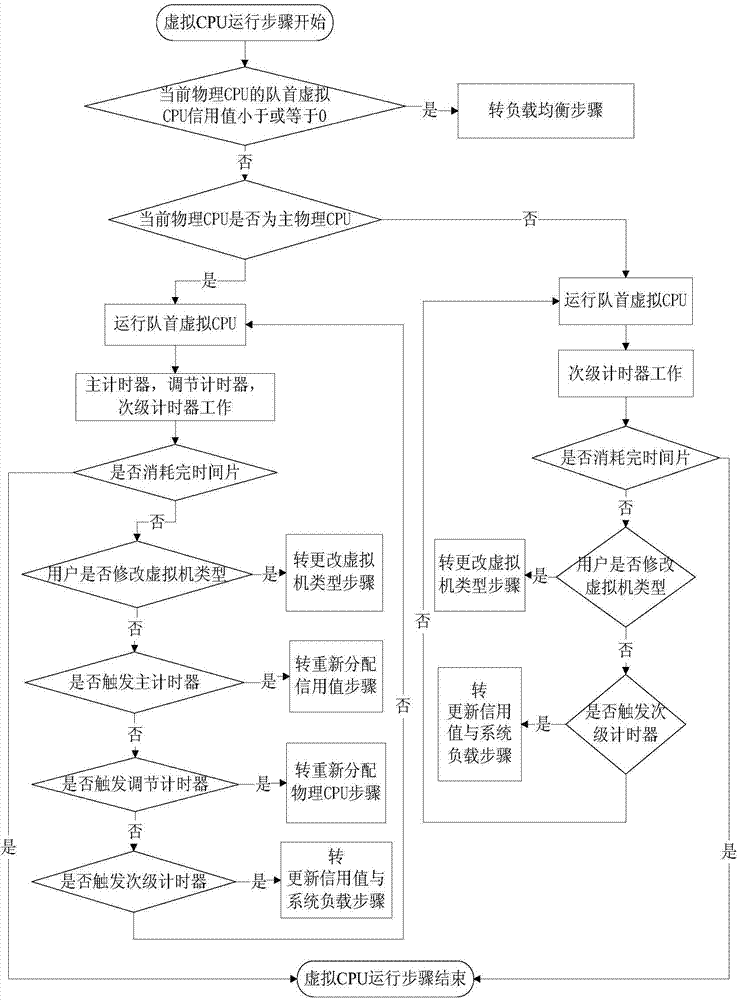

Method for scheduling virtual CPU (Central Processing Unit)

ActiveCN104503838AReduce overheadAdd Urgency IndicatorResource allocationSoftware simulation/interpretation/emulationVirtualizationDistributed computing

The invention discloses a method for scheduling virtual CPU (Central Processing Unit), belongs to the technical field of computer virtualization and solves the problems that I / O processing can not be responded in time, load characteristics can not be satisfied and a load balancing strategy in a traditional scheduling algorithm is too simple. The method comprises the following steps: carrying out scheduling initialization, inserting into a virtual CPU running queue, carrying out virtual CPU operation, carrying out load balancing, updating a credit value and system load, reassigning the credit value, reassigning physical CPUs and revising the type of a virtual machine. The virtual machine is divided into three classes, carries out dynamic isolation on different types of load and is bound to two groups of physical CPU with different types of load to run, and different time slices are given to the virtual CPU on which different types of load are operated to improve operation efficiency and guarantee I / O performance. The virtual CPU scheduling method redesigns the load balancing strategy, and selects the strategy with minimum migration expenses in addition to that the isolation of different types of load is guaranteed, and the problem that the load balancing strategy in the traditional scheduling algorithm is too simple is solved.

Owner:HUAZHONG UNIV OF SCI & TECH

Computer system with dual operating modes

ActiveUS7849311B2Digital data processing detailsAnalogue secracy/subscription systemsComputerized systemOperation mode

The present invention is a system that switches between non-secure and secure modes by making processes, applications and data for the non-active mode unavailable to the active mode. That is, non-secure processes, applications and data are not accessible when in the secure mode and visa versa. This is accomplished by creating dual hash tables where one table is used for secure processes and one for non-secure processes. A hash table pointer is changed to point to the table corresponding to the mode. The path-name look-up function that traverses the path name tree to obtain a device or file pointer is also restricted to allow traversal to only secure devices and file pointers when in the secure mode and only to non-secure devices and files in the non-secure mode. The process thread run queue is modified to include a state flag for each process that indicates whether the process is a secure or non-secure process. A process scheduler traverses the queue and only allocates time to processes that have a state flag that matches the current mode. Running processes are marked to be idled and are flagged as unrunnable, depending on the security mode, when the process reaches an intercept point. The switch operation validates the switch process and pauses the system for a period of time to allow all running processes to reach an intercept point and be marked as unrunnable. After all the processes are idled, the hash table pointer is changed, the look-up control is changed to allow traversal of the corresponding security mode branch of the file name path tree, and the scheduler is switched to allow only threads that have a flag that corresponds to the security mode to run. The switch process is then put to sleep and a master process, either secure or non-secure, depending on the mode, is then awakened.

Owner:MORGAN STANLEY +1

System and method for scheduling compatible threads in a simultaneous multi-threading processor using cycle per instruction value occurred during identified time interval

ActiveUS7360218B2Improve performanceDigital computer detailsMultiprogramming arrangementsComputer compatibilityInstruction cycle

A system and method for identifying compatible threads in a Simultaneous Multithreading (SMT) processor environment is provided by calculating a performance metric, such as cycles per instruction (CPI), that occurs when two threads are running on the SMT processor. The CPI that is achieved when both threads were executing on the SMT processor is determined. If the CPI that was achieved is better than the compatibility threshold, then information indicating the compatibility is recorded. When a thread is about to complete, the scheduler looks at the run queue from which the completing thread belongs to dispatch another thread. The scheduler identifies a thread that is (1) compatible with the thread that is still running on the SMT processor (i.e., the thread that is not about to complete), and (2) ready to execute. The CPI data is continually updated so that threads that are compatible with one another are continually identified.

Owner:META PLATFORMS INC

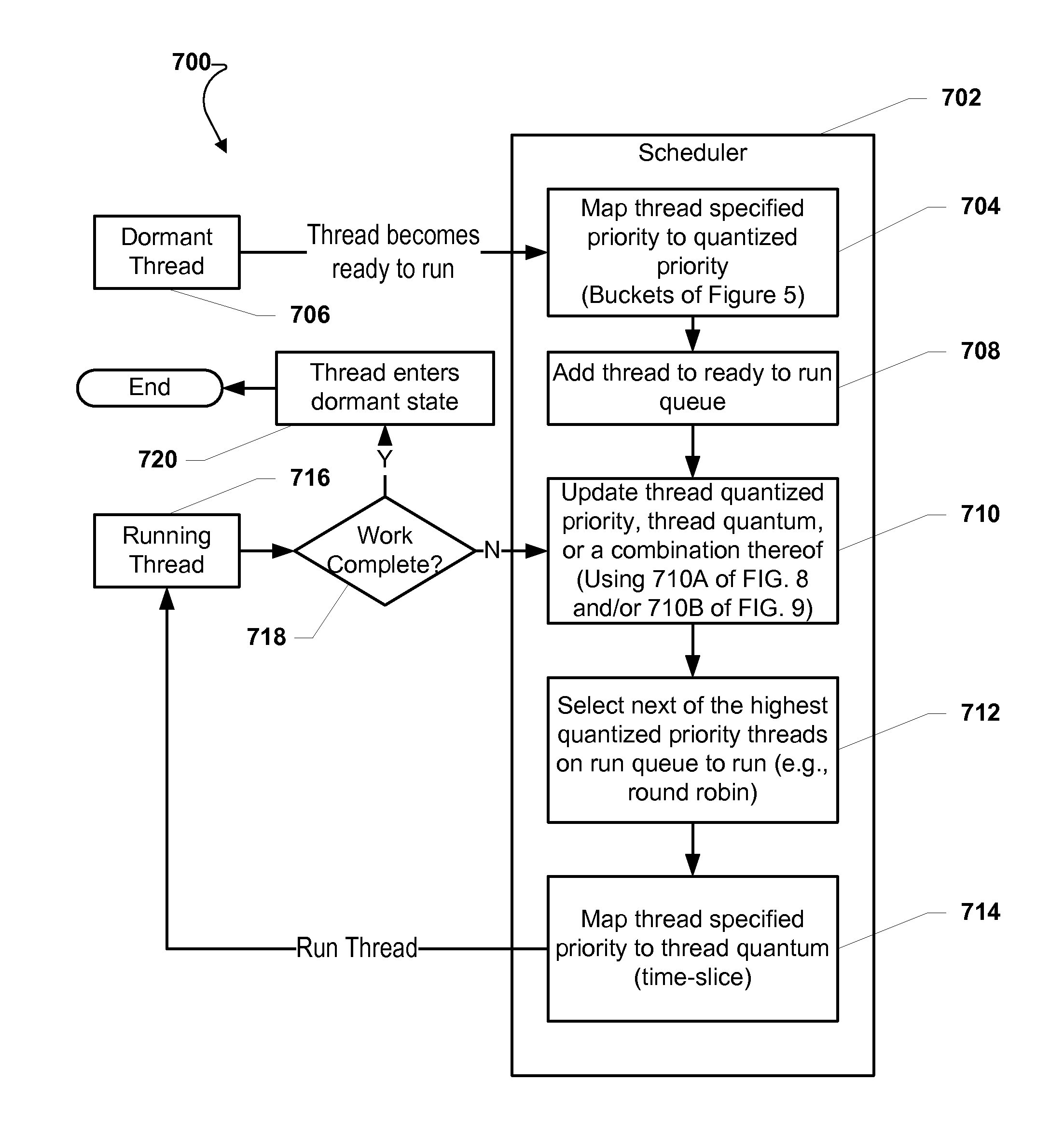

System and method of executing threads at a processor

A method and system for executing a plurality of threads are described. The method may include mapping a thread specified priority value associated with a dormant thread to a thread quantized priority value associated with the dormant thread if the dormant thread becomes ready to run. The method may further include adding the dormant thread to a ready to run queue and updating the thread quantized priority value. A thread quantum value associated with the dormant thread may also be updated, or a combination of the quantum value and quantized priority value may be both updated.

Owner:QUALCOMM INC

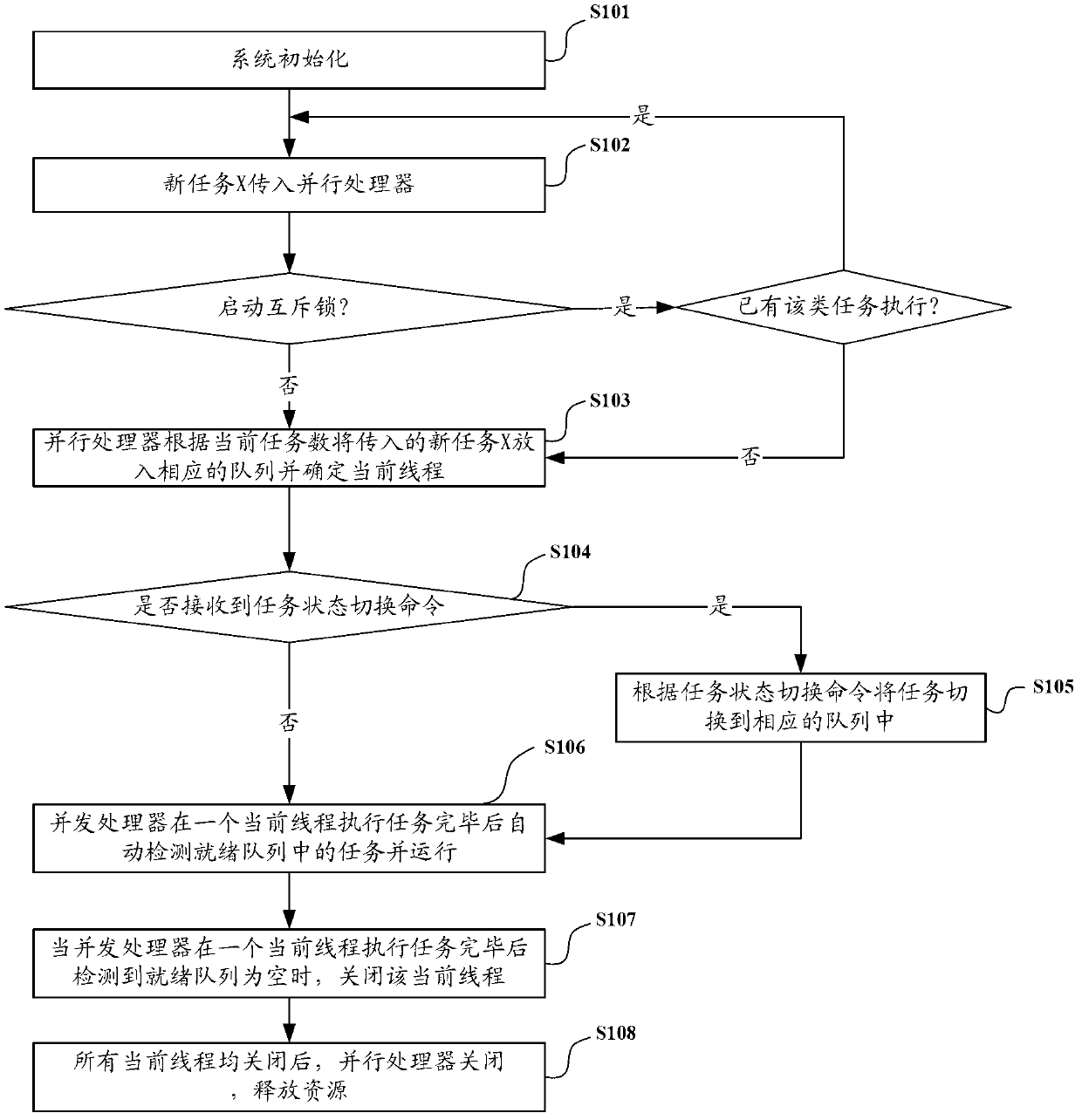

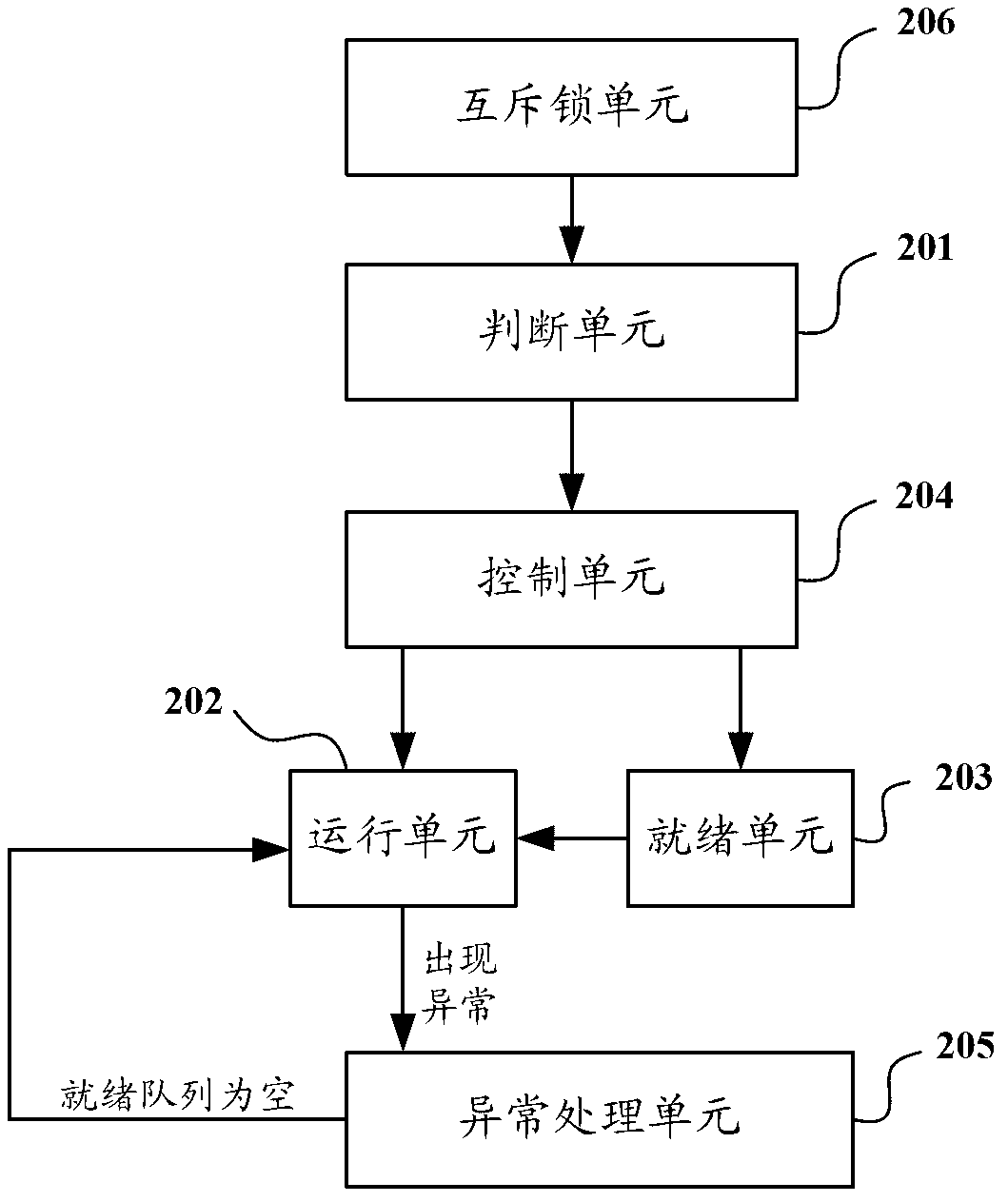

Multi-task concurrent processing method and device for Android system

InactiveCN103279331AAvoid exceptionPrevent crashConcurrent instruction executionDistributed computingRun queue

The invention discloses multi-task concurrent processing method and device for an Android system. The method includes: after receiving a new incoming task, a concurrent processor judges whether the number of current threads is smaller than a set maximum thread number or not; if yes, after the new incoming task is put in a running queue, one current thread is created for the new task and used for executing the new incoming task; if not, the new incoming task is put in a ready queue; if the current thread created by the concurrent processor is executed, the top task in the ready queue is moved to the running queue by the concurrent processor, and one current thread for executing the task is created for the task; and if the current thread created by the concurrent processor is suspended or abnormal during execution, the current thread is moved to a suspension and anomaly queue and is then moved to the ready queue when an onRestart command is received.

Owner:NO 15 INST OF CHINA ELECTRONICS TECH GRP

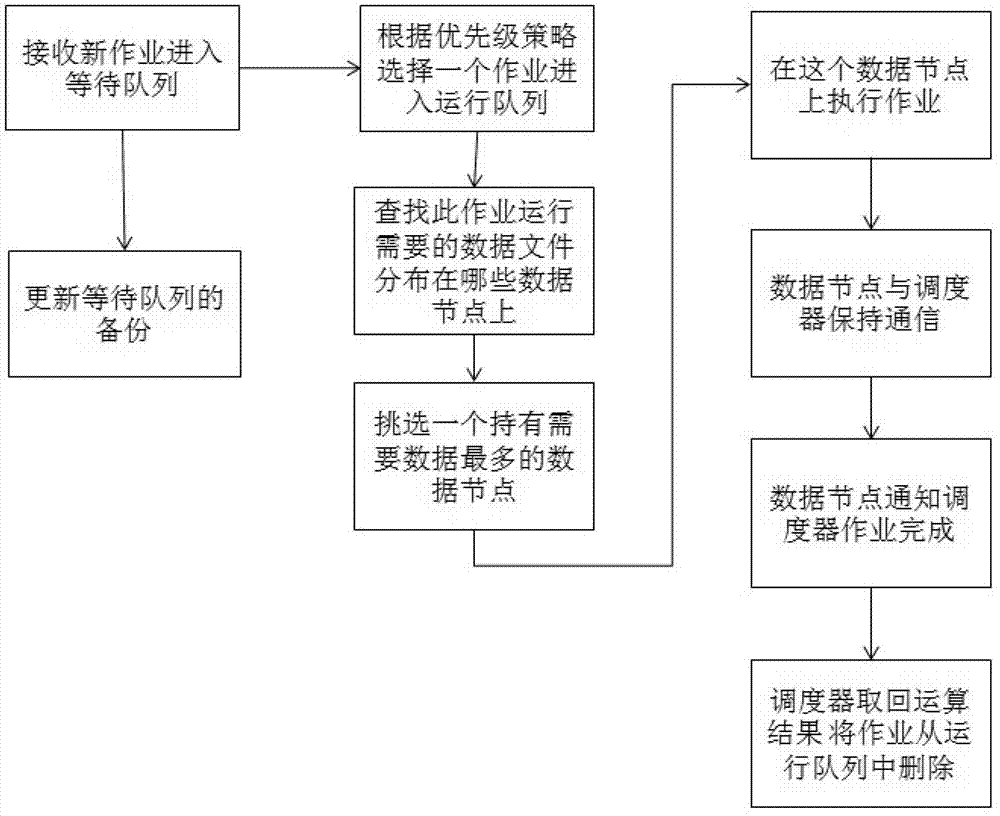

Massive geoscientific data parallel processing method based on distributed file system

The invention discloses a massive geoscientific data parallel processing method based on a distributed file system. The massive geoscientific data parallel processing method comprises the following steps: 1), taking the distributed file system as a storage system of geoscientific data, and deploying the distributed file system on a computing cluster, wherein the distributed file system has a unified name space; 2), storing received calculating tasks in a waiting queue by a task scheduling system of the computing cluster; 3), selecting one calculating task from the waiting queue by the scheduling system, and entering a running queue; 4), according to information of the calculating task, searching a computing node of a data file required by running the calculating task from metadata of the distributed file system by the scheduling system; and 5), selecting the computing node possessing the maximum data required by running the calculating task by the task scheduling system, remotely acquiring a data file, which is required by the calculating task, but not possessed by the computing node, executing the calculating task at the computing node, and returning an execution result. By the massive geoscientific data parallel processing method, computation localization is achieved to the maximum extent.

Owner:COMP NETWORK INFORMATION CENT CHINESE ACADEMY OF SCI

Ordering tasks scheduled for execution based on priority and event type triggering the task, selecting schedulers for tasks using a weight table and scheduler priority

ActiveUS9417912B2Program initiation/switchingSoftware simulation/interpretation/emulationEvent typeRun queue

A virtual machine monitor and a scheduling method thereof is provided. The virtual machine monitor may operate at least two domains. The virtual machine monitor may include at least one run queue and a plurality of schedulers, at least two of the plurality of schedulers comprising different scheduling characteristics. The virtual machine monitor may insert a task received from the domain into the run queue and may select a scheduler for scheduling the task, which may be inserted into the run queue, from the schedulers, according to an event type.

Owner:SAMSUNG ELECTRONICS CO LTD

Simulating a multi-queue scheduler using a single queue on a processor

A method and system for scheduling tasks on a processor, the tasks being scheduled by an operating system to run on the processor in a predetermined order, the method comprising identifying and creating task groups of all related tasks; assigning the tasks in the task groups into a single common run-queue; selecting a task at the start of the run-queue; determining if the task at the start of the run-queue is eligible to be run based on a pre-defined timeslice allocated and on the presence of older starving tasks on the runqueue; executing the task in the pre-defined time slice; associating a starving status to all unexecuted tasks and running all until all tasks in the run-queue complete execution and the run-queue become empty.

Owner:INT BUSINESS MASCH CORP

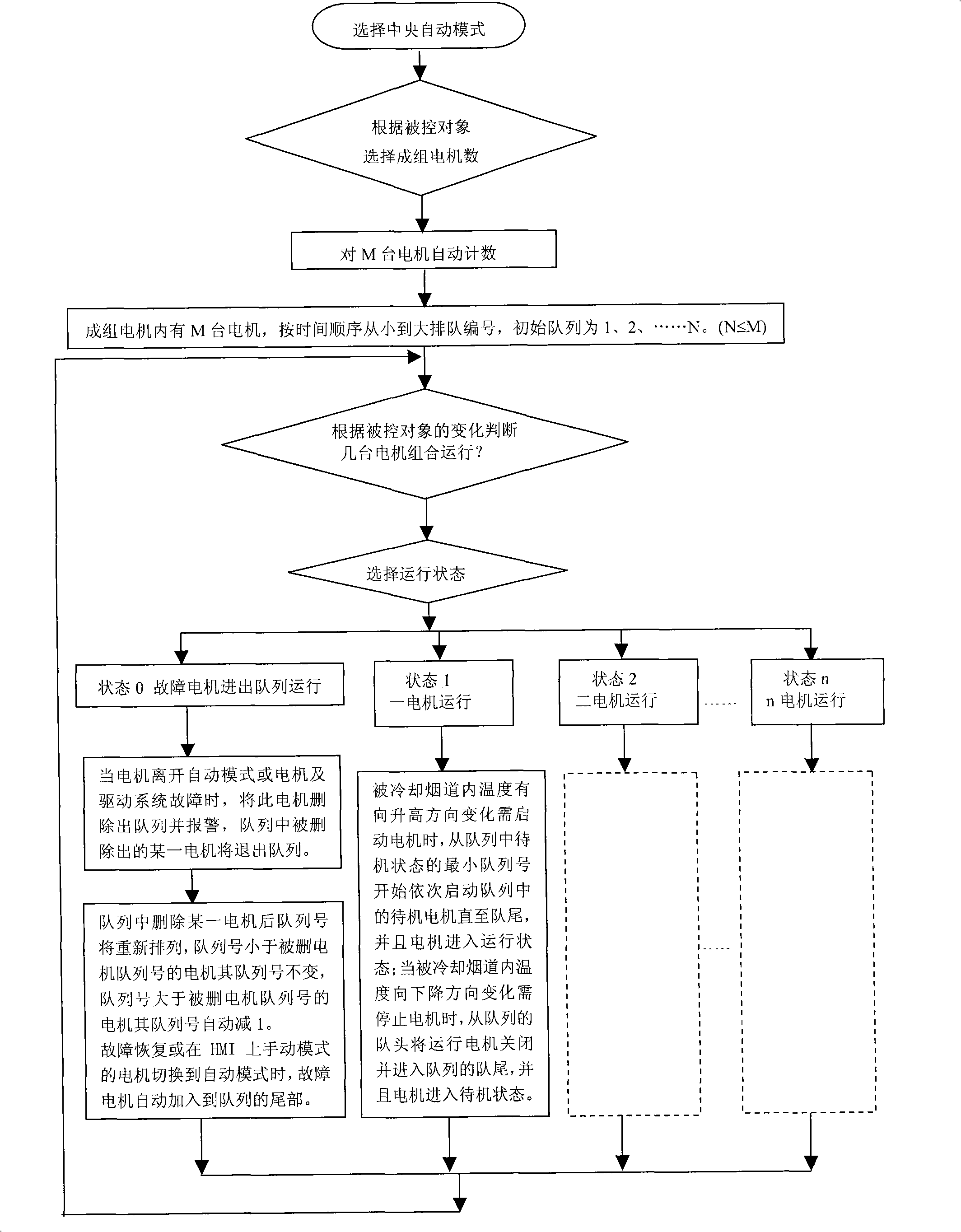

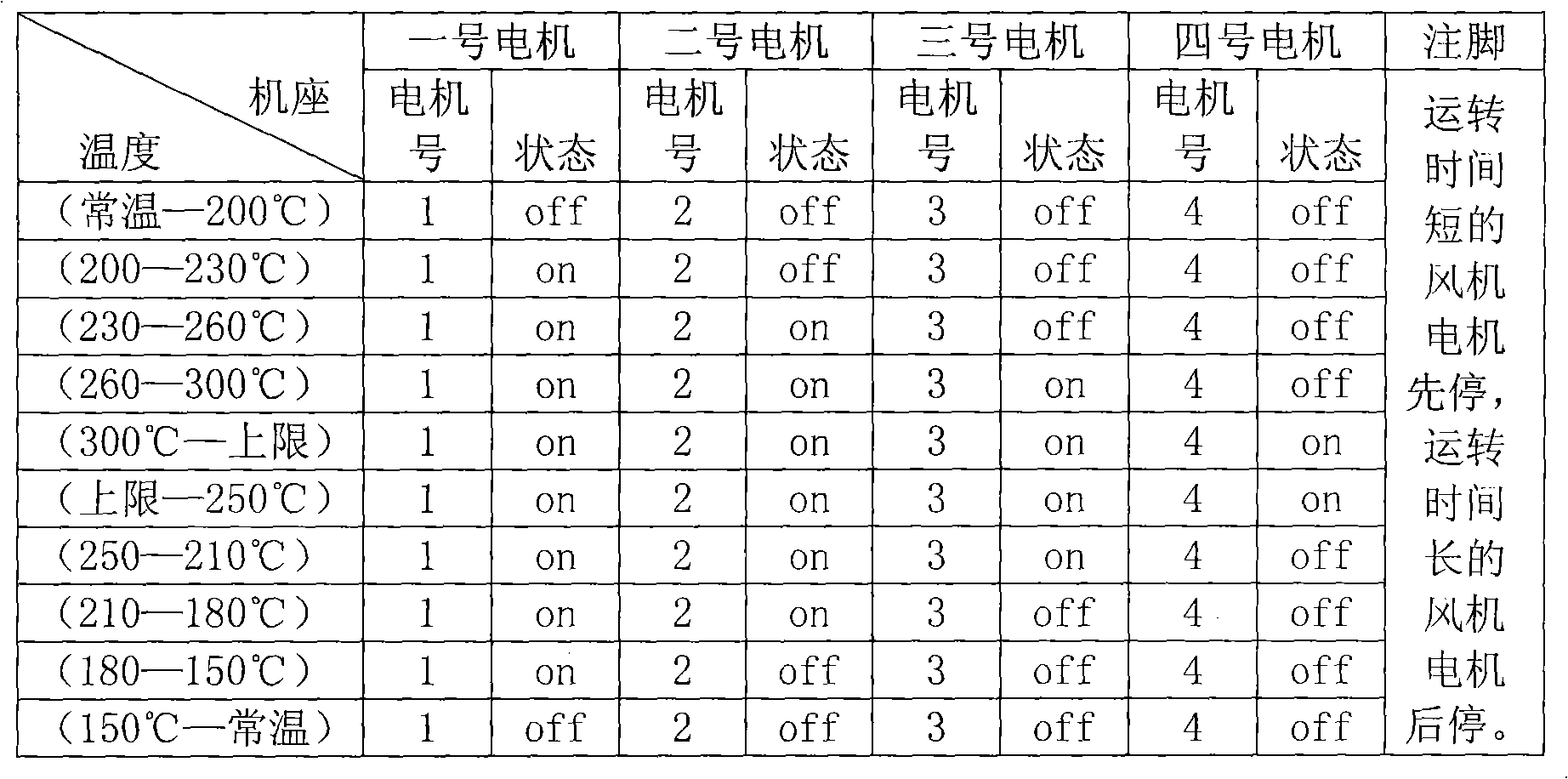

Control method for equilibrium operation of converter dust-removing flue cooling fan

InactiveCN101275173AOvercome the defect of last start first stopGuaranteed uptimeManufacturing convertersTemperature control using electric meansQueue numberAutomatic control

The invention discloses a method for evenly controlling the running of cooling fan in convertor dust removal flue, the control method comprises the steps of: orderly starting up engines in a state of standby in the queue from the minimum queue number to the end of the queue and leading the engines to the running state, when the temperature in the cooling flue raises and the engines are in need of starting-up; shutting down the running engines from the head of running queue, enabling the end of queue entering the queue to become a new end of queue and leading the engines to the standby state, when the temperature in the cooling flue drops and the engines are in need of shutdown. The invention automatically controls the engines according to the principal first-startup-first-shutdown, the engine running in a long time has the priority of shutdown so that each engine runs evenly; under the condition of fluctuating interval temperature, each engine can averagely share the times of startup and shutdown, which can prolong the service life of the apparatus and improve the dependability. The invention can be suitable for the apparatus including other controlled objects in need of adjustment and using a plurality of engines.

Owner:BAOSHAN IRON & STEEL CO LTD

Apparatus and method for conducting load balance to multi-processor system

A method, system and apparatus for integrating a system task scheduler with a workload manager are provided. The scheduler is used to assign default priorities to threads and to place the threads into run queues and the workload manager is used to implement policies set by a system administrator. One of the policies may be to have different classes of threads get different percentages of a system's CPU time. This policy can be reliably achieved if threads from a plurality of classes are spread as uniformly as possible among the run queues. To do so, the threads are organized in classes. Each class is associated with a priority as per a use-policy. This priority is used to modify the scheduling priority assigned to each thread in the class as well as to determine in which band or range of priority the threads fall. Then periodically, it is determined whether the number of threads in a band in a run queue exceeds the number of threads in the band in another run queue by more than a pre-determined number. If so, the system is deemed to be load-imbalanced. If not, the system is load-balanced by moving one thread in the band from the run queue with the greater number of threads to the run queue with the lower number of threads.

Owner:INT BUSINESS MASCH CORP

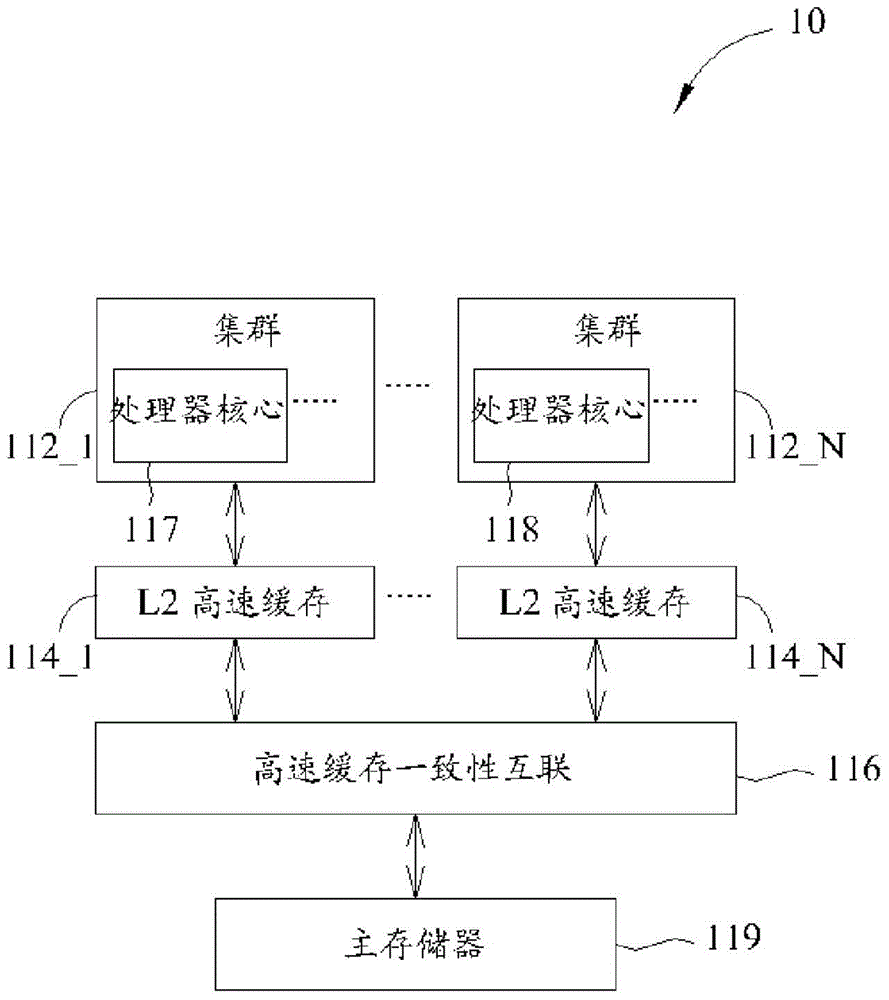

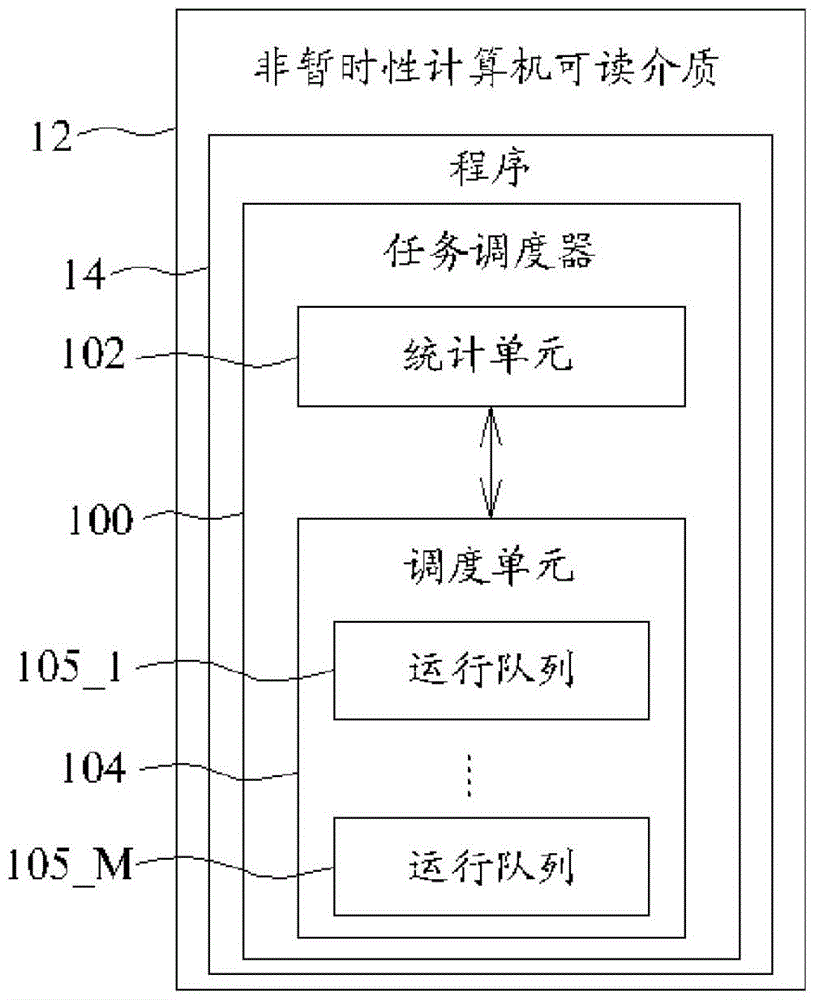

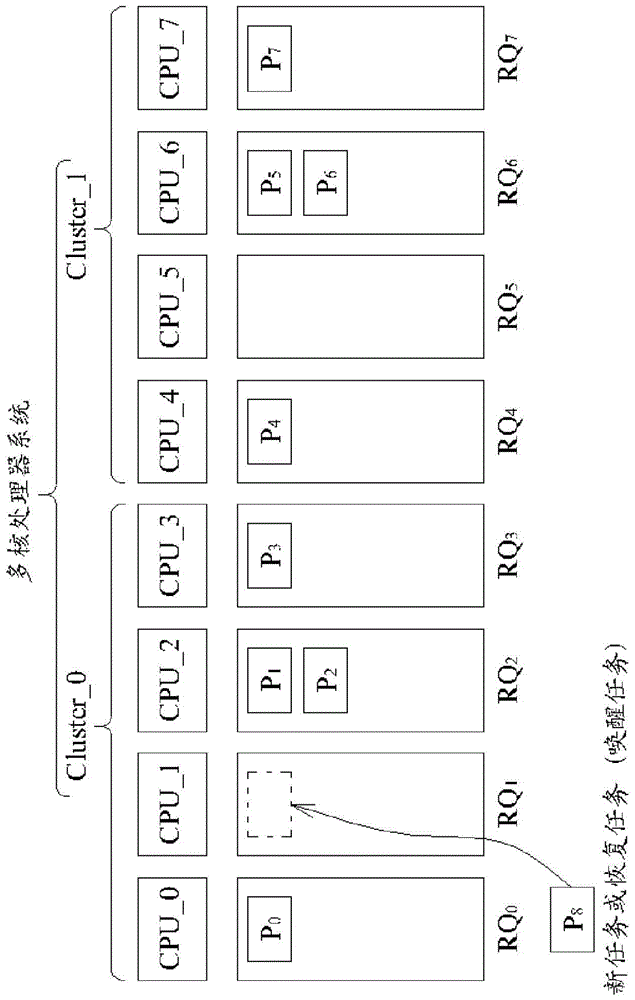

Task scheduling method and related non-transitory computer readable medium for dispatching task in multi-core processor system based at least partly on distribution of tasks sharing same data and/or accessing same memory address (ES)

A task scheduling method for a multi-core processor system includes at least the following steps: when a first task belongs to a thread group currently in the multi-core processor system, where the thread group has a plurality of tasks sharing same specific data and / or accessing same specific memory address (es), and the tasks comprise the first task and at least one second task, determining a target processor core in the multi-core processor system based at least partly on distribution of the at least one second task in at least one run queue of at least one processor core in the multi-core processor system, and dispatching the first task to a run queue of the target processor core.

Owner:MEDIATEK INC

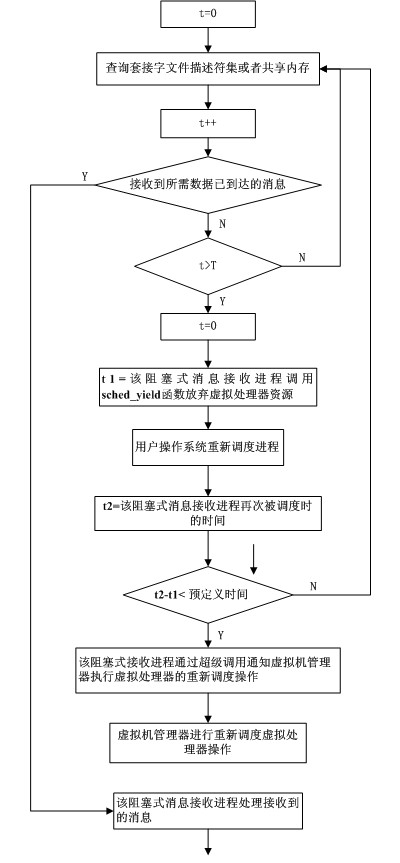

Method for receiving message passing interface (MPI) information under circumstance of over-allocation of virtual machine

InactiveCN101968749AImprove communication performanceImprove performanceMultiprogramming arrangementsSoftware simulation/interpretation/emulationOperational systemMessage Passing Interface

The invention discloses a method for receiving message passing interface (MPI) information under the circumstance of over-allocation of a virtual machine, which comprises the following steps: polling a socket file descriptor set or shared memory, invoking a sched_yield function, and releasing the currently-occupied virtual processor resource of the process in a blocking information receiving process; inquiring the run queue of a virtual processor and selecting a process which can be scheduled to carry out scheduling operation by a user operating system in a virtual machine comprising the virtual processor; when the blocking information receiving process is re-scheduled to operate, judging whether needing to notify a virtual machine manager of executing the rescheduling operation of the virtual processor; and executing the rescheduling operation of the virtual processor by the virtual machine manager through super invoking in the blocking information receiving process, and dealing withthe received information in the blocking information receiving process. The invention can reduce the performance loss caused by 'busy waiting' phenomenon produced by an MPI library information receiving mechanism.

Owner:HUAZHONG UNIV OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com