Patents

Literature

186 results about "Processor node" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Processor nodes. The processor nodes drive all functions in the storage system. Each node consists of a Power® server that contains POWER7® or POWER7+™ processors and memory.

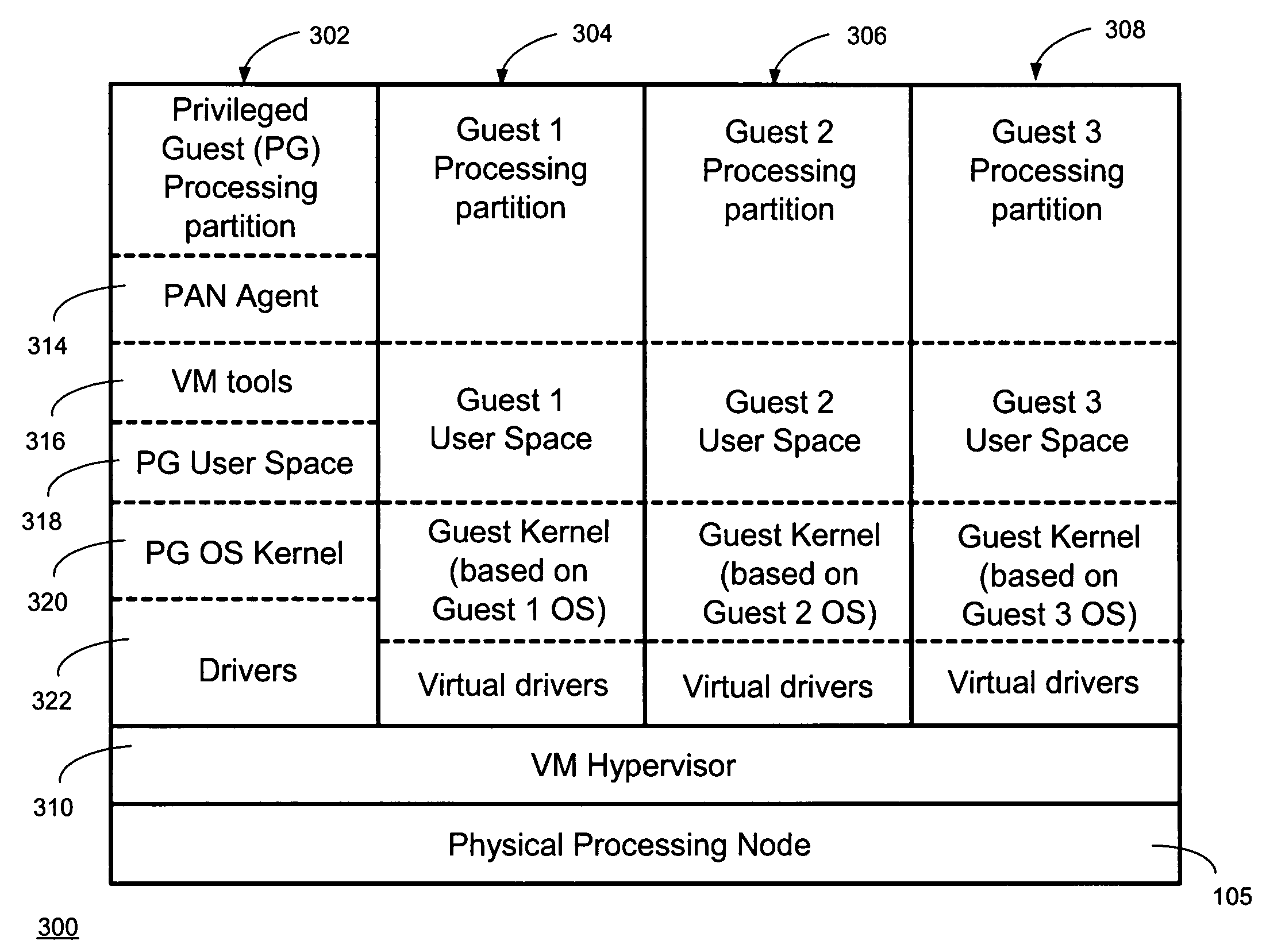

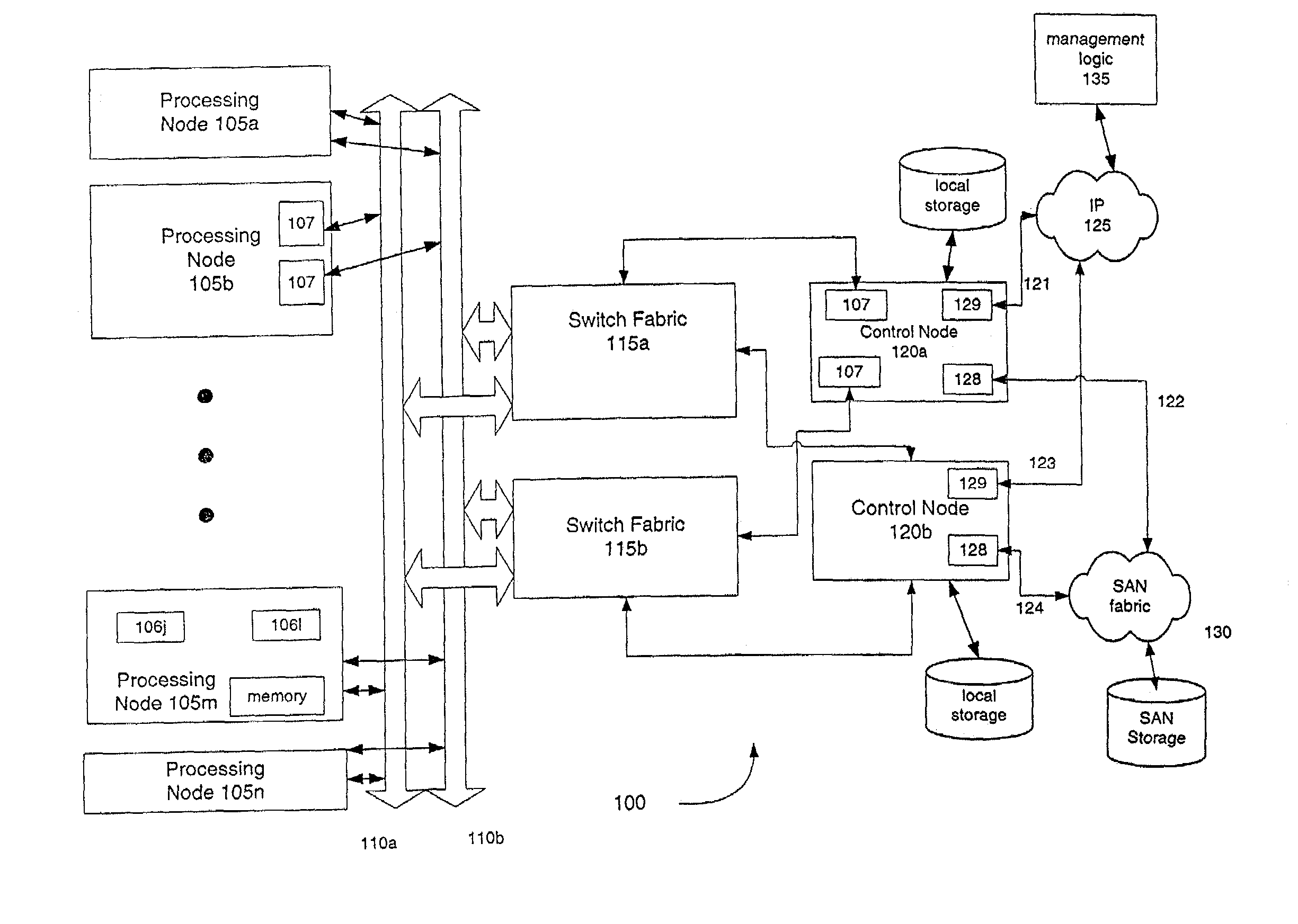

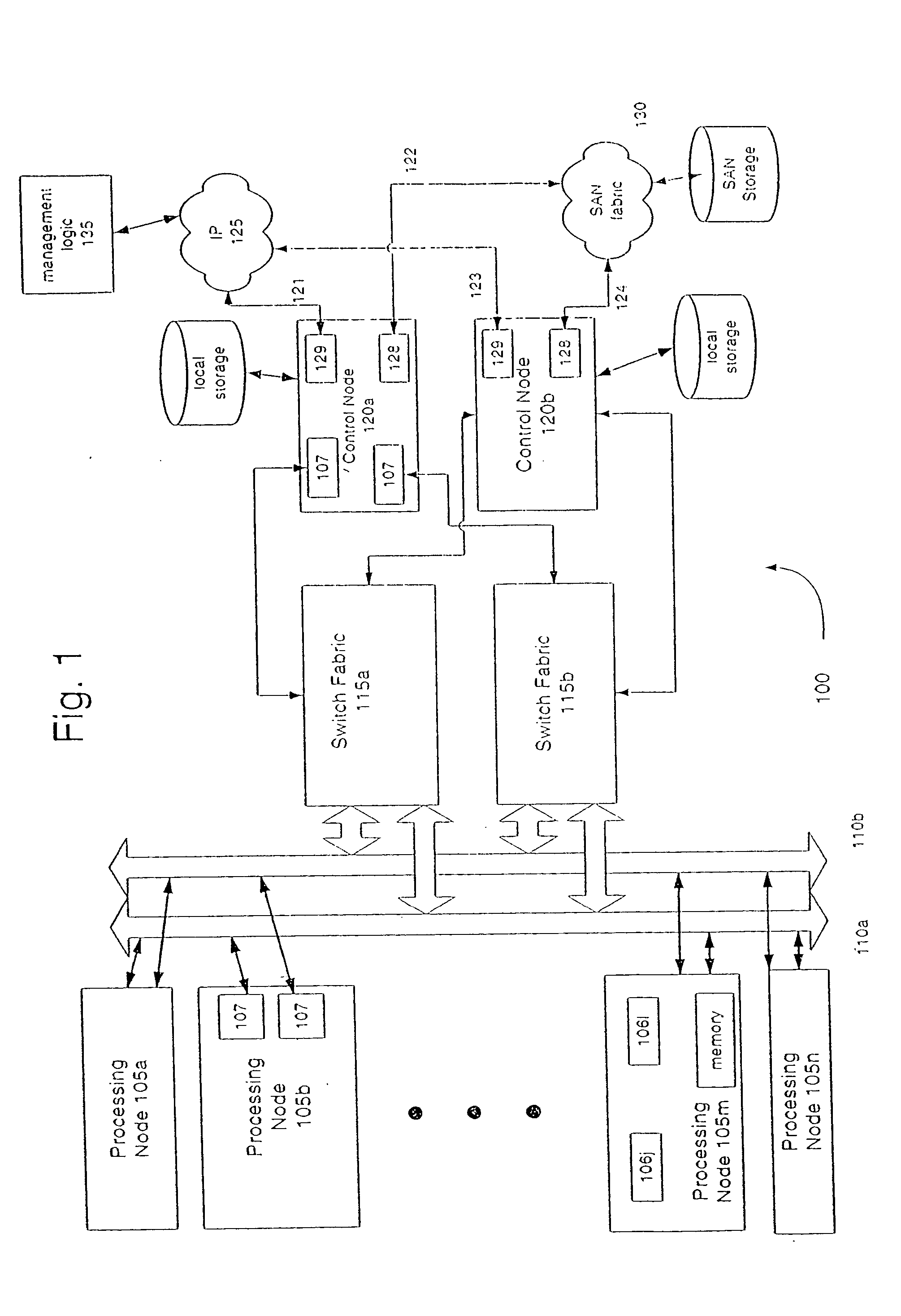

Providing virtual machine technology as an embedded layer within a processing platform

InactiveUS20080059556A1Satisfies specificationMultiple digital computer combinationsProgram controlAuto-configurationProcessor node

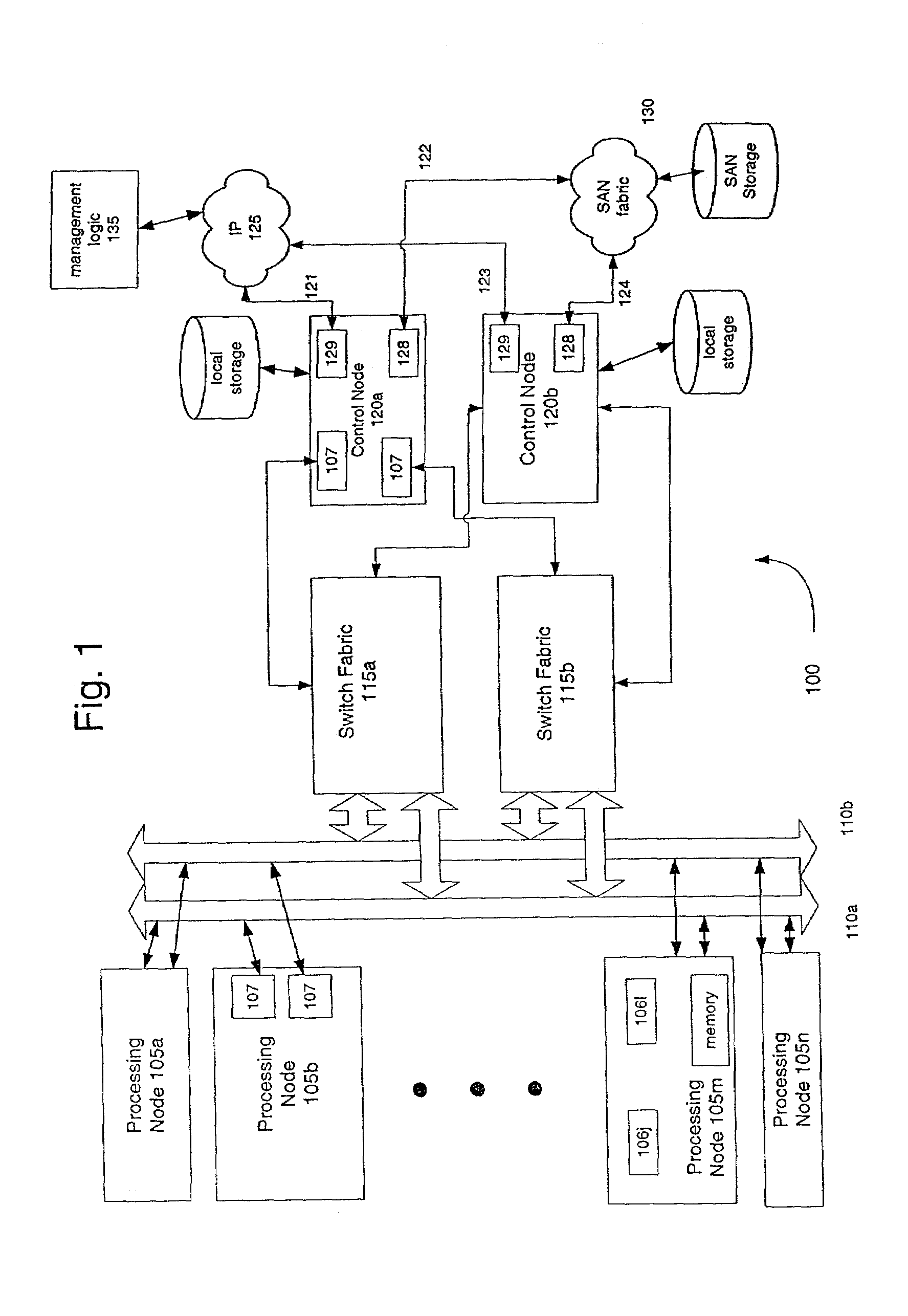

A platform, method, and computer program product, provides virtual machine technology within a processing platform. A computing platform automatically deploys one or more servers in response to receiving corresponding server specifications. Each server specification identifies a server application that a corresponding server should execute and defines communication network and storage network connectivity for the server. The platform includes a plurality of processor nodes and virtual machine hypervisor. The virtual machine hypervisor logic has logic for instantiating and controlling the execution of one or more guest virtual machines on a computer processor. In response to interpreting the server specification, control software deploys computer processors or guest virtual machines to execute the identified server application and automatically configures the defined communication network and storage network connectivity to the selected computer processors or guest virtual machines to thereby deploy the server defined in the server specification.

Owner:EGENERA

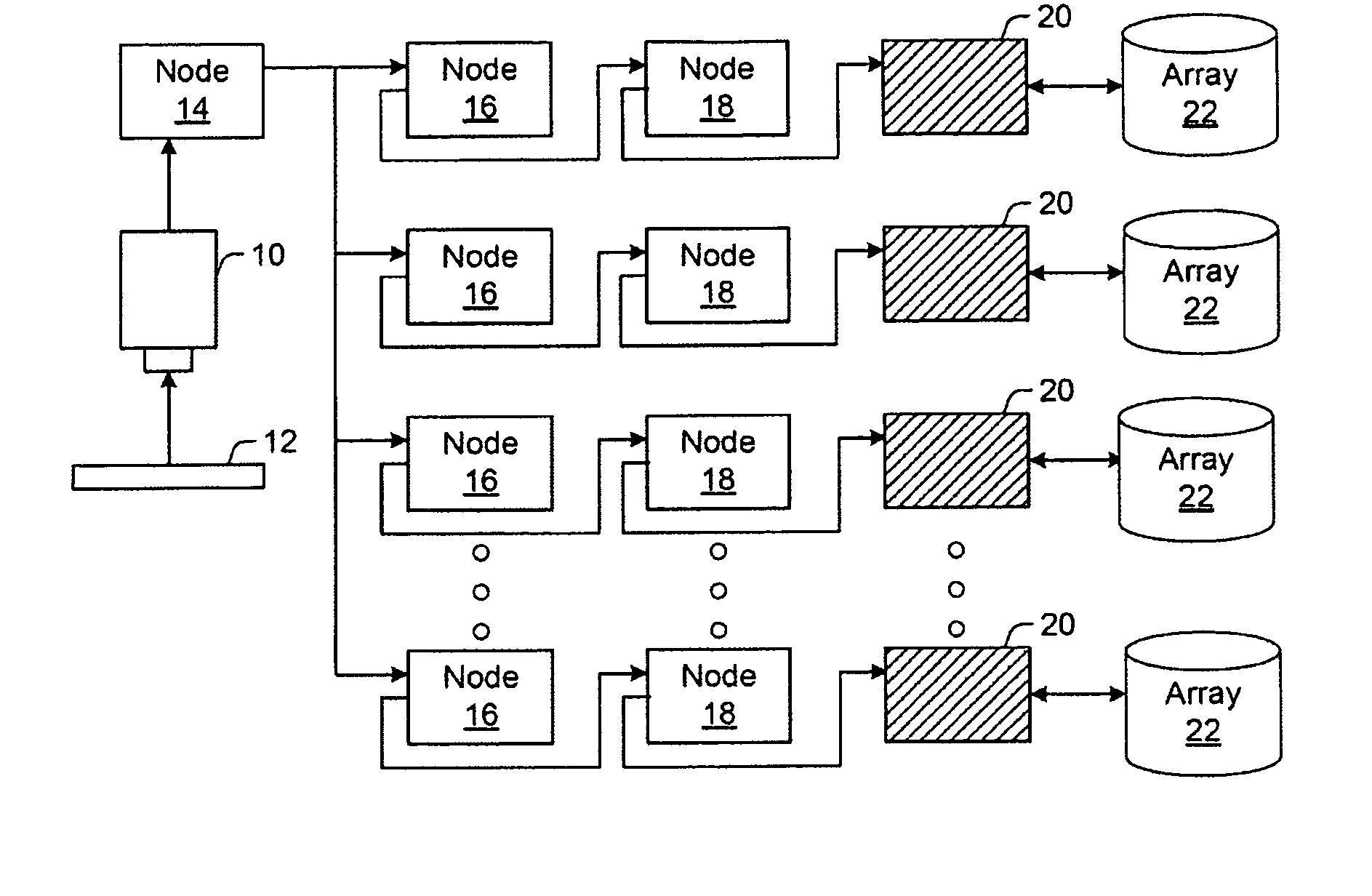

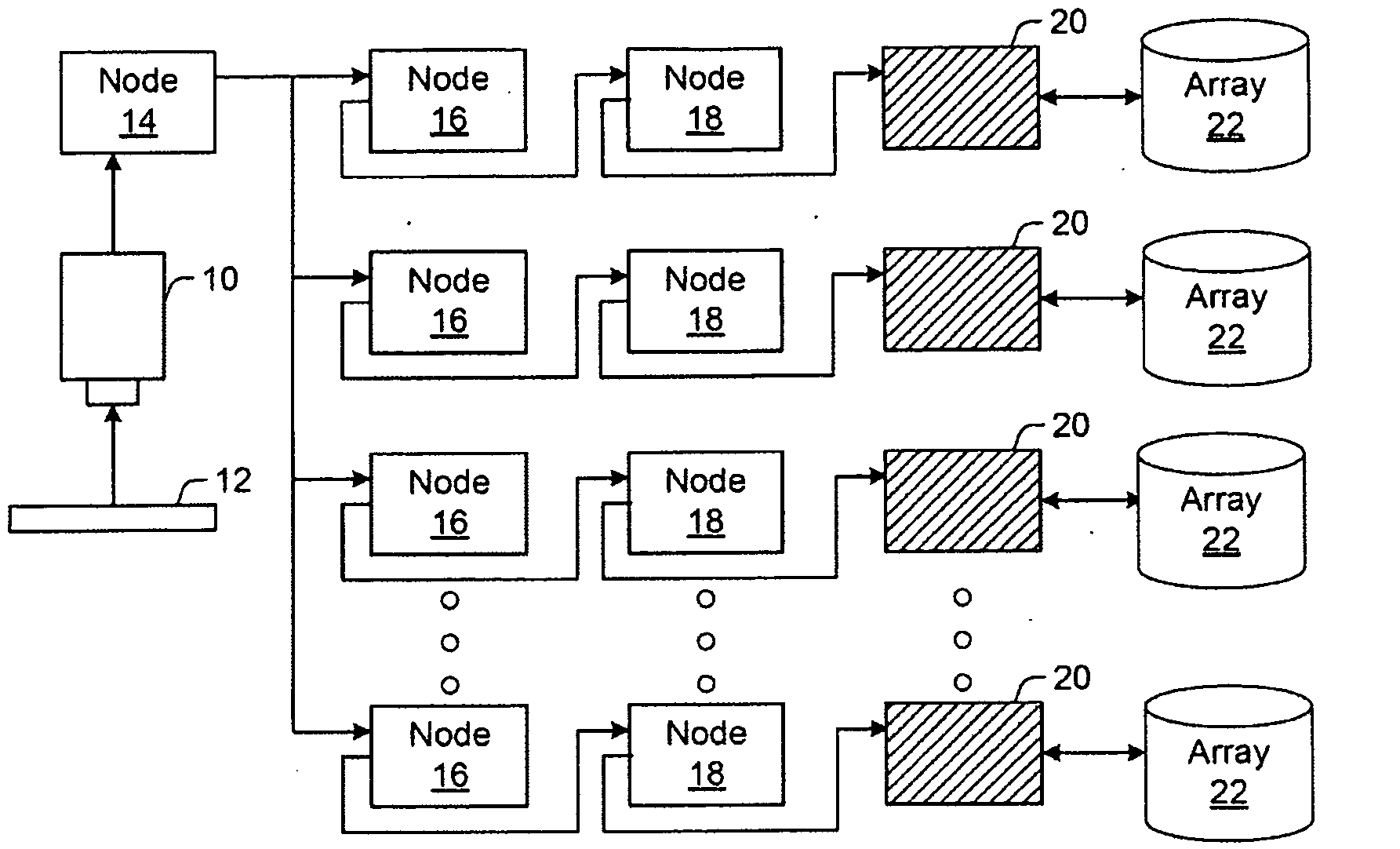

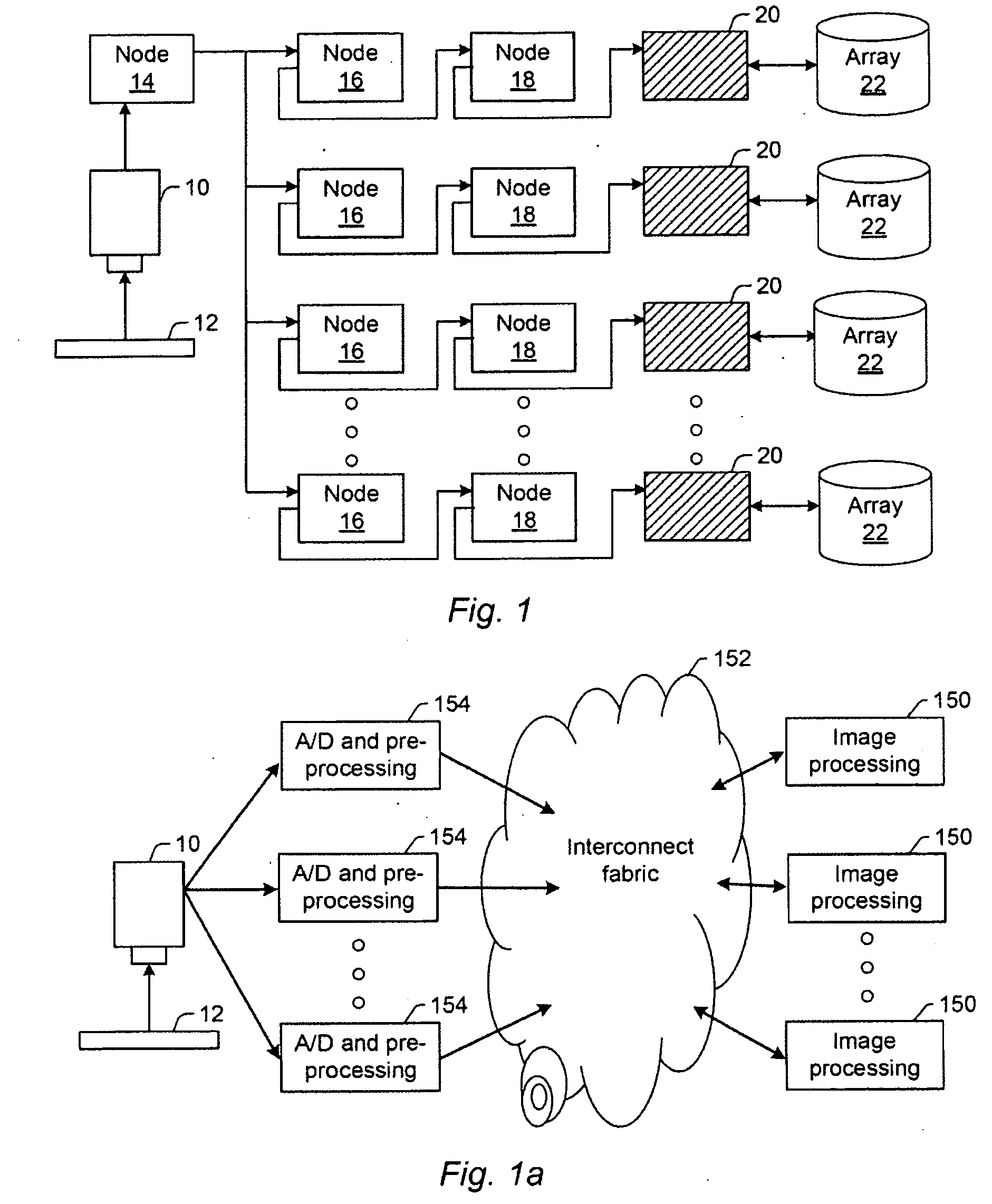

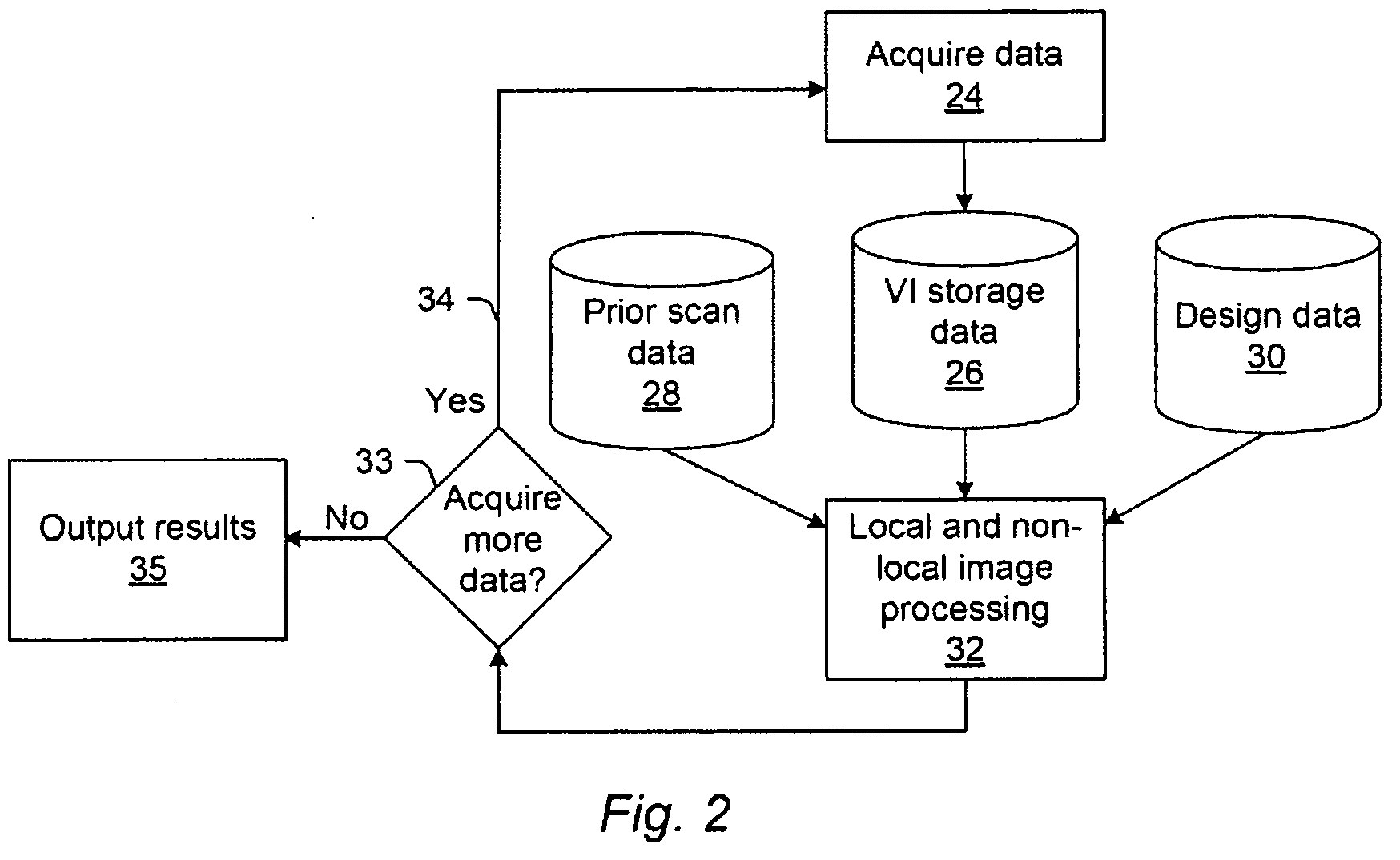

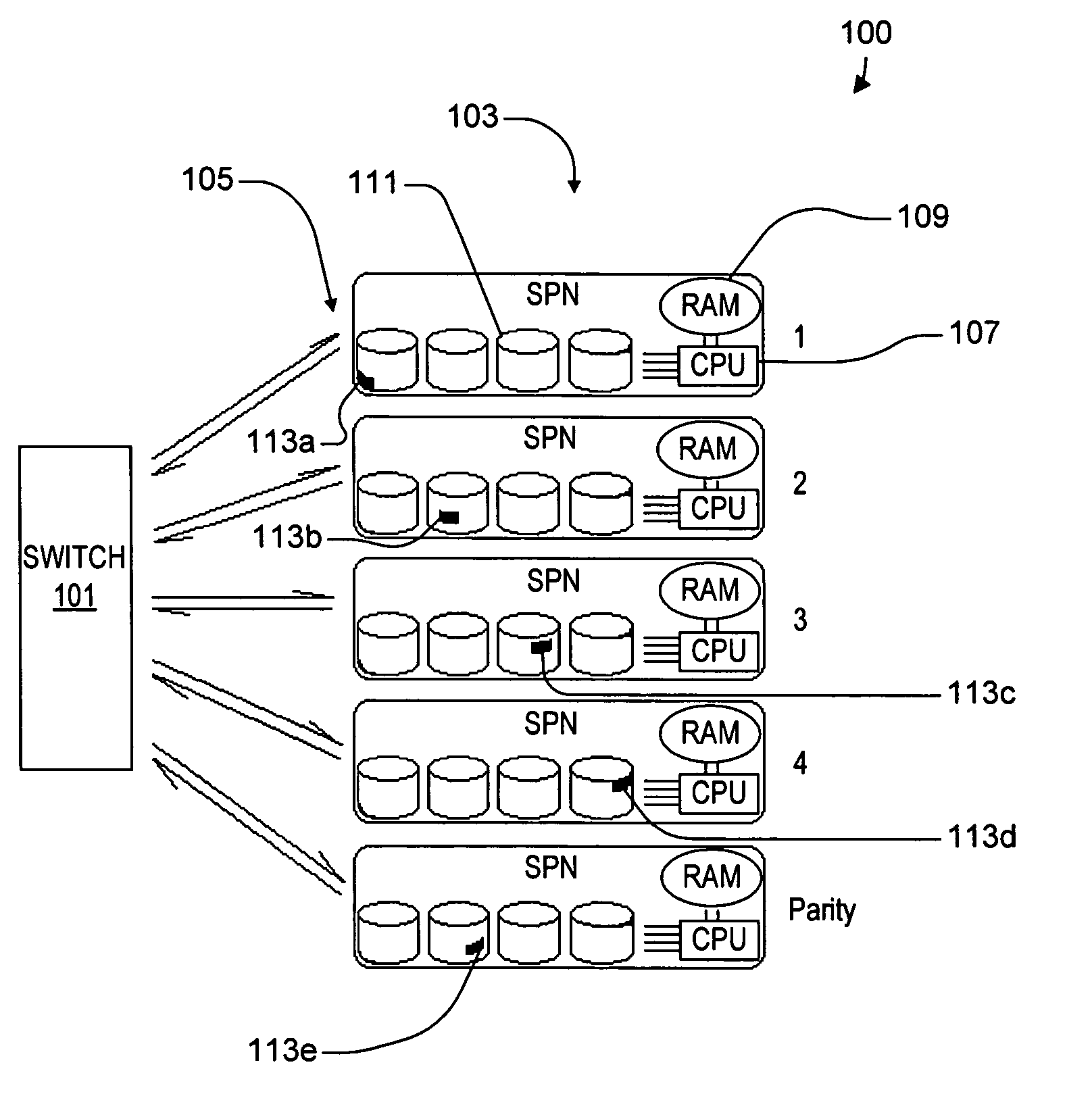

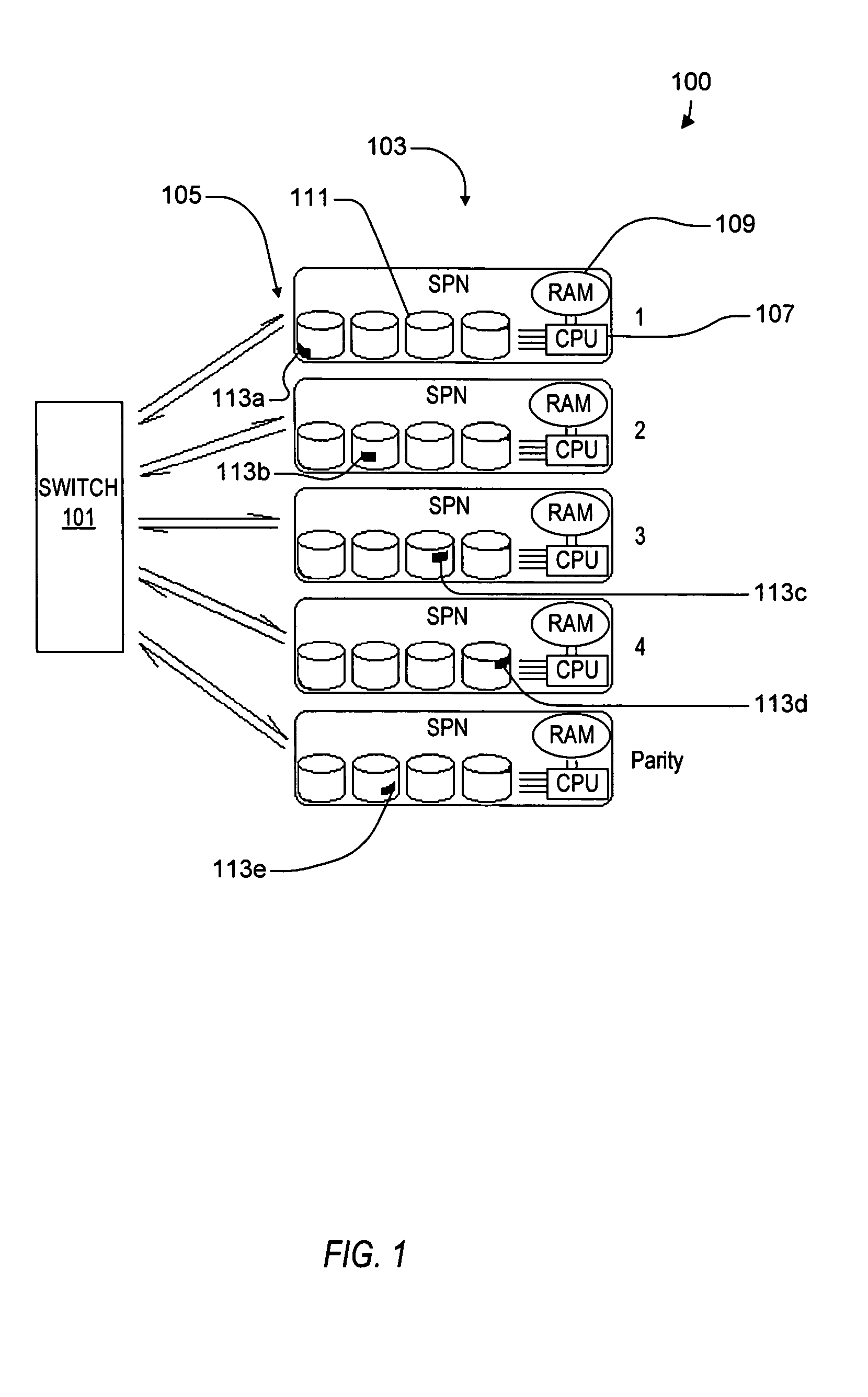

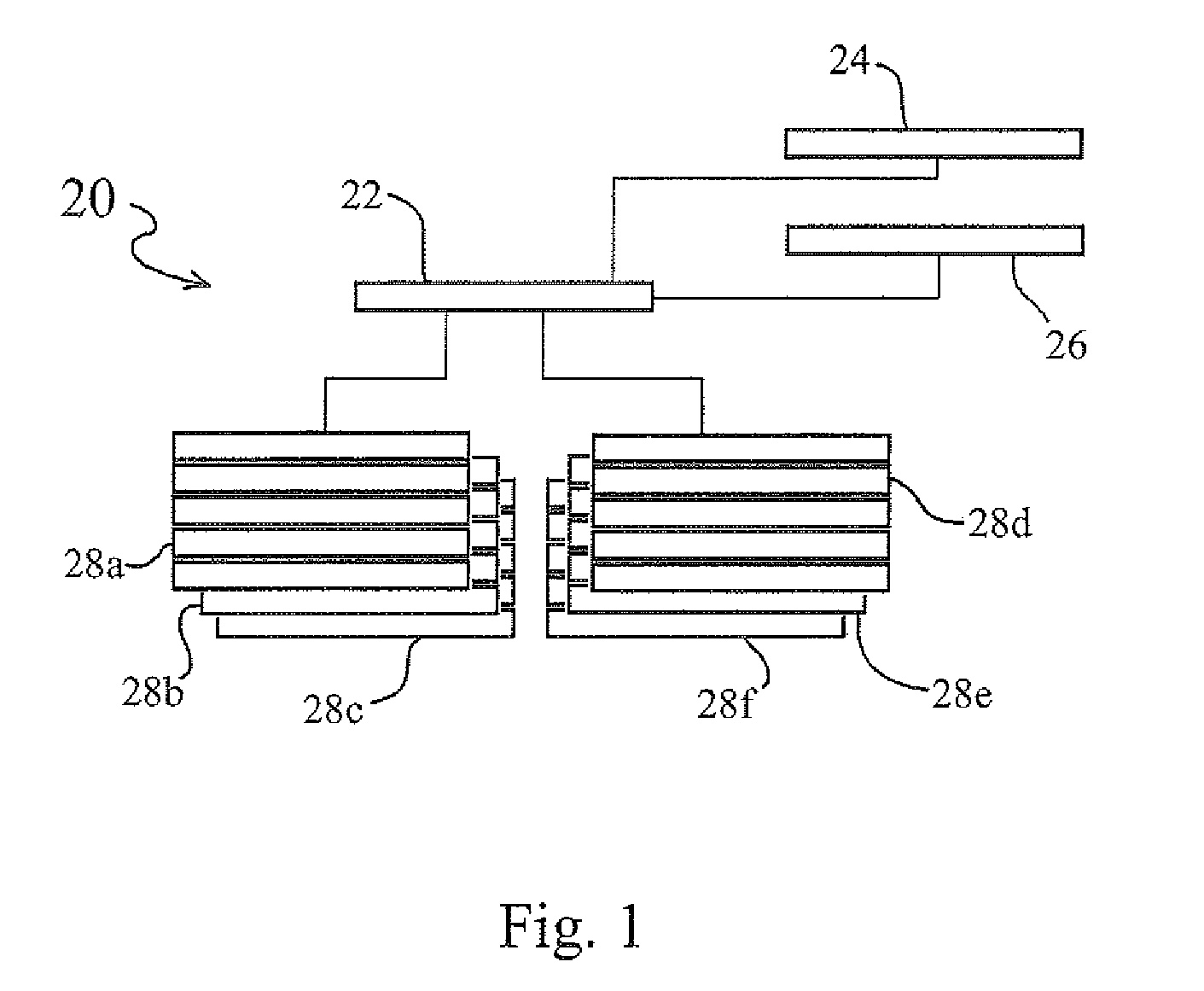

Systems and methods for creating persistent data for a wafer and for using persistent data for inspection-related functions

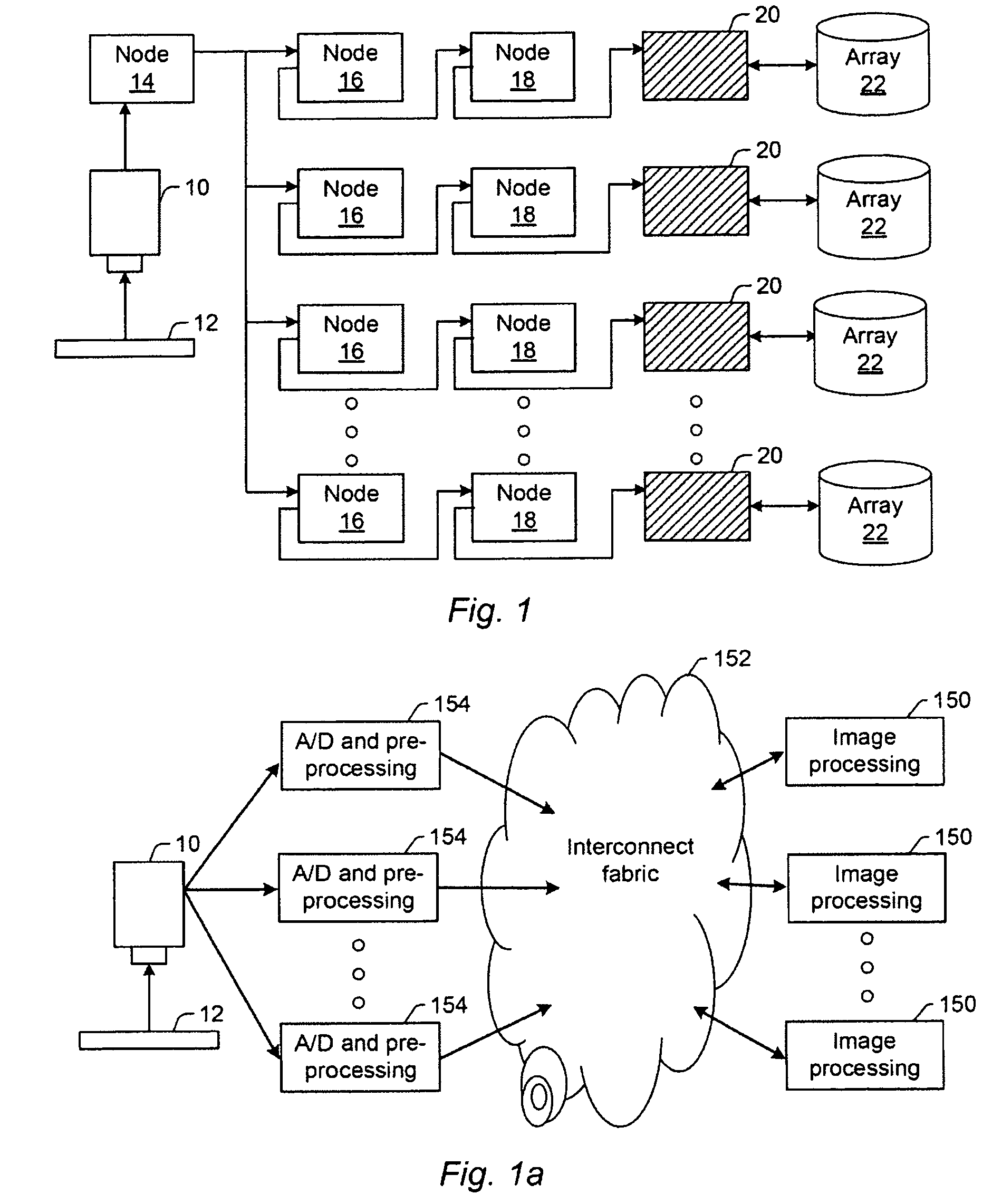

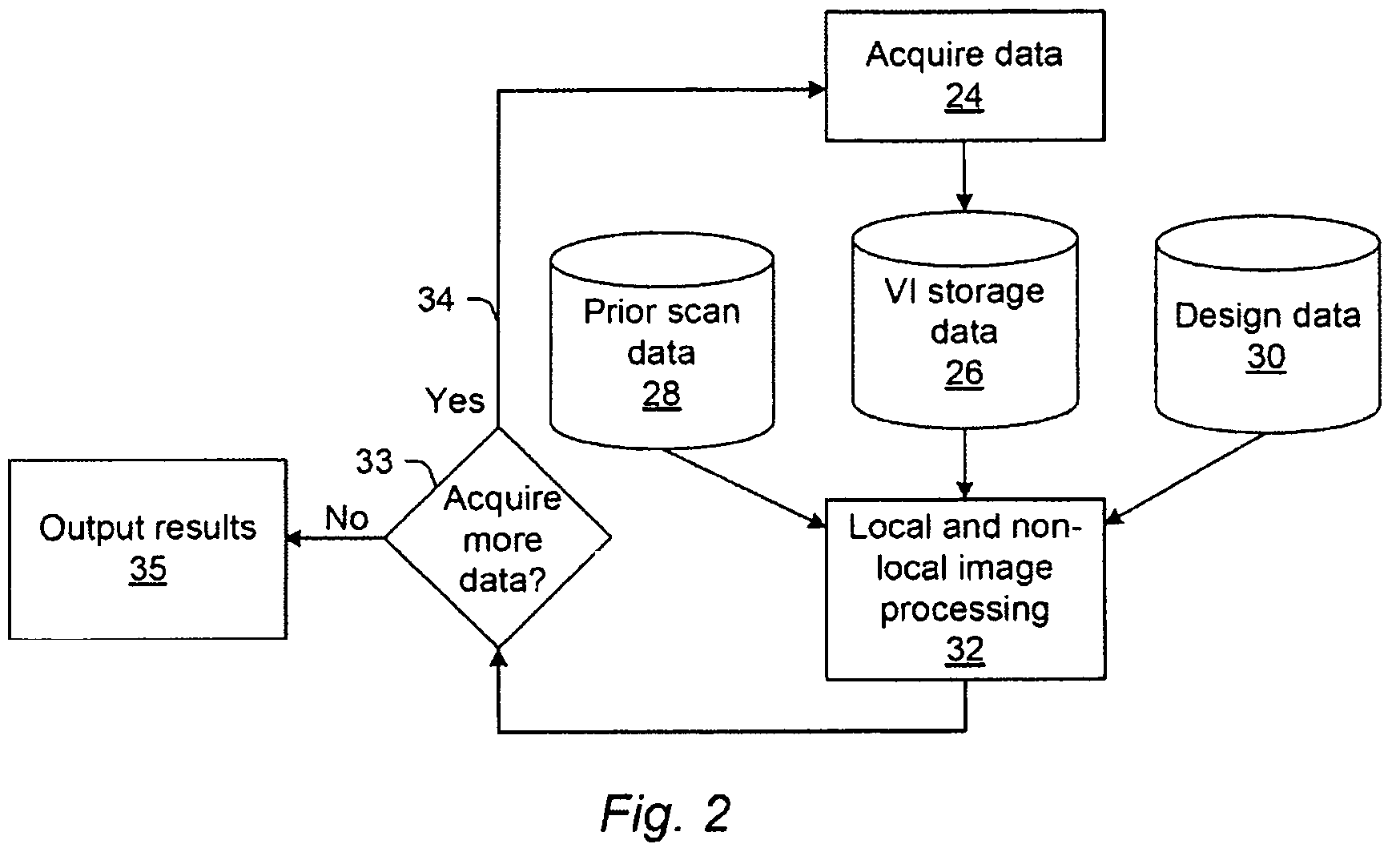

Various systems and methods for creating persistent data for a wafer and using persistent data for inspection-related functions are provided. One system includes a set of processor nodes coupled to a detector of an inspection system. Each of the processor nodes is configured to receive a portion of image data generated by the detector during scanning of a wafer. The system also includes an array of storage media separately coupled to each of the processor nodes. The processor nodes are configured to send all of the image data or a selected portion of the image data received by the processor nodes to the arrays of storage media such that all of the image data or the selected portion of the image data generated by the detector during the scanning of the wafer is stored in the arrays of the storage media.

Owner:KLA CORP

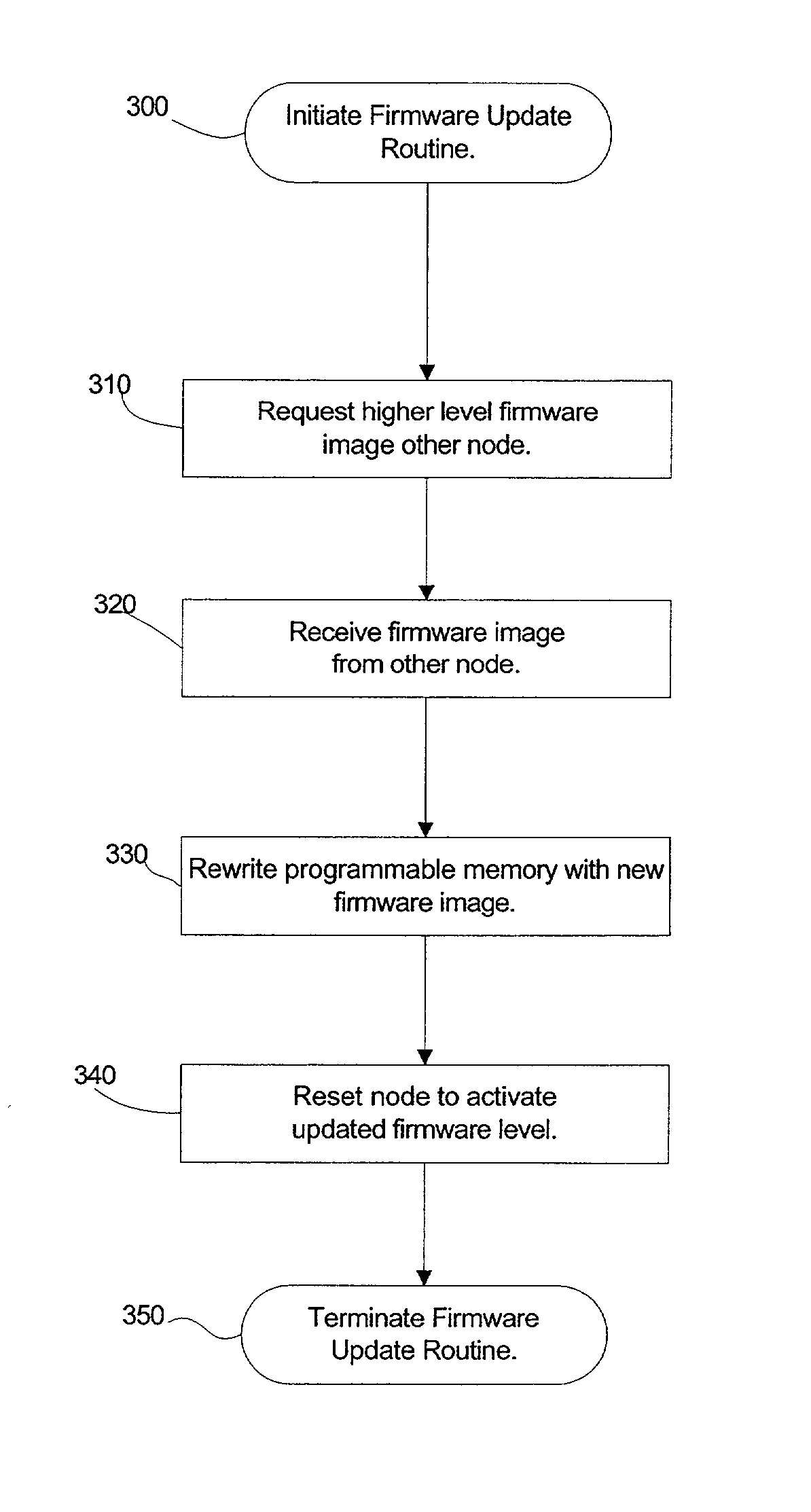

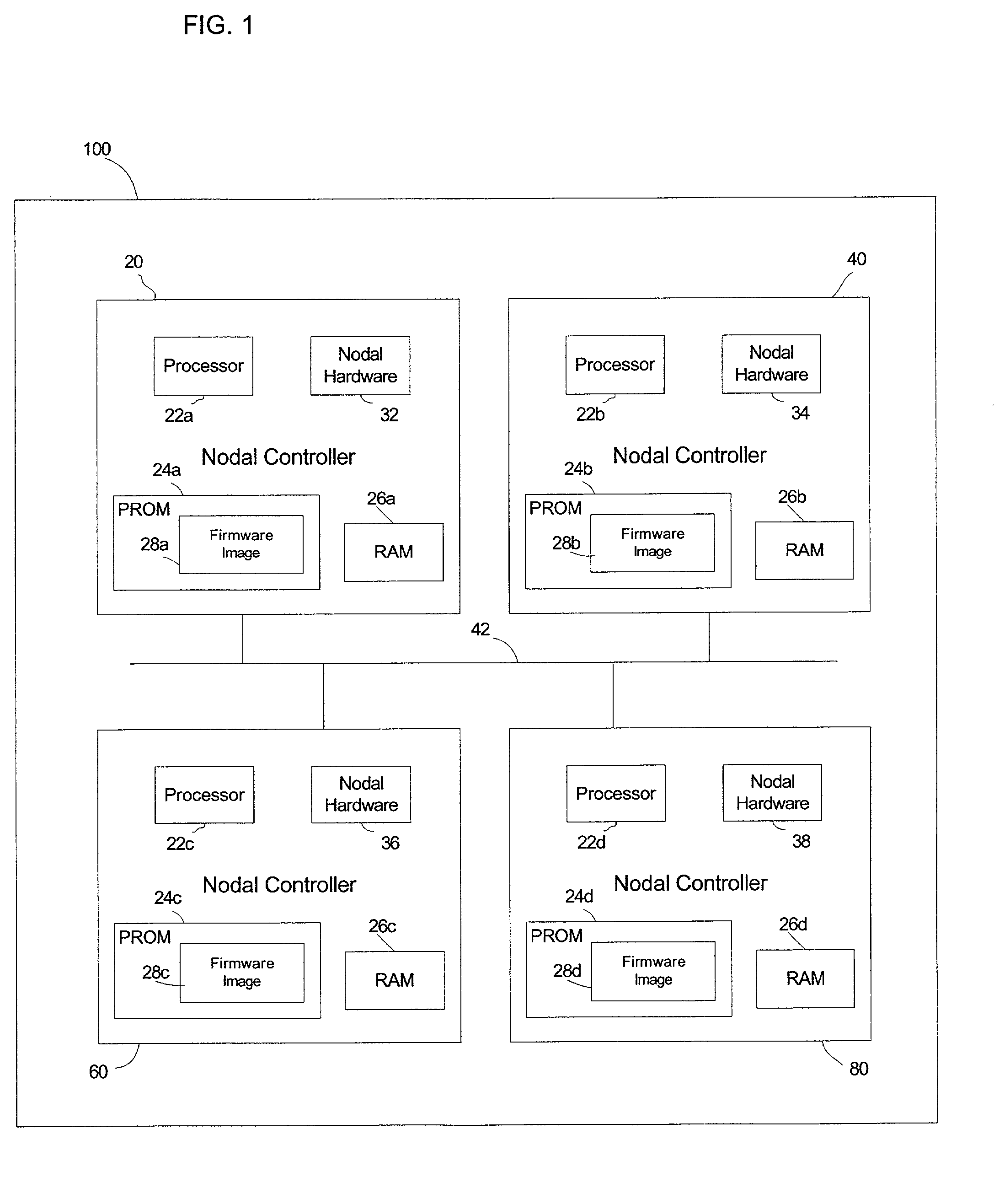

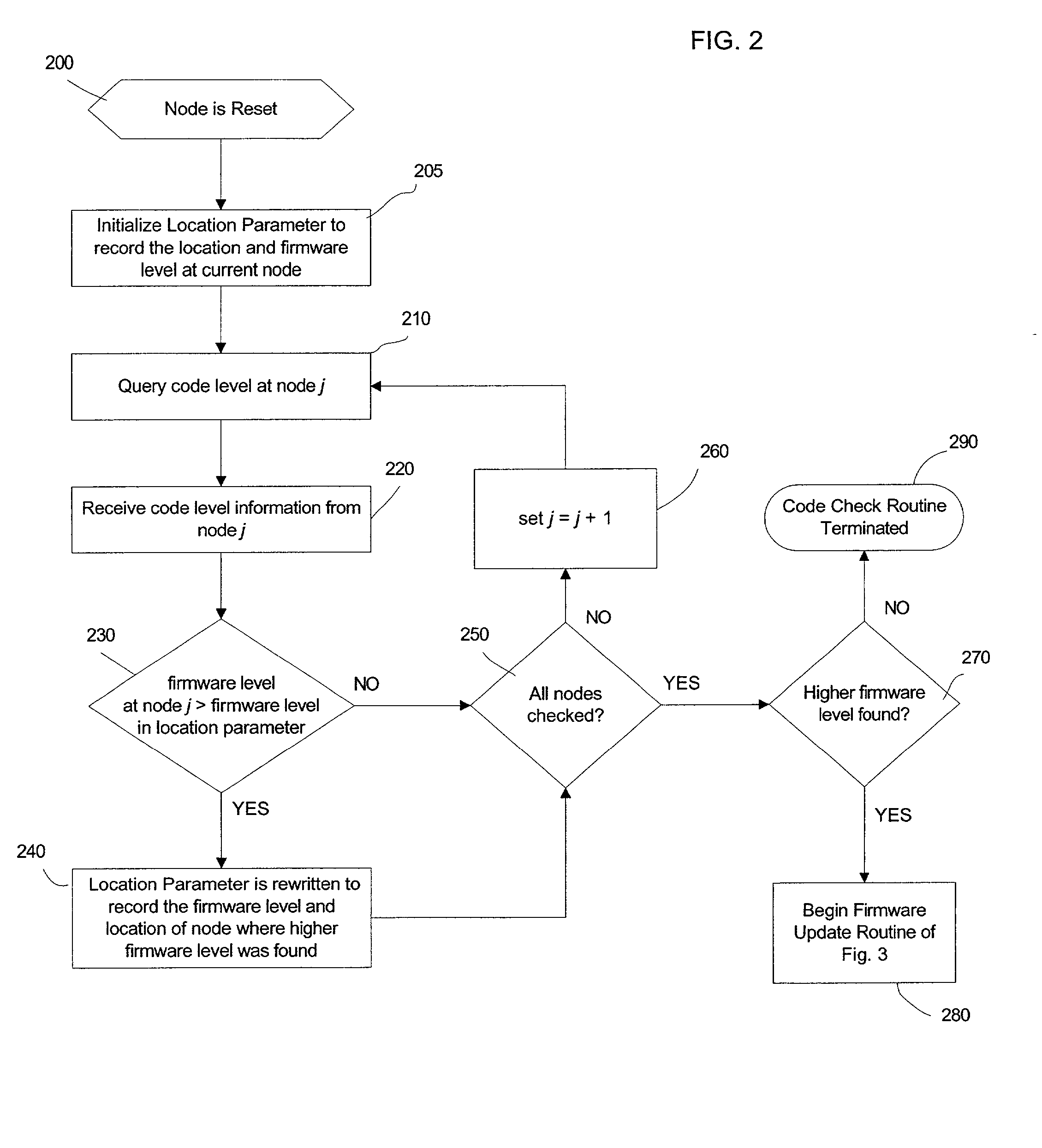

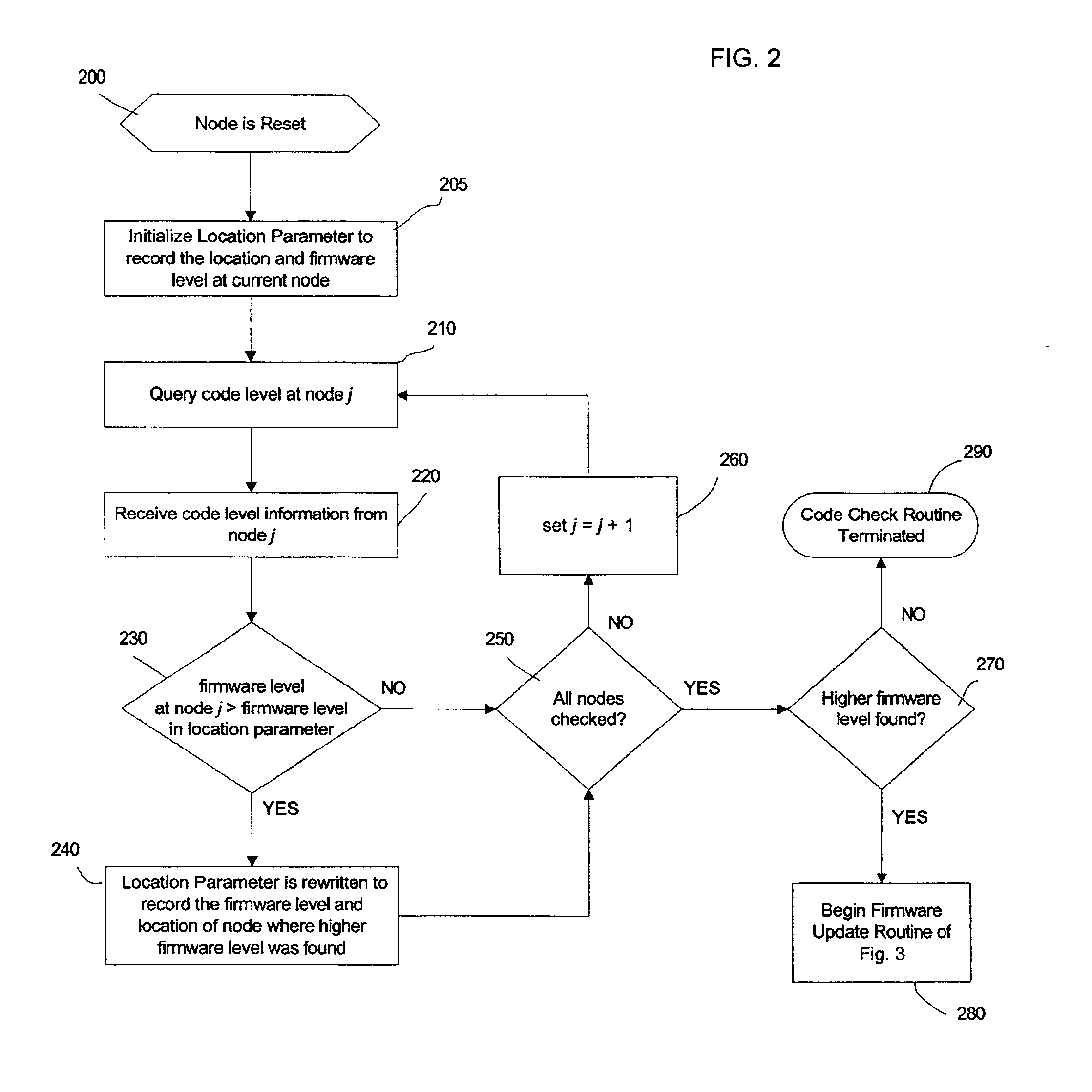

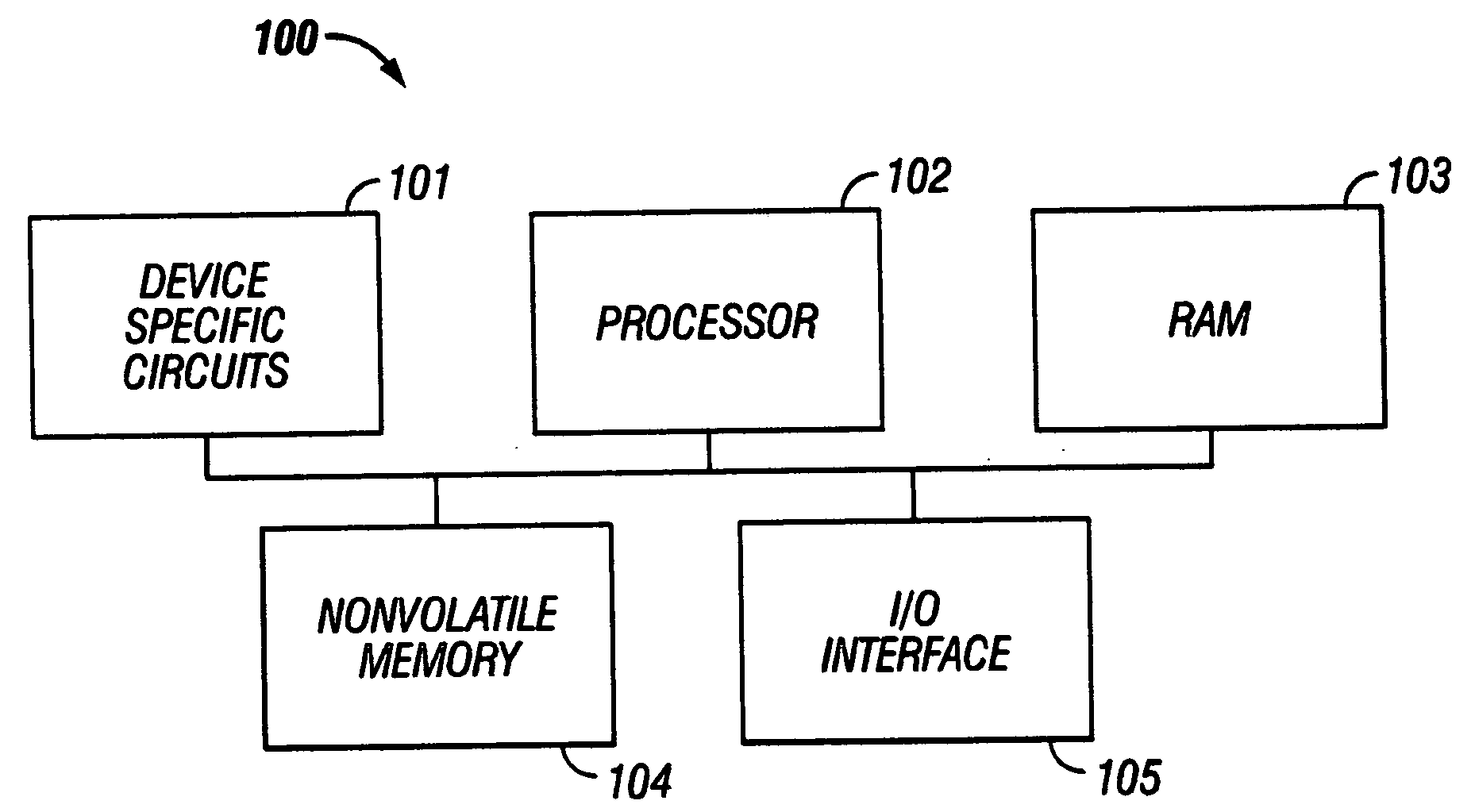

Automatic firmware update of processor nodes

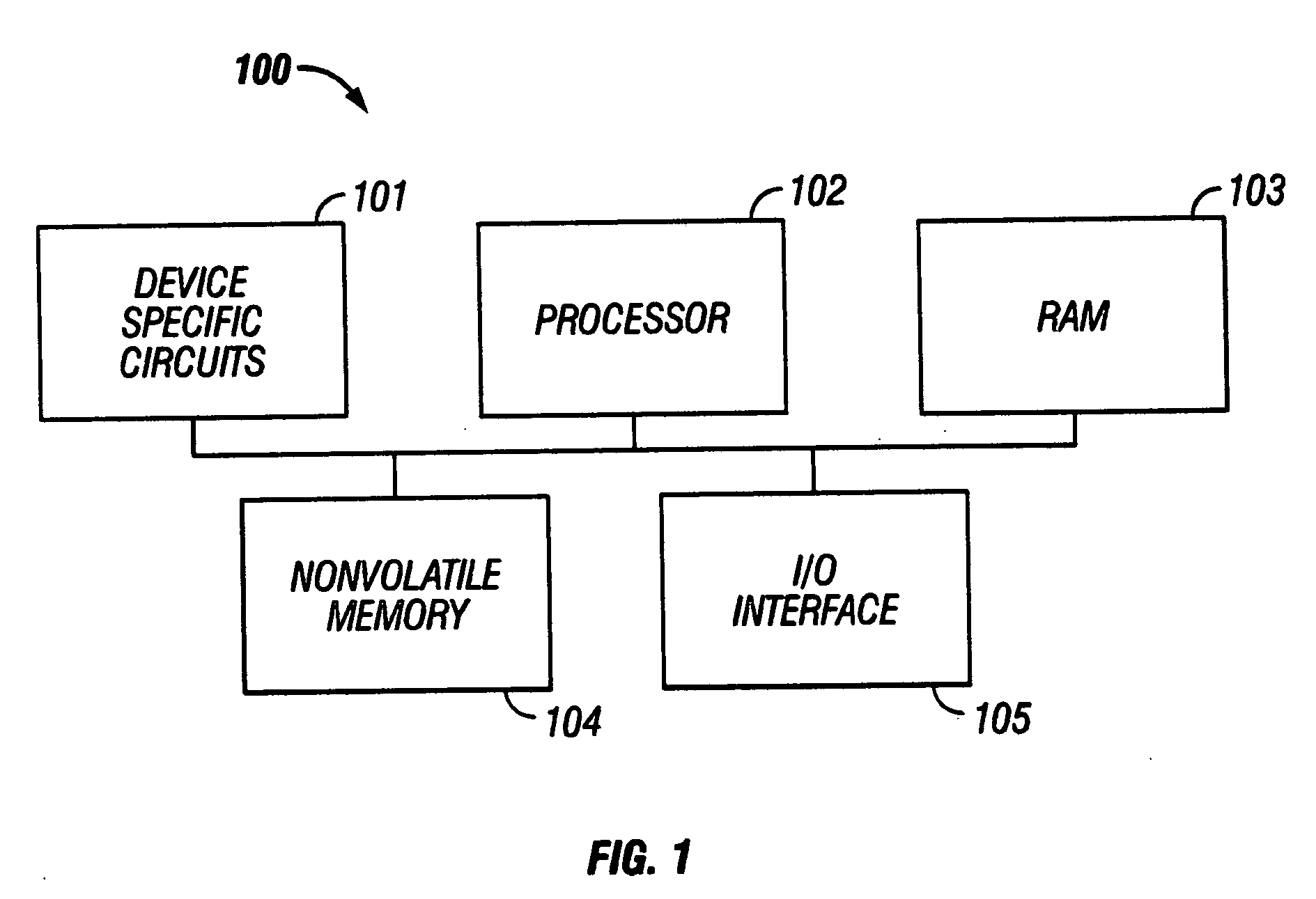

InactiveUS20020091807A1Raise the possibilityAvoid incompatibilitiesDigital computer detailsData resettingCommunication interfaceProcessor node

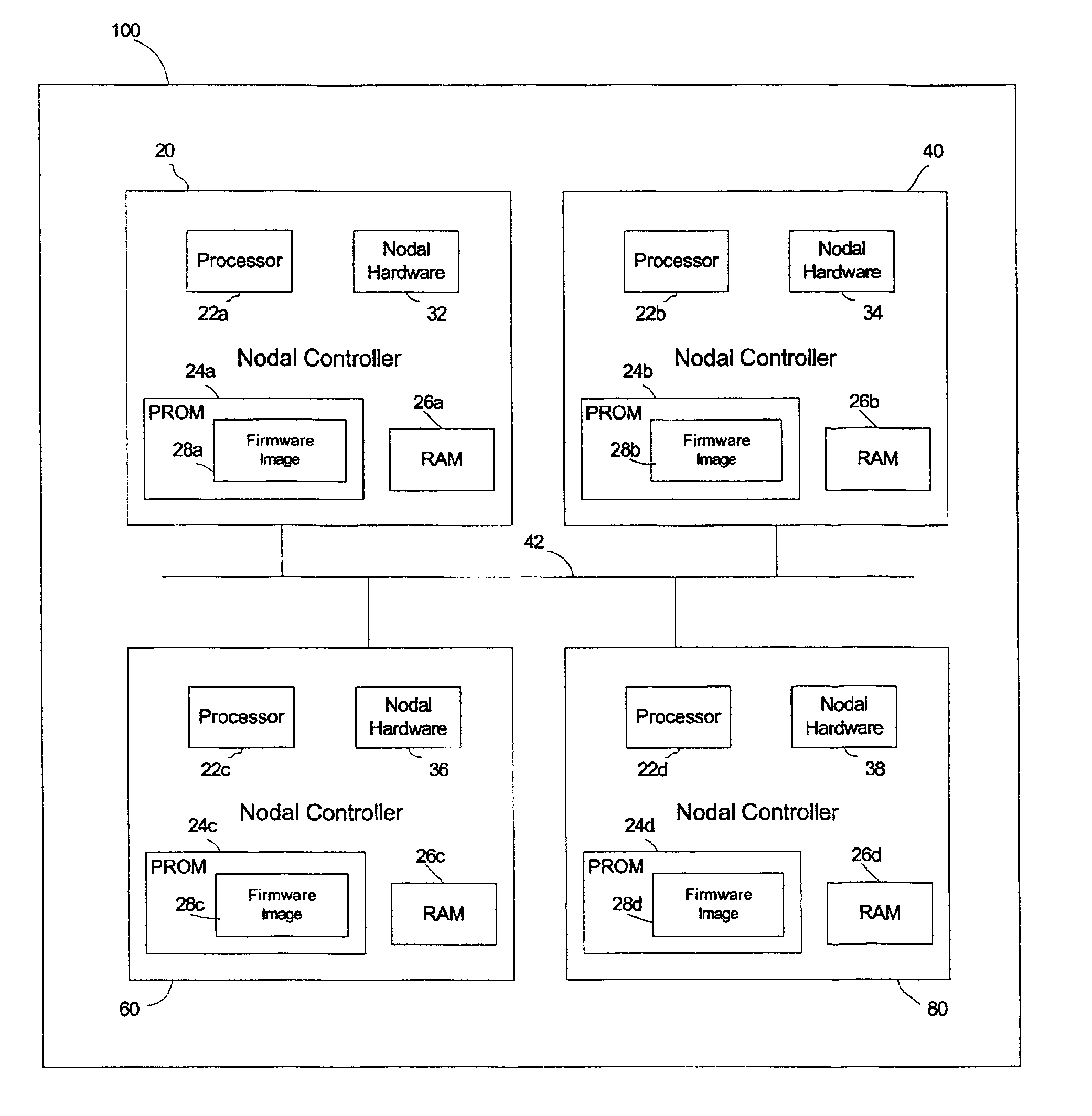

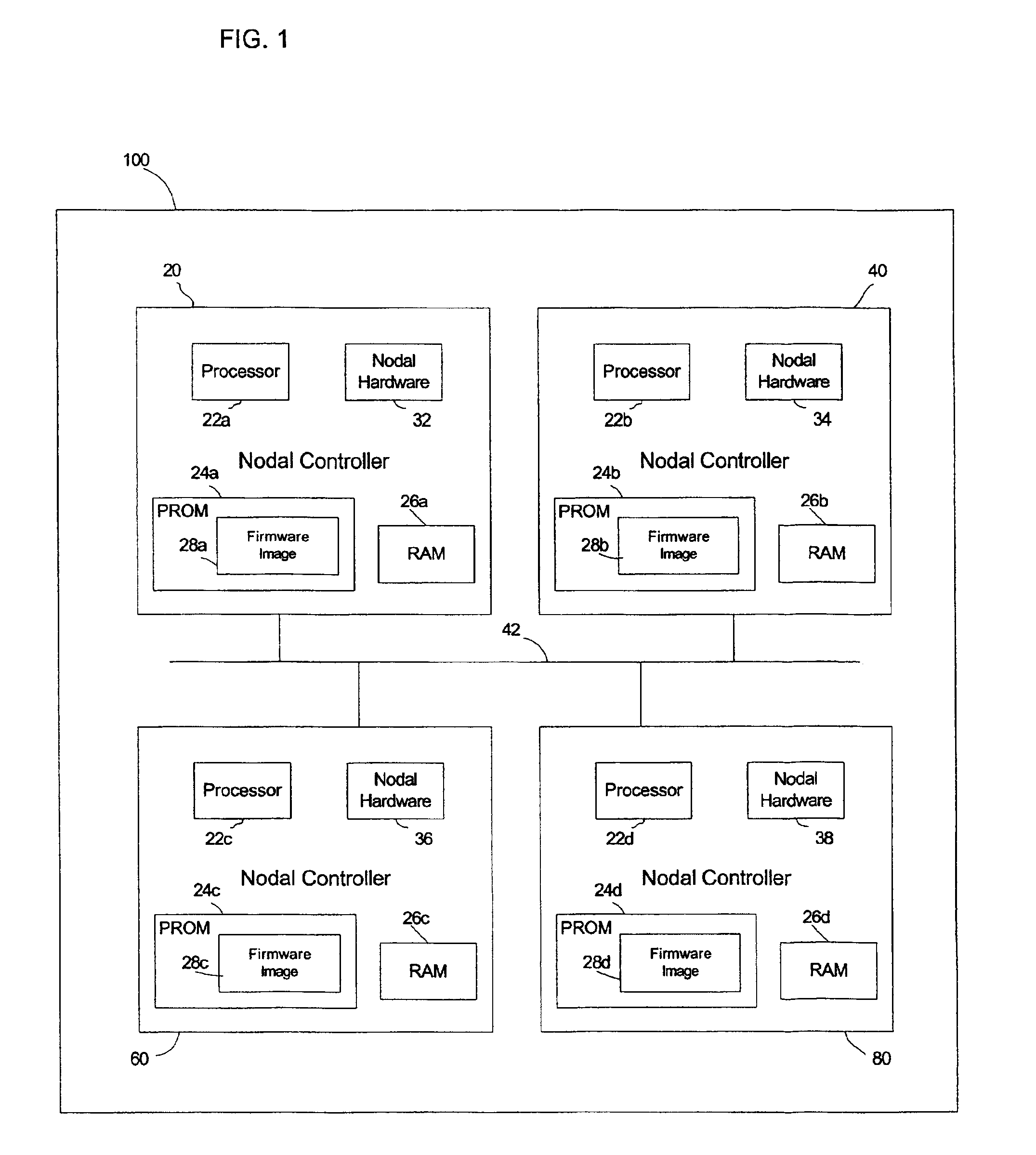

Provided is a method, system, and program for updating the firmware in a nodal system. The nodal system includes at least two nodes, wherein each node includes a processing unit and a memory including code. The nodes communicate over a communication interface. At least one querying node transmits a request to at least one queried node in the nodal system for a level of the code at the node over the communication interface. At least one node receives a response from the queried node receiving the request indicating the level of code at the queried node over the communication interface. The node receiving the response determines whether at least one queried node has a higher code level.

Owner:IBM CORP

Systems and methods for creating persistent data for a wafer and for using persistent data for inspection-related functions

Various systems and methods for creating persistent data for a wafer and using persistent data for inspection-related functions are provided. One system includes a set of processor nodes coupled to a detector of an inspection system. Each of the processor nodes is configured to receive a portion of image data generated by the detector during scanning of a wafer. The system also includes an array of storage media separately coupled to each of the processor nodes. The processor nodes are configured to send all of the image data or a selected portion of the image data received by the processor nodes to the arrays of storage media such that all of the image data or the selected portion of the image data generated by the detector during the scanning of the wafer is stored in the arrays of the storage media.

Owner:KLA TENCOR CORP

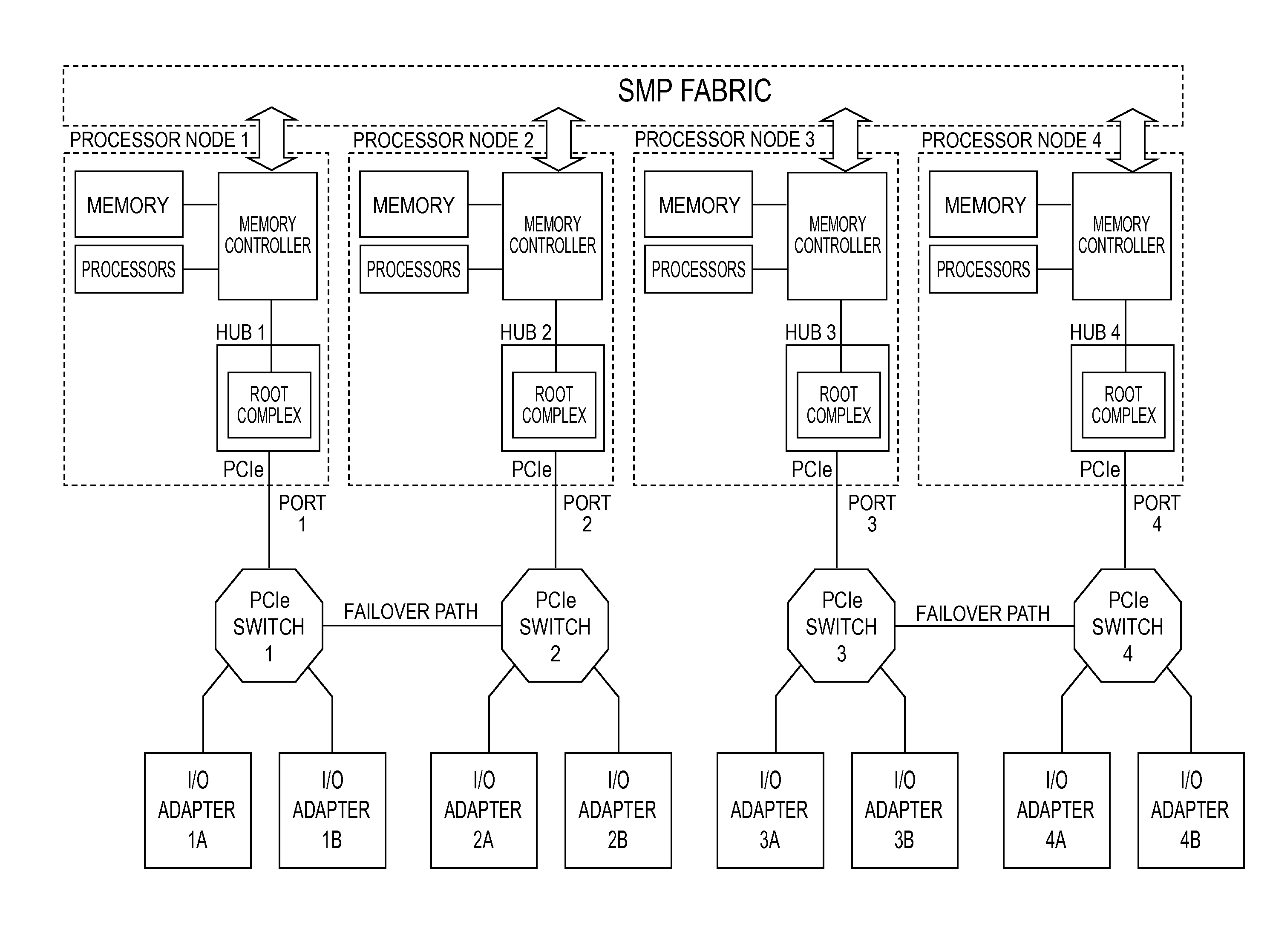

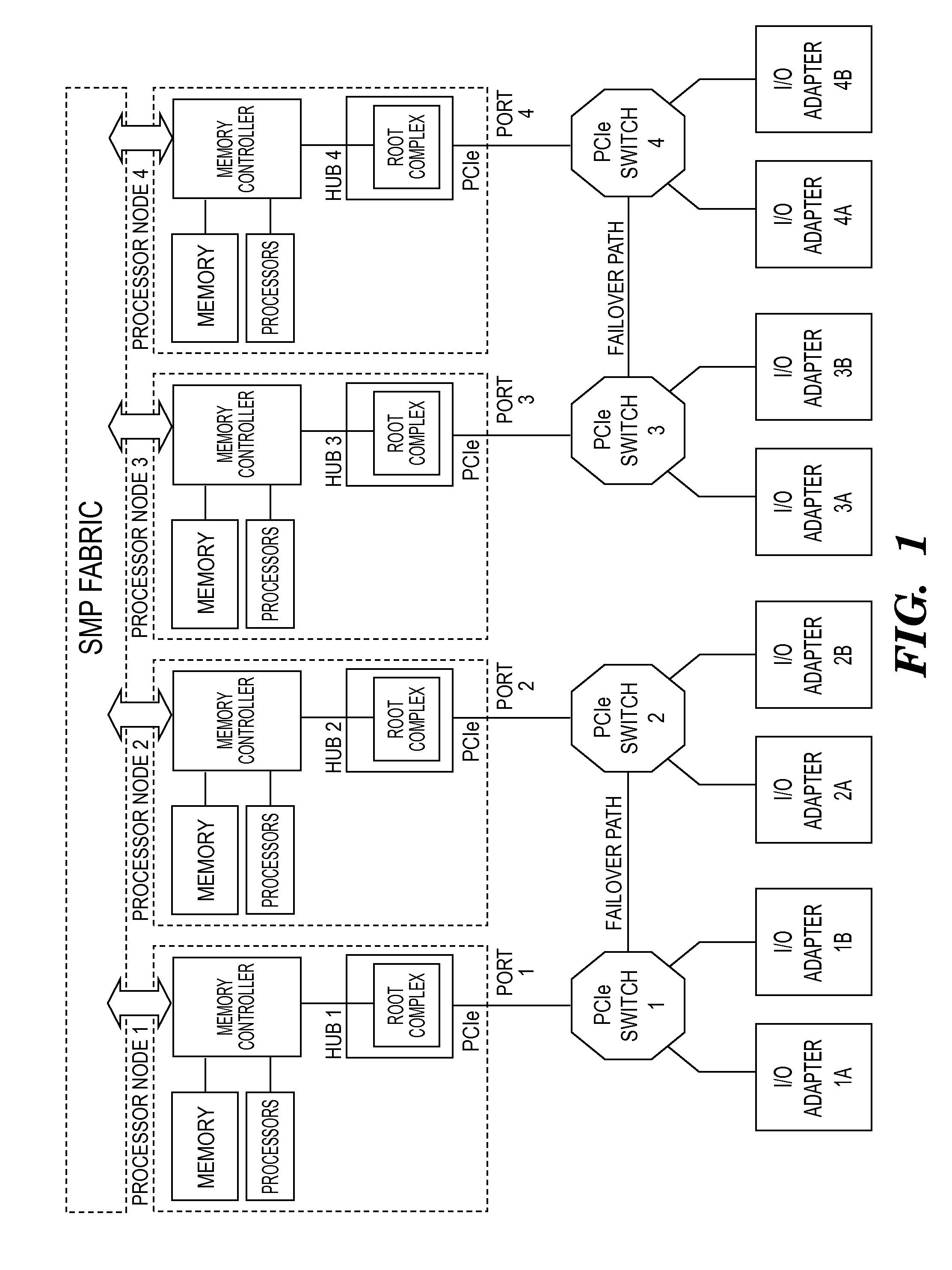

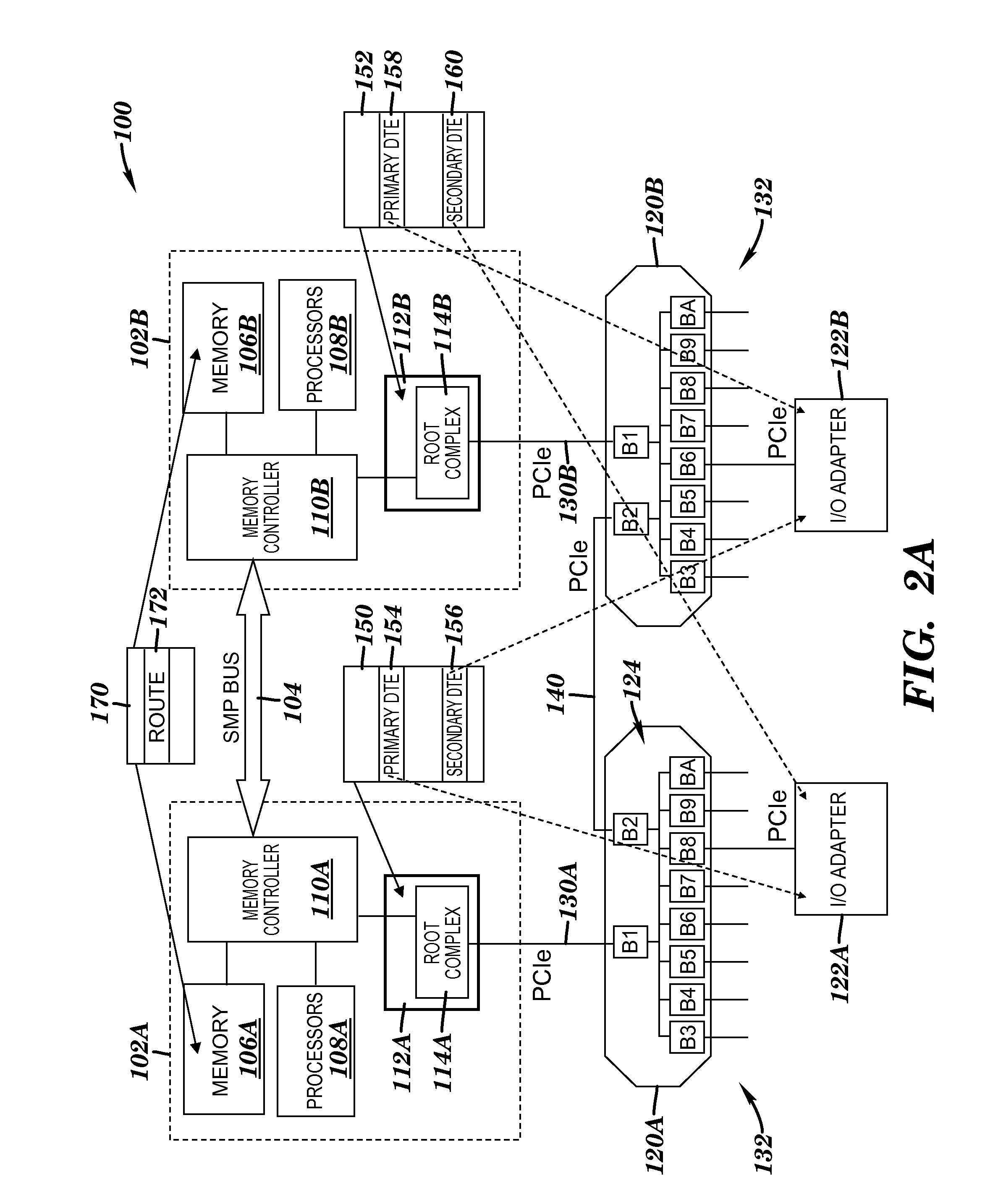

Switch failover control in a multiprocessor computer system

A system and a method for failover control comprising: maintaining a primary device table entry (DTE) in a first table activated for a first adapter in communication with a first processor node having a first root complex via a first switch assembly and maintaining a secondary DTE in standby for a second adapter in communication with a second processor node having a second root complex via a second switch assembly; maintaining a primary DTE in a second table activated for the second adapter and maintaining a secondary DTE in standby for the first adapter; and upon a failover, updating the secondary DTE in the first table as an active entry for the second adapter and forming a path to enable traffic to route from the second adapter through the second switch assembly over to the first switch assembly and up to the first root complex of the first processor node.

Owner:IBM CORP

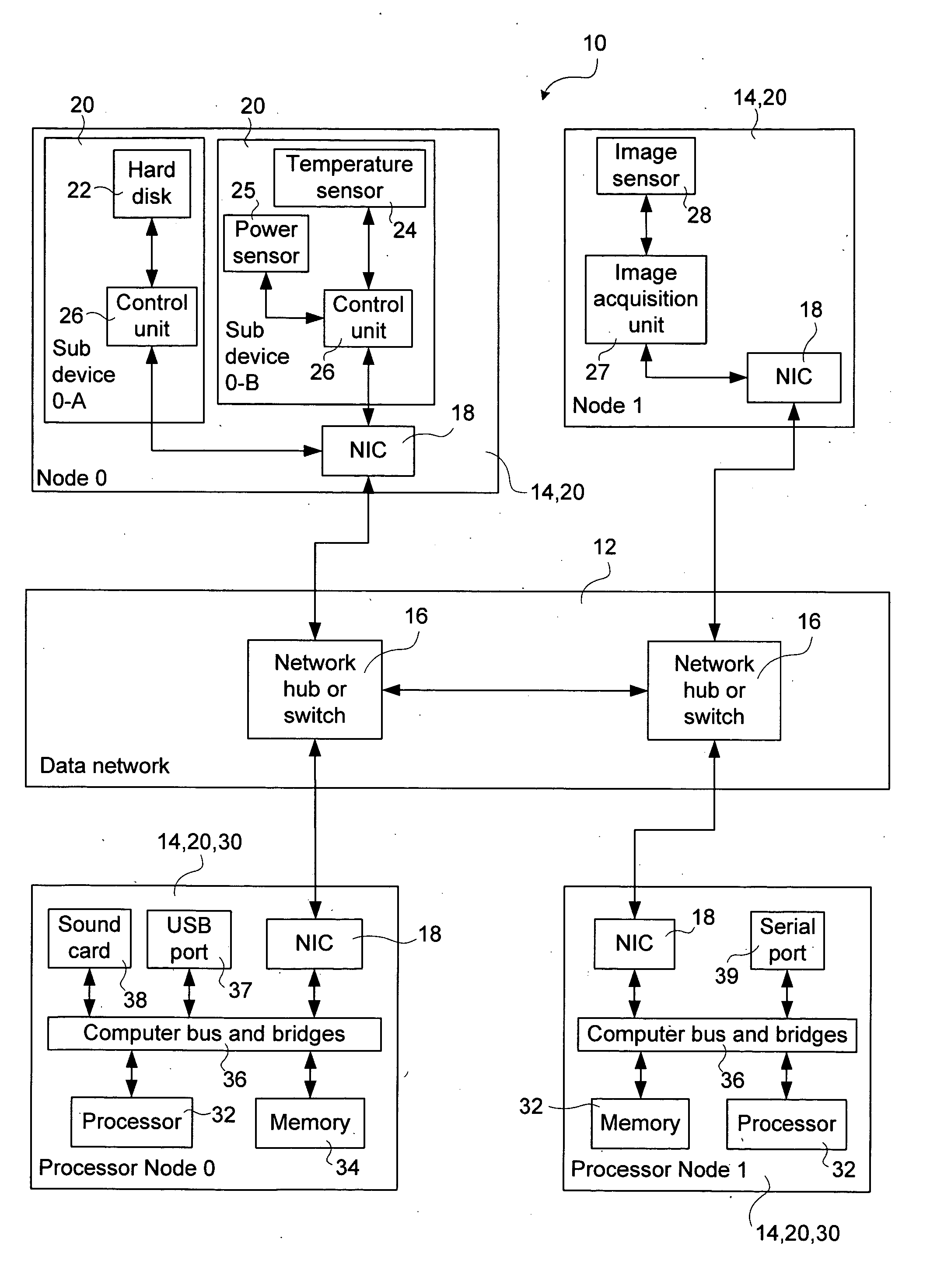

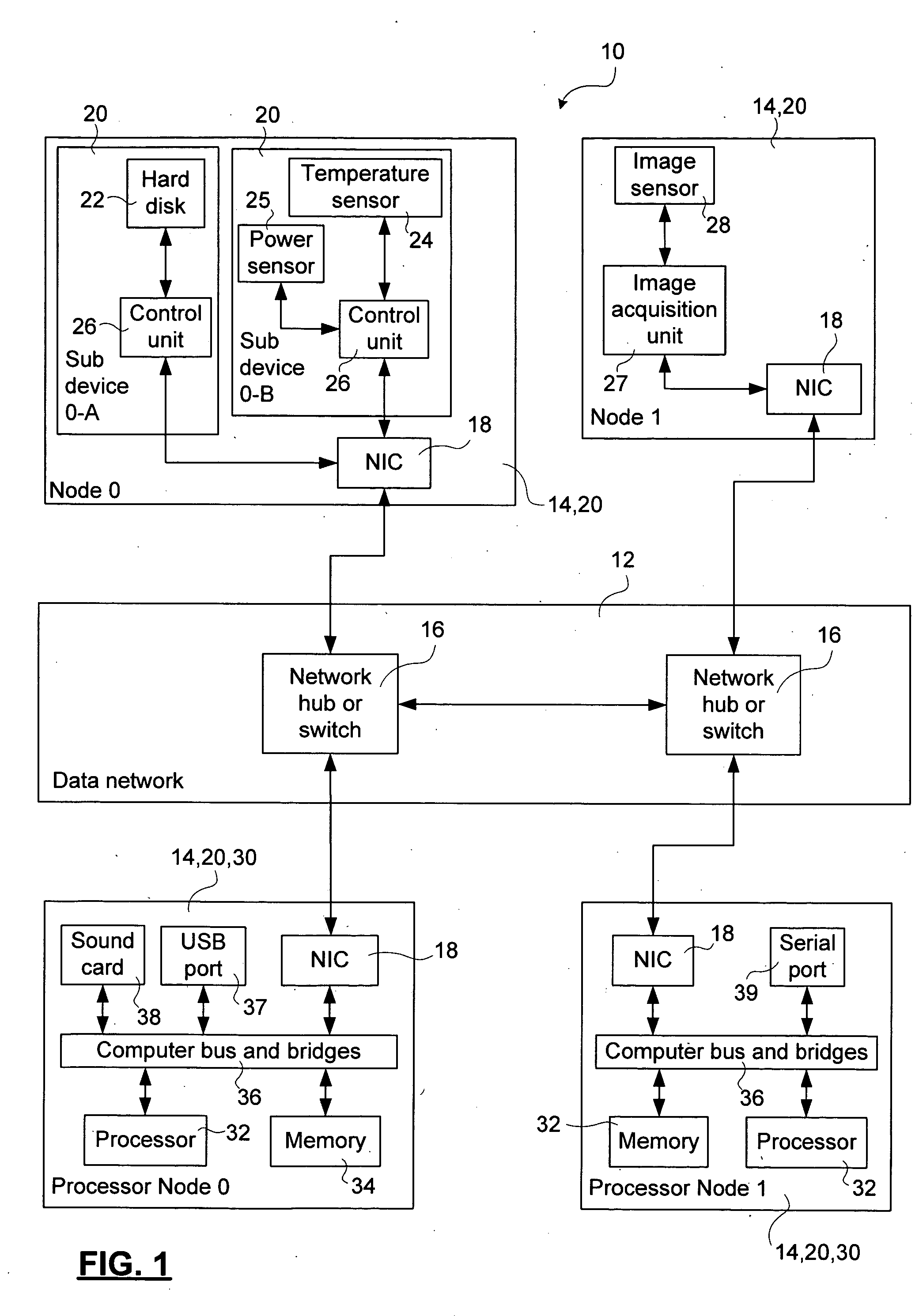

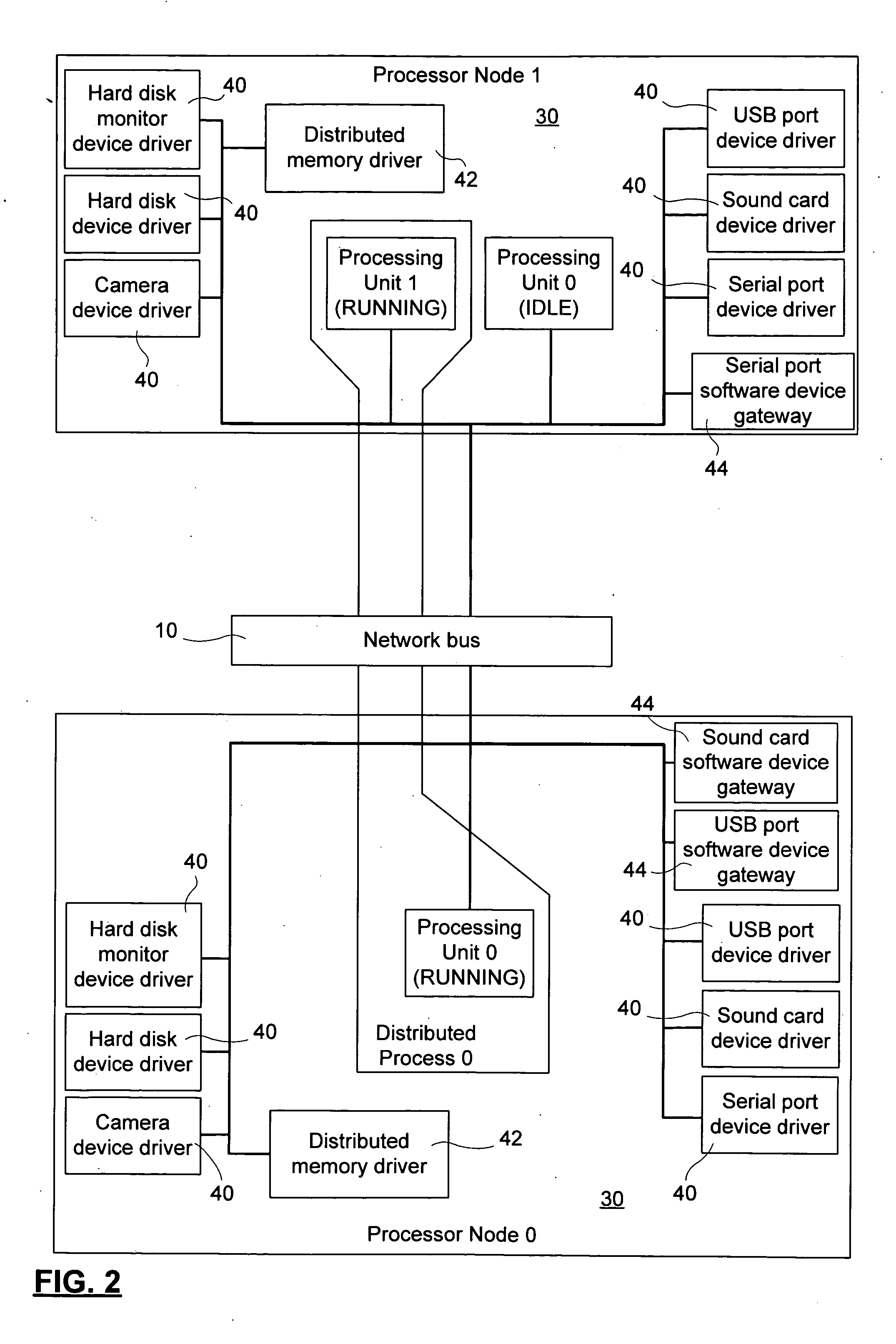

Methods and apparatus for enabling bus connectivity over a data network

ActiveUS20060059287A1Facilitate communicationSelectively leverage operating system capabilitiesProgram synchronisationMemory addressAbstraction layer

A method and system for interconnecting peripherals, processor nodes, and hardware devices to a data network to produce a network bus providing OS functionality for managing hardware devices connected to the network bus involves defining a network bus driver at each of the processor nodes that couples hardware device drivers to a network hardware abstraction layer of the processor node. The network bus can be constructed to account for the hot-swappable nature of the hardware devices using a device monitoring function, and plug and play functionality for adding and, removing device driver instances. The network bus can be used to provide a distributed processing system by defining a shared memory space at each processor node. Distributed memory pages are provided with bus-network-wide unique memory addresses, and a distributed memory manager is added to ensure consistency of the distributed memory pages, and to provide a library of functions for user mode applications.

Owner:PLEORA TECH

Virtual file system

ActiveUS20050114350A1Input/output to record carriersRecording by optical meansVirtual file systemProcessor node

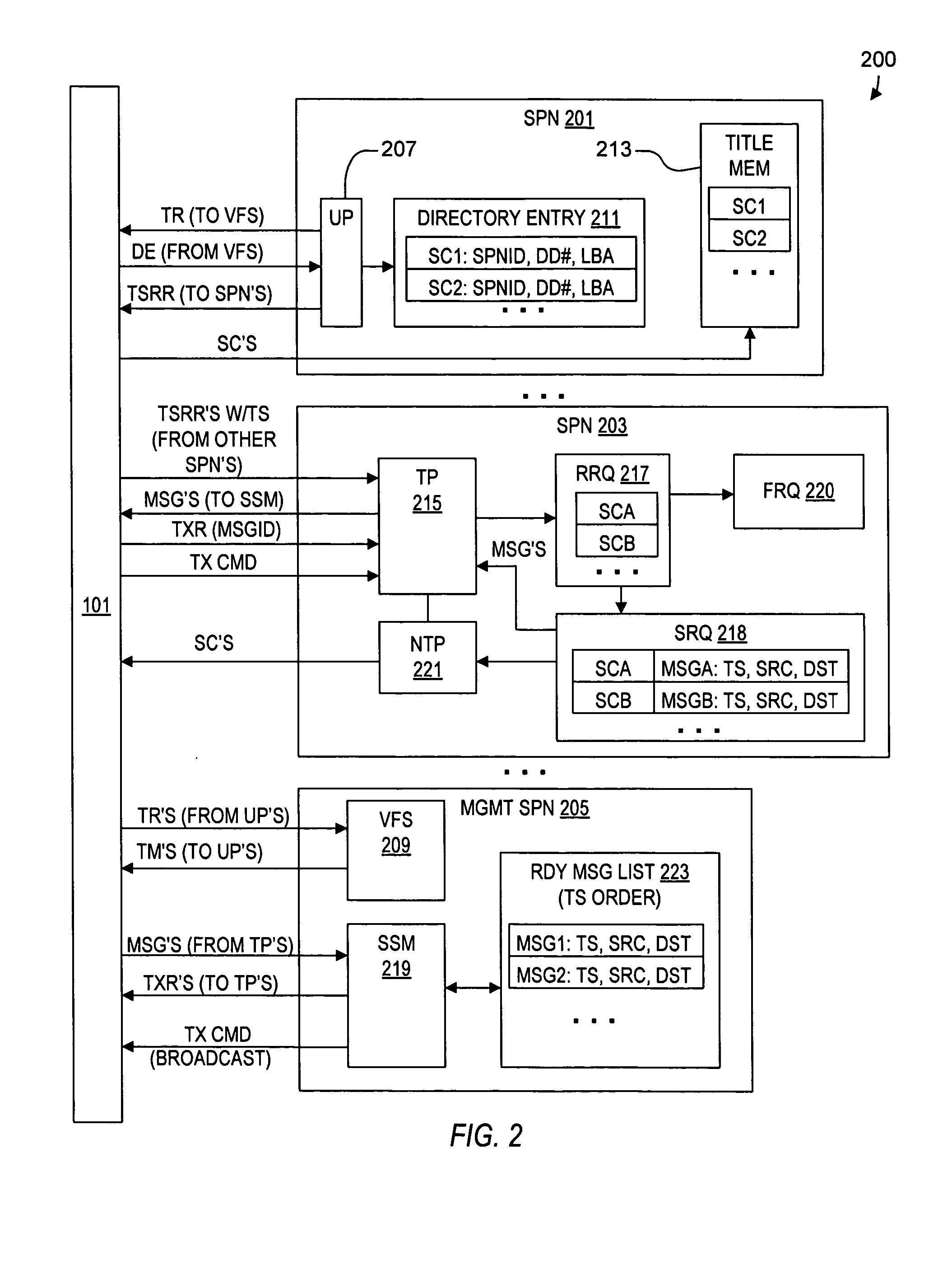

A virtual file system including multiple storage processor nodes including a management node, a backbone switch, a disk drive array, and a virtual file manager executing on the management node. The backbone switch enables communication between the storage processor nodes. The disk drive array is coupled to and distributed across the storage processor nodes and stores multiple titles. Each title is divided into data subchunks which are distributed across the disk drive array in which each subchunk is stored on a disk drive of the disk drive array. The virtual file manager manages storage and access of each subchunk, and manages multiple directory entries including a directory entry for each title. Each directory entry is a list of subchunk location entries in which each subchunk location entry includes a storage processor node identifier, a disk drive identifier, and a logical address for locating and accessing each subchunk of each title.

Owner:INTERACTIVE CONTENT ENGINES LLC

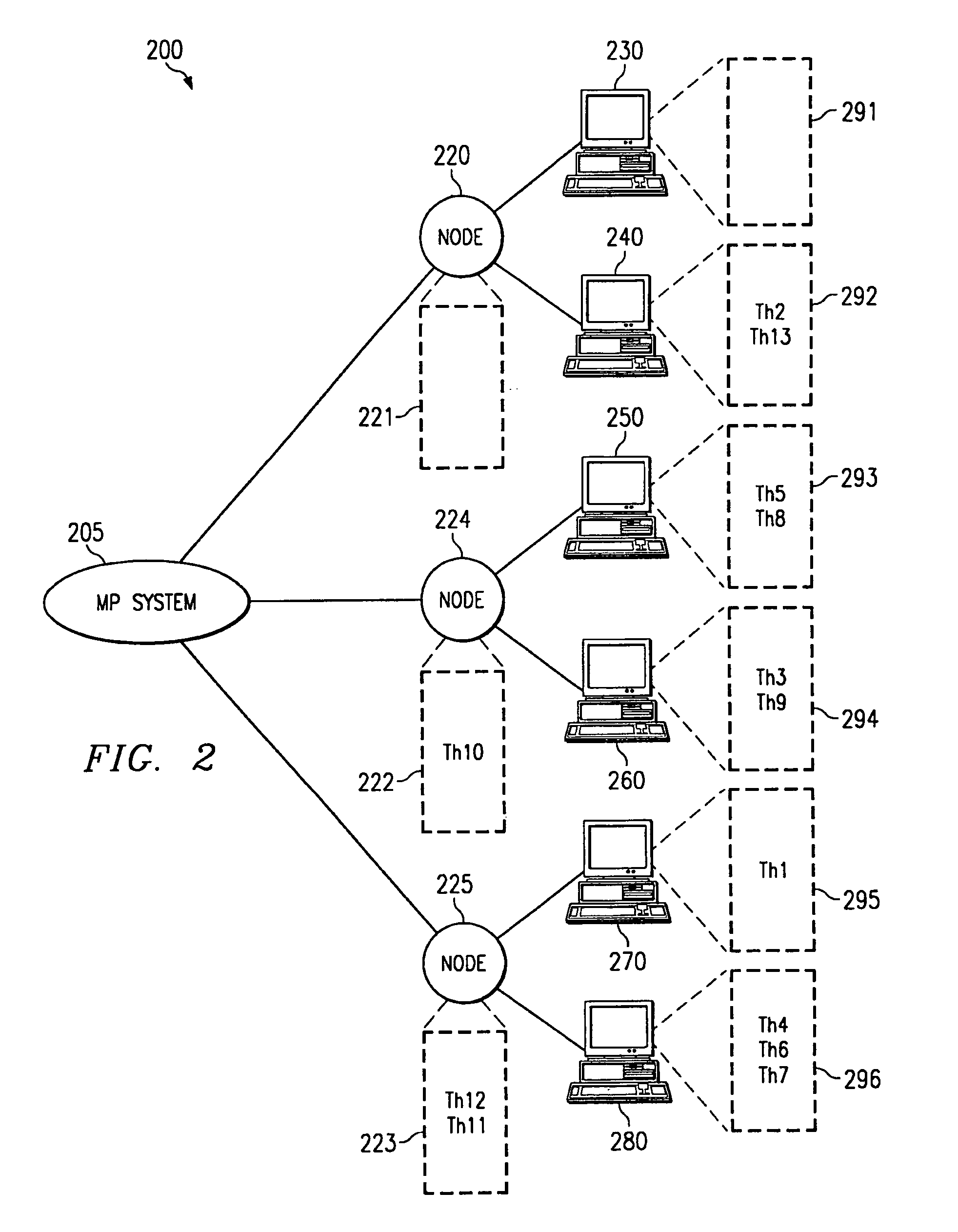

High speed methods for maintaining a summary of thread activity for multiprocessor computer systems

InactiveUS6886162B1Reduce in quantityMultiprogramming arrangementsMemory systemsQuiescent stateProcessor node

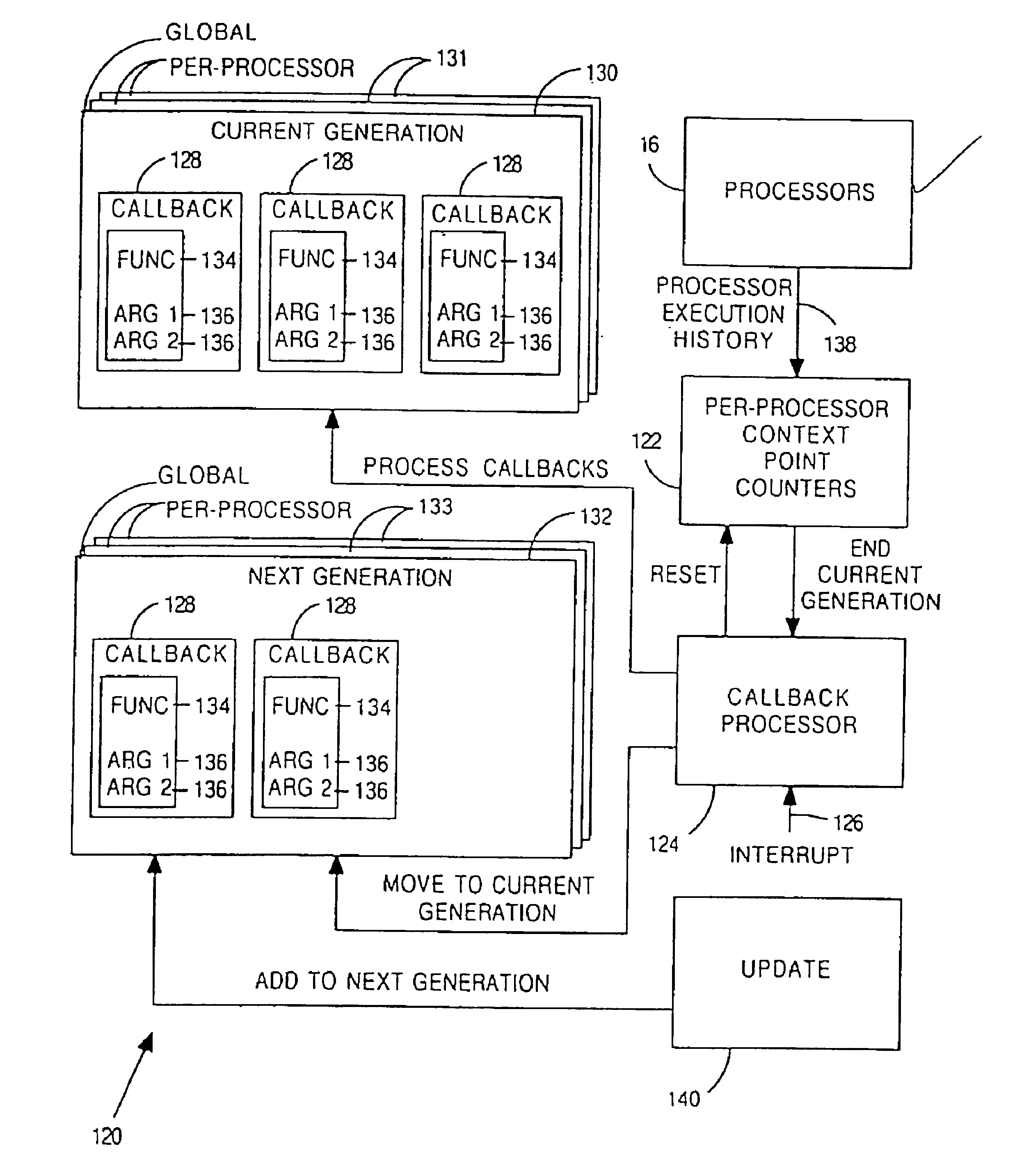

A high-speed method for maintaining a summary of thread activity reduces the number of remote-memory operations for an n processor, multiple node computer system from n2 to (2n−1) operations. The method uses a hierarchical summary of-thread-activity data structure that includes structures such as first and second level bit masks. The first level bit mask is accessible to all nodes and contains a bit per node, the bit indicating whether the corresponding node contains a processor that has not yet passed through a quiescent state. The second level bit mask is local to each node and contains a bit per processor per node, the bit indicating whether the corresponding processor has not yet passed through a quiescent state. The method includes determining from a data structure on the processor's node (such as a second level bitmask) if the processor has passed through a quiescent state. If so, it is then determined from the data structure if all other processors on its node have passed through a quiescent state. If so, it is then indicated in a data structure accessible to all nodes (such as the first level bitmask) that all processors on the processor's node have passed through a quiescent state. The local generation number can also be stored in the data structure accessible to all nodes. If a processor determines from this data structure that the processor is the last processor to pass through a quiescent state, the processor updates the data structure for storing a number of the current generation stored in the memory of each node.

Owner:SEQUENT COMPUTER SYSTEMS

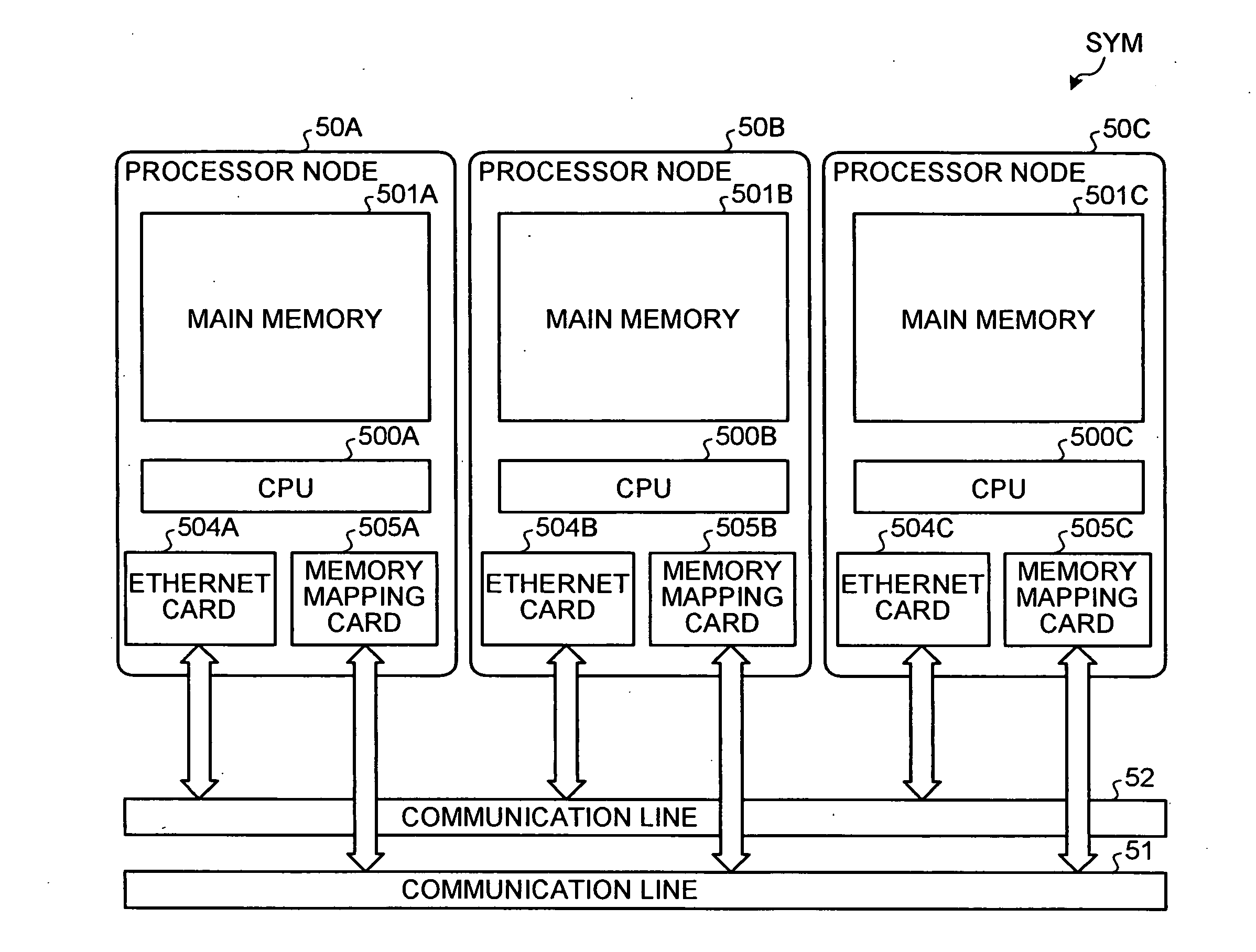

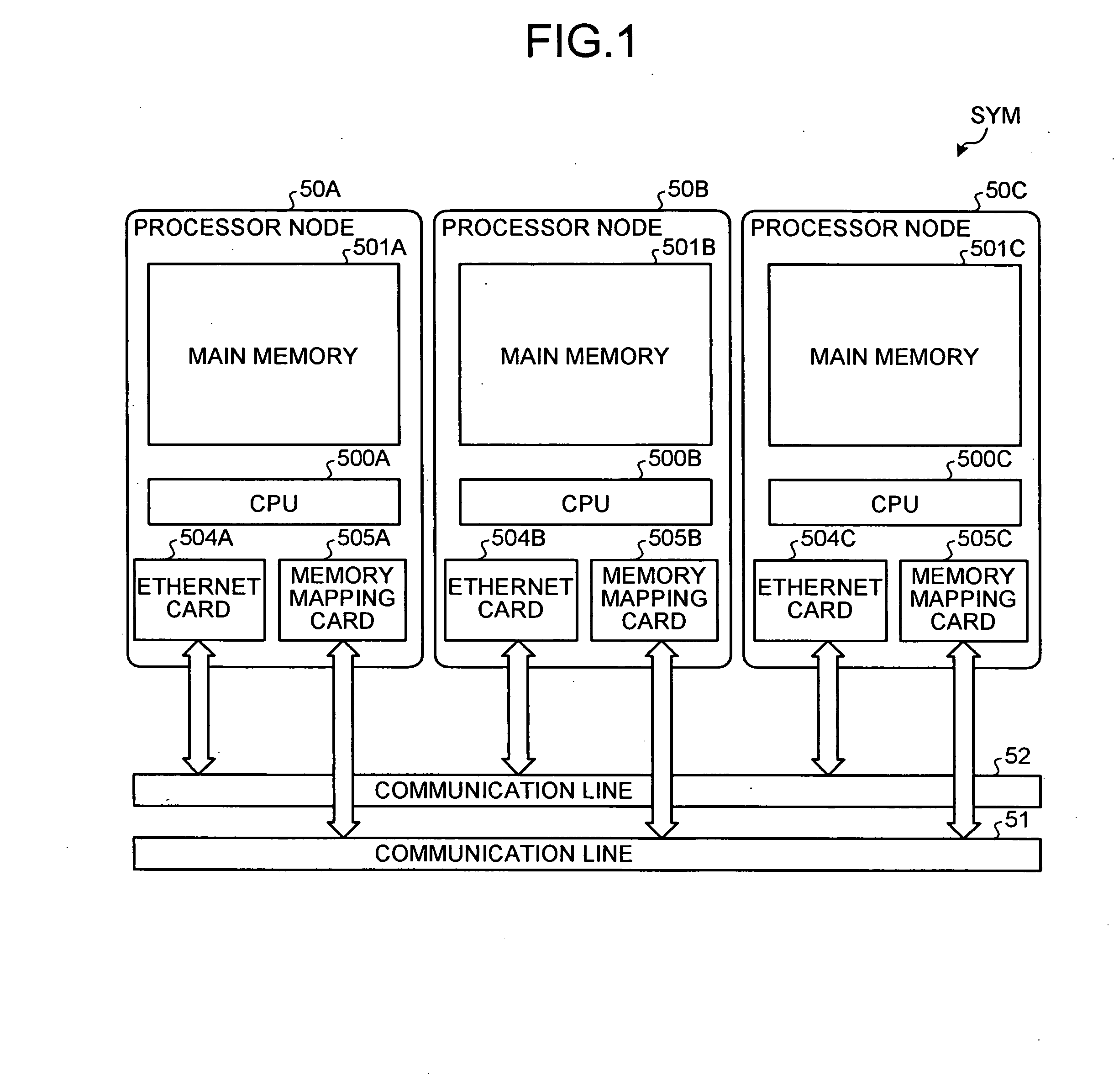

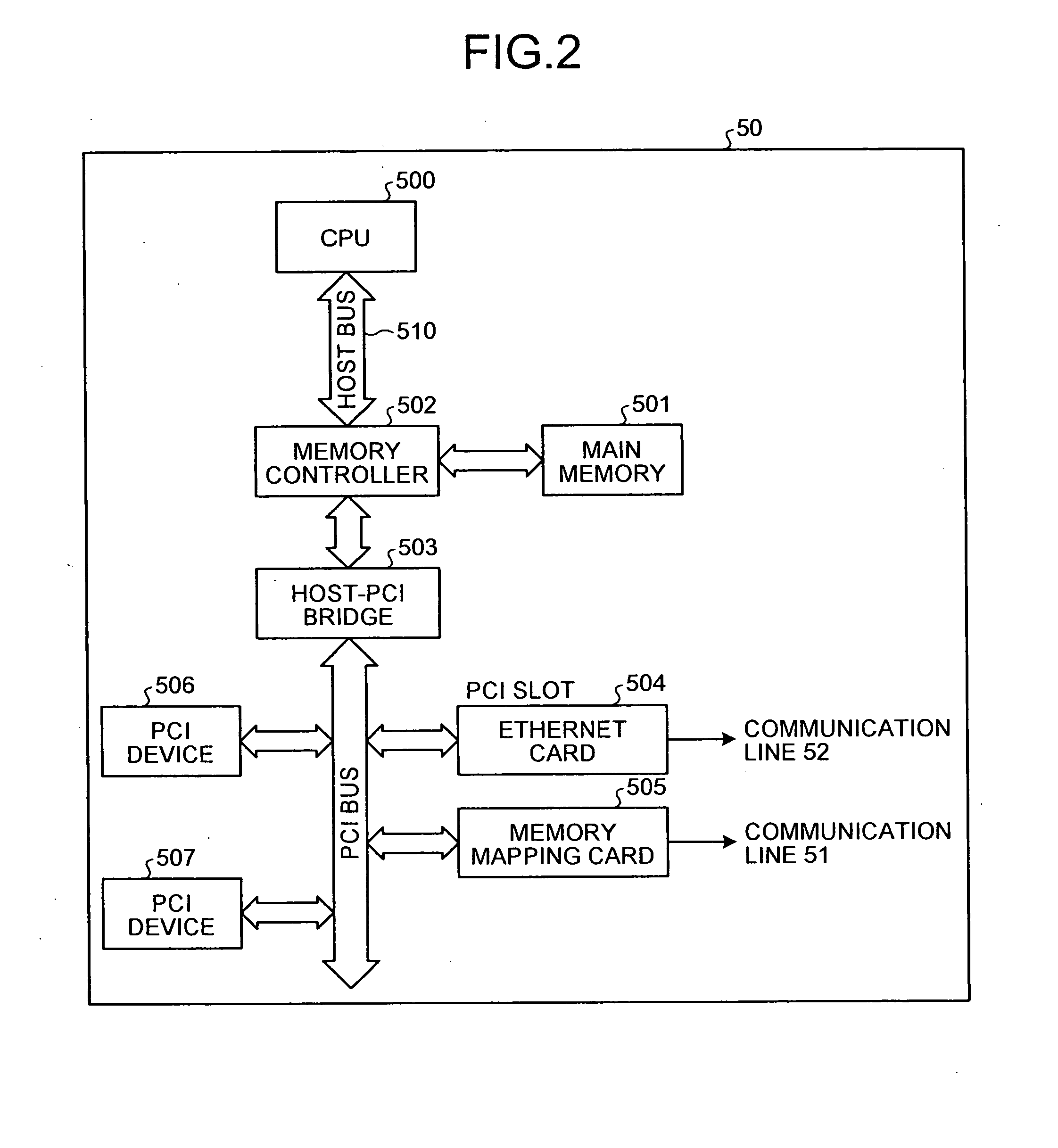

Multiprocessor system

InactiveUS20090077326A1Solve problemsMemory adressing/allocation/relocationGeneral purpose stored program computerCommunication unitManagement unit

A memory mapping unit requests allocation of a remote memory to memory mapping units of other processor nodes via a second communication unit, and requests creation of a mapping connection to a memory-mapping managing unit of a first processor node via the second communication unit. The memory-mapping managing unit creates the mapping connection between a processor node and other processor nodes according to a connection creation request from the memory mapping unit, and then transmits a memory mapping instruction for instructing execution of a memory mapping to the memory mapping unit via a first communication unit of the first processor node.

Owner:RICOH KK

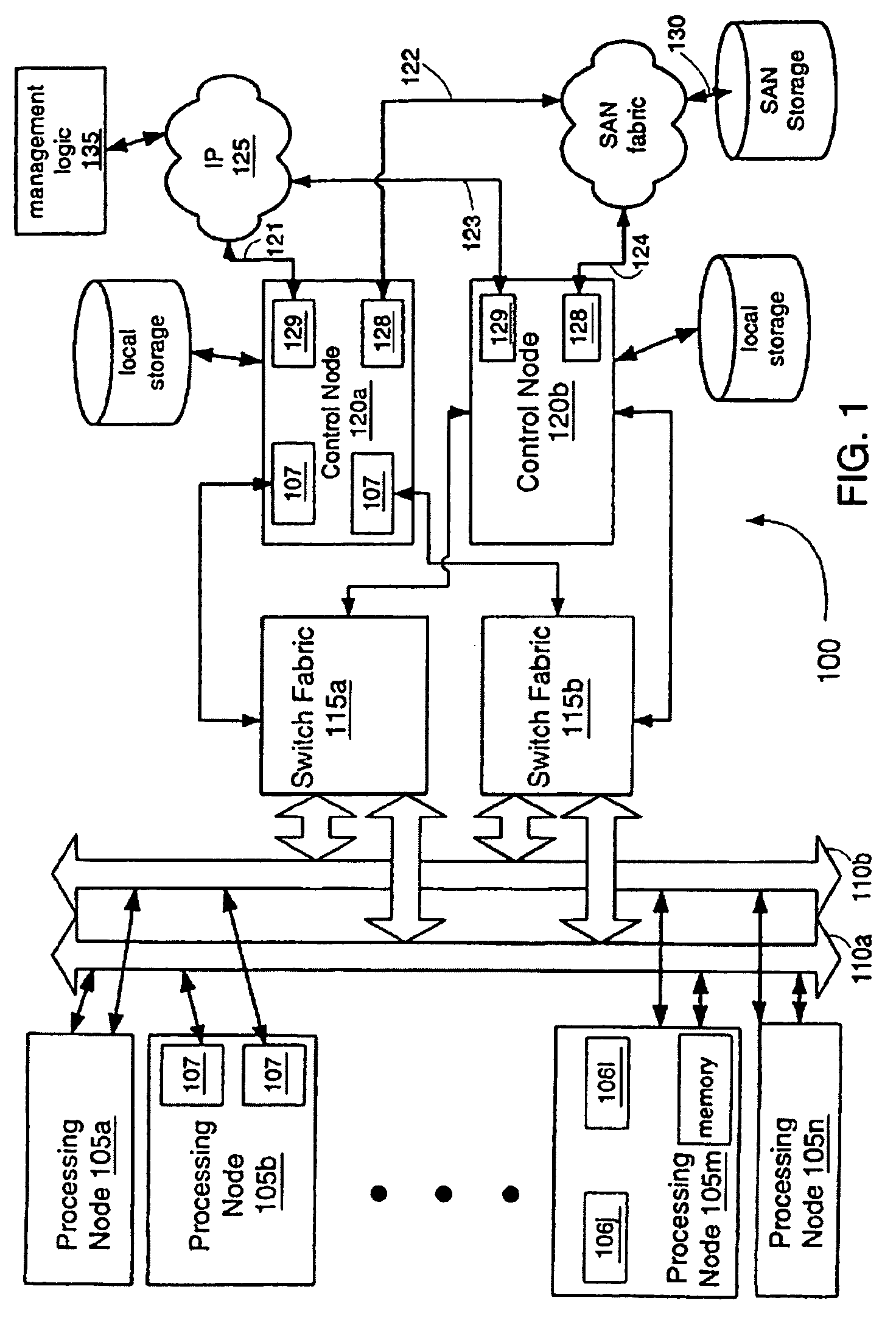

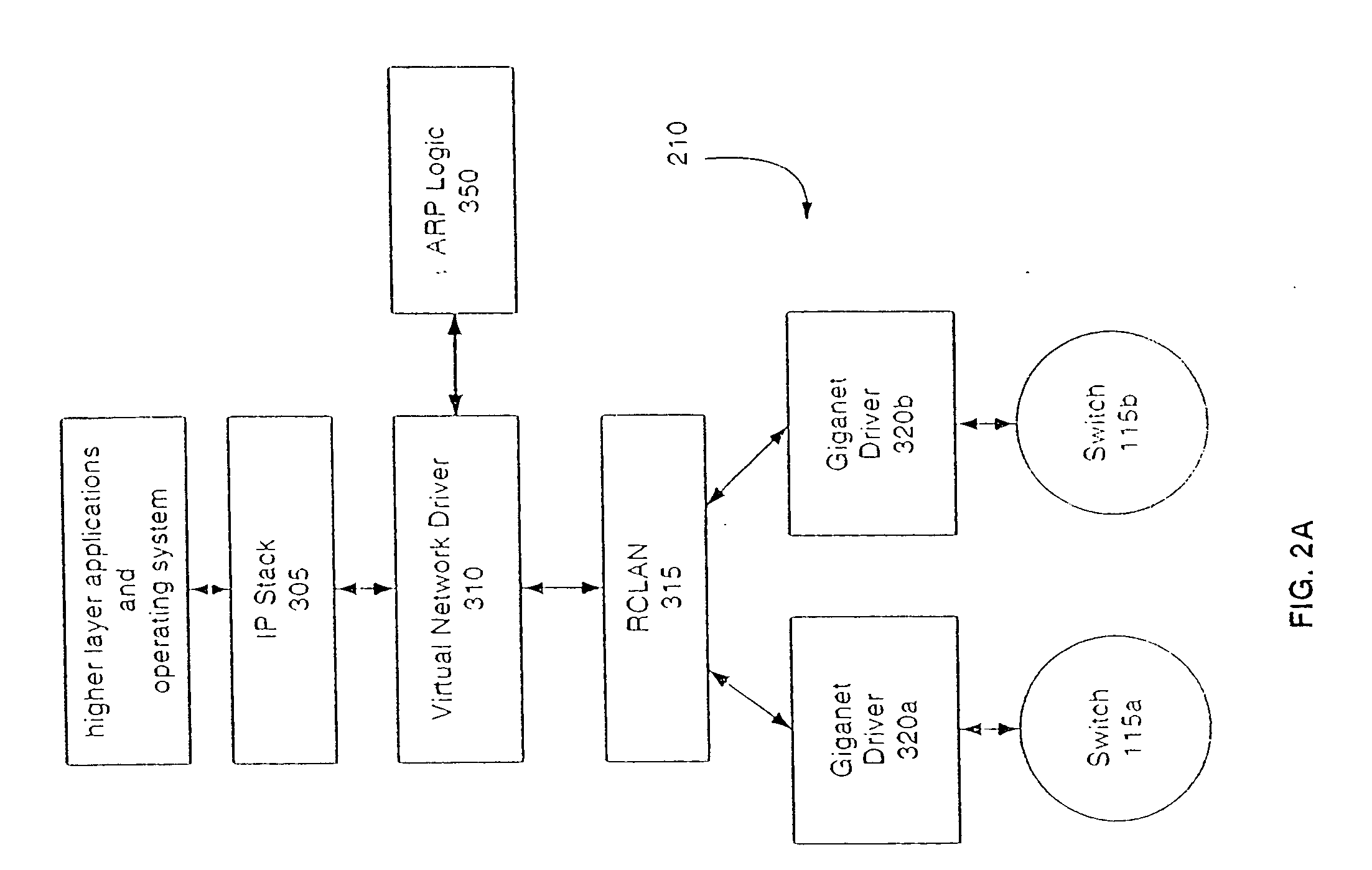

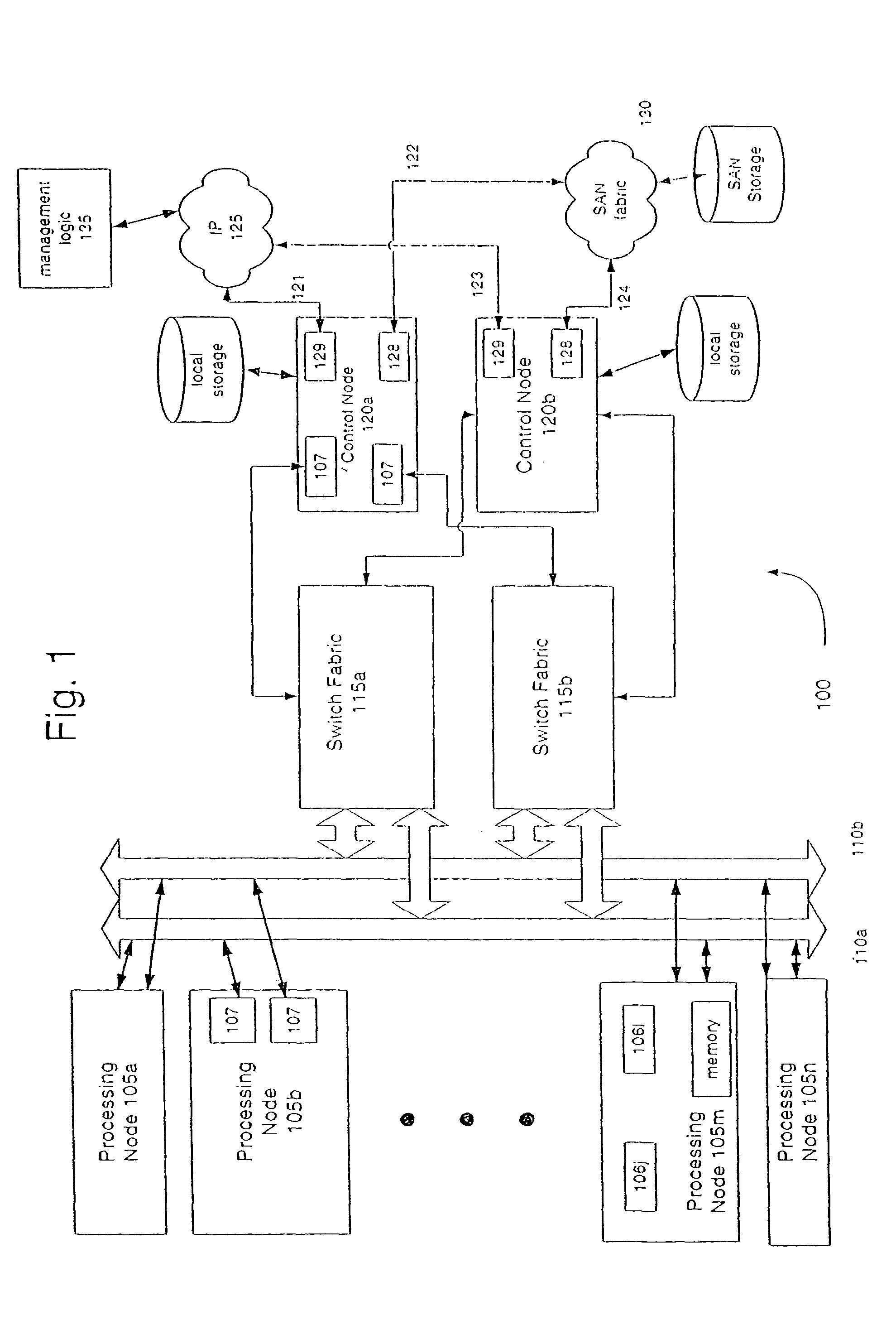

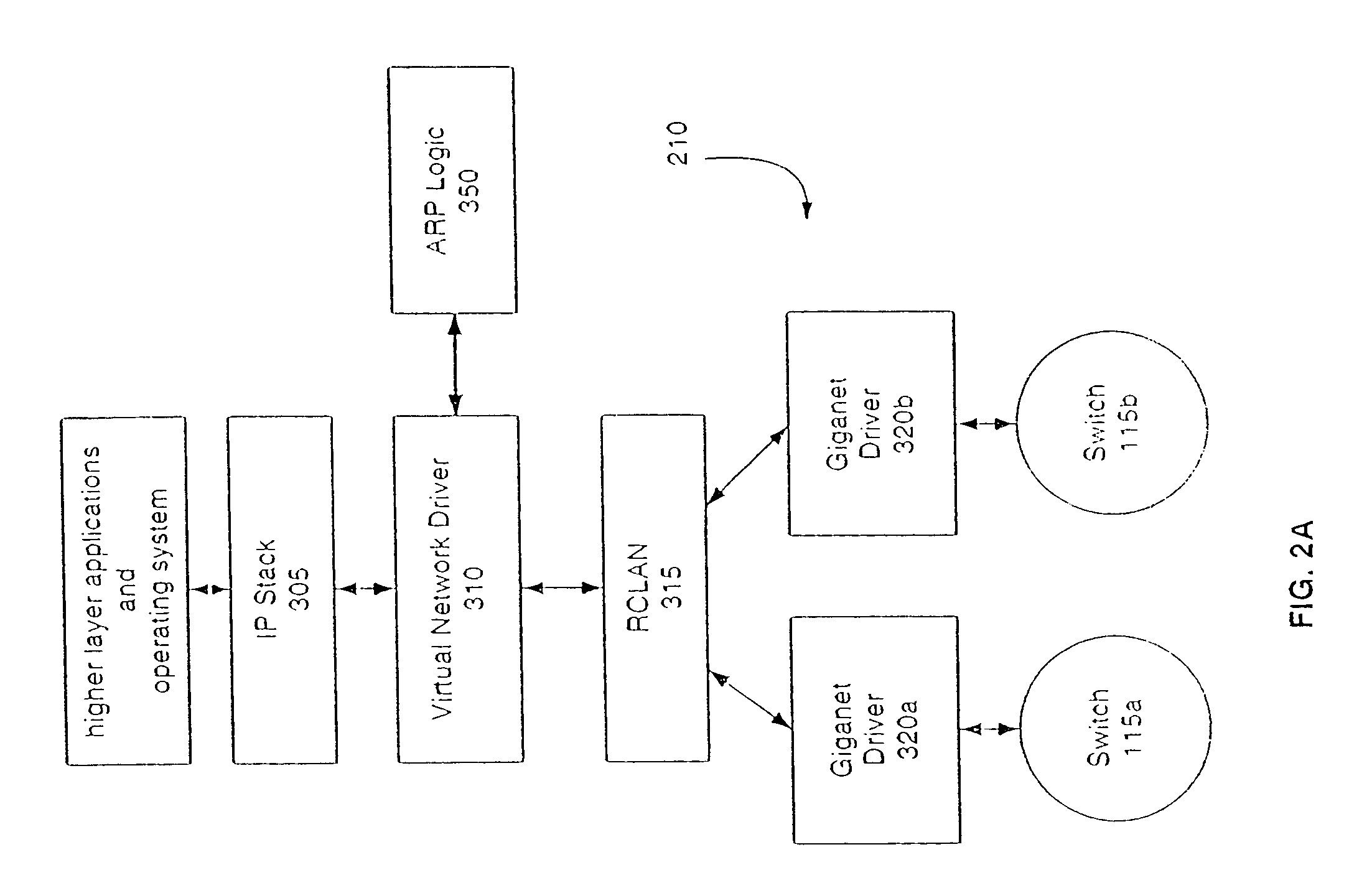

Address resolution protocol system and method in a virtual network

InactiveUS7174390B2Special service provision for substationData switching by path configurationAddress Resolution ProtocolIp address

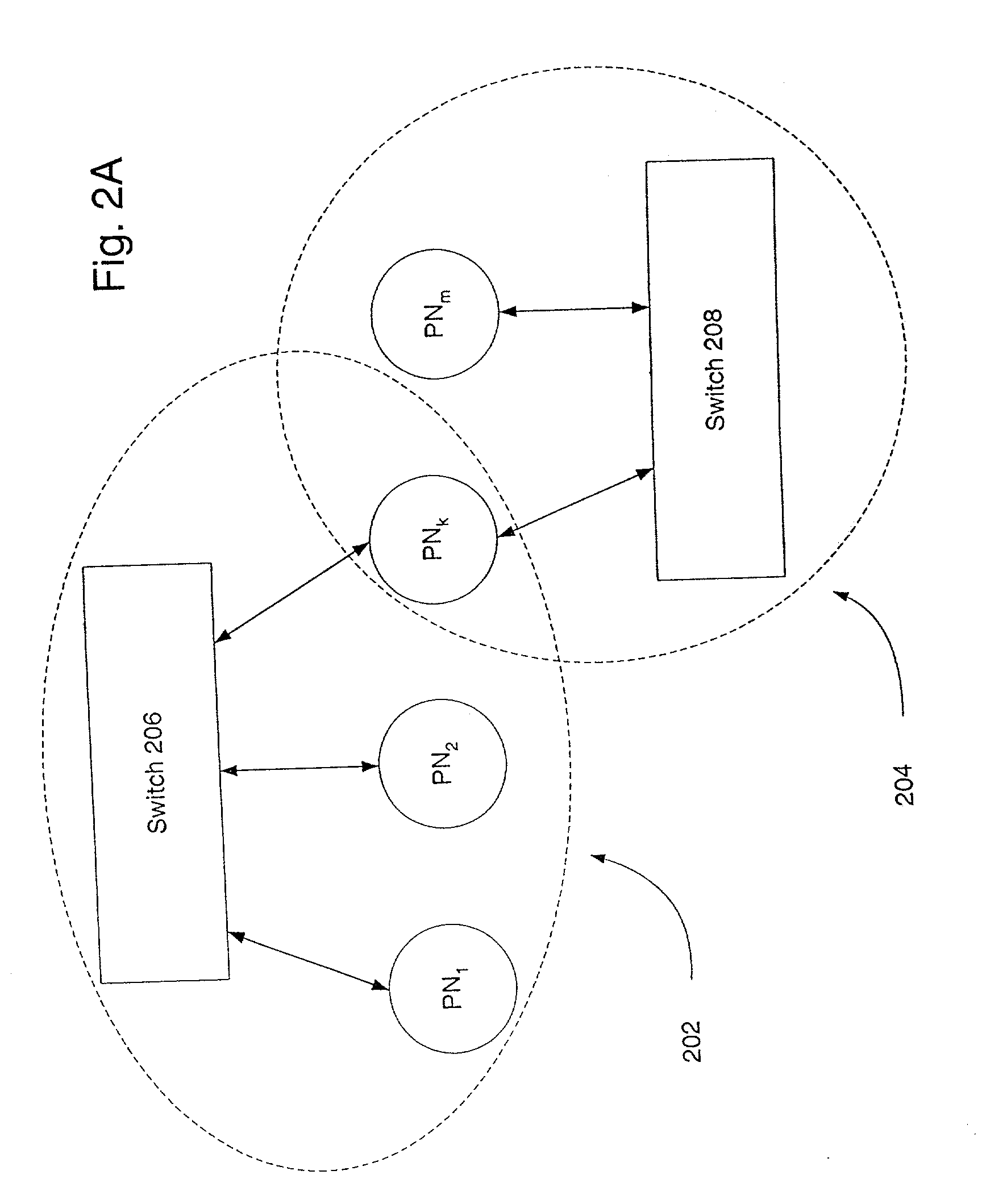

A virtual networking system and method are disclosed. Switched Ethernet local area network semantics are provided over an underlying point to point mesh. Computer processor nodes may directly communicate via virtual interfaces over a switch fabric or they may communicate via an ethernet switch emulation. Address resolution protocol logic helps associate IP addresses with virtual interfaces while allowing computer processors to reply to ARP requests with virtual MAC addresses.

Owner:EGENERA

Automatic firmware update of processor nodes

InactiveUS6904457B2Raise the possibilityAvoid incompatibilitiesDigital computer detailsData resettingCommunication interfaceProcessor node

Provided is a method, system, and program for updating the firmware in a nodal system. The nodal system includes at least two nodes, wherein each node includes a processing unit and a memory including code. The nodes communicate over a communication interface. At least one querying node transmits a request to at least one queried node in the nodal system for a level of the code at the node over the communication interface. At least one node receives a response from the queried node receiving the request indicating the level of code at the queried node over the communication interface. The node receiving the response determines whether at least one queried node has a higher code level.

Owner:IBM CORP

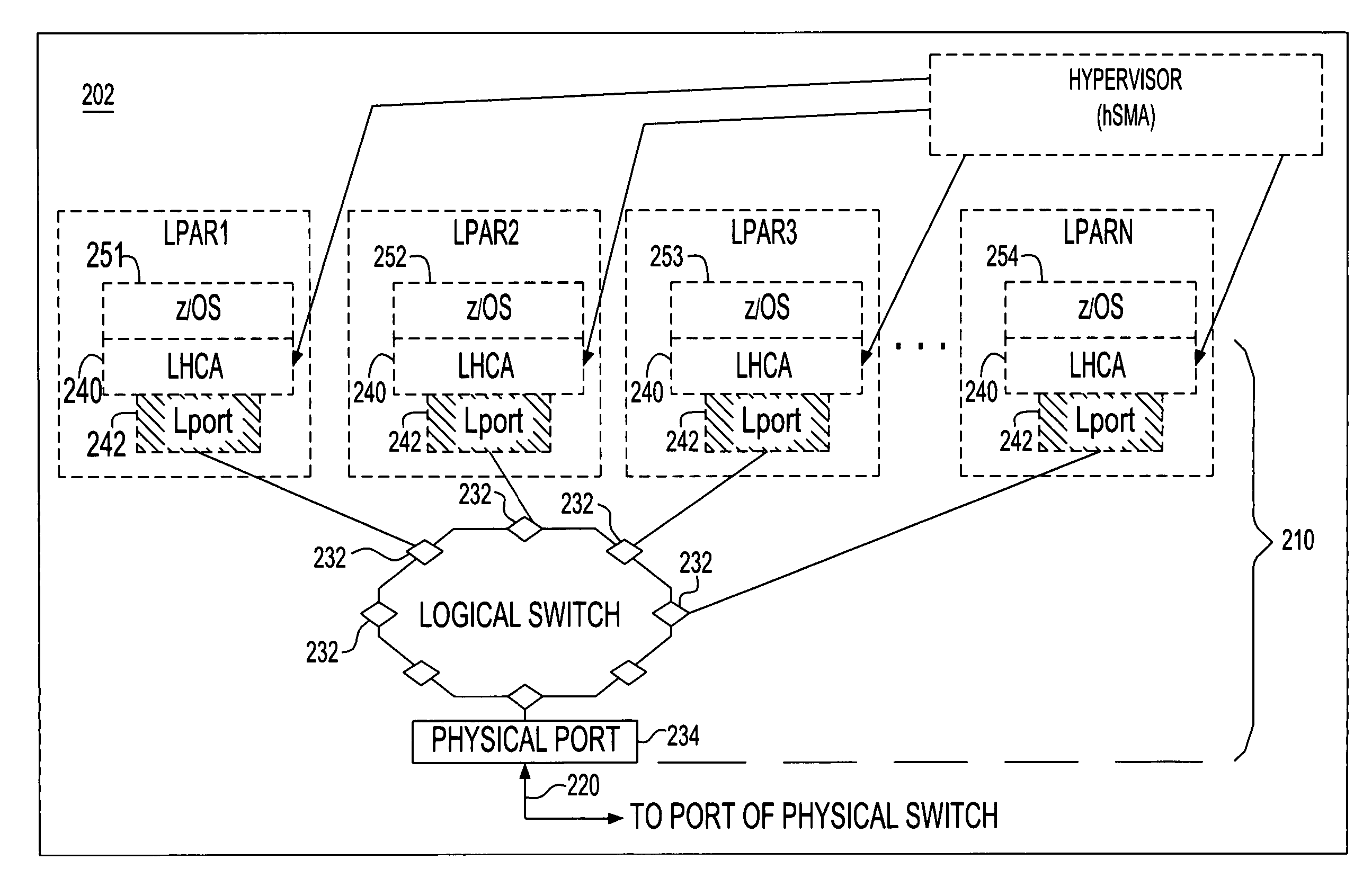

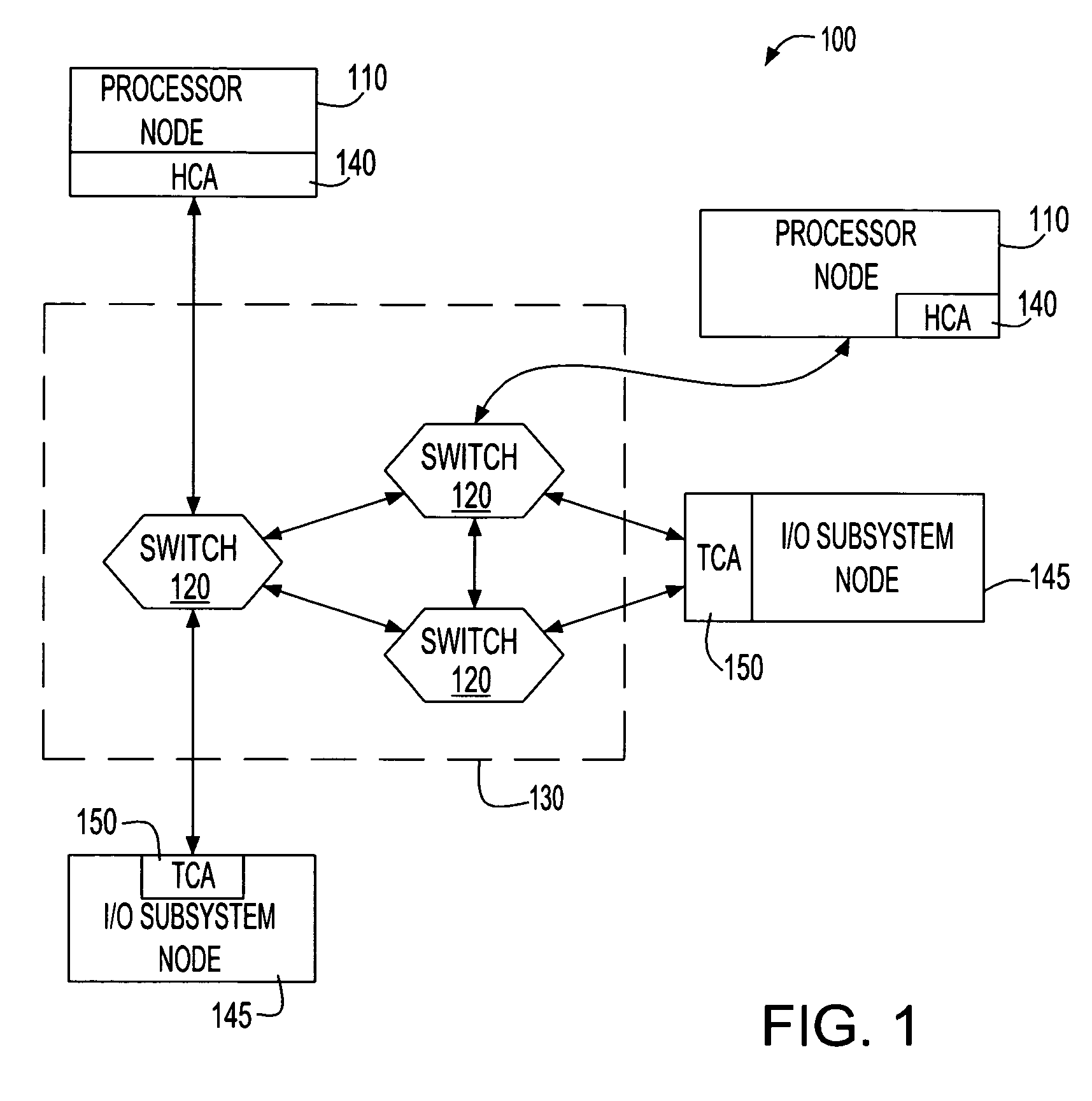

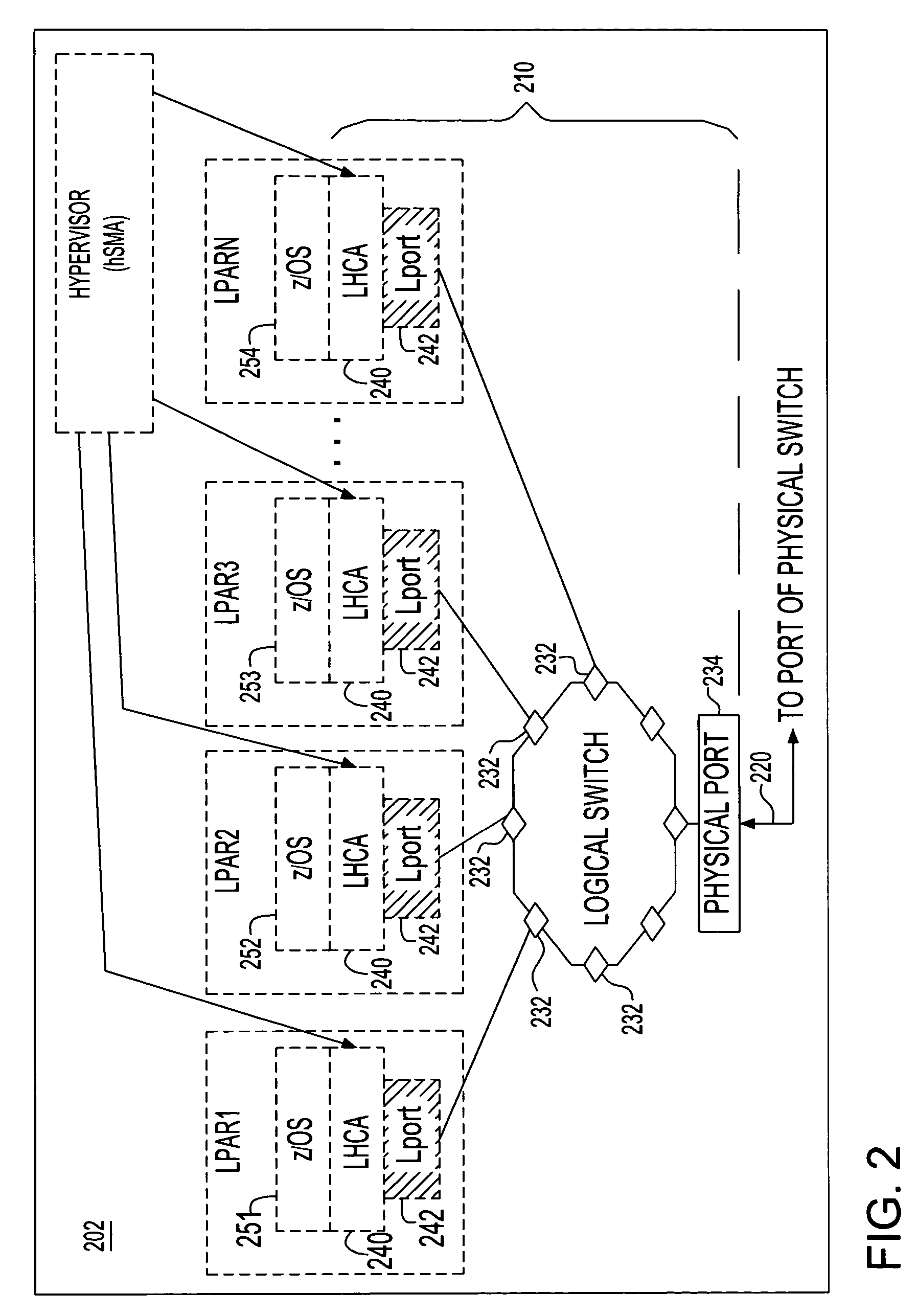

System and method for providing multiple virtual host channel adapters using virtual switches

InactiveUS20060230185A1Multiple digital computer combinationsTransmissionVirtualizationProcessor node

A processor node of a network is provided which includes one or more processors and a virtualized channel adapter. The virtualized channel adapter is operable to reference a table to determine whether a destination of the communication is supported by the virtualized channel adapter. When the destination is supported for routing via hardware, the virtualized channel adapter is operable to route the communication via hardware to at least one of a physical port and a logical port of the virtualized channel adapter. Otherwise, when the destination is not supported for routing via hardware, the virtualized channel adapter is operable to route the communication via firmware to a virtual port of the virtualized channel adapter. A corresponding method and a recording medium having information recorded thereon for performing such method are also provided herein.

Owner:IBM CORP

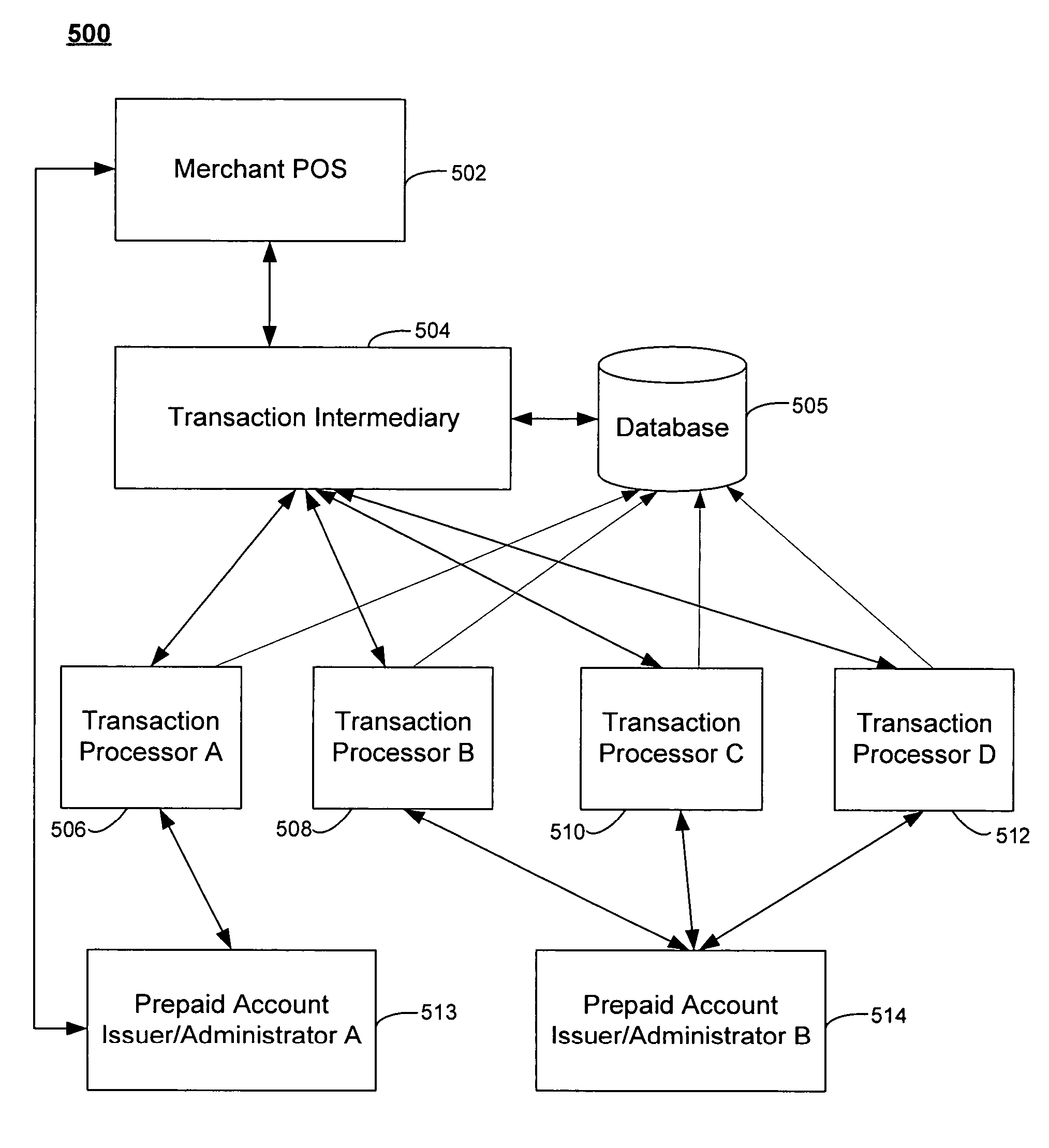

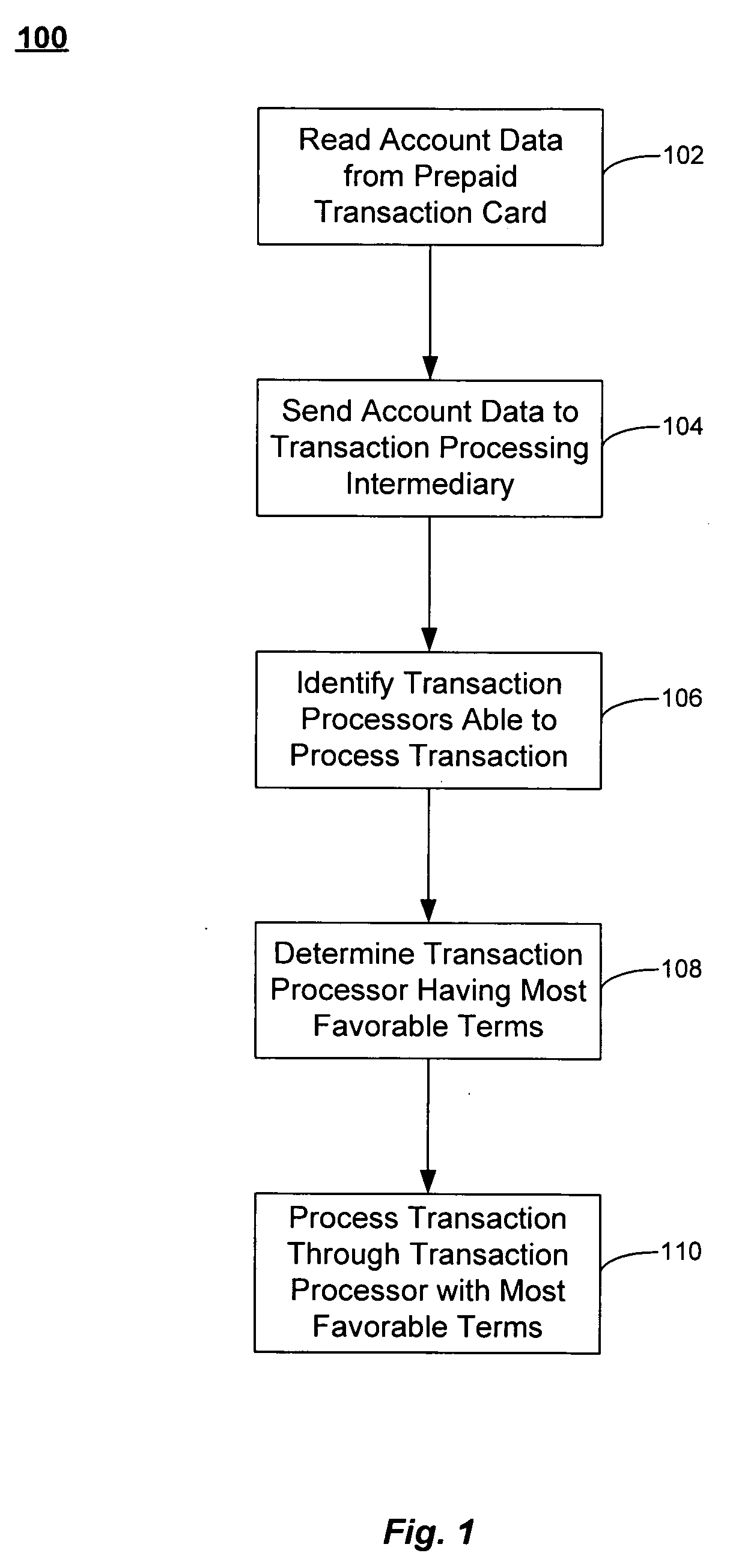

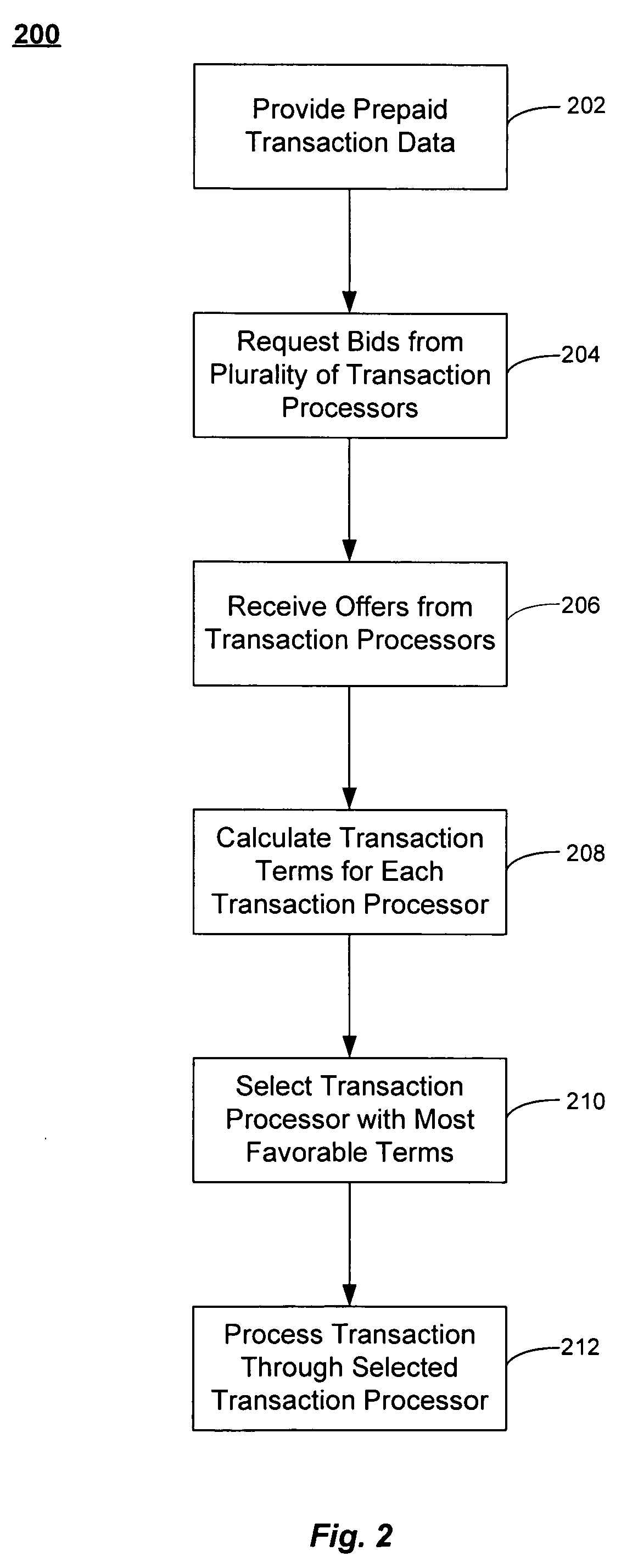

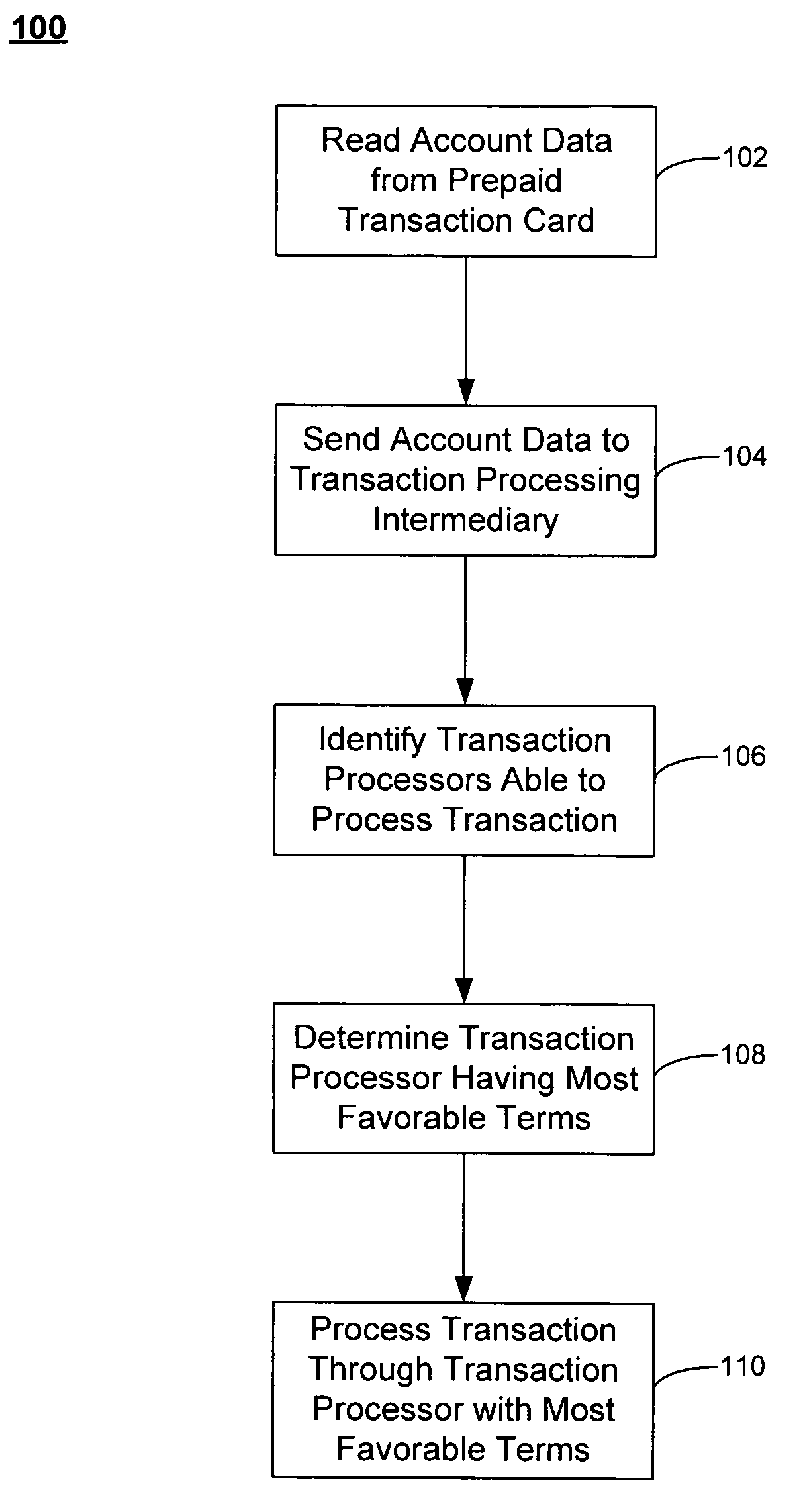

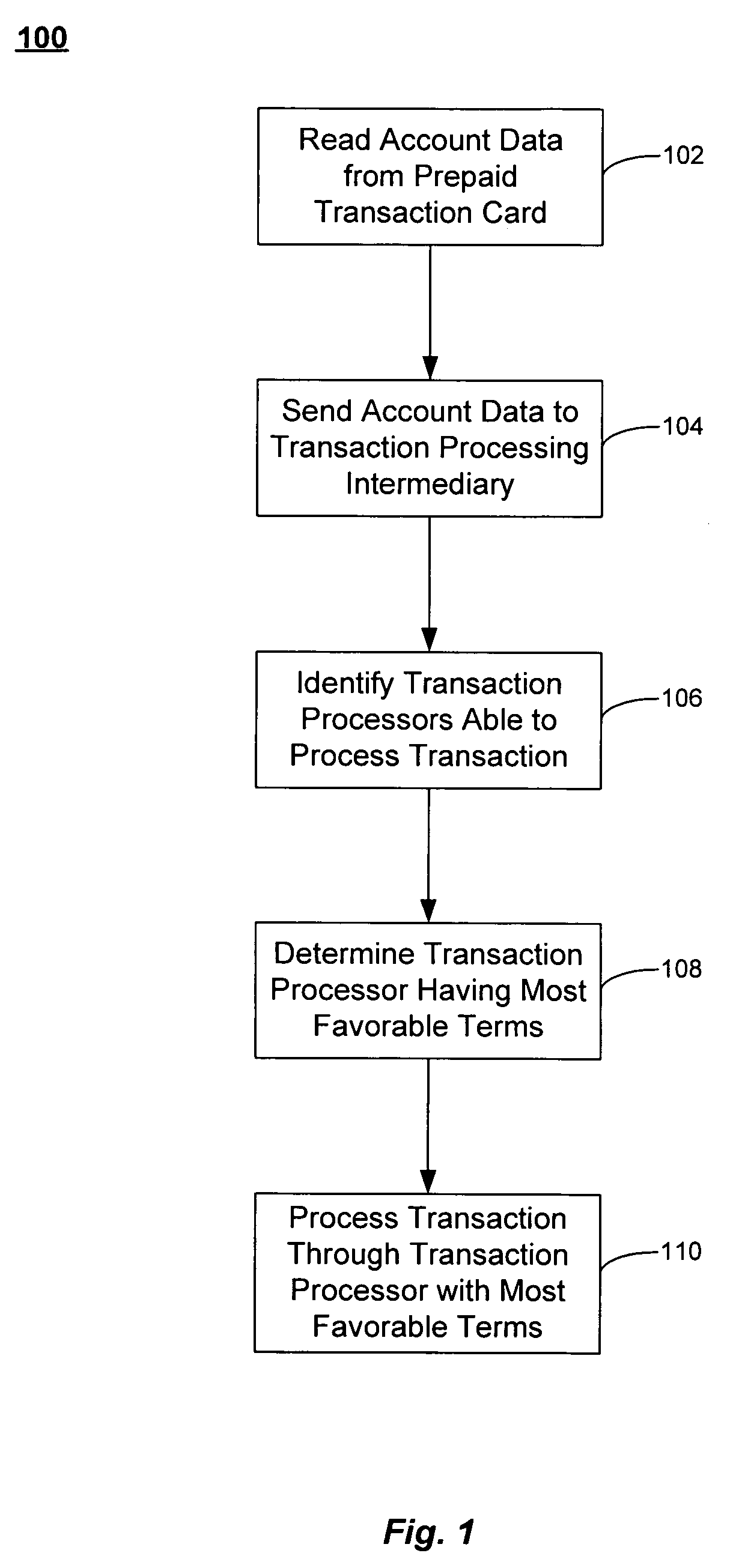

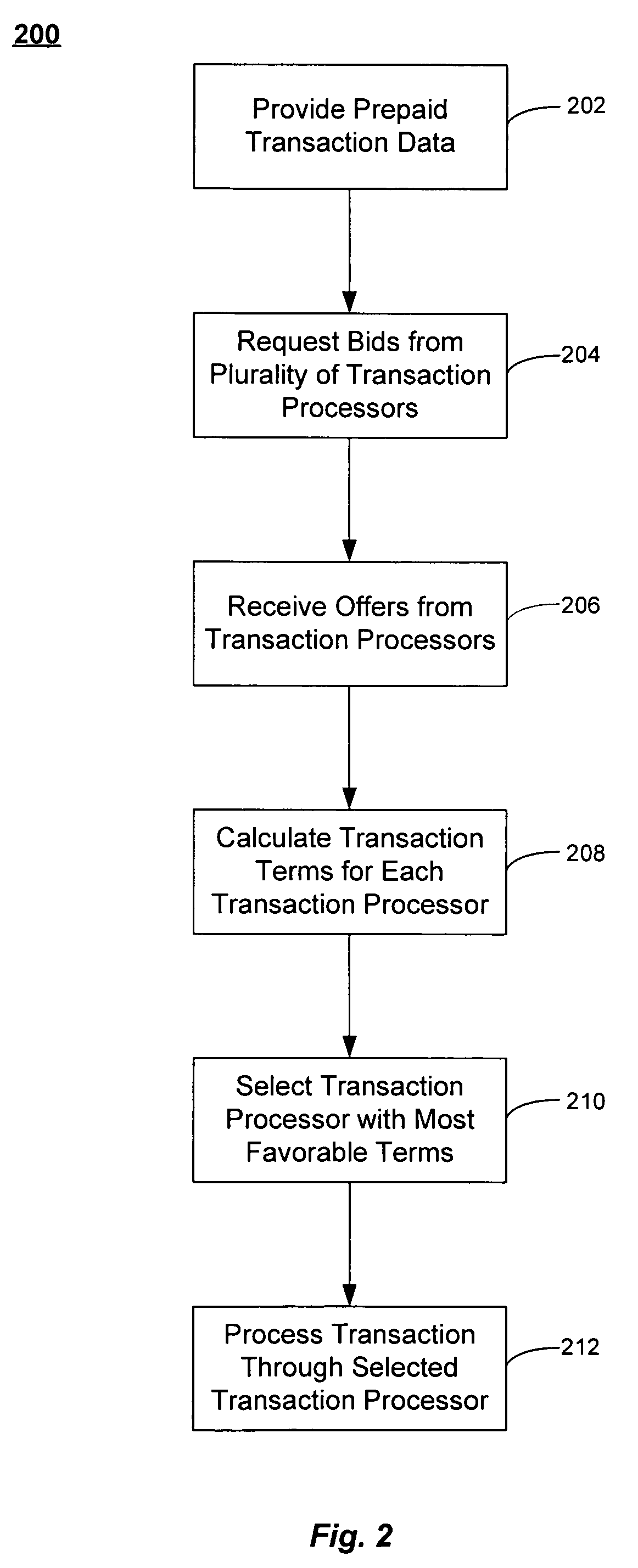

Real time prepaid transaction bidding

ActiveUS20070090183A1High commissionComplete banking machinesFinanceProcessor nodeElectronic communication

Electronic transaction networks are described that are operable to find a pathway to complete an electronic data exchange for a prepaid transaction account. The networks may include an intermediary node, in electronic communication with a transaction point node where transaction information is input, and a plurality of processing nodes that can communicate with an account provider node that administers the prepaid transaction account. The intermediary node receives transaction data that may include an account identifier from the transaction point node, and identify one or more of the processing nodes that can form part of the pathway. The pathway may include the transaction point node, the intermediary node, at least one of the processing nodes, and the account provider node. In addition, the intermediary node may find the processing node that forms the pathway for the highest transaction commission when more than one of the processor nodes can form part of the pathway.

Owner:FIRST DATA RESOURCES LLC

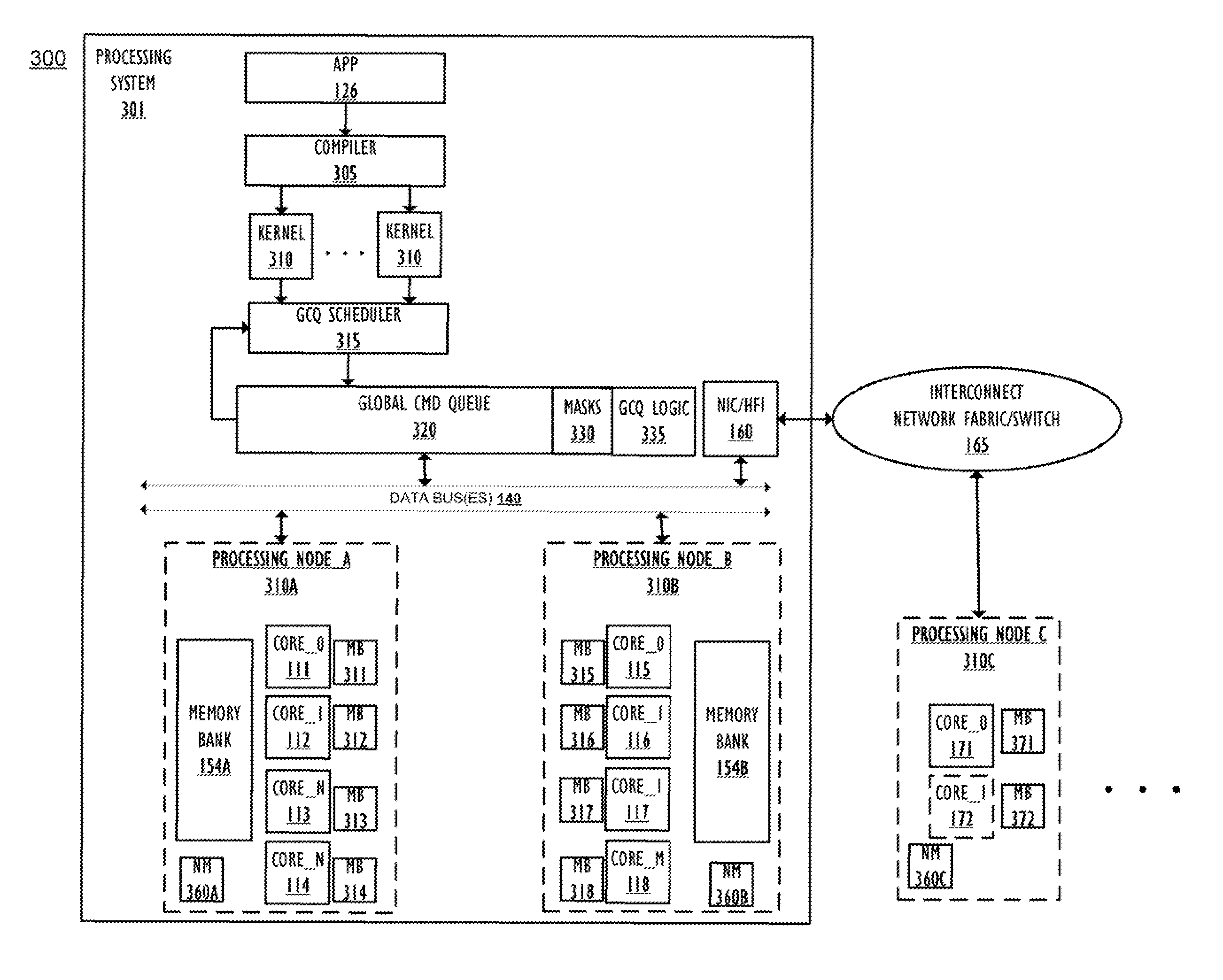

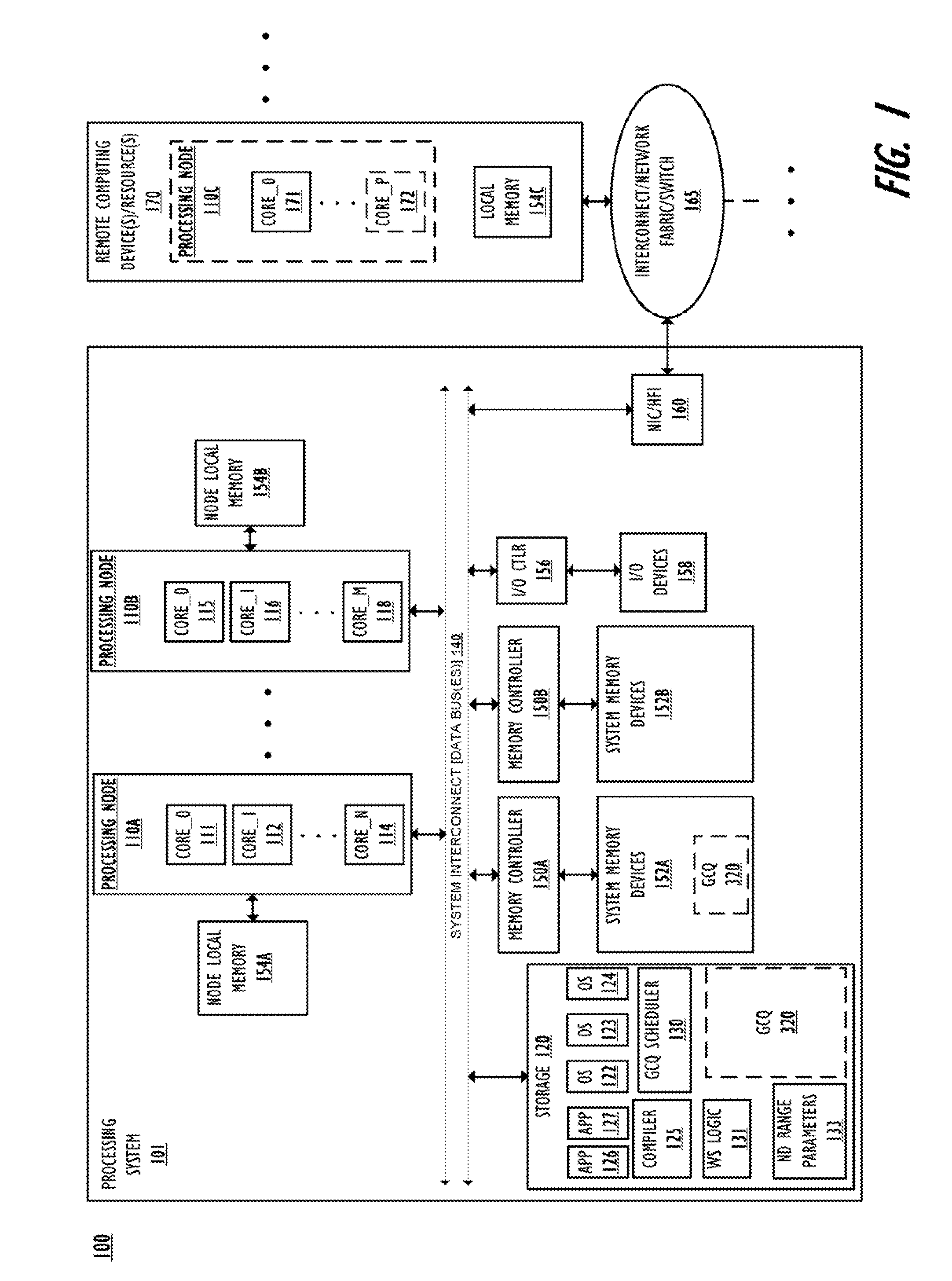

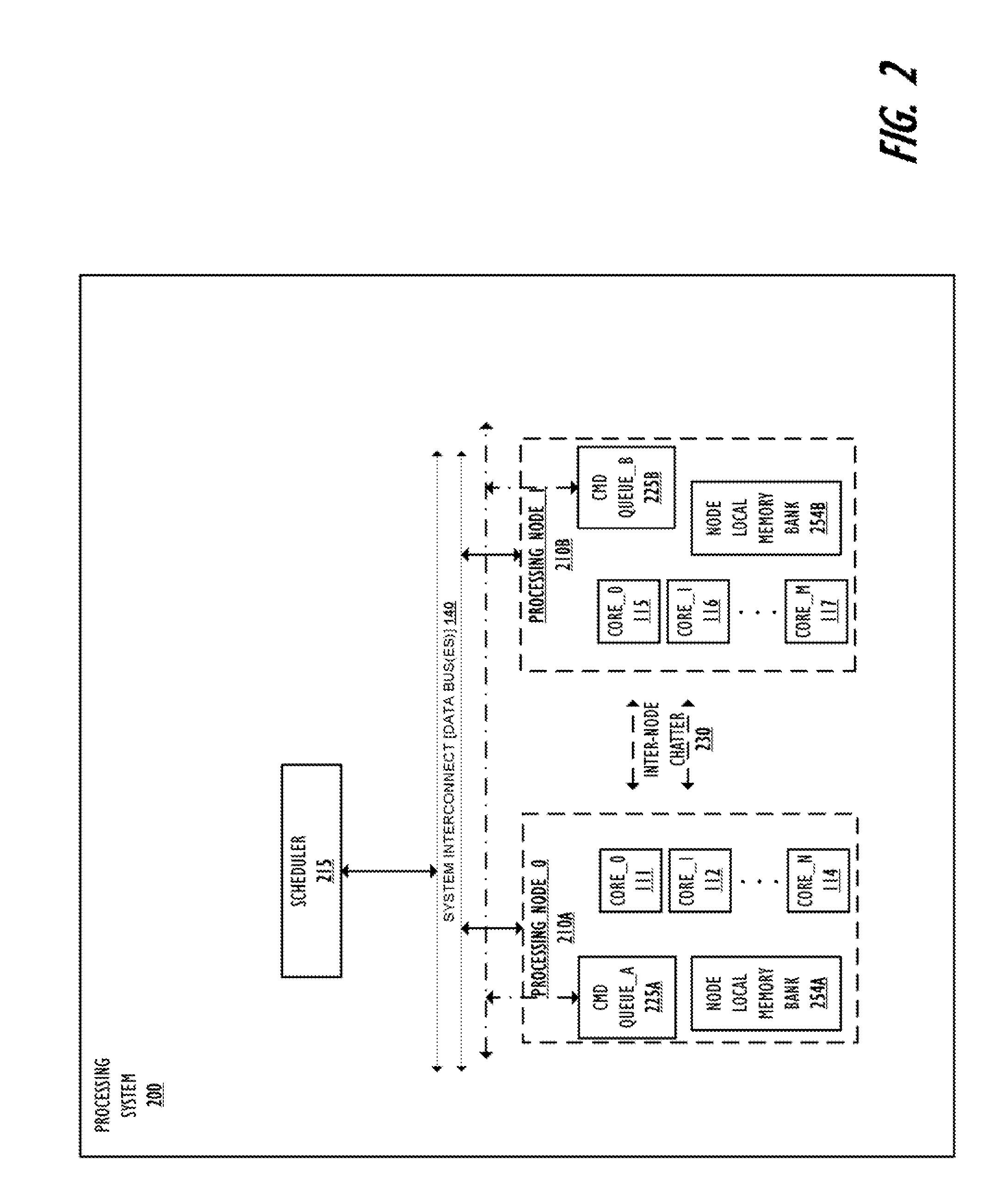

Method to reduce queue synchronization of multiple work items in a system with high memory latency between processing nodes

InactiveUS20110161976A1High access latencyEfficient dispatch/completionResource allocationProgram synchronisationParallel computingEngineering

A method efficiently dispatches / completes a work element within a multi-node, data processing system that has a global command queue (GCQ) and at least one high latency node. The method comprises: at the high latency processor node, work scheduling logic establishing a local command / work queue (LCQ) in which multiple work items for execution by local processing units can be staged prior to execution; a first local processing unit retrieving via a work request a larger chunk size of work than can be completed in a normal work completion / execution cycle by the local processing unit; storing the larger chunk size of work retrieved in a local command / work queue (LCQ); enabling the first local processing unit to locally schedule and complete portions of the work stored within the LCQ; and transmitting a next work request to the GCQ only when all the work within the LCQ has been dispatched by the local processing units.

Owner:IBM CORP

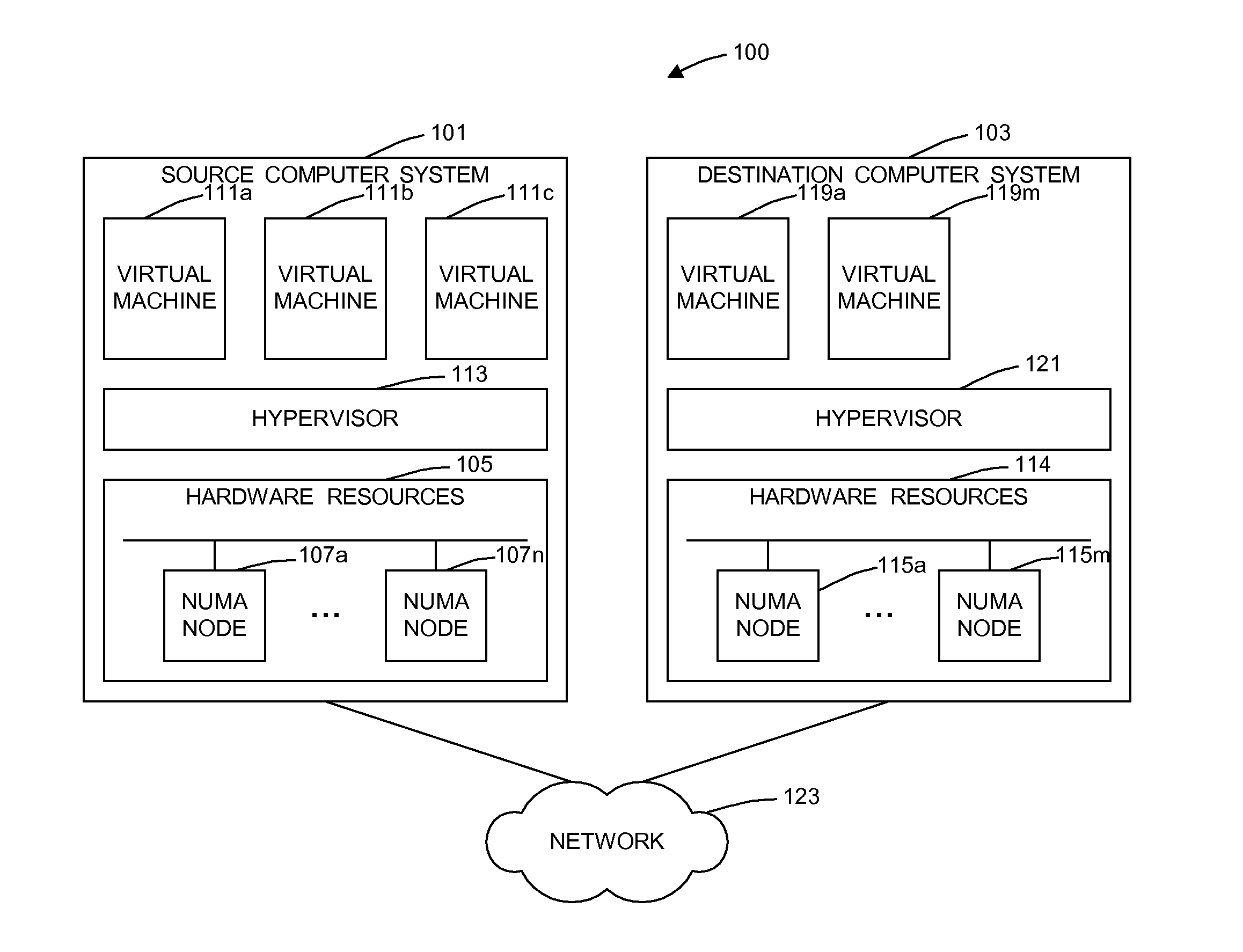

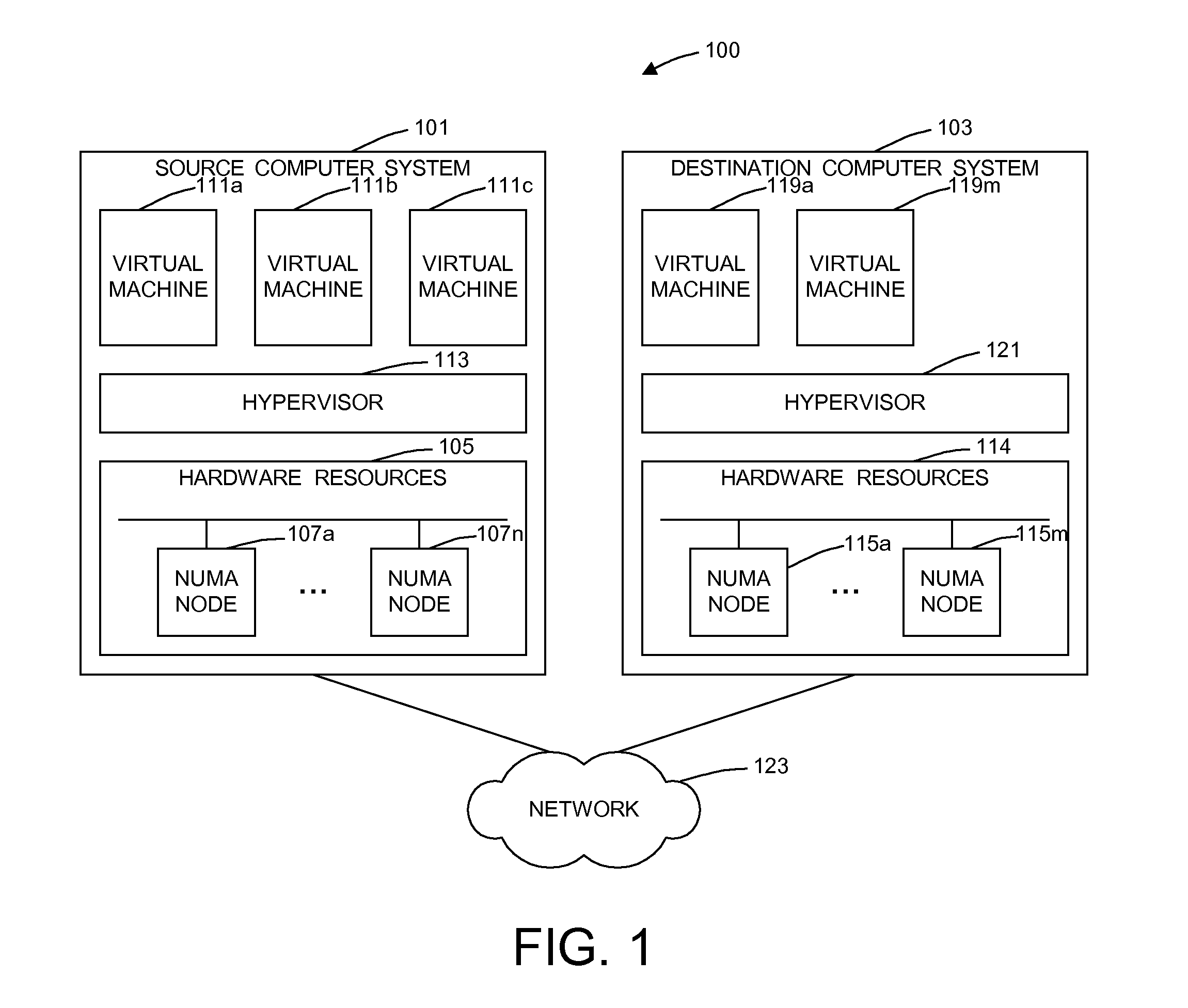

Dynamic memory affinity reallocation after partition migration

ActiveUS20120102258A1Memory adressing/allocation/relocationComputer security arrangementsProcessor nodeComputerized system

A method of dynamically reallocating memory affinity in a virtual machine after migrating the virtual machine from a source computer system to a destination computer system migrates processor states and resources used by the virtual machine from the source computer system to the destination computer system. The method maps memory of the virtual machine to processor nodes of the destination computer system. The method deletes memory mappings in processor hardware, such as translation lookaside buffers and effective-to-real address tables, for the virtual machine on the destination computer system. The method starts the virtual machine on the destination computer system in virtual real memory mode. A hypervisor running on the destination computer system receives a page fault and virtual address of a page for said virtual machine from a processor of the destination computer system and determines if the page is in local memory of the processor. If the hypervisor determines the page to be in the local memory of the processor, the hypervisor returning a physical address mapping for the page to the processor. If the hypervisor determines the page not to be in the local memory of the processor, the hypervisor moves the page to local memory of the processor and returns a physical address mapping for said page to the processor.

Owner:IBM CORP

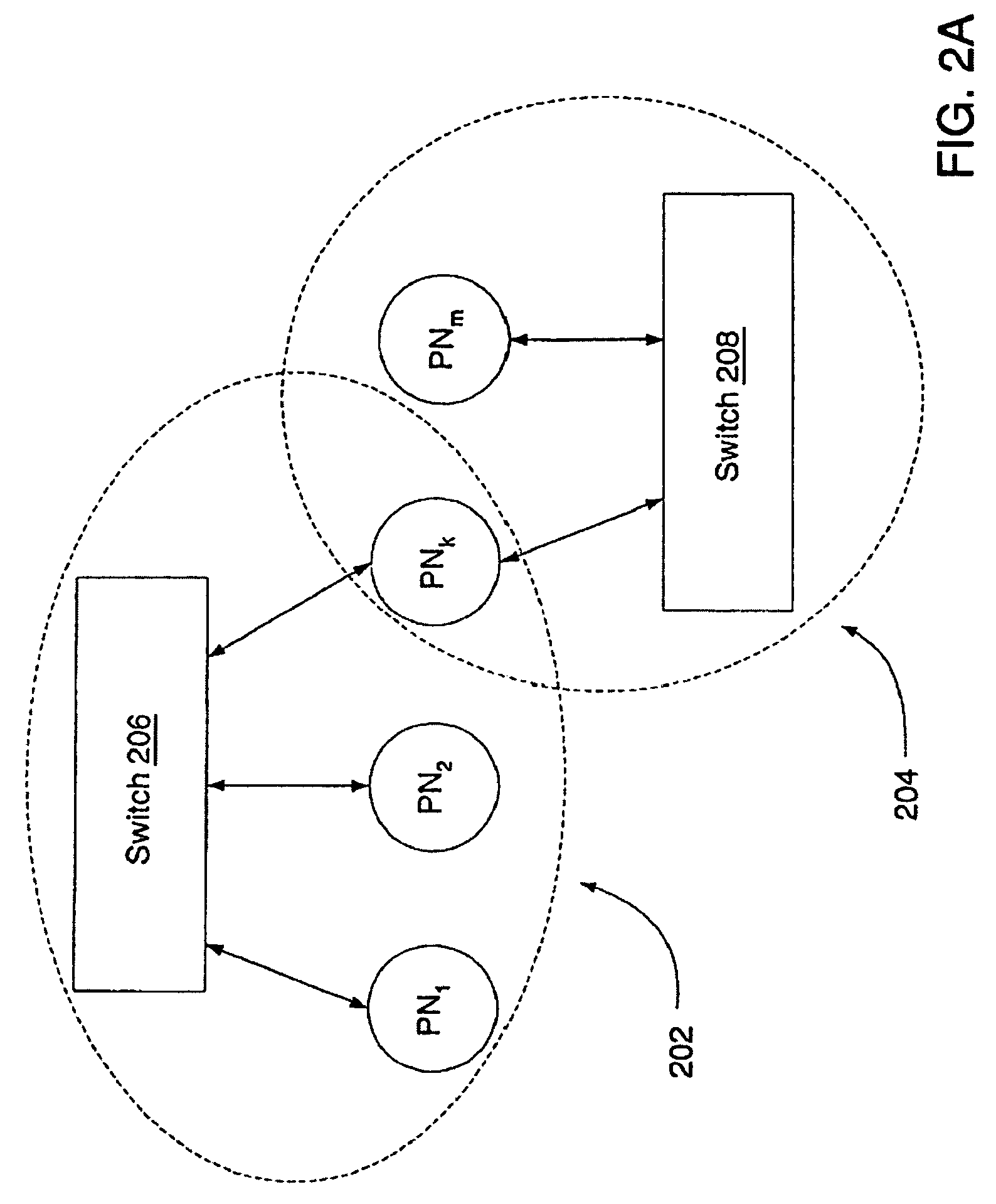

Proximity-based memory allocation in a distributed memory system

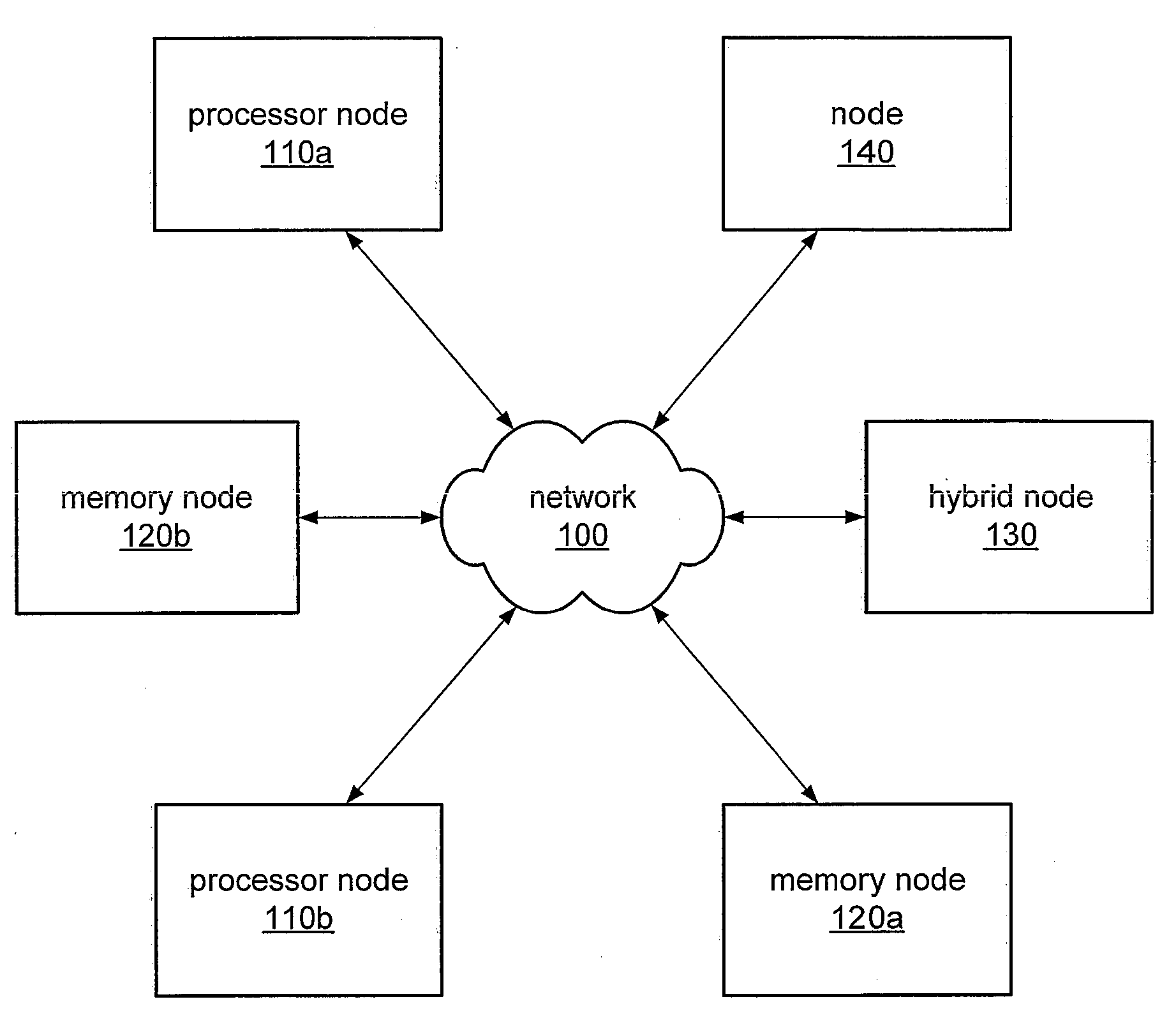

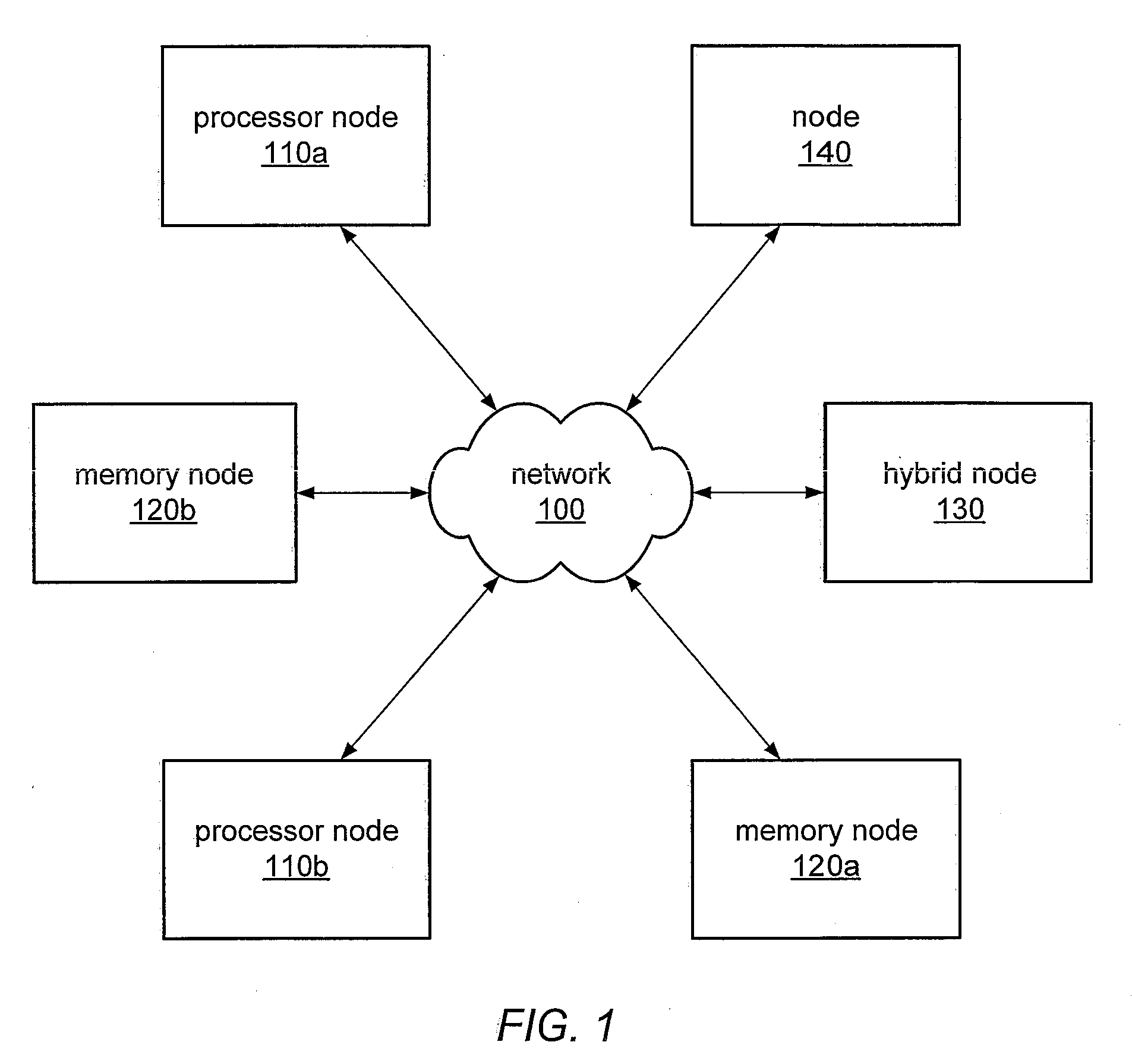

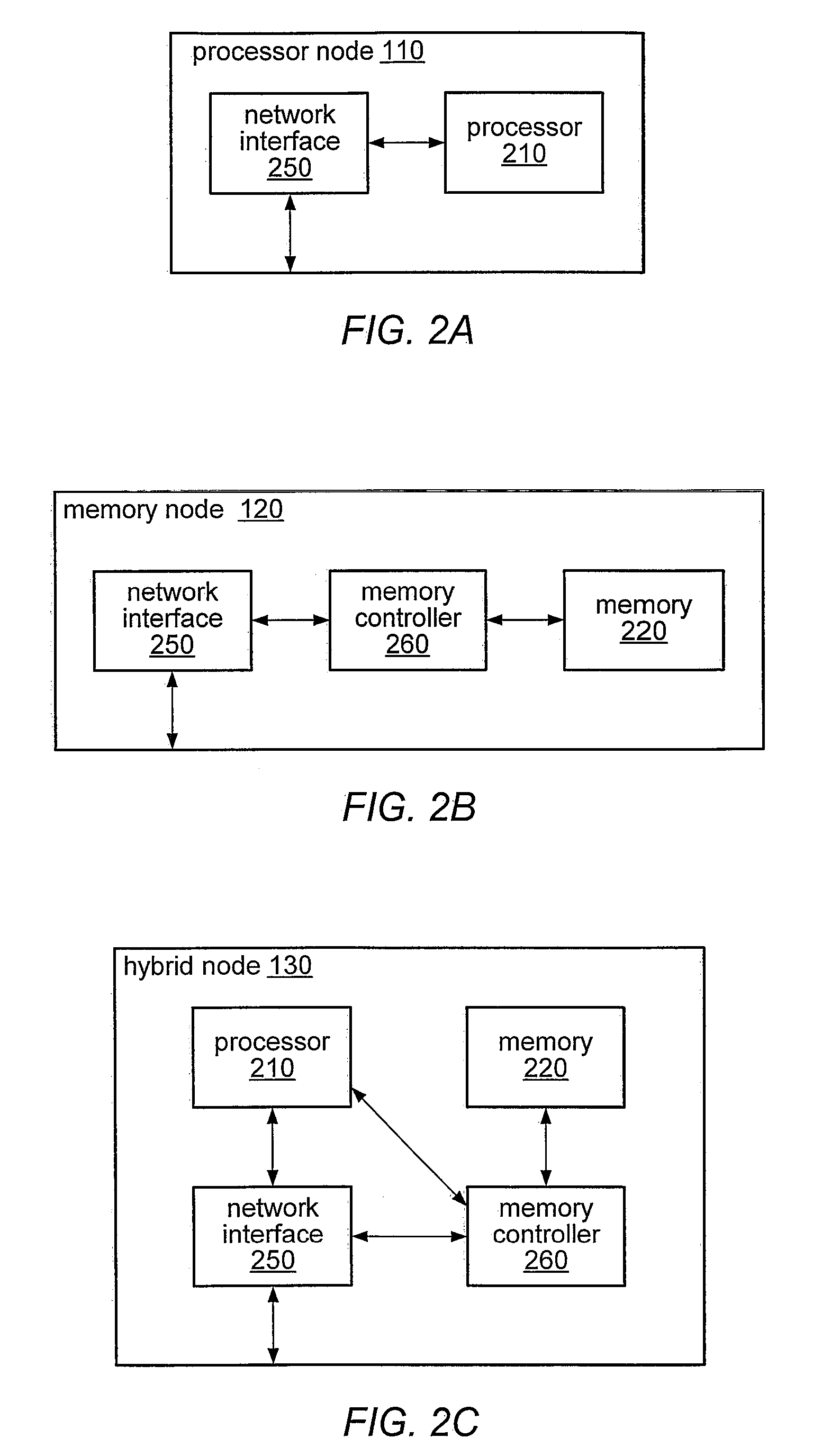

ActiveUS20070250604A1Increase the number ofDigital computer detailsData switching by path configurationProcessor nodeNetwork connection

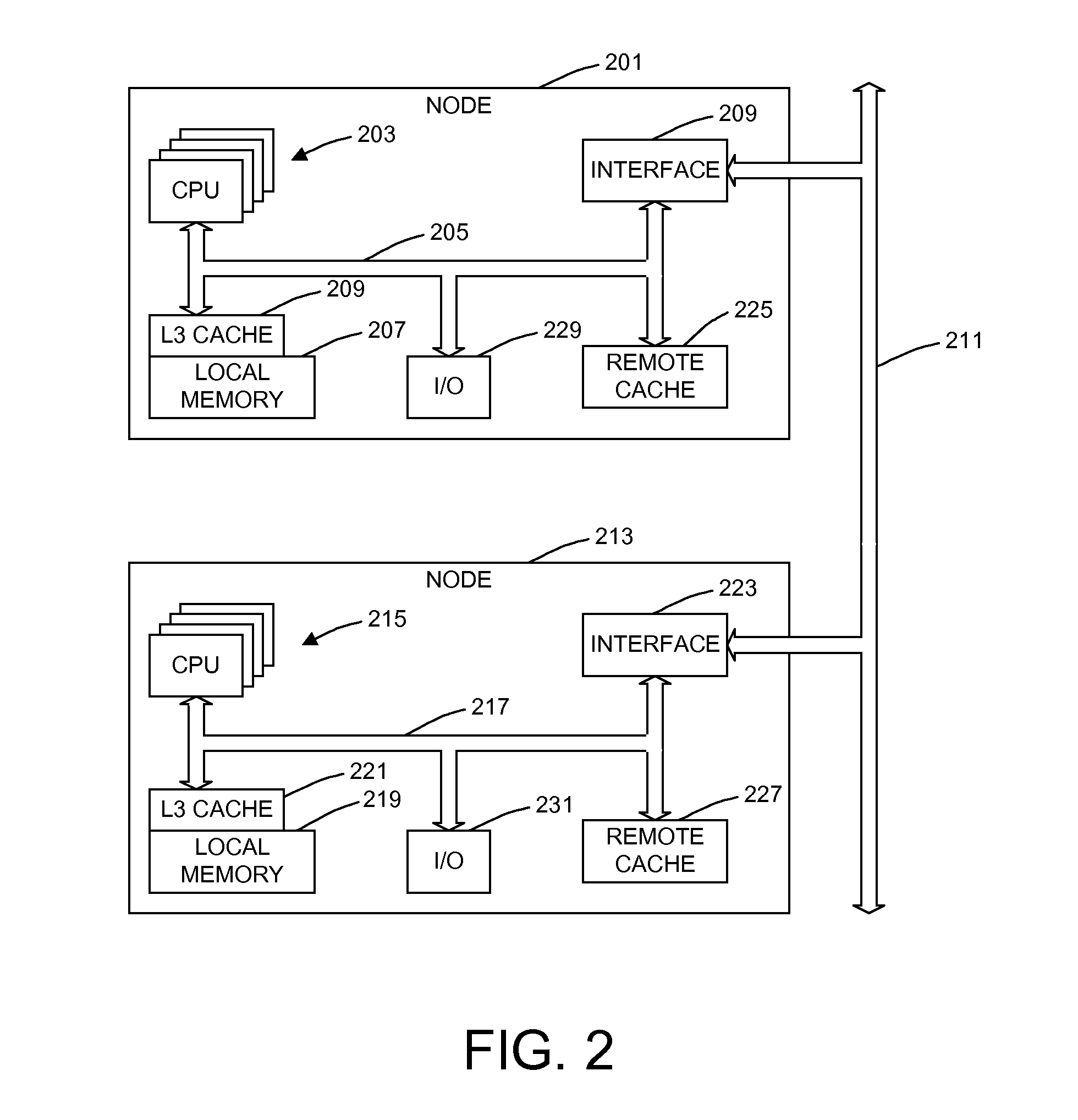

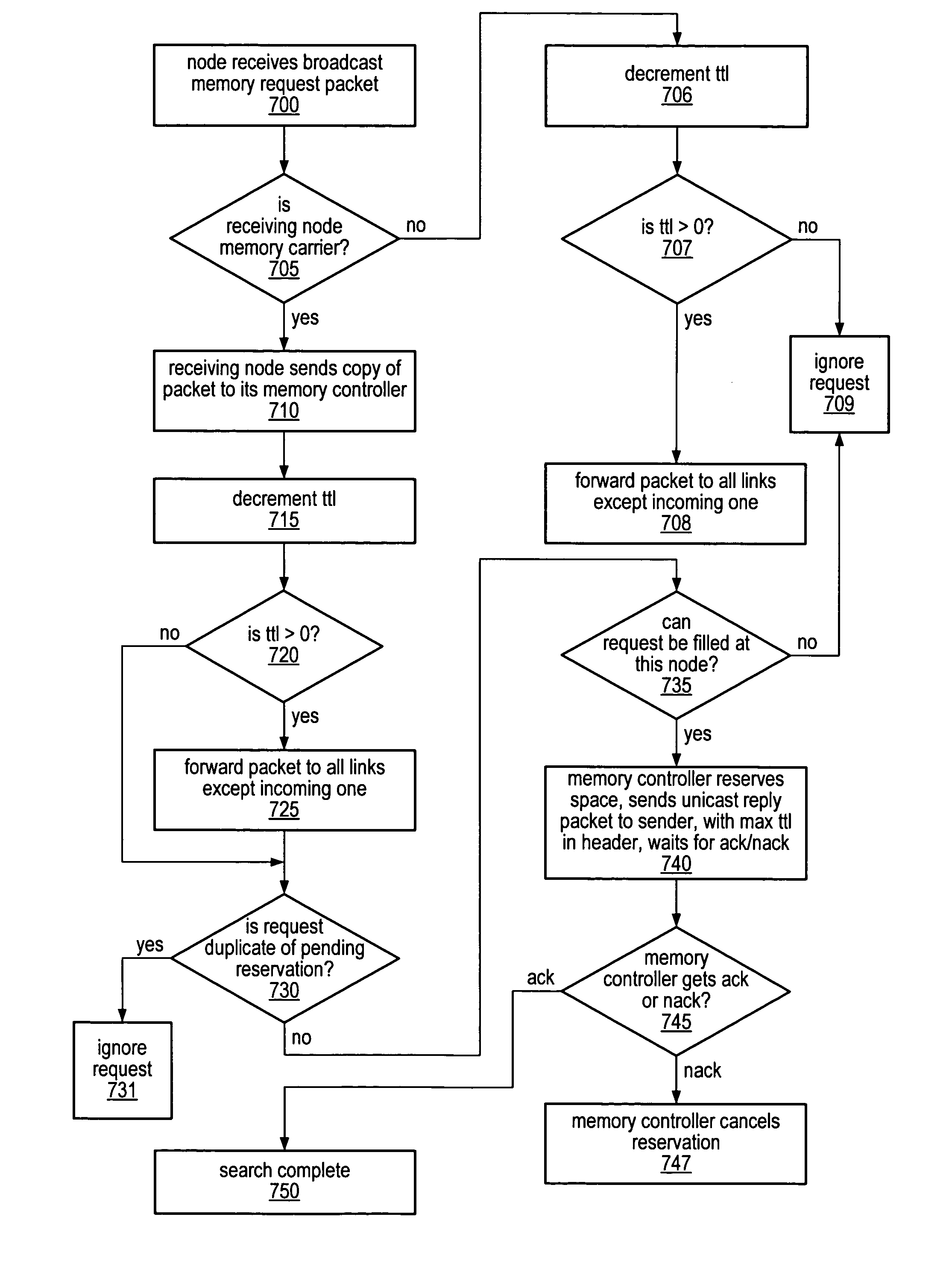

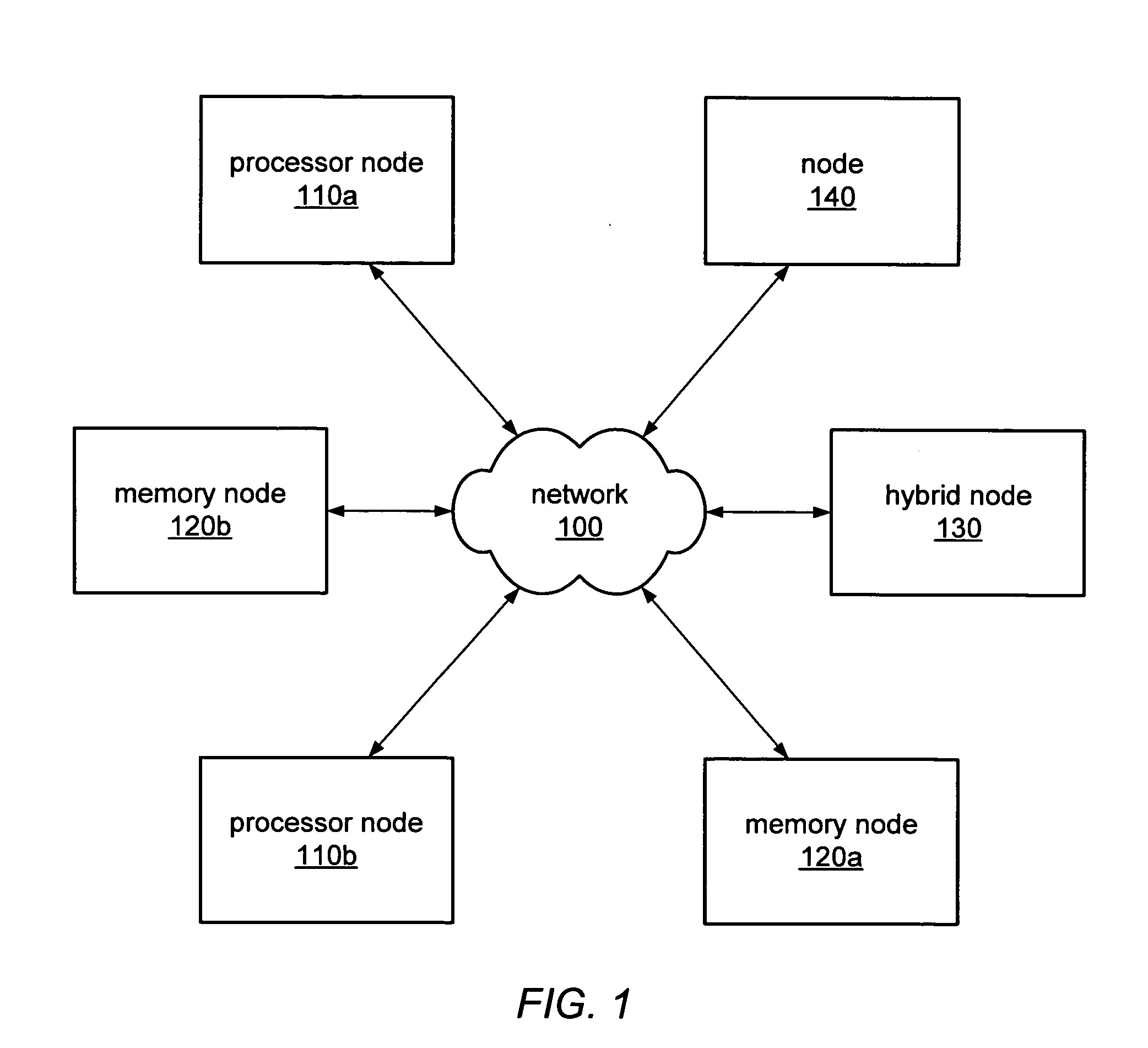

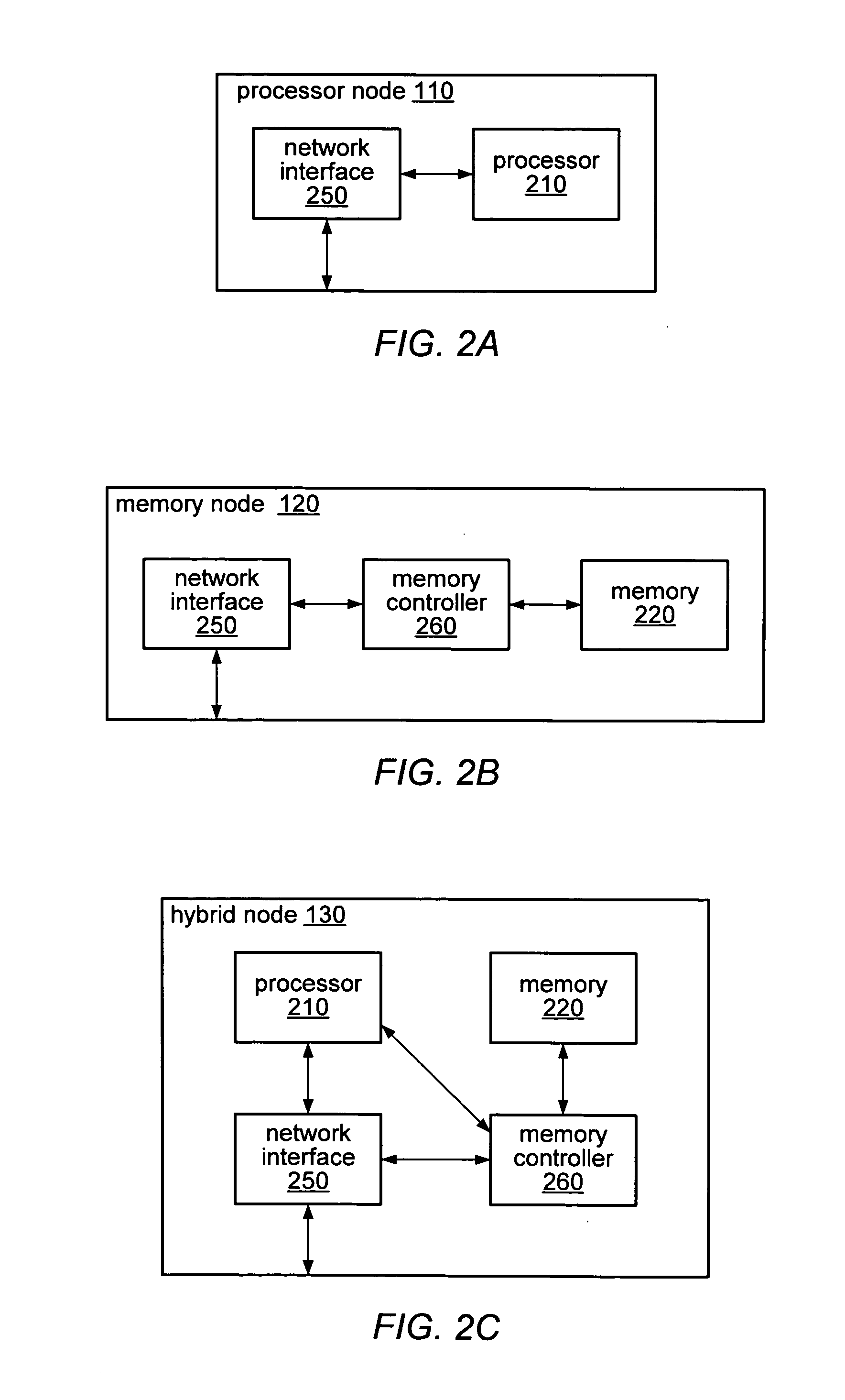

A system and method for allocating the nearest available physical memory in a distributed, shared memory system. In various embodiments, a processor node may broadcast a memory request to a first subset of nodes connected to it via a communication network. In some embodiments, if none of these nodes is able to satisfy the request, the processor node may broadcast the request to additional subsets of nodes. In some embodiments, each node of the first subset of nodes may be removed from the processor node by one network hop and each node of the additional subsets of nodes may be removed from the processor node by no more than an iteratively increasing number of network hops. In some embodiments, the processor node may send an acknowledgment to one node that can fulfill the request and a negative acknowledgement to other nodes that can fulfill the request.

Owner:ORACLE INT CORP

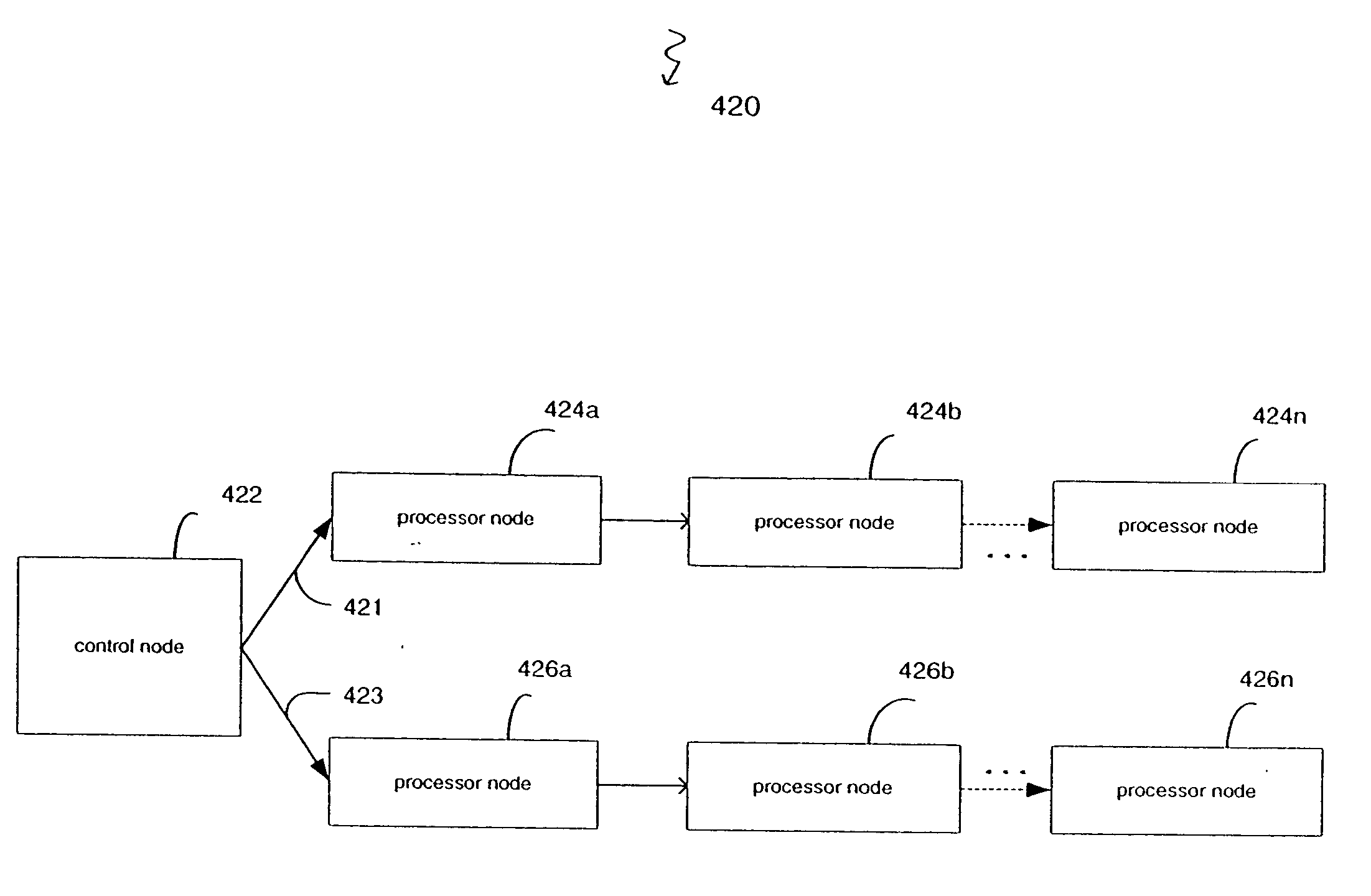

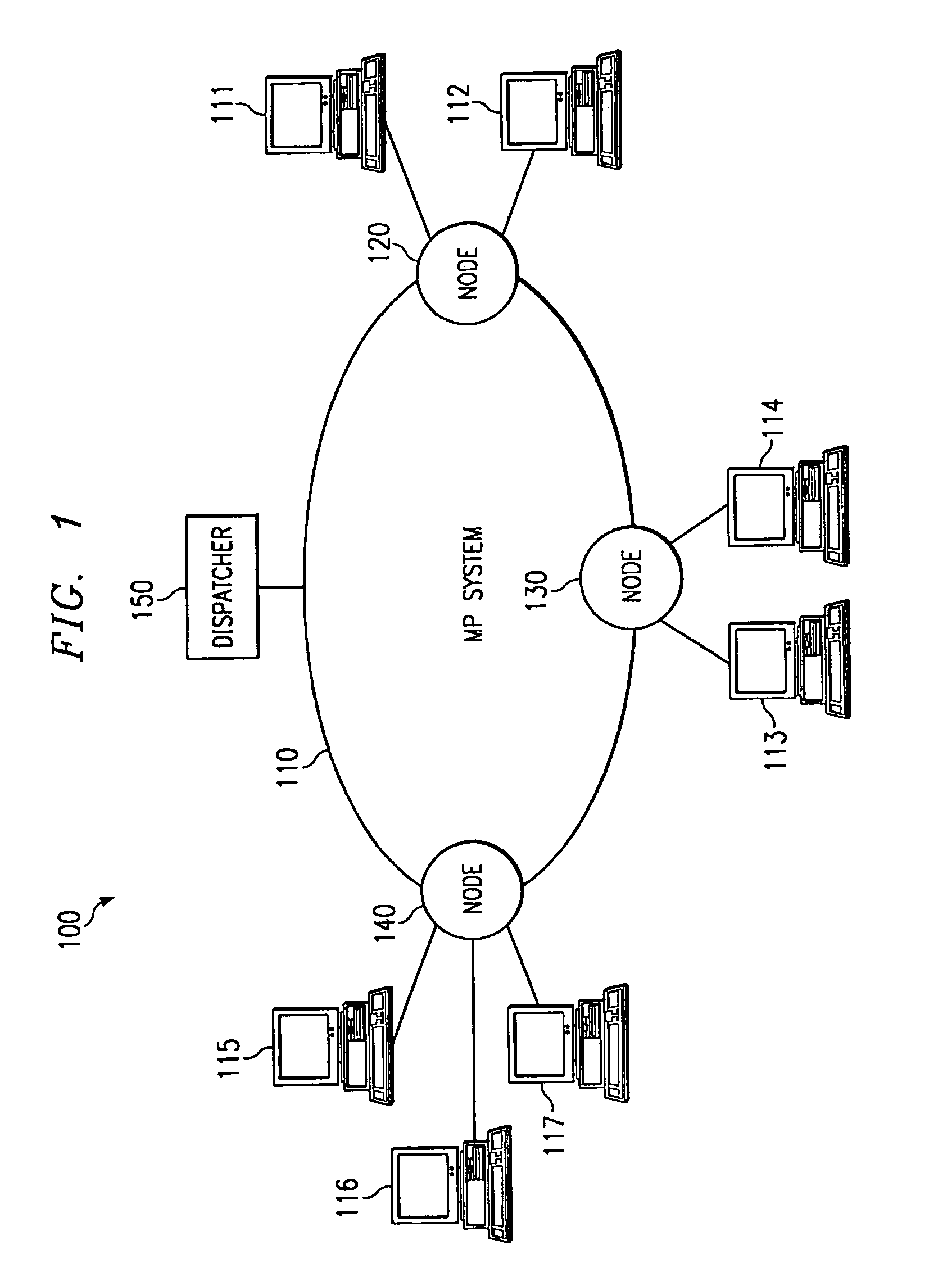

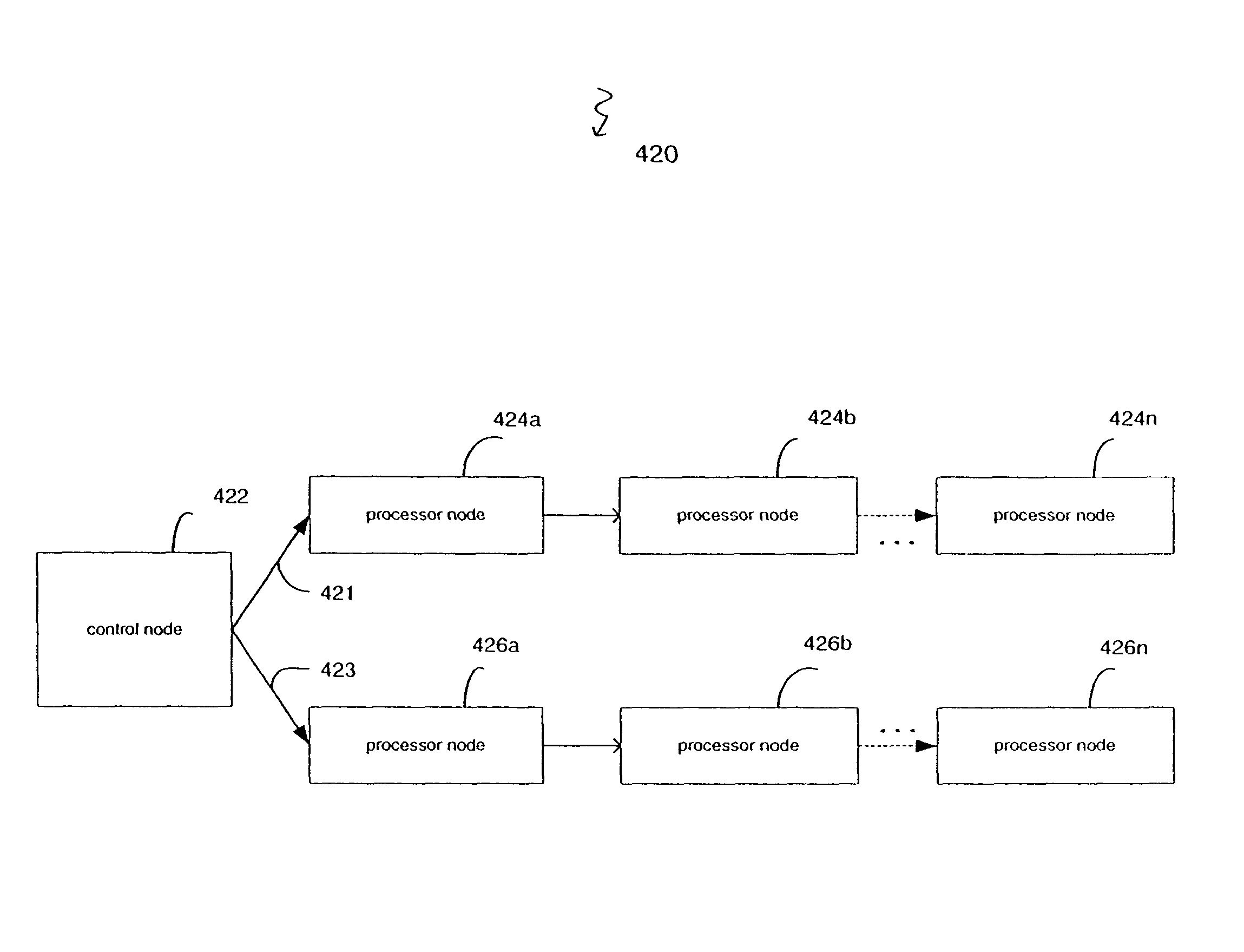

Distributed multicast system and method in a network

InactiveUS20060114903A1Time-division multiplexFrequency-division multiplexProcessor nodeMulticast packets

The invention provides multicast communication using distributed topologies in a network. The control nodes in the network build a distributed topology of processor nodes for providing multicast packet distribution. Multiple processor nodes in the network participate in the decisions regarding the forwarding of multicast packets as opposed to multicast communications being centralized in the control nodes.

Owner:EGENERA

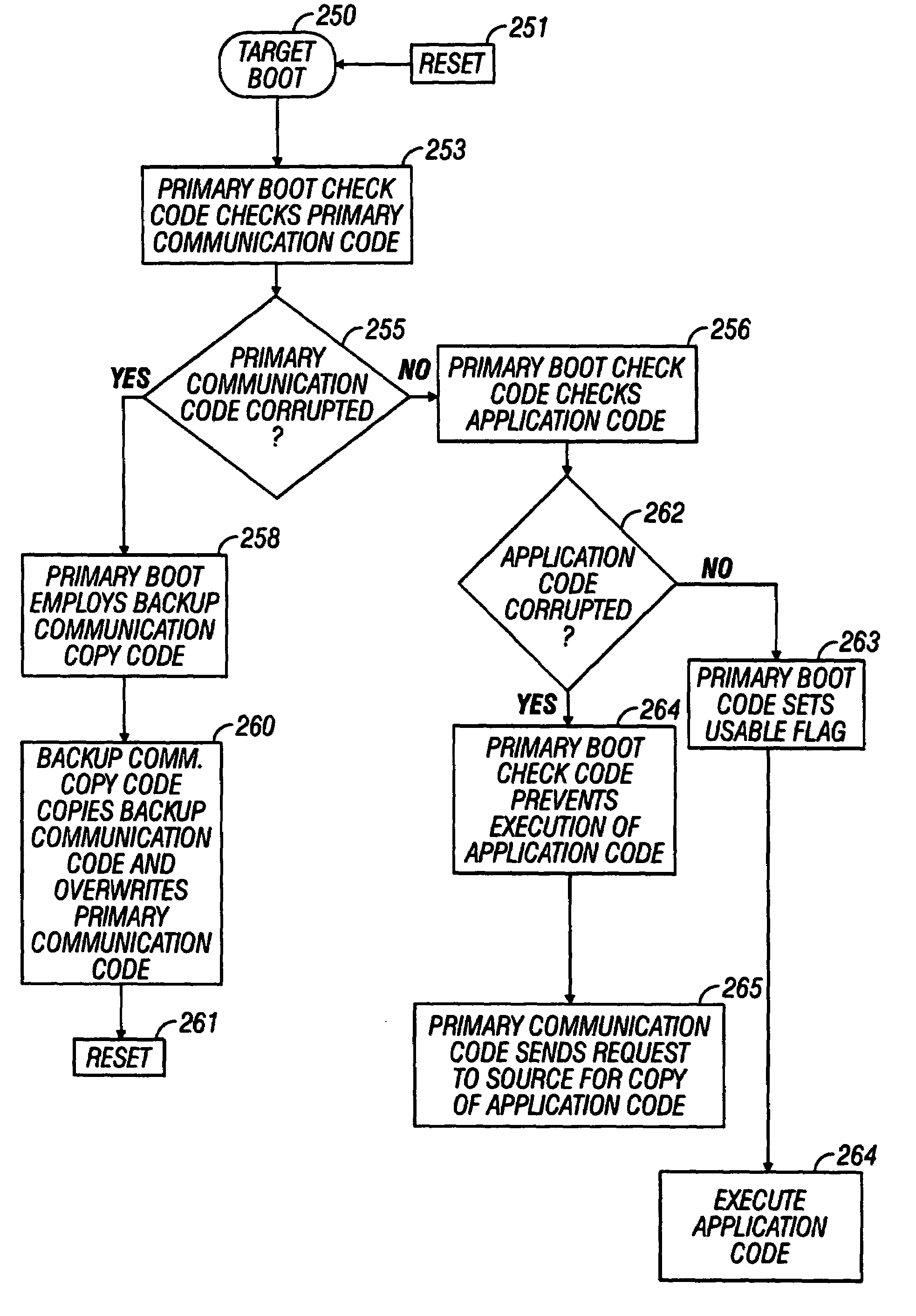

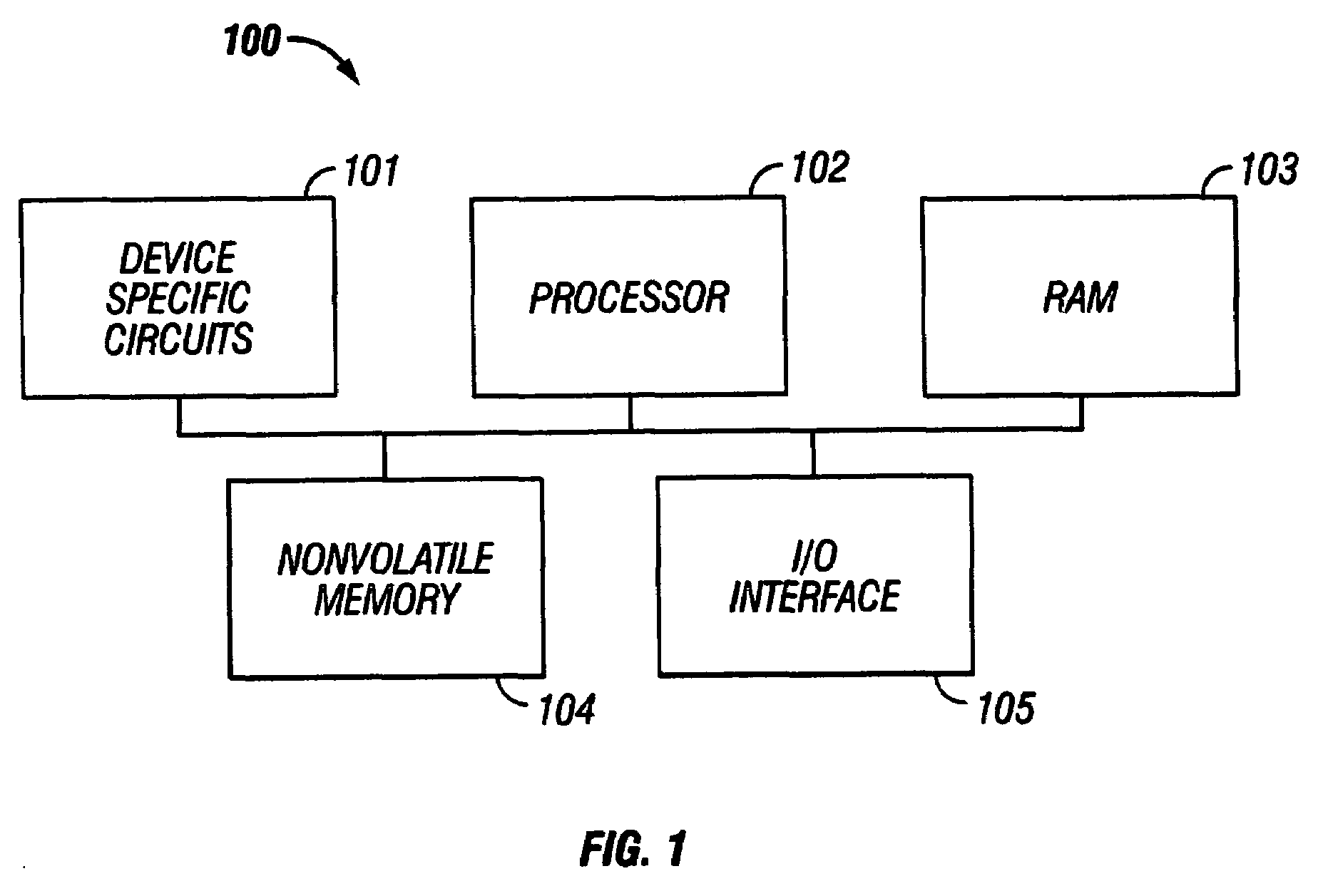

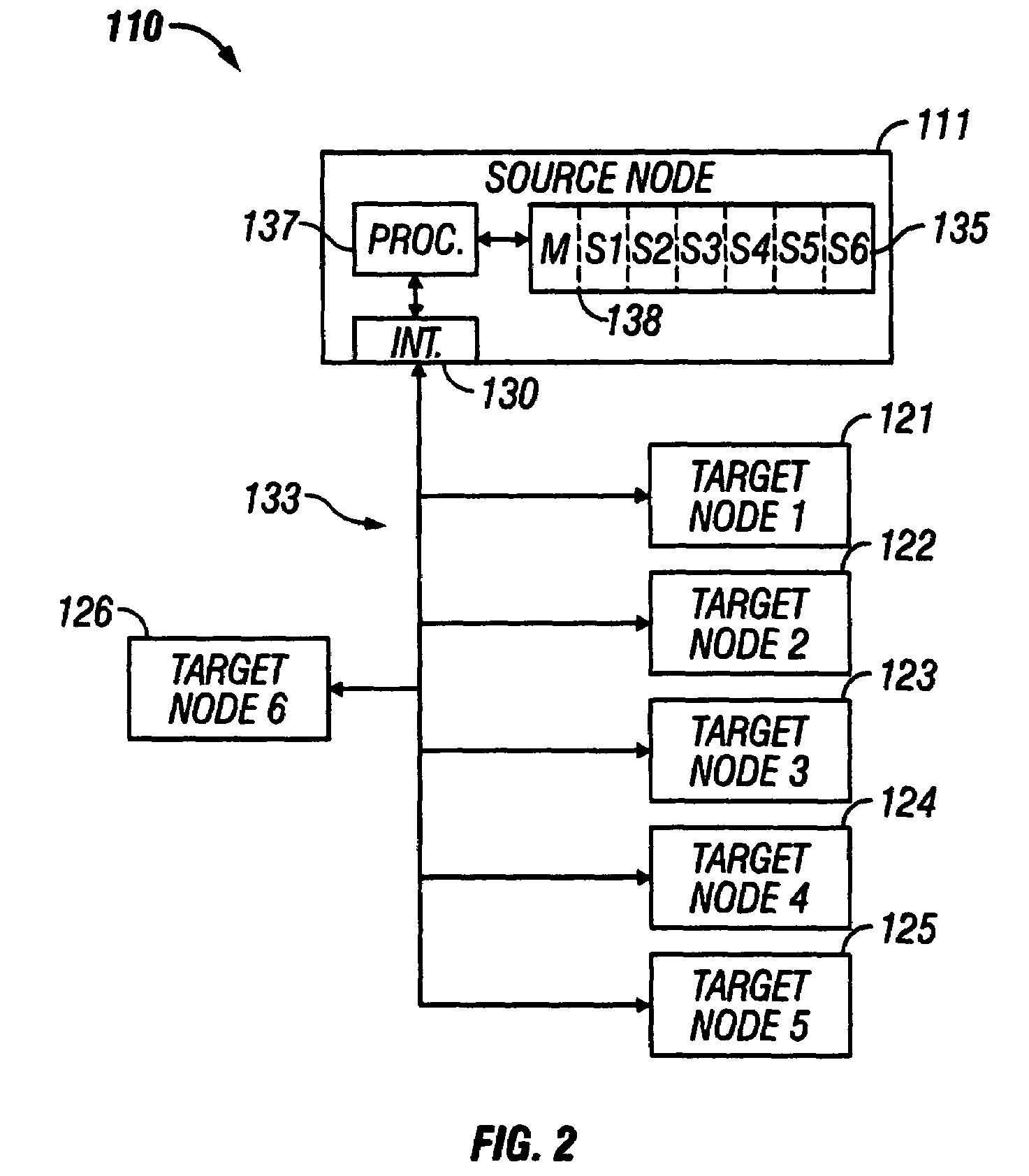

Updatable firmware having boot and/or communication redundancy

InactiveUS20050251673A1Preventing executionError detection/correctionDigital computer detailsObject codeProcessor node

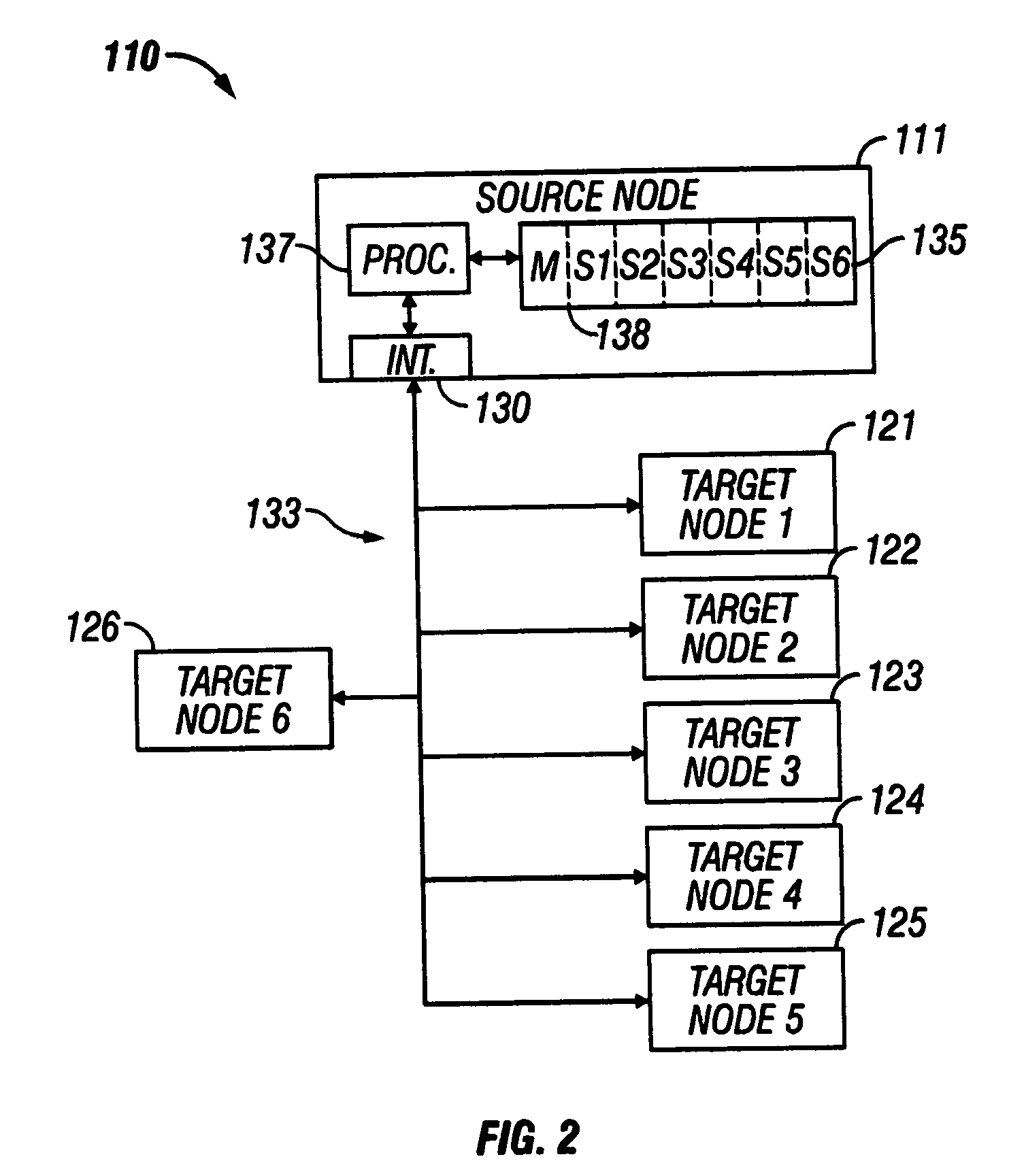

A distributed nodal system with a source computer processor node storing program code for target node(s). A target node has an updatable firmware memory storing program code for operating a target processor. The target code comprises application code for controlling an embedded device, primary communication code for communicating with a network, backup communication code also having copy code for copying code between portions of the firmware memory, and primary boot code for booting the target processor and having check code. The check code determines whether the primary communication code is corrupted, and if it is corrupted, employs the copy code to overwrite the primary communication code with the backup code. If uncorrupted, the check code determines whether the application code is corrupted, and if corrupted, prevents execution of the code.

Owner:IBM CORP

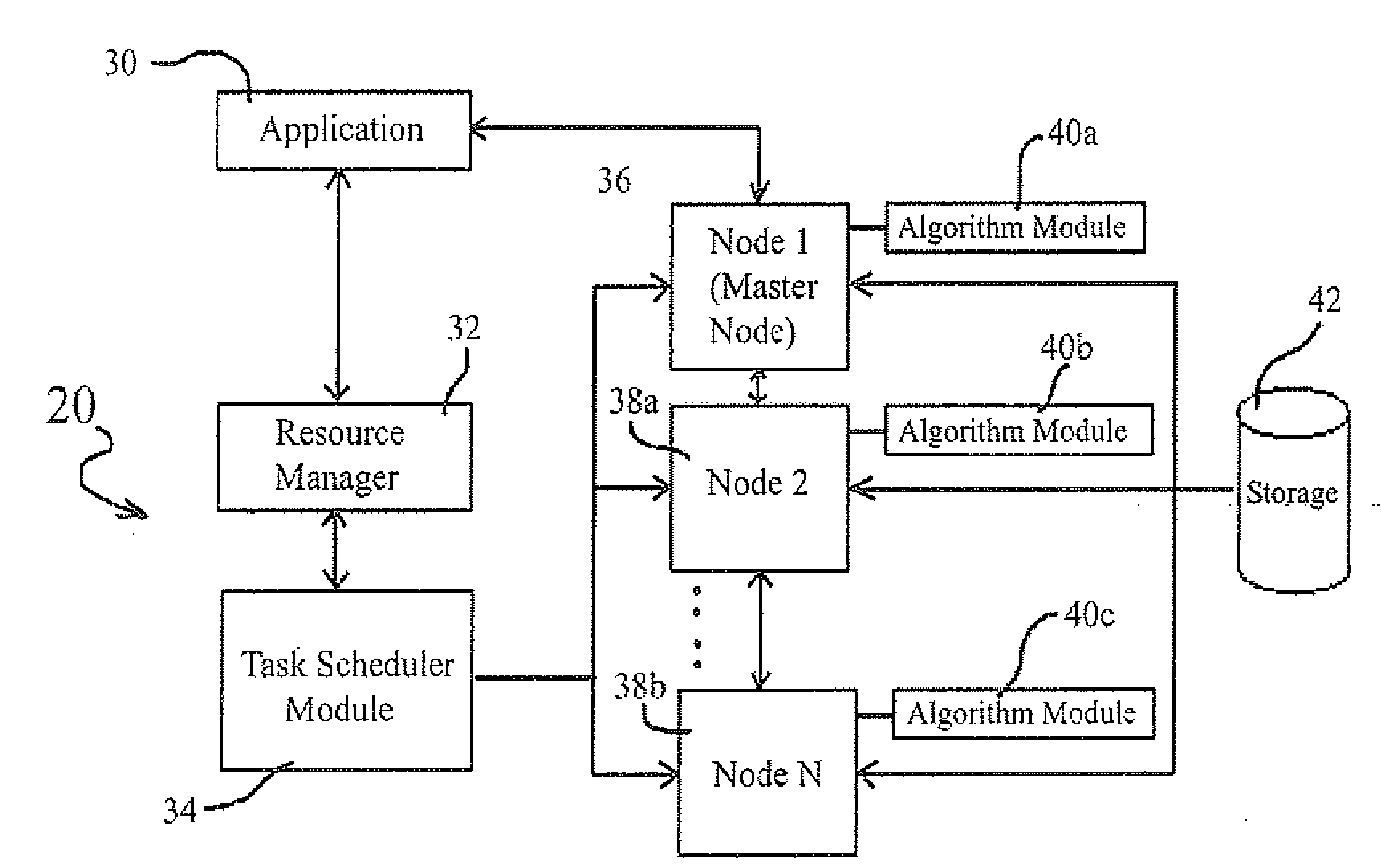

Method and system for processing a volume visualization dataset

InactiveUS20080195843A1Direct accessMedical simulationGeneral purpose stored program computerData setProcessor node

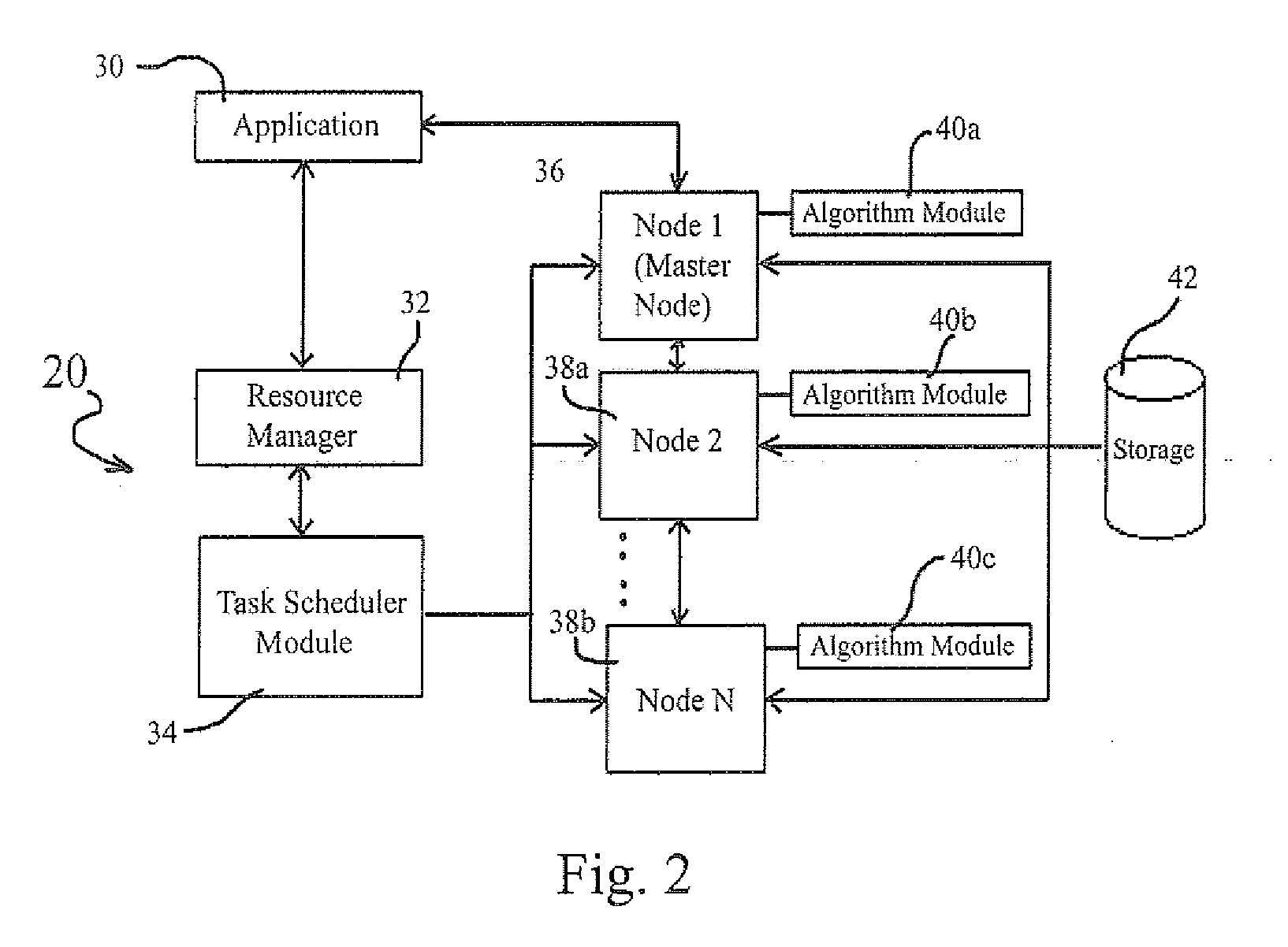

A method of processing a volume visualization dataset. Information is transmitted from a resource manager to a task scheduling module regarding the number of processor nodes and amount of storage available in associated storage devices, and sub-tasks instructions including algorithm modules are transmitted from the task scheduling module to a master processor and multiple slave processor nodes. Portions of the volume visualization dataset are transmitted from data storage devices to RAM accessed directly by the master and slave processor nodes. The sub-task instructions and algorithm modules are executed on the individual master and slave processor nodes by accessing directly the portions of the dataset on their respective RAM. Results are transmitted to the master processor node of the slave processor node execution of any sub-task and algorithm module assigned to the slave node. The results are combined at the master processor node and transmitted to the volume visualization application.

Owner:KJAYA

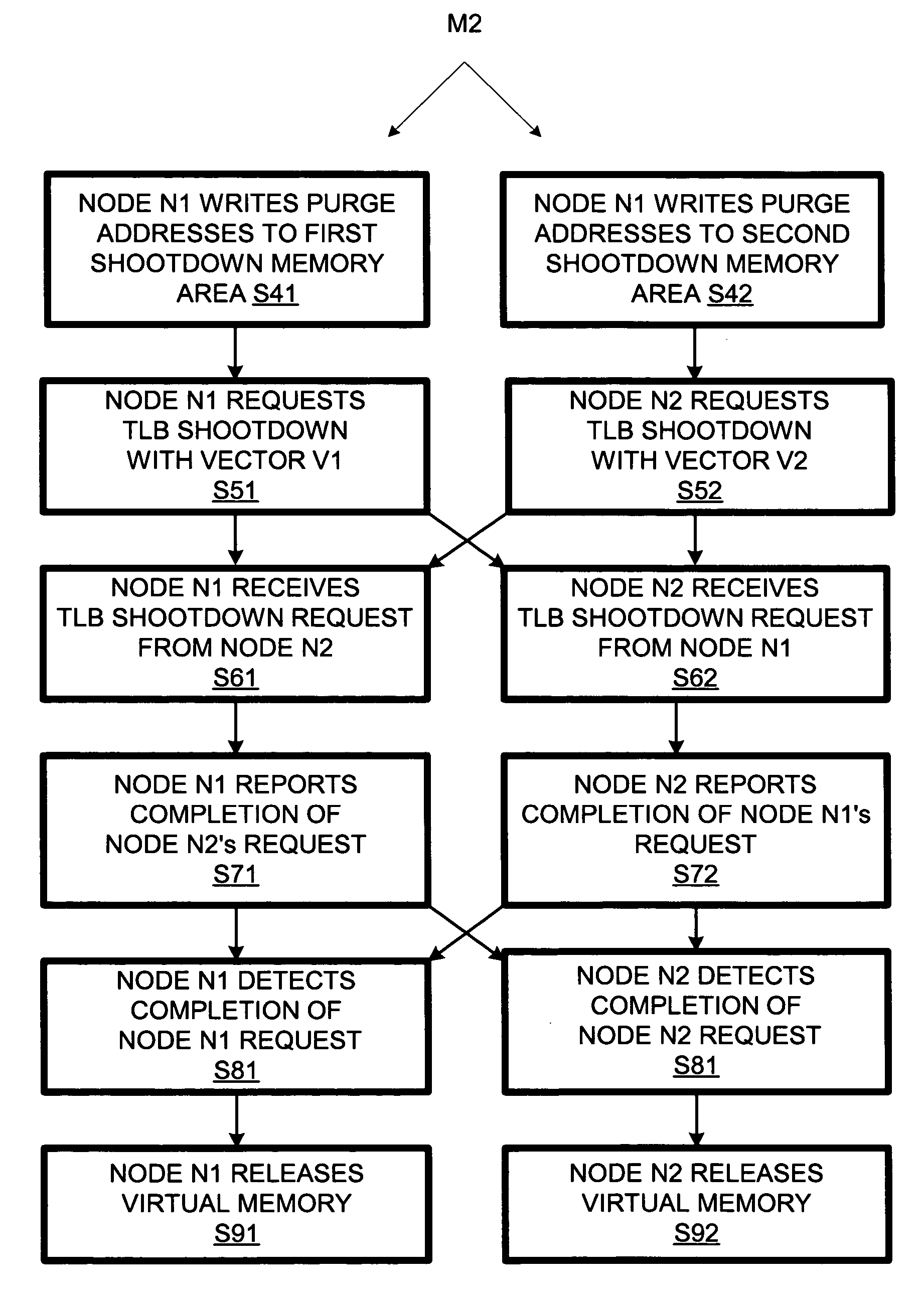

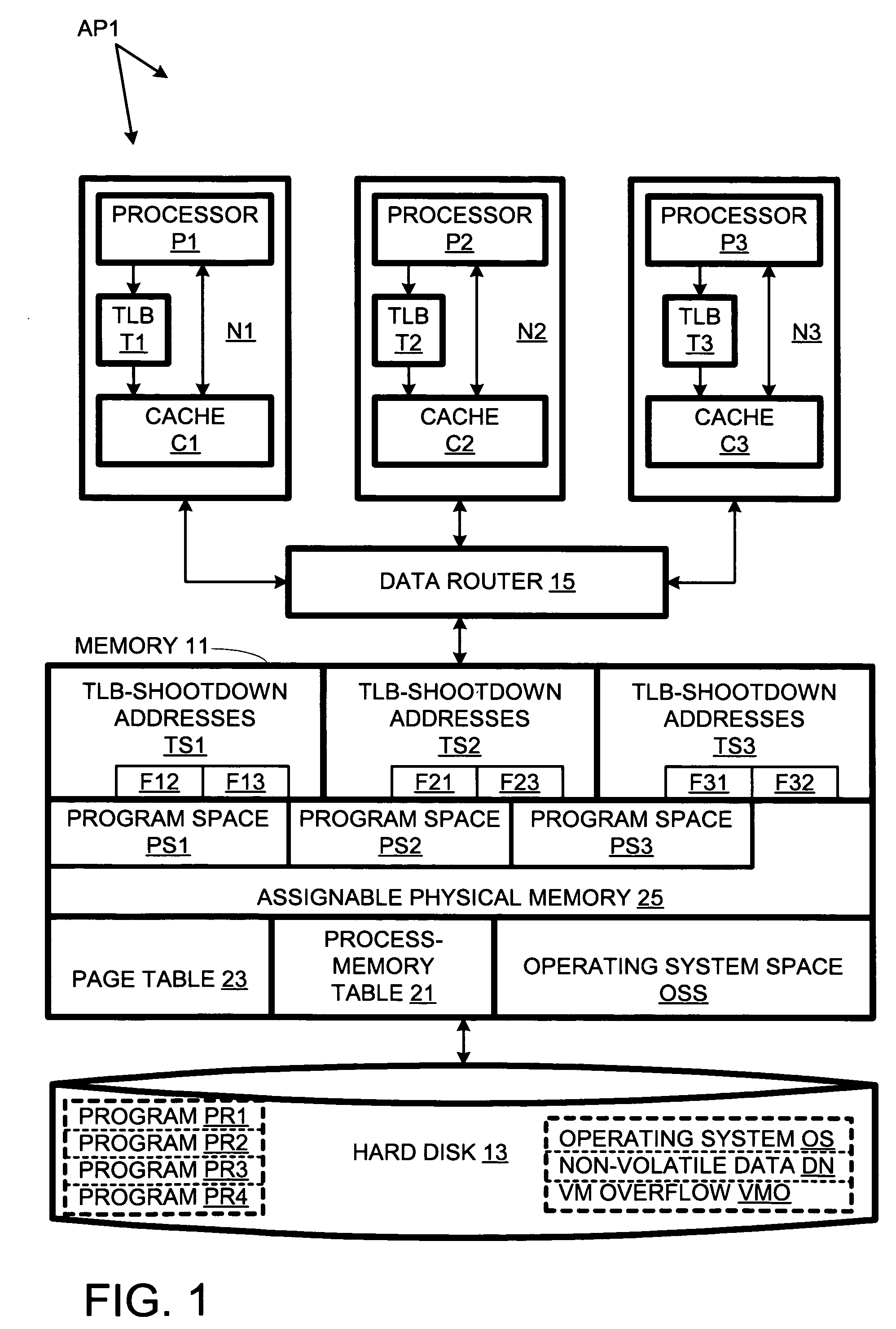

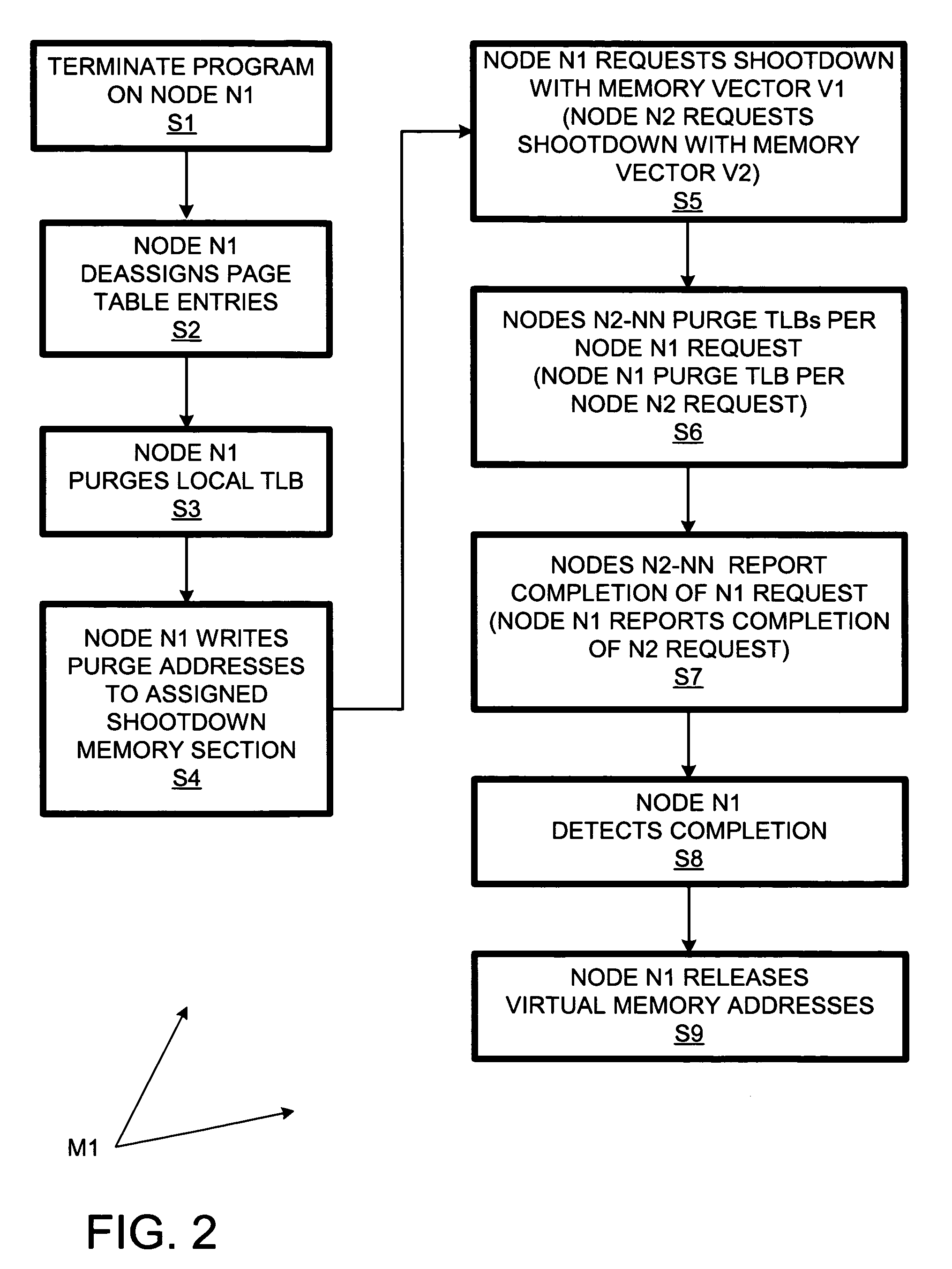

Multiprocessor system having plural memory locations for respectively storing TLB-shootdown data for plural processor nodes

InactiveUS20060026359A1Raise the possibilityMemory architecture accessing/allocationMemory adressing/allocation/relocationExtensibilityMulti processor

The present invention provides a multiprocessor system and method in which plural memory locations are used for storing TLB-shootdown data respectively for plural processors. In contrast to systems in which a single area of memory serves for all processors' TLB-shootdown data, different processors can describe the memory they want to free concurrently. Thus, concurrent TLB-shootdown request are less likely to result in performance-limiting TLB-shootdown contentions that have previously constrained the scaleability of multiprocessor systems.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Resource Reservation Protocol over Unreliable Packet Transport

ActiveUS20080195719A1Increase the number ofDigital computer detailsTransmissionTransport layerProcessor node

A system and method for allocating physical memory in a distributed, shared memory system and for maintaining interaction with the memory using a reservation protocol is disclosed. In various embodiments, a processor node may broadcast a memory request message to a first subset of nodes connected to it via a communication network. If none of these nodes is able to satisfy the request, the processor node may broadcast the request message to additional subsets of nodes until a positive response is received. The reservation protocol may include a four-way handshake between the requesting processor node and a memory node that can fulfill the request. The method may include creation of a reservation structure on the requesting processor and on one or more responding memory nodes. The reservation protocol may facilitate the use of a proximity-based search methodology for memory allocation in a system having an unreliable underlying transport layer.

Owner:SUN MICROSYSTEMS INC

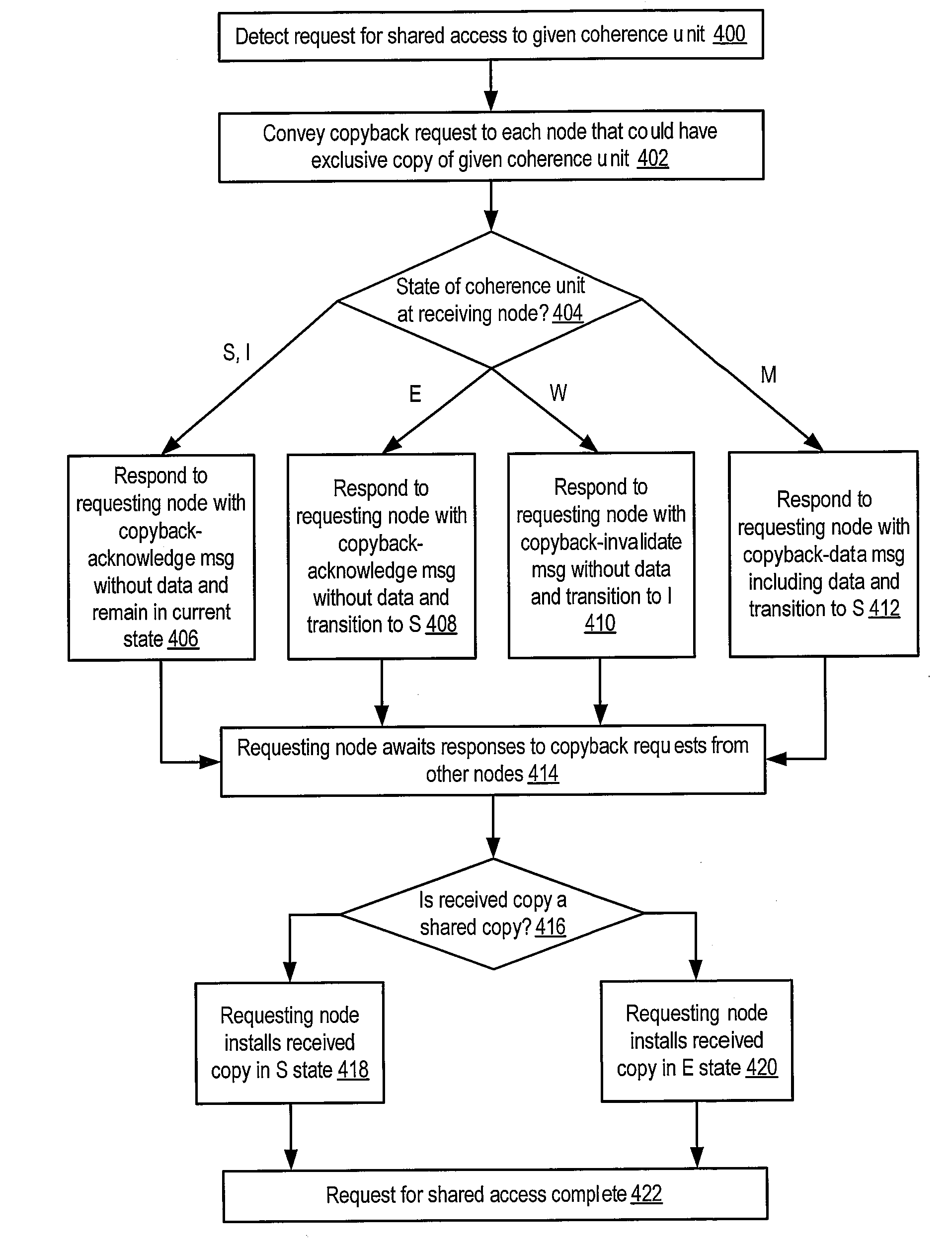

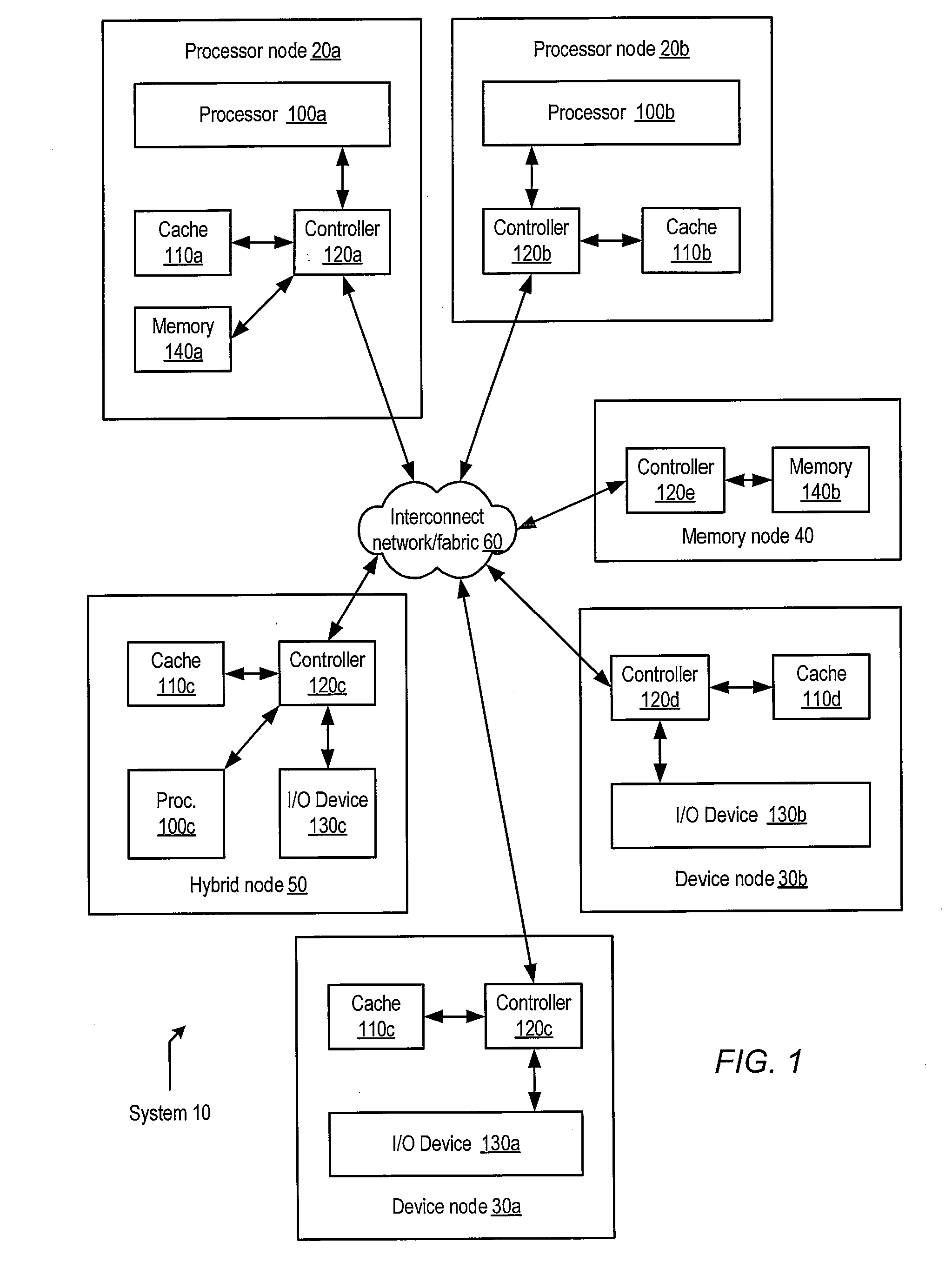

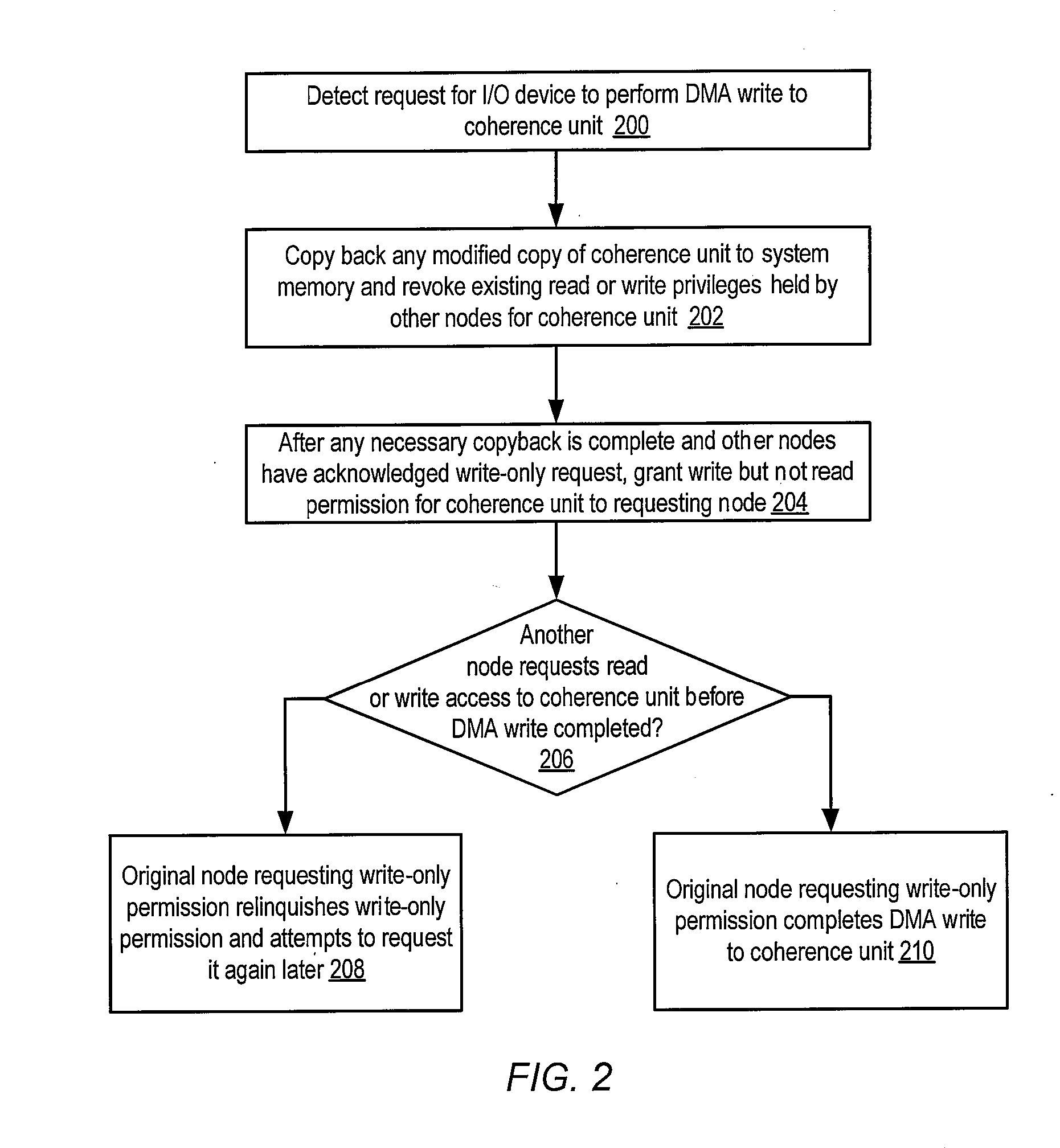

Cache coherence protocol with write-only permission

ActiveUS20080120441A1Memory architecture accessing/allocationMemory adressing/allocation/relocationProcessor nodeConsistency control

A system may include a processor node, and may also include an input / output (I / O) node including a processor and an I / O device. The processor and I / O nodes may each include a respective cache memory configured to cache a system memory and a respective cache coherence controller. The system may further include interconnect through which the nodes may communicate. In response to detecting a request for the I / O device to perform a DMA write operation to a coherence unit of the I / O node's respective cache memory, and in response to determining that the coherence unit is not modified with respect to the system memory and no other cache memory within the system has read or write permission corresponding to a copy of the coherence unit, the I / O node's respective cache coherence controller may grant write permission but not read permission for the coherence unit to the I / O node's respective cache memory.

Owner:ORACLE INT CORP

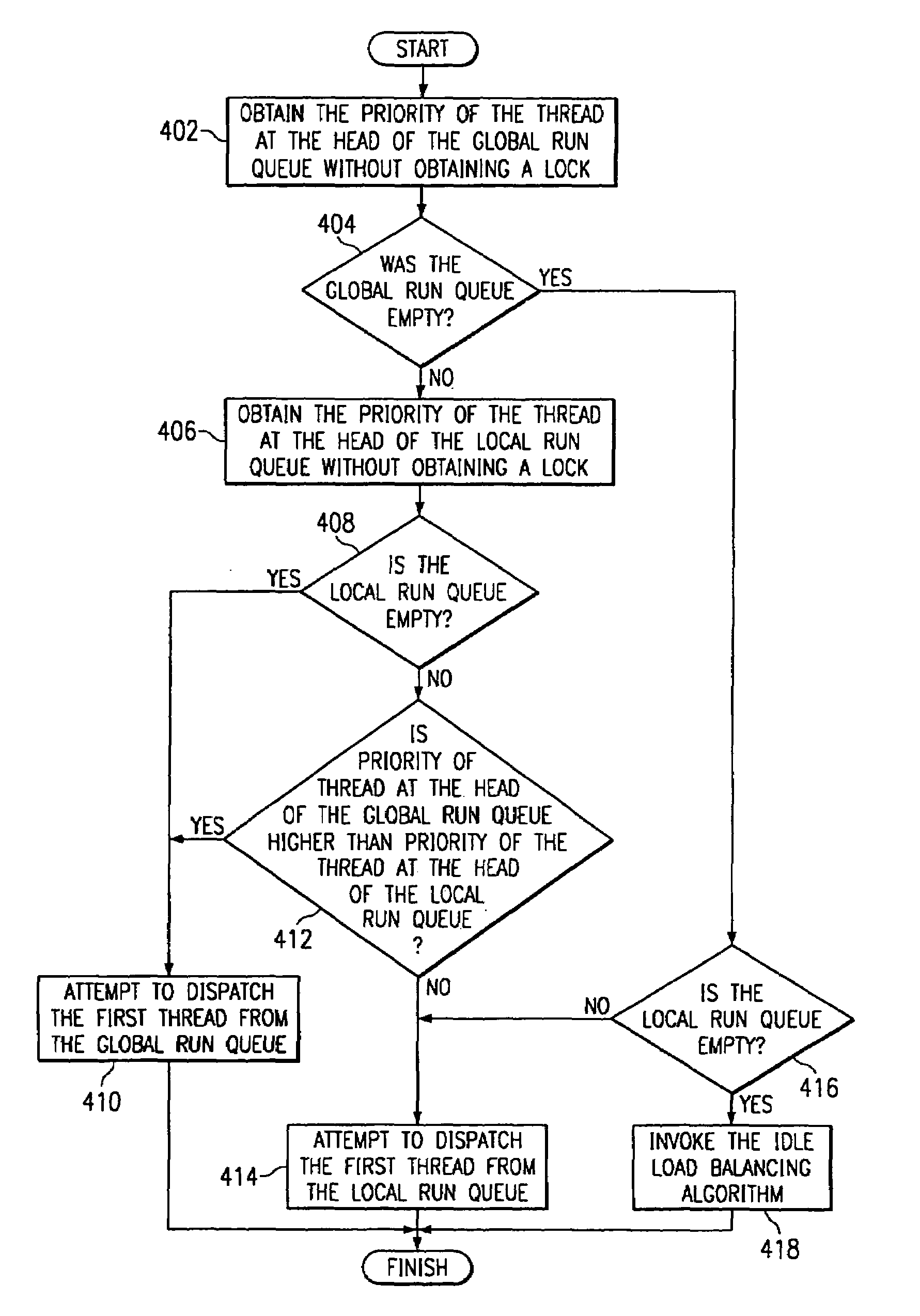

Apparatus for minimizing lock contention in a multiple processor system with multiple run queues when determining the threads priorities

Apparatus and methods are provided for selecting a thread to dispatch in a multiple processor system having a global run queue associated with each multiple processor node and having a local run queue associated with each processor. If the global run queue and the local run queue associated with the processor performing the dispatch are both not empty, then the highest priority queue is selected for dispatch, as determined by examining the queues without obtaining a lock. If one of the two queues is empty and the other queue is not empty, then the non-empty queue is selected for dispatch. If the global queue is selected for dispatch but a lock on the global queue cannot be obtained immediately, then the local queue is selected for dispatch. If both queues are empty, then an idle load balancing operation is performed. Local run queues for other processors at the same node are examining without obtaining a lock. If a candidate thread is found that satisfies a set of shift conditions, and if a lock can be obtained on both the non-local run queue and the candidate thread, then the thread is shifted for dispatch by the processor that is about to become idle.

Owner:IBM CORP

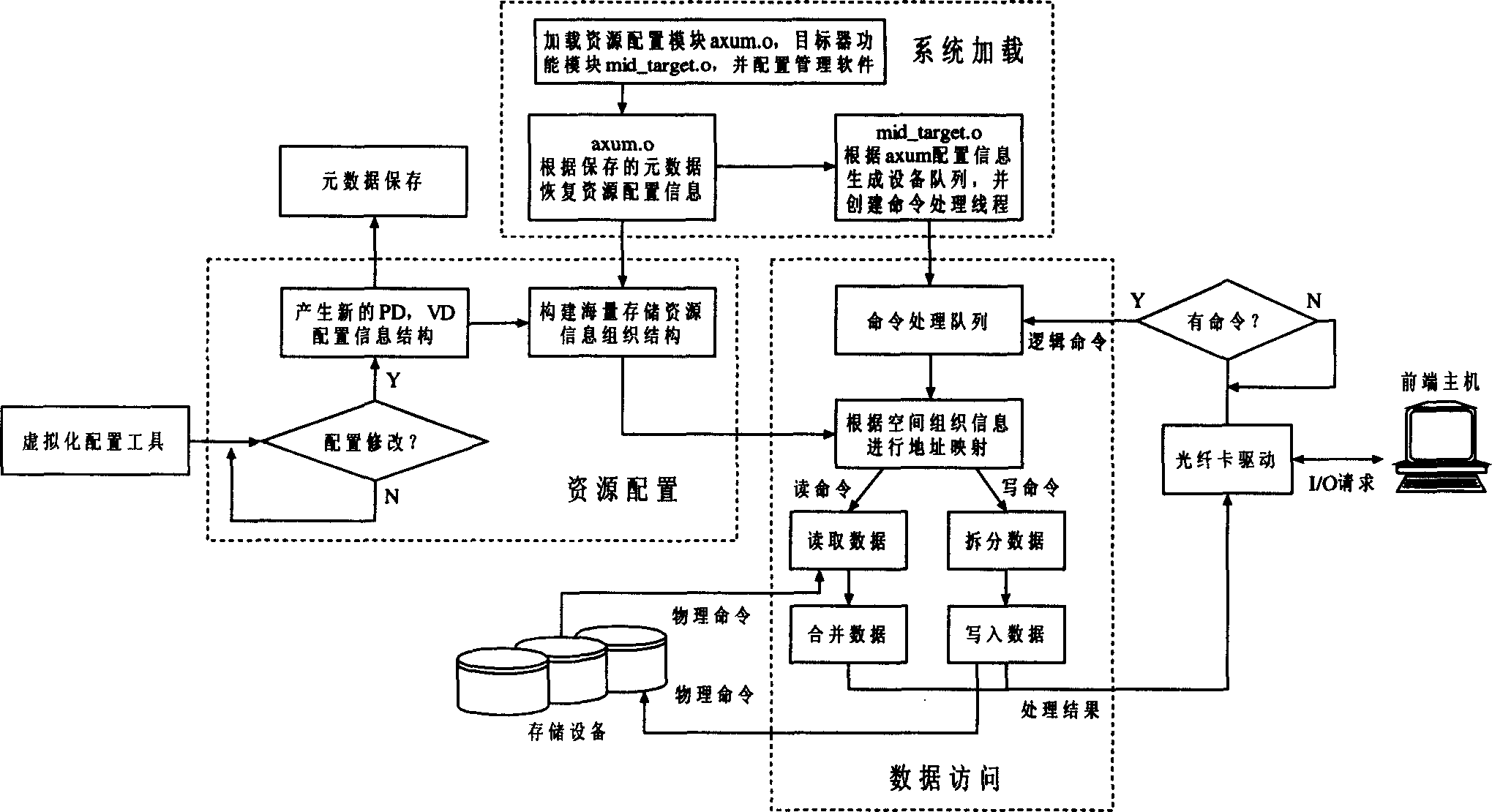

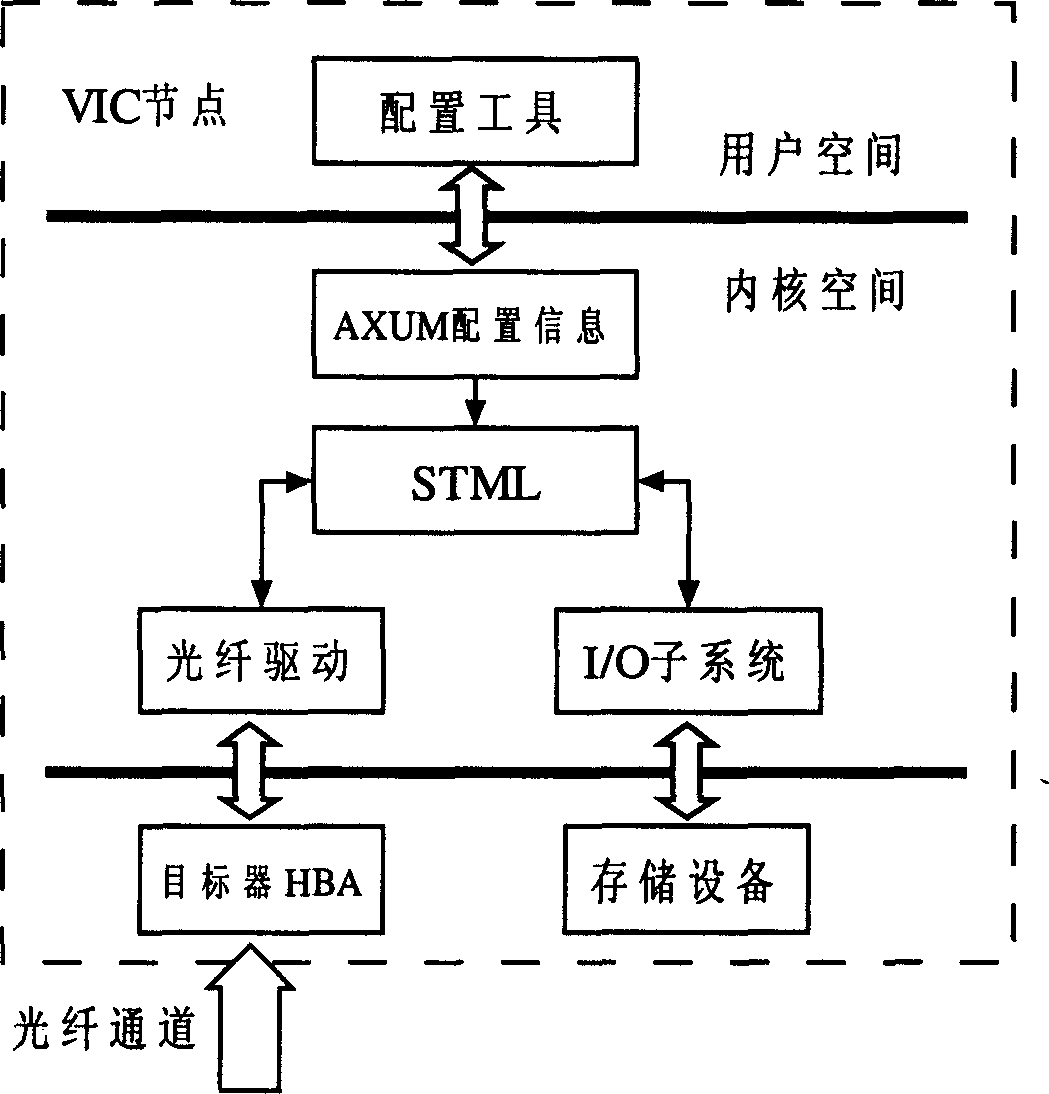

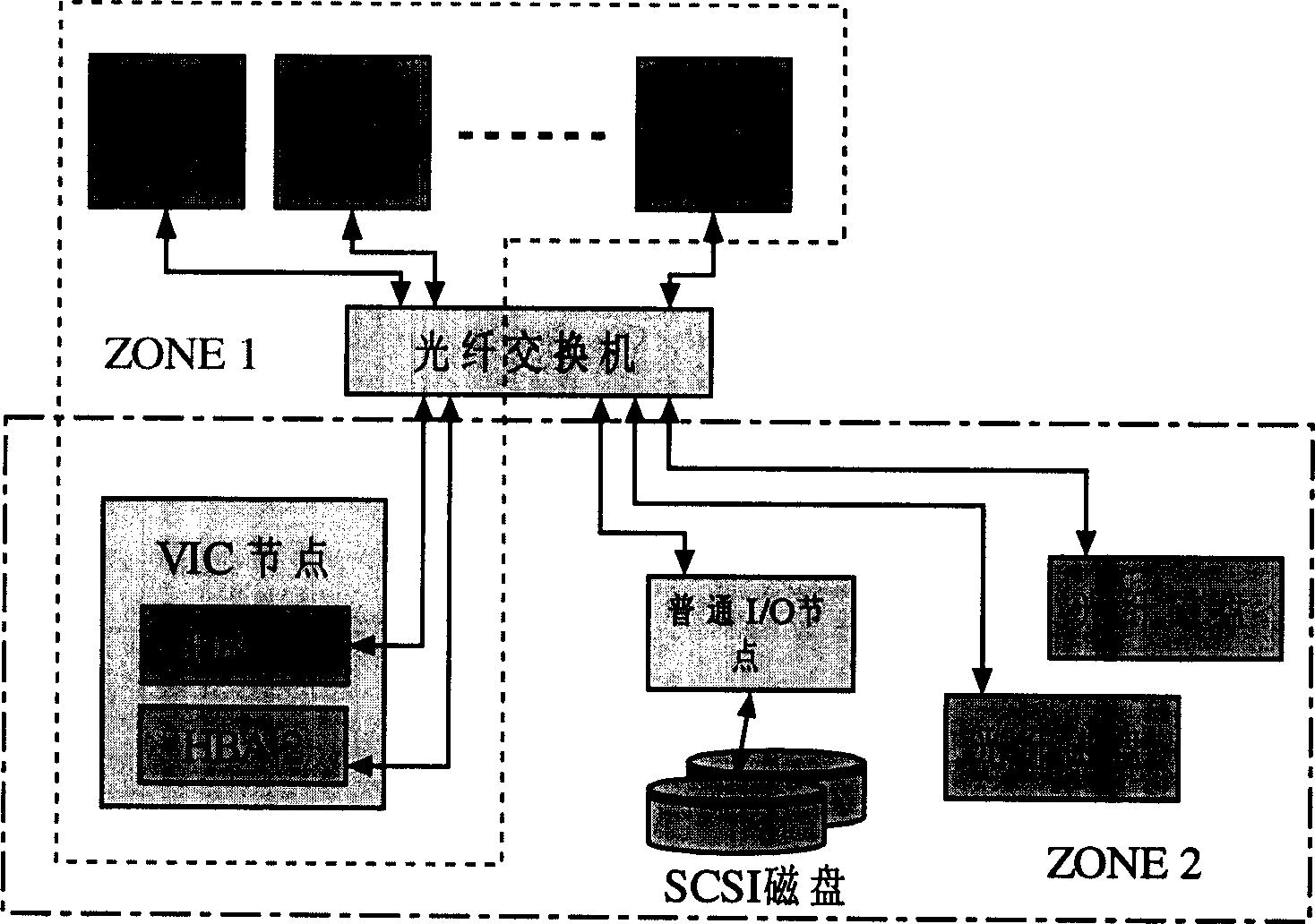

Large scale resource memory managing method based on network under SAN environment

InactiveCN1645342AEasy to manageSmall space requirementMetering/charging/biilling arrangementsMemory adressing/allocation/relocationVirtualizationSCSI

A management method of large scale storage resource includes maintaining a set of storage resouce disposal information by node machine of special processor to manage different storage device resource in storage network so as to provide virtual storage service for front end host by carrying out process of command analysis with interface middle layer of small computer interface. The node machine with information list and software target machine can convert virtual access to be access of physical device.

Owner:TSINGHUA UNIV

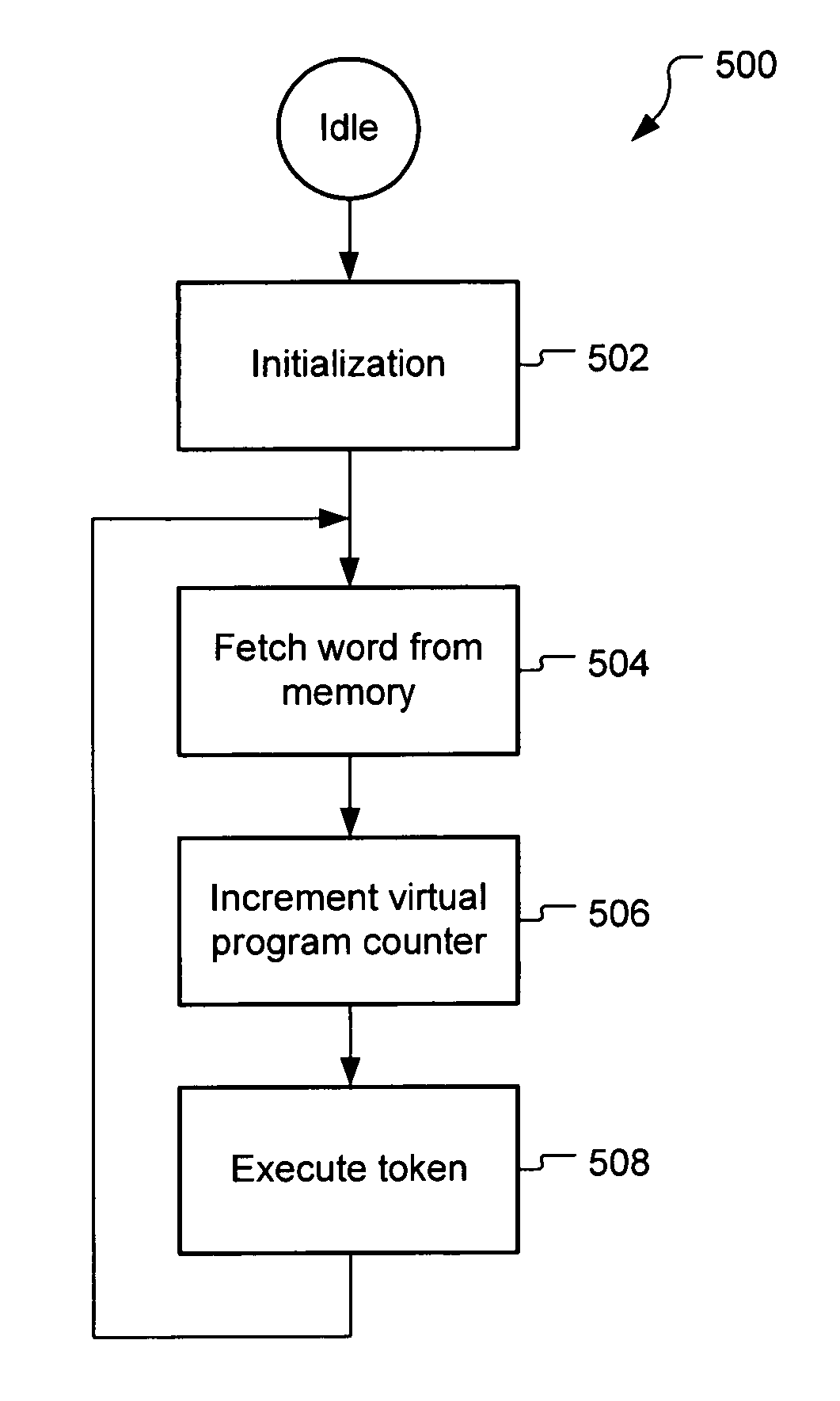

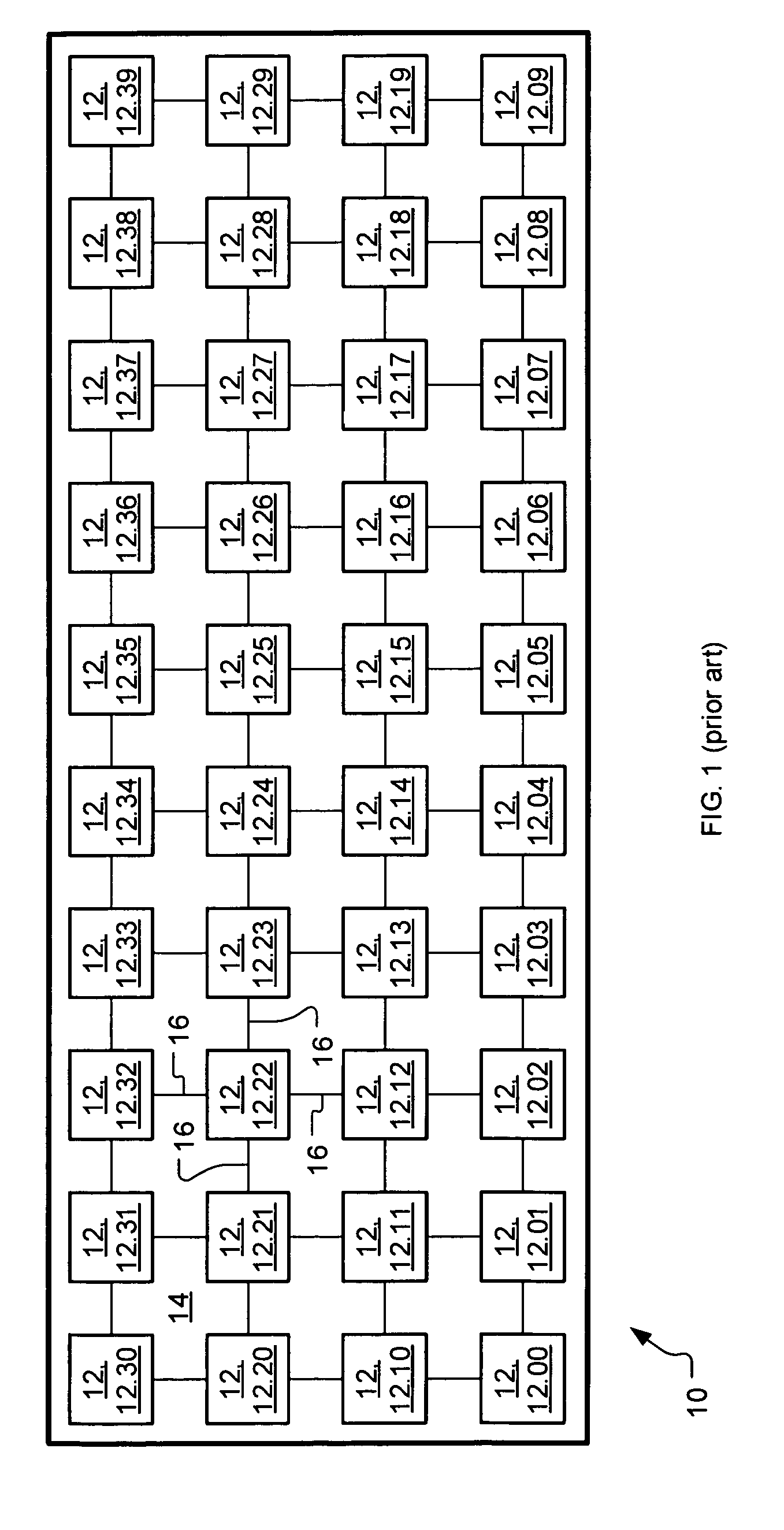

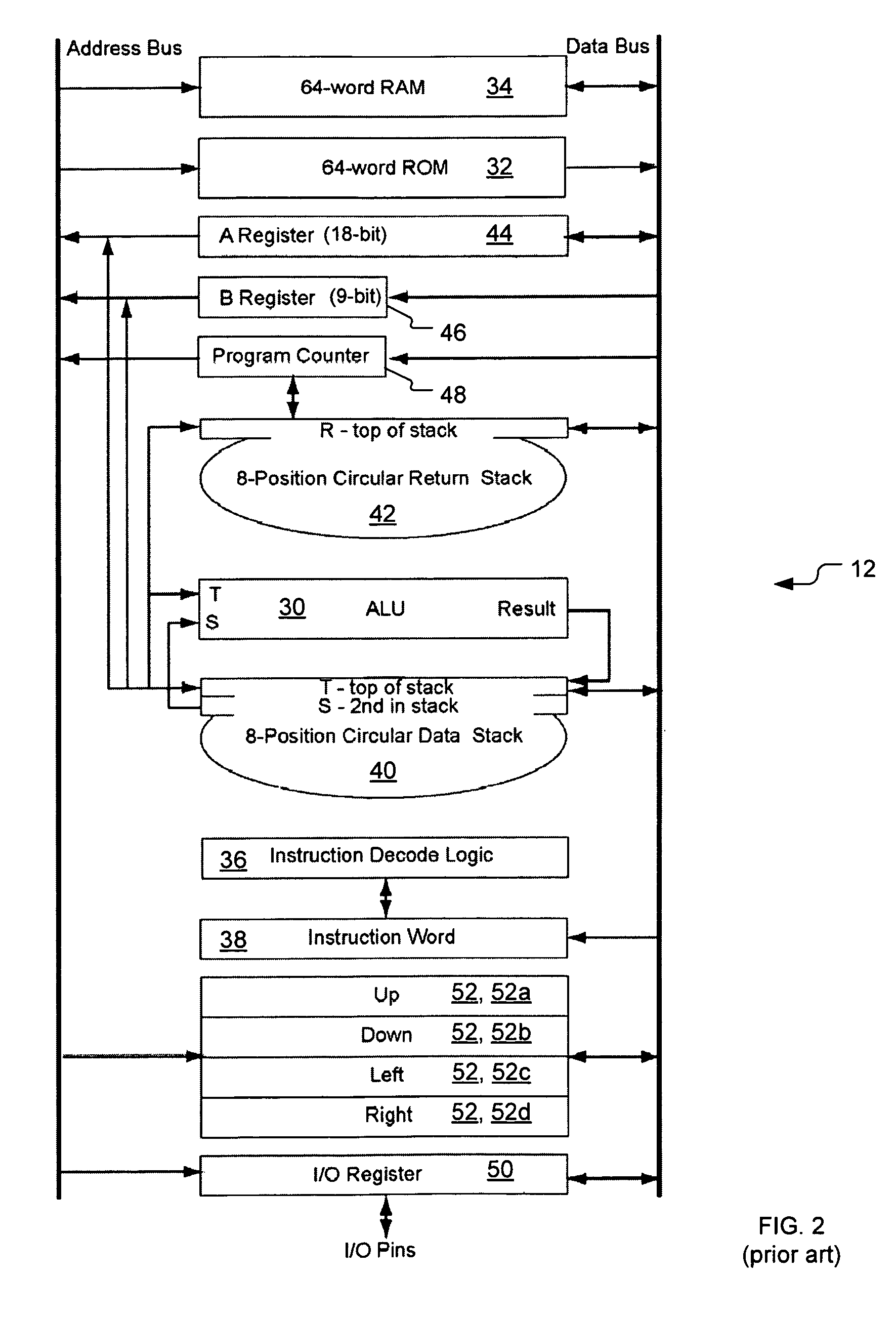

System for native code execution

A process, apparatus, and system to execute a program in an array of processor nodes that include an agent node and an executor node. A virtual program of tokens of different types represents the program and is provided in a memory. The types include a run type that includes native code instructions of the executer node. A token is loaded from the memory and executed in the agent node based on its type. In particular, if the token is an optional stop type execution ends and if the token is a run type the native code instructions in the token are sent to the executor node. The native code instructions are executed in the executor node as received from the agent node. And such loading and execution continues in this manner indefinitely or until a stop type token is executed.

Owner:ARRAY PORTFOLIO

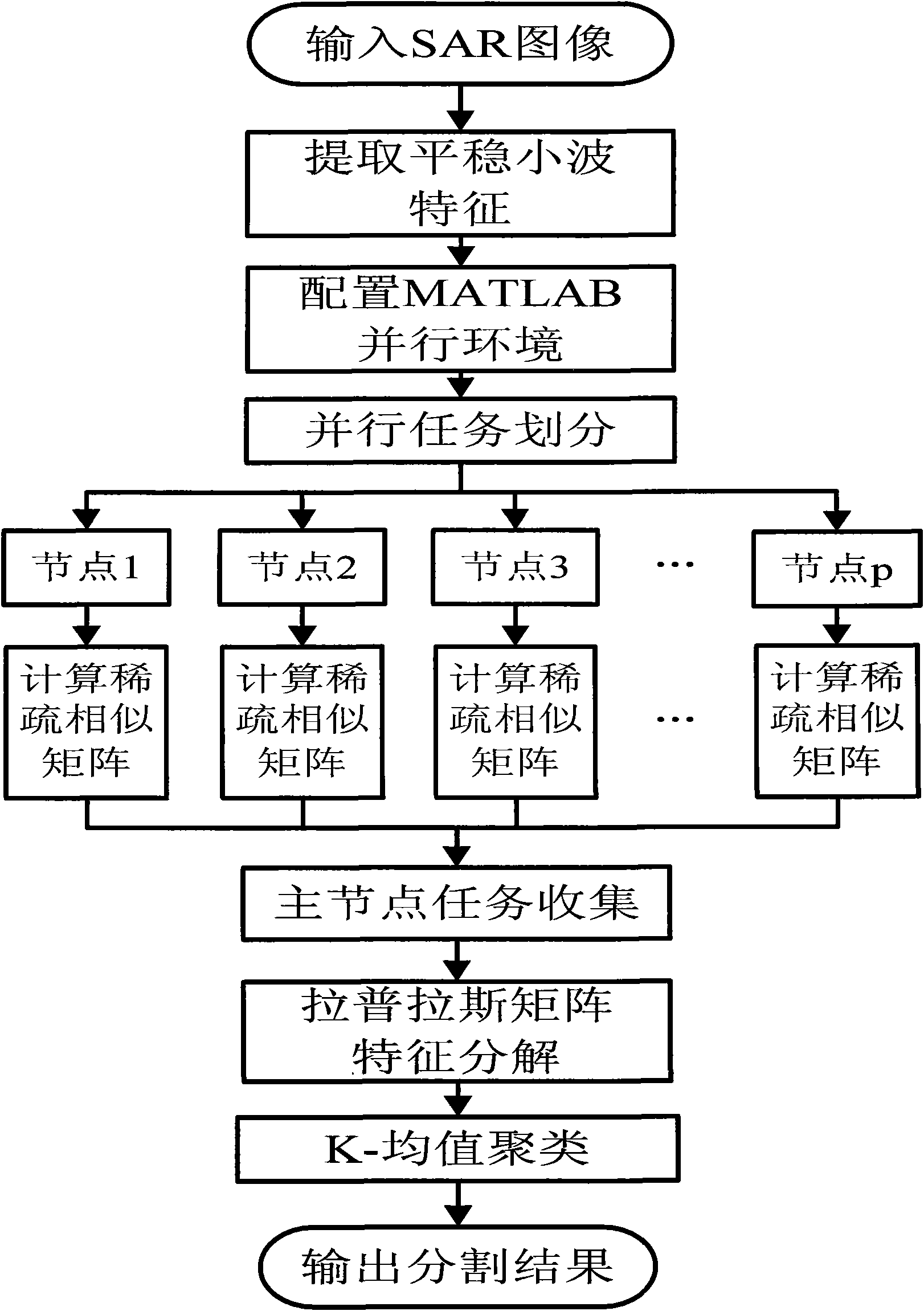

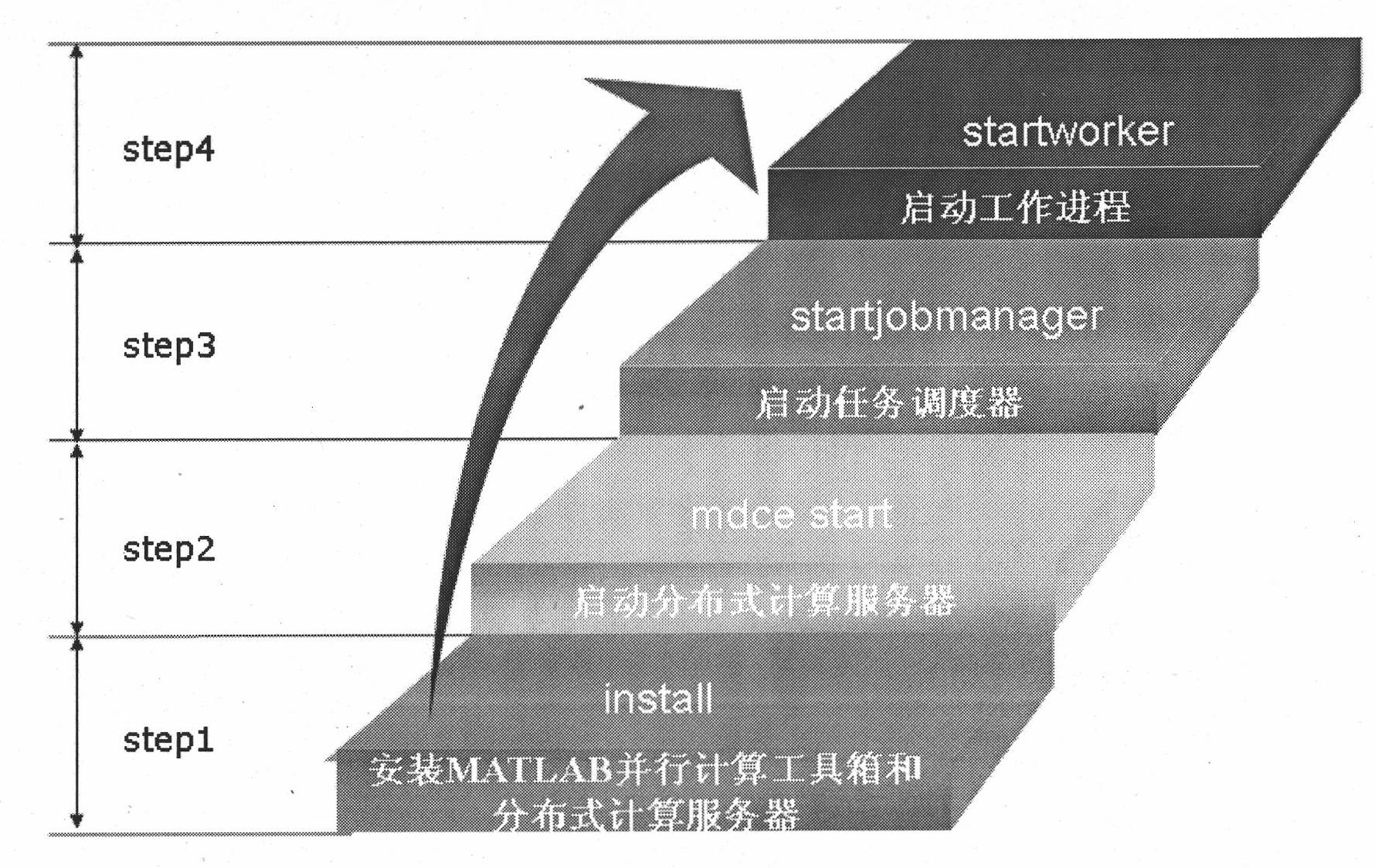

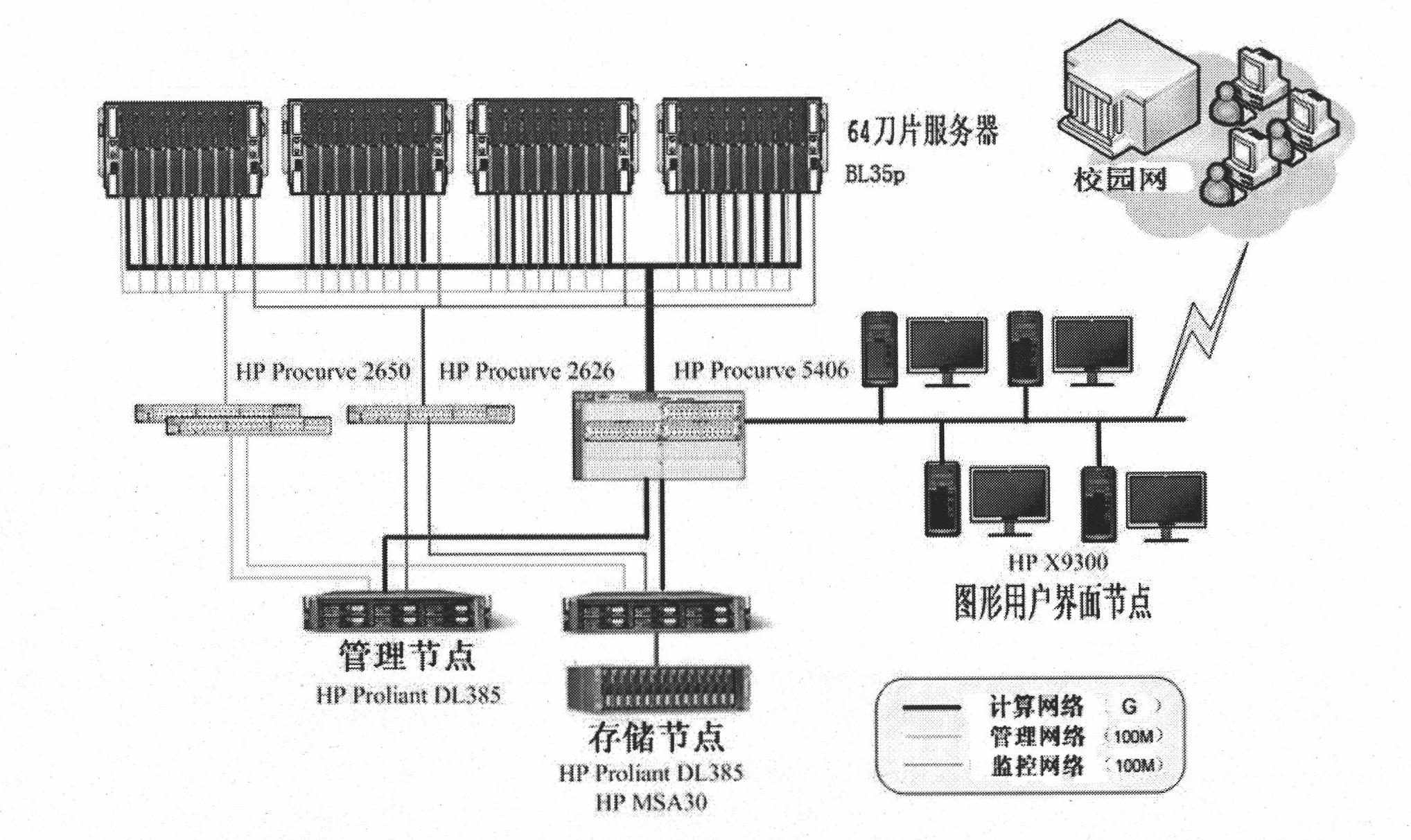

SAR (Synthetic Aperture Radar) image segmentation method based on parallel sparse spectral clustering

InactiveCN101853491ASolve the problem of excessive calculationOvercome limitationsImage enhancementScene recognitionDecompositionSynthetic aperture radar

The invention discloses an SAR (Synthetic Aperture Radar) image segmentation method based on parallel sparse spectral clustering, relating to the technical field of image processing and mainly solving the problem of limitation of segmentation application of large-scale SAR images in the traditional spectral clustering technology. The SAR image segmentation method comprises the steps of: 1, extracting features of an SAR image to be segmented; 2, configuring an MATLAB (matrix laboratory) parallel computing environment; 3, allocating tasks all to processor nodes and computing partitioned sparse similar matrixes; 4, collecting computing results by a parallel task dispatcher and merging into an integral sparse similar matrix; 5, resolving a Laplacian matrix and carrying out feature decomposition; 6, carrying out K-means clustering on a feature vector matrix subjected to normalization; and 7, outputting a segmentation result of the SAR image. The invention can effectively overcome the bottleneck problem in computation and storage space of the traditional spectral clustering technology, has remarkable segmentation effect on large-scale SAR images, and is suitable for SAR image target detection and target identification.

Owner:XIDIAN UNIV

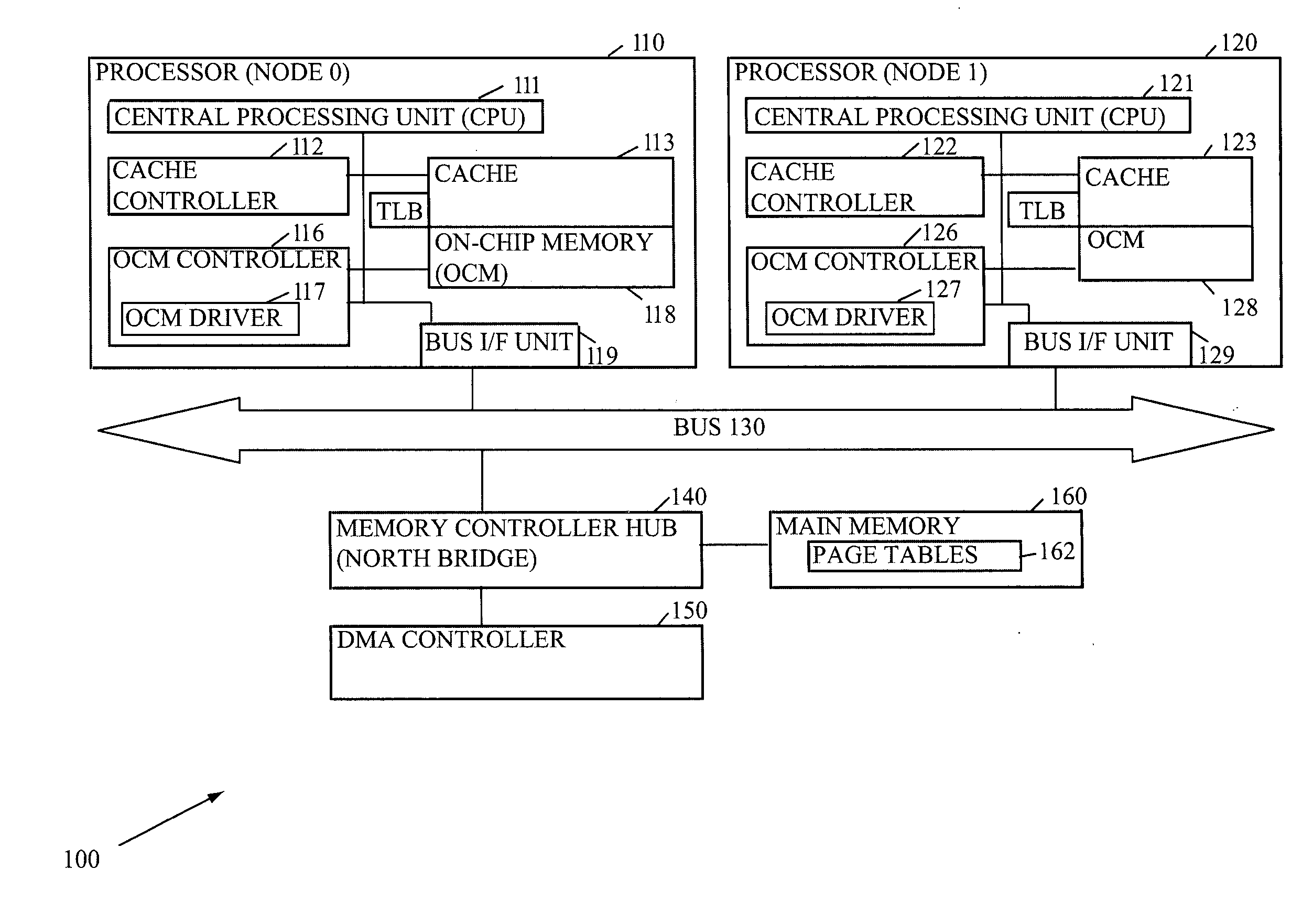

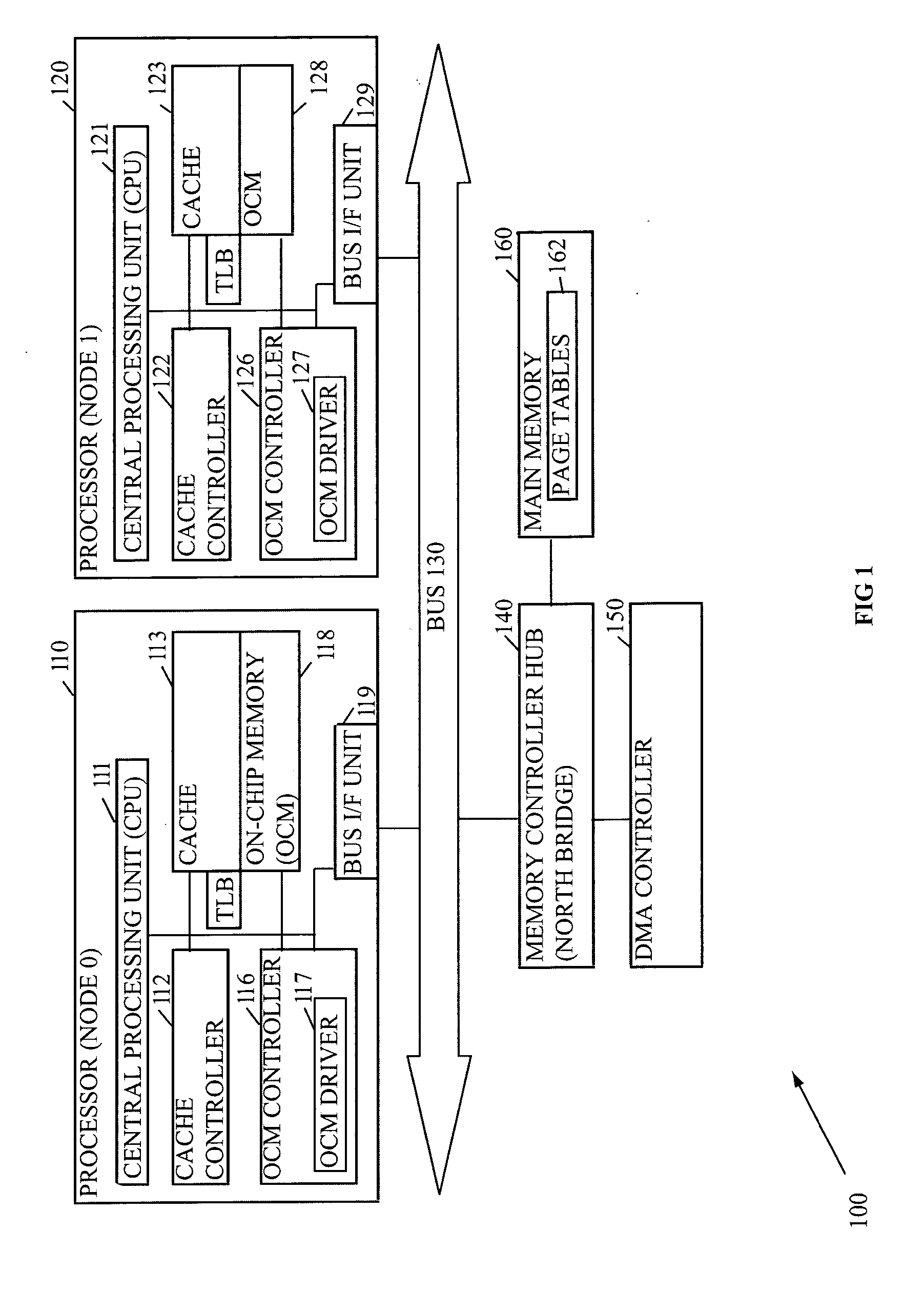

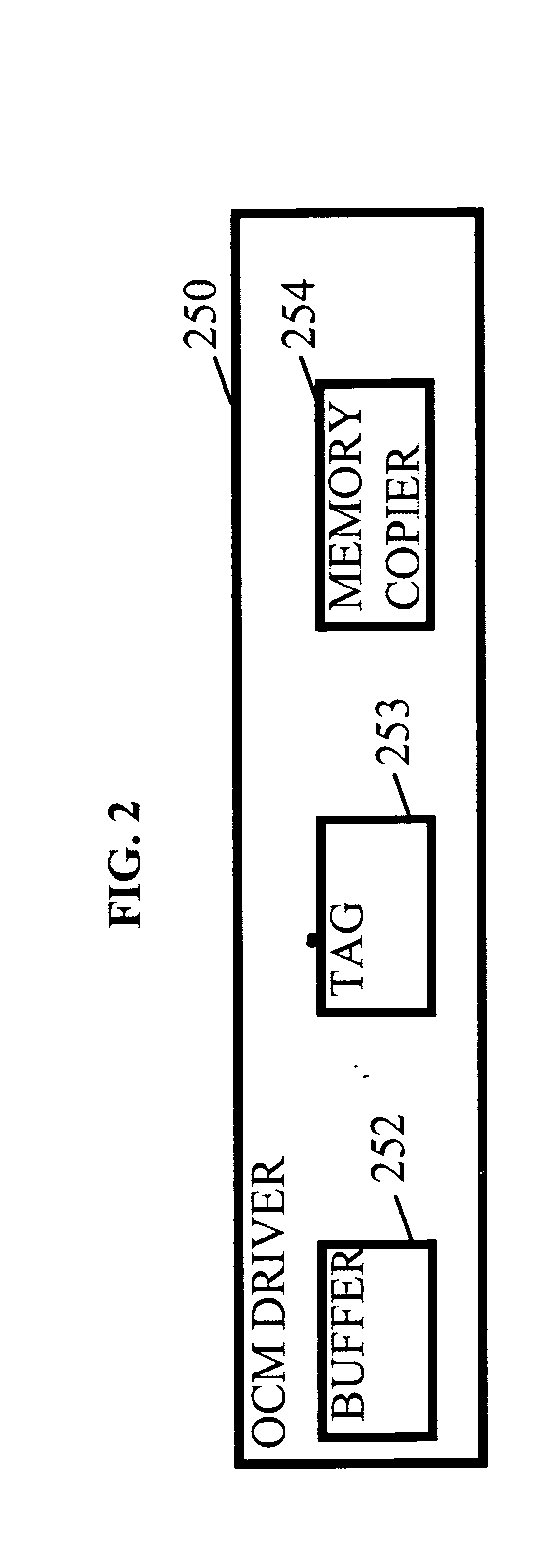

Methods and arrangements to manage on-chip memory to reduce memory latency

InactiveUS20060155886A1Improve performanceLower requirementMemory architecture accessing/allocationMemory adressing/allocation/relocationProgram managementOperational system

Methods, systems, and media for reducing memory latency seen by processors by providing a measure of control over on-chip memory (OCM) management to software applications, implicitly and / or explicitly, via an operating system are contemplated. Many embodiments allow part of the OCM to be managed by software applications via an application program interface (API), and part managed by hardware. Thus, the software applications can provide guidance regarding address ranges to maintain close to the processor to reduce unnecessary latencies typically encountered when dependent upon cache controller policies. Several embodiments utilize a memory internal to the processor or on a processor node so the memory block used for this technique is referred to as OCM.

Owner:IBM CORP

Distributed multicast system and method in a network

InactiveUS8086755B2Time-division multiplexFrequency-division multiplexProcessor nodeMulticast packets

The invention provides multicast communication using distributed topologies in a network. The control nodes in the network build a distributed topology of processor nodes for providing multicast packet distribution. Multiple processor nodes in the network participate in the decisions regarding the forwarding of multicast packets as opposed to multicast communications being centralized in the control nodes.

Owner:EGENERA

Updatable firmware having boot and/or communication redundancy

A distributed nodal system with a source computer processor node storing program code for target node(s). A target node has an updatable firmware memory storing program code for operating a target processor. The target code comprises application code for controlling an embedded device, primary communication code for communicating with a network, backup communication code also having copy code for copying code between portions of the firmware memory, and primary boot code for booting the target processor and having check code. The check code determines whether the primary communication code is corrupted, and if it is corrupted, employs the copy code to overwrite the primary communication code with the backup code. If uncorrupted, the check code determines whether the application code is corrupted, and if corrupted, prevents execution of the code.

Owner:INT BUSINESS MASCH CORP

Real time prepaid transaction bidding

Electronic transaction networks are described that are operable to find a pathway to complete an electronic data exchange for a prepaid transaction account. The networks may include an intermediary node, in electronic communication with a transaction point node where transaction information is input, and a plurality of processing nodes that can communicate with an account provider node that administers the prepaid transaction account. The intermediary node receives transaction data that may include an account identifier from the transaction point node, and identify one or more of the processing nodes that can form part of the pathway. The pathway may include the transaction point node, the intermediary node, at least one of the processing nodes, and the account provider node. In addition, the intermediary node may find the processing node that forms the pathway for the highest transaction commission when more than one of the processor nodes can form part of the pathway.

Owner:FIRST DATA RESOURCES LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com