Patents

Literature

10601 results about "Image segmentation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computer vision, image segmentation is the process of partitioning a digital image into multiple segments (sets of pixels, also known as image objects). The goal of segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. Image segmentation is typically used to locate objects and boundaries (lines, curves, etc.) in images. More precisely, image segmentation is the process of assigning a label to every pixel in an image such that pixels with the same label share certain characteristics.

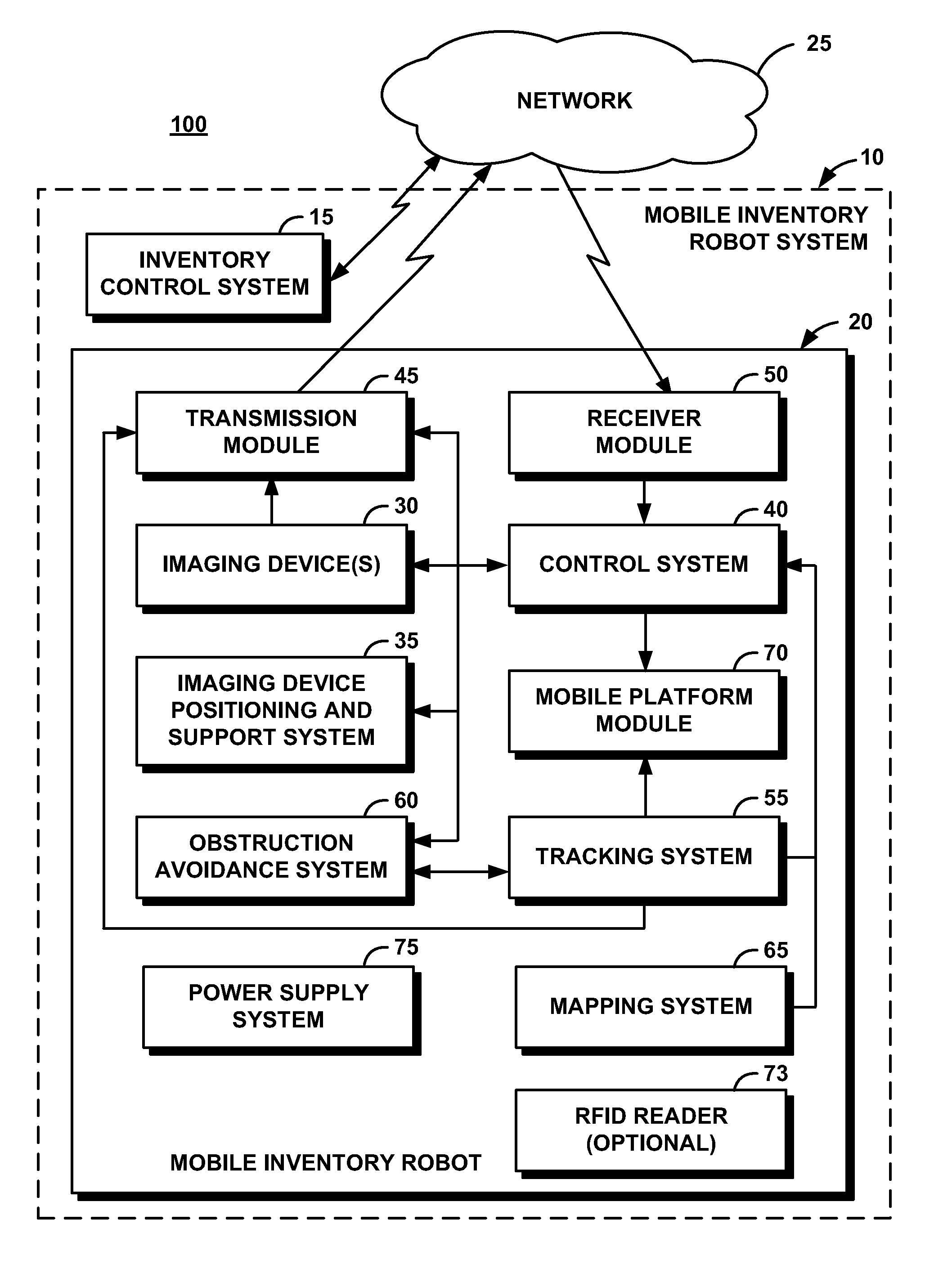

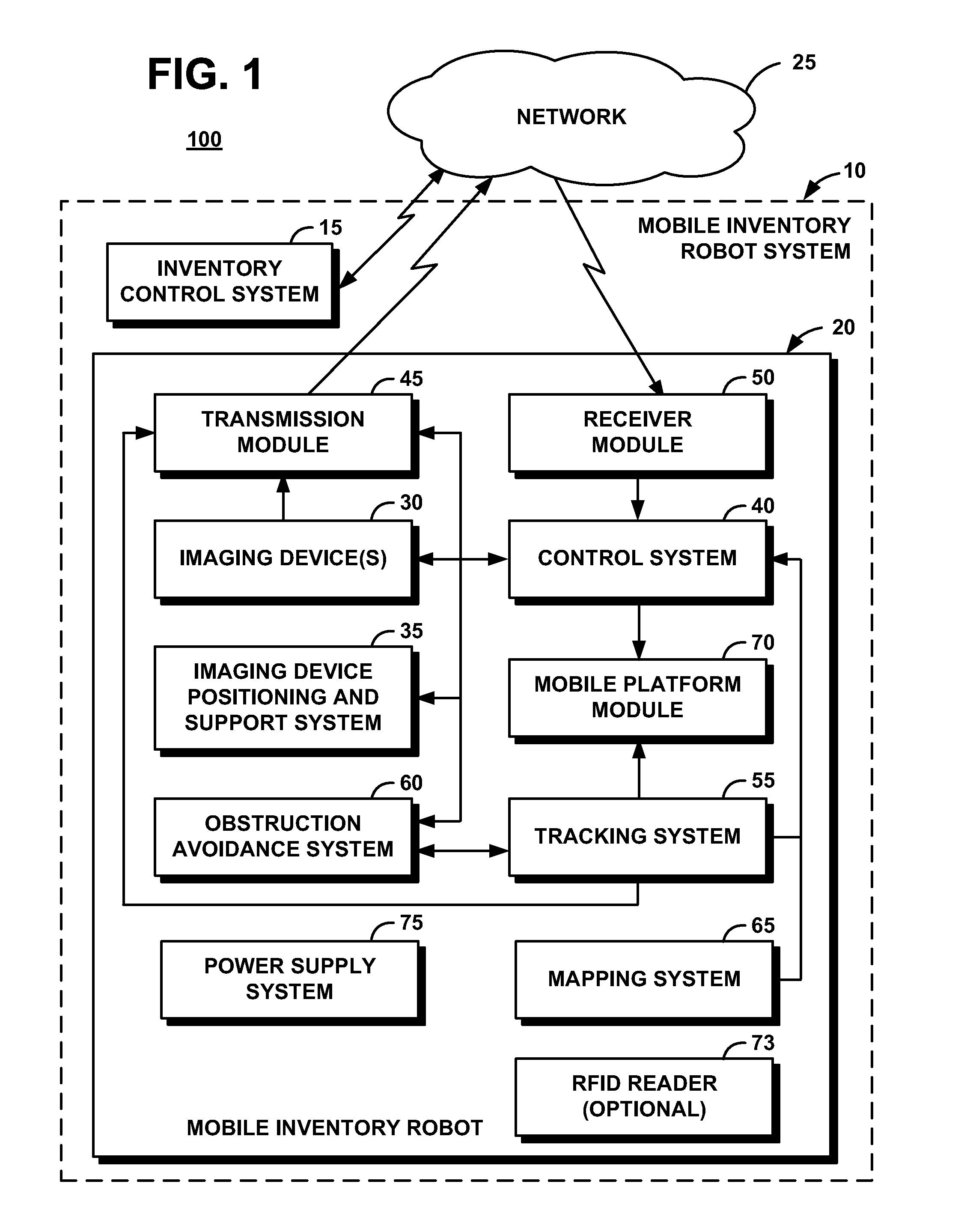

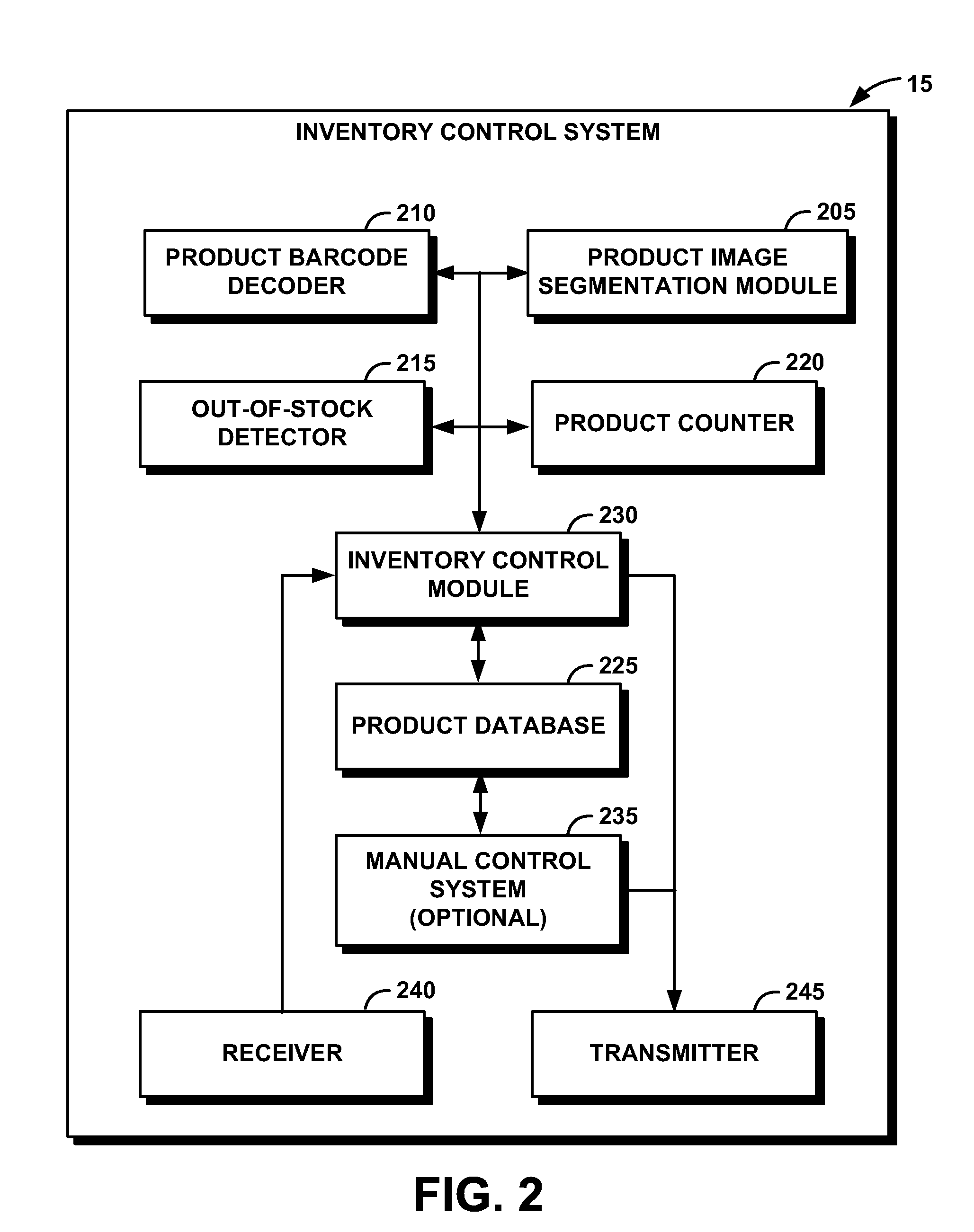

System and Method for Performing Inventory Using a Mobile Inventory Robot

InactiveUS20080077511A1Still image data retrievalVehicle position/course/altitude controlRobotic systemsBarcode

A mobile inventory robot system generates an inventory map of a store and a product database when a mobile inventory robot is manually navigated through the store to identify items on shelves, a location for each of the items on the shelves, and a barcode for each of the items. The system performs inventory of the items by navigating through the store via the inventory map, capturing a shelf image, decoding a product barcode from the captured shelf image, retrieving a product image for the decoded product barcode from the product database, segmenting the captured shelf image to detect an image of an item on the shelves, determining whether the detected image matches the retrieved image and, if not, setting an out-of-stock flag for an the item.

Owner:IBM CORP

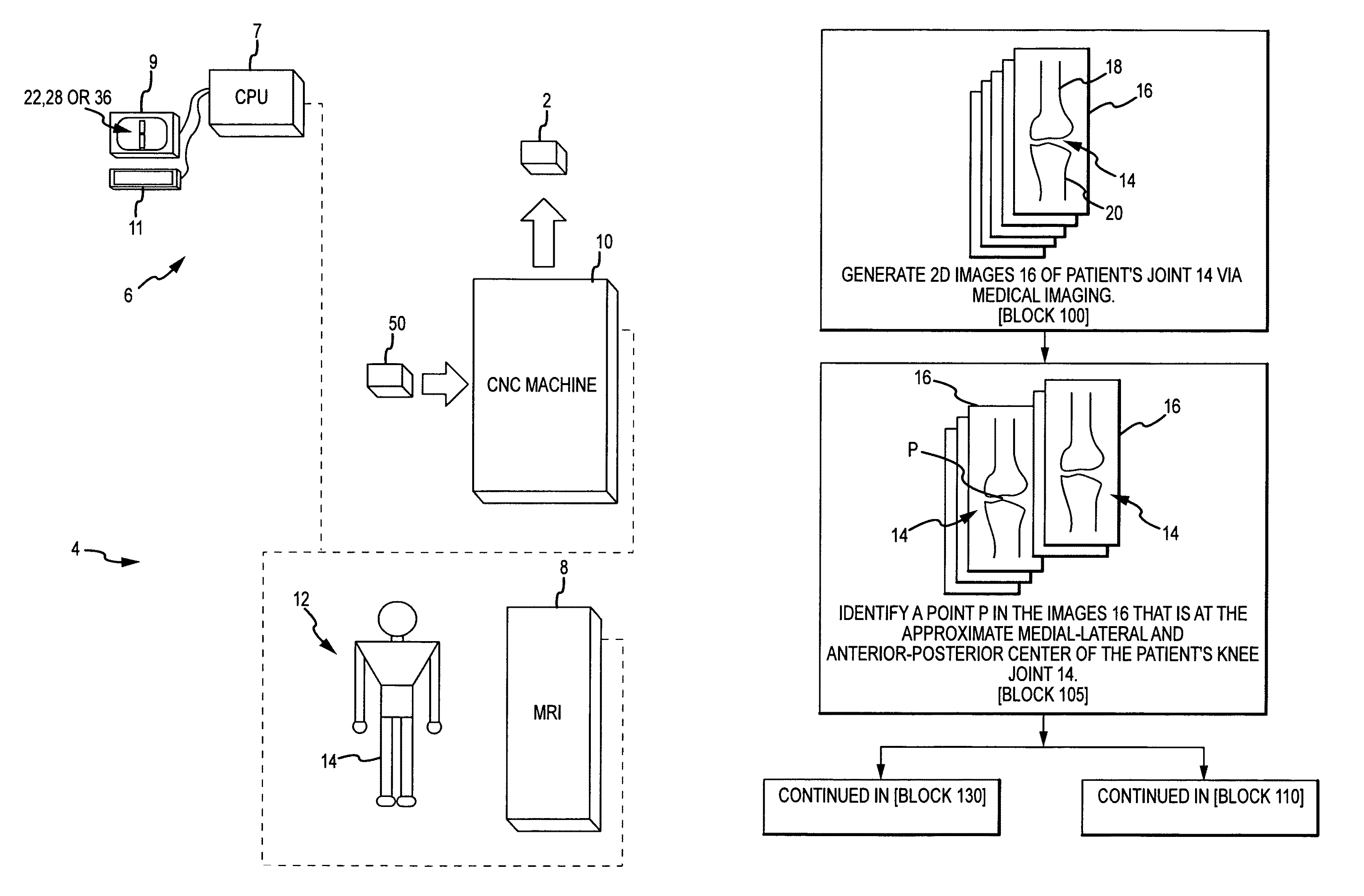

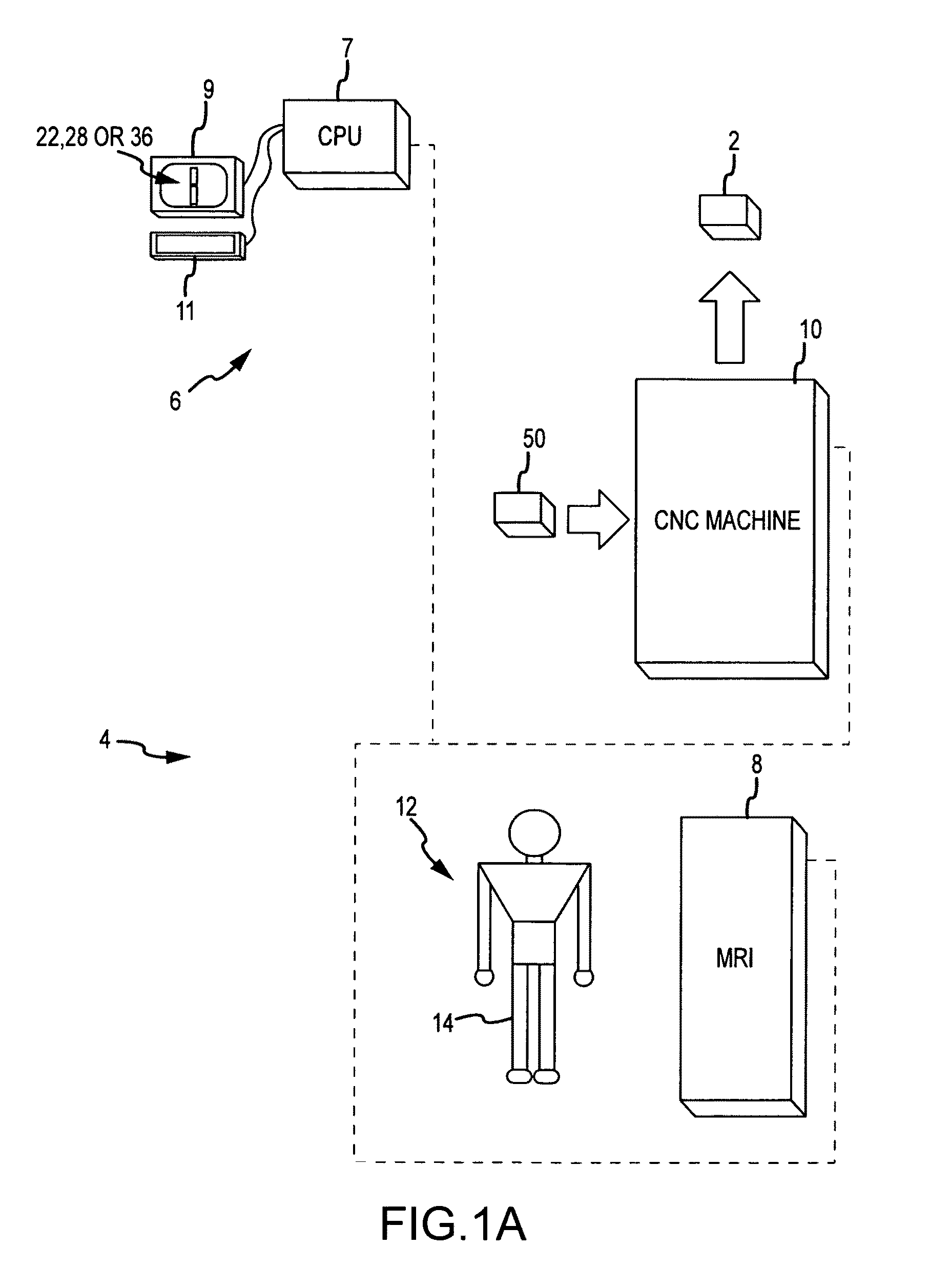

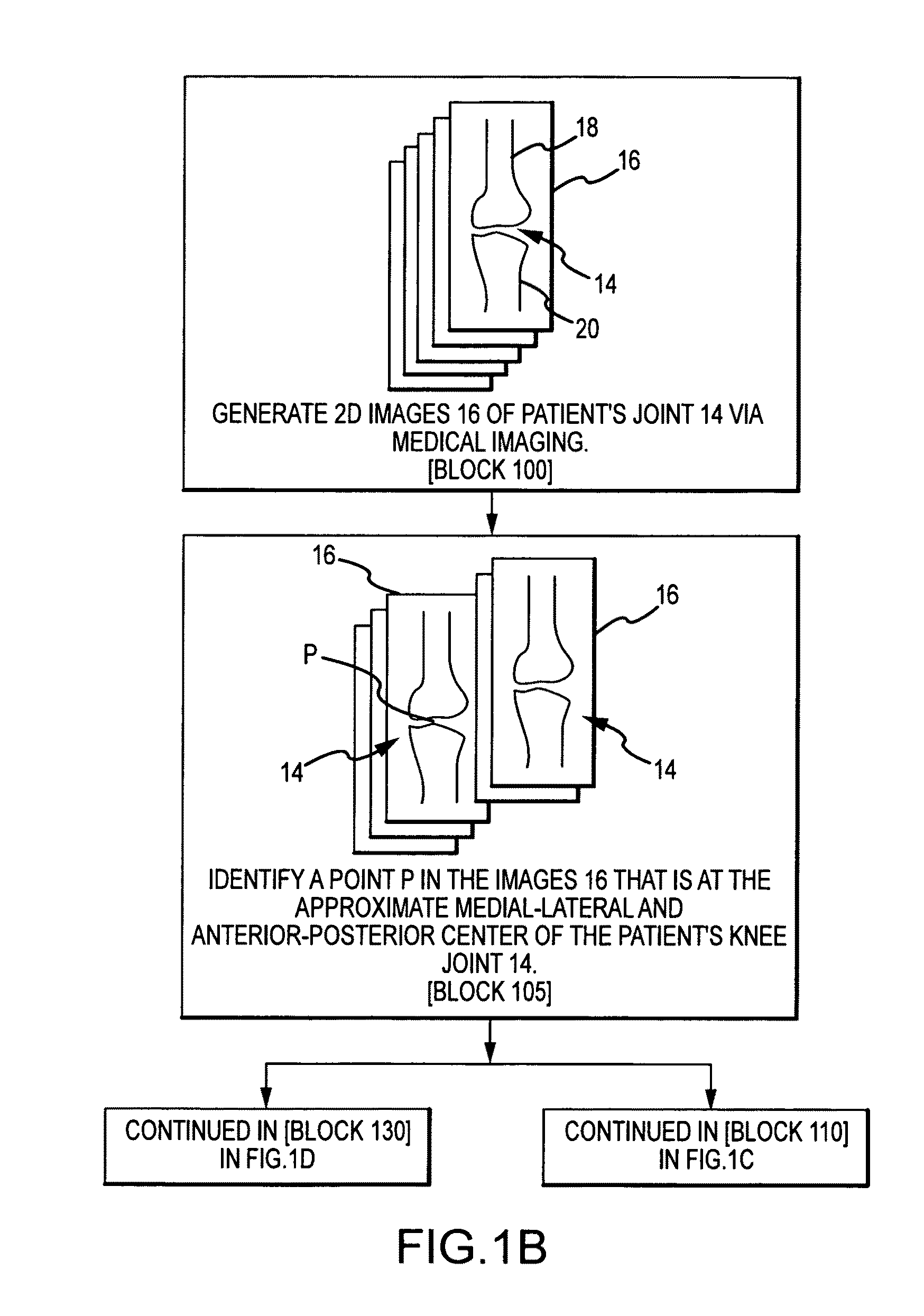

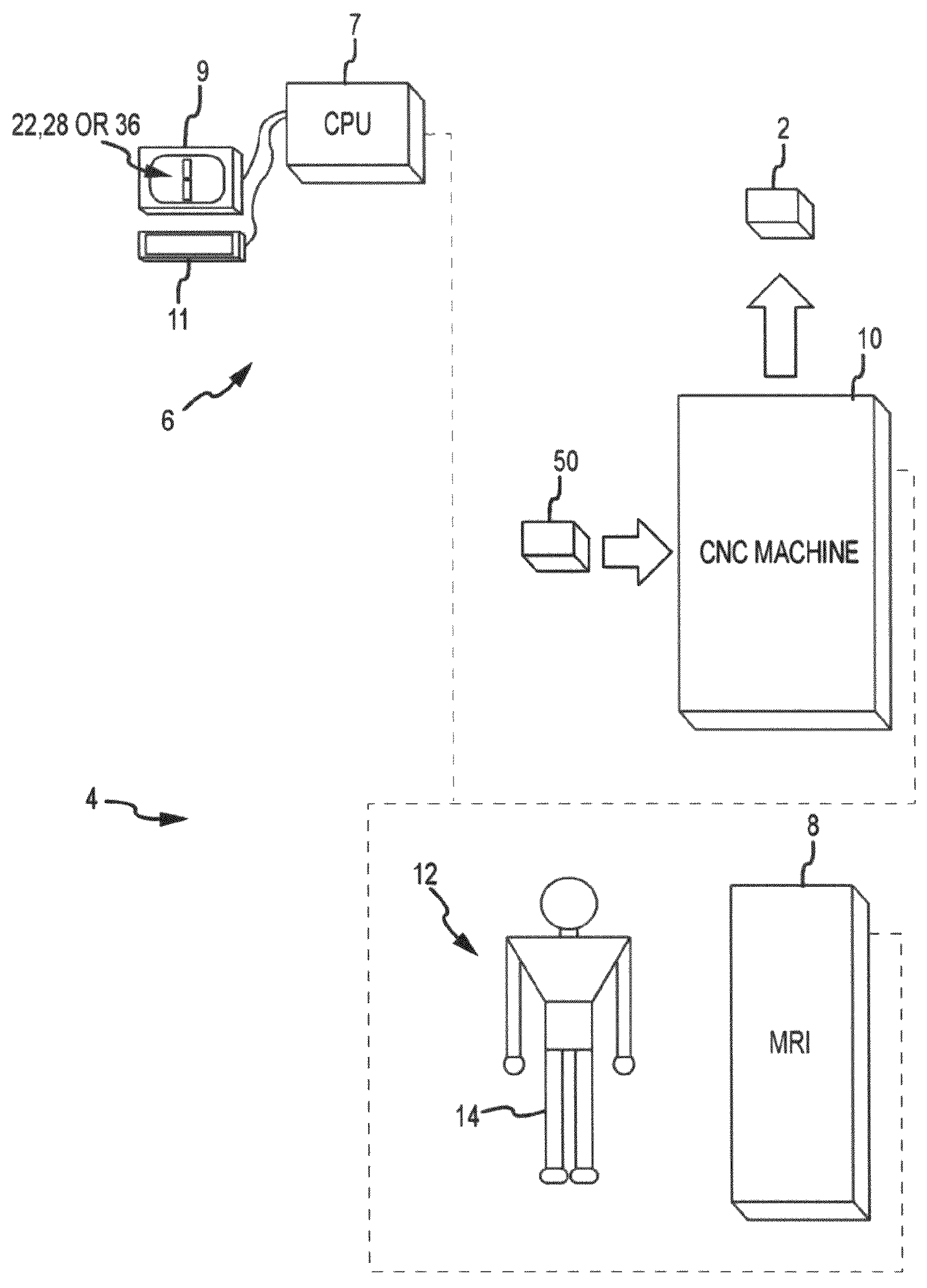

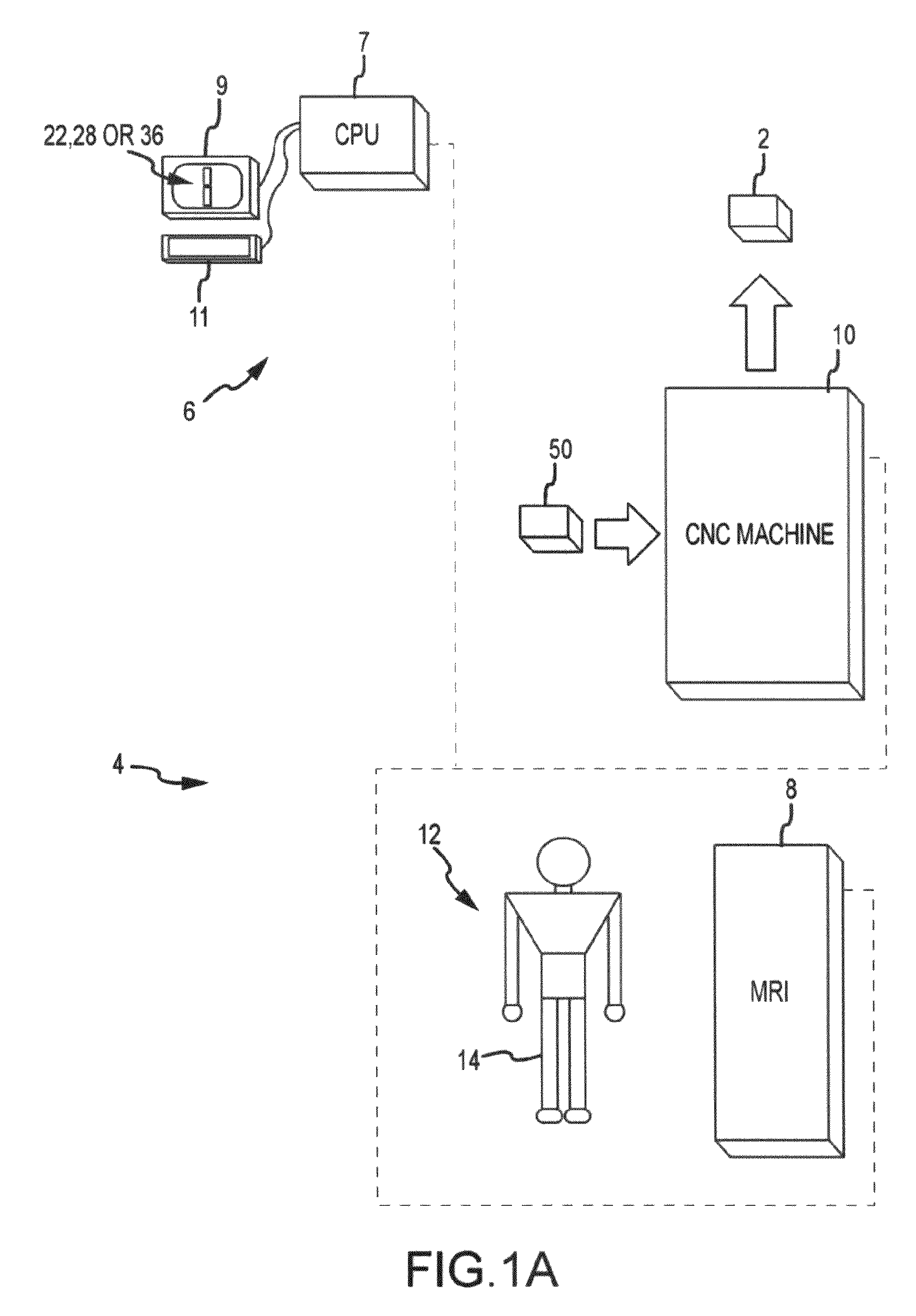

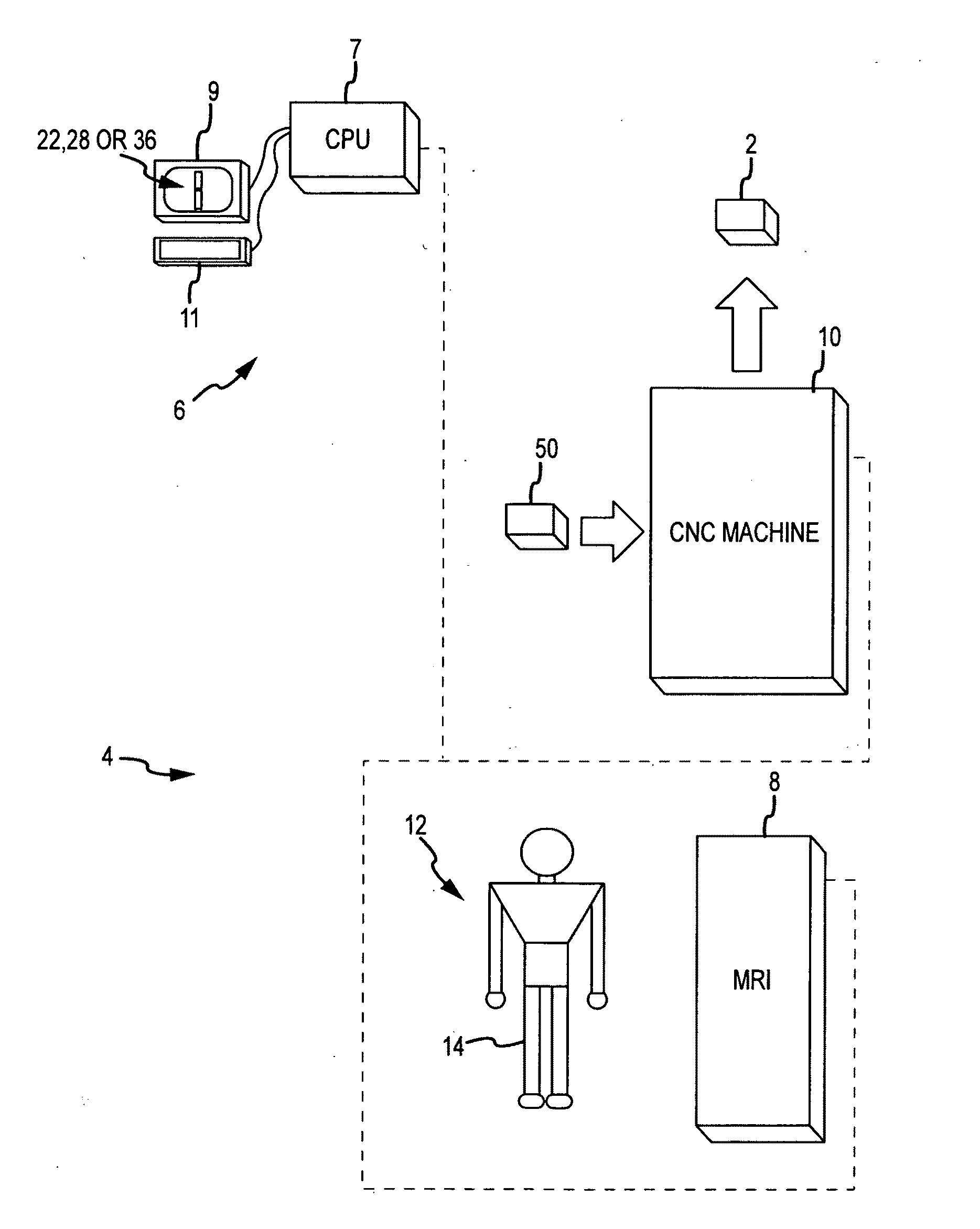

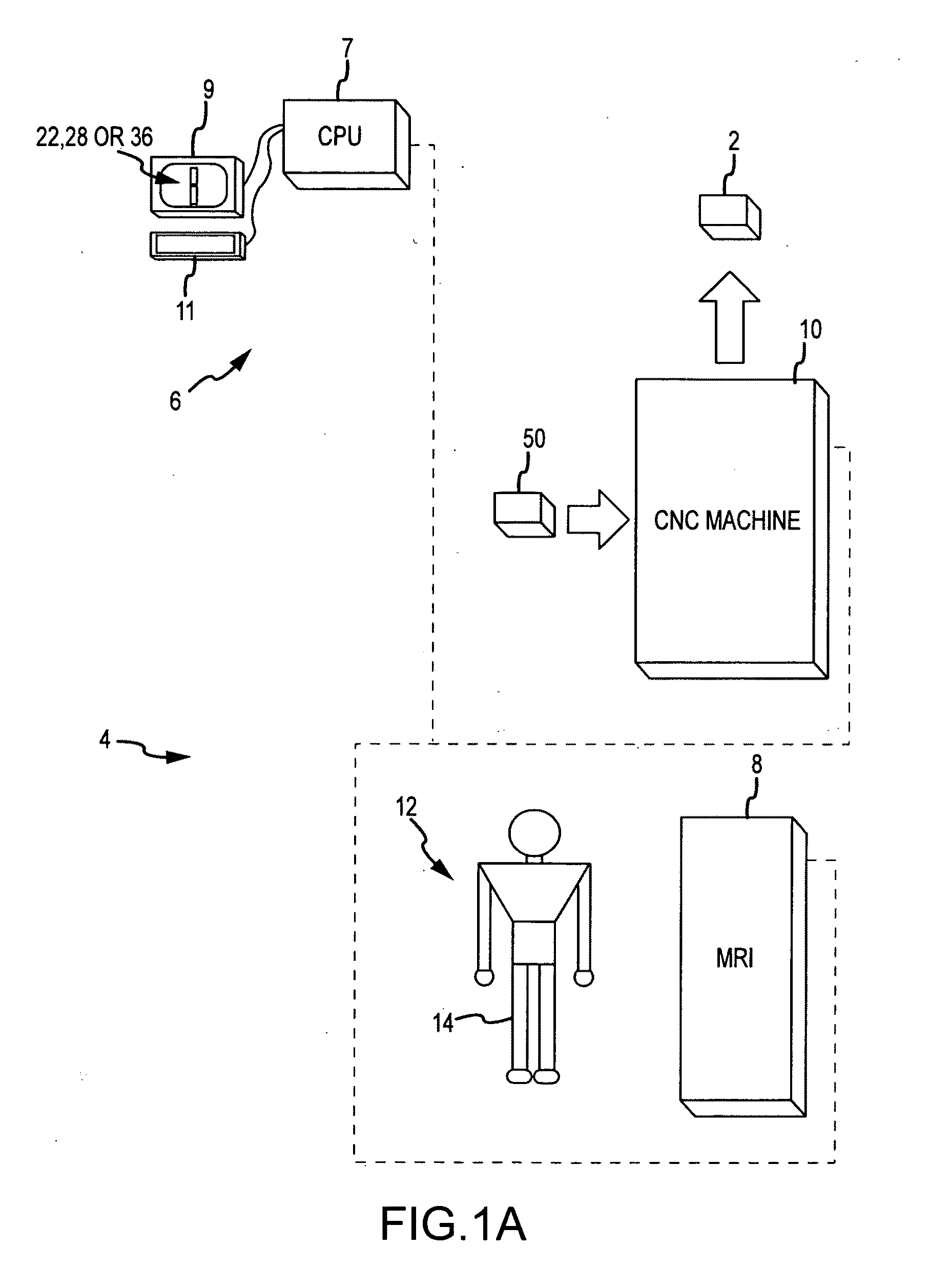

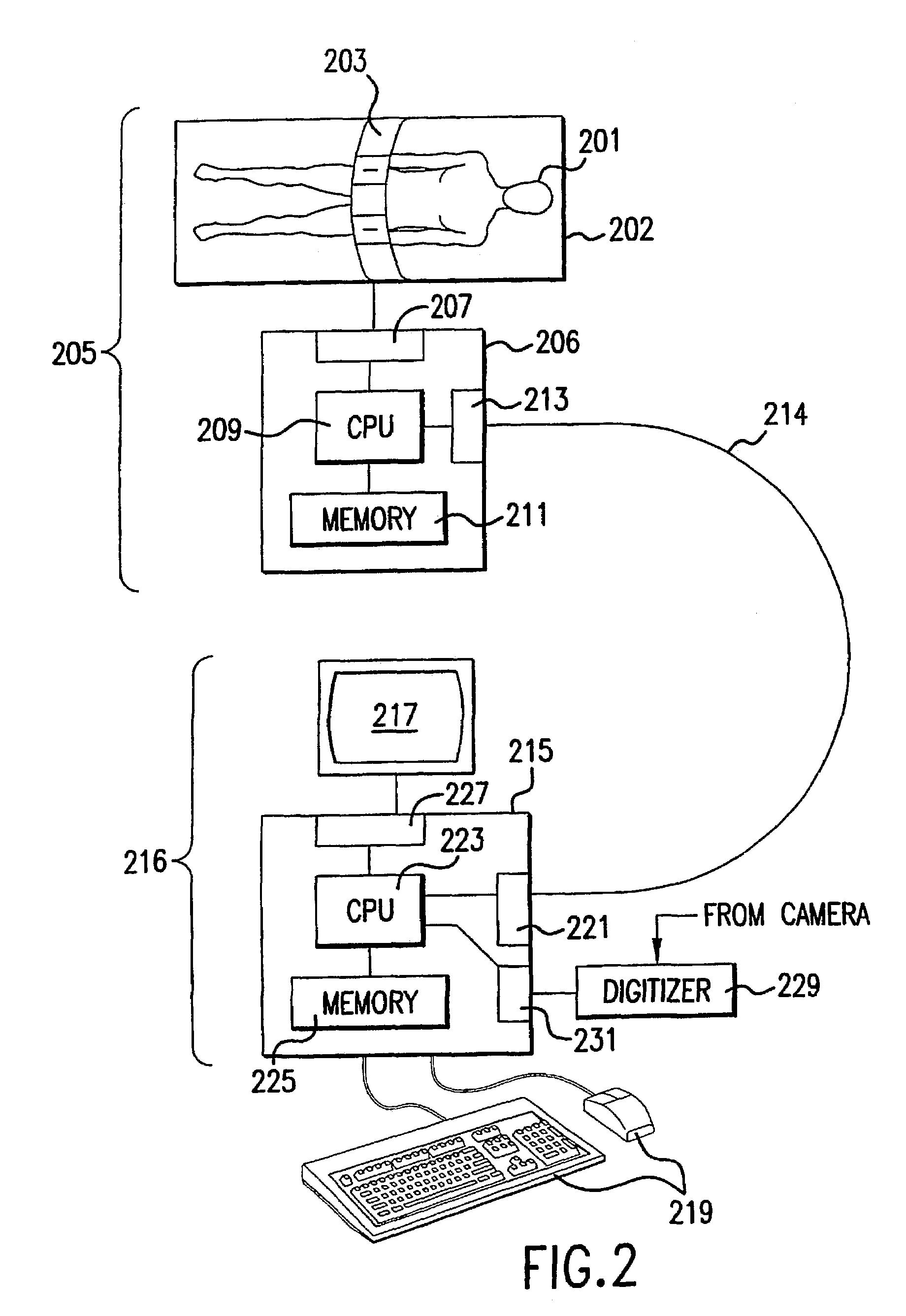

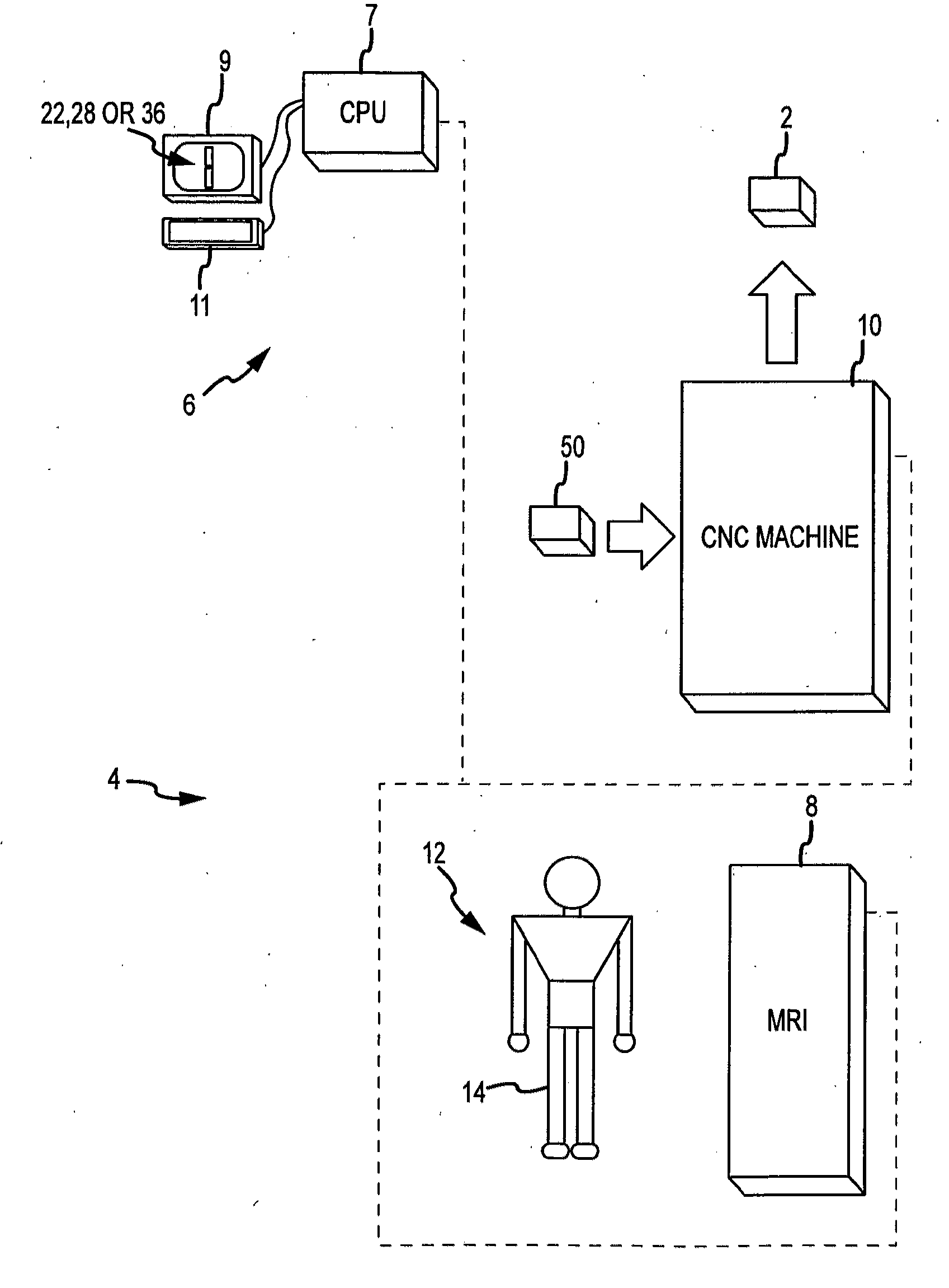

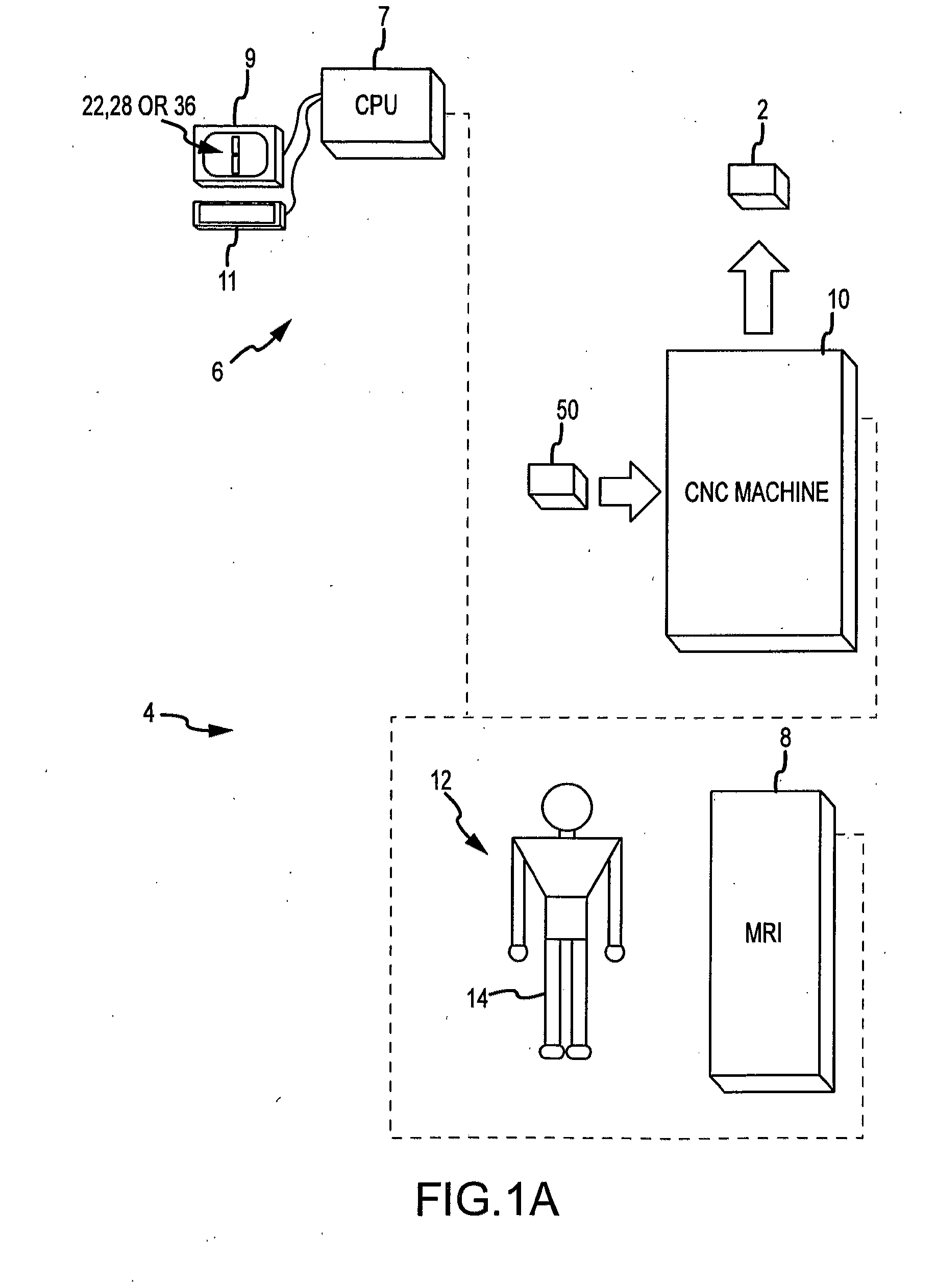

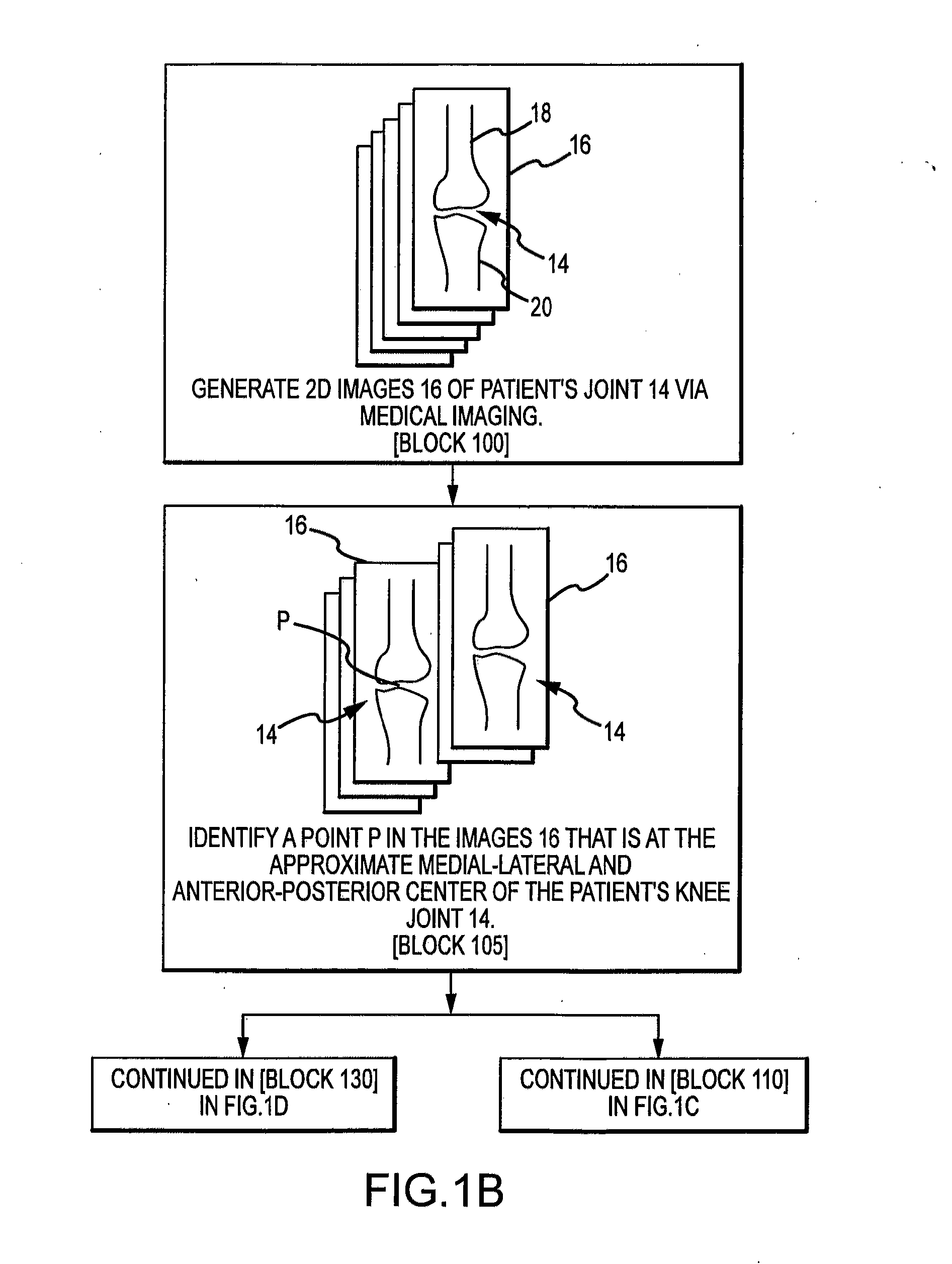

System and method for image segmentation in generating computer models of a joint to undergo arthroplasty

ActiveUS8160345B2Details involving processing stepsImage enhancementMedical imagingImage segmentation

A custom arthroplasty guide and a method of manufacturing such a guide are disclosed herein. The guide manufactured includes a mating region configured to matingly receive a portion of a patient bone associated with an arthroplasty procedure for which the custom arthroplasty guide is to be employed. The mating region includes a surface contour that is generally a negative of a surface contour of the portion of the patient bone. The surface contour of the mating region is configured to mate with the surface contour of the portion of the patient bone in a generally matching or interdigitating manner when the portion of the patient bone is matingly received by the mating region. The method of manufacturing the custom arthroplasty guide includes: a) generating medical imaging slices of the portion of the patient bone; b) identifying landmarks on bone boundaries in the medical imaging slices; c) providing model data including image data associated with a bone other than the patient bone; d) adjusting the model data to match the landmarks; e) using the adjusted model data to generate a three dimensional computer model of the portion of the patient bone; f) using the three dimensional computer model to generate design data associated with the custom arthroplasty guide; and g) using the design data in manufacturing the custom arthroplasty guide.

Owner:HOWMEDICA OSTEONICS CORP

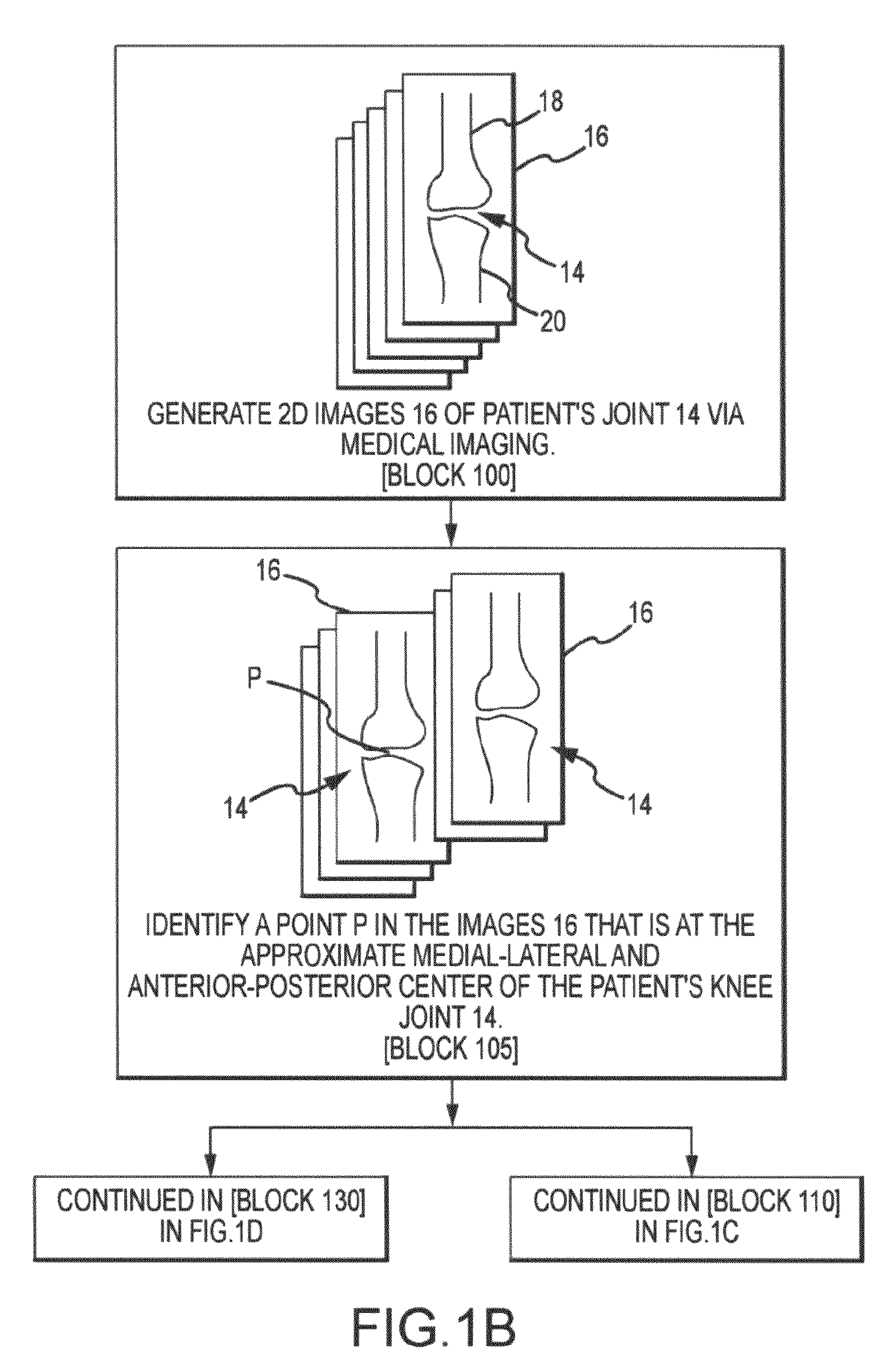

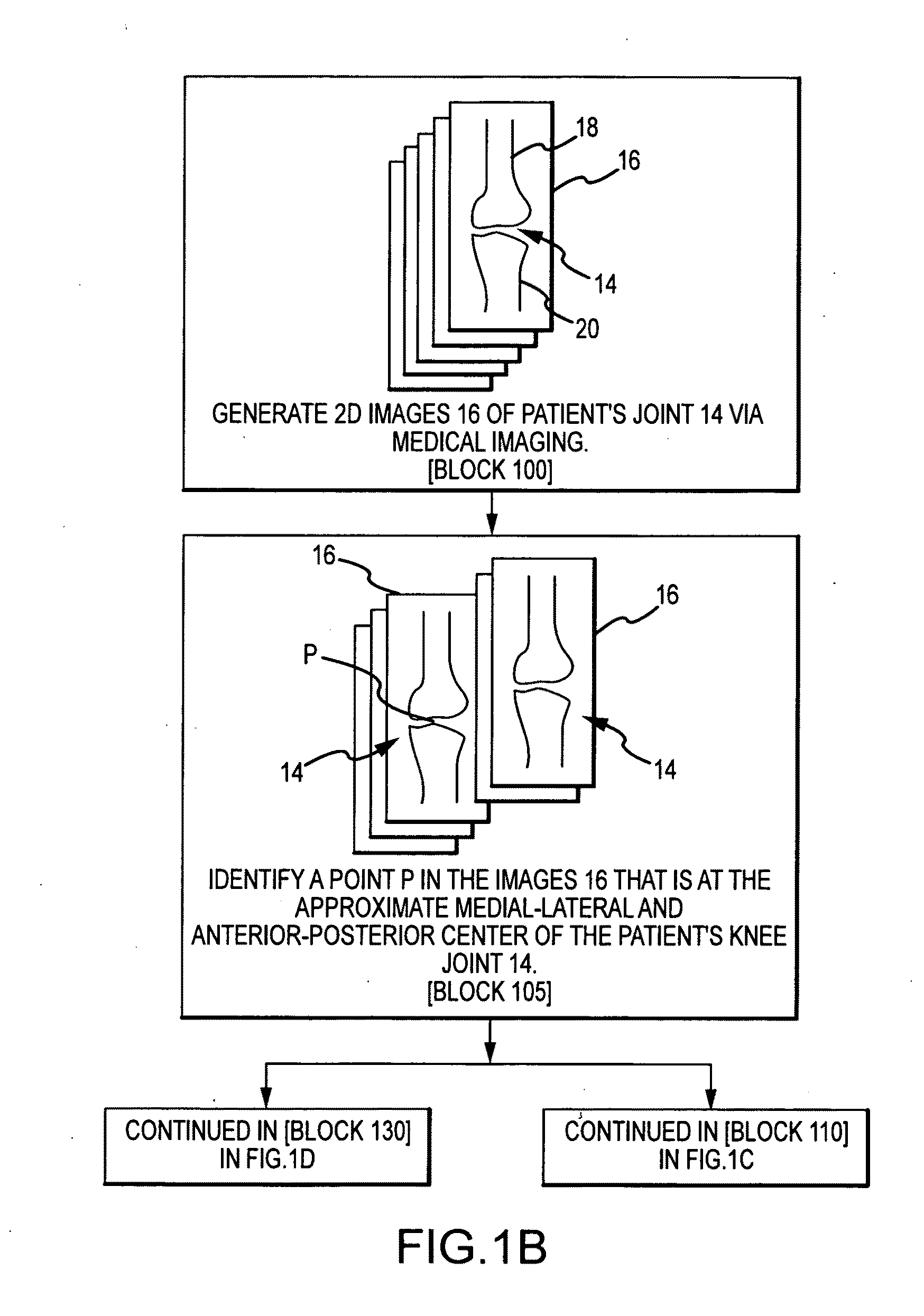

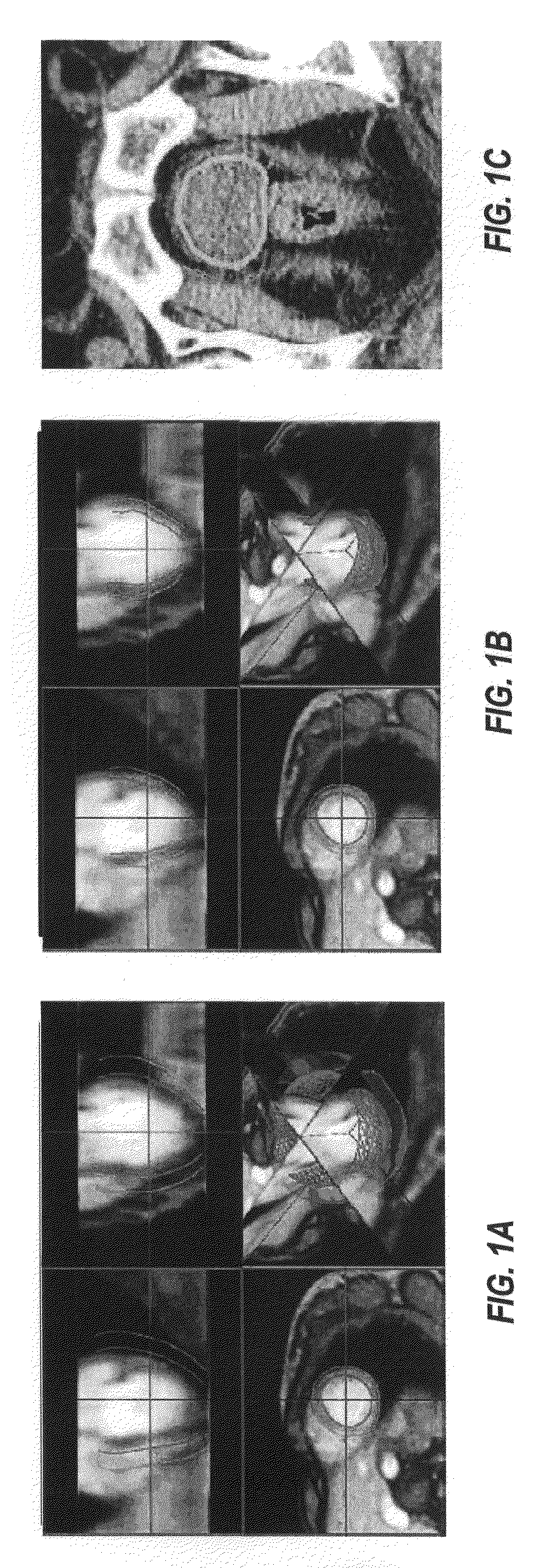

System and method for image segmentation in generating computer models of a joint to undergo arthroplasty

Systems and methods for image segmentation in generating computer models of a joint to undergo arthroplasty are disclosed. Some embodiments may include a method of partitioning an image of a bone into a plurality of regions, where the method may include obtaining a plurality of volumetric image slices of the bone, generating a plurality of spline curves associated with the bone, verifying that at least one of the plurality of spline curves follow a surface of the bone, and creating a 3D mesh representation based upon the at least one of the plurality of spline curve.

Owner:HOWMEDICA OSTEONICS CORP

System and method for image segmentation in generating computer models of a joint to undergo arthroplasty

ActiveUS20110282473A1Image enhancementDetails involving processing stepsImage segmentationMedical imaging

A custom arthroplasty guide and a method of manufacturing such a guide are disclosed herein. The guide manufactured includes a mating region configured to matingly receive a portion of a patient bone associated with an arthroplasty procedure for which the custom arthroplasty guide is to be employed. The mating region includes a surface contour that is generally a negative of a surface contour of the portion of the patient bone. The surface contour of the mating region is configured to mate with the surface contour of the portion of the patient bone in a generally matching or interdigitating manner when the portion of the patient bone is matingly received by the mating region. The method of manufacturing the custom arthroplasty guide includes: a) generating medical imaging slices of the portion of the patient bone; b) identifying landmarks on bone boundaries in the medical imaging slices; c) providing model data including image data associated with a bone other than the patient bone; d) adjusting the model data to match the landmarks; e) using the adjusted model data to generate a three dimensional computer model of the portion of the patient bone; f) using the three dimensional computer model to generate design data associated with the custom arthroplasty guide; and g) using the design data in manufacturing the custom arthroplasty guide.

Owner:HOWMEDICA OSTEONICS CORP

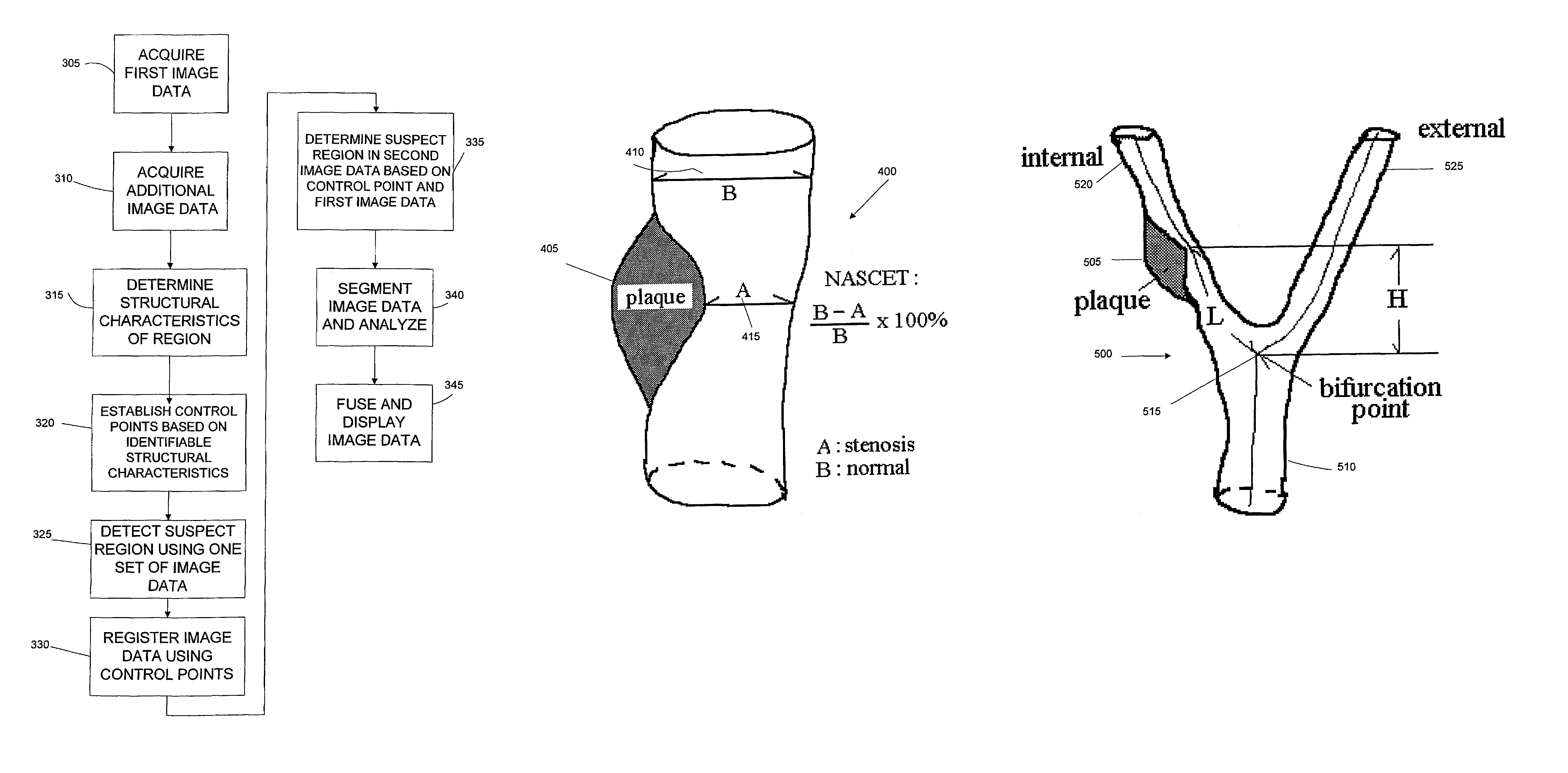

Computer aided treatment planning and visualization with image registration and fusion

A computer based system and method of visualizing a region using multiple image data sets is provided. The method includes acquiring first volumetric image data of a region and acquiring at least second volumetric image data of the region. The first image data is generally selected such that the structural features of the region are readily visualized. At least one control point is determined in the region using an identifiable structural characteristic discernable in the first volumetric image data. The at least one control point is also located in the at least second image data of the region such that the first image data and the at least second image data can be registered to one another using the at least one control point. Once the image data sets are registered, the registered first image data and at least second image data can be fused into a common display data set. The multiple image data sets have different and complimentary information to differentiate the structures and the functions in the region such that image segmentation algorithms and user interactive editing tools can be applied to obtain 3d spatial relations of the components in the region. Methods to correct spatial inhomogeneity in MR image data is also provided.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

System and method for image segmentation in generating computer models of a joint to undergo arthroplasty

ActiveUS20120192401A1Image enhancementDetails involving processing stepsImage segmentationMedical imaging

A custom arthroplasty guide and a method of manufacturing such a guide are disclosed herein. The method of manufacturing the custom arthroplasty guide includes: a) generating medical imaging slices of the portion of the patient bone; b) identifying landmarks on bone boundaries in the medical imaging slices; c) providing model data including image data associated with a bone other than the patient bone; d) adjusting the model data to match the landmarks; e) using the adjusted model data to generate a three dimensional computer model of the portion of the patient bone; f) using the three dimensional computer model to generate design data associated with the custom arthroplasty guide; and g) using the design data in manufacturing the custom arthroplasty guide.

Owner:HOWMEDICA OSTEONICS CORP

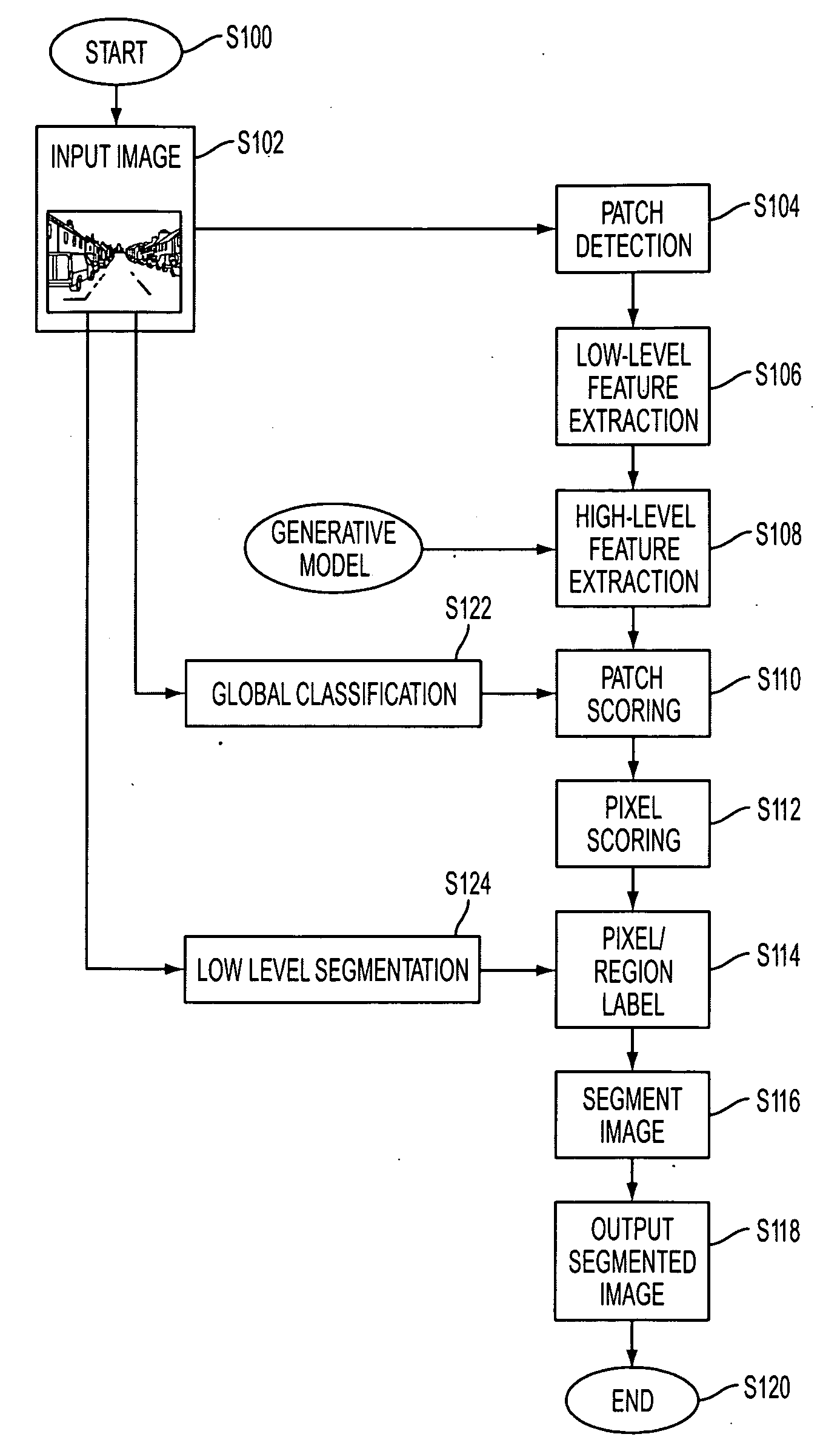

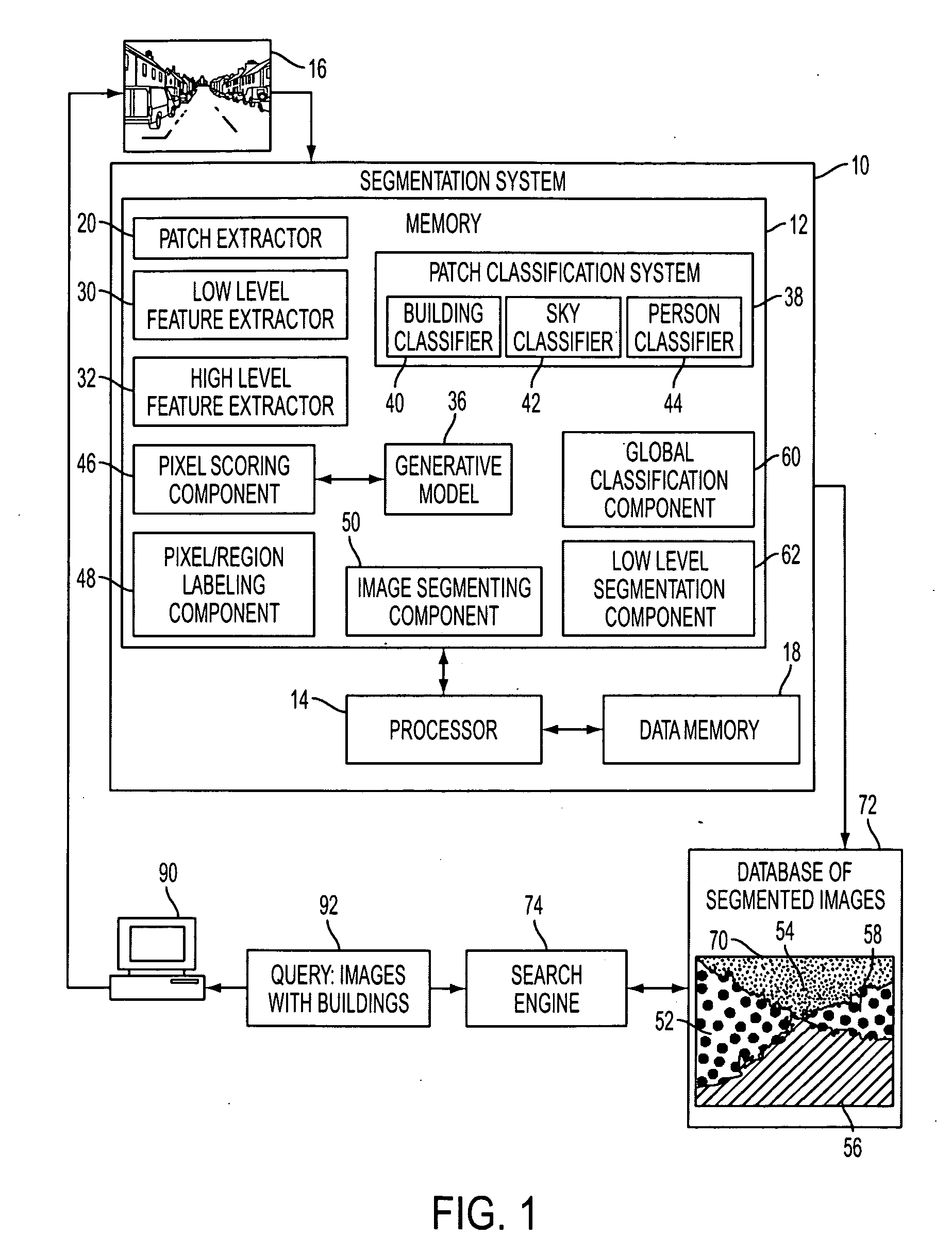

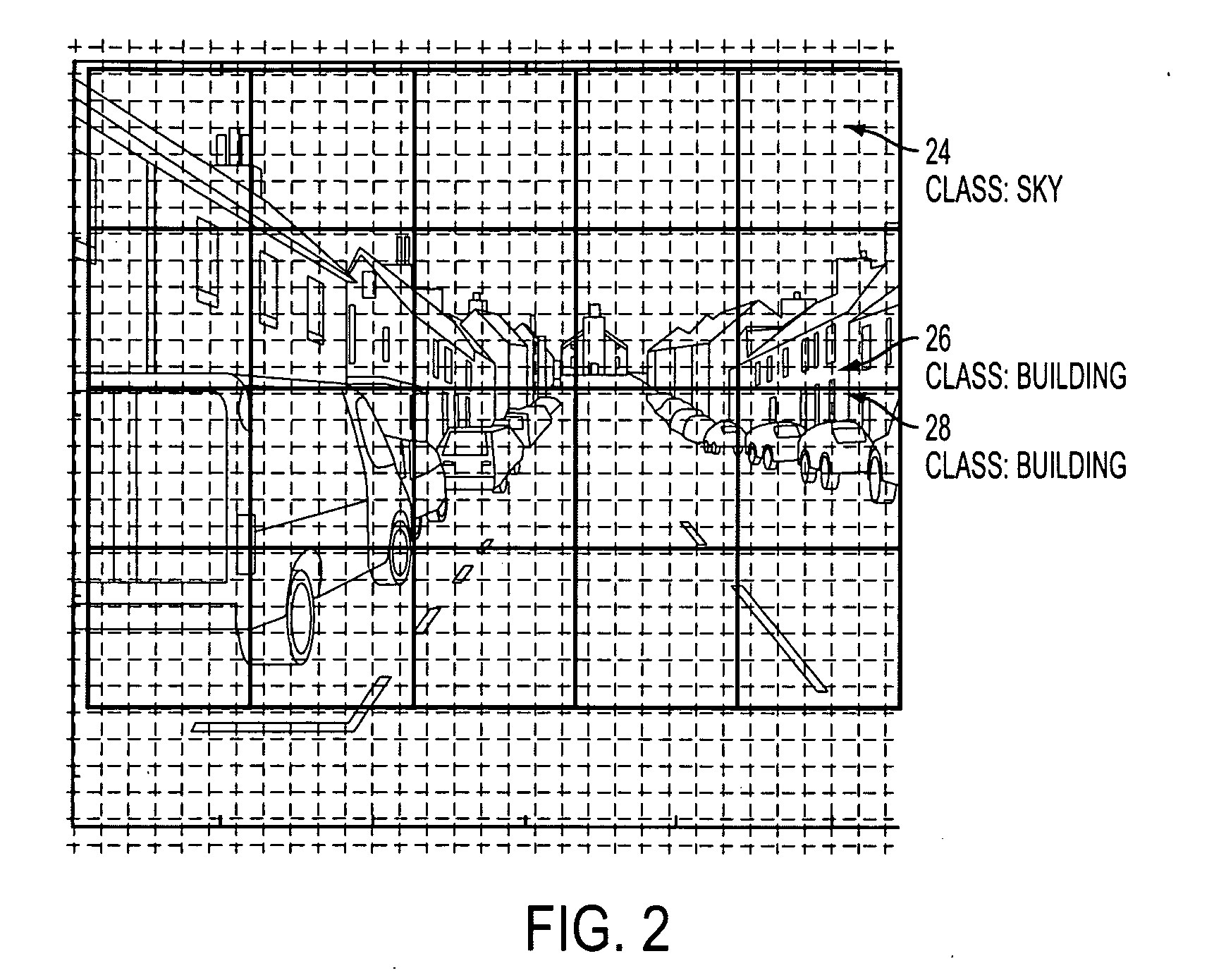

System and method for object class localization and semantic class based image segmentation

An automated image processing system and method are provided for class-based segmentation of a digital image. The method includes extracting a plurality of patches of an input image. For each patch, at least one feature is extracted. The feature may be a high level feature which is derived from the application of a generative model to a representation of low level feature(s) of the patch. For each patch, and for at least one object class from a set of object classes, a relevance score for the patch, based on the at least one feature, is computed. For at least some or all of the pixels of the image, a relevance score for the at least one object class based on the patch scores is computed. An object class is assigned to each of the pixels based on the computed relevance score for the at least one object class, allowing the image to be segmented and the segments labeled, based on object class.

Owner:XEROX CORP

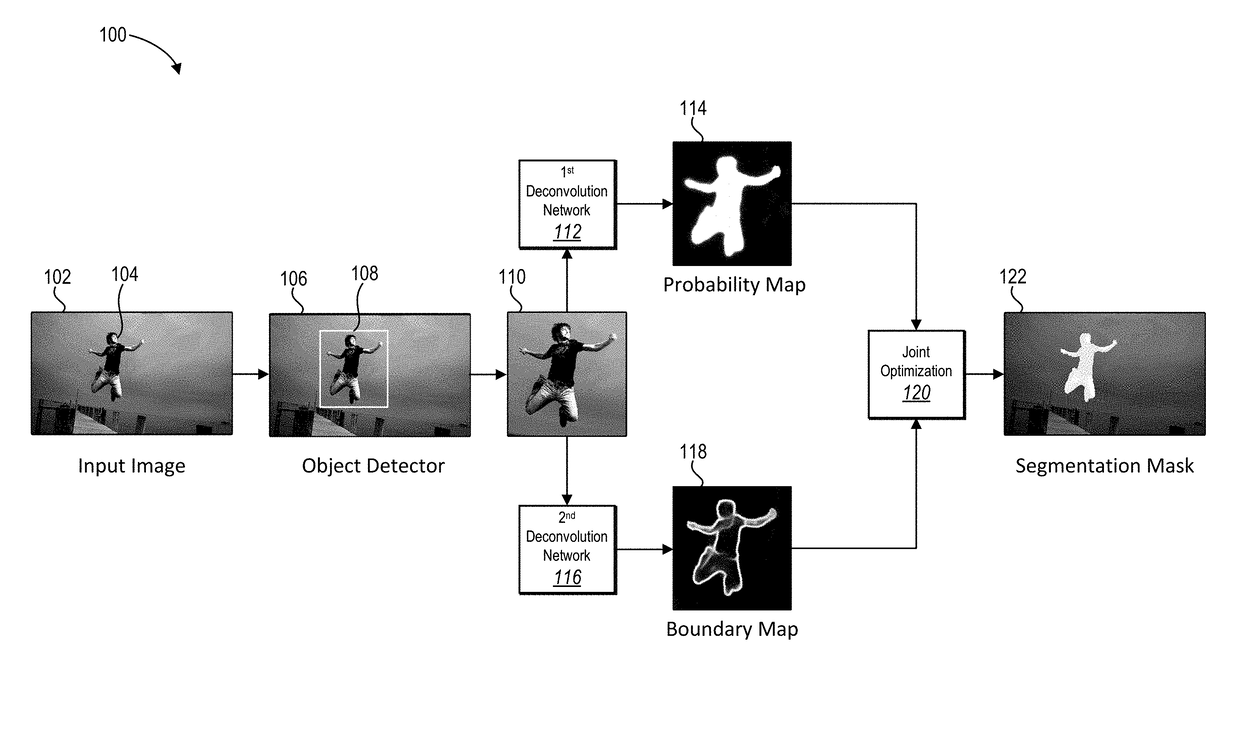

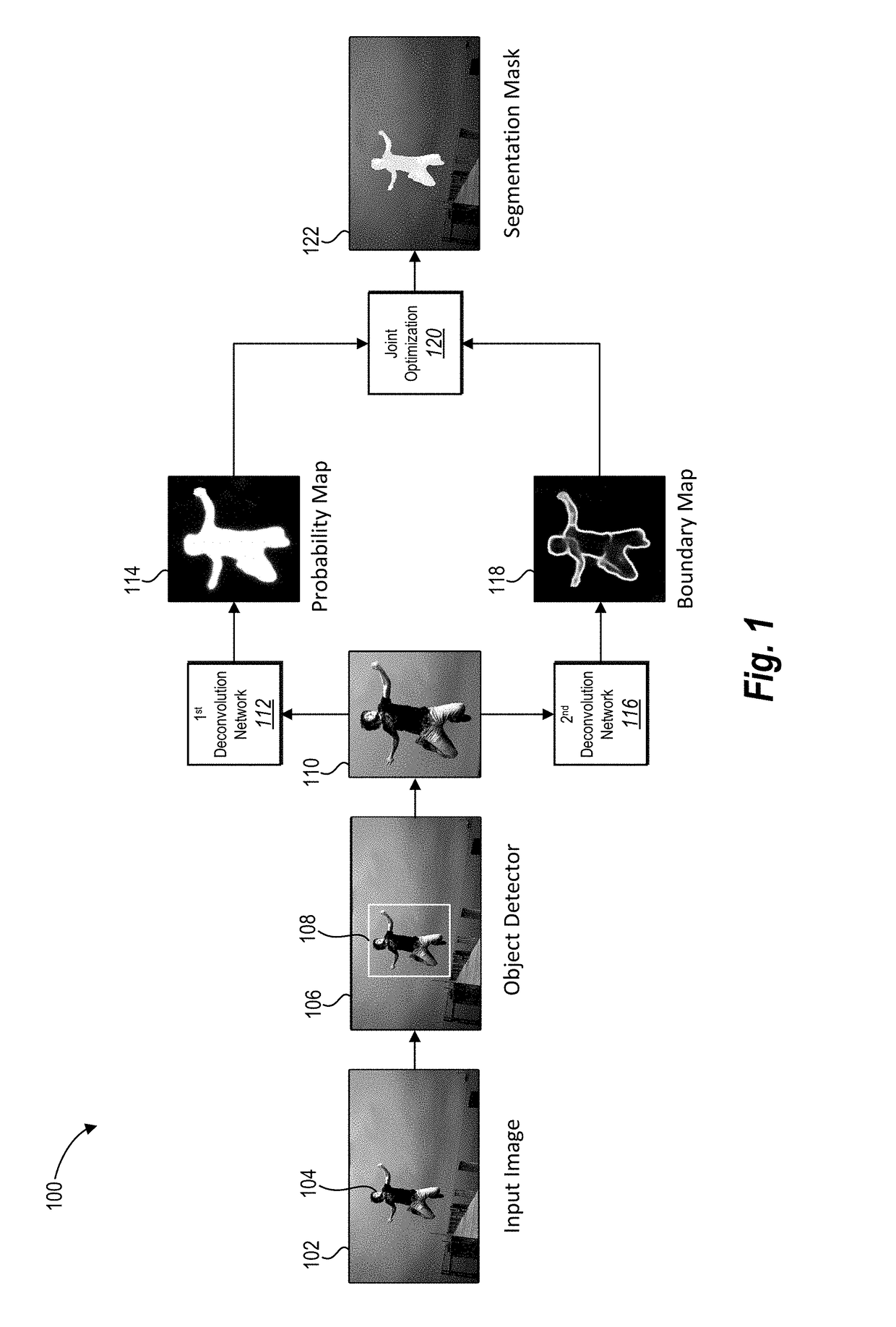

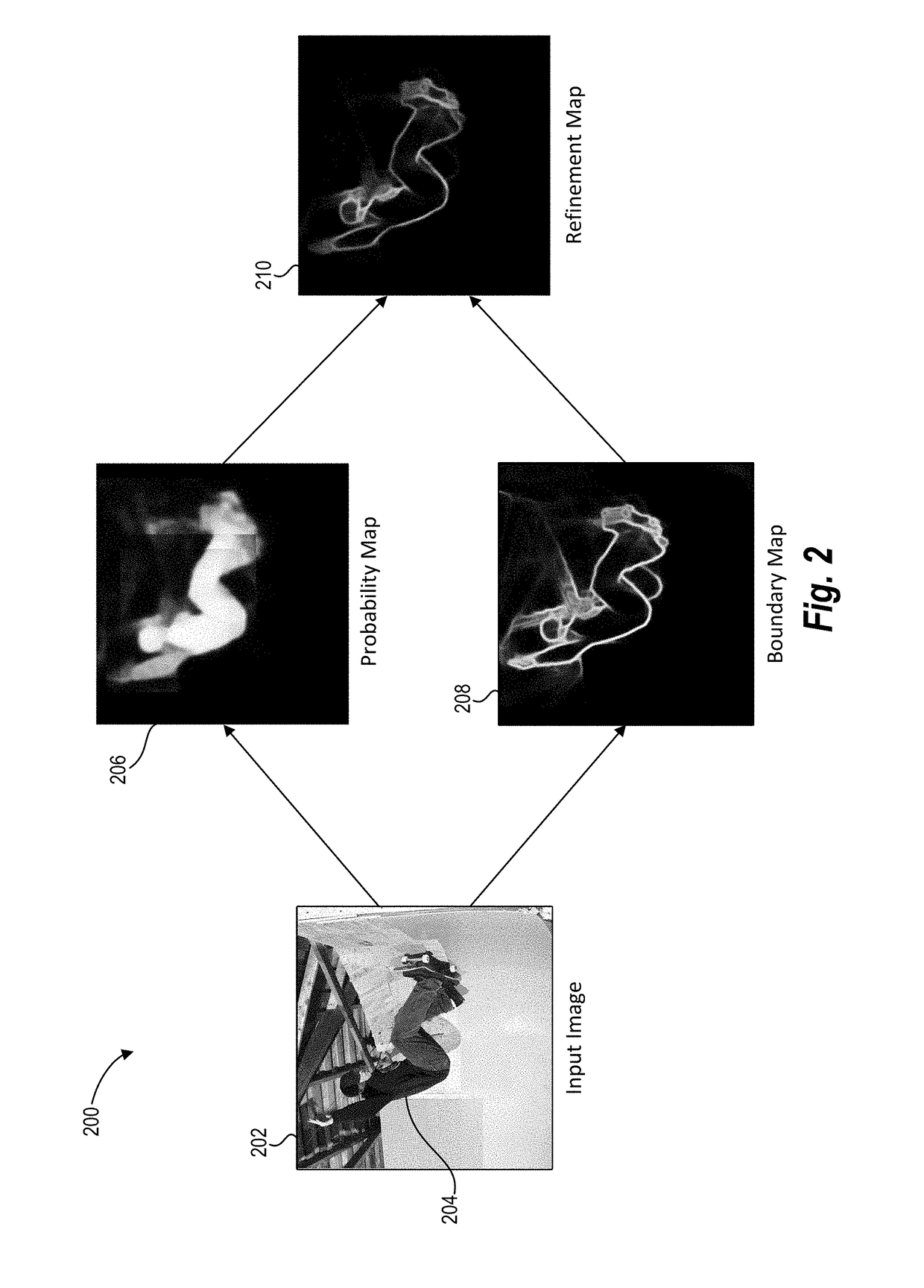

Utilizing deep learning for boundary-aware image segmentation

ActiveUS20170287137A1Accurate segmentationAccurate identificationImage enhancementMathematical modelsImage segmentationComputer vision

Systems and methods are disclosed for segmenting a digital image to identify an object portrayed in the digital image from background pixels in the digital image. In particular, in one or more embodiments, the disclosed systems and methods use a first neural network and a second neural network to generate image information used to generate a segmentation mask that corresponds to the object portrayed in the digital image. Specifically, in one or more embodiments, the disclosed systems and methods optimize a fit between a mask boundary of the segmentation mask to edges of the object portrayed in the digital image to accurately segment the object within the digital image.

Owner:ADOBE INC

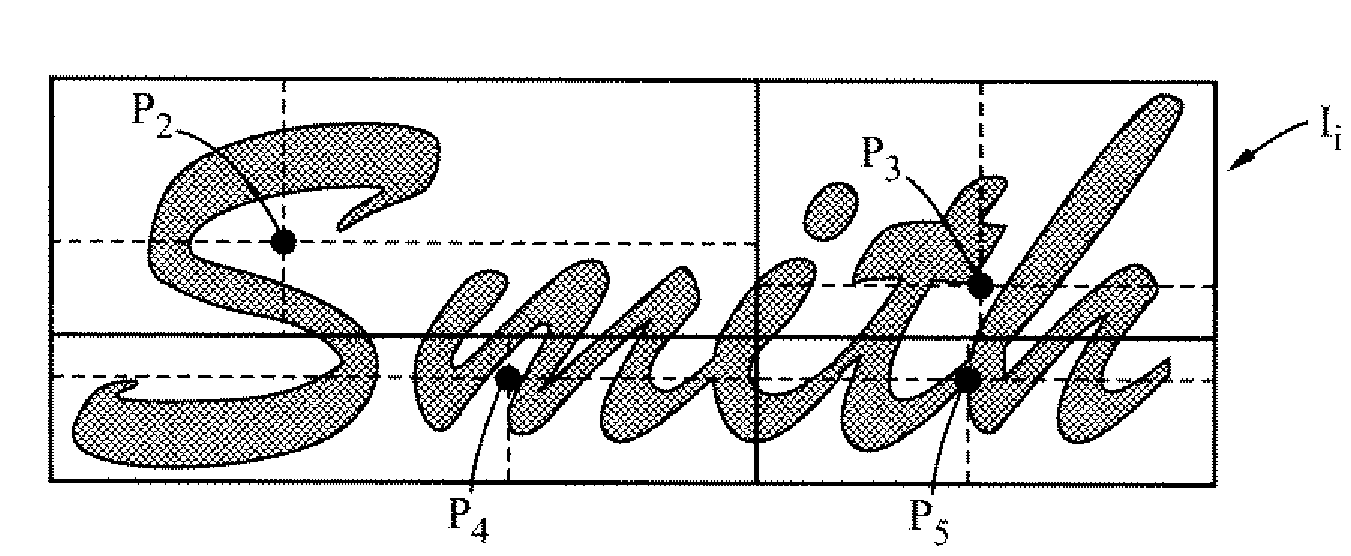

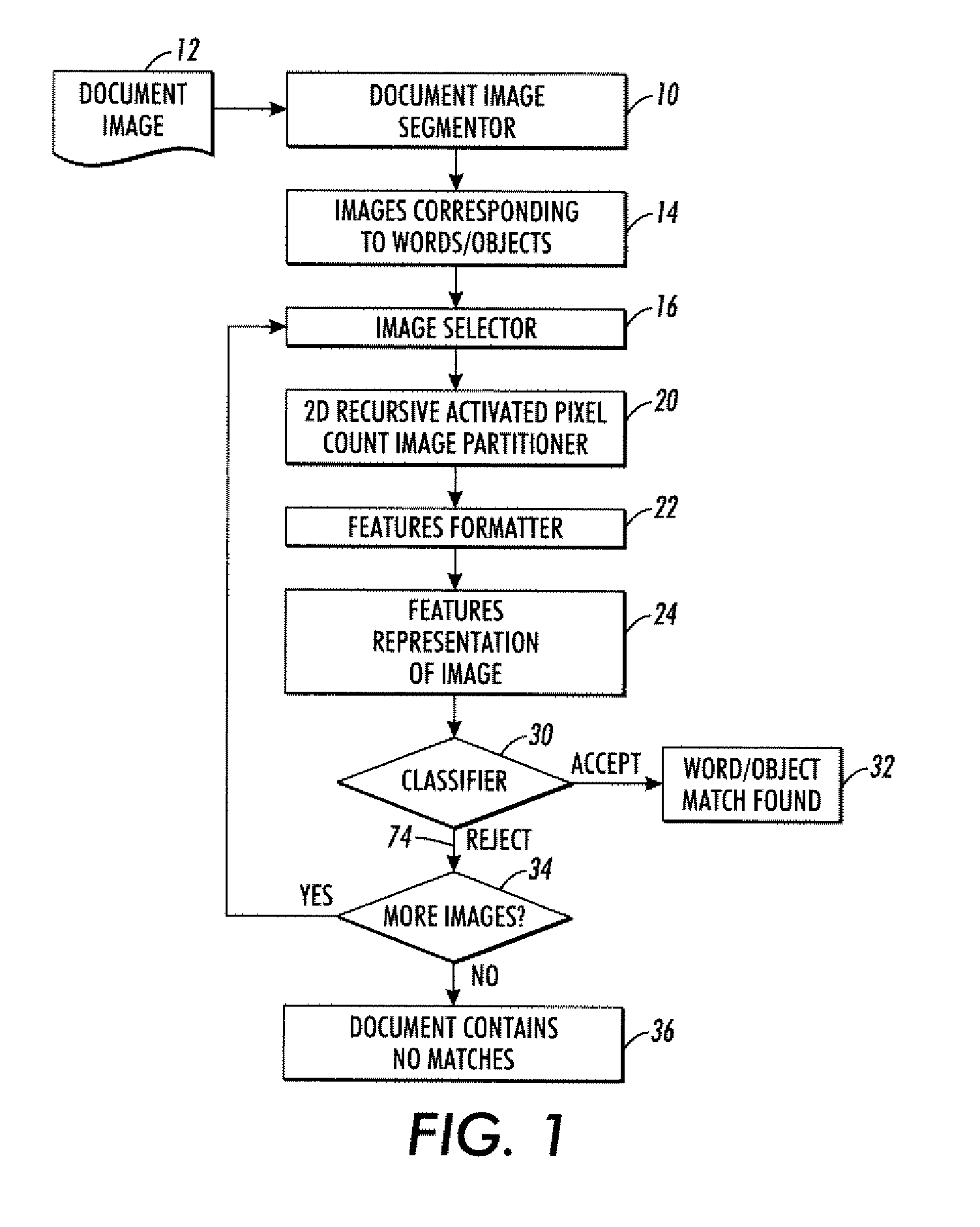

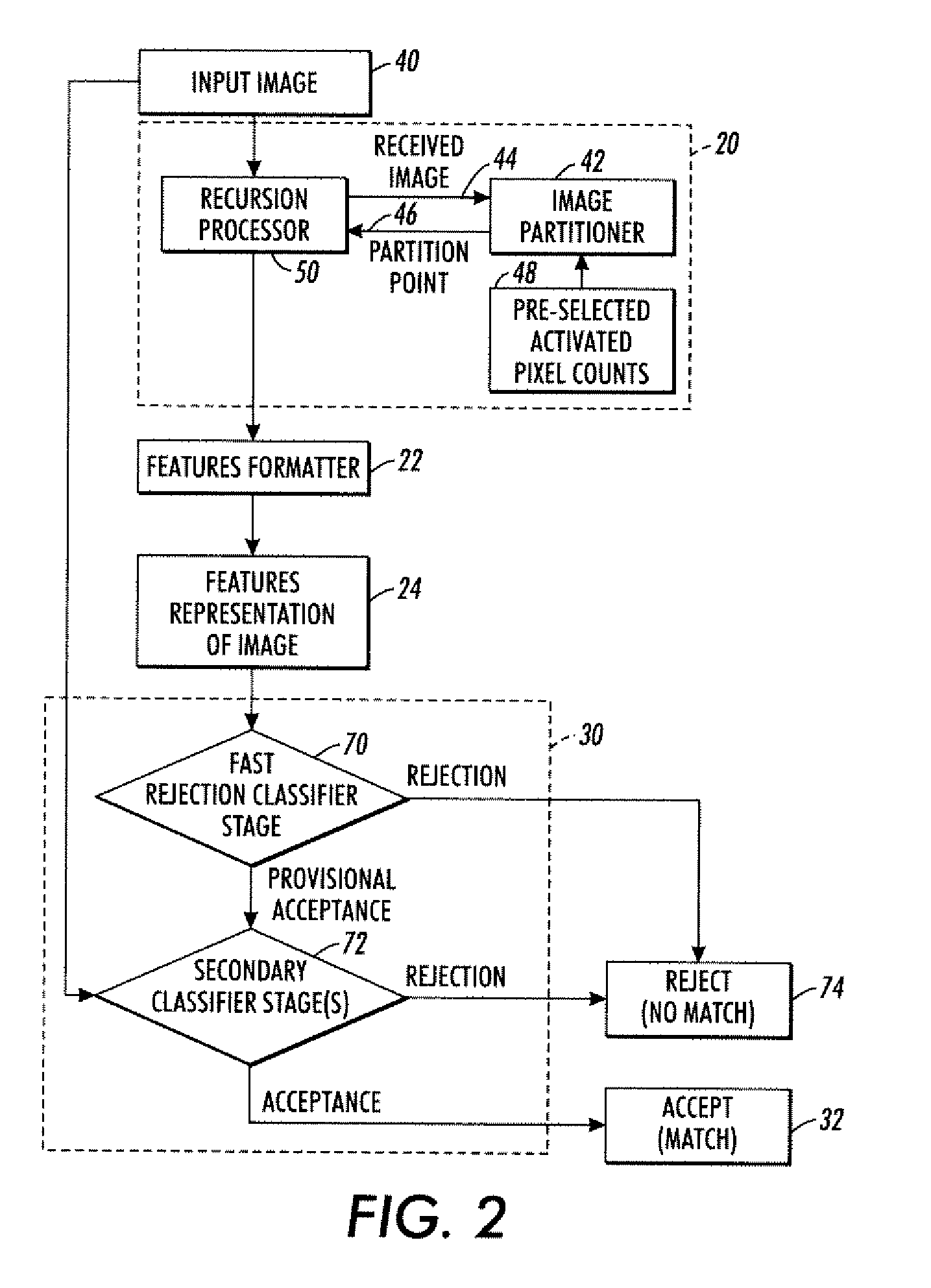

Features generation and spotting methods and systems using same

An image partitioner is configured to find a partition point that divides a received image into four sub-images each having a pre-selected activated pixel count. A recursion processor is configured to (i) apply the image partitioner to an input image to generate a first partition point and four sub-images and to (ii) recursively apply the image partitioner to at least one of the four sub-images for at least one recursion iteration to generate at least one additional partition point. A formatter is configured to generate a features representation of the input image in a selected format. The features representation is based at least in part on the partition points. The features representation can be used in various ways, such as by a classifier configured to classify the input image based on the features representation.

Owner:XEROX CORP

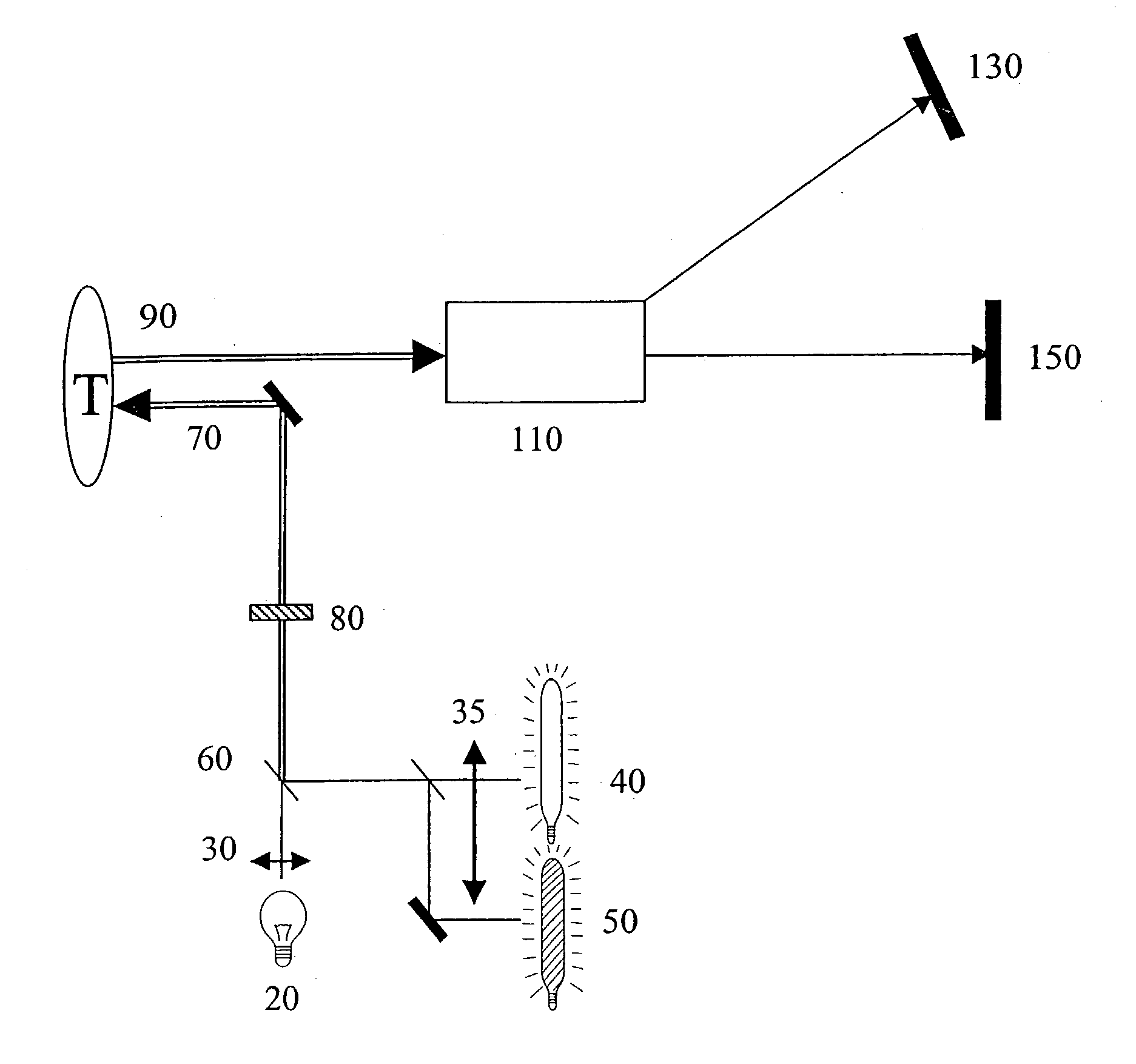

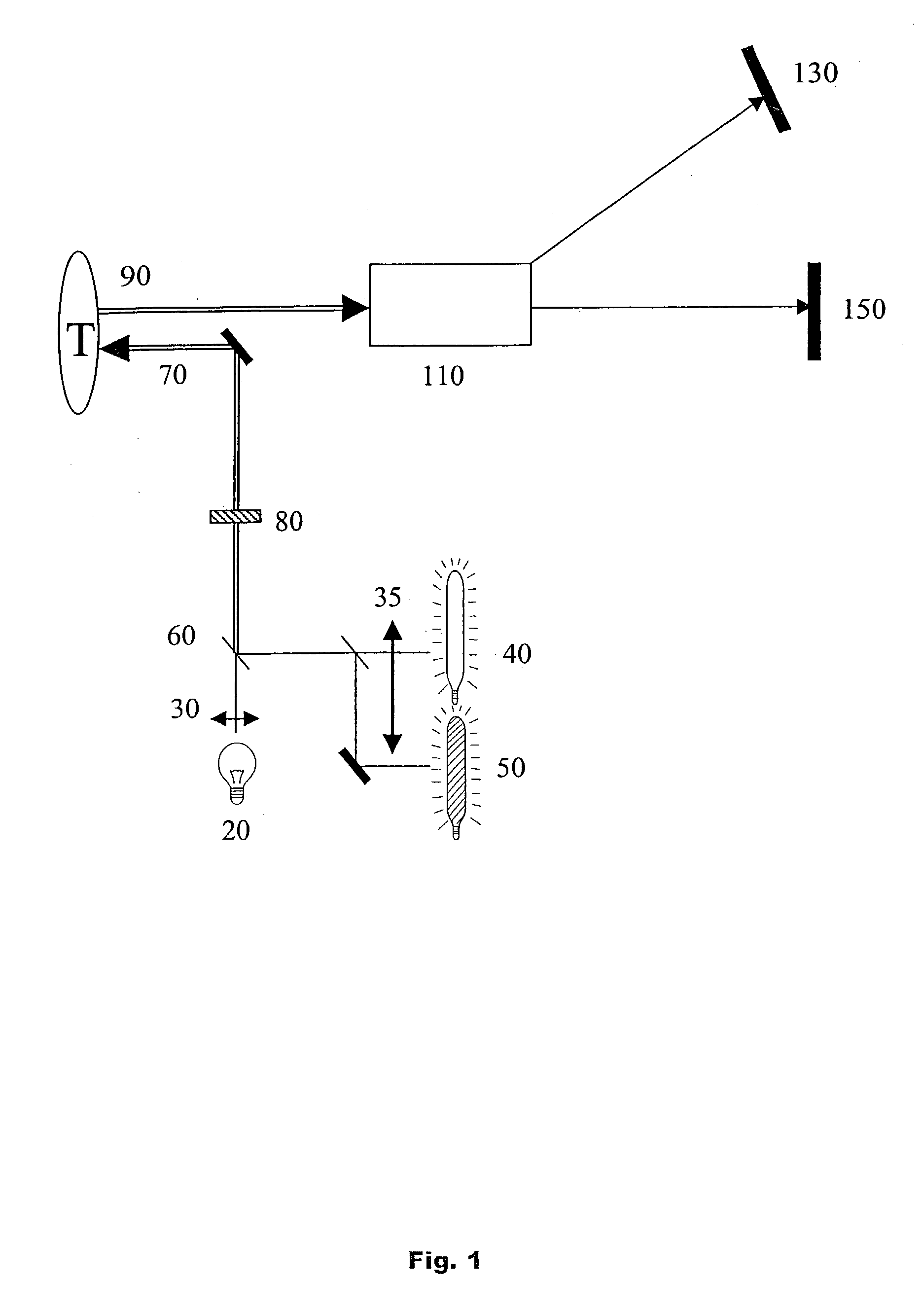

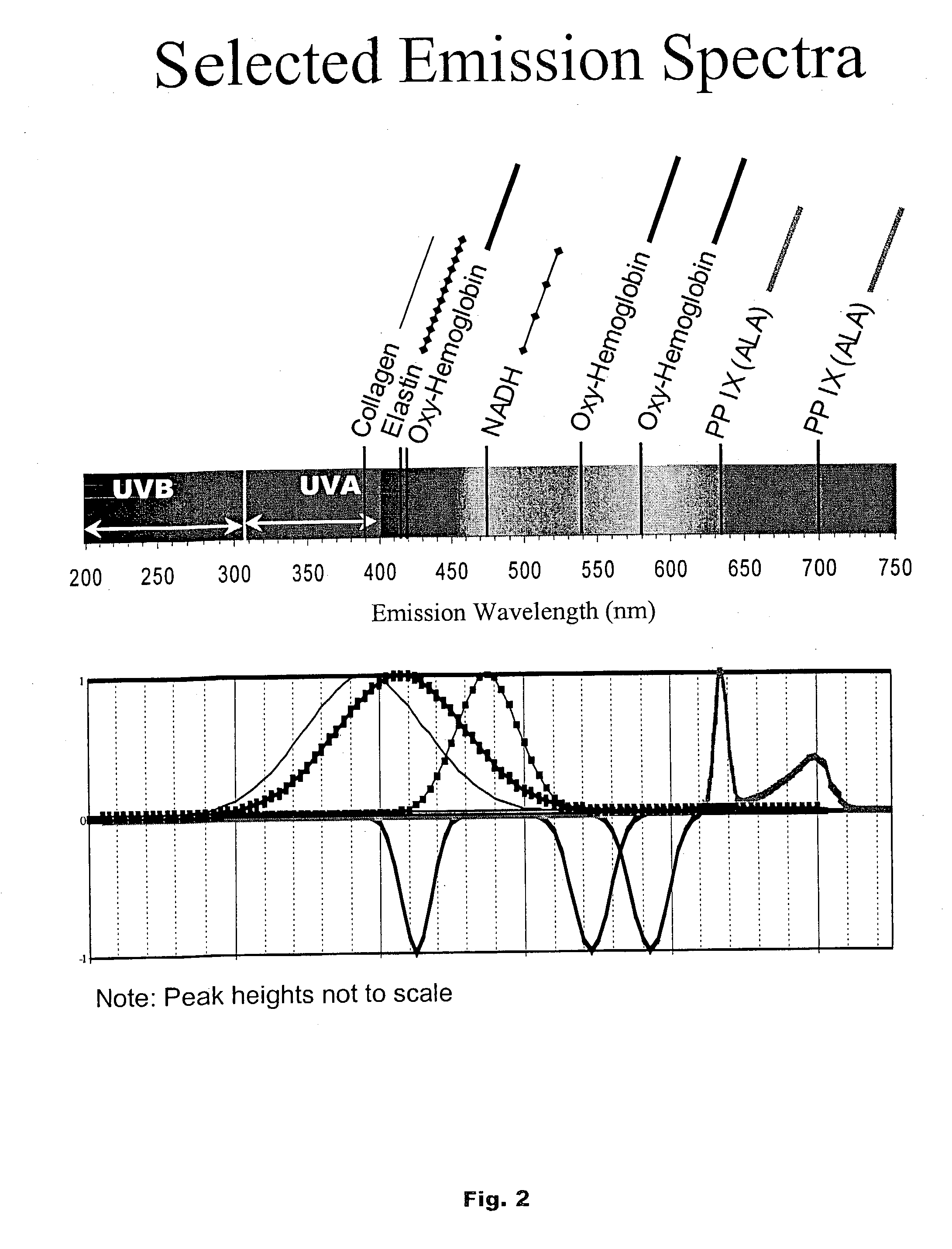

Dual mode real-time screening and rapid full-area, selective-spectral, remote imaging and analysis device and process

InactiveUS20030158470A1Quick scanAvoid delayDiagnostics using spectroscopyEndoscopesSpectral bandsImaging processing

The invention provides a device and process for real-time screening of areas that can be identified as suspicious either through image segmentation utilizing image processing techniques or through treatment with an exogenous fluorescent marker that selectively localizes in abnormal areas. If screening detects a suspicious area, then the invention allows acquiring of autofluorescence images at multiple selected narrow differentiating spectral bands so that a "virtual biopsy" can be obtained to differentiate abnormal areas from normal areas based on differentiating portions of autofluorescence spectra. Full spatial information is collected, but autofluorescence data is collected only at the selected narrow spectral bands, avoiding the collection of full spectral data, so that the speed of analysis is increased.

Owner:STI MEDICAL SYST

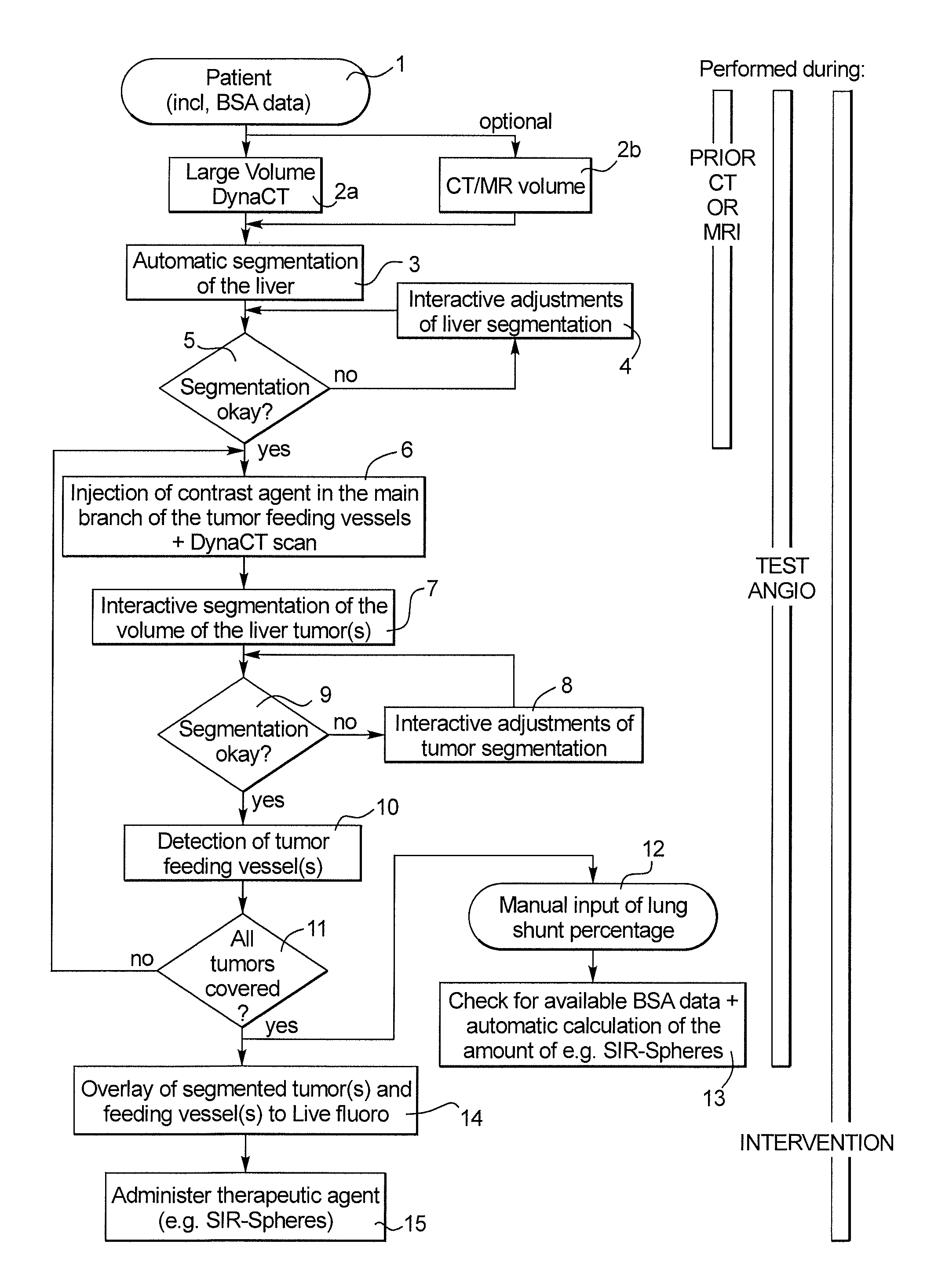

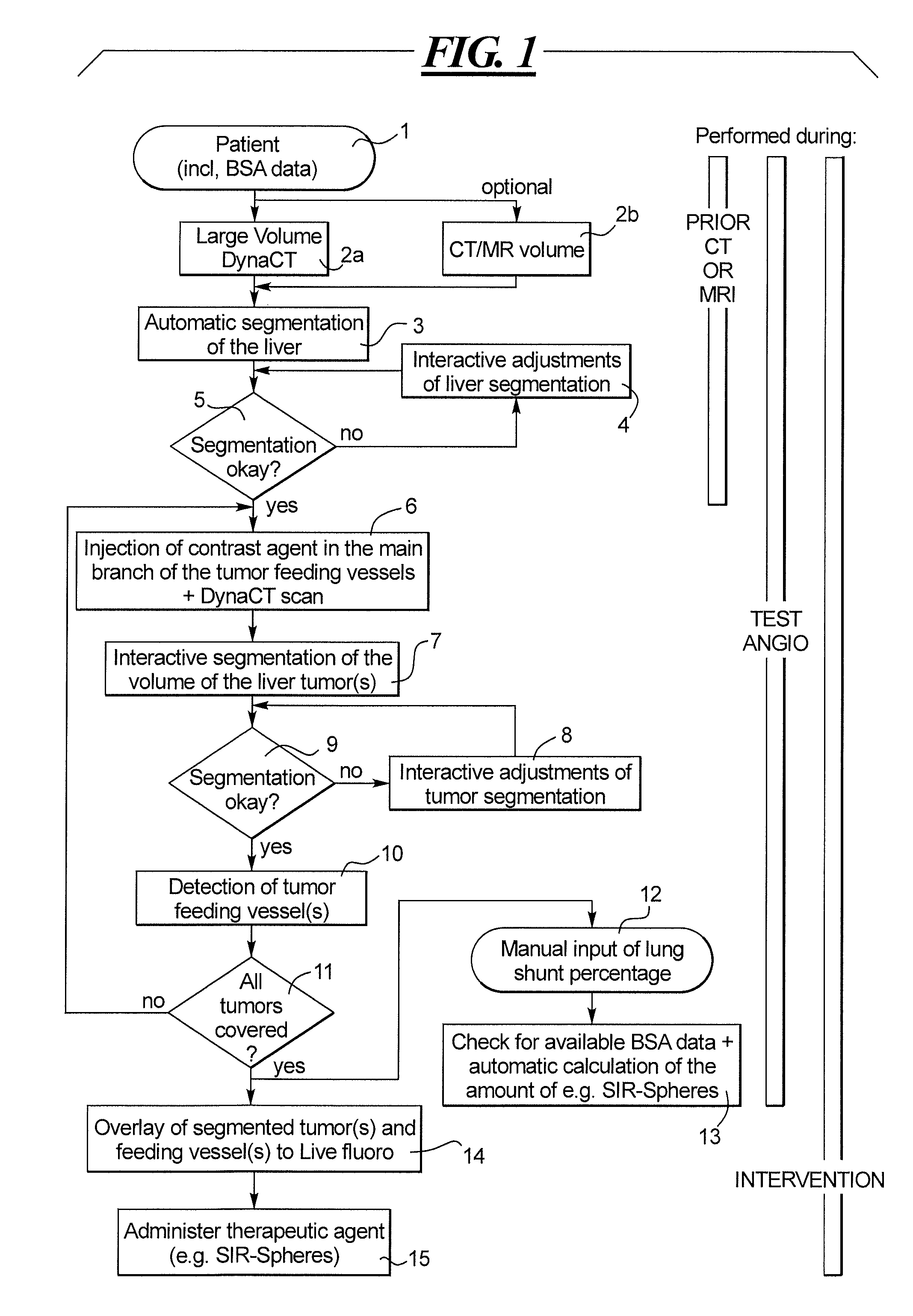

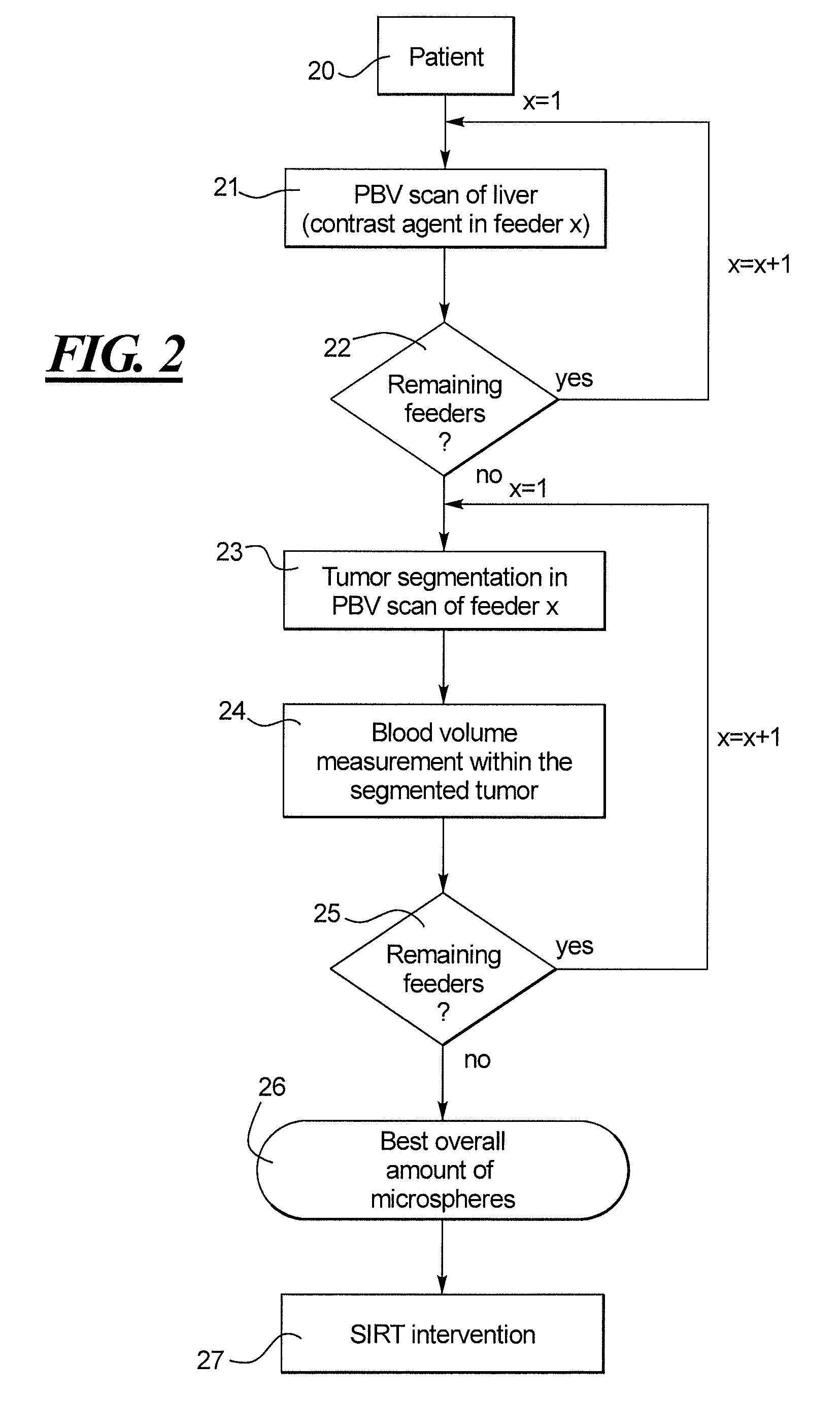

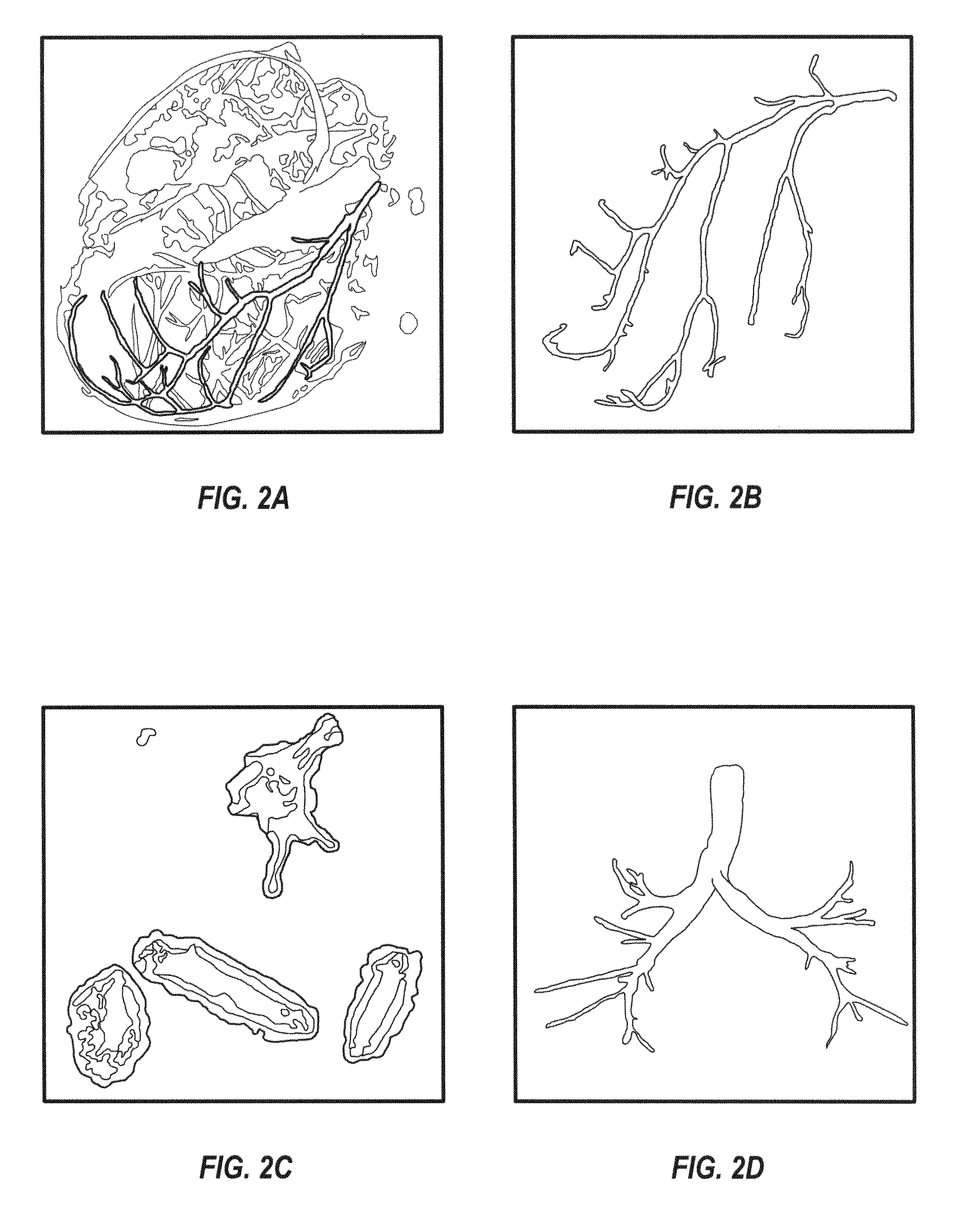

Method and apparatus for selective internal radiation therapy planning and implementation

ActiveUS8738115B2Easy and fast and reliableOptimize successMechanical/radiation/invasive therapiesDrug and medicationsDiagnostic Radiology ModalityTumour volume

In a method and system for planning and implementing a selective internal radiation therapy (SIRT), the liver volume and the tumor volume are automatically calculated in a processor by analysis of items segmented from images obtained from the patient using one or more imaging modalities, with the administration of a contrast agent. The volume of therapeutic agent that is necessary to treat the tumor is automatically calculated from the liver volume, the tumor volume, and the body surface area of the patient and the lung shunt percentage for the patient. The therapeutic agent can be administered via respective feeder vessels in respectively different amounts that correspond to the percentage of blood supply to the tumor from the respective feeder vessels, this distribution also being automatically calculated by analysis of one or more parenchymal blood volume (PBV) images.

Owner:SIEMENS HEALTHCARE GMBH

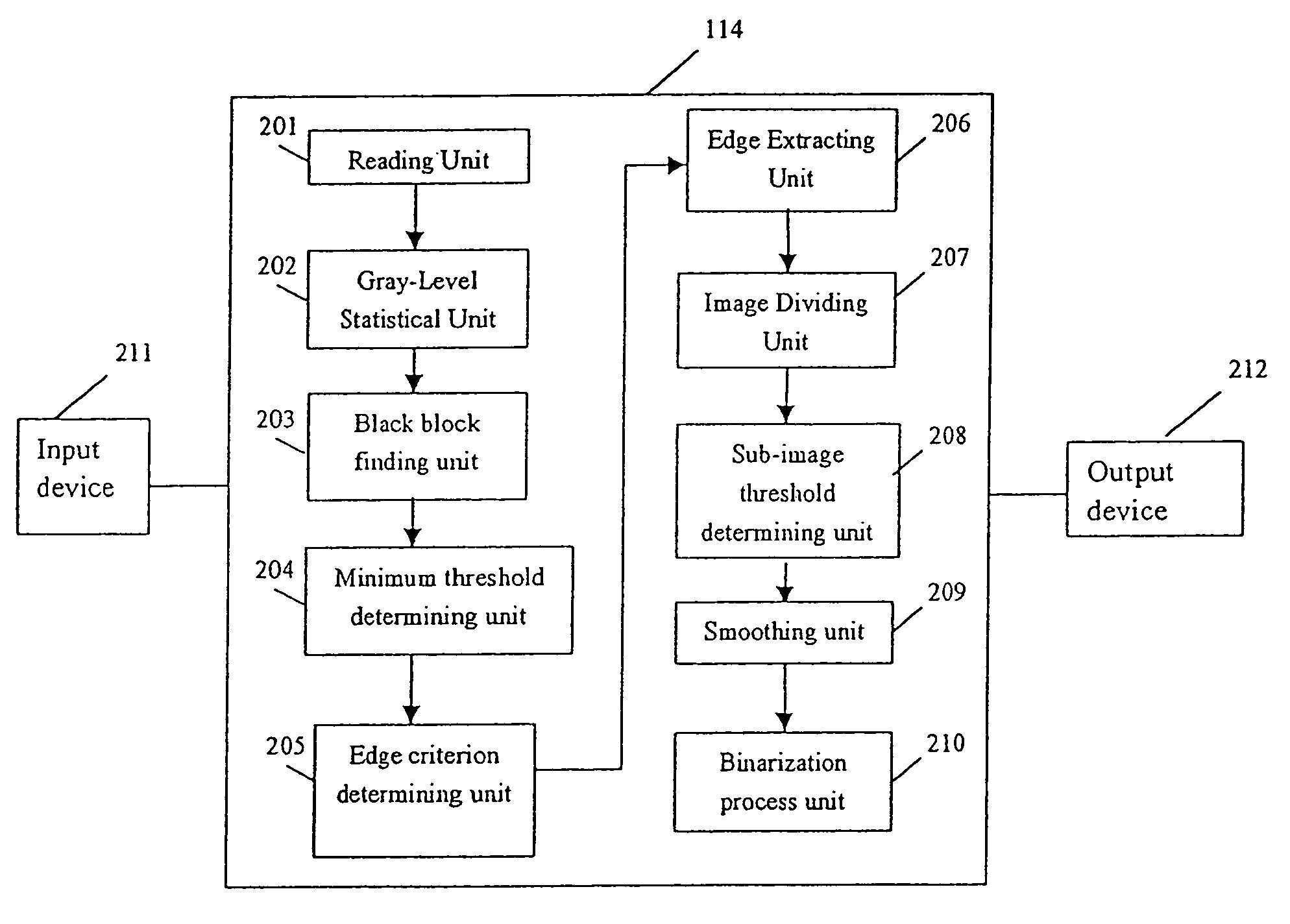

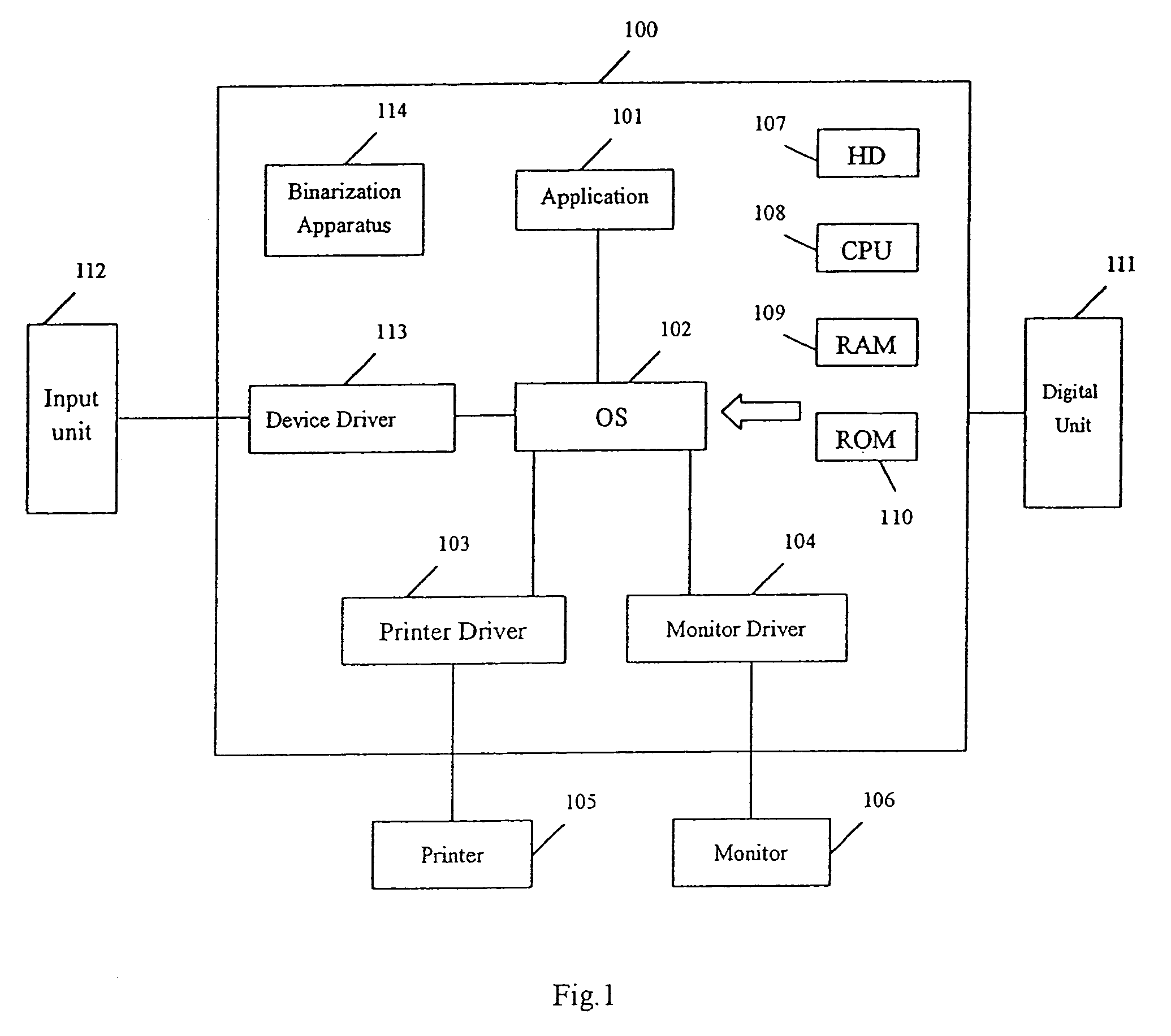

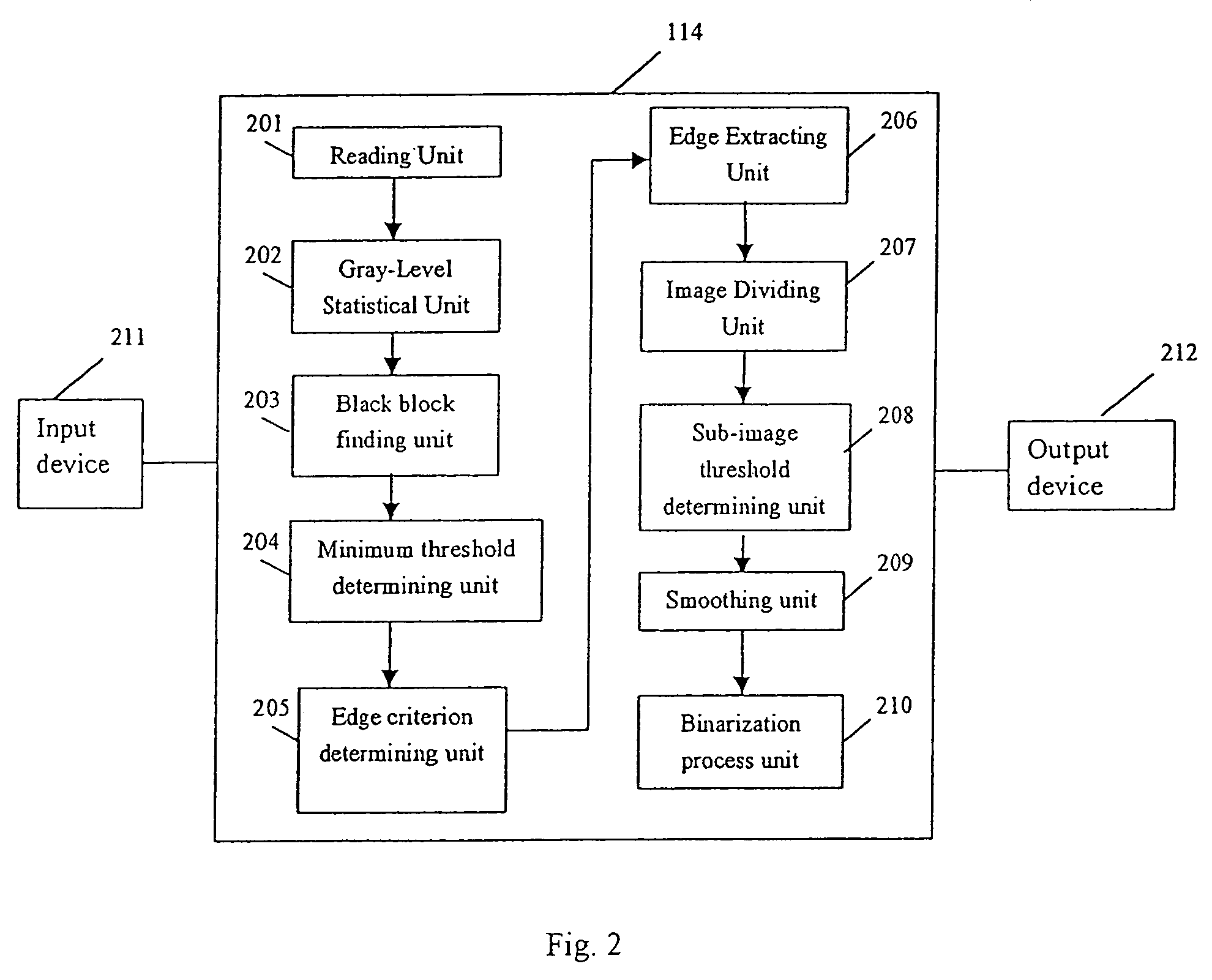

Image processing method and apparatus using self-adaptive binarization

ActiveUS7062099B2Fast and effective binarization processImage enhancementImage analysisPattern recognitionImaging processing

The present invention provides a unique method, apparatus, system and storage medium for image binarization process, wherein an image to be processed is divided into a plurality of sub-images and an binarization threshold for each of the sub-images is determined based on the gray-levels of the edge pixels detected in respective sub-image. The image processing method of the present invention comprises: calculating a gray-level statistical distribution of pixels of an image, detecting edge pixels in an image based on an edge criterion corresponding to the gray-level statistical distribution, dividing the image into a plurality of sub-images; determining a binarization threshold for each of the sub-images based on the gray-levels of edge pixels detected in the sub-image; and performing binarization process for each of the sub-images based on the binarization threshold of the sub-image.

Owner:CANON KK

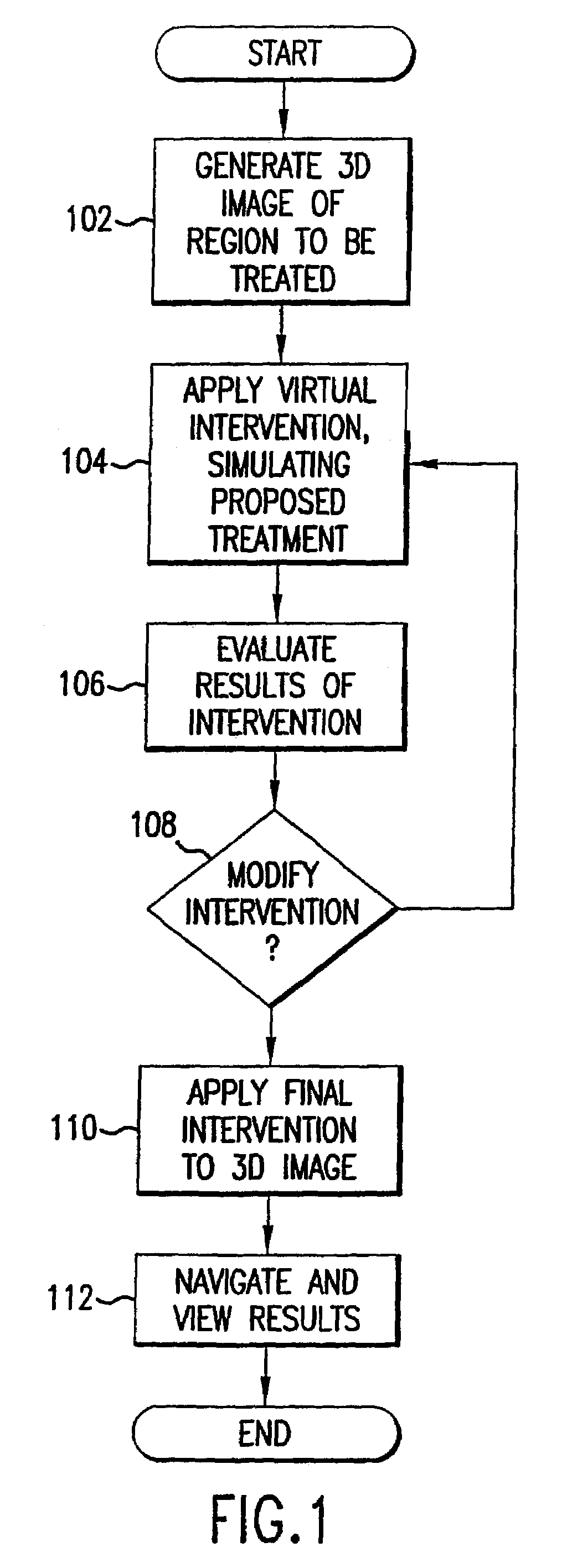

Virtual endoscopy with improved image segmentation and lesion detection

InactiveUS7747055B1Exact matchAccurate representationUltrasonic/sonic/infrasonic diagnosticsImage enhancementBody organsLesion detection

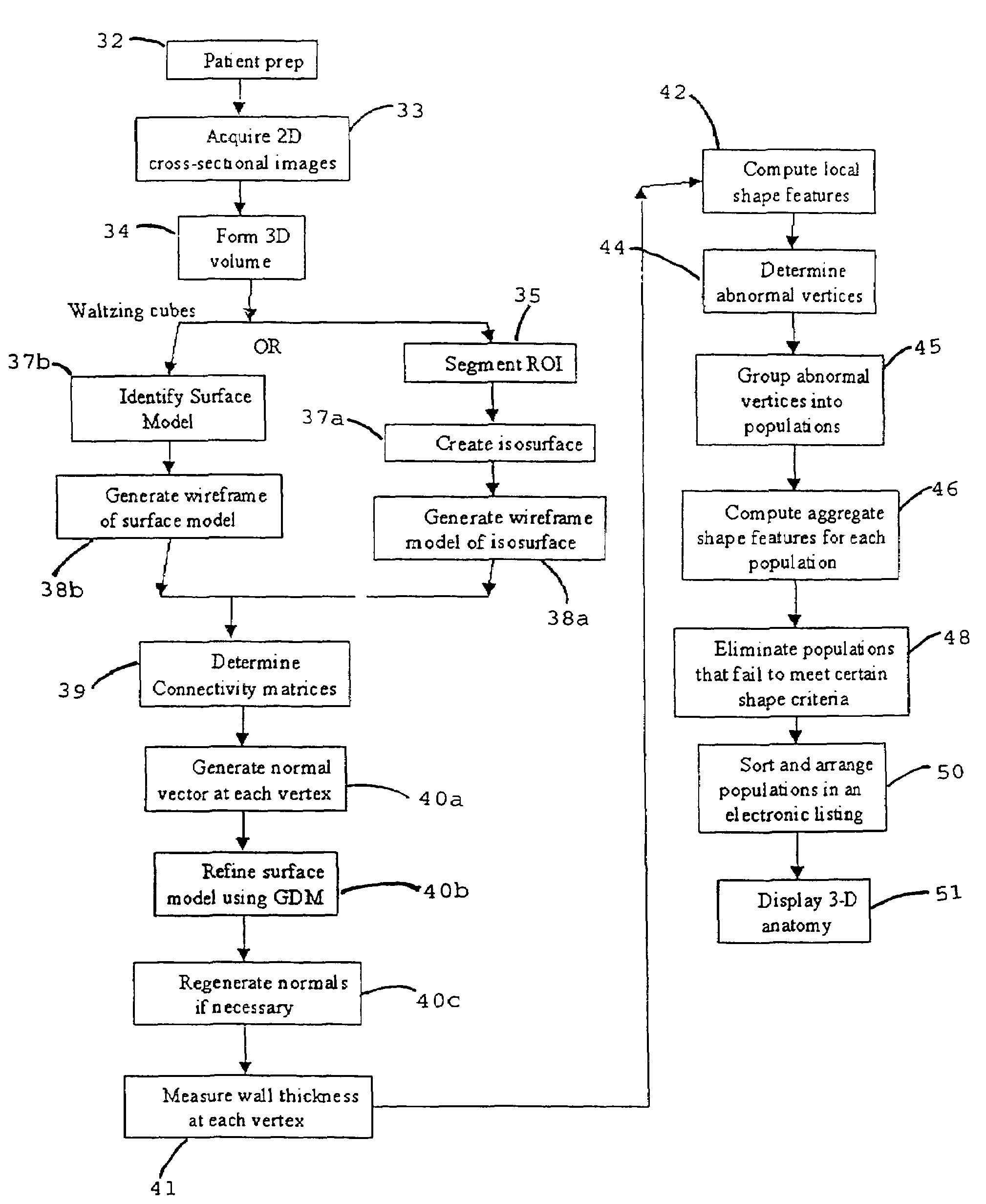

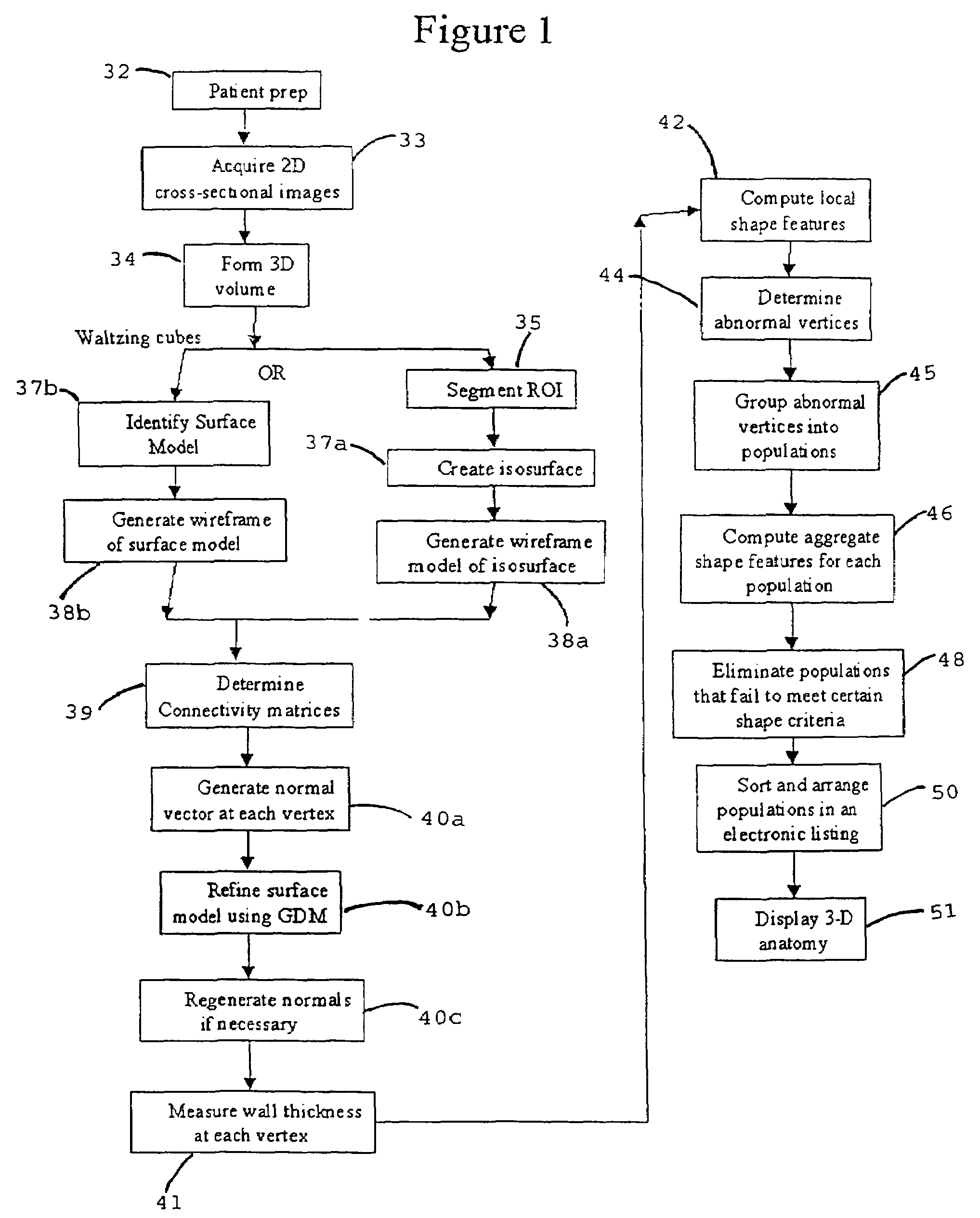

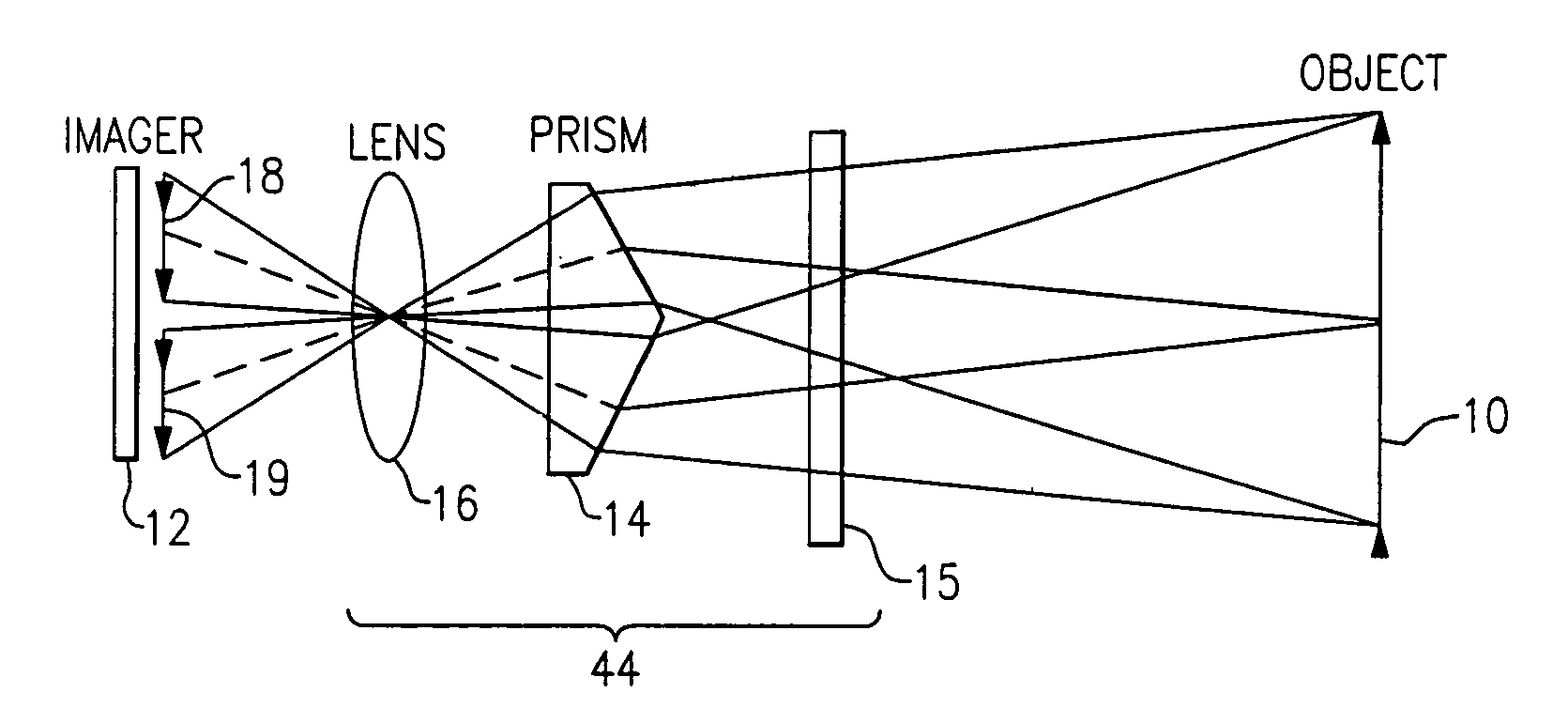

A system, and computer implemented method are provided for interactively displaying three-dimensional structures. Three-dimensional volume data (34) is formed from a series of two-dimensional images (33) representing at least one physical property associated with the three-dimensional structure, such as a body organ having a lumen. A wire frame model of a selected region of interest is generated (38b). The wireframe model is then deformed or reshaped to more accurately represent the region of interest (40b). Vertices of the wire frame model may be grouped into regions having a characteristic indicating abnormal structure, such as a lesion. Finally, the deformed wire frame model may be rendered in an interactive three-dimensional display.

Owner:WAKE FOREST UNIV HEALTH SCI INC

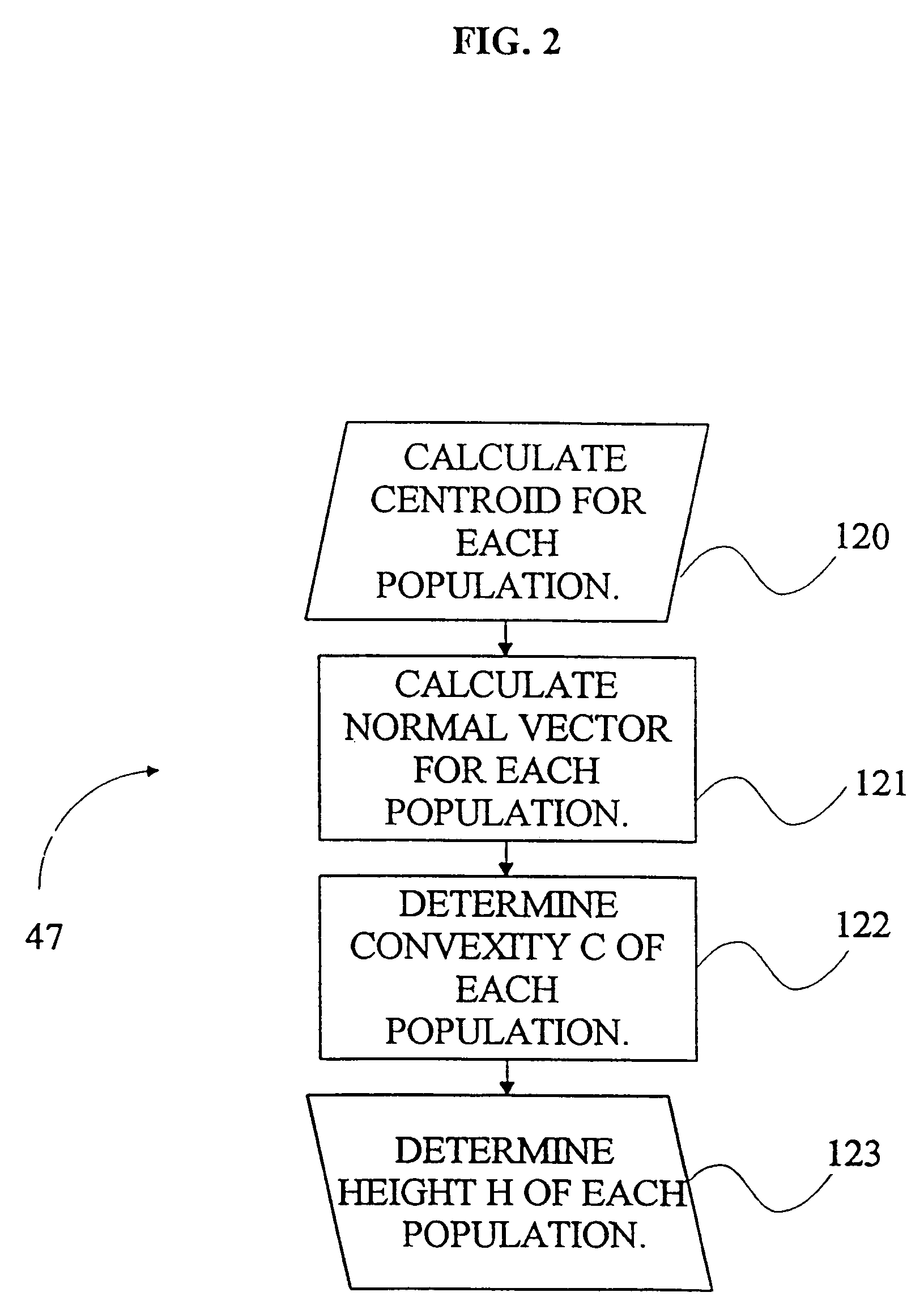

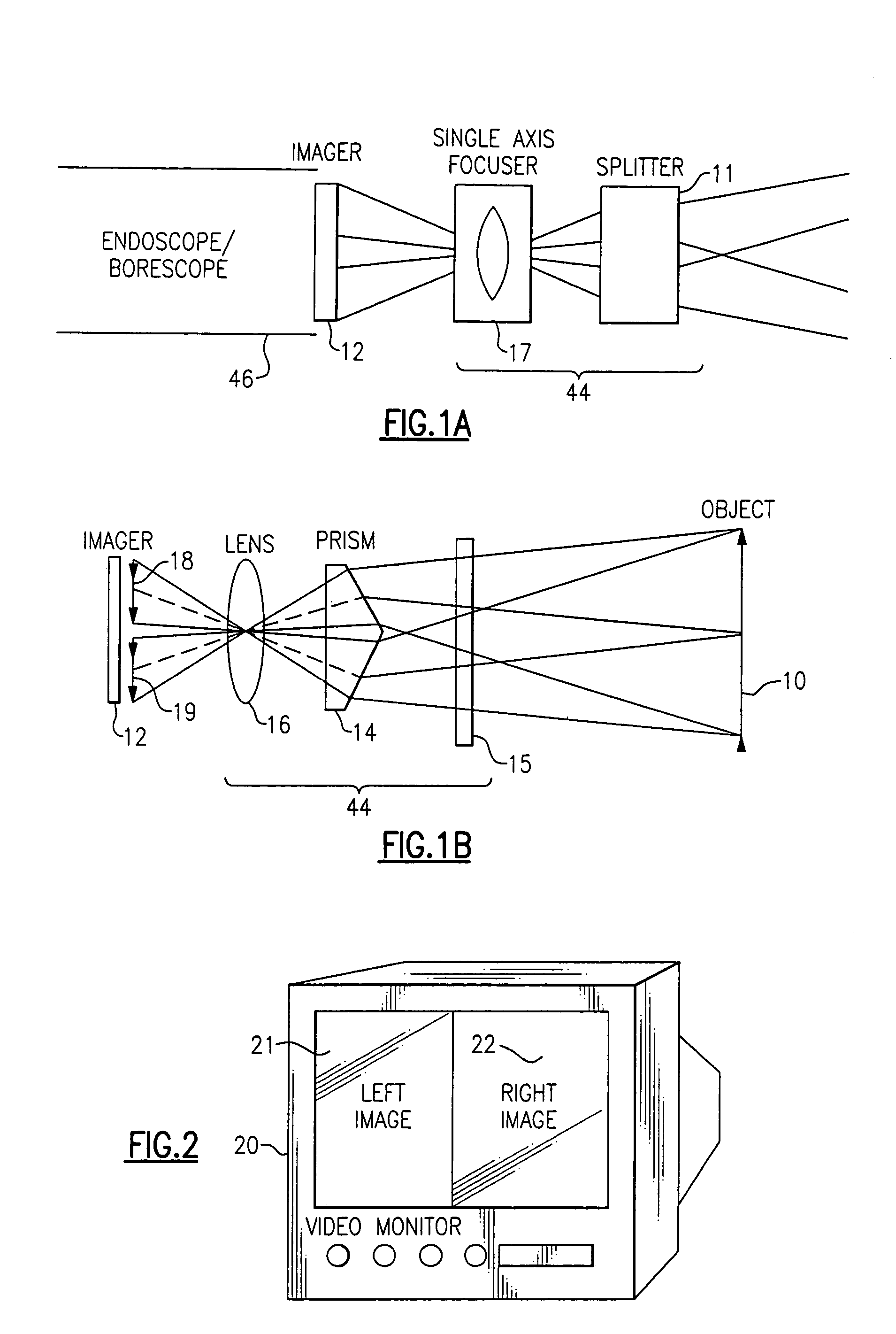

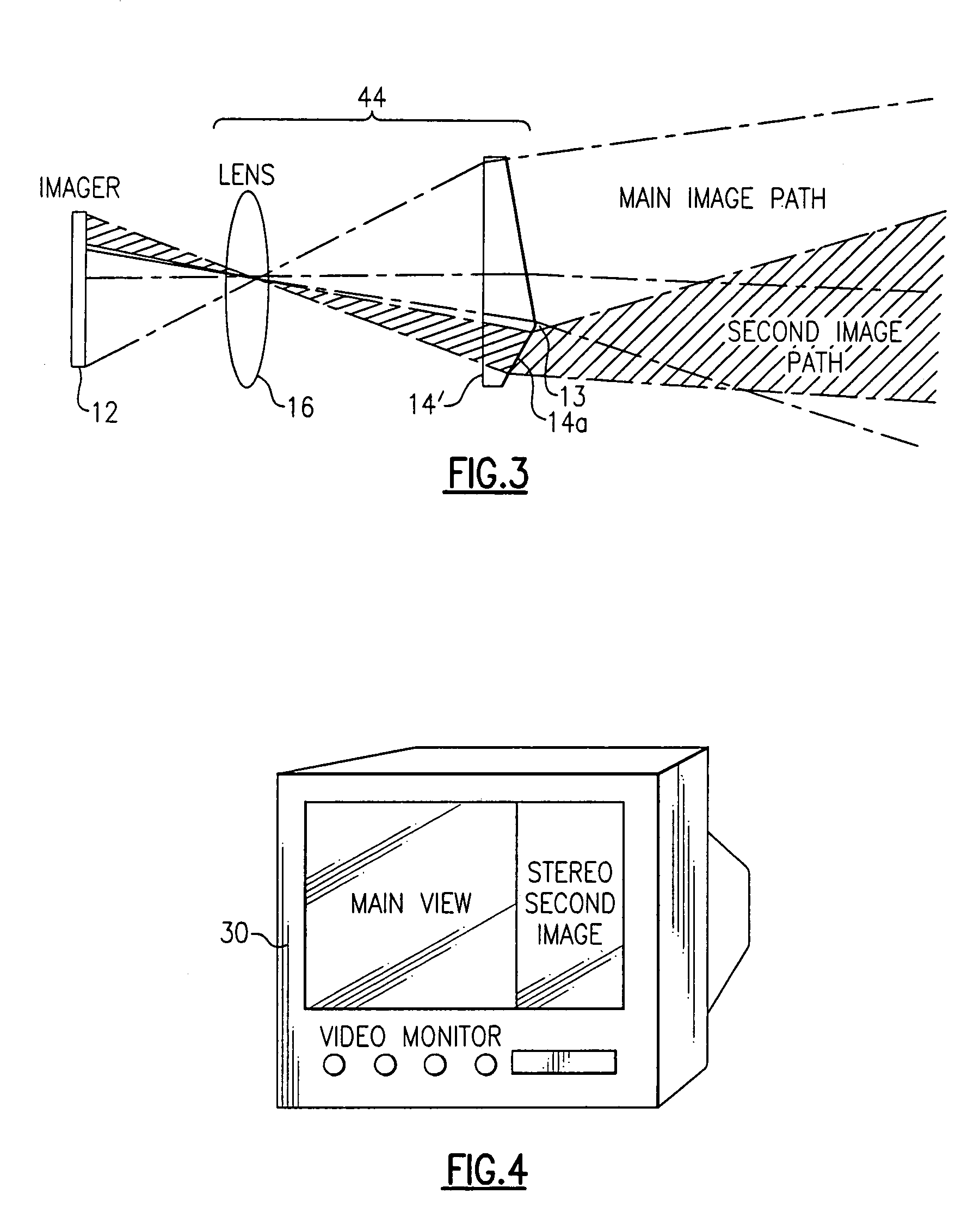

Stereo-measurement borescope with 3-D viewing

Two stereo images are created by splitting a single image into two images using a field of view dividing splitter. The two images can be displayed side by side so that they can be viewed directly using stereopticon technology, heads-up display, or other 3-D display technology, or they can be separated for individual eye viewing. The two images focus on one imager such that the right image appears on the right side of the monitor and the left image appears on the left side of the monitor. The view of the images is aimed to converge at a given object distance such that the views overlap 100% at the object distance. Measurement is done with at least one onscreen cursor.

Owner:GE INSPECTION TECH LP

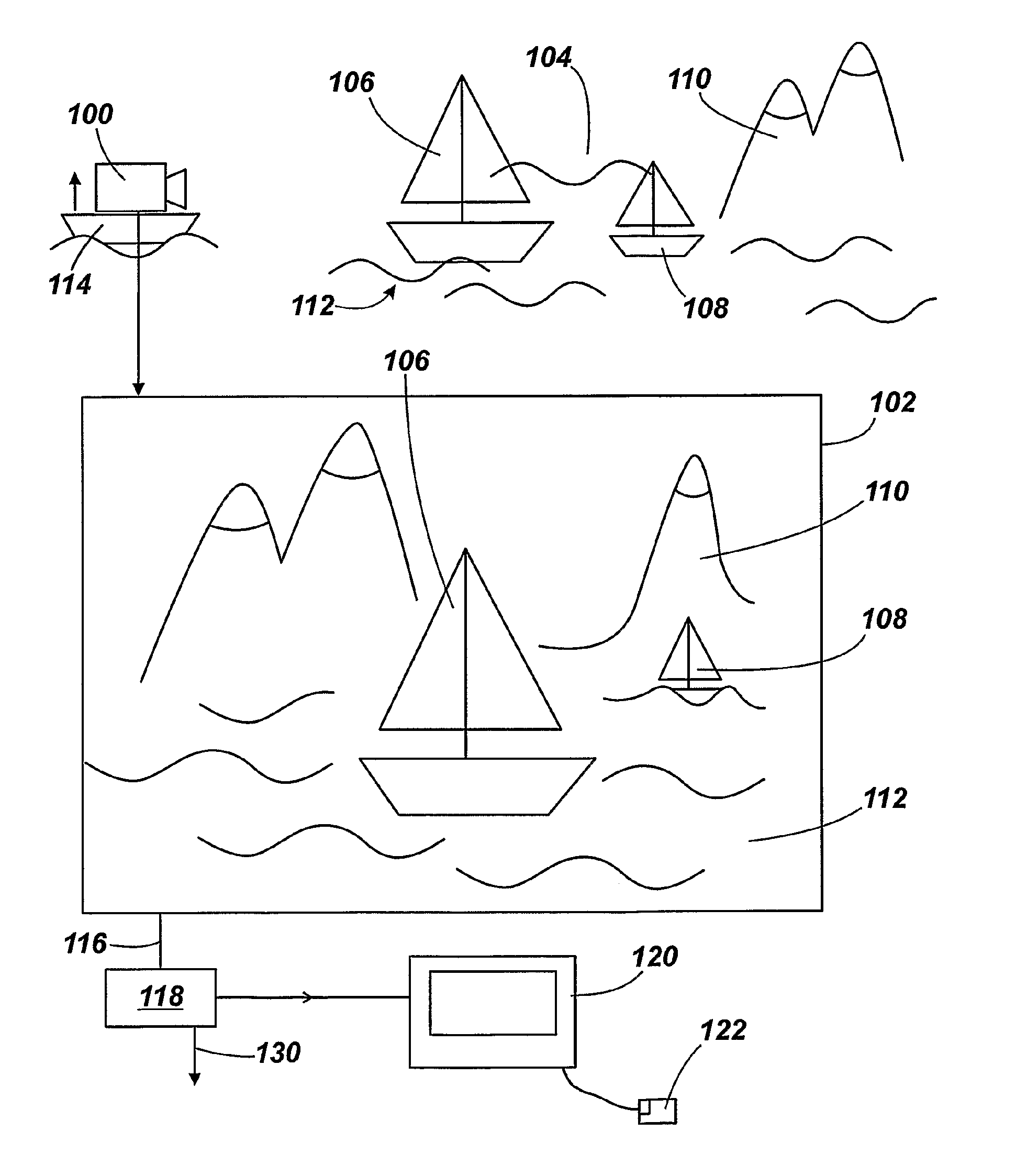

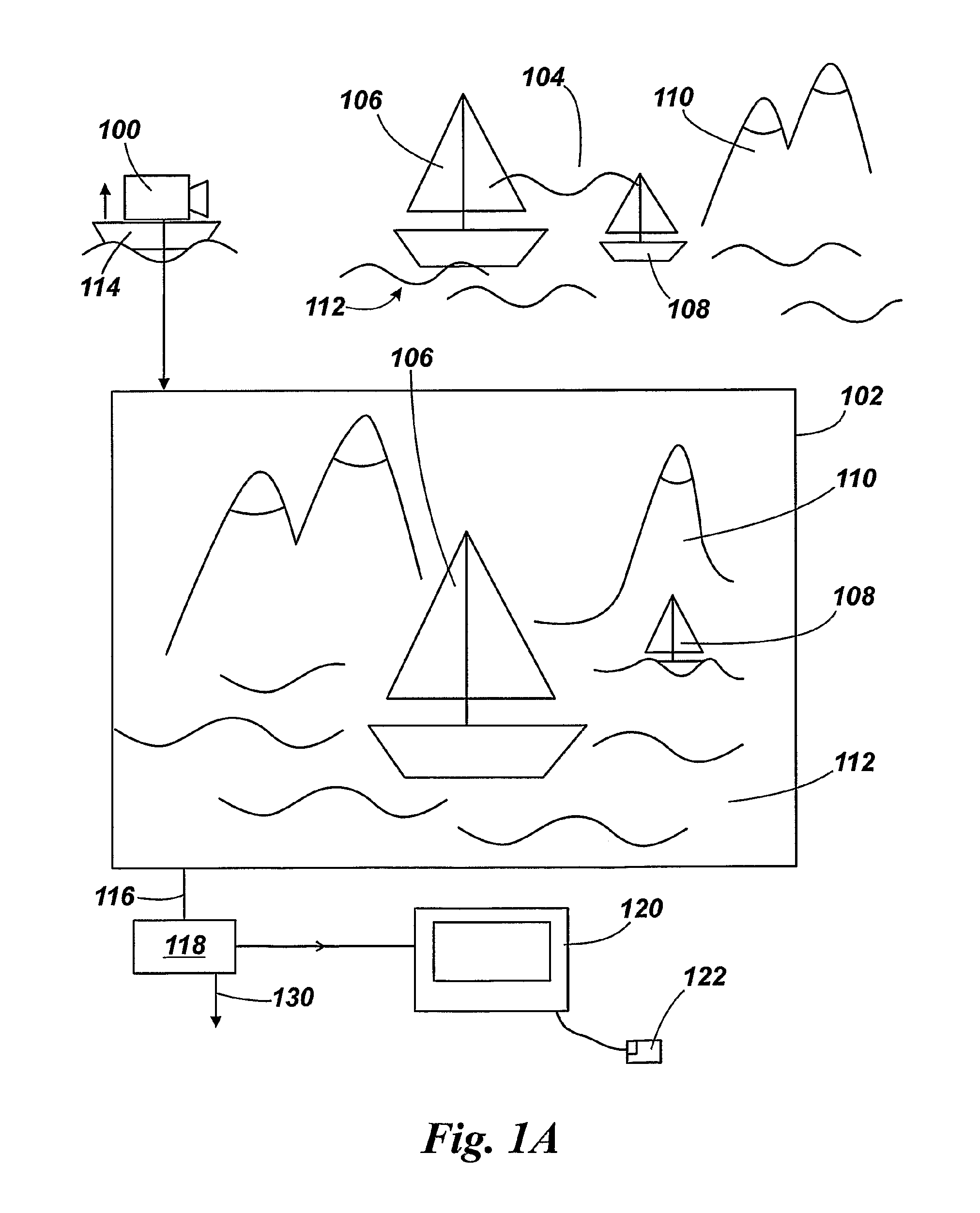

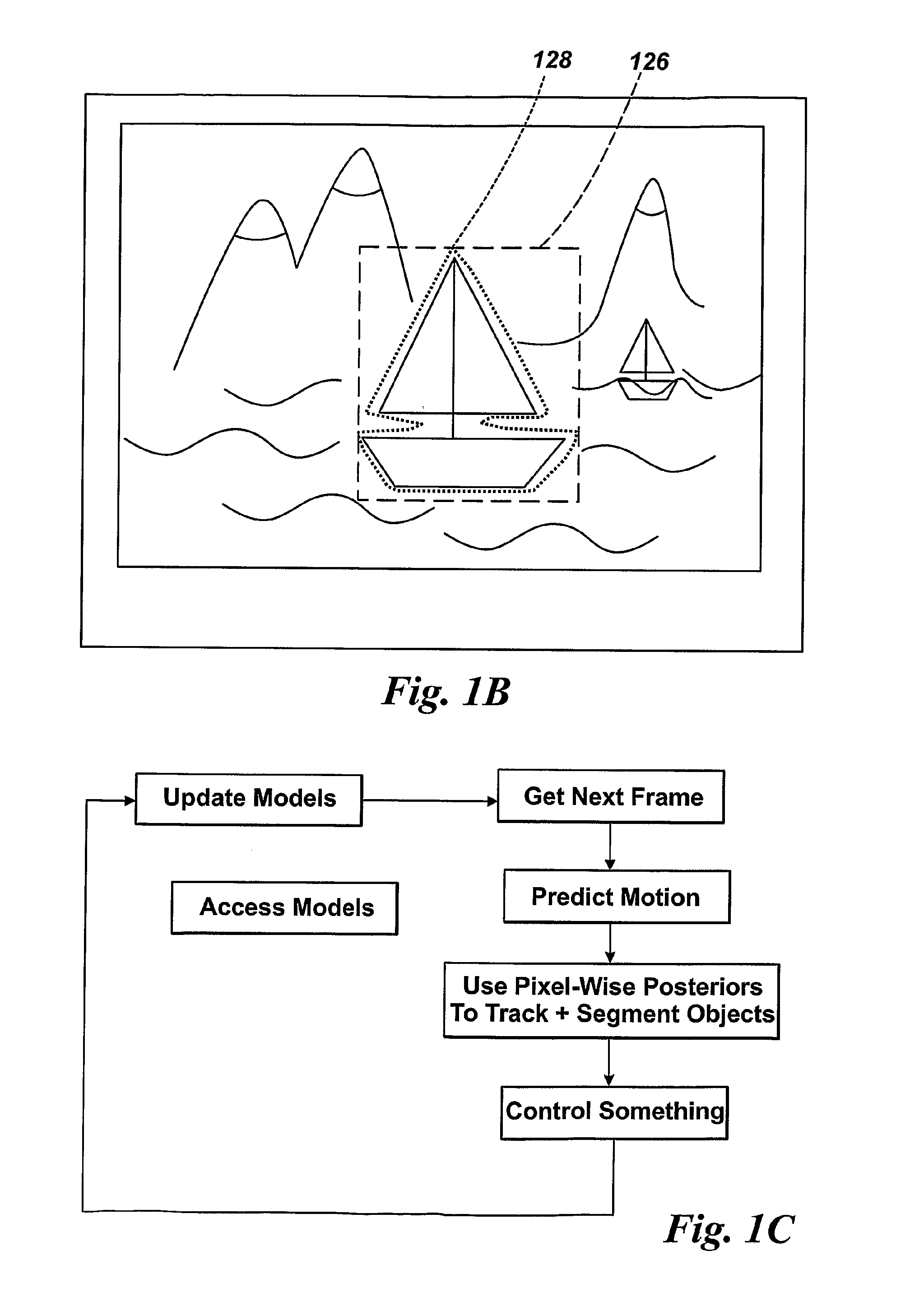

Visual tracking of objects in images, and segmentation of images

InactiveUS20110254950A1Easy to implementPromote resultsImage enhancementImage analysisComputer graphics (images)Radiology

A method is discussed of tracking objects in a series of n-D images (102) that have objects (106, 108) appearing in a background (110, 112), that method comprises using a probabilistic model of the appearance of the objects and of the appearance of the background in the images, and using an evaluation of whether particular pixels in the images (102) are a part of an object (106, 108) or a part of the background (110, 112), that evaluation comprising determining the posterior model probabilities that a particular pixel (x) or group of pixels belongs to an object or to the background, and further comprising marginalising over these object / background membership probabilities to yield a function of the pose parameters of the objects, where at least the object / background membership is adjudged to be a nuisance parameter and marginalised out.

Owner:OXFORD UNIV INNOVATION LTD

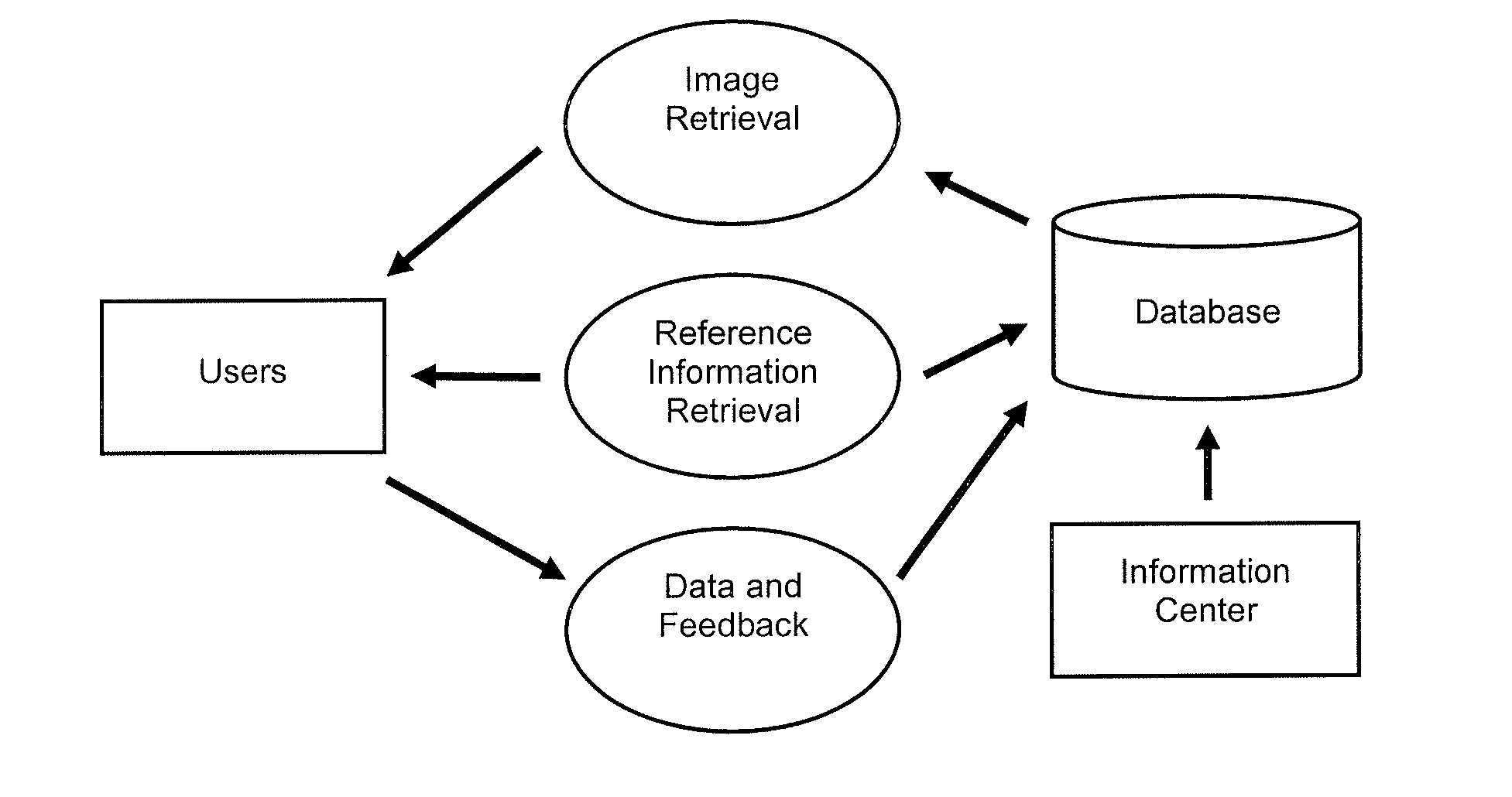

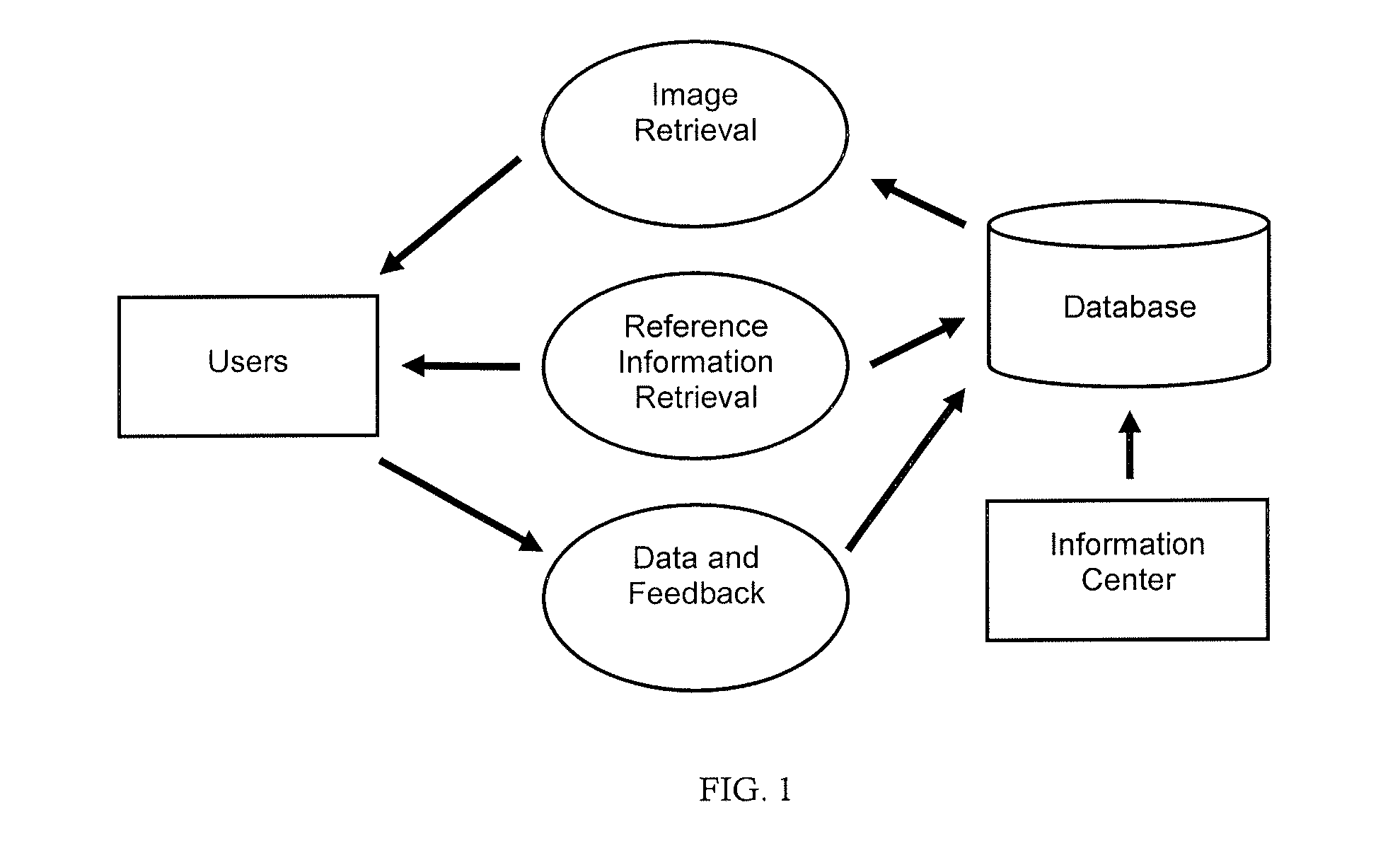

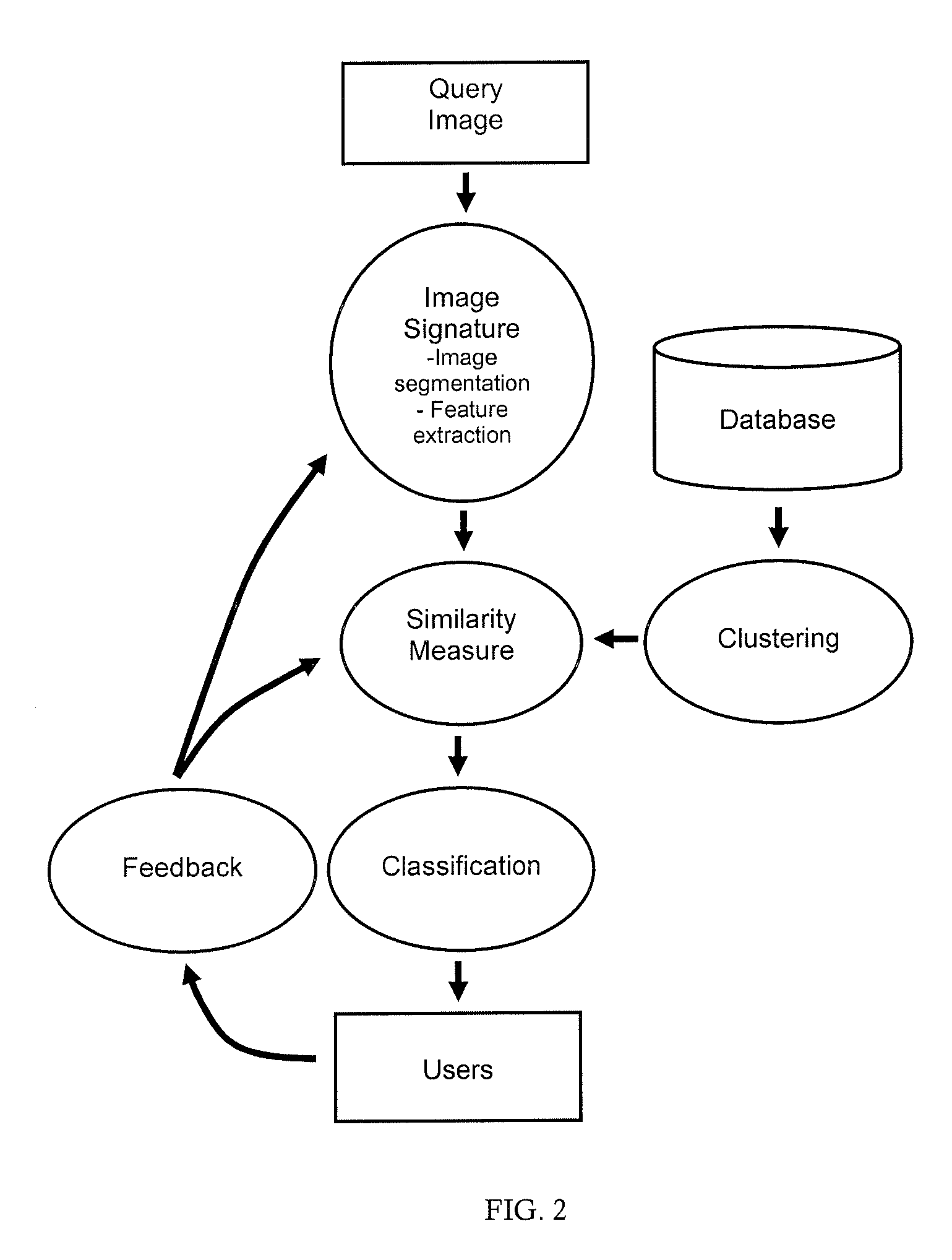

Diagnosis Support System Providing Guidance to a User by Automated Retrieval of Similar Cancer Images with User Feedback

InactiveUS20120283574A1Improve proficiencyIncrease diagnostic powerDigital data information retrievalImage analysisDiseaseImage retrieval

The present invention is a diagnosis support system providing automated guidance to a user by automated retrieval of similar disease images and user feedback. High resolution standardized labeled and unlabeled, annotated and non-annotated images of diseased tissue in a database are clustered, preferably with expert feedback. An image retrieval application automatically computes image signatures for a query image and a representative image from each cluster, by segmenting the images into regions and extracting image features in the regions to produce feature vectors, and then comparing the feature vectors using a similarity measure. Preferably the features of the image signatures are extended beyond shape, color and texture of regions, by features specific to the disease. Optionally, the most discriminative features are used in creating the image signatures. A list of the most similar images is returned in response to a query. Keyword query is also supported.

Owner:STI MEDICAL SYST

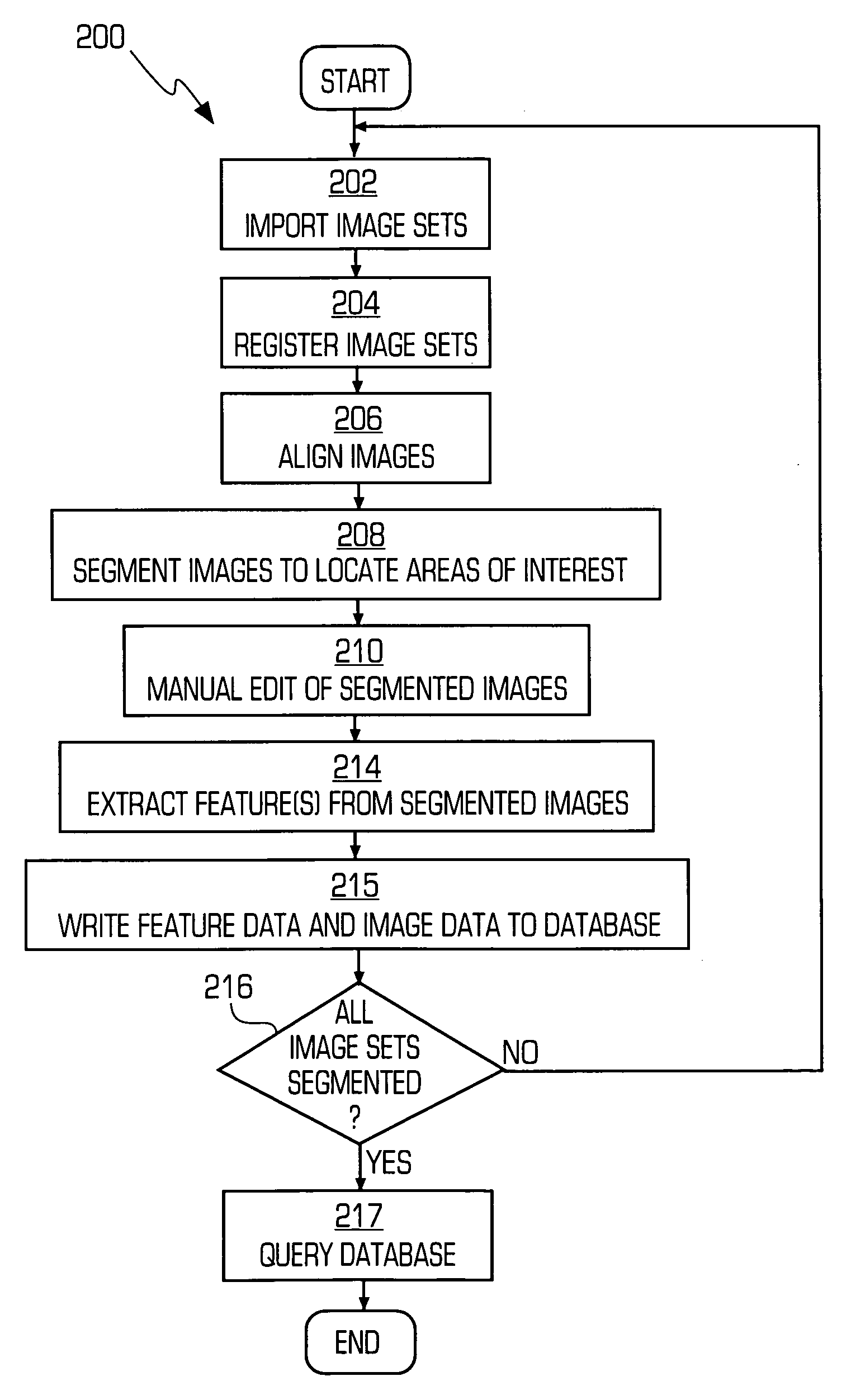

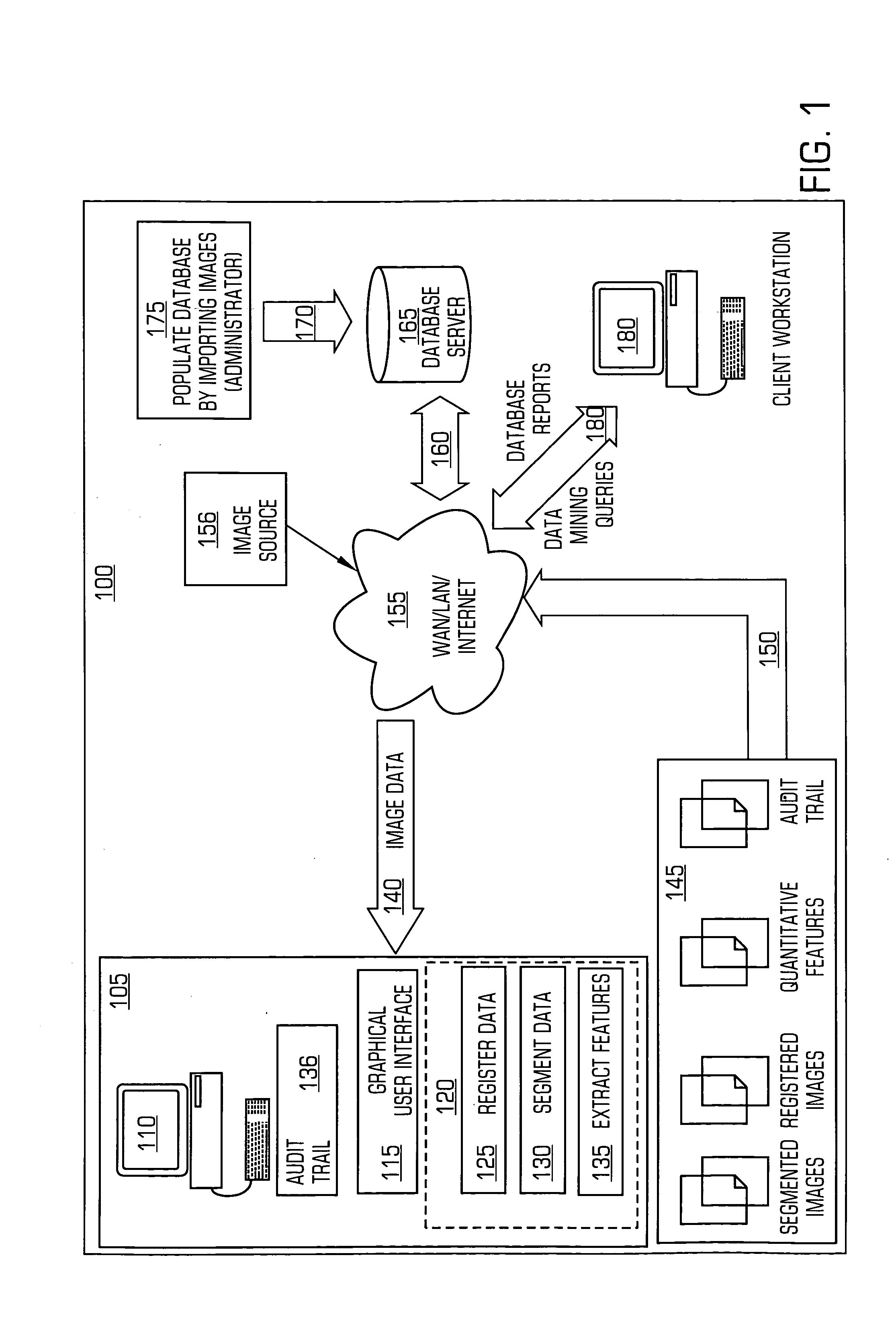

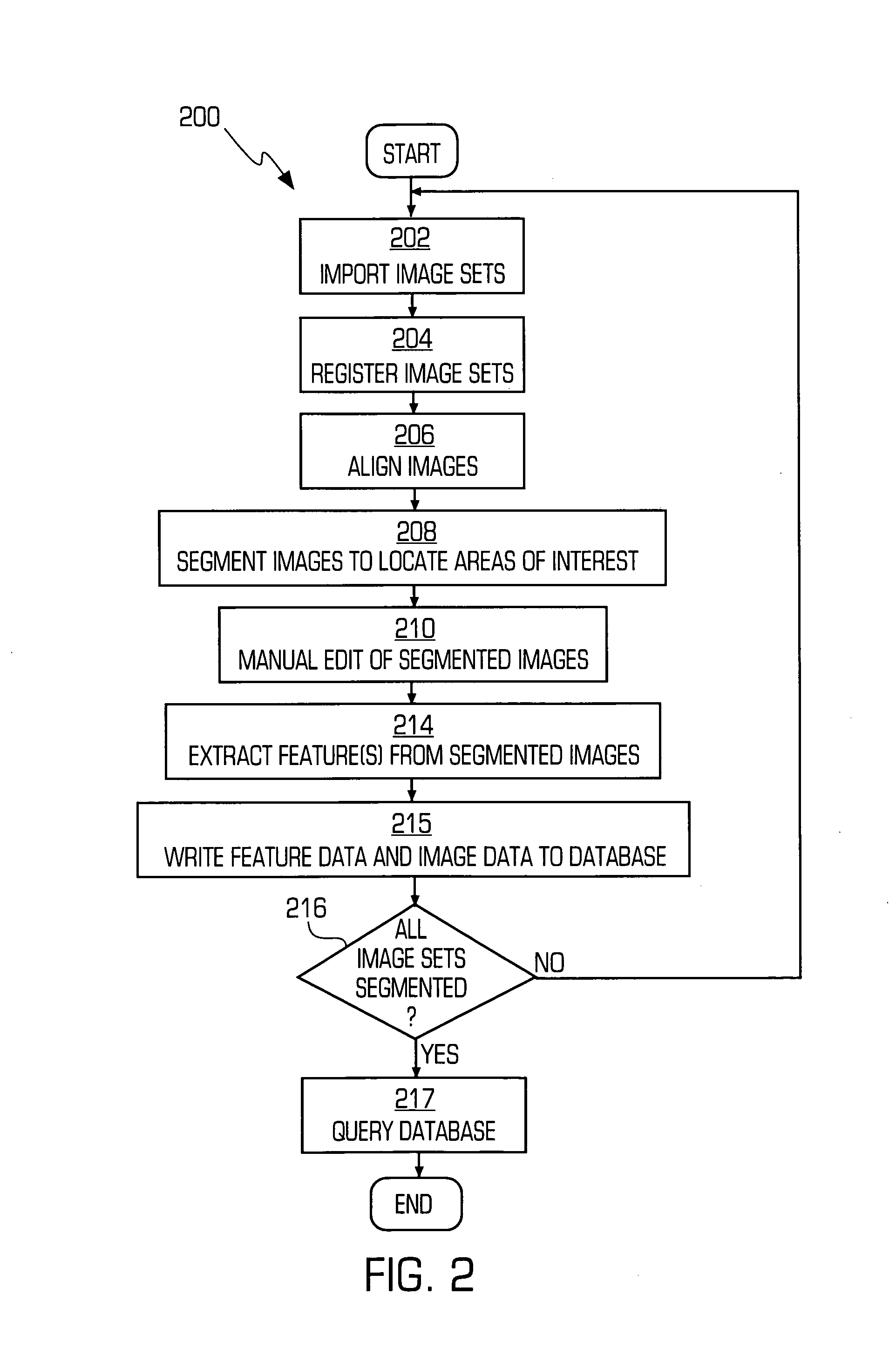

System and method for mining quantitive information from medical images

ActiveUS7158692B2Enhancing clinical diagnosisImprove researchMedical data miningRecognition of medical/anatomical patternsGraphicsFeature extraction

A system for image registration and quantitative feature extraction for multiple image sets. The system includes an imaging workstation having a data processor and memory in communication with a database server in communication. The a data processor capable of inputting and outputting data and instructions to peripheral devices and operating pursuant to a software product and accepts instructions from a graphical user interface capable of interfacing with and navigating the imaging software product. The imaging software product is capable of instructing the data processor, to register images, segment images and to extract features from images and provide instructions to store and retrieve one or more registered images, segmented images, quantitative image features and quantitative image data and from the database server.

Owner:CLOUD SOFTWARE GRP INC

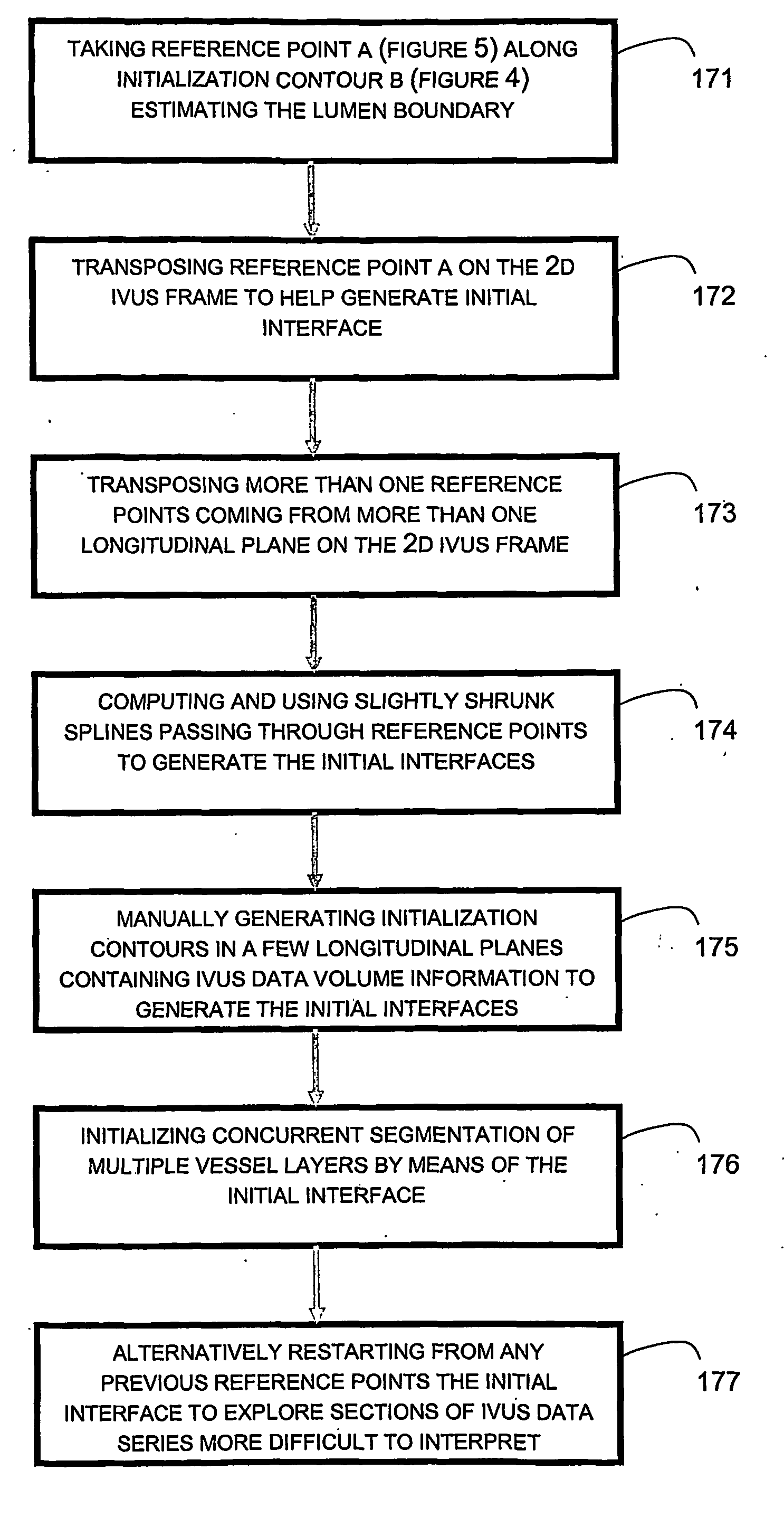

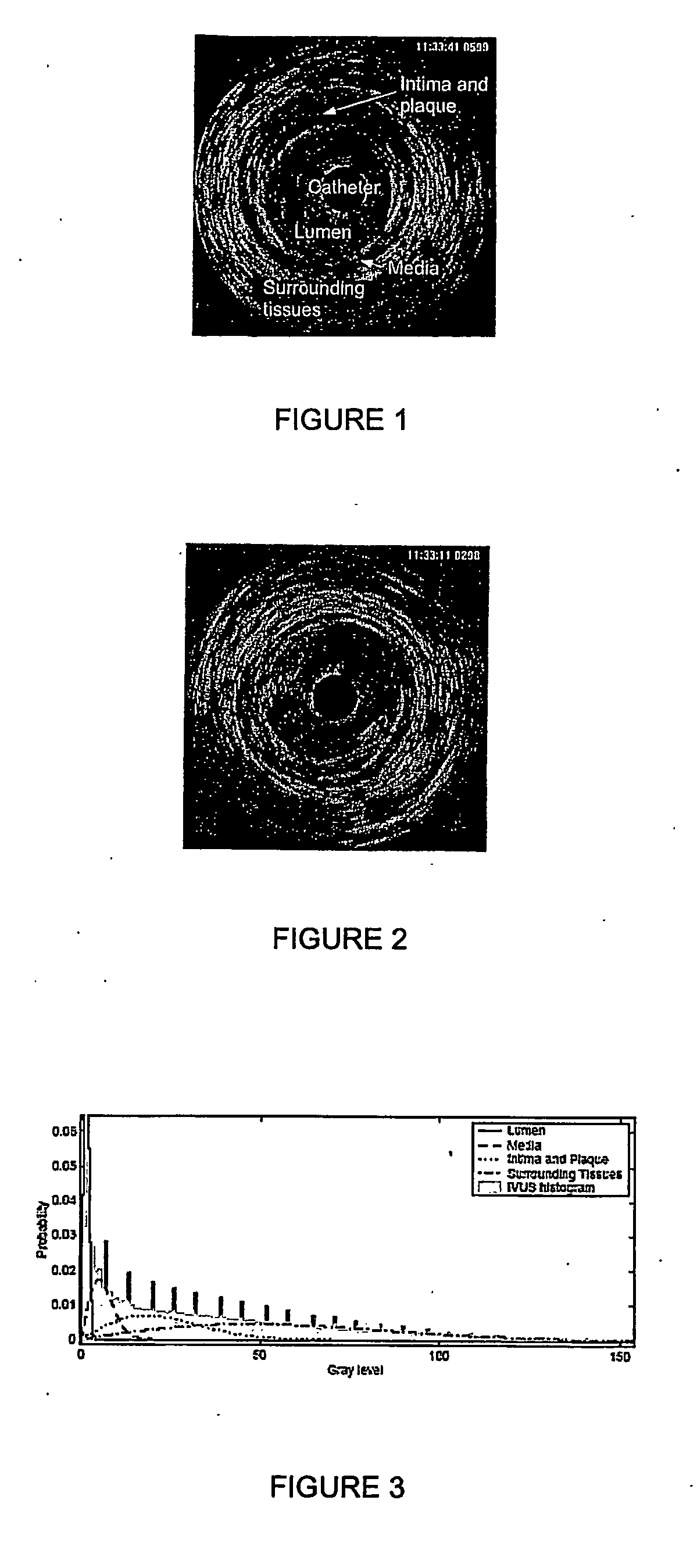

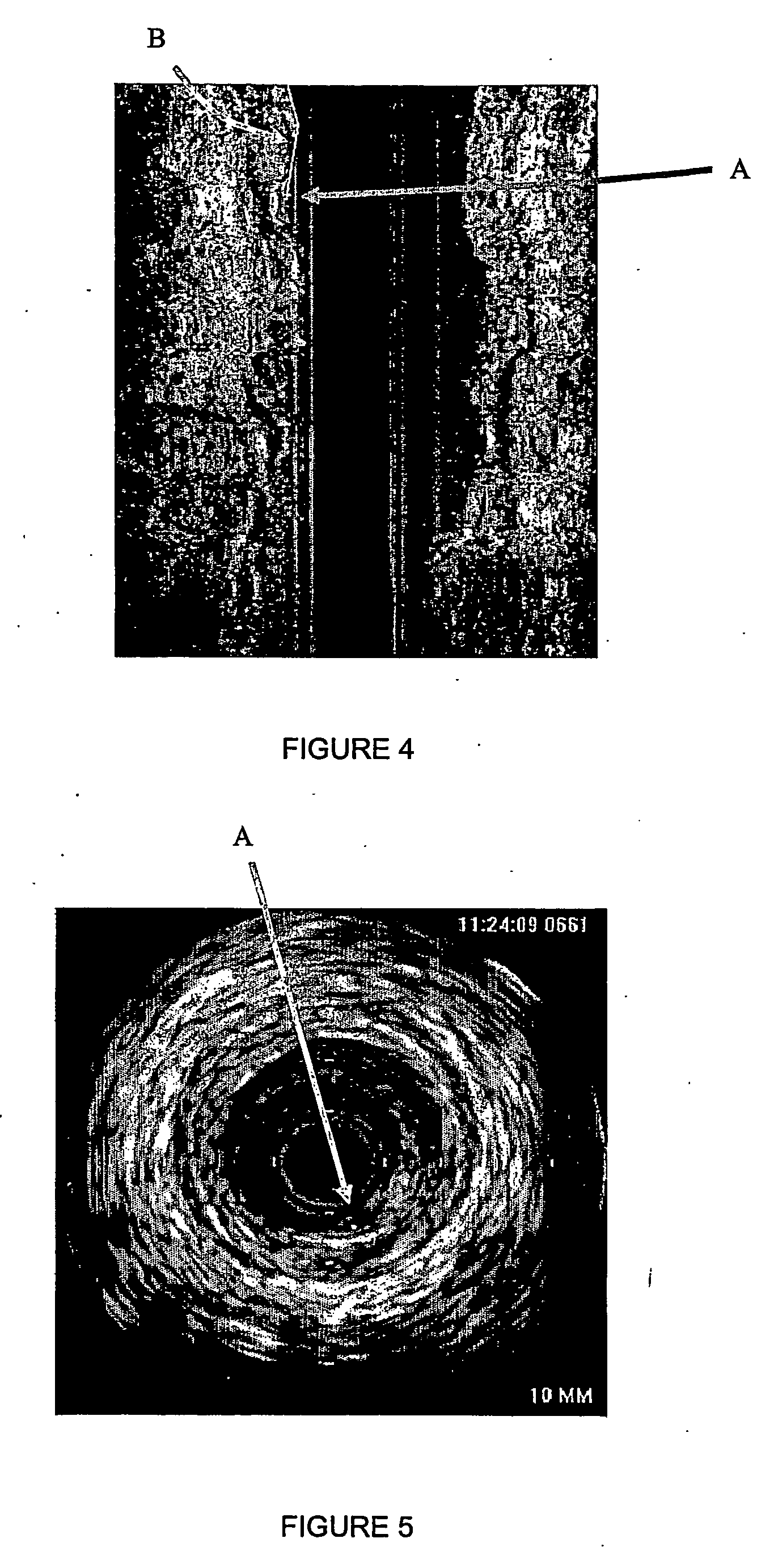

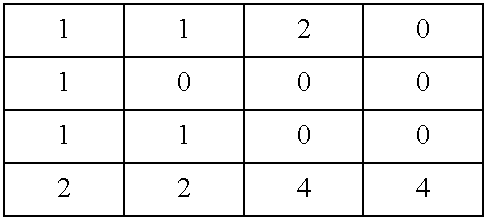

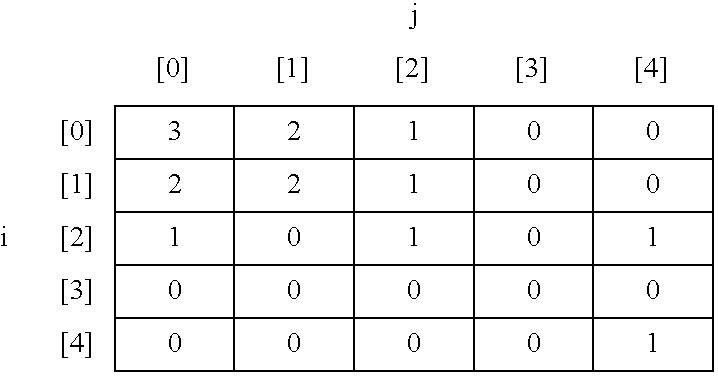

Automatic multi-dimensional intravascular ultrasound image segmentation method

The present invention generally relates to intravascular ultrasound (IVUS) image segmentation methods, and is more specifically concerned with an intravascular ultrasound image segmentation method for characterizing blood vessel vascular layers. The proposed image segmentation method for estimating boundaries of layers in a multi-layered vessel provides image data which represent a plurality of image elements of the multi-layered vessel. The method also determines a plurality of initial interfaces corresponding to regions of the image data to segment and further concurrently propagates the initial interfaces corresponding to the regions to segment. The method thereby allows to estimate the boundaries of the layers of the multi-layered vessel by propagating the initial interfaces using a fast marching model based on a probability function which describes at least one characteristic of the image elements.

Owner:VAL CHUM PARTNERSHIP +1

Apparatus and method for statistical image analysis

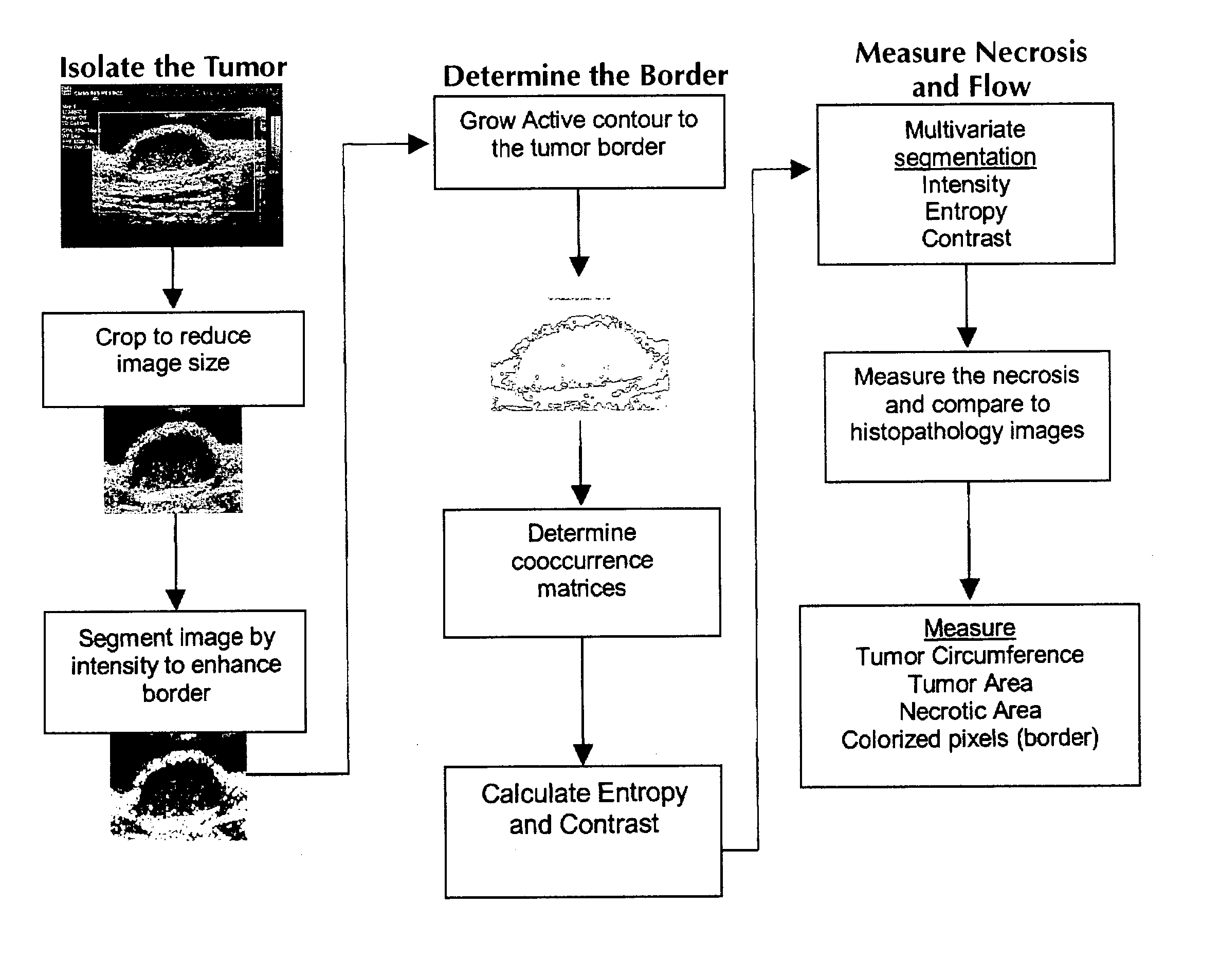

An apparatus, system, method, and computer readable medium containing computer-executable code for implementing image analysis uses multivariate statistical analysis of sample images, and allows segmentation of the image into different groups or classes, depending on a correlation to one or more sample textures, or sample surface features. In one embodiment, the invention performs multivariate statistical analysis of ultrasound images, wherein a tumor may be characterized by segmenting viable tissue from necrotic tissue, allowing for more detailed in vivo analysis of tumor growth beyond simple dimensional measurements or univariate statistical analysis. Application of the apparatus and method may also be used for characterizing other types of samples having textured features including, for example, tumor angiogenesis biomarkers from Power Doppler.

Owner:PFIZER INC

Method and apparatus for matching portions of input images

InactiveUS20070185946A1Overcome difficultiesLinear complexityImage enhancementTelevision system detailsAmbiguityImage segmentation

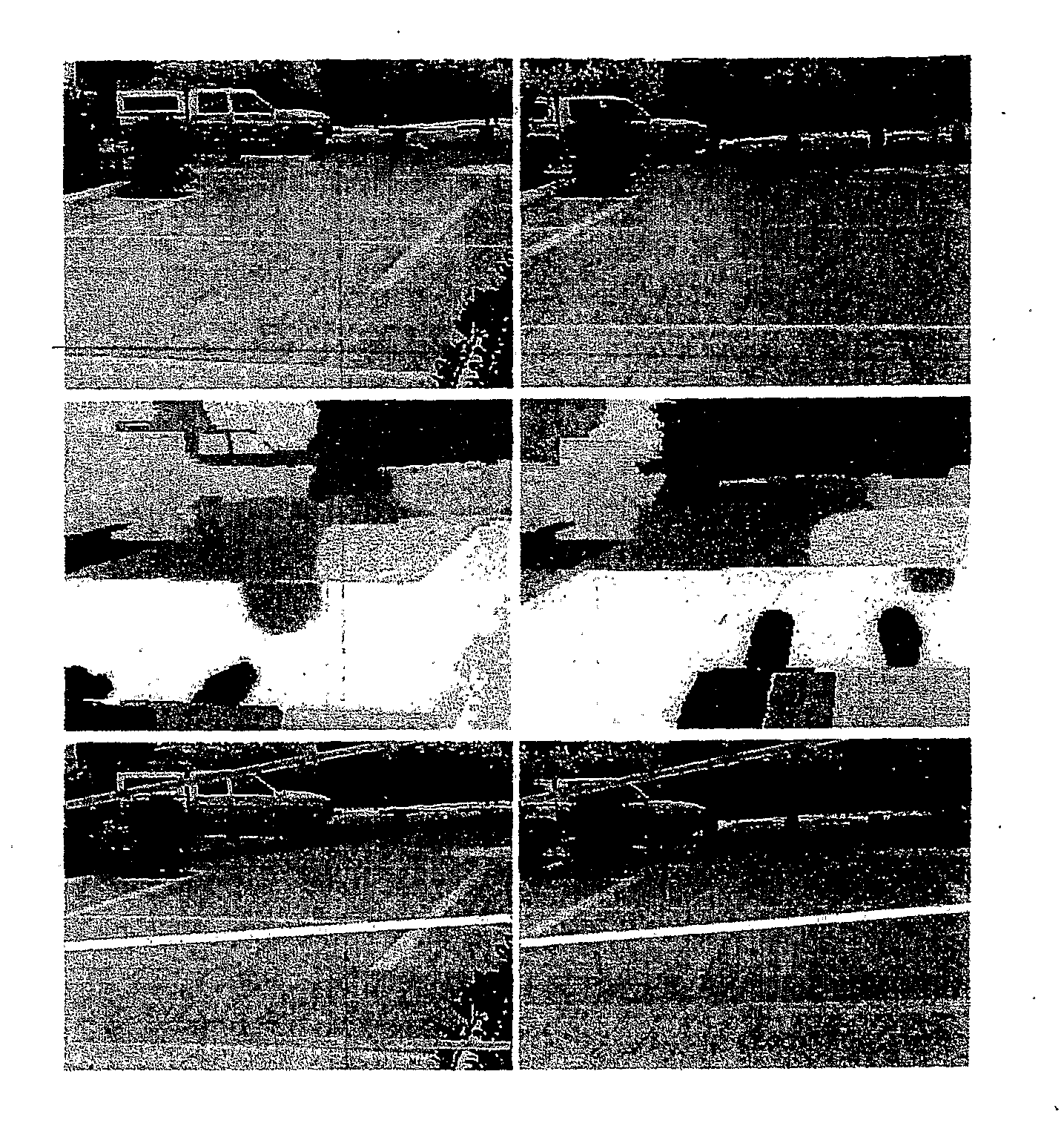

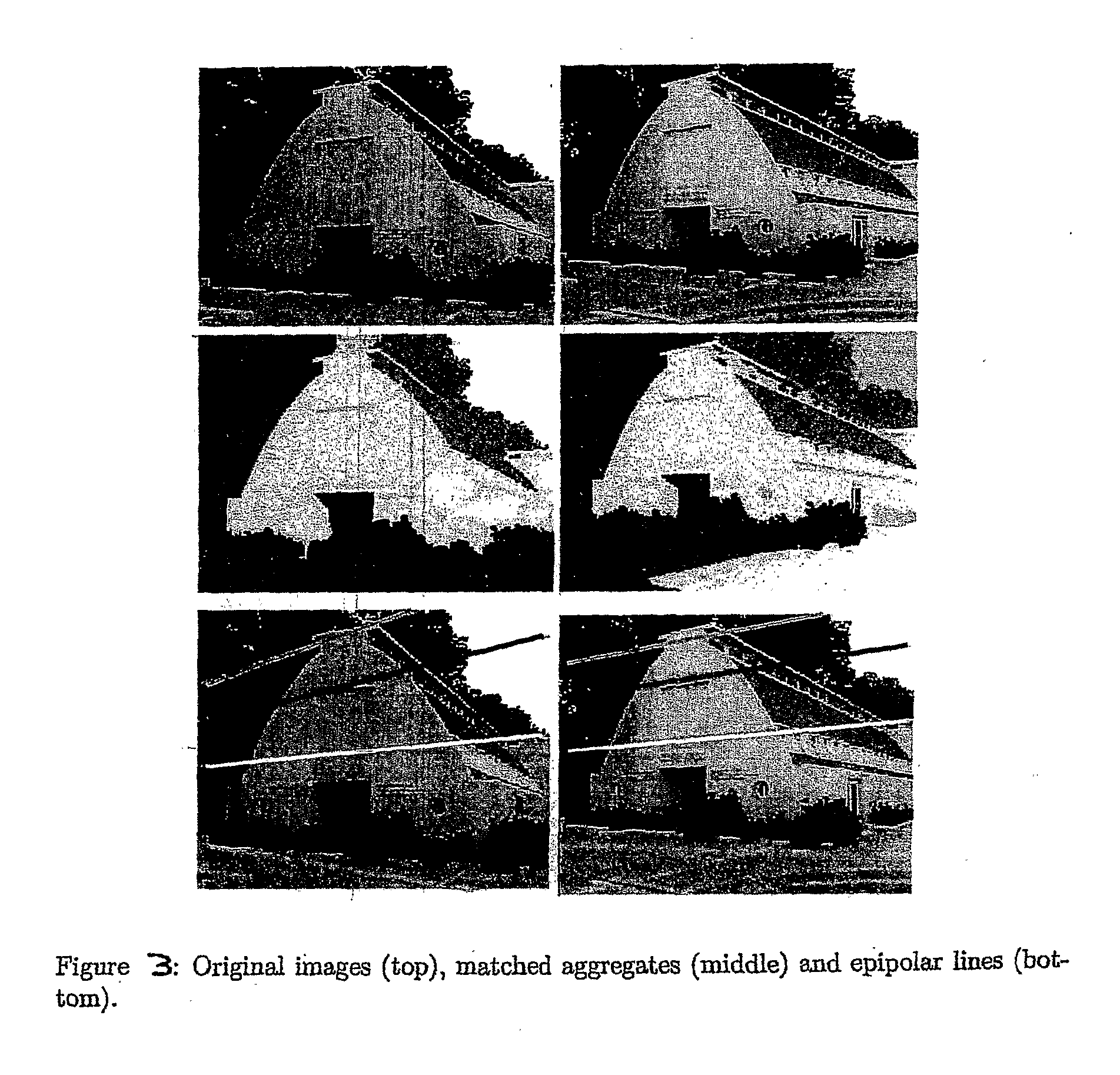

A method and apparatus for finding correspondence between portions of two images that first subjects the two images to segmentation by weighted aggregation (10), then constructs directed acylic graphs (16,18) from the output of the segmentation by weighted aggregation to obtain hierarchical graphs of aggregates (20,22), and finally applies a maximally weighted subgraph isomorphism to the hierarchical graphs of aggregates to find matches between them (24). Two algorithms are described; one seeks a one-to-one matching between regions, and the other computes a soft matching, in which is an aggregate may have more than one corresponding aggregate. A method and apparatus for image segmentation based on motion cues. Motion provides a strong cue for segmentation. The method begins with local, ambiguous optical flow measurements. It uses a process of aggregation to resolve the ambiguities and reach reliable estimates of the motion. In addition, as the process of aggregation proceeds and larger aggregates are identified, it employs a progressively more complex model to describe the motion. In particular, the method proceeds by recovering translational motion at fine levels, through affine transformation at intermediate levels, to 3D motion (described by a fundamental matrix) at the coarsest levels. Finally, the method is integrated with a segmentation method that uses intensity cues. The utility of the method is demonstrated on both random dot and real motion sequences.

Owner:YEDA RES & DEV CO LTD

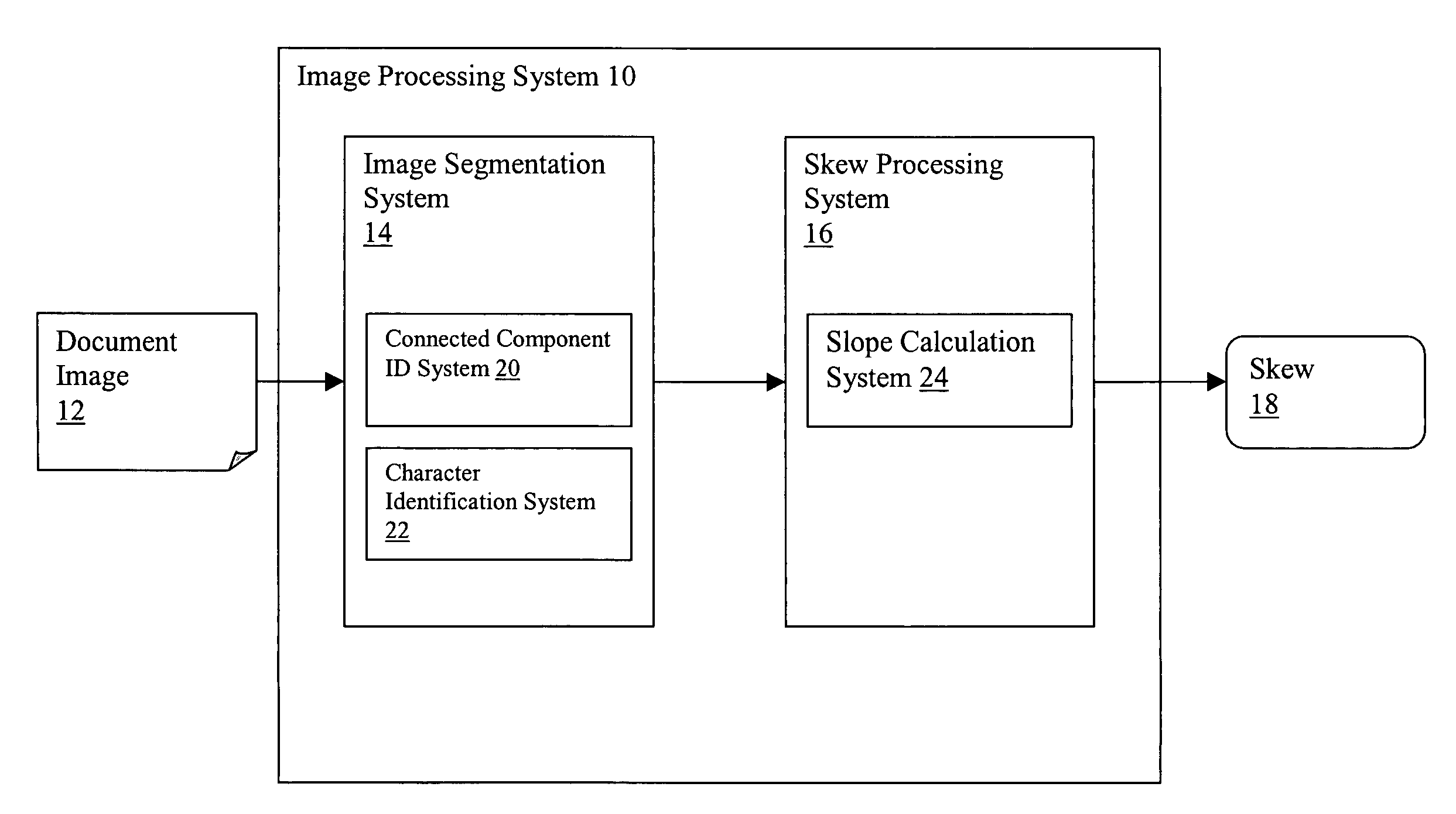

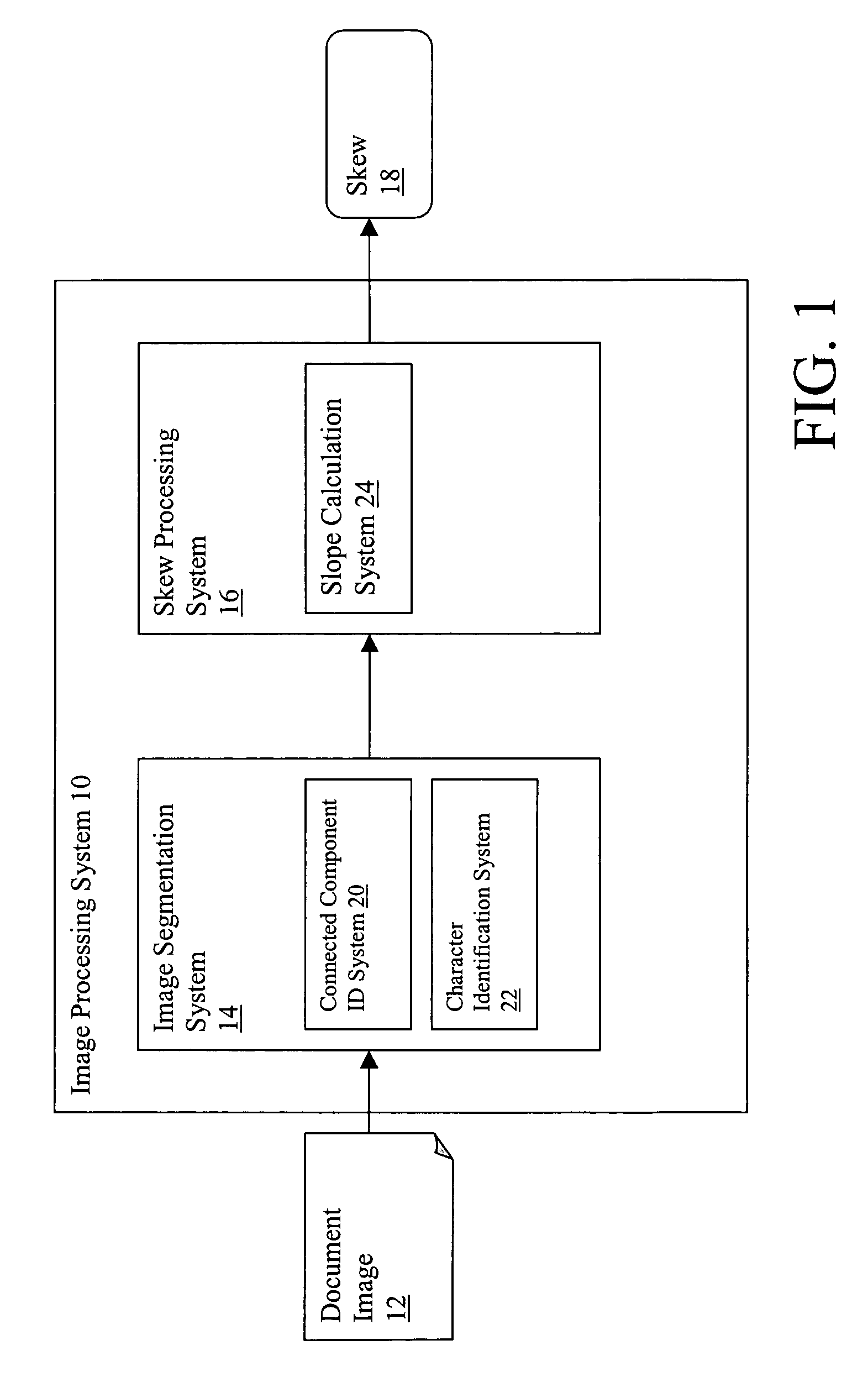

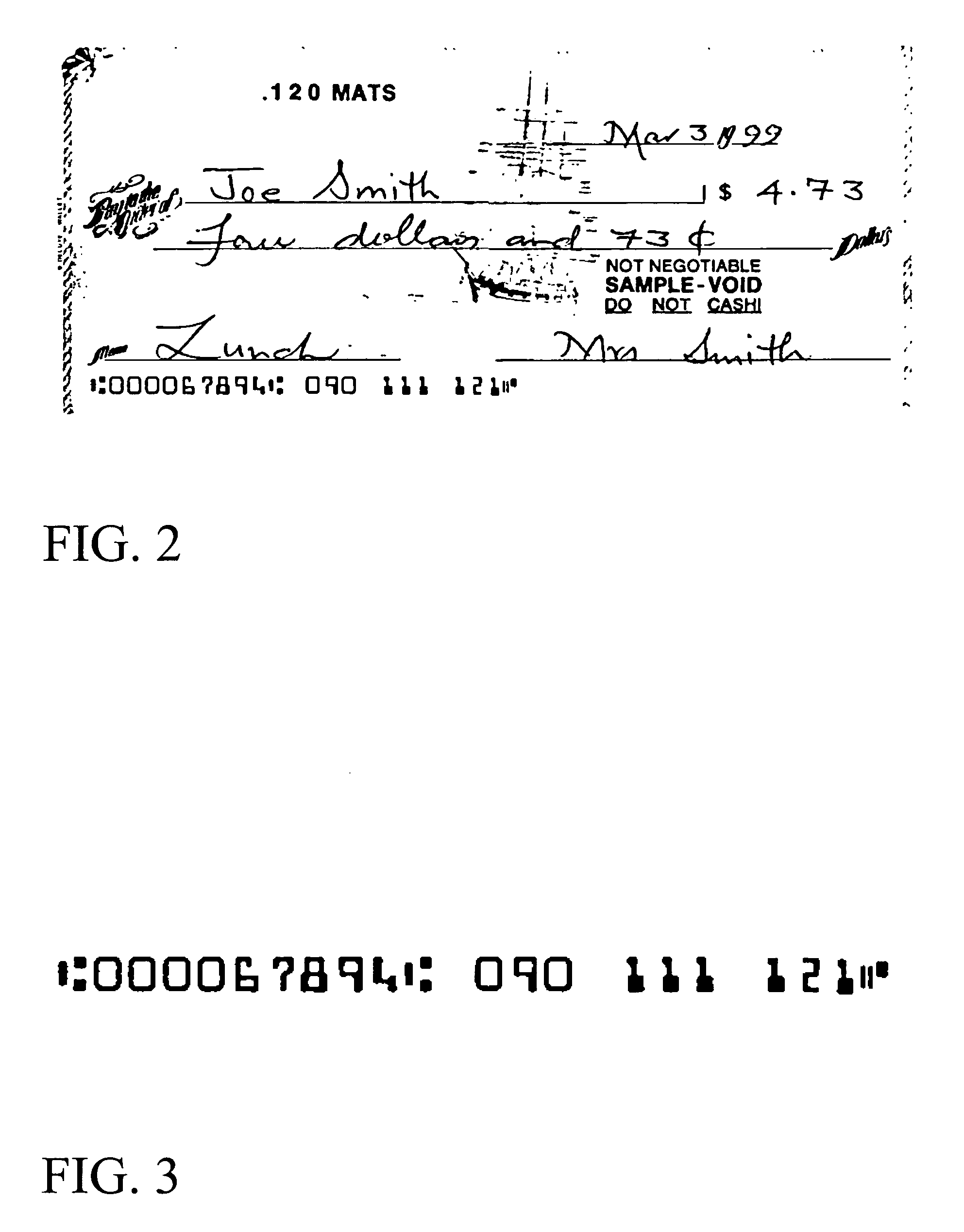

System and method of determining image skew using connected components

A system and method for determining skew of a document image. An image processing system is provided, comprising: an image segmentation system that identifies and segments a line of printed characters; and a skew processing system that determines the skew by calculating slope values for pairs of characters in the line.

Owner:TRUIST BANK

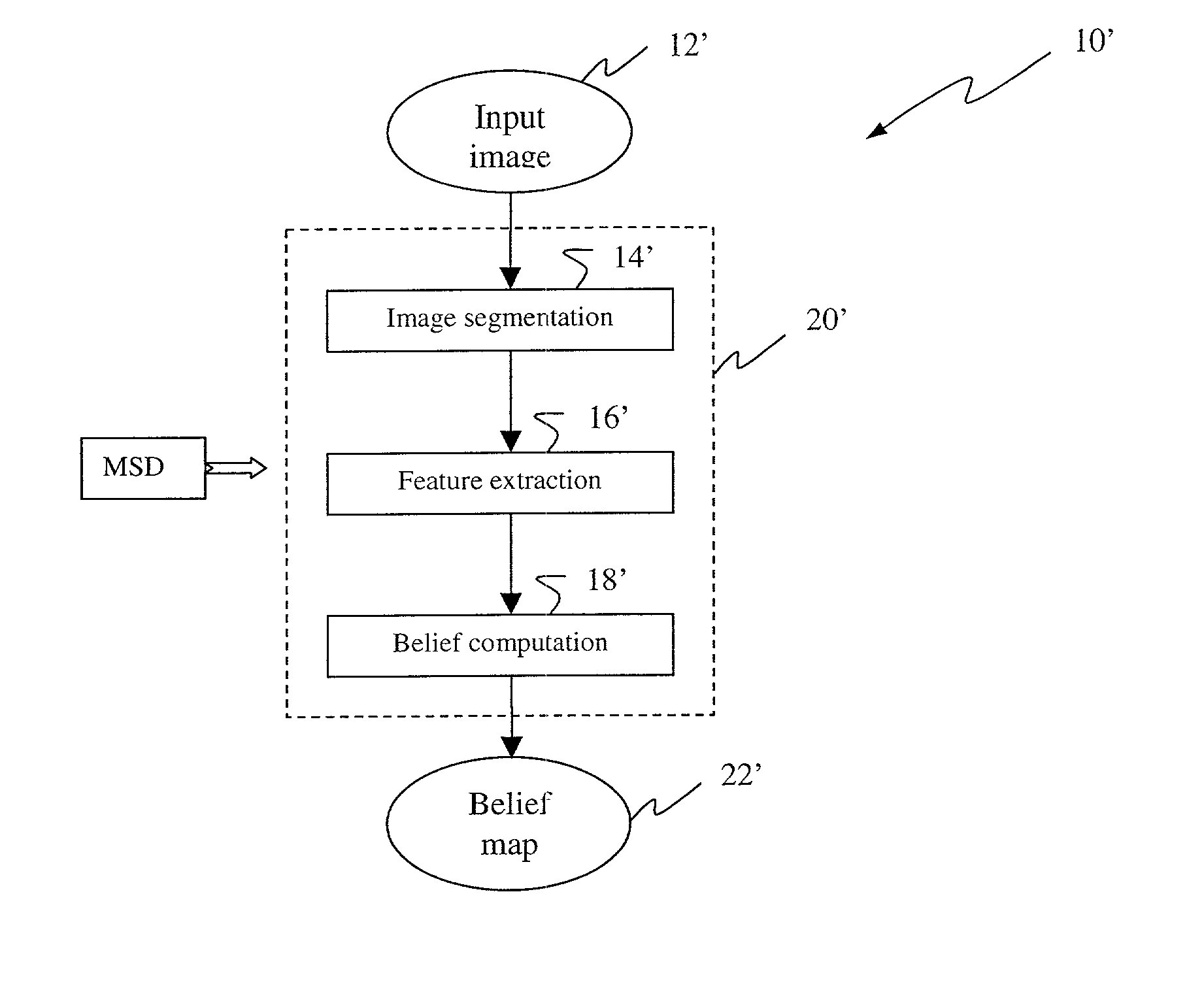

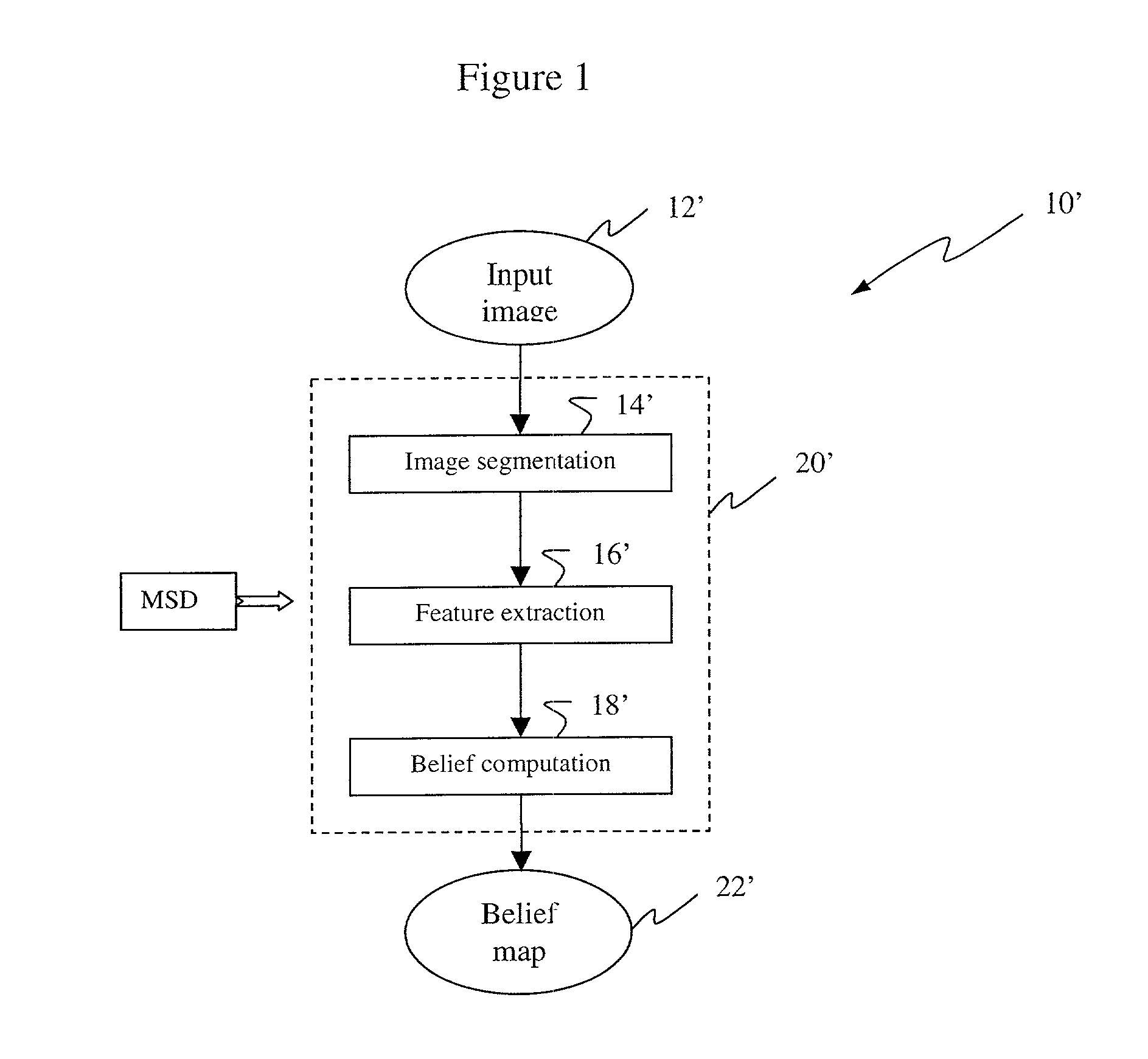

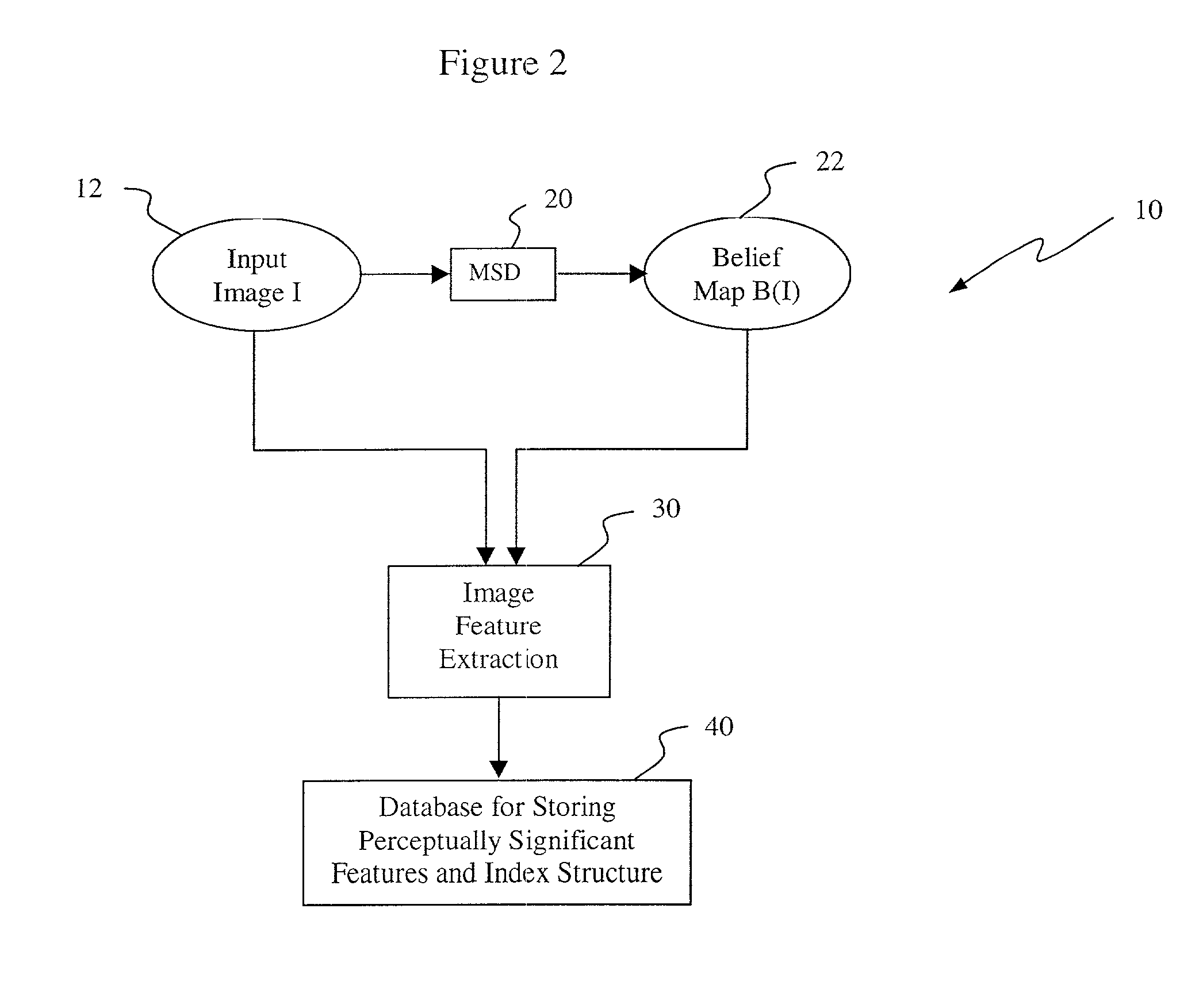

System and method for determining image similarity

A system and method for determining image similarity. The method includes the steps of automatically providing perceptually significant features of main subject or background of a first image; automatically providing perceptually significant features of main subject or background of a second image; automatically comparing the perceptually significant features of the main subject or the background of the first image to the main subject or the background of the second image; and providing an output in response thereto. In the illustrative implementation, the features are provided by a number of belief levels, where the number of belief levels are preferably greater than two. The perceptually significant features include color, texture and / or shape. In the preferred embodiment, the main subject is indicated by a continuously valued belief map. The belief values of the main subject are determined by segmenting the image into regions of homogenous color and texture, computing at least one structure feature and at least one semantic feature for each region, and computing a belief value for all the pixels in the region using a Bayes net to combine the features. In an illustrative application, the inventive method is implemented in an image retrieval system. In this implementation, the inventive method automatically stores perceptually significant features of the main subject or background of a plurality of first images in a database to facilitate retrieval of a target image in response to an input or query image. Features corresponding to each of the plurality of stored images are automatically sequentially compared to similar features of the query image. Consequently, the present invention provides an automatic system and method for controlling the feature extraction, representation, and feature-based similarity retrieval strategies of a content-based image archival and retrieval system based on an analysis of main subject and background derived from a continuously valued main subject belief map.

Owner:MONUMENT PEAK VENTURES LLC

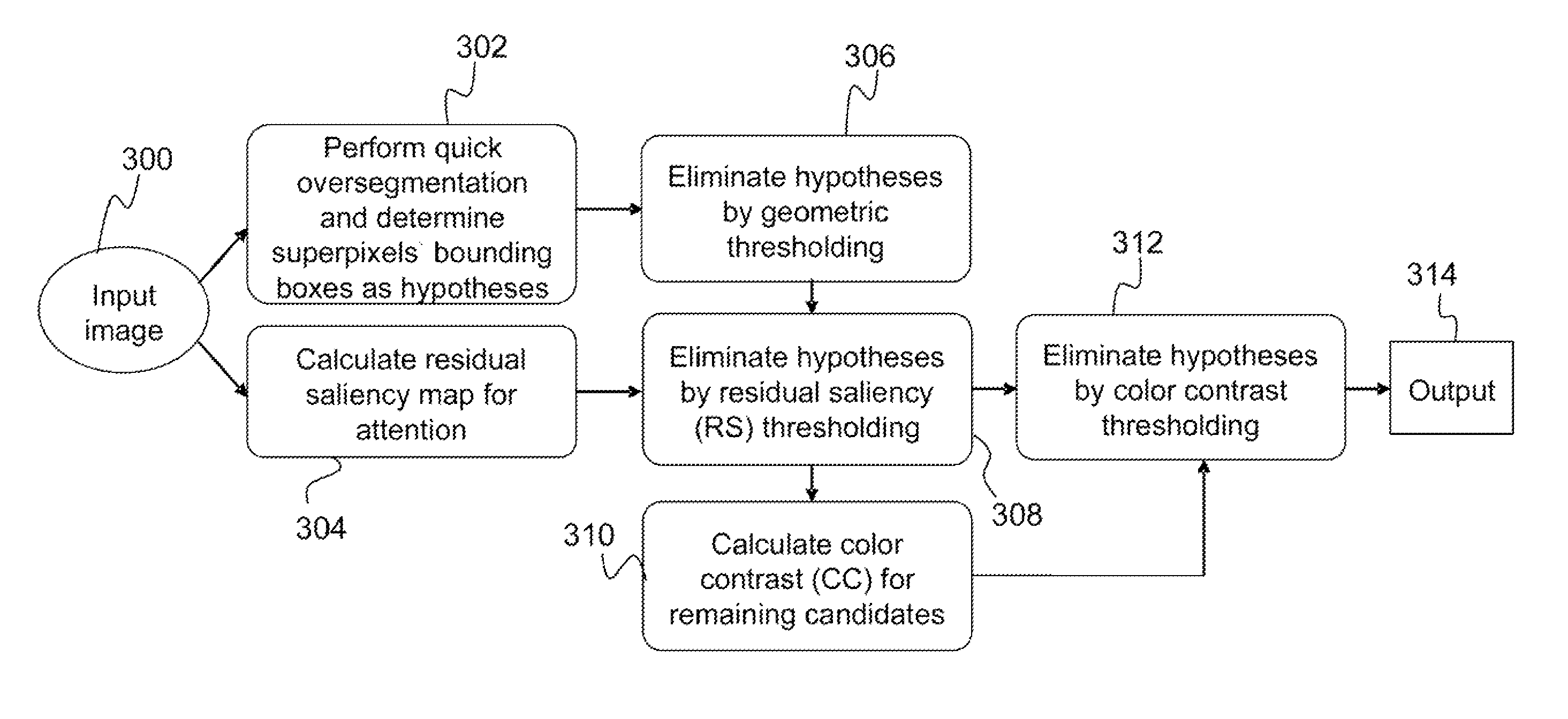

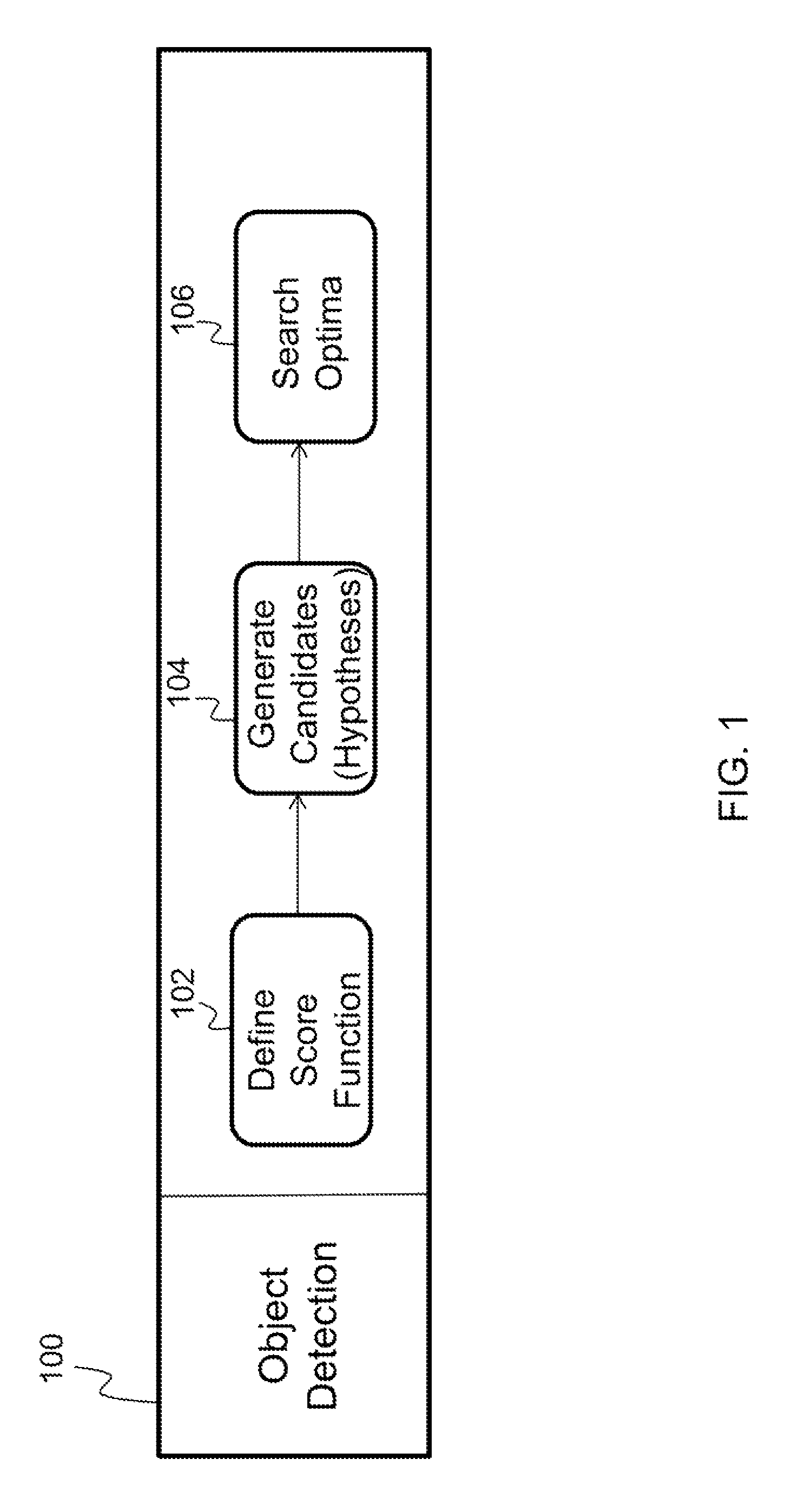

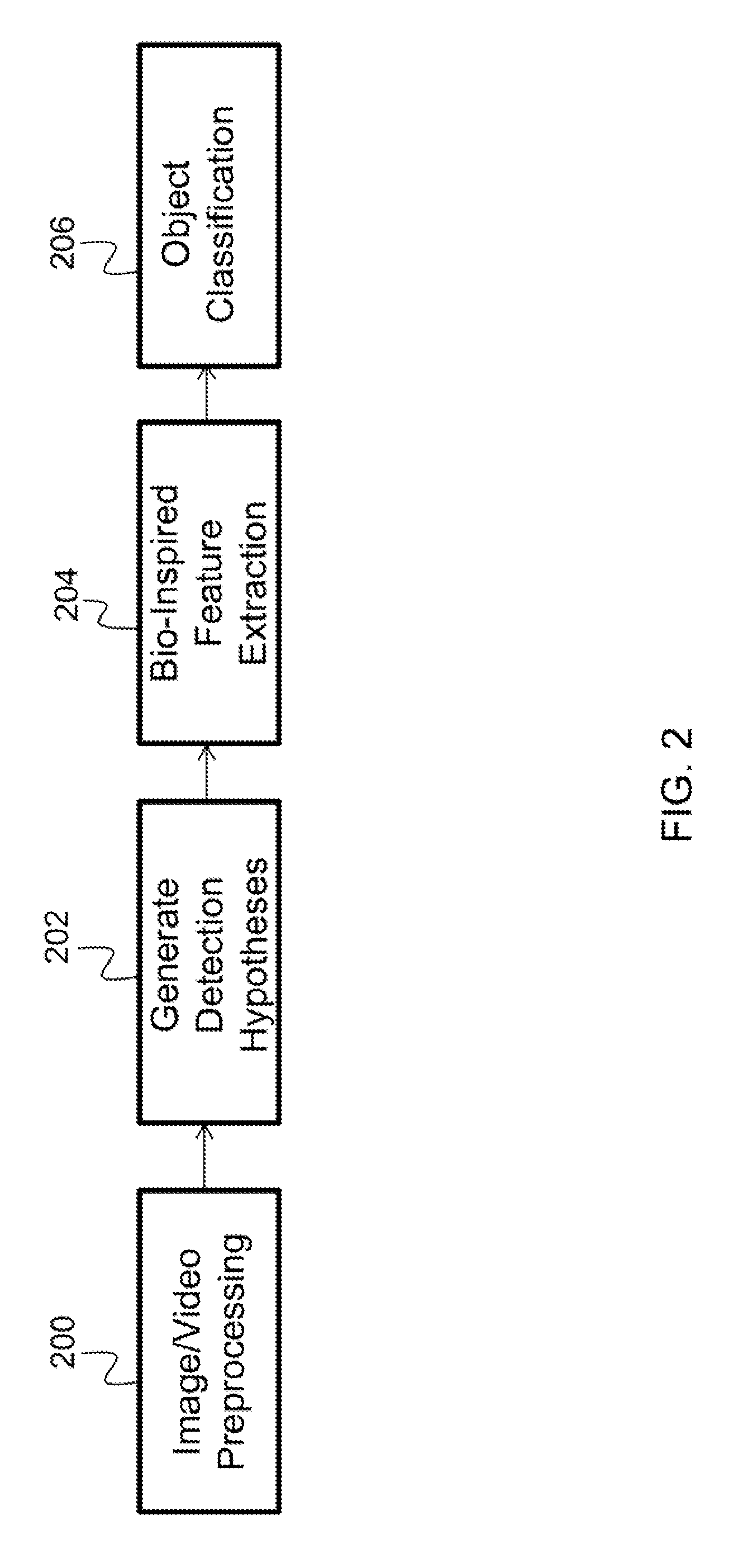

Rapid object detection by combining structural information from image segmentation with bio-inspired attentional mechanisms

Described is a system for rapid object detection combining structural information with bio-inspired attentional mechanisms. The system oversegments an input image into a set of superpixels, where each superpixel comprises a plurality of pixels. For each superpixel, a bounding box defining a region of the input image representing a detection hypothesis is determined. An average residual saliency (ARS) is calculated for the plurality of pixels belonging to each superpixel. Each detection hypothesis that is out of a range of a predetermined threshold value for object size is eliminated. Next, each remaining detection hypothesis having an ARS below a predetermined threshold value is eliminated. Then, color contrast is calculated for the region defined by the bounding box for each remaining detection hypothesis. Each detection hypothesis having a color contrast below a predetermined threshold is eliminated. Finally, the remaining detection hypotheses are output to a classifier for object recognition.

Owner:HRL LAB

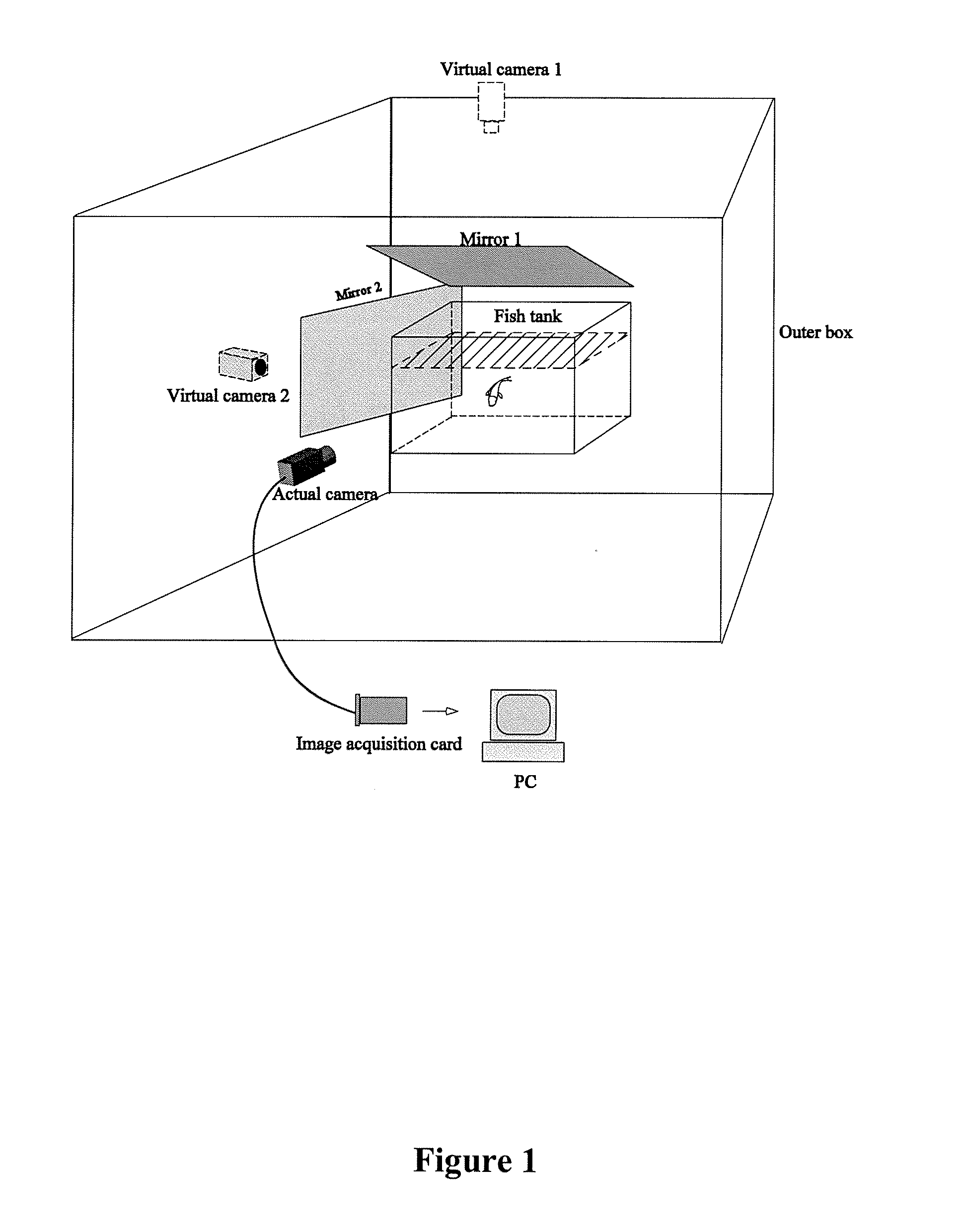

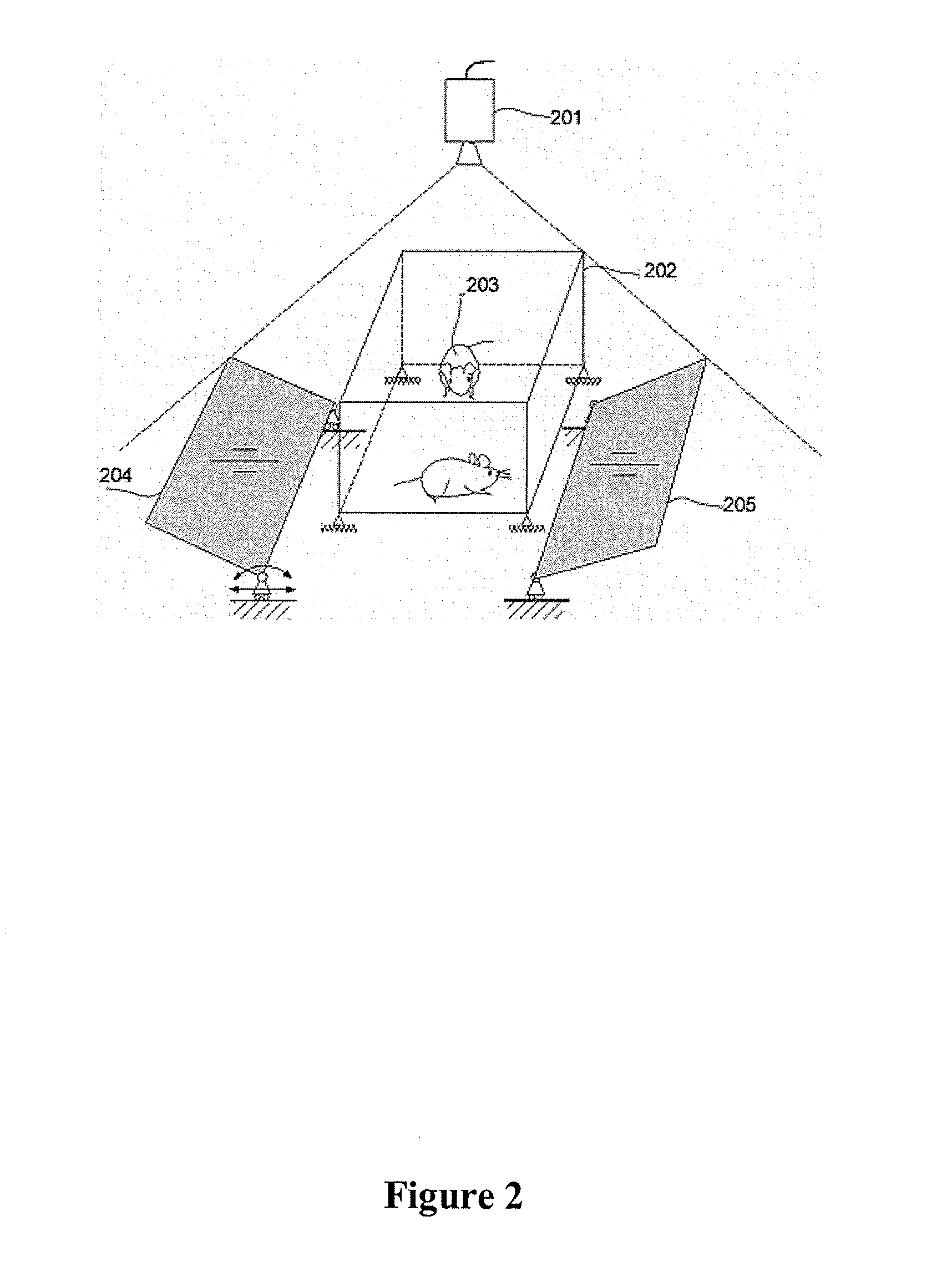

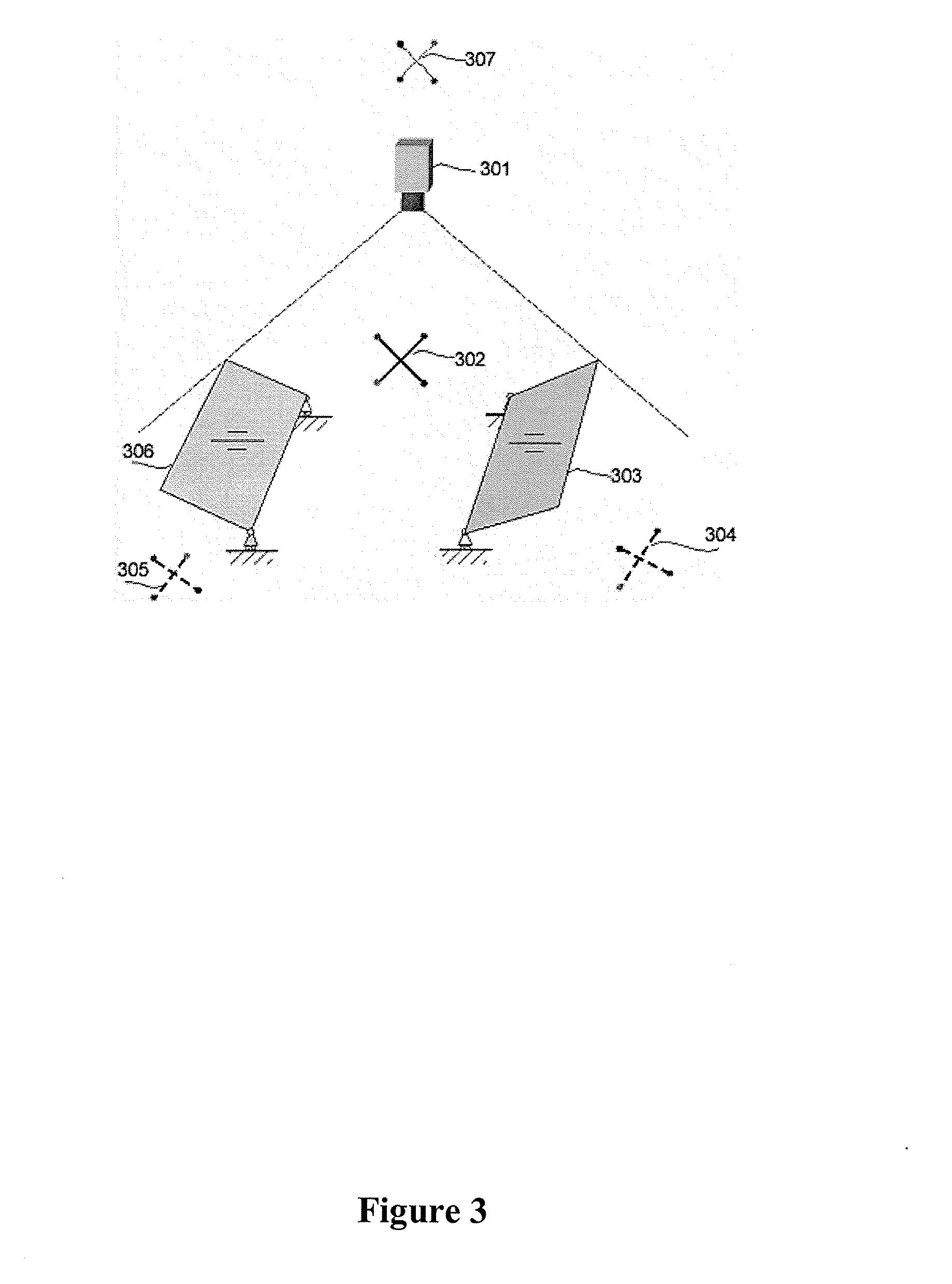

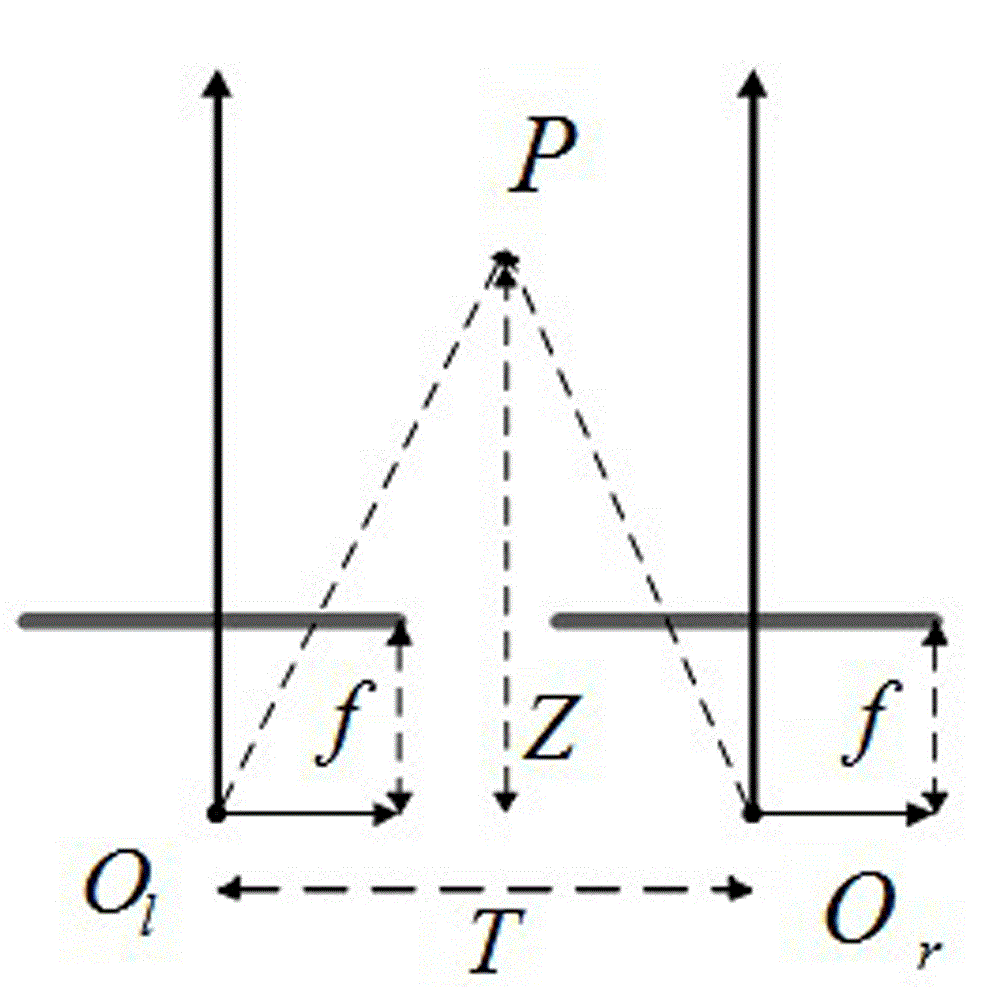

System For 3D Monitoring And Analysis Of Motion Behavior Of Targets

The present invention relates to a system for the 3-D monitoring and analysis of motion-related behavior of test subjects. The system comprises an actual camera, at least one virtual camera, a computer connected to the actual camera and the computer is preferably installed with software capable of capturing the stereo images associated with the 3-D motion-related behavior of test subjects as well as processing these acquired image frames for the 3-D motion parameters of the subjects. The system of the invention comprises hardware components as well as software components. The hardware components preferably comprise a hardware setup or configuration, a hardware-based noise elimination component, an automatic calibration device component, and a lab animal container component. The software components preferably comprise a software-based noise elimination component, a basic calibration component, an extended calibration component, a linear epipolar structure derivation component, a non-linear epipolar structure derivation component, an image segmentation component, an image correspondence detection component, a 3-D motion tracking component, a software-based target identification and tagging component, a 3-D reconstruction component, and a data post-processing component In a particularly preferred embodiment, the actual camera is a digital video camera, the virtual camera is the reflection of the actual camera in a planar reflective mirror. Therefore, the preferred system is a catadioptric stereo computer vision system.

Owner:INGENIOUS TARGETING LAB

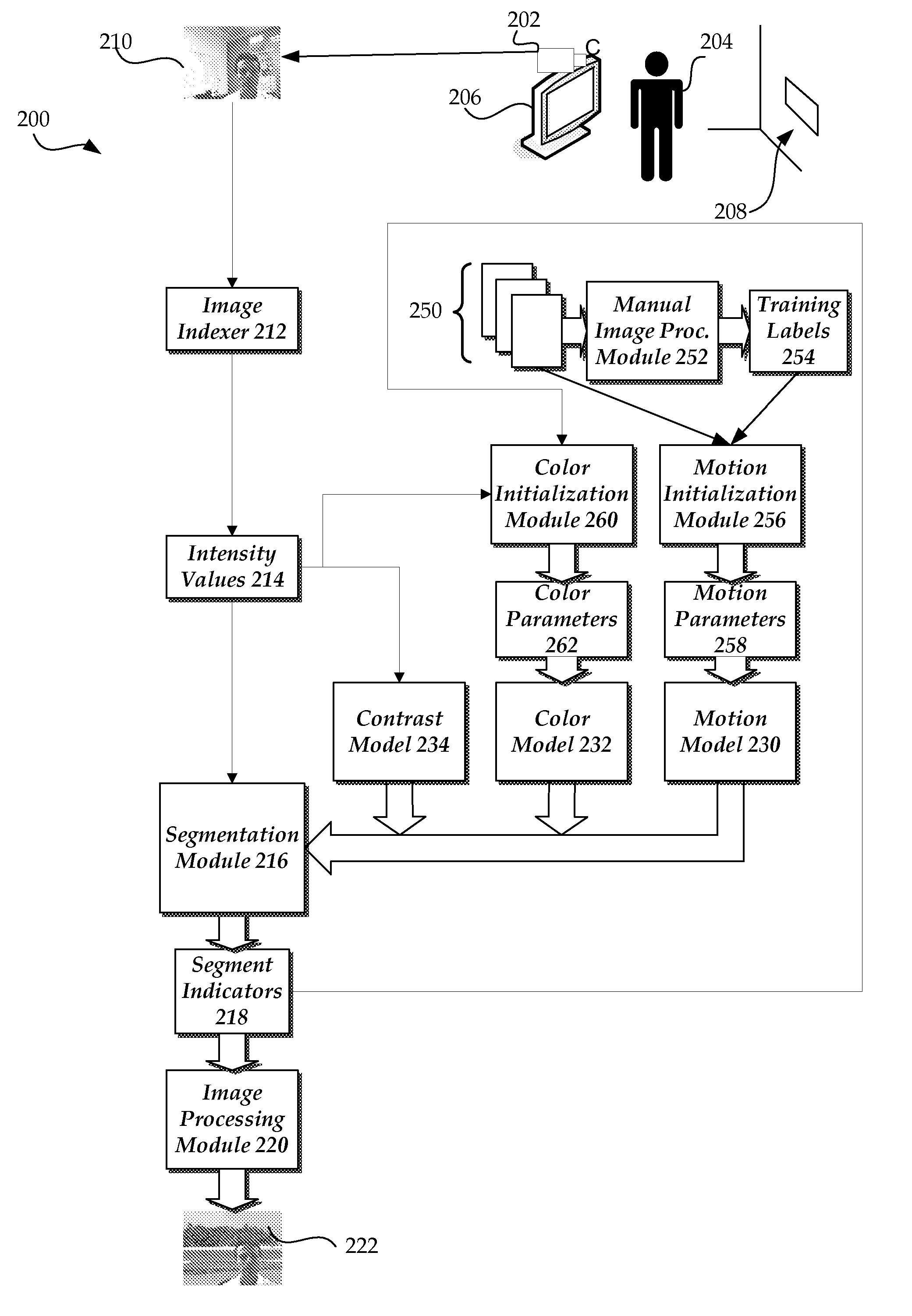

Image segmentation

ActiveUS20060285747A1Reduce segmentation errorEfficient solutionImage enhancementTelevision system detailsImage segmentationComputer vision

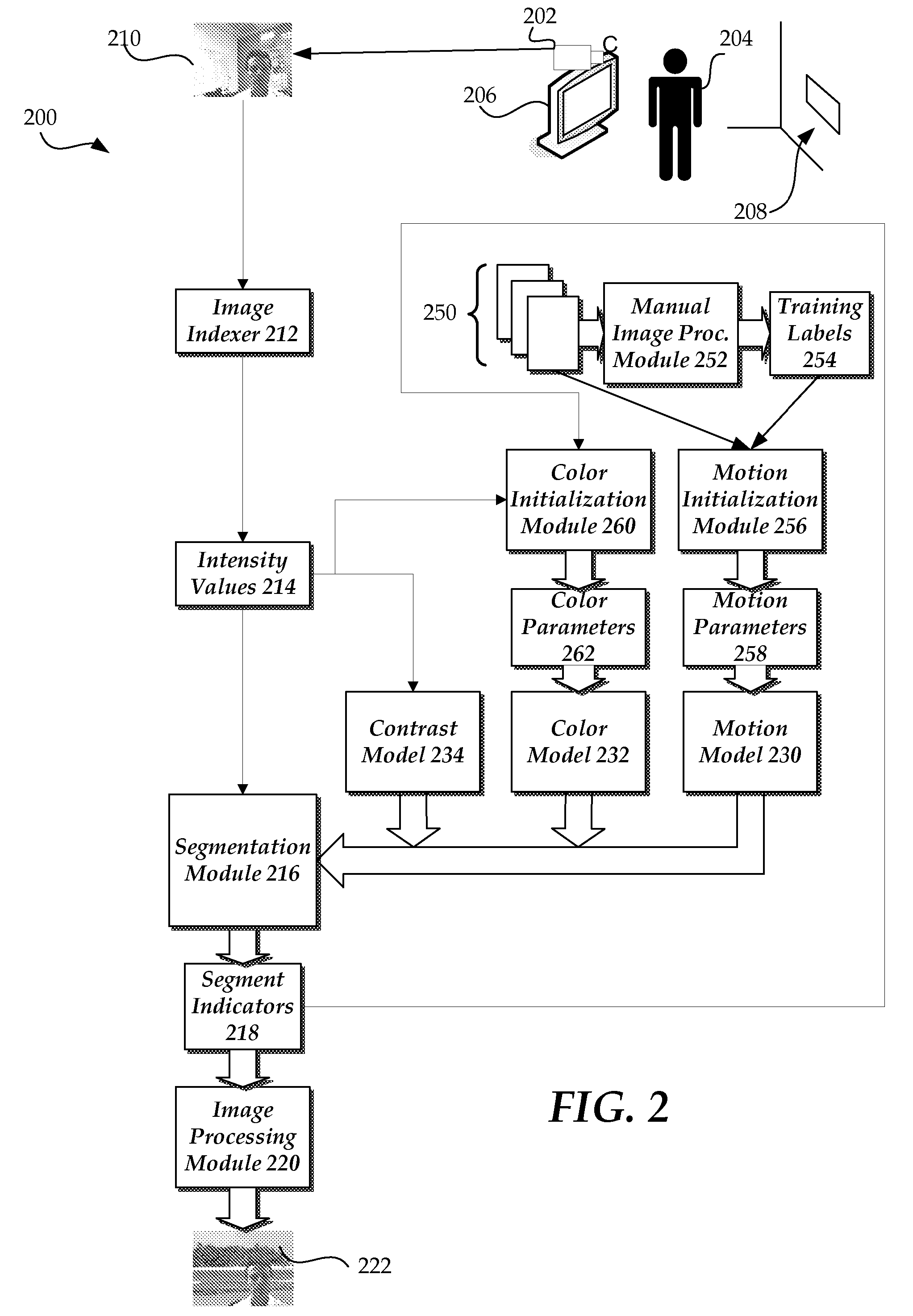

Segmentation of foreground from background layers in an image may be provided by a segmentation process which may be based on one or more factors including motion, color, contrast, and the like. Color, motion, and optionally contrast information may be probabilistically fused to infer foreground and / or background layers accurately and efficiently. A likelihood of motion vs. non-motion may be automatically learned from training data and then fused with a contrast-sensitive color model. Segmentation may then be solved efficiently by an optimization algorithm such as a graph cut.

Owner:MICROSOFT TECH LICENSING LLC

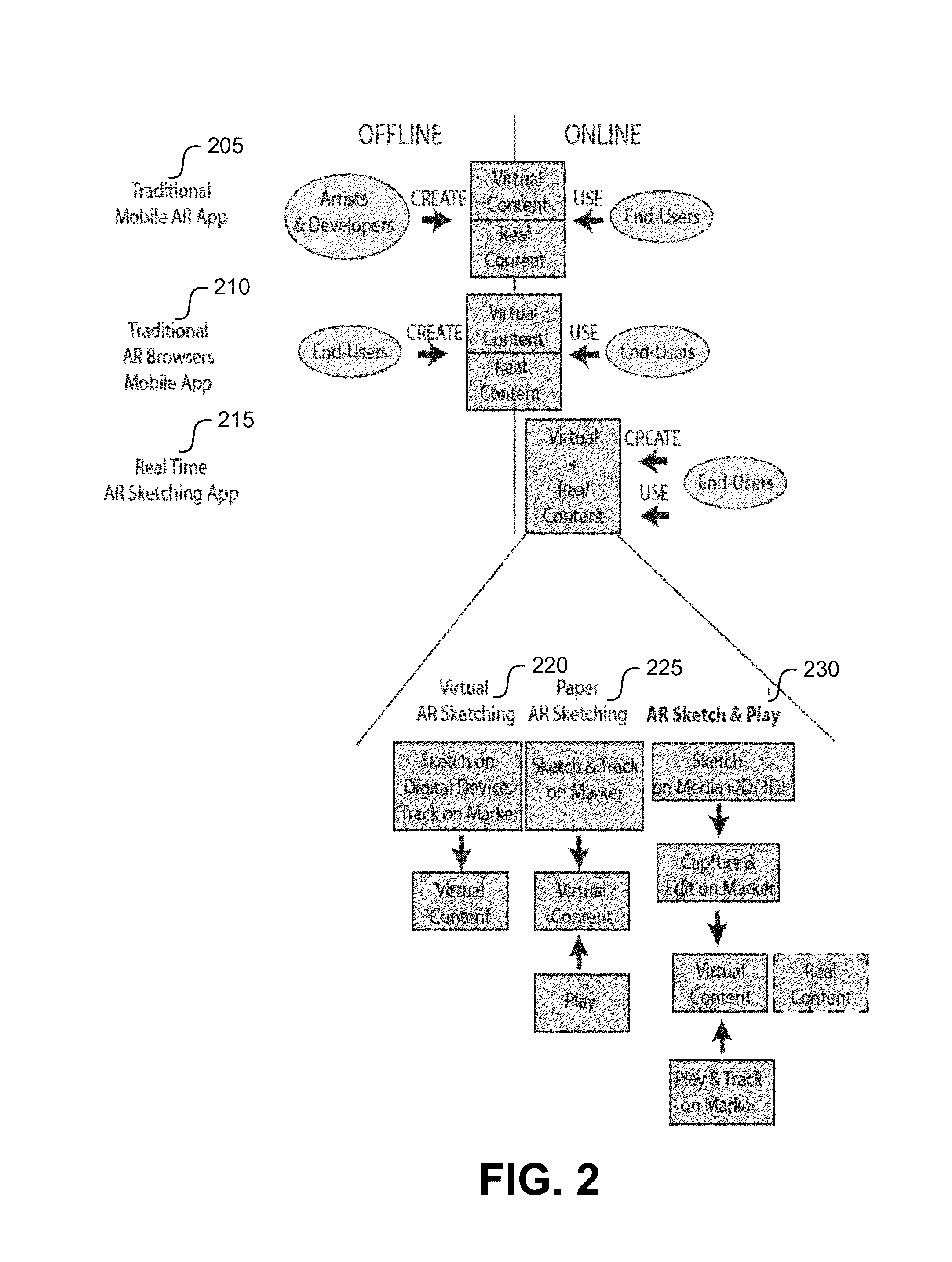

Augmented reality (AR) capture & play

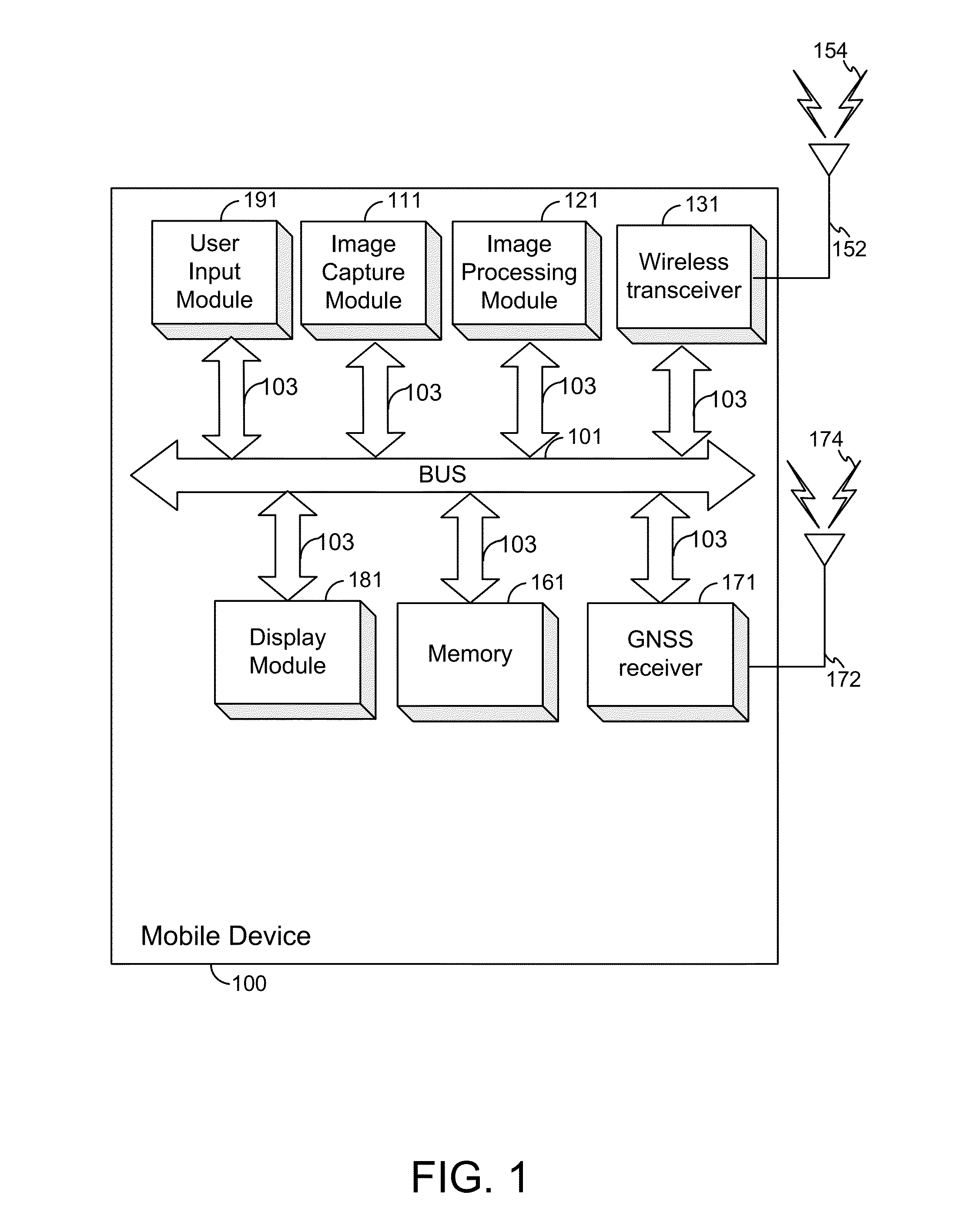

ActiveUS20140344762A1Image data processingInput/output processes for data processingImage segmentationDigital image

Methods, systems, computer-readable media, and apparatuses for generating an Augmented Reality (AR) object are presented. The method may include capturing an image of one or more target objects, wherein the one or more target objects are positioned on a pre-defined background. The method may also include segmenting the image into one or more areas corresponding to the one or more target objects and one or more areas corresponding to the pre-defined background. The method may additionally include converting the one or more areas corresponding to the one or more target objects to a digital image. The method may further include generating one or more AR objects corresponding to the one or more target objects, based at least in part on the digital image.

Owner:QUALCOMM INC

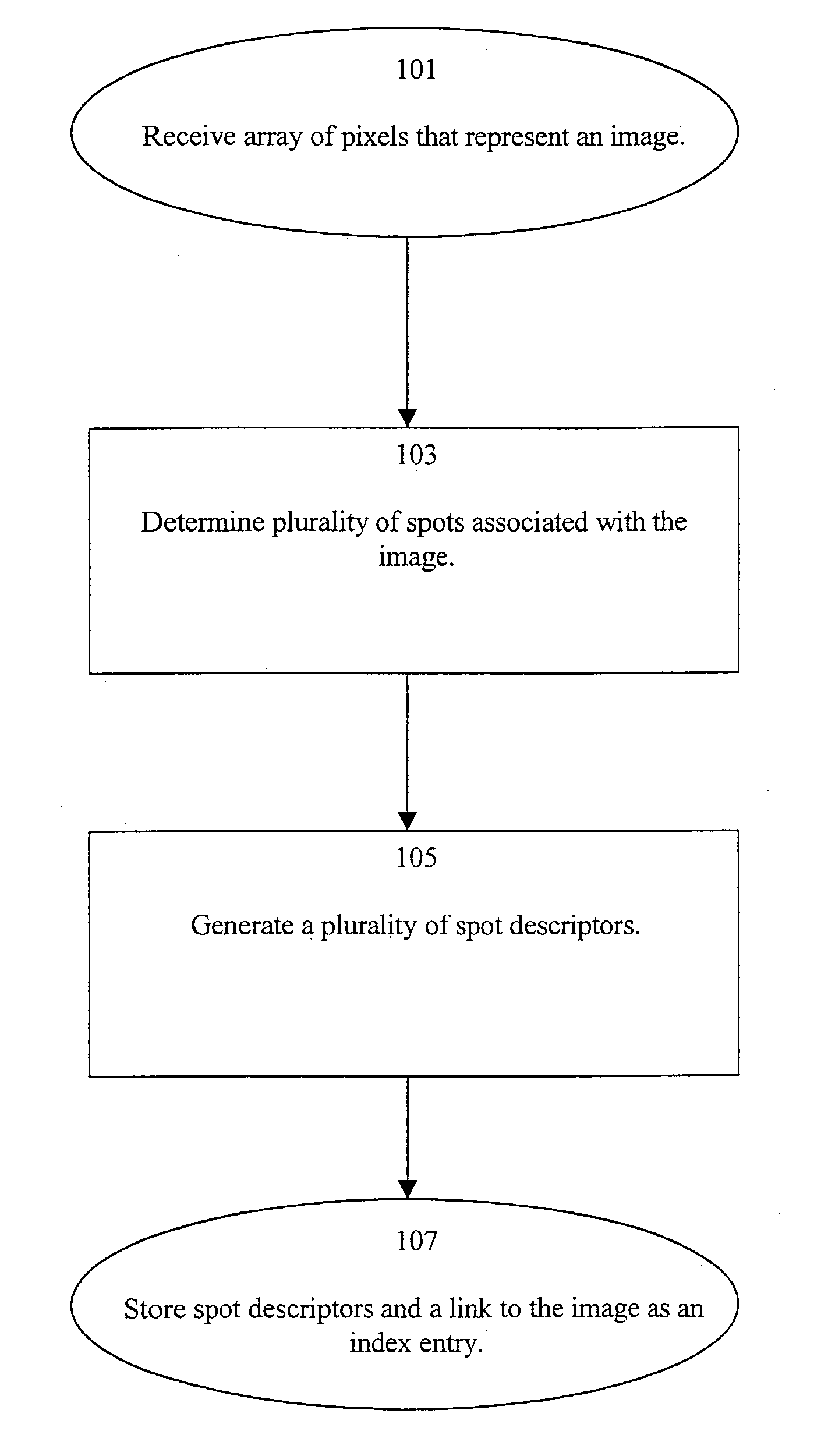

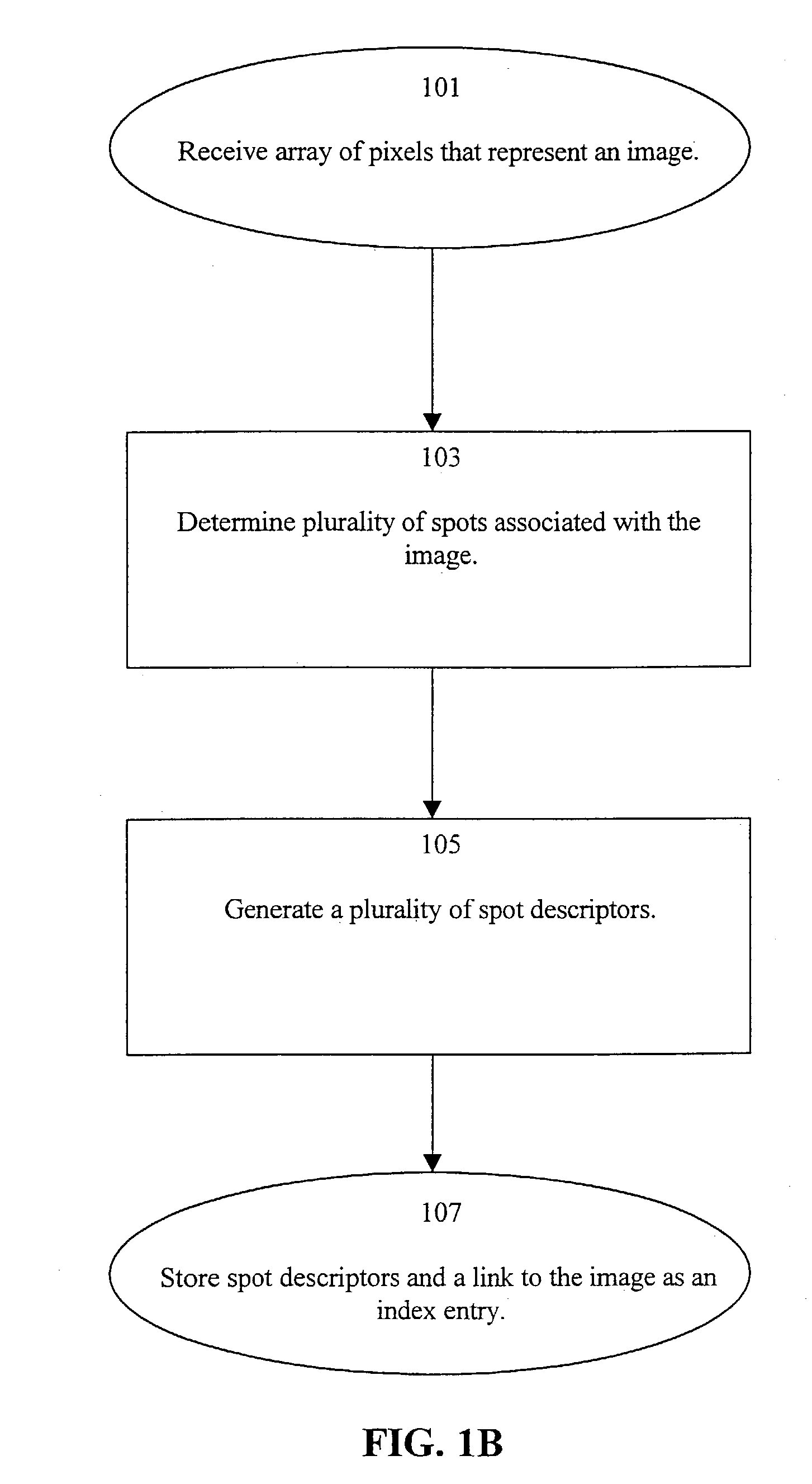

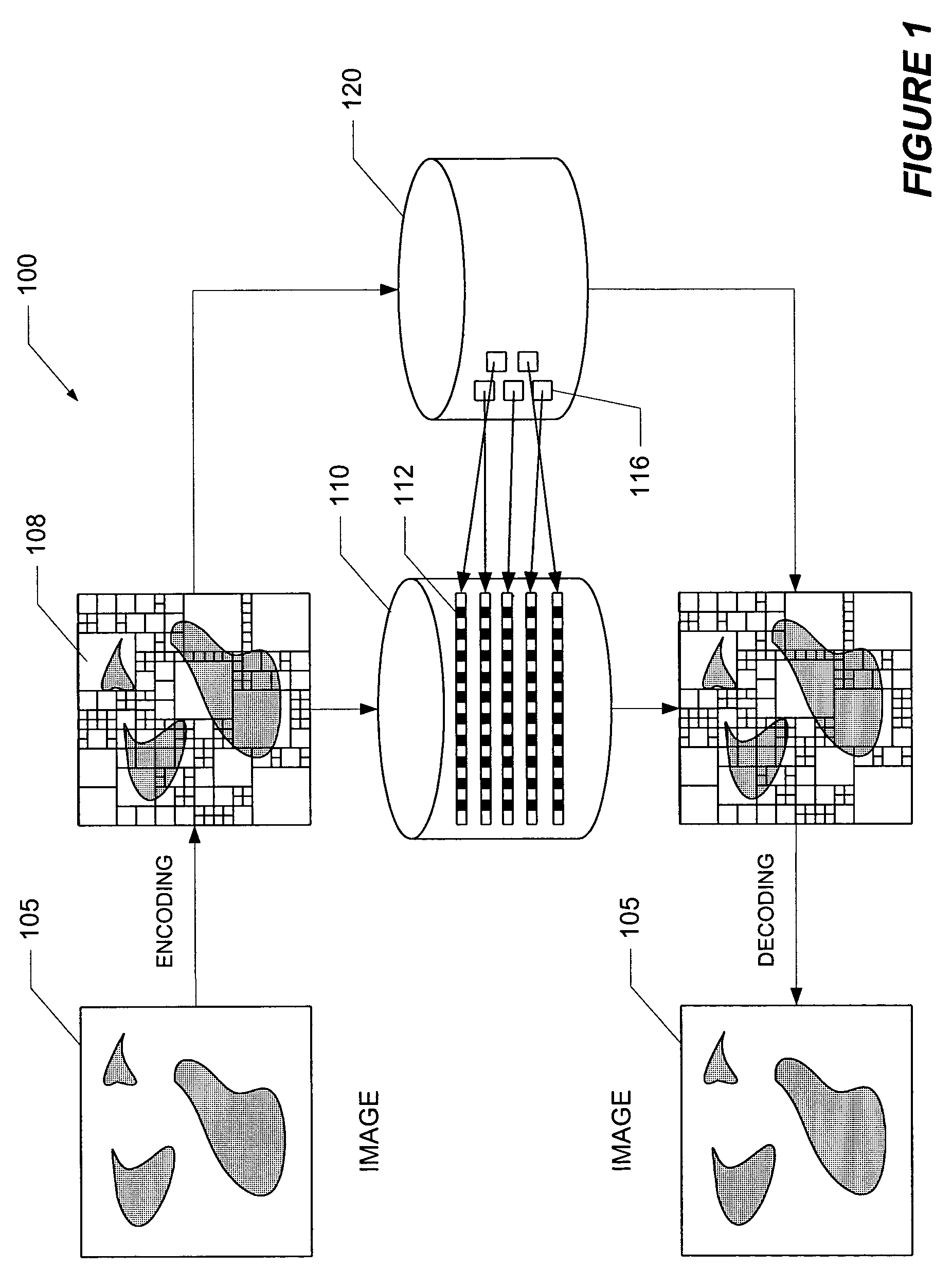

Perceptual similarity image retrieval

InactiveUS7031555B2High degreeNoise insensitiveData processing applicationsDigital data information retrievalWeb serviceContinuous tone

A system and method indexes an image database by partitioning an image thereof into a plurality of cells, combining the cells into intervals and then spots according to perceptual criteria, and generating a set of spot descriptors that characterize the perceptual features of the spots, such as their shape, color and relative position within the image. The shape preferably is a derivative of the coefficients of a Discrete Fourier Transform (DFT) of the perimeter trace of the spot. The set of spot descriptors forms as an index entry for the spot. This process repeated for the various images of the database. To search the index, a key comprising a set of spot descriptors for a query image is generated and compared according to a perceptual similarity metric to the entries of the index. The metric determines the perceptual similarity that the features of the query image match those of the indexed image. The search results are presented as a scored list of the indexed images. A wide variety of image types can be indexed and searched, including: bi-tonal, gray-scale, color, “real scene” originated, and artificially generated images. Continuous-tone “real scene” images such as digitized still pictures and video frames are of primary interest. There are stand alone and networked embodiments. A hybrid embodiment generates keys locally and performs image and index storage and perceptual comparison on a network or web server.

Owner:MIND FUSION LLC

System and methods for image segmentation in N-dimensional space

ActiveUS20090136103A1Improve accuracyImprove robustnessImage enhancementImage analysisVolumetric dataGraph theoretic

A system and methods for the efficient segmentation of globally optimal surfaces representing object boundaries in volumetric datasets is provided. An optical surface detection system and methods are provided that are capable of simultaneously detecting multiple interacting surfaces in which the optimality is controlled by the cost functions designed for individual surfaces and by several geometric constraints defining the surface smoothness and interrelations. The present invention includes surface segmentation using a layered graph-theoretic approach that optimally segments multiple interacting surfaces of a single object using a pre-segmentation step, after which the segmentation of all desired surfaces of the object are performed simultaneously in a single optimization process.

Owner:UNIV OF IOWA RES FOUND

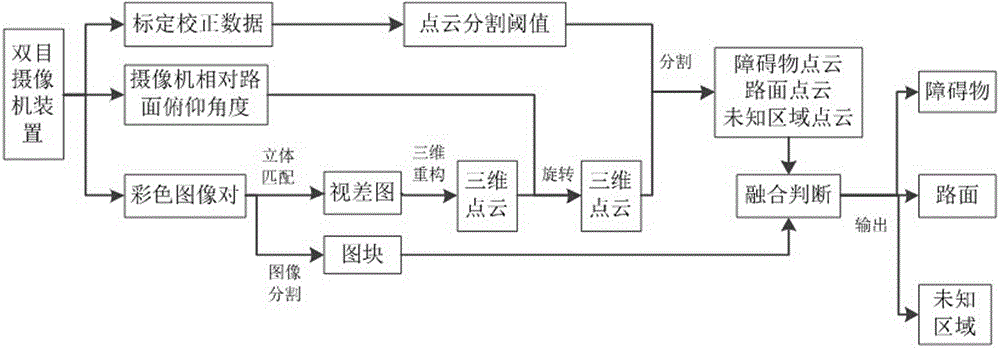

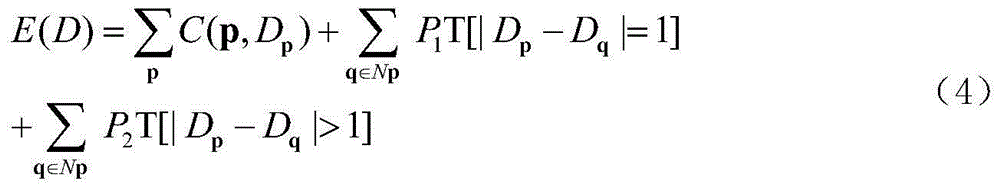

Binocular vision obstacle detection method based on three-dimensional point cloud segmentation

InactiveCN103955920AImprove reliabilityImprove practicalityImage analysis3D modellingReference mapCamera image

The invention provides a binocular vision obstacle detection method based on three-dimensional point cloud segmentation. The method comprises the steps of synchronously collecting two camera images of the same specification, conducting calibration and correction on a binocular camera, and calculating a three-dimensional point cloud segmentation threshold value; using a three-dimensional matching algorithm and three-dimensional reconstruction calculation for obtaining a three-dimensional point cloud, and conducting image segmentation on a reference map to obtain image blocks; automatically detecting the height of a road surface of the three-dimensional point cloud, and utilizing the three-dimensional point cloud segmentation threshold value for conducting segmentation to obtain a road surface point cloud, obstacle point clouds at different positions and unknown region point clouds; utilizing the point clouds obtained through segmentation for being combined with the segmented image blocks, determining the correctness of obstacles and the road surface, and determining position ranges of the obstacles, the road surface and unknown regions. According to the binocular vision obstacle detection method, the camera and the height of the road surface can be still detected under the complex environment, the three-dimensional segmentation threshold value is automatically estimated, the obstacle point clouds, the road surface point cloud and the unknown region point clouds can be obtained through segmentation, the color image segmentation technology is ended, color information is integrated, correctness of the obstacles and the road surface is determined, the position ranges of the obstacles, the road surface and the unknown regions are determined, the high-robustness obstacle detection is achieved, and the binocular vision obstacle detection method has higher reliability and practicability.

Owner:GUILIN UNIV OF ELECTRONIC TECH +1

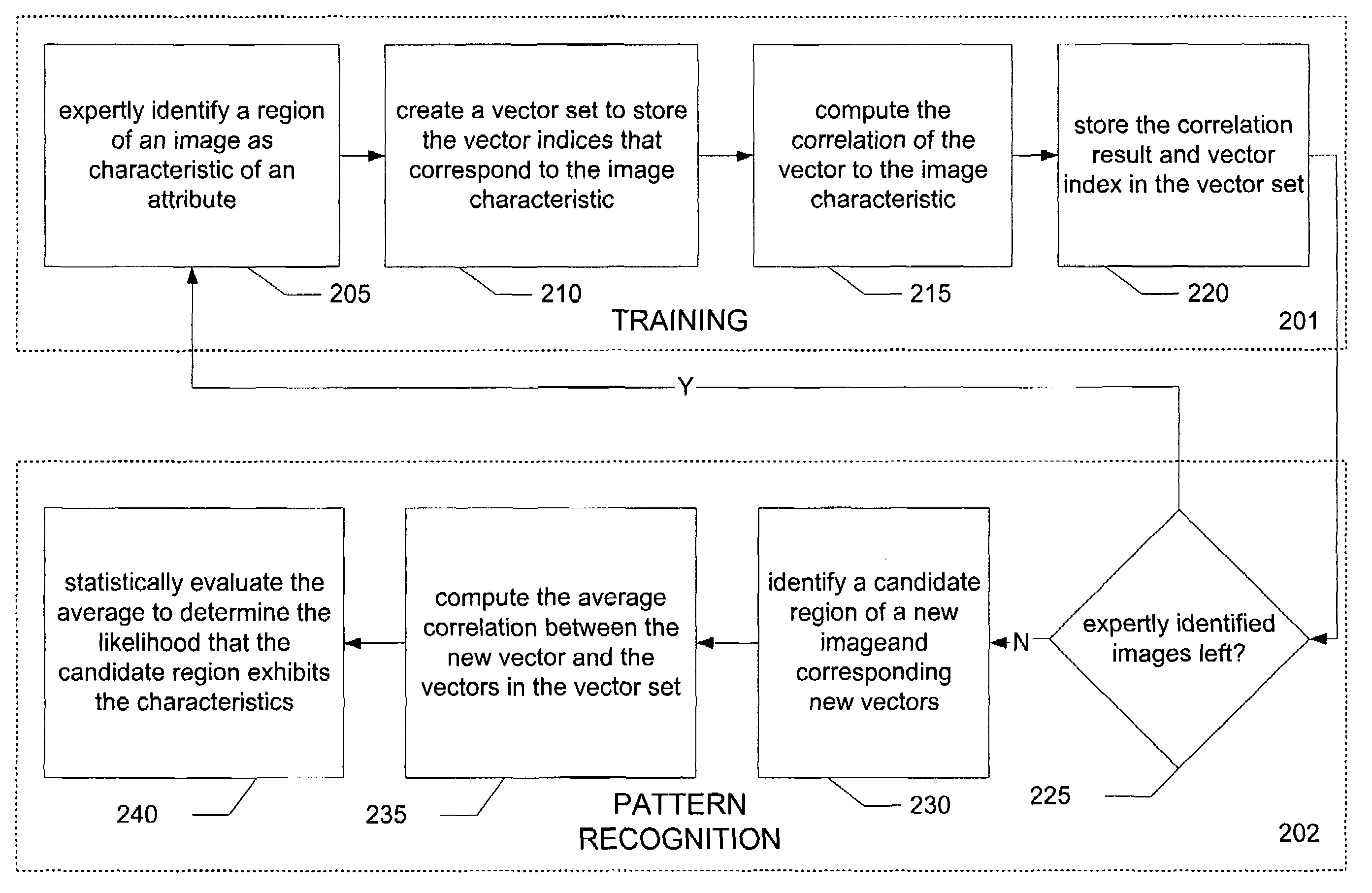

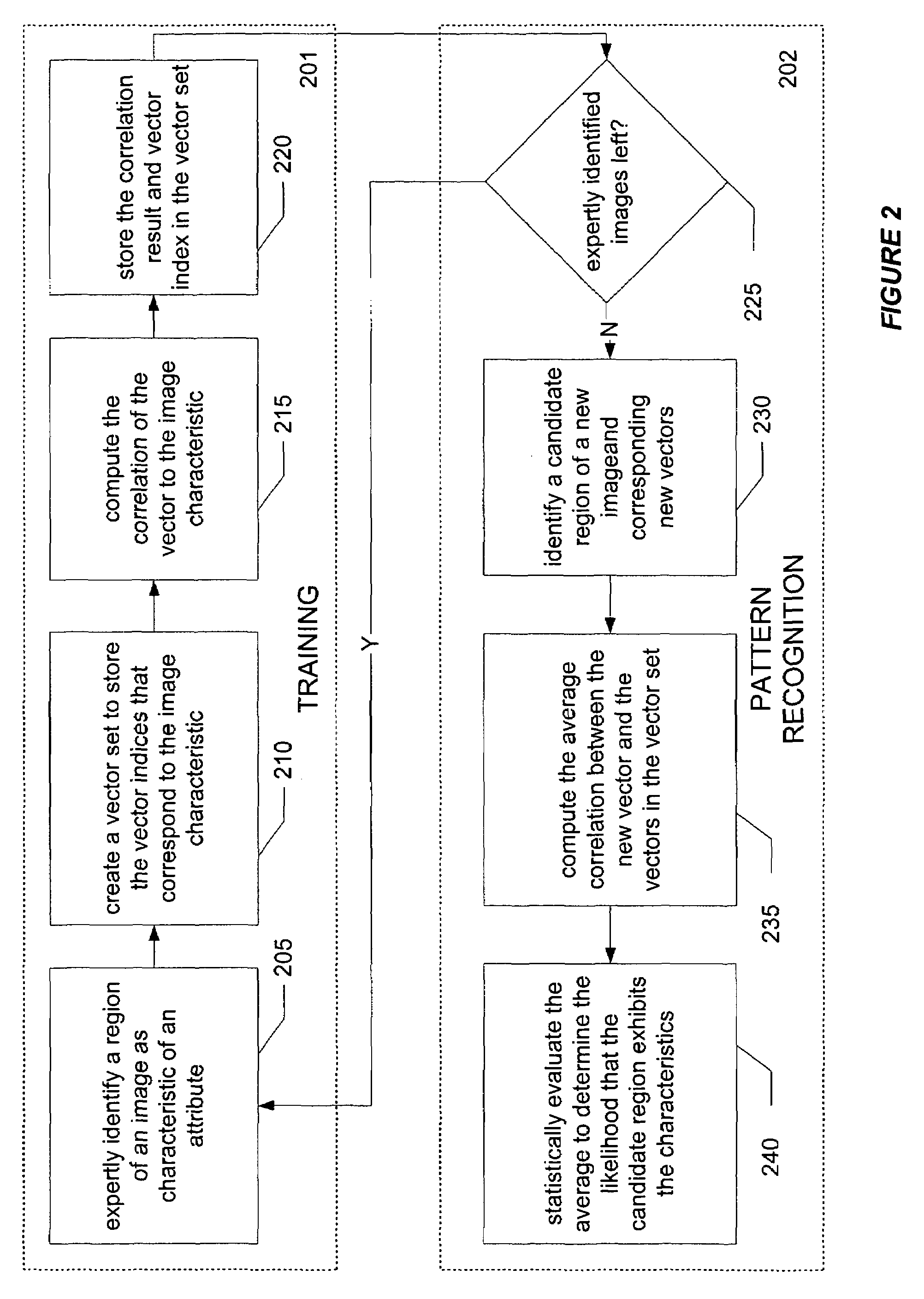

Systems and methods for image pattern recognition

Systems and methods for image pattern recognition comprise digital image capture and encoding using vector quantization (“VQ”) of the image. A vocabulary of vectors is built by segmenting images into kernels and creating vectors corresponding to each kernel. Images are encoded by creating a vector index file having indices that point to the vectors stored in the vocabulary. The vector index file can be used to reconstruct an image by looking up vectors stored in the vocabulary. Pattern recognition of candidate regions of images can be accomplished by correlating image vectors to a pre-trained vocabulary of vector sets comprising vectors that correlate with particular image characteristics. In virtual microscopy, the systems and methods are suitable for rare-event finding, such as detection of micrometastasis clusters, tissue identification, such as locating regions of analysis for immunohistochemical assays, and rapid screening of tissue samples, such as histology sections arranged as tissue microarrays (TMAs).

Owner:LEICA BIOSYST IMAGING

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com