Patents

Literature

393 results about "Point cloud segmentation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Point cloud segmentation can be straightforward as long as the assumptions can be mathematically modelled. For example, a flat floor can simply be extracted by using an elevation threshold. However, it is not always possible to make such simple assumptions, and the more assumptions that are made, the less generally applicable the approach becomes.

Binocular vision obstacle detection method based on three-dimensional point cloud segmentation

InactiveCN103955920AImprove reliabilityImprove practicalityImage analysis3D modellingReference mapCamera image

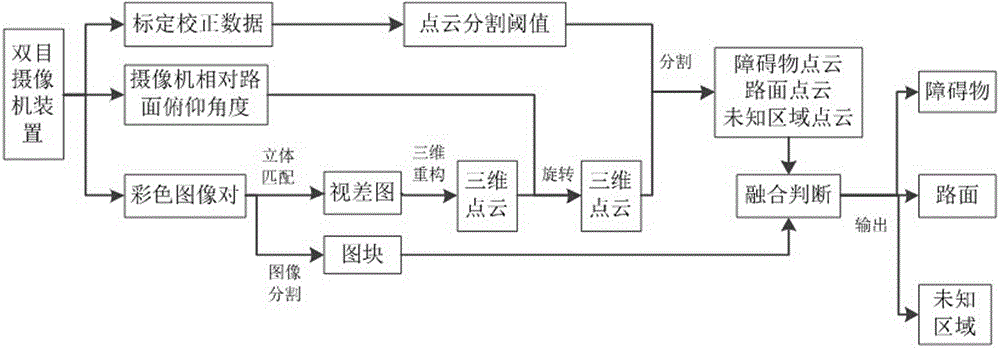

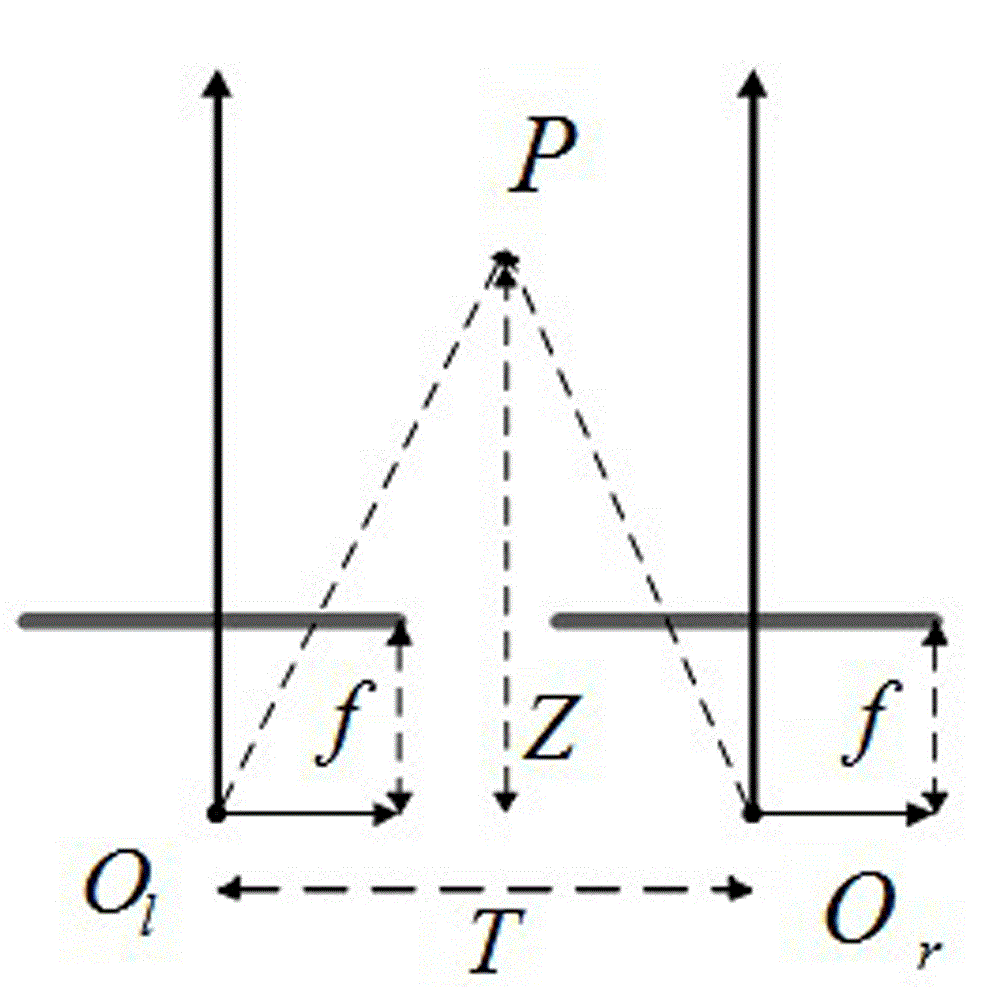

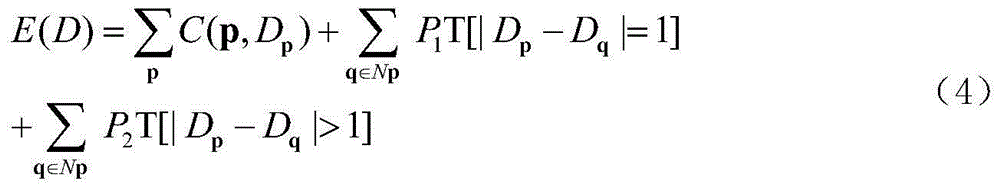

The invention provides a binocular vision obstacle detection method based on three-dimensional point cloud segmentation. The method comprises the steps of synchronously collecting two camera images of the same specification, conducting calibration and correction on a binocular camera, and calculating a three-dimensional point cloud segmentation threshold value; using a three-dimensional matching algorithm and three-dimensional reconstruction calculation for obtaining a three-dimensional point cloud, and conducting image segmentation on a reference map to obtain image blocks; automatically detecting the height of a road surface of the three-dimensional point cloud, and utilizing the three-dimensional point cloud segmentation threshold value for conducting segmentation to obtain a road surface point cloud, obstacle point clouds at different positions and unknown region point clouds; utilizing the point clouds obtained through segmentation for being combined with the segmented image blocks, determining the correctness of obstacles and the road surface, and determining position ranges of the obstacles, the road surface and unknown regions. According to the binocular vision obstacle detection method, the camera and the height of the road surface can be still detected under the complex environment, the three-dimensional segmentation threshold value is automatically estimated, the obstacle point clouds, the road surface point cloud and the unknown region point clouds can be obtained through segmentation, the color image segmentation technology is ended, color information is integrated, correctness of the obstacles and the road surface is determined, the position ranges of the obstacles, the road surface and the unknown regions are determined, the high-robustness obstacle detection is achieved, and the binocular vision obstacle detection method has higher reliability and practicability.

Owner:GUILIN UNIV OF ELECTRONIC TECH +1

Multiline laser radar-based 3D point cloud segmentation method

ActiveCN106204705AThe method of removing floating points is simpleImprove filtering effect3D-image rendering3D modellingVoxelRadar

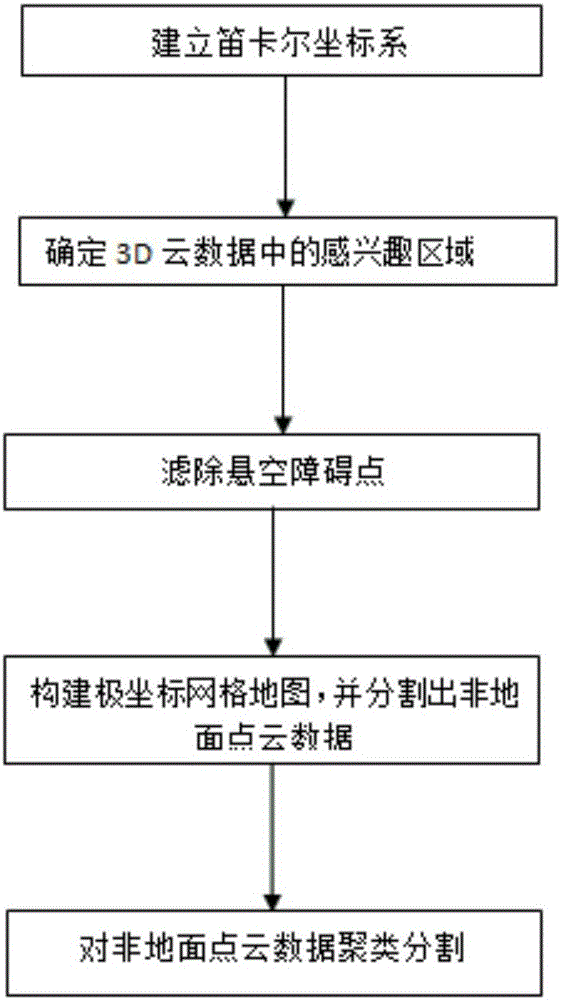

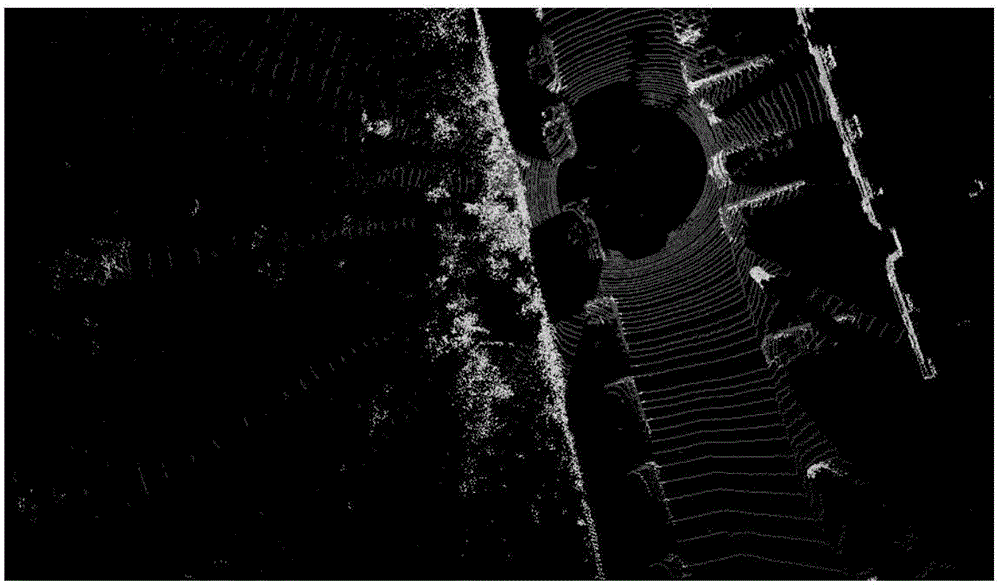

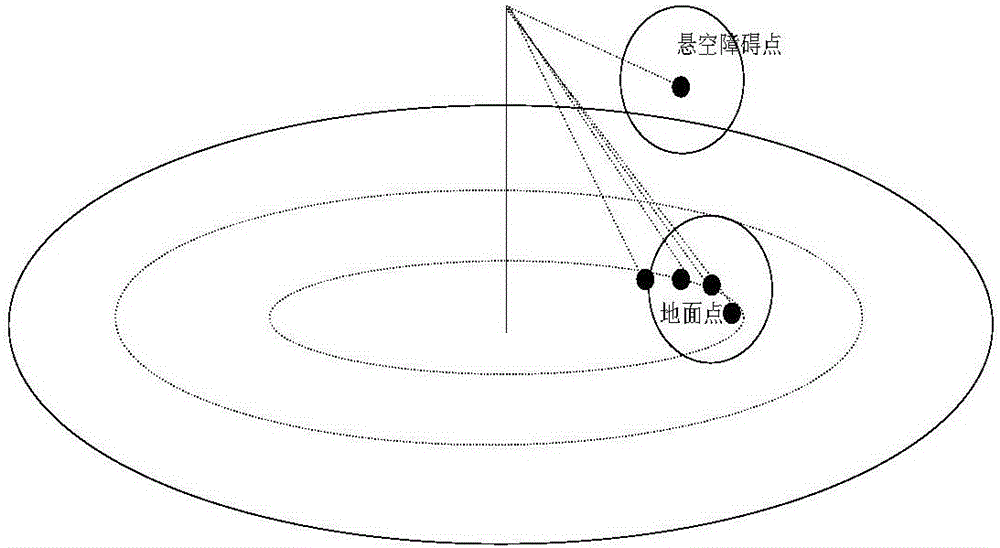

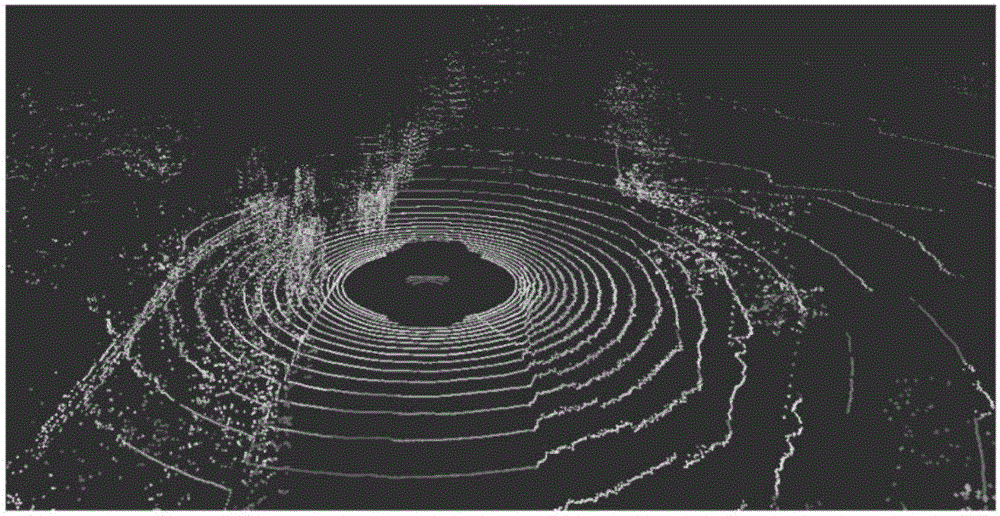

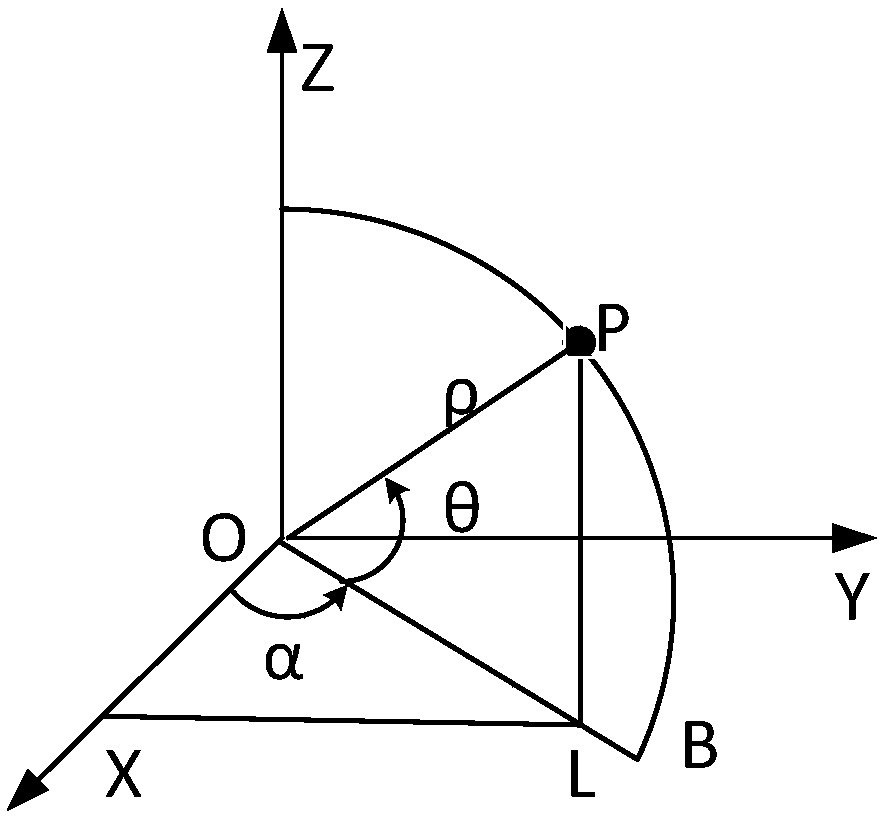

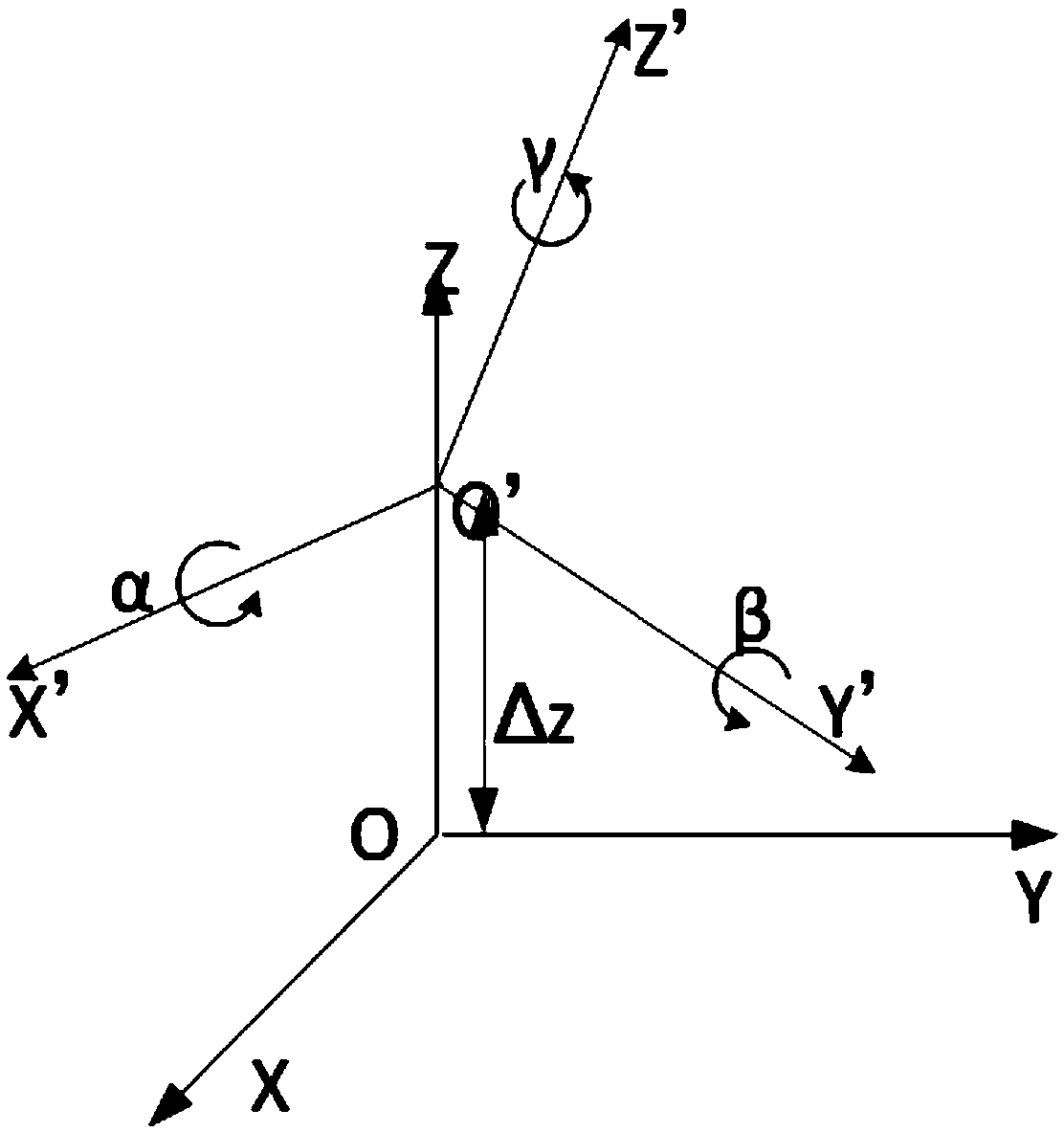

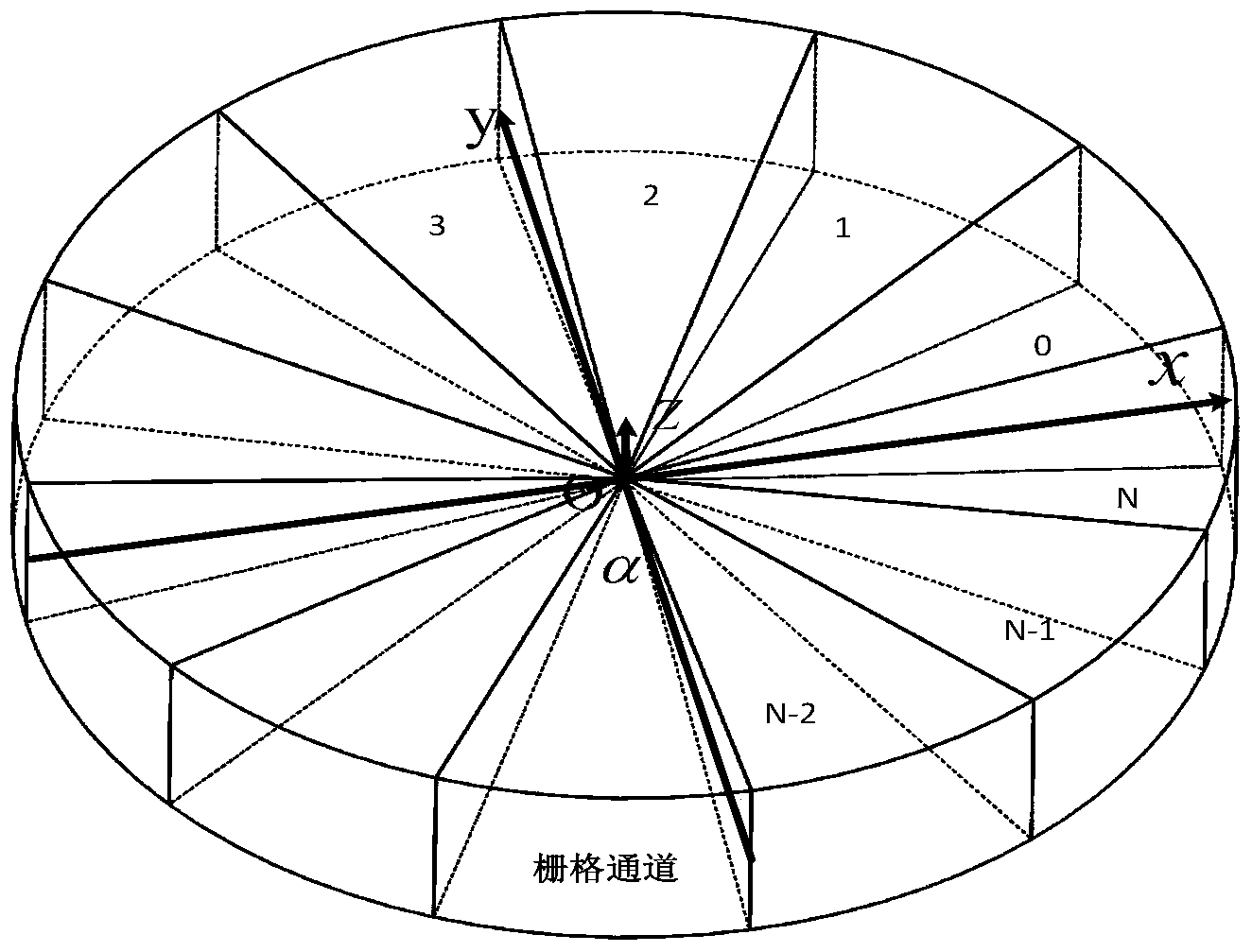

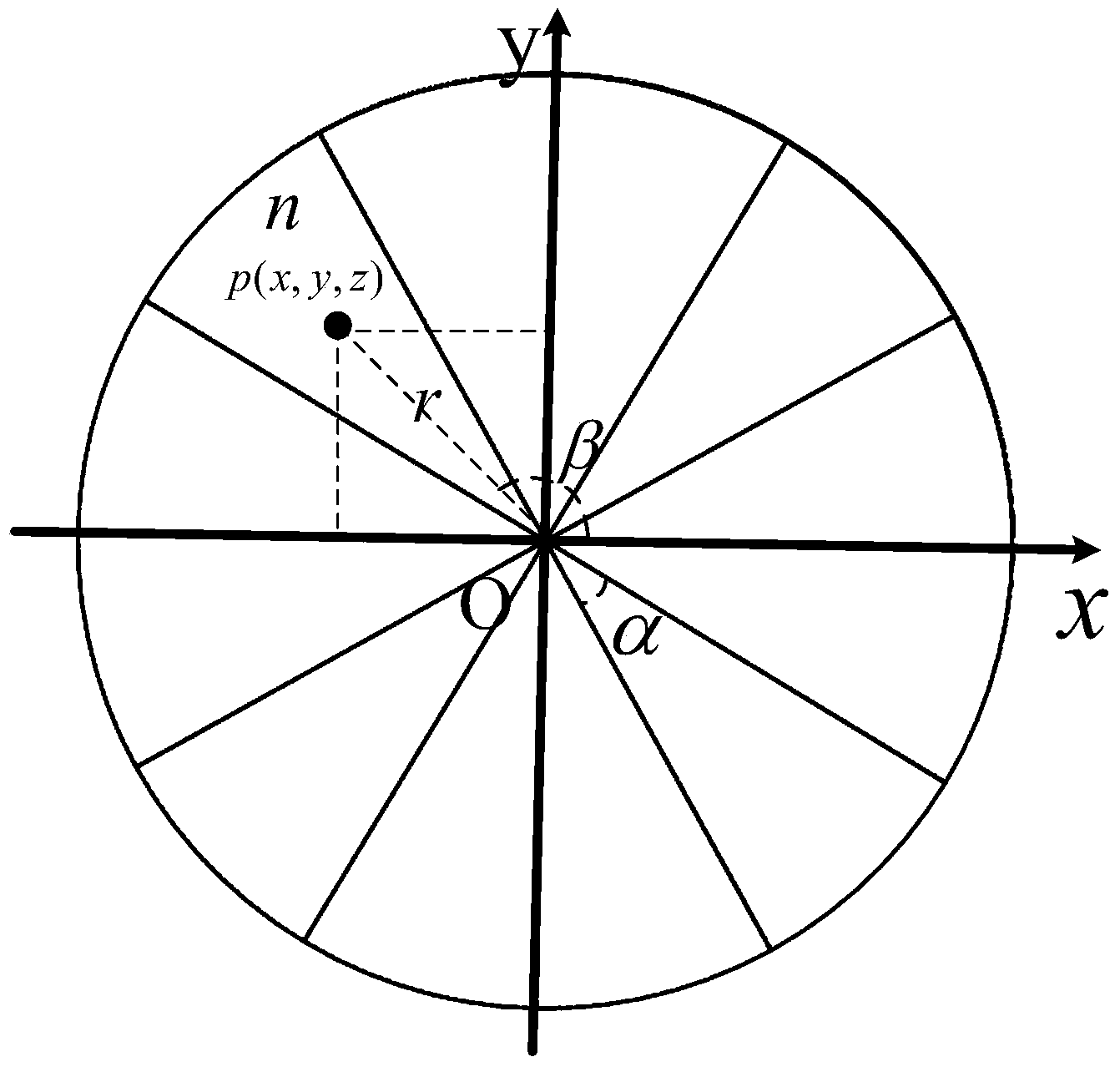

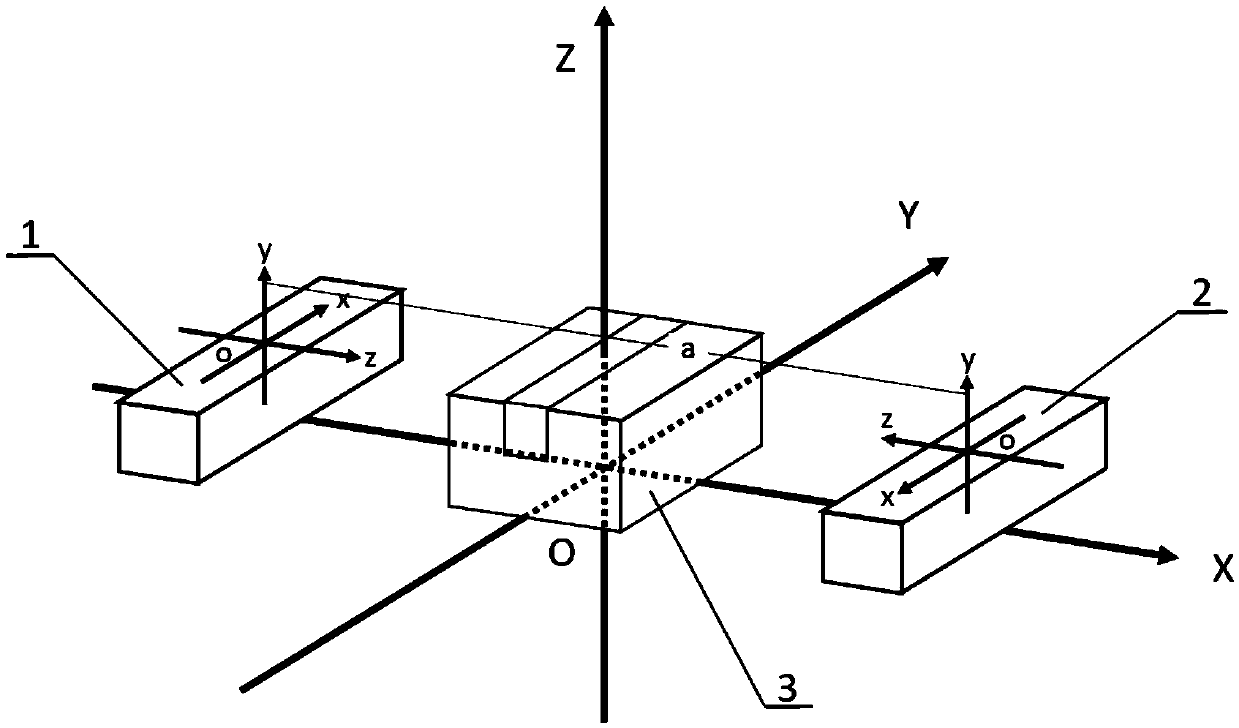

The invention discloses a multiline laser radar-based 3D point cloud segmentation method. The method comprises the following steps of: 1) scanning 3D point cloud data in a 360-degree range by utilizing multiline laser radar, establishing a Cartesian coordinate system OXYZ, converting the 3D point cloud data under the Cartesian coordinate system, and pre-processing the 3D point cloud data under the Cartesian coordinate system so as to determine a region of interest in the 3D point cloud data; 2) filtering suspended obstacle points in the region of interest by utilizing the statistic characteristics of adjacent points; 3) constructing a polar coordinates grid map, mapping the 3D point cloud data, the suspended obstacle points of which are filtered, into the polar coordinates grid map, and segmenting non-ground point cloud data from the 3D point cloud data in the polar coordinates grid map; and 4) voxelizing the non-ground point cloud data by utilizing an octree and carrying out clustering segmentation by adoption of an octree voxel grid-based region growing method. The method can improve the operation efficiency, is high in detection precision and strong in reliability, and can be widely applied to the technical field of vehicle environment perception.

Owner:CHANGAN UNIV

Laser-point-cloud-based urban road identification method and apparatus

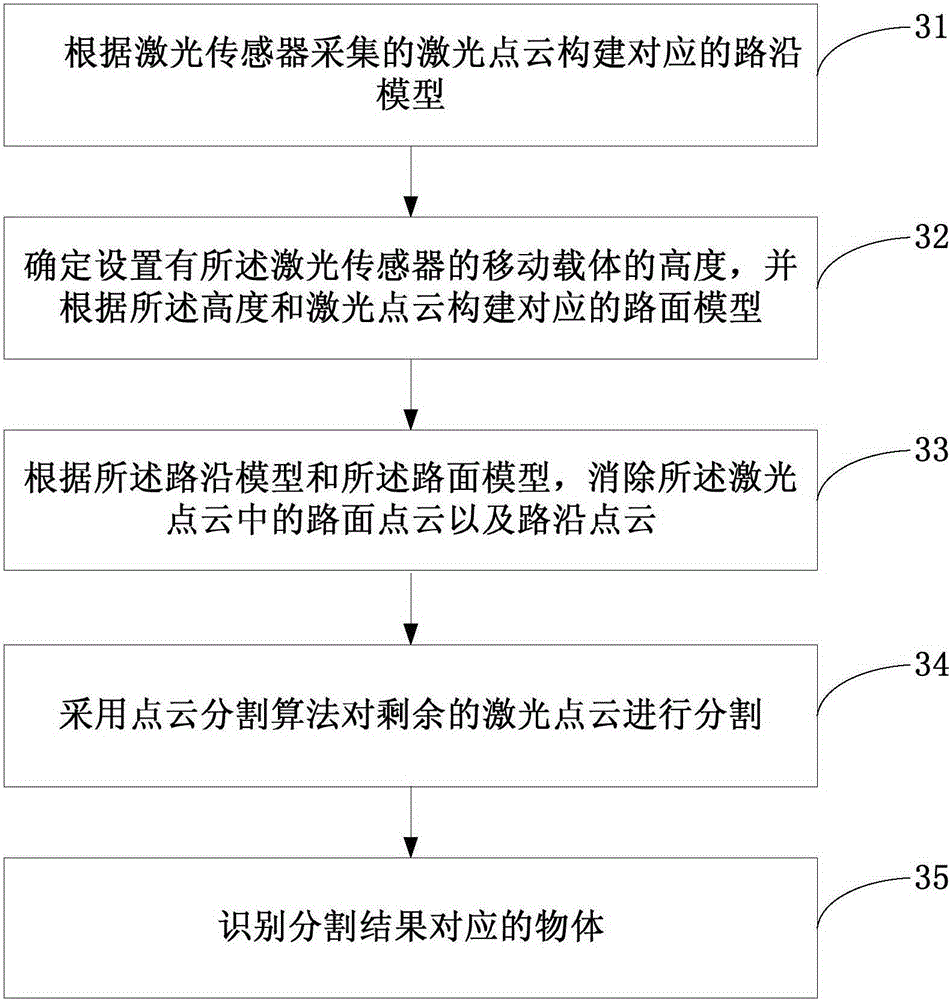

ActiveCN105184852AImprove build efficiencyImprove accuracyImage enhancementImage analysisEngineeringRoad surface

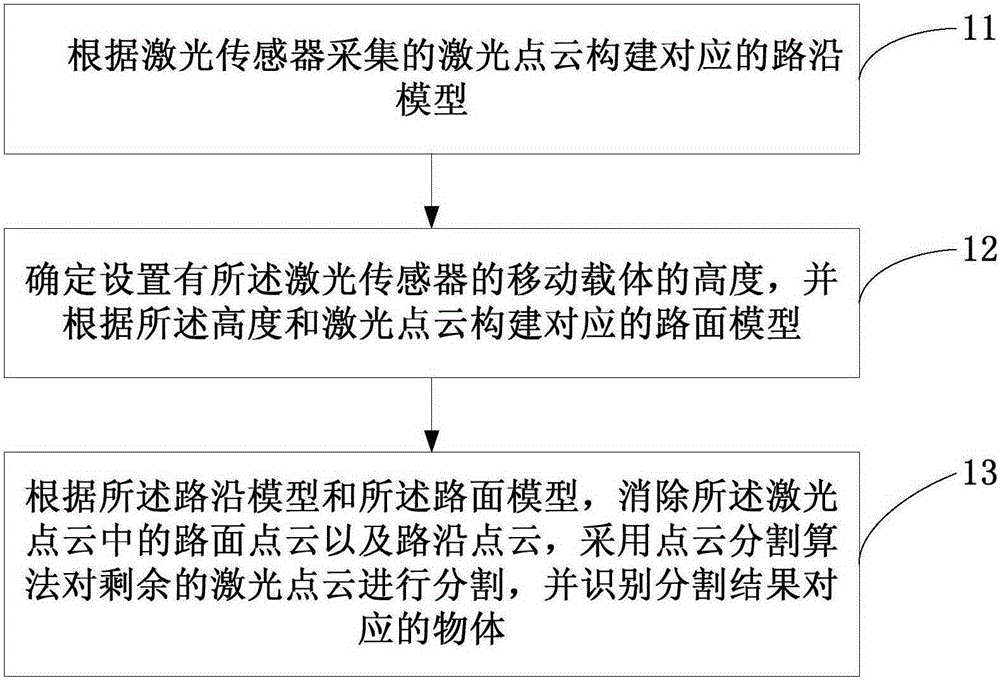

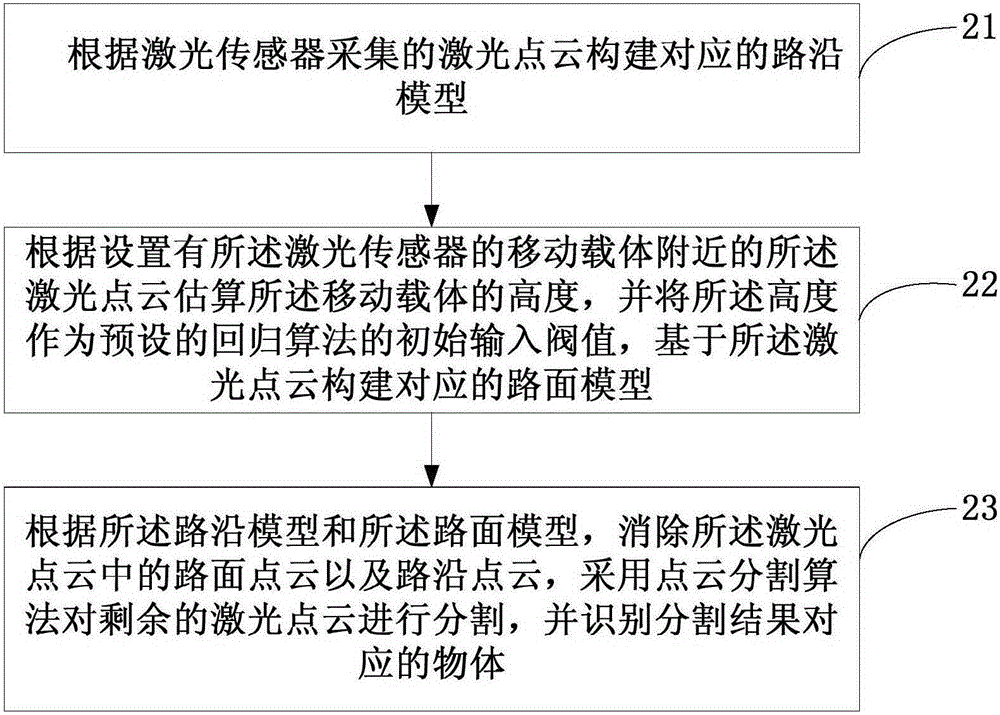

The embodiment of the invention discloses a laser-point-cloud-based urban road identification method and apparatus. The method comprises: a corresponding road edge model is constructed according to laser point clouds collected by a laser sensor; a height of a mobile carrier with the laser sensor is determined and a corresponding road surface model is constructed based on the height and the laser point; and according to the road edge model and the road surface model, a road surface point cloud and a road edge point cloud in the laser point cloud are eliminated, the rest of laser point clouds are segmented by using a point cloud segmentation algorithm, and an object corresponding to the a segmentation result is identified. The height of the mobile carrier is estimated based on the laser point cloud and the road surface model corresponding to the laser point cloud is constructed by using the height, so that the construction efficiency and accuracy of the road surface model are improved. Therefore, the identification efficiency and accuracy of the corresponding object are improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Robot grasp pose estimation method based on object recognition depth learning model

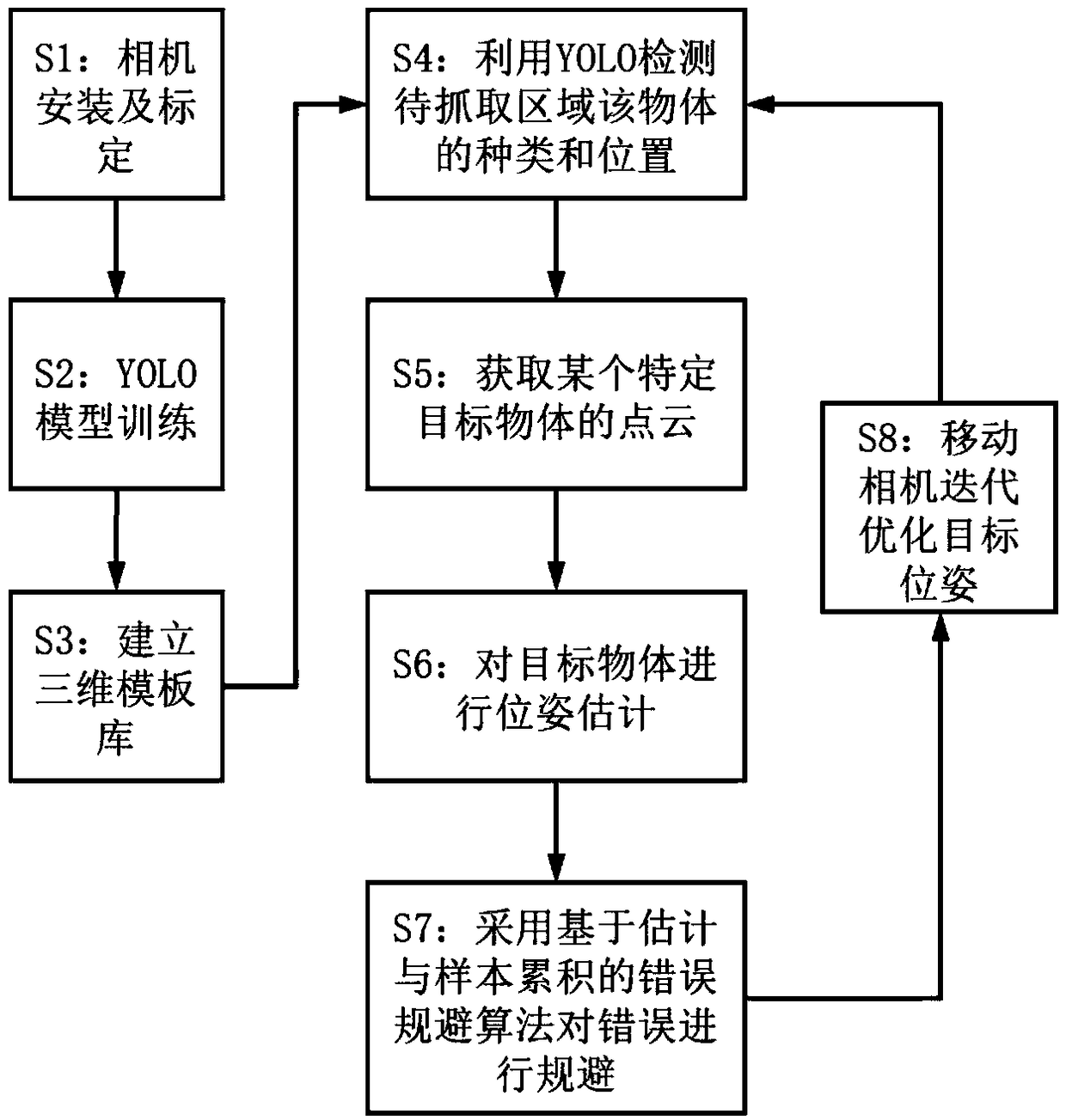

InactiveCN109102547AIncrease success rateImprove computing efficiencyImage analysisEstimation methodsHand eye calibration

The invention discloses a robot grasping pose estimation method based on an object recognition depth learning model, which relates to the technical field of computer vision. The method is based on anRGBD camera and depth learning. The method comprises the following steps: S1, camera parameter calibration and hand-eye calibration being carried out; S2, training an object detection object model; S3, establishing a three-dimensional point cloud template library of the target object; 4, identifying the types and position of each article in the area to be grasped; 5, fusing two-dimensional and three-dimensional vision information and obtaining a point cloud of a specific target object; 6, completing the pose estimation of the target object; S7: adopting an error avoidance algorithm based on sample accumulation to avoid errors; S8: Steps S4 to S7 being continuously repeated by the vision system in the process of moving the robot end toward the target object, so as to realize iterative optimization of the pose estimation of the target object. The algorithm of the invention utilizes a target detection YOLO model to carry out early-stage fast target detection, reduces the calculation amount of three-dimensional point cloud segmentation and matching, and improves the operation efficiency and accuracy.

Owner:SHANGHAI JIEKA ROBOT TECH CO LTD

Two-dimensional laser radar point cloud data processing method and dynamic robot posture calibration method

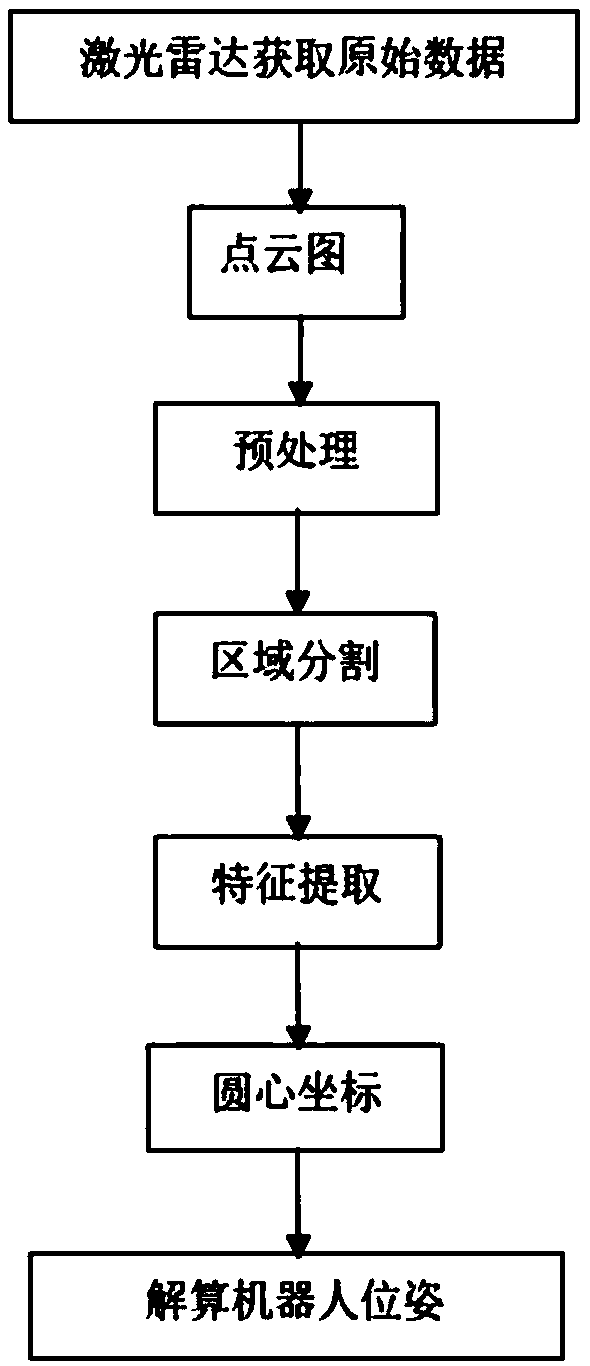

ActiveCN108932736ASolve complexitySolve the accuracy problemImage enhancementImage analysisFeature extractionRectangular coordinates

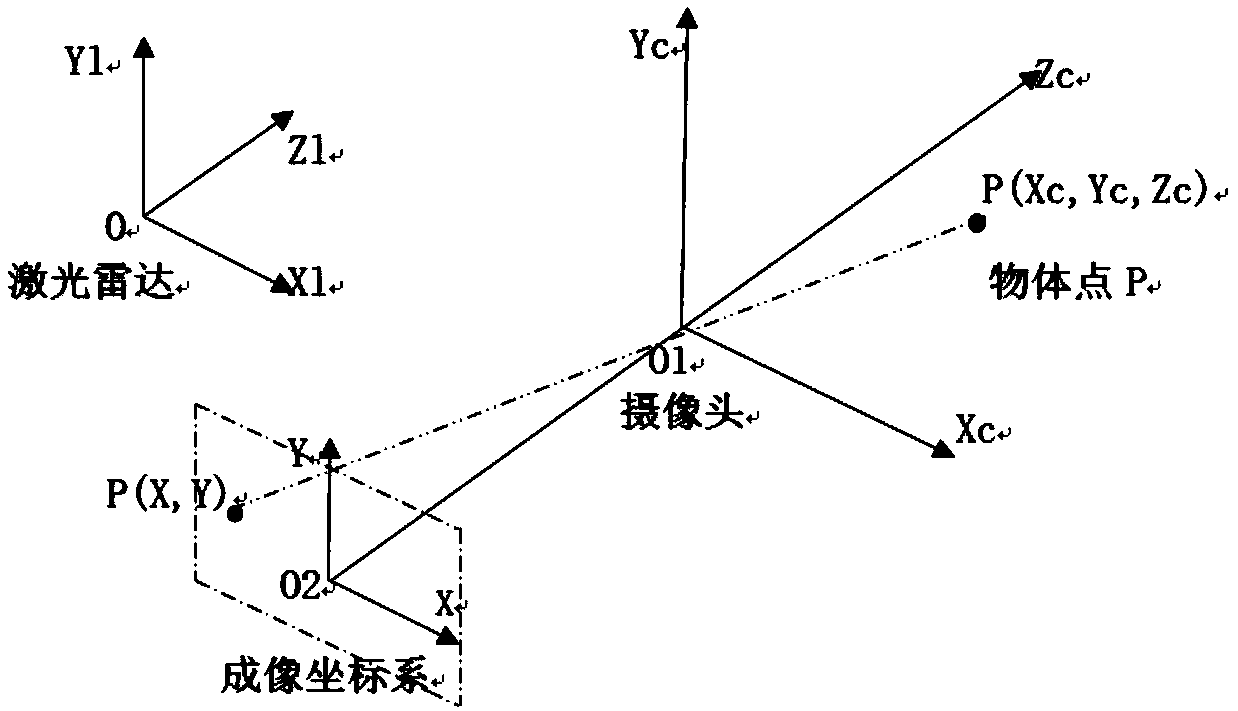

The invention discloses a two-dimensional laser radar point cloud data processing method. Environmental data is collected through laser radar scanning, point cloud data pre-processing is performed, apolar coordinate system with laser radar being a coordinate point is converted into a rectangular coordinate system that is used for posture calibration of a robot, environmental information is effectively detected and recognized through region segmentation, feature extraction, point cloud segmentation, two-dimensional laser radar and a camera fusion detection algorithm, and three-dimensional coordinate fusion is conducted through a vent needle model of the camera and the laser radar, so that the robot calibrates the posture according to a deviation. The invention also discloses a dynamic robot posture calibration method, wherein a camera is adopted as an auxiliary detection sensor and obtains the real-time posture state of the robot together with laser radar, the adjustment amount of a calibration system is obtained by comparing the real-time posture state with a desired posture state, calibration is continuously carried out until the deviation between the robot posture detected by the laser radar and the desired posture is less than a certain threshold value, and thus a whole closed-loop posture calibration process is completed.

Owner:NANCHANG UNIV

Ground point cloud segmentation method based on three-dimensional laser radar

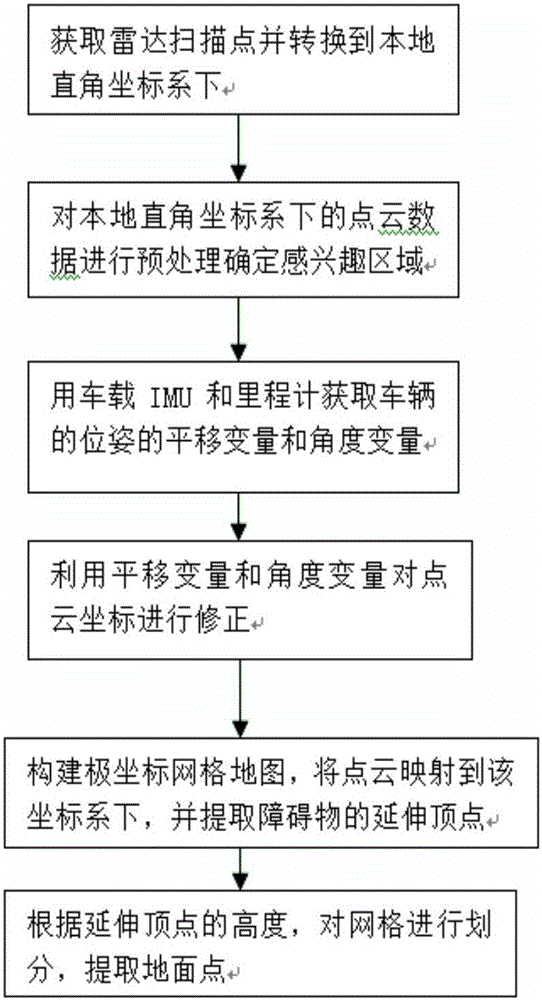

The invention discloses a ground point cloud segmentation method based on a three-dimensional laser radar. The ground point cloud segmentation method comprises the steps of: 1) acquiring polar coordinate data of three-dimensional laser radar scanning point cloud in the surrounding environment of a vehicle and converting the polar coordinate data to a local rectangular coordinate system; 2) correcting radar data by utilizing a vehicle-mounted IMU and an odometer; 3) constructing a polar coordinate grid map, and extracting an extension vertex in each grid according to vertical continuity of point cloud distribution in the grids; 4) extracting ground points in non-marginal grids according to height attributes of the extension vertexes and a ground smooth consistency criterion, and adopting a 3sigma criterion for further extraction of ground points in marginal grids. Compared with the prior art, the ground point cloud segmentation method can reduce ground extraction errors caused by motion of the vehicle, avoids the occurrence of over-segmentation and under-segmentation, is high in precision and high in reliability, has high real-time performance and can be widely used in the field of radar-based environment sensing technologies.

Owner:CHANGAN UNIV

Point cloud partition method and device

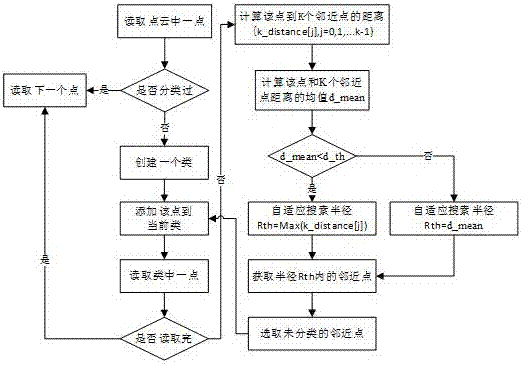

ActiveCN104143194AAvoid undersegmentationPrevent oversegmentationImage analysisTransducerPoint cloud segmentation

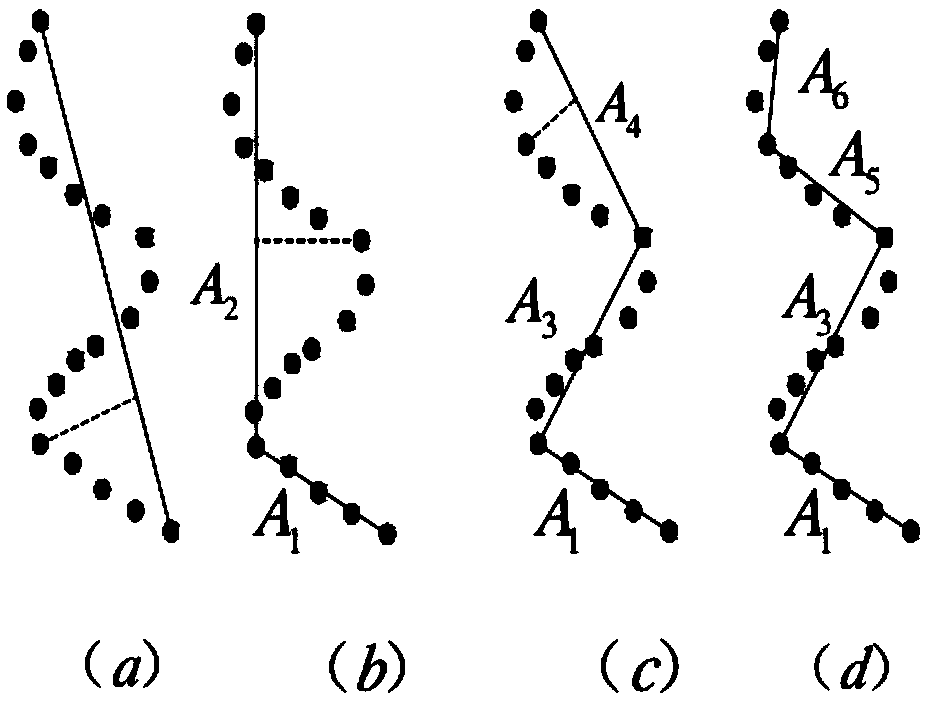

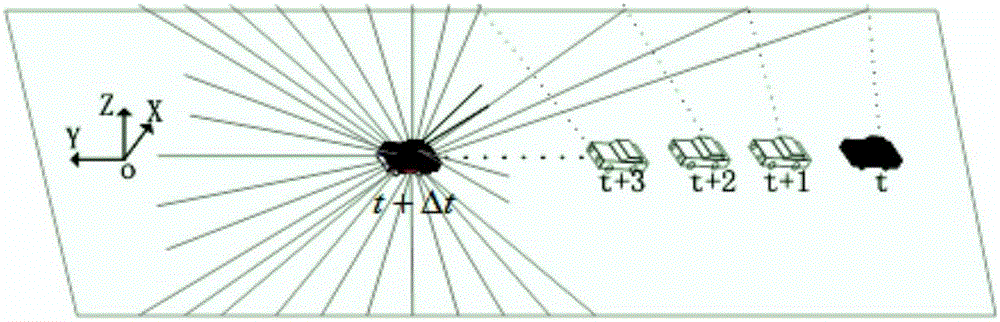

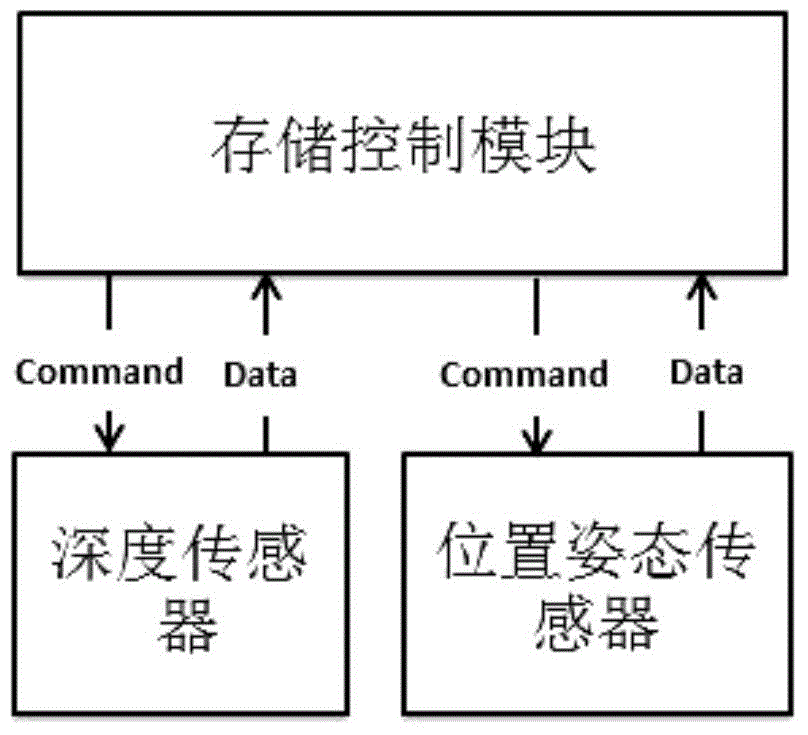

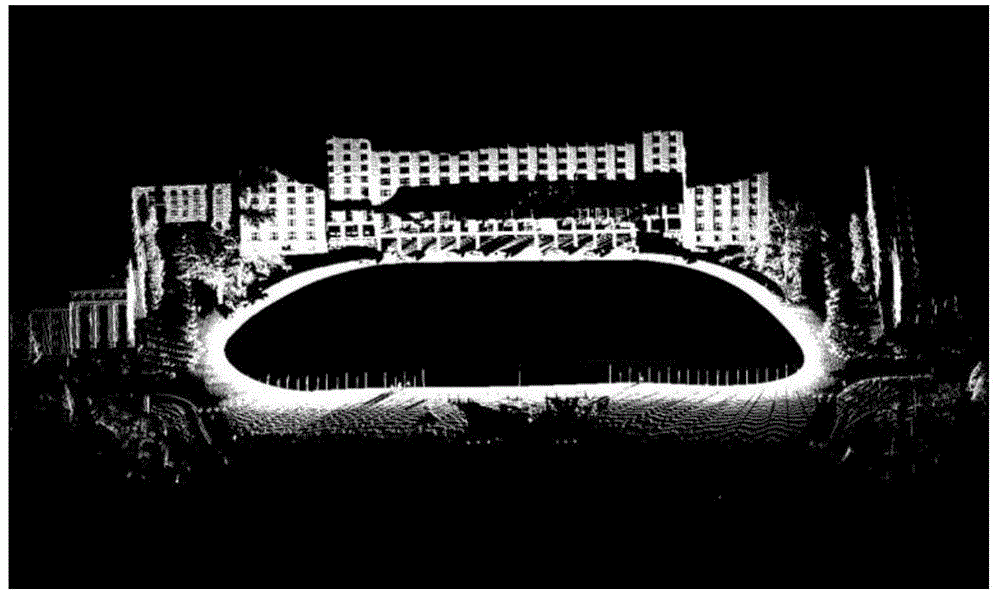

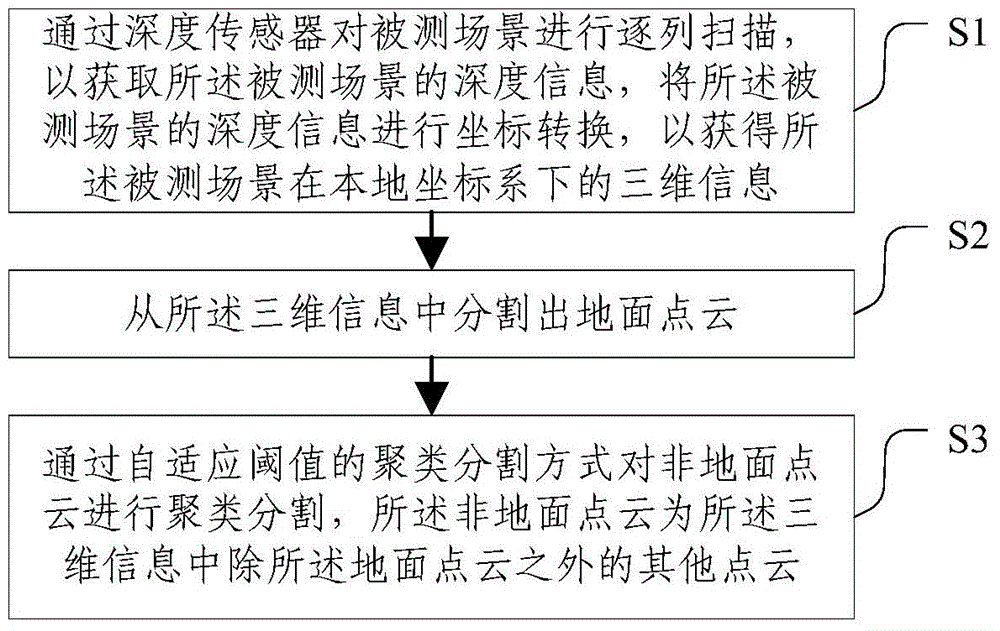

The invention discloses a point cloud partition method and device, and relates to the technical field of three-dimensional reconstruction. The point cloud partition method includes the following steps: (S1) line-by-line scanning is carried out on a measured scene through a depth transducer to obtain depth information of the measured scene, and coordinate transformation is carried out on the depth information of the measured scene to obtain three-dimensional information of the measured scene under a local coordinate system; (S2) ground point clouds are partitioned from the three-dimensional information; (S3) clustering partition is carried out on non-ground point clouds in the self-adaptation threshold value clustering partition mode, wherein the non-ground point clouds are the other point clouds except the ground point clouds in the three-dimensional information. By means of the point cloud partition method and device, clustering partition is carried out on the non-ground point clouds in the self-adaptation threshold value clustering partition mode, and insufficient partition and excessive partition caused by clustering partition on the non-ground point clouds with fixed threshold values are effectively avoided.

Owner:TSINGHUA UNIV

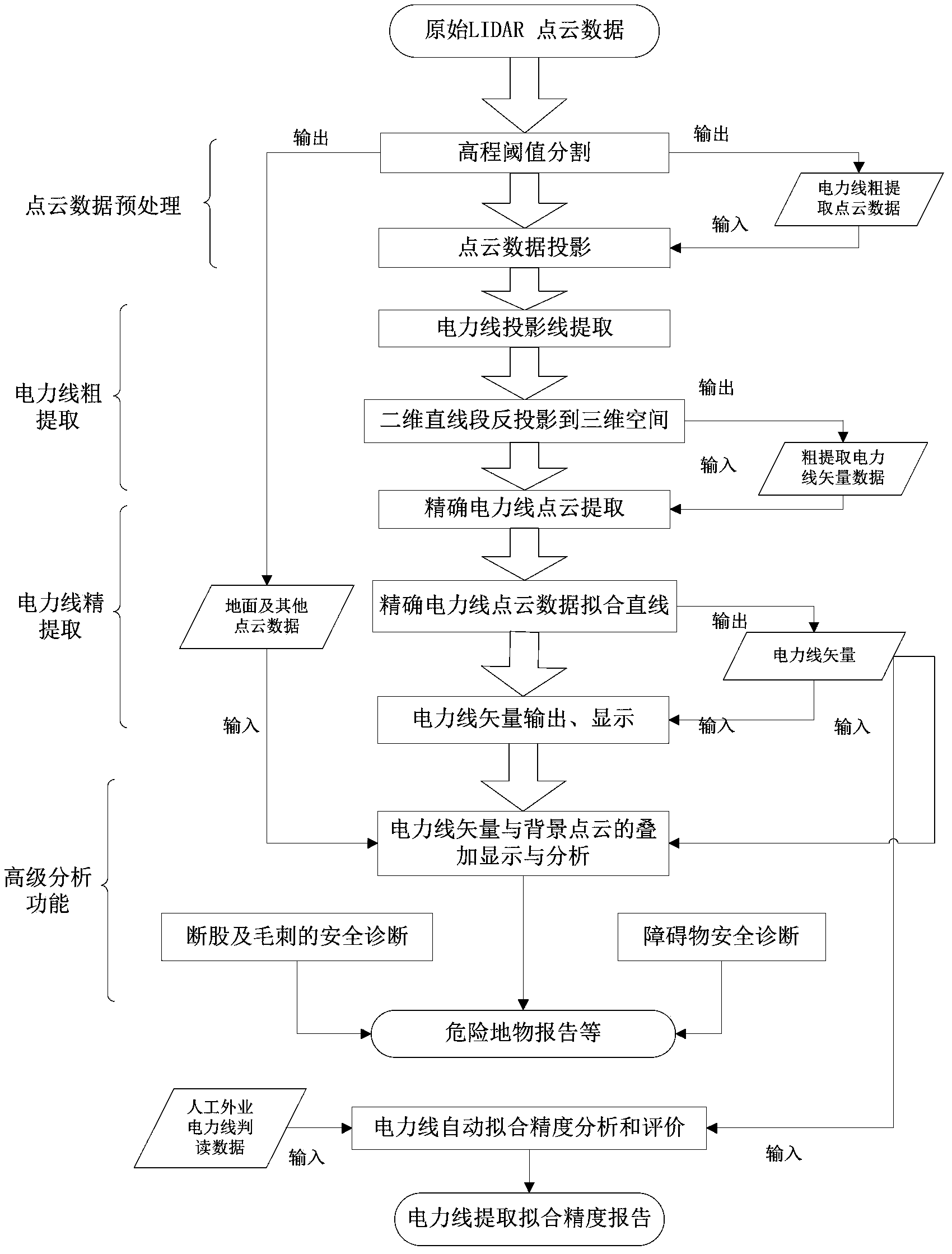

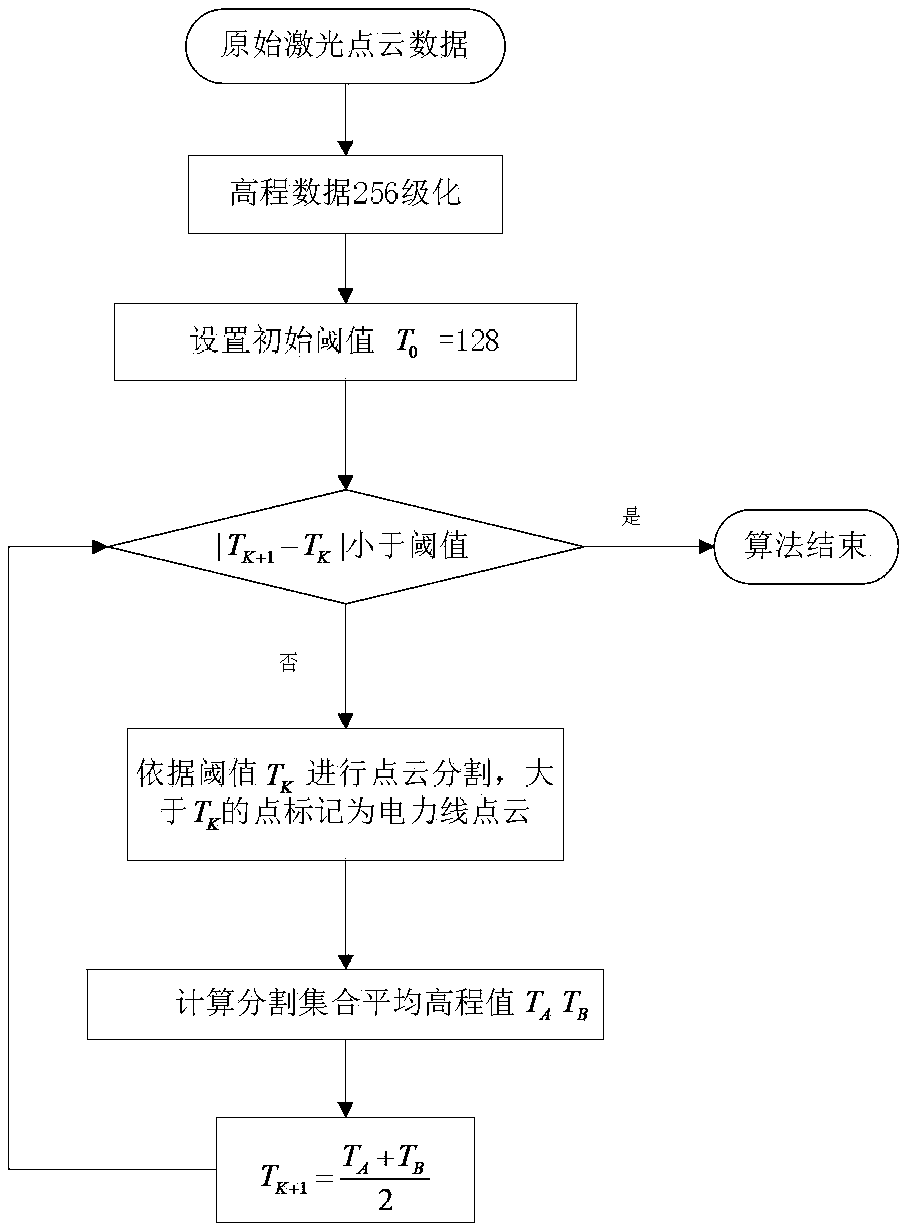

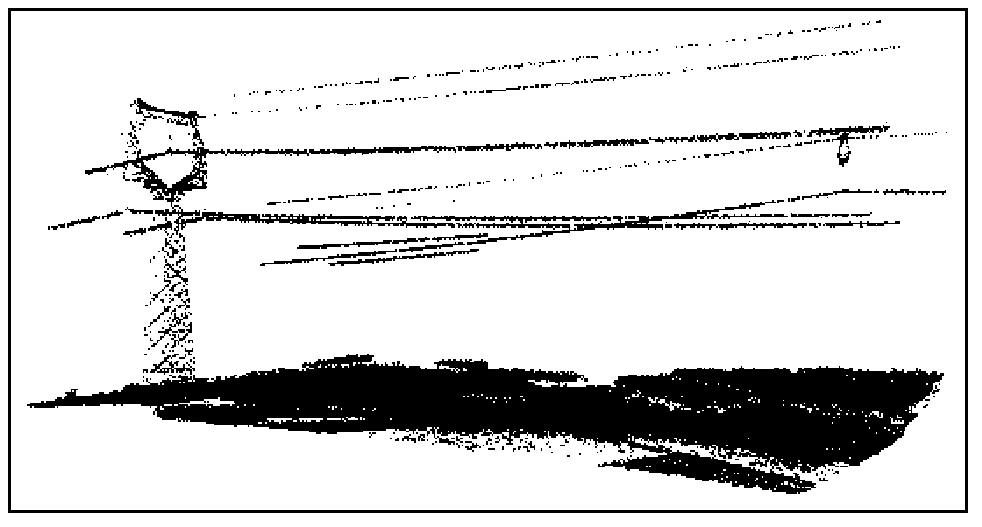

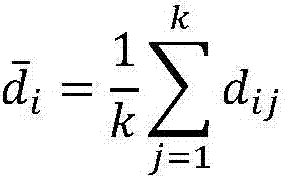

Automatically-extracting power line method in random laser point cloud data

ActiveCN103413133ARealize automatic extractionEasy extractionWave based measurement systemsCharacter and pattern recognitionRandom laserThree-dimensional space

The invention discloses an automatically-extracting power line method in random laser point cloud data. According to the automatically-extracting power line method, a ground point cloud is segmented and filtered through a point cloud of an elevation; on direction projection of the segmented point cloud, a straight line segment is detected and further back-projected to three-dimensional space so as to obtain a three-dimensional line segment; while the three-dimensional line segment serves as a clustering nuclear, a distance clustering method is used to obtain an accurate point cloud of the power line and generates a power line vector node based on the accurate point cloud of the power line; and a power line vector is outputted, so that automatic extraction of the power line is realized. The automatically-extracting power line method in the random laser point cloud data is efficient in extracting, and can automatically extract the power line.

Owner:ELECTRIC POWER RES INST OF GUANGDONG POWER GRID

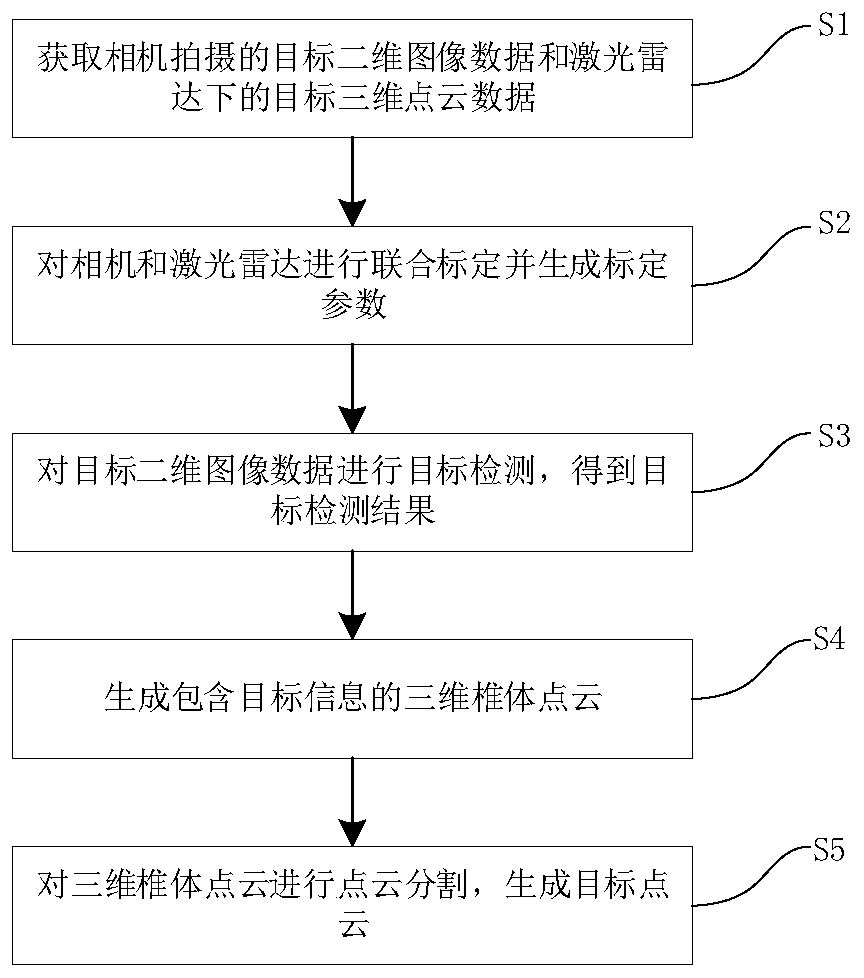

Sparse point cloud segmentation method and device

ActiveCN110264416AHigh precisionImprove efficiencyImage enhancementImage analysisImaging processingRadar

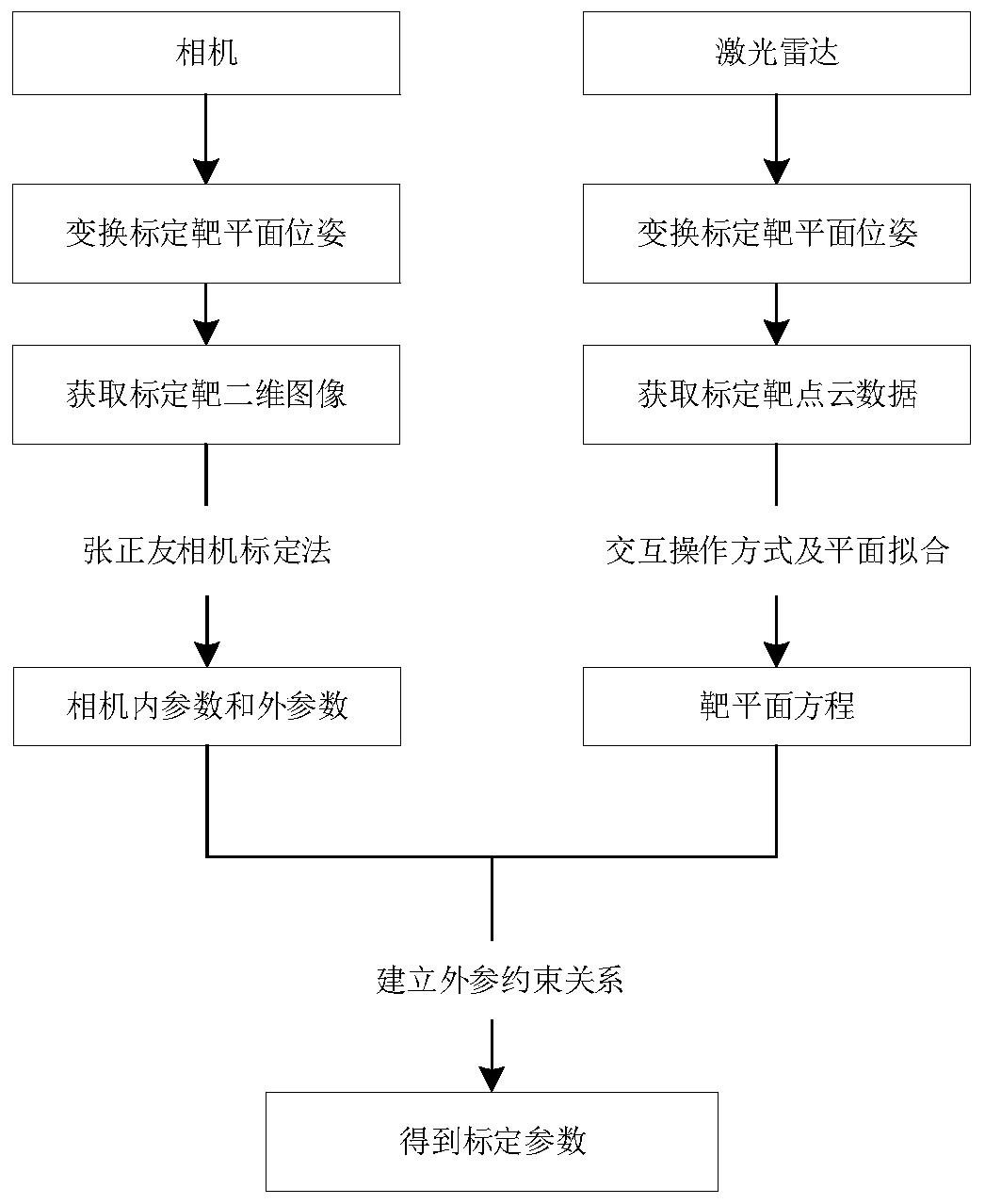

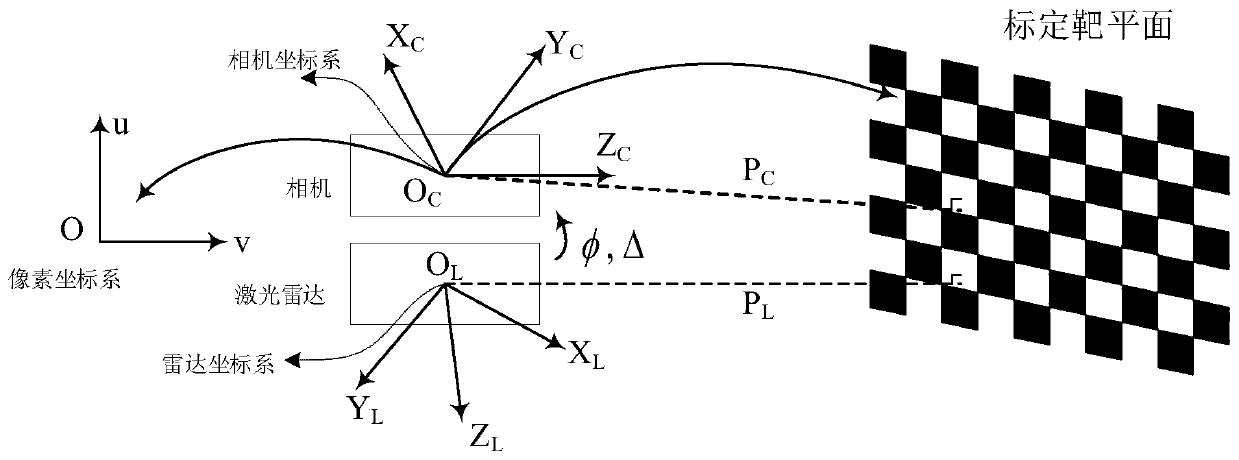

The invention discloses a sparse point cloud segmentation method and device. Relating to the field of image processing, the method comprises the following steps of: obtaining a target object, acquiring target two-dimensional image data shot by a camera and target three-dimensional point cloud data under the laser radar; performing joint calibration on the camera and the laser radar, generating calibration parameters, performing target detection on target two-dimensional image data, extracting three-dimensional points which can be converted to a target two-dimensional boundary frame according to a target detection result and a selection principle, generating three-dimensional cone point cloud containing target information, and finally performing point cloud segmentation to generate target point cloud. In the prior art, a laser radar combination fusion mode is used to carry out point cloud segmentation. According to the method, the equipment cost is reduced, the three-dimensional cone point cloud containing the target information is obtained according to the selection principle, then point cloud segmentation is carried out to remove the noise point cloud, the point cloud segmentation precision and efficiency are improved, and the method has a good practical application value.

Owner:SHENZHEN UNIV

Network-connected vehicle positioning method based on 3D laser radar and V2X

InactiveCN108983248AAvoid crowdingAvoid cloggingElectromagnetic wave reradiationRadarComputer module

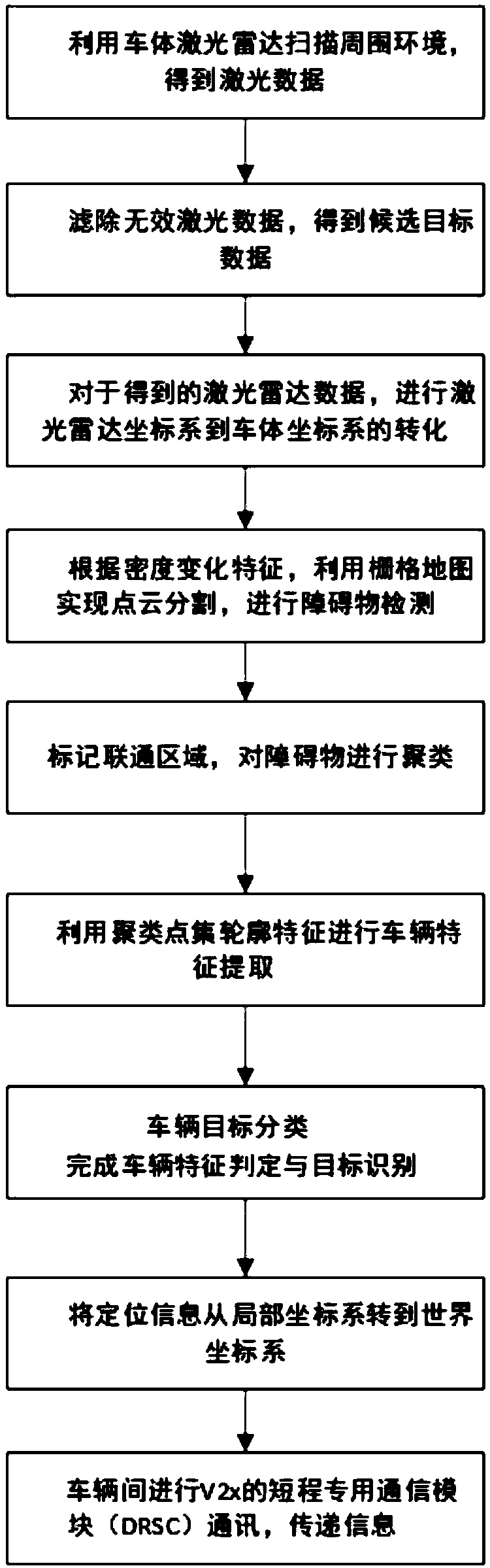

The invention discloses a network-connected vehicle positioning method based on a 3D laser radar and V2X. The method comprises the following steps of using a laser radar installed on a network-connected vehicle to scan an environment around a network-connected vehicle so as to obtain radar data; preprocessing the radar data, acquiring preprocessed laser point cloud data, converting the point clouddata to a network-connected vehicle coordinate system, and then, according to the density change characteristic of a radar point in the point cloud data, using a raster map to carry out point cloud segmentation so as to realize the separation of a pavement and barriers; carrying out the clustering of the barriers, acquiring a radar point set corresponding to each barrier, and then obtaining the contour of the barrier, then carrying out linear fitting to obtain the shape of the barrier, and combining the size of the barrier to realize barrier identification; and finally, realizing the exchangeof barrier information among other network-connected vehicles through a V2X module. In the invention, a problem that an existing vehicle positioning method has low accuracy and poor reliability is solved, and simultaneously problems that a requirement to the speed of a mobile communication network is high and cost is high are alleviated.

Owner:CHANGAN UNIV

Airborne laser point cloud classification method based on high-order conditional random field

ActiveCN110110802ABuild accuratelyAccurate classificationCharacter and pattern recognitionConditional random fieldClassification methods

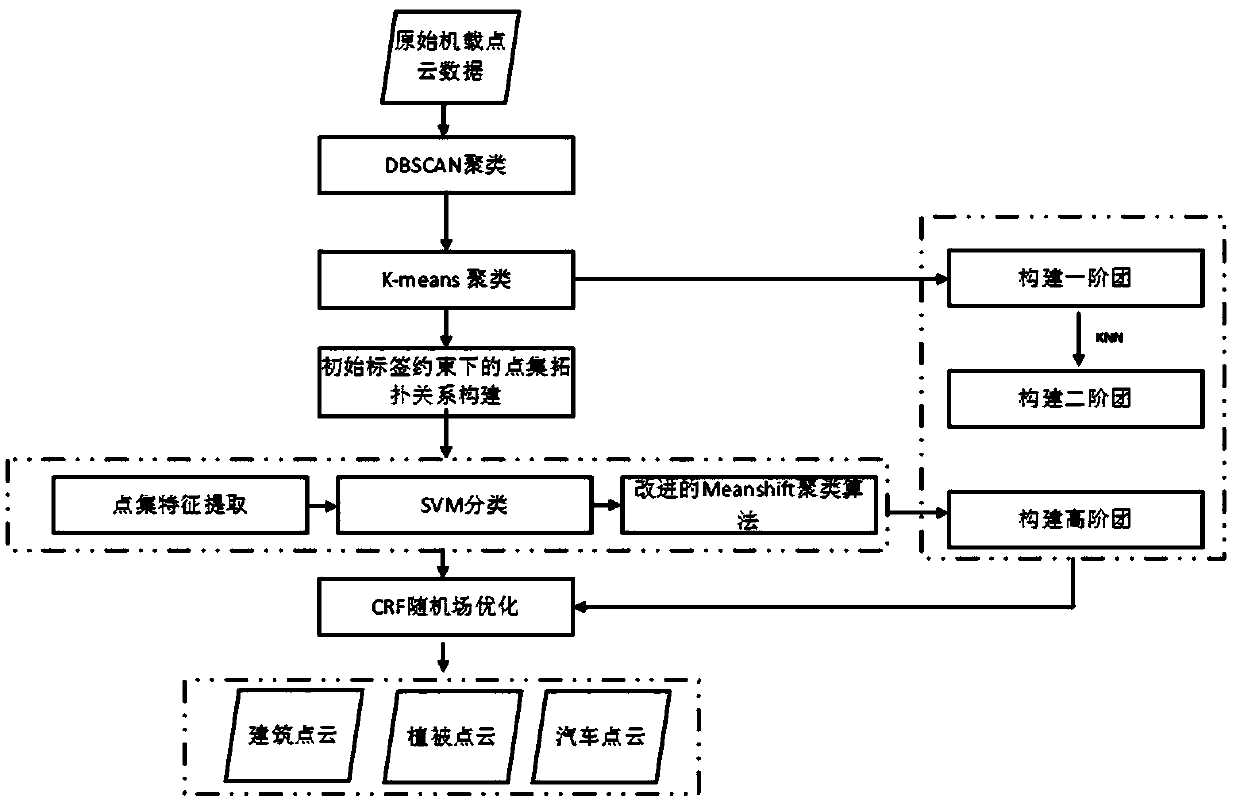

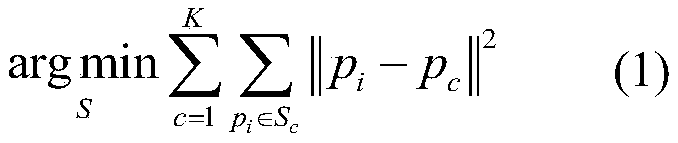

The invention provides an airborne laser point cloud classification method based on a high-order conditional random field. The airborne laser point cloud classification method specifically comprises the following steps: (1) point cloud segmentation based on DBSCAN clustering; (2) point cloud over-segmentation based on the K-means cluster; (3) construction of a point set adjacency relation based onthe Meanshift clustering; and (4) construction of a point cloud classification method of a high-order conditional random field based on the multi-level point set. The method has the advantages that:(1) a multi-layer clustering point set structure construction method is provided, and a connection relation between point sets is constructed by introducing a Meanshift point set cluster constrained by category labels, so that the categories of the point sets can be classified more accurately; (2) a multi-level point set of the non-linear point cloud number can be adaptively constructed, and information such as the structure and the shape of a point cloud target can be more completely represented; and (3) a CRF model is constructed by taking the point set as a first-order item, and higher efficiency and a classification effect are achieved, so that a higher framework is integrated, and a better effect is obtained.

Owner:NANJING FORESTRY UNIV

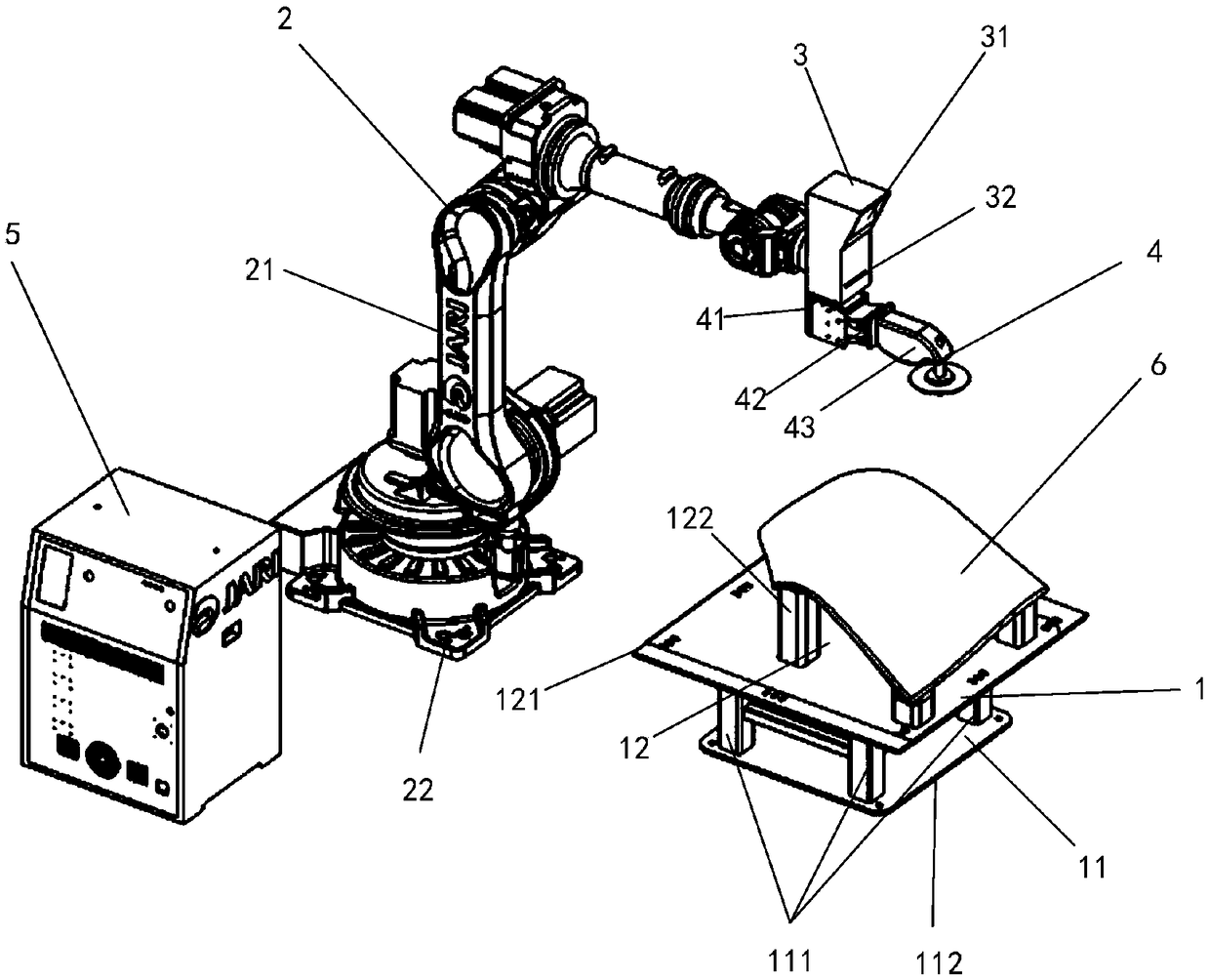

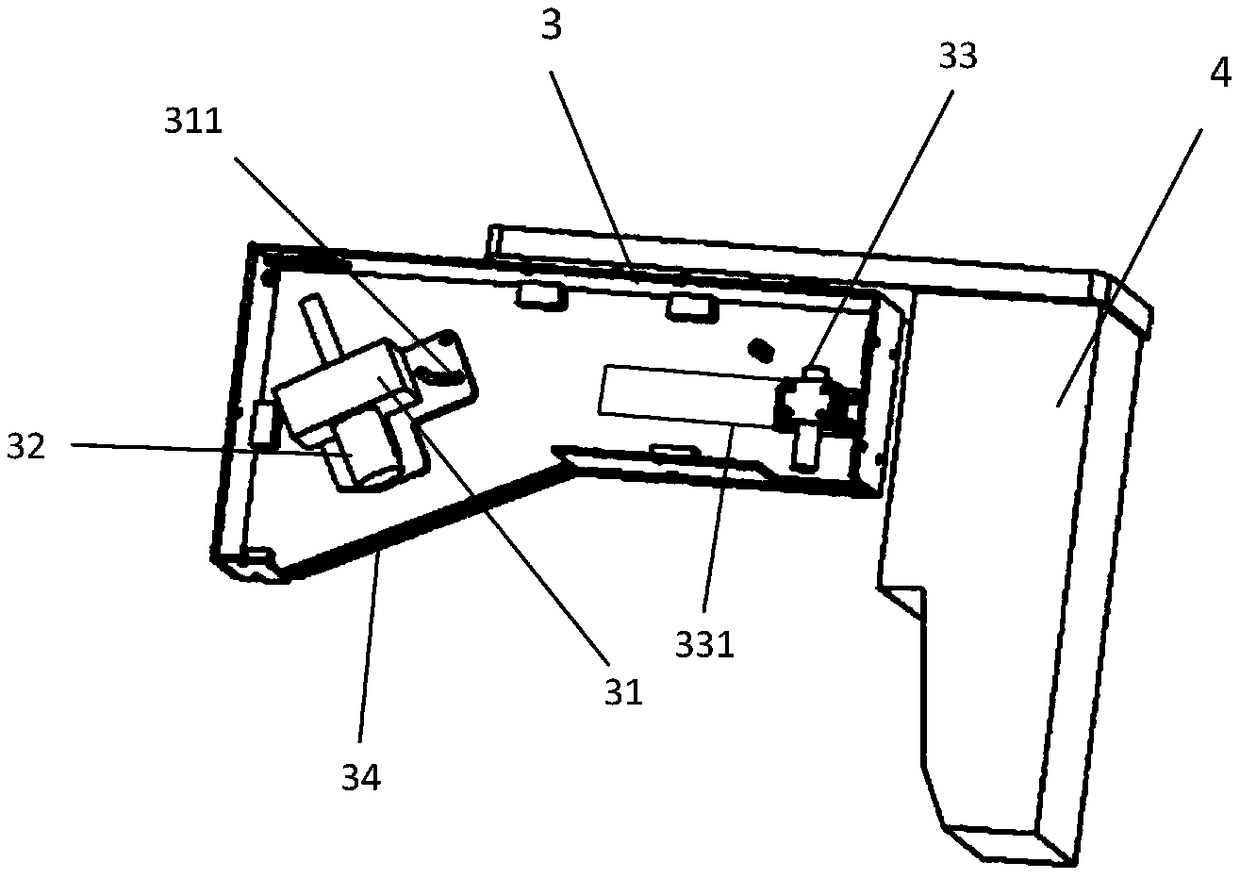

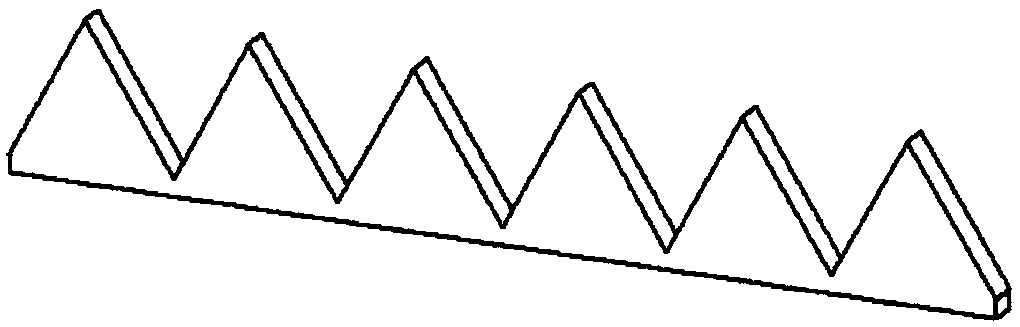

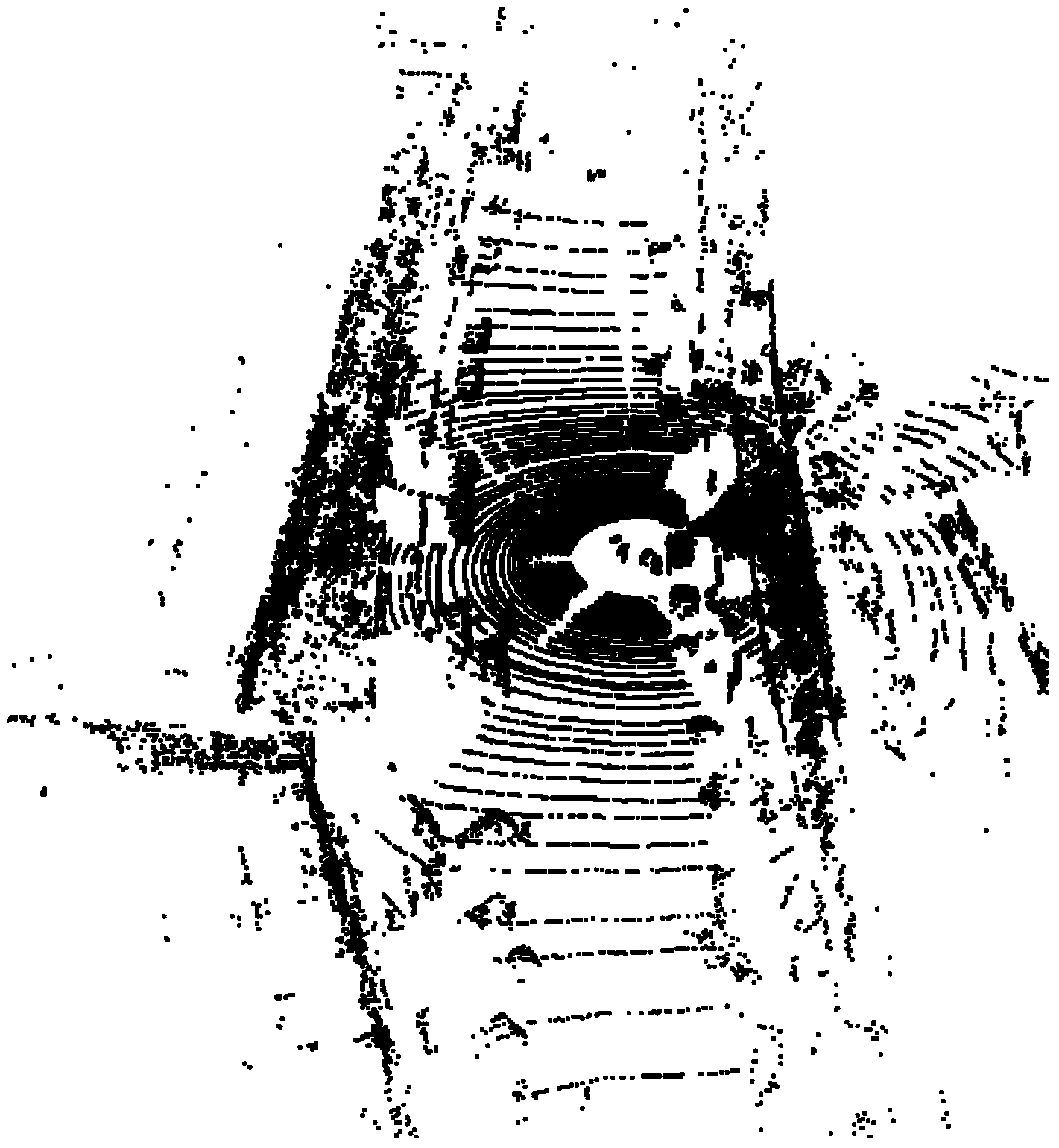

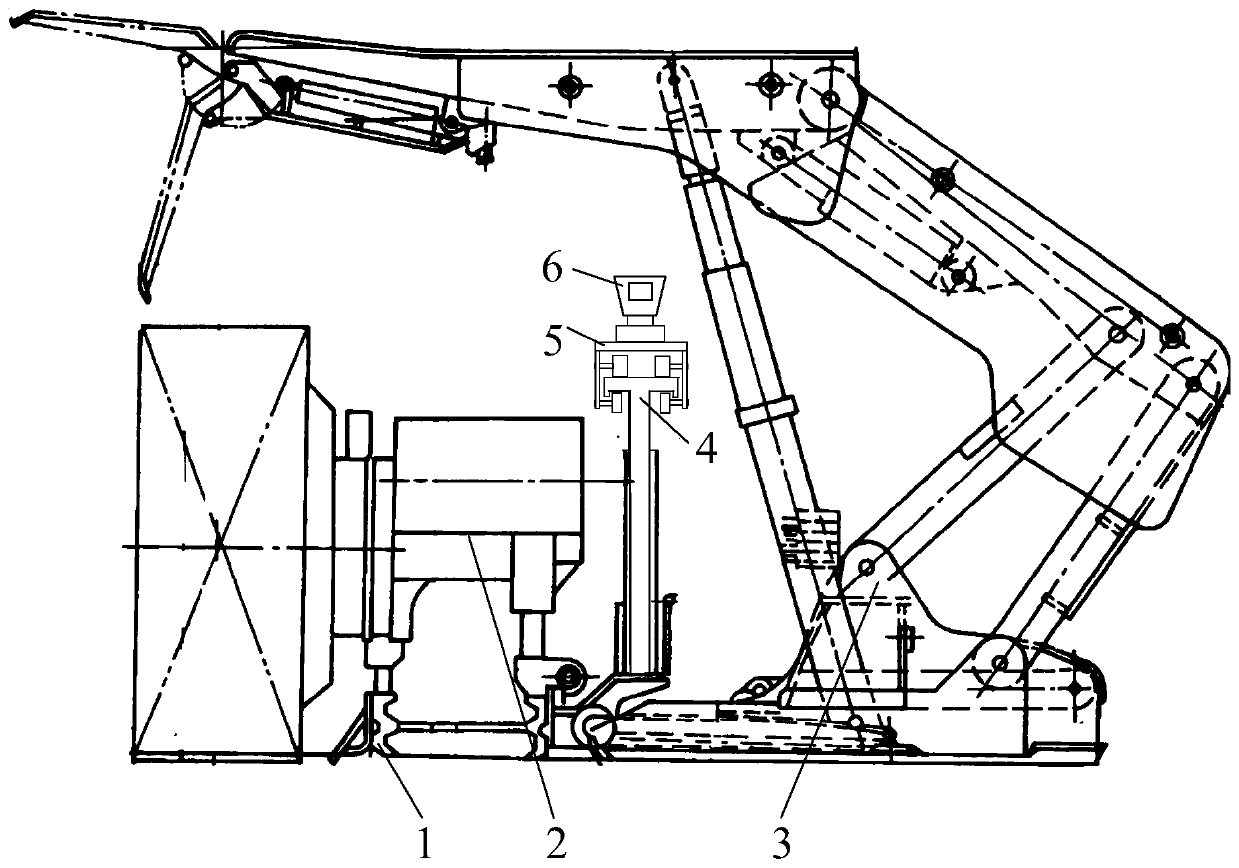

Robot grinding system with three-dimensional vision and control method thereof

PendingCN109483369ASolve the problem of automatic grindingRealize flexible grinding workGrinding feed controlCharacter and pattern recognitionEngineering3d camera

The invention discloses a robot grinding system with three-dimensional vision and a control method thereof. The robot grinding system comprises a workpiece fixing device, a grinding robot, an opticaldevice, pneumatic grinding equipment, a power supply and a control device. The control method of the robot grinding system with the three-dimensional vision comprises the steps that the grinding robotdrives the optical device to horizontally scan a curved surface workpiece; a laser image collected by a 3D camera is transmitted to the control device in the power supply and the control device; thecontrol device performs the three-dimensional point cloud processing to the image obtained by the 3D camera by using a corresponding function, and the preliminary filtering denoising is carried out; the point cloud segmentation and a slice algorithm are used for performing the further processing on the obtained three-dimensional point cloud; and processing results are analyzed and sent to the control device according to rules, and the robot is driven to perform the wholly grinding operation on the curved surface workpiece in the correct attitude. The robot grinding system with three-dimensional vision and the control method thereof are mainly used for automatic grinding in the field of curved surface structure manufacturing, and the production efficiency and production quality are improvedby improving the automation degree of the industrial grinding field.

Owner:716TH RES INST OF CHINA SHIPBUILDING INDAL CORP +1

3D point cloud data processing method

ActiveCN104298998ASimple data structureImprove filtering effectCharacter and pattern recognitionGeographical information databasesGround planeMultiple category

The invention discloses a 3D point cloud data processing method. A ground filtering method for a 3D point cloud is achieved by establishing a 3D raster map and fitting a ground plane curve, a data structure is simple, the obtained ground plane curve is accurate and reliable, and filtering effect and real-timeliness are very good. A provided partition method adopts a method for clustering search windows in the cylindrical coordinate raster map, the calculation amount in the clustering process is greatly decreased, real-timeliness is good, and a clustering result is accurate. A provided training sample marking method is formed by combining point cloud partition with an appropriate display and storage method and is easy to achieve, multiple categories of samples can be marked in point cloud data of each frame, and sample marking efficiency is greatly improved.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

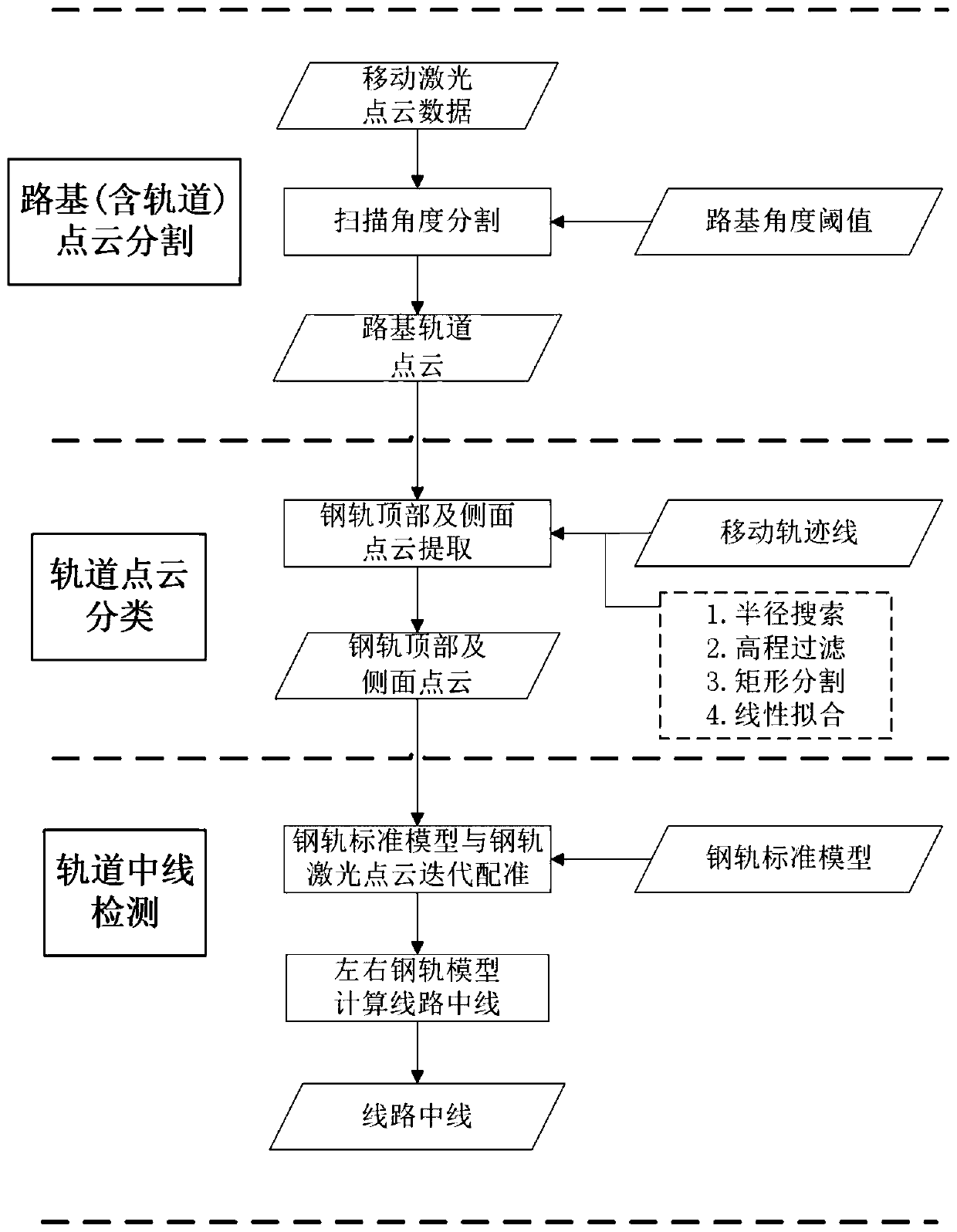

Track center line automatic detection method based on vehicle-mounted mobile laser point cloud

ActiveCN110647798AImprove computing efficiencyQuick splitImage enhancementImage analysisIn vehicleTrackway

The invention discloses a track center line automatic detection method based on vehicle-mounted mobile laser point cloud. The method comprises the following steps: S1, point cloud segmentation of a roadbed and a track; s2, track point cloud classification; s3, automatic track center line detection: according to a cross section design drawing of a standard steel rail, automatically reconstructing astandard three-dimensional geometric model of the steel rail in a segmented manner, carrying out iterative registration on the standard steel rail model and the segmented steel rail point cloud in the S2, and carrying out automatic reconstruction on a segmented three-dimensional model of the steel rail; calculating track geometric parameters by utilizing the reconstructed track three-dimensionalgeometric model, calculating track center line point locations in a segmented manner on the basis of the reference track, and sequentially connecting the track center line point locations extracted inthe segmented manner to form a line center line. According to the method, roadbed and rail point clouds can be rapidly segmented, the area of rail point cloud classification search is reduced, automatic classification of laser point clouds on the surfaces of left and right steel rails is realized, and the precision requirement of existing rail transit midline detection can be met.

Owner:CHINA RAILWAY DESIGN GRP CO LTD

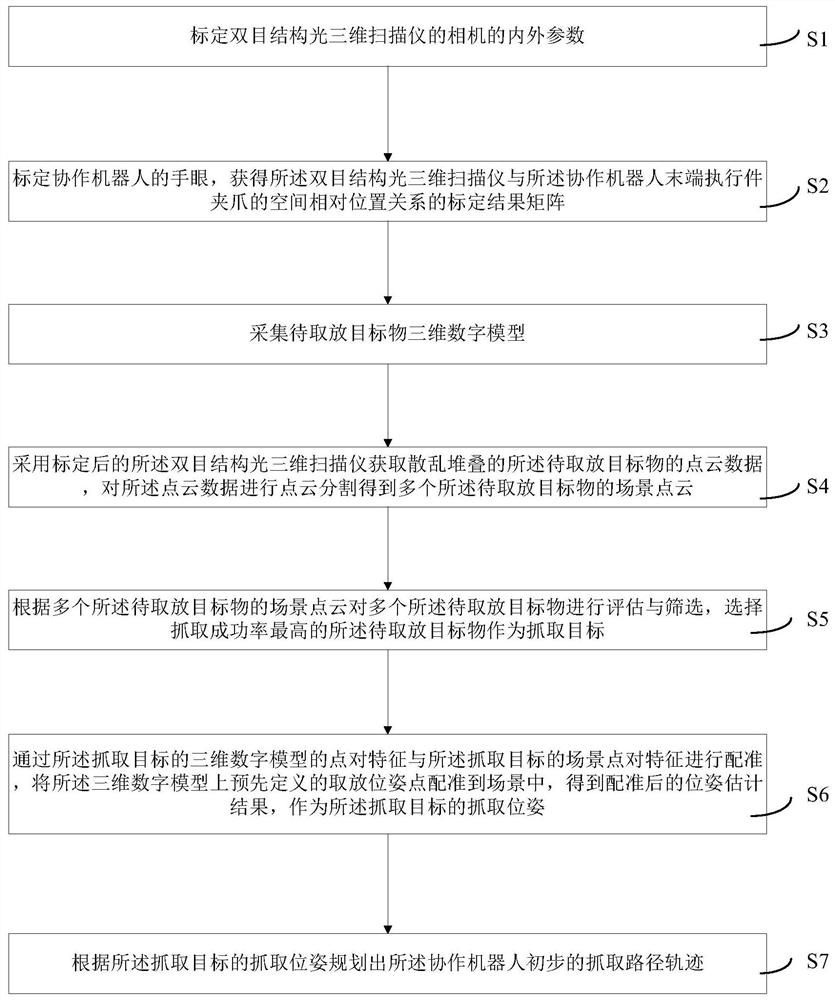

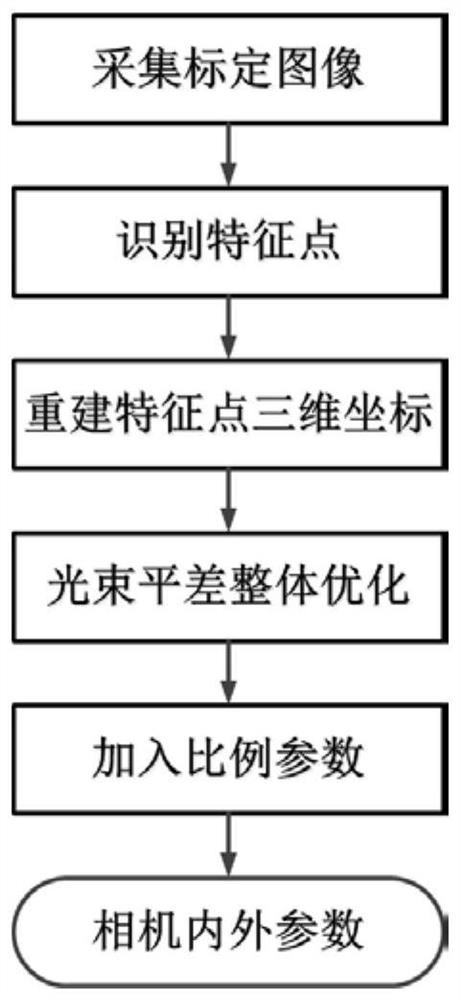

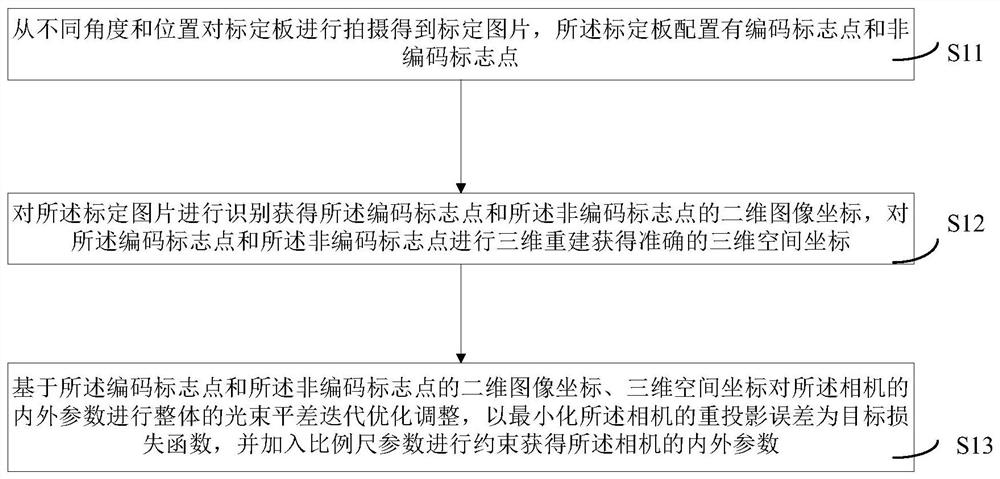

Visual 3D taking and placing method and system based on cooperative robot

ActiveCN112476434AImprove the quality of acquisitionGet fastProgramme-controlled manipulatorImage analysisPattern recognitionComputer graphics (images)

The invention provides a visual 3D taking and placing method and system based on a cooperative robot. The method comprises the steps that internal and external parameters of a camera of a binocular structured light three-dimensional scanner are calibrated; the hands and eyes of the cooperative robot are calibrated, and a calibration result matrix is obtained; a three-dimensional digital model of to-be-taken-and-placed target objects is collected; the calibrated binocular structured light three-dimensional scanner is adopted to obtain point cloud data of the to-be-taken-and-placed target objects which are stacked in a scattered mode, and the point cloud is segmented to obtain scene point clouds of the multiple to-be-taken-and-placed target objects; the to-be-taken-and-placed target object with the highest grabbing success rate is selected as a grabbing target according to the scene point clouds of the multiple to-be-taken-and-placed target objects; the three-dimensional digital model ofthe grabbing target and scene point pair features are registered, pre-defined taking and placing pose points are registered into a scene, and a registered pose estimation result is obtained and serves as a grabbing pose of the grabbing target; and a preliminary grabbing path track of the cooperative robot is planned. The target object can be accurately recognized, and the grabbing positioning precision is high.

Owner:新拓三维技术(深圳)有限公司

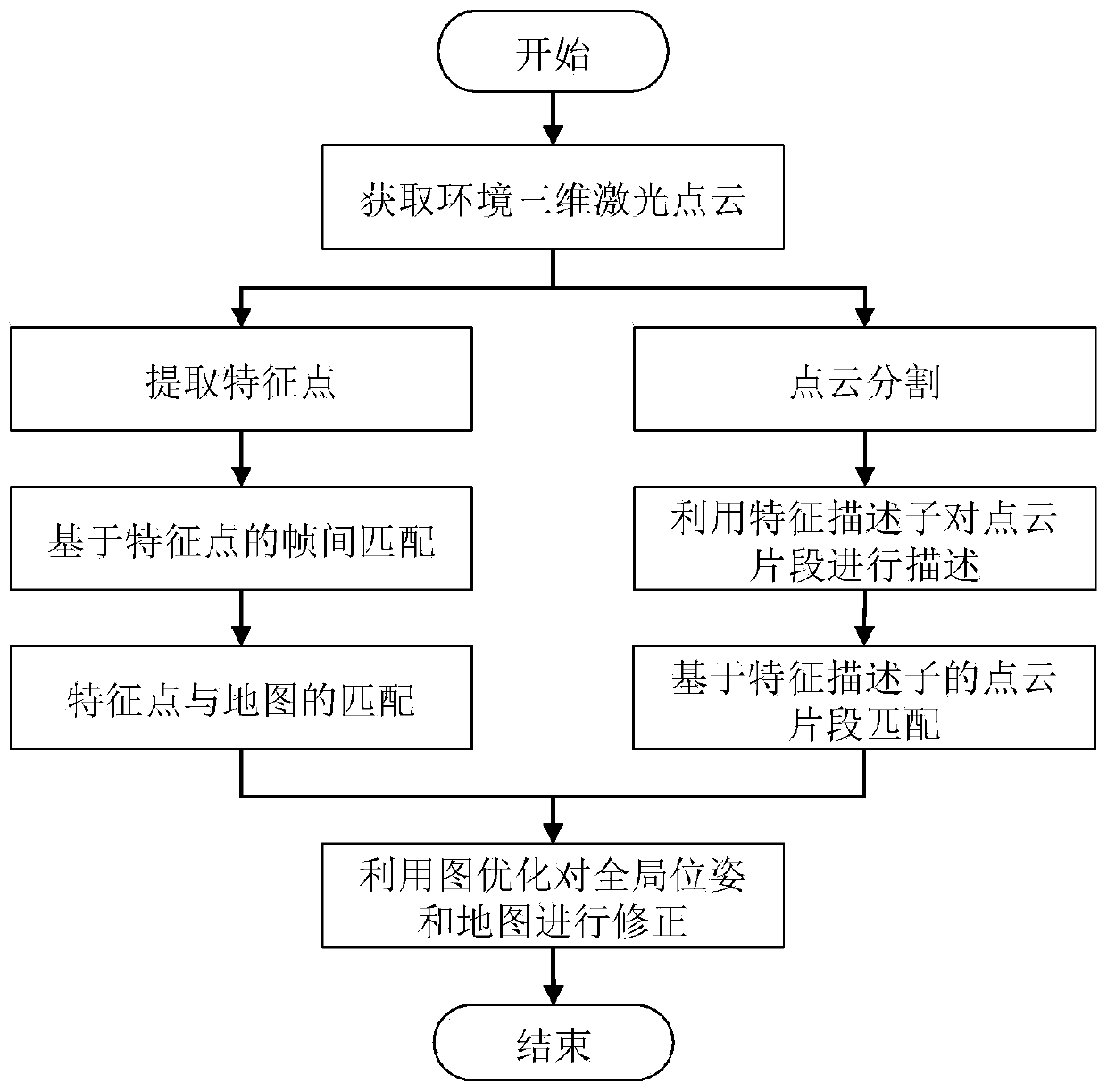

Synchronous positioning and composition algorithm based on point cloud segmentation matching closed-loop correction

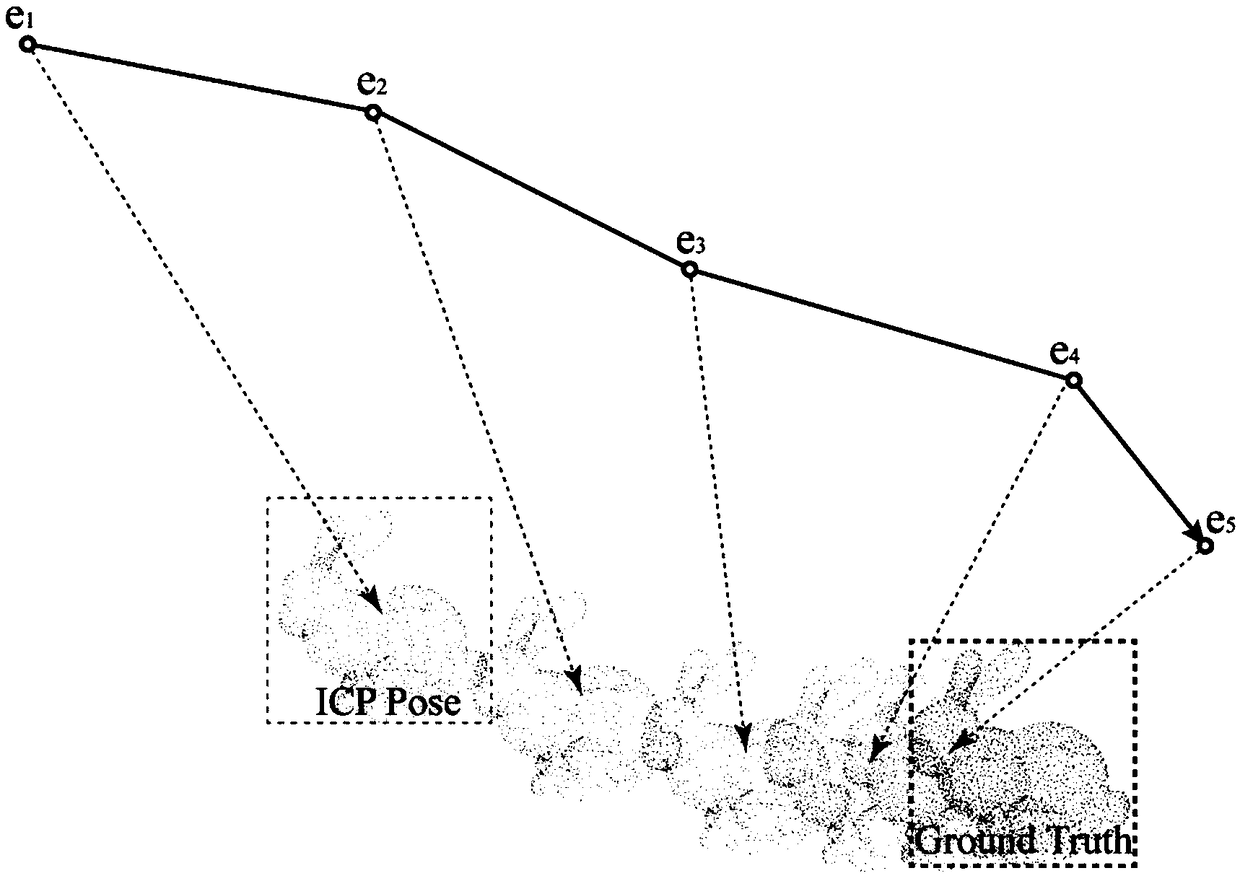

ActiveCN110689622AGood global consistencyEliminate cumulative errorsImage enhancementImage analysisAlgorithmComputer graphics

The invention discloses a synchronous positioning and composition algorithm based on point cloud segmentation matching closed-loop correction, and belongs to the technical field of robot autonomous navigation and computer graphics. According to the algorithm, inter-frame matching is carried out on feature points extracted from three-dimensional point cloud to obtain relative pose transformation ofthe robot, meanwhile, the obtained pose is stored at the rear end in a graph form, and then the point cloud is recorded based on the pose to form a map; the point cloud is fragemented and stored by using a point cloud segmentation and description algorithm, and the point cloud fragments are matched by using a random forest algorithm to form a closed-loop constraint condition; and finally, the historical poses and the map are corrected through a graph optimization algorithm to realize synchronous positioning and composition of the robot. According to the method, while the local positioning precision is ensured, the historical poses and maps are stored and corrected, accumulated errors in an outdoor long-distance environment are effectively reduced, and then synchronous positioning and composition of the robot with good global consistency are achieved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

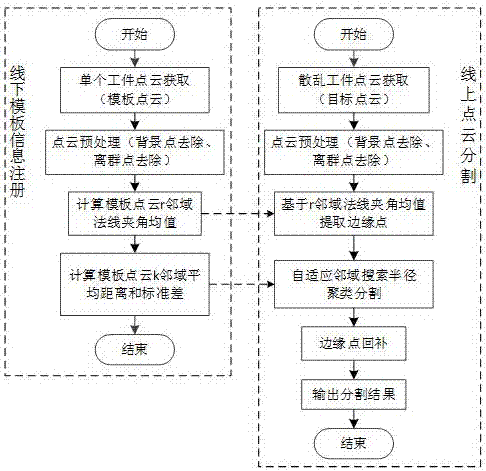

Improved Euclidean clustering-based scattered workpiece point cloud segmentation method

ActiveCN107369161APrevent oversegmentationImprove removal efficiencyImage enhancementImage analysisScene segmentationNeighborhood search

The invention provides an improved Euclidean clustering-based scattered workpiece point cloud segmentation method and relates to the field of point cloud segmentation. According to the method, a corresponding scene segmentation scheme is proposed in view of inherent disorder and randomness of scattered workpiece point clouds. The method comprises the specific steps of preprocessing the point clouds: removing background points by using an RANSAC method, and removing outliers by using an iterative radius filtering method. A parameter selection basis is provided for online segmentation by adopting an information registration method for offline template point clouds, thereby increasing the online segmentation speed; a thought of removing edge points firstly, then performing cluster segmentation and finally supplementing the edge points is proposed, so that the phenomenon of insufficient segmentation or over-segmentation in a clustering process is avoided; during the cluster segmentation, an adaptive neighborhood search radius-based clustering method is proposed, so that the segmentation speed is greatly increased; and surface features of workpieces are reserved in edge point supplementation, so that subsequent attitude locating accuracy can be improved.

Owner:WUXI XINJIE ELECTRICAL

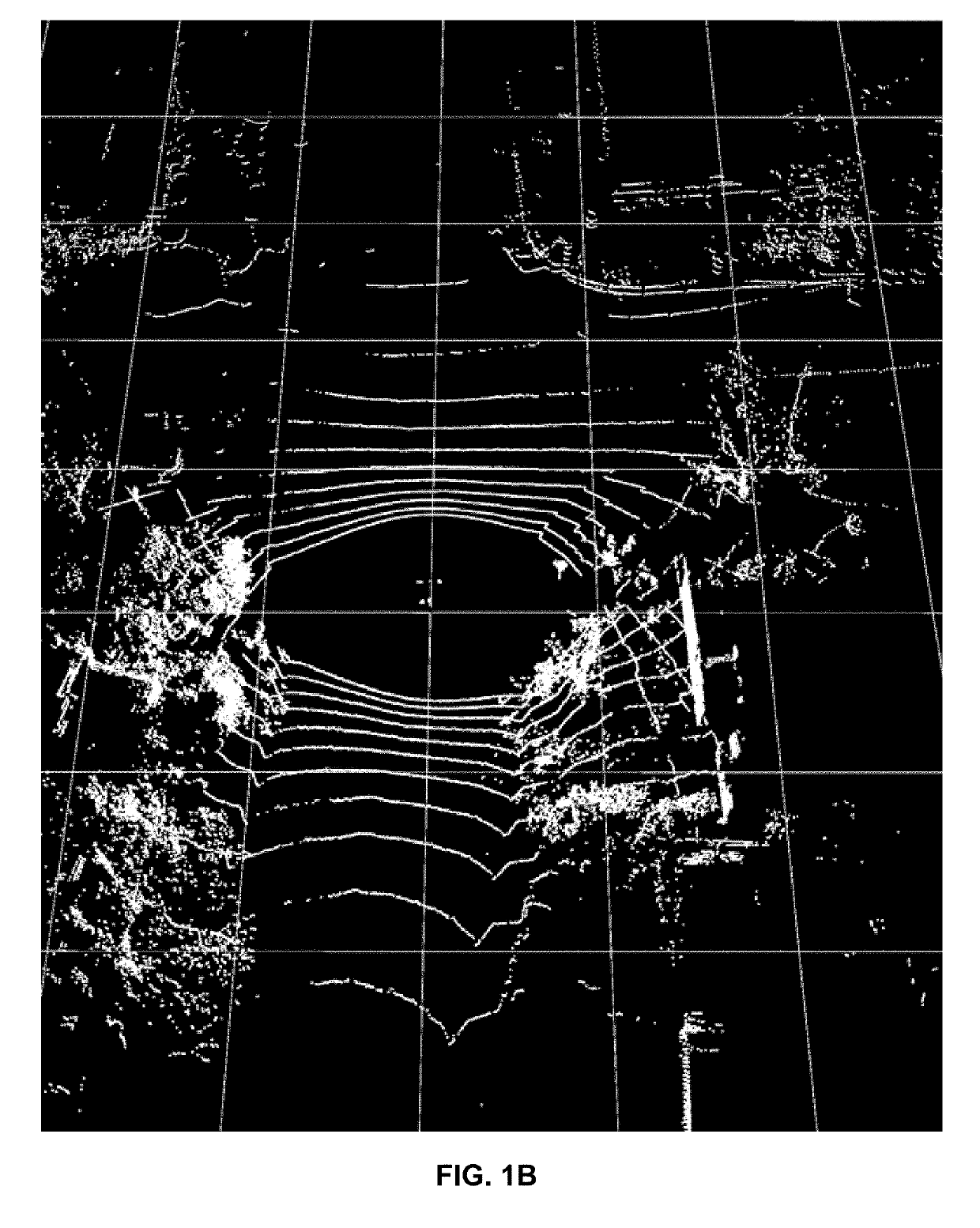

Efficient and scalable three-dimensional point cloud segmentation for navigation in autonomous vehicles

Efficient and scalable three-dimensional point cloud segmentation. In an embodiment, a three-dimensional point cloud is segmented by adding points to a spatial hash. For each unseen point, a cluster is generated, the unseen point is added to the cluster and marked as seen, and, for each point that is added to the cluster, the point is set as a reference, a reference threshold metric is computed, all unseen neighbors are identified based on the reference threshold metric, and, for each identified unseen neighbor, the unseen neighbor is marked as seen, a neighbor threshold metric is computed, and the neighbor is added or not added to the cluster based on the neighbor threshold metric. When the cluster reaches a threshold size, it may be added to a cluster list. Objects may be identified based on the cluster list and used to control autonomous system(s).

Owner:APEX AI INC

Method and device for marking point cloud data

ActiveCN107945198AReduce manual workloadReduce labeling costsImage enhancementImage analysisRadarData acquisition

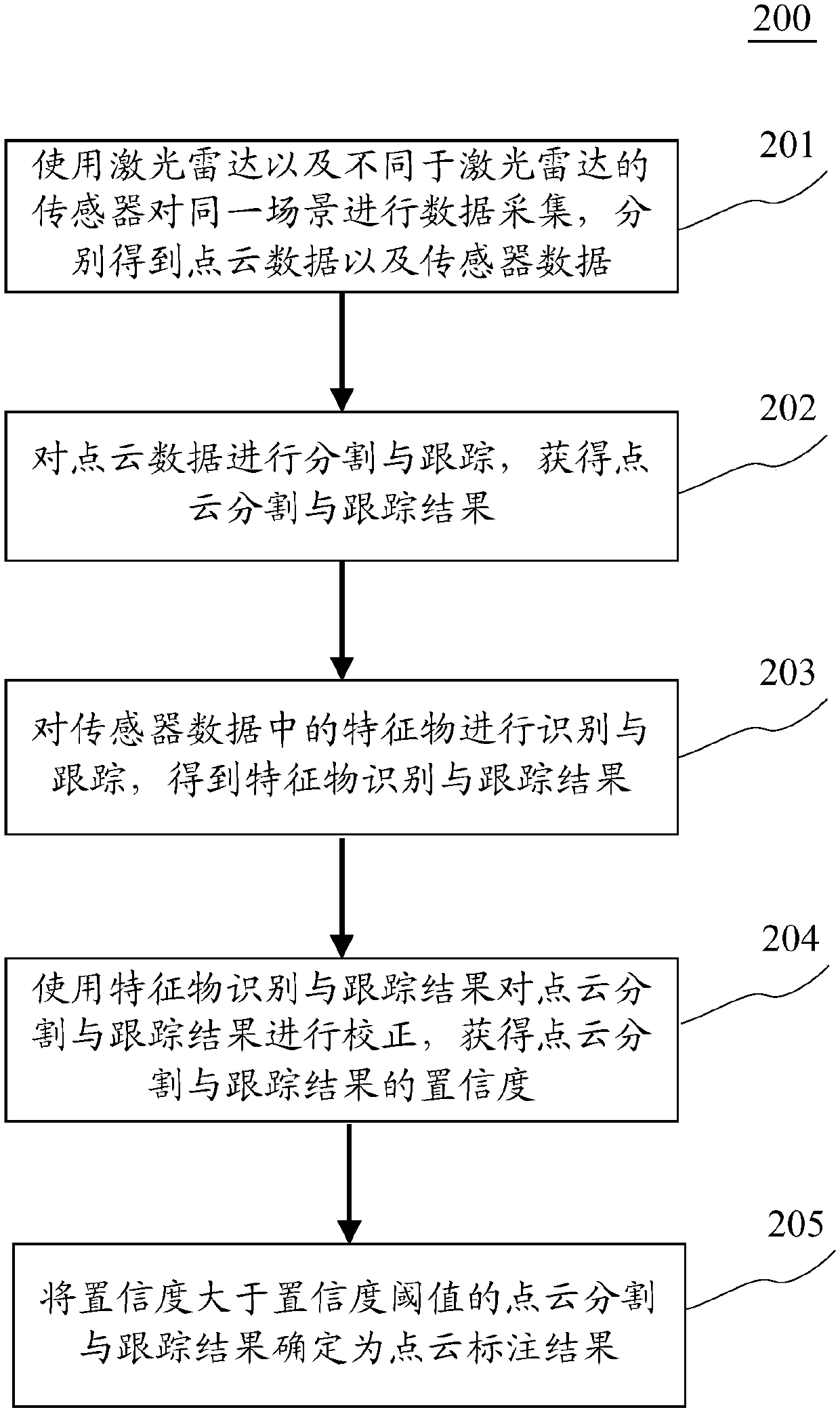

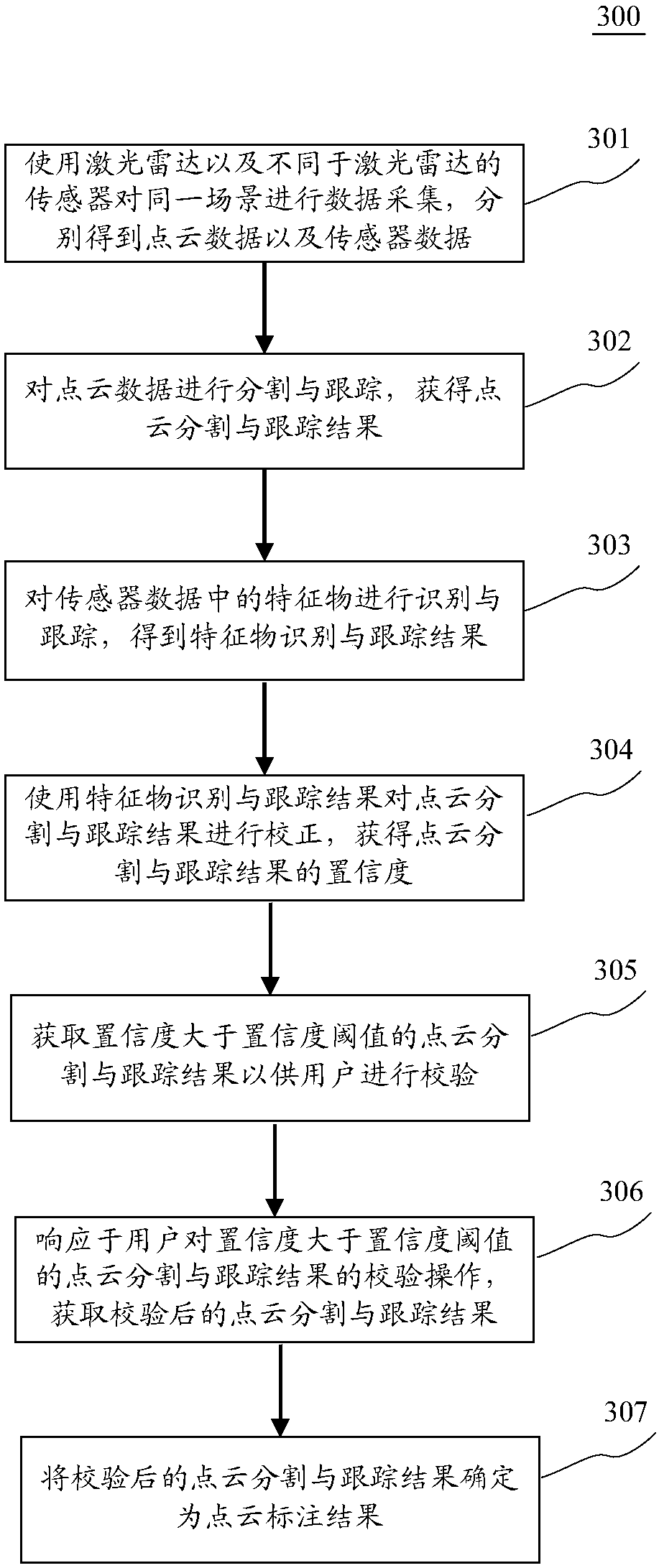

The application discloses a method and a device for marking point cloud data. According to a specific implementation, the method includes the following steps: using a laser radar and a sensor different from the laser radar to collect the data of the same scene to obtain point cloud data and sensor data; segmenting and tracking the point cloud data to obtain a point cloud segmenting and tracking result; identifying and tracking the features in the sensor data to obtain a feature identifying and tracking result; using the feature identifying and tracking result to correct the point cloud segmenting and tracking result to obtain the confidence of the point cloud segmenting and tracking result; and determining a point cloud segmenting and tracking result of which the confidence is greater thana confidence threshold as a point cloud marking result. The implementation reduces the manual workload of point cloud data marking and reduces the cost of marking.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

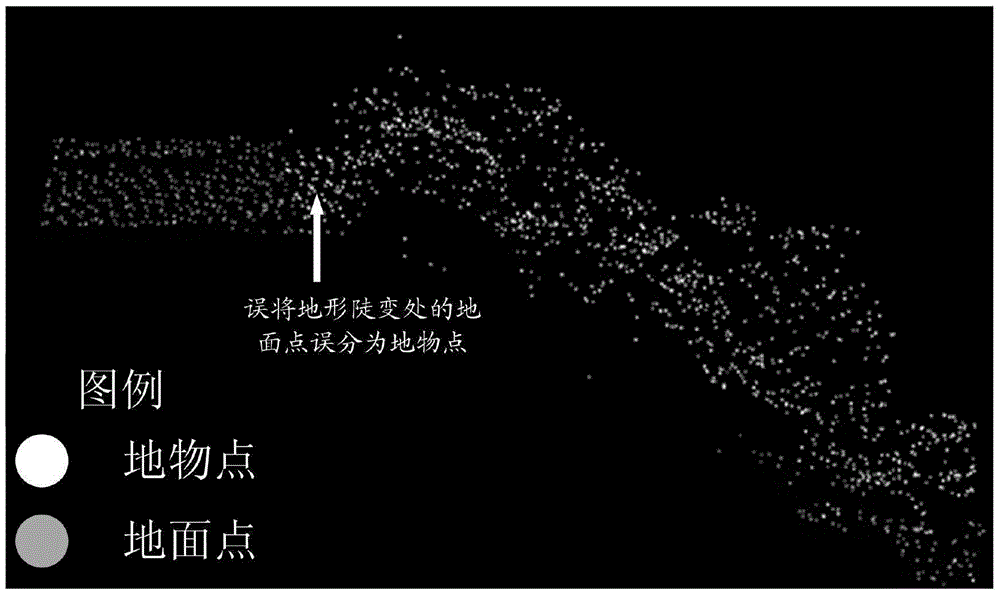

Object-oriented airborne laser radar point cloud filtering method

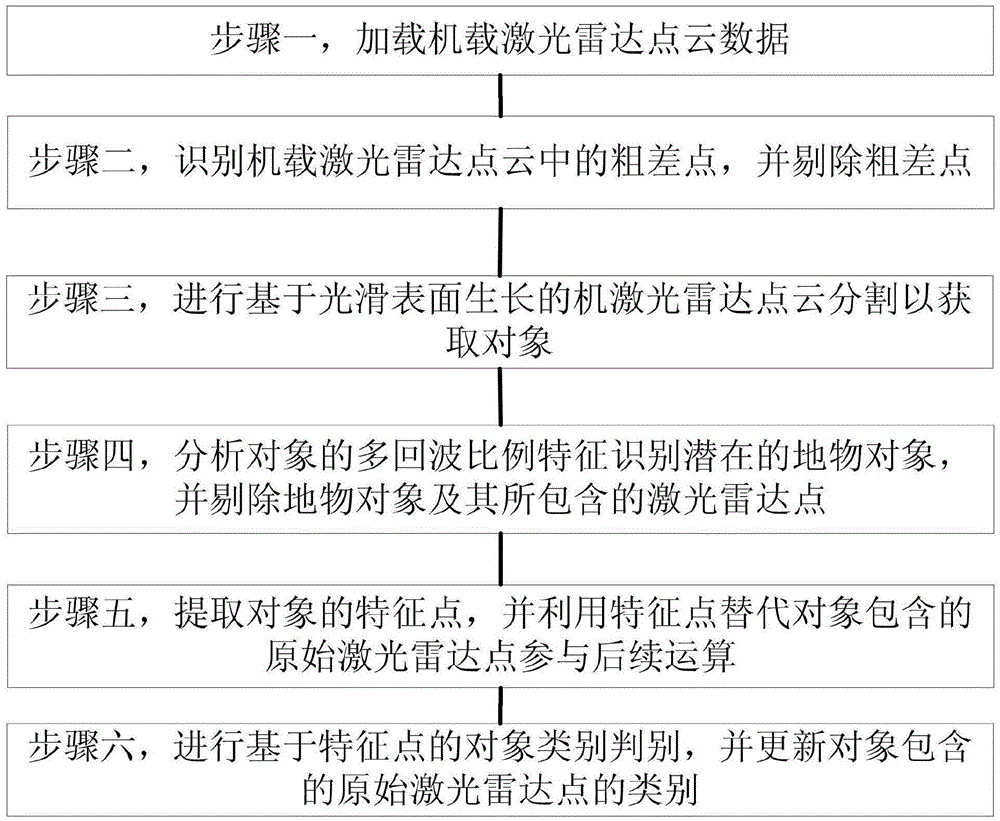

InactiveCN105488770AHigh precisionEffective WaysImage enhancementImage analysisRadarLidar point cloud

The invention discloses an object-oriented airborne laser radar point cloud filtering method. The method comprises the following steps: loading airborne laser radar point cloud data; identifying and removing a gross error point in airborne laser radar point cloud; performing smooth surface growth based airborne laser radar point cloud segmentation to obtain an object; analyzing a multi-echo ratio feature of the object to identify a potential earth object, and removing the earth object and a laser radar point contained in the earth object; extracting a feature point of the object, and performing subsequent operation by utilizing the feature point instead of an original laser radar point contained in the object; performing feature point based object type judgment, and updating the type of the original laser radar point contained in the object. According to the method, a complete object-oriented airborne laser radar point cloud filtering technical process is formed, so that the airborne laser radar point cloud filtering precision is effectively improved; and the method has wide applicability for various geomorphic types such as a mountainous area, an urban area and the like.

Owner:CHINESE ACAD OF SURVEYING & MAPPING

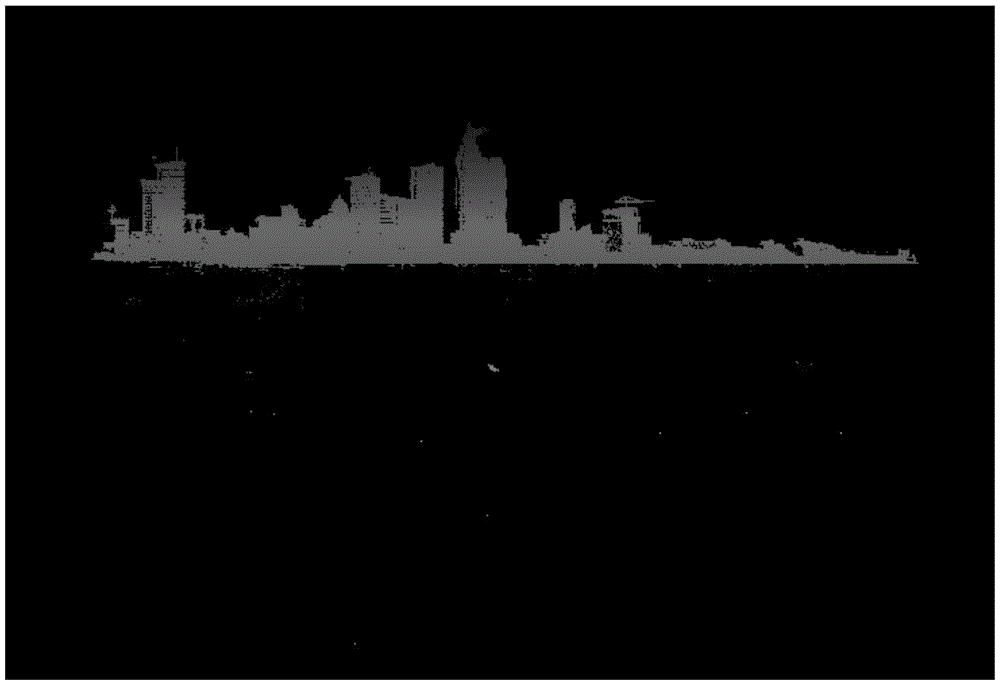

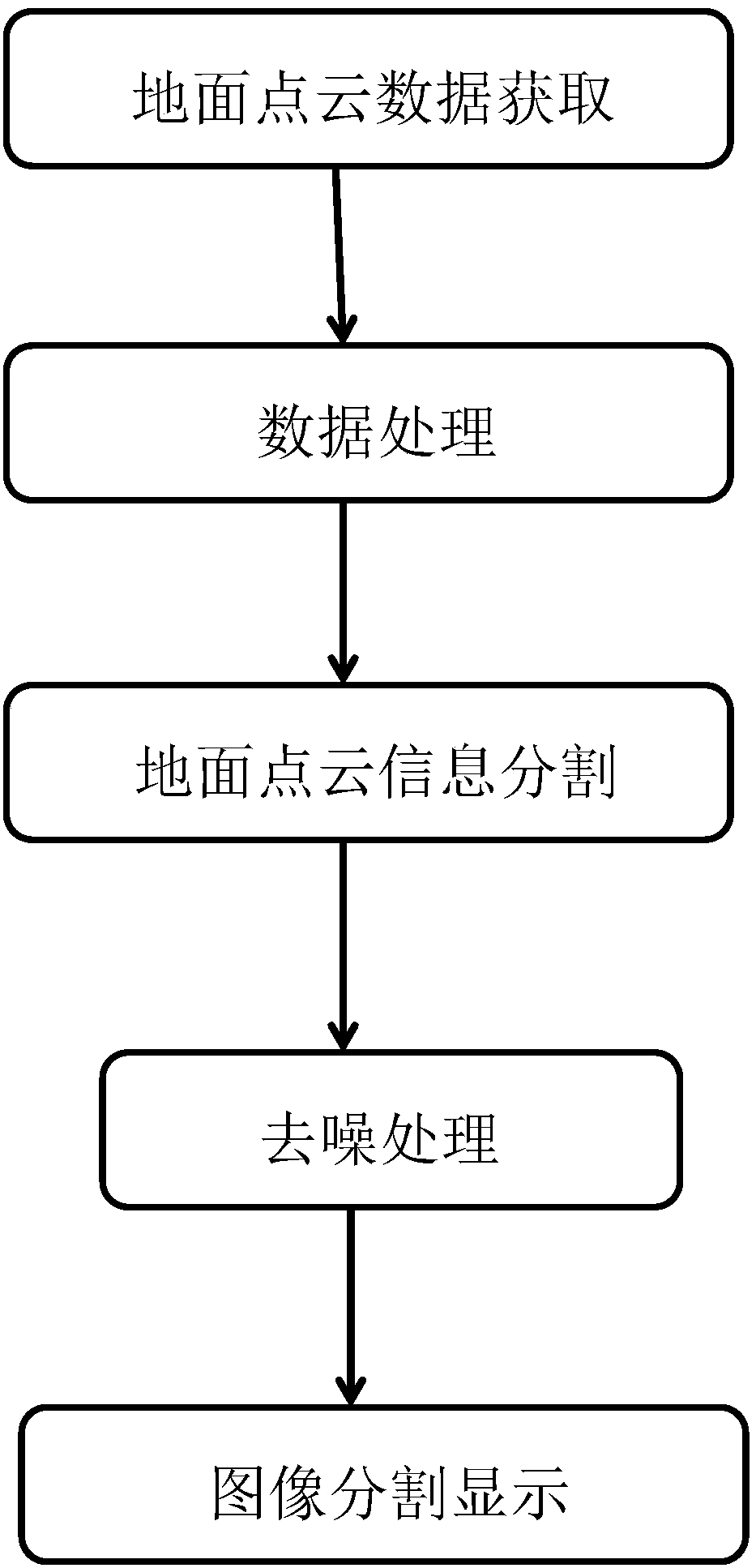

Ground point cloud segmentation method based on three-dimensional laser radar

InactiveCN108596860AAchieve complete acquisitionImprove integrityImage enhancementImage analysisRectangular coordinatesRadar

The invention discloses a ground point cloud segmentation method based on a three-dimensional laser radar. The method comprises the following steps that: S100: obtaining ground point cloud data, carrying out scanning on the basis of the ground three-dimensional laser radar to obtain spatial information data, and meanwhile, combining with a GPS (Global Positioning System) to obtain ground data; S200: carrying out data processing, establishing a rectangular coordinate system, converting the coordinate of the obtained ground data into the rectangular coordinate system, and meanwhile, adopting equal-thickness layering according to contour line and contour interval properties; S300: carrying out ground point cloud information segmentation, and classifying the ground laser point cloud information obtained by scanning; S400: carrying out denoising processing, classifying noise data, and independently carrying out noise processing on the noise data; and S500: carrying out image segmentation display, and directly extracting building elevation information through a gridding way. Through a way of combining positioning with laser scanning, more comprehensive ground point cloud data is obtained, meanwhile, the accuracy of the point cloud data is improved through the ways of classified segmentation and step-by-step denoising, and convenience is brought to subsequent modeling and simulation.

Owner:WUHU HANGFEI SCI & TECH

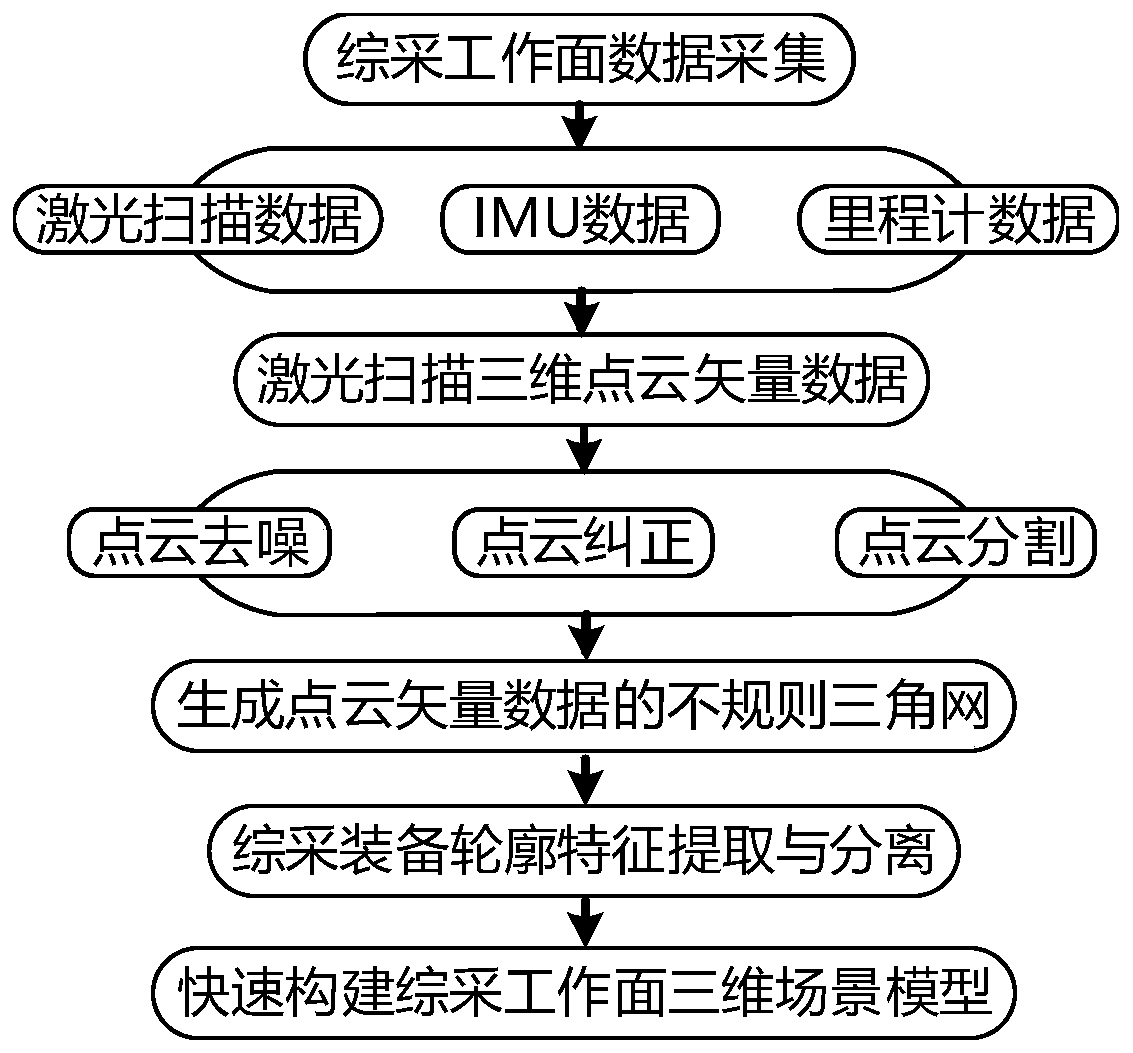

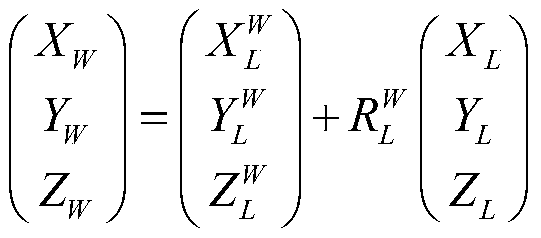

Method for quickly constructing three-dimensional scene of underground fully mechanized coal mining face

ActiveCN110232736AReduce casualtiesFix workImage enhancementDetails involving processing stepsTriangulationRadar

The invention discloses a method for quickly constructing a three-dimensional scene of an underground fully mechanized mining face, which comprises the following steps of: (1) mounting a laser radar,an inertial measurement unit and a speedometer on a movable holder, enabling the movable holder to quickly move on a flexible track, and mounting the flexible track on a track support frame on the outer side of a ledge of a scraper conveyor; (2) acquiring measurement data of the IMU, the odometer and the laser radar in real time and fusing the measurement data to obtain laser scanning three-dimensional point cloud vector data of the fully mechanized coal mining face; (3) respectively performing point cloud denoising, point cloud correction and point cloud segmentation processing on the three-dimensional point cloud vector data; (4) generating an irregular triangulation network of the three-dimensional point cloud vector data; and (5) extracting and separating the contour features of the fully mechanized mining equipment, and quickly constructing a fully mechanized mining face three-dimensional scene model. According to the method, the three-dimensional scene information of the fully mechanized coal mining face can be rapidly constructed, and the fully mechanized coal mining face production full view can be rapidly displayed in a simple and visual mode.

Owner:CHINA UNIV OF MINING & TECH

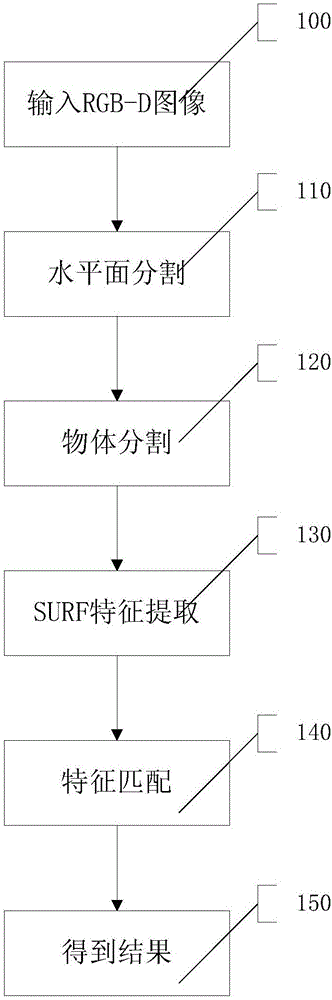

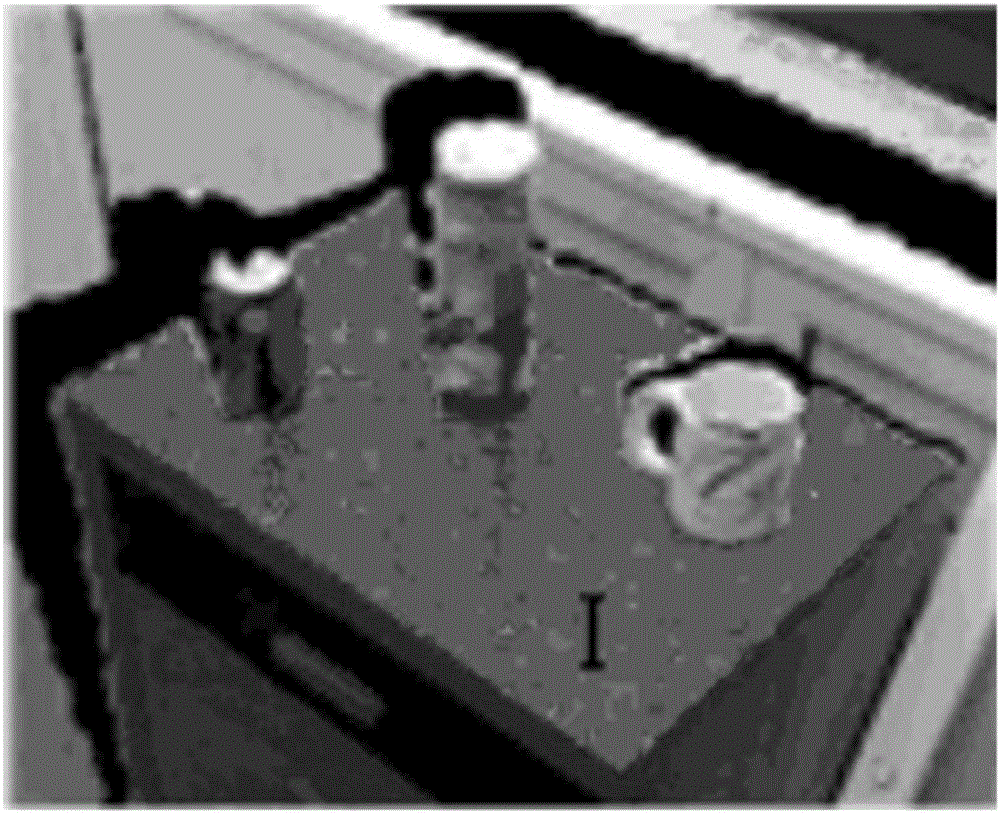

Real-time object detection method based on combination of three-dimensional point cloud segmentation and local feature matching

InactiveCN106529573AFew parametersSimple calculationCharacter and pattern recognitionPlane segmentationPoint cloud segmentation

The invention provides a real-time object detection method based on combination of three-dimensional point cloud segmentation and local feature matching. The method comprises the following steps that: a normal vector of each point in a three-dimensional point cloud is calculated rapidly by combining an integral image so as to carry out horizontal plane segmentation on an object; the object is segmented; target object feature extraction is carried out; and the extracted target object feature is compared with an object feature database. According to the method provided by the invention, object detection and positioning are realized by rapid segmentation of the three-dimensional point cloud; and with an SURF-feature-matching-based method, target object identification is realized. Because of the integrated structure characteristics of orderly point clod data, a method combining the integral image with regional growth is provided, so that rapid three-dimensional object detection is realized.

Owner:BEIJING UNION UNIVERSITY

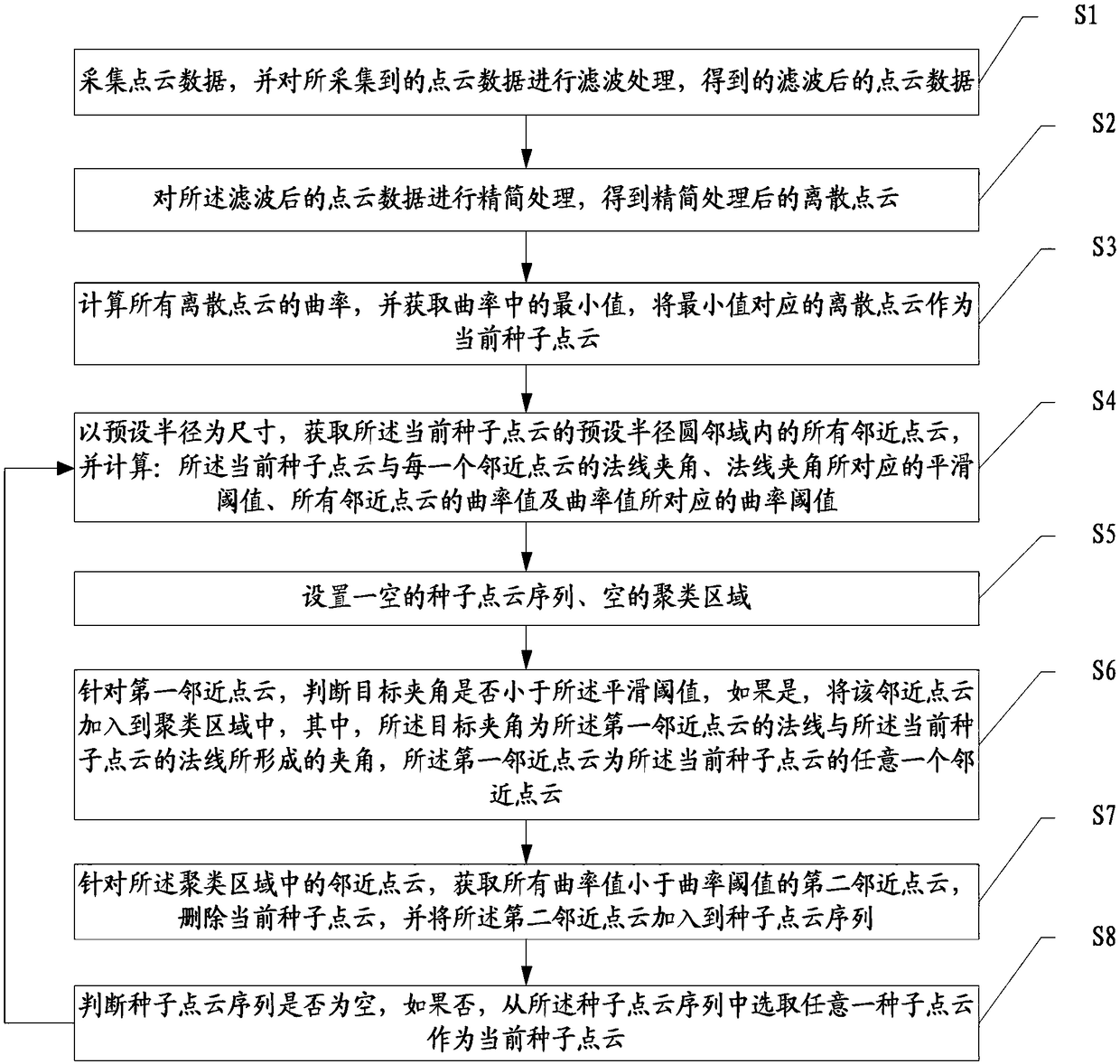

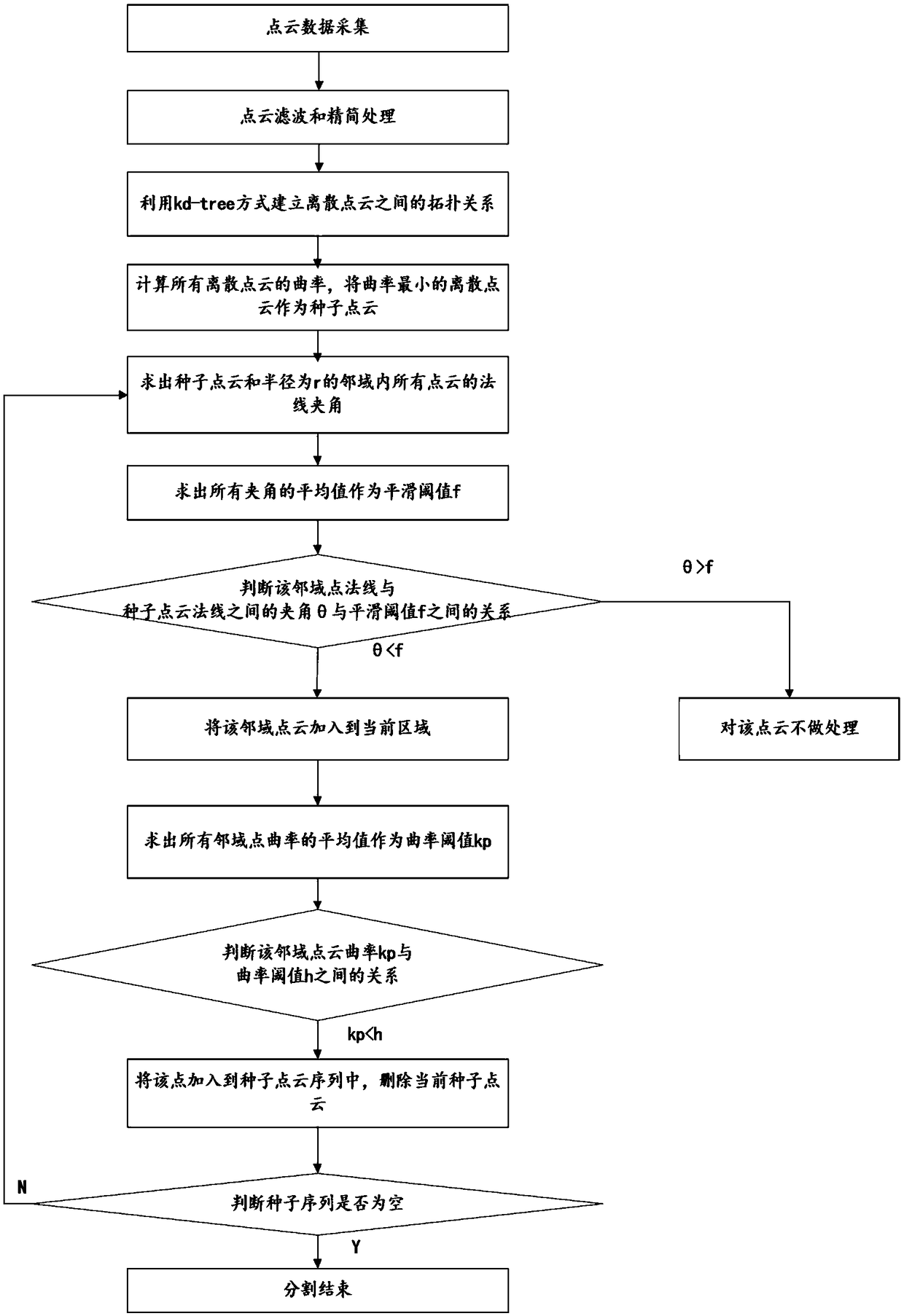

Point cloud segmentation method and device, computer readable storage medium and terminal

ActiveCN109409437AEfficient removalDoes not affect data characteristicsImage enhancementImage analysisOutlierComputer terminal

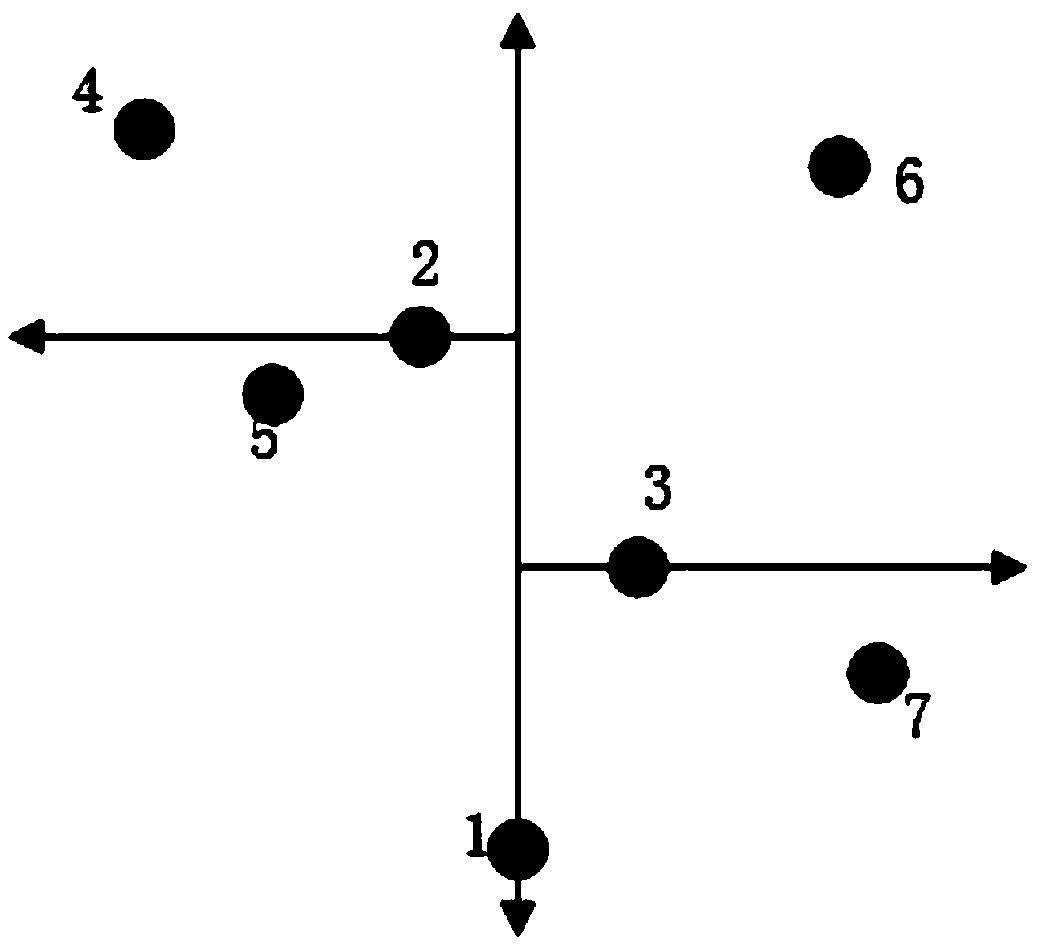

The invention belongs to the technical field of point cloud data segmentation, and particularly relates to a point cloud segmentation method and device, a computer readable storage medium and a terminal. The invention discloses a point cloud segmentation method. The method comprises the following steps: carrying out filtering processing on collected point cloud data, filtering the point cloud datato obtain filtered point cloud data; the discrete point cloud after the simplification processing is obtained; calculating curvatures of all discrete point clouds; acquiring a minimum value in the curvature; taking the discrete point cloud corresponding to the minimum value as the current seed point cloud; and by taking a preset radius as a size, obtaining all adjacent point clouds in a preset radius circle neighborhood of the current seed point cloud, and calculating a normal included angle between the current seed point cloud and each adjacent point cloud, a smooth threshold corresponding to the normal included angle, curvature values of all adjacent point clouds and a curvature threshold corresponding to the curvature values. According to the method, some outliers far away from the main body can be effectively removed, and the data processing speed is greatly increased on the premise that point cloud data characteristics are not affected.

Owner:ANHUI AGRICULTURAL UNIVERSITY

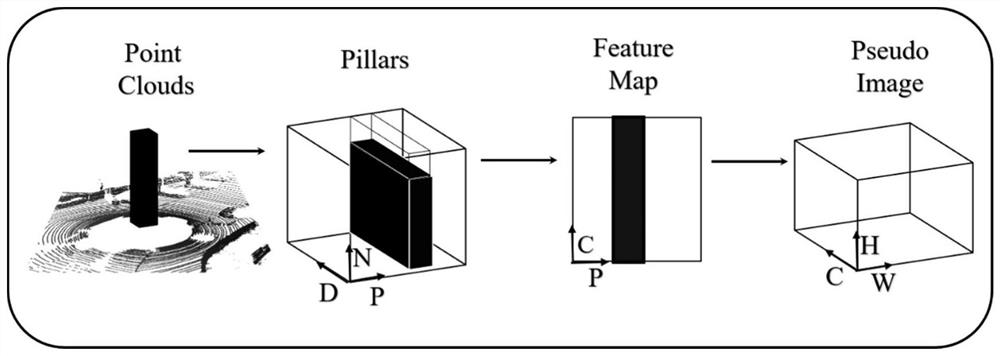

3D point cloud semantic segmentation method under bird's-eye view coding view angle

InactiveCN111862101AImprove segmentation efficiencyGuaranteed Segmentation AccuracyImage enhancementImage analysisPattern recognitionVoxel

The invention discloses a 3D point cloud semantic segmentation method under a bird's-eye view coding view angle. The method is applied to an input 3D point cloud. The method comprises: converting a voxel-based coding mode into a view angle of a bird's-eye view; extracting a feature of each voxel through a simplified Point Net network; converting the feature map into a feature map which can be directly processed by utilizing a 2D convolutional network; and processing the encoded feature map by using a full convolutional network structure composed of residual modules reconstructed through decomposition convolution and hole convolution, so that an end-to-end pixel-level semantic segmentation result is obtained, point cloud network semantic segmentation can be accelerated, and a point cloud segmentation task in a high-precision real-time large scene can be achieved under the condition that hardware is limited. The method can be directly used for tasks of robots, unmanned driving, disordered grabbing and the like, and due to the design of the method on a coding mode and a network structure, the system overhead is lower while high-precision point cloud semantic segmentation is achieved,and the method is more suitable for hardware-limited scenes of robots, unmanned driving and the like.

Owner:XI AN JIAOTONG UNIV

Three-dimensional point cloud semantic segmentation method and device, equipment and medium

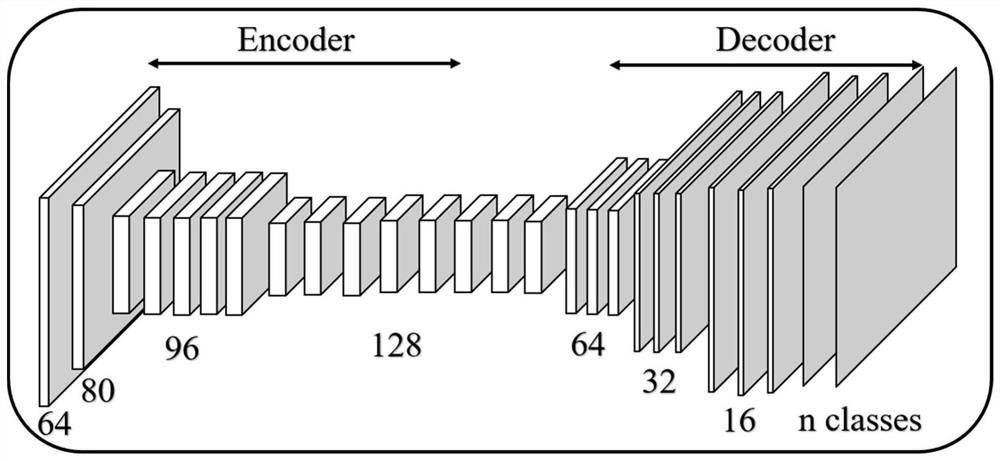

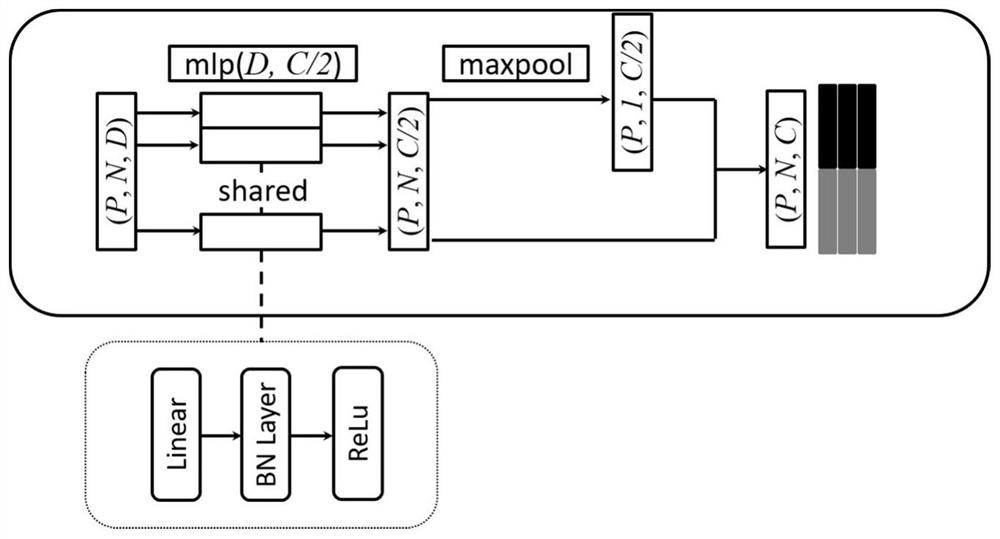

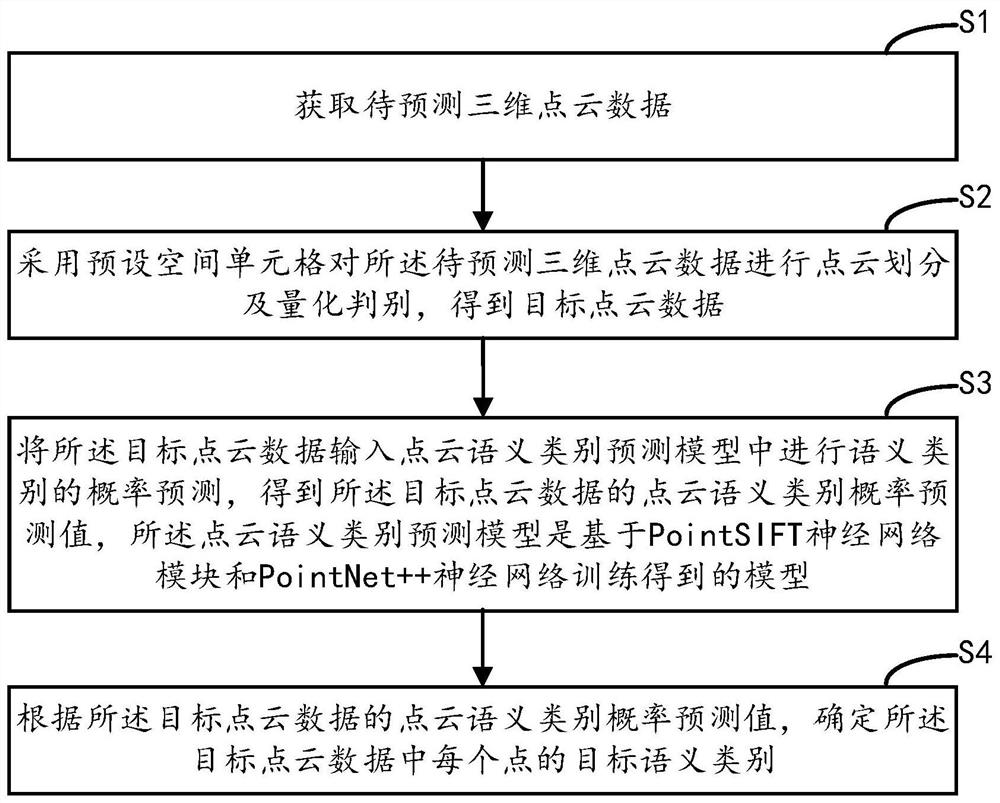

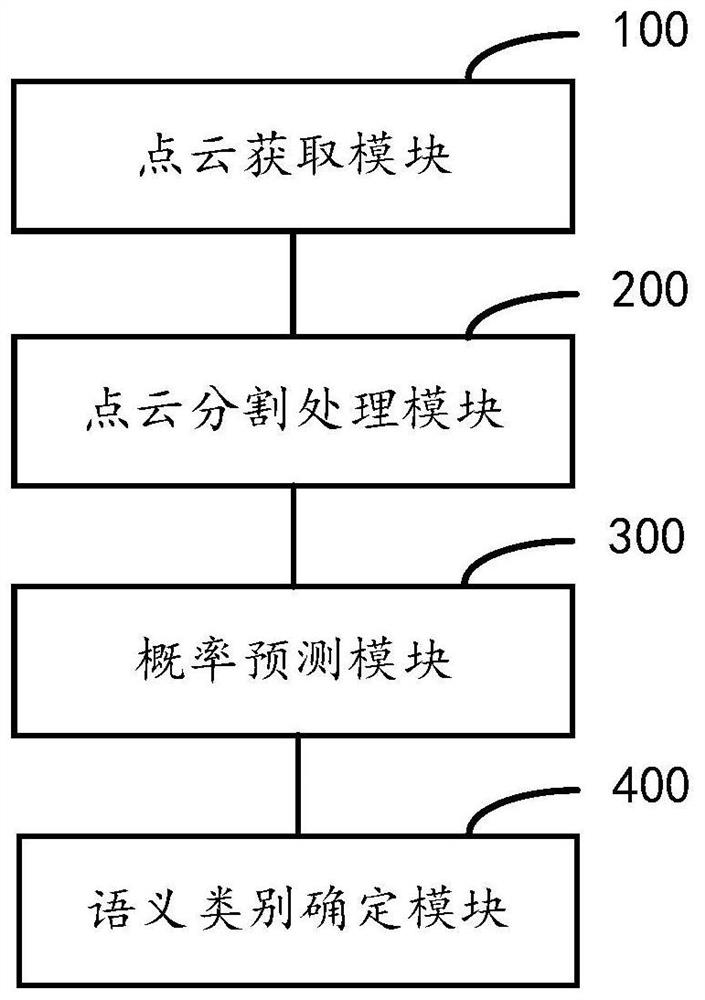

PendingCN112287939AImprove recognition accuracyFast and accurate logical divisionCharacter and pattern recognitionNeural architecturesEngineeringCloud data

The invention relates to the technical field of artificial intelligence, and discloses a three-dimensional point cloud semantic segmentation method, device and equipment and a medium, and the method comprises the steps: carrying out the point cloud division and quantitative discrimination of to-be-predicted three-dimensional point cloud data through employing a preset space cell, and obtaining target point cloud data; inputting the target point cloud data into a point cloud semantic category prediction model to perform semantic category probability prediction to obtain a point cloud semantic category probability prediction value of the target point cloud data, wherein the point cloud semantic category prediction model is a model obtained by training based on a Point SIFT neural network module and a Point Net + + neural network; and determining a target semantic category of each point in the target point cloud data according to the point cloud semantic category probability prediction value. According to the method, rapid and accurate logic division is carried out on the point cloud of the complex large-scale target object, the recognition precision of point cloud segmentation is improved, fine features of the complex target object can be well processed, and the accuracy of semantic category prediction is improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

Outdoor large-scale scene three-dimensional mapping method fusing multiple sensors

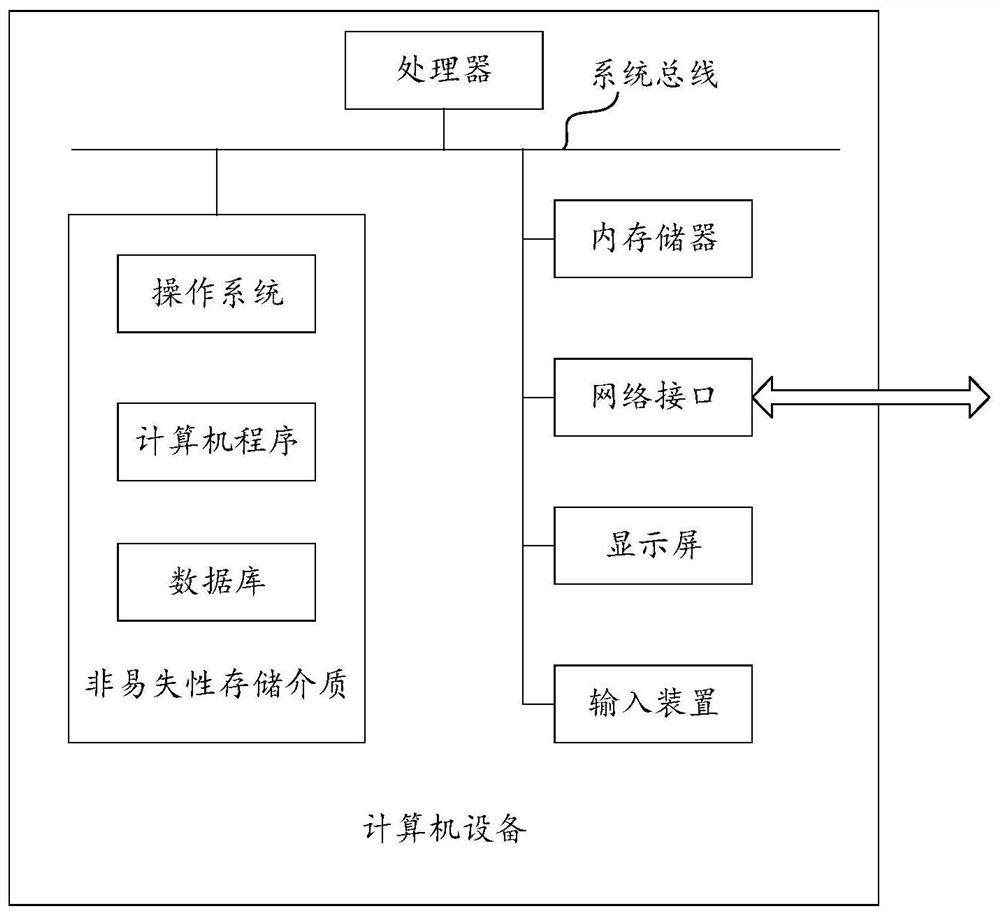

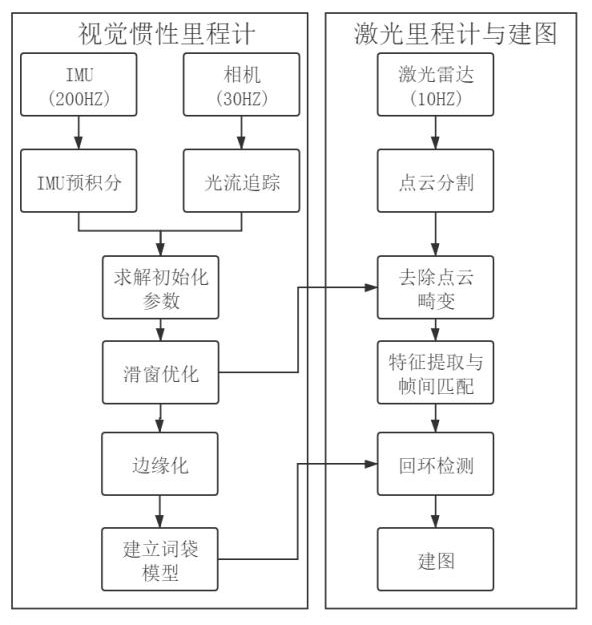

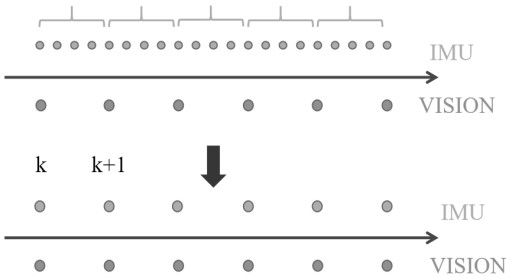

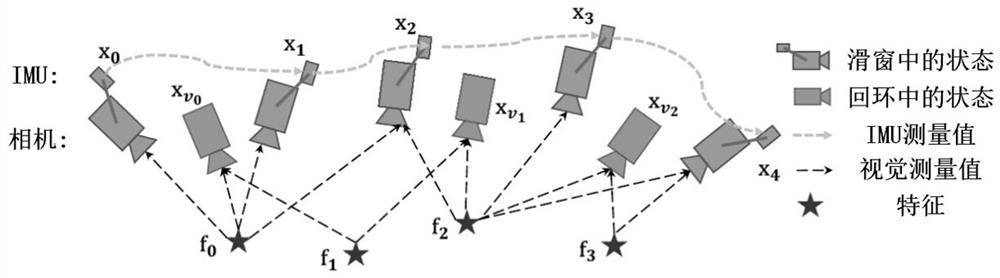

ActiveCN112634451AEasy to adaptHigh loop detection accuracyImage enhancementImage analysisFeature extractionMultiple sensor

The invention provides an outdoor large-scale scene three-dimensional mapping method fusing multiple sensors. The implementation process is divided into two modules, namely a visual inertia odometer module, and a laser odometer and mapping module. The visual inertia odometer module comprises optical flow tracking, IMU pre-integration, initialization, sliding window optimization, marginalization and word bag model establishment. The laser odometer and mapping module comprises point cloud segmentation, point cloud distortion removal, feature extraction and inter-frame matching, loopback detection and mapping. Compared with a single radar mapping scheme, the high-frequency pose of the visual inertia odometer is fused, and the method has the advantages of being good in point cloud distortion removal effect, high in loopback detection precision and high in mapping precision. The problem that an outdoor large-scene three-dimensional map is low in precision is solved, and a breakthrough is provided for further development of unmanned driving.

Owner:FUZHOU UNIV

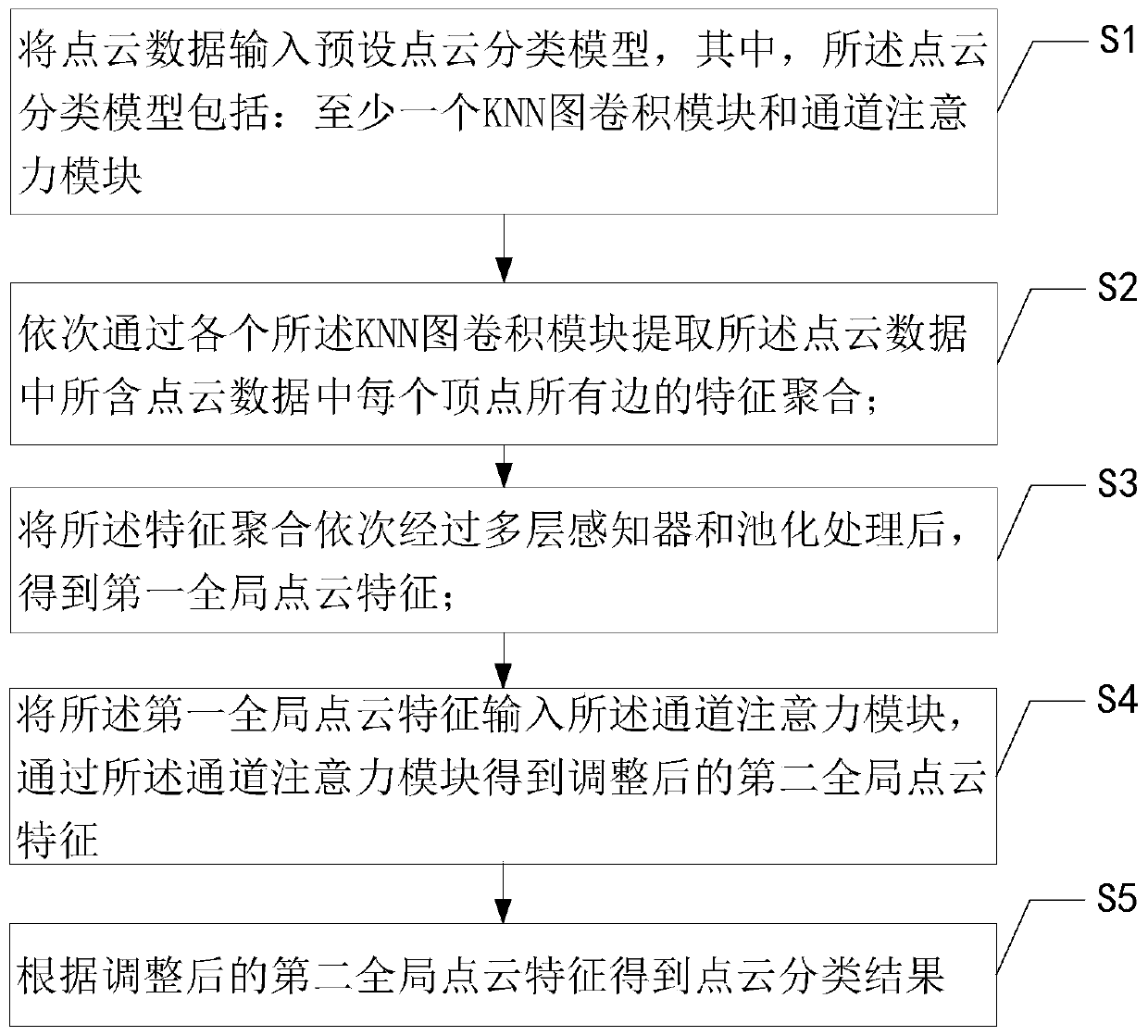

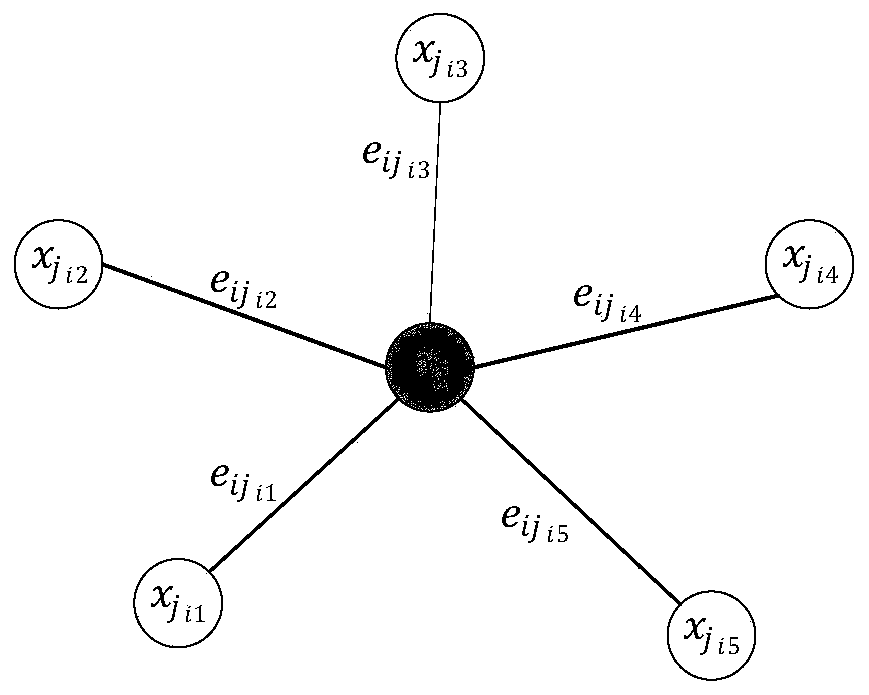

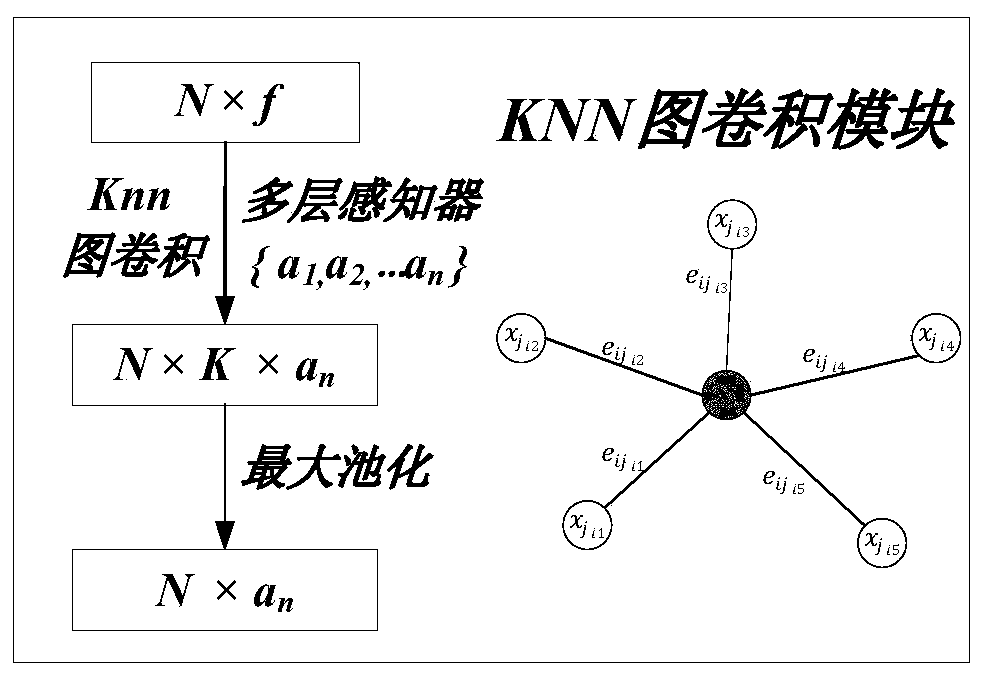

Point cloud classification method, point cloud segmentation method and related equipment

ActiveCN111242208AIncrease interdependenceEnhancing Global Feature RepresentationCharacter and pattern recognitionNeural architecturesAlgorithmNetwork structure

Owner:SHENZHEN UNIV

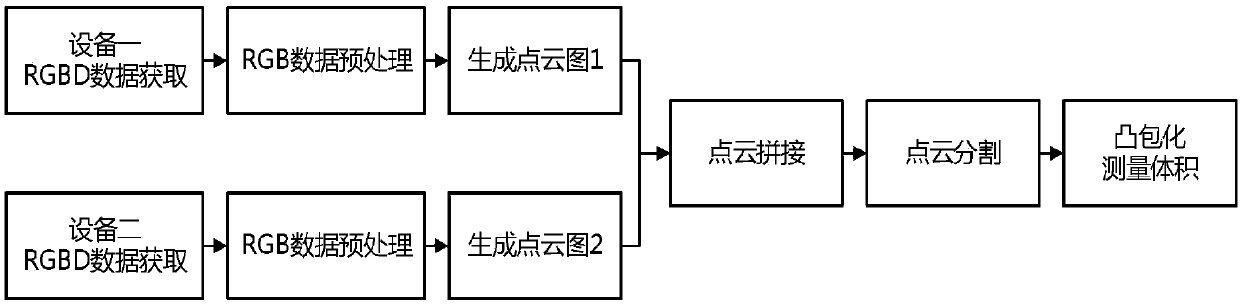

Cargo volume measurement method based on deep learning and three-dimensional reconstruction

ActiveCN109655019AIncreased complexityHigh precisionMeasurement devicesNeural architecturesData acquisitionPoint cloud segmentation

The invention relates to a cargo volume measurement method based on deep learning and three-dimensional reconstruction. The method comprises the steps of RGBD data acquisition, RGB data preprocessing,point cloud generation, point cloud splicing, point cloud segmentation, convex hull generation and volume detection, wherein the RGB data preprocessing adopts a deep learning method, and the deep learning method can accurately identify and segment a target object from a three-dimensional scene, so that the volume measurement precision of the target object is improved. Meanwhile, when the volume of a cargo is measured, a convex hull is used for replacing target point cloud data triangularization, the problems that the volume of an unsealed three-dimensional model cannot be measured and the measurement error of the actual space occupied by a concave cargo is large can be solved, and therefore practicability is further improved.

Owner:NORTH CHINA UNIVERSITY OF TECHNOLOGY

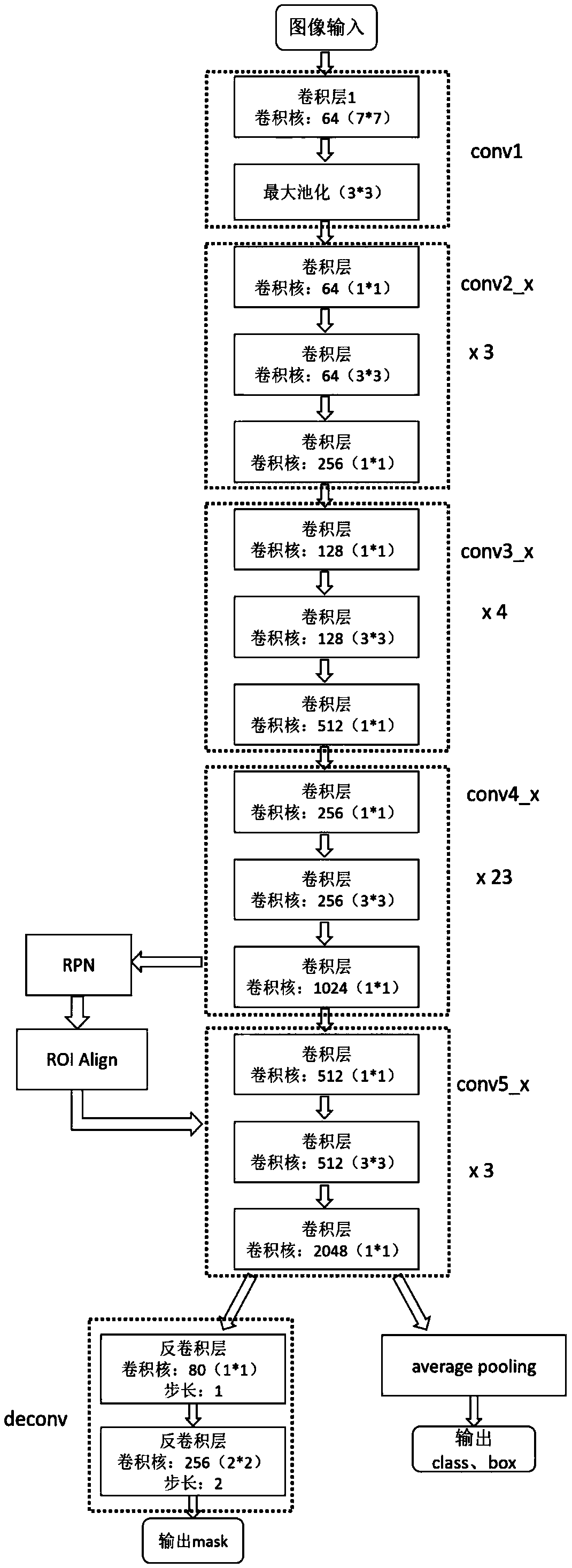

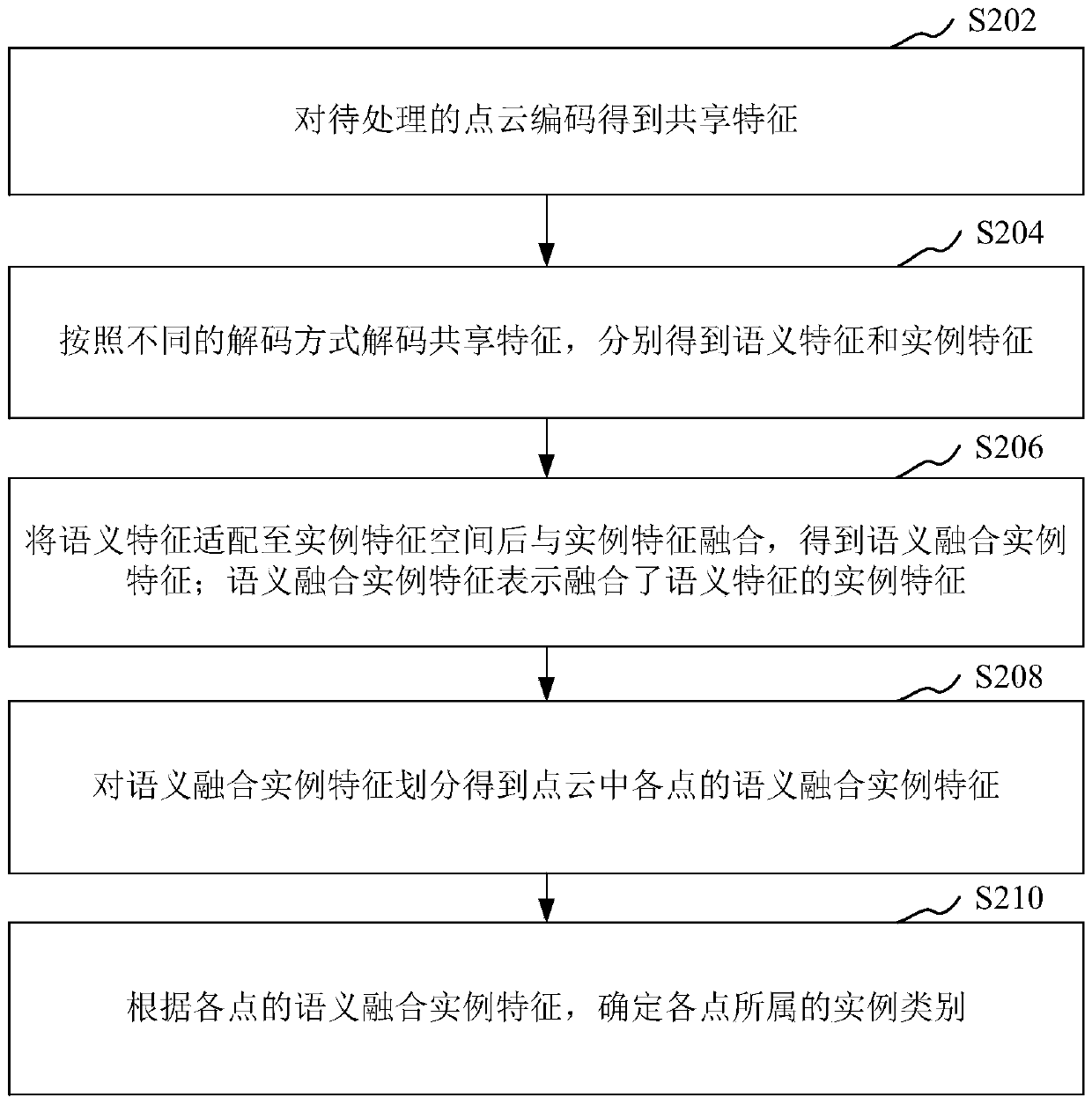

Point cloud segmentation method and device, computer readable storage medium and computer equipment

ActiveCN109886272AImprove efficiencyImprove accuracyImage codingThree-dimensional object recognitionPattern recognitionSemantic feature

Owner:TENCENT TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com