Patents

Literature

318 results about "Scene segmentation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

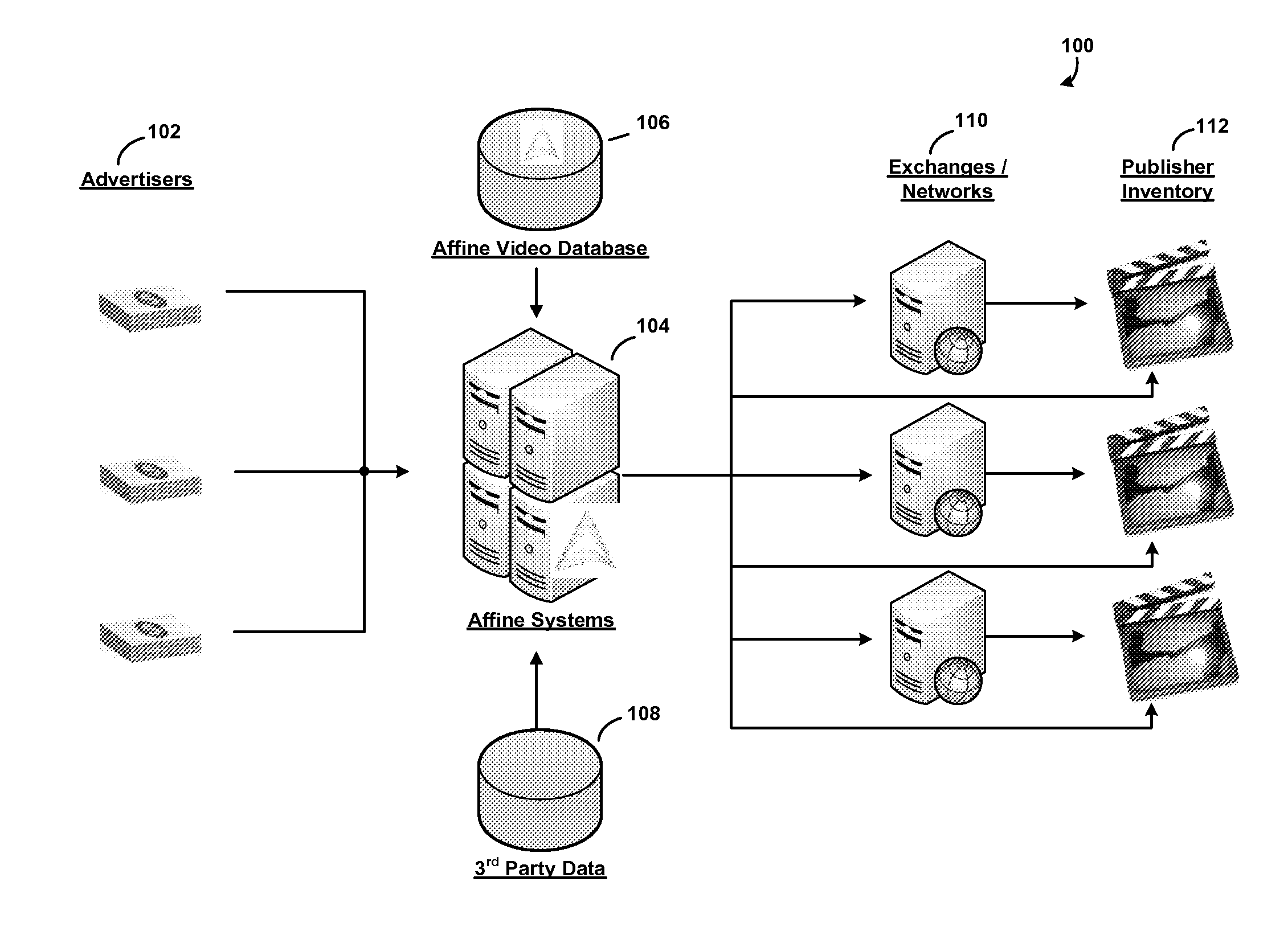

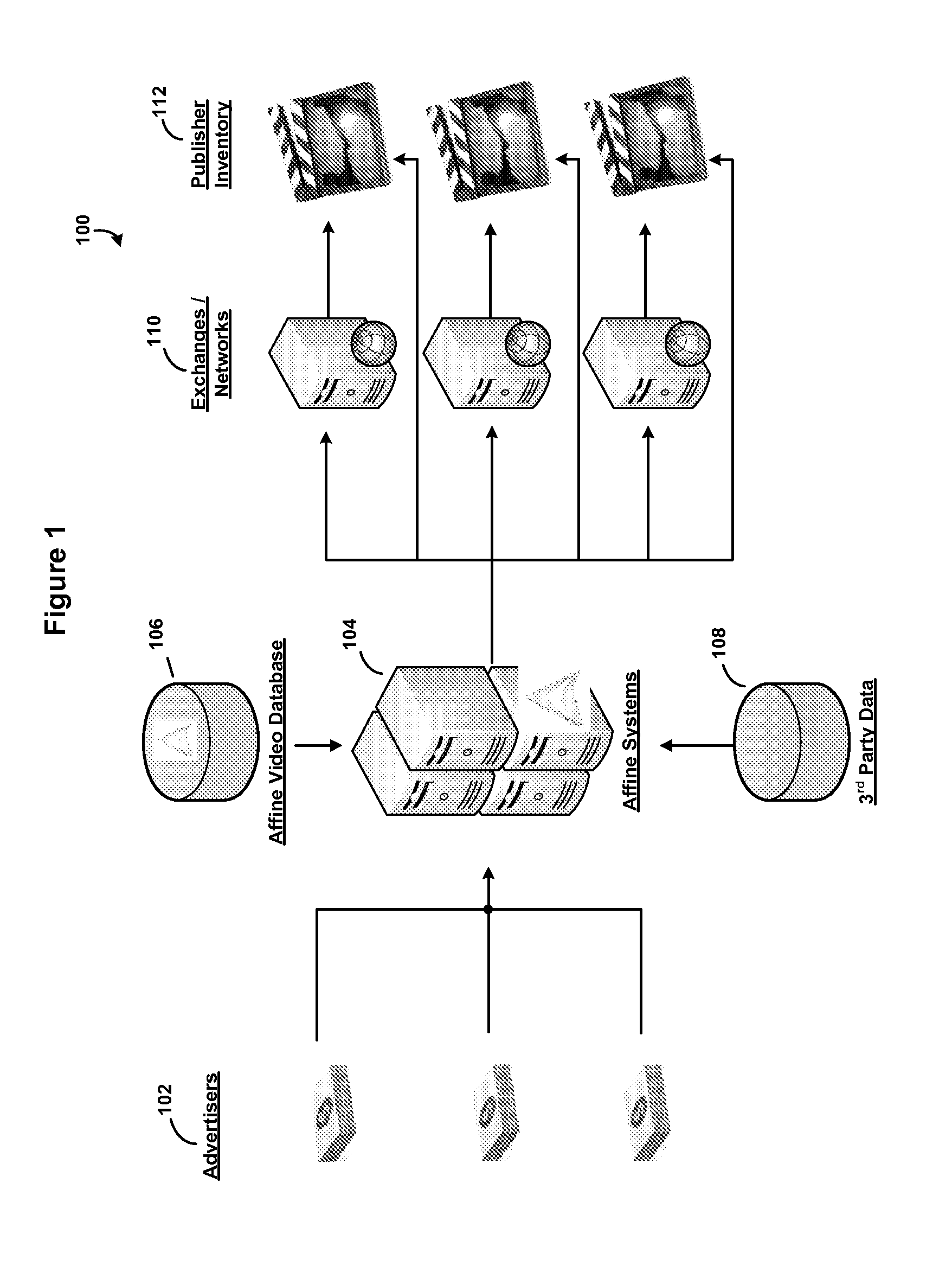

Systems and methods for matching an advertisement to a video

InactiveUS20110251896A1Accurate signatureAdvertisementsSpecific information broadcast systemsHyperlinkScene segmentation

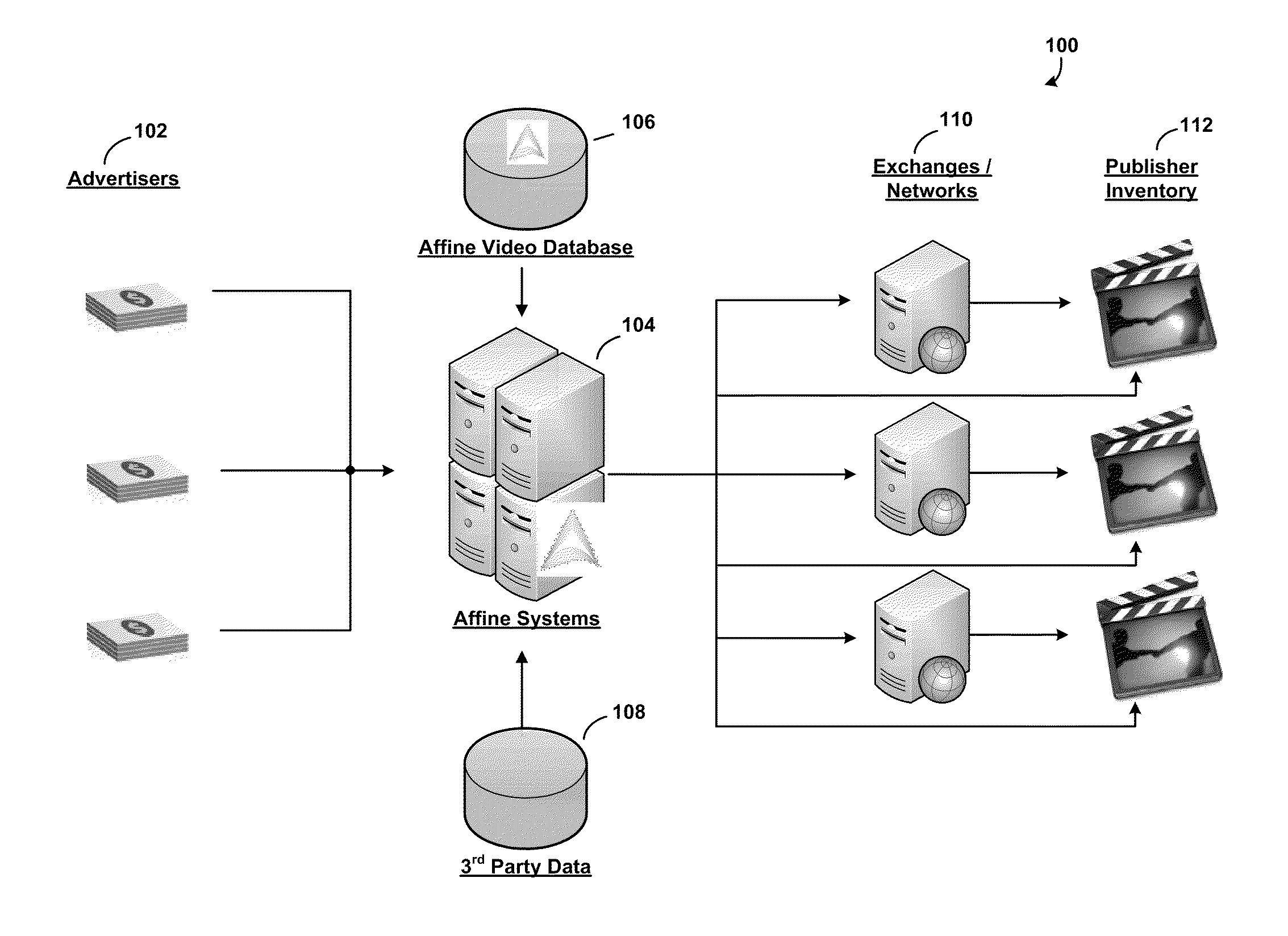

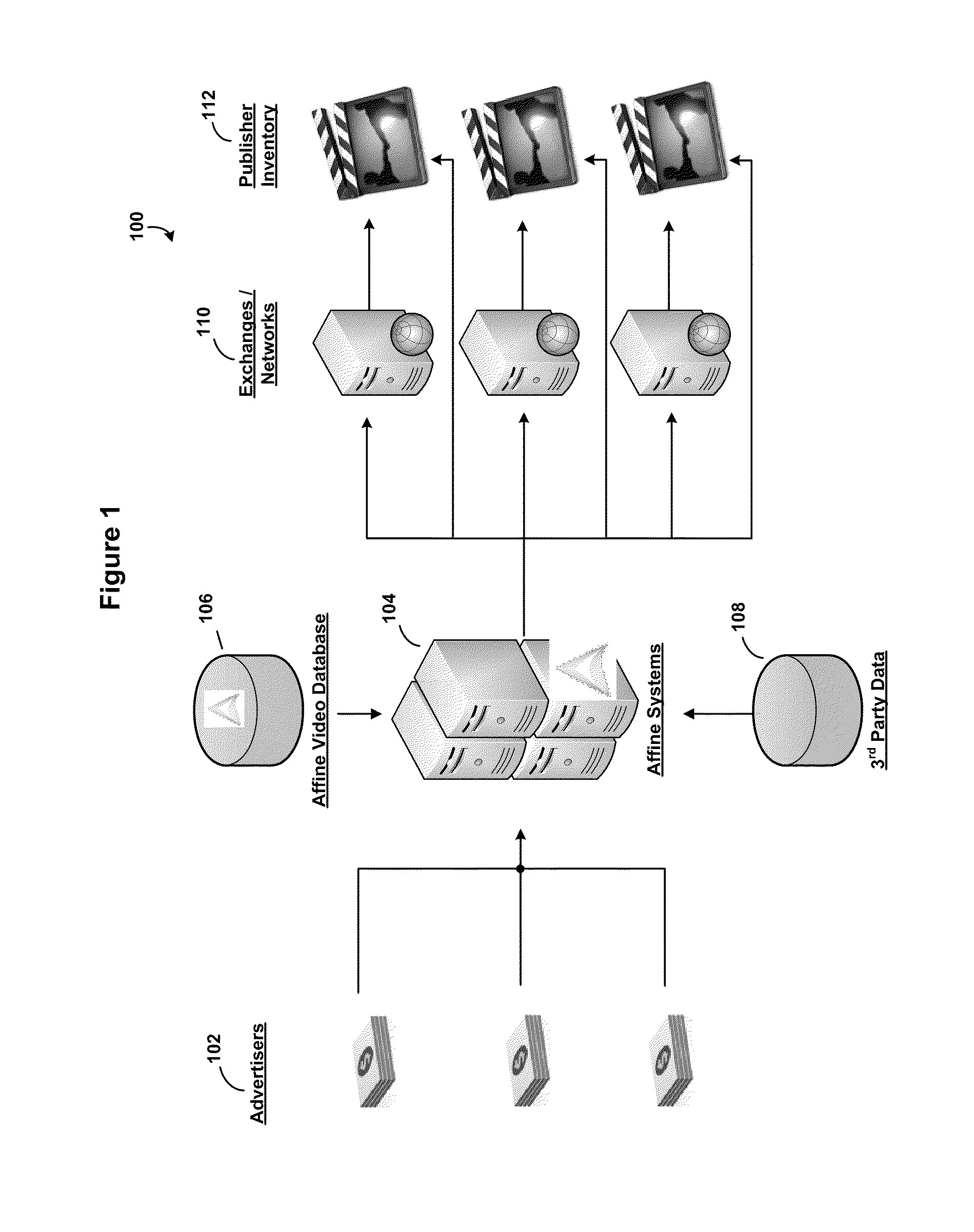

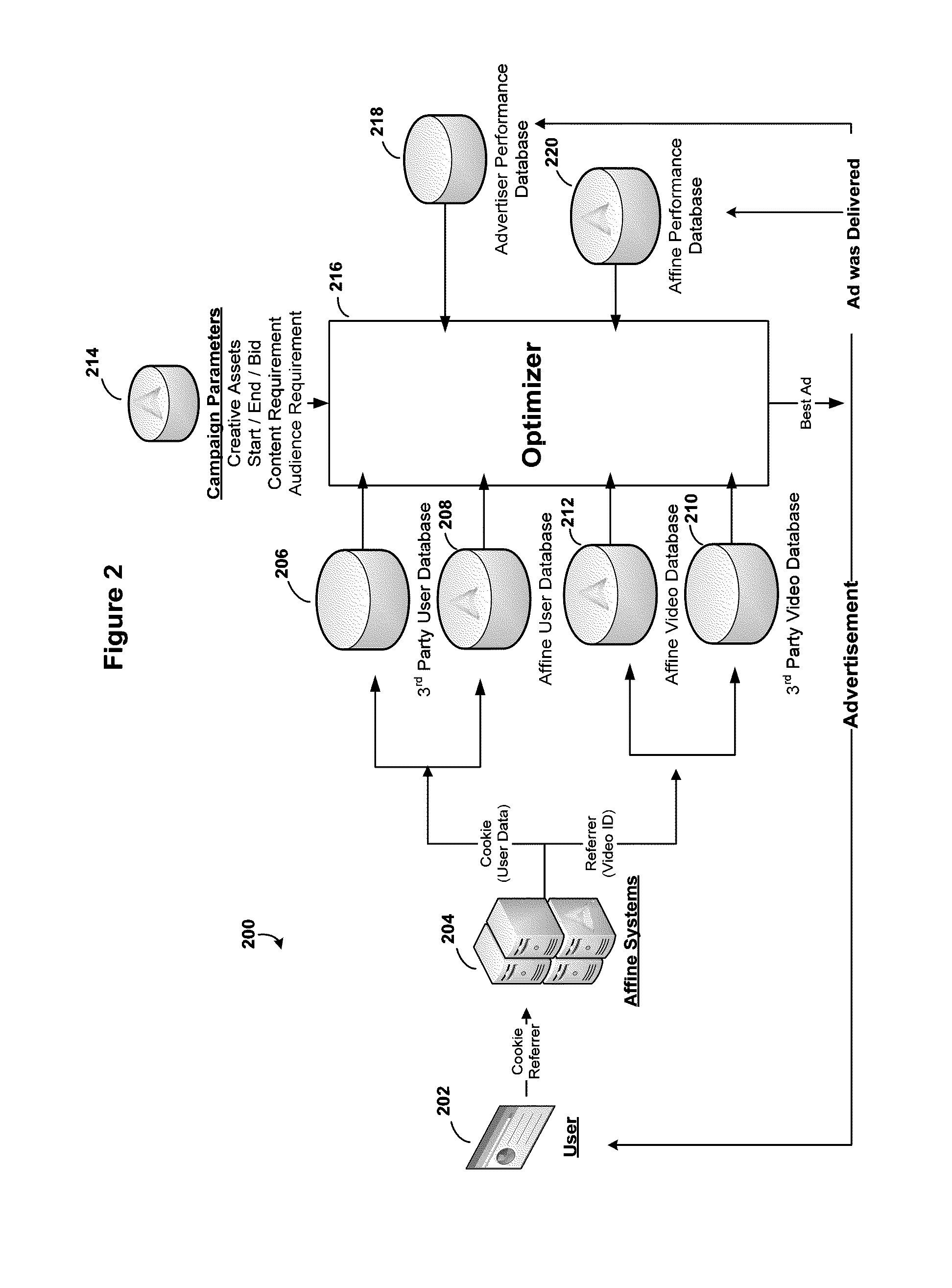

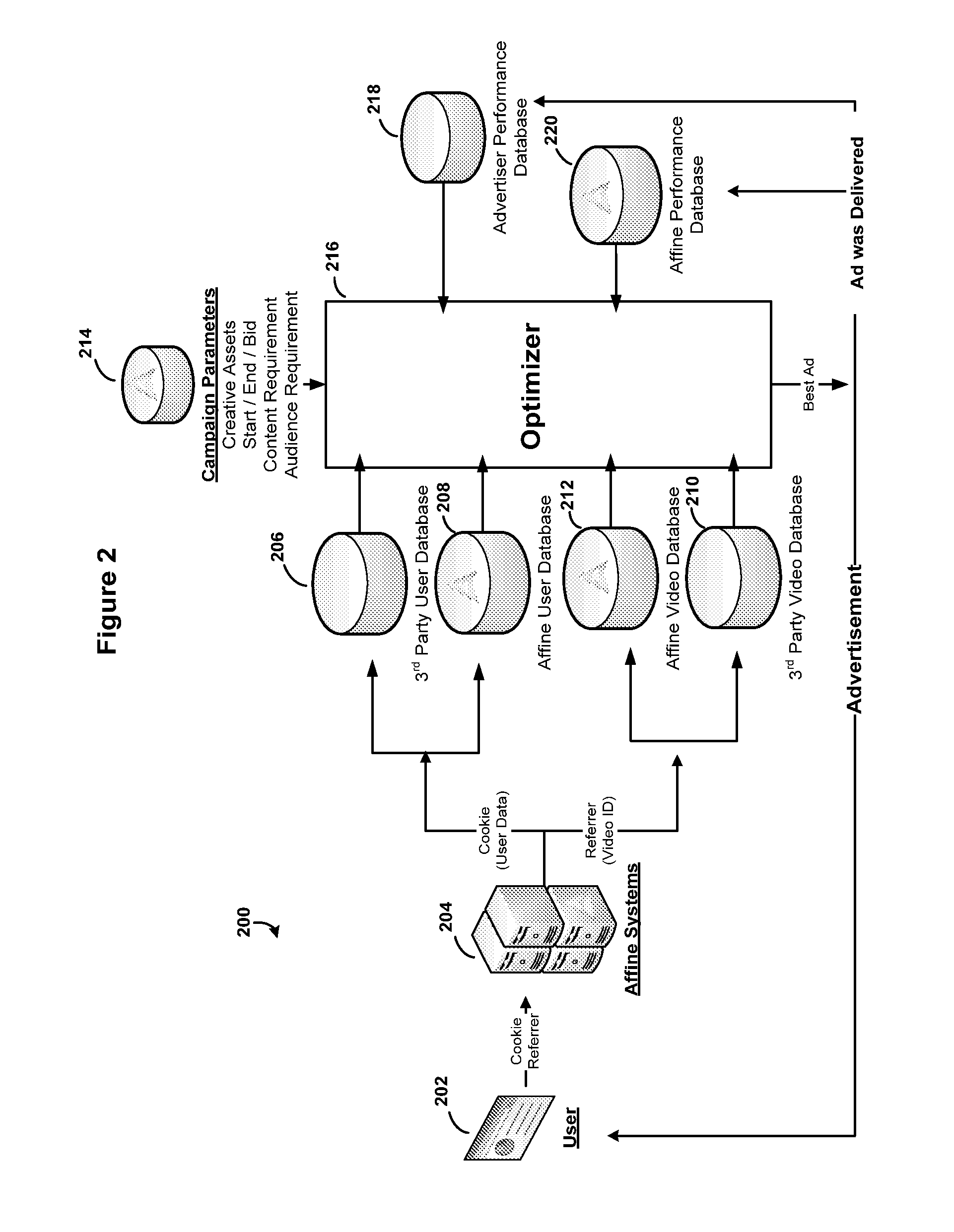

Systems and methods for automatically matching in real-time an advertisement with a video desired to be viewed by a user are provided. A database is created that stores one or more attributes (e.g., visual metadata relating to objects, faces, scene classifications, pornography detection, scene segmentation, production quality, fingerprinting) associated with a plurality of videos. Supervised machine learning can be used to create signatures that uniquely identify particular attributes of interest, which can then be used to generate the attributes associated with the plurality of videos. When a user requests to view an on-line video having associated with it an advertisement, an advertisement can be selected for display with the video based on matching an advertiser's requirements or campaign parameters with the stored attributes associated with the requested video, with the user's information, or a combination thereof. The displayed advertisement can function as a hyperlink that allows a user to select to receive additional information about the advertisement. The performance or effectiveness of the selected advertisements can be measured and recorded.

Owner:CONVERSANT

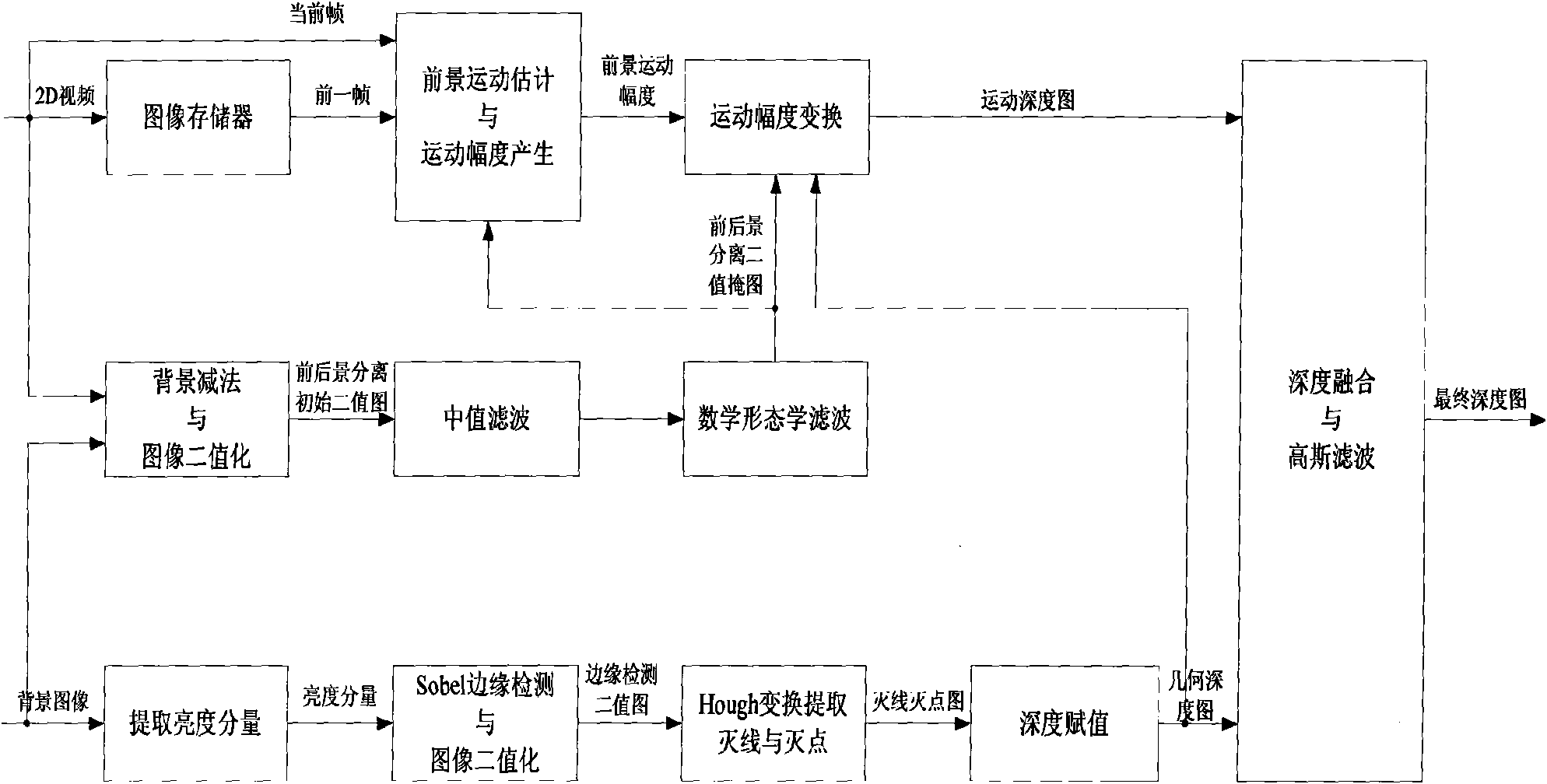

Depth extraction method of merging motion information and geometric information

ActiveCN101640809AQuality improvementSuppress background noiseImage analysisSteroscopic systemsScene segmentationMotion vector

The invention discloses a depth extraction method of merging motion information and geometric information, which comprises the following steps: (1) carrying out scene segmentation on each frame of two-dimensional video image, and separating the static background and the dynamic foreground; (2) processing a scene segmentation chart by binaryzation and filtering; (3) generating a geometric depth chart of the static background based on the geometric information; (4) calculating the motion vector of the foreground object, and converting the motion vector into the motion amplitude; (5) linearly transforming the motion amplitude of the foreground object according to the position of the foreground object, and obtaining a motion depth chart; and (6) merging the motion depth chart and the geometricdepth chart, and filtering to obtain a final depth chart. The method only calculates the motion vector of the separated dynamic foreground object, thereby eliminating the mismatching points of the background and reducing the amount of calculation. Meanwhile, the motion amplitude of the foreground object is linearly transformed according to the position of the foreground object, the motion amplitude is merged into the background depth, thereby integrally improving the quality of the depth chart.

Owner:万维显示科技(深圳)有限公司

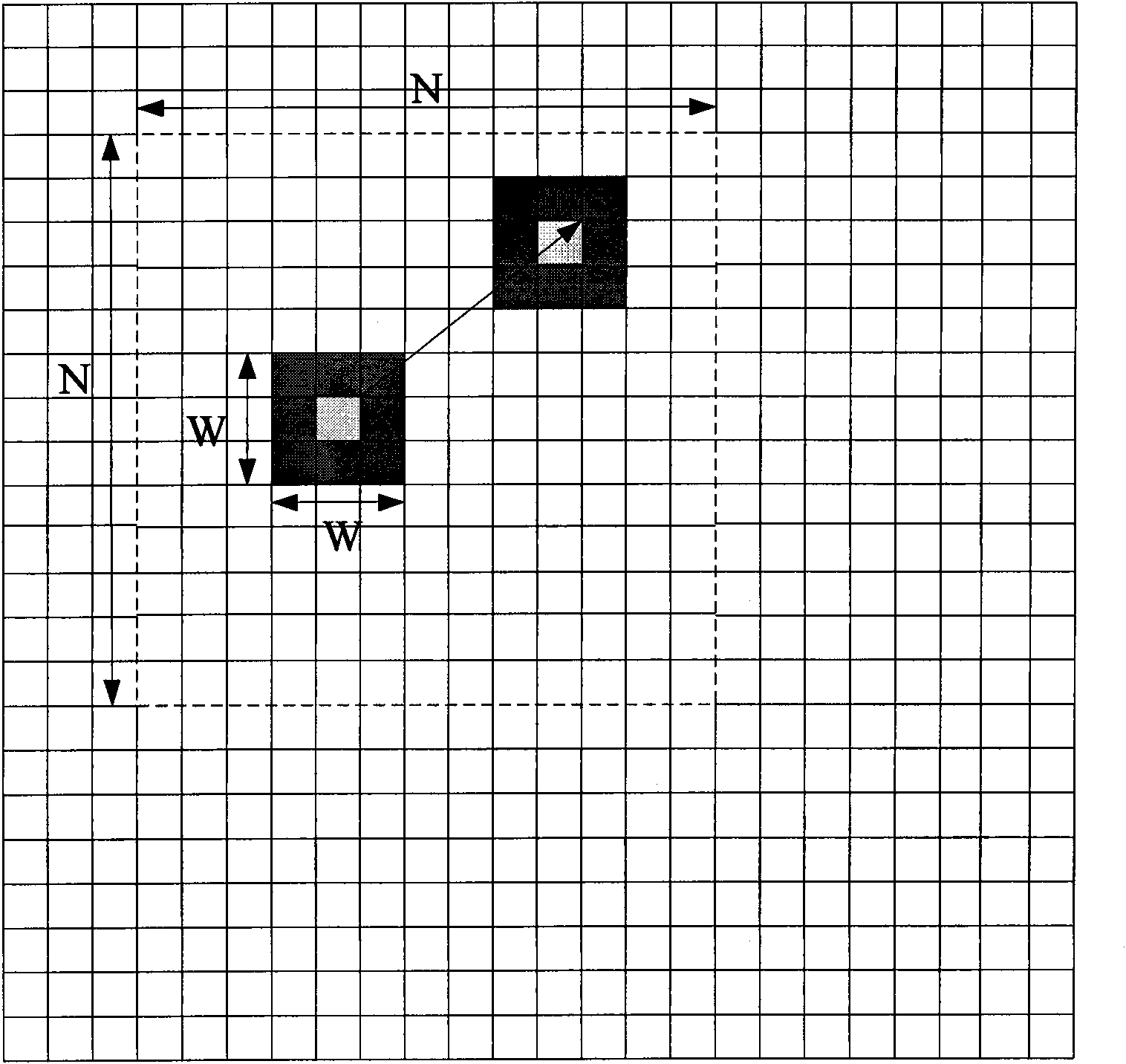

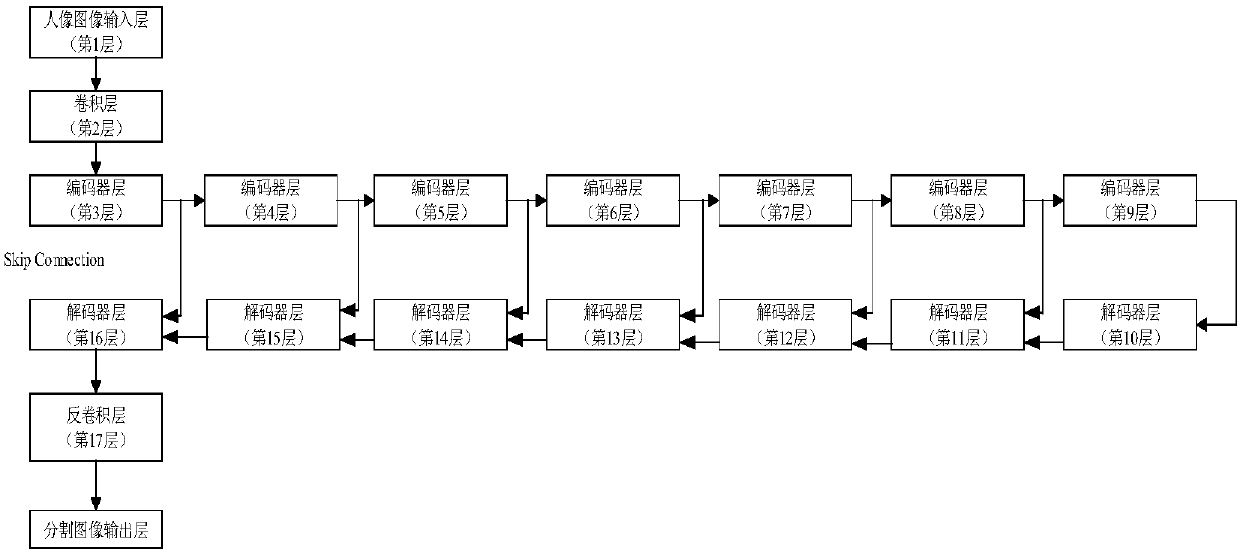

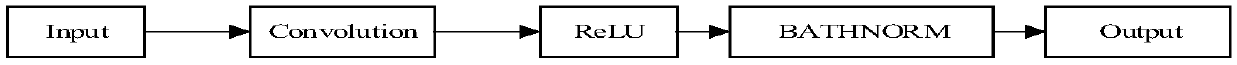

Generative adversarial network-based pixel-level portrait cutout method

ActiveCN107945204AImprove Segmentation AccuracyGood segmentation effectImage enhancementImage analysisConditional random fieldData set

The invention discloses a generative adversarial network-based pixel-level portrait cutout method and solves the problem that massive data sets with huge making costs are needed to train and optimizea network in the field of machine cutout. The method comprises the steps of presetting a generative network and a judgment network of an adversarial learning mode, wherein the generative network is adeep neural network with a jump connection; inputting a real image containing a portrait to the generative network for outputting a person and scene segmentation image; inputting first and second image pairs to the judgment network for outputting a judgment probability, and determining loss functions of the generative network and the judgment network; according to minimization of the values of theloss functions of the two networks, adjusting configuration parameters of the two networks to finish training of the generative network; and inputting a test image to the trained generative network for generating the person and scene segmentation image, randomizing the generated image, and finally inputting a probability matrix to a conditional random field for further optimization. According tothe method, a training image quantity is reduced in batches; and the efficiency and the segmentation precision are improved.

Owner:XIDIAN UNIV

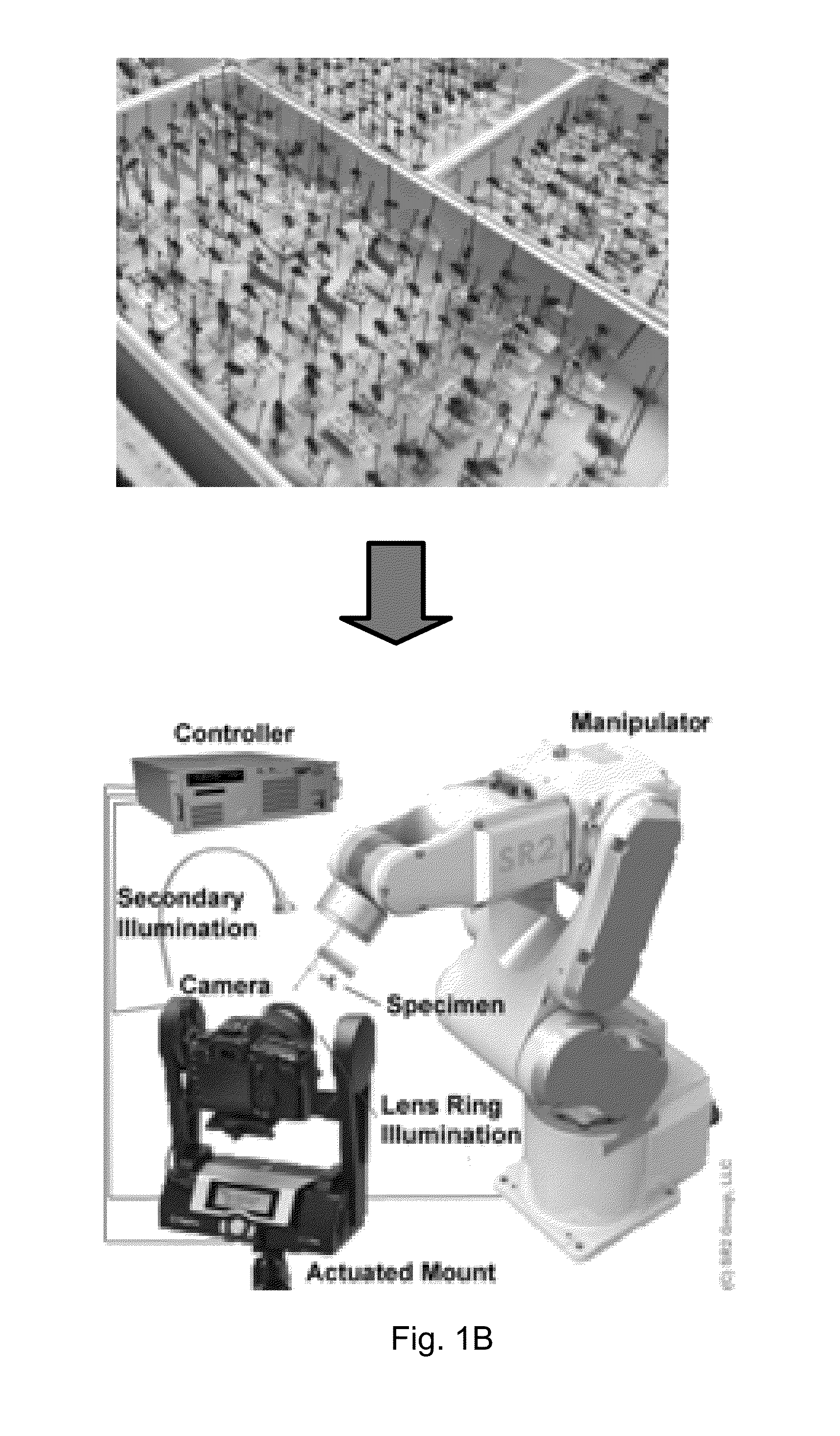

System and method for adaptively conformed imaging of work pieces having disparate configuration

ActiveUS9258550B1Using optical meansBiometric pattern recognitionRelative displacementScene segmentation

A system is provided for adaptively conformed imaging of work pieces having disparate configurations, which comprises a mount unit for holding at least one work piece, and an imaging unit for capturing images of the work piece held by the mount unit. At least one manipulator unit is coupled to selectively manipulate at least one of the mount and imaging units for relative displacement therebetween. A controller coupled to automatically actuate the manipulator and imaging units executes scene segmentation about the held work piece, which spatially defines at least one zone of operation in peripherally conformed manner about the work piece. The controller also executes an acquisition path mapping for the work piece, wherein a sequence of spatially offset acquisition points are mapped within the zone of operation, with each acquisition point defining a vantage point for the imaging unit to capture an image of the work piece from.

Owner:REALITY ANALYTICS INC

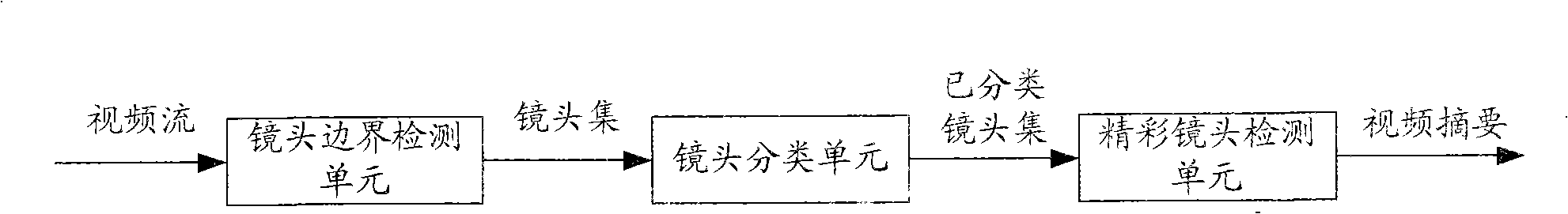

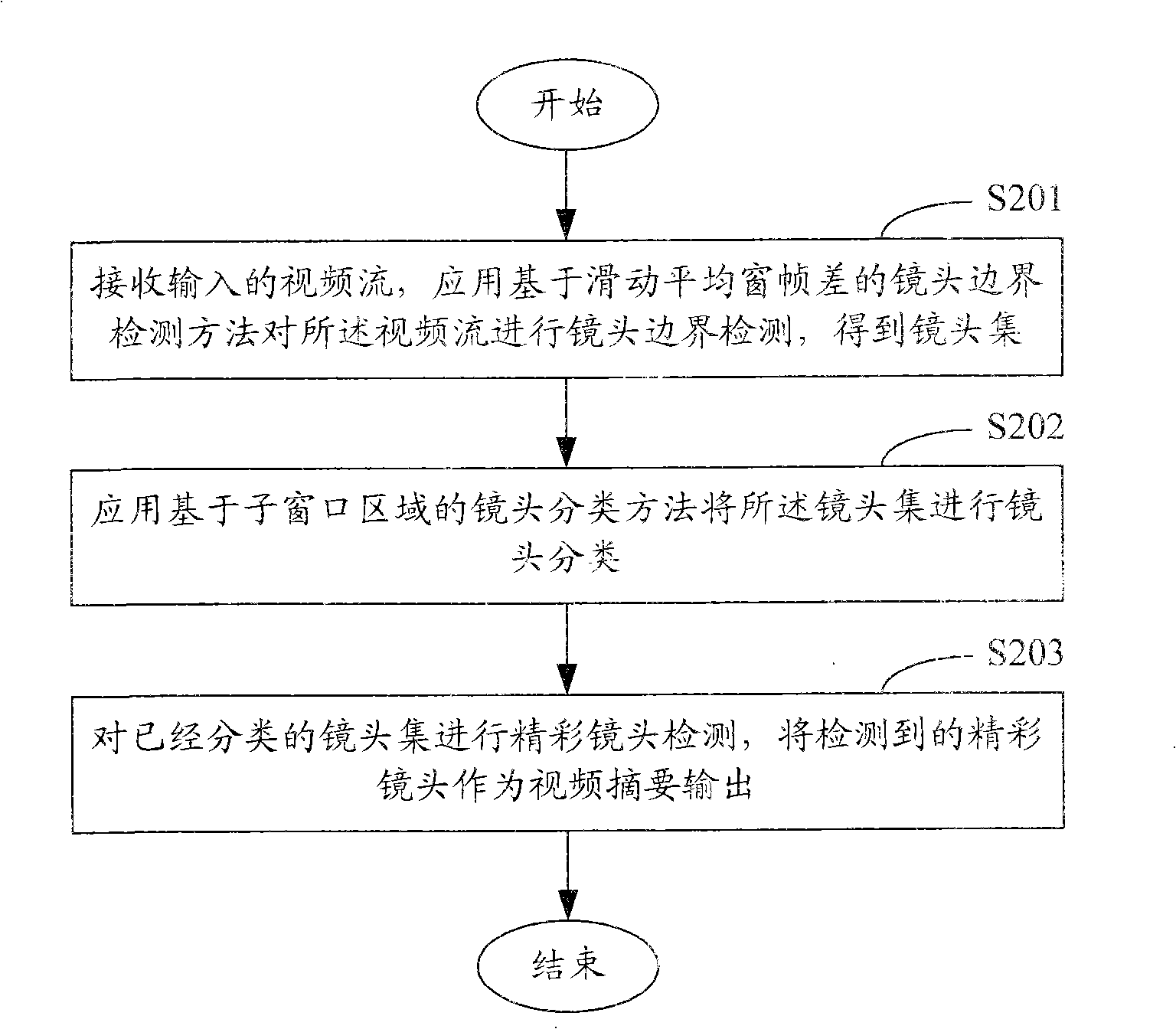

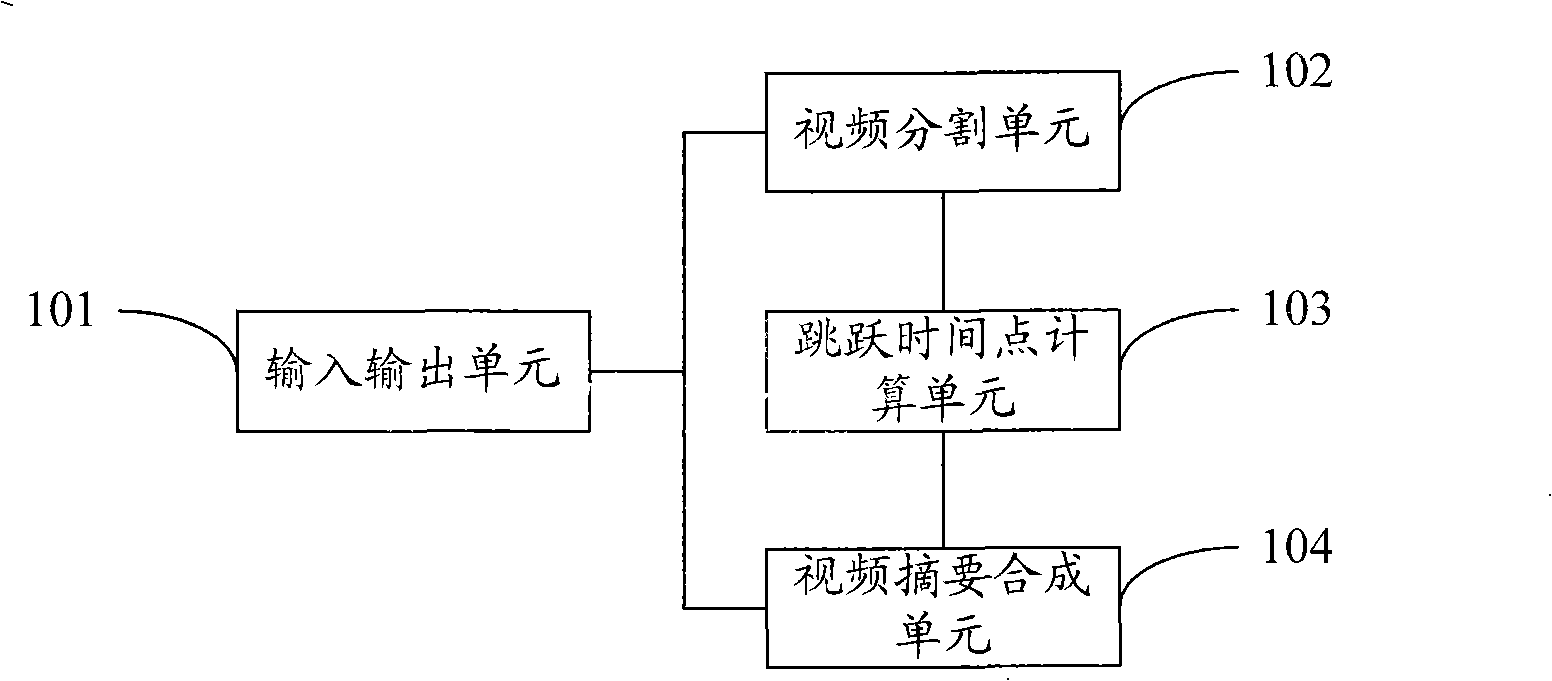

Method, system and device for generating video frequency abstract

ActiveCN101308501AImprove completenessImprove universalityCharacter and pattern recognitionSpecial data processing applicationsGeneration processFeature vector

The invention relates to the electronic communications and video image processing field, and provides a video summary generation method, a system and a device. The method comprises the following steps: A, to receive and divide the inputted video to get candidate time point sequences; B, through the scene segmentation algorithm, to get a jumping time point sequence selected from the candidate time point sequences; C, according to the jumping time point sequence, to extract the video segment corresponding to each jumping time point, and to combine the all the video segments into a video summary and output the summary. In the video summary generation process, the invention firstly acquires the eigenvector of each video frame and selects the jumping time point sequence through hierarchical clustering, and finally extracts the corresponding video frames to form the video summary based on the jumping time point sequence, so as to cover the scene as much as possible and achieve the biggest difference among the video frames to enhance the completeness of the information of the video summary; in addition, the invention has no requirements to video types so as to improve the universality in technology application.

Owner:TENCENT TECH (SHENZHEN) CO LTD

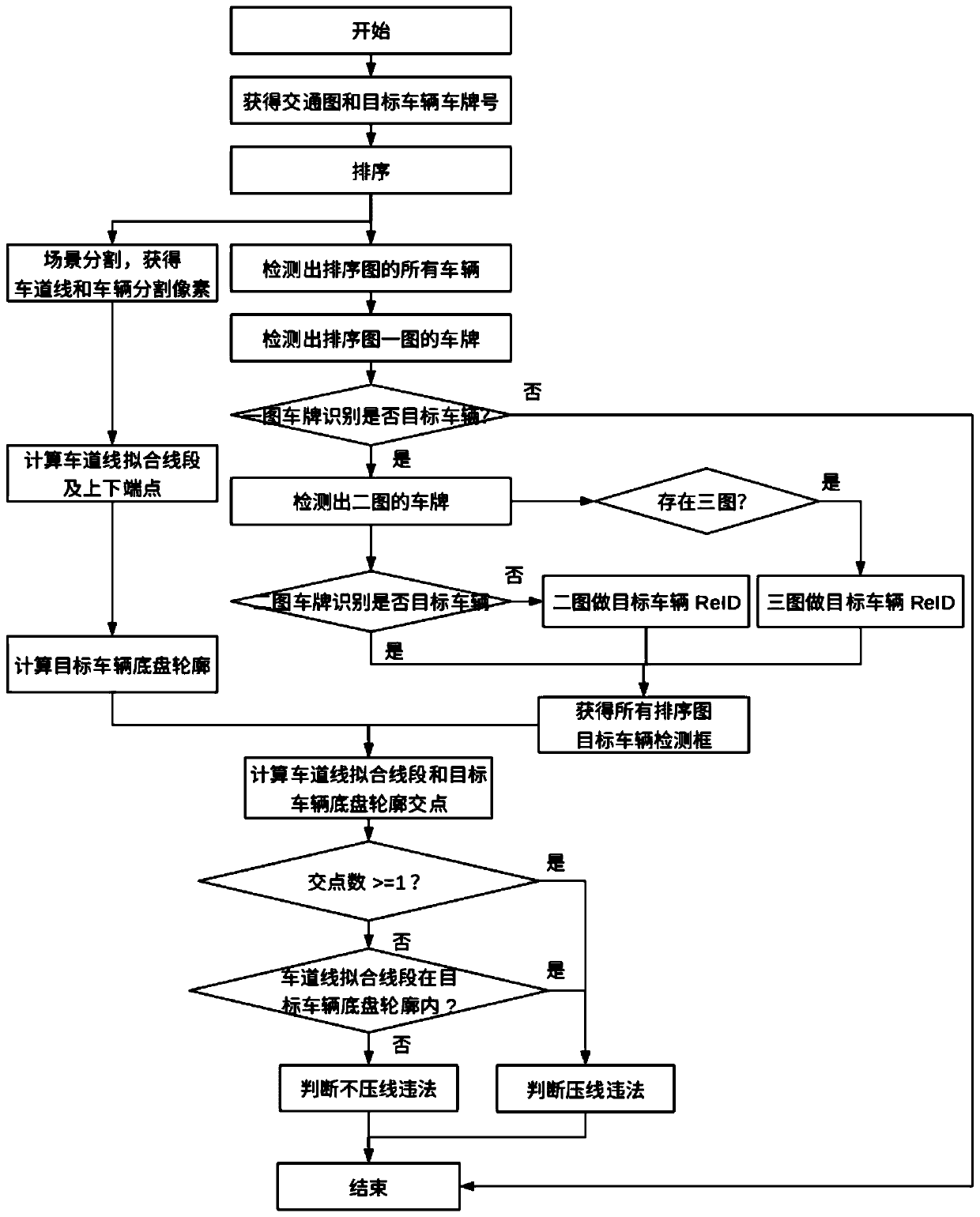

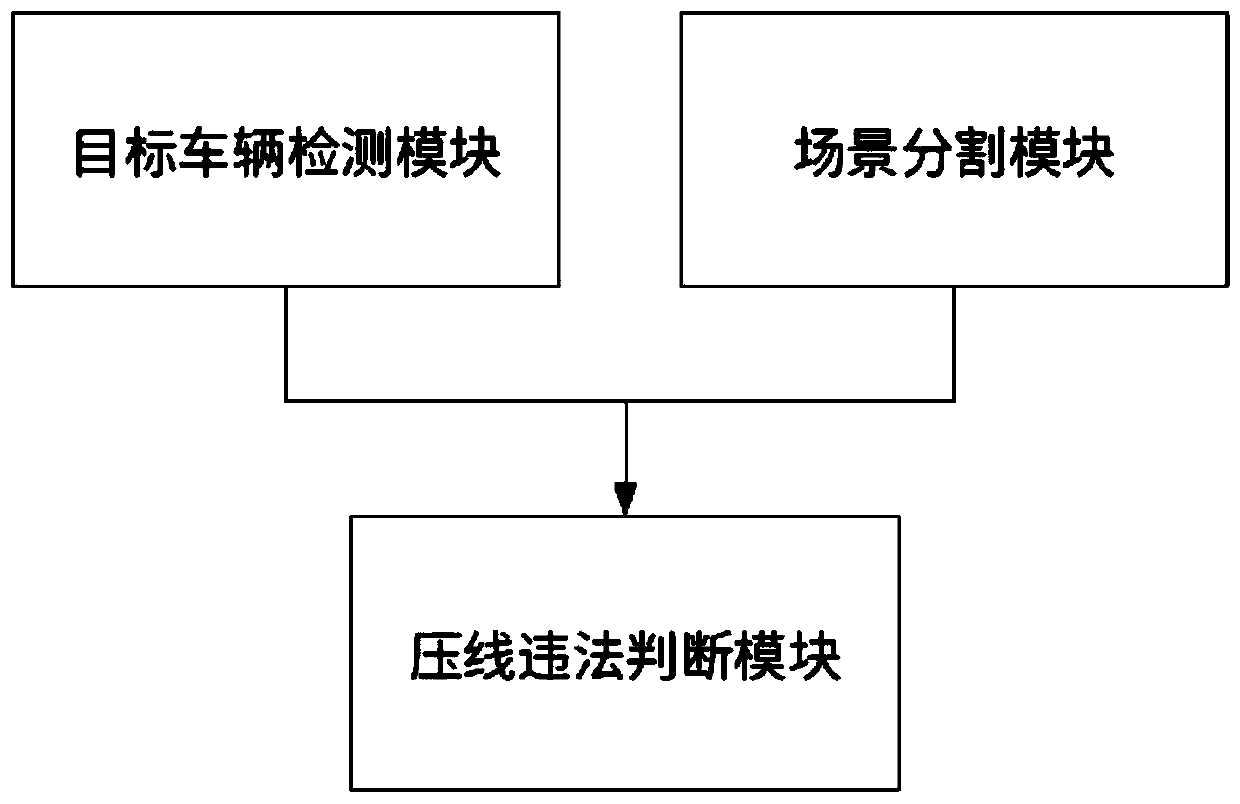

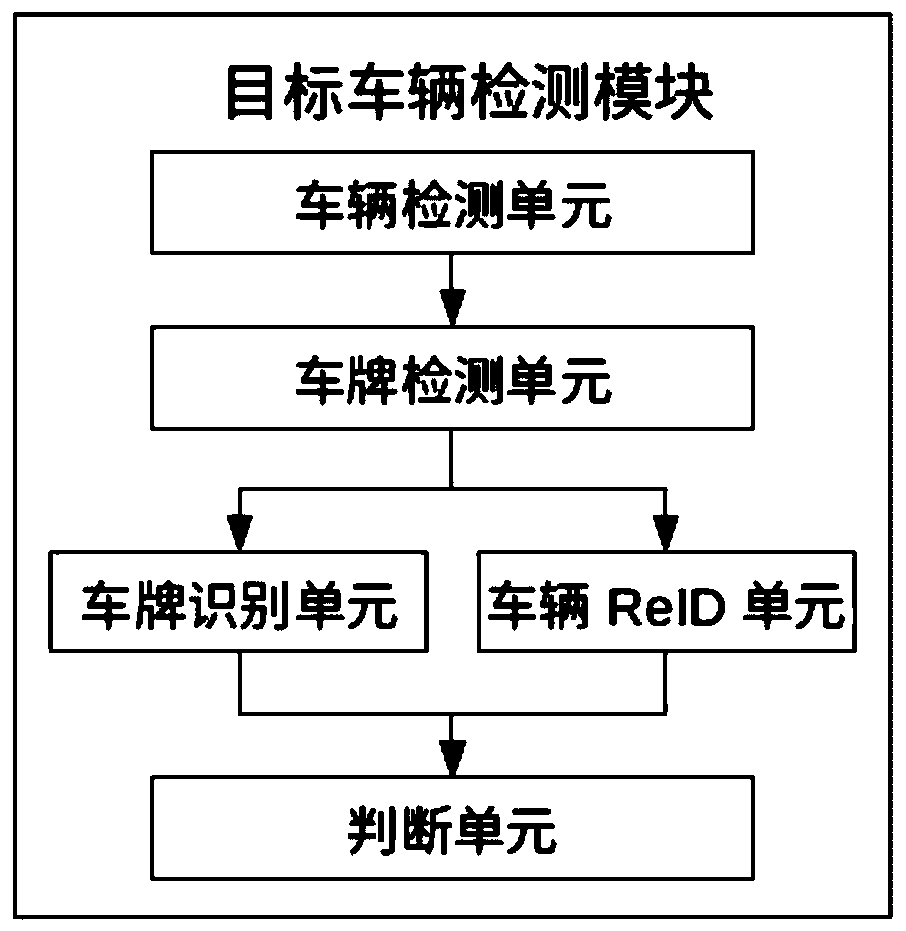

Deep learning based automatic checking method against vehicle lane-pressing illegal behavior

ActiveCN109949578AMeet the characteristicsRealize fully automatic detectionRoad vehicles traffic controlCharacter and pattern recognitionLearning basedComputer graphics (images)

The invention discloses a deep learning based automatic checking method against a vehicle lane-pressing illegal behavior. The method comprises the following steps that a snapshot picture of a camera is obtained, cut and ordered; the license plate number of a target vehicle is obtained; a deep learning based target vehicle detection module is used to detect the target vehicle in different ordered images, and a target vehicle detection frame is obtained; scene cutting is carried out on the ordered image by means of deep learning based scene cutting module, and segmented solid line pixels are obtained; in each ordered image, a vehicle lane pressing illegal behavior determining module determines whether there is an intersection point between a solid line fitting straight line and a straight line of a lower frame of the target vehicle detection frame by calculation; and whether the target vehicle in the group of ordered images has a lane pressing illegal behavior is determined according toposition of the intersection point. Thus, the method is suitable for illegal behavior checking via the pictures shot by traffic cameras in the real scene.

Owner:上海眼控科技股份有限公司

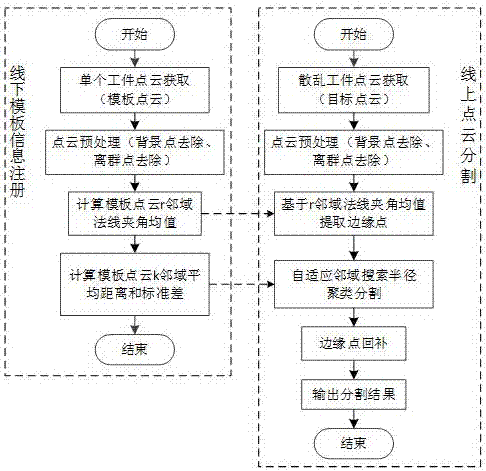

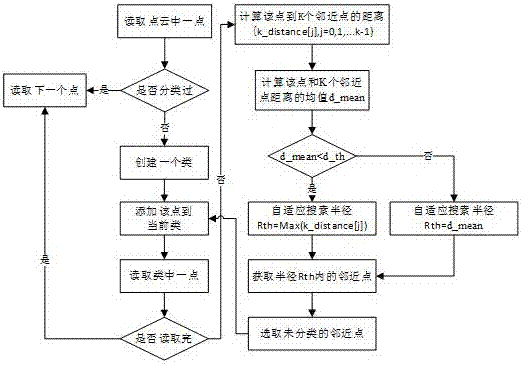

Improved Euclidean clustering-based scattered workpiece point cloud segmentation method

ActiveCN107369161APrevent oversegmentationImprove removal efficiencyImage enhancementImage analysisScene segmentationNeighborhood search

The invention provides an improved Euclidean clustering-based scattered workpiece point cloud segmentation method and relates to the field of point cloud segmentation. According to the method, a corresponding scene segmentation scheme is proposed in view of inherent disorder and randomness of scattered workpiece point clouds. The method comprises the specific steps of preprocessing the point clouds: removing background points by using an RANSAC method, and removing outliers by using an iterative radius filtering method. A parameter selection basis is provided for online segmentation by adopting an information registration method for offline template point clouds, thereby increasing the online segmentation speed; a thought of removing edge points firstly, then performing cluster segmentation and finally supplementing the edge points is proposed, so that the phenomenon of insufficient segmentation or over-segmentation in a clustering process is avoided; during the cluster segmentation, an adaptive neighborhood search radius-based clustering method is proposed, so that the segmentation speed is greatly increased; and surface features of workpieces are reserved in edge point supplementation, so that subsequent attitude locating accuracy can be improved.

Owner:WUXI XINJIE ELECTRICAL

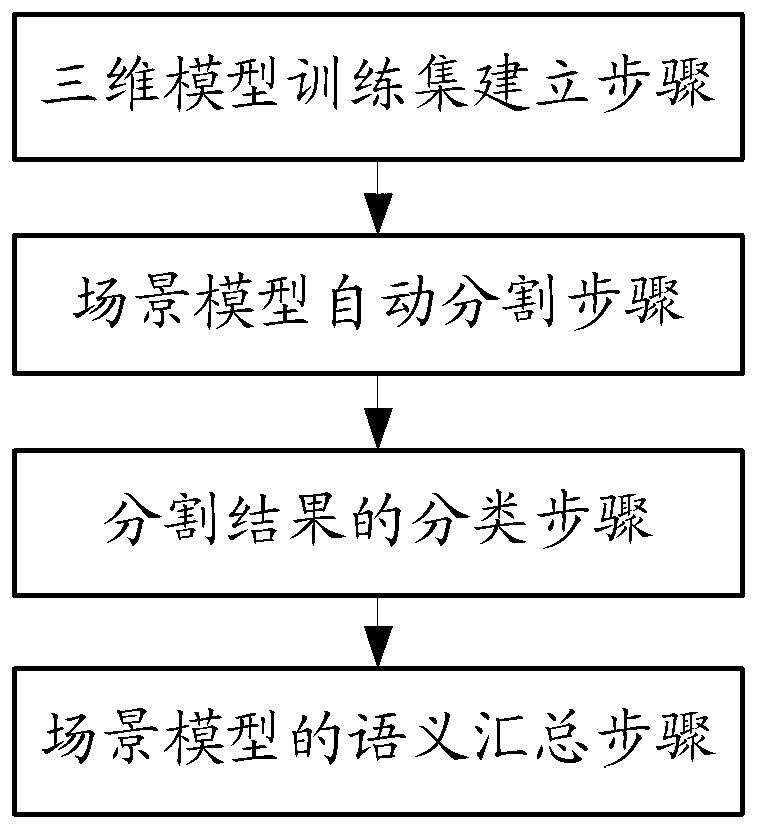

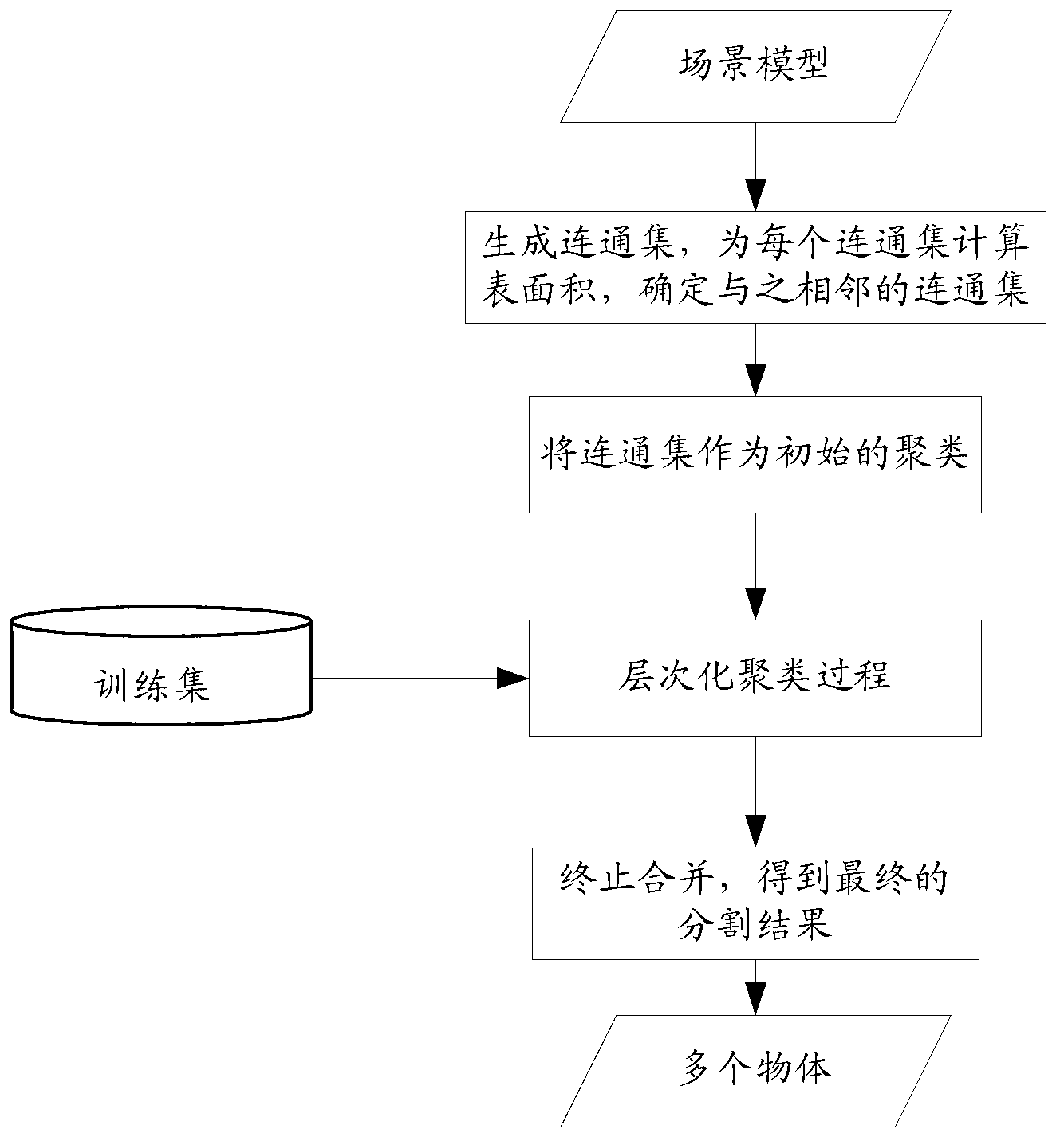

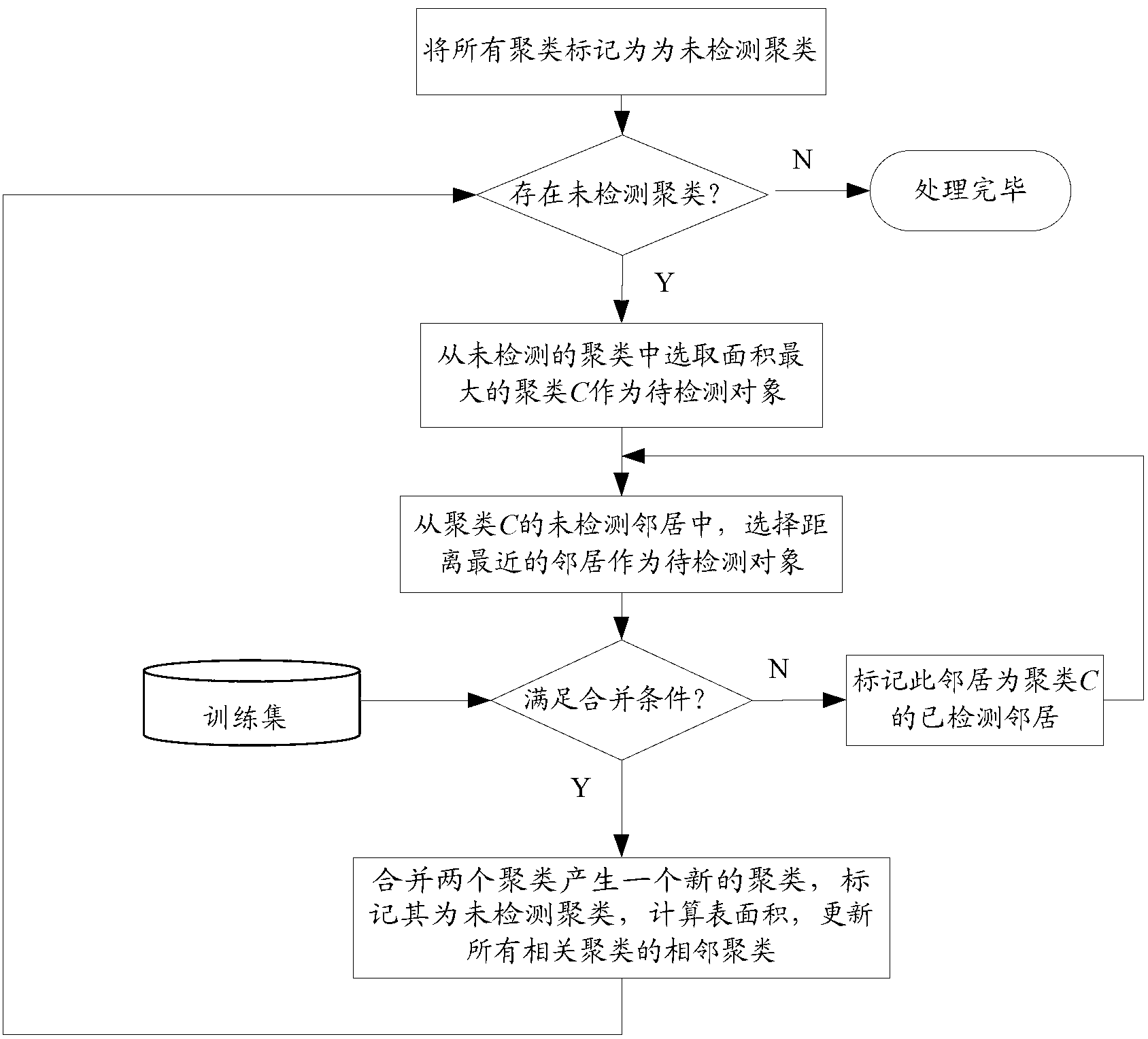

Segmentation and semantic annotation method of geometry grid scene model

InactiveCN103268635ASolve difficult problems that are difficult to deal with touching objectsSemantic AnnotationCharacter and pattern recognition3D modellingCluster algorithmAutomatic segmentation

The invention relates to the technical field of computer graphics, in particular to a segmentation and semantic annotation method of a geometry grid scene model. The method includes the following steps of building a three-dimensional training set, wherein each three-dimensional model in the training set is required to be a single object; automatically segmenting the scene model, wherein the scene model is segmented into multiple objects according to the training set and on the basis of the clustering hierarchy algorithm; classifying segmentation results, extracting shape characteristics of each object obtained through segmentation, and deciding a class label of the object according to the classification algorithm; collecting the semanteme of the scene model, and collecting the class labels of the objects to obtain a semantic label set of the scene model. Compared with the prior art, the method has the advantages that known shape knowledge in the training set is used in the automatic segmentation method of the scene model for assisting decision making. Therefore, the problem that contact objects are difficult to process during scene segmentation is solved, and semantic annotation of the scene model better fits visual perception of people for scenes.

Owner:BEIJING JIAOTONG UNIV

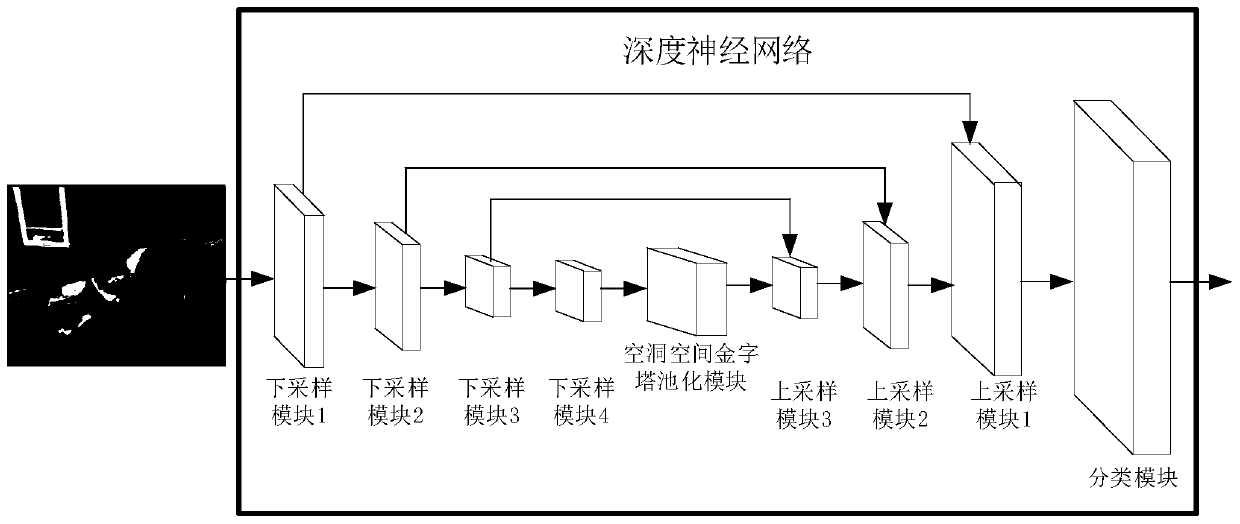

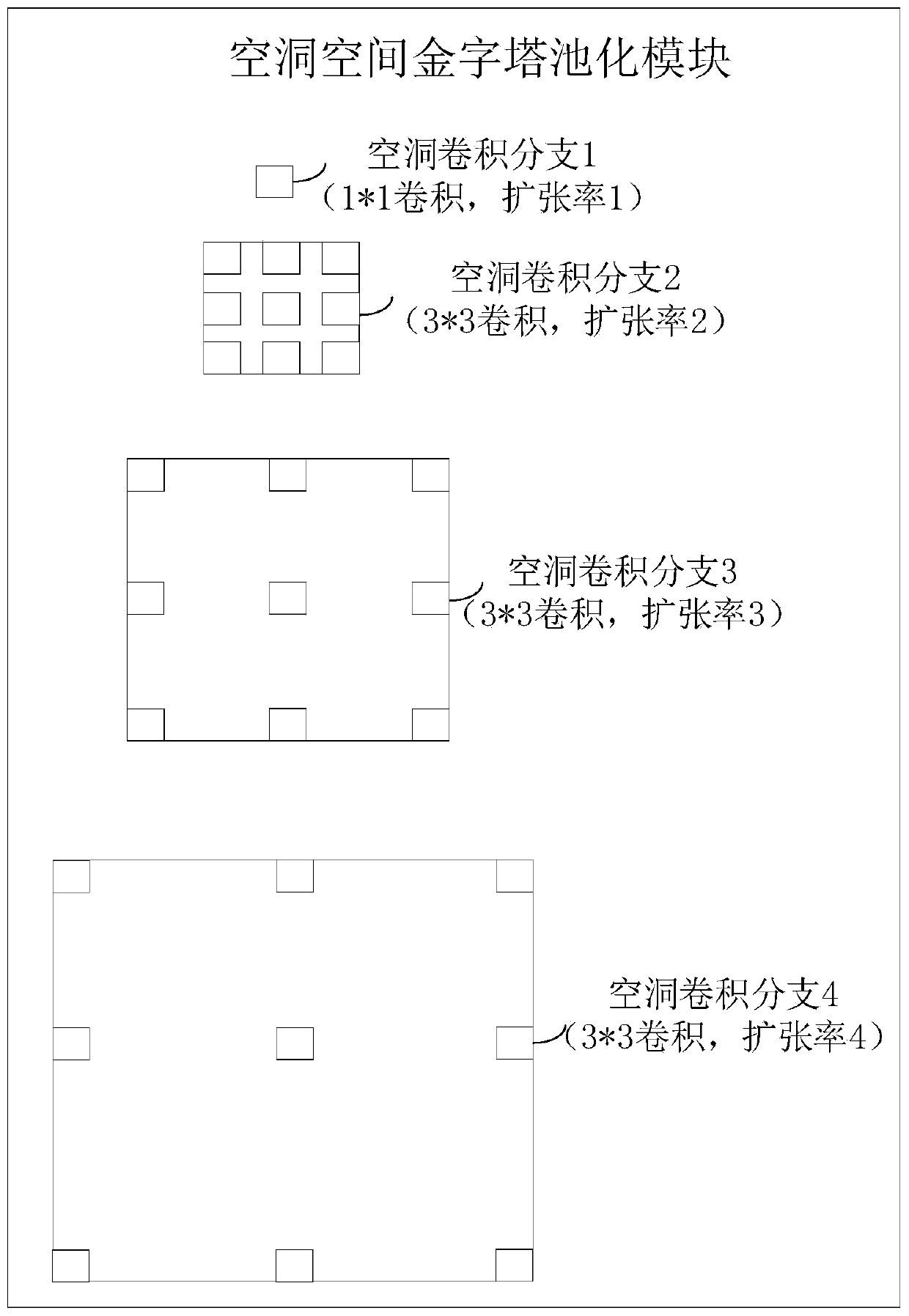

Scene segmentation method and device, computer equipment and storage medium

ActiveCN110136136ASmall amount of calculationReduce the amount of parametersImage enhancementImage analysisScene segmentationComputer module

The invention relates to a scene segmentation method and device, computer equipment and a storage medium, and relates to the technical field of machine learning. The method includes: inputting the image to be identified into the deep neural network; carrying out depth separable convolution on the image through the down-sampling module; obtaining a first feature map of which the size is smaller than that of the image; performing hole convolution on the first feature map through a hole space pyramid pooling module; obtaining a second feature map of different scales, performing depth separable convolution on the second feature maps of different scales through an up-sampling module to obtain a third feature map with the same size as the image, and classifying each pixel in the third feature map through a classification module to obtain a scene segmentation result of the image. According to the invention, the calculation amount of scene segmentation through the deep neural network can be reduced, and the accuracy of scene segmentation through the deep neural network can be ensured.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

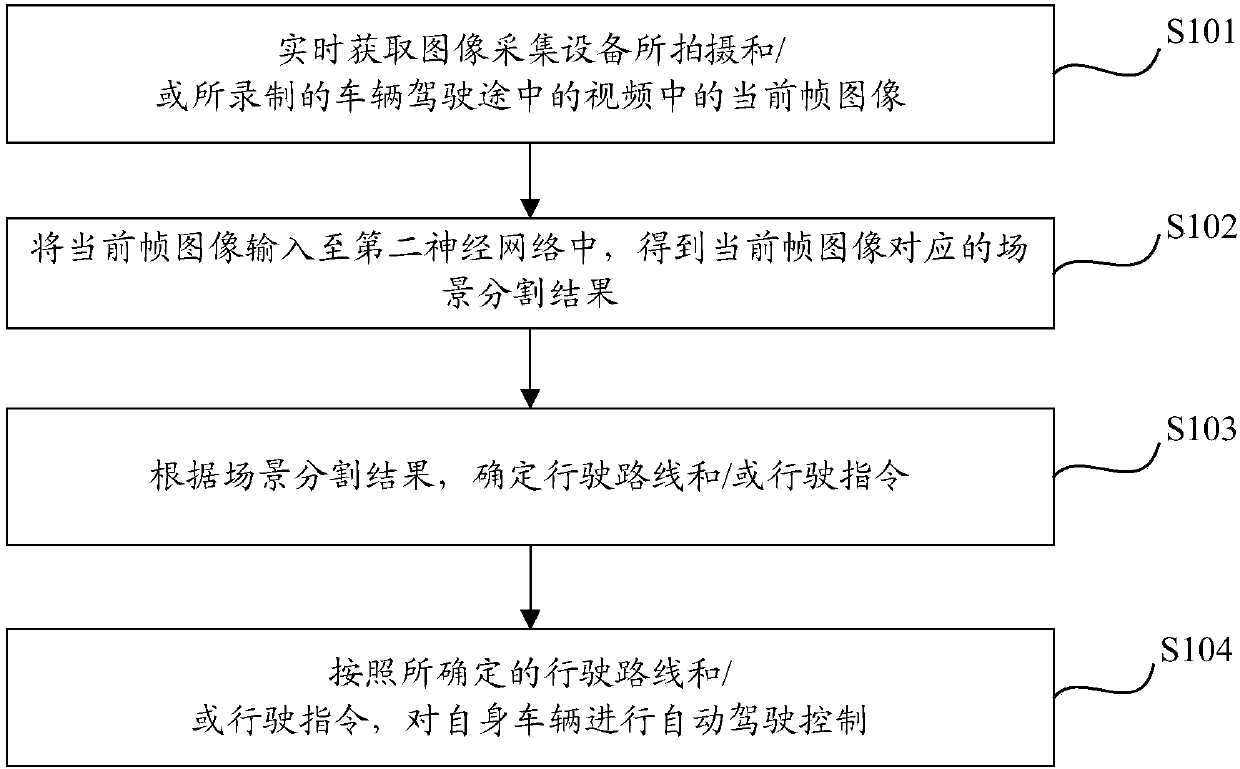

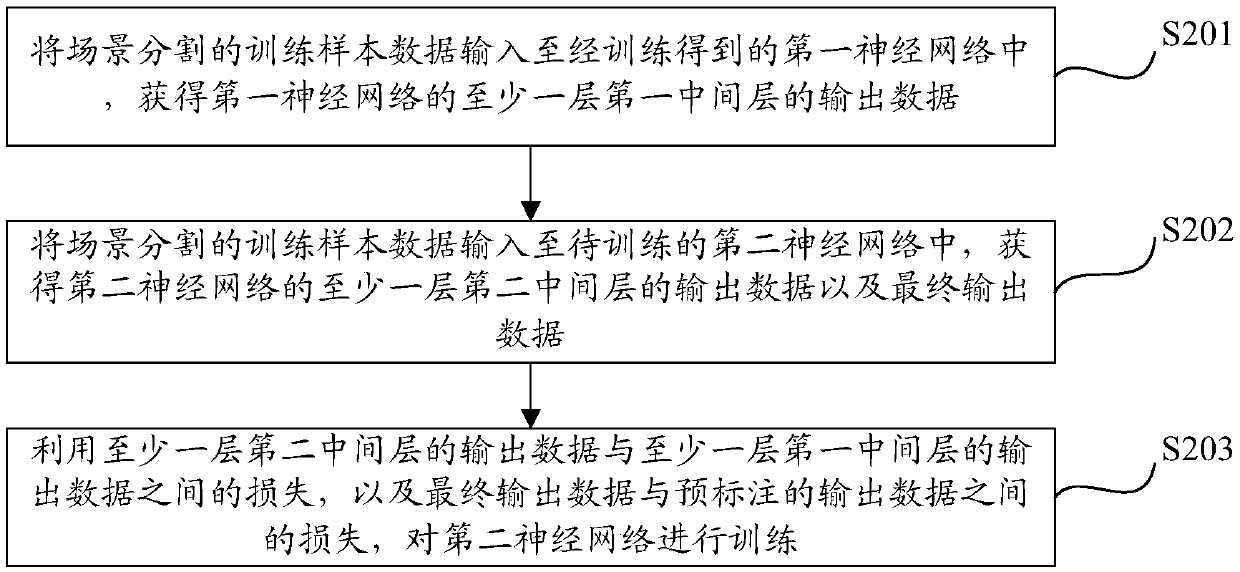

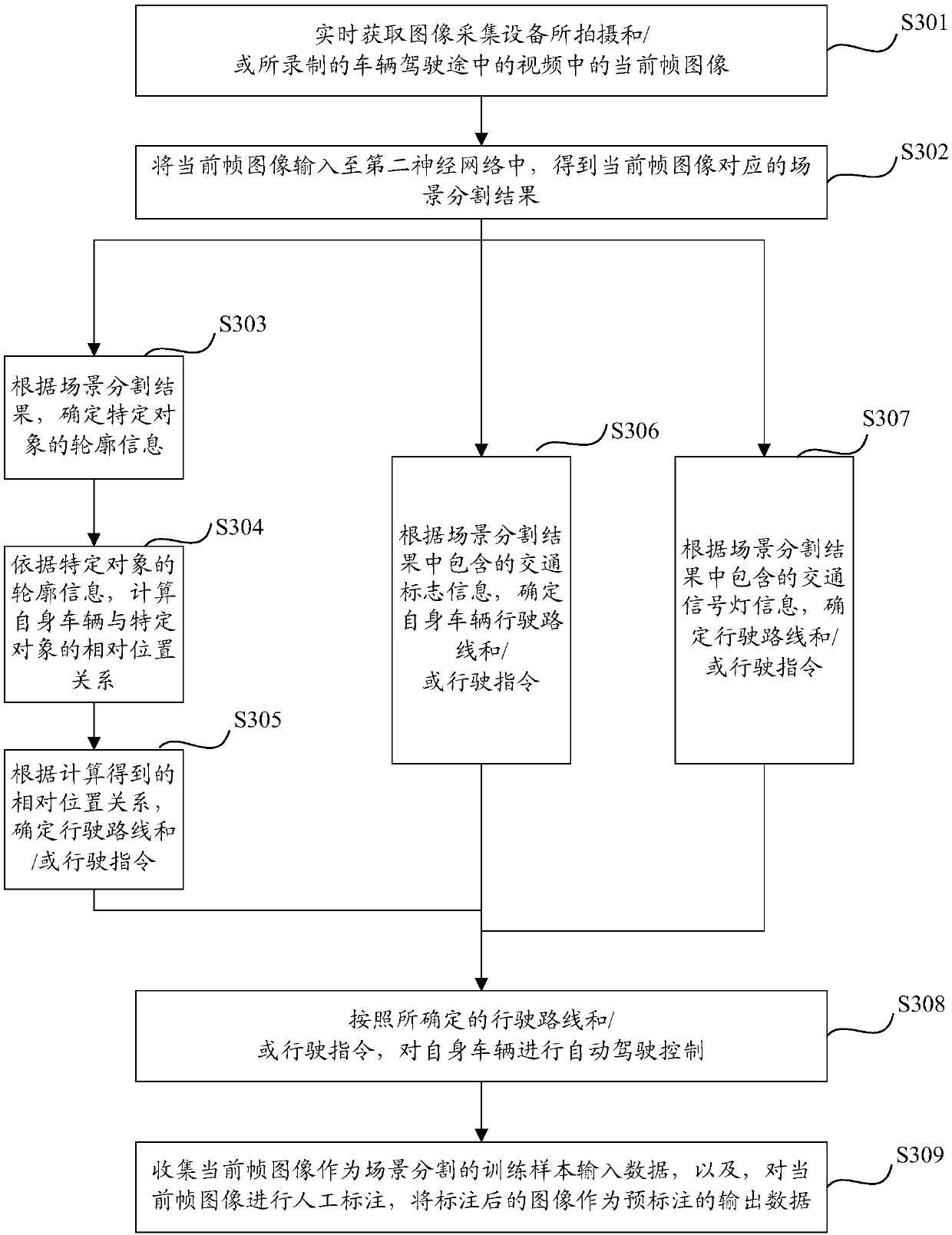

Automatic driving processing method and apparatus based on scene segmentation and computing device

InactiveCN107944375AImprove securityQuick fixCharacter and pattern recognitionPosition/course control in two dimensionsPattern recognitionScene segmentation

The invention discloses an automatic driving processing method and apparatus based on scene segmentation and a computing device. The method comprises the following steps: a current frame image in thevideo shot and / or recorded via an image collection device is obtained in real time during vehicle driving processes, the current frame image is input into a second neural network to obtain a scene segmentation result corresponding to the current frame image, the second neural network is obtained after output data of at least one intermediate layer of the pre-trained first neural network is subjected to guiding and training operation, and the first neural network is greater than the second neural network in terms of layer quantity. According to a scene segmentation result, a driving route and / or a driving instruction is determined; autonomous driving control is exerted on a vehicle is according to the determined driving route and / or driving instruction. Via use of the automatic driving processing method and apparatus based on scene segmentation and the computing device, the neural network with a small number of layers after training operation is used for achieving fast and accurate calculation so as to obtain the scene segmentation result, the scene segmentation result used for accurately determining the driving route and / or the driving instruction, and improvement of automatic driving safety can be facilitated.

Owner:BEIJING QIHOO TECH CO LTD

Systems and methods for matching an advertisement to a video

InactiveUS20130247083A1Improve accuracyVerify accuracyAdvertisementsSpecific information broadcast systemsHyperlinkScene segmentation

Systems and methods for automatically matching in real-time an advertisement with a video desired to be viewed by a user are provided. A database is created that stores one or more attributes (e.g., visual metadata relating to objects, faces, scene classifications, pornography detection, scene segmentation, production quality, fingerprinting) associated with a plurality of videos. Supervised machine learning can be used to create signatures that uniquely identify particular attributes of interest, which can then be used to generate the attributes associated with the plurality of videos. When a user requests to view an on-line video having associated with it an advertisement, an advertisement can be selected for display with the video based on matching an advertiser's requirements or campaign parameters with the stored attributes associated with the requested video, with the user's information, or a combination thereof. The displayed advertisement can function as a hyperlink that allows a user to select to receive additional information about the advertisement. The performance or effectiveness of the selected advertisements can be measured and recorded.

Owner:CONVERSANT

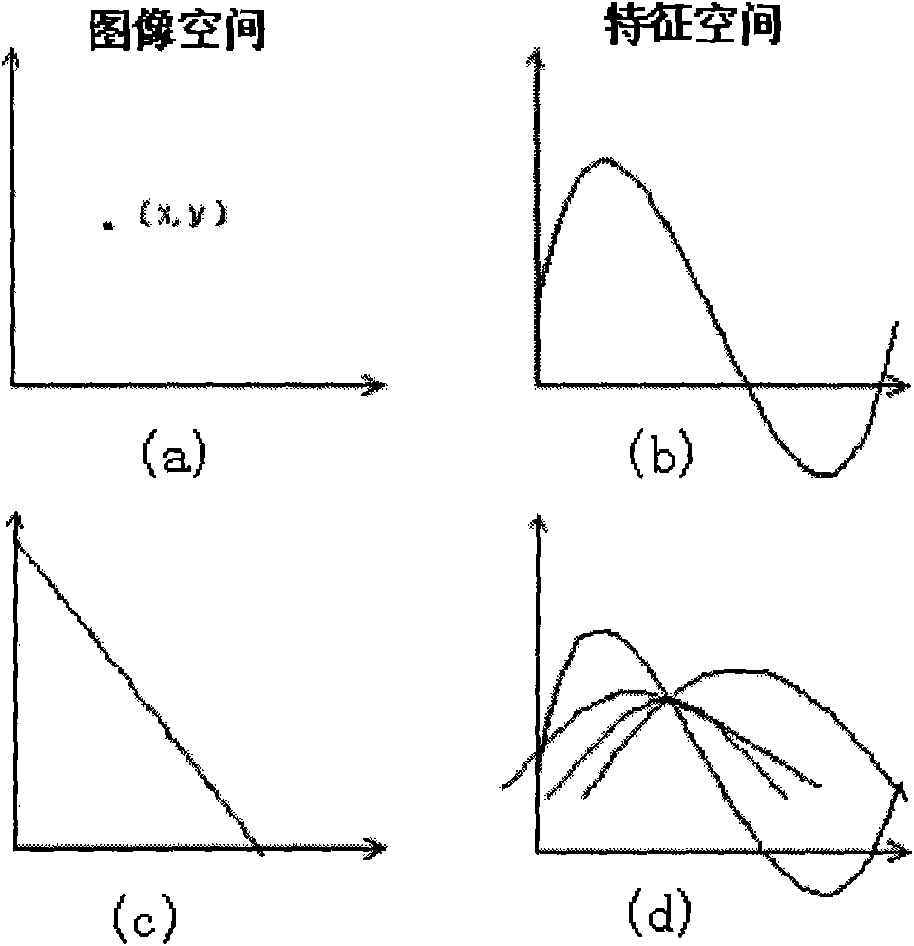

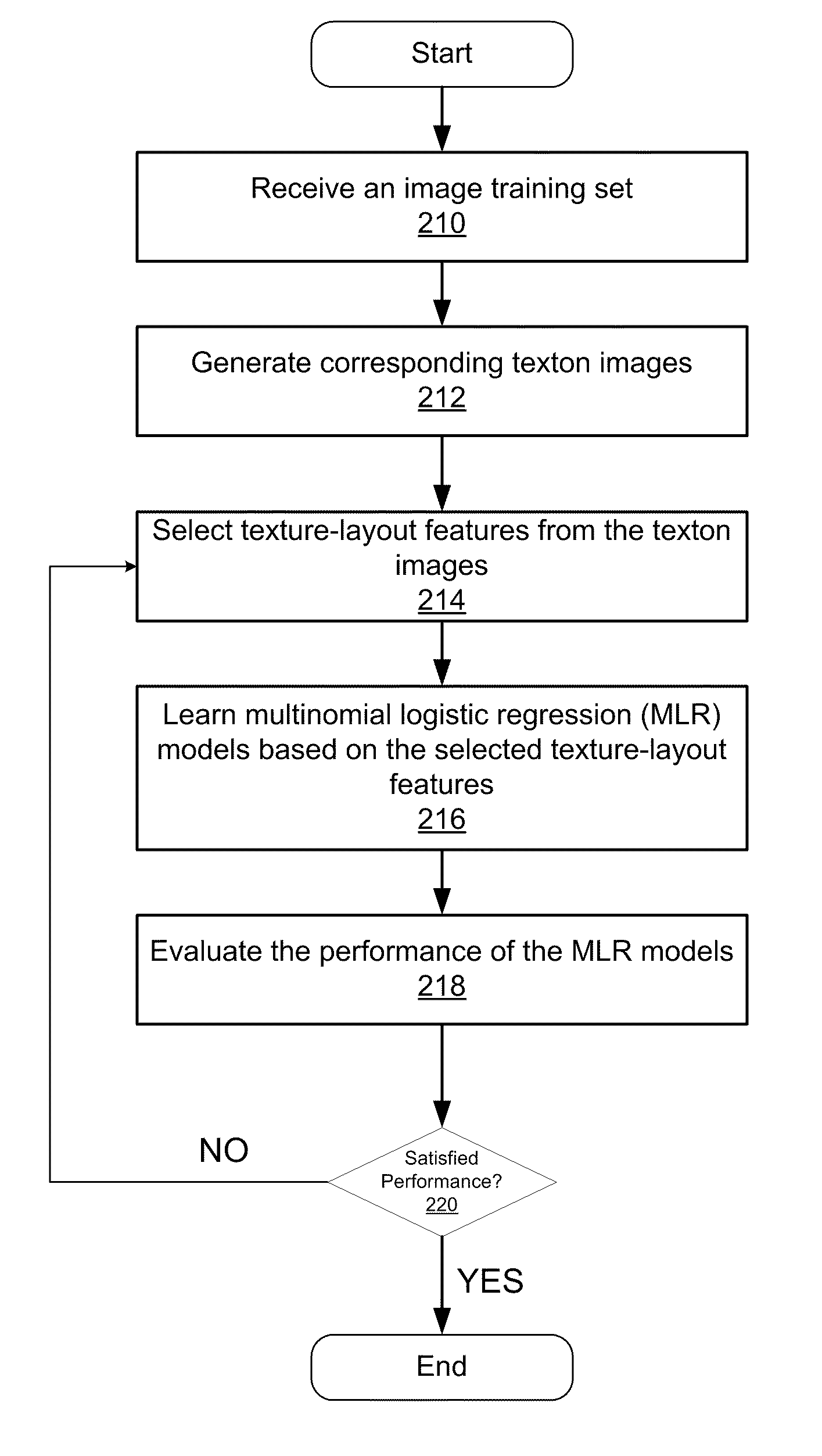

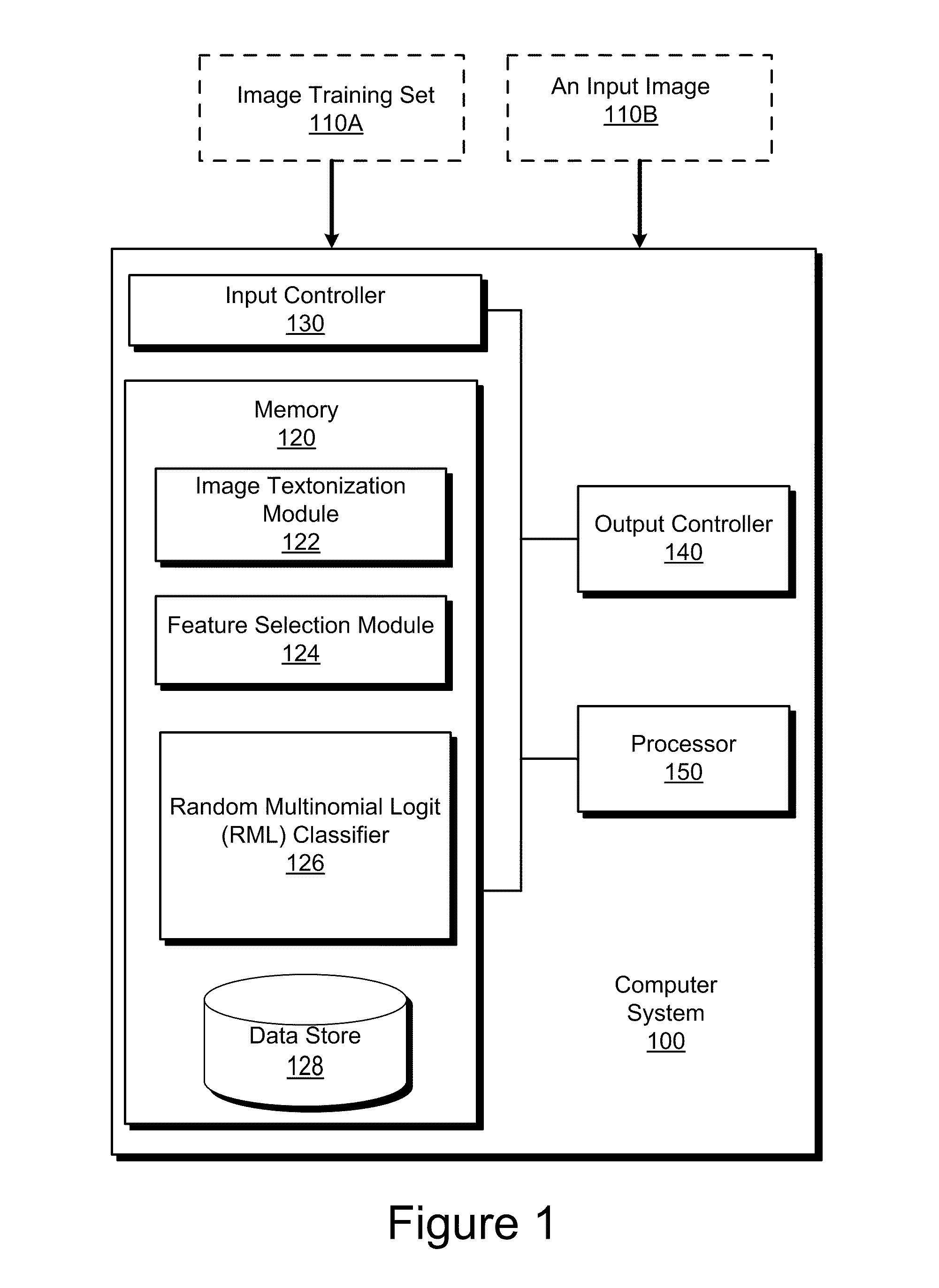

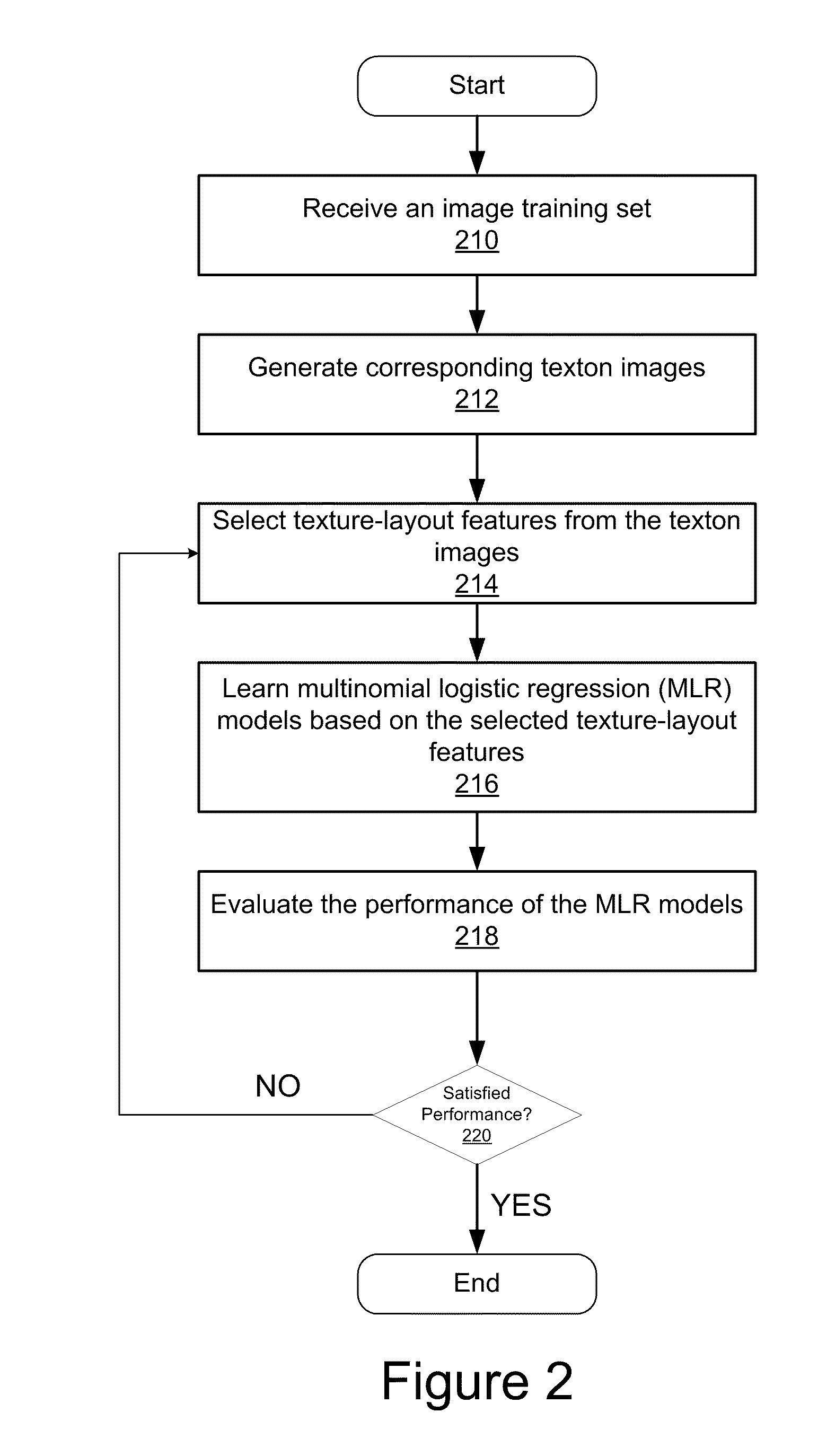

Semantic scene segmentation using random multinomial logit (RML)

A system and method are disclosed for learning a random multinomial logit (RML) classifier and applying the RML classifier for scene segmentation. The system includes an image textonization module, a feature selection module and a RML classifier. The image textonization module is configured to receive an image training set with the objects of the images being pre-labeled. The image textonization module is further configured to generate corresponding texton images from the image training set. The feature selection module is configured to randomly select one or more texture-layout features from the texton images. The RML classifier comprises multiple multinomial logistic regression models. The RML classifier is configured to learn each multinomial logistic regression model using the selected texture-layout features. The RML classifier is further configured to apply the learned regression models to an input image for scene segmentation.

Owner:HONDA MOTOR CO LTD

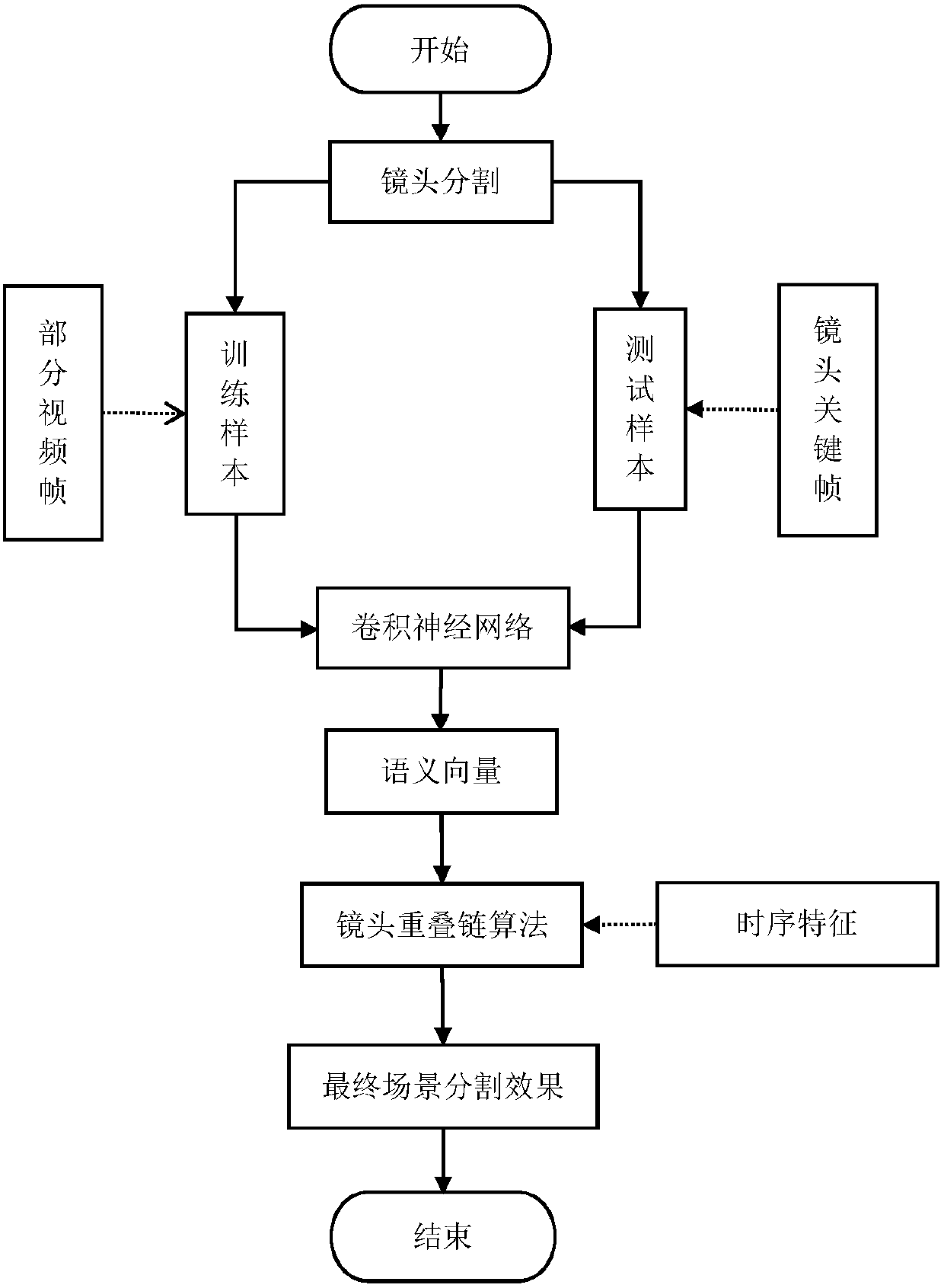

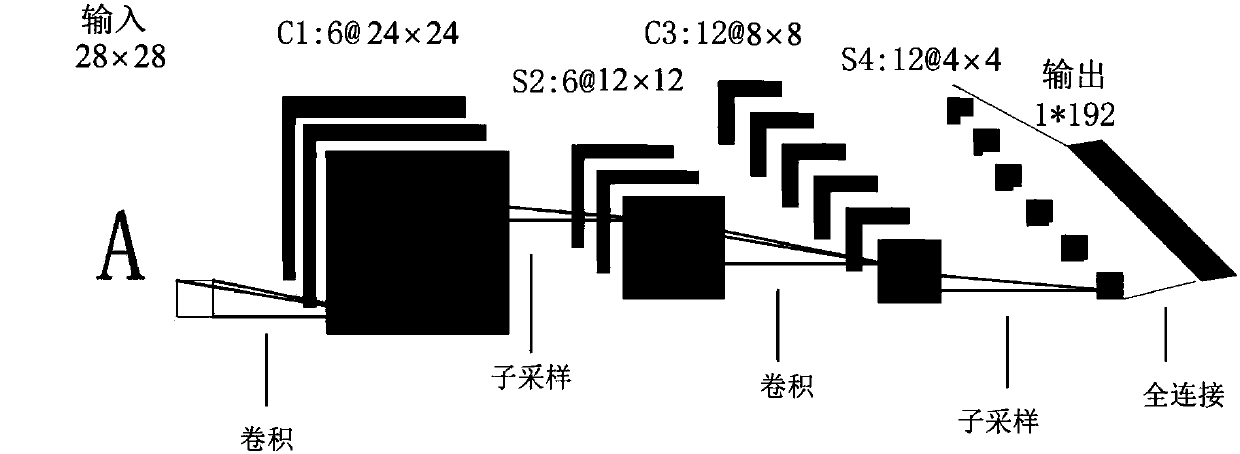

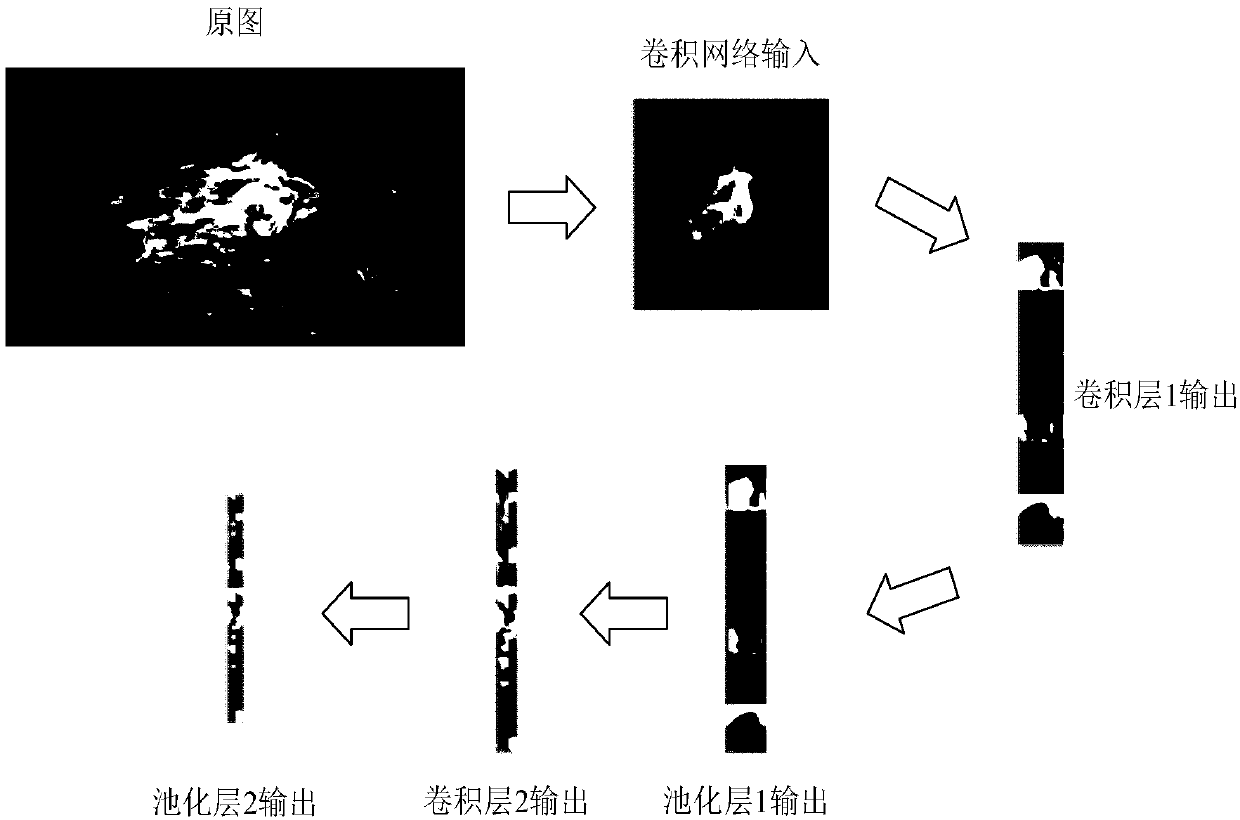

Video semantic scene segmentation method based on convolutional neural network

InactiveCN107590442AGuaranteed completenessAvoid lossCharacter and pattern recognitionProbability estimationScene segmentation

The invention discloses a video semantic scene segmentation method based on a convolutional neural network, which is mainly divided into two parts, wherein one part is that a convolutional neural network is built on the basis of shot segmentation, and then semantic feature vectors of video key frames are obtained by using the built convolutional neural network; and the other part is that the Bhattacharyya distance between the semantic feature vectors of two shot key frames is calculated by using the time continuity of the front and back key frames according to the semantic feature vectors, andthe semantic similarity of the shot key frames is obtained through measuring the Bhattacharyya distance. Probability estimation values of different semantics are outputted by using the convolutionalneural network to act as a semantic feature vector of the frame. Considering a time sequence problem of scene partition in the continuous time, the shot similarity is compared by combining semantic features of the two shot key frames and the time sequence feature distance between the shots, and thus a final scene segmentation result is obtained. The method disclosed by the invention has certain universality and has a good scene segmentation effect under the condition that training sets are sufficient.

Owner:HUAZHONG UNIV OF SCI & TECH

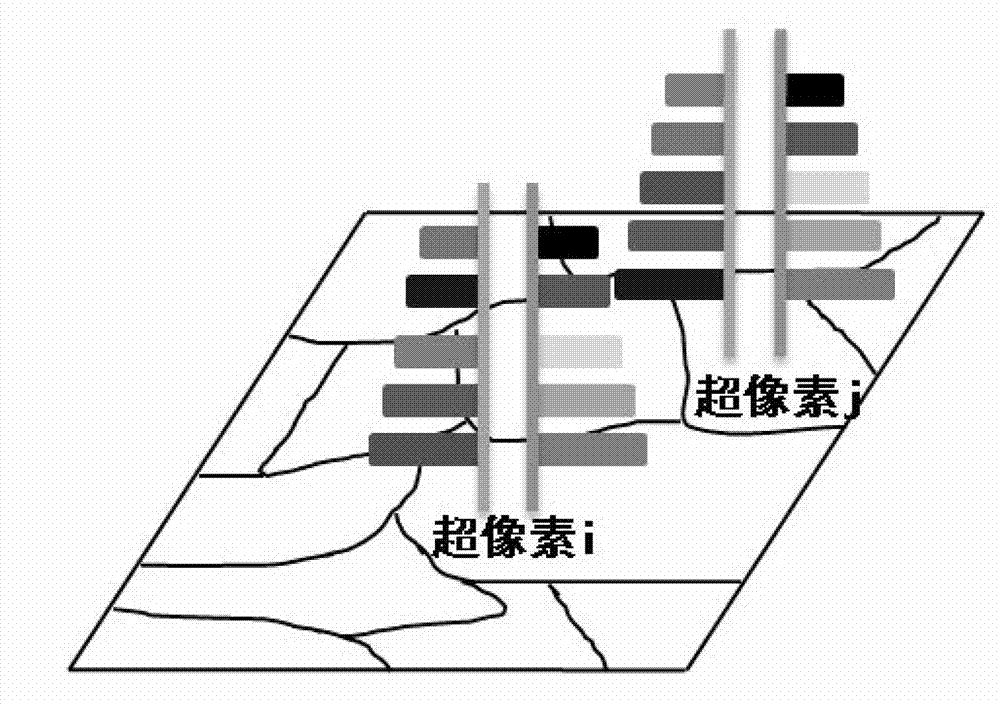

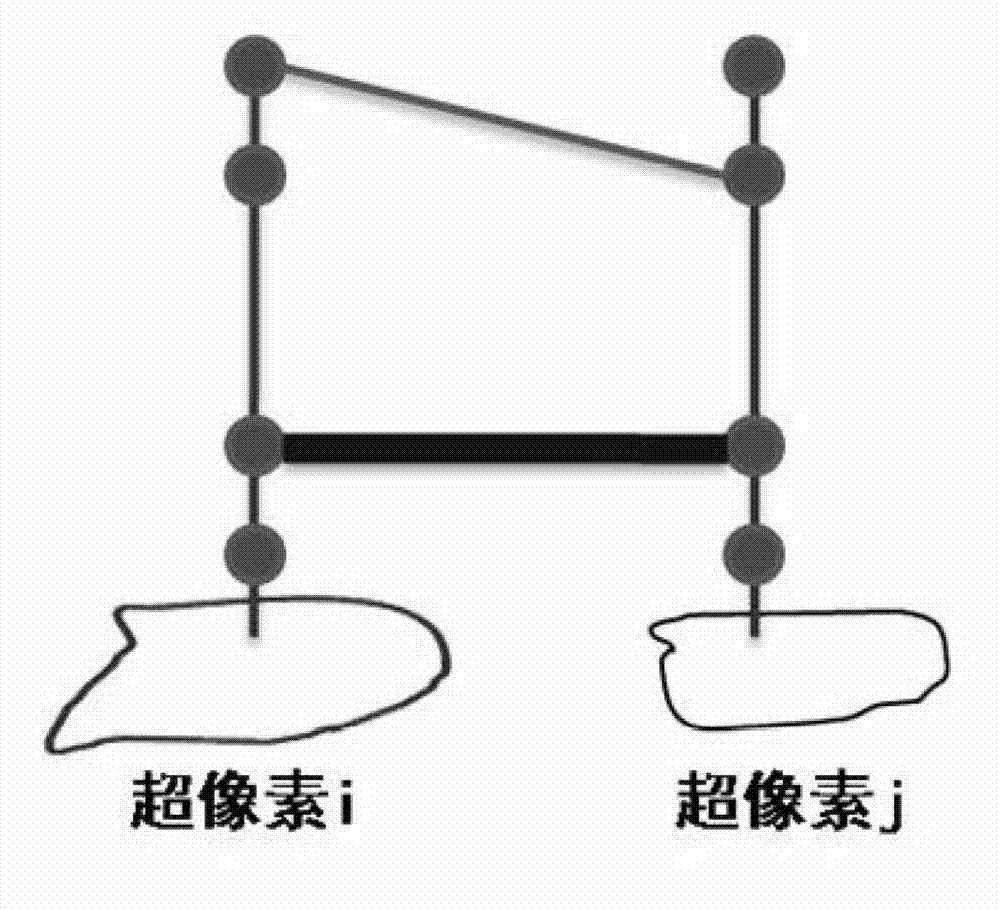

Image scene segmentation and layering joint solution method based on component set sampling

ActiveCN103177450AIncrease three-dimensional understandingReduce technical difficultyImage analysisAviationDiscriminant

The invention discloses an image scene segmentation and layering joint solution method based on component set sampling. The method comprises the following steps of: performing over-segmentation treatment on an input image to obtain a super-pixel set of the image; training on a training dataset to obtain a discriminant model of semantic category and a discriminant model of layer category, and obtain a probability value (of each super-pixel in the input image) belonging to each semantic category and a probability vale belonging to each layer category according to the two models; structuring a candidate graph structure of the input image and calculating a node weighted value, a positive side weighted value and a negative side weighted value; and based on the a candidate graph structure, obtaining an optimal solution by reasoning via a component set sampling algorithm, wherein the optimal solution includes the exact semantic category and the exact layer category of each super-pixel of the input image. The method disclosed by the invention can be widely used for semantic information and layer information labeling of computer visual systems of military, aviation, aerospace, monitoring and manufacturing, and the like.

Owner:BEIHANG UNIV

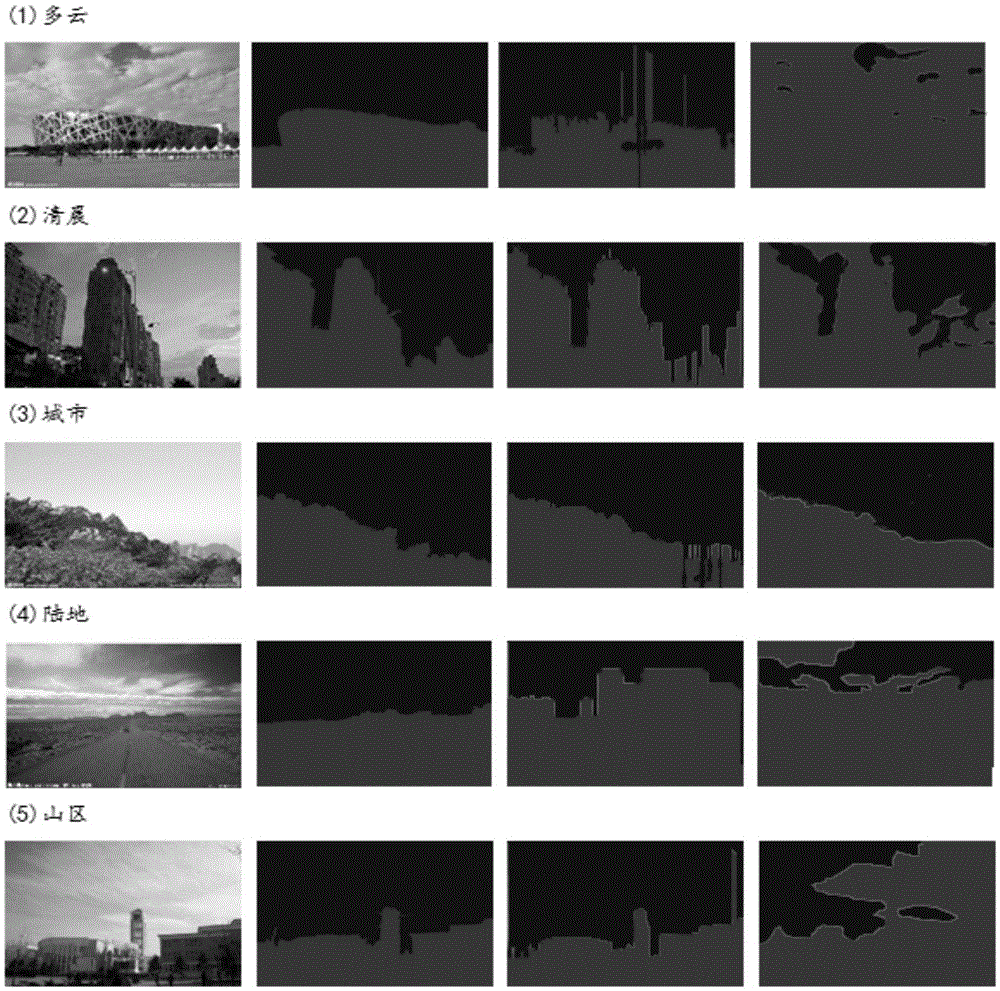

Sky detection algorithm based on context inference

ActiveCN105528575AHigh precisionImprove detection accuracyImage enhancementImage analysisSkyData set

The invention discloses a sky detection algorithm based on context inference. The algorithm comprises establishment of a sky sample data set, a scene segmentation algorithm, extraction of super-pixel features, classifier training and establishment of a context inference model. A training classifier can be used to detect the sky area preliminarily; the CRF context inference model is established, so that the detection precision is further improved via context limitation, and a detection precision higher than a present algorithm is obtained; and a balance is made between the detection precision and the detection speed, and the practical requirements are met.

Owner:CAPITAL NORMAL UNIVERSITY

Video semantic scene segmentation and labeling method

InactiveCN108537134AImprove processing efficiencyImprove experienceCharacter and pattern recognitionVideo retrievalScene segmentation

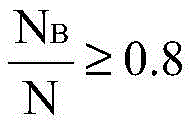

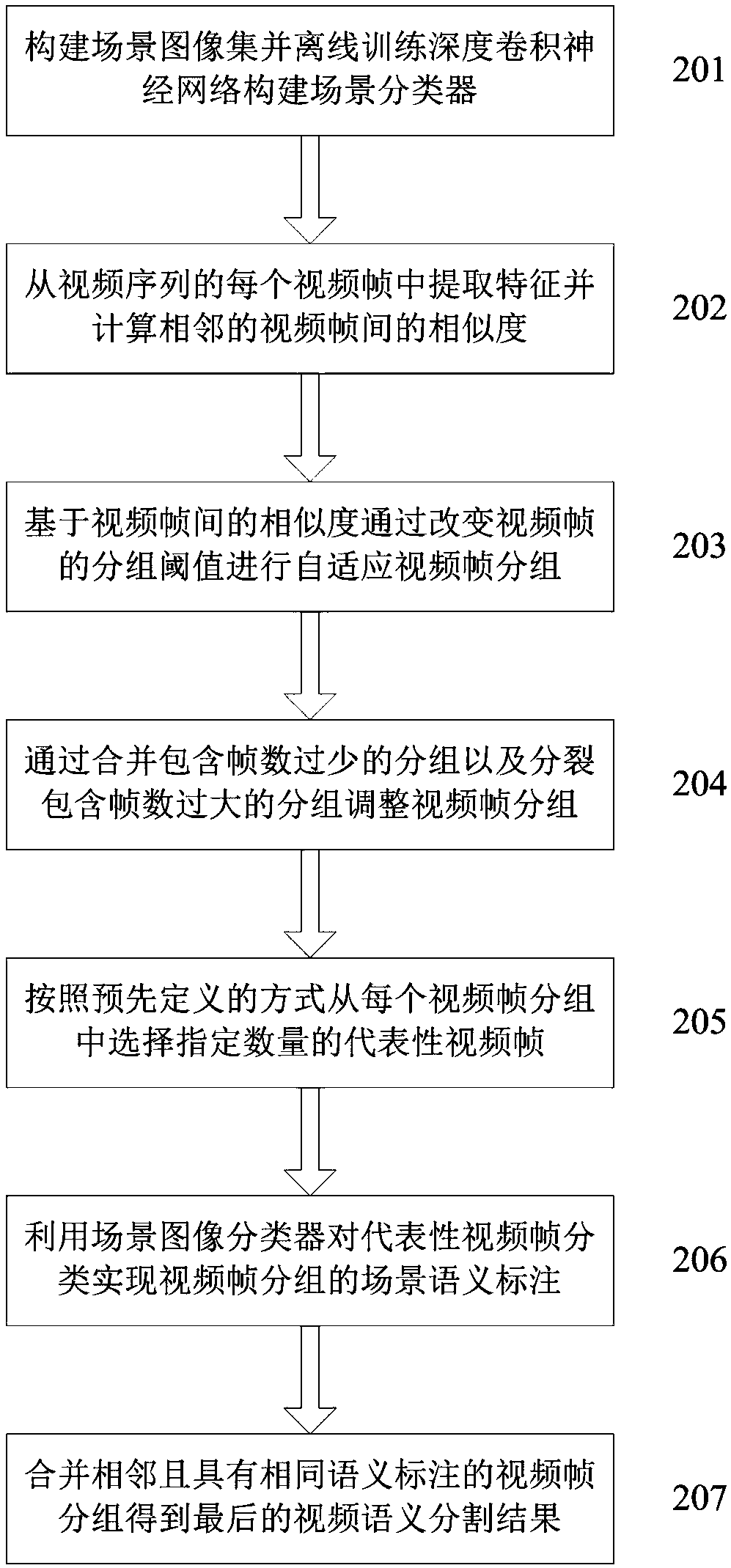

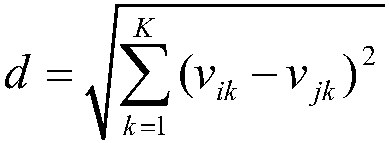

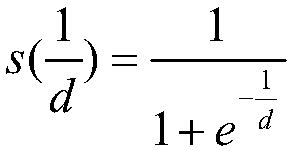

The video semantic scene segmentation and labeling method of the present invention comprises the following steps: offline training a deep convolutional neural network based on a labeled scene image set, and constructing a scene classifier; calculating a similarity between adjacent video frames in a video sequence and grouping the video frames according to the similarity; adaptively adjusting a similarity threshold to obtain video frame packet groups with uniformly distributed number of video frames; merging the frame groups including too few frames, splitting the frame groups including too many frames to readjust a video frame grouping result; selecting a representative video frame for each video frame group; using the scene classifier to identify the scene categories of the video frame groups; and performing semantic scene segmentation and labeling on the video sequence. The method provides an effective means for video retrieval and management, and improves the experience and fun forthe users to watch the videos.

Owner:BEIJING JIAOTONG UNIV

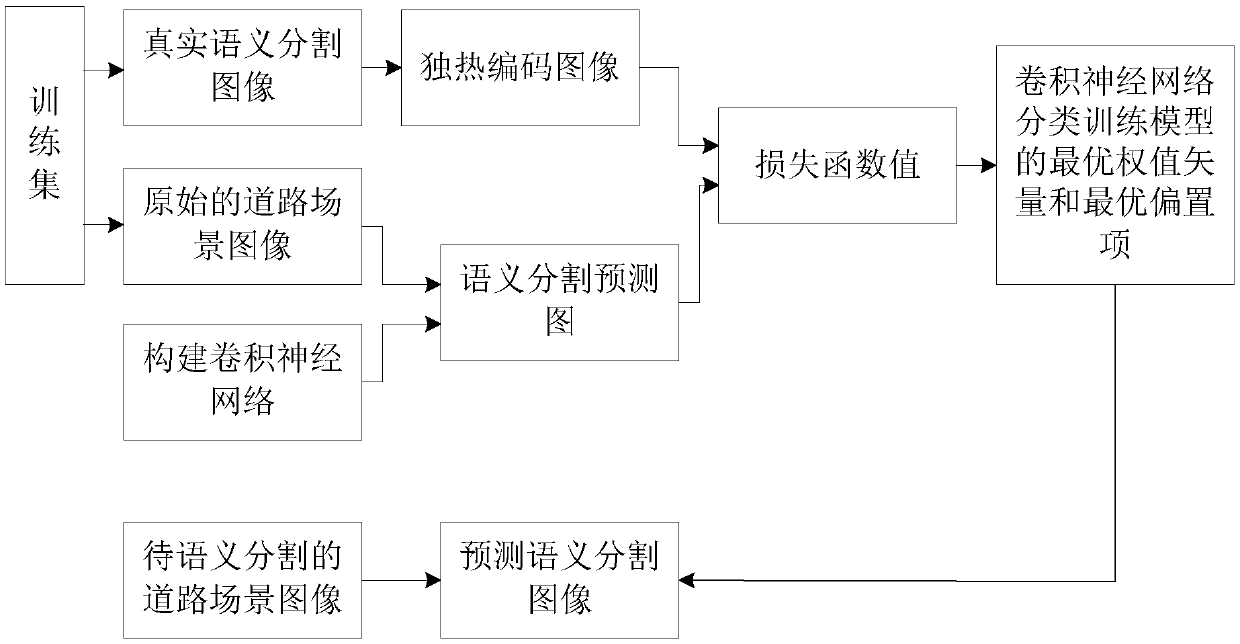

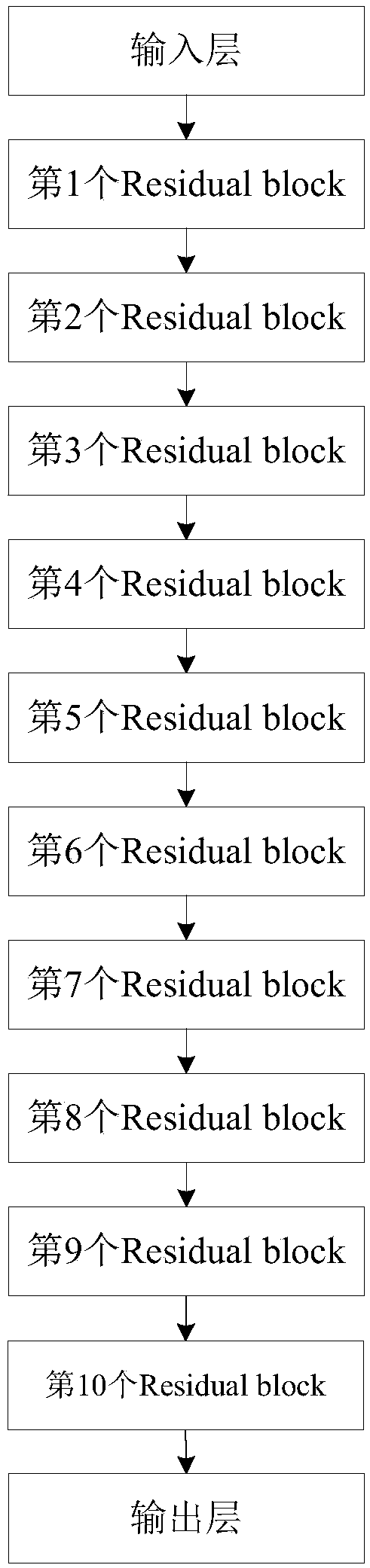

Road scene segmentation method based on residual network and expanded convolution

InactiveCN109635642AImprove the extraction effectFully absorbing structural efficiencyCharacter and pattern recognitionHidden layerComputation complexity

The invention discloses a road scene segmentation method based on a residual network and expanded convolution. The method comprises: a convolutional neural network being constructed in a training stage, and a hidden layer of the convolutional neural network being composed of ten Respondial blocks which are arranged in sequence; inputting each original road scene image in the training set into a convolutional neural network for training to obtain 12 semantic segmentation prediction images corresponding to each original road scene image; calculating a loss function value between a set formed by12 semantic segmentation prediction images corresponding to each original road scene image and a set formed by 12 independent thermal coding images processed by a corresponding real semantic segmentation image to obtain an optimal weight vector of the convolutional neural network classification training model. In the test stage, prediction is carried out by utilizing the optimal weight vector of the convolutional neural network classification training model, and a predicted semantic segmentation image corresponding to the road scene image to be subjected to semantic segmentation is obtained. The method has the advantages of low calculation complexity, high segmentation efficiency, high segmentation precision and good robustness.

Owner:ZHEJIANG UNIVERSITY OF SCIENCE AND TECHNOLOGY

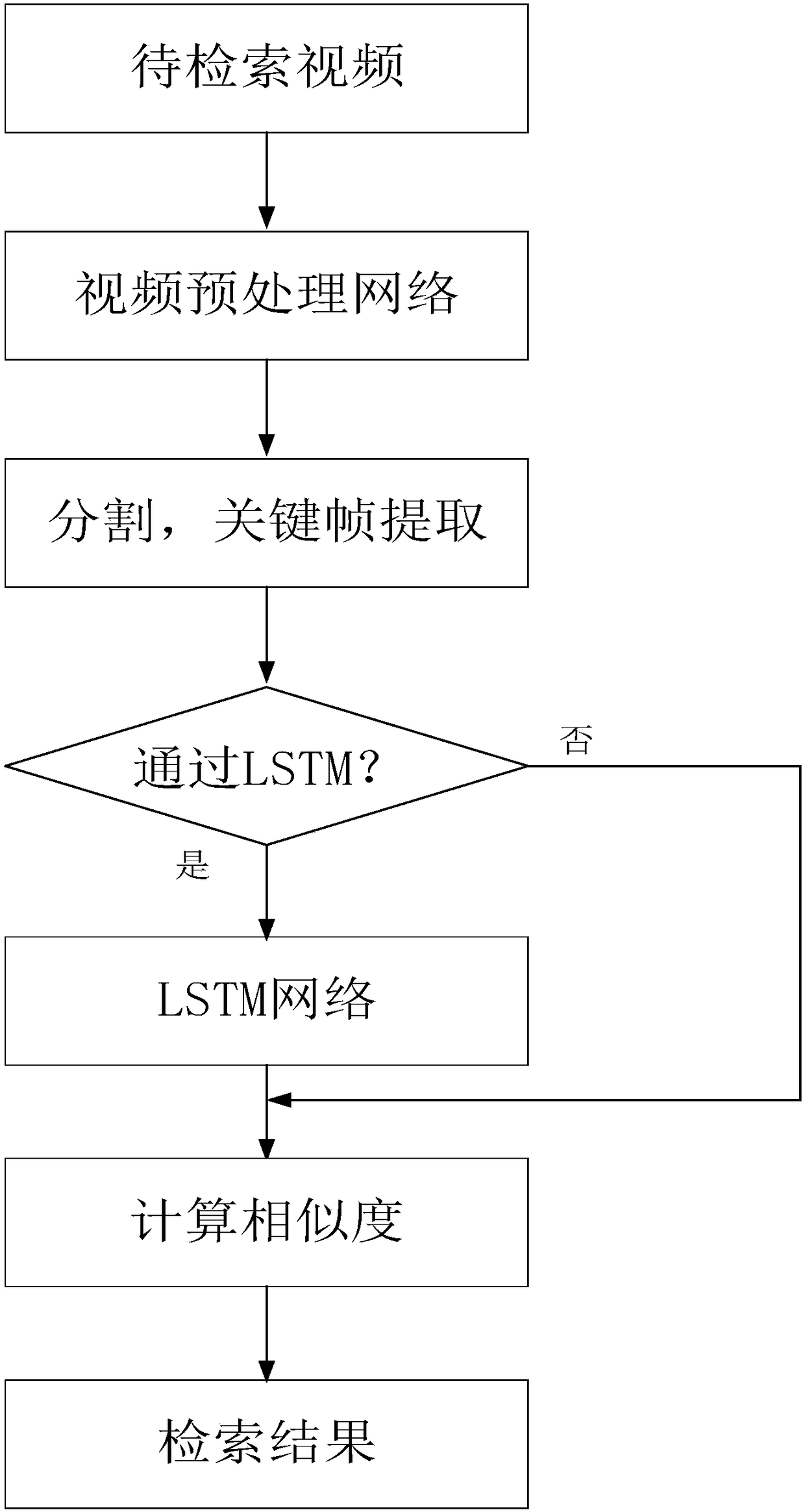

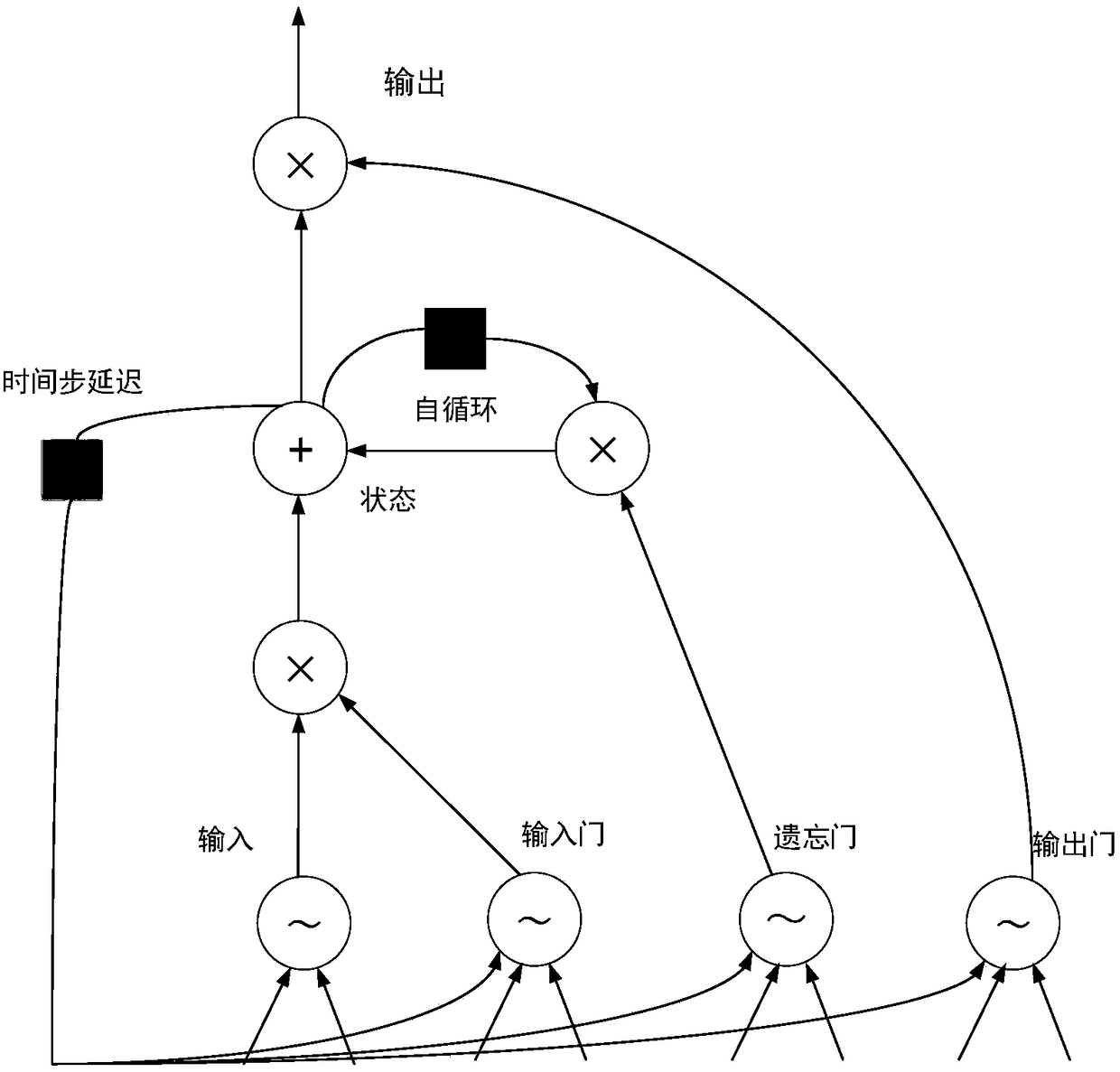

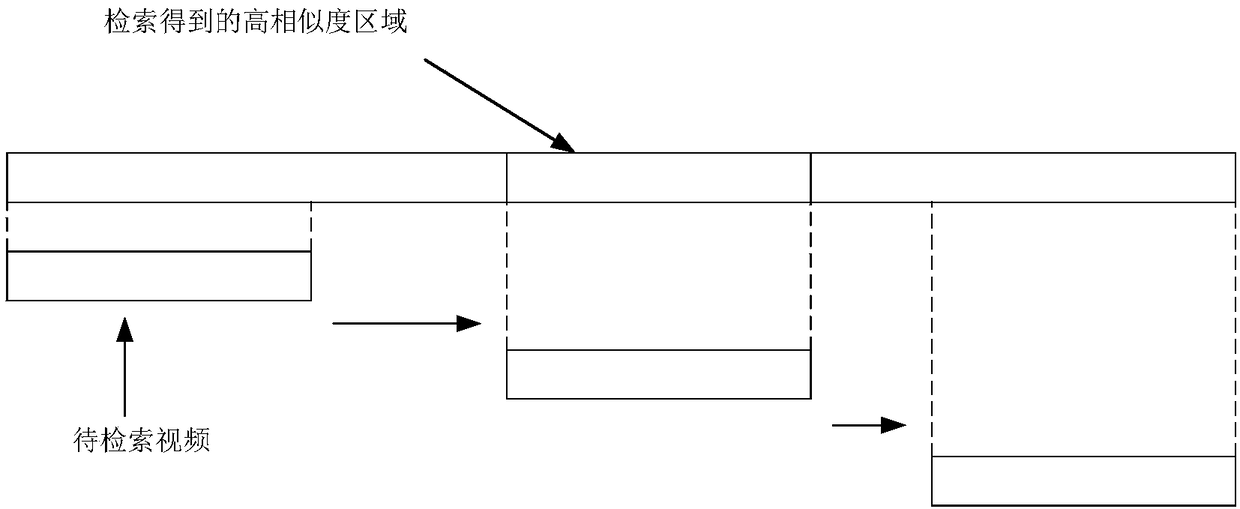

Deep learning based video retrieval method

ActiveCN108228915AAvoid false detectionAvoid missing detectionNeural architecturesSpecial data processing applicationsVideo retrievalFalse detection

The invention discloses a deep learning based video retrieval method which mainly comprises the following parts: performing video pre-processing by using a convolutional neural network; extracting feature vectors of the video after pre-processing by using a long short-term memory; and finally learning by a similarity learning algorithm to obtain a distance calculation method, and performing similarity calculation according to the method and ranking, so as to obtain a video retrieval result. According to the method disclosed by the invention, scene segmentation and key frame selection are performed by the convolutional neural network, and high-level semantics representing video is extracted, so as to acquire a proper quantity of key frame sequences, and effectively avoid false detection andmissing inspection in shot segmentation. According to method disclosed by the invention, time-order characteristics of the video are extracted by the long short-term memory, so as to obtain a more accurate retrieval result. By similarity learning and a text based matching method, matching accuracy of a similarity measurement method can be promoted. By adopting the method disclosed by the invention, accurate retrieval on a large scale of videos can be realized.

Owner:SOUTH CHINA UNIV OF TECH

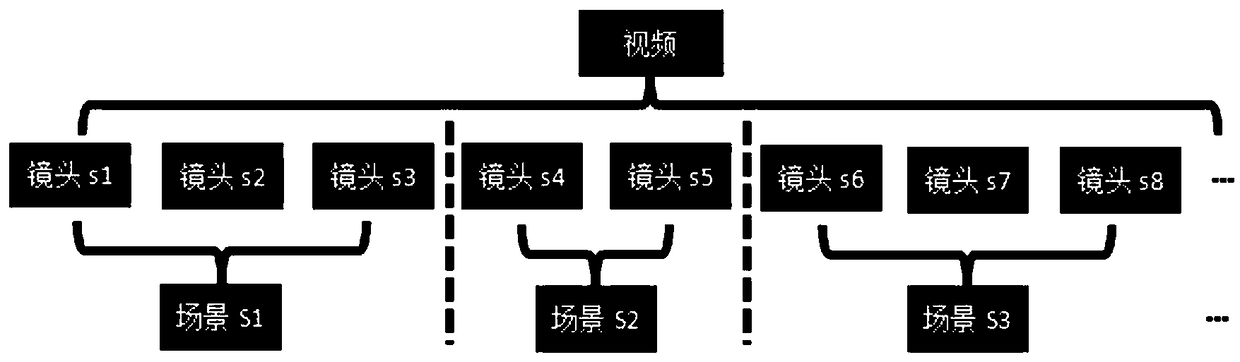

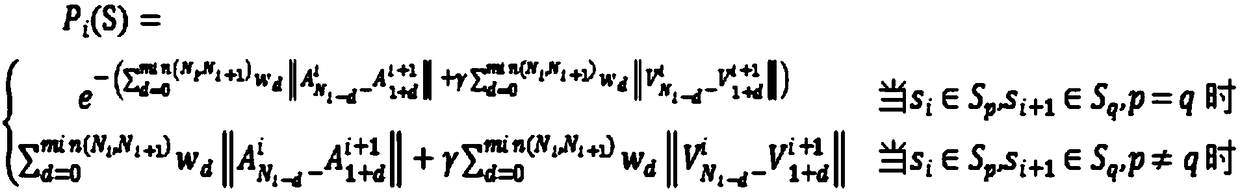

A multimodal video scene segmentation method based on sound and vision

InactiveCN109344780AEfficient combinationImprove the accuracy of scene segmentationSpeech analysisCharacter and pattern recognitionFeature vectorScene segmentation

The invention discloses a multimodal video scene segmentation method based on sound and vision. The method comprises the following steps: step S1, the input video is shot segmented to obtain each shotsegment; step S2, the input video is shot segmented to obtain each shot segment. Step S2, visual and sound features are extracted from the segmented lens segments to obtain visual and sound feature vectors corresponding to the lens; Step S3: According to the visual and sound characteristics, the adjacent shots belonging to the same semantics are merged into the same scene to obtain a new scene time boundary.

Owner:SHANGHAI JILIAN NETWORK TECH CO LTD

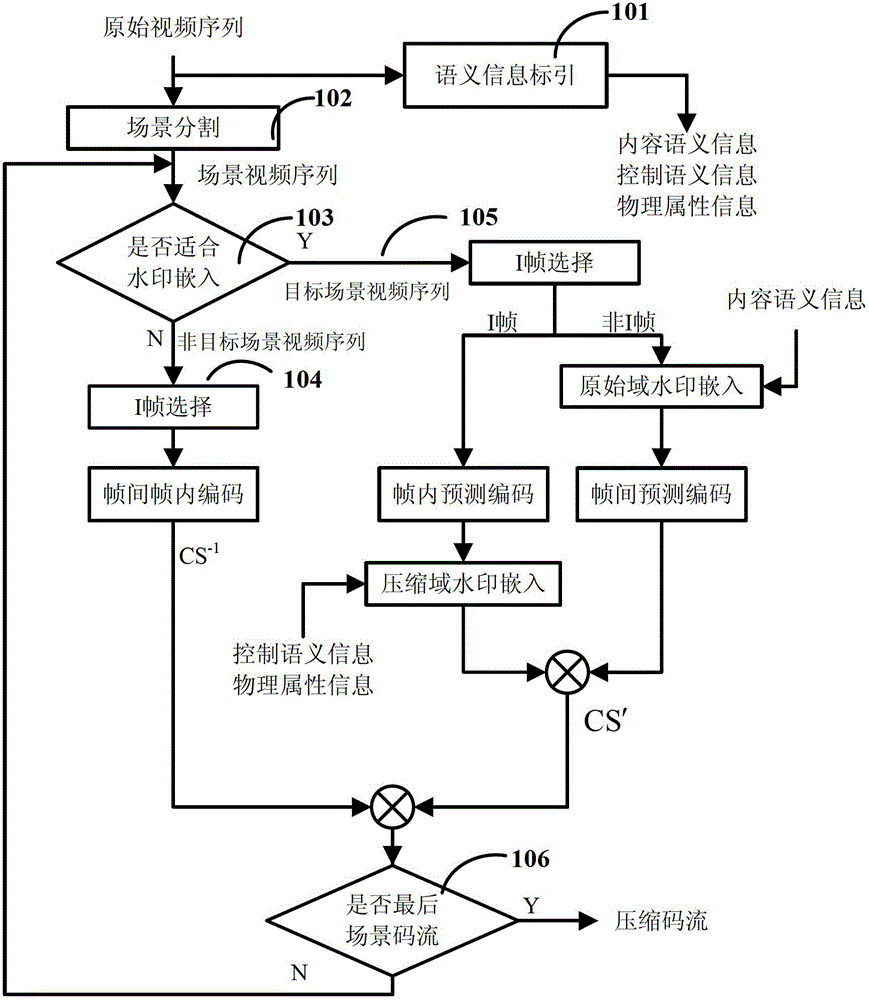

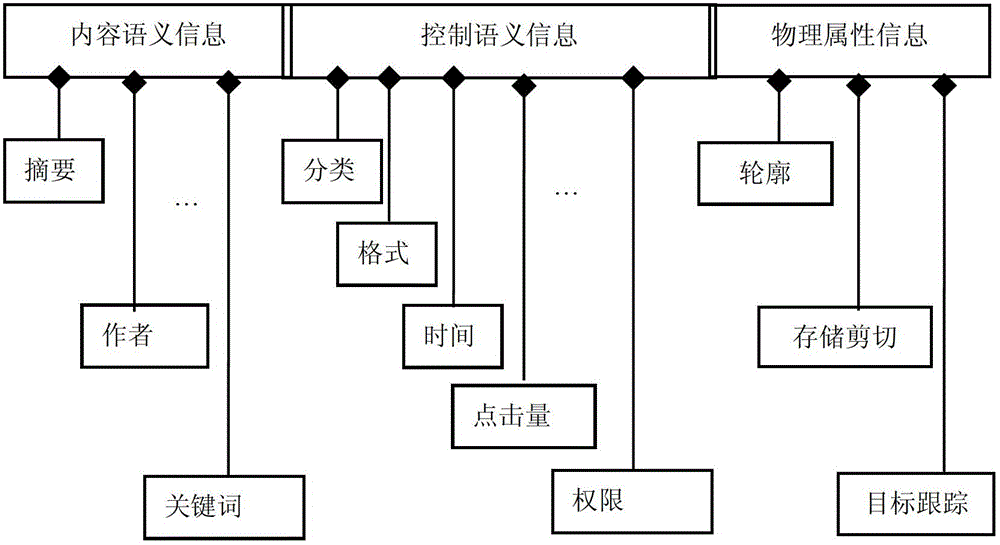

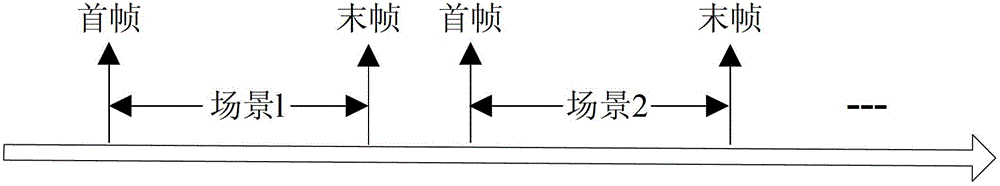

Scene-segmentation-based semantic watermark embedding method for video resource

InactiveCN102724554AResource quality degradationEasy to embedSelective content distributionScene segmentationGroup of pictures

The invention discloses a scene-segmentation-based semantic watermark embedding method for a video resource. According to the scene-segmentation-based semantic watermark embedding method, a video semantic information set, containing content semantic information, control semantic information and optional physical attribute information, is firstly generated, an original video sequence of the video resource is then subjected to segmentation, and a scene video sequence with higher texture complexity and more dramatic interframe change is selected as a target scene video sequence; when the target scene video sequence is subjected to compressed coding, the control semantic information and the physical attribute information are embedded into I frames of each group of picture (GOP), the content semantic information is embedded into non-I frames, and a compressed code stream containing semantic watermarks is then generated; and the semantic information is represented by using the manners of plain texts and mapping codes and then respectively embedded into the non-I frames and I frames of GOPs of compressed codes of the target scene video sequence, so that the embedded amount of the semantic watermarks is increased, the robustness is enhanced, and meanwhile, the remarkable reduction of the quality of the video resource cannot be caused.

Owner:SOUTHWEAT UNIV OF SCI & TECH

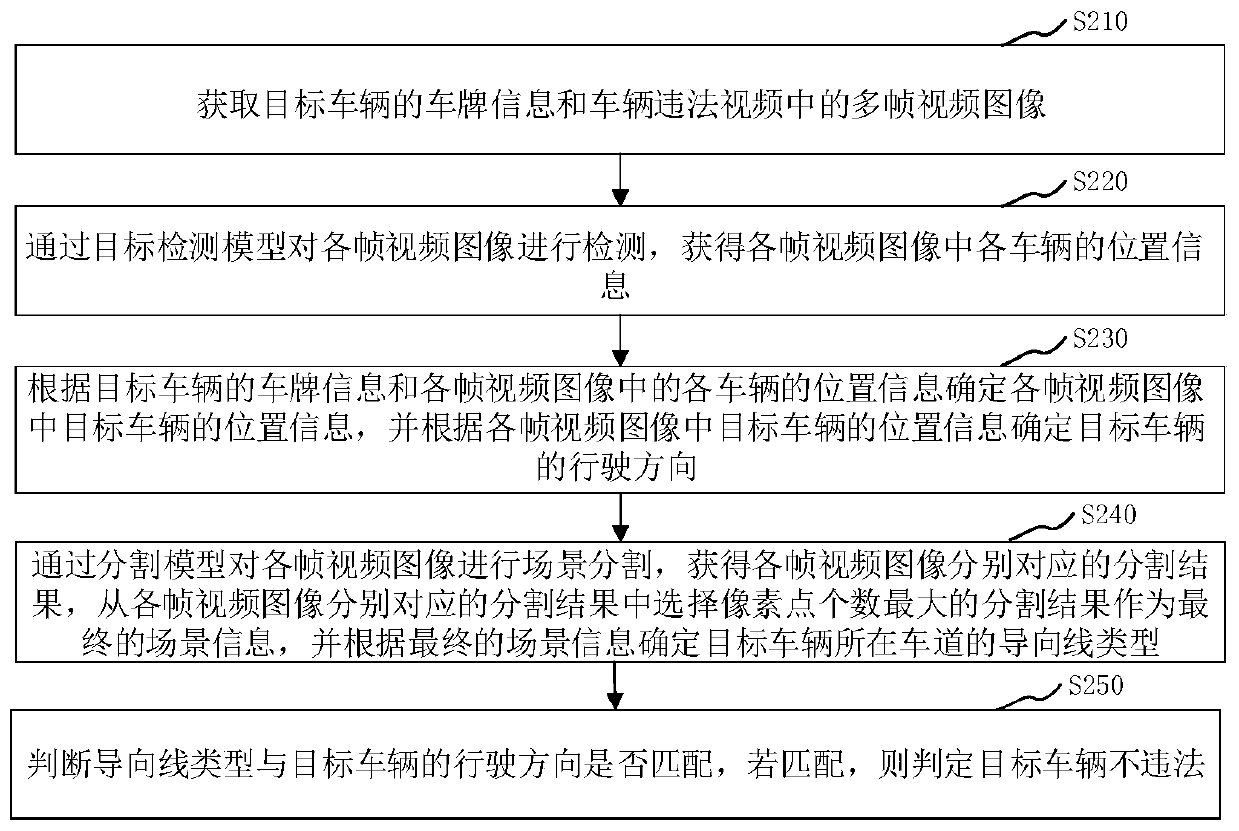

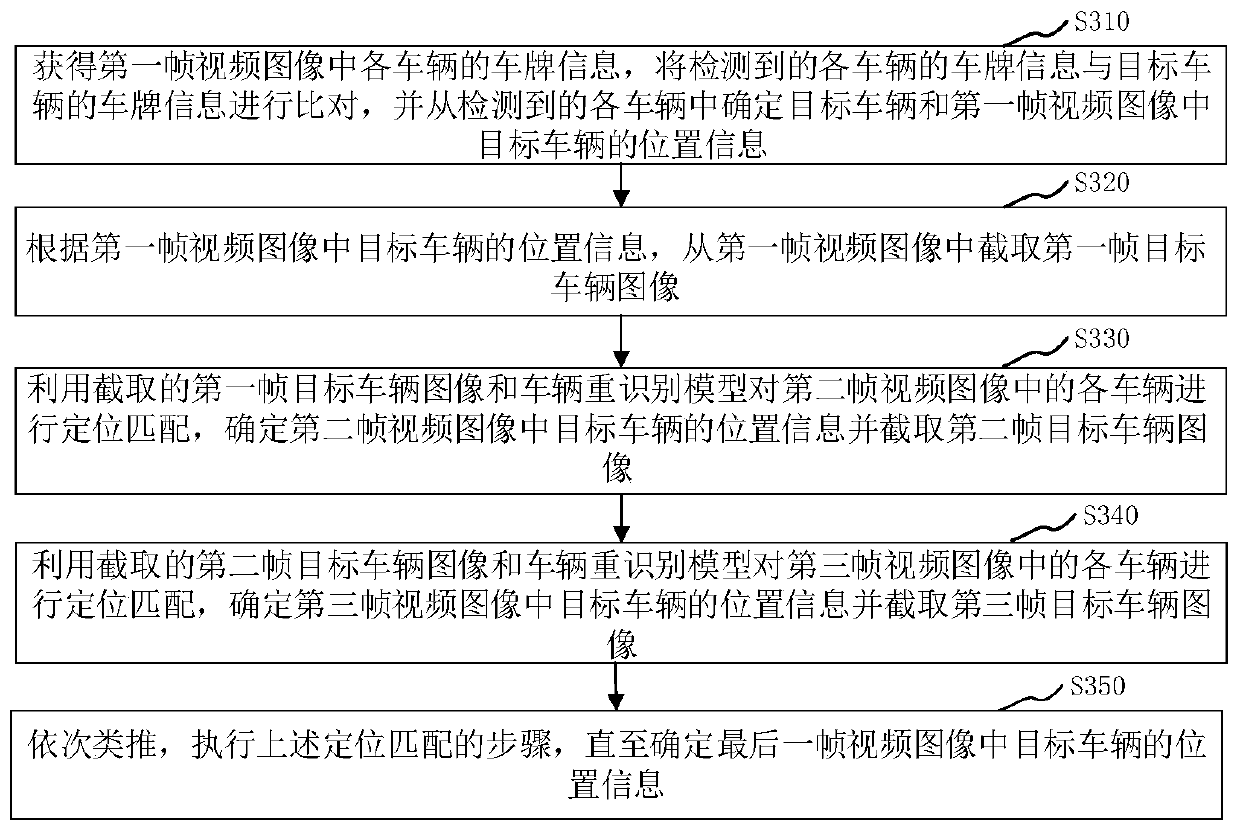

Vehicle violation video processing method and device, computer equipment and storage medium

InactiveCN110533925AImprove audit accuracyRoad vehicles traffic controlCharacter and pattern recognitionScene segmentationMultiple frame

The application relates to a vehicle violation video processing method and device, computer equipment and a storage medium. The method comprises steps: acquiring license plate information of a targetvehicle and multiple frames of video images in a vehicle violation video; detecting each frame of video image by a target detection model to obtain position information of each vehicle in each frame of video image; determining the driving direction of the target vehicle according to the license plate information of the target vehicle and the position information of each vehicle in each frame of video image; performing scene segmentation on each frame of video image by a segmentation model to obtain segmentation results corresponding to all frames of video images, carrying out fusion on the segmentation results to determine final scene information, and determining a guide line type of a lane where the target vehicle is located according to the final scene information; determining whether the guide line type matches the driving direction of the target vehicle or not; and if so, determining that the target vehicle does not break traffic regulations. Therefore, whether the target vehicle breaks traffic regulations can be determined by using multiple frames of video images, so that the examination accuracy is improved.

Owner:上海眼控科技股份有限公司

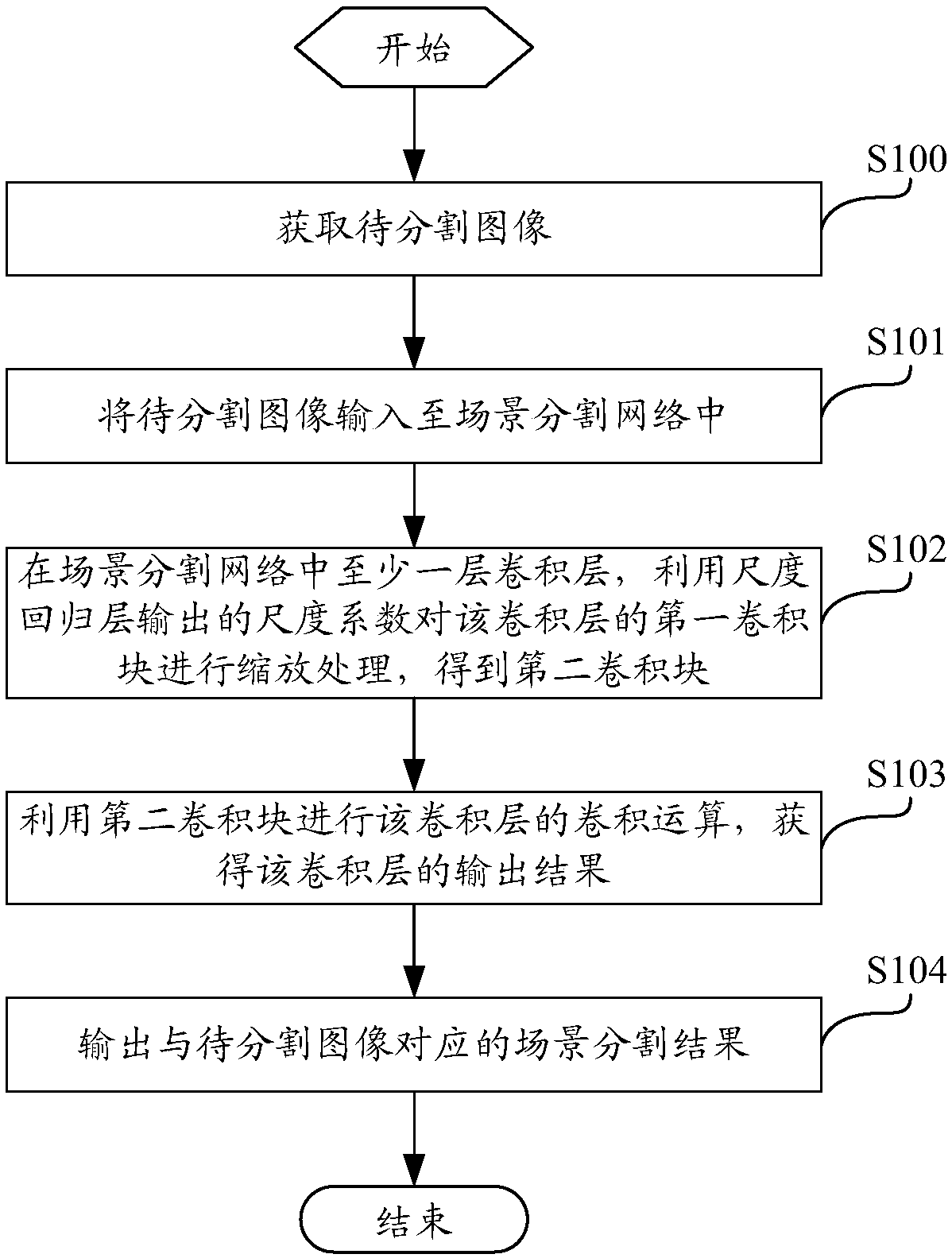

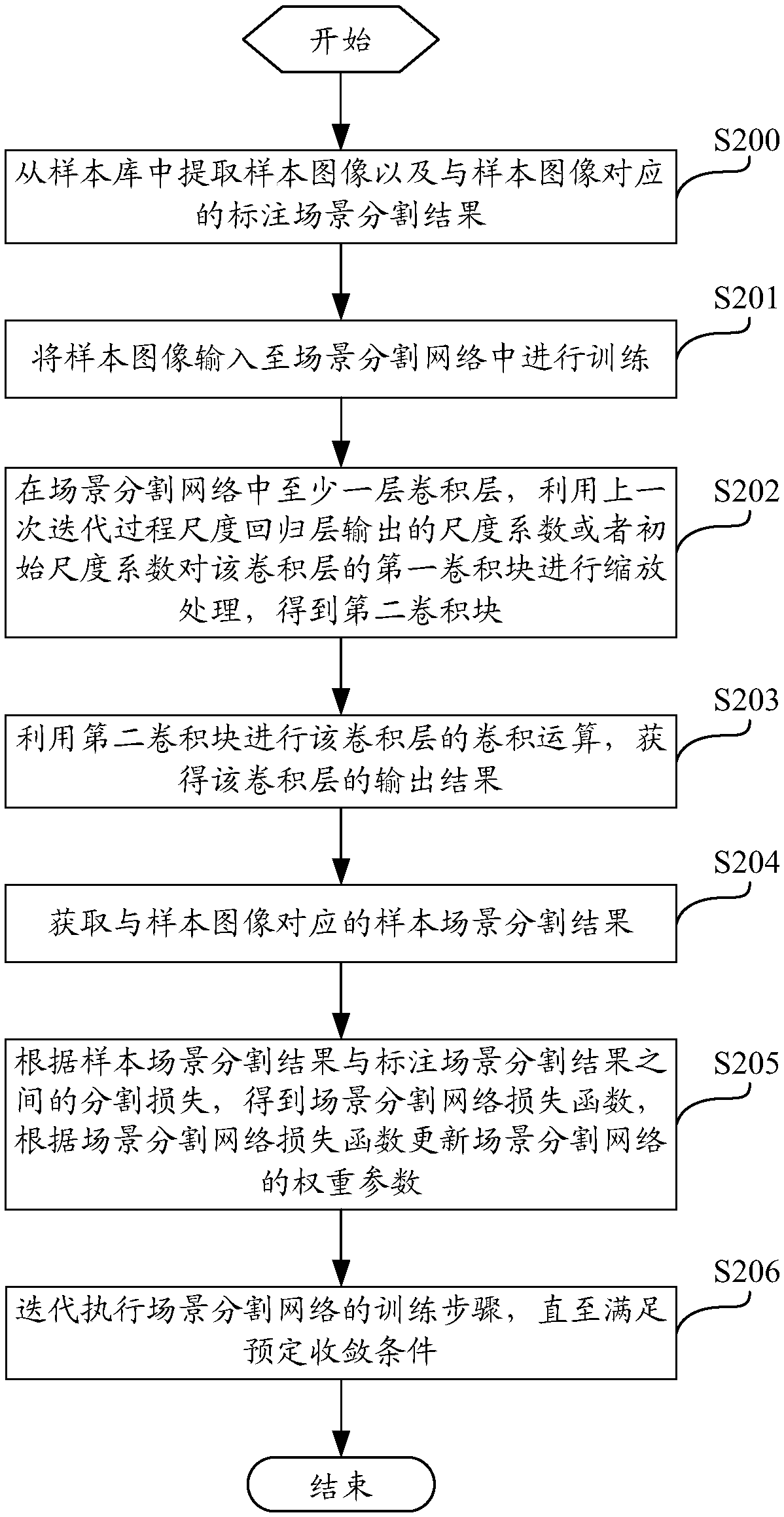

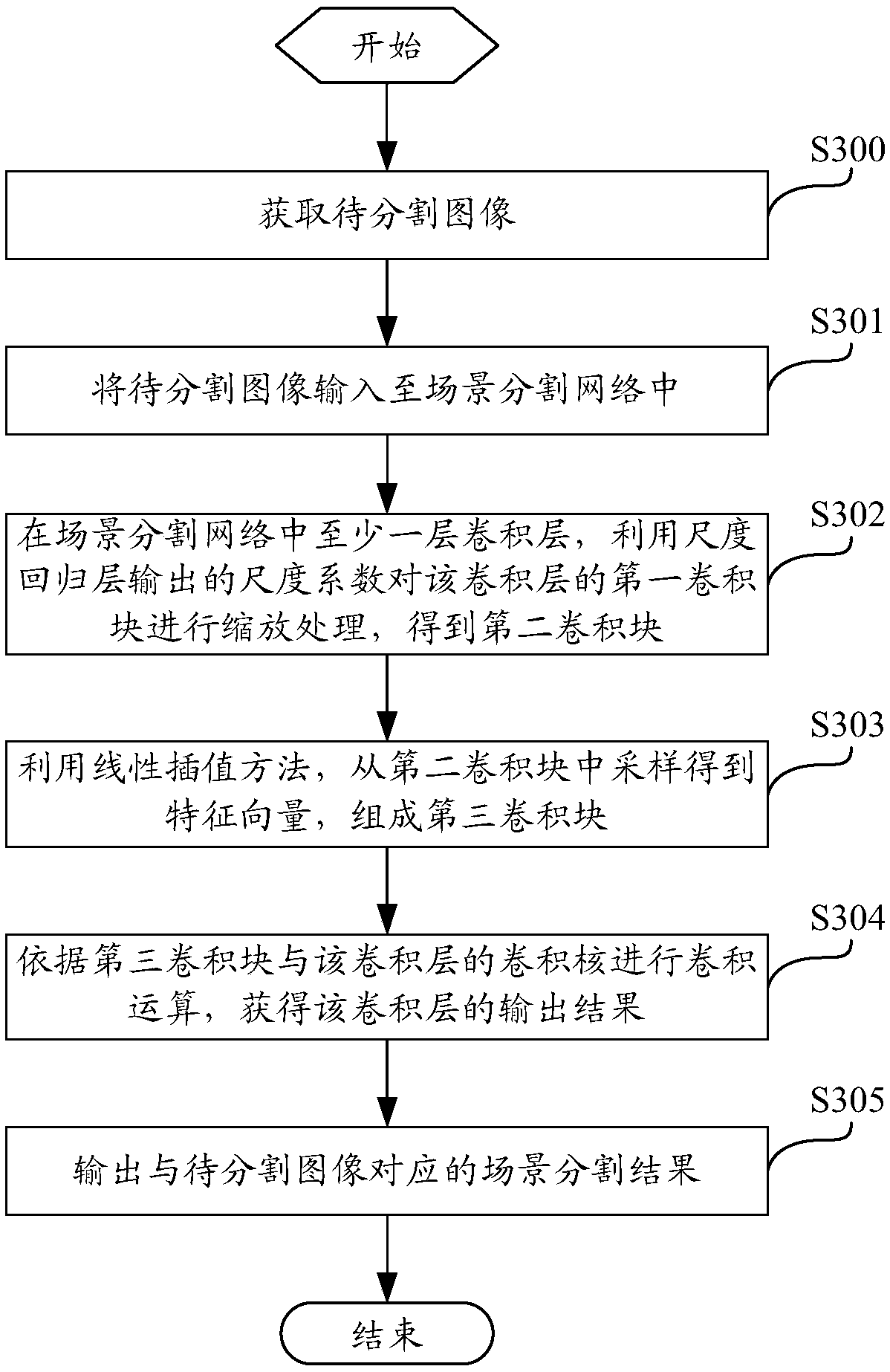

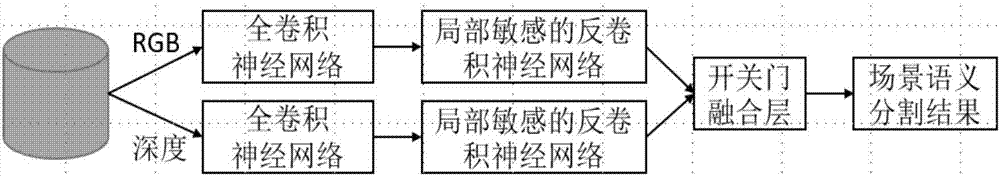

Image scene segmentation method, device, computing device and computer storage medium

ActiveCN107610146AImprove accuracyImprove processing efficiencyImage analysisNeural architecturesScene segmentationComputer vision

The invention discloses an image scene segmentation method, a device, a computing device and a computer storage medium. The image scene segmentation method is executed on the basis of a well trained scene segmentation network. The method comprises the following steps of acquiring a to-be-segmented image; inputting the to-be-segmented image into a scene segmentation network, wherein at least one layer of a convolution layer is formed in the scene segmentation network; subjecting the first convolution block of the convolution layer to scaling treatment by means of a scale coefficient outputted by a scale regression layer to obtain a second convolution block; carrying out the convolution operation of the convolution layer by utilizing the second convolution block to obtain the output result of the convolution layer, wherein the scale regression layer is a middle convolution layer of the scene segmentation network; and outputting a scene segmentation result corresponding to the to-be-segmented image. According to the technical scheme, the self-adaptive scaling of a sensing field is realized. The scene segmentation result can be quickly obtained through the trained scene segmentation network, and the image scene segmentation accuracy and the processing efficiency are improved.

Owner:BEIJING QIHOO TECH CO LTD

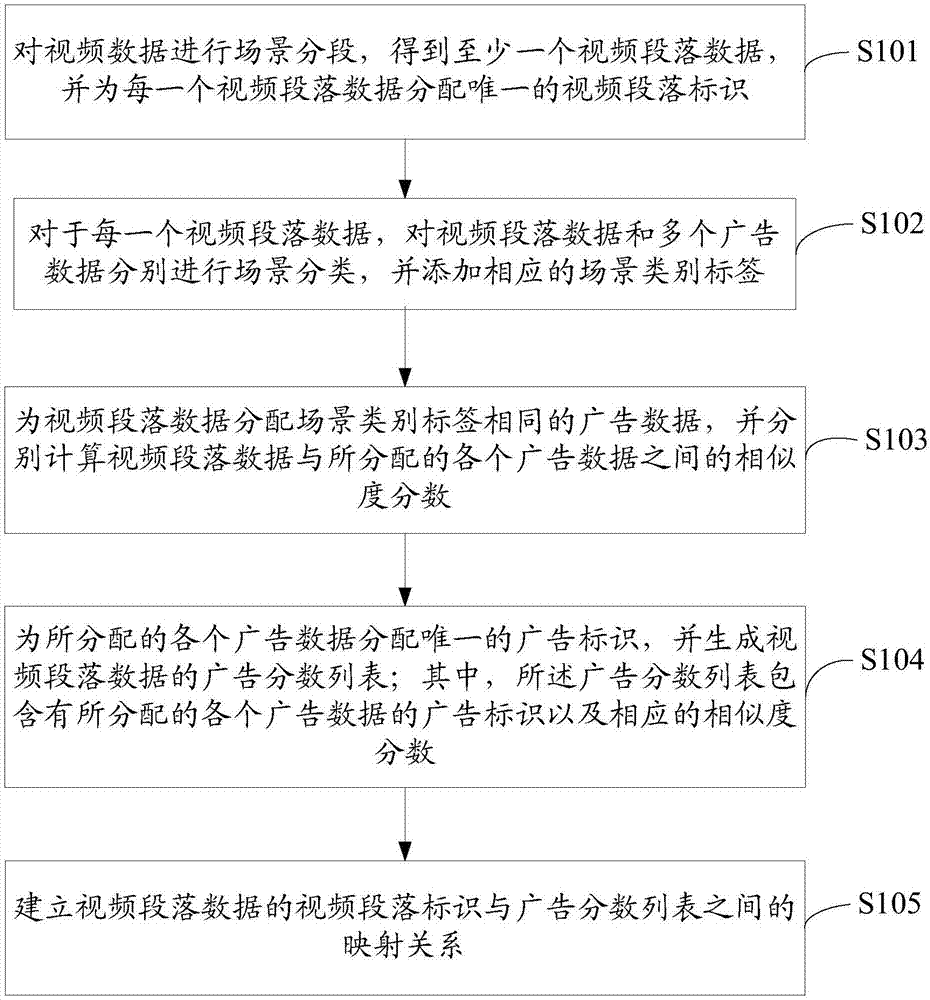

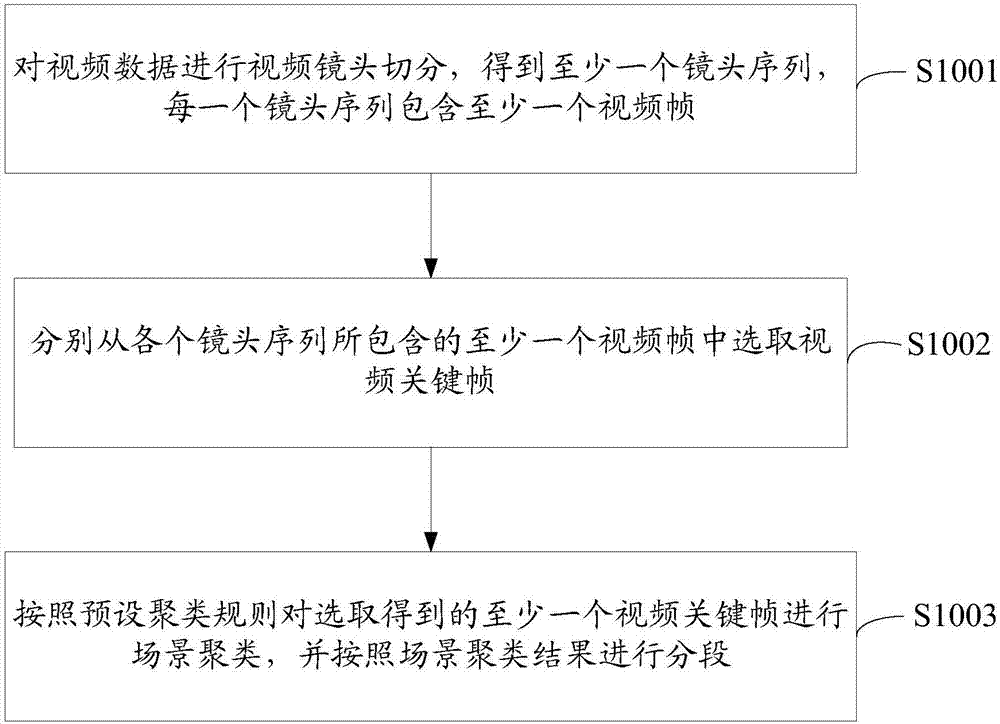

Advertisement data processing method and device, and advertisement putting method and device

The present invention provides an advertisement data processing method and device, and an advertisement putting method and device. The advertisement data processing method comprises: performing scene segmentation of video data, obtaining at least one video paragraph data, and distributing only one video paragraph identification for each video paragraph; aiming each video paragraph data, performing scene classification of the video paragraph data and a plurality of advertisement data, and adding corresponding scene class label; distribute advertisement data having the same scene class label for the video paragraph data, and calculating the similarity score between the video paragraph data and each distributed advertisement data; distributing only one advertisement identification for each distributed advertisement, and generating the advertisement score list of the video paragraph data; and establishing a mapping relation between the video paragraph identification of the video paragraph data and the advertisement score list. The association degree between the video paragraph and each advertisement is obtained to provide guarantee for subsequently putting advertisements with high correlation degree.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

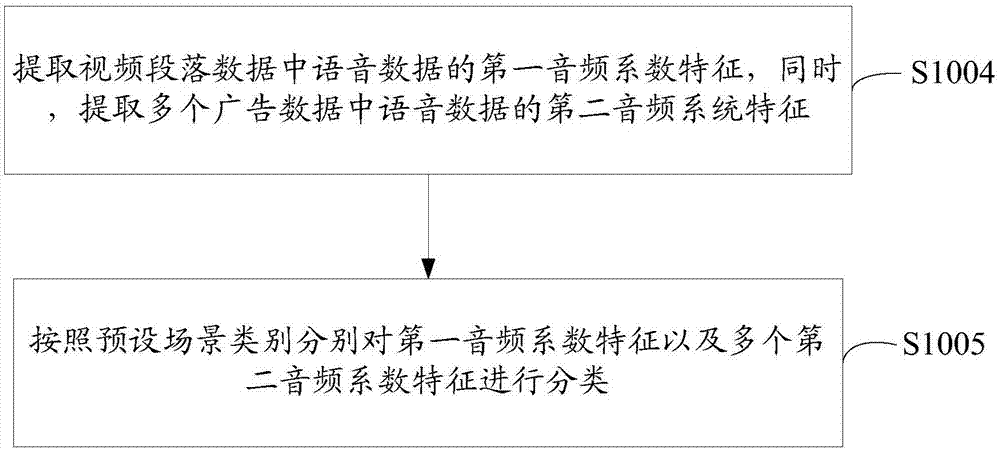

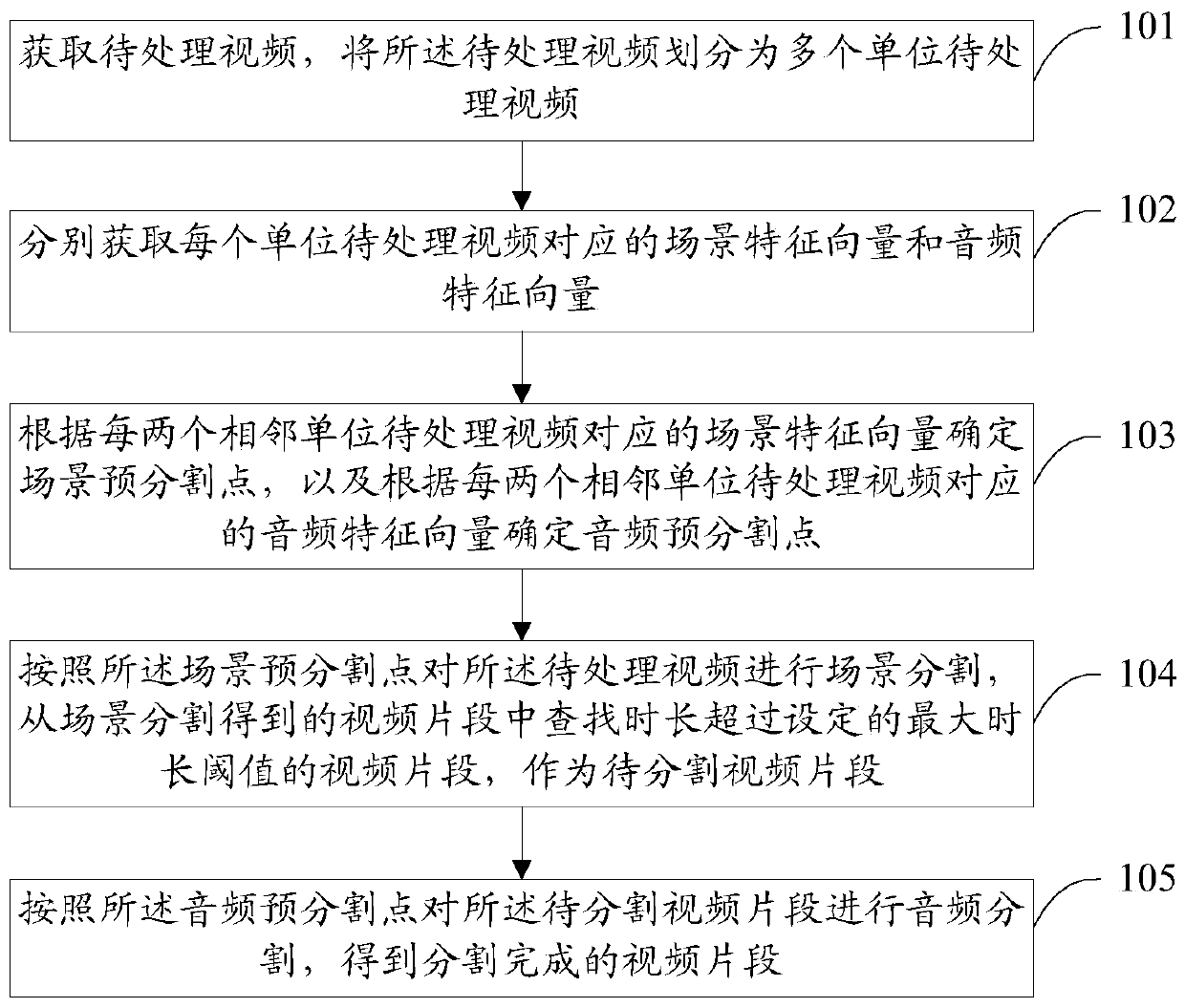

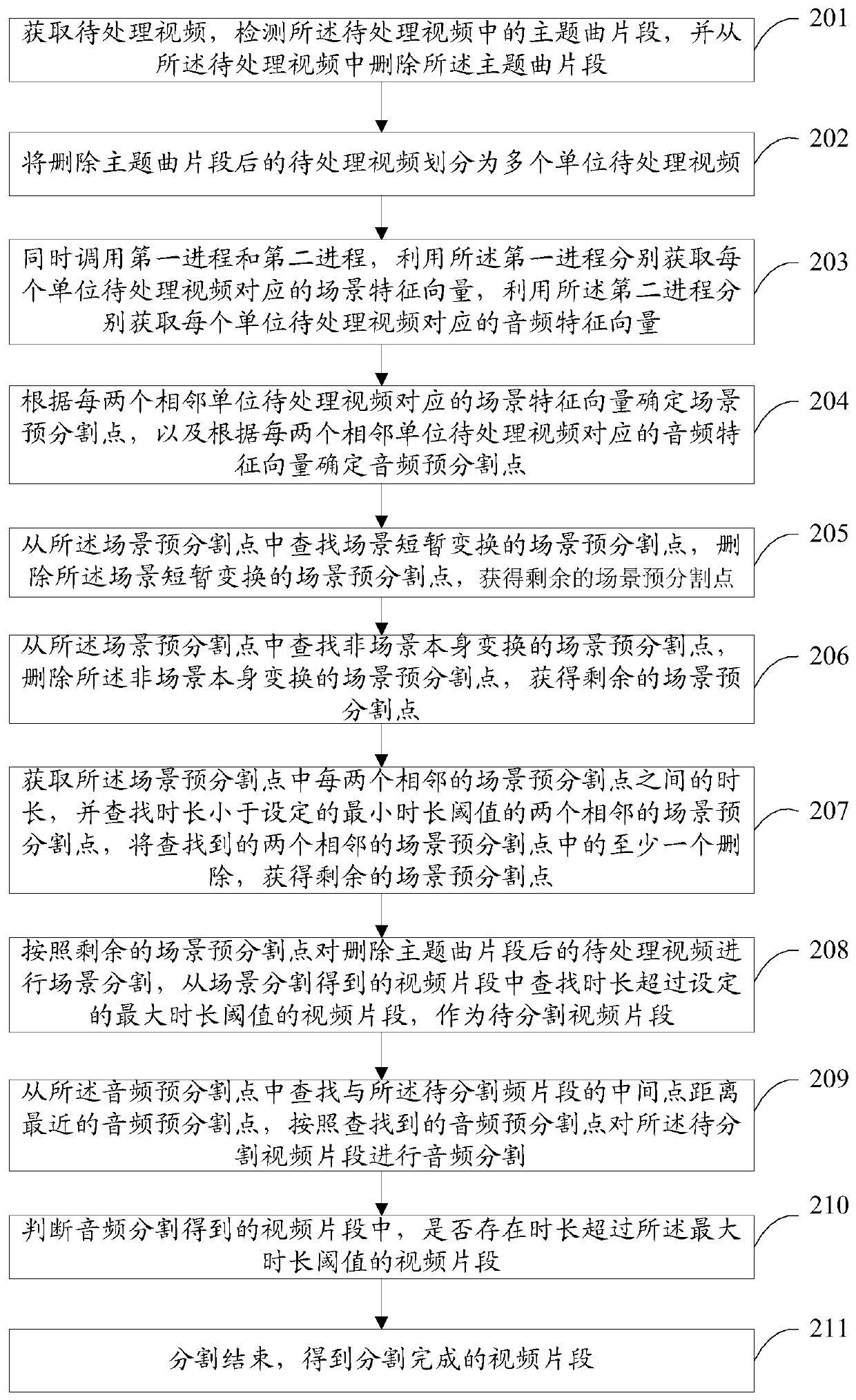

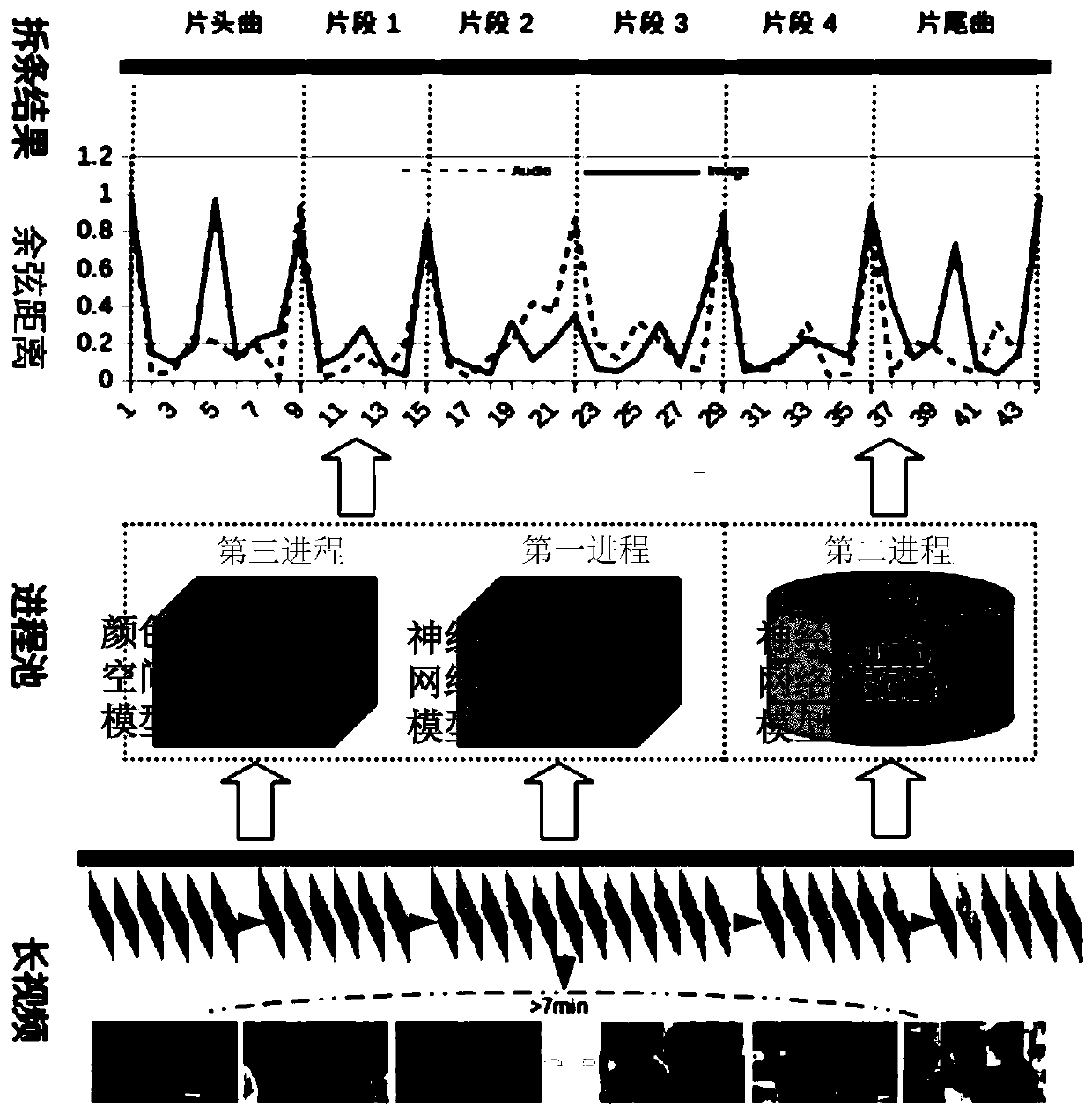

Video processing method and device, electronic equipment and storage medium

ActiveCN110213670AAvoid too long questionsAvoid long questionsSelective content distributionFeature vectorScene segmentation

The invention provides a video processing method and device, electronic equipment and a storage medium. The video processing method comprises the steps of obtaining a to-be-processed video, and dividing the to-be-processed video into a plurality of units of to-be-processed videos; obtaining a scene feature vector and an audio feature vector corresponding to each unit of to-be-processed video; determining scene pre-segmentation points according to the scene feature vectors corresponding to every two adjacent units of to-be-processed videos, and determining audio pre-segmentation points according to the audio feature vectors corresponding to every two adjacent units of to-be-processed videos; performing scene segmentation on the to-be-processed video according to the scene pre-segmentation point, and searching a video clip of which the duration exceeds a set maximum duration threshold from video clips obtained by scene segmentation to serve as a to-be-segmented video clip; and carrying out audio segmentation on the to-be-segmented video clip according to the audio pre-segmentation point to obtain a segmented video clip. According to the invention, the accuracy of splitting is improved, and the requirements of users are better met.

Owner:BEIJING QIYI CENTURY SCI & TECH CO LTD

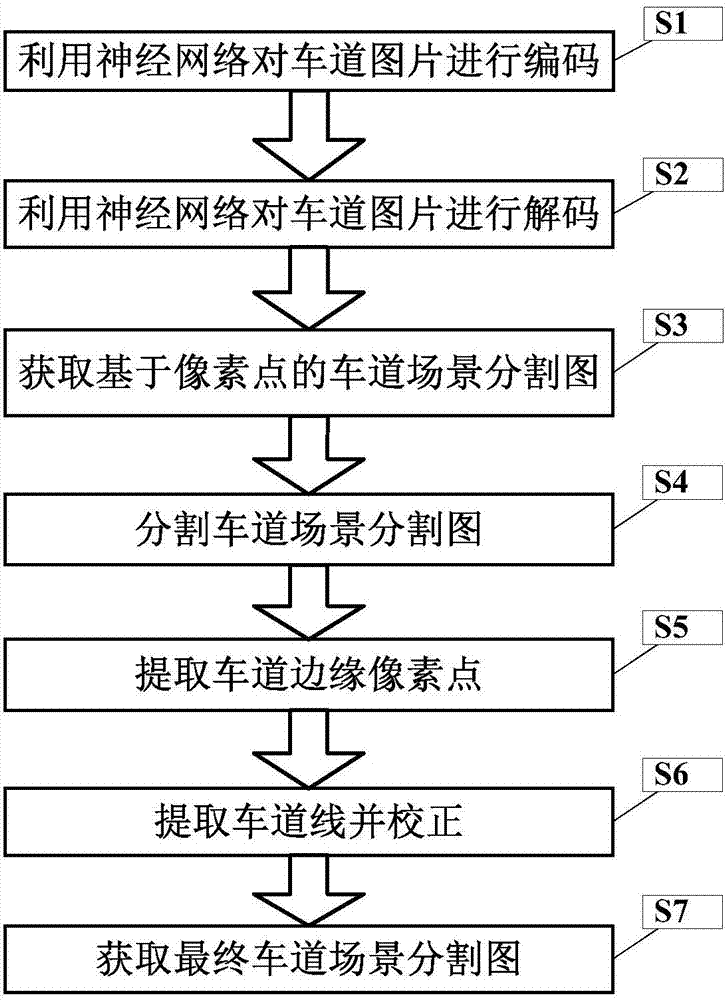

Height information-based unmanned vehicle lane scene segmentation method

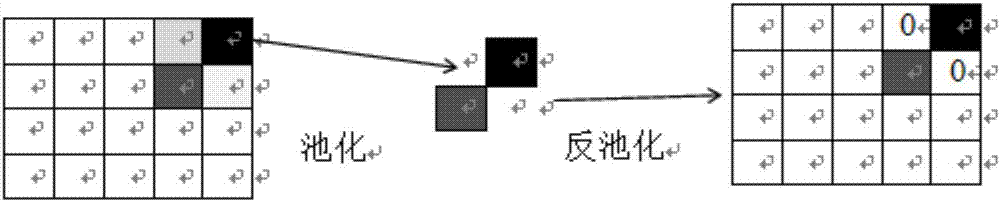

ActiveCN106971155AAchieve divisionReduce problems such as unclear boundary recognitionCharacter and pattern recognitionScene segmentationError processing

The invention discloses a height information-based unmanned vehicle lane scene segmentation method. According to the method, a neural network is utilized to encode and decode a lane image, so that a thickened feature graph is obtained; pixels in the thickened feature graph are classified through a softmax classifier, so that a pixel point-based lane scene segmentation graph can be obtained; and the division of a vehicle road region and a non-road region is realized through using the correction of height information-based error processing; and therefore, noises generated in segmentation can be decreased, and problems such as the ambiguity of the boundaries of the road region and non-road region caused by the noises can be solved.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

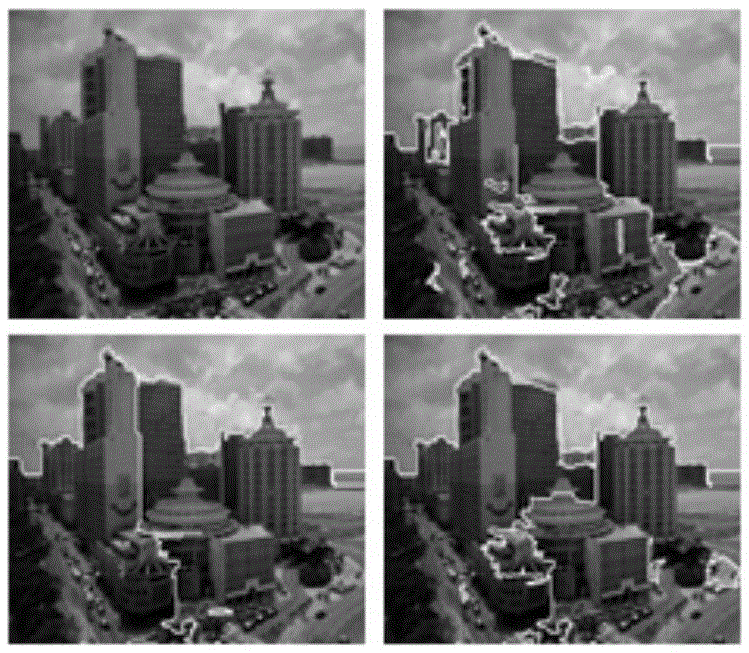

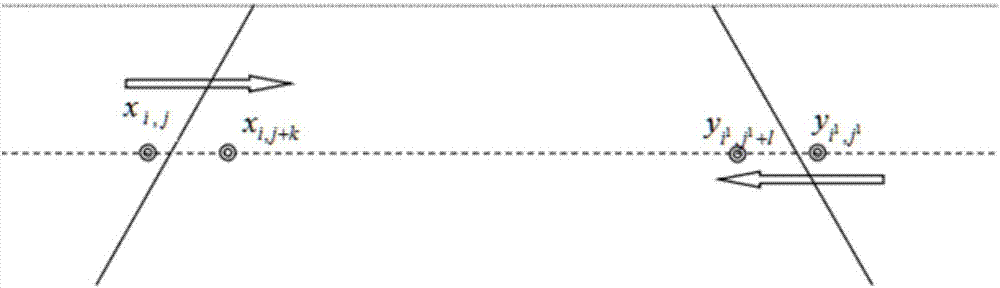

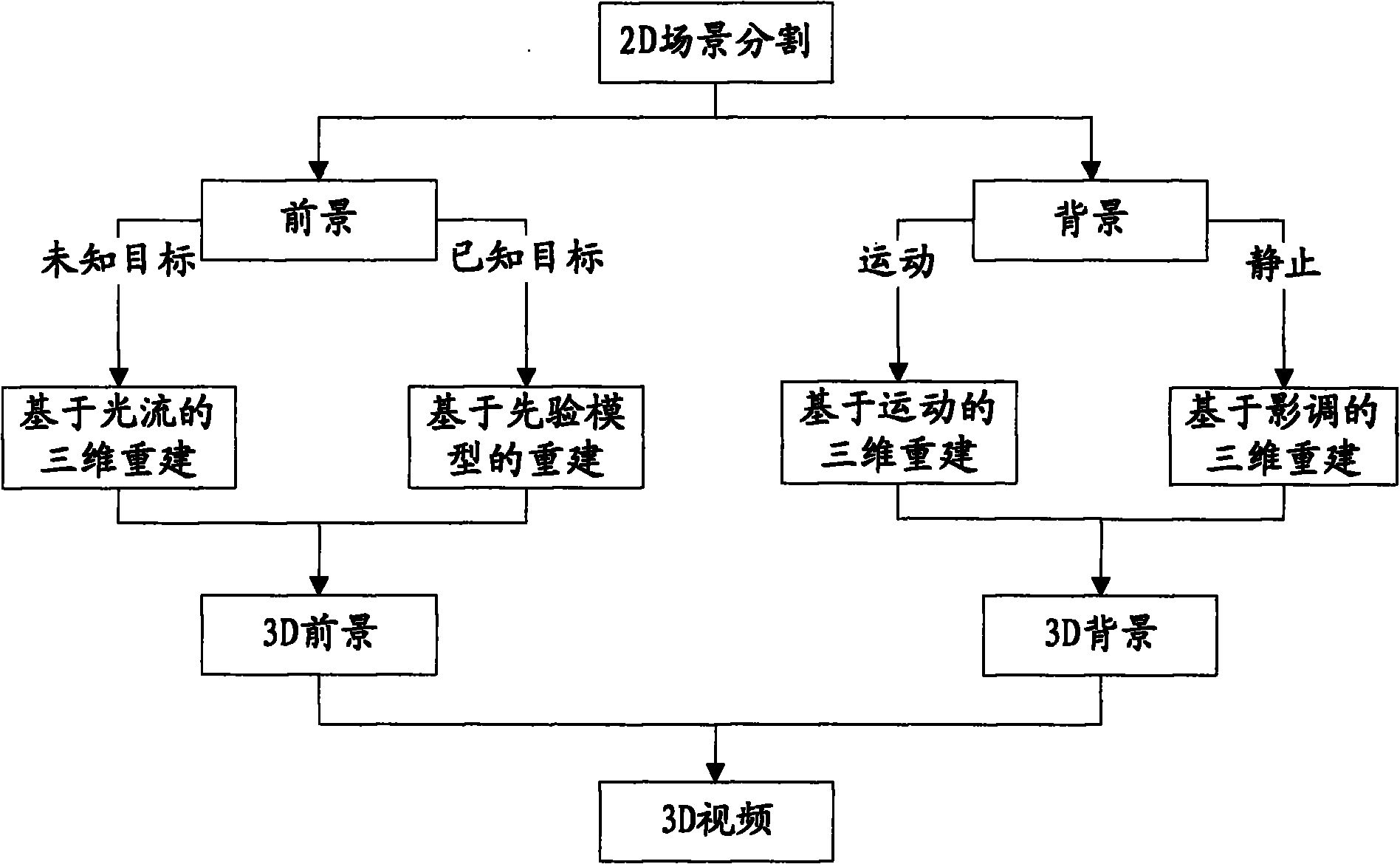

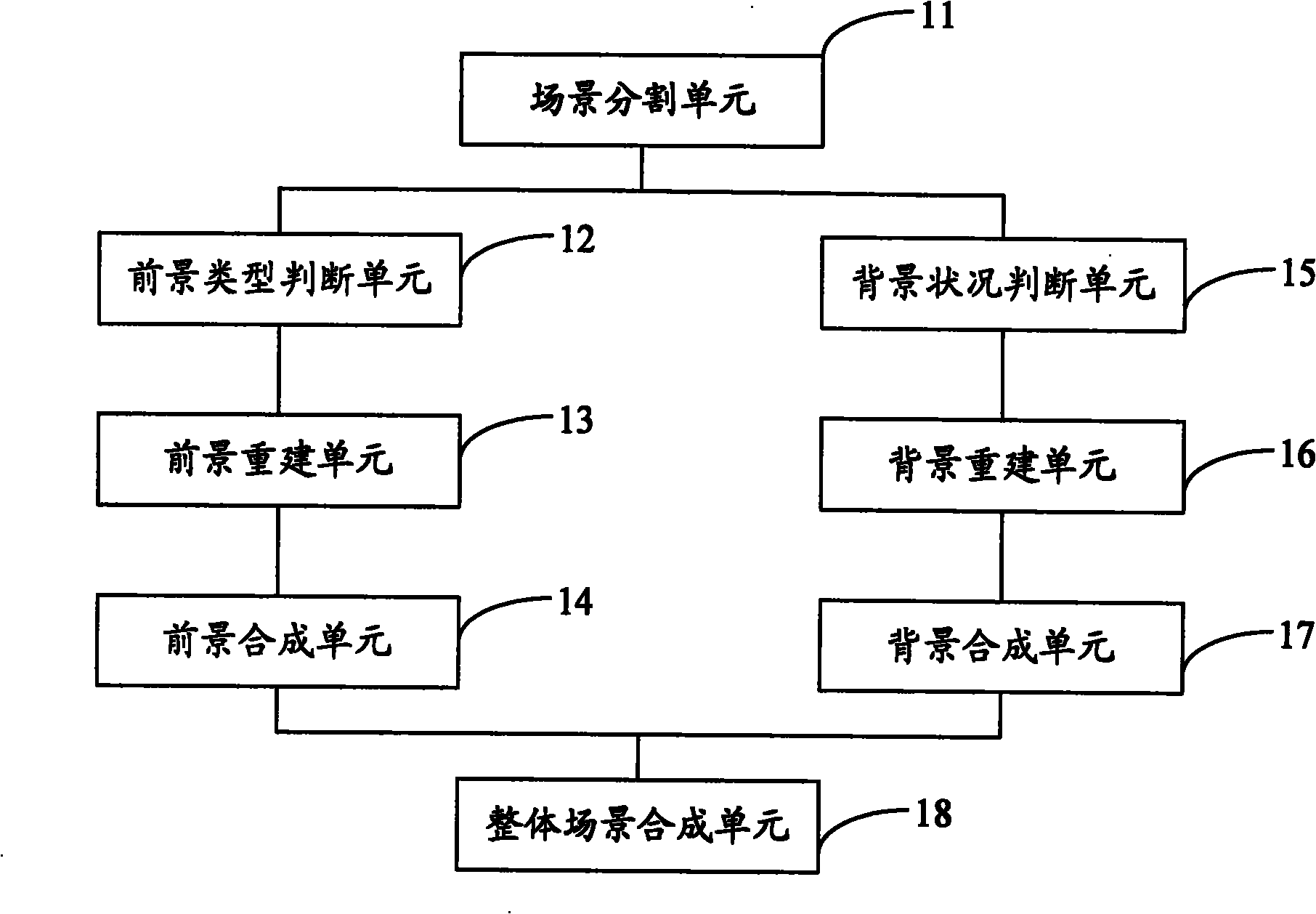

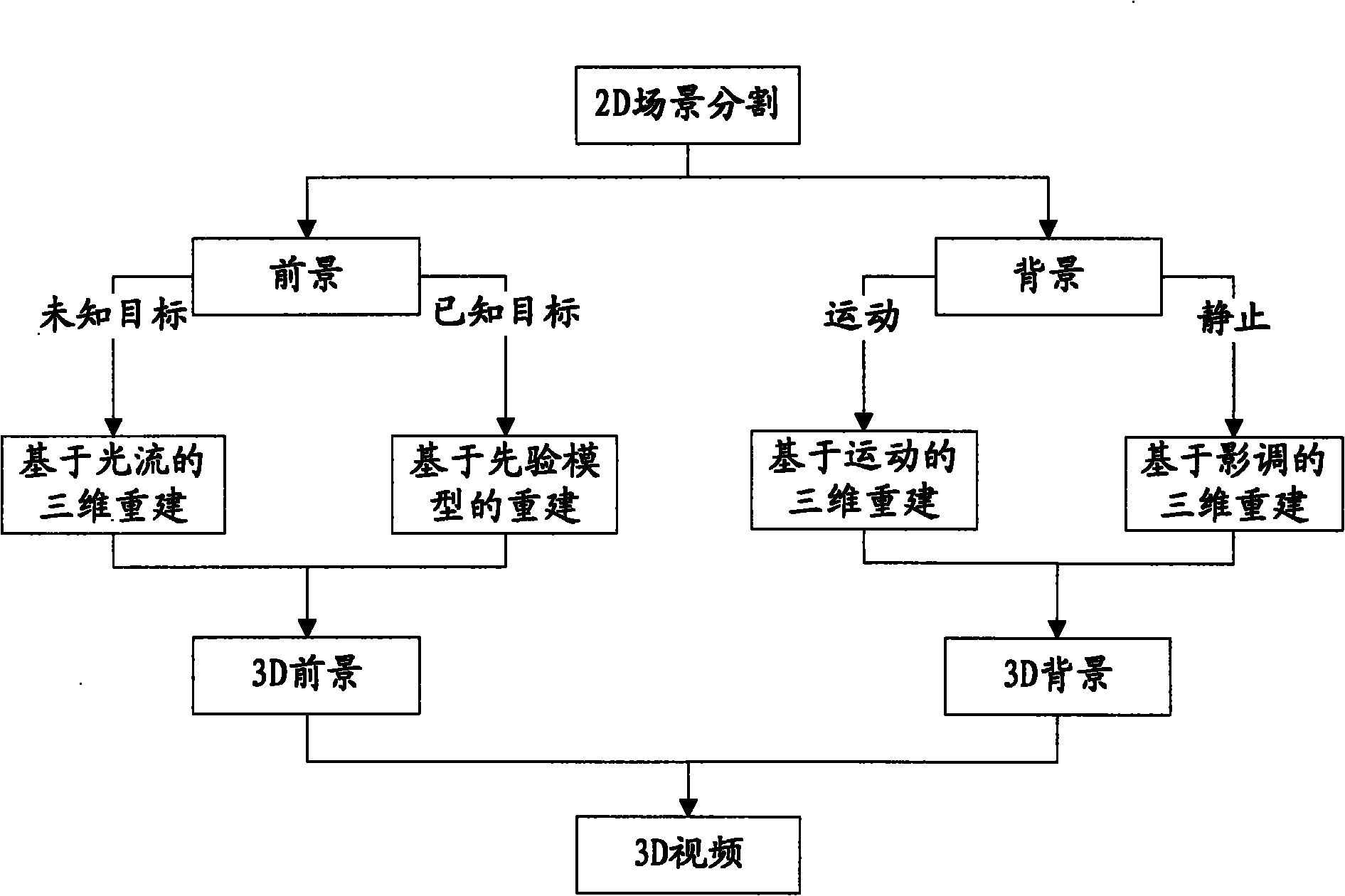

Method and system for converting two-dimensional video of complex scene into three-dimensional video

ActiveCN101917636ARealistic effectAchieve high-precision conversionSteroscopic systemsScene segmentationComputer graphics (images)

The invention discloses a method and a system for converting a two-dimensional video of a complex scene into a three-dimensional video. The method comprises the steps of: carrying out scene segmentation on an input two-dimensional video, segmenting each frame into a foreground part and a background part; judging the type of a foreground target, carrying out three-dimensional reconstruction on the foreground part according to the type of the target; judging whether the background part moves or not, if so, carrying out three-dimensional reconstruction on the background by using a motion-based reconstructing method, if not, carrying out three-dimensional reconstruction on the background by using a tone-based reconstructing method; synthesizing a three-dimensional reconstruction result obtained from the foreground part into a three-dimensional foreground; synthesizing a three-dimensional reconstruction result obtained from the background part into a three-dimensional background; and synthesizing the synthesized three-dimensional foreground and the synthesized three-dimensional background into the three-dimensional video for outputting. The invention can realize high-accuracy three-dimensional conversion on the two-dimensional video of the complex scene, process different types of targets and different types of videos, and generate the three-dimensional video with vivid effect.

Owner:上海易维视科技有限公司

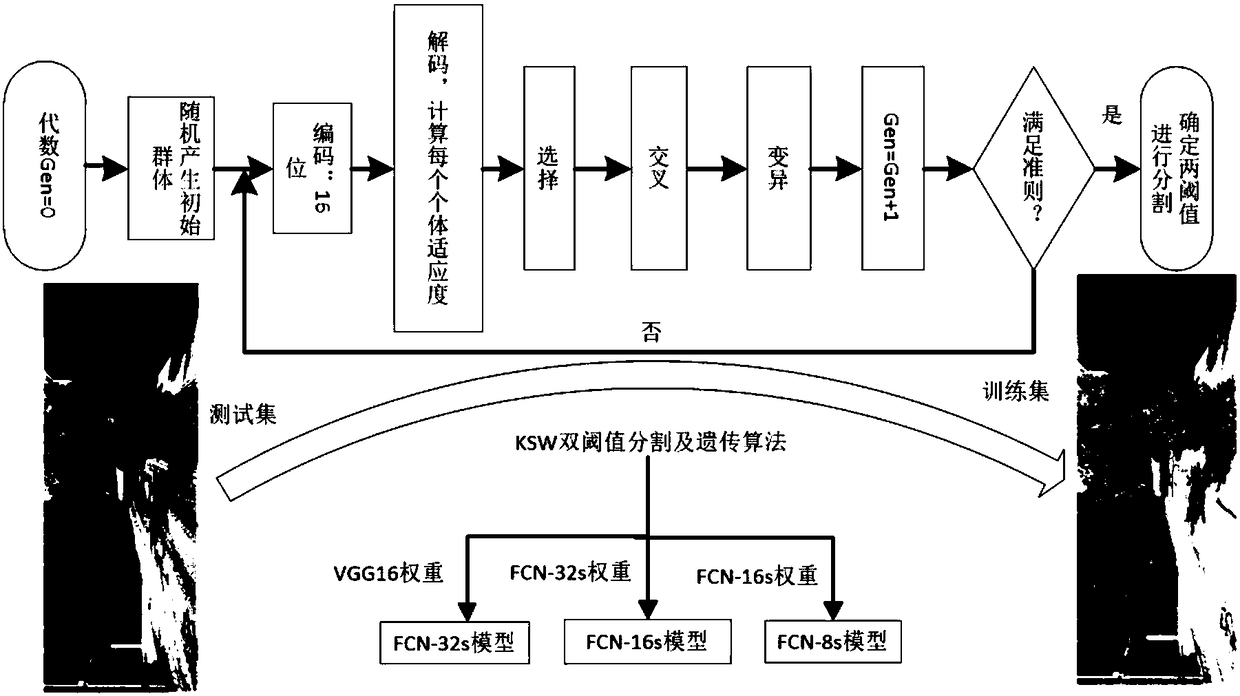

Road scene segmentation method based on full convolutional neural network

ActiveCN108416783APrevent oversegmentationImprove Segmentation AccuracyImage analysisNeural architecturesScene segmentationGenetic algorithm

The invention relates to a road scene segmentation method based on a full convolutional neural network, and the method comprises the following steps: 1, carrying out the median filtering of an original road scene image through a KSW two-dimensional threshold value and a genetic algorithm, and obtaining a training set; 2, constructing a full convolutional neural network framework; 3, taking a training sample obtained at step 1 and an artificial segmentation image discriminated and identified through human eyes as the input data of the full convolutional neural network, and obtaining a deep learning neural network segmentation model with the higher robustness and better accuracy through training; 4, introducing to-be-segmented road scene image test data into the trained deep learning neuralnetwork segmentation model, and obtaining a final segmentation result. An experiment result indicates that the method can effectively solve a segmentation problem of a road scene image, has the higherrobustness and segmentation precision than a conventional road scene image segmentation method, and can be further used for the road image segmentation in more complex scenes.

Owner:HUBEI UNIV OF TECH

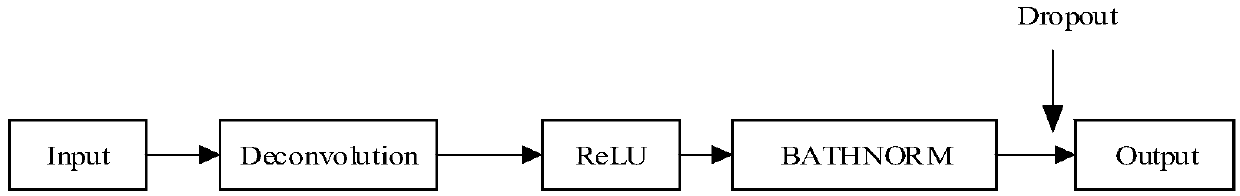

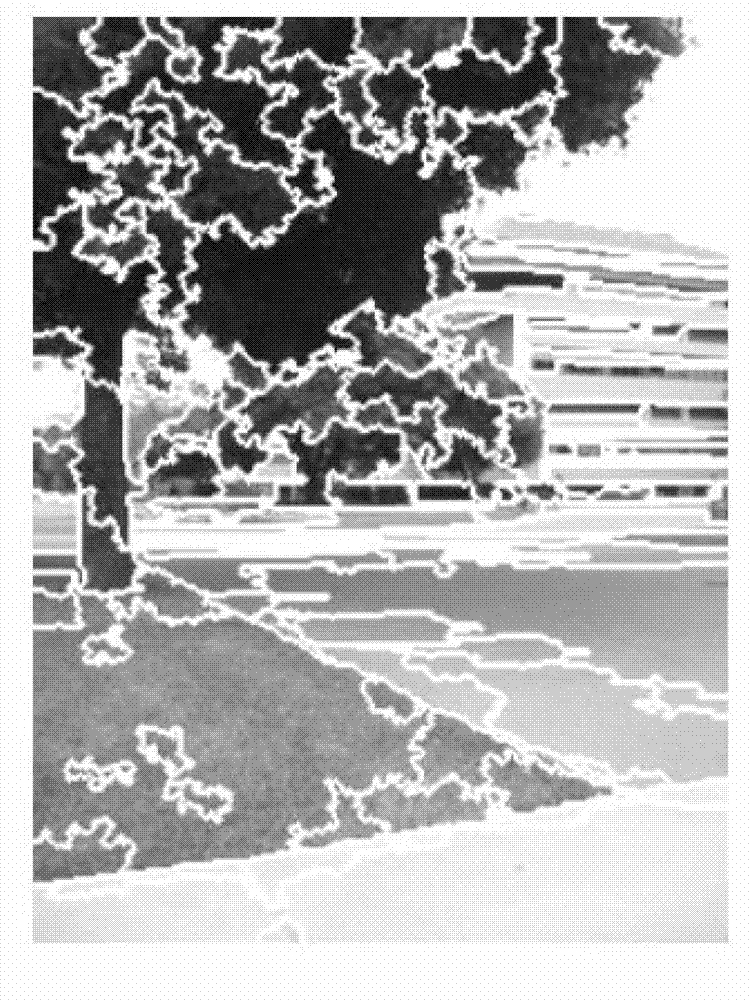

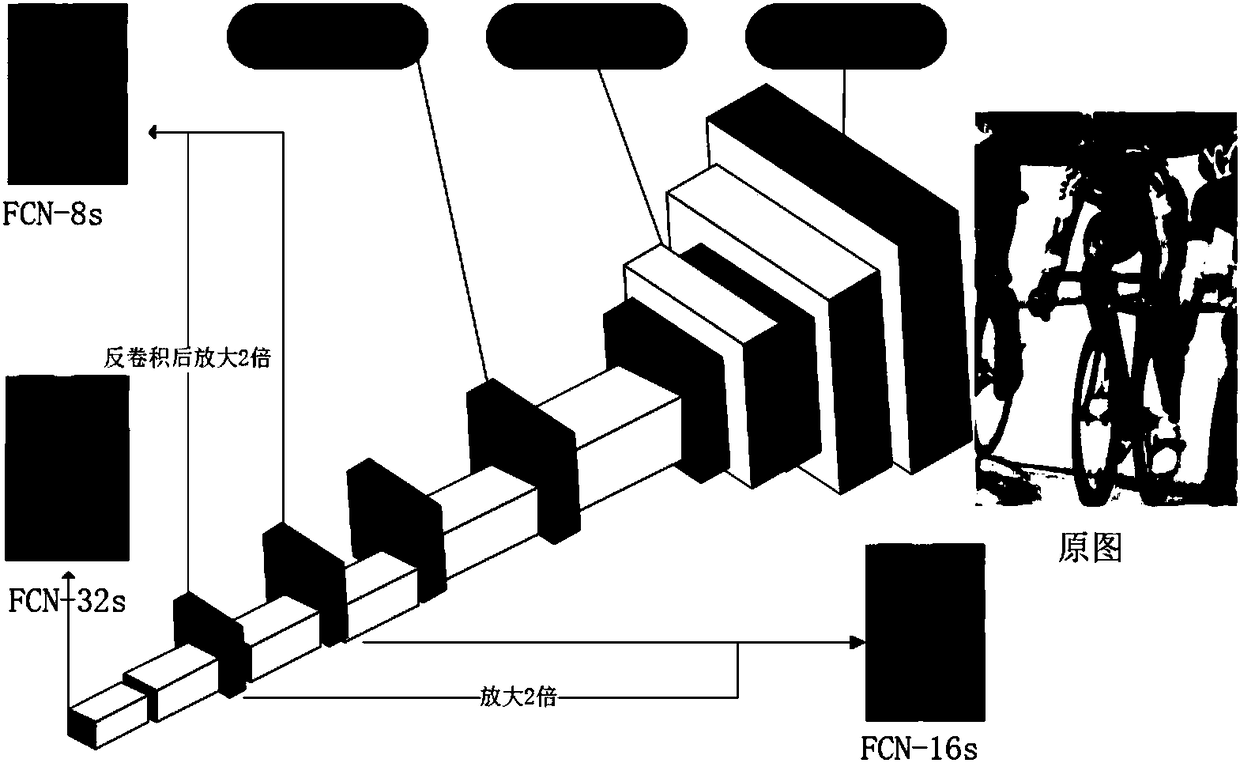

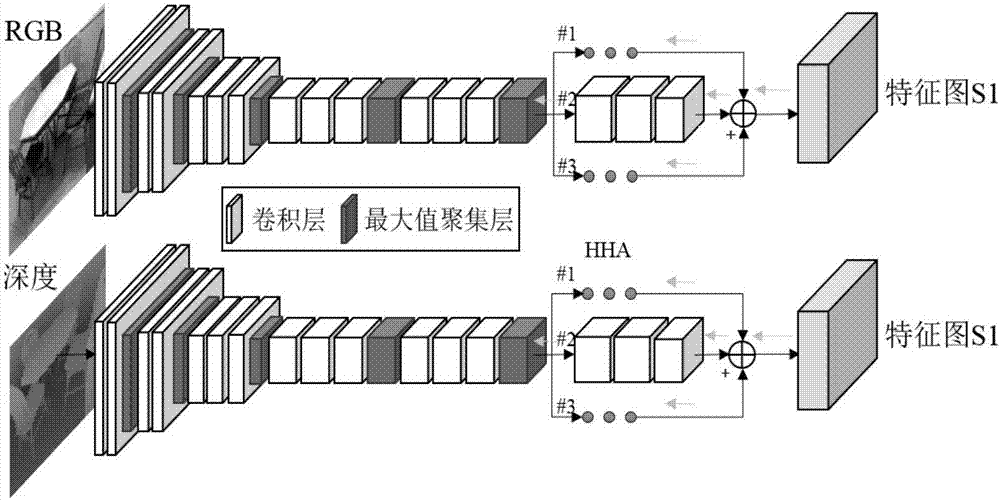

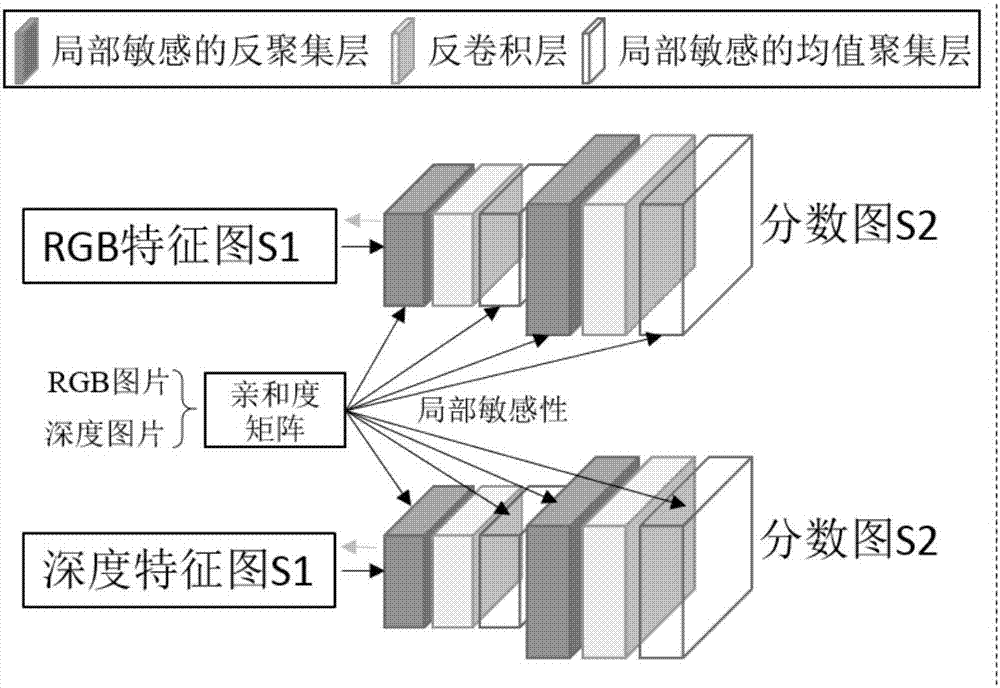

De-convolutional neural network-based scene semantic segmentation method

ActiveCN107066916AOvercome inherent flawsEffective automatic learningCharacter and pattern recognitionScene segmentationAffinity matrix

The invention discloses a de-convolutional neural network-based scene semantic segmentation method. The method comprises the following steps of: S1, extracting intensive feature expression for a scene picture by using a full-convolutional neural network; and S2, carrying out up-sampling learning and object edge optimization on the intensive feature expression obtained in the step S1 through utilizing a locally sensitive de-convolutional neural network by means of a local affinity matrix of the picture, so as to obtain a score map of the picture and then realize refined scene semantic segmentation. Through the locally sensitive de-convolutional neural network, the sensitivity, to the local edge, of the full-convolutional neural network is strengthened by utilizing local bottom layer information, so that scene segmentation with higher precision is obtained.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

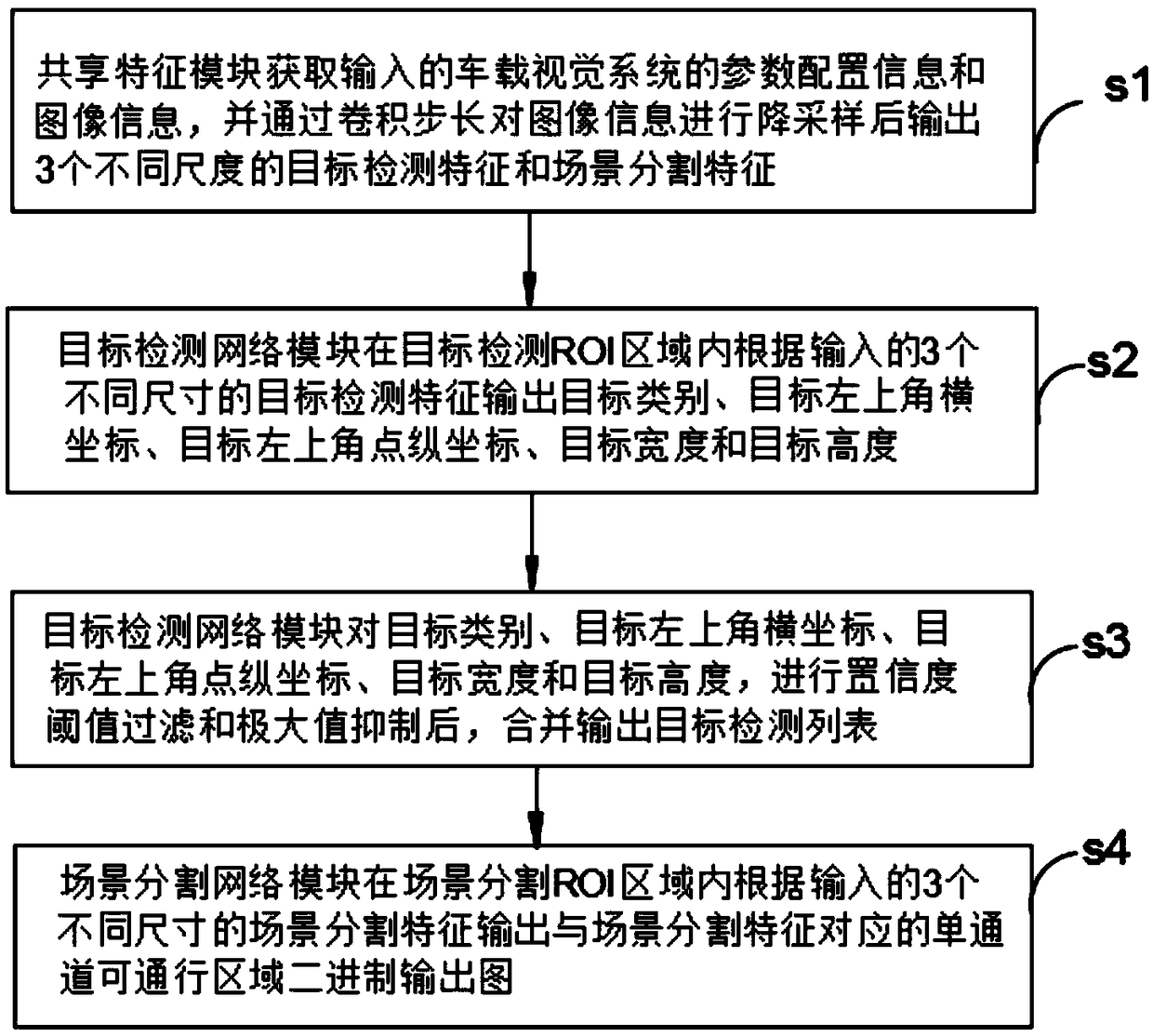

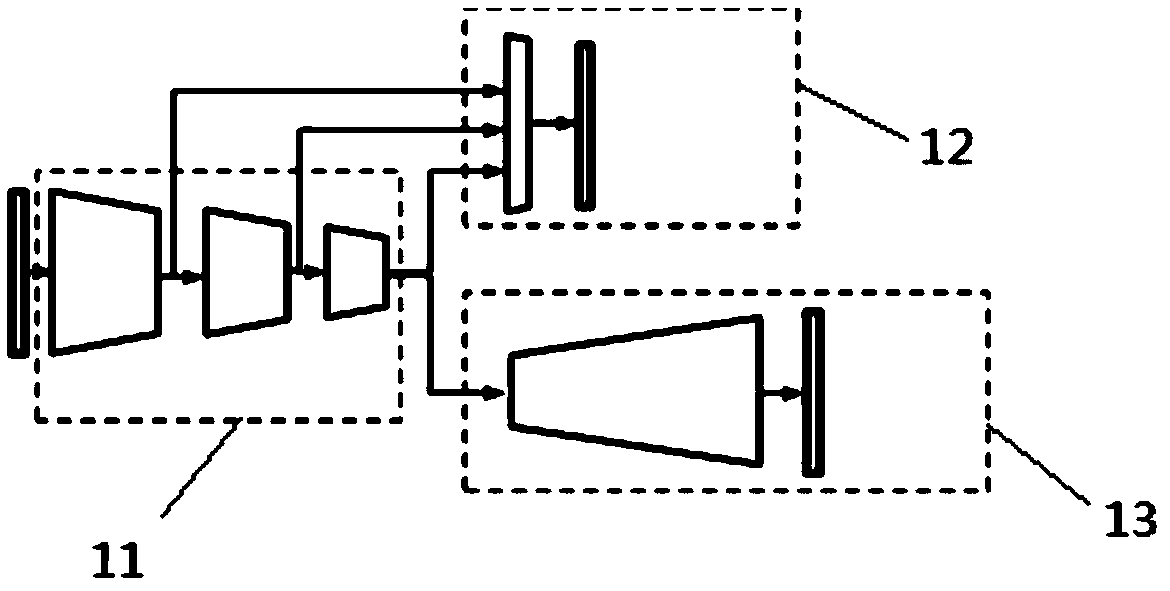

A method for integrating driving scene target recognition and traveling area segmentation

ActiveCN109145798AImprove robustnessImprove accuracyCharacter and pattern recognitionNeural architecturesScene segmentationGoal recognition

The invention discloses a driving scene target recognition and traveling area segmentation integration method. The method comprises the following steps: S1, a shared feature module obtains parameter configuration information and image information of an input vehicle vision system; S2, the target detection network module outputs the target category, the abscissa of the upper left corner of the target, the ordinate of the upper left corner of the target, the width of the target and the height of the target according to the three target detection features of different sizes inputted in the targetdetection ROI area; S3, carrying out confidence threshold filtering and maximum suppression on that target category, the abscissa of the upper left corn of the target, the ordinate of the upper leftcorner of the target, the width of the target and the height of the target by the target detection network module, and merging and outputting a target detection list; S4, the scene segmentation network module outputting a single-channel passable area binary output map corresponding to the scene segmentation feature according to three scene segmentation features of different sizes inputted in the scene segmentation ROI area. By adopting the invention, the robustness and the accuracy are greatly improved.

Owner:ZHEJIANG LEAPMOTOR TECH CO LTD

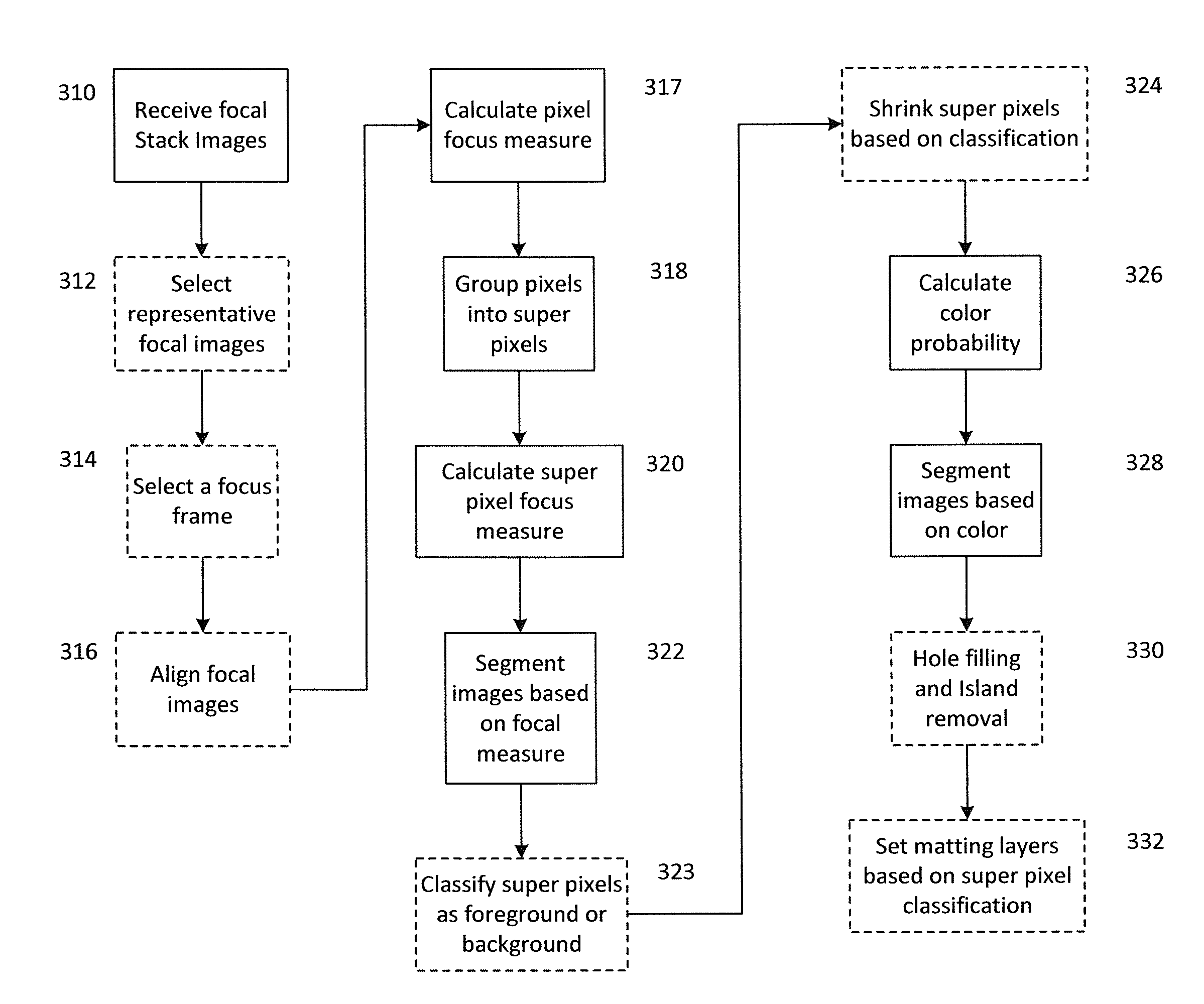

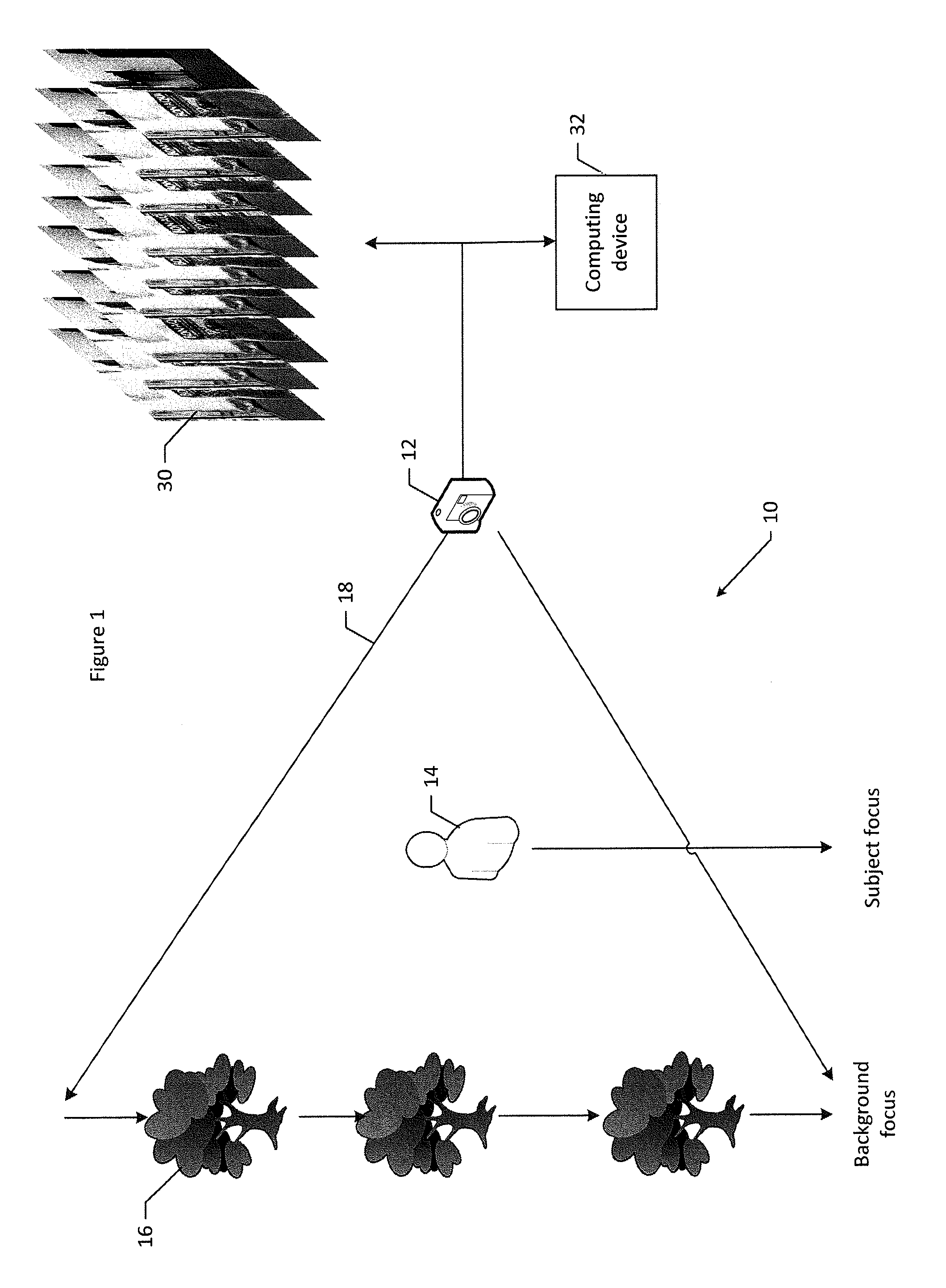

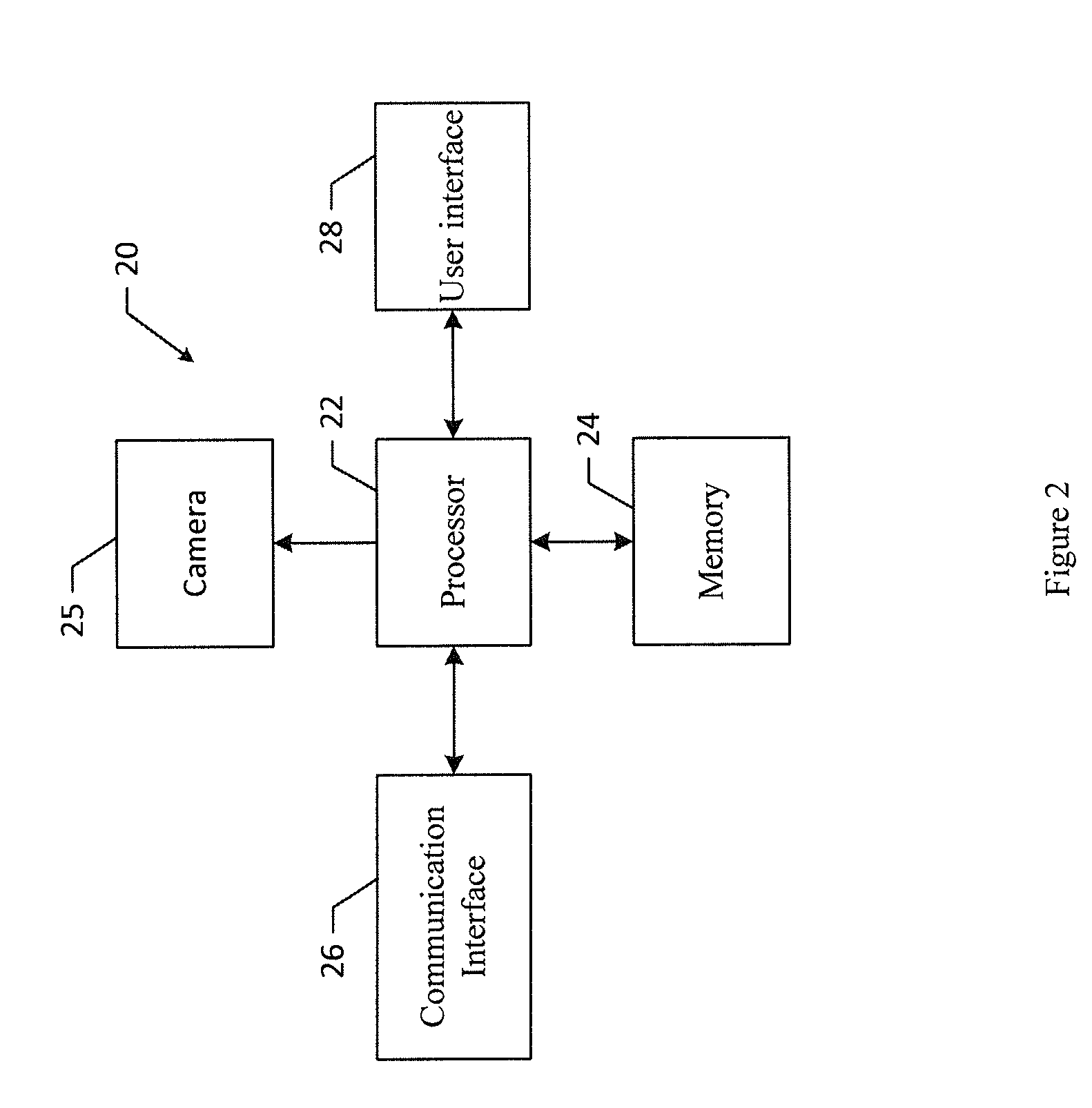

Method and apparatus for scene segmentation from focal stack images

ActiveUS20150110391A1Facilitate scene segmentationImage enhancementImage analysisScene segmentationComputer program

A method, apparatus and computer program product are provided to facilitate scene segmentation from focal stack images. The method may include receiving a set of focal stack images, calculating a focal measure for each of a plurality of pixels of the set of focal stack images, and grouping each of a plurality of pixels for which the focal measure was calculated into a plurality of super pixels. The method may also include calculating a focal measure for each of the plurality of super pixels, segmenting a respective focal stack image based on the focal measure of each of the plurality of super pixels, calculating a color probability for each of the plurality of super pixels, and segmenting each focal stack image based on color probability of each of the plurality of super pixels.

Owner:NOKIA TECHNOLOGLES OY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com