Patents

Literature

2869 results about "Hidden layer" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

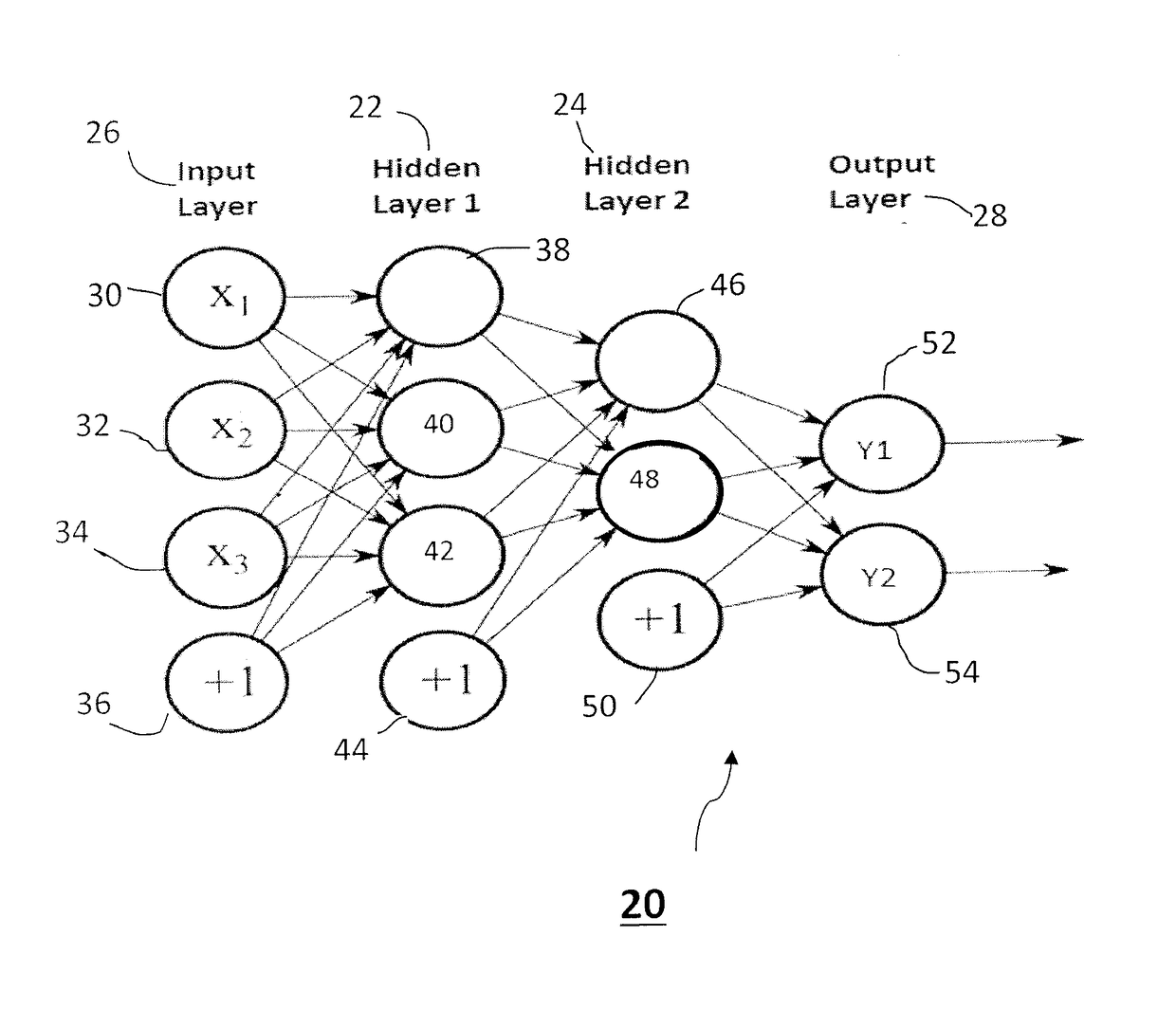

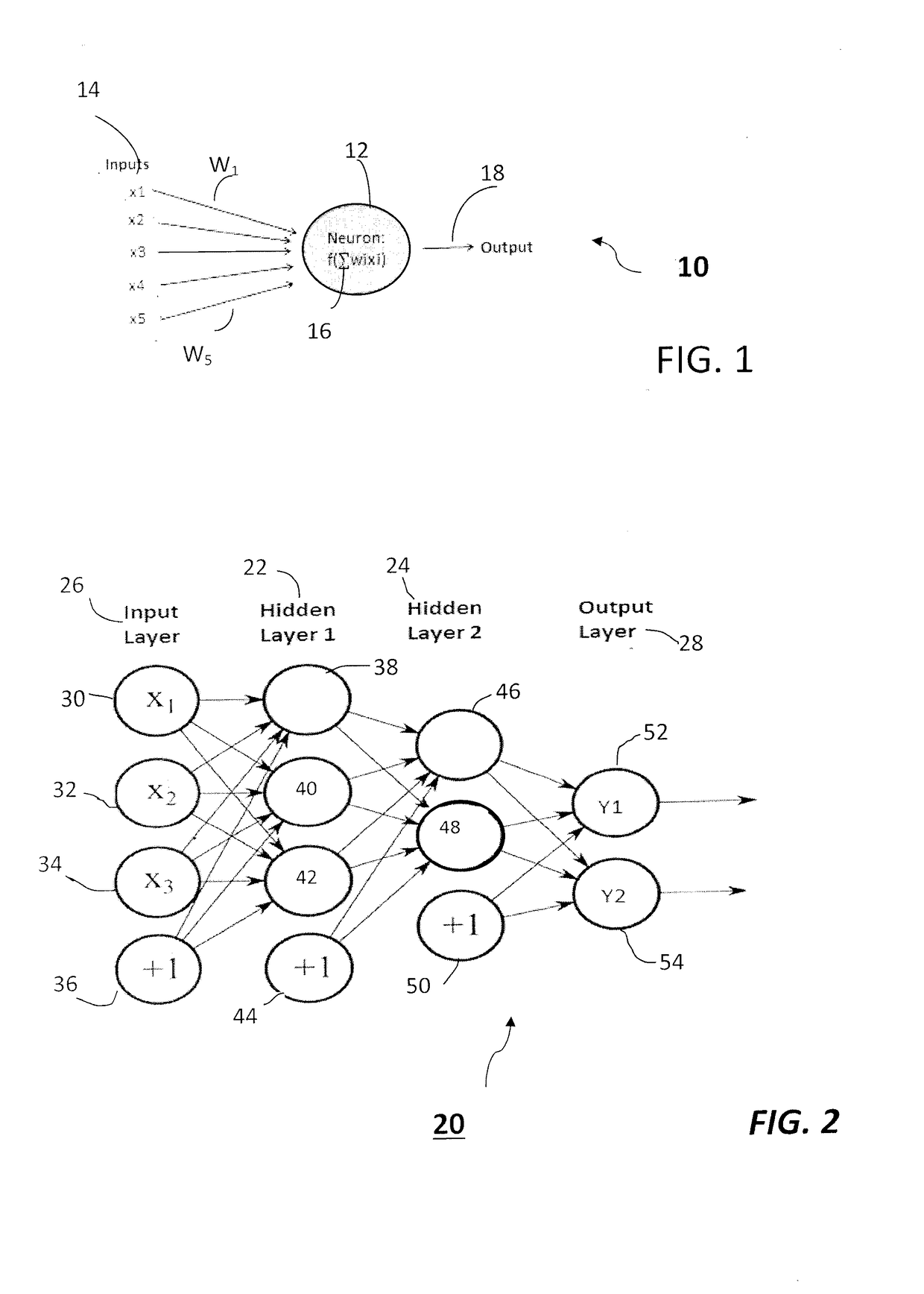

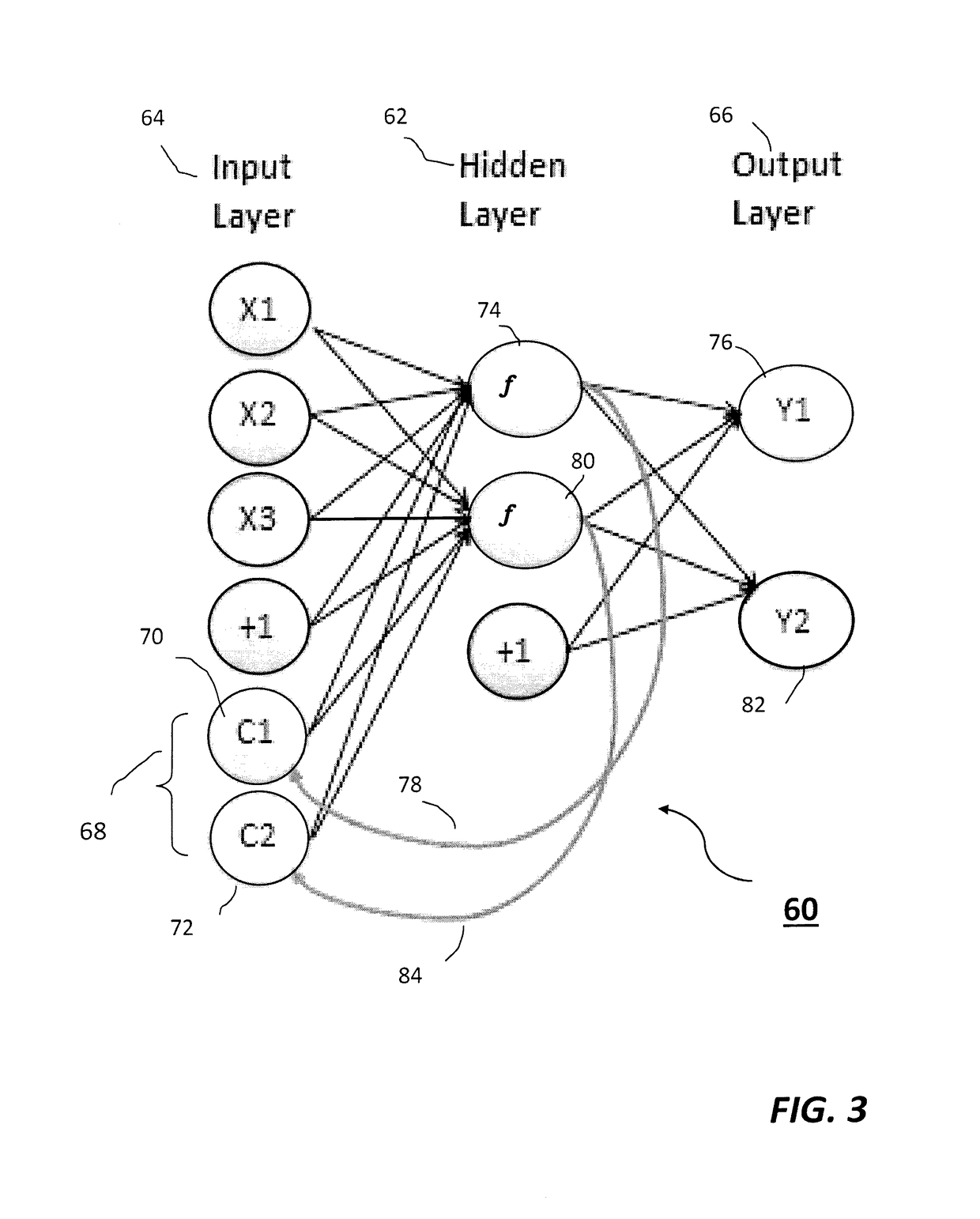

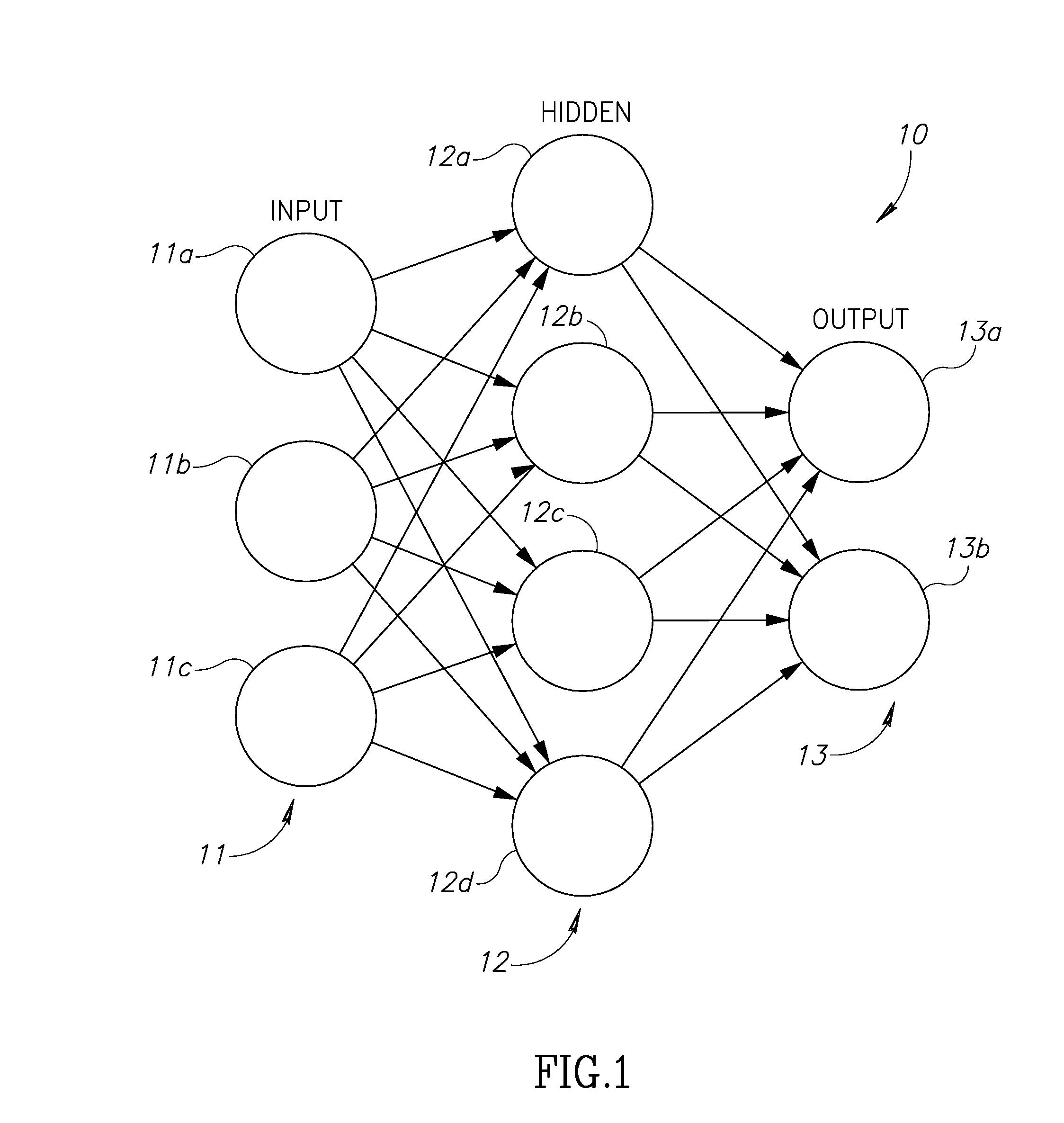

Hidden Layer. Definition - What does Hidden Layer mean? A hidden layer in an artificial neural network is a layer in between input layers and output layers, where artificial neurons take in a set of weighted inputs and produce an output through an activation function.

Multi-domain motion estimation and plethysmographic recognition using fuzzy neural-nets

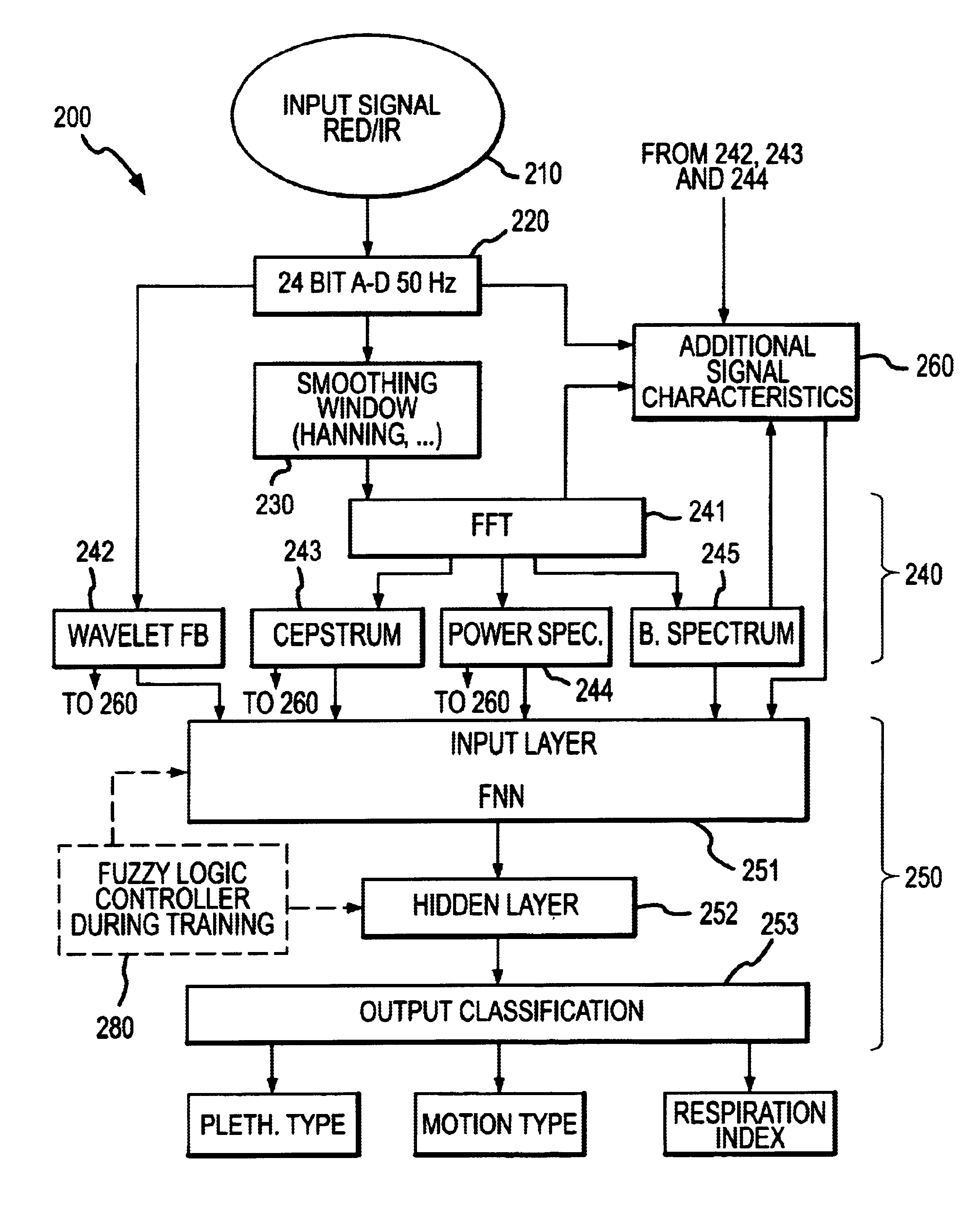

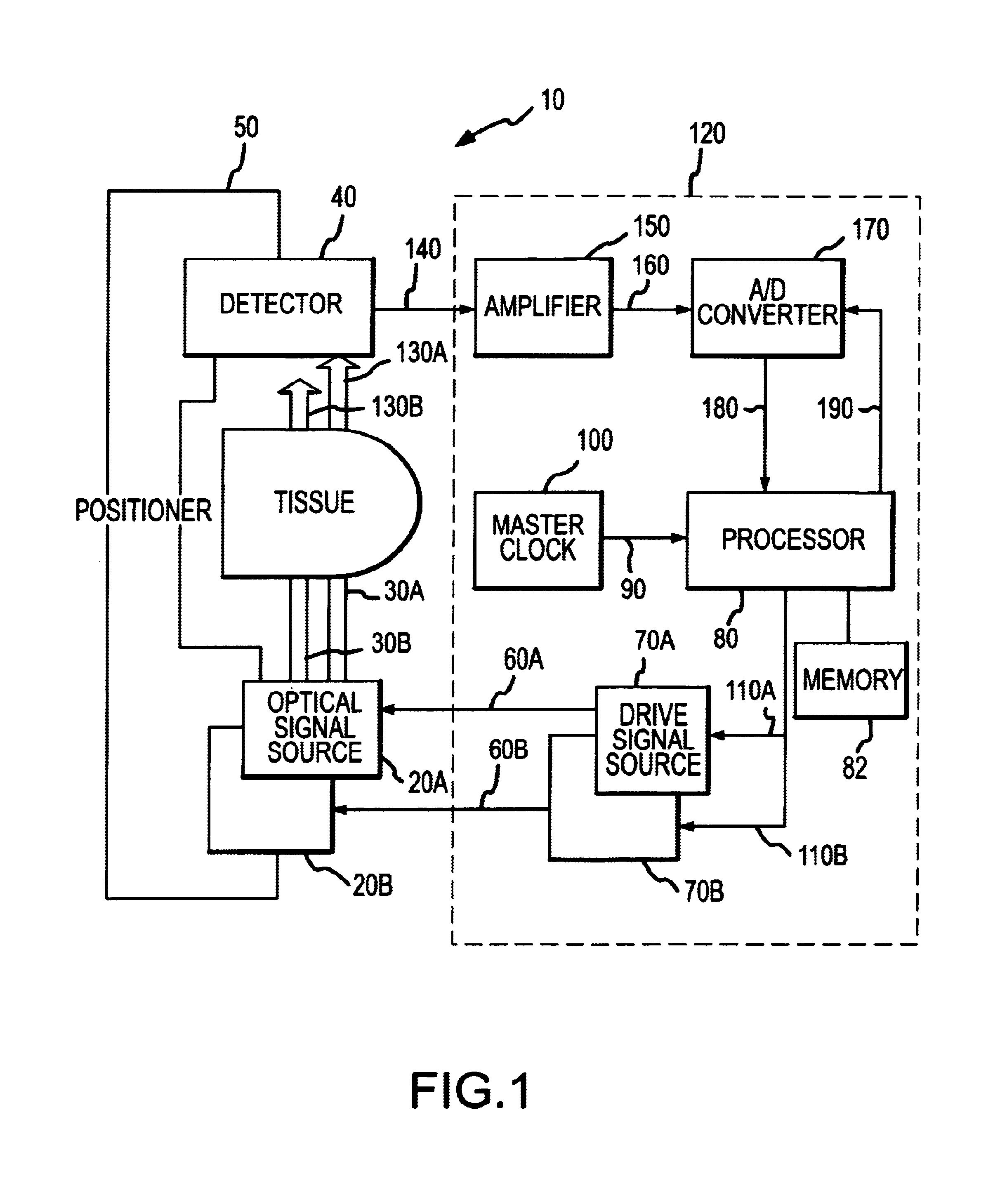

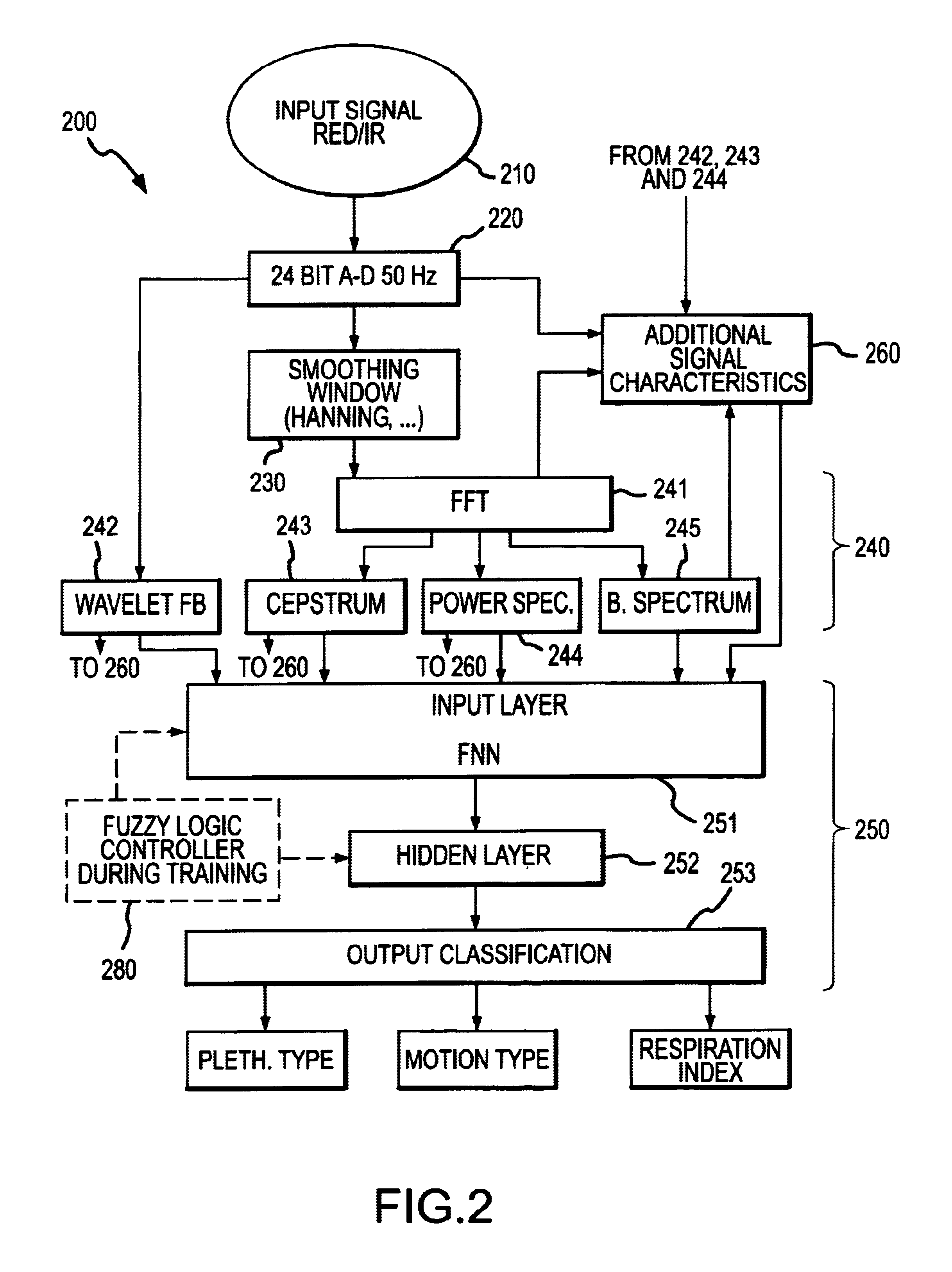

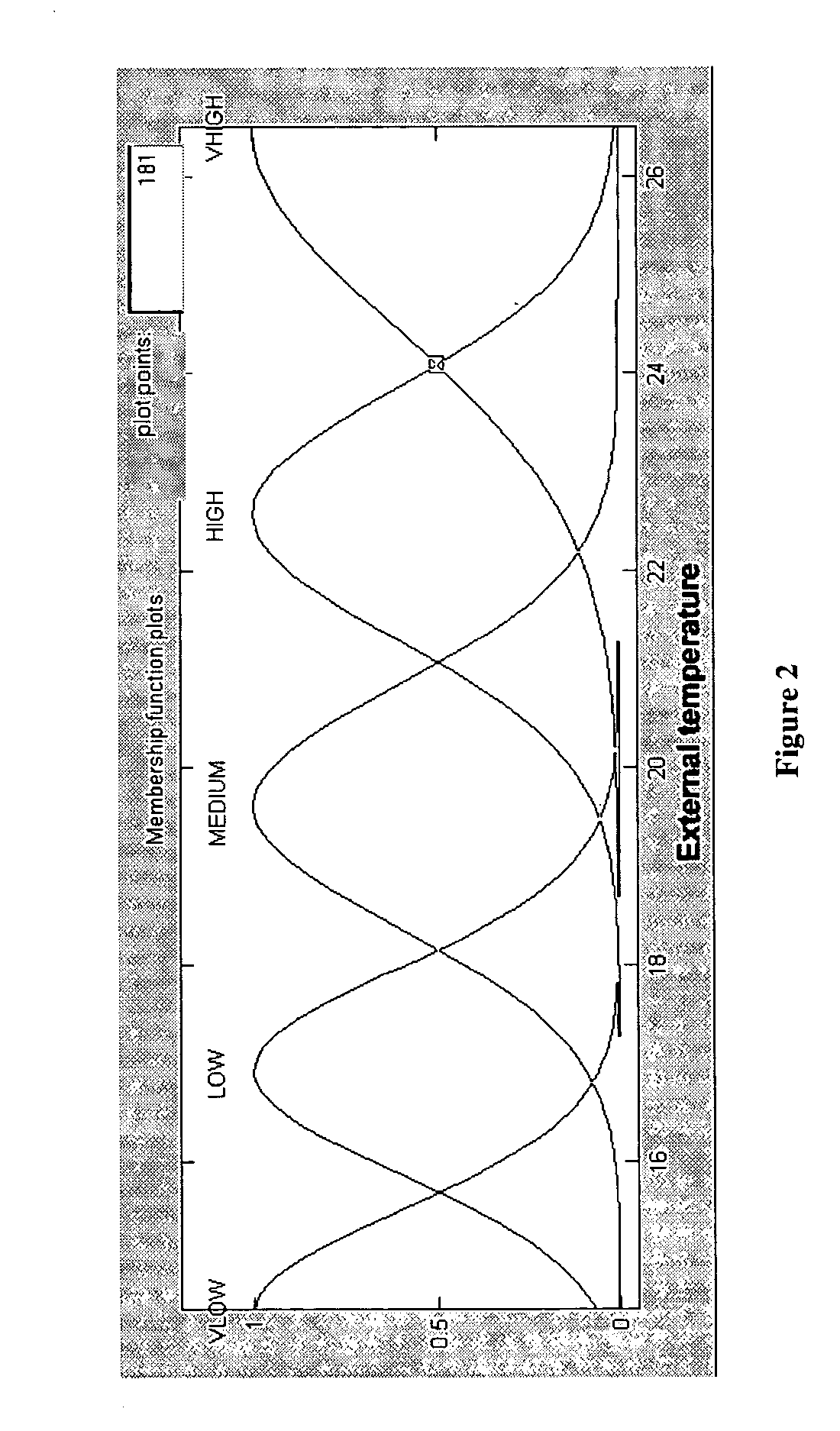

ActiveUS6931269B2Enhanced signalImprove filtering effectSurgeryCatheterPattern recognitionHidden layer

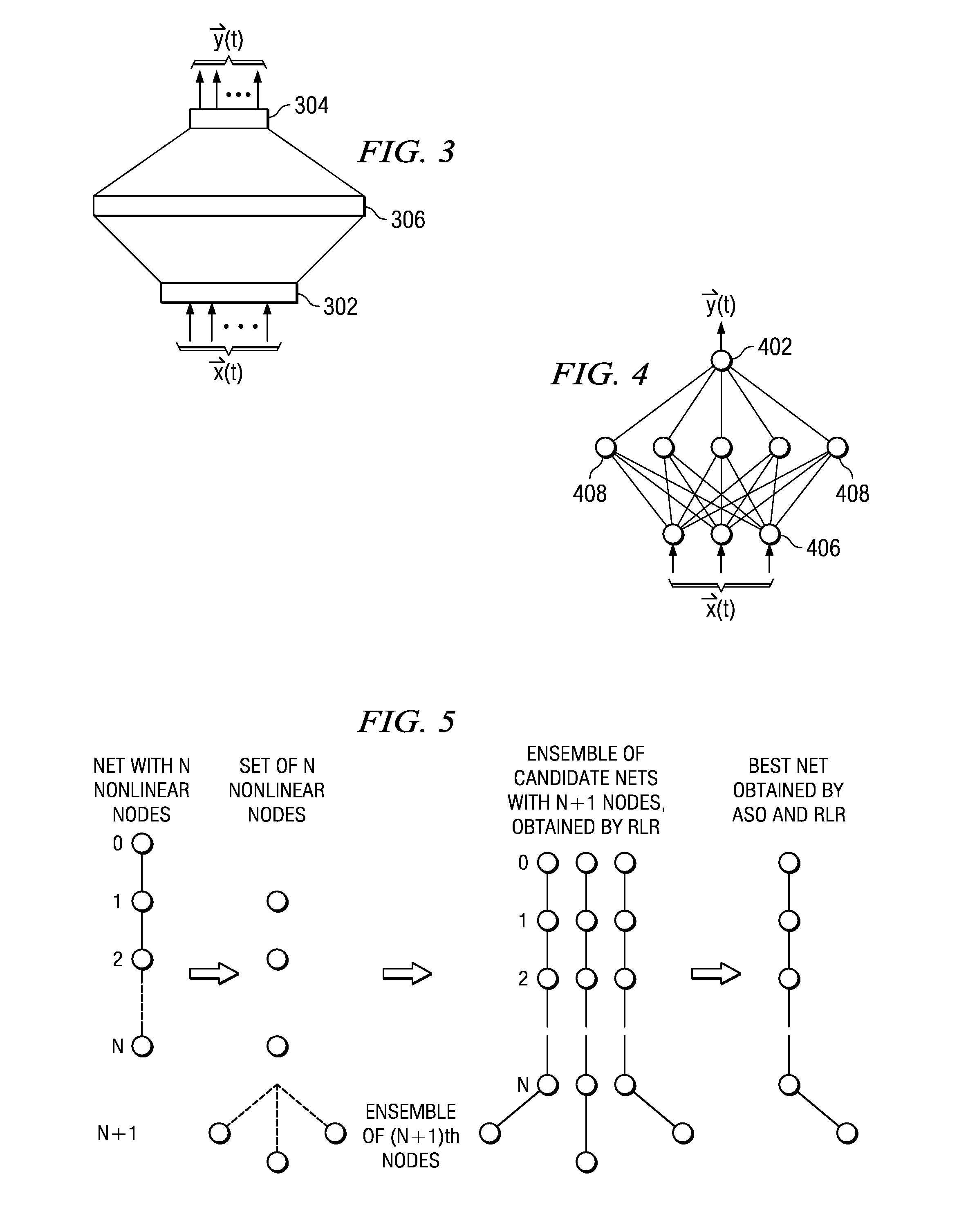

Pulse oximetry is improved through classification of plethysmographic signals by processing the plethysmographic signals using a neural network that receives input coefficients from multiple signal domains including, for example, spectral, bispectral, cepstral and Wavelet filtered signal domains. In one embodiment, a plethysmographic signal obtained from a patient is transformed (240) from a first domain to a plurality of different signal domains (242, 243, 244, 245) to obtain a corresponding plurality of transformed plethysmographic signals. A plurality of sets of coefficients derived from the transformed plethysmographic signals are selected and directed to an input layer (251) of a neural network (250). The plethysmographic signal is classified by an output layer (253) of the neural network (250) that is connected to the input layer (251) by one or more hidden layers (252).

Owner:DATEX OHMEDA

System and method for predicting power plant operational parameters utilizing artificial neural network deep learning methodologies

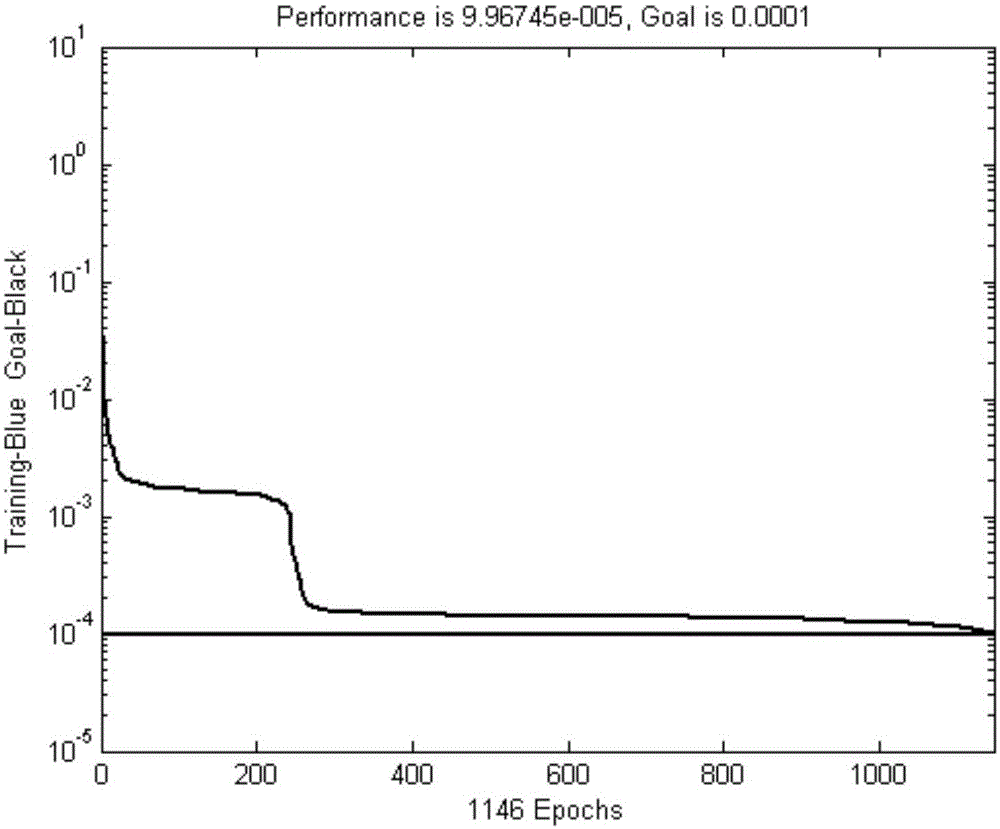

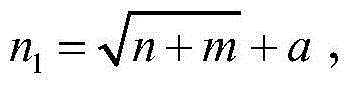

A system and method of predicting future power plant operations is based upon an artificial neural network model including one or more hidden layers. The artificial neural network is developed (and trained) to build a model that is able to predict future time series values of a specific power plant operation parameter based on prior values. By accurately predicting the future values of the time series, power plant personnel are able to schedule future events in a cost-efficient, timely manner. The scheduled events may include providing an inventory of replacement parts, determining a proper number of turbines required to meet a predicted demand, determining the best time to perform maintenance on a turbine, etc. The inclusion of one or more hidden layers in the neural network model creates a prediction that is able to follow trends in the time series data, without overfitting.

Owner:SIEMENS AG

Hardware accelerator and method for realizing sparse GRU neural network based on FPGA

The invention provides a hardware accelerator and method for realizing a sparse GRU neural network based on FPGA. According to the invention, an apparatus for realizing sparse GRU neural network includes: an input receiving unit which is intended for receiving a plurality of input vectors and distributing the plurality of input vectors to a plurality of computing units; a plurality of computing units which acquire input vectors from the input receiving unit, read weight matrix data of a neural network, decoding the weight matrix data and conduct matrix calculation on the decoded weight matrix data and the input vectors, and output the result of the matrix calculation to a hidden layer state computing module; a hidden layer state computing module which acquires the result of matrix calculation from the computing units PE, and computing the state of the hidden layer; and a control unit which is intended for global control. In addition, the invention also provides a method for realizing sparse GRU neural network through iteration.

Owner:XILINX INC

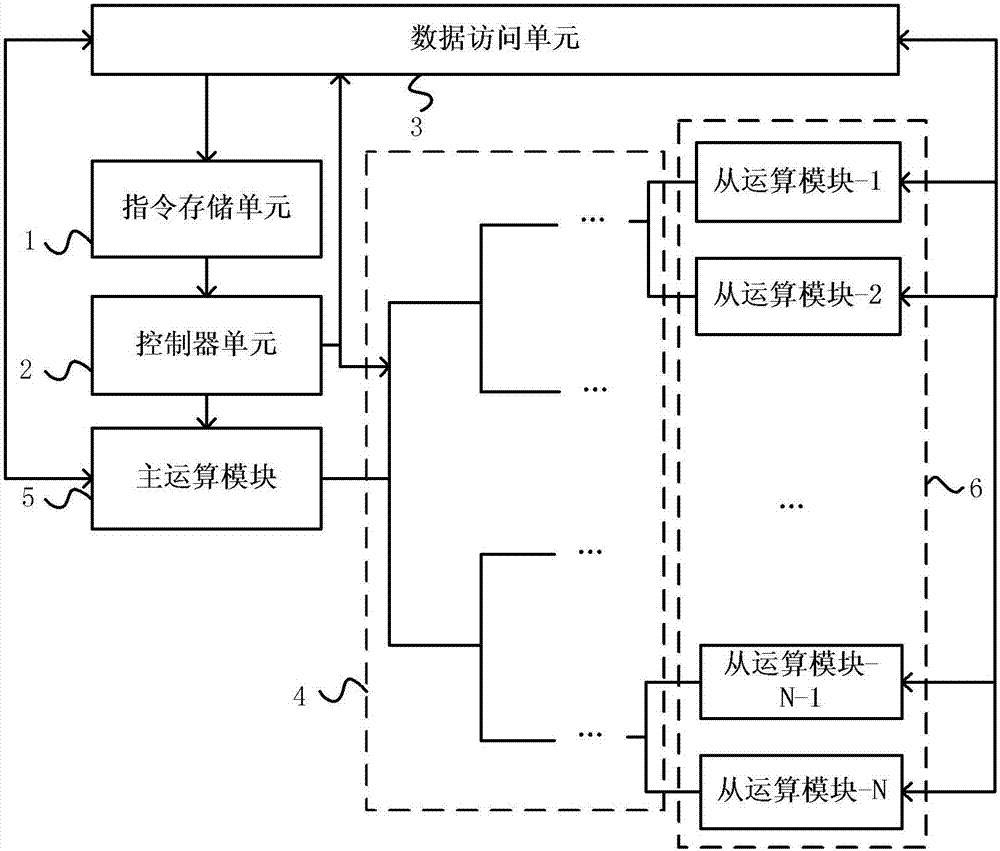

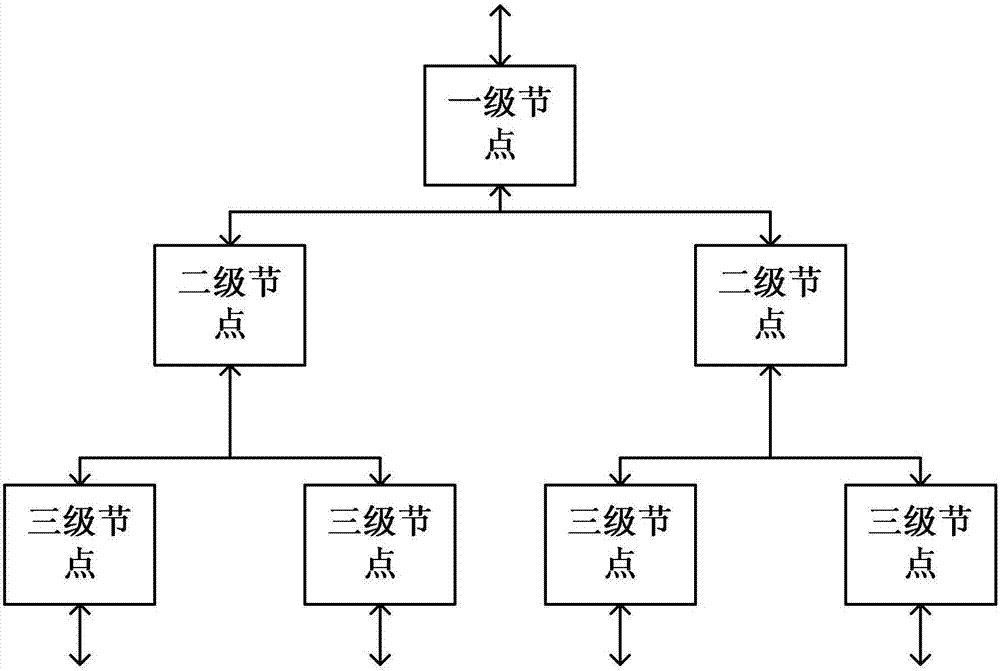

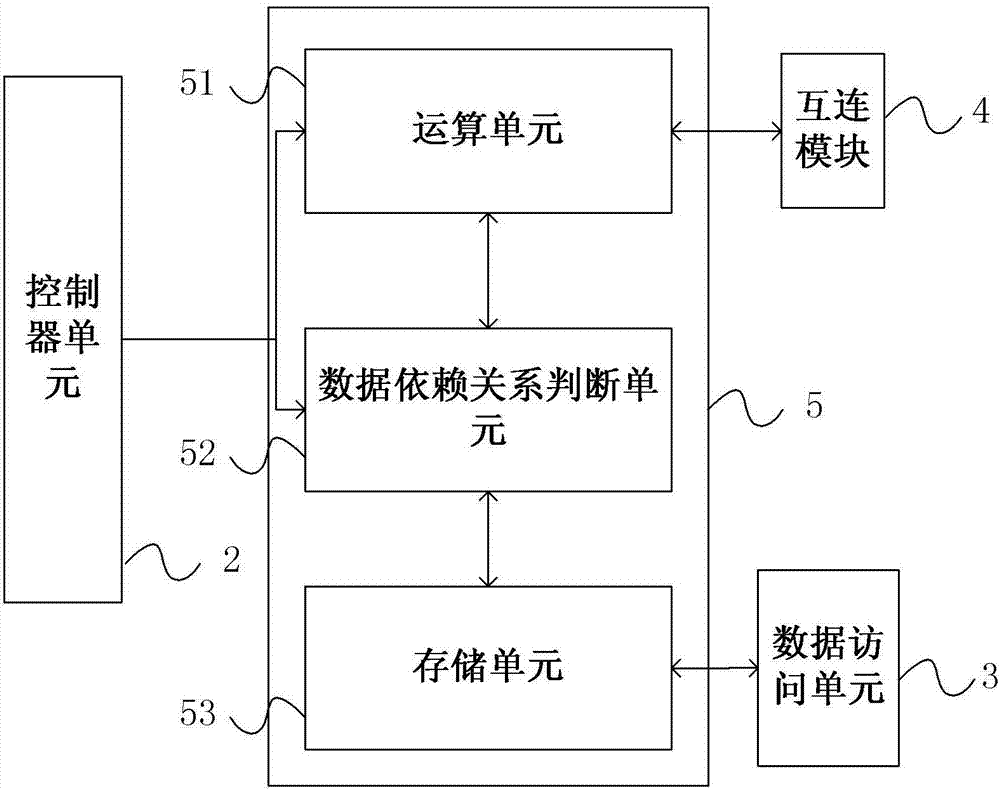

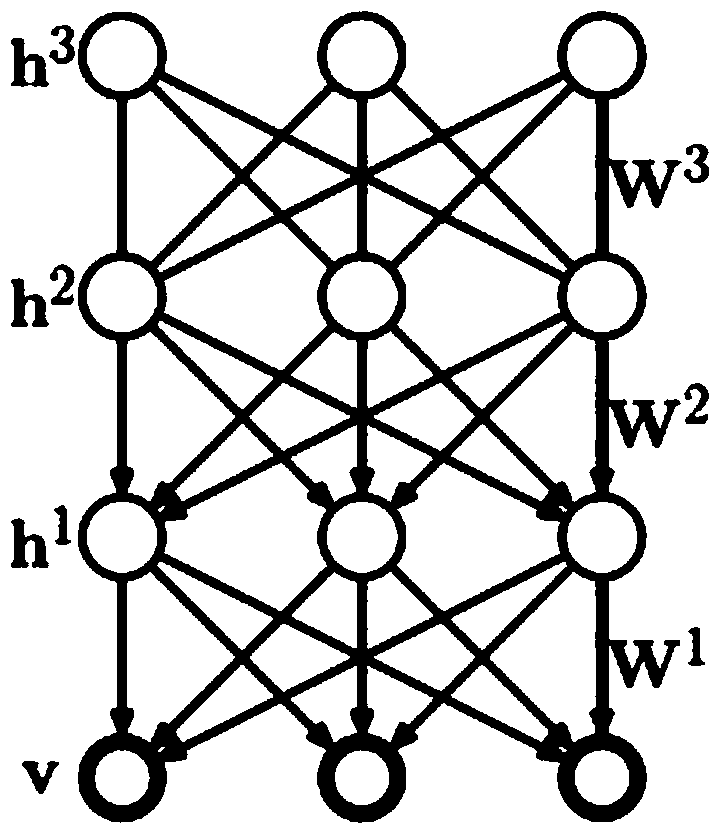

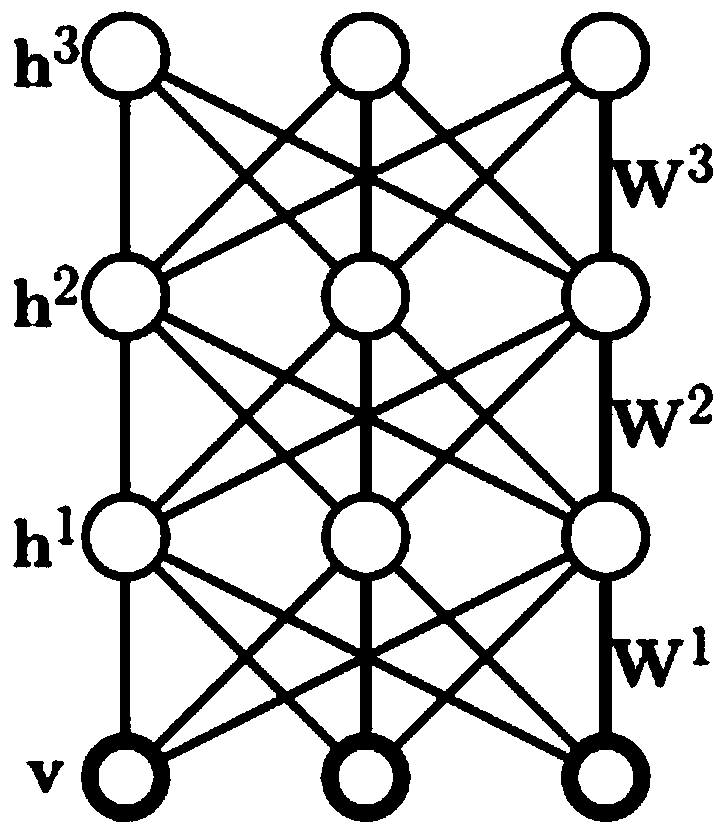

Device and method for executing artificial neural network self-learning operation

ActiveCN107316078ASimplified front-end decoding overheadHigh performance per wattPhysical realisationNeural learning methodsHidden layerNerve network

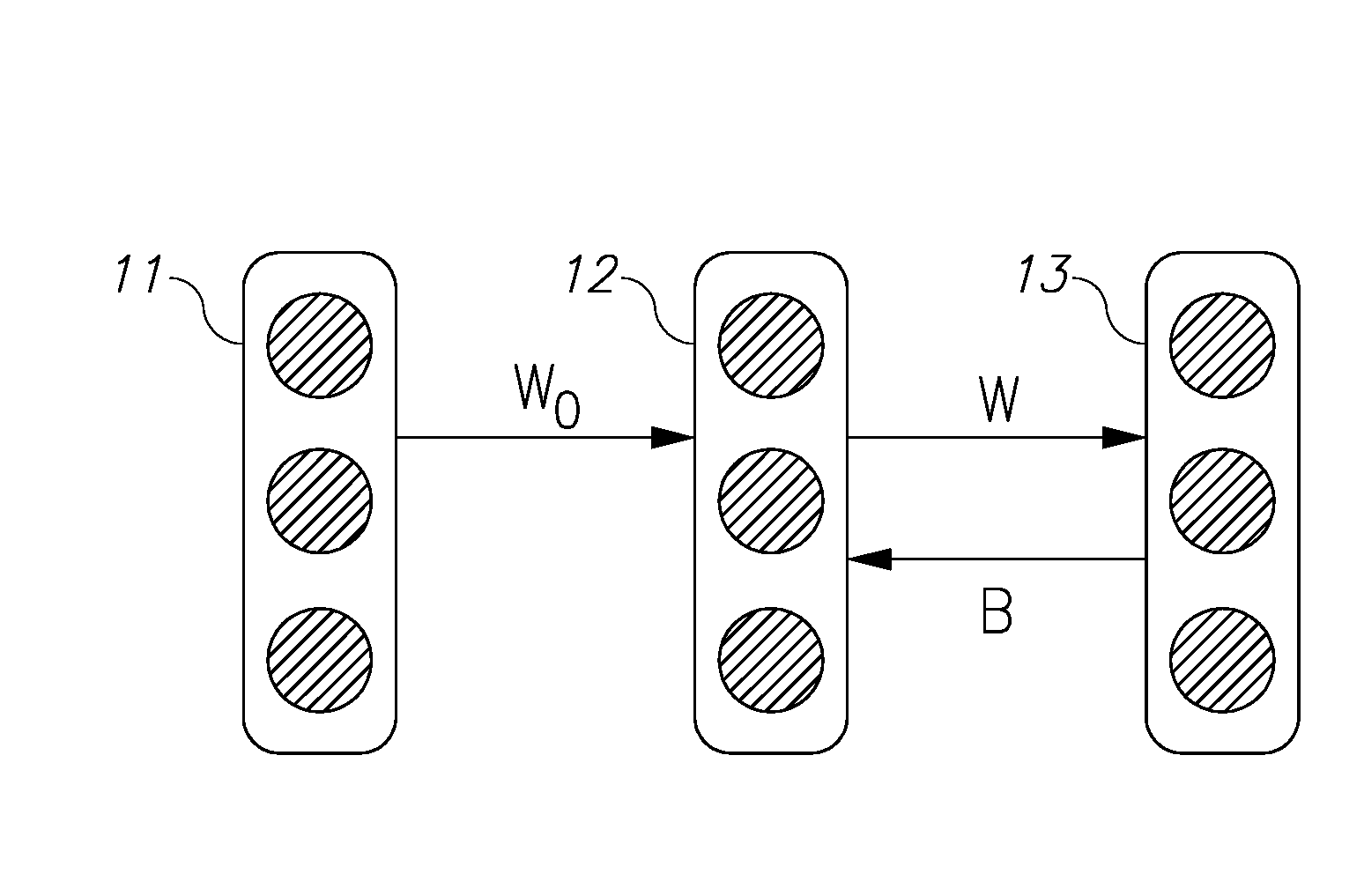

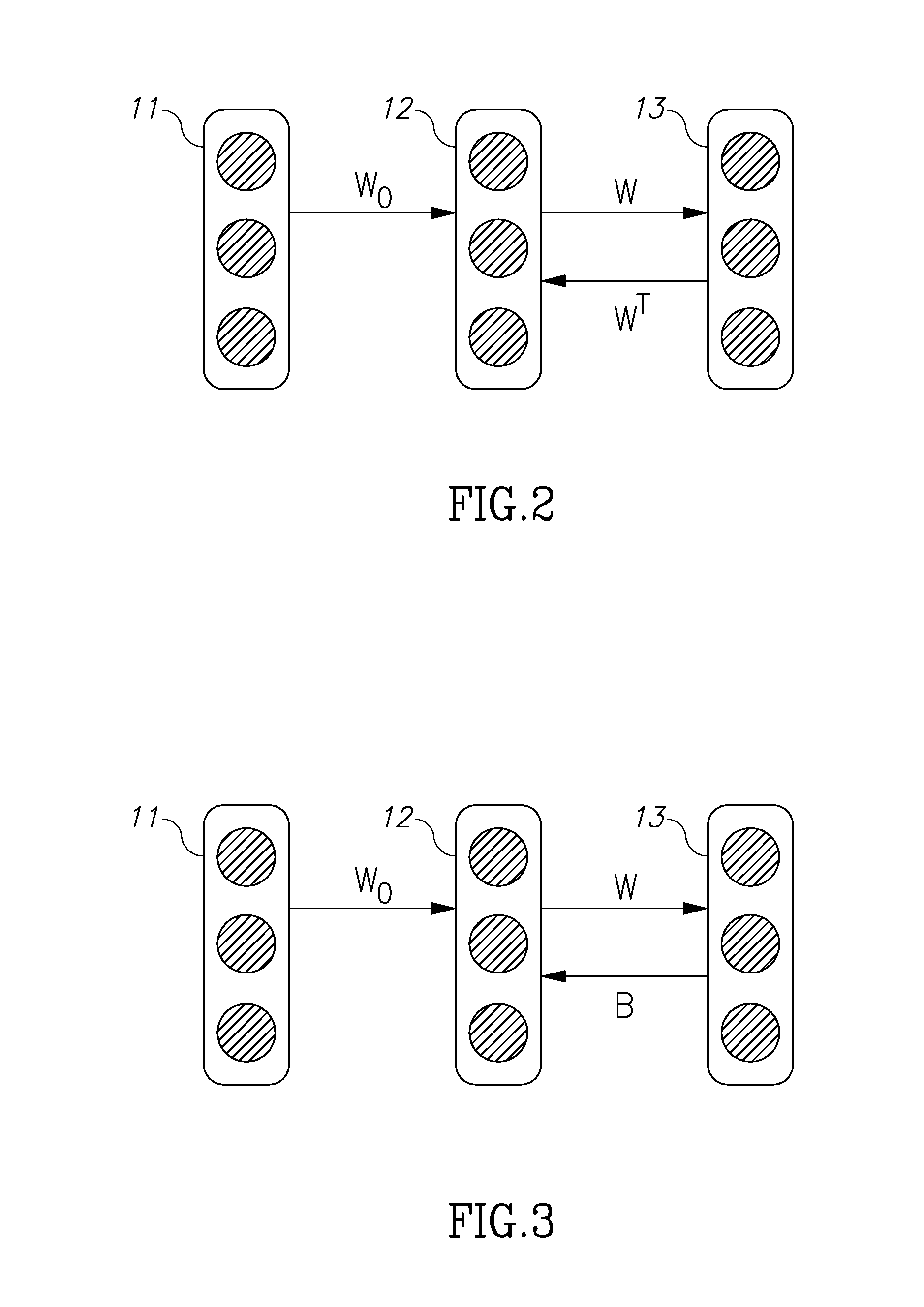

The invention discloses a device and method for executing artificial neural network self-learning operation. The device comprises an instruction storage unit, a controller unit, a data access unit, an interconnection module, a primary operation module and a plurality of secondary operation modules. According to the device and method, the self-learning pre-training of a multilayer neural network can adopt a layer-by-layer training manner; for each layer of network, the self-learning pre-training is completed after multiple operations are iterated until the weight is updated to be smaller than a certain threshold value. Each iteration process can be divided into four stages, calculation is carried out in the first three stages to respectively generate a first-order hidden layer median, a first-order visible layer median and a second-order hidden layer median, and the weight is updated in the last stage by utilizing the medians in the first three stages.

Owner:CAMBRICON TECH CO LTD

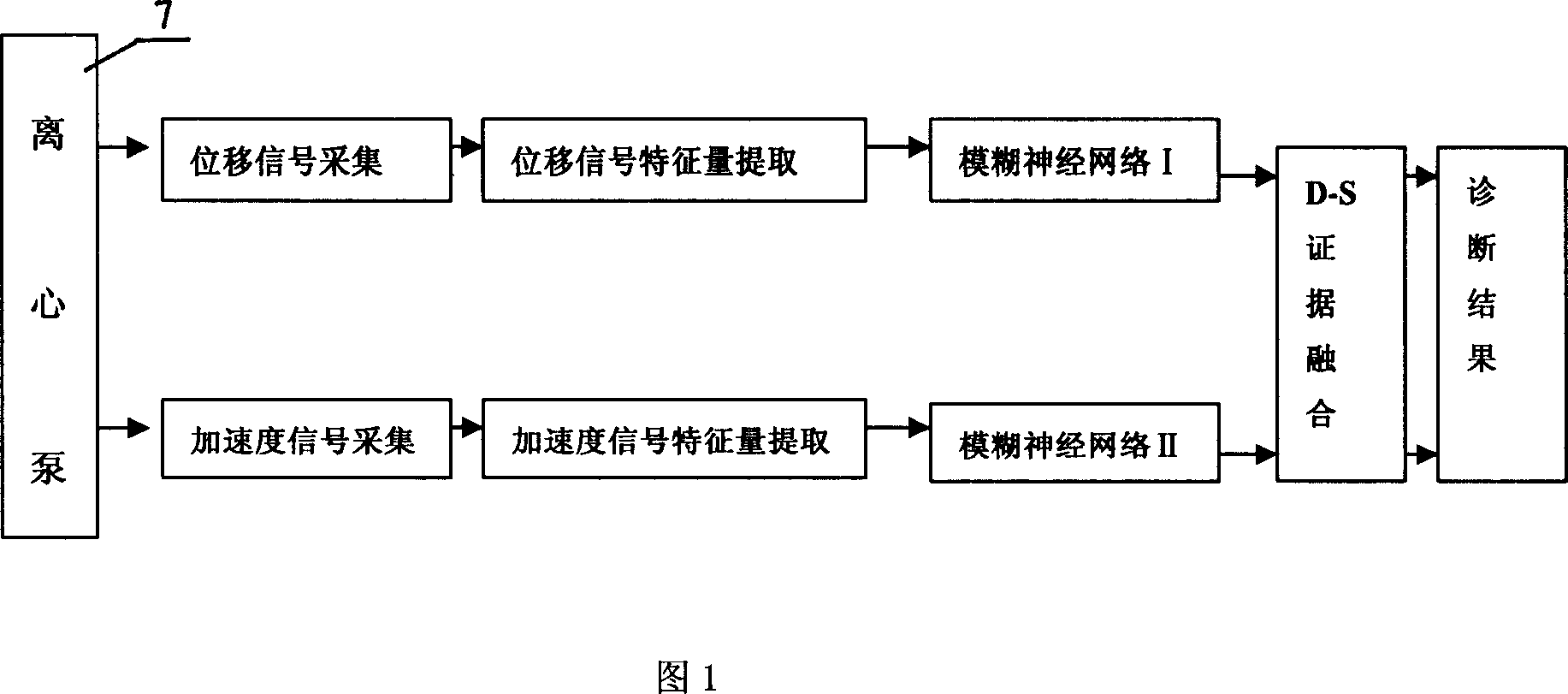

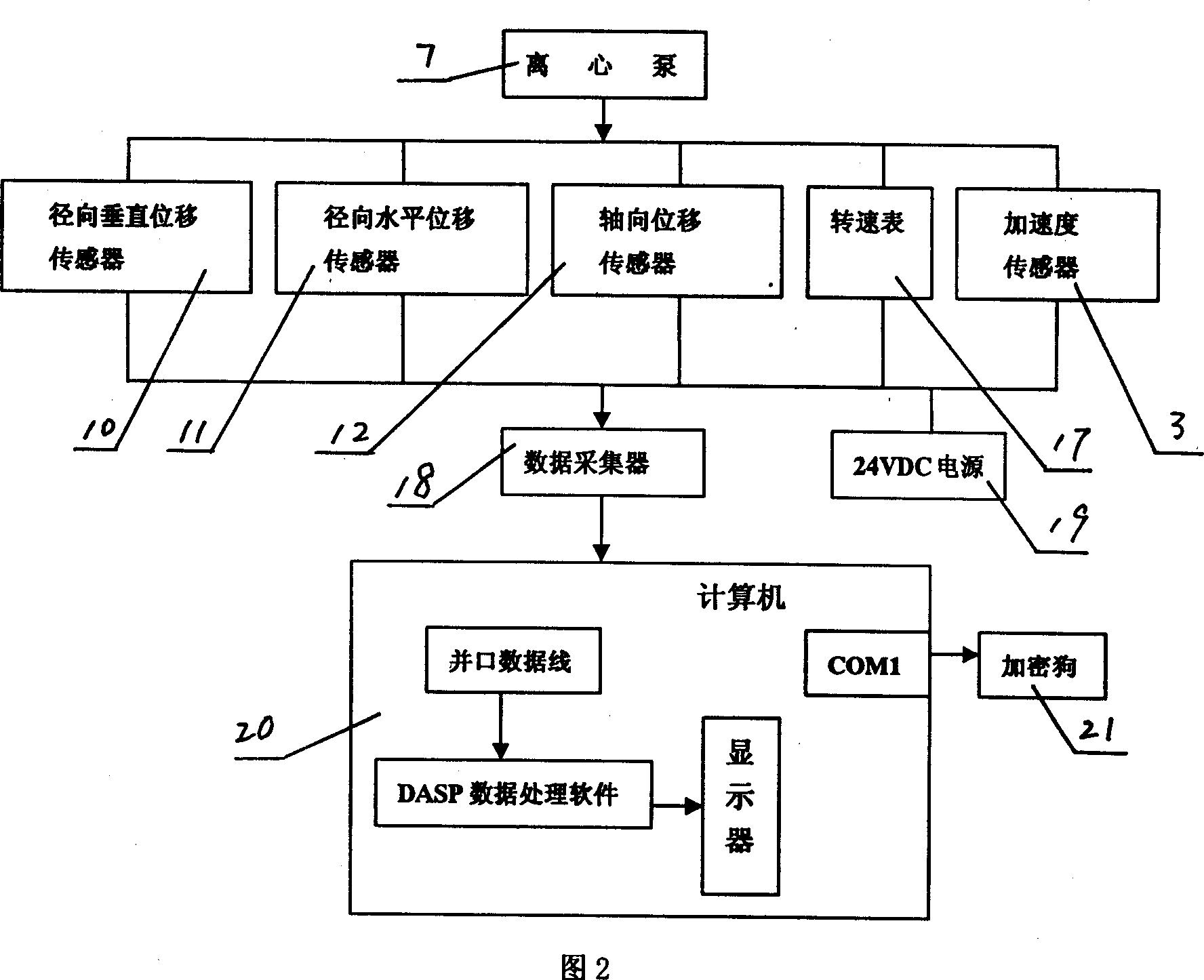

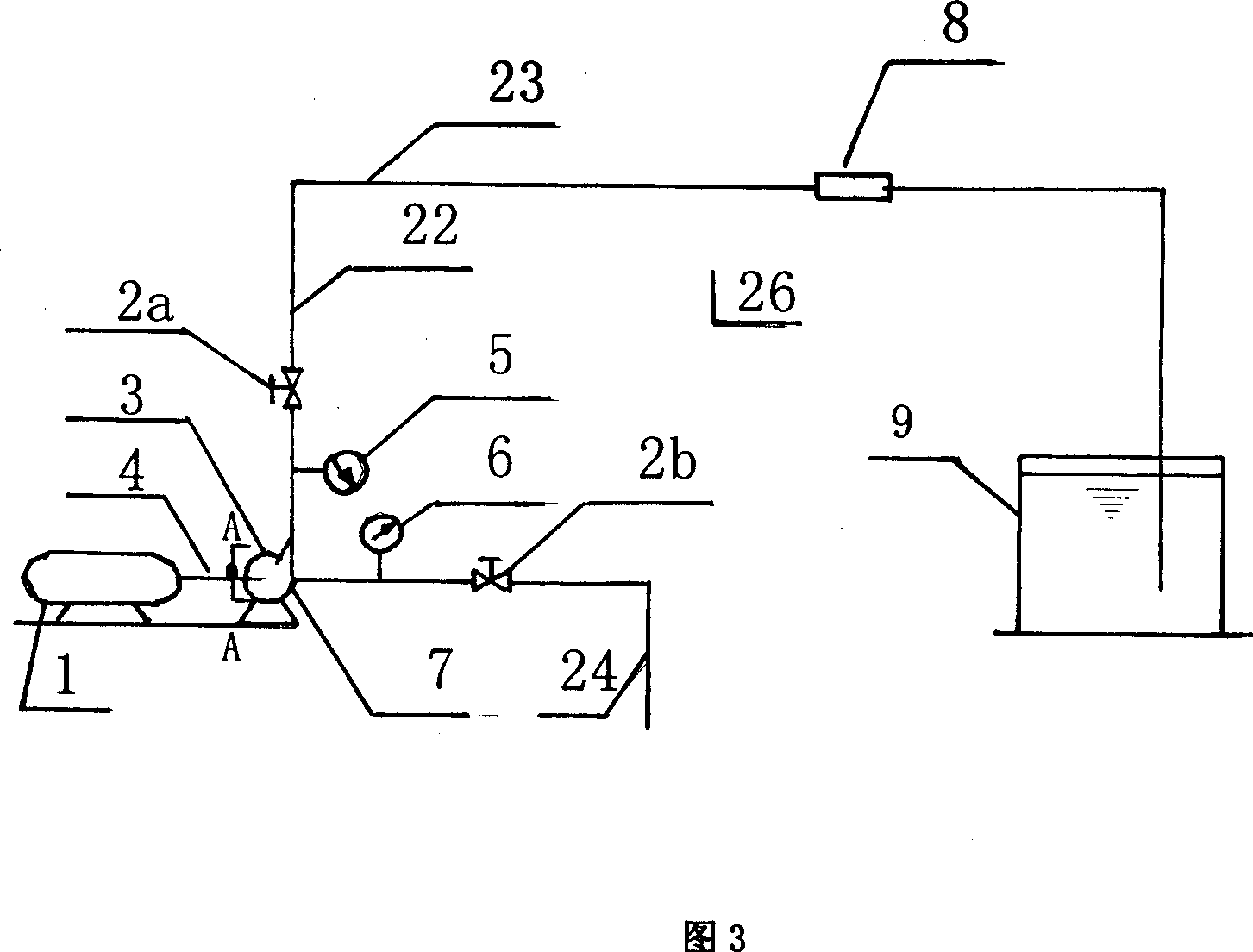

Fusion diagnosing method of centrifugal pump vibration accidents and vibration signals sampling device

InactiveCN1920511AImplementing a normal status signalComprehensive signal acquisitionMachine part testingPump testingEngineeringNormal state

The invention relates to an eccentric pump vibration accidence fusion diagnose method and relative vibration signal collector, wherein said invention is characterized in that: it uses the eccentric pump vibration signal collector to collect the normal state, the quantity imbalance, asymmetry rotate and loose base of eccentric pump; uses wavelet decomposition and reconstruction to extract the character of vibration signal; and inputs the character vectors into sub fuzzy neural networks I and II; to be treated and replace the relation factor matched with sensor signal function; the whole fuzzy neural network comprises data fuzzy layer, input layer, hidden layer and output layer; uses D-S theory to obtain the fused signal function distribution, realize the fusion diagnose on normal state, quantity imbalance, asymmetry rotate and loose base. The invention has simple structure and high effect.

Owner:NORTHEAST DIANLI UNIVERSITY

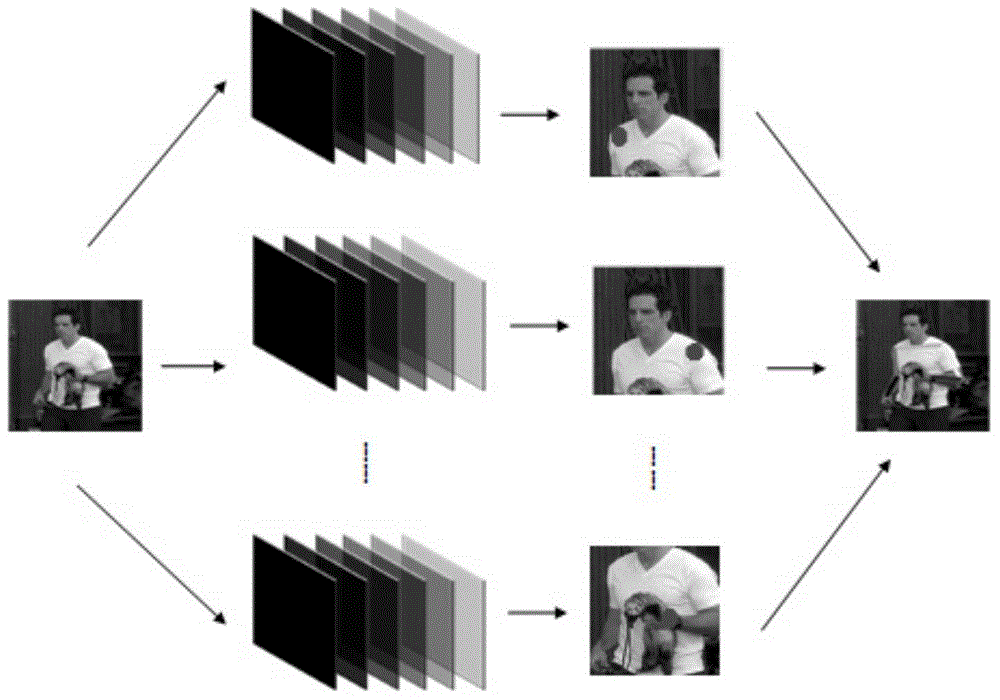

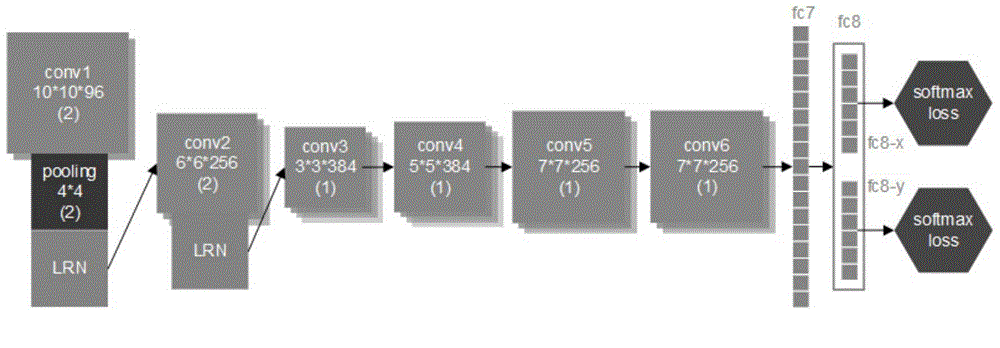

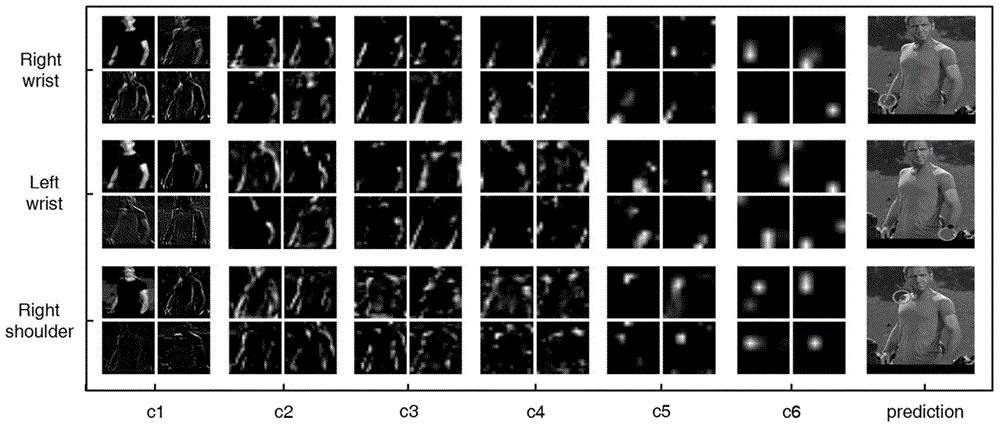

Human body gesture identification method based on depth convolution neural network

InactiveCN105069413AOvercome limitationsEasy to trainBiometric pattern recognitionHuman bodyInformation processing

The invention discloses a human body gesture identification method based on a depth convolution neural network, belongs to the technical filed of mode identification and information processing, relates to behavior identification tasks in the aspect of computer vision, and in particular relates to a human body gesture estimation system research and implementation scheme based on the depth convolution neural network. The neural network comprises independent output layers and independent loss functions, wherein the independent output layers and the independent loss functions are designed for positioning human body joints. ILPN consists of an input layer, seven hidden layers and two independent output layers. The hidden layers from the first to the sixth are convolution layers, and are used for feature extraction. The seventh hidden layer (fc7) is a full connection layer. The output layers consist of two independent parts of fc8-x and fc8-y. The fc8-x is used for predicting the x coordinate of a joint. The fc8-y is used for predicting the y coordinate of the joint. When model training is carried out, each output is provided with an independent softmax loss function to guide the learning of a model. The human body gesture identification method has the advantages of simple and fast training, small computation amount and high accuracy.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

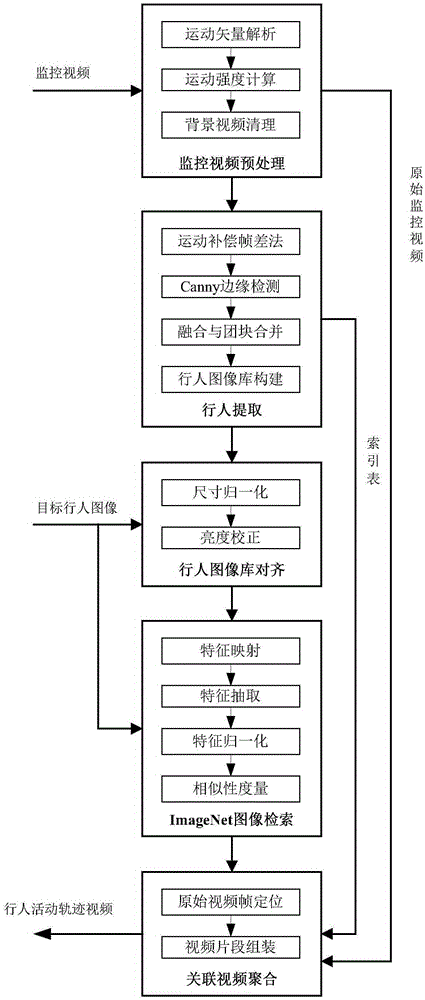

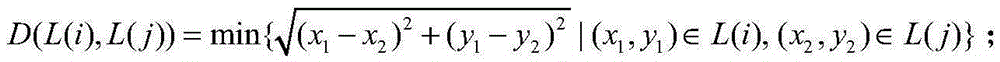

Surveillance video pedestrian re-recognition method based on ImageNet retrieval

ActiveCN105354548AOvercoming adaptabilityOvercoming perspectiveImage analysisCharacter and pattern recognitionHidden layerFrame difference

The present invention discloses a surveillance video pedestrian re-recognition method based on ImageNet retrieval. The pedestrian re-recognition problem is transformed into the retrieval problem of an moving target image database so as to utilize the powerful classification ability of an ImageNet hidden layer feature. The method comprises the steps: preprocessing a surveillance video and removing a large amount of irrelevant static background videos from the video; separating out a moving target from a dynamic video frame by adopting a motion compensation frame difference method and forming a pedestrian image database and an organization index table; carrying out alignment of the size and the brightness on an image in the pedestrian image database and a target pedestrian image; training hidden features of the target pedestrian image and the image in the image database by using an ImageNet deep learning network, and performing image retrieving based on cosine distance similarity; and in a time sequence, converging the relevant videos containing recognition results into a video clip reproducing the pedestrian activity trace. The method disclosed by the present invention can better adapt to changes in lighting, perspective, gesture and scale so as to effective improve accuracy and robustness of a pedestrian recognition result in a camera-cross environment.

Owner:WUHAN UNIV

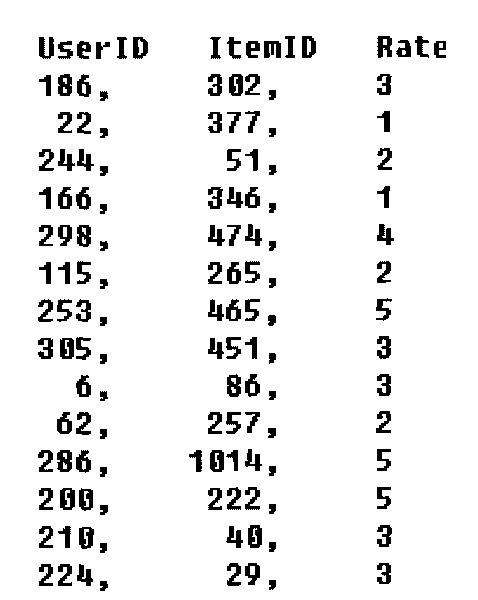

Network resource personalized recommended method based on ultrafast neural network

InactiveCN101694652ALocal minima boostThere is no local minimum problemSpecial data processing applicationsNeural learning methodsNetwork resource managementLearning machine

Owner:XI AN JIAOTONG UNIV

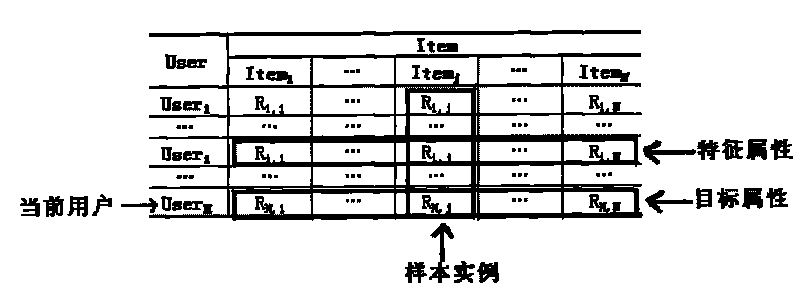

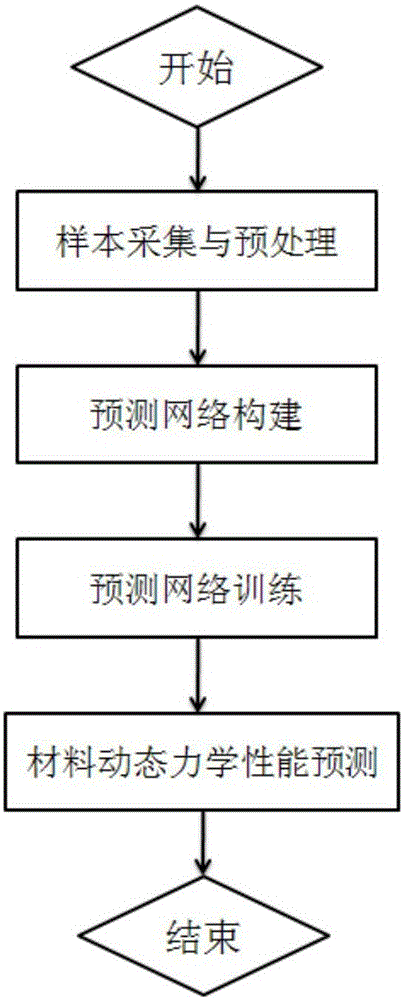

Method for predicting dynamic mechanical property of material based on BP artificial neural network

ActiveCN105095962AImprove simulation accuracyAccurate and fast dynamic mechanical propertiesBiological neural network modelsHidden layerFlow curve

The invention relates to a method for predicting the dynamic mechanical property of a material based on a BP artificial neural network, aims at achieving the prediction of the dynamic mechanical property of the material through the BP artificial neural network, and belongs to the testing field of the dynamic mechanical property of the material. The principle of the method comprises the steps: collecting stress-strain data through employing a high-speed tensile test method, and obtaining a training sample set after normalization preprocessing; building a BP artificial neural network model through designing an input layer, a hidden layer and an output layer, and selecting a proper transfer function, a training function, and a learning function; carrying out the iterative training of the BP artificial neural network through employing the training sample set, and obtaining an optimal prediction network. The above prediction method can be used for the prediction of the dynamic mechanical property of the material, can achieve the quick prediction of a flow curve of the material at different strain rates in a short time, and can provide enough sample data for automobile safety simulation.

Owner:CHINA AUTOMOTIVE ENG RES INST

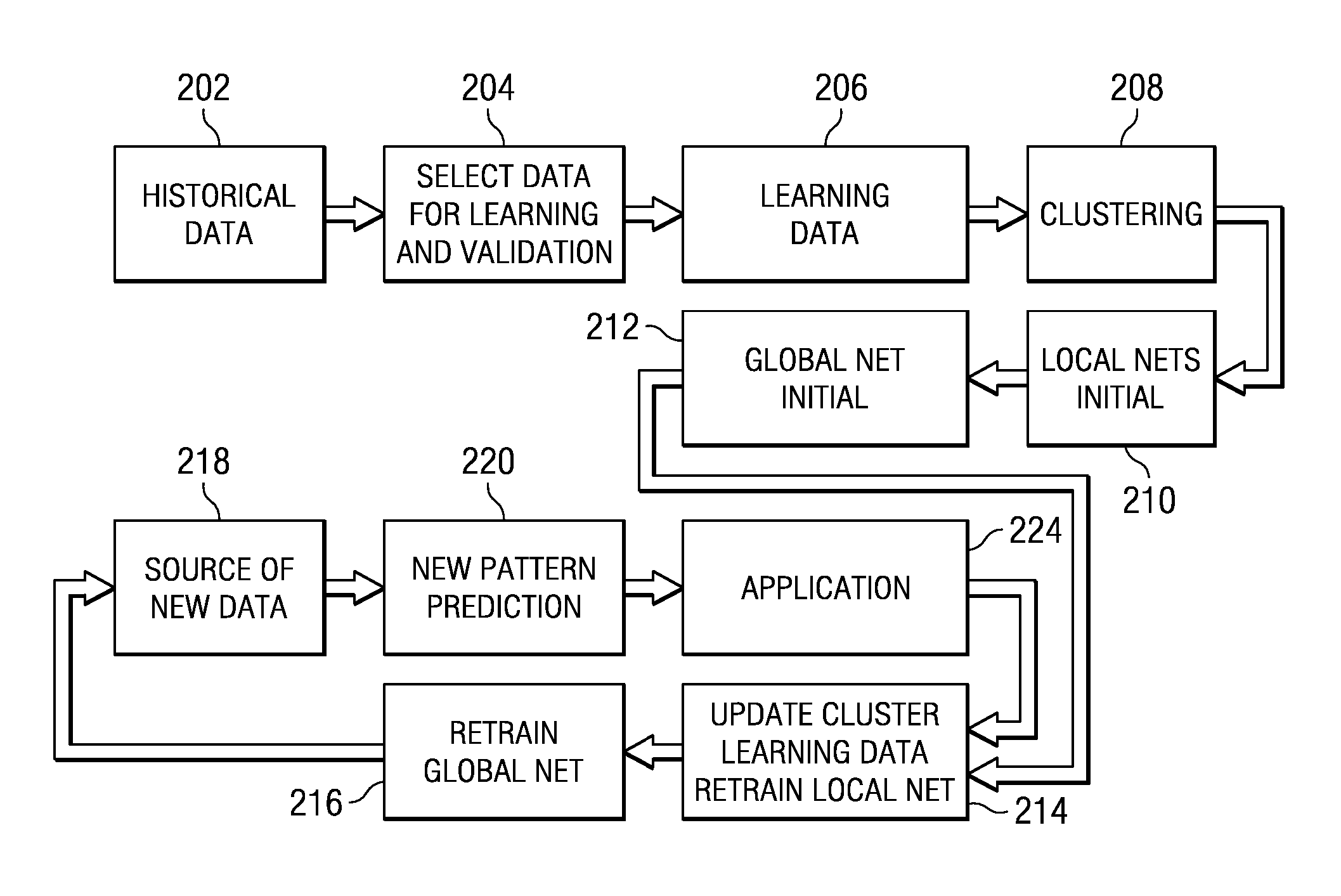

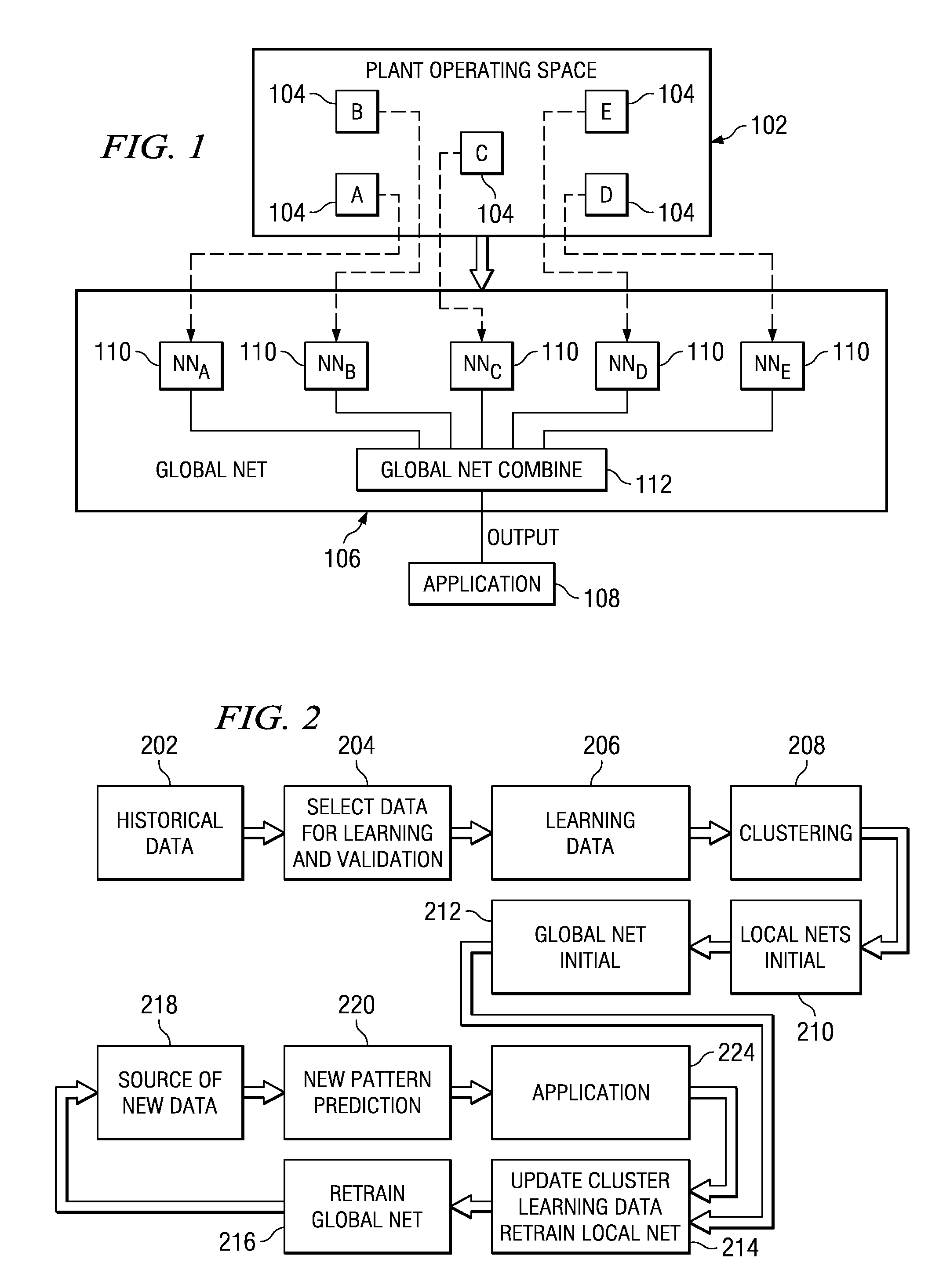

Neural network model with clustering ensemble approach

A predictive global model for modeling a system includes a plurality of local models, each having: an input layer for mapping into an input space, a hidden layer and an output layer. The hidden layer stores a representation of the system that is trained on a set of historical data, wherein each of the local models is trained on only a select and different portion of the set of historical data. The output layer is operable for mapping the hidden layer to an associated local output layer of outputs, wherein the hidden layer is operable to map the input layer through the stored representation to the local output layer. A global output layer is provided for mapping the outputs of all of the local output layers to at least one global output, the global output layer generalizing the outputs of the local models across the stored representations therein.

Owner:PEGASUS TECH INC

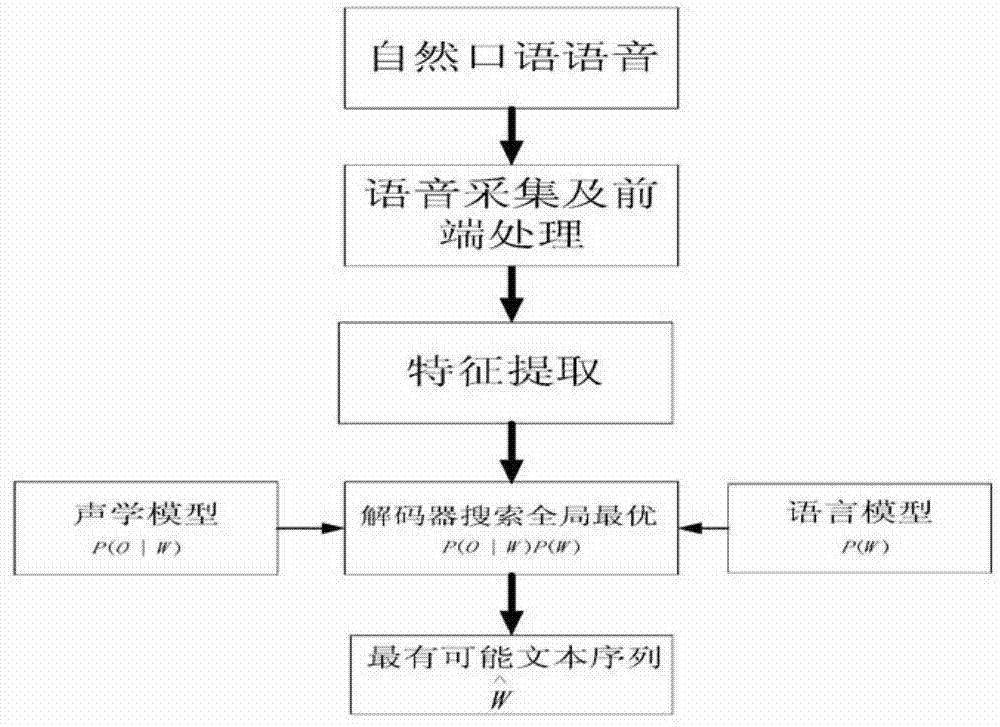

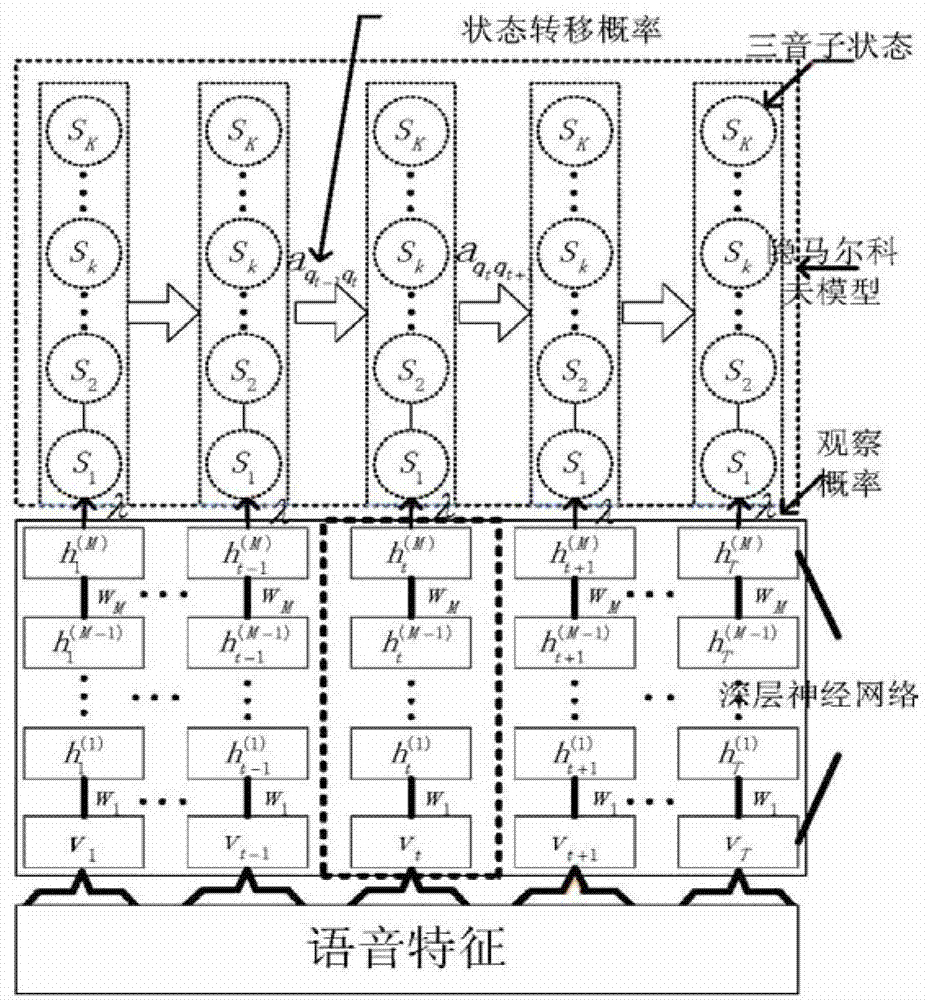

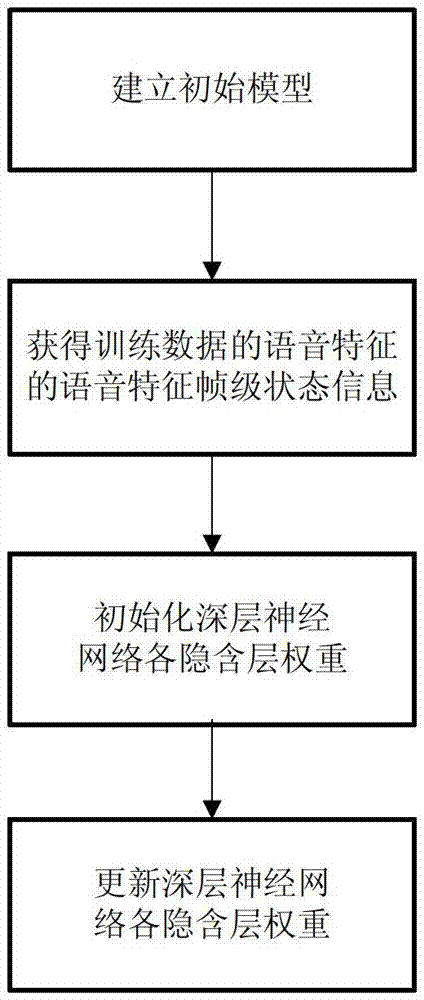

Modeling approach and modeling system of acoustic model used in speech recognition

ActiveCN103117060AMitigate the risk of being easily trapped in local extremaImprove modeling accuracySpeech recognitionHidden layerPropagation of uncertainty

The invention relates to a modeling approach and a modeling system of an acoustic model used in speech recognition. The modeling approach includes the steps of: S1, training an initial model, wherein a modeling unit is a tri-phone state which is clustered by a phoneme decision tree and a state transition probability is provided by the model, S2, obtaining state information of a frame level based on the fact that the initial model aligns the tri-phone state of phonetic features of training data compulsively, S3, pre-training a deep neural network to obtain initial weights of each hidden layer, S4, training the initialized network through error back propagation algorithm based on the obtained frame level state information and updating the weights. According to the modeling approach, a context relevant tri-phone state is used as the modeling unit, the model is established based on the deep neural network, weight of each hidden layer of the network is initialized through restricted Boltzmann algorithm, and the weights can be updated subsequently by means of error back propagation algorithm. Therefore, risk that the network is easy to get into local extremum in pre-training is relieved effectively, and modeling accuracy of the acoustic model is improved greatly.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI +1

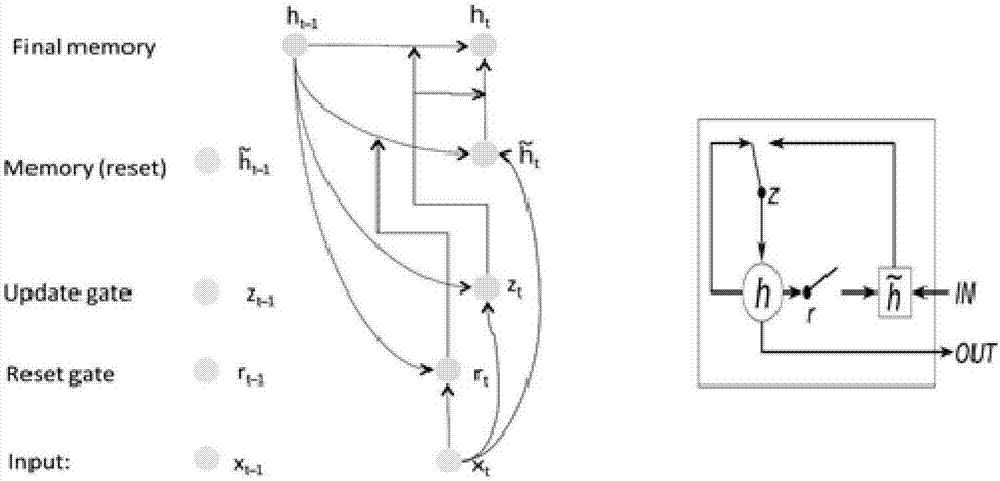

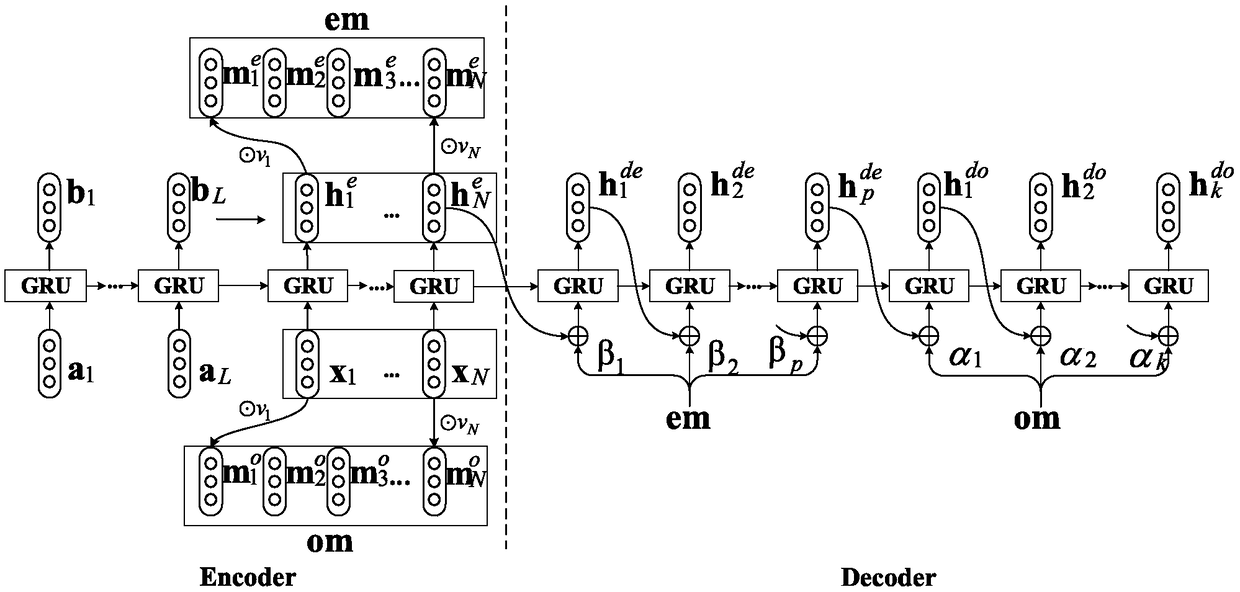

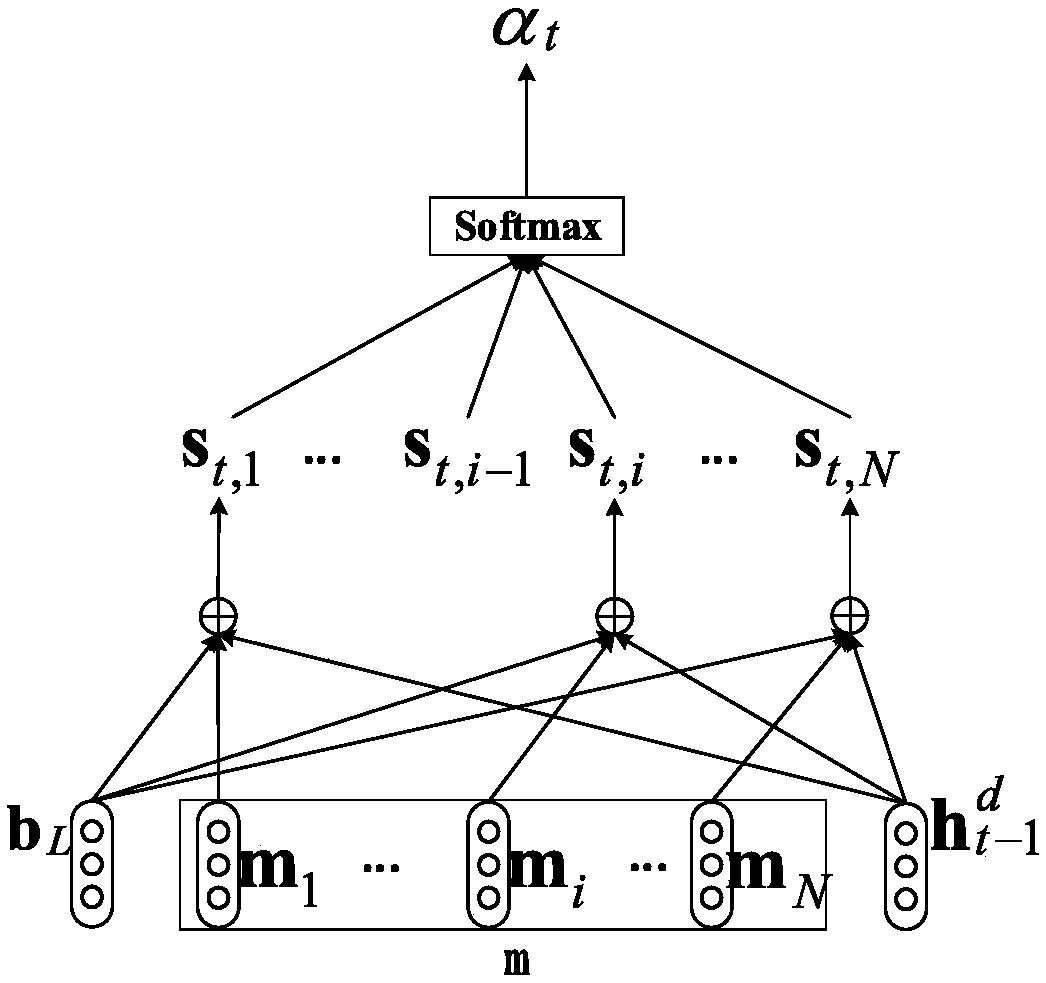

An aspect-level emotion classification model and method based on dual-memory attention

ActiveCN109472031AImprove robustnessImprove accuracySemantic analysisNeural architecturesHidden layerPattern recognition

The invention discloses an aspect-level emotion classification model and method based on dual-memory attention, belonging to the technical field of text emotion classification. The model of the invention mainly comprises three modules: an encoder composed of a standard GRU loop neural network, a GRU loop neural network decoder introducing a feedforward neural network attention layer and a Softmaxclassifier. The model treats input statements as a sequence, based on the attention paid to the position of the aspect-level words in the sentence, Two memory modules are constructed from the originaltext sequence and the hidden layer state of the encoder respectively. The randomly initialized attention distribution is fine-tuned through the attention layer of the feedforward neural network to capture the important emotional features in the sentences, and the encoder-decoder classification model is established based on the learning ability of the GRU loop neural network to the sequence to achieve aspect-level affective classification capabilities. The invention can remarkably improve the robustness of the text emotion classification and improve the classification accuracy.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

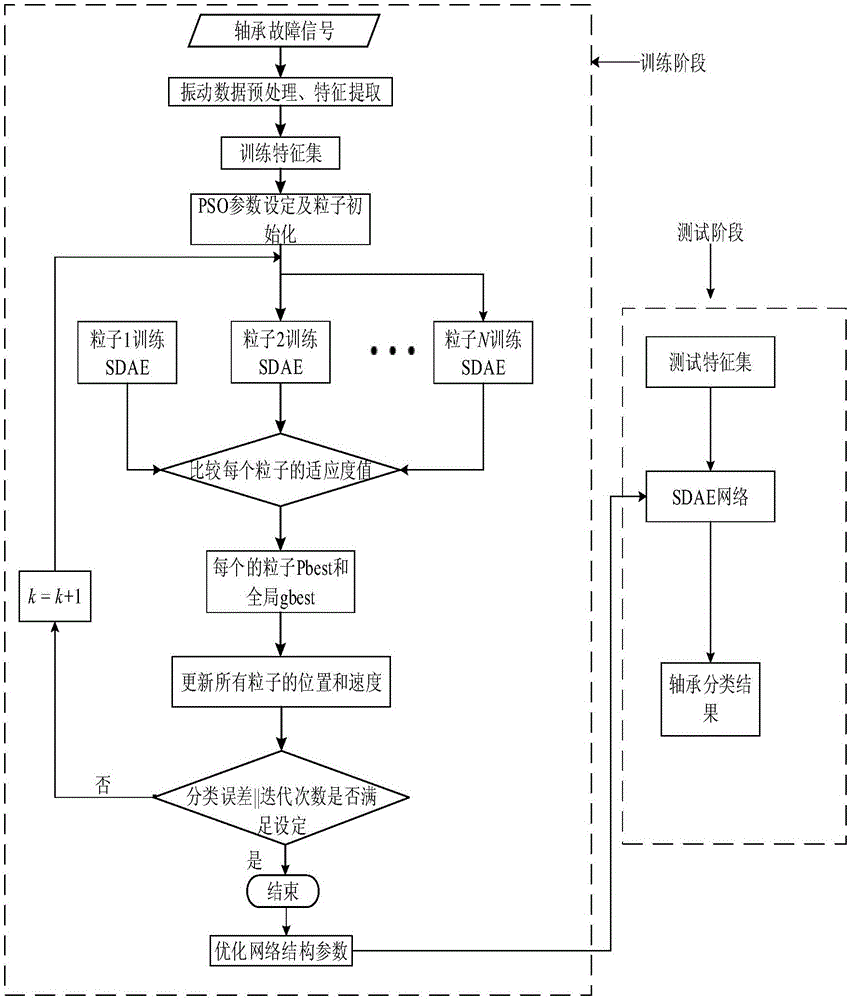

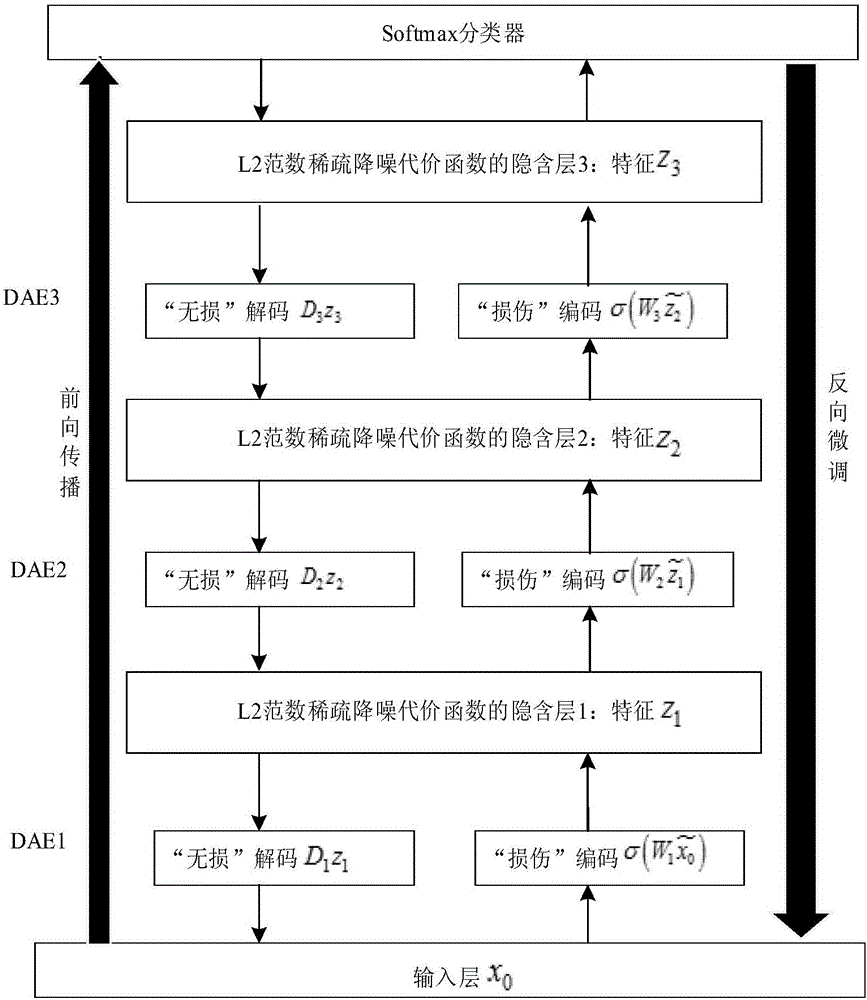

Pile-up noise reduction own coding network bearing fault diagnosis method based on particle swarm optimization

ActiveCN106682688ARobustGood feature learning abilityMachine bearings testingCharacter and pattern recognitionHidden layerDiagnosis methods

The invention discloses a pile-up noise reduction own coding network bearing fault diagnosis method based on particle swarm optimization. The bearing fault diagnosis method provides an improved pile-up noise reduction own coding network SADE bearing fault diagnosis method, SDAE network hyper-parameters, such as cyber hidden layer nodes, sparse parameters, input data random zero setting ratio, are selected adaptively by particle swarm optimization PSO, a SADE network structure is determined, top character representation of malfunction inputting a soft-max classifier is obtained and a classification of defects is discerned. The bearing fault diagnosis method has better feature in learning capacity and more robustness than feature of learning of ordinary sparse own coding device, and builds a SDAE diagnostic model having multi-hidden layer by optimizing the hyper-parameters of noise reduction own coding network deepness network structure with the particle swarm optimization, accuracy of the classification of defects is improved at last.

Owner:SOUTH CHINA UNIV OF TECH

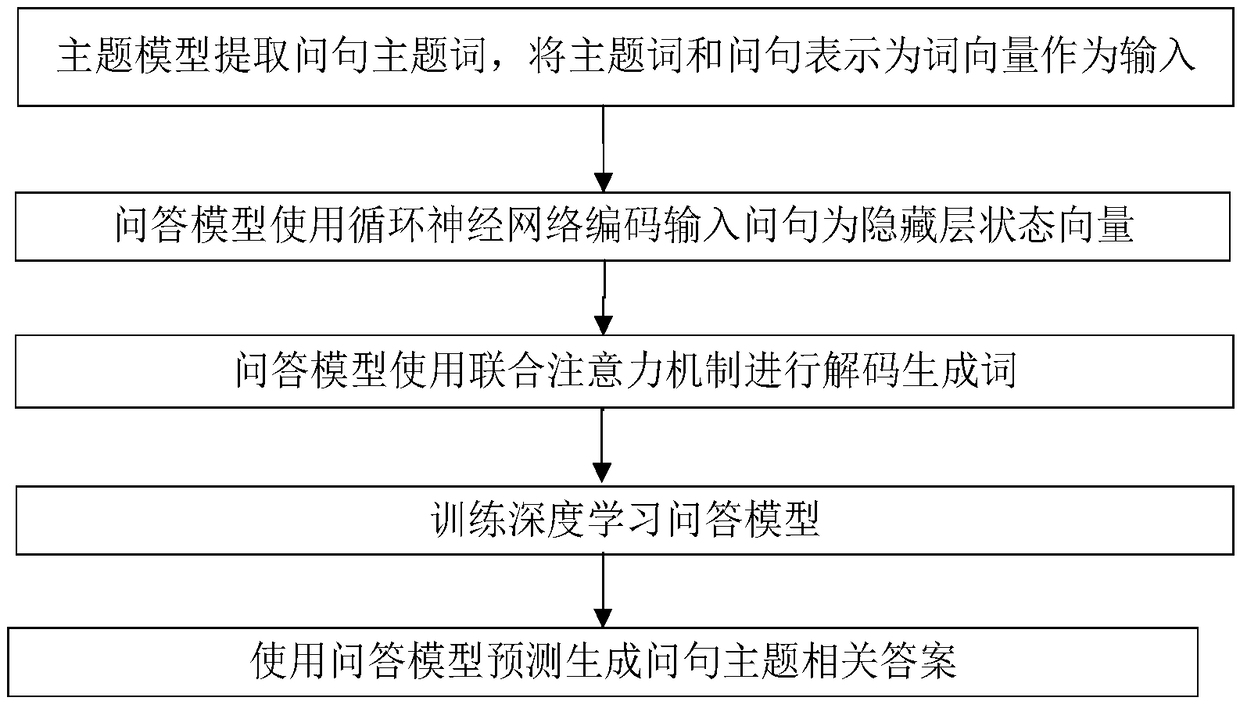

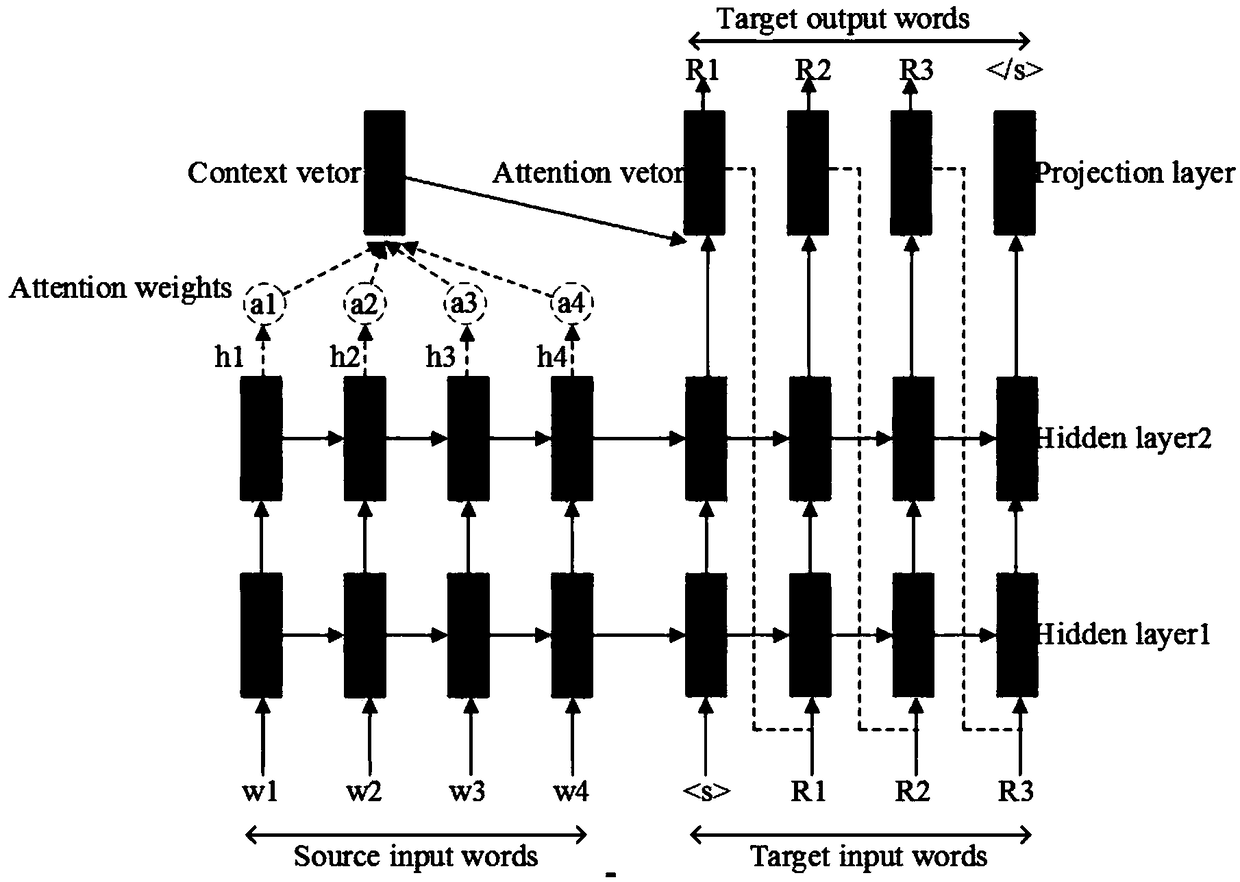

Question and answering (QA) system realization method based on deep learning and topic model

ActiveCN108763284ARich diversityPromote generationSemantic analysisNeural architecturesHidden layerSemantic vector

The invention discloses a question and answering (QA) system realization method based on deep learning and a topic model. The method comprises the steps of: S1, inputting a question sentence to the Twitter LDA topic model to obtain a topic type of the question sentence, extracting a corresponding topic word, and indicating the input question sentence and the topic word as word vectors; S2, inputting word vectors of the input question sentence to a recurrent neural network (RNN) for encoding to obtain an encoded hidden-layer state vector of the question sentence; S3, using a joint attention mechanism and combining local and global hybrid semantic vectors of the question sentence by a decoding recurrent neural network for decoding to generate words; S4, using a large-scale conversation corpus to train a deep-learning topic question and answering model based on an encoding-decoding framework; and S5, using the trained question and answering model to predict an answer to the input questionsentence, and generating answers related to a question sentence topic. The method makes up for the lack of exogenous knowledge of question and answering models, and increases richness and diversity of answers.

Owner:SOUTH CHINA UNIV OF TECH

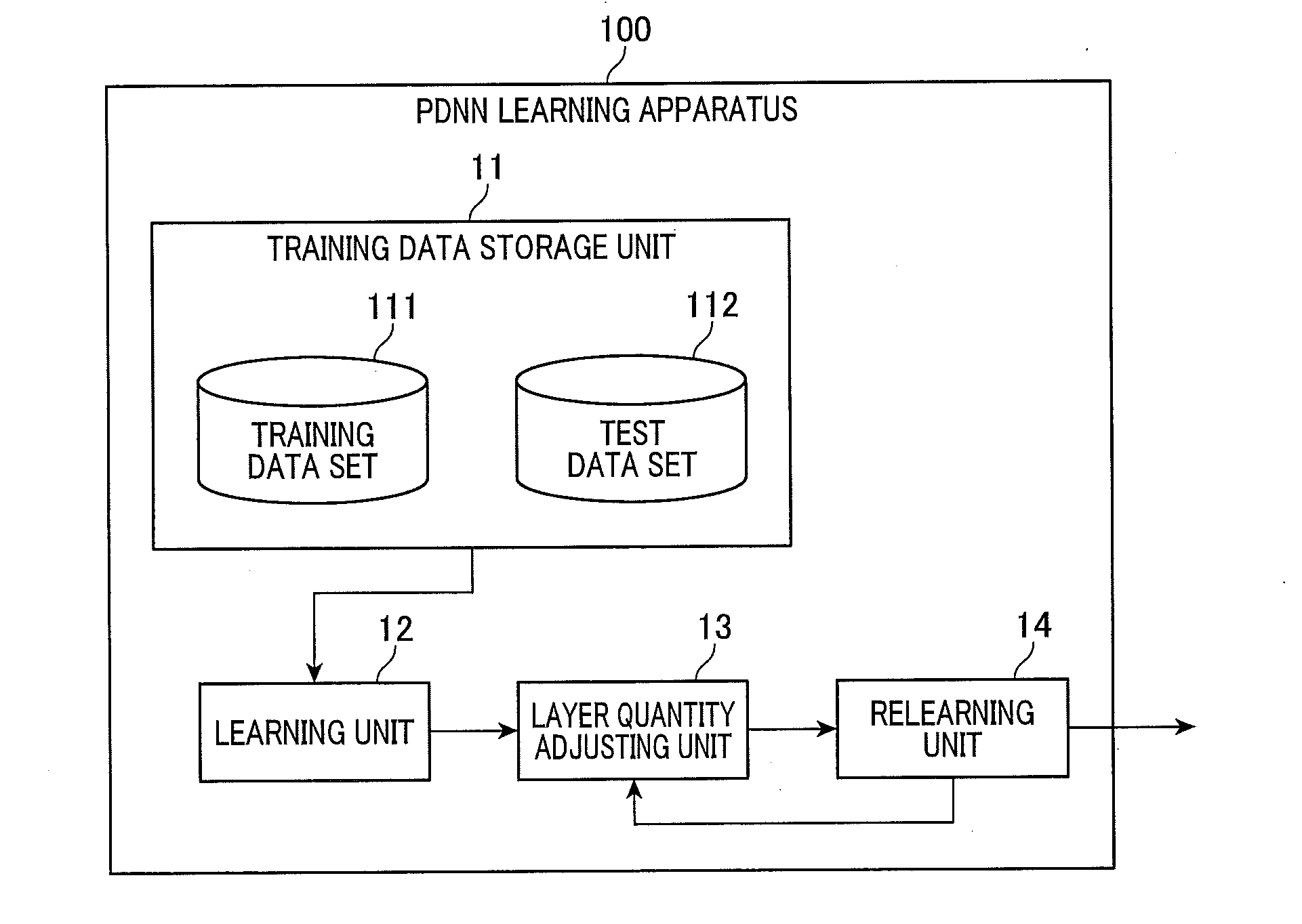

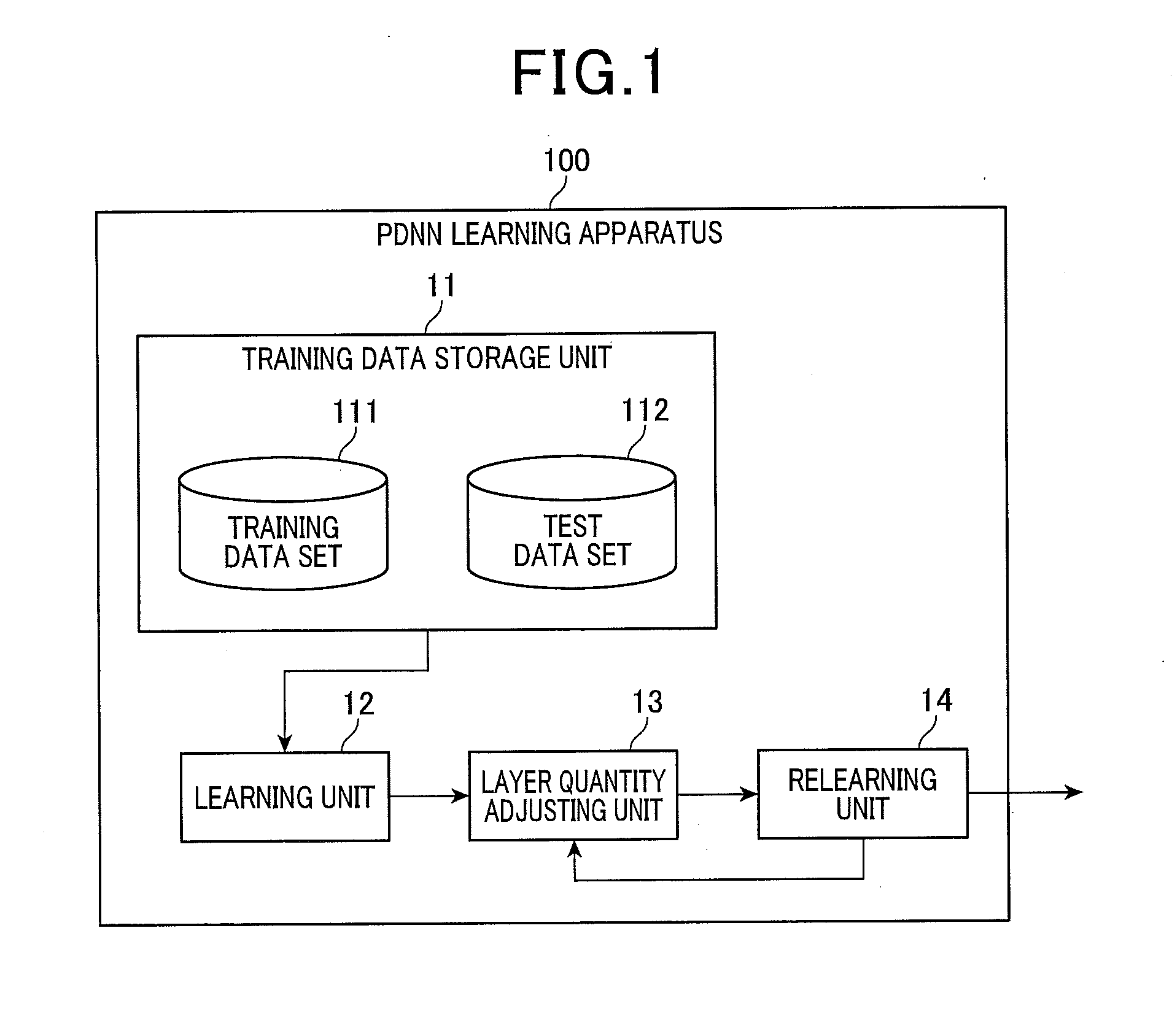

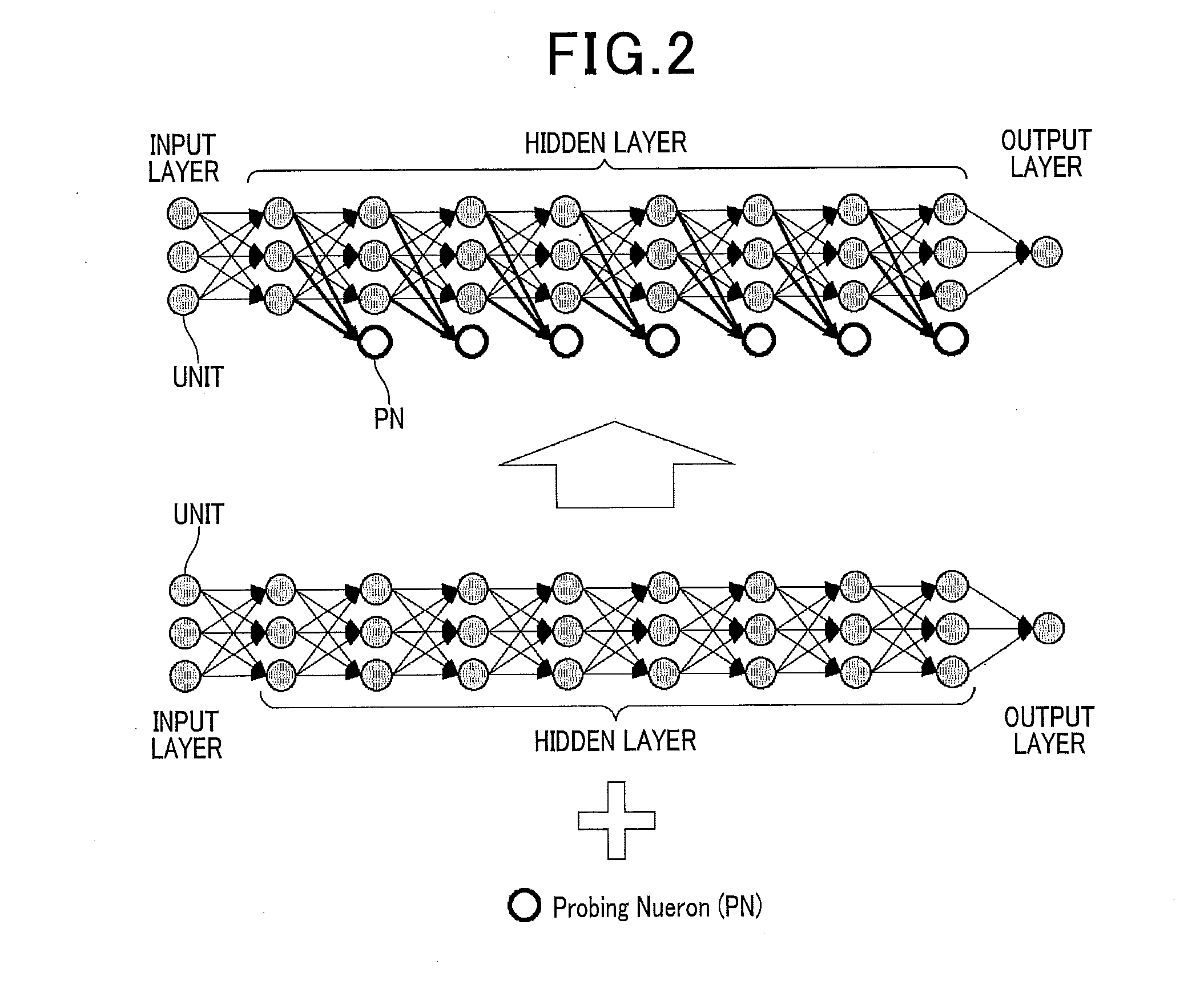

Learning apparatus, learning program, and learning method

ActiveUS20150134583A1Sufficient generalization capabilityReduce the amount requiredDigital computer detailsNeural architecturesHidden layerData set

A learning apparatus performs a learning process for a feed-forward multilayer neural network with supervised learning. The network includes an input layer, an output layer, and at least one hidden layer having at least one probing neuron that does not transfer an output to an uppermost layer side of the network. The learning apparatus includes a learning unit and a layer quantity adjusting unit. The learning unit performs a learning process by calculation of a cost derived by a cost function defined in the multilayer neural network using a training data set for supervised learning. The layer quantity adjusting unit removes at least one uppermost layer from the network based on the cost derived by the output from the probing neuron, and sets, as the output layer, the probing neuron in the uppermost layer of the remaining layers.

Owner:DENSO CORP

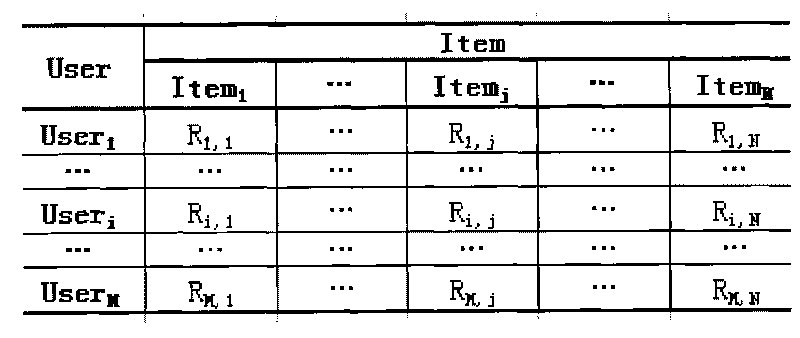

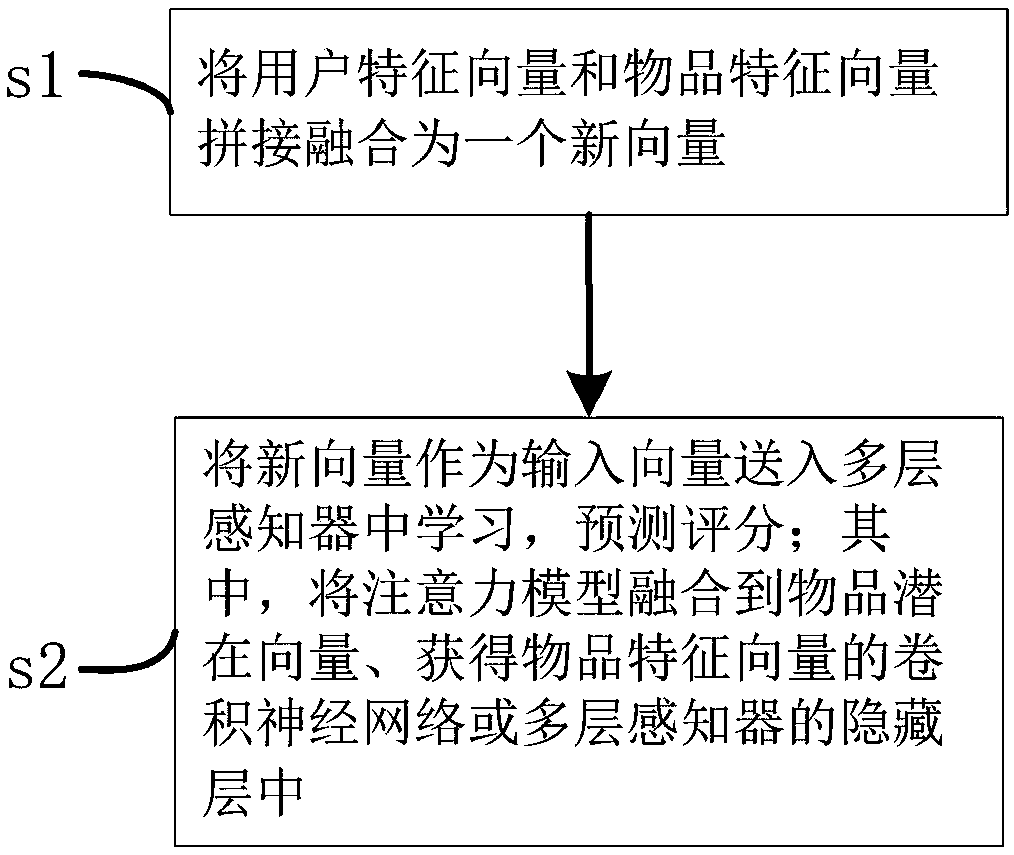

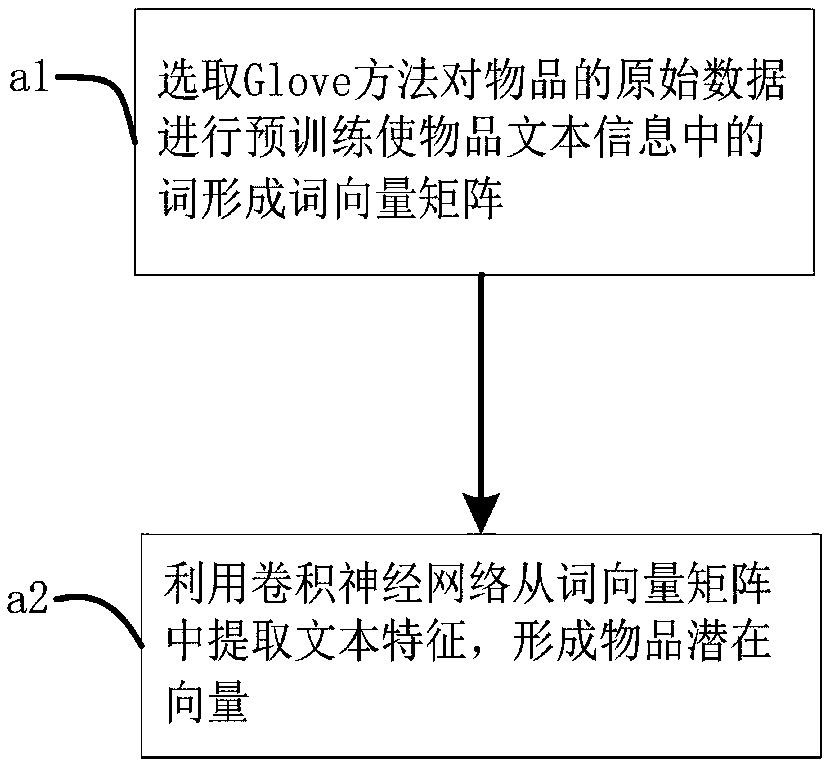

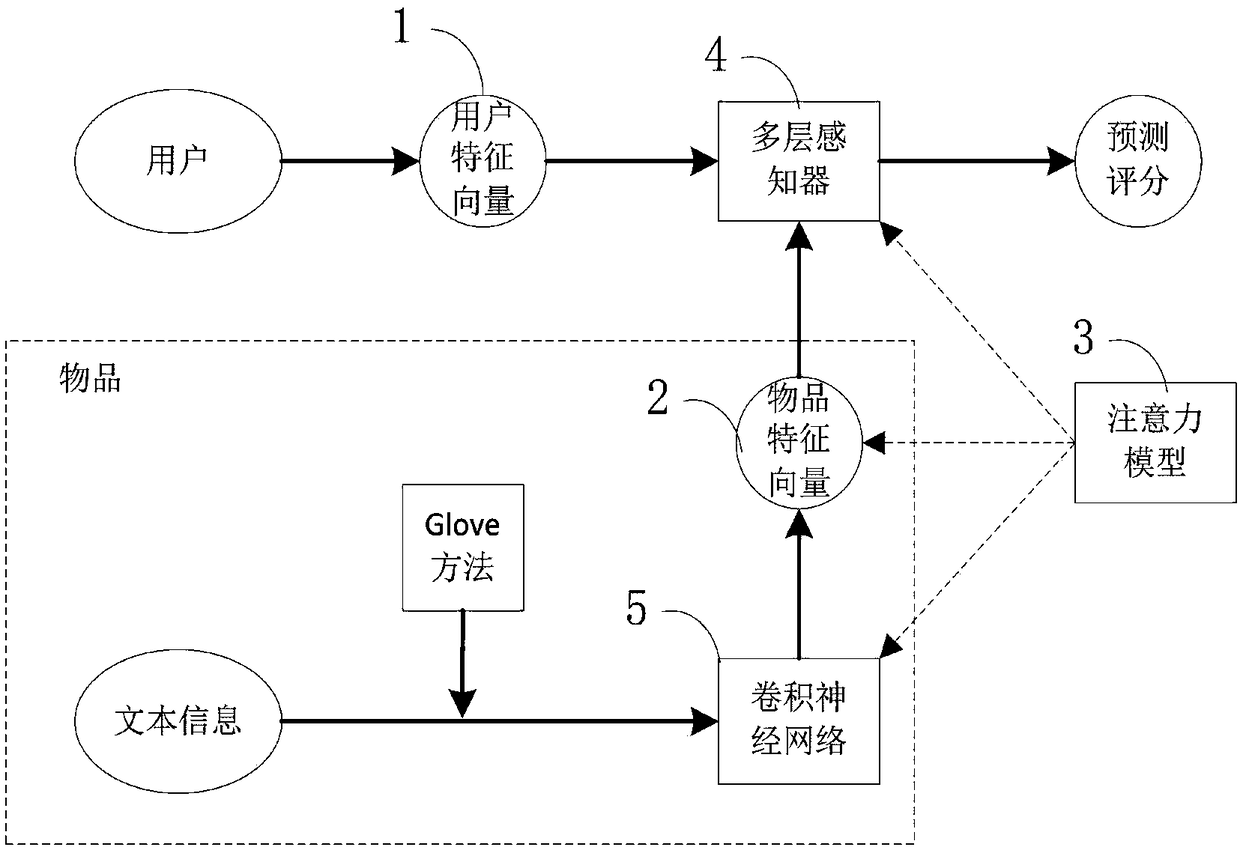

Convolution neural network collaborative filtering recommendation method and system based on attention model

ActiveCN109299396AEfficient extractionImprove rating prediction accuracyDigital data information retrievalNeural architecturesHidden layerFeature vector

The invention discloses a collaborative filtering recommendation method and a collaborative filtering recommendation system of a convolution neural network integrating an attention model, which relates to the technical field of data mining recommendation, improves feature extraction efficiency and scoring prediction accuracy, reduces operation and maintenance cost, simplifies cost management mode,and is convenient for joint operation and large-scale promotion and application. The invention relates to a collaborative filtering recommendation method of a convolution neural network integrating an attention model, comprising the following steps: step S1, splicing a user feature vector and an item feature vector into a new vector; S2, sending the new vector as an input vector into the multi-layer perceptron to learn and predict the score; The attention model is fused into the object potential vector to obtain the convolution neural network of the object feature vector or the hidden layer of the multi-layer perceptron.

Owner:NORTHEAST NORMAL UNIVERSITY

Method and device for speech recognition by use of LSTM recurrent neural network model

ActiveCN105513591AImprove accuracySolve the "after-tail effect"Speech recognitionPattern recognitionHidden layer

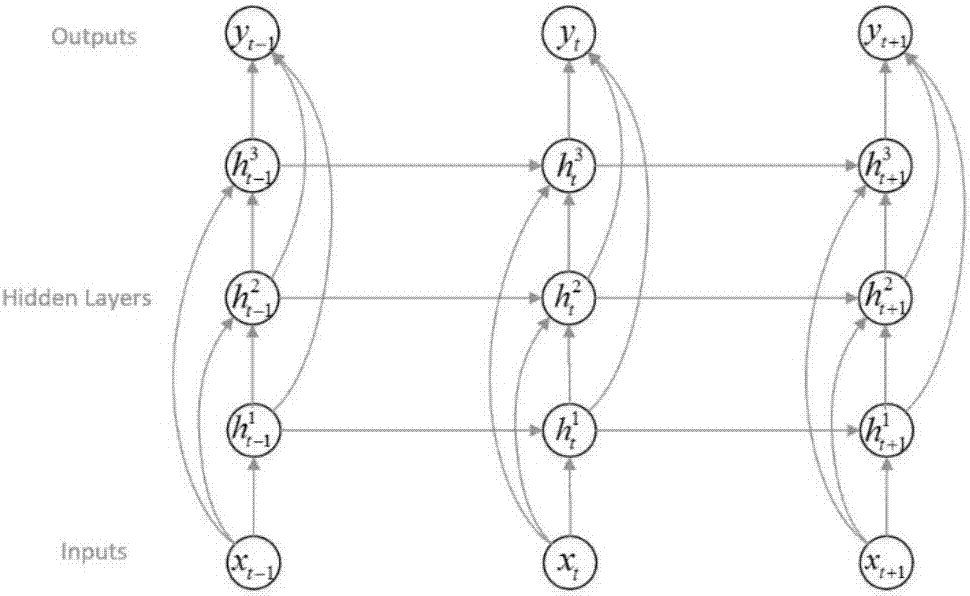

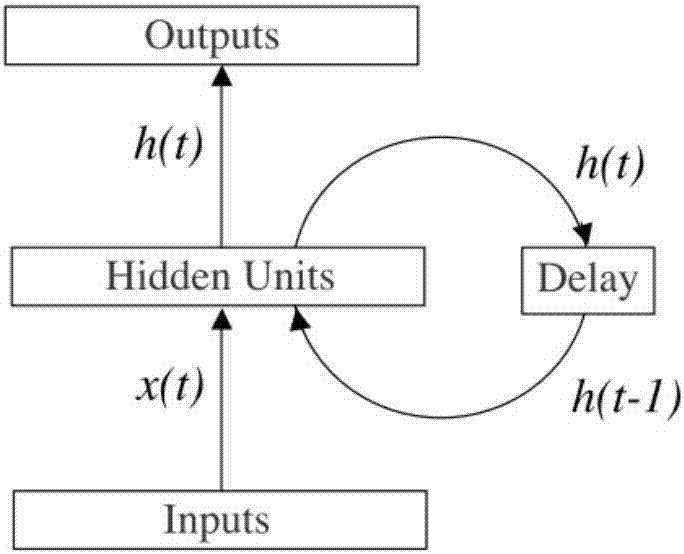

The invention discloses a method and a device for speech recognition by use of a long-short term memory (LSTM) recurrent neural network model. The method comprises the following steps: receiving speech input data at the (t)th time; selecting the LSTM hidden layer states from the (t-1)th time to the (t-n)th time, wherein n is a positive integer; and generating an LSTM result of the (t)th time according to the selected at least one LSTM hidden layer state, the input data at the (t)th time and an LSTM recurrent neural network model. With the method and the device, the 'tail effect' of the deep neural network is well solved, and the accuracy of speech recognition is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

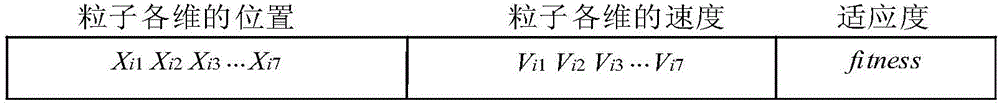

Method for using improved neural network model based on particle swarm optimization for data prediction

ActiveCN104361393AReduce Model ComplexityImprove robustnessBiological neural network modelsHidden layerNerve network

The invention relates to the technical field of computer application engineering, in particular to a method for using an improved neural network model based on particle swarm optimization for data prediction. The method includes the steps of firstly, expressing data samples; secondly, pre-processing data; thirdly, initiating the parameters of an RBF neural network; fourthly, using the binary particle swarm optimization to determine the number of neurons of a hidden layer and the center of the radial basis function of the hidden layer; fifthly, initiating the parameters of the local particle swarm optimization. By the method for using the improved neural network model based on particle swarm optimization for data prediction, the number of the neurons of the hidden layer of the RBF neural network model can be determined easily, RBF neural network performance is improved, and data prediction accuracy is increased. In addition, the improved neural network model based on particle swarm optimization is low in model complexity, high in robustness and good in expandability.

Owner:NORTH CHINA ELECTRIC POWER UNIV (BAODING) +2

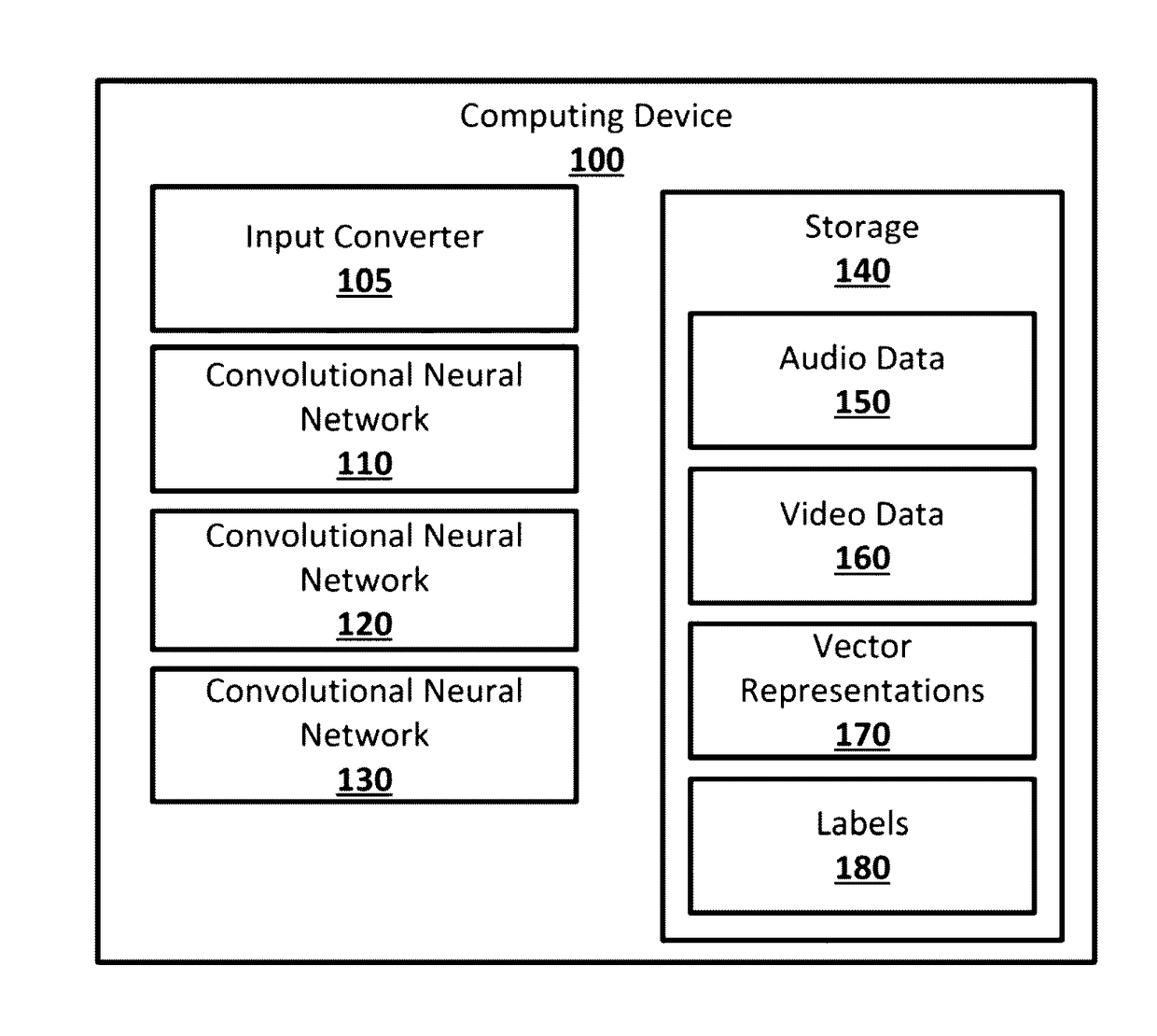

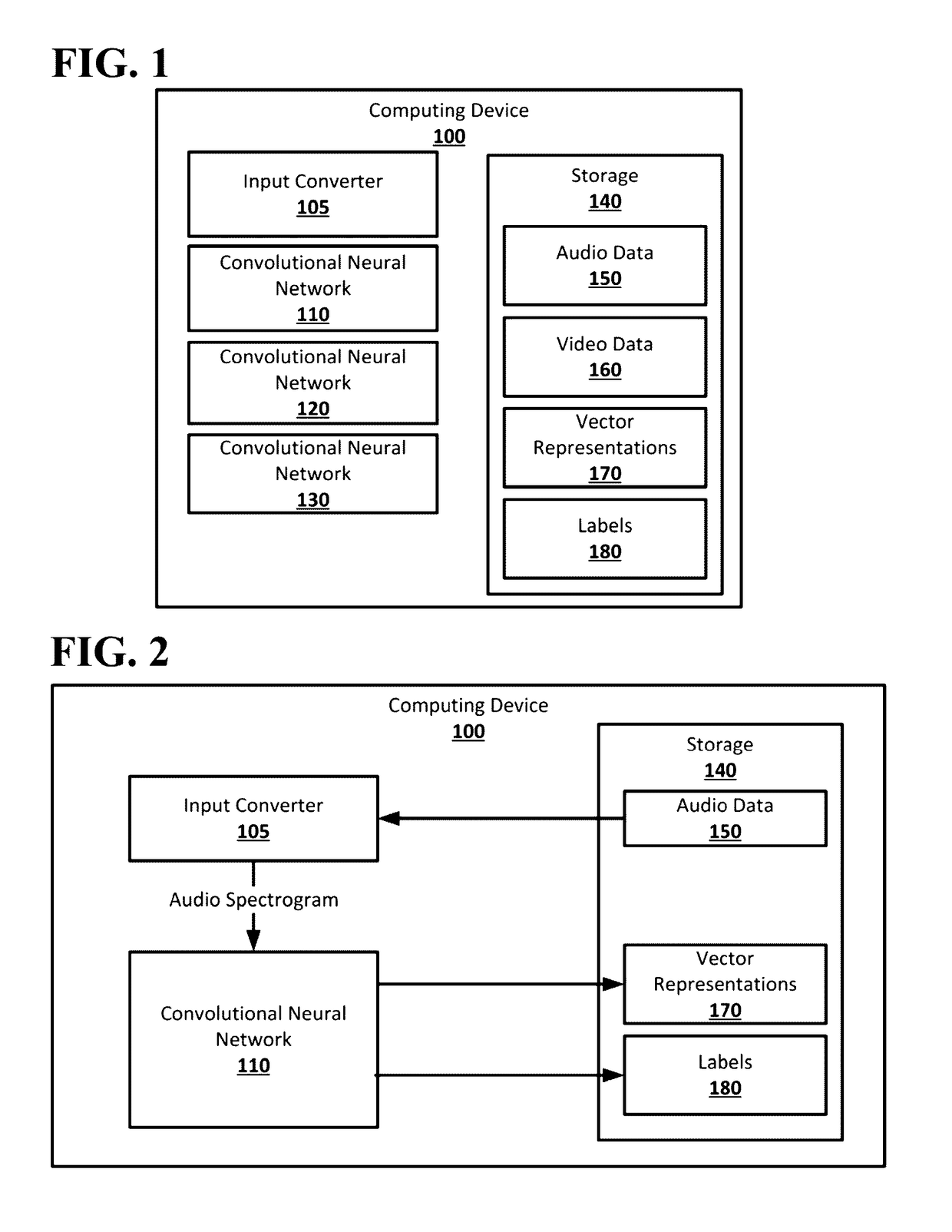

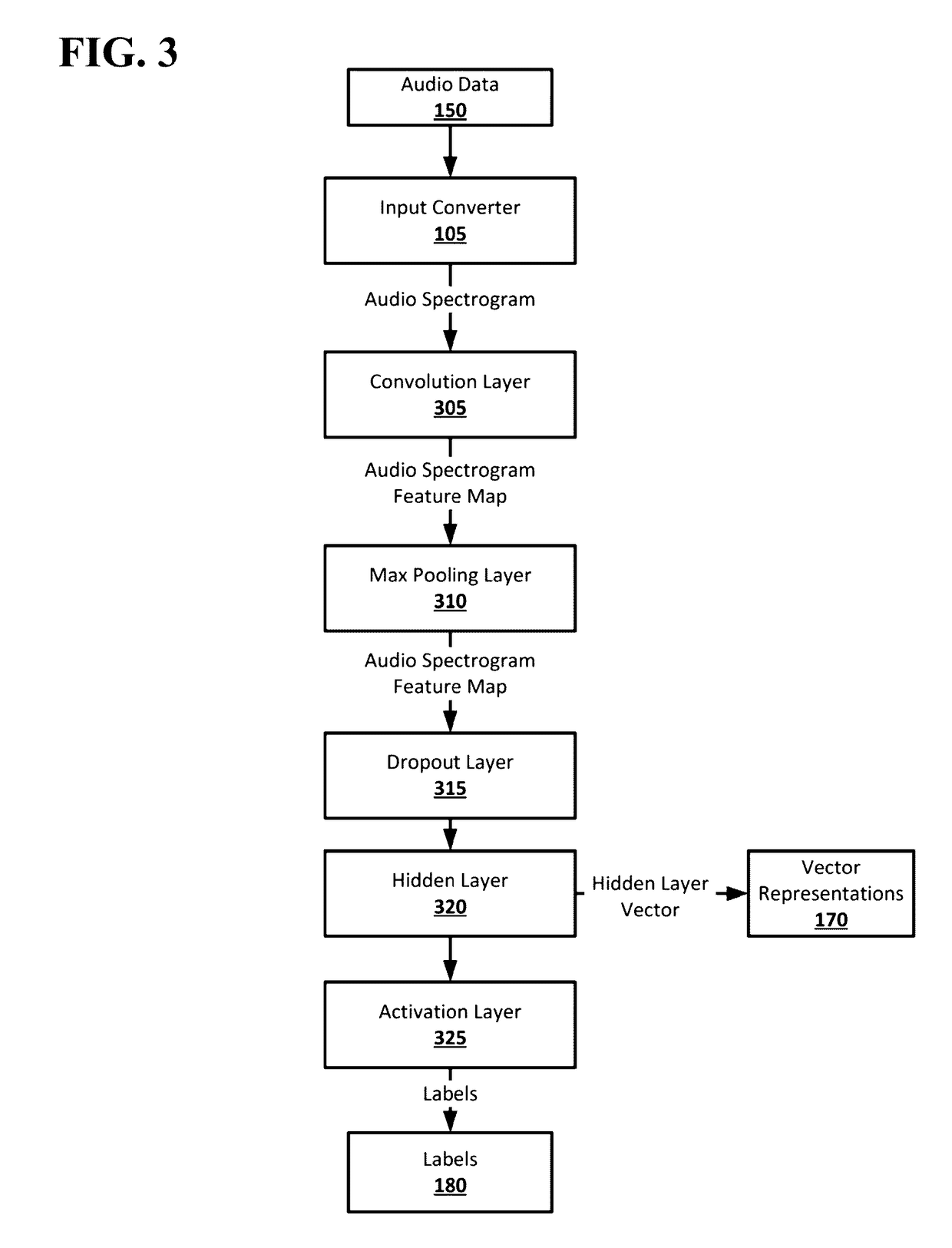

Content filtering with convolutional neural networks

InactiveUS20170140260A1Digital data information retrievalSpeech analysisHidden layerFrequency spectrum

Systems and techniques are provided for content filtering with convolutional neural networks. A spectrogram generated from audio data may be received. A convolution may be applied to the spectrogram to generate a feature map. Values for a hidden layer of a neural network may be determined based on the feature map. A label for the audio data may be determined based on the determined values for the hidden layer of the neural network. The hidden layer may include a vector including the values for the hidden layer. The vector may be stored as a vector representation of the audio data.

Owner:RCRDCLUB

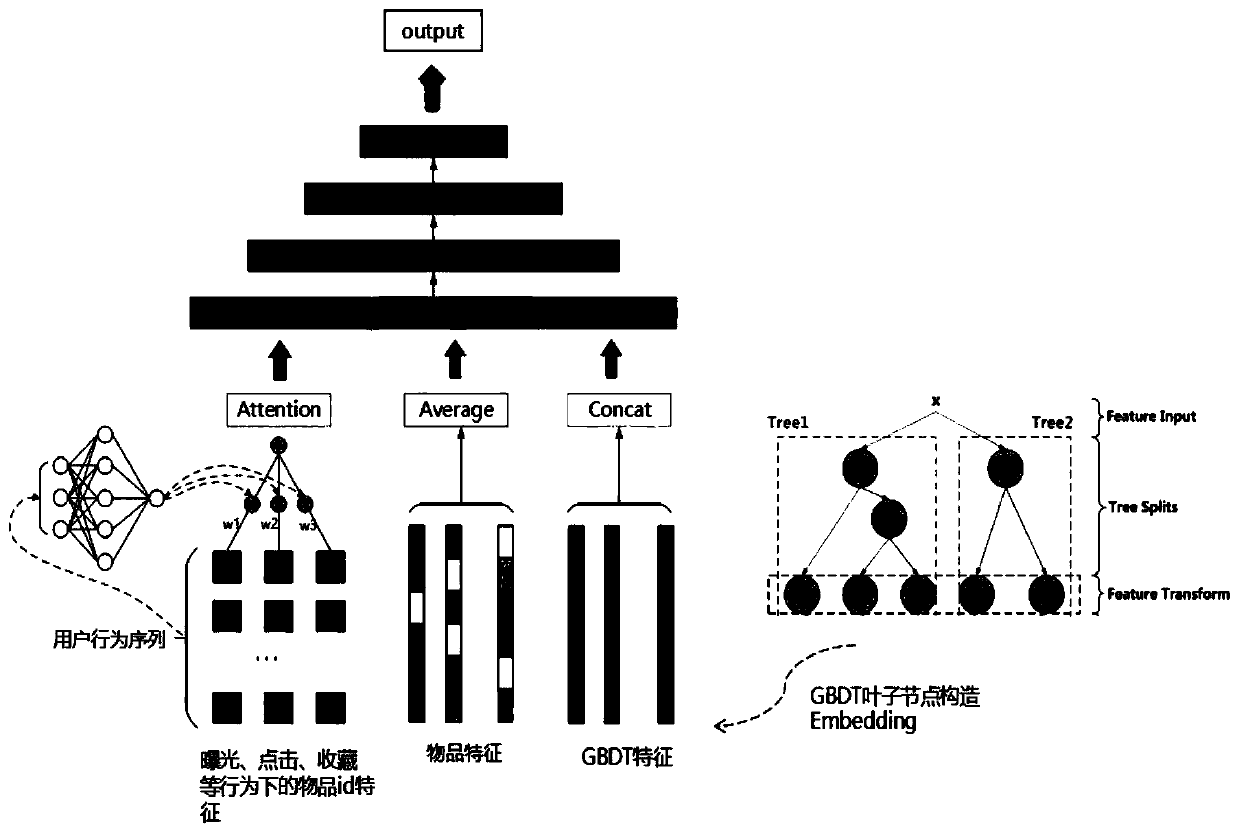

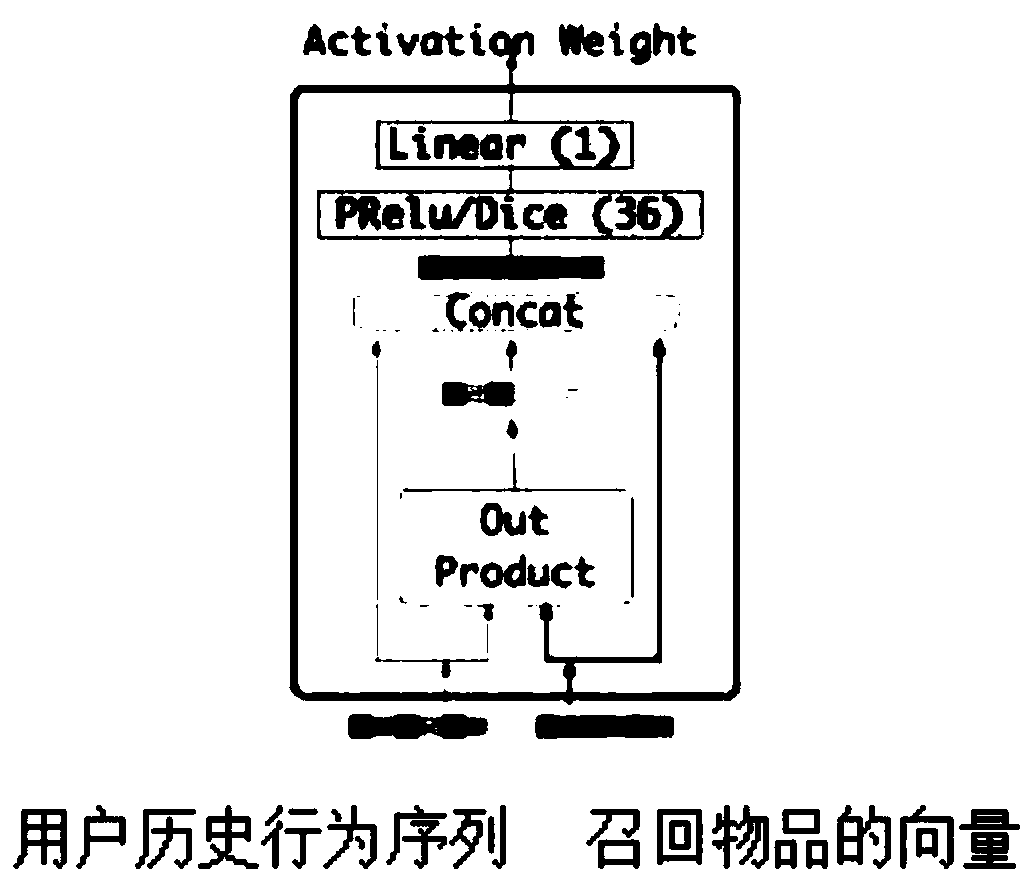

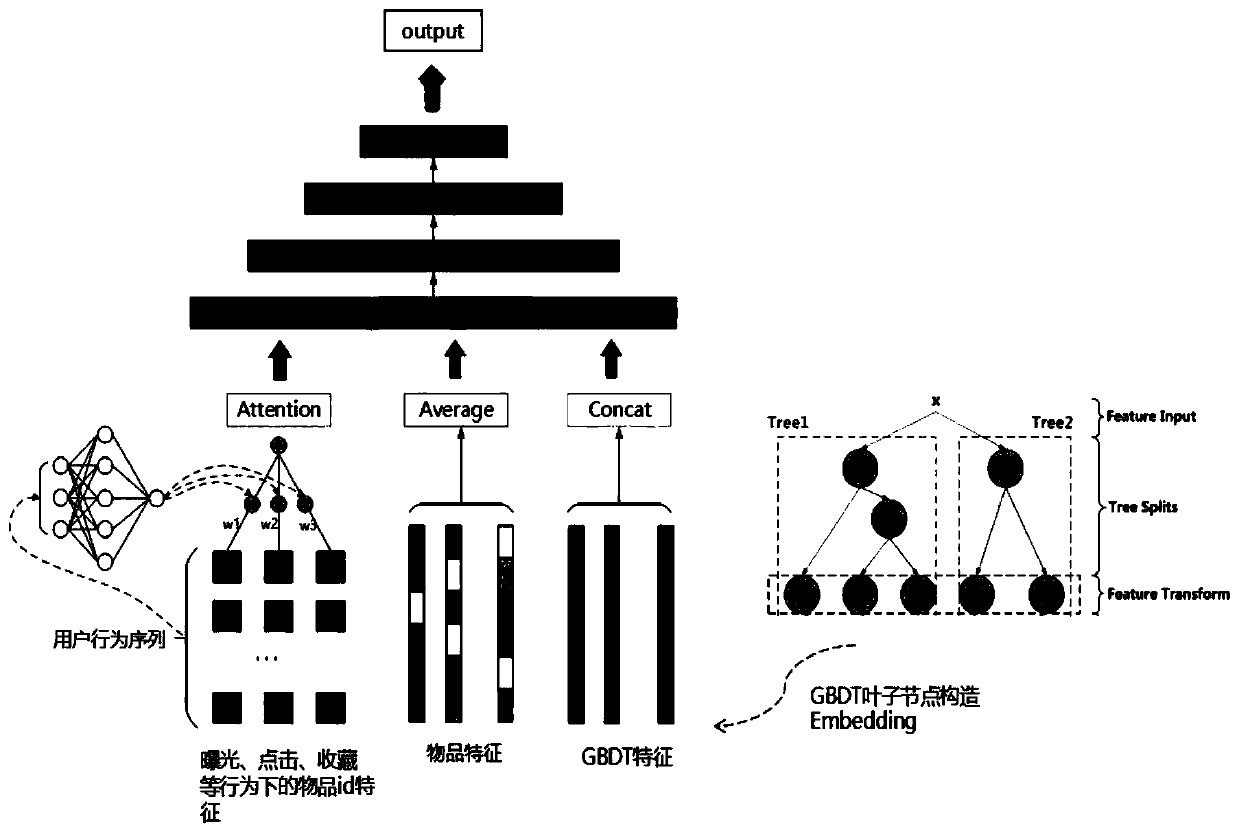

Recommendation system click rate prediction method based on deep neural network

ActiveCN109960759AHigh degree of generalizationImprove scalabilityDigital data information retrievalForecastingHidden layerFeature vector

The invention discloses a recommendation system click rate prediction method based on a deep neural network, and the method comprises the steps: collecting a user click behavior as a sample, extracting numerical features of the sample with a numerical value relation, and inputting the numerical features into a GBDT tree model for training, and obtaining a GBDT leaf node matrix E1; inputting a behavior sequence formed by clicking the articles by all the users in the sample into an Attention network to obtain an interest intensity matrix E2 of all the users in the sample for the articles; summing and averaging the article feature vectors of the click interaction of the user to obtain a click interaction matrix E3 corresponding to the user, splicing E1, E2 and E3, and inputting the E1, E2 andE3 into a deep neural network model with three hidden layers and one output layer to output a prediction result. According to the method, user clicking behaviors are decomposed into attribute characteristics, a GBDT tree model, an Attention network and a deep neural network model are subjected to nonlinear fitting, a recommendation system clicking rate prediction model is constructed, a prediction result is obtained through model training, and the method has the advantages of deep mining of recent interests of users, high generalization degree and high expansibility.

Owner:SUN YAT SEN UNIV

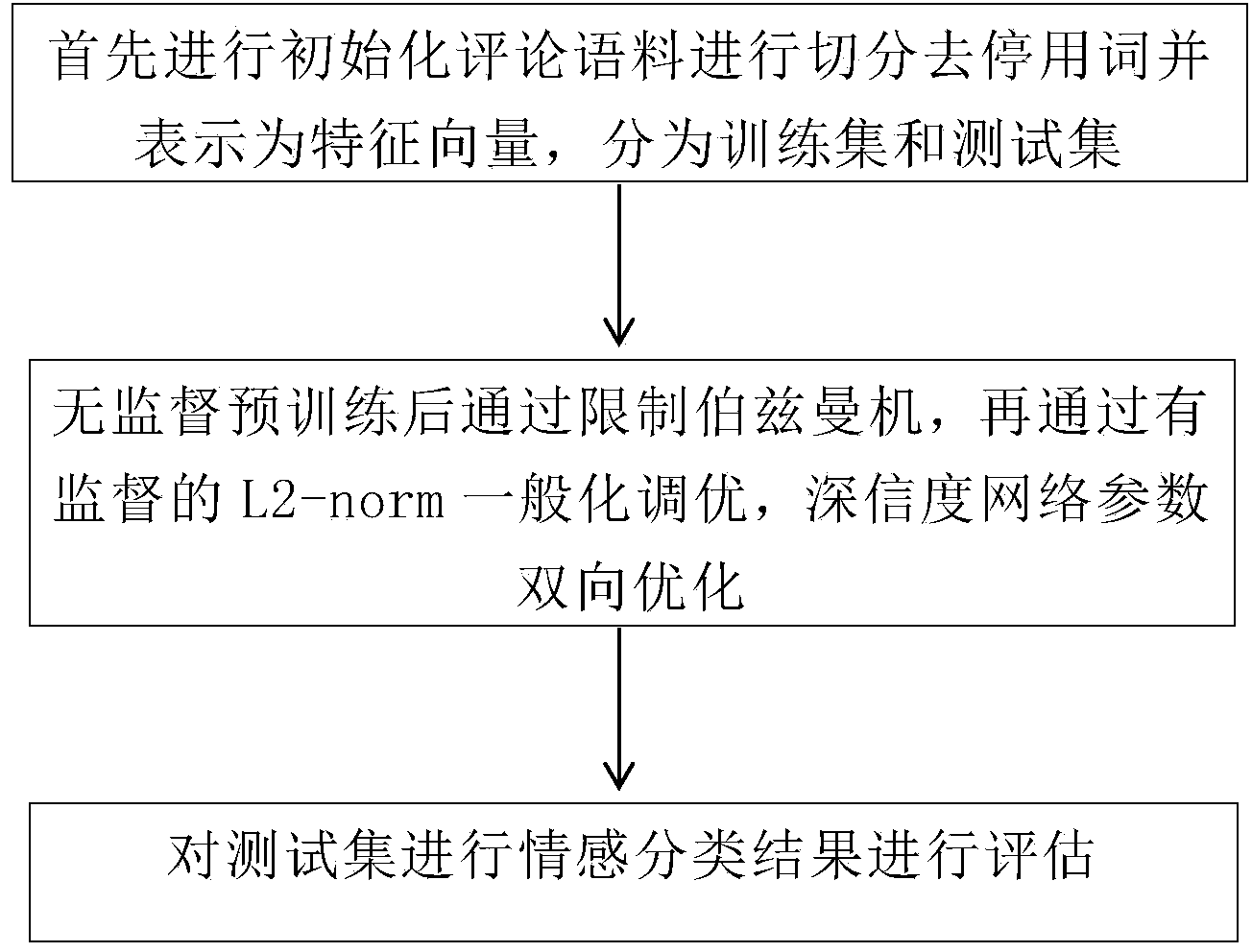

Method for establishing sentiment classification model

InactiveCN103729459AImproved Log ProbabilityReduce training timeNeural learning methodsSpecial data processing applicationsHidden layerNetwork on

The invention provides a sentiment classification method for generating a model deep-convinced-degree network on the basis of the probability of depth study. According to the technical scheme of the method, a plurality of Boltzmann machine layers are stacked, namely, output of this layer is used as input of the next layer. By the adoption of the mode, input information can be expressed in a grading mode, and abstraction can be conducted layer by layer. A multi-layer sensor containing a plurality of hidden layers is the basic study structure of the method. More abstract high layers are formed through combining the characteristics of lower layers and are used for expressing attribute categories or characteristics, so that the distribution type character presentation of data can be discovered. The method belongs to monitoring-free study, and a mainly-used model is the deep-convinced-degree network. The method enables a machine to conduct characteristic abstract better so as to improve the accuracy of sentiment classifications.

Owner:BEIJING UNIV OF POSTS & TELECOMM

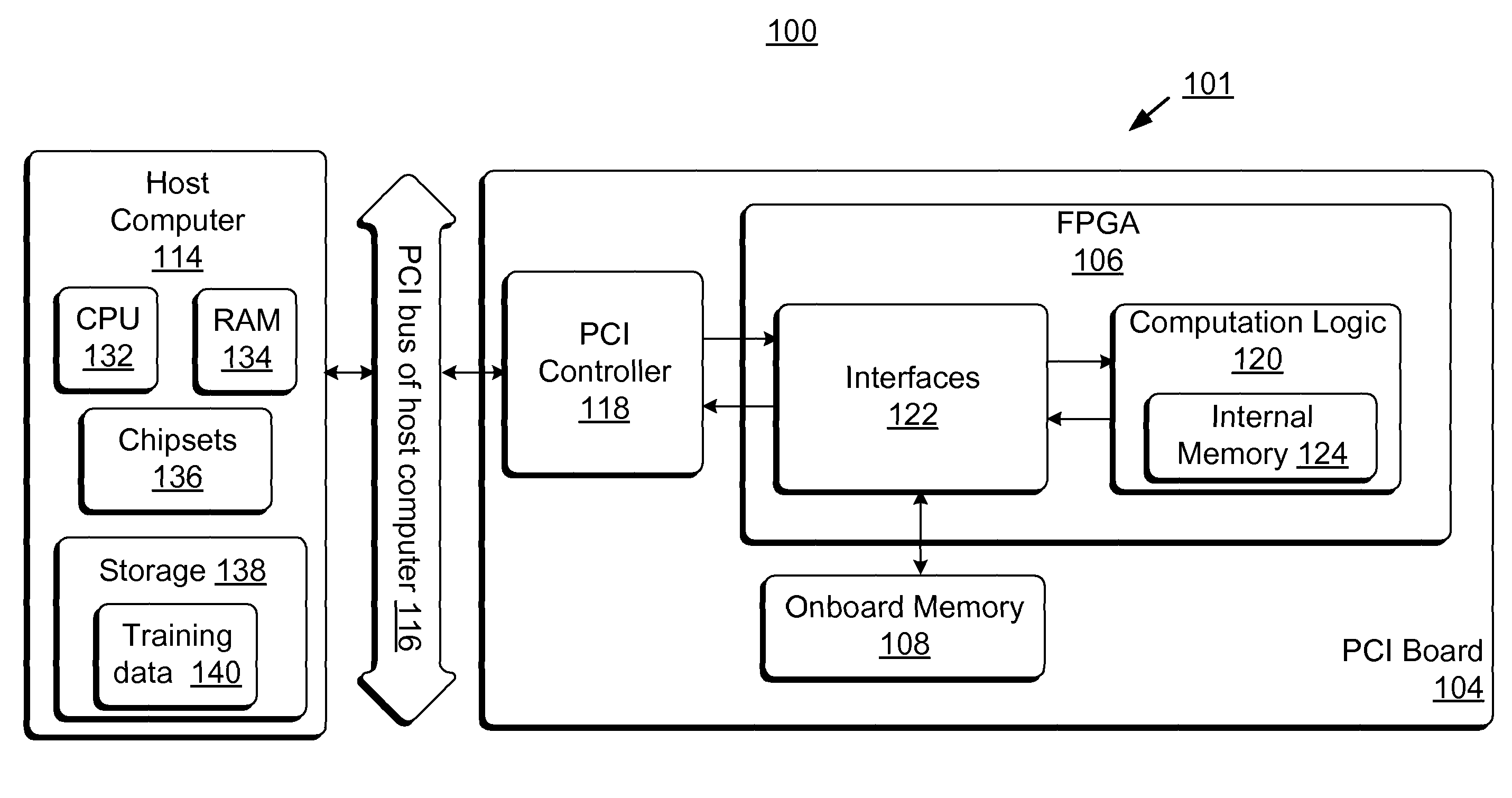

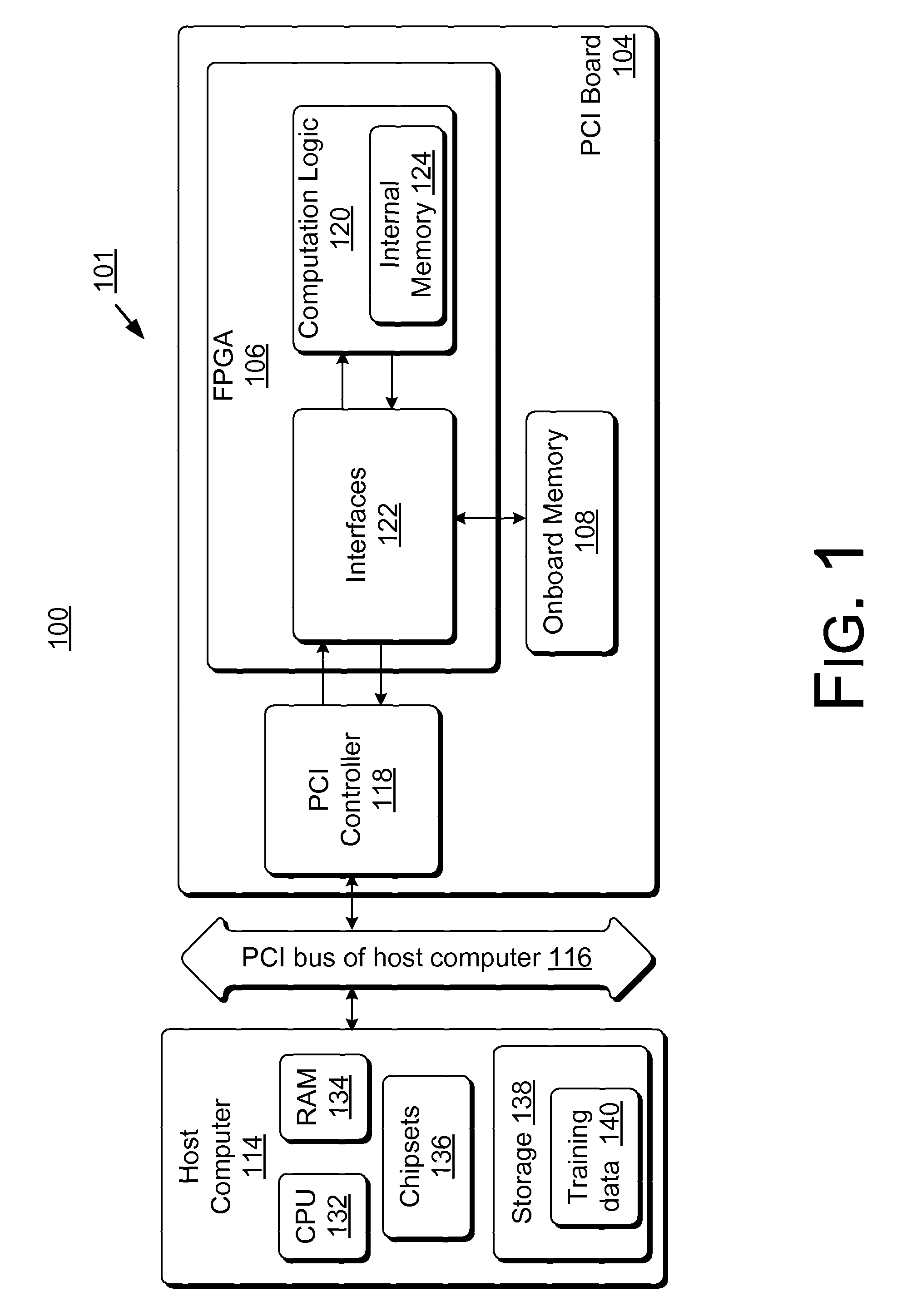

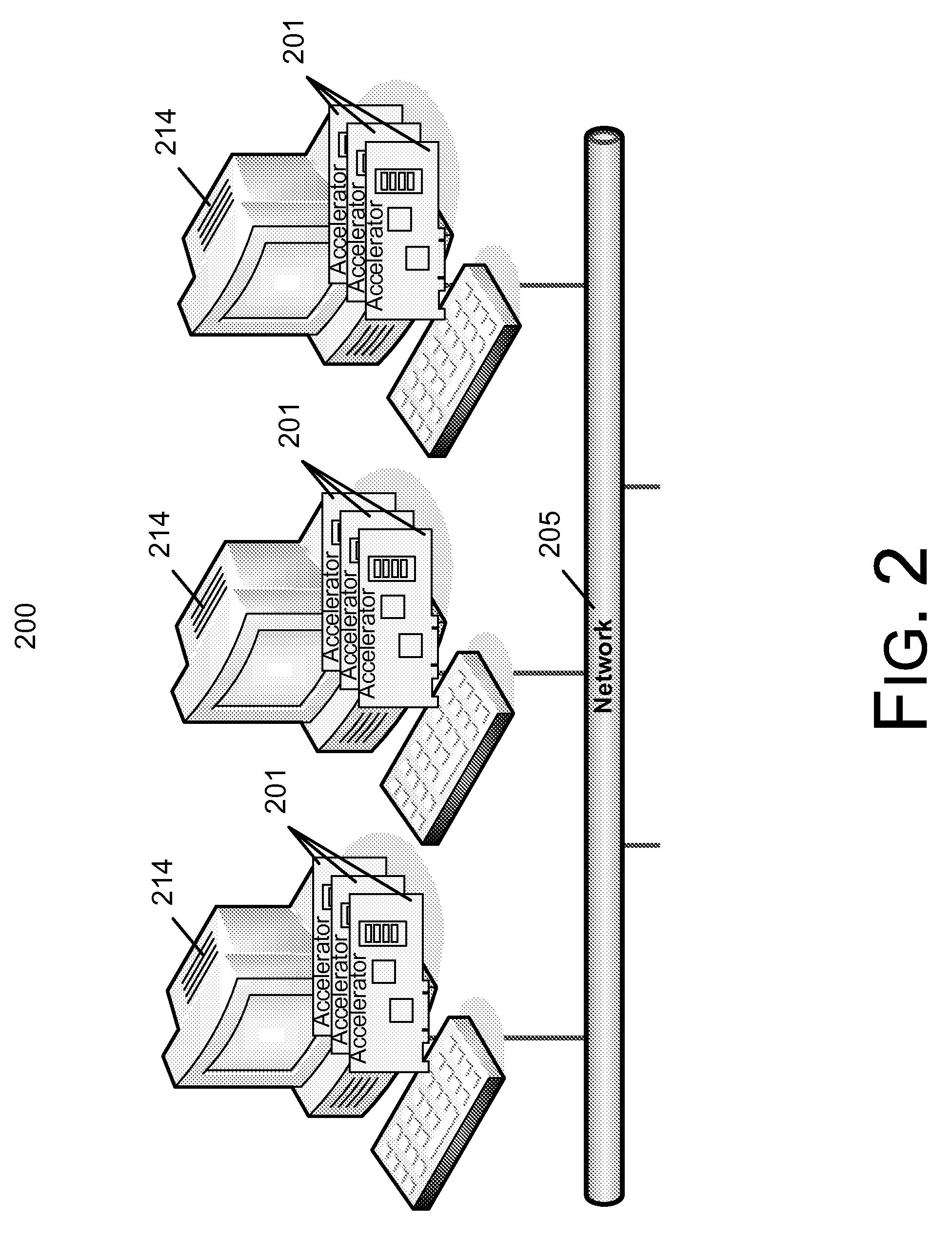

Field-programmable gate array based accelerator system

ActiveUS8131659B2Less timeLess costDigital computer detailsDigital dataHidden layerArithmetic logic unit

Accelerator systems and methods are disclosed that utilize FPGA technology to achieve better parallelism and processing speed. A Field Programmable Gate Array (FPGA) is configured to have a hardware logic performing computations associated with a neural network training algorithm, especially a Web relevance ranking algorithm such as LambaRank. The training data is first processed and organized by a host computing device, and then streamed to the FPGA for direct access by the FPGA to perform high-bandwidth computation with increased training speed. Thus, large data sets such as that related to Web relevance ranking can be processed. The FPGA may include a processing element performing computations of a hidden layer of the neural network training algorithm. Parallel computing may be realized using a single instruction multiple data streams (SIMD) architecture with multiple arithmetic logic units in the FPGA.

Owner:MICROSOFT TECH LICENSING LLC

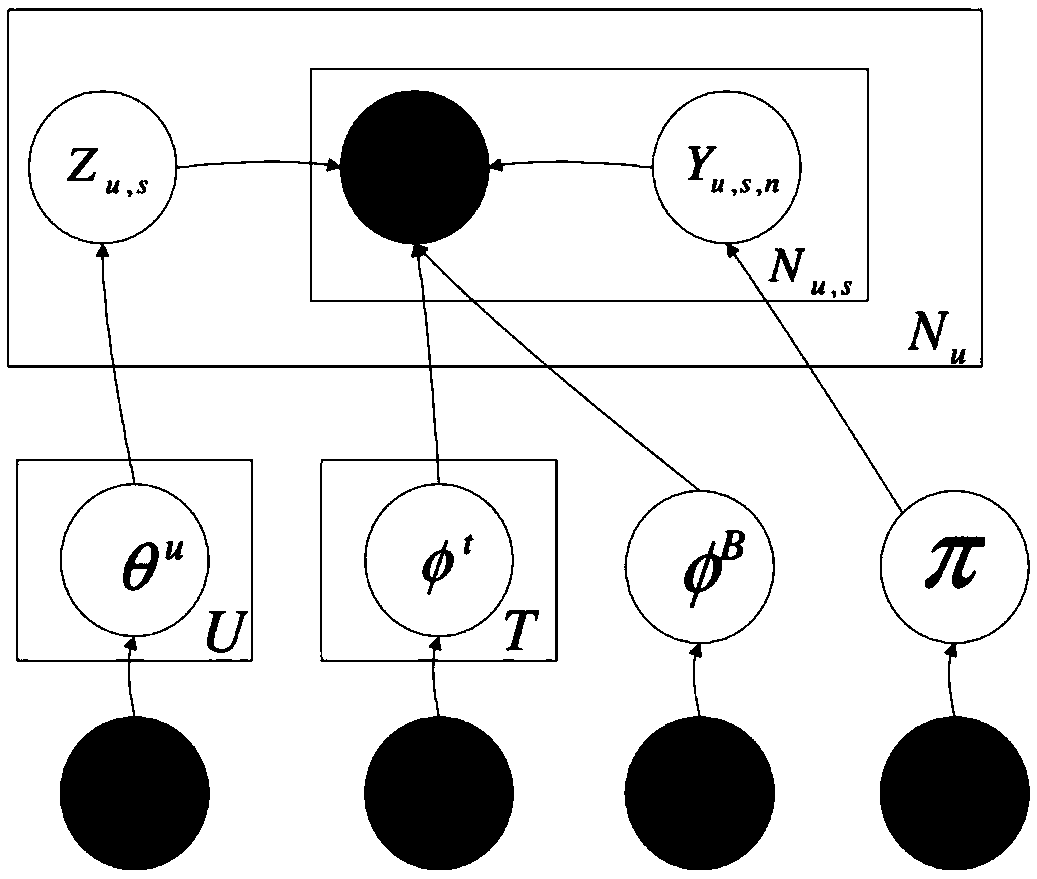

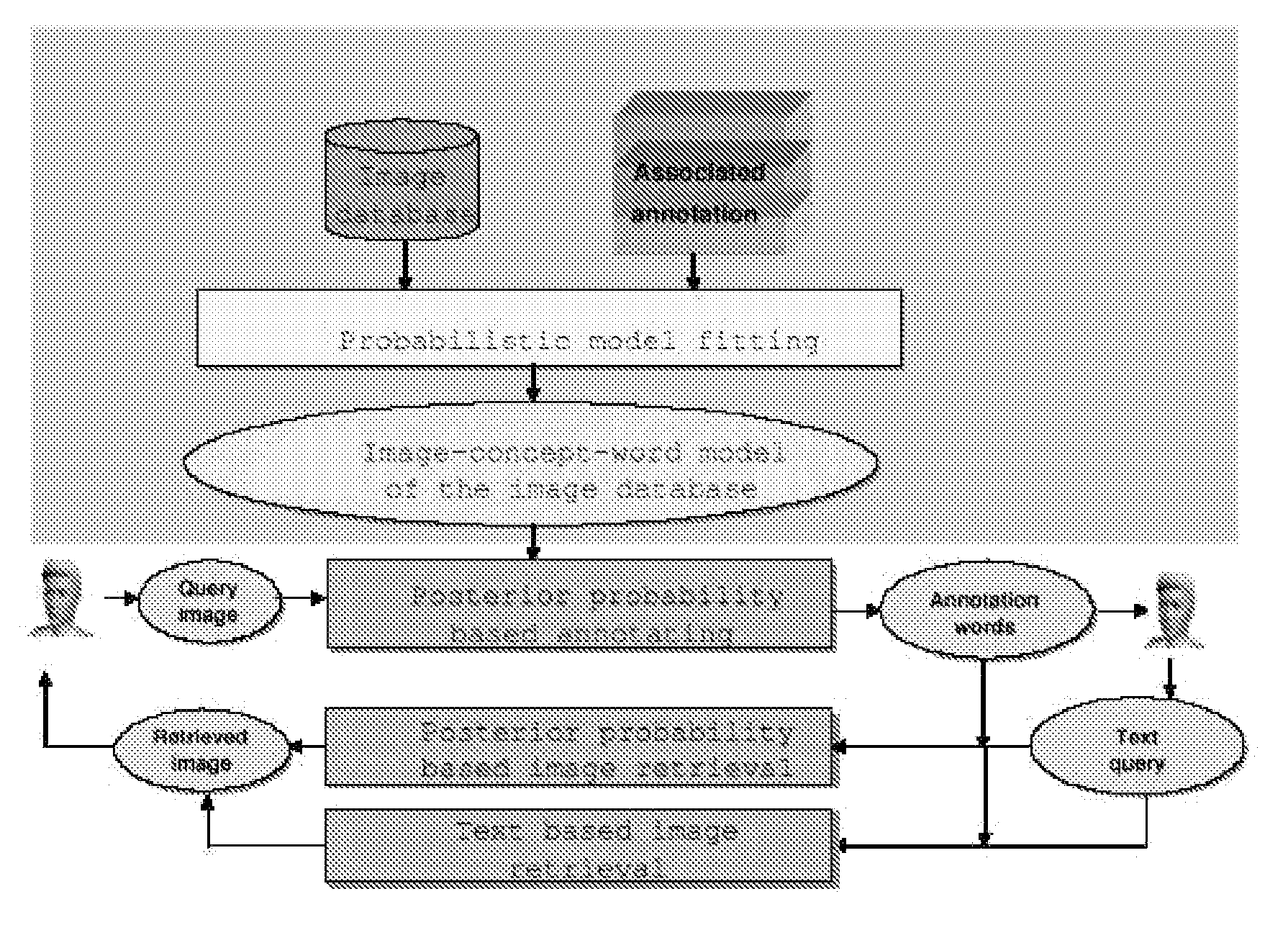

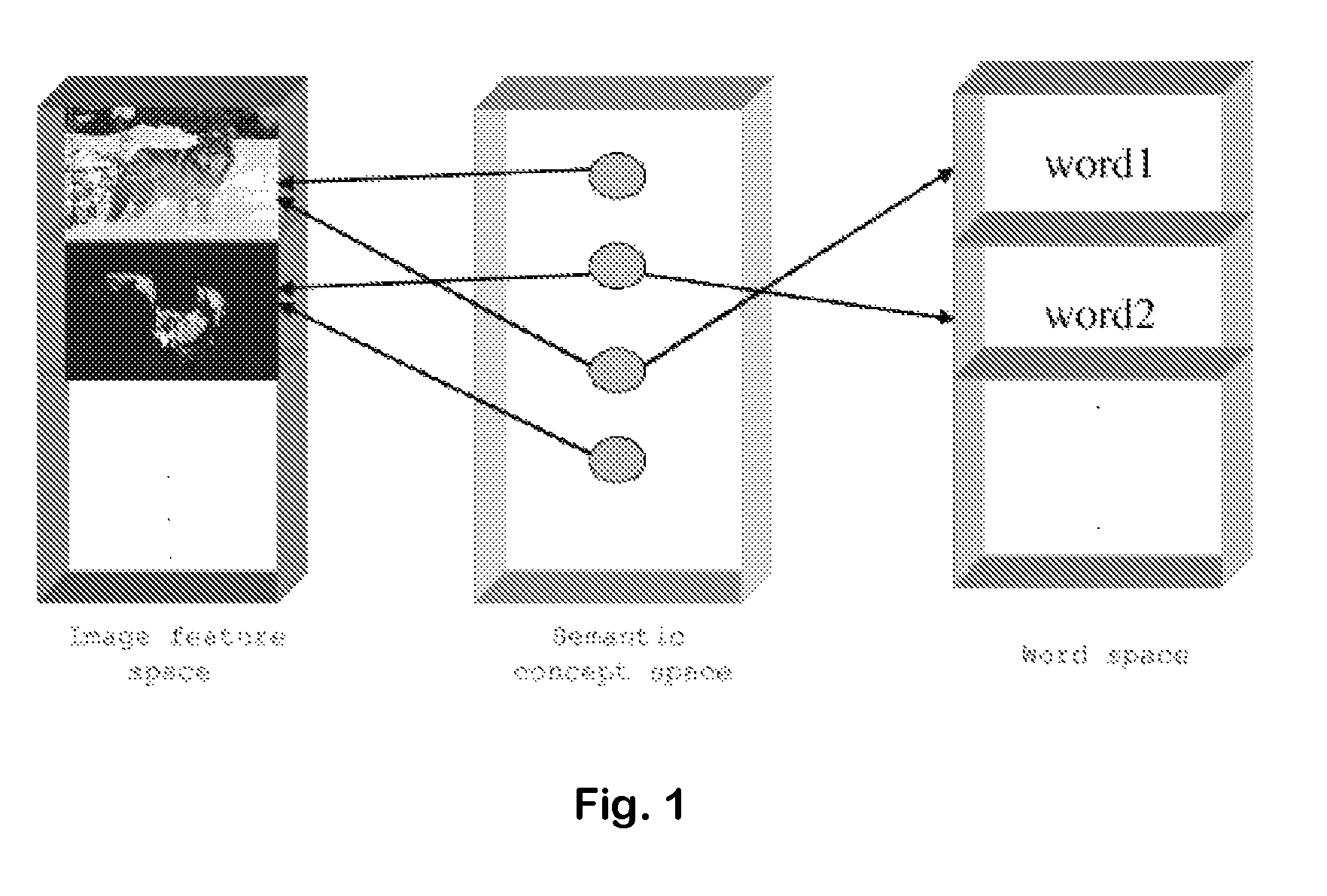

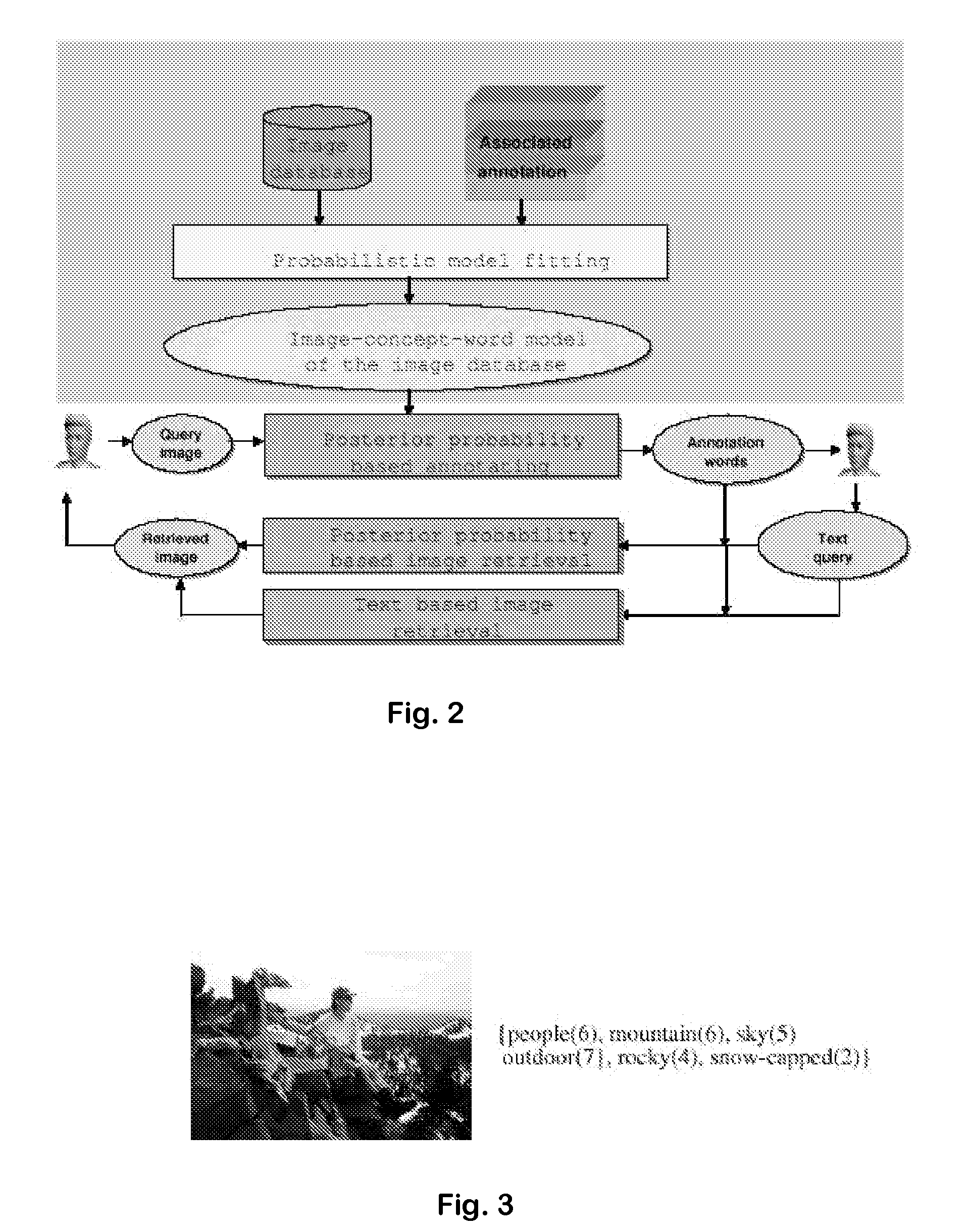

System and method for image annotation and multi-modal image retrieval using probabilistic semantic models

ActiveUS7814040B1Easy to annotate and retrieveEvaluation lessMathematical modelsDigital data information retrievalAlgorithmCorresponding conditional

Systems and Methods for multi-modal or multimedia image retrieval are provided. Automatic image annotation is achieved based on a probabilistic semantic model in which visual features and textual words are connected via a hidden layer comprising the semantic concepts to be discovered, to explicitly exploit the synergy between the two modalities. The association of visual features and textual words is determined in a Bayesian framework to provide confidence of the association. A hidden concept layer which connects the visual feature(s) and the words is discovered by fitting a generative model to the training image and annotation words. An Expectation-Maximization (EM) based iterative learning procedure determines the conditional probabilities of the visual features and the textual words given a hidden concept class. Based on the discovered hidden concept layer and the corresponding conditional probabilities, the image annotation and the text-to-image retrieval are performed using the Bayesian framework.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

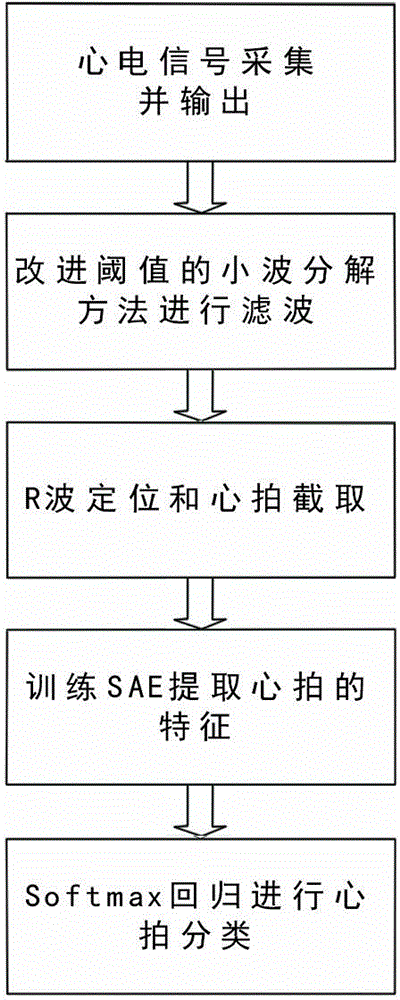

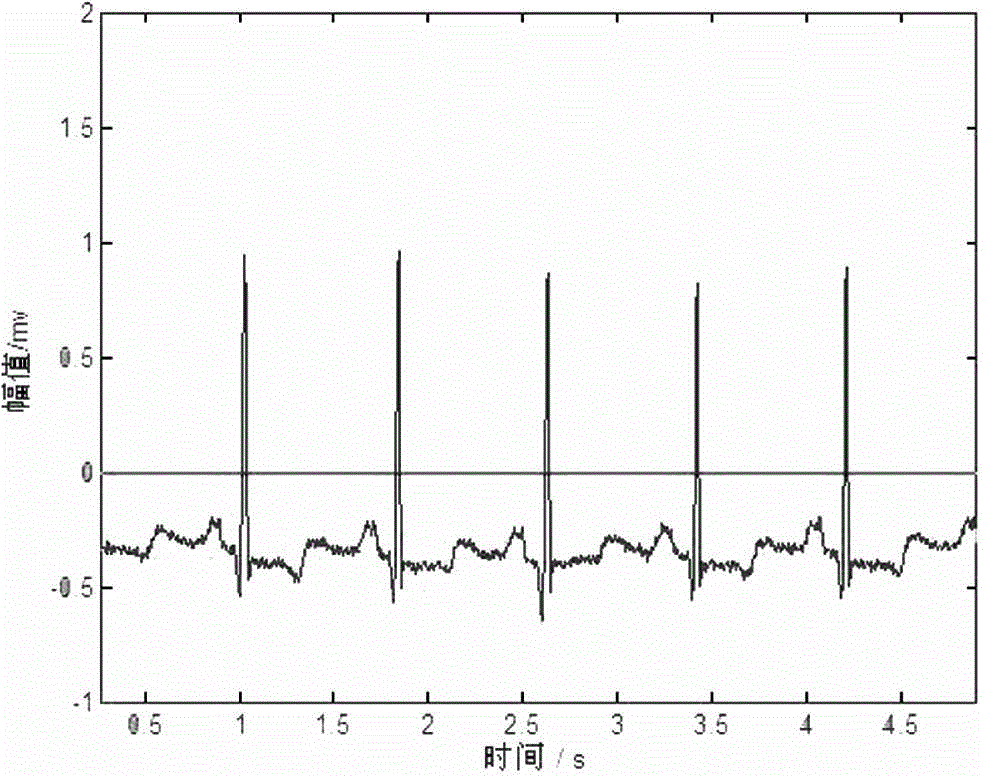

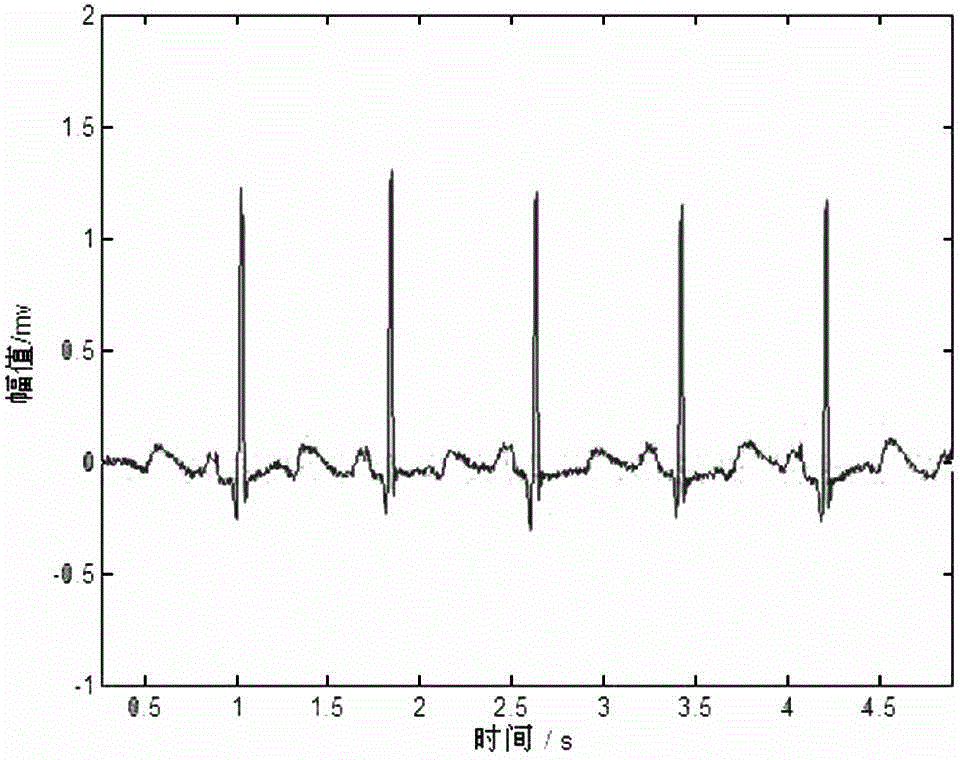

Automatic classification method for electrocardiogram signals

ActiveCN104523266AImprove stabilityRealize precise identificationDiagnostic signal processingSensorsEcg signalHidden layer

Owner:HEBEI UNIVERSITY

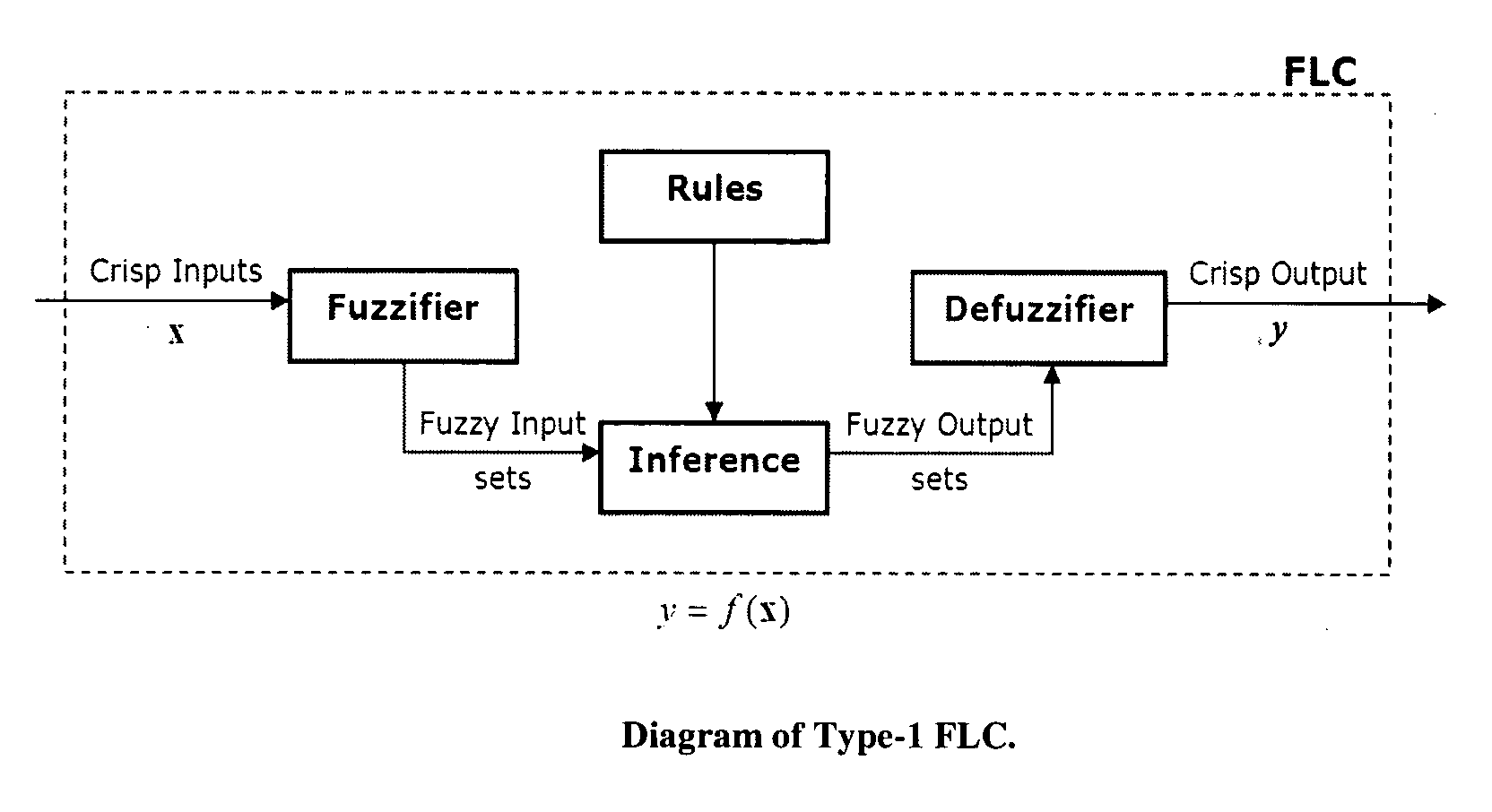

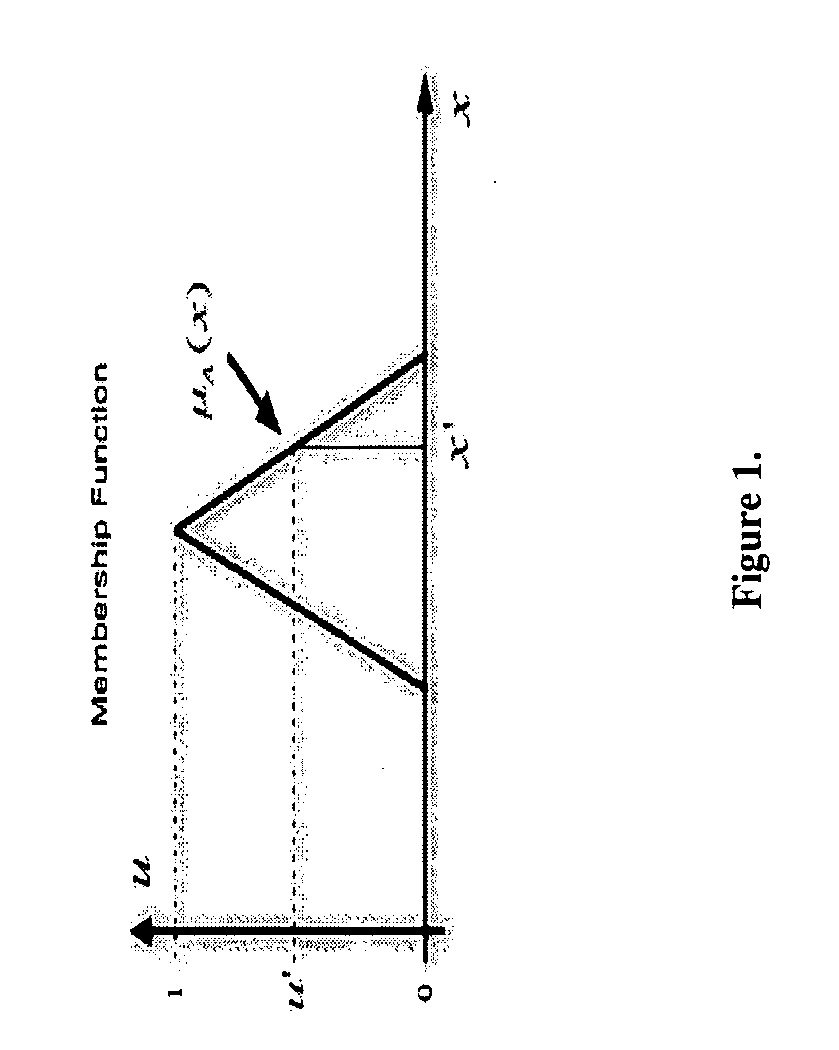

Neuro type-2 fuzzy based method for decision making

ActiveUS20110071969A1Easy to useComputation lotDigital computer detailsDigital dataHidden layerData source

According to a first aspect of the invention there is provided a method of decision-making comprising: a data input step to input data from a plurality of first data sources into a first data bank, analysing said input data by means of a first adaptive artificial neural network (ANN), the neural network including a plurality of layers having at least an input layer, one or more hidden layers and an output layer, each layer comprising a plurality of interconnected neurons, the number of hidden neurons utilised being adaptive, the ANN determining the most important input data and defining therefrom a second ANN, deriving from the second ANN a plurality of Type-1 fuzzy sets for each first data source representing the data source, combining the Type-1 fuzzy sets to create Footprint of Uncertainty (FOU) for type-2 fuzzy sets, modelling the group decision of the combined first data sources; inputting data from a second data source, and assigning an aggregate score thereto, comparing the assigned aggregate score with a fuzzy set representing the group decision, and producing a decision therefrom. A method employing a developed ANN as defined in Claim 1 and extracting data from said ANN, the data used to learn the parameters of a normal Fuzzy Logic System (FLS).

Owner:TEMENOS HEADQUARTERS SA

Weld surface defect identification method based on image texture

InactiveCN105938563AEffectively distinguish texture featuresRealize classification recognitionCharacter and pattern recognitionOptically investigating flaws/contaminationColor imageSample image

The invention provides a weld surface defect identification method based on image texture. Miniature CCD camera photographing parameters are set according to the acquisition image standard; an acquired true color image is converted into a grey-scale map, and a gray scale co-occurrence matrix is created; 24 characteristic parameters in total, including energy, contrast, correlation, homogeneity, entropy and variance, are respectively extracted in the direction of 0 degree, 45 degrees, 90 degrees and 135 degrees, and normalization processing is performed on the extracted characteristic parameters; a BP neural network is trained by utilizing a training sample image, and the number of neurons of the neural network, a hidden layer transfer function, an output layer transfer function and a training algorithm transfer function are set; test sample characteristic parameters are outputted to the trained BP neural network to perform classification and identification; and the matching degree of the test sample and different types of surface welding quality training samples is calculated so that automatic classification and identification of the test sample surface welding quality can be completed.

Owner:BEIJING UNIV OF TECH

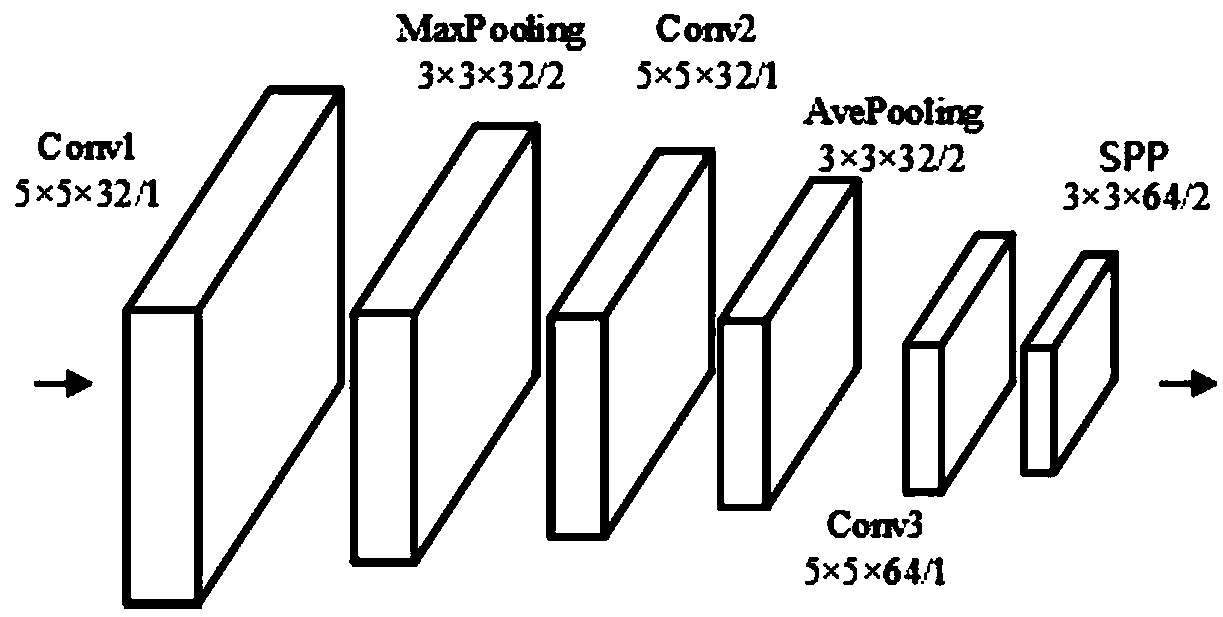

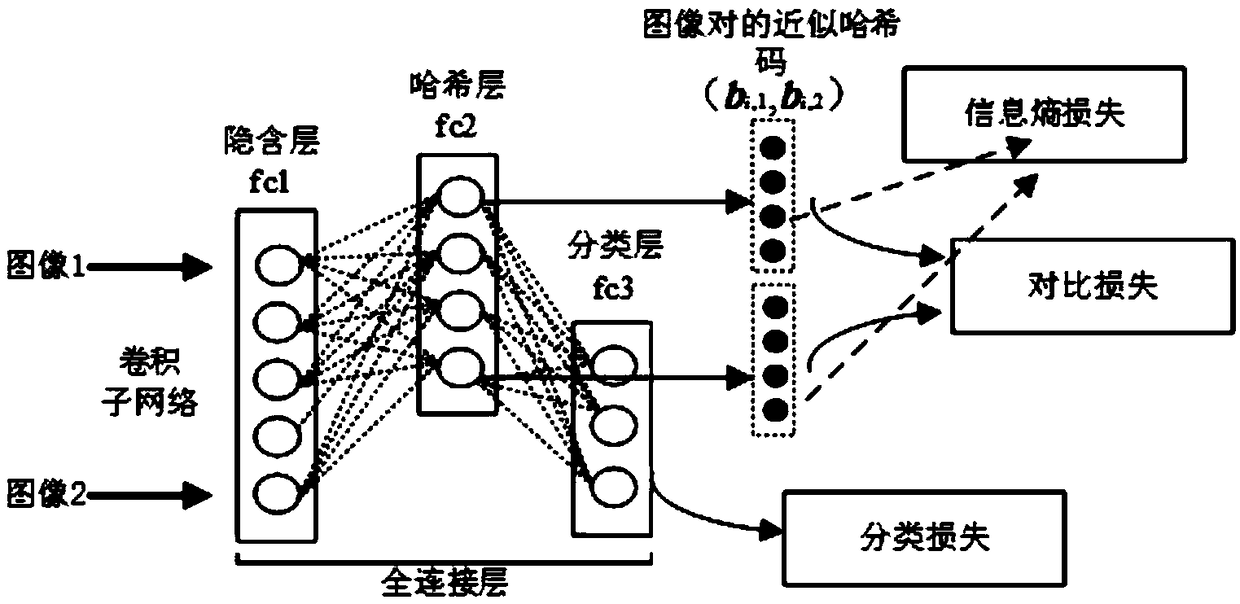

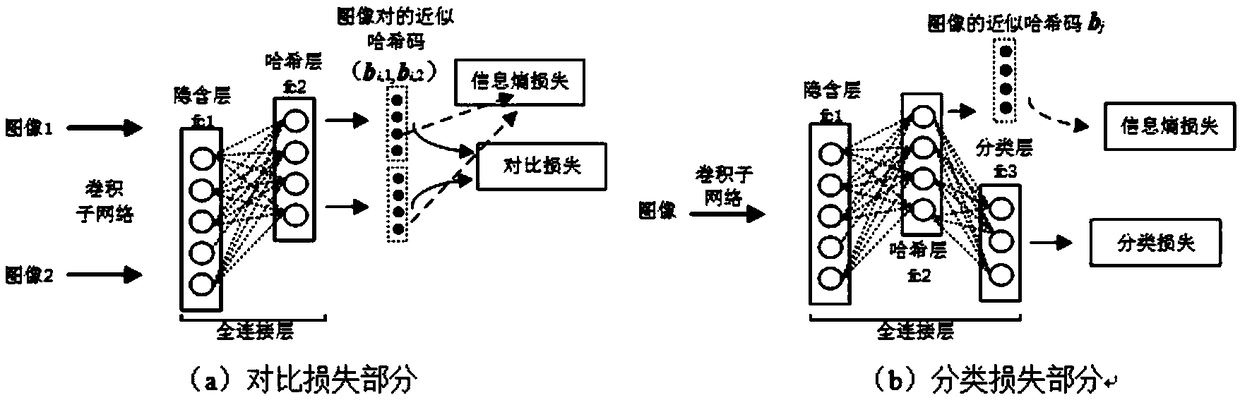

Image retrieval method based on multi-task hash learning

ActiveCN109165306AReduce redundancyGood search accuracyStill image data queryingNeural architecturesHidden layerMulti-task learning

The invention discloses an image retrieval method based on multi-task hash learning. Firstly, the deep convolutional neural network model is determined. Secondly, the loss function is designed by using multi-task learning mechanism. Then, the training method of convolutional neural network model is determined, in combination with the loss function, and back propagation method is used to optimize the model. Finally, the image is input to the convolutionalal neural network model, and the output of the model is transformed into hash code for image retrieval. The convolutional neural network modelis composed of a convolutional sub-network and a full connection layer. The convolutional subnetwork consists of a first convolutional layer, a maximum pooling layer, a second convolutional layer, anaverage pooling layer, a third volume base layer and a spatial pyramid pooling layer. The full connection layer is composed of a hidden layer, a hash layer and a classification layer. The training method of the model includes two training methods: a combined training method and a separated training method. The method of the invention can effectively retrieve single tag and multi-tag images, and the retrieval performance is better than other deep hashing methods.

Owner:CHANGSHA UNIVERSITY OF SCIENCE AND TECHNOLOGY

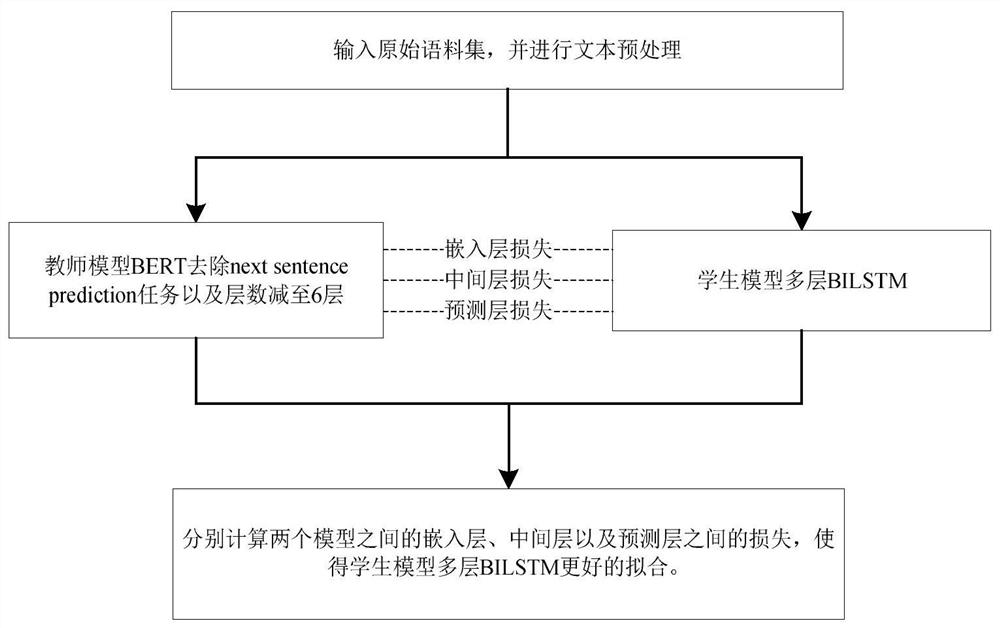

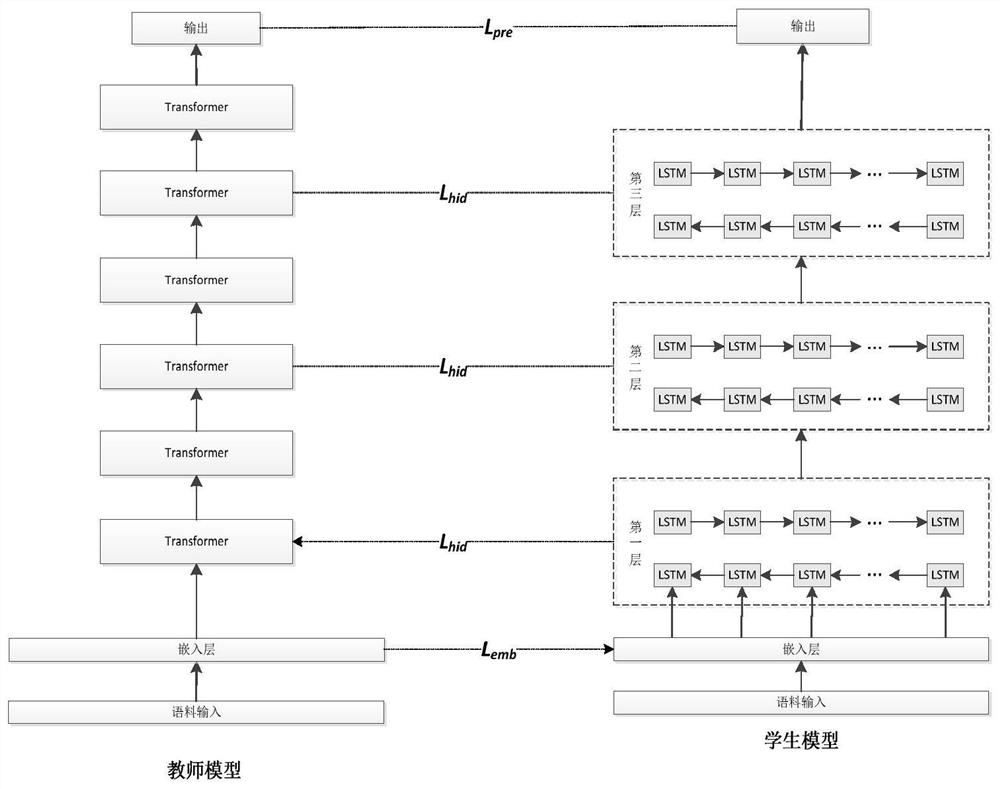

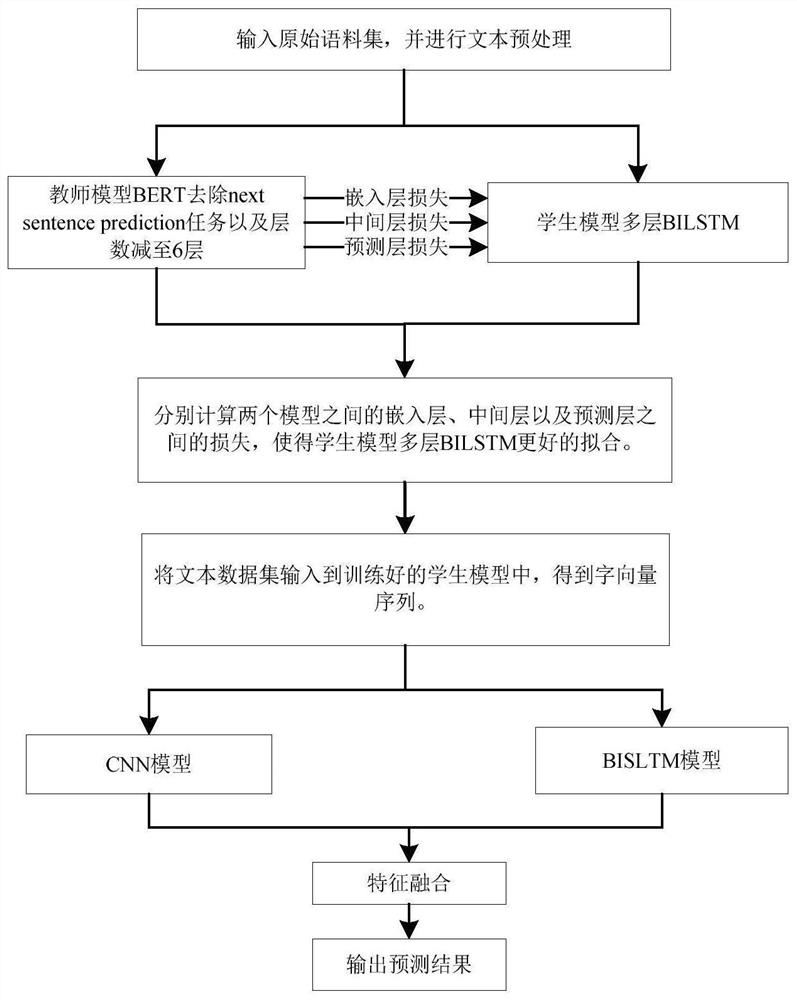

Multilayer neural network language model training method and device based on knowledge distillation

ActiveCN111611377AHigh precisionImprove learning effectSemantic analysisNeural architecturesHidden layerLinguistic model

The invention discloses a multilayer neural network language model training method and device based on knowledge distillation. The method comprises the steps that firstly, a BERT language model and amulti-layer BILSTM model are constructed to serve as a teacher model and a student model, the constructed BERT language model comprises six layers of transformers, and the multi-layer BILSTM model comprises three layers of BILSTM networks; then, after the text corpus set is preprocessed, the BERT language model is trained to obtain a trained teacher model; and the preprocessed text corpus set is input into a multilayer BILSTM model to train a student model based on a knowledge distillation technology, and different spatial representations are calculated through linear transformation when an embedding layer, a hiding layer and an output layer in a teacher model are learned. Based on the trained student model, the text can be subjected to vector conversion, and then a downstream network is trained to better classify the text. According to the method, the text pre-training efficiency and the accuracy of the text classification task can be effectively improved.

Owner:HUAIYIN INSTITUTE OF TECHNOLOGY

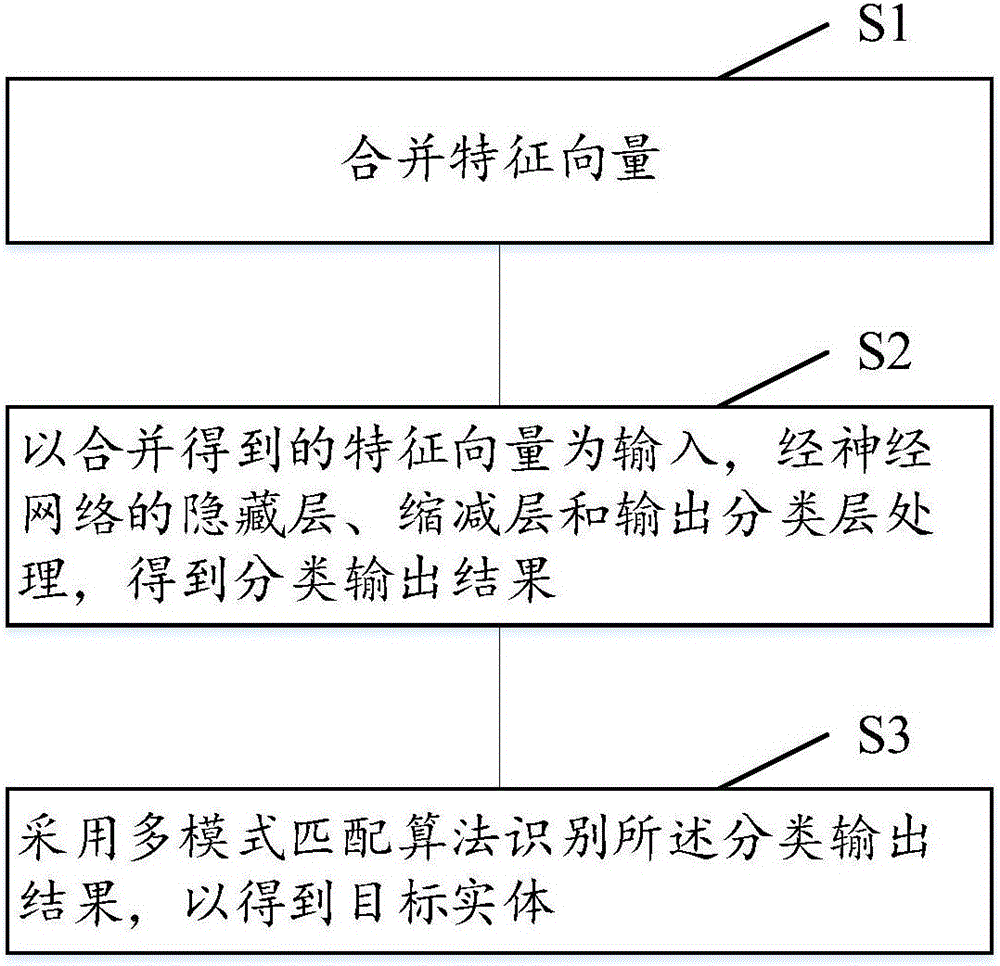

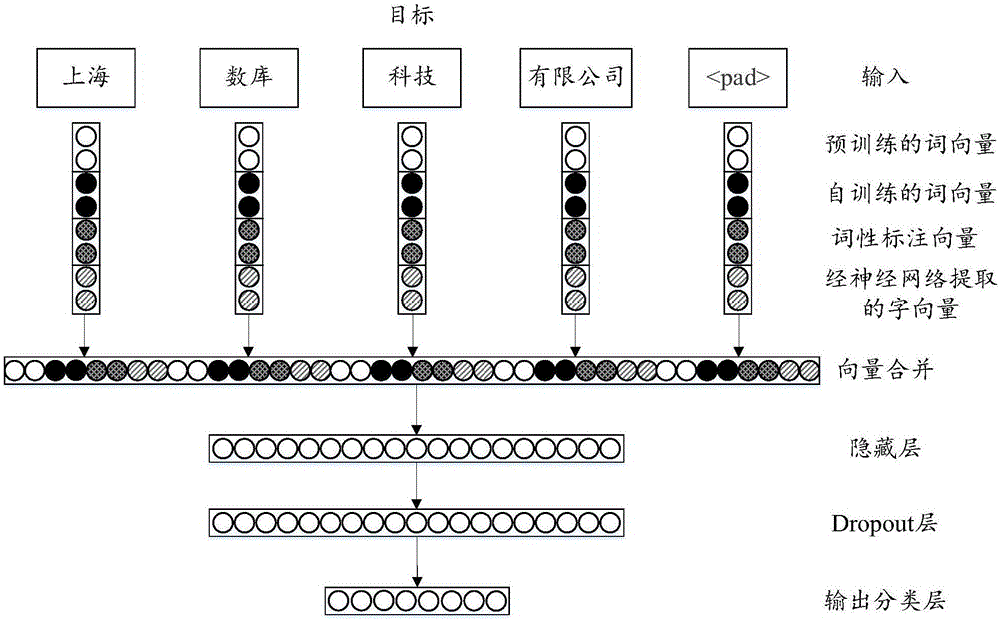

Method and system for identifying named entity

InactiveCN106557462AMake full use of "prior knowledgeSolve predictive powerBiological neural network modelsNatural language data processingHidden layerFeature vector

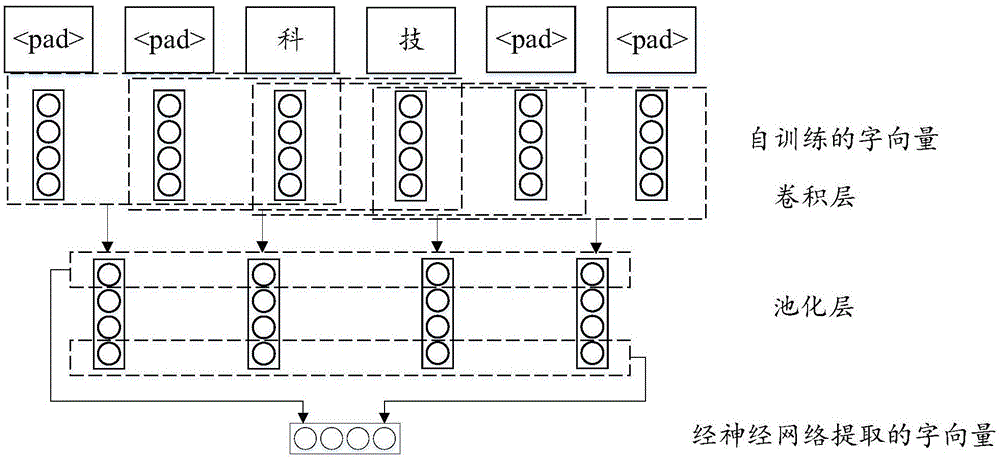

The technical scheme of the invention discloses a method and a system for identifying a named entity. The method for identifying the named entity comprises the following steps: merging feature vectors, wherein the feature vectors comprise pre-trained word vectors, self-training word vectors and part-of-speech tagging vectors, and a neural network is a convolutional neural network or a deep belief neural network; using the merged and obtained feature vectors as input, and obtaining a classified output result through treatment of a hidden layer, a reducing layer and an output classification layer of the neural network; and adopting a multi-mode matching algorithm to identify the classified output result so as to obtain the target entity. Through the technical scheme, the feature vectors are merged as input features of the neural network, so that the method can be well applied to specific classification scenes through treatment of the neural network and multi-mode matching.

Owner:数库(上海)科技有限公司

Method of training a neural network

A method of training a neural network having at least an input layer, an output layer and a hidden layer, and a weight matrix encoding connection weights between two of the layers, the method comprising the steps of (a) providing an input to the input layer, the input having an associated expected output, (b) receiving a generated output at the output layer, (c) generating an error vector from the difference between the generated output and expected output, (d) generating a change matrix, the change matrix being the product of a random weight matrix and the error vector, and (e) modifying the weight matrix in accordance with the change matrix.

Owner:OXFORD UNIV INNOVATION LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com