Patents

Literature

847 results about "Image texture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

An image texture is a set of metrics calculated in image processing designed to quantify the perceived texture of an image. Image texture gives us information about the spatial arrangement of color or intensities in an image or selected region of an image.

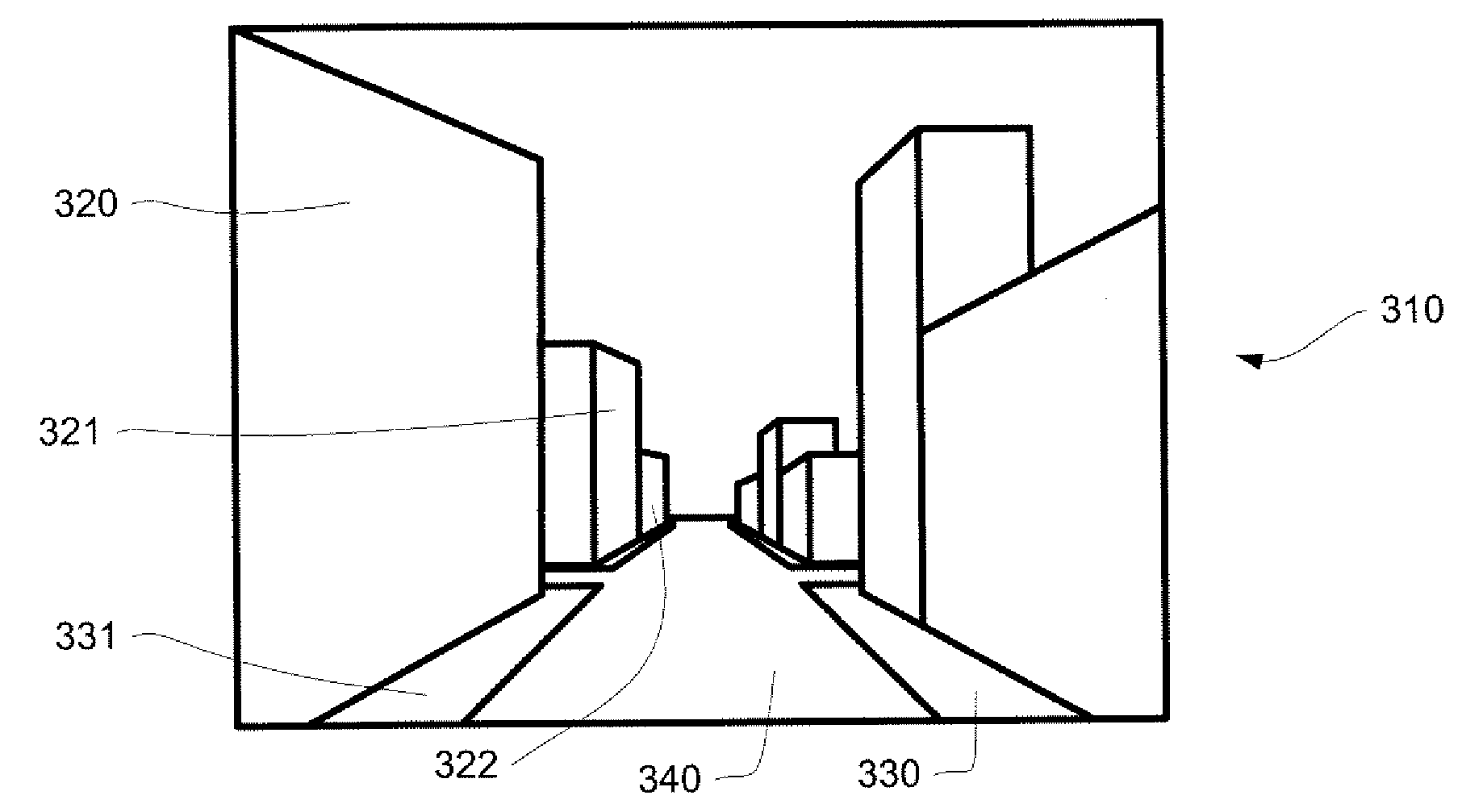

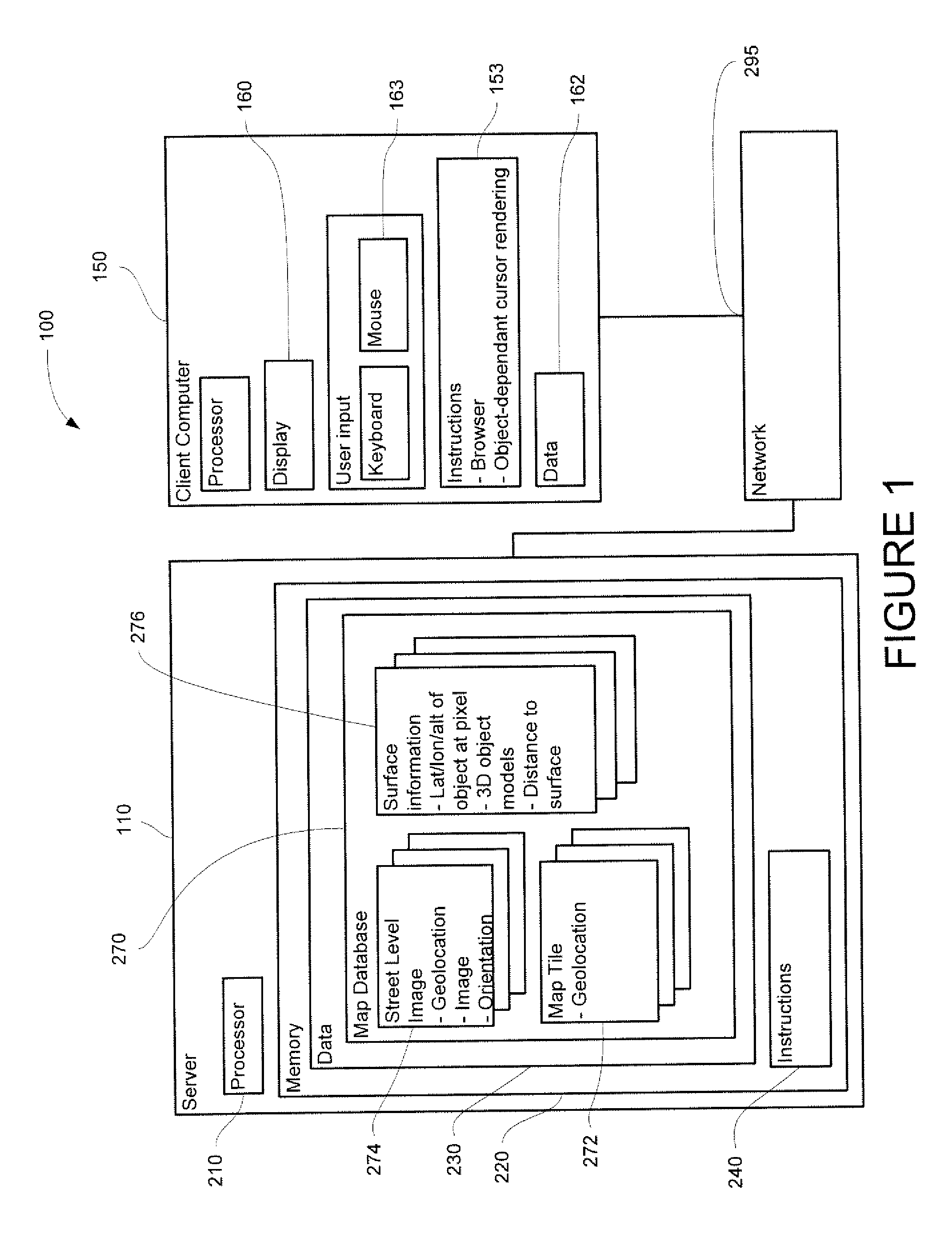

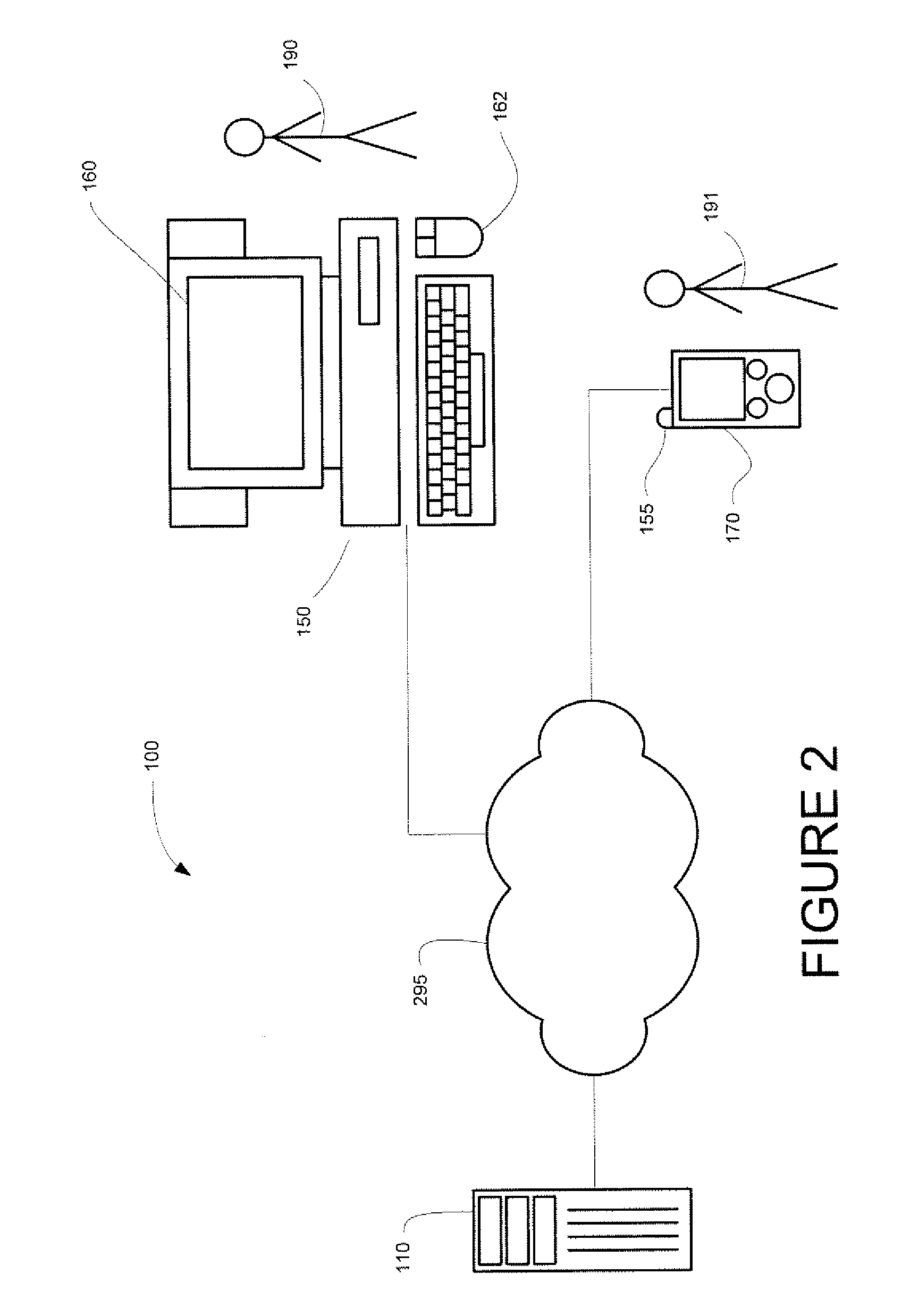

System and method of indicating transition between street level images

InactiveUS20100215250A1Details involving 3D image dataCharacter and pattern recognitionComputer graphics (images)Angle of view

A system and method of displaying transitions between street level images is provided. In one aspect, the system and method creates a plurality of polygons that are both textured with images from a 2D street level image and associated with 3D positions, where the 3D positions correspond with the 3D positions of the objects contained in the image. These polygons, in turn, are rendered from different perspectives to convey the appearance of moving among the objects contained in the original image.

Owner:GOOGLE LLC

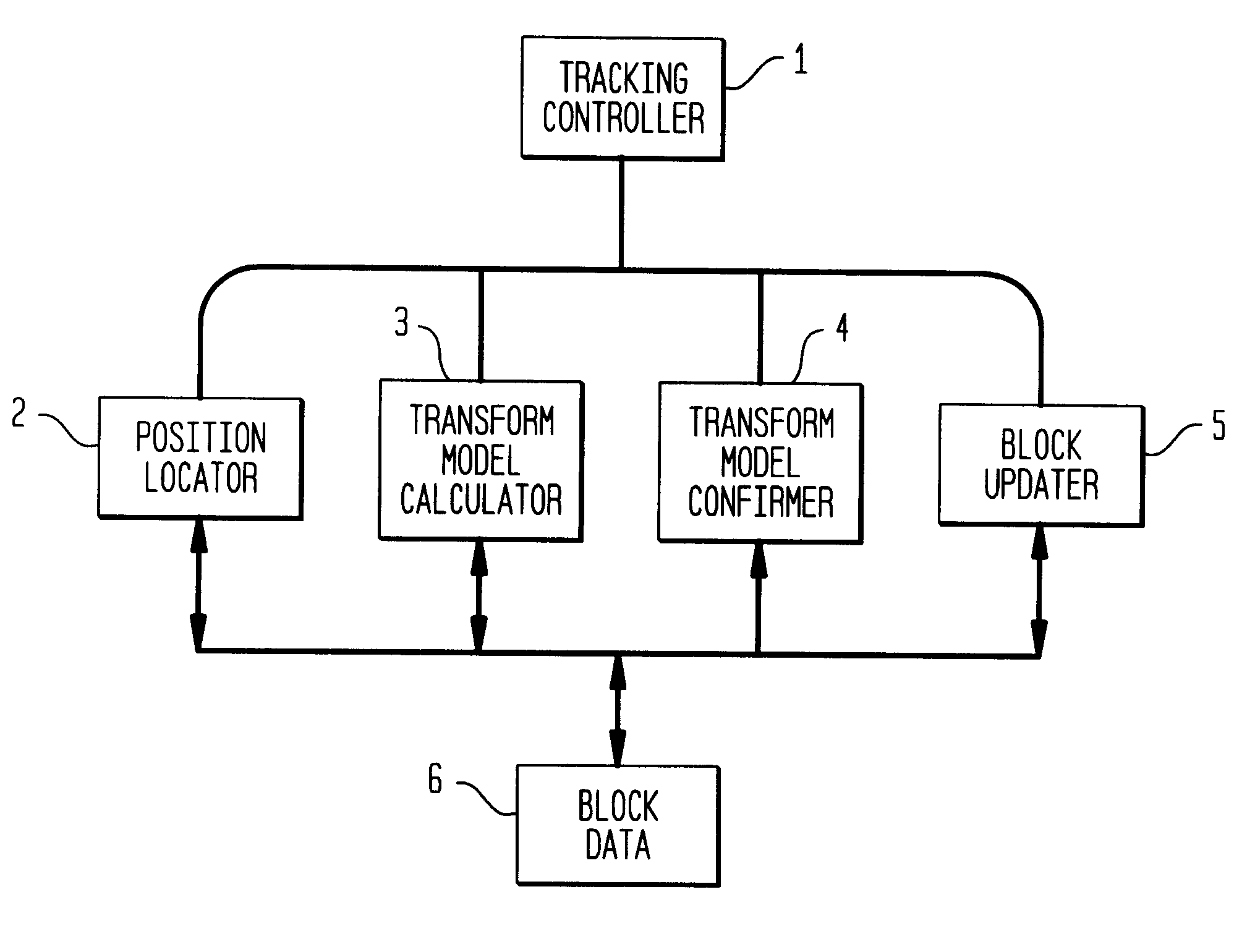

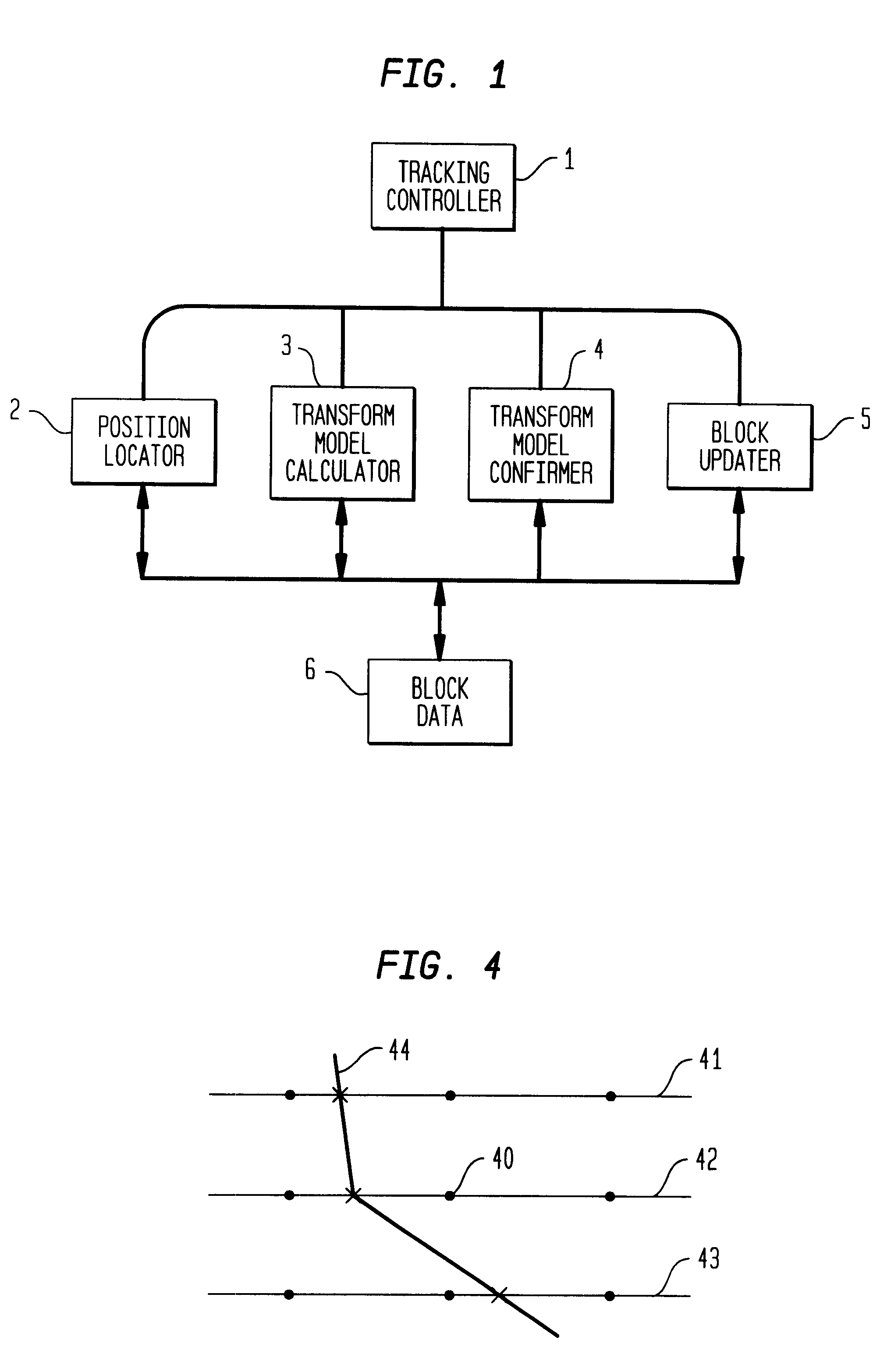

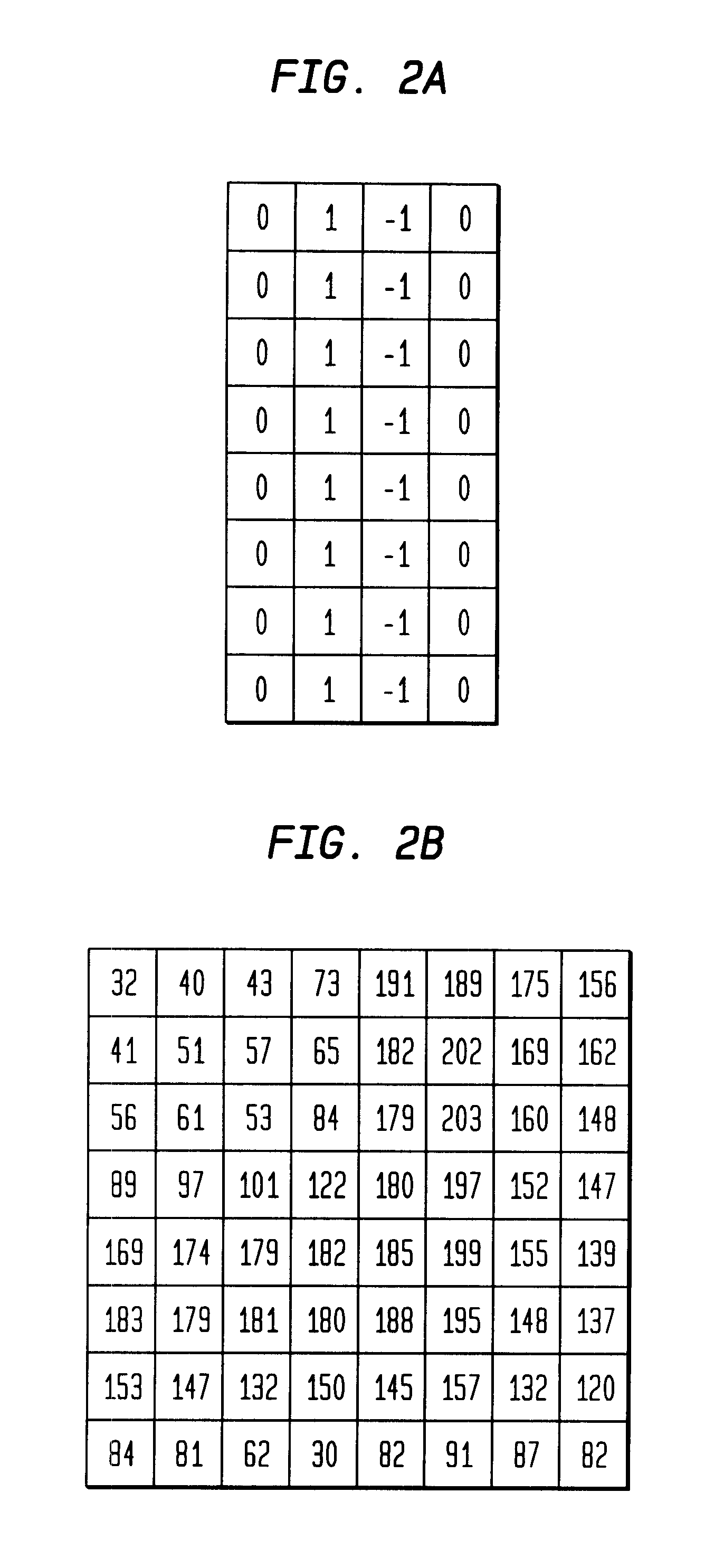

Motion tracking using image-texture templates

Image templates are extracted from video images in real-time and stored in memory. Templates are selected on the basis of their ability to provide useful positional data and compared with regions of subsequent images to find the position giving the best match. From the position data a transform model is calculated. The transform model tracks the background motion in the current image to accurately determine the motion and attitude of the camera recording the current image. The transform model is confirmed by examining pre-defined image templates. Transform model data and camera sensor data are then used to insert images into the live video broadcast at the desired location in the correct perspective. Stored templates are periodically updated to purge those that no longer give valid or significant positional data. New templates extracted from recent images are used to replace the discarded templates.

Owner:DISNEY ENTERPRISES INC

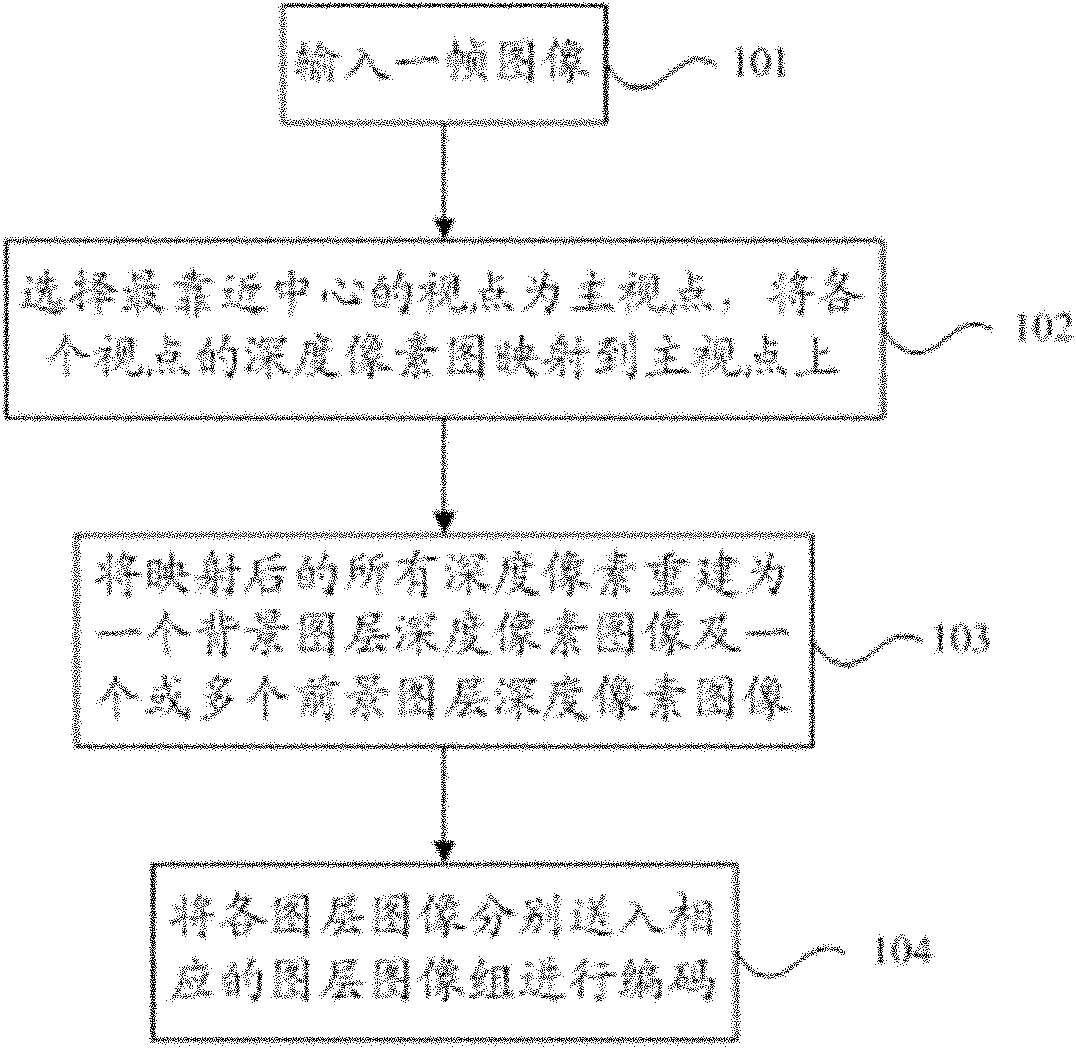

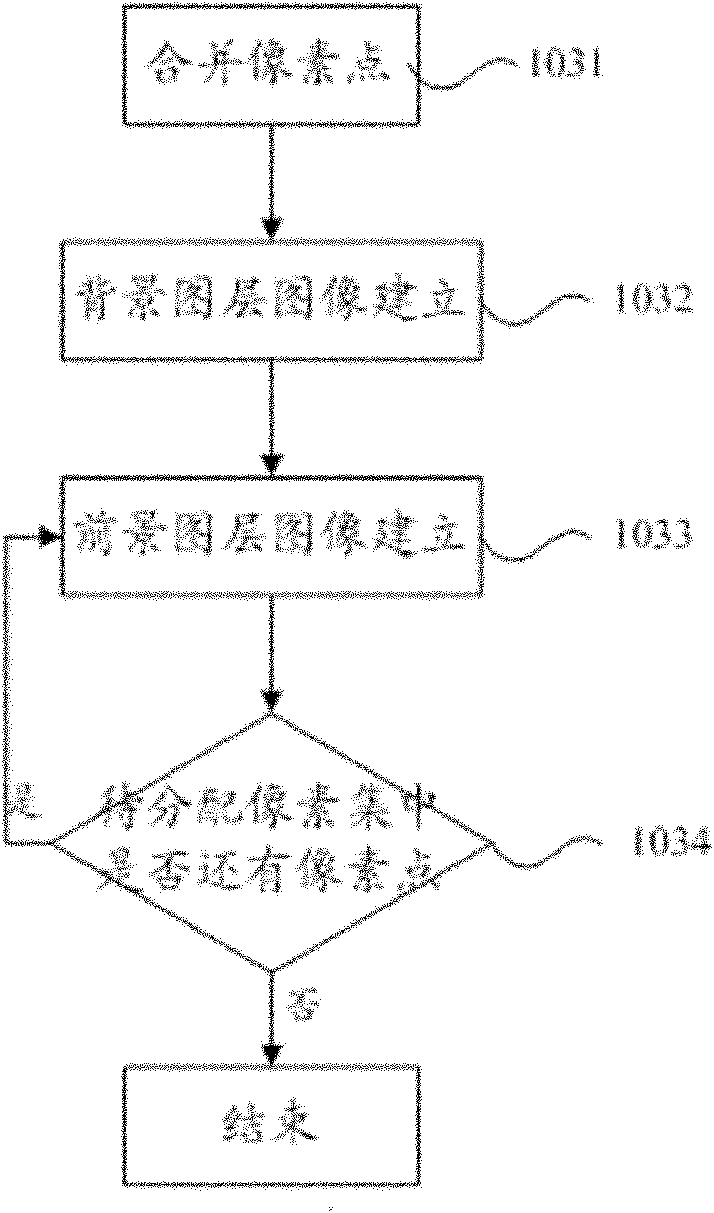

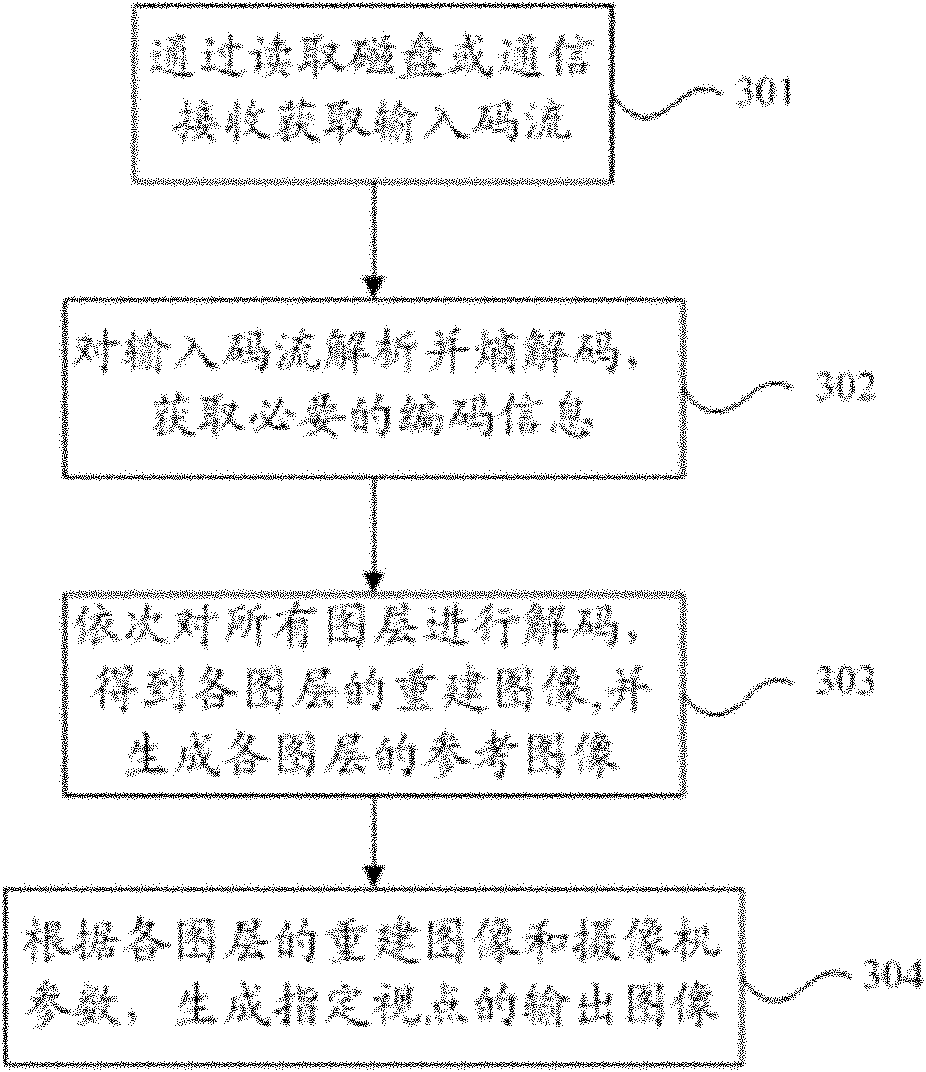

Coding and decoding methods and devices for three-dimensional video

ActiveCN102055982AImprove coding efficiencyImprove compression efficiencyTelevision systemsDigital video signal modificationDecoding methodsViewpoints

The invention discloses a coding method for a three-dimensional video. The method comprises the following steps of: inputting a first frame image which comprises image texture information and depth information at a plurality of different viewpoints at the same time so as to form depth pixel images of the plurality of the viewpoints; selecting a viewpoint which is closest to a center as a main viewpoint and mapping the depth pixel image of each viewpoint onto the main viewpoint; acquiring motion information from the texture information by a motion target detection method, rebuilding all depth pixel points in the mapped depth pixel images by using the depth information and / or the motion information to acquire a background image layer image and one or more foreground image layer images; and coding the background image layer image and the foreground image layer images respectively, wherein the depth information and the texture information are coded respectively. The invention also discloses a decoding method for the three-dimensional video, a coder and a decoder. The coding method is particularly suitable for coding a multi-viewpoint video sequence with a stationary background, can enhance prediction compensation accuracy and decreases code rate on the premise of ensuring subjective quality.

Owner:华雁智科(杭州)信息技术有限公司

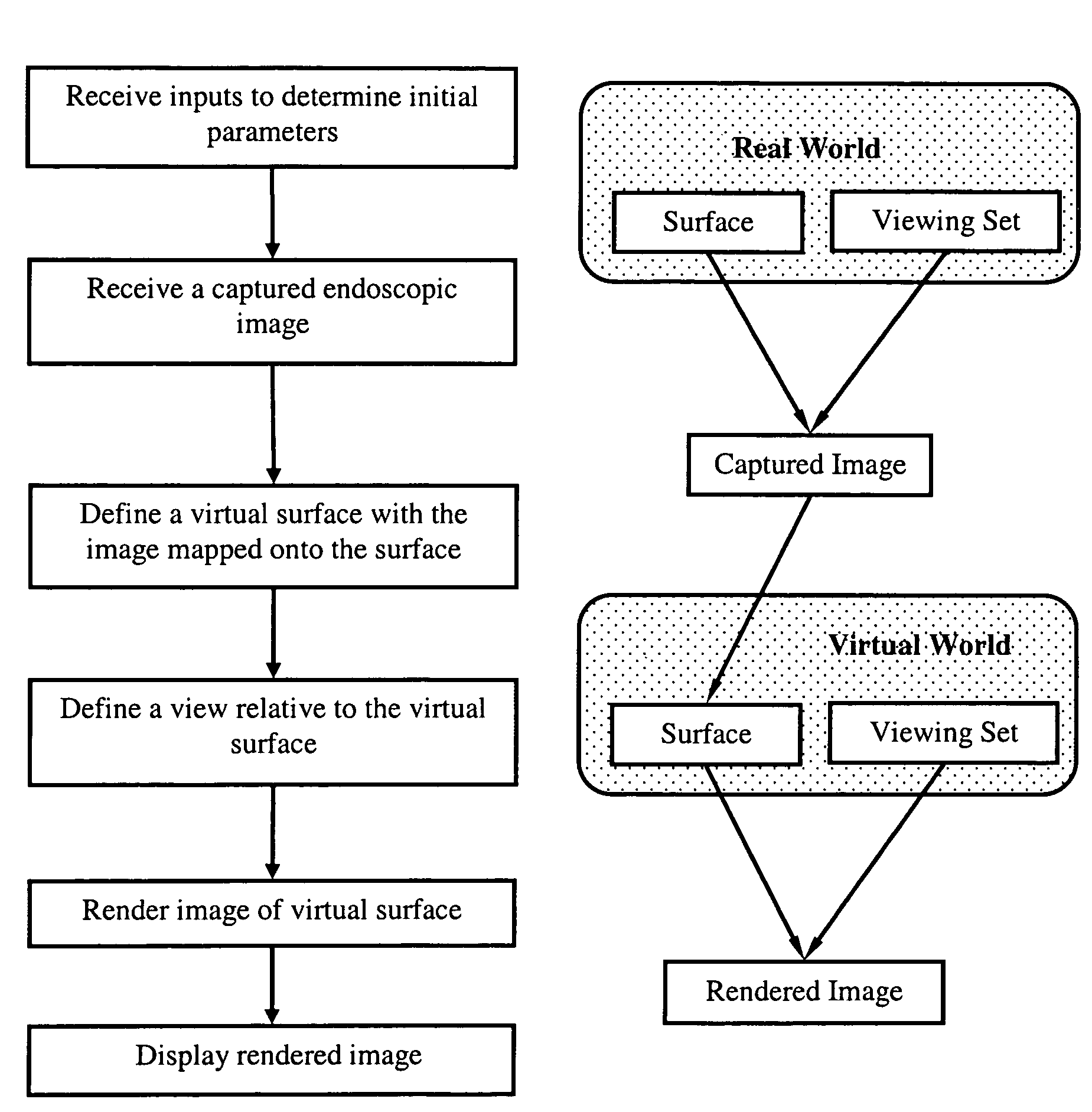

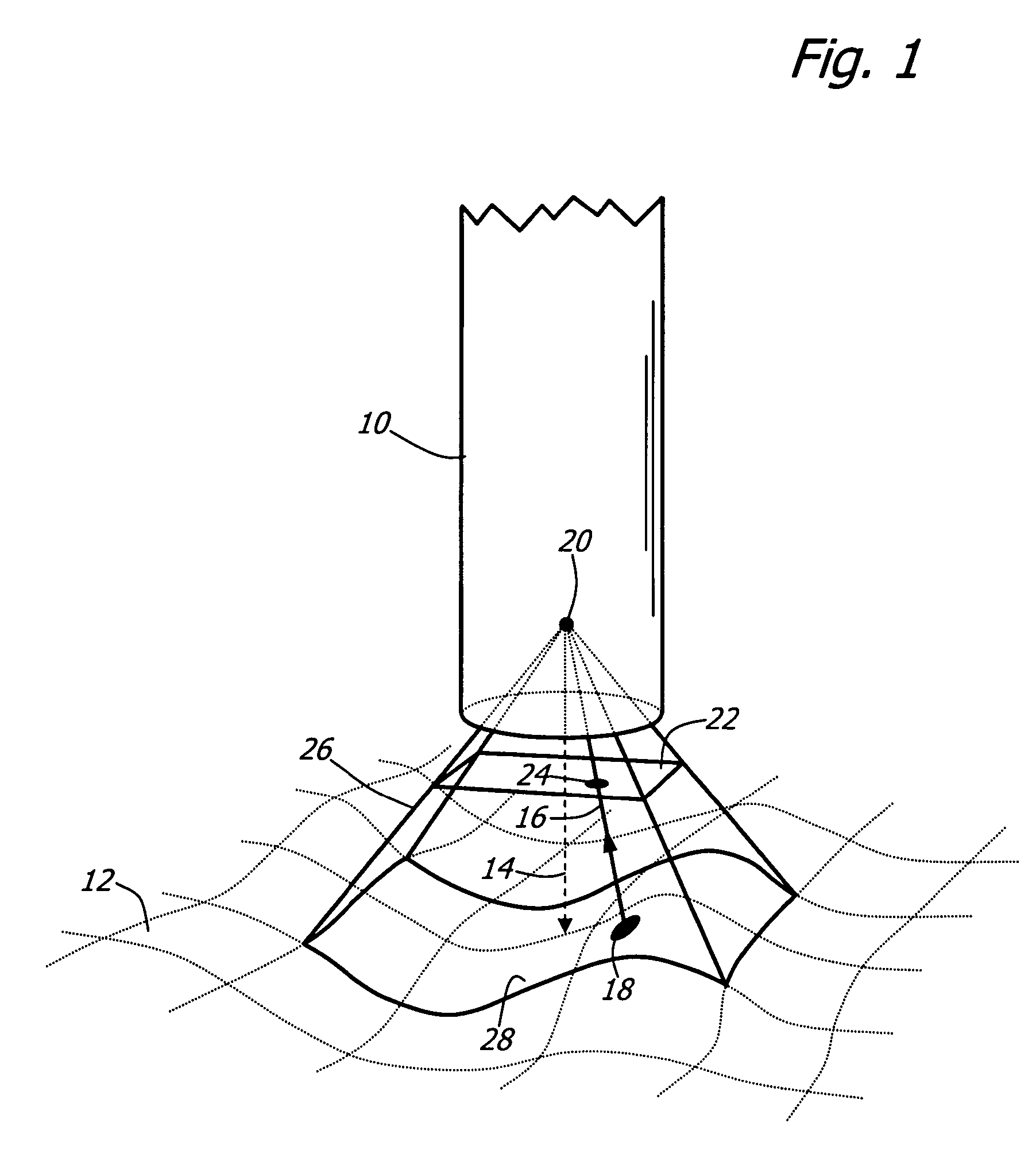

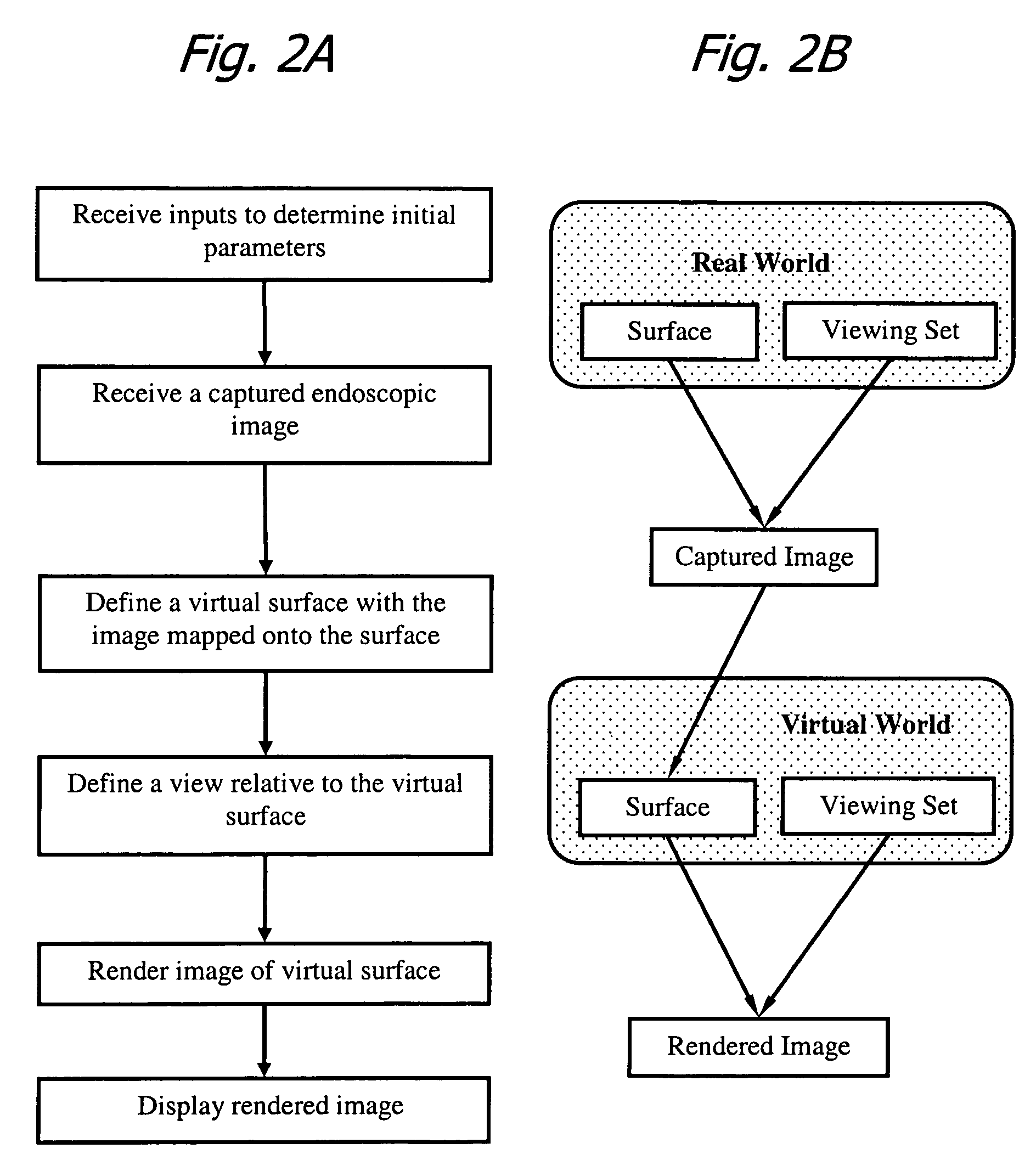

Method and apparatus for displaying endoscopic images

Owner:KARL STORZ IMAGING INC

Apparatus for transmitting point cloud data, a method for transmitting point cloud data, an apparatus for receiving point cloud data and/or a method for receiving point cloud data

In accordance with embodiments, a method for transmitting point cloud data includes generating a geometry image for a location of point cloud data; generating a texture image for attribute of the point cloud data; generating an occupancy map for a patch of the point cloud data; and / or multiplexing the geometry image, the texture image and the occupancy map. In accordance with embodiments, a method for receiving point cloud data includes demultiplexing multiplexing a geometry image for a location of point cloud data, a texture image for attribute of the point cloud data and an occupancy map for a patch of the point cloud data; decompressing the geometry image; decompressing the texture image; and / or decompressing the occupancy map.

Owner:LG ELECTRONICS INC

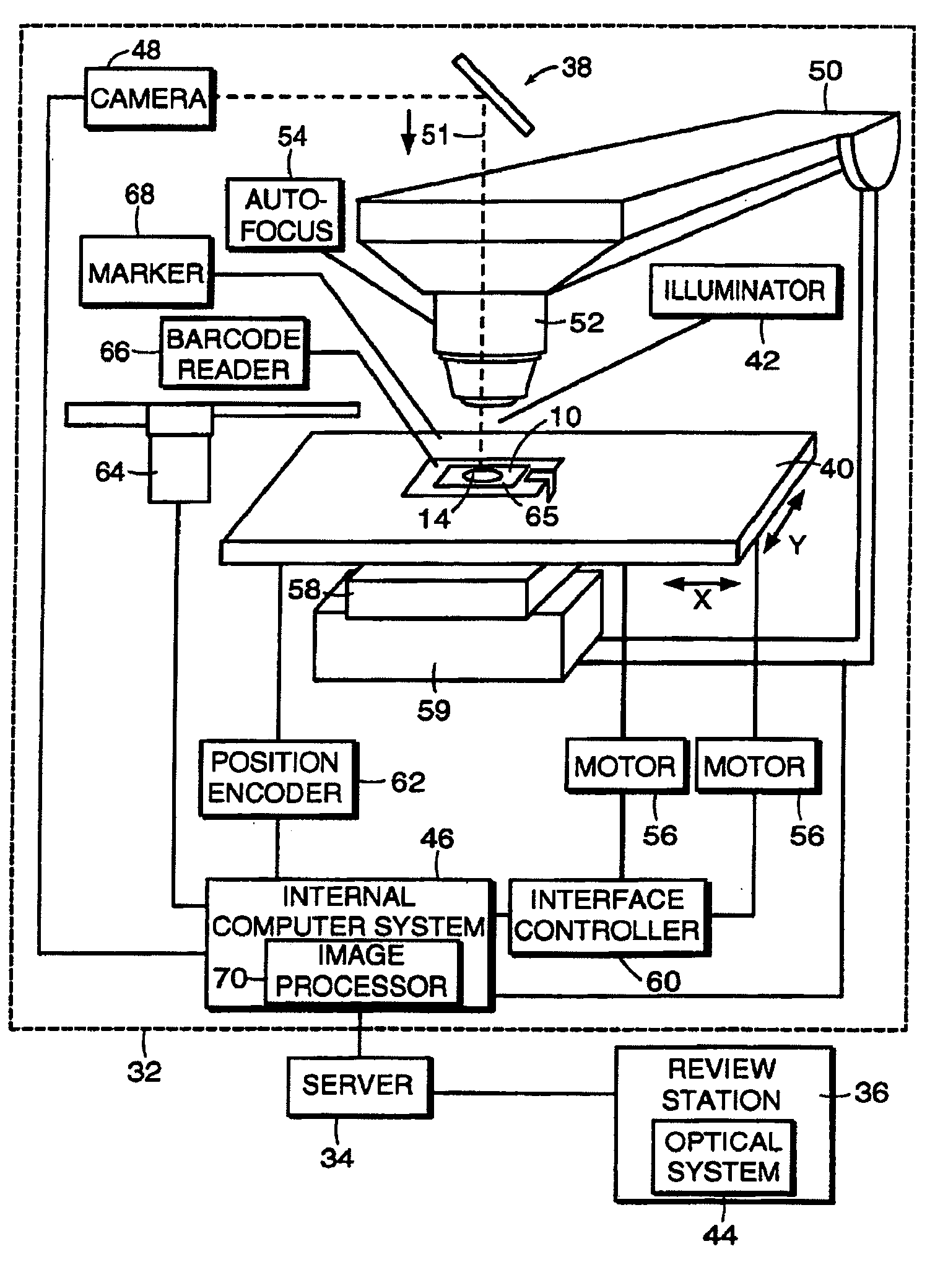

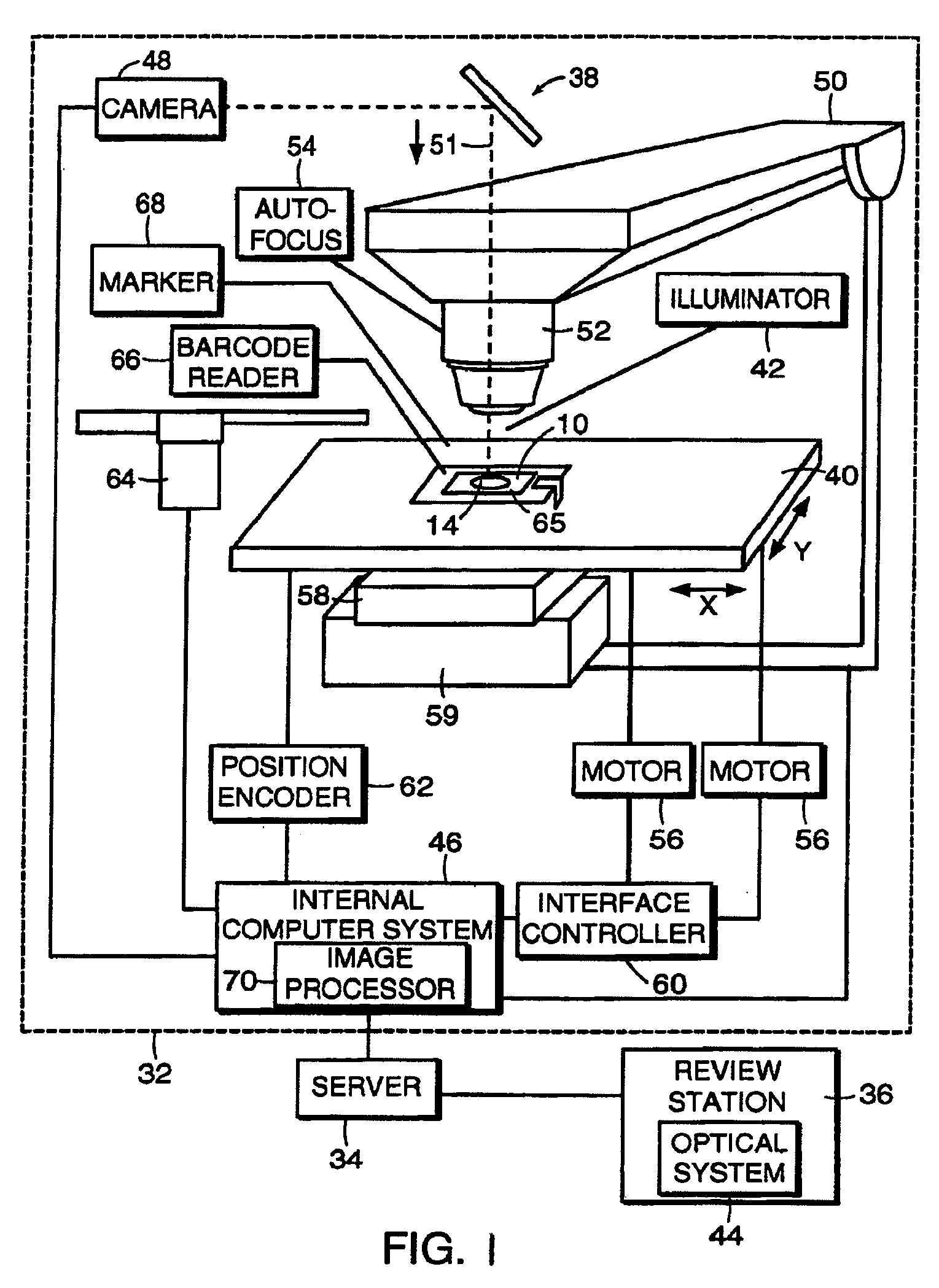

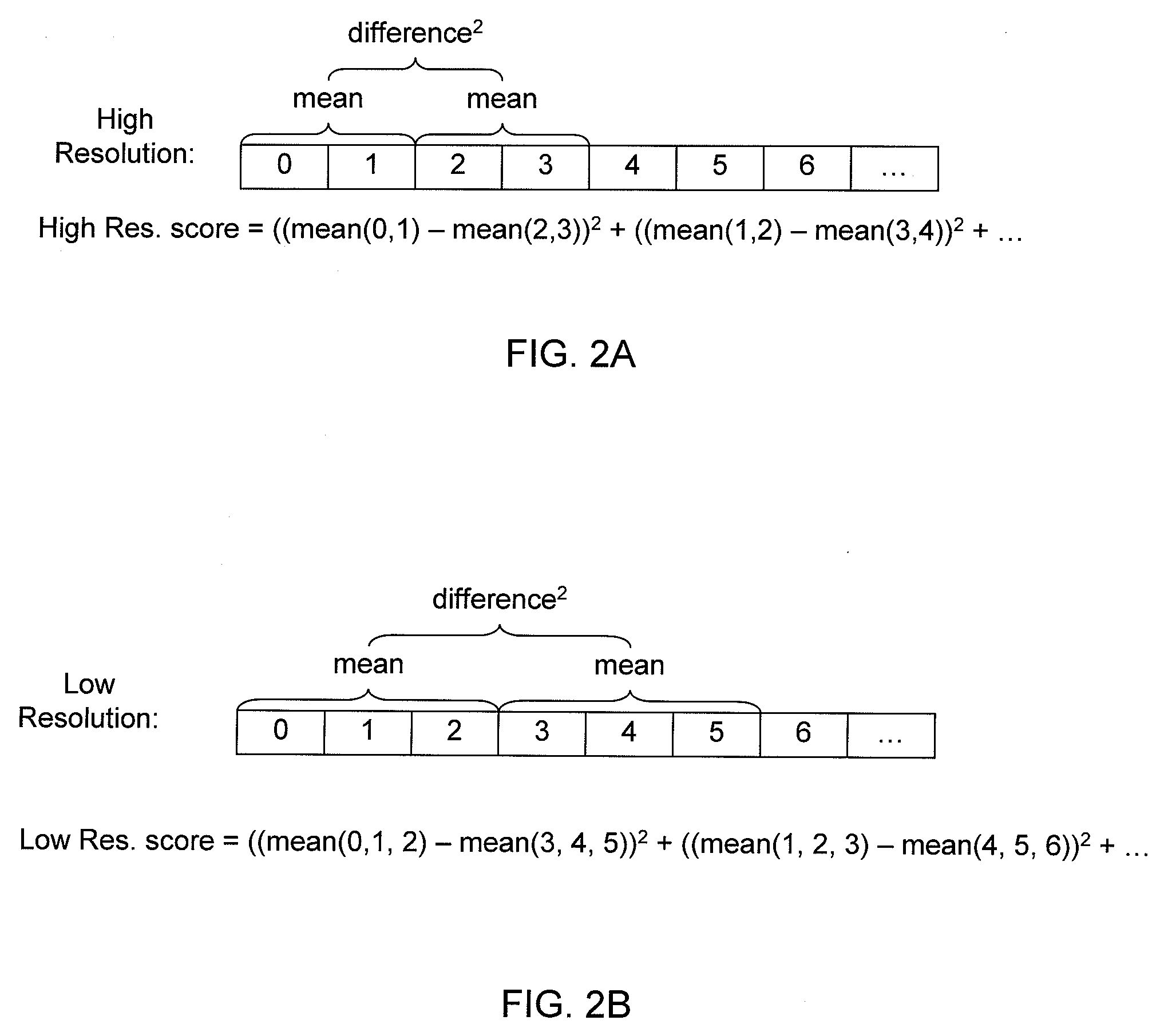

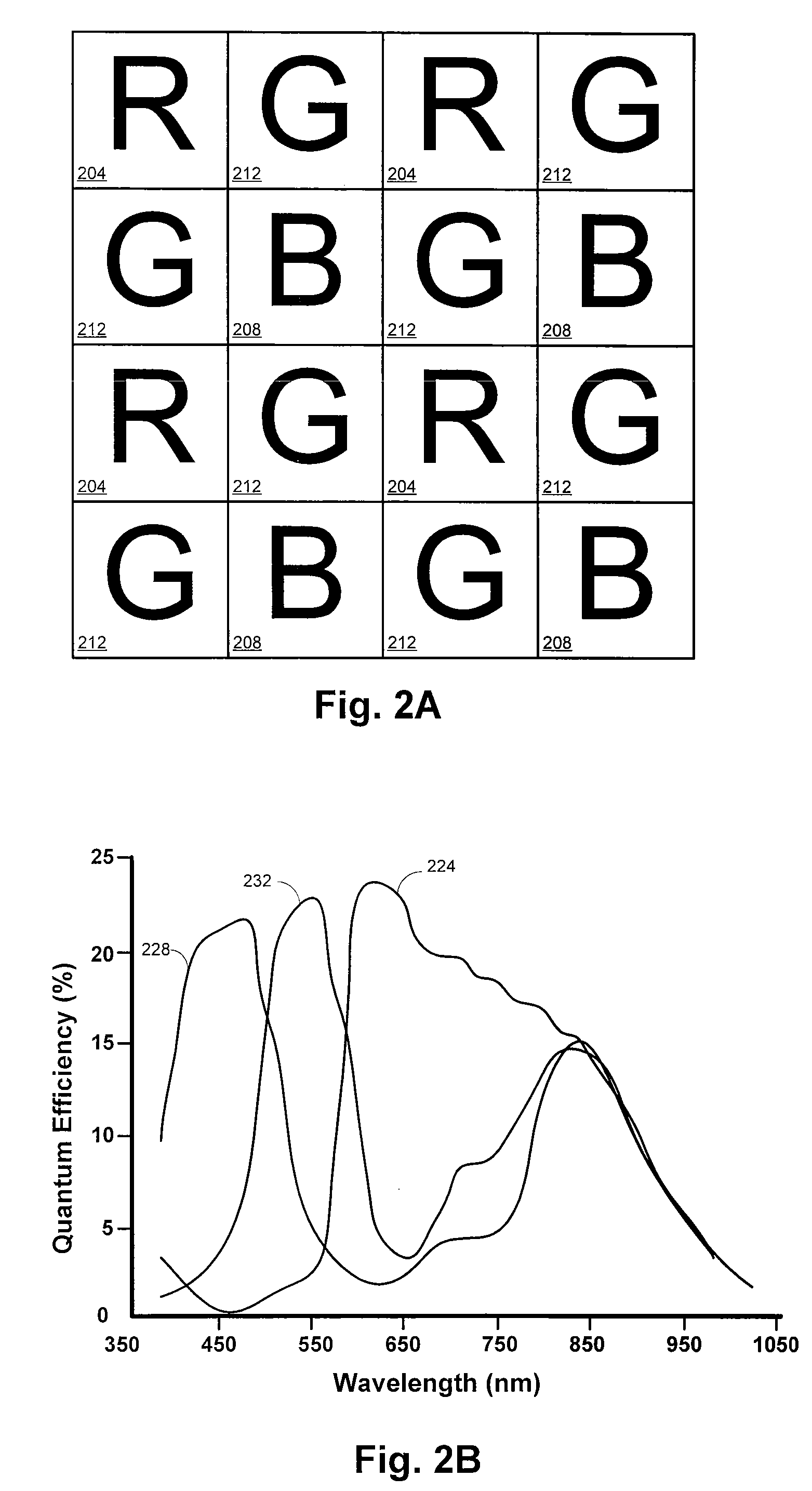

Method for assessing image focus quality

A method for determining the quality of focus of a digital image of a biological specimen includes obtaining a digital image of a specimen using a specimen imaging apparatus. A measure of image texture is calculated at two different scales, and the measurements are compared to determine how much high-resolution data the image contains compared to low-resolution data. The texture measurement may, for example, be a Brenner auto-focus score calculated from the means of adjacent pairs of pixels for the high-resolution measurement and from the means of adjacent triples of pixels for the low-resolution measurement. A score indicative of the quality of focus is then established based on a function of the low-resolution and high-resolution measurements. This score may be used by an automated imaging device to verify that image quality is acceptable. The device may adjust the focus and acquire new images to replace any that are deemed unacceptable.

Owner:CYTYC CORP

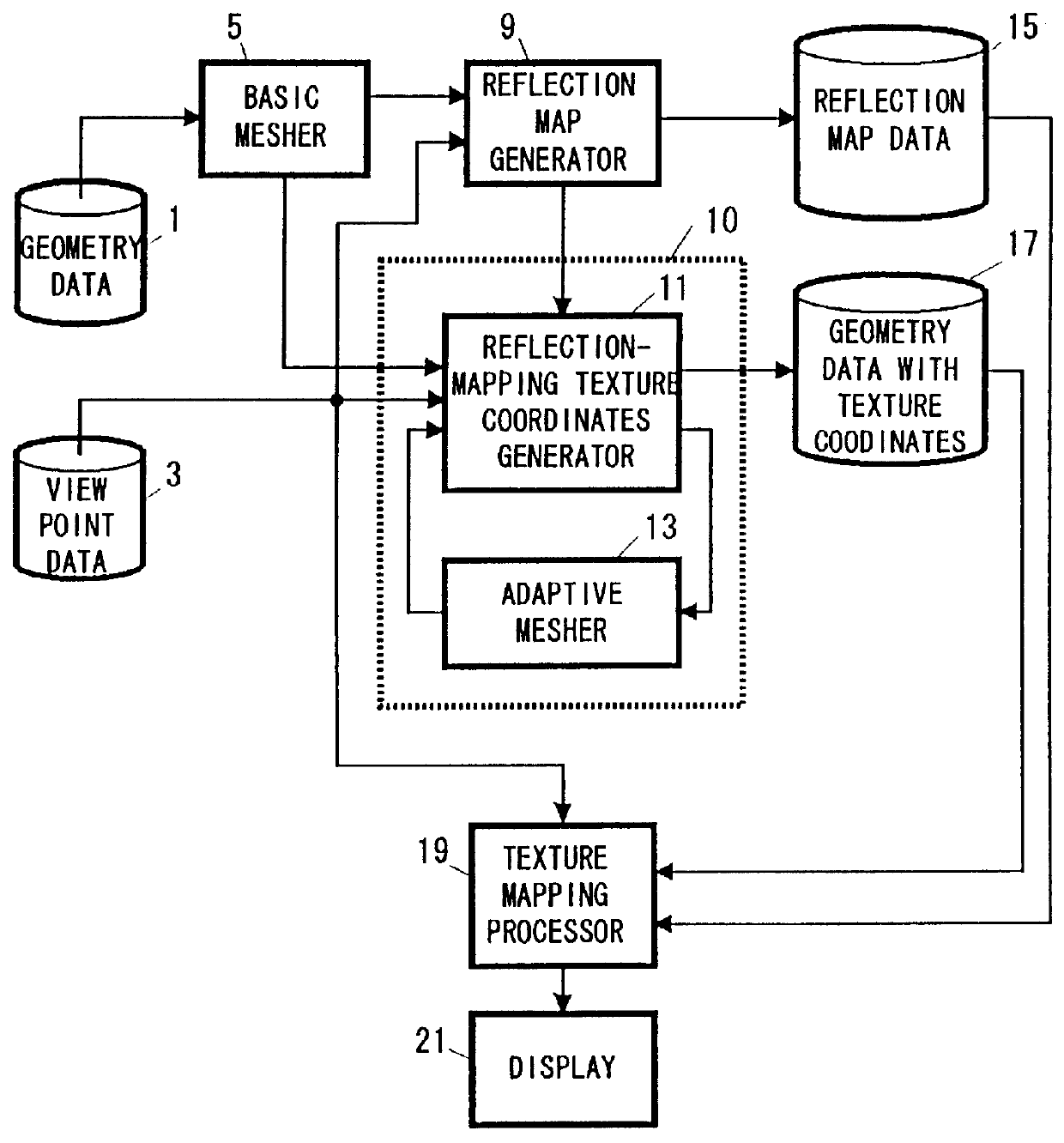

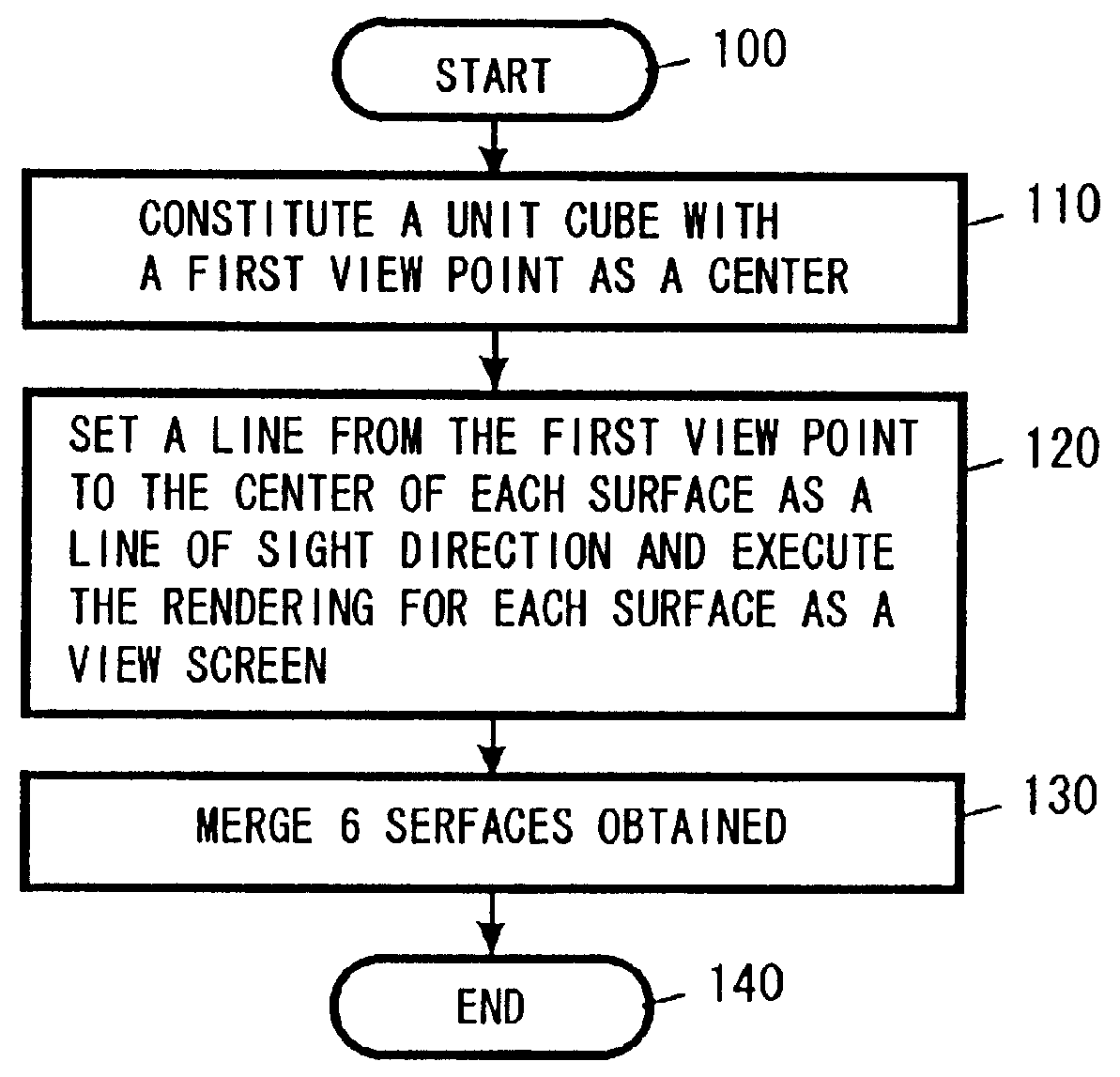

Rendering method and apparatus

InactiveUS6034691AQuality improvementCathode-ray tube indicators3D-image renderingThree-dimensional spaceComputer graphics (images)

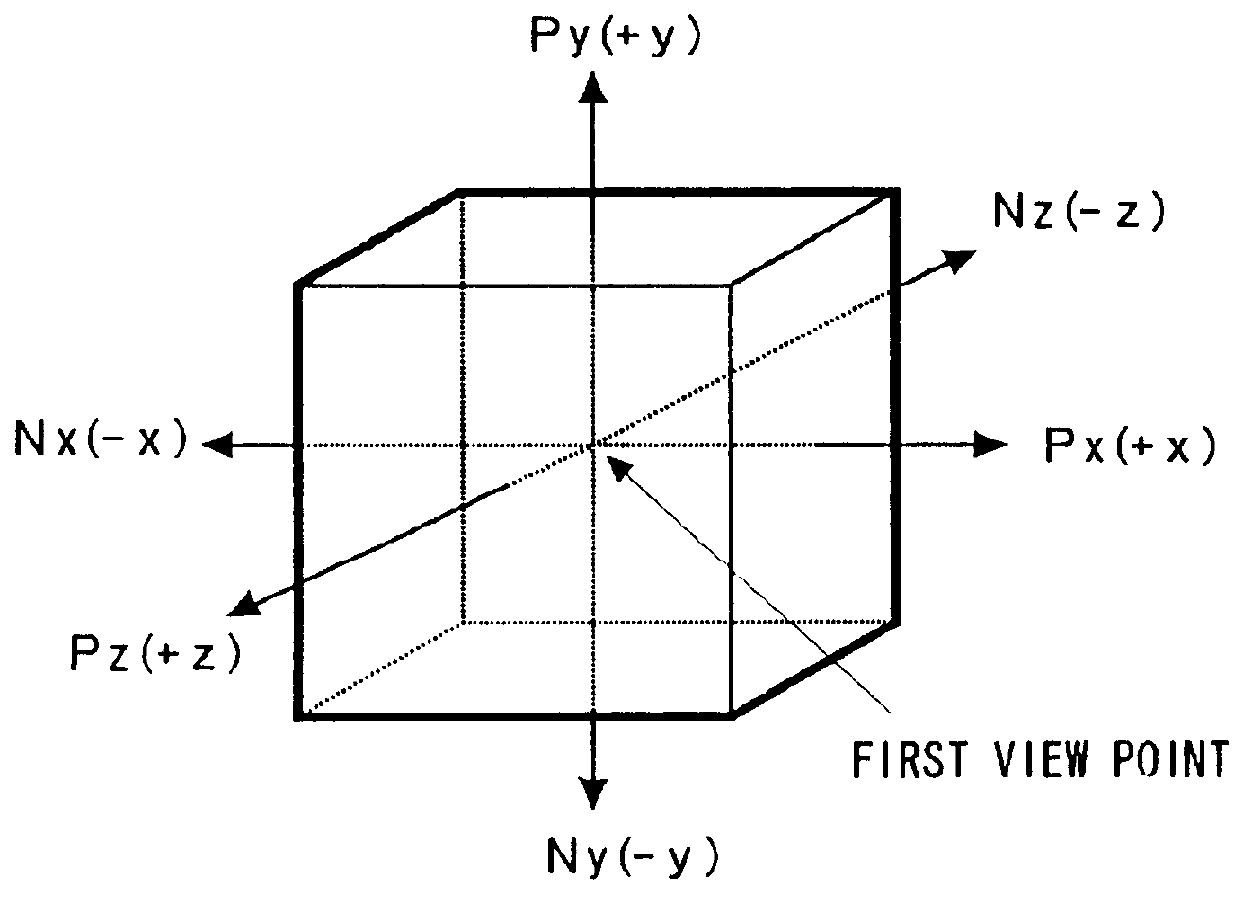

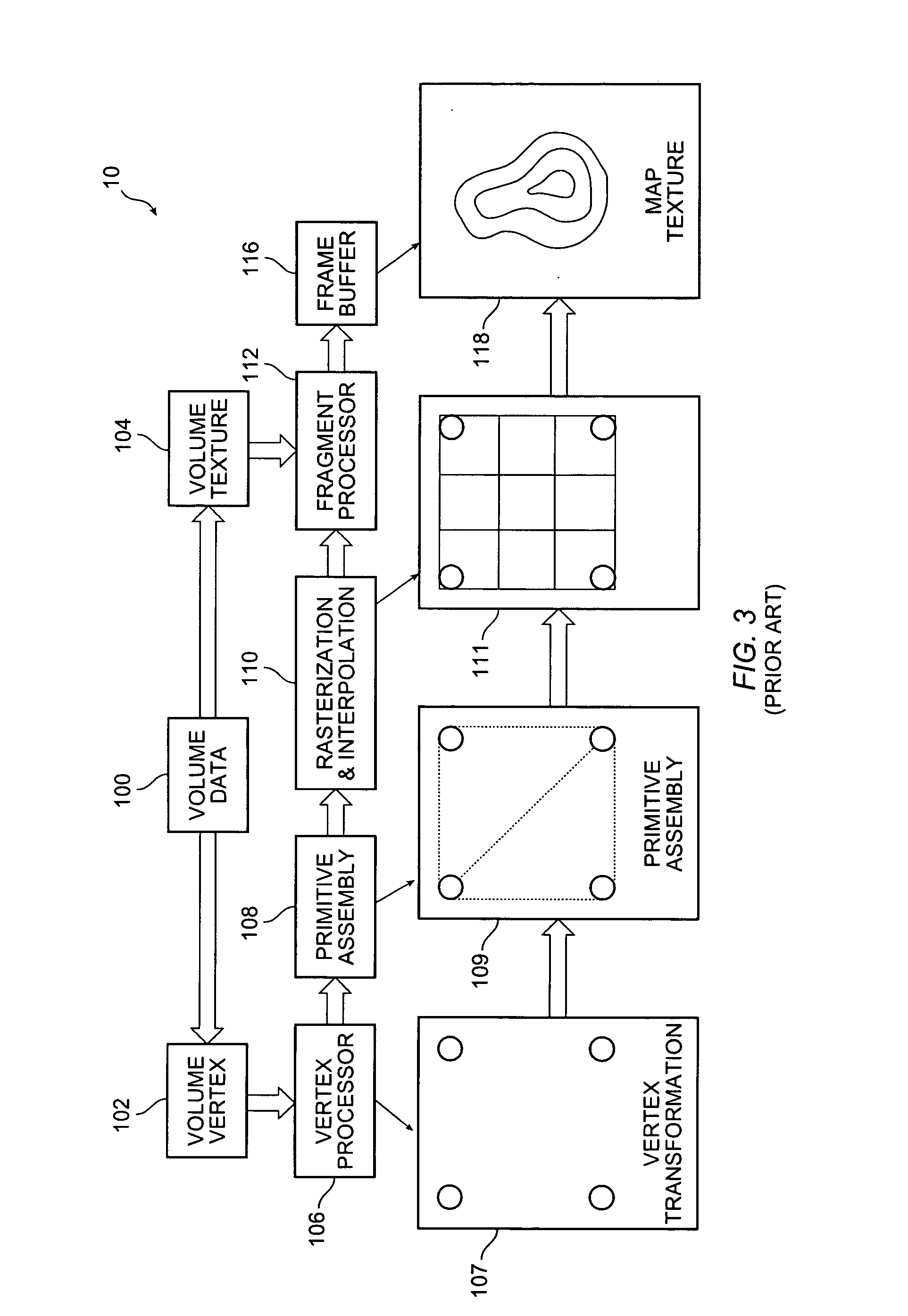

The present invention is directed to a real-time controllable reflection mapping function which covers all stereoscopic directions of space. More specifically, the surface of a mirrored object is segmented into a plurality of polygonal elements (for example, triangles). Then, a polyhedron, which includes a predetermined point (for example, the center of the mirrored object) in a three-dimensional space (for example, a cube) in the interior thereof, is generated and a rendering process is performed for each surface of the polyhedron with the predetermined point as a view point. The rendered image is stored. Thereafter, a reflection vector is calculated between each vertex of the polygonal elements and a view point used when the entire three-dimensional space is rendered. Next, the surface of the polyhedron, in which an intersecting point between the reflection vector with the predetermined point as a start point and the polyhedron exists, is obtained. The coordinate in the image, which corresponds to each vertex of the polygonal elements, is calculated by using the surface where the intersecting point exists and the reflection vector. The image is texture-mapped onto the surface of the object by using the coordinate in the image which corresponds to each vertex of the polygonal elements. Finally, the result of the texture mapping is displayed.

Owner:GOOGLE LLC

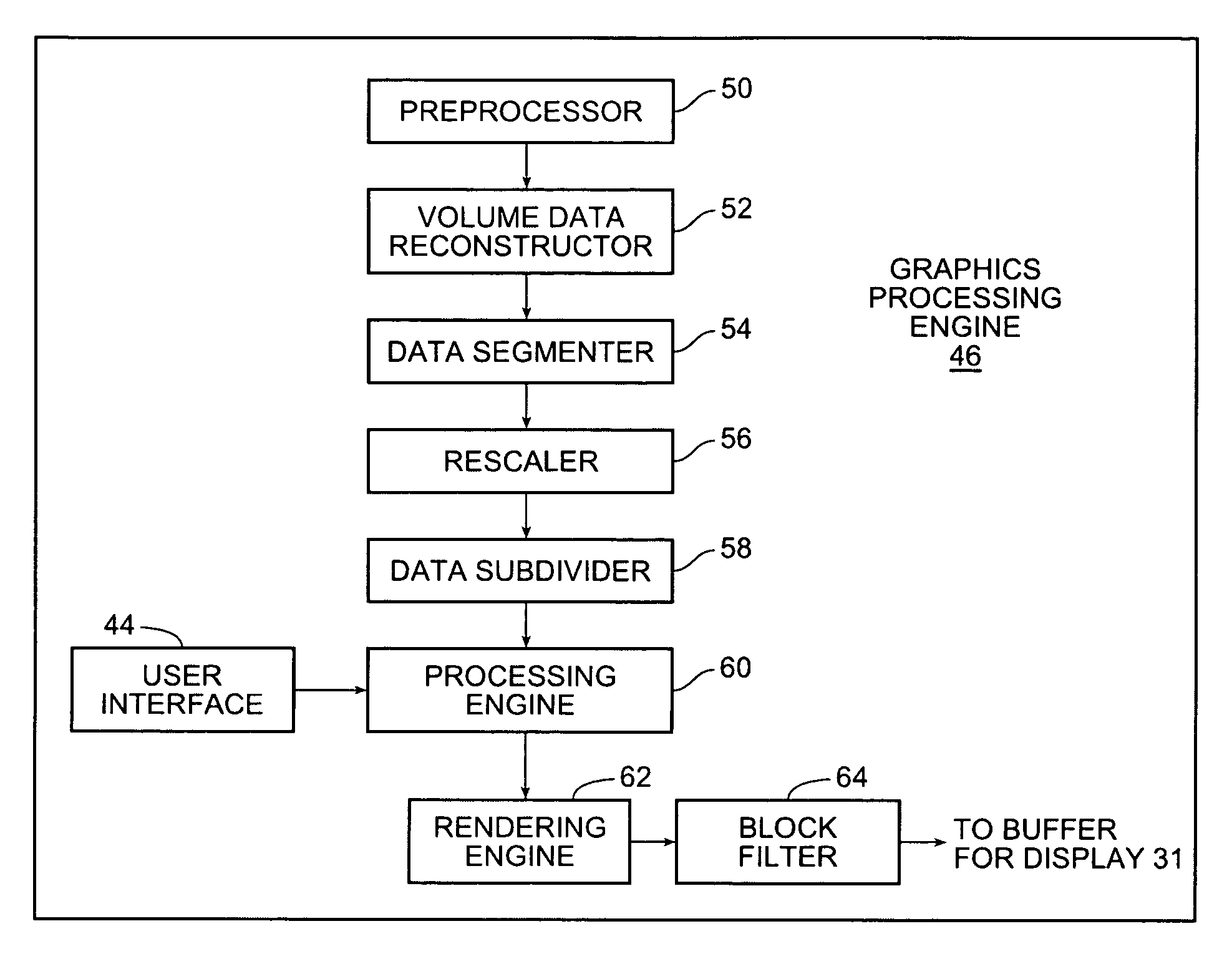

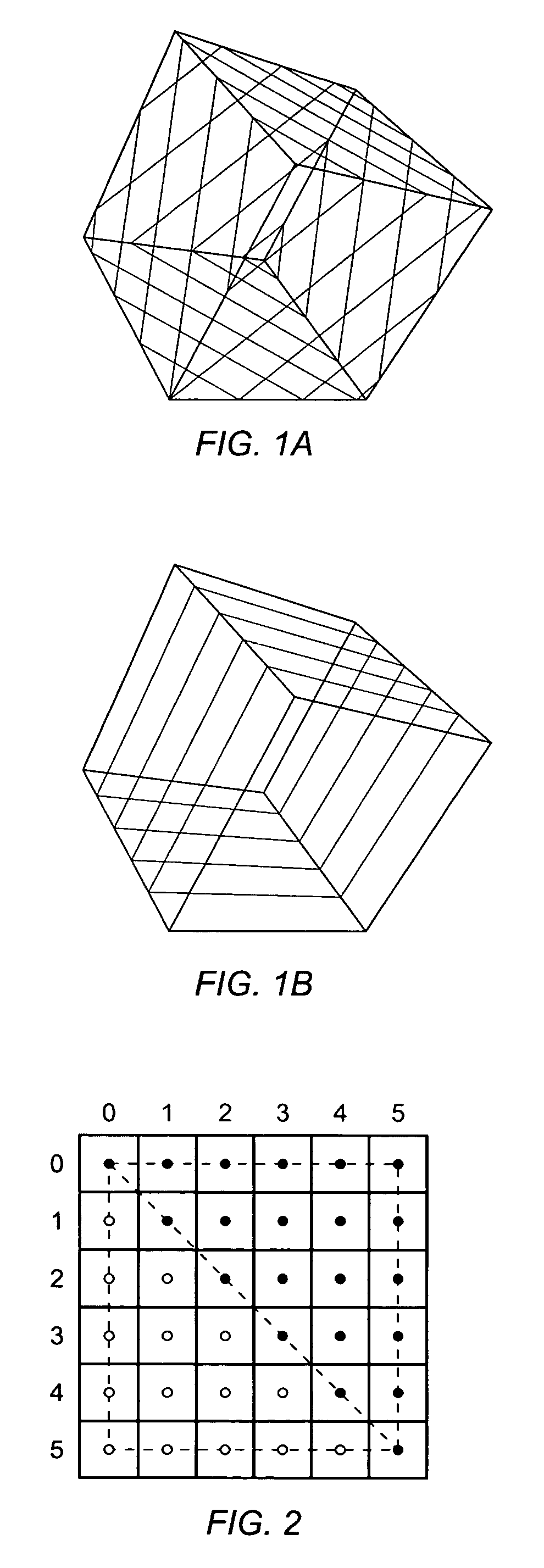

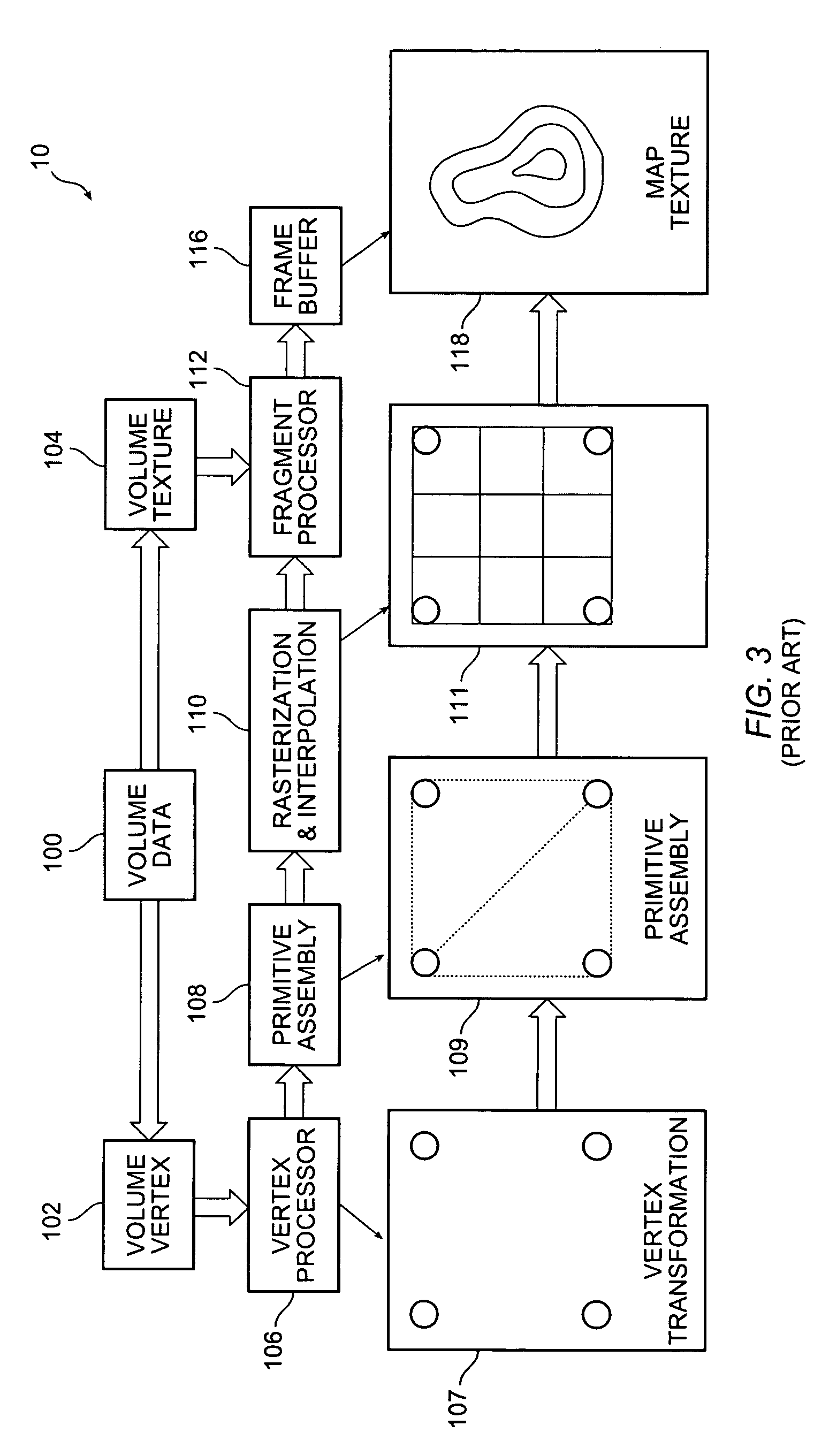

Block-based fragment filtration with feasible multi-GPU acceleration for real-time volume rendering on conventional personal computer

ActiveUS20050231503A1Reduce the burden onDetails involving 3D image dataCathode-ray tube indicatorsVoxelFiltration

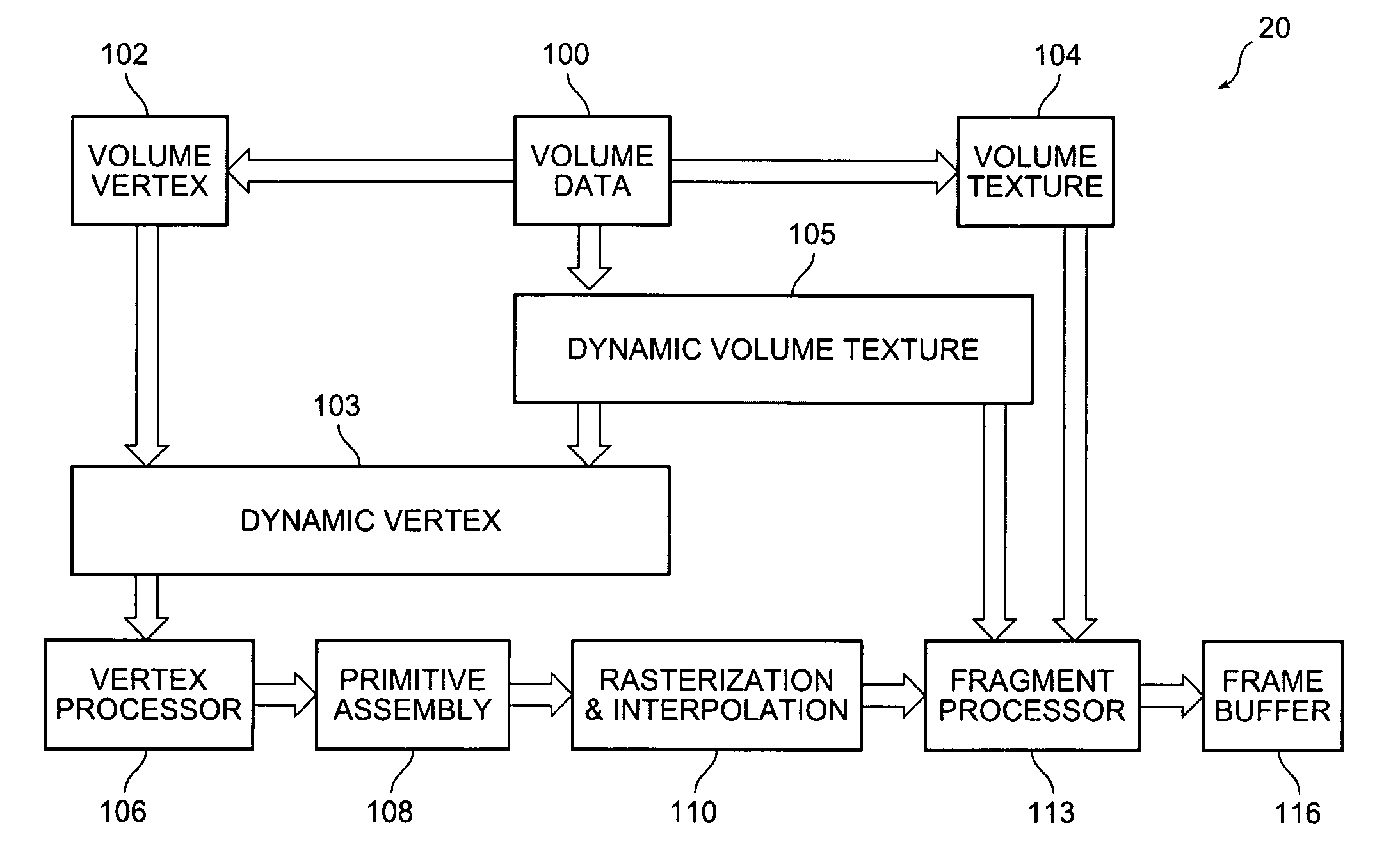

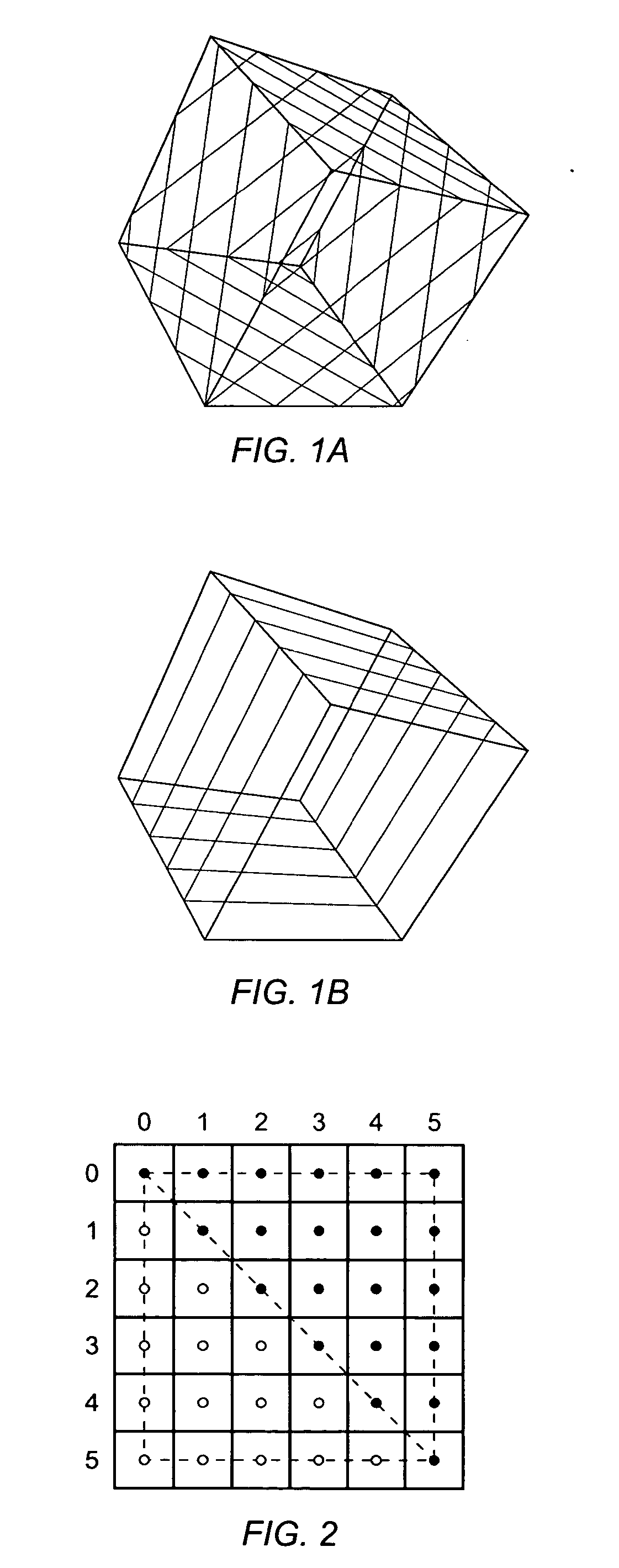

A computer-based method and system for interactive volume rendering of a large volume data on a conventional personal computer using hardware-accelerated block filtration optimizing uses 3D-textured axis-aligned slices and block filtration. Fragment processing in a rendering pipeline is lessened by passing fragments to various processors selectively in blocks of voxels based on a filtering process operative on slices. The process involves generating a corresponding image texture and performing two-pass rendering, namely a virtual rendering pass and a main rendering pass. Block filtration is divided into static block filtration and dynamic block filtration. The static block filtration locates any view-independent unused signal being passed to a rasterization pipeline. The dynamic block filtration determines any view-dependent unused block generated due to occlusion. Block filtration processes utilize the vertex shader and pixel shader of a GPU in conventional personal computer graphics hardware. The method is for multi-thread, multi-GPU operation.

Owner:THE CHINESE UNIVERSITY OF HONG KONG

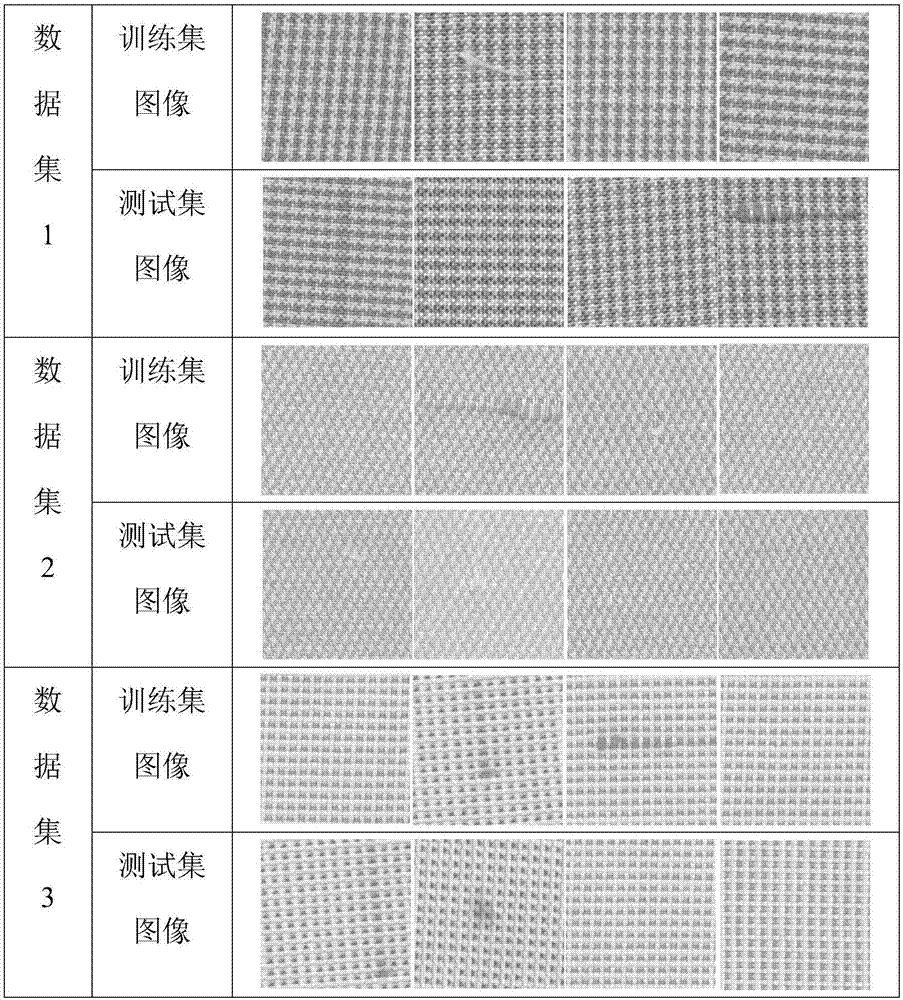

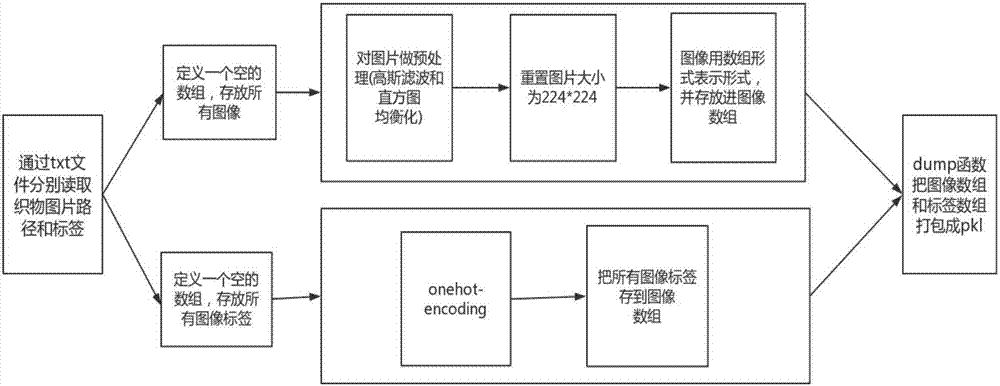

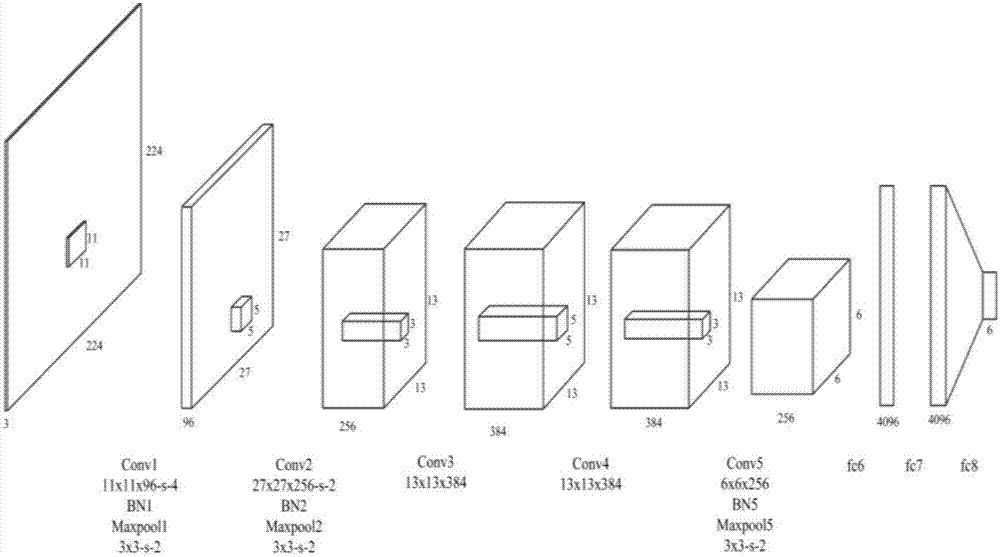

Convolutional neural network-based yarn dyed fabric defect detection method

ActiveCN107169956AIntuitive changesFast defect detectionImage enhancementImage analysisYarnNetwork structure

The invention discloses a convolutional neural network-based yarn dyed fabric defect detection method. The method comprises a training stage and a detection stage in total. In the training stage, firstly a yarn dyed fabric defect image library is established, and images are preprocessed for reducing the influence of noises and image textures; the images and image tags are packaged; and an AlexNet convolutional neural network-based yarn dyed fabric defect detection model is built; a series of operations of image convolution, pooling, batch normalization, full connection and the like are carried out; defect features in the images are extracted; and a convolution kernel number, a layer number, a network structure and the like of the network model are improved, so that the accuracy of predicting test images by the built convolutional neural network model is further improved. By using a deep learning method, the convolutional neural network model is built for detecting yarn dyed fabric image defects. Compared with a conventional method, a detection result is more accurate and the yarn dyed fabric defects can be detected more efficiently.

Owner:XIAN HUODE IMAGE TECH CO LTD

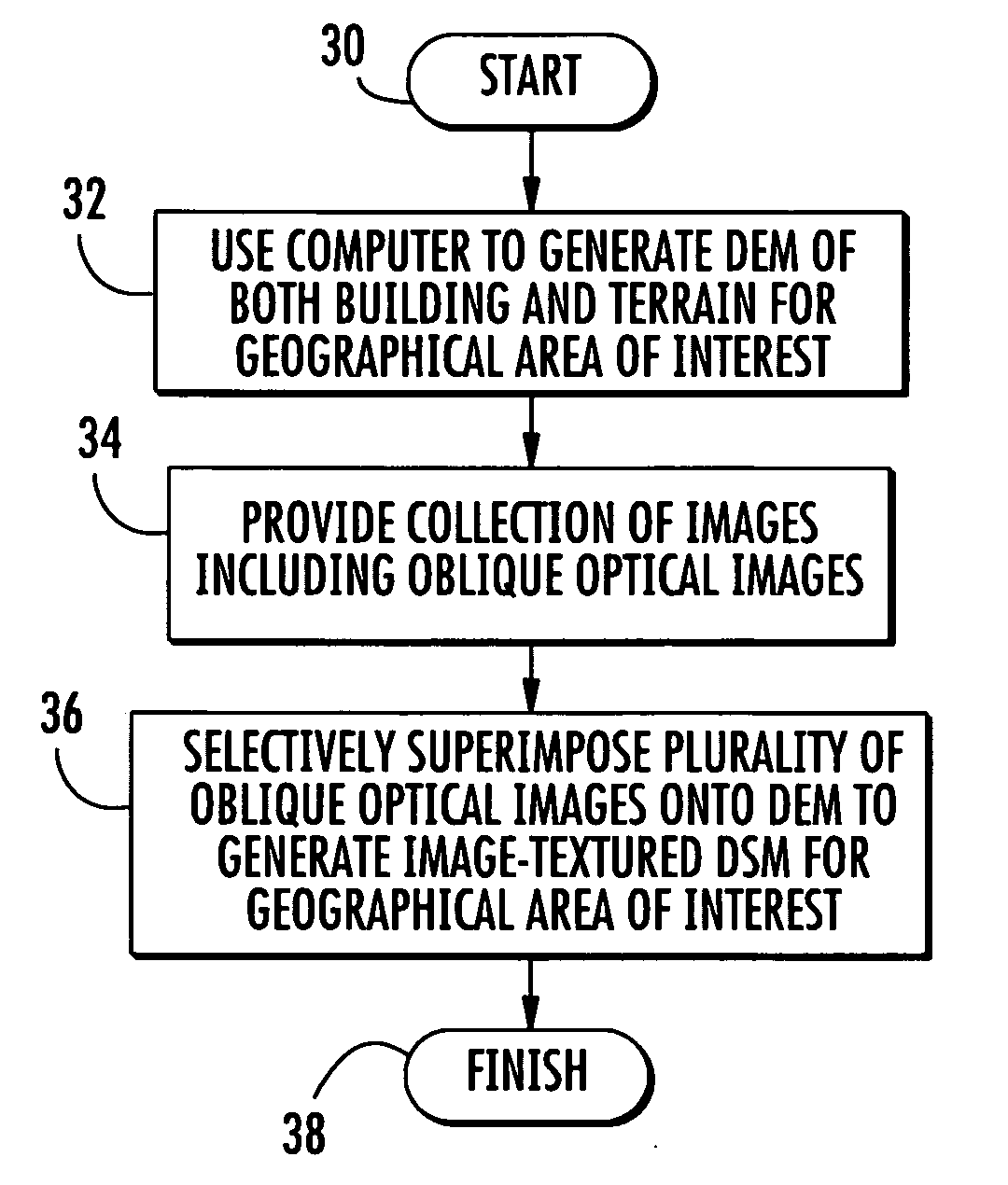

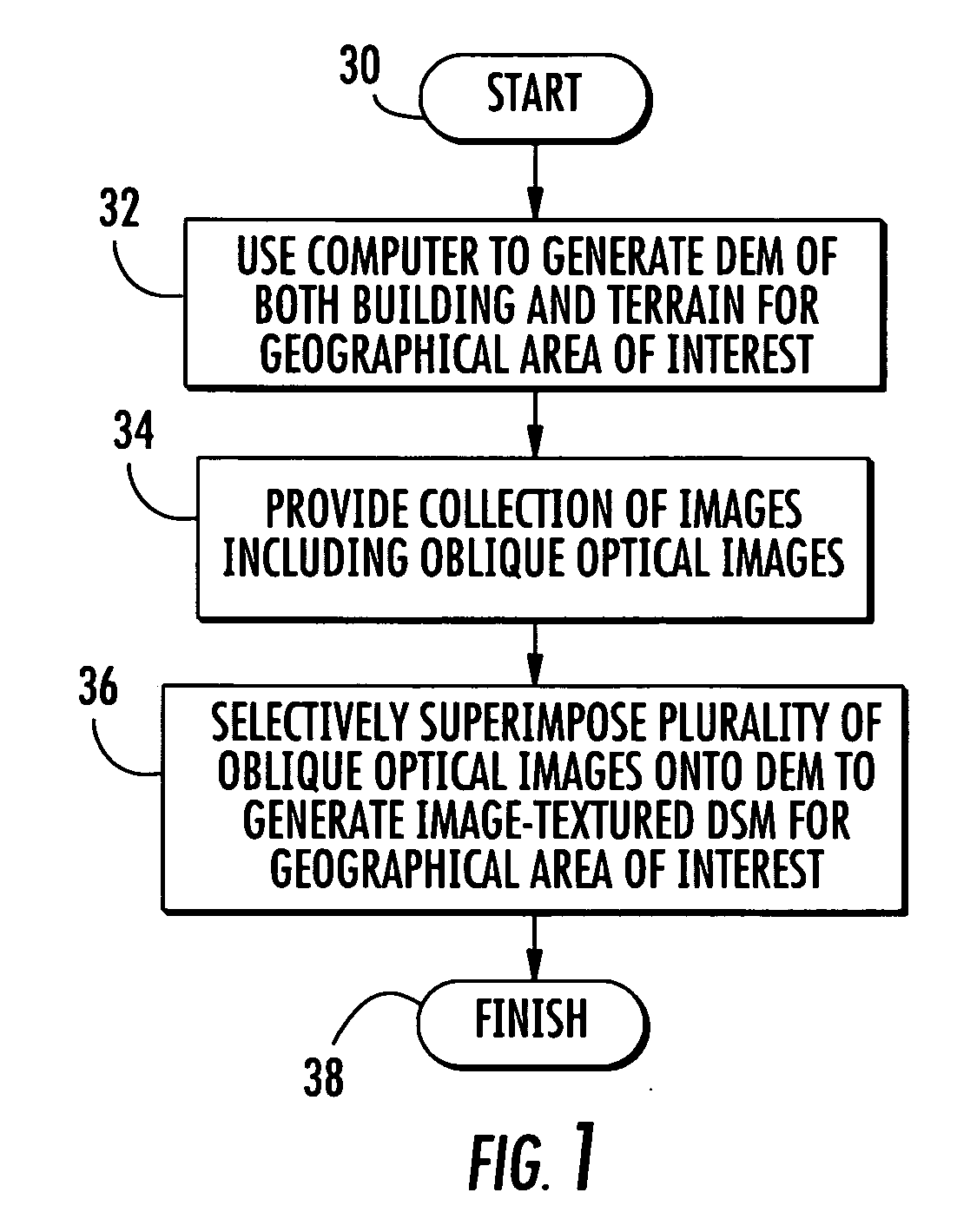

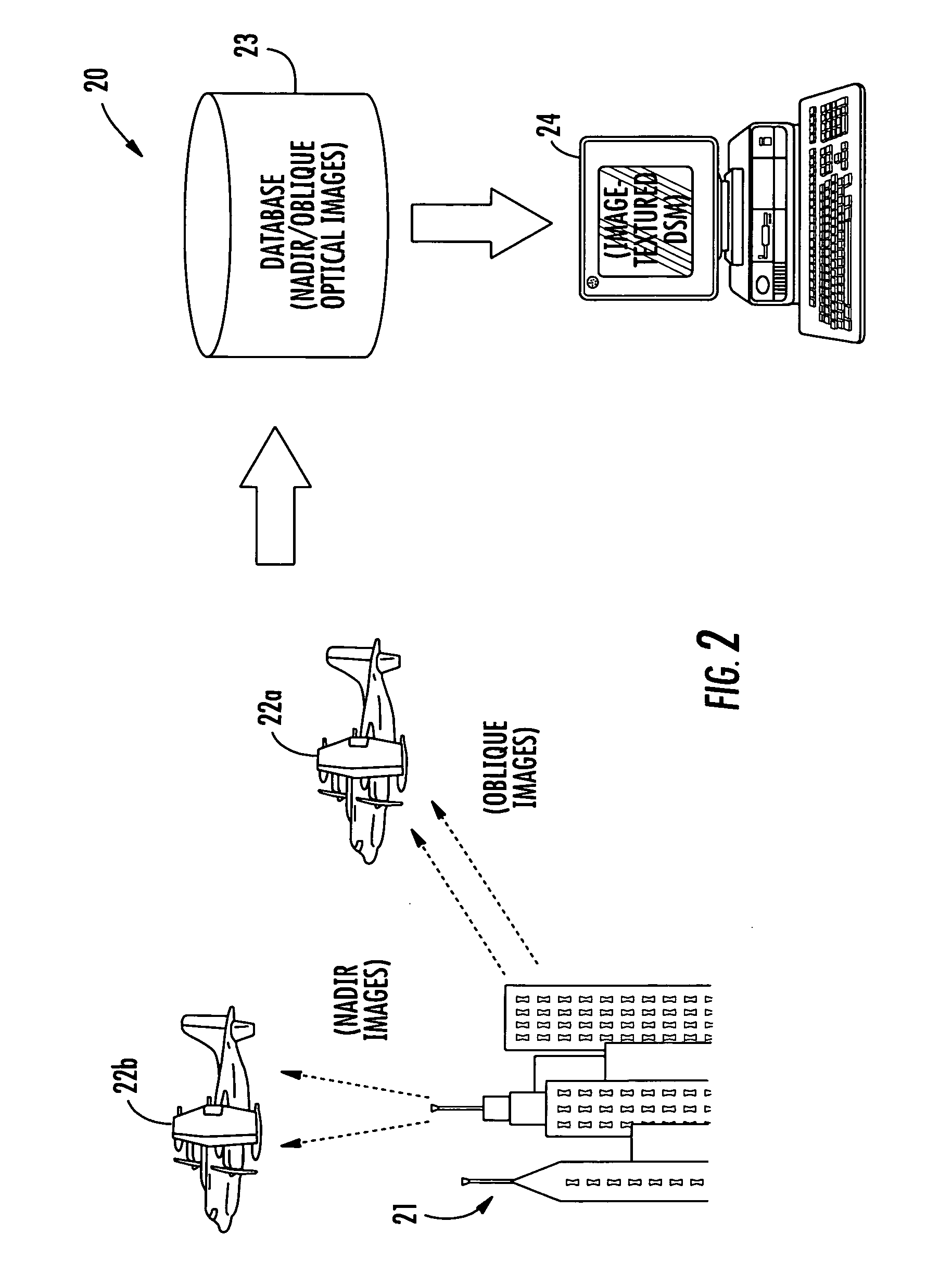

Method and System for Generating an Image-Textured Digital Surface Model (DSM) for a Geographical Area of Interest

A computer-implemented method for generating an image-textured digital surface model (DSM) for a geographical area of interest including both buildings and terrain may include using a computer to generate a digital elevation model (DEM) of both the buildings and terrain for the geographical area of interest. The method may further include providing a collection of optical images including oblique optical images for the geographical area of interest including both buildings and terrain. The computer may also be used to selectively superimpose oblique optical images from the collection of optical images onto the DEM of both the buildings and terrain for the geographical area of interest and to thereby generate the image-textured DSM for the geographical area of interest including both buildings and terrain.

Owner:HARRIS CORP

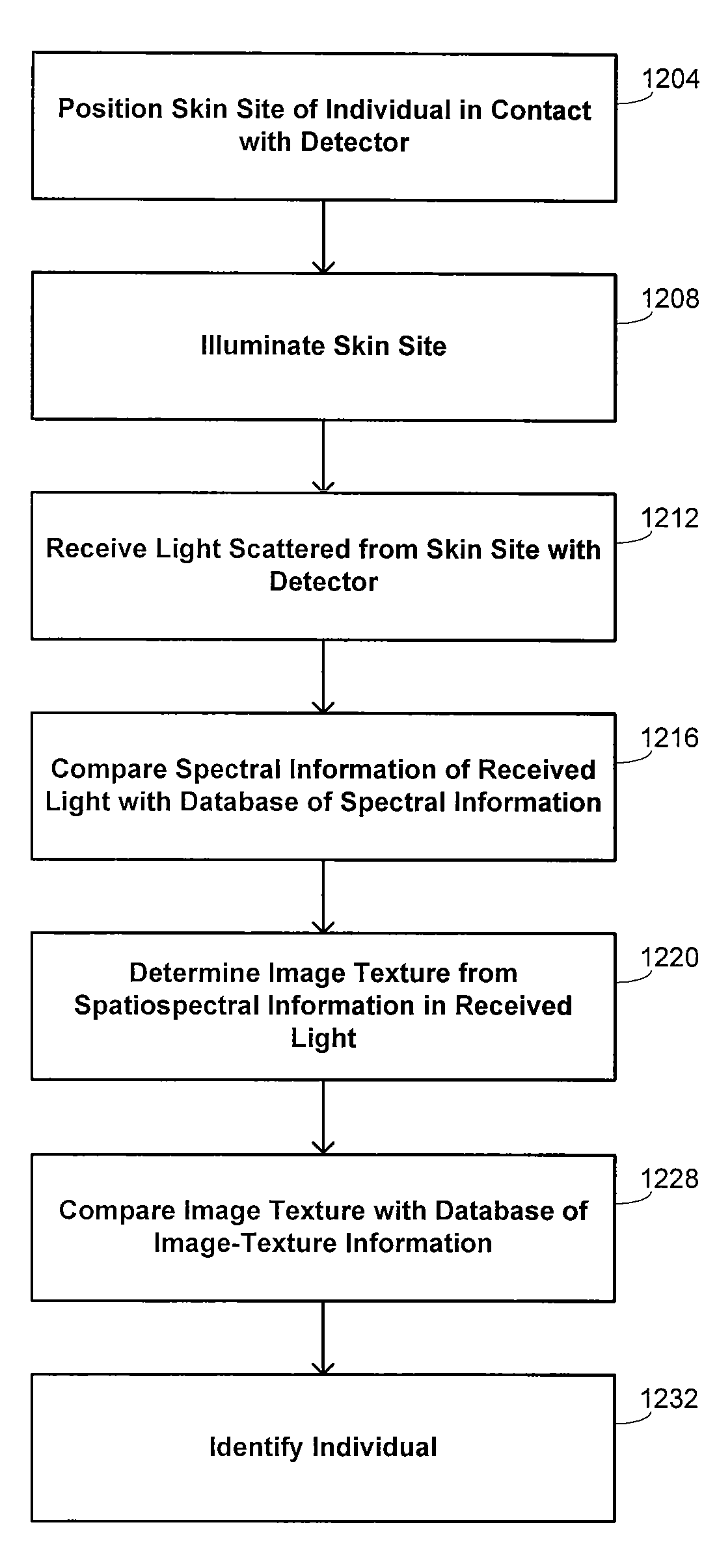

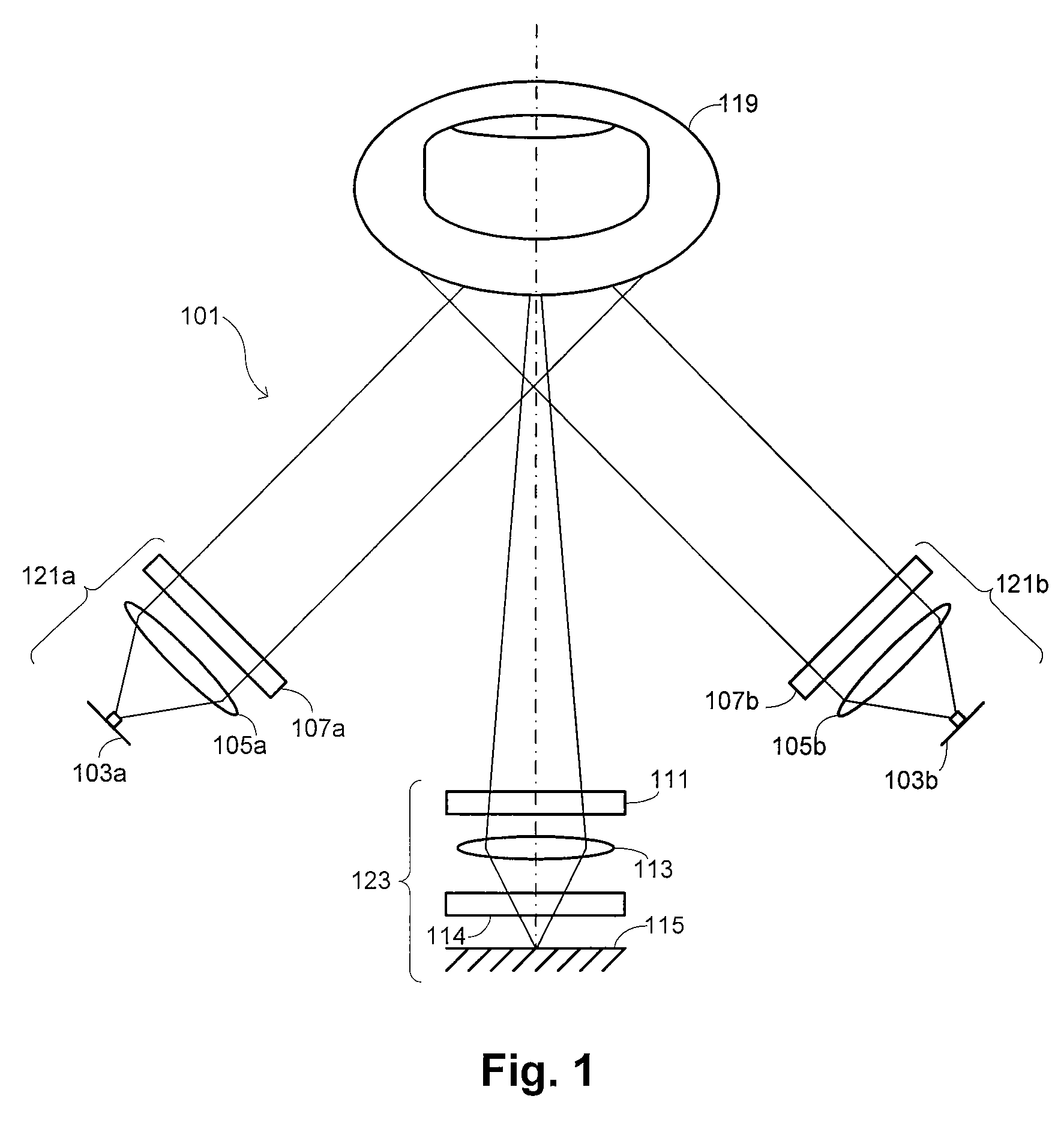

Texture-biometrics sensor

Methods and systems are provided for performing a biometric function. A purported skin site of an individual is illuminated with illumination light. The purported skin site is in contact with a surface. Light scattered from the purported skin site is received in a plane that includes the surface. An image is formed from the received light. An image-texture measure is generated from the image. The generated image-texture measure is analyzed to perform the biometric function.

Owner:HID GLOBAL CORP

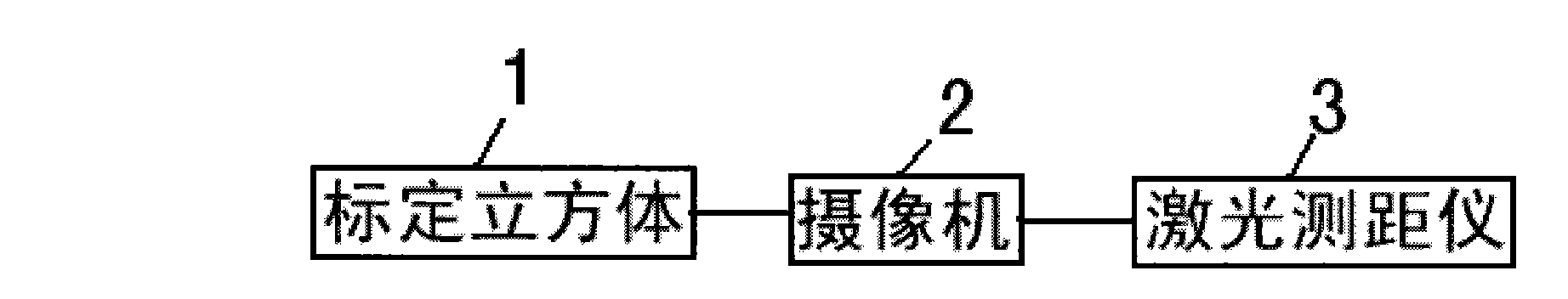

Three-dimensional rebuilding method based on laser and camera data fusion

InactiveCN101581575AReal-time acquisitionReduce mistakesPhotogrammetry/videogrammetryUsing optical meansPoint cloudRadar

A three-dimensional rebuilding method based on laser and camera data fusion is characterized in that the rebuilding method comprises the following steps: (1) a C++ language is adopted to compile a laser data automatic collecting platform based on a radar principle; (2) a rotation matrix R and a translation vector T are arranged between a camera coordinate system and a laser coordinate system; (3) a motion object is extracted and subdivided by utilizing an optical flow field region merging algorithm, thus further subdividing the point cloud corresponding to the motion object; and (4) guarantee of point cloud and image texture on the precise positioning is provided for the three-dimensional image texture mapping, thus realizing the three-dimensional rebuilding system based on data fusion. The three-dimensional rebuilding method has the advantages of quickly and automatically collecting and processing laser data, quickly completing the external labeling of the laser and CCD, effectively extracting and subdividing effective point clouds of the object in complex environment and exactly completing the three-dimensional rebuilding of the laser and CCD data of the object.

Owner:NANCHANG HANGKONG UNIVERSITY

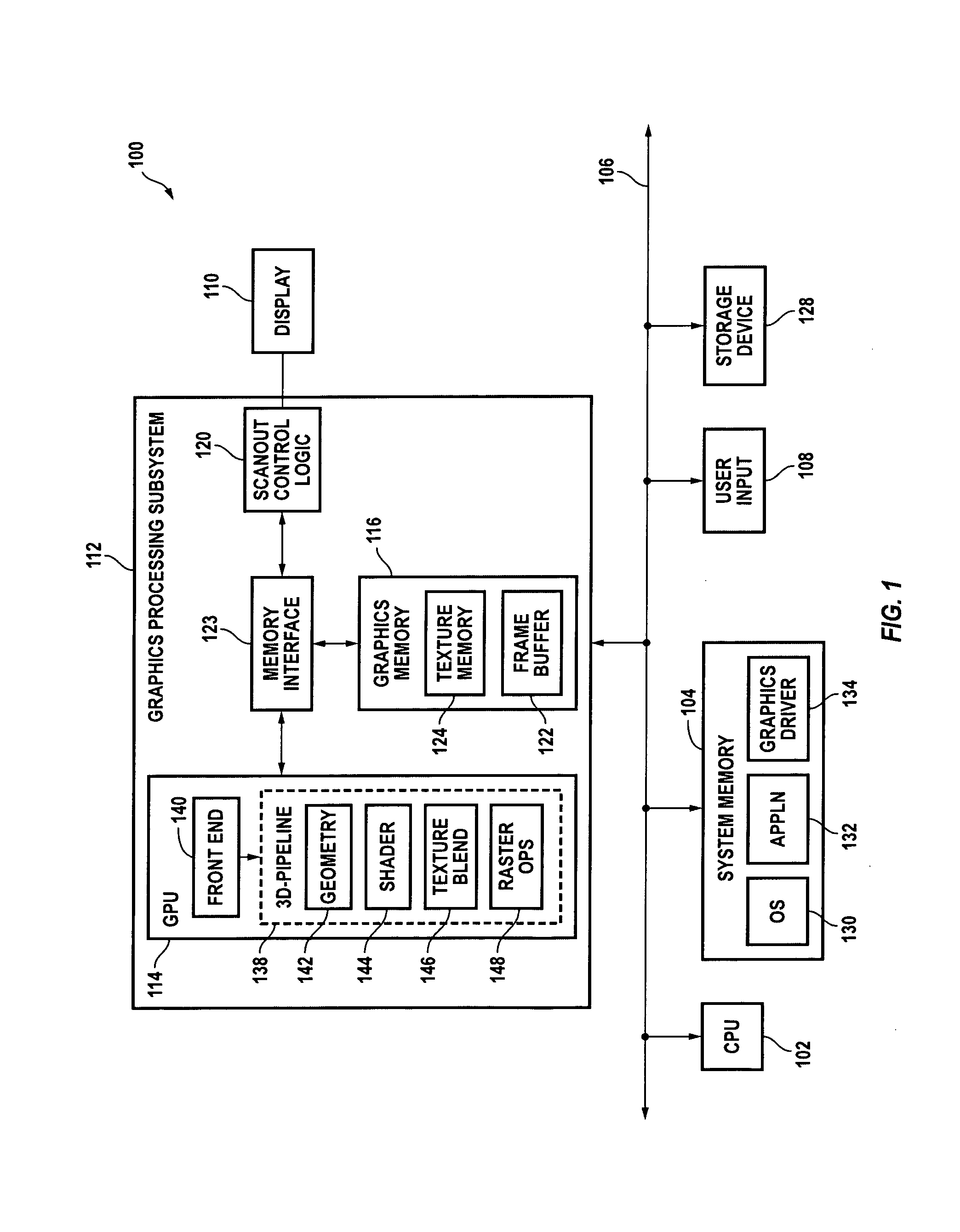

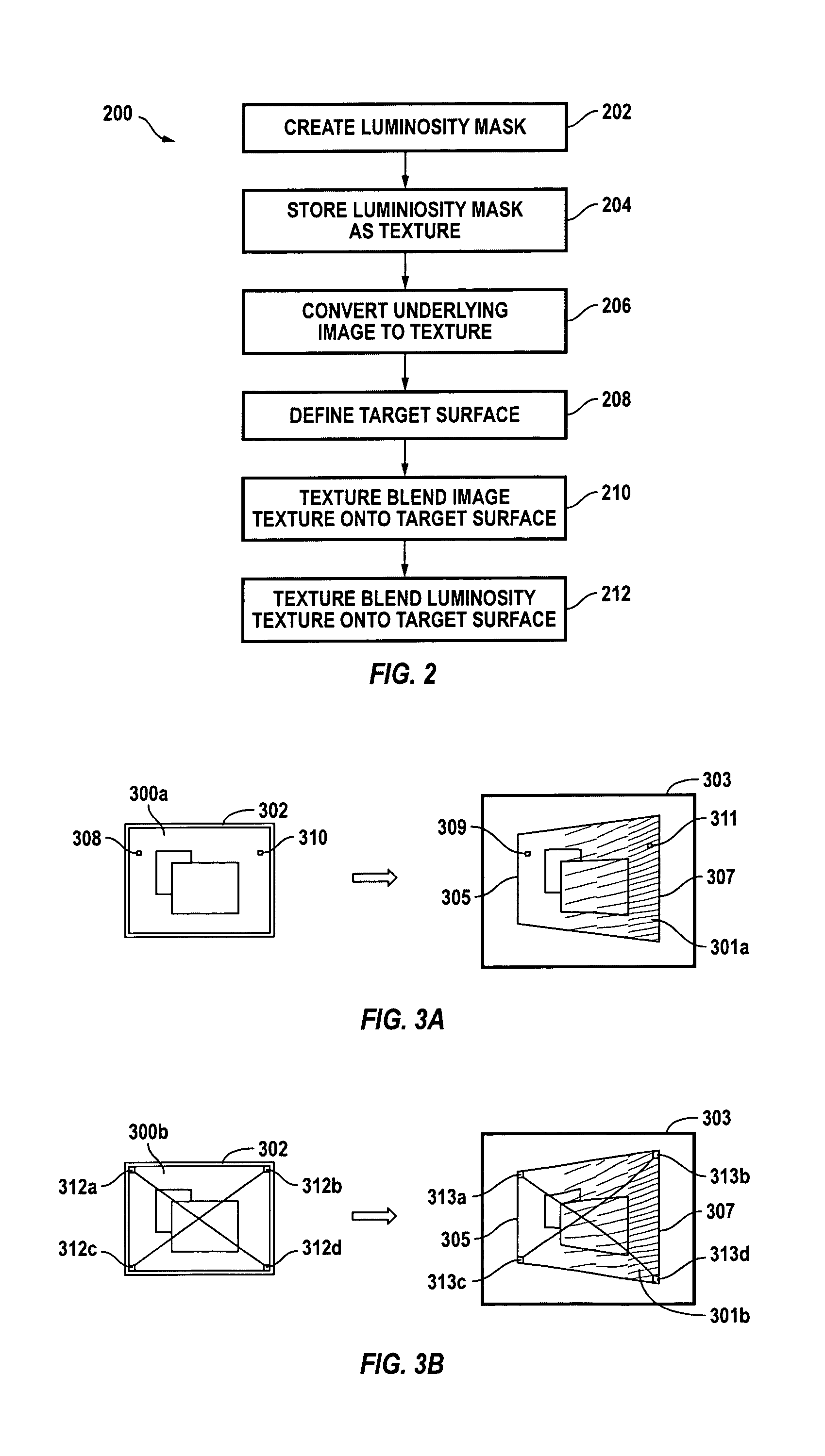

Per-pixel output luminosity compensation

ActiveUS7336277B1Television system scanning detailsCharacter and pattern recognitionTarget surfaceComputer graphics (images)

Per-pixel luminosity adjustment uses a luminosity mask applied as a texture. In one embodiment, a luminosity texture is defined. Pixel data of an underlying image is converted to an image texture. The image texture is blended onto a target surface. The luminosity texture is also blended onto the target surface, thereby generating luminosity compensated pixel data for the image.

Owner:NVIDIA CORP

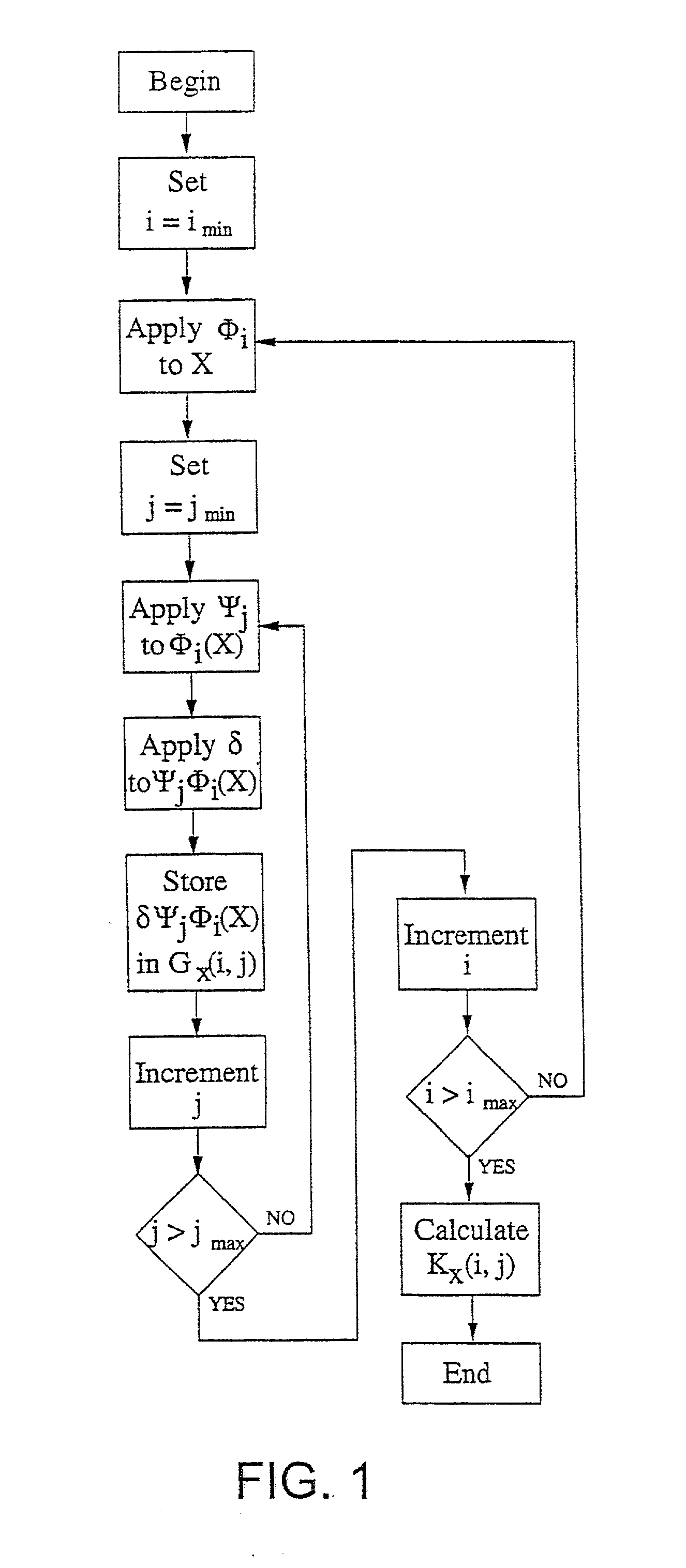

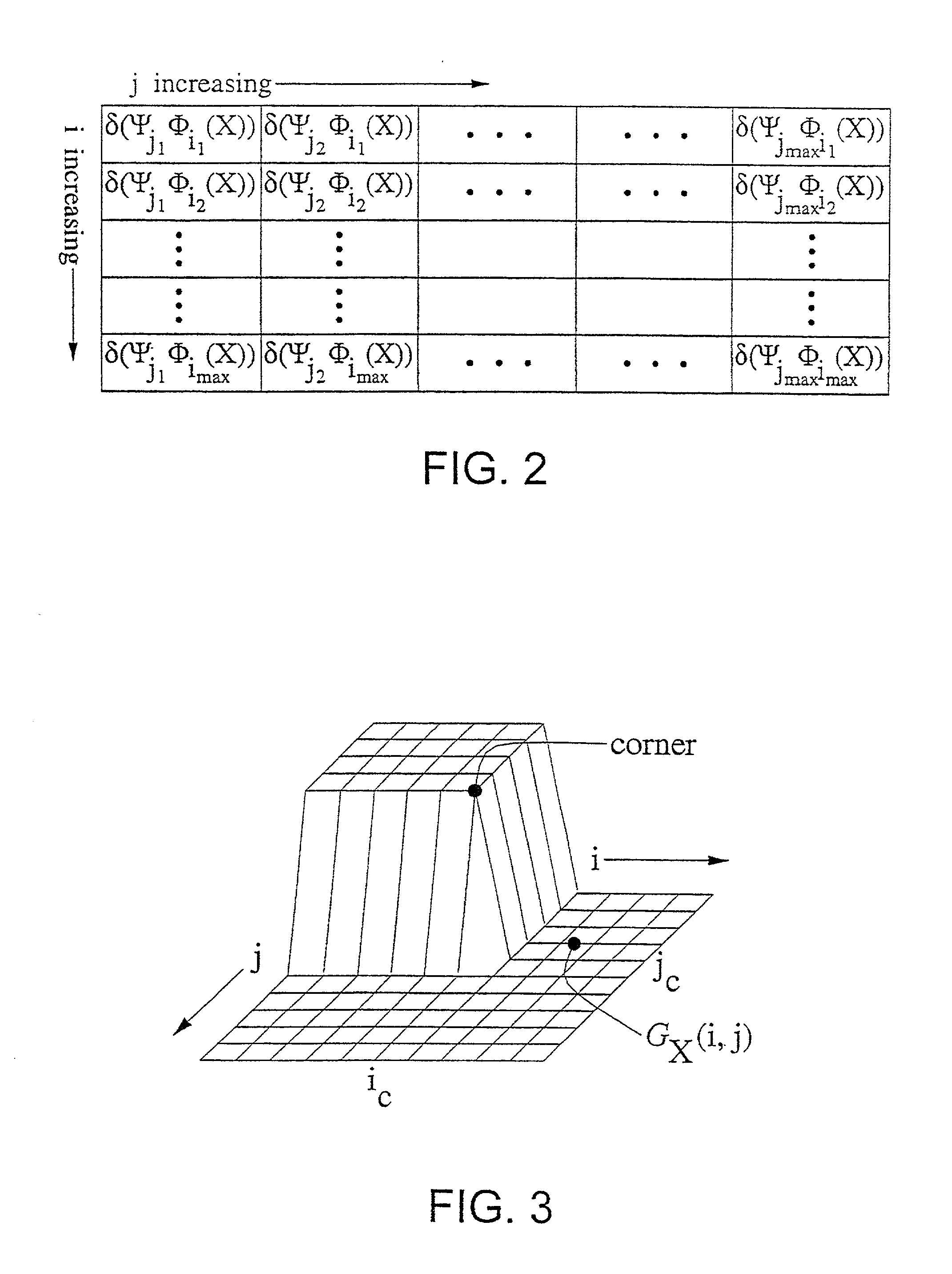

Method for image texture analysis

A method and apparatus for image texture analysis in which the image is mapped into a first set of binary representation by a monotonically varying operator, such as a threshold operator. Each binary representation in the first set of binary representations is mapped to a further set of binary representations using a second monotonically varying operator, such as a spatial operator. The result of the two mappings is a matrix of binary image representations. Each binary image representation is allocated a scalar value to form an array of scalar values which may be analysed to identify defined texture characteristics.

Owner:UNIVERSITY OF ADELAIDE +9

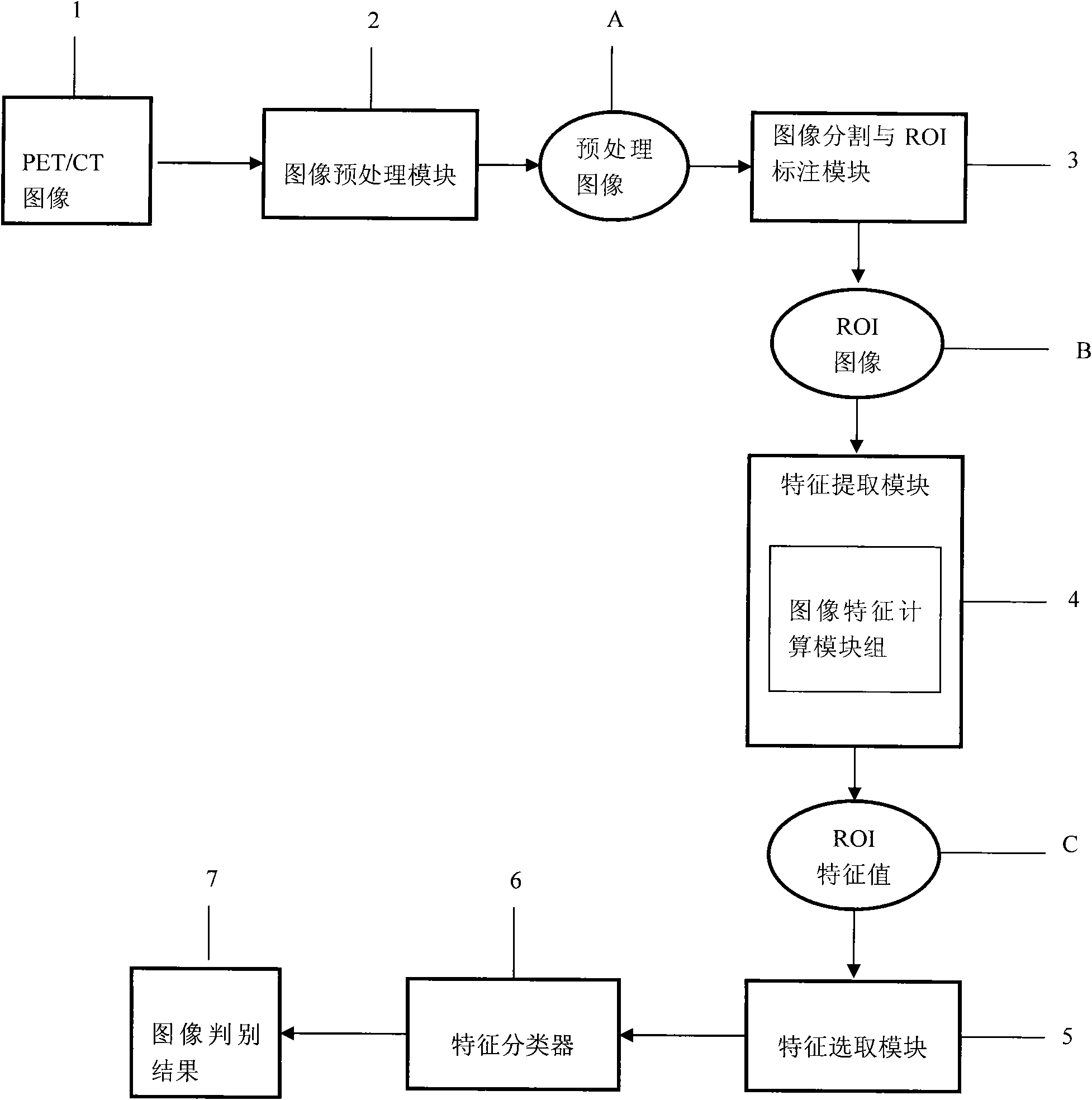

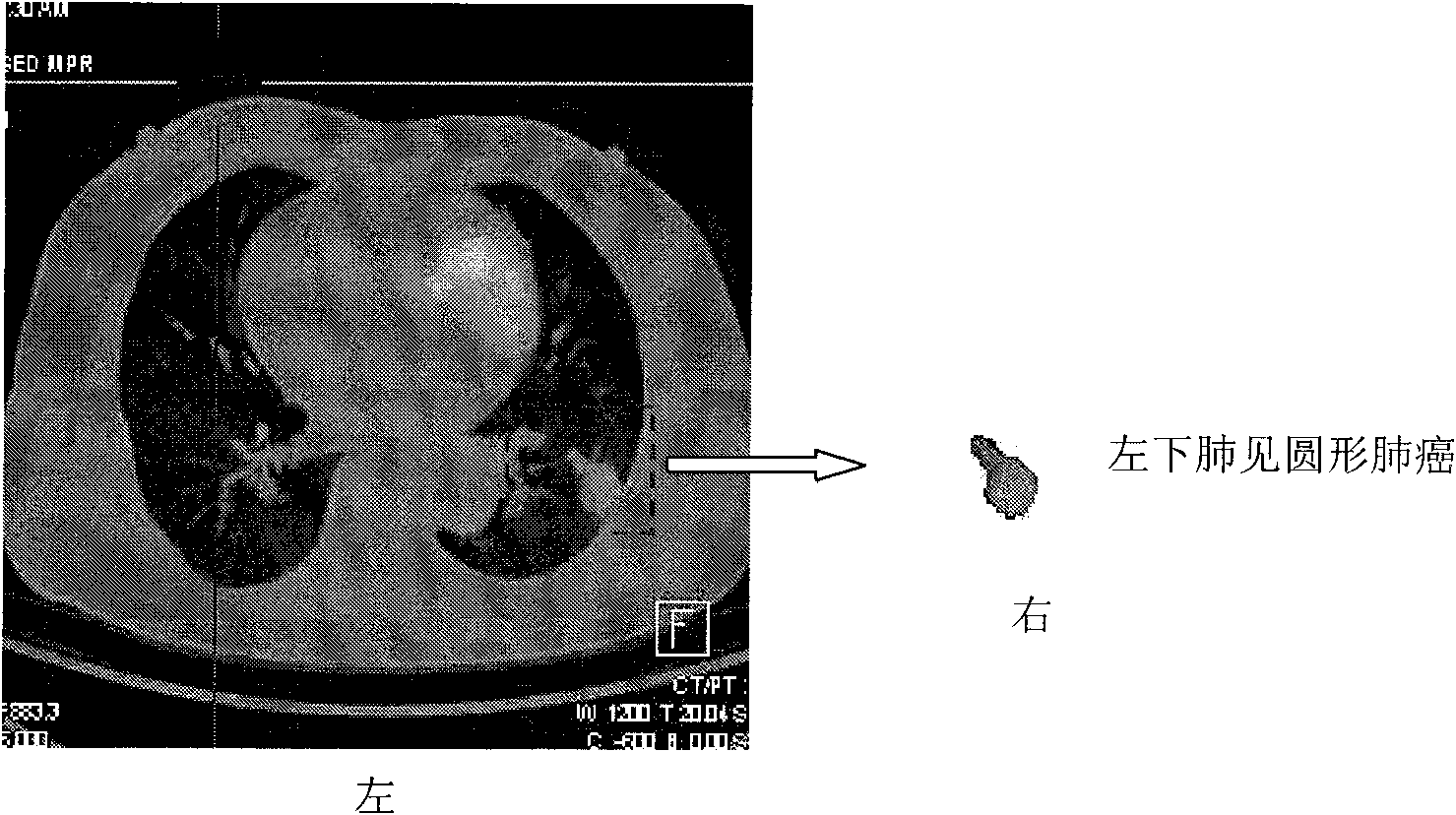

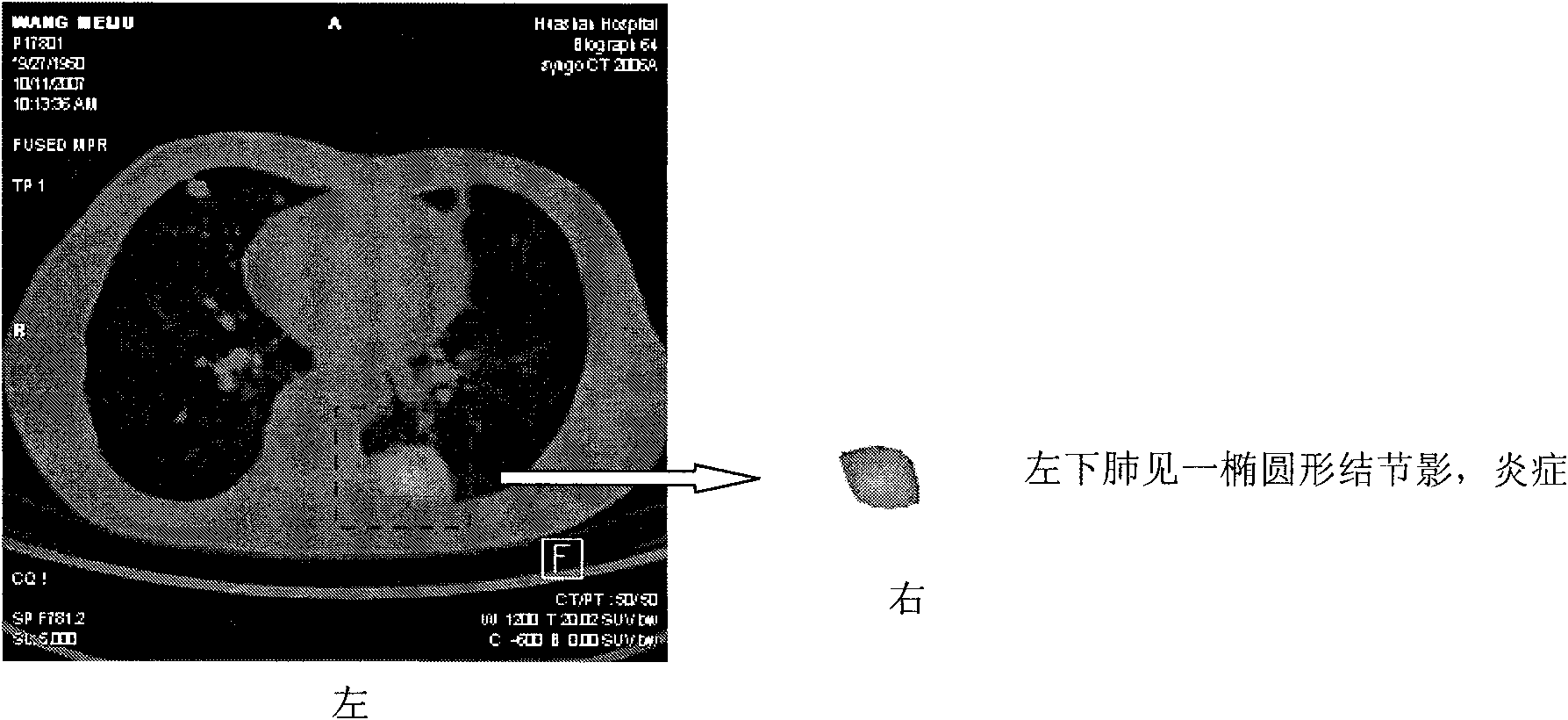

System for detecting pulmonary malignant tumour and benign protuberance based on PET/CT image texture characteristics

InactiveCN101669828AImprove processing efficiencyImprove user experienceImage analysisComputerised tomographsTumour tissueFungating tumour

The invention relates to a system for detecting a pulmonary malignant tumour and a benign protuberance based on PET / CT image texture characteristics, belonging to the technical field of medical digital image processing. The invention has the major function of searching useful texture characteristics from a PET / CT picture to better distinguish a pulmonary tumour tissue and a benign tissue. The system comprises the following steps: firstly, dividing a region of interest ROI from the PET / CT image and then extracting five texture characteristics of roughness, contrast, busyness, complexity and intensity of the ROI; then, carrying out classifying discrimination on the characteristics by distance calculation and a characteristic classifier and distinguishing the malignant tumour and the benign protuberance effectively by the combined data of various characteristics.

Owner:FUDAN UNIV

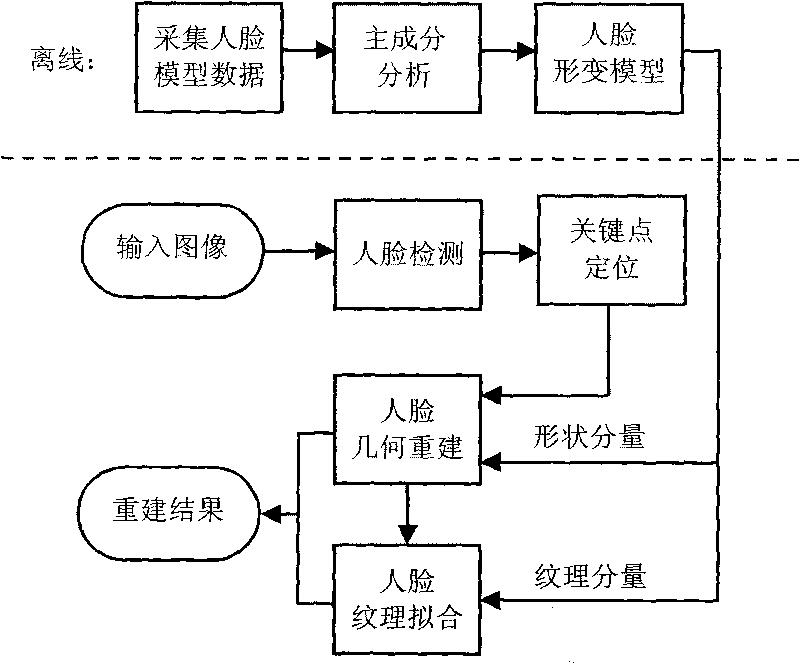

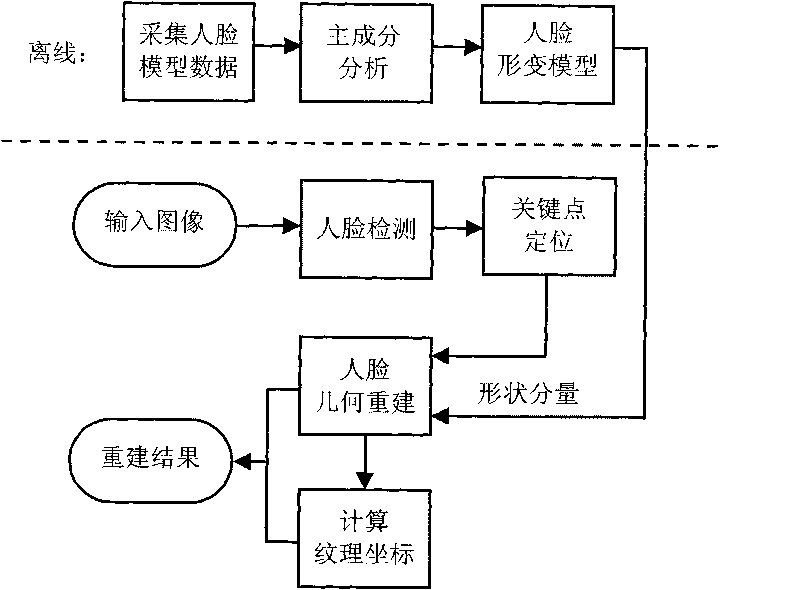

Three-dimensional facial reconstruction method

InactiveCN101751689AGeometry reconstruction speed reducedImplement automatic rebuild3D-image rendering3D modellingAdaBoostFace model

The invention relates to a three-dimensional facial reconstruction method, which can automatically reconstruct a three-dimensional facial model from a single front face image and puts forward two schemes. The first scheme is as follows: a deformable face model is generated off line; Adaboost is utilized to automatically detect face positions in the inputted image; an active appearance model is utilized to automatically locate key points on the face in the inputted image; based on the shape components of the deformable face model and the key points of the face on the image, the geometry of a three-dimensional face is reconstructed; with a shape-free texture as a target image, the texture components of the deformable face model are utilized to fit face textures, so that a whole face texture is obtained; and after texture mapping, a reconstructed result is obtained. The second scheme has the following differences from the first scheme: after the geometry of the three-dimensional face is reconstructed, face texture fitting is not carried out, but the inputted image is directly used as a texture image as a reconstructed result. The first scheme is applicable to fields such as film and television making and three-dimensional face recognition, and the reconstruction speed of the second scheme is high.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI +1

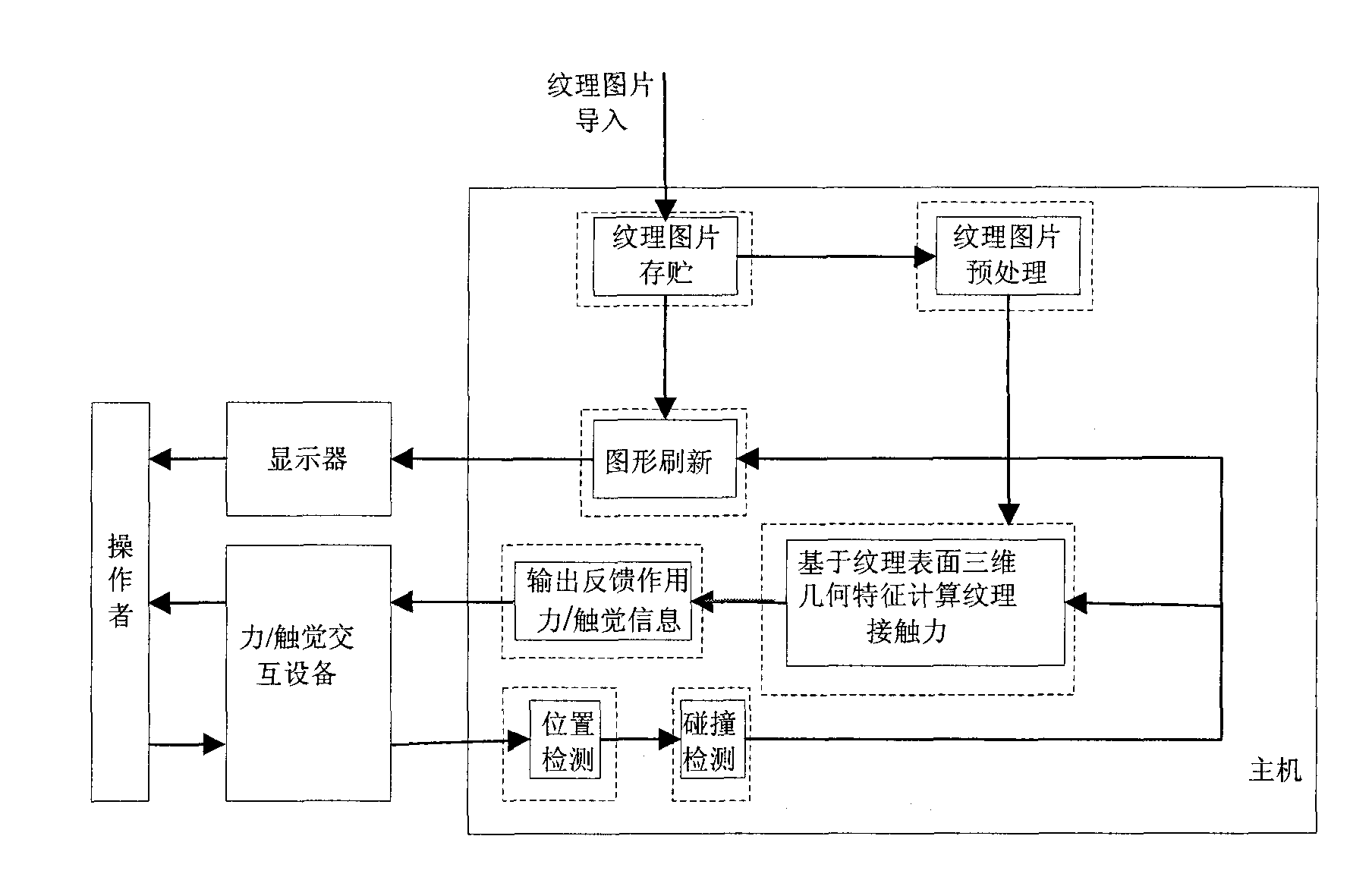

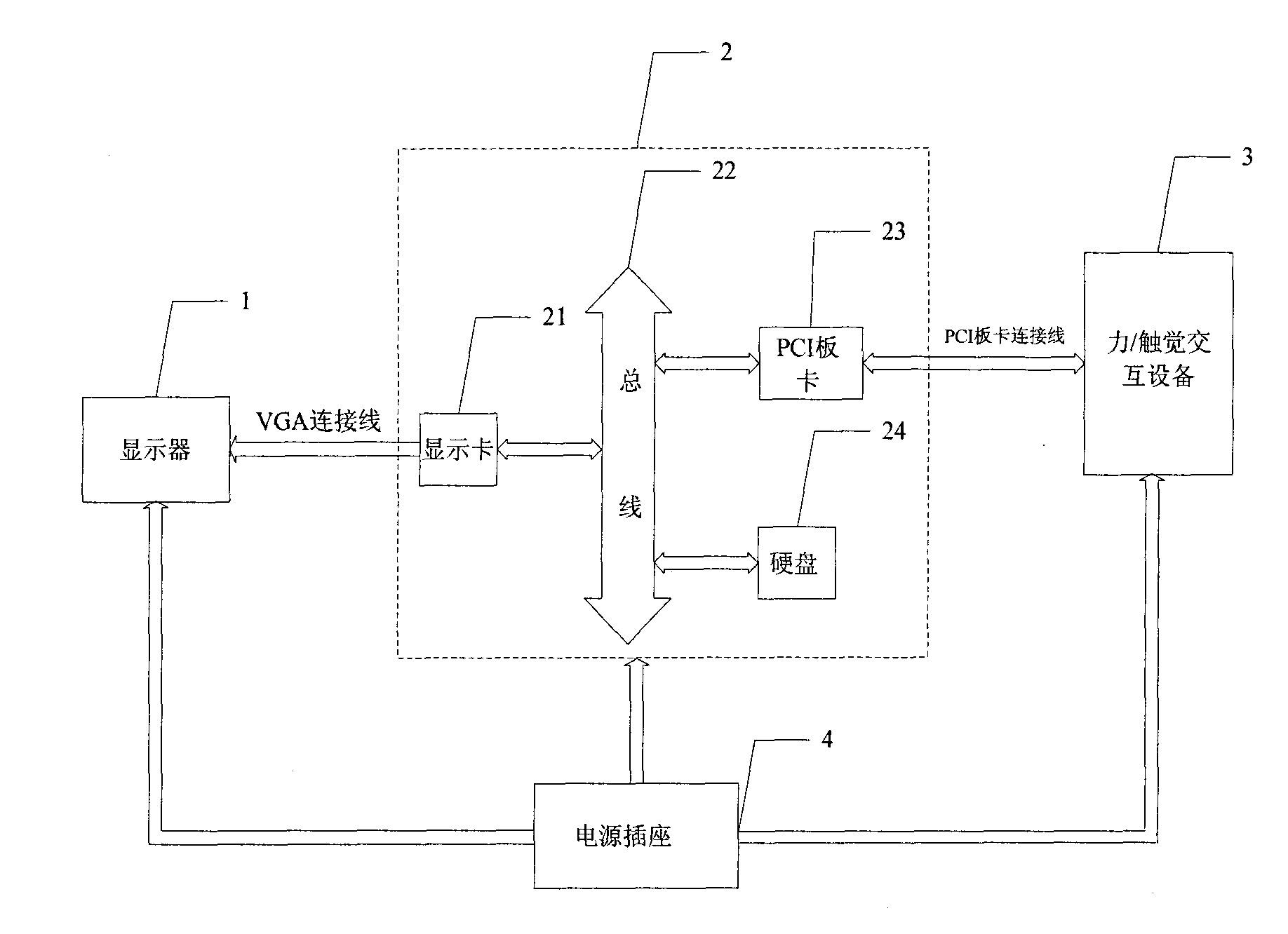

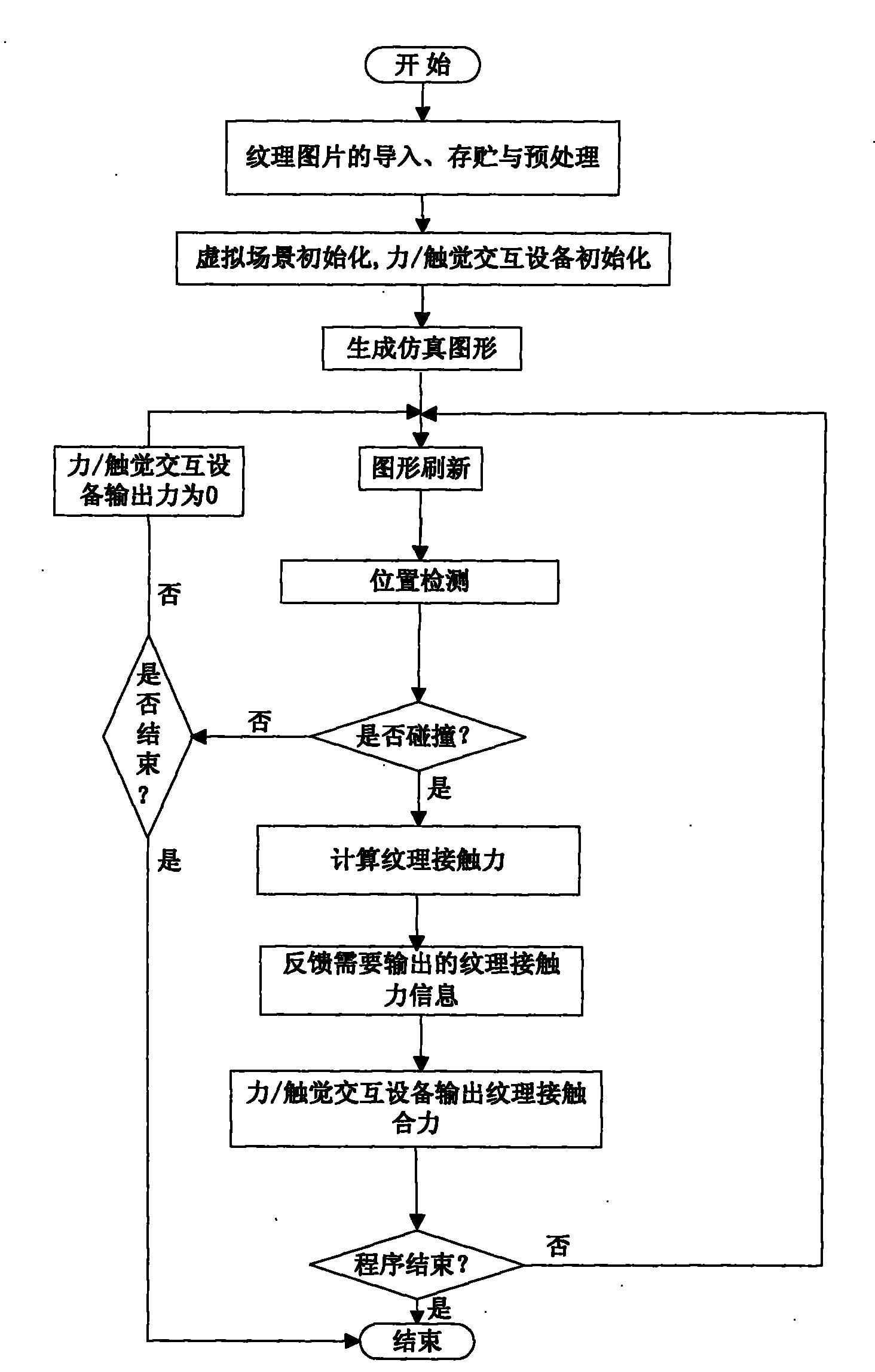

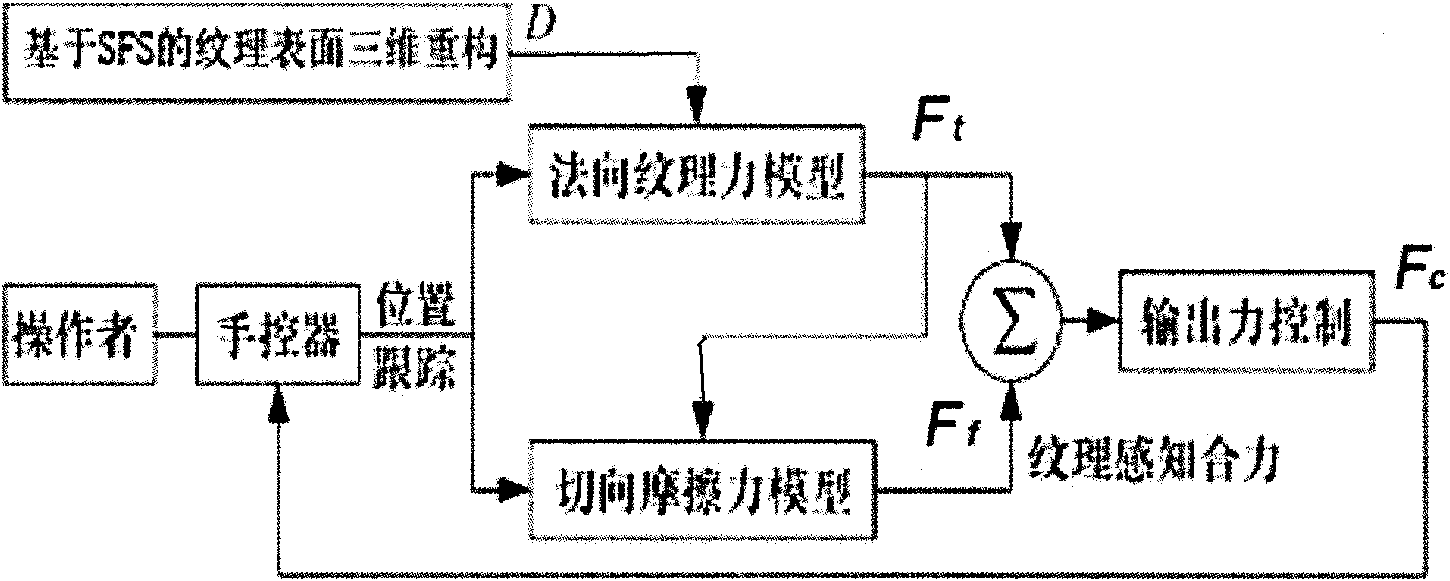

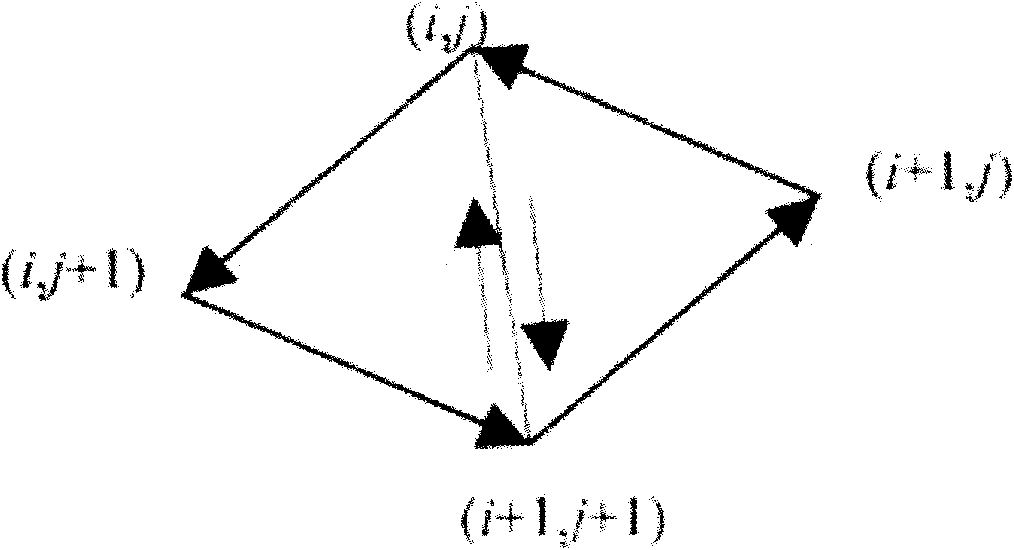

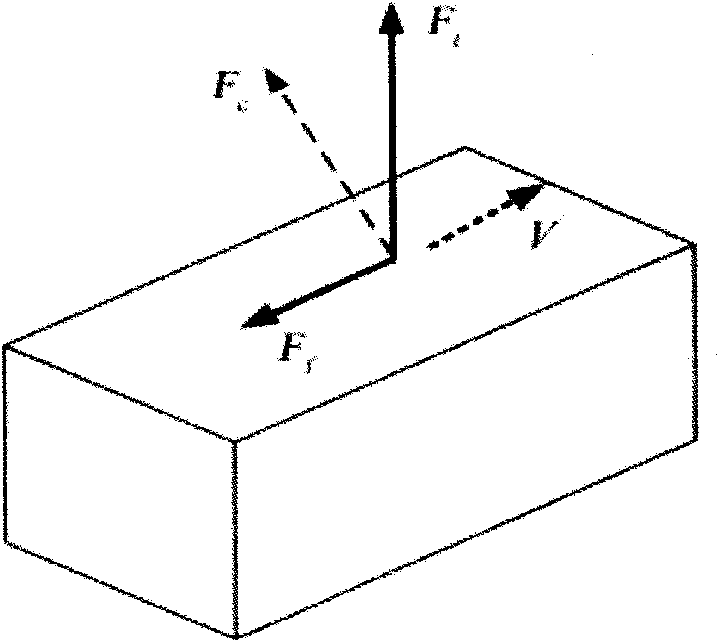

Image texture tactile representation system based on force/haptic interaction equipment

ActiveCN101819462AImprove realismImprove stabilityInput/output for user-computer interactionGraph readingInteraction systemsImaging processing

The invention discloses an image texture tactile representation system based on force / haptic interaction equipment for virtual reality human-computer interaction, which is characterized in that when the virtual proxy of the force / haptic interaction equipment slides on a texture surface of a virtual object in a virtual environment, the surface height of the object texture corresponding to the contact point and a coefficient of kinetic friction for reflecting the rough degree of the contact point are firstly obtained on the basis of an image processing method, a continuous normal contact force model reflecting the concave-convex degree of the contact point and a tangential friction model reflecting the rough degree of the contact point are respectively established, and finally the texture contact force is fed back to an operator in real time through the force / haptic interaction equipment so as to realize the force haptic express and reappear when fingers slide over the surface texture of the virtual object. The feedback continuous change normal force not only enables the human-computer interaction to be more real, but also enables an interaction system to be more stable, and the feedback friction related to the rough degree of the contact point also further enhances the sense of reality when the texture reappears.

Owner:NANTONG MINGXIN CHEM +1

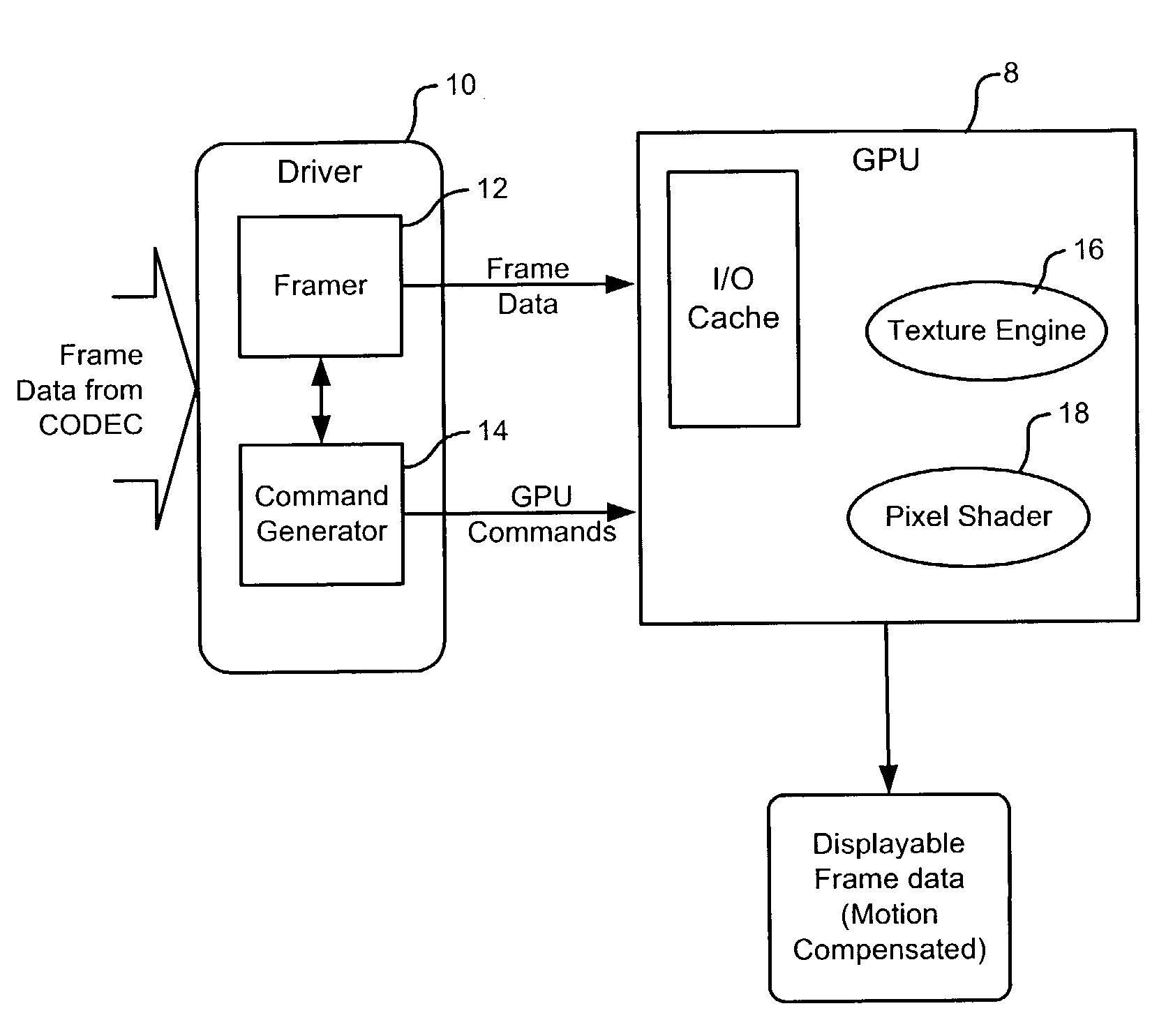

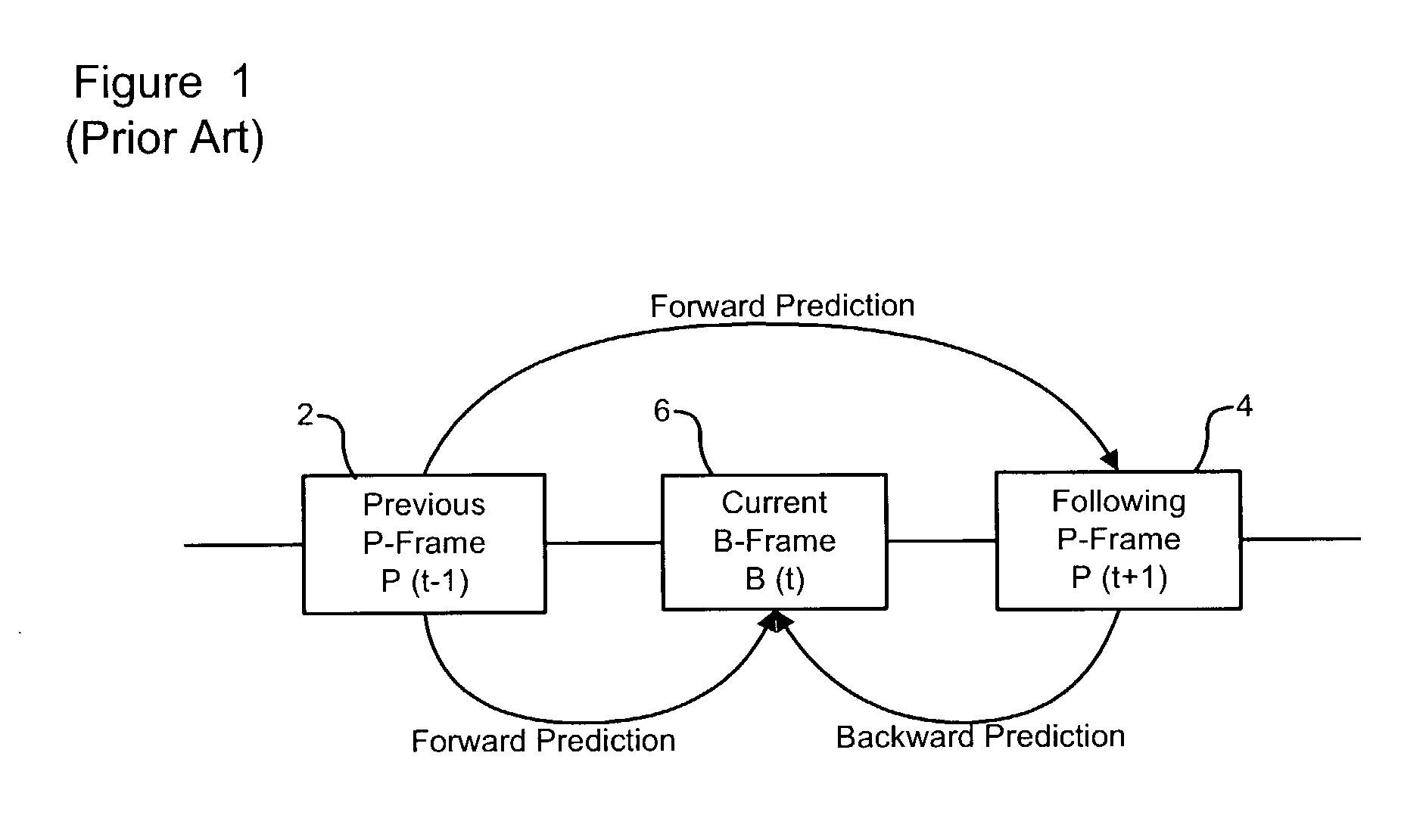

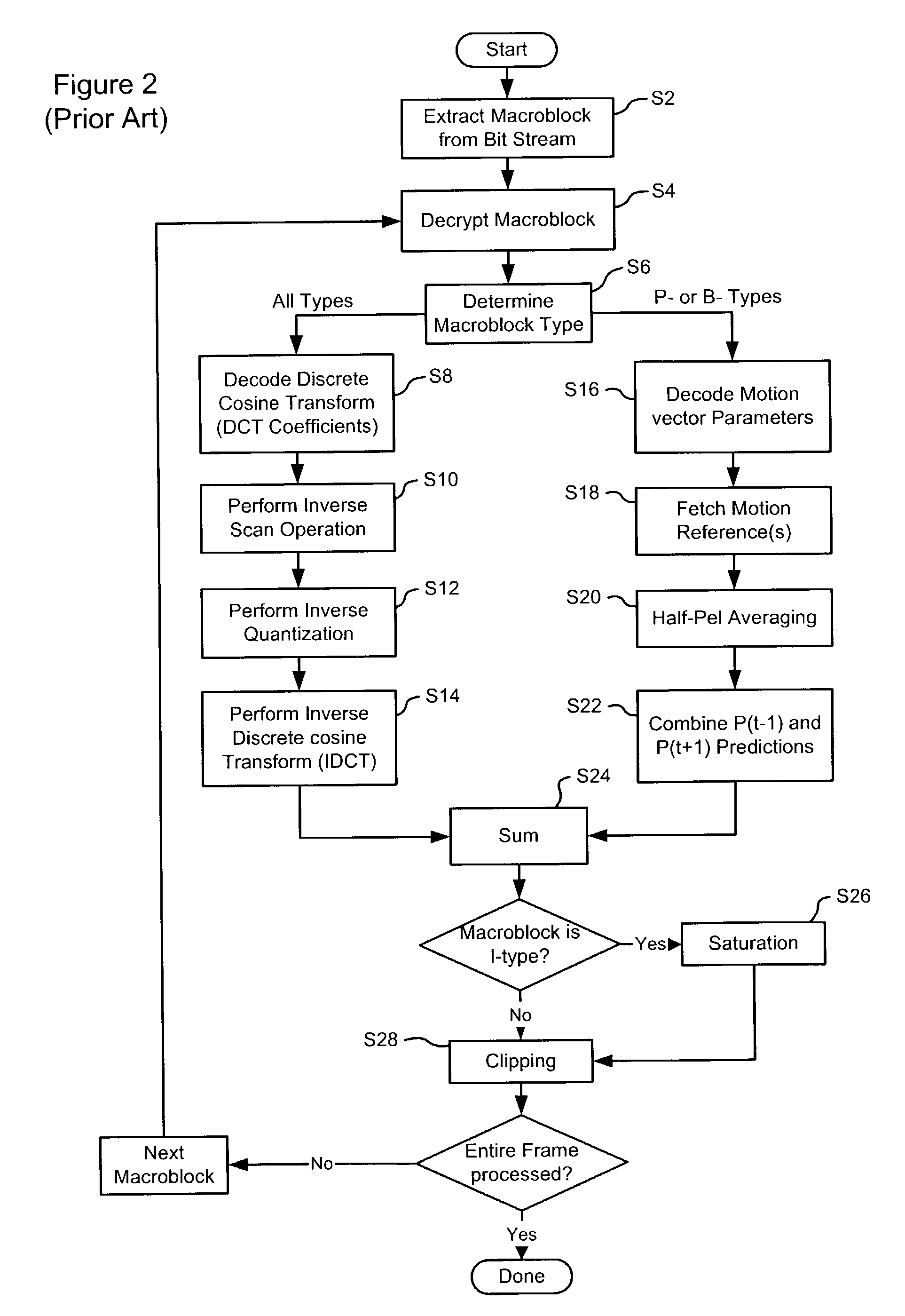

Motion compensation using shared resources of a graphics processor unit

ActiveUS6952211B1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningGraphicsMotion vector

A method of motion compensation within a displayable video stream using shared resources of a Graphics Processor Unit (GPU). Image data including a sequential series of image frames is recieved. Each frame includes any one or more: frame-type; image texture; and motion vector information. At least a current image frame in analysed, and the shared resources of the GPU are controlled to generate a motion compensated image frame corresponding to the current image frame, using one or more GPU commands.

Owner:MATROX

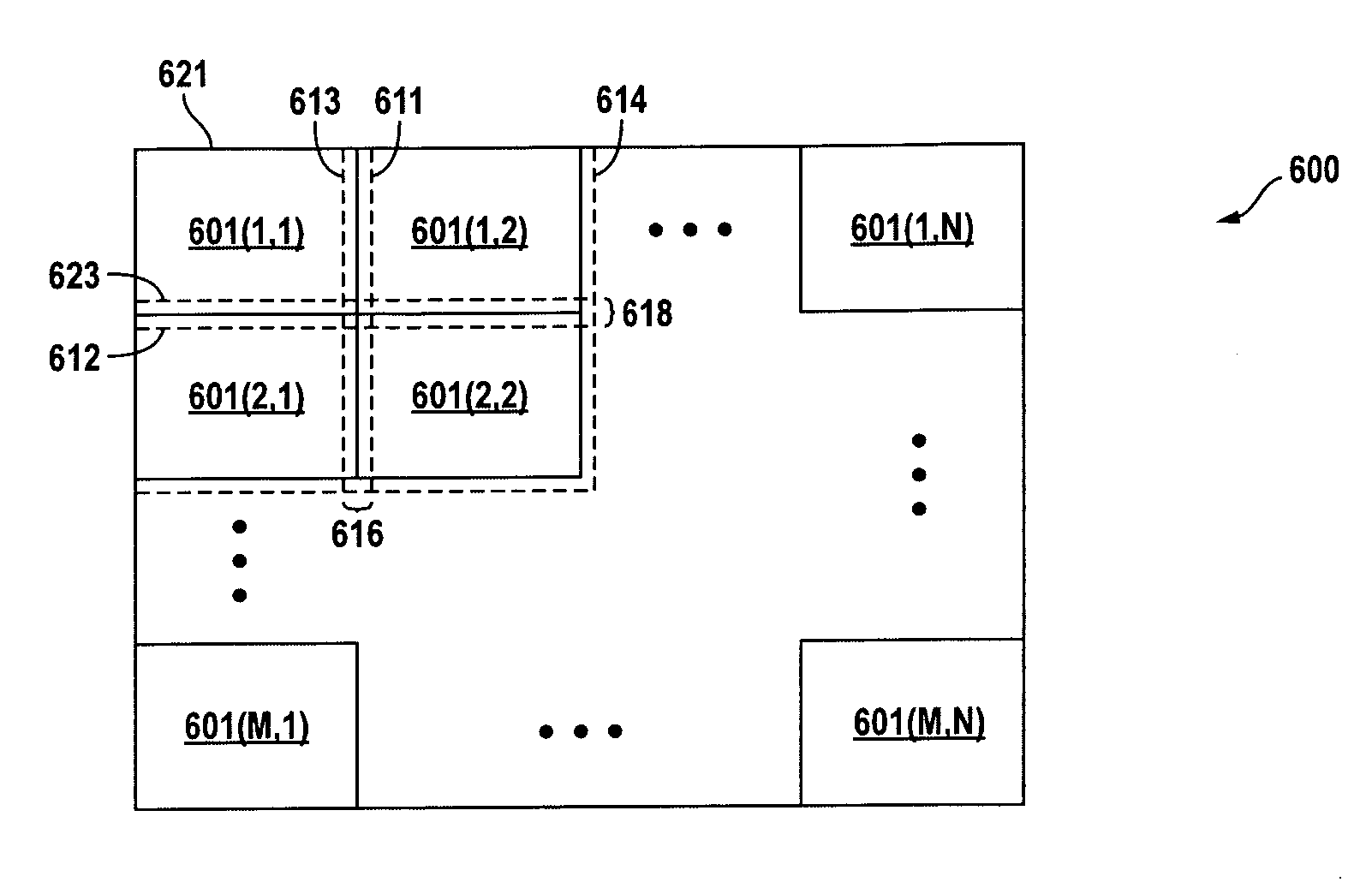

Block-based fragment filtration with feasible multi-GPU acceleration for real-time volume rendering on conventional personal computer

ActiveUS7154500B2Reduce the burden onDetails involving 3D image dataCathode-ray tube indicatorsVoxelFiltration

A computer-based method and system for interactive volume rendering of a large volume data on a conventional personal computer using hardware-accelerated block filtration optimizing uses 3D-textured axis-aligned slices and block filtration. Fragment processing in a rendering pipeline is lessened by passing fragments to various processors selectively in blocks of voxels based on a filtering process operative on slices. The process involves generating a corresponding image texture and performing two-pass rendering, namely a virtual rendering pass and a main rendering pass. Block filtration is divided into static block filtration and dynamic block filtration. The static block filtration locates any view-independent unused signal being passed to a rasterization pipeline. The dynamic block filtration determines any view-dependent unused block generated due to occlusion. Block filtration processes utilize the vertex shader and pixel shader of a GPU in conventional personal computer graphics hardware. The method is for multi-thread, multi-GPU operation.

Owner:THE CHINESE UNIVERSITY OF HONG KONG

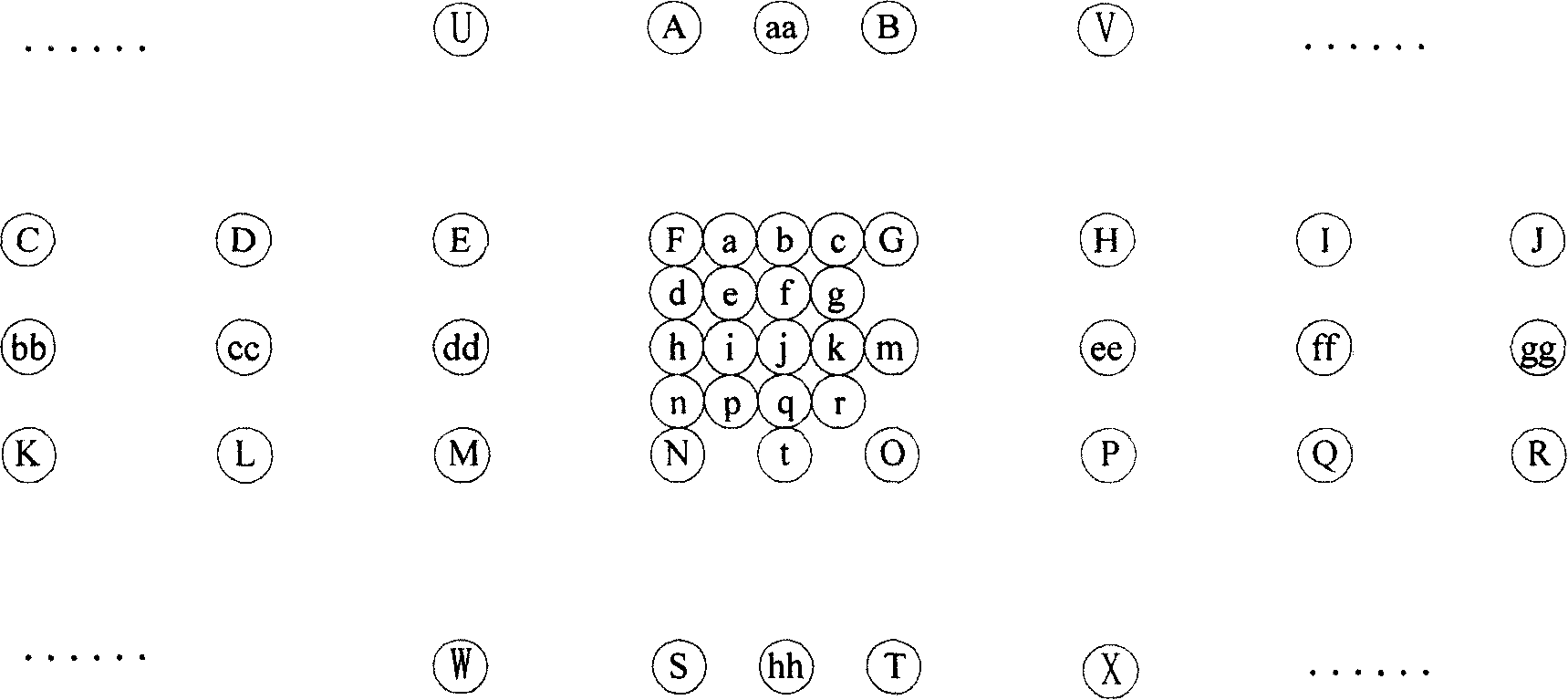

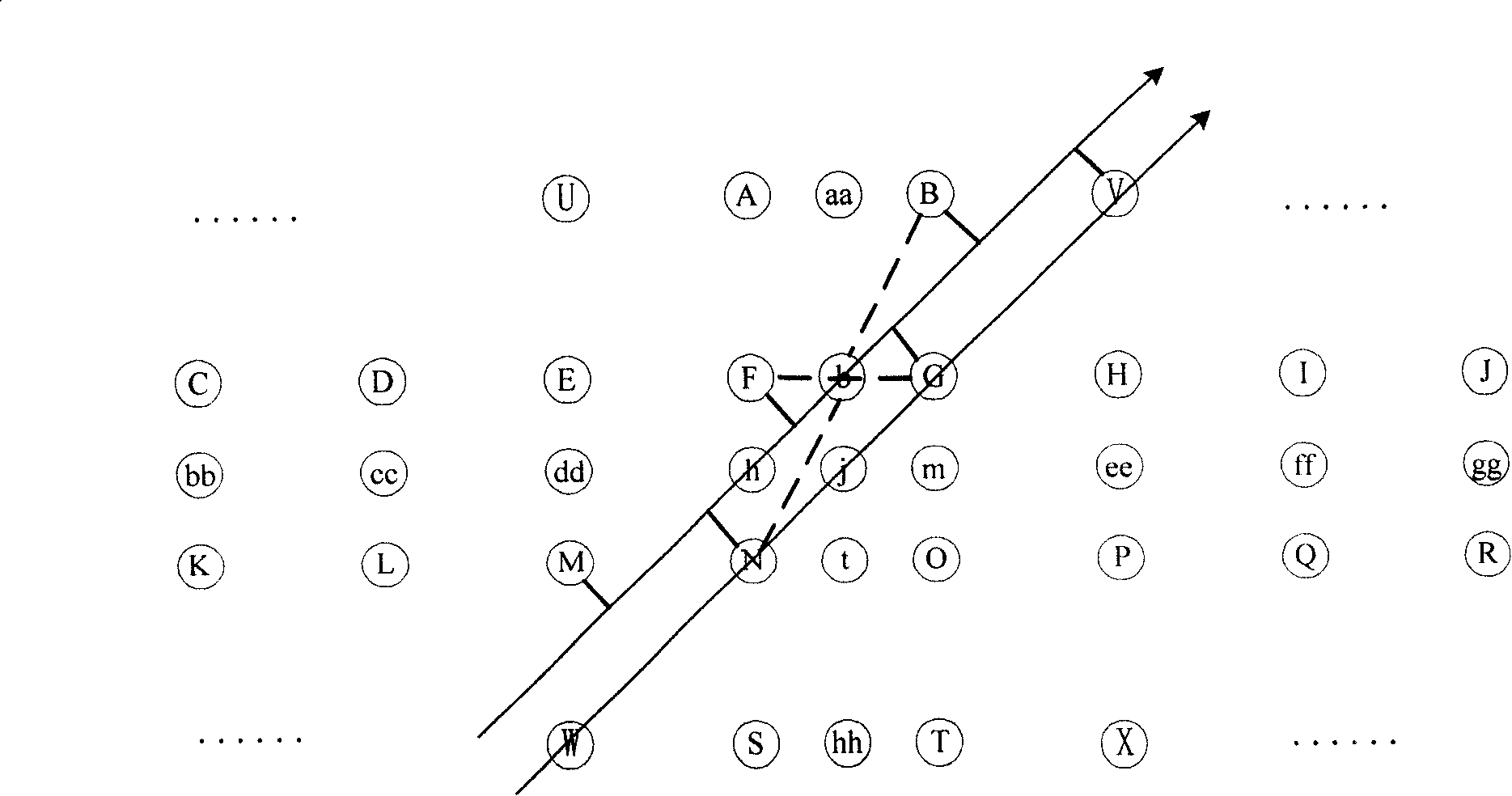

Encoding and decoding method and device, image element interpolation processing method and device

ActiveCN101198063AImprove encoding rateConforms to texture distribution characteristicsPulse modulation television signal transmissionGeometric image transformationDecoding methodsReference sample

The invention discloses an interframe forecasting coding method, a decoding method, a coder, a decoder, a sub-pixel interpolation processing method and a sub-pixel interpolation processing device. The interframe forecasting coding and decoding technical proposal provided by the invention is that: sub-pixel interpolation is respectively performed on integer pixel samples of image blocks waiting for coding for a plurality of times through consideration of affection of texture distribution direction of images on precision of sub-pixel reference samples and by the means of adjusting interpolation directions, and multiple groups of sub-pixel reference sample values with different precisions are obtained, and then reference sample values with highest precision are selected from integer pixel sample values and various sub-pixel reference sample values, thereby encoding rate of a coding end is improved. The sub-pixel interpolation technical proposal provided by the invention is that: at least two different interpolation directions are set for each sub-pixel waiting for interpolation and corresponding predicted values are computed, and then optimal values are selected from all the predicated values and taken as optimal predicted values of sub-pixel samples waiting for interpolation, thereby the precision of the sub-pixel reference sample values is improved.

Owner:HONOR DEVICE CO LTD

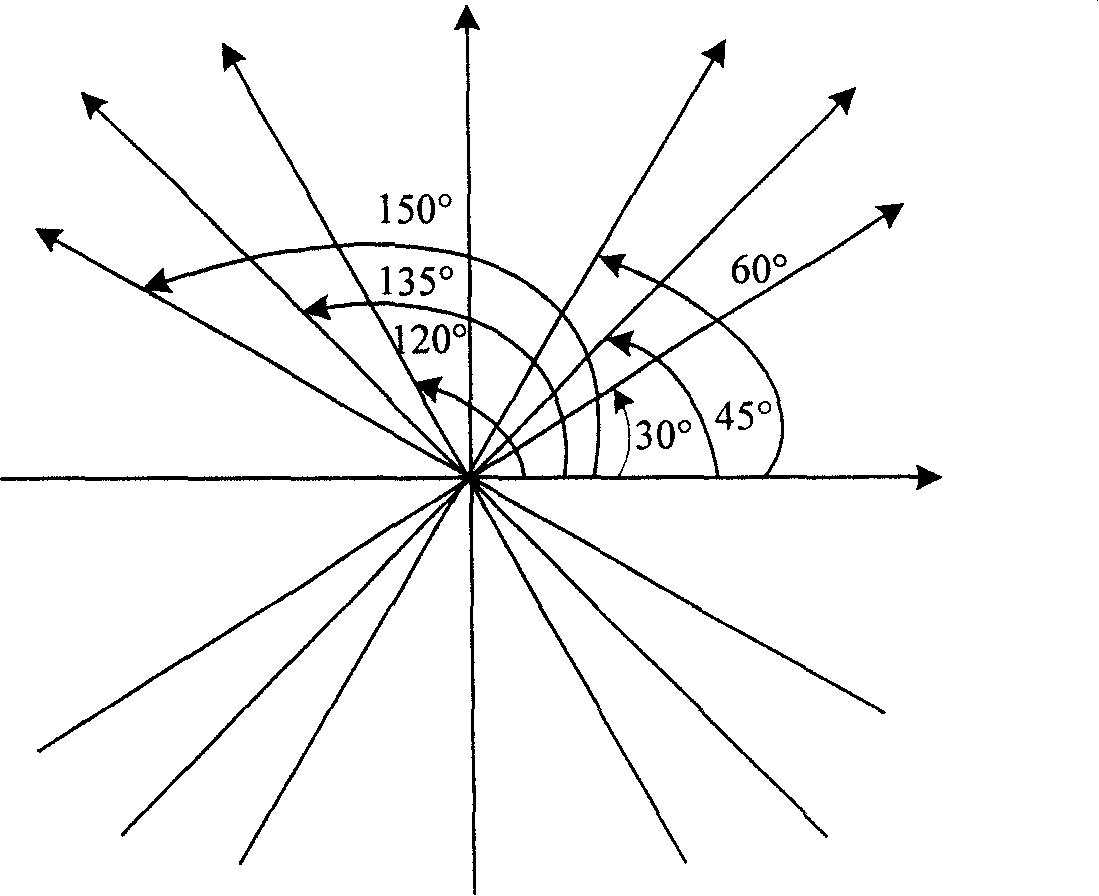

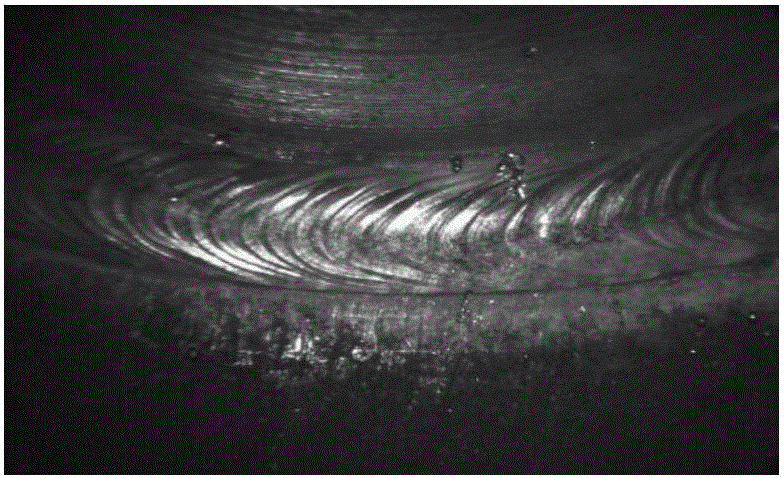

Weld surface defect identification method based on image texture

InactiveCN105938563AEffectively distinguish texture featuresRealize classification recognitionCharacter and pattern recognitionOptically investigating flaws/contaminationColor imageSample image

The invention provides a weld surface defect identification method based on image texture. Miniature CCD camera photographing parameters are set according to the acquisition image standard; an acquired true color image is converted into a grey-scale map, and a gray scale co-occurrence matrix is created; 24 characteristic parameters in total, including energy, contrast, correlation, homogeneity, entropy and variance, are respectively extracted in the direction of 0 degree, 45 degrees, 90 degrees and 135 degrees, and normalization processing is performed on the extracted characteristic parameters; a BP neural network is trained by utilizing a training sample image, and the number of neurons of the neural network, a hidden layer transfer function, an output layer transfer function and a training algorithm transfer function are set; test sample characteristic parameters are outputted to the trained BP neural network to perform classification and identification; and the matching degree of the test sample and different types of surface welding quality training samples is calculated so that automatic classification and identification of the test sample surface welding quality can be completed.

Owner:BEIJING UNIV OF TECH

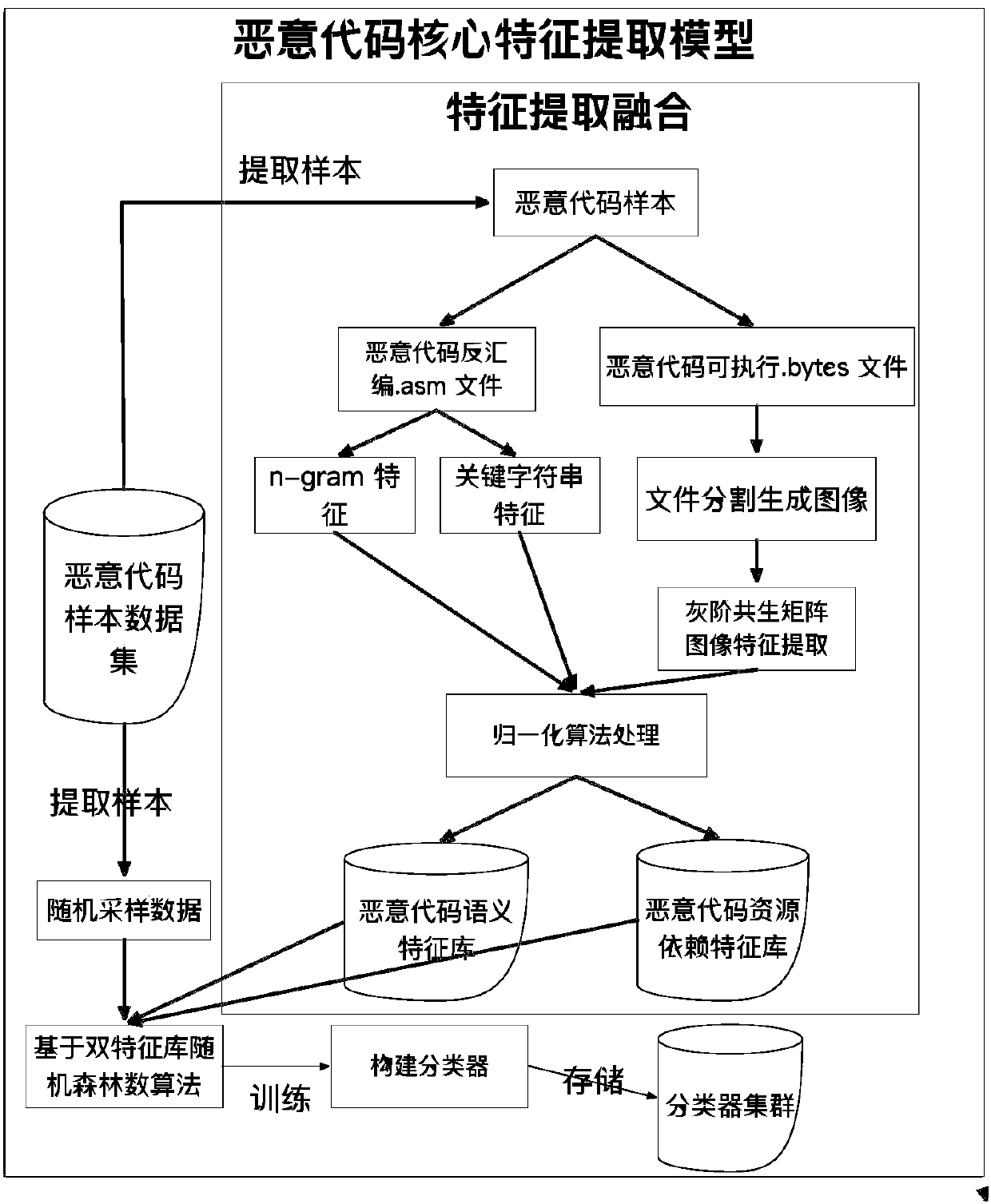

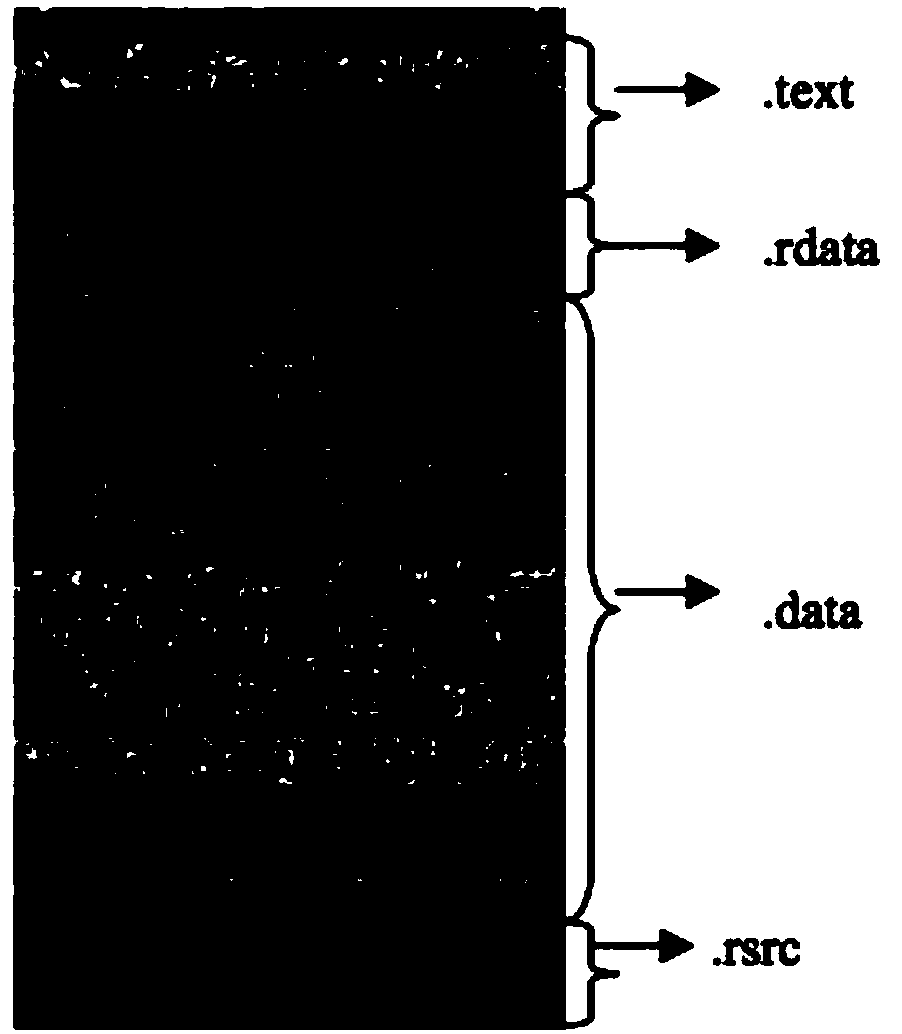

Method for automatically detecting core characteristics of malicious code

ActiveCN107908963AEfficient detection abilityAvoid interferencePlatform integrity maintainanceMachine learningFeature vectorComputerized system

The invention discloses a method for automatically detecting core characteristics of a malicious code, and belongs to overall design of computer system security. The method is a method for detecting the core characteristics of the malicious code based on a machine learning algorithm. Due to static analysis, from the perspective of the actual security significance of the malicious code, image texture, key API calling and key character string characteristics of the malicious code are extracted; the extracted characteristics are learned through a random forest tree algorithm based on a normalizedbicharacteristic library; therefore, a family core characteristic library of the malicious code is obtained; for the malicious code, image characteristics of the malicious code have better expressiveforce; therefore, a bicharacteristic sub-library is constructed; the image characteristics of the malicious code are warehoused independently; the fact that certain characteristic values in image characteristic vectors can be selected for training in characteristic fusion every time can be ensured; and thus, a classifier obtained by training has a certain accuracy rate.

Owner:BEIJING UNIV OF TECH

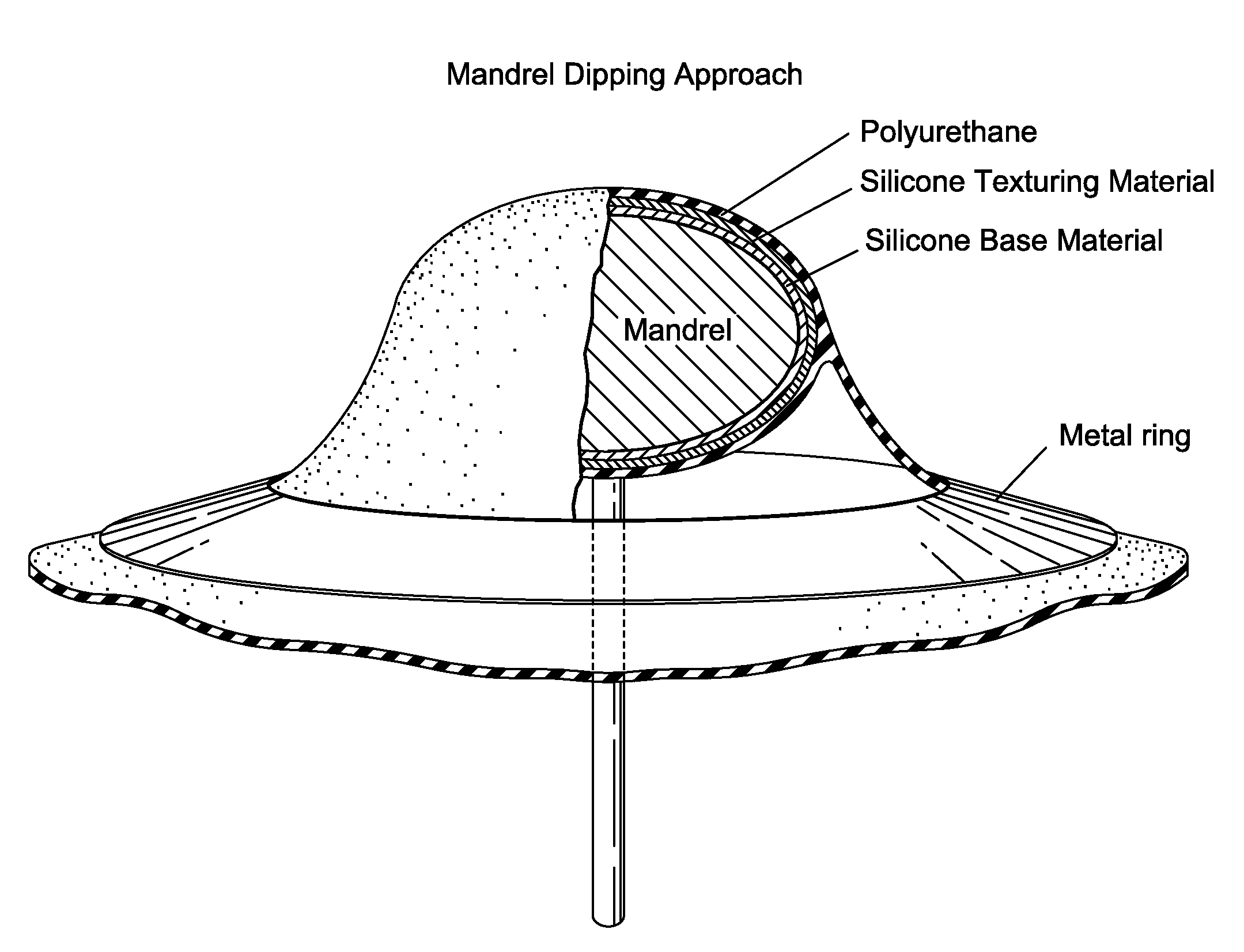

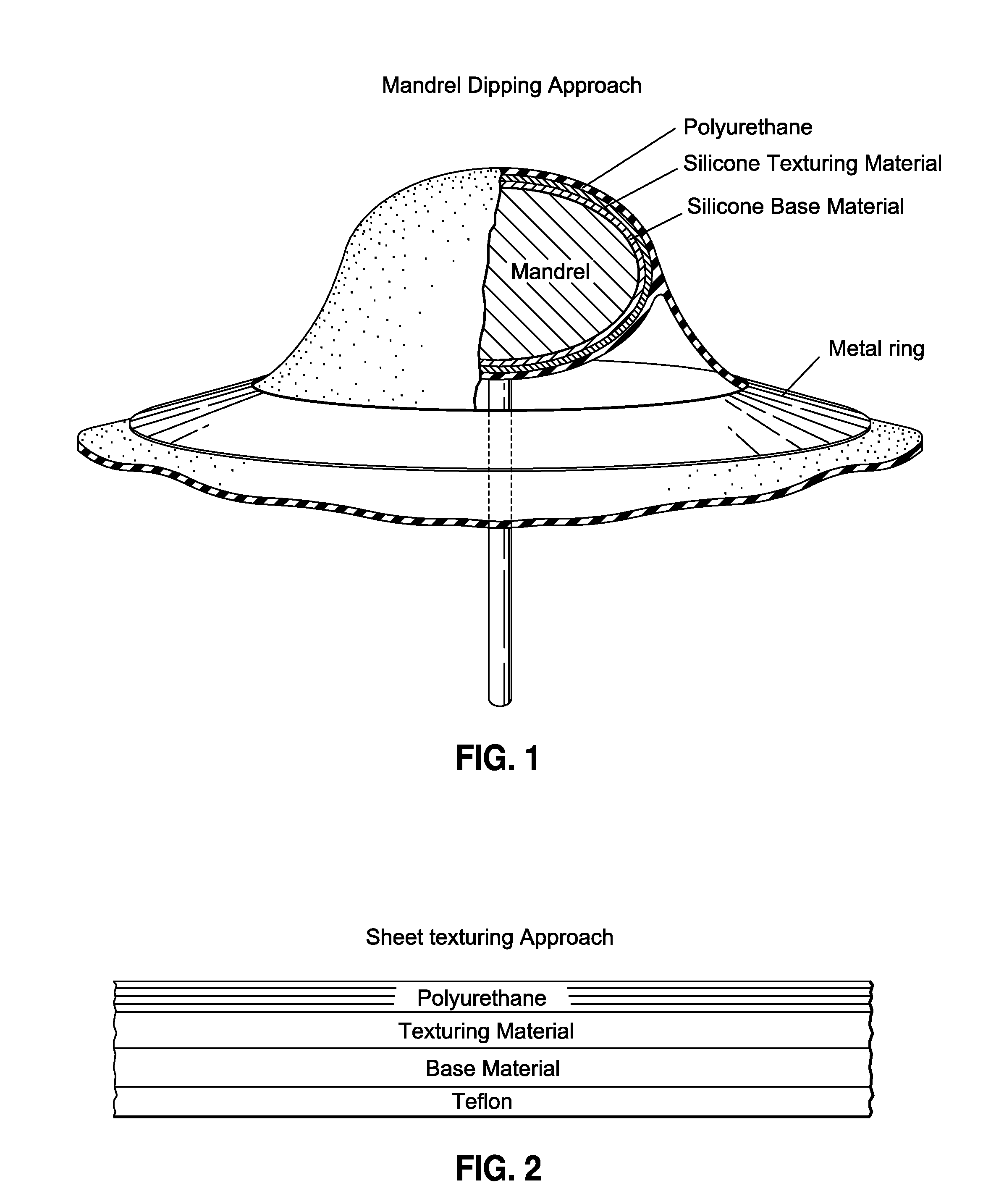

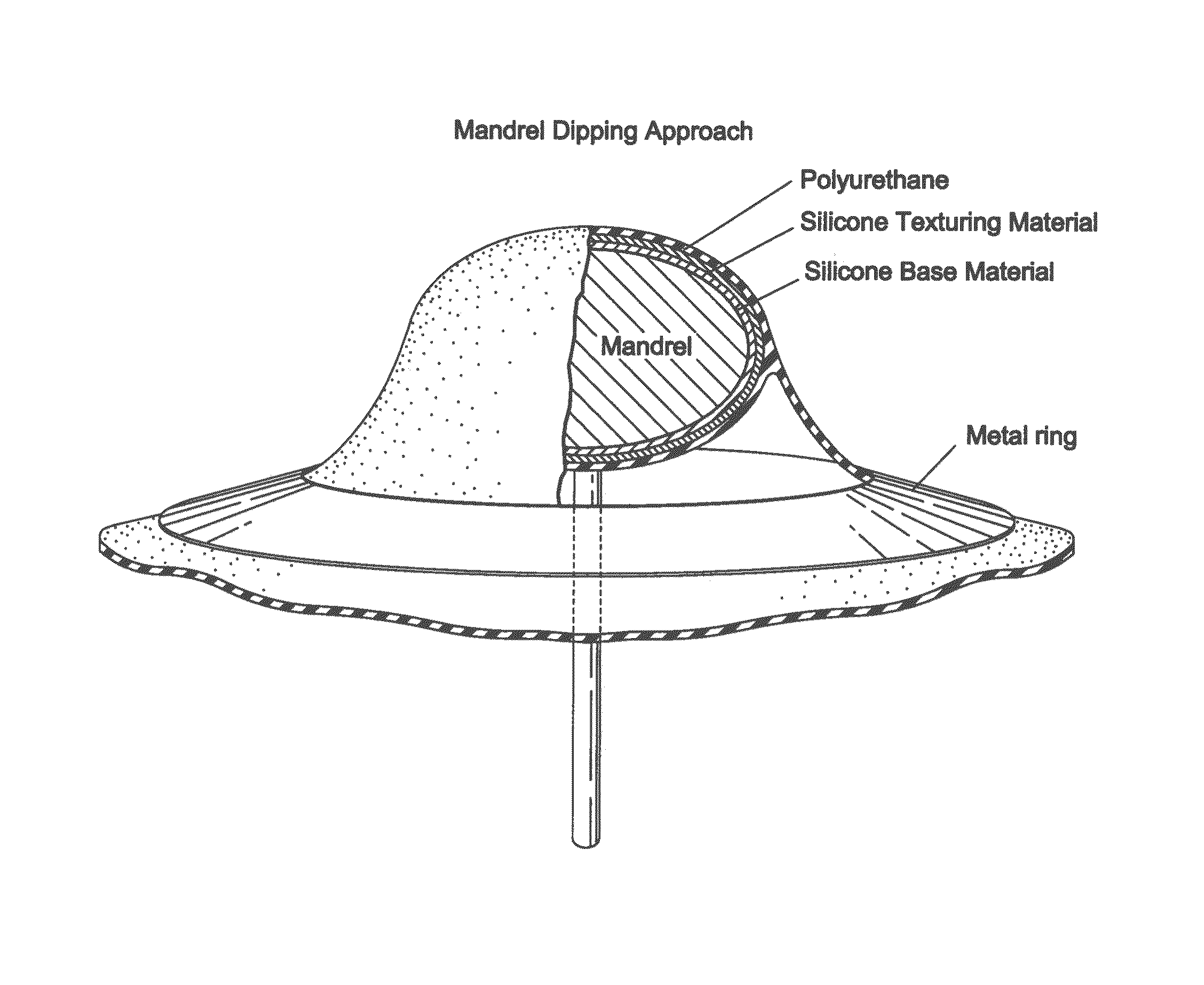

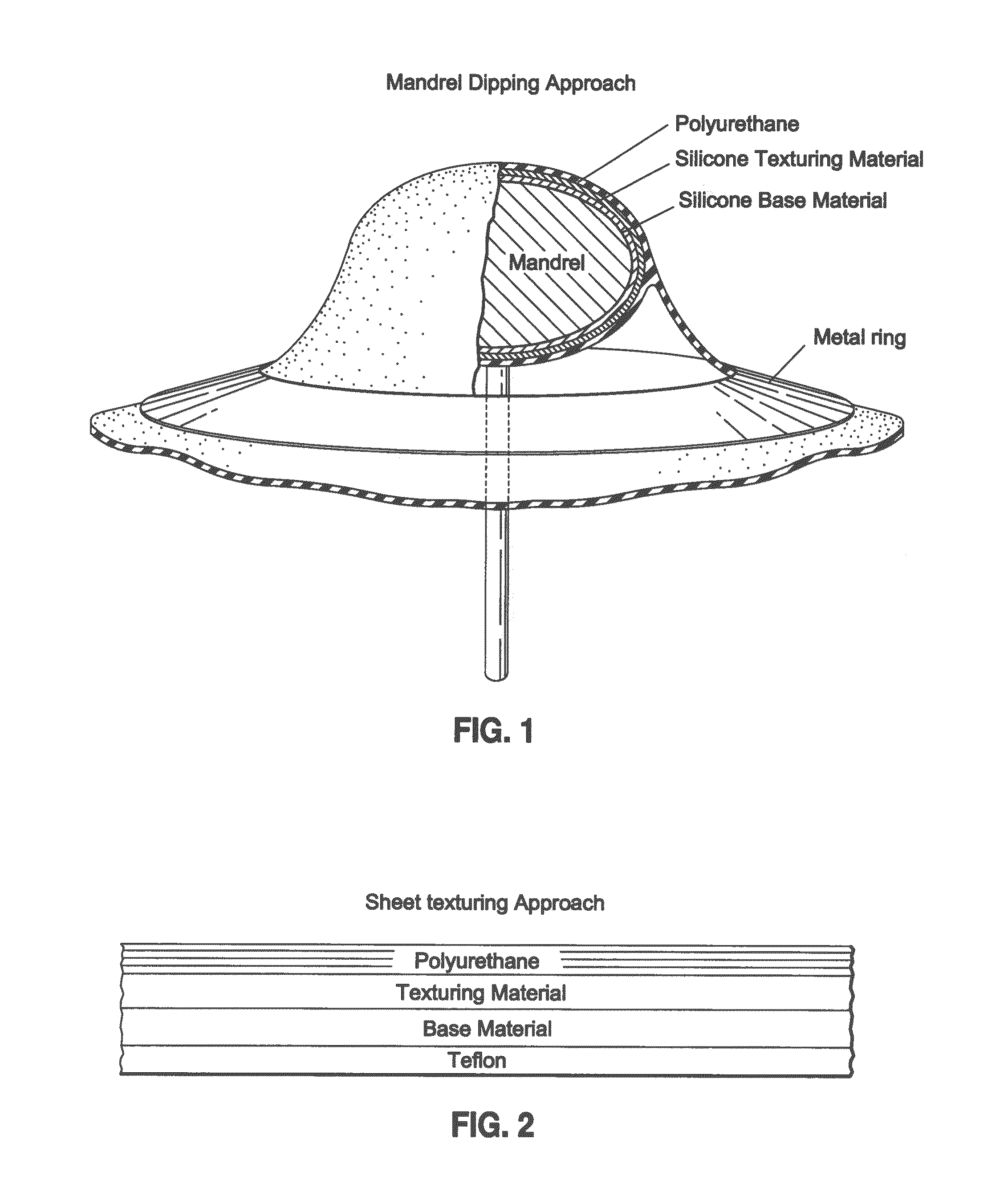

Methods for creating foam-like texture

ActiveUS20140156002A1Pharmaceutical delivery mechanismPretreated surfacesImage textureMaterials science

Methods for creating a foam-like texture on an implantable material are provided. More particularly, methods for creating foam-like texture on implantable silicone materials are provided.

Owner:ALLERGAN INC

Methods for creating foam-like texture

Methods for creating a foam-like texture on an implantable material are provided. More particularly, methods for creating foam-like texture on implantable silicone materials are provided.

Owner:ALLERGAN INC

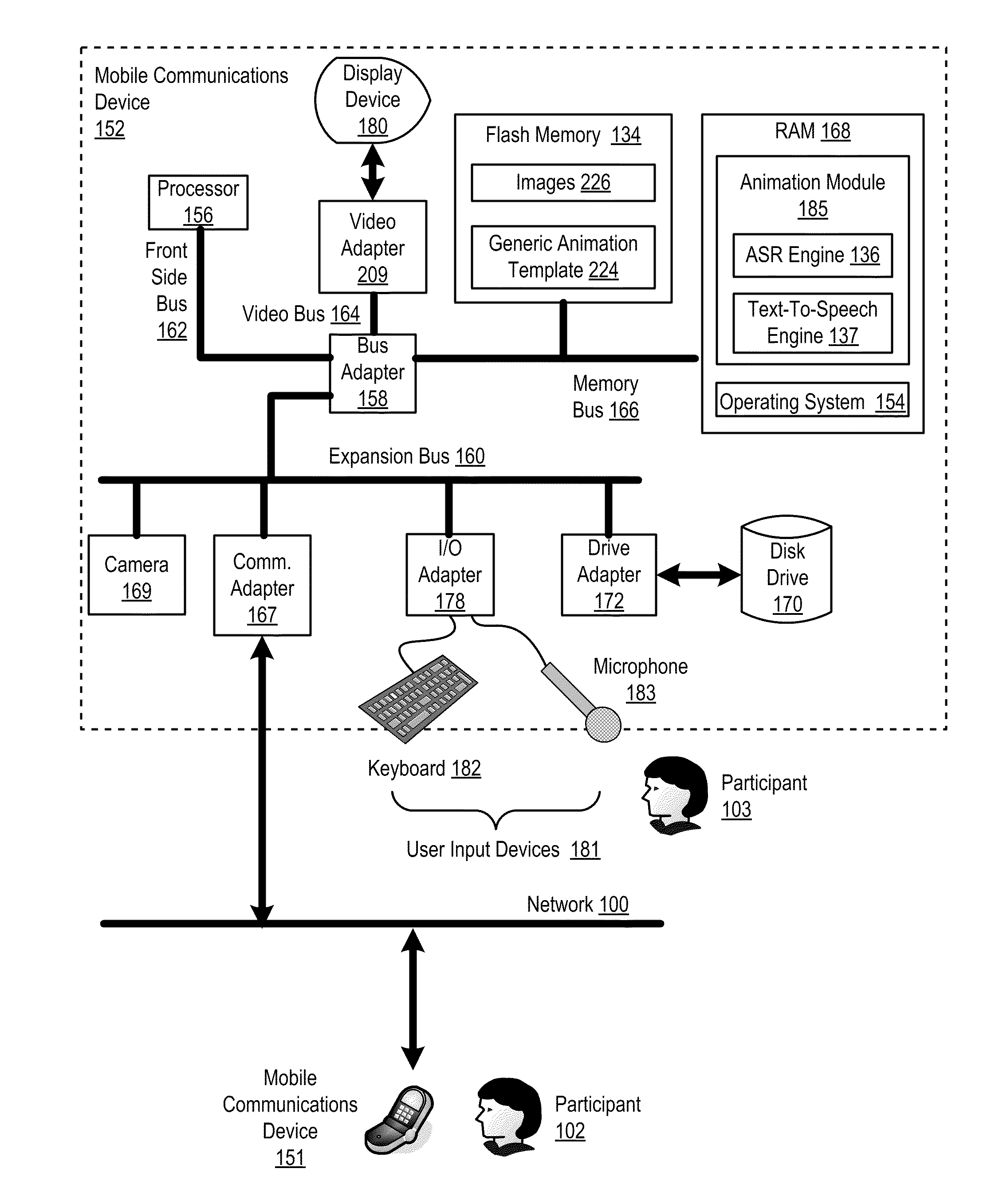

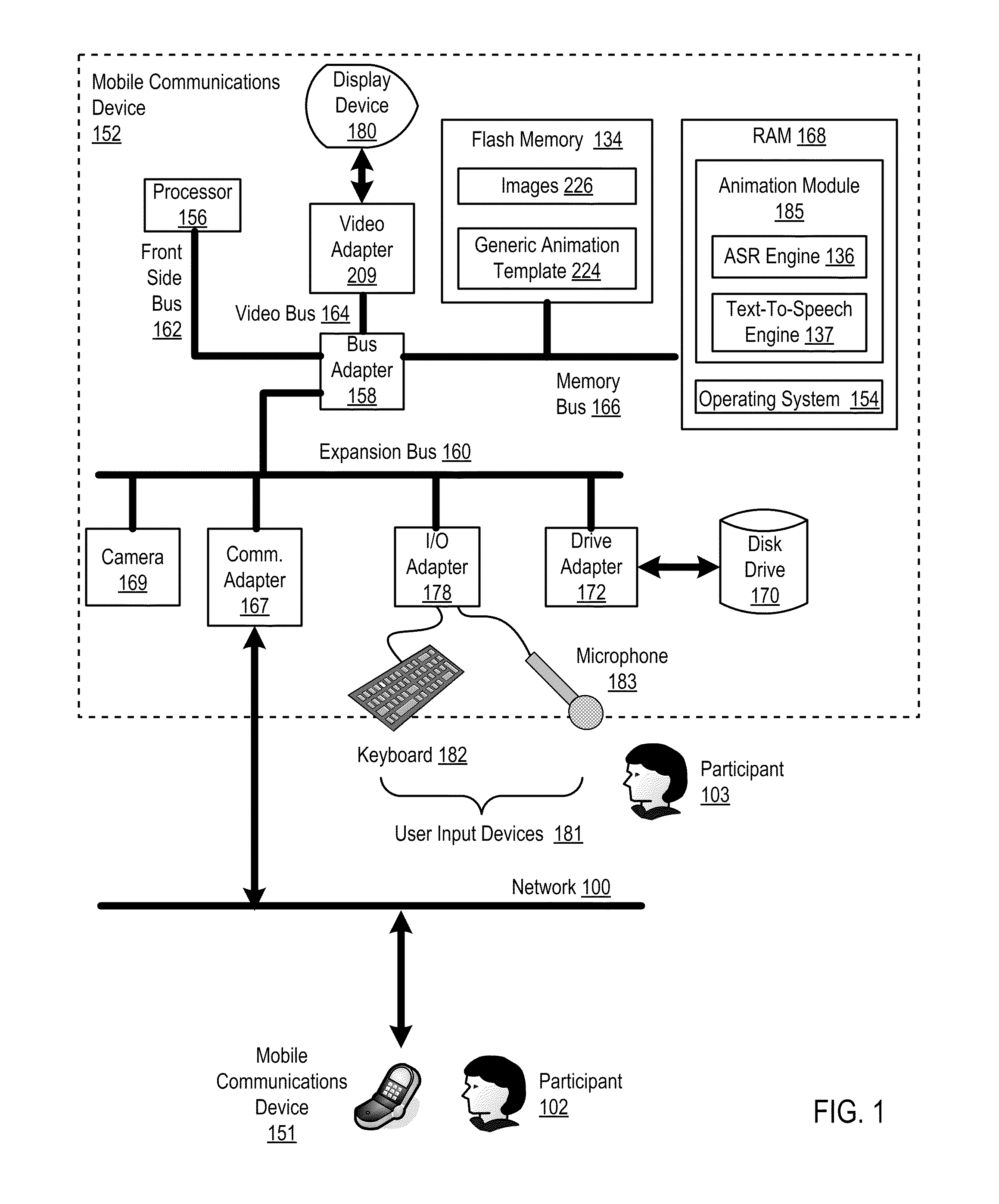

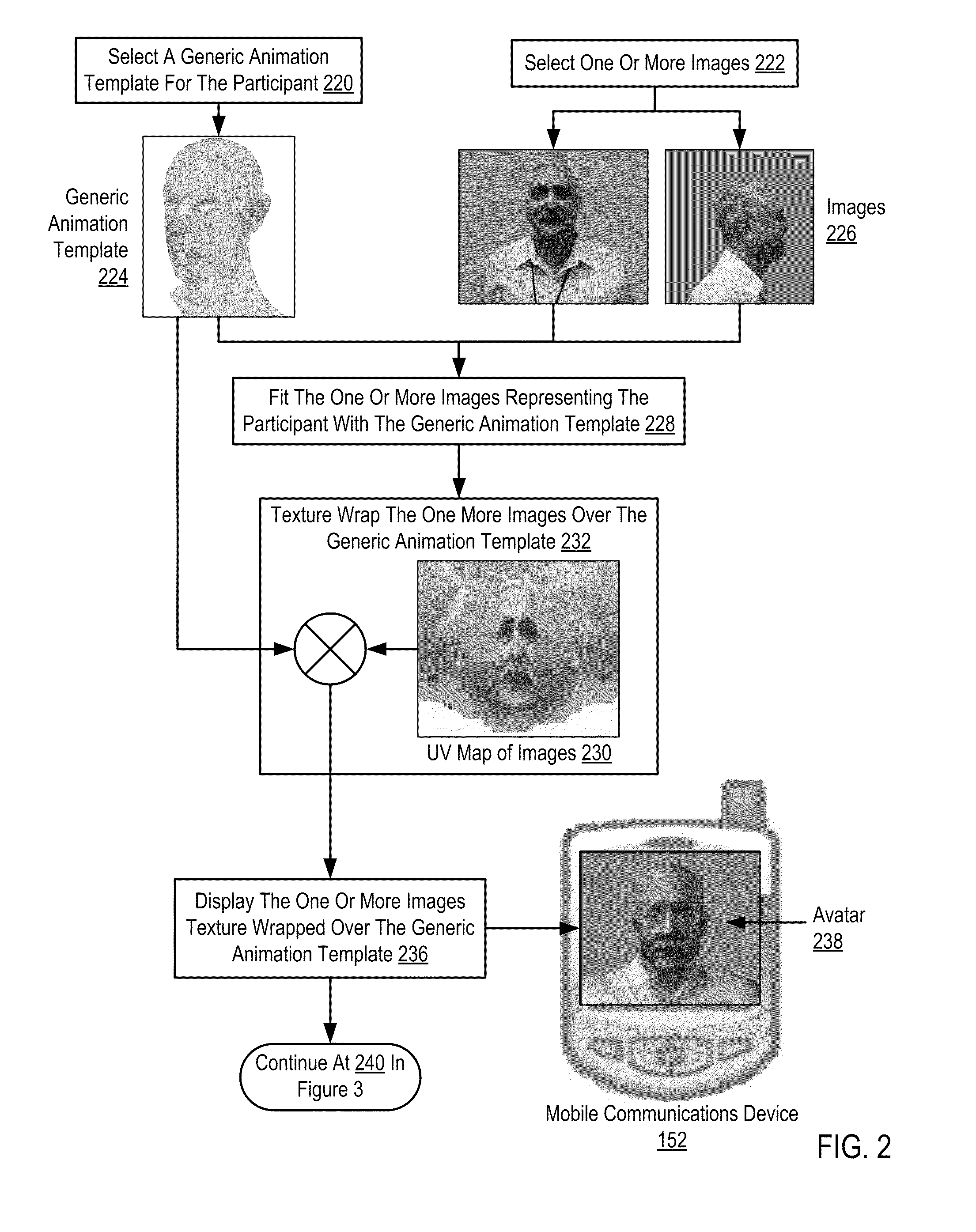

Animating Speech Of An Avatar Representing A Participant In A Mobile Communication

Animating speech of an avatar representing a participant in a mobile communication including selecting one or more images; selecting a generic animation template; fitting the one or more images with the generic animation template; texture wrapping the one more images over the generic animation template; and displaying the one or more images texture wrapped over the generic animation template. Receiving an audio speech signal; identifying a series of phonemes; and for each phoneme: identifying a new mouth position for the mouth of the generic animation template; altering the mouth position to the new mouth position; texture wrapping a portion of the one or more images corresponding to the altered mouth position; displaying the texture wrapped portion of the one or more images corresponding to the altered mouth position of the mouth of the generic animation template; and playing the portion of the audio speech signal represented by the phoneme.

Owner:ACTIVISION PUBLISHING

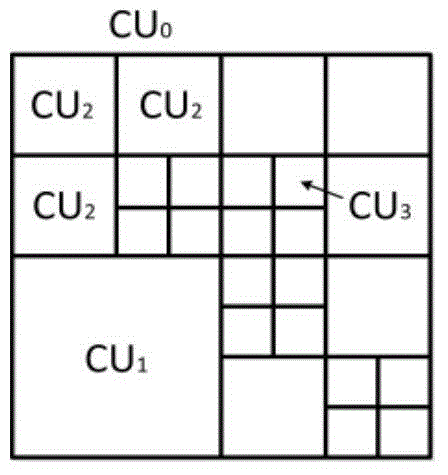

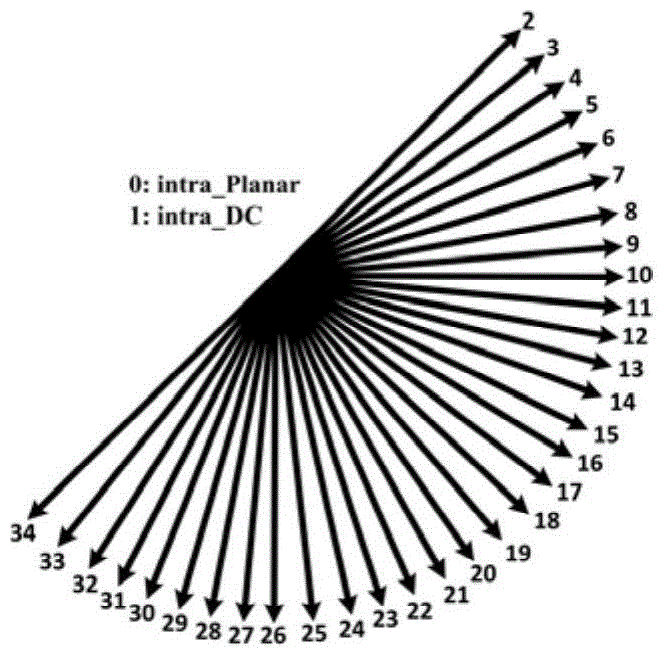

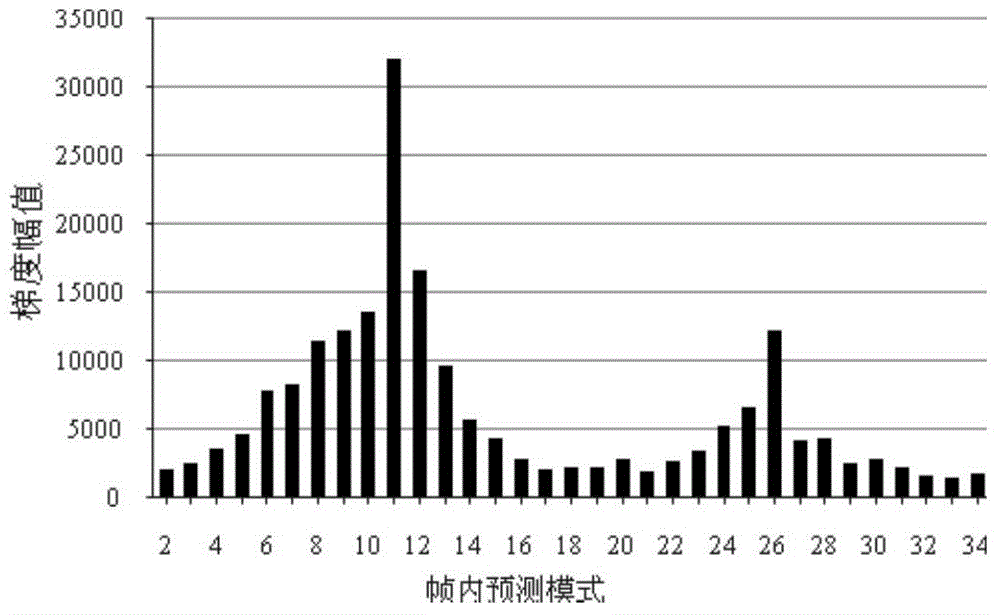

Video coding intra-frame prediction method based on image texture features

ActiveCN105120292AFlexible control compromiseReduce coding complexityDigital video signal modificationDigital videoInformation processing

The invention relates to a video coding intra-frame prediction method based on image texture features, relating to video information processing in the field of digital video communication. The video coding intra-frame prediction method comprises the following steps: calculating out the number of strong edges of a current CU (Control Unit), an angle prediction mode list related to a gradient amplitude value and two strong threshold values for a CU division process by utilizing a gradient histogram of the current CU at first; performing CU depth skipping or early termination of CU division when the strong edges of the current CU satisfy a selected threshold value in the intra-frame prediction process; and directly selecting a proper number of modes as candidate prediction modes from the predication mode list according to image texture conditions in the best candidate mode selecting stage. By CU depth and predication modes cutting, the HEVC (High Efficiency Video Coding) intra-frame prediction coding complexity can be effectively reduced; and the real-time application of an HEVC coder is facilitated.

Owner:XIAMEN UNIV

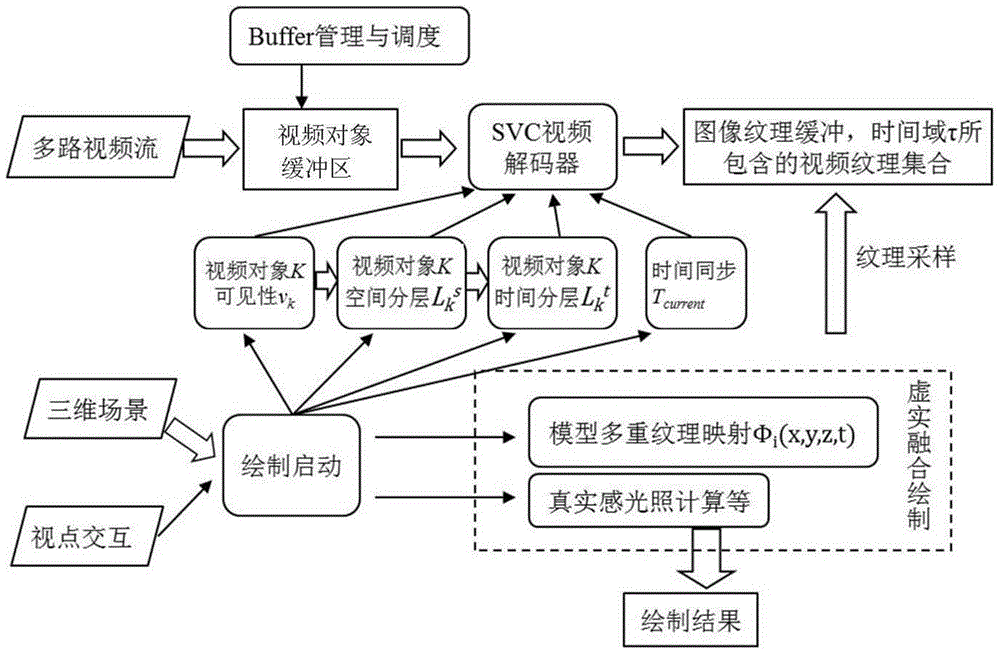

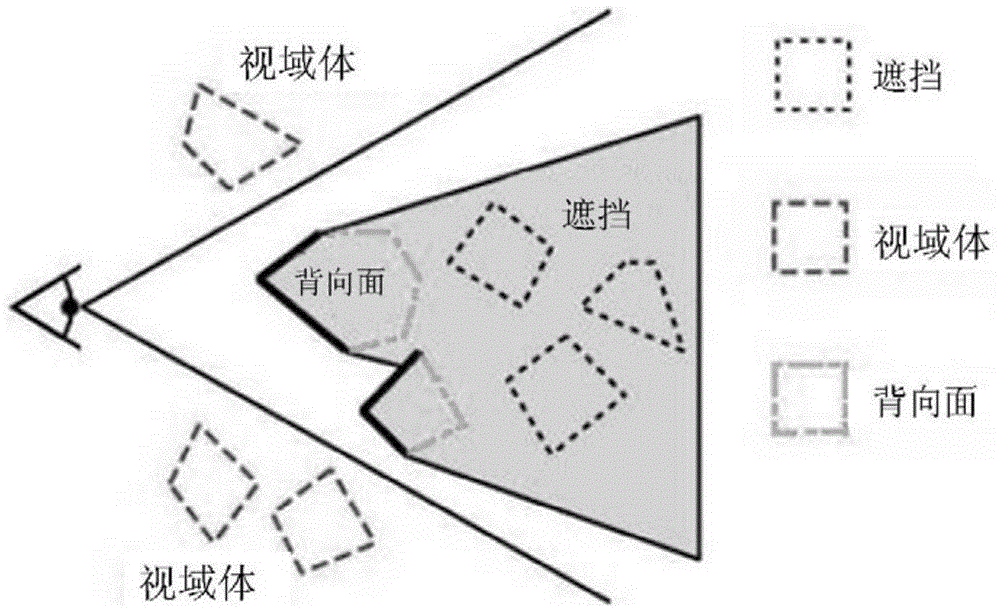

Effective GPU three-dimensional video fusion drawing method

ActiveCN104616243AMeet the need for effectivenessMeet needsImage data processing detailsSteroscopic systemsVisibilityComputer graphics (images)

The invention relates to an effective GPU three-dimensional video fusion drawing method. The effective GPU three-dimensional video fusion drawing method includes steps that acquiring video data input by multi-video streaming through a video object buffer region; carrying out extensible layered decoding on the acquired video data in a GPU, wherein the decoding thread is controlled and driven by partial visual characters of the corresponding three-dimensional scene on which the video object is depended, and the visual characters comprise visibility, layered attribute and time consistency; after finishing decoding, binding all the image sequences and texture IDS obtained through decoding corresponding time slices according to a synchronization time, and storing in an image texture buffer region; using spatio-temporal texture mapping functions to sample the textures in the image texture buffer region, mapping to the surface of an object in the three-dimensional scene, finishing the other operations relevant to third dimension drawing, and outputting a video-based virtual-real fusion drawing result. The effective GPU three-dimensional video fusion drawing method is capable of meeting the effectiveness demand, precision demand and reliability demand of the virtual-real three-dimensional video fusion.

Owner:北京道和智信科技发展有限公司

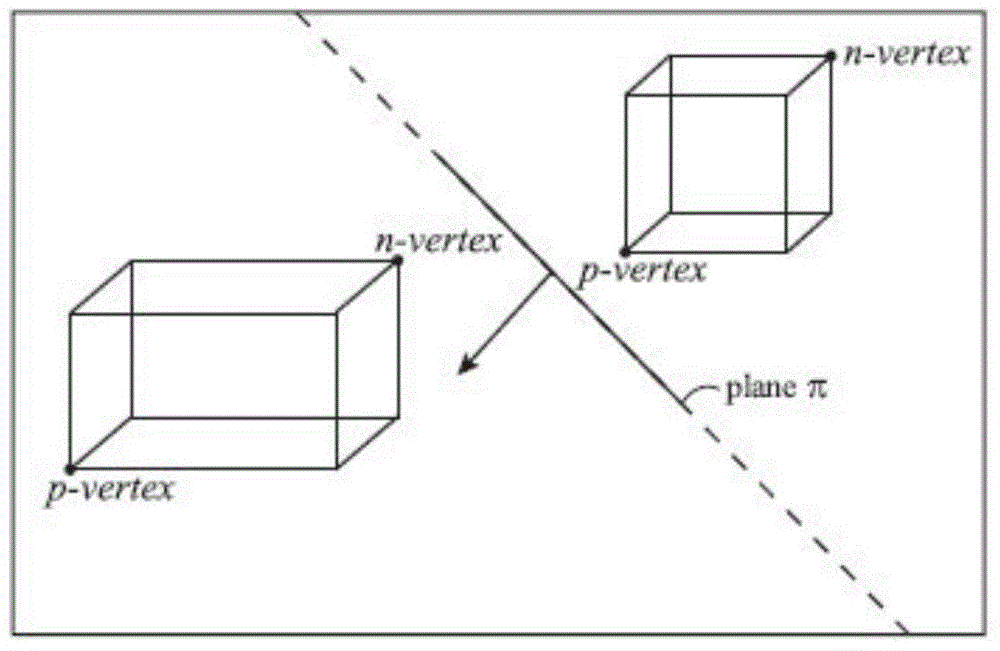

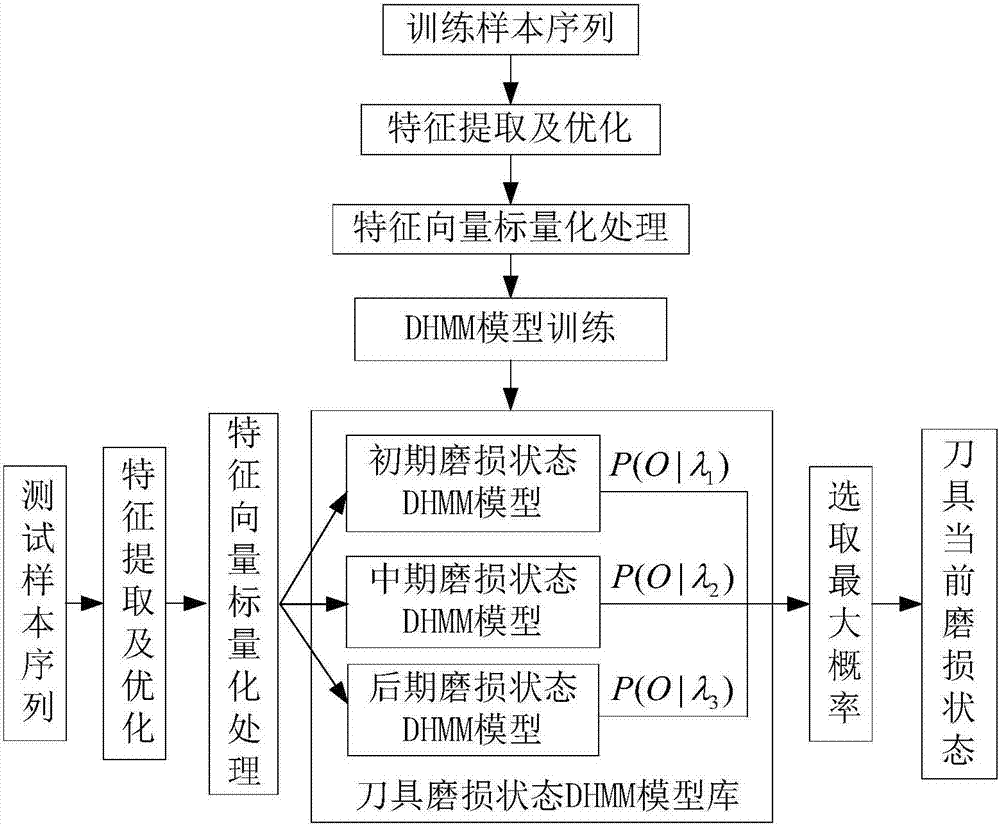

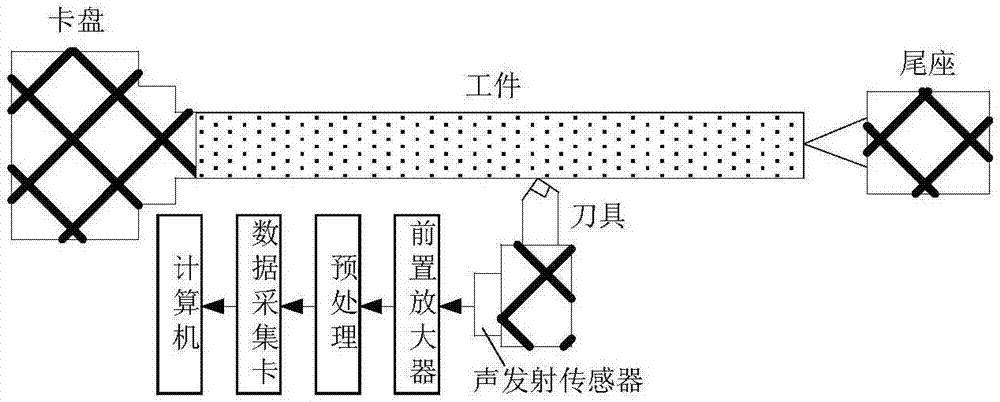

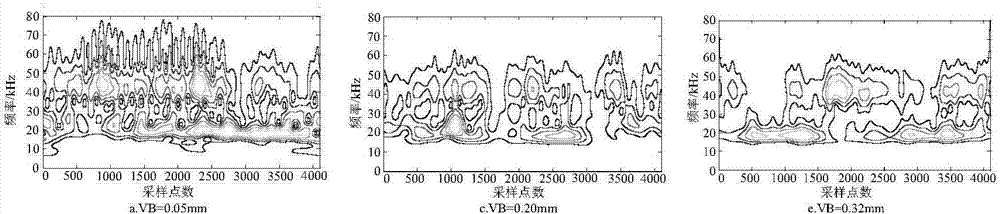

Monitoring method based on image features and LLTSA algorithm for tool wear state

ActiveCN107378641ARealization of wear status monitoringFully automatedMeasurement/indication equipmentsTime–frequency analysisTool wear

The invention relates to a monitoring method based on image features and an LLTSA algorithm for a tool wear state. According to the method, an image texture feature extraction technology is introduced into the field of tool wear fault diagnosis, and monitoring for the tool wear state is realized in combination with three flows of ' signal denoising', 'feature extraction and optimization' and 'mode recognition'. The method comprises the steps of firstly, acquiring an acoustic emission signal in a tool cutting process through an acoustic emission sensor, and carrying out signal denoising processing through an EEMD diagnosis; secondly, carrying out time-frequency analysis on a denoising signal through S transformation, converting a time-frequency image to a contour gray-level map, extracting image texture features through a gray-level co-occurrence matrix diagnosis, and then further carrying out dimensionality reduction and optimization on an extracted feature vector through a scatter matrix and the LLTSA algorithm to obtain a fusion feature vector; and finally training a discrete hidden Markov model of the tool wear state through the fusion feature vector, and establishing a classifier, thereby realizing automatic monitoring and recognition for the tool wear state.

Owner:NORTHEAST DIANLI UNIVERSITY

Method for reproducing texture force touch based on shape-from-shading technology

InactiveCN101615072AImprove realismStrong real-timeInput/output for user-computer interactionGraph readingComputer graphics (images)Robot control

The invention aims at the problem how to reproduce the force touch of image textures and provides a method for reproducing the texture force touch based on a shape-from-shading technology from grey scales of an image. The method is characterized by processing the grey scales of a texture image; extracting the micro three-dimensional contour information of the true textures from the grey scale information of the image to be drawn into a virtual surface; rendering the textures of the virtual surface by a force touch model; and outputting calculated force from a general manual controller to an operator. The invention has the advantages that the miniature contour of an object surface is needless to be measured by a special instrument, a special texture force touch expression device is needless to design, and the like and can be used in the fields for simulating virtual environments, controlling remotely-operated robots, browsing remote museums and online shops, simulating surgeries, and the like.

Owner:JIANGSU HONGBO MACHINERY MFG +1

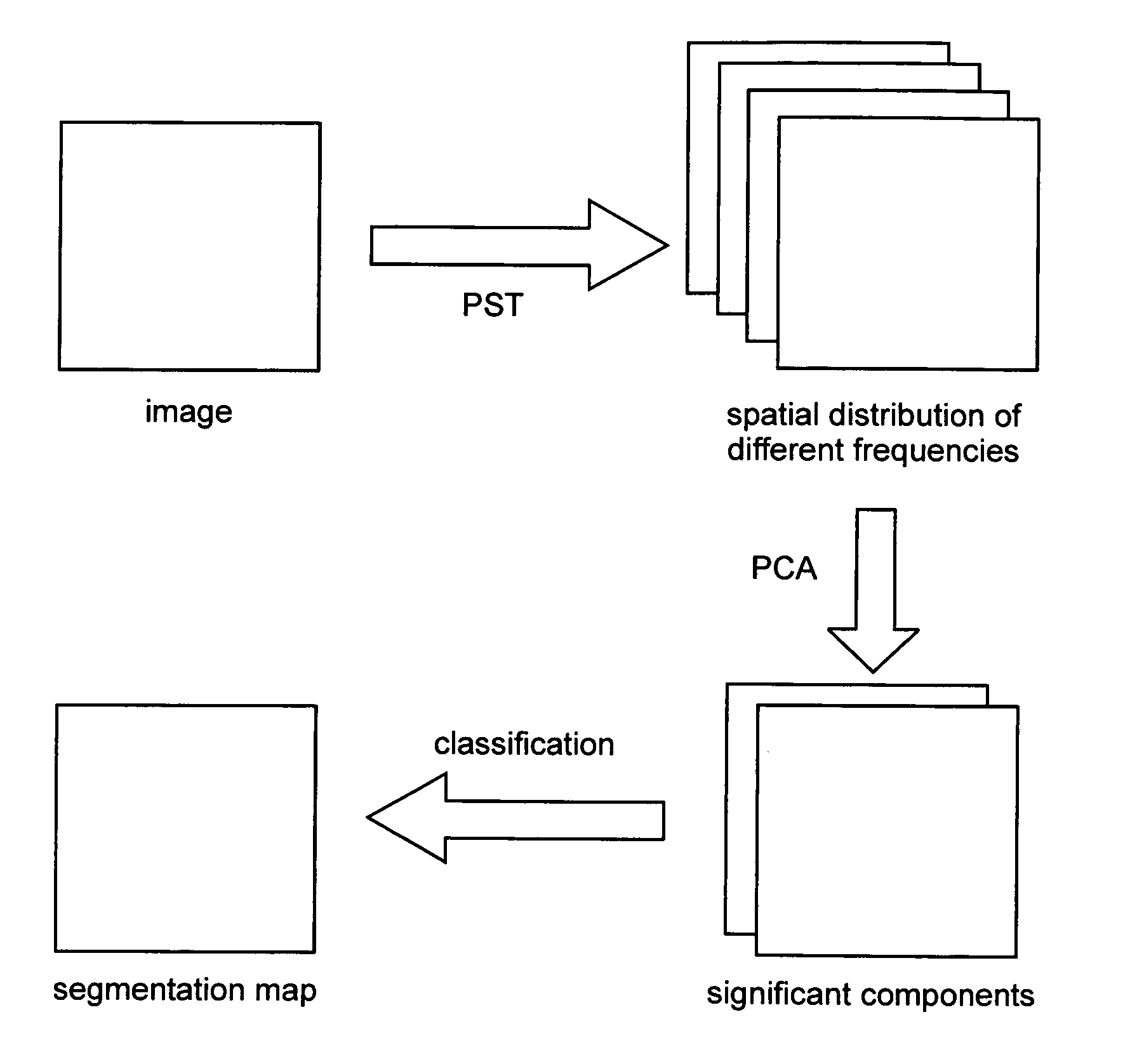

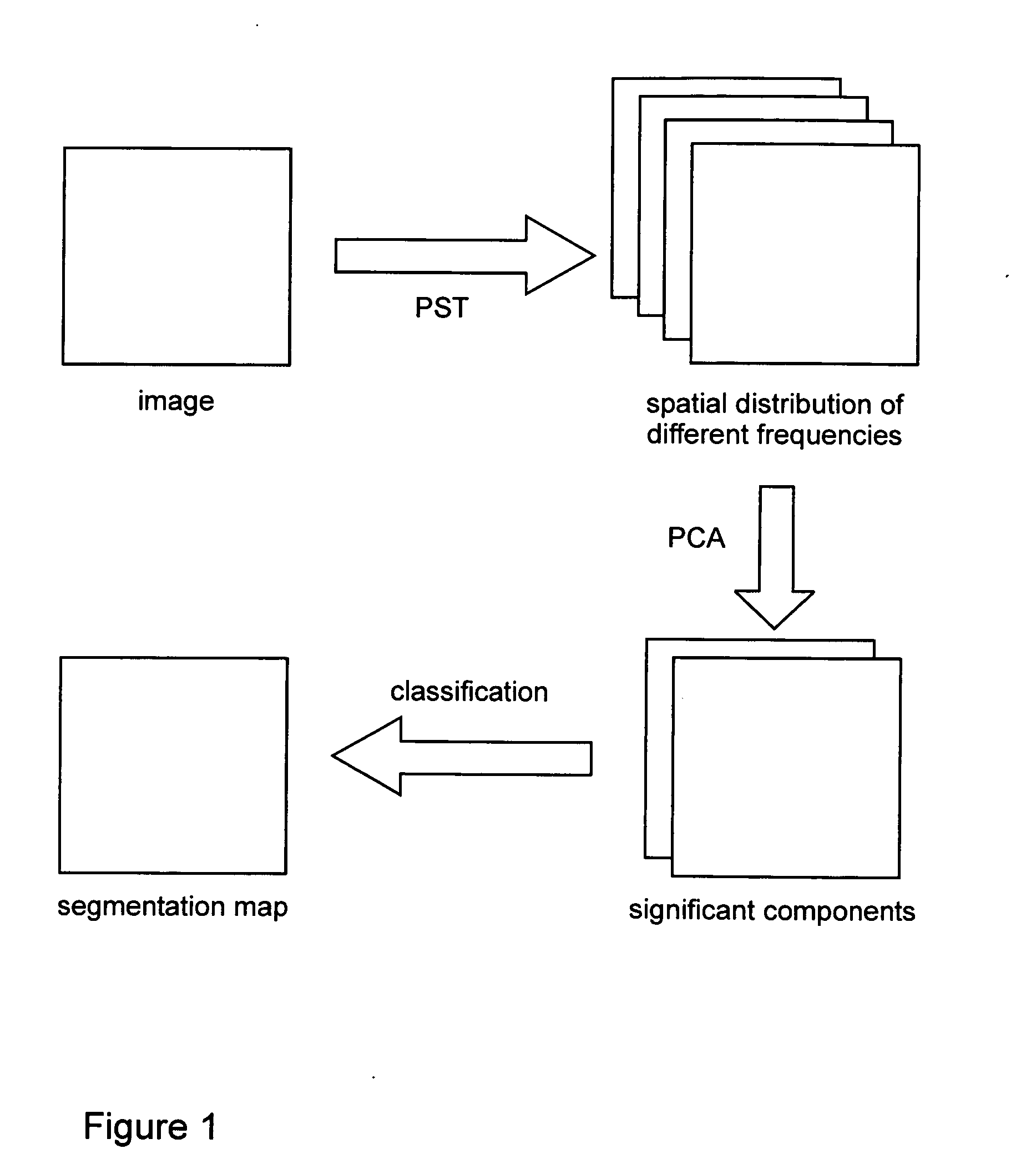

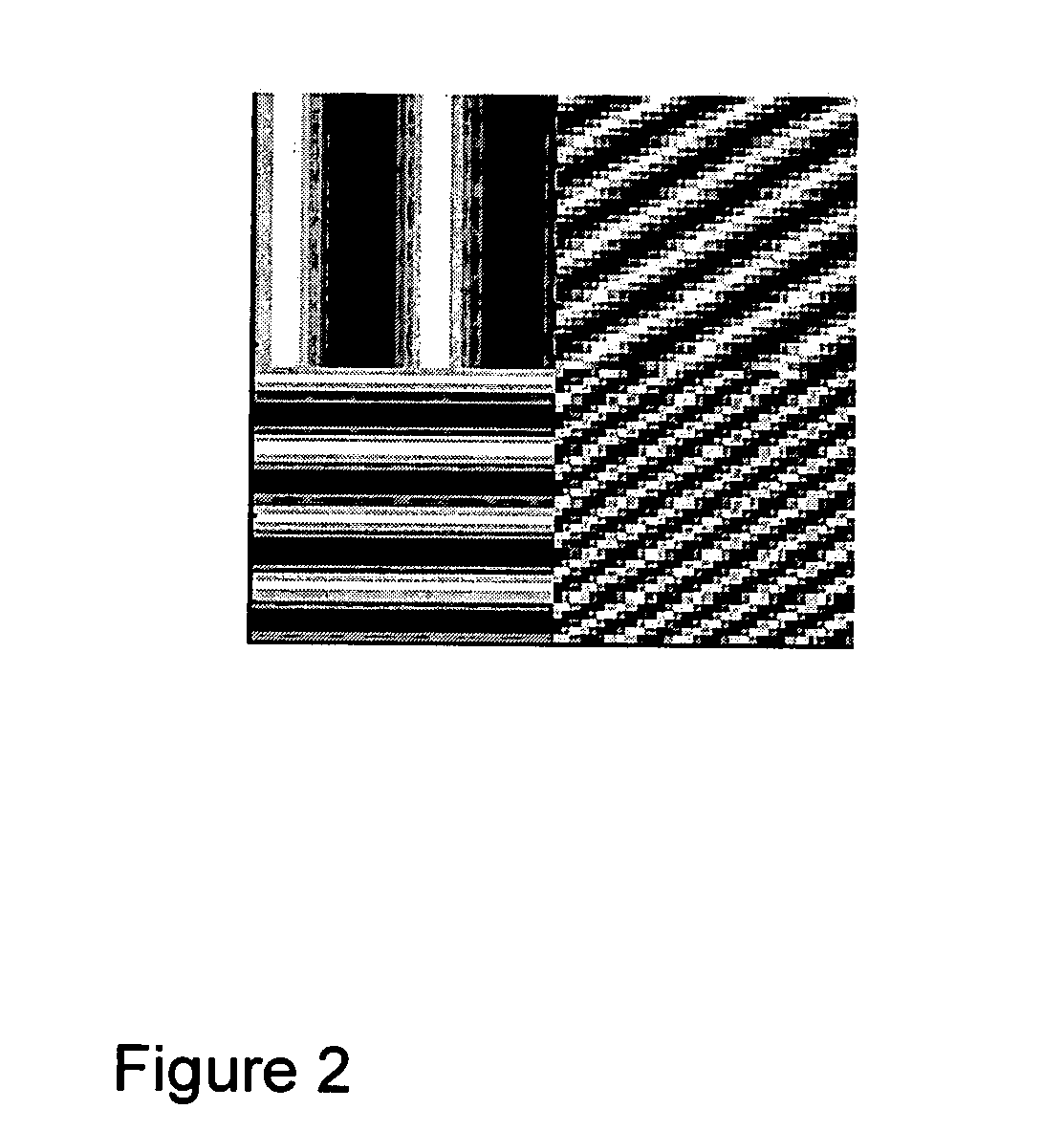

Image texture segmentation using polar S-transform and principal component analysis

ActiveUS20050253863A1Reduce redundancyMaximal data variationImage enhancementImage analysisPrincipal component analysisS transform

The present invention relates to a method and system for segmenting texture of multi-dimensional data indicative of a characteristic of an object. Received multi-dimensional data are transformed into second multi-dimensional data within a Stockwell domain based upon a polar S-transform of the multi-dimensional data. Principal component analysis is then applied to the second multi-dimensional data for generating texture data characterizing texture around each data point of the multi-dimensional data. Using a classification process the data points of the multi-dimensional data are partitioned into clusters based on the texture data. Finally, a texture map is produced based on the partitioned data points. The present invention provides image texture segmentation based on the polar S-transform having substantially reduced redundancy while keeping maximal data variation.

Owner:CALGARY SCIENTIFIC INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com