Patents

Literature

160 results about "Video fusion" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

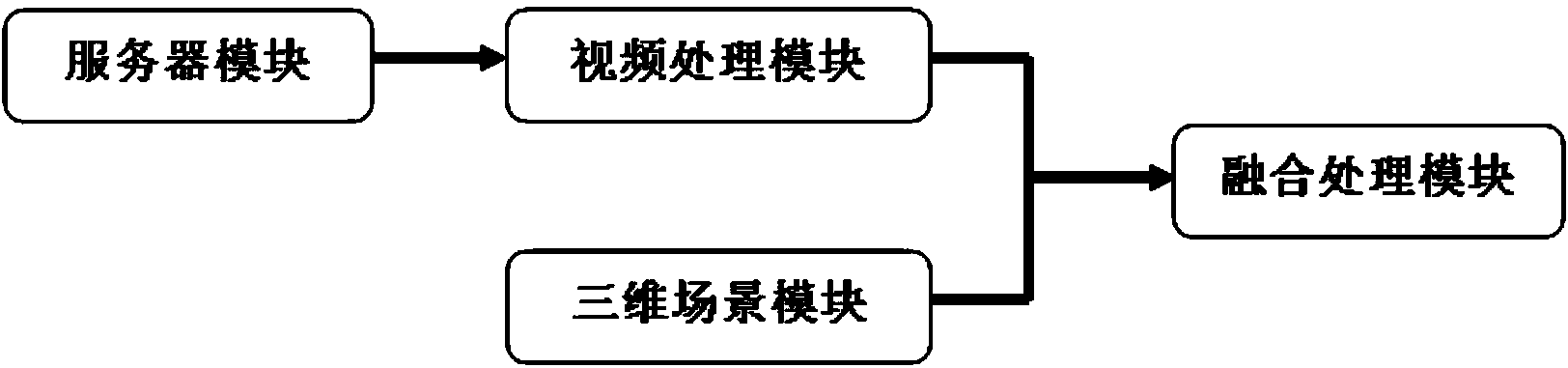

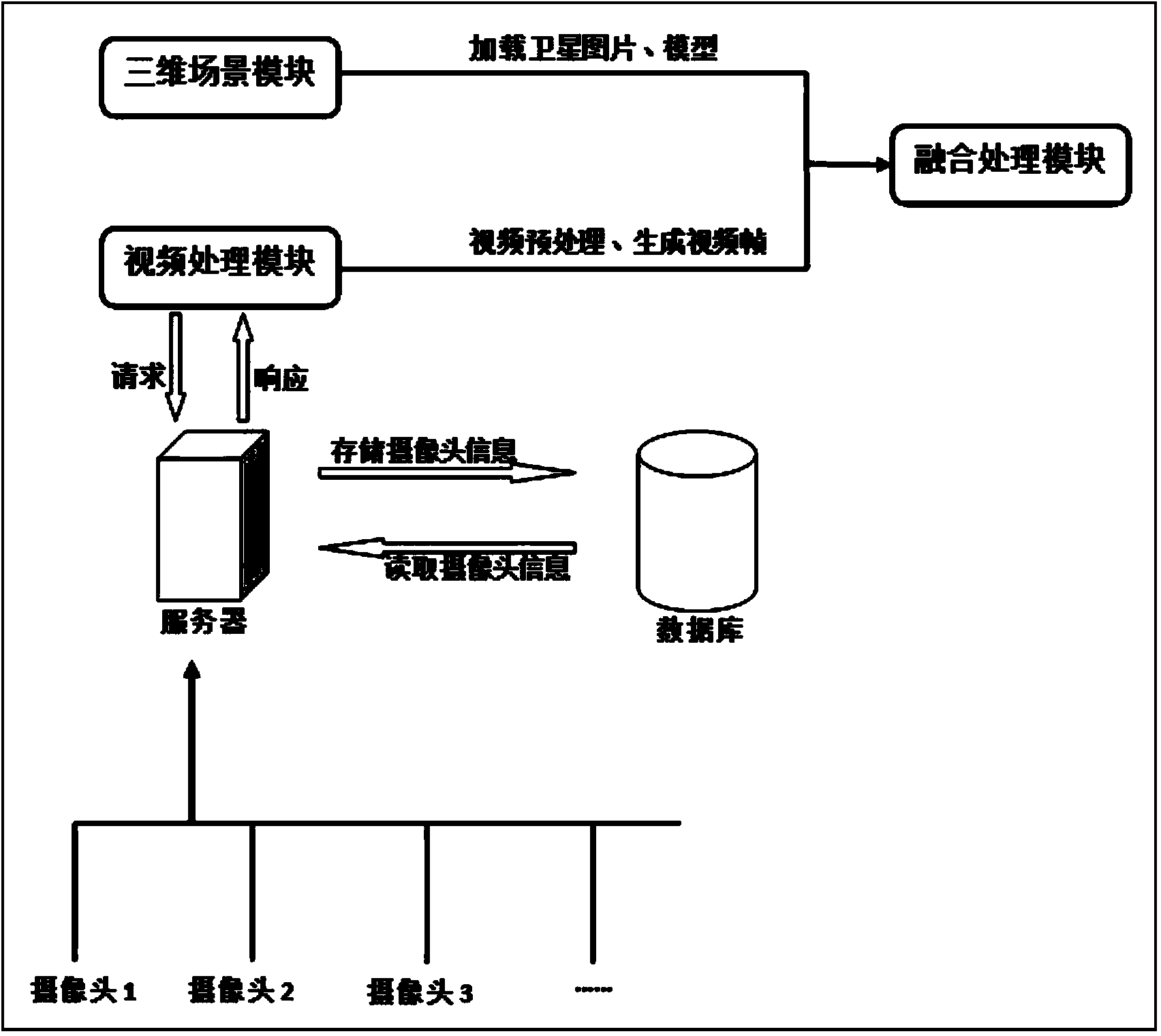

Monitoring video fusion system and monitoring video fusion method based on three-dimension space scene

The invention provides a monitoring video fusion system and a monitoring video fusion method based on a three-dimension space scene. Video frames are extracted from a monitoring video data stream and are projected to a three-dimension space scene so as to realize full-space-time stereo fusion of video data and three-dimension scene data and change a traditional mode in which a map application can only be statically displayed; and a plurality of channels of real-time monitoring videos deployed in different geographic locations and a three-dimension model in a monitoring area are registered and fused so as to generate a large-range three-dimension panoramic dynamic monitoring picture and realize real-time global control on the overall security situation of the monitoring area.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

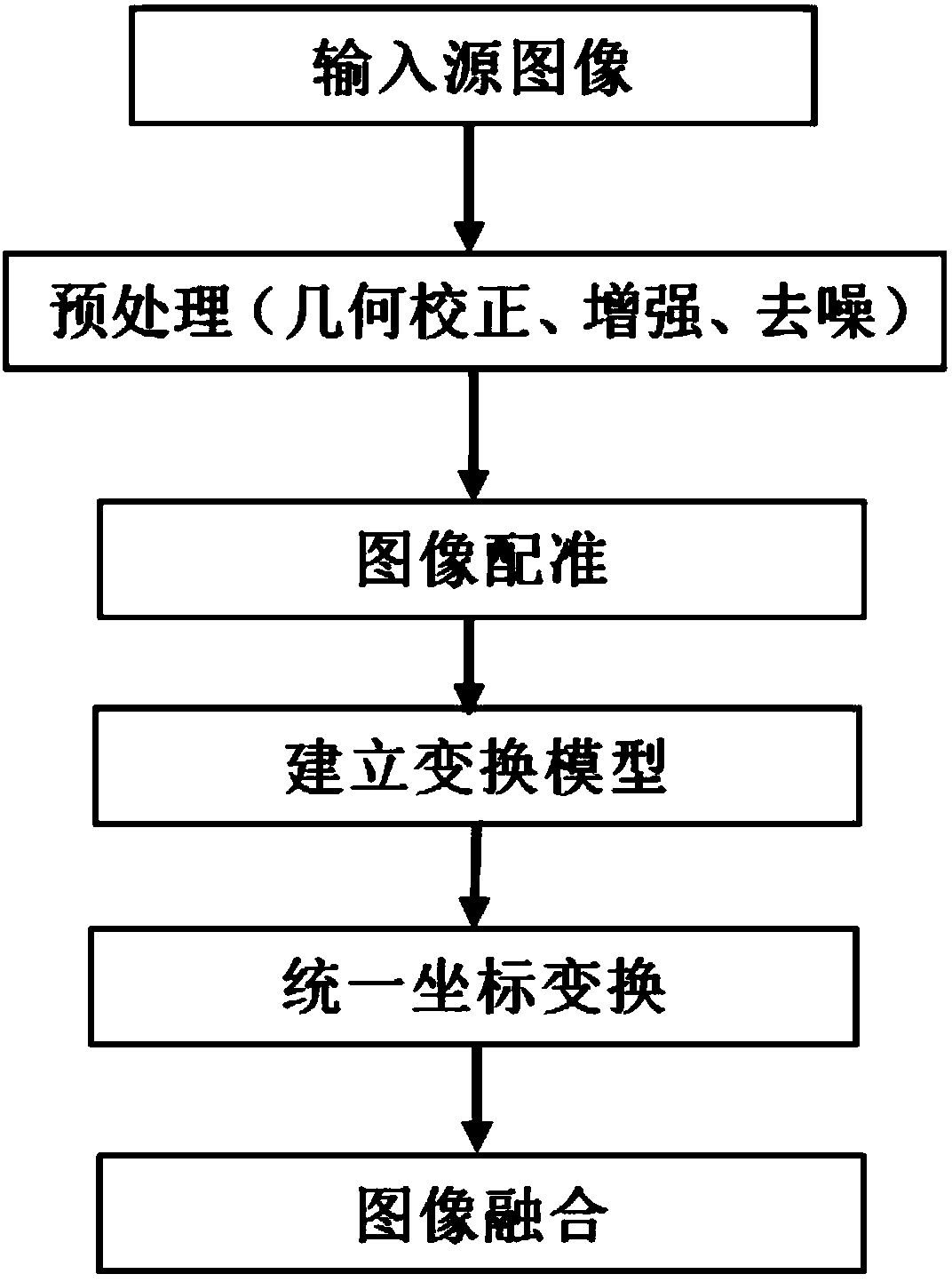

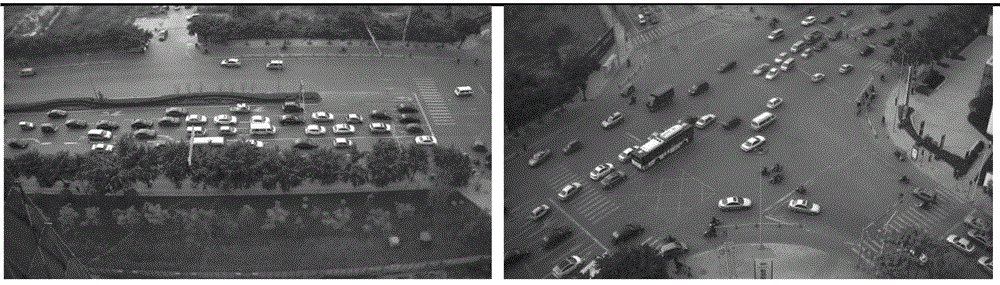

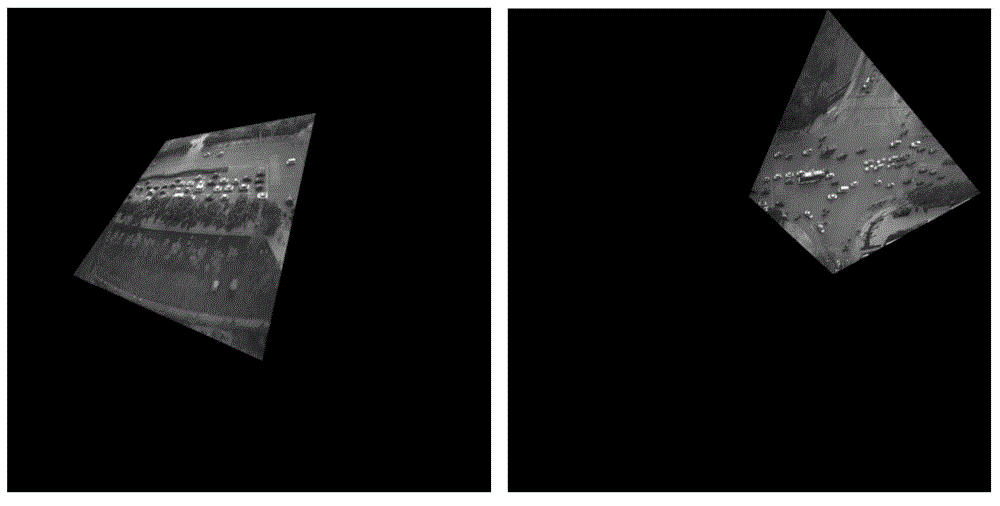

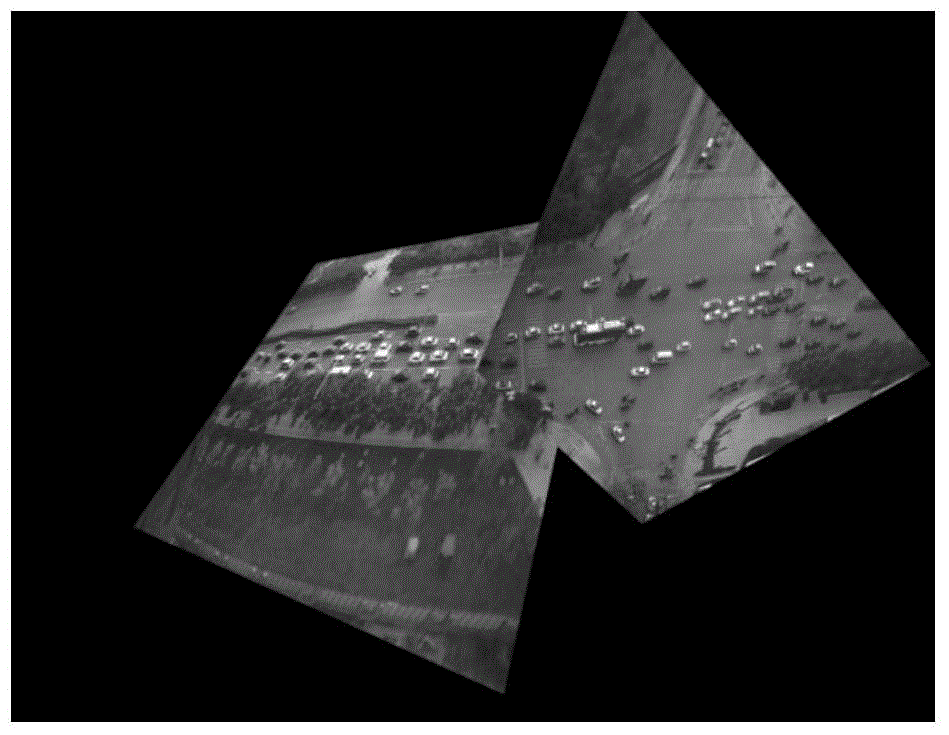

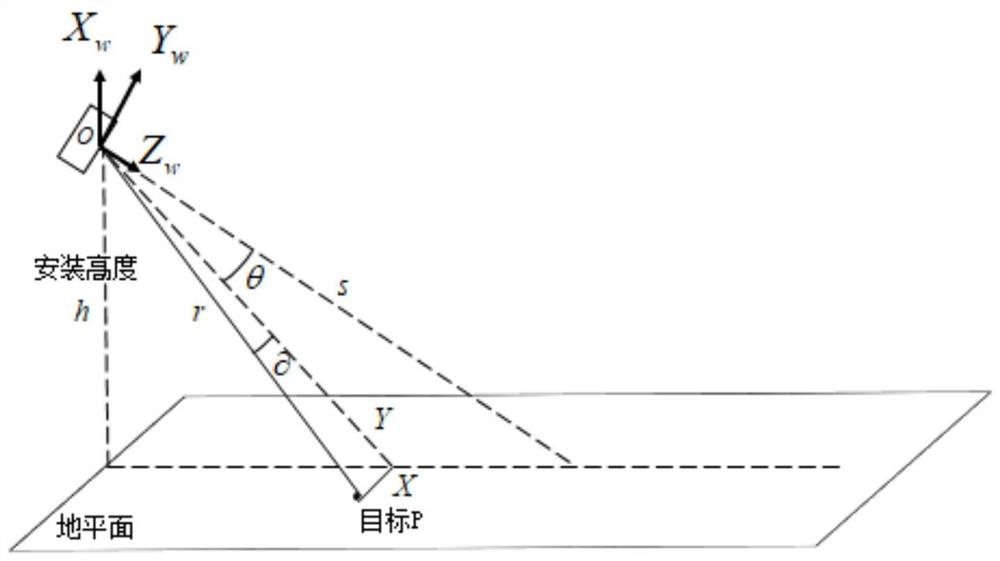

Multi-view video fusion and traffic parameter collecting method for large-scale scene traffic monitoring

The invention relates to the field of computer application technique and traffic management, in particular to a multi-view video fusion and traffic parameter collecting method for large-scale scene traffic monitoring. The fusion method comprises the steps of collection and decoding of videos of viewpoint video sources, transformation and splice fusion of adjacent view frame images, and image rendering of the frame images after fusion of the multi-view video fusion. According to the method, real-time collection, decoding, video frame transformation registering and texture mapping are performed on multi-path parallel videos, and therefore fused traffic large-scale scene videos are obtained; texture characteristic analysis is performed on roads in a traffic large-scale scene image sequence to obtain real-time traffic parameters, used for traffic analysis and evaluation, such as the traffic queuing length, the non-motor vehicle density, the traffic flow and travel time of the roads in various directions of the area.

Owner:WISESOFT CO LTD +1

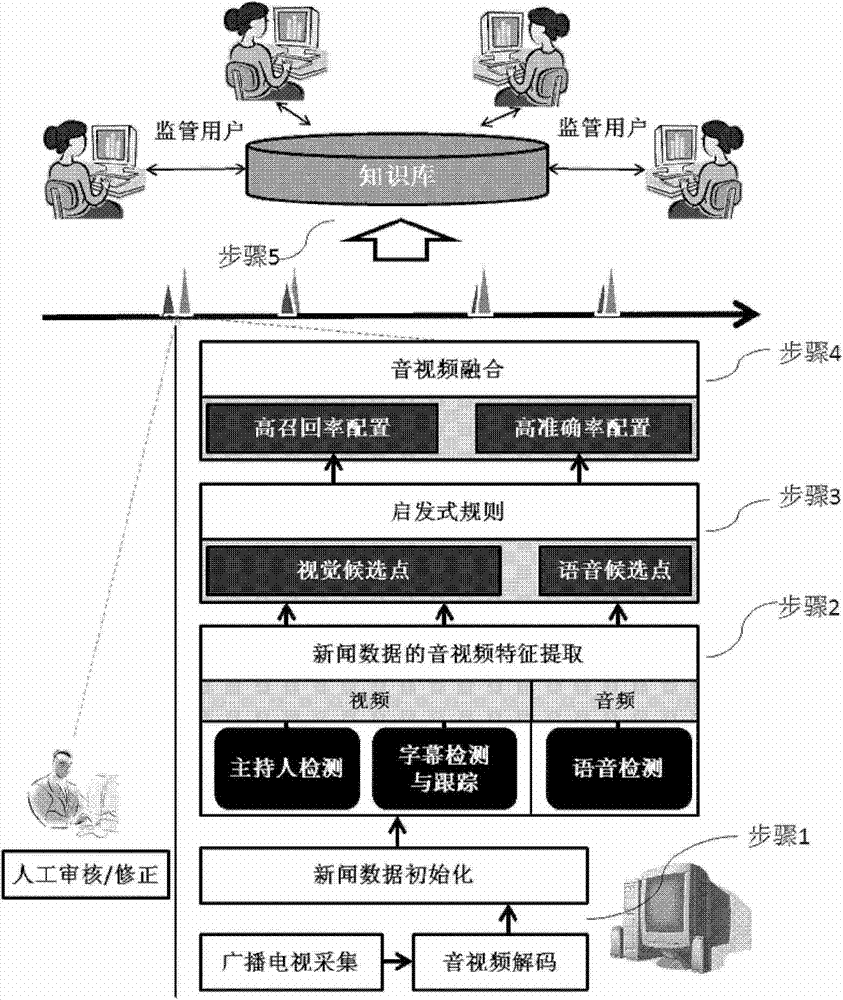

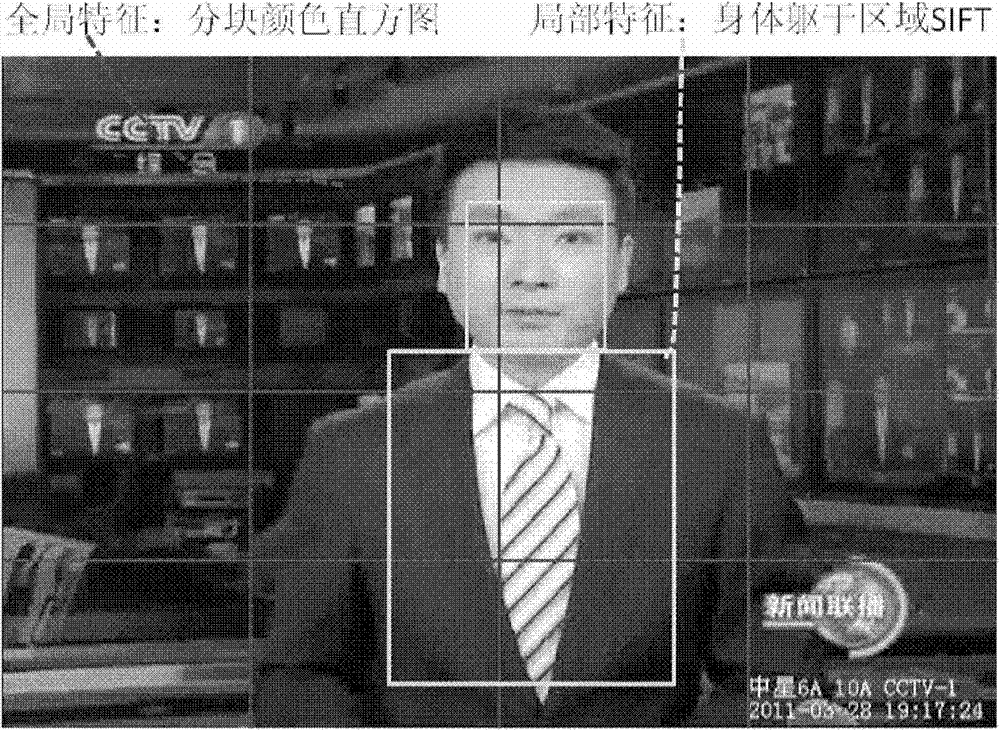

Automatic news splitting method for volume broadcast television supervision

ActiveCN103546667AEfficiencyPlay immediacyTelevision system detailsCharacter and pattern recognitionVideo imageBroadcasting

The invention discloses an automatic news splitting method for volume broadcast television supervision. The automatic news splitting method for volume broadcast television supervision comprises the steps that broadcast television data are initialized to automatically obtain a news program voice frequency waveform and a video image, audio and video features of the news data are extracted and comprise host detection, subtitling detection and traction and voice detection, a vision candidate point and a voice candidate point of a news item boundary are obtained through a heuristic rule, the news item boundary is calculated in a positioning mode according to audio and video fusion, a processing result provided in the previous steps is stored into a knowledge base to serve as knowledge resources supporting supervision requirements after artificial check. The automatic news splitting method for volume broadcast television supervision has the advantages that the constructed audio and video features accord with the description of the news item boundary, the designed audio and video fusion strategy fits with a news item organization structure, and therefore the news splitting process is higher in execution efficiency, higher in column robustness and more ideal in result compared with an existing method.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

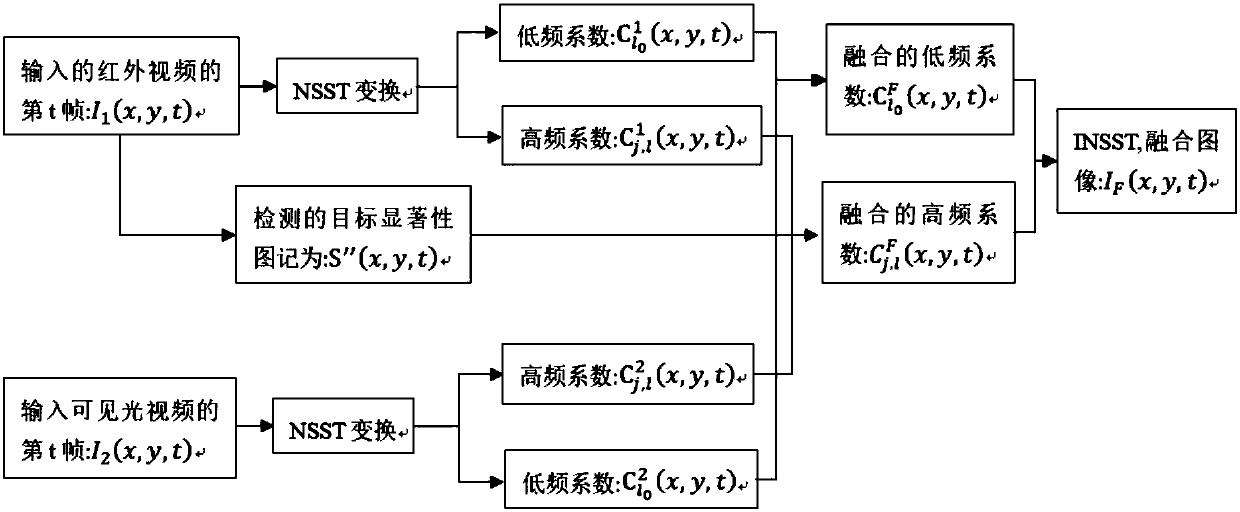

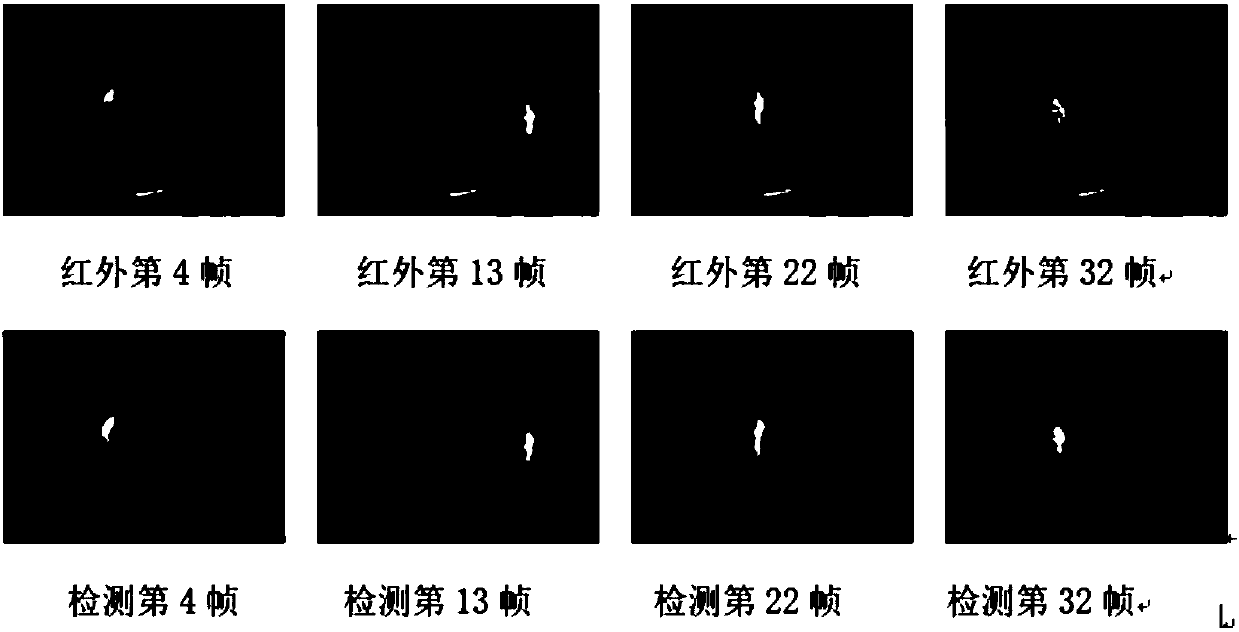

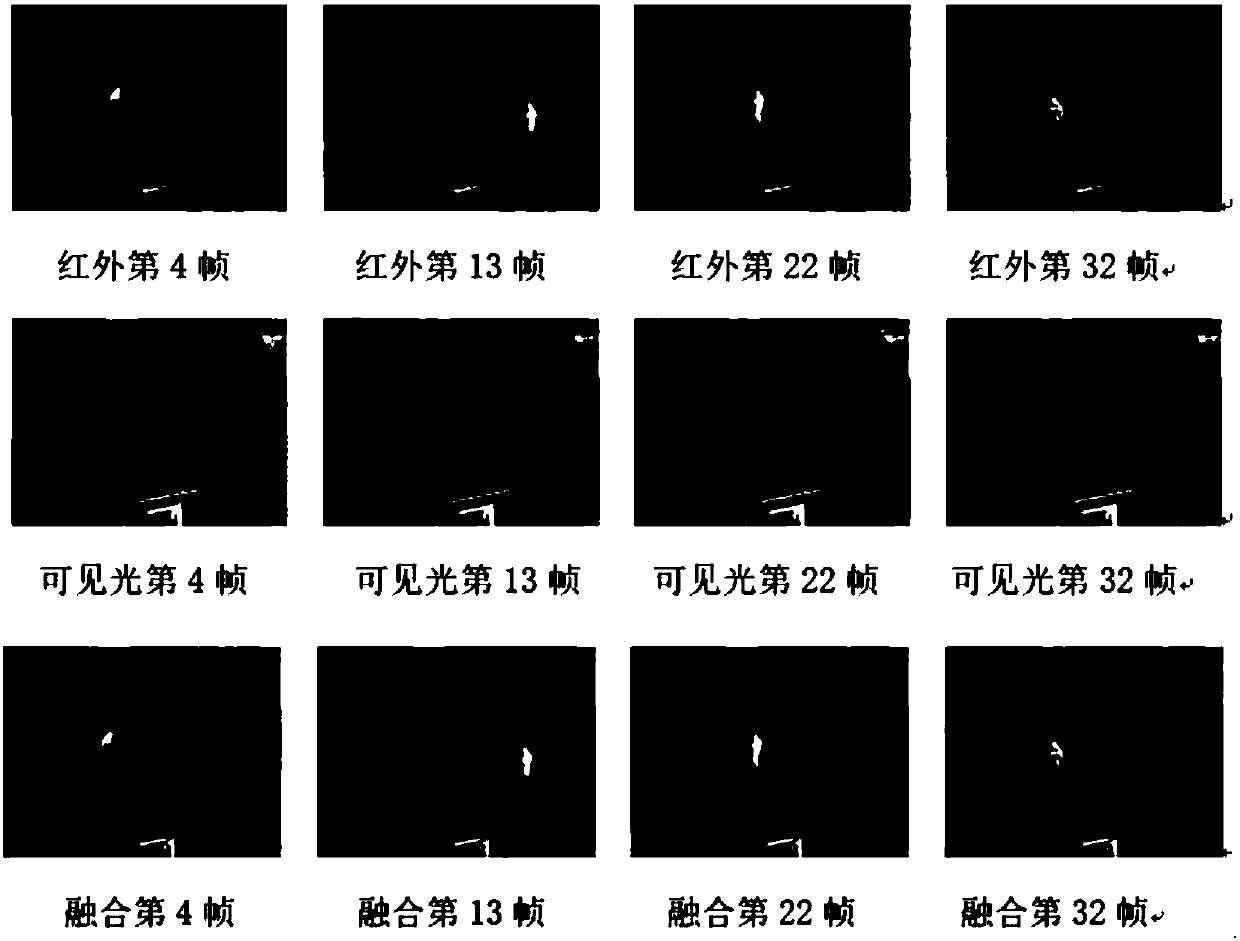

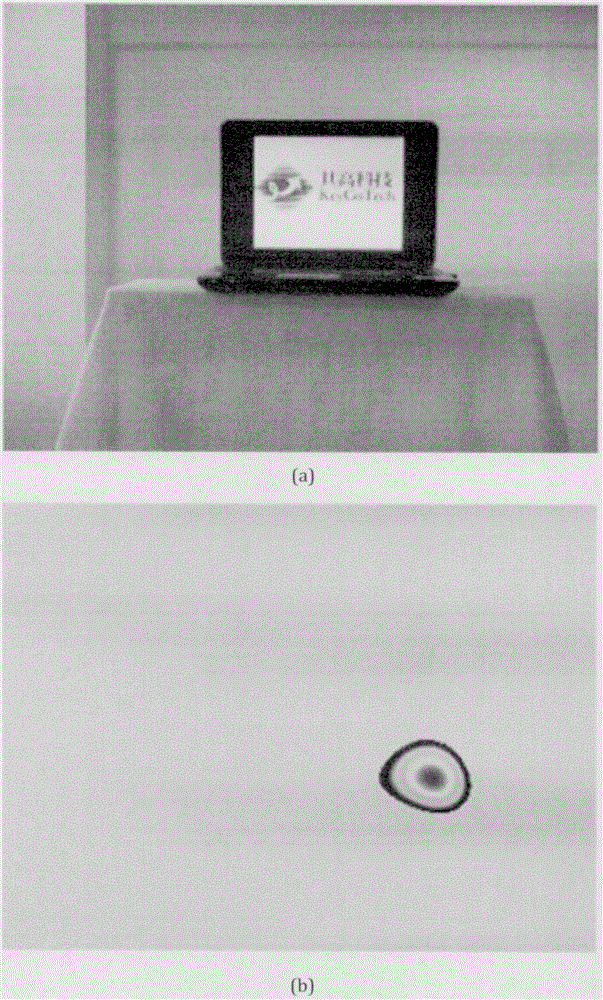

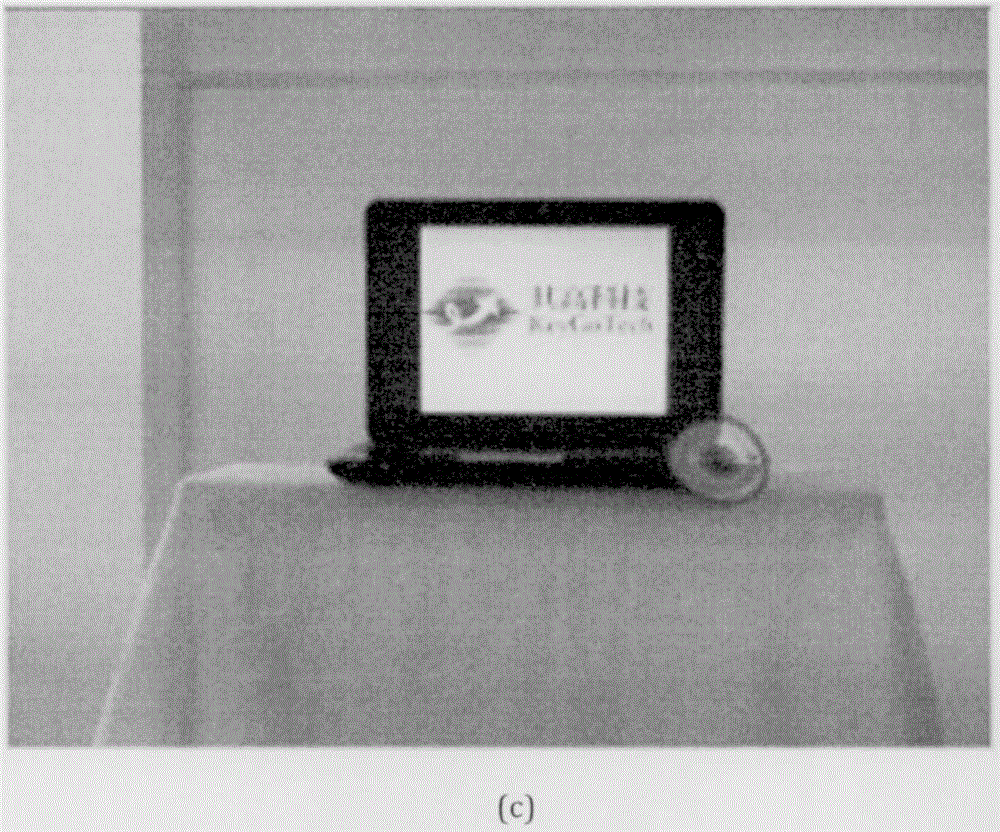

Infrared and visible light video fusion method based on moving target detection

ActiveCN107657217AImprove robustnessImprove computing powerCharacter and pattern recognitionSaliency mapComputer vision

An infrared and visible light video fusion method based on moving target detection belongs to the technical field of video fusion. A moving target is detected through low-rank sparse representation, the target is extracted using the low-rank and sparse time slices along X-T and Y-T planes, the integrity of the target detected is ensured by spatial information, and the robustness and accuracy of detection are improved. Then, the structure information of an image can be better described through non-subsample shearlet transform with a good fusion effect. The method has the advantages of high computational efficiency, unlimited filtering direction, translational invariance, and the like, and improves the fusion effect. In addition, the low-frequency sub-band coefficients are fused according toa sparse representation rule, the high-frequency sub-band coefficients are fused according to a weighting rule taking a target saliency map as a weighting factor, and a better fusion effect is achieved. Therefore, the infrared and visible light video fusion method based on moving target detection presented by the invention is more robust, and has a better fusion effect.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Video fusion and video linkage method based on three-dimensional scene and electronic equipment

ActiveCN111836012AImprove intelligenceGood application effectImage enhancementTelevision system detailsComputer graphics (images)Engineering

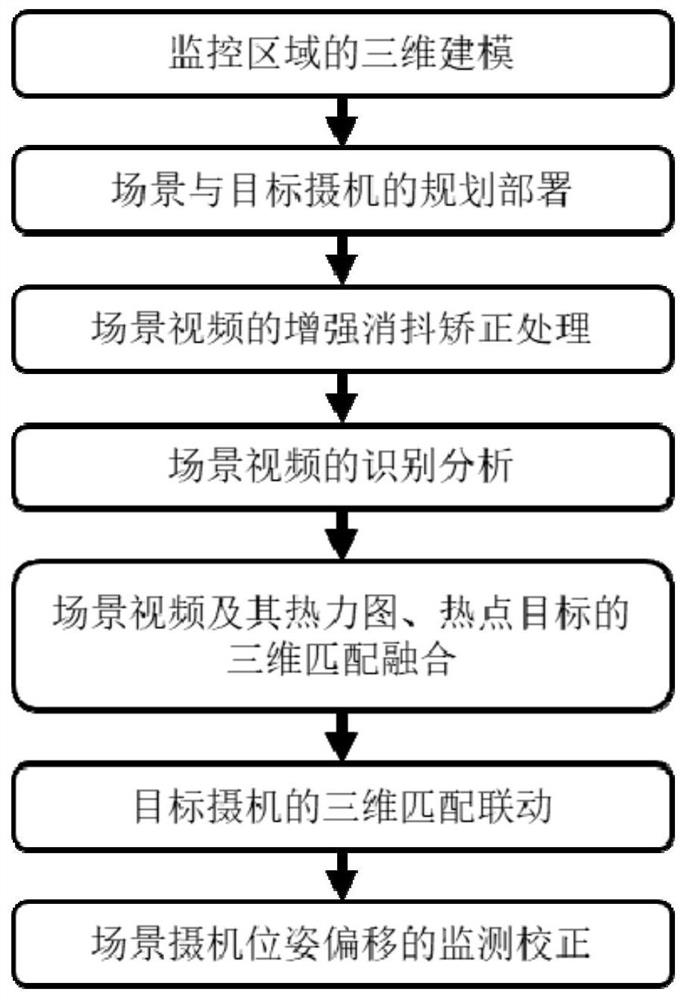

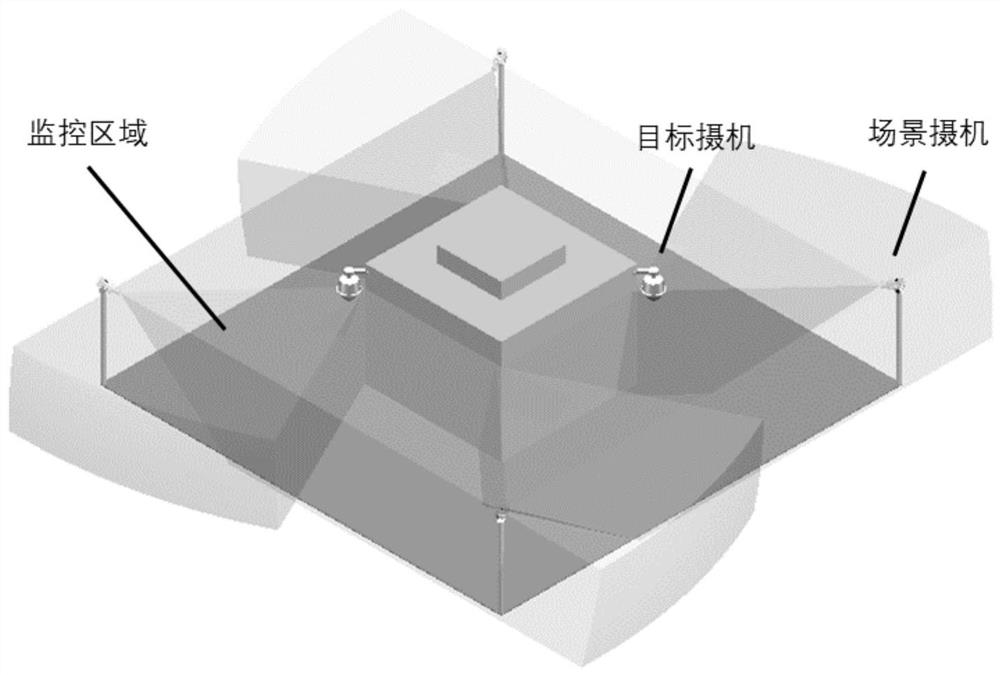

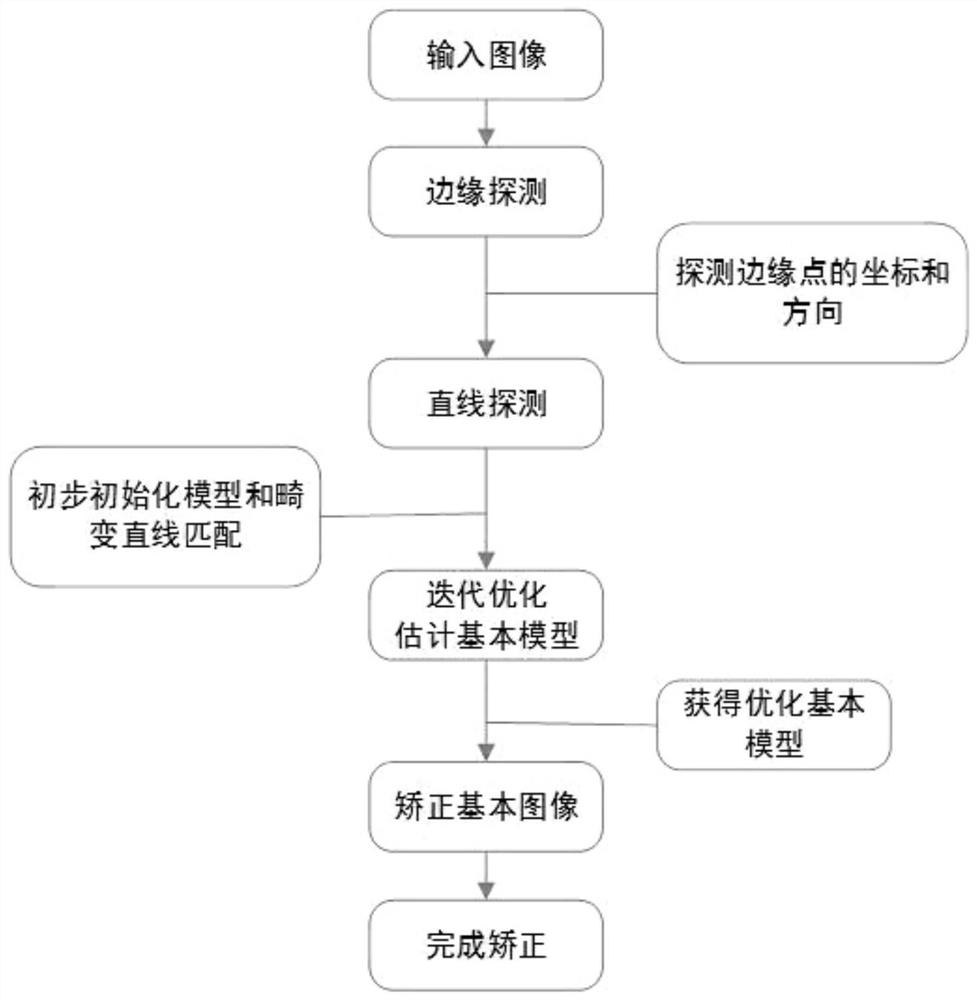

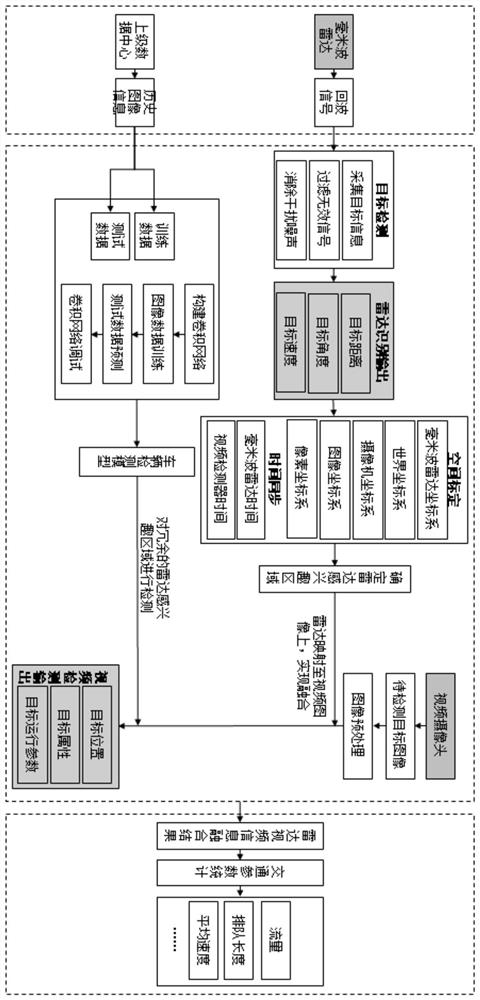

The invention relates to the technical field of comprehensive situation monitoring and commanding. The invention particularly relates to a video fusion and video linkage method based on a three-dimensional scene and electronic equipment. The method comprises the steps of three-dimensional modeling of a monitoring area, planning and deployment of a scene and a target camera, enhancement, jitter elimination and correction processing of a scene video, recognition and analysis of a scene video, three-dimensional matching and fusion of the scene video and a thermodynamic diagram thereof and a hotspot target, three-dimensional matching and linkage of the target camera, monitoring and correction of pose offset of the scene camera and the like. Accurate space matching and fusion display of a scenecamera video image, a thermodynamic diagram of the scene camera video image, a hotspot target and a scene three-dimensional model are realized; the target camera is intelligently dispatched to obtaina fine image of the hotspot target; accurate space matching and fusion display of other situation awareness information, equipment facility information and task management and control information anda scene three-dimensional model are realized; full-dimensional situation fusion monitoring based on a three-dimensional scene and intelligent space positioning analysis and associated information fusion display based on hotspot inspection, planned tasks and detection alarm are achieved, and the intelligent level and application efficiency of a comprehensive situation monitoring command system areremarkably improved.

Owner:航天图景(北京)科技有限公司

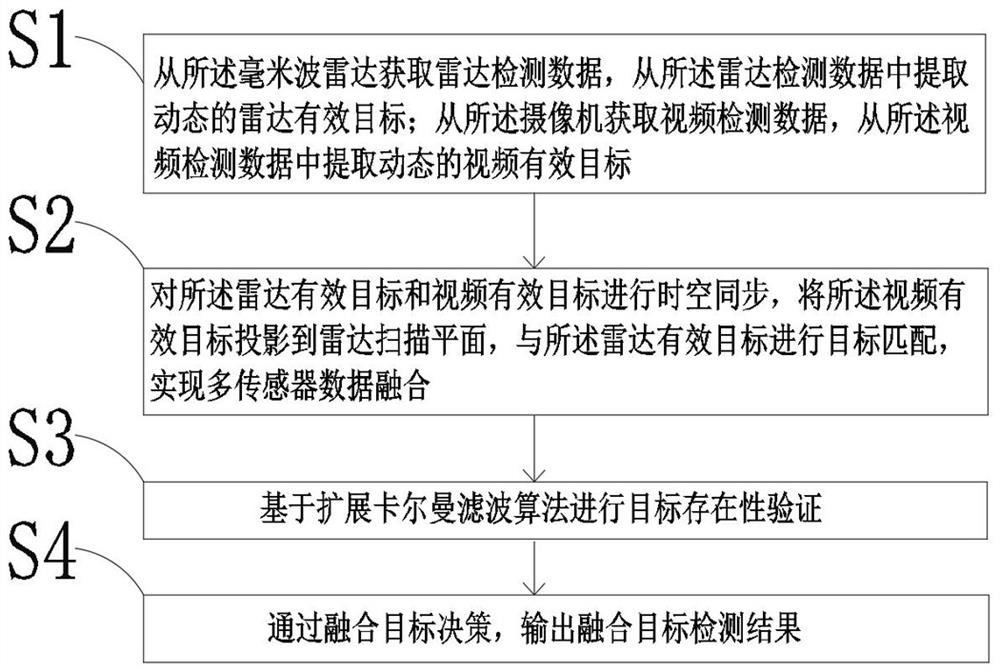

Road running state detection method and system based on radar and video fusion

InactiveCN112946628AImprove robustnessRich and accurate traffic operation status informationDetection of traffic movementNeural architecturesNerve networkEngineering

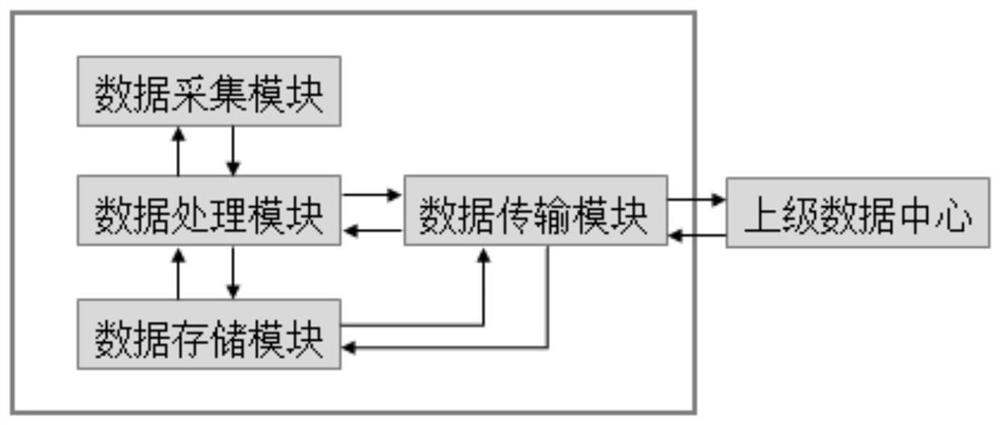

The invention relates to the technical field of road traffic state detection, and particularly relates to a road running state detection method and system based on radar and video fusion. The method comprises the following steps: 1, carrying out the data collection of a moving target through a millimeter-wave radar and a video detector, and carrying out the preprocessing of the data; 2, converting millimeter wave radar data coordinates into video pixel coordinate system coordinates, and registering the time of the millimeter wave radar and the time of a video detector to realize mapping of a redundant vehicle region of interest of the millimeter wave radar to a video image and completing fusion of space and time; 3, performing vehicle identification on the region of interest by using a convolutional neural network obtained through historical image data training, determining the region of interest of a vehicle, and eliminating radar false alarm information; and 4, carrying out traffic statistical data calculation according to a vehicle identification result, and acquiring traffic operation state parameters in a certain time period, wherein the parameters comprise a flow, an average speed and a queuing length.

Owner:JIANGSU SINOROAD ENG TECH RES INST CO LTD

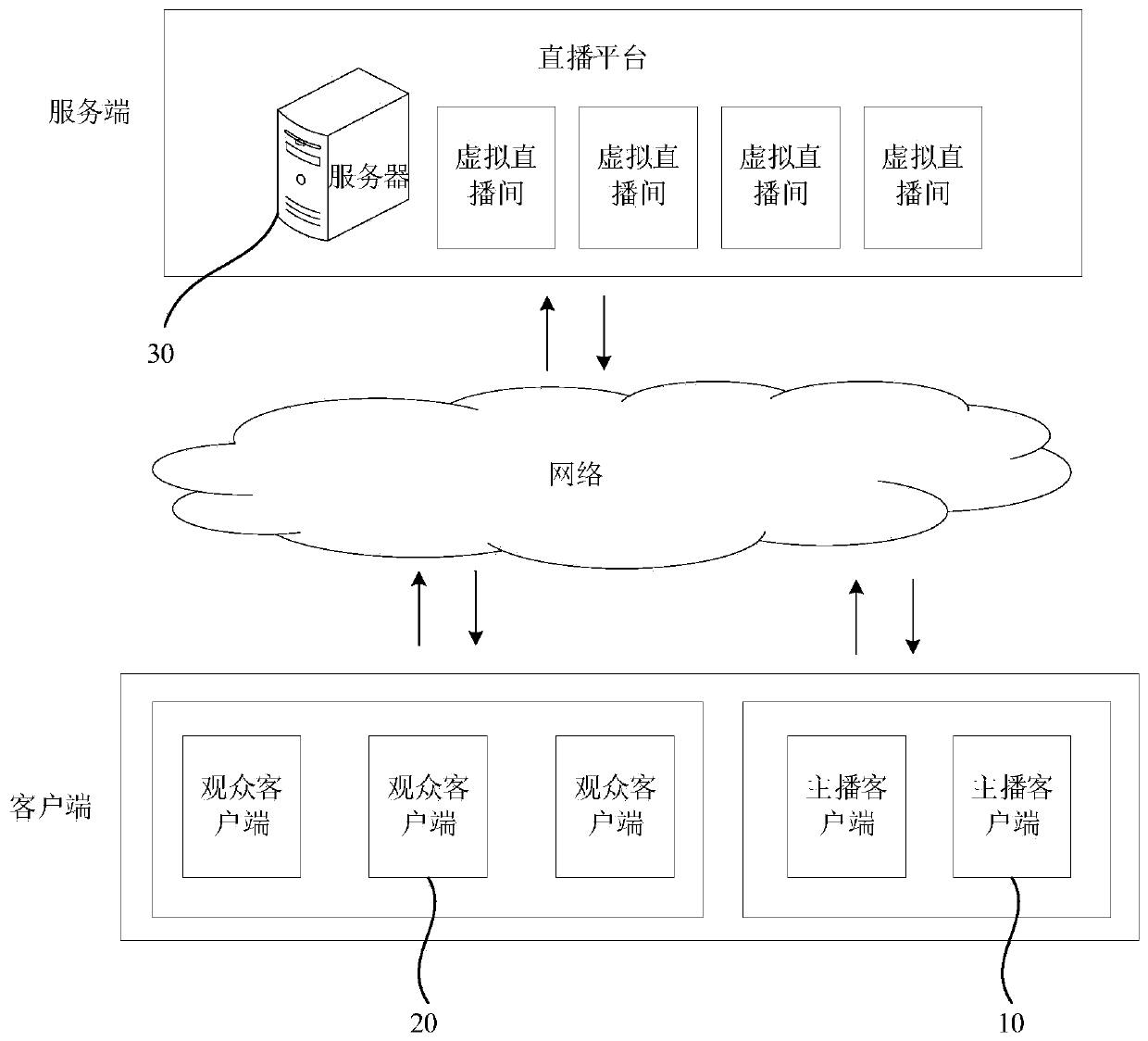

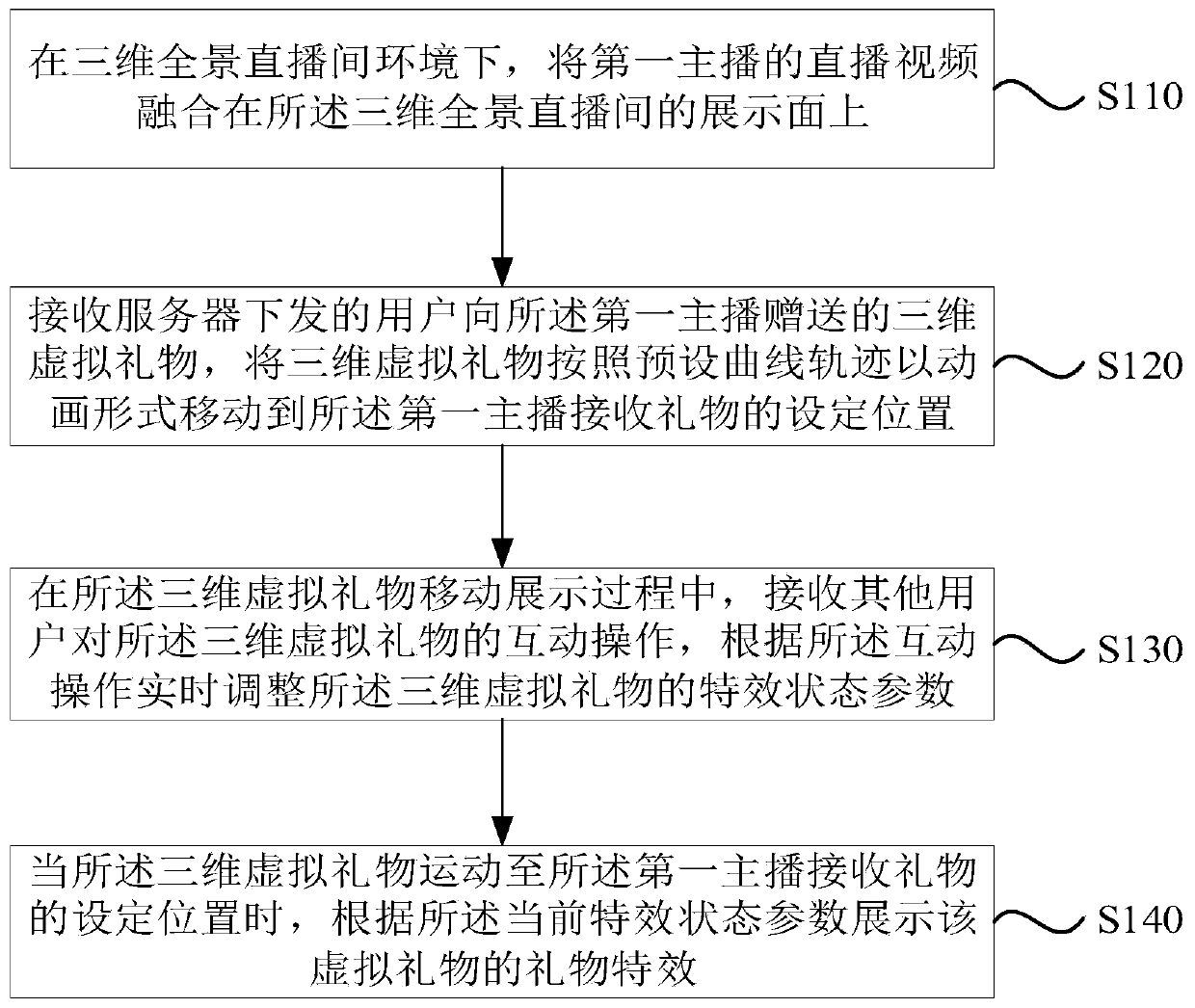

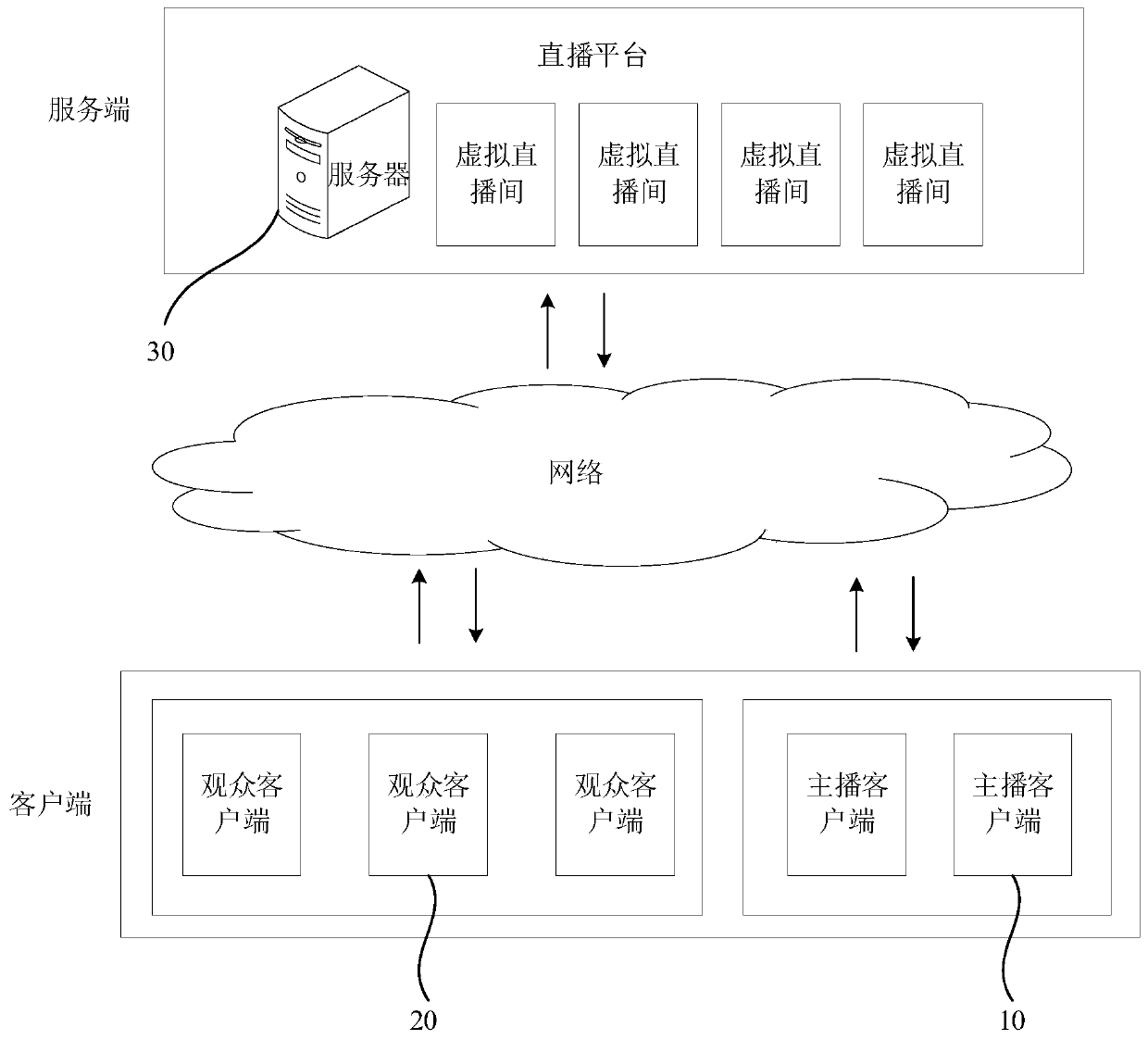

Panoramic live broadcast gift sending method and device, equipment and storage medium

InactiveCN111541909AImprove retentionReal-time adjustment of special effect status parametersAnimationSelective content distributionTelecommunicationsAnimation

The invention provides a panoramic live broadcast gift sending method and device, equipment and a storage medium. Relating to the live broadcast field. The gift sending method includes: fusing an anchor video on a display surface in a three-dimensional panoramic live broadcast room; receiving a three-dimensional virtual gift given to the anchor by the user, moving the three-dimensional virtual gift to a set position where the first anchor receives the gift in an animation form according to a preset curve track; and in the moving display process of the three-dimensional virtual gift, receivinginteractive operation of other users on the three-dimensional virtual gift, adjusting the special effect state parameters of the three-dimensional virtual gift in real time, and when the three-dimensional virtual gift moves to the set position, displaying the gift special effect of the virtual gift according to the current special effect state parameters. According to the invention, the presentation mode of live broadcast gift sending can be enriched, the interestingness of gift sending and the interactivity between an anchor and audience users are improved, and the reservation amount of the users and the enthusiasm of gift sending are improved.

Owner:广州方硅信息技术有限公司

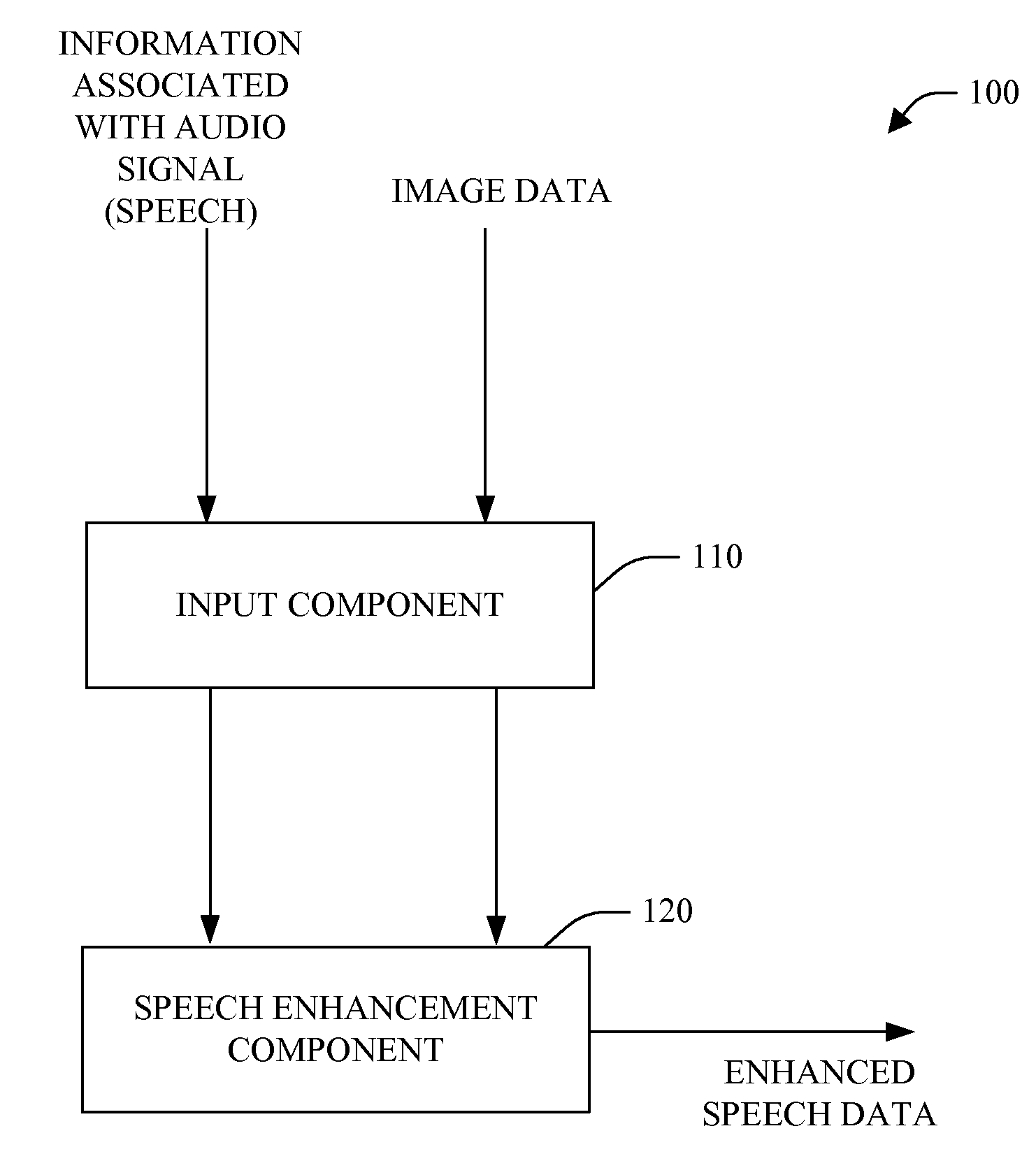

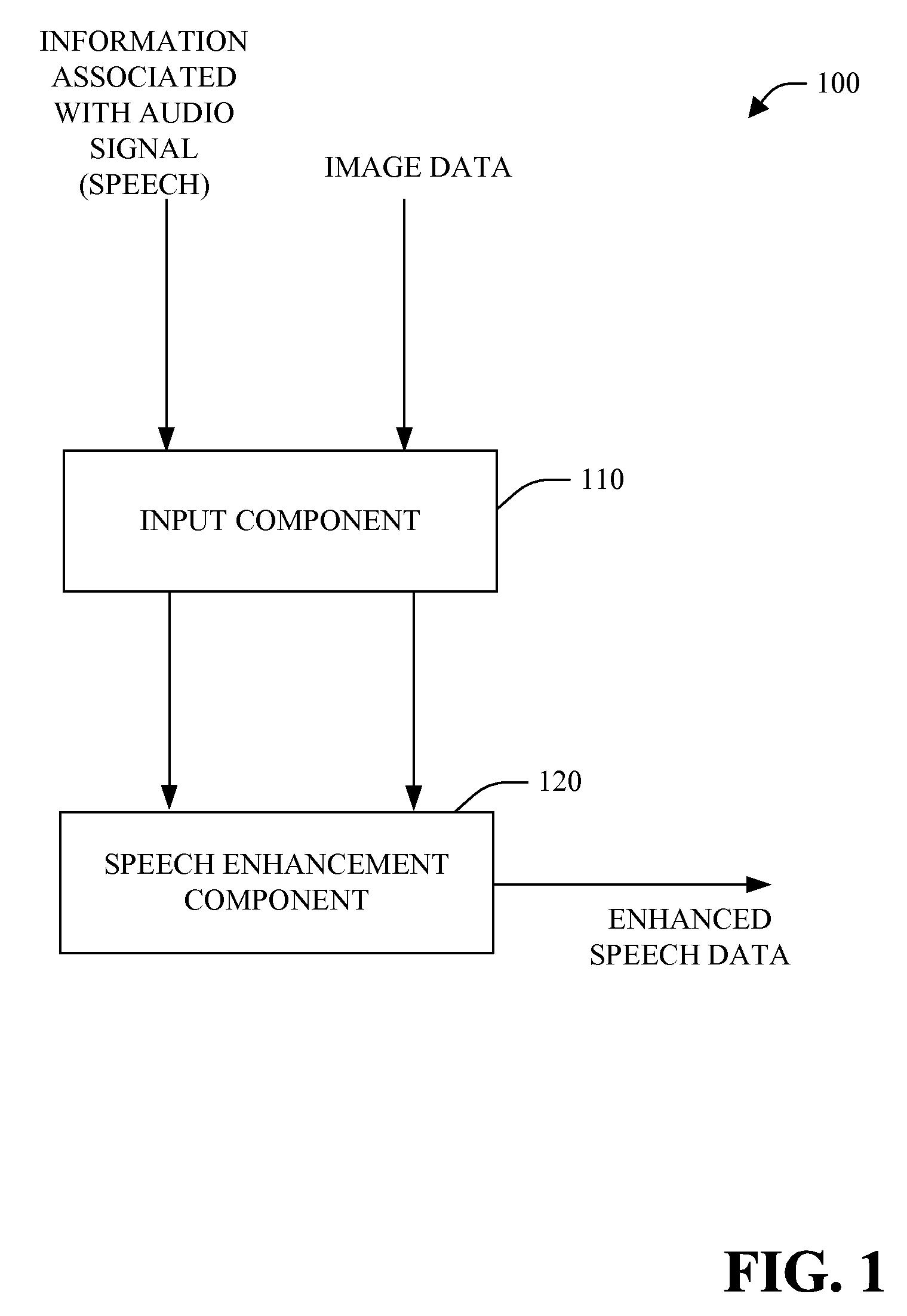

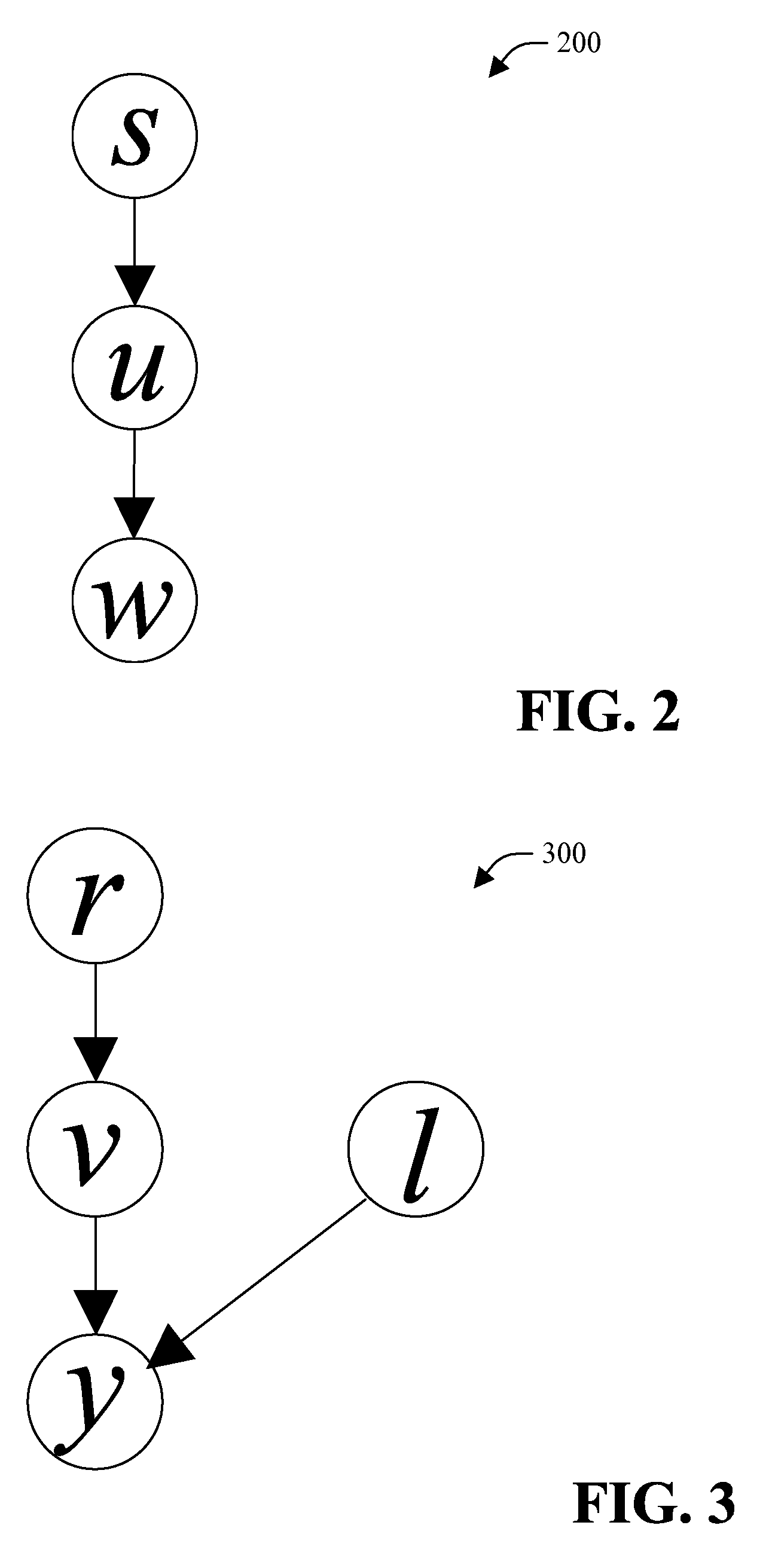

Speech detection and enhancement using audio/video fusion

InactiveUS20080059174A1Adapt quicklyDetect and enhance speechDigital computer detailsCharacter and pattern recognitionCross modelVideo fusion

Owner:MICROSOFT TECH LICENSING LLC

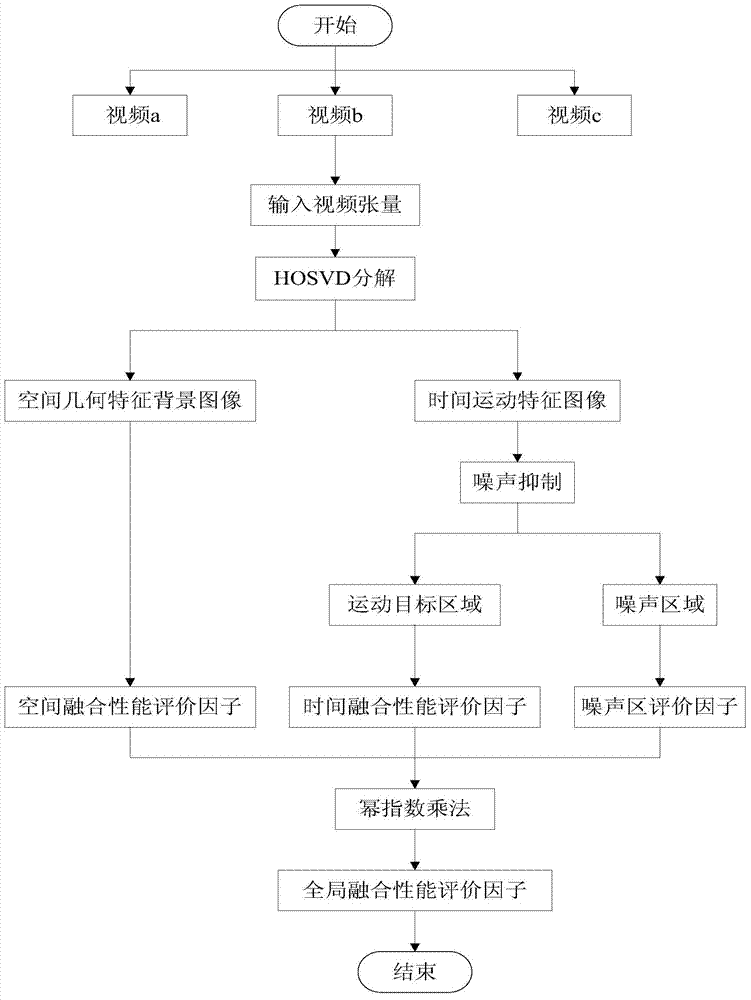

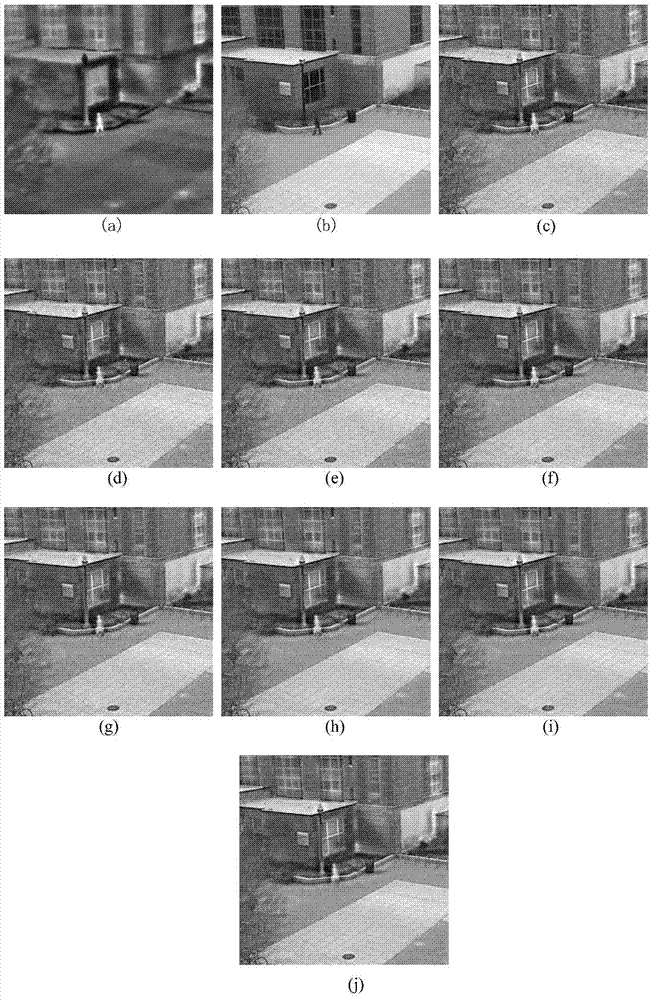

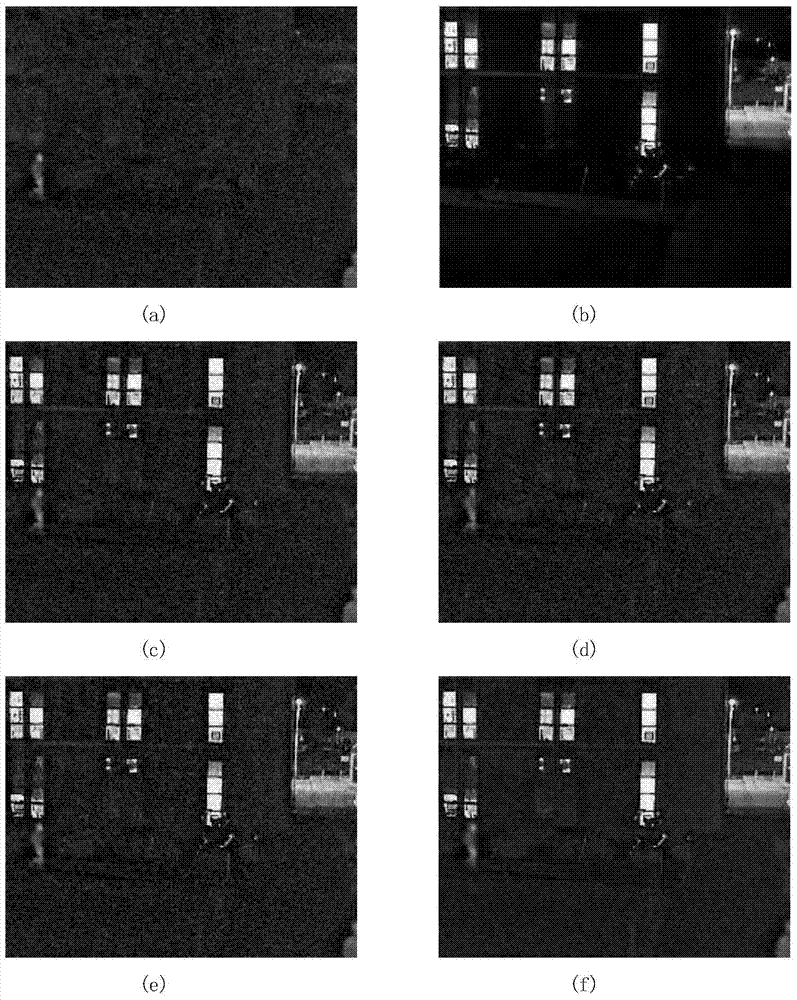

Video fusion performance evaluating method based on high-order singular value decomposition

InactiveCN103905815AImprove extraction performance evaluation resultsEfficient extractionTelevision systemsPattern recognitionBackground image

The invention discloses a video fusion performance evaluating method based on high-order singular value decomposition. The video fusion performance evaluating method based on the high-order singular value decomposition mainly solves the problem that the fusion performance of video images containing noise cannot be evaluated in the prior art. The video fusion performance evaluating method based on the high-order singular value decomposition comprises the implementation steps that two registered reference videos and a registered fusion video are input respectively; a four-order tensor is formed by the input videos, the high-order singular value decomposition is carried out on the four-order tensor, and respective space geometrical characteristic background images and respective time motion characteristic images are obtained; the time motion characteristic images are divided into motion target areas and noise areas through a thresholding method; then, different evaluation indexes are designed respectively to evaluate all the areas; lastly, an overall performance evaluation index is structured through power exponent multiplication, and thus evaluation on the whole fusion performance of the video images is achieved. The video fusion performance evaluating method based on the high-order singular value decomposition can effectively, accurately and objectively evaluate the fusion performance of the videos in a noise environment, and can be used for monitoring fusion video image quality.

Owner:XIDIAN UNIV

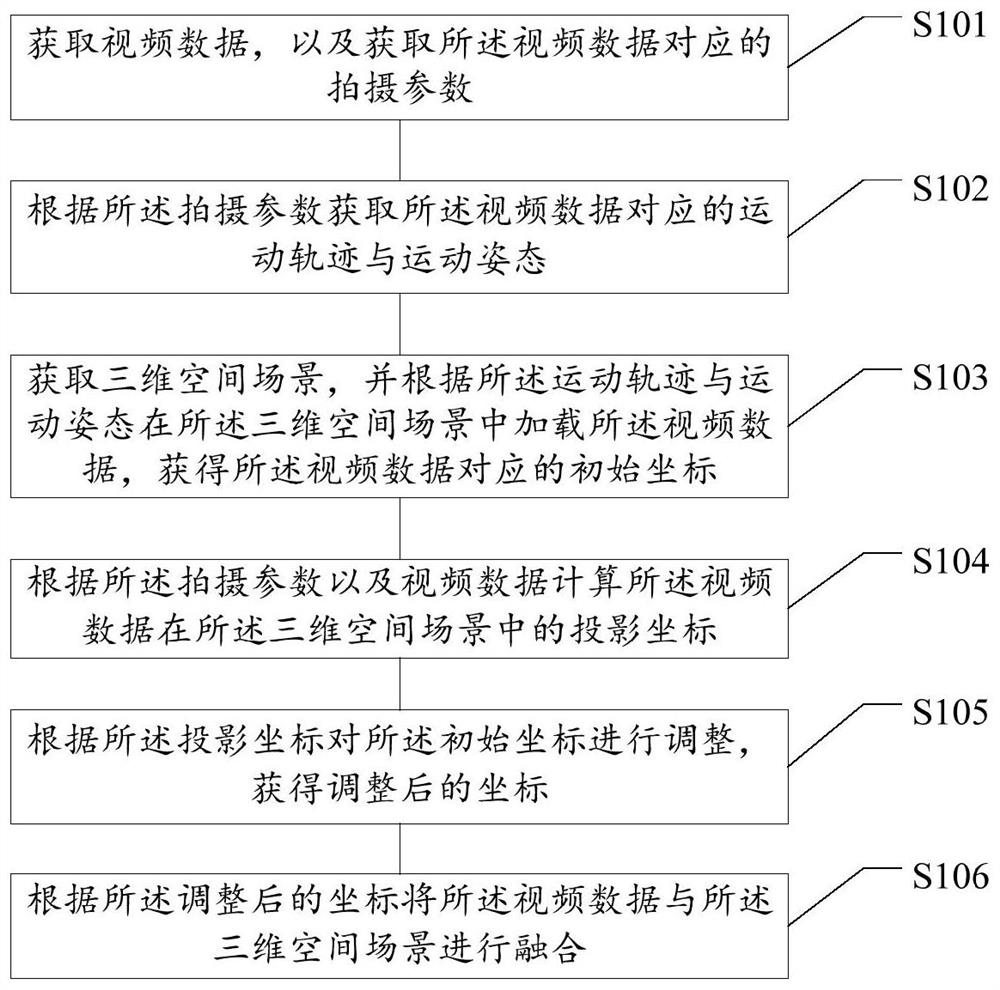

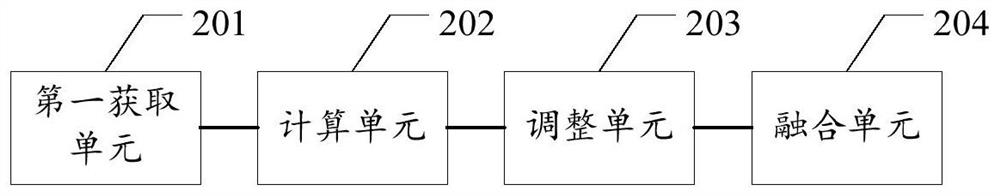

Video fusion method, device and equipment and storage medium

PendingCN112489121AImprove efficiencyEnhanced informationImage enhancementImage analysisComputer graphics (images)Three-dimensional space

The embodiment of the invention discloses a video fusion method, device and equipment and a computer readable storage medium. According to the embodiment of the invention, the method comprises the steps: obtaining video data, and obtaining a photographing parameter corresponding to the video data; acquiring a motion track and a motion posture corresponding to the video data according to the shooting parameters; obtaining a three-dimensional space scene, and loading the video data in the three-dimensional space scene according to the motion trail and the motion posture to obtain an initial coordinate corresponding to the video data; calculating projection coordinates of the video data in the three-dimensional space scene according to the shooting parameters and the video data; adjusting theinitial coordinates according to the projection coordinates to obtain adjusted coordinates; and fusing the video data with the three-dimensional space scene according to the adjusted coordinates. Themotion video and the three-dimensional scene are fused, and the accuracy and efficiency of obtaining the specific position in the video data are improved.

Owner:丰图科技(深圳)有限公司

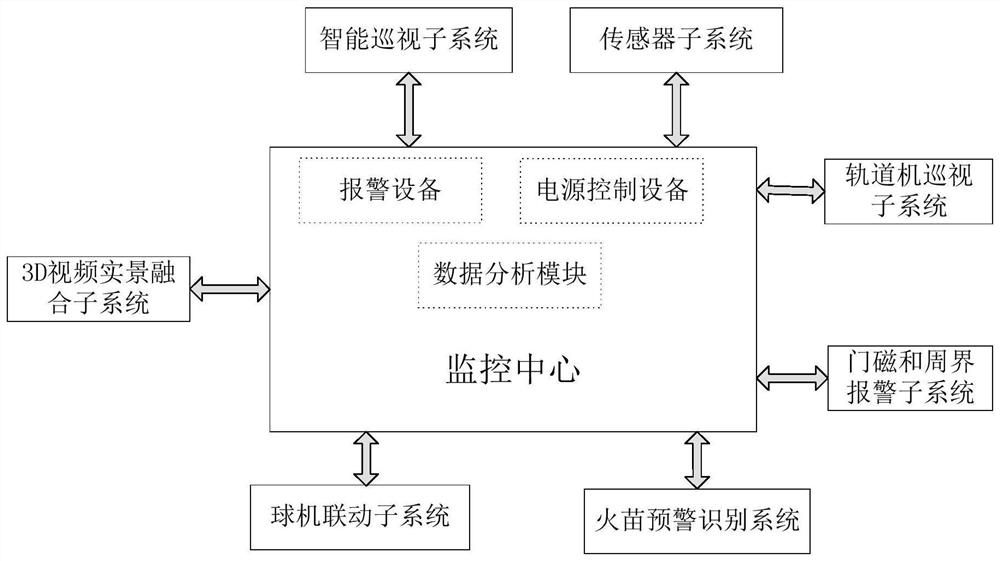

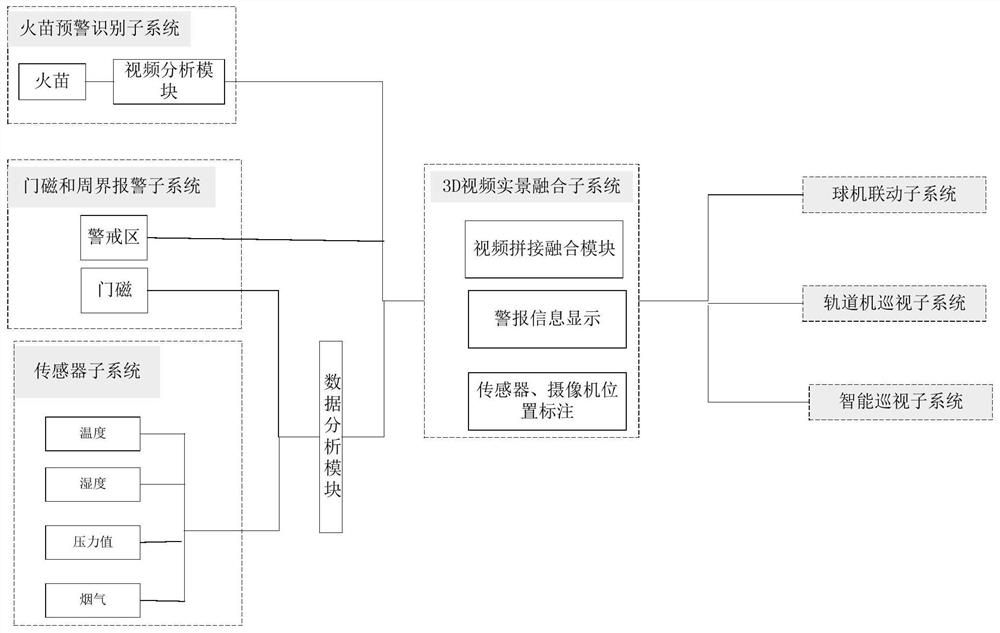

Three-dimensional visual unattended transformer substation intelligent linkage system based on video fusion

PendingCN112288984ARealize intelligent monitoringReal-timeClosed circuit television systemsFire alarm electric actuationTransformerSmart surveillance

The invention relates to a three-dimensional visual unattended substation intelligent linkage system based on video fusion. The system comprises a 3D video live-action fusion subsystem, an intelligentinspection subsystem, a sensor subsystem, a rail machine inspection subsystem, a door magnet and perimeter alarm subsystem, a dome camera linkage subsystem, a flame recognition and early warning subsystem and a monitoring center, and all the subsystems are interconnected and intercommunicated with the monitoring center. The three-dimensional visual unattended substation intelligent linkage systemis realized by fusing three-dimensional videos, the all-weather, omnibearing and 24-hour uninterrupted intelligent monitoring of a substation is realized, and the arbitrary browsing and viewing of athree-dimensional real scene of the substation and alarm prompting of faults in the substation are realized; a worker can watch the videos in the monitoring room, the real-time monitoring and dispatching command of a plurality of substations under administration are achieved, the worker does not need to inspect power transformation facilities and equipment every day, and the monitoring capacity and the working efficiency are improved.

Owner:刘禹岐

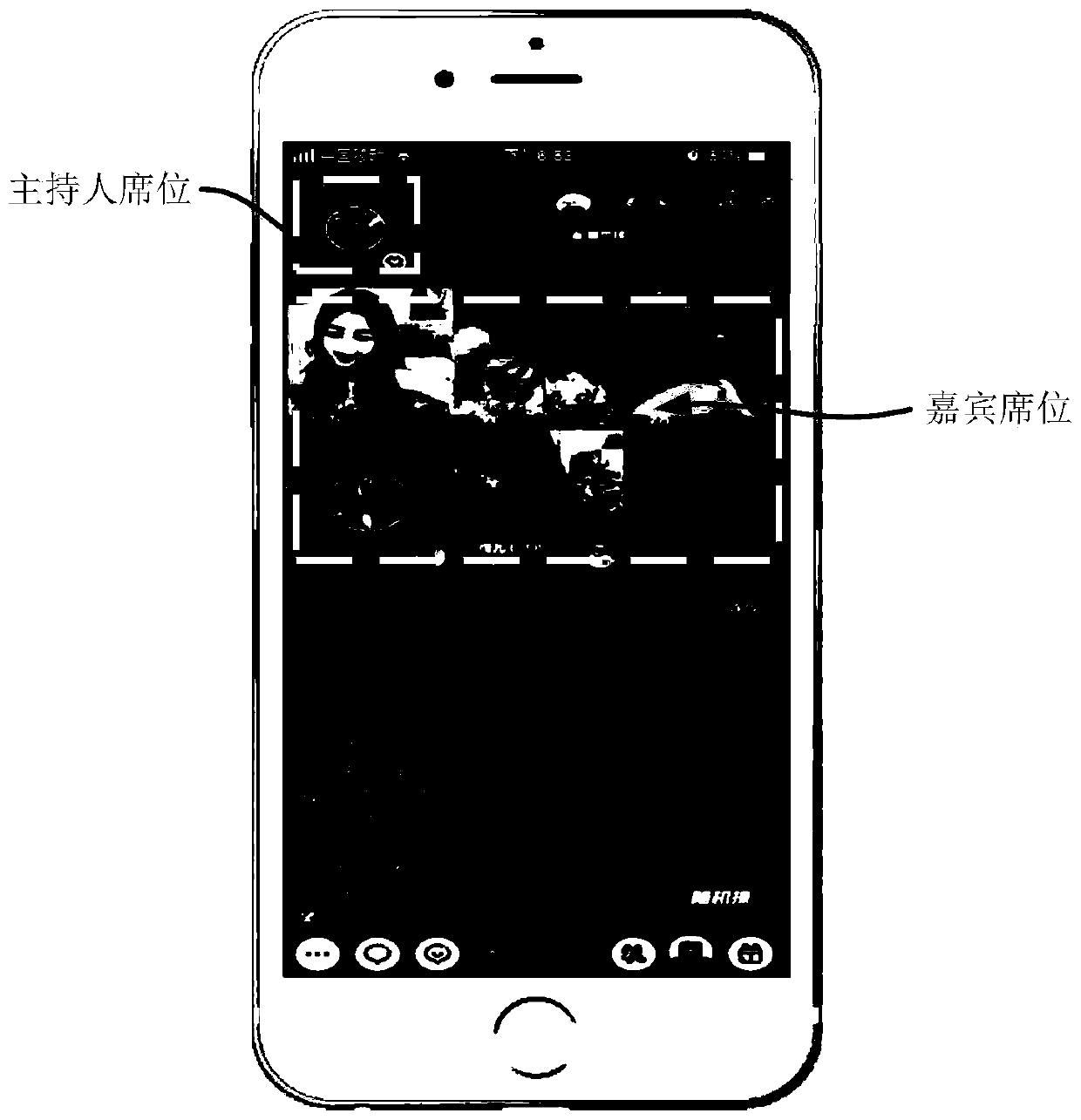

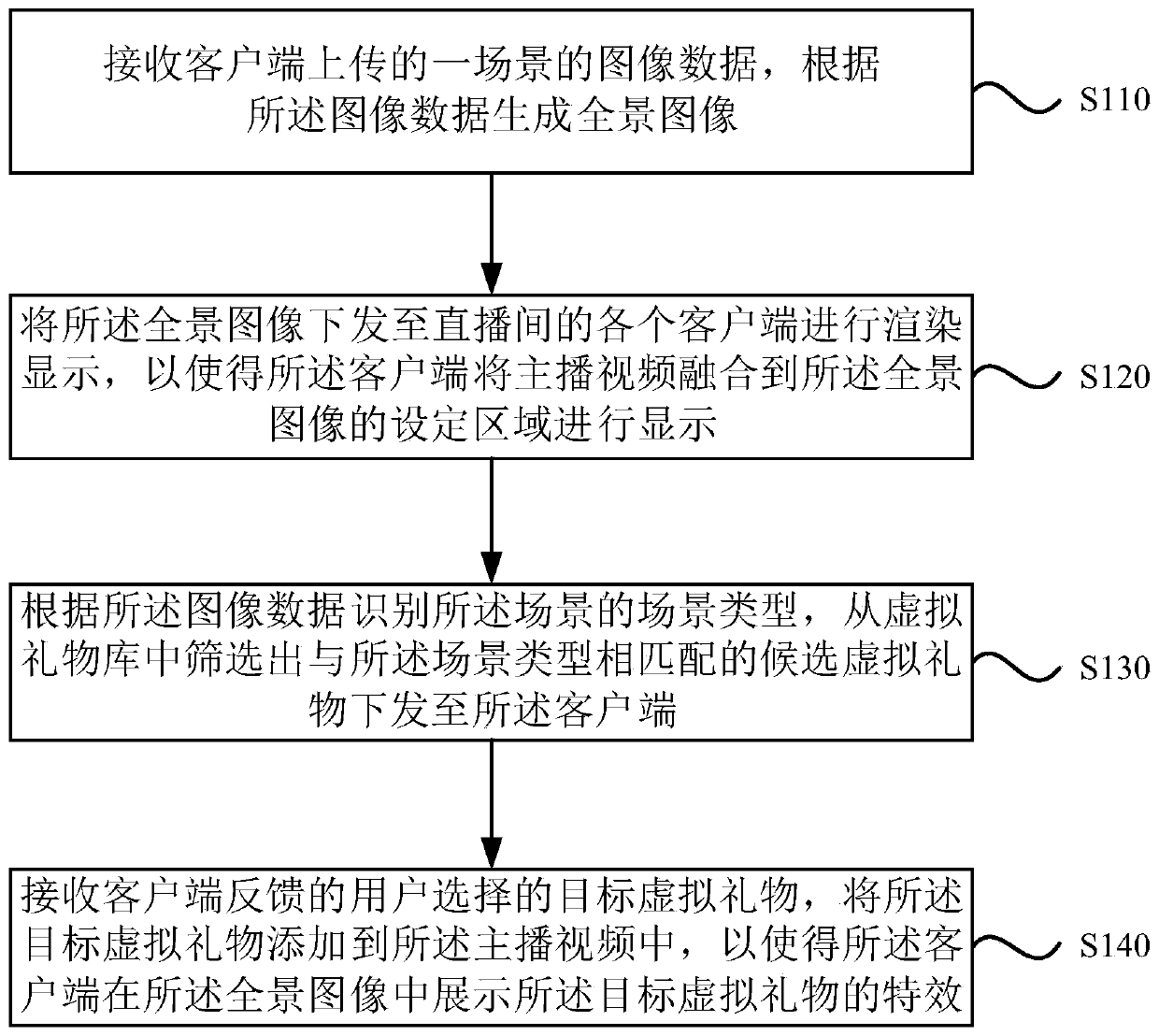

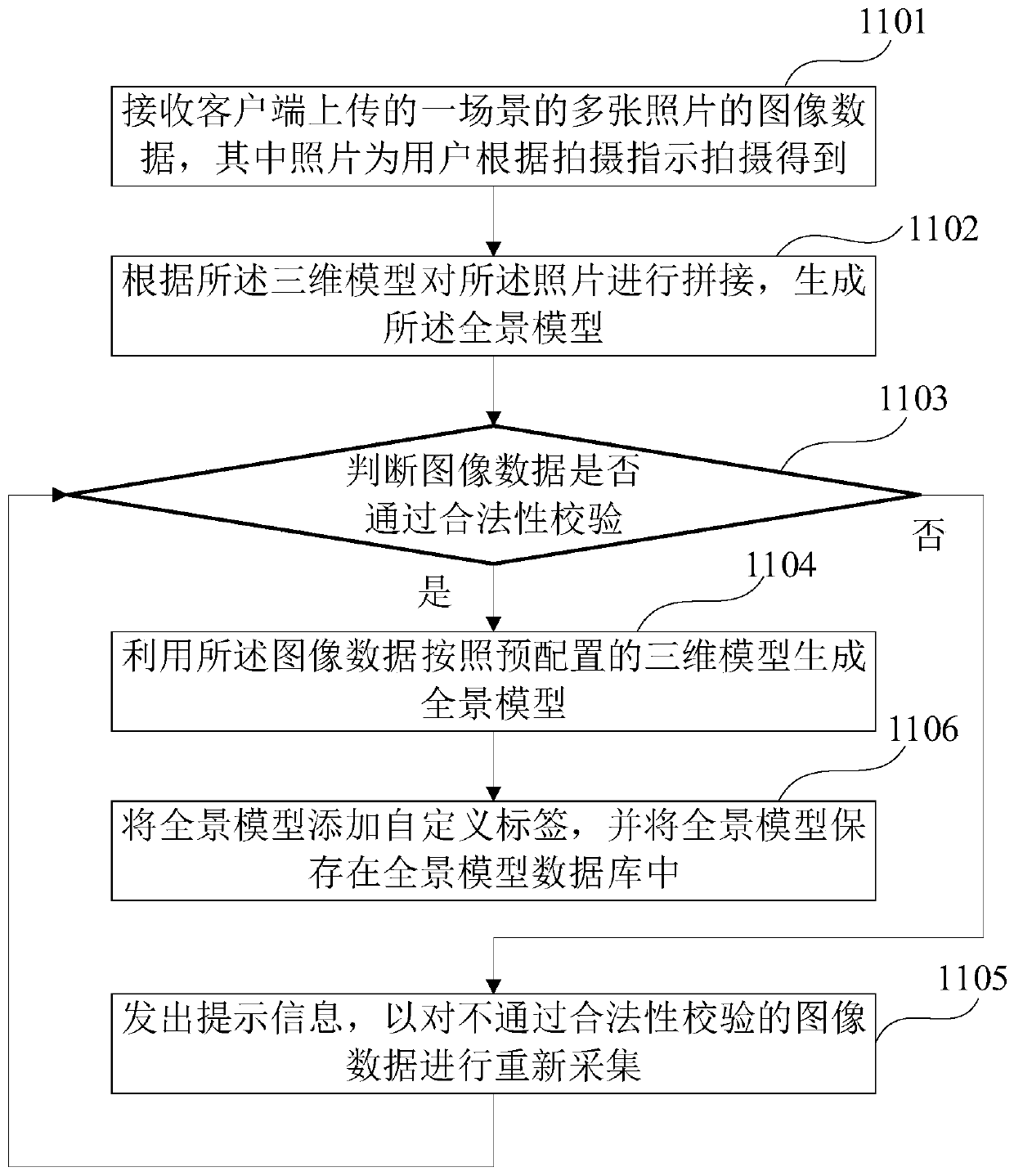

Virtual gift display method, device and equipment and storage medium

ActiveCN111225231AIncrease motivationImprove expressivenessSelective content distributionSteroscopic systemsComputer graphics (images)Engineering

The embodiment of the invention provides a virtual gift display method, device and equipment and a storage medium, and relates to the technical field of videos, and the method comprises the steps: receiving image data of a scene uploaded by a client, and generating a panoramic image; issuing the panoramic image to a client to render and display so as to fuse the anchor video to a set area of the panoramic image; identifying the scene type of the scene according to the image data, screening out candidate virtual gifts matched with the scene type from a virtual gift library, and issuing the candidate virtual gifts to the client; and receiving a target virtual gift selected by the user and fed back by the client, adding the target virtual gift to the anchor video, and displaying the special effect of the target virtual gift in the panoramic image. According to the technical scheme, the visual effect that audiences are personally close to the scene where the anchor is located is achieved,the virtual gift is pushed according to the scene, the content and the special effect of the virtual gift better conform to the scene where the current anchor video is located, the expressive force ofthe virtual gift effect is enhanced, and the enthusiasm of the audiences for giving gifts to the anchor is improved.

Owner:广州方硅信息技术有限公司

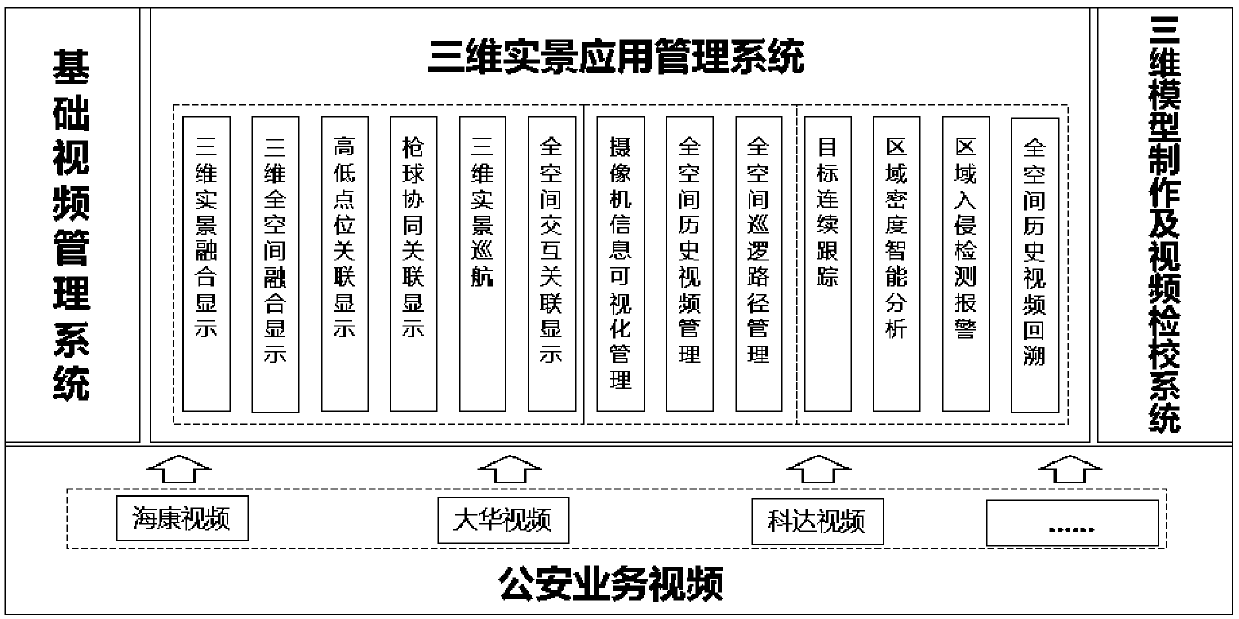

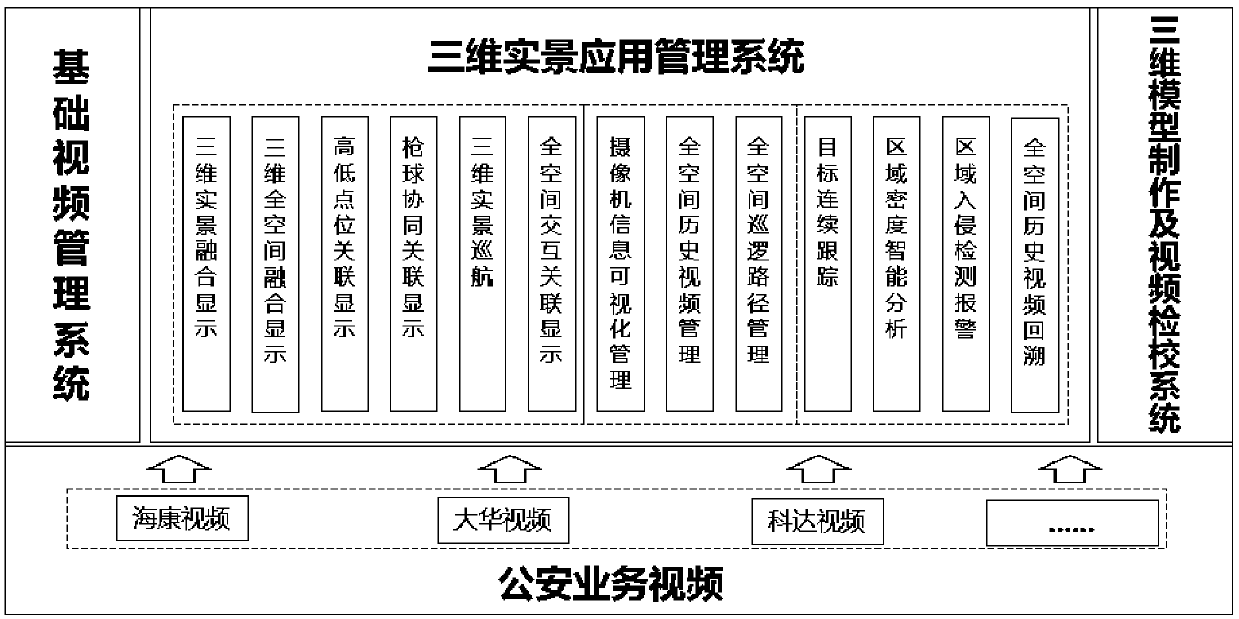

Panoramic monitoring system based on mixed reality and video intelligent analysis technology

InactiveCN111163286AAchieve viewRealize all-roundTelevision system detailsCharacter and pattern recognitionVideo monitoringMixed reality

The invention relates to a panoramic monitoring system based on mixed reality and a video intelligent analysis technology. Overall situation control and detail target staring control are achieved through real-time fusion of a three-dimensional model and video monitoring. The system comprises four platforms of three-dimensional video fusion, two-dimensional panoramic stitching, historical video backtracking and three-dimensional plan deployment, and mainly has the functions of panoramic monitoring, high-low association, gun-ball linkage, crowd density analysis, border crossing alarm, three-dimensional plan deployment and historical video backtracking.

Owner:CHINA CHANGFENG SCI TECH IND GROUPCORP

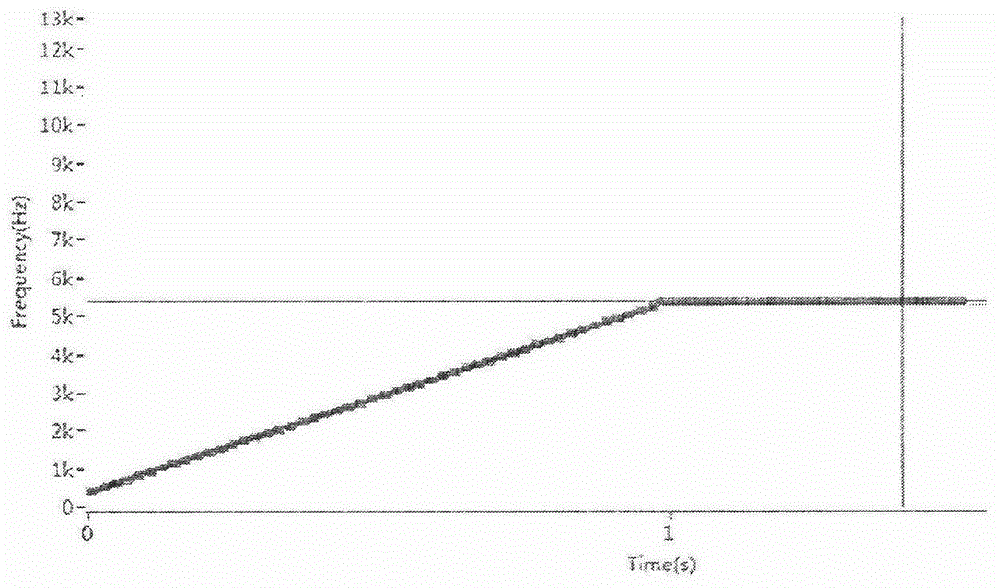

Optimized sound field imaging positioning method and system

ActiveCN106488358AIncrease diversityImprove accuracyPosition fixationTransducer circuitsTime informationSound sources

The invention discloses an optimized sound field imaging positioning method and system. Multi-channel sound field samples synchronous with a video are collected by employing a microphone array; time frequency distribution characteristics of the sound field samples are obtained from the sound field samples; a corresponding relationship between time information and analysis frequency is obtained according to time frequency distribution; a sound field state corresponding to analysis time and the analysis frequency is obtained according to the corresponding relationship; and the sound field state and the video are integrated in a cloud chart mode, thereby realizing sound field imaging. According to the method and the system, concrete positions of a plurality of sound sources and the attributes of different sound bands of the various sound sources can be obtained visually and rapidly.

Owner:SHANGHAI KEYGO TECH CO LTD

Radar and video fusion traffic target tracking method and system, equipment and terminal

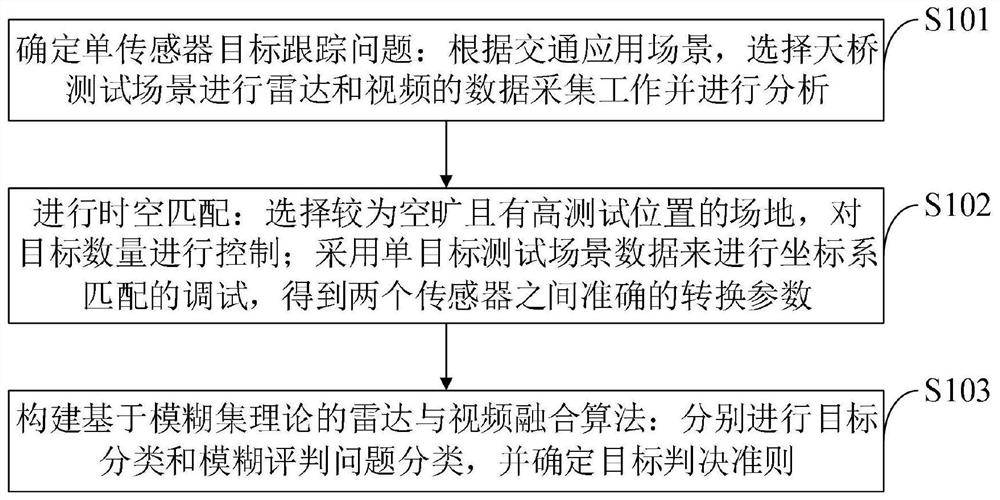

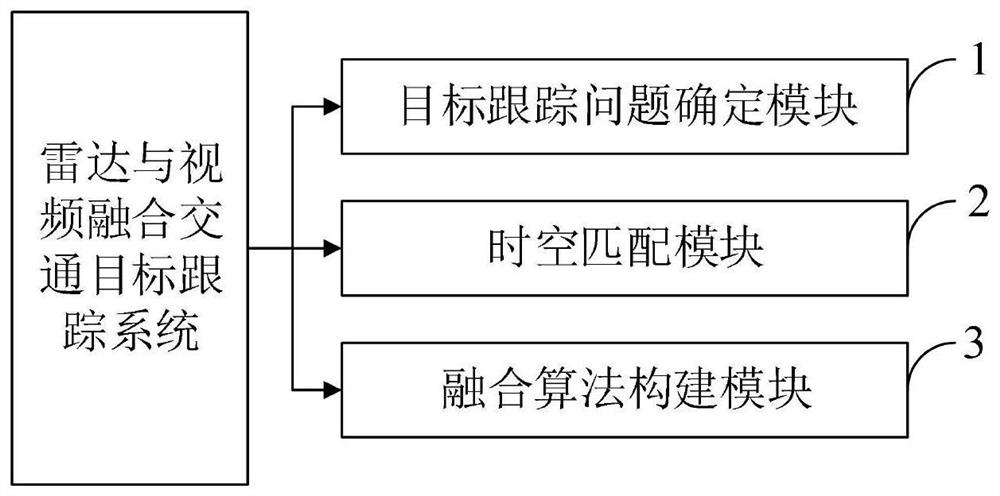

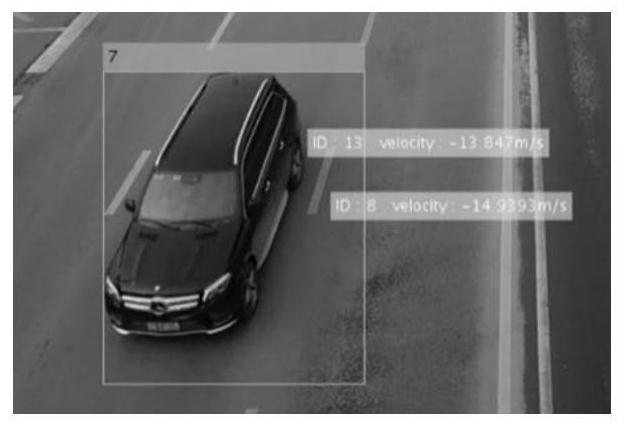

PendingCN113671480AHigh precisionImprove accuracyDetection of traffic movementRadio wave reradiation/reflectionObject tracking algorithmData acquisition

The invention belongs to the technical field of traffic target tracking, and discloses a radar and video fusion traffic target tracking method and system, equipment and a terminal. The method comprises the steps of: determining a single-sensor target tracking problem, namely selecting a platform bridge test scene to carry out radar and video data collection and analysis; performing space-time matching, namely selecting an open site with a high test position, and controlling the number of targets; performing coordinate system matching debugging by adopting single-target test scene data; and constructing a radar and video fusion algorithm based on a fuzzy set theory, namely carrying out target classification and fuzzy evaluation problem classification, and determining a target judgment criterion. According to the radar and video fusion traffic target tracking method and system, two common traffic information acquisition sensor millimeter wave radars and cameras are selected, a radar and video fusion traffic target tracking algorithm based on a fuzzy set is provided, and high-precision and high-accuracy target tracking is realized by using a multi-sensor fusion algorithm under the condition of reducing the complexity of a single-sensor tracking algorithm.

Owner:亿太特陕西科技有限公司

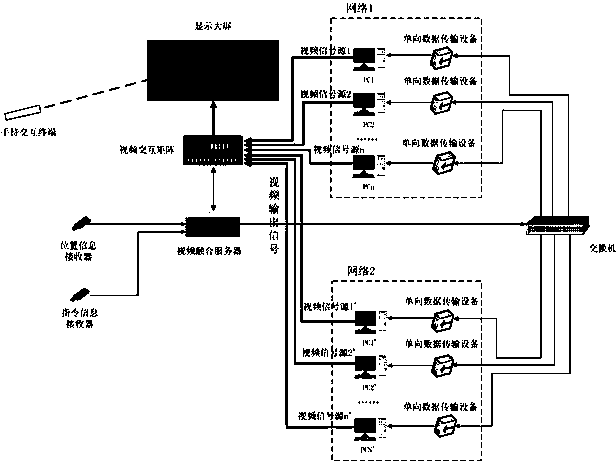

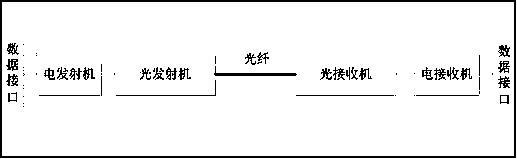

Method for carrying out security fusion interaction on multi-network multi-system application interface

InactiveCN110851063ATelevision system detailsOptical transmission adaptationsComputer hardwareLarge screen

The invention discloses a method for carrying out security fusion interaction on a multi-network multi-system application interface. A preferable system of the method comprises a video signal source,a large display screen, a video interaction matrix, a video fusion server, a switch, one-way data transmission equipment, a handheld interaction terminal, a position information receiver and an instruction information receiver. The interaction method comprises the steps that display interfaces of a plurality of application systems from different networks are output to a video interaction matrix ina video signal format and displayed on a large screen; a user interacts with a plurality of application system display interfaces on the large screen through the handheld interaction terminal. Digital display separation and interactive instruction one-way transmission are adopted; the application systems of different networks cannot communicate with each other and cannot access the video fusion server and the video interaction matrix in the interaction system, thereby guaranteeing the network safety and information safety between the application systems, and achieving the safety fusion interaction and resource sharing of the application interfaces of a plurality of application systems of different networks.

Owner:丁建华

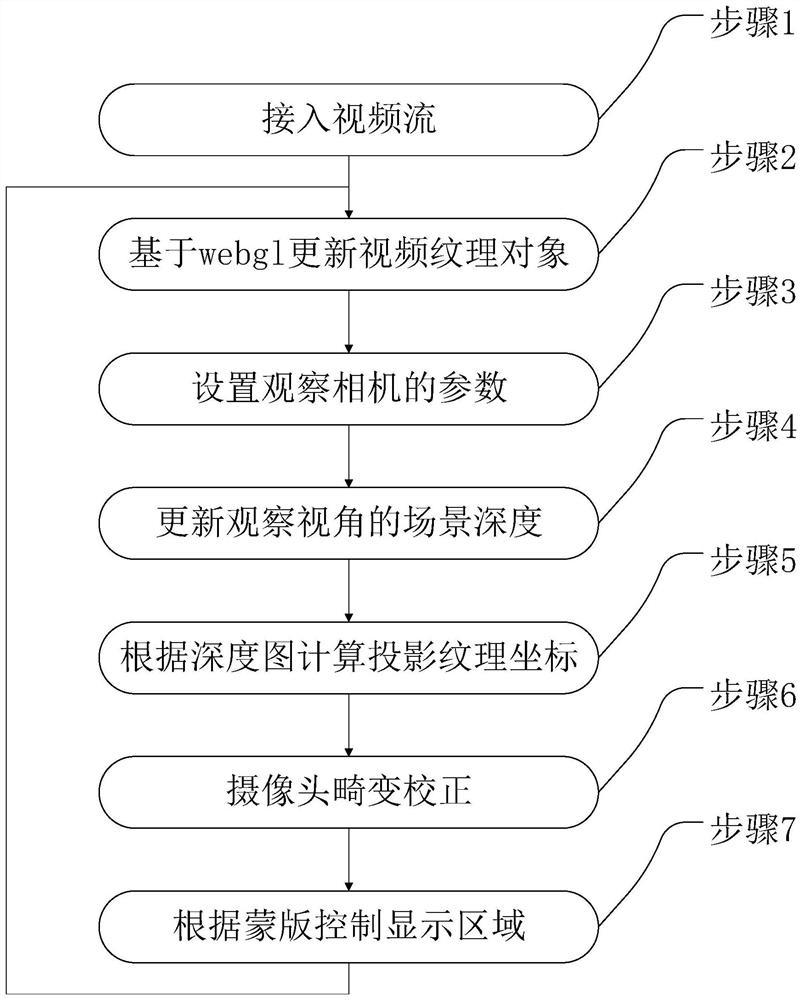

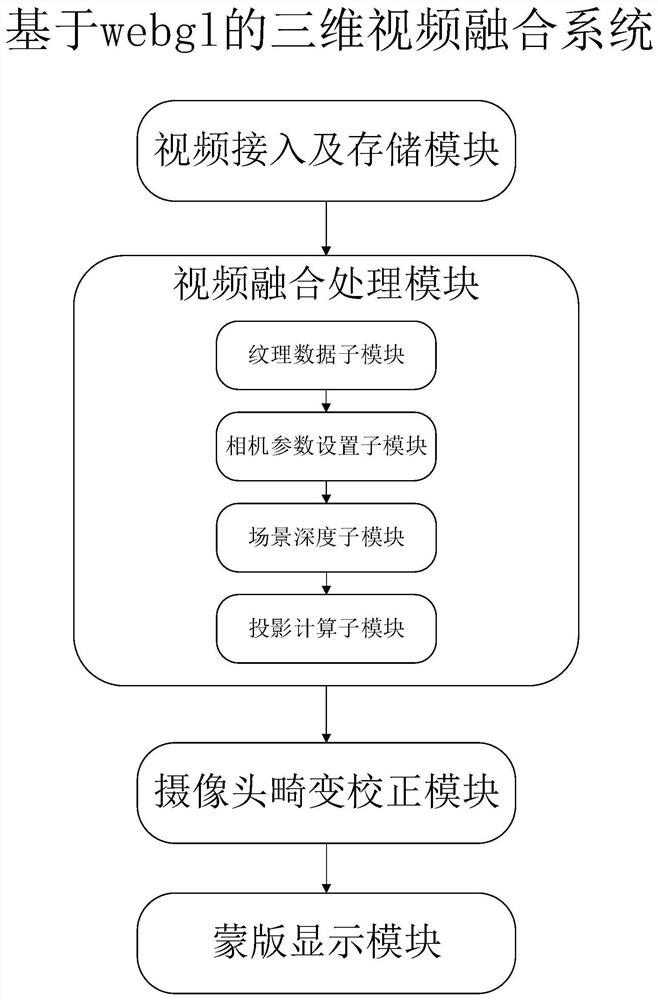

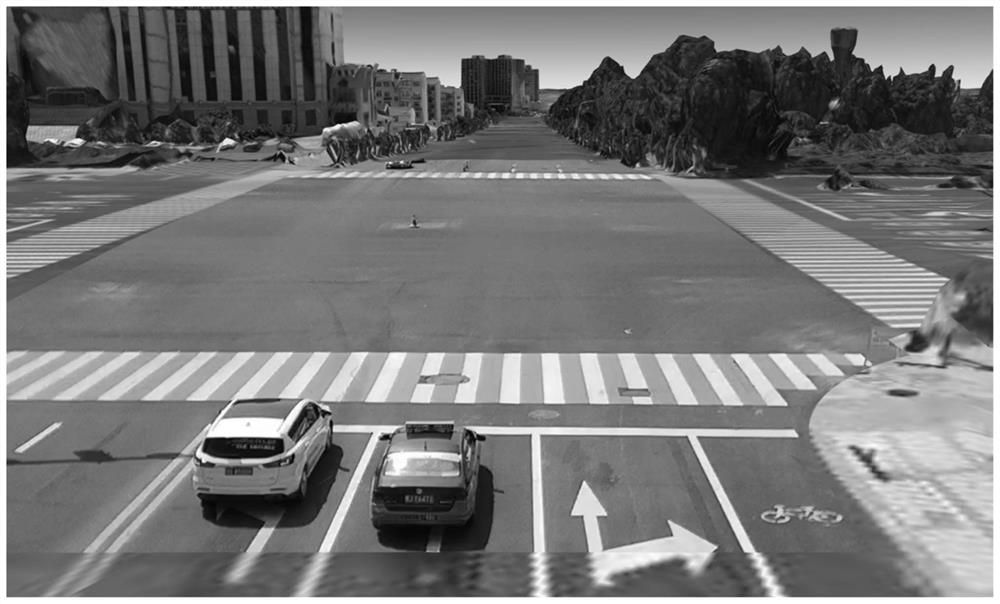

Three-dimensional video fusion method and system based on WebGL

ActiveCN112437276AAvoid overlapping displayImprove the display effectImage enhancementTelevision system detailsViewing frustumRadiology

The invention provides a three-dimensional video fusion method and system based on WebGL. According to the invention, there is no need to process a video source, and the method comprises the steps: accessing an HTTP video stream, updating a video texture object based on WebGL, updating and setting a near cutting surface, a far cutting surface and a camera position and orientation of a view cone ofan observation camera, then updating the scene depth of an observation view angle, projecting and restoring to an observer camera coordinate system, fusing with the live-action model, performing distortion correction on the camera, and finally realizing a video area cutting effect by adopting masking. The problems in the prior art are solved, three-dimensional video fusion is achieved on the basis of WebGL, the projection area is cut, adjacent videos can be prevented from being displayed in an overlapped mode, distortion correction is conducted on the cameras, and therefore the good display effect can be achieved for the cameras with large distortion and the situation that the installation positions are low.

Owner:埃洛克航空科技(北京)有限公司

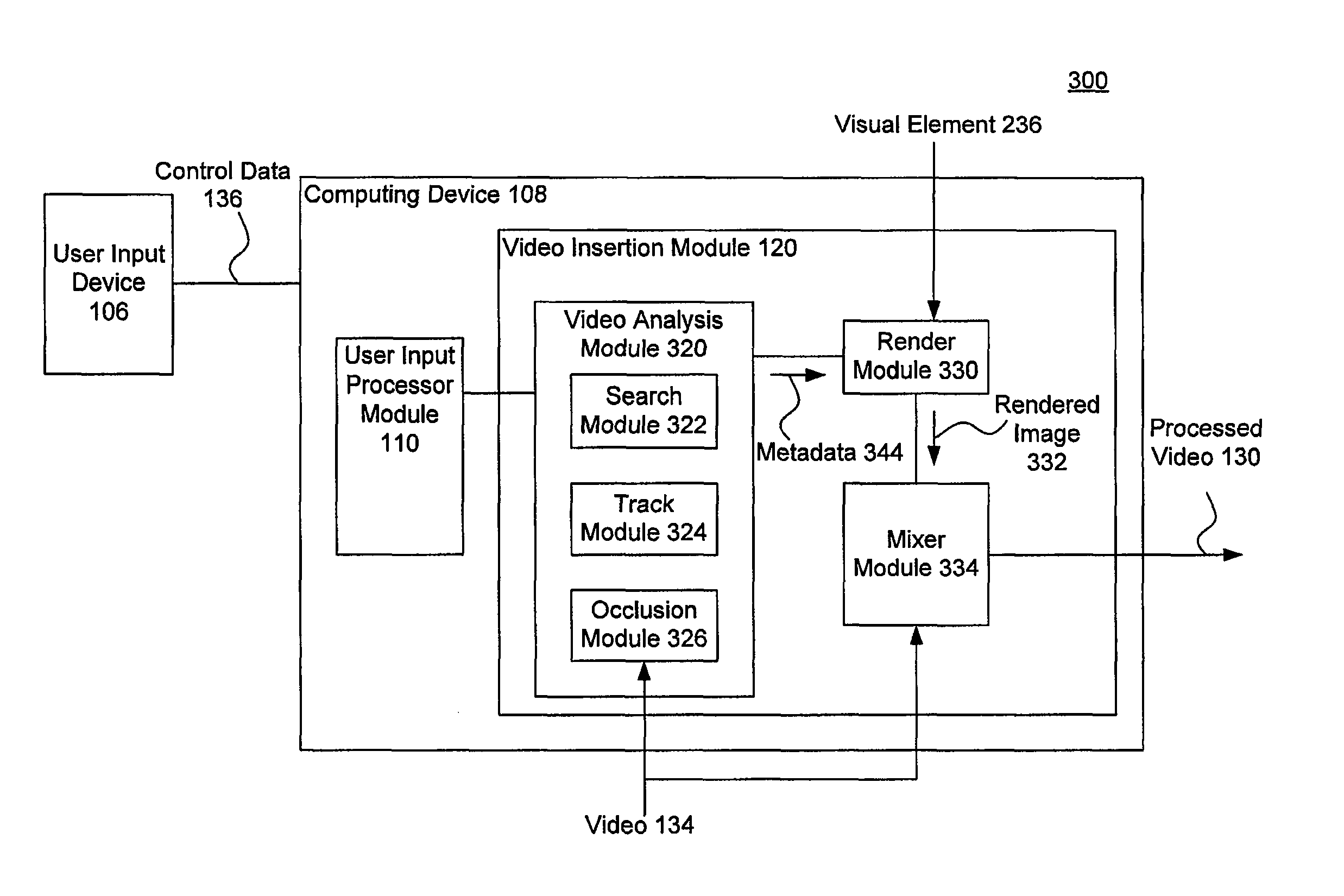

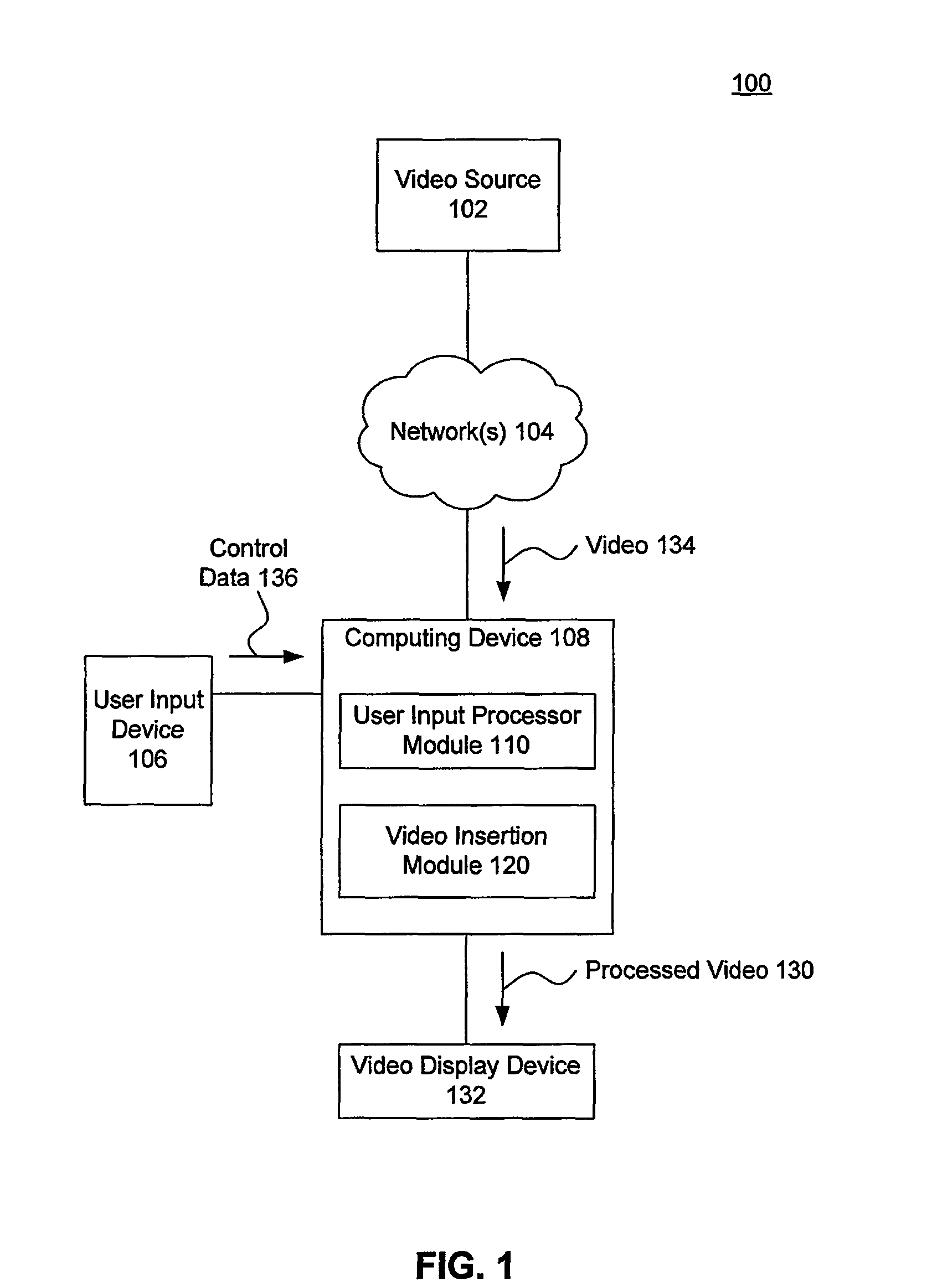

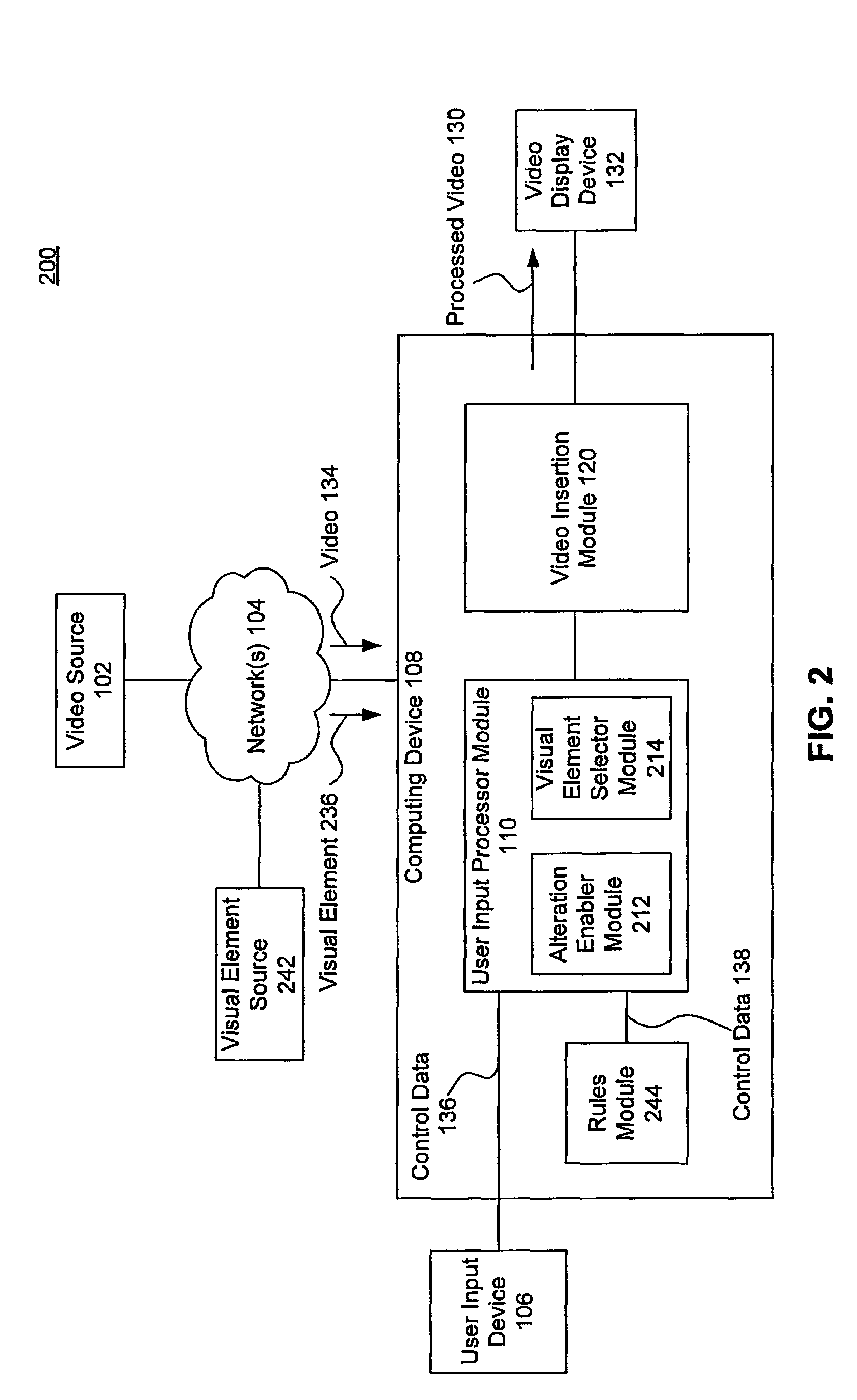

Interactive video insertions, and applications thereof

ActiveUS8665374B2Television system detailsColor signal processing circuitsComputer graphics (images)Interactive video

Embodiments of this invention relate to controlling insertion of visual elements integrated into video. In an embodiment, a method enables control of insertions in a video. In the embodiment, control data is received from a user input device. Movement of at least one point of interest in a video is analyzed to determine video metadata. Finally, a visual element is inserted into a video according to the control data, and the visual element changes or moves with the video as specified by the video metadata to appear integrated with the video.

Owner:DISNEY ENTERPRISES INC

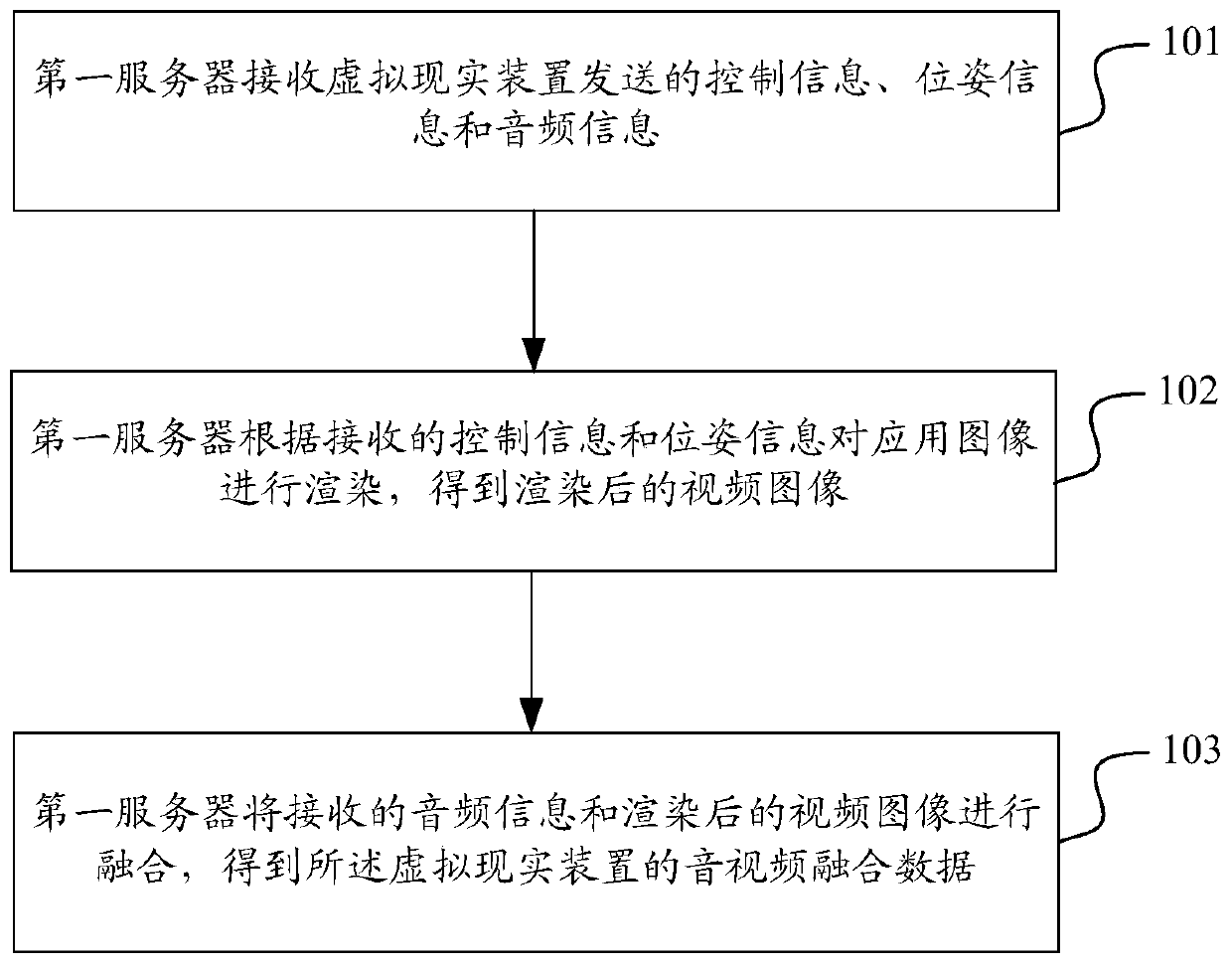

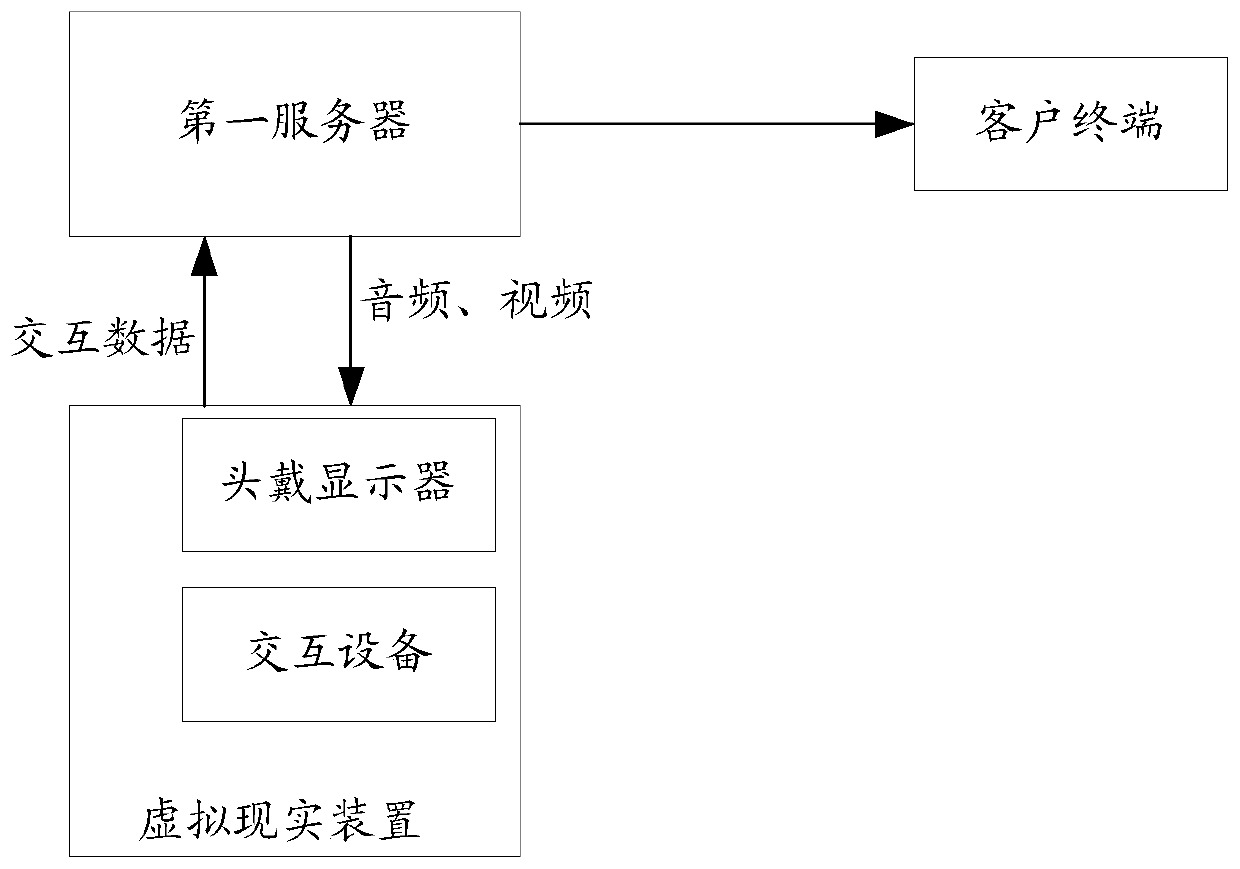

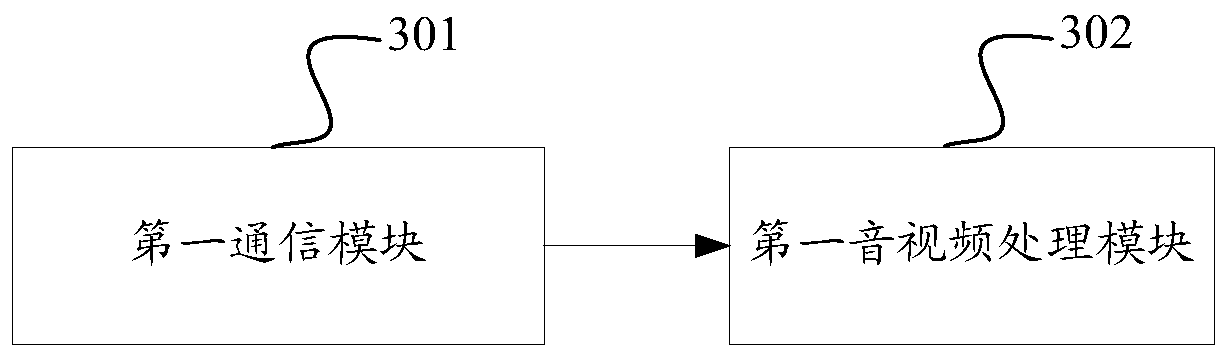

Data processing method, first server, second server and storage medium

PendingCN111459267AWith audio interaction functionImprove experienceInput/output for user-computer interactionSound input/outputComputer graphics (images)Video fusion

The invention discloses a data processing method, a first server, a second server and a storage medium. The method comprises the steps that the first server receives control information, pose information and audio information sent by a virtual reality device; the first server renders the application image according to the received control information and pose information to obtain a rendered videoimage; and the first server fuses the received audio information and the rendered video image to obtain audio and video fusion data of the virtual reality device. The first server fuses the receivedaudio information and the rendered video image to obtain the audio and video fusion data of the virtual reality device, so that the virtual reality device has an audio interaction function, and the user experience is improved.

Owner:杭州嘉澜创新科技有限公司

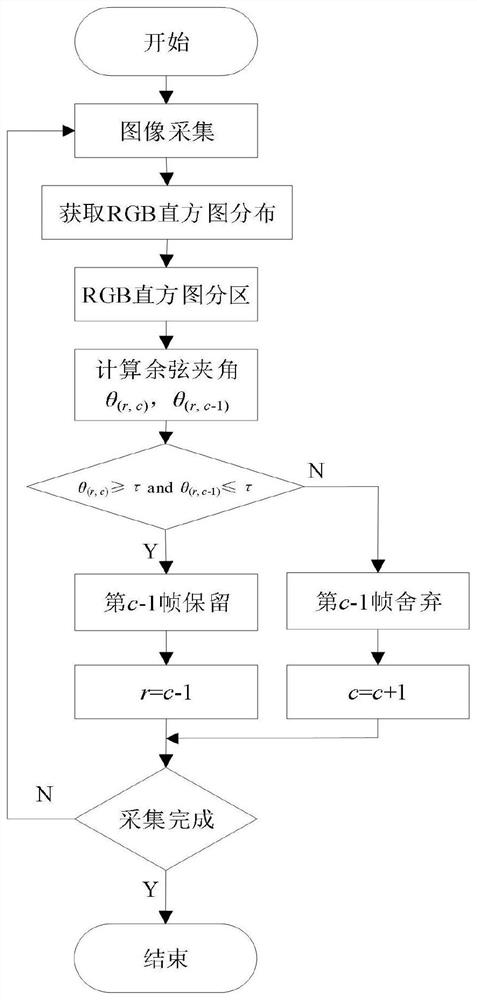

Night vision anti-corona video processing method based on heterogeneous image fusion

ActiveCN112053313AReduce computationSolve the data bloat problemImage enhancementImage analysisData expansionMotion vector

The invention discloses a night vision anti-corona video processing method based on heterogeneous image fusion. According to the method, the continuous optimal cosine included angle threshold of the video content is determined by researching the cosine included angle theta of the feature vectors of the two frames of images and the correlation between the non-linear correlation information entropyNCIE and the frame removal rate, redundant frames in a video sequence are abandoned, and only anti-corona fusion processing is performed on reserved frames, so that the processing efficiency of the algorithm can be greatly improved; the problem of data expansion caused by a night vision anti-corona video fusion method in an information fusion process is solved; the original frame numbers of the extraction frames are reserved as time marks, the number of frames to be inserted between the extraction frames is determined, and the video frame rate after frame removal is recovered; the motion vector of the object is calculated by utilizing the inter-frame content difference, and the motion vector between the to-be-interpolated frame and the reference frame is obtained by endowing different self-adaptive weights to the reference motion vector, so that the to-be-interpolated frame image synchronous with the original video is constructed, and the problem that the content of the video after frame interpolation is not synchronous with the content of the original video is solved.

Owner:XIAN TECHNOLOGICAL UNIV

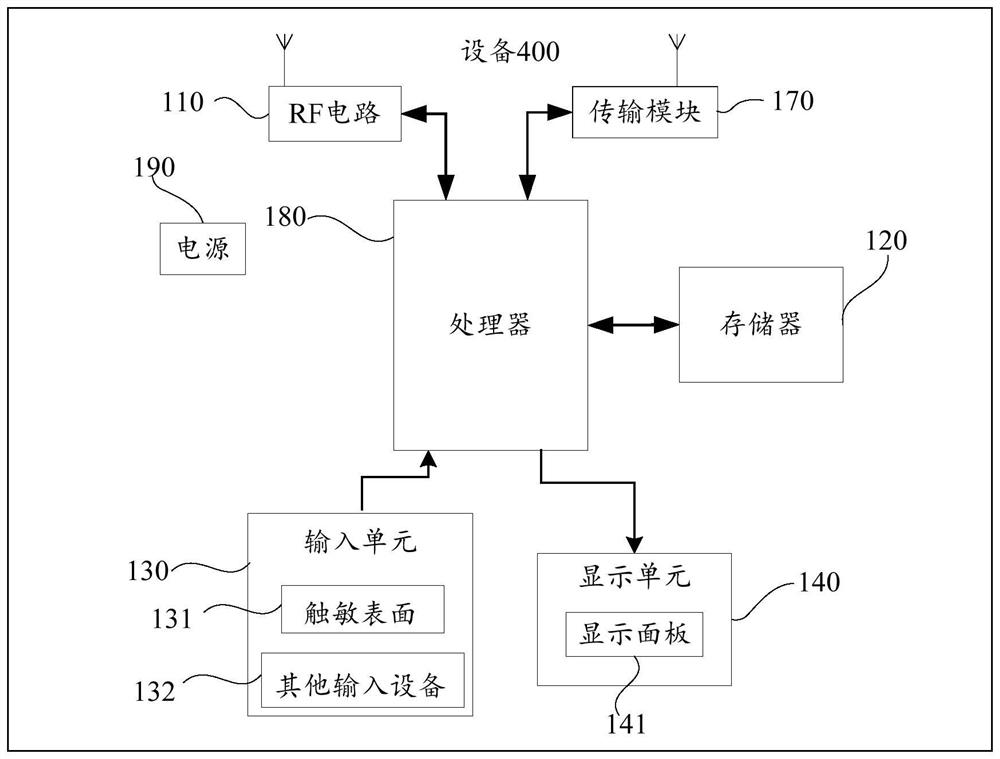

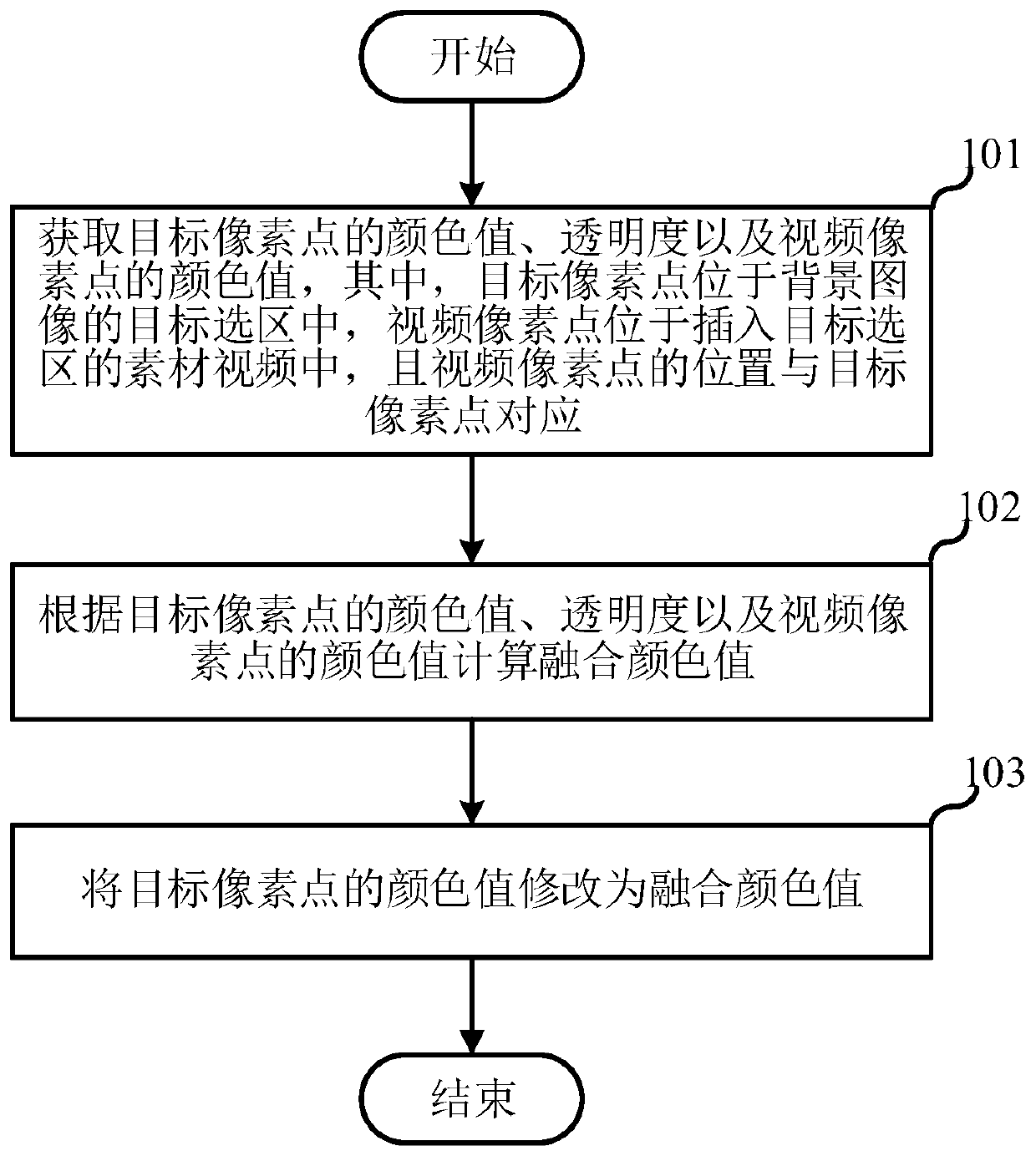

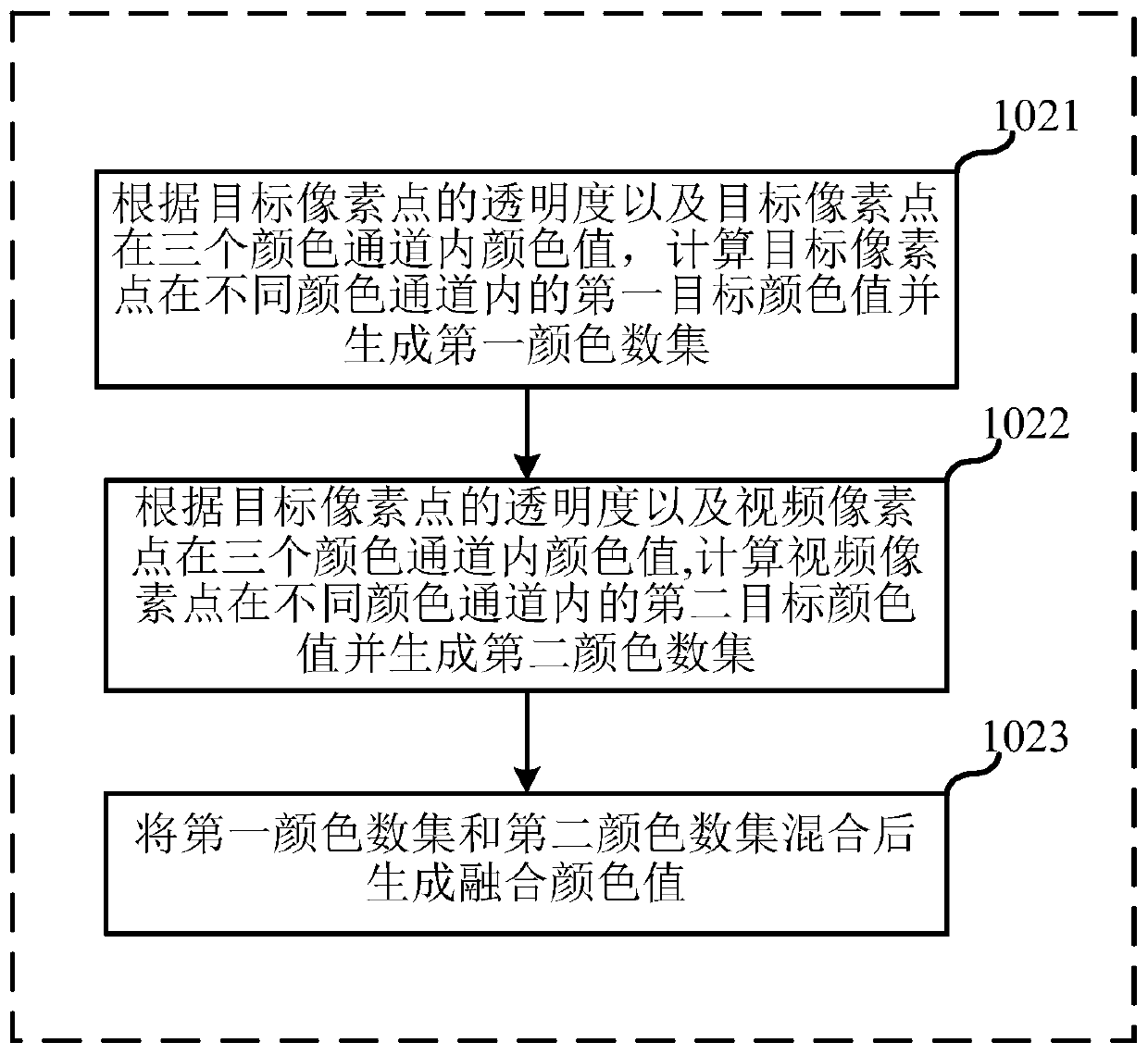

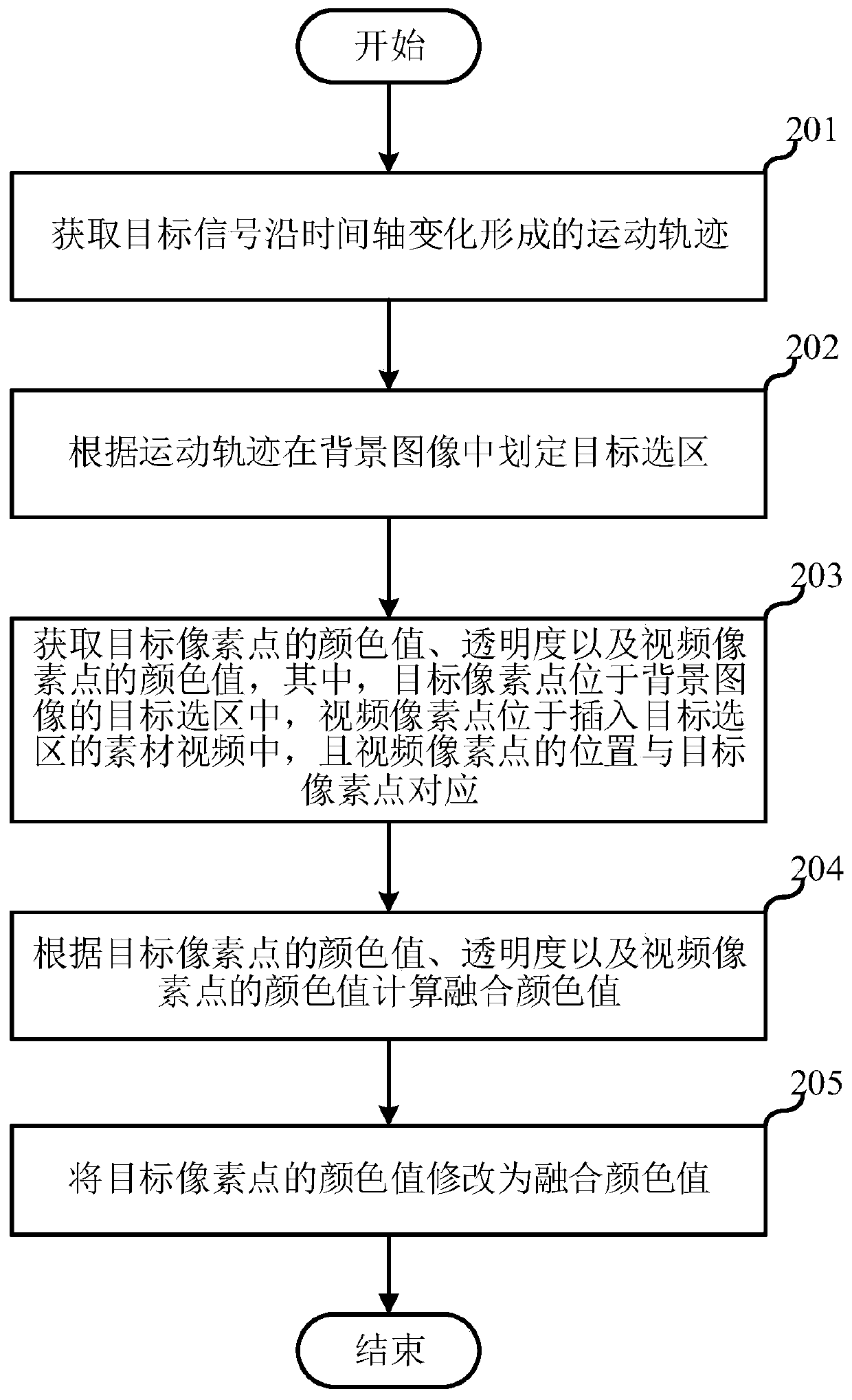

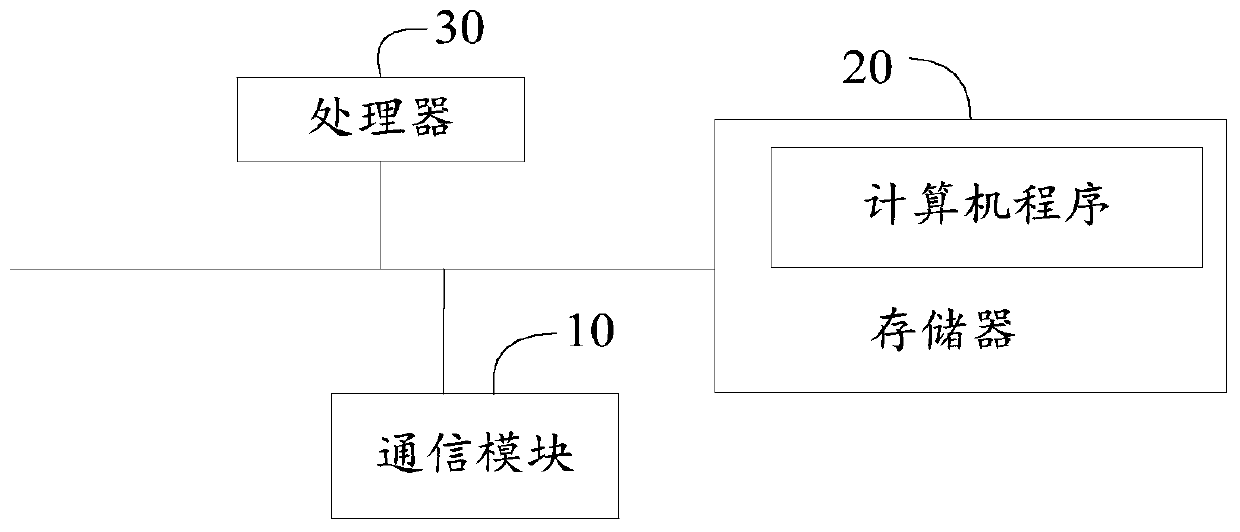

Video fusion method, electronic equipment and storage medium

ActiveCN110971839AImage enhancementTelevision system detailsImaging processingComputer graphics (images)

The embodiment of the invention relates to the technical field of image processing, and discloses a video fusion method, electronic equipment and a storage medium. The method comprises the steps thatthe color value and transparency of a target pixel point and the color value of a video pixel point are acquired, the target pixel point is located in a target selection area of a background image, the video pixel point is located in a material video inserted into the target selection area, and the position of the video pixel point corresponds to the target pixel point; a fusion color value is calculated according to the color value and transparency of the target pixel point and the color value of the video pixel point; and the color value of the target pixel point is modified into the fused color value. By modifying the color value of the target pixel point into the fused color value, sawteeth can be prevented from appearing at the edge of the target selection area of the background imageand the edge of the material video inserted into the target selection area.

Owner:MIGU COMIC CO LTD +2

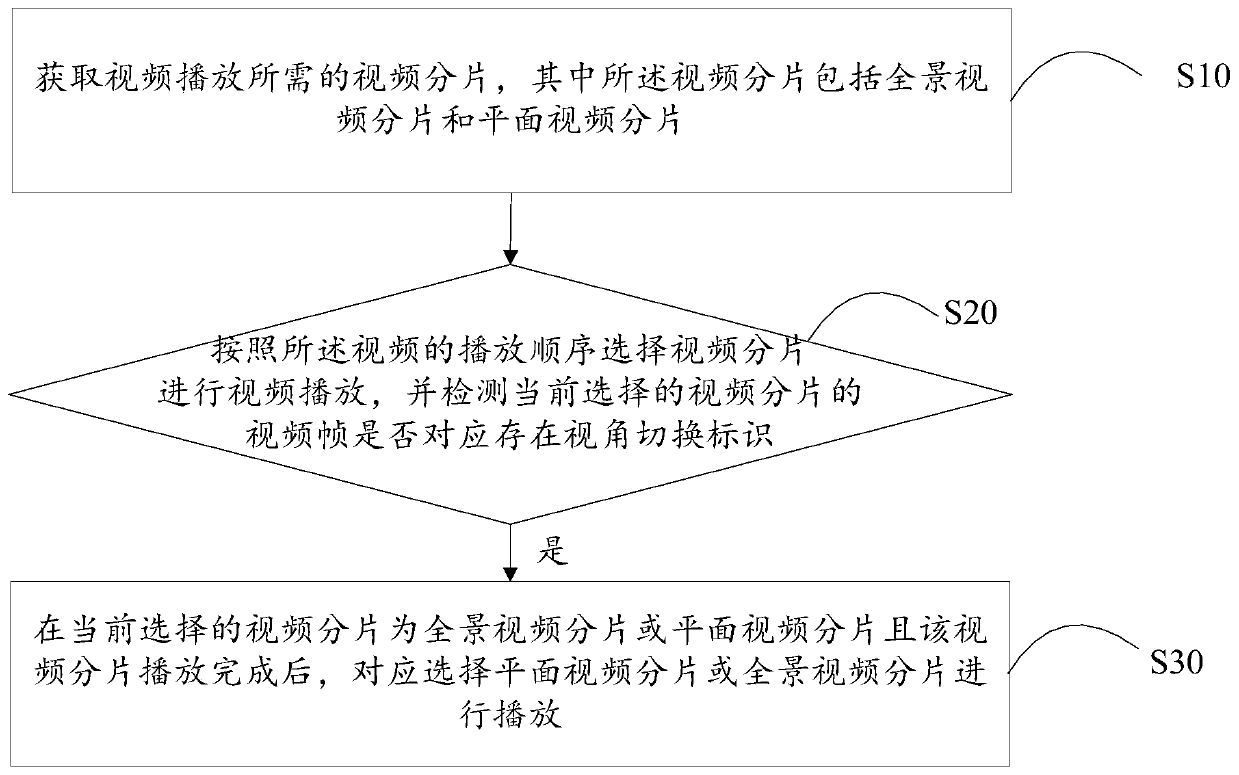

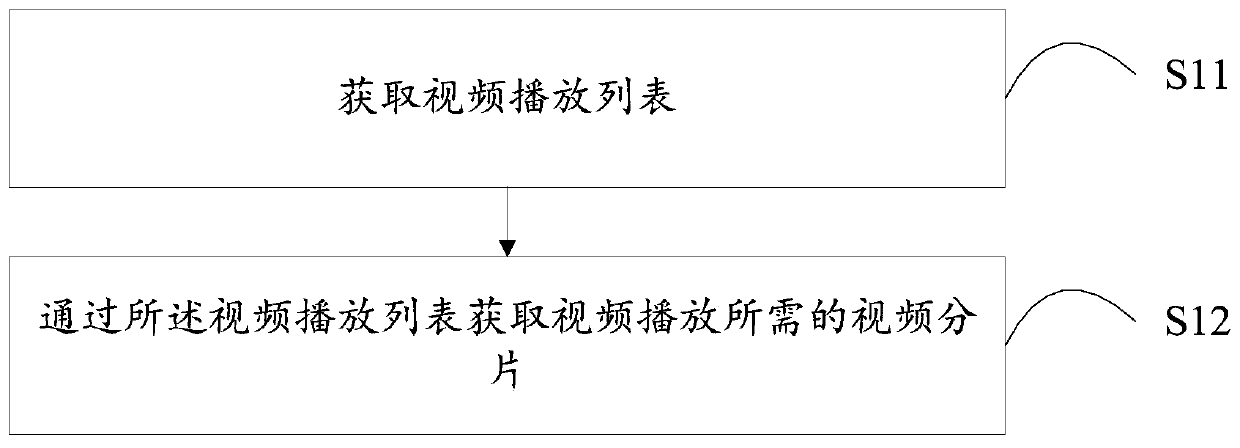

Video playing method, display terminal and storage medium

ActiveCN110913278AGuaranteed adjustabilityPlay switching is not abruptSelective content distributionComputer graphics (images)Panorama

The invention provides a video playing method, a display terminal and a storage medium, and the method comprises the steps: obtaining video fragments needed by video playing, and enabling the video fragments to comprise a panoramic video fragment and a plane video fragment; selecting video fragments for video playing according to the playing sequence of the video, and detecting whether a video frame of the currently selected video fragment corresponds to a view angle switching identifier or not; and if the view angle switching identifier exists, correspondingly selecting the plane video fragment or the panoramic video fragment for playing after the currently selected video fragment is the panoramic video fragment or the plane video fragment and the video fragment is played. According to the scheme, mutual switching between the panoramic video fragments and the planar video fragments can be realized according to the view angle switching identifier, so that planar video playing is certainly interspersed between the two panoramic videos of which the view angles need to be switched on the premise of ensuring the adjustability of the playing view angles through a video fusion playing technology, and the playing switching is not abrupt.

Owner:SHENZHEN SKYWORTH NEW WORLD TECH CO LTD

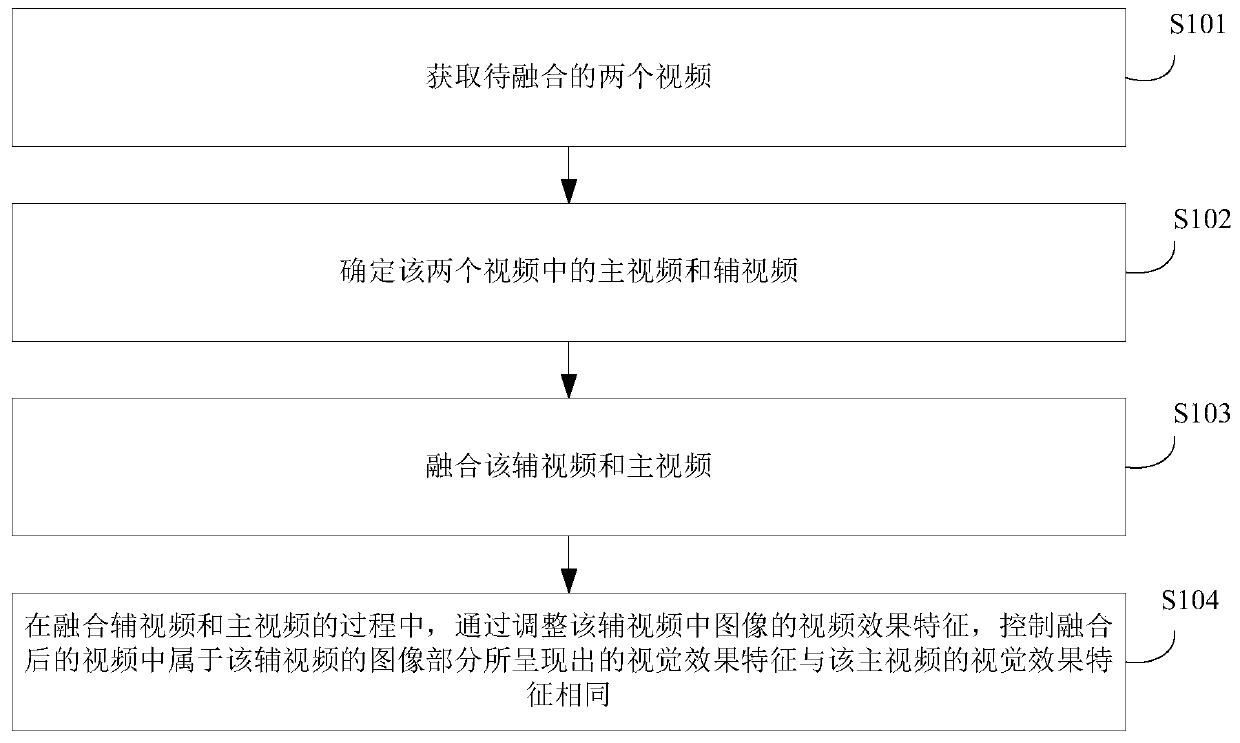

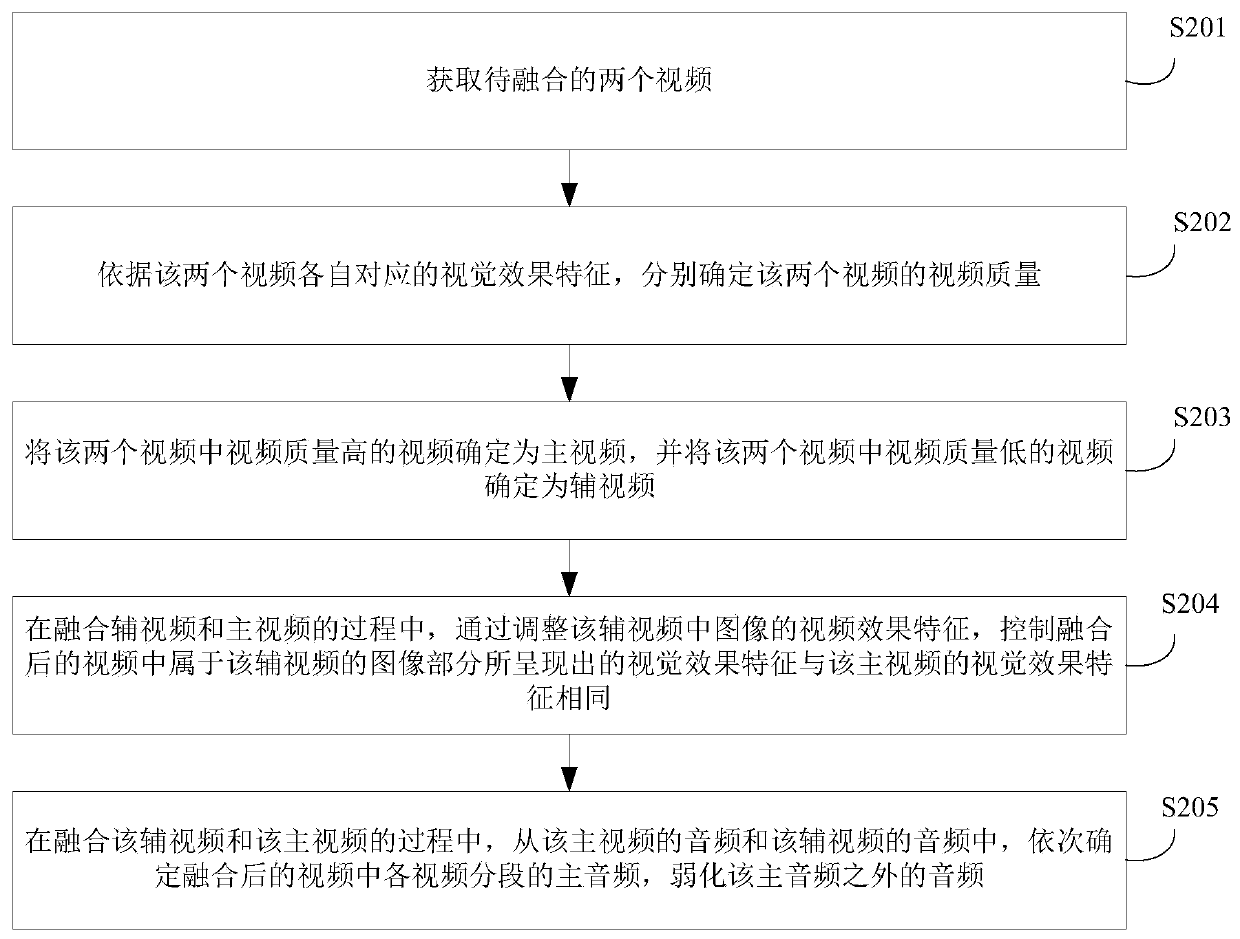

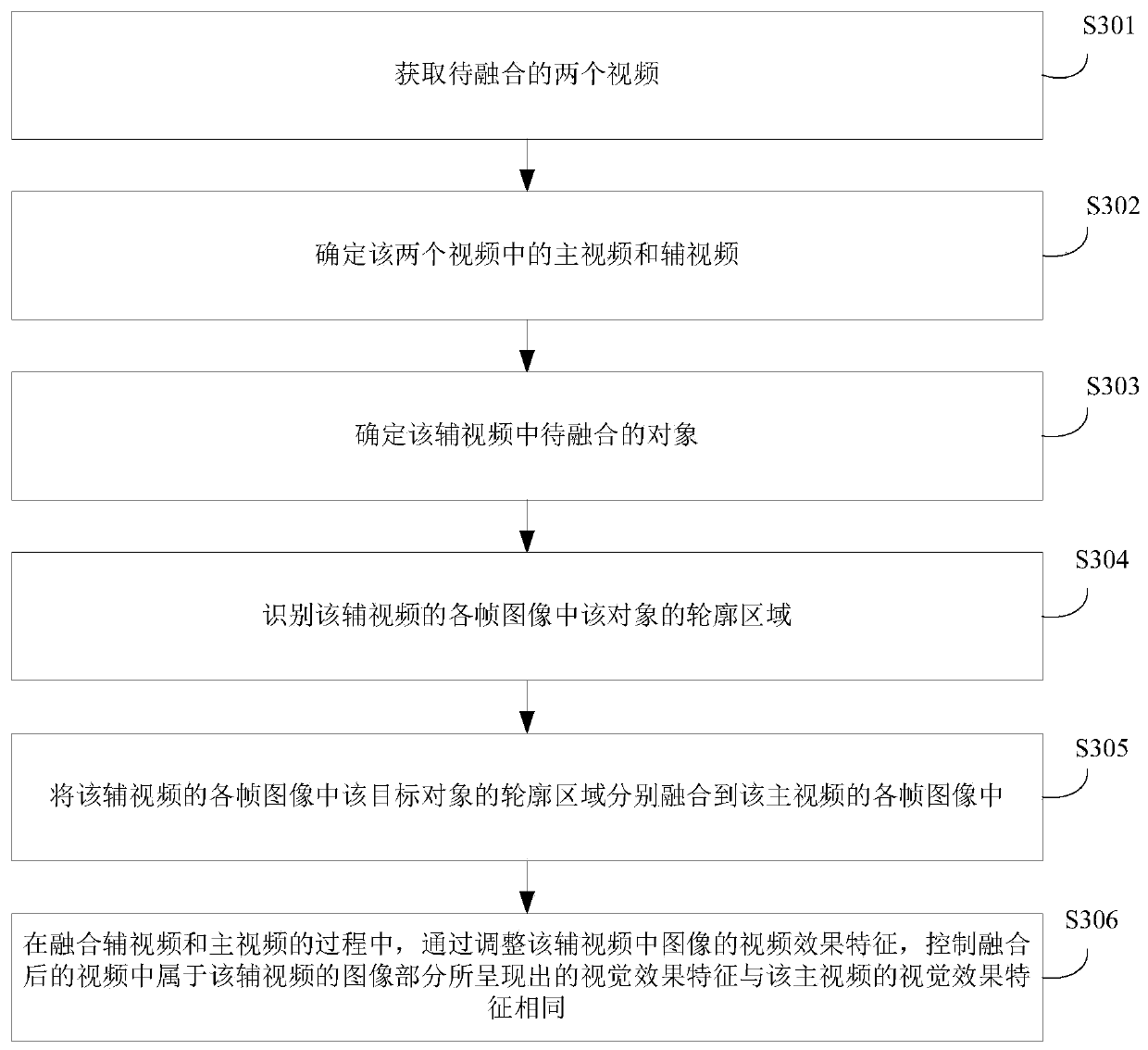

Video processing method and device and electronic device

ActiveCN110855905AUnity of visual effectsImprove video qualityTelevision system detailsColor television detailsPattern recognitionComputer graphics (images)

The invention discloses a video processing method and device and an electronic device, and the method comprises the steps of obtaining two to-be-fused videos, wherein the visual effect features of theimages in the two videos are different; determining a main video and an auxiliary video in the two videos; fusing the auxiliary video and the main video; and in the process of fusing the auxiliary video and the main video, controlling the visual effect feature presented by an image part belonging to the auxiliary video in the fused video to be the same as the visual effect feature of the main video by adjusting the video effect feature of the image in the auxiliary video. According to the scheme, the image quality of the fused video can be improved.

Owner:LENOVO (BEIJING) CO LTD

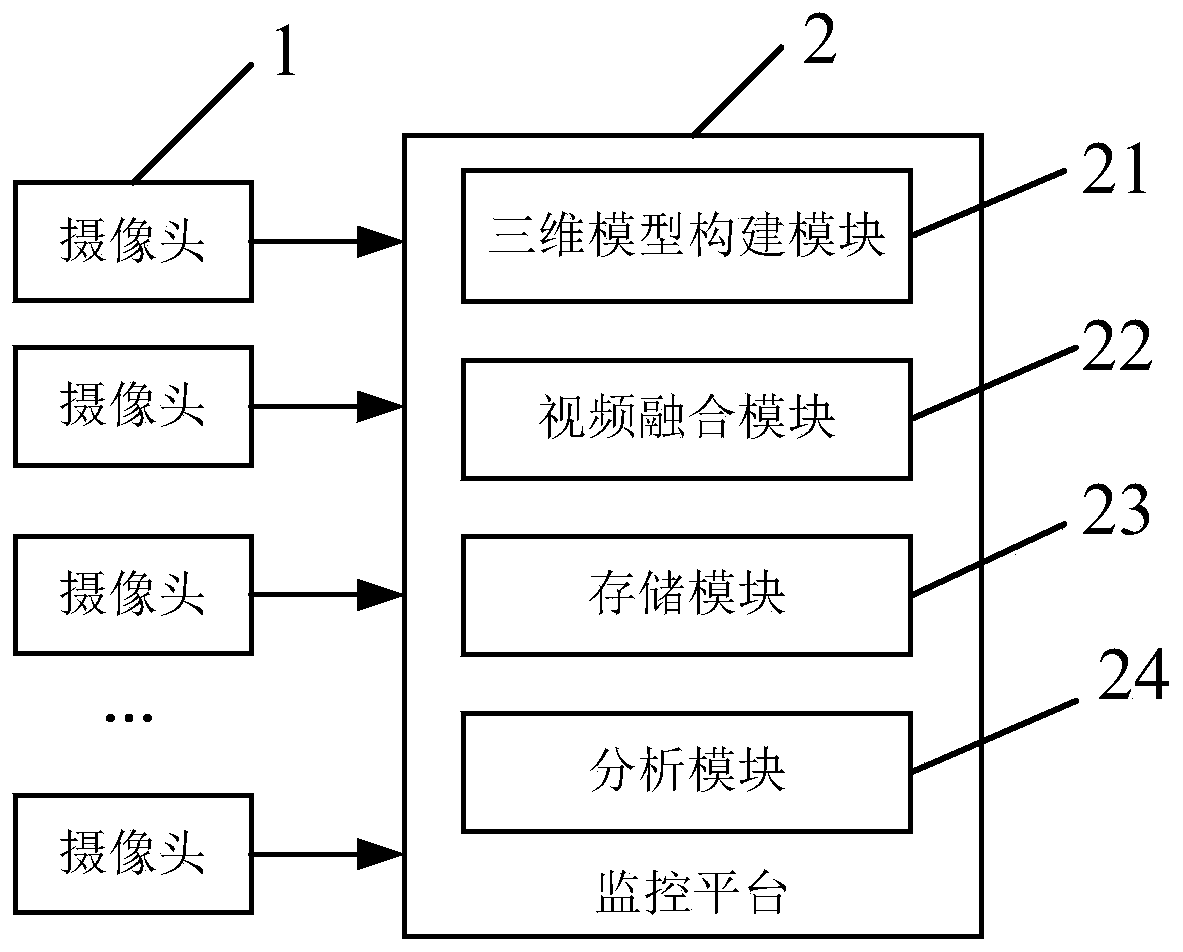

Stadium three-dimensional video fusion visual monitoring system and method

InactiveCN111586351ACommand in timeTimely disposalDetails involving 3D image dataClosed circuit television systemsVideo monitoringVisual monitoring

The invention relates to a stadium three-dimensional video fusion visual monitoring system. A plurality of cameras and a monitoring platform are arranged in each area of a stadium, and the cameras shoot videos in the front range of the corresponding area and transmit video images and video tags to a video fusion module; the monitoring platform comprises a three-dimensional model construction module for constructing a three-dimensional scene model of the stadium based on a 3D GIS technology; and the video fusion module analyzes an area to which the transmitted video image belongs based on the video label, performs video fusion on the video images transmitted by the cameras belonging to the same area, and visually embeds the fused video image into the area in the three-dimensional scene model. According to the method, the sub-lens monitoring videos which are located at different positions and have different visual angles are fused into the three-dimensional scene model in real time, real-time global monitoring of an overall large scene in a monitoring area range is realized, any camera video screen does not need to be switched, various emergencies are conveniently commanded and handled in time, and the practical efficiency of video monitoring is greatly improved.

Owner:SHANGHAI SECURITY SERVICE

Video fusion method, electronic equipment and storage medium

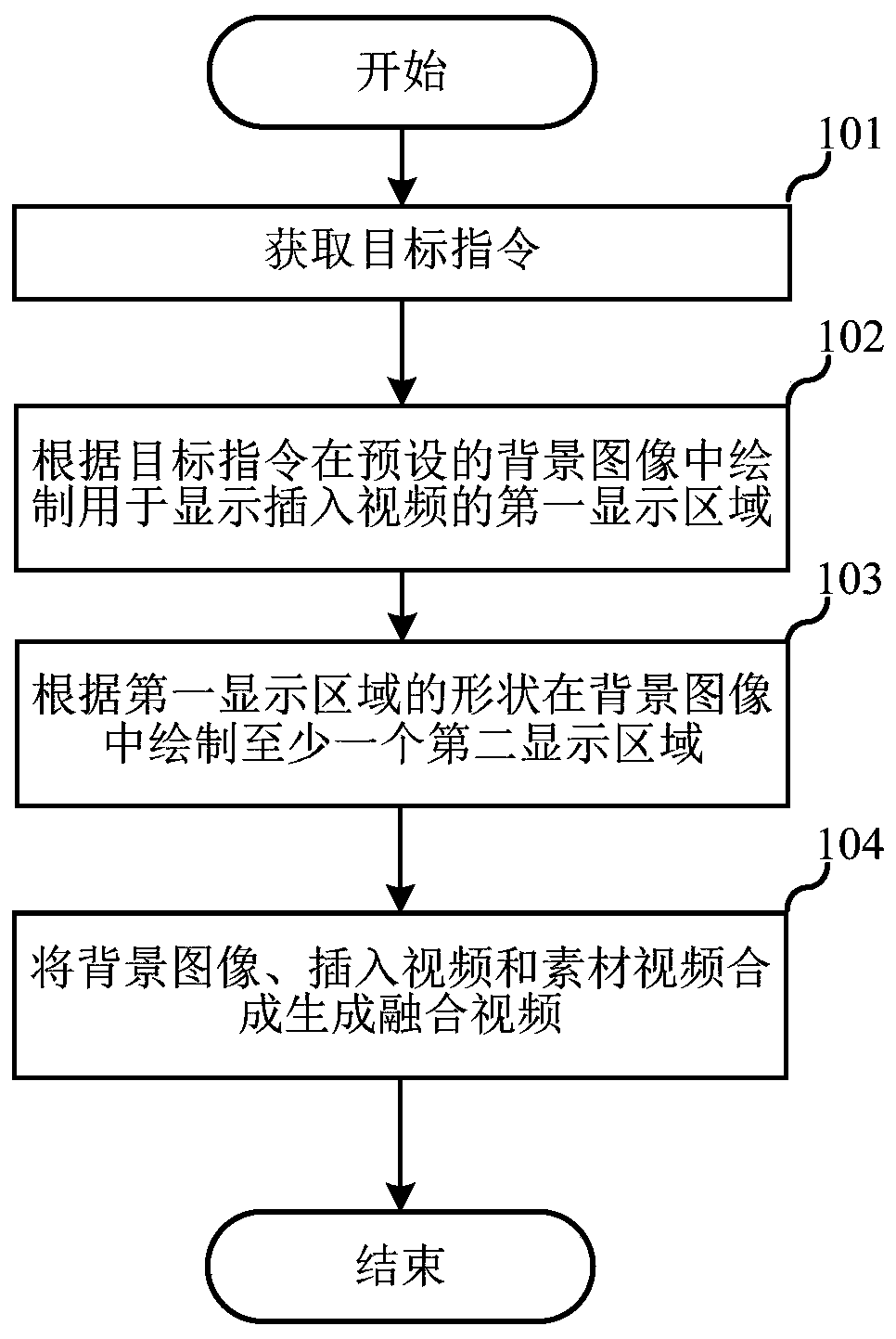

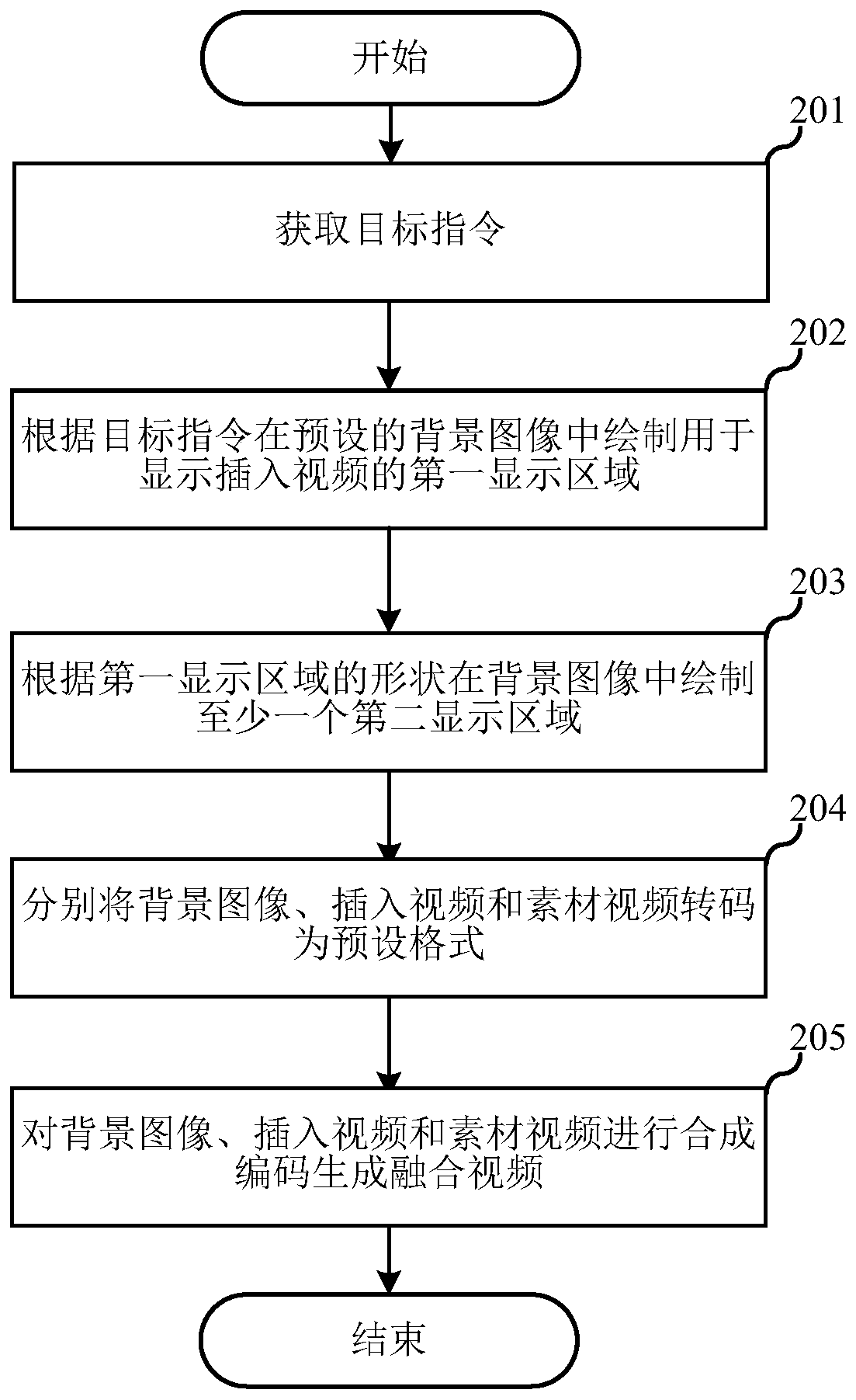

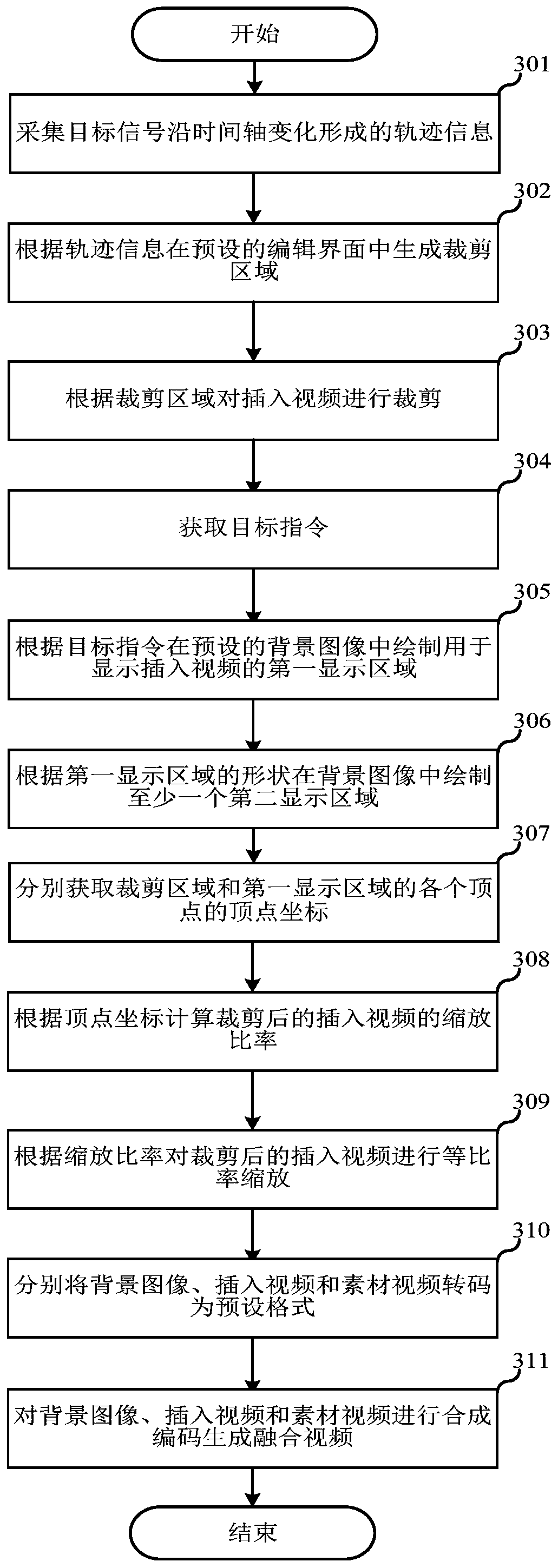

InactiveCN110996150AImprove fusion funFusion coordinationSelective content distributionInformation processingPersonalization

The embodiment of the invention relates to the field of information processing, and discloses a video fusion method, electronic equipment and a storage medium. The video fusion method comprises the following steps: acquiring a target instruction; drawing a first display area for displaying the inserted video in a preset background image according to the target instruction; drawing at least one second display area in the background image according to the shape of the first display area, the second display area being used for displaying the material video; and synthesizing the background image,the insertion video and the material video to generate a fused video. According to the method, the playing areas are set for the inserted video in the preset background picture, and the shapes of theplaying areas corresponding to the to-be-fused material video are set according to the shapes of the playing areas of the inserted video, so that the two playing areas can be fused more harmoniously,video fusion has a personalized background and playing areas, and the video fusion interestingness is improved.

Owner:MIGU COMIC CO LTD +2

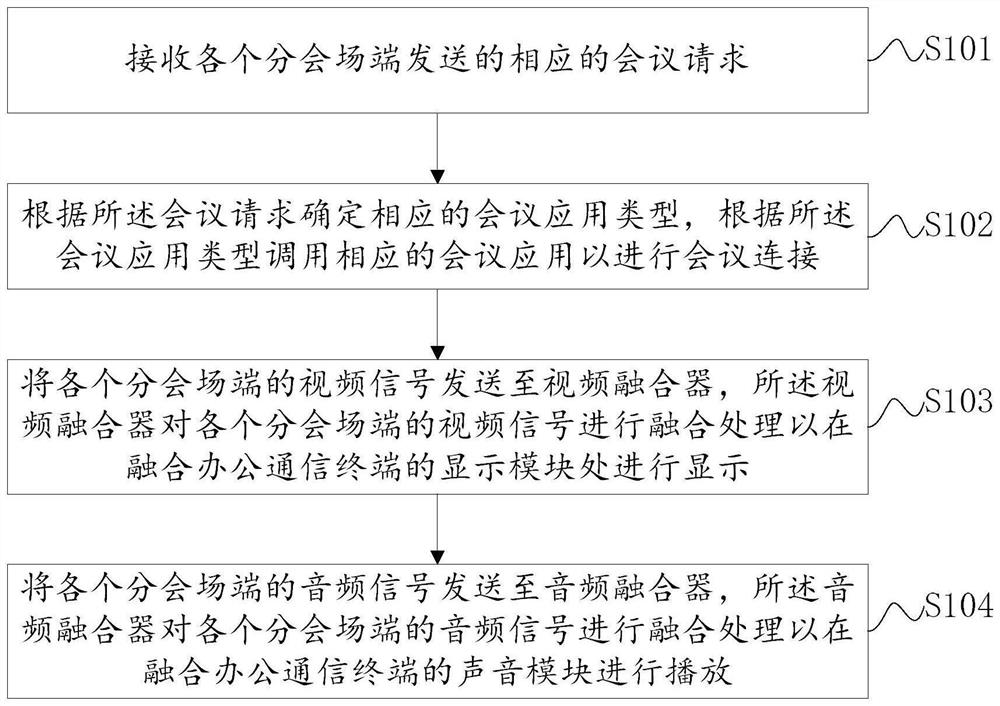

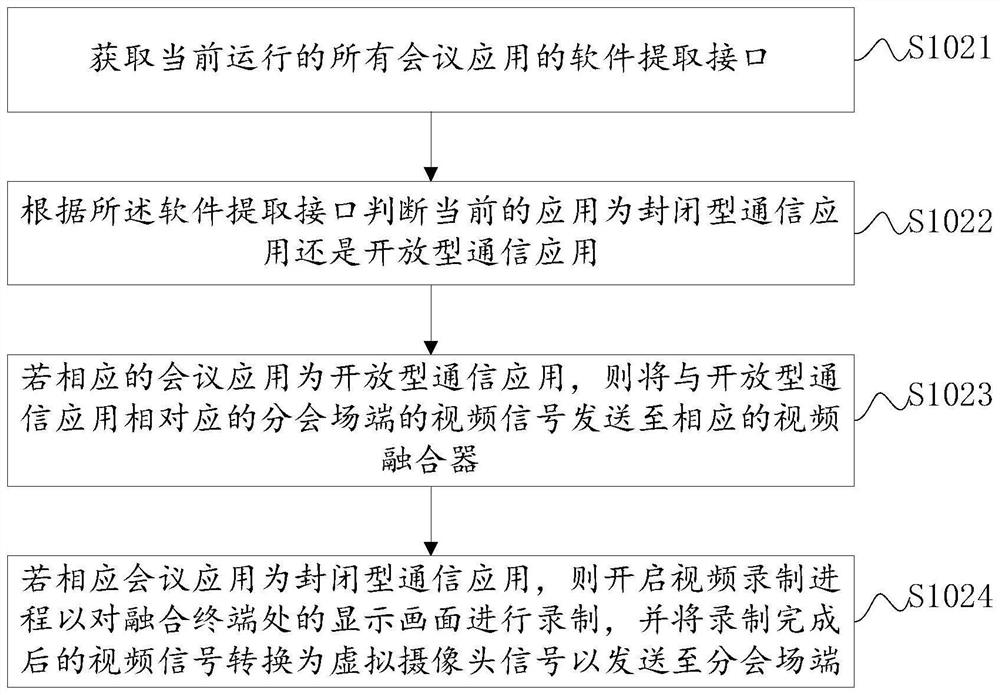

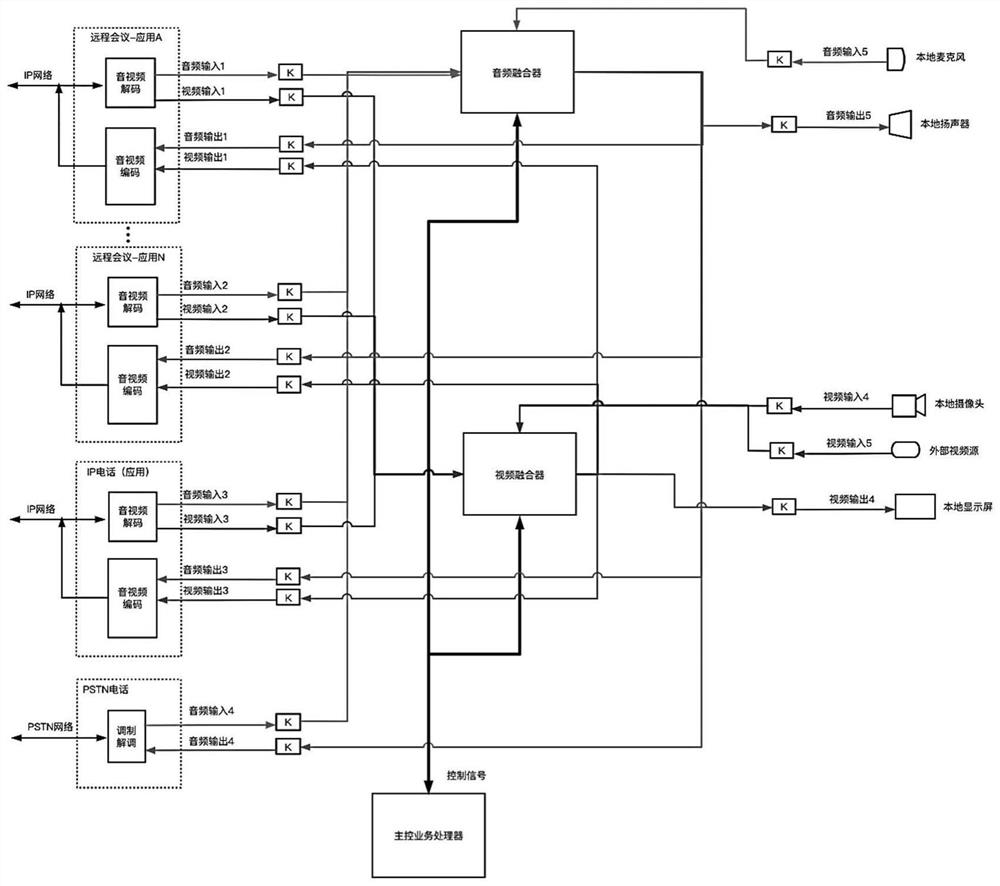

Information fusion method and device for different platforms

PendingCN114125360AImprove collaborationTelevision conference systemsTwo-way working systemsTelecommunicationsSoftware engineering

The embodiment of the invention relates to the technical field of conference systems, and discloses an information fusion method for different platforms, which comprises the following steps: receiving corresponding conference requests sent by each branch conference place end; determining a corresponding conference application type according to the conference request, and calling a corresponding conference application according to the conference application type to perform conference connection; sending the video signals of each branch meeting place end to a video fusion device, and fusing the video signals of each branch meeting place end by the video fusion device; and the audio signals of all the branch meeting place ends are sent to an audio fusion device, and the audio fusion device carries out fusion processing on the audio signals of all the branch meeting place ends so that the audio signals can be played in a sound module of the fusion office communication terminal. According to the information fusion method provided by the embodiment of the invention, communication applications of various different platforms can be unified together for use, so that users which are located at different places and use different communication applications can perform conference connection, and the collaboration among teams is greatly improved.

Owner:广州迷听科技有限公司

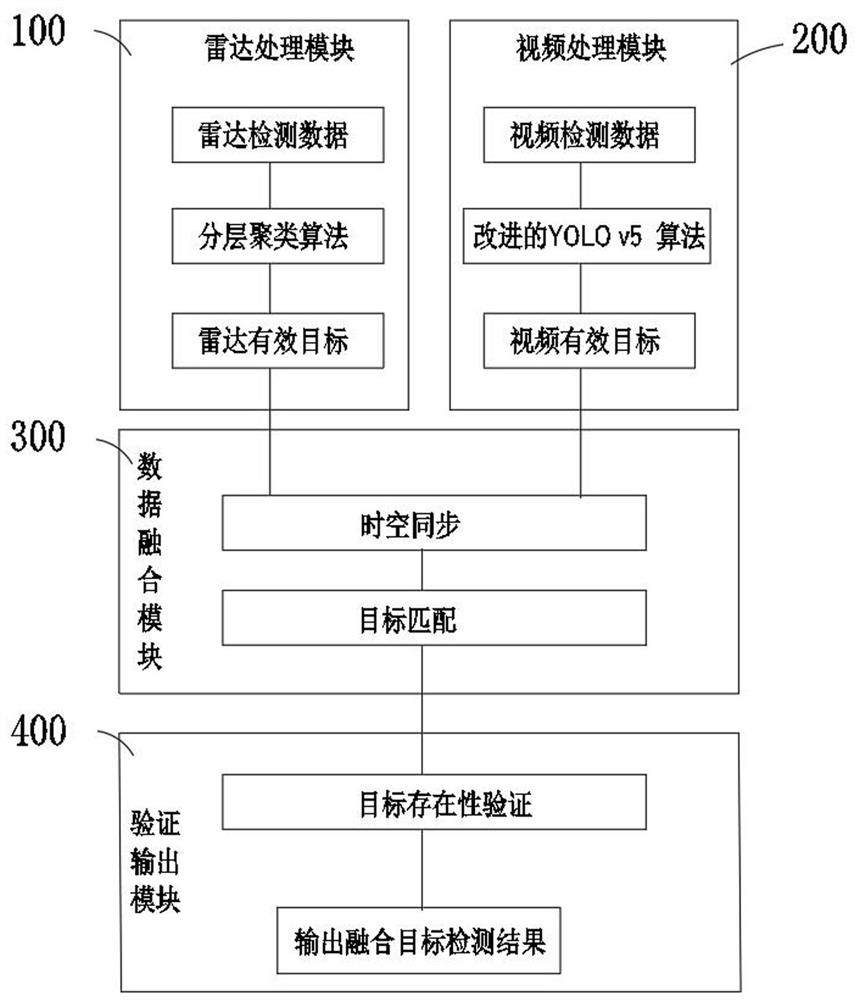

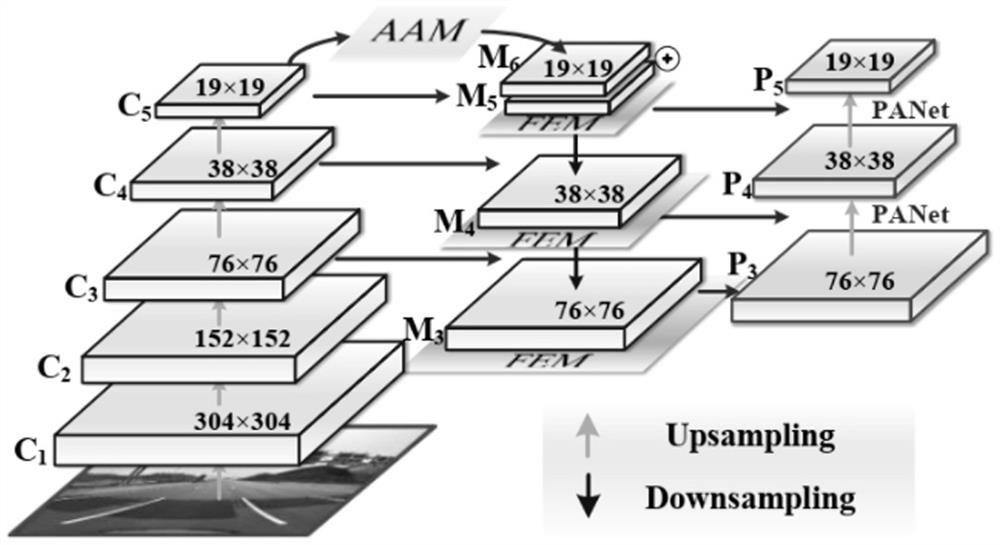

Target detection method and system based on millimeter wave radar and video fusion

ActiveCN114236528AReduce missed detection rateNarrow down the range of detection targetsRadio wave reradiation/reflectionNear neighborEngineering

The invention provides a target detection method and system based on millimeter-wave radar and video fusion, and the method comprises the steps: firstly filtering invalid targets obtained by the millimeter-wave radar and a camera, and reducing the target detection range; under the condition that spatial synchronization of the millimeter-wave radar and the camera is ensured, target matching of detection results of the millimeter-wave radar and the camera is carried out; and calculating a target detection intersection-union ratio, judging the accuracy of target matching according to the target detection intersection-union ratio, and further performing second target matching by adopting a global nearest neighbor data association algorithm under the condition that the recognition accuracy cannot be judged, thereby realizing target matching of detection objects of the millimeter wave radar and the camera as many as possible. The missed detection rate of the target object can be reduced, and the accuracy of identification matching is ensured at the same time.

Owner:浙江高信技术股份有限公司

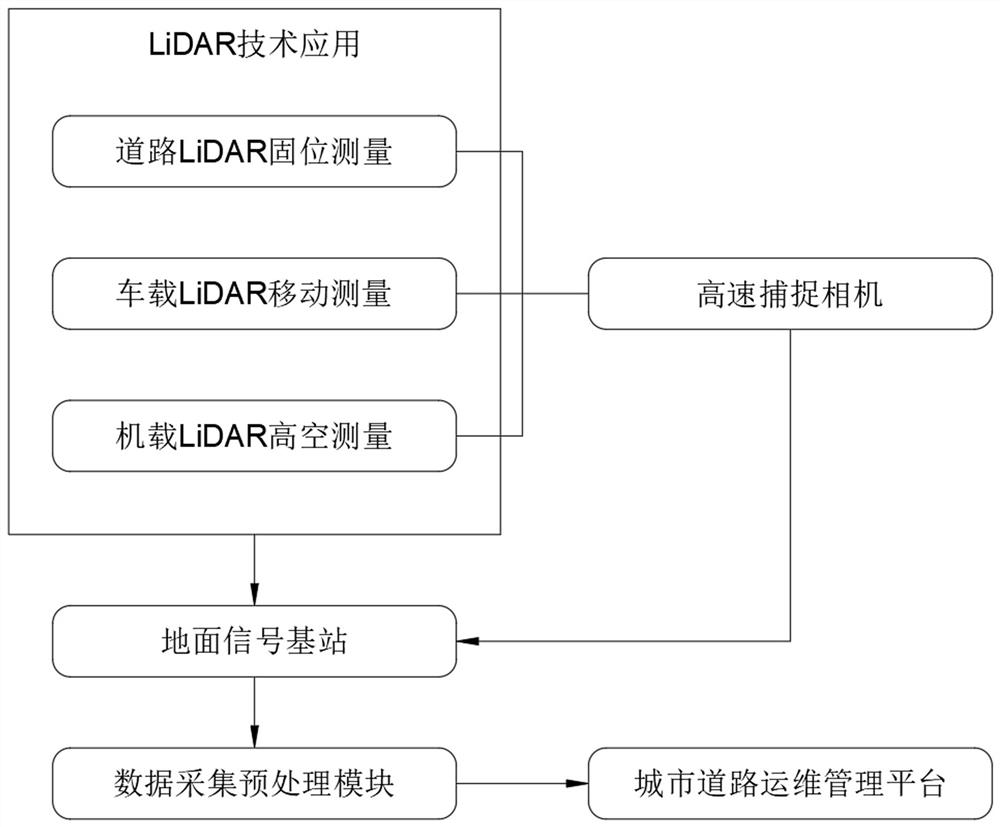

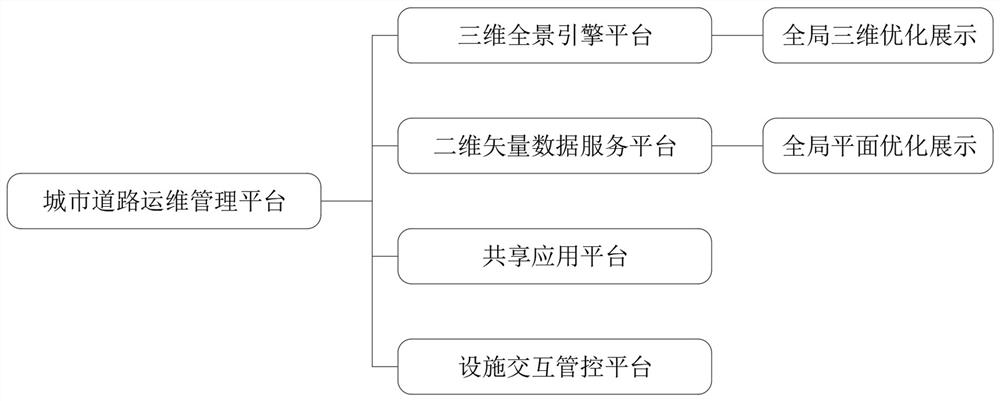

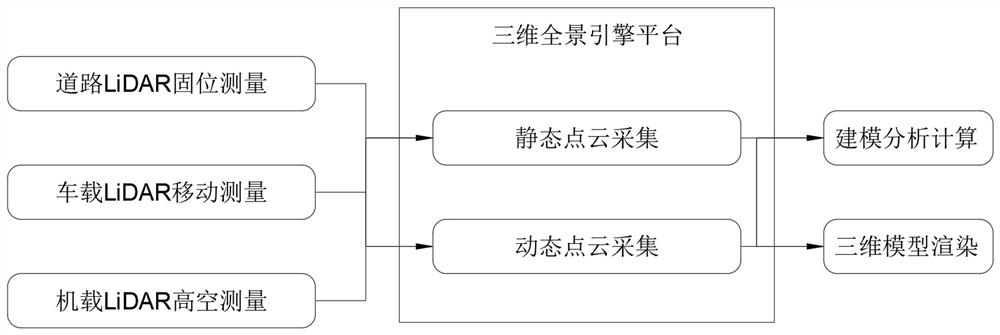

Traffic facility vector recognition, extraction and analysis system based on point cloud data and AI technology

ActiveCN114419231APrecise detectionRealize holographic depictionSpecial data processing applications3D-image renderingPoint cloudSimulation

The invention discloses a traffic facility vector recognition, extraction and analysis system based on point cloud data and an AI technology, and belongs to the technical field of intelligent traffic. In order to solve the problems that an existing traffic control department monitors road vehicles and traffic facilities in a picture or plane signal mode, certain limitation exists in the mode, workers cannot visually obtain actual data of the facilities and the vehicles, and therefore errors can occur in use in some applications. The three-dimensional live-action modeling technology engine is a three-in-one engine and is a three-in-one autonomous engine of a data engine, a map engine and a video engine. Holographic description, immersive and immersive experience command are realized by adopting video fusion, multi-map integrated multi-view application, first-person-view multi-lens fusion high and low point linkage indoor and outdoor linkage and real-time video real-time face vehicle feature behavior real-time structured analysis.

Owner:幂元科技有限公司

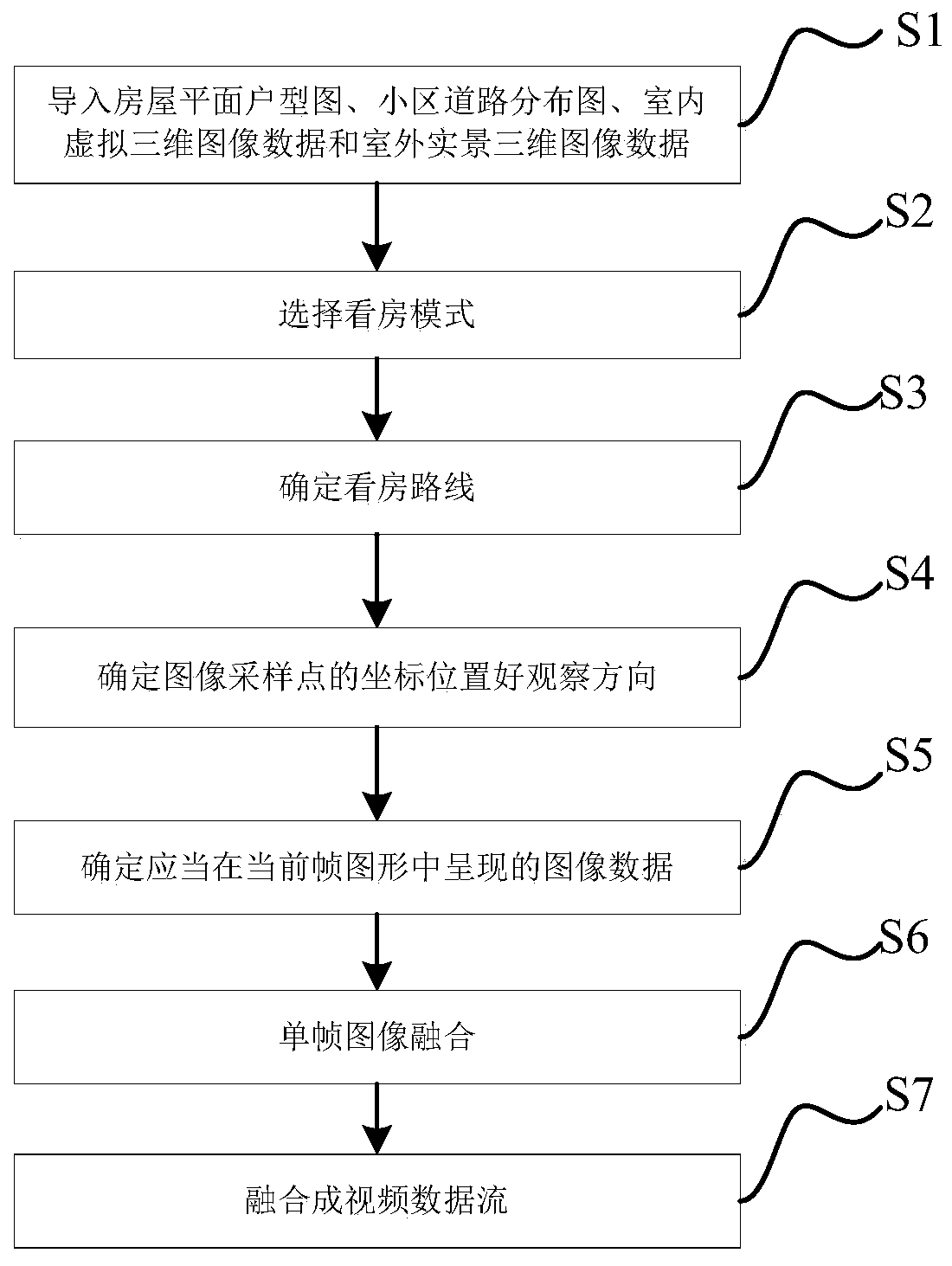

Indoor and outdoor panoramic house viewing video fusion method

ActiveCN110751616AEnhance viewing experienceImprove viewing experienceImage enhancementImage analysisComputer graphics (images)Panorama

The invention discloses an indoor and outdoor panoramic house viewing video fusion method. The method comprises the following steps: a house plane house type graph, a community road distribution graph, indoor virtual three-dimensional image data and outdoor live-action three-dimensional image data are imported; a user can select a house viewing mode by himself / herself and plan a house viewing route by himself / herself, so that a virtual-real fused panoramic house viewing video can be automatically generated along the house viewing route. The method has the effects that the aerial triangulationtechnology in the existing surveying and mapping technology is effectively utilized, a user can define a route by himself / herself, a video is automatically generated, indoor and outdoor panorama virtuality and reality are fused, online naked eye 3D indoor and outdoor panorama house viewing experience is achieved, and the house viewing experience feeling is effectively improved.

Owner:睿宇时空科技(重庆)股份有限公司

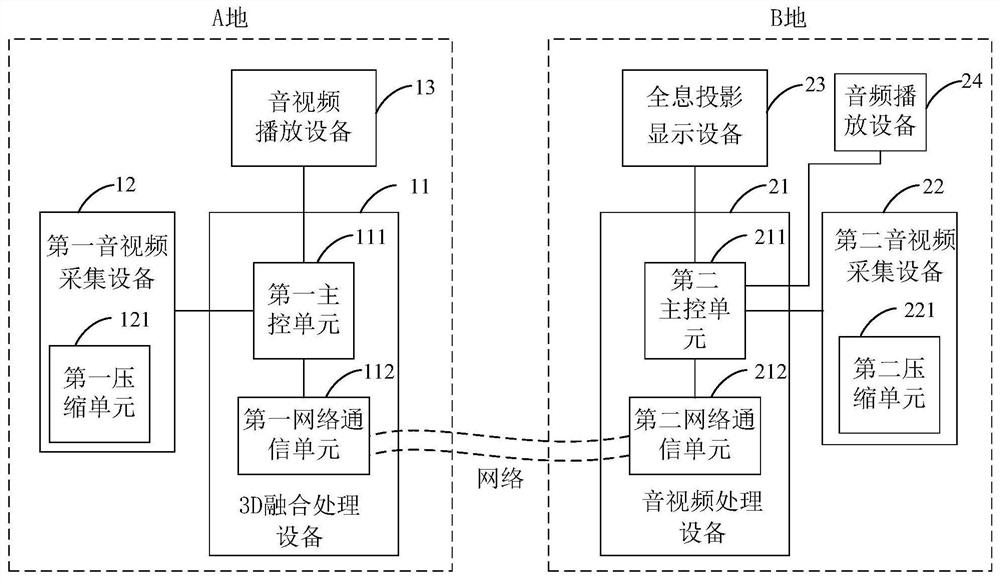

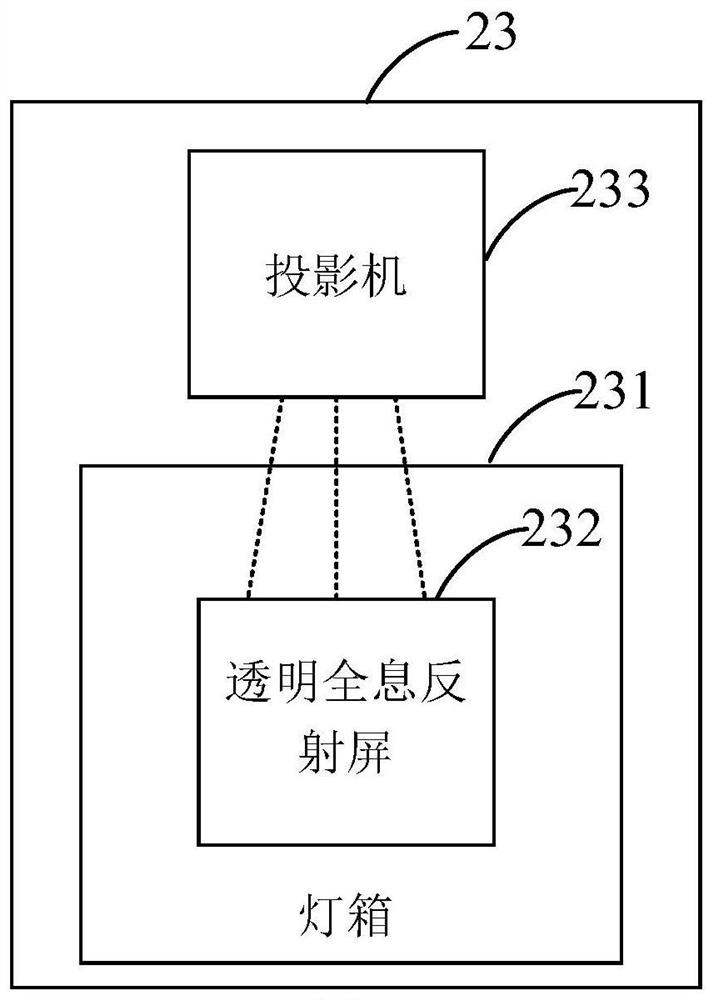

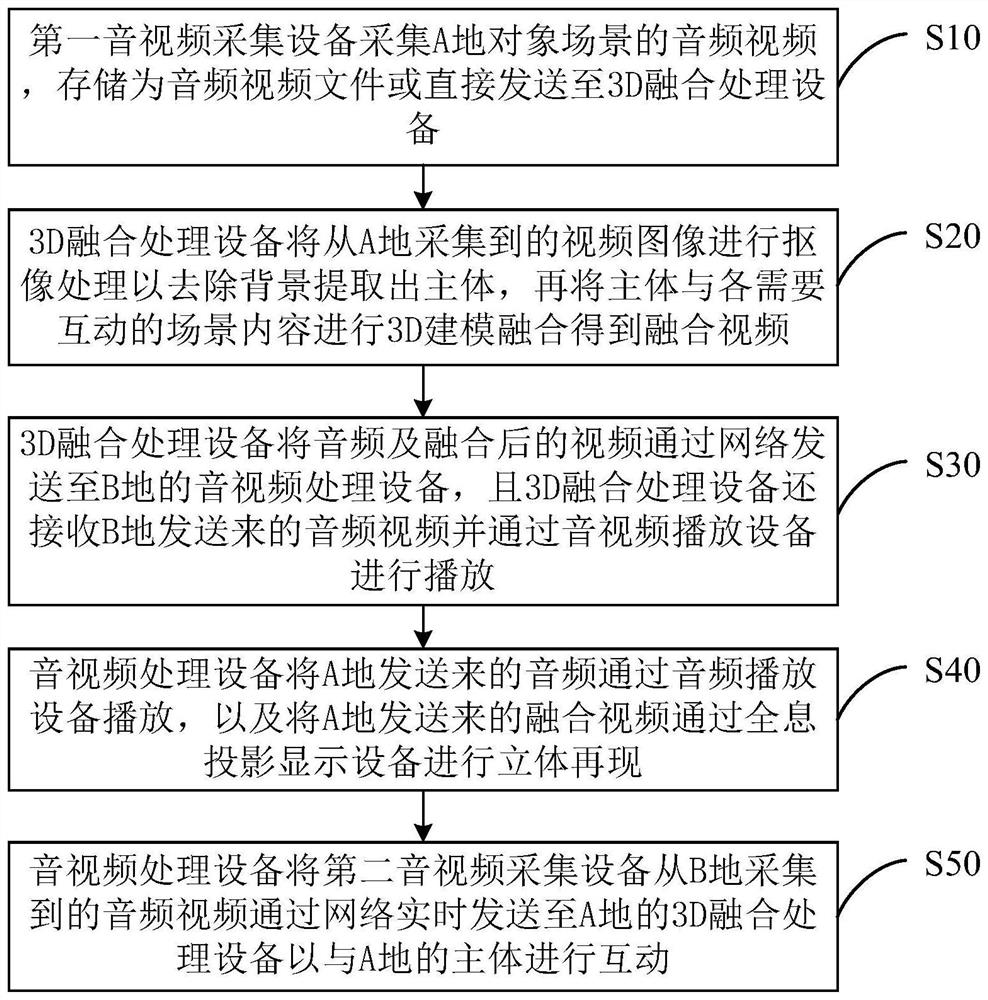

AR-based three-dimensional holographic real-time interaction system and method

ActiveCN112532963AImprove interactive experienceInput/output for user-computer interactionImage enhancementInteraction systemsAcquisition apparatus

The invention discloses an AR-based three-dimensional holographic real-time interaction system and method. The system comprises 3D fusion processing equipment, first audio and video acquisition equipment and audio and video playing equipment, wherein the 3D fusion processing equipment is arranged at a place A, the first audio and video acquisition equipment acquires audios and videos in a scene ofthe place A, and the 3D fusion processing equipment performs 3D fusion on the video acquired at the site A to form a fused video capable of being displayed stereoscopically; audio and video processing equipment, second audio and video acquisition equipment, audio playing equipment and holographic projection display equipment, wherein the audio and video processing equipment is arranged at a placeB, the second audio and video acquisition equipment acquires audios and videos in a scene at the place B, the audio and video processing equipment sends the audios and videos acquired at the place Bto the place A, the audio playing equipment plays the audios sent from the place A, and the holographic projection display equipment is used for stereoscopically displaying the fused video sent from the place A with a holographic projection effect. According to the invention, the A-place main body can be stereoscopically reproduced in the B place in a holographic projection manner, so that the experience of interactive communication is improved.

Owner:深圳臻像科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com