Patents

Literature

175 results about "Viewing frustum" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

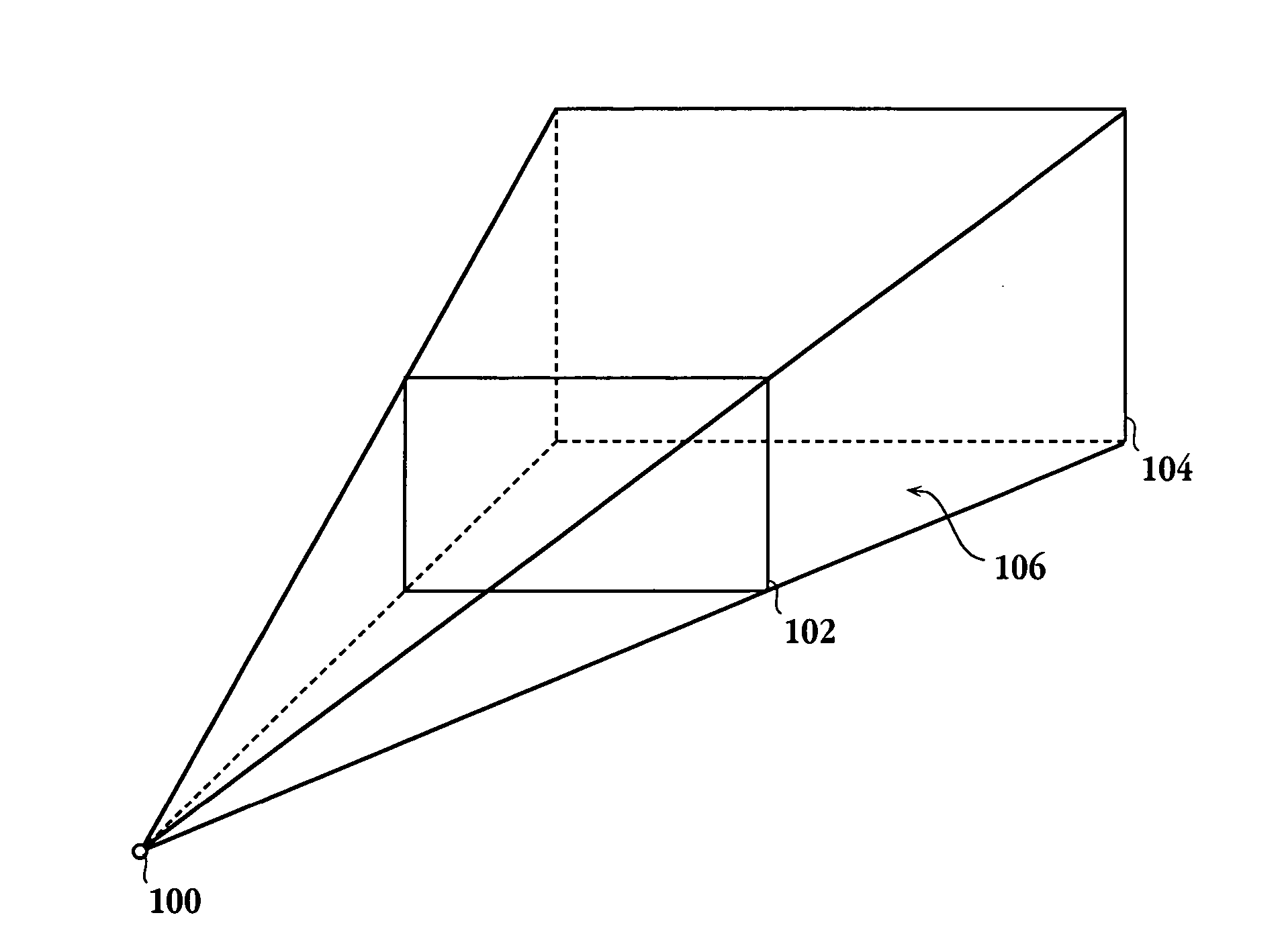

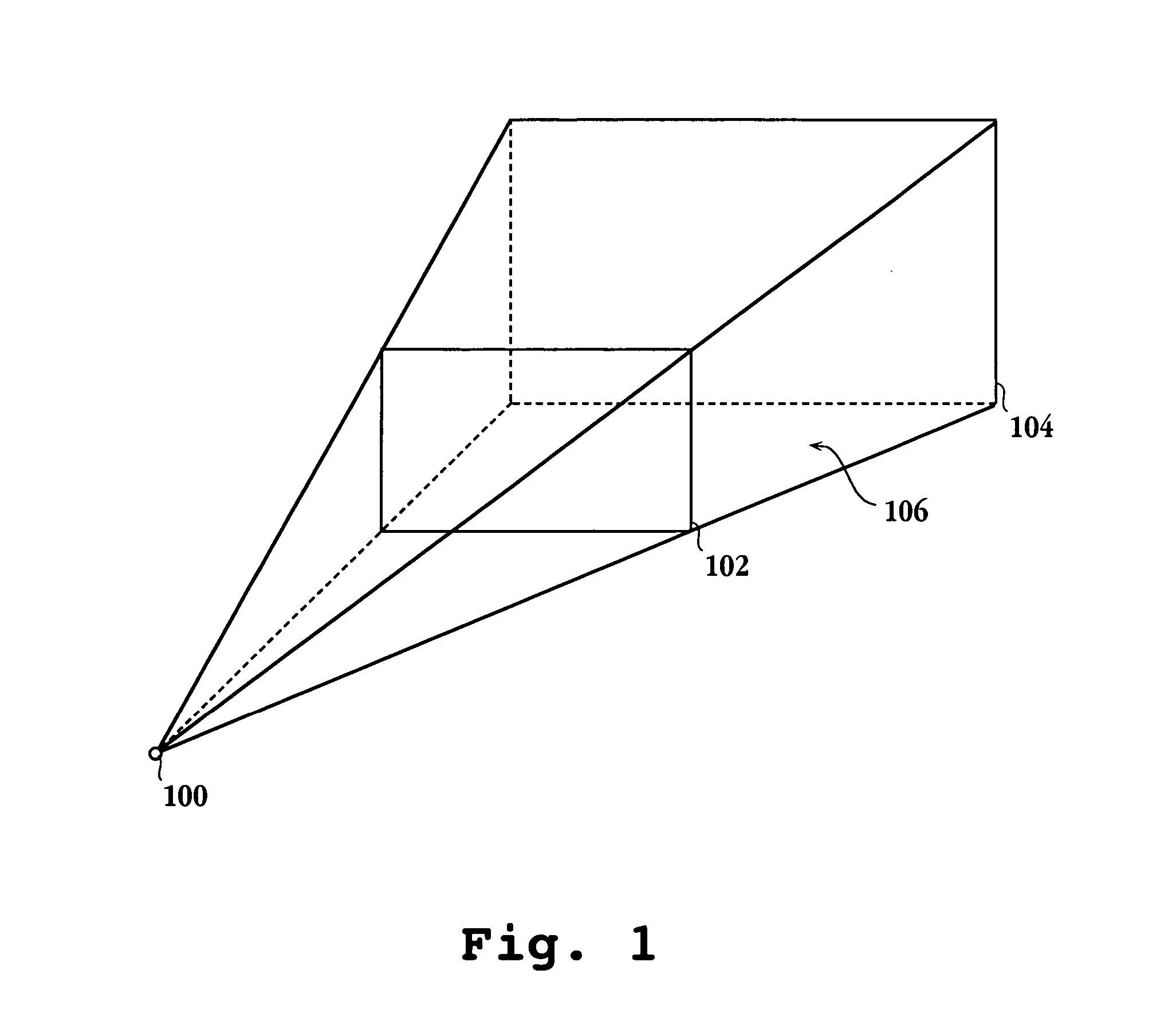

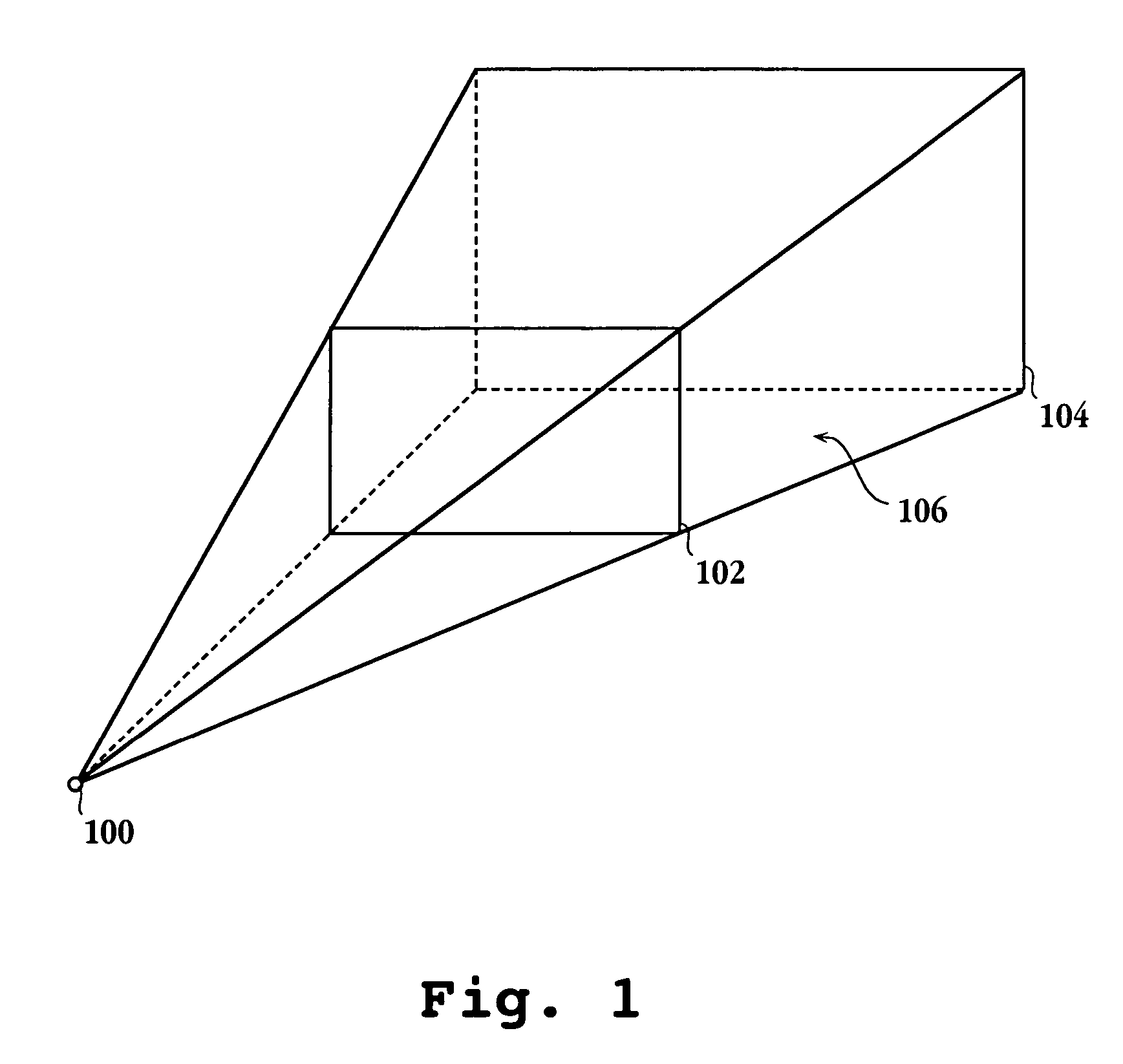

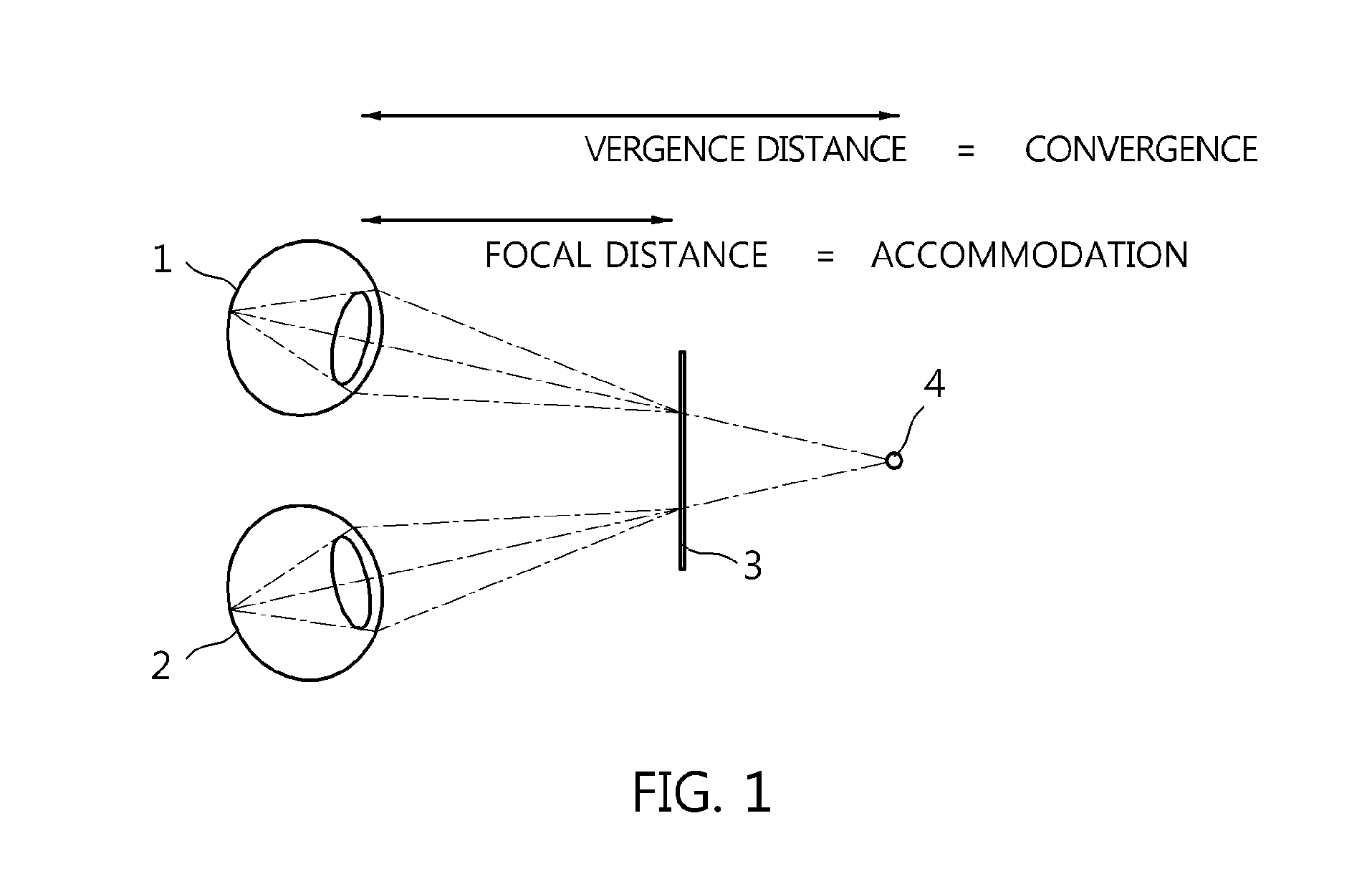

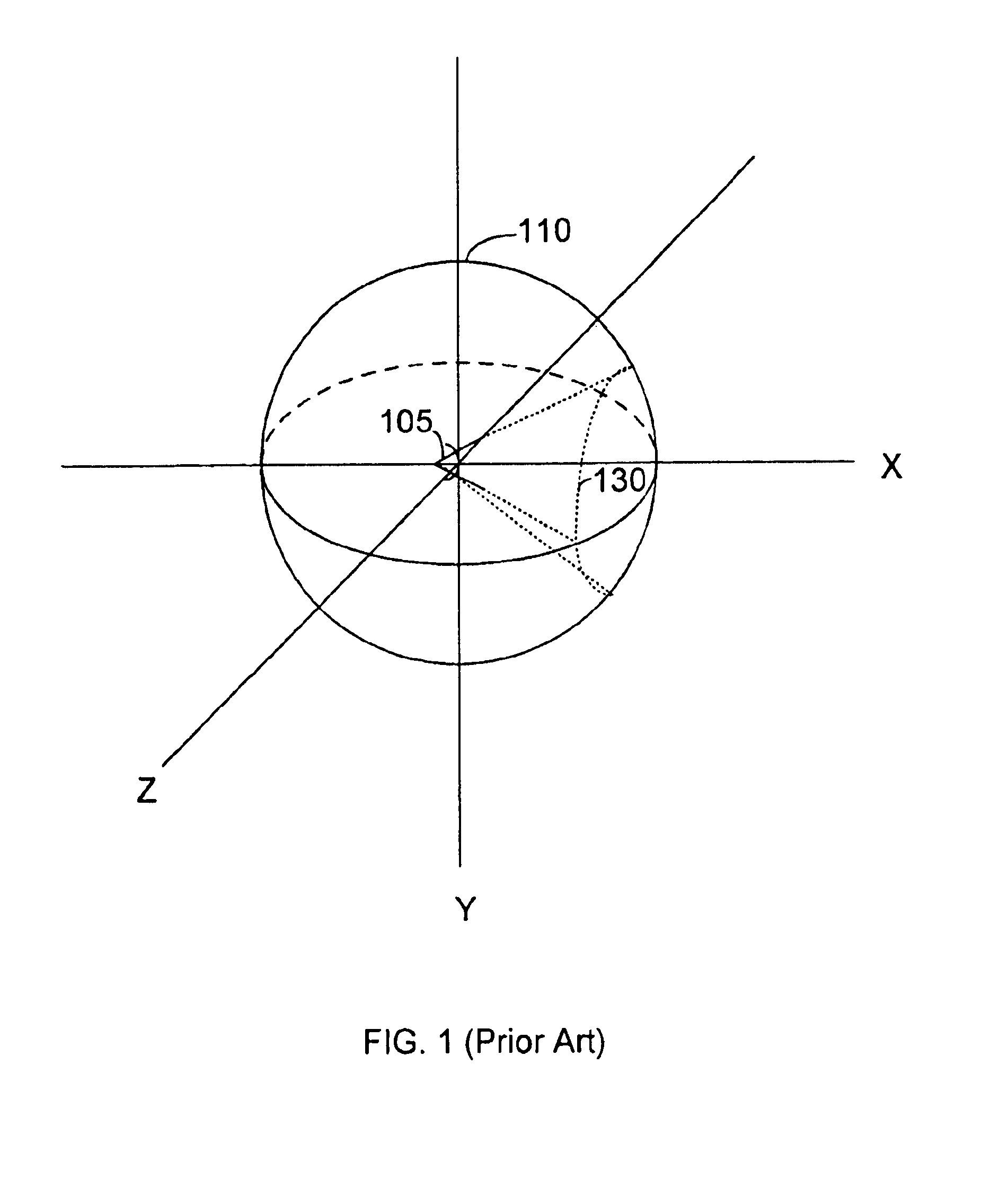

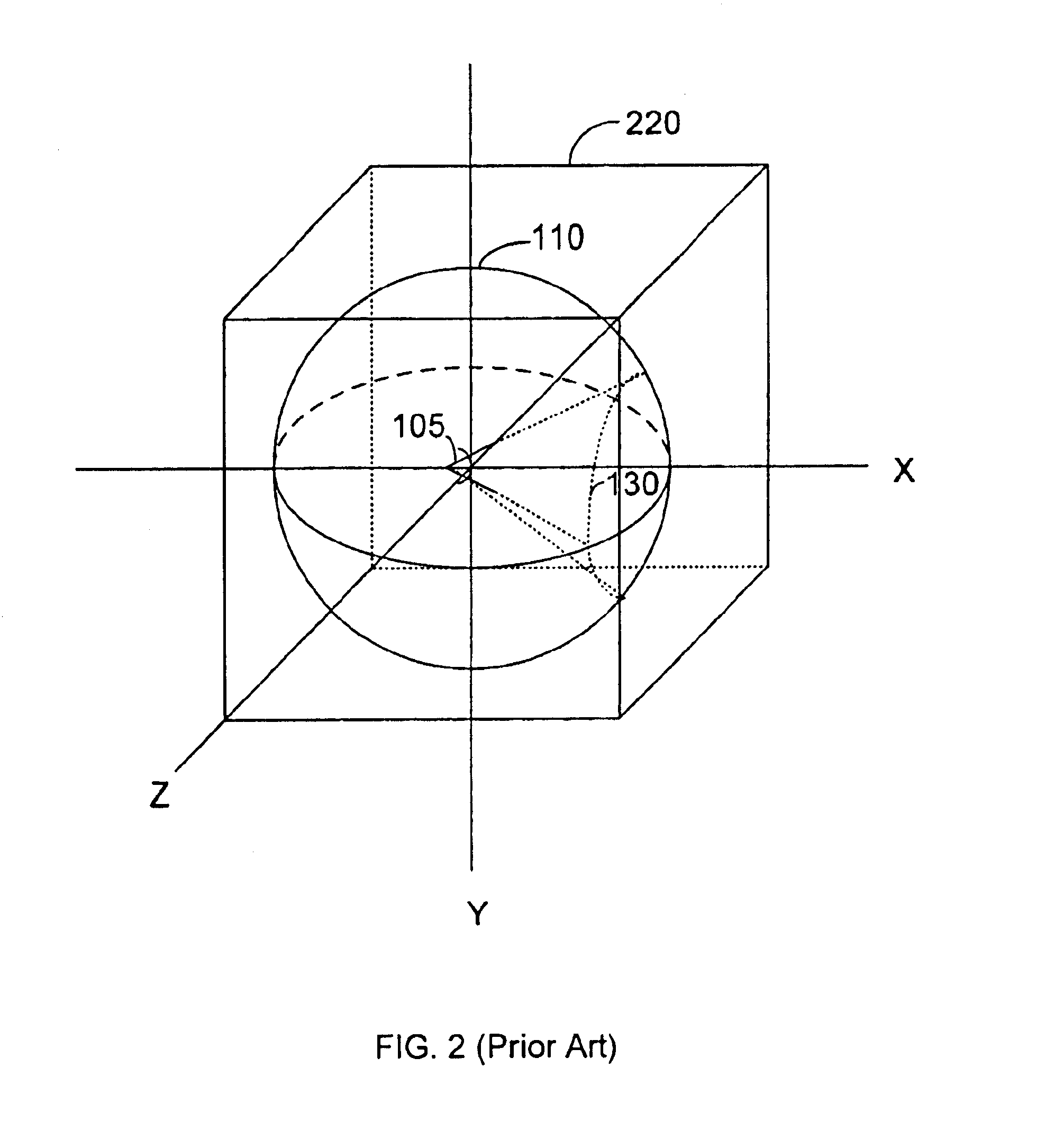

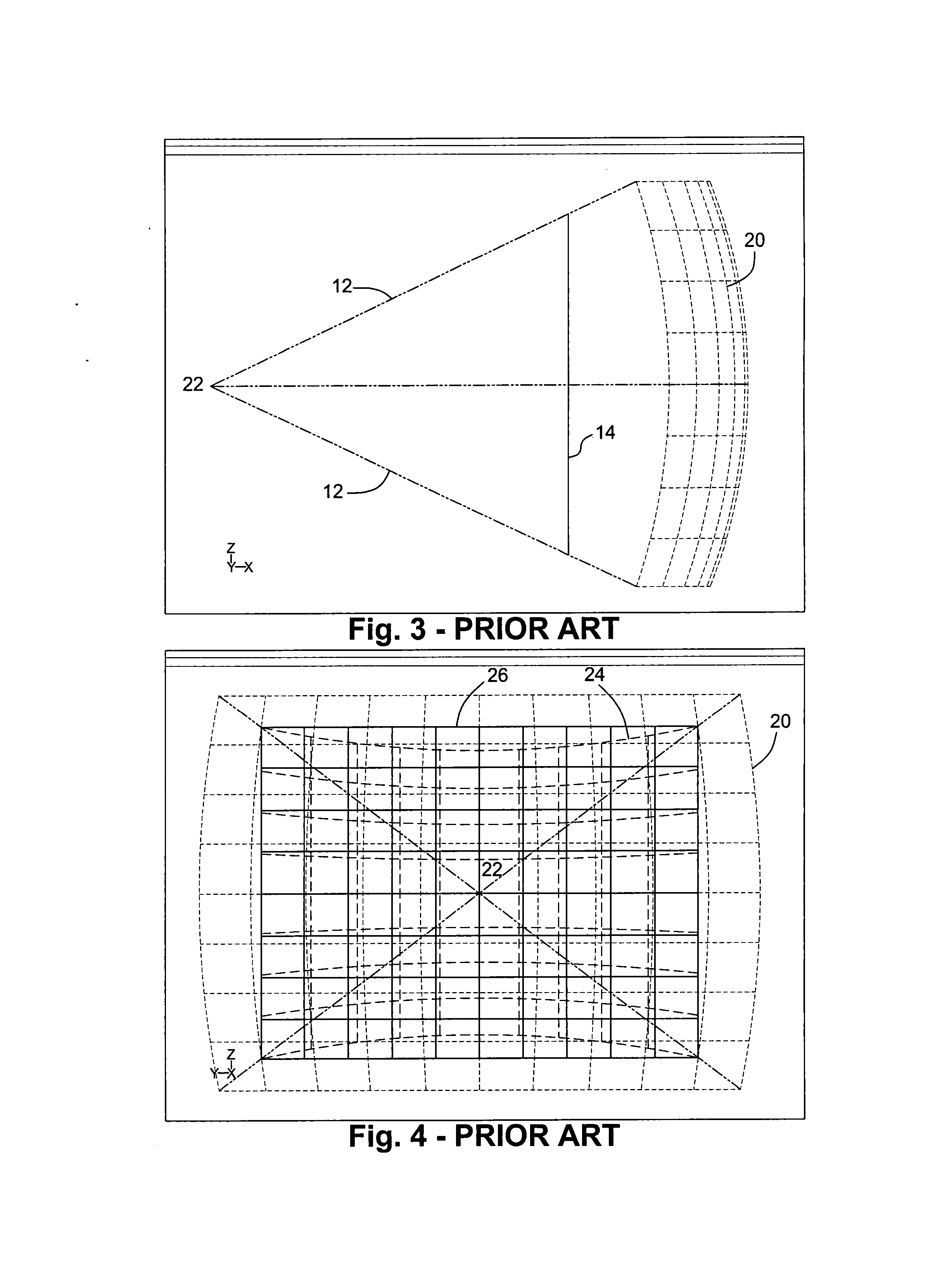

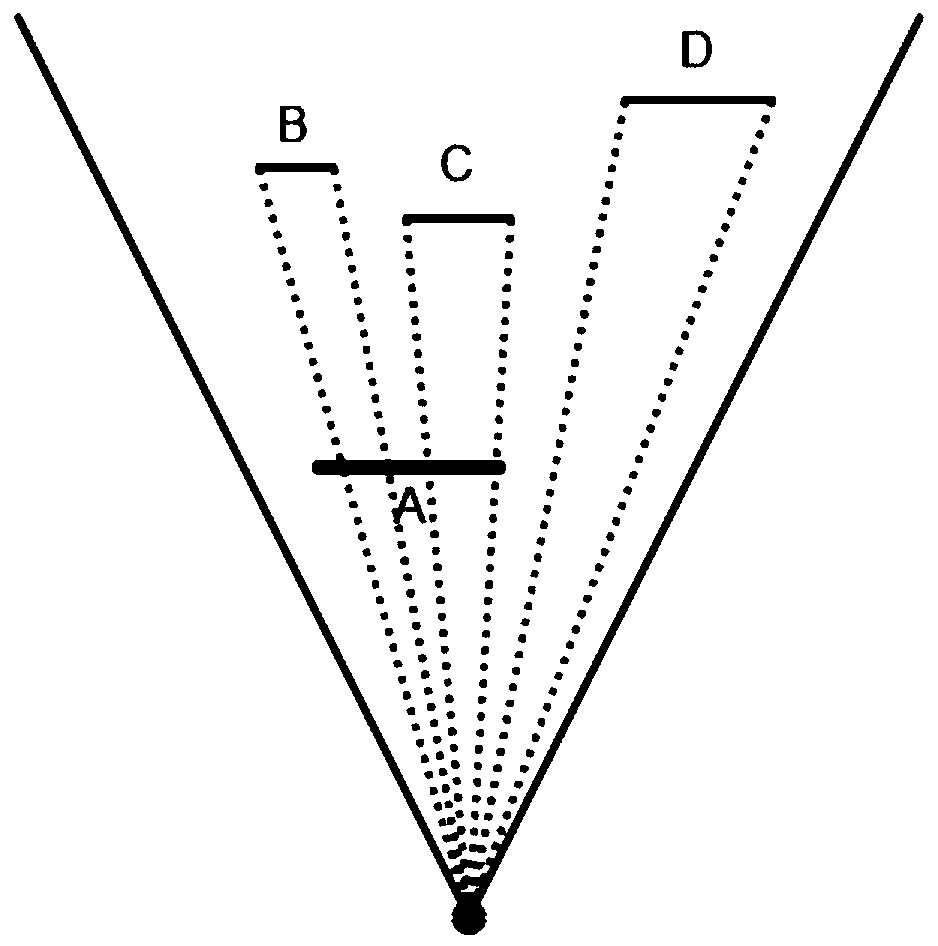

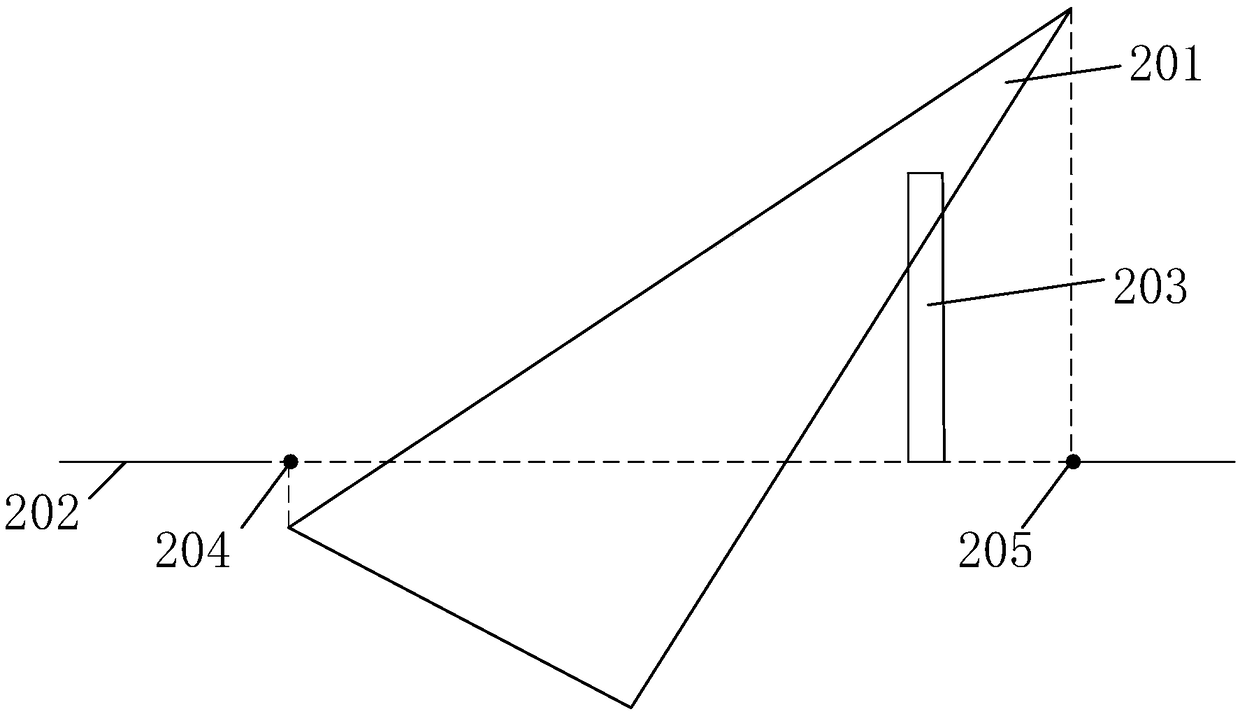

In 3D computer graphics, the view frustum (also called viewing frustum) is the region of space in the modeled world that may appear on the screen; it is the field of view of the notional camera. The view frustum is typically obtained by taking a frustum—that is a truncation with parallel planes—of the pyramid of vision, which is the adaptation of (idealized) cone of vision that a camera or eye would have to the rectangular viewports typically used in computer graphics. Some authors use pyramid of vision as a synonym for view frustum itself, i.e. consider it truncated.

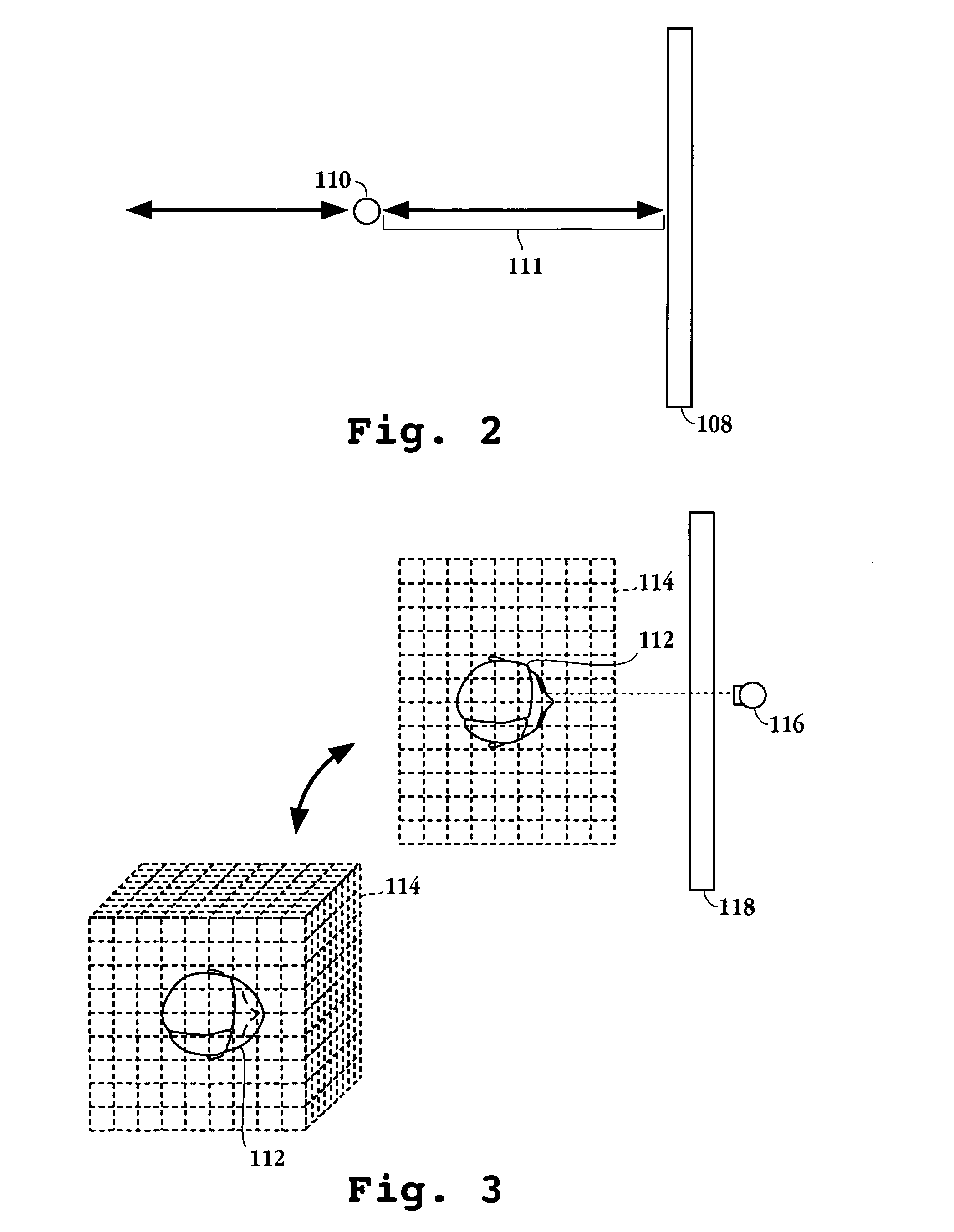

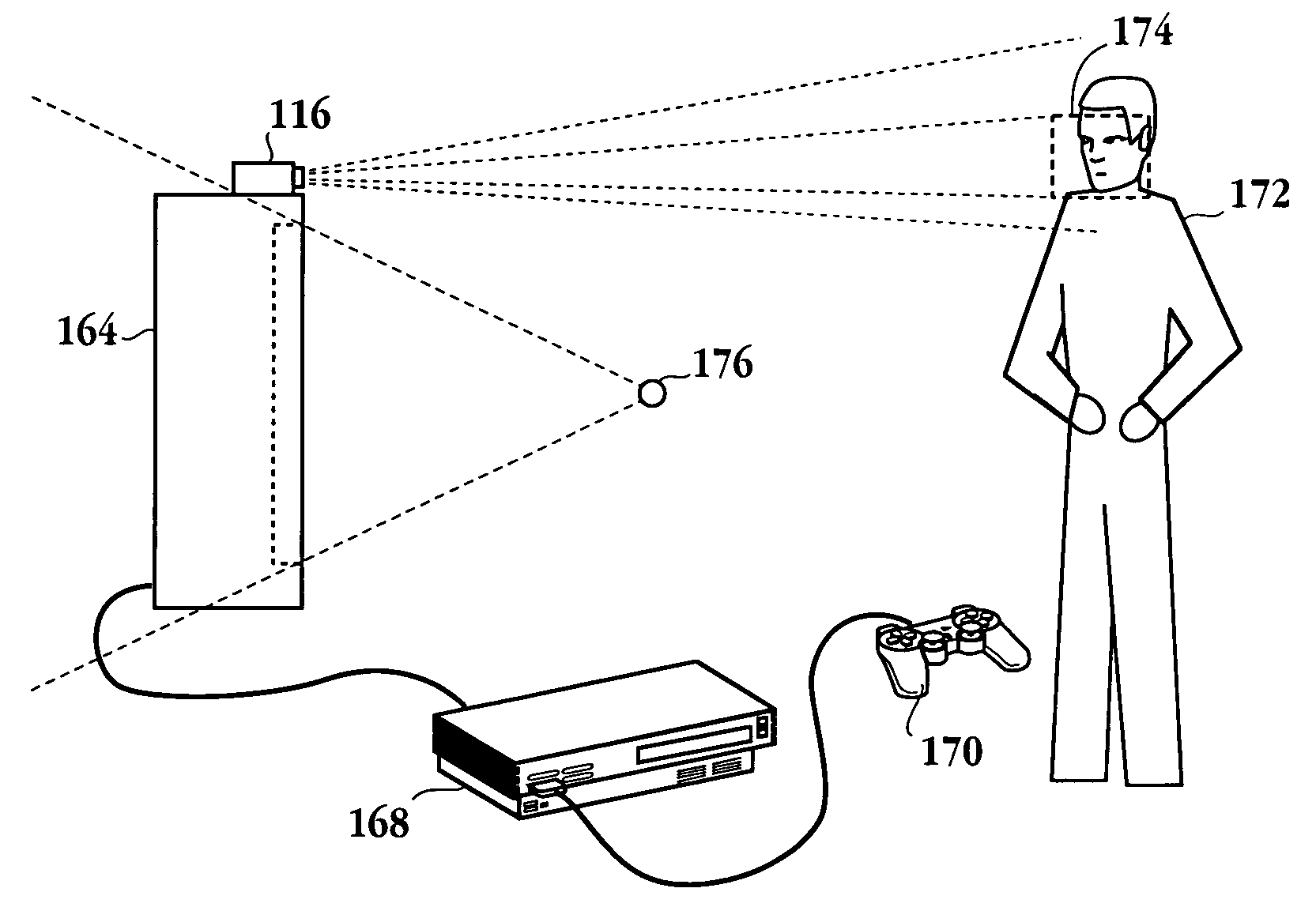

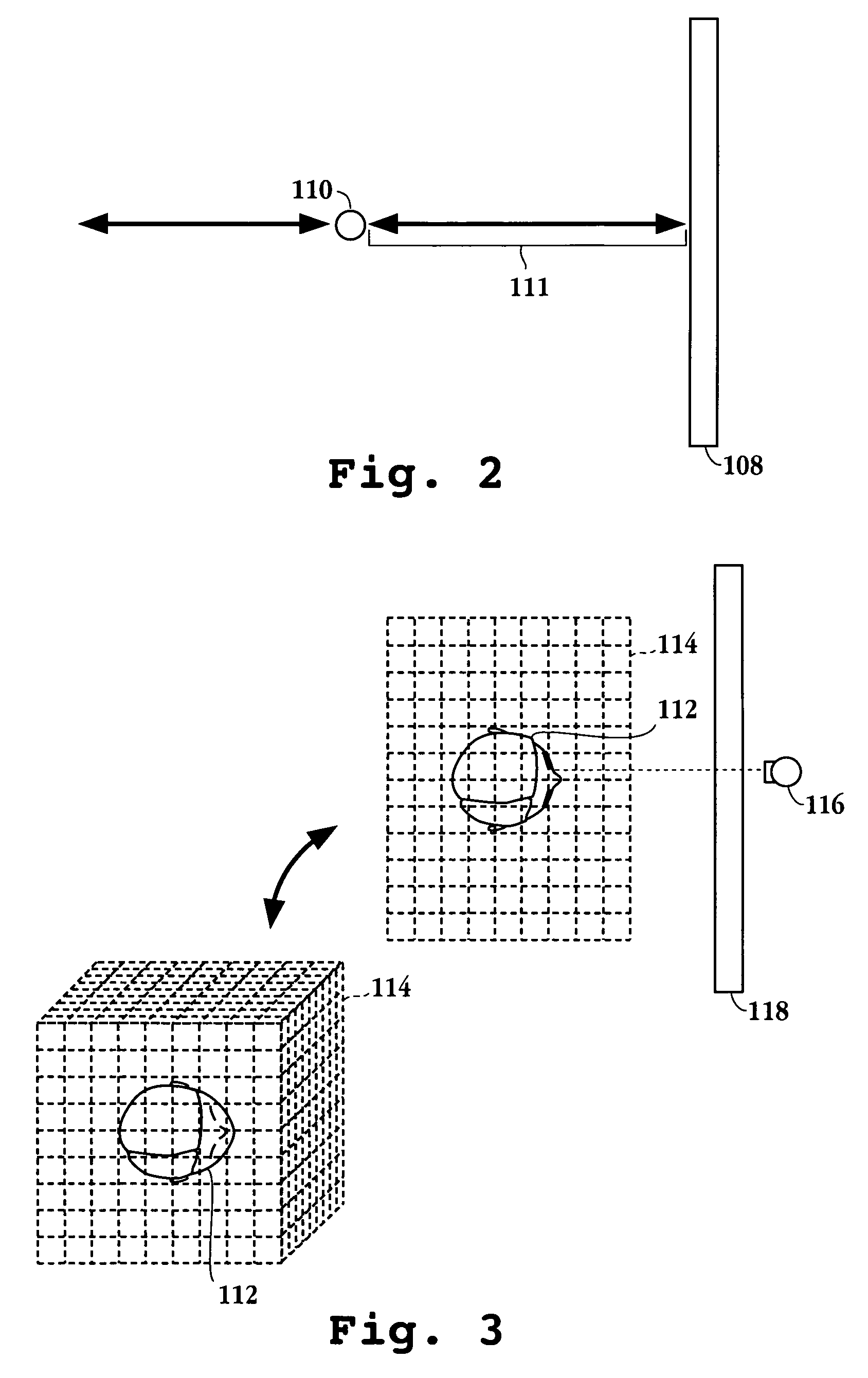

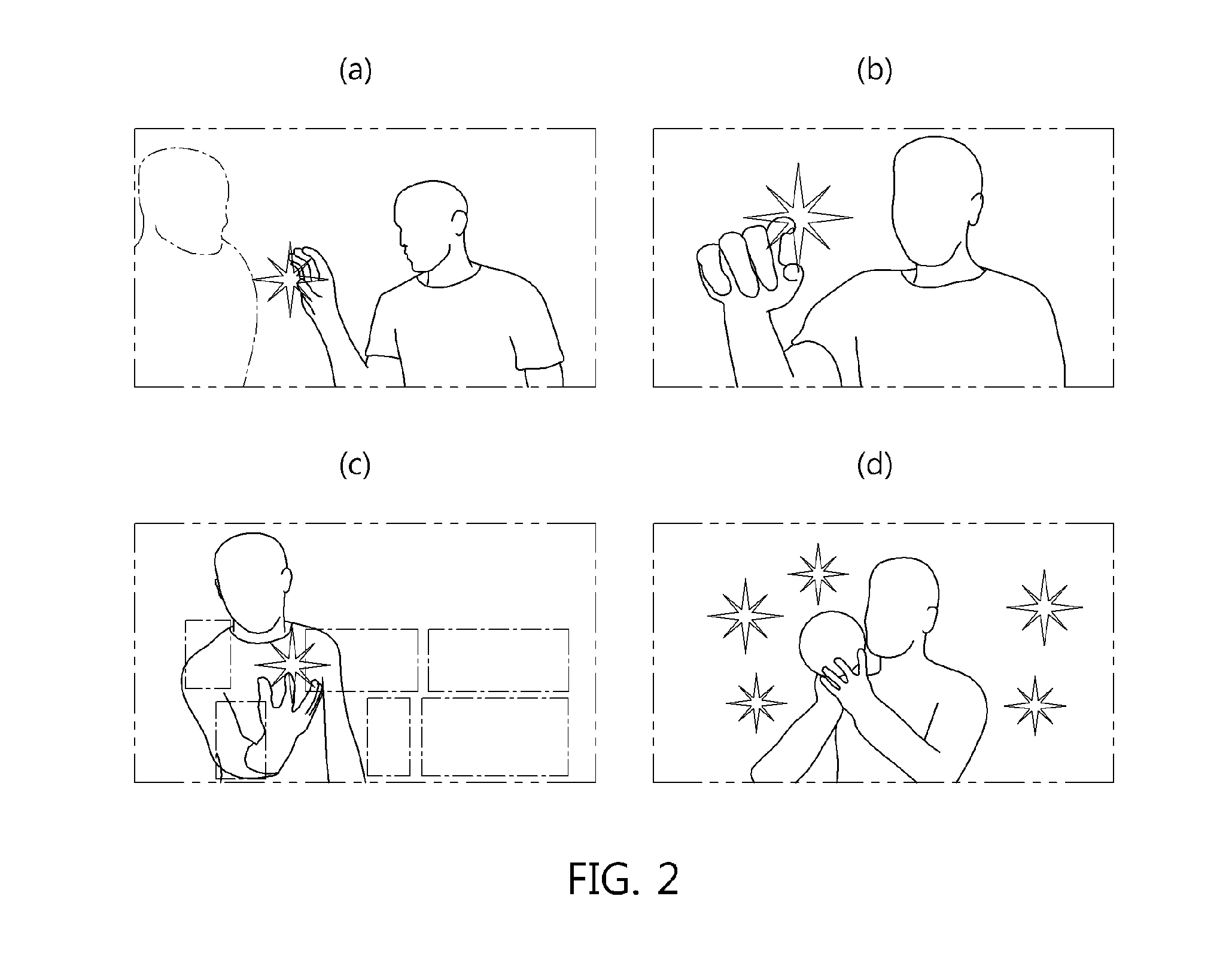

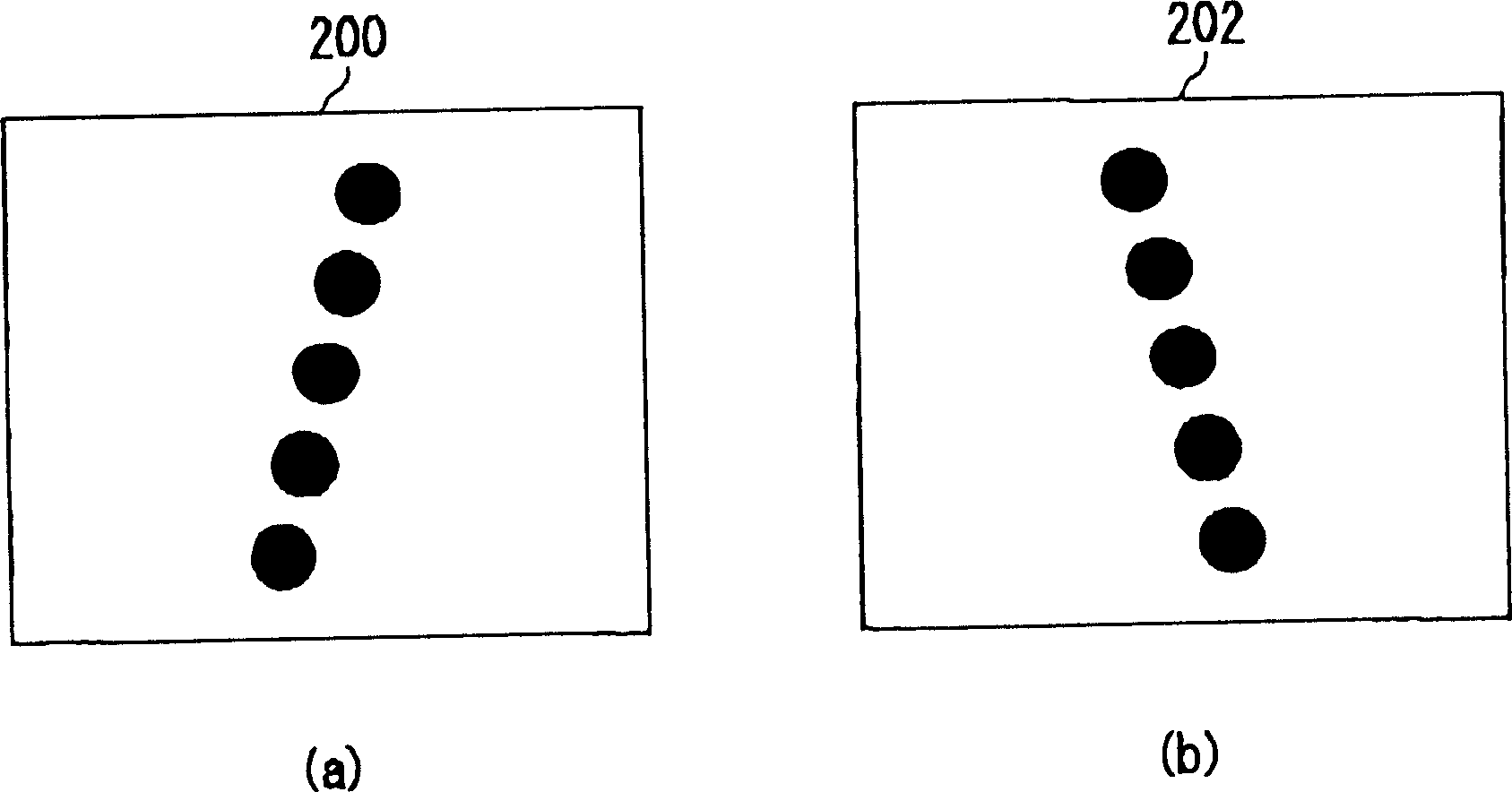

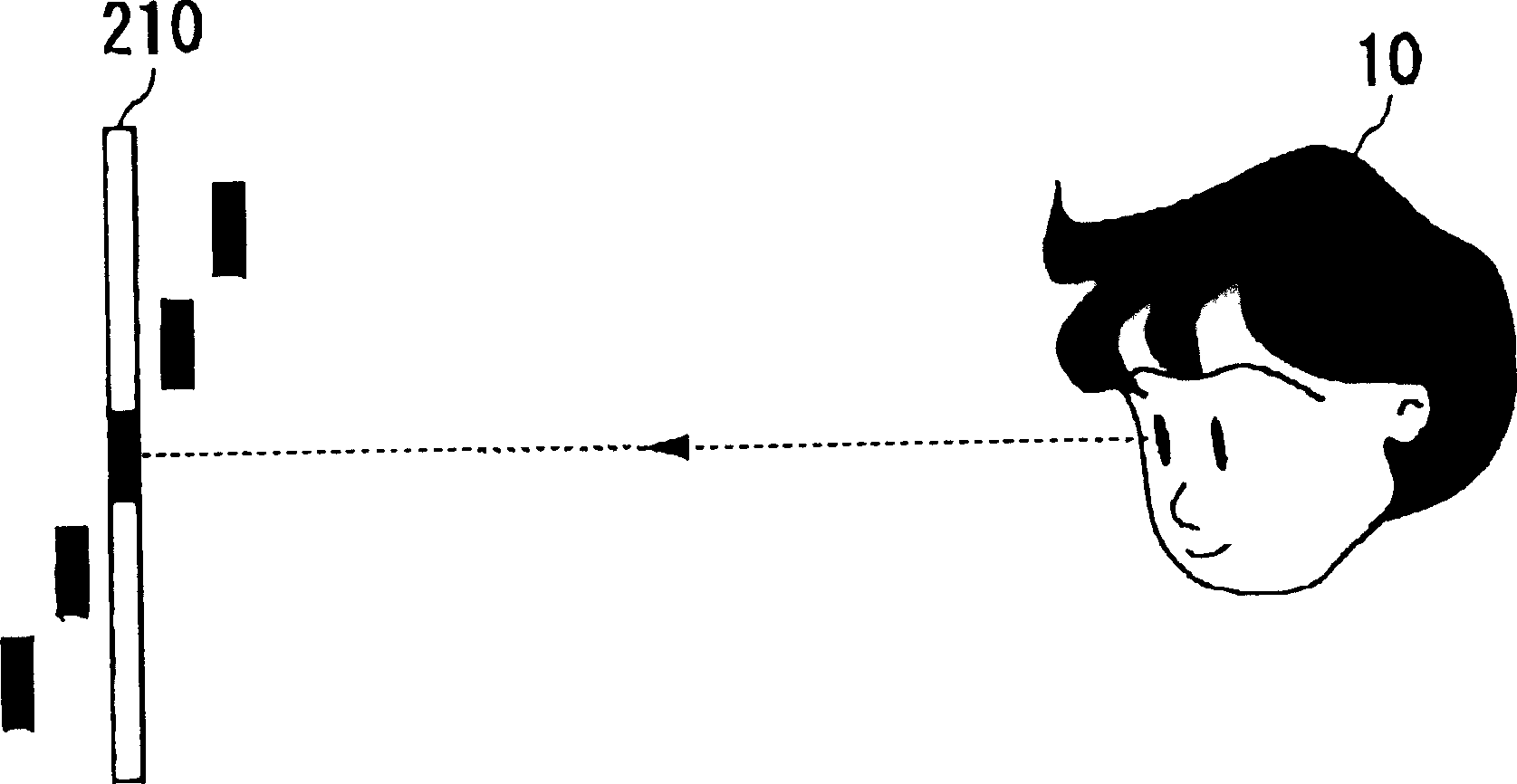

Method and apparatus for adjusting a view of a scene being displayed according to tracked head motion

A method for processing interactive user control with a scene of a video clip is provided. The method initiates with identifying a head of a user that is to interact with the scene of the video clip. Then, the identified head of the user is tracked during display of the video clip, where the tracking enables detection of a change in position of the head of the user. Next, a view-frustum is adjusted in accordance with the change in position of the head of the user. A computer readable media, a computing device and a system for enabling interactive user control for defining a visible volume being displayed are also included.

Owner:SONY COMPUTER ENTERTAINMENT INC

Method and apparatus for adjusting a view of a scene being displayed according to tracked head motion

A method for processing interactive user control with a scene of a video clip is provided. The method initiates with identifying a head of a user that is to interact with the scene of the video clip. Then, the identified head of the user is tracked during display of the video clip, where the tracking enables detection of a change in position of the head of the user. Next, a view-frustum is adjusted in accordance with the change in position of the head of the user. A computer readable media, a computing device and a system for enabling interactive user control for defining a visible volume being displayed are also included.

Owner:SONY COMPUTER ENTERTAINMENT INC

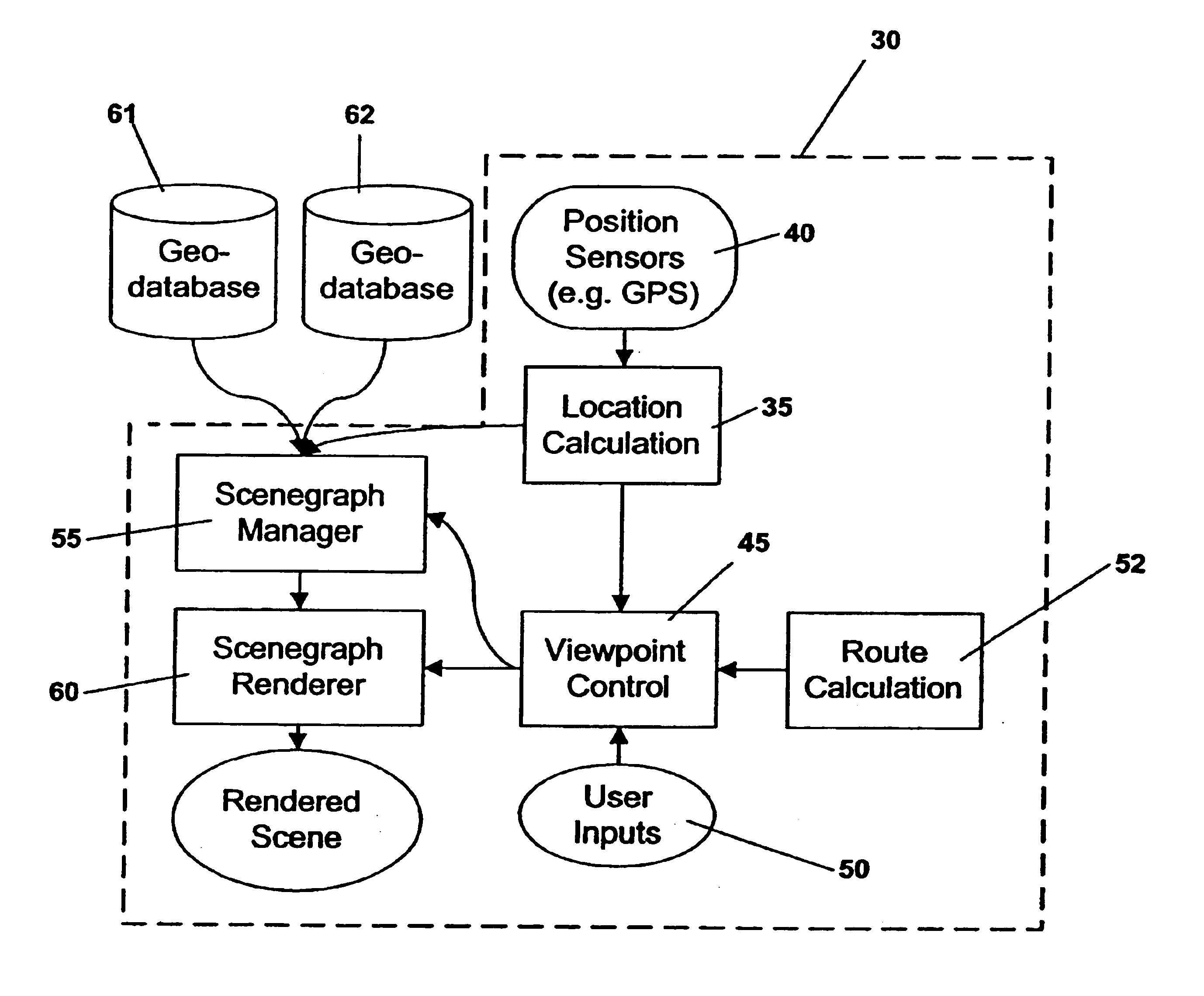

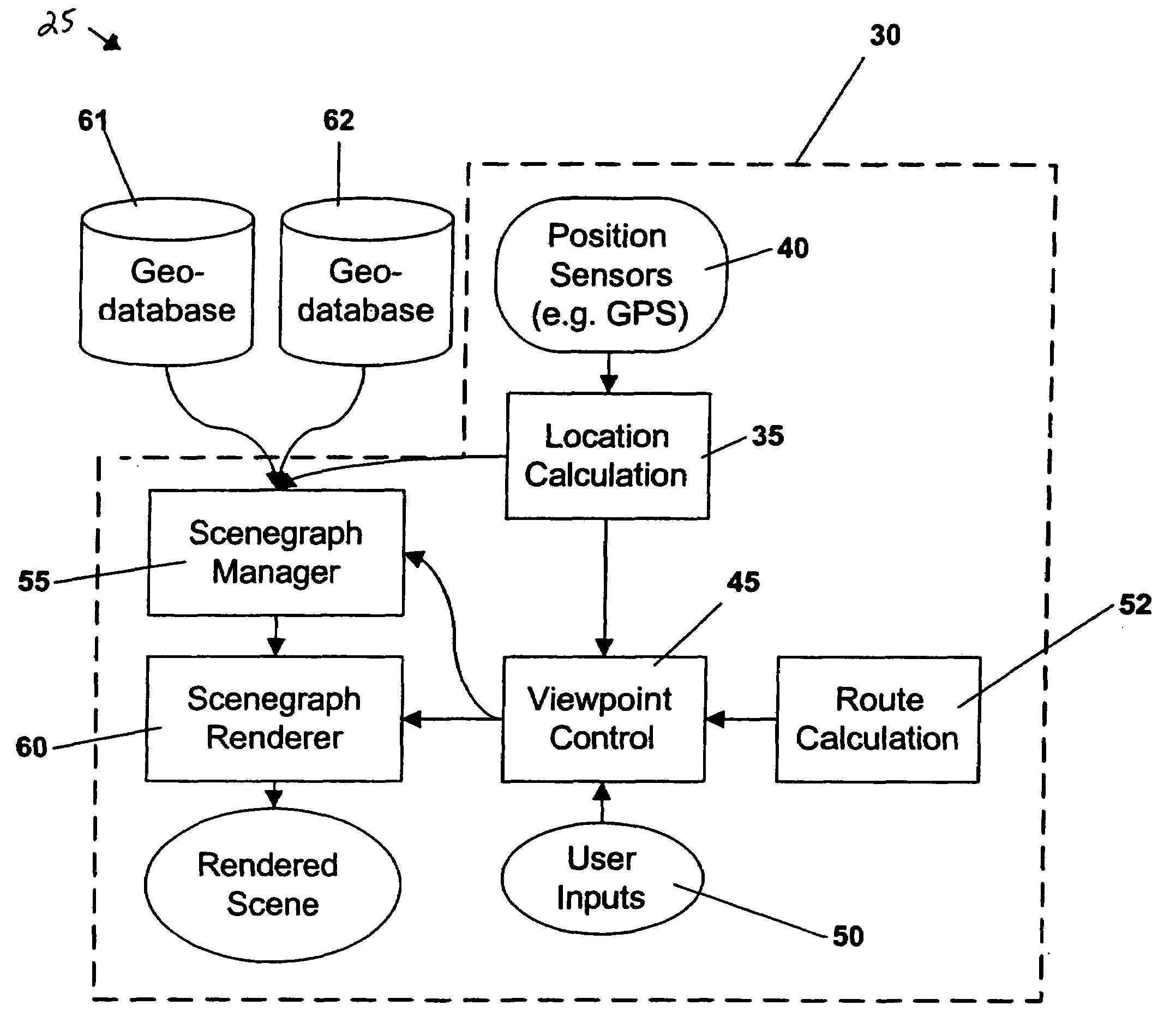

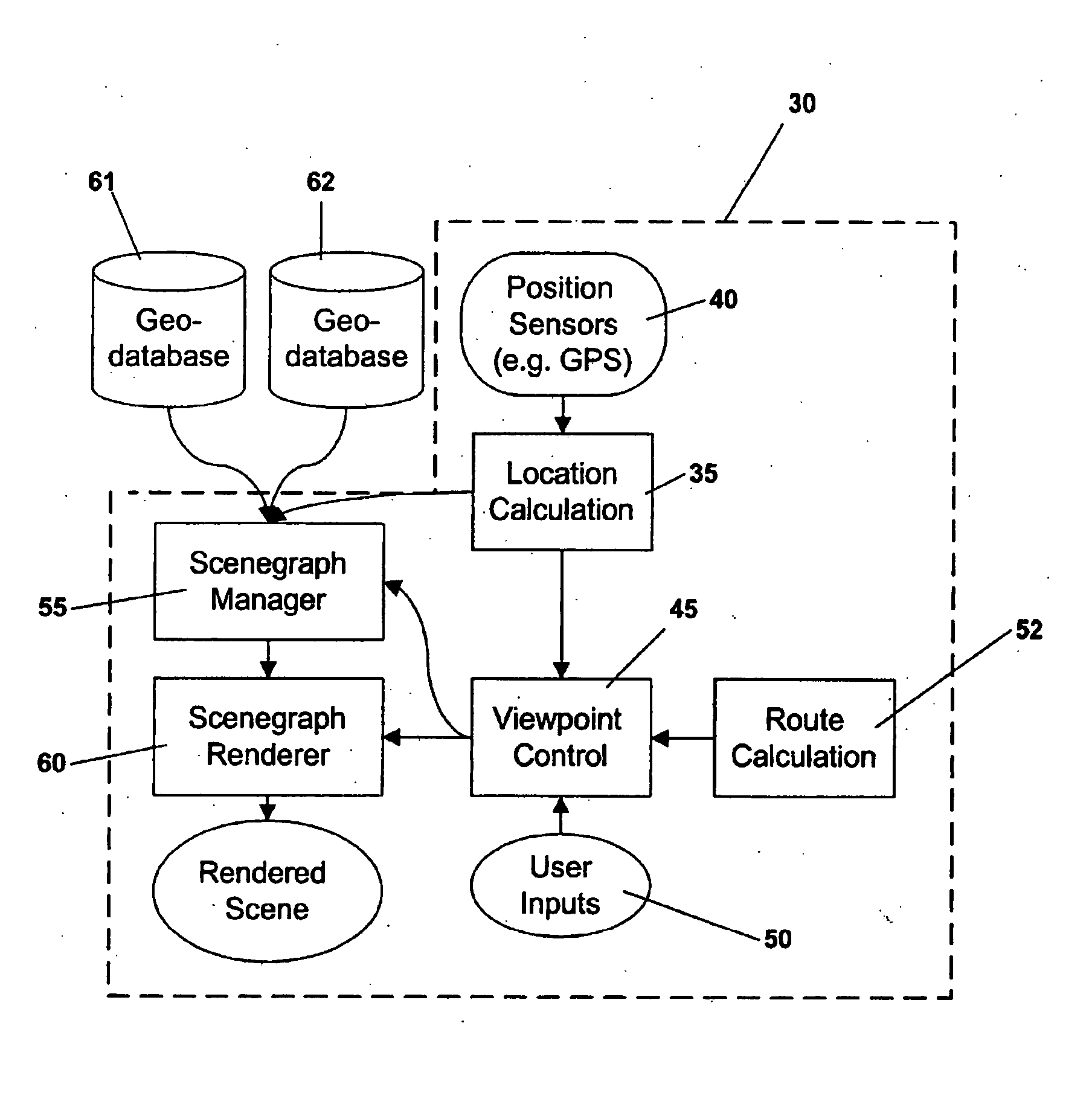

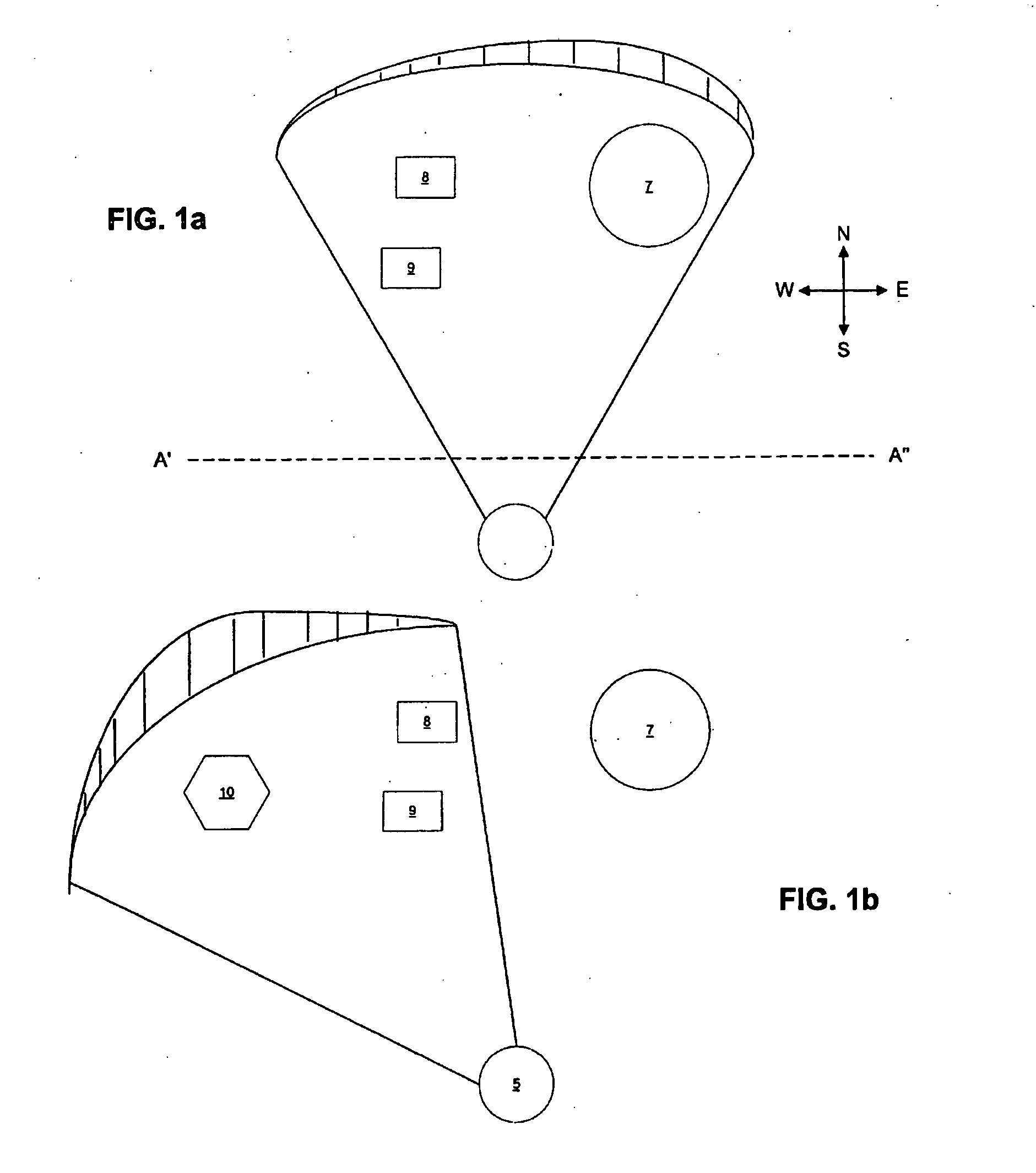

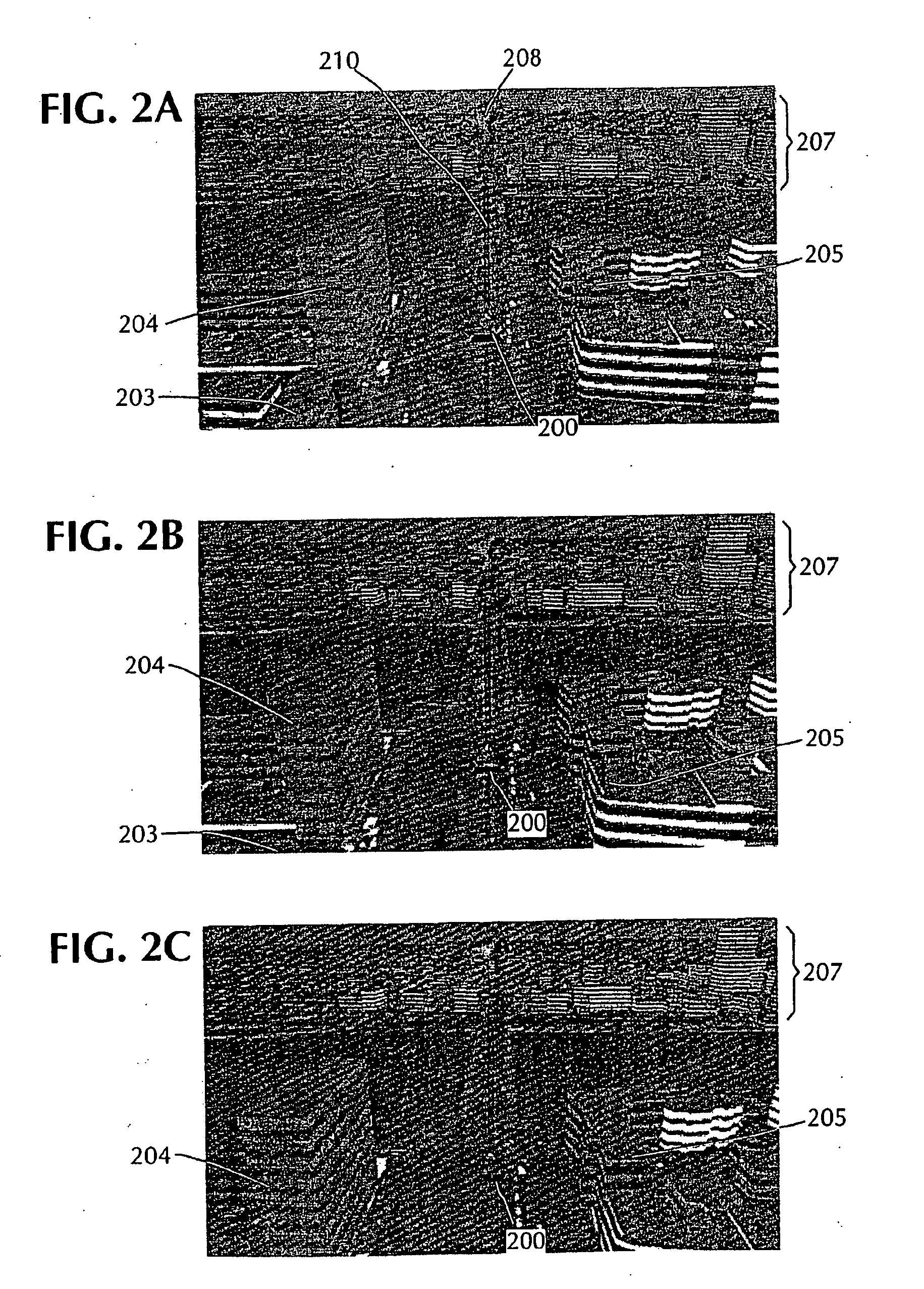

System and method for advanced 3D visualization for mobile navigation units

InactiveUS6885939B2Improve realismReduce unevennessInstruments for road network navigationRoad vehicles traffic controlMobile navigationViewpoints

A system providing three-dimensional visual navigation for a mobile unit includes a location calculation unit for calculating an instantaneous position of the mobile unit, a viewpoint control unit for determining a viewing frustum from the instantaneous position, a scenegraph manager in communication with at least one geo-database to obtain geographic object data associated with the viewing frustum and generating a scenegraph organizing the geographic object data, and a scenegraph renderer which graphically renders the scenegraph in real time. To enhance depiction, a method for blending images of different resolutions in the scenegraph reduces abrupt changes as the mobile unit moves relative to the depicted geographic objects. Data structures for storage and run-time access of information regarding the geographic object data permit on-demand loading of the data based on the viewing frustum and allow the navigational system to dynamically load, on-demand, only those objects that are visible to the user.

Owner:ROBERT BOSCH GMBH

System and method of reducing transmission bandwidth required for visibility-event streaming of interactive and non-interactive content

InactiveUS20120229445A1High precisionReduce computing costSpecial data processing applicationsDetails involving image processing hardwareVisibilityInteractive content

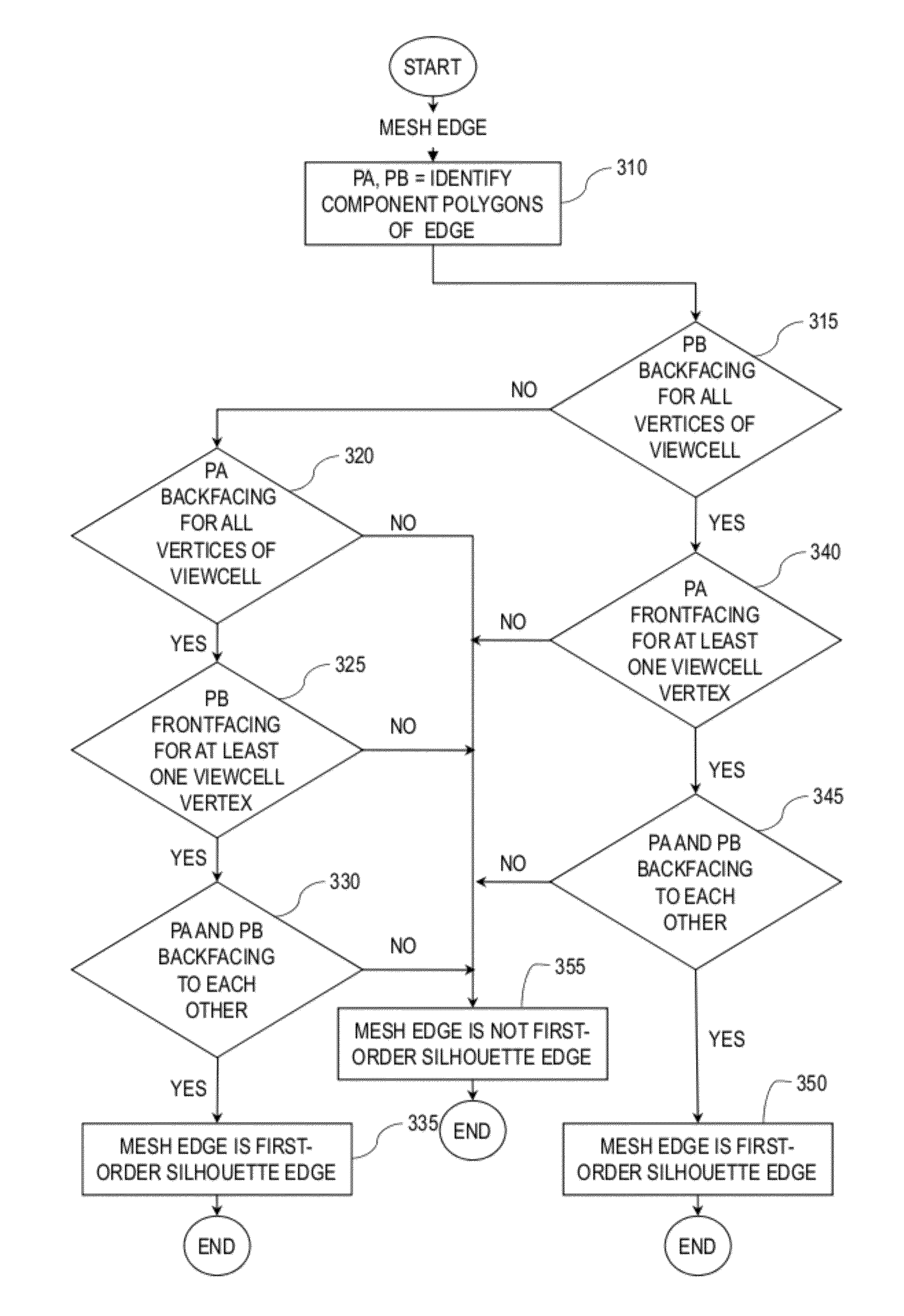

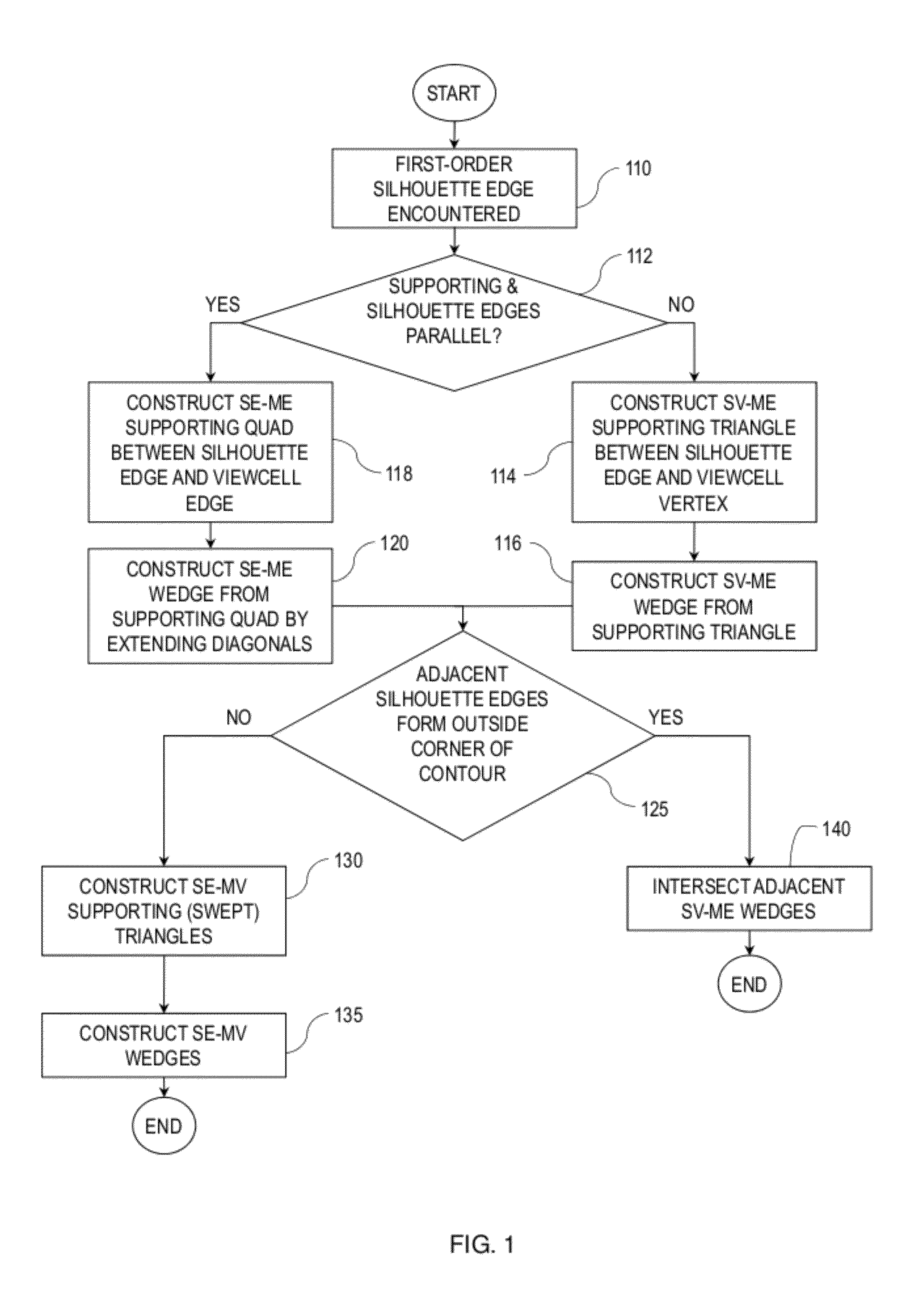

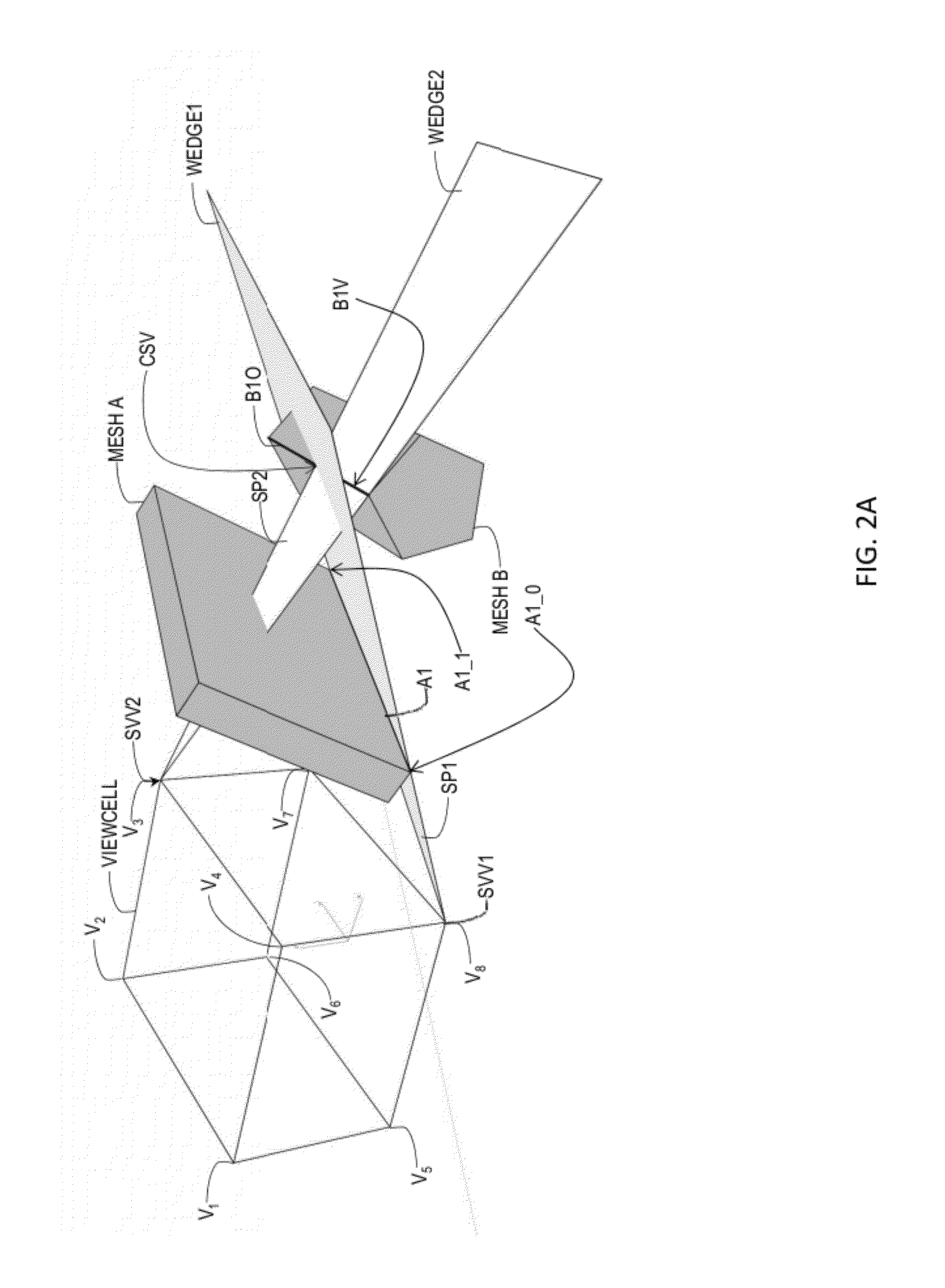

In an exemplary embodiment, a computer-implemented method determines a set of mesh polygons or fragments of the mesh polygons visible from a navigation cell. The method includes determining a composite view frustum containing predetermined view frusta and determining mesh polygons contained in the composite view frustum. The method includes determining at least one supporting polygon between the navigation cell and the contained mesh polygons. The method further includes constructing at least one wedge from the at least one supporting polygon, the at least one wedge extending away from the navigation cell beyond at least the contained mesh polygons. The method includes determining one or more intersections of the at least one wedge with the contained mesh polygons. The method also includes determining the set of the contained mesh polygons or fragments of the contained mesh polygons visible from the navigation cell using the determined one or more intersections.

Owner:JENKINS BARRY L

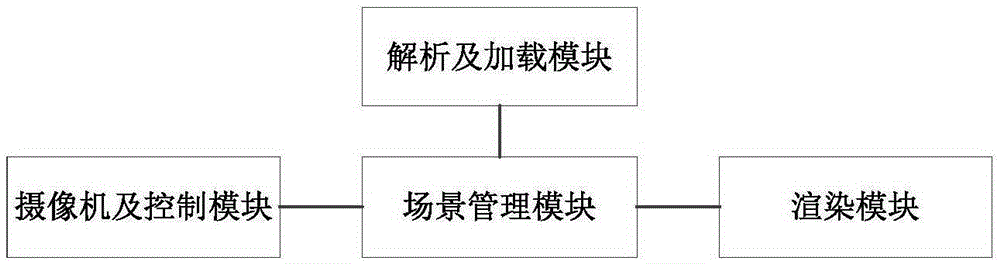

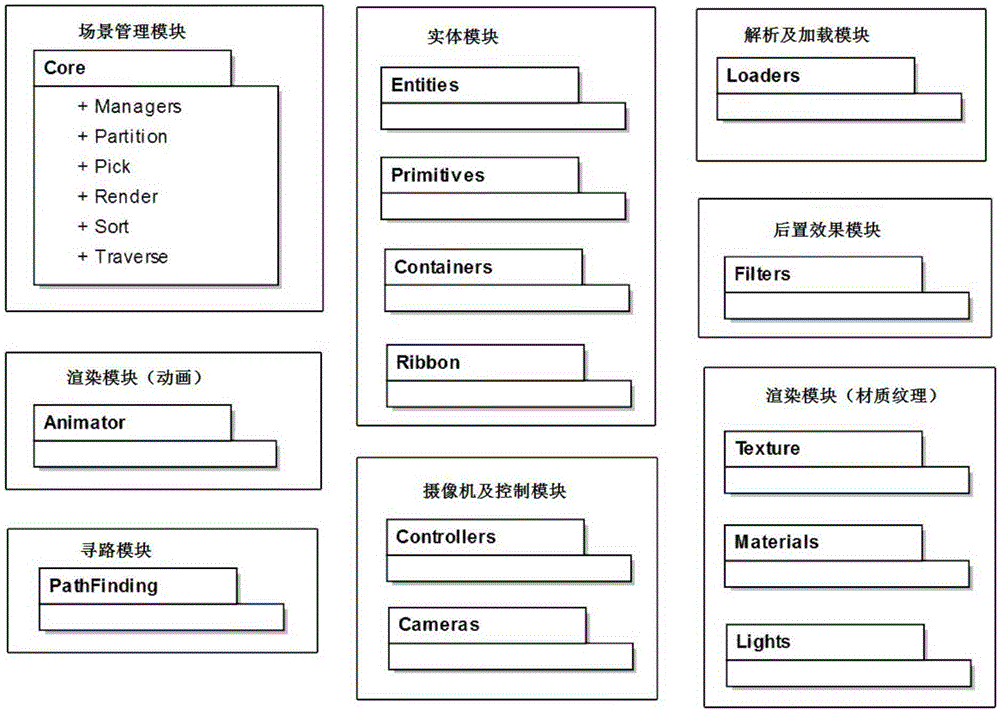

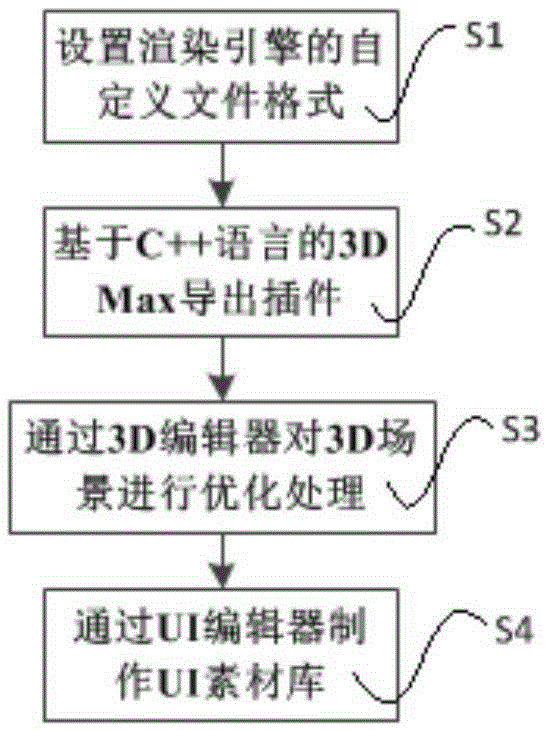

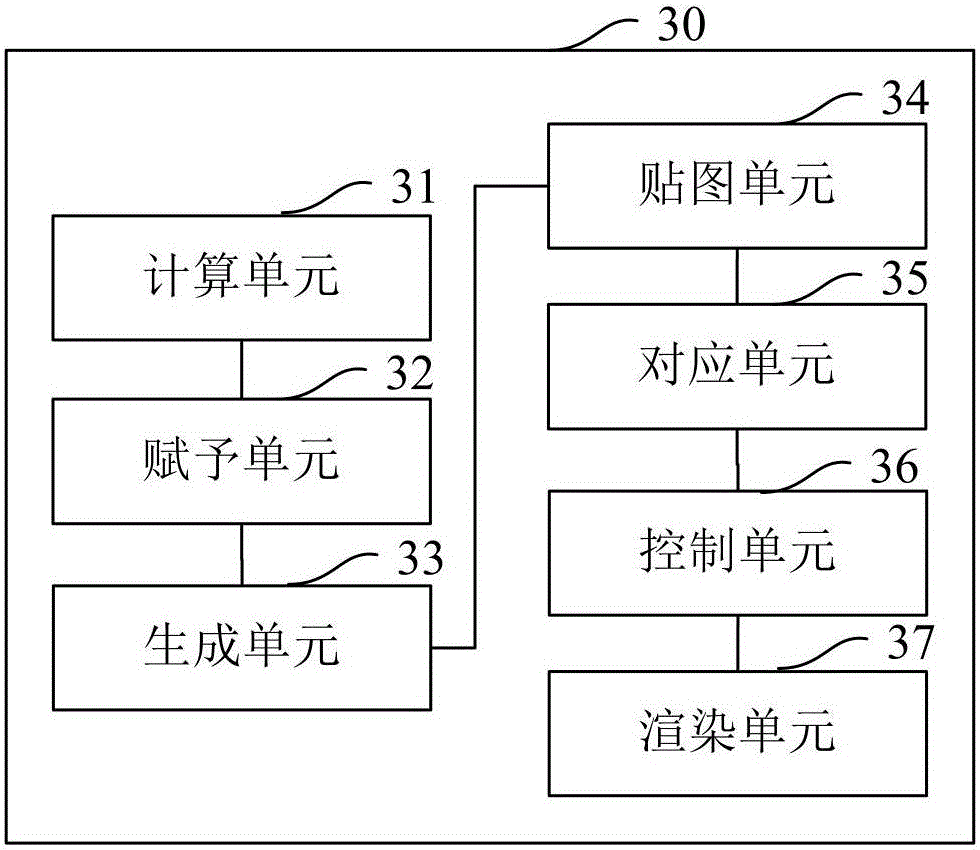

Rendering engine, implementation method and producing tools for 3D web game

ActiveCN105354872AShorten the development cycleImprove good performanceAnimationViewing frustumParsing

The present invention provides a rendering engine, an implementation method and producing tools for a 3D web game. The rendering engine comprises: a parsing and loading module, which is used for reading, decompressing, and parsing a self-defined file format that is finally output by a workflow, to obtain information required by specific rendering, so as to implement non-blocked parsing; a scene management module, which is used for implementing view frustum culling, traversing and classifying objects in a scene, dispatching pickup and mouse events, and managing a rendering process; a camera and control module, which is used for scene roaming and camera animation; and a rendering module, which is used for rendering all information required by an object, which comprises renderings for geometry, material quality, animation and a transformation matrix. The producing tools comprise an export plug-in, a self-defined file format, a 3D editor, a special effect producing tool and a UI producing tool. The rendering engine, implementation method and producing tools provided by the present invention have good cross-platform performance and scalability, and can greatly shorten a game development period and lower a development threshold.

Owner:深圳墨麟科技股份有限公司

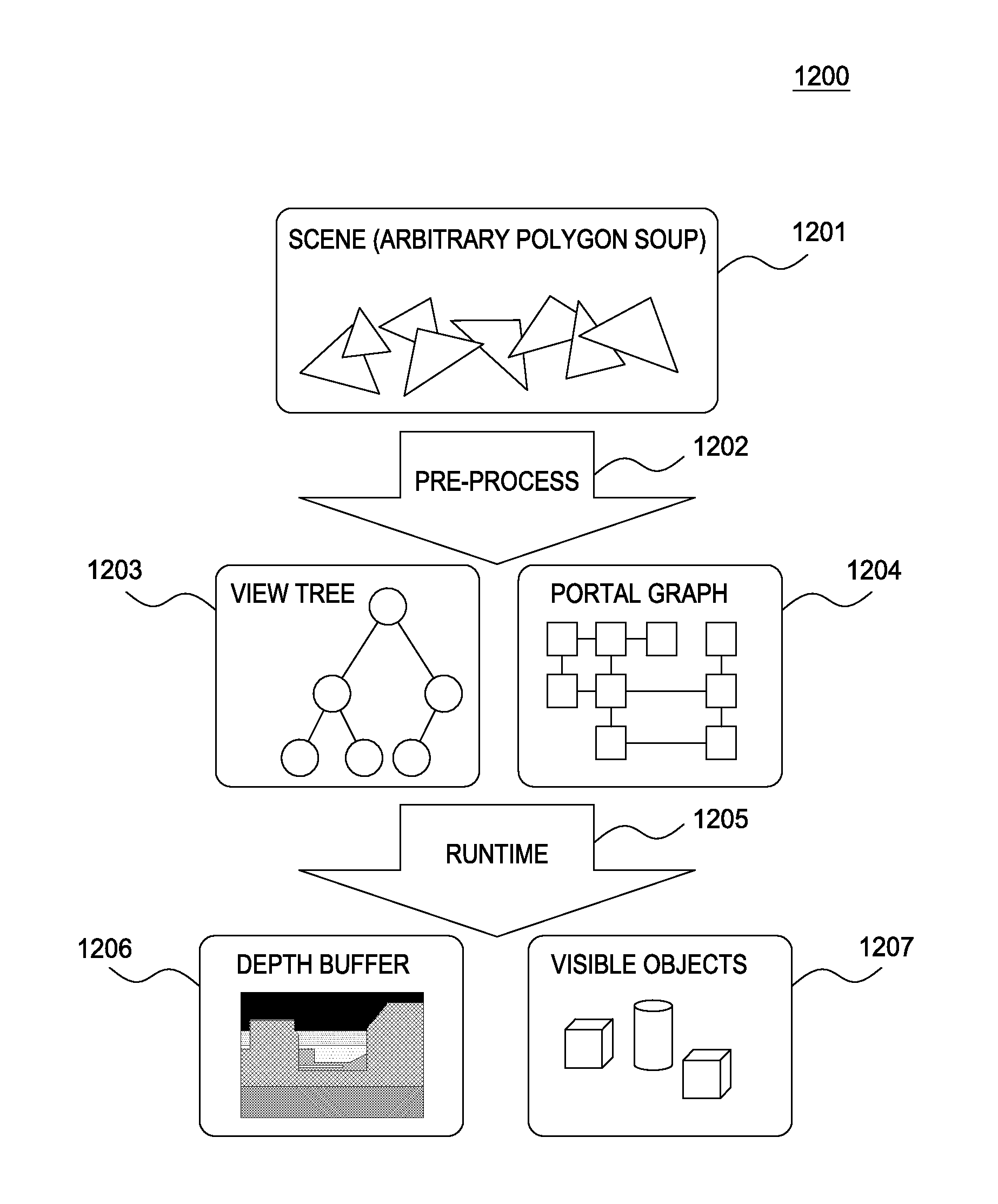

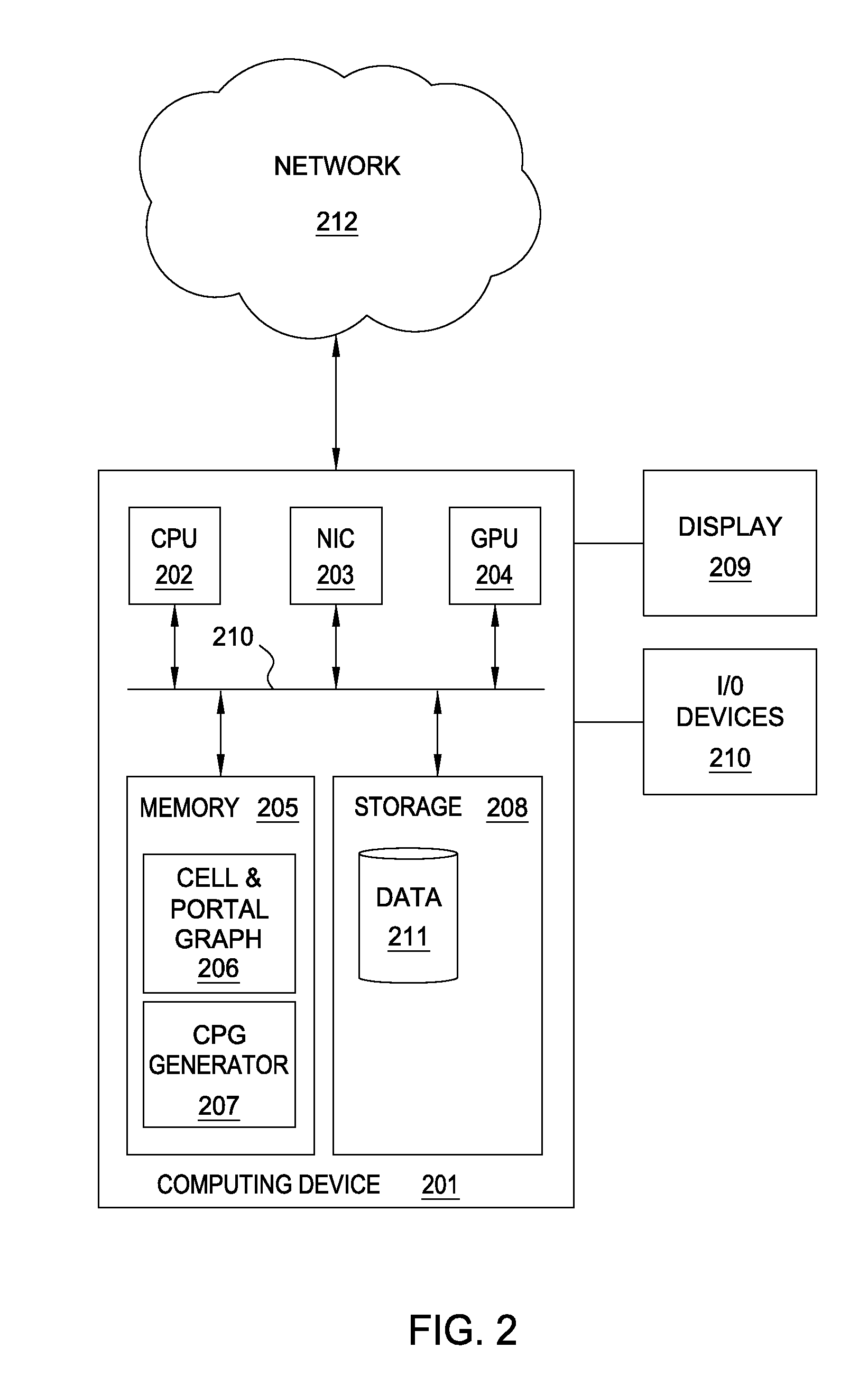

Conservative cell and portal graph generation

Embodiments presented herein provide techniques for creating and simplifying a cell and portal graph. The simplified cell and portal graph may be used to make a conservative determination of whether an element of geometry is visible for a given view frustum (and therefore needs to be rendered). That is, the simplified cell and portal graph retains the encoded visibility for given set of geometry. The simplified cell and portal graph provides a “conservative” determination of visibility as it may indicate that some objects are visible that are not (resulting in unneeded rendering), but not the other way around. Further, this approach allows cell and portal graphs to be generated dynamically, allowing the cell and portal graphs to be used for scenes where the geometry can change (e.g., as 3D world of a video game).

Owner:A9 COM INC

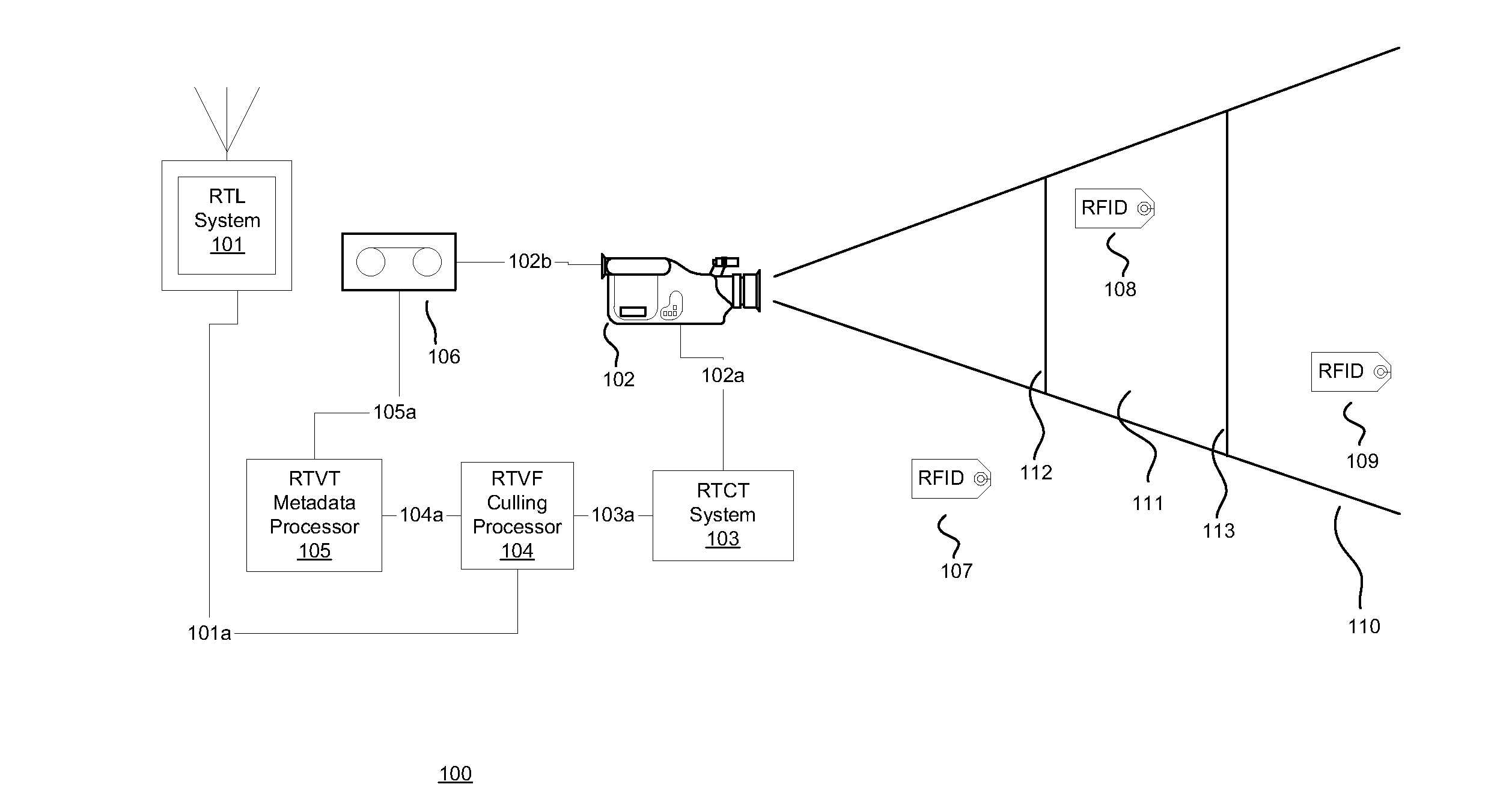

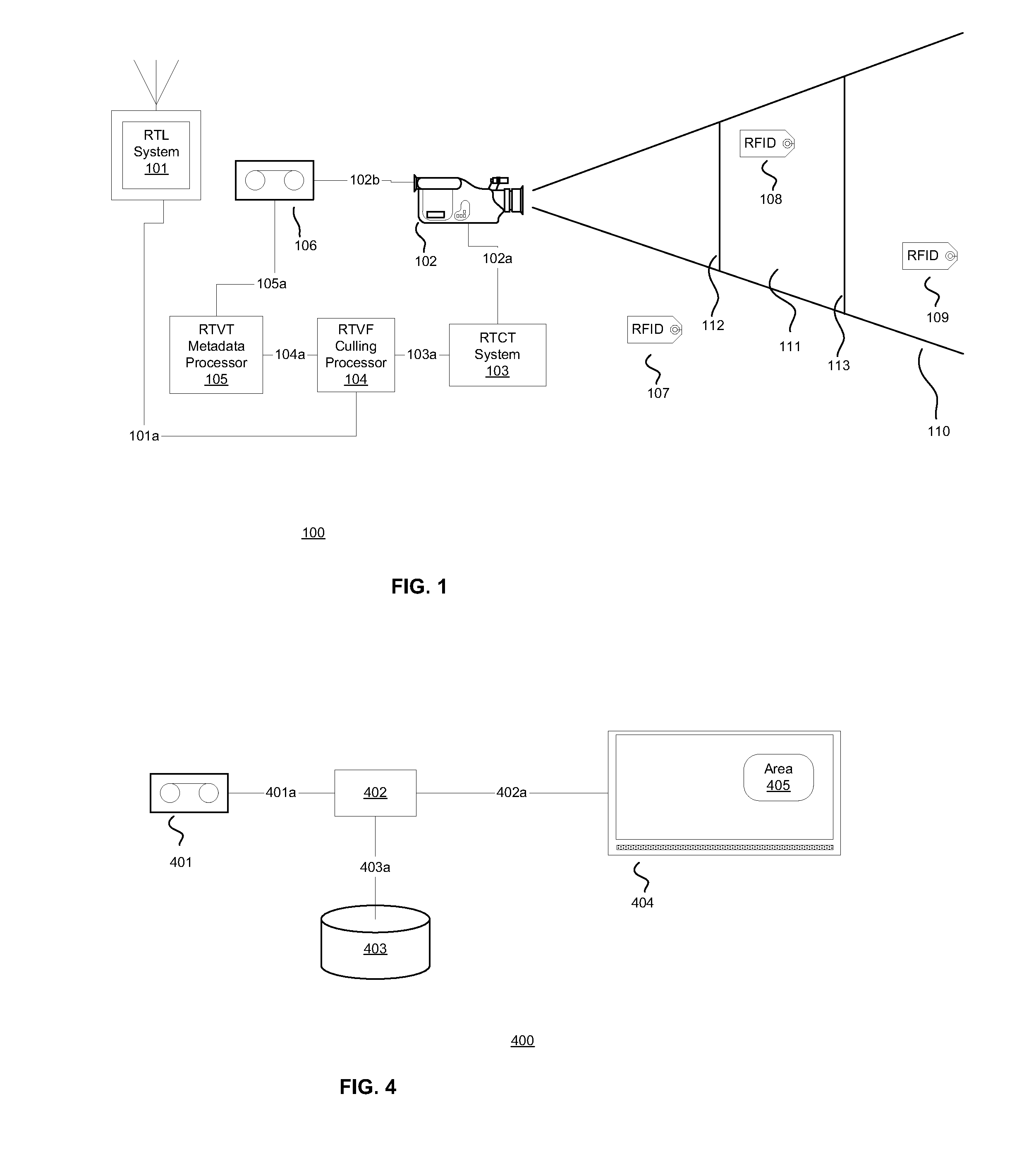

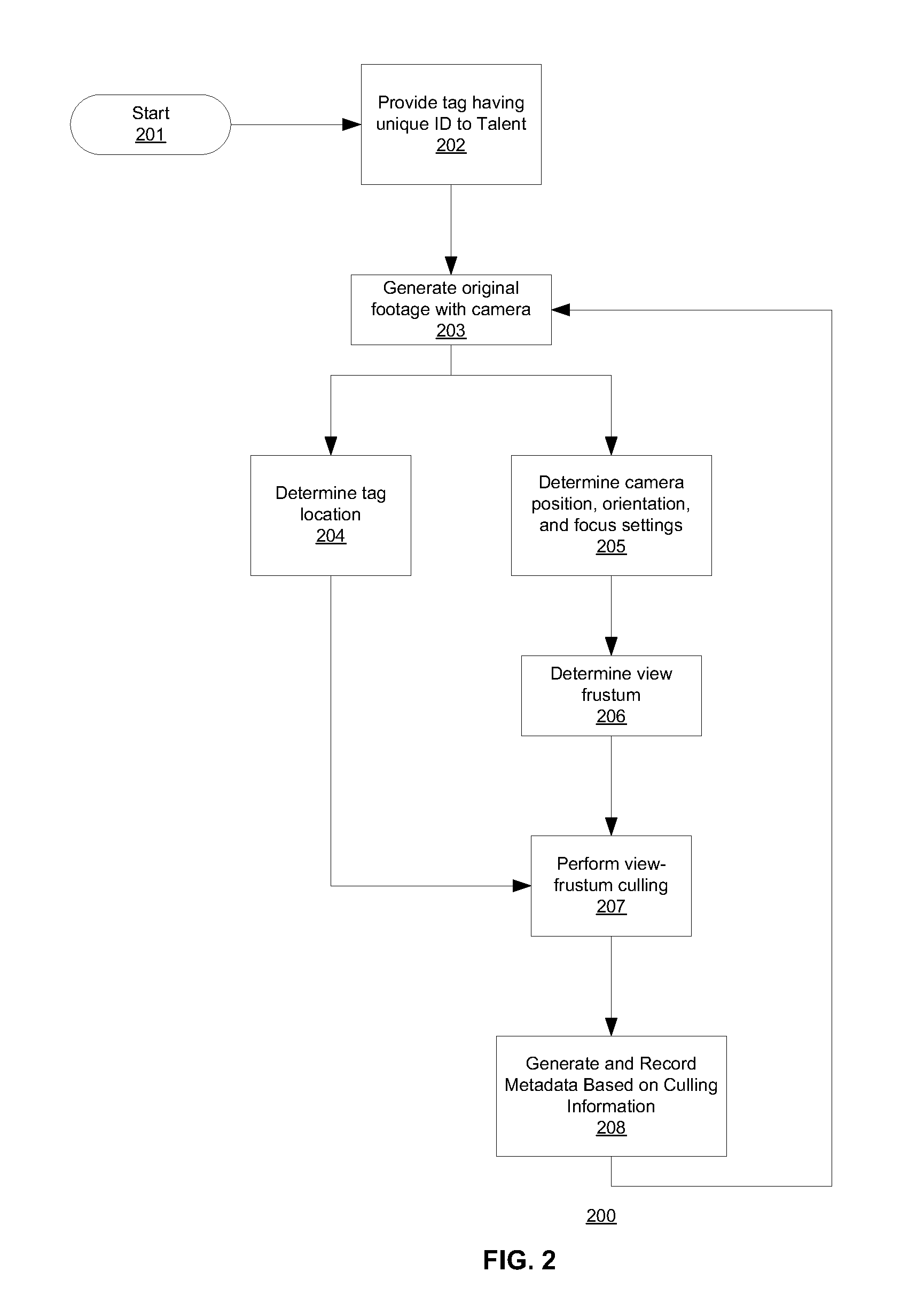

Real-time visible-talent tracking system

ActiveUS20120195574A1Television system detailsRecording carrier detailsPattern recognitionComputer graphics (images)

In one embodiment, a movie set includes a motion-picture camera and a visible-talent tracking system having several elements. Based on the camera's characteristics, items in a portion of the movie set called the view frustum will appear in focus in the film. The camera and a camera-tracking system provide camera-location, orientation, and settings information to a culling processor. The culling processor delineates the location and dimensions of the view frustum based on the received camera information. Wireless tags are attached to talent on set. A tag-locating system tracks the real-time respective locations of the wireless tags and provides real-time spatial information regarding the tags to the culling processor, which determines which tags, if any, are considered to be within the view frustum, and provides information associated with the intra-frustum tags to a track recorder for recording along with the corresponding film frames. That information is variously used after editing.

Owner:HOME BOX OFFICE INC

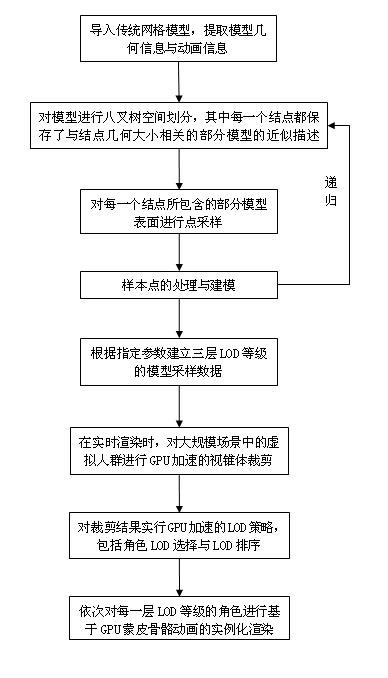

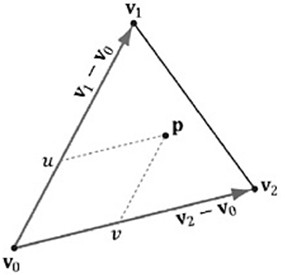

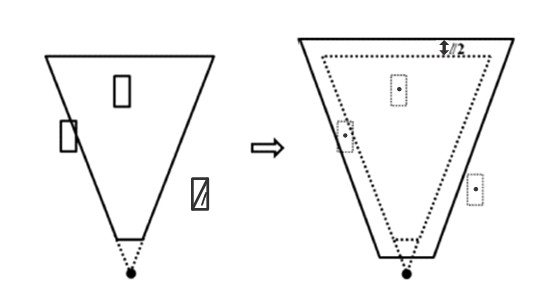

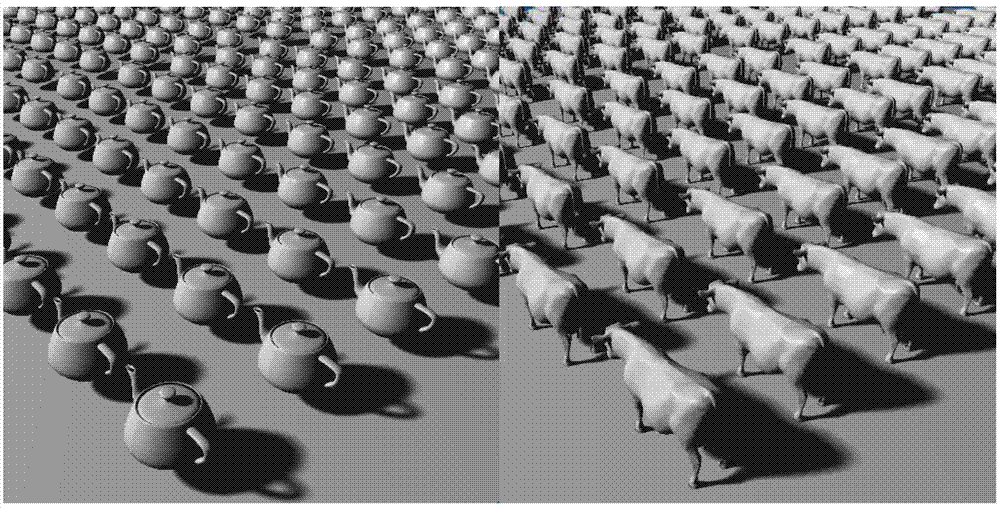

Large-scale virtual crowd real-time rendering method

The invention relates to a large-scale virtual crowd real-time rendering method, which comprises the following steps of: 1, introducing the conventional grid model and extracting geometric information and animation information of the model; 2, performing octree space subdivision on the model, wherein approximate description of a part of the model related to geometric size of each node is stored in the node; 3, performing point sampling on the surface of the part of the model included by each node; 4, processing and modeling a sample point, wherein the step comprises the sub-steps of calculating sample point information by interpolation, selecting sample point animation information, oversampling and removing redundancy and the like; 5, establishing model sampling data of three-layer LOD (Levels of Detail) according to specified parameters; 6, performing view frustum culling accelerated by a GPU (Graphic Processing Unit) on a virtual crowd in a large-scale scene during the real-time rendering; 7, performing an LOD strategy accelerated by the GPU on the cuffing result, wherein the step comprises the sub-steps of selecting role LOD and ordering LOD; and 8, sequentially performing GPU skin-skeleton animation based instancing rendering on the role of each layer of LOD level. By adopting the method, quick real-time rendering of a large-scale virtual crowd can be realized.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Apparatus and method for designing display for user interaction

ActiveUS20150061998A1Avoid negative effectsInhibitionInput/output for user-computer interactionCathode-ray tube indicators3d imageVirtual screen

The present invention relates to an apparatus and method for designing a display for user interaction. The proposed apparatus includes an input unit for receiving physical information of a user and a condition depending on a working environment. A space selection unit selects an optimal near-body work space corresponding to the condition received by the input unit. A space search unit calculates an overlapping area between a viewing frustum space, defined by a relationship between a gaze of the user and an optical system of a display enabling a 3D image to be displayed, and the optimal near-body work space selected by the space selection unit. A location selection unit selects a location of a virtual screen based on results of calculation. An optical system production unit produces an optical system in which the virtual screen is located at the location selected by the location selection unit.

Owner:ELECTRONICS & TELECOMM RES INST

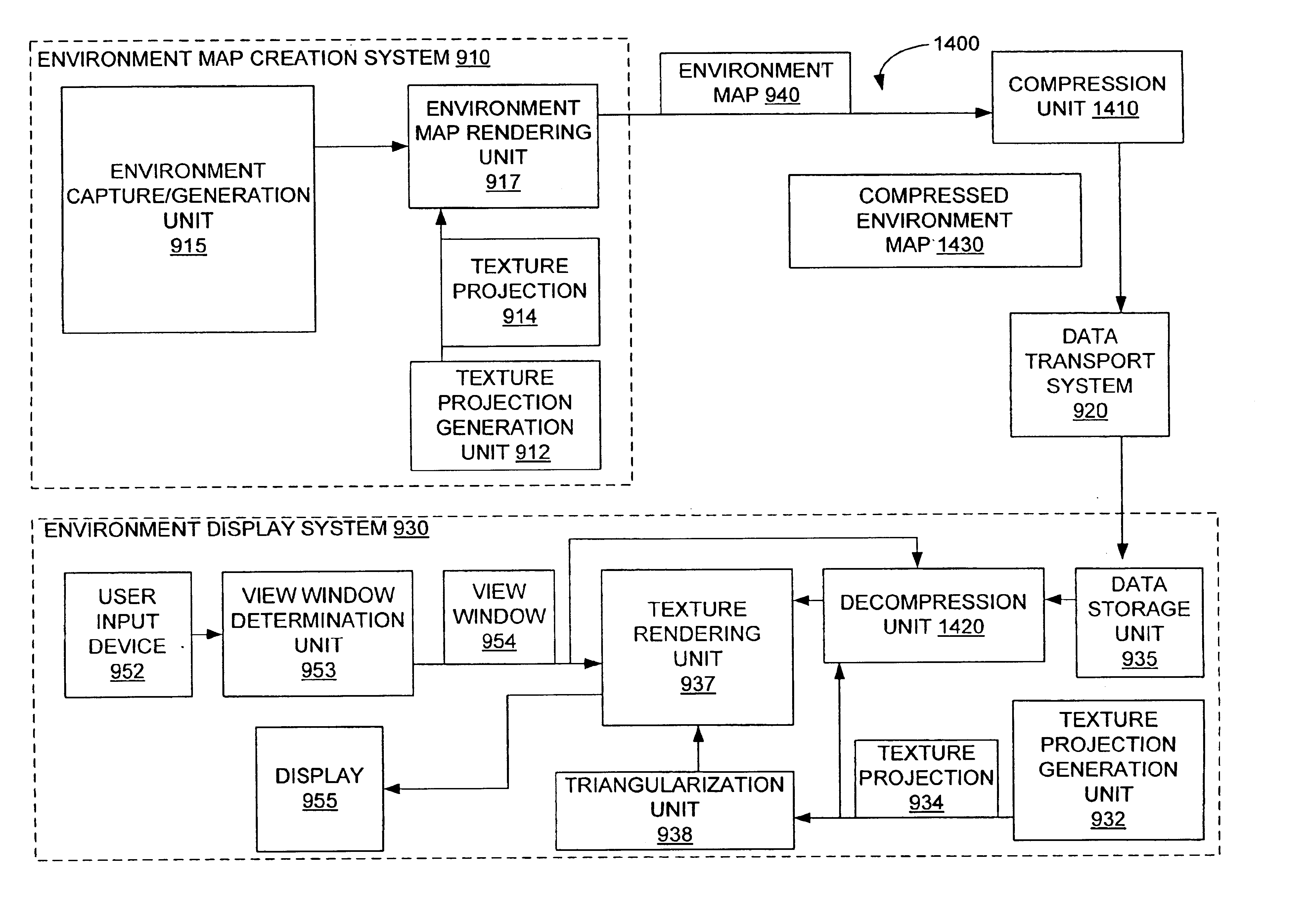

Partial image decompression of a tiled image

InactiveUS6897858B1Ideally suitImage codingCathode-ray tube indicatorsImage decompressionViewing frustum

A decompression unit is configured to partially decompress a compressed image formed by compressed tiles. Each compressed tiles correspond to a tile of the uncompressed image. The decompression unit selects a subset of relevant tiles, which are visible in a view window or a view frustum. Specifically, the decompression unit includes a tile selector to select the relevant tile and a tile decompressor to decompress the relevant tiles. By decompressing only a subset of the compressed tiles, the decompression unit reduces the processing time required to generate the contents of the view window.

Owner:DIGIMEDIA TECH LLC

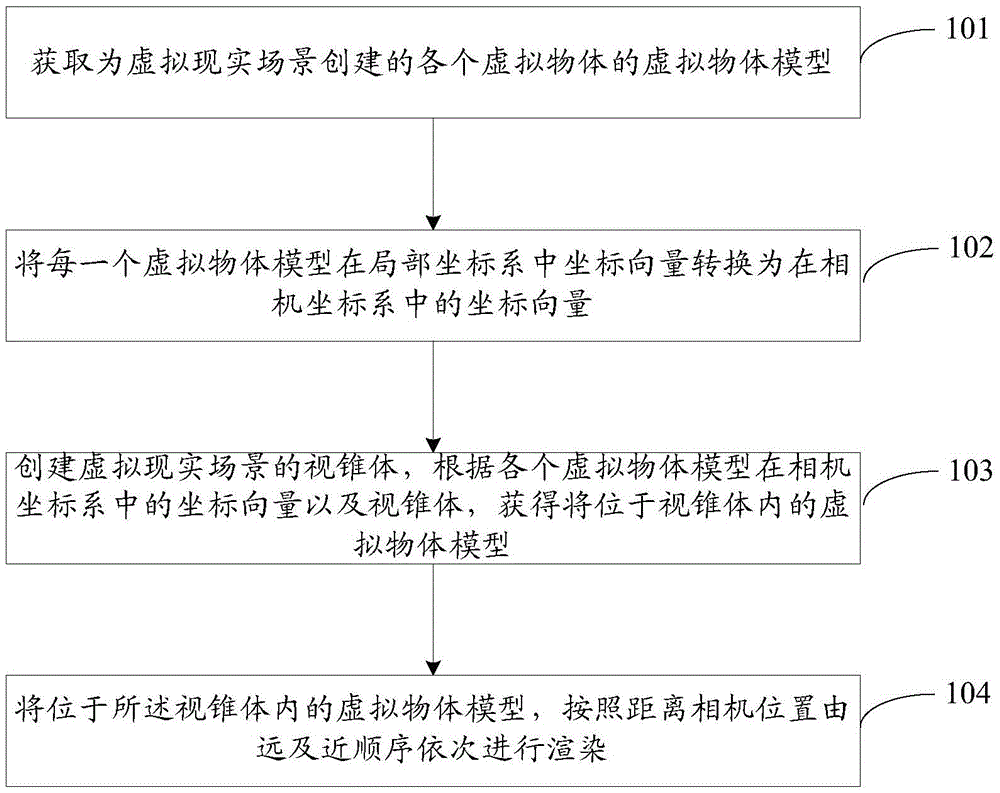

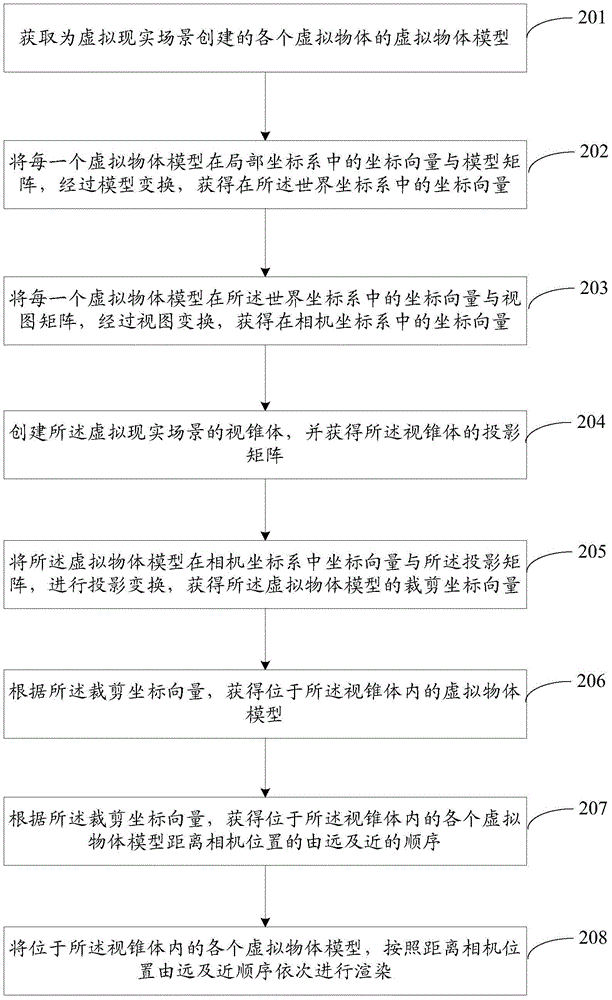

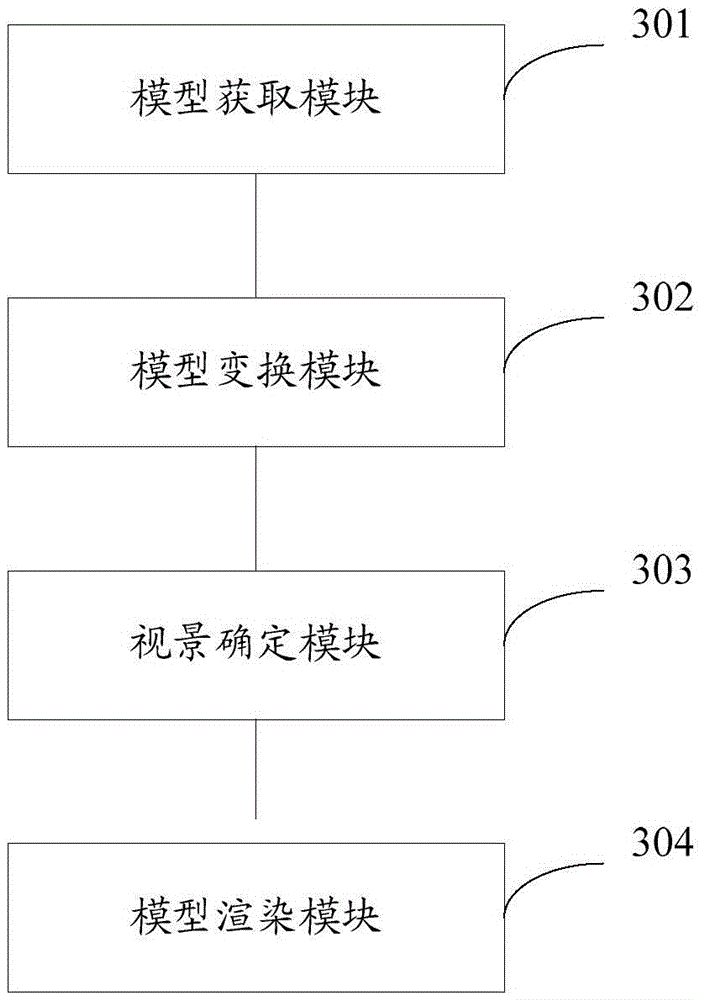

Model rendering method and device

The invention provides a model rendering method and device. The method comprises the steps that the virtual object model of each virtual object is acquired, wherein the virtual object model is created for a virtual reality scene; the coordinate vector of each virtual object model in a local coordinate system is converted to a coordinate vector in a camera coordinate system; the scene cone of the virtual reality scene is created; according to the coordinate vector of each virtual object model in the camera coordinate system and the scene cone, each virtual object model in the scene cone is acquired; and each virtual object model in the scene cone is successively rendered from being far to a camera to being near to the camera to display the virtual reality scene. According to the invention, the model rendering efficiency and the model rendering display effect are improved.

Owner:LE SHI ZHI ZIN ELECTRONIC TECHNOLOGY (TIANJIN) LTD

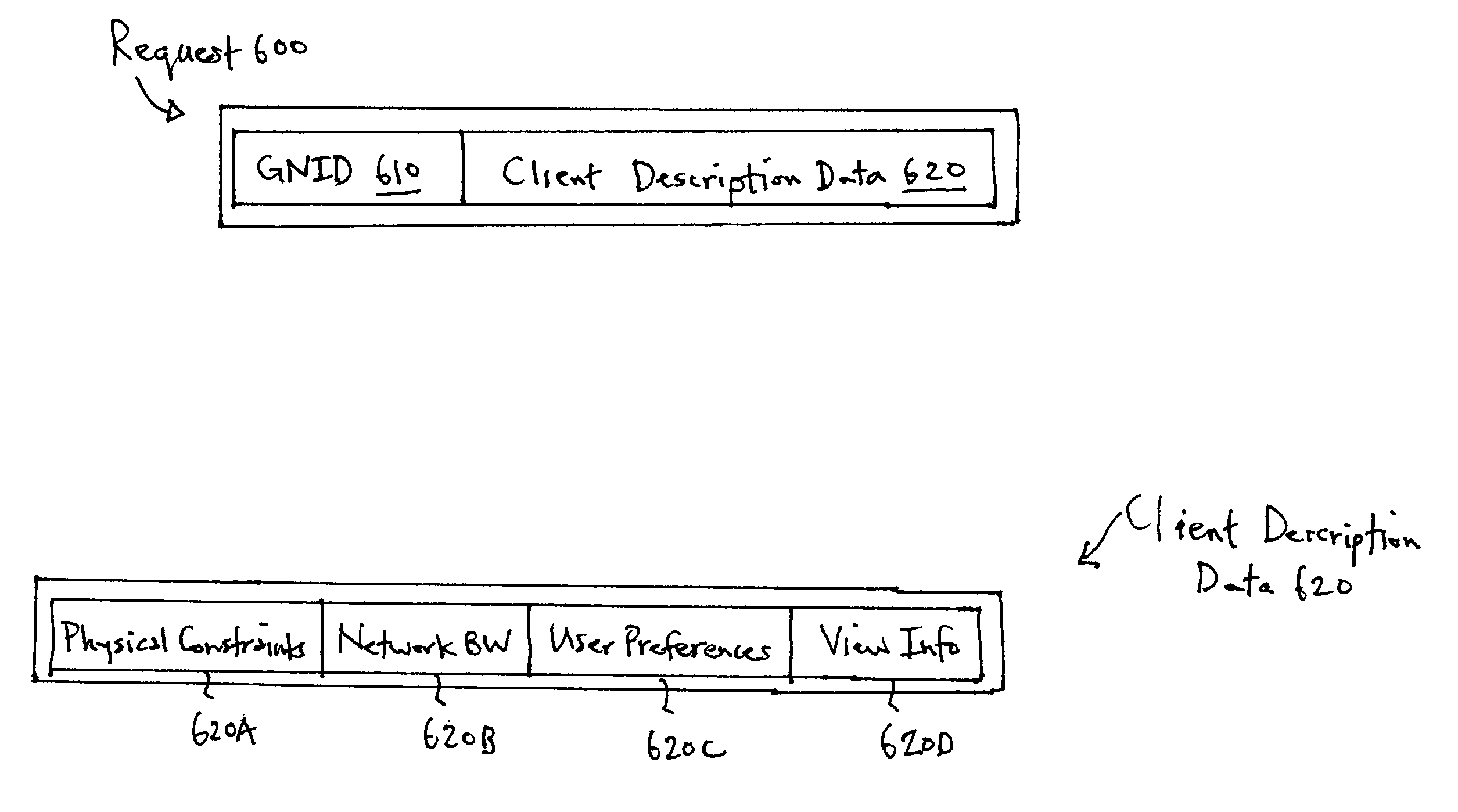

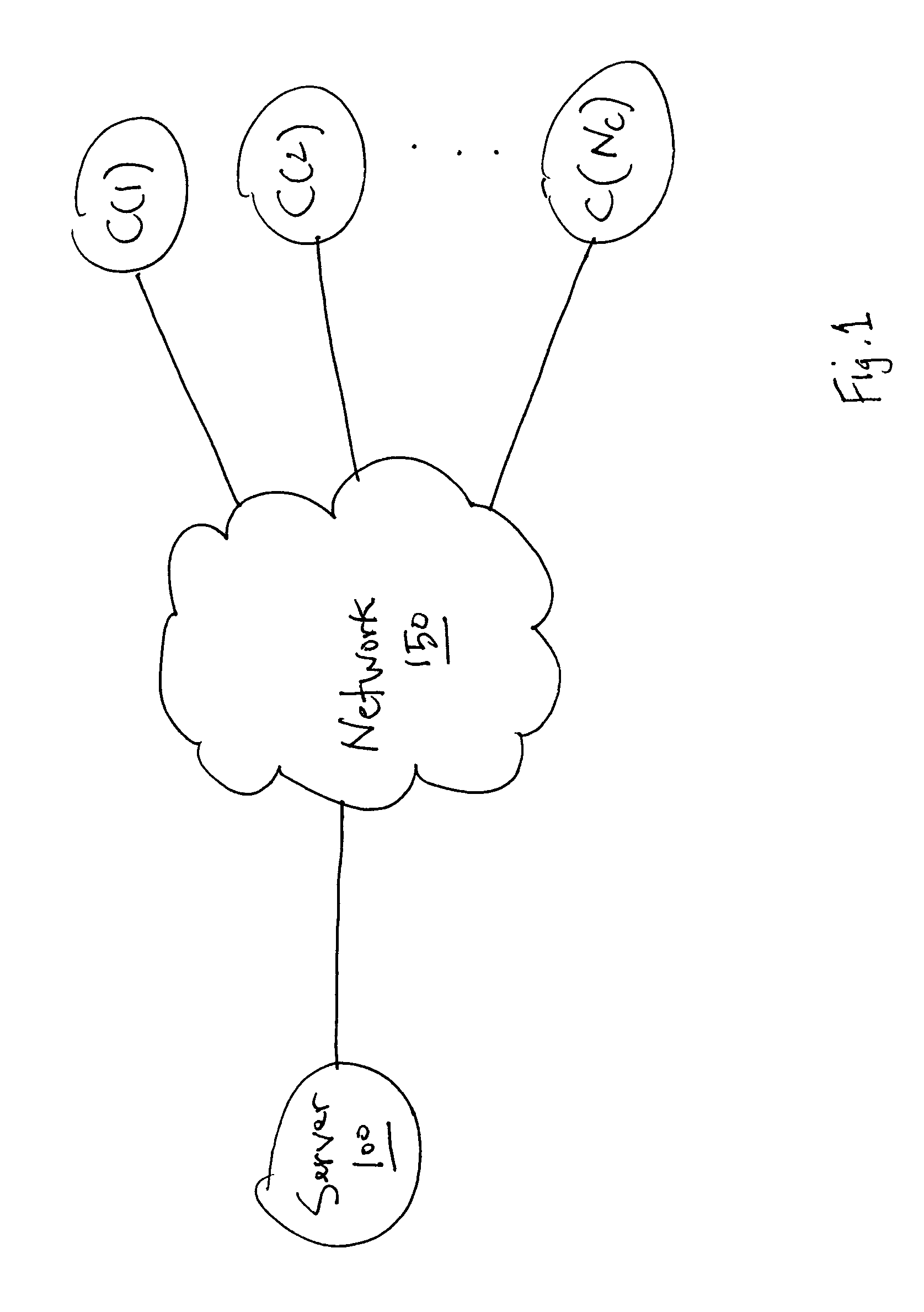

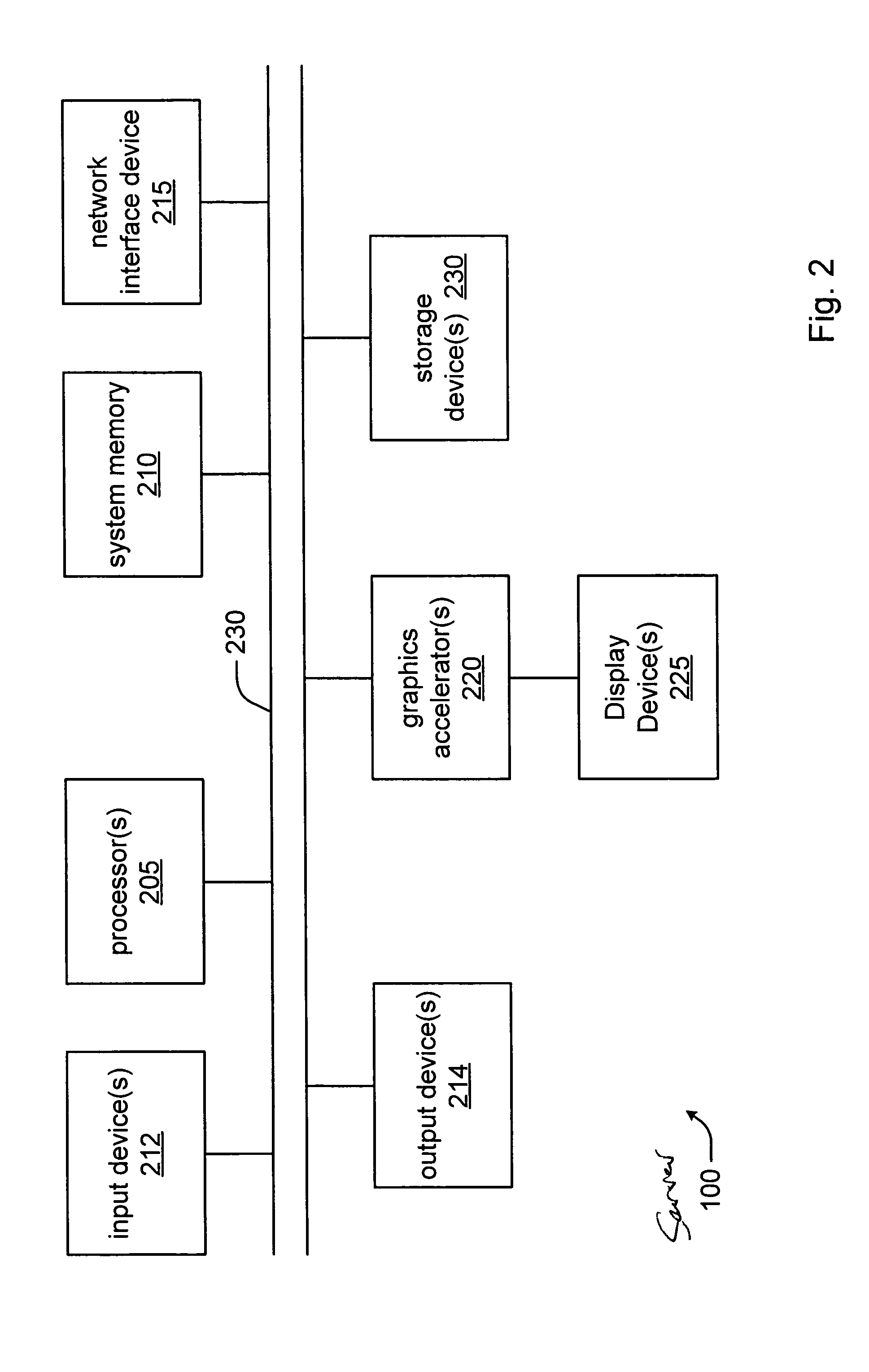

Software system for efficient data transport across a distributed system for interactive viewing

ActiveUS8035636B1Small sizeFast internetImage data processing detailsAnimationGraphicsSoftware system

A server receives a graphics data request from a client system through a network. The request includes client description data describing capabilities of the client system. The server accesses the requested graphics data from an augmented scene graph. The server determines which one or more operations from a set of operations are to be performed on the graphics data based on the client description data. The set of operations includes:(a) compiling the graphics data; and(b) removing nodes from the compiled graphics data that are not visible to a user to obtain view-limited compiled graphics data.(c) culling the view-limited compiled graphics data with a view frustum to obtain state-sorted graphics data and(d) rendering the state-sorted graphics data to obtain images.The server performs the one or more operations on the graphics data to obtain resultant graphics data, and, transmits the resultant graphics data to the client system.

Owner:ORACLE INT CORP

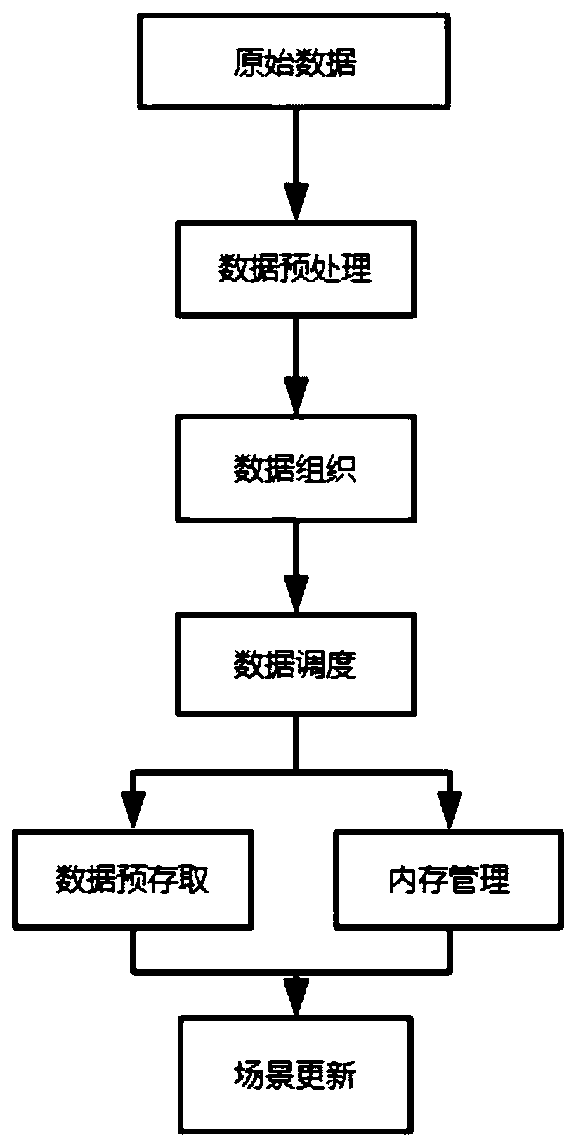

Method for mass model data dynamic scheduling and real-time asynchronous loading under virtual reality

InactiveCN103914868ASolve bottlenecksThe ability to expand the scale of the scene3D modellingComputational scienceViewing frustum

The invention discloses a method for mass model data dynamic scheduling and real-time asynchronous loading under virtual reality. The method includes the following steps of firstly, preprocessing 3D model scene data, secondly, cutting and partitioning a whole model scene, thirdly, conducting multithreading parallel distribution loading and fourthly, clipping a view cone. Based on a view cone clipping algorithm for acquiring the point of intersection of the view cone and a topographic region, real-time clipping based on the topographic region in dynamic scheduling is achieved, by the adoption of a multithreading processing mechanism of a model data partitioning scheduling and rending pipeline, a dynamic scheduling and drawing pipeline and a data processing scheduling pipeline between different mediums can be asynchronously loaded, and therefore dynamic balance of loading and performance efficiency of the mass scene model data is achieved in the hardware environment with a limited memory, a limited processor and the like.

Owner:柳州腾龙煤电科技股份有限公司

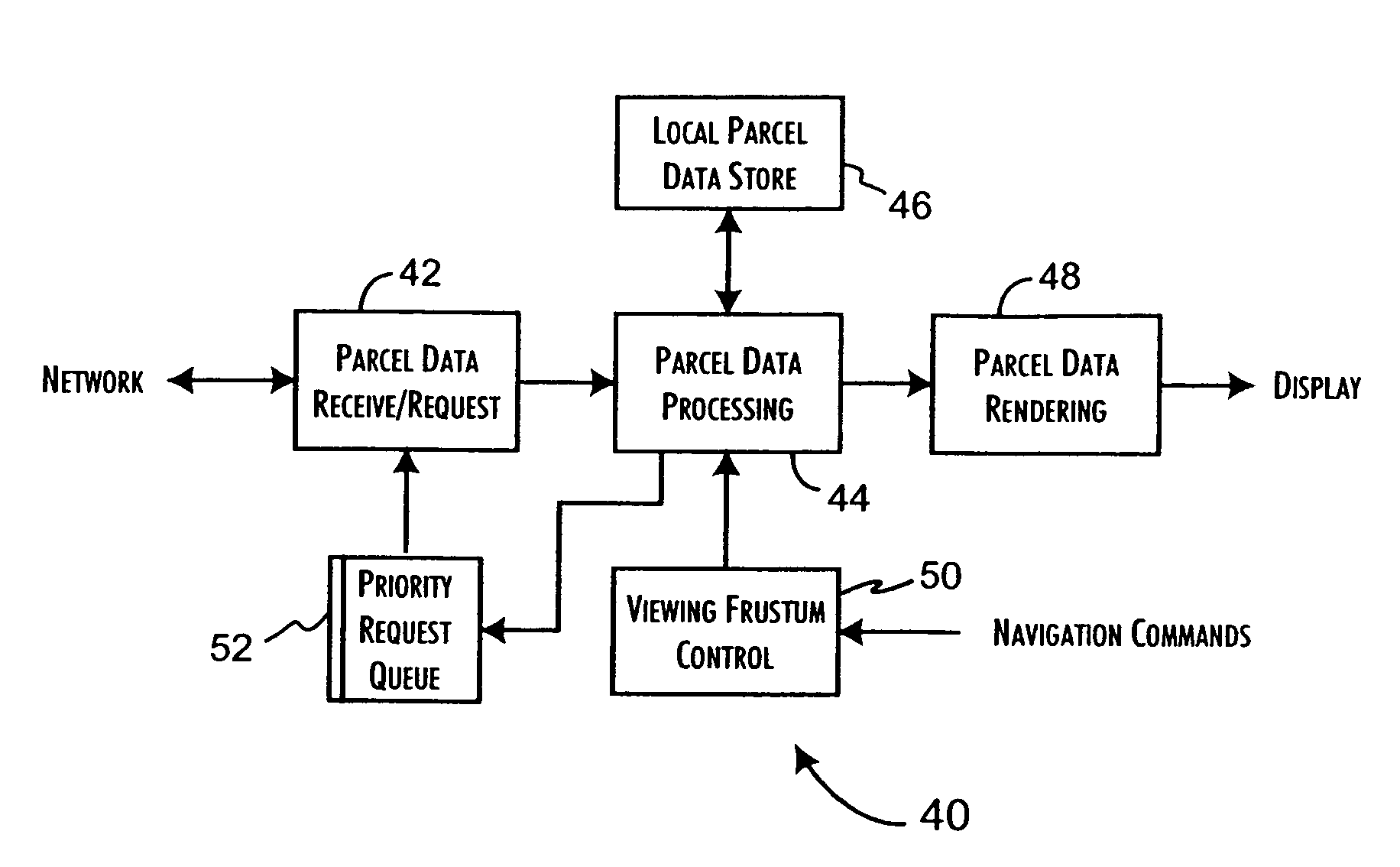

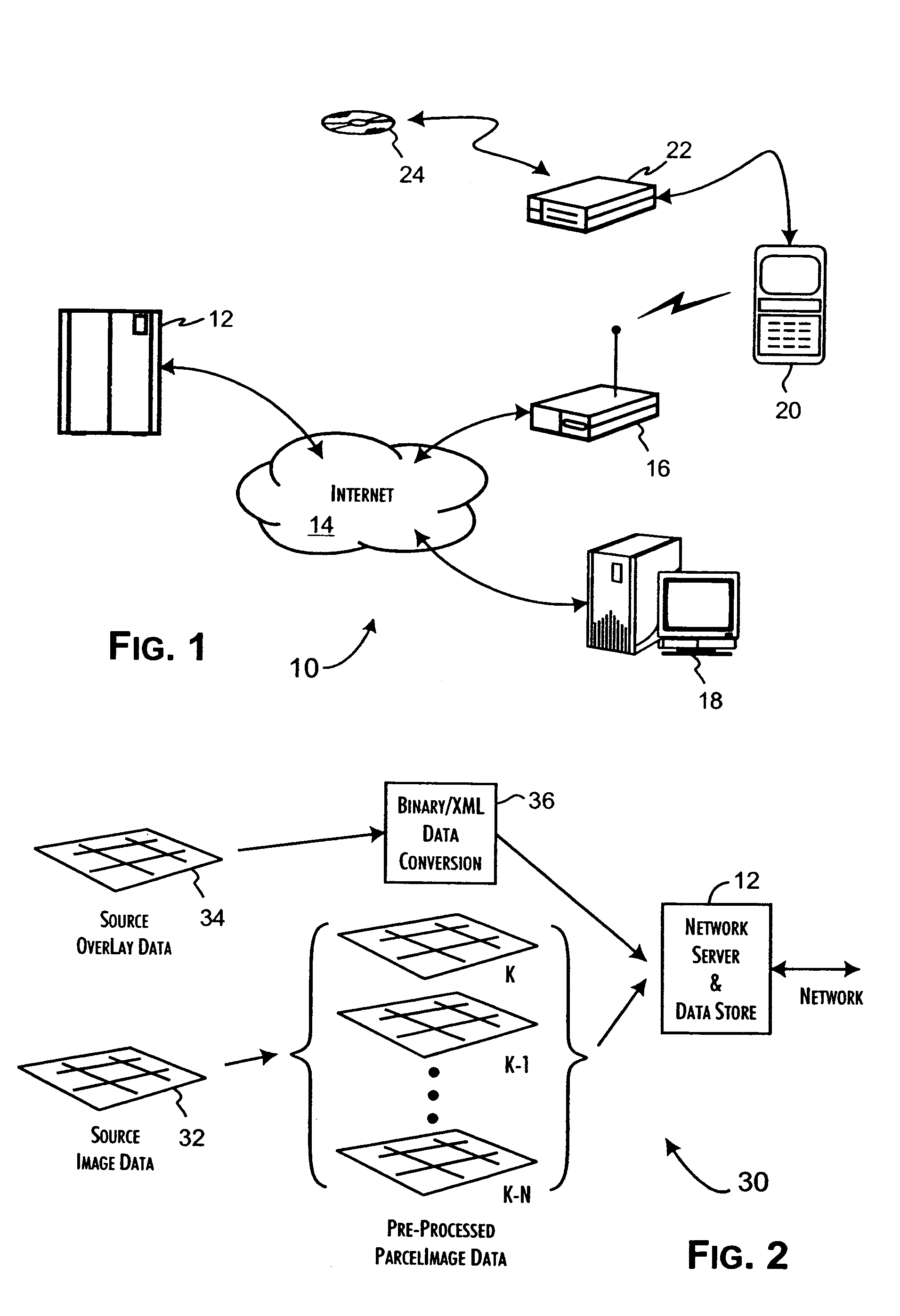

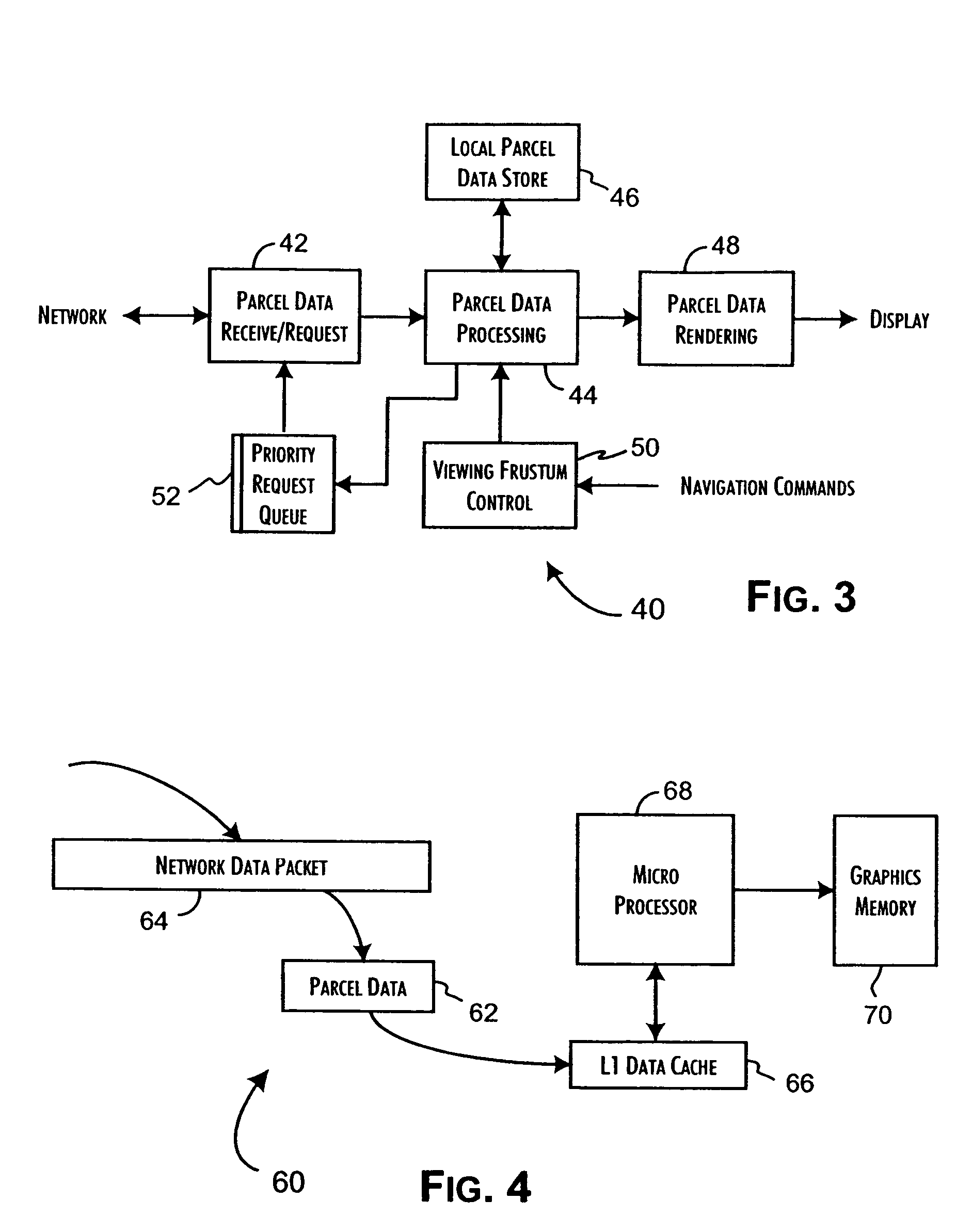

System and methods for network image delivery with dynamic viewing frustum optimized for limited bandwidth communication channels

ActiveUS7139794B2Minimizes computational complexityIncrease display resolutionGeometric image transformationCharacter and pattern recognitionViewing frustumNetwork communication

Dynamic visualization of image data provided through a network communications channel is performed by a client system including a parcel request subsystem and a parcel rendering subsystem. The parcel request subsystem includes a parcel request queue and is operative to request discrete image data parcels in a priority order and to store received image data parcels in a parcel data store. The parcel request subsystem is responsive to an image parcel request of assigned priority to place the image parcel request in the parcel request queue ordered in correspondence with the assigned priority. The parcel rendering subsystem is coupled to the parcel data store to selectively retrieve and render received image data parcels to a display memory. The parcel rendering system provides the parcel request subsystem with the image parcel request of the assigned priority.

Owner:BRADIUM TECHOLOGIES LLC

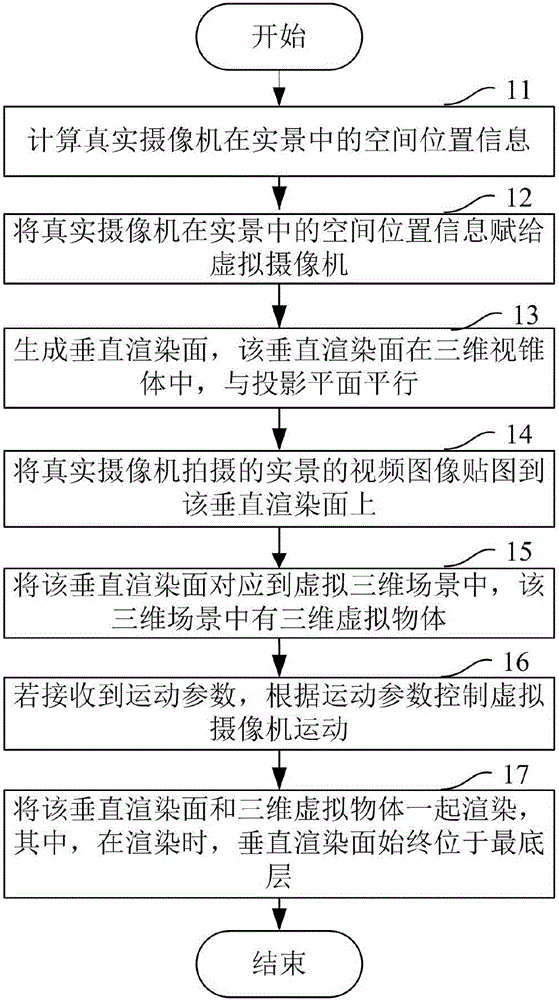

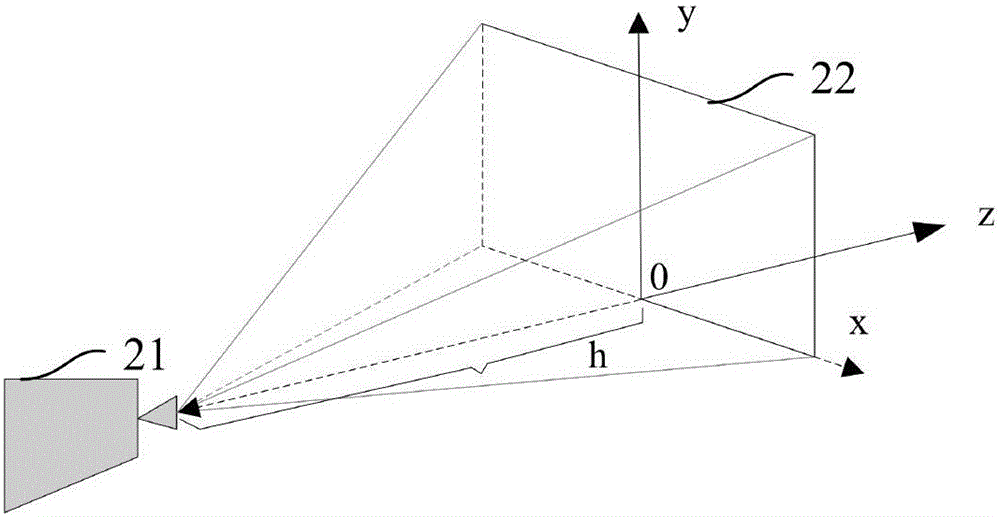

Method and terminal for realizing virtual rocker arm

ActiveCN106027855ARealize virtual rocker effectEasy to operateTelevision system detailsColor television detailsViewing frustumMotion parameter

The invention provides a method and a terminal for realizing a virtual rocker arm. The method comprises the following steps: calculating spatial position parameter information of a real camera in a real scene; assigning the spatial position parameter information of the real camera in the real scene to a virtual camera; generating a vertical rendering surface, wherein the vertical rendering surface is parallel to a projection plane in a three-dimensional view frustum; mapping a video image of the real scene shot by the real camera onto the vertical rendering surface; corresponding the vertical rendering surface into a virtual three-dimensional scene, wherein there is a three-dimensional virtual object in the three-dimensional scene; if a motion parameter is received, controlling the virtual camera to move according to the motion parameter; and rendering the vertical rendering surface together with the three-dimensional virtual object, wherein the vertical rendering surface is consistently located on the mot bottom layer during the rendering. By adopting the method and the terminal, an effect of the virtual rocker arm of a virtual implantation system is achieved, the operation is simple, the use is convenient, and no goof phenomenon is generated.

Owner:SHENZHEN DLP DIGITAL TECH

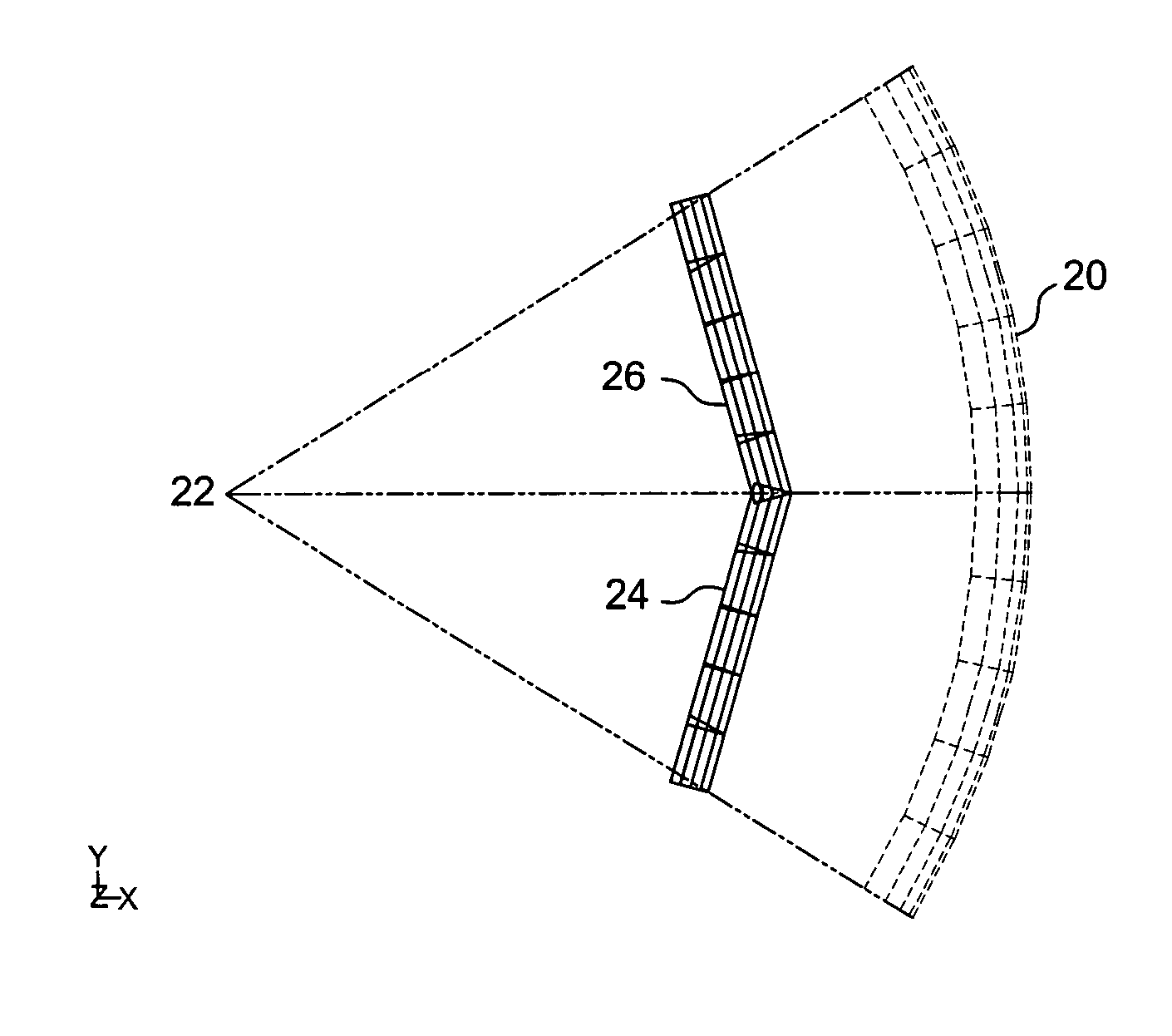

Hierarchical system and method for on-demand loading of data in a navigation system

ActiveUS7305396B2Improve realismReduce unevenness and abrupt changeInstruments for road network navigationData processing applicationsViewpointsViewing frustum

A system providing three-dimensional visual navigation for a mobile unit includes a location calculation unit for calculating an instantaneous position of the mobile unit, a viewpoint control unit for determining a viewing frustum from the instantaneous position, a scenegraph manager in communication with at least one geo-database to obtain geographic object data associated with the viewing frustum and generating a scenegraph organizing the geographic object data, and a scenegraph renderer which graphically renders the scenegraph in real time. To enhance depiction, a method for blending images of different resolutions in the scenegraph reduces abrupt changes as the mobile unit moves relative to the depicted geographic objects. Data structures for storage and run-time access of information regarding the geographic object data permit on-demand loading of the data based on the viewing frustum and allow the navigational system to dynamically load, on-demand, only those objects that are visible to the user.

Owner:ROBERT BOSCH GMBH

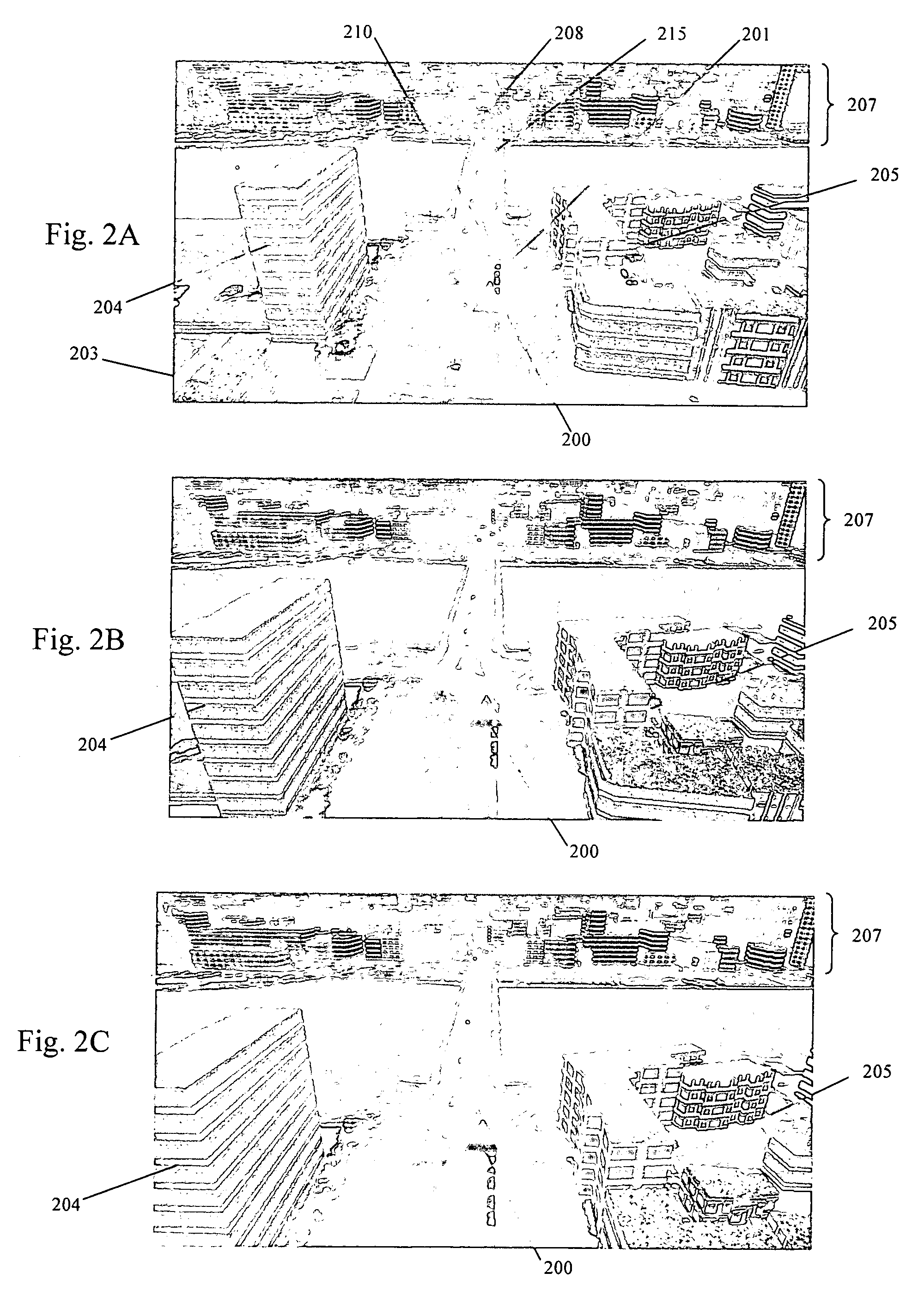

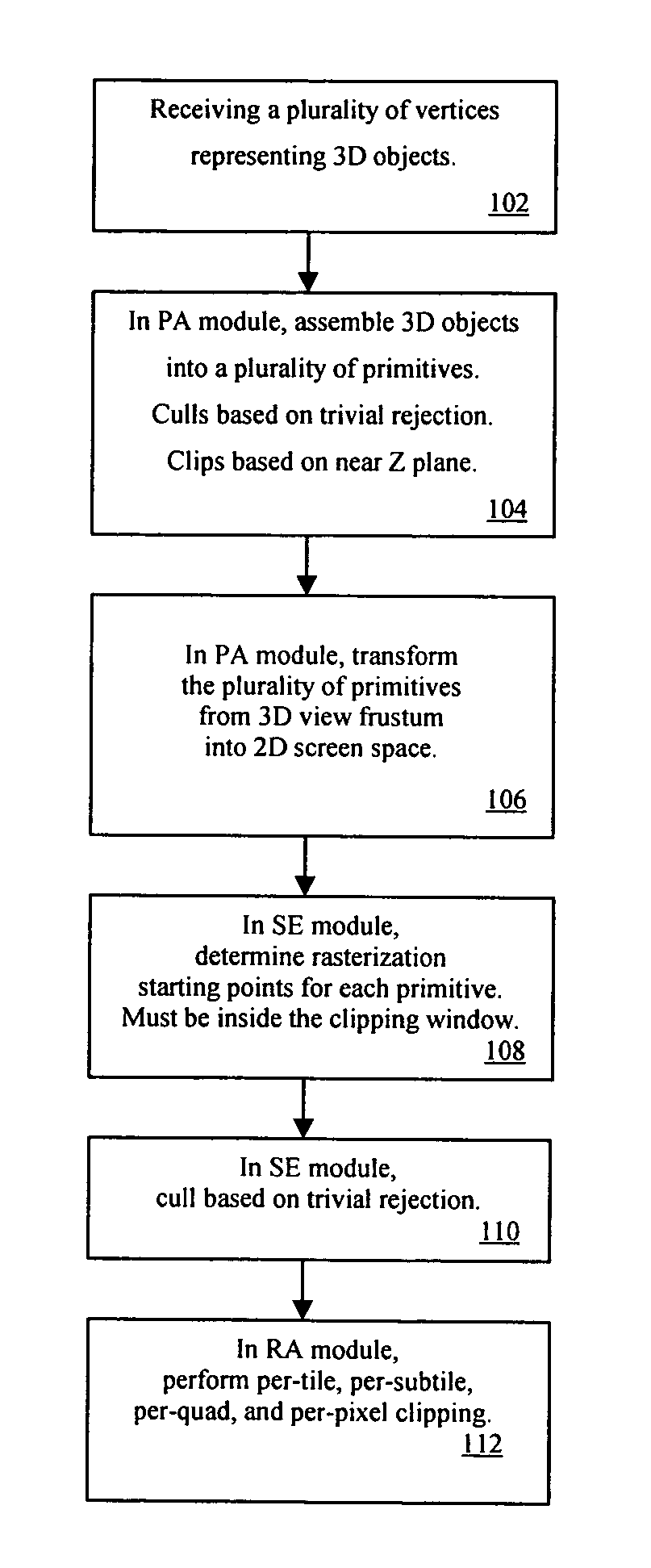

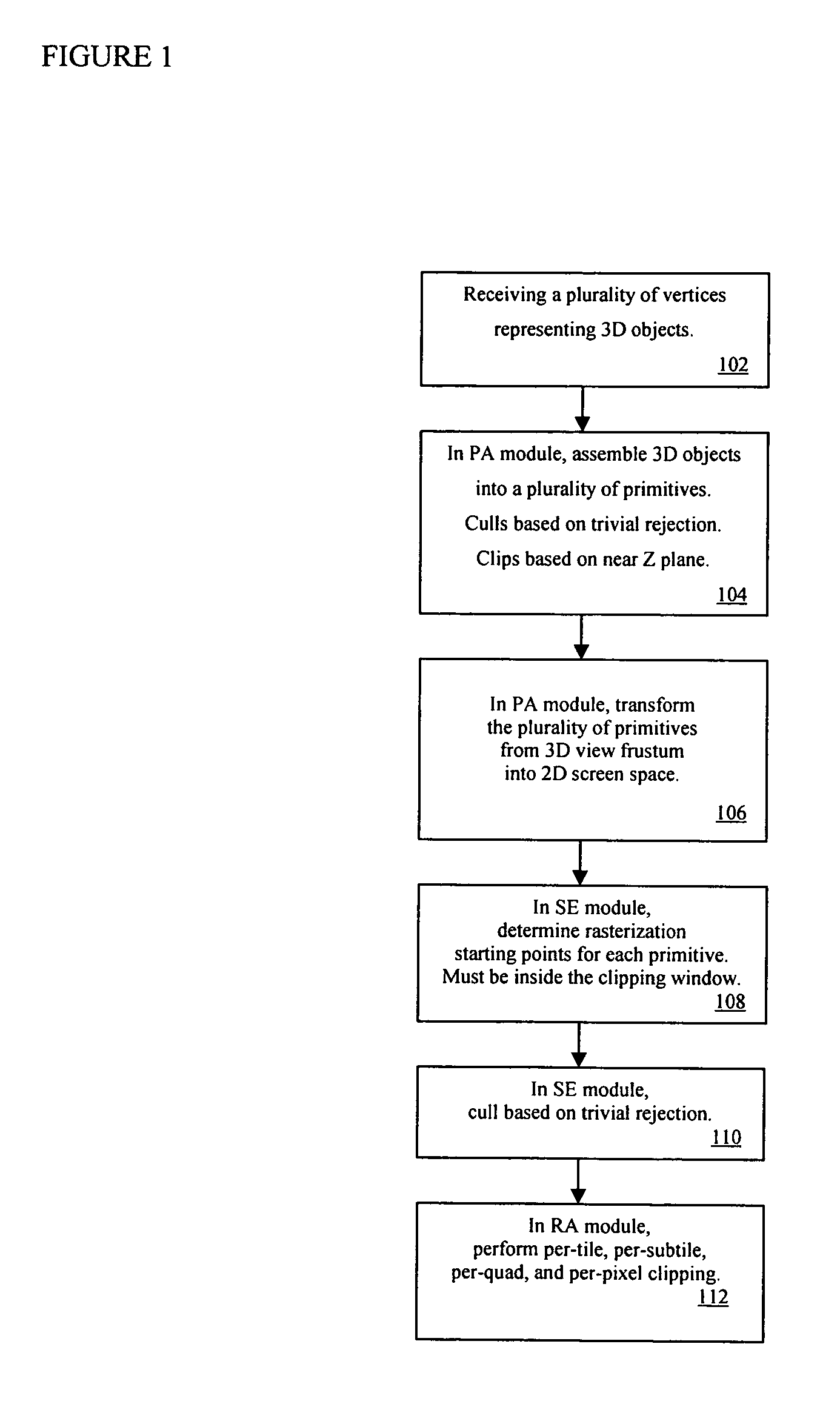

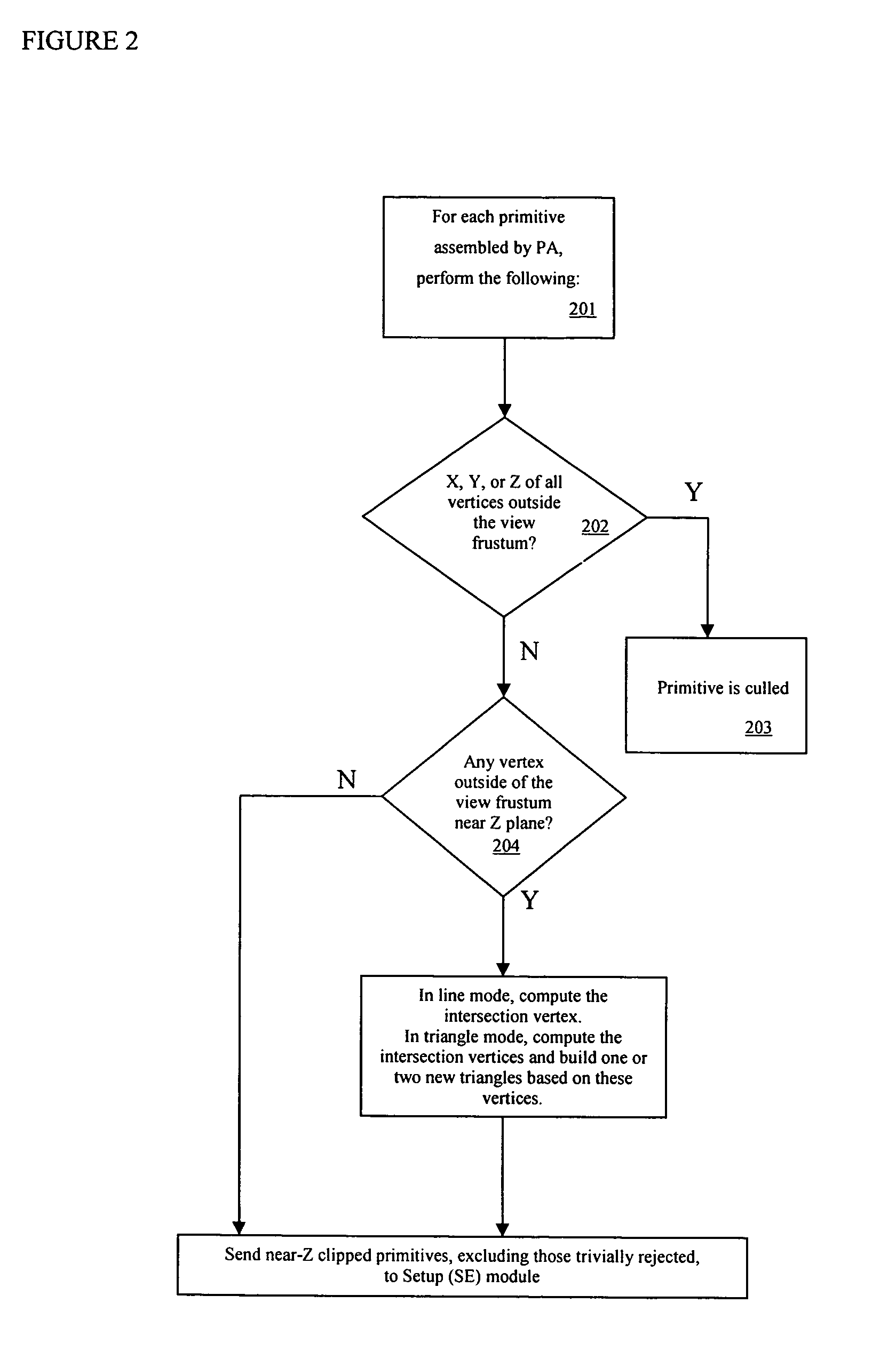

Method for distributed clipping outside of view volume

A distributed clipping scheme is provided, view frustum culling is distributed in several places in a graphics processing pipeline to simplify hardware implementation and improve performance. In general, many 3D objects are outside viewing frustum. In one embodiment, clipping is performed on these objects with a simple algorithm in the PA module, such as near Z clipping, trivial rejection and trivial acceptance. In one embodiment, the SE and RA modules perform the rest of clipping, such as X, Y and far Z clipping. In one embodiment, the SE module performs clipping by way of computing a initial point of rasterization. In one embodiment, the RA module performs clipping by way of conducting the rendering step of the rasterization process. This approach distributes the complexity in the graphics processing pipeline and makes the design simpler and faster, therefore design complexity, cost and performance may all be improved in hardware implementation.

Owner:VIVANTE CORPORATION

Hierarchical system and method for on-demand loading of data in a navigation system

InactiveUS20080091732A1Improve realismReduce unevenness and abrupt changeInstruments for road network navigationData processing applicationsViewpointsViewing frustum

A system providing three-dimensional visual navigation for a mobile unit includes a location calculation unit for calculating an instantaneous position of the mobile unit, a viewpoint control unit for determining a viewing frustum from the instantaneous position, a scenegraph manager in communication with at least one geo-database to obtain geographic object data associated with the viewing frustum and generating a scenegraph organizing the geographic object data, and a scenegraph renderer which graphically renders the scenegraph in real time. To enhance depiction, a method for blending images of different resolutions in the scenegraph reduces abrupt changes as the mobile unit moves relative to the depicted geographic objects. Data structures for storage and run-time access of information regarding the geographic object data permit on-demand loading of the data based on the viewing frustum and allow the navigational system to dynamically load, on-demand, only those objects that are visible to the user.

Owner:ROBERT BOSCH GMBH

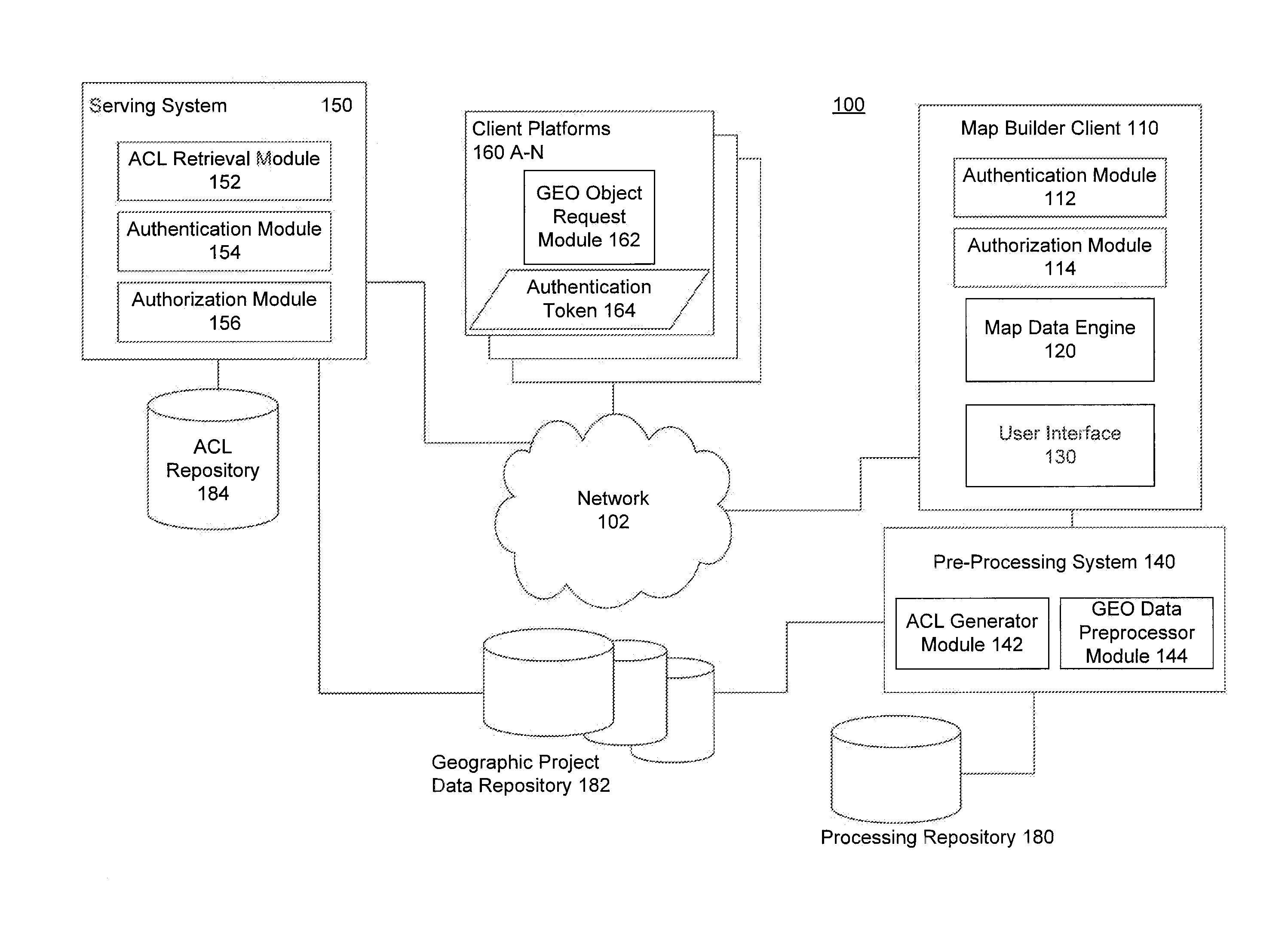

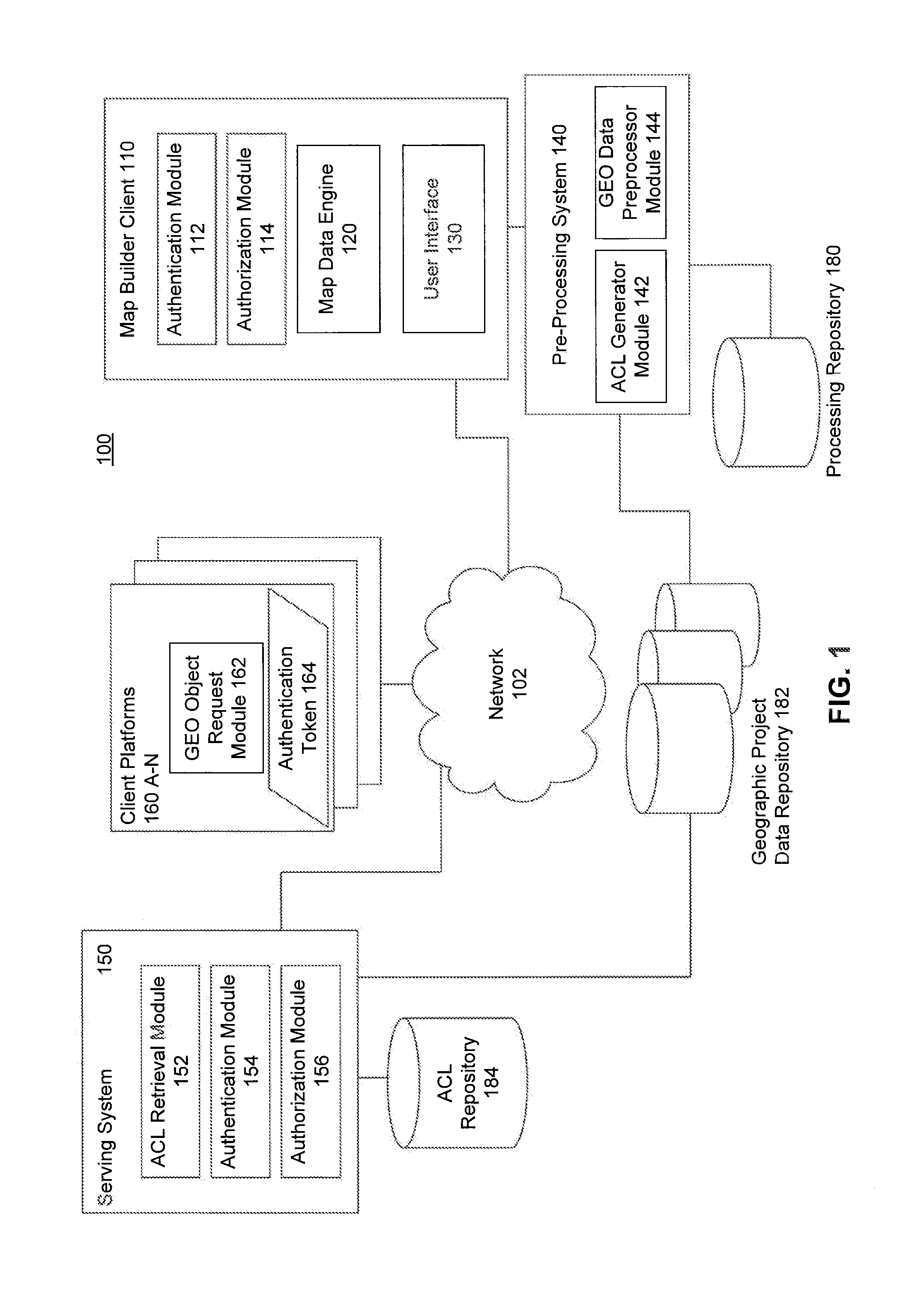

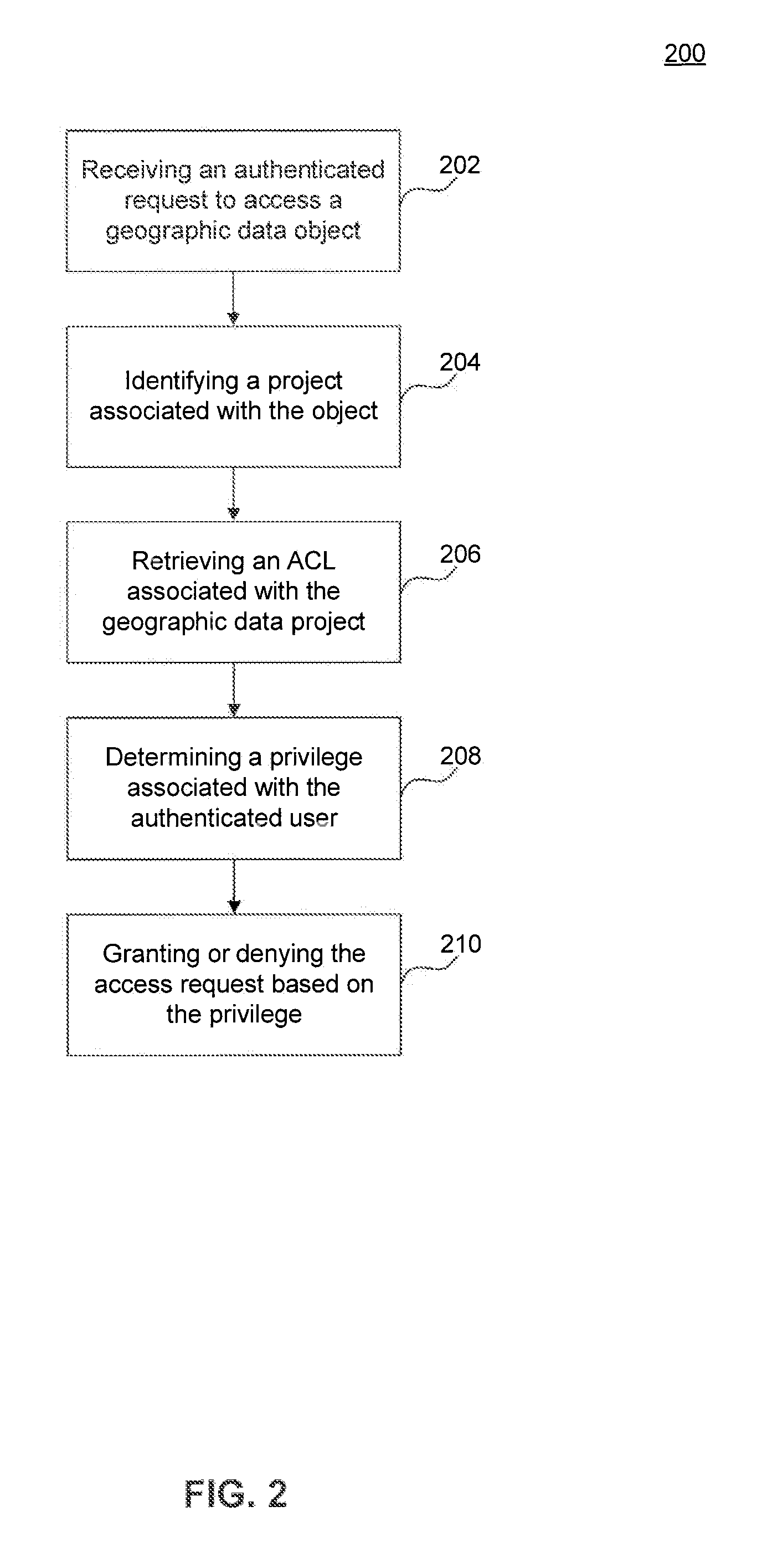

Object-Based Access Control for Map Data

InactiveUS20120246705A1Digital data processing detailsComputer security arrangementsObject basedData access control

Embodiments allow access to geographic data objects on a per-object basis. A client may send a plurality of requests for geographic data to display within a view frustum. Map data may include a layer with a plurality of assets. Each request may be authenticated by an access control filter, which determines whether the user is authorized to view the data requested.

Owner:GOOGLE LLC

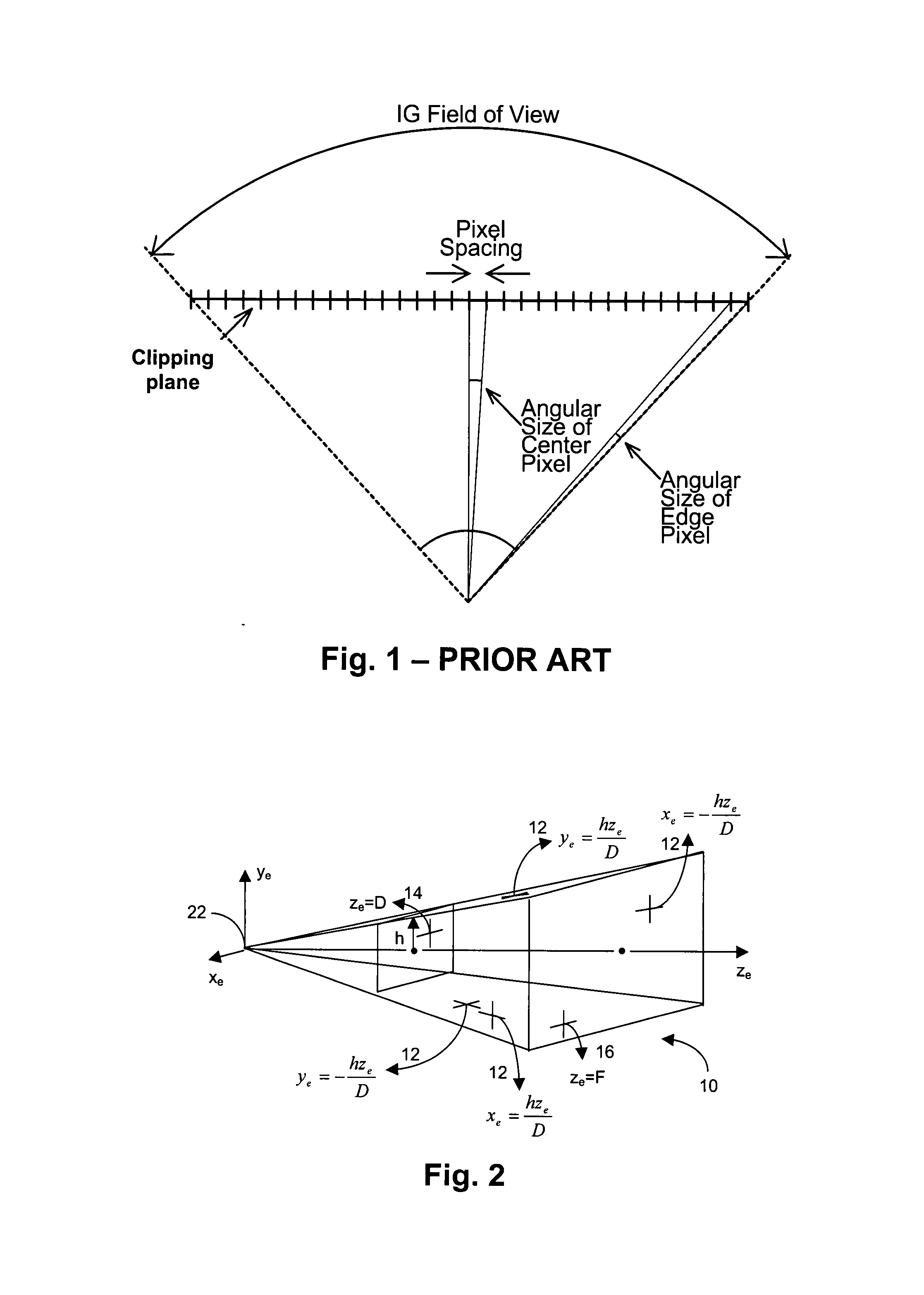

Non-linear image mapping using a plurality of non-coplanar clipping planes

ActiveUS20080012879A1Lower latencyQuality improvementCathode-ray tube indicatorsPicture reproducersImage resolutionViewing frustum

A display processing system for providing video signals for displaying an image is provided. The display processing system comprises input channels for receiving a plurality of component images, each component image being a portion of the complete image for display, the image data of each component image being defined by a view frustum having a clipping plane, and a combiner for combining the image data of the component images. According to the present invention, at least two clipping planes of two of the view frustums are non-coplanar. Such display processing system corrects for the defect of driving angular resolution to the edges of the field of view of a display system, in particular for large field-of-view display systems, and balances the overall system angular resolution by allowing each image generator channel to render to an optimal view frustum for its portion of the complete image.

Owner:ESTERLINE BELGIUM BVBA

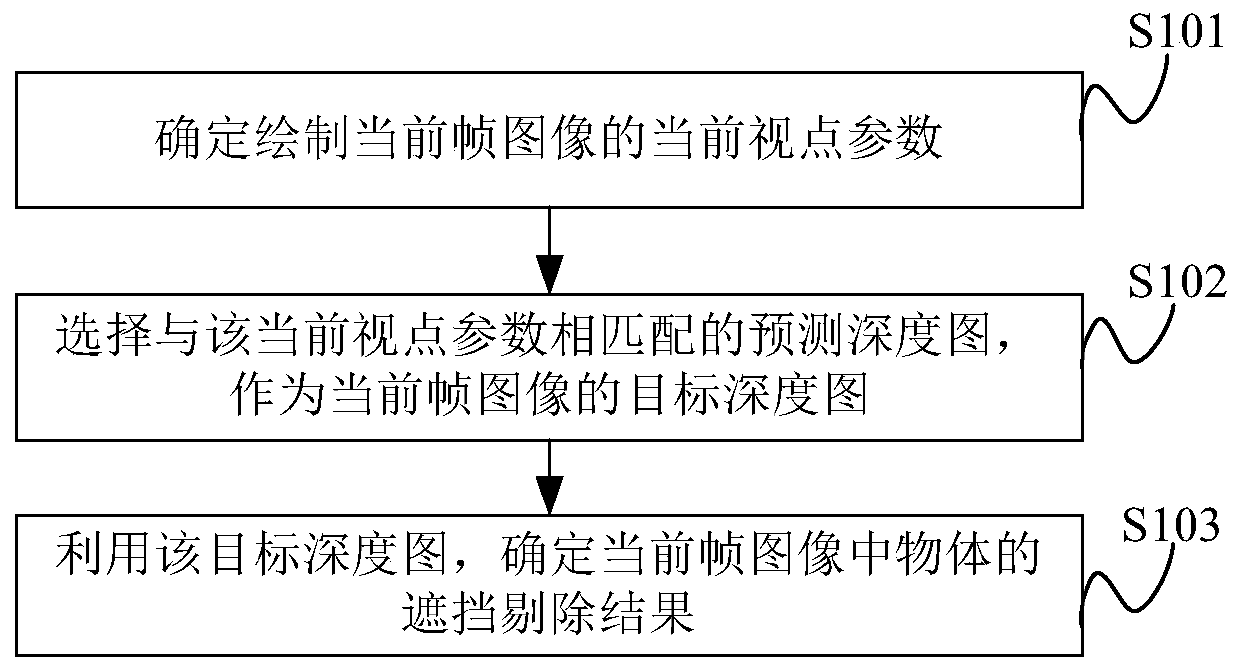

Shielding removing method and device and computer equipment

ActiveCN110136082AImprove the efficiency of occlusion judgmentReduce acquisition timeImage enhancement3D-image renderingViewpointsViewing frustum

The invention provides a shielding removing method and device and computer equipment. The method comprises the following steps that when a shielded object in a current frame of image needs to be determined, a current frame image is drawn, the prediction depth map matched with the current viewpoint parameter can be directly selected from a pre-stored prediction depth map and be taken as a target depth map of the current frame image; the target depth map is utilized to quickly and accurately determine the shielding removal result of the object in the current frame of image; the depth map under the current view frustum does not need to be drawn in the current frame, the waiting time for obtaining the depth map corresponding to the current frame image is greatly shortened, the efficiency of judging the shielding of the object in the image is improved, and due to the mode, the shielding rejection operation cost is reduced, so that the method can be suitable for a mobile platform with poor computing power.

Owner:TENCENT TECH (SHENZHEN) CO LTD

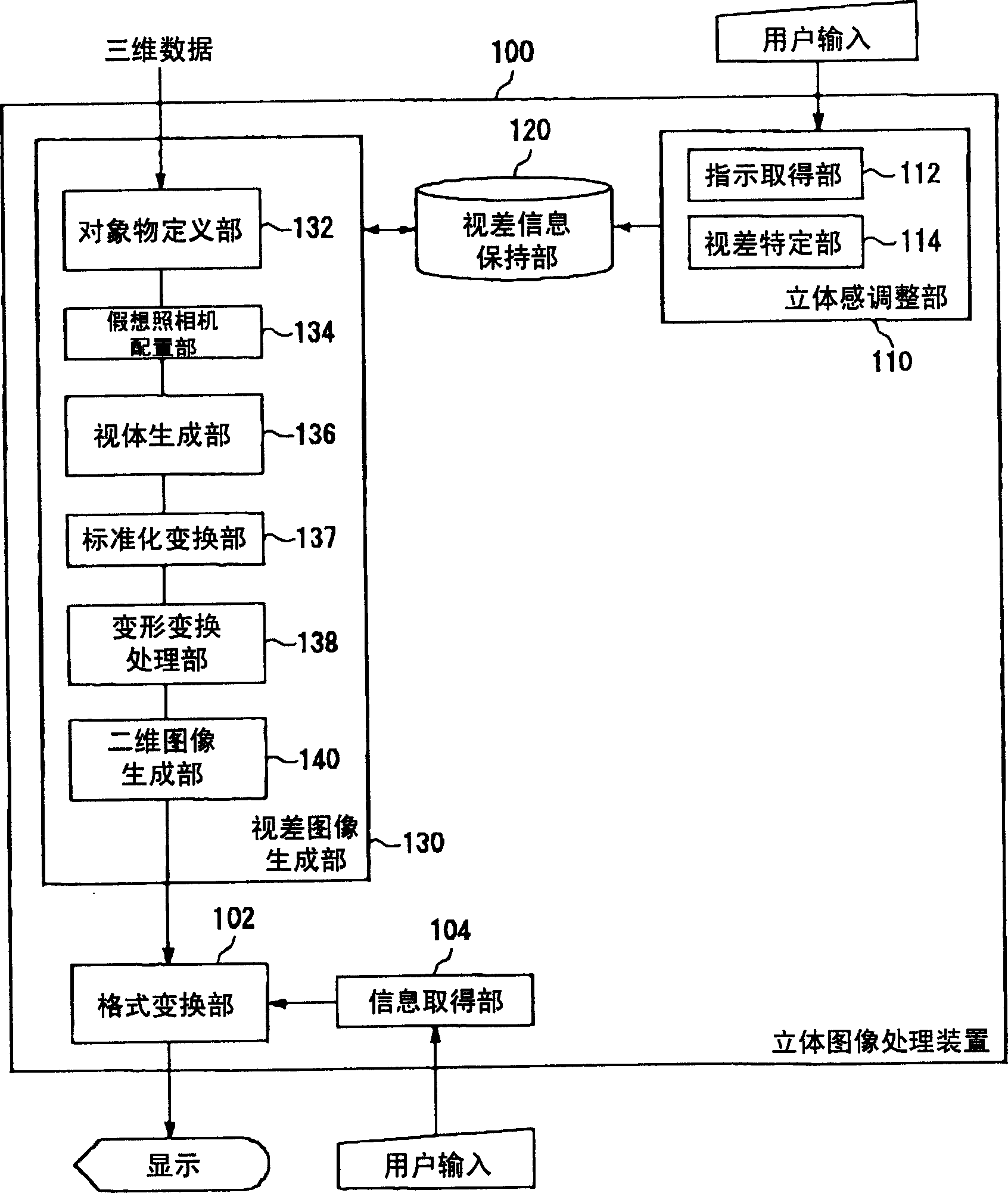

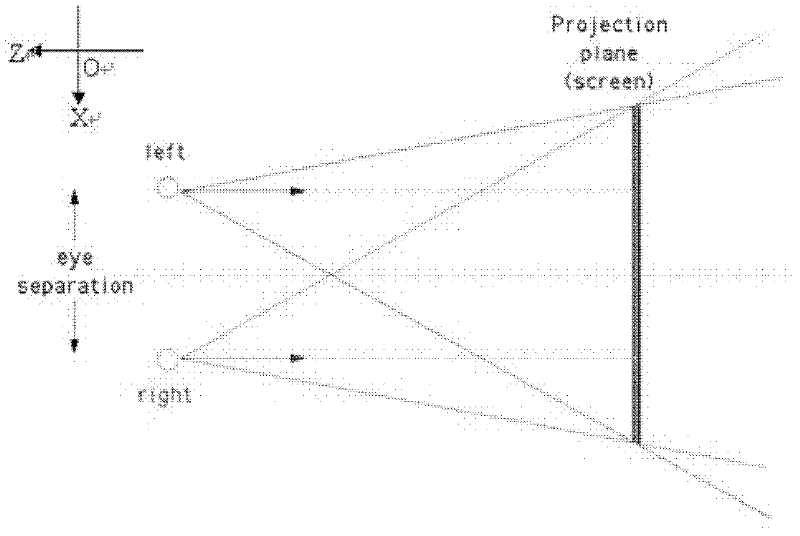

Method and apparatus for processing three-dimensional images

InactiveCN1696978AEfficient Stereo Image Processing2D-image generationParallaxComputer graphics (images)

The presnent invention provided a 3D image processing apparatus and method. A 3D image processing apparatus first generates a combined view volume that contains view volumes set respectively by a plurality of real cameras, based on a single temporary camera placed in a virtual 3D space. Then, this apparatus performs skewing transformation on the combined view volume so as to acquire view volumes for each of the plurality of real cameras. Finally, two view volumes acquired for the each of the plurality of real cameras are projected on a projection plane so as to produce 2D images having parallax. Using the temporary camera alone, the 2D images serving as base points for a parallax image can be produced by acquiring the view volumes for the each of the plurality of real cameras. As a result, a processing for actually placing the real cameras can be skipped, so that a high-speed processing as a whole can be realized.

Owner:SANYO ELECTRIC CO LTD

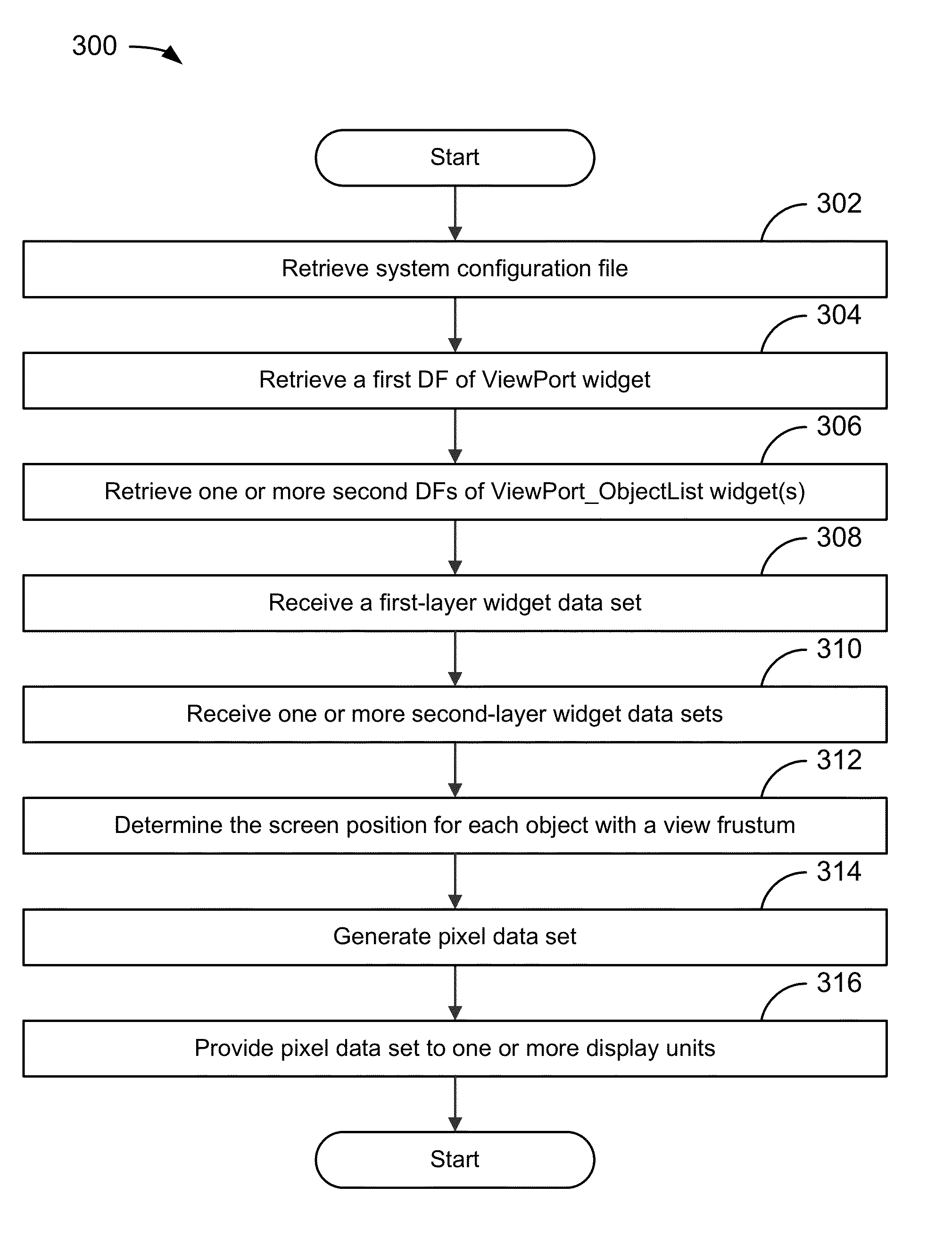

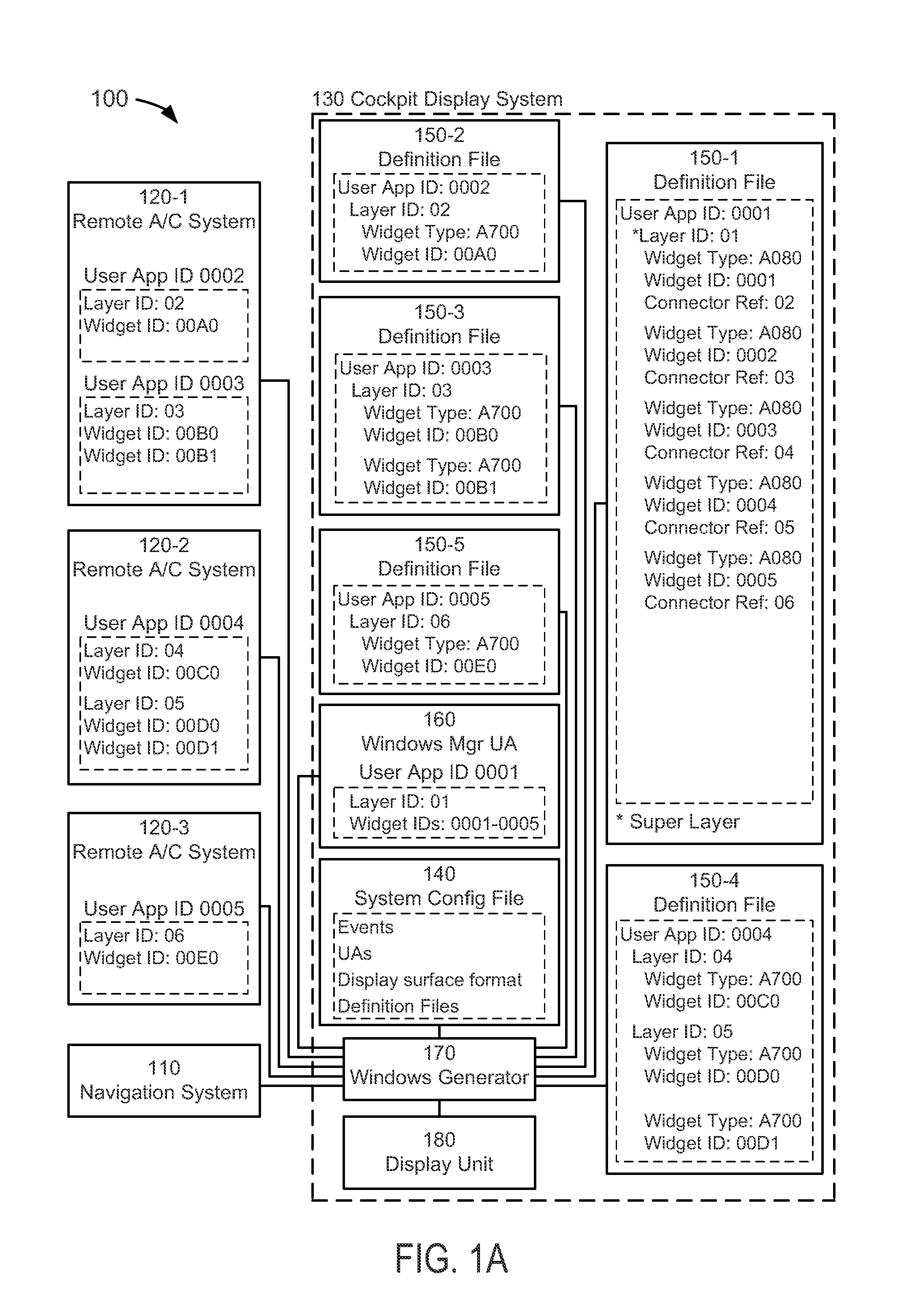

Object symbology generating system, device, and method

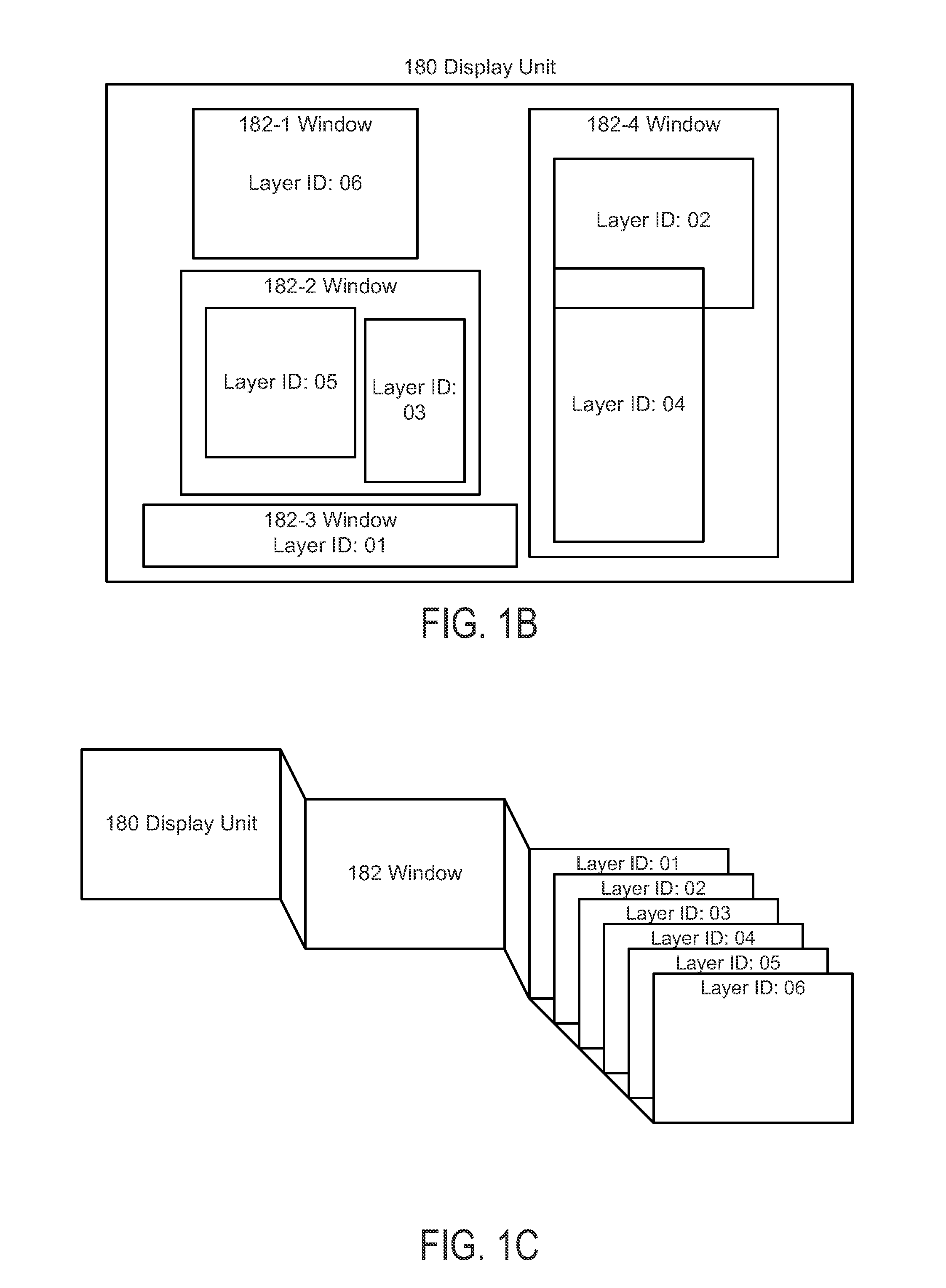

A present novel and non-trivial system, device, and method for generating object symbology are disclosed. A system may be comprised one or more aircraft systems and a cockpit display system comprised of a system configuration file, a plurality of definition files, and a windows generator (“WG”). The WG may be configured to perform initialization and run-time operations. The initialization operation may be comprised of retrieving a system configuration file; retrieving a first definition file comprised of a first layer; and retrieving one or more second definition files comprised of one or more second layers. The run-time operation may be comprised of receiving a first-layer widget data set; receiving one or more second-layer widget data sets; determining the screen position of each object located within a view frustum; and generating a pixel data set in response to the determination.

Owner:ROCKWELL COLLINS INC

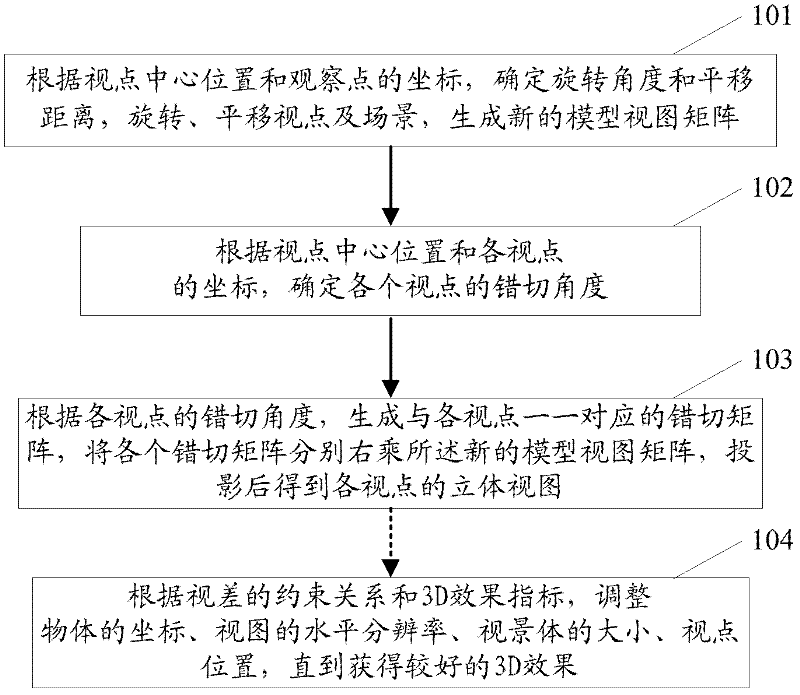

Method for converting virtual 3D (Three-Dimensional) scene into 3D view

The invention discloses a method for converting a virtual 3D (Three-Dimensional) scene into a 3D view. The method can be used for converting any virtual 3D scene in OpenGL into a three-dimensional view by the following steps of: rotating and translating a world coordinate system, wherein an observation point is taken as the original point of a new coordinate system, and a connecting line between the central position of viewpoints and the observation point serves as an axis-Z positive axis; determining a rotating angle and a translating distance according to the central position of the viewpoints and the coordinate of the observation point; determining the shear mapping angle of each viewpoint according to the central position of the viewpoints and the coordinate of each viewpoint to generate a corresponding shear mapping matrix, performing right-handed multiplication on a model view matrix of each viewpoint, and projecting to obtain corresponding image data of each viewpoint; and adjusting the coordinate of the 3D scene, the horizontal resolution of the view, the size of a view frustum and the positions of the viewpoints according to a constraint condition of a parallax error and 3D effect experience to improve the 3D effect of the 3D view. Shear transformation and parameter adjustment are inserted in an OpenGL processing flow, so that the problems of unremarkable 3D effect and the presence of vertical parallax are solved, and an optimal 3D effect is achieved.

Owner:BEIJING JETSEN TECH

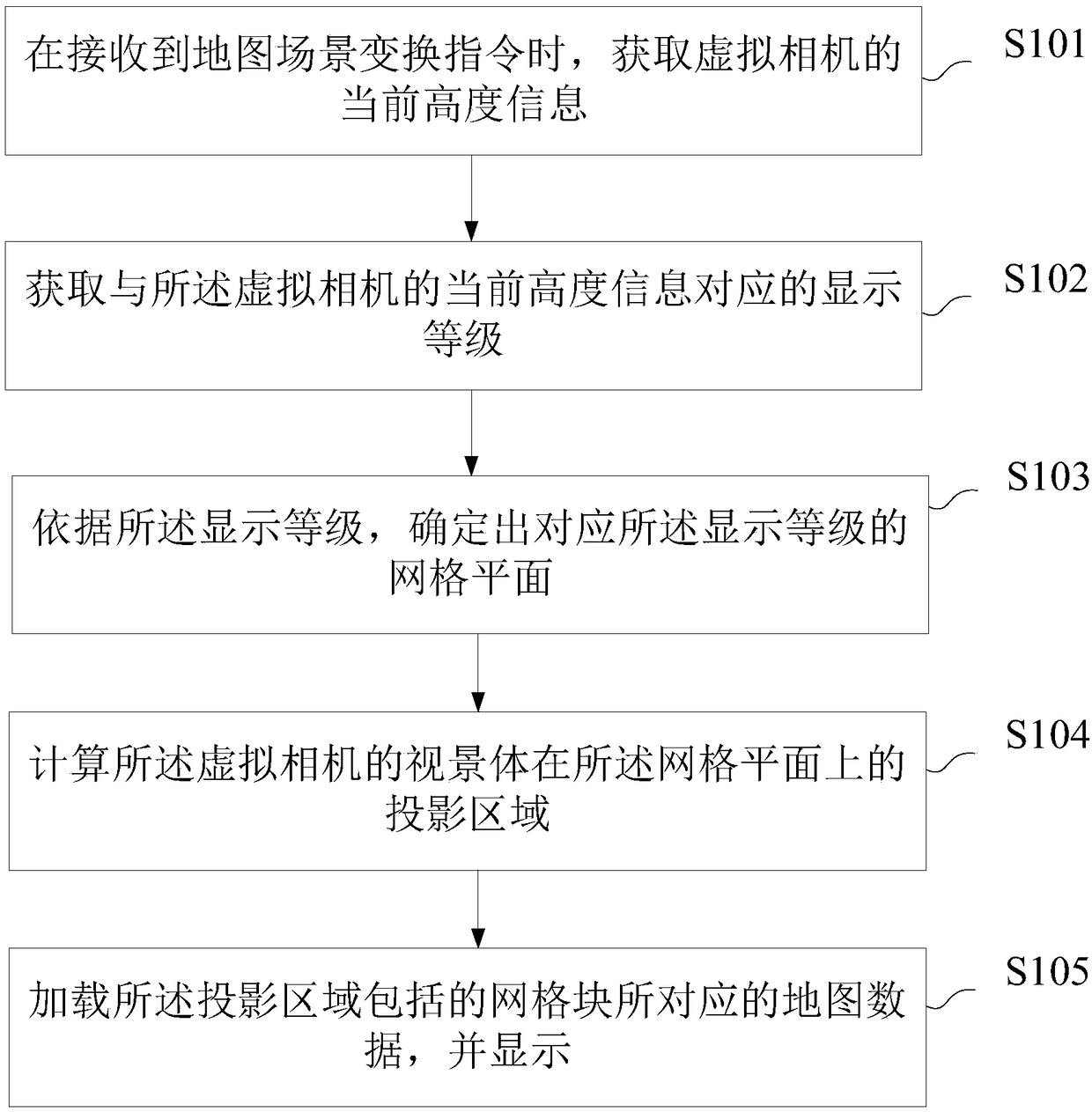

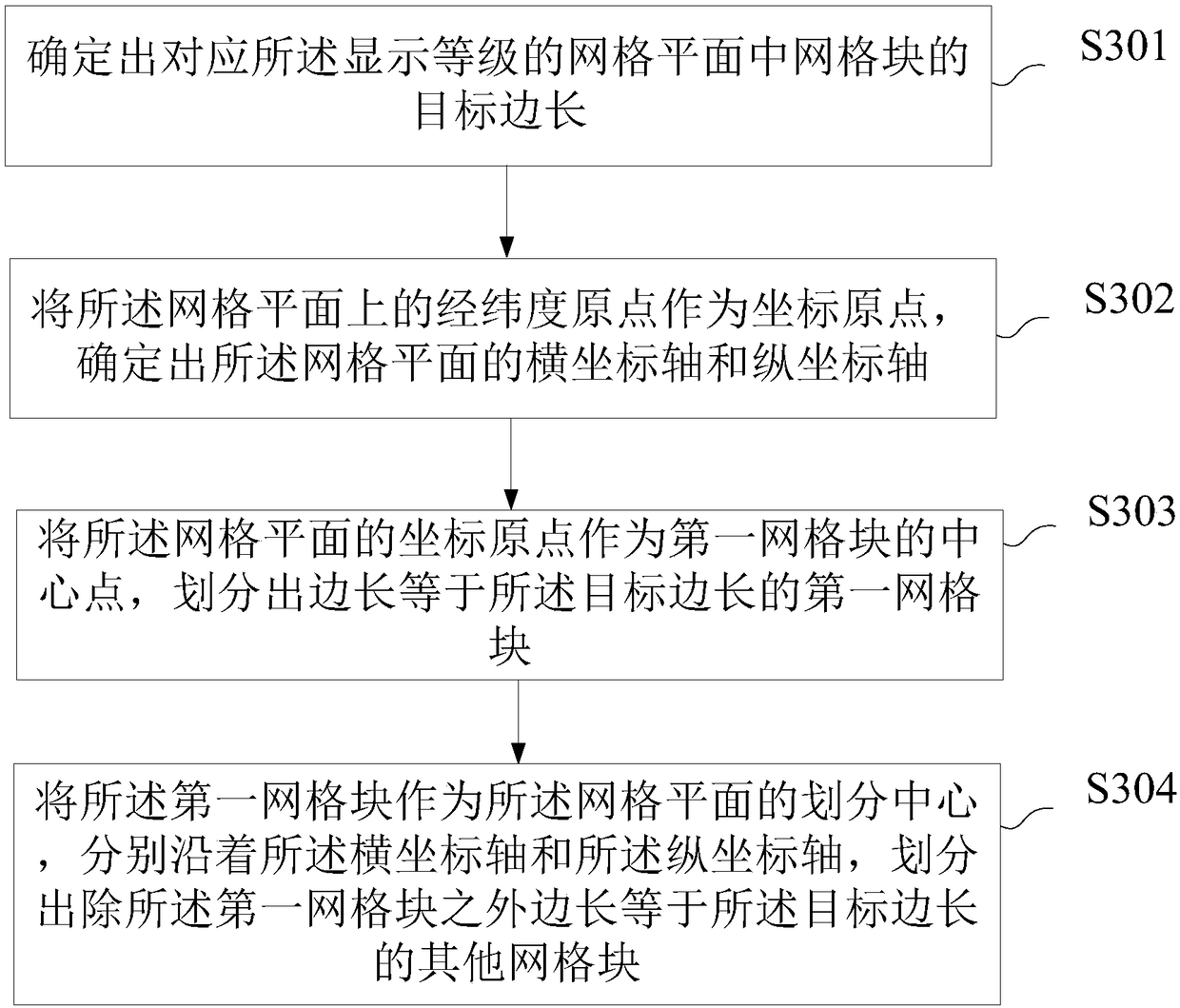

Map display method and device

ActiveCN108267154AAccurate displayImprove intuitivenessInstruments for road network navigationMaps/plans/chartsViewing frustumVirtual camera

The invention provides a map display method and device. By means of a grid plane determined according to the display level, the projection area of a virtual camera's view frustum intersecting with thegrid plane thereon can be calculated, and then when the map data corresponding to the grid blocks in the projection area are loaded and displayed, correct display of map data containing complete elevation information can be guaranteed, a 3D geographic scene corresponding to the map element can be accurately displayed to a user on an electronic map, and the visuality and accuracy of the electronicmap can be improved.

Owner:城市生活(北京)信息有限公司

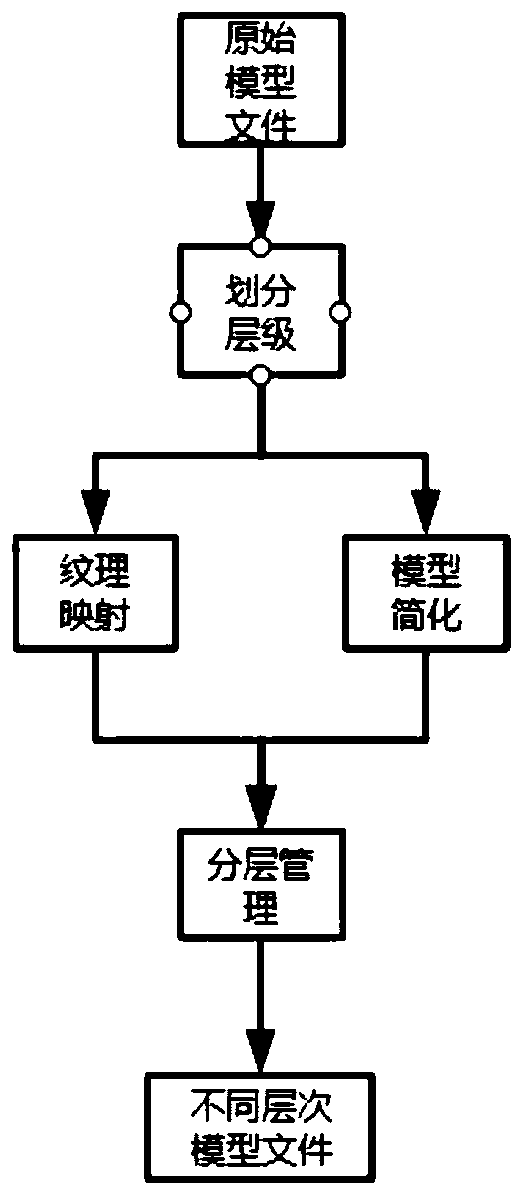

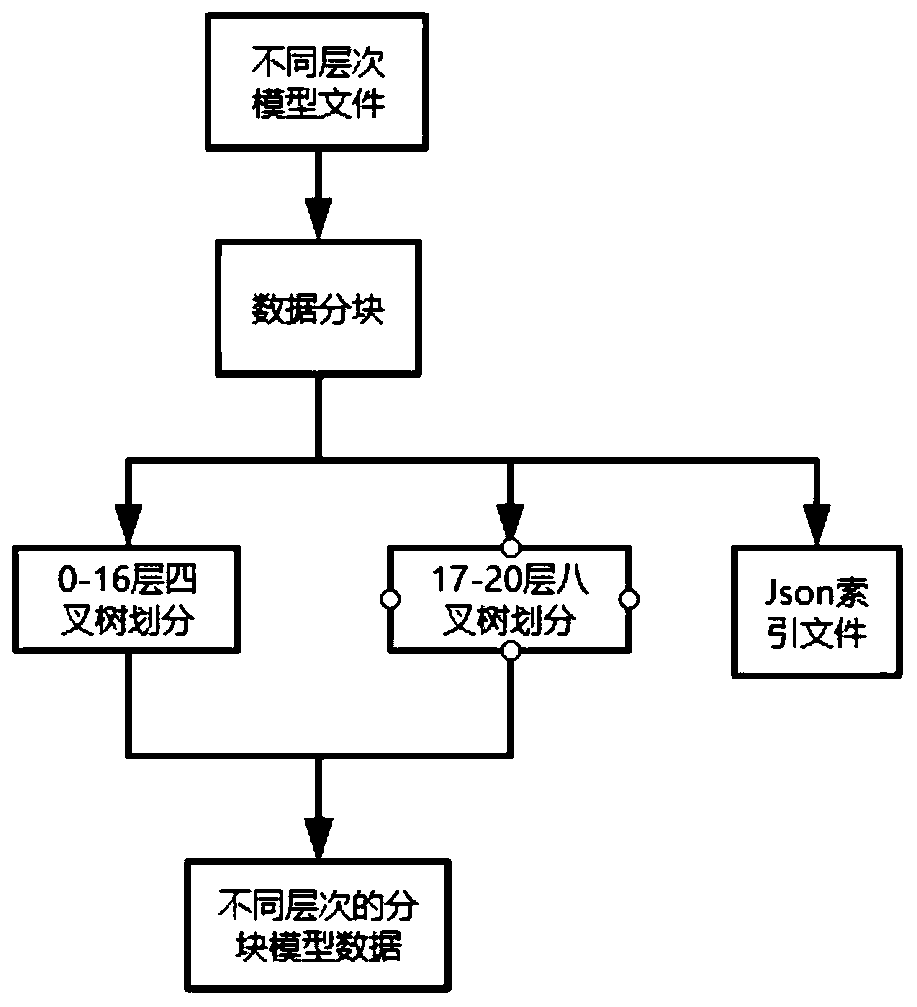

Large-scale oblique photography model organization and scheduling method

PendingCN110992458ARapid positioningEfficient management3D-image renderingTheoretical computer scienceViewing frustum

The invention discloses an organization and scheduling method based on a large-scale oblique photography model, and the method comprises the steps: carrying out the model data hierarchical division ofoblique photography model data, determining the simplified model data and model material files of all hierarchies through combining with the simplification degrees of all hierarchies, and generatingmodel files of different hierarchies; partitioning the model file of each hierarchy to generate partitioned model data of different hierarchies, and recording tiles by adopting OCT coding to generatea Json index file; generating a quadrangular view cone region according to the selected view angle and viewpoint, and calling block model data in the view cone region in combination with the index file; and matching the current view cone area according to the viewpoint and view angle changes, and updating the model data of the corresponding view cone area. According to the method, the data index and the tree-shaped data structure are established after the data is layered and blocked, so that quick positioning and effective management of the data are facilitated; through scheduling strategies of pre-access, three-level caching and the like, the model data loading and rendering efficiency is improved, and the data loading and visualization capacity of the three-dimensional GIS is expanded.

Owner:中国科学院电子学研究所苏州研究院

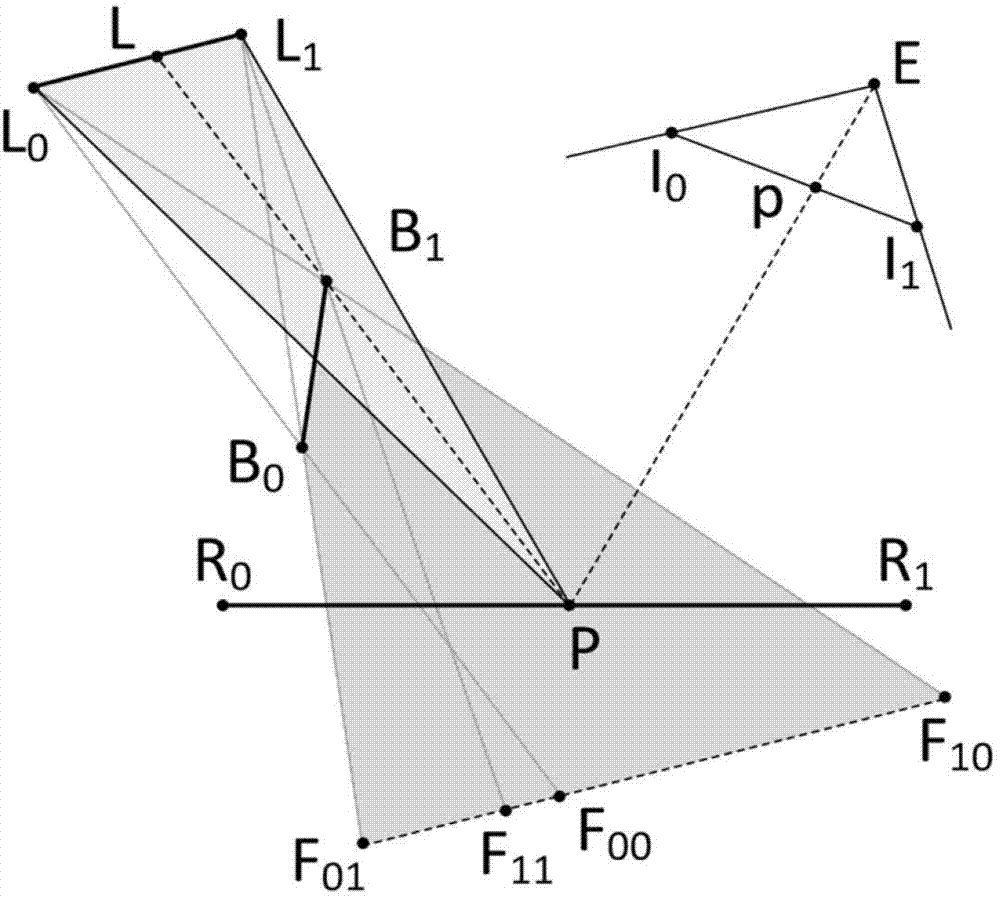

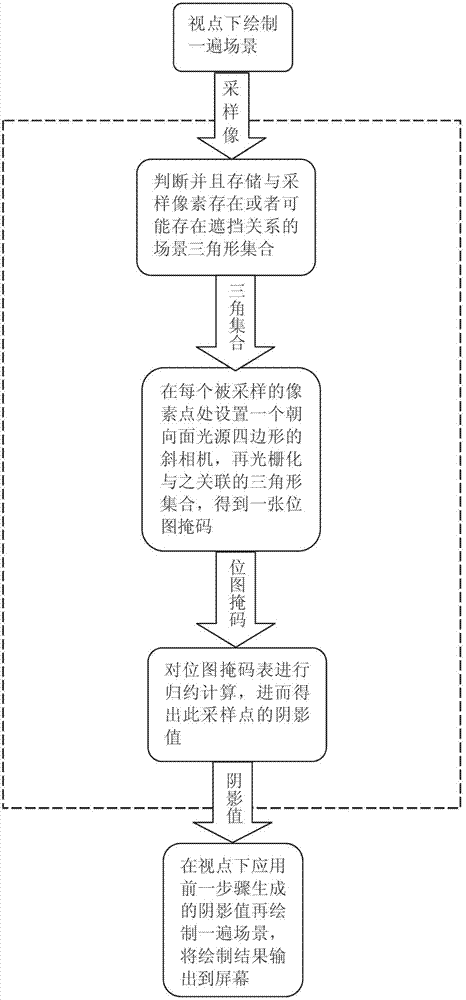

Efficient accurate general soft shadow generation method

InactiveCN102831634ADrawn preciselyRealistic, efficient and versatile3D-image renderingVisibilityViewpoints

The invention provides an efficient accurate general soft shadow generation method. The framework process of the method includes: firstly, rendering a scene under a viewpoint, and rendering the three-dimensional coordinate information of each sampled pixel into a floating texture; secondly, arranging an oblique camera towards a surface light source quadrilateral at the position of each sampled pixel, rasterizing scene triangles possibly in camera view frustums to obtain the visibility information of the sampled point to a light source, and further obtaining a shadow value of the sampled point; and thirdly, rendering the scene again under the viewpoint by applying the shadow value generated in the previous step, and outputting rendering results to a screen. The method supports dynamic surface light sources and dynamic deformable scenes, does not need precomputation, and has the advantages of vividness, high efficiency, generality and the like.

Owner:BEIHANG UNIV

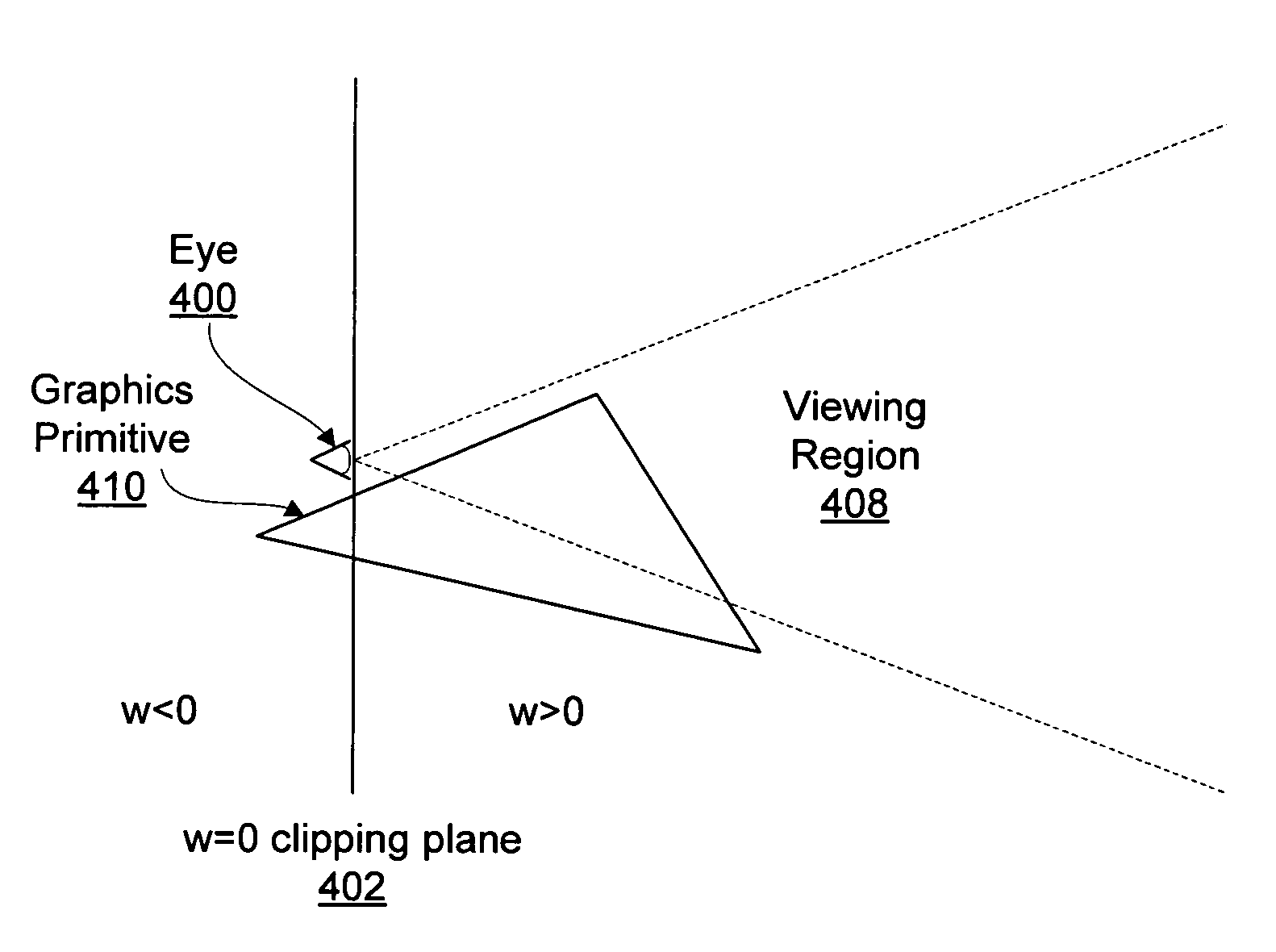

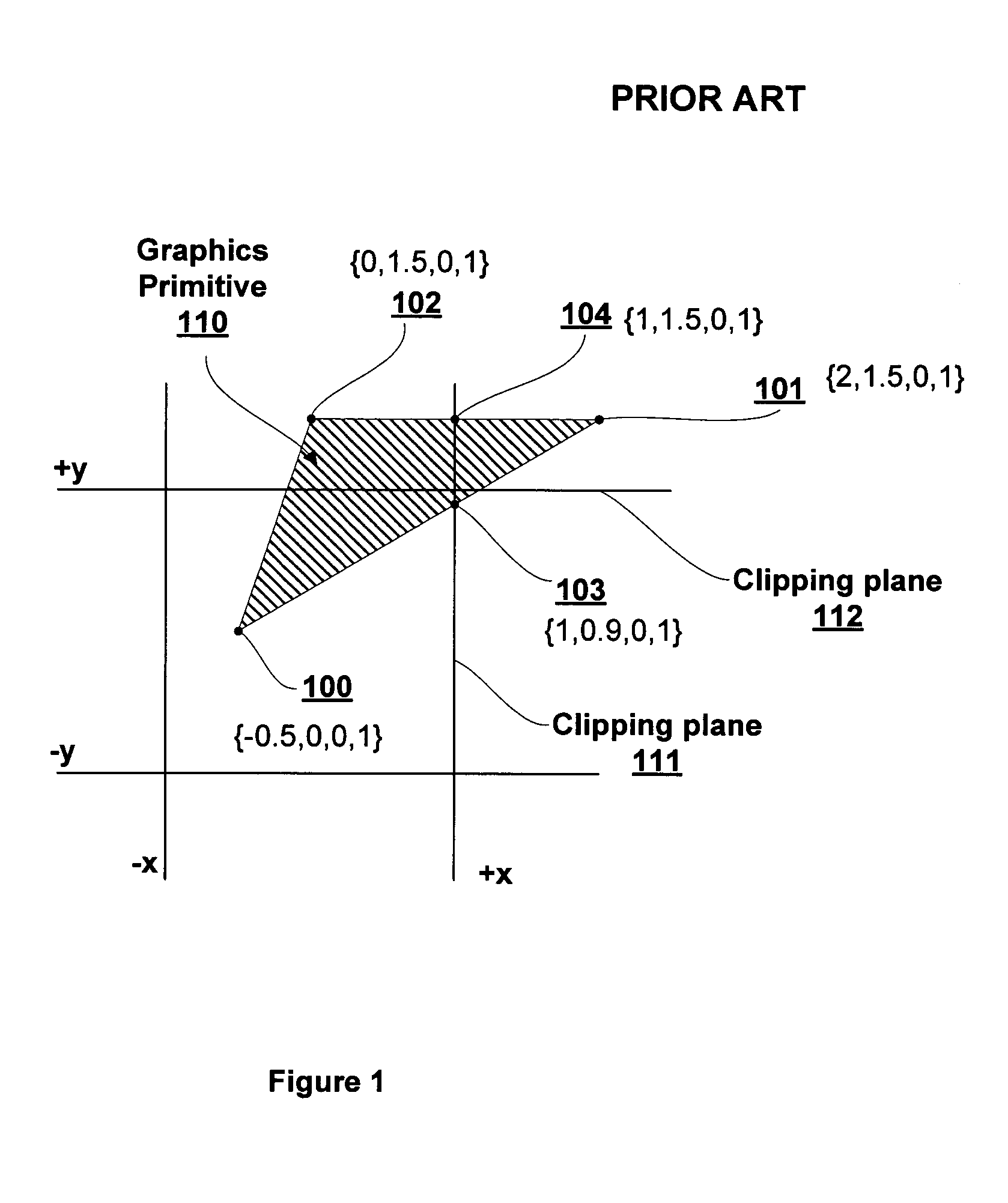

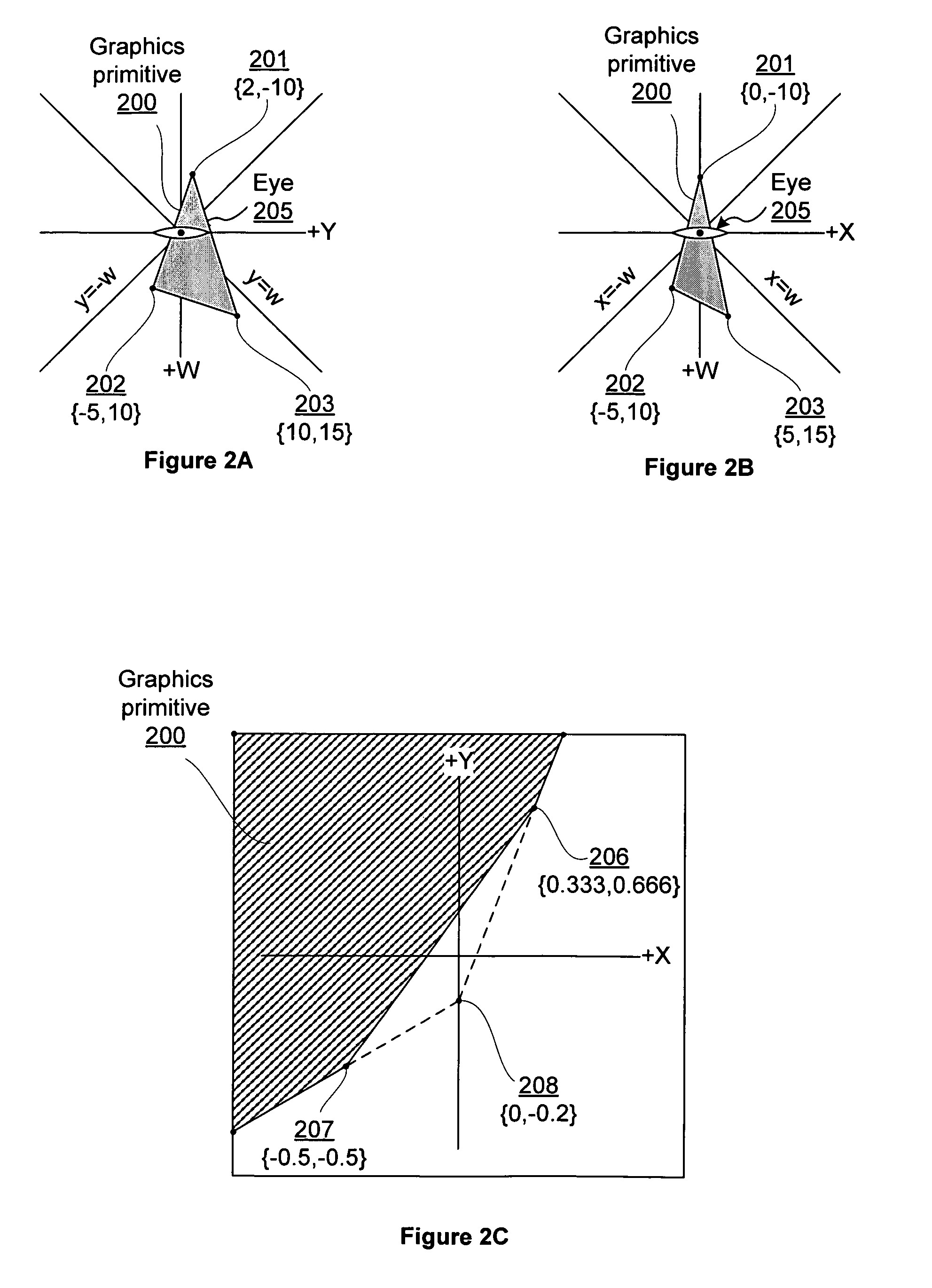

Clipping graphics primitives to the w=0 plane

ActiveUS7466322B1Simplifies rasterization computationLess complexCathode-ray tube indicatorsFilling planer surface with attributesGraphicsViewing frustum

Vertices defining a graphics primitive are converted into homogeneous space and clipped against a single clipping plane, the w=0 plane, to produce a clipped graphics primitive having vertices including w coordinates that are greater than or equal to zero. Rasterizing a graphics primitive having a vertex with a w coordinates that is greater than or equal to zero is less complex than rasterizing a graphics primitive having a vertex with a w coordinate that is less than zero. Clipping against the w=0 plane is less complex than conventional clipping since conventional clipping may require that the graphics primitive be clipped against each of the six faces of the viewing frustum to produce a clipped graphics primitive.

Owner:NVIDIA CORP

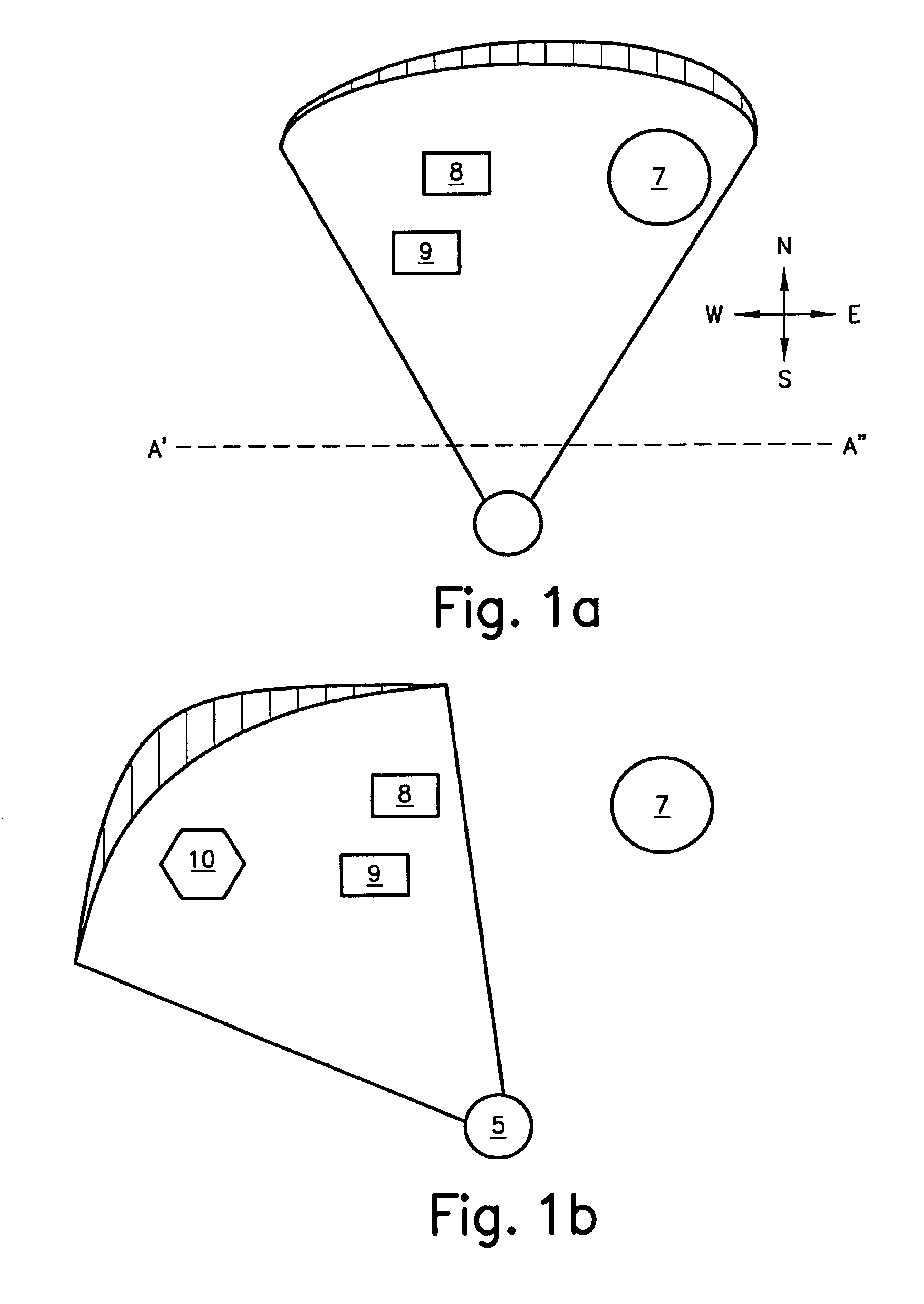

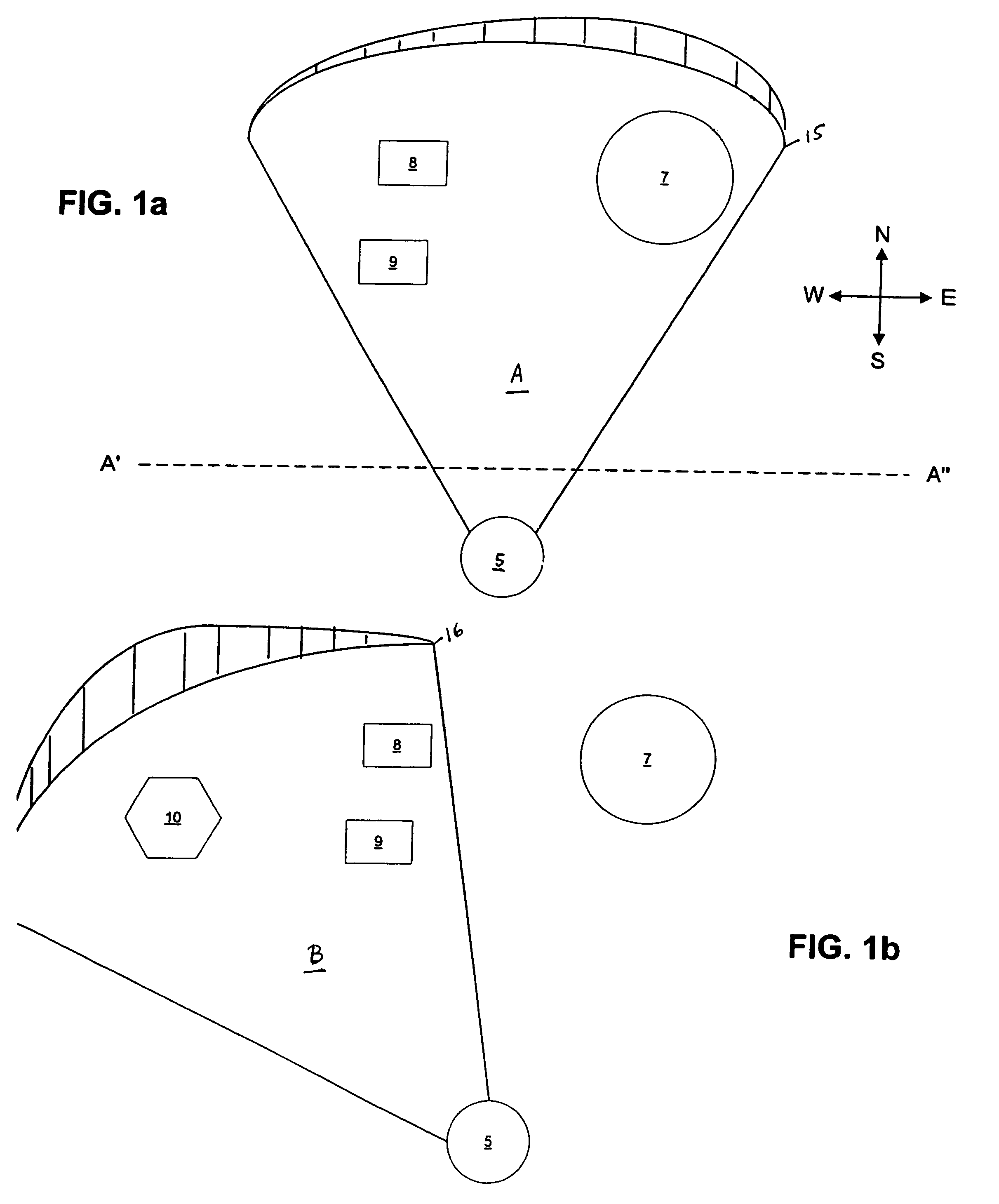

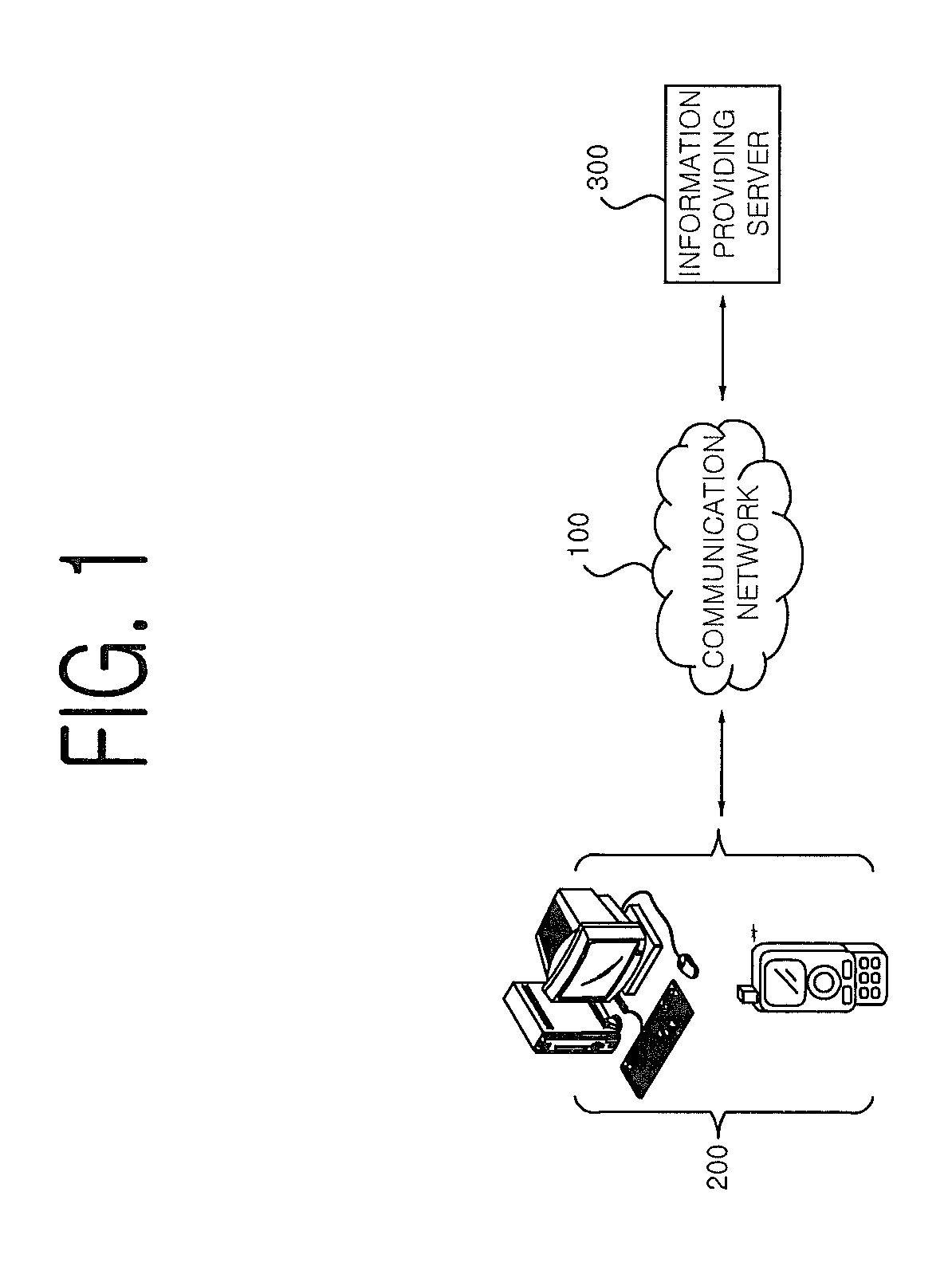

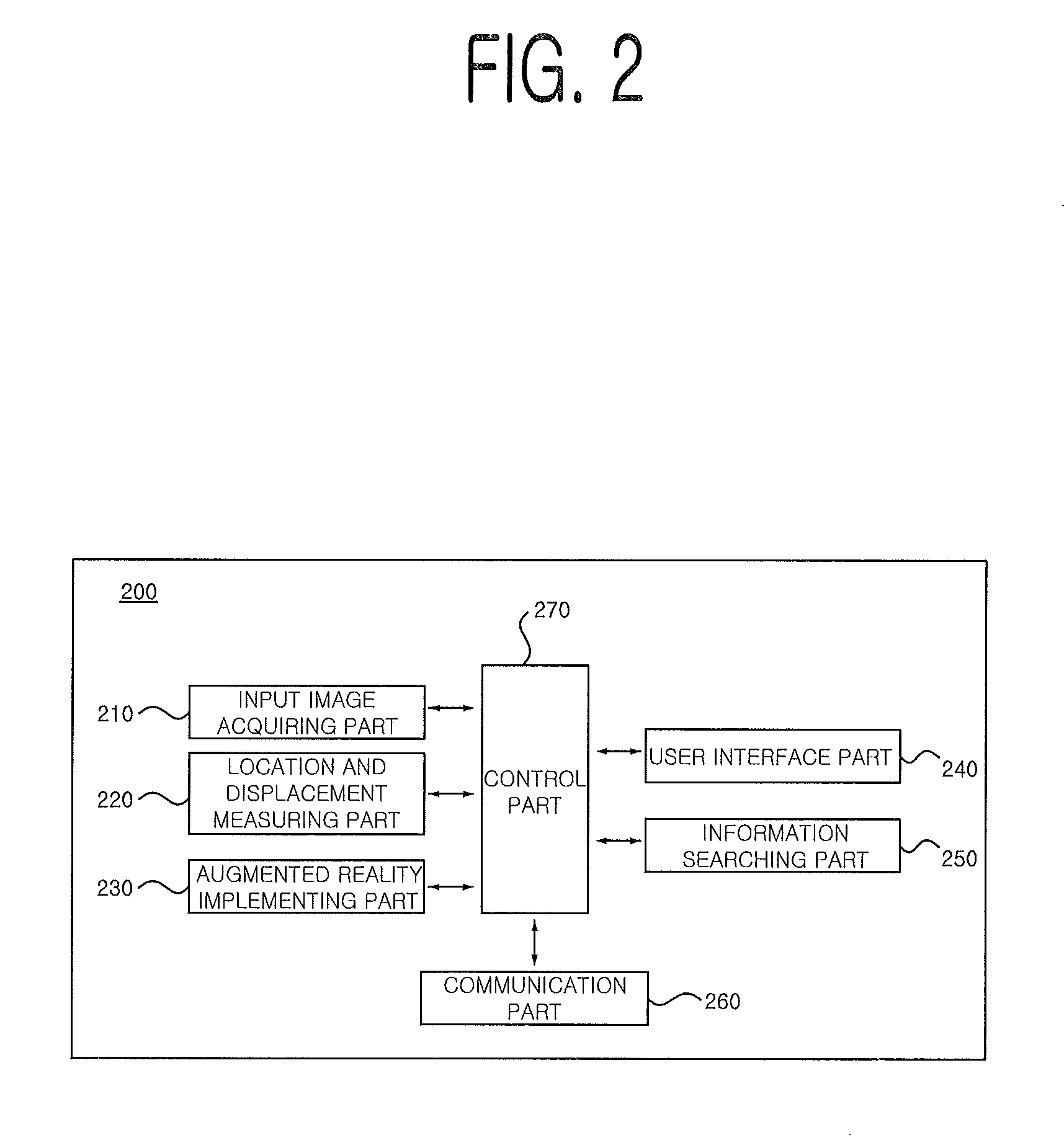

Method for providing information on object which is not included in visual field of terminal device, terminal device and computer readable recording medium

InactiveUS8373725B2Lavatory sanitoryCharacter and pattern recognitionVisual field lossViewing frustum

The present invention relates to a method for providing information on an object excluded in a visual field of a terminal in a form of augmented reality (AR) by using an image inputted to the terminal and information related thereto. The method includes the steps of: (a) specifying the visual field of the terminal corresponding to the inputted image by referring to at least one piece of information on a location, a displacement and a viewing angle of the terminal; (b) searching an object(s) excluded in the visual field of the terminal; and (c) displaying guiding information on the searched object(s) with the inputted image in a form of the augmented reality; wherein the visual field is specified by a viewing frustum whose vertex corresponds to the terminal.

Owner:INTEL CORP

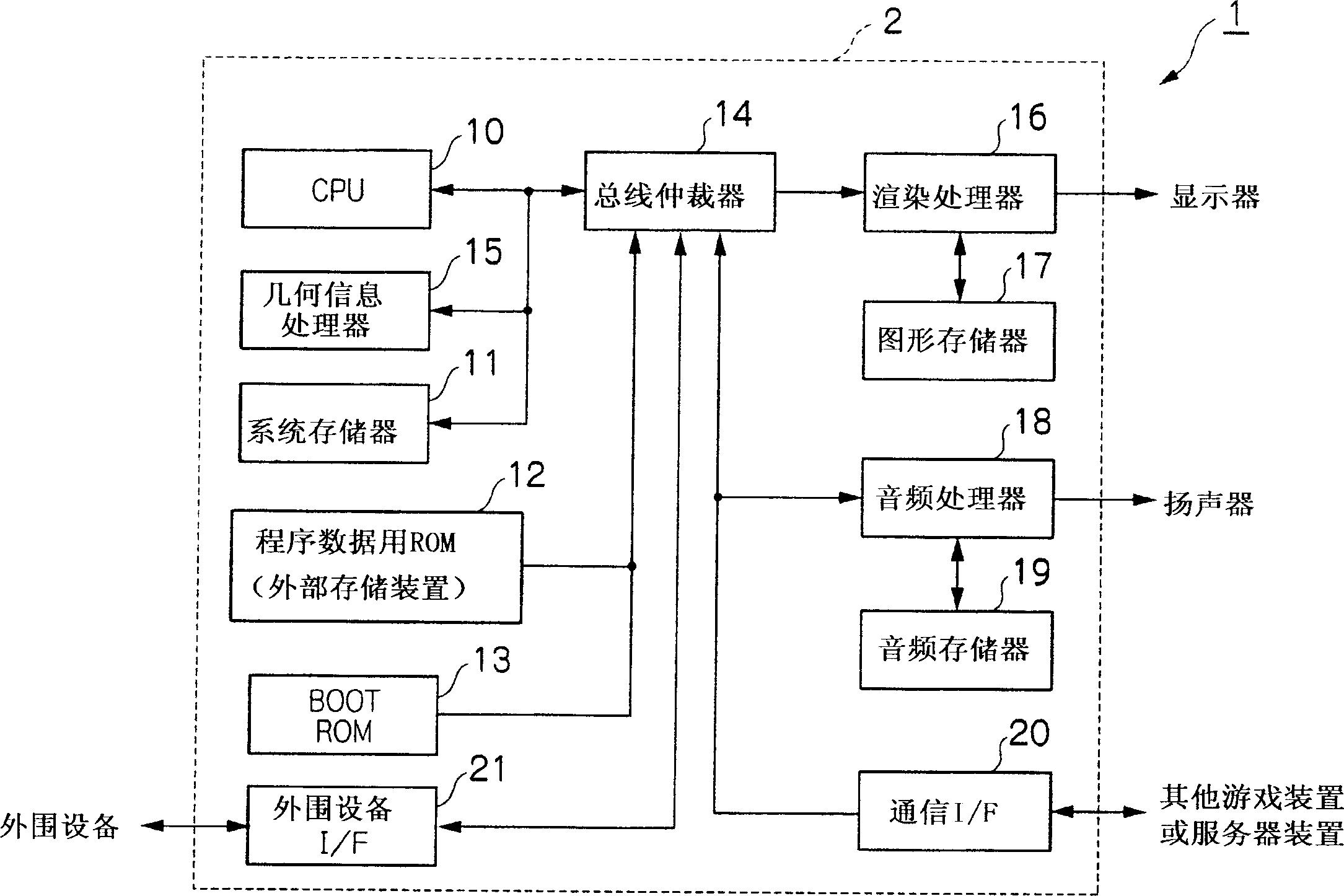

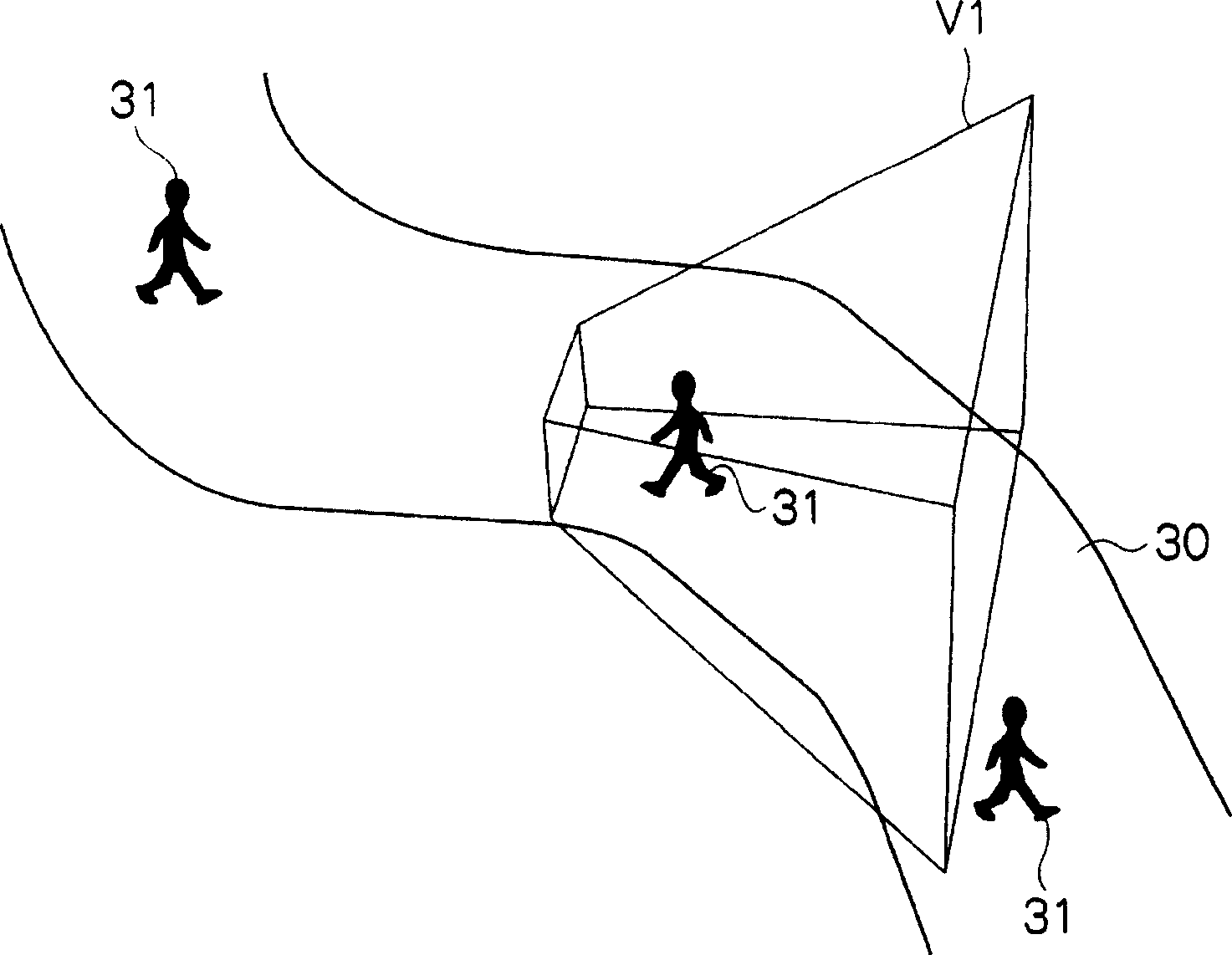

Image generating program, storage medium, image processing method, and image processing device

InactiveCN1908949AAvoid wastingVideo gamesImage data processingImaging processingComputer graphics (images)

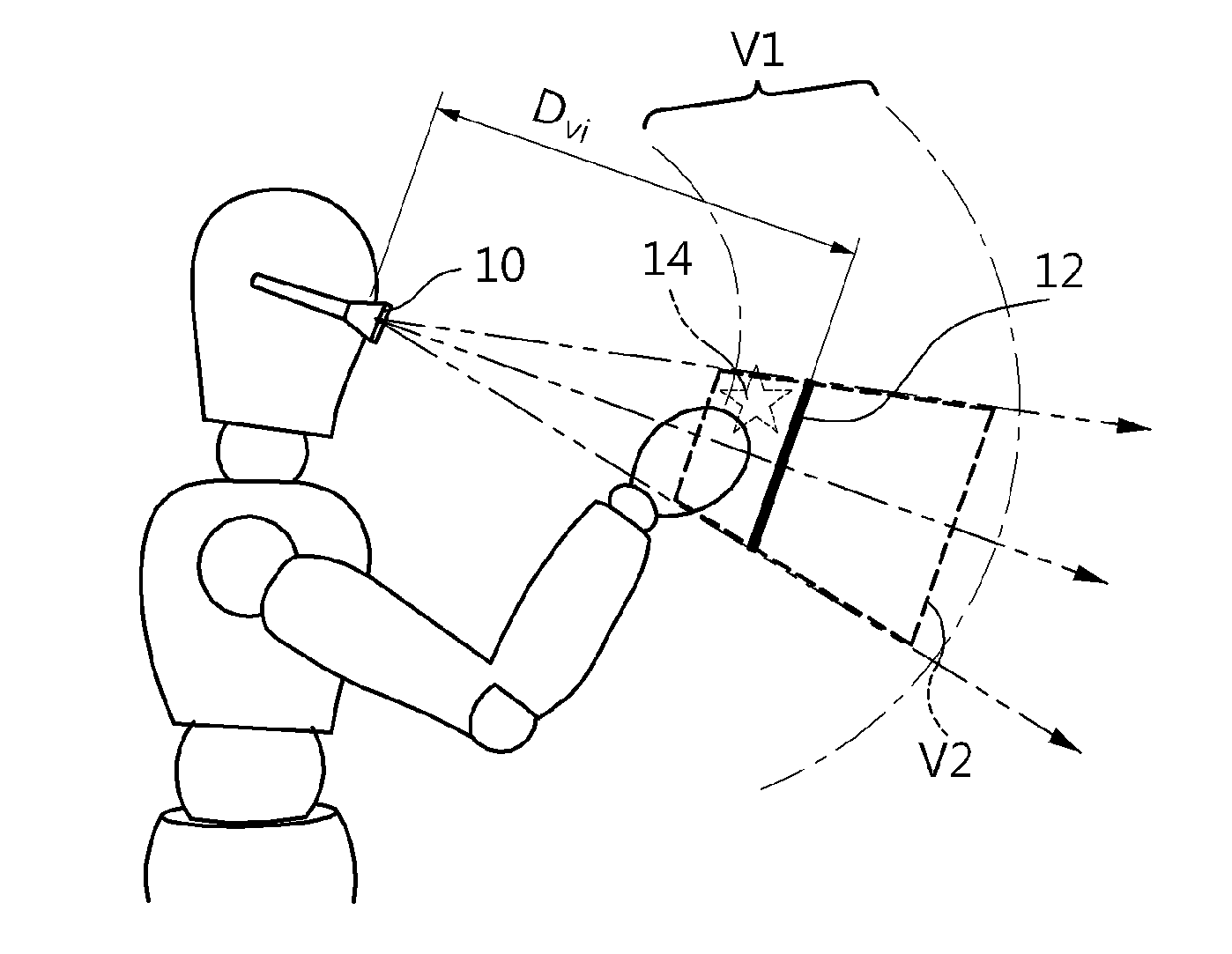

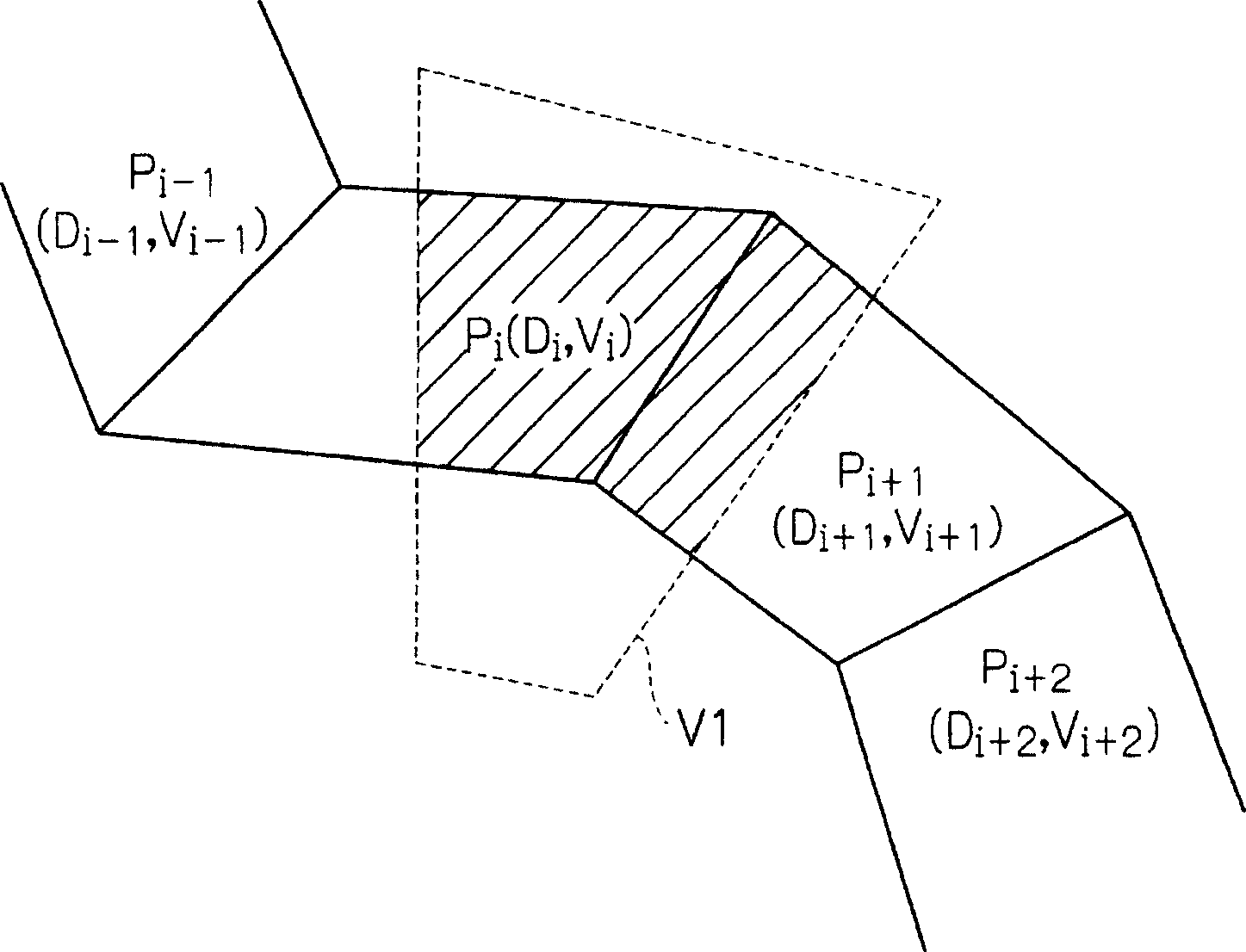

An image generating program, a storage medium, an image processing method, and an image processing device are provided capable of carrying out very detailed image control while preventing wastage of resources. This is configured such that a area of a movement path (30) present in a viewing frustum (V1) is calculated, then a determination is made based on the area (30) as to whether or not moving objects (31) are to be generated, and when it is determined that moving objects (31) are to be generated, information of the moving objects (31) to be generated is stored in storage means (12) such that positions of moving objects (31) present in the viewing frustum (V1) are renewed based on the information of the plurality of moving objects (31) stored in the storage means (12) and images of the plurality of moving objects (31) are generated.

Owner:SEGA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com