Patents

Literature

645 results about "Visual navigation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Visual electronic library

InactiveUS20050102610A1Digital computer detailsNatural language data processingDocumentation procedureInformation resource

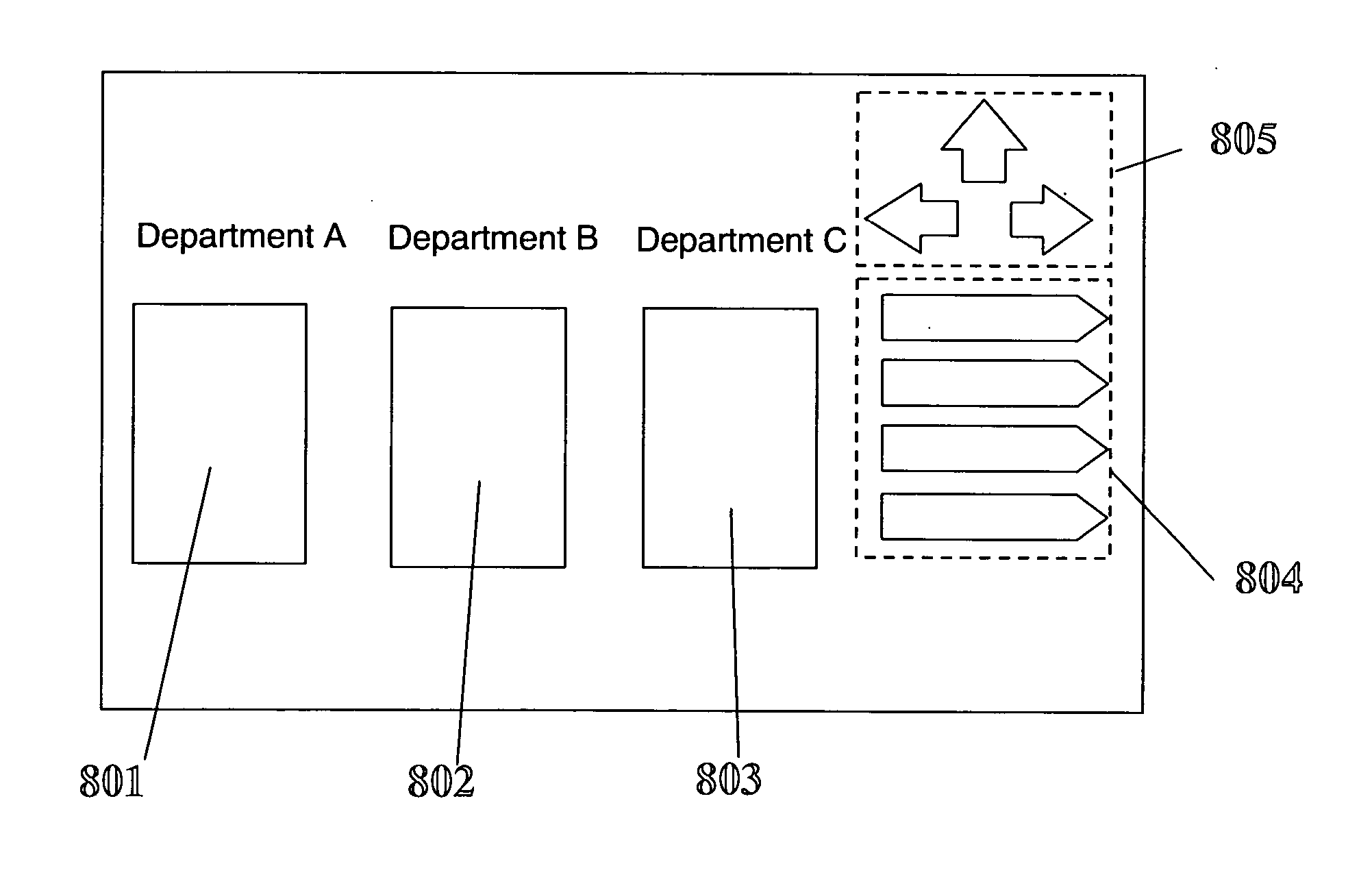

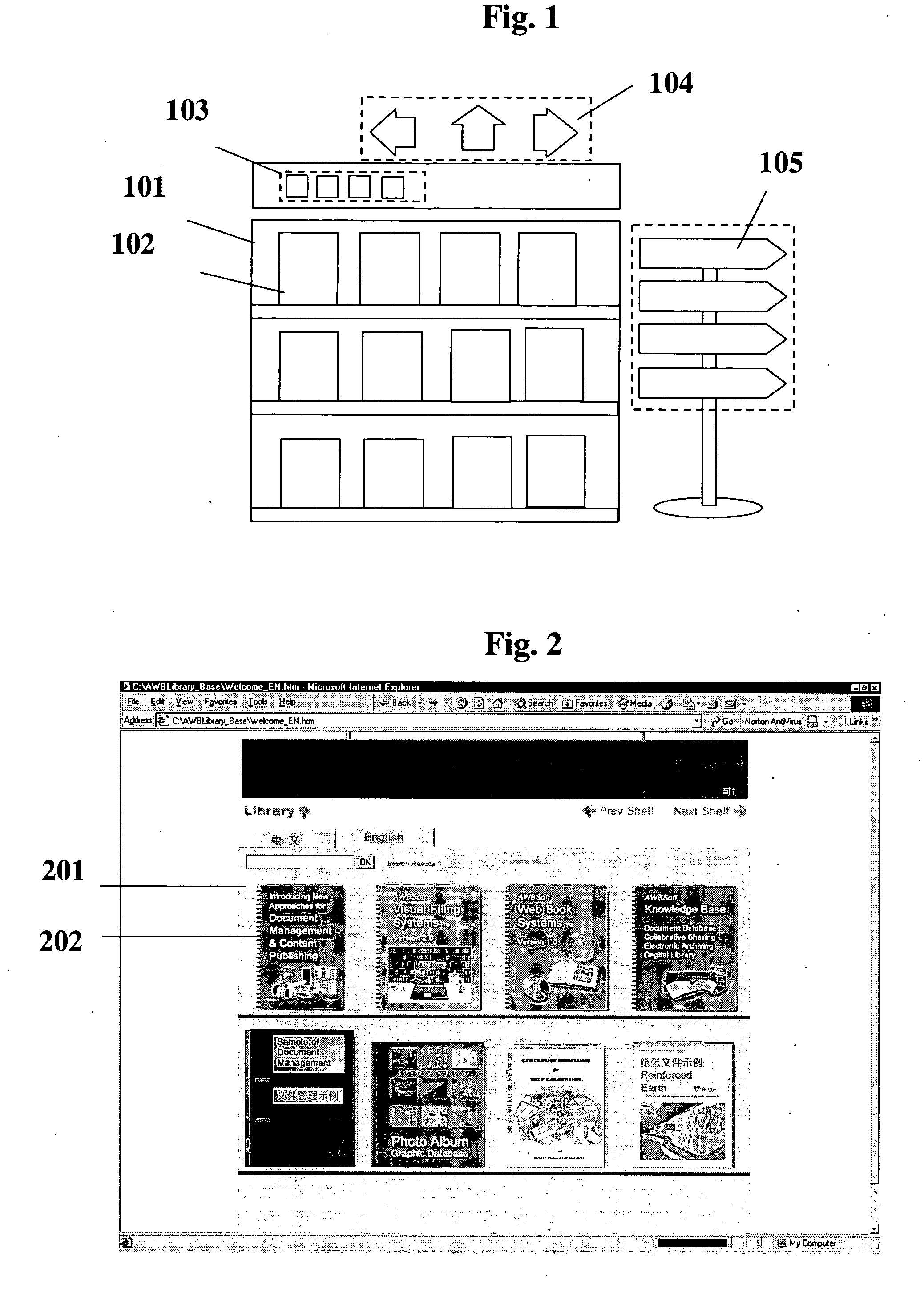

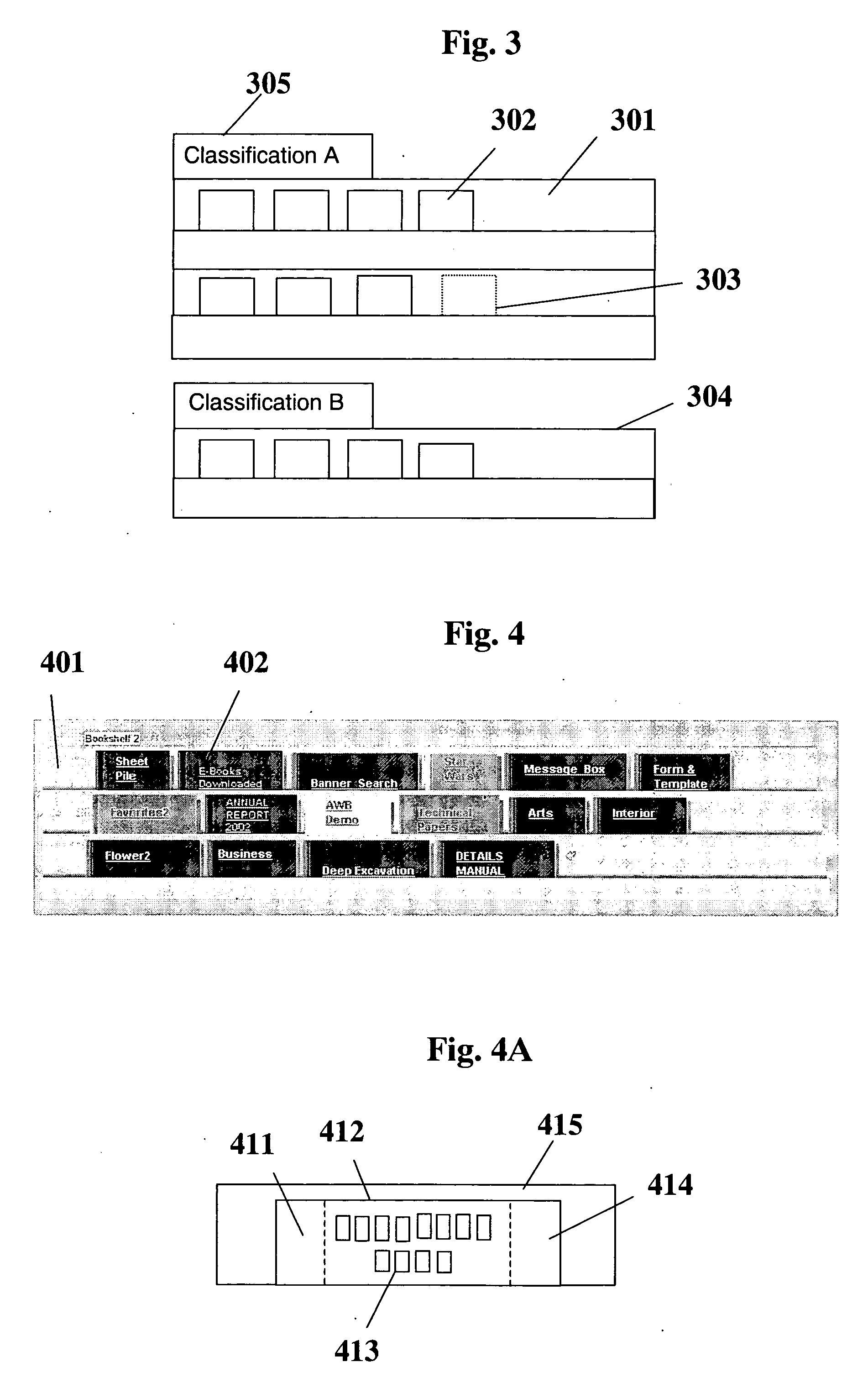

A method and system of generation, organization and presentation of web pages or computer graphic user interfaces on computer, computer peripheral devices, electronic equipment, digital processing systems, and other information resources are disclosed. Modeling physical books, bookshelves and libraries, Visual Library comprises Visual Bookshelves, while Visual Bookshelf comprises Visual Book Covers that represent and link to Visual Books, and Visual Books comprise a set of Visual Navigation Tabs that represent and link a set of web pages or a set of graphic user interfaces. With look-and-feel, the linked Visual Library, Visual Bookshelves and Visual Books in hierarchical structures provide a friendly environment in which the documents, contents can be well organized and easily navigated.

Owner:JIE WEI

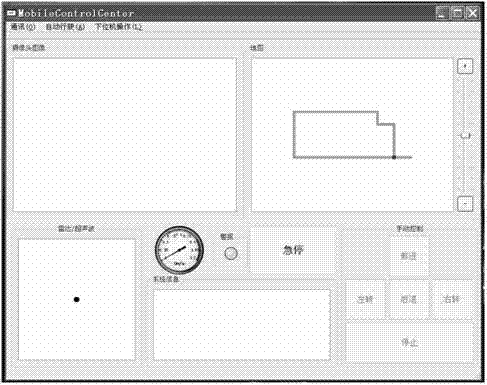

Vision-based combined navigation robot and navigation method

ActiveCN102789233AImplement combined navigationImprove reliabilityNavigation instrumentsPosition/course control in two dimensionsVisual navigationDigital camera

The invention relates to a vision-based combined navigation robot and a navigation method. The robot comprises a four-wheel drive trolley and a color digital camera arranged on the body of the trolley; a plurality of ultrasonic sensors which are arranged at the front and the rear ends of the trolley body and used for detecting the distance information of obstacles around the robot; a gyroscope mounted inside the trolley body and used for detecting the attitude information of the robot; four photoelectric encoders which are arranged on four sets of servo drive motors respectively and used as speedometers; a motor controller; and a robot trolley body control system computer used for ensuring the real time performance of image processing and control. The navigation method provided by the invention mainly works based on the visual navigation in combination with the related information of the speedometer, the gyroscope and the ultrasonic sensors, resulting in combined navigation, so as to improve the reliability and navigation precision of the system to the greatest extent.

Owner:HUBEI SANJIANG AEROSPACE HONGFENG CONTROL

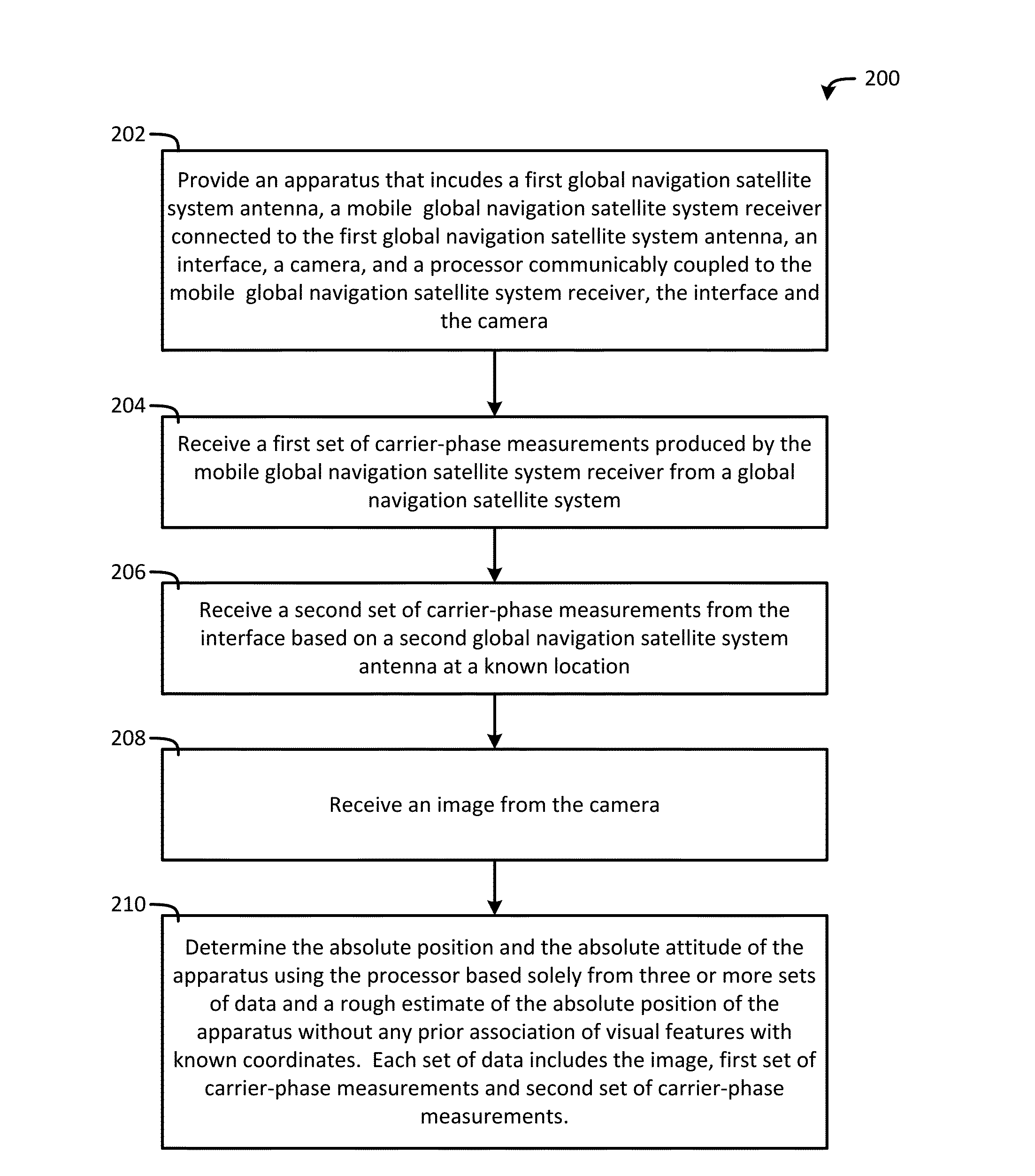

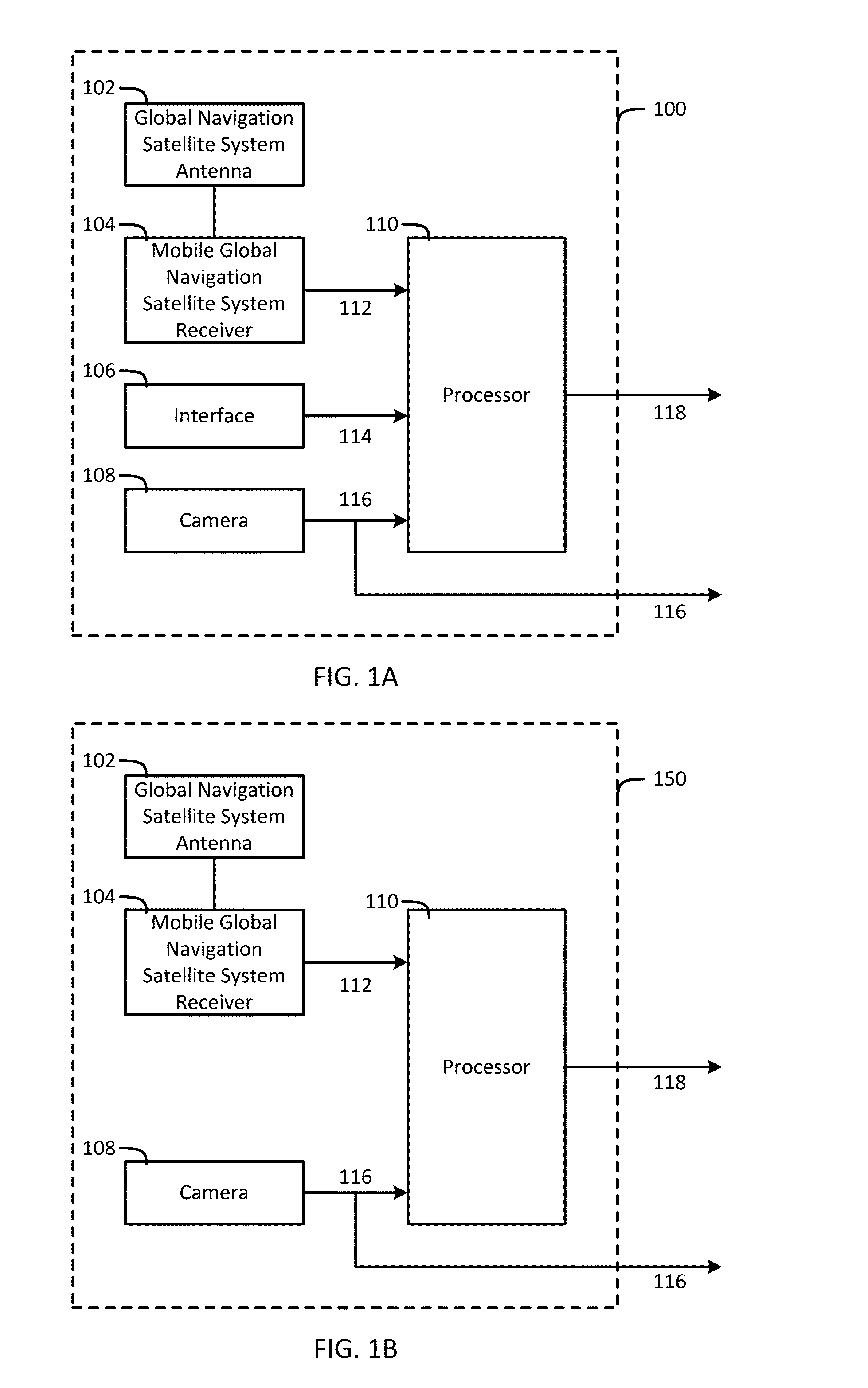

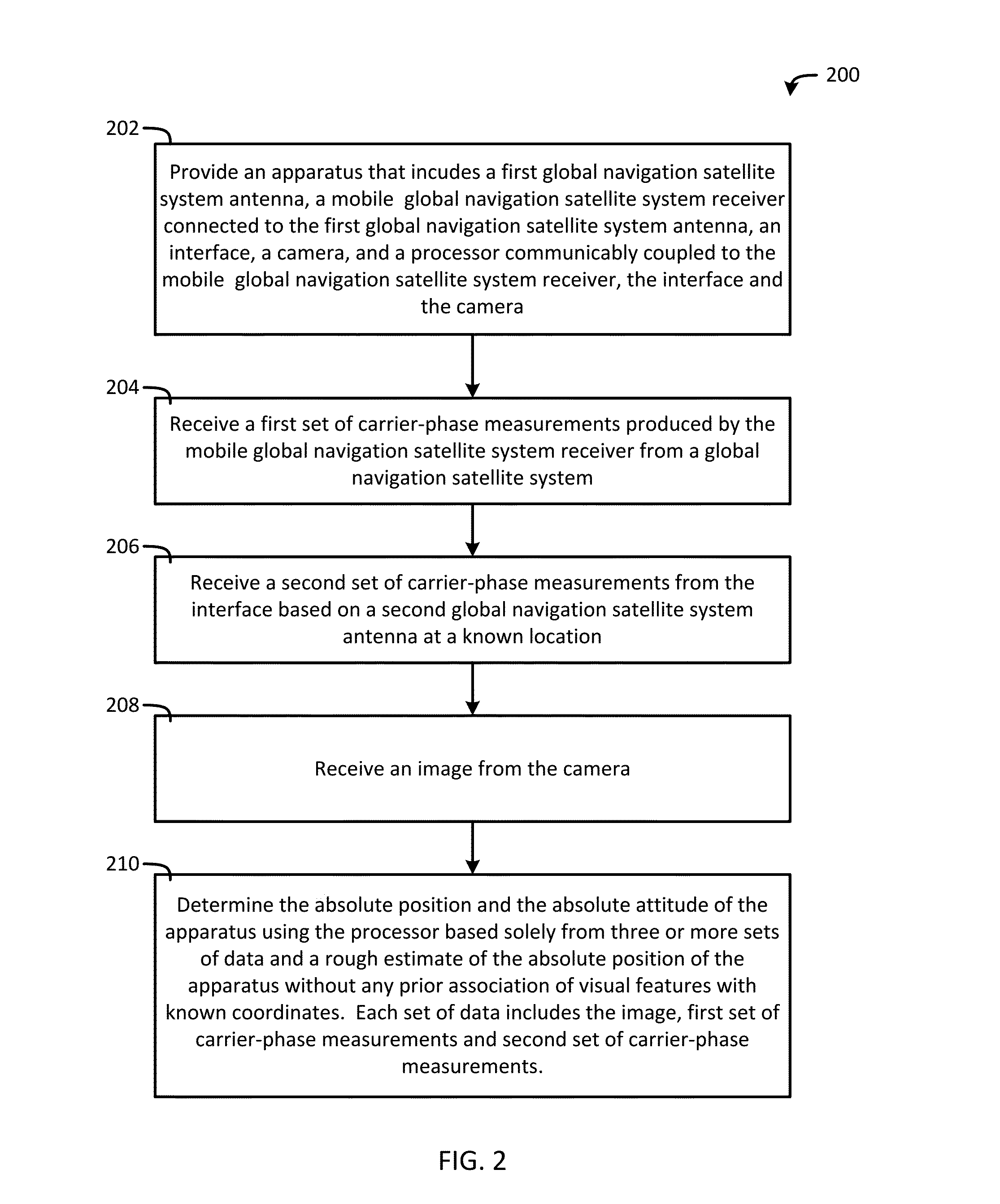

System and method for using global navigation satellite system (GNSS) navigation and visual navigation to recover absolute position and attitude without any prior association of visual features with known coordinates

InactiveUS20150219767A1Precise and absolute positionPrecise and absolute and orientationSatellite radio beaconingOrbitVisual perception

An apparatus includes a global navigation satellite system antenna, a global navigation satellite system receiver, a camera, and a processor. The mobile global navigation satellite system receiver produces a set of carrier-phase measurements from a global navigation satellite system. The camera produces an image. The processor determines an absolute position and an absolute attitude of the apparatus solely from three or more sets of data and a rough estimate of the absolute position of the apparatus without any prior association of visual features with known coordinates. Each set of data includes the image and the set of carrier-phase measurements. In addition, the processor uses either a precise orbit and clock data for the global navigation satellite system or another set of carrier-phase measurements from another global navigation satellite system antenna at a known location in each set of data.

Owner:BOARD OF RGT THE UNIV OF TEXAS SYST

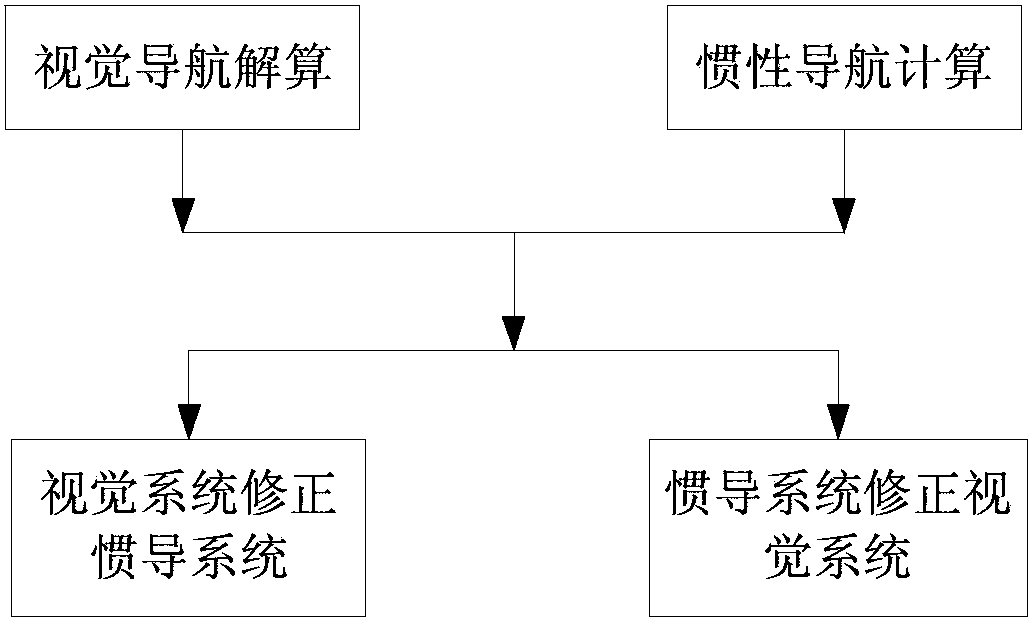

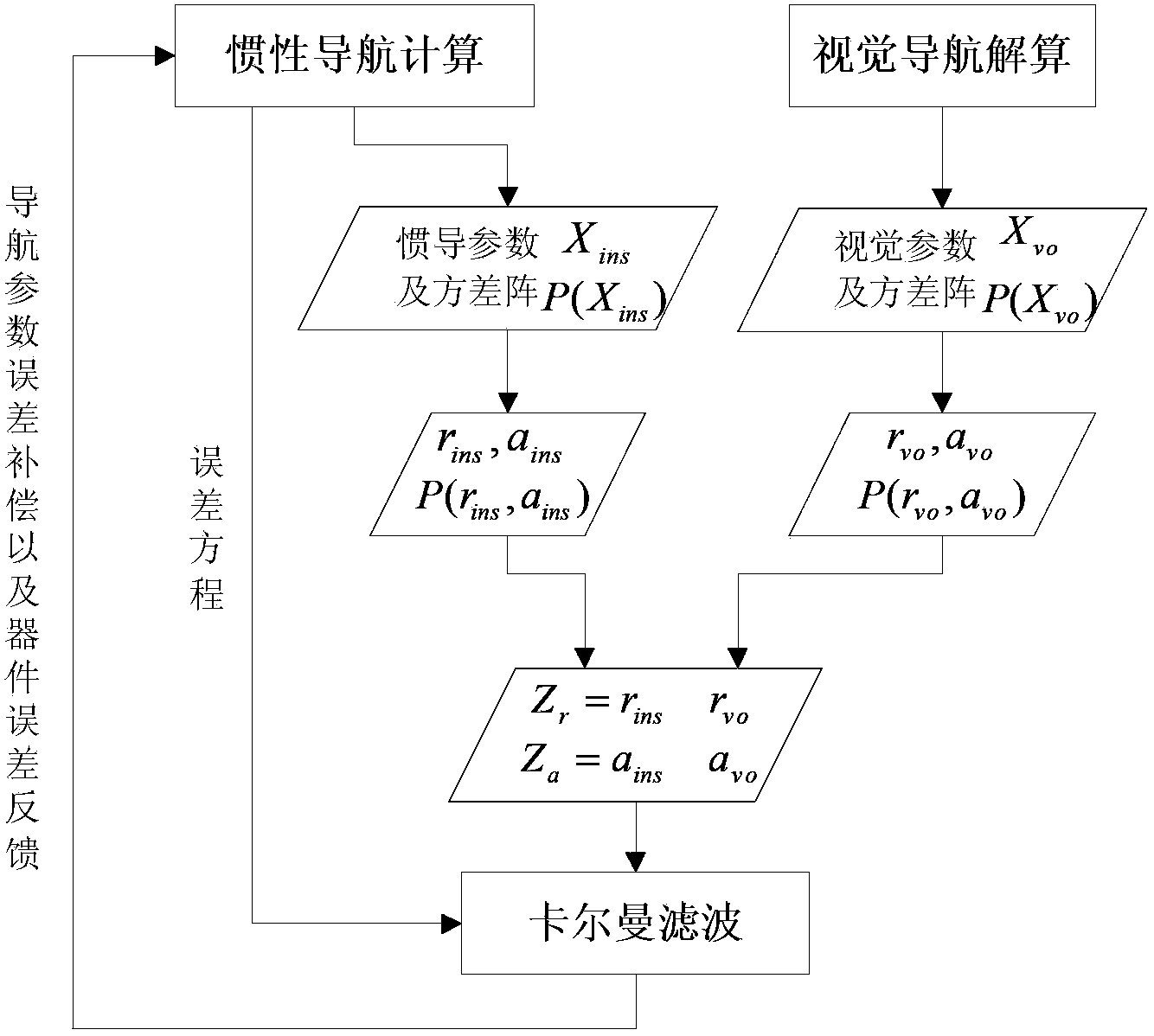

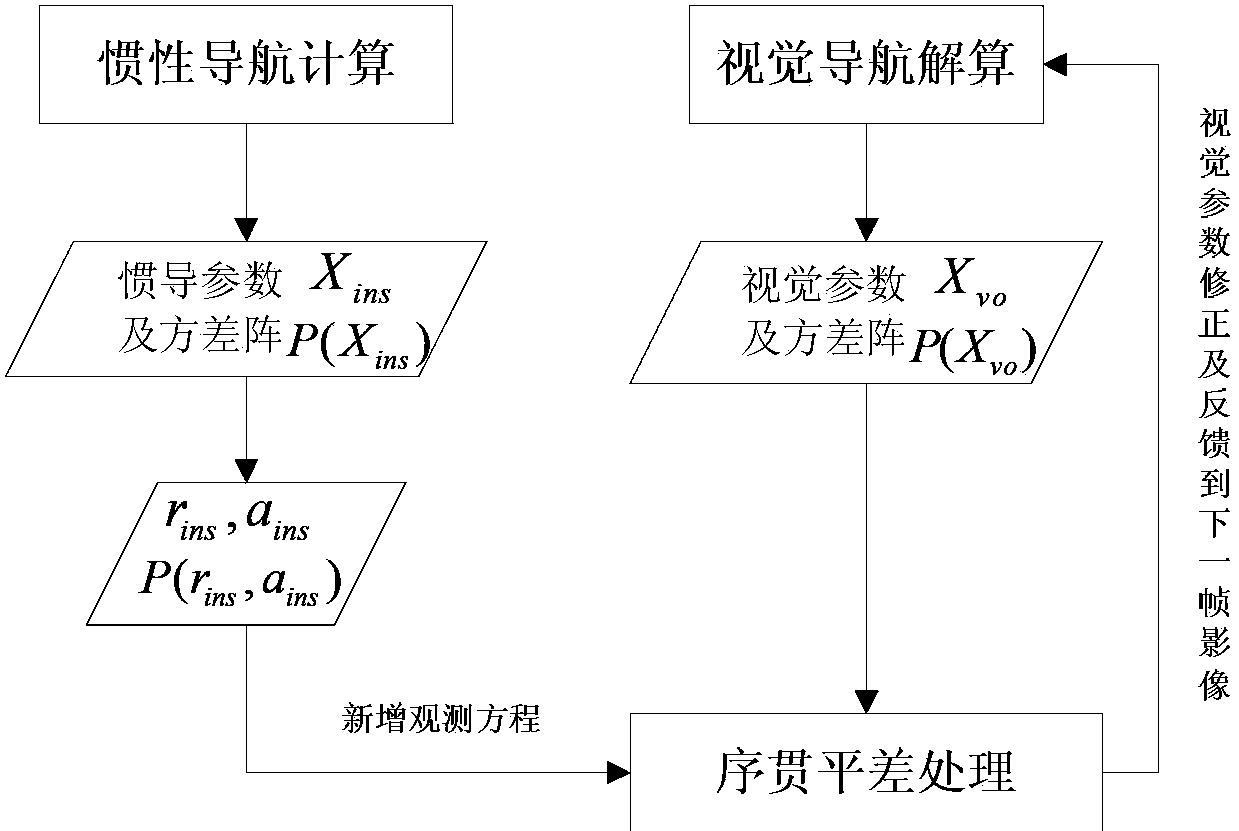

Visual navigation/inertial navigation full combination method

InactiveCN103424114AHigh precisionReduce precisionNavigation by speed/acceleration measurementsComputer visionLeast squares

The invention relates to a visual navigation / inertial navigation full combination method. The method comprises the following steps: first, calculation of visual navigation: observation equations are listed based on collinearity equations, carrier positions and attitude parameters are obtained through the least square principle and adjustment,, and variance-covariance arrays among the parameters are calculated; second, calculation of inertial navigation: navigation calculation is carried out in the local horizontal coordinates, carrier positions, speeds and attitude parameters of each moment are obtained, variance-covariance arrays among the parameters are calculated; third, correction of the inertial navigation system through the visual system: by means of the Kalman filtering, navigation parameter errors and device errors of the inertial navigation system are estimated, and subjected to compensation and feedback correction, and therefore the optimal estimated values of all the parameters of the inertial navigation system are obtained; fourth, correction of the visual system through the inertial navigation system: all the parameters of the visual system are corrected through the sequential adjustment treatment. Compared to the prior art, the method has advantages of rigorous theories, stable performances, high efficiency and the like.

Owner:TONGJI UNIV

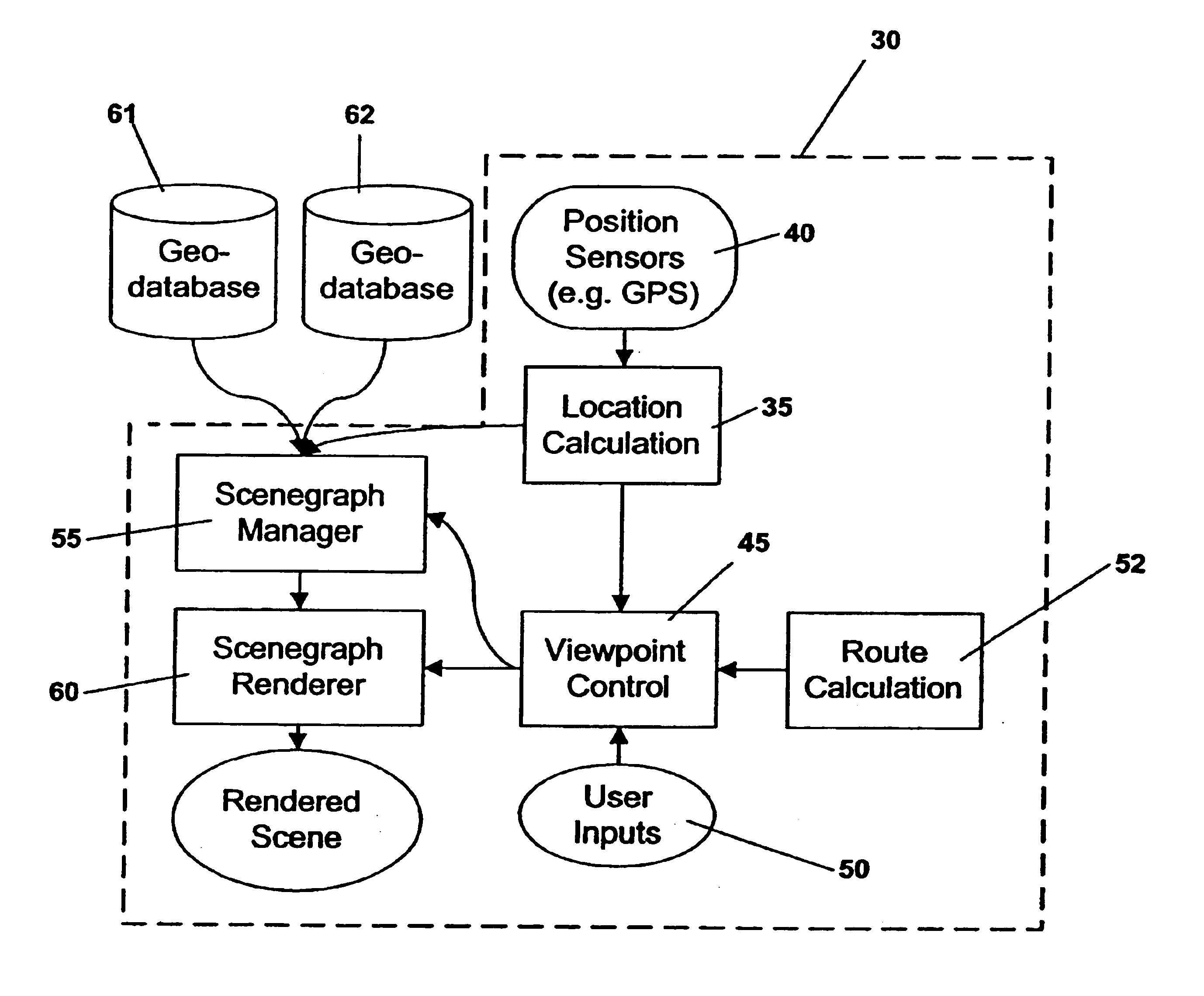

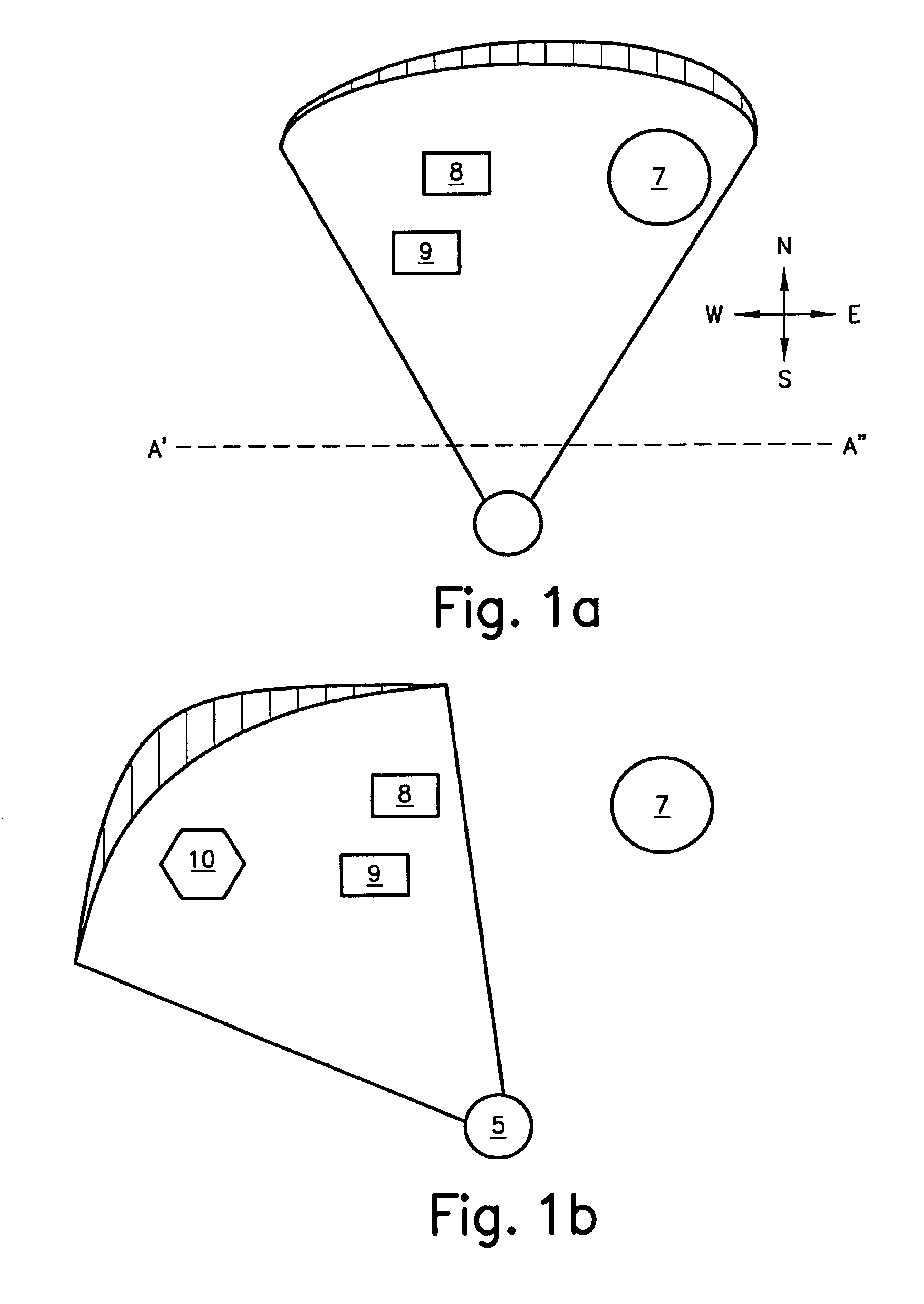

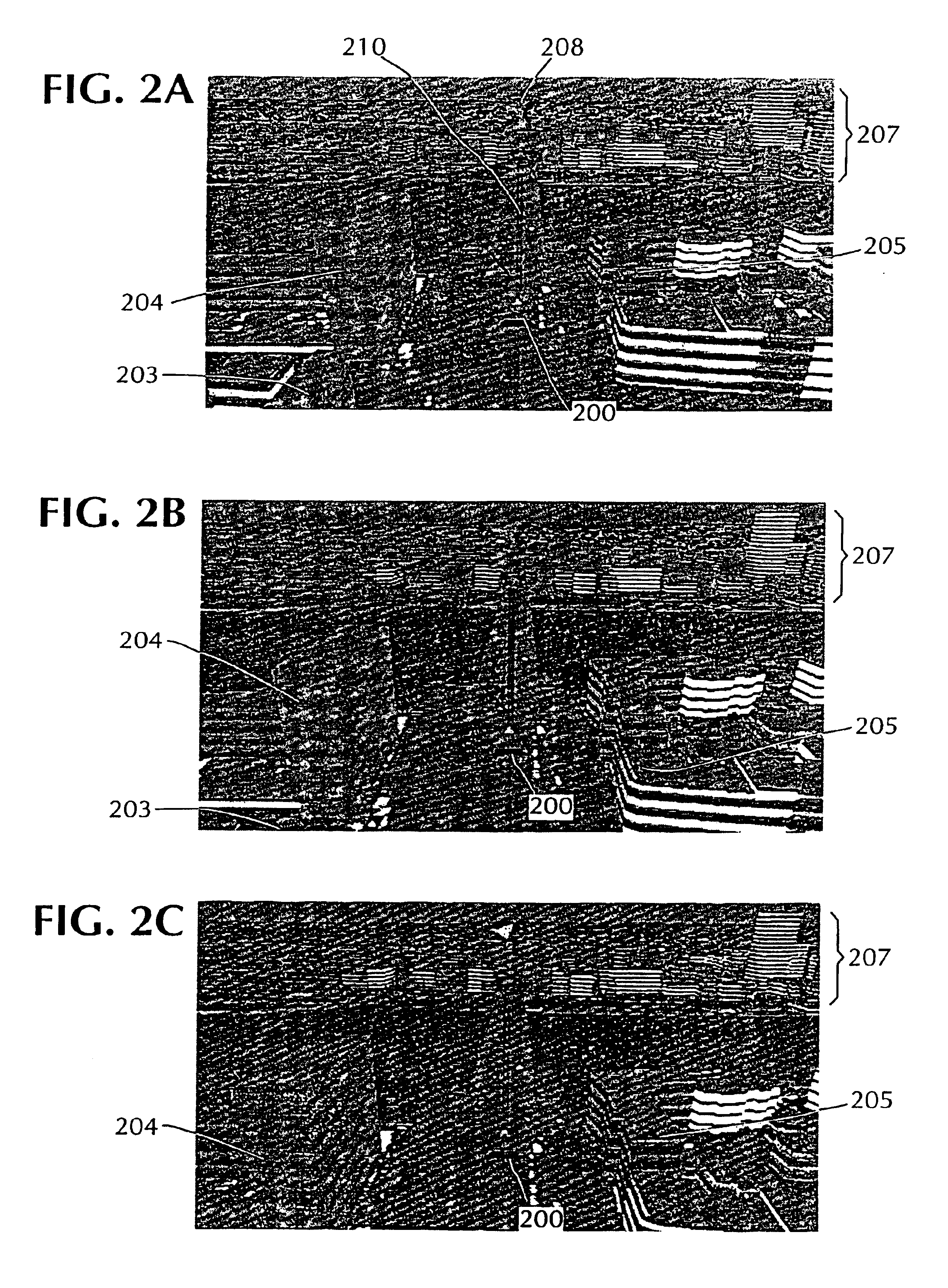

System and method for advanced 3D visualization for mobile navigation units

InactiveUS6885939B2Improve realismReduce unevennessInstruments for road network navigationRoad vehicles traffic controlMobile navigationViewpoints

A system providing three-dimensional visual navigation for a mobile unit includes a location calculation unit for calculating an instantaneous position of the mobile unit, a viewpoint control unit for determining a viewing frustum from the instantaneous position, a scenegraph manager in communication with at least one geo-database to obtain geographic object data associated with the viewing frustum and generating a scenegraph organizing the geographic object data, and a scenegraph renderer which graphically renders the scenegraph in real time. To enhance depiction, a method for blending images of different resolutions in the scenegraph reduces abrupt changes as the mobile unit moves relative to the depicted geographic objects. Data structures for storage and run-time access of information regarding the geographic object data permit on-demand loading of the data based on the viewing frustum and allow the navigational system to dynamically load, on-demand, only those objects that are visible to the user.

Owner:ROBERT BOSCH GMBH

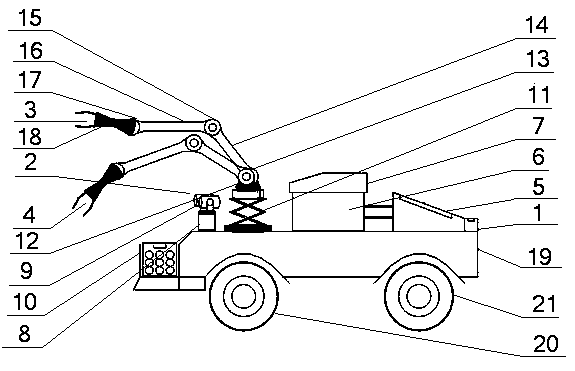

Double-manipulator fruit and vegetable harvesting robot system and fruit and vegetable harvesting method thereof

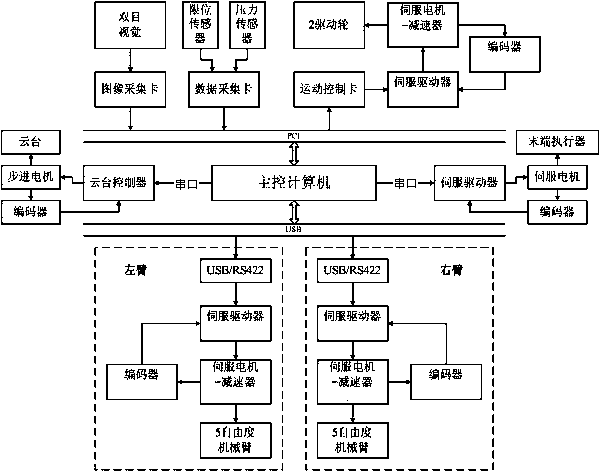

ActiveCN103503639ARealize automatic harvestingImprove work efficiencyProgramme-controlled manipulatorPicking devicesSimulationActuator

The invention discloses a double-manipulator fruit and vegetable harvesting robot system and a fruit and vegetable harvesting robot method of the double-manipulator fruit and vegetable harvesting robot system. According to the system, a binocular stereoscopic vision system is used for visual navigation of walking motion of a robot and obtaining of position information of harvested targets and barriers; a manipulator device is used for grasping and separating according to the positions of the harvested targets and the barriers; a robot movement platform is used for autonomous moving under the operation environment; a main control computer is a control center, integrates a control interface and all software modules, and controls the whole system. The binocular stereoscopic vision system comprises two color vidicons, an image collection card and an intelligent control cloud deck; the manipulator device comprises two five degree-of-freedom manipulator bodies, a joint servo driver, an actuator motor and the like; the robot movement platform comprises a wheel-type body, a power source and power control device and a fruit and vegetable harvesting device. Binocular vision and the double-manipulator bionic personification are used for building the fruit and vegetable harvesting robot, and autonomous navigation walking and automatic harvesting of the fruit and vegetable targets are achieved.

Owner:溧阳常大技术转移中心有限公司

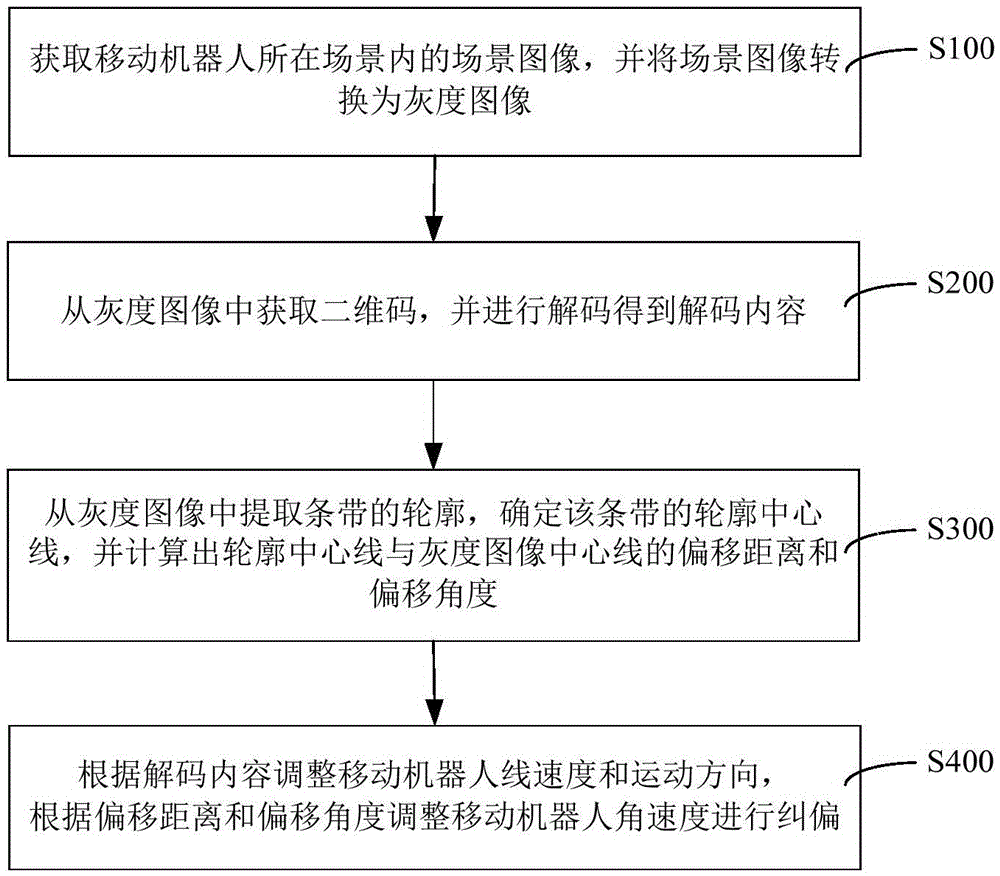

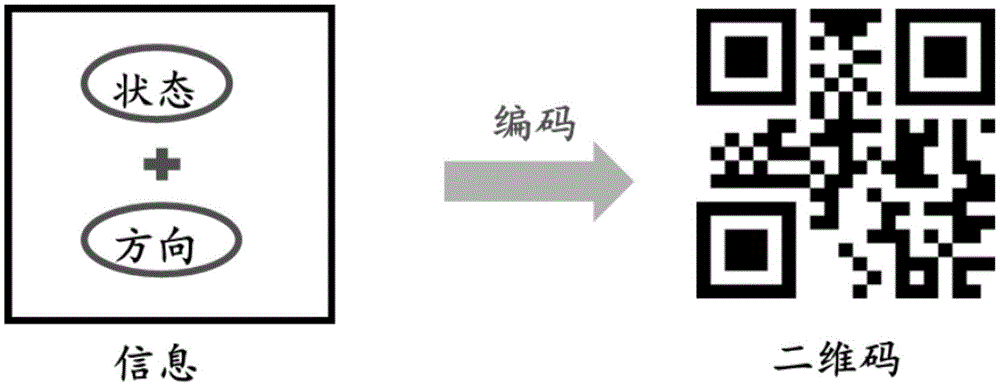

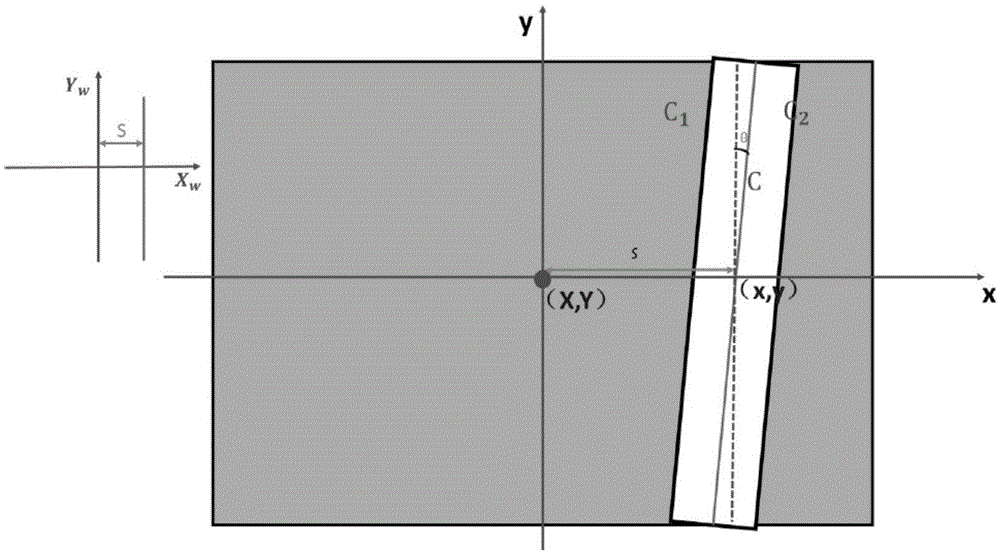

Visual navigation method and system of mobile robot as well as warehouse system

ActiveCN105651286AReal-time acquisitionNavigational calculation instrumentsPosition/course control in two dimensionsOffset distanceAngular velocity

The invention discloses a visual navigation method and system of a mobile robot. According to the method, a scene image in a scene where the mobile robot is located is acquired in real time and converted into a grayscale image; a two-dimensional code in the grayscale image is identified and decoded, and state transition information and speed change information are obtained; meanwhile, an outline center line of a stripe in the same frame of grayscale image is determined, and the offset distance and the offset angle between the outline center line of the stripe and a center line of the grayscale image are calculated; the linear velocity and the motion direction of the mobile terminal are adjusted according to the state transition information and the speed change information, and meanwhile, the angular velocity of the mobile robot is corrected in real time according to the offset distance and the offset angle. The two-dimensional code on the stripe and the scene image for correction are acquired simultaneously in the image manner, the two-dimensional code and the stripe in the same frame of image are merged, meanwhile, preset motion and real-time correction of the robot are controlled, the control and correction method can be significantly simplified, the velocity can be increased, and the system can be more stable.

Owner:NINGBO INST OF MATERIALS TECH & ENG CHINESE ACADEMY OF SCI

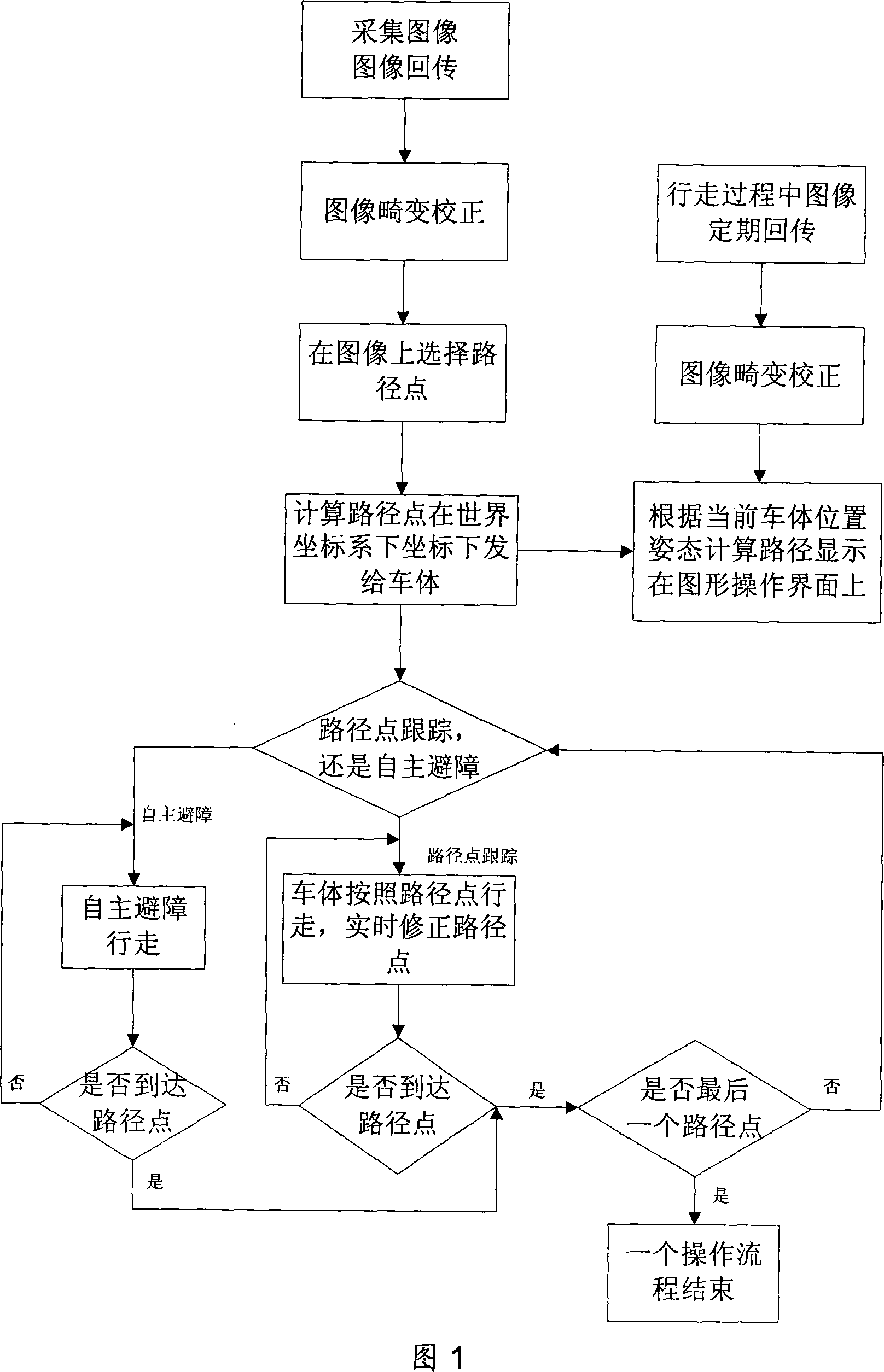

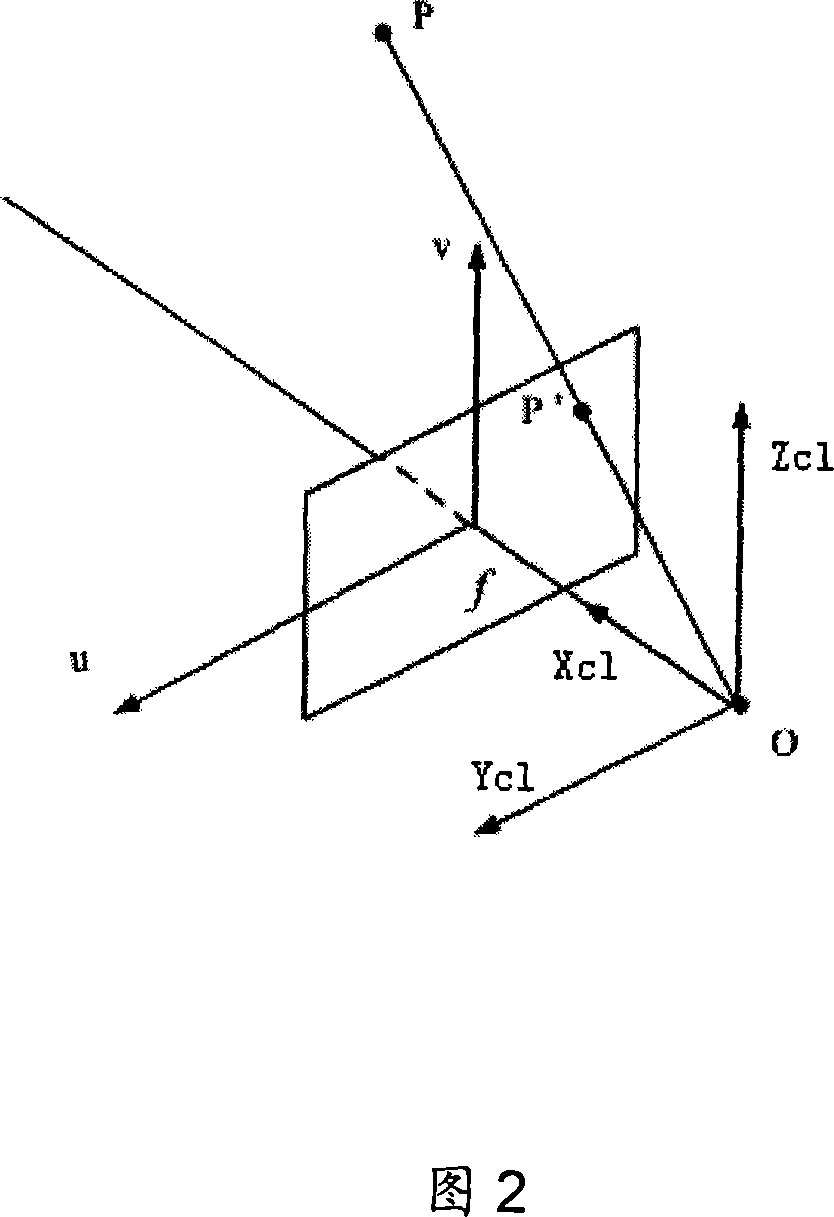

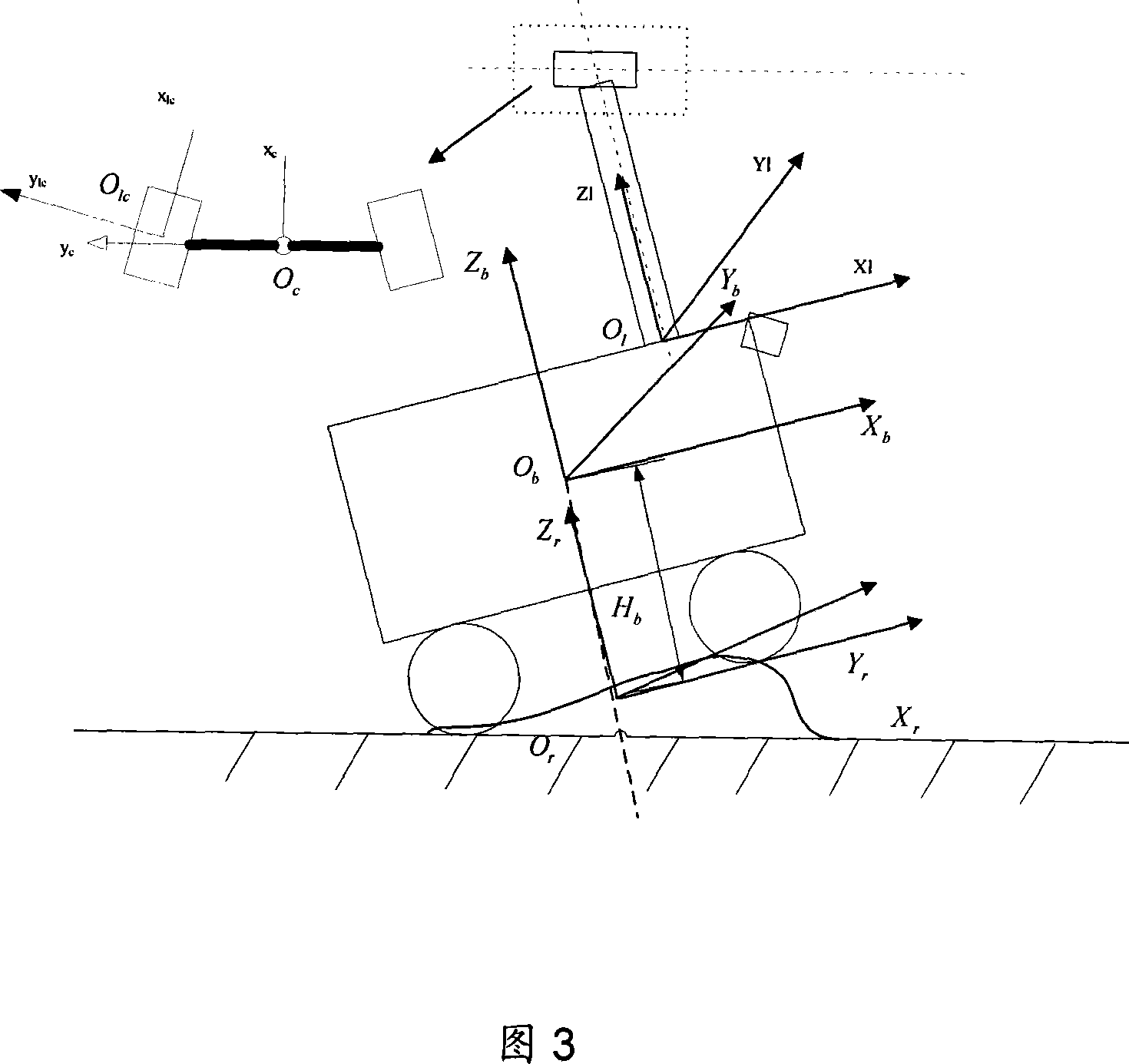

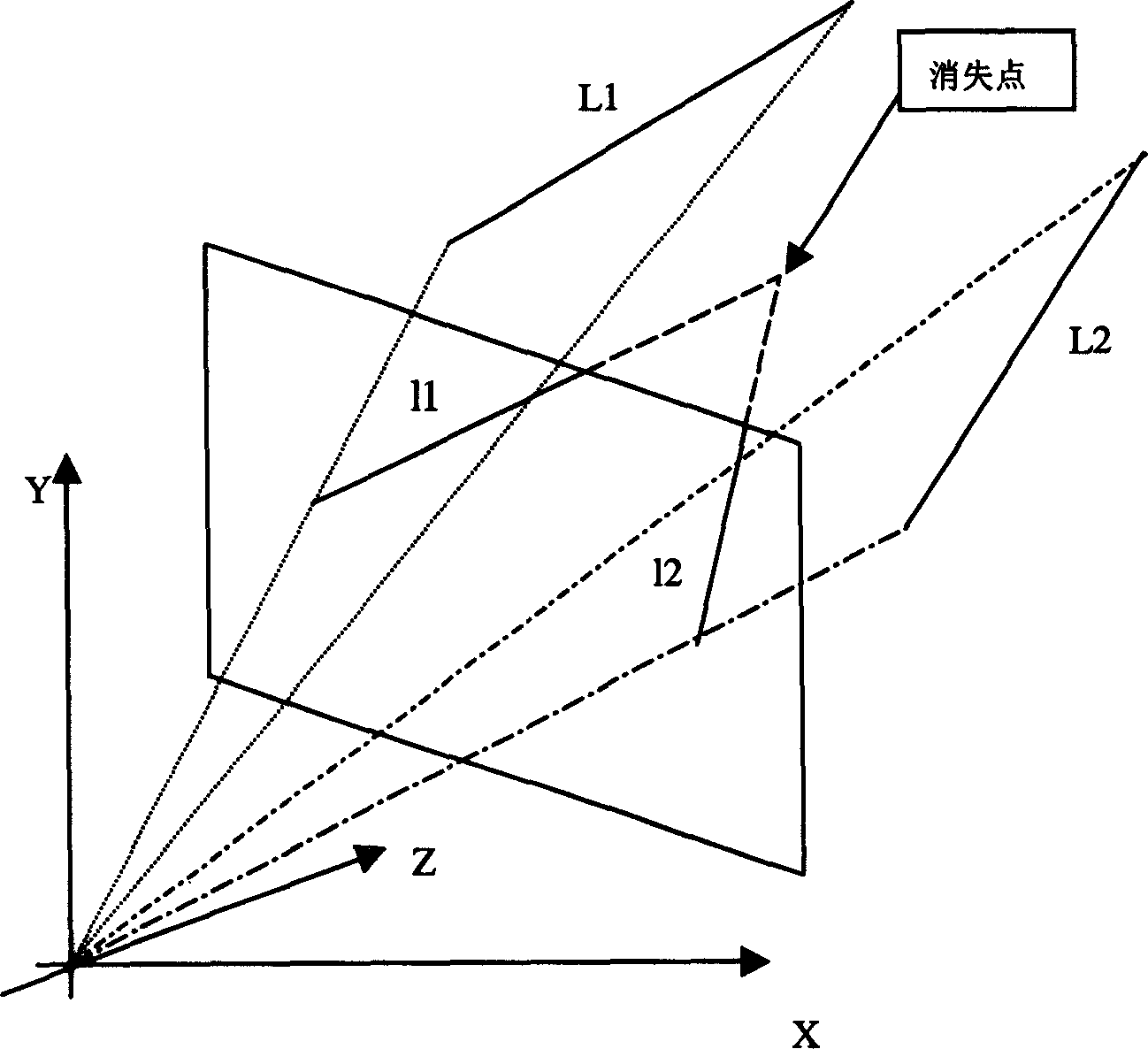

Environment sensing one-eye visual navigating method adapted to self-aid moving vehicle

ActiveCN101067557ASimple calculationMeet real-time requirementsInstruments for road network navigationCharacter and pattern recognitionGraph operationsMobile vehicle

The invention is suitable to the independent motion vehicles environment sensation monocular visual navigation method, first surveys the camera distortion parameter, puts the camera on the independent motion vehicles, determines transformation relation from image plane coordinate system to world coordinate system; then records current car body posture, gathers the picture in the current car body visible scope, feedback the picture to the picture operation contact surface in order to definite the walk mode of the car body and do distortion correction on the gathering picture, select the path and the path spot on the path, according to the coordinates transformation relations under the world coordinate system put the current position and choice path result to the car body and demonstrate on the graph operation contact surface by the car body width; the car body walks according to the independently evades bonds or the way track way till the current visible scope last way spot, and then circling start from the gather picture to the final object point. The invention applies the monocular measuring technique in the unknown environment the visual navigation.

Owner:BEIJING INST OF CONTROL ENG

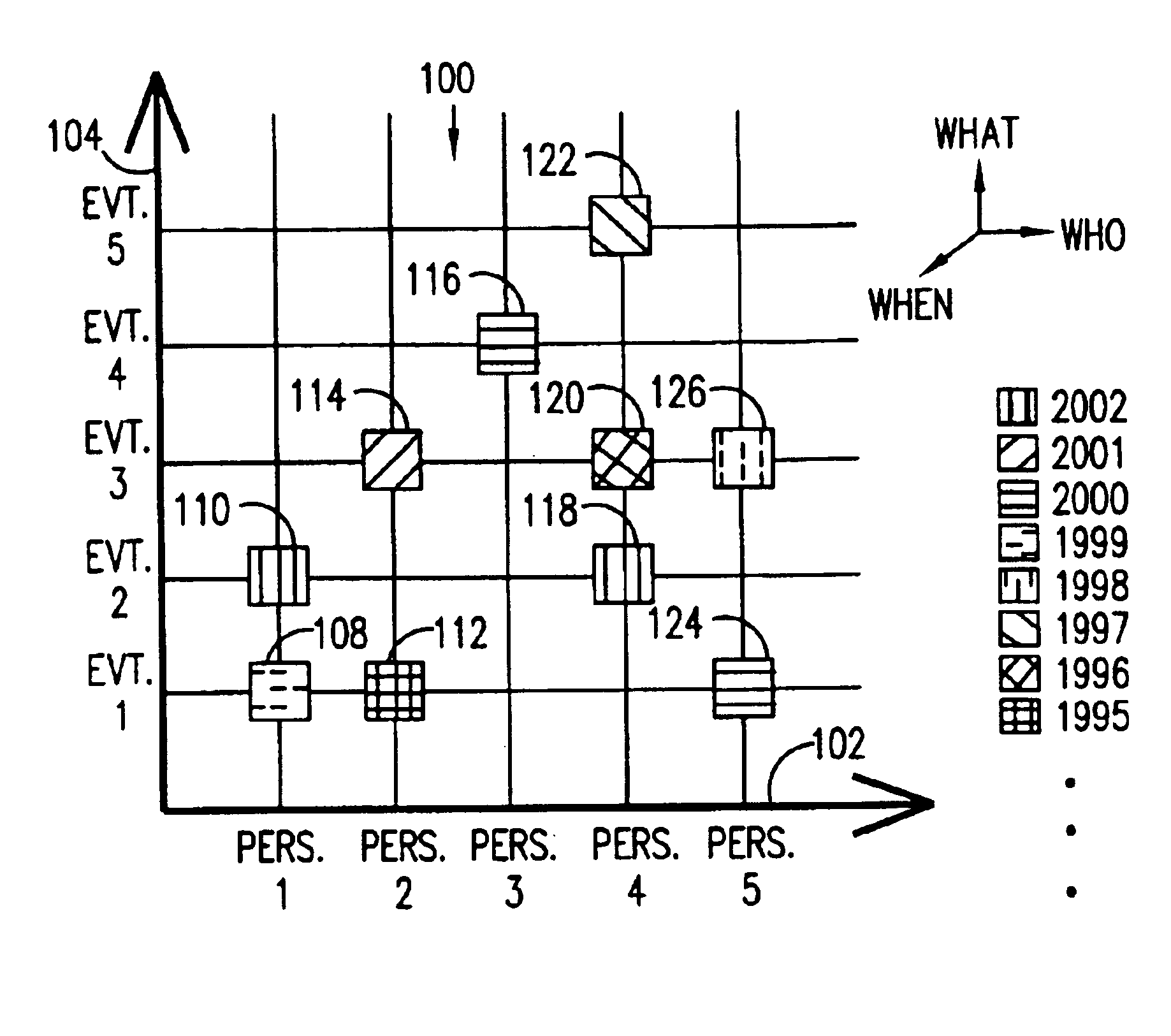

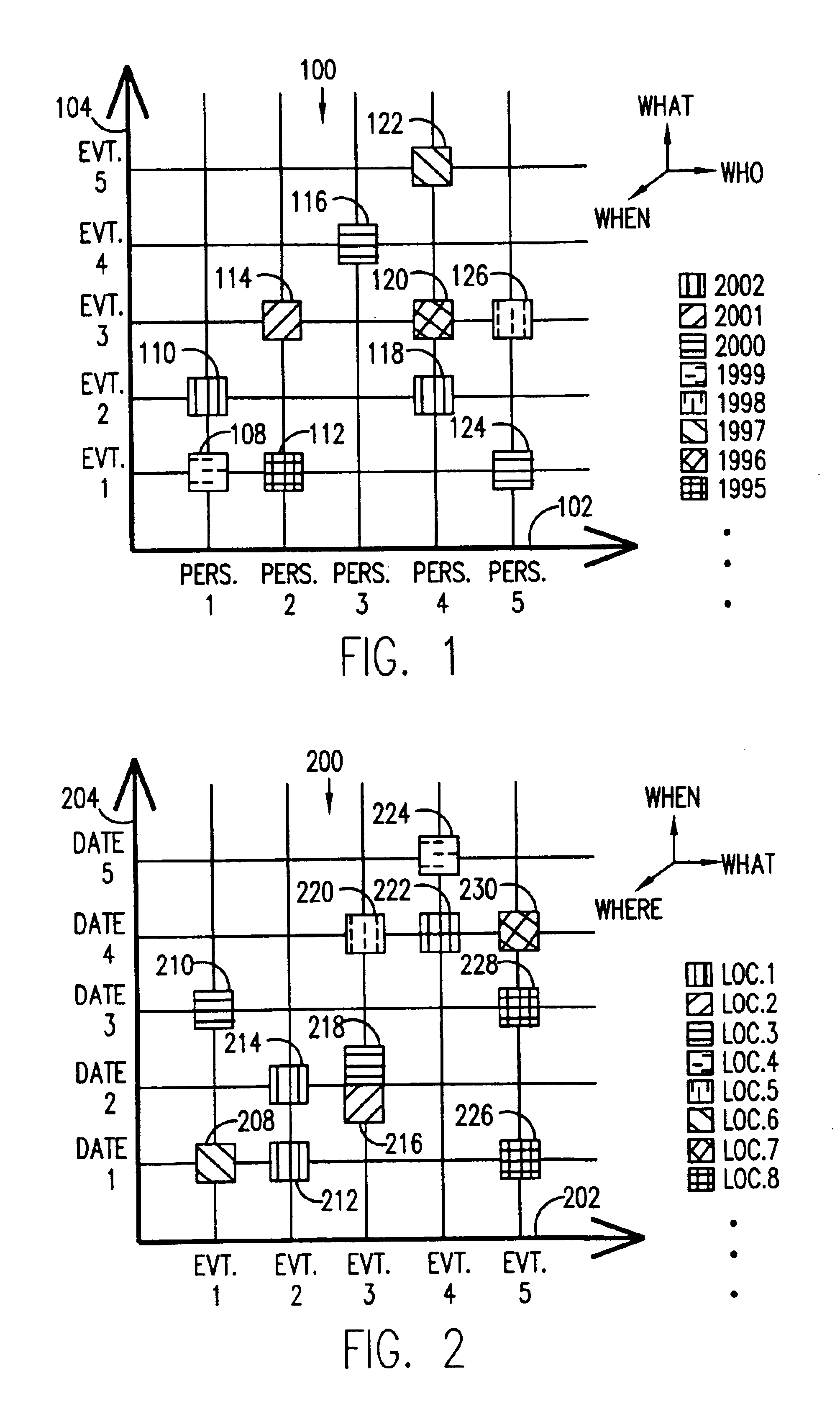

Graphical user interface utilizing three-dimensional scatter plots for visual navigation of pictures in a picture database

InactiveUS6948124B2Metadata still image retrievalSpecial data processing applicationsGraphicsGraphical user interface

A novel graphical user interface (GUI) using metadata, generates three-dimensional scatter plots (100, 200, 300, 400) for the efficient and aesthetic navigation and retrieval of pictures in a picture database. The first and second dimensions (102, 104, 202, 204, 302, 304, 402, 404) represent abscissas and ordinates corresponding to two picture characteristics chosen by the user. Distinguishing characteristics of icons (108-126, 208-230, 308-326, 408-430) in the scatter plot (100, 200, 300, 400), which icons represent groups of pictures, indicate the third dimension, also chosen by the user. In the preferred embodiment, the third dimension is indicated by the color of the icon (108-126, 208-230, 308-326, 408-430). Along with many other possibilities, the three dimensions of a scatter plot (100, 200, 300, 400) can represent combinations of “Who,”“What,”“When,”“Where,” and “Why” picture characteristic information contained in the picture metadata. Activating an icon (108-126, 208-230, 308-326, 408-430) produces a thumbnail of the pictures in the group represented by the particular icon (108-126, 208-230, 308-326, 408-430). Updating one display dimension dynamically updates the other display dimensions.

Owner:MONUMENT PEAK VENTURES LLC

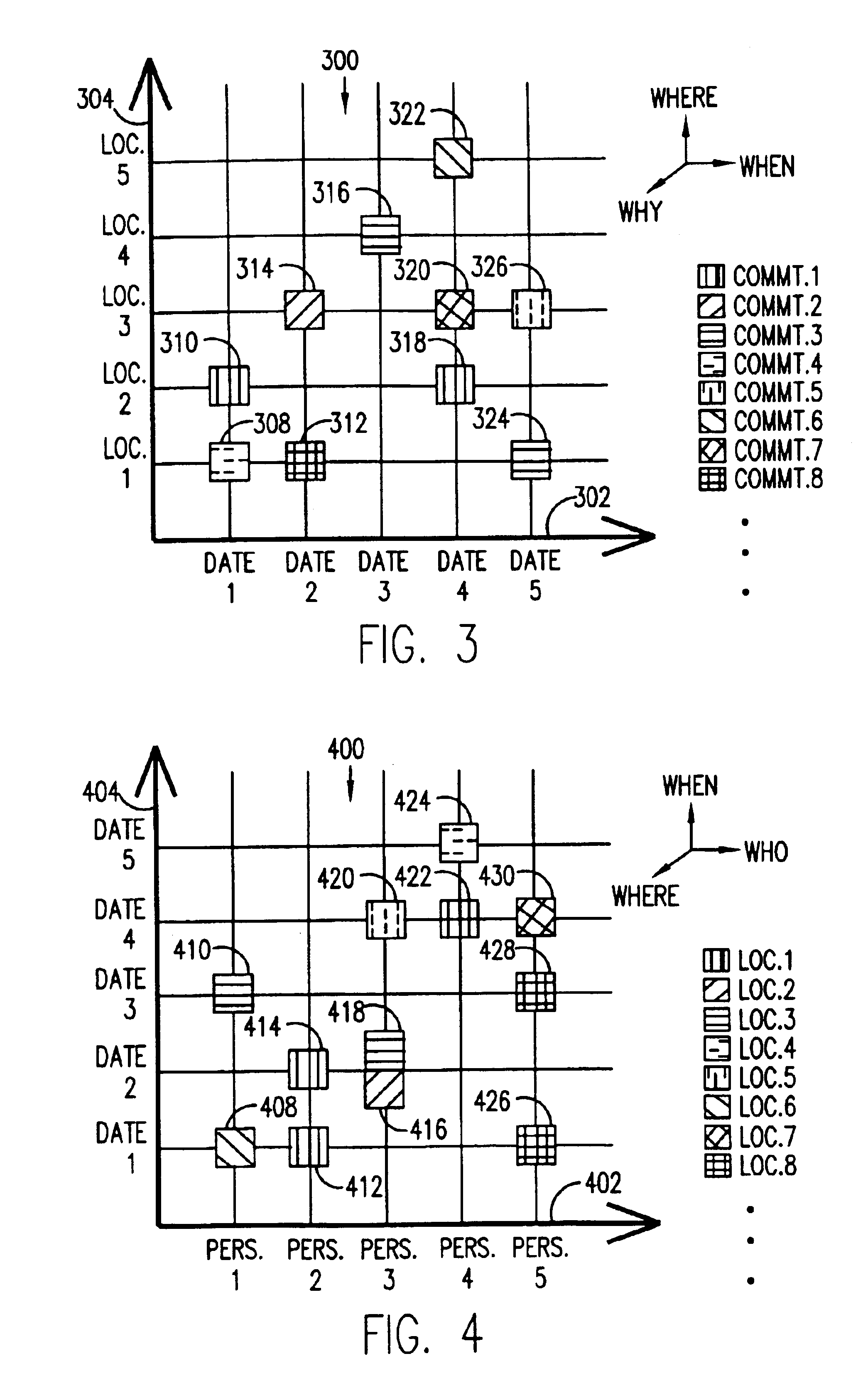

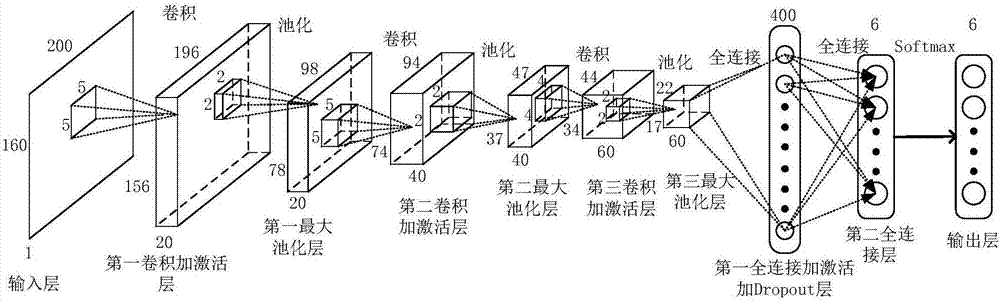

Robot positioning method with fusion of visual features and IMU information

InactiveCN110345944AImprove robustnessAccurate estimateImage analysisNavigational calculation instrumentsSlide windowVisual perception

The invention relates to a robot positioning method with fusion of visual features and IMU information. The invention puts forward a method of fusion of monocular vision with IMU. Visual front-end pose tracking is performed and the post of a robot is estimated by using feature points; an IMU deviation model, an absolute scale and a gravity acceleration direction are estimated by using pure visualinformation; IMU solution is performed to obtain high-precision pose information and thus an initial reference is provided to optimize the search process, and the initial reference, a state quantity and visual navigation information are used for participating in optimization; and a rear end employs a sliding-window-based tightly coupled nonlinear optimization method to realize pose and map optimization. And the computational complexity is fixed while the speedometer is calculated based on a sliding window method, so that the robustness of the algorithm is enhanced.

Owner:ZHEJIANG UNIV OF TECH

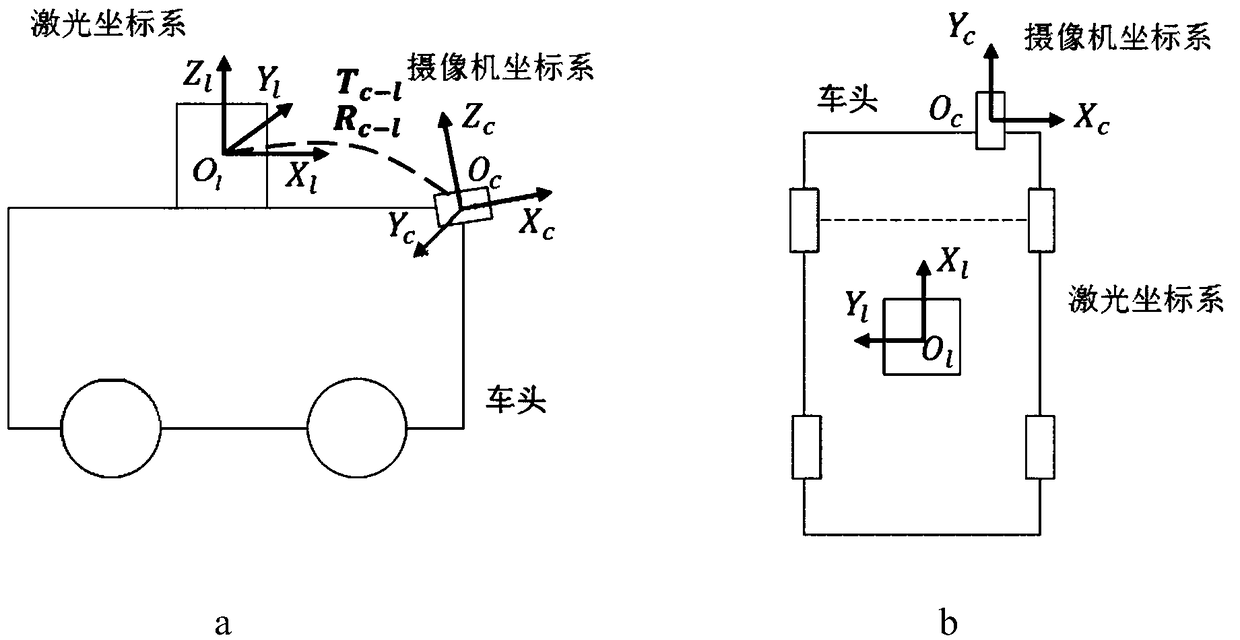

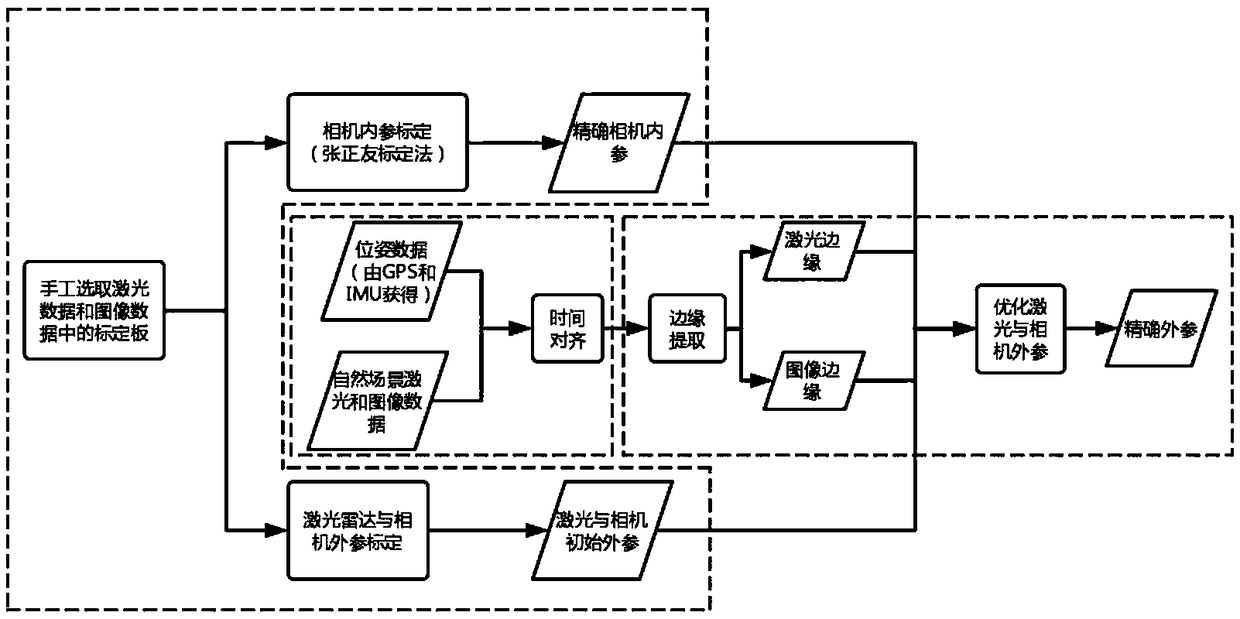

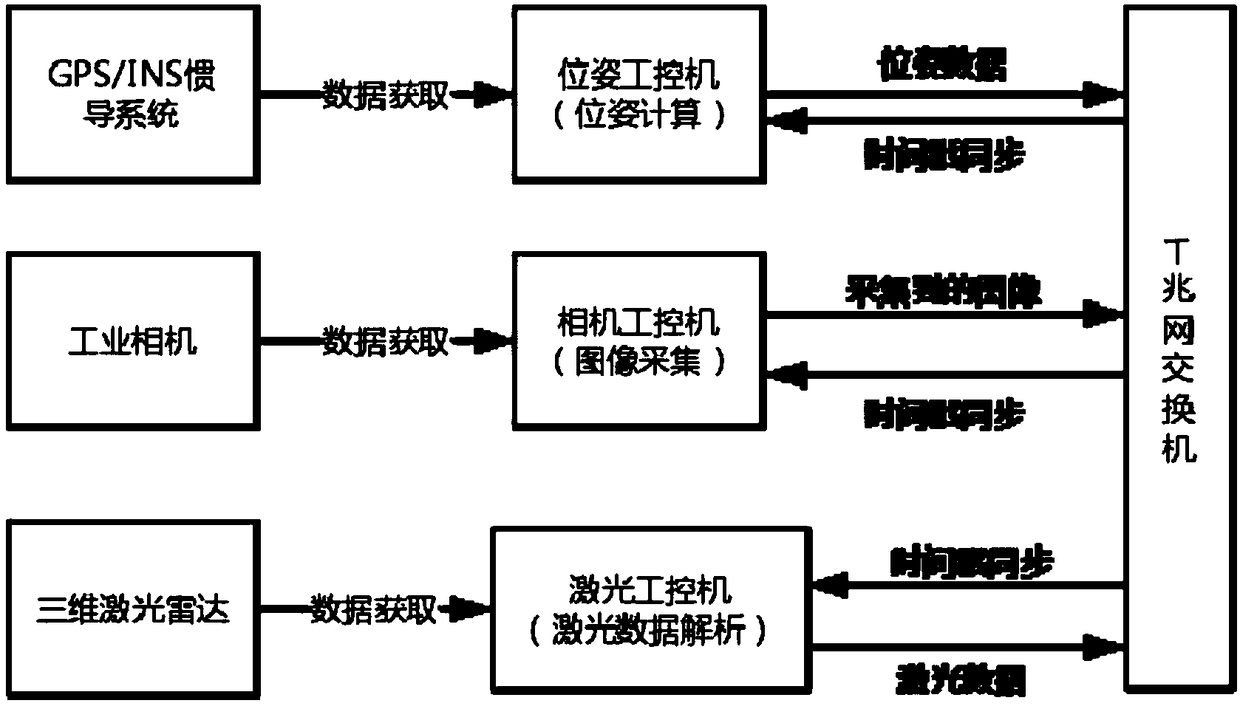

Intelligent vehicle laser sensor and online camera calibration method

ActiveCN109270534AAchieve high-precision calibrationNavigation by speed/acceleration measurementsRadio wave reradiation/reflectionLaser dataTime alignment

The invention discloses an intelligent vehicle laser sensor and an online camera calibration method. Laser data and image data are accurately calibrated via the steps of camera calibration, offline calibration of a three-dimensional laser sensor and an image sensor, time alignment of laser data and image data, and online alignment of the laser sensor and the image sensor. The calibration system can be applied to multiple different road conditions and scene, to achieve online high precision calibration of laser and an image. After the laser and the image are calibrated, the information of the two sensors can be used for comprehensively analyzing a barrier and making an accurate decision, so that the method has is significant in sensing technology of an intelligent vehicle. Therefore, the technology can be widely applied to the fields of driverless vehicle visual navigation and intelligent vehicle visual assistance driving.

Owner:XI AN JIAOTONG UNIV

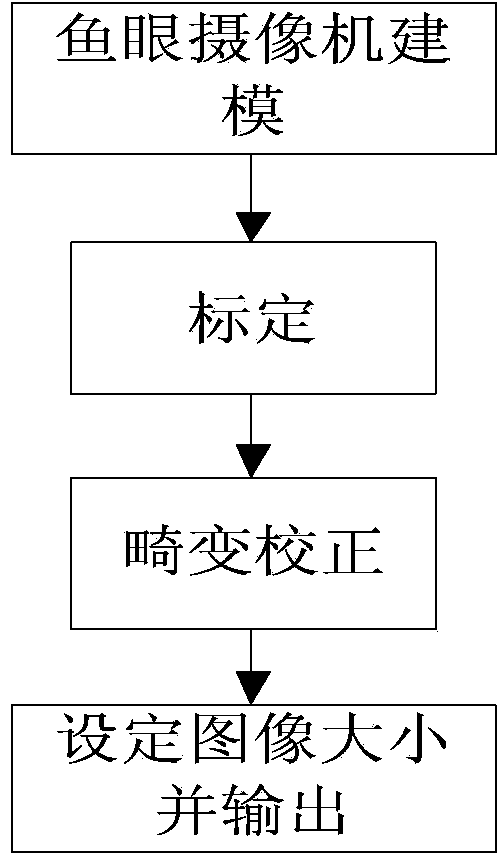

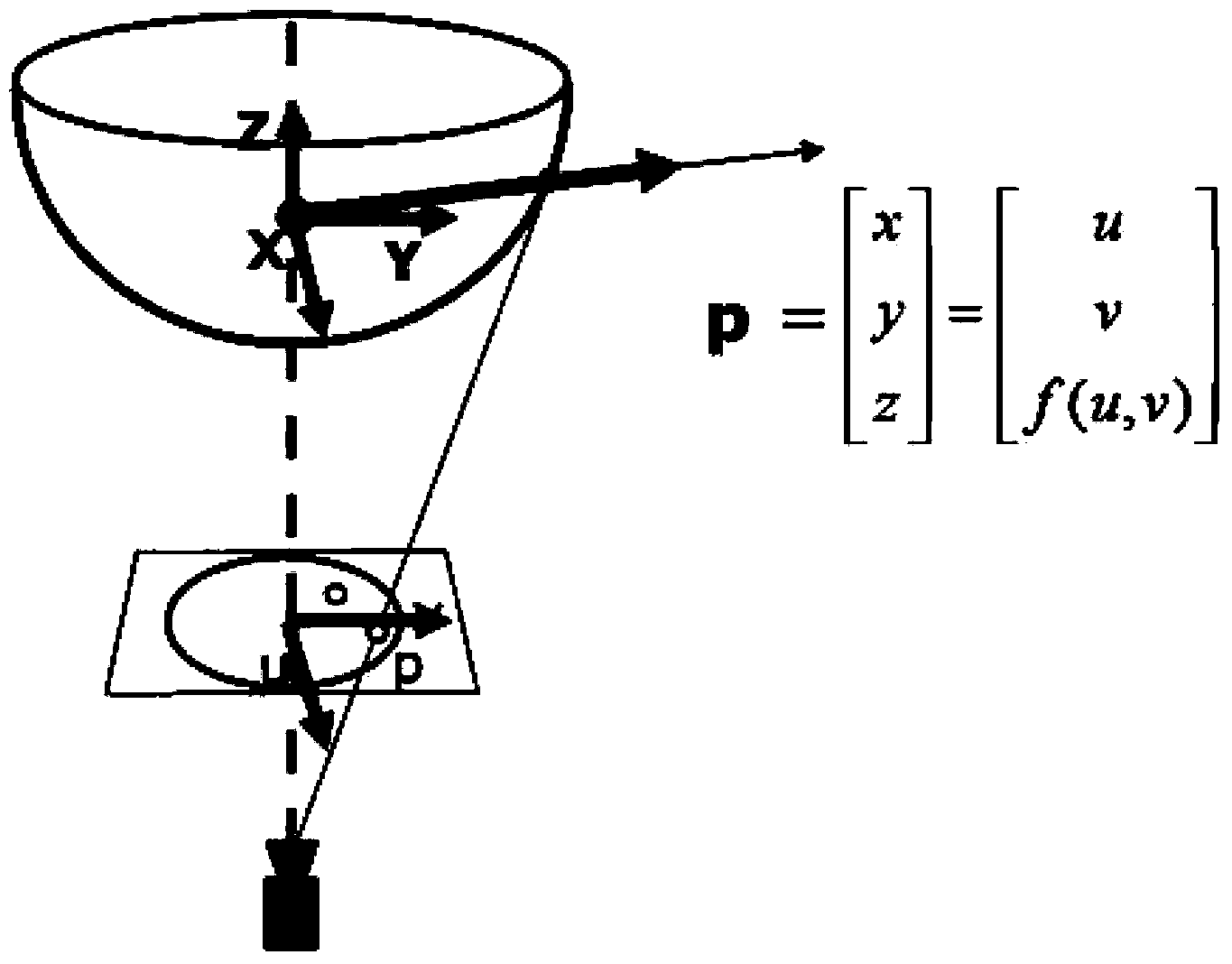

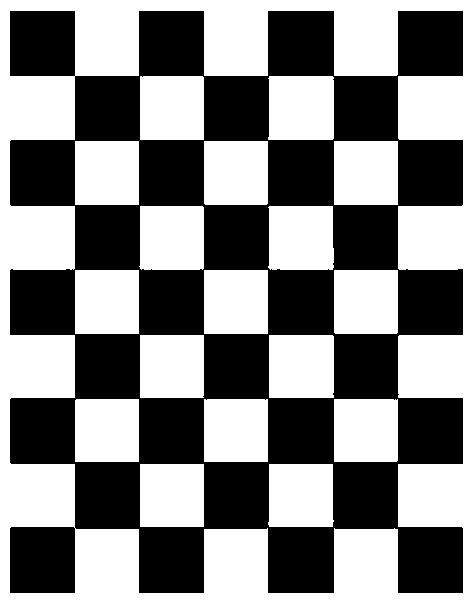

Fisheye image correction method after calibration conducted by fisheye lens

ActiveCN104240236AEasy to operateImprove efficiencyImage enhancementImage analysisCamera lensImage correction

The invention belongs to the field of digital image processing and discloses a fisheye image correction method after calibration conducted by a fisheye lens. The method includes the steps that firstly, a complete fisheye camera projection and distortion model is established and is a commonly-used fisheye model, a self-made planer calibration board and the commonly-used fisheye model are used for calibrating model parameters of a fisheye camera, and the calibrated lens distortion parameters are used for restoring circular distortion fisheye images to perspective images meeting the requirements for the human eye visual effect. The fisheye lens calibration and fisheye distortion correction method based on a planar checkerboard is quite suitable for occasions such as visual navigation and mobile monitoring. The method is suitable for the circular fisheye images and incomplete fisheye images can be corrected as well.

Owner:SUN YAT SEN UNIV +1

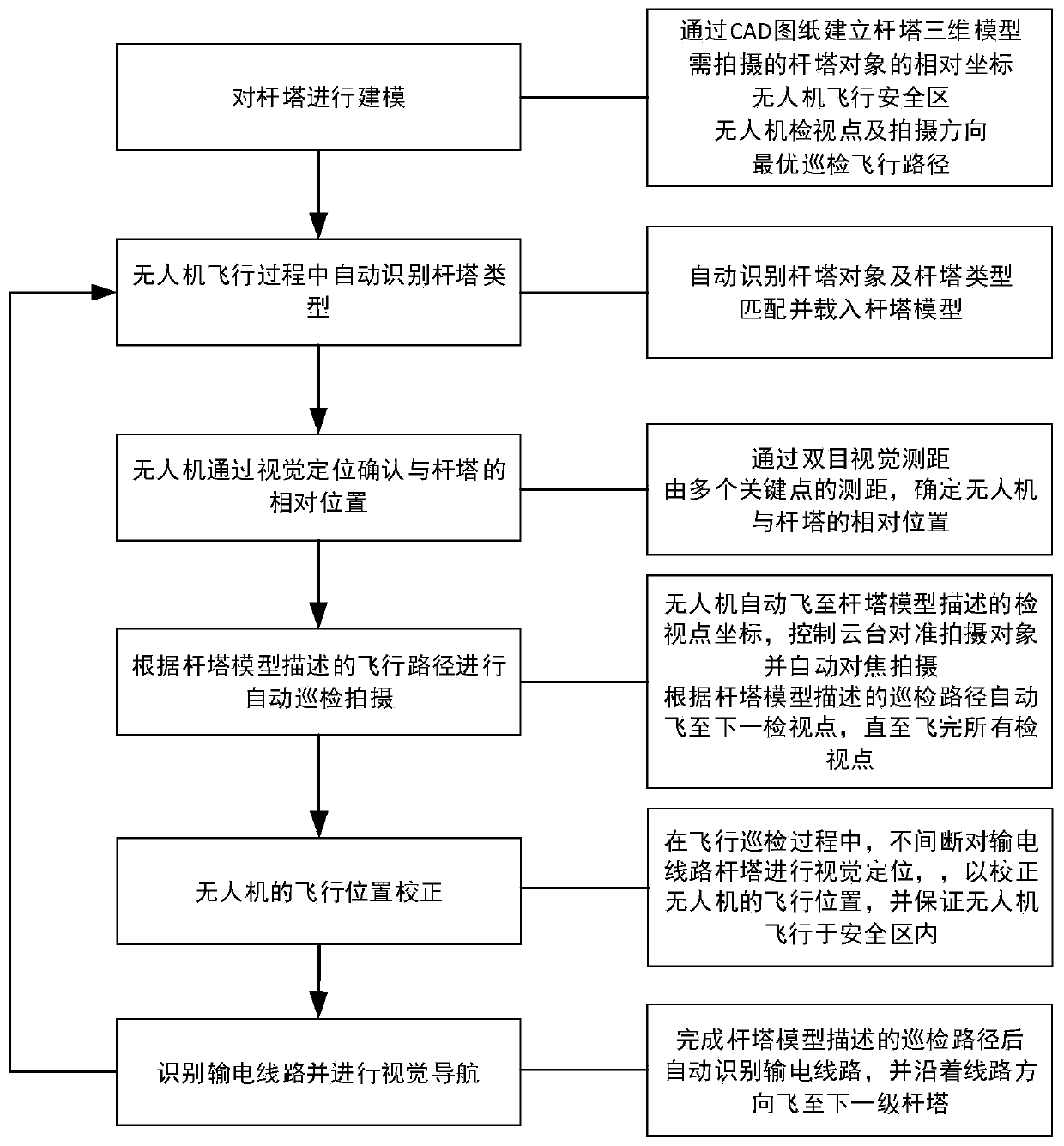

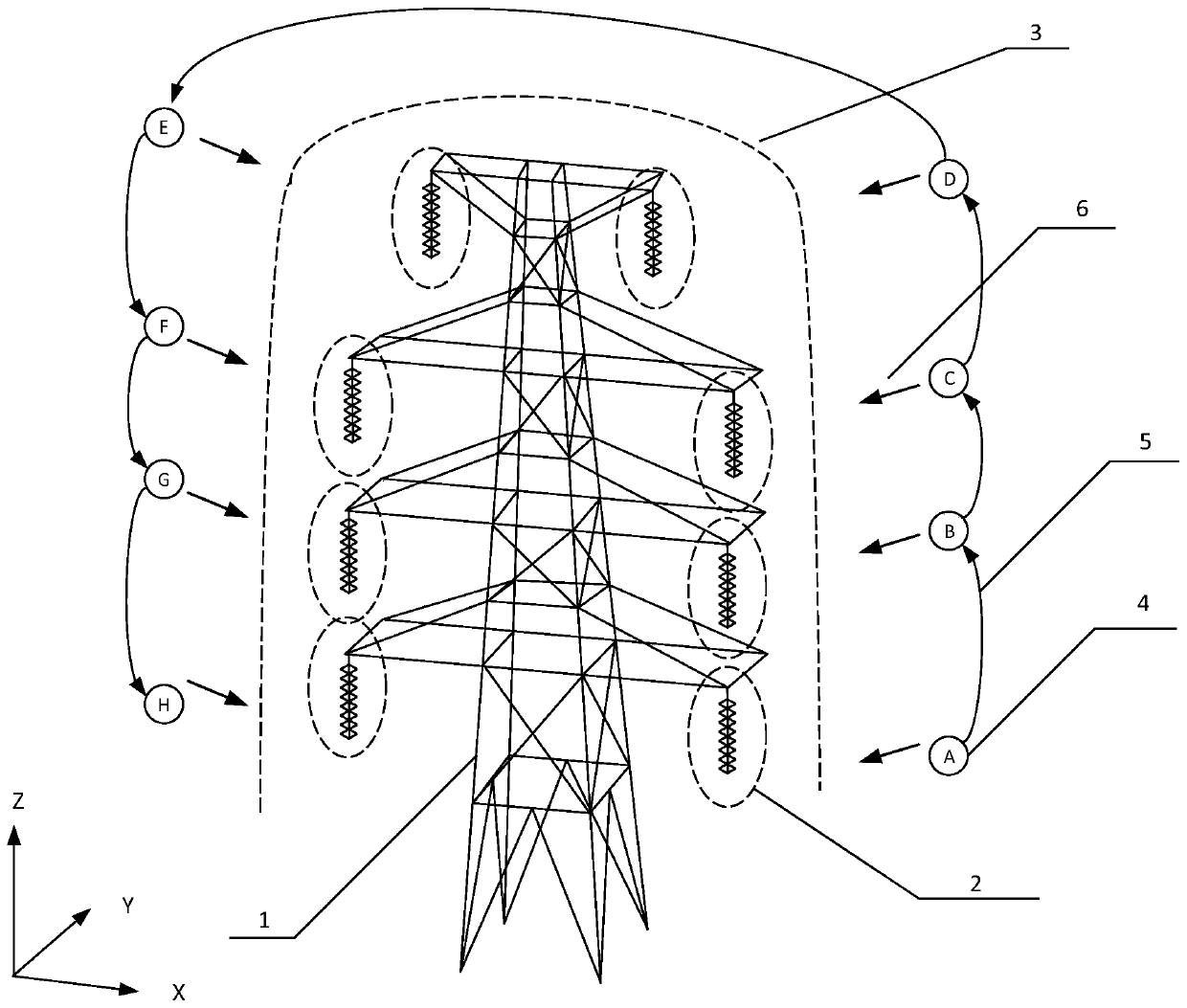

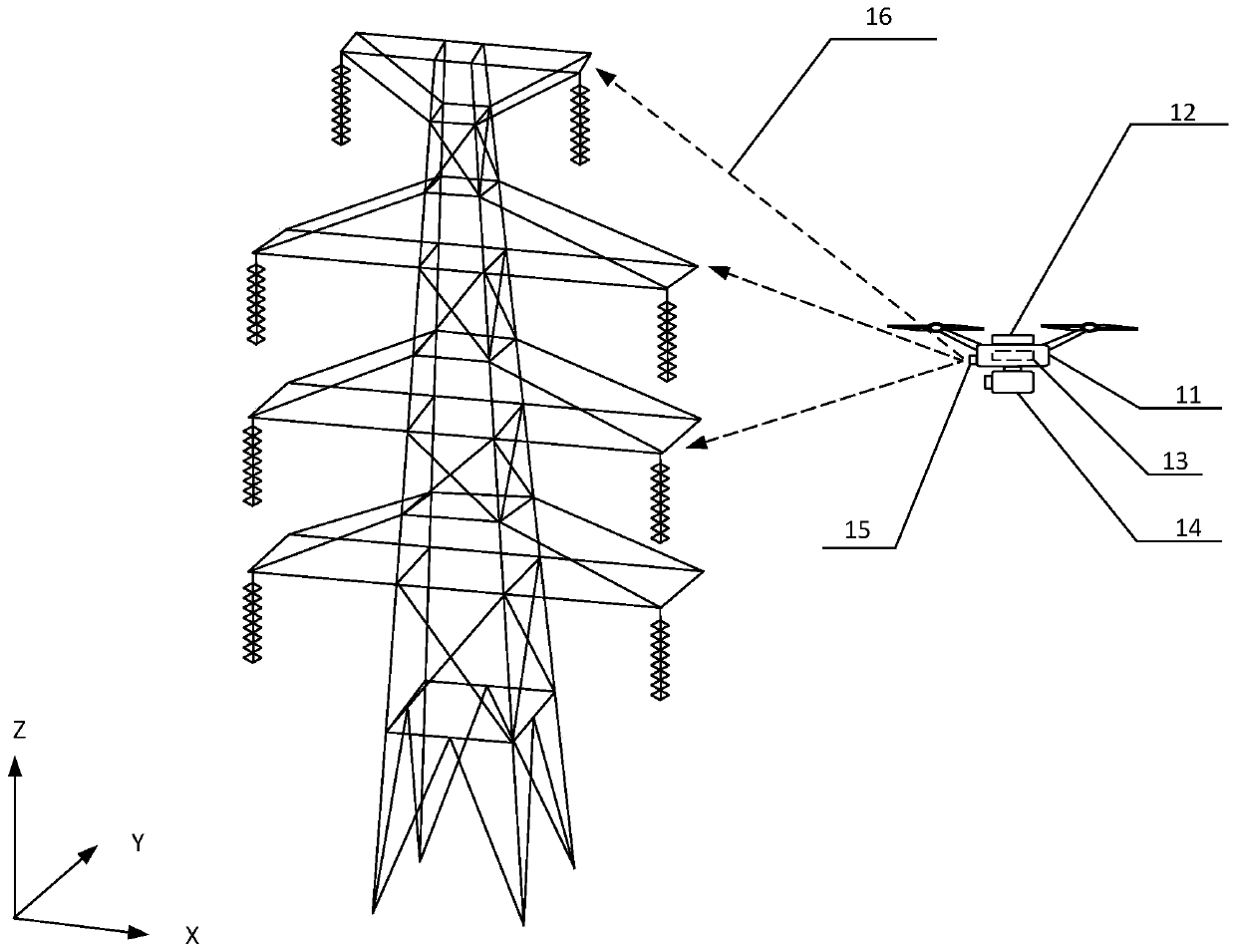

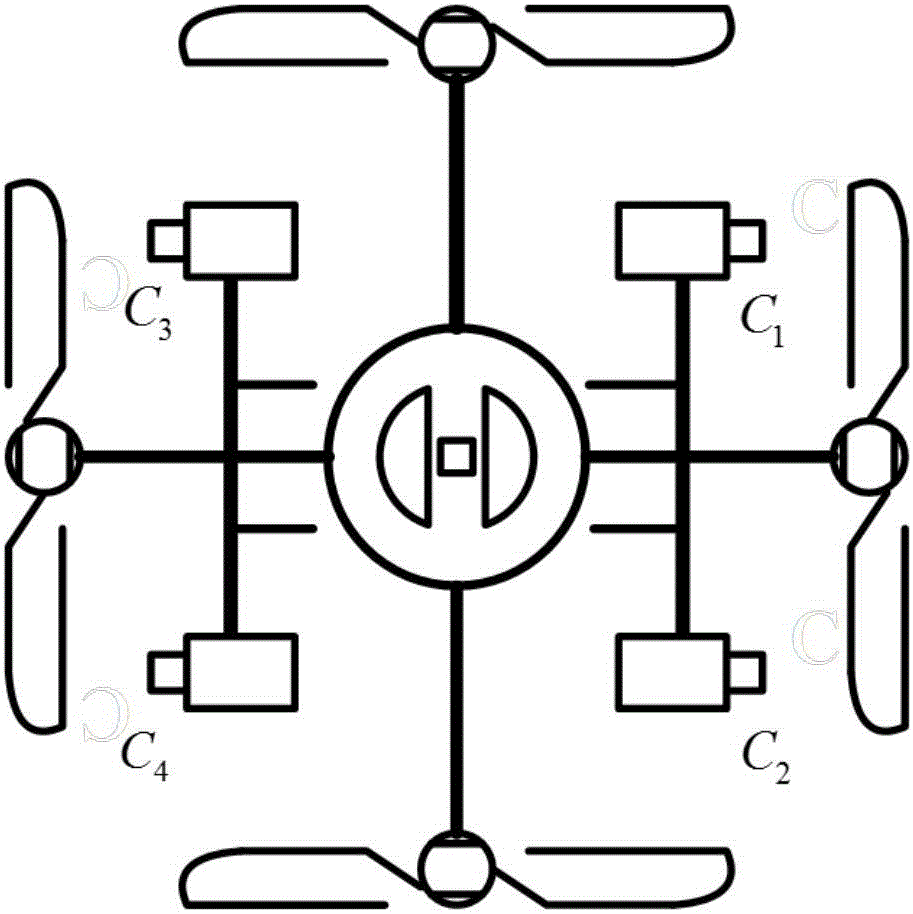

Pole tower model matching and visual navigation-based power unmanned aerial vehicle and inspection method

ActiveCN110133440AAchieve autonomous inspectionImprove inspection efficiencyFault location by conductor typesDesign optimisation/simulationUncrewed vehicleTower

The invention discloses a pole tower model matching and visual navigation-based power unmanned aerial vehicle and an inspection method. In an unmanned aerial vehicle, a depth image of a front end of the unmanned aerial vehicle is acquired by a dual-eye visual sensor, distance between the unmanned aerial vehicle and a front object is further measured, a surrounding image is acquired by a cloud deckand a camera, the object is further identified, and the flight gesture of the unmanned aerial vehicle is controlled by flight control of unmanned aerial vehicle. The method comprises the steps of performing pole tower model building on different types of power transmission line pole towers; automatically identifying the power transmission line pole towers and pole tower types by the unmanned aerial vehicle during the flight process, matching and loading a pre-built pole tower model; performing visual positioning on the power transmission line pole towers by the unmanned aerial vehicle, and acquiring relative positions of the unmanned aerial vehicle and the pole towers; and performing flight inspection by the unmanned aerial vehicle according to optimal flight path. By the unmanned aerialvehicle, the modeling workload is greatly reduced, and the model universality is improved; and the inspection method does not dependent on absolute coordinate flight, the flexibility is greatly improved, the cost is reduced, and the power facility safety is improved.

Owner:NARI TECH CO LTD

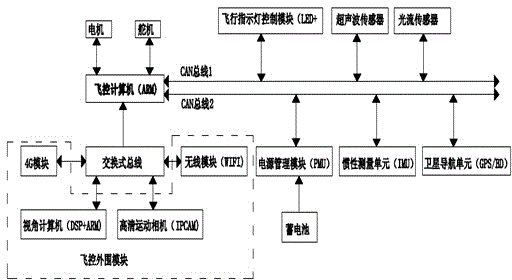

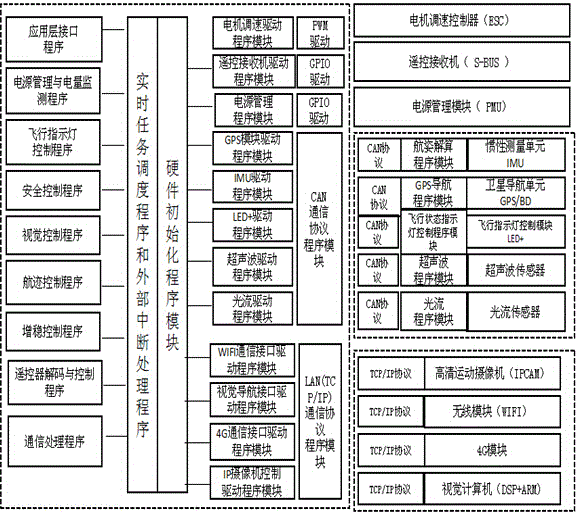

Ethernet-exchange-bus-based unmanned plane flight control system and method

InactiveCN104977912ASimplify the communication linkLess investmentProgramme total factory controlUltrasonic sensorTelecommunications link

The invention provides an ethernet-exchange-bus-based unmanned plane flight control system and method. The system comprises a flight control computer (ARM), a flight indication lamp control module (LED+), an ultrasonic sensor, a light stream sensor, a power management unit (PMU), a storage battery, an inertia management unit (IMU), a satellite navigation unit (GPS / BD+), a motor, and a steering engine. An Ethernet exchange chip (LAN swtich) is embedded into the flight control computer (ARM) and is connected with the flight control computer (ARM) by an LAN and is connected with a flight control peripheral module by an LAN. The system and method have the following beneficial effects: a communication link between an unmanned plane and the ground is simplified; a high-definition digital image can be returned to the ground in real time or be connected to the interne or be passed back to a command center; the visual navigation, obstacle avoidance, and image target identification tracking are supported; the communication demand of the unmanned plane formation flight is satisfied; the traditional IOSD equipment as well as the investment are saved; and the new function of the modularized smooth extension flight control is supported.

Owner:深圳大漠大智控技术有限公司

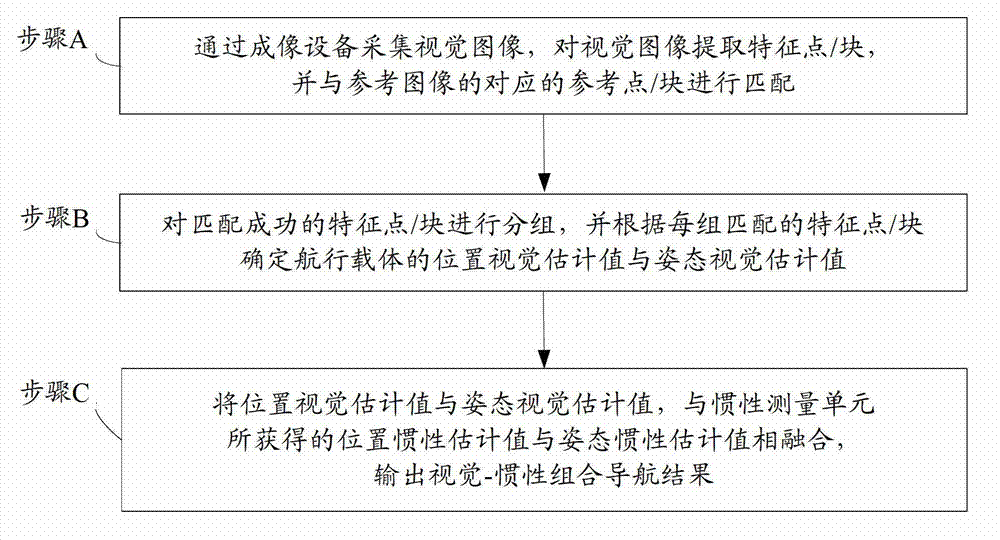

Visual-inertial combined navigation method

ActiveCN102768042ACorrection errorAchieving autonomous flight capabilityNavigation by speed/acceleration measurementsInertial measurement unitVisual navigation

The invention provides a visual-inertial combined navigation method, which comprises the following steps of: acquiring a visual image through imaging equipment, extracting feature points / blocks from the visual image, and matching with corresponding reference points / blocks of a reference image; grouping the feature points / blocks succeeding in matching, and determining a position visual estimation value and a posture visual estimation value of a navigation carrier according to each group of matched feature points / blocks; and fusing the position visual estimation value, the posture visual estimation value and a position inertial estimation value and a posture inertial estimation value which are acquired by an inertial measurement unit, and outputting a visual-inertial combined navigation result. According to the visual-inertial combined navigation method of the embodiment, errors of an inertial navigation system are corrected by using a visual navigation technology, so that the navigation precision is improved, and autonomous flight capability of the navigation carrier is realized.

Owner:TSINGHUA UNIV

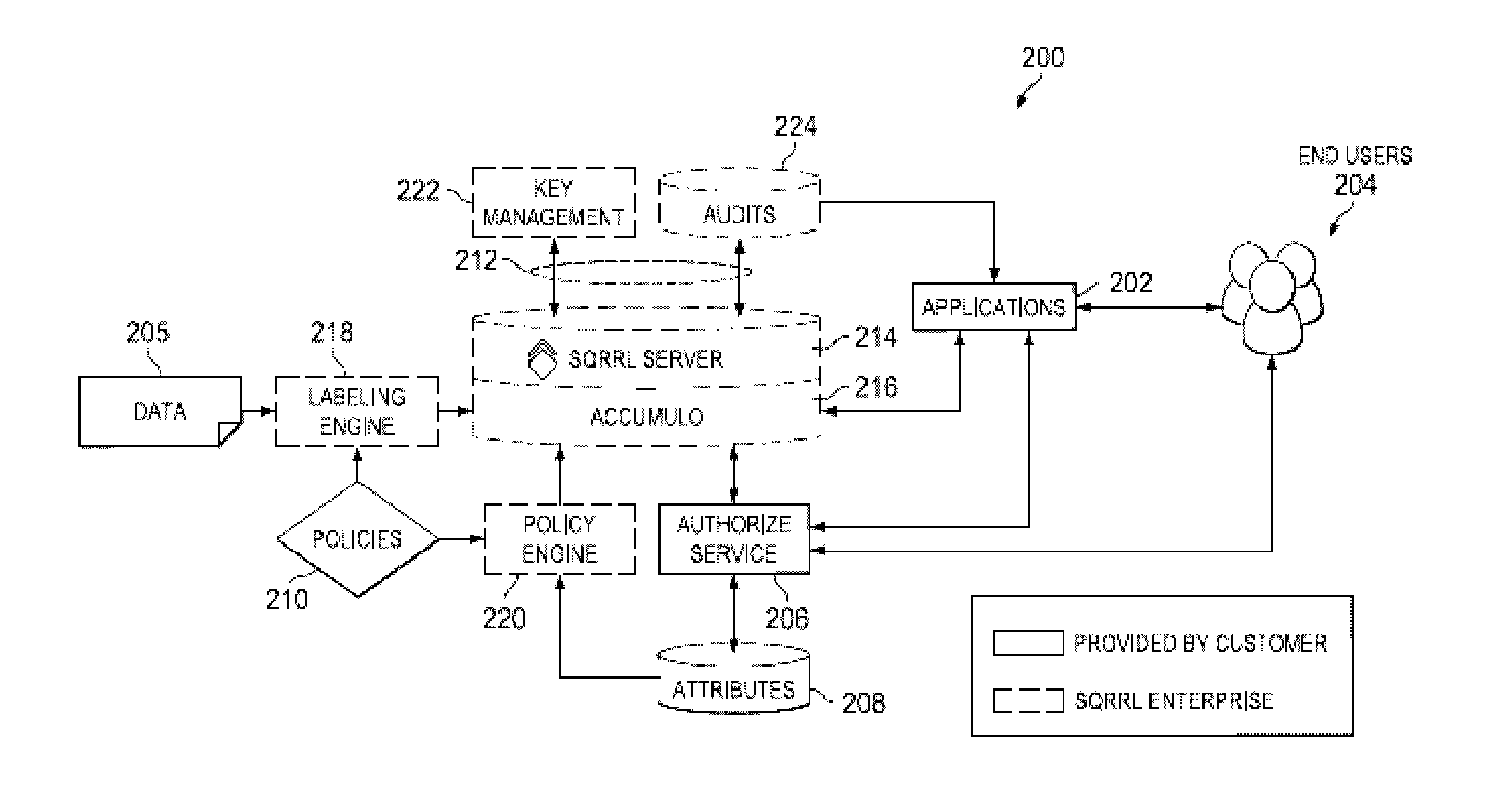

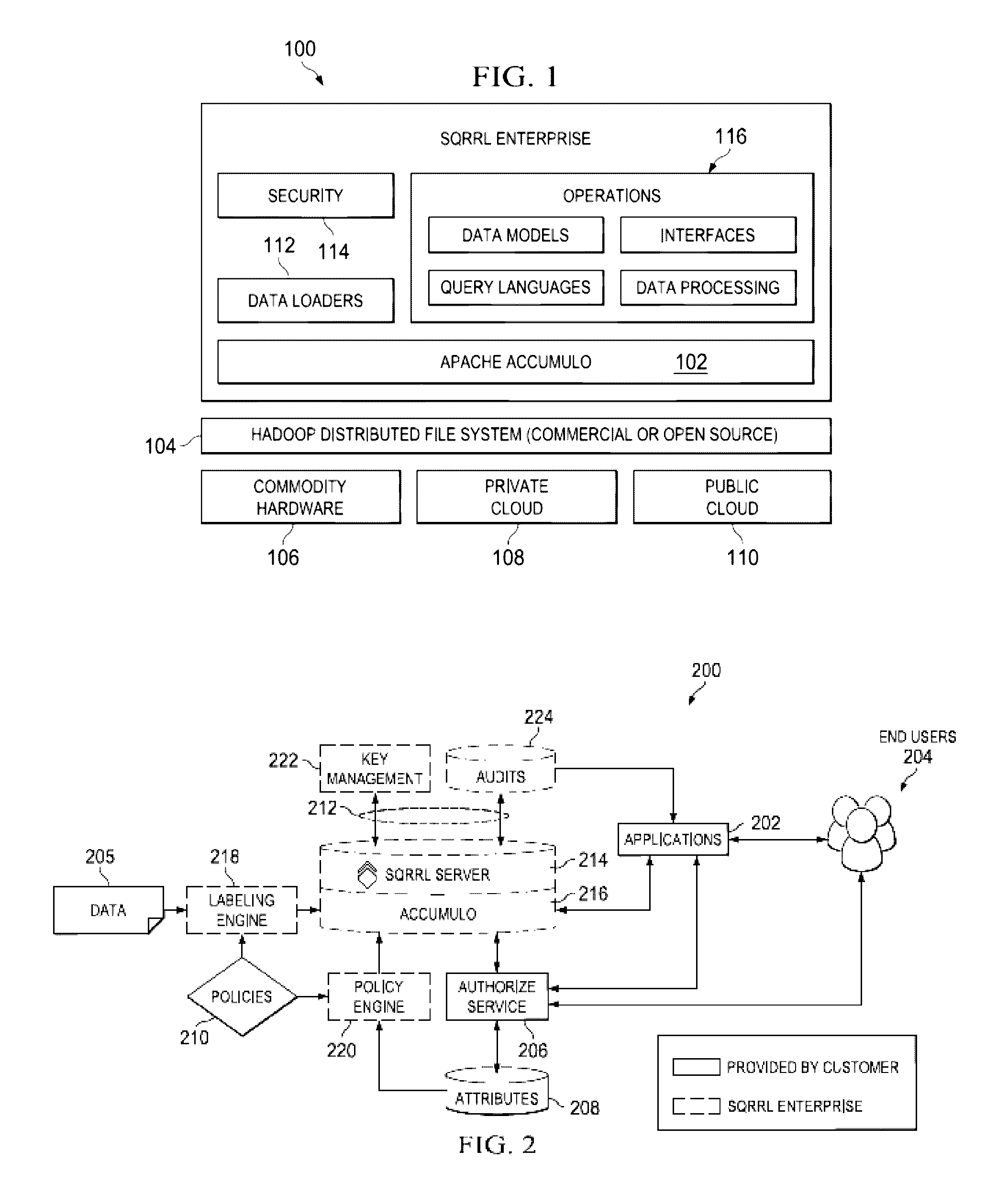

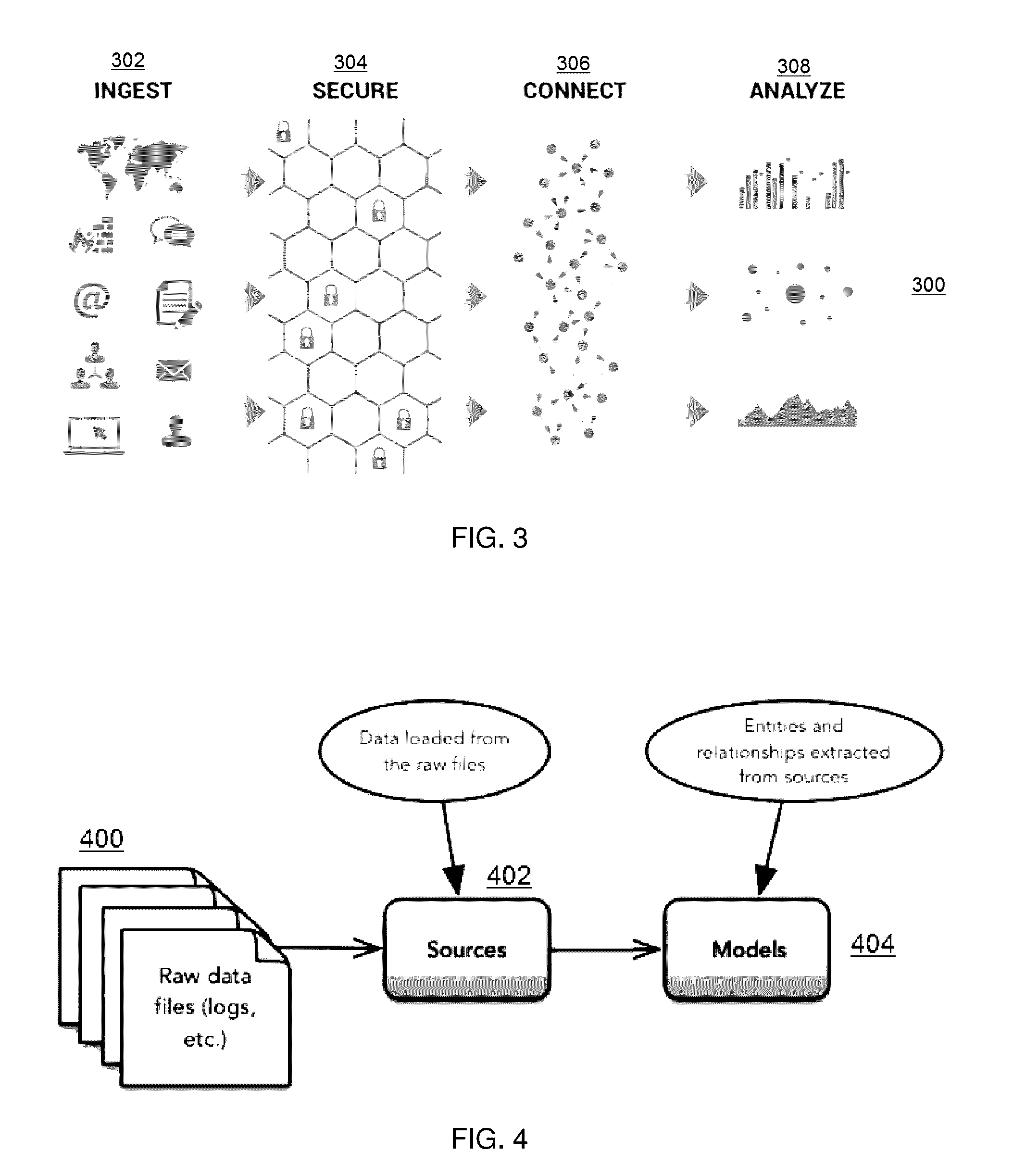

Entity-relationship modeling with provenance linking for enhancing visual navigation of datasets

InactiveUS20170017708A1Facilitate data analysisVisual data miningStructured data browsingEntity–relationship modelData set

A method of data analysis is enabled by receiving raw data records extracted from one or more data sources, and then generating from the data records an entity-relationship model. The entity-relationship model comprises more entity instances, and one or more relationships between those entity instances. Data analysis of the model is facilitated using one or more provenance links. A provenance link associates raw data records and one or more entity instances. Using a visual explorer that displays a set of entity instances and relationships from a selected entity-relationship model, a user can display details for an entity instance, and see relationships between and among entity instances. By virtue of the underlying linkage provided by the provenance links, the user can also display source records for an entity instance, and display entity instances for a source record. The technique facilitates Big Data analytics.

Owner:A9 COM INC

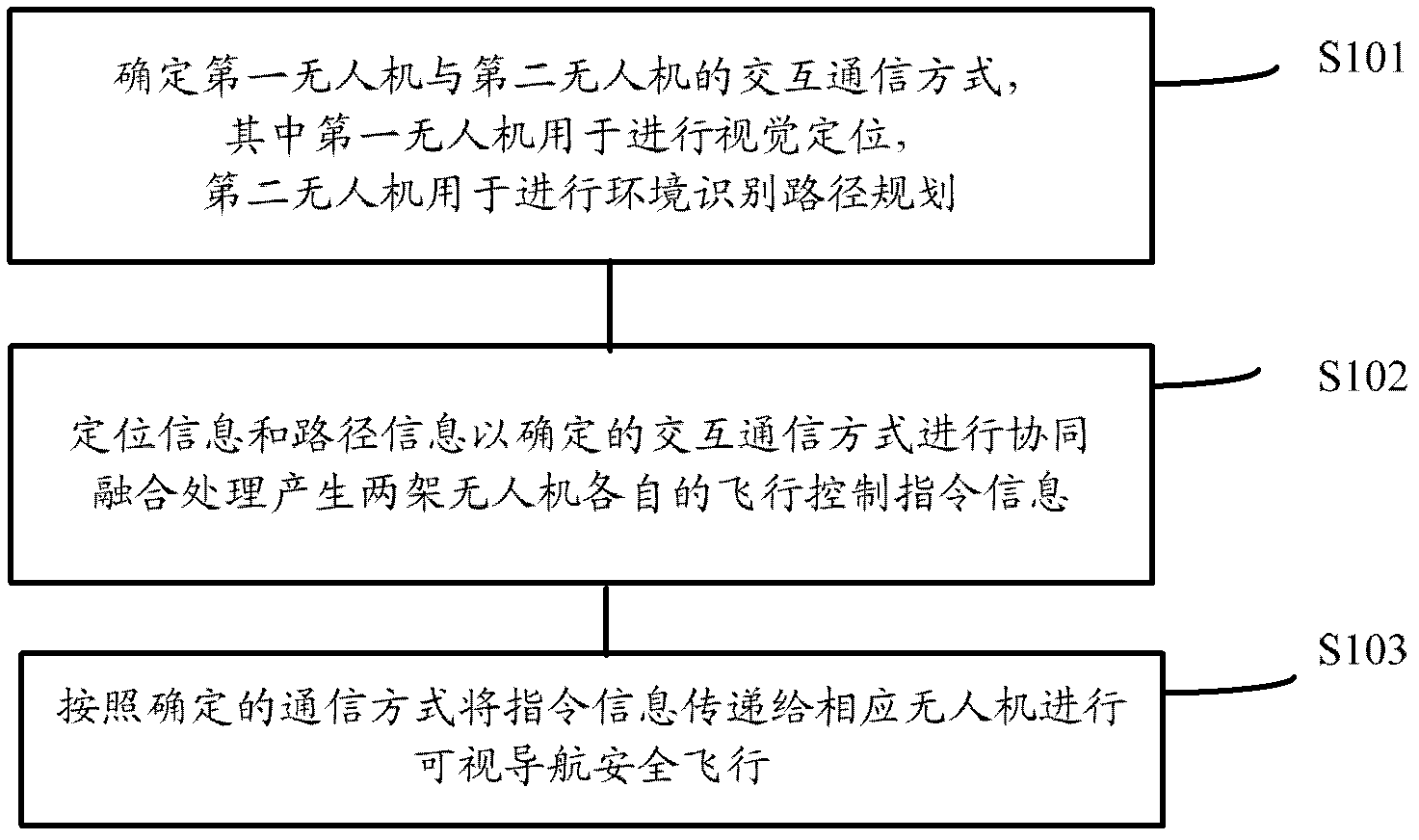

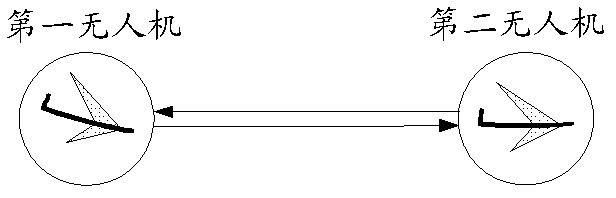

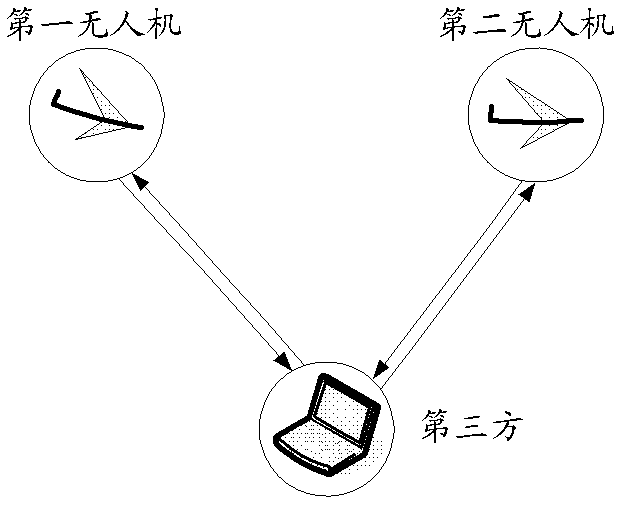

Task collaborative visual navigation method of two unmanned aerial vehicles

ActiveCN102628690AControl the amount of information transmittedImprove matchNavigation instrumentsInformation transmissionUncrewed vehicle

The invention provides a task collaborative visual navigation method of two unmanned aerial vehicles. The method comprises the following steps: determining an interactive communication mode between a first unmanned aerial vehicle and a second unmanned aerial vehicle, wherein the first unmanned aerial vehicle is used to perform visual positioning, and the second unmanned aerial vehicle is used to perform environment identification route planning; performing fusion processing on visual positioning information generated by the first unmanned aerial vehicle and route information generated by the second unmanned aerial vehicle to generate respective flight control instruction information of the first and second unmanned aerial vehicles at all times; transferring the flight control instruction information to the corresponding first and second unmanned aerial vehicles respectively by the interactive communication mode so as to perform visual navigation safe flight. The method provided in the invention can effectively control information transmission volume of real-time videos and image transmission in cooperative visual navigation of the unmanned aerial vehicles, has advantages of good match capability and good reliability, and is an effective technology for implementing cooperative visual navigation of unmanned aerial vehicles cluster to avoid risks, barriers and the like.

Owner:TSINGHUA UNIV

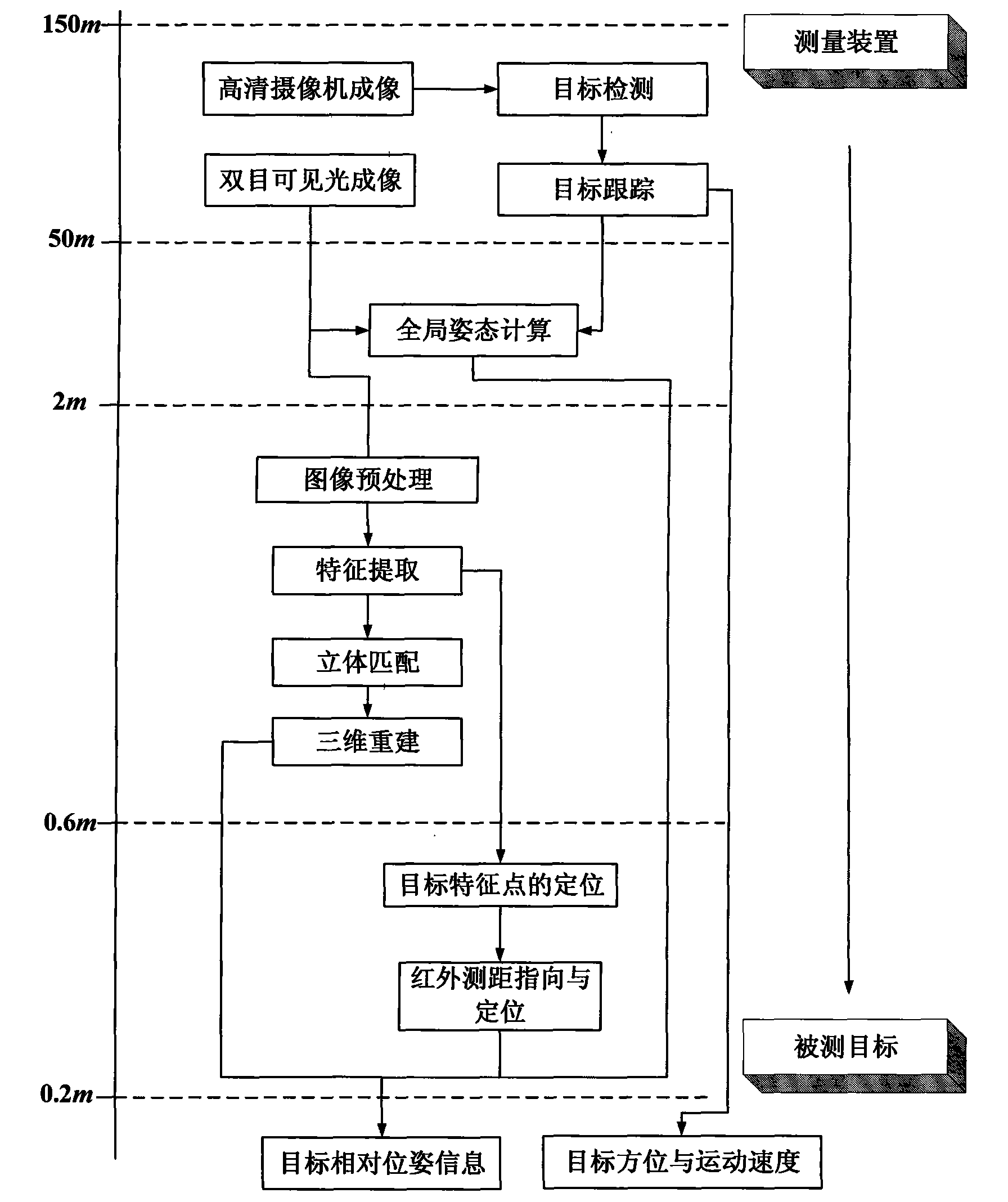

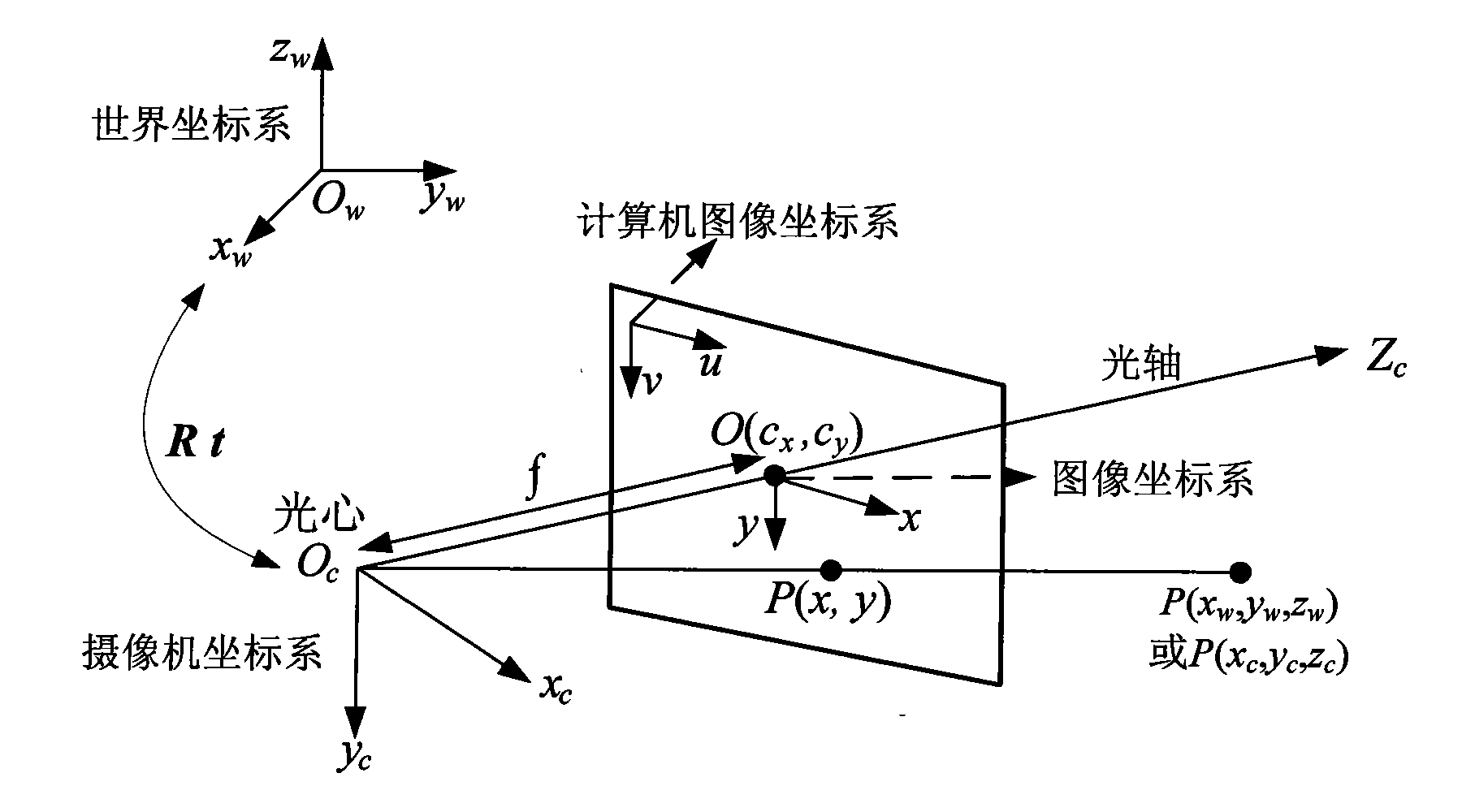

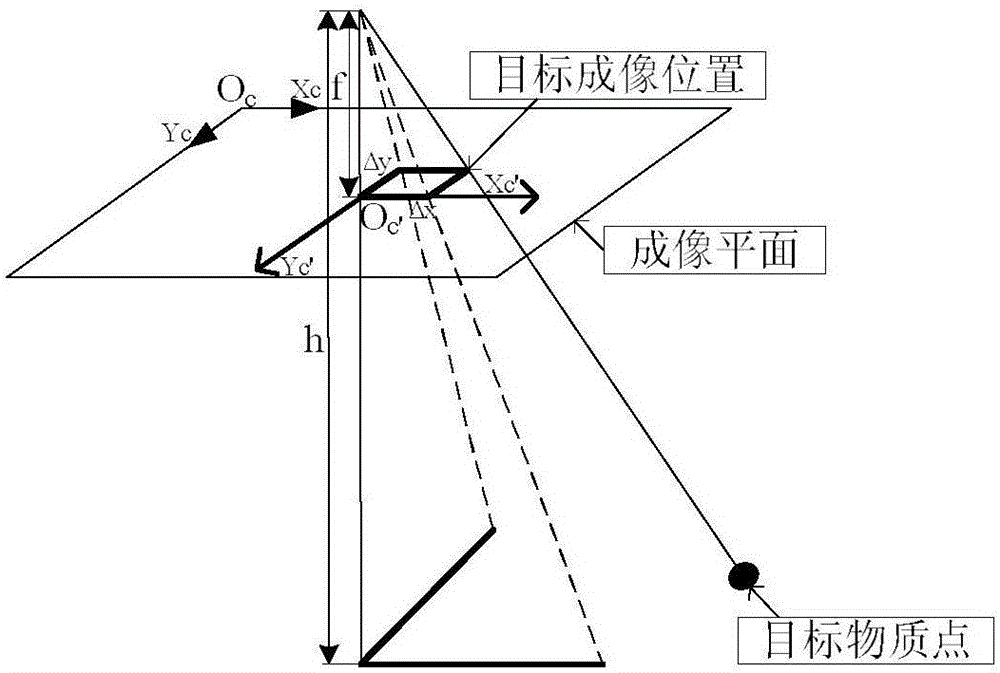

Visual navigation method in autonomous rendezvous and docking

InactiveCN101839721AFix target rotationFix scaling issuesImage analysisInstruments for comonautical navigationStereo matchingVisual perception

The invention discloses a visual navigation method in autonomous rendezvous and docking, which mainly solves the problem that specific parameters of targets need to be known in advance in the prior art. The method comprises the following specific processes of: effectively tracking the targets by utilizing a particle filter at the stage of between 150 and 50 meters; measuring pose information of the targets by adopting stereoscopic vision at the stage of between 50 and 2 meters, and performing quick stereo matching by adopting a box filtering acceleration method; positioning areas and extracting area characteristic points by adopting Hough conversion at the stage of between 2 and 0.6 meter; and eliminating measuring dead zones by adopting infrared auxiliary distance measurement at the stage of between 0.6 and 0.2 meter. In the method, under the condition that the specific parameters of the targets are unknown, the Robust identification and tracking of the targets and the determination of the relative pose of the targets are realized only by using geometrical characteristics of the targets, and the method can be used for autonomous navigation in the process of getting close to the targets gradually under the unknown environment.

Owner:XIDIAN UNIV

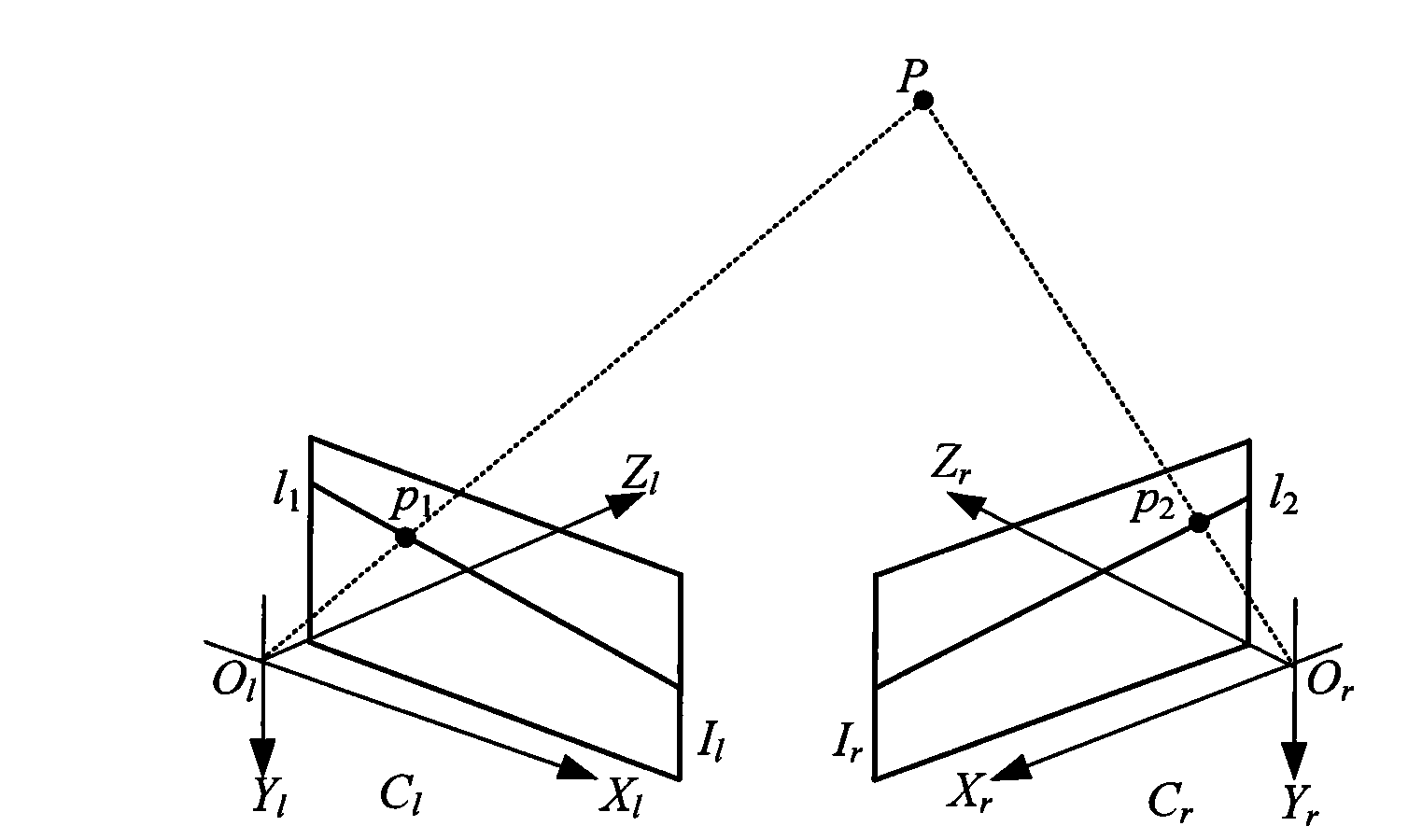

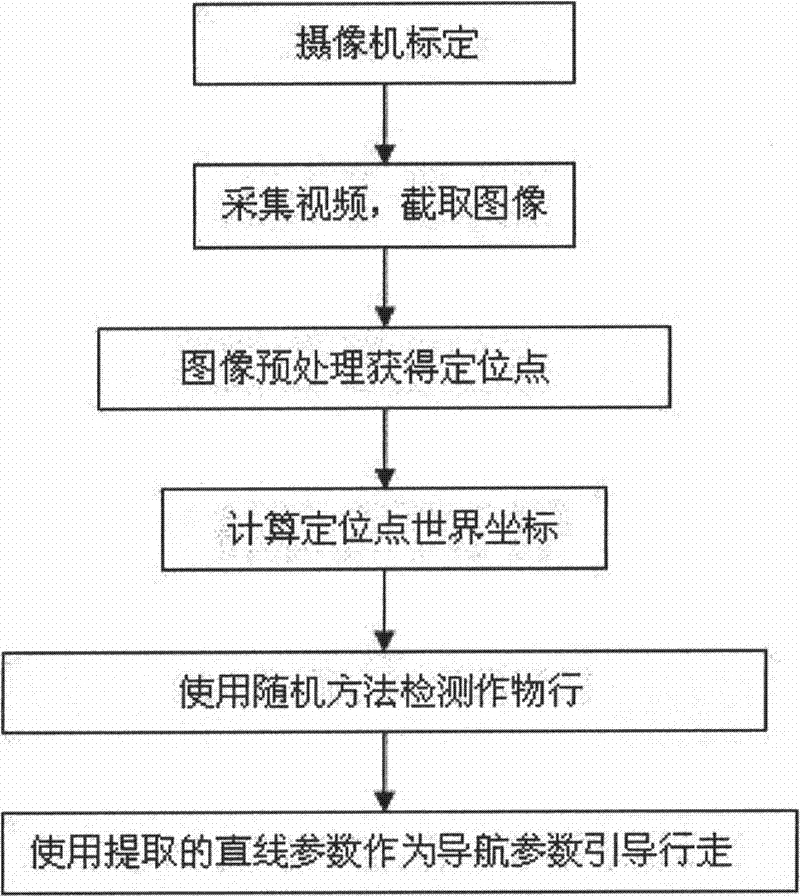

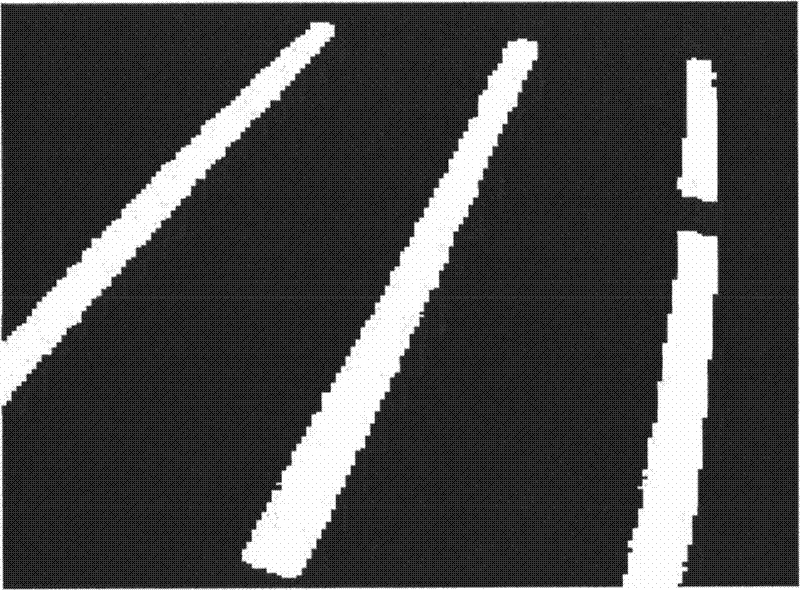

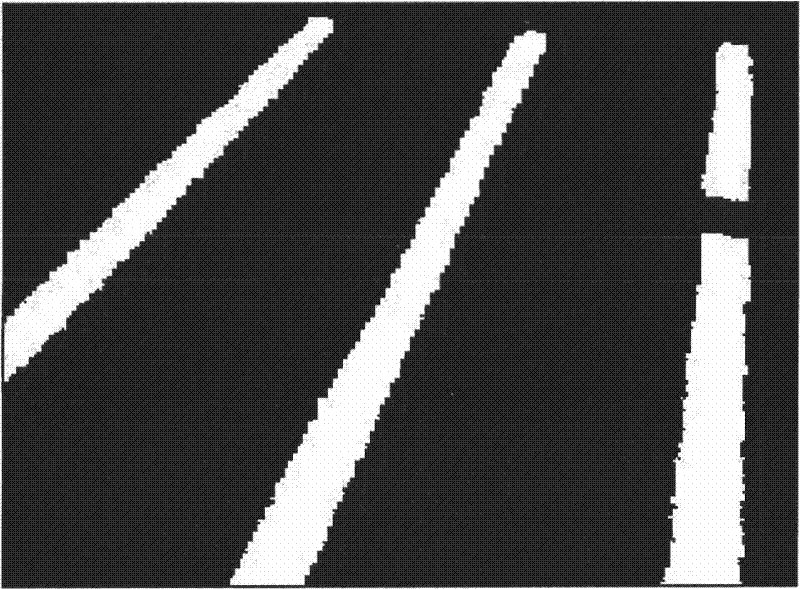

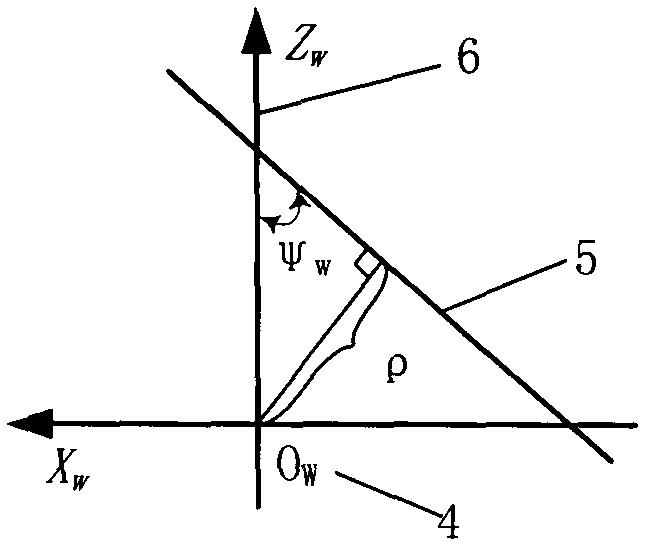

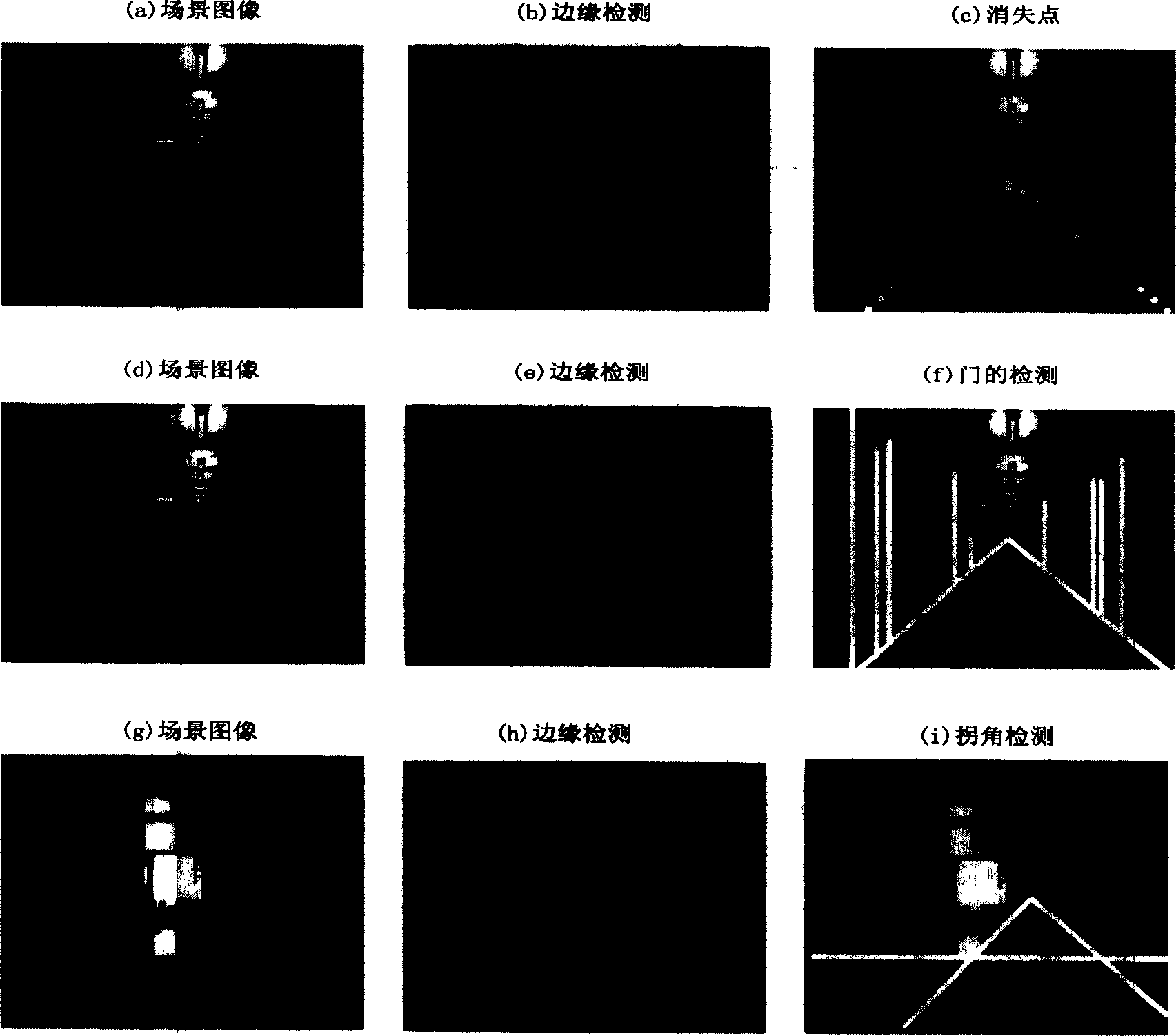

Visual navigation based multi-crop row detection method

InactiveCN101750051AImprove real-time performanceImprove accuracyImage analysisPhotogrammetry/videogrammetryVertical projectionReal time navigation

The invention relates to a visual navigation based multi-crop row detection method, which belongs to the related field of machine vision navigation and image processing, and aims to quickly and accurately extract a plurality of ridge lines in farmland and meet the requirements of the real-time navigation and positioning of agricultural machinery. The invention provides an agricultural machine vision navigation based multi-crop row detection method, which comprises the steps: calibrating camera parameters, acquiring video and image frames, and carrying out distortion correction for the image; dividing a crop ridge line area, extracting navigation positioning points by using the vertical projection method, and calculating the world coordinates of the positioning points; using the random straight line detection method to calculate the positioning points, and detecting the straight lines on which crop ridge rows are positioned; and obtaining the position of each crop ridge row in a world coordinate system relative to the agricultural operation machinery by calculation according to the slope parameters and intercept parameters of the straight lines. Compared with the traditional technology, the technical scheme of the invention greatly reduces time complexity and space complexity, and also improves the accuracy and the real-time of navigation.

Owner:CHINA AGRI UNIV

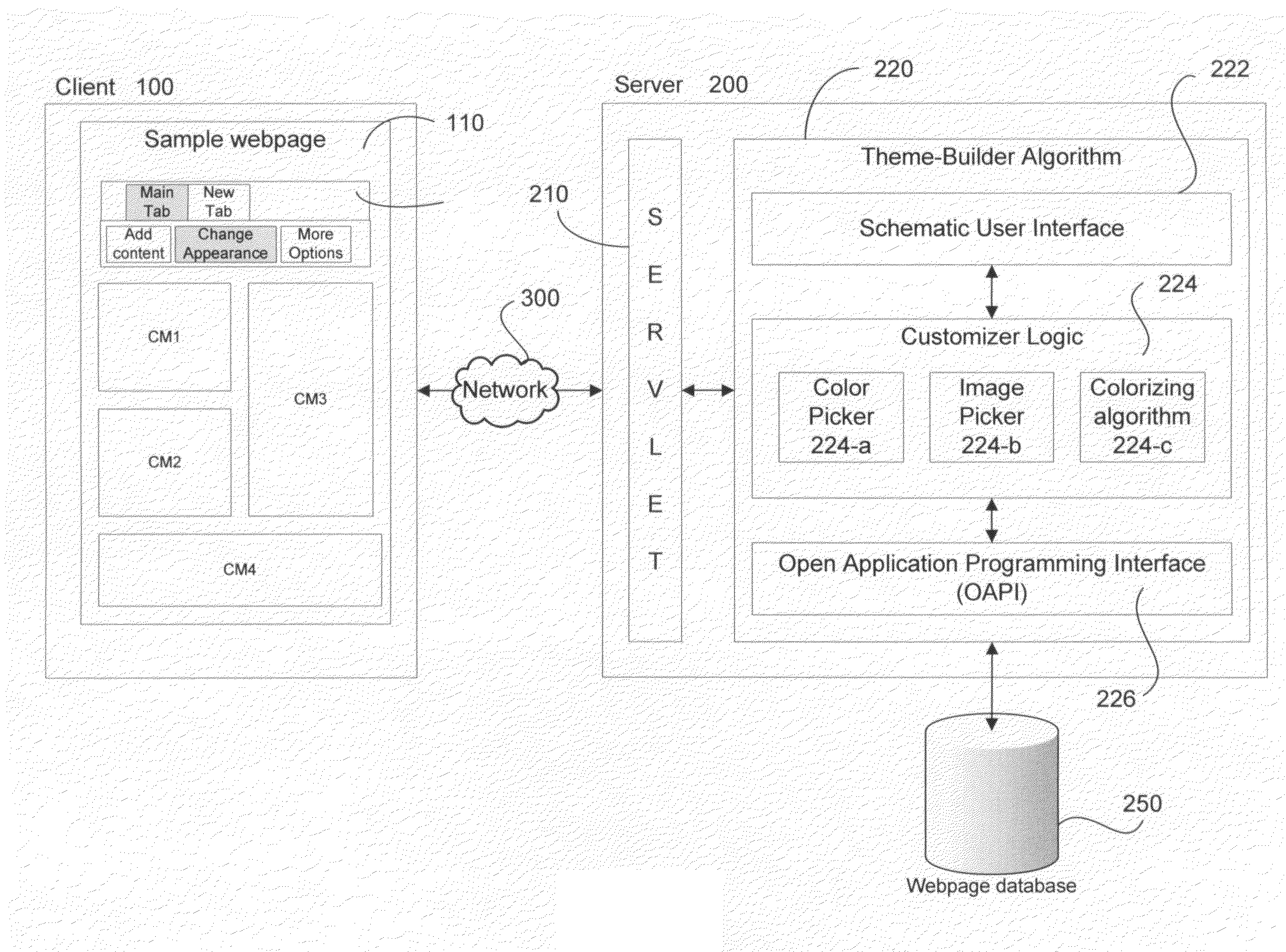

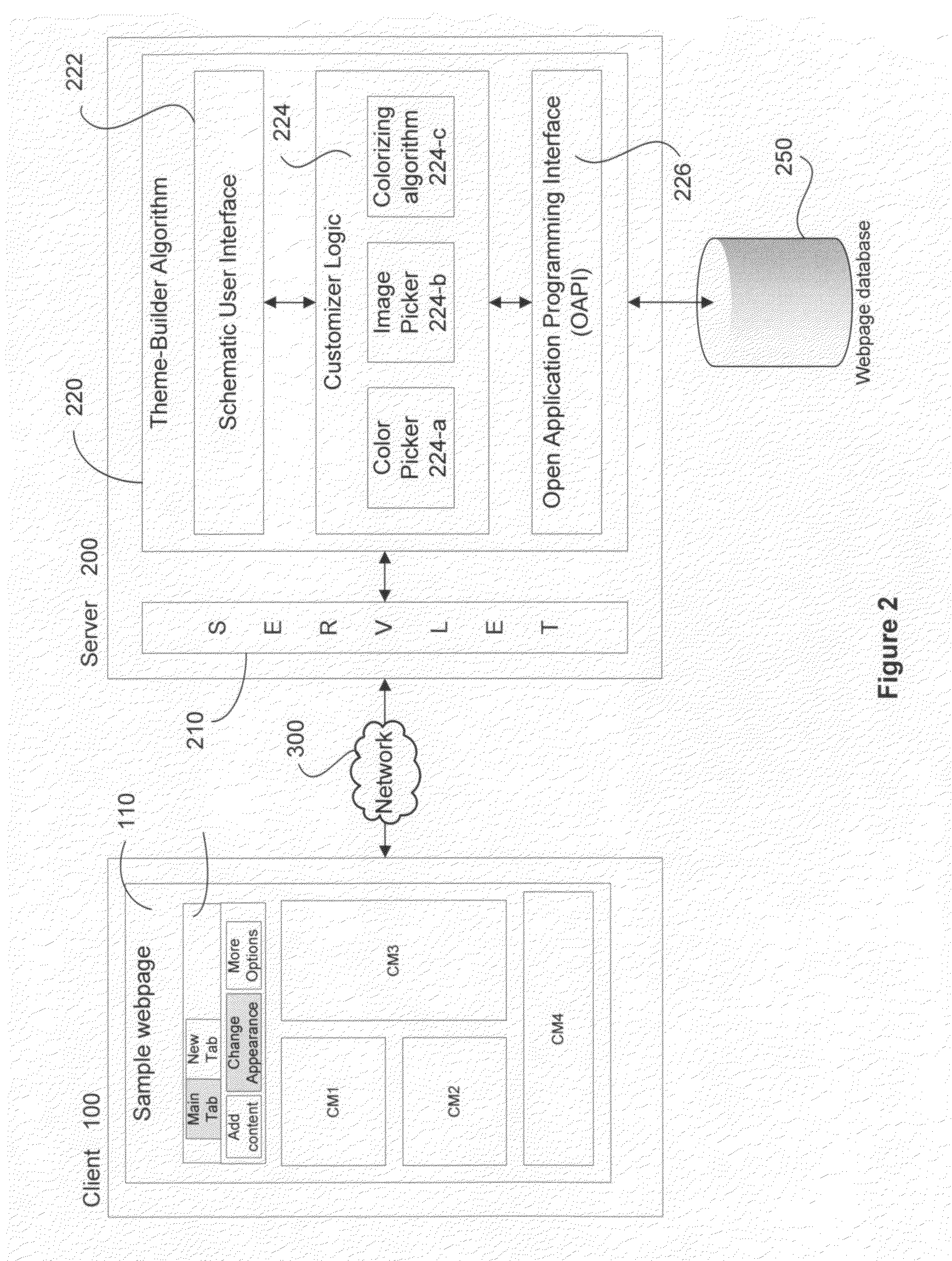

Open Theme Builder and API

ActiveUS20100299586A1Easy to controlIncrease flexibilityNatural language data processingSpecial data processing applicationsWeb pageComputer science

Methods and system for customizing a webpage include generating a schematic representation of the webpage. The webpage includes a plurality of section elements that are customizable. The schematic representation identifies a plurality of schematic section elements corresponding to the plurality of section elements of the webpage. The schematic representation is rendered alongside the webpage and provides visual navigation through various section elements of the webpage. A schematic section element is identified and selected from the schematic representation, for customizing. The selection of the schematic section element triggers rendering of one or more navigation links associated with the selected schematic section element. The navigation links provide tools or options enabling customization. Changes to one or more attributes associated with the selected schematic section element representing customization are received and the attributes are updated at the schematic representation in real-time. The changes are cascaded to the corresponding section elements of the webpage in substantial real-time based on user interaction.

Owner:R2 SOLUTIONS

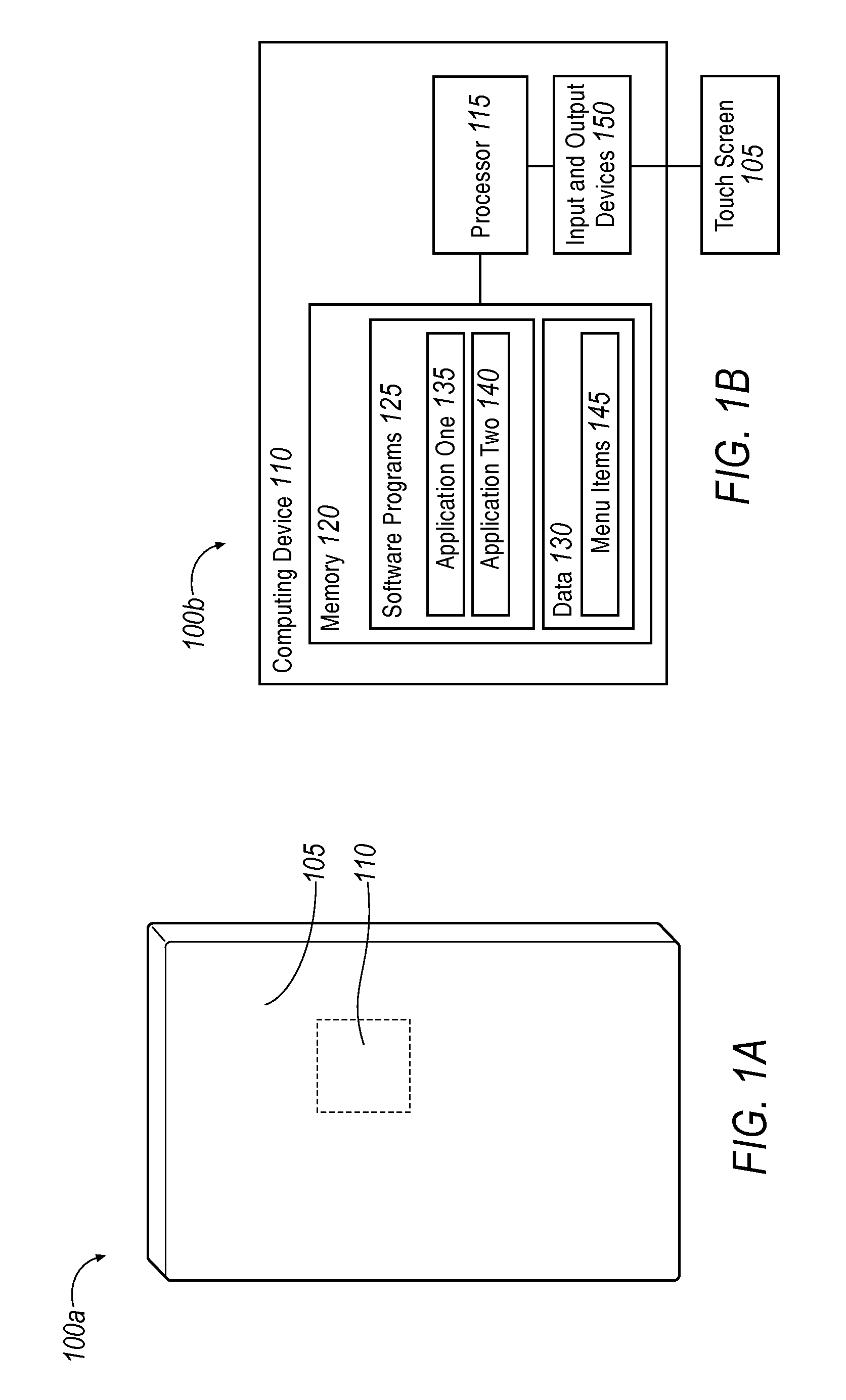

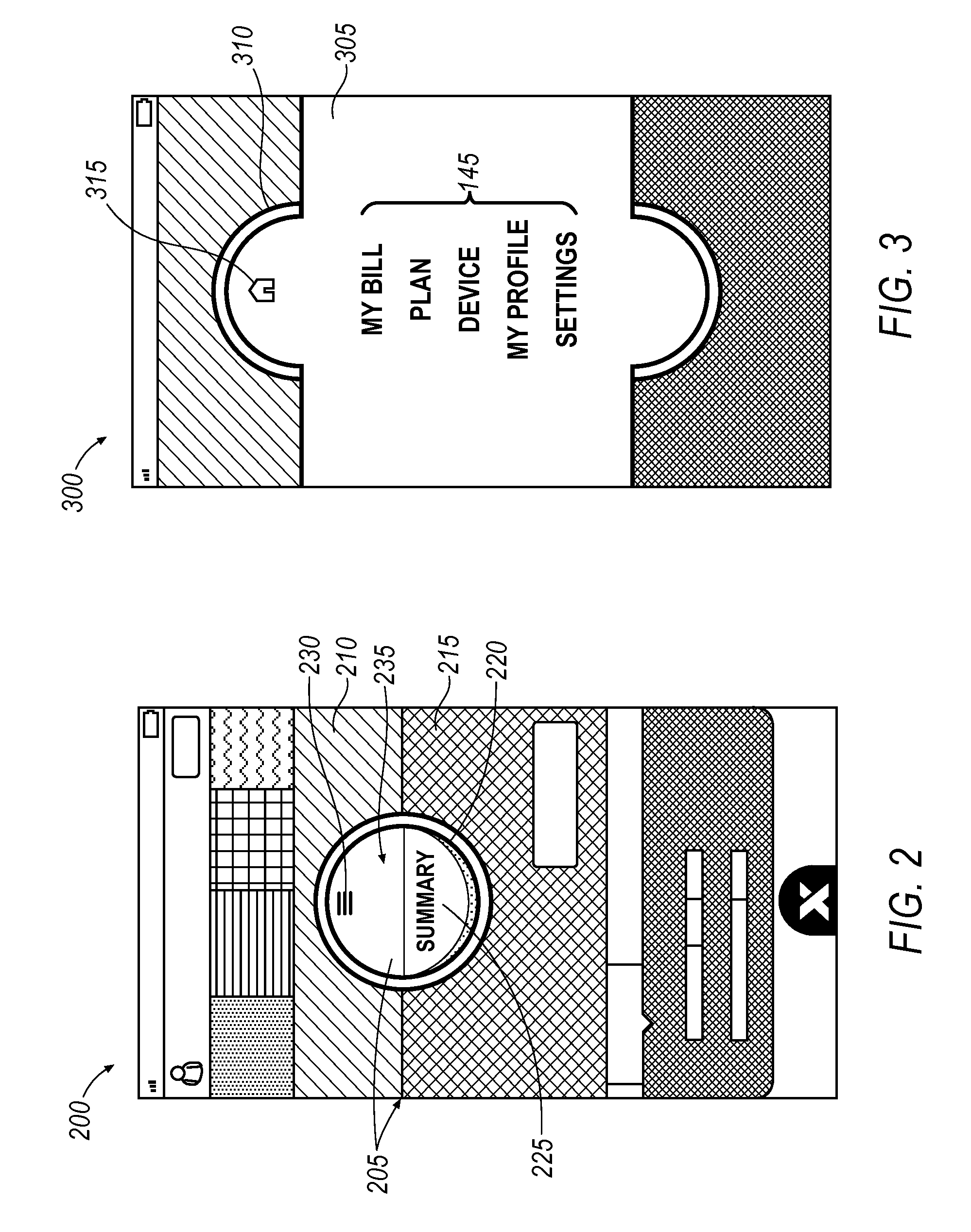

Visual navigation

A system may include a touchscreen and a computing device executing an application. The application displays on the touch screen a navigation area including a centrally disposed selection element. There is a first display area in the navigation area, wherein the first display area shows a first menu item from a list of menu items. The application changes the first display area, when a next menu select action is detected based on interactions with the touch screen, to show a second menu item from the list of menu items, where in the information on the touch screen is updated to corresponds to the second menu item.

Owner:VERIZON PATENT & LICENSING INC

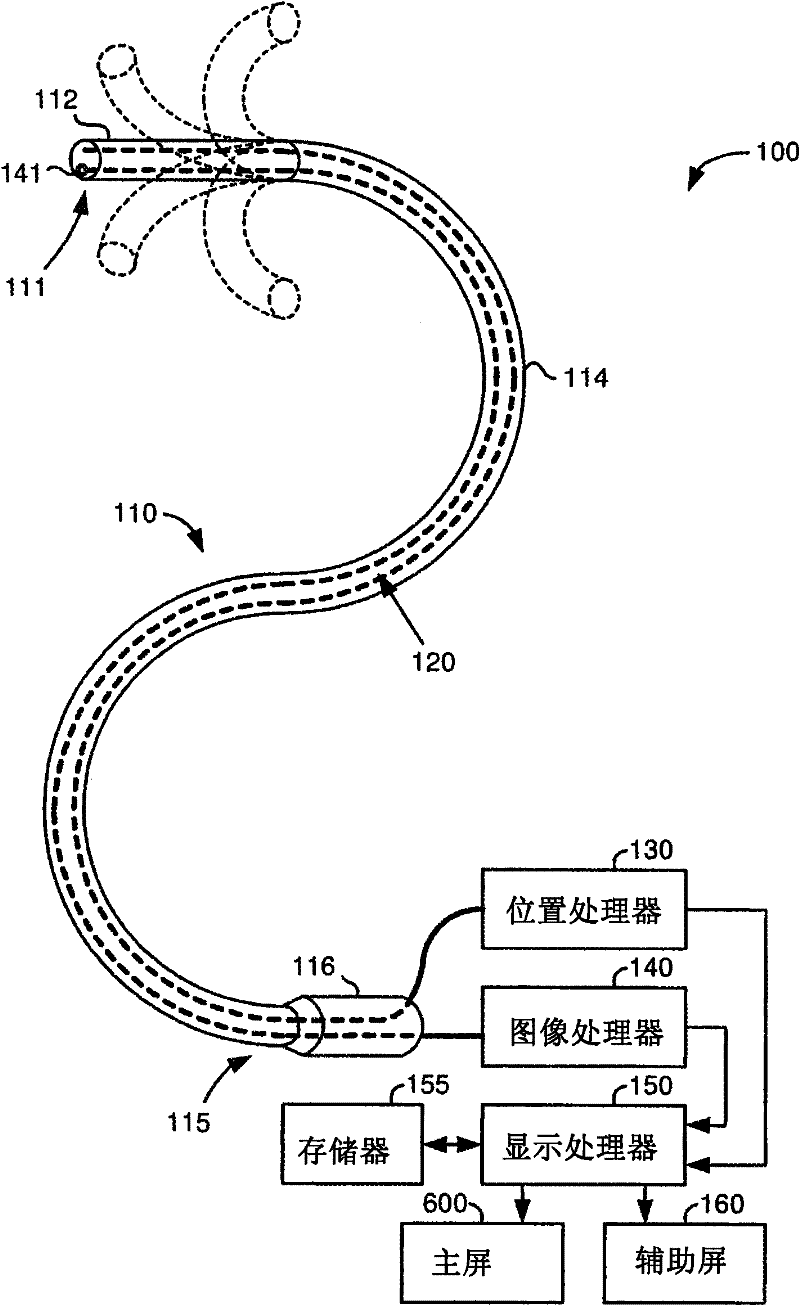

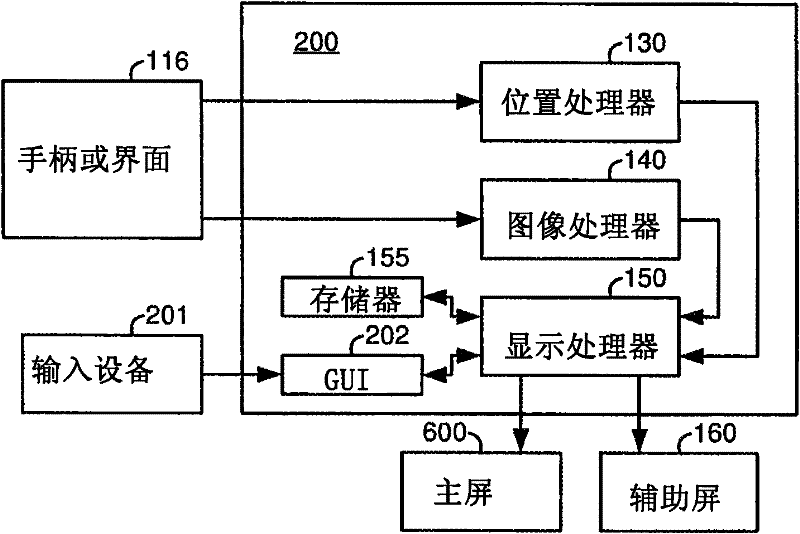

System for providing visual guidance for steering a tip of an endoscopic device towards one or more landmarks and assisting an operator in endoscopic navigation

Landmark directional guidance is provided to an operator of an endoscopic device by displaying graphical representations of vectors adjacent a current image captured by an image capturing device disposed at a tip of the endoscopic device and being displayed at the time on a display screen, wherein the graphical representations of the vectors point in directions that the endoscope tip is to be steered in order to move towards associated landmarks such as anatomic structures. Navigation guidance is provided to the operator by determining a current position and shape of the endoscope relative to a reference frame, generating an endoscope computer model according to the determined position and shape, and displaying the endoscope computer model along with a patient computer model referenced to the reference frame so as to be viewable by the operator while steering the endoscope.

Owner:INTUITIVE SURGICAL OPERATIONS INC

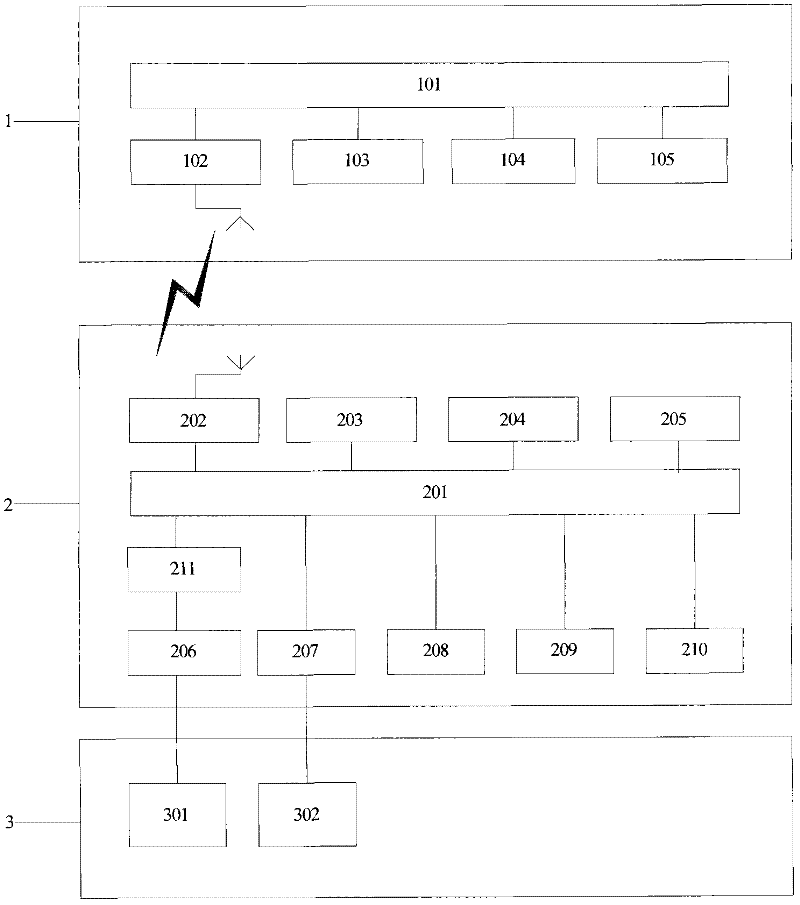

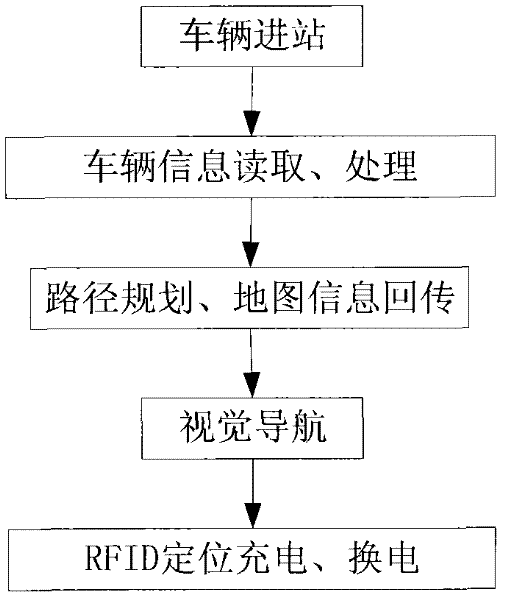

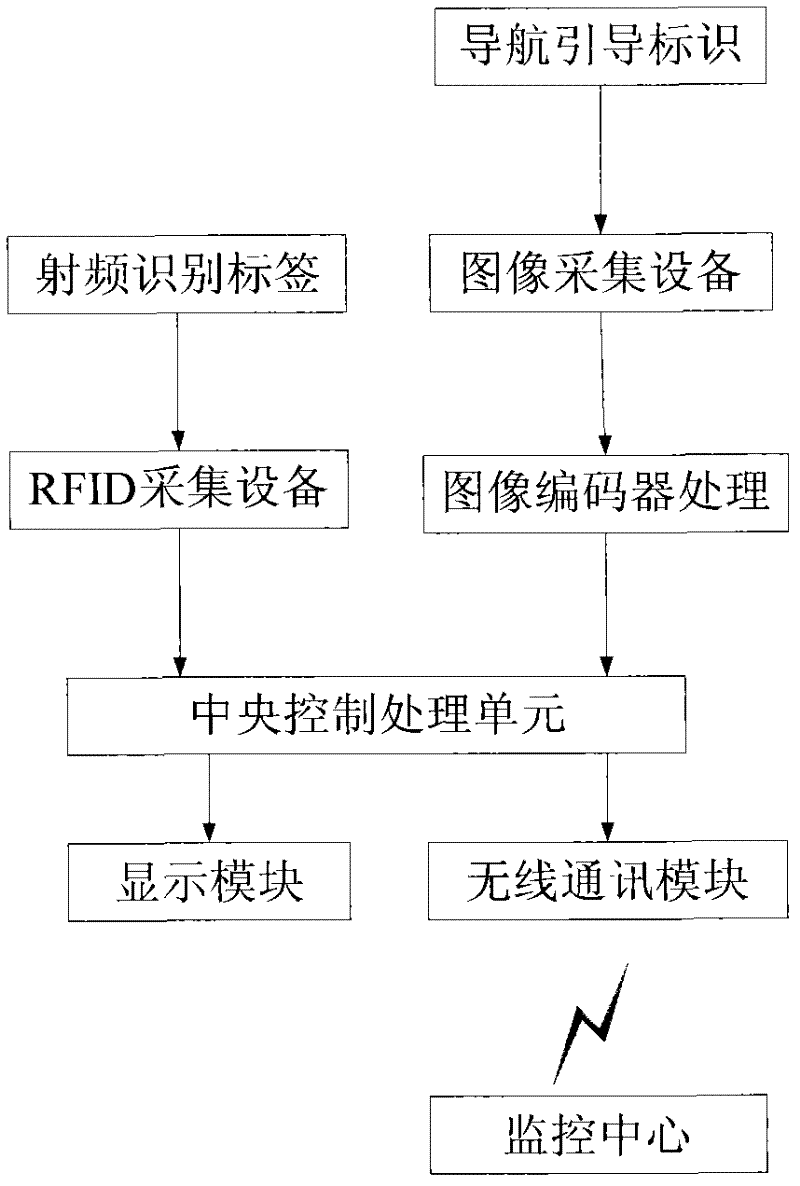

Vehicle guide system of electric vehicle charging station and guide method

InactiveCN102508489AEasy to set upEasy to changeCharacter and pattern recognitionSensing record carriersData acquisitionGlobal Positioning System

The invention relates to a guide method for a vehicle guide system of an electric vehicle charging and replacing station. The guide system comprises a monitor center, one or more vehicle terminals, and one or more ground marks, wherein the monitor center comprises a monitor server; the vehicle terminal comprises a central control processing unit, which is respectively connected with an RFID (radio frequency identification) module, an image acquisition module, a video encoding module, a vehicle data acquisition module, a vehicle control module, a display module, a GPS (global positioning system) module, a wireless communication module (II), a power supply module, and a storage module; and the ground mark comprises an image mark line and an RFID mark. The guide method comprises the following steps: (1) vehicle information reading and processing; (2) path planning, and map information returning; (3) visual navigation; (4) IRID location charging and battery replacing. By adopting image mark navigation and RFID mark location, the method can guide electric vehicle to park at charging position or replacing position rapidly and accurately, thereby providing guarantee for stable operation of the electric vehicle charging and replacing station.

Owner:STATE GRID CORP OF CHINA +1

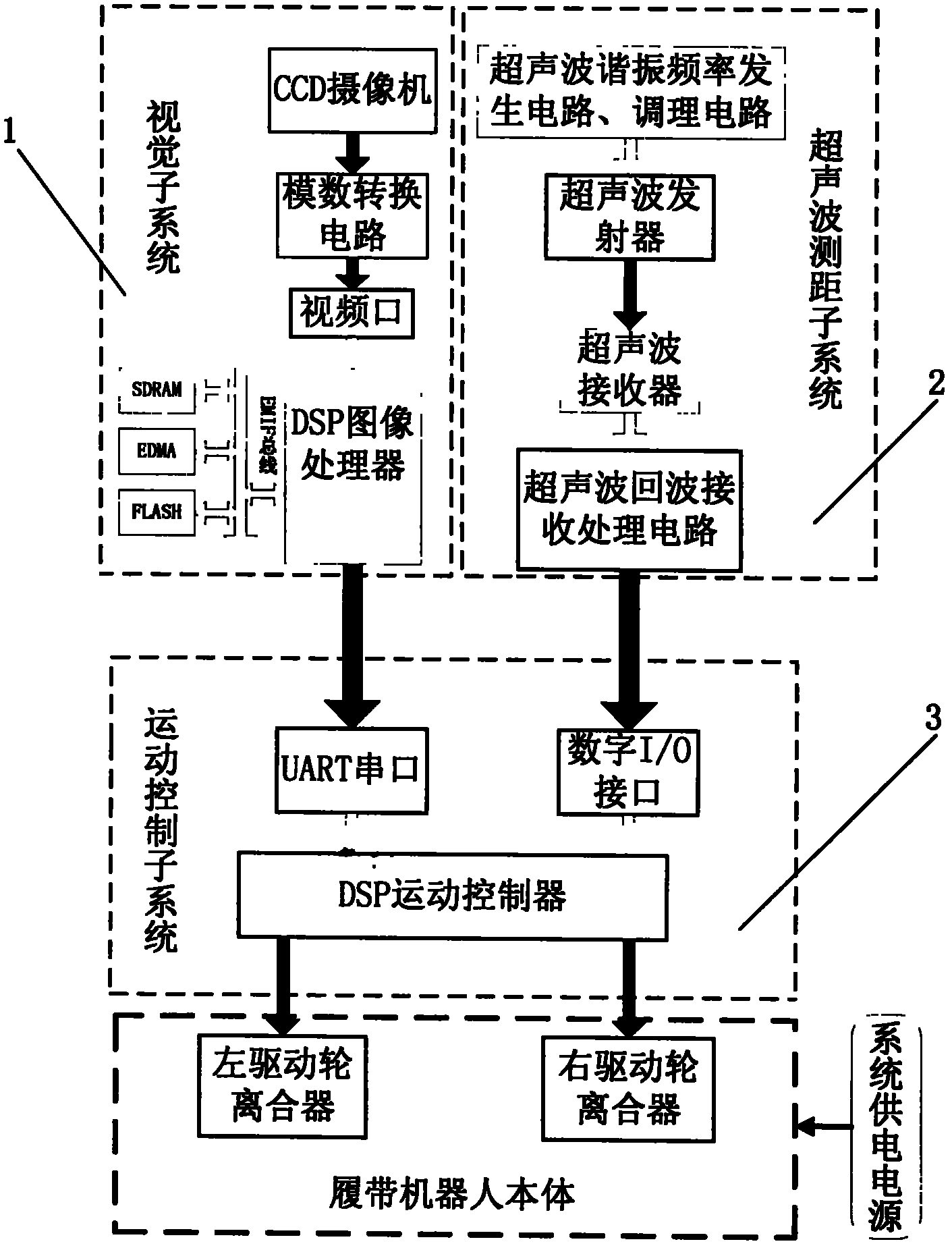

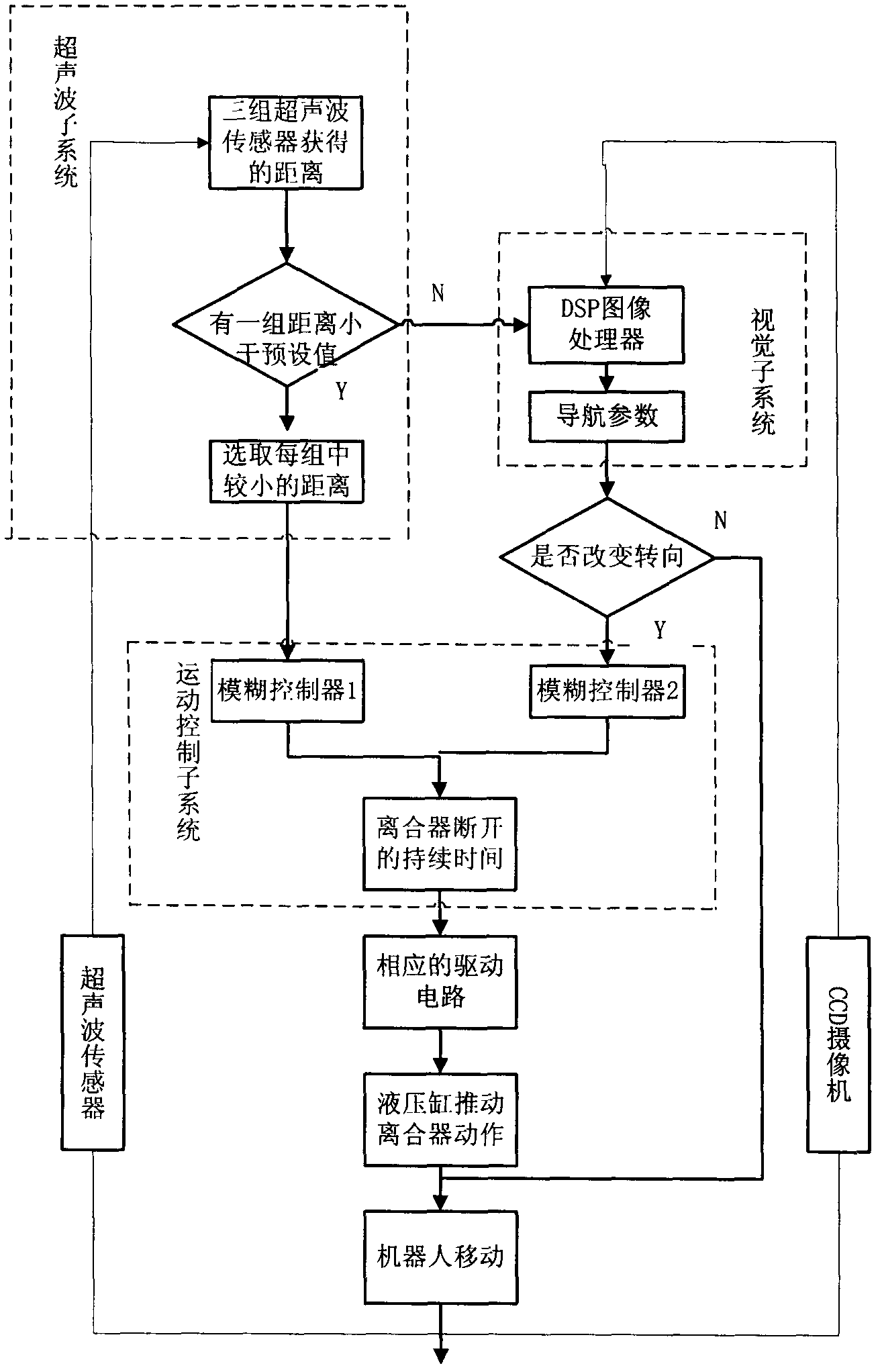

Navigation control system based on vision and ultrasonic waves

InactiveCN102621986AImprove navigation accuracyImprove reliabilityPosition/course control in two dimensionsAcoustic wave reradiationAnti jammingSignal processing circuits

The invention relates to a navigation control system based on vision and ultrasonic waves, which comprises a caterpillar robot body, an ultrasonic ranging subsystem, a vision subsystem and a motion control subsystem, wherein the ultrasonic ranging subsystem is arranged in front of the robot, the vision subsystem is arranged over the robot, and the motion control subsystem is positioned above the robot. The ultrasonic ranging subsystem comprises ultrasonic transmitters, ultrasonic receivers and a signal processing circuit, and a plurality of transmitters and a plurality of receivers are distributed in an equally spaced mode. The vision subsystem comprises a CCD (Charge Coupled Device) camera, an analog-to-digital conversion circuit and a DSP (Digital Signal Processor) image processor, and the motion control subsystem comprises a DSP motion controller and corresponding peripheral circuits. Information processed by the vision subsystem and the ultrasonic ranging subsystem is transmitted to a fuzzy controller inlaid in the motion control subsystem, and the fuzzy controller outputs control information to control the motion of the robot. The system has the advantages that the advantages of visual navigation and ultrasonic navigation are combined, so the navigation accuracy is increased; as the DSP high-speed processor is adopted, the instantaneity and the expandability are increased; and the anti-jamming capability is enhanced through applying a fuzzy control method.

Owner:NORTHWEST A & F UNIV

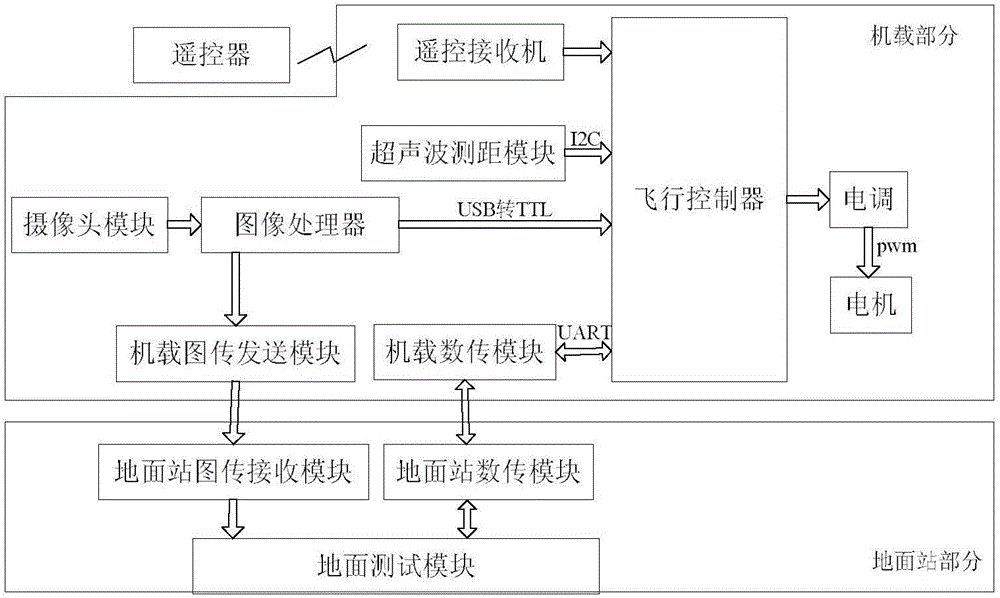

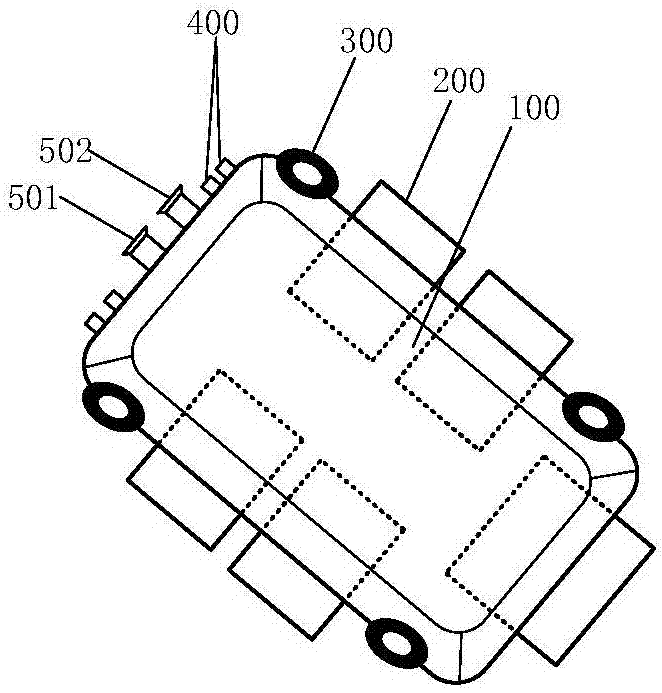

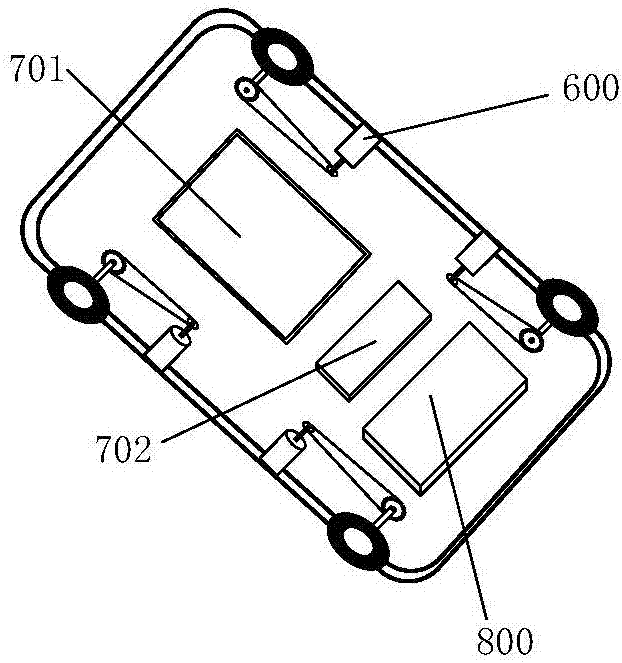

Vision based steady object tracking control system and method for rotor UAV (unmanned aerial vehicle)

ActiveCN106774436AReduce distractionsPrecise positioningInternal combustion piston enginesTarget-seeking controlControl systemVision based

The invention discloses a vision based steady object tracking control system for a rotor UAV (unmanned aerial vehicle). The system comprises an airborne part, wherein the airborne part comprises a flight control unit of the rotor UAV, an ultrasonic ranging module and a visual navigation module; the visual navigation module comprises an image processor, a camera module and a two-axis brushless pan-tilt unit which is fixed under the rotor UAV; the camera module and the ultrasonic ranging module are both fixed on the two-axis brushless pan-tilt unit, and a lens of the camera module and the ultrasonic ranging module are both parallel to the horizontal plane. The invention further discloses a vision based steady object tracking control method for the rotor UAV. The airborne visual navigation module is used for automatically detecting a target characteristic indication and solving the practical relative error distance between the rotor UAV and a target object, and the problem that the rotor UAV cannot steadily track a moving object under the condition that the target object is shielded is particularly solved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

Unmanned logistics vehicle based on depth learning

ActiveCN106873566AReduce the difficulty of installation and debuggingLow costProgramme controlComputer controlDecision modelMotor drive

The invention relates to an unmanned logistics vehicle based on depth learning. The unmanned logistics vehicle comprises a logistics vehicle body, an ultrasonic obstacle avoidance module, a binocular stereo vision obstacle avoidance module, a motor driving module, an embedded system, a power supply module, and a visual navigation processing system. The binocular stereo vision obstacle avoidance module is used for detecting a distant obstacle in a road scene. The ultrasonic obstacle avoidance module is used for detecting a near distance obstacle, and distance information of the obstacles obtained by the two modules are called obstacle avoidance information. According to the visual navigation processing system, a depth learning model trained by the sample set is used to process collected road image data, and control command information is outputted. Finally, a decision model integrates control instruction information and the obstacle avoidance information to control the motor driving module so as to realize the unmanned driving function of a logistics vehicle. According to the unmanned logistics vehicle, the installation of auxiliary equipment is not needed, the depth learning model can sense and understand a road surrounding environment through a learning sample set, and the unmanned driving function of the logistics vehicle is realized.

Owner:NORTHEASTERN UNIV

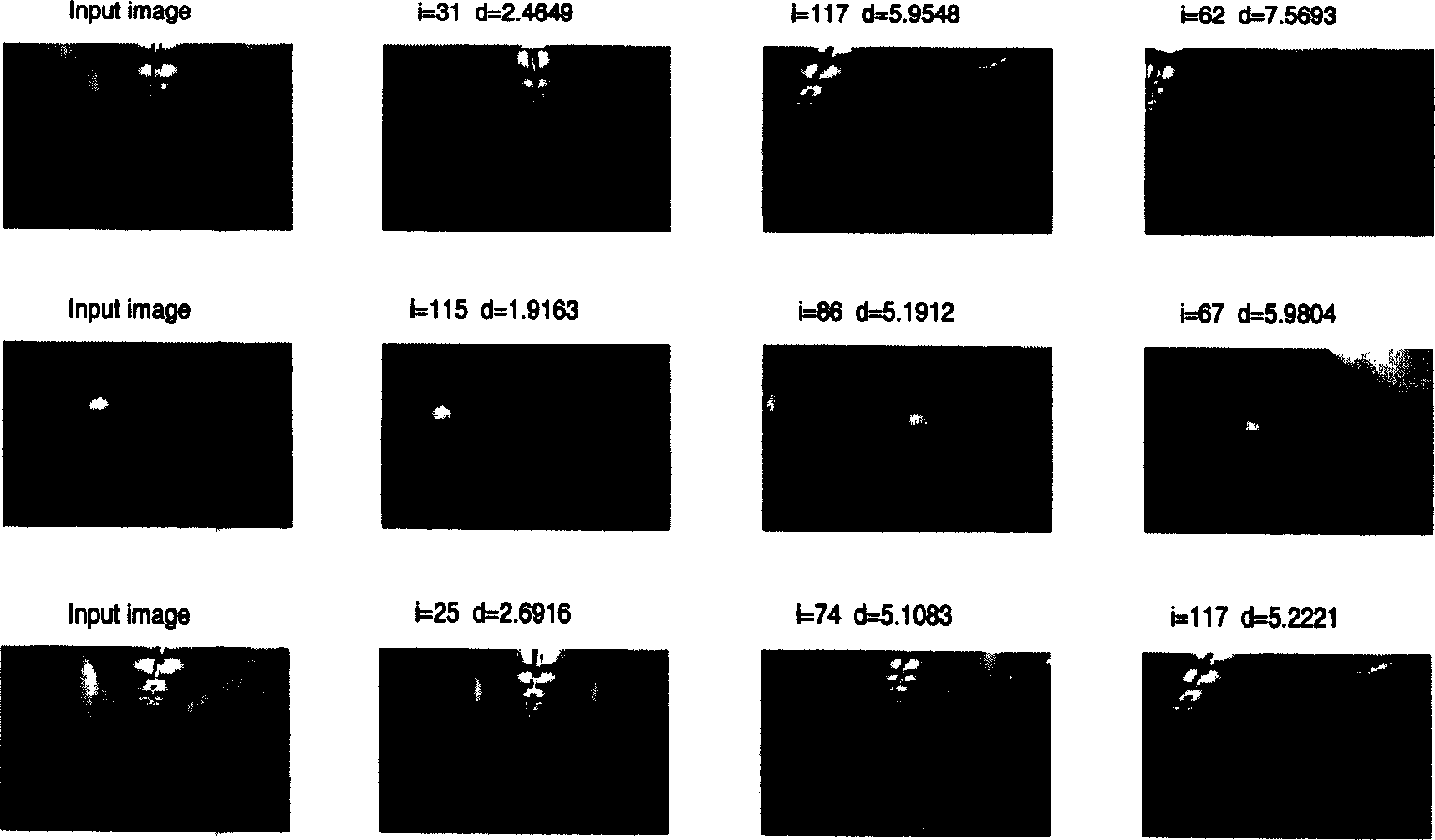

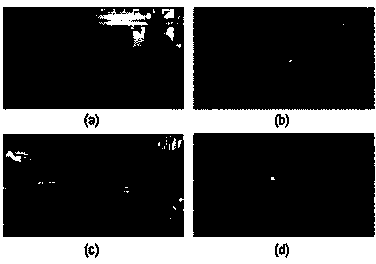

Moving robot's vision navigation method based on image representation feature

InactiveCN1569558ASolve hardware problemsLong detection distanceNavigational aid arrangementsCharacter and pattern recognitionVision basedNavigation system

The invention is a vision navigating method for mobile robot based on image representation character. It includes steps: the mobile robot detects the natural marked object automatically; matches the current image with the image and scene in sample bank, in order to determinate the current position. The method solves the hardware problem caused by former navigation system with sensor, which can be applied to non-field environment where the traditional navigation mode is difficult to be adaptive, and carries on the self localization of mobile robot. The navigation method combines the scene mark detection and scene image representation analysis.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Multi-sensor fusion-based visual navigation AGV system

ActiveCN104166400ALower build costsImprove general performancePosition/course control in two dimensionsAdaptive learningRoad surface

The invention relates to a multi-sensor fusion-based visual navigation AGV system comprising a vehicle body. A remote ultrasonic ranging module and an image acquisition device are installed at the front side of the vehicle body; and near-field ultrasonic ranging modules are uniformly distributed at the two sides of the vehicle body. A GPS positioning module, a power supply module, a motor drive module and an upper computer are installed at the vehicle body. The AVG visual guiding method of the vehicle body includes a step that is executed by one time at a system initialization period or a phase one executed after setting condition triggering by the system and a phase two executed continuously at a system running period; and the phase one is an adaptive learning phase and the phase two is a road surface detection and road path planning phase. According to the invention, the system has the following advantages: manual guidance identifier laying is not required; the application of the system is flexible; the universality is high; the integrated construction cost of the AGV system is effectively lowered; the system is suitable for various complex road conditions and various weather conditions; and influences on road identification by factors like illumination, shadow, and lane lines and the like can be effectively eliminated by using the adaptive learning algorithm.

Owner:浙江科钛机器人股份有限公司

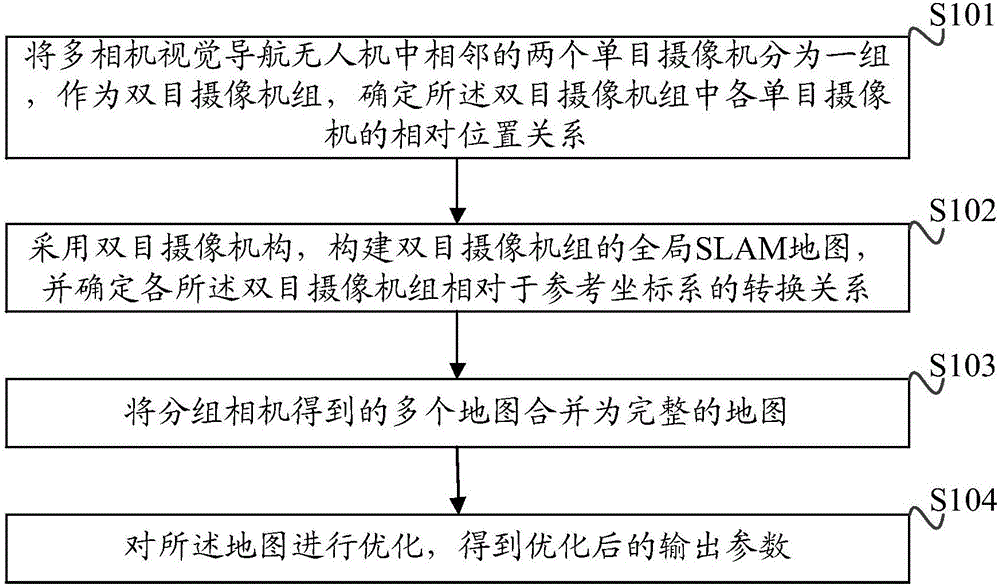

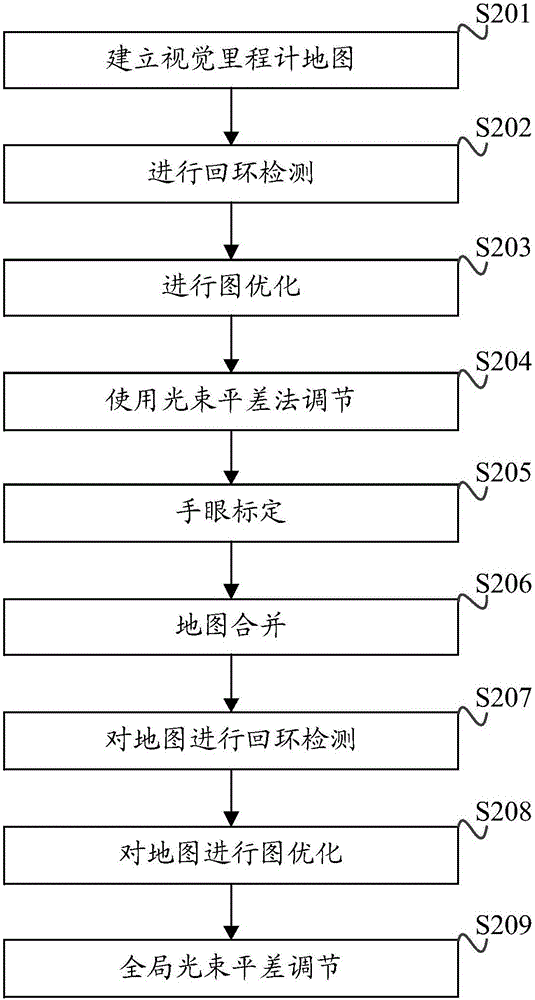

Camera calibration method and device for visual navigation unmanned aerial vehicle

The invention discloses a camera calibration method and device for a visual navigation unmanned aerial vehicle (UAV). The method comprises steps of: grouping two adjacent monocular cameras in a multi-camera visual navigation UAV as a binocular camera group, and determining the relative position relation of respective monocular cameras in the binocular camera group; constructing a global SLAM map of the binocular camera group by using a binocular camera mechanism and determining the conversion relation of each binocular camera group relative to a reference coordinate system; merging maps obtained by the grouped cameras into a complete map; and optimizing the map to obtain an optimized output parameter. The method and the device are simple and easy to implement, and avoid a complex calibration block selection process. A continuous global map established in the calibration process can be used for the visual positioning and navigation of the UAV, reduces algorithm complexity of system real-time computing, and simplifies the operation procedure of a traditional calibration method.

Owner:CHENGDU TOPPLUSVISION TECH CO LTD

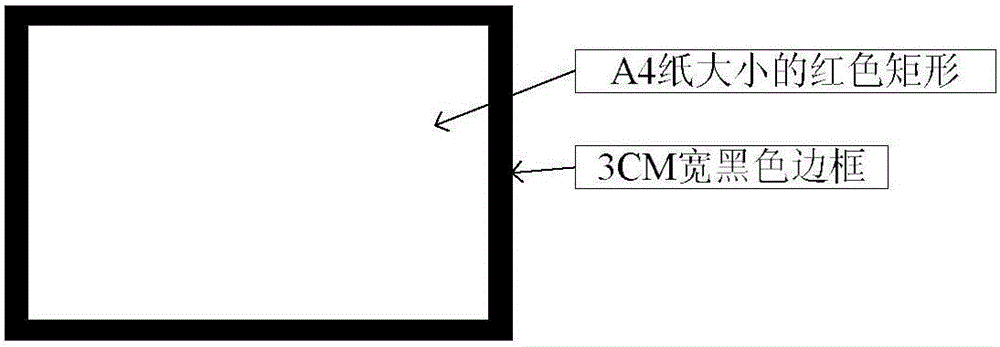

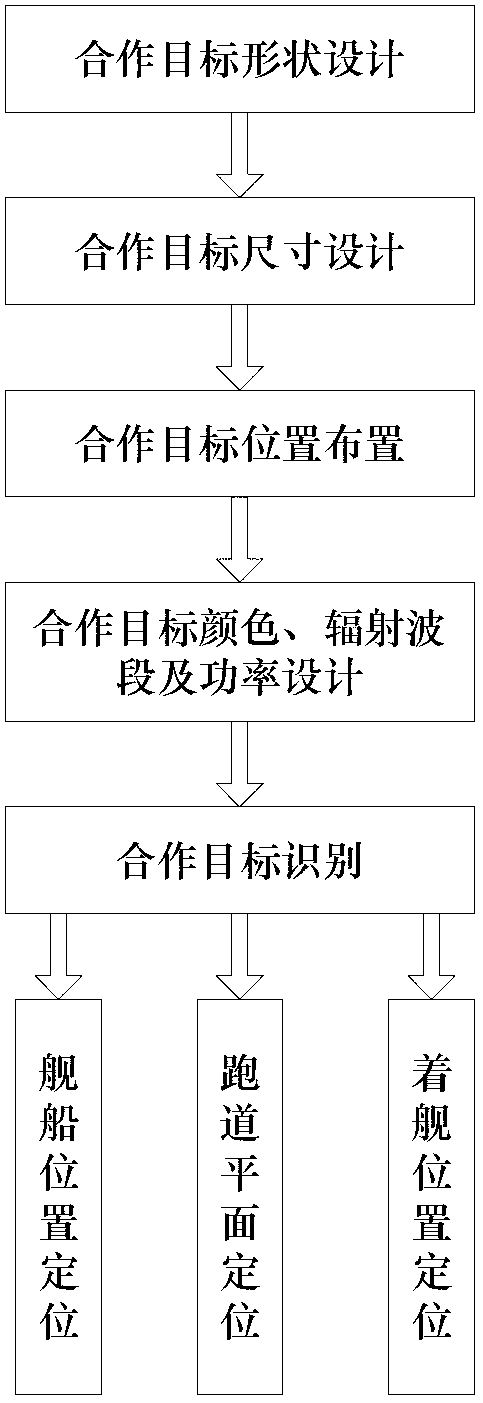

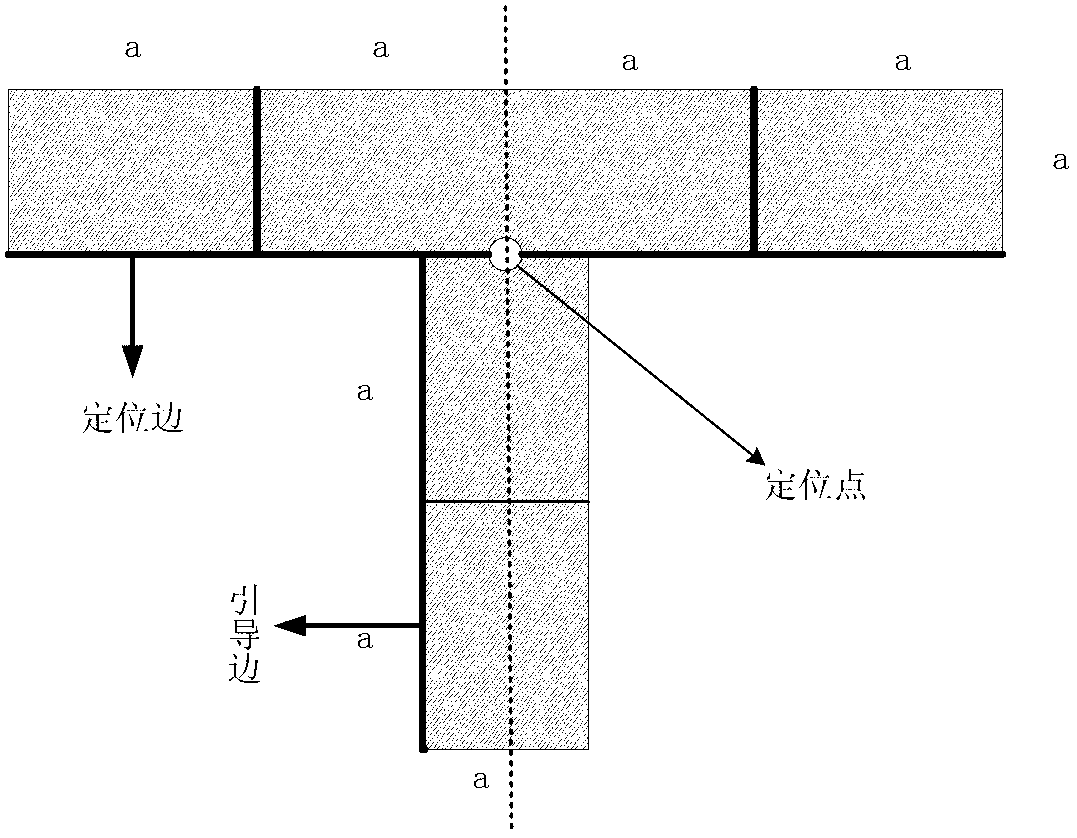

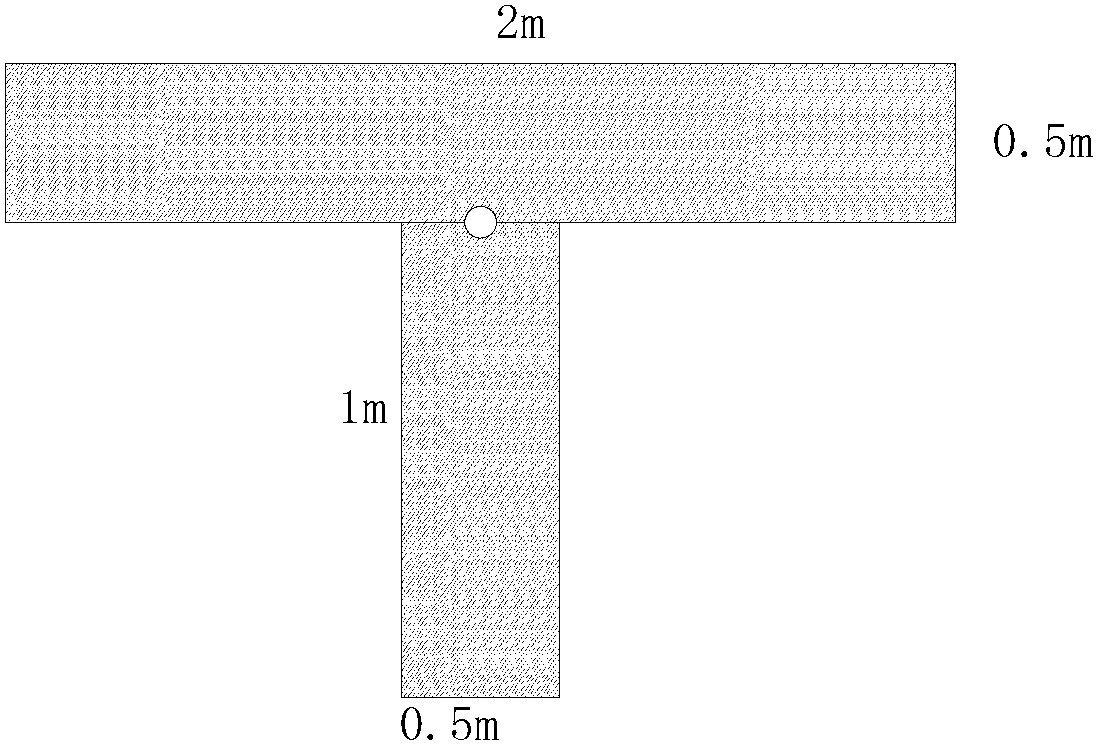

Cooperative target designing and locating method for unmanned aerial vehicle autonomous landing

ActiveCN103218607AEasy to identifyImprove robustnessCharacter and pattern recognitionFar distanceUncrewed vehicle

The invention belongs to the filed of visual navigation, and discloses a cooperative target designing and locating method for unmanned aerial vehicle autonomous landing. The method is based on the principle of being simple in structure and convenient to identify, having direction guidance, and facilitating accurate locating under the affine transformation condition, the shape, size, color, position arrangement and radiation band and power of the cooperative target are designed, and then an identification scheme of the cooperative target is designed. The identification scheme comprises 5000-meter far distance naval vessel position detection, 1000-meter middle distance runway plane locating and 500-meter near distance vessel landing position locating. By means of the cooperative target designing and locating method, safety and success rate of unmanned aerial vehicle autonomous landing can be improved, and the designed cooperative target is convenient to identify and locate and high in robustness. By means of computer view, the cooperative target can be fast identified, and unmanned aerial vehicle autonomous landing is achieved.

Owner:北京北航天宇长鹰无人机科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com